Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

185 results about "Massively parallel" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, massively parallel refers to the use of a large number of processors (or separate computers) to perform a set of coordinated computations in parallel (simultaneously). In one approach, e.g., in grid computing the processing power of many computers in distributed, diverse administrative domains, is opportunistically used whenever a computer is available. An example is BOINC, a volunteer-based, opportunistic grid system, whereby the grid provides power only on a best effort basis.

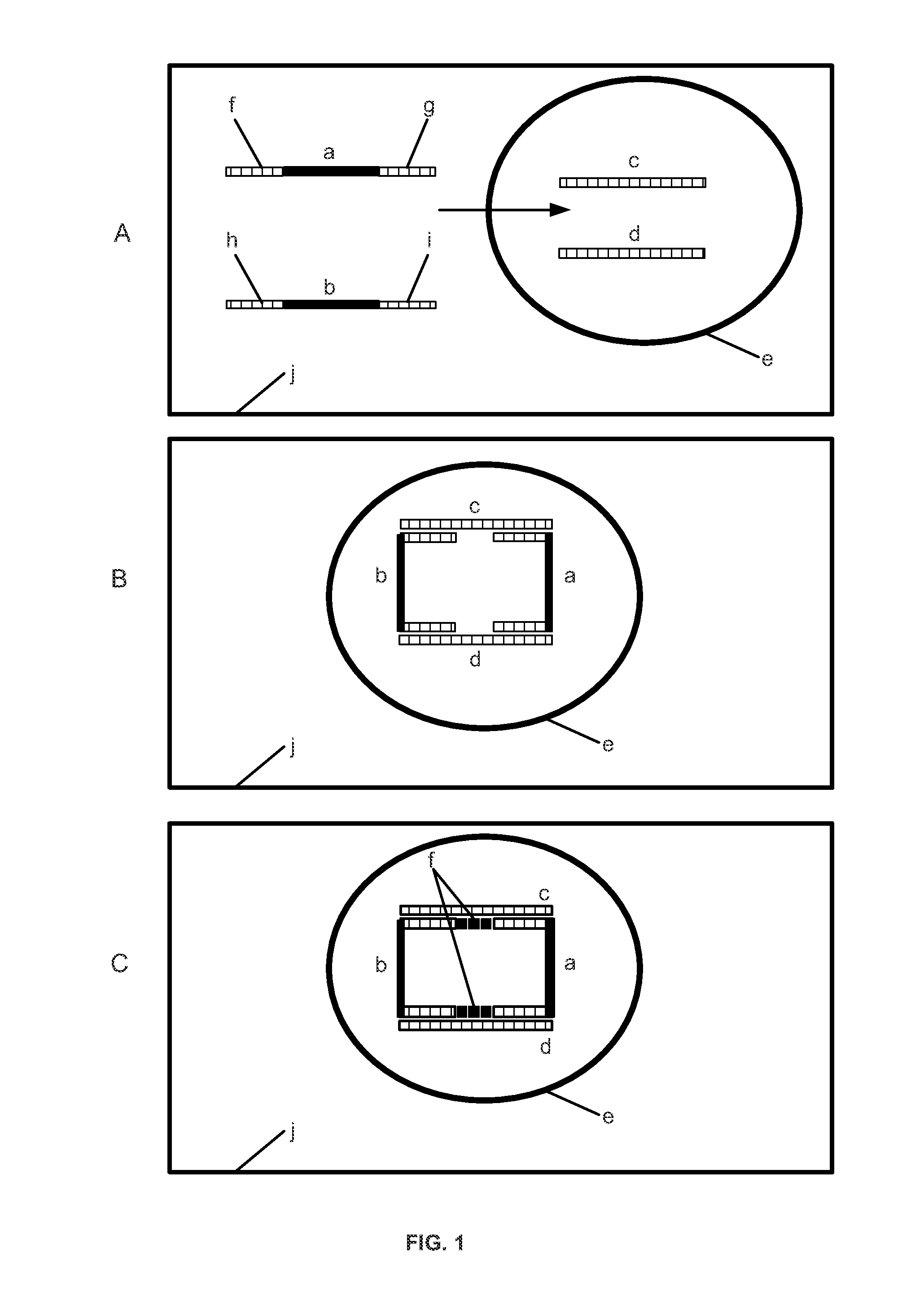

Non-invasive fetal genetic screening by digital analysis

ActiveUS20070202525A1Enriching fetal DNAMicrobiological testing/measurementFermentationChorionic villiMassively parallel

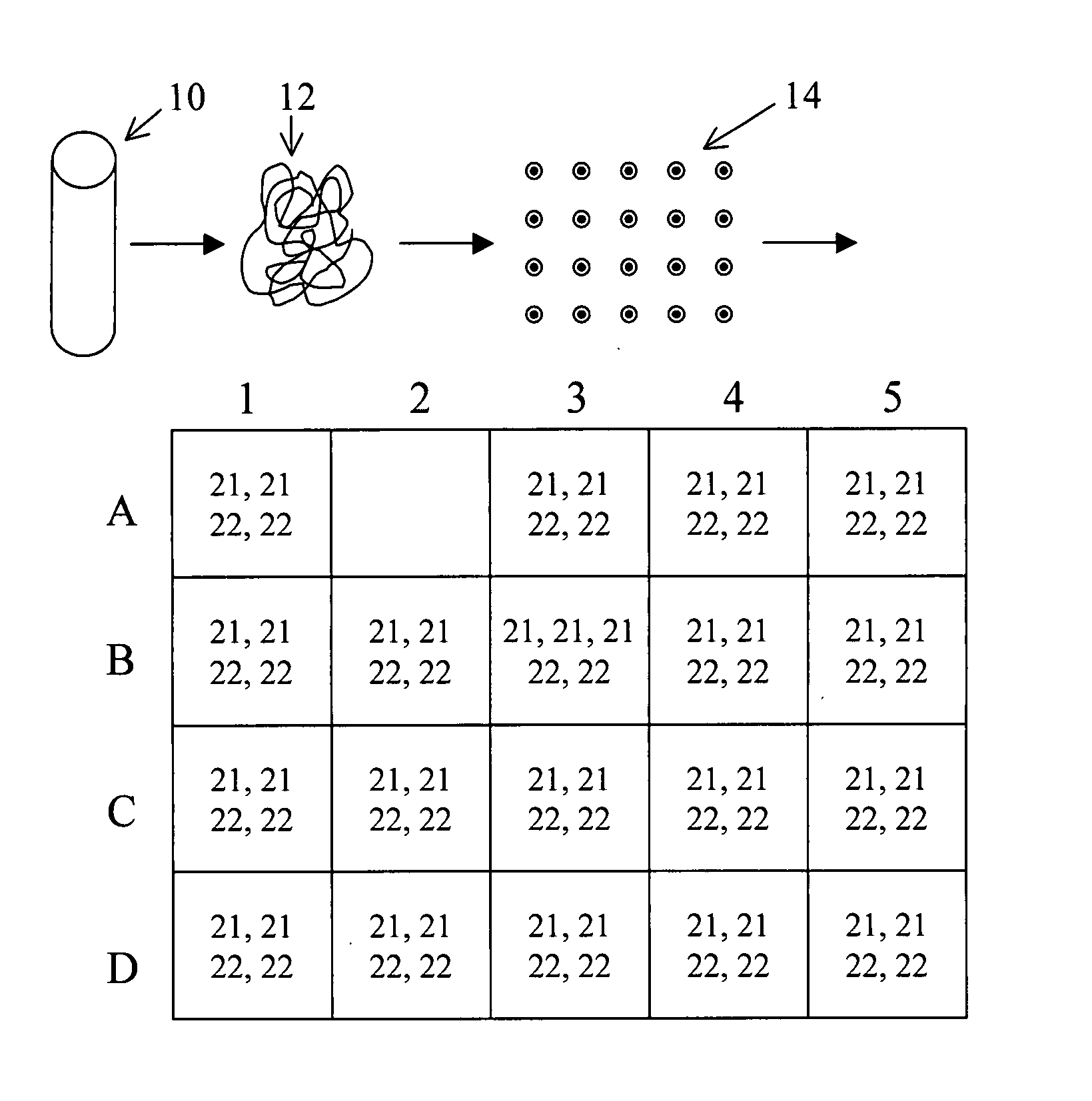

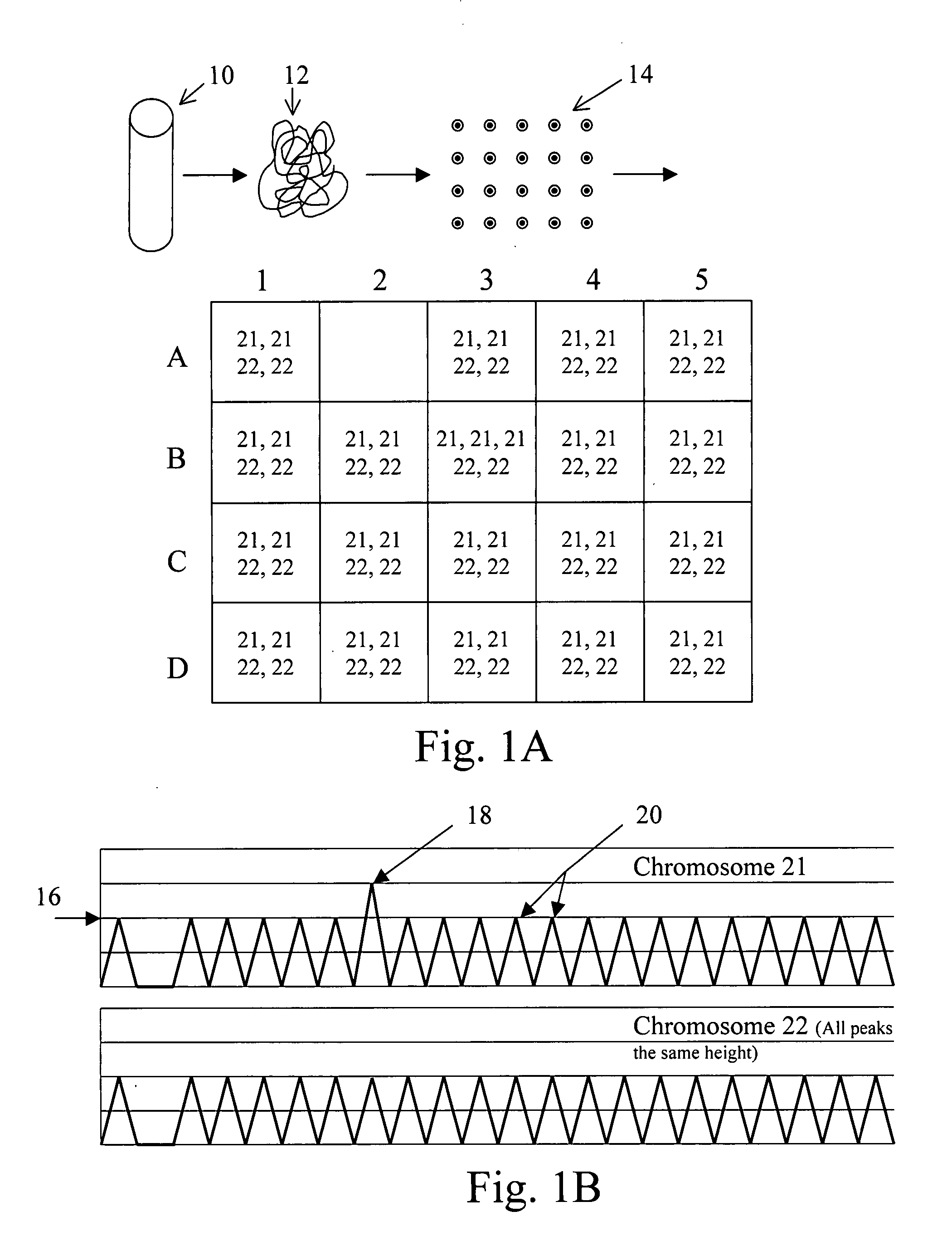

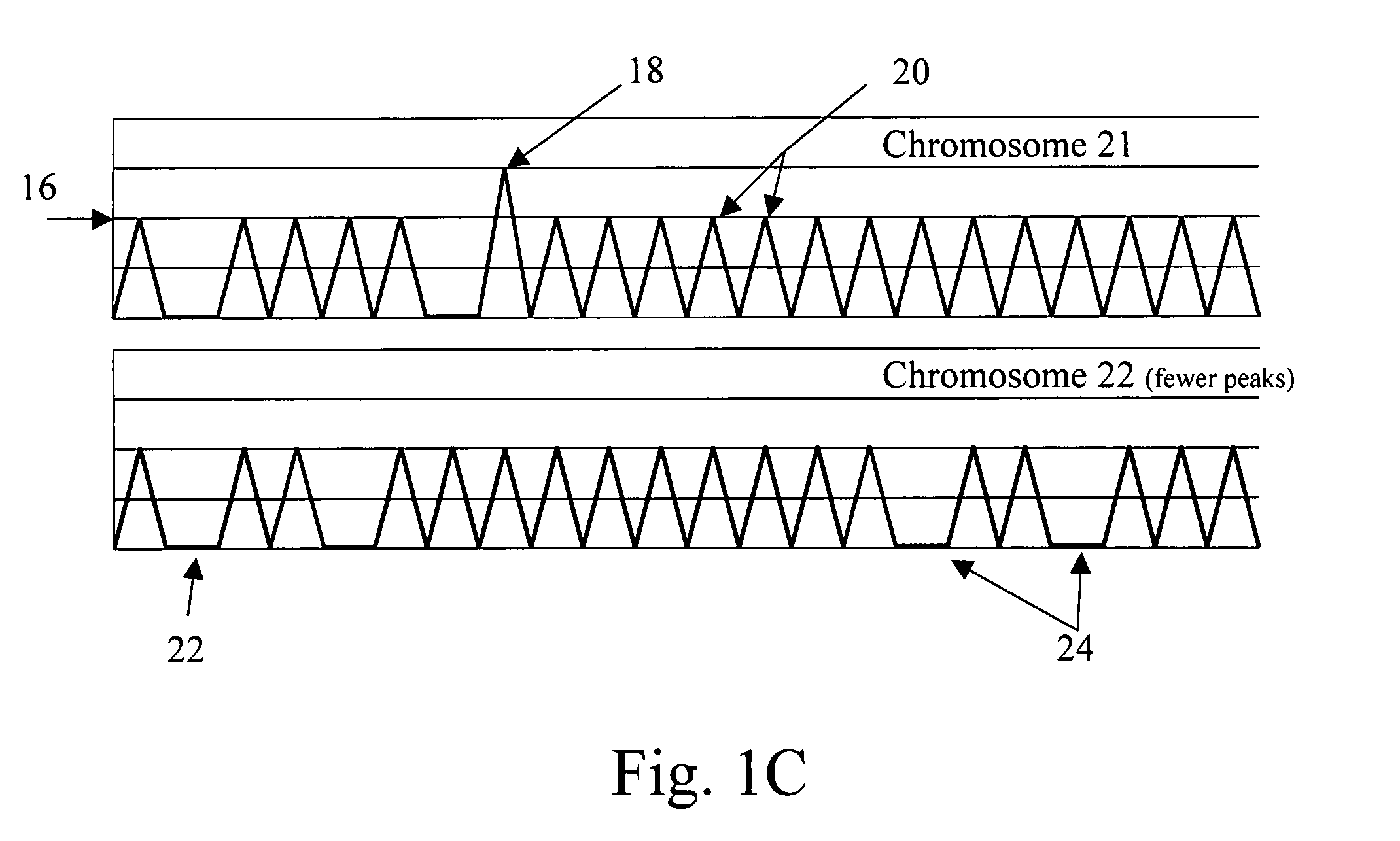

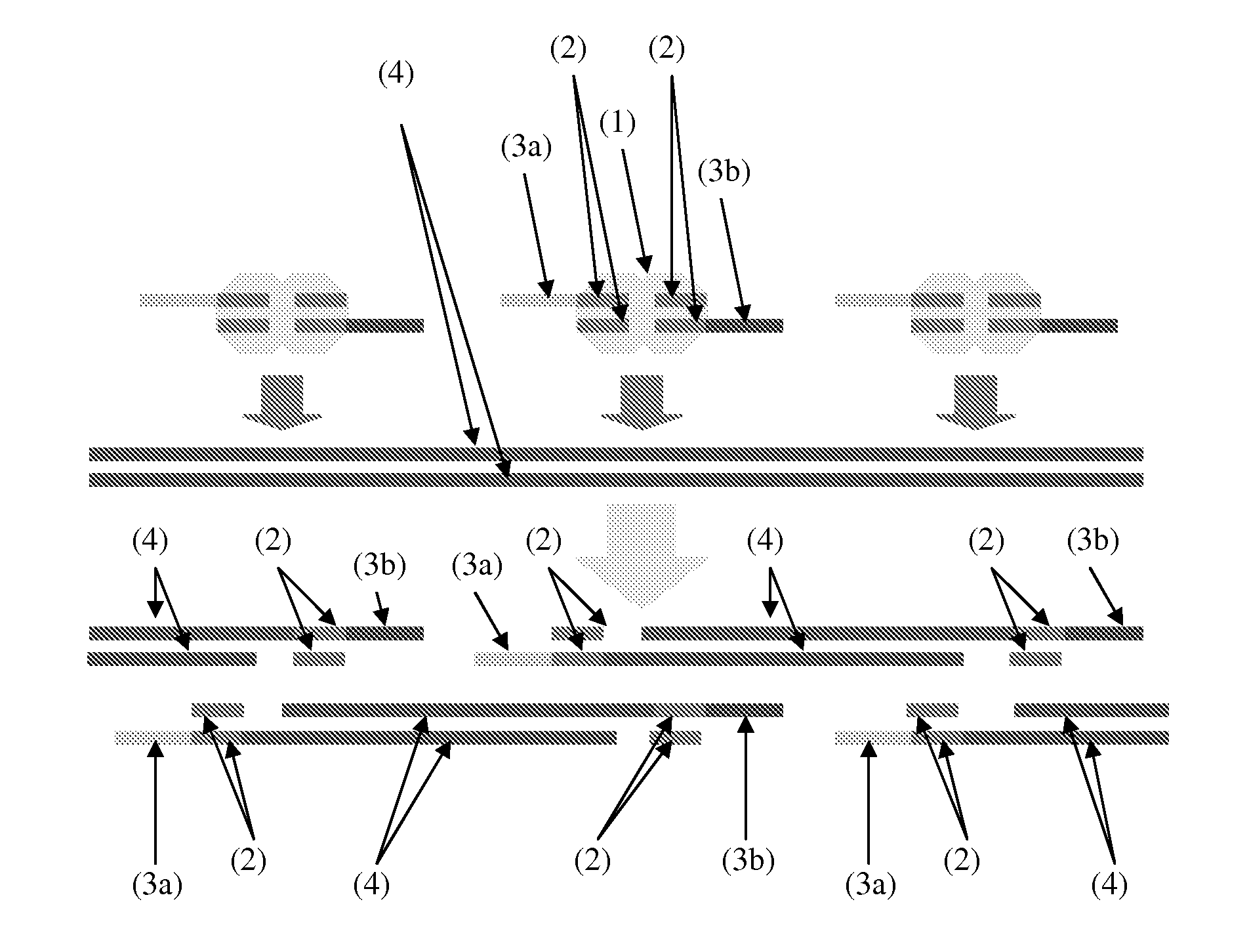

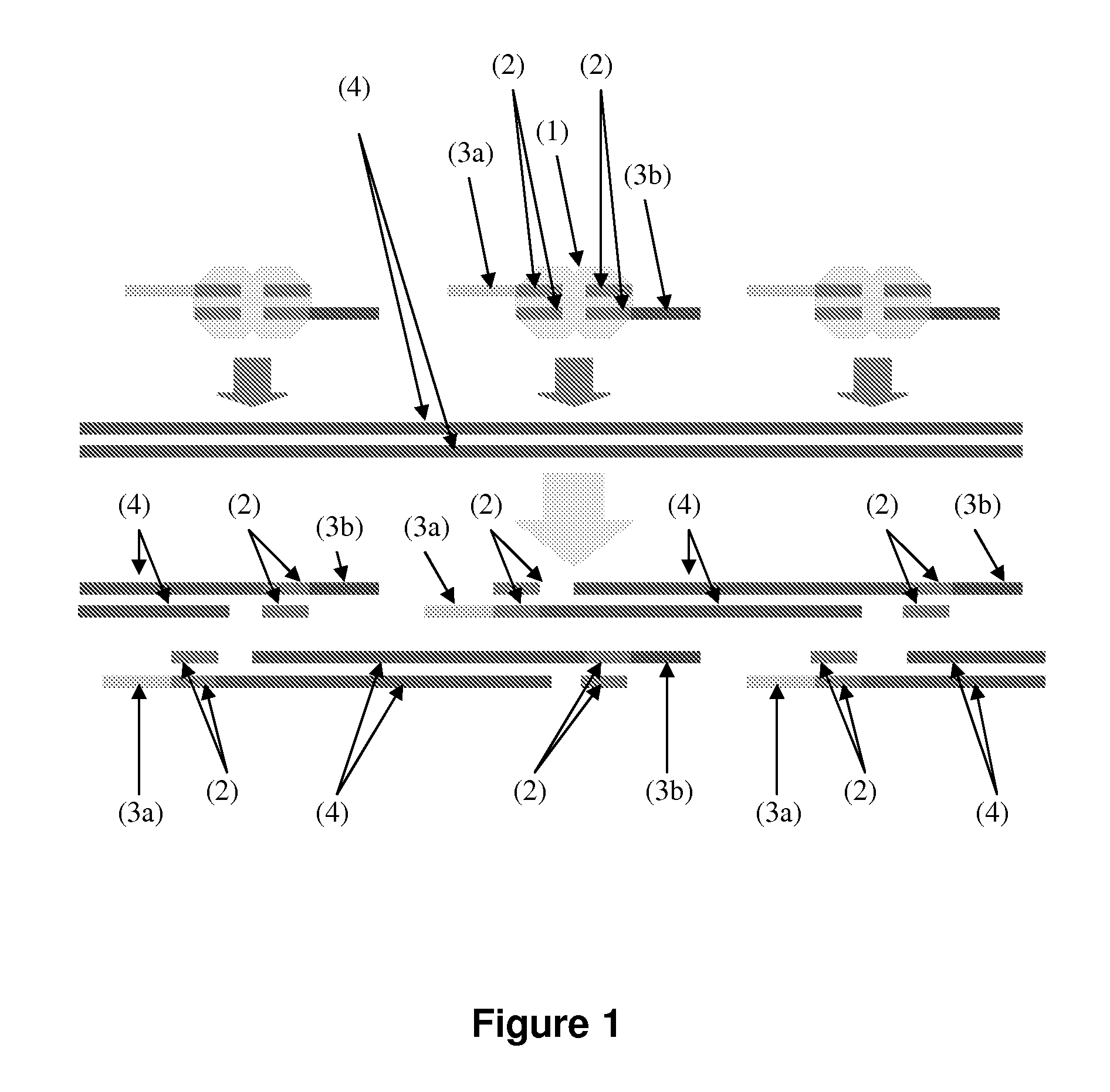

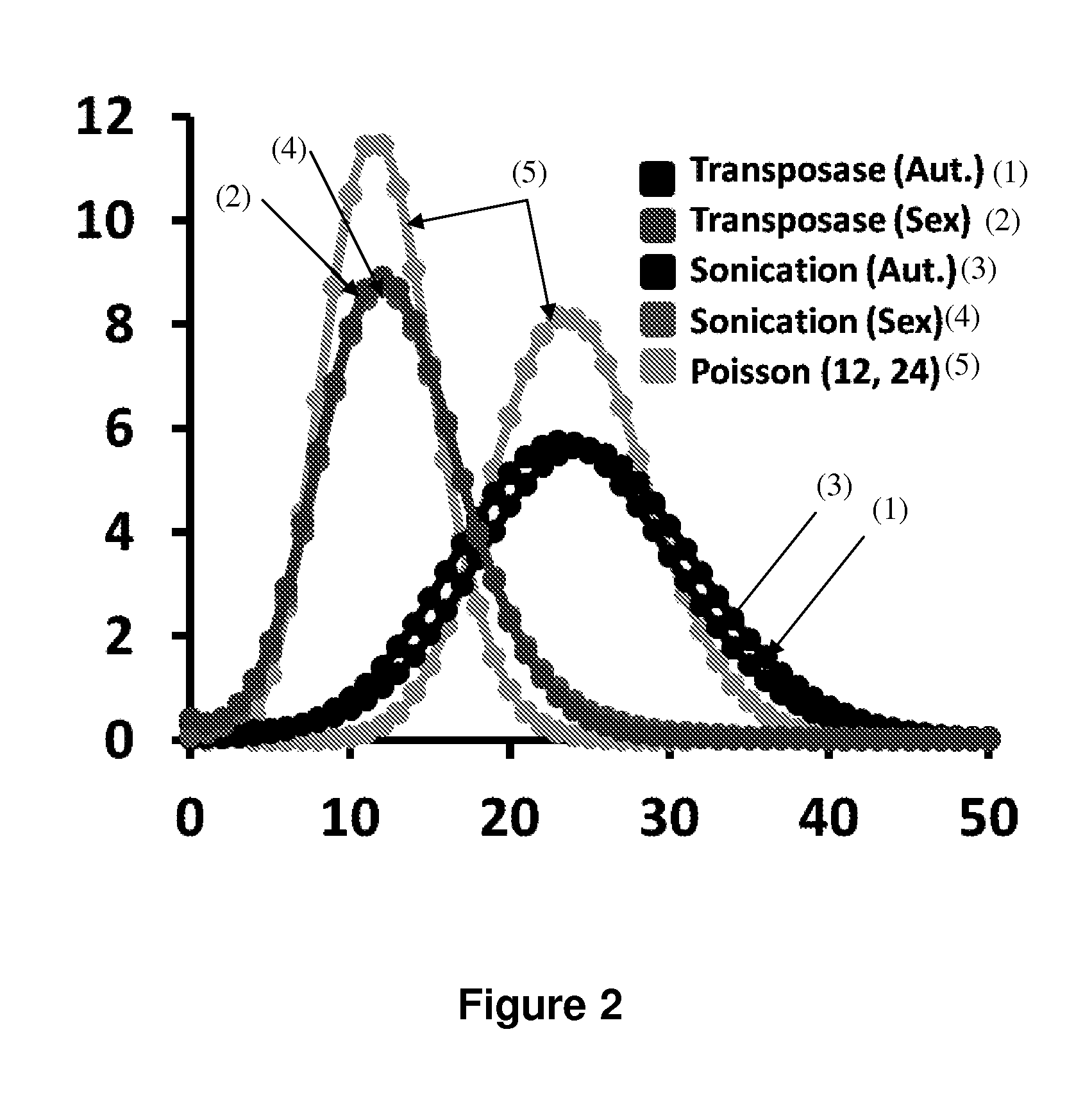

The present methods are exemplified by a process in which maternal blood containing fetal DNA is diluted to a nominal value of approximately 0.5 genome equivalent of DNA per reaction sample. Digital PCR is then be used to detect aneuploidy, such as the trisomy that causes Down Syndrome. Since aneuploidies do not present a mutational change in sequence, and are merely a change in the number of chromosomes, it has not been possible to detect them in a fetus without resorting to invasive techniques such as amniocentesis or chorionic villi sampling. Digital amplification allows the detection of aneuploidy using massively parallel amplification and detection methods, examining, e.g., 10,000 genome equivalents.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

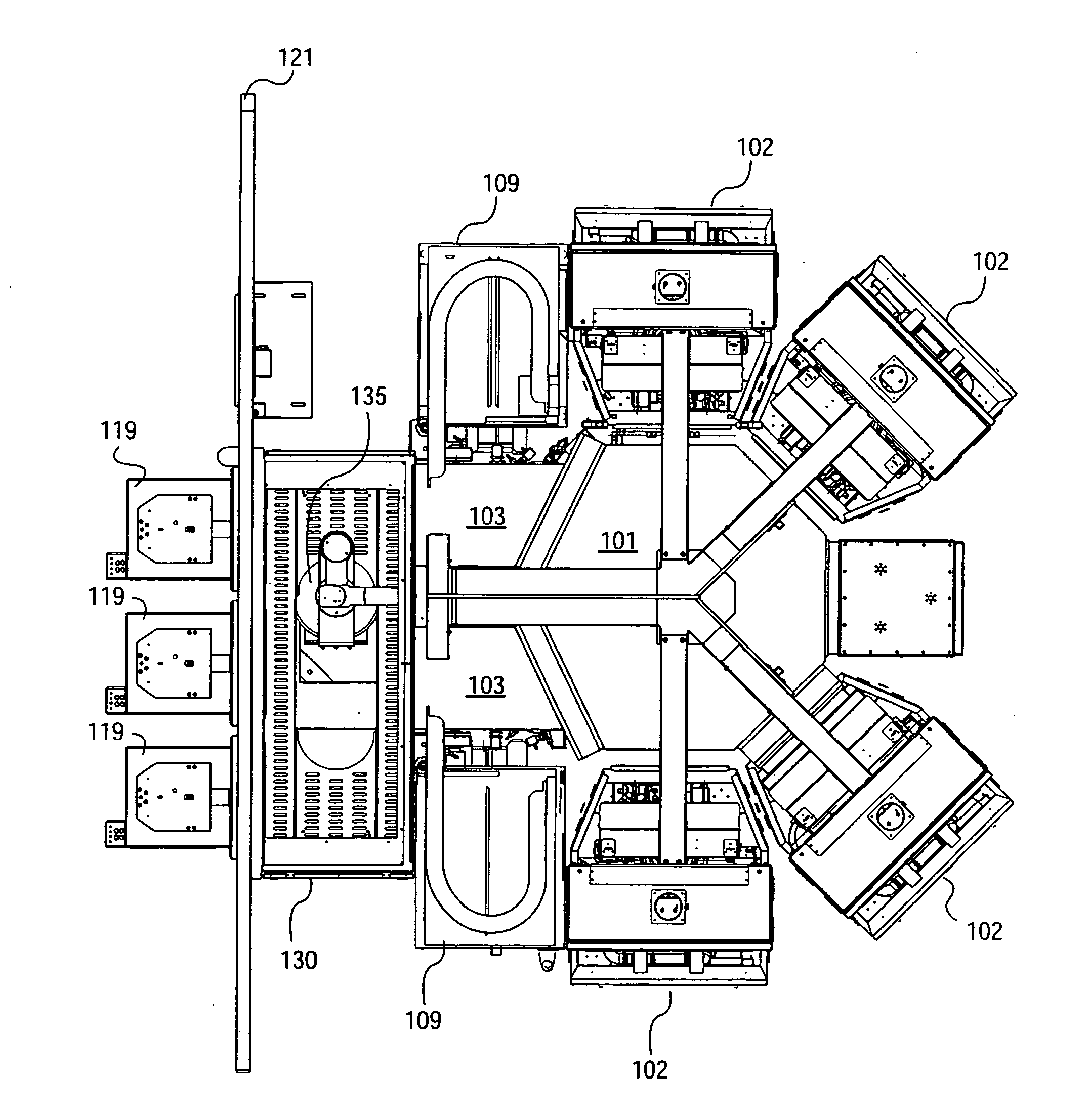

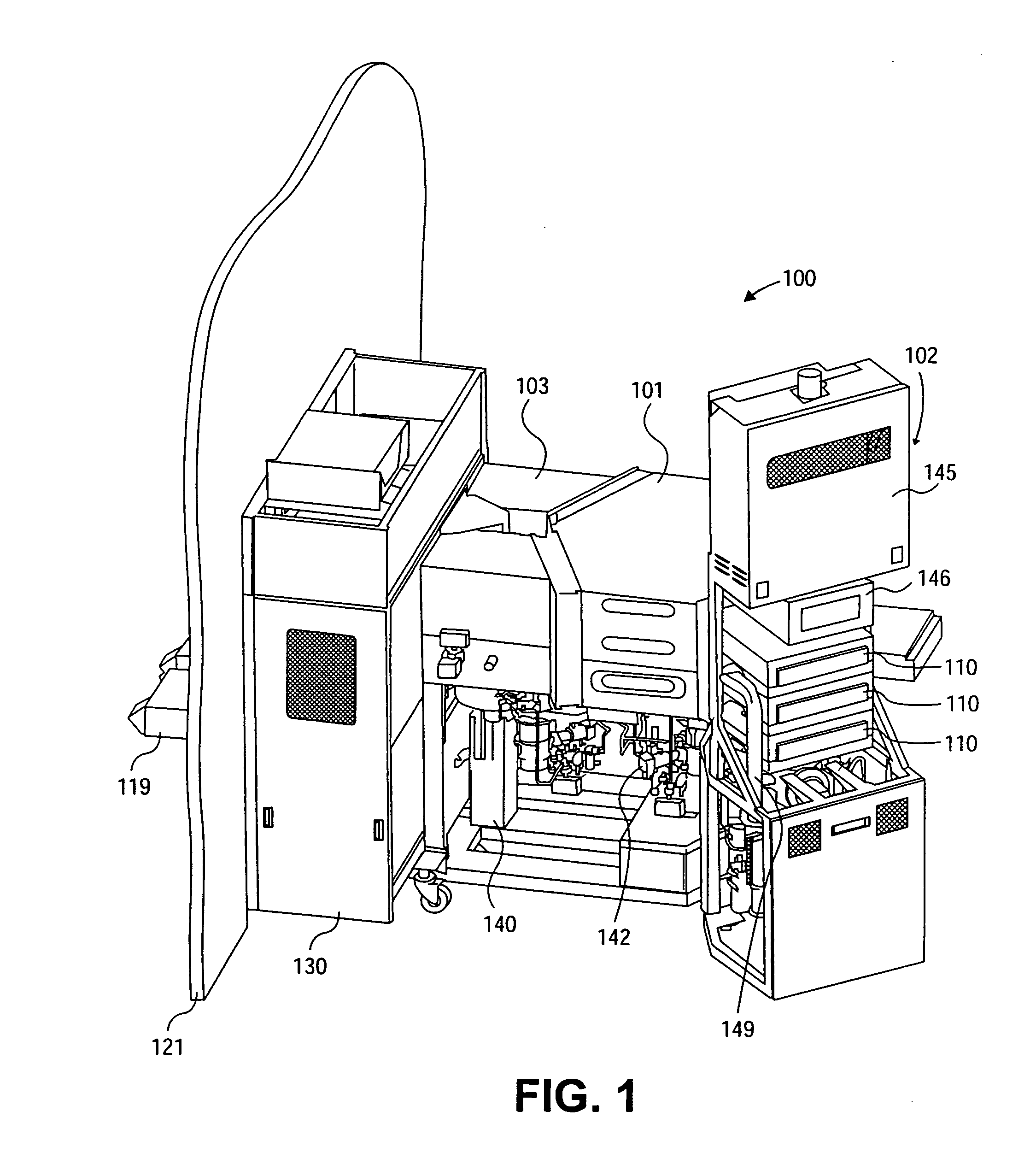

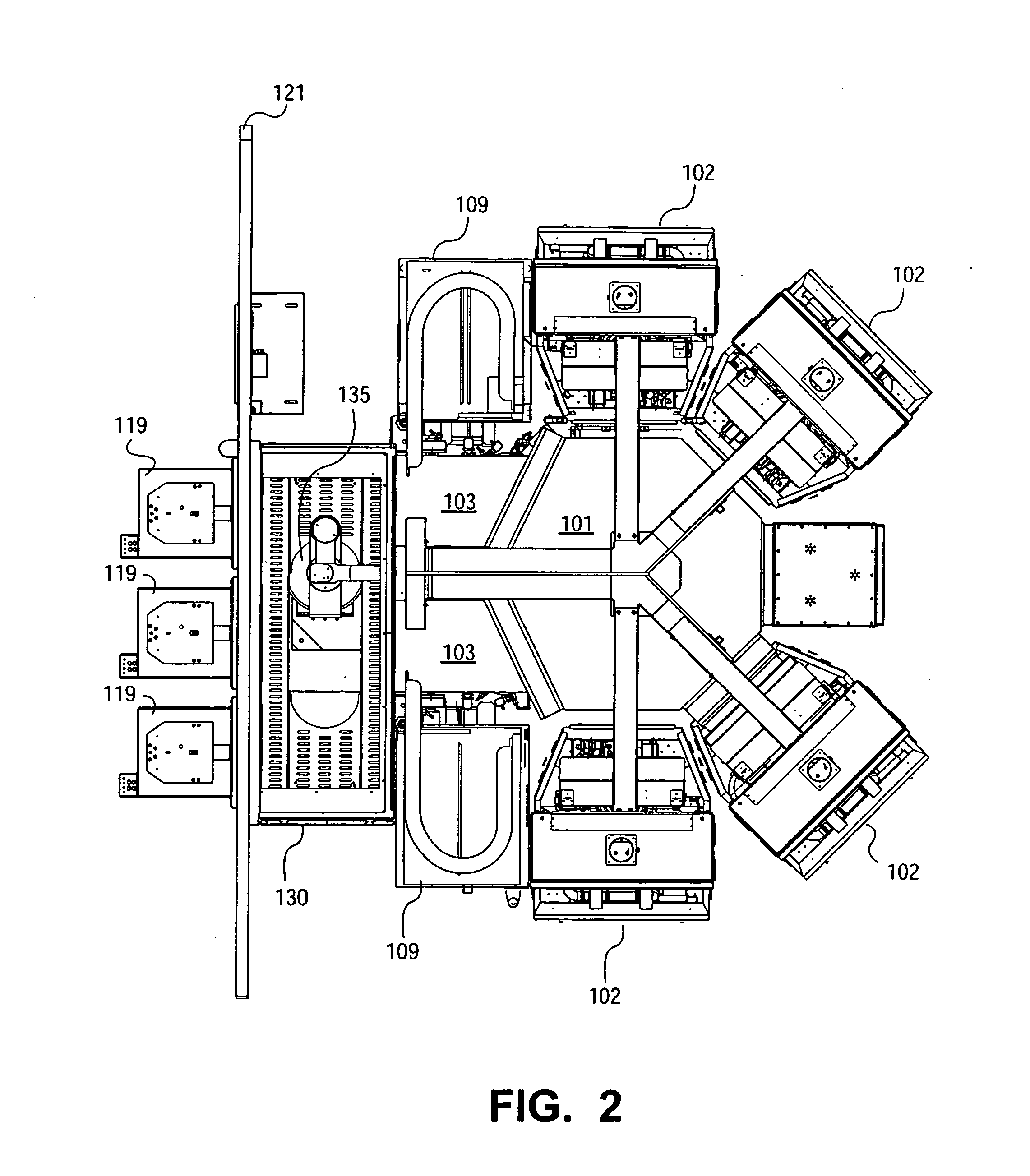

Massively parallel atomic layer deposition/chemical vapor deposition system

InactiveUS20050274323A1Improve generally axi-symmetric gas flowMinimizing vertical heightChemical vapor deposition coatingMassively parallelGas phase

A method and apparatus for the use of individual vertically stacked ALD or CVD reactors. Individual reactors are independently operable and maintainable. The gas inlet and output are vertically configured with respect to the reactor chamber for generally axi-symmetric process control. The chamber design is modular in which cover and base plates forming the reactor have improved flow design.

Owner:AIXTRON INC

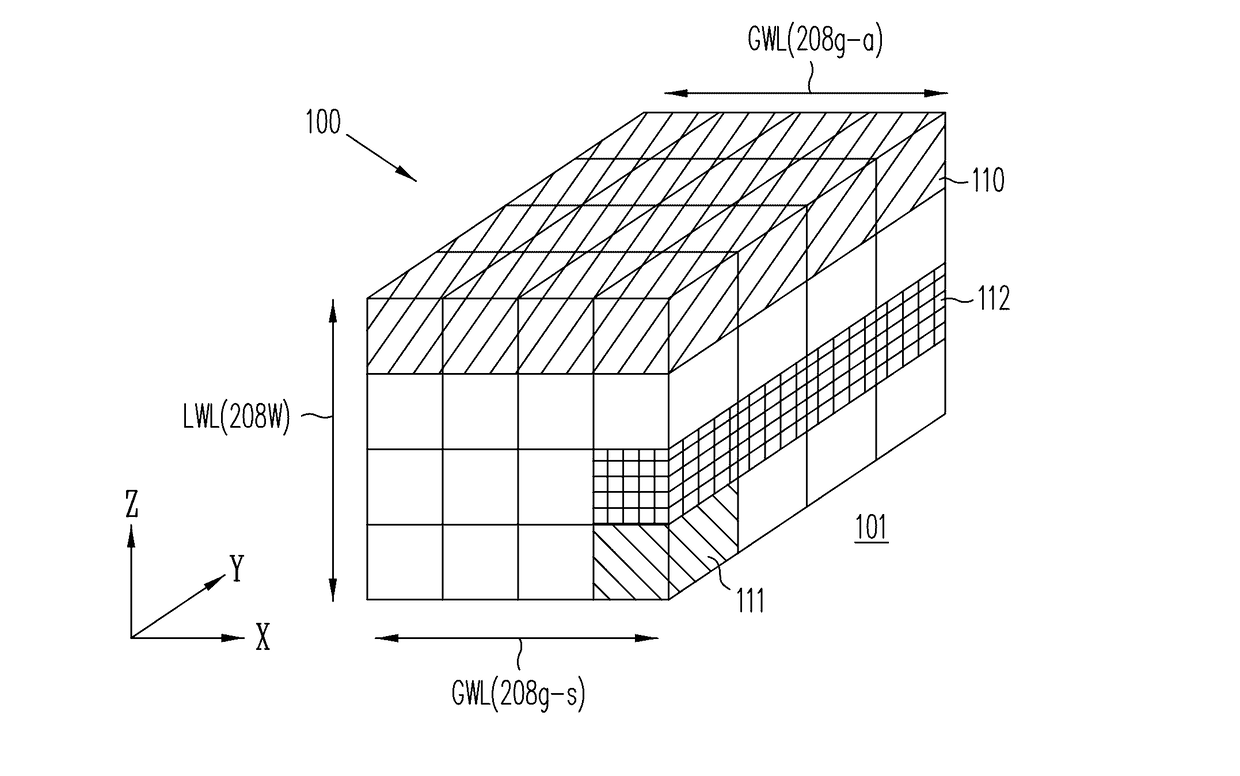

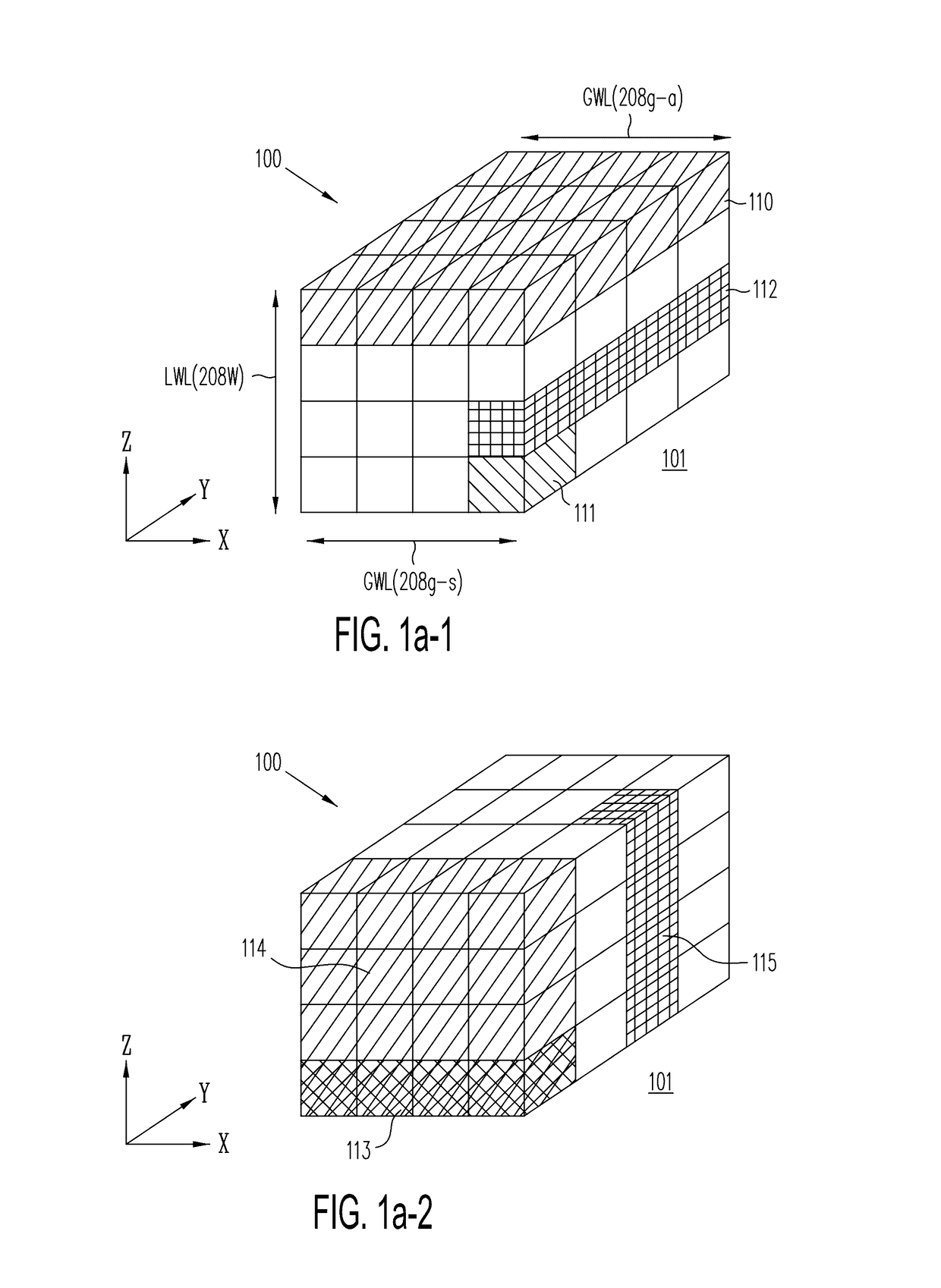

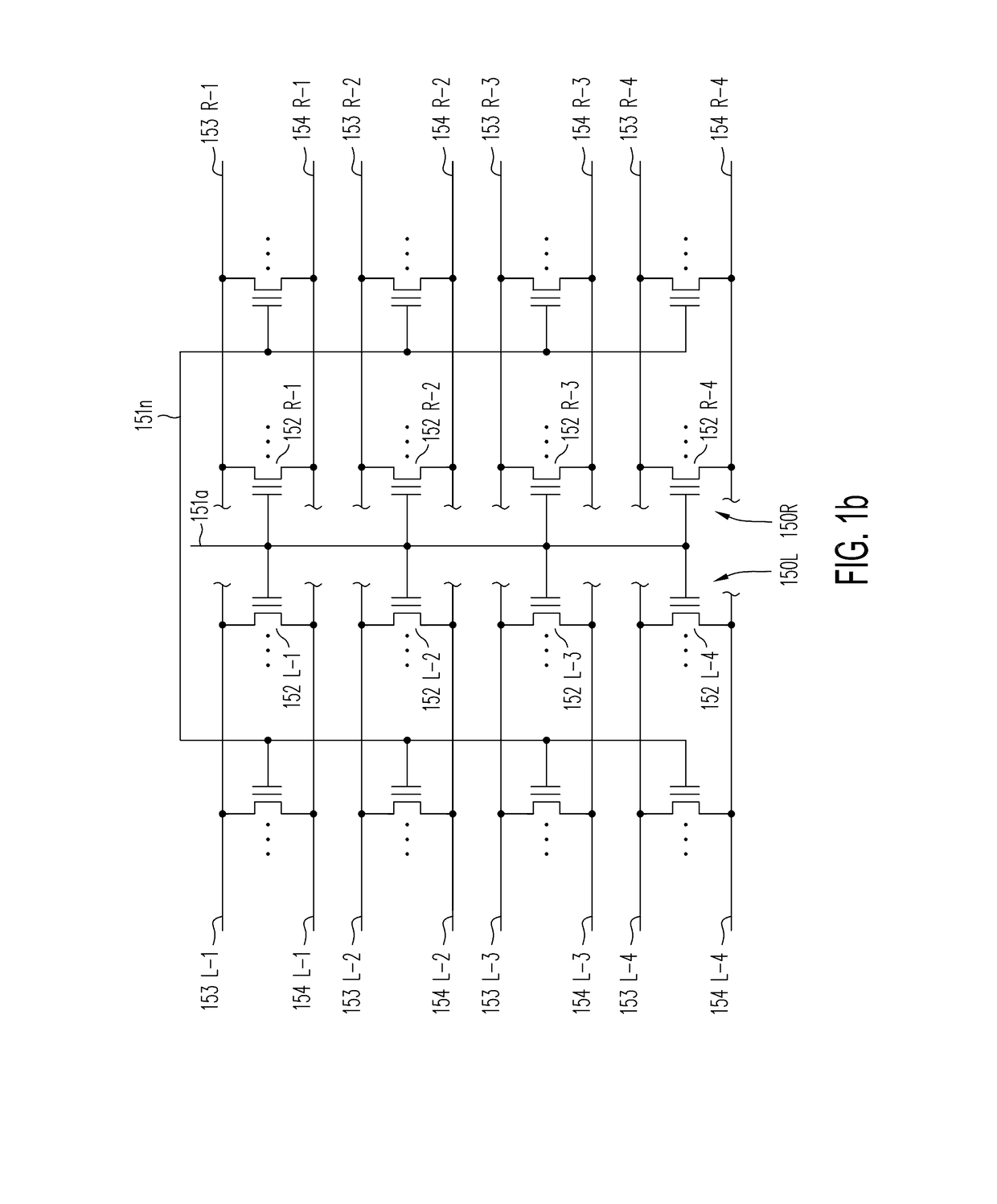

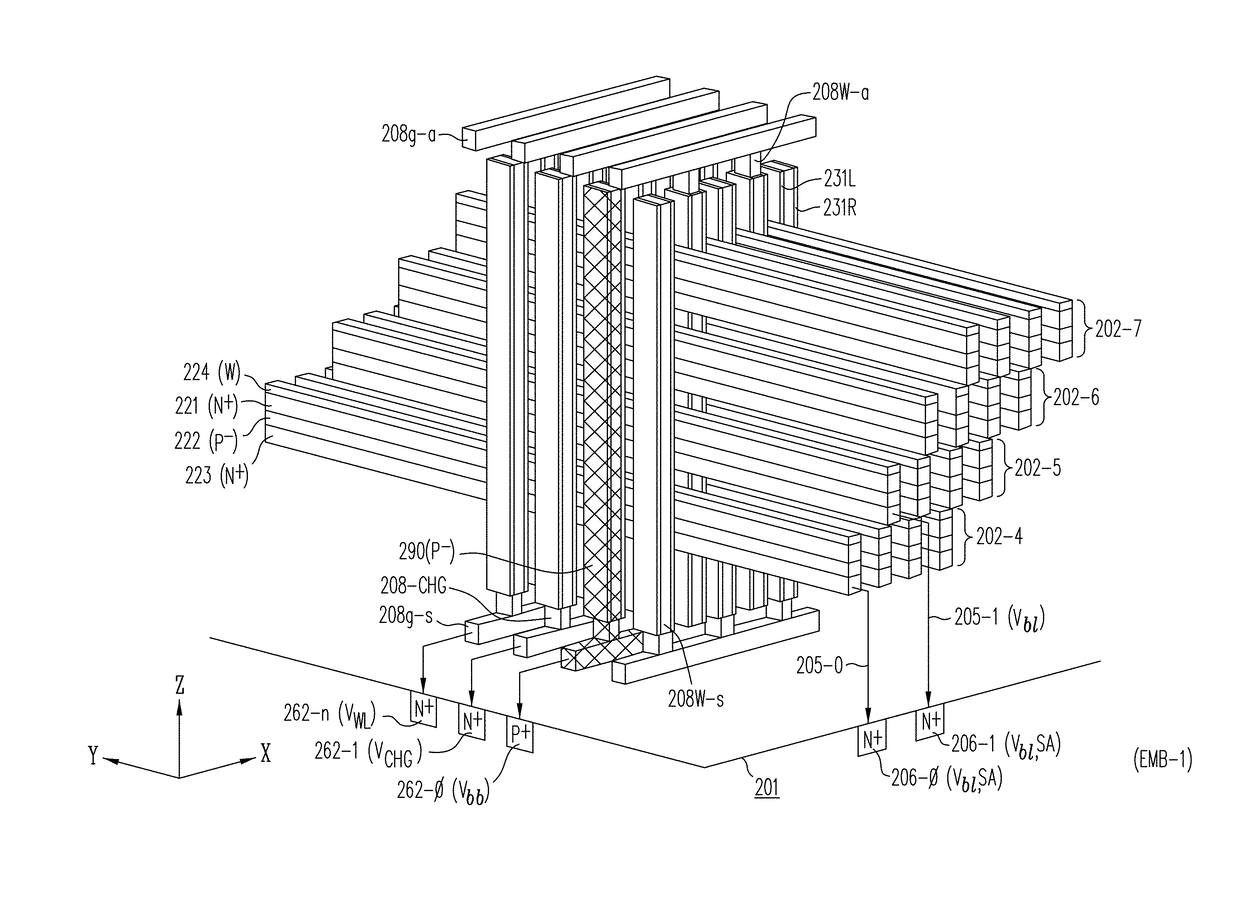

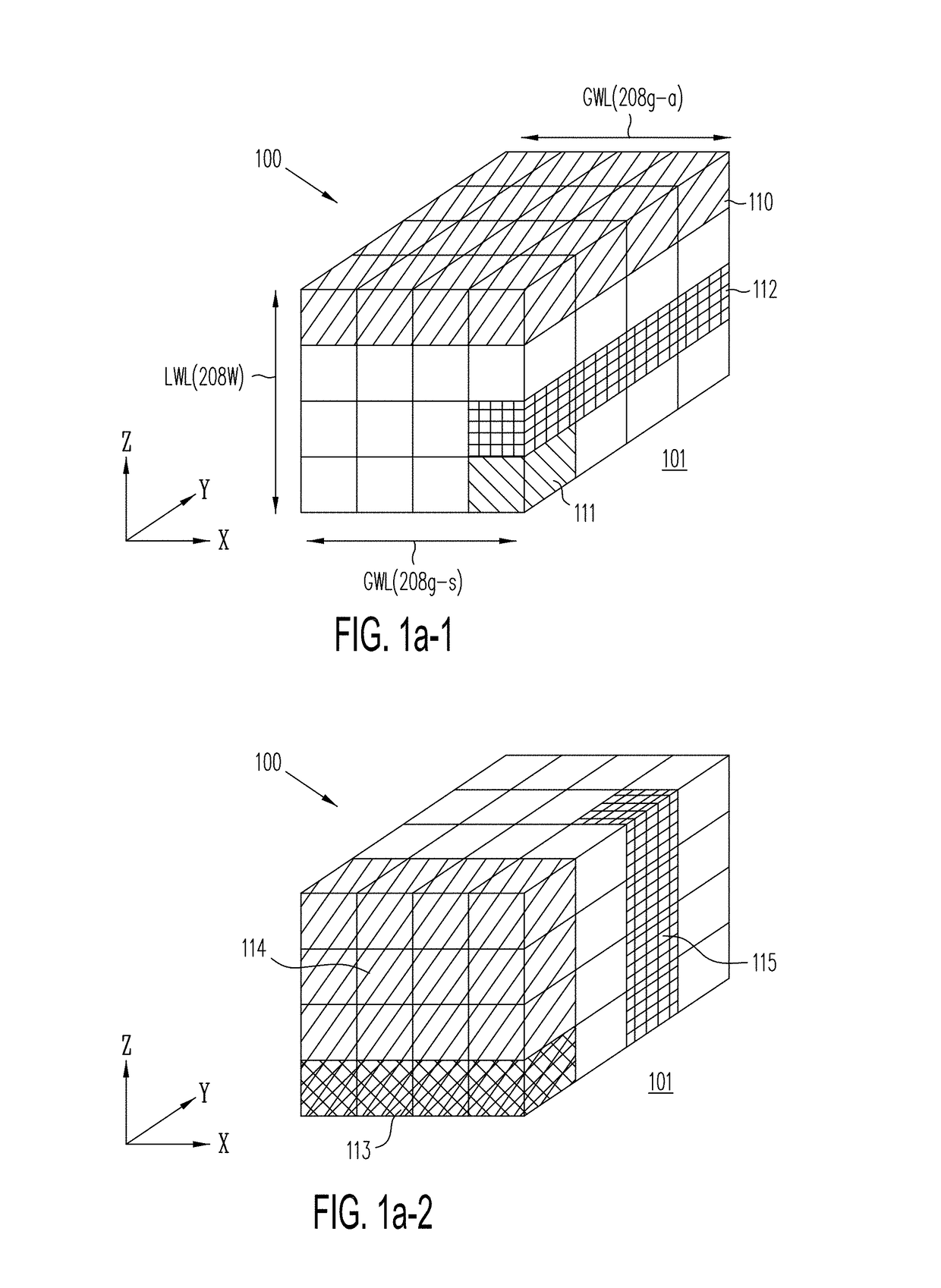

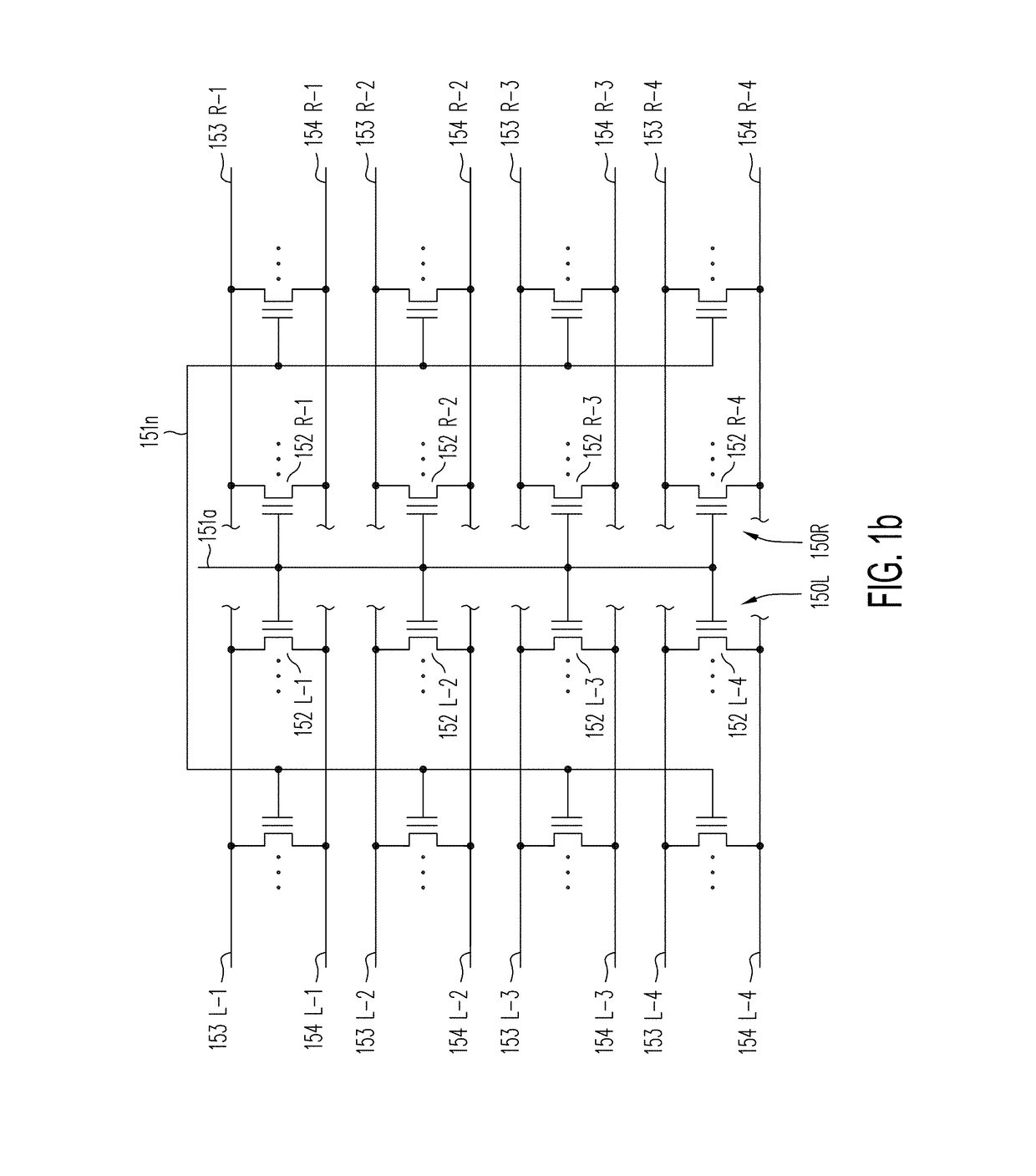

Capacitive-coupled non-volatile thin-film transistor strings in three dimensional arrays

ActiveUS20170092371A1Improve storage densityLower read latencyTransistorSolid-state devicesCapacitive couplingParasitic capacitance

Multi-gate NOR flash thin-film transistor (TFT) string arrays are organized as three dimensional stacks of active strips. Each active strip includes a shared source sublayer and a shared drain sublayer that is connected to substrate circuits. Data storage in the active strip is provided by charge-storage elements between the active strip and a multiplicity of control gates provided by adjacent local word-lines. The parasitic capacitance of each active strip is used to eliminate hard-wire ground connection to the shared source making it a semi-floating, or virtual source. Pre-charge voltages temporarily supplied from the substrate through a single port per active strip provide the appropriate voltages on the source and drain required during read, program, program-inhibit and erase operations. TFTs on multiple active strips can be pre-charged separately and then read, programmed or erased together in a massively parallel operation.

Owner:SUNRISE MEMORY CORP

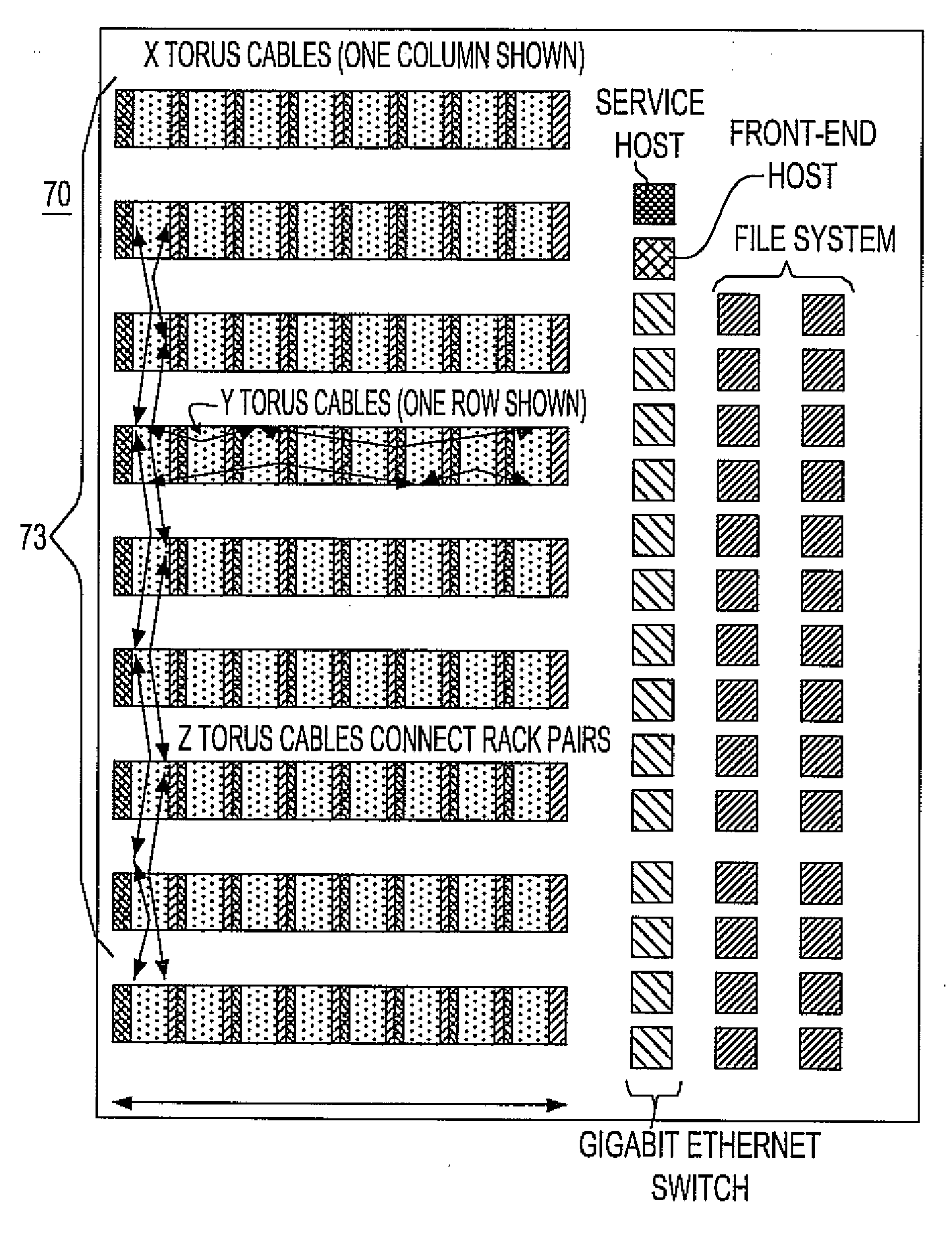

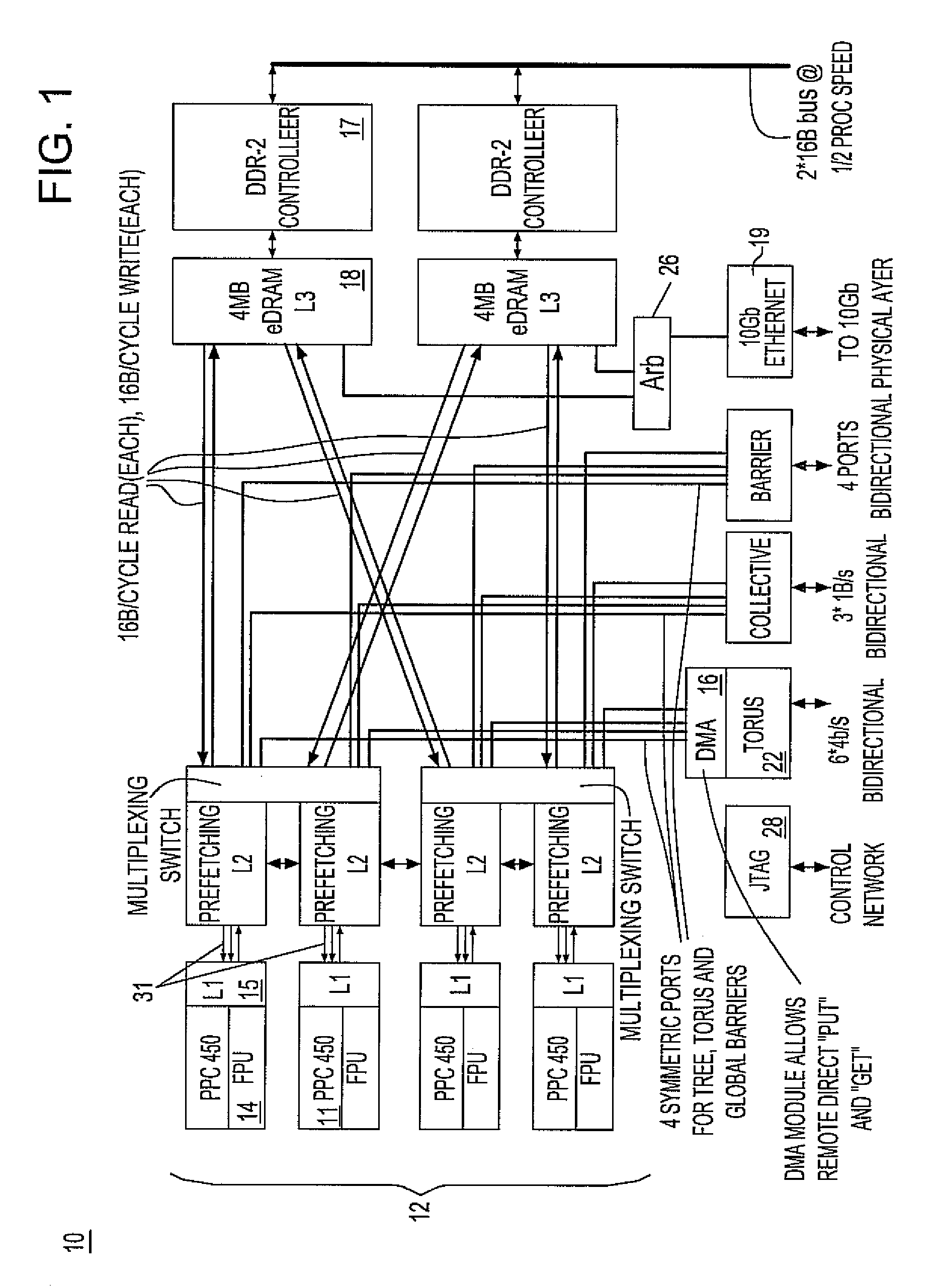

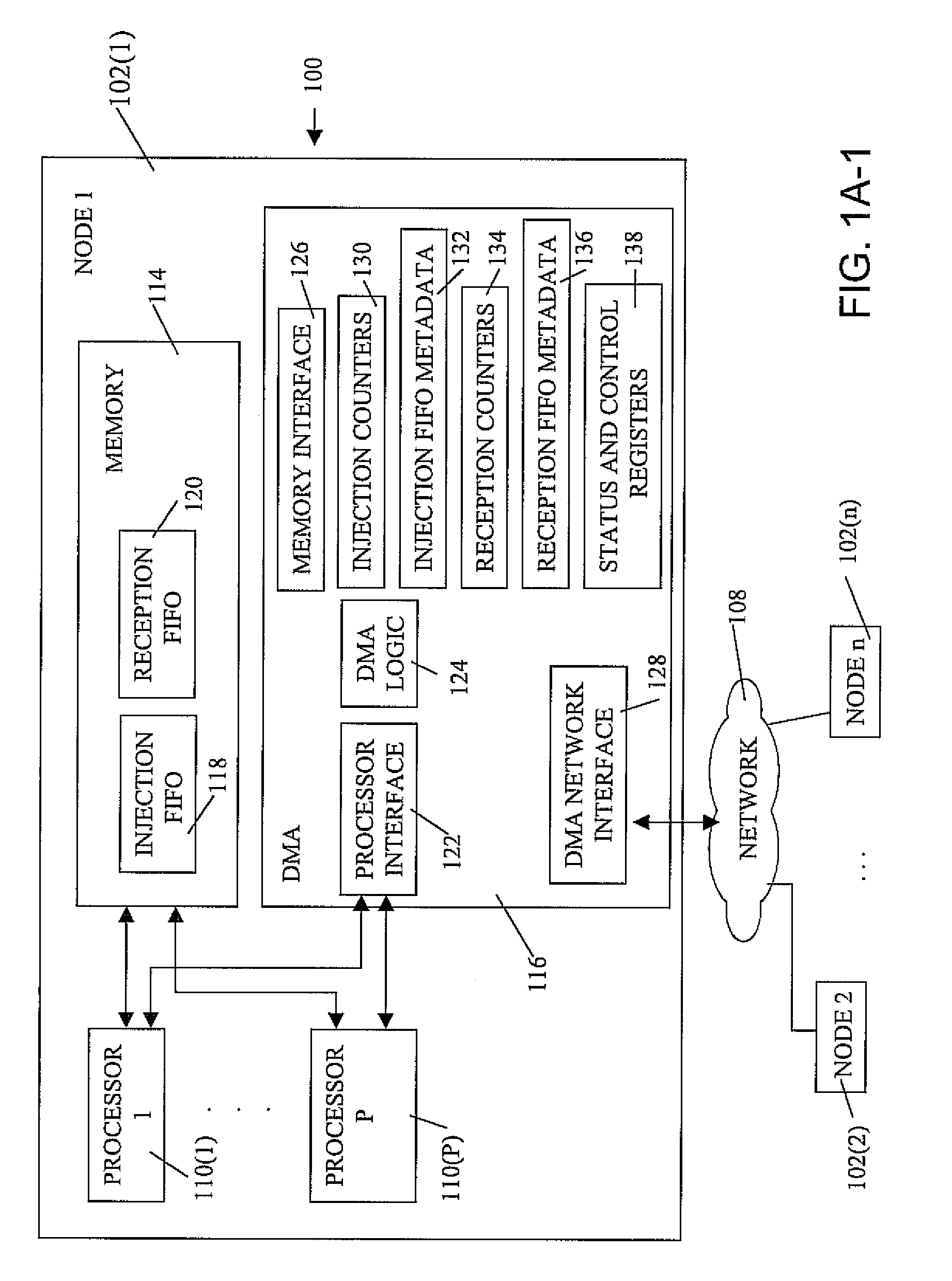

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

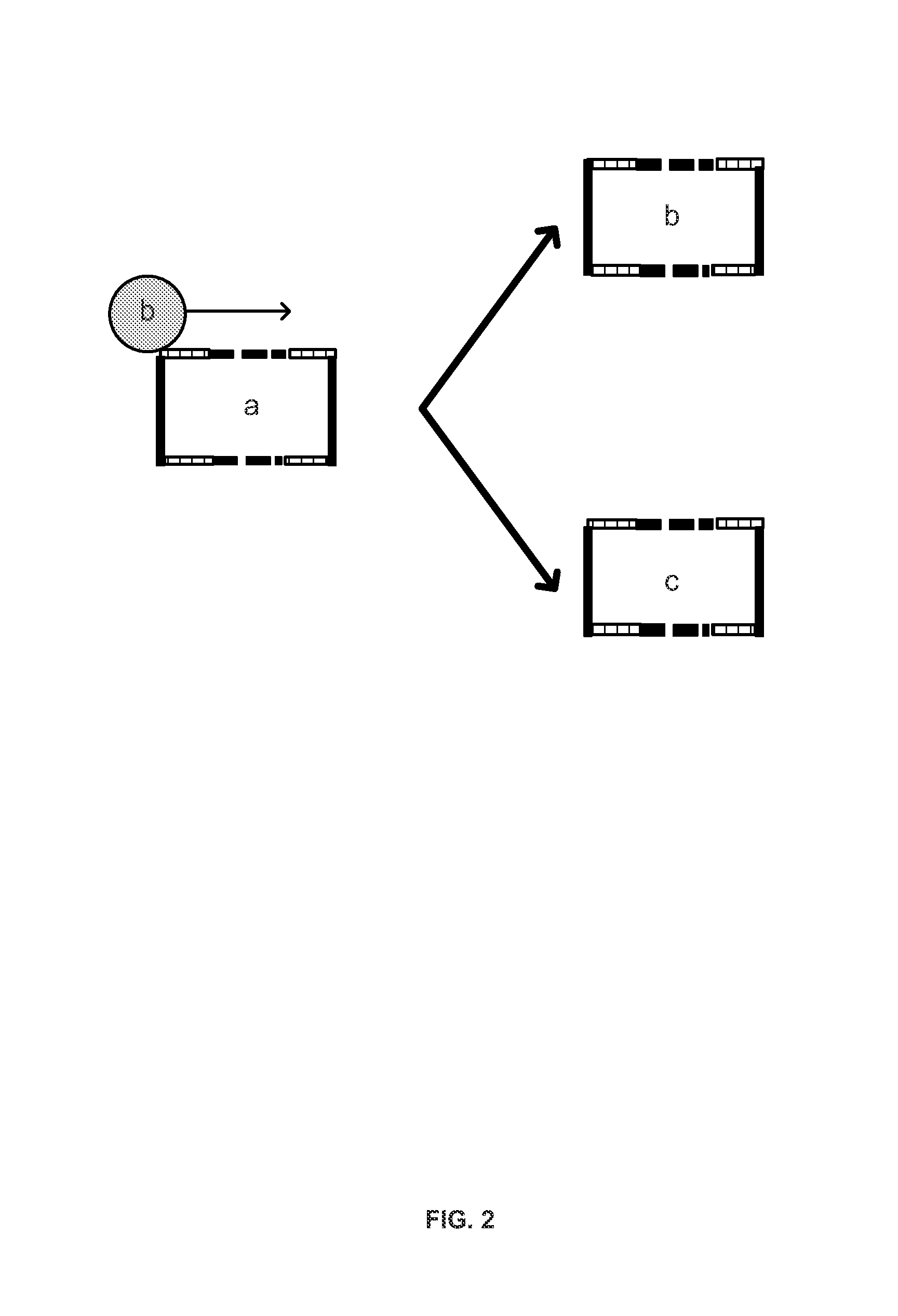

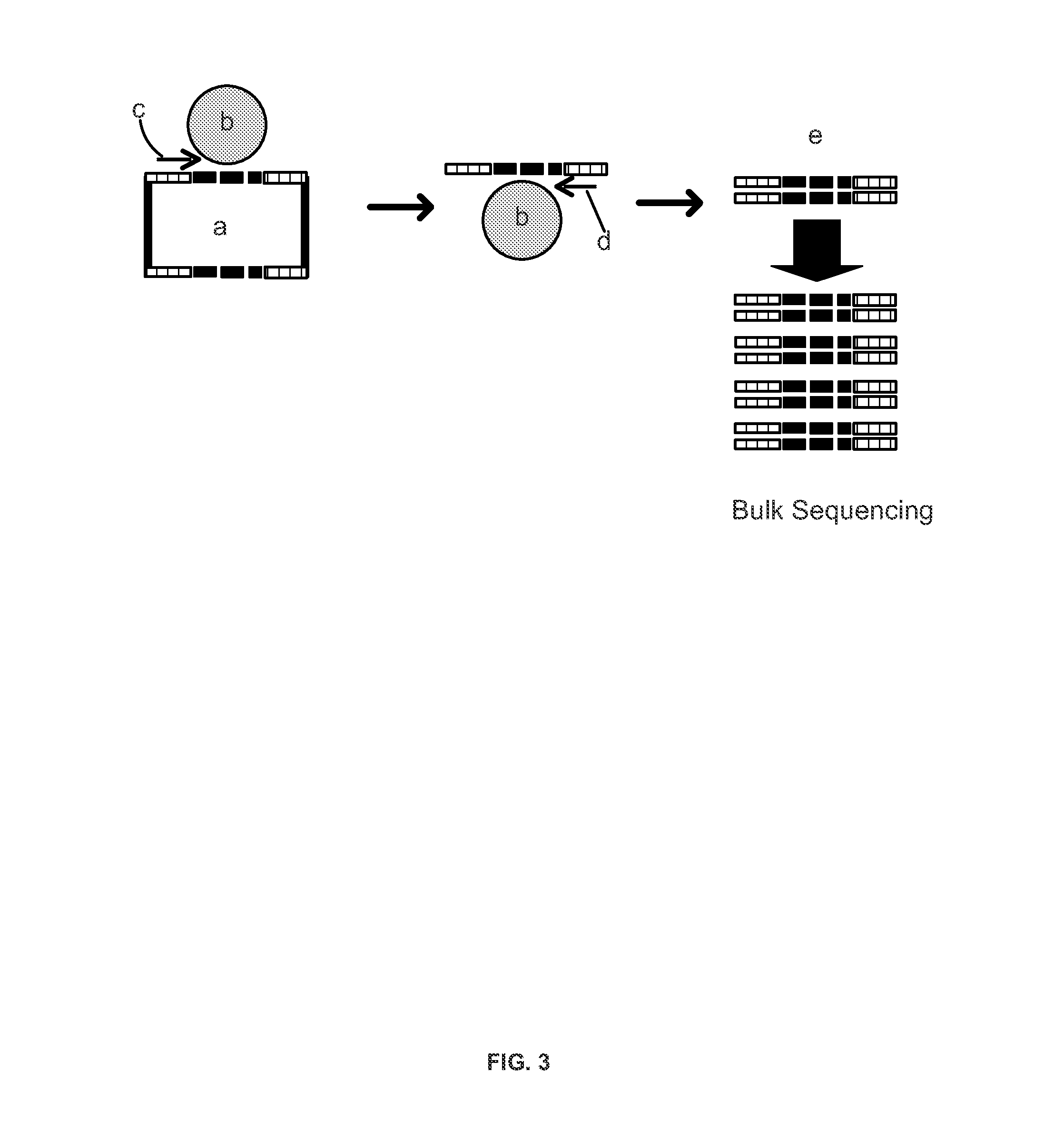

System and Methods for Massively Parallel Analysis of Nucleic Acids in Single Cells

InactiveUS20140057799A1Microbiological testing/measurementLibrary screeningGenetic analysisPopulation

Methods and systems are provided for massively parallel genetic analysis of single cells in emulsion droplets or reaction containers. Genetic loci of interest are targeted in a single cell using a set of probes, and a fusion complex is formed by molecular linkage and amplification techniques. Methods are provided for high-throughput, massively parallel analysis of the fusion complex in a single cell in a population of at least 10,000 cells. Also provided are methods for tracing genetic information back to a cell using barcode sequences.

Owner:GIGAGEN

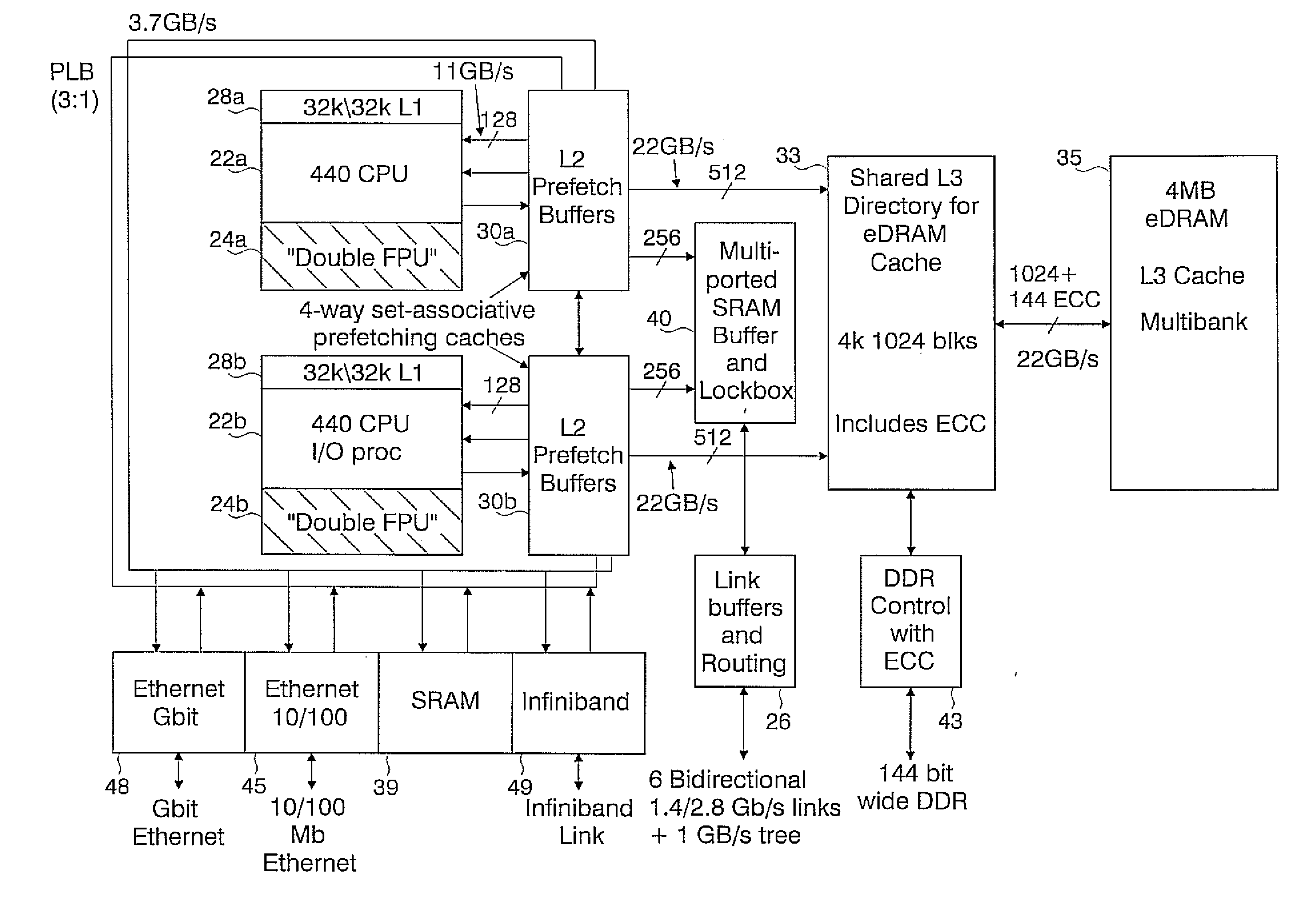

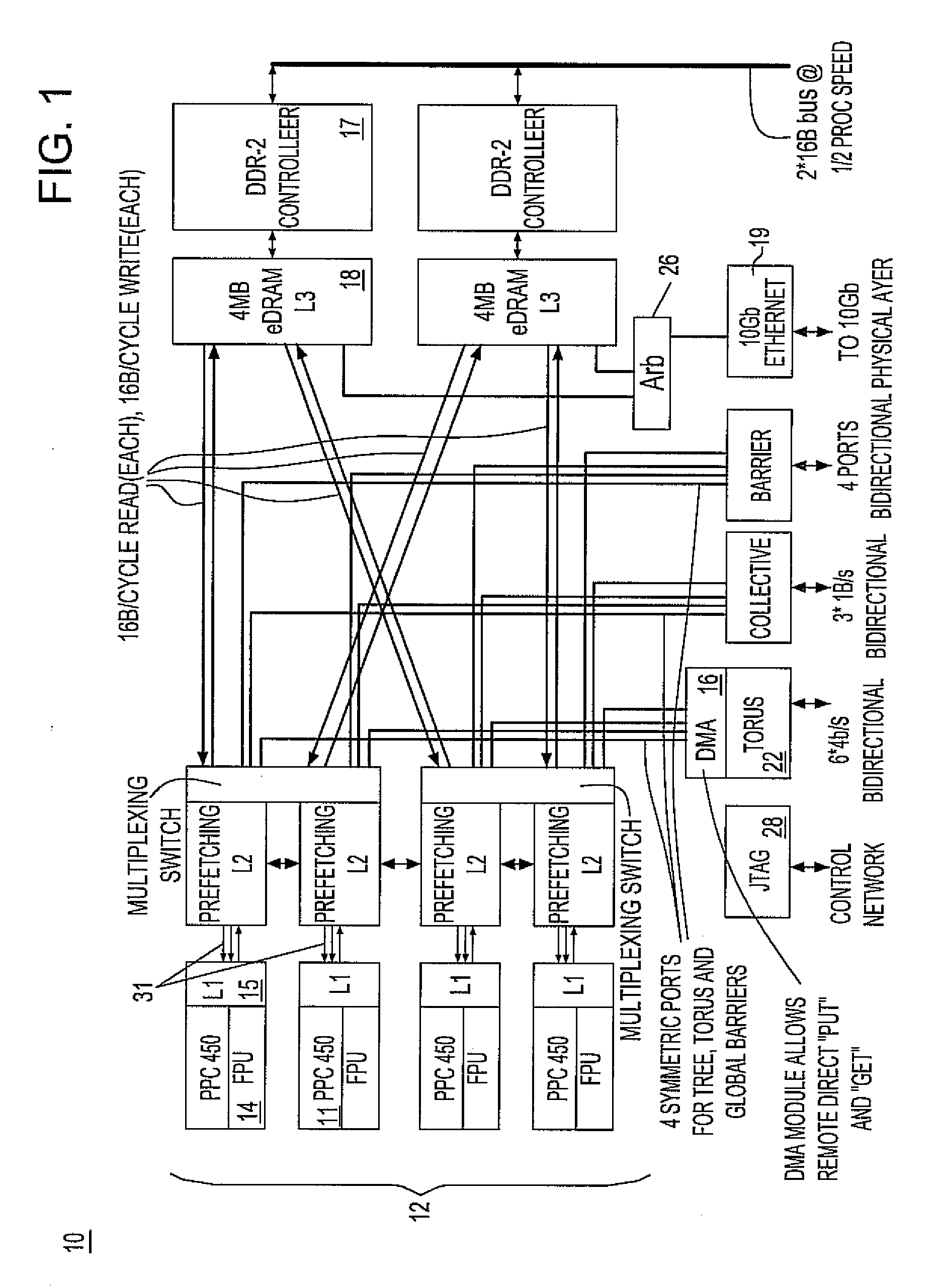

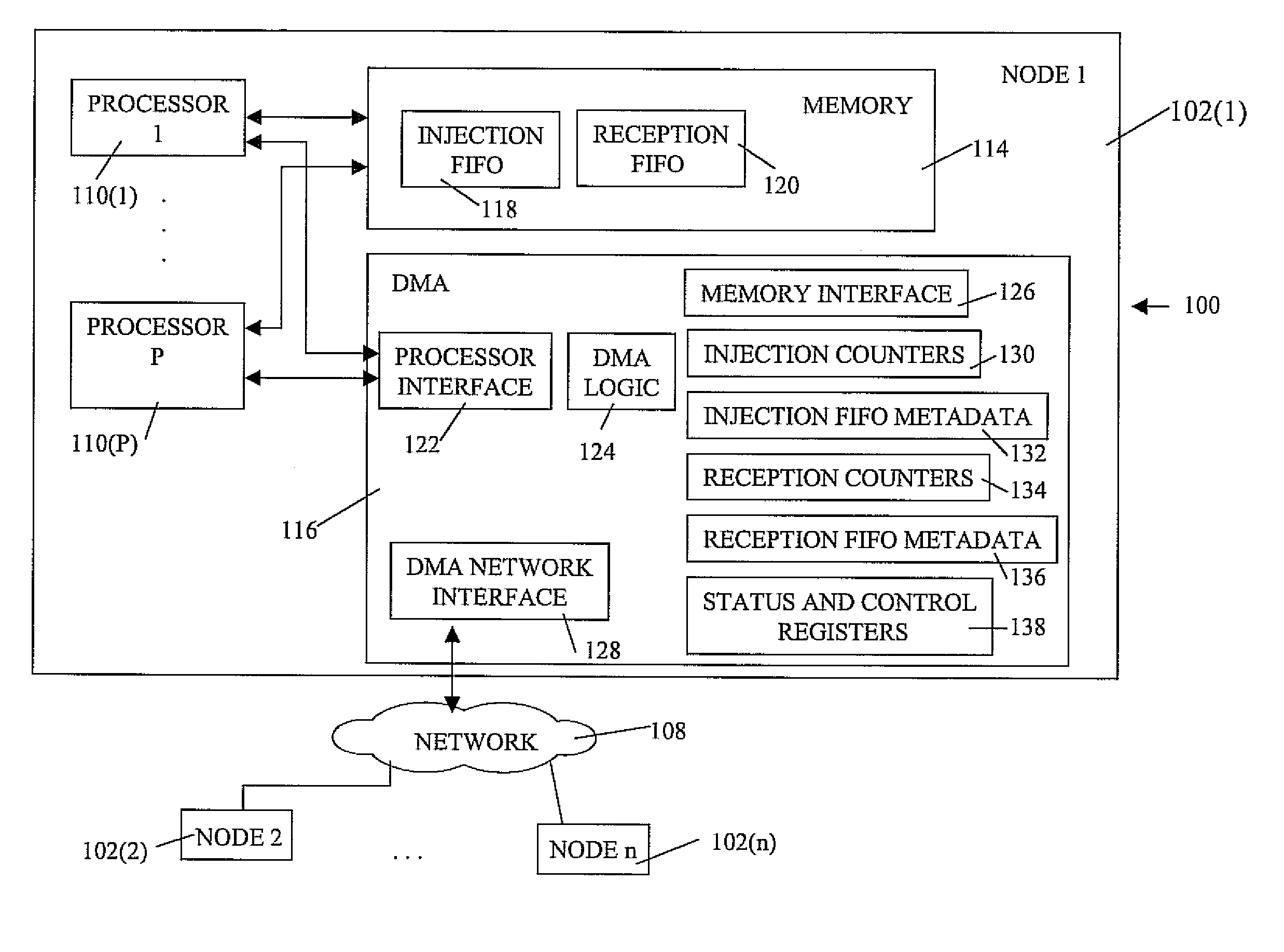

Ultrascalable petaflop parallel supercomputer

InactiveUS20090006808A1Massive level of scalabilityUnprecedented level of scalabilityProgram control using stored programsArchitecture with multiple processing unitsMessage passingPacket communication

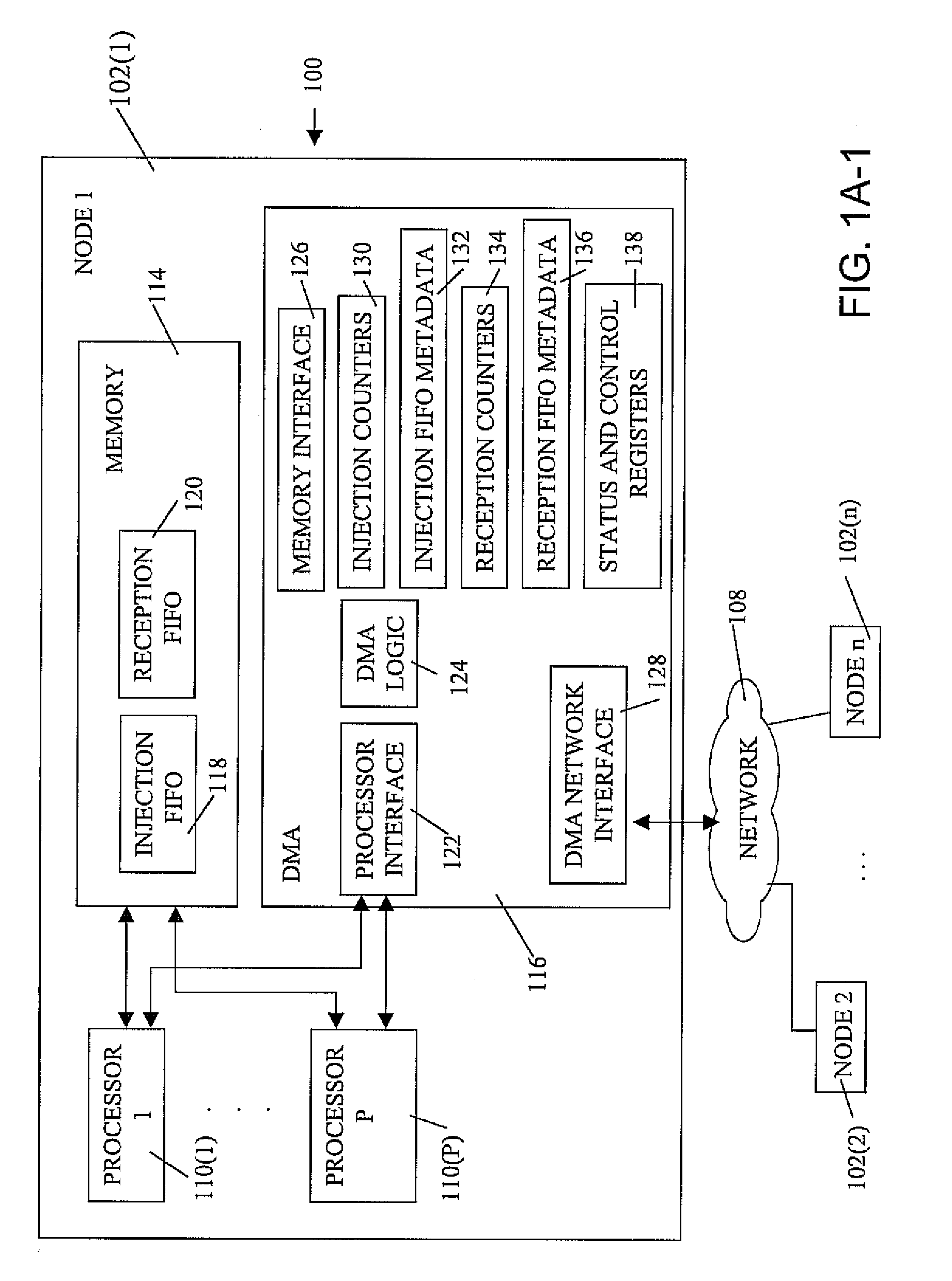

A novel massively parallel supercomputer of petaOPS-scale includes node architectures based upon System-On-a-Chip technology, where each processing node comprises a single Application Specific Integrated Circuit (ASIC) having up to four processing elements. The ASIC nodes are interconnected by multiple independent networks that optimally maximize the throughput of packet communications between nodes with minimal latency. The multiple networks may include three high-speed networks for parallel algorithm message passing including a Torus, collective network, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. Novel use of a DMA engine is provided to facilitate message passing among the nodes without the expenditure of processing resources at the node.

Owner:IBM CORP

Massively parallel contiguity mapping

ActiveUS20130203605A1Reducing sequenceLimited to sequenceLibrary member identificationChemical recyclingHuman DNA sequencingMammalian genome

Contiguity information is important to achieving high-quality de novo assembly of mammalian genomes and the haplotype-resolved resequencing of human genomes. The methods described herein pursue cost-effective, massively parallel capture of contiguity information at different scales.

Owner:UNIV OF WASHINGTON CENT FOR COMMERICIALIZATION

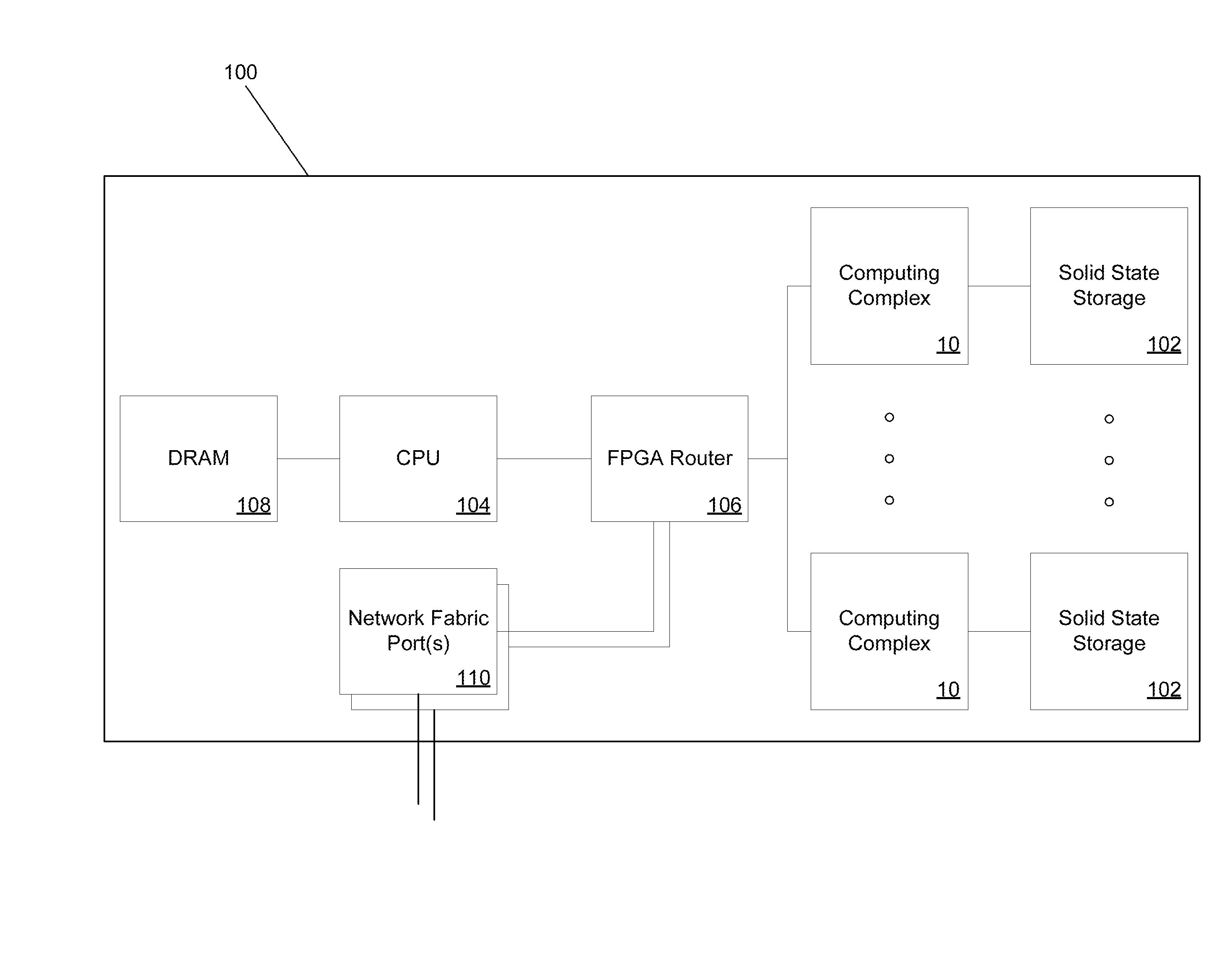

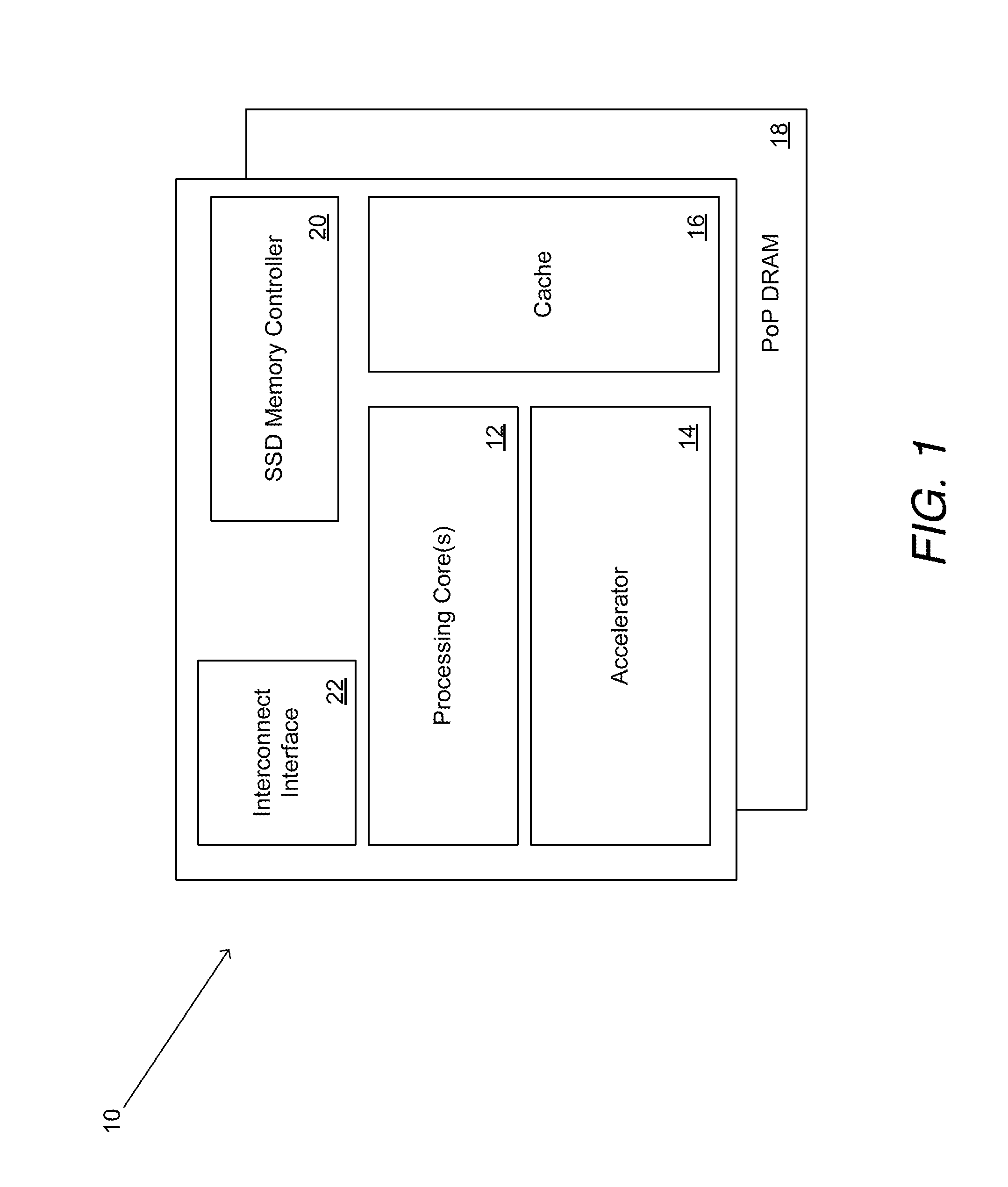

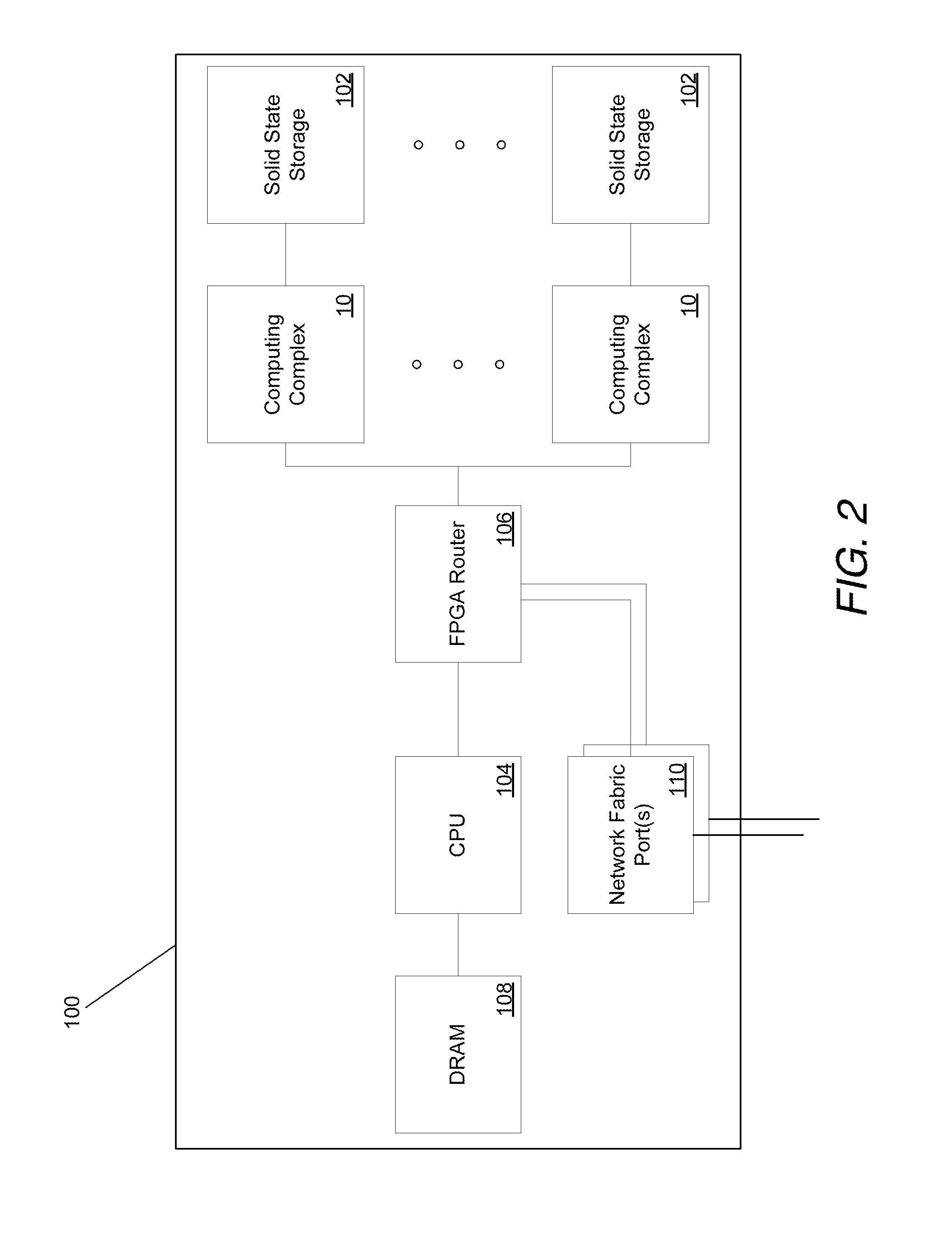

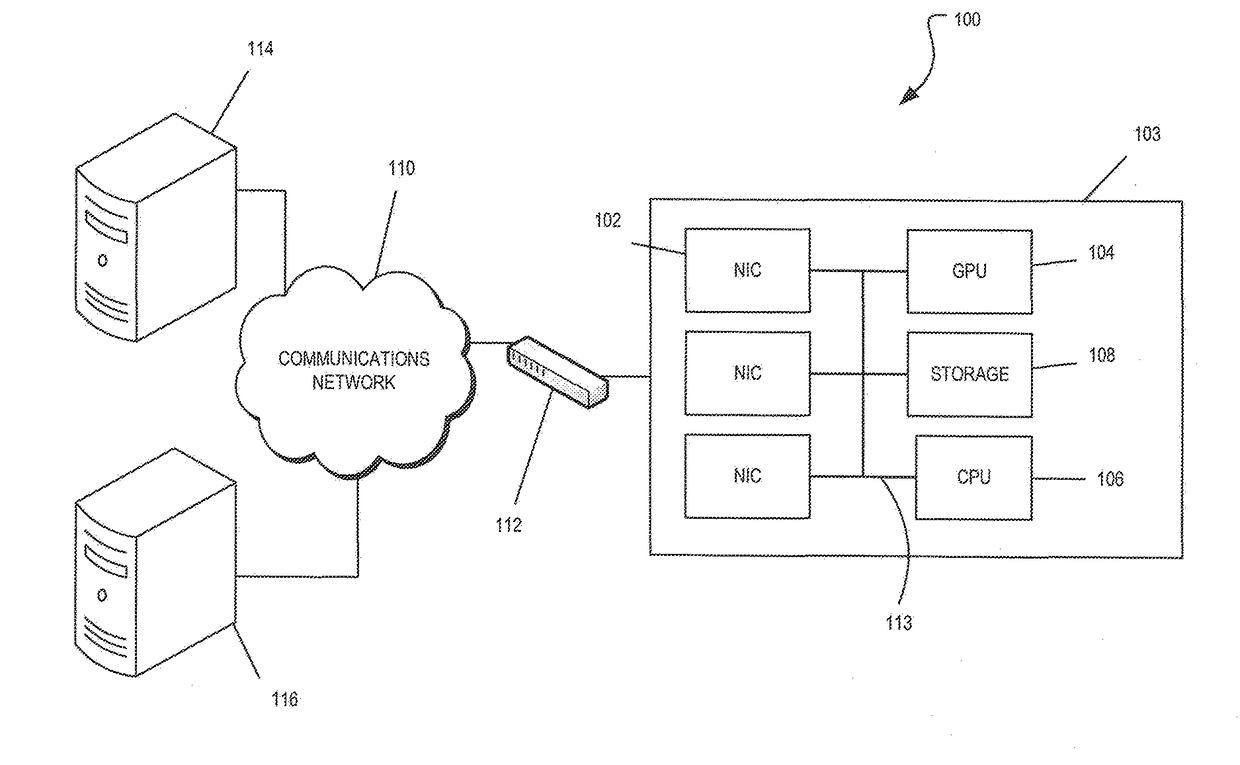

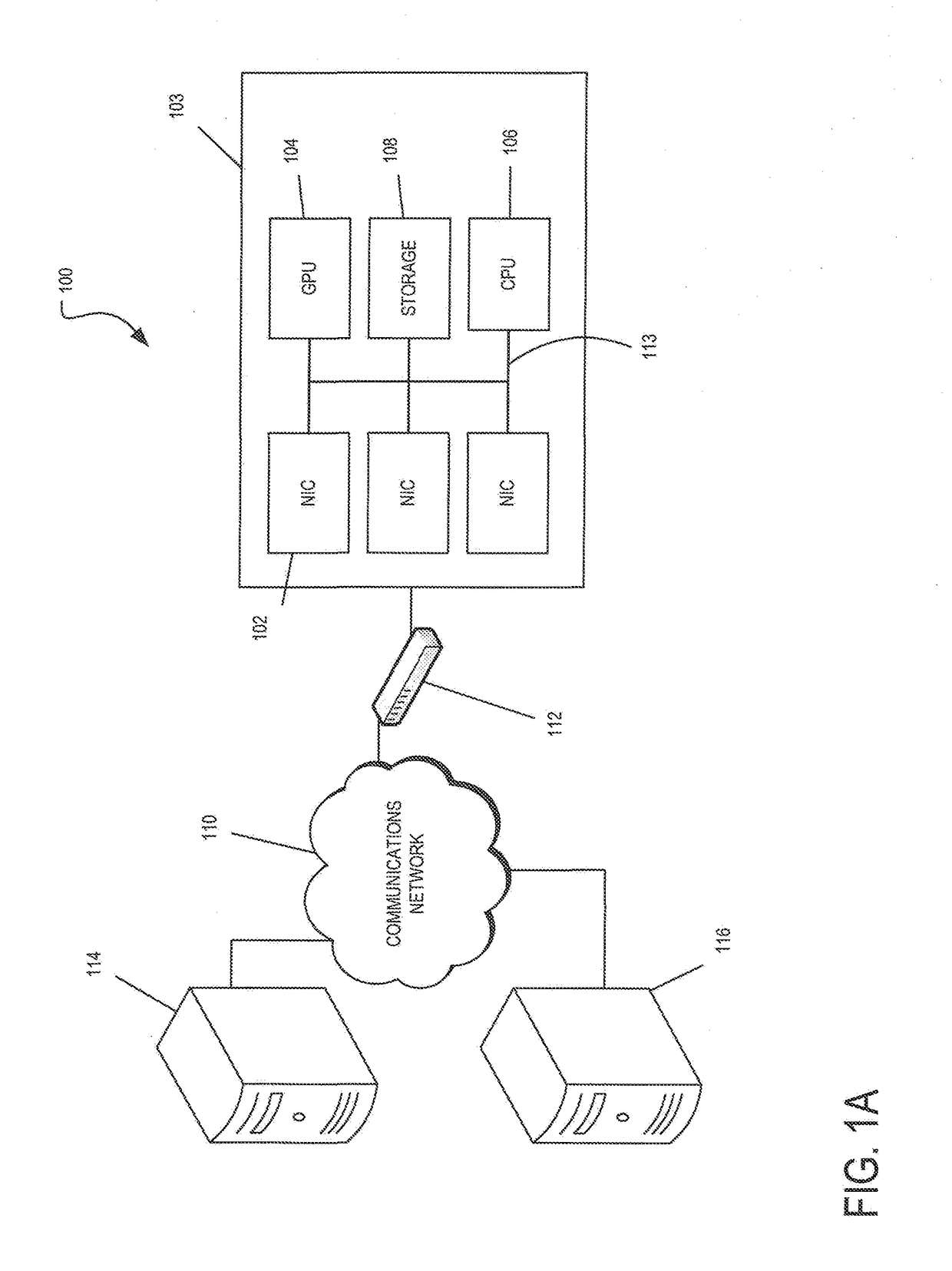

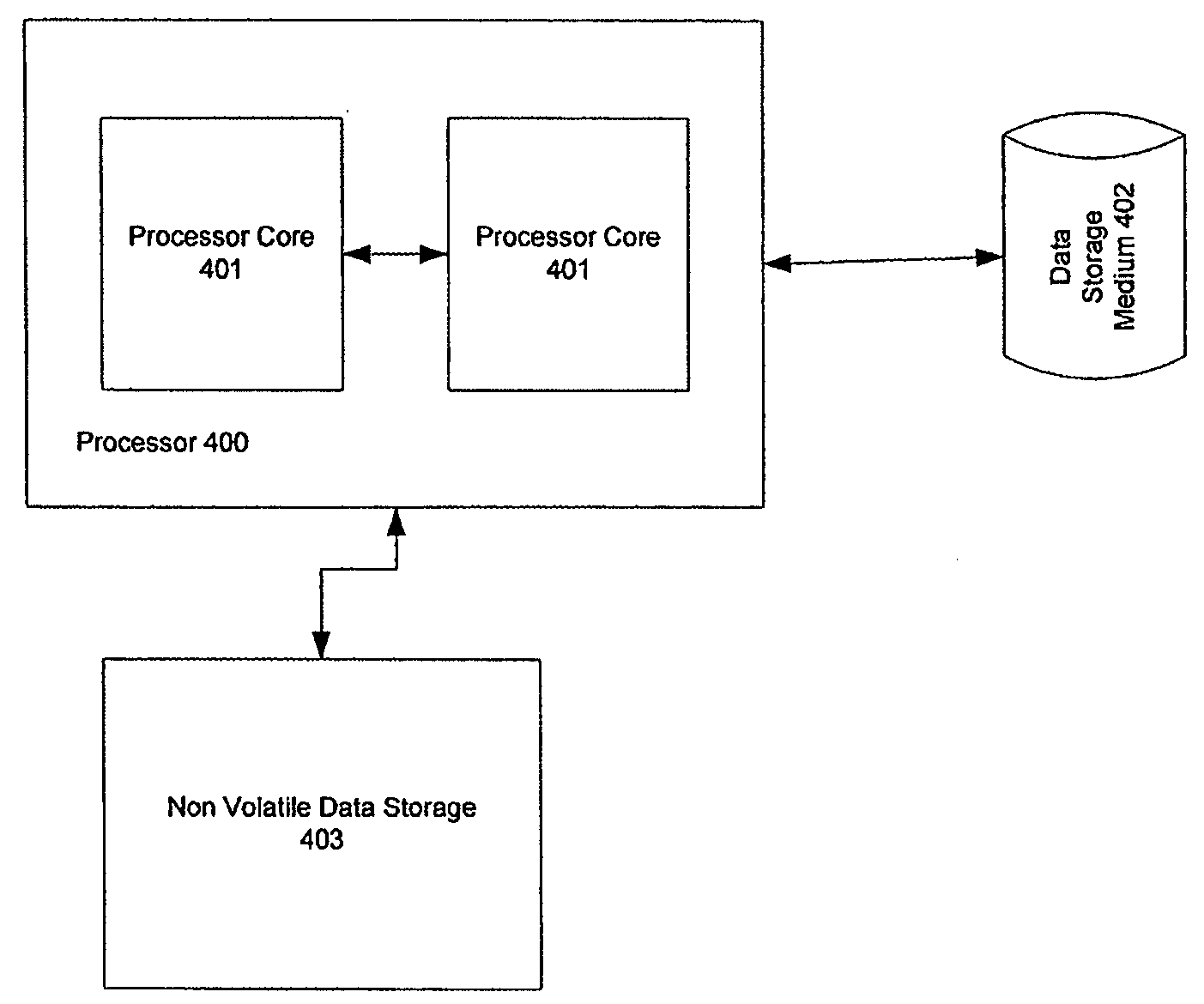

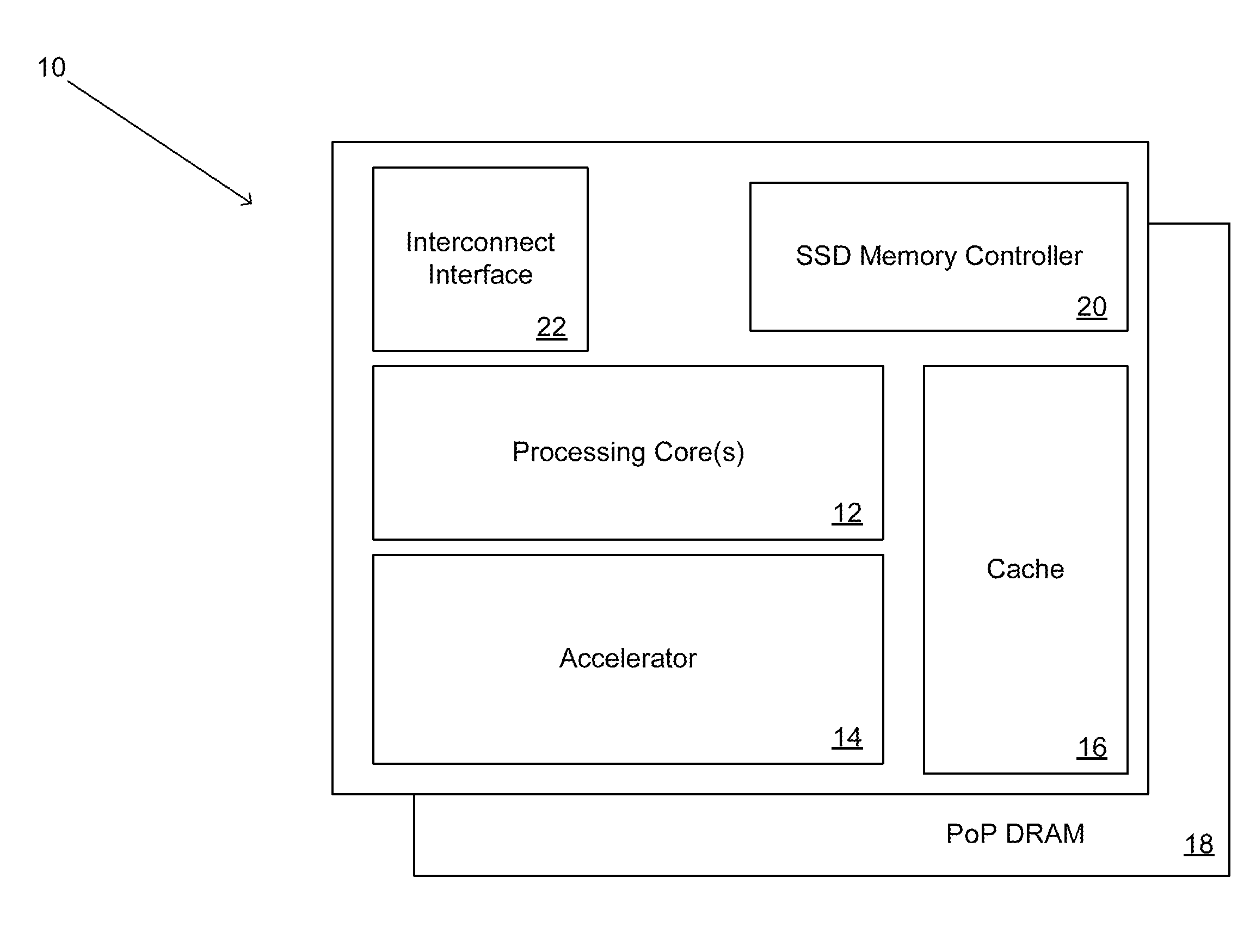

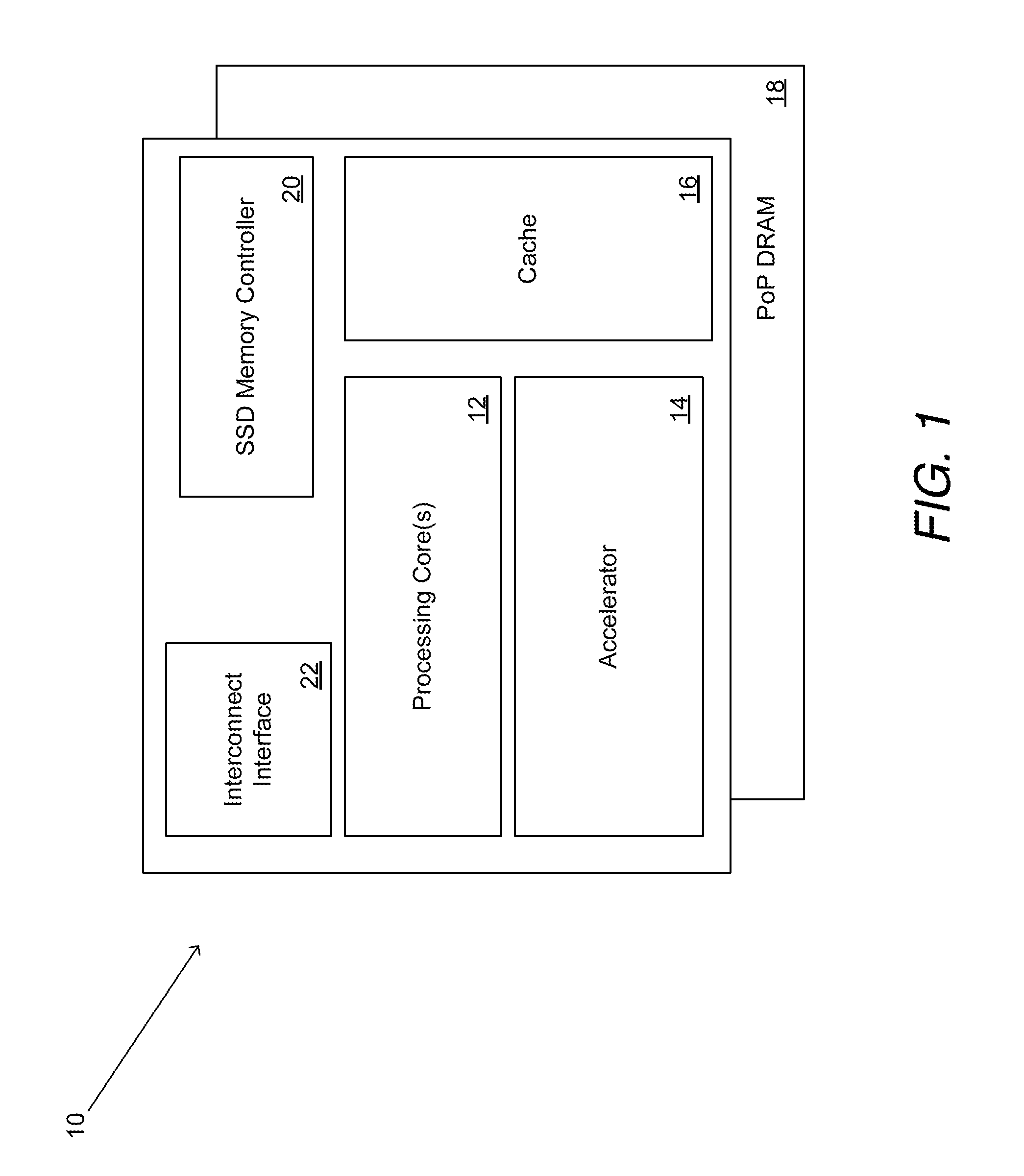

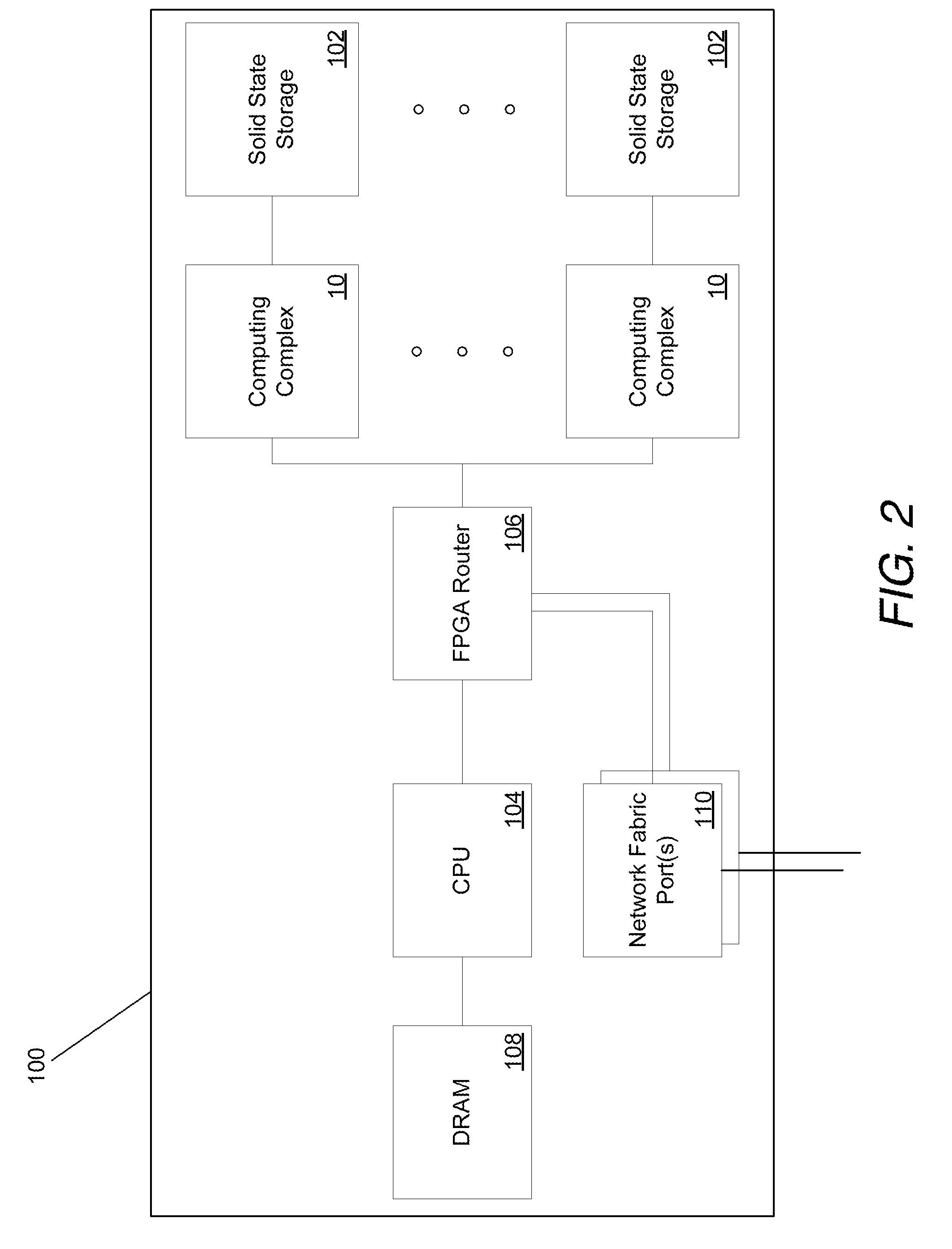

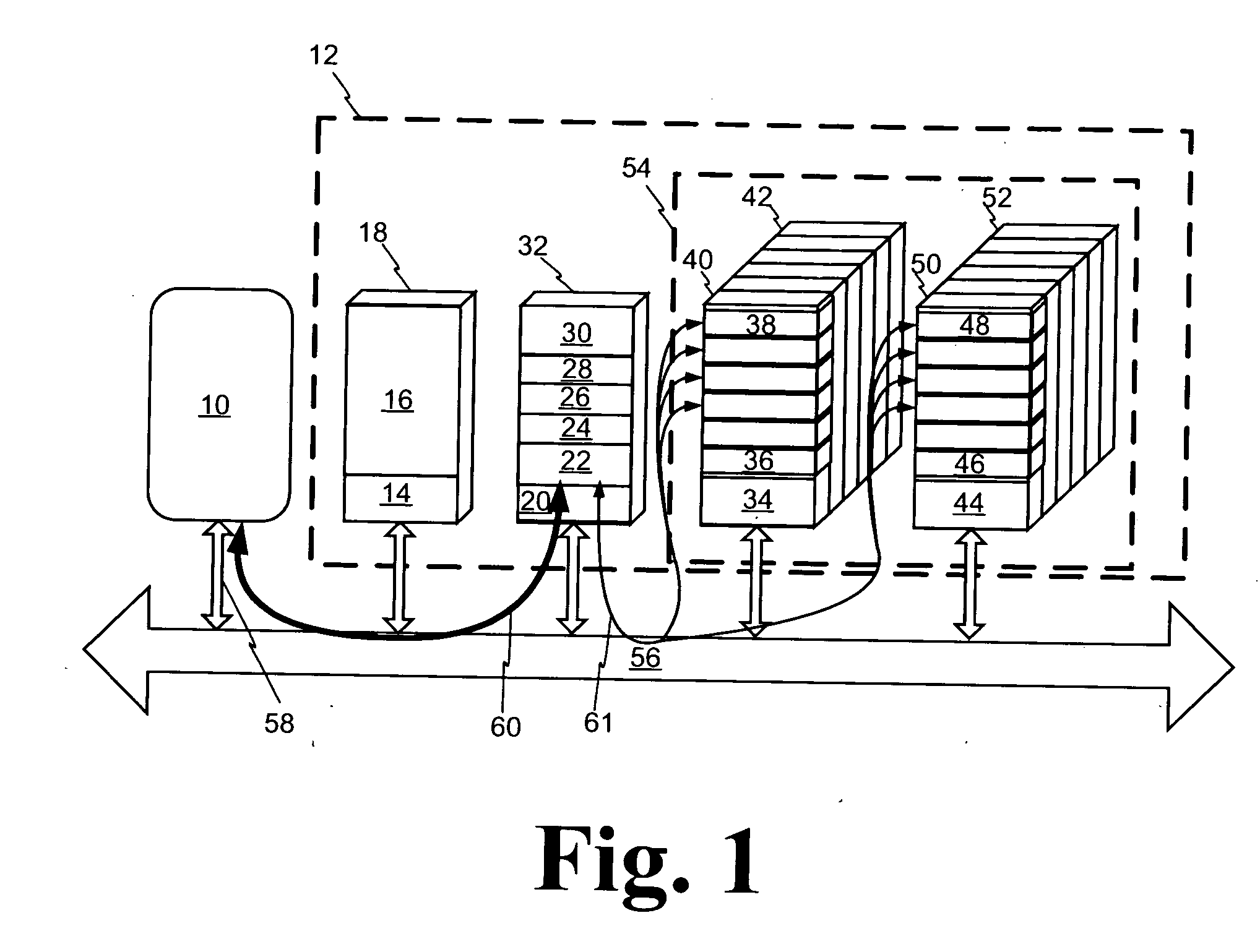

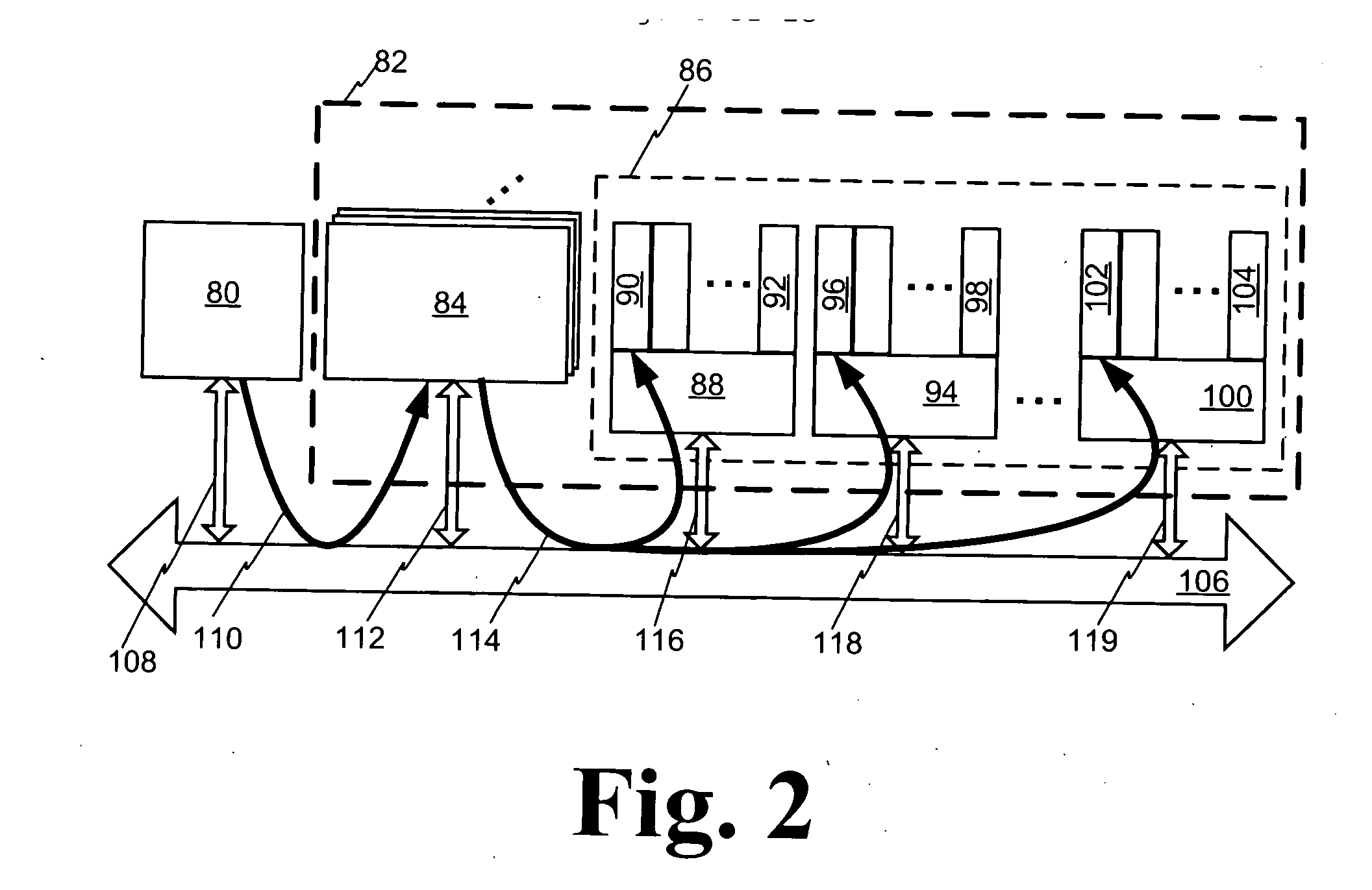

Systems and methods for rapid processing and storage of data

ActiveUS9552299B2Faster bandwidthImprove latencyMemory architecture accessing/allocationMemory adressing/allocation/relocationMassively parallelProcessing core

Systems and methods of building massively parallel computing systems using low power computing complexes in accordance with embodiments of the invention are disclosed. A massively parallel computing system in accordance with one embodiment of the invention includes at least one Solid State Blade configured to communicate via a high performance network fabric. In addition, each Solid State Blade includes a processor configured to communicate with a plurality of low power computing complexes interconnected by a router, and each low power computing complex includes at least one general processing core, an accelerator, an I / O interface, and cache memory and is configured to communicate with non-volatile solid state memory.

Owner:CALIFORNIA INST OF TECH

Ultrascalable petaflop parallel supercomputer

InactiveUS7761687B2Maximize throughputDelay minimizationGeneral purpose stored program computerElectric digital data processingSupercomputerPacket communication

A massively parallel supercomputer of petaOPS-scale includes node architectures based upon System-On-a-Chip technology, where each processing node comprises a single Application Specific Integrated Circuit (ASIC) having up to four processing elements. The ASIC nodes are interconnected by multiple independent networks that optimally maximize the throughput of packet communications between nodes with minimal latency. The multiple networks may include three high-speed networks for parallel algorithm message passing including a Torus, collective network, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. The use of a DMA engine is provided to facilitate message passing among the nodes without the expenditure of processing resources at the node.

Owner:INT BUSINESS MASCH CORP

Capacitive-coupled non-volatile thin-film transistor NOR strings in three-dimensional arrays

ActiveUS10121553B2High densityLower read latencyTransistorSolid-state devicesCapacitive couplingParasitic capacitance

Owner:SUNRISE MEMORY CORP

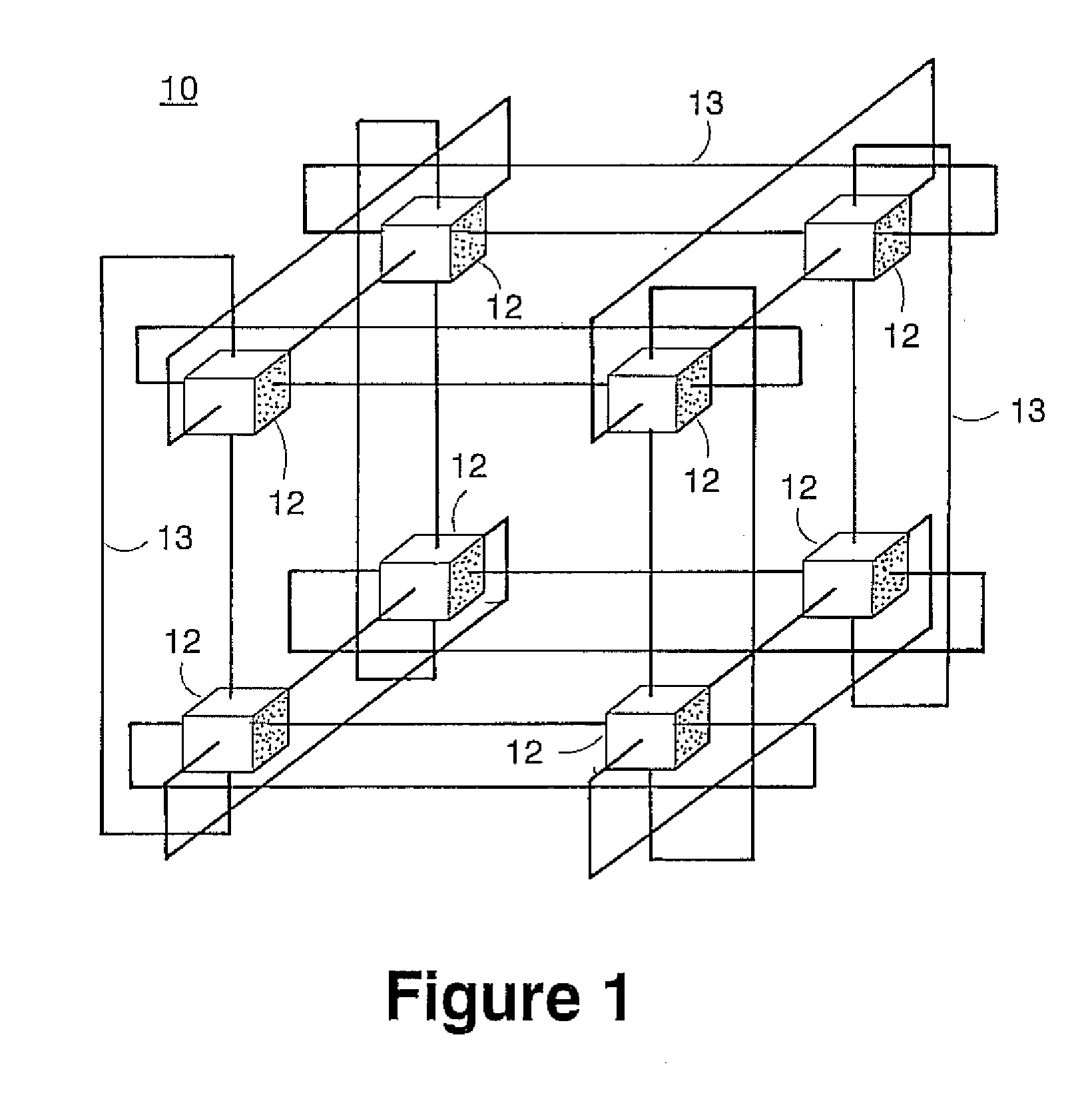

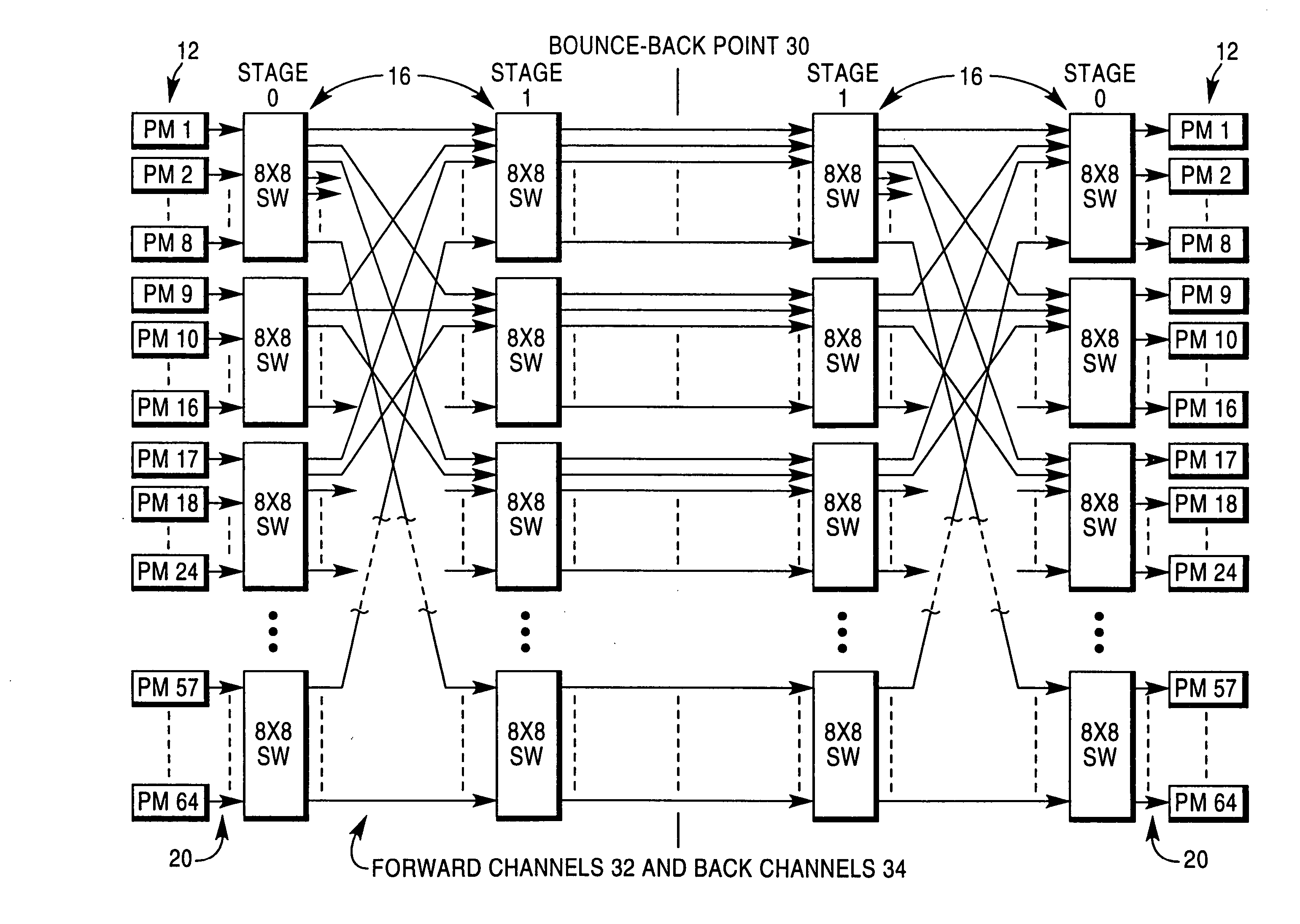

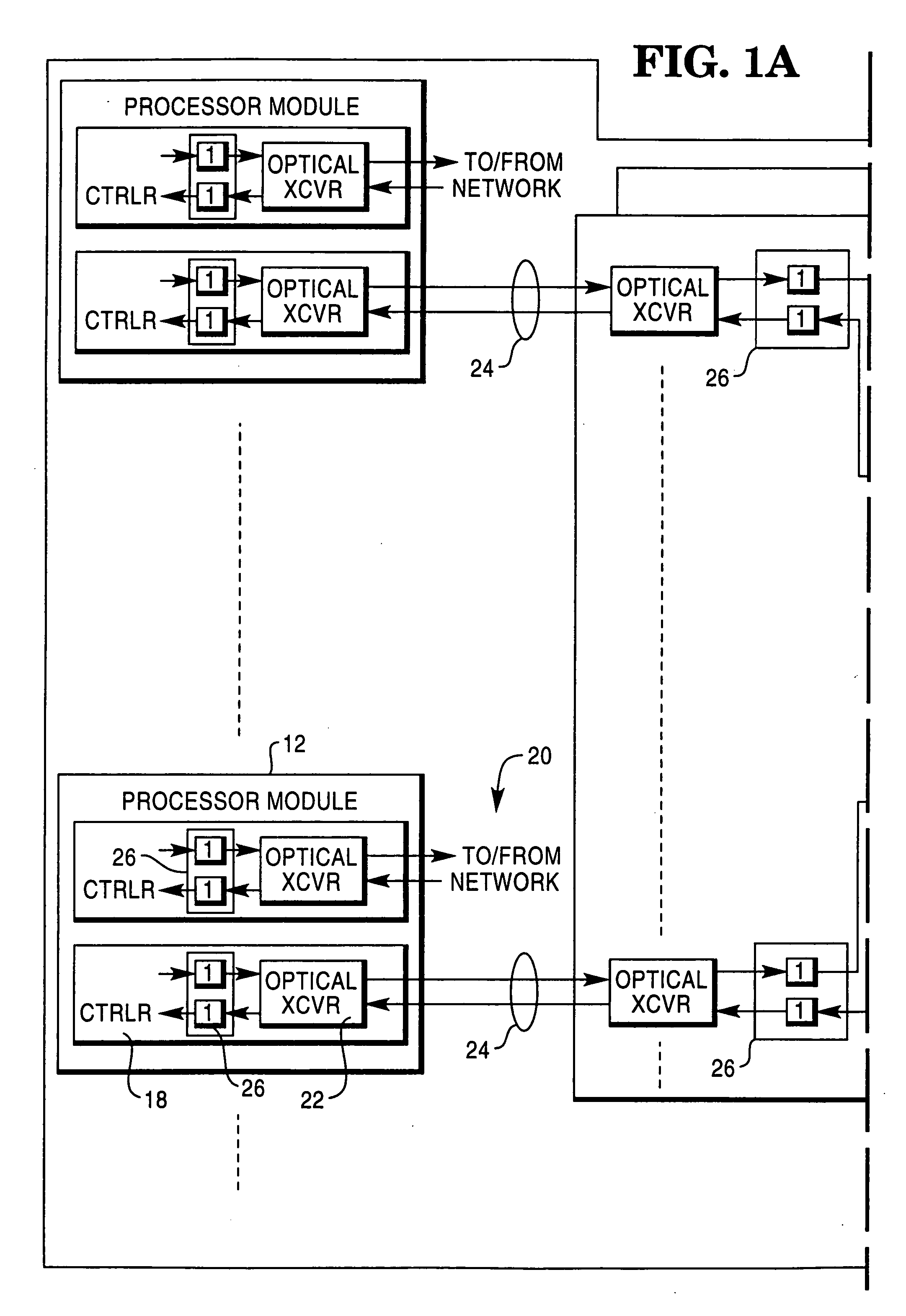

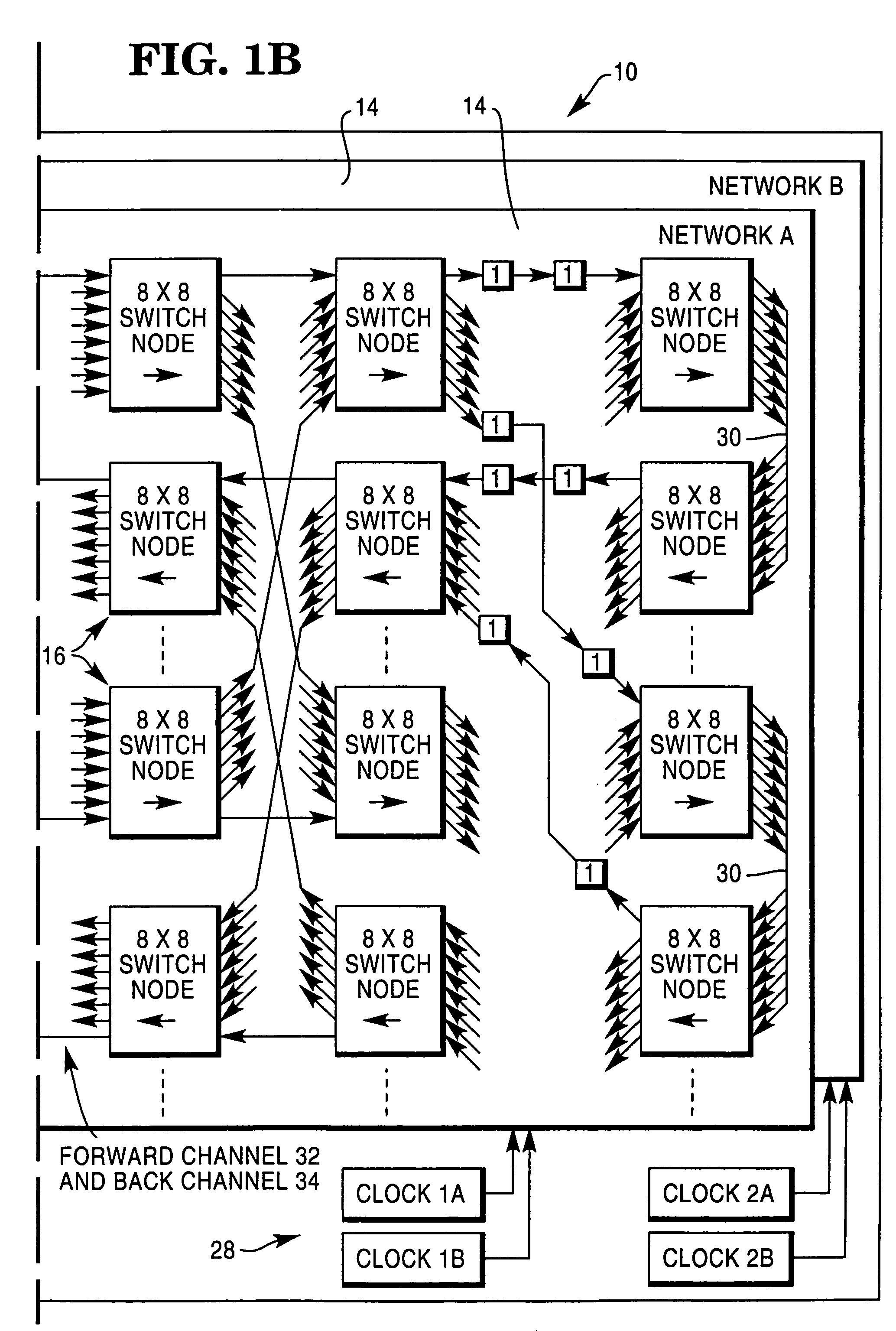

Reconfigurable, fault tolerant, multistage interconnect network and protocol

InactiveUS20060013207A1Improve fault toleranceReduce contentionMultiplex system selection arrangementsRadiation pyrometryFault toleranceMassively parallel

A multistage interconnect network (MIN) capable of supporting massive parallel processing, including point-to-point and multicast communications between processor modules (PMs) which are connected to the input and output ports of the network. The network is built using interconnected switch nodes arranged in 2 [logb N] stages, wherein b is the number of switch node input / output ports, N is the number of network input / output ports and [logb N] indicates a ceiling function providing the smallest integer not less than logb N. The additional stages provide additional paths between network input ports and network output ports, thereby enhancing fault tolerance and lessening contention.

Owner:TERADATA US

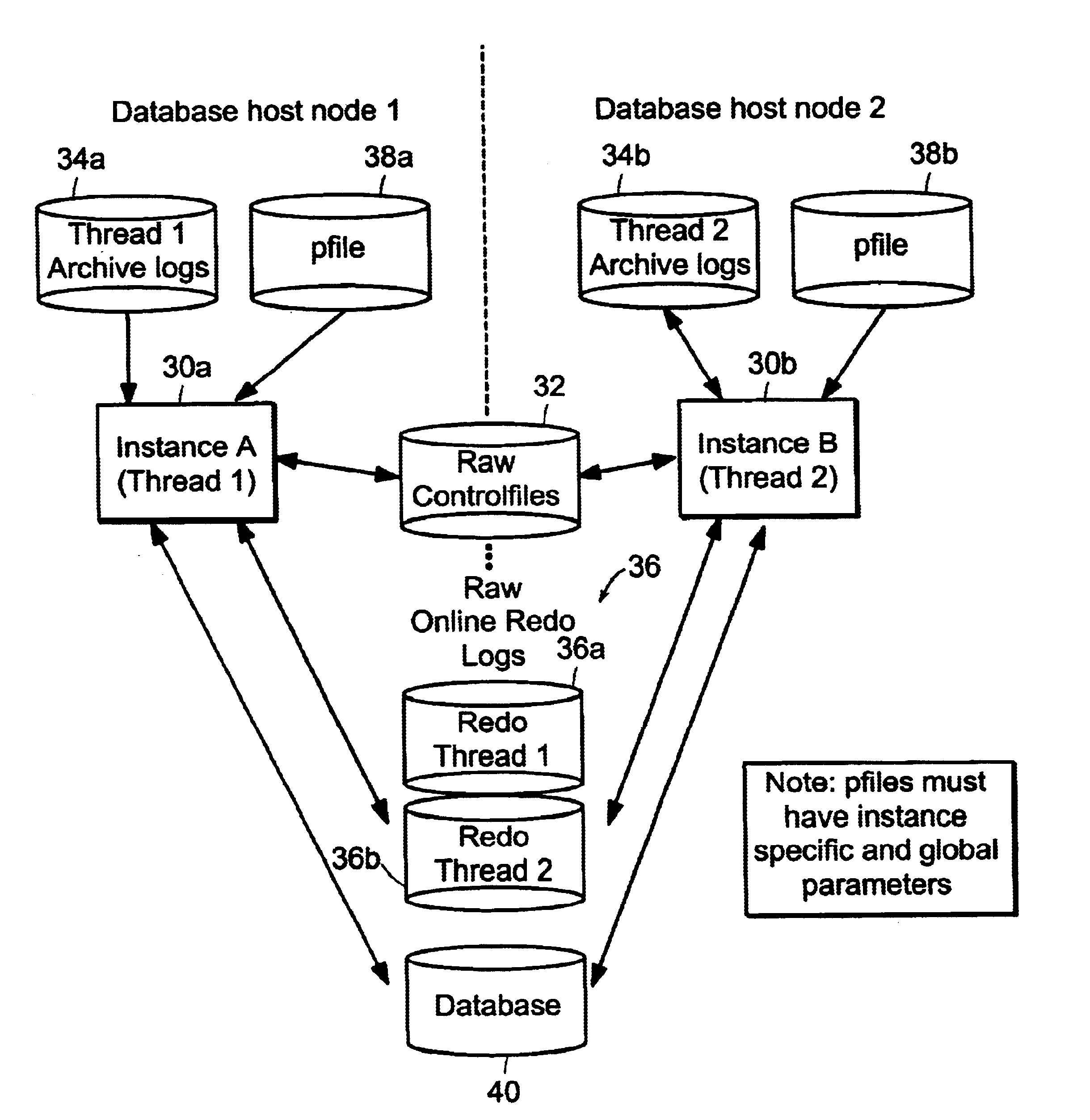

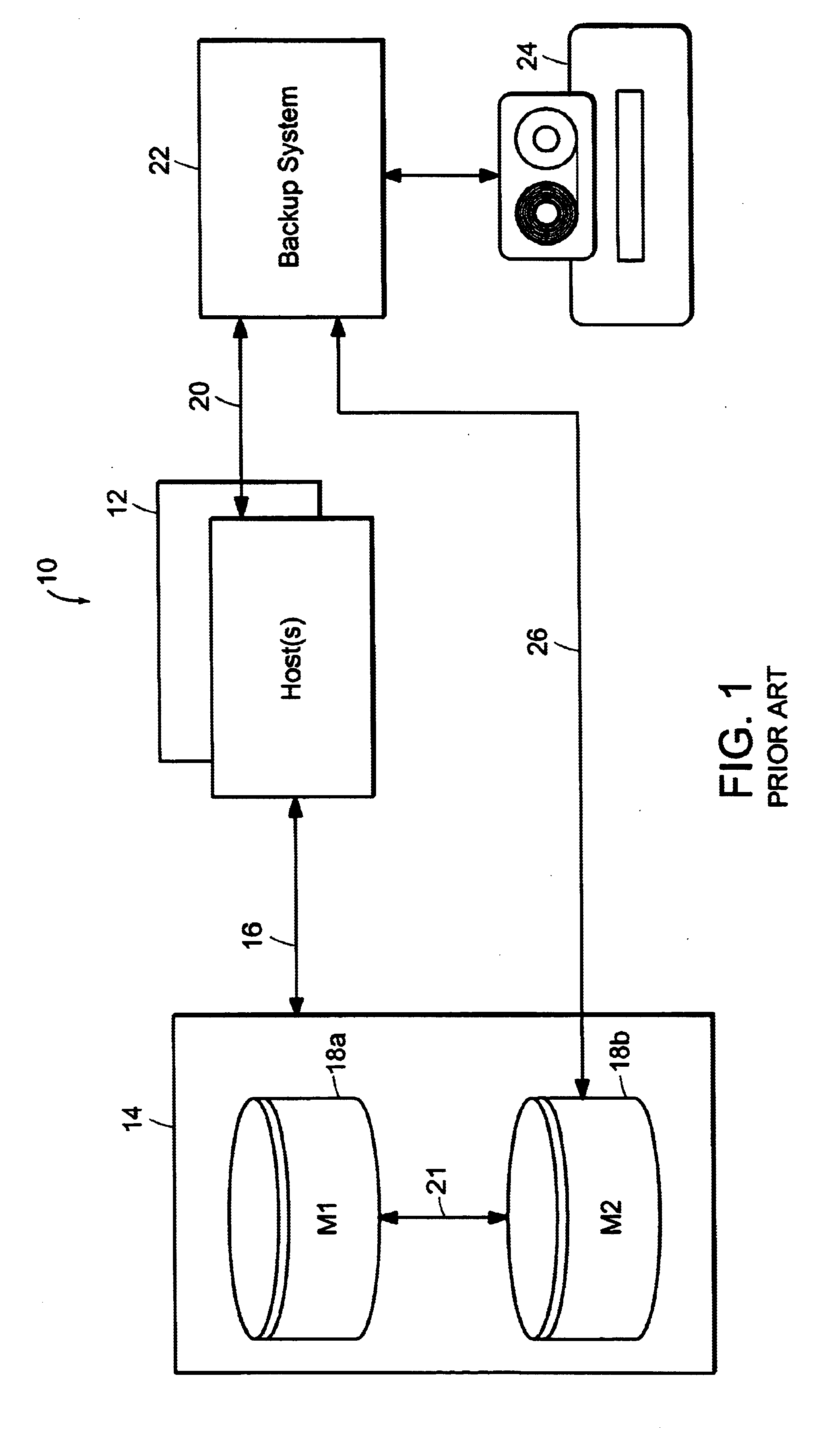

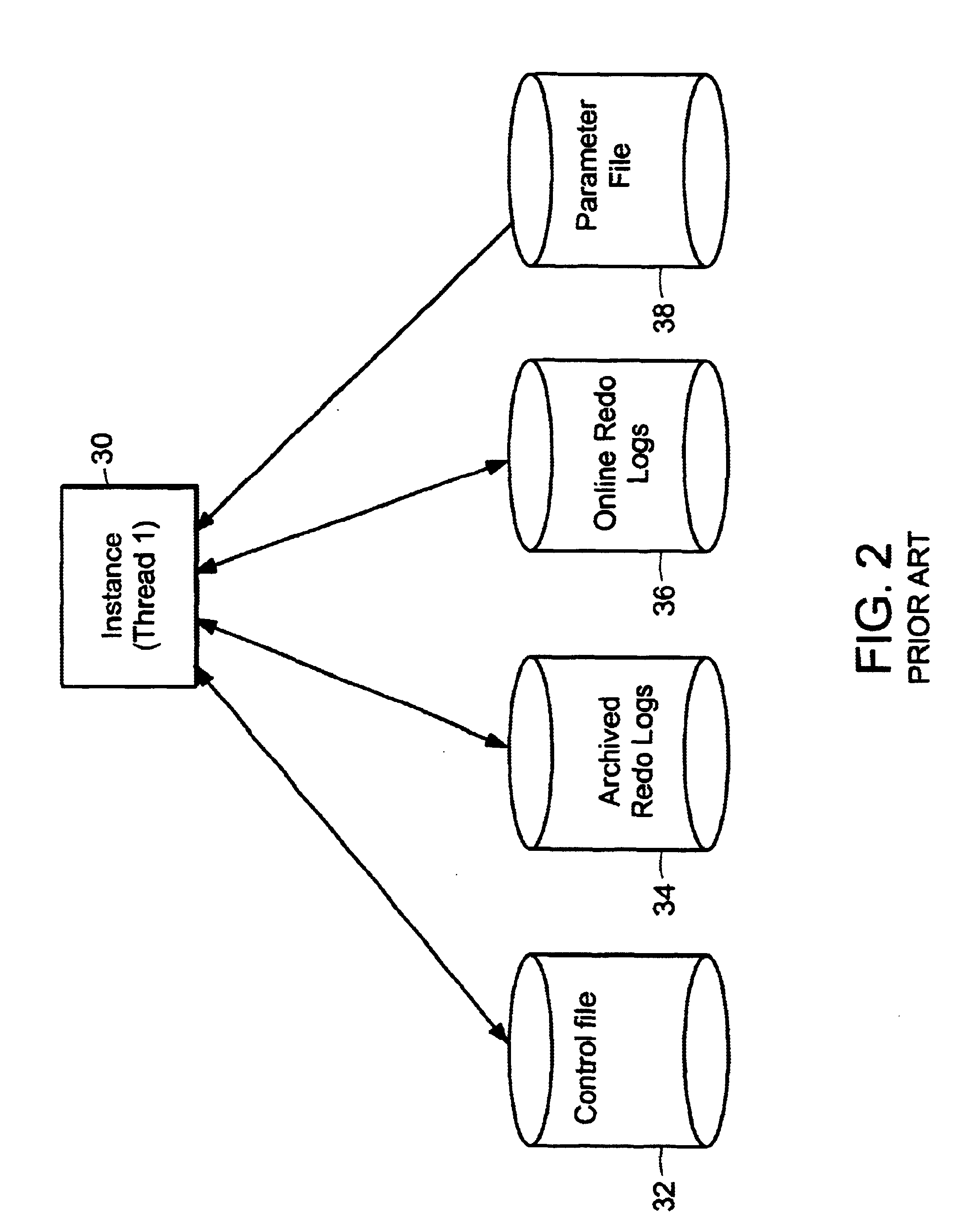

System and method for backup a parallel server data storage system

InactiveUS6658589B1Safe and effective backupSafe and effective and restoreData processing applicationsRedundant operation error correctionMulti processorParallel processing

A system and method for safe and effective backup and restore of parallel server databases stored in data storage systems. Parallel server databases allow multiple nodes in MPP (Massively Parallel Processor) or SMP (Symmetric Multi-Processor) systems to simultaneously access a database. Each node is running an instance (thread) which provides access to the database. The present invention allows for online or offline backup to be performed from any node in the system, with proper access to all control files and logs, both archived and online, whether the files are stored in raw partitions in the data storage system, or local on certain nodes. Two different types of external restore supported: complete external restore and partial external restore. In a complete external restore, all spaces will be restored to the most recent checkpoint that was generated while creating an external backup. If users lose only a portion of the data (which is more typically the case), a partial external restore may be performed.

Owner:EMC IP HLDG CO LLC

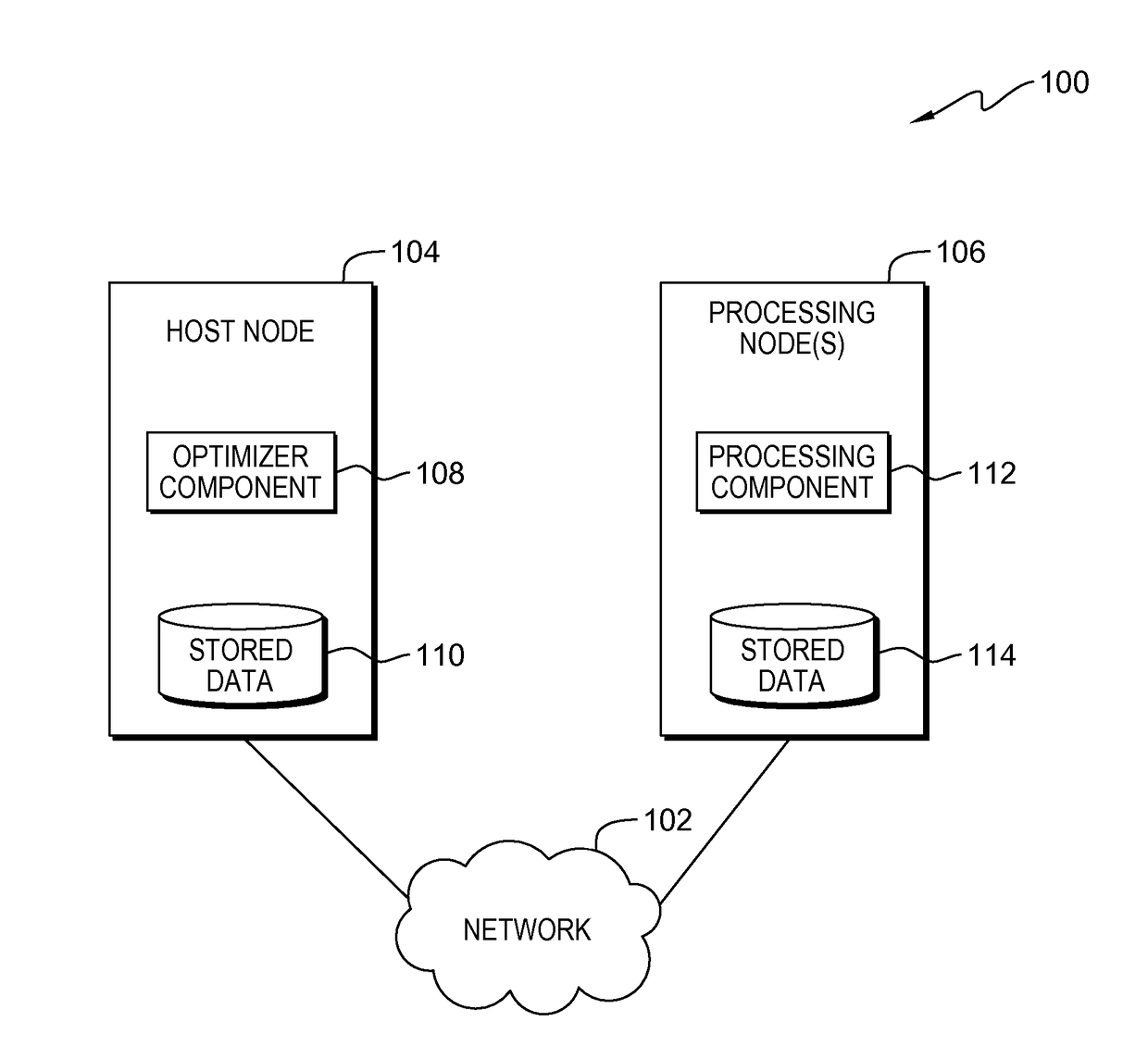

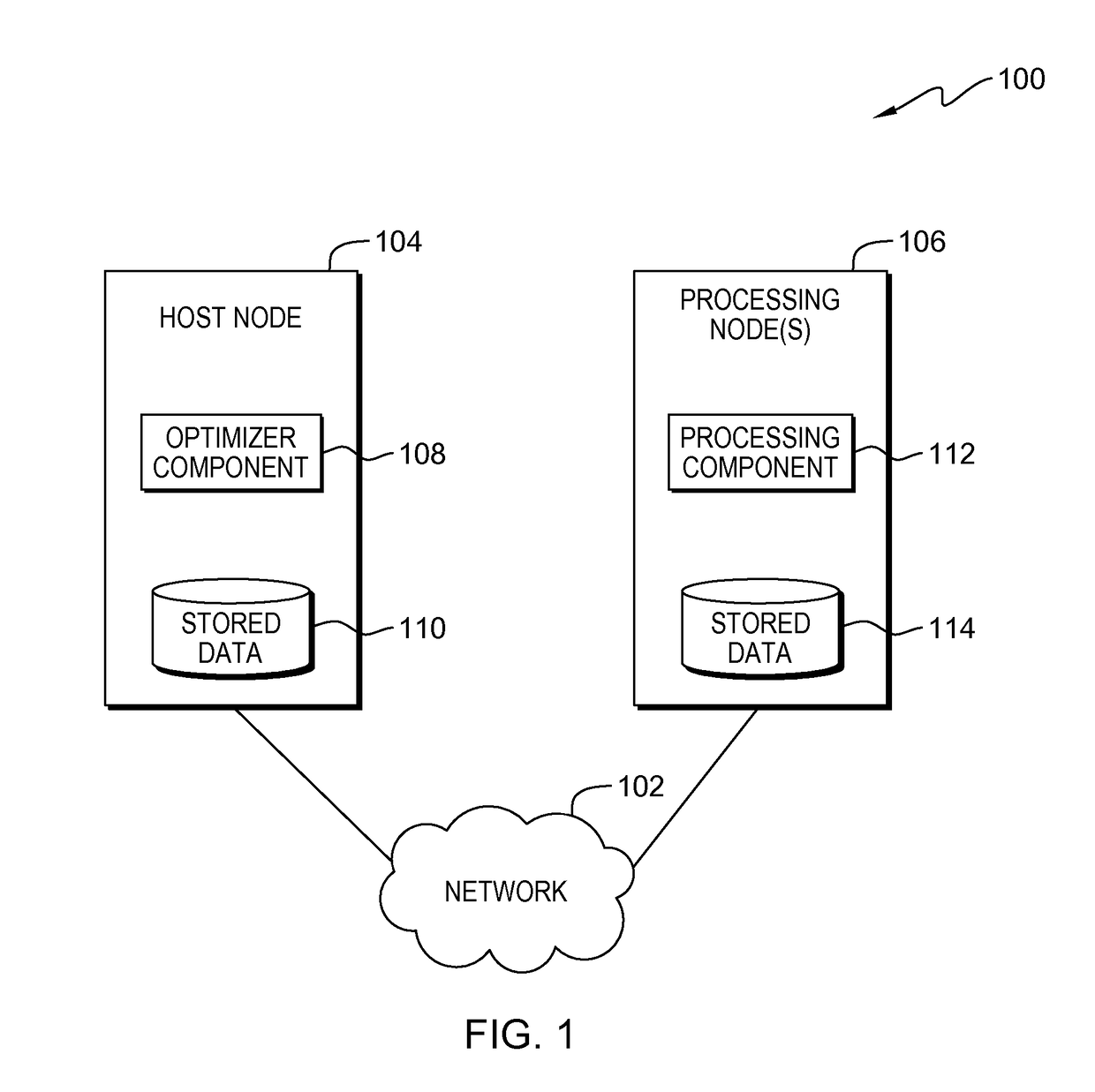

Parallel preparation of a query execution plan in a massively parallel processing environment based on global and low-level statistics

InactiveUS20170147640A1Low cost of executionLow costDigital data information retrievalSpecial data processing applicationsExecution planParallel processing

In an approach to preparing a query execution plan, a host node receives a query implicating one or more data tables. The host node broadcasts one or more implicated data tables to one or more processing nodes. The host node receives a set of node-specific query execution plans and execution cost estimates associated with each of the node-specific query execution plans, which have been prepared in parallel based on global statistics and node-specific low level statistics. The host node selects an optimal query execution plan based on minimized execution cost.

Owner:IBM CORP

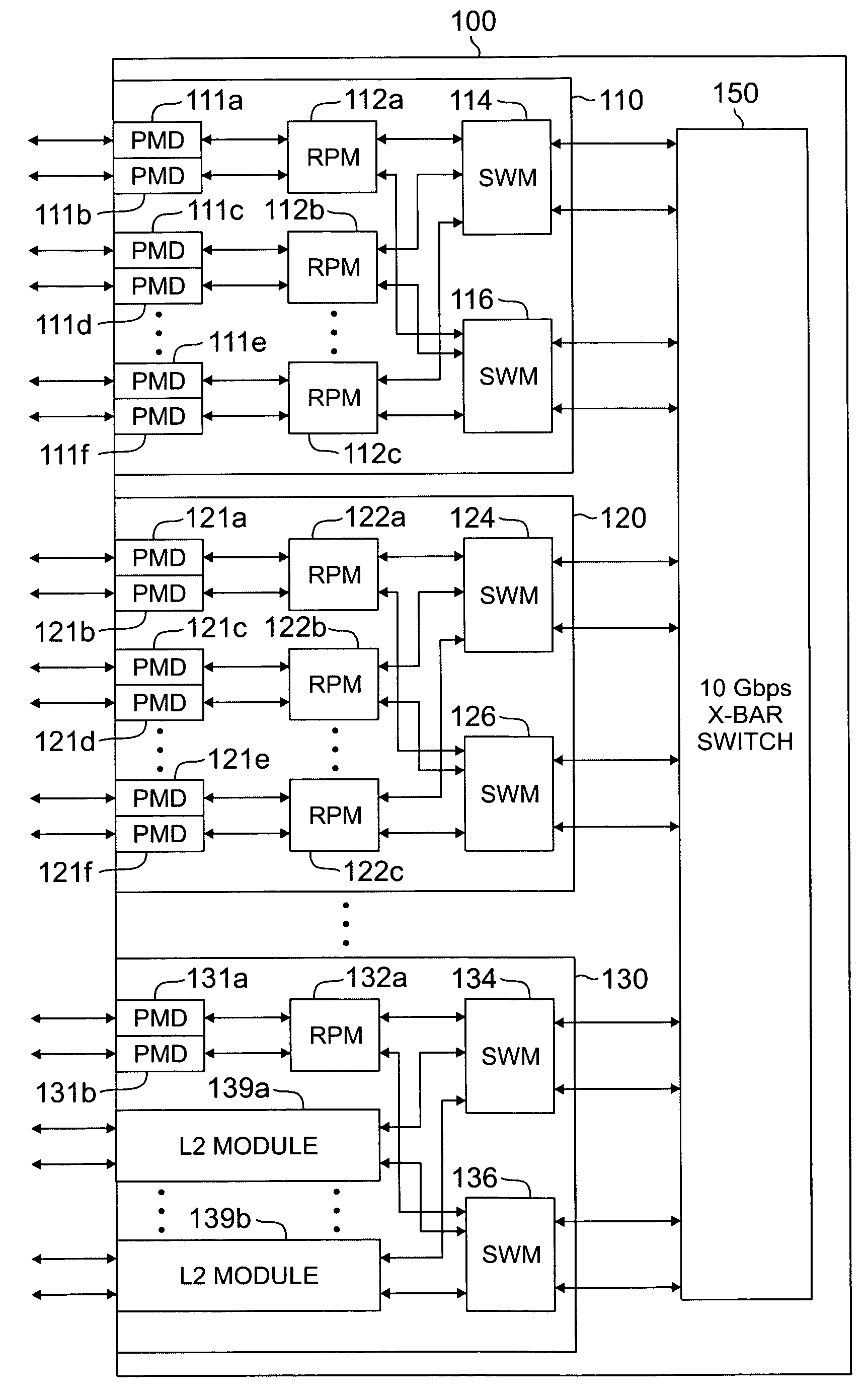

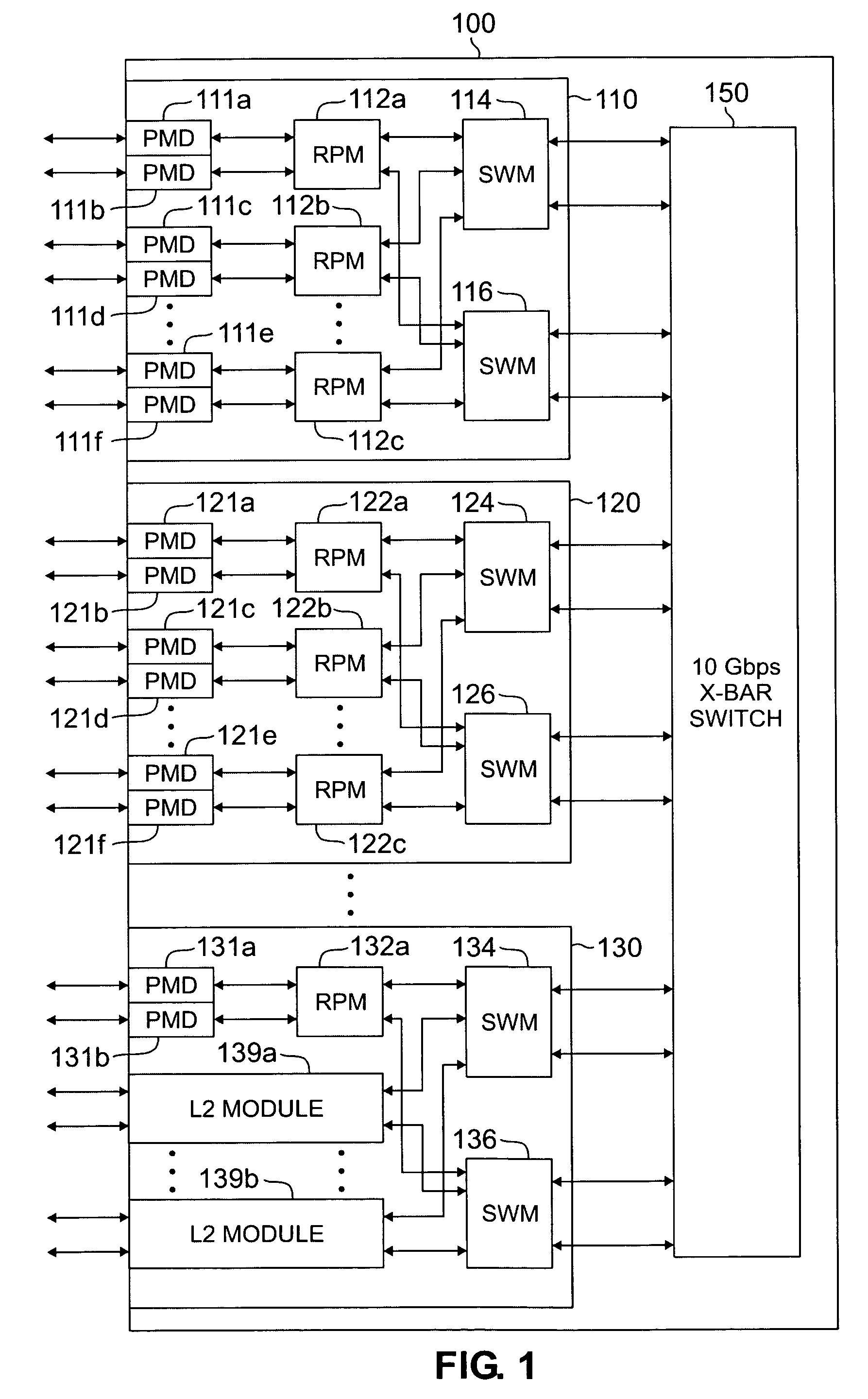

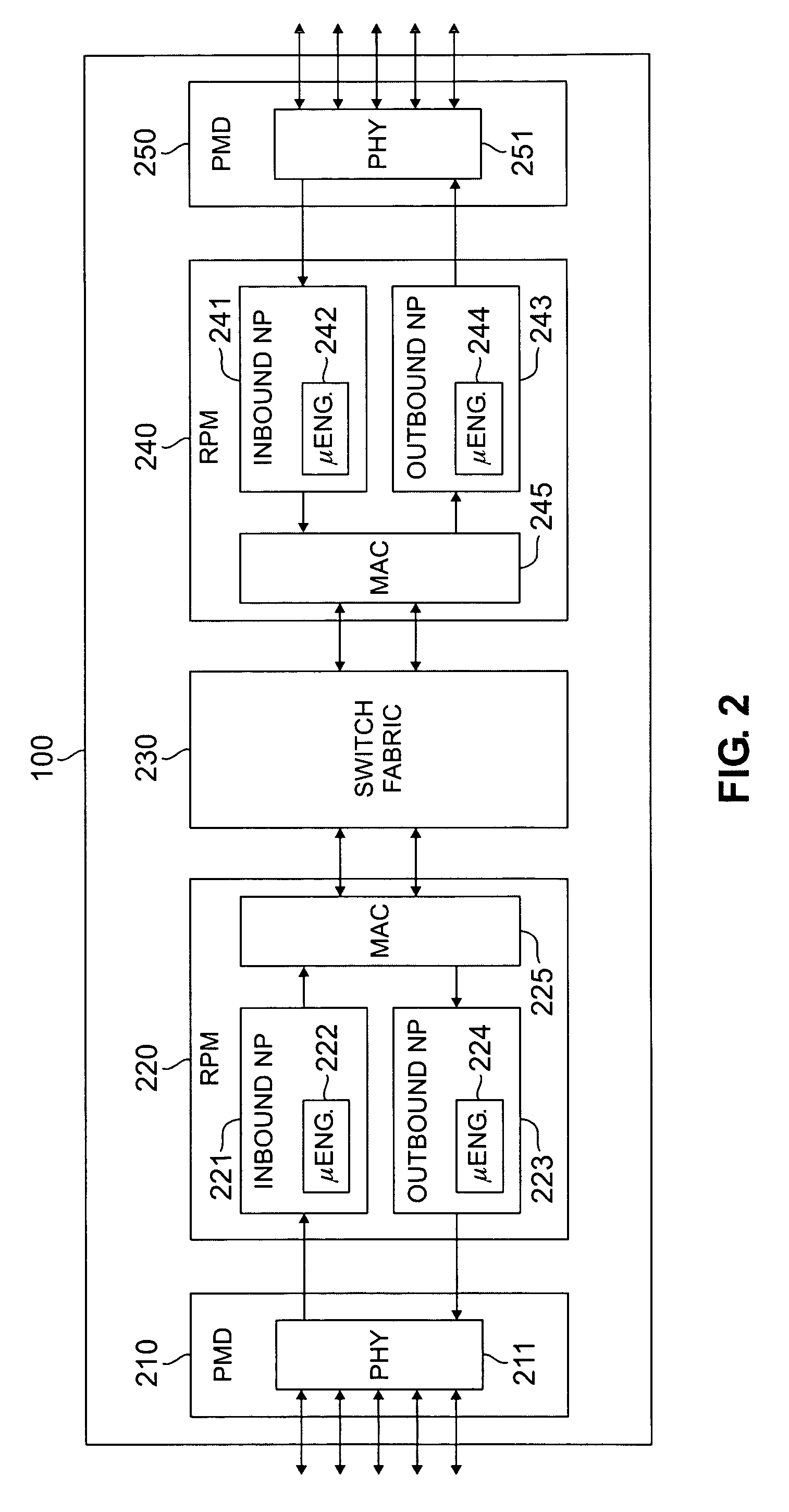

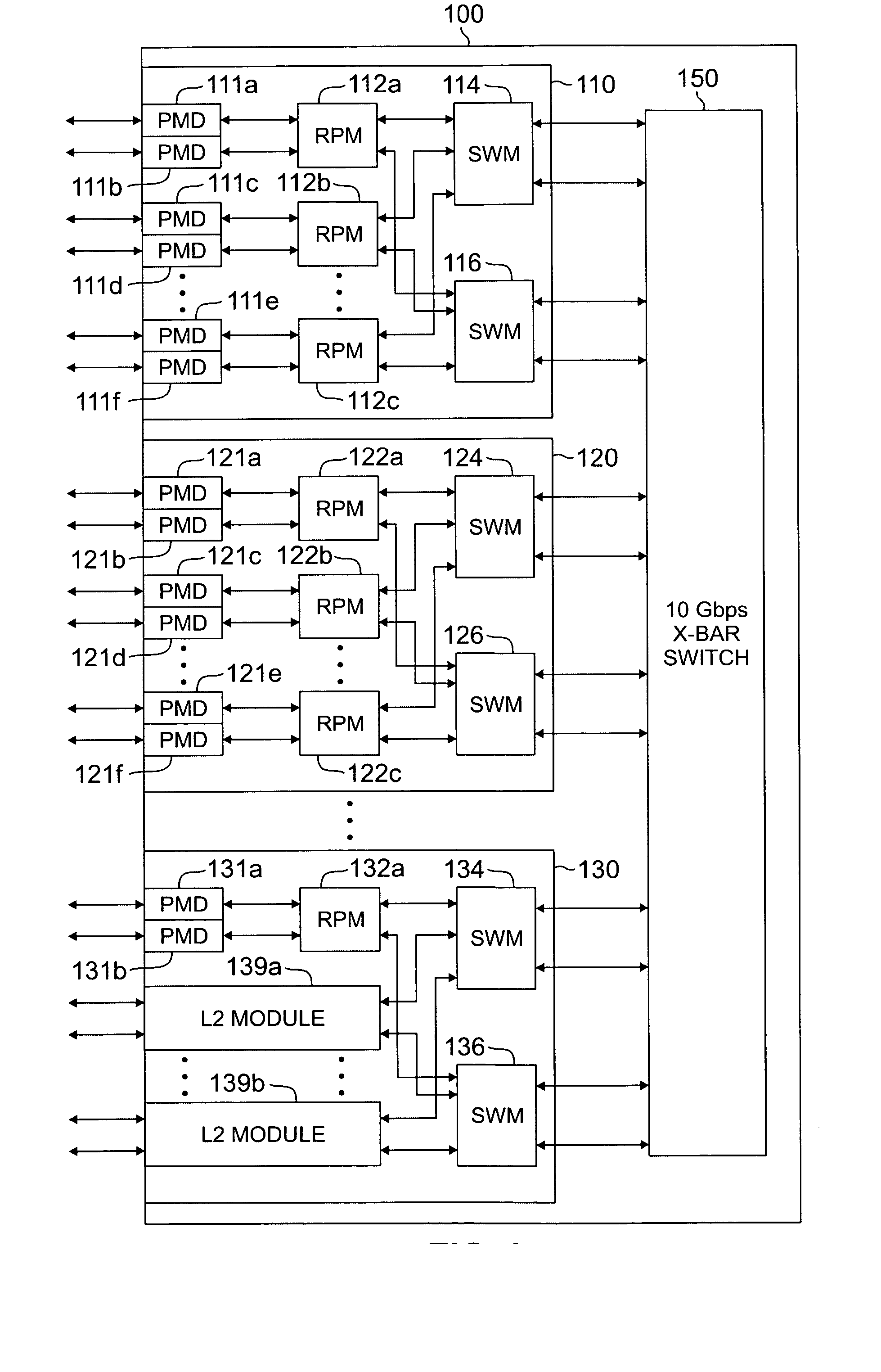

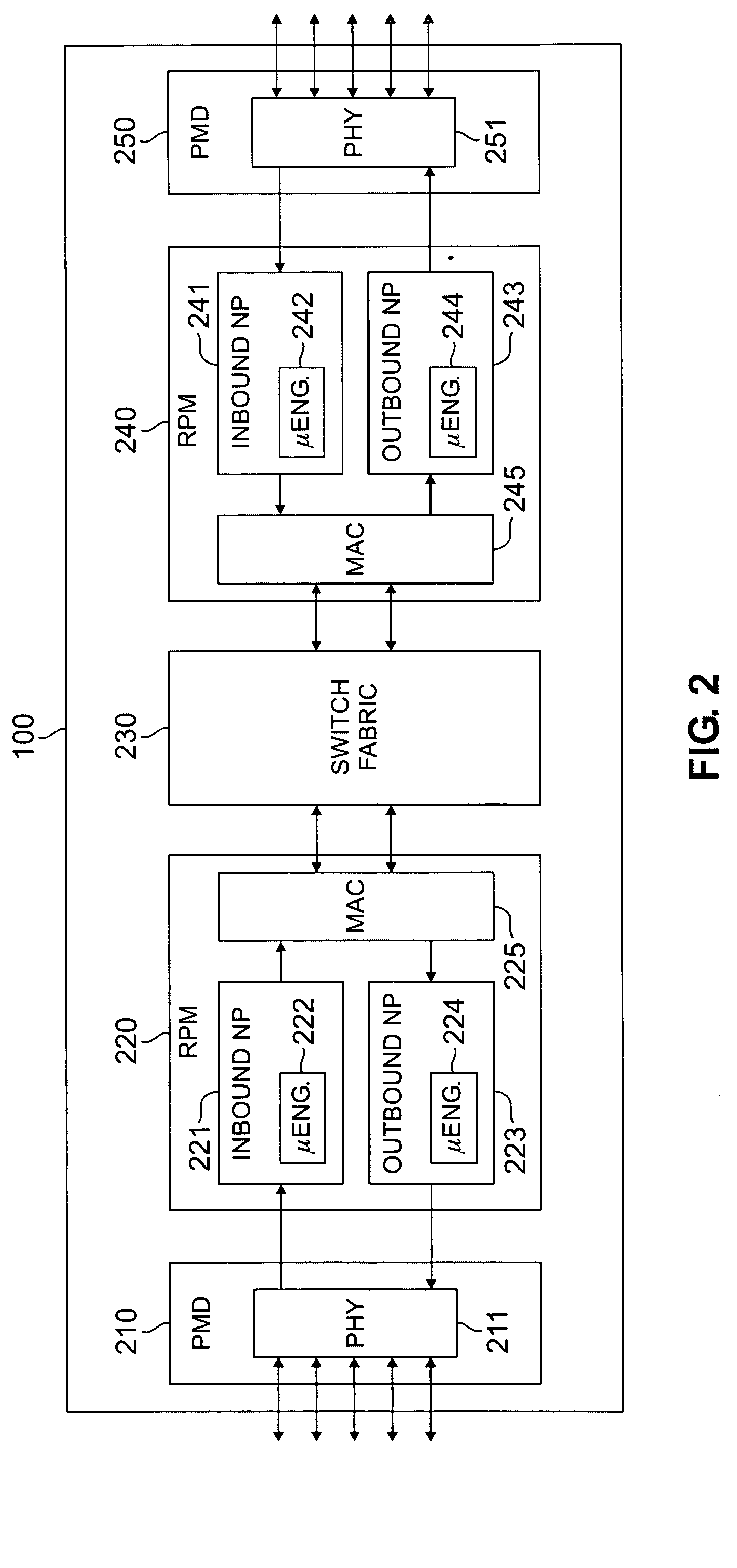

Apparatus and method for route summarization and distribution in a massively parallel router

InactiveUS7369561B2Limited bandwidthReduced space requirementsError preventionTransmission systemsMassively parallelComputer network

A router for interconnecting external devices coupled to the router. The router comprises a switch fabric and a plurality of routing nodes coupled to the switch fabric. Each routing node is capable of transmitting data packets to, and receiving data packets from, the external devices and is further capable of transmitting data packets to, and receiving data packets from, other routing nodes via the switch fabric. The router also comprises a control processor for comparing the N most significant bits of a first subnet address associated with a first external port of a first routing node with the N most significant bits of a second subnet address associated with a second external port of the first routing node. The router determines a P-bit prefix of similar leading bits in the first and second subnet addresses and transmits the P-bit prefix to other routing nodes.

Owner:SAMSUNG ELECTRONICS CO LTD

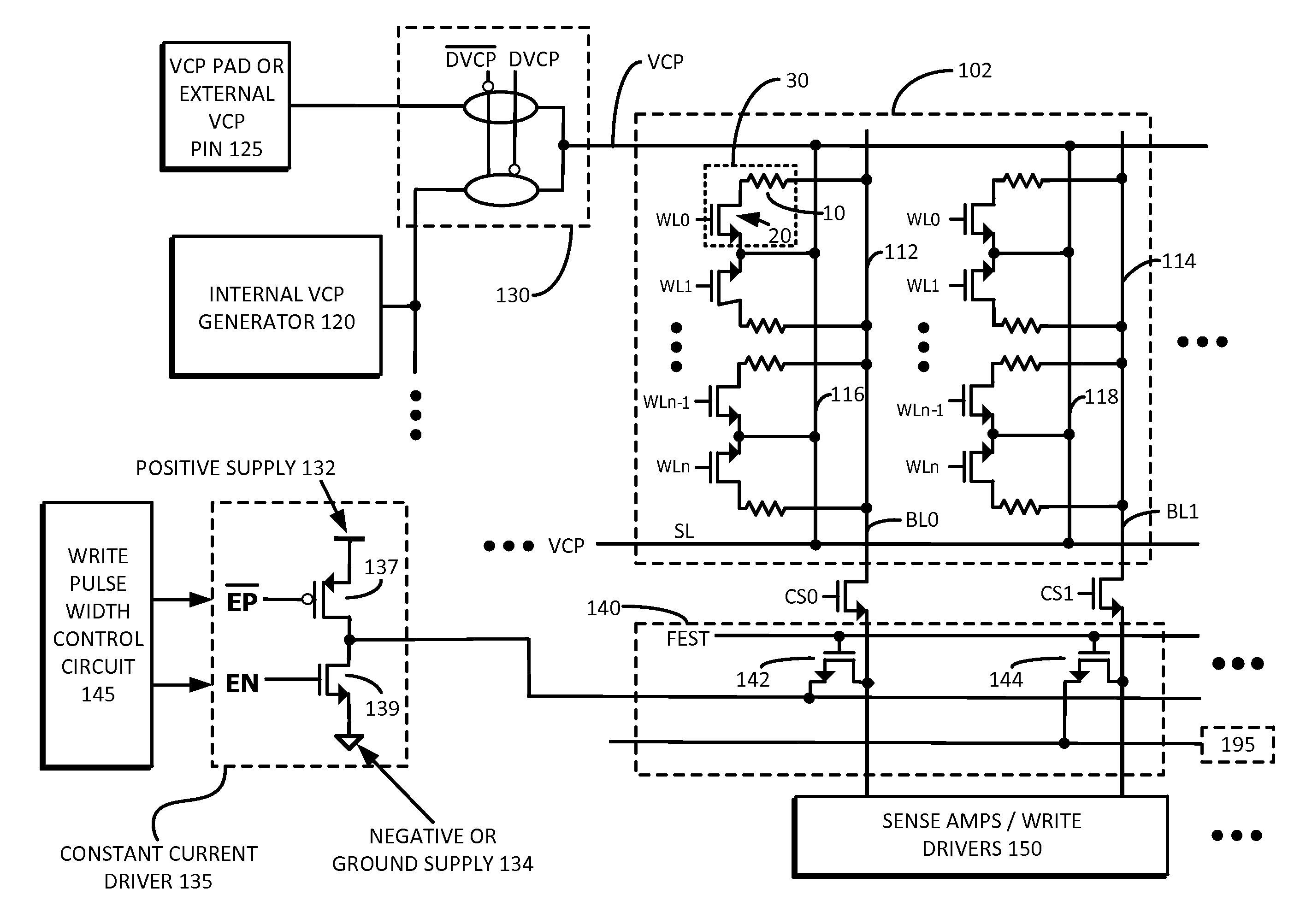

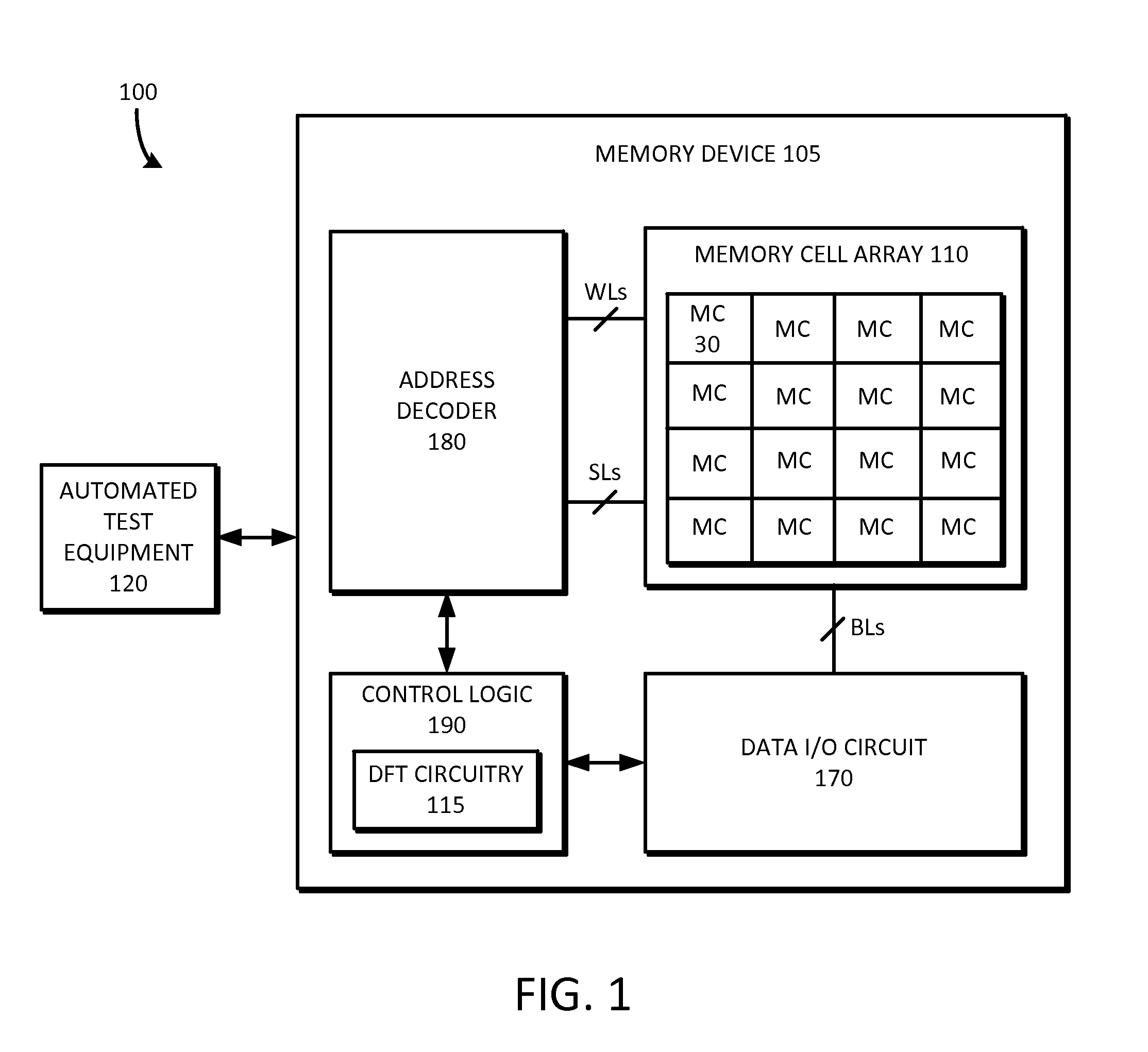

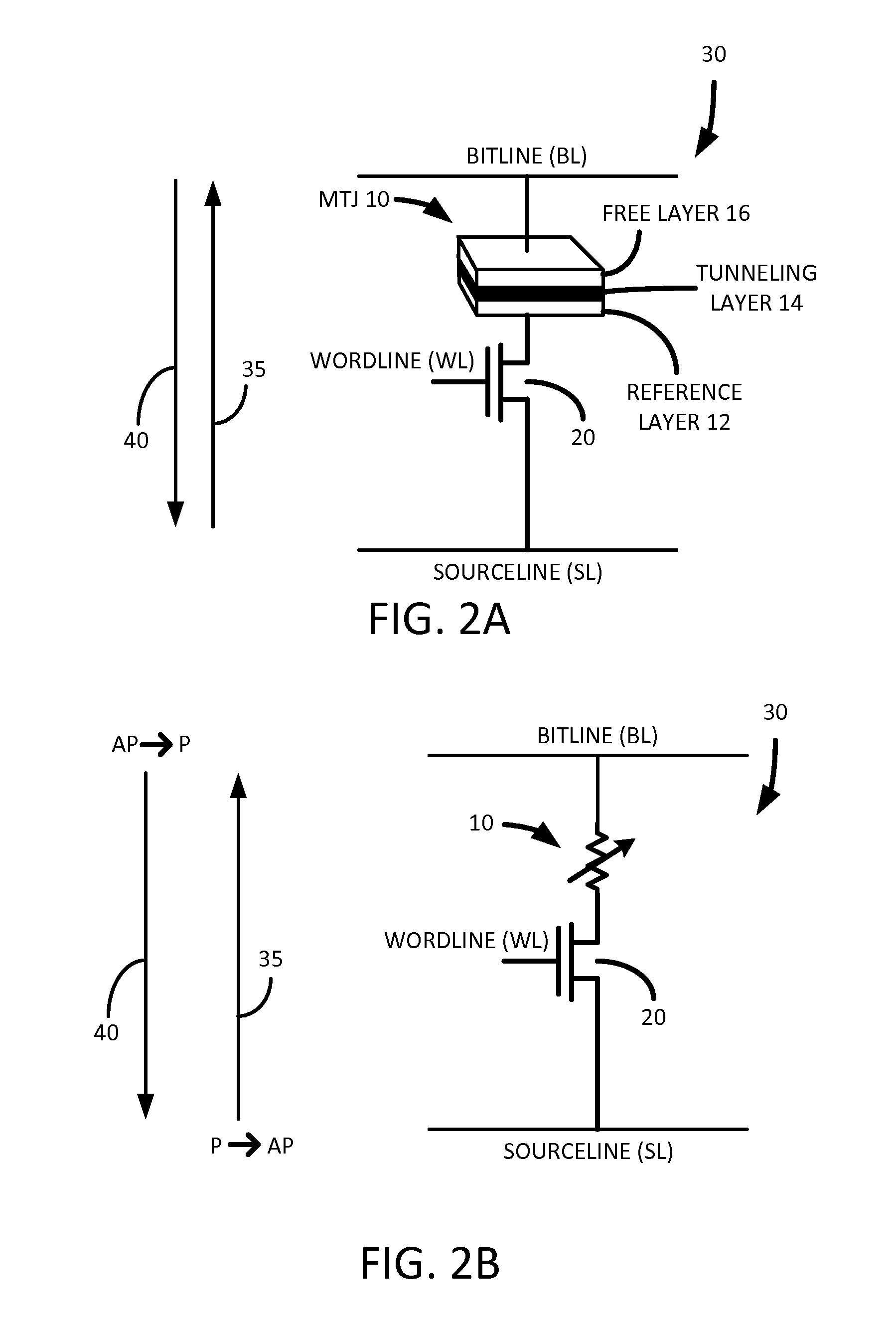

Architecture, system and method for testing resistive type memory

Example embodiments include a method for massive parallel stress testing of resistive type memories. The method can include, for example, disabling one or more internal analog voltage generators, configuring memory circuitry to use a common plane voltage (VCP) pad or external pin, connecting bit lines of the memory device to a constant current driver, which works in tandem with the VCP pad or external pin to perform massive parallel read or write operations. The inventive concepts include fast test setup and initialization of the memory array. The data can be retention tested or otherwise verified using similar massive parallel testing techniques. Embodiments also include a memory test system including a memory device having DFT circuitry configured to perform massive parallel stress testing, retention testing, functional testing, and test setup and initialization.

Owner:SAMSUNG ELECTRONICS CO LTD

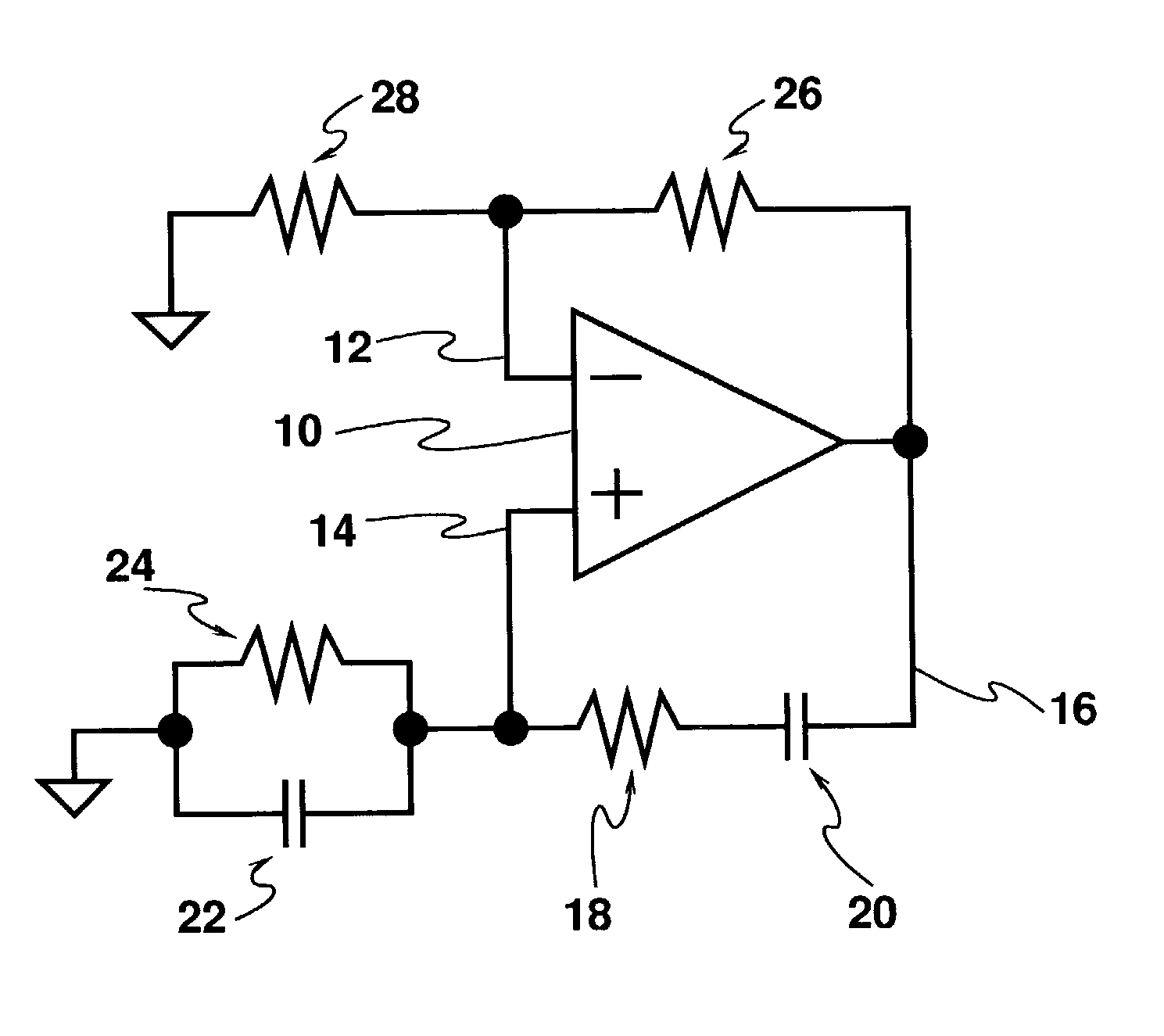

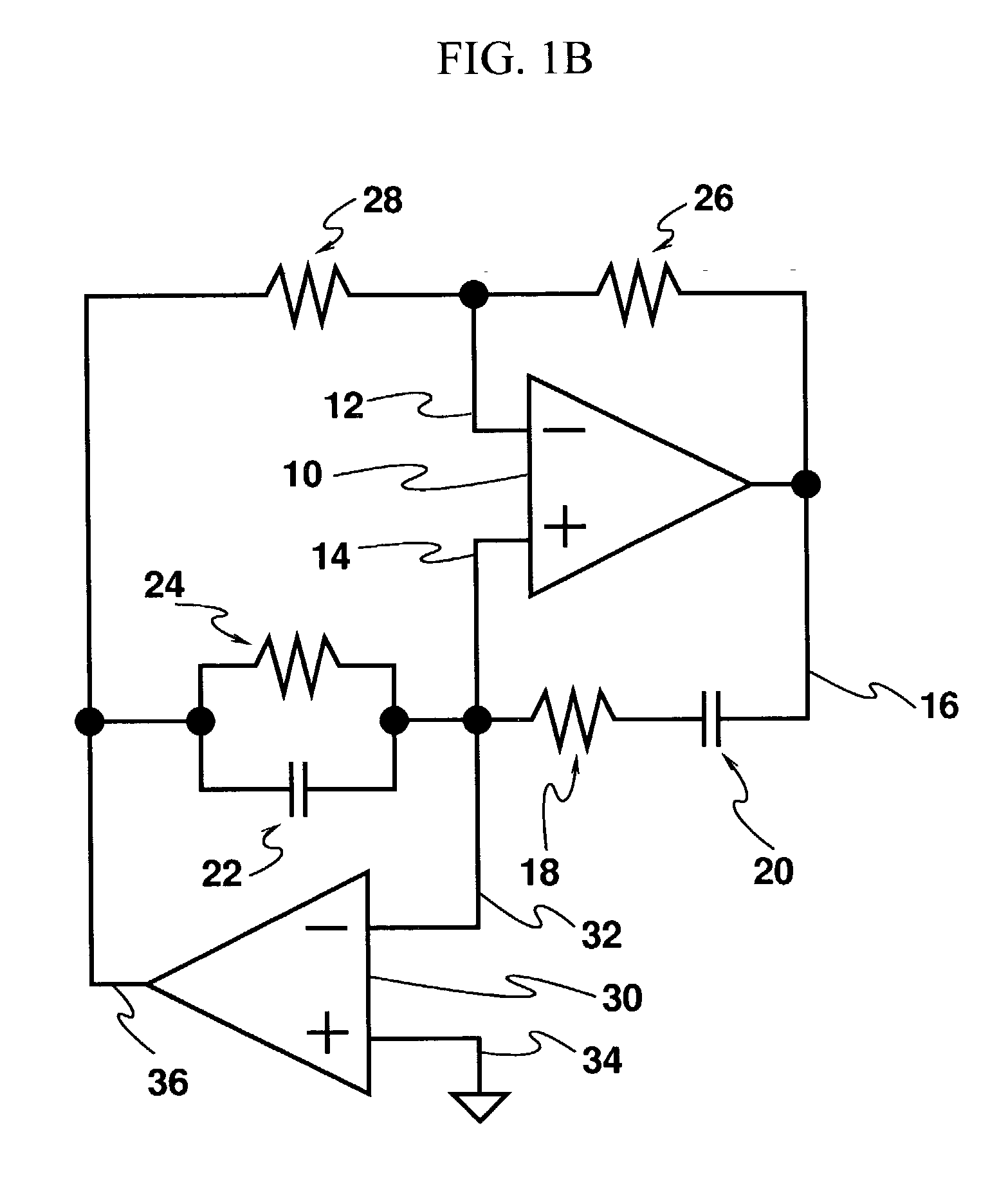

Non-linear reference waveform generators for data conversion and other applications

A machine for generating piece-wise non-linear reference waveforms at low cost, particularly in integrated circuit technologies. The reference waveform can be a distorted sinusoidal waveform such as a truncated sinusoid. Provided that local distortion is low enough in some waveform segments, for instance, in the transitions between saturation levels, parts of the waveform can be used as non-linear reference segments in data converters based on comparison of inputs. The distorted sinusoidal waveform can also be filtered to reduce distortion. In the preferred embodiment of the invention, suitable sinusoids with high or low distortion can be generated using an op-amp configured with a Wien Bridge providing positive feedback and a resistor bridge providing negative feedback. The invention differs from prior art Wien Bridge oscillators in having negative feedback gain that forces the op-amp output into saturation. One filter stage is provided by the positive feedback network itself. Additional filtering can allow further distortion reduction. The specification suggests a cascade of servo-grounded Wien Bridge stages as a simple and efficient approach to the filtering. The invention can be fabricated using a small number of parts which are easy to fabricate with existing integrated circuit technologies such as CMOS, so that low-cost, low-power implementations can be included in mixed-signal chips as parts of A / D converters, D / A converters, or calibration signal generators. The invention is also amenable to massively parallel and shared implementations, for instance, on a CMOS image sensor array chip or on a image display device.

Owner:MURPHY CHARLES DOUGLAS

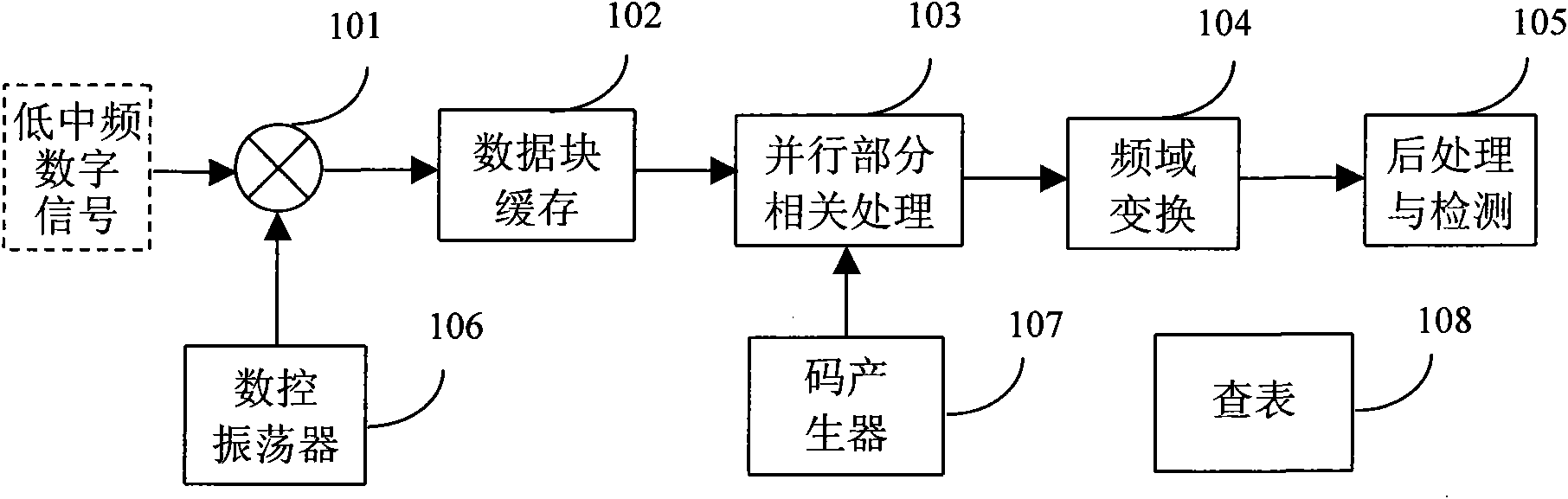

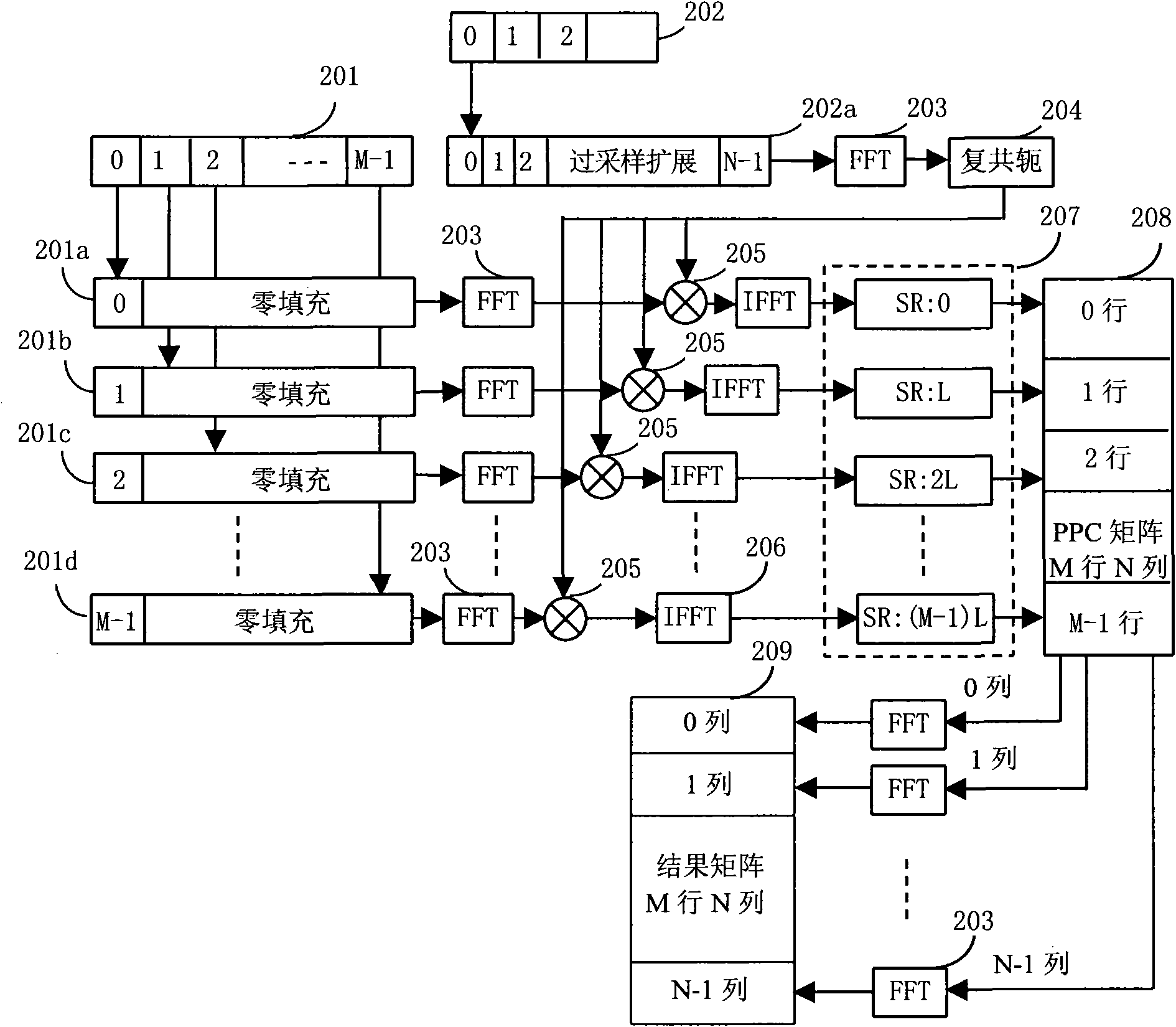

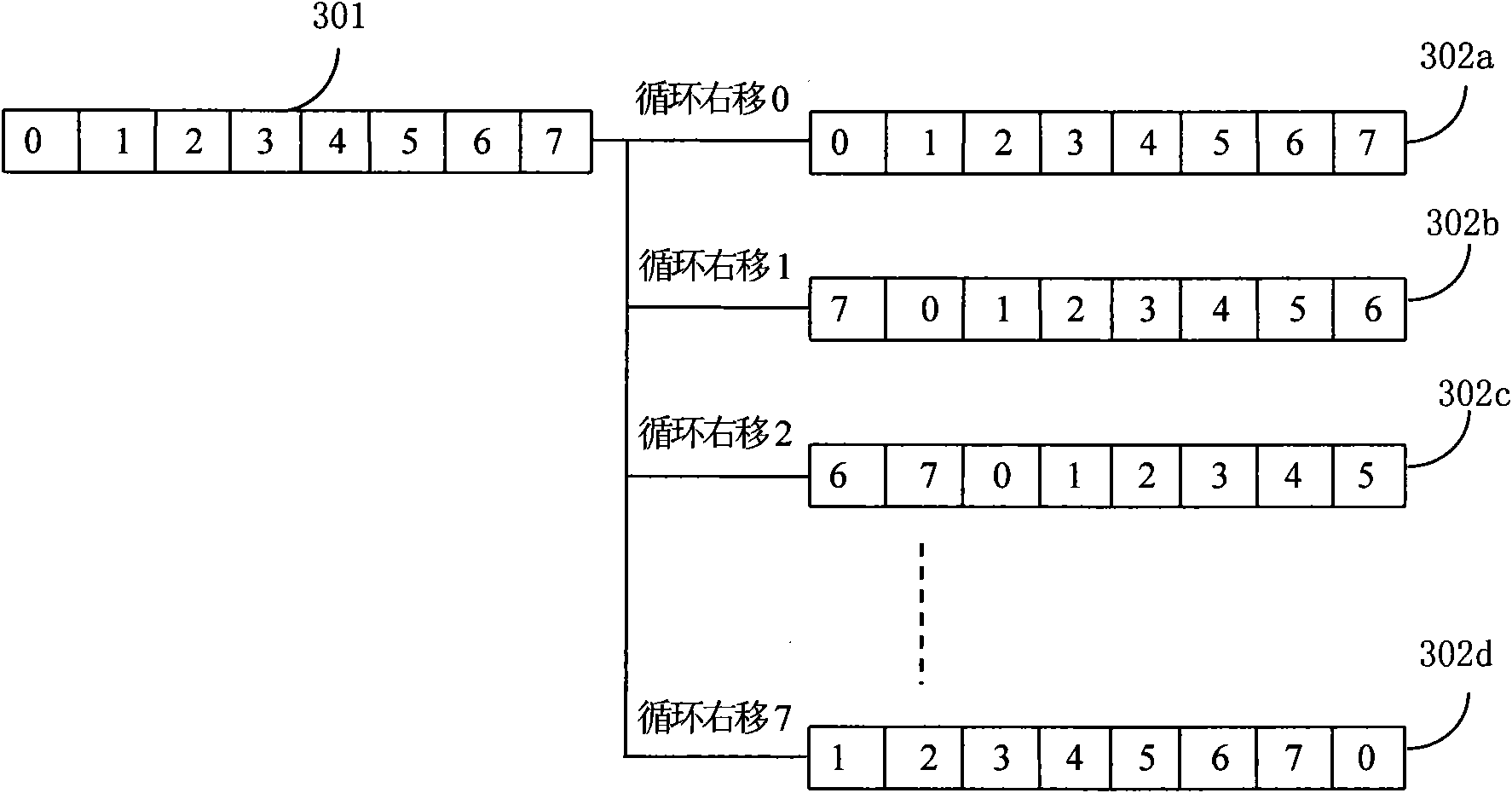

GPS signal large-scale parallel quick capturing method and module thereof

ActiveCN101625404ALow costCapture flexibleBeacon systemsTransmissionData segmentIntermediate frequency

The invention discloses a GPS signal large-scale parallel quick capturing method, which comprises the following steps: configuring a large-scale parallel quick capturing module firmware comprising submodules of multiplier, data block cache, parallel part correlative processing, frequency domain transformation, postprocessing and digital controlled oscillator, code generator and the like in a system CPU; through the calling computation, converting low-medium frequency digital signals into baseband signals in a processing procedure to combine a data block; performing zeroing extension of the length and the data block on each equational data section in the data block; then based on FFT transformation computation, performing parallel part correlative PPC processing on each extended data section and local spreading codes, and performing FFT transformation on each line of a formed PPC matrix to obtain a result matrix; and performing coherent or incoherent integration on a plurality of result matrixes formed by processing a plurality of data blocks to increase the processing gain, improve the capturing sensitivity, roughly determine the code phase and the Doppler frequency of GPS signals, and achieve two-dimensional parallel quick capturing of the GPS signals. The method has high processing efficiency and high capturing speed, and can be applied to various GPS positioning navigation aids.

Owner:杭州中科微电子有限公司

System and method for accelerating network applications using an enhanced network interface and massively parallel distributed processing

InactiveUS20170180272A1Image memory managementProcessor architectures/configurationMassively parallelExtensibility

The amount of data being delivered across networks is constantly increasing. This system and method demonstrates an improved system and method for establishing secure network connections with increased scalability and reduced latency. This approach also includes arbitrary segmentation of incoming network traffic, and dynamic assignment of parallel processing resources to execute application code specific to the segmented packets. The method uses a modified network state model to optimize the delivery of information and compensate for overall network latencies by eliminating excessive messaging. Network data is application generated, and encoded into pixel values in a shared framebuffer using many processors in parallel. These pixel values are transported over existing high speed video links to the Advanced Network Interface Card, where the network data is extracted and placed directly on to high speed network links.

Owner:BERNATH TRACEY

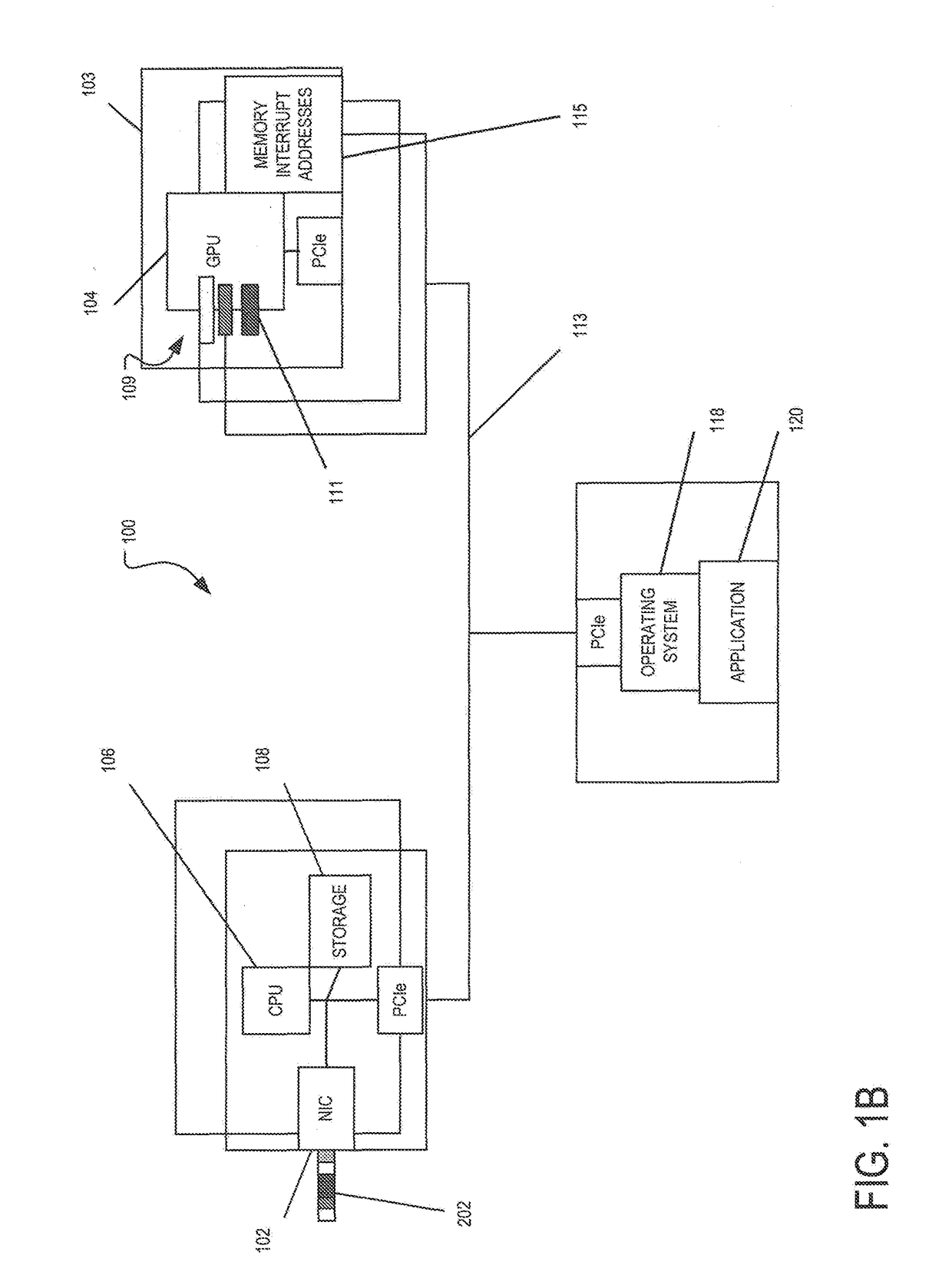

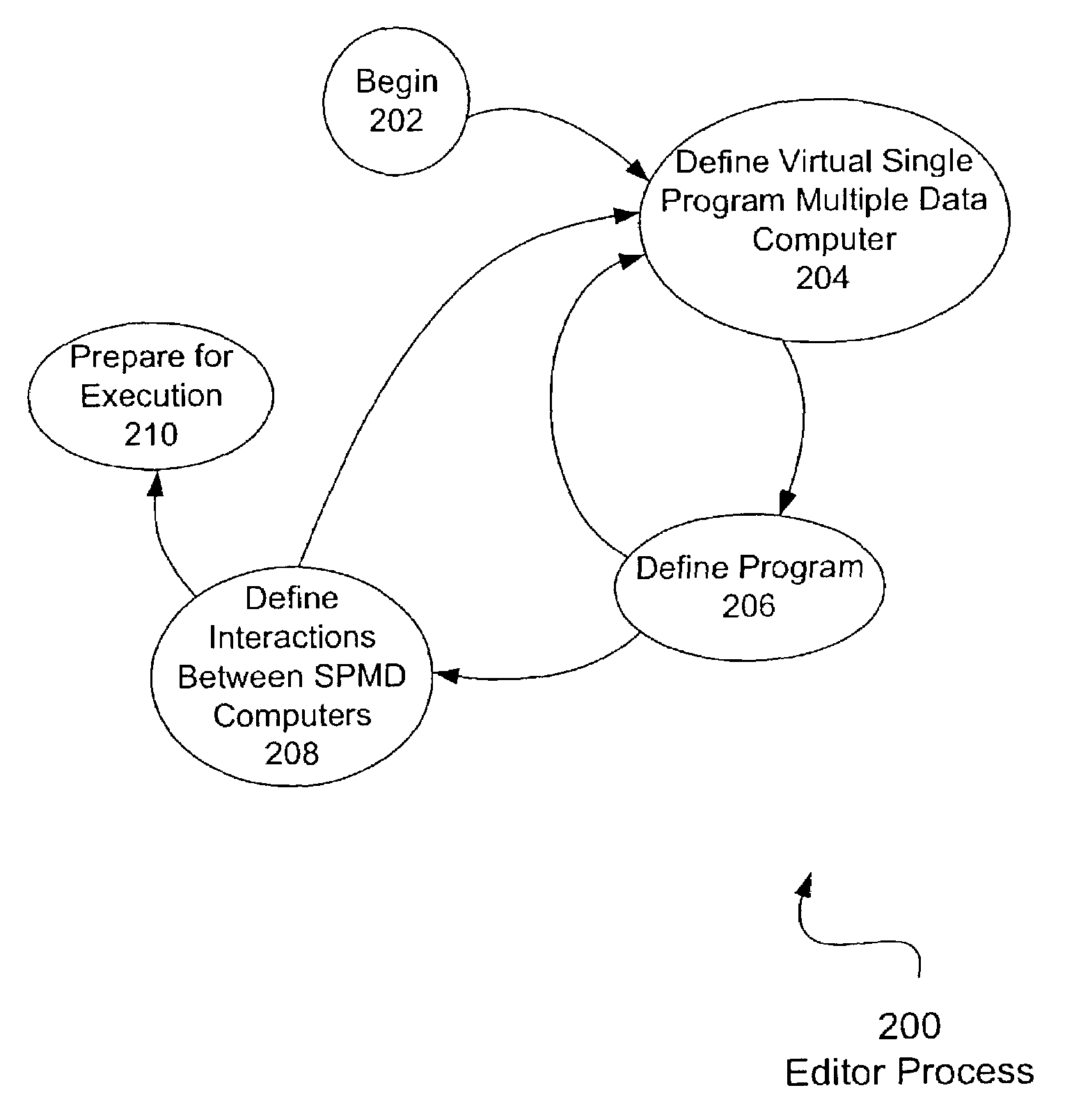

Scalable parallel processing on shared memory computers

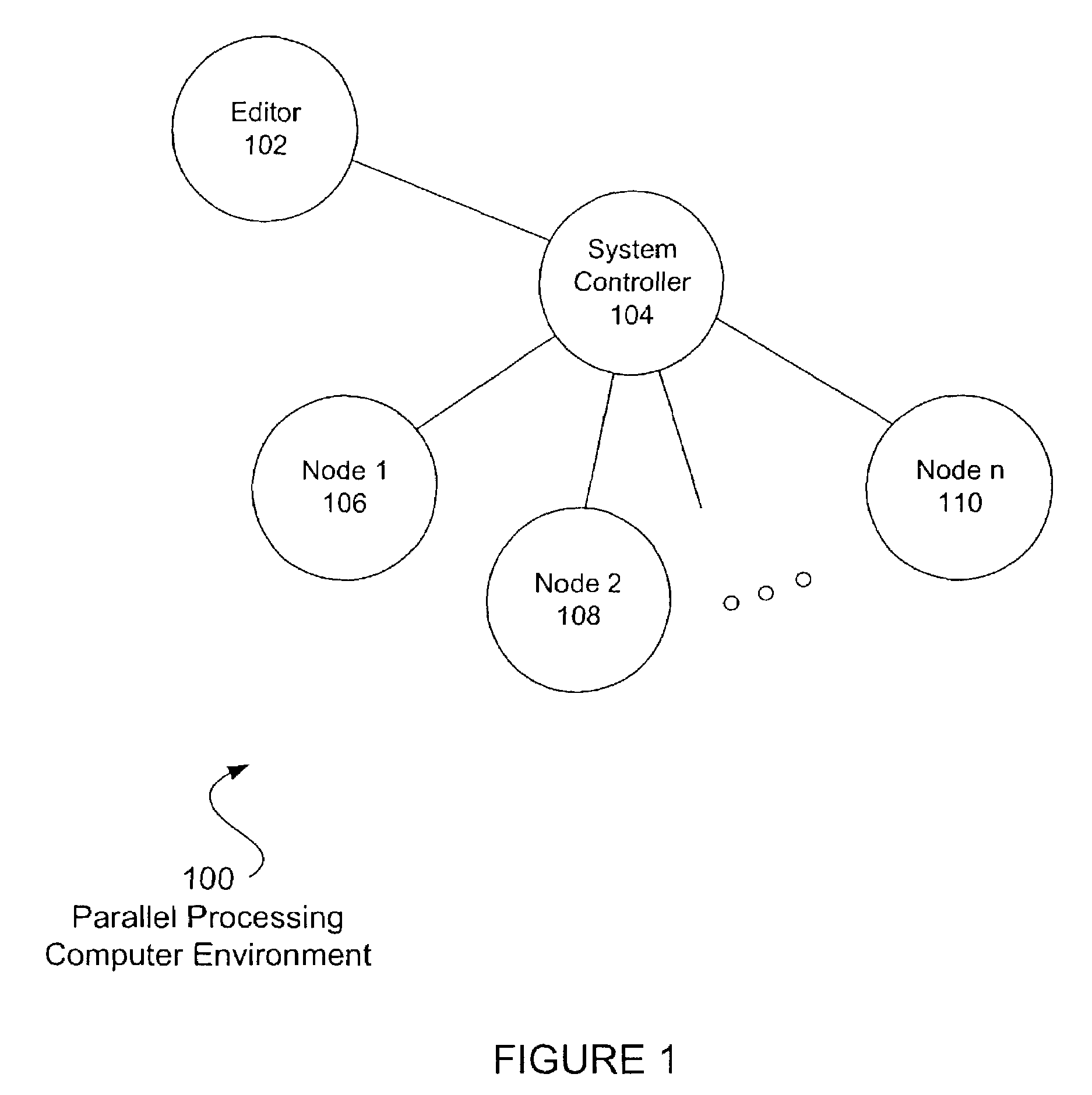

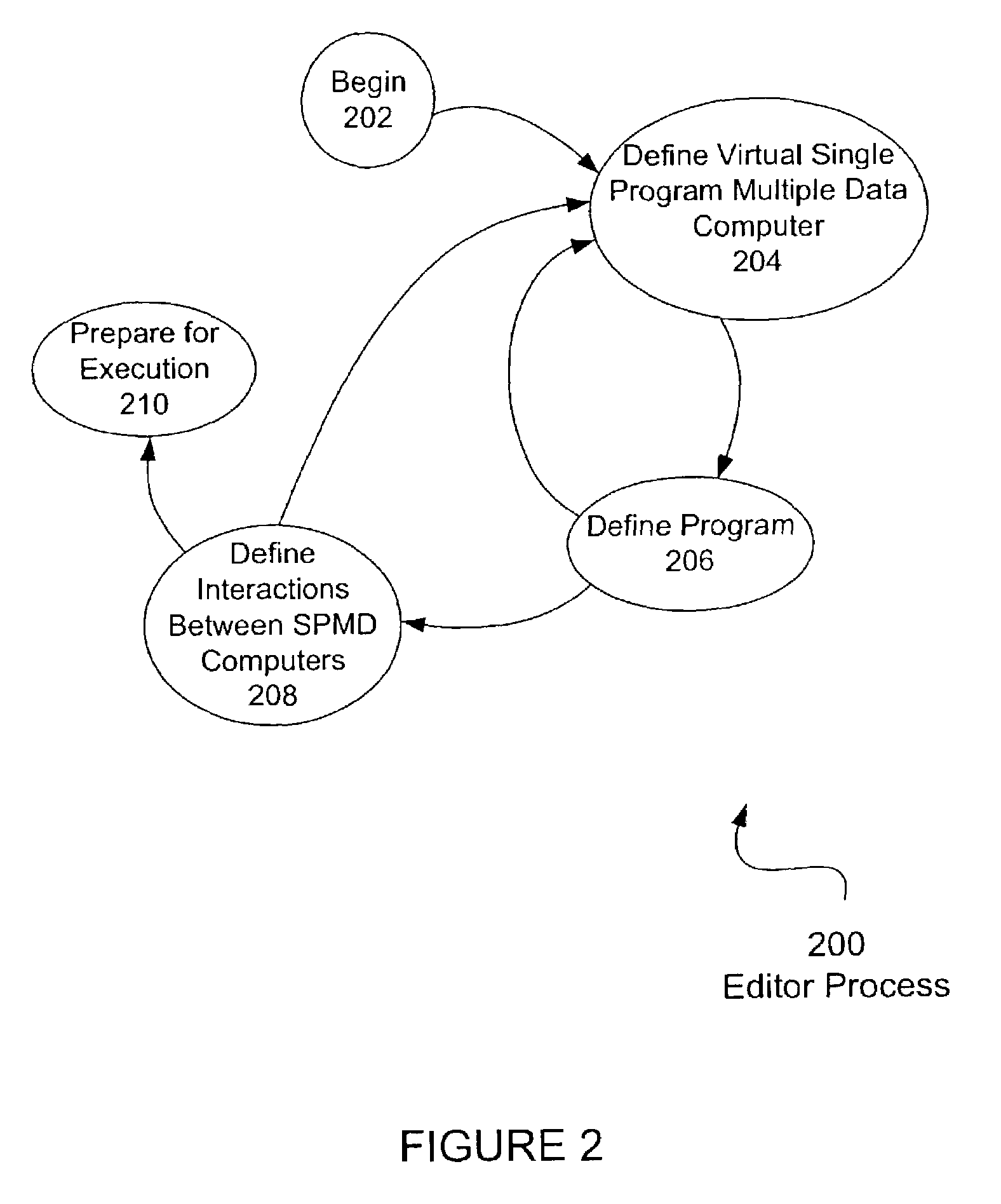

ActiveUS7454749B2Resource allocationGeneral purpose stored program computerMassively parallelHardware architecture

A virtual parallel computer is created within a programming environment comprising both shared memory and distributed memory architectures. At run time, the virtual architecture is mapped to a physical hardware architecture. In this manner, a massively parallel computing program may be developed and tested on a first architecture and run on a second architecture without reprogramming.

Owner:INNOVATIONS IN MEMORY LLC +1

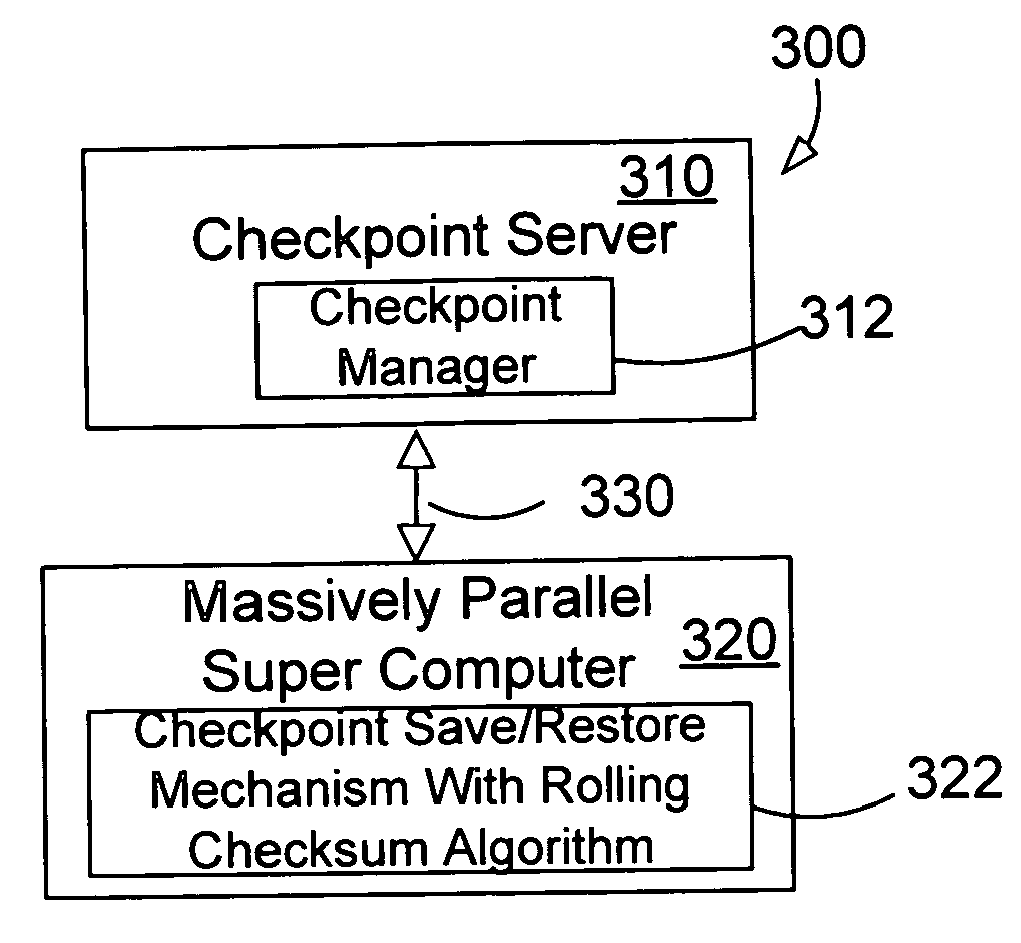

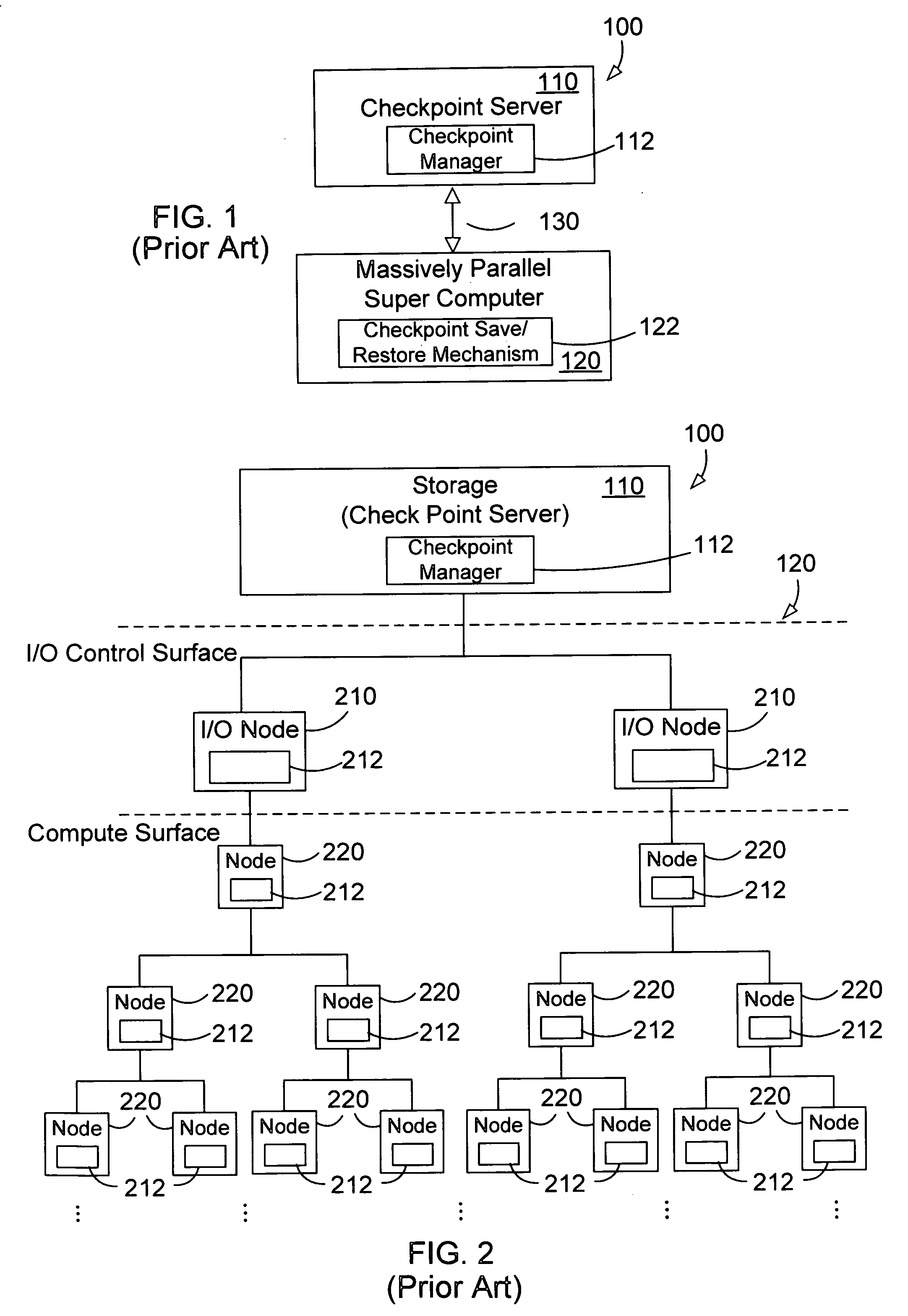

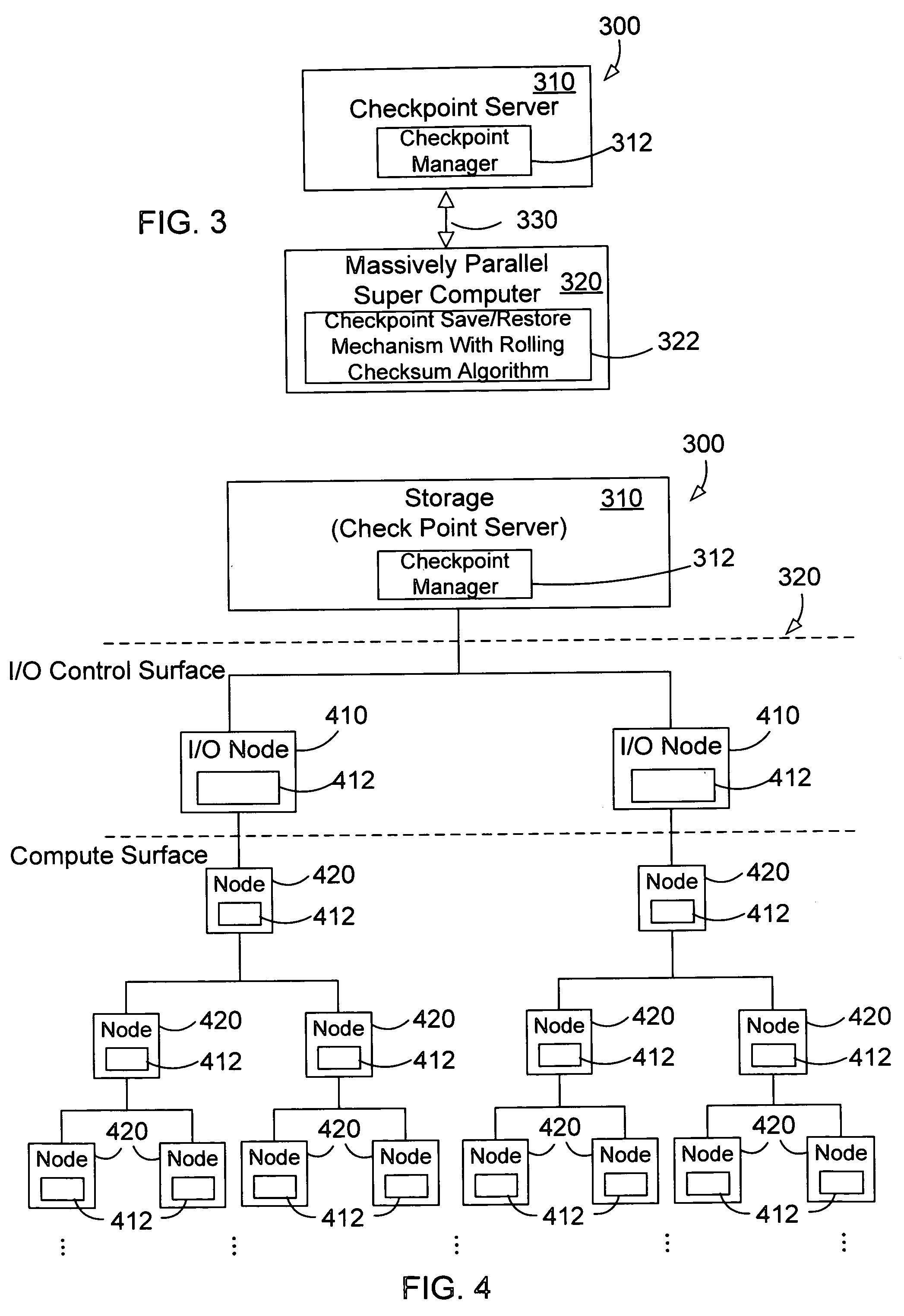

Method and apparatus for template based parallel checkpointing

InactiveUS20060236152A1Reduce the amount requiredImprove efficiencyError detection/correctionData compressionParallel computing

A method and apparatus for a template based parallel checkpoint save for a massively parallel super computer system using a parallel checksum algorithm such as rsync. In preferred embodiments, the checkpoint data for each node is compared to a template checkpoint file that resides in the storage and that was previously produced. Embodiments herein greatly decrease the amount of data that must be transmitted and stored for faster checkpointing and increased efficiency of the computer system. Embodiments are directed a parallel computer system with nodes arranged in a cluster with a high speed interconnect that can perform broadcast communication. The checkpoint contains a set of actual small data blocks with their corresponding checksums from all nodes in the system. The data blocks may be compressed using conventional non-lossy data compression algorithms to further reduce the overall checkpoint size.

Owner:IBM CORP

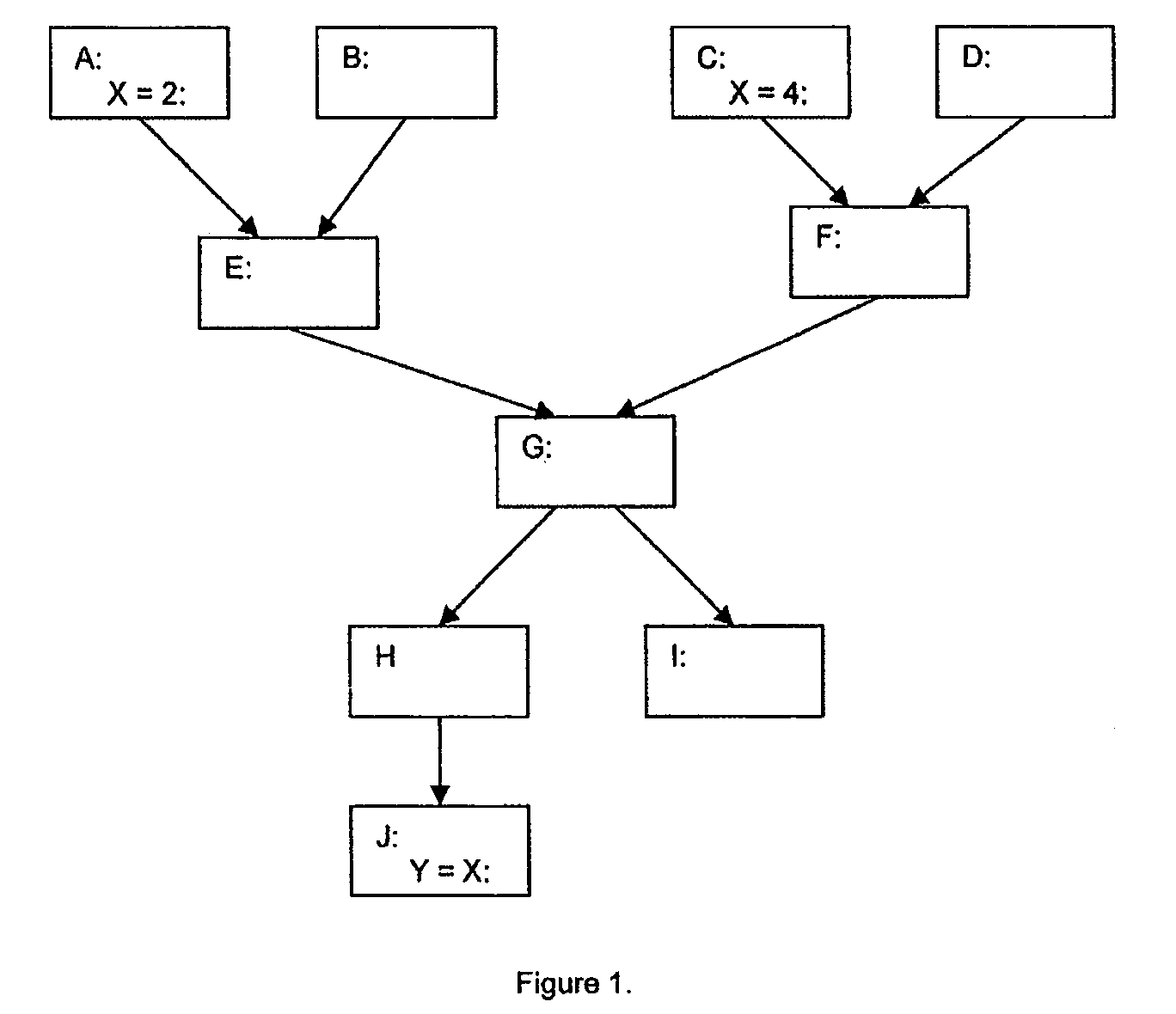

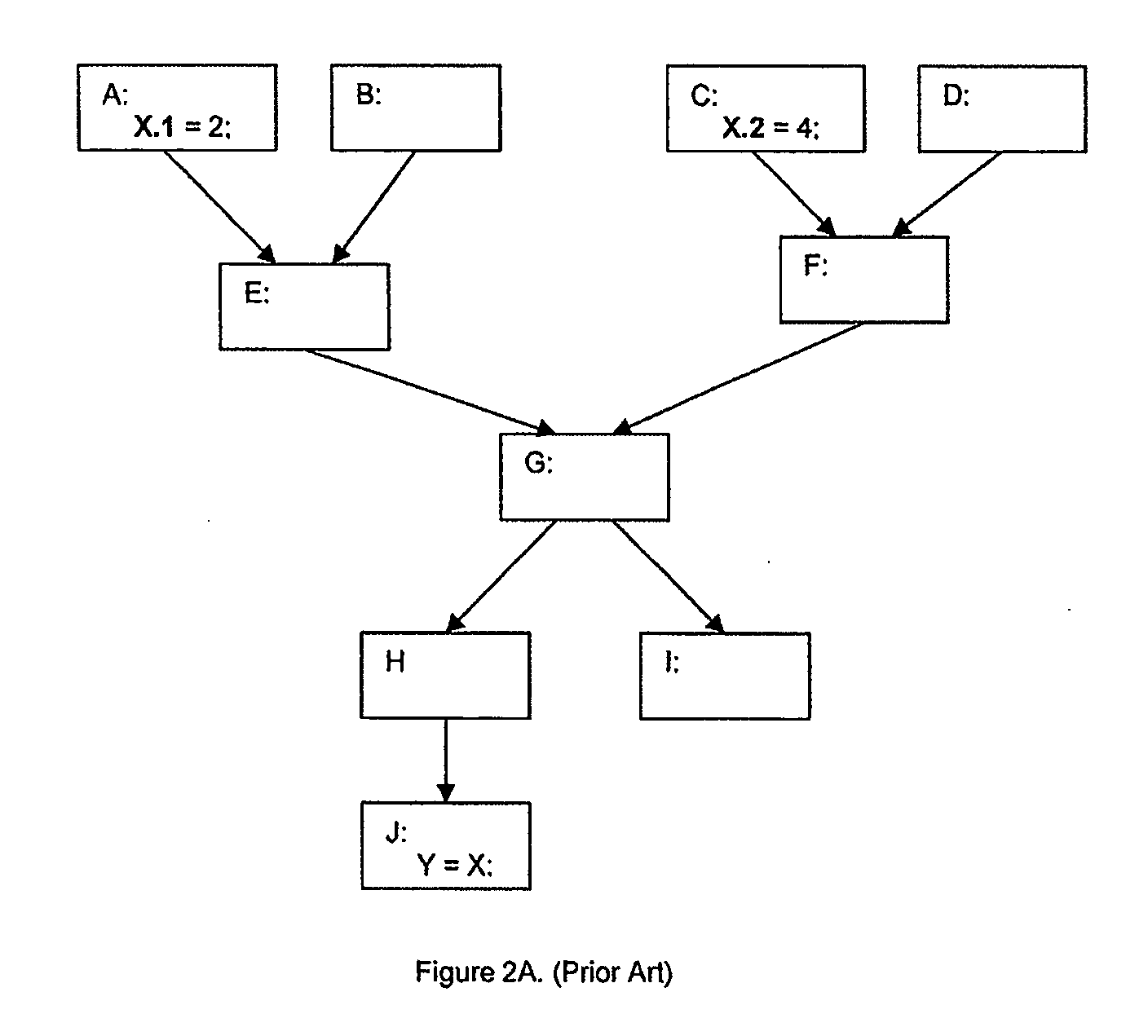

Highly scalable parallel static single assignment for dynamic optimization on many core architectures

A method, system, and computer readable medium for converting a series of computer executable instructions in control flow graph form into an intermediate representation, of a type similar to Static Single Assignment (SSA), used in the compiler arts. The indeterminate representation may facilitate compilation optimizations such as constant propagation, sparse conditional constant propagation, dead code elimination, global value numbering, partial redundancy elimination, strength reduction, and register allocation. The method, system, and computer readable medium are capable of operating on the control flow graph to construct an SSA representation in parallel, thus exploiting recent advances in multi-core processing and massively parallel computing systems. Other embodiments may be employed, and other embodiments are described and claimed.

Owner:INTEL CORP

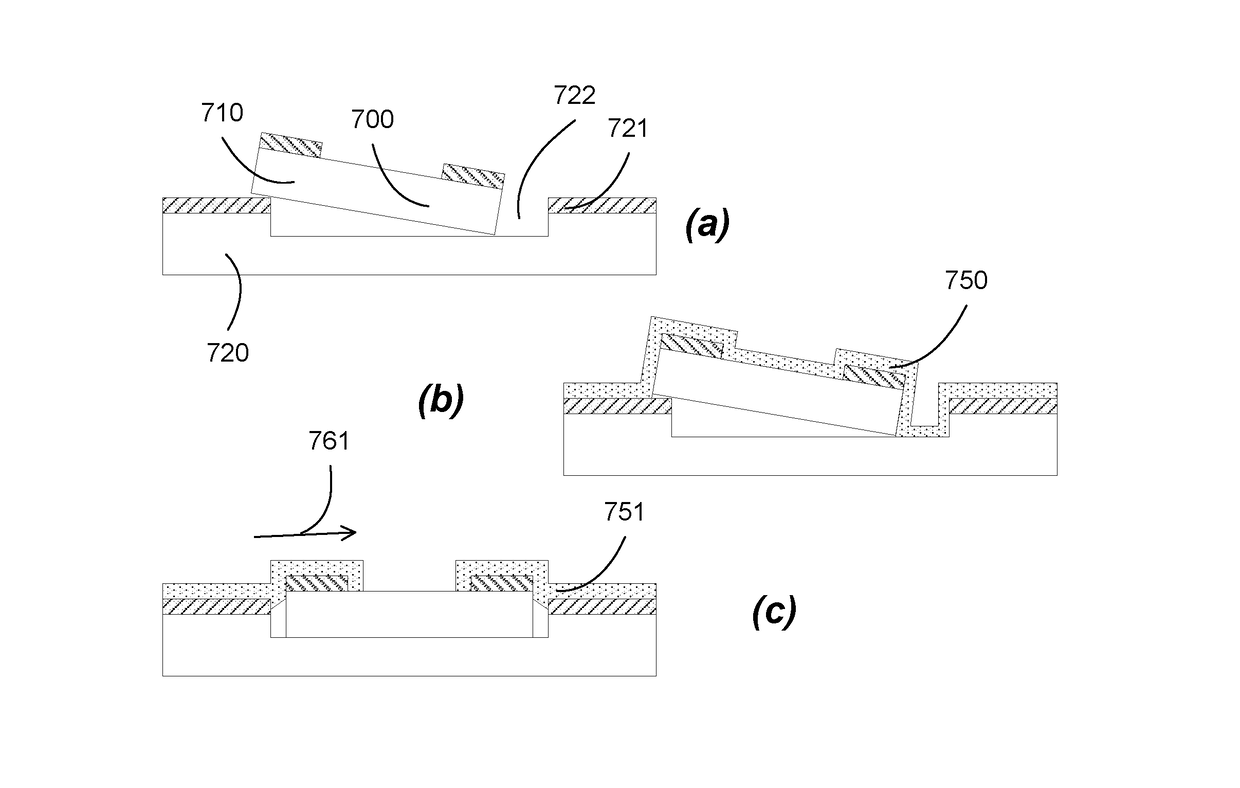

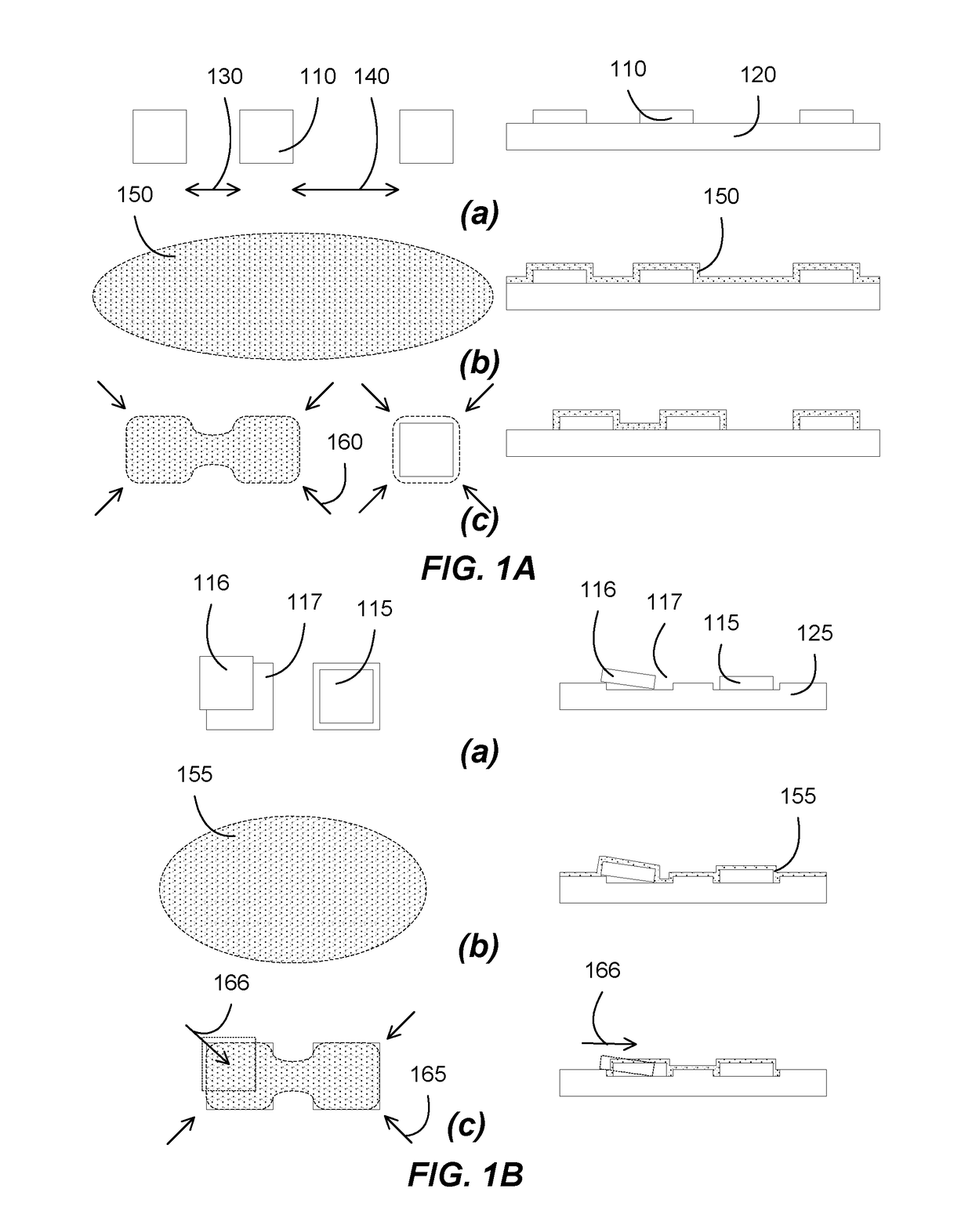

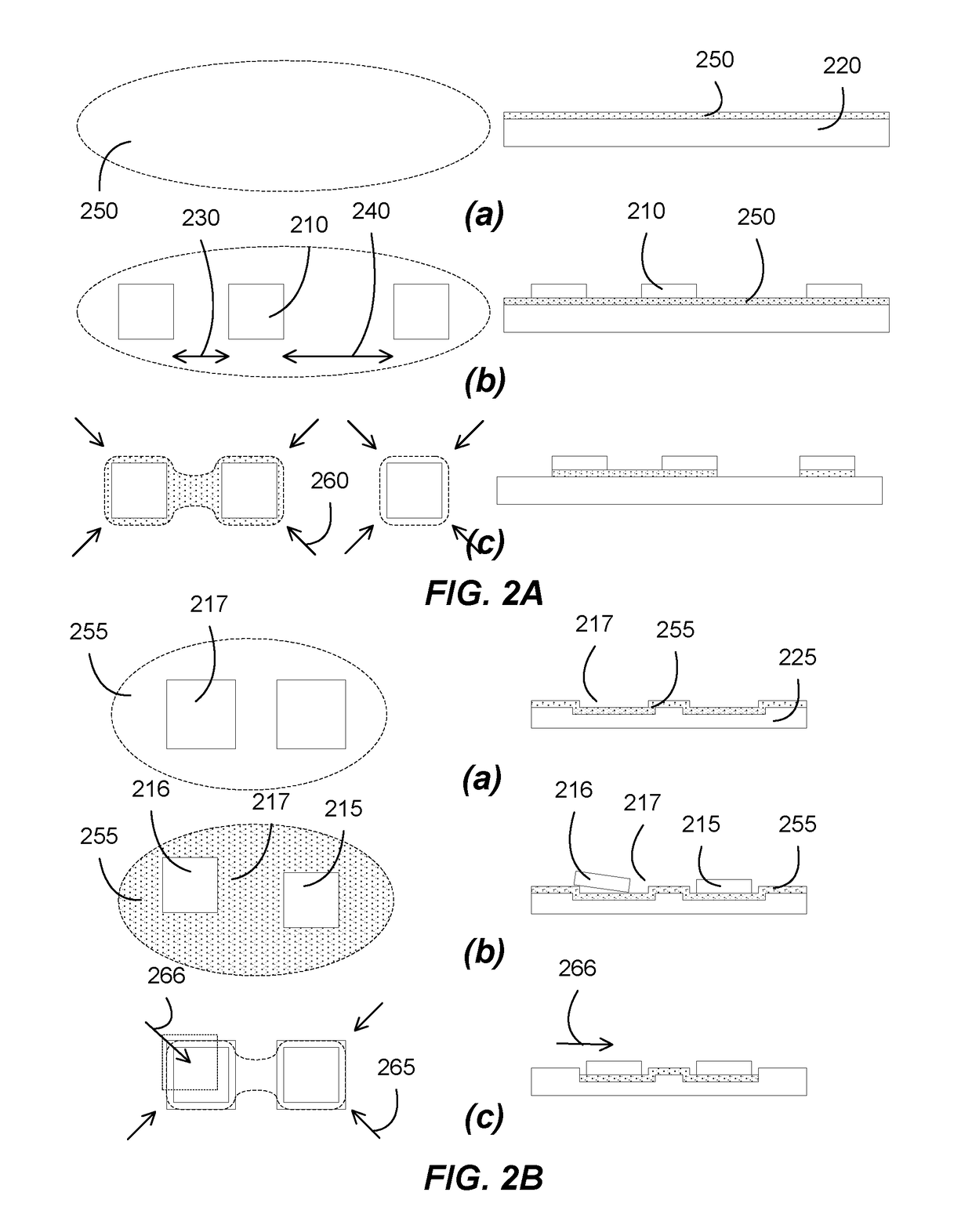

Massively parallel transfer of microLED devices

InactiveUS20180190614A1Correct misalignmentSemiconductor/solid-state device detailsSolid-state devicesMassively parallelDisplay device

MicroLED devices can be transferred in large numbers to form microLED displays using processes such as pick-and-place, thermal adhesion transfer, or fluidic transfer. A blanket solder layer can be applied to connect the bond pads of the microLED devices to the terminal pads of a support substrate. After heating, the solder layer can connect the bond pads with the terminal pads in vicinity of each other. The heated solder layer can correct misalignments of the microLED devices due to the transfer process.

Owner:KUMAR ANANDA H +2

Apparatus and method for route summarization and distribution in a massively parallel router

InactiveUS20050013308A1Limited bandwidthReduces forwarding table space requirementData switching by path configurationMassively parallelComputer network

A router for interconnecting external devices coupled to the router. The router comprises a switch fabric and a plurality of routing nodes coupled to the switch fabric. Each routing node is capable of transmitting data packets to, and receiving data packets from, the external devices and is further capable of transmitting data packets to, and receiving data packets from, other routing nodes via the switch fabric. The router also comprises a control processor for comparing the N most significant bits of a first subnet address associated with a first external port of a first routing node with the N most significant bits of a second subnet address associated with a second external port of the first routing node. The router determines a P-bit prefix of similar leading bits in the first and second subnet addresses and transmits the P-bit prefix to other routing nodes.

Owner:SAMSUNG ELECTRONICS CO LTD

Systems and methods for rapid processing and storage of data

ActiveUS20110307647A1Faster bandwidthImprove latencyMemory architecture accessing/allocationTransmissionMassively parallelProcessing core

Systems and methods of building massively parallel computing systems using low power computing complexes in accordance with embodiments of the invention are disclosed. A massively parallel computing system in accordance with one embodiment of the invention includes at least one Solid State Blade configured to communicate via a high performance network fabric. In addition, each Solid State Blade includes a processor configured to communicate with a plurality of low power computing complexes interconnected by a router, and each low power computing complex includes at least one general processing core, an accelerator, an I / O interface, and cache memory and is configured to communicate with non-volatile solid state memory.

Owner:CALIFORNIA INST OF TECH

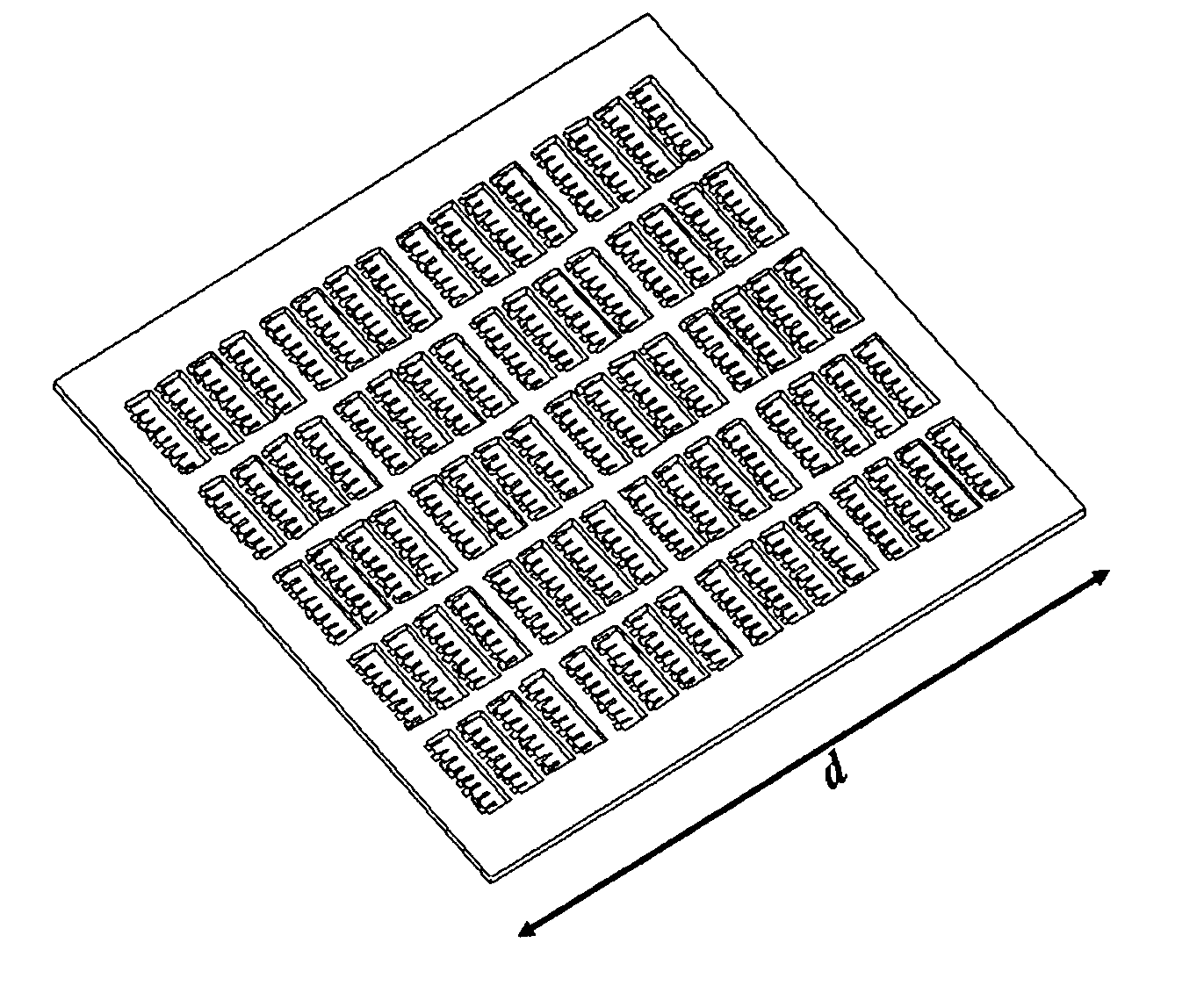

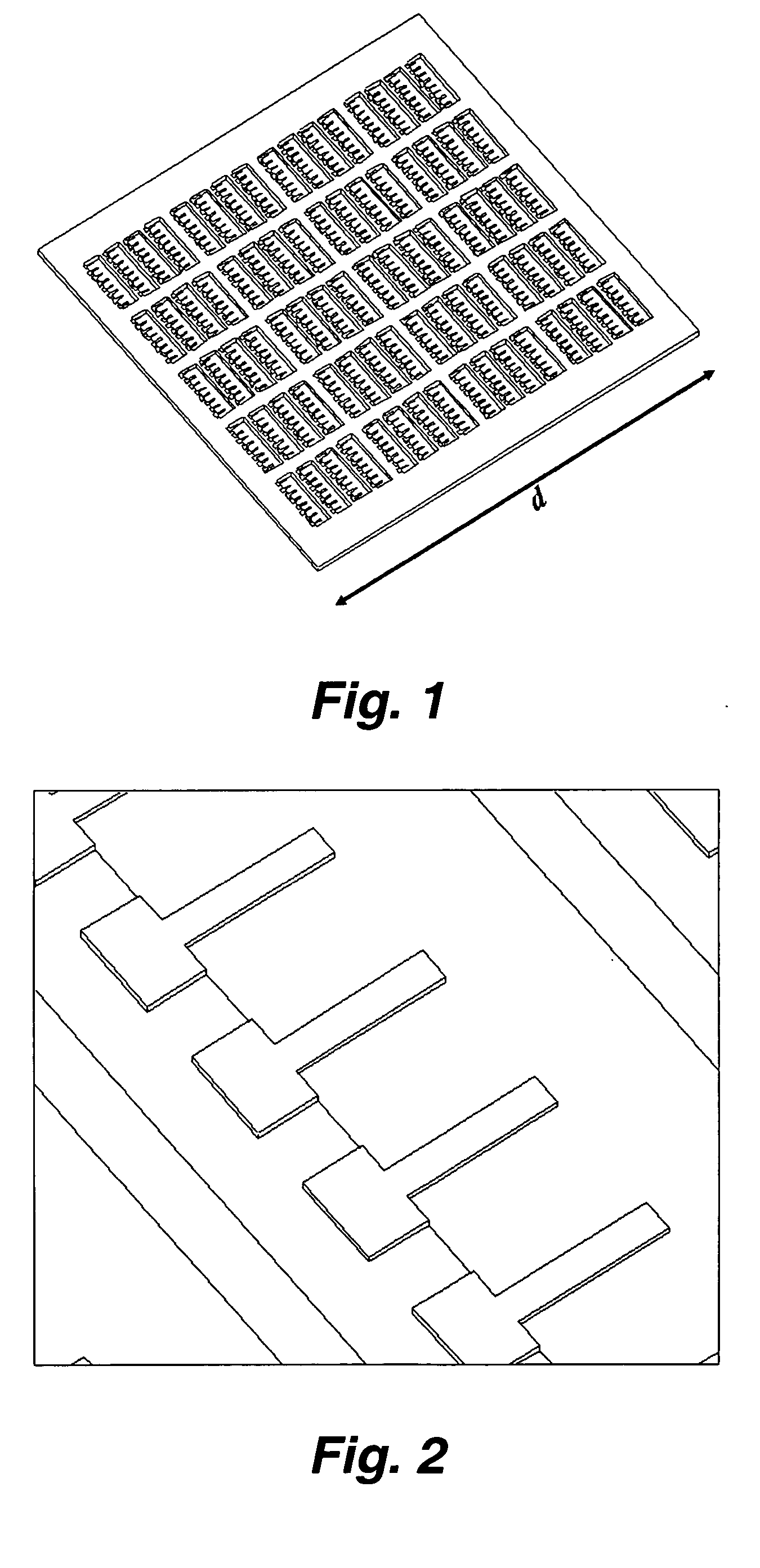

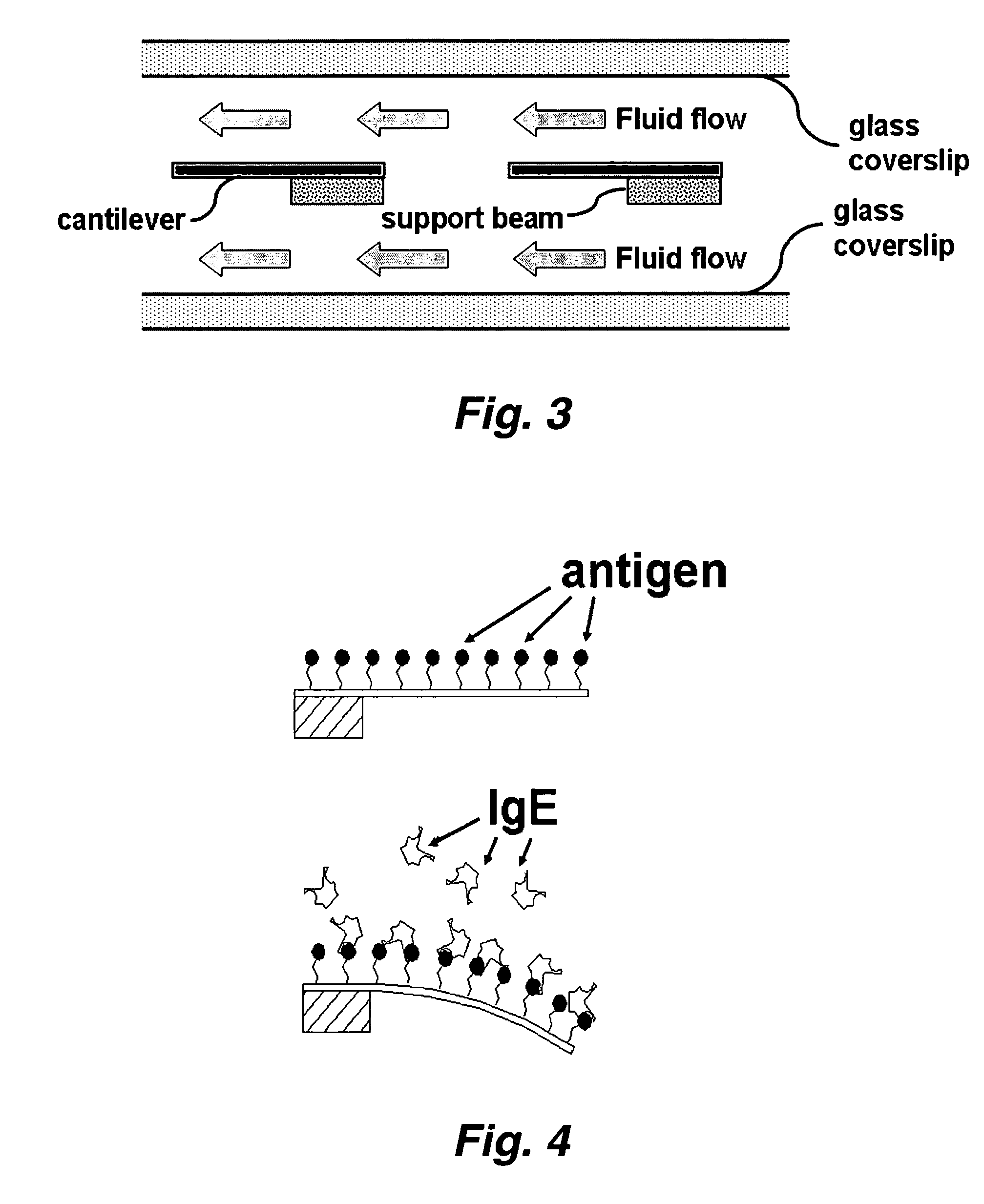

Large scale parallel immuno-based allergy test and device for evanescent field excitation of fluorescence

InactiveUS20070117217A1Quick checkRapid diagnosisMaterial analysis by optical meansDisease diagnosisAntigenMassively parallel

This invention provides a device and methods for the rapid detection and / or diagnosis and / or characterization of one or more allergies (e.g., causes IgE mediated allergic reaction (immediate hypersensitvity) in a mammal (e.g., a human or a non-human mammal). In certain embodiments, the device comprises a microcantilever array where different cantilevers comprising the array bear different antigens. Binding of IgE to the antigen on a cantilever causes bending of the cantilever which can be readily detected.

Owner:RGT UNIV OF CALIFORNIA

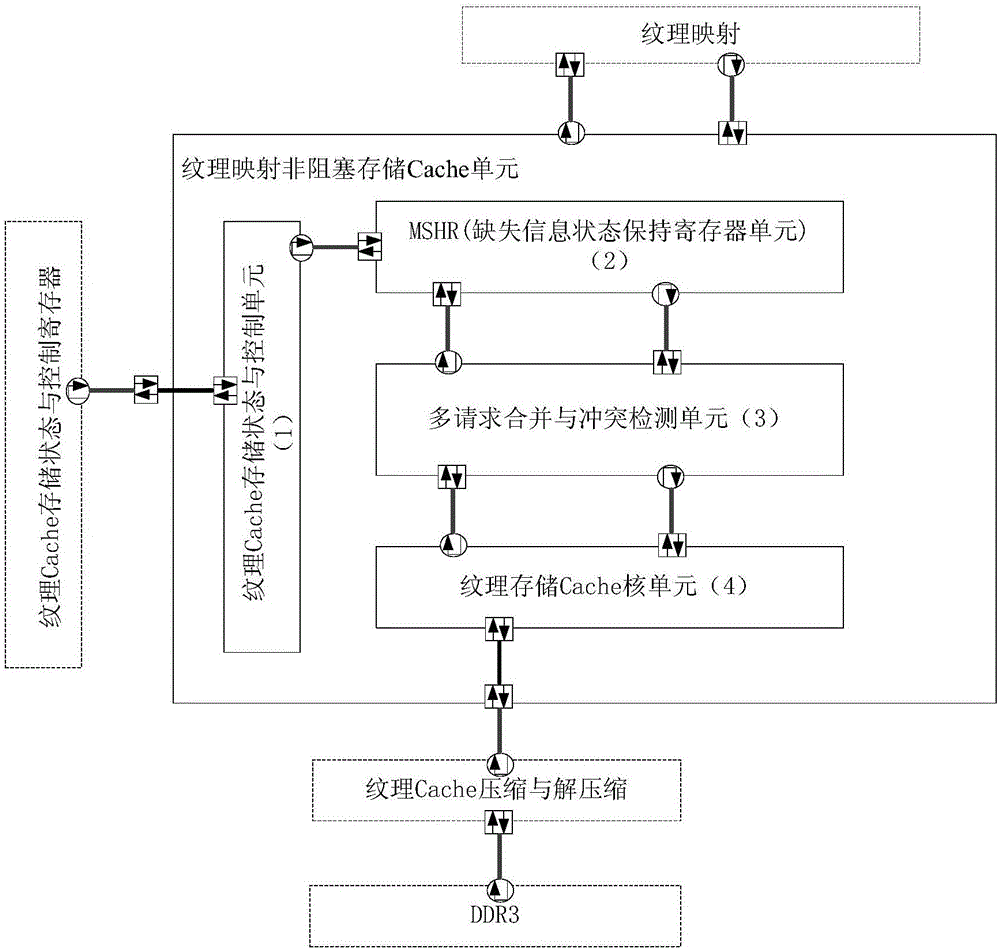

Modeling structure of GPU texture mapping non-blocking memory Cache

ActiveCN106683158AAchieving massive parallelismRealize requirementsImage memory managementProcessor architectures/configurationCollision detectionSignal design

The invention belongs to the field of computer figures, and provides a modeling structure of a GPU texture mapping non-blocking memory Cache. The modeling structure comprises a texture Cache storage status and control unit (1), a missing information status handling register memory cell (MSHR) (2), a multi-request combination and collision detection unit (3), and a texture memory Cache core unit (4). Hardware modeling with accurate periods is conducted on a texture memory Cache access process, parallel processing of texture access request data is realized through a multi-process mode including input request collision detection, request combination and division, multiple ports, multiple Banks and non-blocking flowing, and large-scale parallelism and high throughput demands of texture access data are met effectively. Moreover, the modeling structure effectively avoids configuration of complex circuit signal design and rapid assessment large-scale hardware system, is suitable for system level design and development of circuits in an early age, and provides effective reference for products and functions of the same kind.

Owner:XIAN AVIATION COMPUTING TECH RES INST OF AVIATION IND CORP OF CHINA

Distributed processing RAID system

InactiveUS20060168398A1Reduce component utilizationReduces system power requirementEnergy efficient ICTMemory loss protectionRAIDMassively parallel

A distributed processing RAID data storage system utilizing optimized methods of data communication between elements. In a preferred embodiment, such a data storage system will utilize efficient component utilization strategies at every level. Additionally, component interconnect bandwidth will be effectively and efficiently used; systems power will be rationed; systems component utilization will be rationed; enhance data-integrity and data-availability techniques will be employed; physical component packaging will be organized to maximize volumetric efficiency; and control logic of the implemented that maximally exploits the massively parallel nature of the component architecture.

Owner:CADARET PAUL

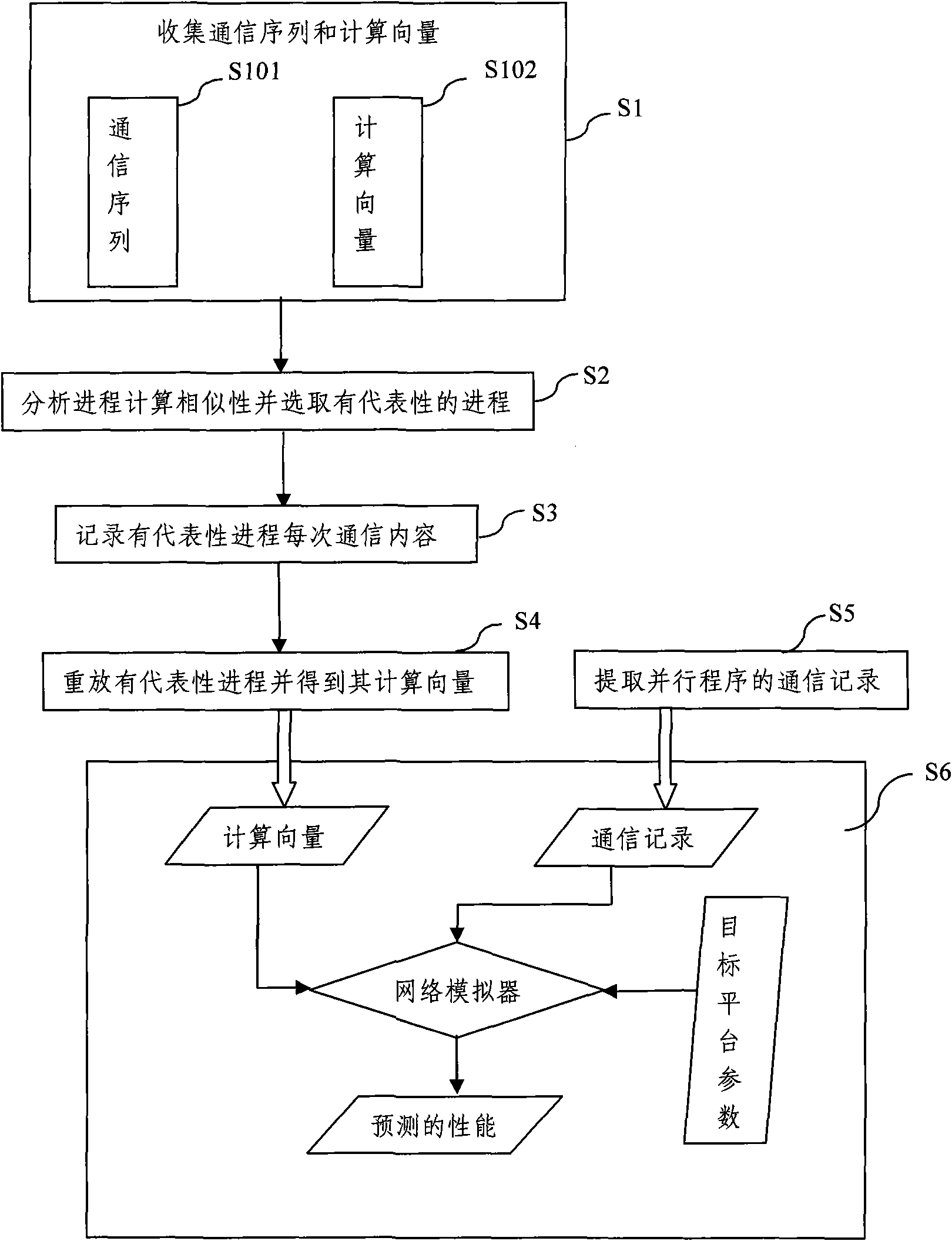

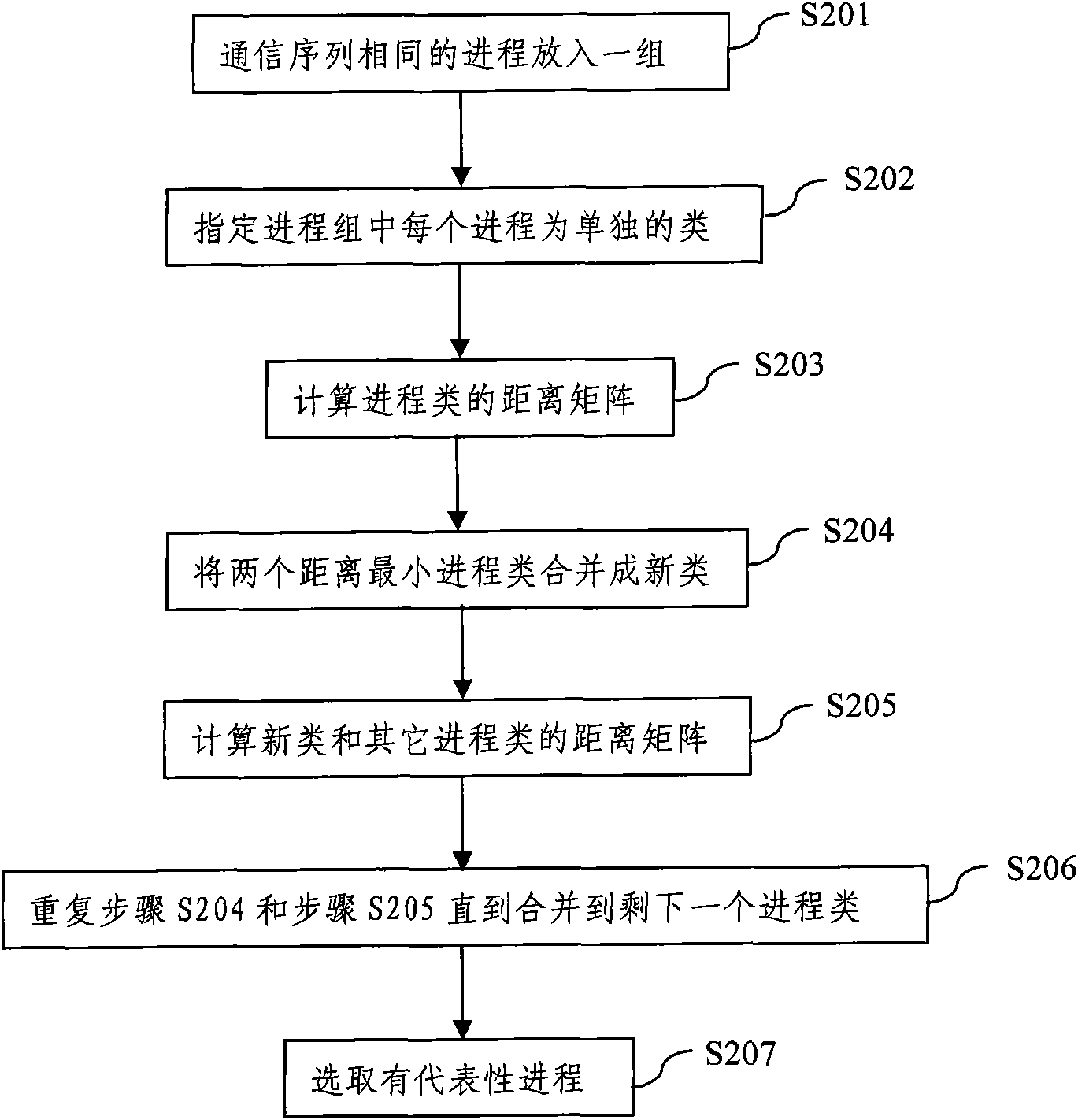

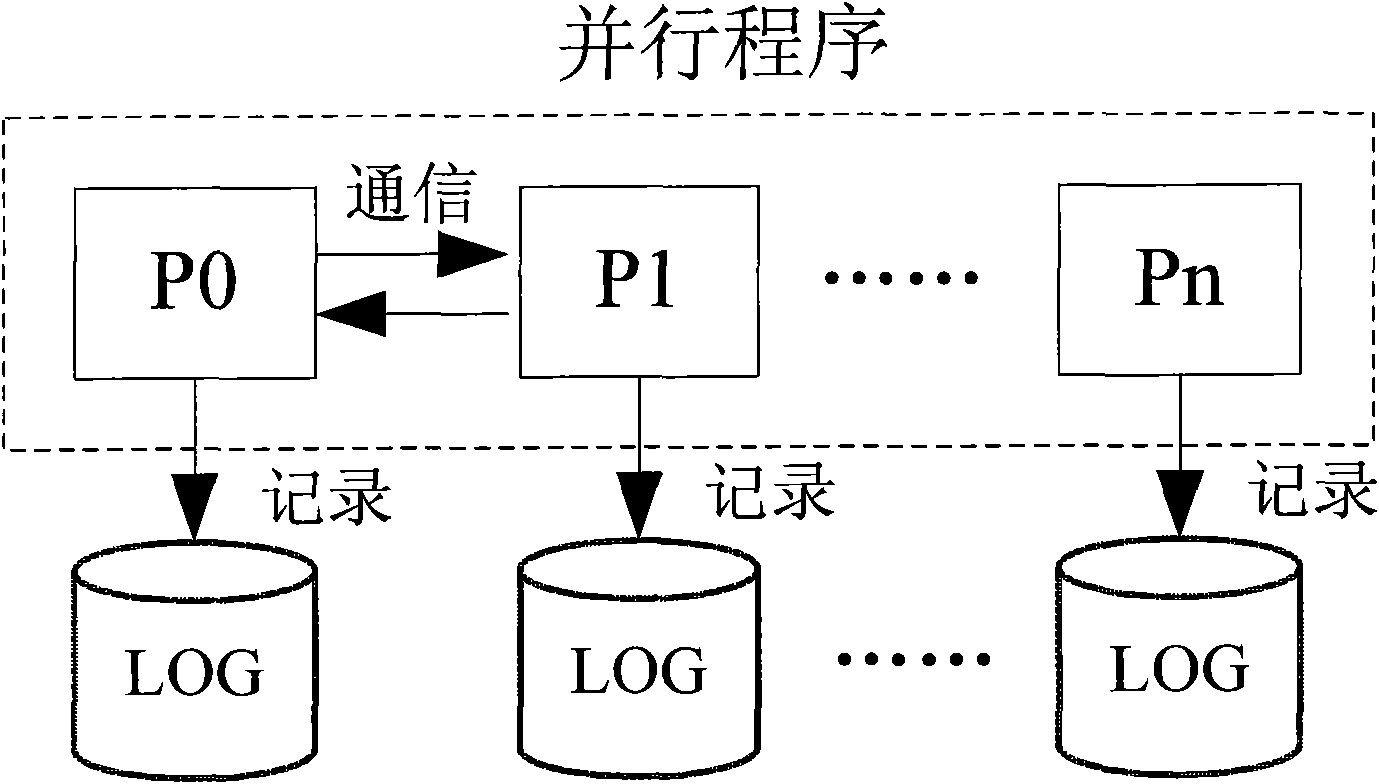

Large-scale parallel program property-predication realizing method

ActiveCN101650687ASave resourcesNo intervention requiredConcurrent instruction executionSoftware testing/debuggingParallel computingSequential computation

Owner:TSINGHUA UNIV

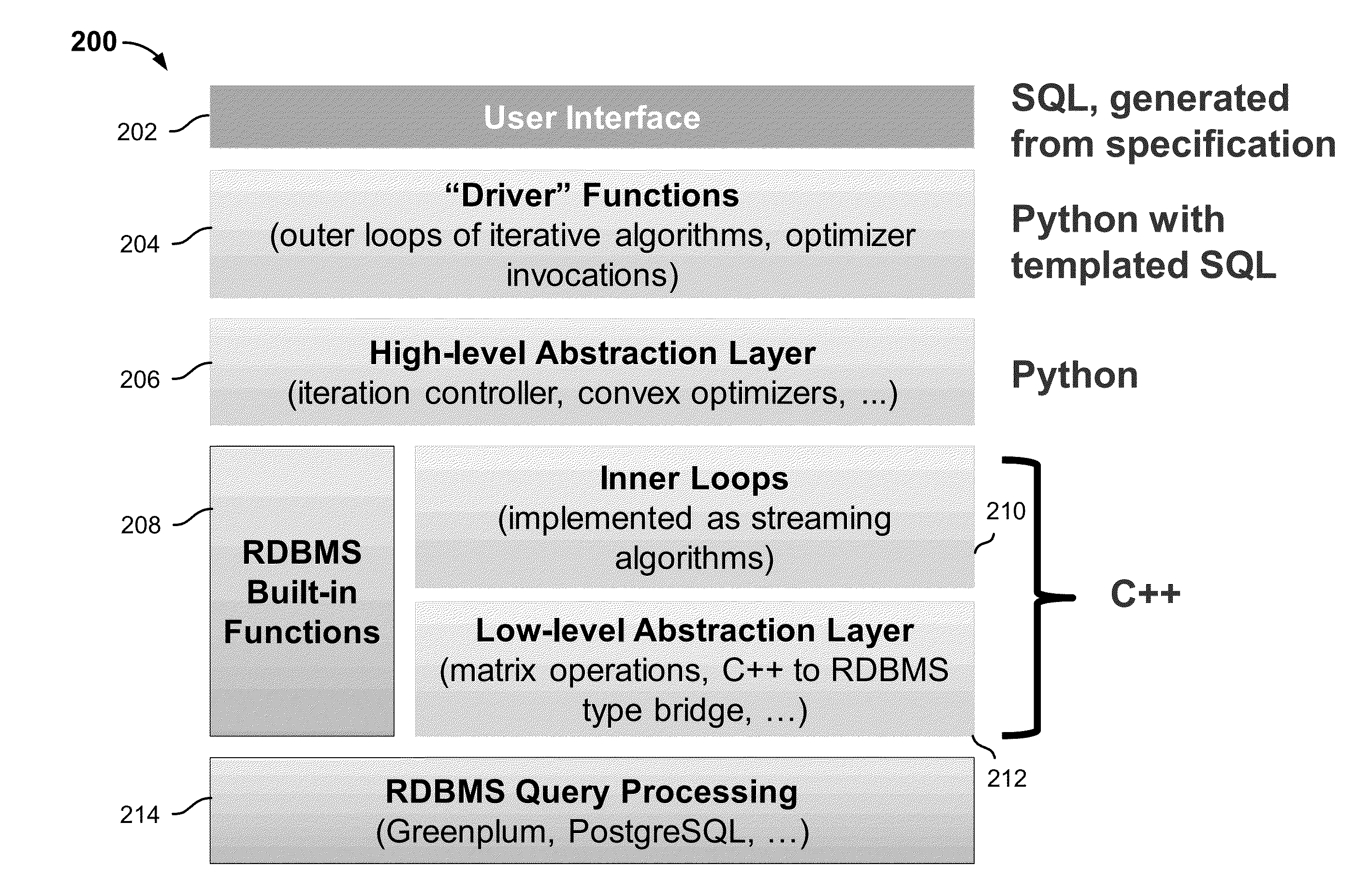

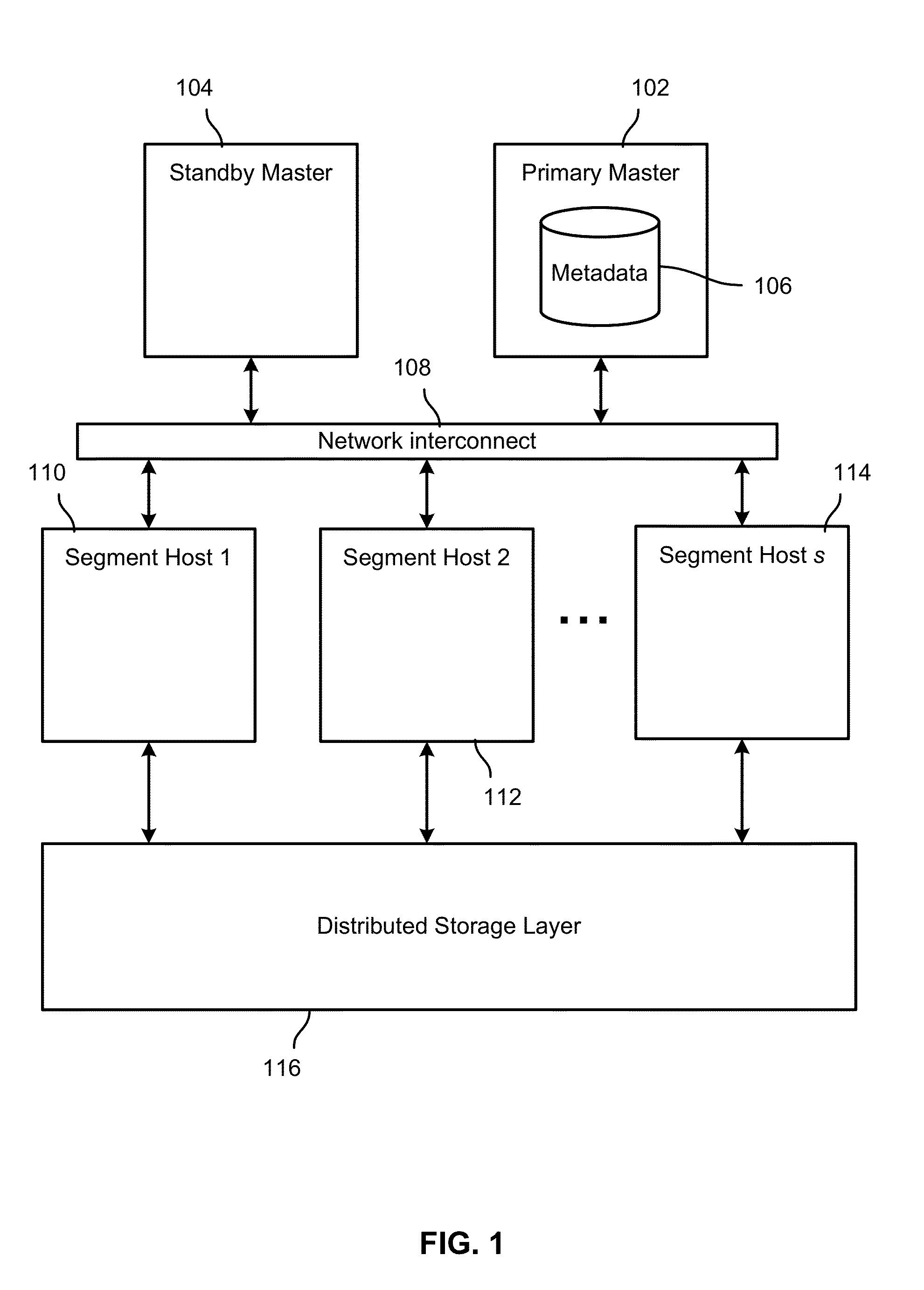

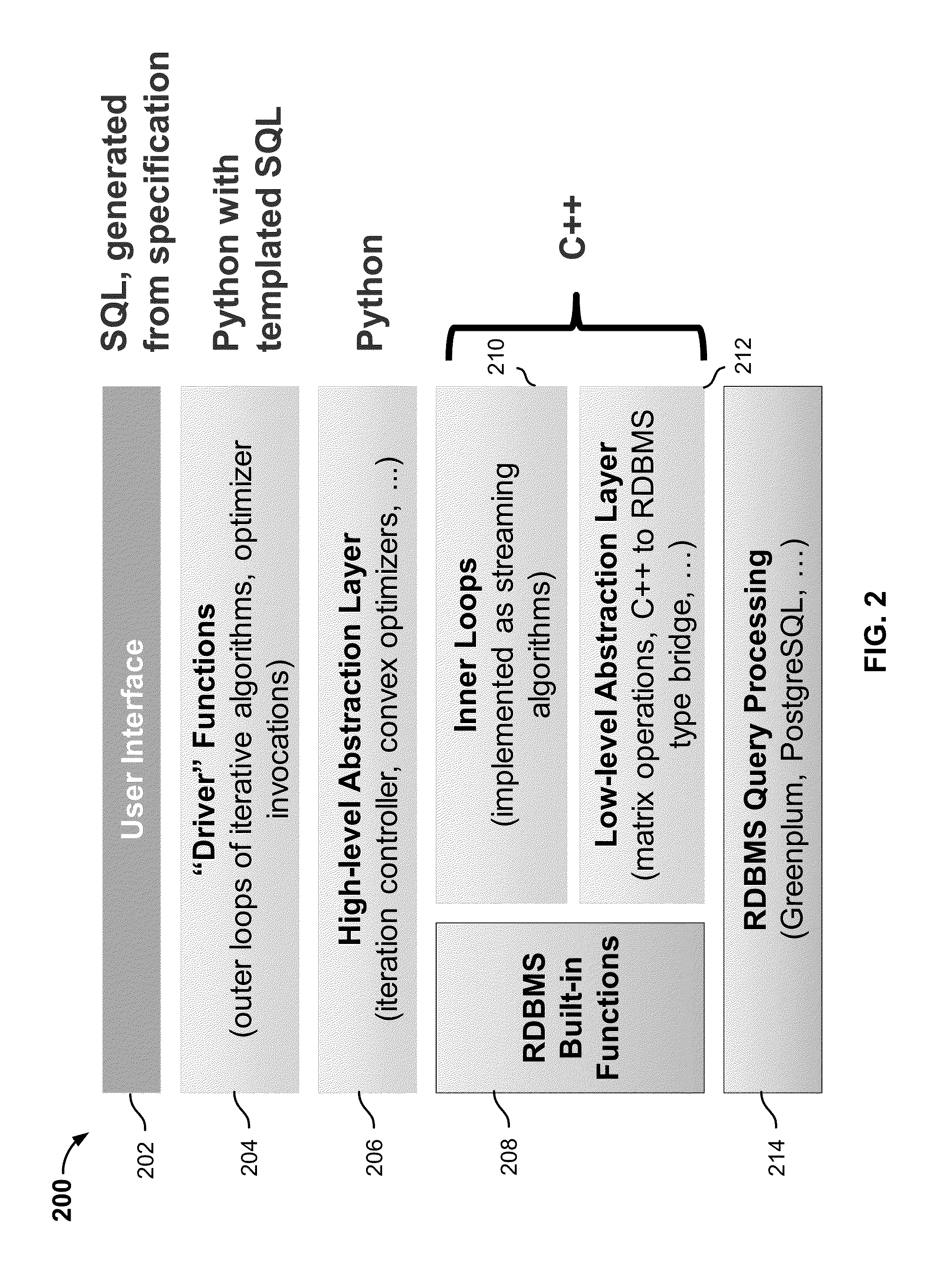

Data analytics platform over parallel databases and distributed file systems

ActiveUS20140244701A1Challenge can be overcomeMultimedia data queryingDatabase distribution/replicationMassively parallelDistributed File System

Performing data analytics processing in the context of a large scale distributed system that includes a massively parallel processing (MPP) database and a distributed storage layer is disclosed. In various embodiments, a data analytics request is received. A plan is created to generate a response to the request. A corresponding portion of the plan is assigned to each of a plurality of distributed processing segments, including by invoking as indicated in the assignment one or more data analytical functions embedded in the processing segment.

Owner:EMC IP HLDG CO LLC

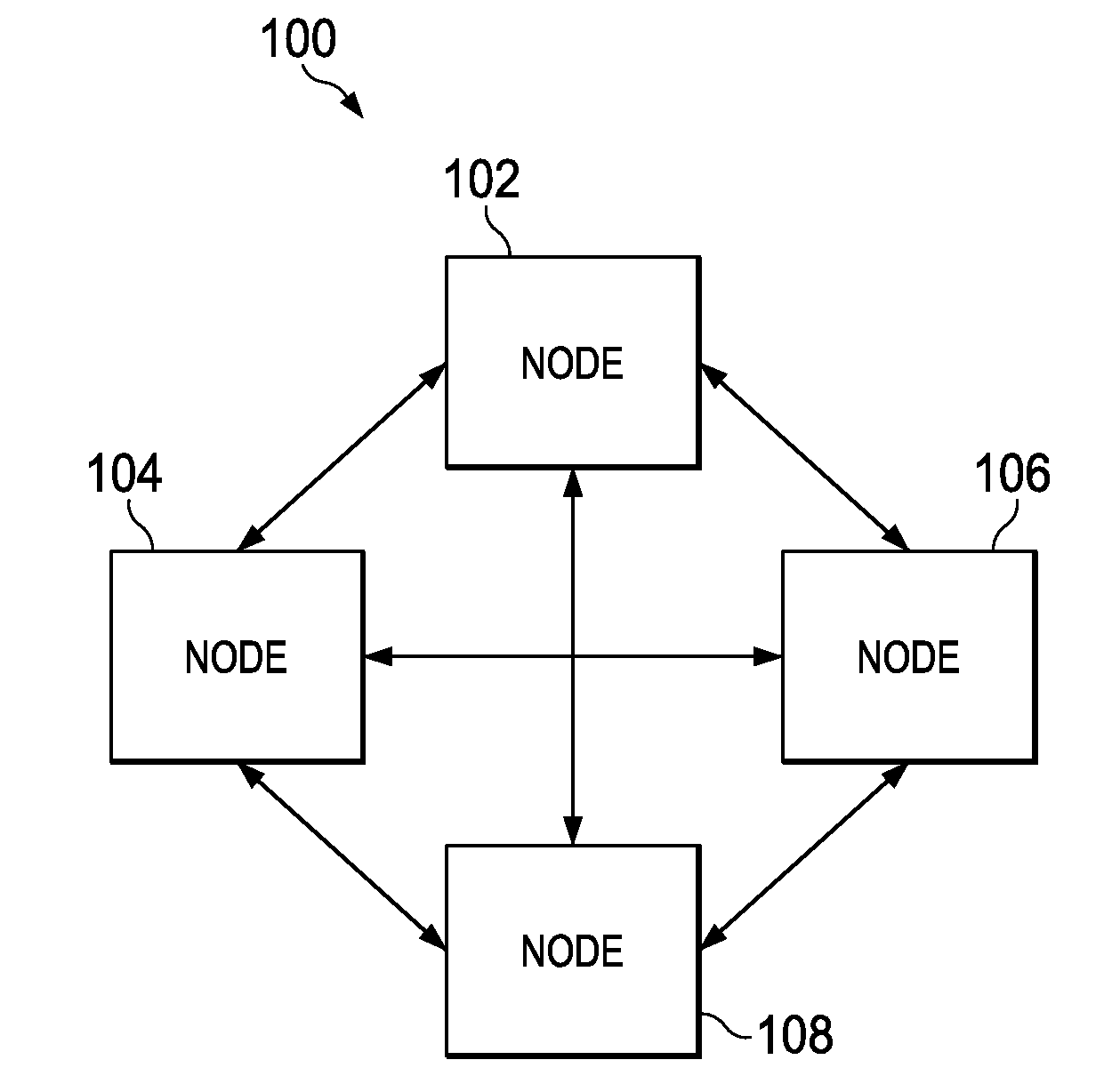

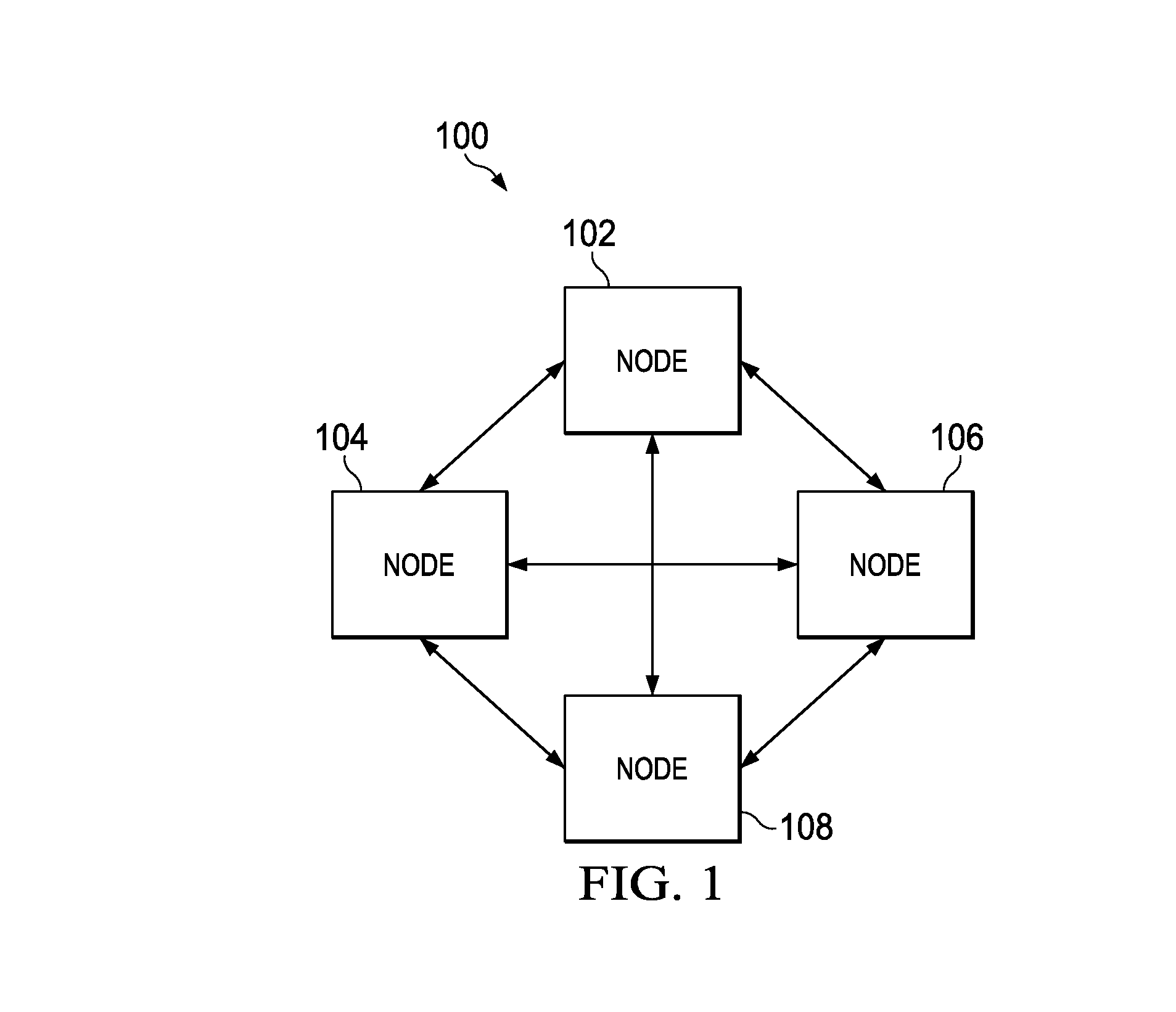

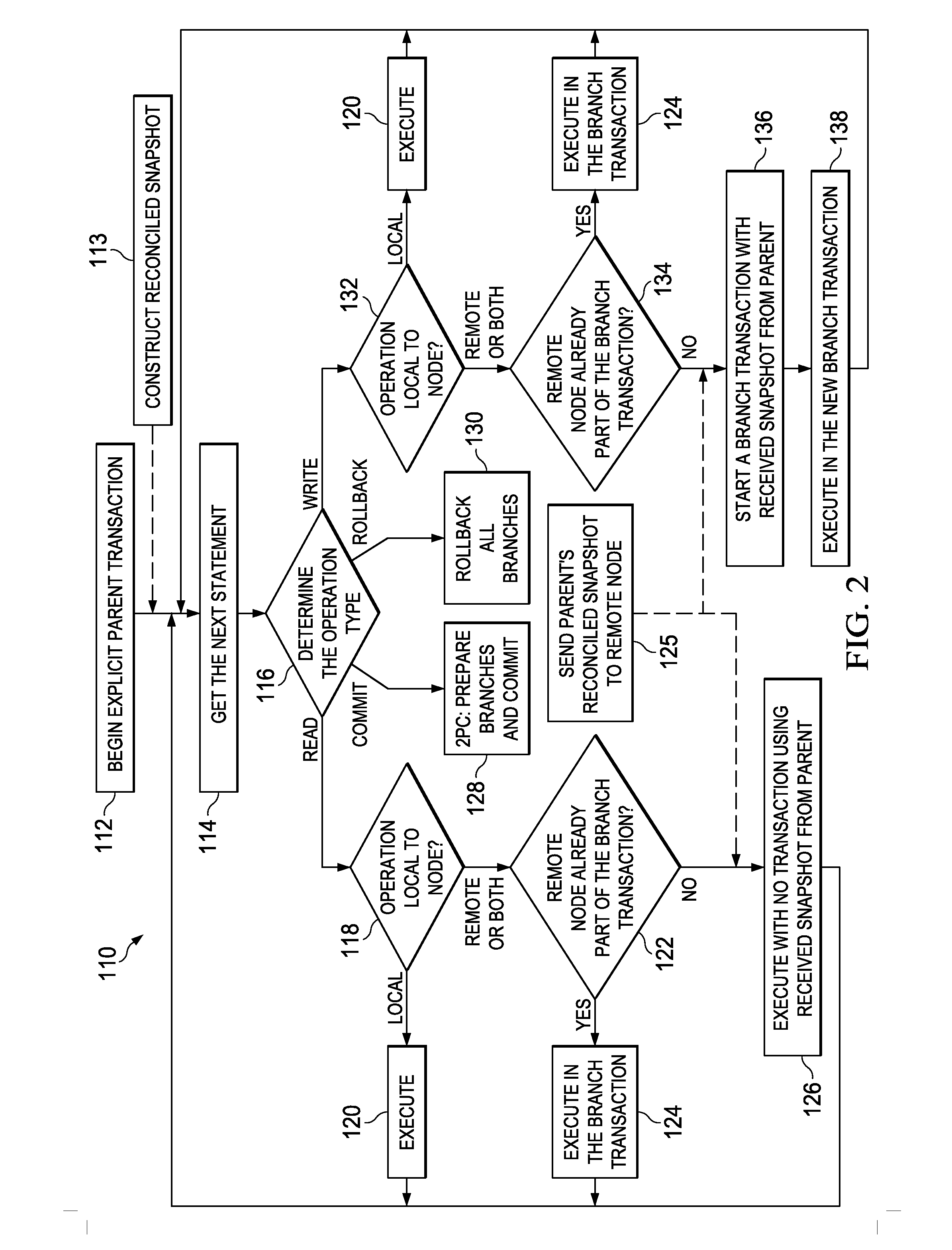

System and Method for Creating a Distributed Transaction Manager Supporting Repeatable Read Isolation level in a MPP Database

InactiveUS20150120645A1Easy to useDigital data information retrievalDigital data processing detailsMassively parallelDistributed transaction

Embodiments are provided to provide a distributed transaction manager supporting repeatable read isolation level in Massively Parallel Processing (MPP) database systems without a centralized component. Before starting a transaction, a first node identifies a second node involved in the transaction, and requests from the second node a snapshot of current transactions at the second node. After receiving the snapshot from the second node, the first node combines into a reconciled snapshot the snapshot of transactions from the second node with current transactions at the first node. The first node then transmits the reconciled snapshot to the second node and starts the transaction using the reconciled snapshot. A branch transaction is then started at the second node in accordance with the reconciled snapshot. Upon ending the transaction and the branch transaction, the first node and the second node perform a two phase commit (2PC) protocol.

Owner:FUTUREWEI TECH INC

Popular searches

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com