Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

31346results about "Memory adressing/allocation/relocation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

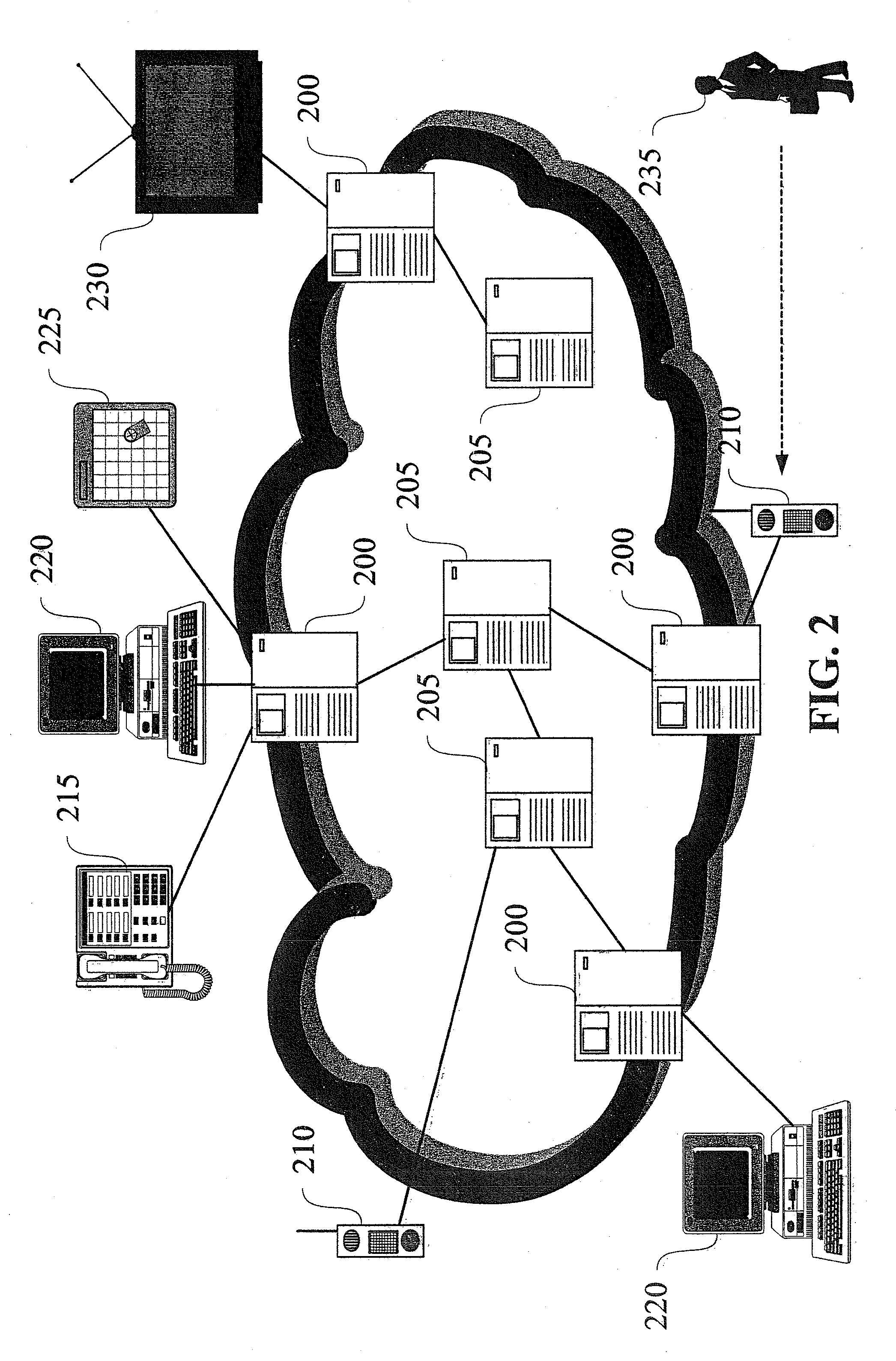

Computer system and process for transferring multiple high bandwidth streams of data between multiple storage units and multiple applications in a scalable and reliable manner

InactiveUS7111115B2Improve reliabilityImprove scalabilityInput/output to record carriersData processing applicationsHigh bandwidthData segment

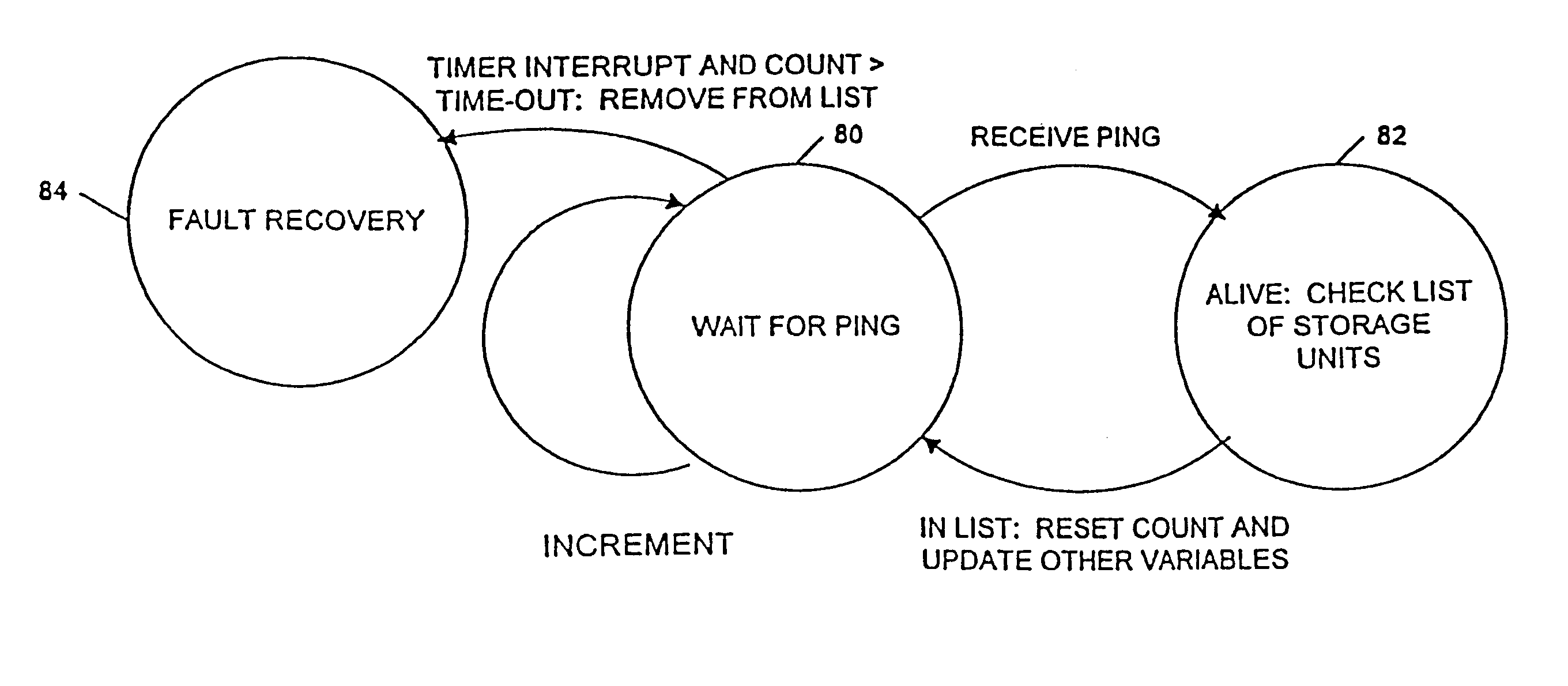

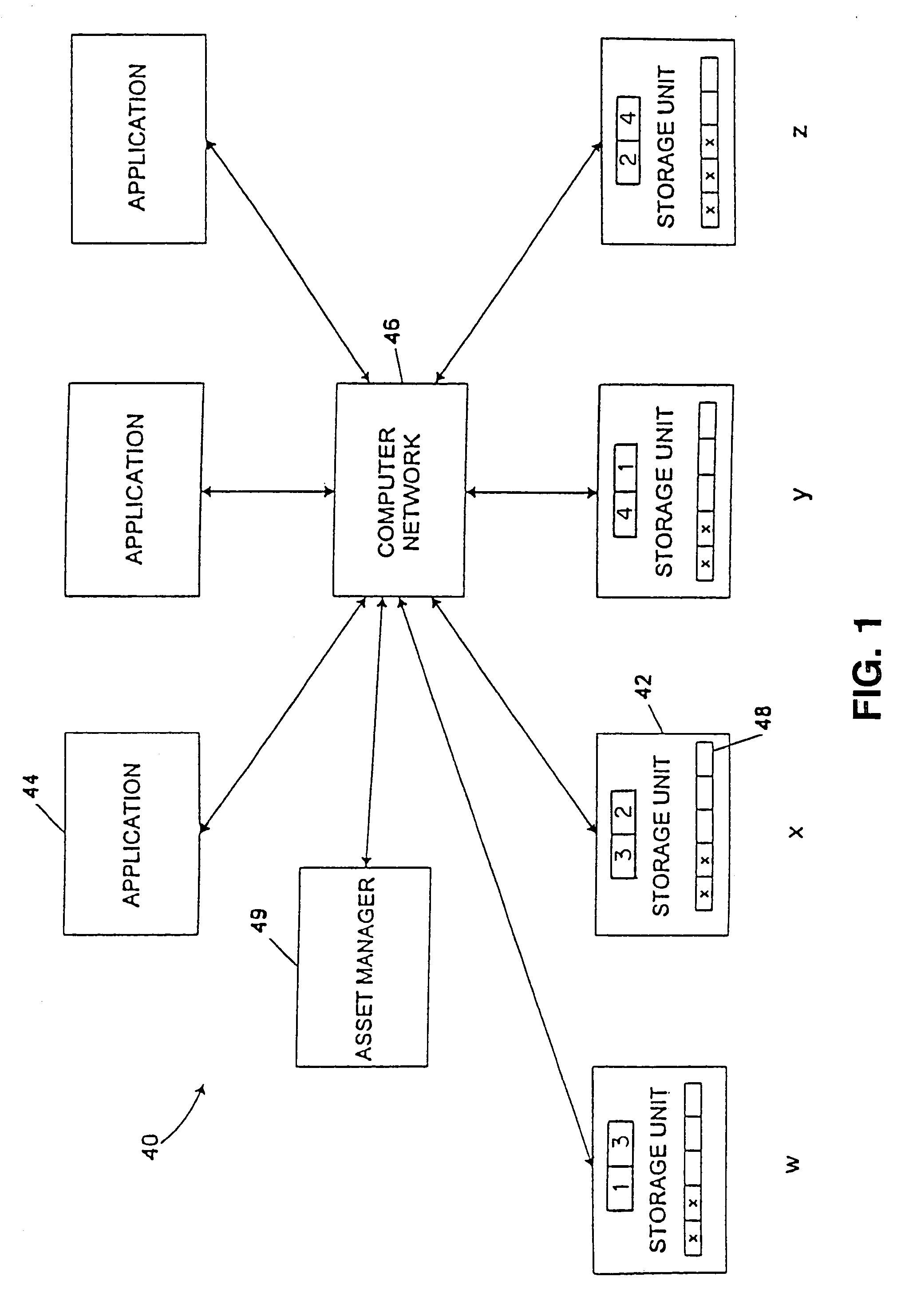

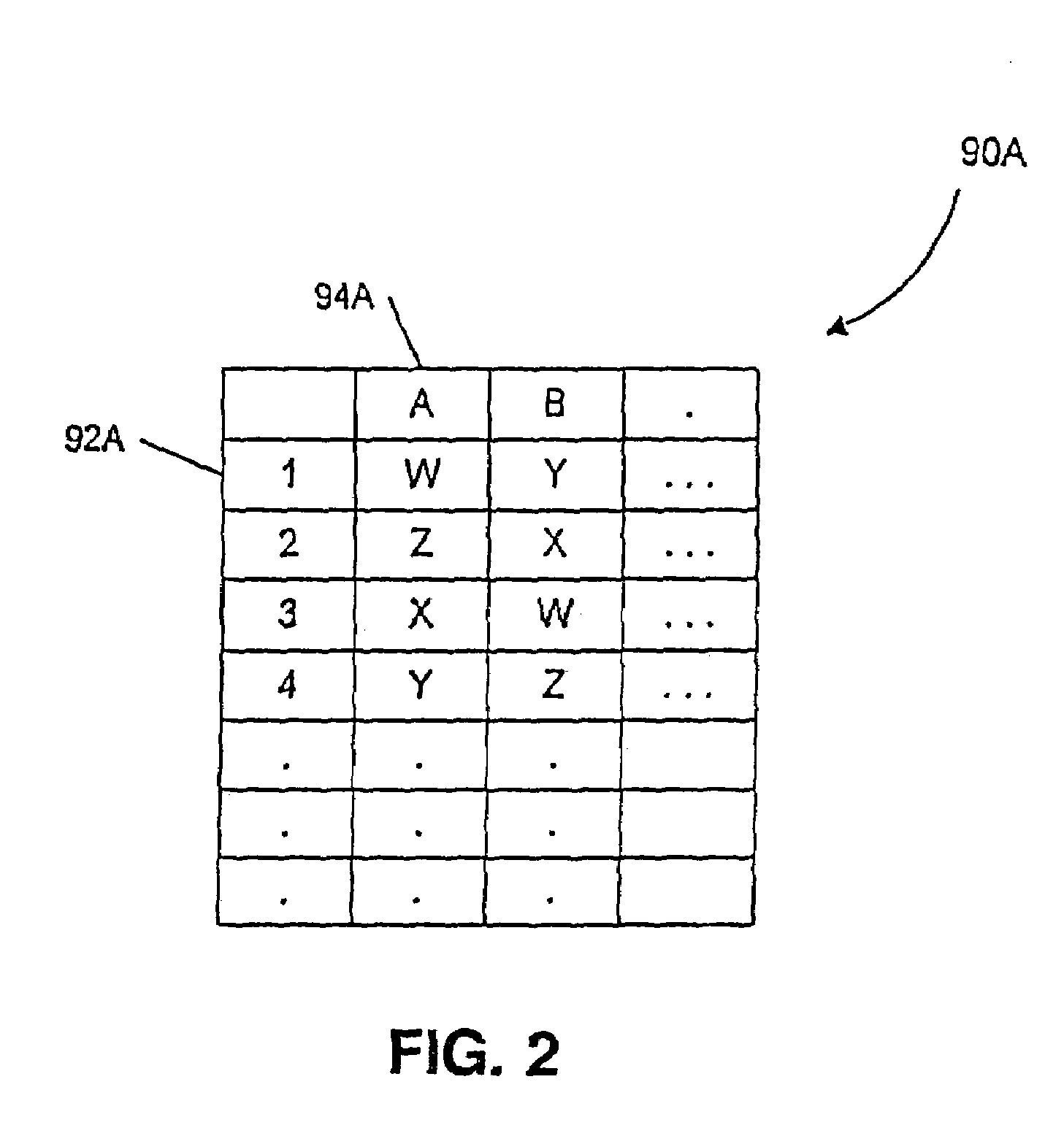

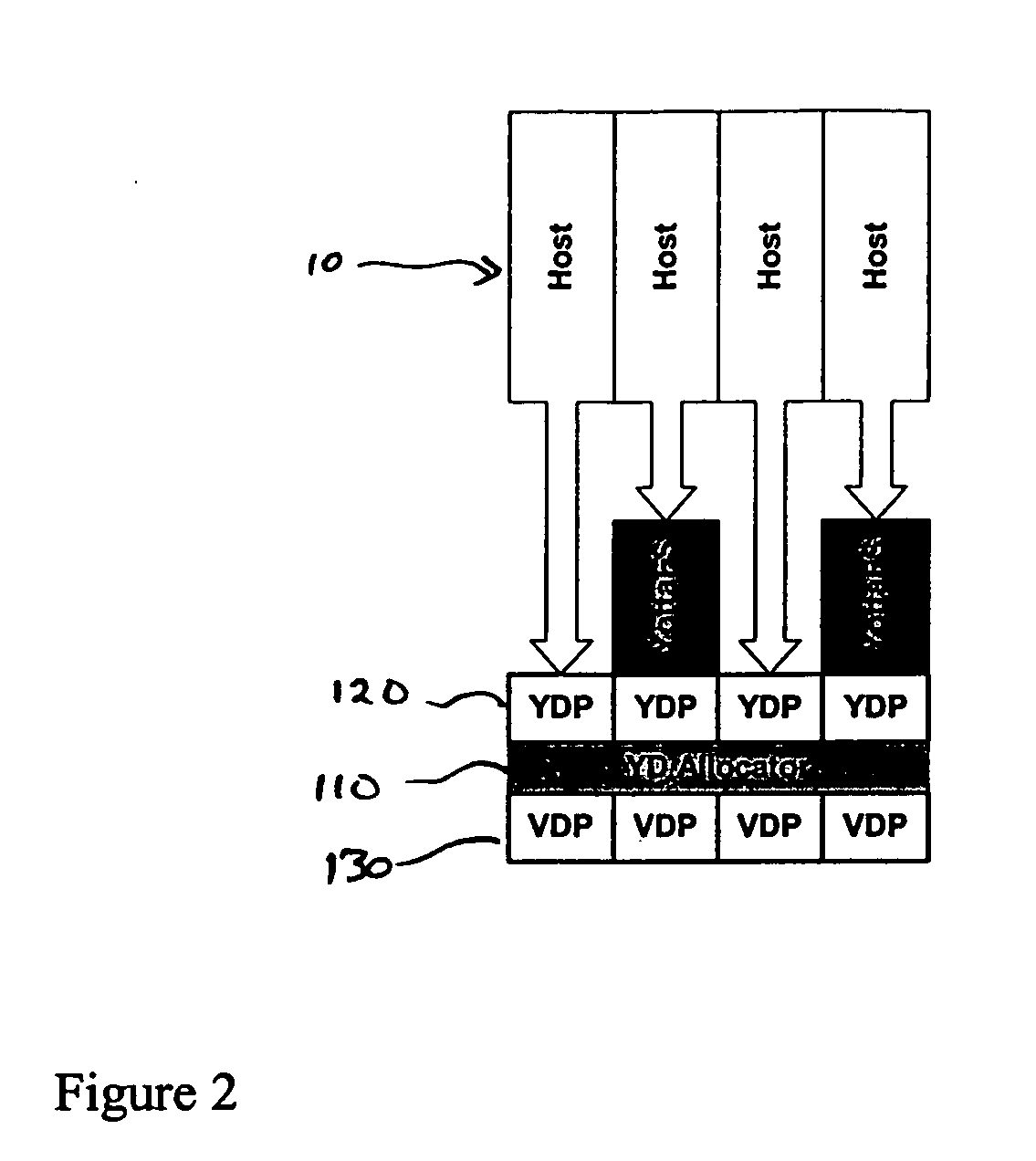

Multiple applications request data from multiple storage units over a computer network. The data is divided into segments and each segment is distributed randomly on one of several storage units, independent of the storage units on which other segments of the media data are stored. At least one additional copy of each segment also is distributed randomly over the storage units, such that each segment is stored on at least two storage units. This random distribution of multiple copies of segments of data improves both scalability and reliability. When an application requests a selected segment of data, the request is processed by the storage unit with the shortest queue of requests. Random fluctuations in the load applied by multiple applications on multiple storage units are balanced nearly equally over all of the storage units. This combination of techniques results in a system which can transfer multiple, independent high-bandwidth streams of data in a scalable manner in both directions between multiple applications and multiple storage units.

Owner:AVID TECHNOLOGY

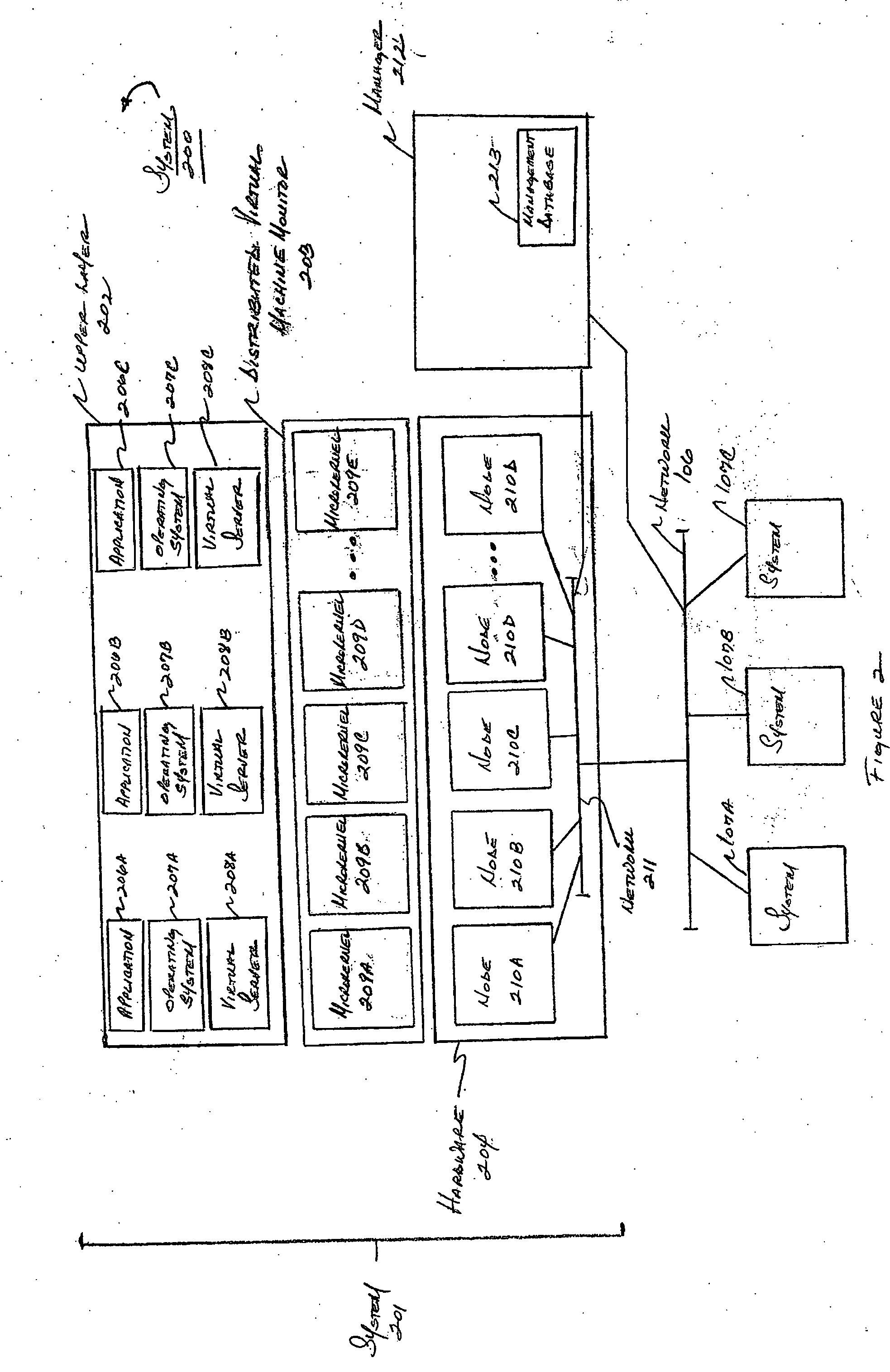

System and method for managing virtual servers

ActiveUS20050120160A1Grow and shrink capabilityMaximize useResource allocationMemory adressing/allocation/relocationOperational systemPrimitive state

A management capability is provided for a virtual computing platform. In one example, this platform allows interconnected physical resources such as processors, memory, network interfaces and storage interfaces to be abstracted and mapped to virtual resources (e.g., virtual mainframes, virtual partitions). Virtual resources contained in a virtual partition can be assembled into virtual servers that execute a guest operating system (e.g., Linux). In one example, the abstraction is unique in that any resource is available to any virtual server regardless of the physical boundaries that separate the resources. For example, any number of physical processors or any amount of physical memory can be used by a virtual server even if these resources span different nodes. A virtual computing platform is provided that allows for the creation, deletion, modification, control (e.g., start, stop, suspend, resume) and status (i.e., events) of the virtual servers which execute on the virtual computing platform and the management capability provides controls for these functions. In a particular example, such a platform allows the number and type of virtual resources consumed by a virtual server to be scaled up or down when the virtual server is running. For instance, an administrator may scale a virtual server manually or may define one or more policies that automatically scale a virtual server. Further, using the management API, a virtual server can monitor itself and can scale itself up or down depending on its need for processing, memory and I / O resources. For example, a virtual server may monitor its CPU utilization and invoke controls through the management API to allocate a new processor for itself when its utilization exceeds a specific threshold. Conversely, a virtual server may scale down its processor count when its utilization falls. Policies can be used to execute one or more management controls. More specifically, a management capability is provided that allows policies to be defined using management object's properties, events and / or method results. A management policy may also incorporate external data (e.g., an external event) in its definition. A policy may be triggered, causing the management server or other computing entity to execute an action. An action may utilize one or more management controls. In addition, an action may access external capabilities such as sending notification e-mail or sending a text message to a telephone paging system. Further, management capability controls may be executed using a discrete transaction referred to as a “job.” A series of management controls may be assembled into a job using one or management interfaces. Errors that occur when a job is executed may cause the job to be rolled back, allowing affected virtual servers to return to their original state.

Owner:ORACLE INT CORP

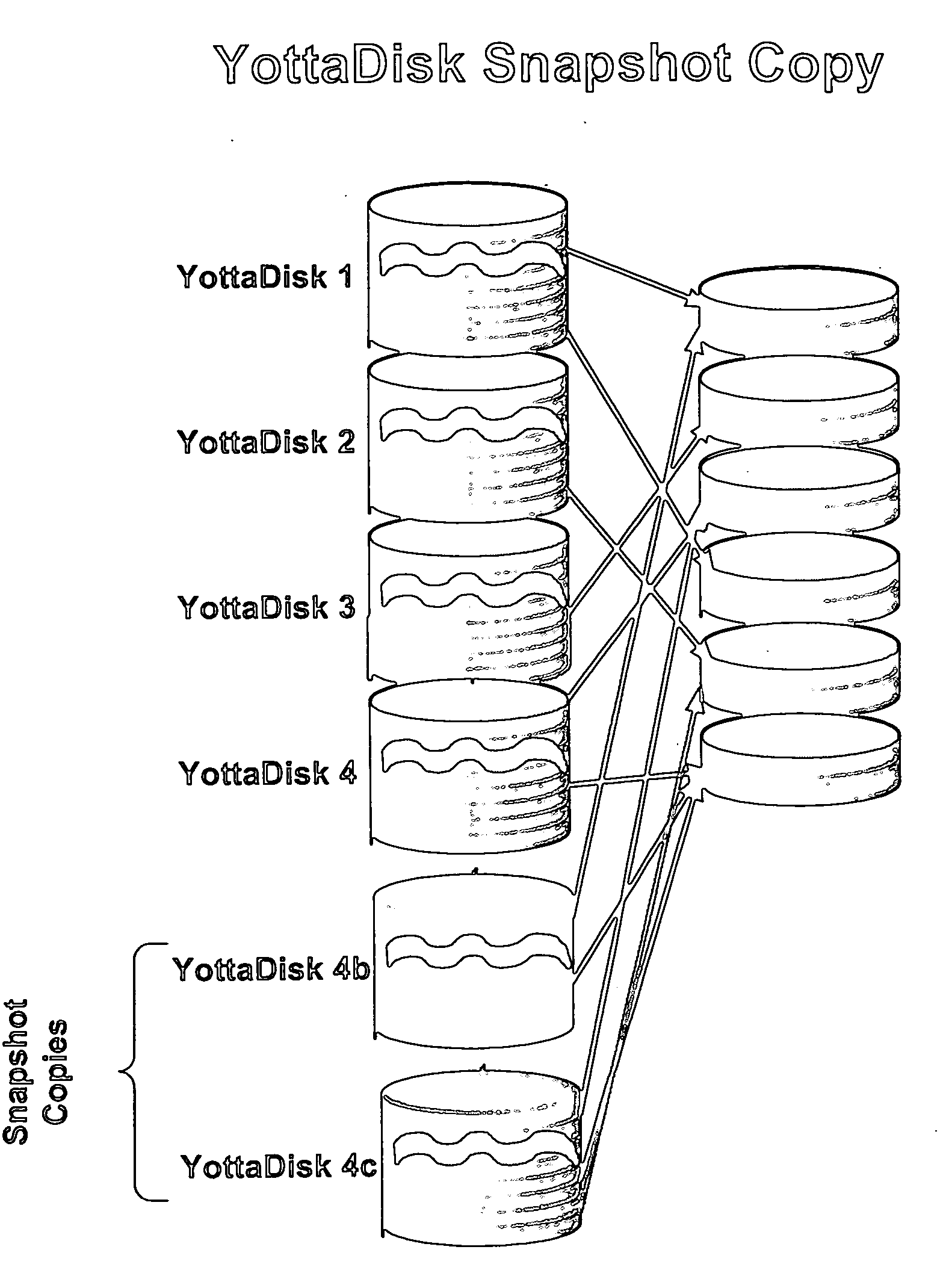

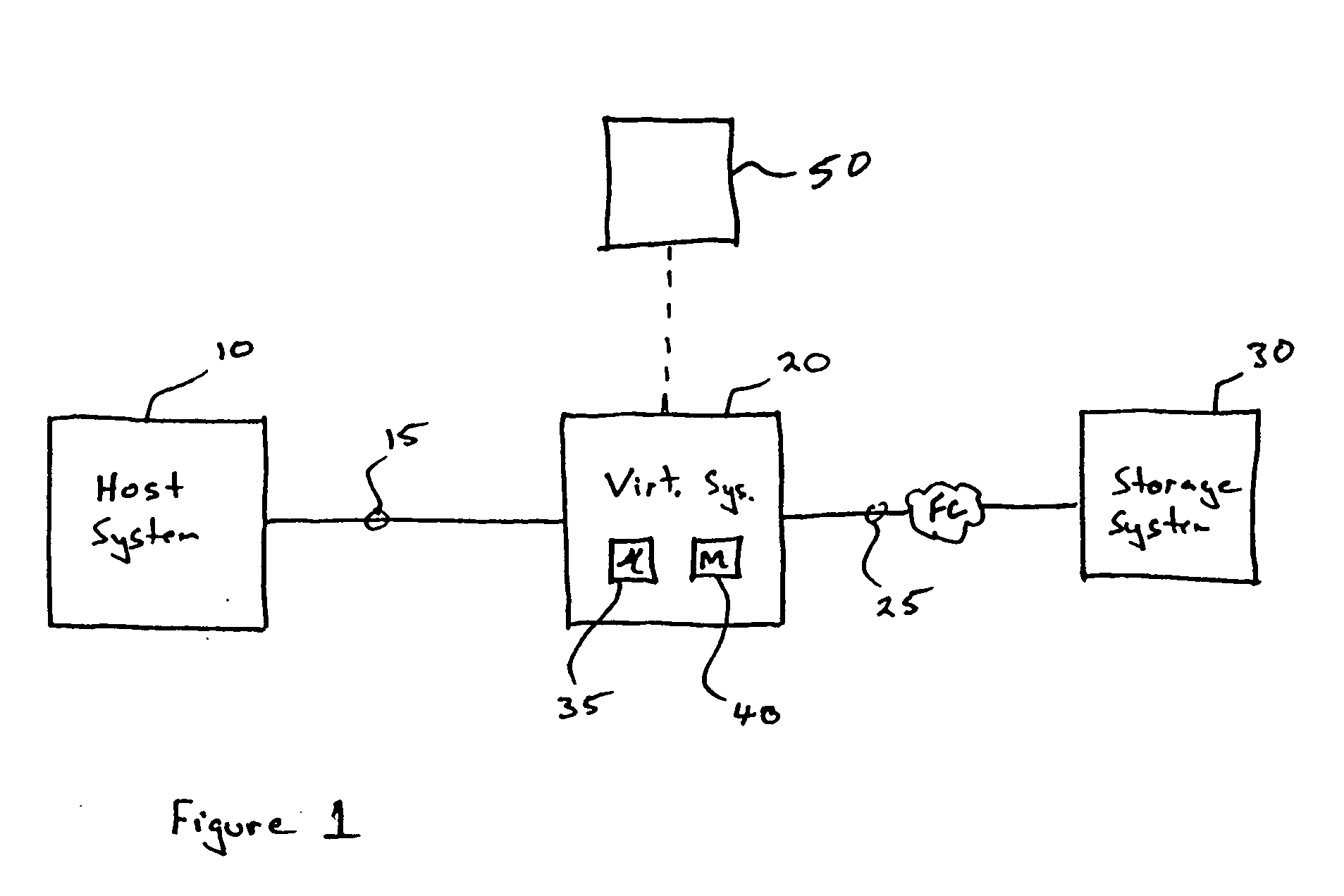

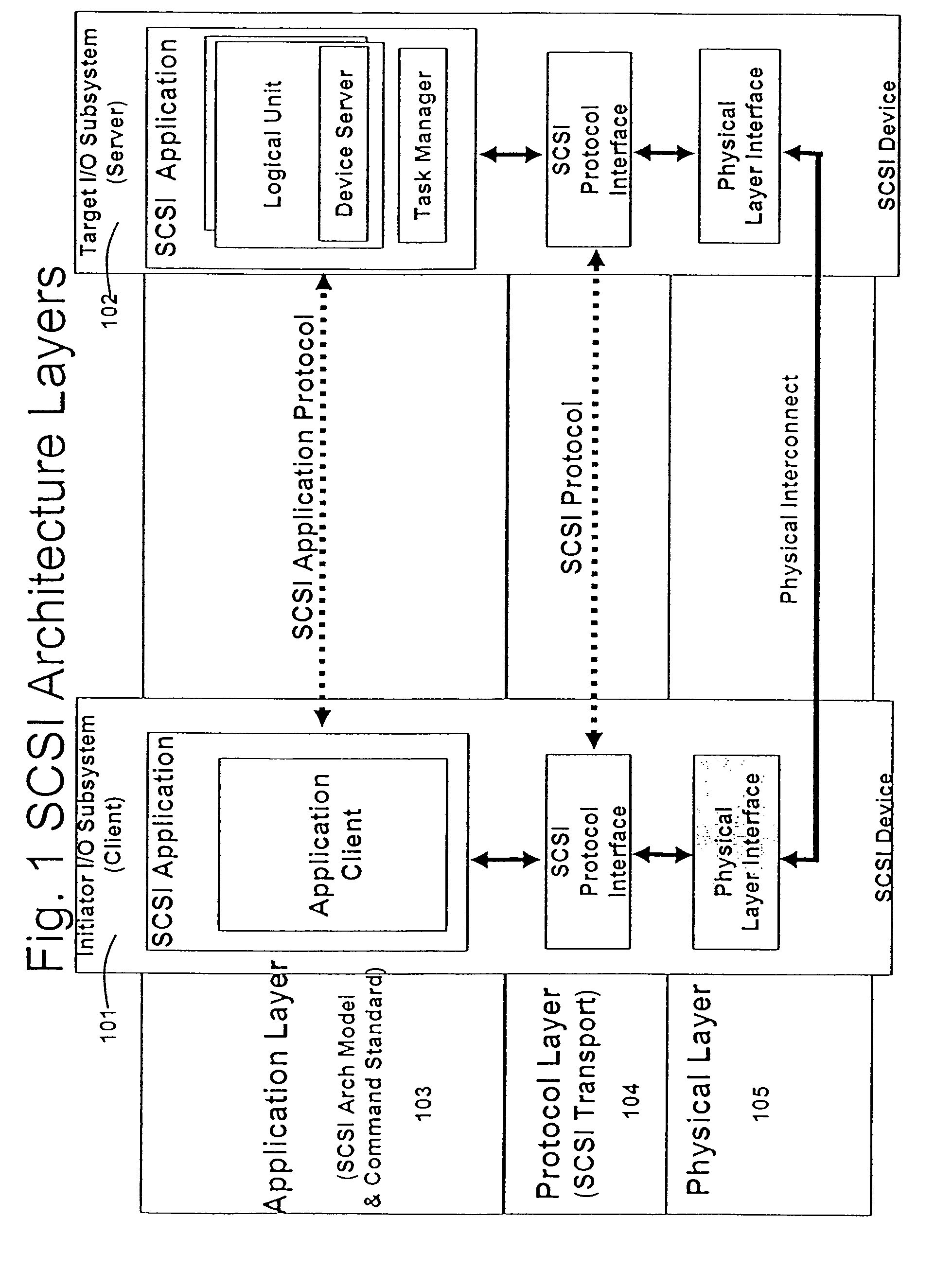

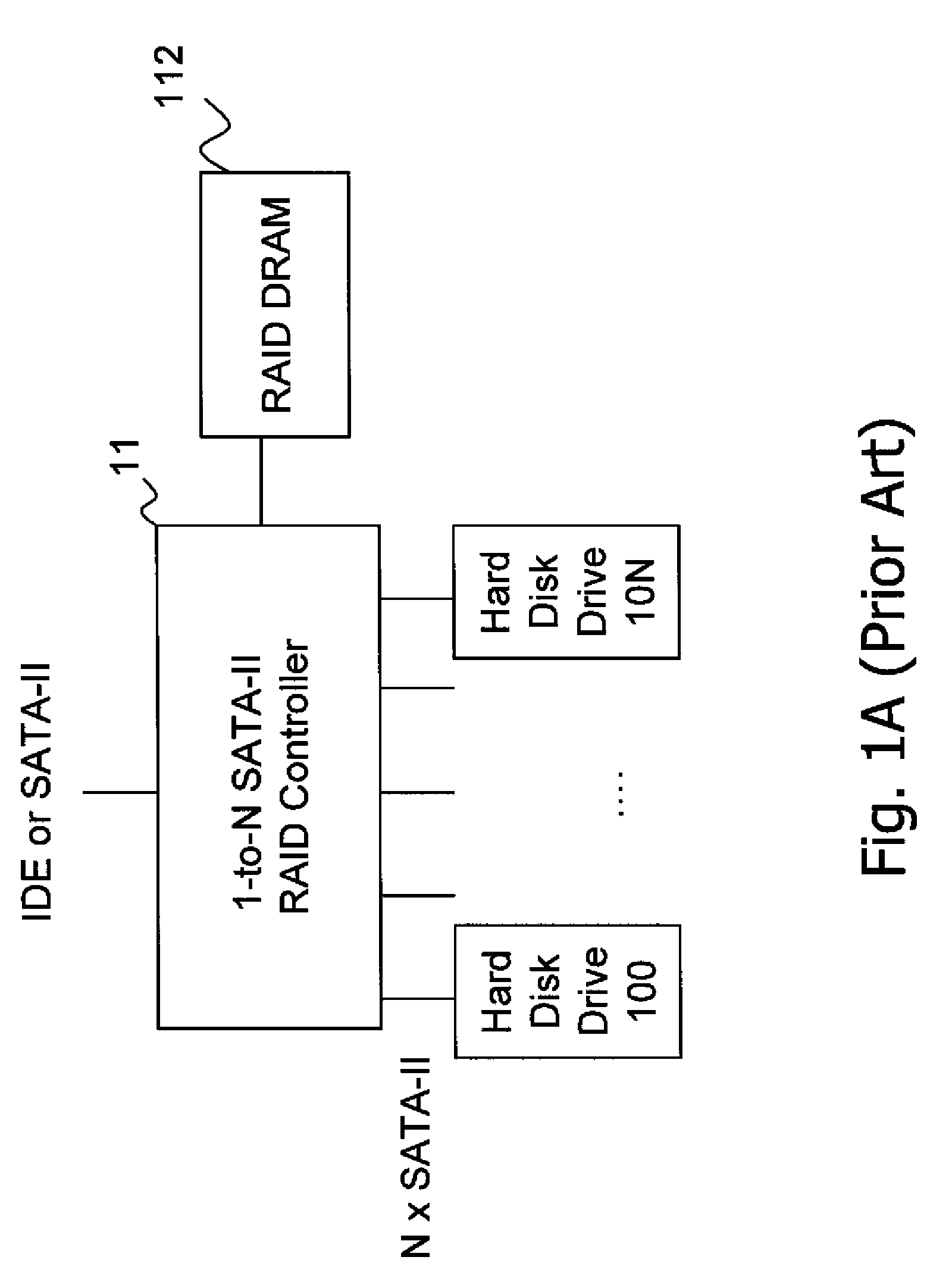

Storage virtualization system and methods

InactiveUS20050125593A1Reduce consumptionWithout impactInput/output to record carriersMemory adressing/allocation/relocationRAIDIslanding

Storage virtualization systems and methods that allow customers to manage storage as a utility rather than as islands of storage which are independent of each other. A demand mapped virtual disk image of up to an arbitrarily large size is presented to a host system. The virtualization system allocates physical storage from a storage pool dynamically in response to host I / O requests, e.g., SCSI I / O requests, allowing for the amortization of storage resources-through a disk subsystem while maintaining coherency amongst I / O RAID traffic. In one embodiment, the virtualization functionality is implemented in a controller device, such as a controller card residing in a switch device or other network device, coupled to a storage system on a storage area network (SAN). The resulting virtual disk image that is observed by the host computer is larger than the amount of physical storage actually consumed.

Owner:EMC IP HLDG CO LLC

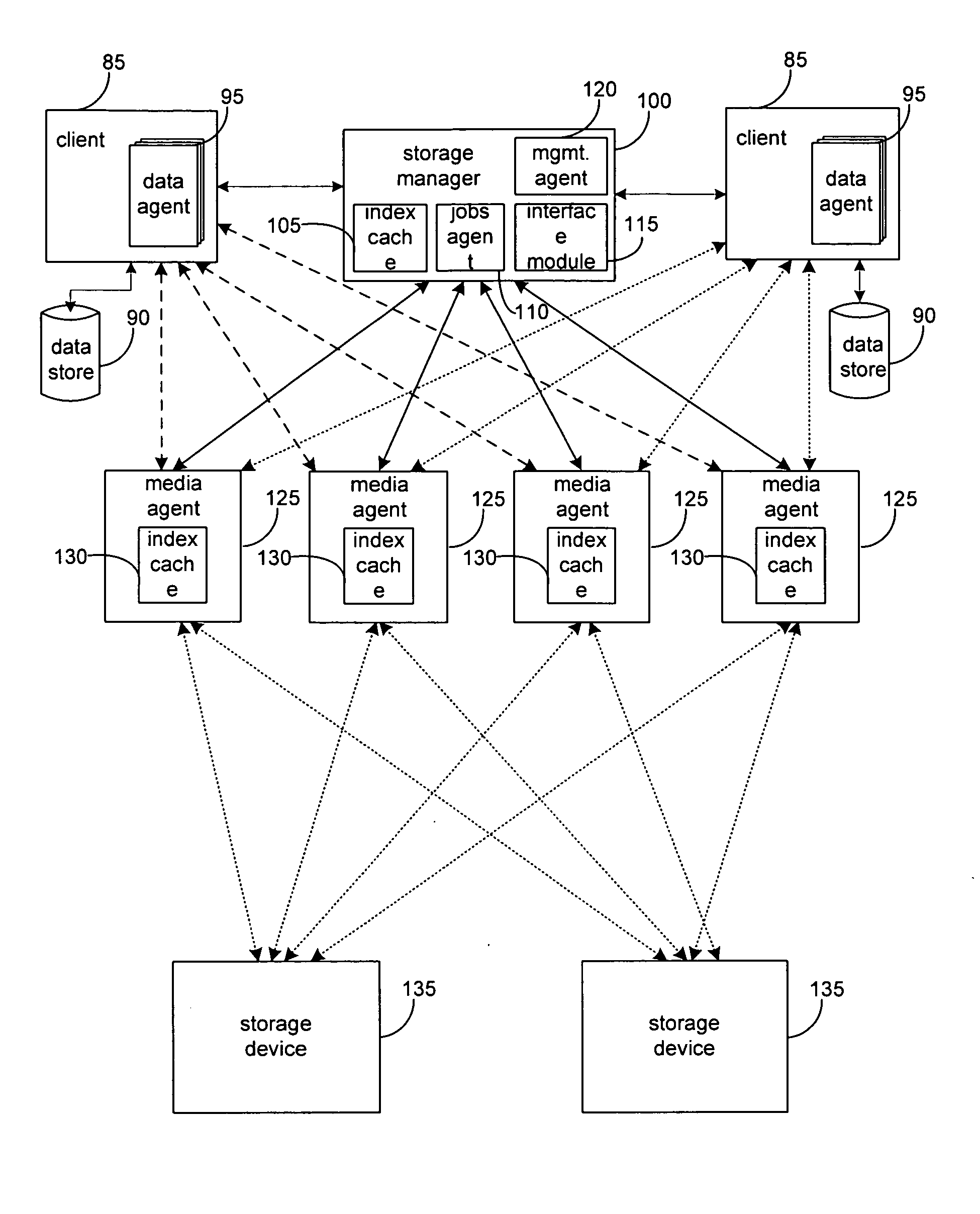

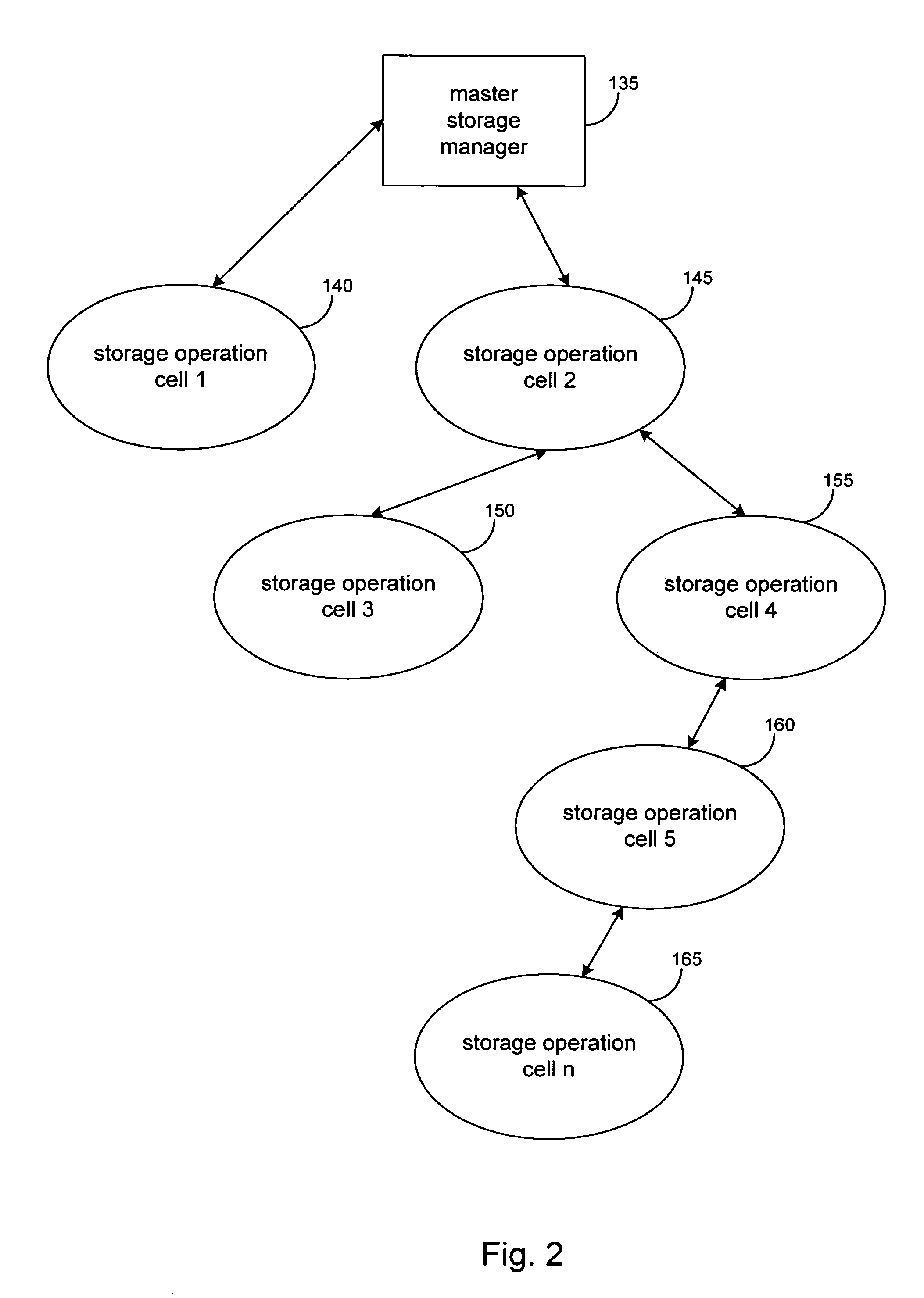

Hierarchical backup and retrieval system

InactiveUS7395282B1Data processing applicationsDigital data processing detailsSoftware agentAdministrative controls

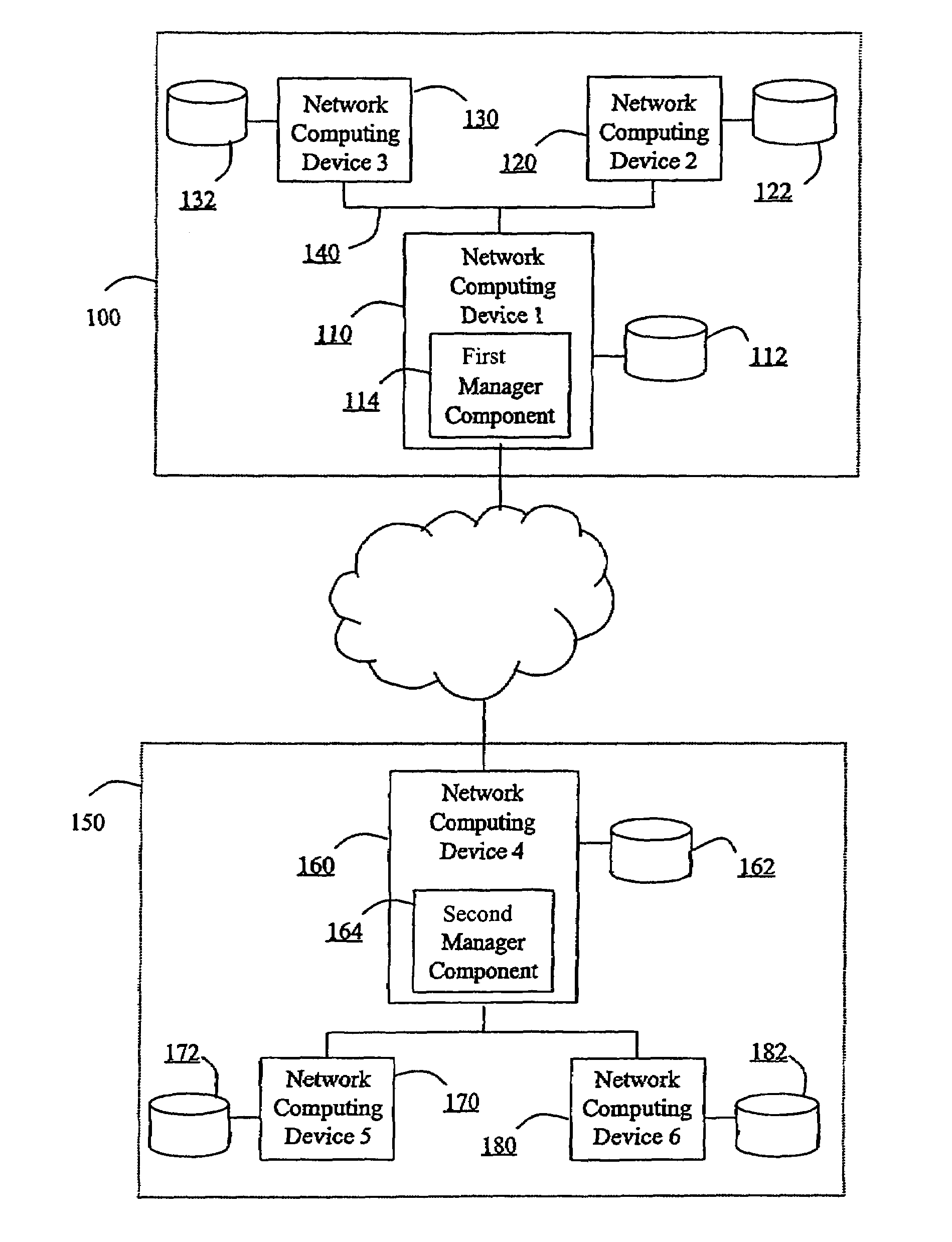

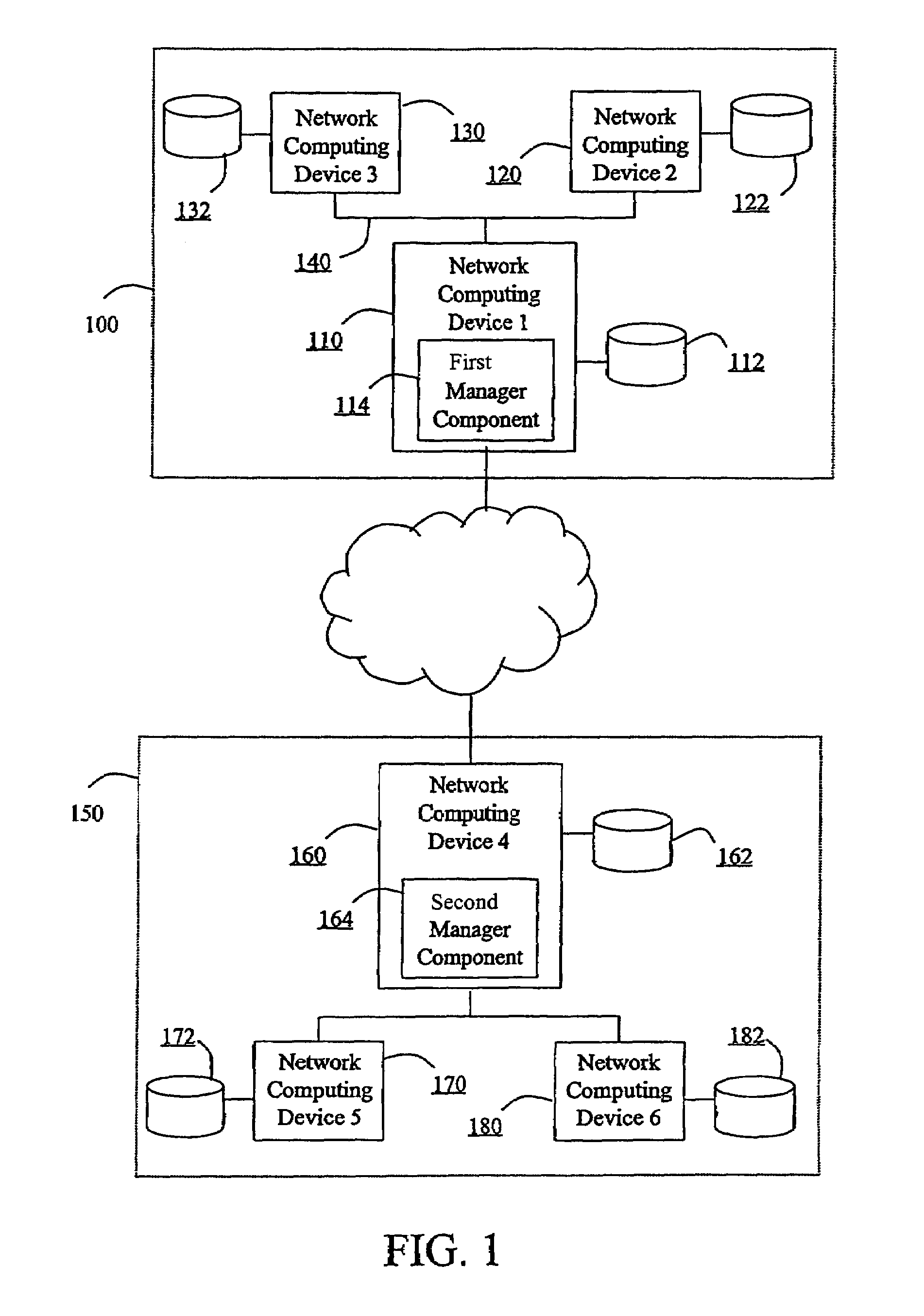

The invention is a hierarchical backup system. The interconnected network computing devices are put into groups of backup cells. A backup cell has a manager software agent responsible maintaining and initiating a backup regime for the network computing devices in the backup cell. The backups are directed to backup devices within the backup cell. Several backup cells can be defined. A manager software agent for a particular cell may be placed into contact with the manager software agent of another cell, by which information about the cells may be passed back and forth. Additionally, one of the software agents may be given administrative control over another software agent with which it is in communication.

Owner:COMMVAULT SYST INC

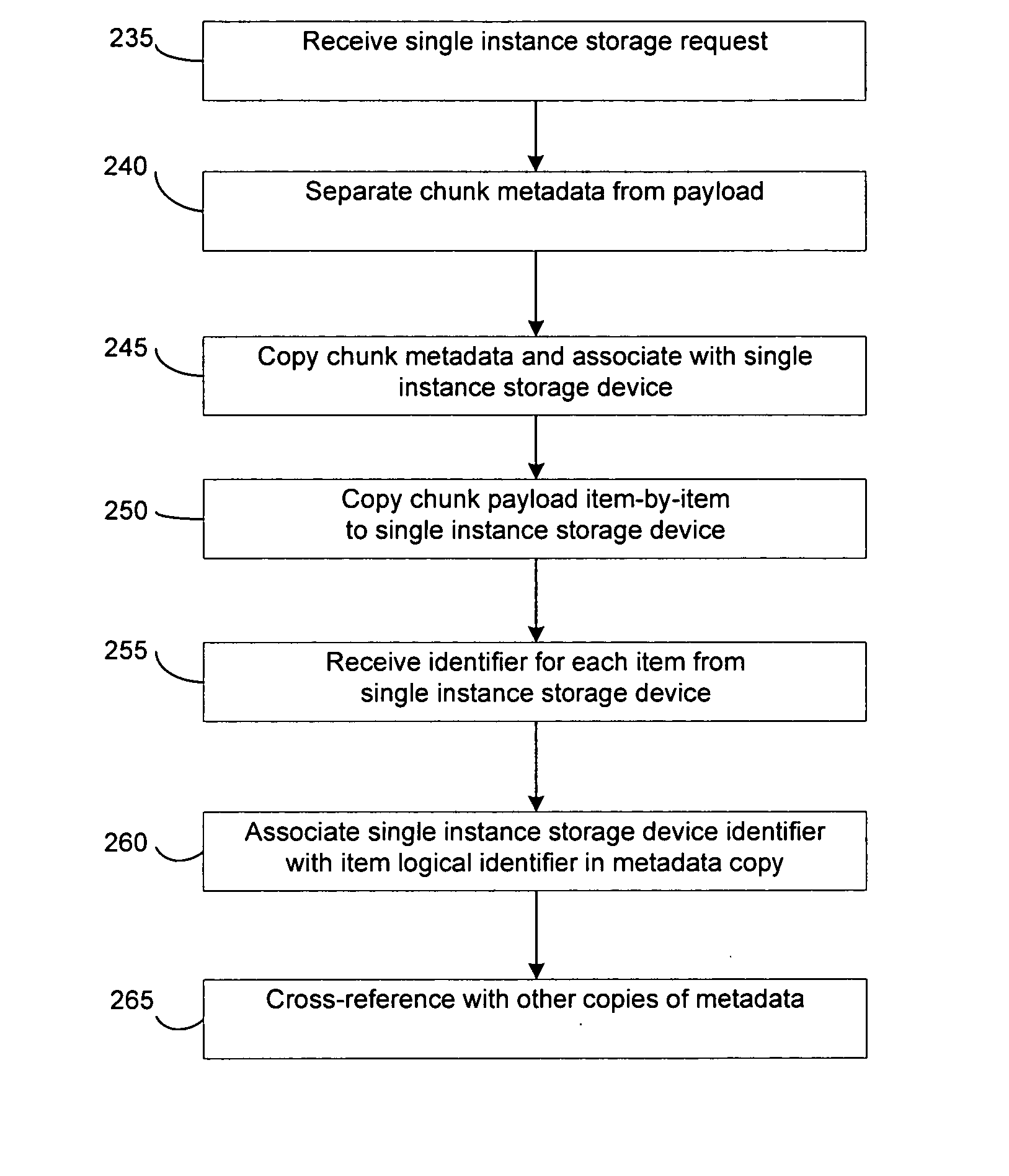

System and method to support single instance storage operations

Systems and methods for single instance storage operations are provided. Systems constructed in accordance with the principals of the present invention may process data containing a payload and associated metadata. Often, chunks of data are copied to traditional archive storage wherein some or all of the chunk, including the payload and associated metadata are copied to the physical archive storage medium. In some embodiments, chunks of data are designated for storage in single instance storage devices. The system may remove the encapsulation from the chunk and may copy the chunk payload to a single instance storage device. The single instance storage device may return a signature or other identifier for items copied from the chunk payload. The metadata associated with the chunk may be maintained in separate storage and may track the association between the logical identifiers and the signatures for the individual items of the chunk payload which may be generated by the single instance storage device.

Owner:COMMVAULT SYST INC

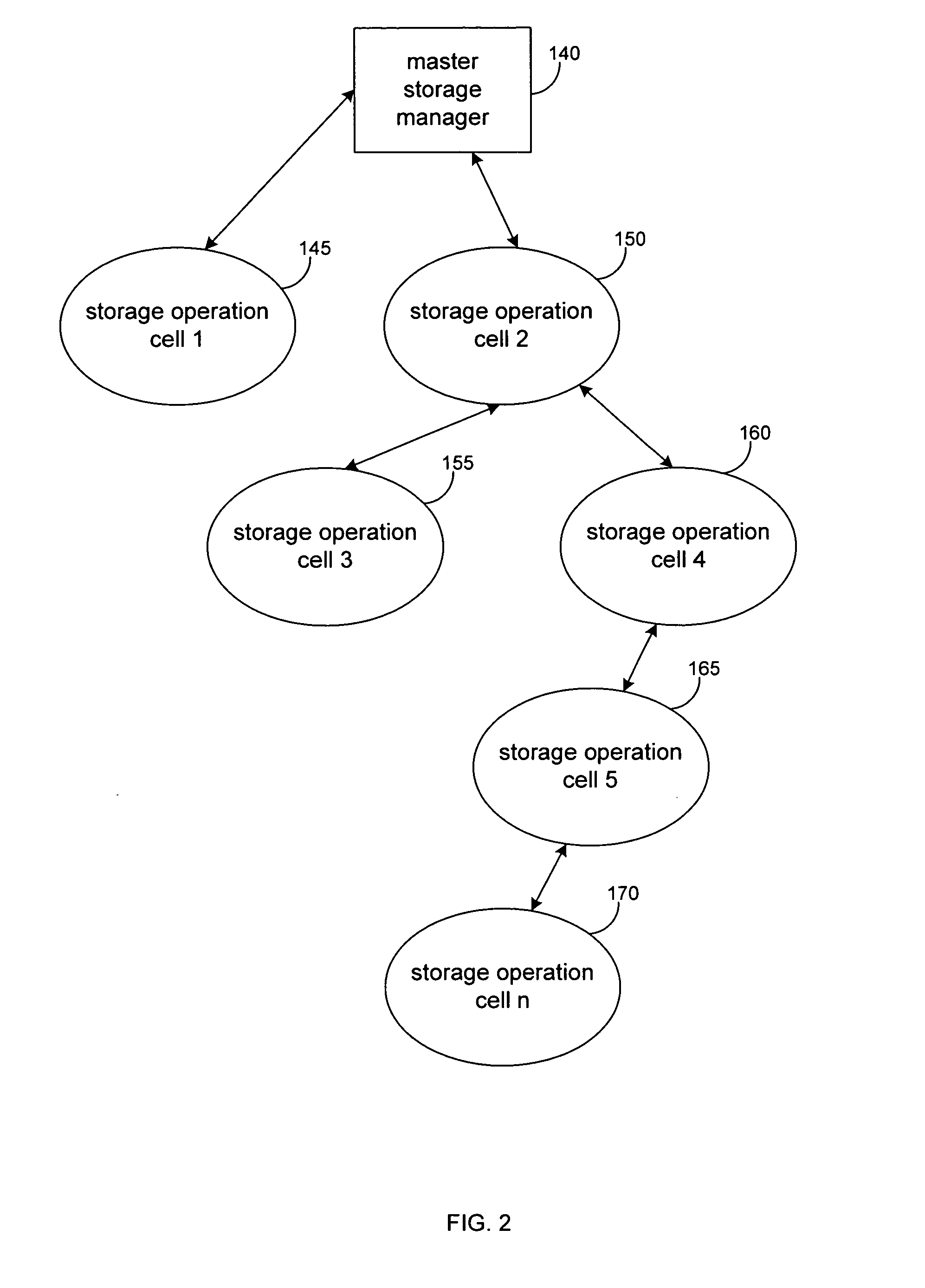

Hierarchical systems and methods for providing a unified view of storage information

The present invention provides systems and methods for data storage. A hierarchical storage management architecture is presented to facilitate data management. The disclosed system provides methods for evaluating the state of stored data relative to enterprise needs by using weighted parameters that may be user defined. Also disclosed are systems and methods evaluating costing and risk management associated with stored data.

Owner:COMMVAULT SYST INC

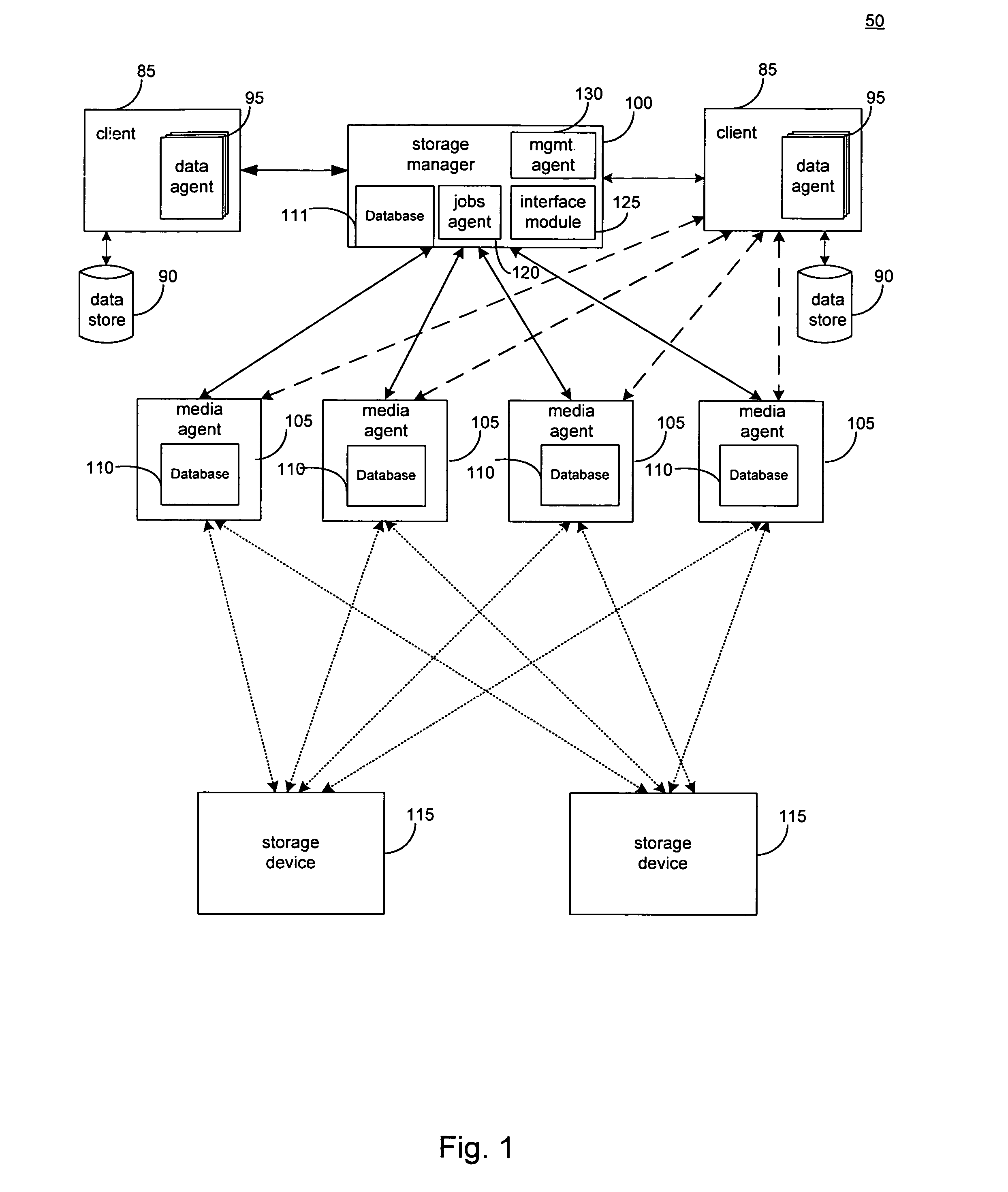

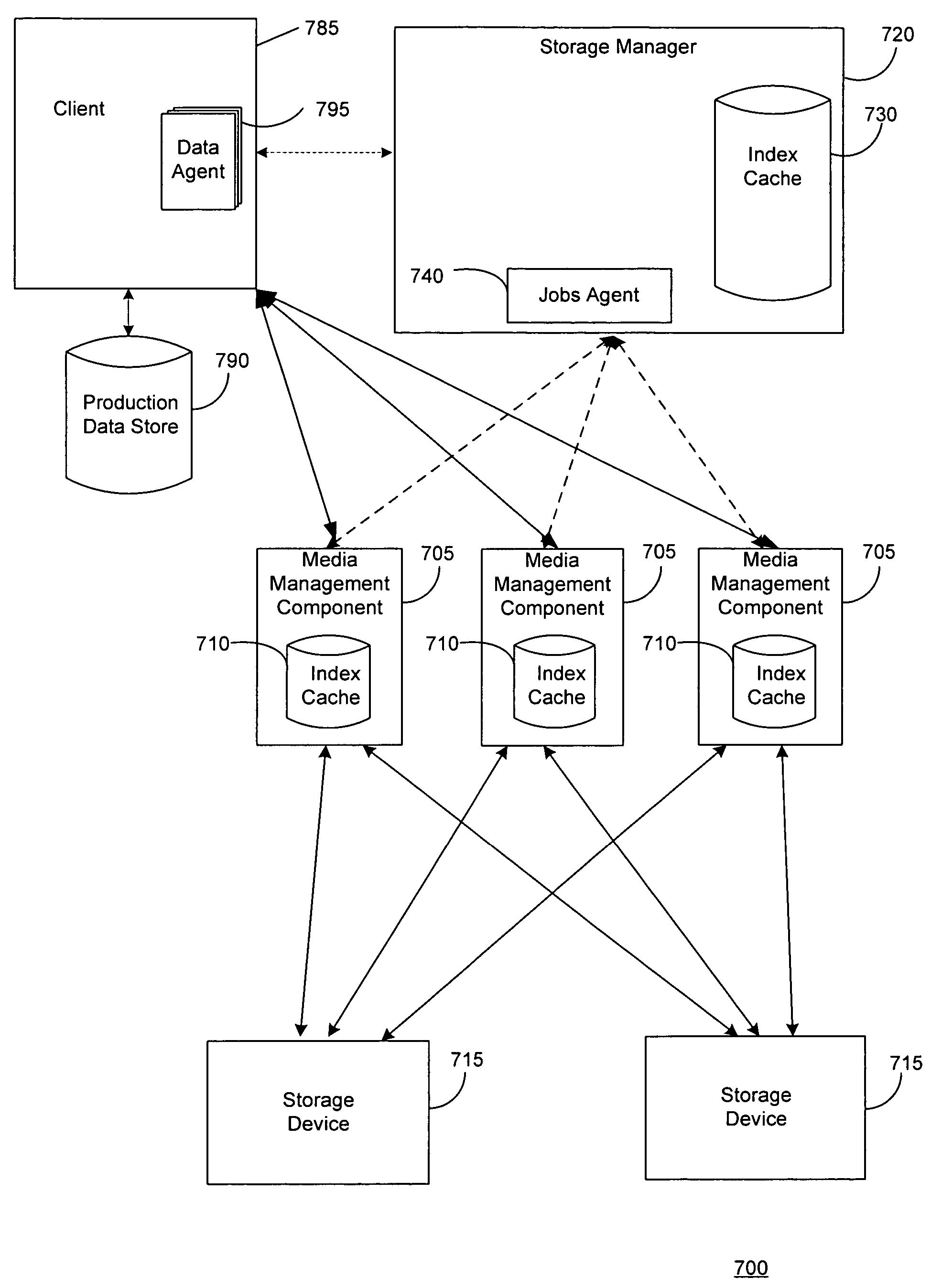

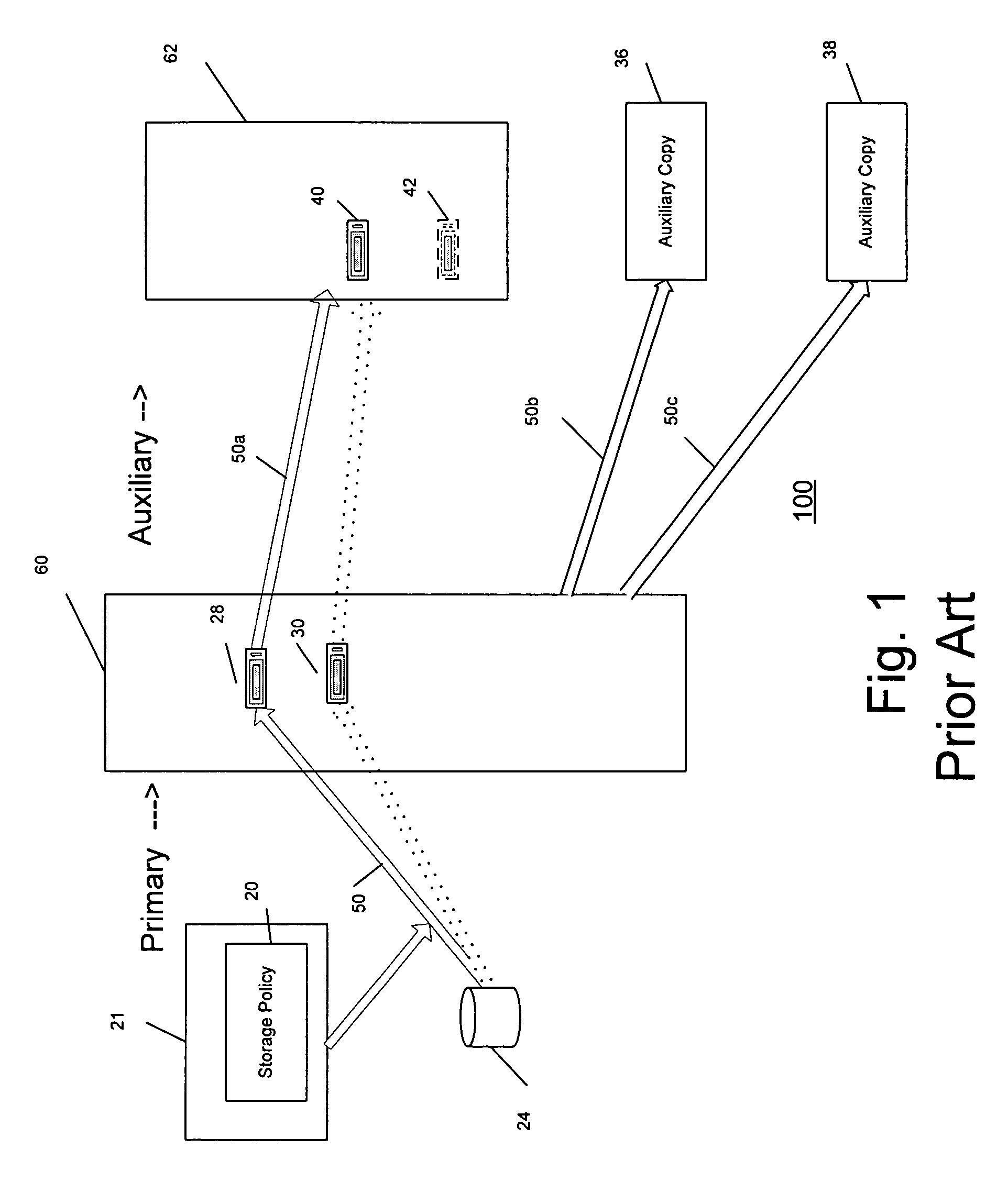

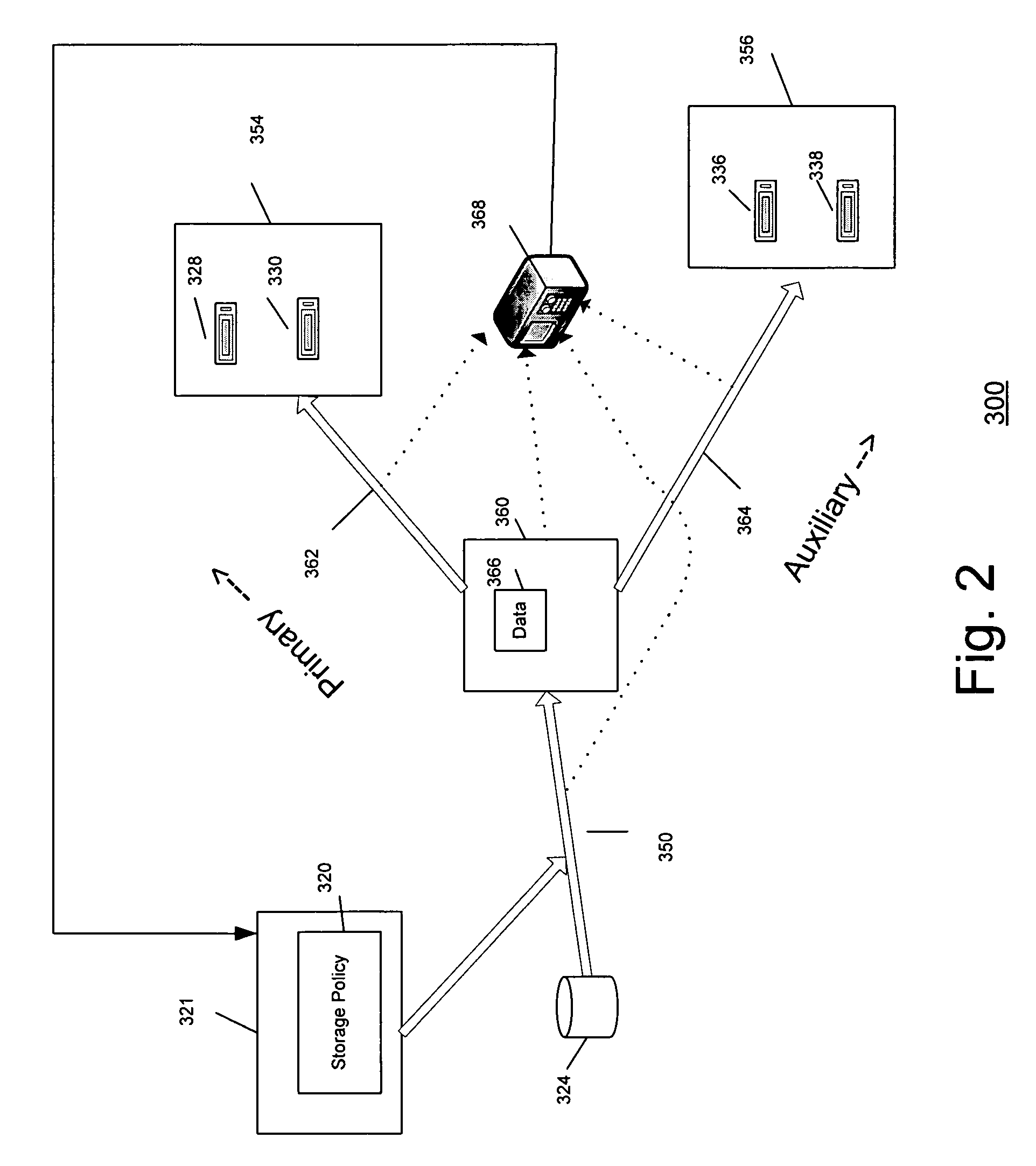

System and method for performing auxiliary storage operations

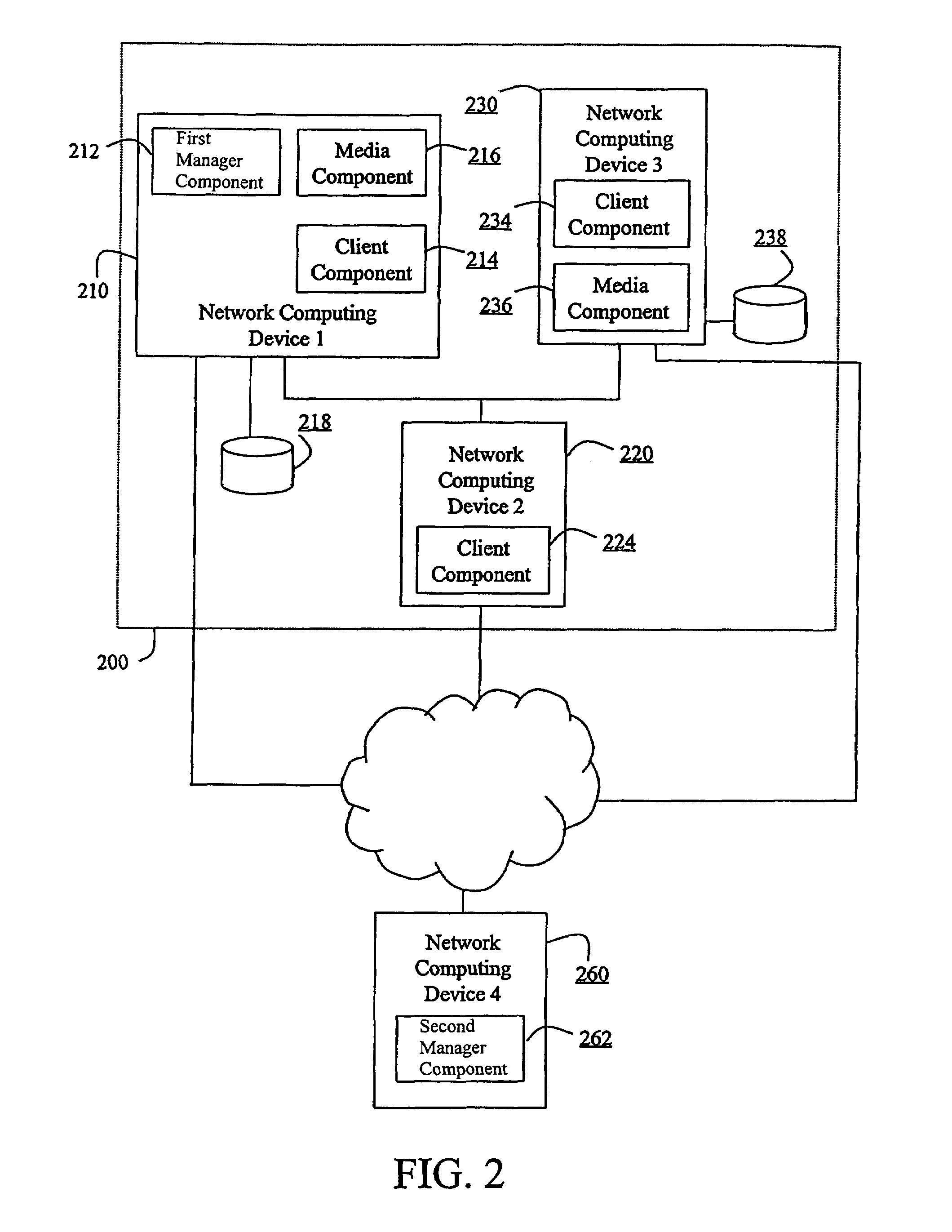

Systems and methods for protecting data in a tiered storage system are provided. The storage system comprises a management server, a media management component connected to the management server, a plurality of storage media connected to the media management component, and a data source connected to the media management component. Source data is copied from a source to a buffer to produce intermediate data. The intermediate data is copied to both a first and second medium to produce a primary and auxiliary copy, respectively. An auxiliary copy may be made from another auxiliary copy. An auxiliary copy may also be made from a primary copy right before the primary copy is pruned.

Owner:COMMVAULT SYST INC

Method and system to reduce system boot loader download time for spi based flash memories

InactiveUS20140115229A1High frequencyMemory adressing/allocation/relocationDigital storageLoad timePeripheral

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

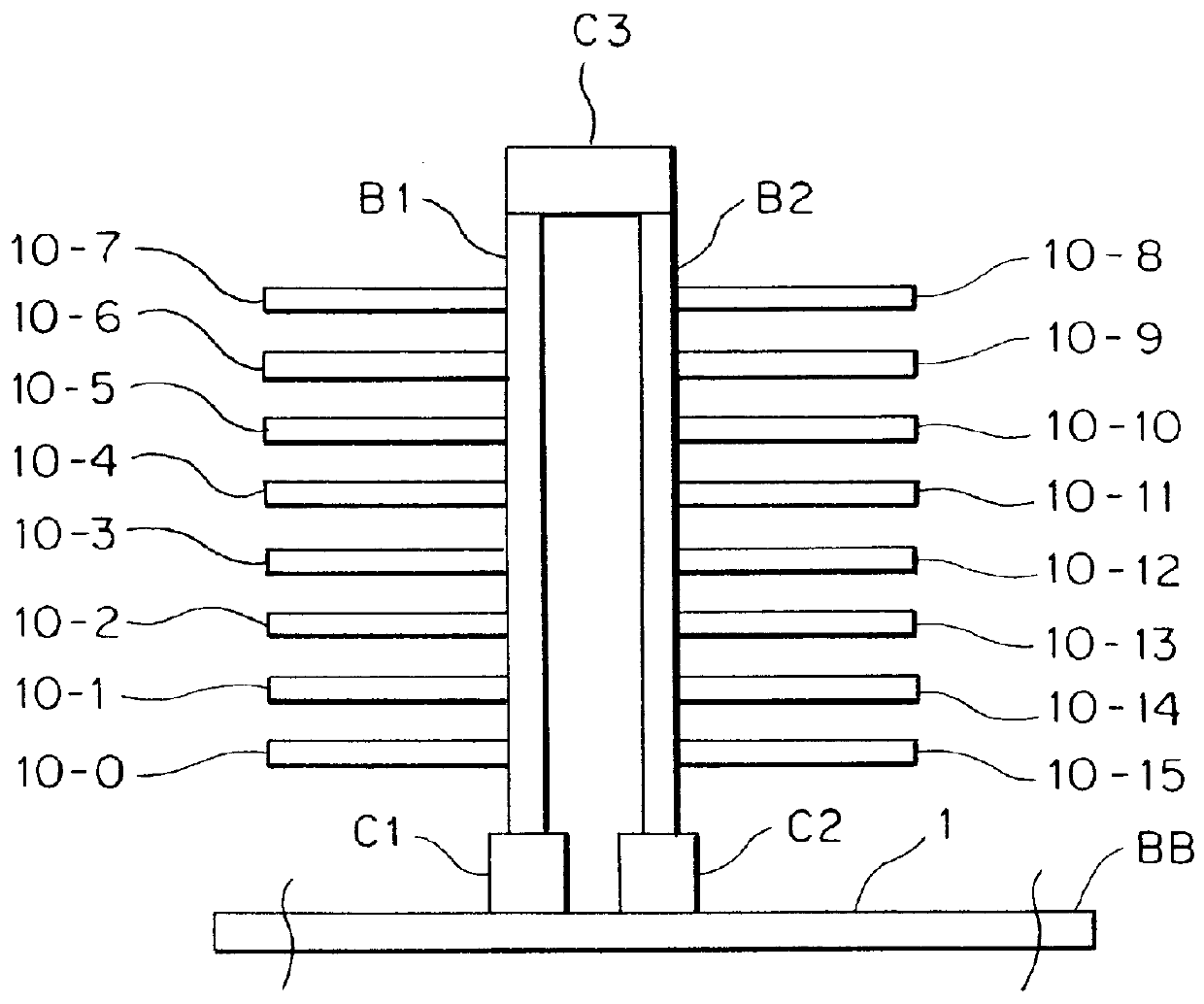

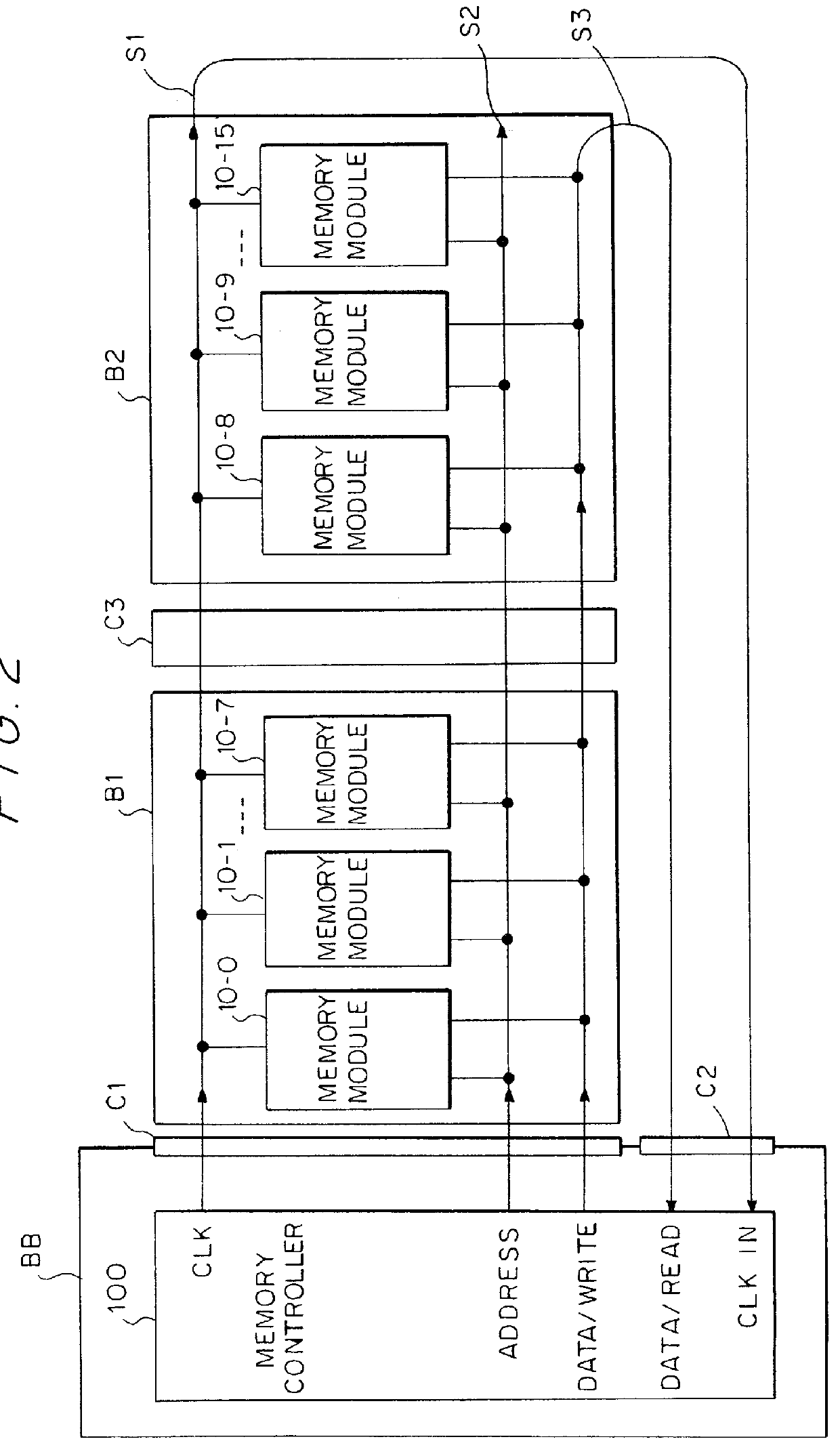

Source-clock-synchronized memory system and memory unit

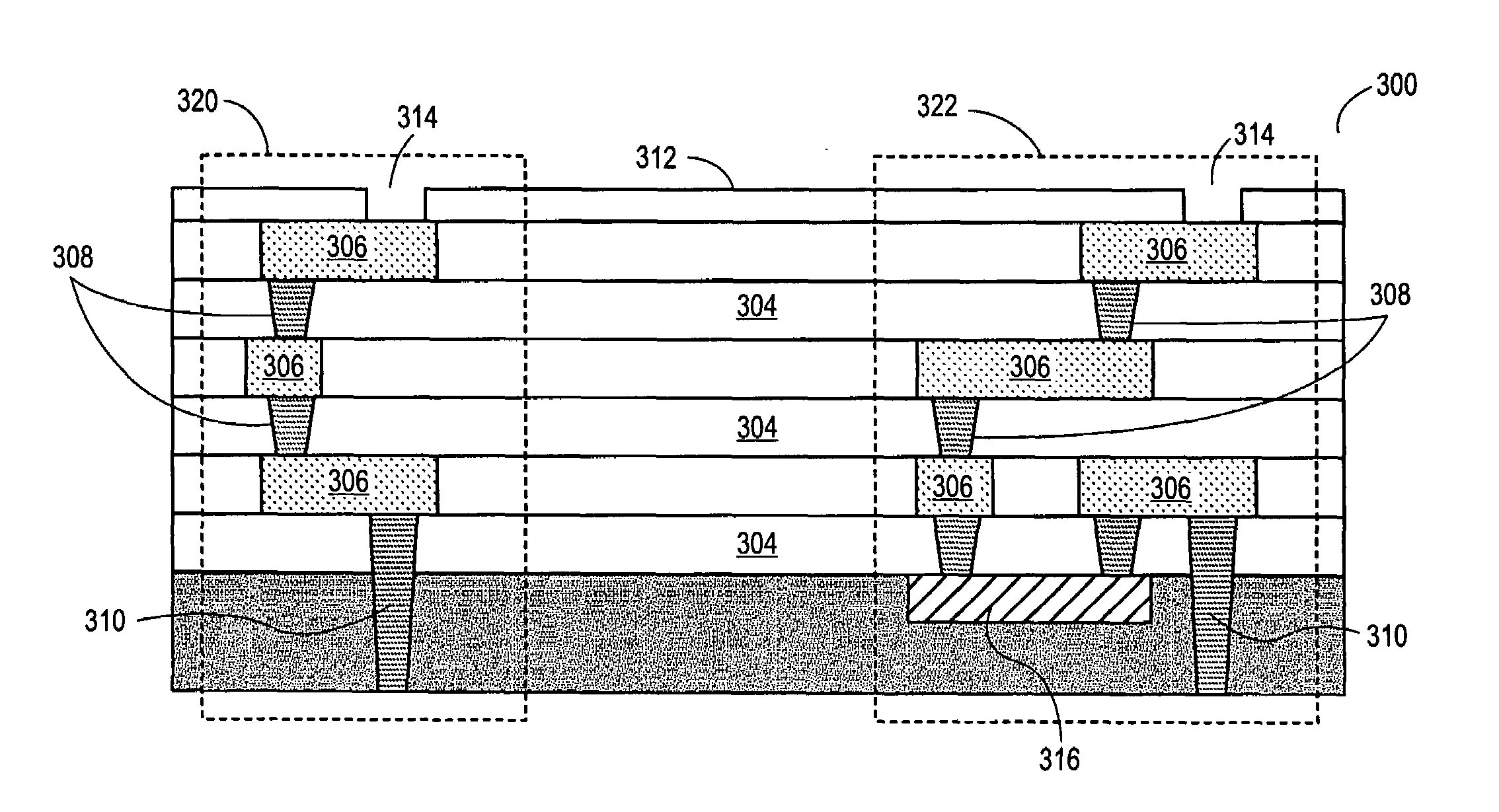

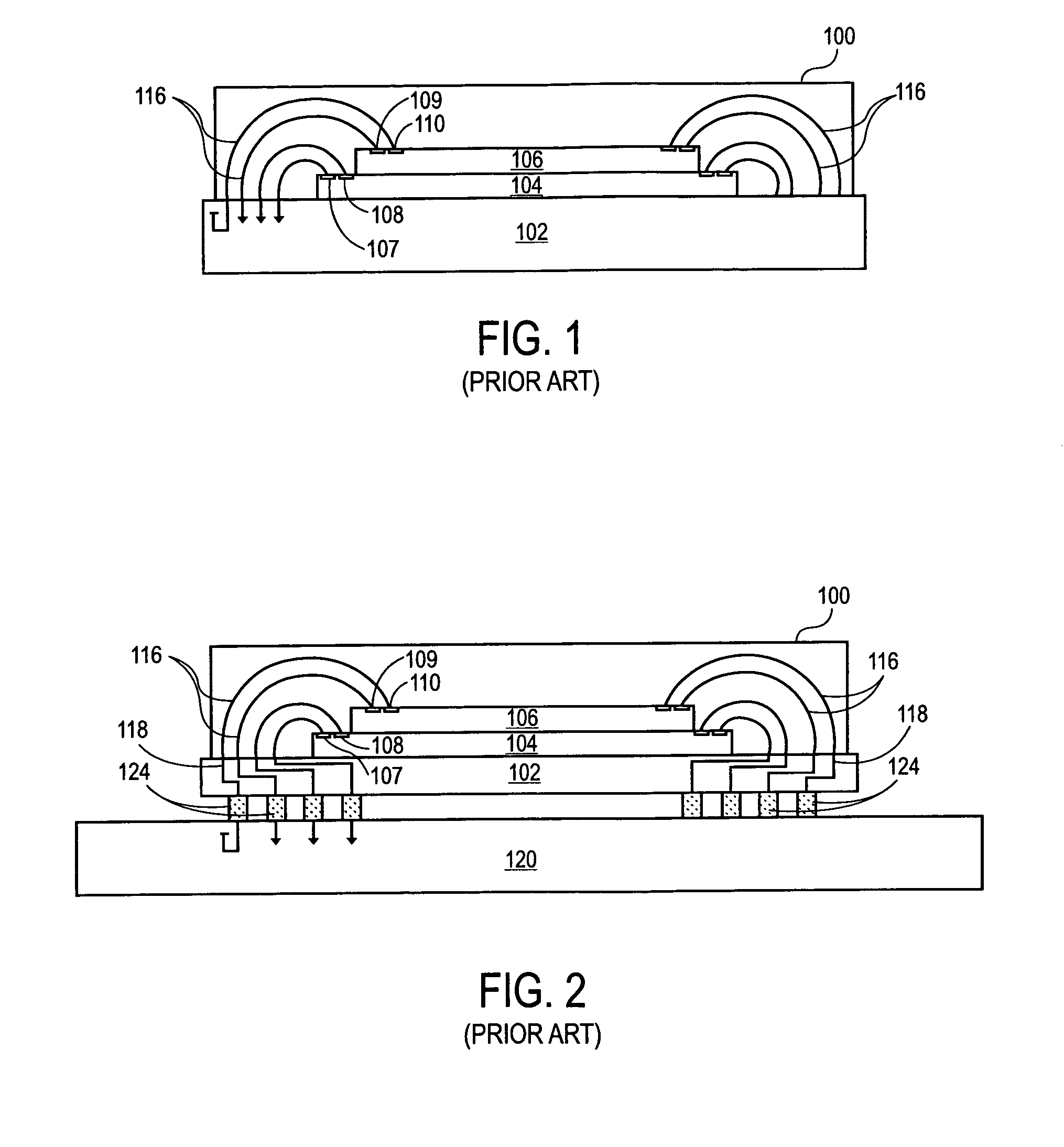

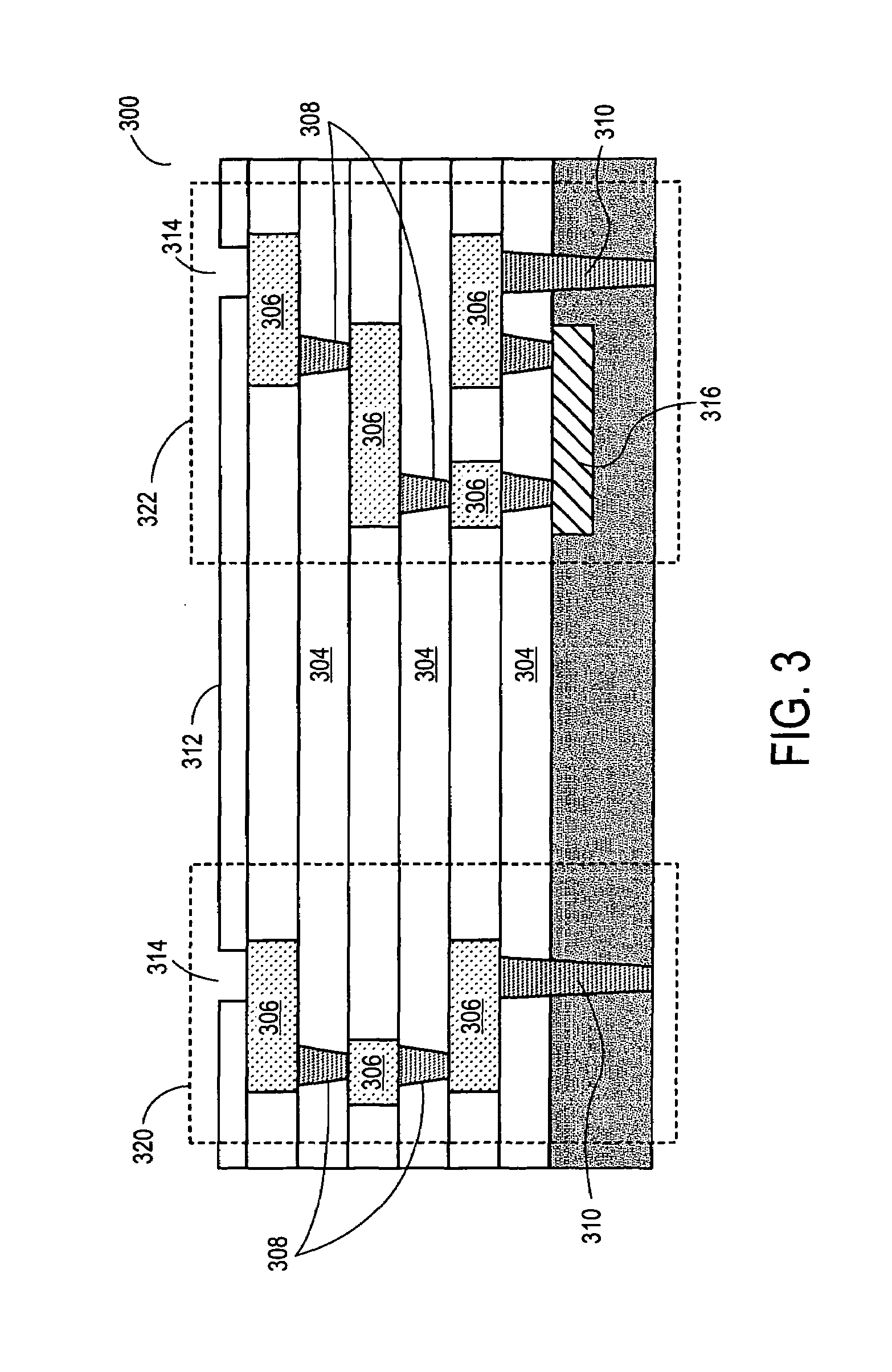

InactiveUS6034878ALarge data storage capacity per memoryImprove installation densityMemory adressing/allocation/relocationDigital storageMemory bankComputer module

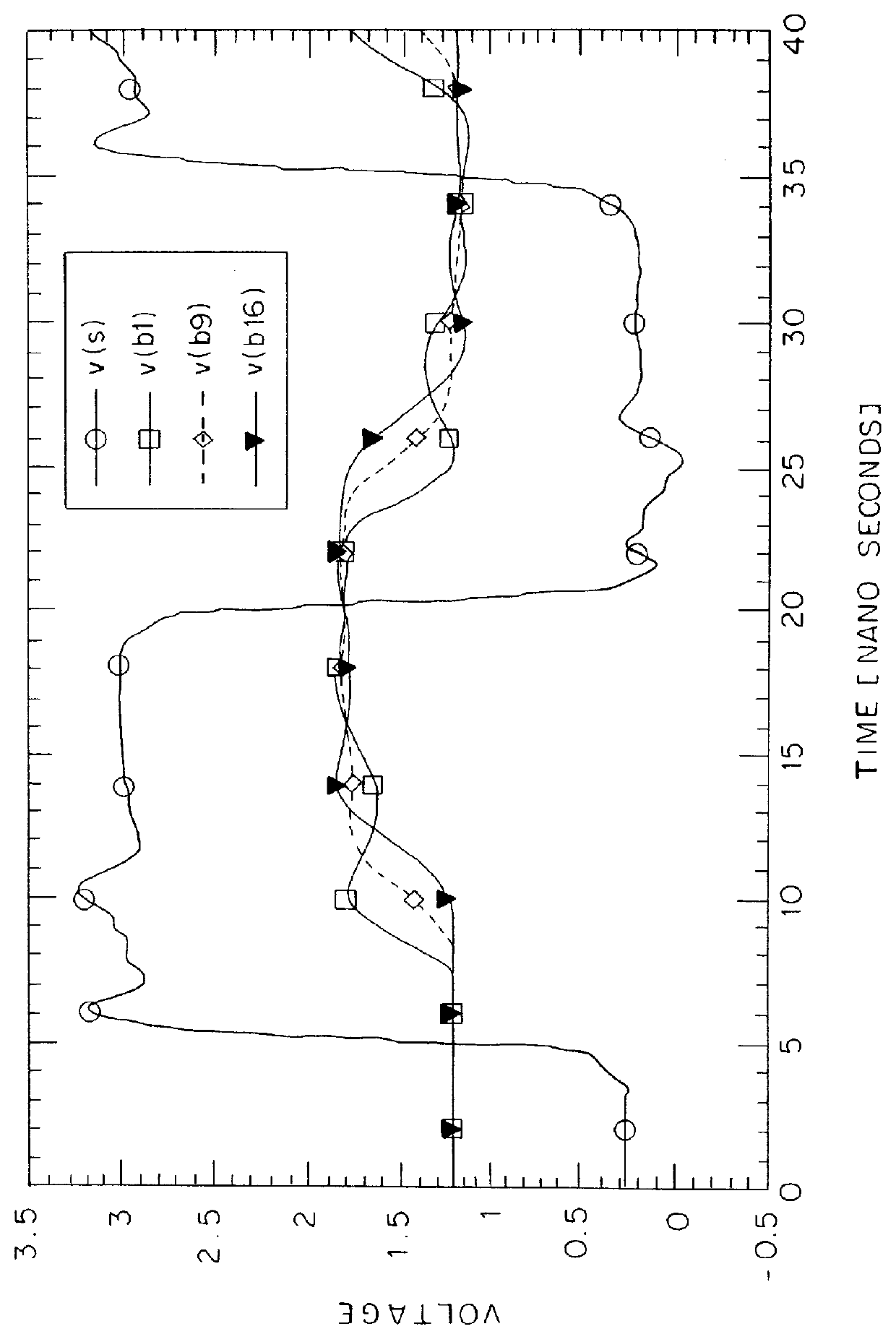

A source-clock-synchronized memory system having a large data storage capacity per memory bank and a high mounting density. The invention includes a memory unit having a first memory riser board B1 mounted on a base board through a first connector C1 and a second memory riser board B2 mounted on the base board BB through a second connector C2. The first memory riser board has a plurality of first memory modules mounted on the front surface thereof and the second memory riser board has a plurality of second memory modules mounted on the front surface thereof. The first and second memory riser boards are arranged in such a way that the back surface of the first memory riser board faces the back surface of the second memory riser board. The invention further includes a board linking connector for connecting signal lines on the first memory riser board to corresponding signal lines on the second memory riser board.

Owner:DELTA KOGYO CO LTD +1

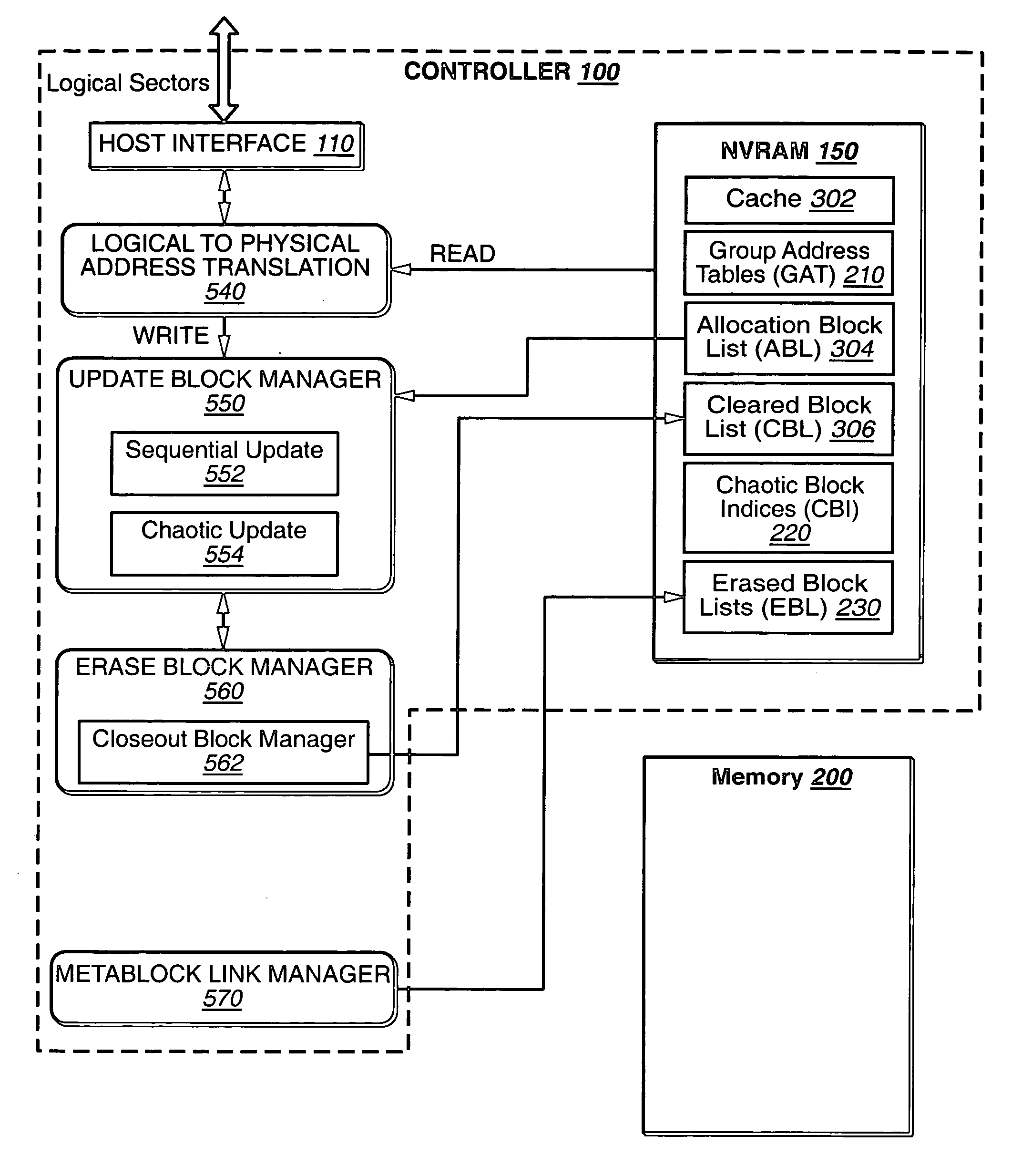

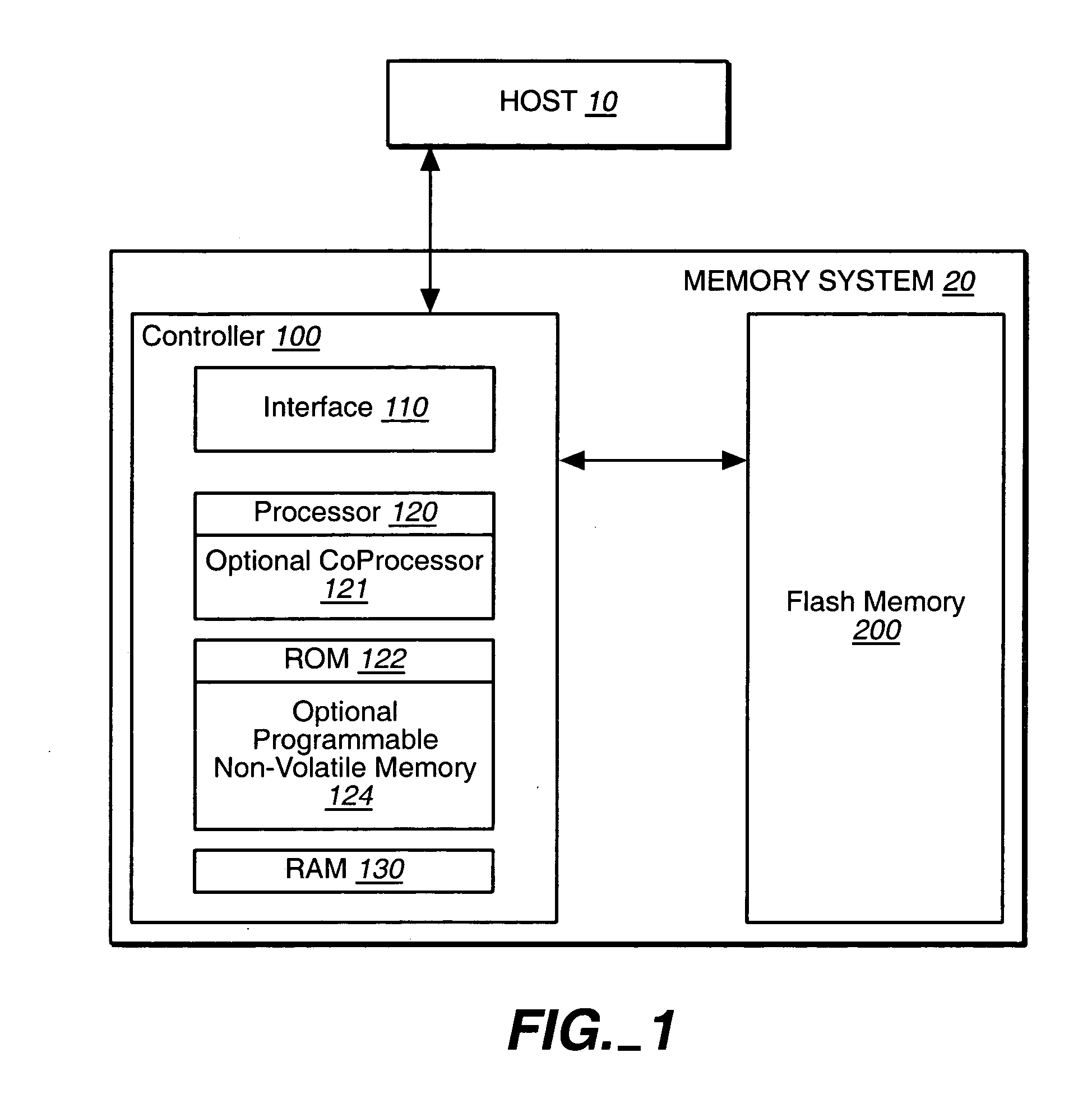

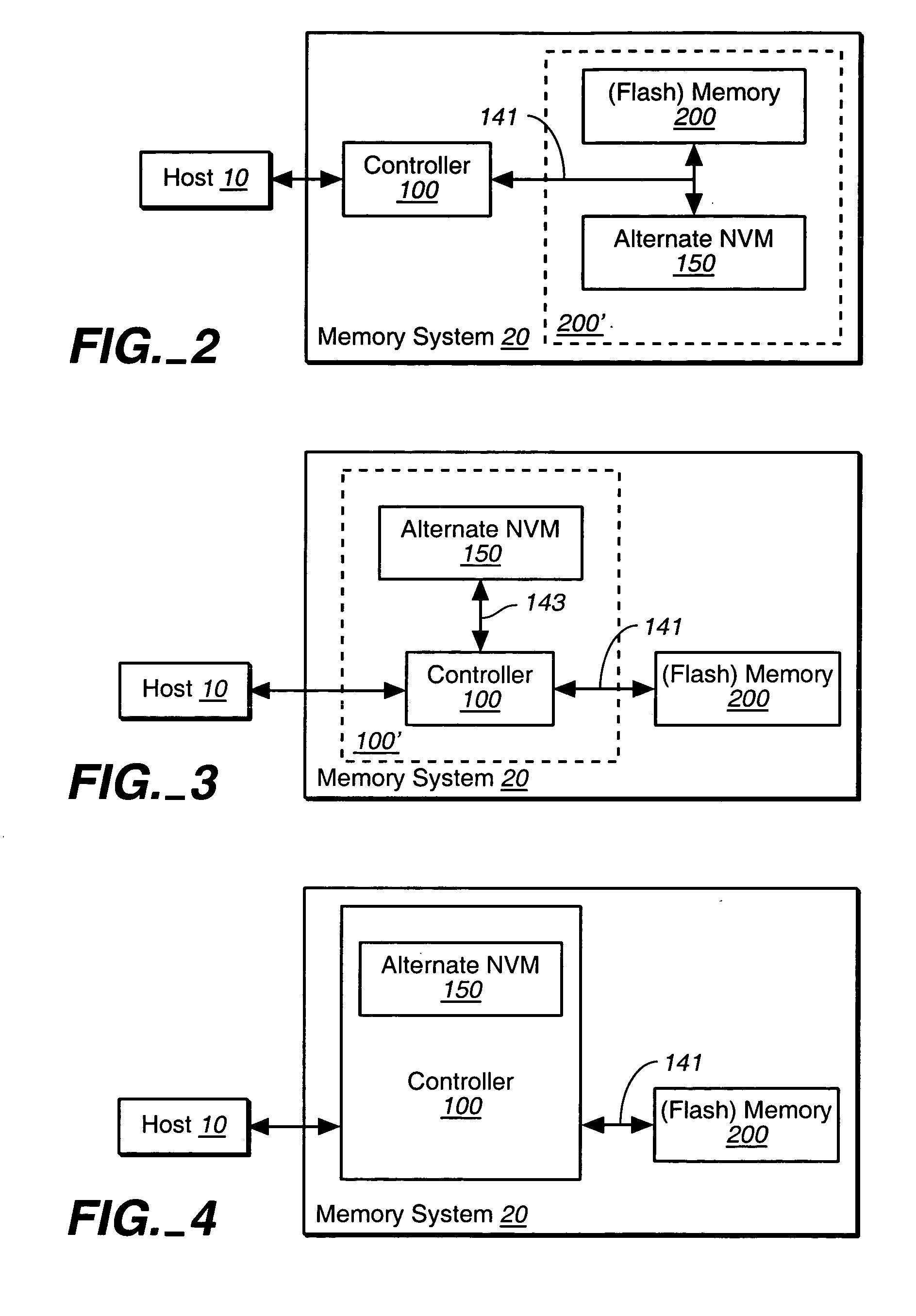

Hybrid non-volatile memory system

InactiveUS20050251617A1Easy accessMemory architecture accessing/allocationInput/output to record carriersData controlControl data

The present invention presents a hybrid non-volatile system that uses non-volatile memories based on two or more different non-volatile memory technologies in order to exploit the relative advantages of each these technology with respect to the others. In an exemplary embodiment, the memory system includes a controller and a flash memory, where the controller has a non-volatile RAM based on an alternate technology such as FeRAM. The flash memory is used for the storage of user data and the non-volatile RAM in the controller is used for system control data used by the control to manage the storage of host data in the flash memory. The use of an alternate non-volatile memory technology in the controller allows for a non-volatile copy of the most recent control data to be accessed more quickly as it can be updated on a bit by bit basis. In another exemplary embodiment, the alternate non-volatile memory is used as a cache where data can safely be staged prior to its being written to the to the memory or read back to the host.

Owner:SANDISK TECH LLC

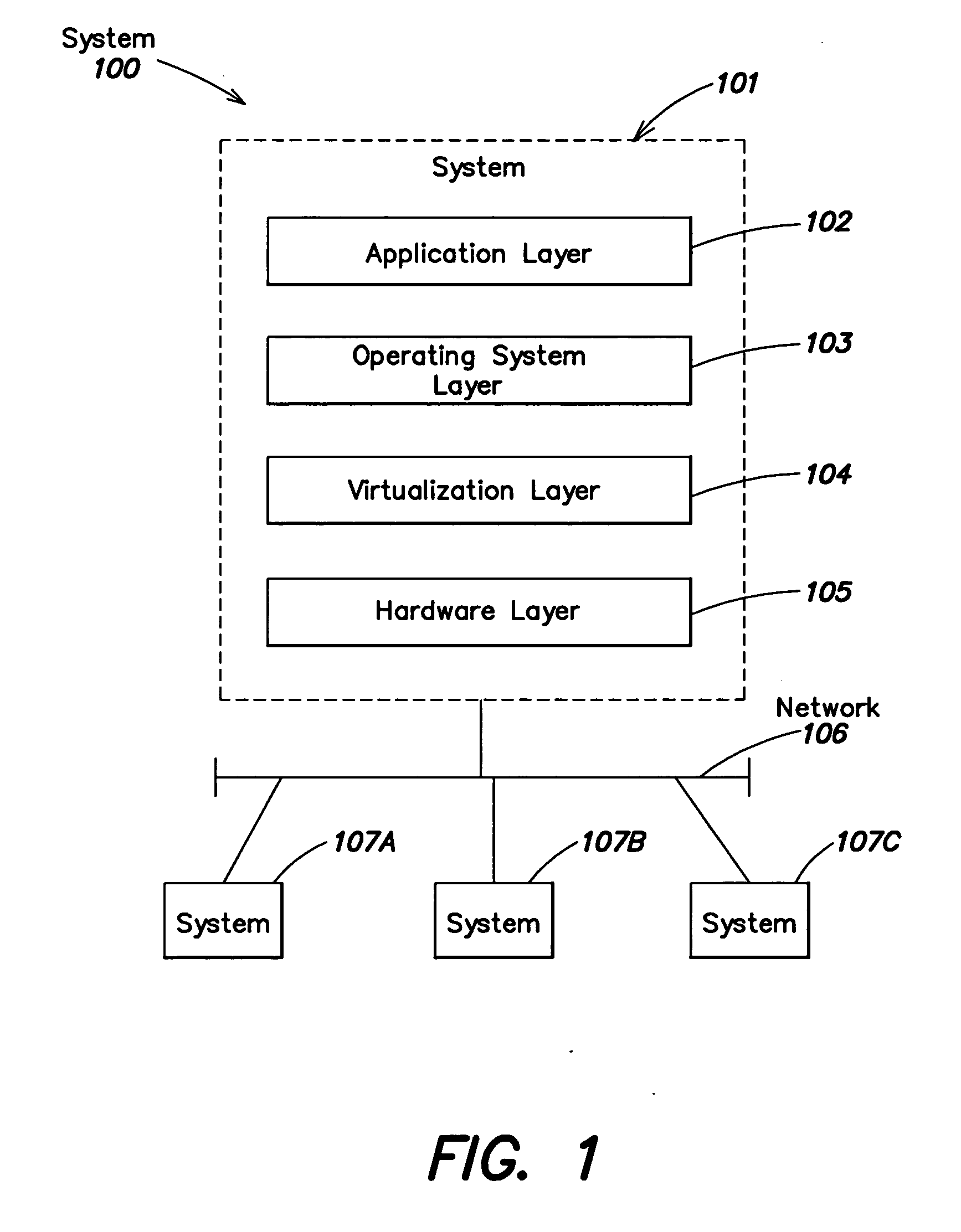

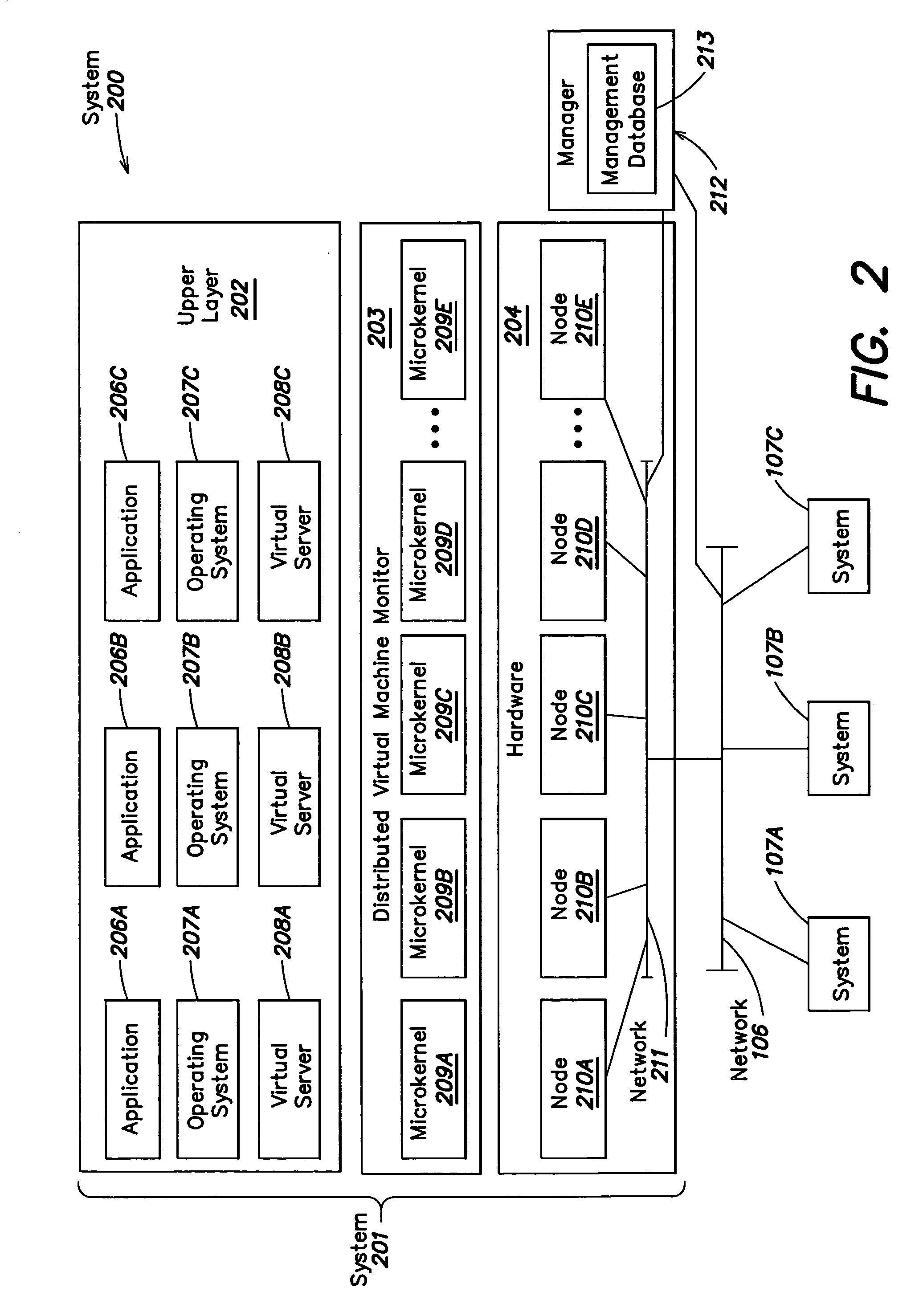

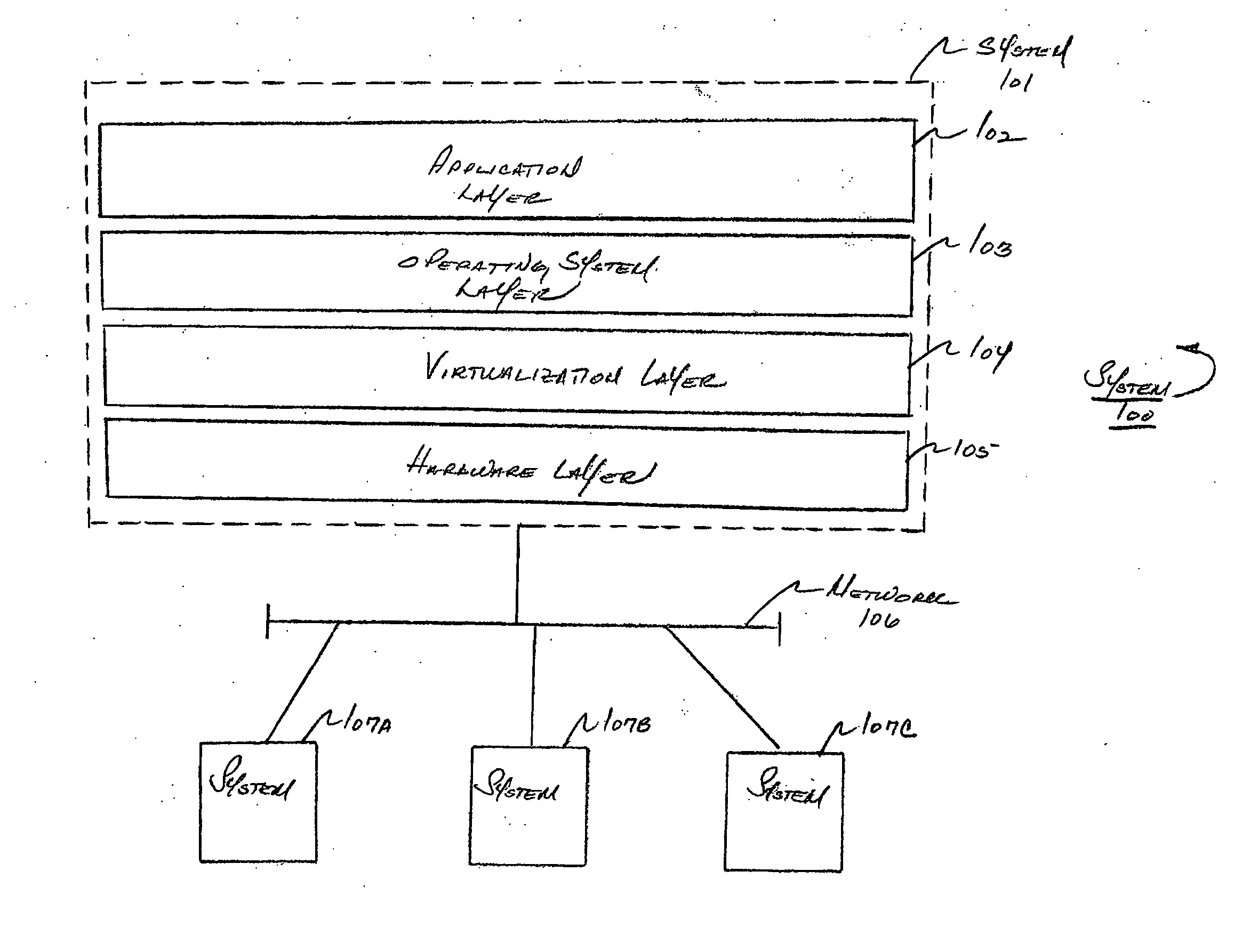

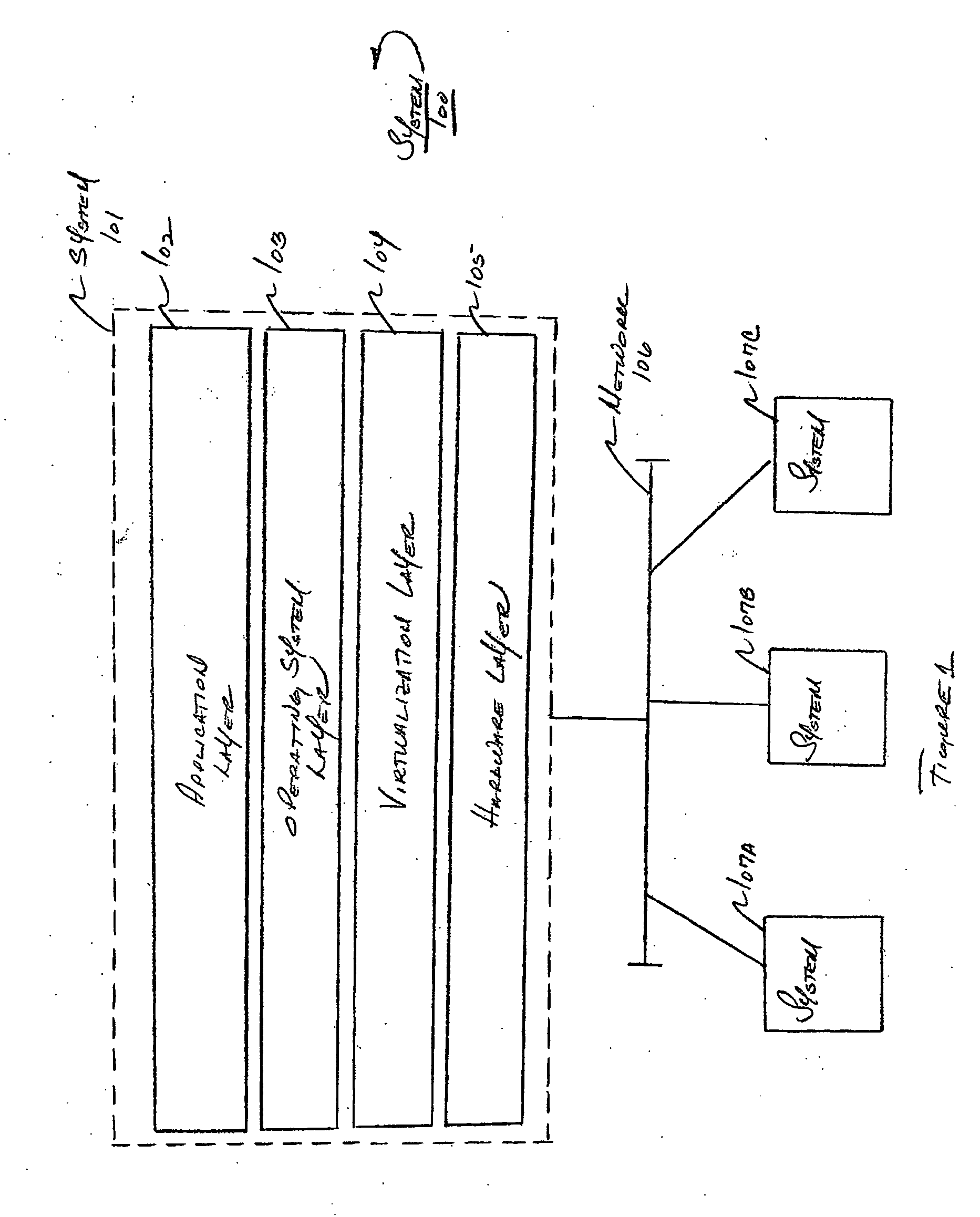

Method and apparatus for providing virtual computing services

InactiveUS20050044301A1Easy to manageLow costResource allocationMemory adressing/allocation/relocationVirtualizationOperational system

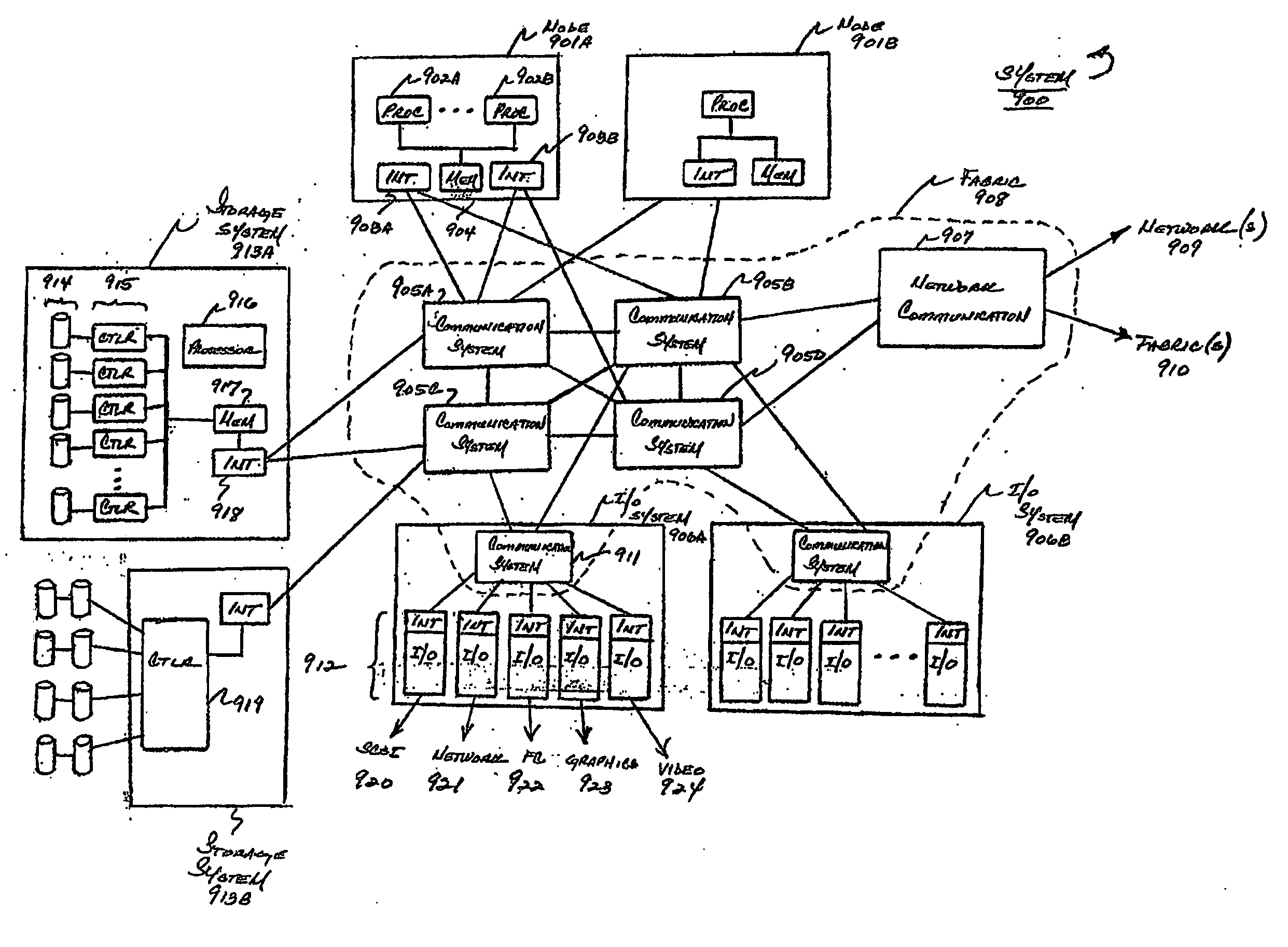

A level of abstraction is created between a set of physical processors and a set of virtual multiprocessors to form a virtualized data center. This virtualized data center comprises a set of virtual, isolated systems separated by a boundary referred as a partition. Each of these systems appears as a unique, independent virtual multiprocessor computer capable of running a traditional operating system and its applications. In one embodiment, the system implements this multi-layered abstraction via a group of microkernels, each of which communicates with one or more peer microkernel over a high-speed, low-latency interconnect and forms a distributed virtual machine monitor. Functionally, a virtual data center is provided, including the ability to take a collection of servers and execute a collection of business applications over a compute fabric comprising commodity processors coupled by an interconnect. Processor, memory and I / O are virtualized across this fabric, providing a single system, scalability and manageability. According to one embodiment, this virtualization is transparent to the application, and therefore, applications may be scaled to increasing resource demands without modifying the application.

Owner:ORACLE INT CORP

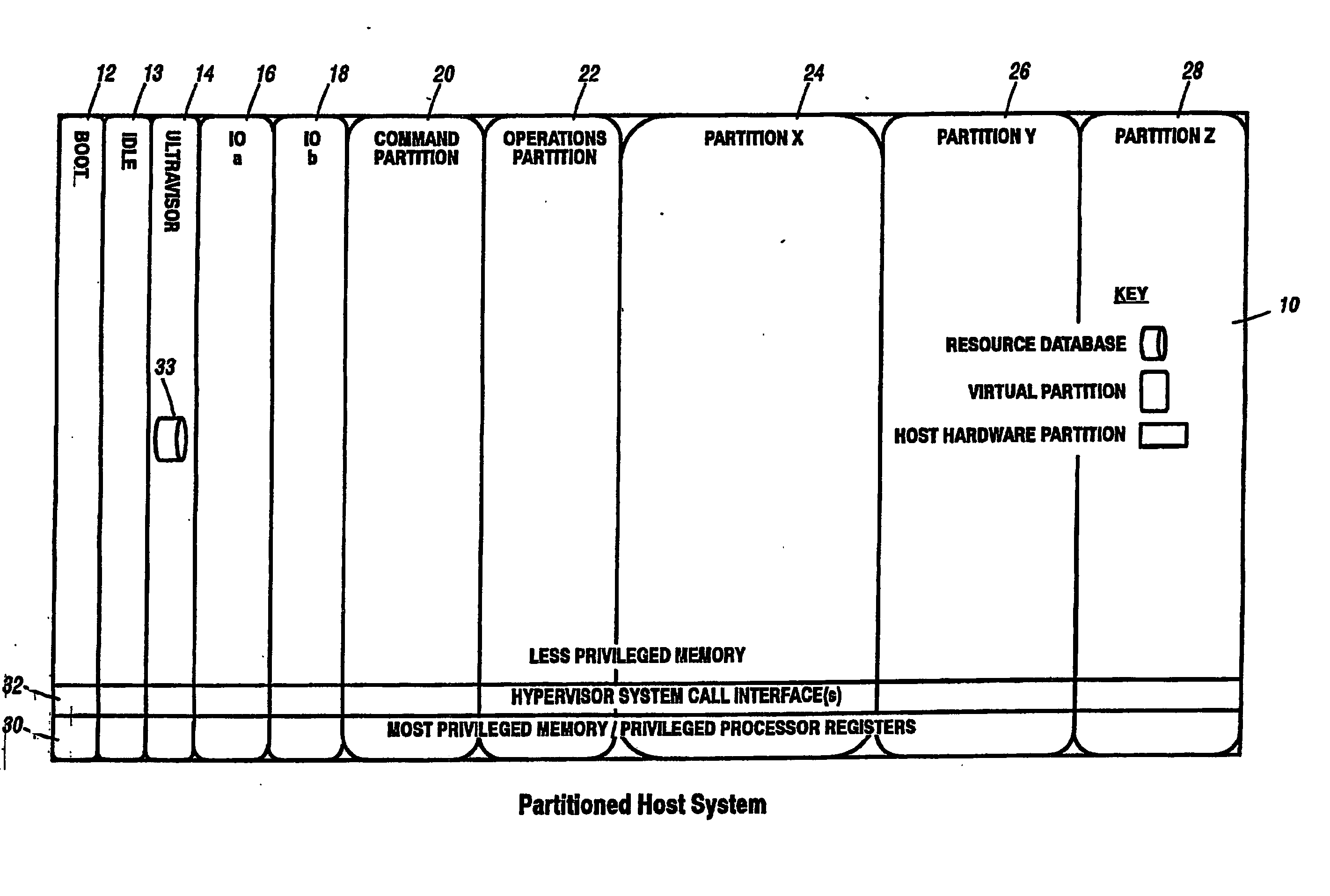

Virtual data center that allocates and manages system resources across multiple nodes

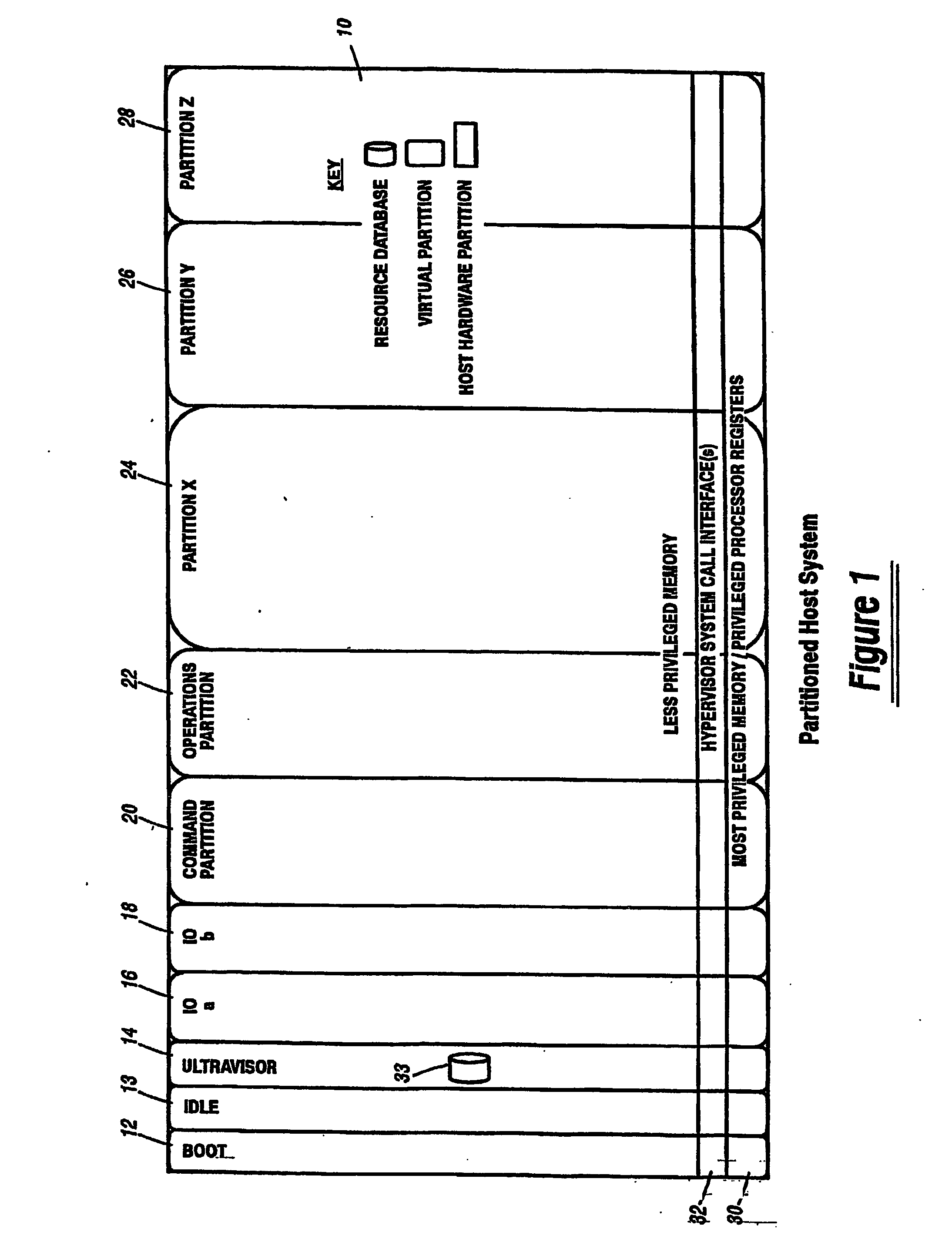

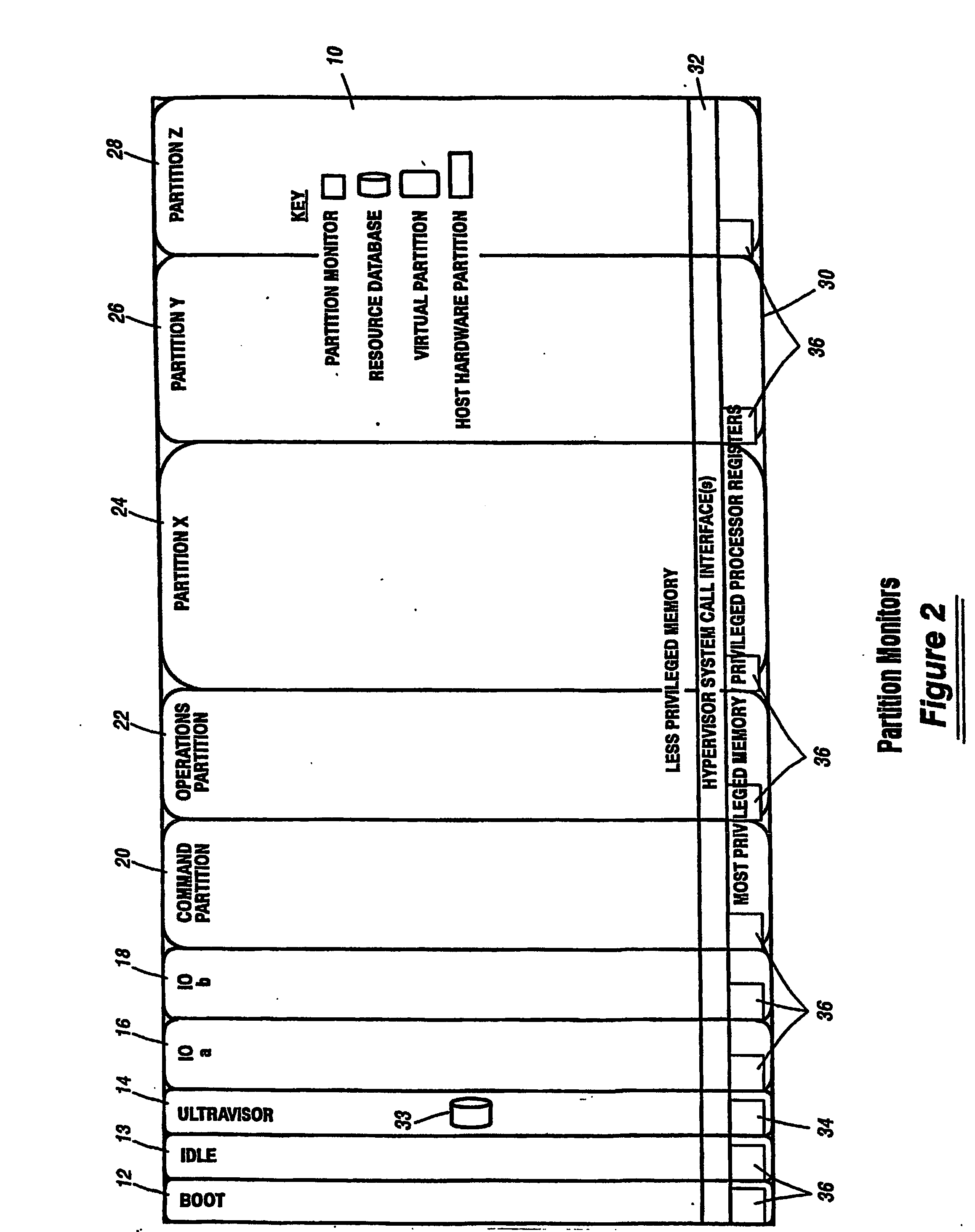

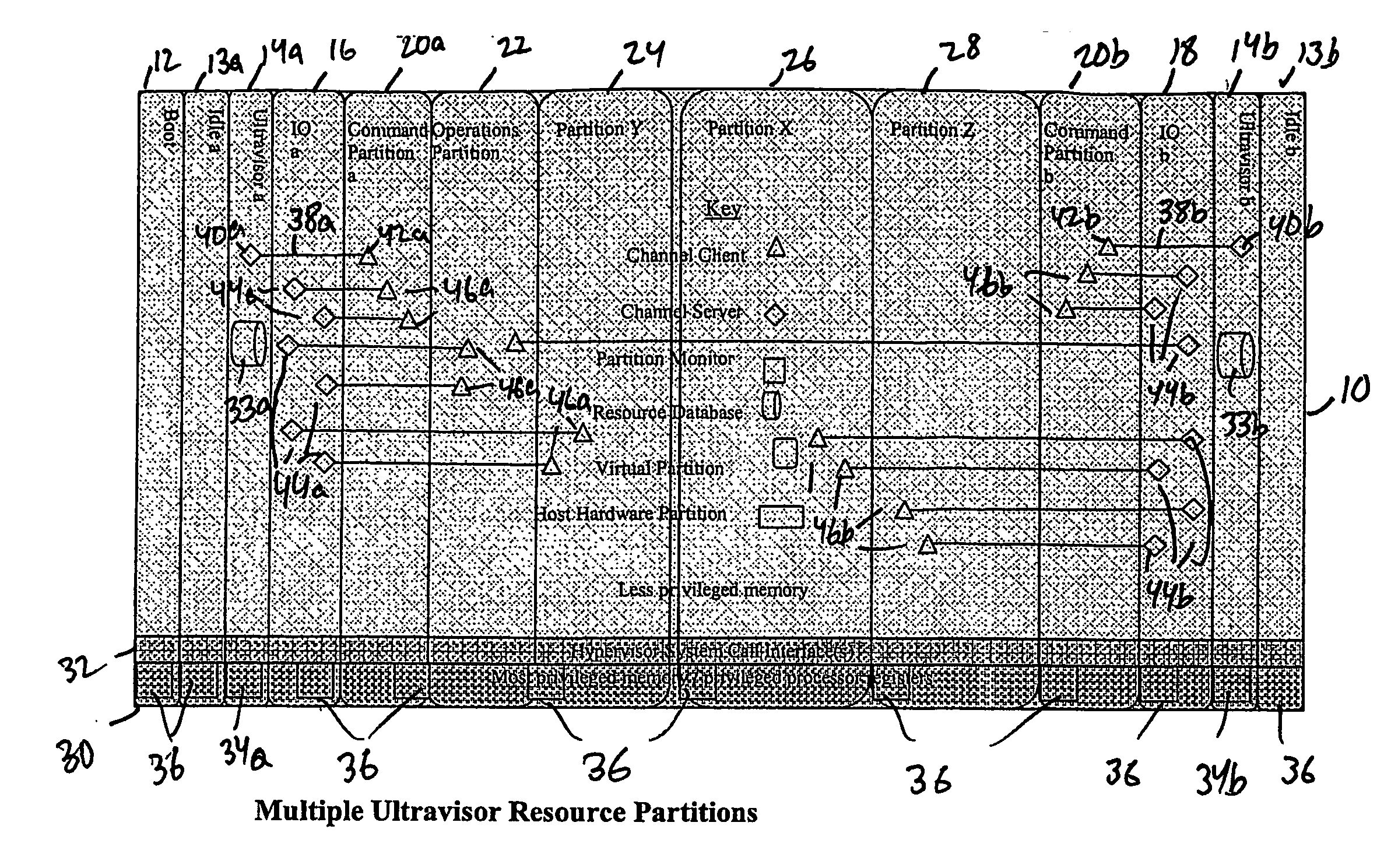

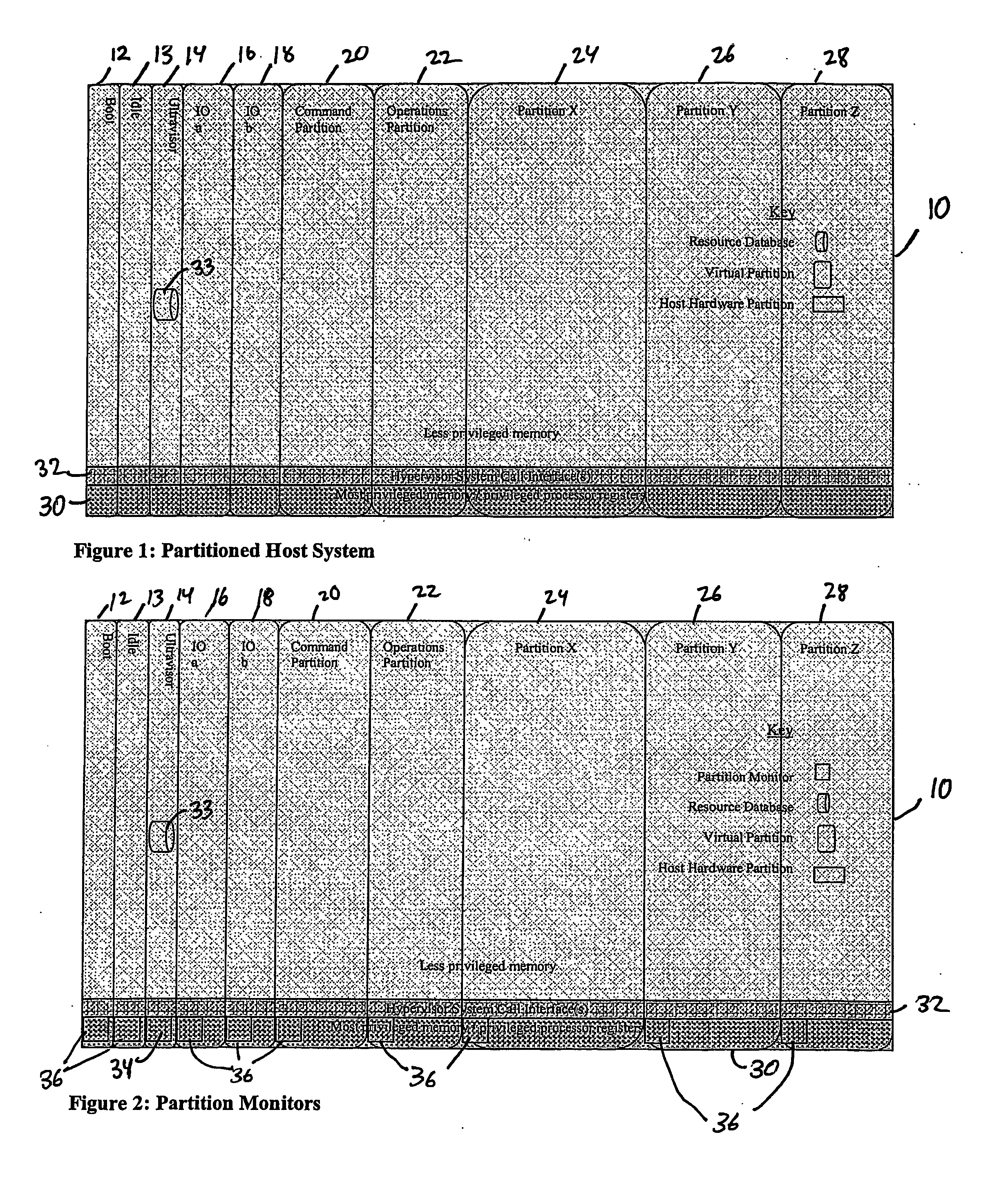

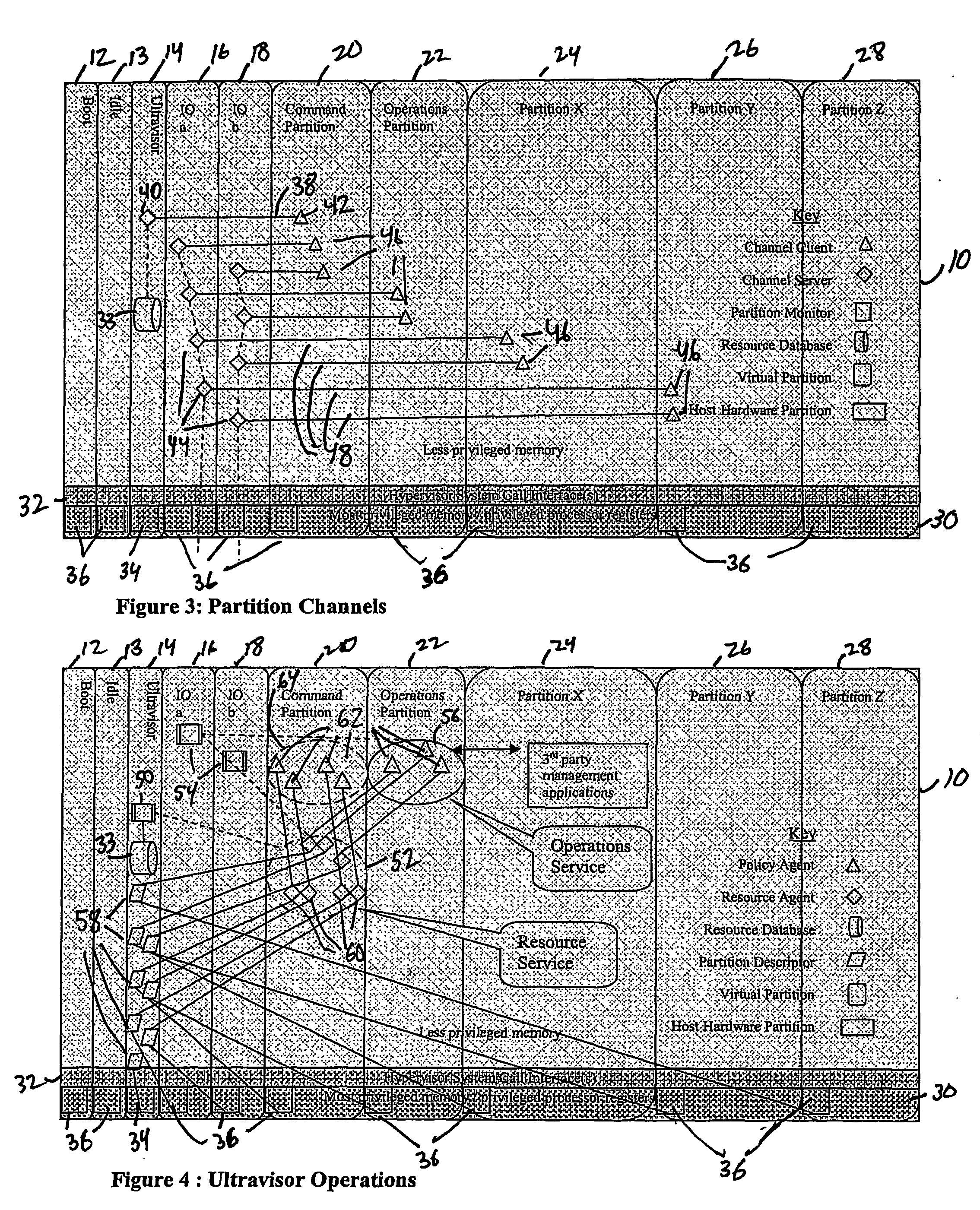

ActiveUS20070067435A1Improve securityExcessive removalError detection/correctionMemory adressing/allocation/relocationOperational systemData center

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. Operating systems in other logical or virtual partitions communicate with the I / O partitions via memory channels established by the ultravisor partition. The guest operating systems in the respective logical or virtual partitions are modified to access monitors that implement a system call interface through which the ultravisor, I / O, and any other special infrastructure partitions may initiate communications with each other and with the respective guest partitions. The guest operating systems are modified so that they do not attempt to use the “broken” instructions in the x86 system that complete virtualization systems must resolve by inserting traps. System resources are separated into zones that are managed by a separate partition containing resource management policies that may be implemented across nodes to implement a virtual data center.

Owner:UNISYS CORP

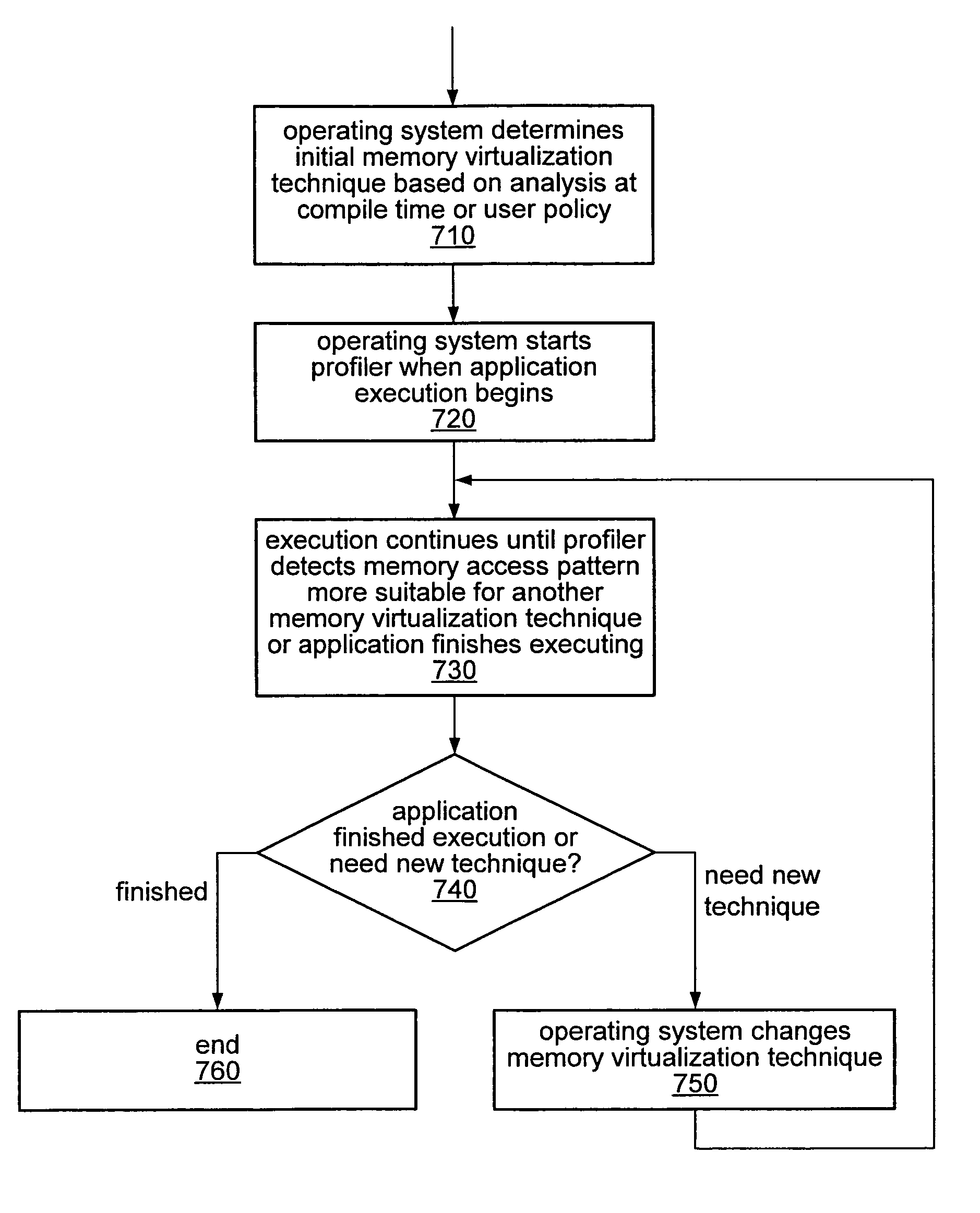

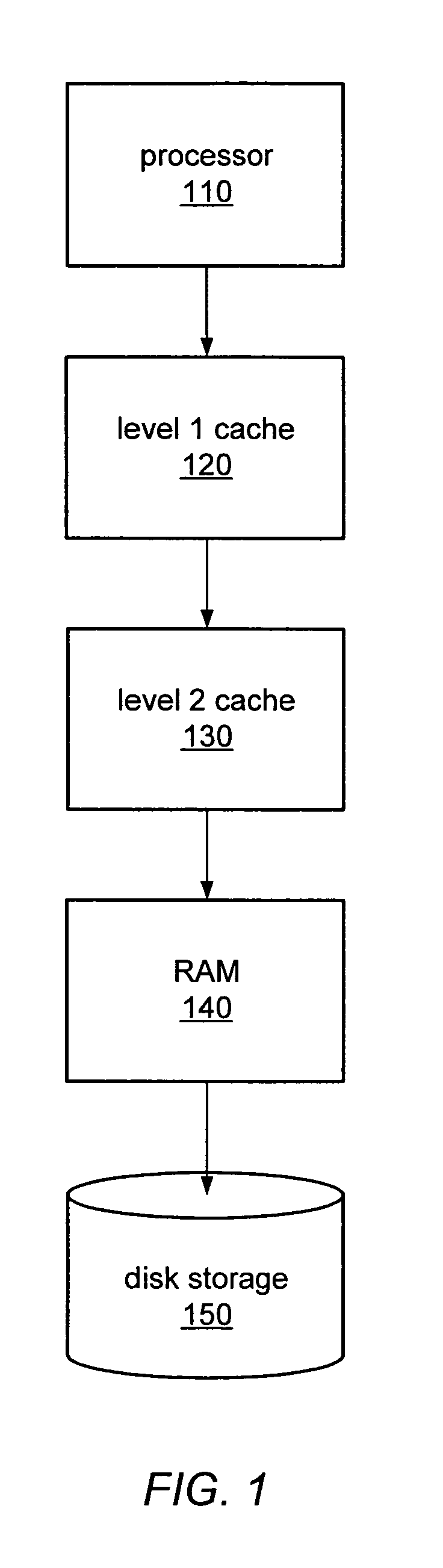

Dynamic selection of memory virtualization techniques

ActiveUS7752417B2Error detection/correctionMemory adressing/allocation/relocationVirtualizationComputerized system

A computer system may be configured to dynamically select a memory virtualization and corresponding virtual-to-physical address translation technique during execution of an application and to dynamically employ the selected technique in place of a current technique without re-initializing the application. The computer system may be configured to determine that a current address translation technique incurs a high overhead for the application's current workload and may be configured to select a different technique dependent on various performance criteria and / or a user policy. Dynamically employing the selected technique may include reorganizing a memory, reorganizing a translation table, allocating a different block of memory to the application, changing a page or segment size, or moving to or from a page-based, segment-based, or function-based address translation technique. A selected translation technique may be dynamically employed for the application independent of a translation technique employed for a different application.

Owner:ORACLE INT CORP

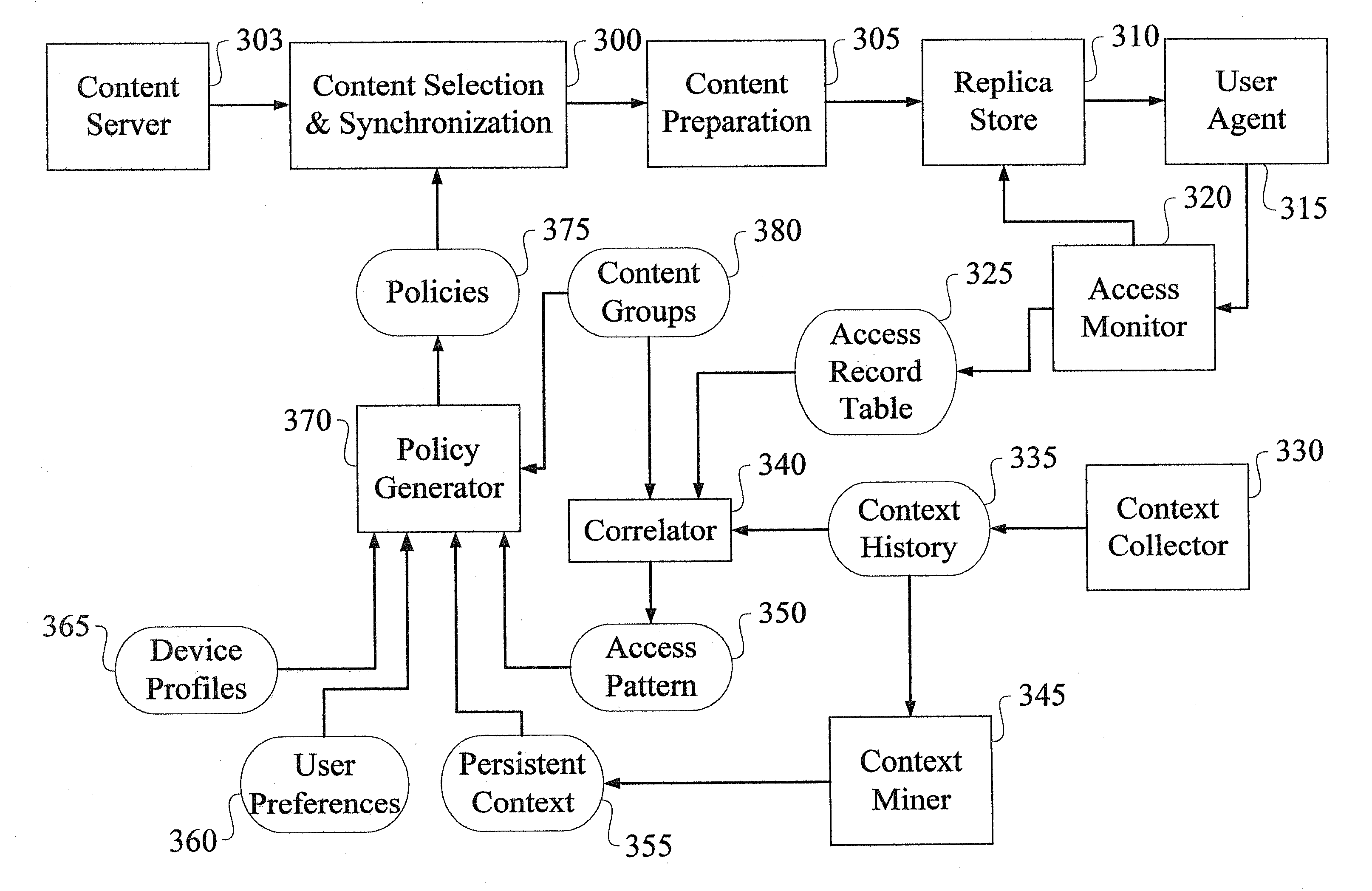

Method and Apparatus for Content Pre-Fetching and Preparation

InactiveUS20090287750A1Improve responsivenessMemory adressing/allocation/relocationInformation formatInformation processingProgram planning

A method of pre-fetching and preparing content in an information processing system is provided. The method includes the steps of generating at least one content pre-fetching policy and at least one content preparation policy, wherein each of the policies are at least in part a function of context information associated with a user. The content is pre-fetched based on information contained within the at least one content pre-fetching policy. Once the content has been pre-fetched, it is prepared based on information contained within the at least one content preparation policy. The context information associated with the user includes at least one of the user's usage patterns, current location, future plans and preferences.

Owner:IBM CORP

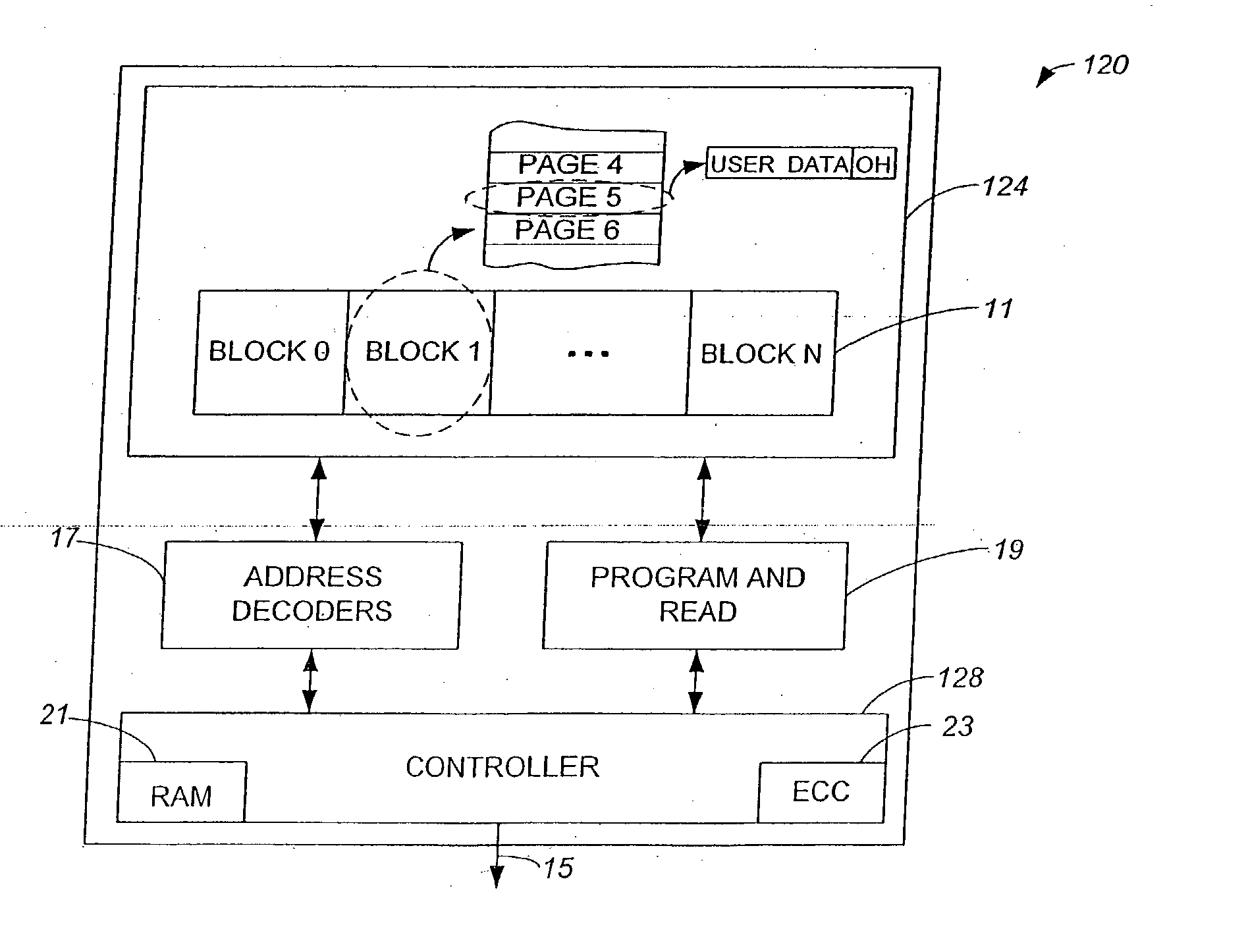

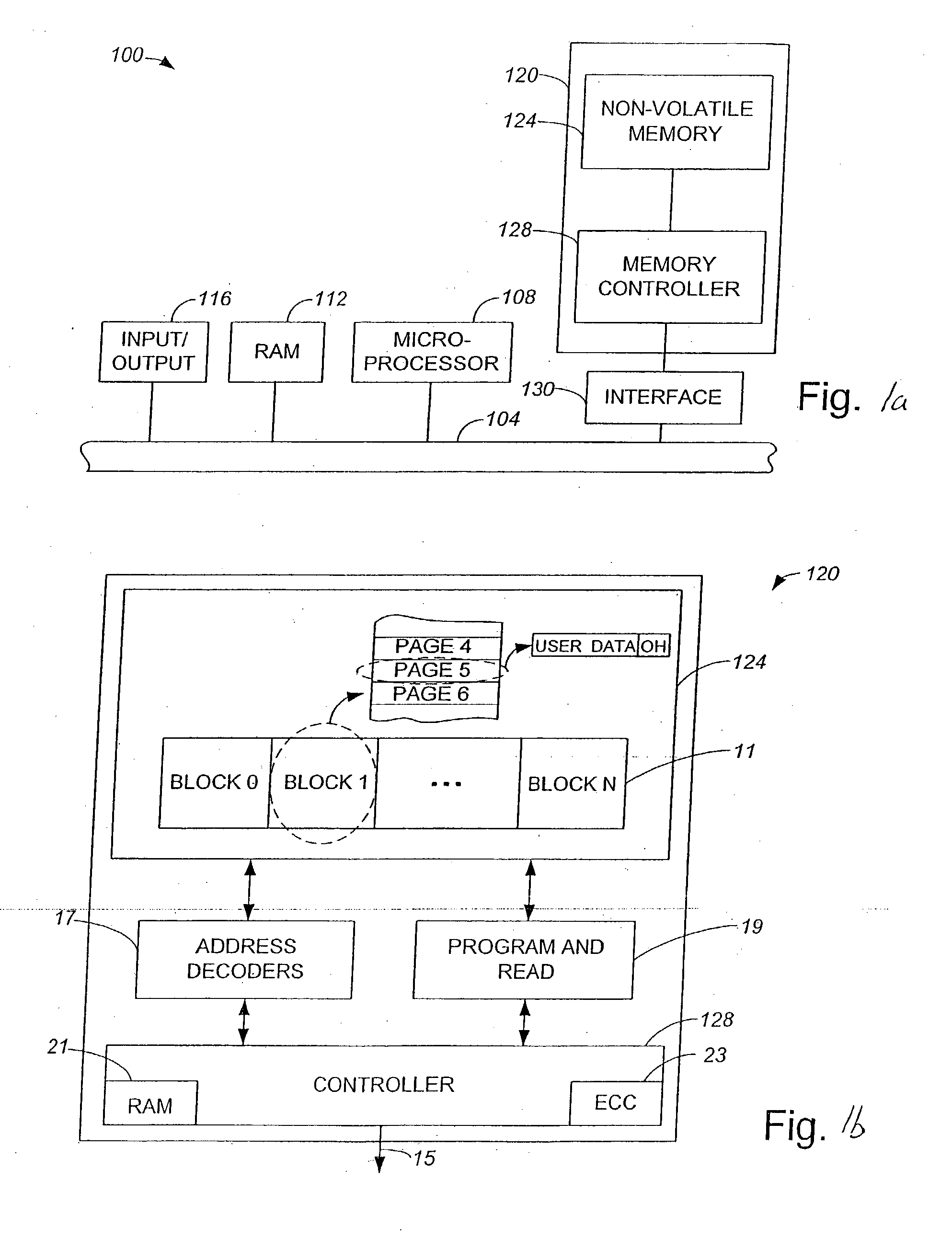

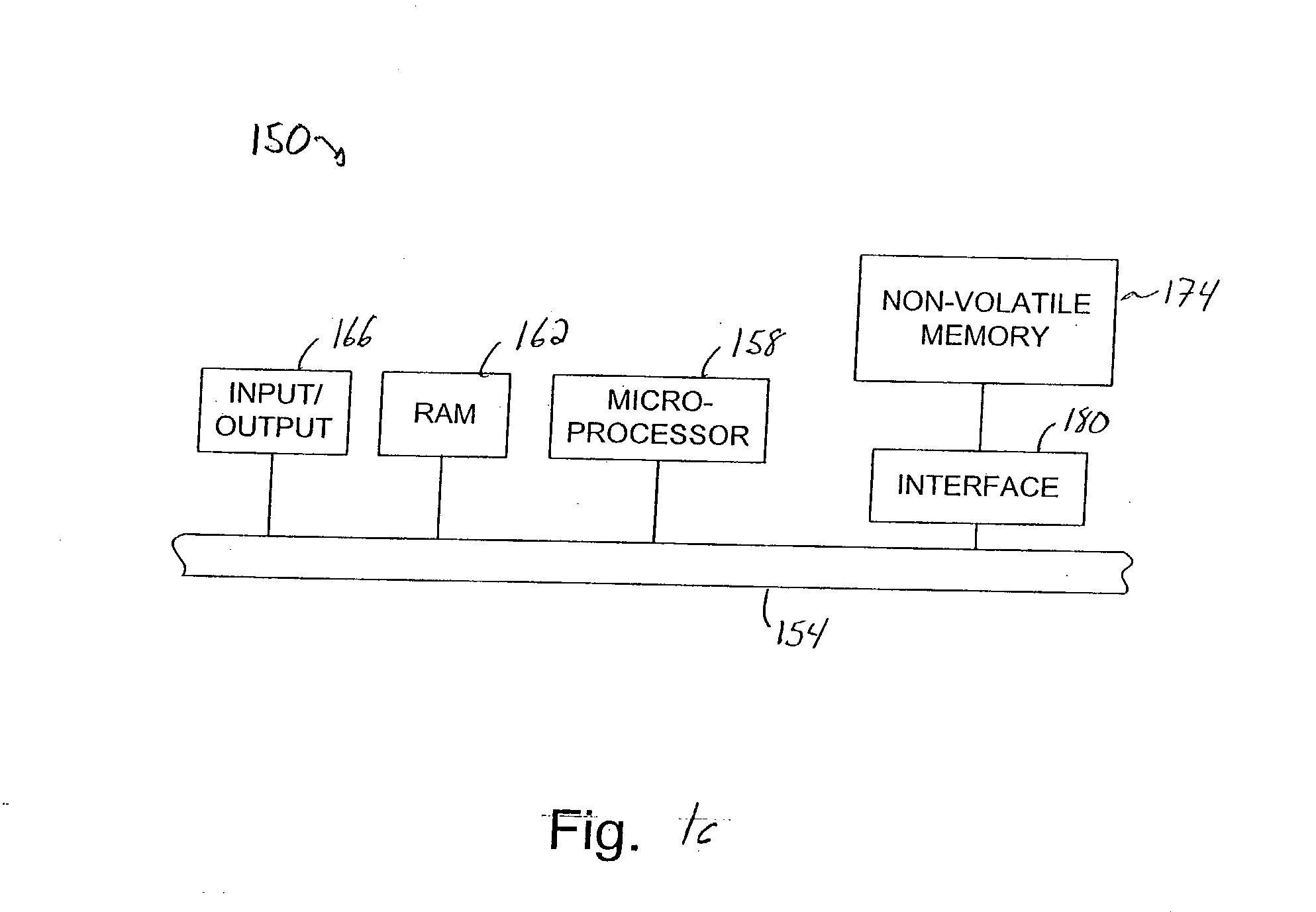

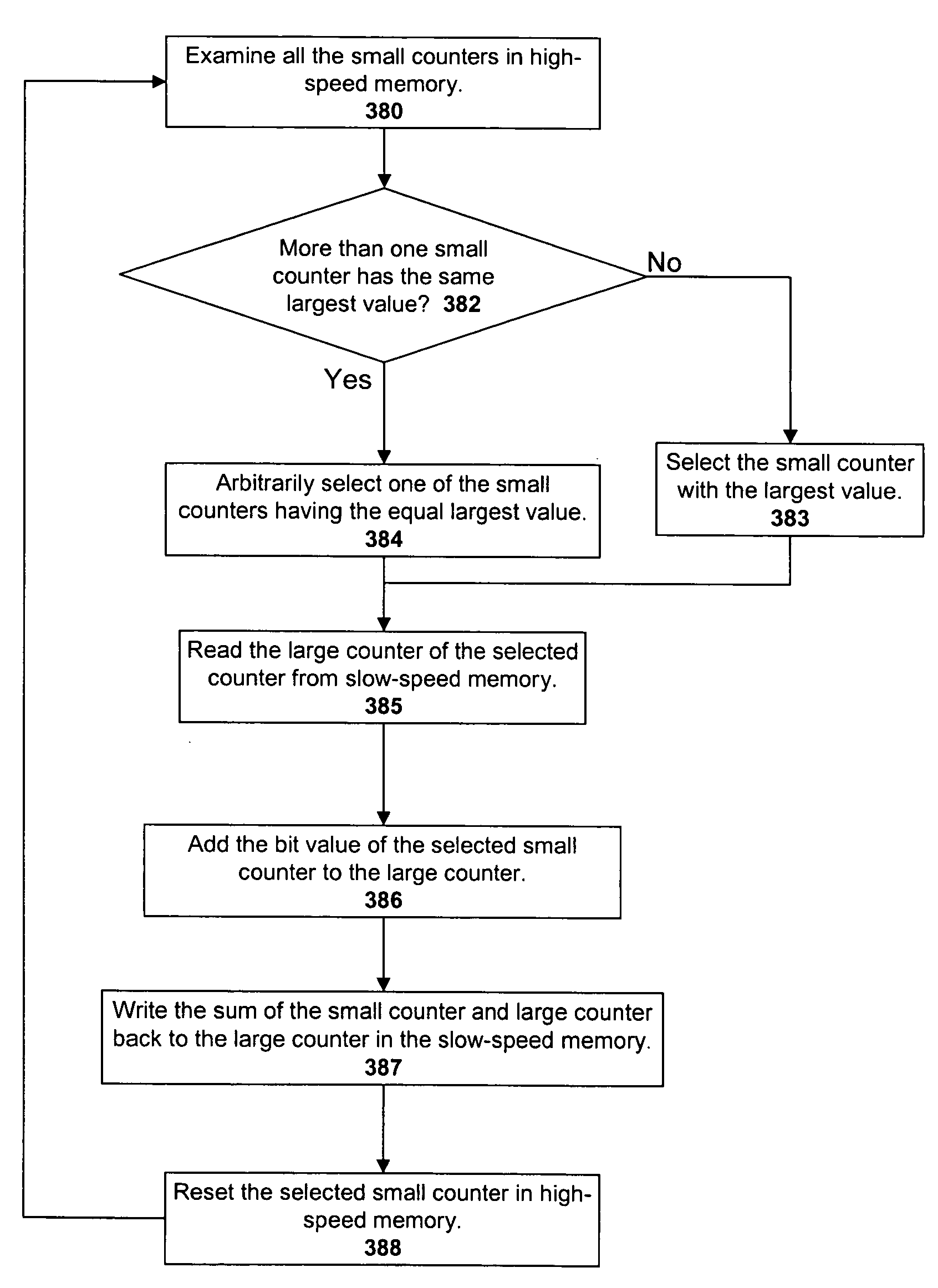

Maintaining erase counts in non-volatile storage systems

InactiveUS20040080985A1Memory architecture accessing/allocationMemory adressing/allocation/relocationData structureVolatile memory

Methods and apparatus for storing erase counts in a non-volatile memory of a non-volatile memory system are disclosed. According to one aspect of the present invention, a data structure in a non-volatile memory includes a first indicator that provides an indication of a number of times a first block of a plurality of blocks in a non-volatile memory has been erased. The data structure also includes a header that is arranged to contain information relating to the blocks in the non-volatile memory.

Owner:SANDISK TECH LLC

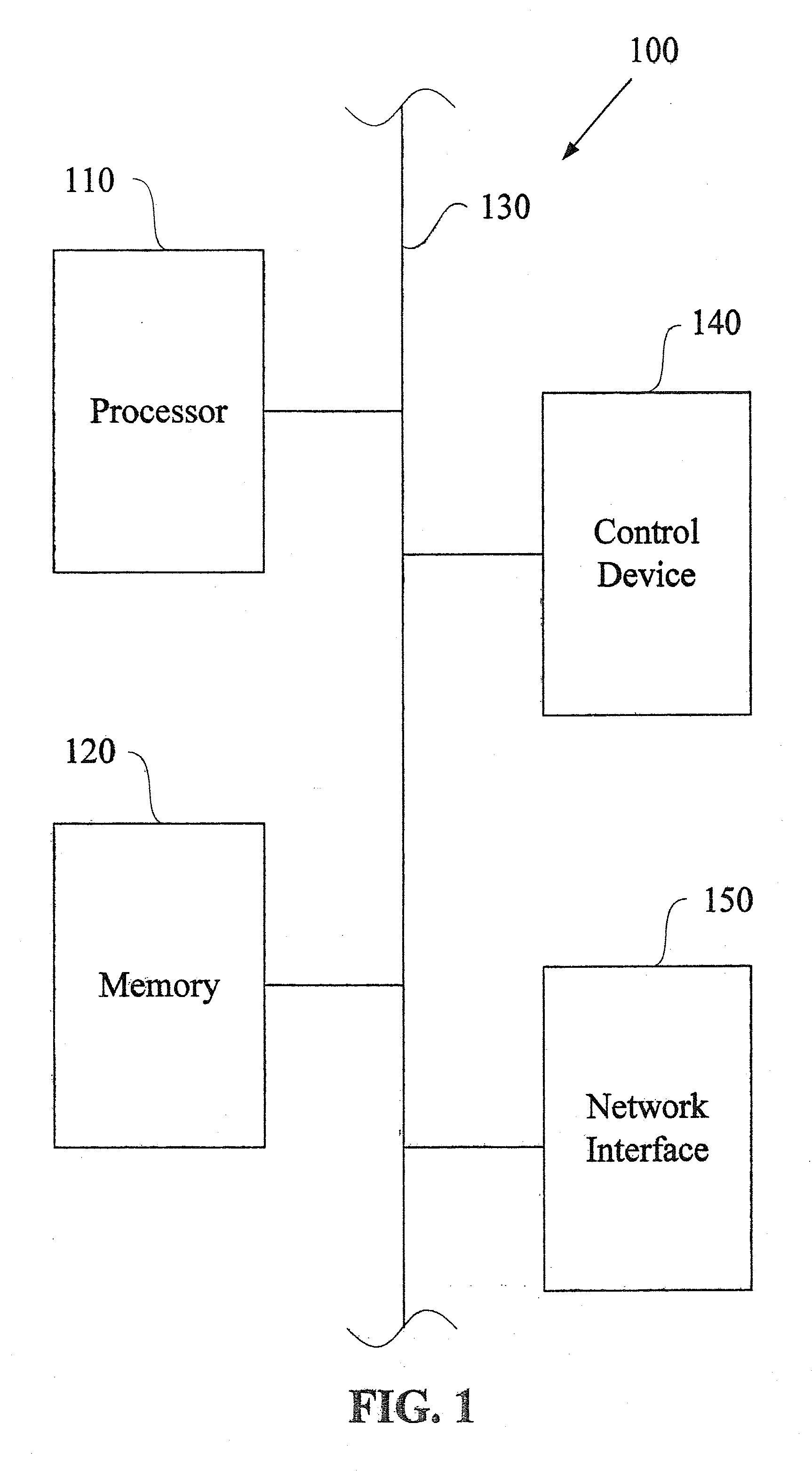

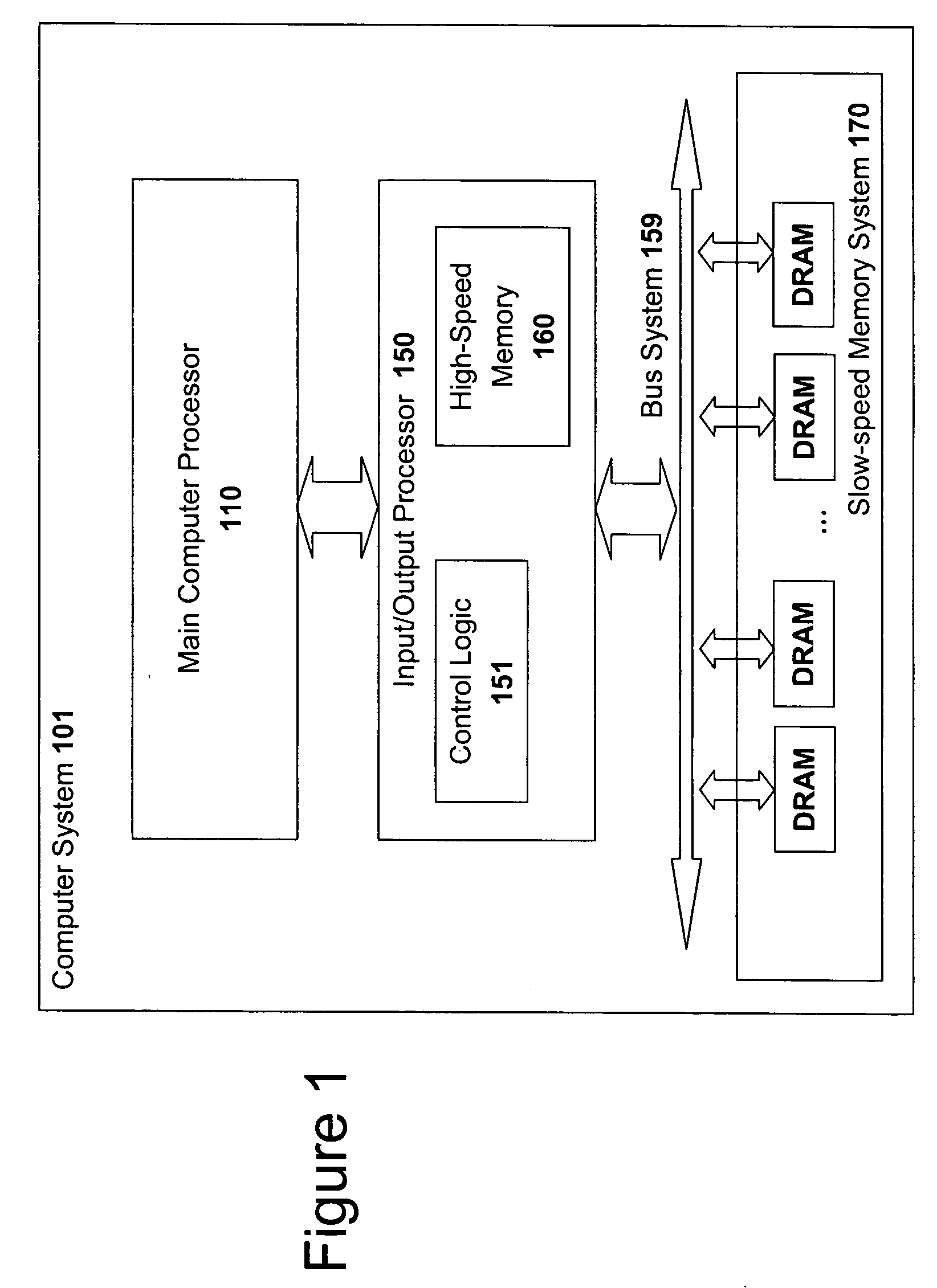

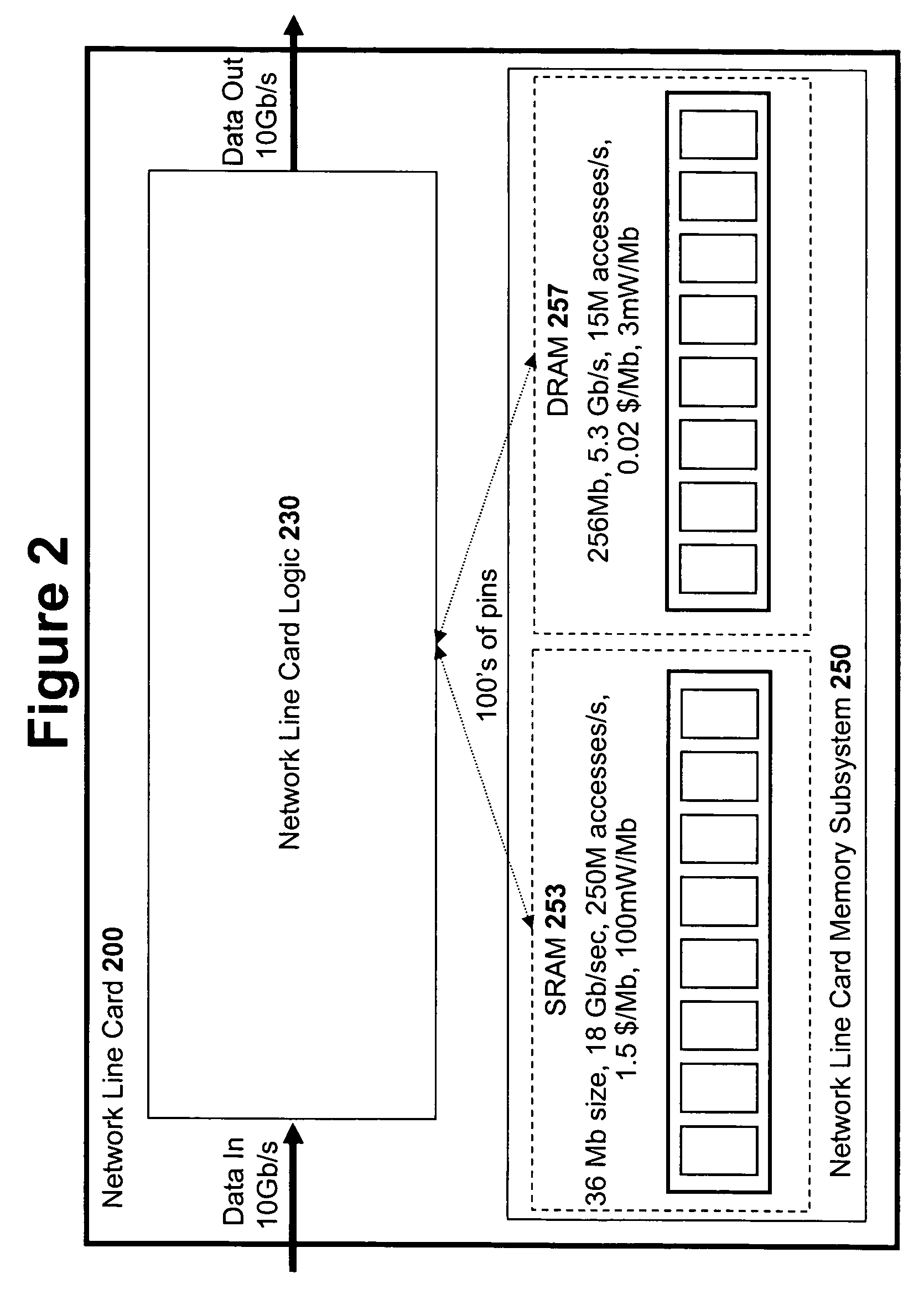

High speed memory control and I/O processor system

ActiveUS20050240745A1Easy to handleSimplify memory access taskMemory architecture accessing/allocationMemory adressing/allocation/relocationHigh speed memoryTailored approach

An input / output processor for speeding the input / output and memory access operations for a processor is presented. The key idea of an input / output processor is to functionally divide input / output and memory access operations tasks into a compute intensive part that is handled by the processor and an I / O or memory intensive part that is then handled by the input / output processor. An input / output processor is designed by analyzing common input / output and memory access patterns and implementing methods tailored to efficiently handle those commonly occurring patterns. One technique that an input / output processor may use is to divide memory tasks into high frequency or high-availability components and low frequency or low-availability components. After dividing a memory task in such a manner, the input / output processor then uses high-speed memory (such as SRAM) to store the high frequency and high-availability components and a slower-speed memory (such as commodity DRAM) to store the low frequency and low-availability components. Another technique used by the input / output processor is to allocate memory in such a manner that all memory bank conflicts are eliminated. By eliminating any possible memory bank conflicts, the maximum random access performance of DRAM memory technology can be achieved.

Owner:CISCO TECH INC

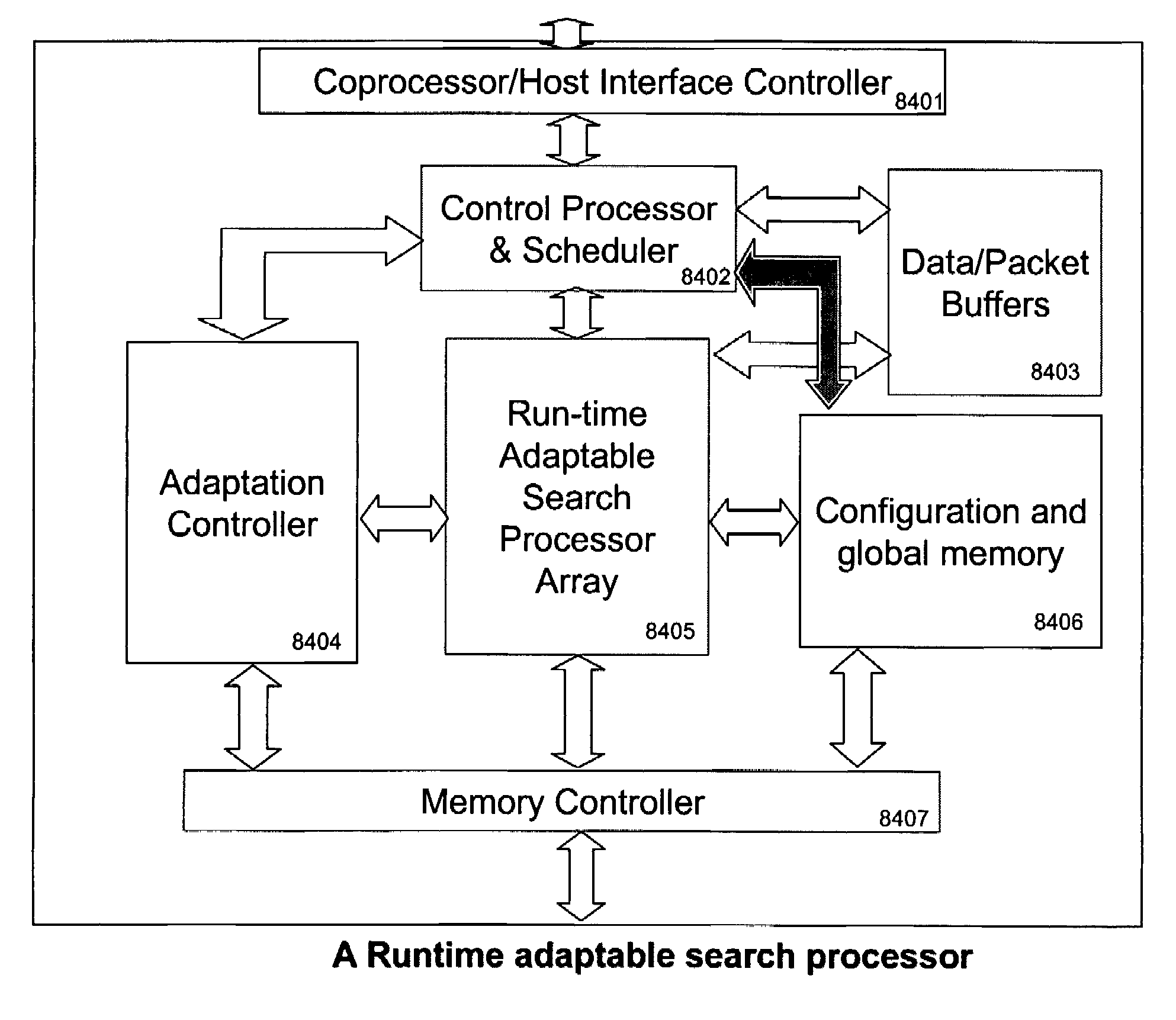

Runtime adaptable search processor

ActiveUS7685254B2Improve application performanceLarge capacityWeb data indexingMemory adressing/allocation/relocationPacket schedulingSchema for Object-Oriented XML

A runtime adaptable search processor is disclosed. The search processor provides high speed content search capability to meet the performance need of network line rates growing to 1 Gbps, 10 Gbps and higher. The search processor provides a unique combination of NFA and DFA based search engines that can process incoming data in parallel to perform the search against the specific rules programmed in the search engines. The processor architecture also provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. Scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

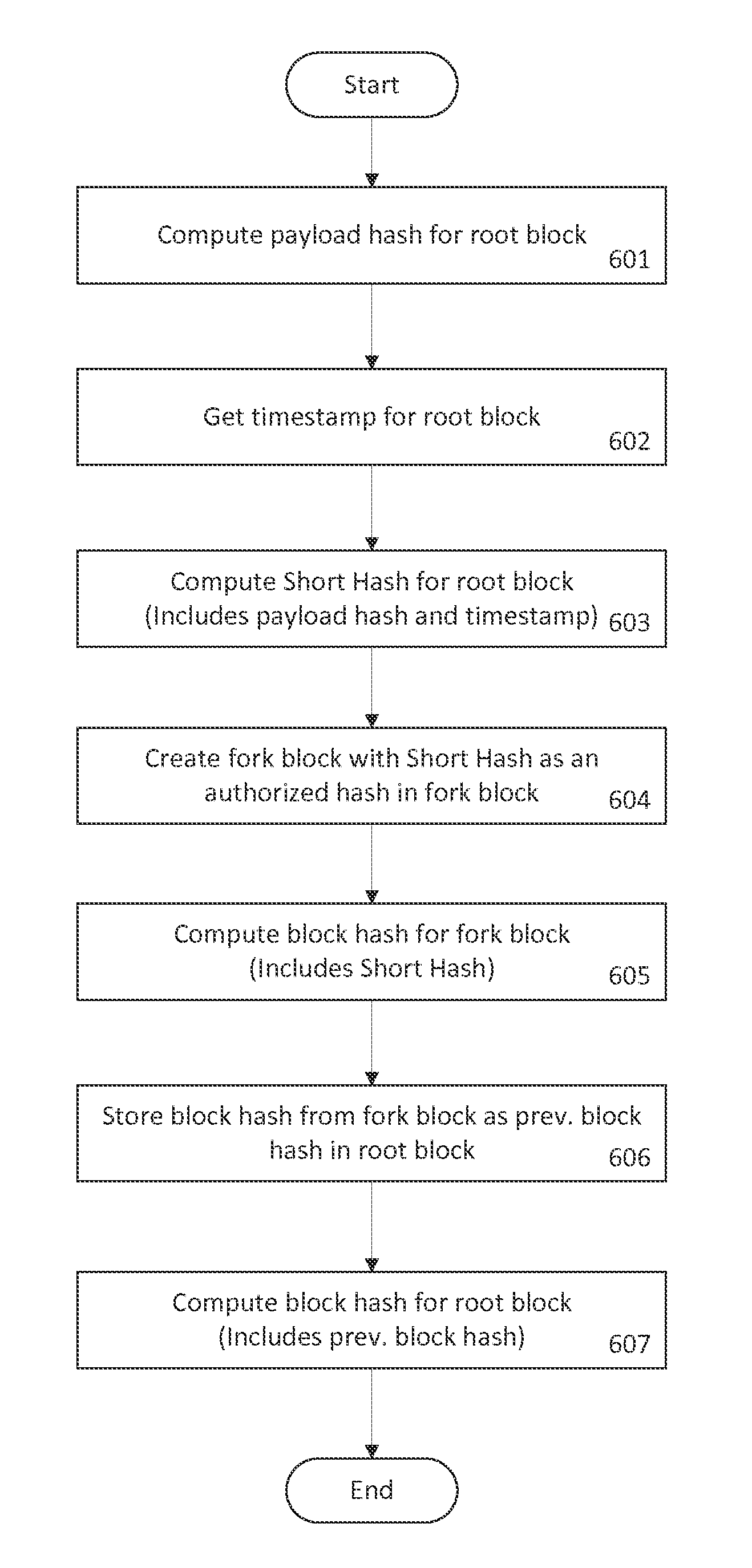

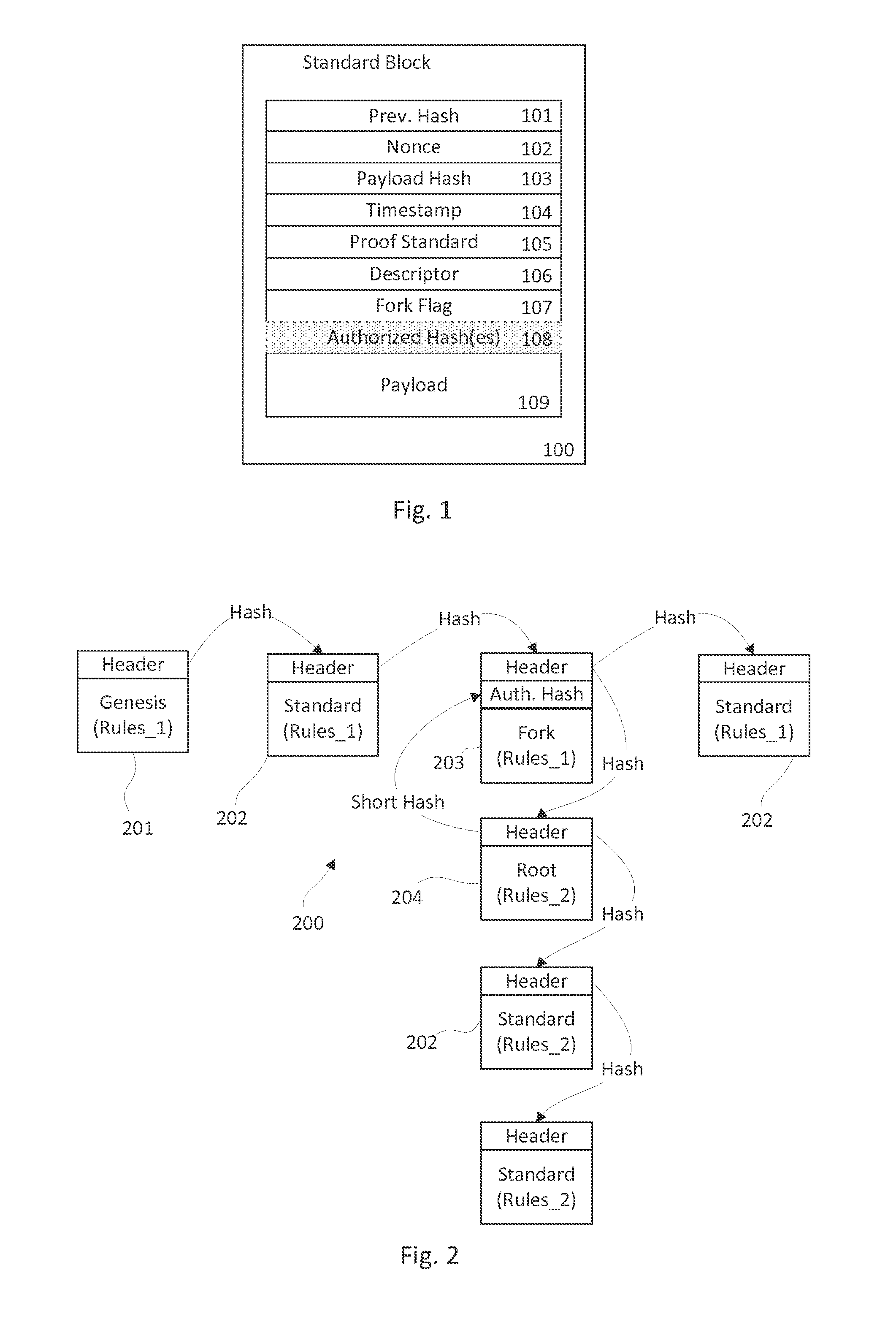

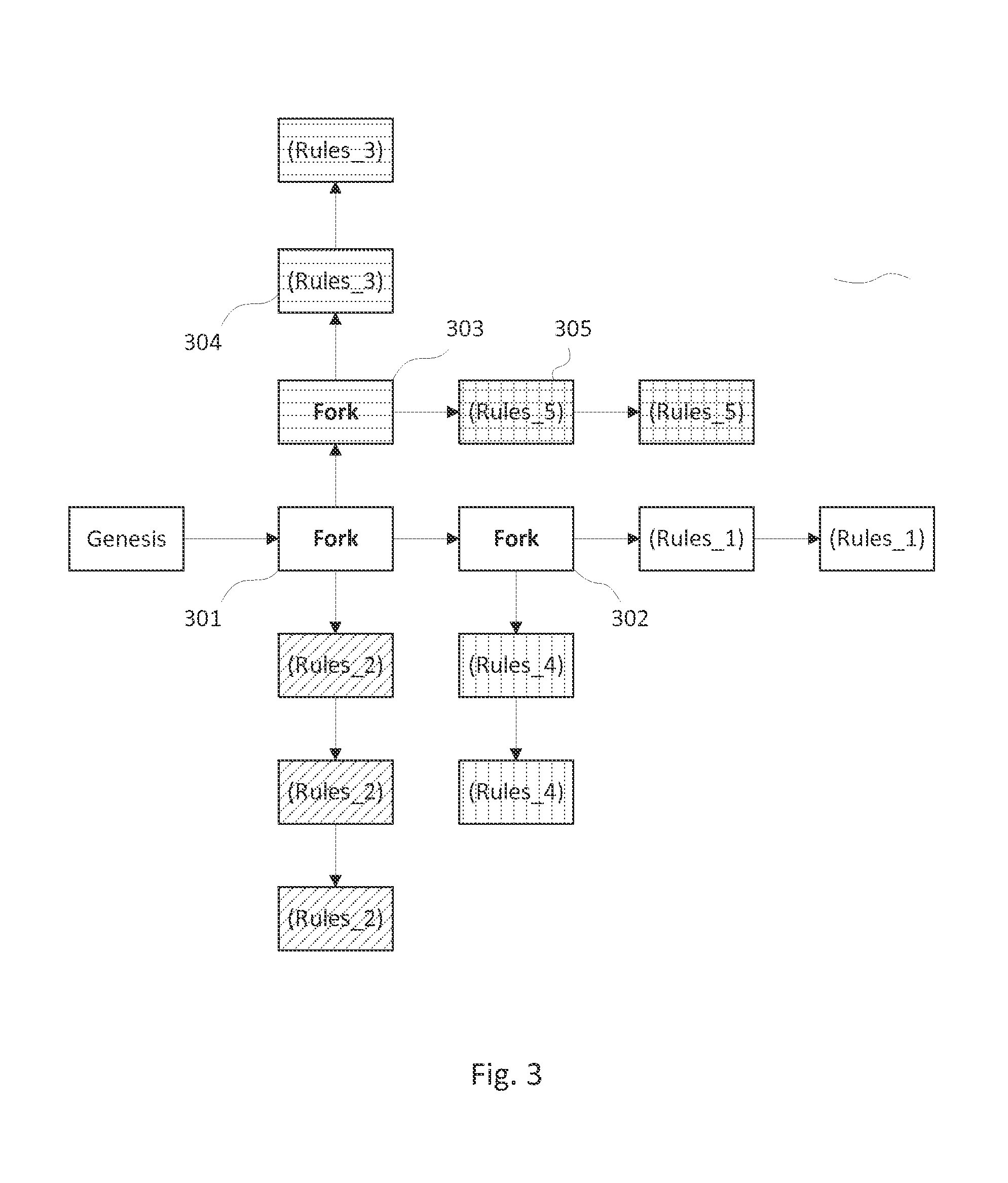

System and method for creating a multi-branched blockchain with configurable protocol rules

ActiveUS20160028552A1Improve securityQuickly become obsoleteMemory architecture accessing/allocationUser identity/authority verificationPrimitive stateEmerging technologies

The present invention generally relates to blockchain technology. Specifically, this invention relates to creating a blockchain called a slidechain that allows for multiple valid branches or forks to propagate simultaneously with a customized set of protocol rules embedded in and applied to each fork chain that branches from another chain. The invention generally provides a computer-implemented method for accessing, developing and maintaining a decentralized database through a peer-to-peer network, to preserve the original state of data inputs while adapting to changing circumstances, user preferences, and emerging technological capabilities.

Owner:BLOCKTECH LLC

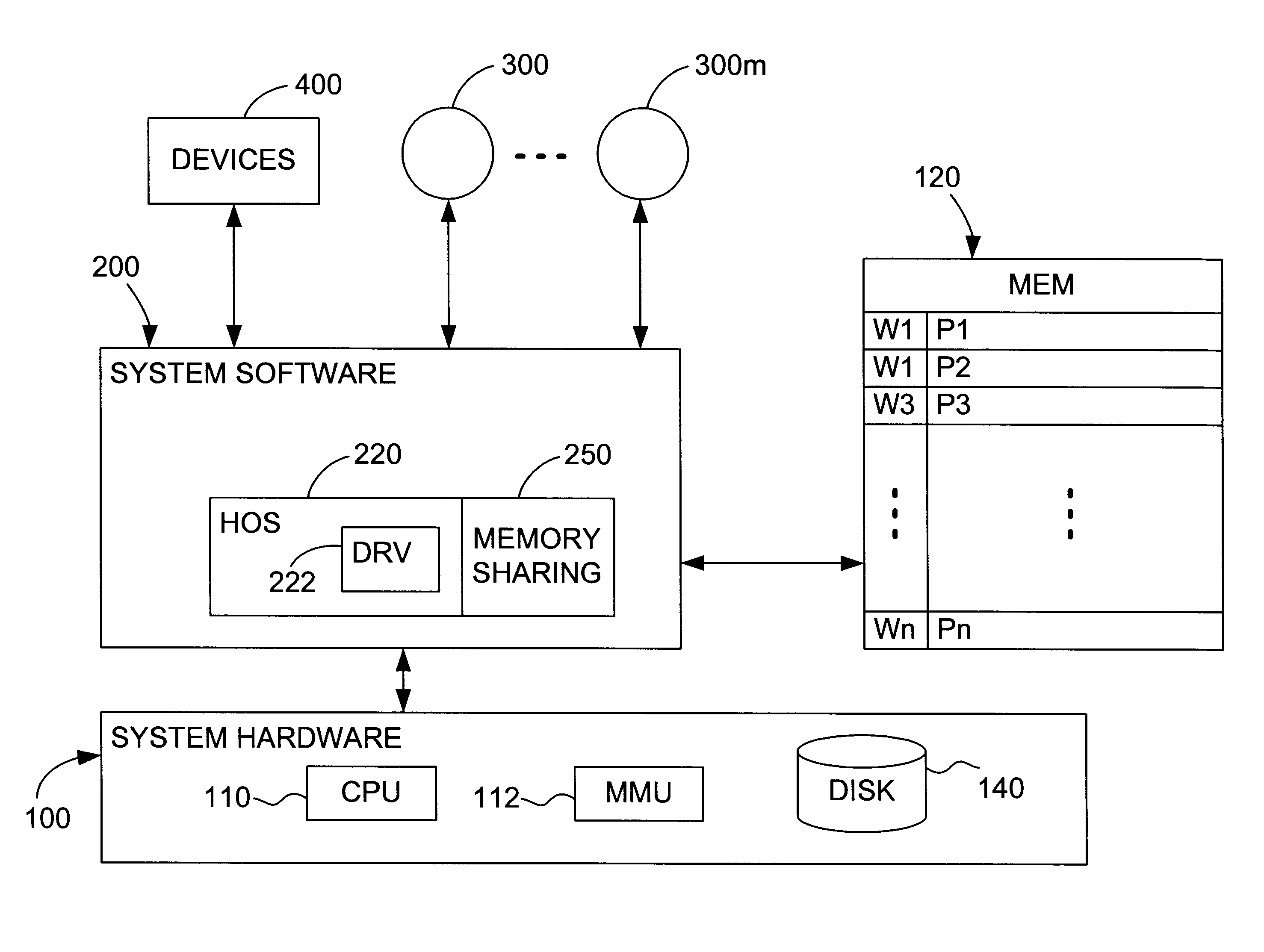

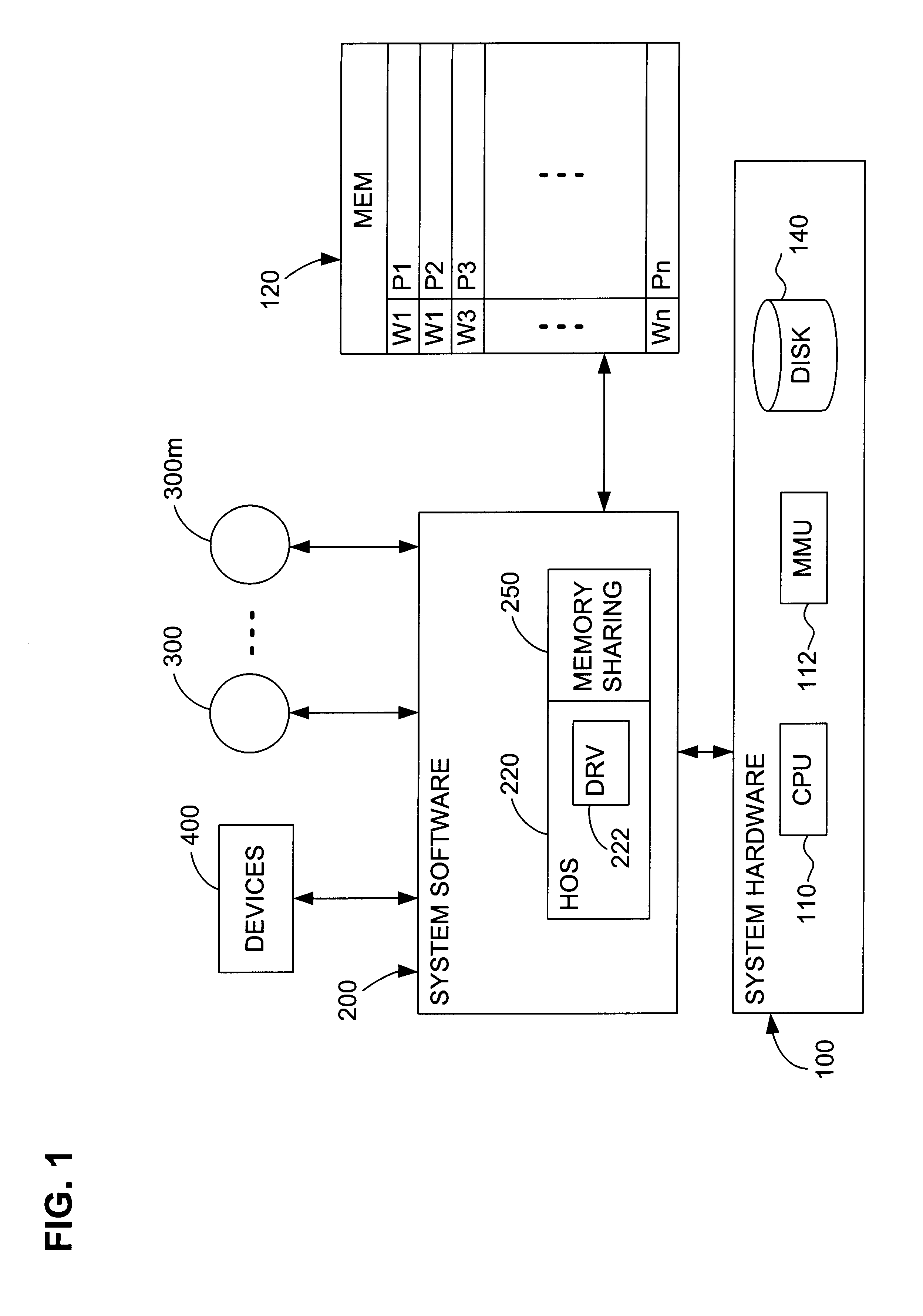

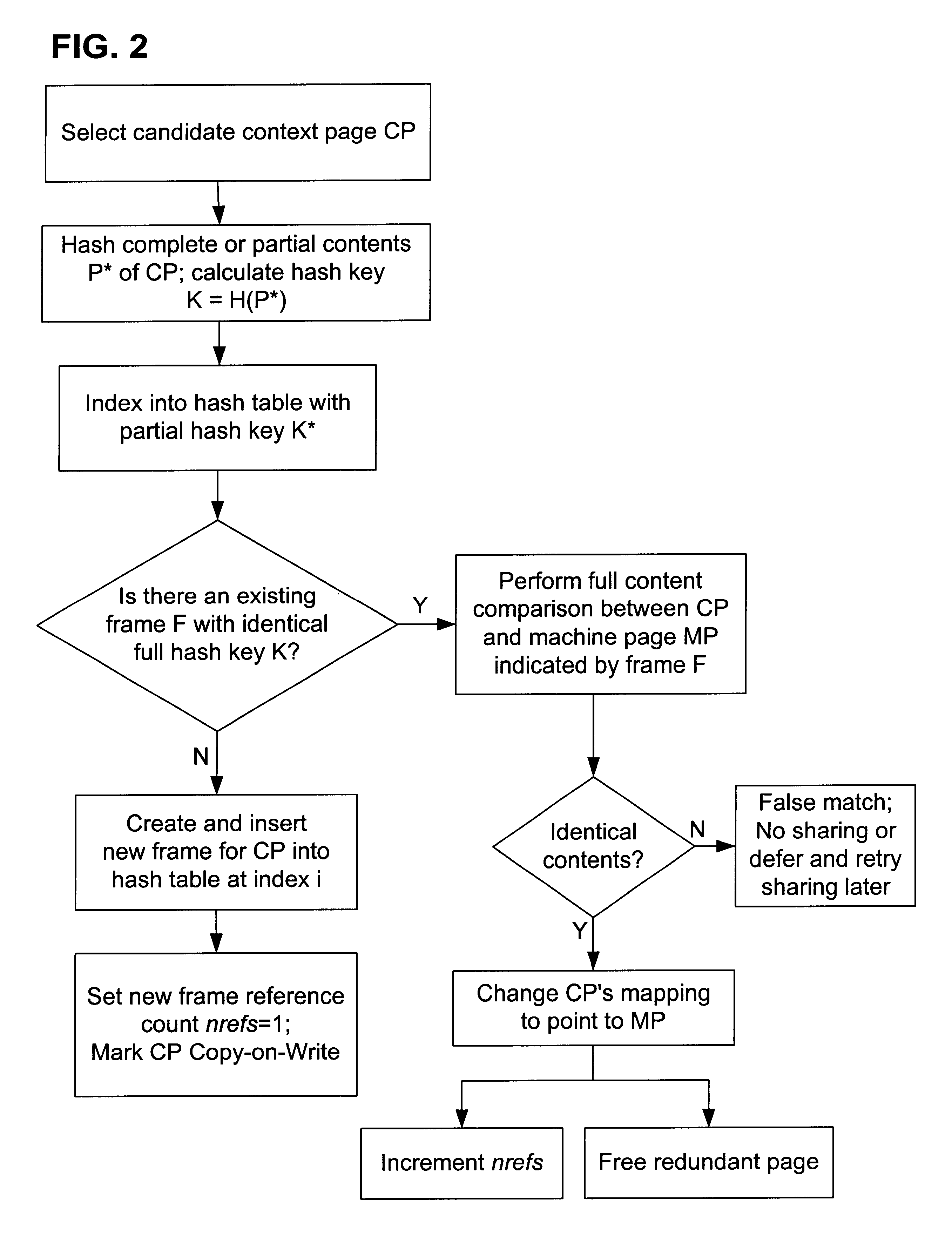

Content-based, transparent sharing of memory units

InactiveUS6789156B1Memory architecture accessing/allocationMemory adressing/allocation/relocationComputer hardwareMultiple context

A computer system has one or more software context that share use of a memory that is divided into units such as pages. In the preferred embodiment of the invention, the context are, or include, virtual machines running on a common hardware platform. The context, as opposed to merely the addresses or page numbers, of virtual memory pages that accessible to one or more contexts are examined. If two or more context pages are identical, then their memory mappings are changed to point to a single, shared copy of the page in the hardware memory, thereby freeing the memory space taken up by the redundant copies. The shared copy is ten preferable marked copy-on-write. Sharing is preferably dynamic, whereby the presence of redundant copies of pages is preferably determined by hashing page contents and performing full content comparisons only when two or more pages hash to the same key.

Owner:VMWARE INC

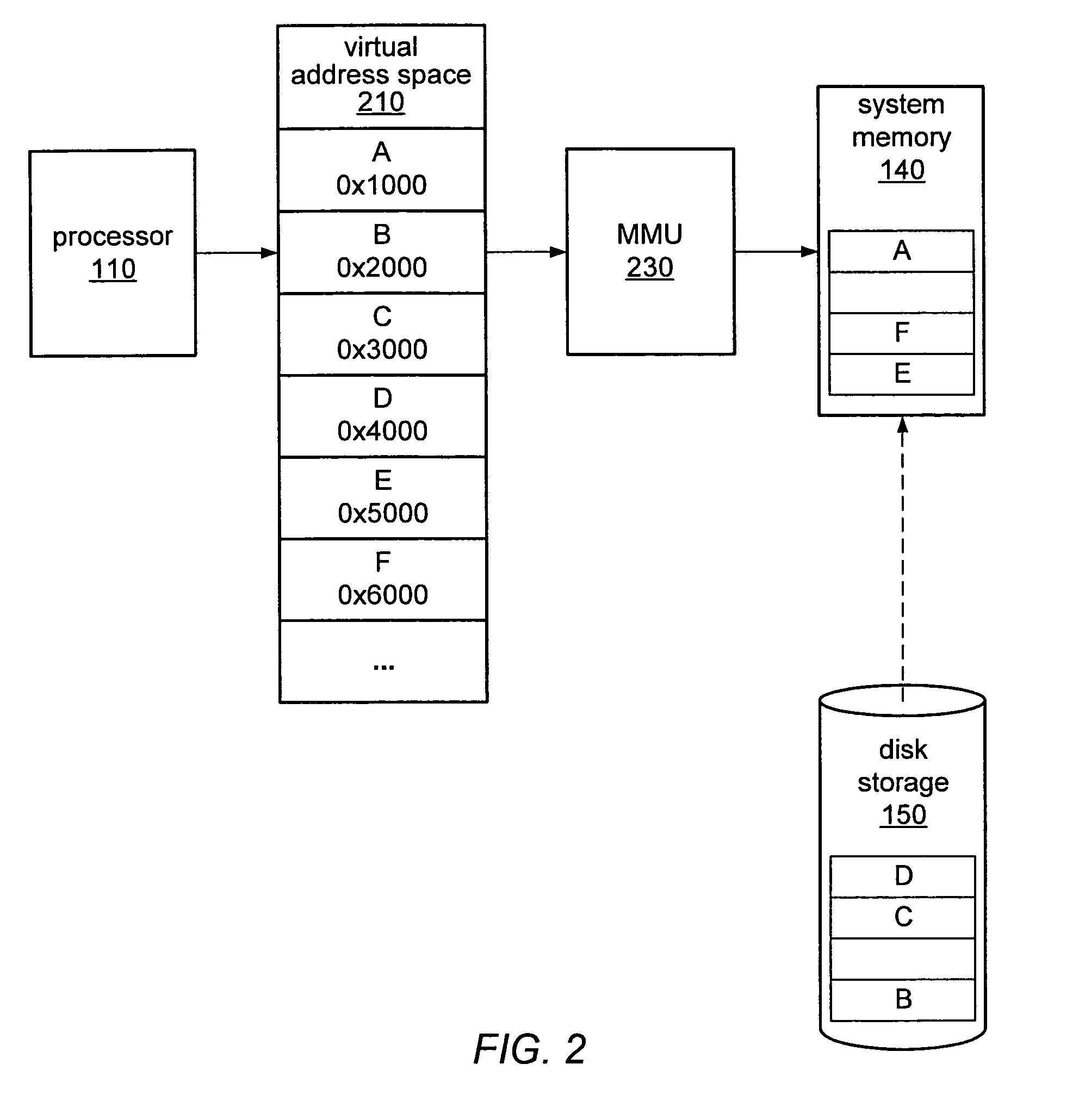

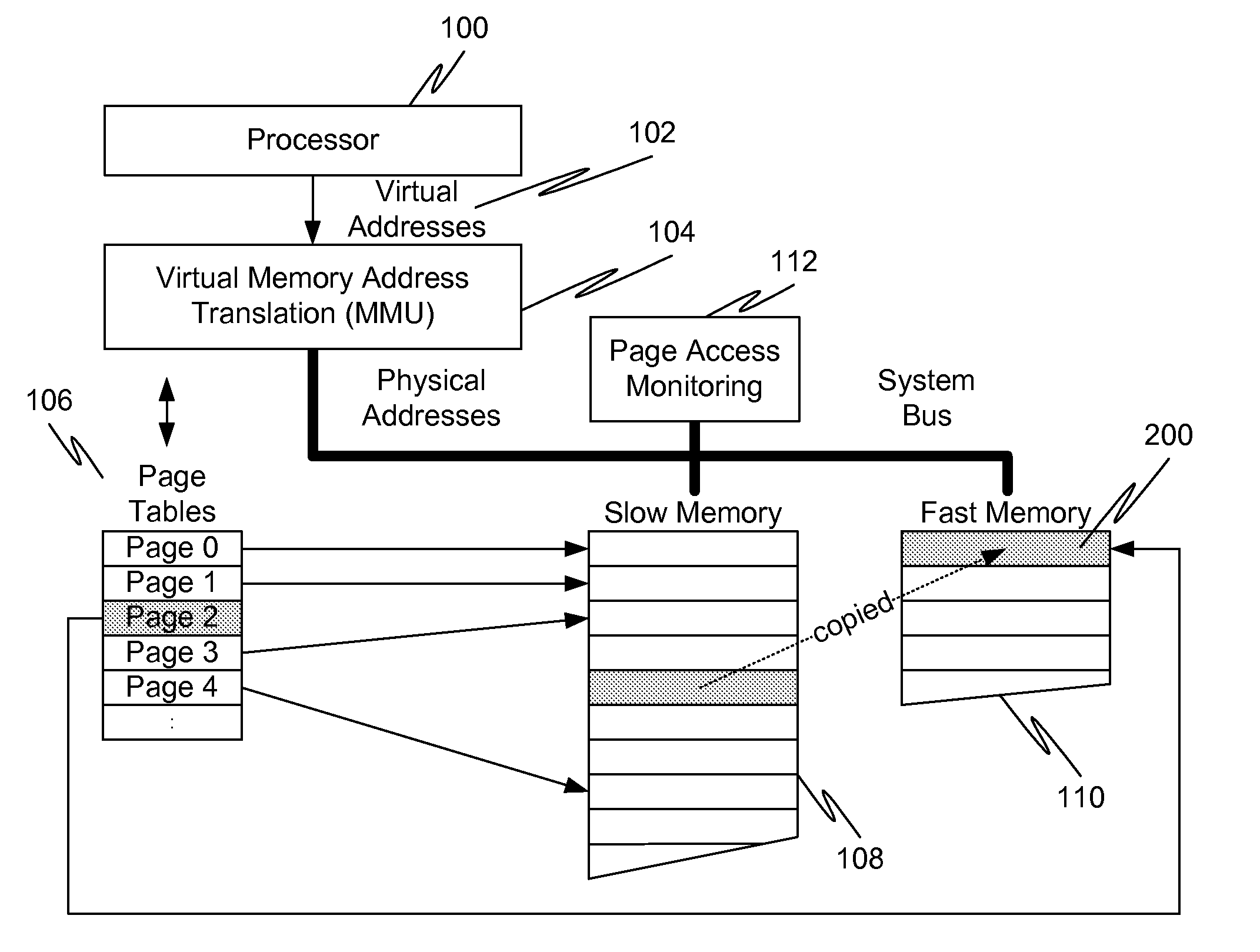

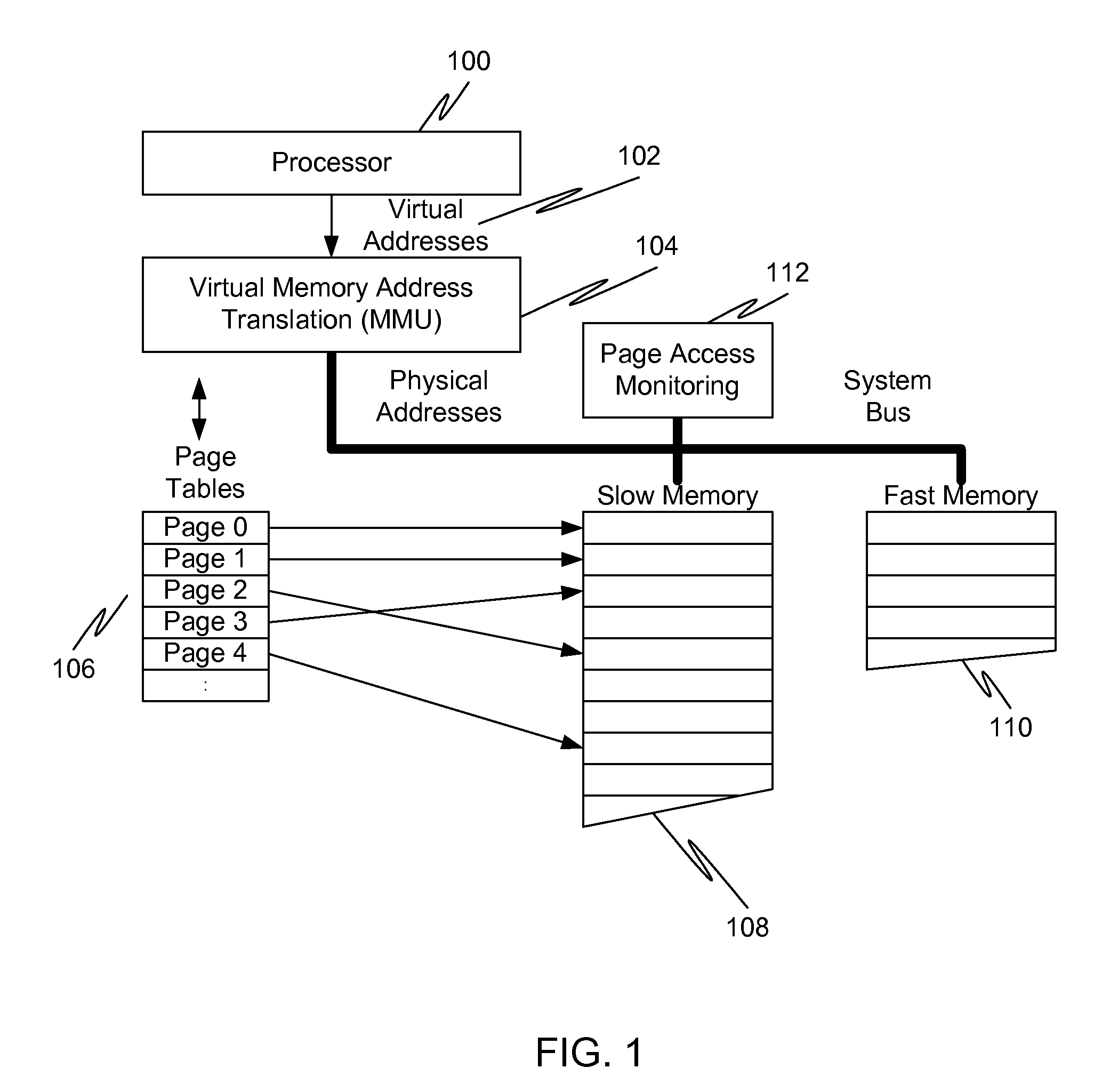

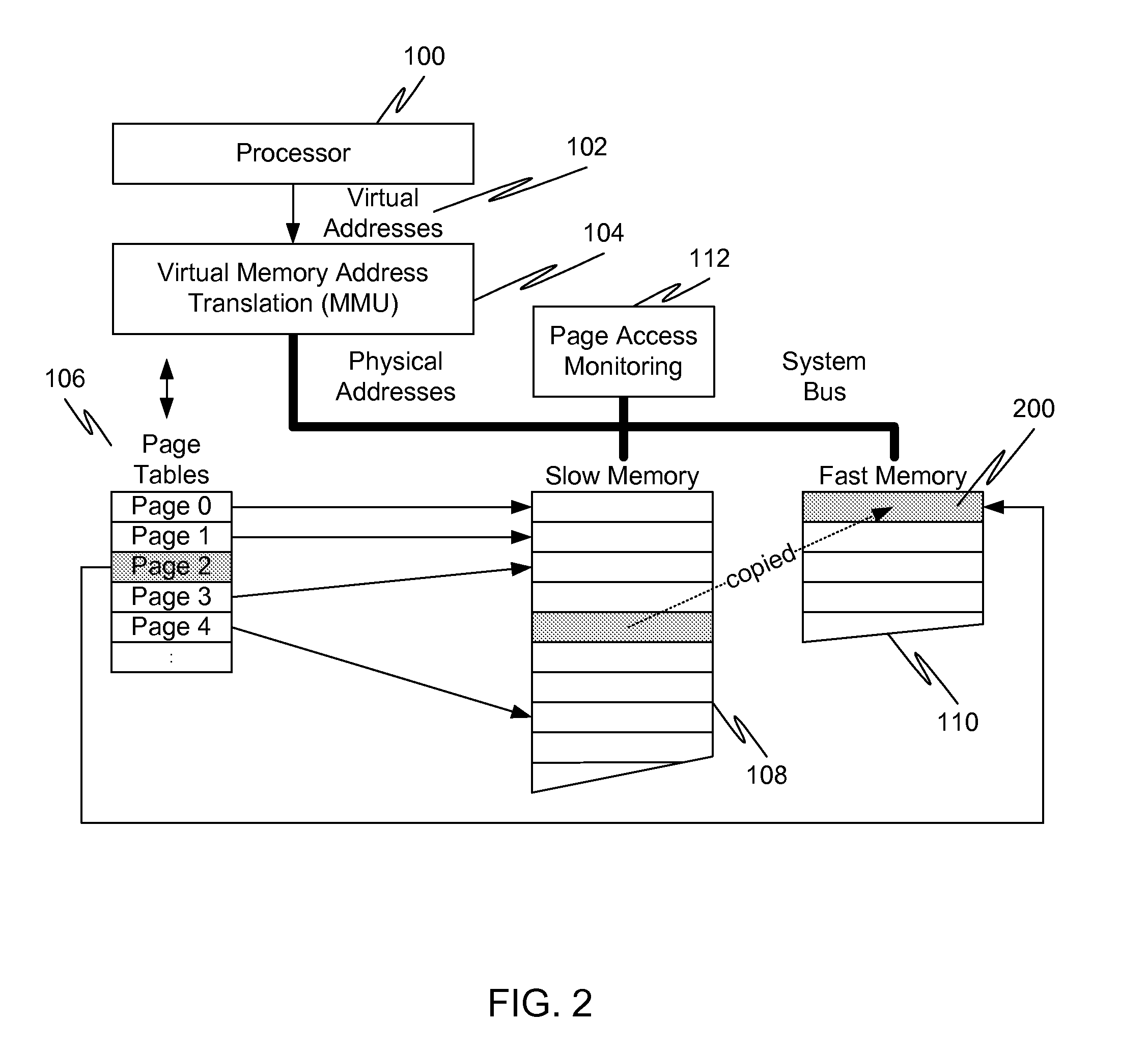

Caching using virtual memory

InactiveUS20120017039A1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryPage table

In a first embodiment of the present invention, a method for caching in a processor system having virtual memory is provided, the method comprising: monitoring slow memory in the processor system to determine frequently accessed pages; for a frequently accessed page in slow memory: copy the frequently accessed page from slow memory to a location in fast memory; and update virtual address page tables to reflect the location of the frequently accessed page in fast memory.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

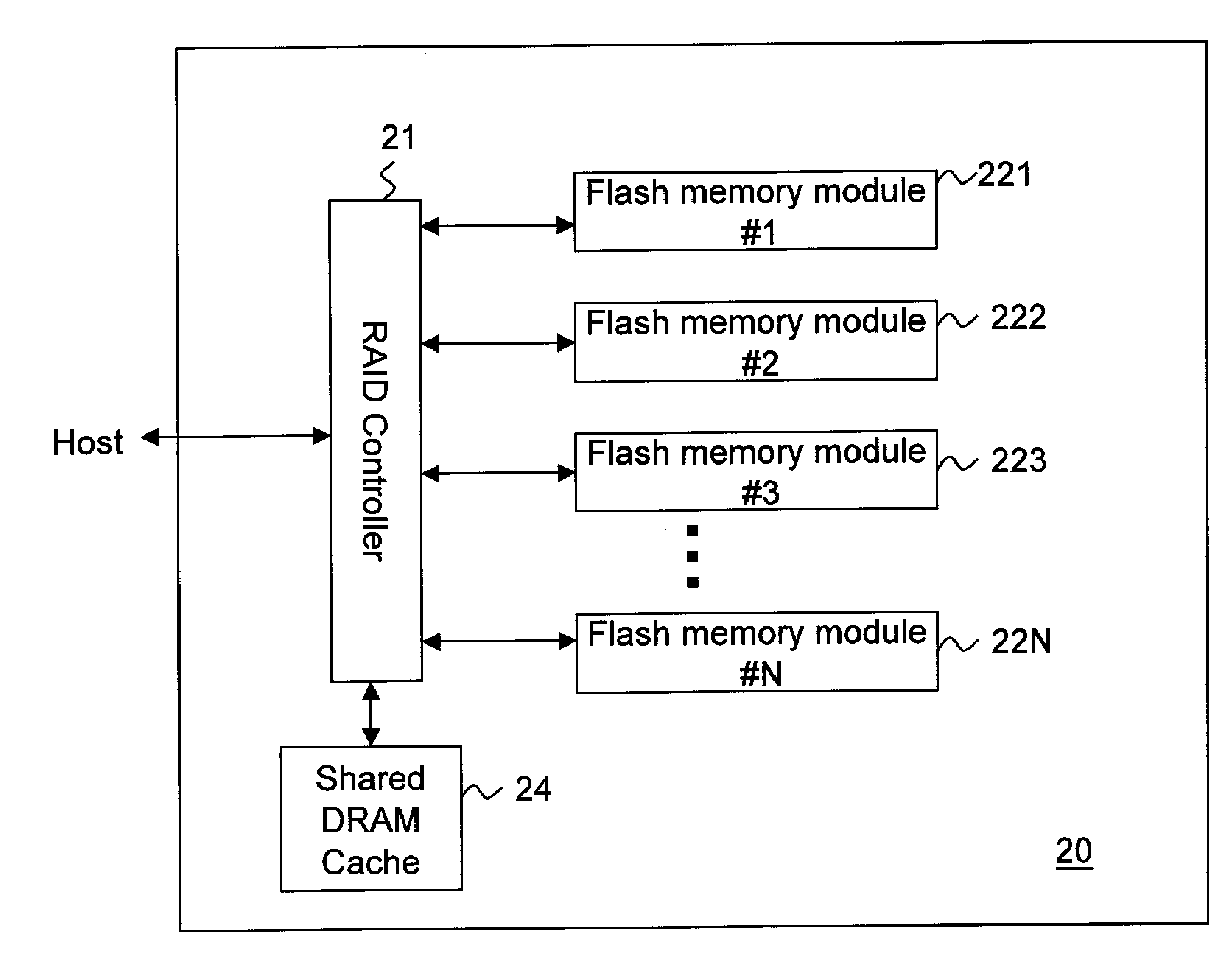

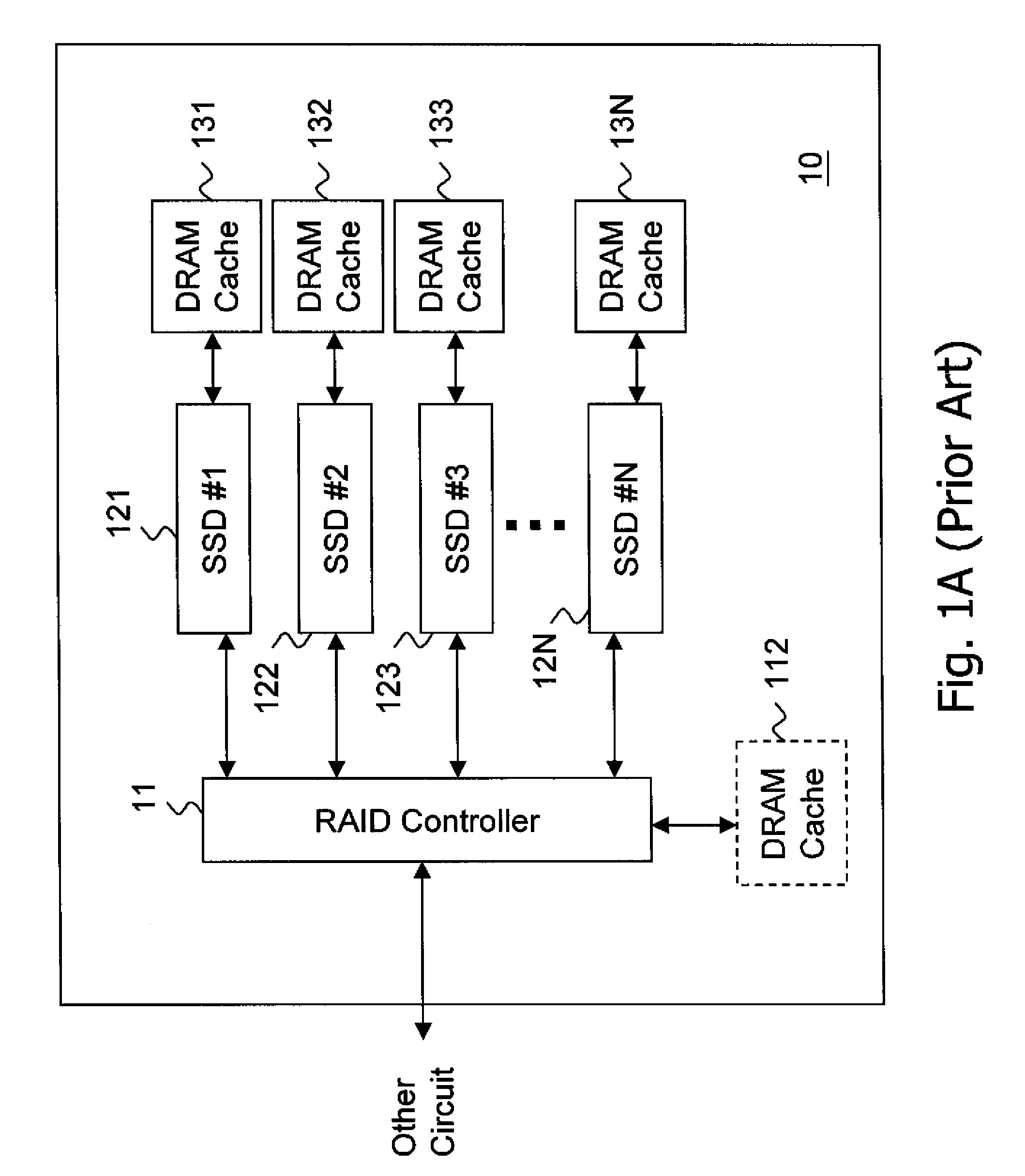

Non-volatile memory storage system

InactiveUS20100125695A1Speed up data transferCost-effective structureMemory architecture accessing/allocationMemory adressing/allocation/relocationRAIDComputer science

The present invention discloses a flash memory storage system, comprising at least one RAID controller; a plurality of flash memory cards electrically connected with the RAID controller; and a cache memory electrically connected with the RAID controller and shared by the RAID controller and the flash memory cards. The cache memory efficiently enhances the system performance. The storage system may comprise more RAID controllers to construct a nested RAID architecture.

Owner:NANOSTAR CORP

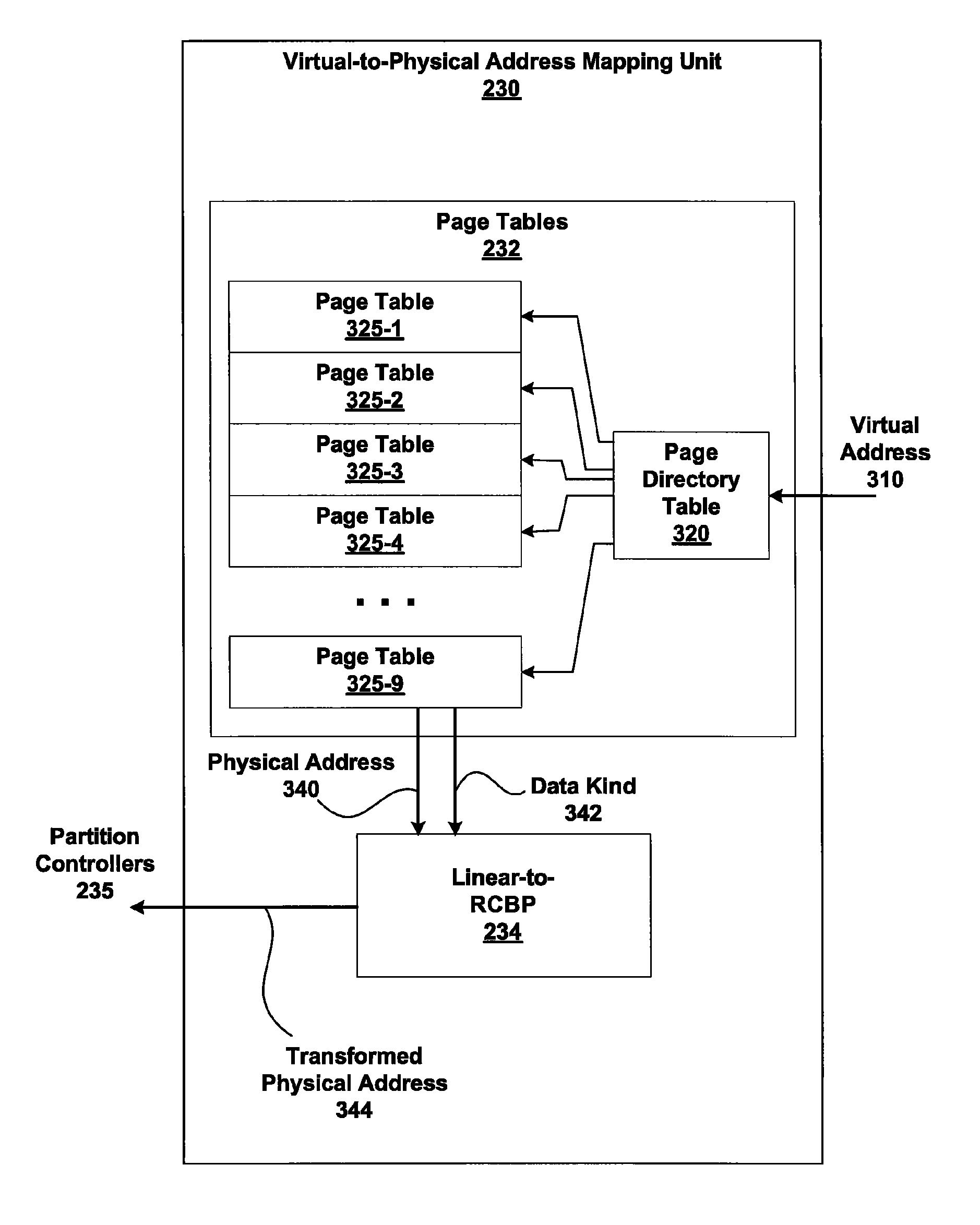

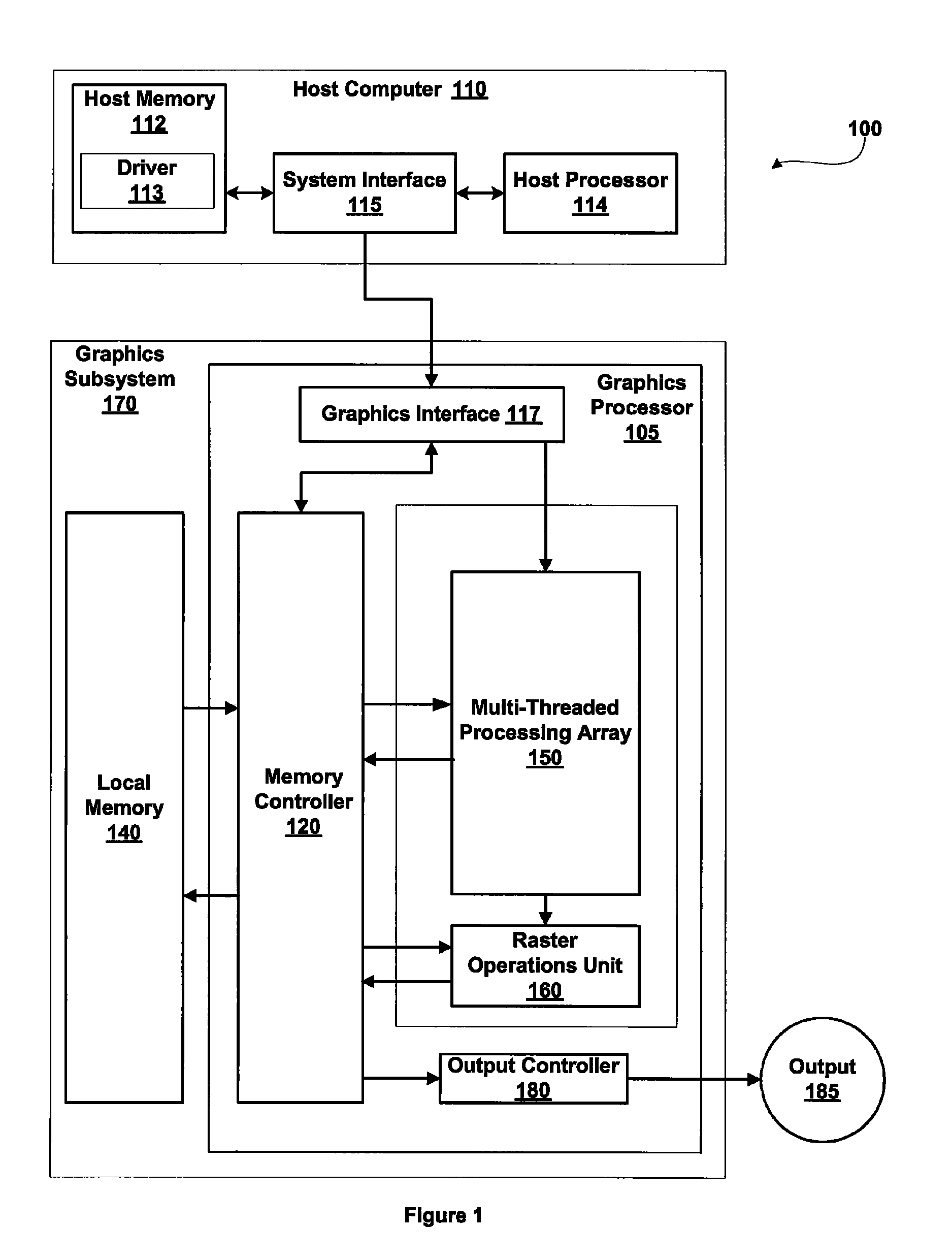

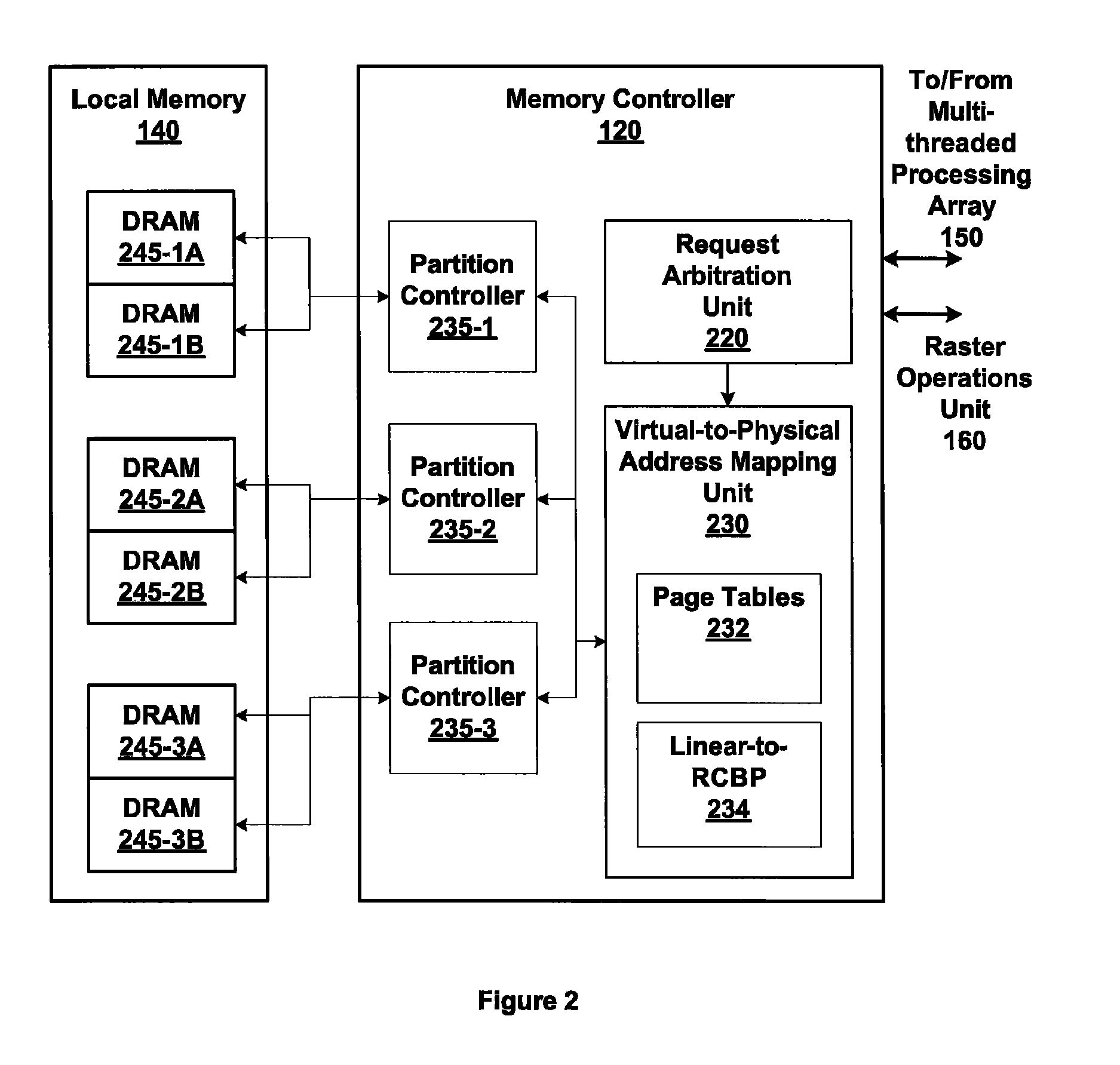

Memory addressing controlled by PTE fields

ActiveUS7805587B1Reduce accessMemory adressing/allocation/relocationComputer security arrangementsMemory addressDram memory

Embodiments of the present invention enable virtual-to-physical memory address translation using optimized bank and partition interleave patterns to improve memory bandwidth by distributing data accesses over multiple banks and multiple partitions. Each virtual page has a corresponding page table entry that specifies the physical address of the virtual page in linear physical address space. The page table entry also includes a data kind field that is used to guide and optimize the mapping process from the linear physical address space to the DRAM physical address space, which is used to directly access one or more DRAM. The DRAM physical address space includes a row, bank and column address. The data kind field is also used to optimize the starting partition number and partition interleave pattern that defines the organization of the selected physical page of memory within the DRAM memory system.

Owner:NVIDIA CORP

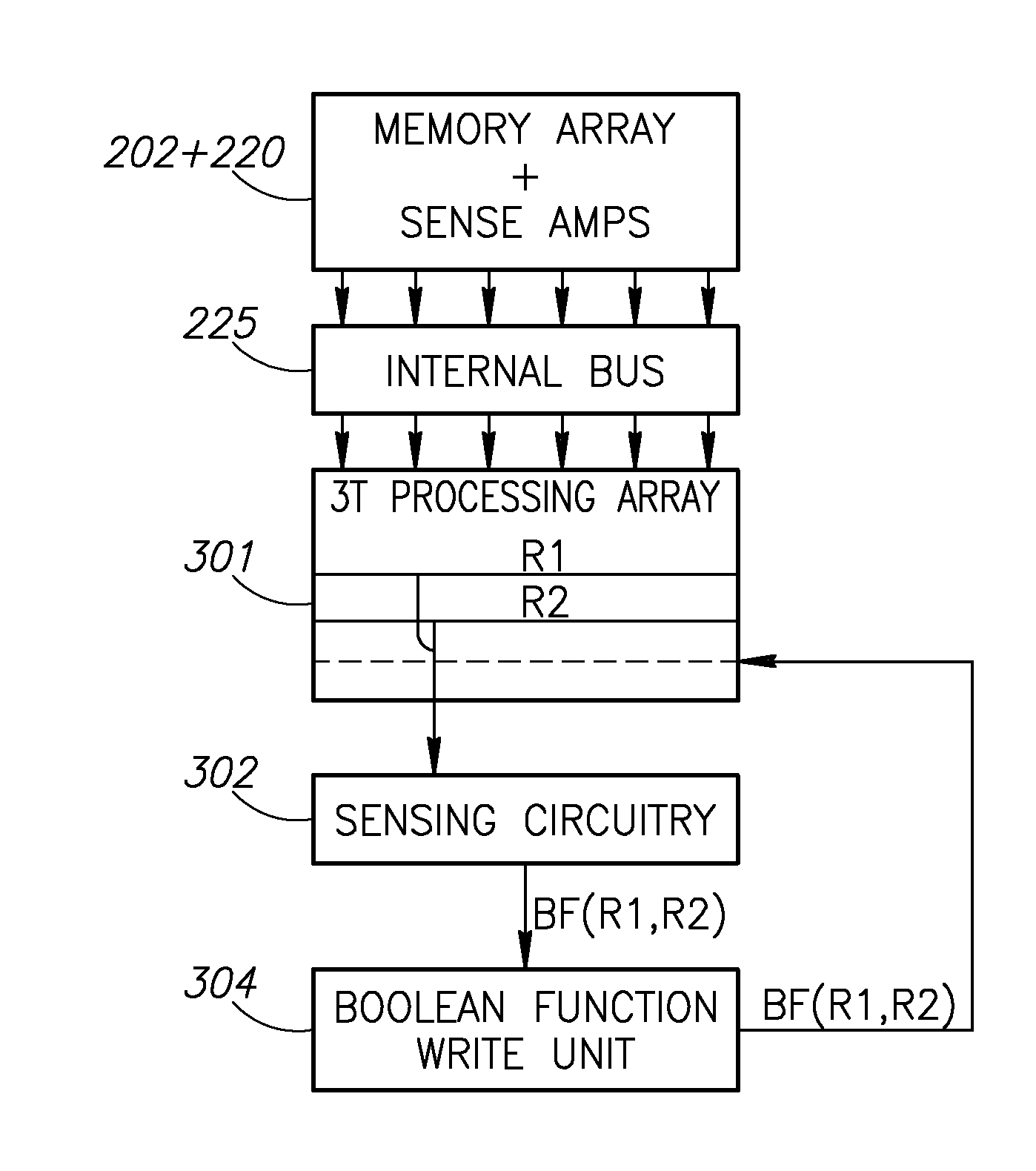

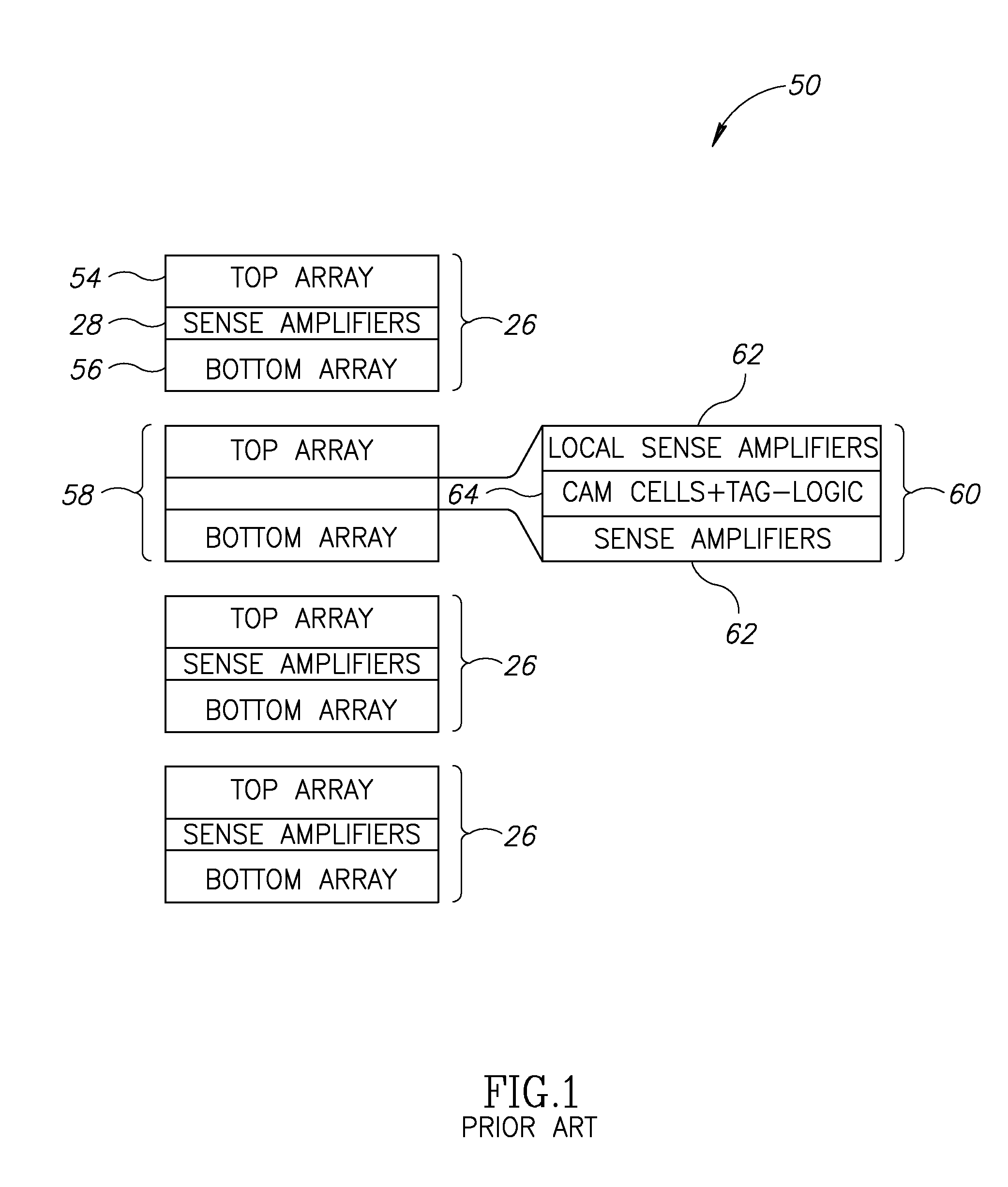

Neighborhood operations for parallel processing

InactiveUS20120246380A1Memory adressing/allocation/relocationDigital storageProcessing elementParallel processing

A memory device includes a plurality of storage units in which to store data of a bank, wherein the data has a logical order prior to storage and a physical order different than the logical order within the plurality of storage units and a within-device reordering unit to reorder the data of a bank into the logical order prior to performing on-chip processing. In another embodiment, the memory device includes an external device interface connectable to an external device communicating with the memory device, an internal processing element to process data stored on the device and multiple banks of storage. Each bank includes a plurality of storage units and each storage unit has two ports, an external port connectable to the external device interface and an internal port connected to the internal processing element.

Owner:GSI TECH

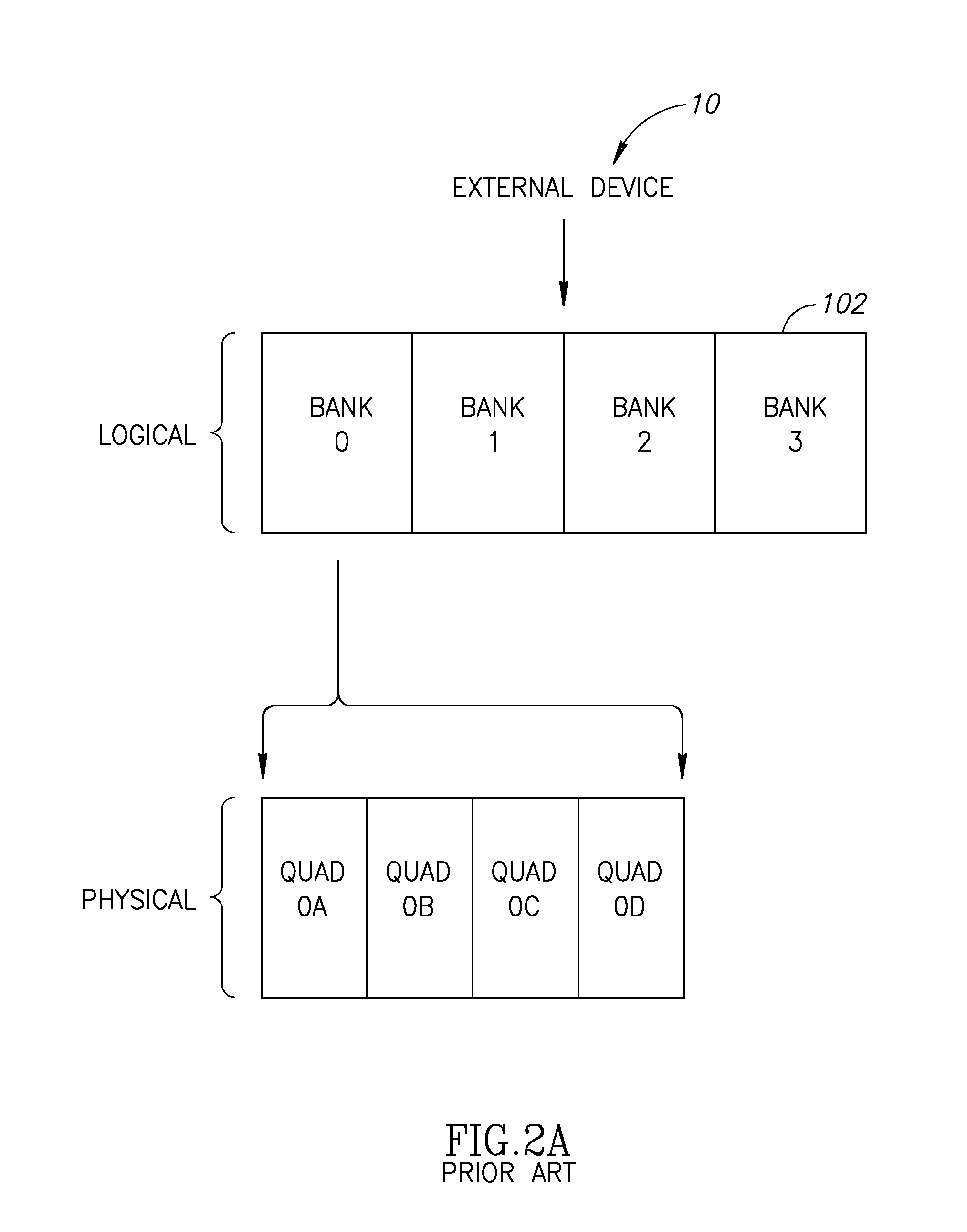

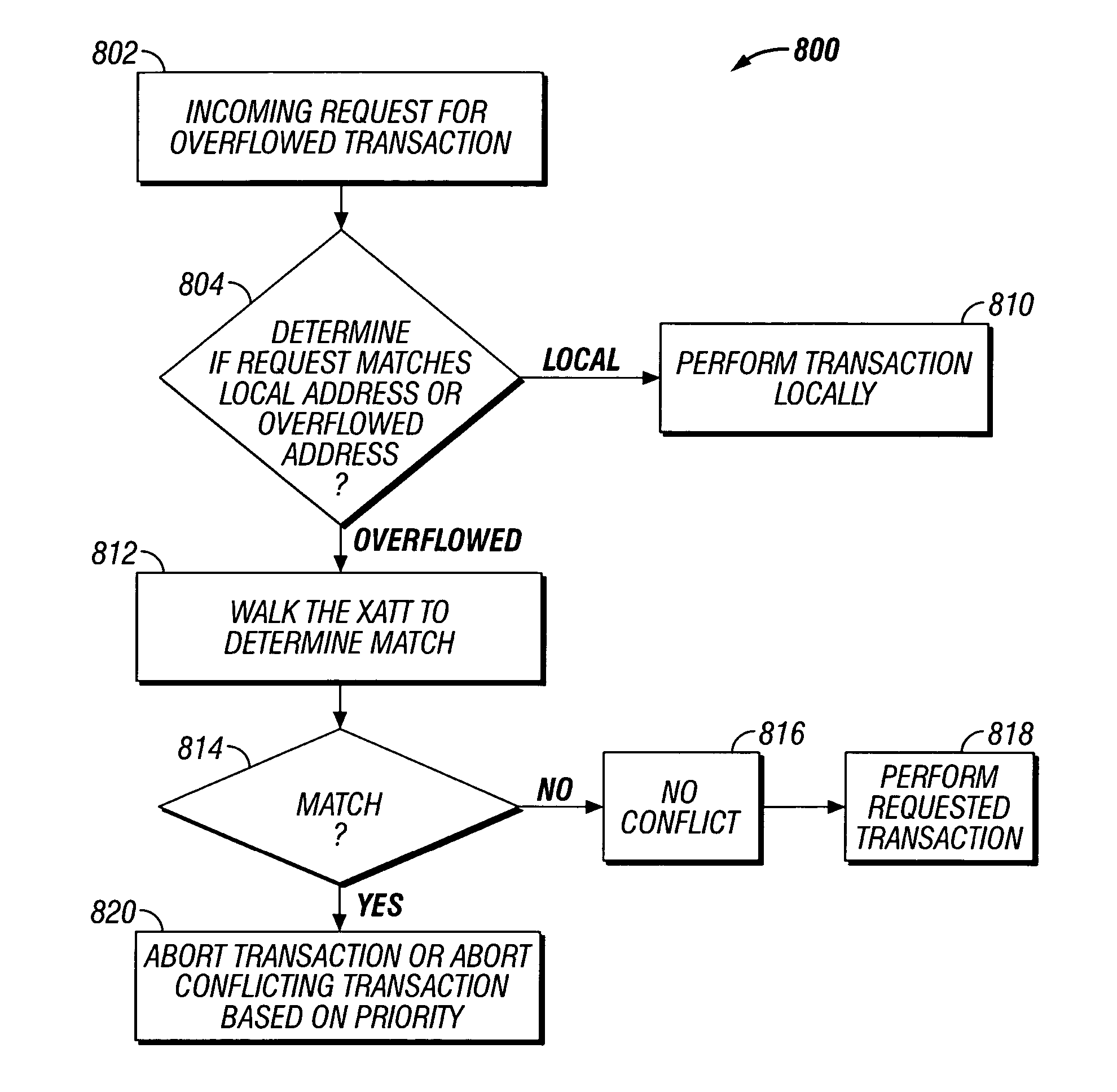

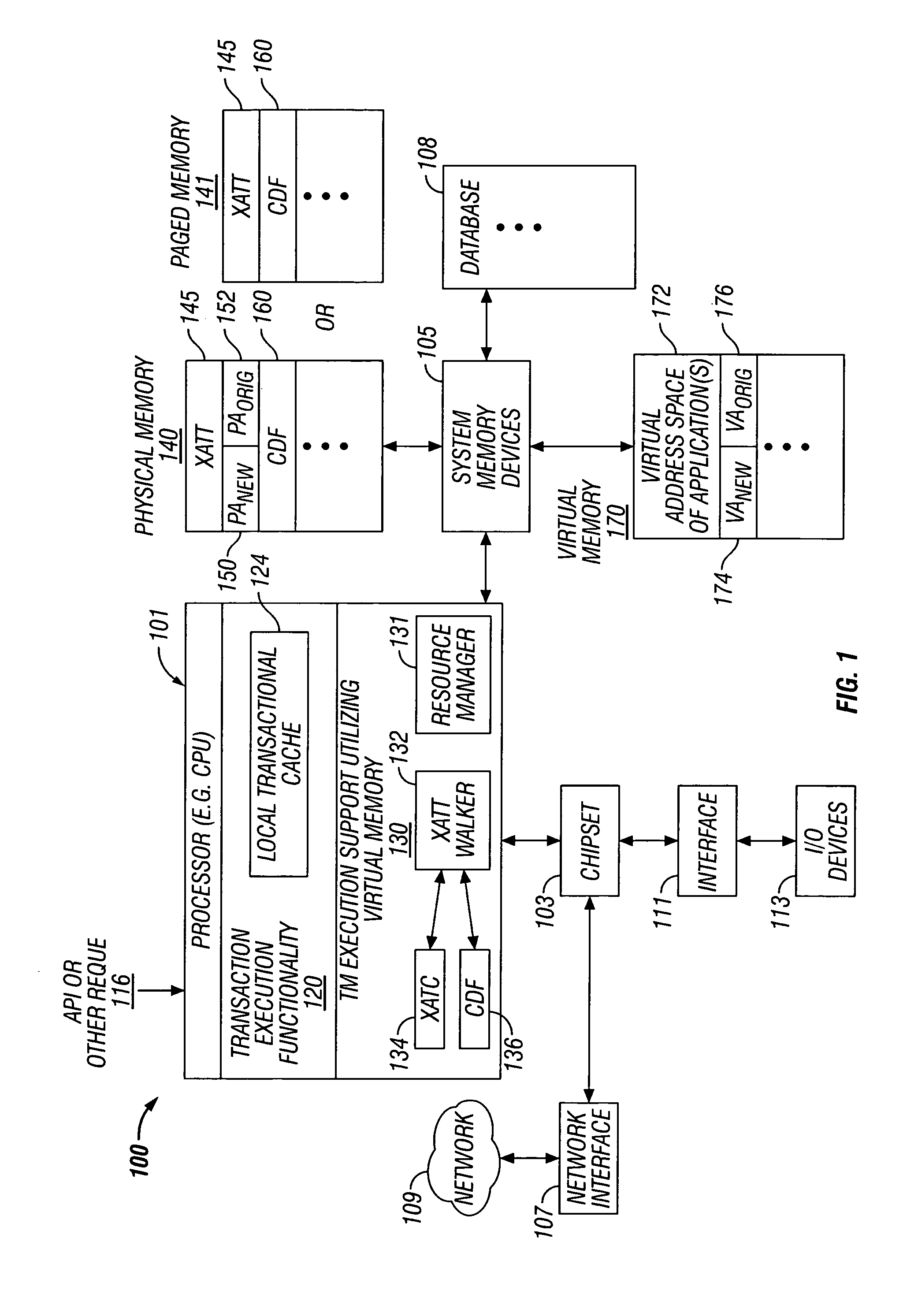

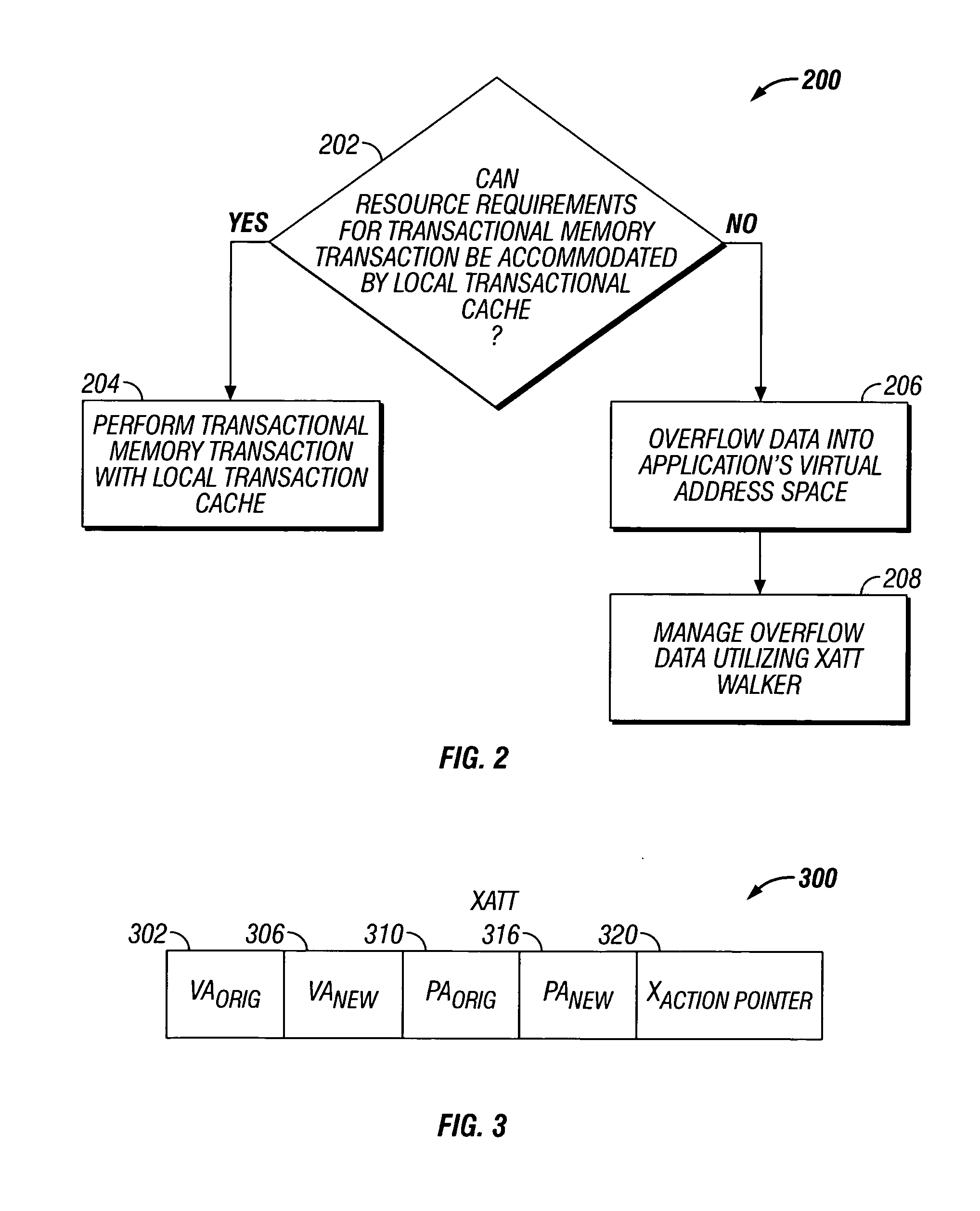

Transactional memory execution utilizing virtual memory

ActiveUS7685365B2Memory adressing/allocation/relocationTransaction processingVirtual memoryTransactional memory

Embodiments of the invention relate to transactional memory execution utilizing virtual memory. A processor includes a local transactional cache and a resource manager. The resource manager responsive to a transactional memory transaction request from a requesting thread determines whether the local transactional cache is capable of accommodating the transactional memory transaction request and, if so, the local transactional caches performs the transactional memory transaction. However, if the local transactional cache is not capable of accommodating the transactional memory transaction request, data for the transactional memory transaction request is overflowed into an application's virtual address space associated with the requesting thread.

Owner:INTEL CORP

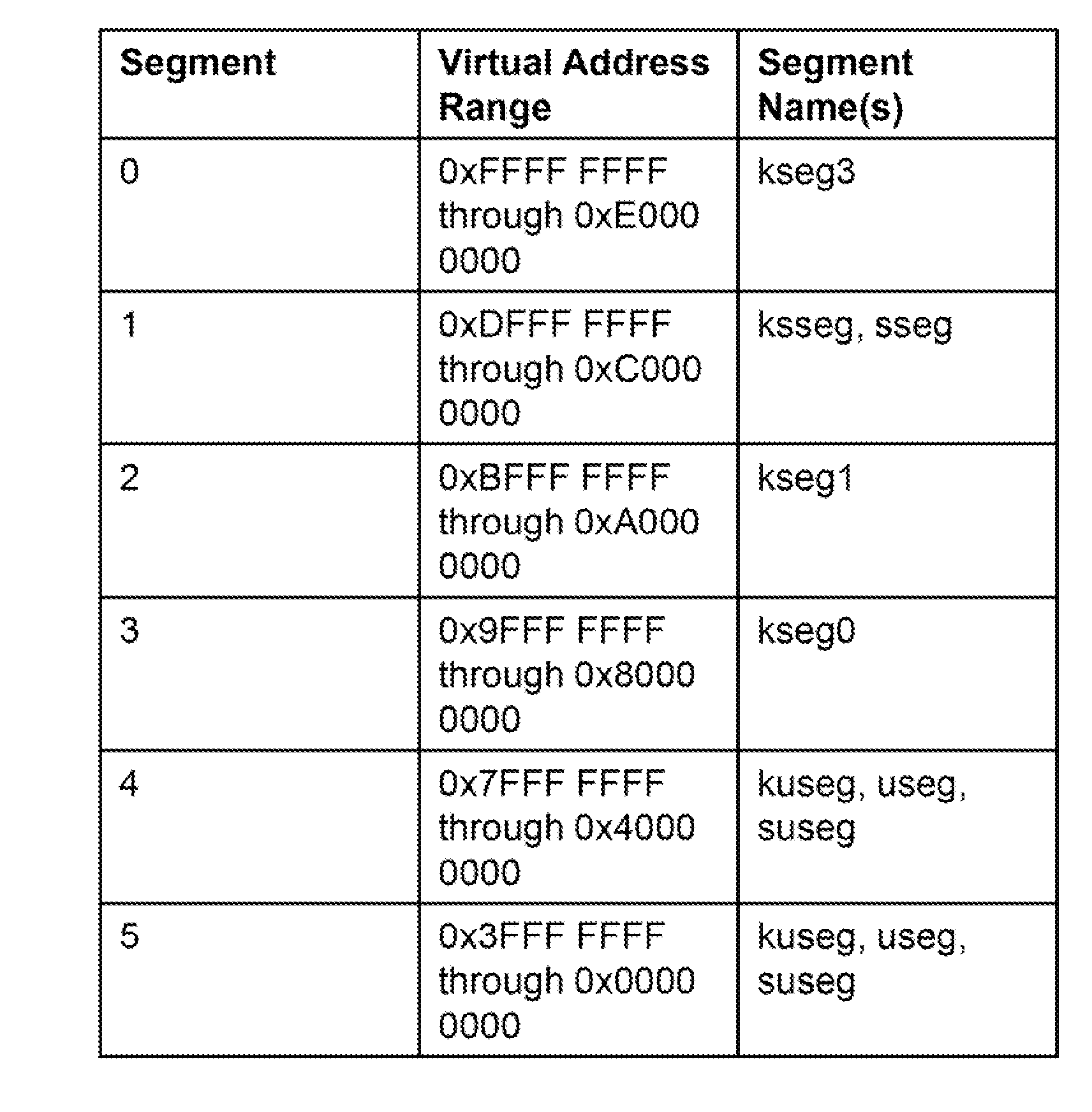

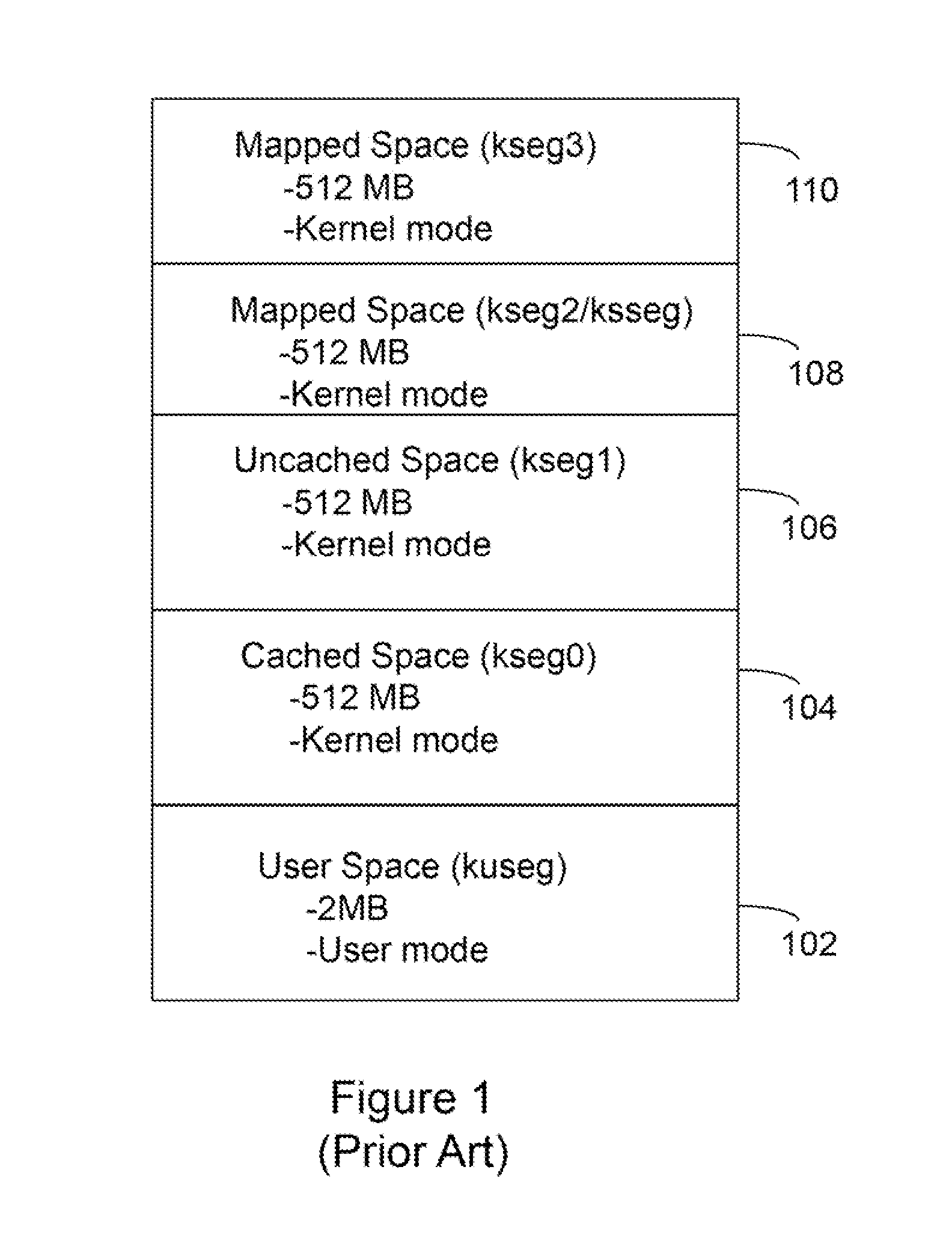

Processor with Kernel Mode Access to User Space Virtual Addresses

ActiveUS20130132702A1Enhanced kernel mode memory capacityIncrease memory capacityMemory adressing/allocation/relocationUnauthorized memory use protectionMemory addressParallel computing

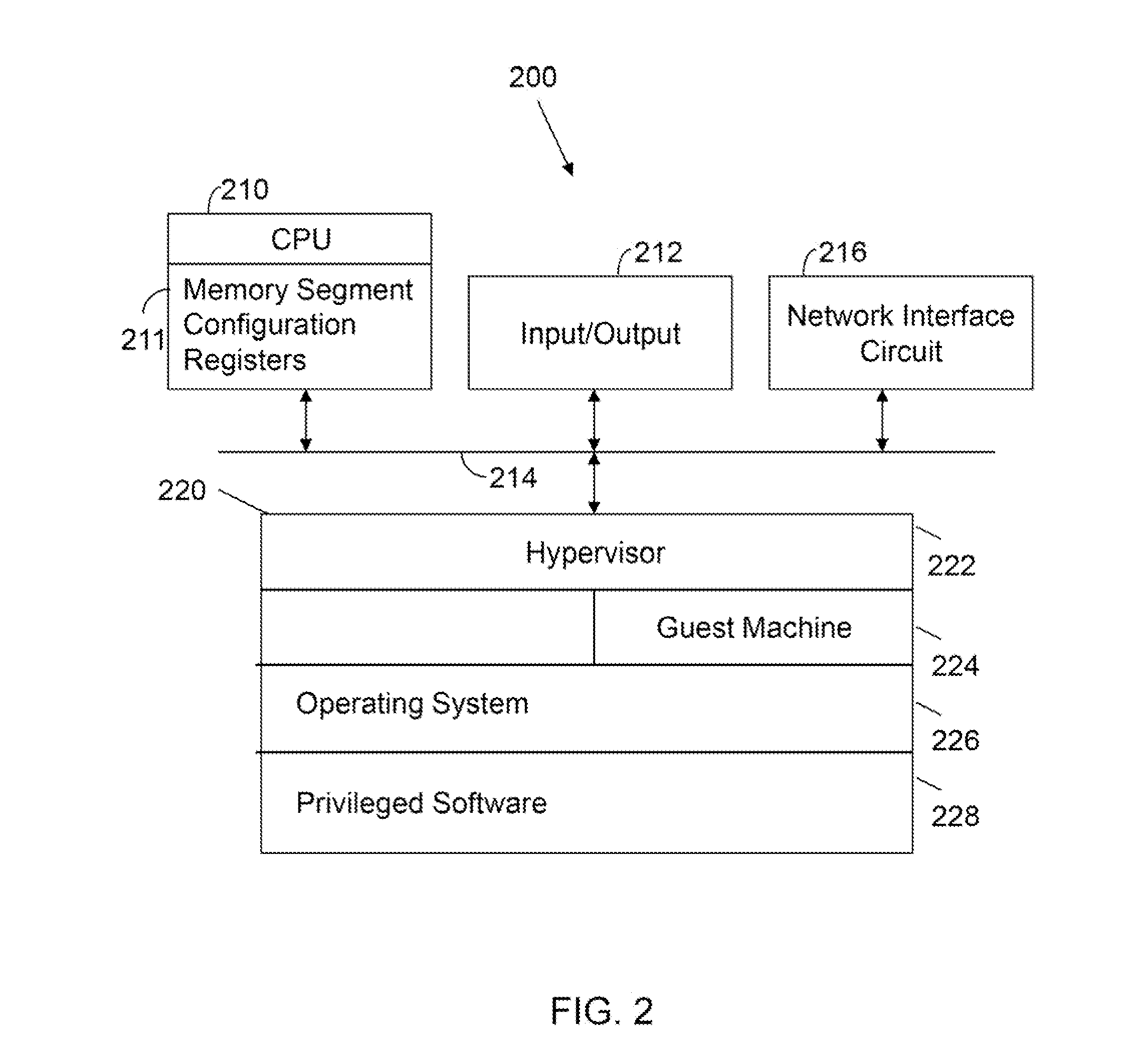

A computer includes a memory and a processor connected to the memory. The processor includes memory segment configuration registers to store defined memory address segments and defined memory address segment attributes such that the processor operates in accordance with the defined memory address segments and defined memory address segment attributes to allow kernel mode access to user space virtual addresses for enhanced kernel mode memory capacity.

Owner:MIPS TECH INC

Computer system para-virtualization using a hypervisor that is implemented in a partition of the host system

ActiveUS20070028244A1Improve securityExcessive removalError detection/correctionMemory adressing/allocation/relocationOperational systemSystem call

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. Operating systems in other logical or virtual partitions communicate with the I / O partitions via memory channels established by the ultravisor partition. The guest operating systems in the respective logical or virtual partitions are modified to access monitors that implement a system call interface through which the ultravisor, I / O, and any other special infrastructure partitions may initiate communications with each other and with the respective guest partitions. The guest operating systems are modified so that they do not attempt to use the “broken” instructions in the x86 system that complete virtualization systems must resolve by inserting traps.

Owner:UNISYS CORP

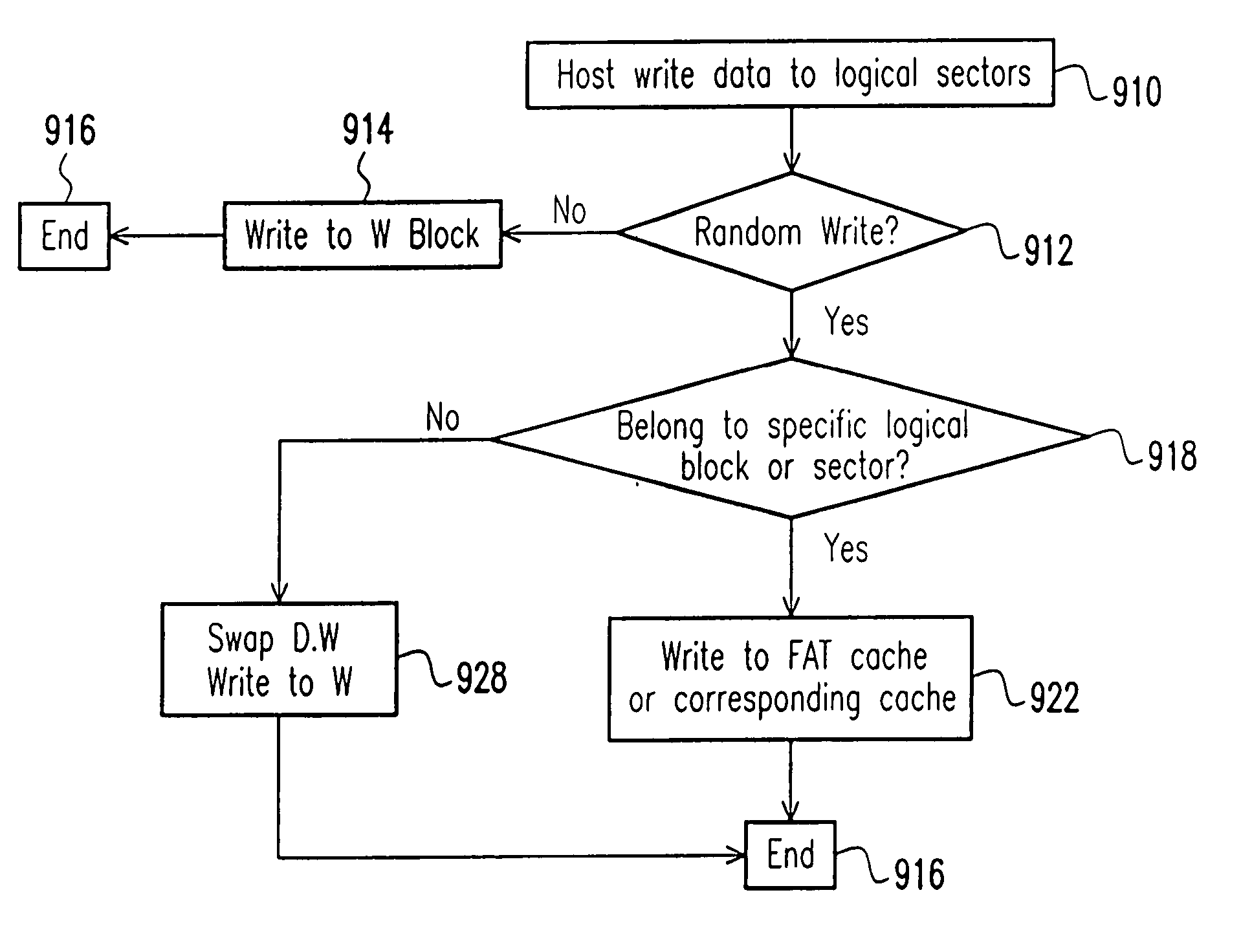

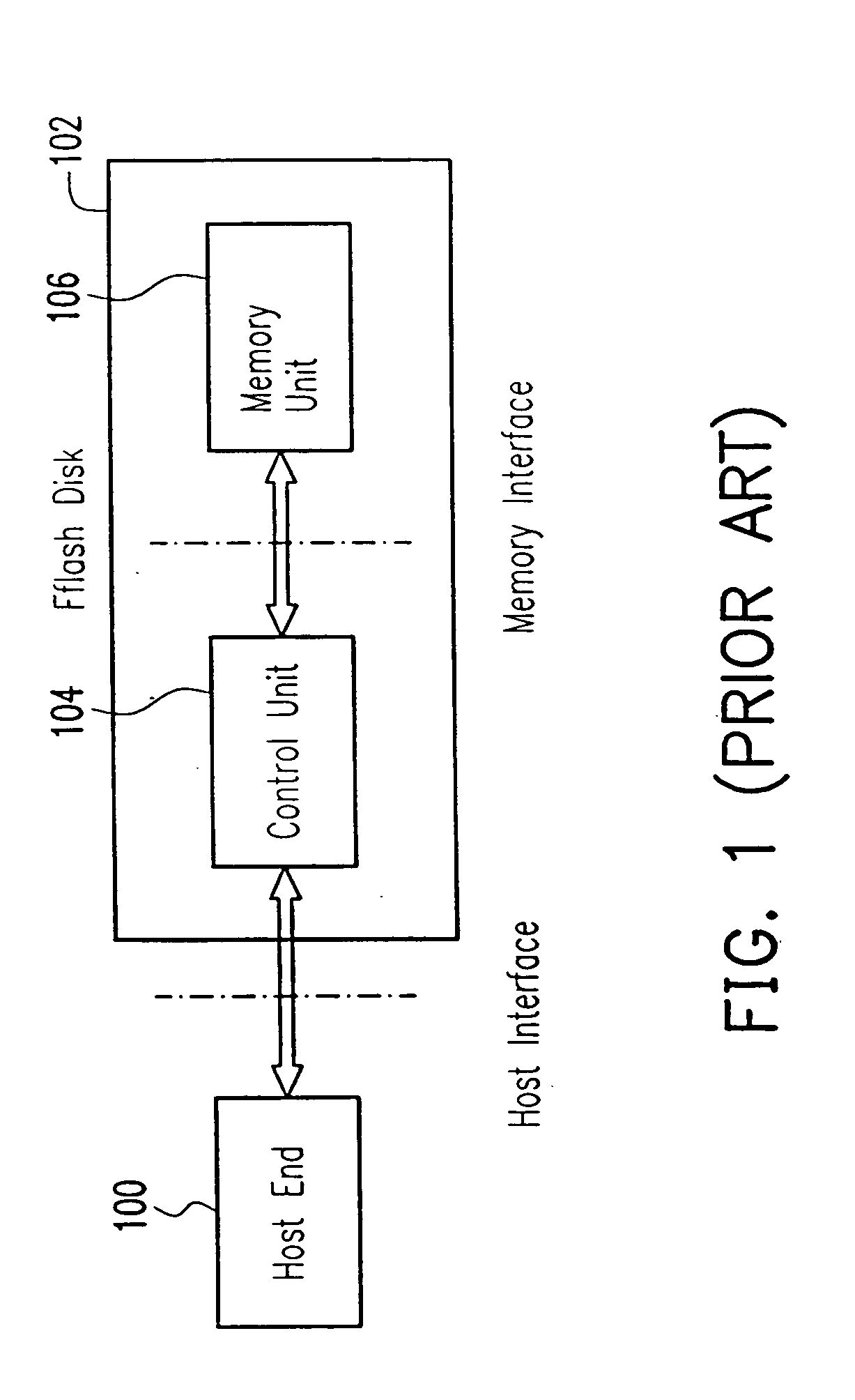

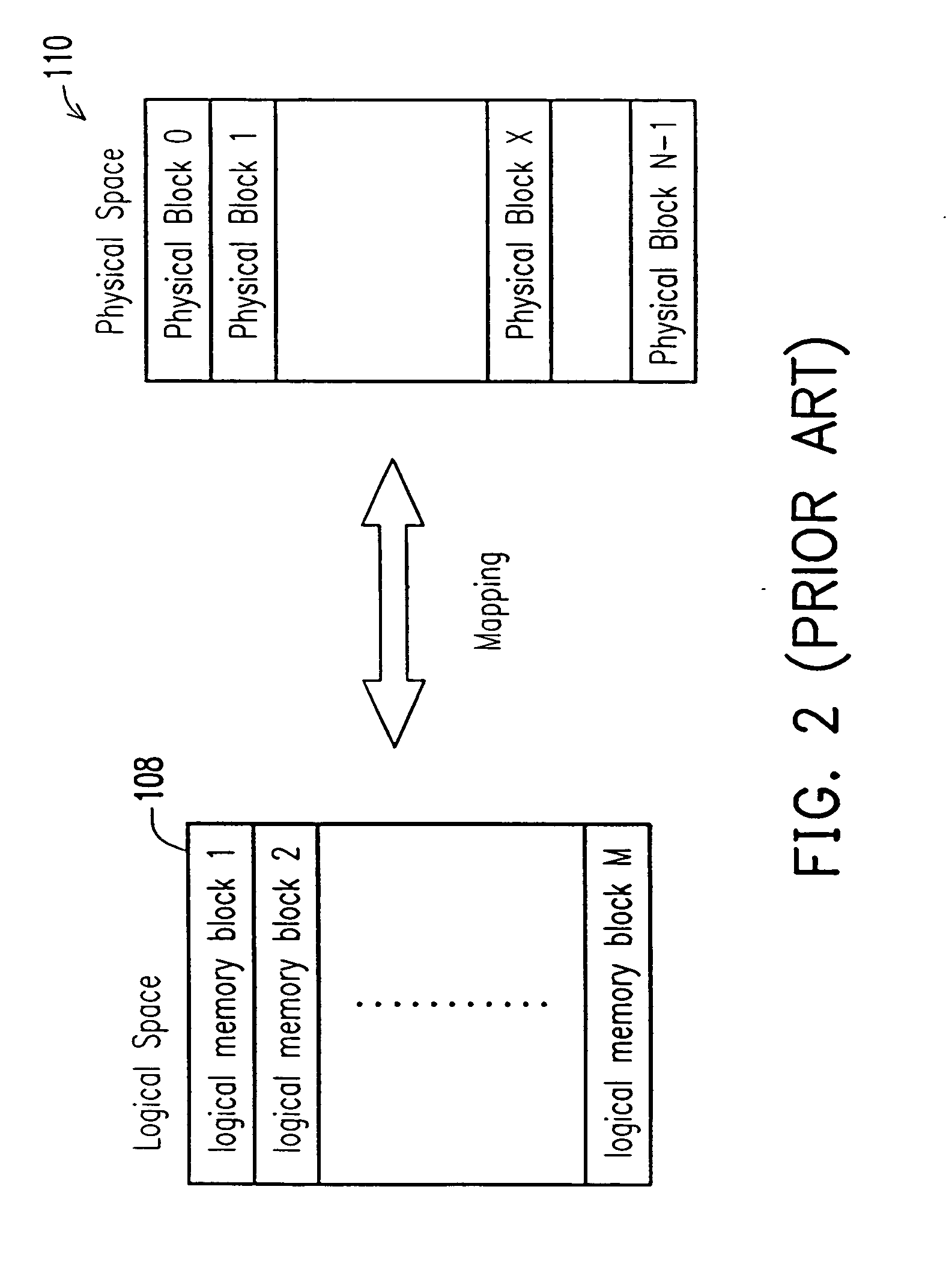

Nonvolatile memory unit with specific cache

InactiveUS20050015557A1Reduce frequencyAvoid actionMemory architecture accessing/allocationInput/output to record carriersOperating systemVolatile memory

The invention provides a method for organizing a writing operation to a nonvolatile memory. The method comprises setting a specific cache area, into which a specific data belonging to a specific group of logical blocks is to be written. It is determined whether or not the writing operation is a random write. If the writing operation is the random write, then the following steps are performed: determining whether or not the writing operation is to write a data that is belonging to the specific group of logical blocks; and writing the data into the specific cache area if the data is belonging to the specific group of logical blocks. As a result, a swap action between a data block and a writing block can be avoided during a random write operation. A storage structure in a nonvolatile memory device are organized to perform the forgoing writing operation.

Owner:SOLID STATE SYST

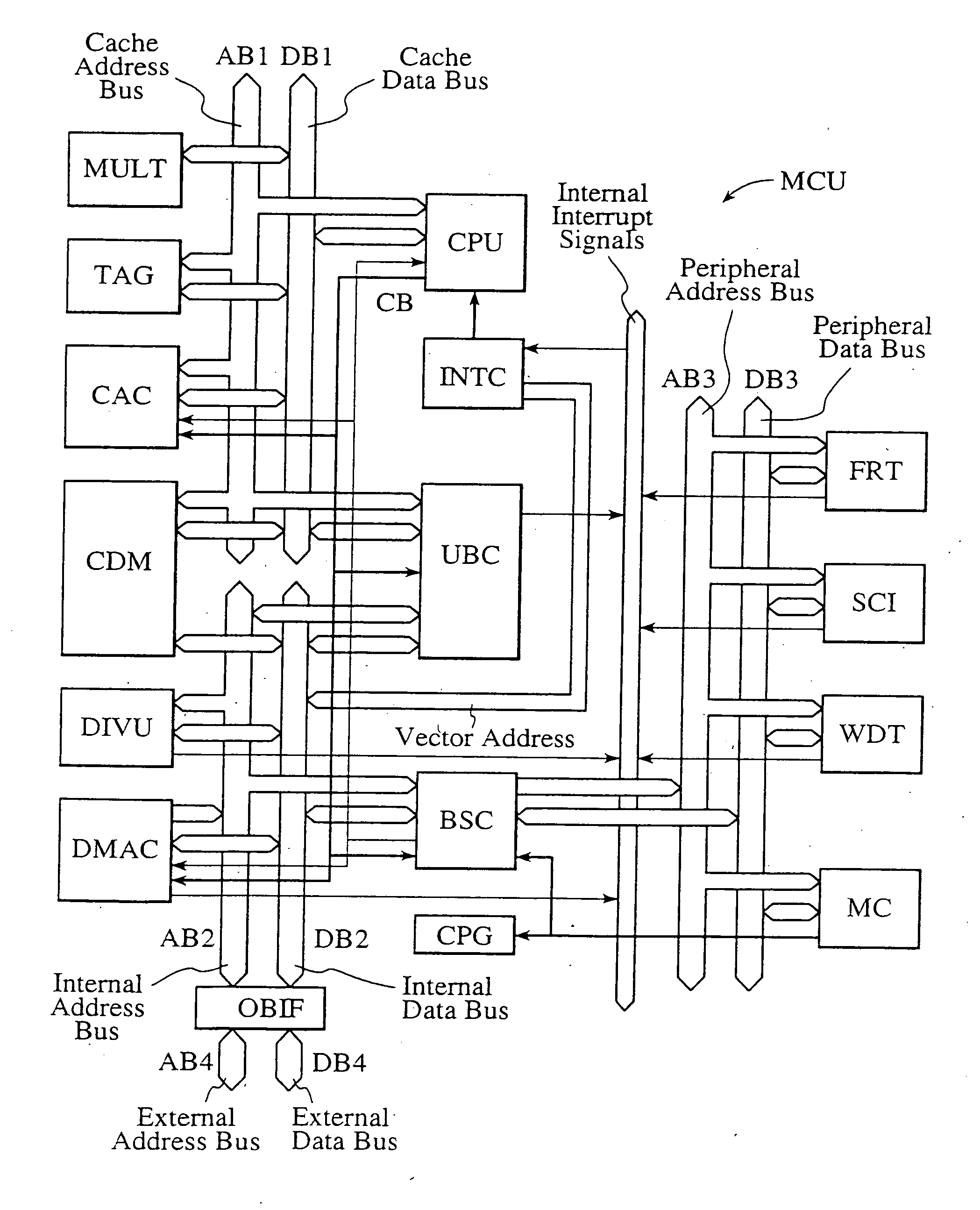

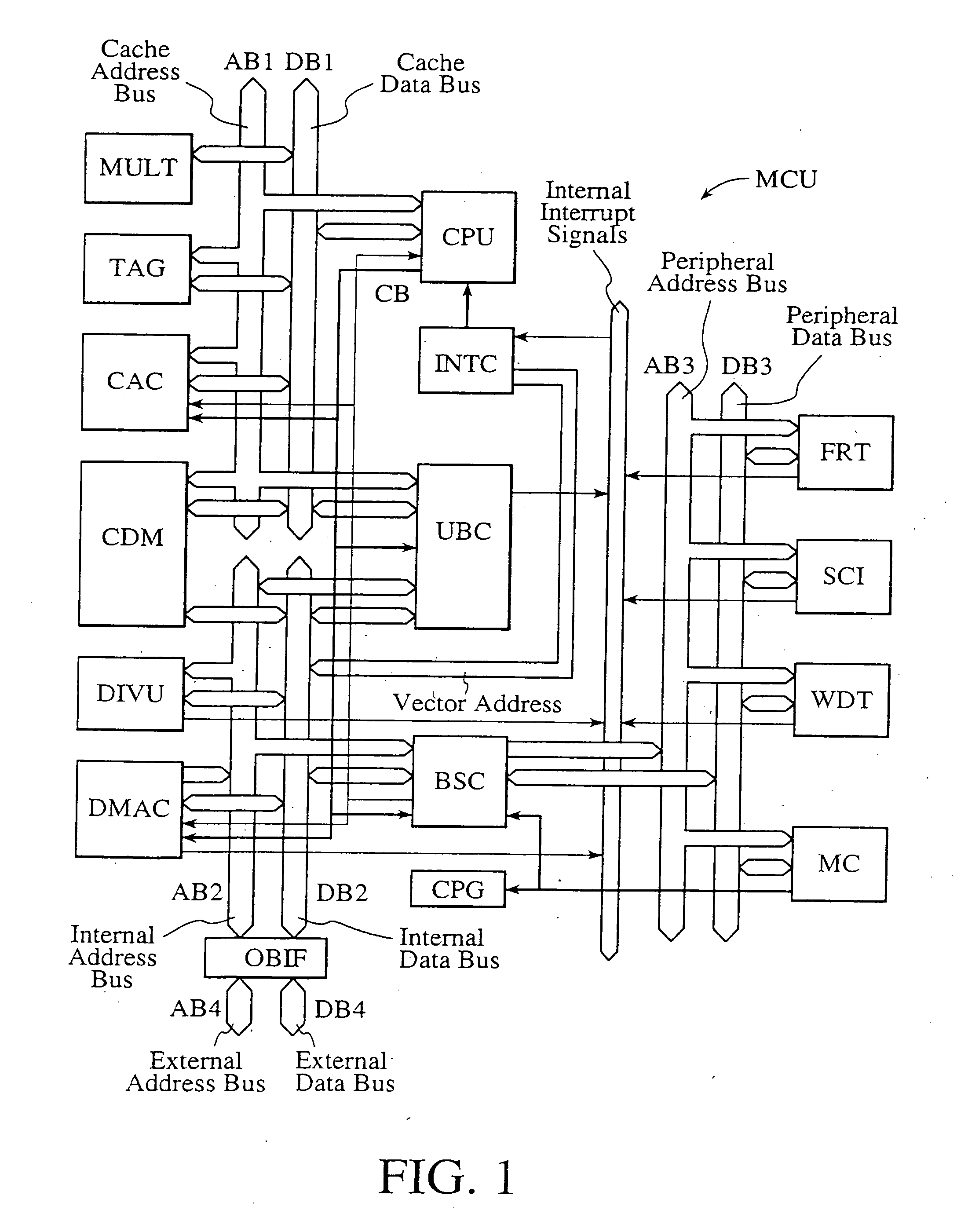

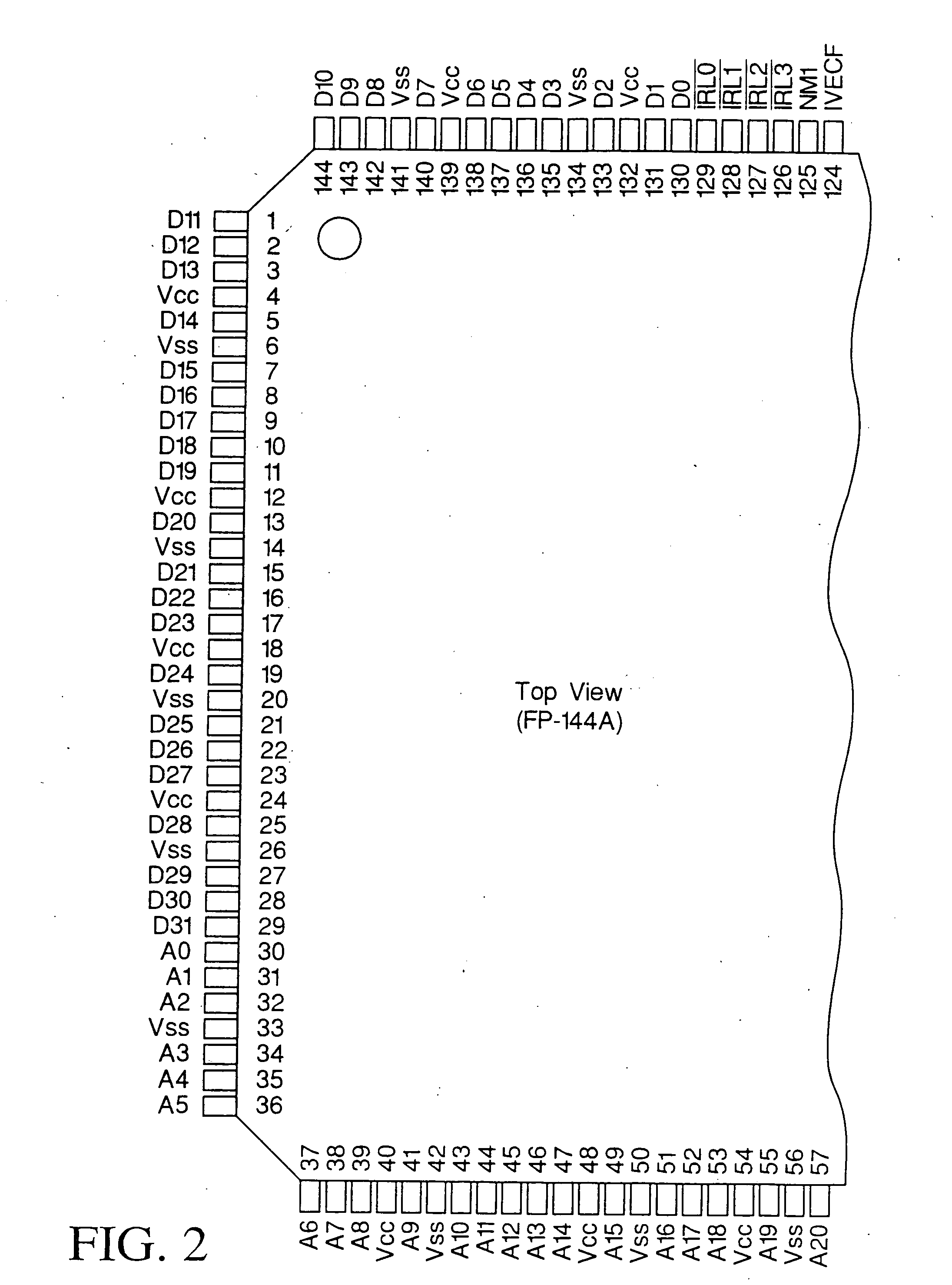

Single-chip microcomputer

InactiveUS20120023281A1Multiple functionsPerformance multiplePower managementEnergy efficient ICTMicrocontrollerMicrocomputer

A single-chip microcomputer comprising: a first bus having a central processing unit and a cache memory connected therewith; a second bus having a dynamic memory access control circuit and an external bus interface connected therewith; a break controller for connecting the first bus and the second bus selectively; a third bus having a peripheral module connected therewith and having a lower-speed bus cycle than the bus cycles of the first and second buses; and a bus state controller for effecting a data transfer and a synchronization between the second bus and the third bus. The single-chip microcomputer has the three divided internal buses to reduce the load capacity upon the signal transmission paths so that the signal transmission can be accomplished at a high speed. Moreover, the peripheral module required to have no operation speed is isolated so that the power dissipation can be reduced.

Owner:KAWASAKI SHUMPEI +8

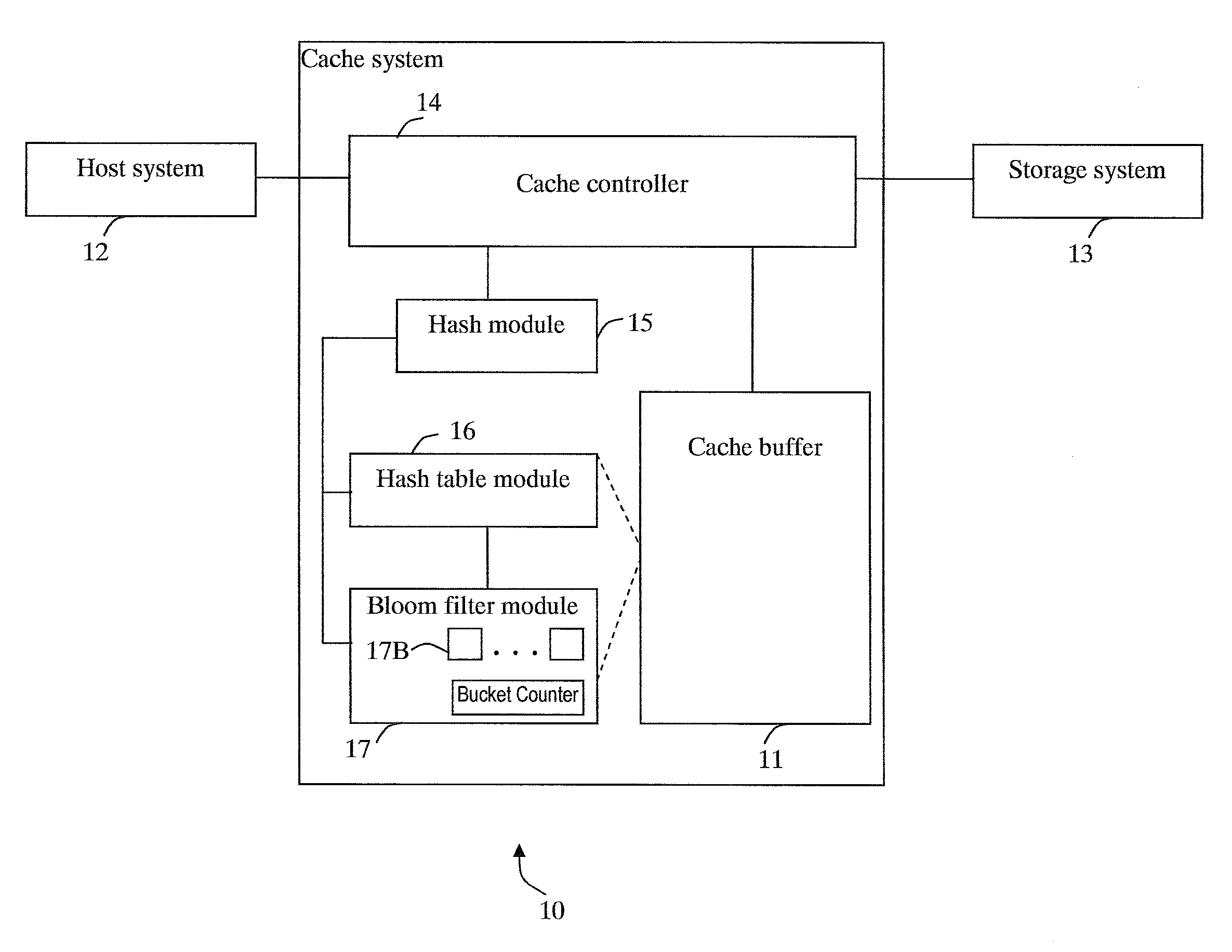

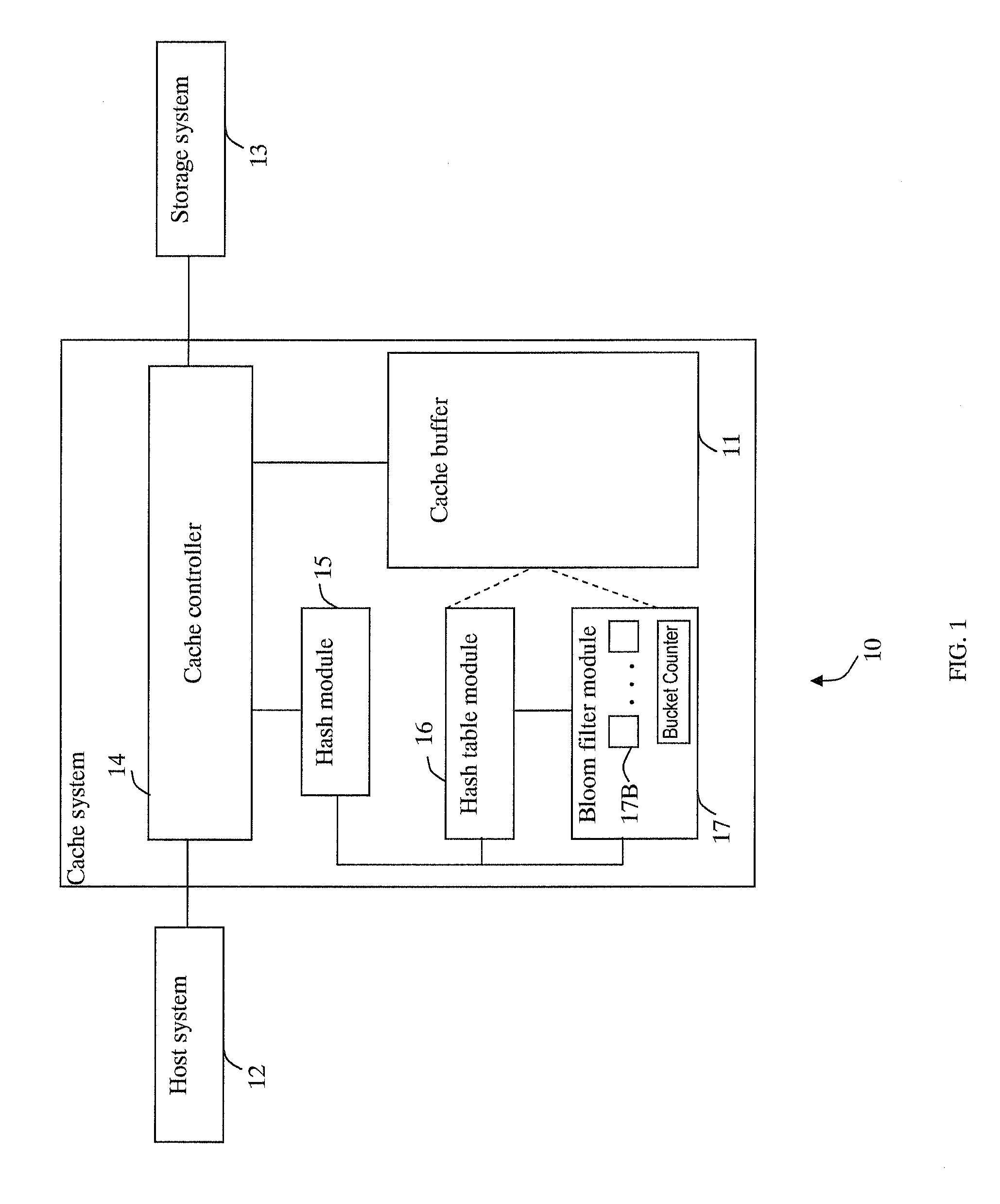

Cache system optimized for cache miss detection

ActiveUS20130326154A1Memory architecture accessing/allocationMemory adressing/allocation/relocationLogical block addressingBloom filter

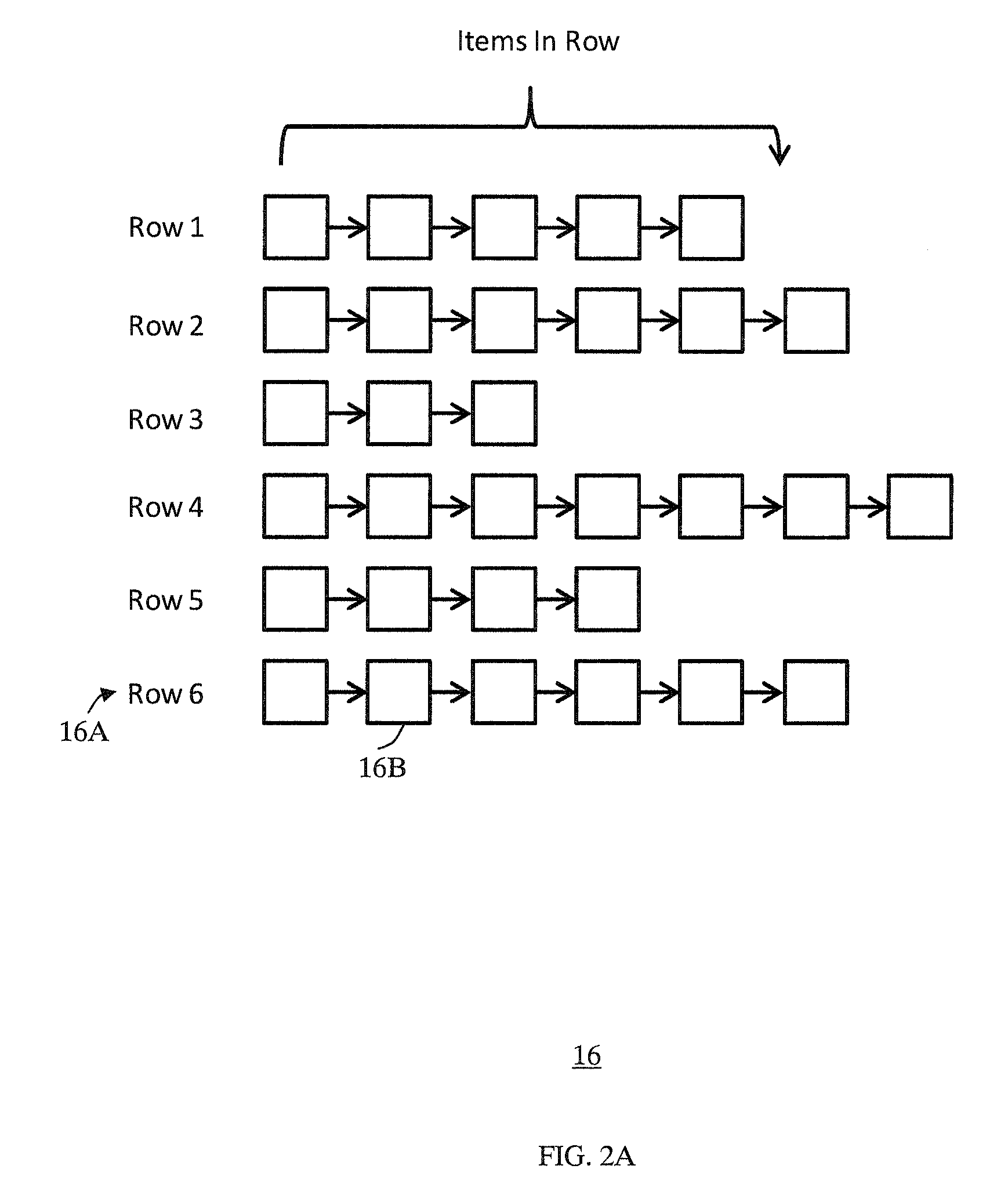

According to an embodiment of the invention, cache management comprises maintaining a cache comprising a hash table including rows of data items in the cache, wherein each row in the hash table is associated with a hash value representing a logical block address (LBA) of each data item in that row. Searching for a target data item in the cache includes calculating a hash value representing a LBA of the target data item, and using the hash value to index into a counting Bloom filter that indicates that the target data item is either not in the cache, indicating a cache miss, or that the target data item may be in the cache. If a cache miss is not indicated, using the hash value to select a row in the hash table, and indicating a cache miss if the target data item is not found in the selected row.

Owner:SAMSUNG ELECTRONICS CO LTD

Popular searches

Two-way working systems Redundant data error correction Transmission Selective content distribution Video data retrieval Special data processing applications Redundant hardware error correction Data switching by path configuration Software simulation/interpretation/emulation Micro-instruction address formation

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com