Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

260results about How to "Improve application performance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

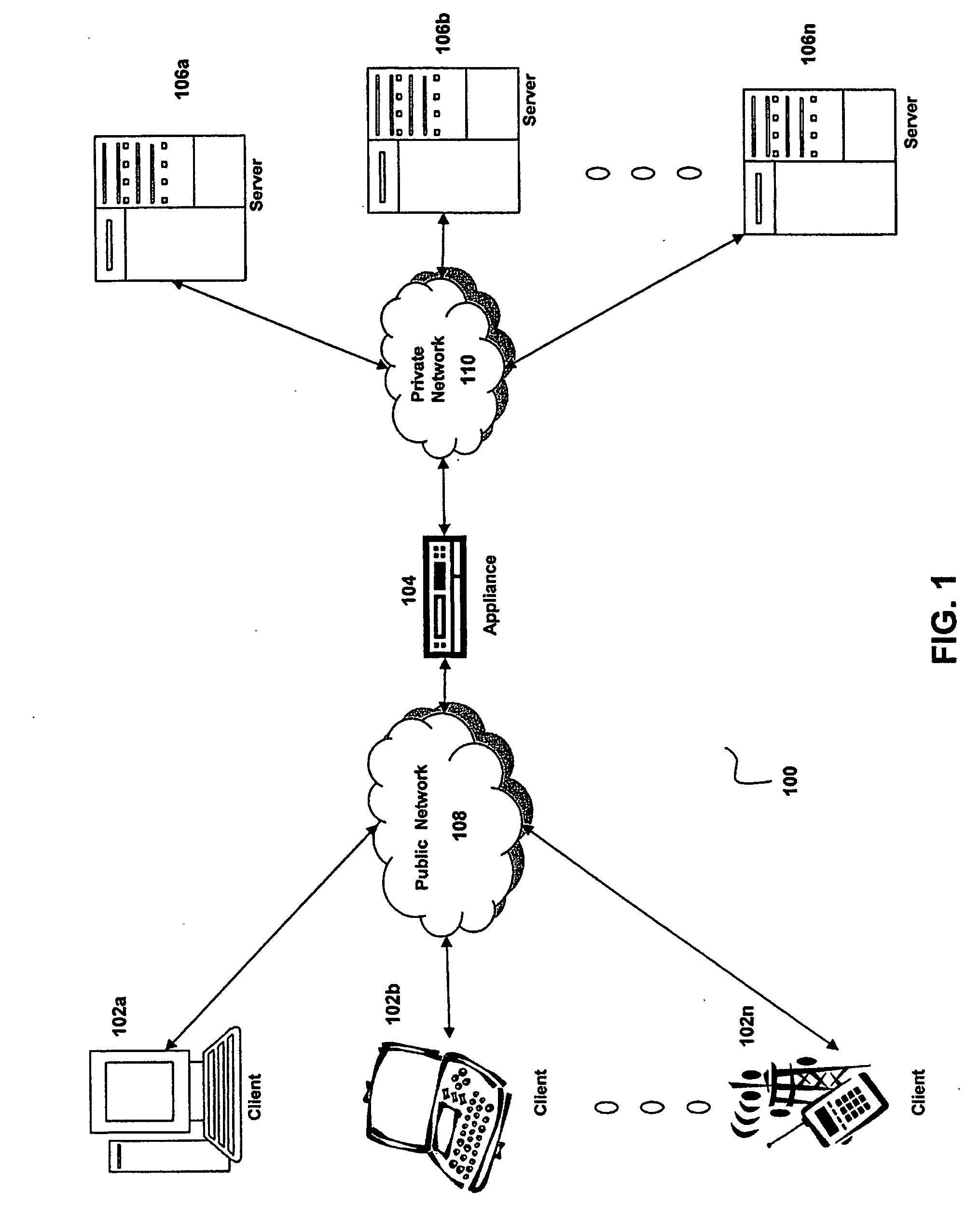

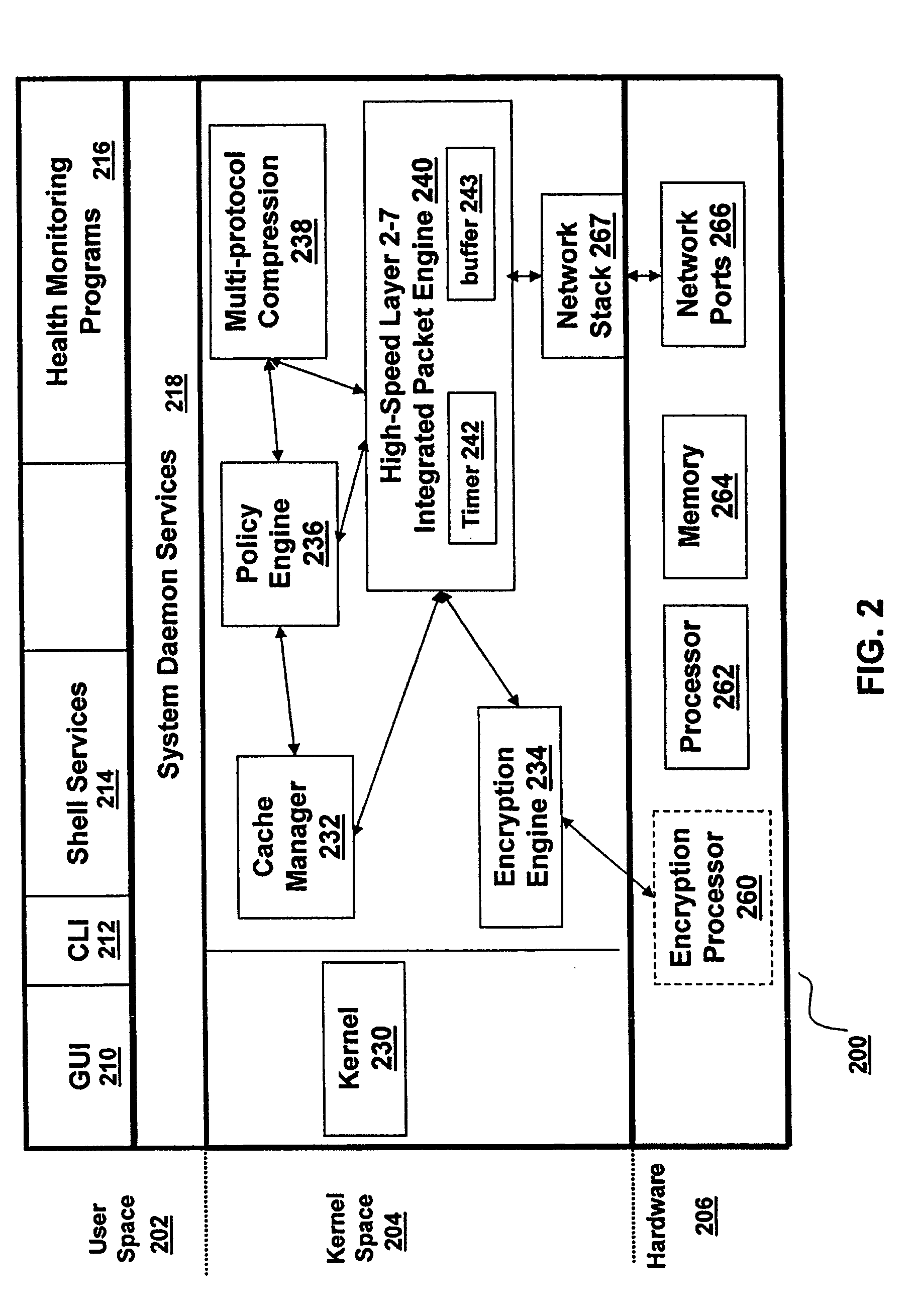

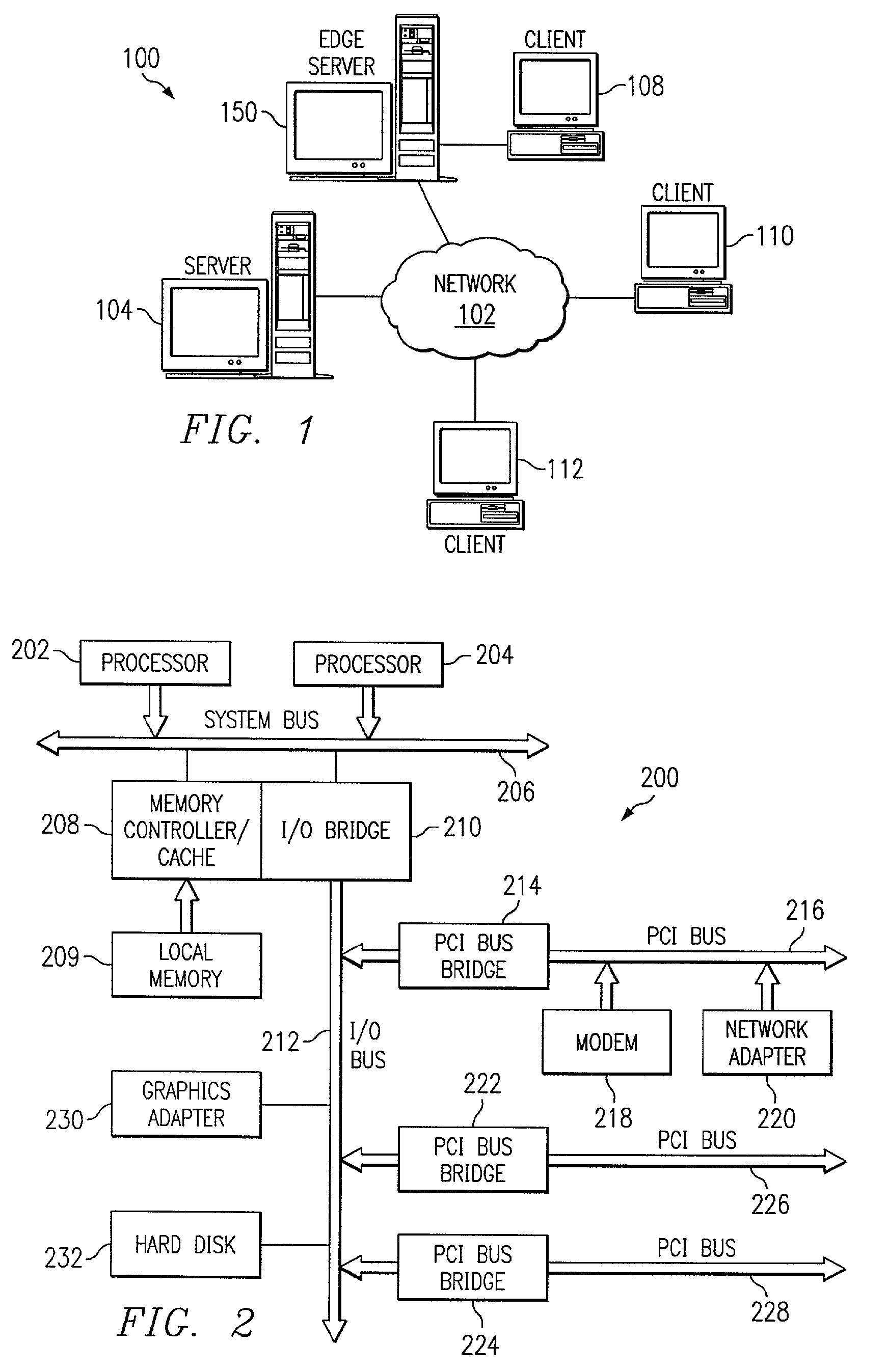

Runtime adaptable search processor

ActiveUS20060136570A1Reduce stacking processImproving host CPU performanceWeb data indexingMultiple digital computer combinationsData packInternal memory

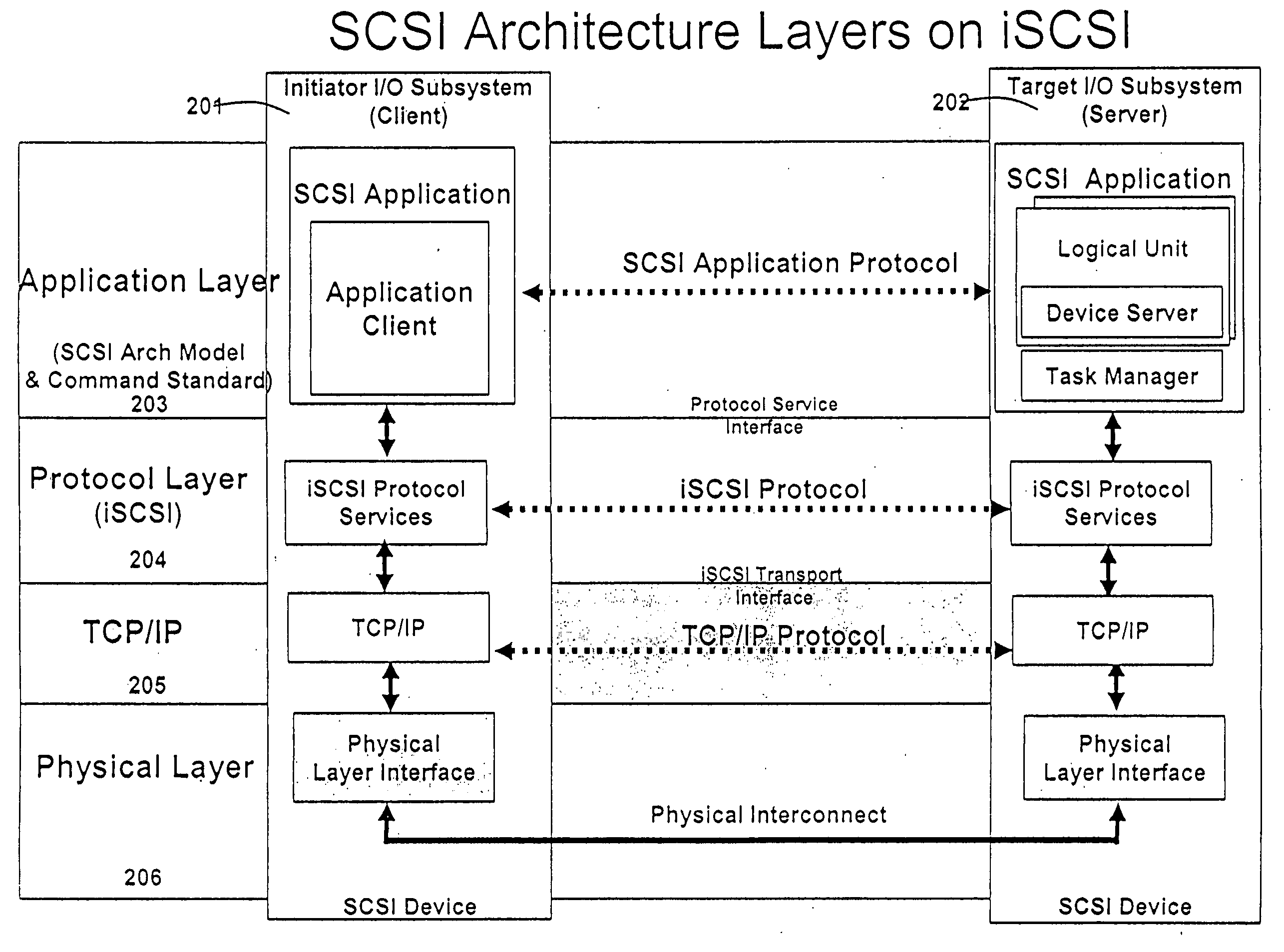

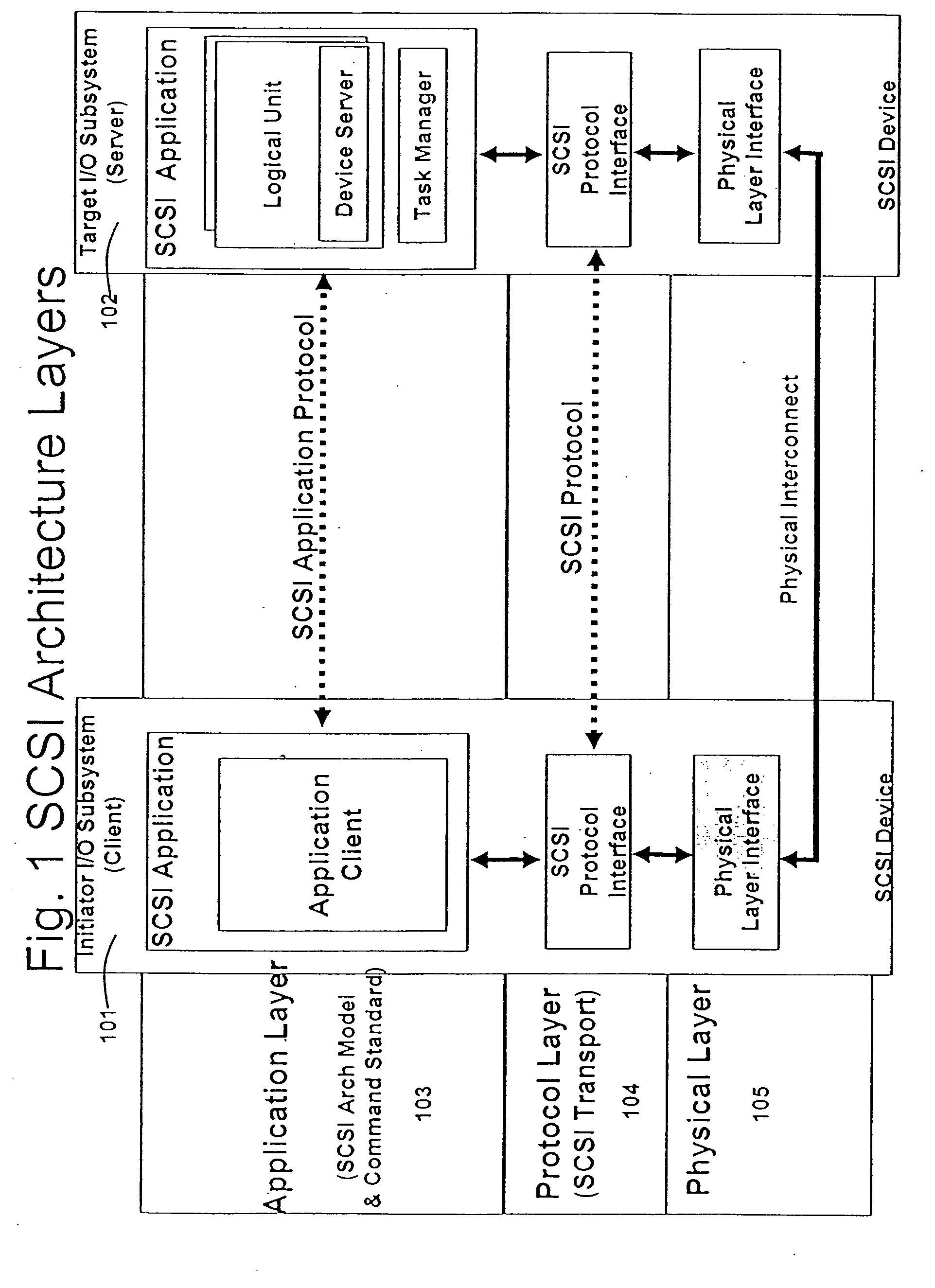

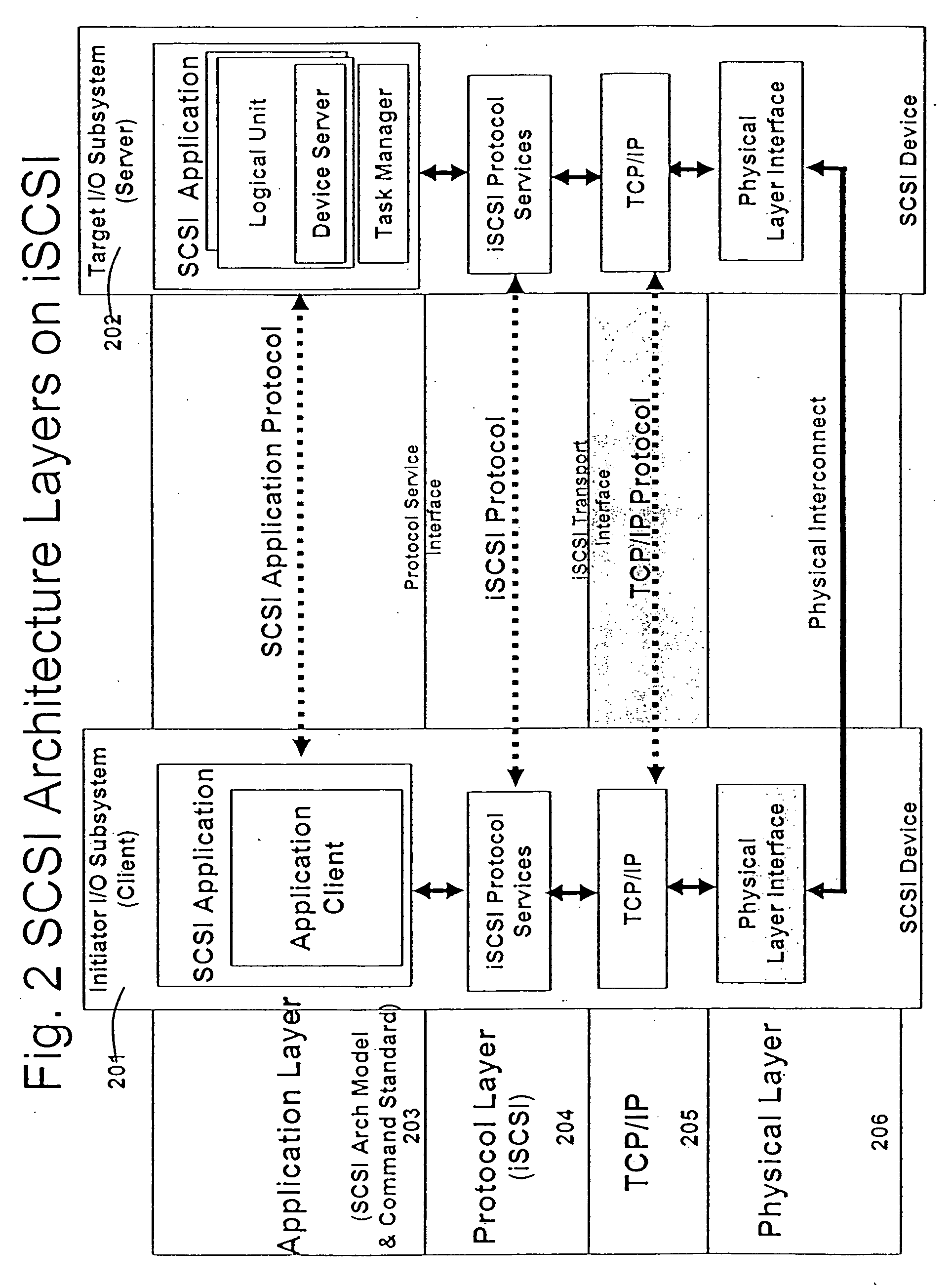

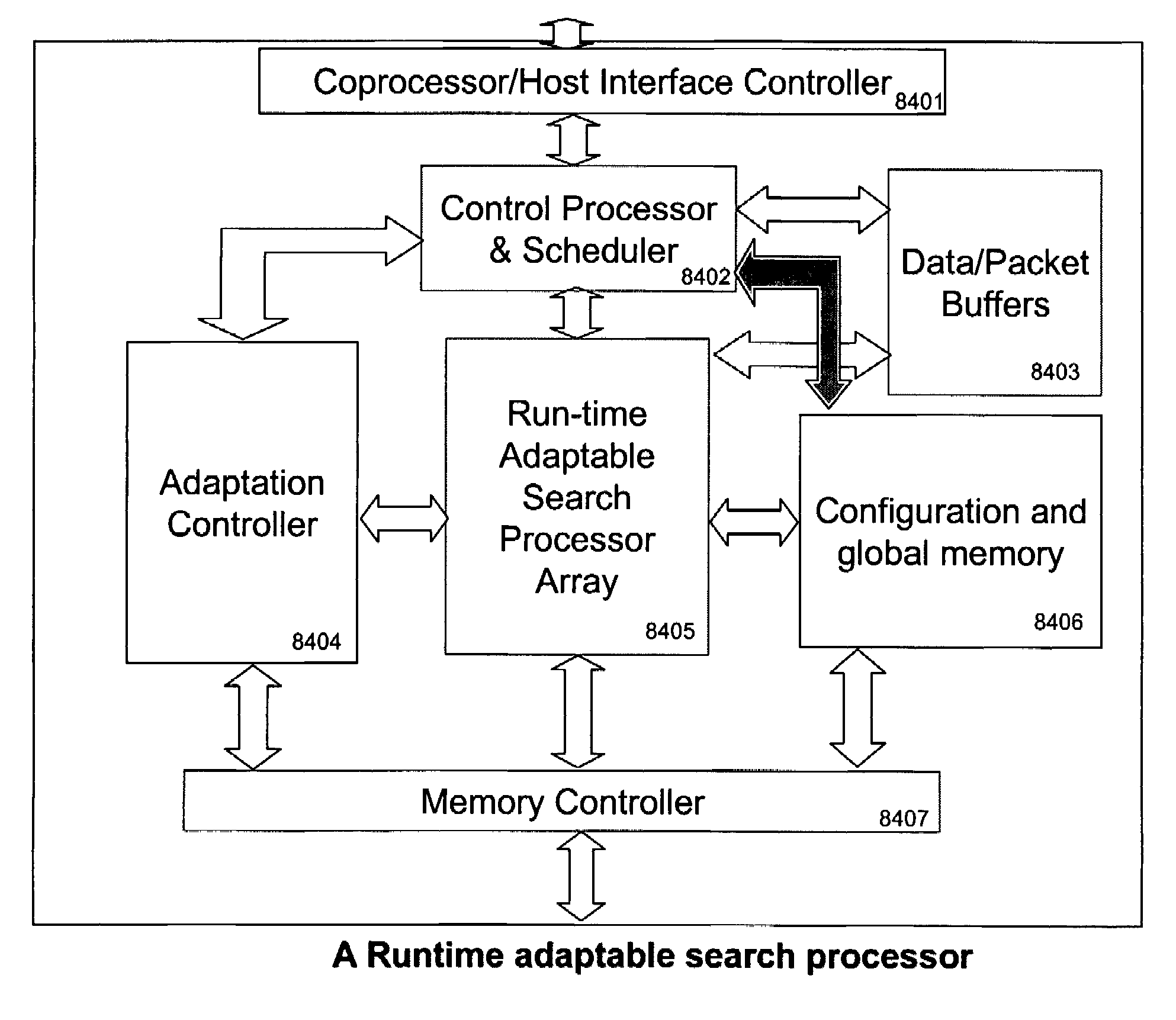

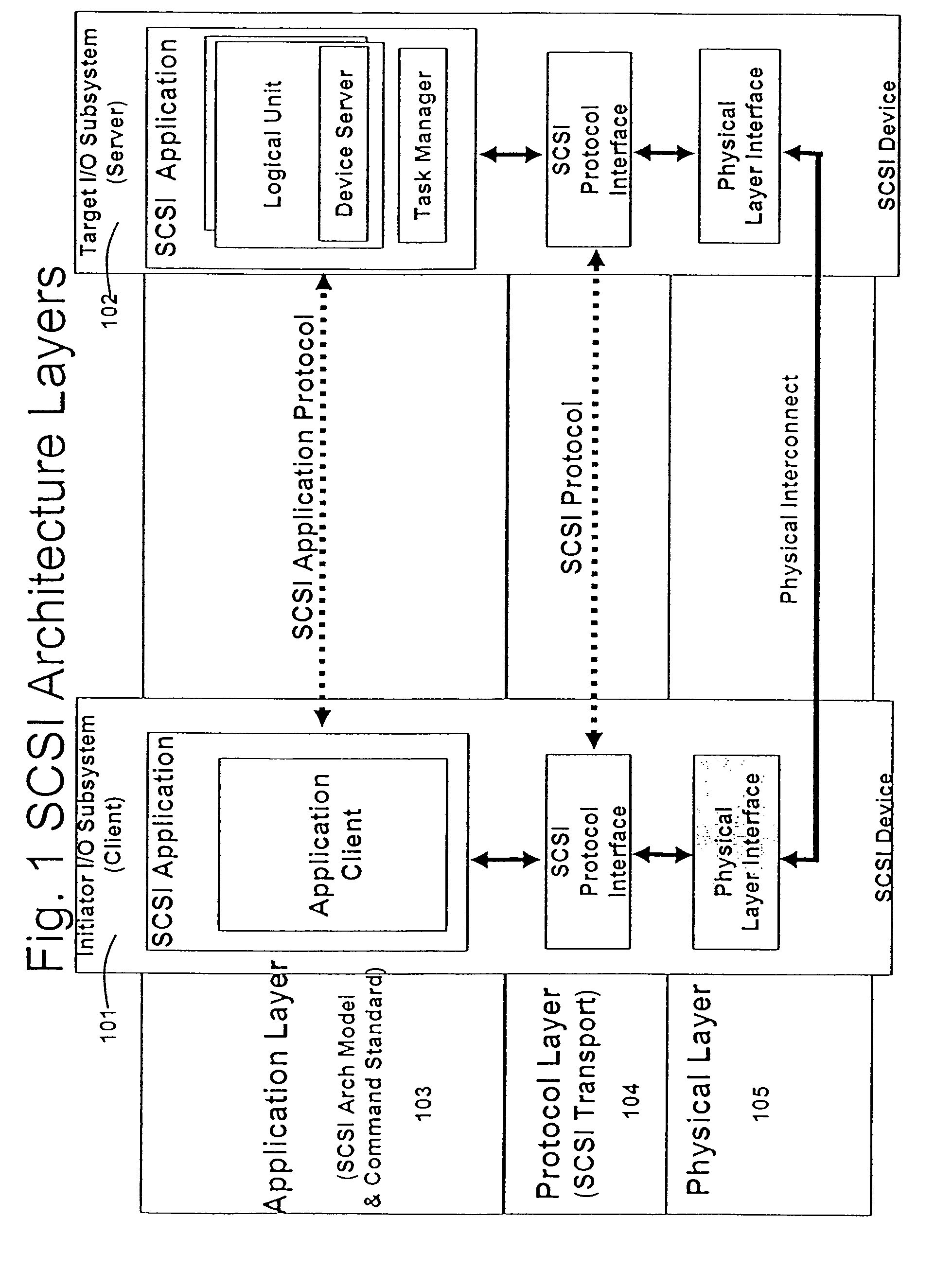

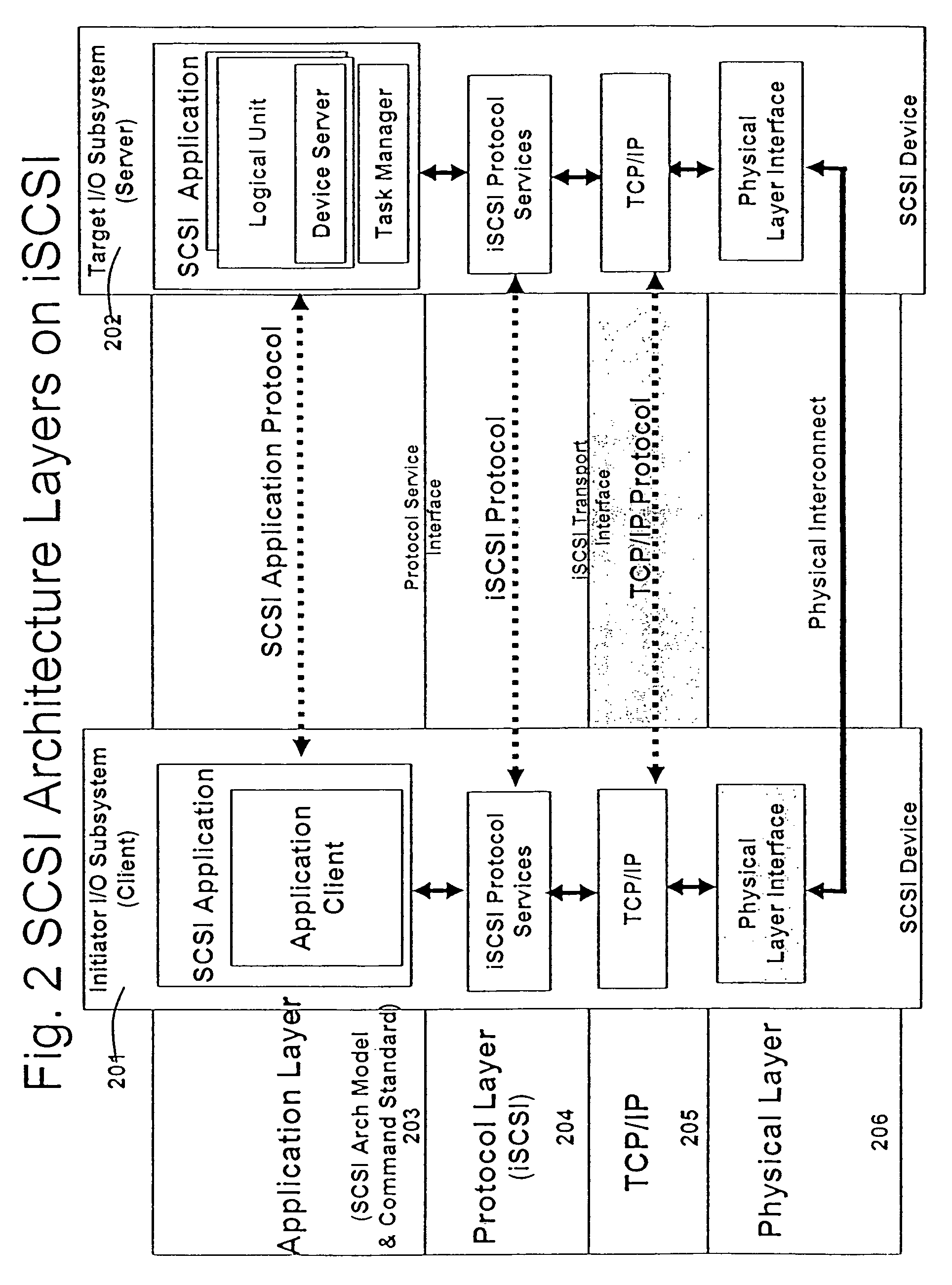

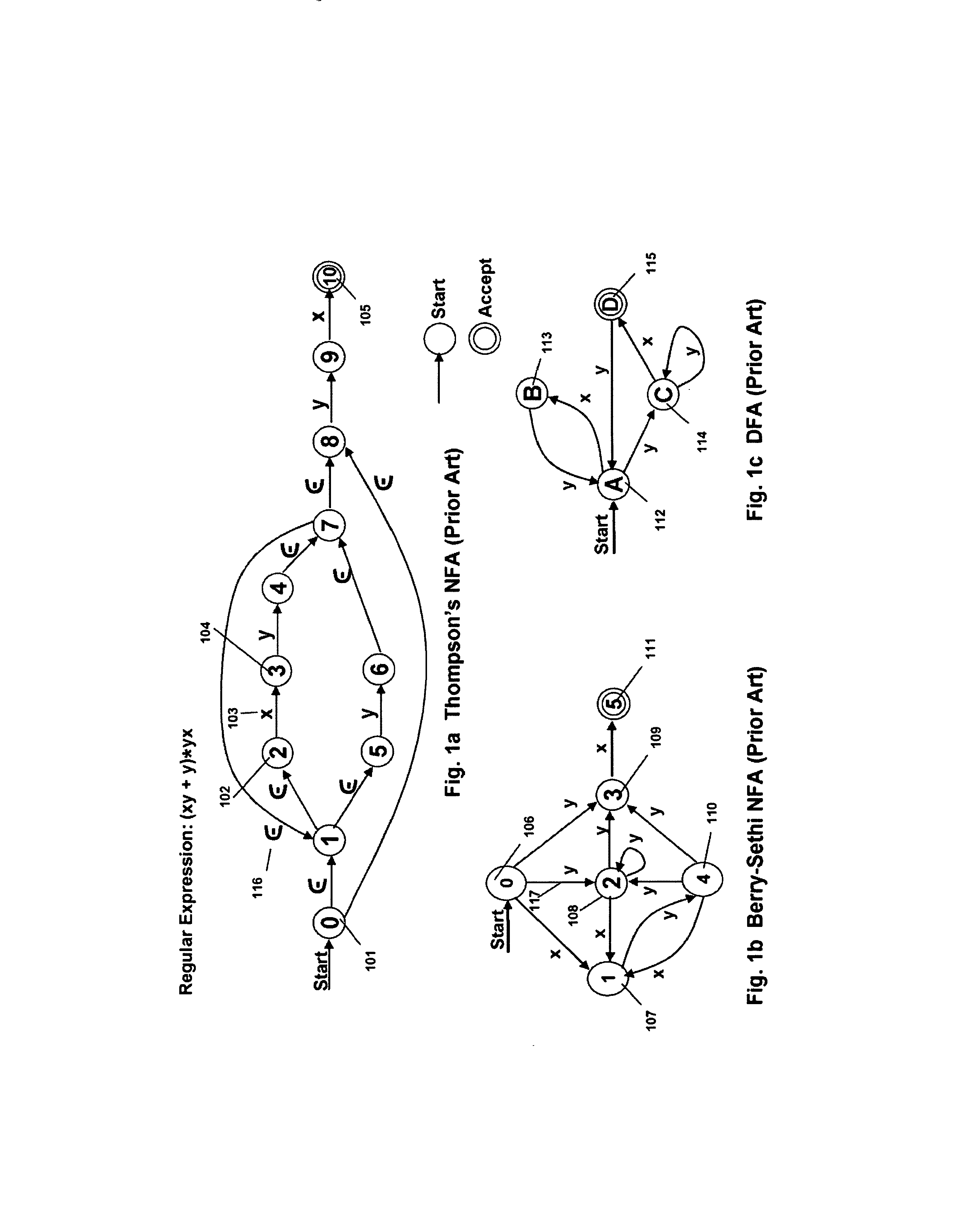

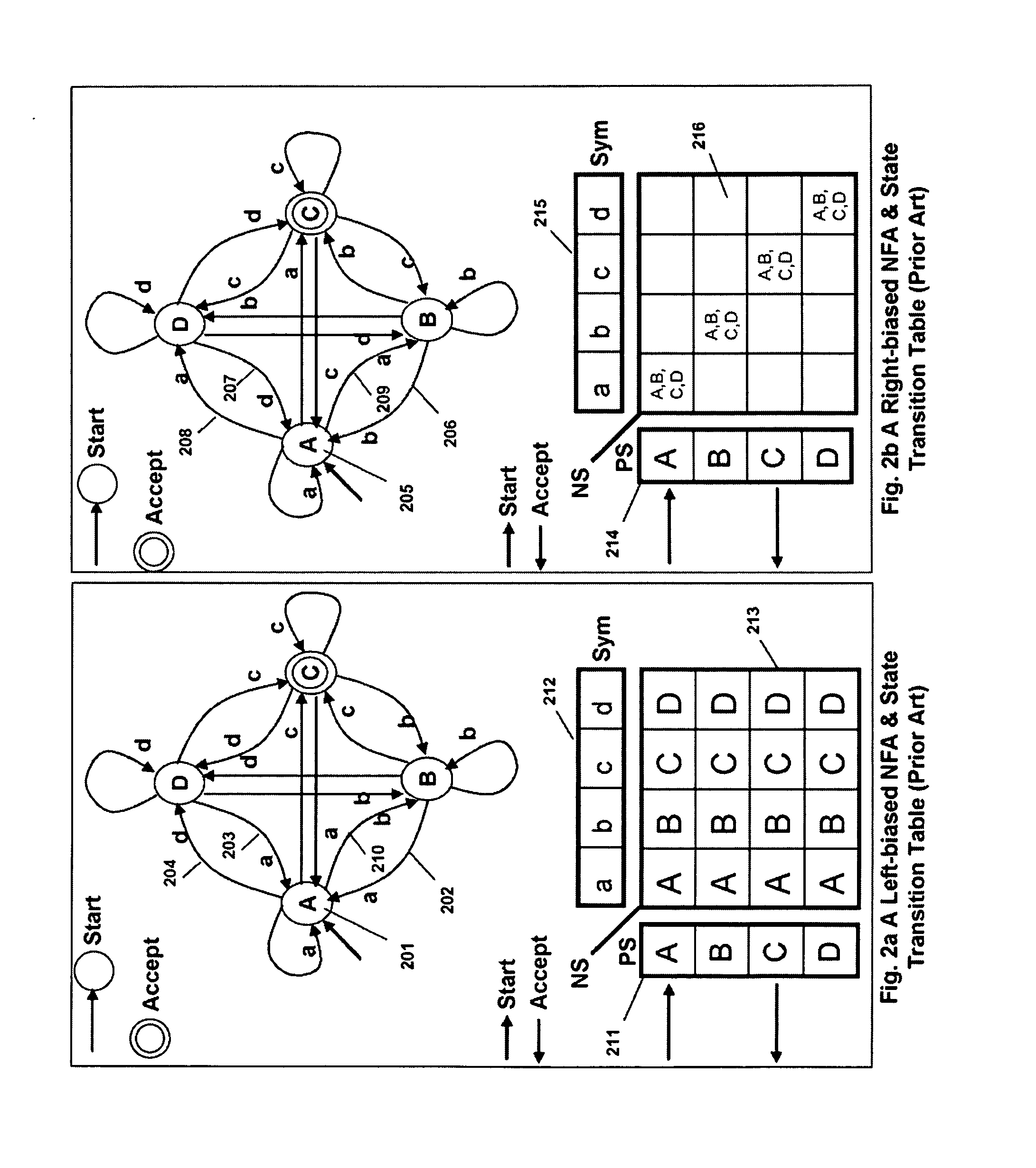

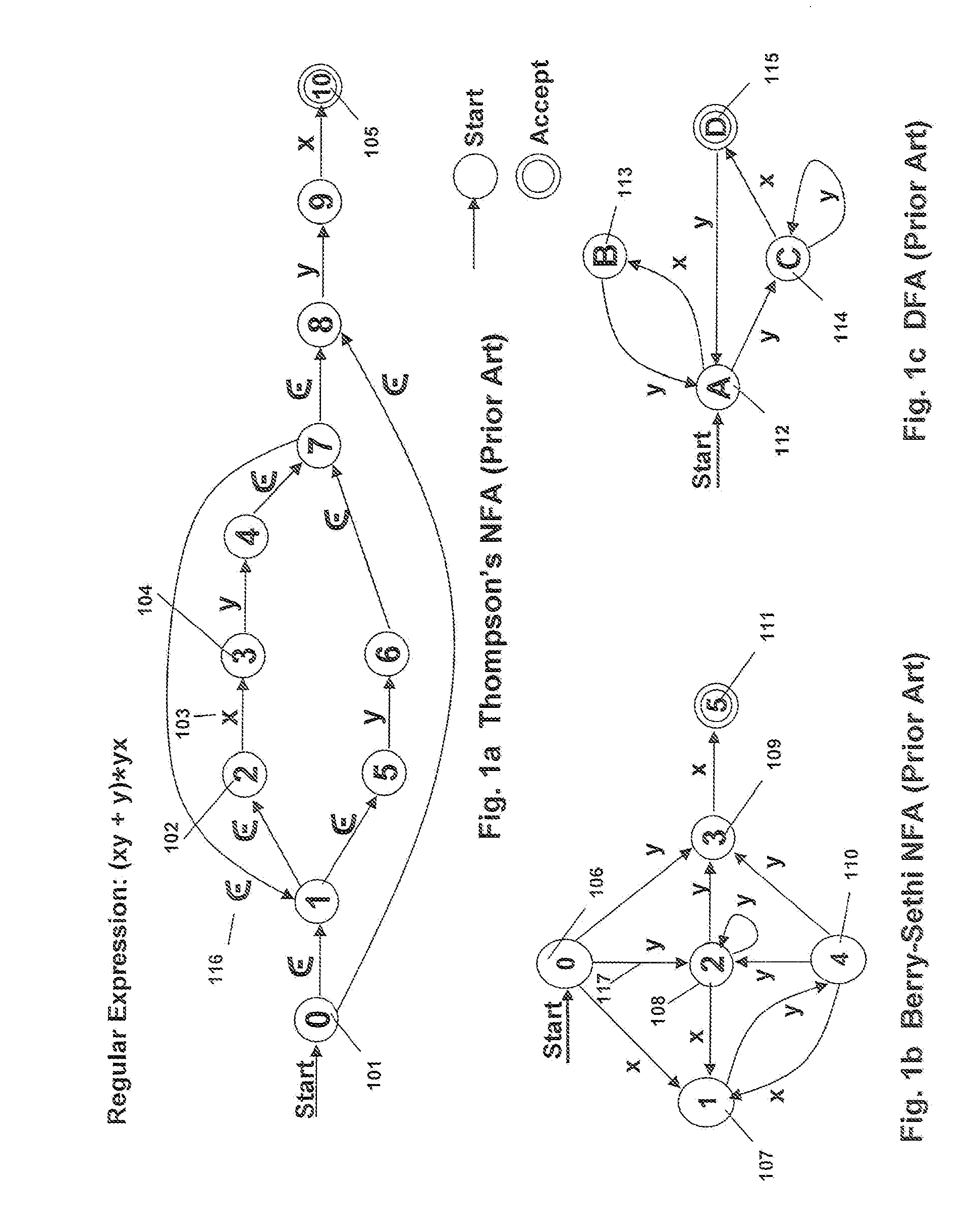

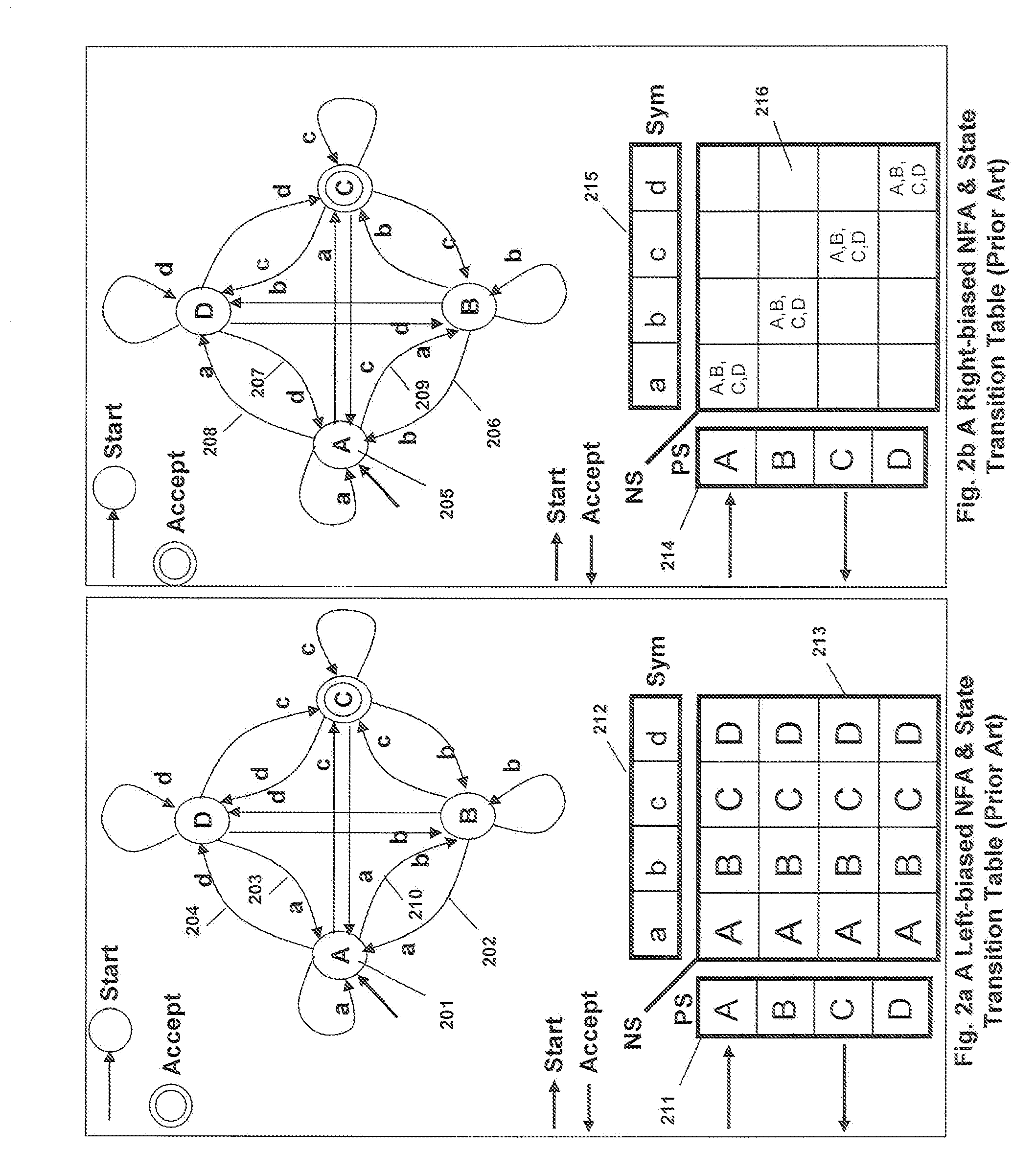

A runtime adaptable search processor is disclosed. The search processor provides high speed content search capability to meet the performance need of network line rates growing to 1 Gbps, 10 Gbps and higher. he search processor provides a unique combination of NFA and DFA based search engines that can process incoming data in parallel to perform the search against the specific rules programmed in the search engines. The processor architecture also provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

Runtime adaptable search processor

ActiveUS7685254B2Improve application performanceLarge capacityWeb data indexingMemory adressing/allocation/relocationPacket schedulingSchema for Object-Oriented XML

A runtime adaptable search processor is disclosed. The search processor provides high speed content search capability to meet the performance need of network line rates growing to 1 Gbps, 10 Gbps and higher. The search processor provides a unique combination of NFA and DFA based search engines that can process incoming data in parallel to perform the search against the specific rules programmed in the search engines. The processor architecture also provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. Scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

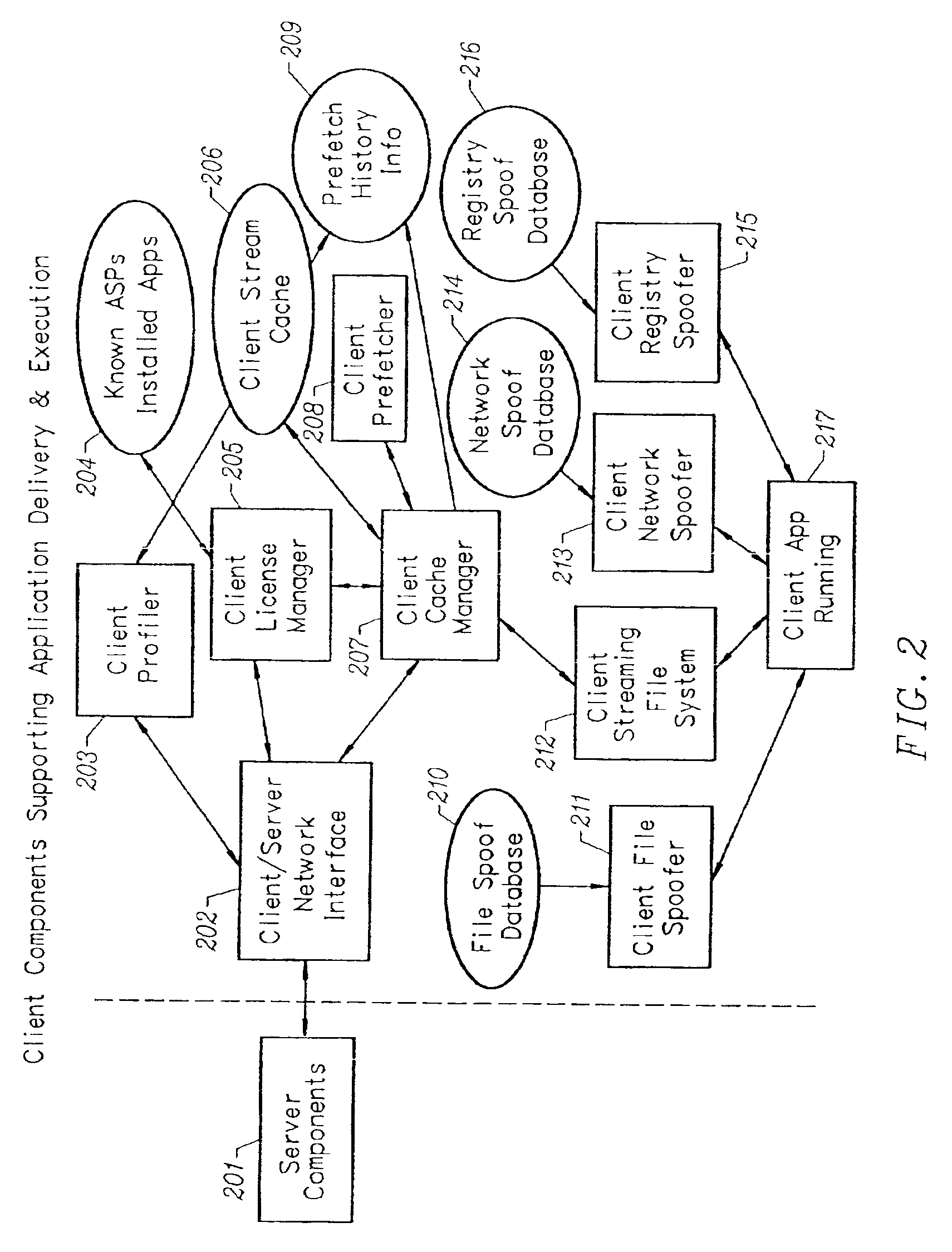

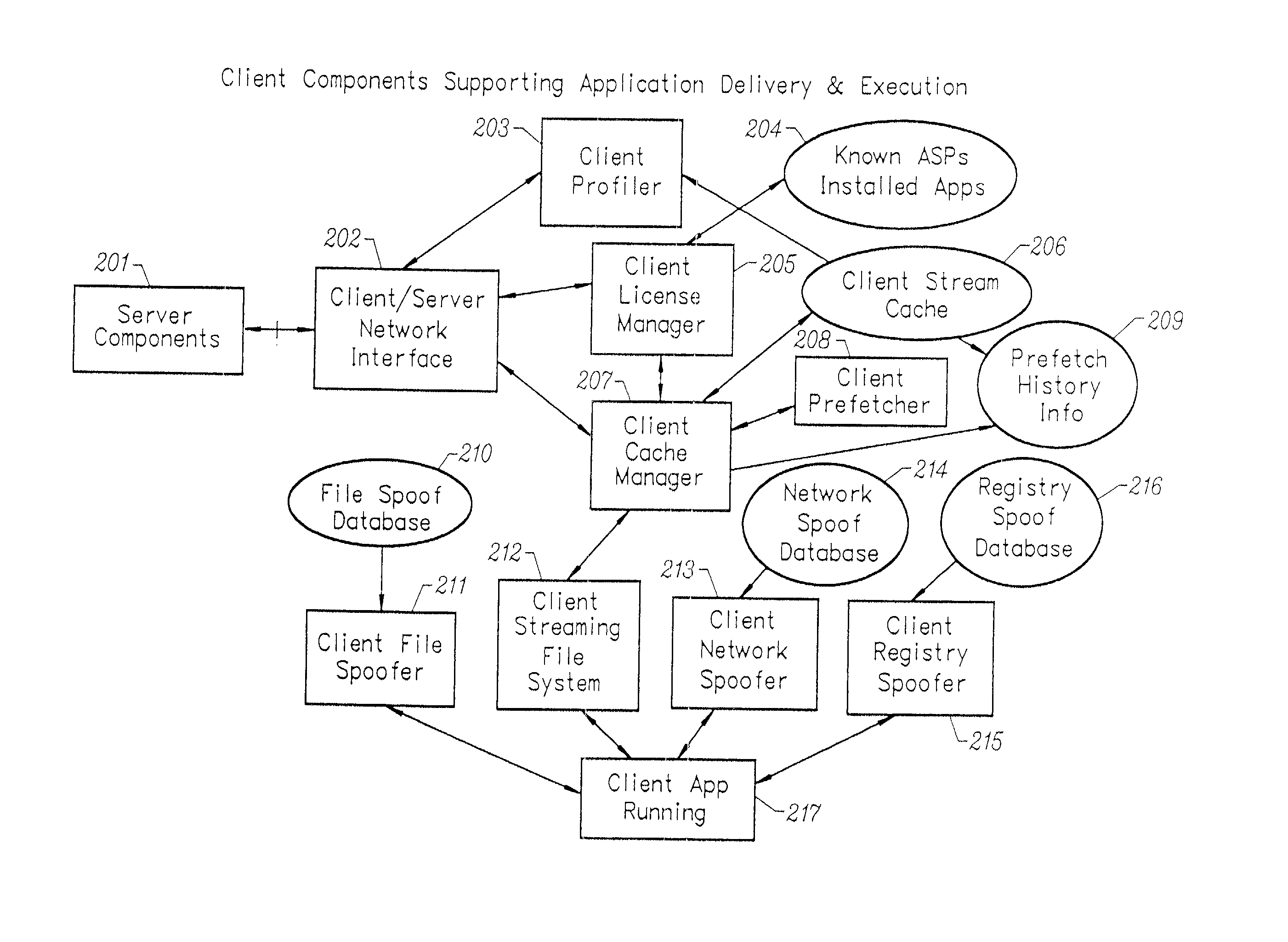

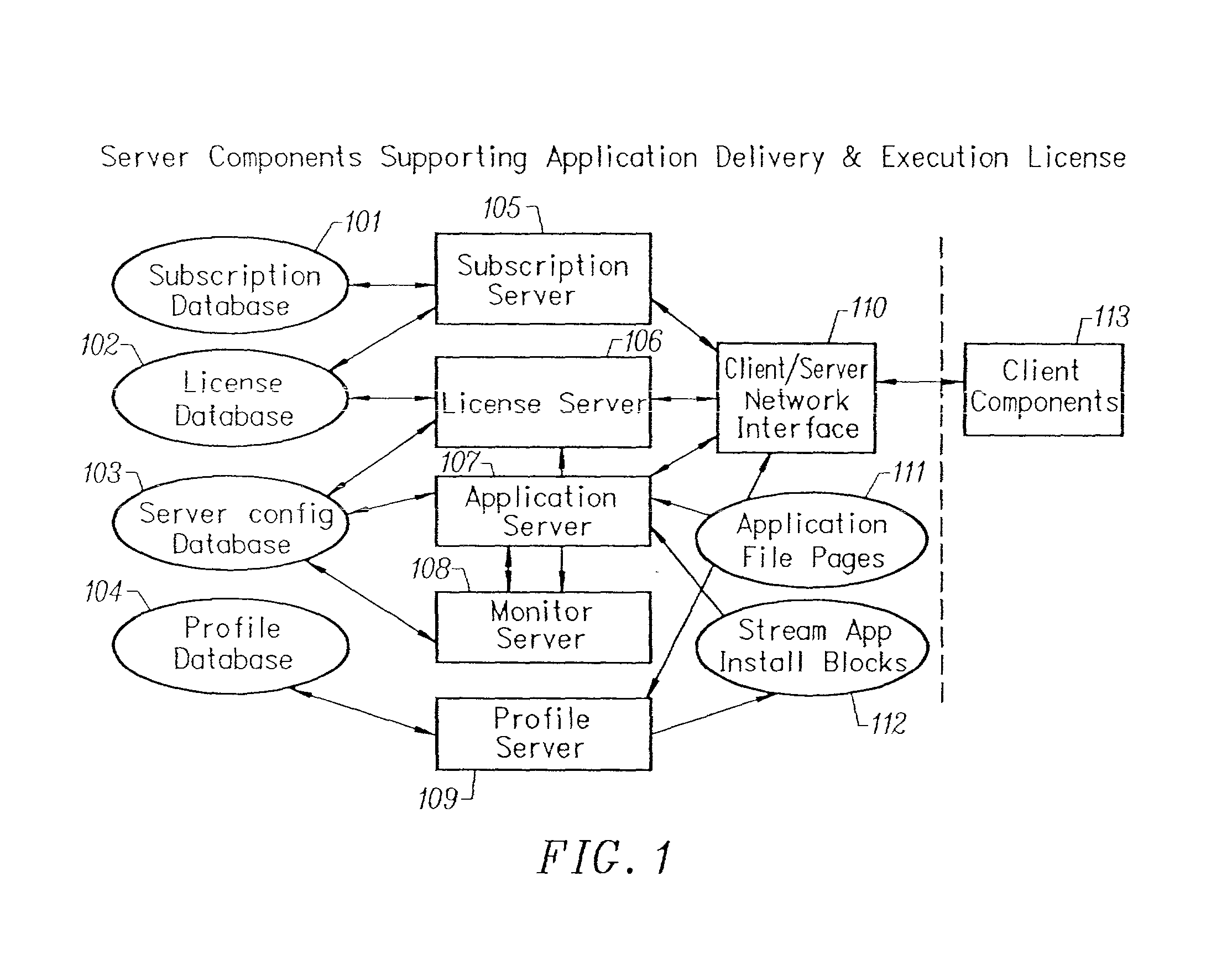

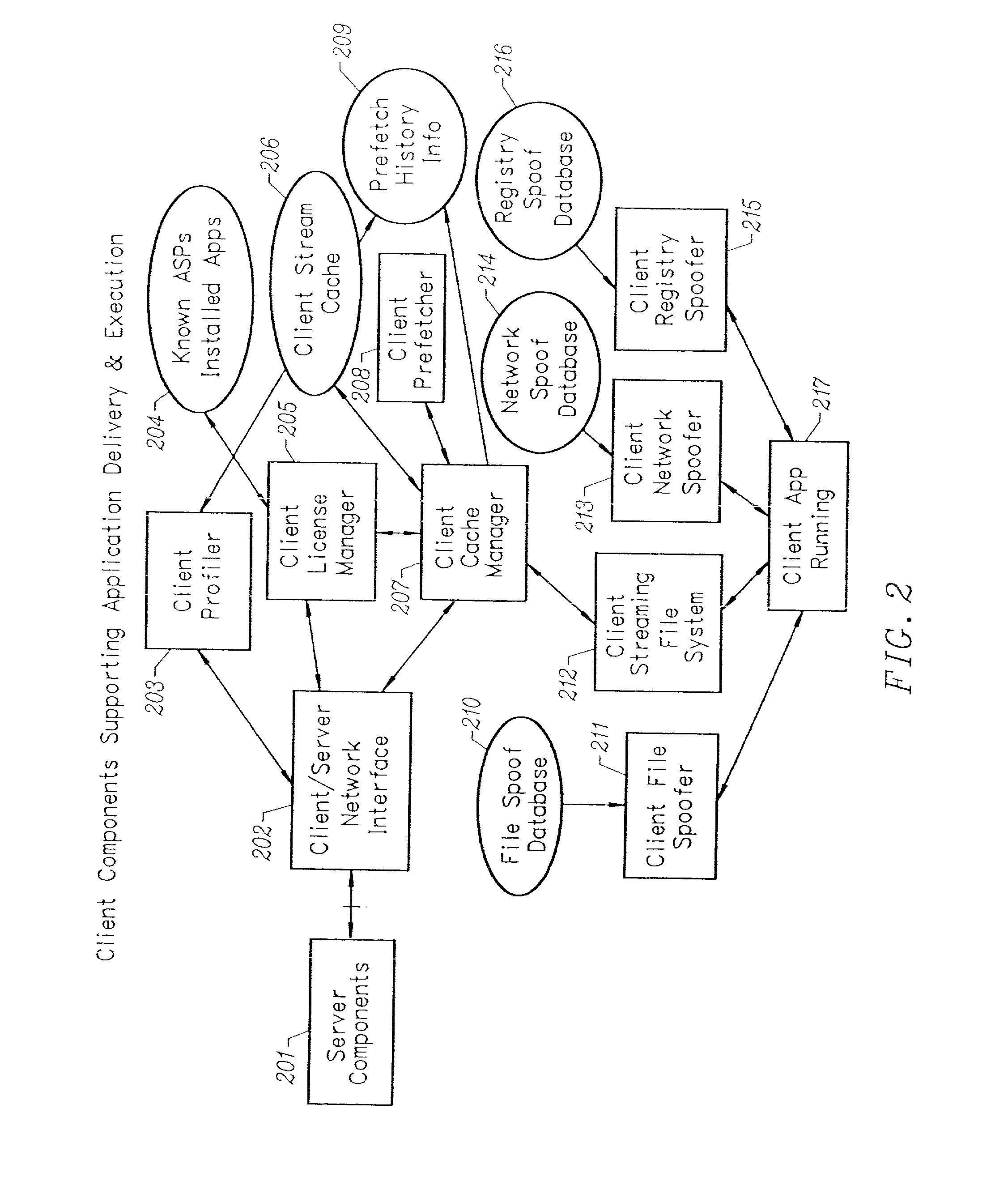

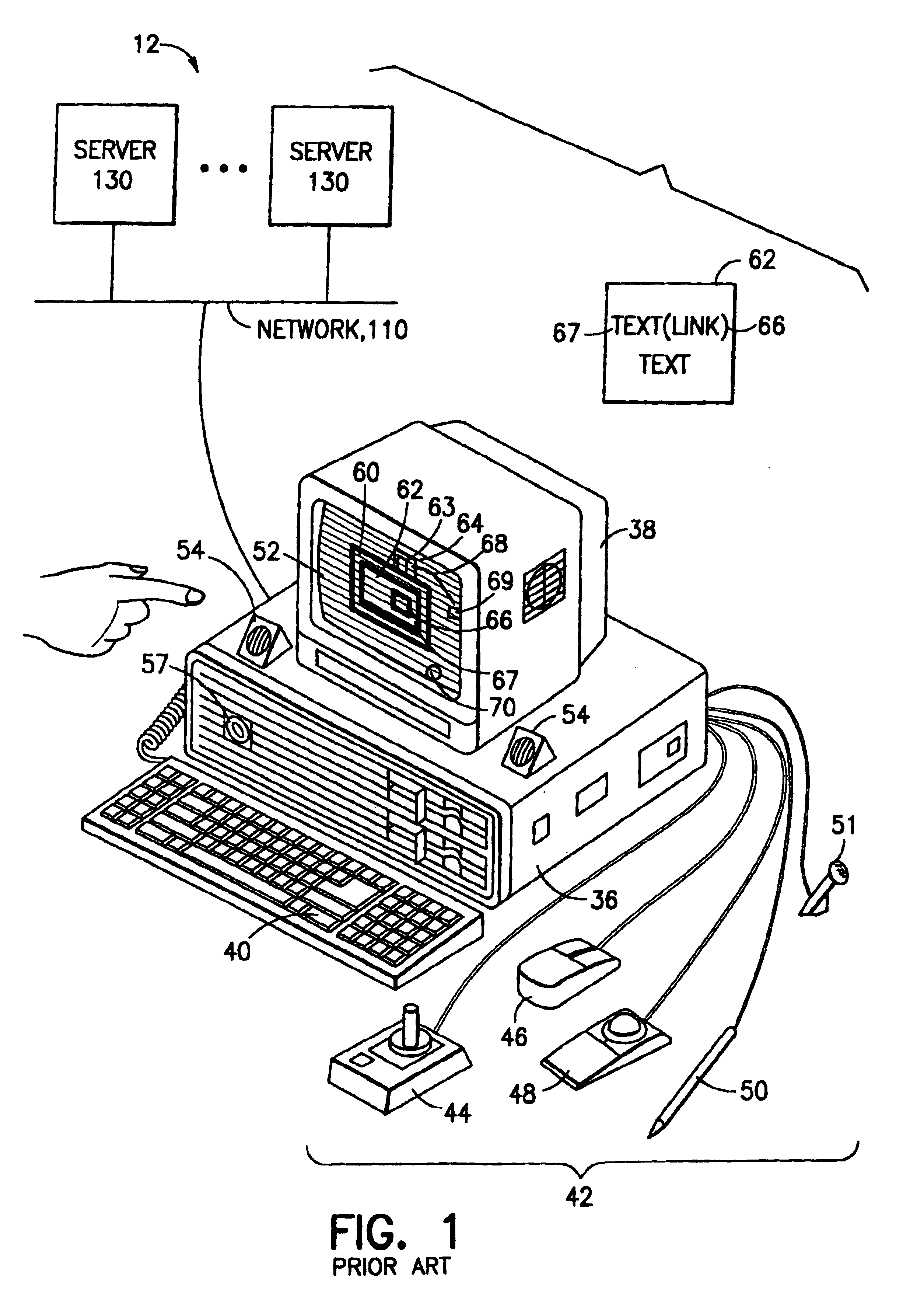

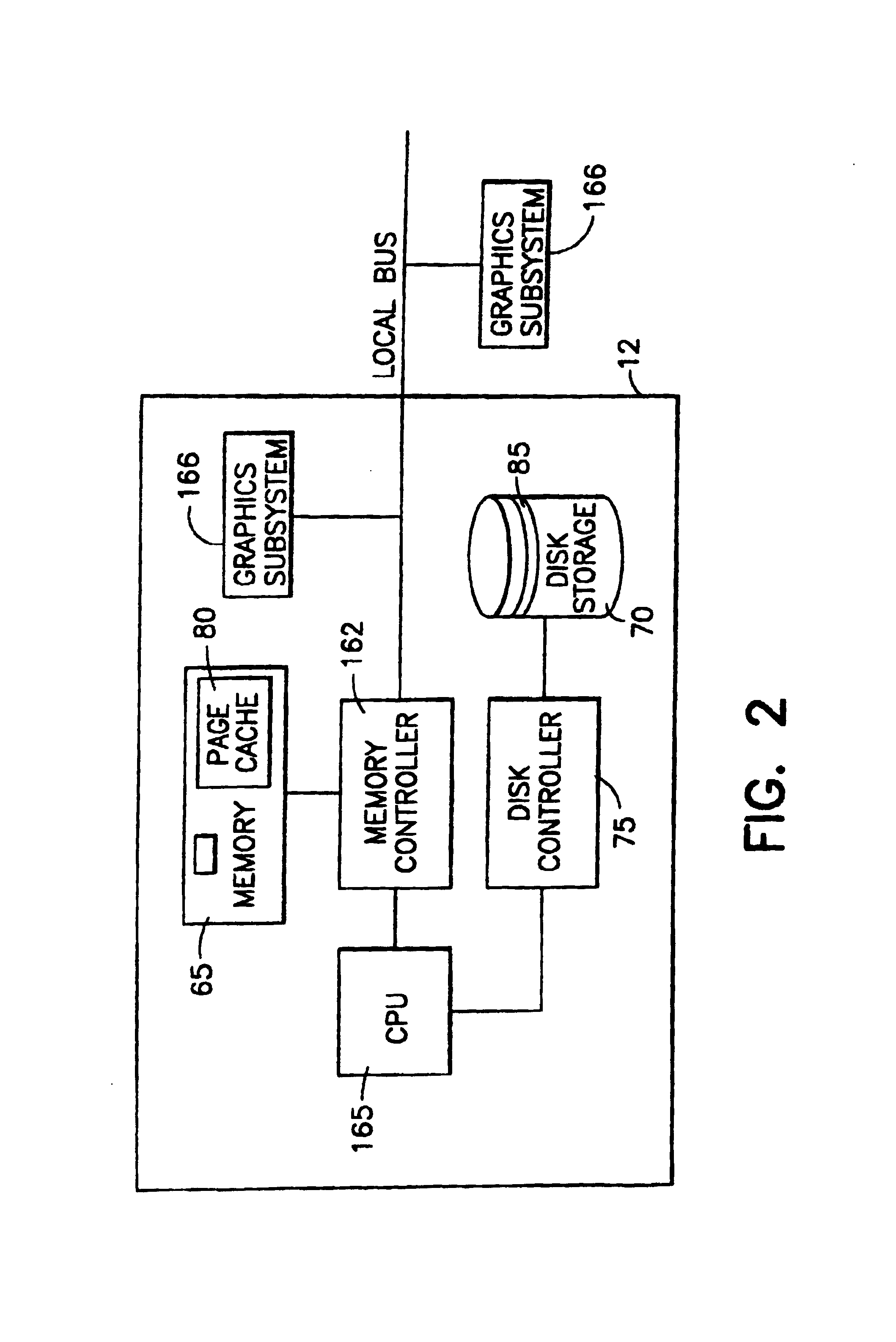

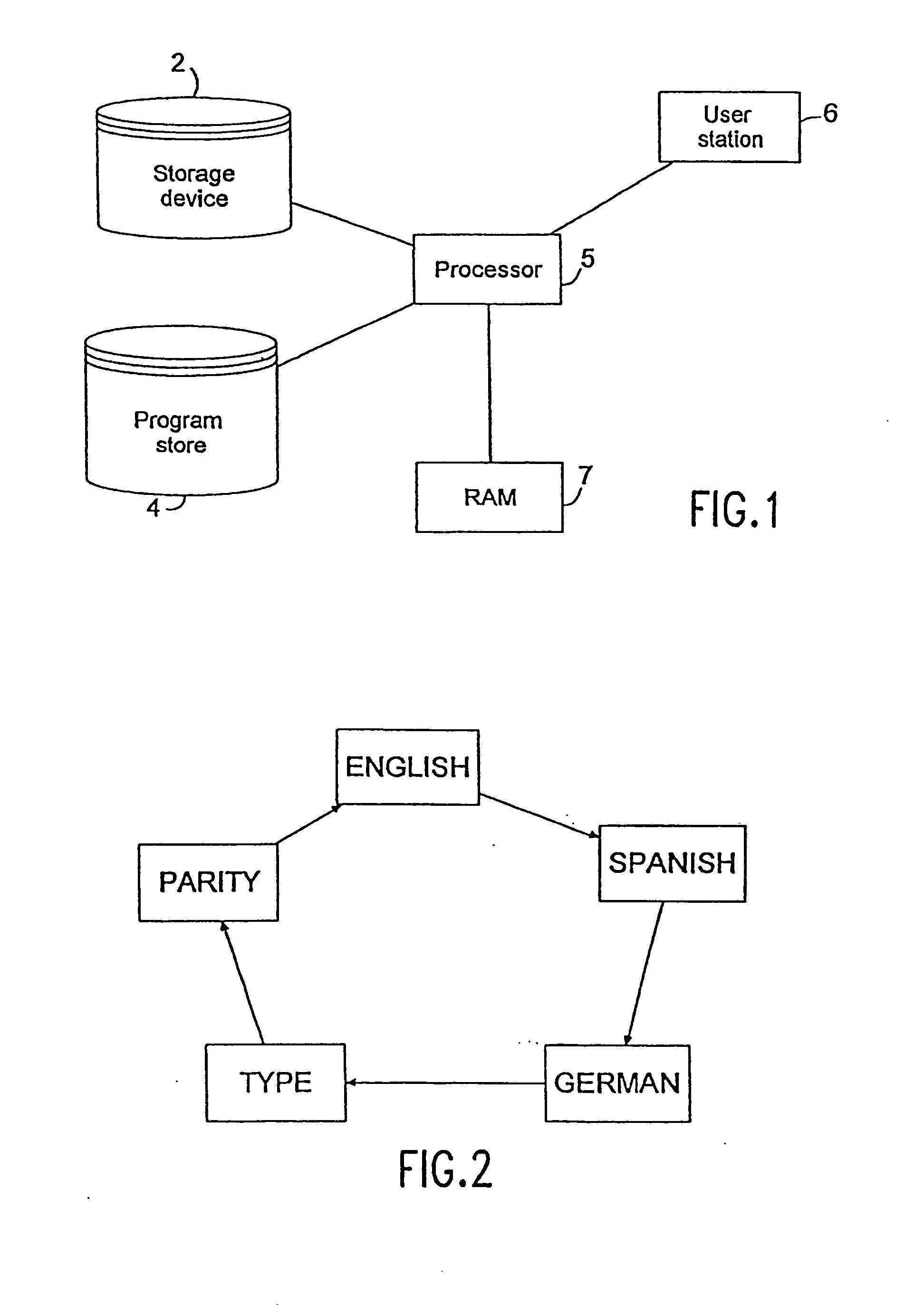

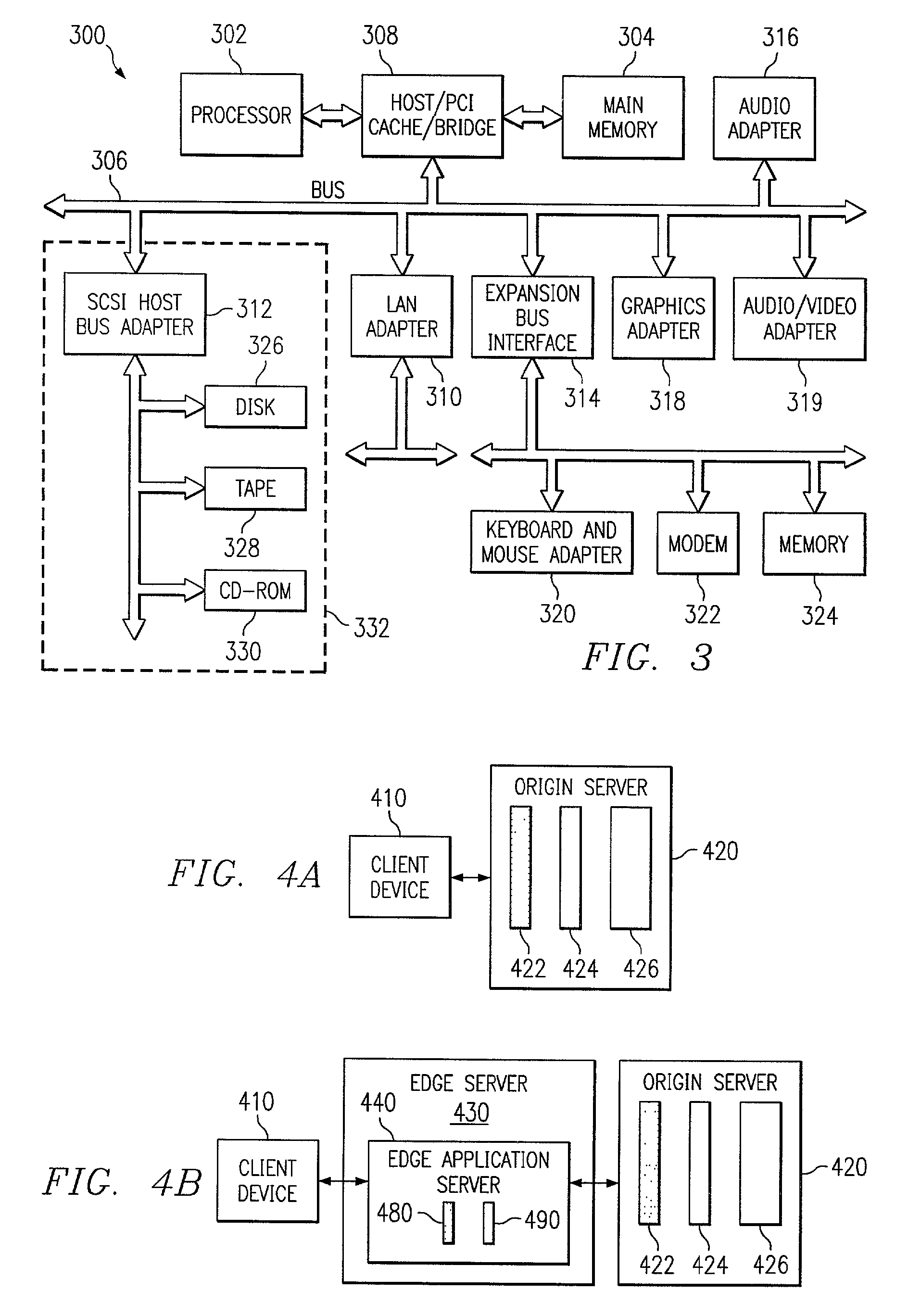

Client-side performance optimization system for streamed applications

InactiveUS6959320B2Efficiently stream and executeEasily integrates into client system 's operating systemMultiple digital computer combinationsProgram loading/initiatingApplication serverData file

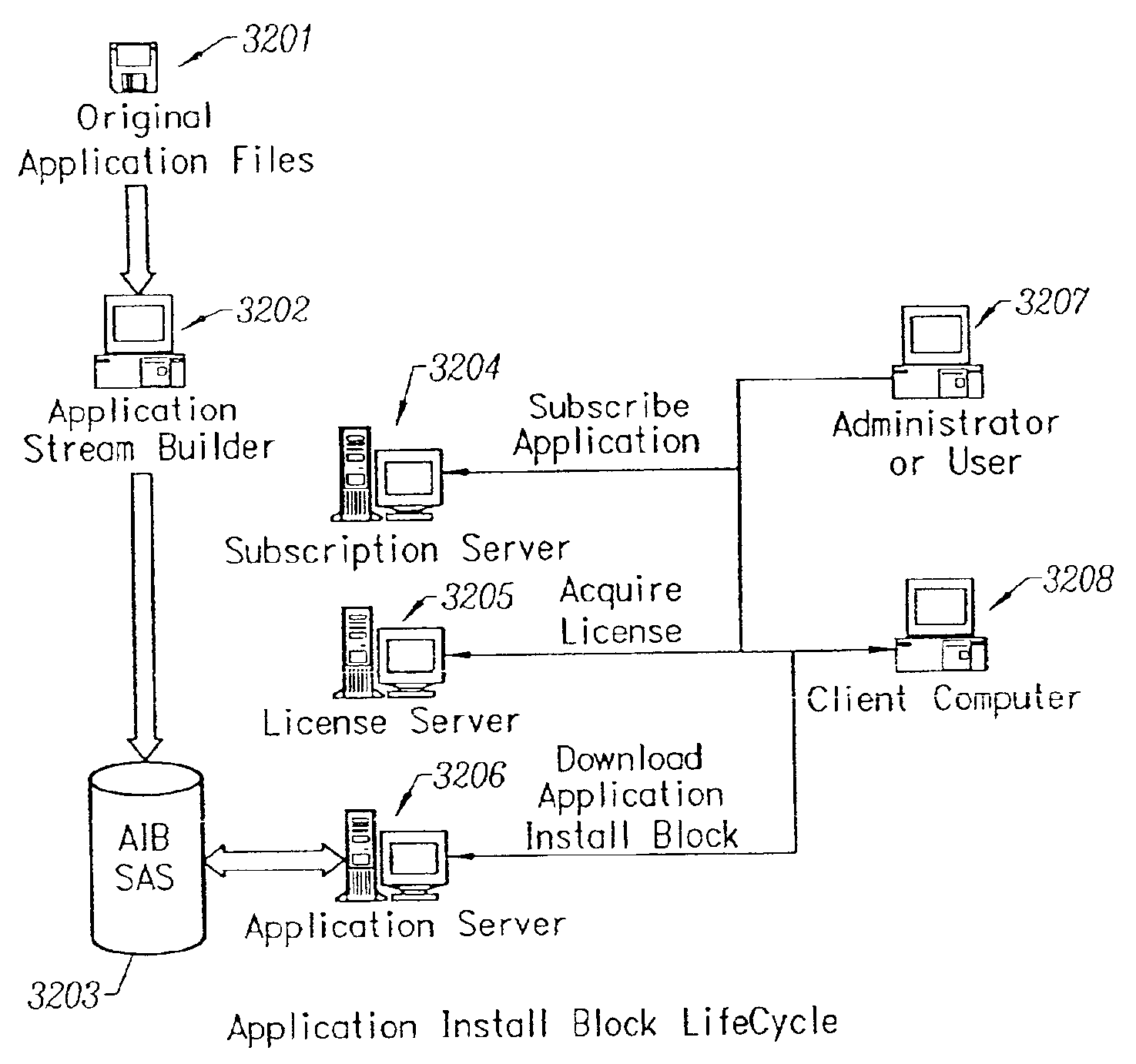

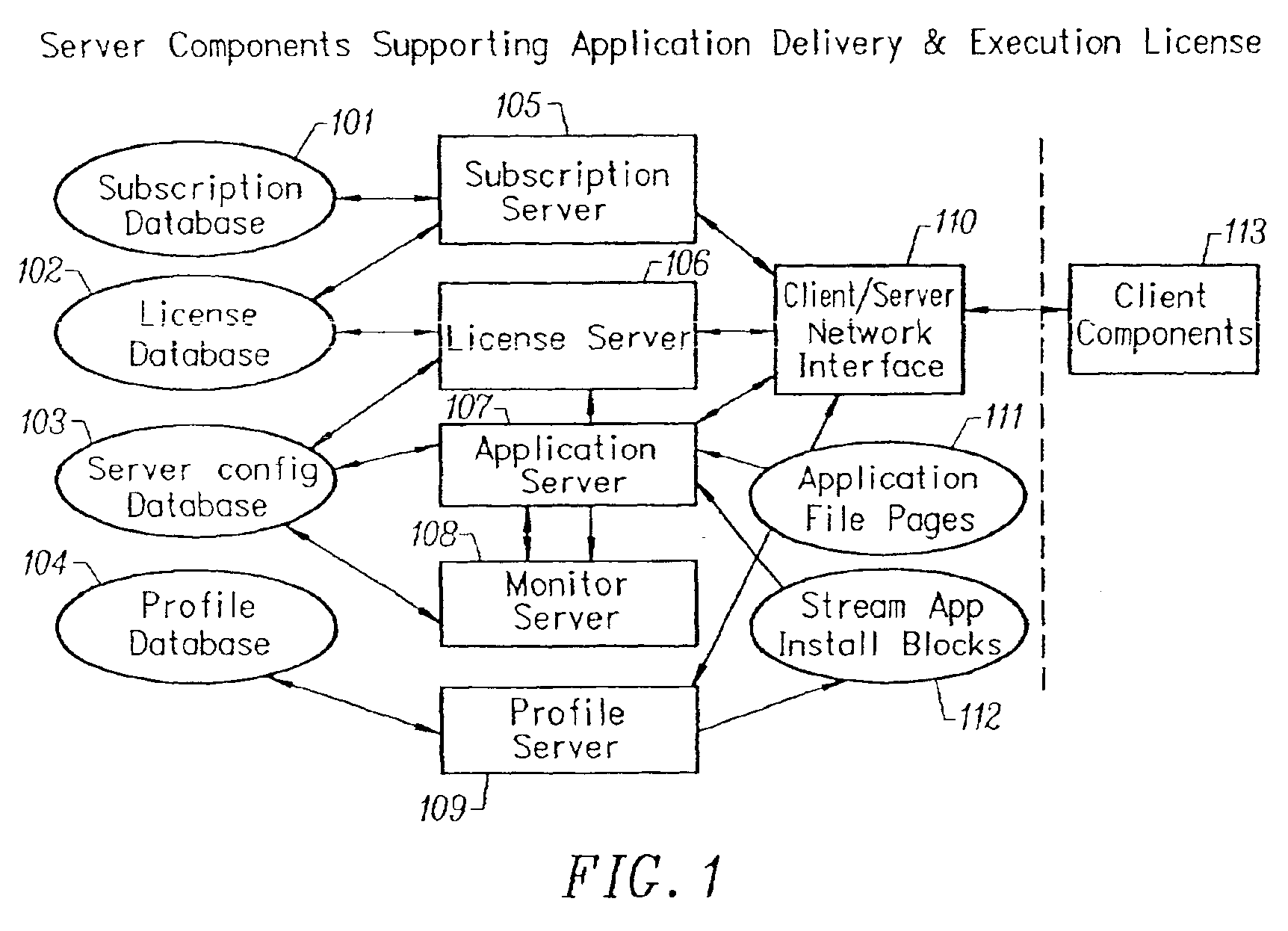

An client-side performance optimization system for streamed applications provides several approaches for fulfilling client-side application code and data file requests for streamed applications. A streaming file system or file driver is installed on the client system that receives and fulfills application code and data requests from a persistent cache or the streaming application server. The client or the server can initiate the prefetching of application code and data to improve interactive application performance. A client-to-client communication mechanism allows local application customization to travel from one client machine to another without involving server communication. Applications are patched or upgraded via a change in the root directory for that application. The client can be notified of application upgrades by the server which can be marked as mandatory, in which case the client will force the application to be upgraded. The server broadcasts an application program's code and data and any client that is interested in that particular application program stores the broadcasted code and data for later use.

Owner:NUMECENT HLDG

Client-side performance optimization system for streamed applications

InactiveUS20020091763A1Improve application performanceMultiple digital computer combinationsProgram loading/initiatingData fileApplication software

An client-side performance optimization system for streamed applications provides several approaches for fulfilling client-side application code and data file requests for streamed applications. A streaming file system or file driver is installed on the client system that receives and fulfills application code and data requests from a persistent cache or the streaming application server. The client or the server can initiate the prefetching of application code and data to improve interactive application performance. A client-to-client communication mechanism allows local application customization to travel from one client machine to another without involving server communication. Applications are patched or upgraded via a change in the root directory for that application. The client can be notified of application upgrades by the server which can be marked as mandatory, in which case the client will force the application to be upgraded. The server broadcasts an application program's code and data and any client that is interested in that particular application program stores the broadcasted code and data for later use.

Owner:NUMECENT HLDG

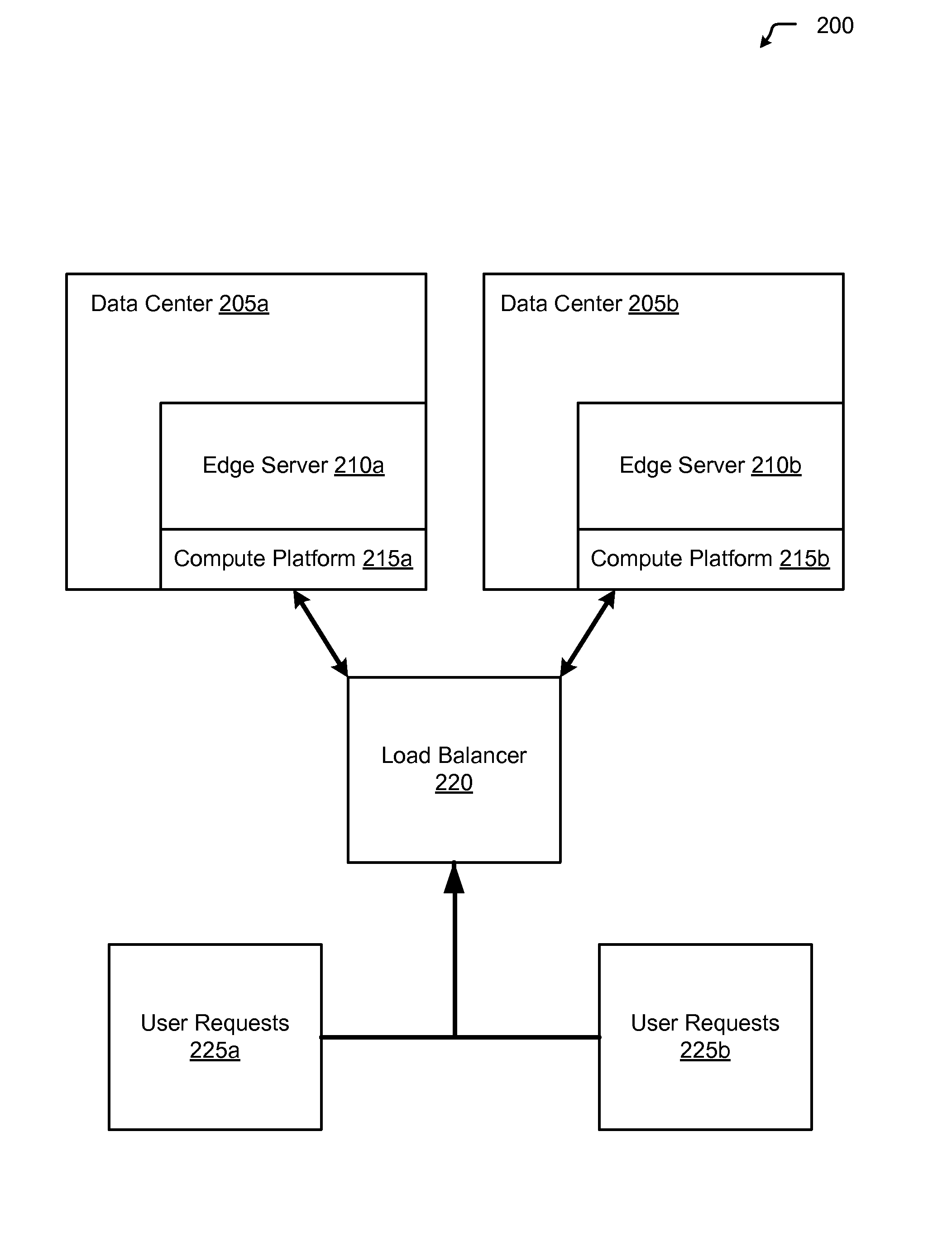

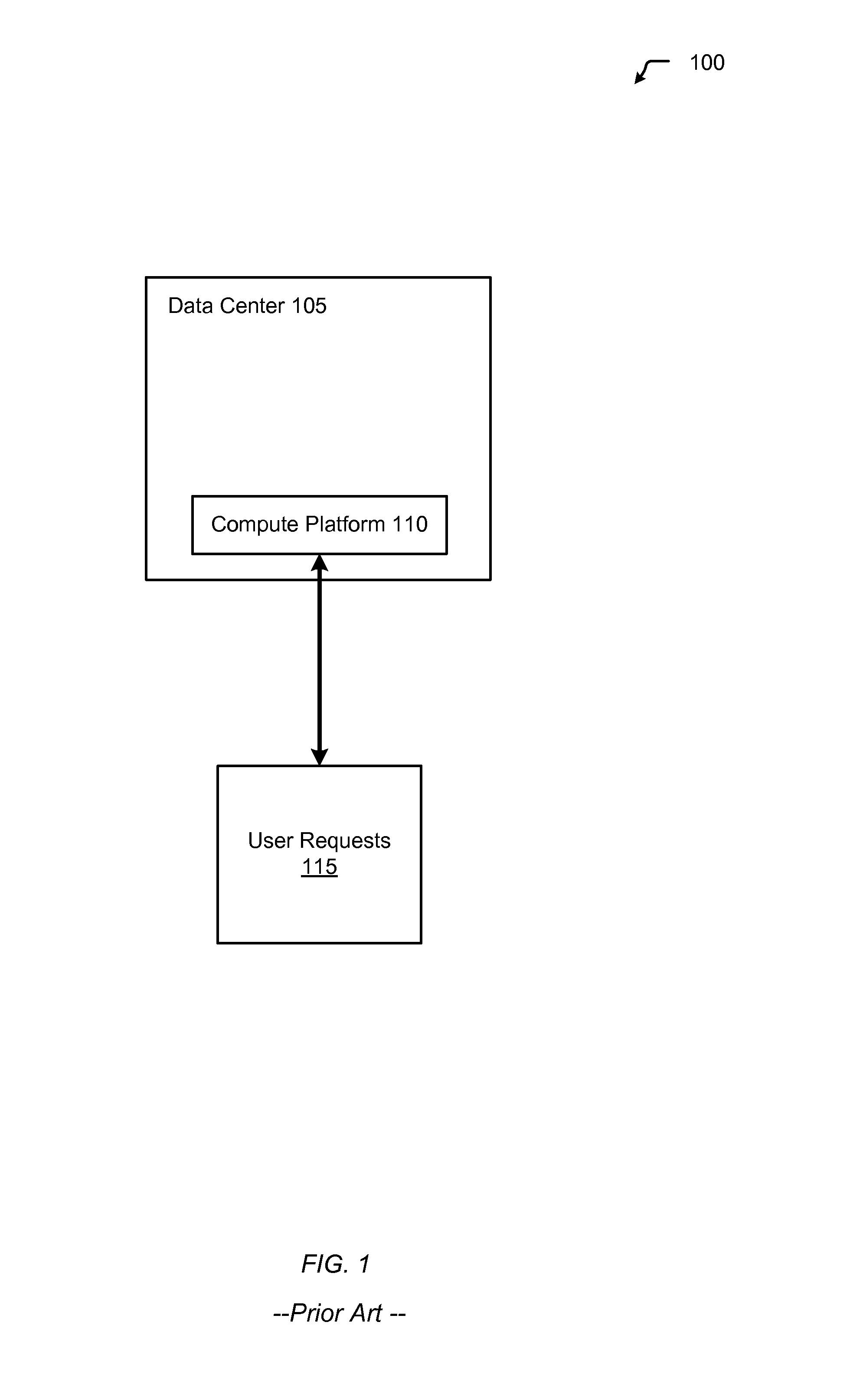

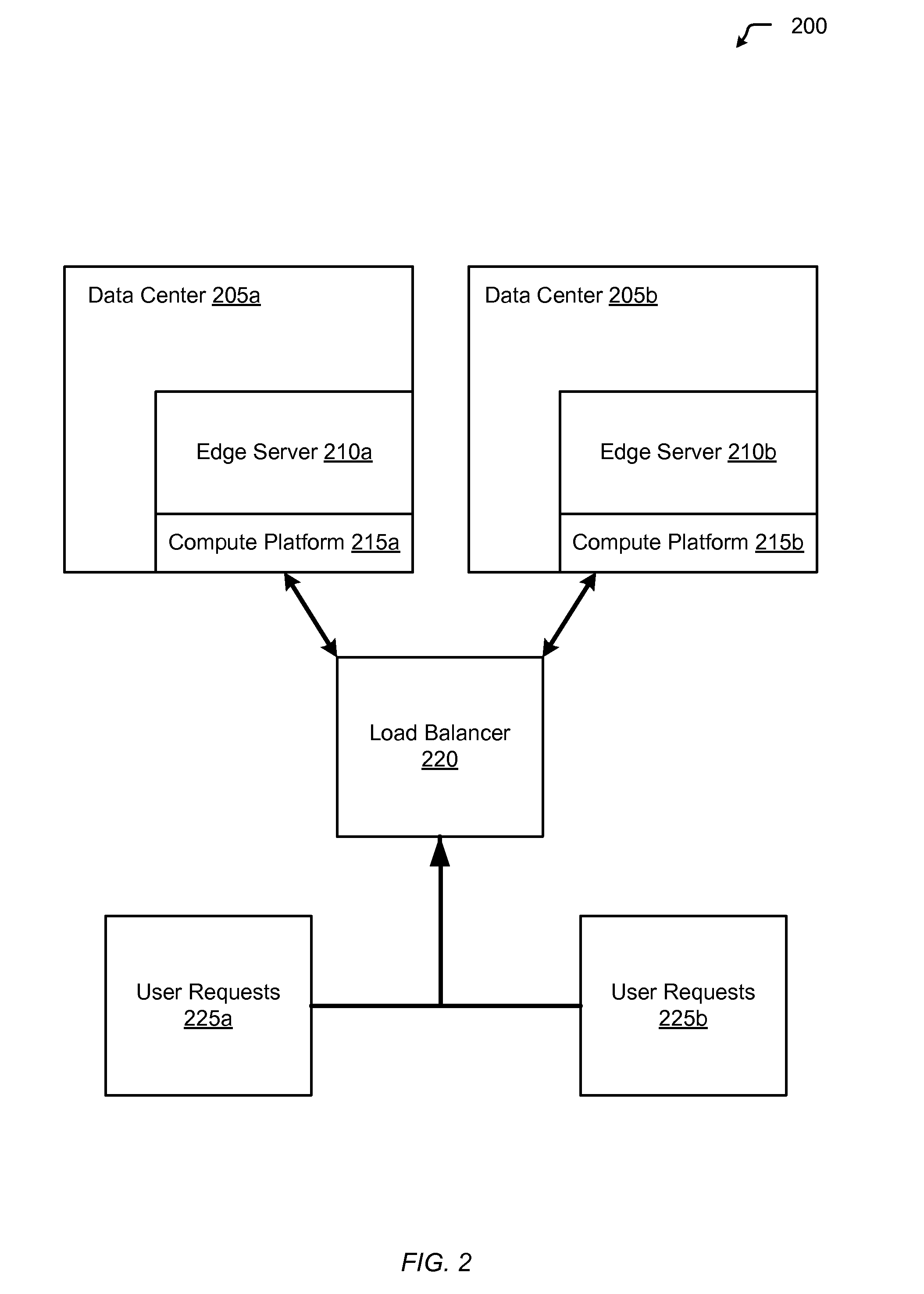

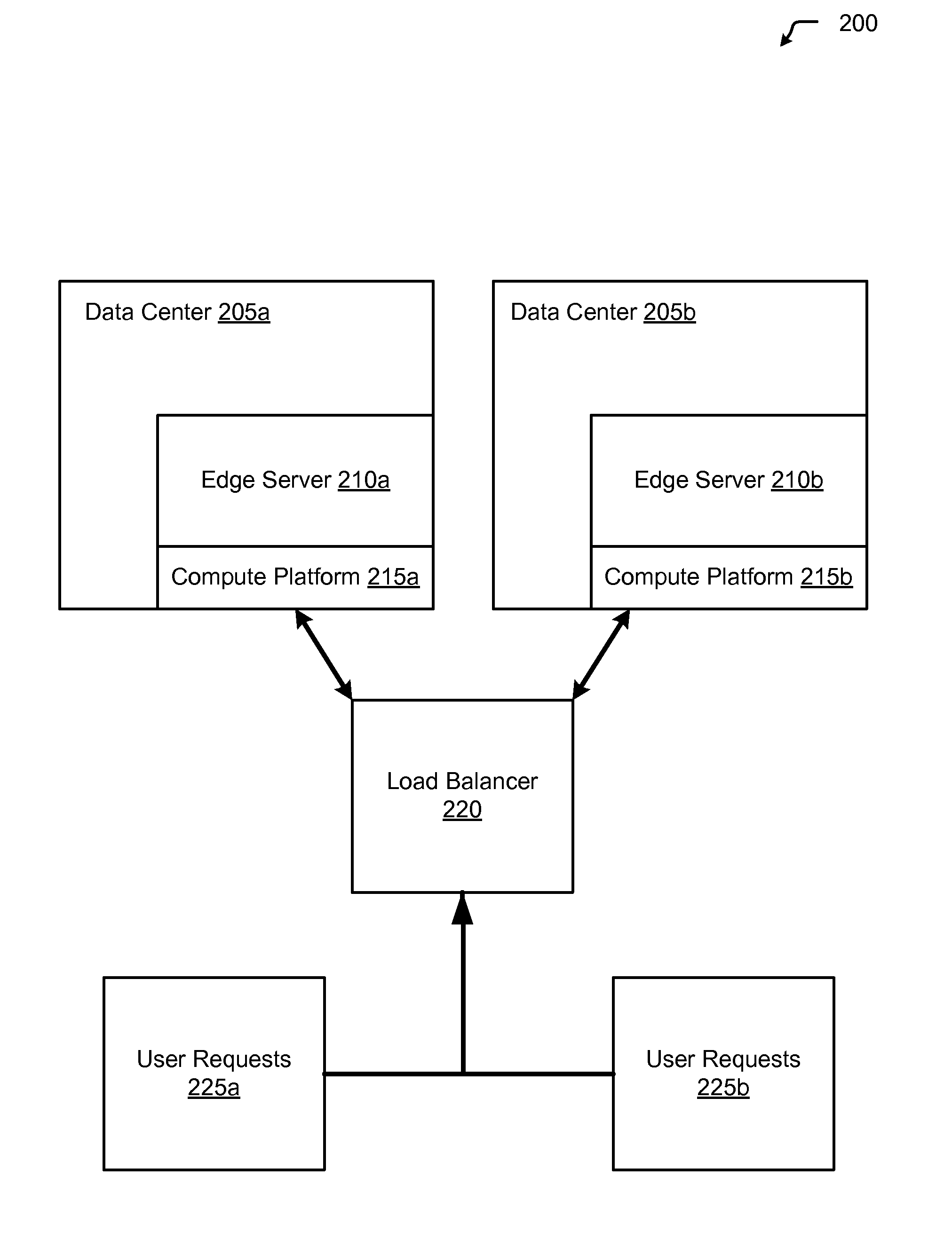

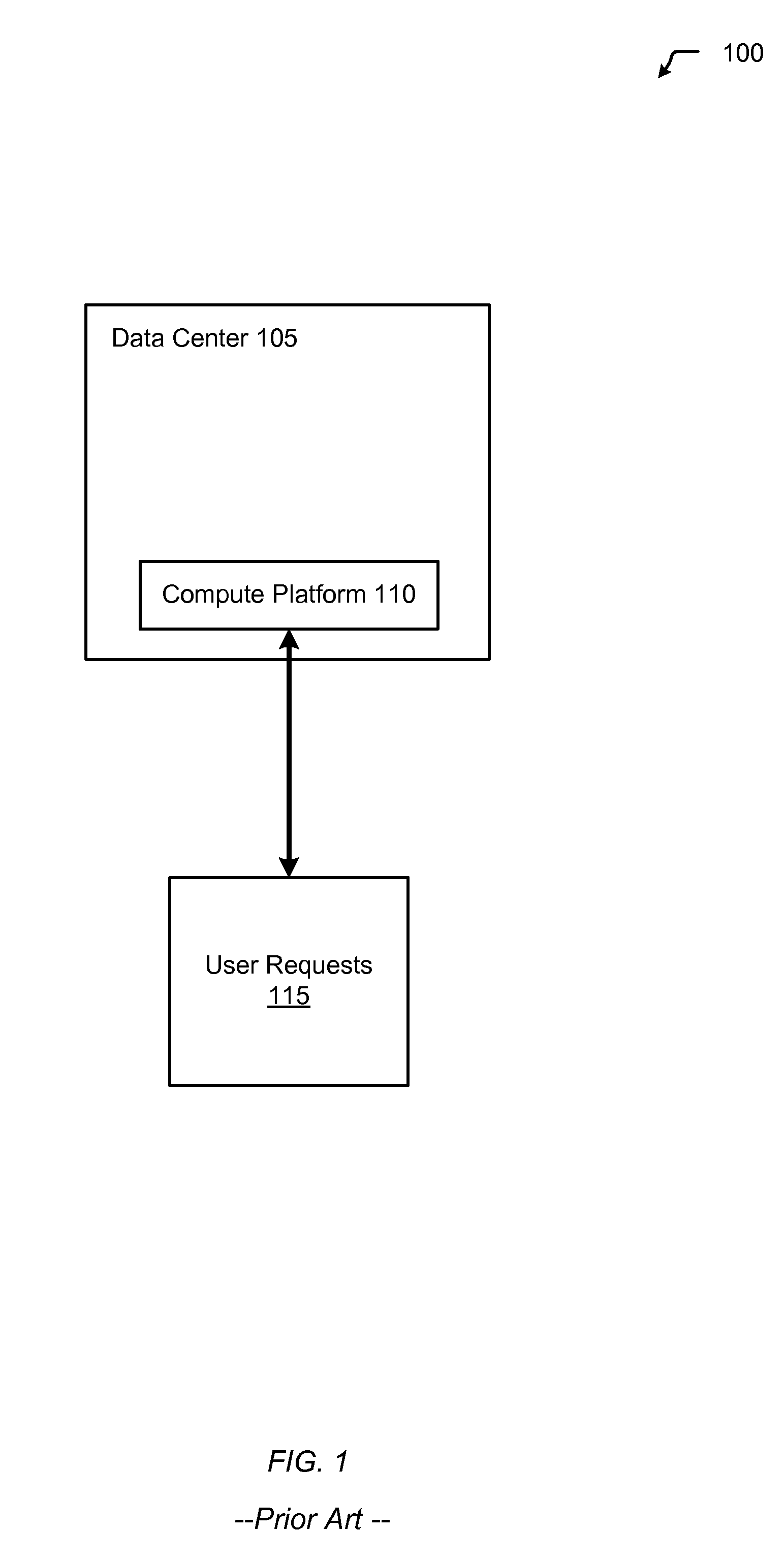

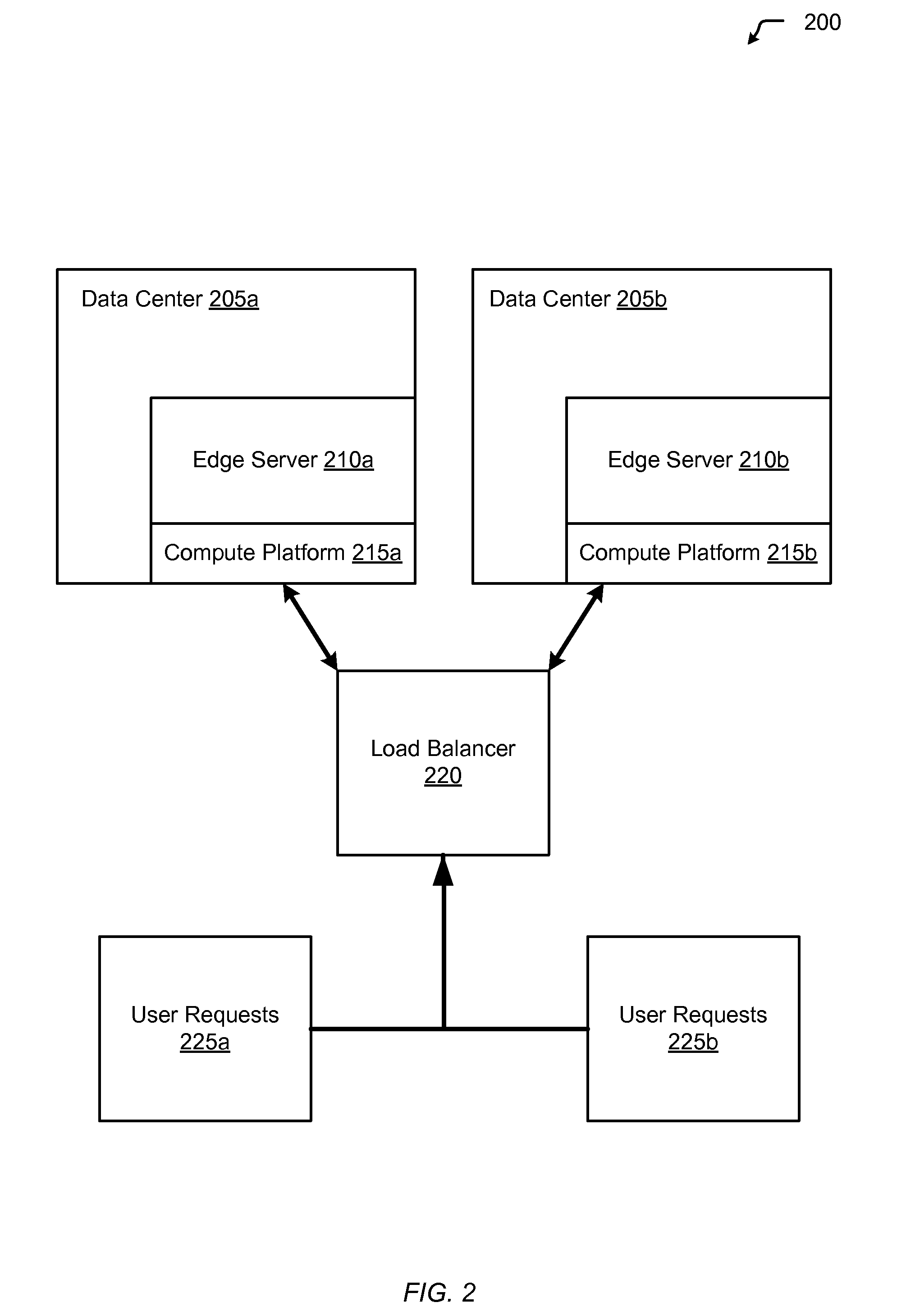

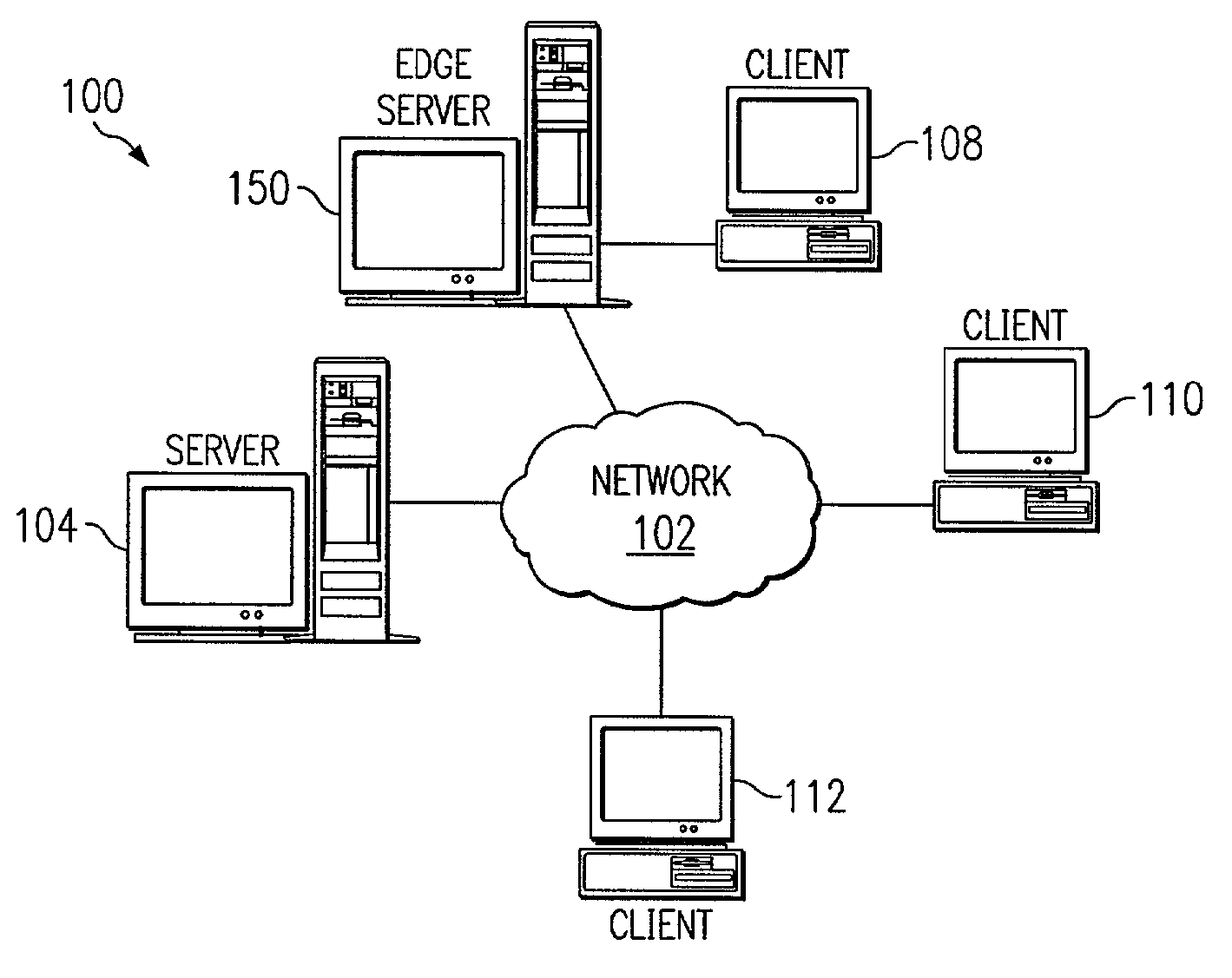

Dynamic route requests for multiple clouds

InactiveUS20130080623A1Affect service qualityImprove application performanceDigital computer detailsProgram controlEdge serverUser device

Aspects of the present invention include a method of dynamically routing requests within multiple cloud computing networks. The method includes receiving a request for an application from a user device, forwarding the request to an edge server within a content delivery network (CDN), and analyzing the request to gather metrics about responsiveness provided by the multiple cloud computing networks running the application. The method further includes analyzing historical data for the multiple cloud computing networks regarding performance of the application, based on the performance metrics and the historical data, determining an optimal cloud computing network within the multiple cloud computing networks to route the request, routing the request to the optimal cloud computing network, and returning the response from the optimal cloud computing network to the user device.

Owner:LIMELIGHT NETWORKS

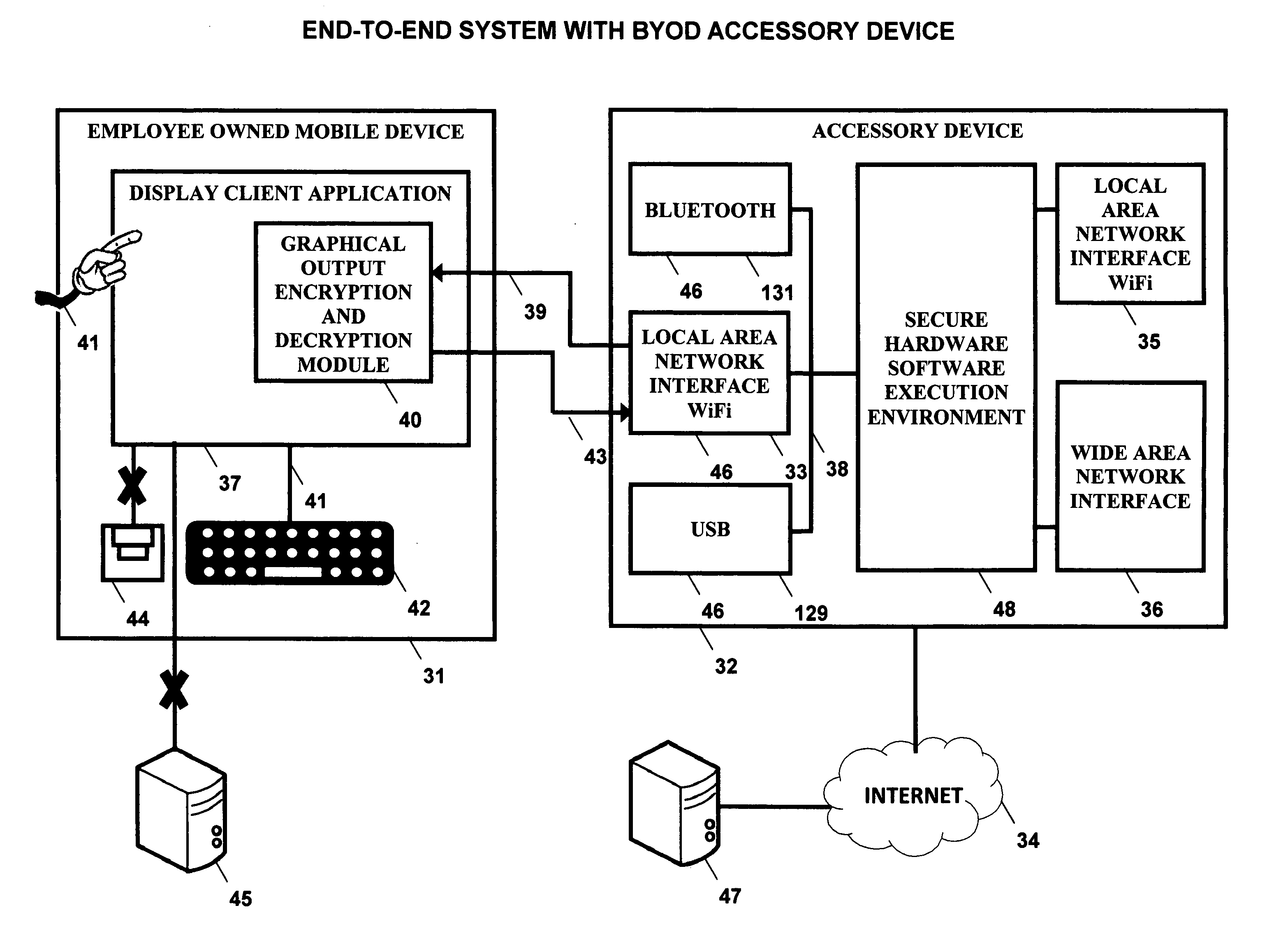

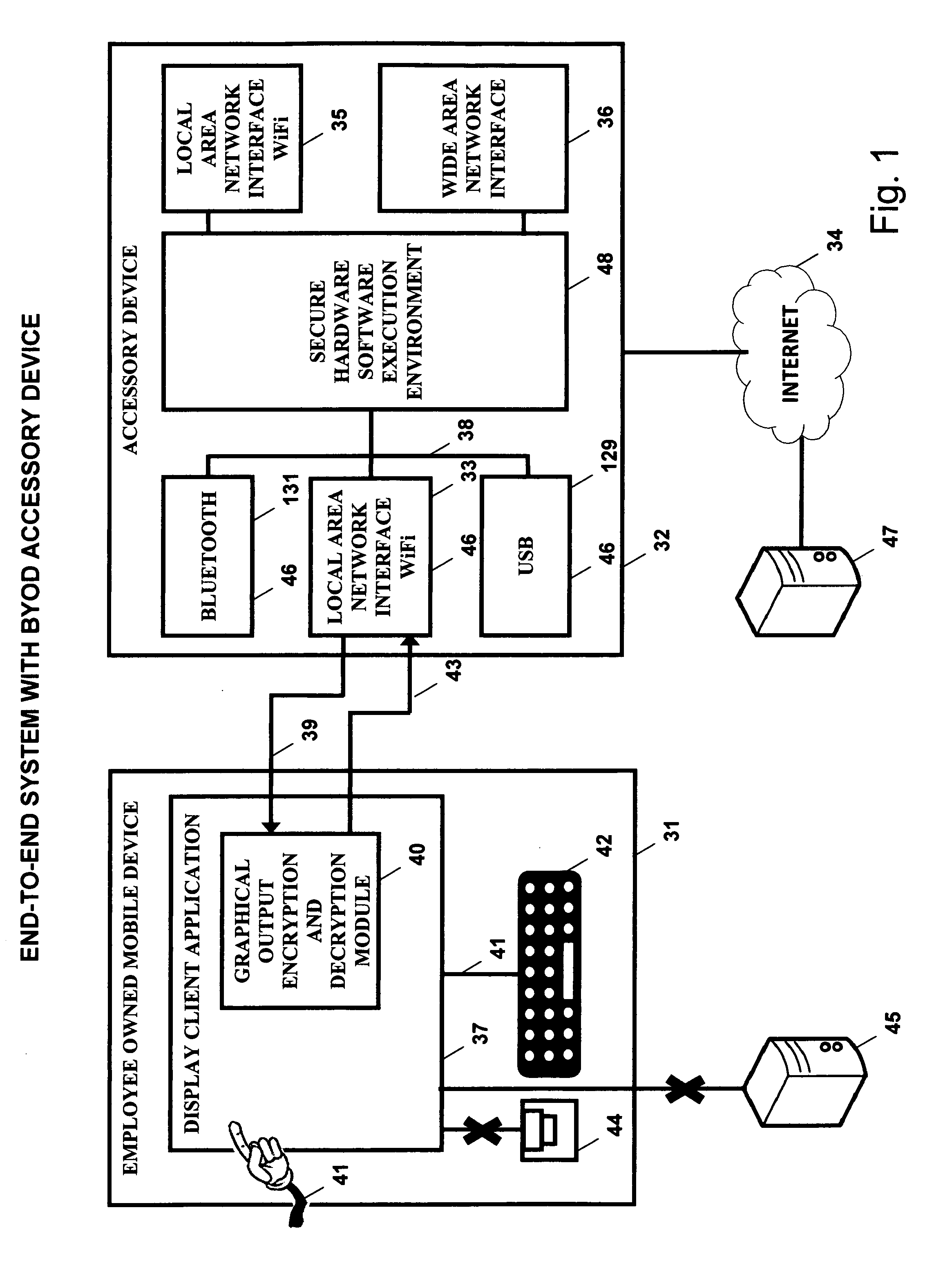

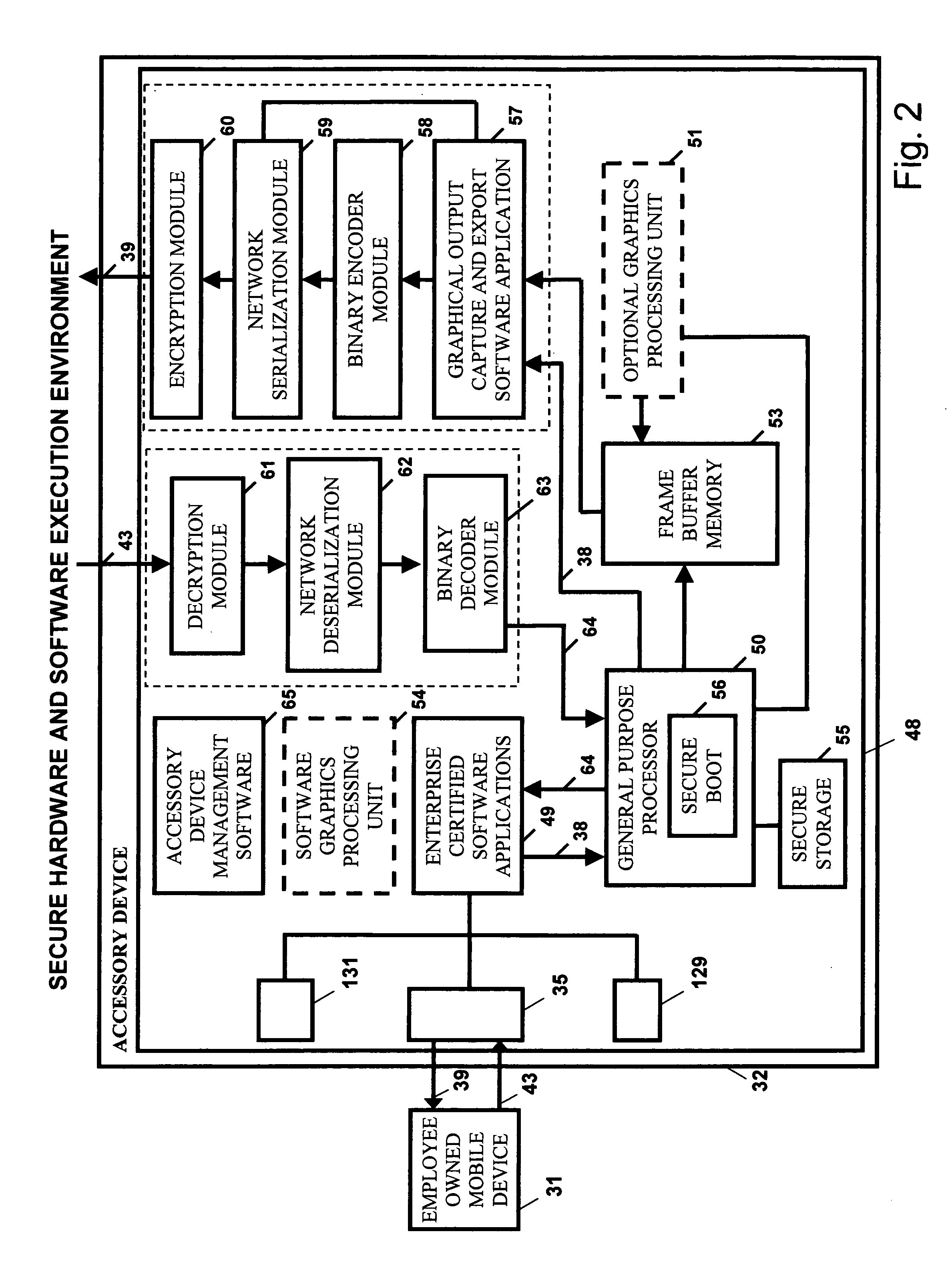

Bring your own device system using a mobile accessory device

InactiveUS20140173692A1Improve application performanceImprove securityDigital data processing detailsMultiple digital computer combinationsCorporate networkInternet network

A BYOD solution using a combination device is described. This combination device is comprised of an employee owned smart mobile device (31) and an accessory device (32) used together using a wireless local area network (46).Mobile device (31) is an employee owned device that is used as a remote display of display output of enterprise certified applications (49) executing at accessory device (32).Accessory device (32) is comprised of a general purpose processor, optional graphics processing unit, one or more local wireless area network interfaces that connect the mobile device (31) to accessory device (32), and one or more Internet network interfaces (52) that connect accessory to enterprise network.The BYOD accessory device acts as a secure hardware gateway to connect the mobile device to corporate network.The BYOD accessory device also acts as a secure execution environment of corporate applications in addition to providing secure storage of corporate data.

Owner:SRINIVASAN SUDHARSHAN +2

Edge-based resource spin-up for cloud computing

ActiveUS8244874B1Affect service qualityImprove application performanceEnergy efficient ICTDigital computer detailsVirtualizationWeb service

Aspects of the present invention include distributing new resources closer to end-users which are making increased demands by spinning-up additional virtualized instances (as part of a cloud provisioning) within servers that are physically near to the network equipment (i.e., web servers, switches, routers, load balancers) that are receiving the requests.

Owner:LIMELIGHT NETWORKS

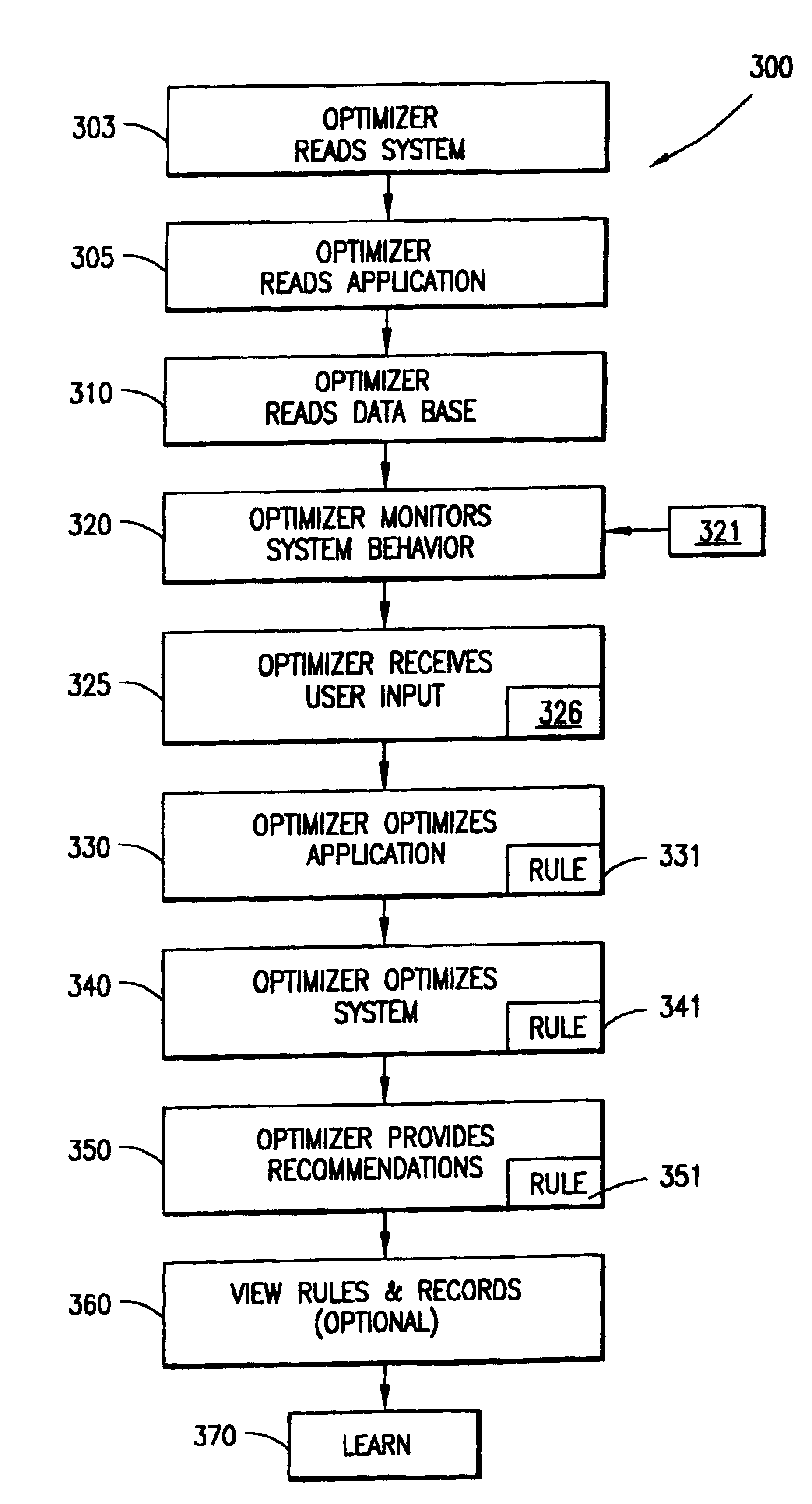

System and method for optimizing computer software and hardware

InactiveUSRE38865E1Increase apparent speedRun efficientlyData processing applicationsSoftware engineeringApplication softwareComputer software

A method of optimizing the operation of a computer system in running application programs in accordance with system capabilities, user preferences and configuration parameters of the application program. More specifically, with this invention, an optimizing program gathers information on the system capabilities, user preferences and configuration parameters of the application program to maximize the operation of the application program or computer system. Further, user selected rules of operation can be selected by dragging rule icons to target optimizer icon.

Owner:ACTIVISION PUBLISHING

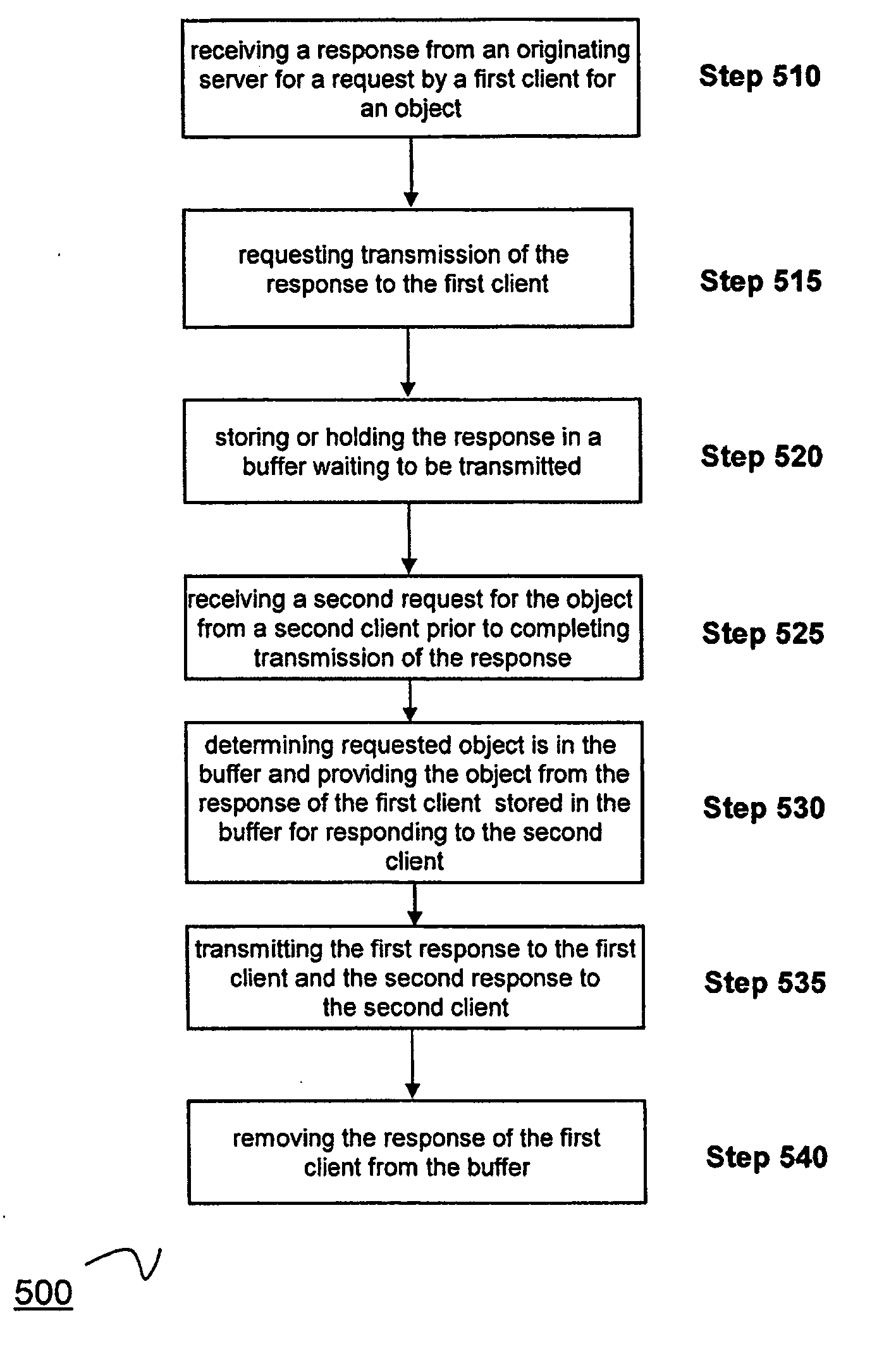

Method and device for performing caching of dynamically generated objects in a data communication network

ActiveUS20070156965A1Improve abilitiesEasy to handleDigital data information retrievalTransmissionObject storeClient-side

A method for maintaining a cache of dynamically generated objects. The method includes storing in the cache dynamically generated objects previously served from an originating server to a client. A communication between the client and server is intercepted by the cache. The cache parses the communication to identify an object determinant and to determine whether the object determinant indicates whether a change has occurred or will occur in an object at the originating server. The cache marks the object stored in the cache as invalid if the object determinant so indicates. If the object has been marked as invalid, the cache retrieves the object from the originating server.

Owner:CITRIX SYST INC

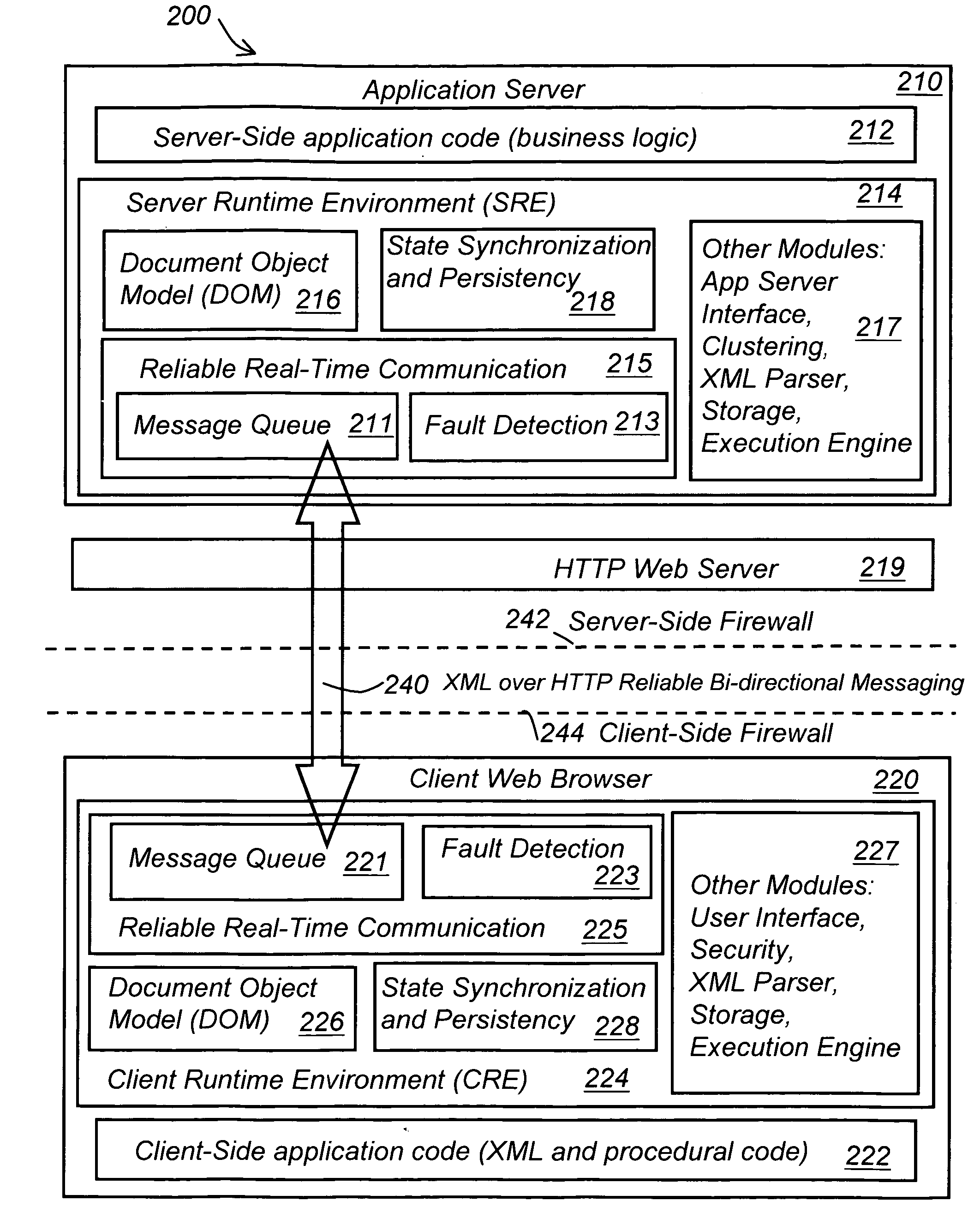

System and method for stateful web-based computing

InactiveUS20050198365A1Reduce waiting timeFast transferNatural language data processingMultiple digital computer combinationsReceiptClient-side

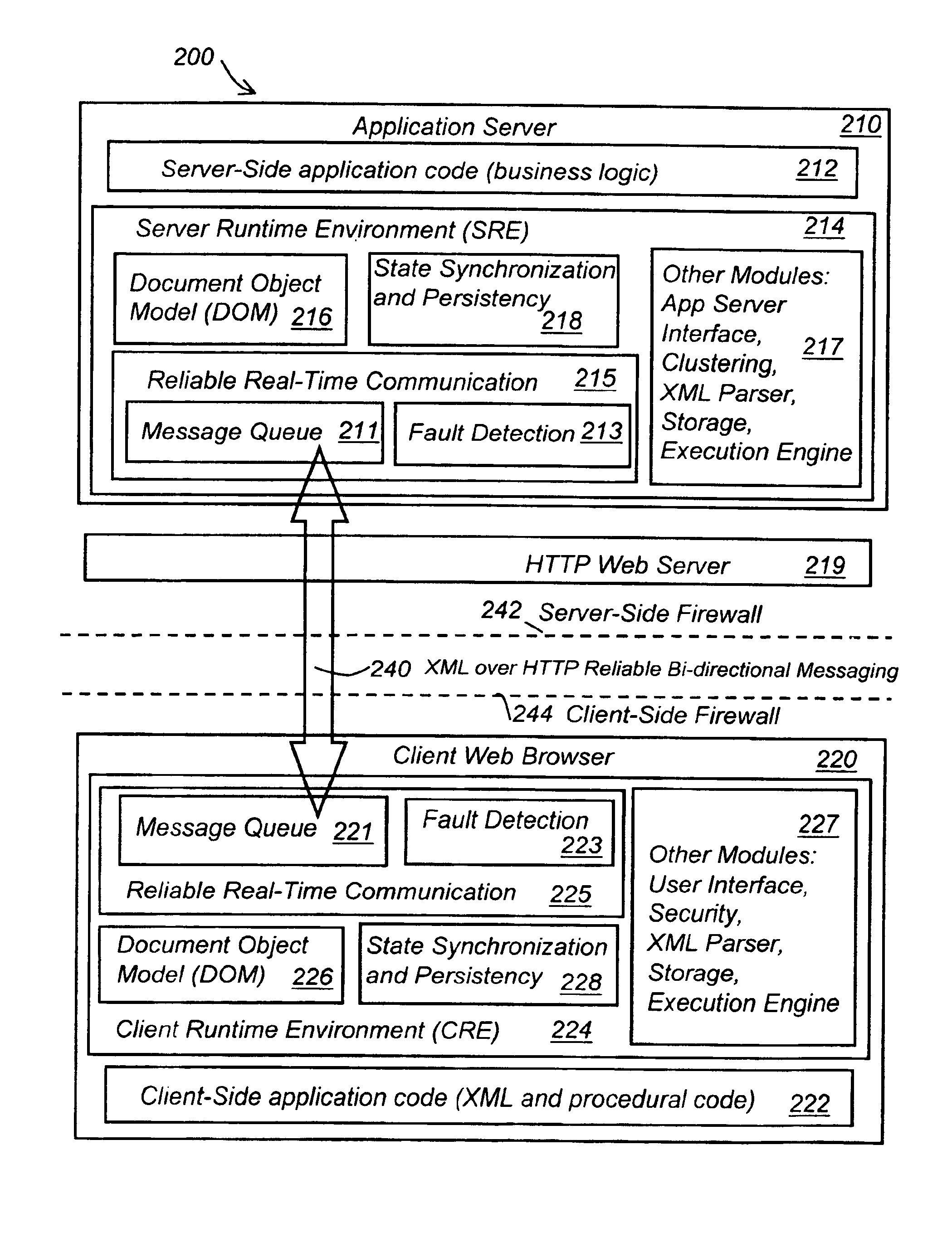

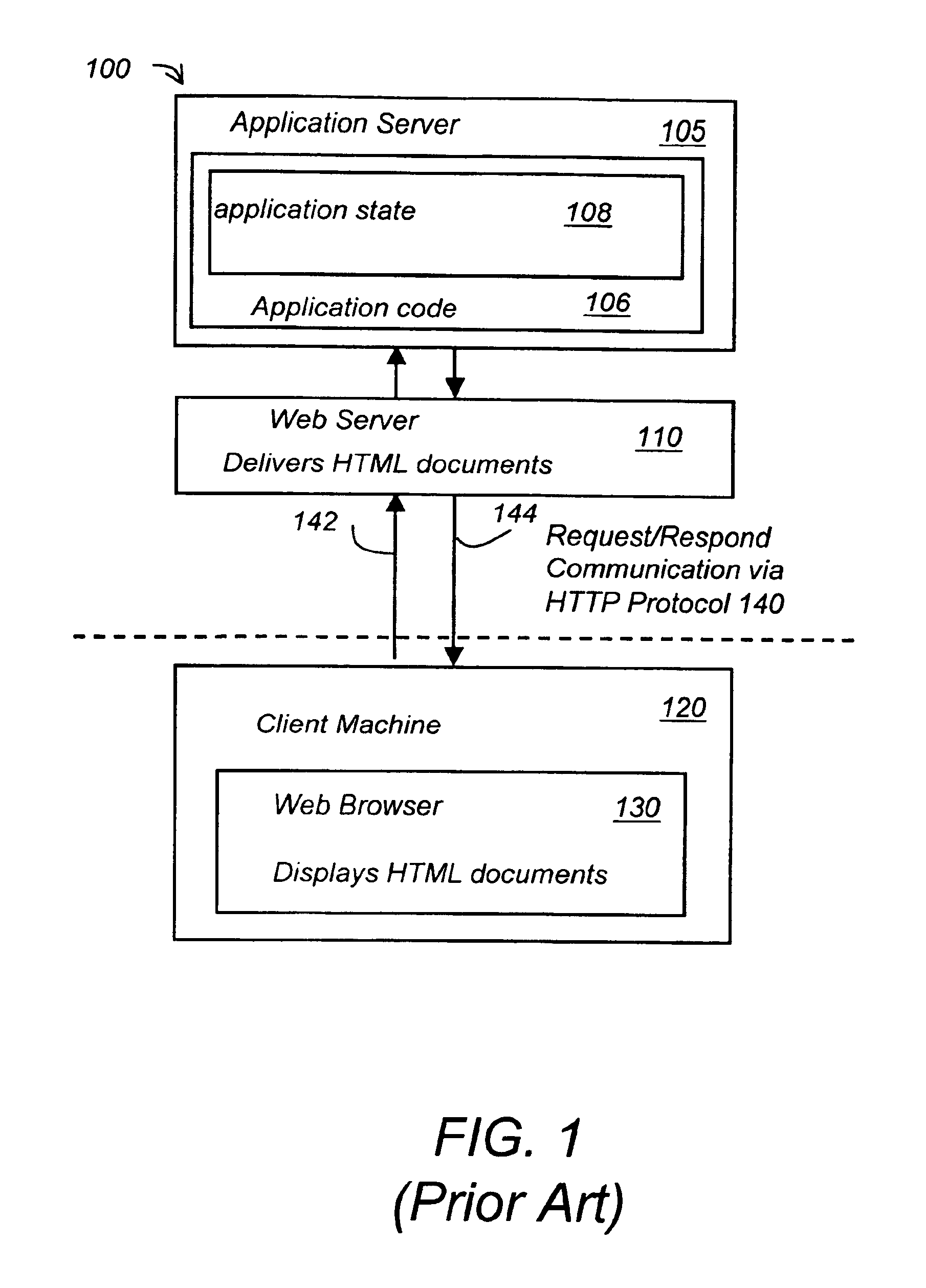

A method for providing “guaranteed message delivery” for network based communications between a client machine and a server. The client machine includes a Client Runtime Environment (CRE) and the server includes a Server Runtime Environment (SRE). The method includes the following steps. A first message queue is maintained in the CRE. A first unique identification is attached to the first message from the first message queue and the first message is sent from the CRE to the SRE via a network communication. The SRE receives the first message and sends an acknowledgement of the receipt of the first message to the CRE. Upon receiving of the acknowledgement within a certain time threshold, the CRE removes the first message from the first message queue in the CRE. A method of providing “server-push” of messages from the server to the client machine utilizing a push Servlet and a push API.

Owner:NEXAWEB

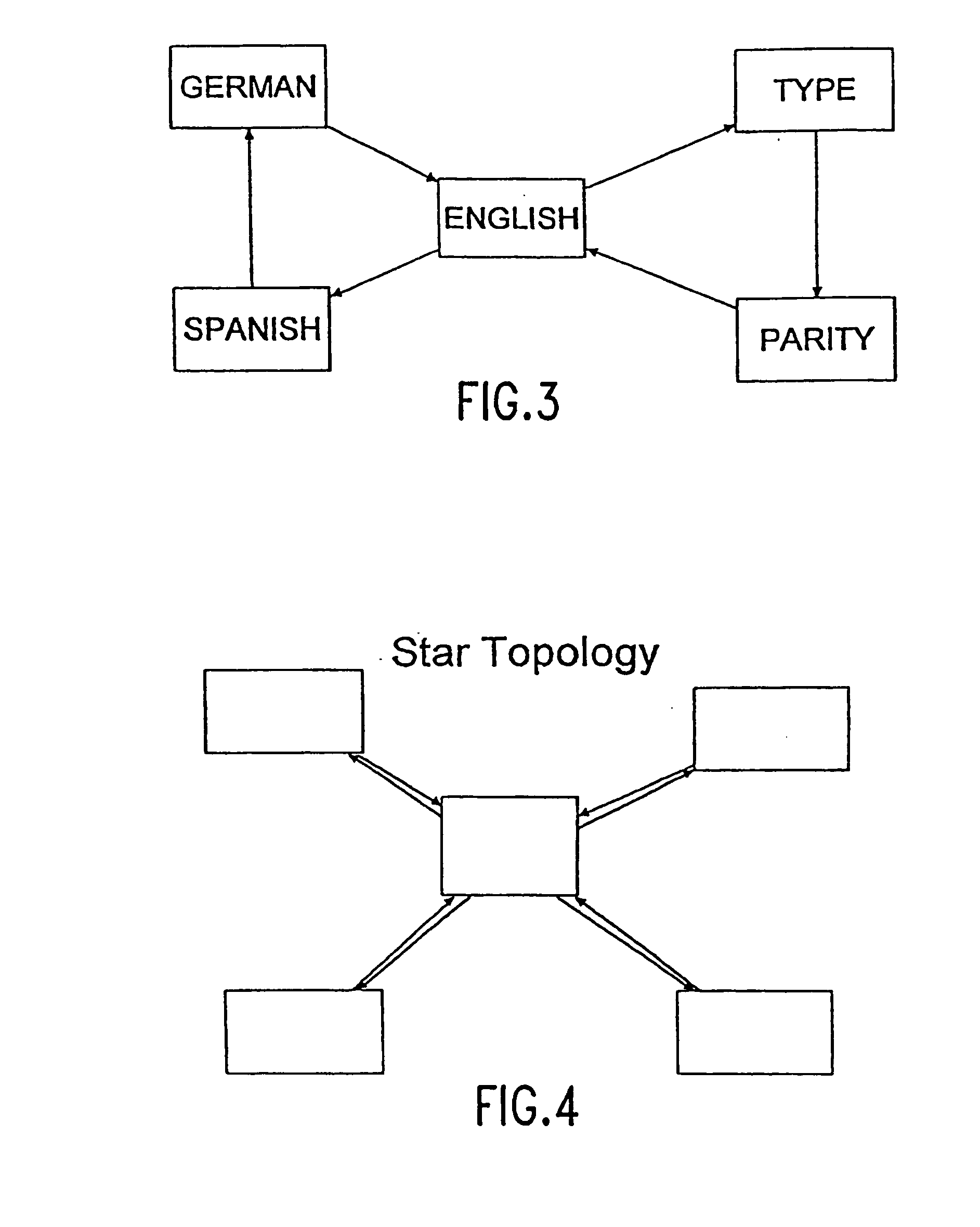

System and method for stateful web-based computing

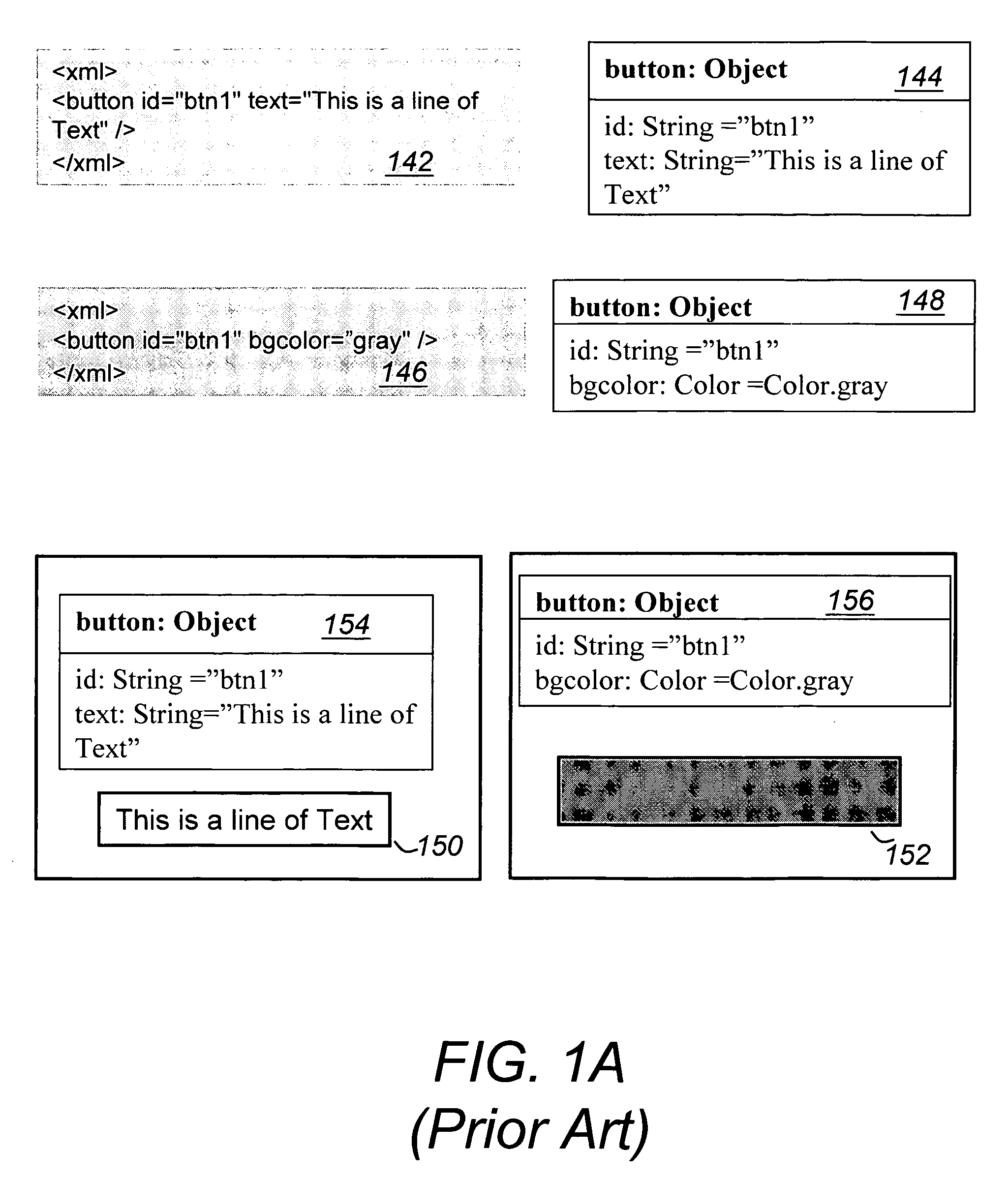

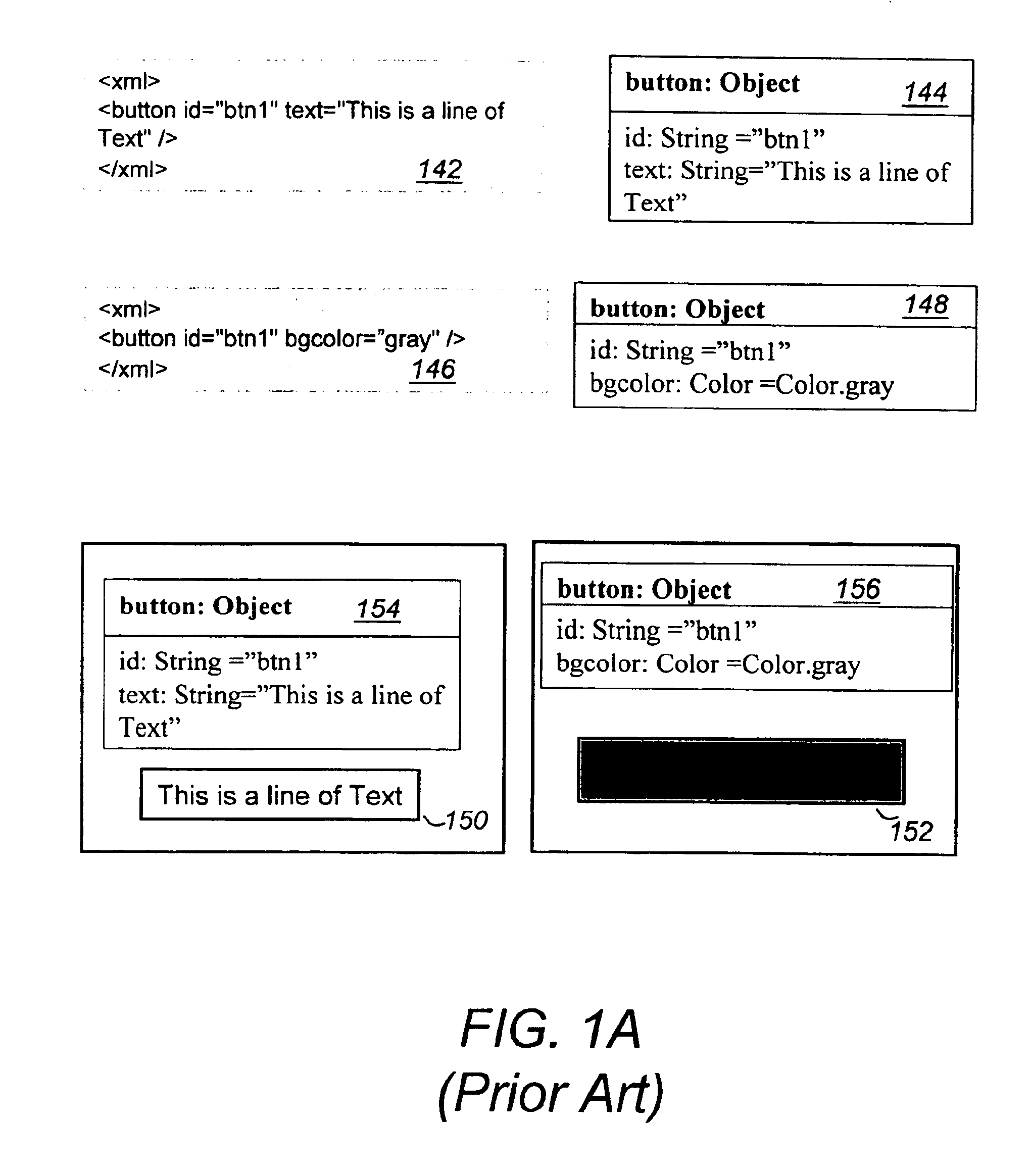

ActiveUS6886169B2Reduce waiting timeFast transferInterprogram communicationNatural language data processingClient-sideApplication software

A computing system for performing stateful distributed computing includes a client machine having a Client Runtime Environment (CRE) that is adapted to maintain the state of an application in the client machine. The CRE maintains state of the application by first retrieving a first markup document of the application, creating and storing a first object oriented representation of information contained in the first markup document. The first object oriented representation defines a first state of the application. Next, retrieving a second markup document, creating and storing a second object oriented representation of information contained in the second markup document. Finally merging the first and second object oriented representations thereby forming a new object oriented representation of information contained in the first or the second markup documents. This new object oriented representation defines a new state of said application. The CRE may further update the new state of the application by retrieving one or more additional markup documents, creating and storing one or more additional object oriented representations of information contained in the one or more additional markup documents, respectively, and merging the one or more additional object oriented representations with the new object oriented representation thereby forming an updated state of the application.

Owner:EMC CORP +1

Value-instance connectivity computer-implemented database

InactiveUS20080059412A1Reduce memory usageShorten the timeMulti-dimensional databasesSpecial data processing applicationsMulti dimensional dataData value

A computer-implemented database and method providing an efficient, ordered reduced space representation of multi-dimensional data. The data values for each attribute are stored in a manner that provides an advantage in, for example, space usage and / or speed of access, such as in condensed form and / or sort order. Instances of each data value for an attribute are identified by instance elements, each of which is associated with one data value. Connectivity information is provided for each instance element that uniquely associates each instance element with a specific instance of a data value for another attribute. In accordance with one aspect of the invention, low cardinality fields (attributes) may be combined into a single field (referred to as a “combined field”) having values representing the various combinations of the original fields. In accordance with another aspect of the invention, the data values for several fields may be stored in a single value list (referred to as a “union column”). Still another aspect of the invention is to apply redundancy elimination techniques, utilizing in some cases union columns, possibly together with combined fields, in order to reduce the space needed to store the database.

Owner:TARIN STEPHEN A

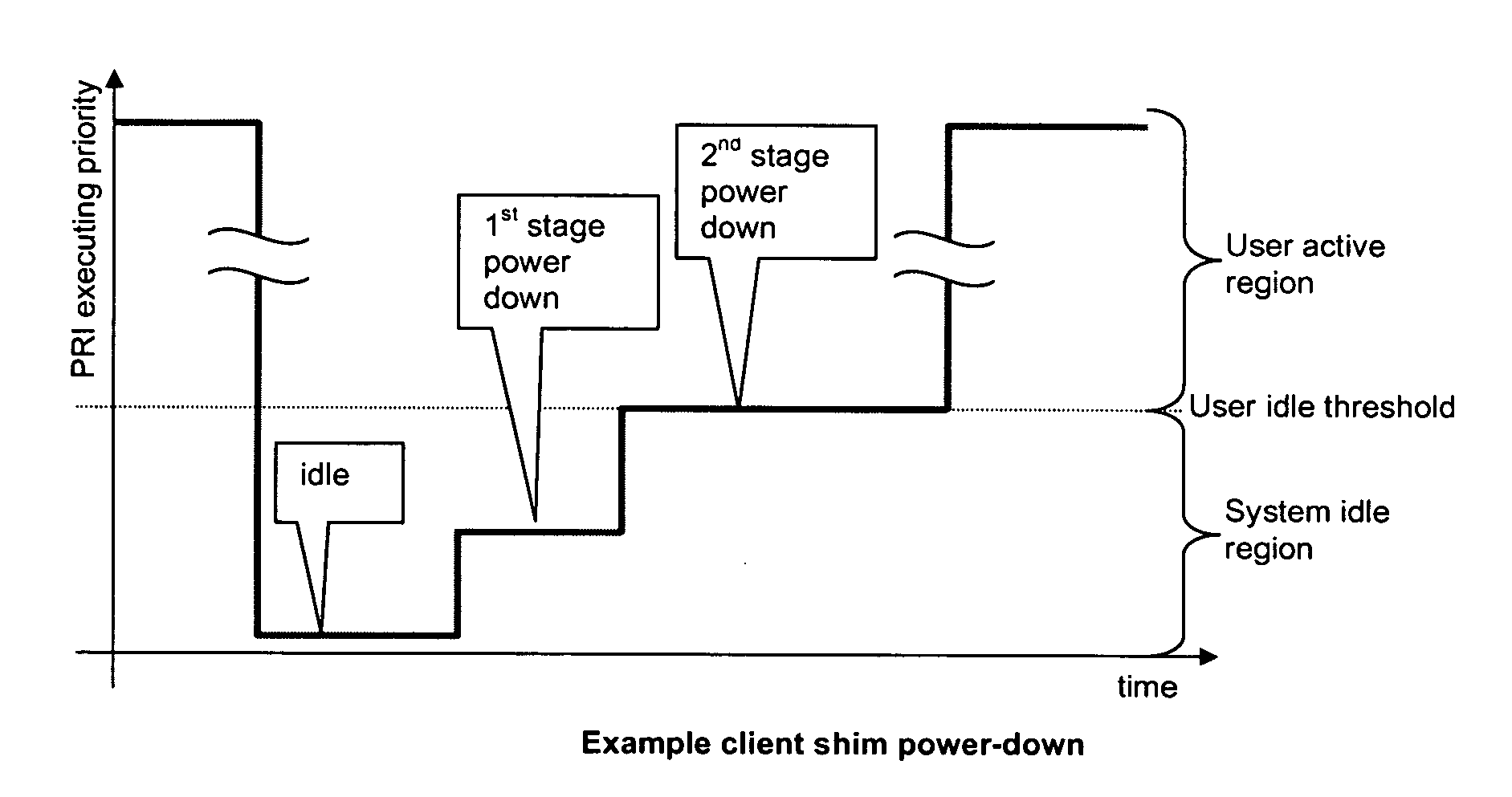

Managing power consumption in a multicore processor

ActiveUS20070220294A1Improve application performanceIncrease speedEnergy efficient ICTVolume/mass flow measurementProcessor elementMulti-core processor

A method and computer-usable medium including instructions for performing a method of managing power consumption in a multicore processor comprising a plurality of processor elements with at least one power saving mode. The method includes listing, using at least one distribution queue, a portion of the executable transactions in order of eligibility for execution. A plurality of executable transaction schedulers are provided. The executable transaction schedulers are linked together to provide a multilevel scheduler. The most eligible executable transaction is output from the multilevel scheduler to the at least one distribution queue. One or more of the plurality of processor elements are placed into a first power saving mode when a number of executable transactions allocated to the plurality of processor elements is such that only a portion of available processor elements are used to execute executable transactions.

Owner:FUJITSU SEMICON LTD +1

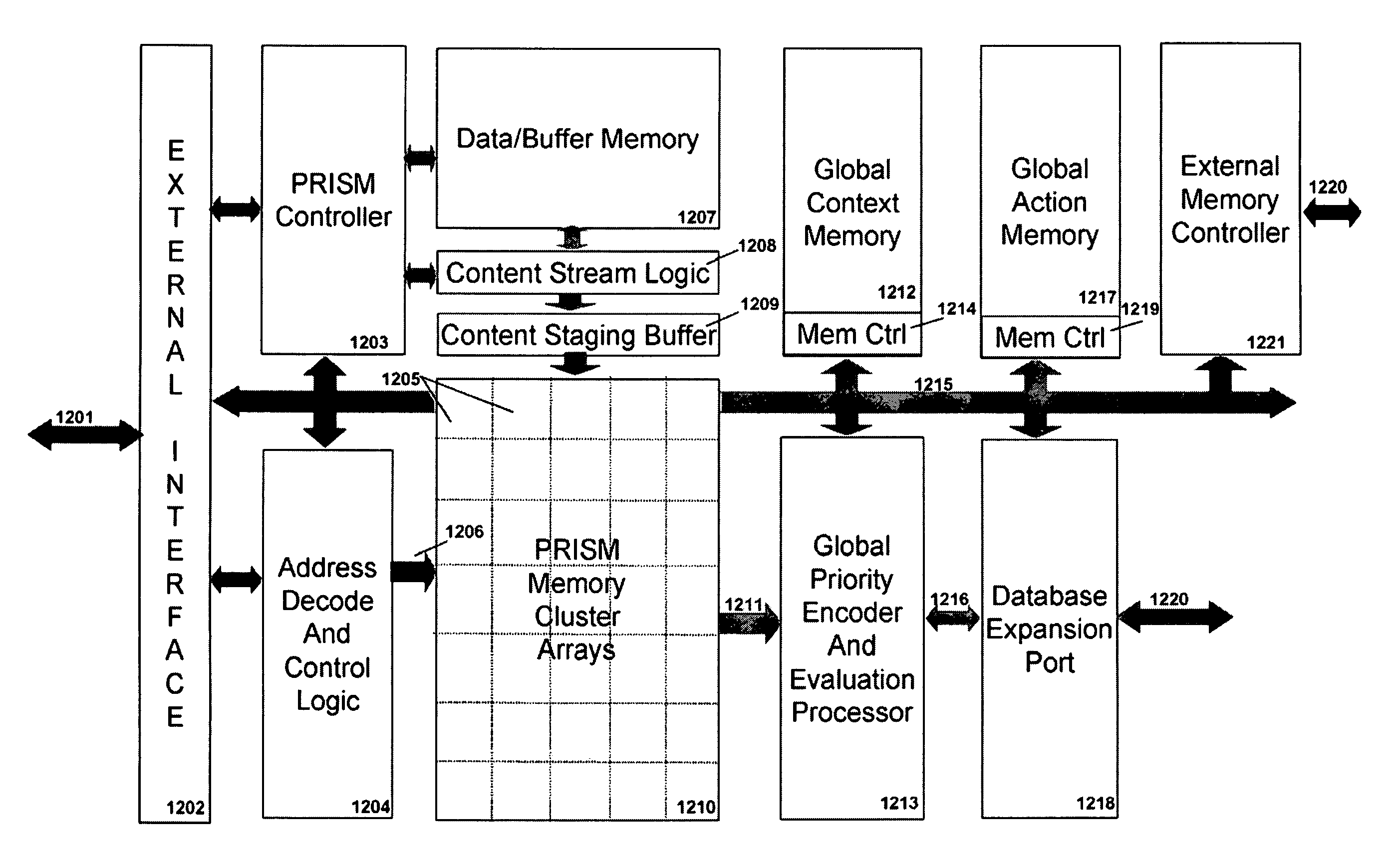

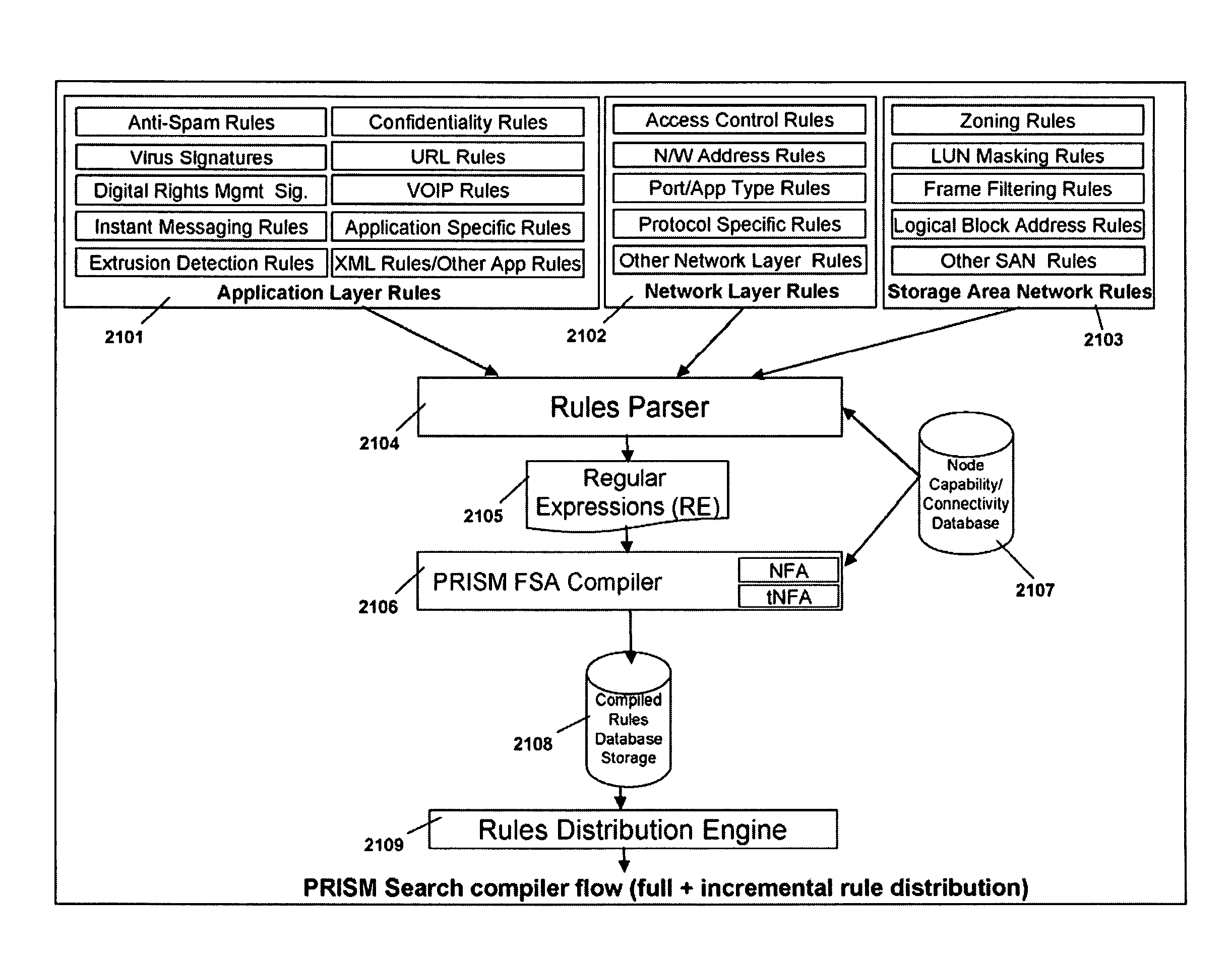

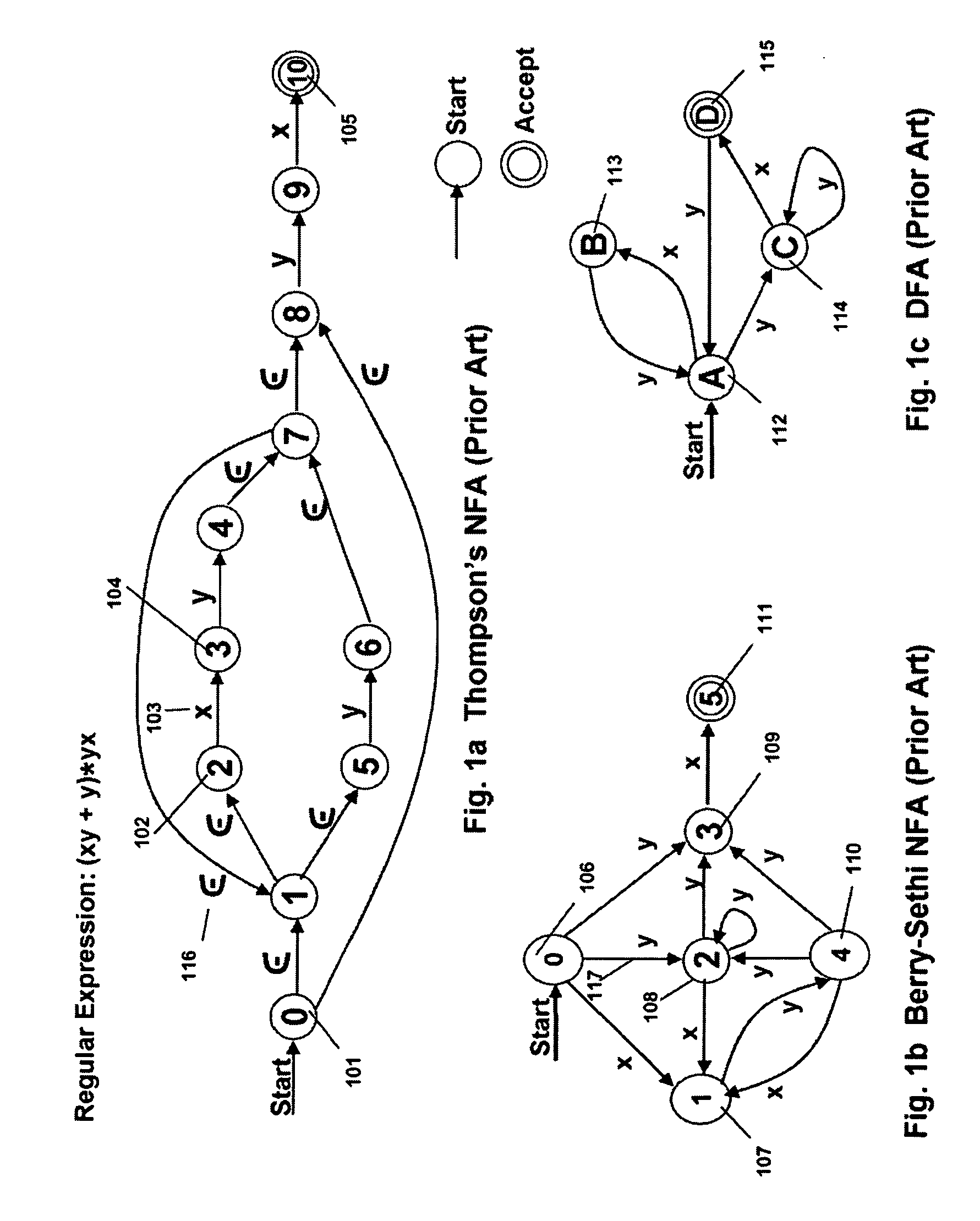

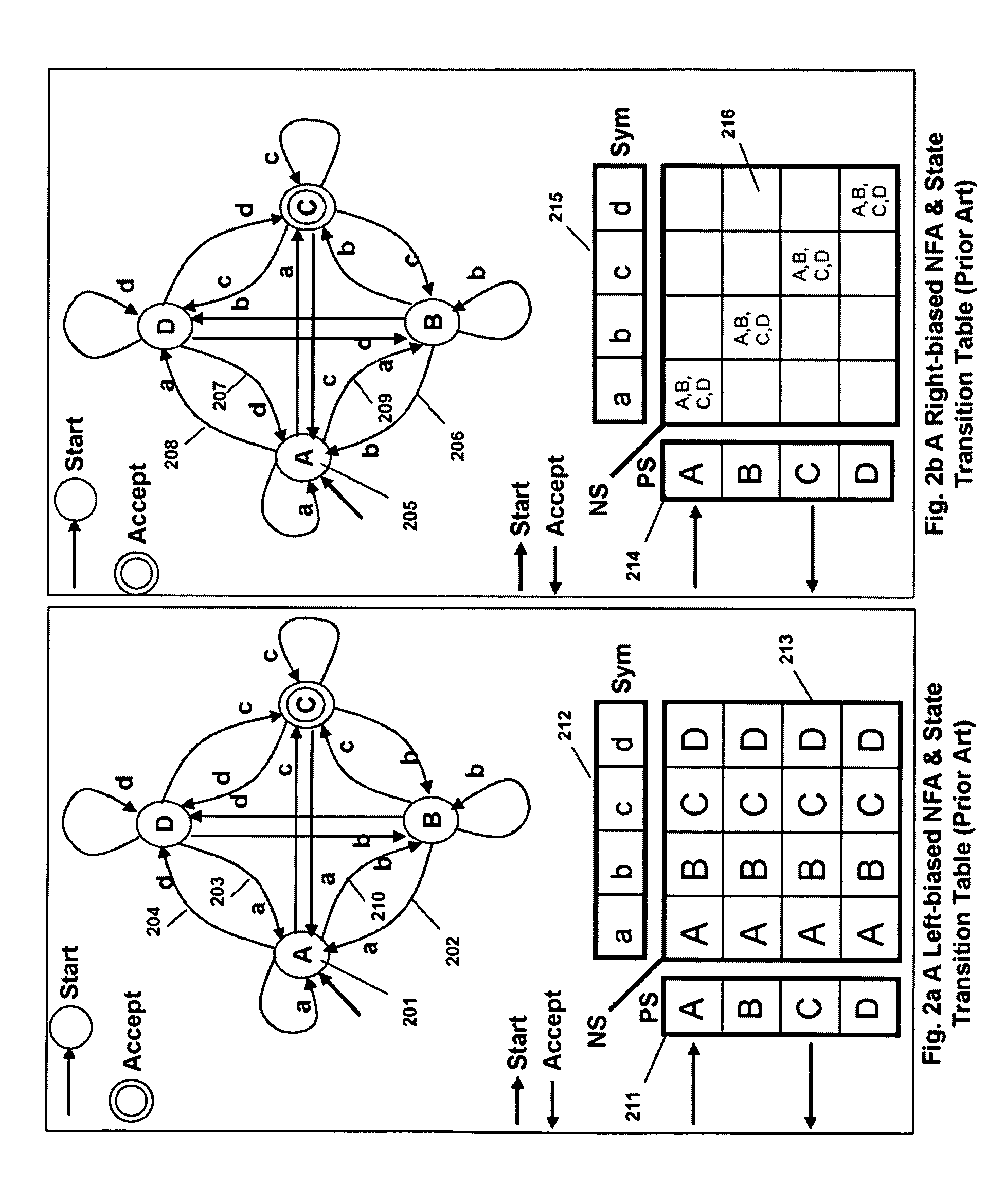

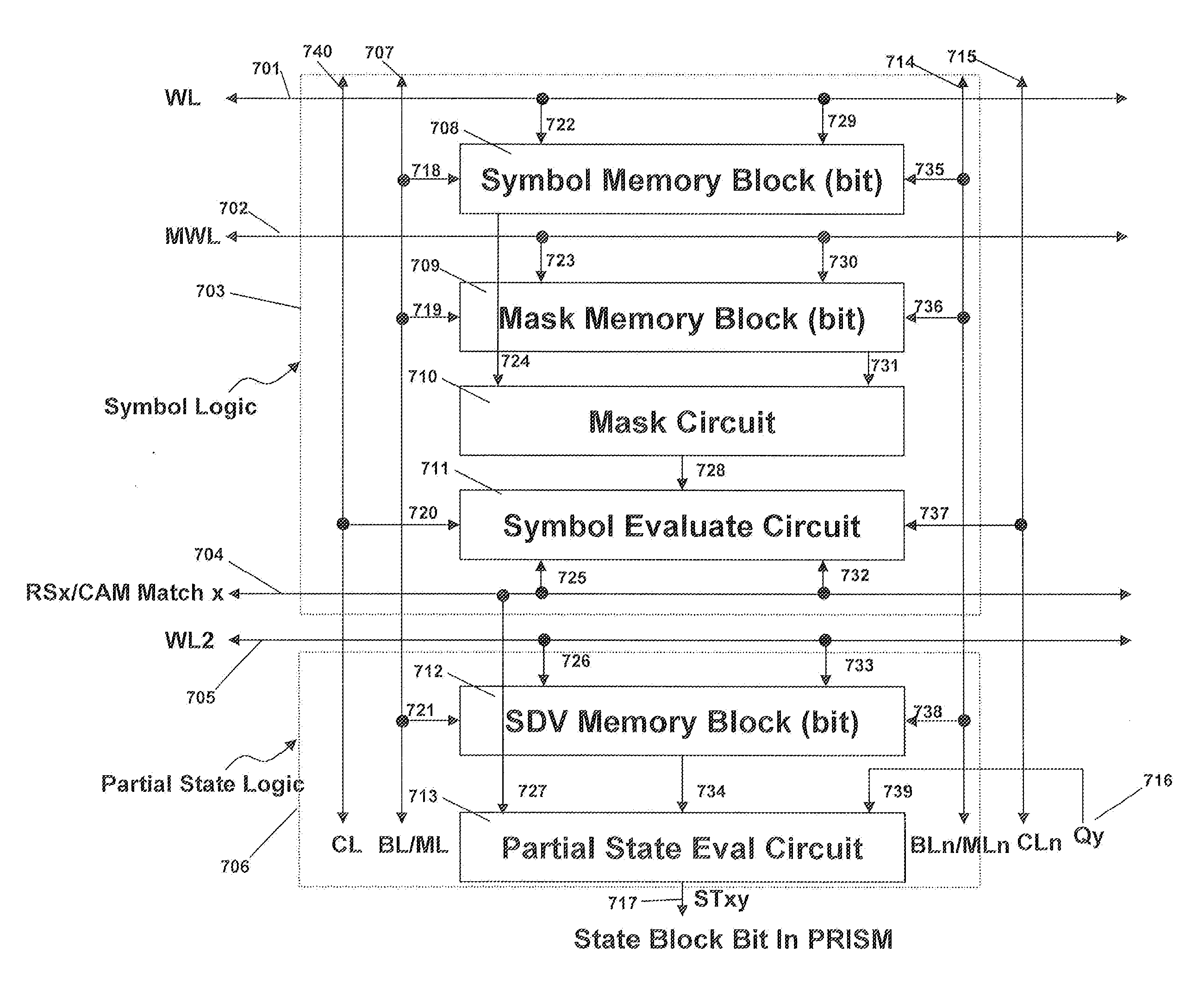

Programmable Intelligent Search Memory

ActiveUS20080140912A1Efficient and compact realizationImprove application performanceDigital data information retrievalDigital storageMemory architectureAutomaton

Memory architecture provides capabilities for high performance content search. The architecture creates an innovative memory that can be programmed with content search rules which are used by the memory to evaluate presented content for matching with the programmed rules. When the content being searched matches any of the rules programmed in the Programmable Intelligent Search Memory (PRISM) action(s) associated with the matched rule(s) are taken. Content search rules comprise of regular expressions which are converted to finite state automata and then programmed in PRISM for evaluating content with the search rules.

Owner:INFOSIL INC

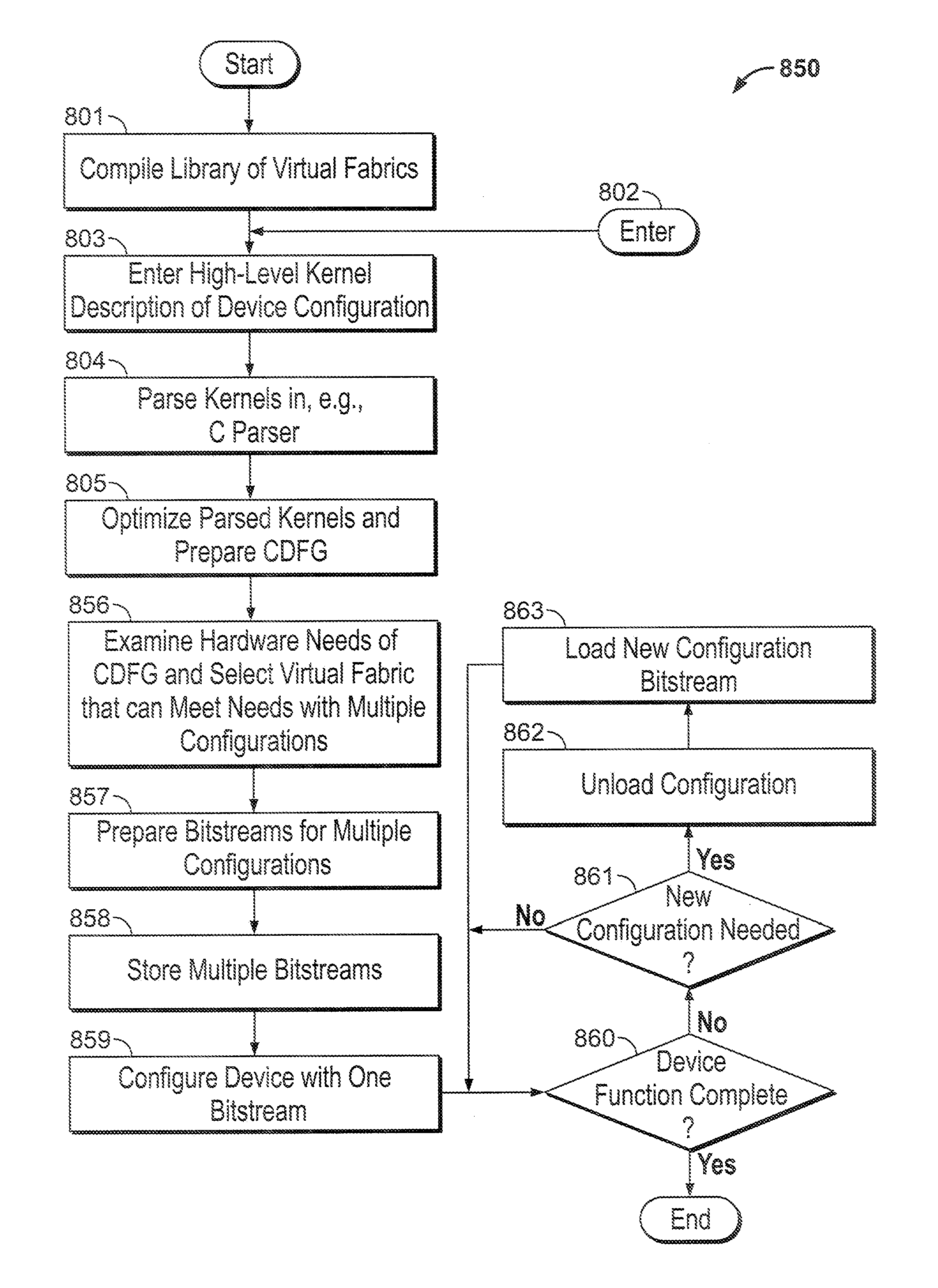

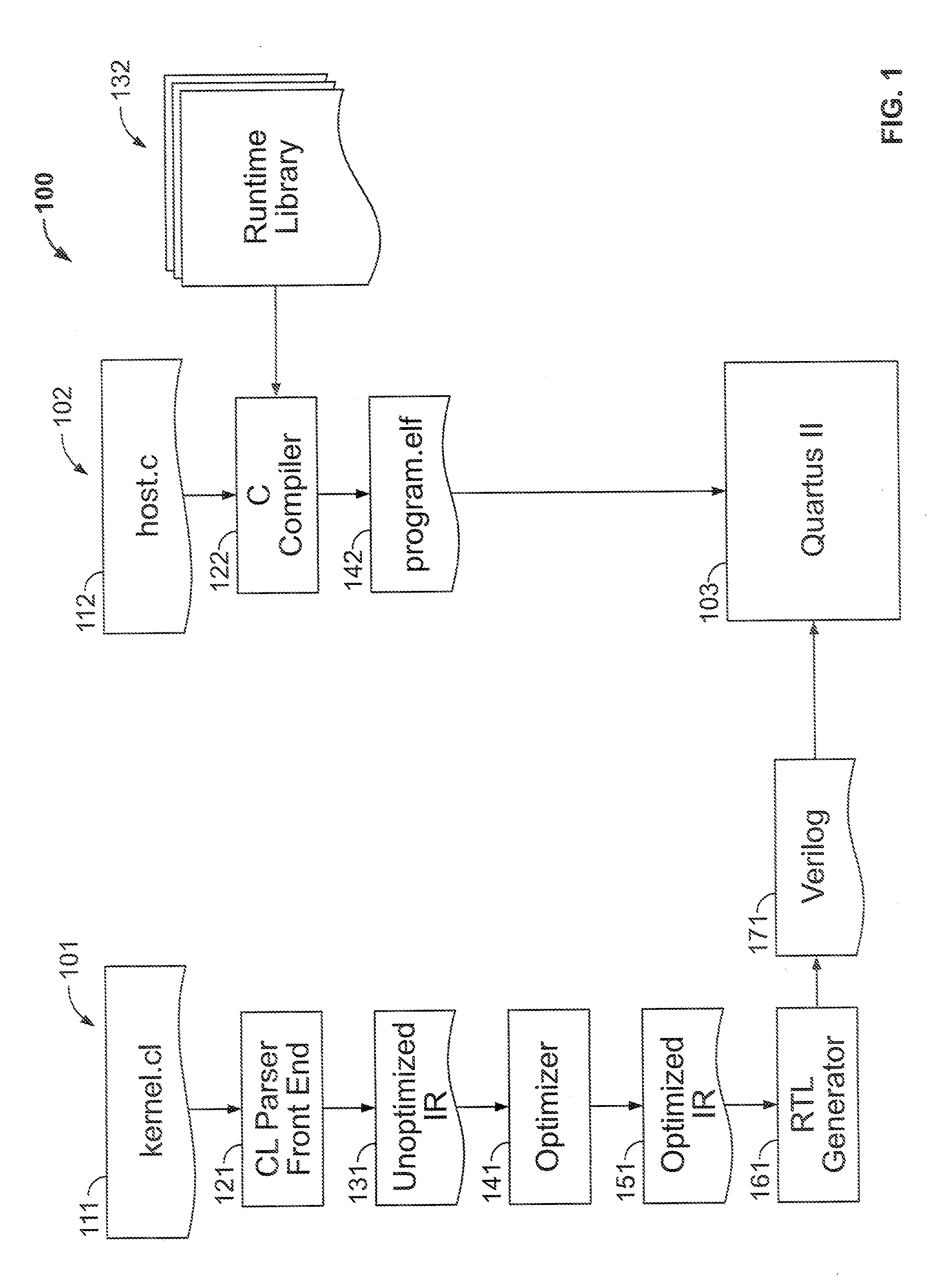

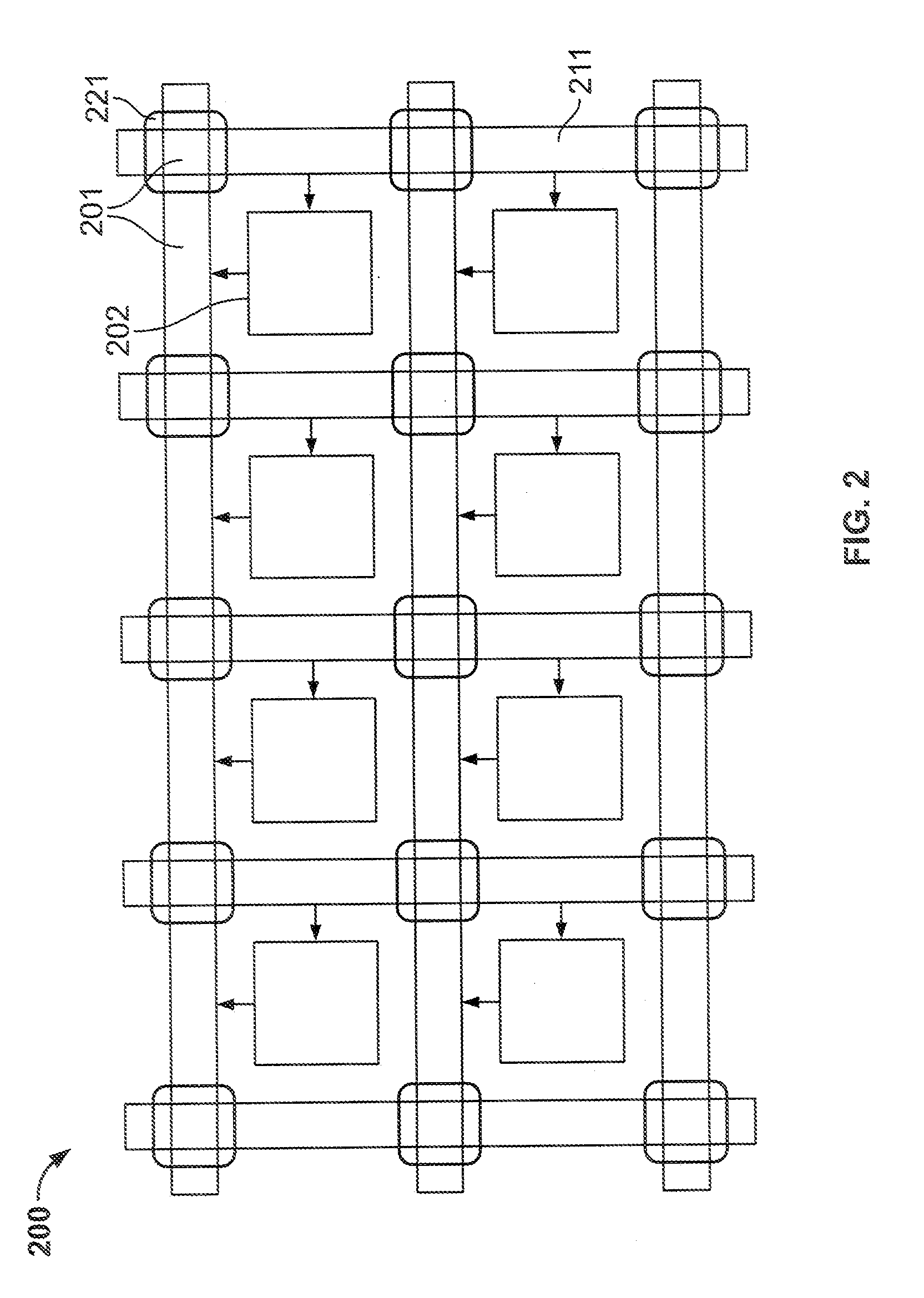

Configuring a programmable device using high-level language

ActiveUS20130212365A1Improve application performanceDigital computer detailsData switching by path configurationProgrammable logic deviceSignaling network

A method of preparing a programmable integrated circuit device for configuration using a high-level language includes compiling a plurality of virtual programmable devices from descriptions in said high-level language. The compiling includes compiling configurations of configurable routing resources from programmable resources of said programmable integrated circuit device, and compiling configurations of a plurality of complex function blocks from programmable resources of said programmable integrated circuit device. A machine-readable data storage medium may be encoded with a library of such compiled configurations. A virtual programmable device may include a stall signal network and routing switches of the virtual programmable device may include stall signal inputs and outputs.

Owner:ALTERA CORP

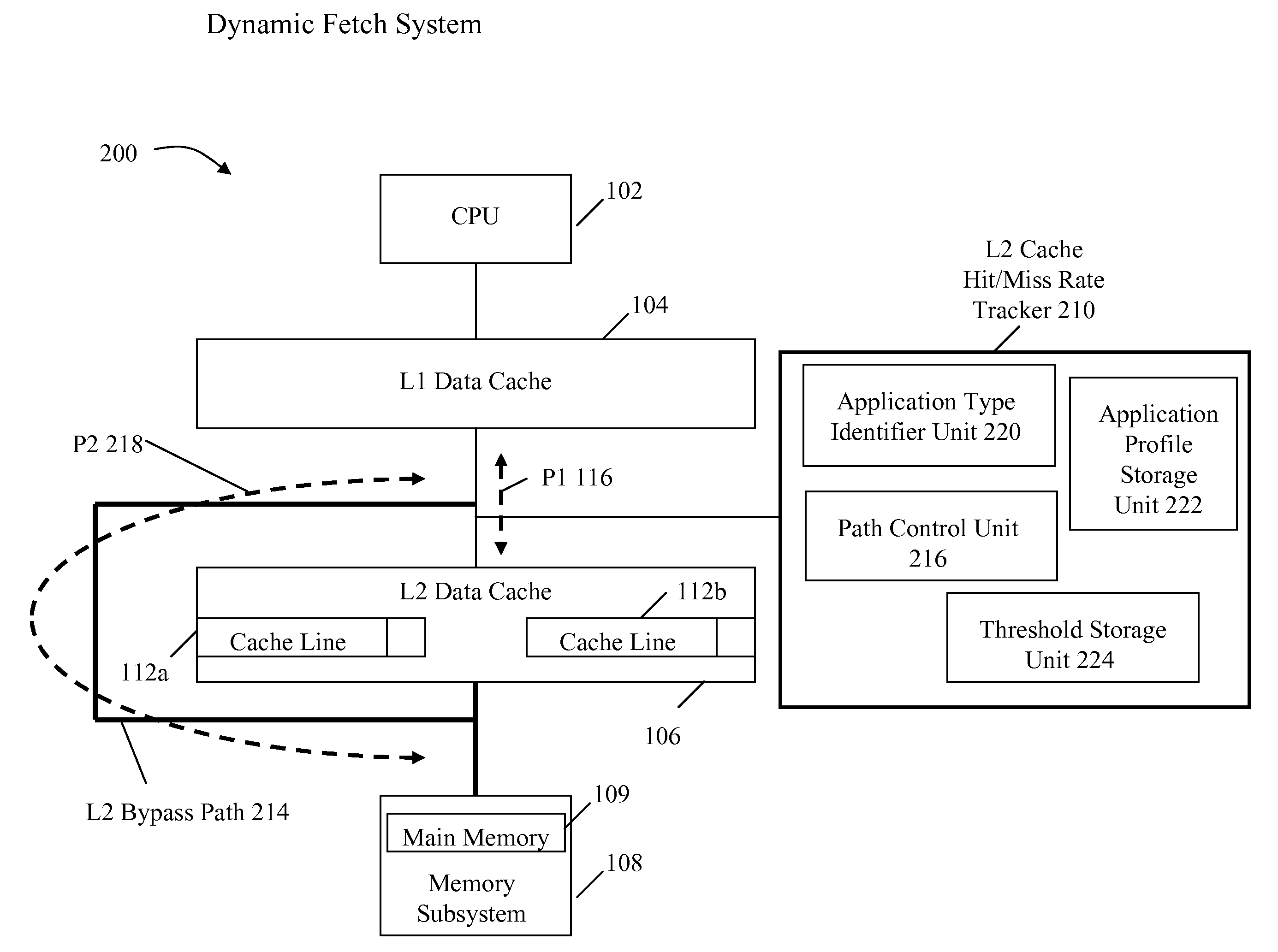

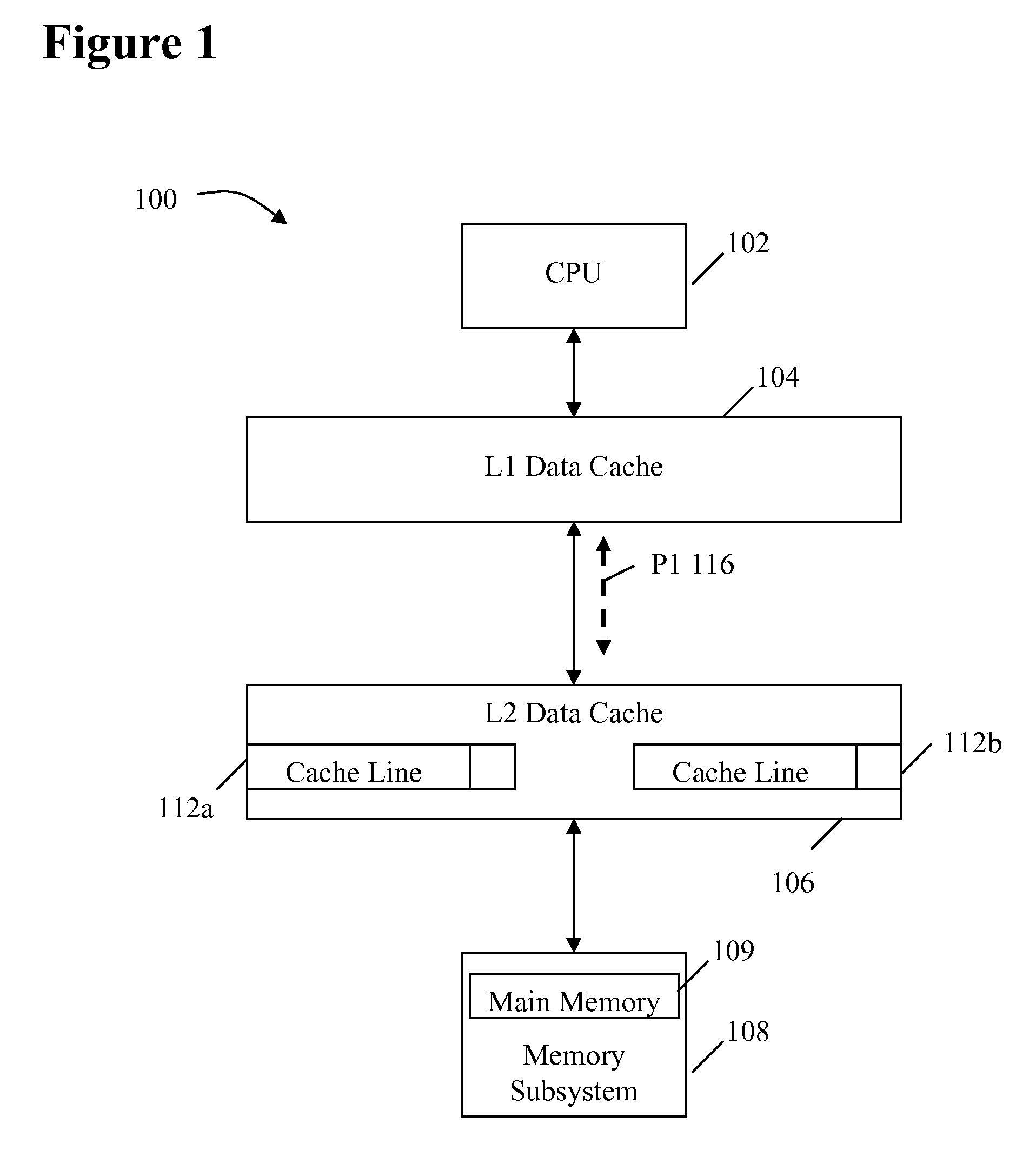

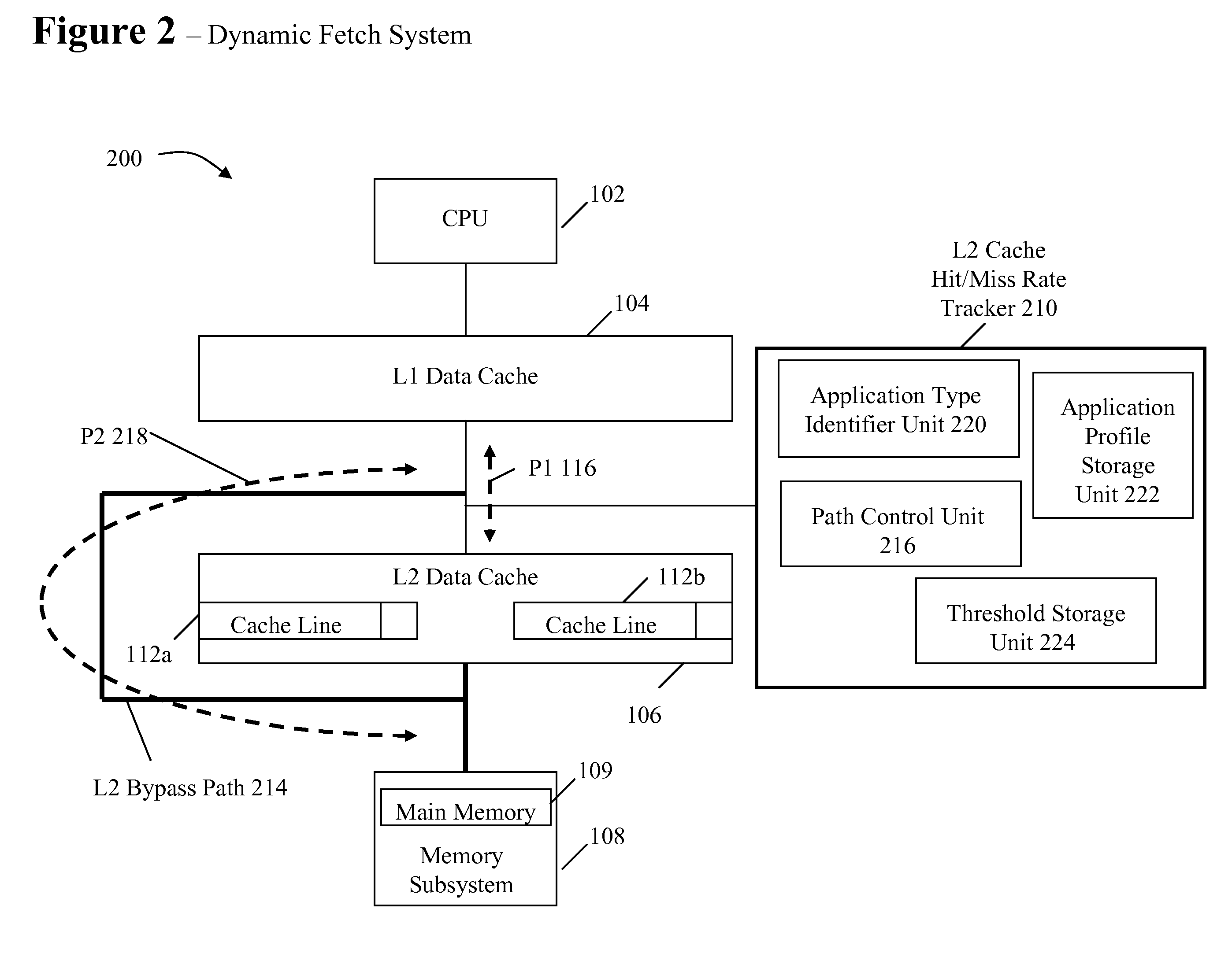

System and method for dynamically selecting the fetch path of data for improving processor performance

InactiveUS20090037664A1Improve system performanceImprove latencyMemory adressing/allocation/relocationData accessParallel computing

A system and method for dynamically selecting the data fetch path for improving the performance of the system improves data access latency by dynamically adjusting data fetch paths based on application data fetch characteristics. The application data fetch characteristics are determined through the use of a hit / miss tracker. It reduces data access latency for applications that have a low data reuse rate (streaming audio, video, multimedia, games, etc.) which will improve overall application performance. It is dynamic in a sense that at any point in time when the cache hit rate becomes reasonable (defined parameter), the normal cache lookup operations will resume. The system utilizes a hit / miss tracker which tracks the hits / misses against a cache and, if the miss rate surpasses a prespecified rate or matches an application profile, the hit / miss tracker causes the cache to be bypassed and the data is pulled from main memory or another cache thereby improving overall application performance.

Owner:IBM CORP

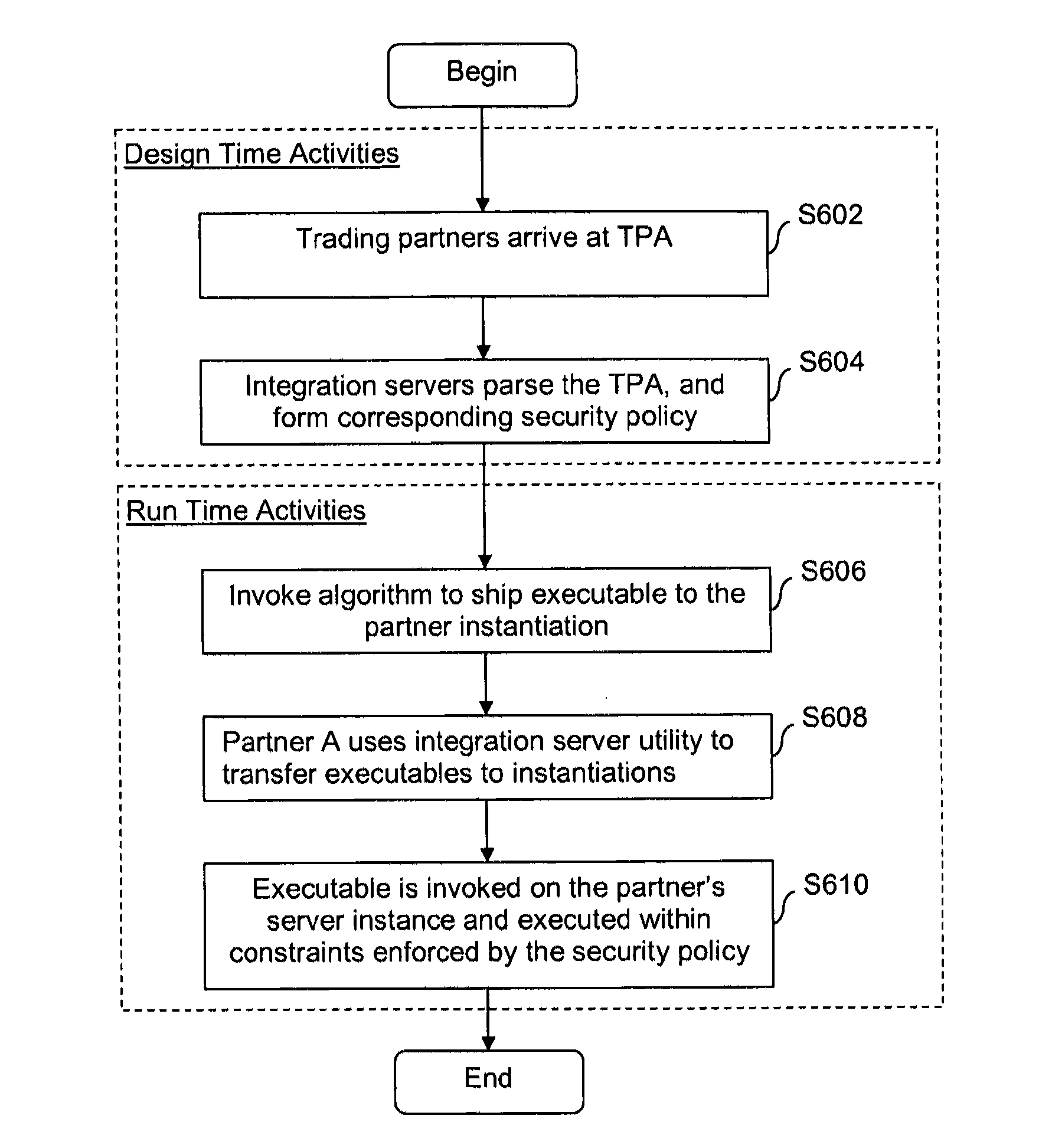

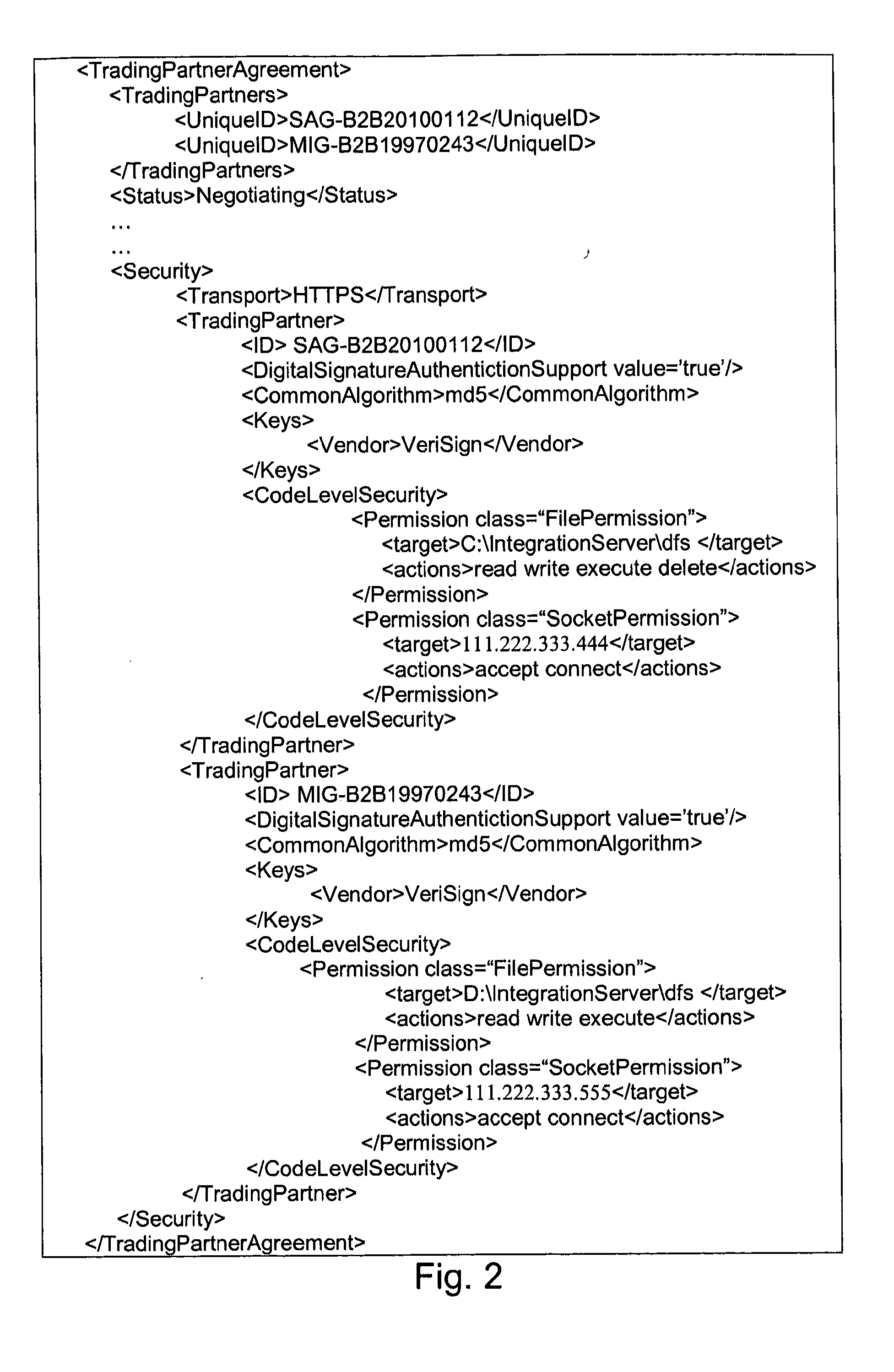

Security systems and/or methods for cloud computing environments

ActiveUS20120116782A1Improve application performanceQuickly deliver business resultComputer security arrangementsTransmissionSecurity policyShared resource

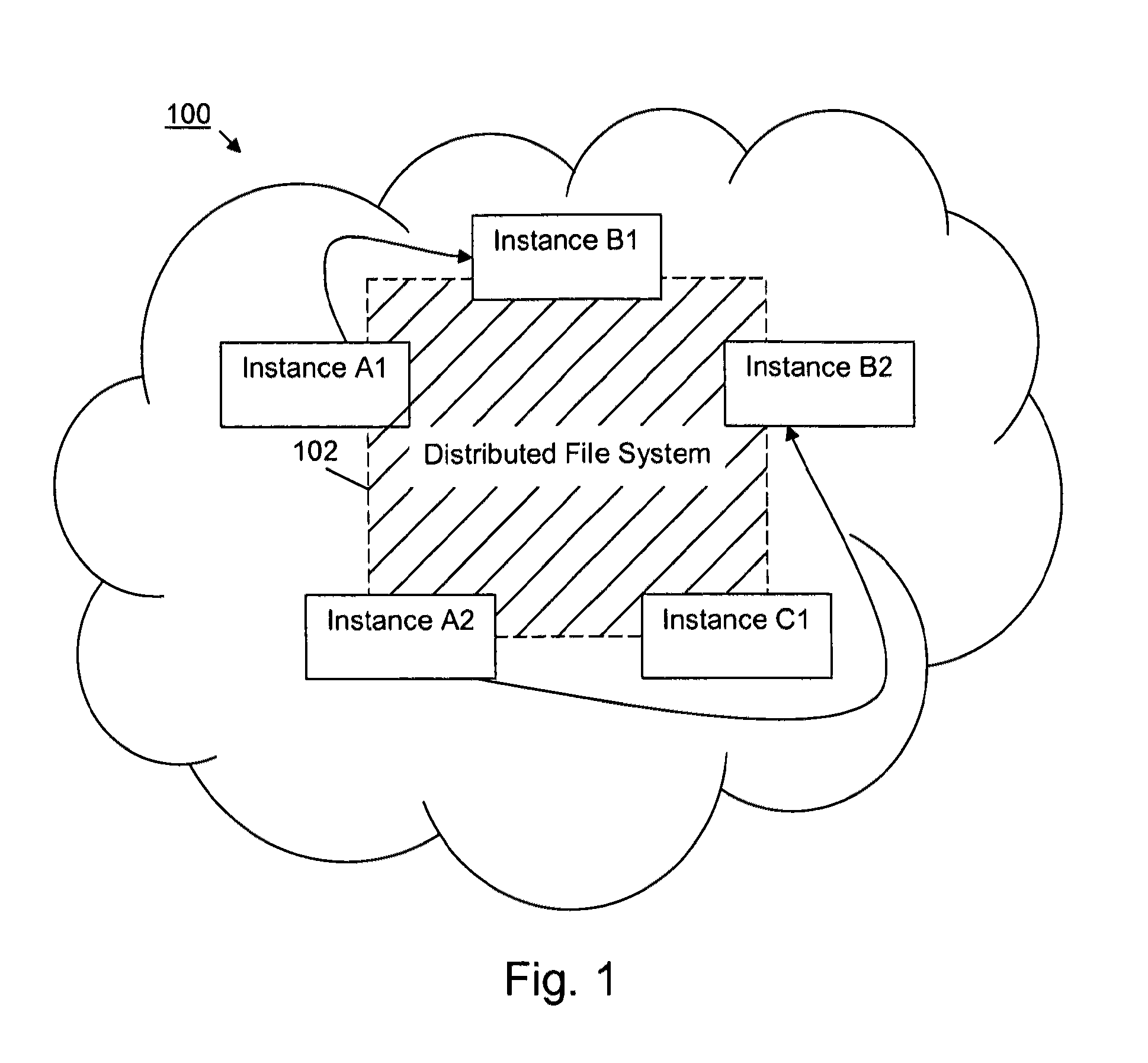

Certain example embodiments described herein relate to security systems and / or methods for cloud computing environments. More particularly, certain example embodiments described herein relate to the negotiation and subsequent use of Trading Partner Agreements (TPAs) between partners in a Virtual Organization, the TPAs enabling resources to be shared between the partners in a secure manner. In certain example embodiments, TPAs are negotiated, an algorithm is executed to determine where an executable is to be run, the resource is transferred to the location where it is to be run, and it is executed—with the TPAs collectively defining a security policy that constrains how and where it can be executed, the resources it can use, etc. The executable may be transferred to a location in a multipart (e.g., SMIME) message, along with header information and rights associated with the executable.

Owner:SOFTWARE AG

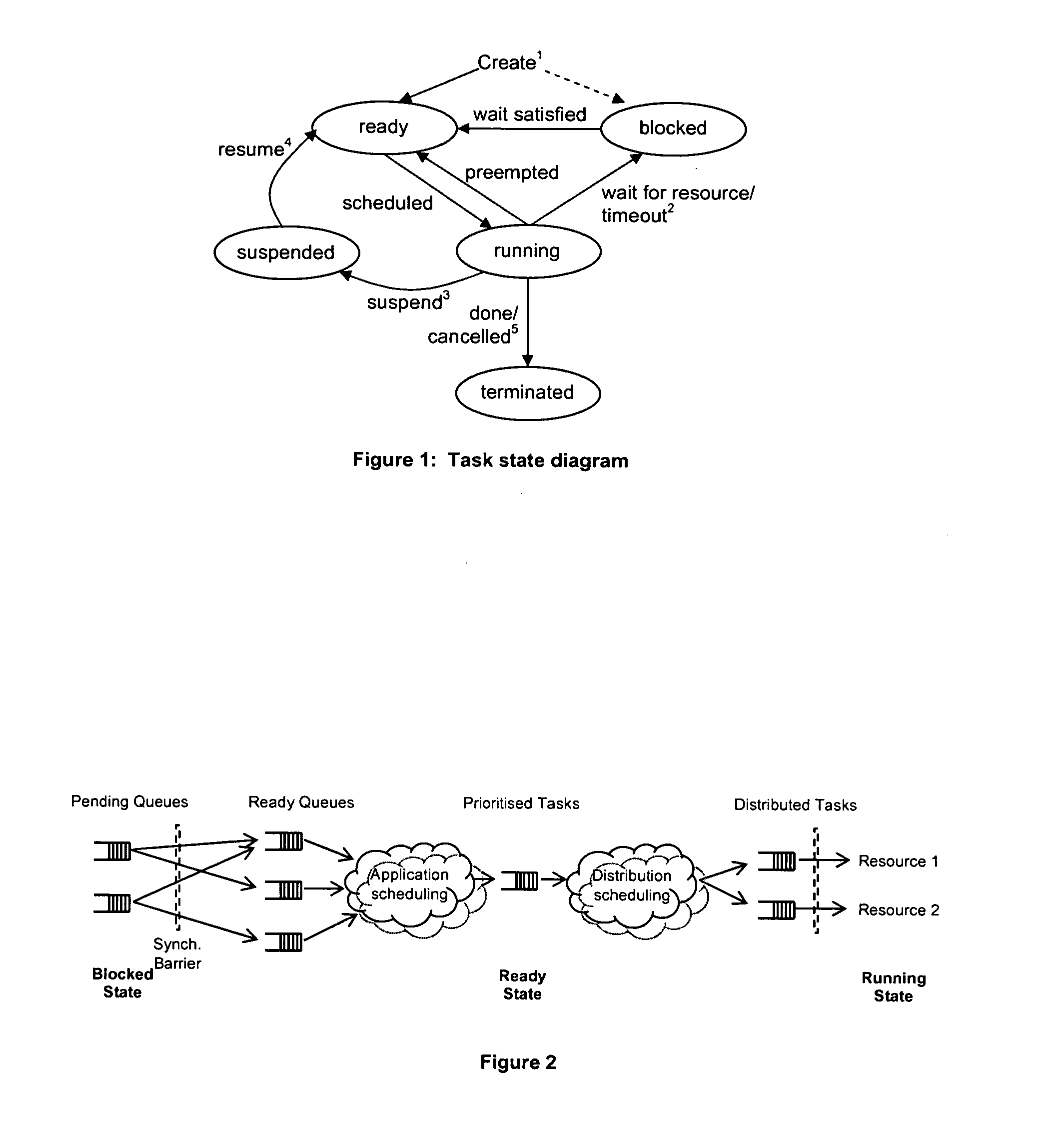

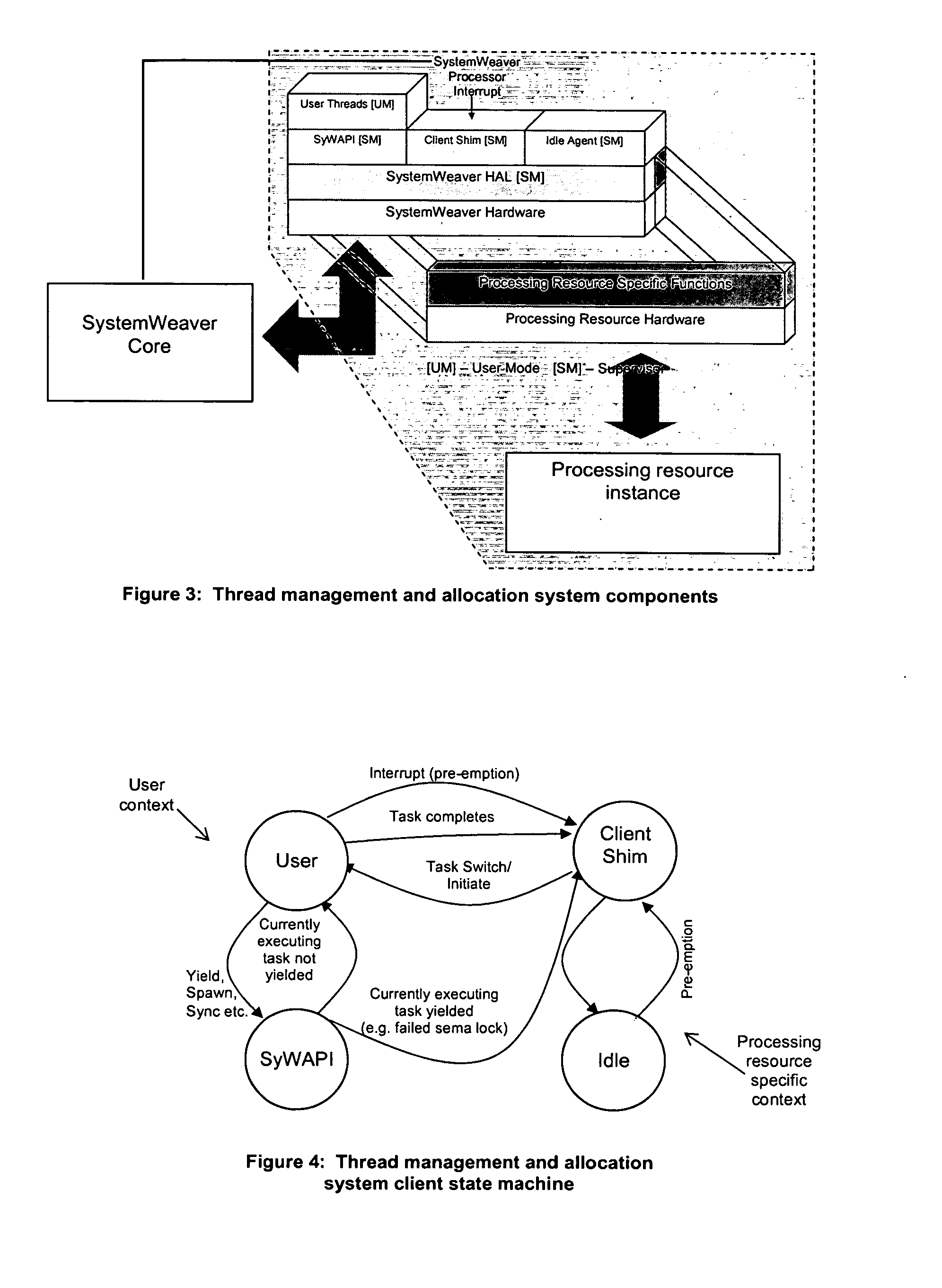

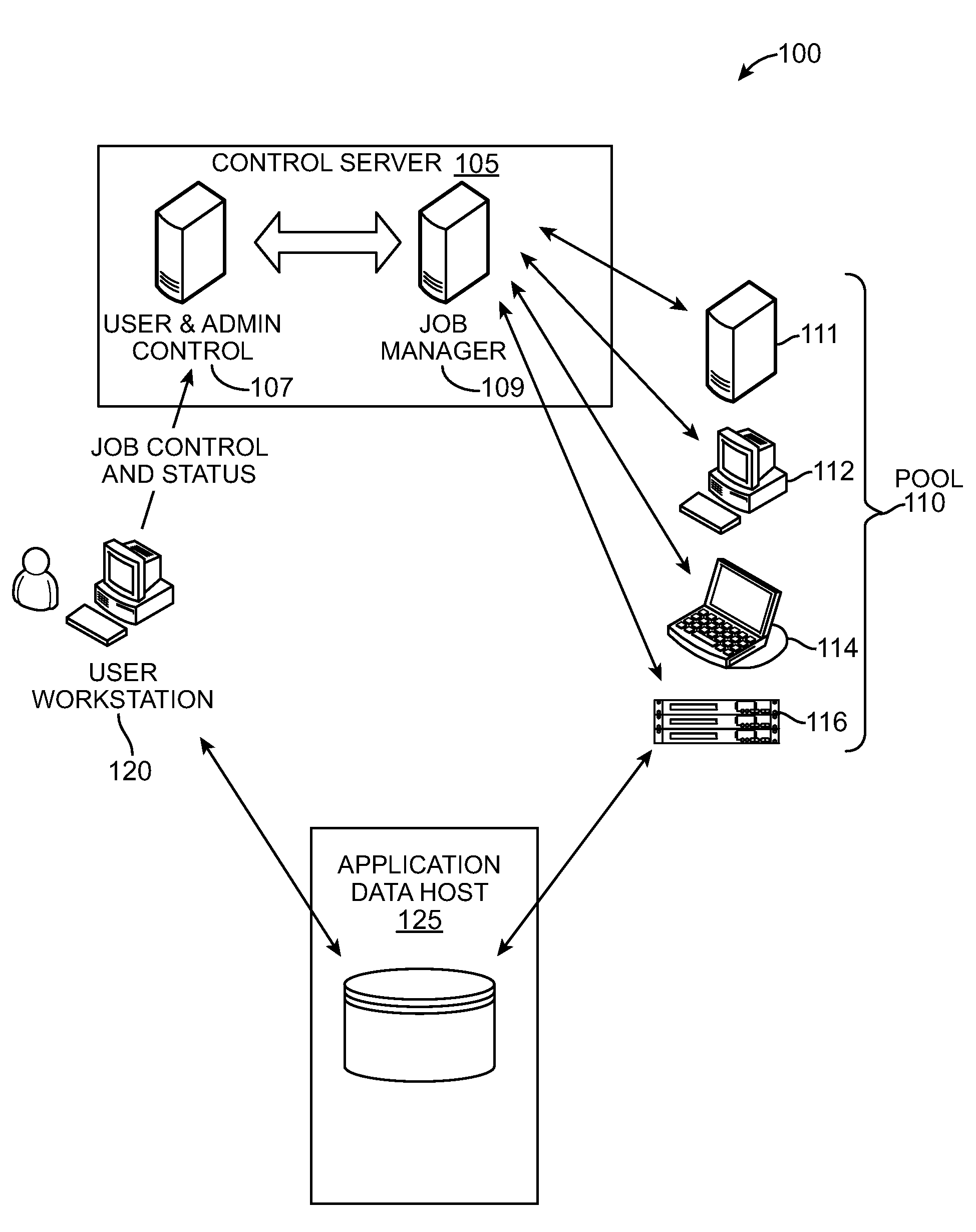

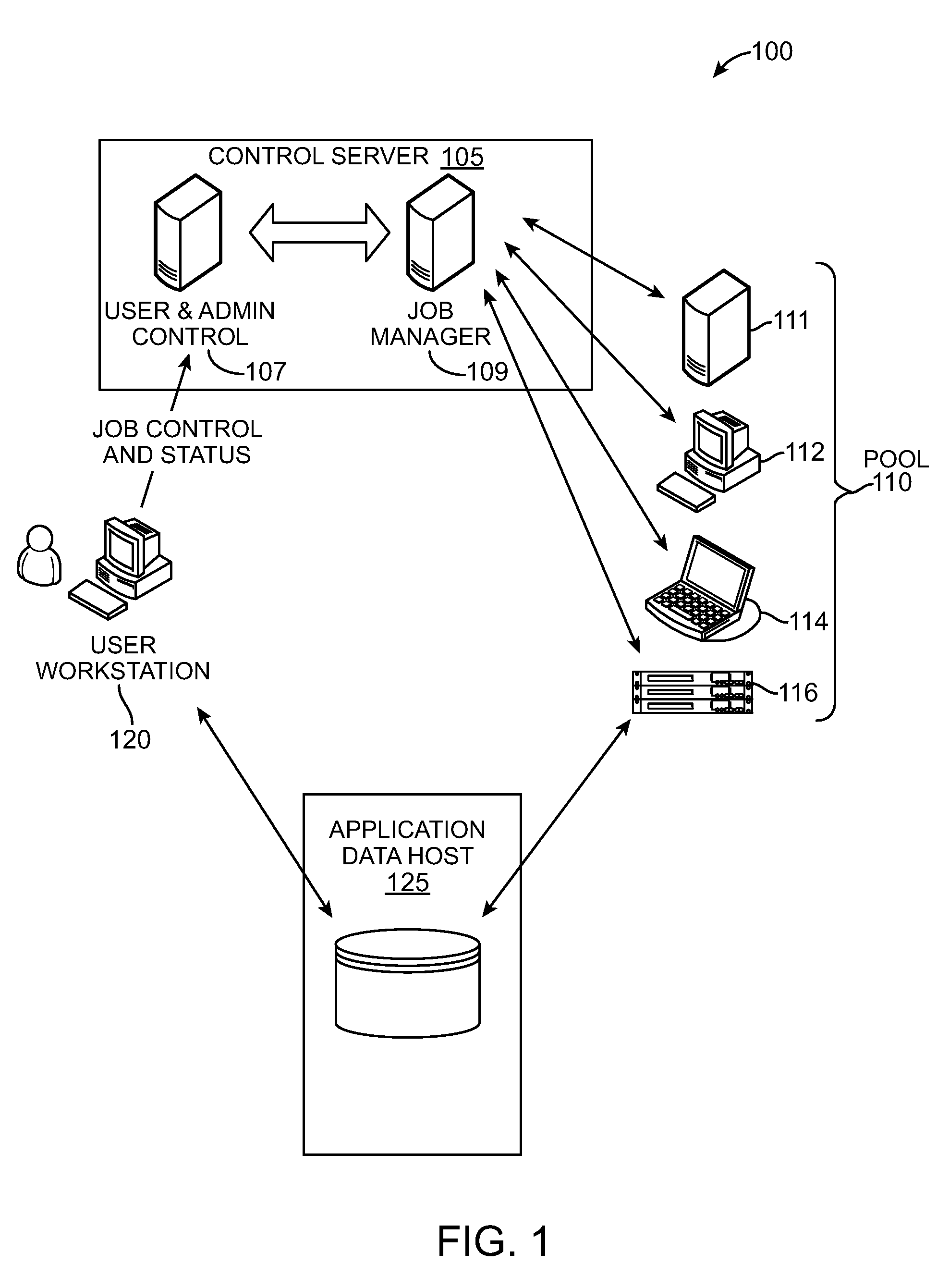

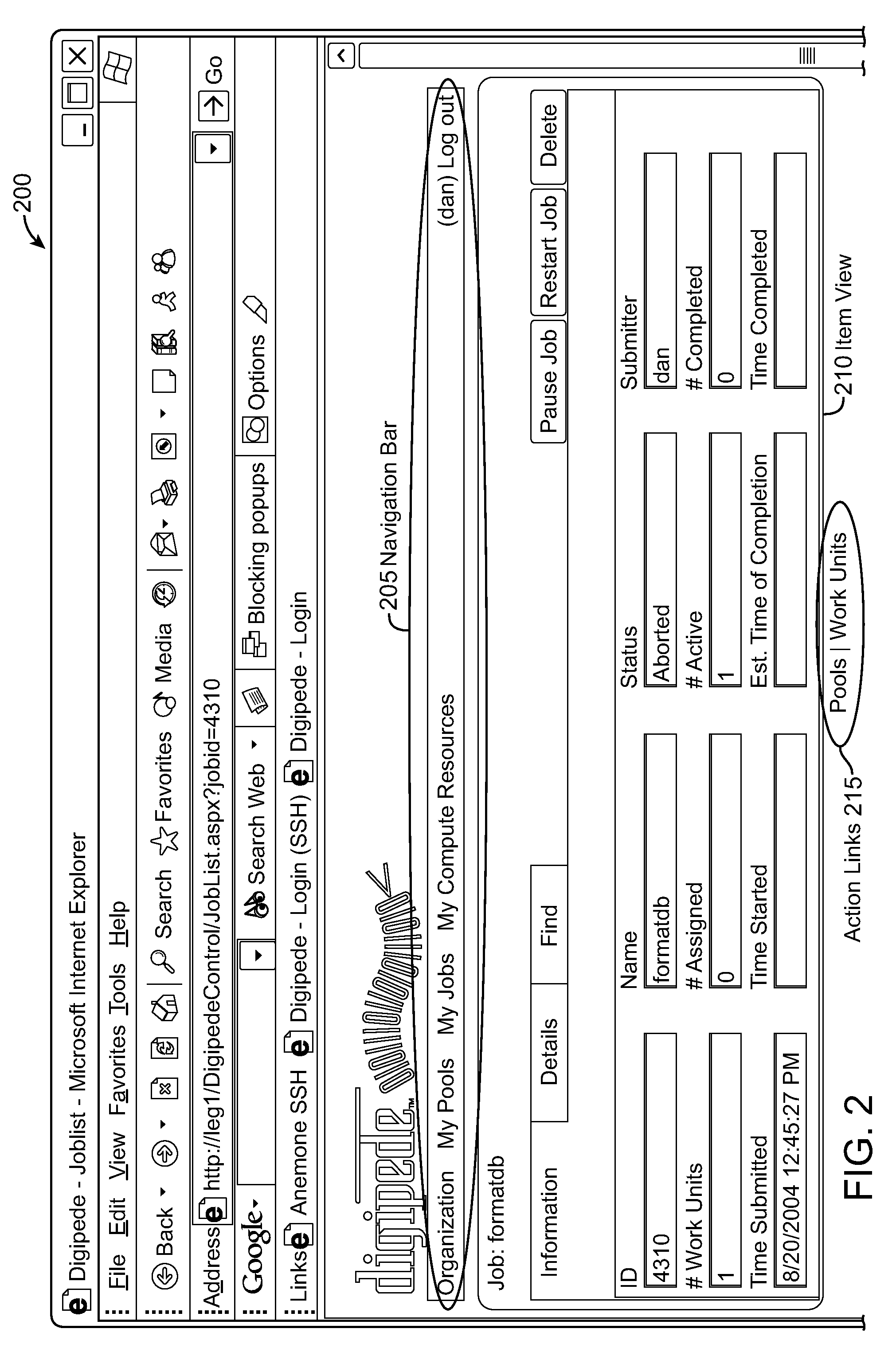

Multicore distributed processing system using selection of available workunits based on the comparison of concurrency attributes with the parallel processing characteristics

ActiveUS8230426B2Improve application performanceFast communicationGeneral purpose stored program computerMultiprogramming arrangementsMulti processorWork unit

Owner:DIGIPEDE TECH LLC

Dynamic Programmable Intelligent Search Memory

ActiveUS20080140991A1Reduce classification overheadEfficient and compact realizationDigital data information retrievalGeneral purpose stored program computerDynamic storageMemory circuits

Memory architecture provides capabilities for high performance content search. The architecture creates an innovative memory derived using randomly accessible dynamic memory circuits that can be programmed with content search rules which are used by the memory to evaluate presented content for matching with the programmed rules. When the content being searched matches any of the rules programmed in the dynamic Programmable Intelligent Search Memory (PRISM) action(s) associated with the matched rule(s) are taken. Content search rules comprise of regular expressions which are converted to finite state automata and then programmed in dynamic PRISM for evaluating content with the search rules.

Owner:INFOSIL INC

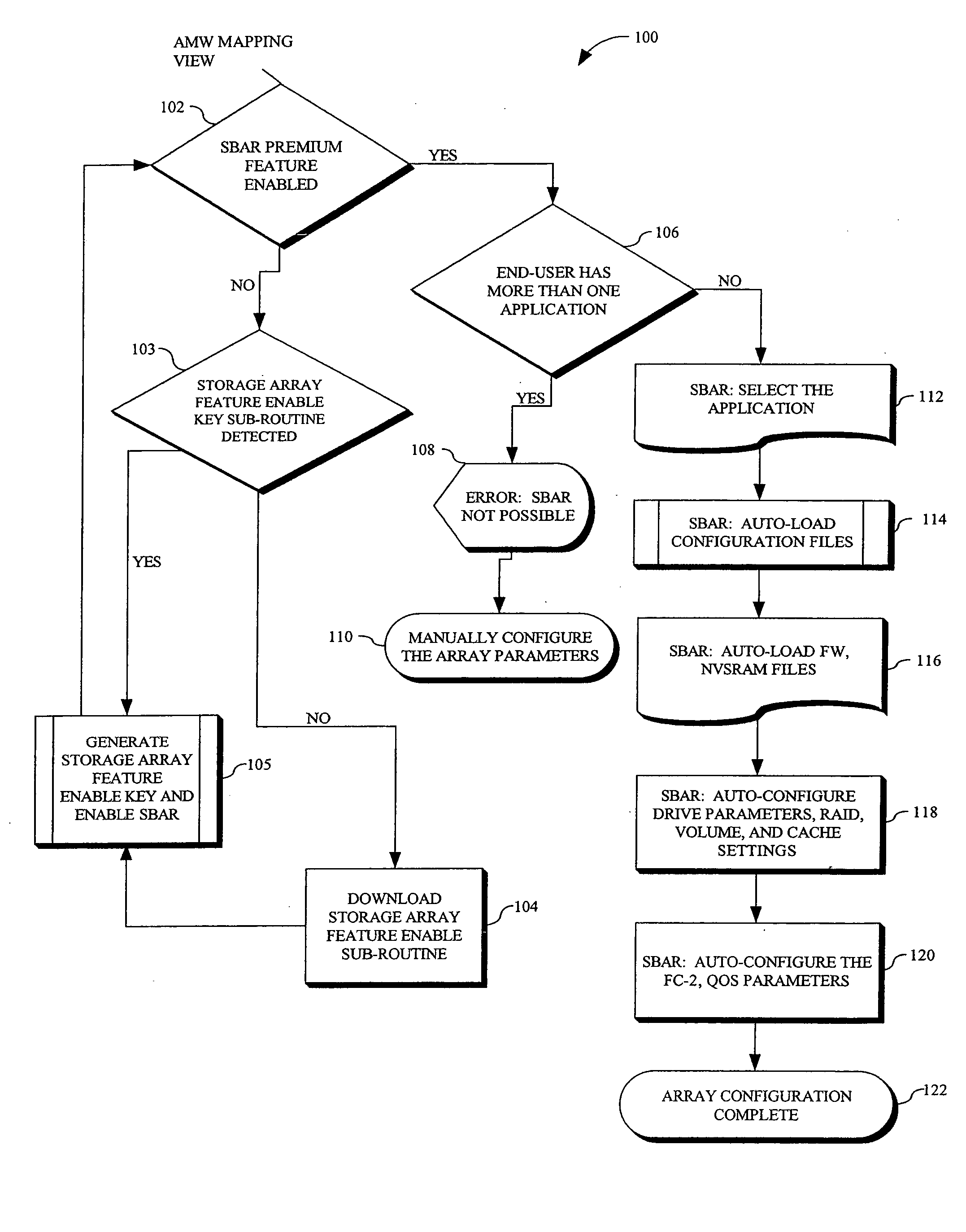

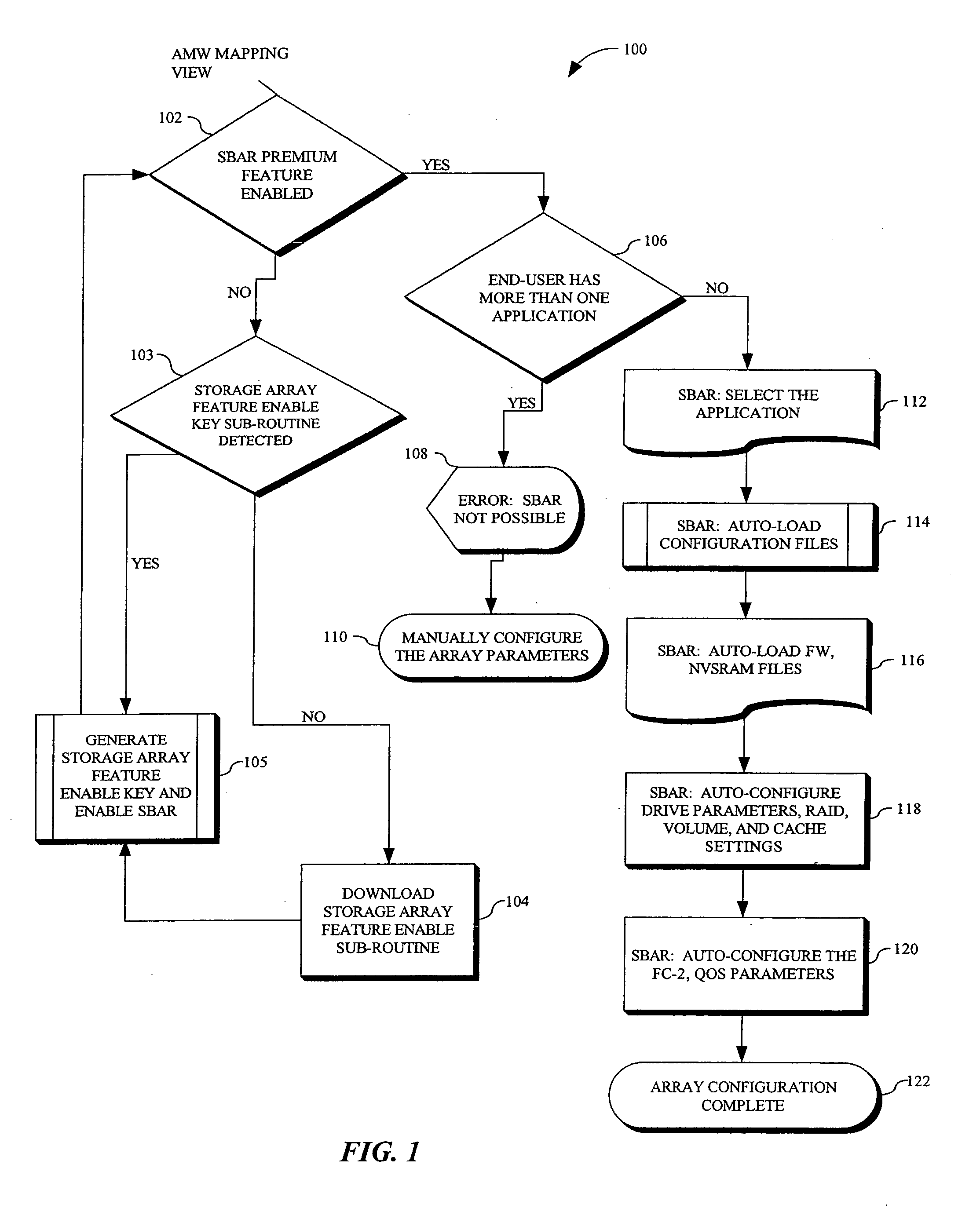

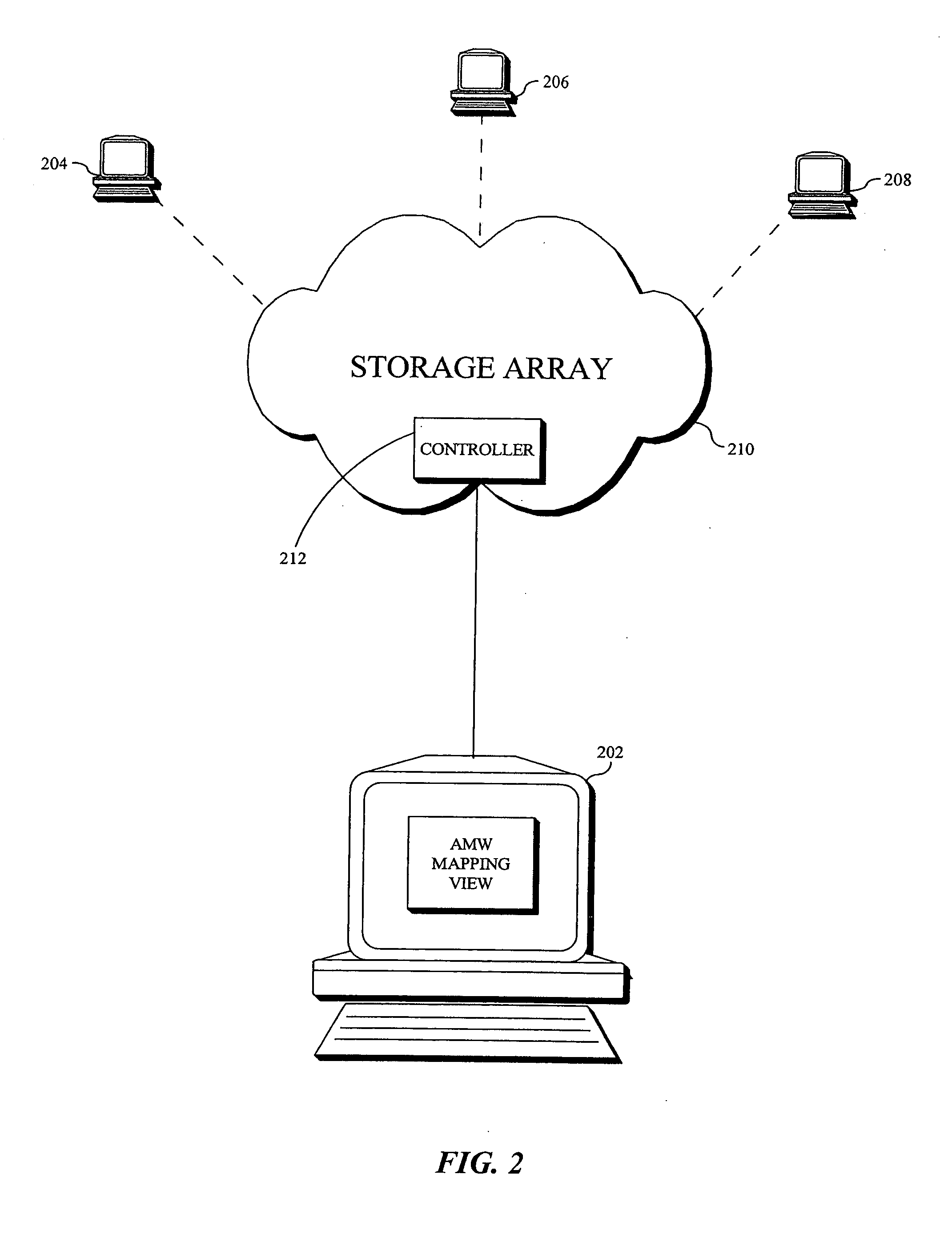

SAN based application recognition (SBAR) for RAID controller

InactiveUS20050289296A1Good application effectImprove application performanceInput/output to record carriersMemory systemsSoftware engineeringRAID

Owner:LSI CORPORATION

Method for preparing durable super-hydrophobic material

InactiveCN103214690AReduce manufacturing costGood application performanceMetallic material coating processesLiquid separationHydrophobic surfacesOil water

The invention relates to a method for preparing a durable super-hydrophobic material. The present invention is to solve the problem that conventional methods for preparing super-hydrophobic materials are high in production costs and super-hydrophobic surfaces are easy to fall off, thereby losing the hydrophobic performances. The method includes: first, preparing a nano particle dispersion liquid; second, preparing a composite material of a substrate and nano-particles; third, washing and drying; and fourth, soaking, washing and drying; namely obtaining the durable super-hydrophobic material. The invention has the advantages that: firstly, specialized expensive equipments and harsh experimental conditions are not needed in the preparation of the durable super-hydrophobic material by the method of the present invention, and the production cost is reduced; secondly, the durable super-hydrophobic material prepared by the invention is used in the oil water separation field, can be used repeatedly for 300 times or more, and has good durability performance. The present invention can be used for preparing the durable super-hydrophobic material.

Owner:HARBIN INST OF TECH

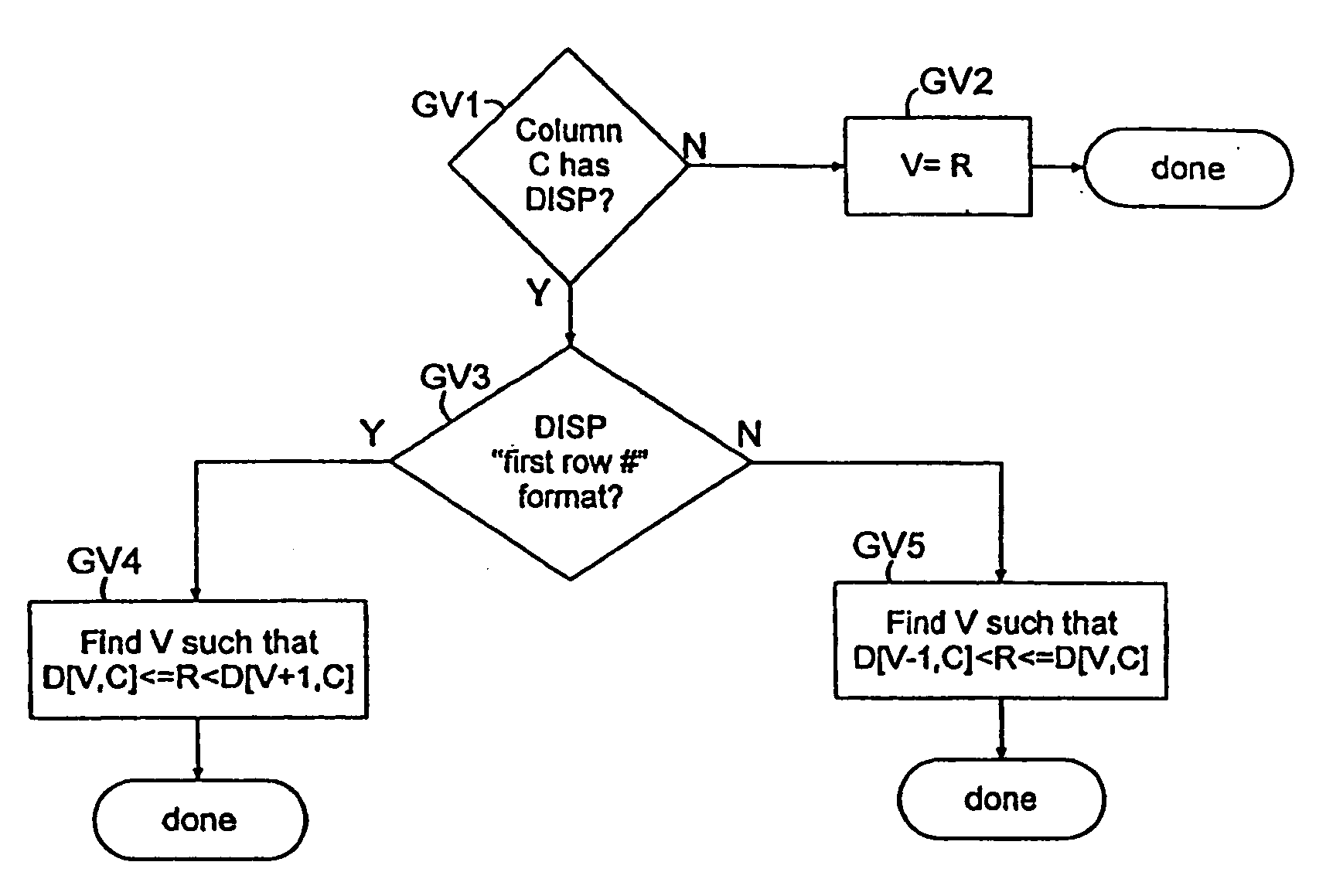

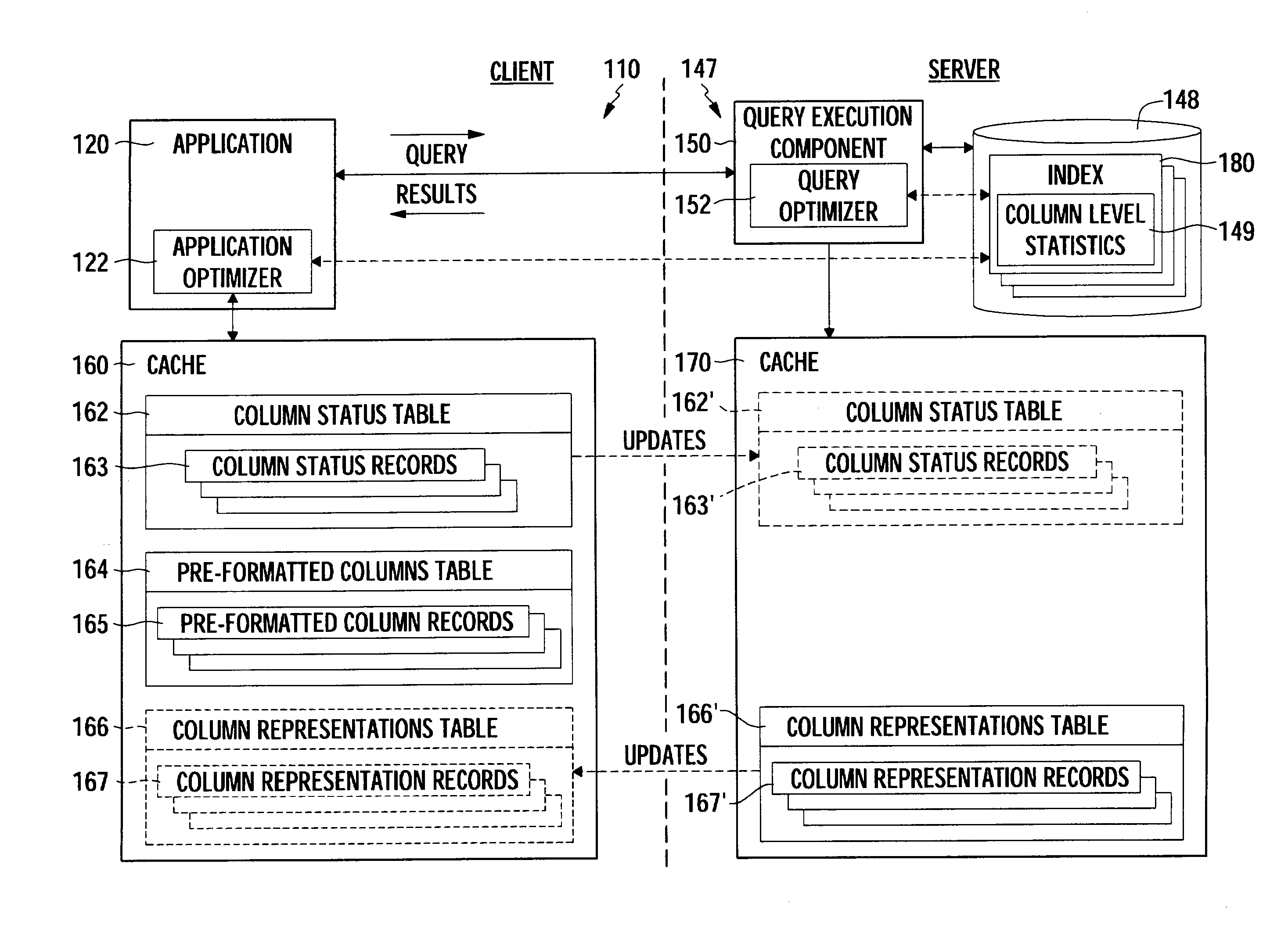

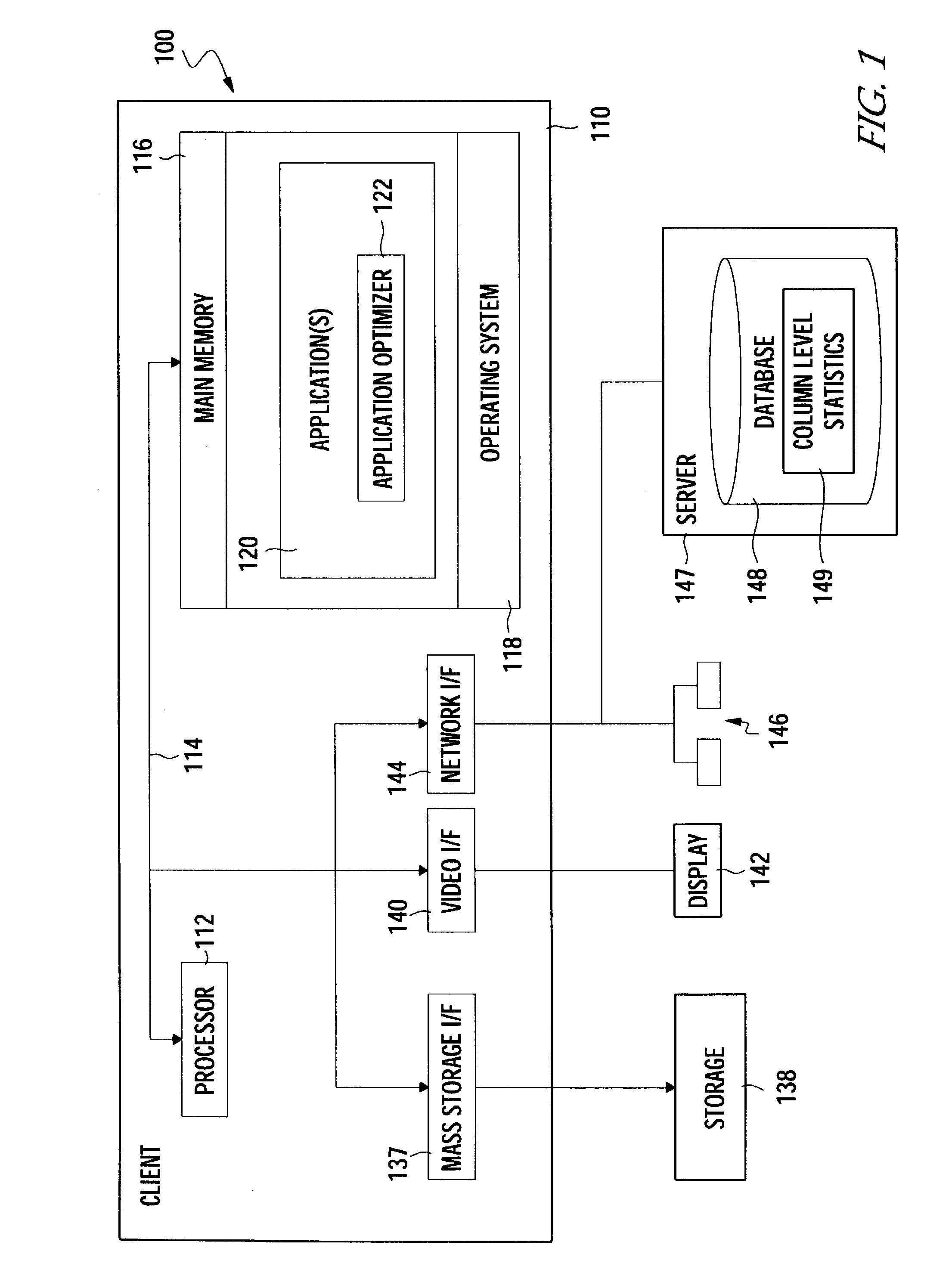

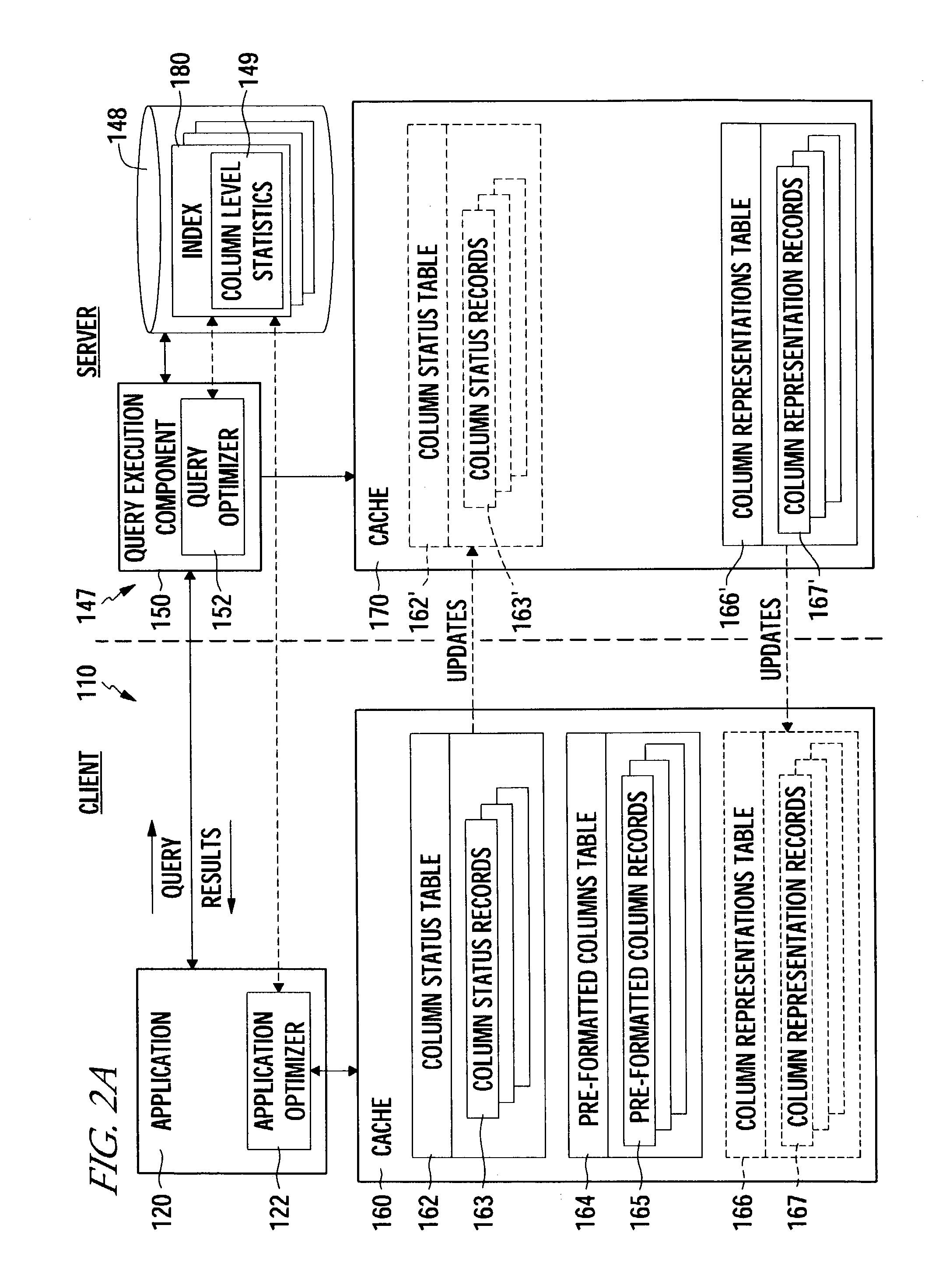

Pre-formatted column-level caching to improve client performance

InactiveUS7117222B2Improve application performanceReduce network trafficData processing applicationsDigital data information retrievalData sourceClient-side

Methods, articles of manufacture, and systems for improving the performance of a client accessing data from a database are provided. For some embodiments, the client may access and manipulate data from a data source. The client may obtain information regarding one or more distinct date values in a first format stored in a field of a data source to be queried by the client. Furthermore, the client may, based on the information, generate one or more data objects, each containing one of the distinct data values and a corresponding pre-formatted instance of the distinct data value in a second format, wherein the second format is an output format in which to display data values to a user; wherein the corresponding pre-formatted Instance of the distinct values are output for display instead of the respective distinct data value in each instance of a query result returning the respective distinct data value.

Owner:IBM CORP

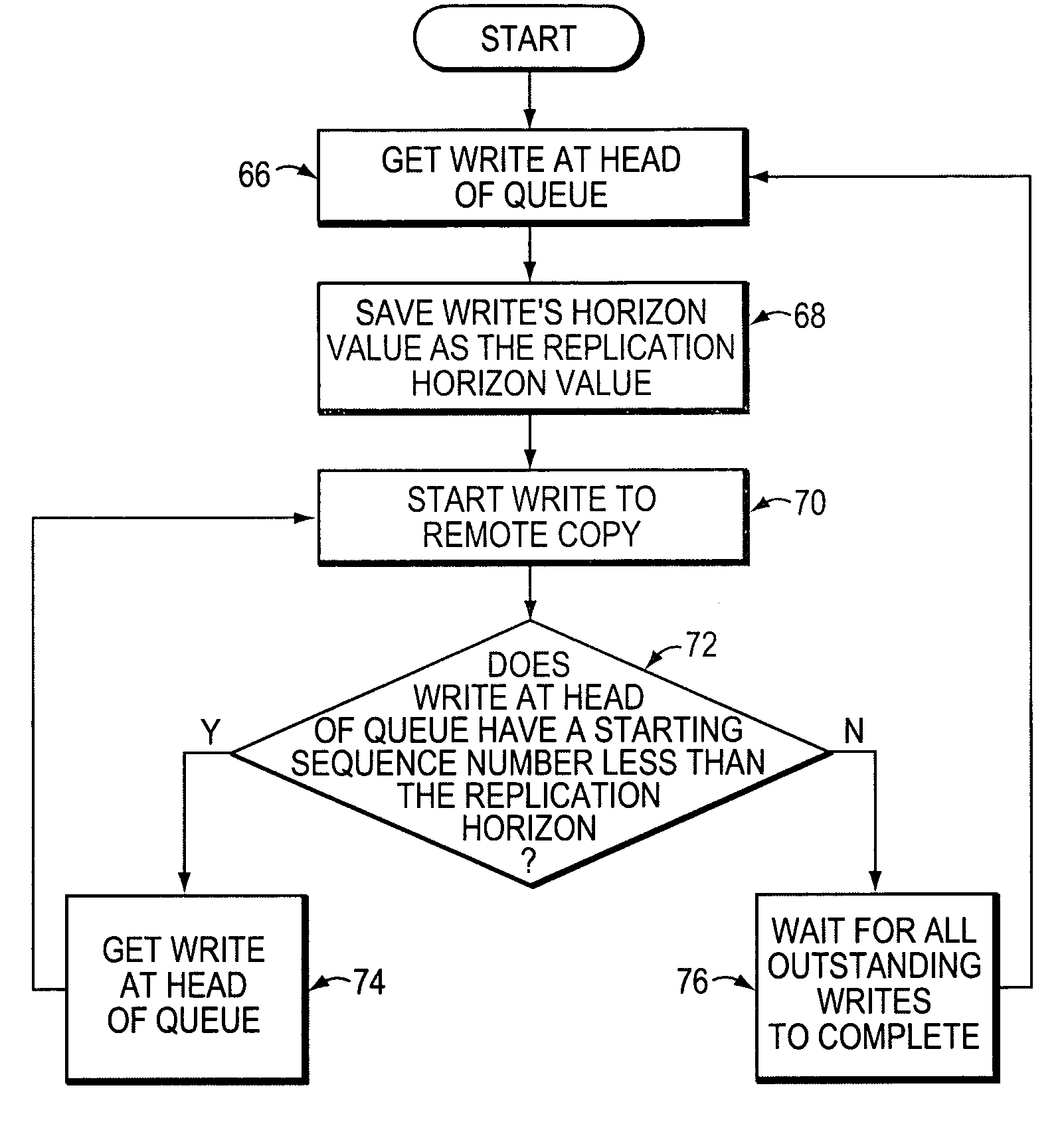

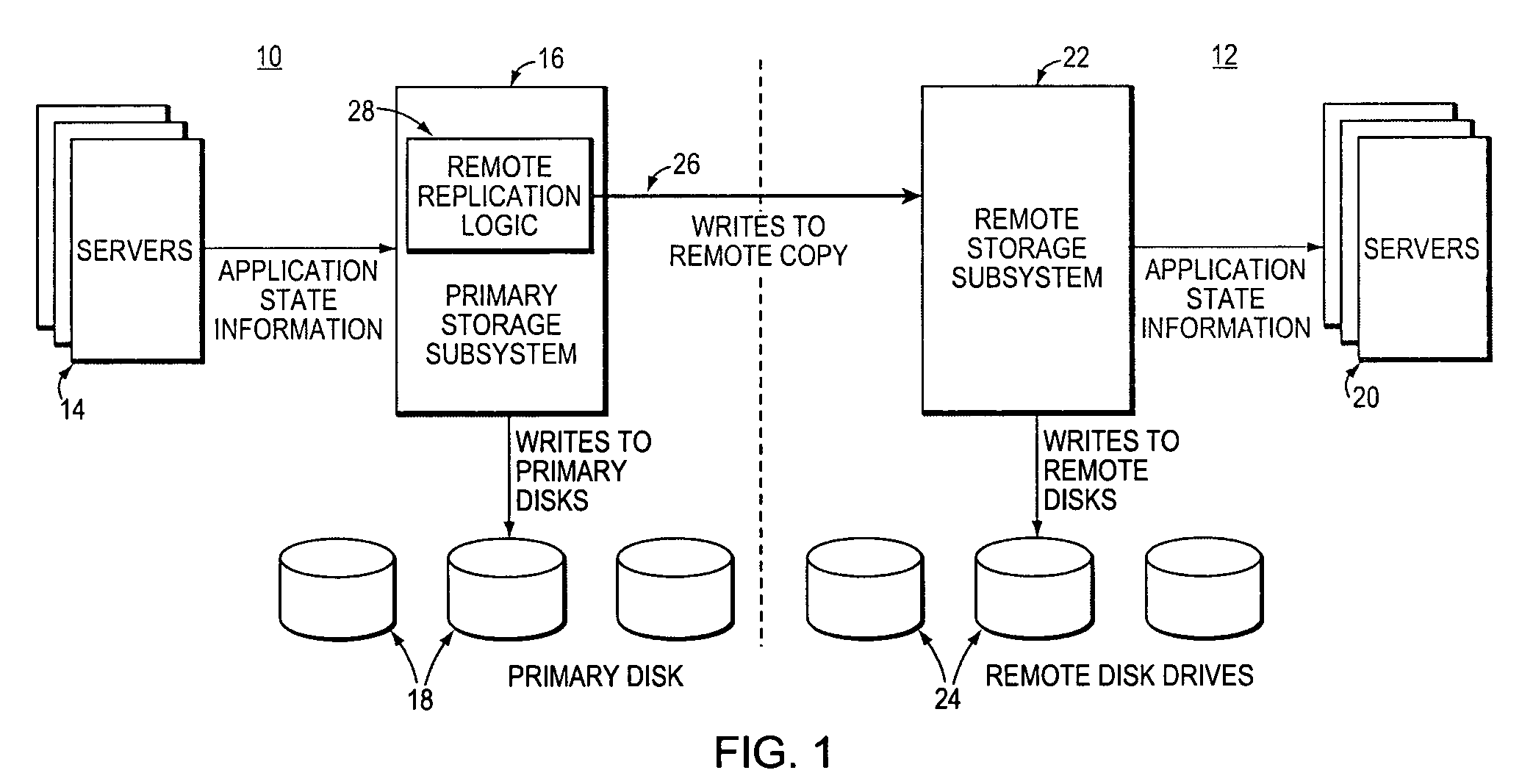

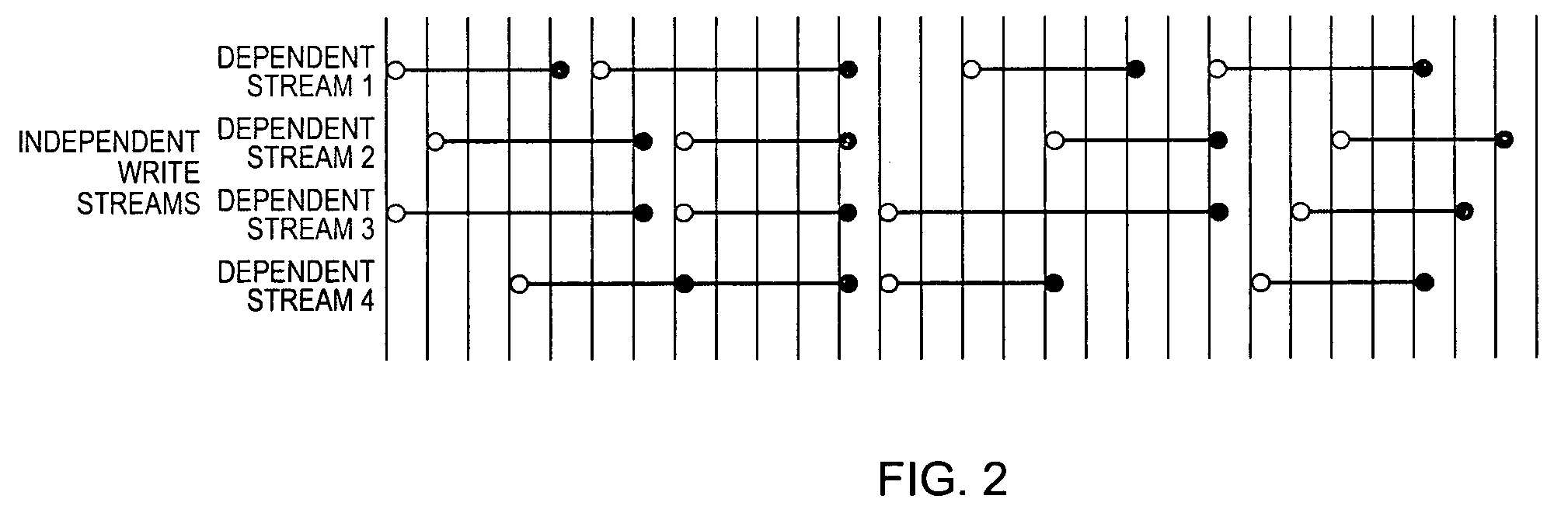

Method for maintaining high performance while preserving relative write I/O ordering for a semi-synchronous remote replication solution

ActiveUS7254685B1Improve application performanceData processing applicationsMemory loss protectionHorizonSerial code

A remote replication solution for a storage system receives a stream of data including independent streams of dependent writes. The method is able to discern dependent from independent writes. The method discerns dependent from independent writes by assigning a sequence number to each write, the sequence number indicating a time interval in which the write began. It then assigns a horizon number to each write request, the horizon number indicating a time interval in which the first write that started at a particular sequence number ends. A write is caused to be stored on a storage device, and its horizon number is assigned as a replication number. Further writes are caused to be stored on the storage device if the sequence number associated with the writes is less than the replication number.

Owner:EMC IP HLDG CO LLC

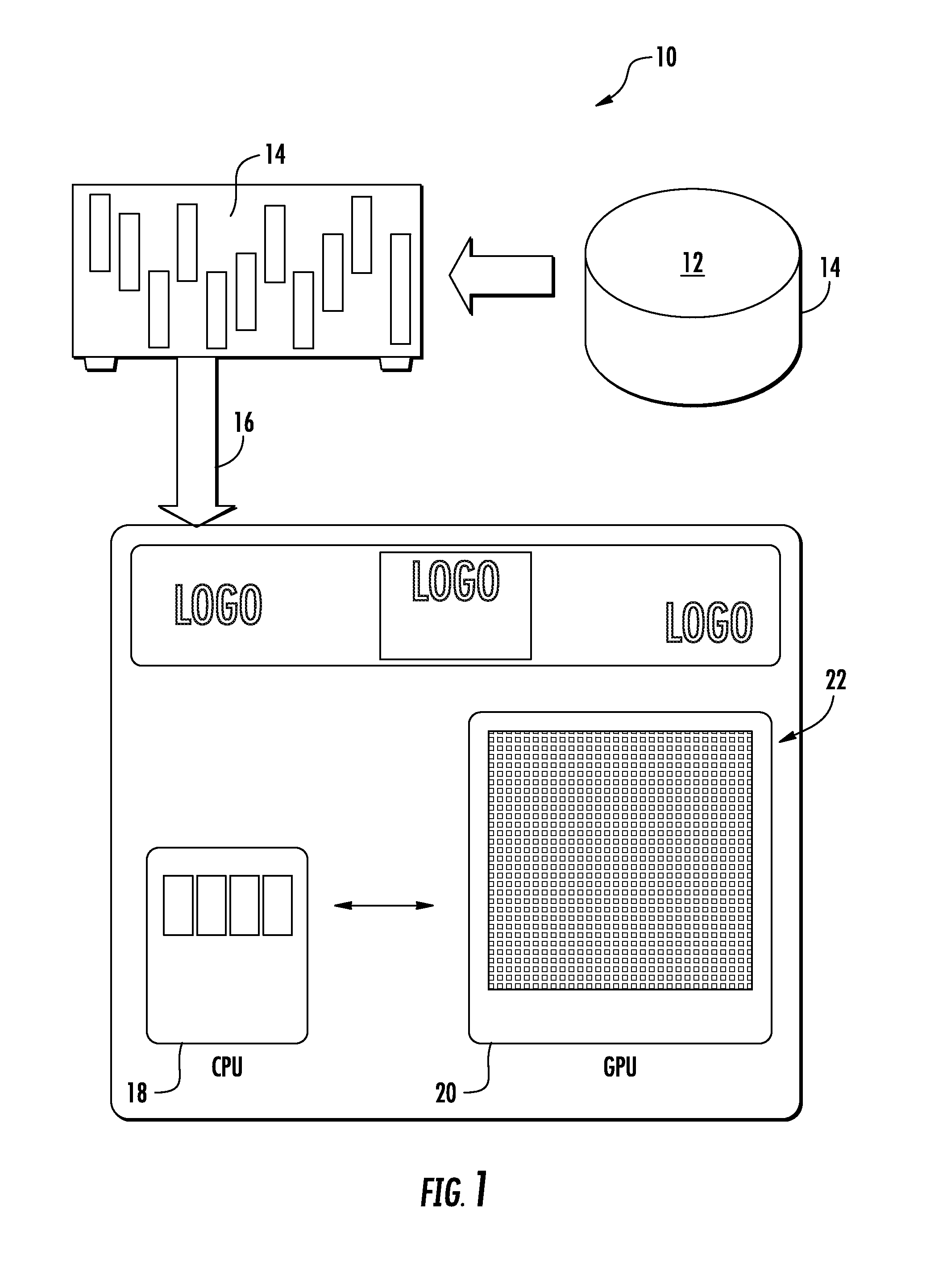

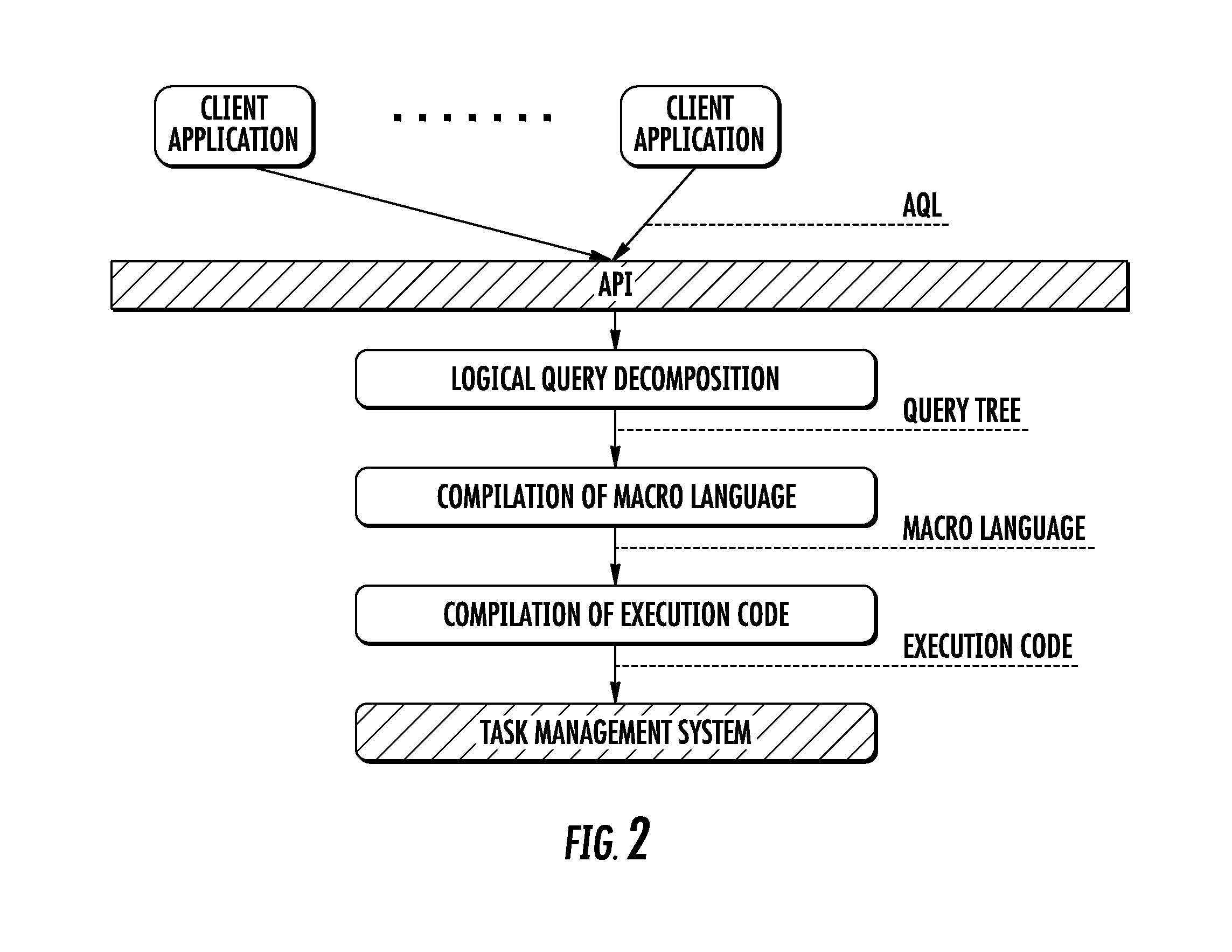

In-memory aggregation system and method of multidimensional data processing for enhancing speed and scalability

ActiveUS9411853B1Accelerates BI application performanceGuaranteed maximum utilizationMulti-dimensional databasesSpecial data processing applicationsGeneral purposeMultidimensional signal processing

An in-memory aggregation (IMA) system having a massive parallel hardware (HW) accelerated aggregation engine uses the IMA for providing maximal utilization of HW resources of any processing unit (PU), such as a central processing unit (CPU), general purpose GPU, special coprocessors, and like subsystems. The PU accelerates business intelligence (BI) application performance by massive parallel execution of compute-intensive tasks of in-memory data aggregation processes.

Owner:INTELIMEDIX LLC

Programmable intelligent search memory

InactiveUS20110113191A1Efficient and compact realizationImprove application performanceDigital data information retrievalDigital storageMemory architectureAutomaton

Memory architecture provides capabilities for high performance content search. The architecture creates an innovative memory that can be programmed with content search rules which are used by the memory to evaluate presented content for matching with the programmed rules. When the content being searched matches any of the rules programmed in the Programmable Intelligent Search Memory (PRISM) action(s) associated with the matched rule(s) are taken. Content search rules comprise of regular expressions which are converted to finite state automata and then programmed in PRISM for evaluating content with the search rules.

Owner:PANDYA ASHISH A

Kernel mode graphics driver for dual-core computer system

ActiveUS7773090B1Improve graphic application performanceImprove application performanceDigital computer detailsImage data processing detailsProcessing coreDual core

A kernel-mode graphics driver (e.g., a D3D driver running under Microsoft Windows) exploits the parallelism available in a dual-core computer system. When an application thread invokes the kernel-mode graphics driver, the driver creates a second (“auxiliary”) thread and binds the application thread to a first one of the processing cores. The auxiliary thread, which generates instructions to the graphics hardware, is bound to a second processing core. The application thread transmits each graphics-driver command to the auxiliary thread, which executes the command. The application thread and auxiliary thread can execute synchronously or asynchronously.

Owner:NVIDIA CORP

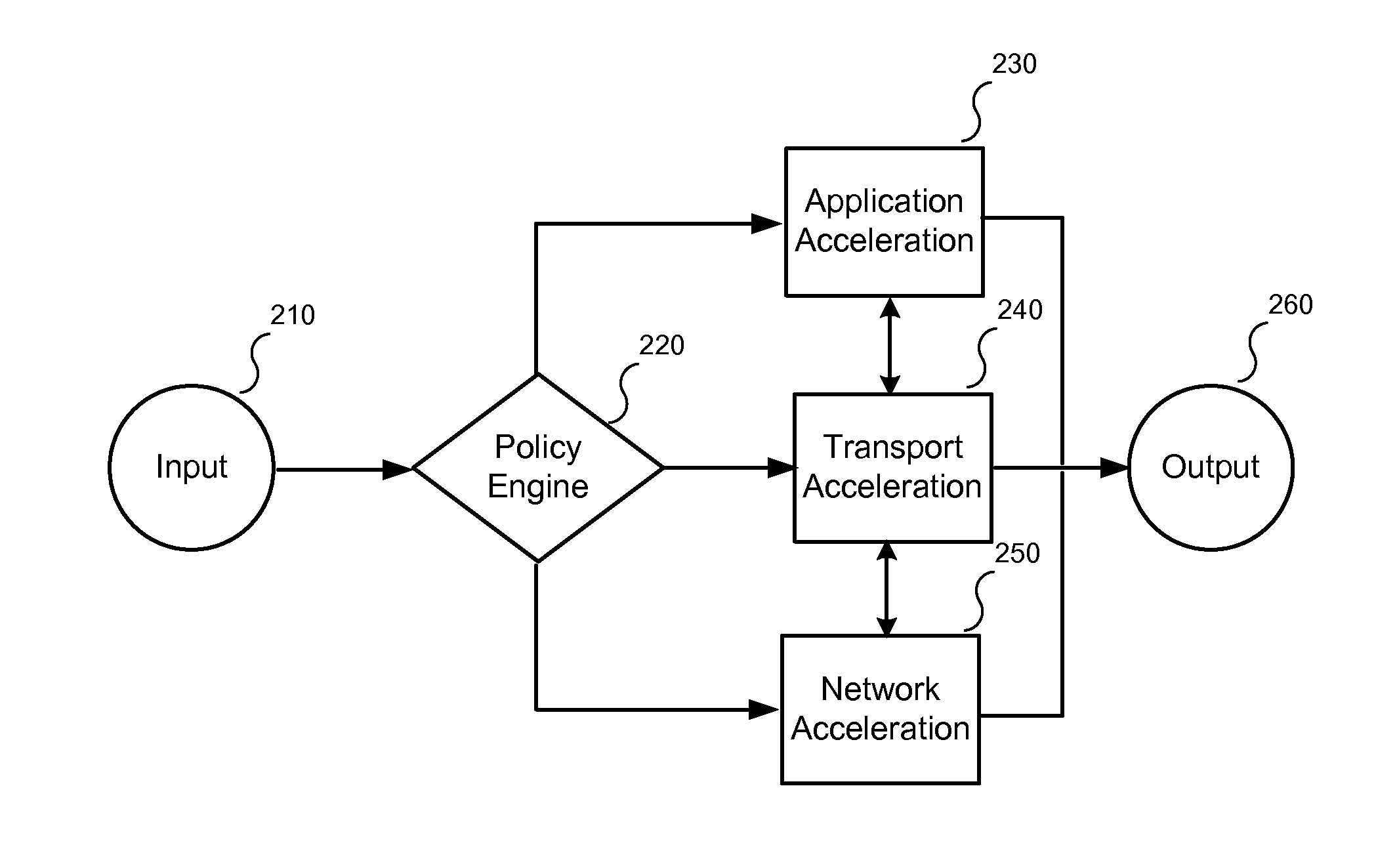

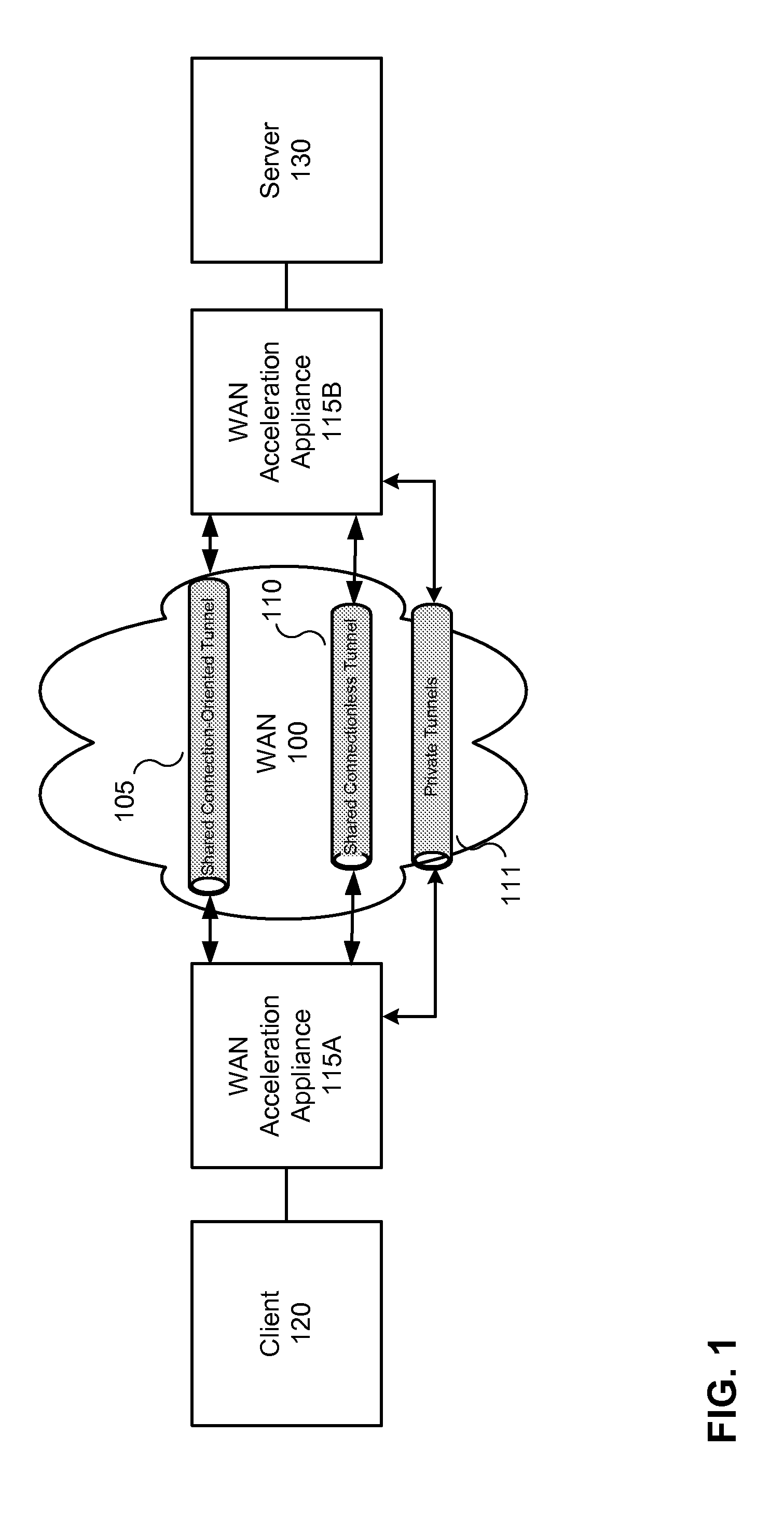

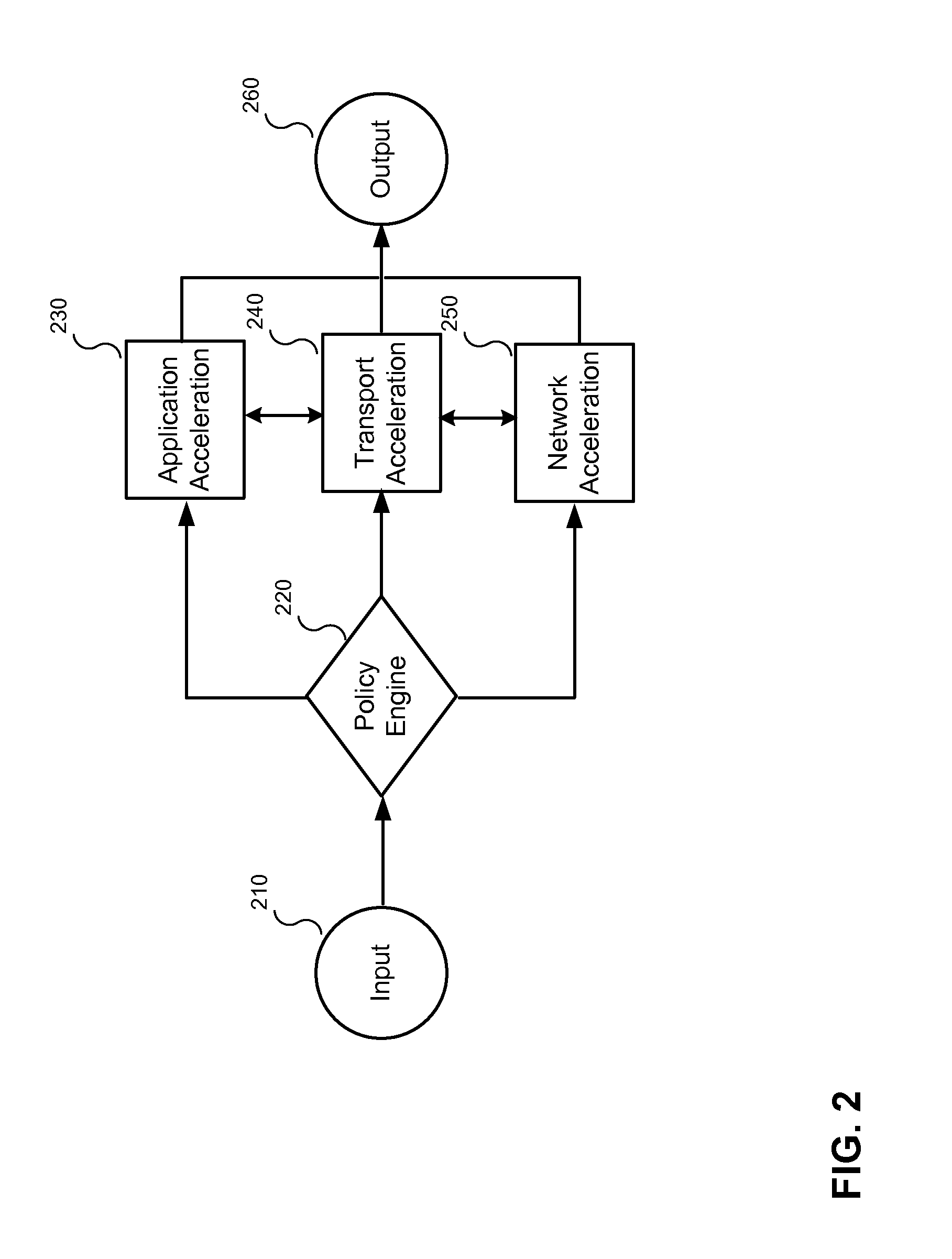

Accelerating data communication using tunnels

ActiveUS7873060B2Improve application performanceFacilitate data communicationData switching by path configurationMultiple digital computer combinationsTraffic capacityHome area network

Methods and systems are provided for increasing application performance and accelerating data communications in a WAN environment. According to one embodiment, a method is provided for securely accelerating network traffic. One or more tunnels are established between a first wide area network (WAN) acceleration device, interposed between a public network and a first local area network (LAN), and a second WAN acceleration device, interposed between a second LAN and the public network. Thereafter, network traffic exchanged between the first LAN and the second LAN is securely accelerated by (i) multiplexing multiple data communication sessions between the first LAN and the second LAN onto the one or more tunnels, (ii) performing one or more of application acceleration, transport acceleration and network acceleration on the data communication sessions and (iii) performing one or more security functions on the data communication sessions.

Owner:FORTINET

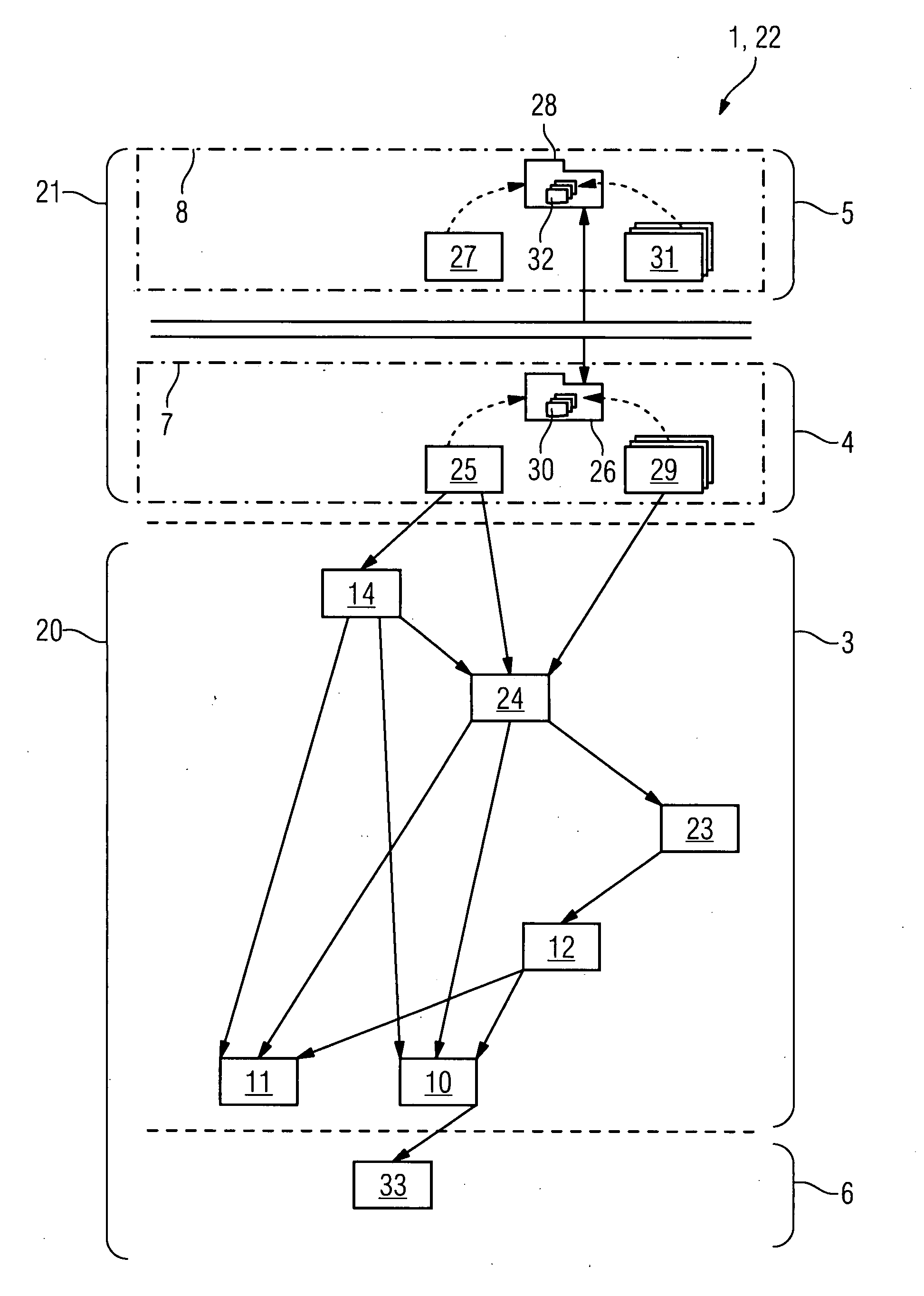

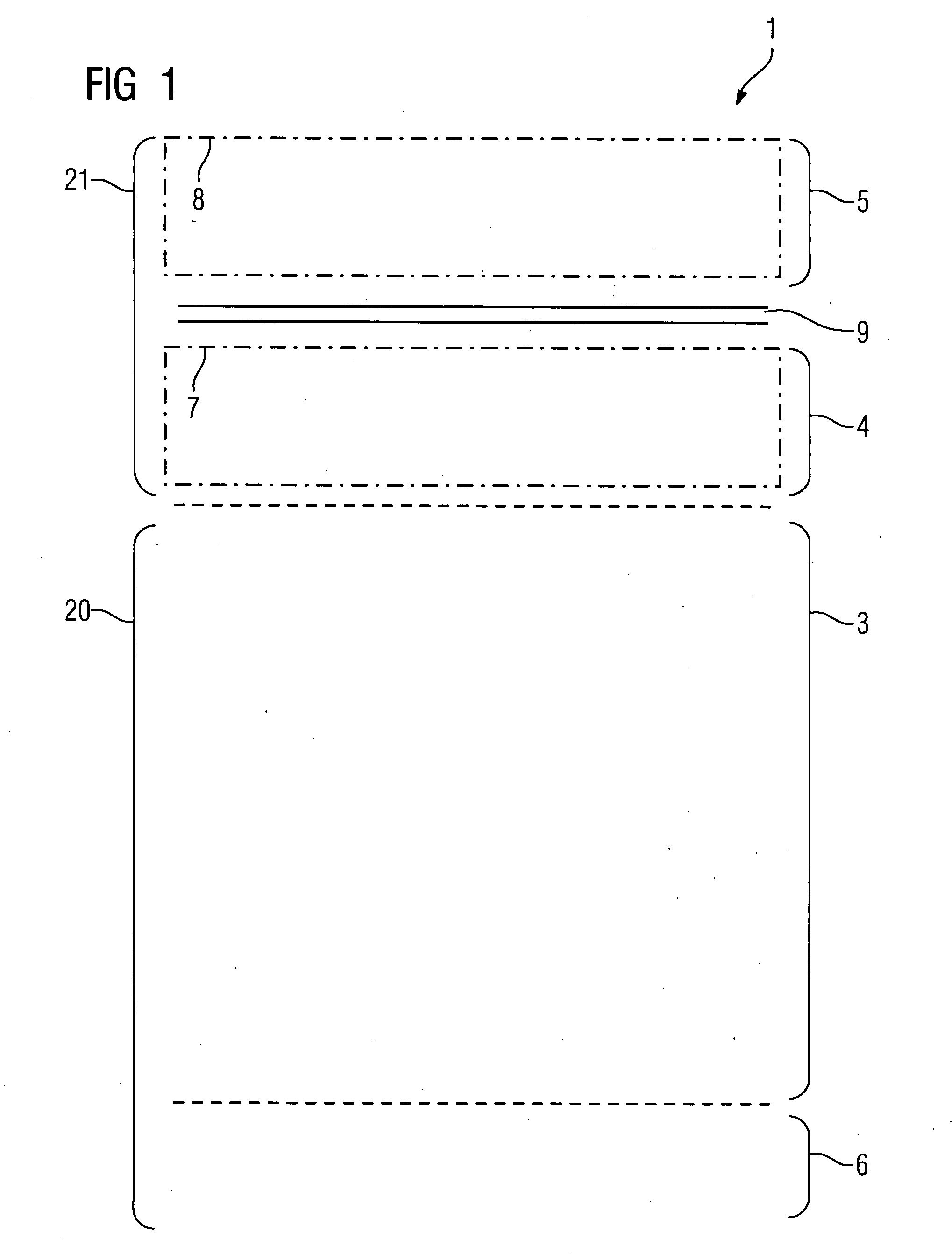

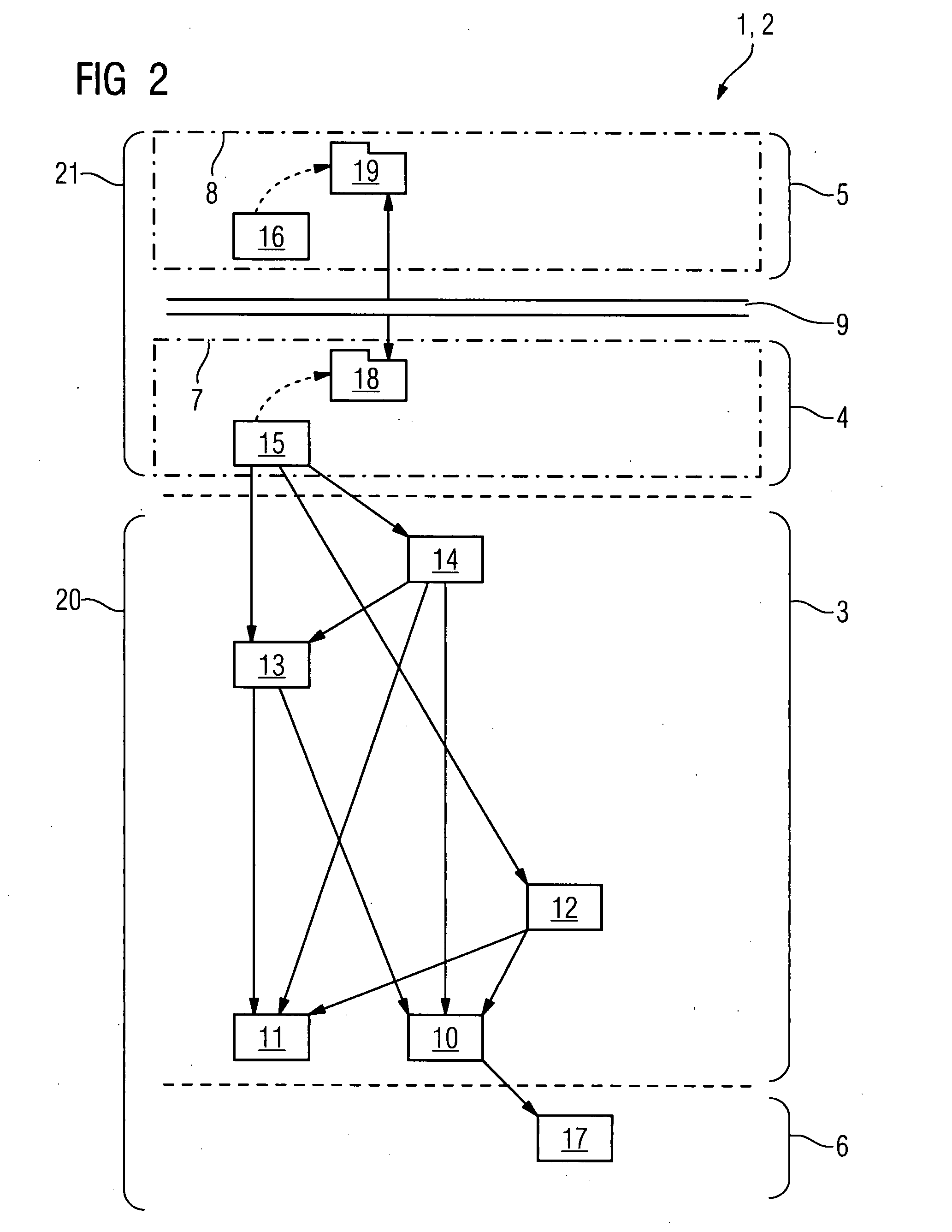

System for creating and running a software application for medical imaging

InactiveUS20080082966A1Easy to handleSimple structureCharacter and pattern recognitionSoftware designApplication programming interfaceMedical imaging

An effective and user-friendly system is disclosed for creating and running a software application for medical imaging, having at least one framework which has a service layer and a superordinate toolkit layer as an application programming interface, wherein functions of the toolkit layer and of the service layer are grouped in each case in a number of components which are arranged strictly hierarchically in such a way that any component can only ever be accessed from a superordinate component.

Owner:SIEMENS HEALTHCARE GMBH

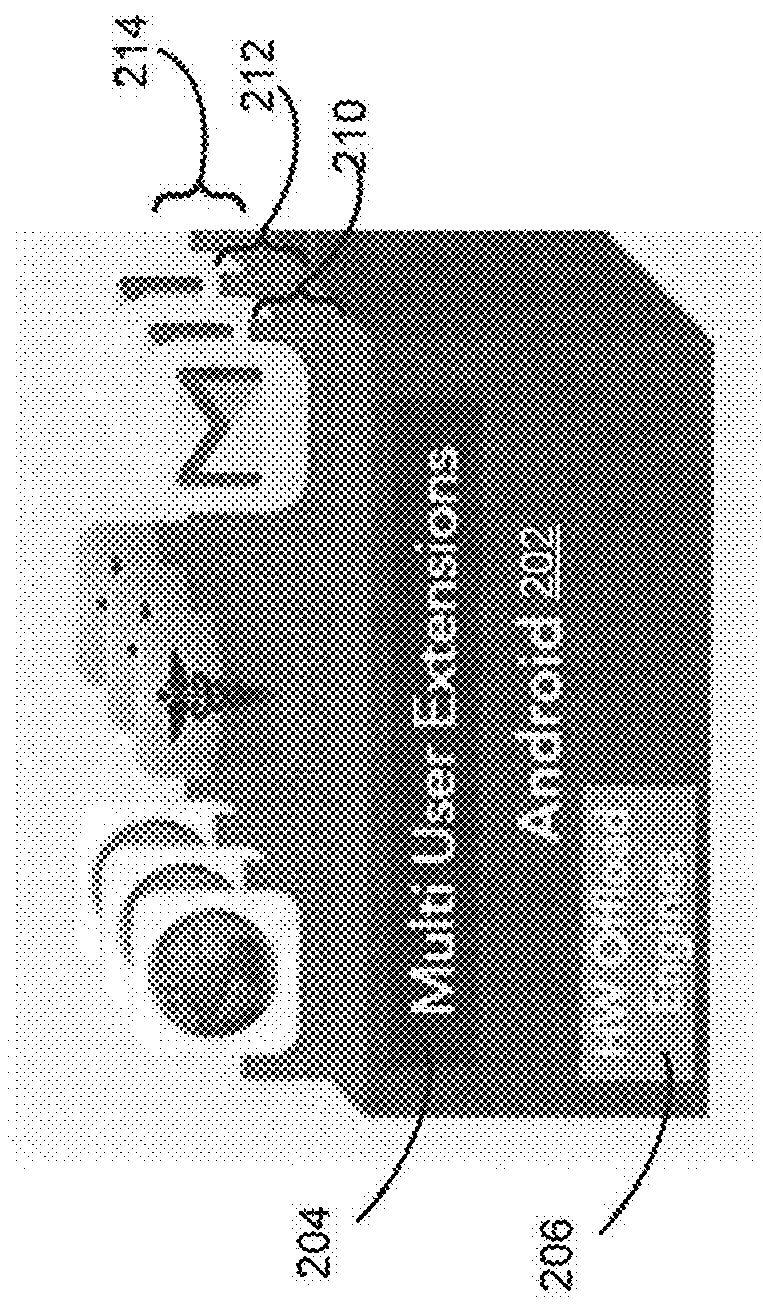

Offloading application components to edge servers

InactiveUS20130254258A1Improve application performanceImprove performanceSecuring communicationEdge serverApplication software

An apparatus and method for off-loading application components to edge servers are provided. An application is made edge-aware by defining which components of the application may be run from an edge server, and which components cannot be run from an edge server. When a request is received that is to be processed by an application on an origin server, a determination is made as to whether the application contains edgable components. If so, an edgified version of the application is created. When a request is received that is handled by a component that may be run on the edge server, the request is handled by that component on the edge server. When a request is received that is handled by a component that is not edgable, the request is passed to a proxy agent which then provides the request to a broker agent on the origin server.

Owner:INT BUSINESS MASCH CORP

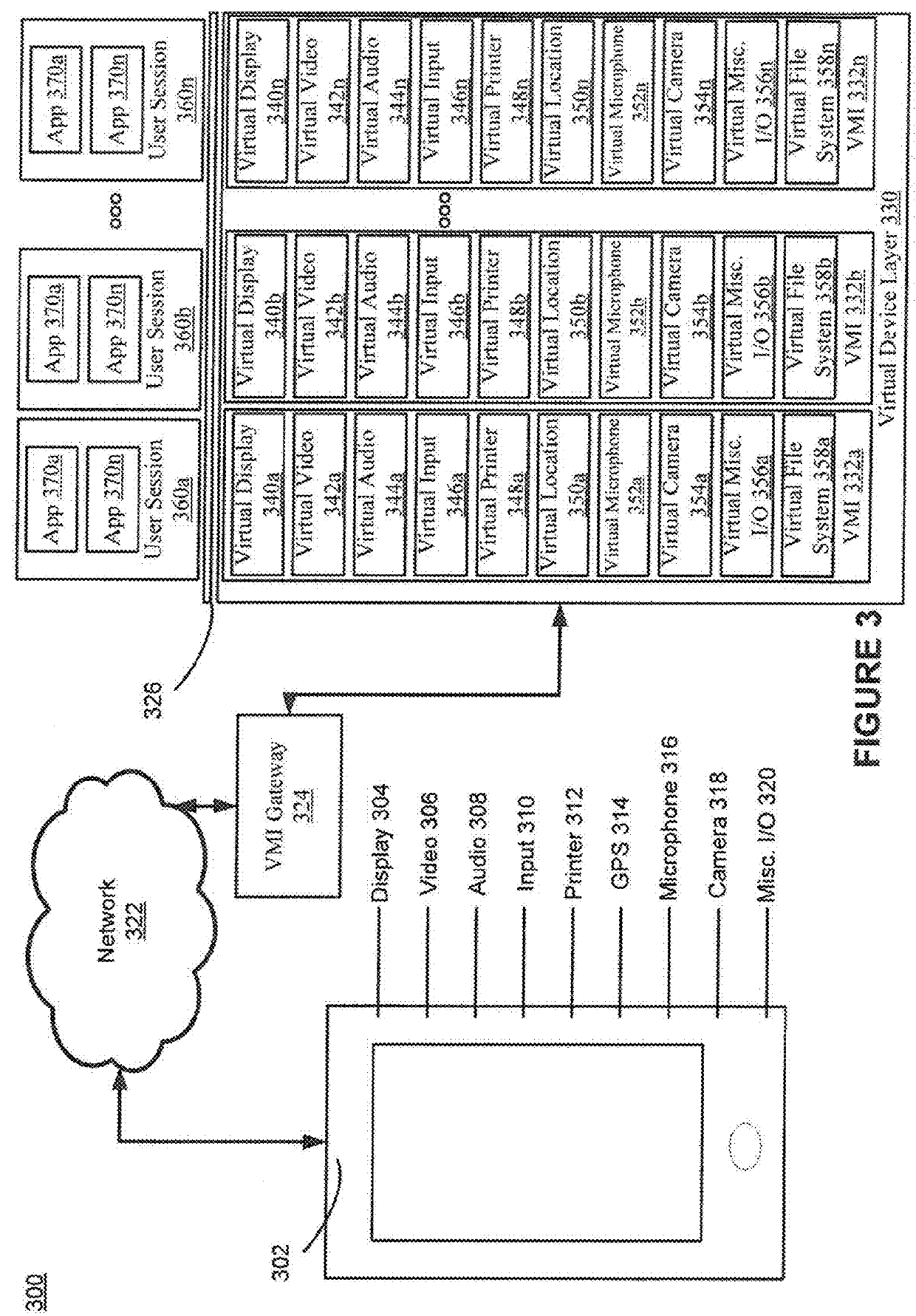

Method for cloud based mobile application virtualization

ActiveUS20180109625A1Reduce in quantityImprove performanceTransmissionExecution for user interfacesVirtualizationCloud base

A system and method for mobile application virtualization. The method includes creating a plurality of user sessions each comprising a unique session ID and allocating server resources to each user session. The method further includes creating a plurality of sets of virtual devices. Each set of the plurality of sets of virtual devices is associated with a respective session ID. The method further includes executing an application of a plurality of applications within a user session of the plurality of user sessions and receiving a request from the application. The method further includes sending the request to a first virtual device of a set of virtual devices based on a session ID. The sending is performed by a single operating system and the single operating system is configured to route requests between applications and the plurality of sets of virtual devices based on the session IDs.

Owner:SIERRAWARE LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com