Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

5259 results about "Message queue" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, message queues and mailboxes are software-engineering components used for inter-process communication (IPC), or for inter-thread communication within the same process. They use a queue for messaging – the passing of control or of content. Group communication systems provide similar kinds of functionality.

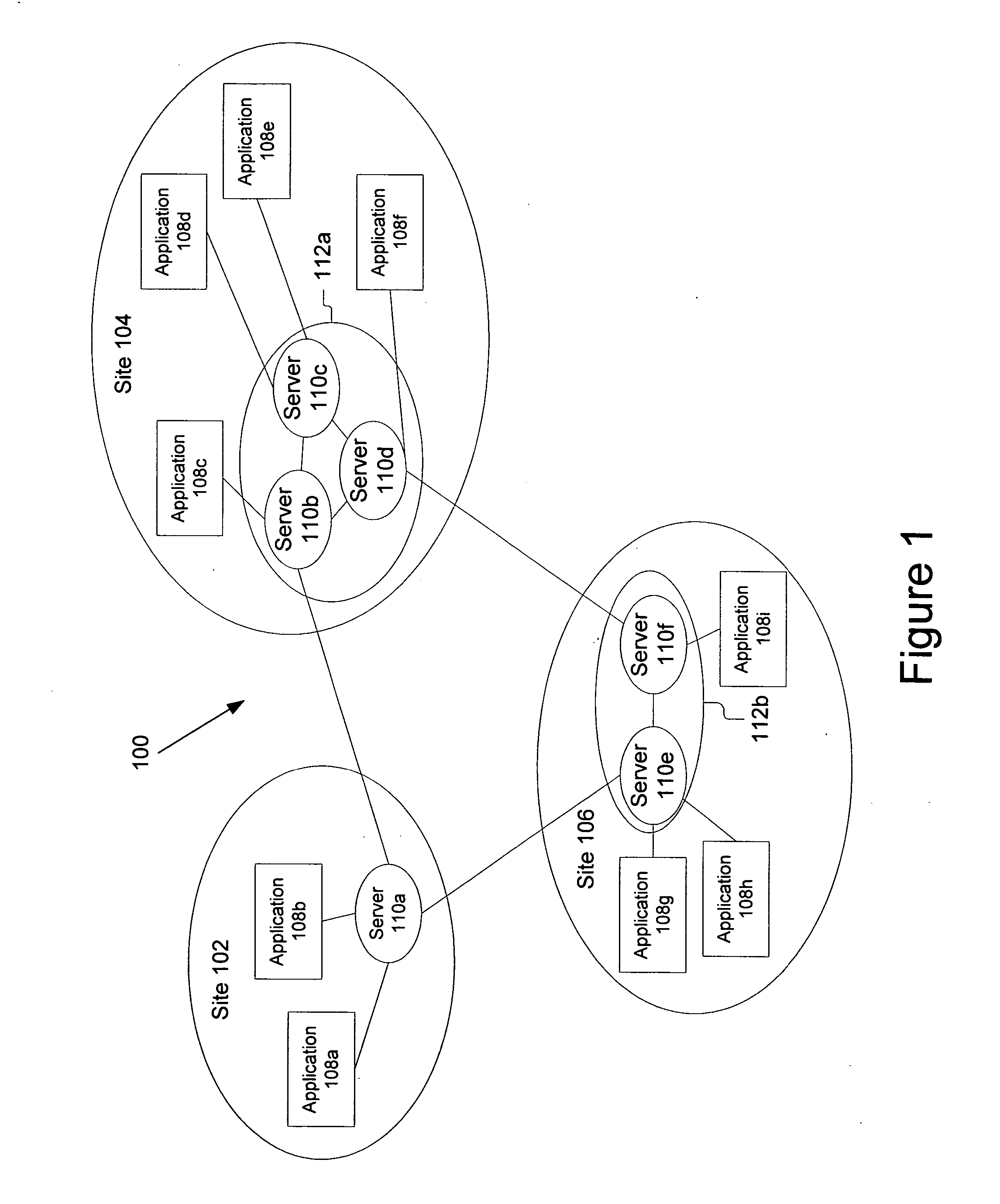

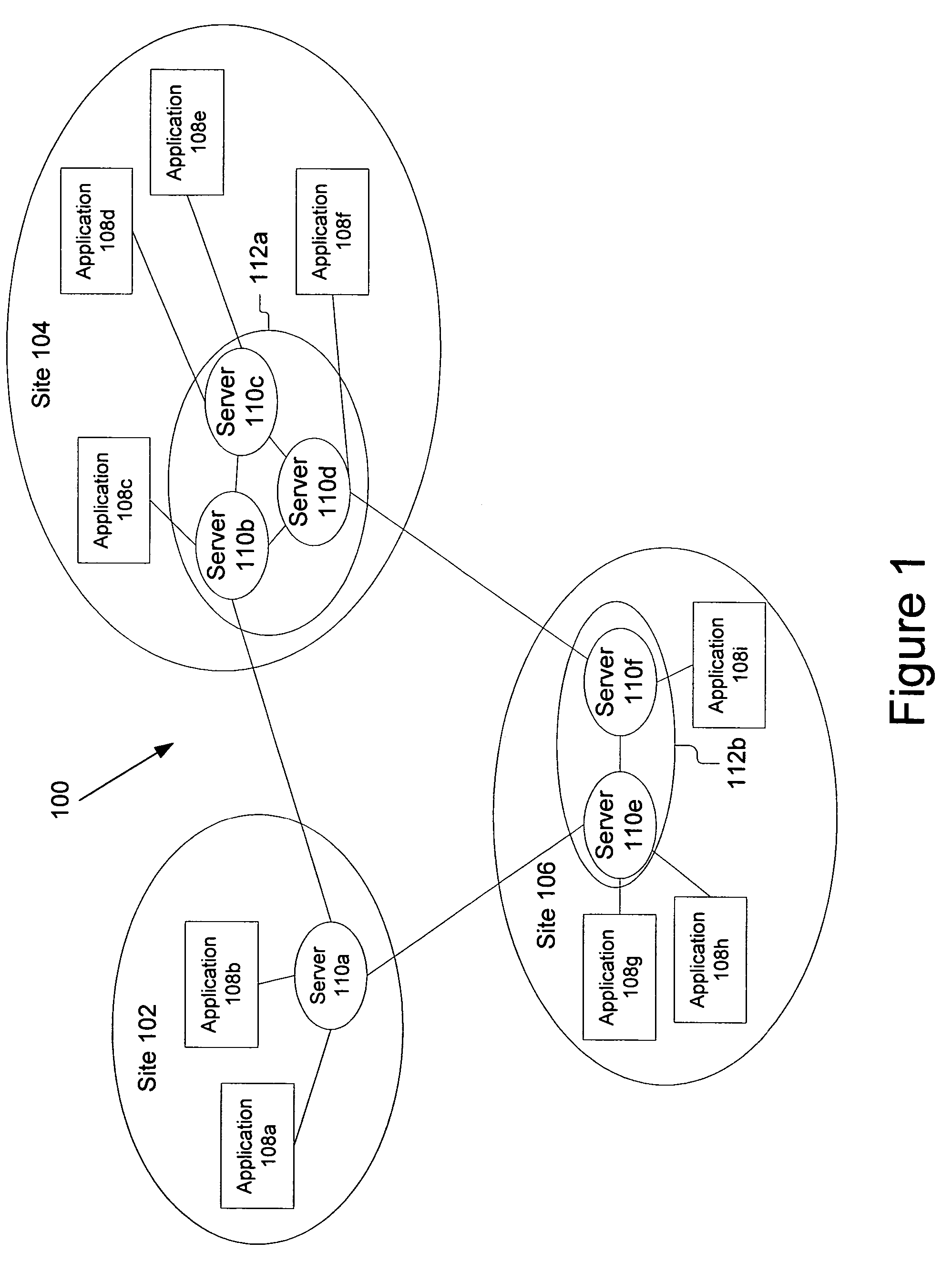

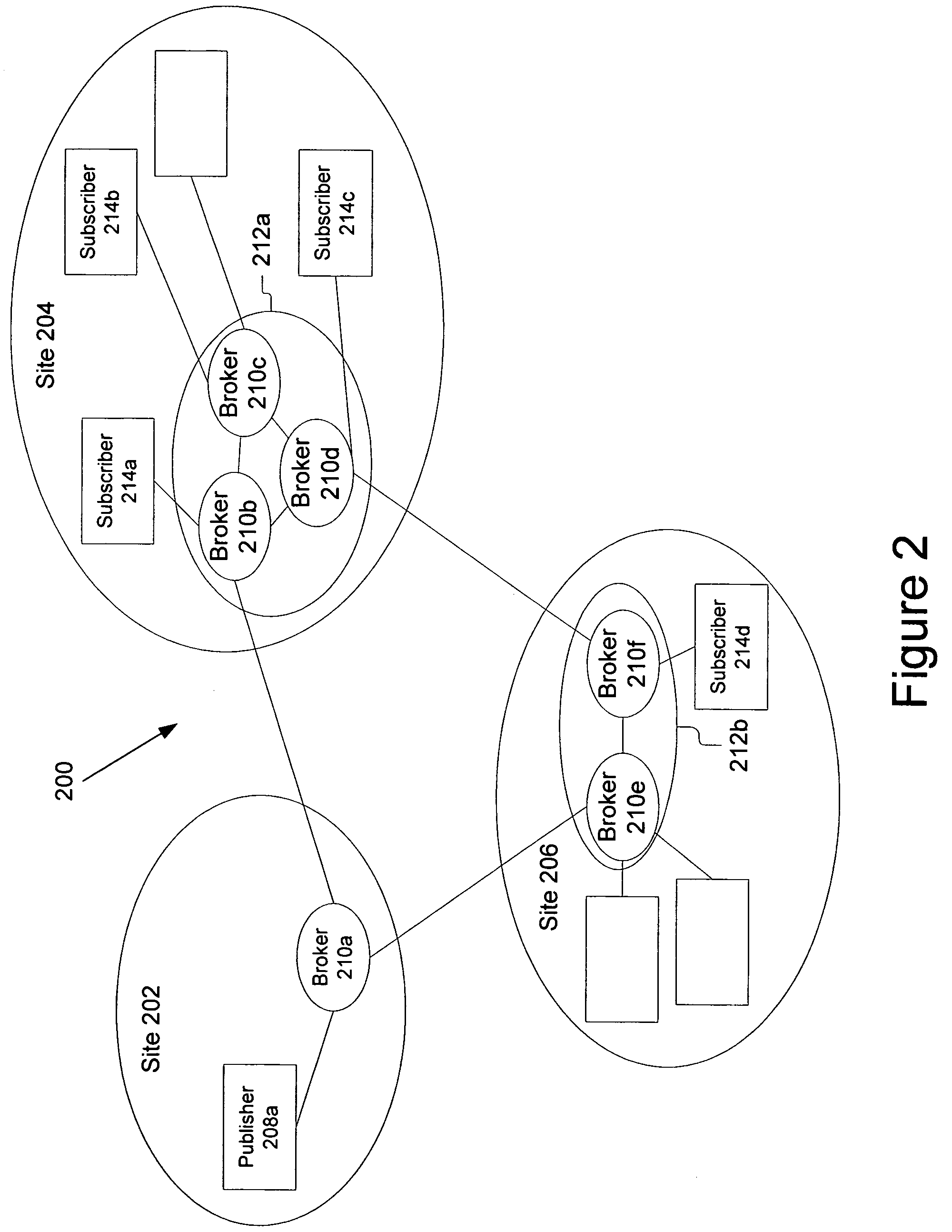

Dynamic subscription and message routing on a topic between publishing nodes and subscribing nodes

ActiveUS20050021622A1Allocation is accurateAutomatically removing subscribersMultiple digital computer combinationsData switching networksMessage queueMessage routing

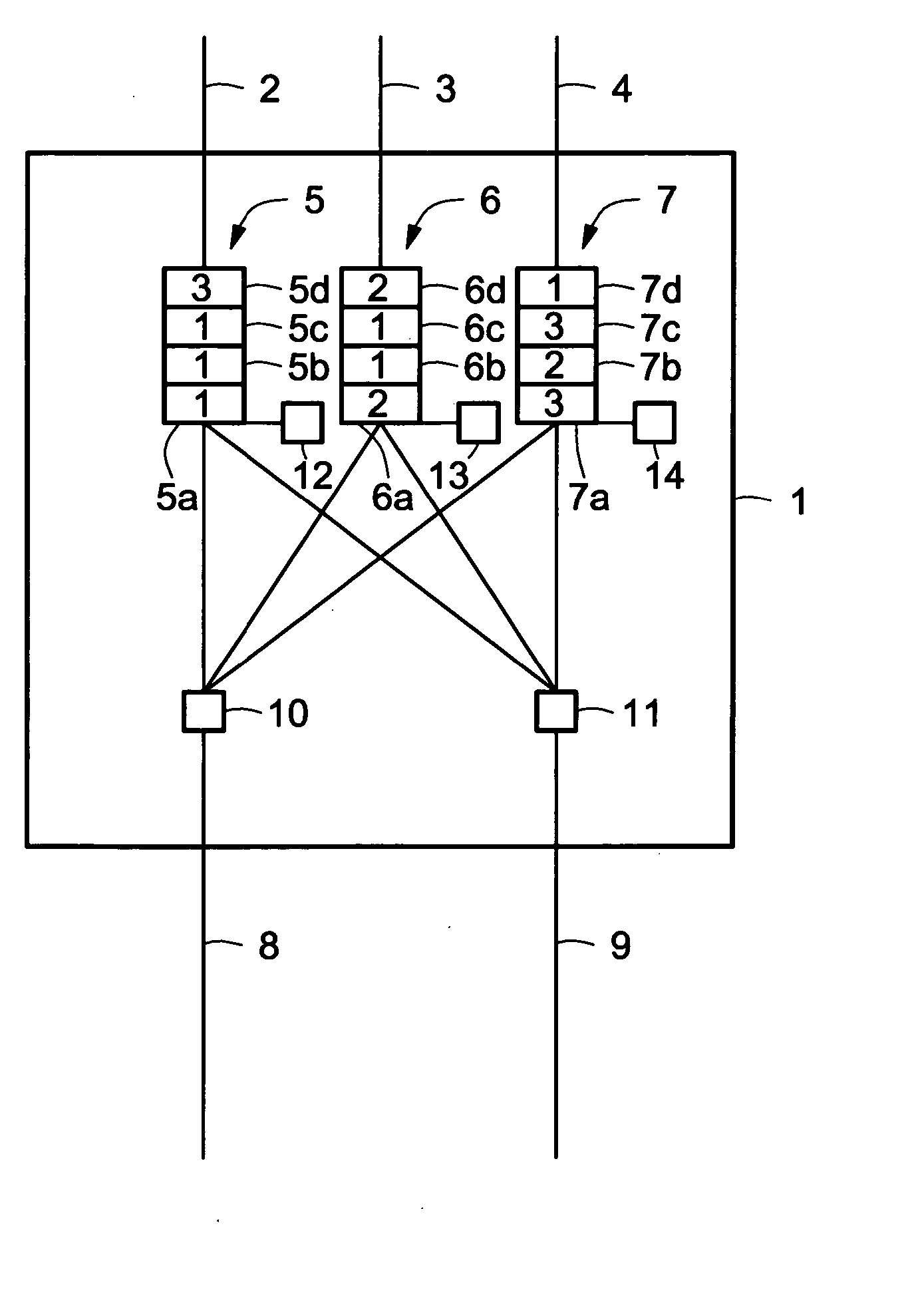

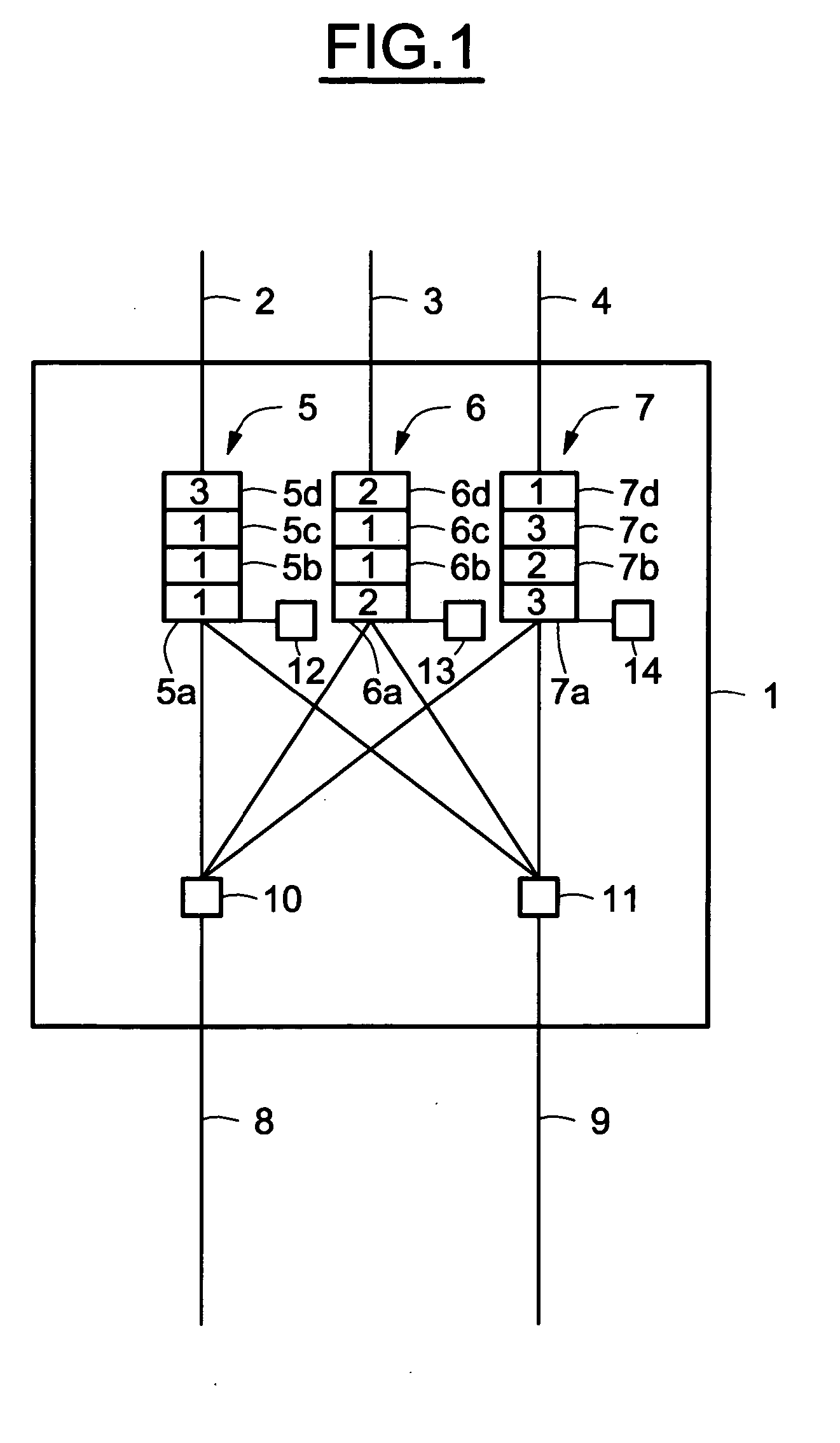

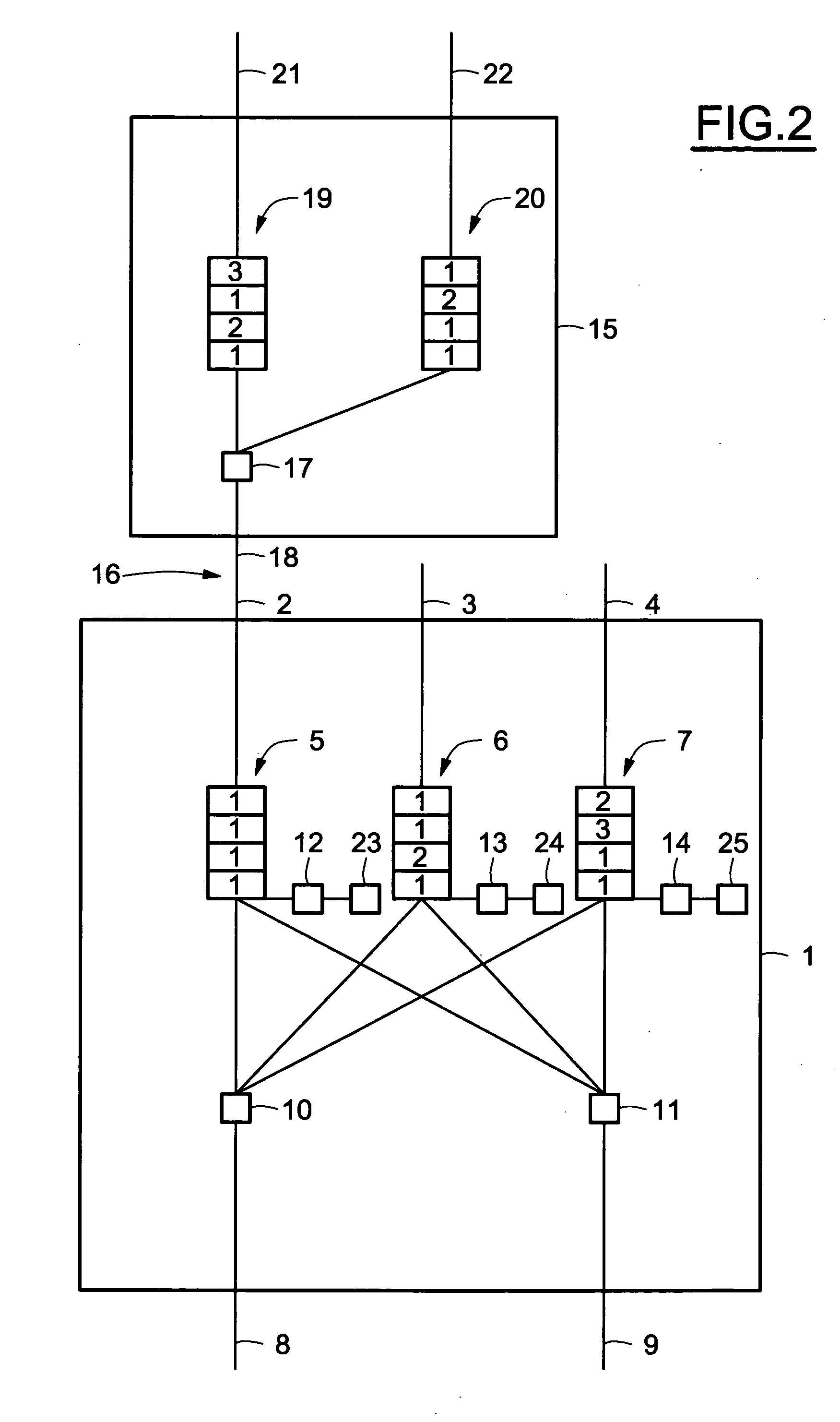

A system for dynamic message routing on a topic between publishing nodes and subscribing nodes includes a plurality of message queues, at least one topic / node table, a subscribing module, a publishing module, and other modules to send messages between one or more publisher and one or more subscribers. These modules are coupled together by a bus in a plurality of nodes and provide for the dynamic message routing on a topic between publishing nodes and subscribing nodes. The message queues store messages at each node for delivery to subscribers local to that node. The topic / node table lists which clients subscribe to which topics, and is used by the other modules to ensure proper distribution of messages. The subscribing module is use to establish a subscription to a topic for that node. The publishing module is used to identify subscribers to a topic and transmit messages to subscribers dynamically. The other modules include various devices to optimize message communication in a publish / subscribe architecture operating on a distributed computing system. The present invention also includes a number of novel methods including: a method for publishing a message on a topic, a method for forwarding a message on a topic, a method for subscribing to messages on a topic, a method for automatically removing subscribers, a method for direct publishing of messages, and methods for optimizing message transmission between nodes.

Owner:AUREA SOFTWARE

Message queuing method, system, and program product with reusable pooling component

InactiveUS7240089B2Prevent excessive making and breaking of connections and associated overheadMultiprogramming arrangementsMultiple digital computer combinationsMessage queueClient-side

A pooling mechanism to limit repeated connections in message queuing systems and to prevent excessive making and breaking of connections and associated overhead. The invention does this by providing a layer between a client and the message queuing system where connections are pooled. The pooling mechanism of the invention prevents a system from losing too many resources through the repeated making and breaking of excessive message queuing system connections.

Owner:SNAP INC

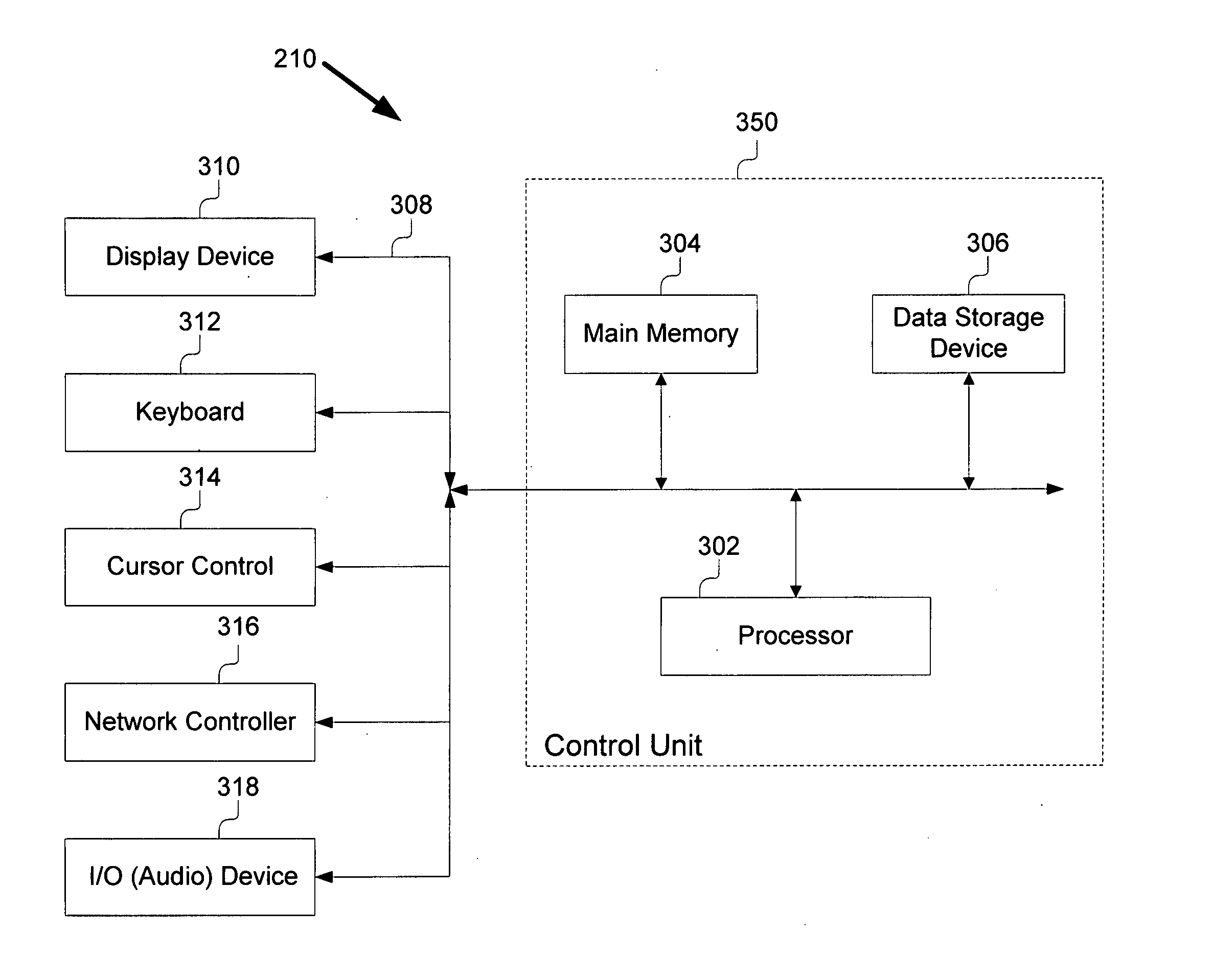

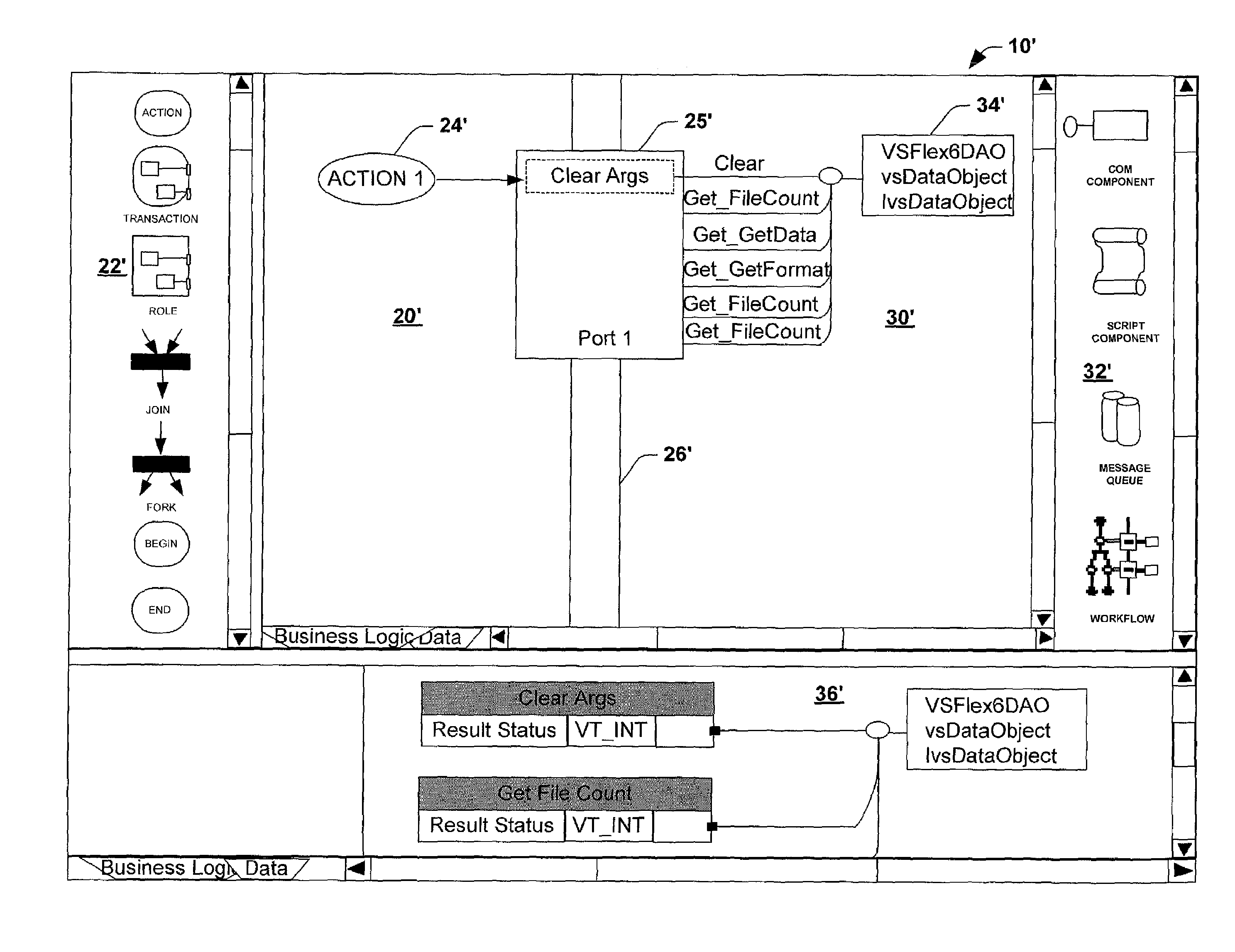

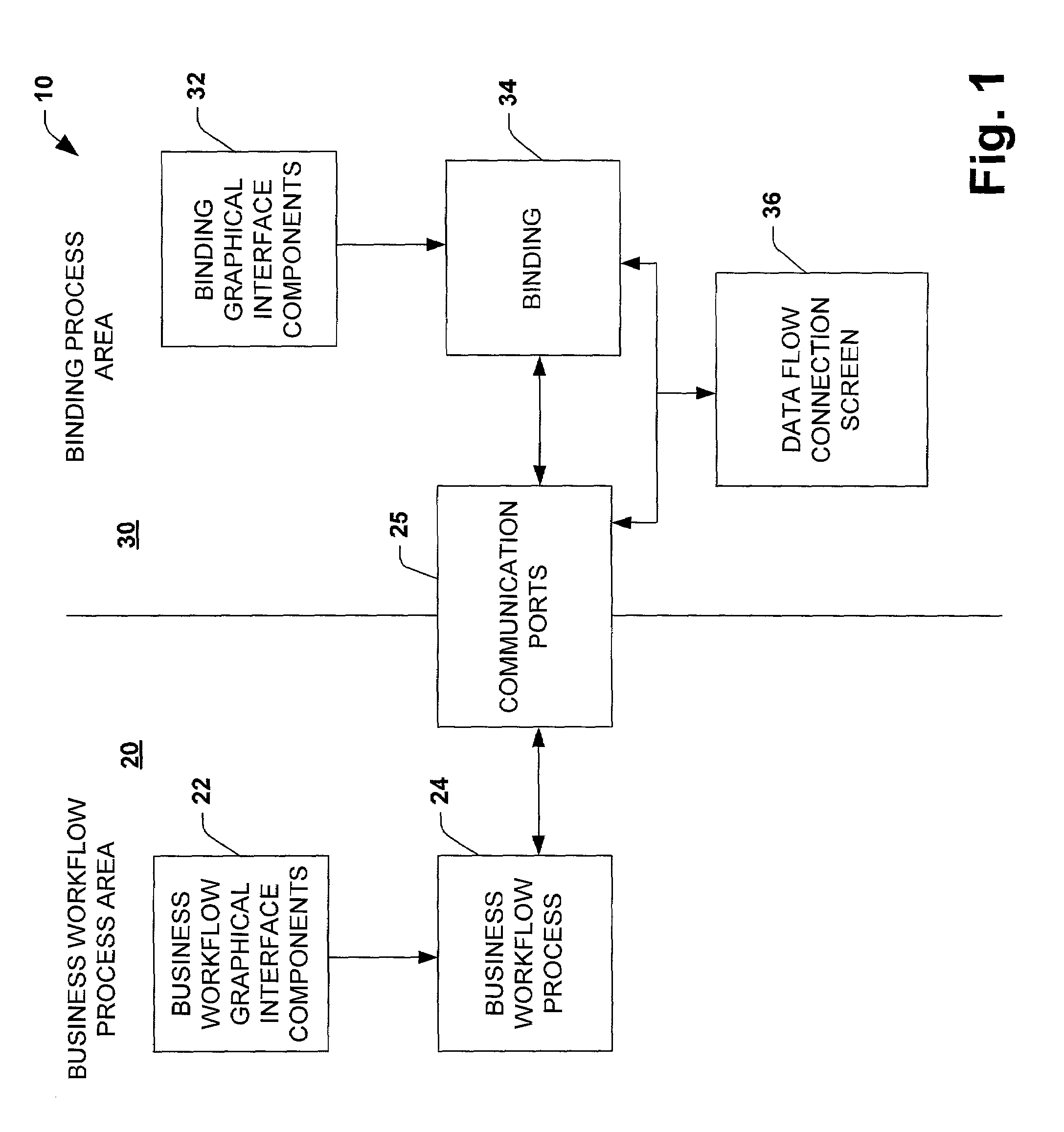

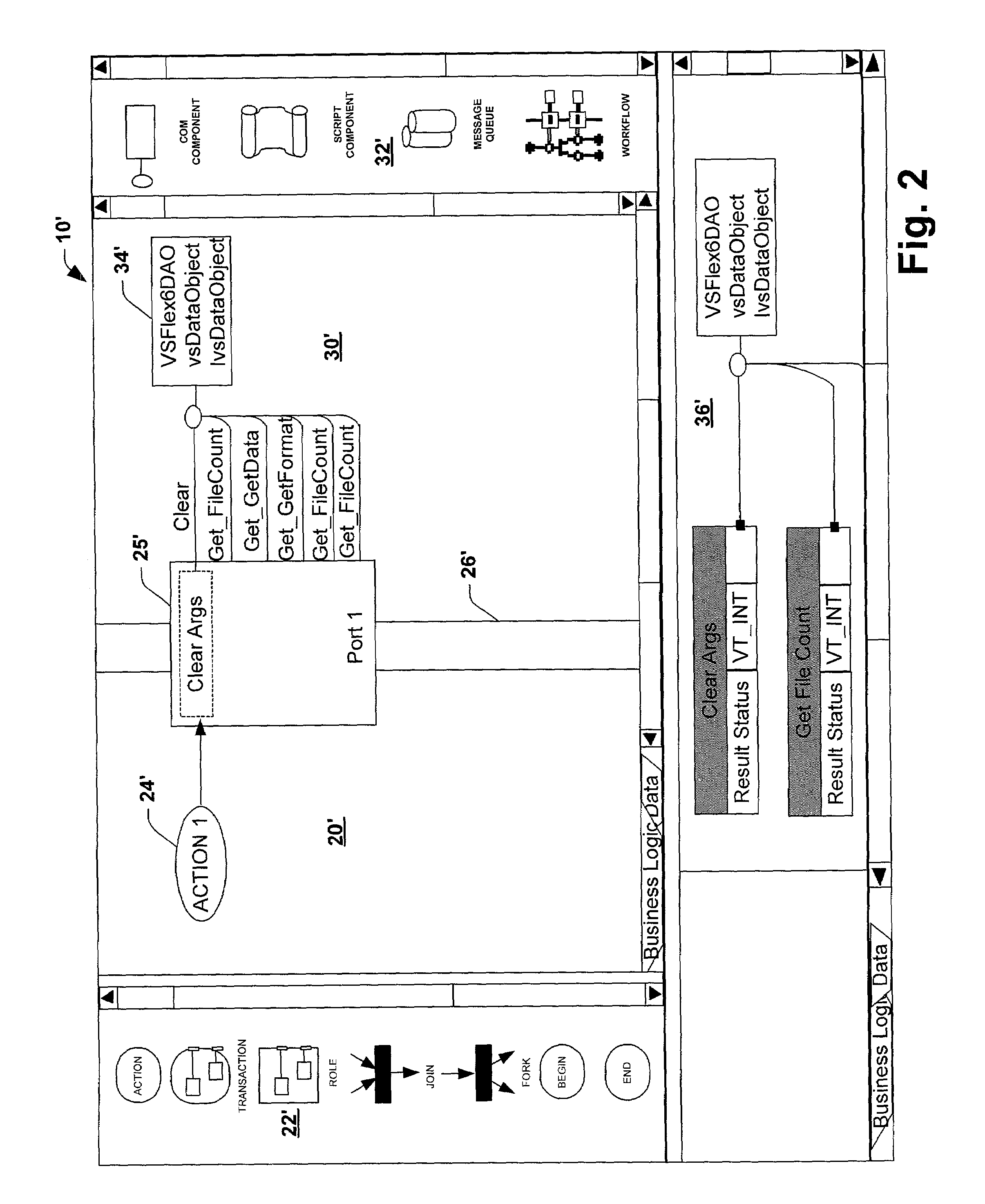

System and method utilizing a graphical user interface of a business process workflow scheduling program

InactiveUS7184967B1Promote modelOffice automationProgramme total factory controlSoftware engineeringBusiness process

A graphical user interface (GUI) scheduler program is provided for modeling business workflow processes. The GUI scheduler program includes tools to allow a user to create a schedule for business workflow processes based on a set of rules defined by the GUI scheduler program. The rules facilitate deadlock not occurring within the schedule. The program provides tools for creating and defining message flows between entities. Additionally, the program provides tools that allow a user to define a binding between the schedule and components, such as COM components, script components, message queues and other workflow schedules. The scheduler program allows a user to define actions and group actions into transactions using simple GUI scheduling tools. The schedule can then be converted to executable code in a variety of forms such as XML, C, C+ and C++. The executable code can then be converted or interpreted for running the schedule.

Owner:MICROSOFT TECH LICENSING LLC

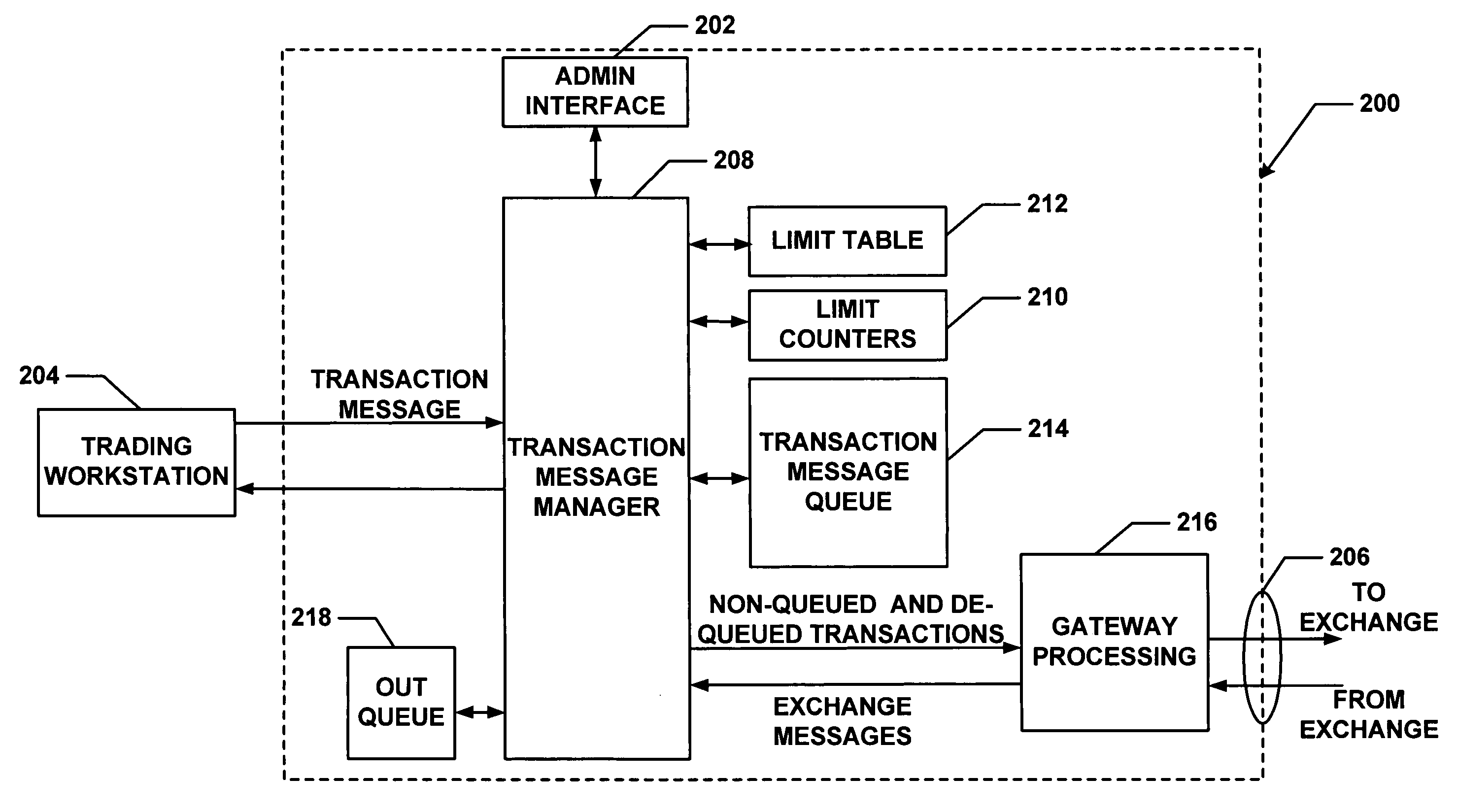

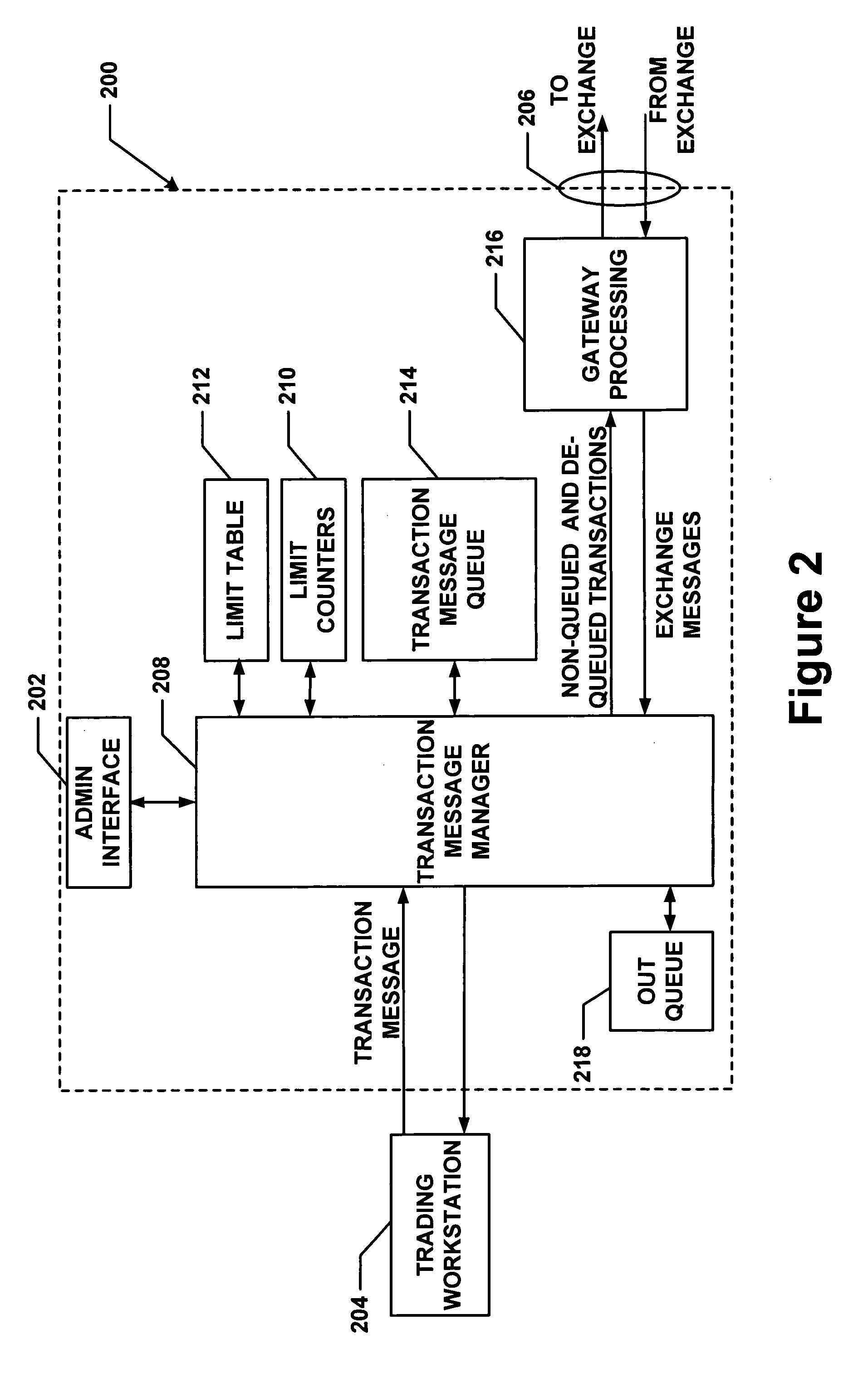

Method and apparatus for message flow and transaction queue management

Management of transaction message flow utilizing a transaction message queue. The system and method are for use in financial transaction messaging systems. The system is designed to enable an administrator to monitor, distribute, control and receive alerts on the use and status of limited network and exchange resources. Users are grouped in a hierarchical manner, preferably including user level and group level, as well as possible additional levels such as account, tradable object, membership, and gateway levels. The message thresholds may be specified for each level to ensure that transmission of a given transaction does not exceed the number of messages permitted for the user, group, account, etc.

Owner:TRADING TECH INT INC

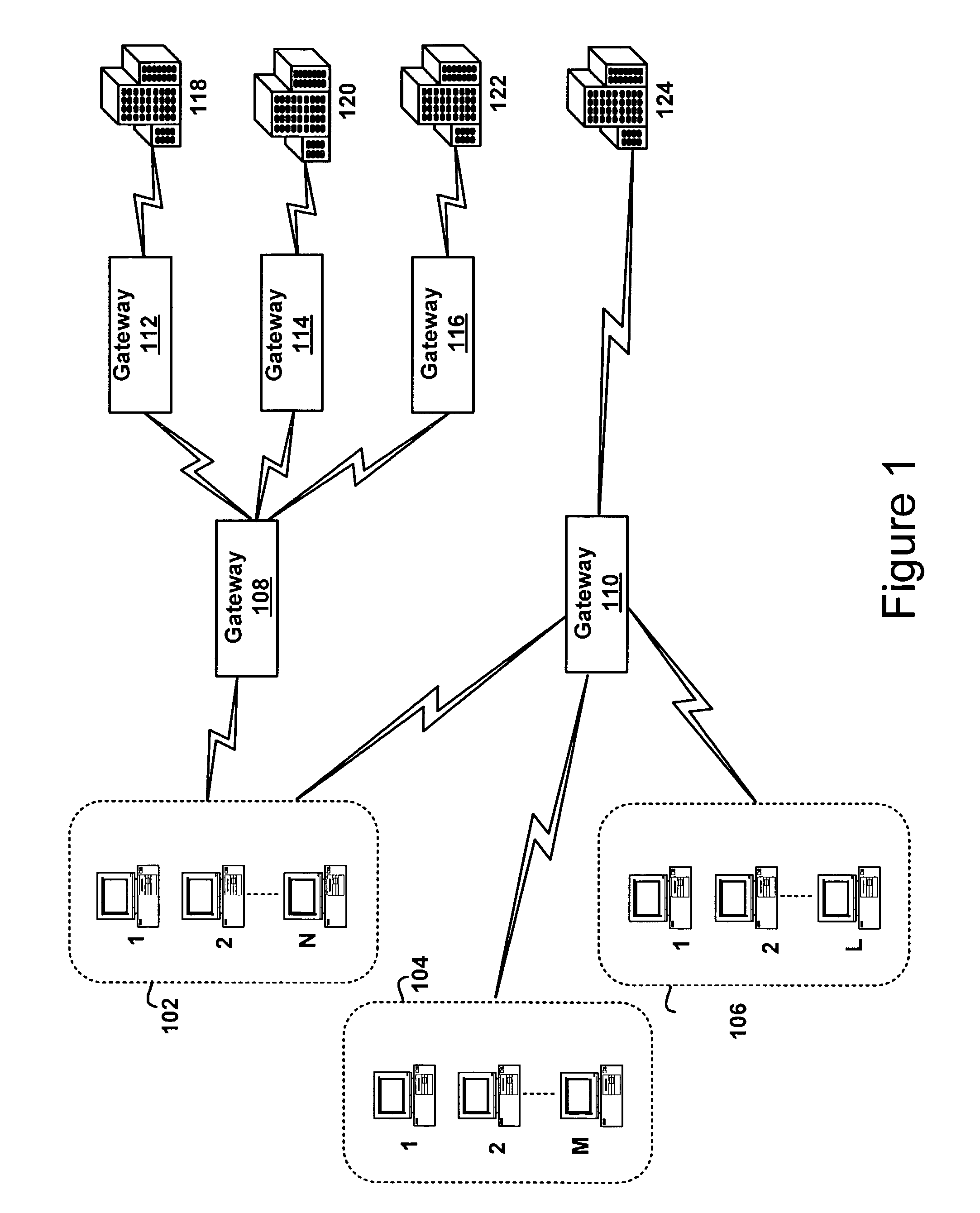

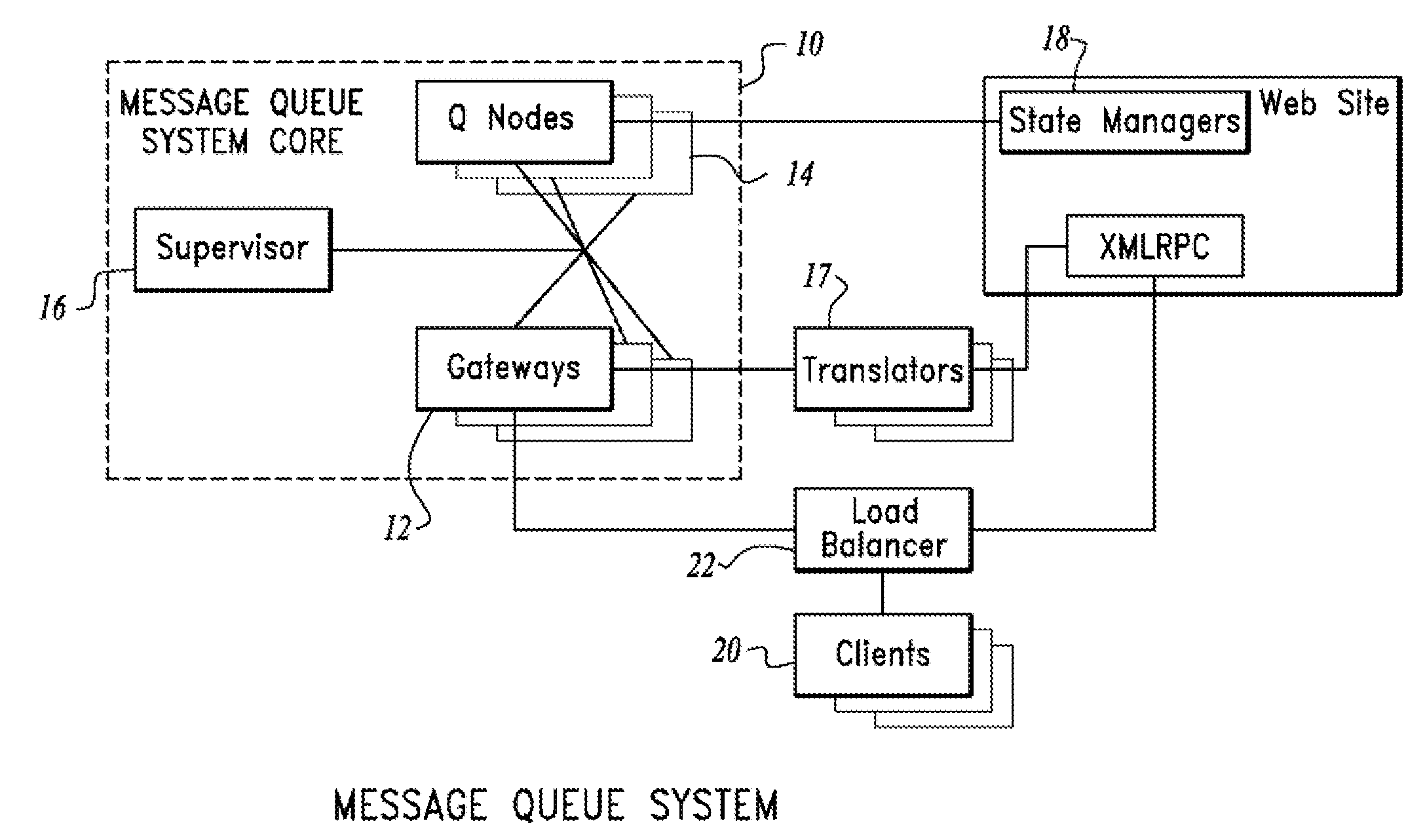

System and method for managing multiple queues of non-persistent messages in a networked environment

ActiveUS20120198004A1Data switching by path configurationMultiple digital computer combinationsMessage queueMultilevel queue

A system and method for managing multiple queues of non-persistent messages in a networked environment are disclosed. A particular embodiment includes: receiving a non-persistent message at a gateway process, the message including information indicative of a named queue; mapping, by use of a data processor, the named queue to a queue node by use of a consistent hash of the named queue; mapping the message to a queue process at the queue node; accessing, by use of the queue process, a list of subscriber gateways; and routing the message to each of the subscriber gateways in the list.

Owner:IMVU

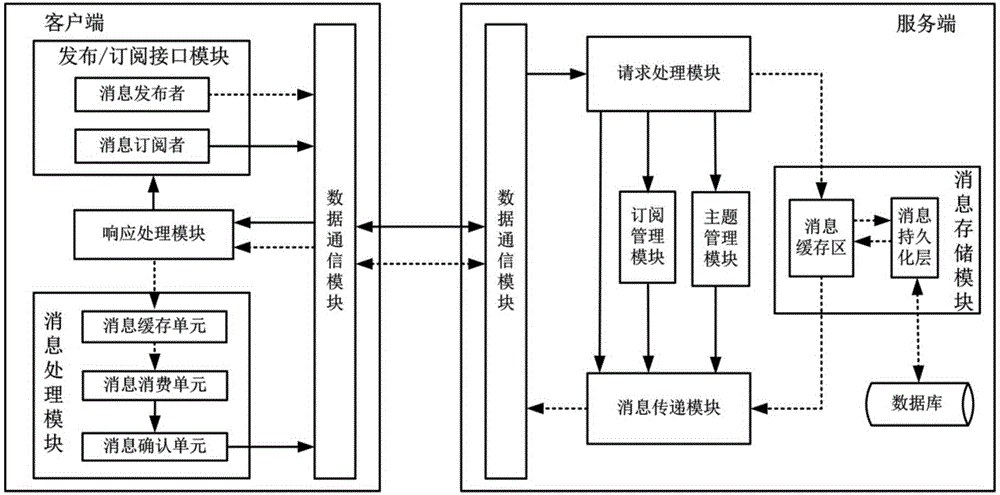

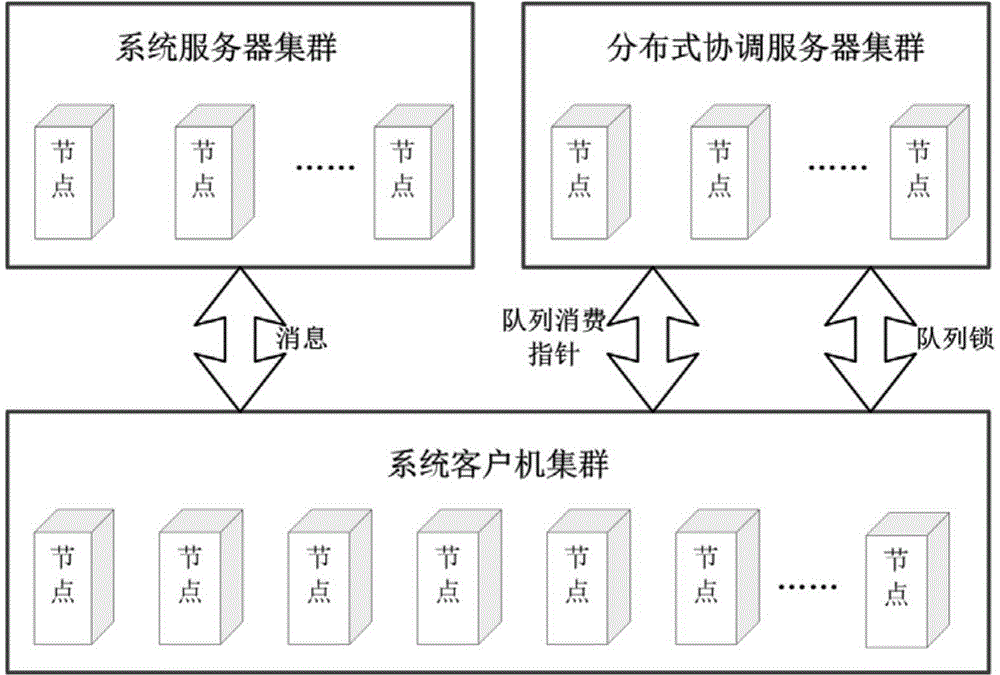

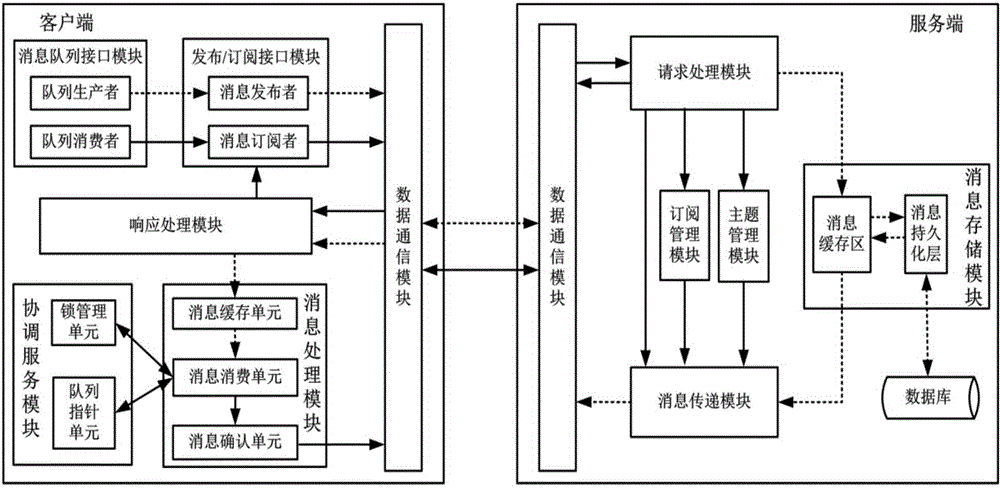

Posting/subscribing system for adding message queue models and working method thereof

ActiveCN104092767AReduce hardware costsAchieve mutual compatibilityTransmissionMessage queueTransfer model

The invention provides a posting / subscribing system for adding message queue models and a working method of the posting / subscribing system. The system comprises a distributed message-oriented middleware server-side cluster, a client-side cluster and a distributed coordinate server cluster. Service nodes in the message-oriented middleware server-side cluster are composed of corresponding modules of an original posting / subscribing system, and the function of the message-oriented middleware server-side cluster is the same as the information transferring relation; a client side is simultaneously provided with a calling interface of a posting / subscribing model and a calling interface of a message queue model which are used for achieving the purpose that a user can select and use the two different message transferring models directly without transforming the bottom layer middleware, so that a coordinate service module and a message processing module are additionally arranged on the client side based on an original framework. The system supports the posting / subscribing message transferring model and the message queue message transferring model simultaneously and guarantees that the availability, flexibility and other performance of the two models are all within the acceptable range, and the problem that it is difficult to transform the bottom layer message transferring model for the user is better solved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

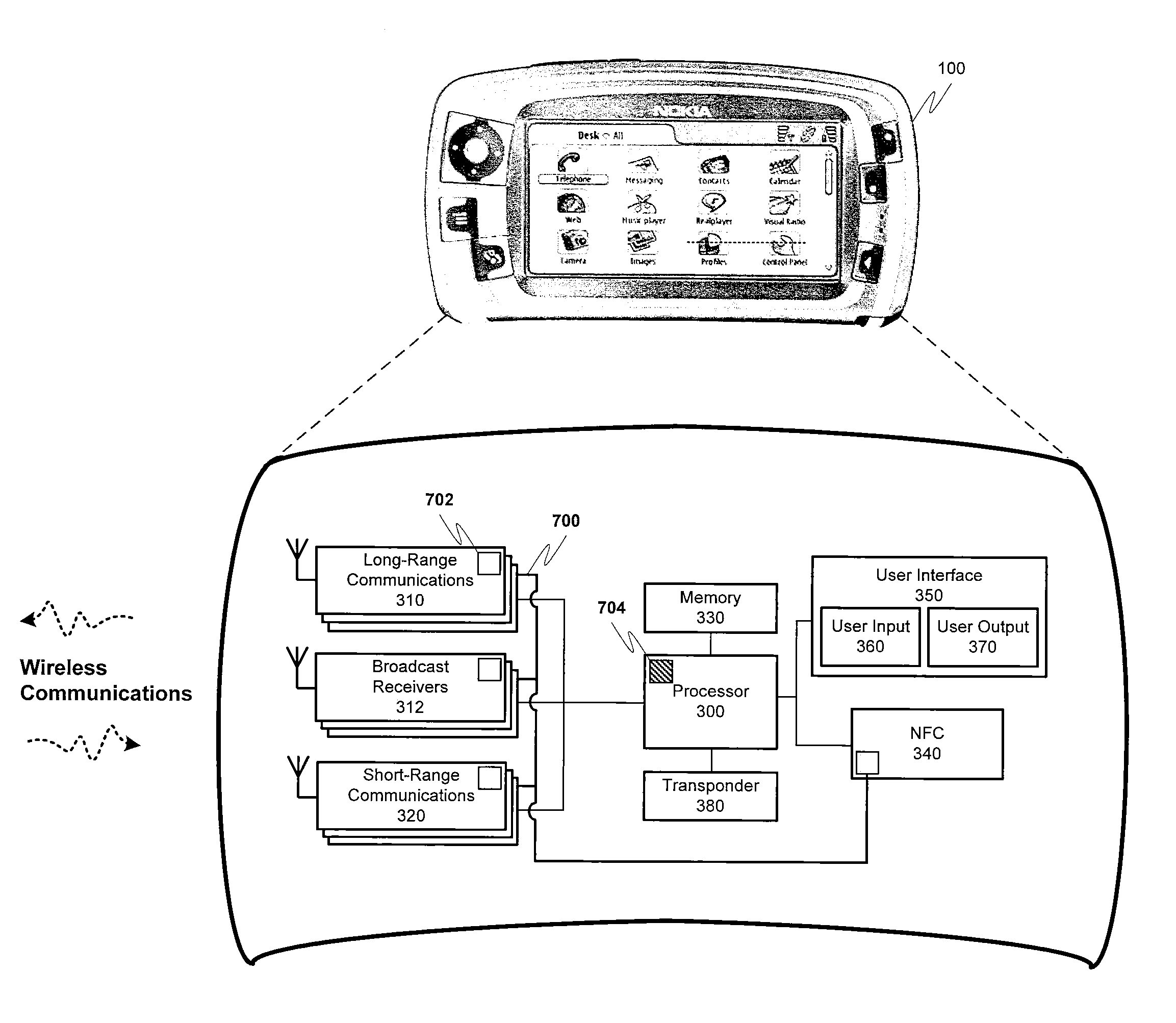

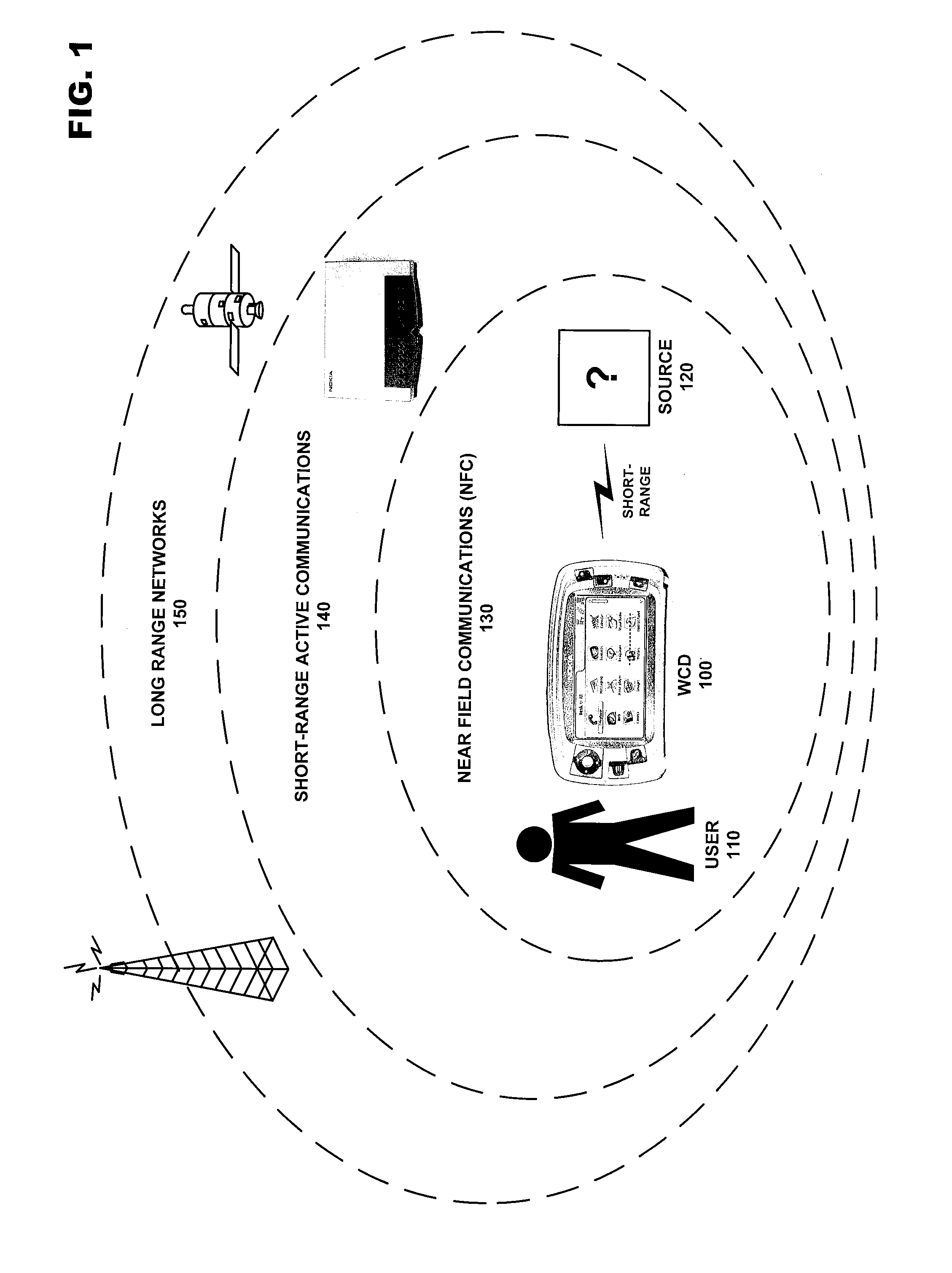

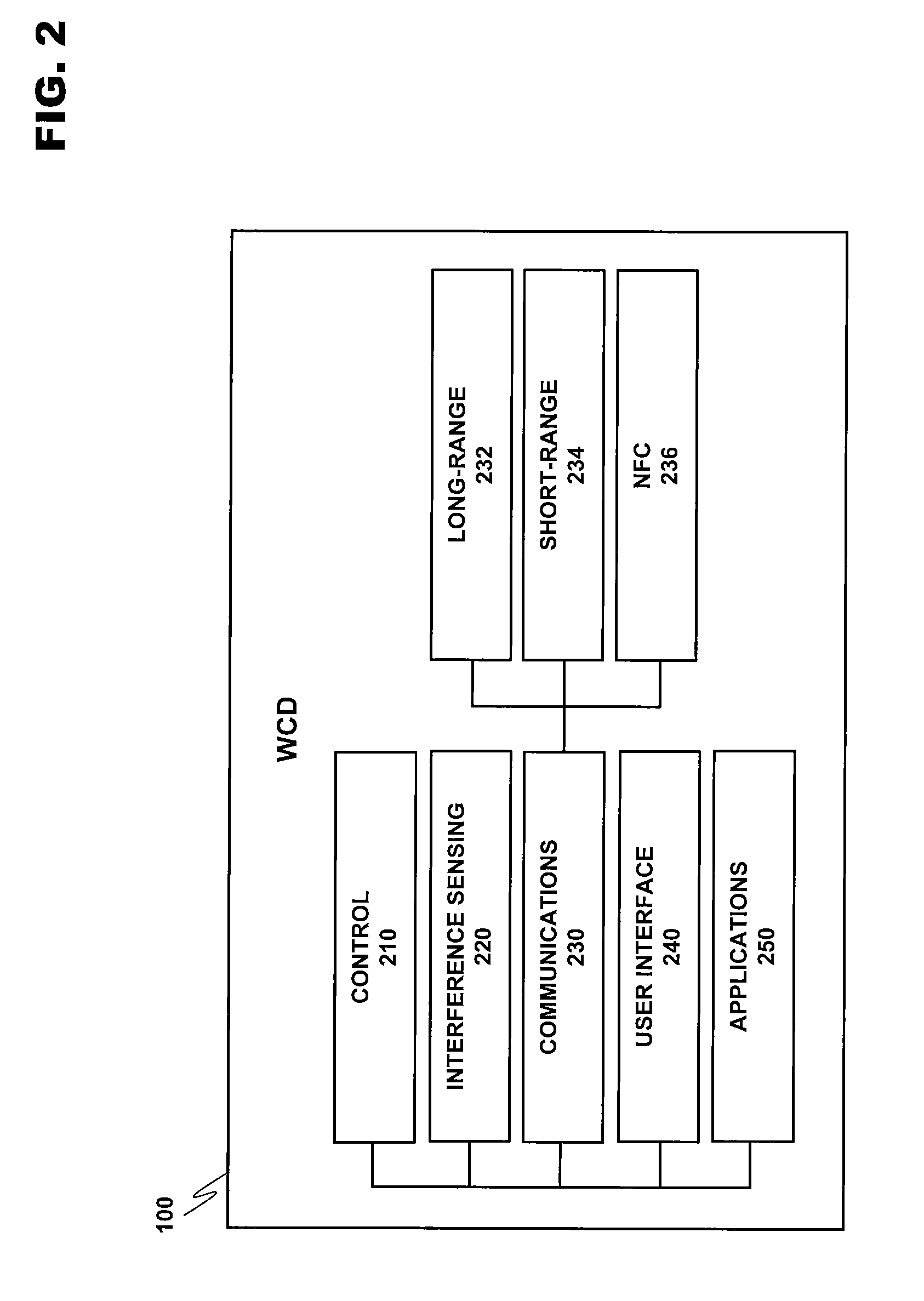

Multiradio priority control based on modem buffer load

ActiveUS7668565B2Improve balanceAlleviate a potential message queueNetwork traffic/resource managementAssess restrictionMessage queueModem device

Owner:NOKIA TECH OY

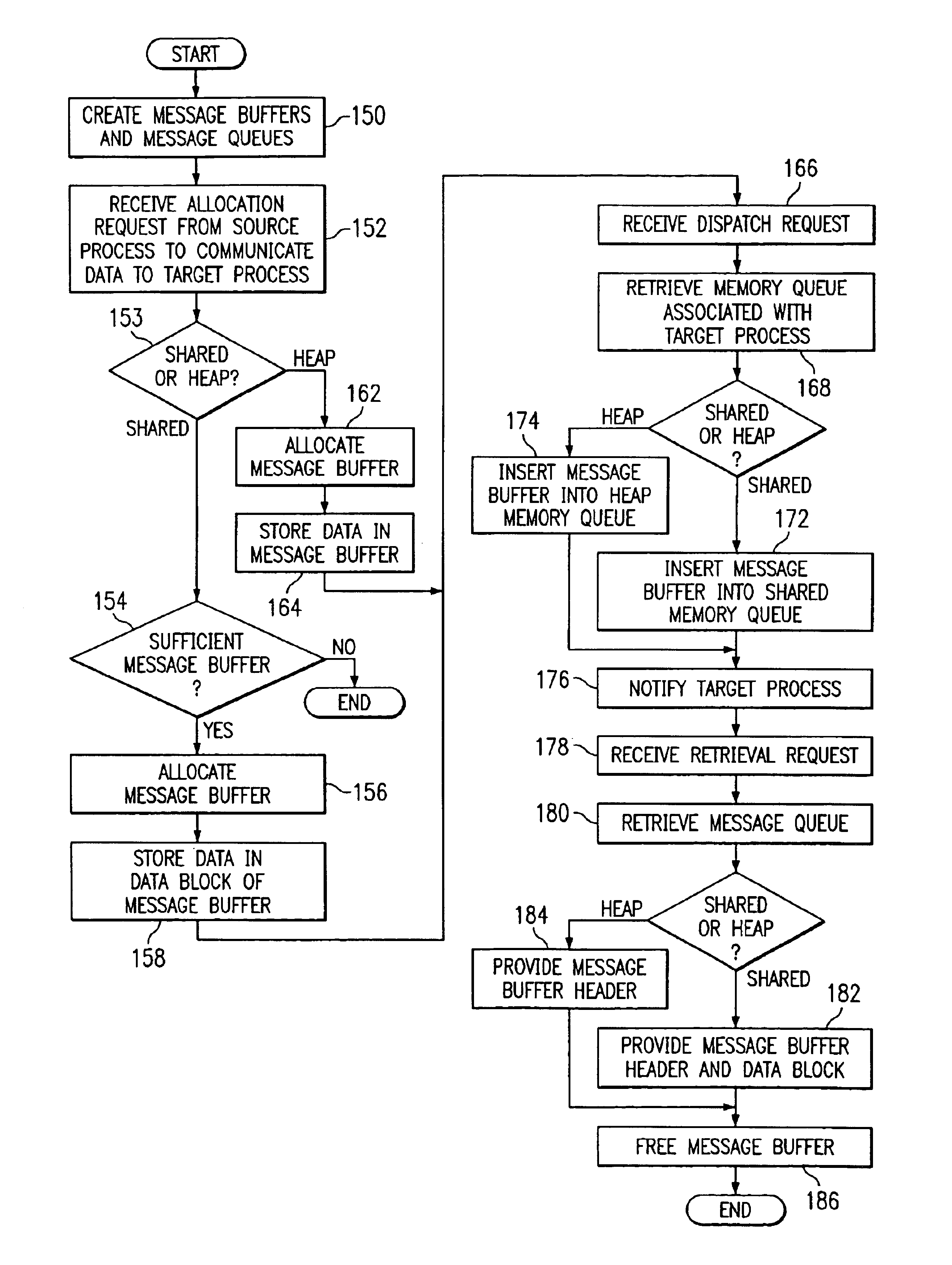

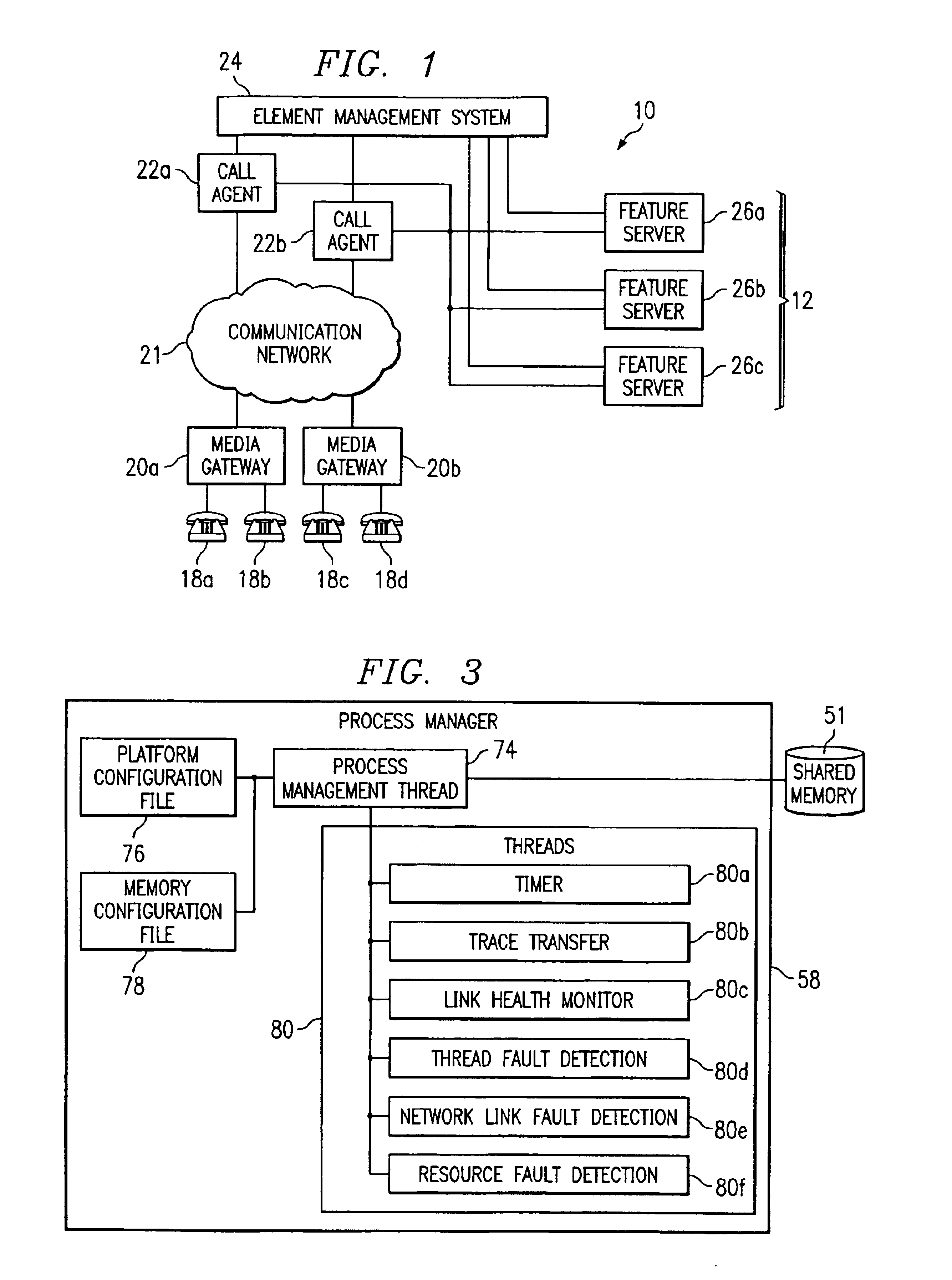

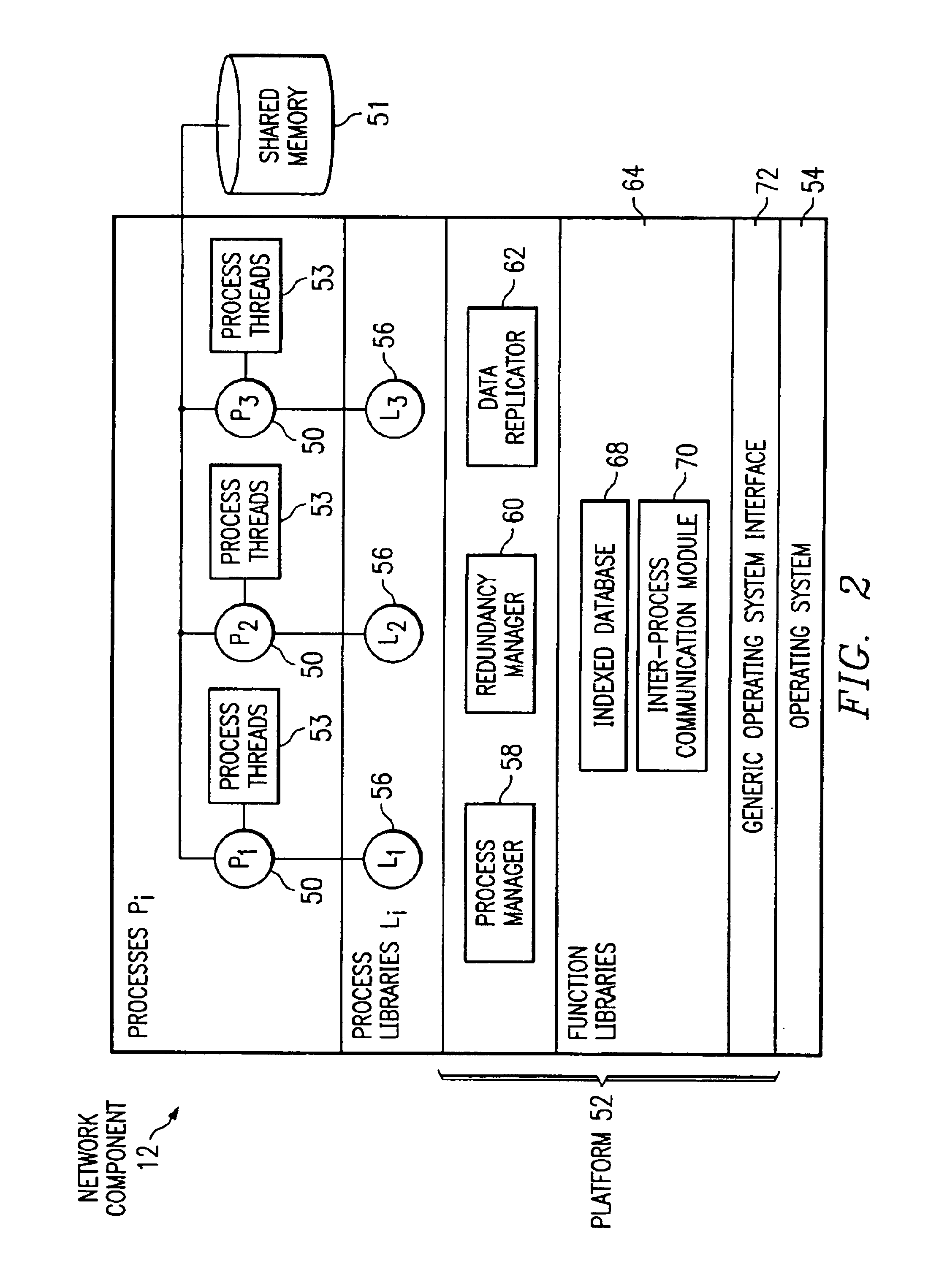

Data communication among processes of a network component

InactiveUS6847991B1Disadvantages and reduced eliminatedEasy to handleMultiple digital computer combinationsProgram controlMessage queueComputer module

A method for communicating data among processes of a network component is disclosed. A process communication module receives a request from a first process of a network component. The request requests a message buffer operable to store data associated with the first process. A message buffer is allocated to the first process in response to the request, and the data is stored in the message buffer. The message buffer is inserted into a message queue corresponding to a second process of the network component. The second process is provided access to the message buffer to read the data.

Owner:CISCO TECH INC

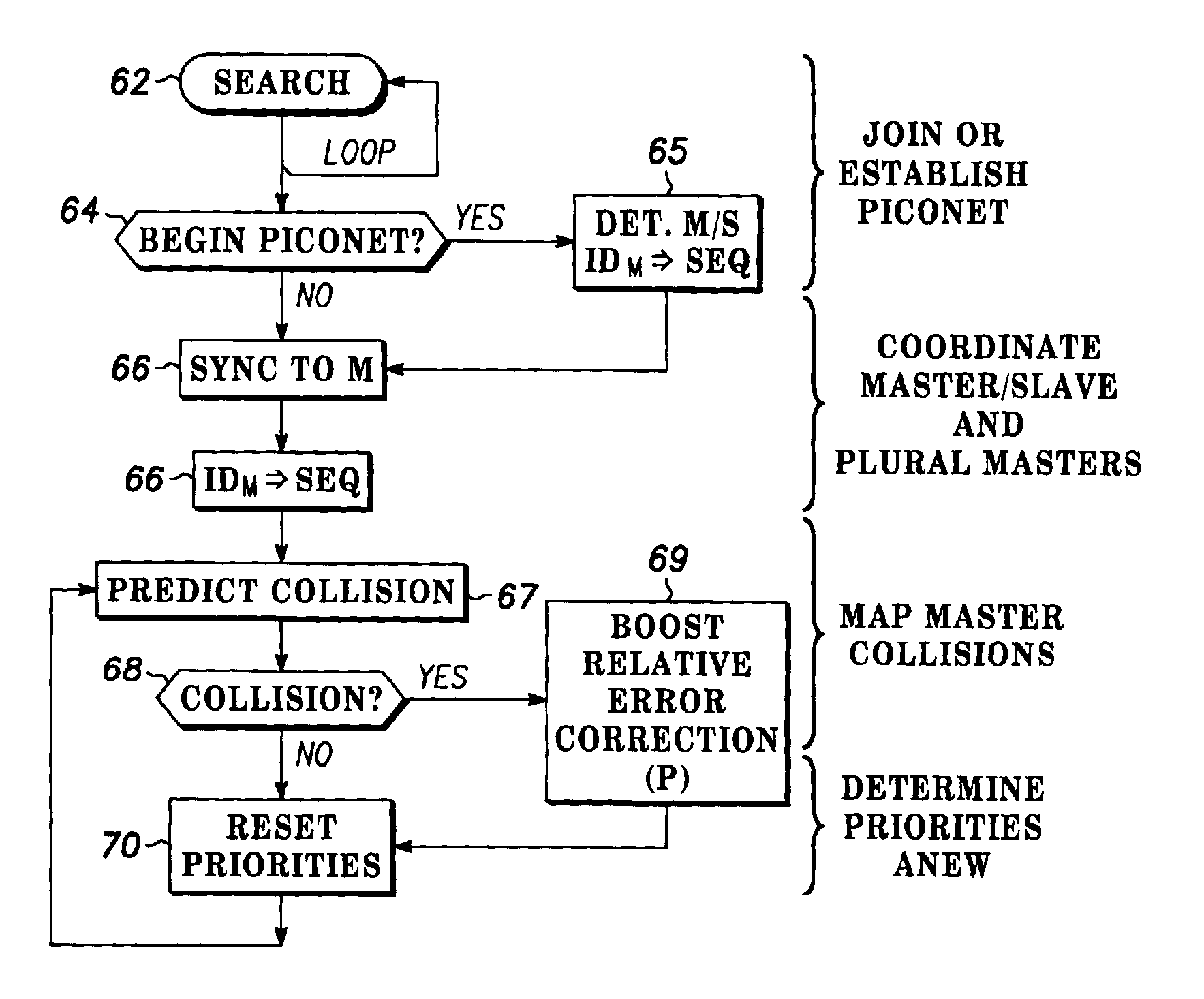

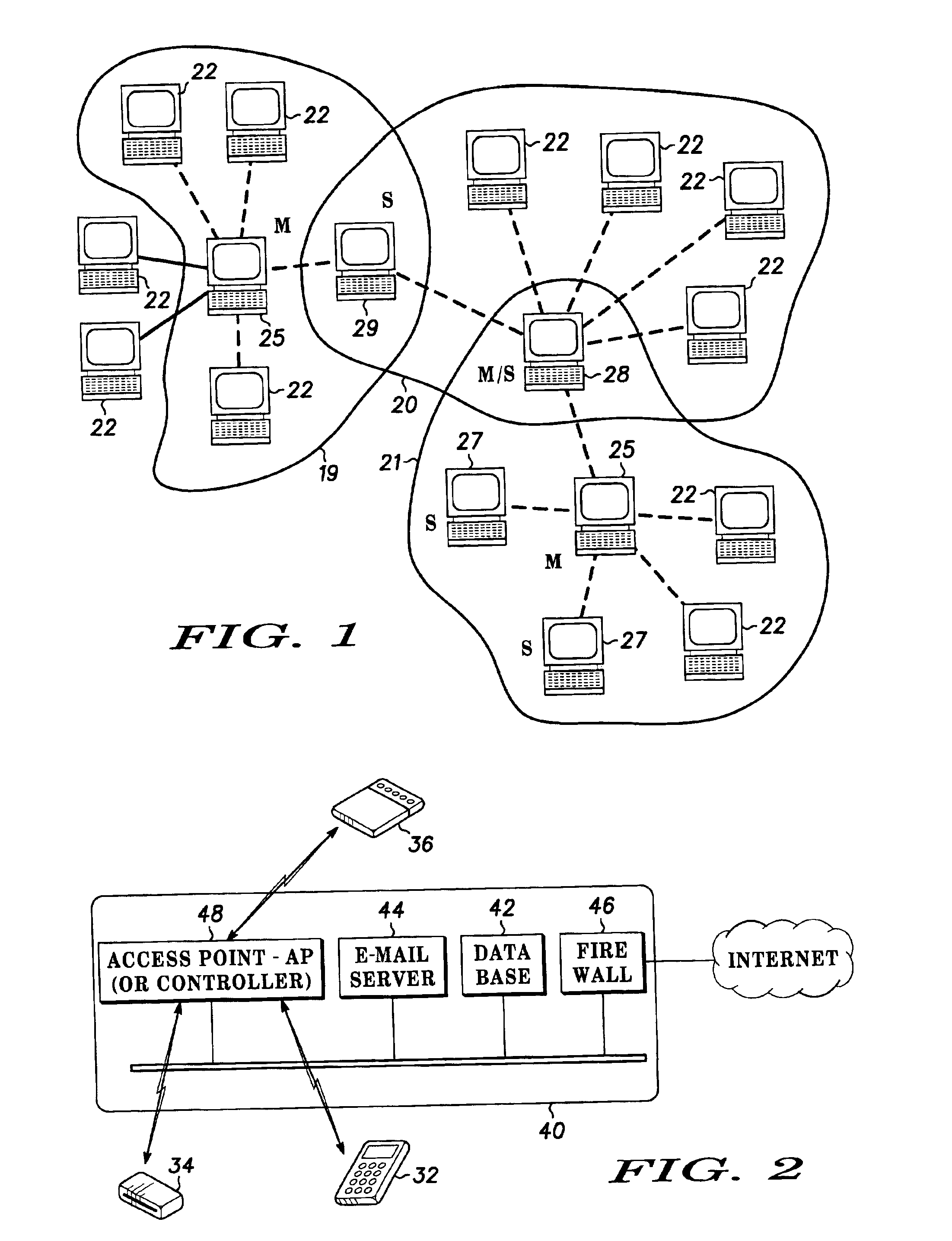

Multiple access frequency hopping network with interference anticipation

InactiveUS6920171B2Spreading over bandwidthSecret communicationMulti-frequency code systemsMessage queueRadio equipment

Spread spectrum packet-switching radio devices (22) are operated in two or more ad-hoc networks or pico-networks (19, 20, 21) that share frequency-hopping channel and time slots that may collide. The frequency hopping sequences (54) of two or more masters (25) are exchanged using identity codes, permitting the devices to anticipate collision time slots (52). Priorities are assigned to the simultaneously operating piconets (19, 20, 21) during collision slots (52), e.g., as a function of their message queue size or latency, or other factors. Lower priority devices may abstain from transmitting during predicted collision slots (52), and / or a higher priority device may employ enhanced transmission resources during those slots, such as higher error correction levels, or various combinations of abstinence and error correction may be applied. Collisions are avoided or the higher priority piconet (19, 20, 21) is made likely to prevail in a collision.

Owner:GOOGLE TECH HLDG LLC +1

Data access, replication or communication system comprising a distributed software application

ActiveUS20070130255A1Improve availabilityHigh bandwidthMultiple digital computer combinationsData switching networksMessage queueCommunications system

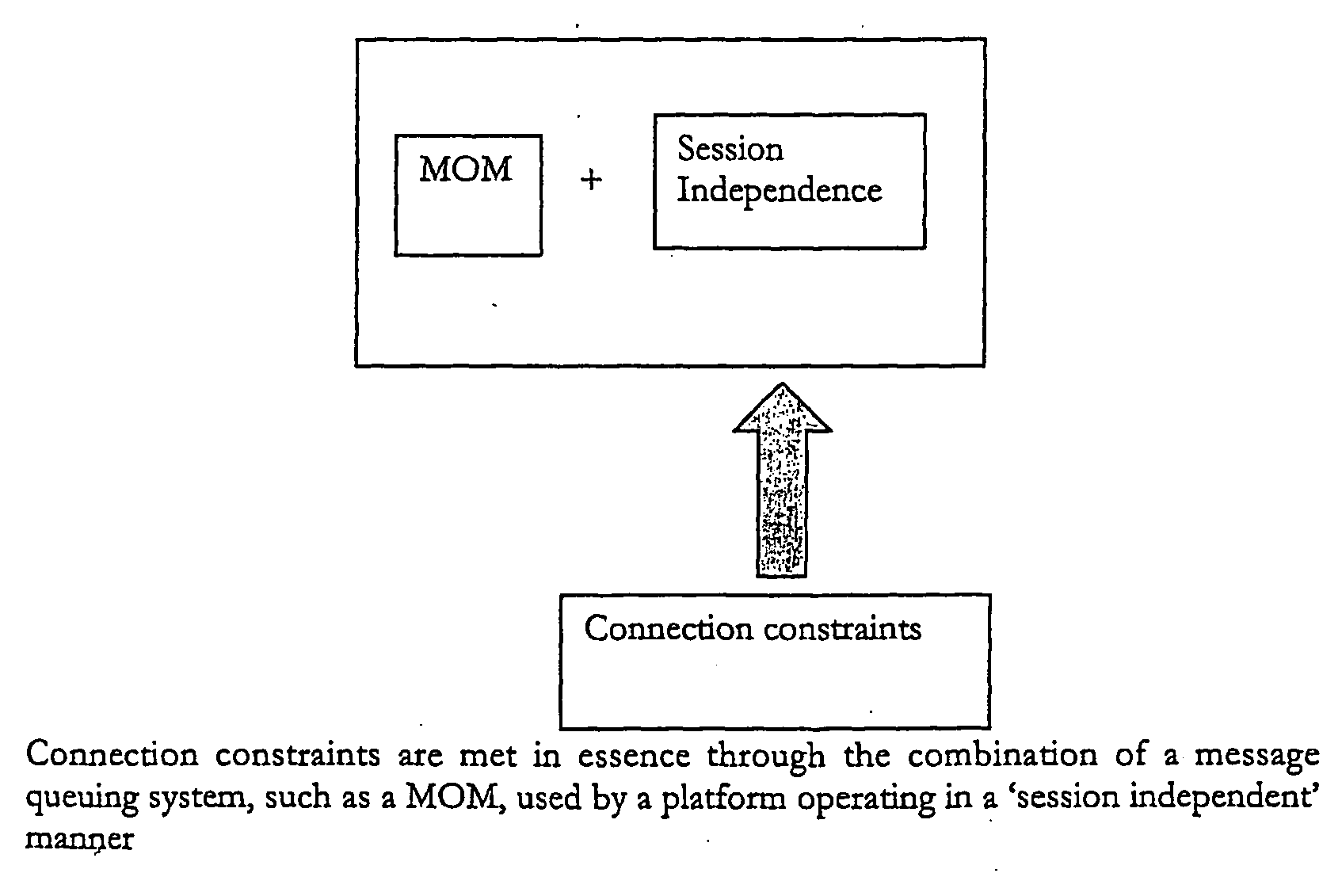

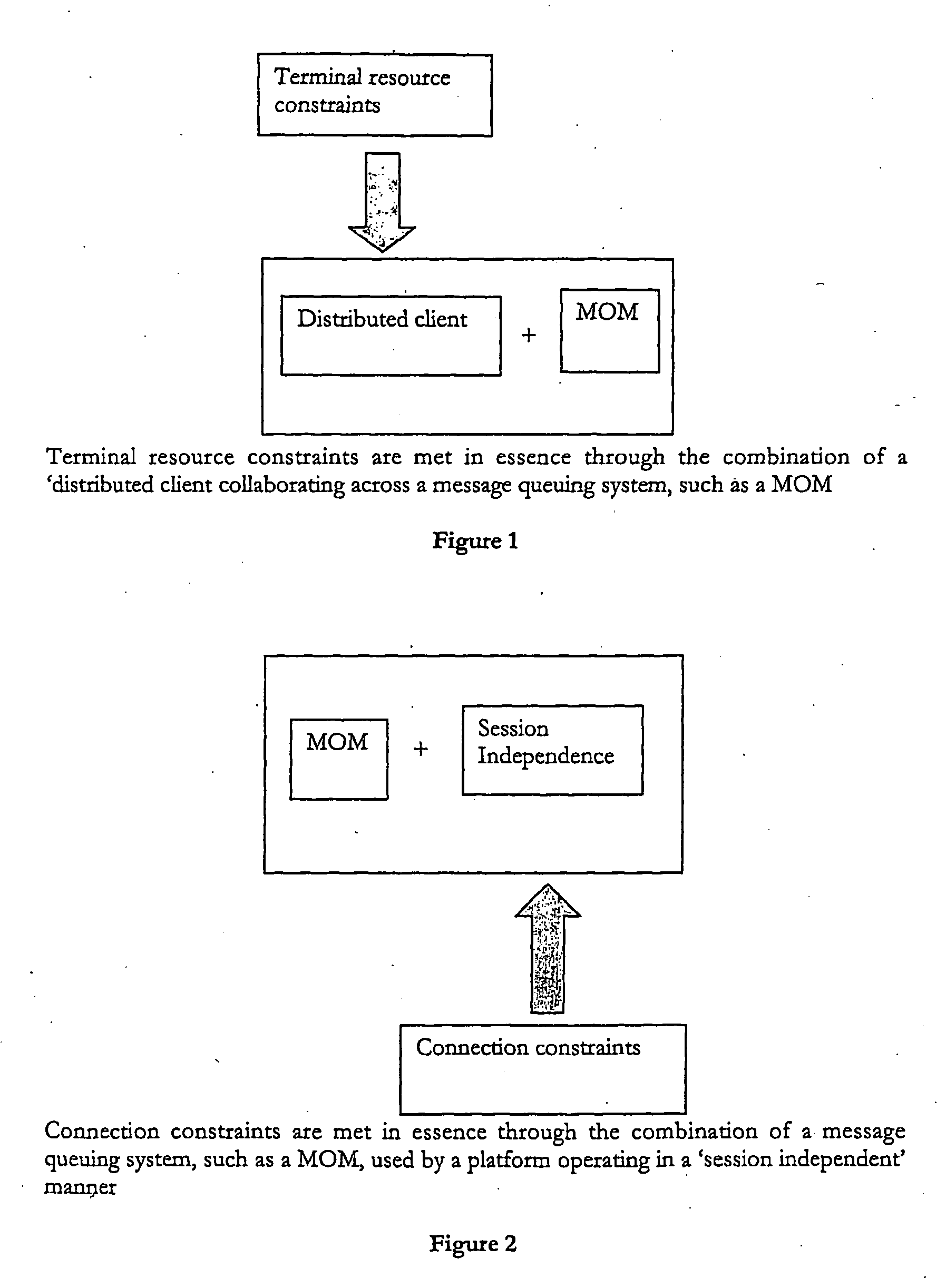

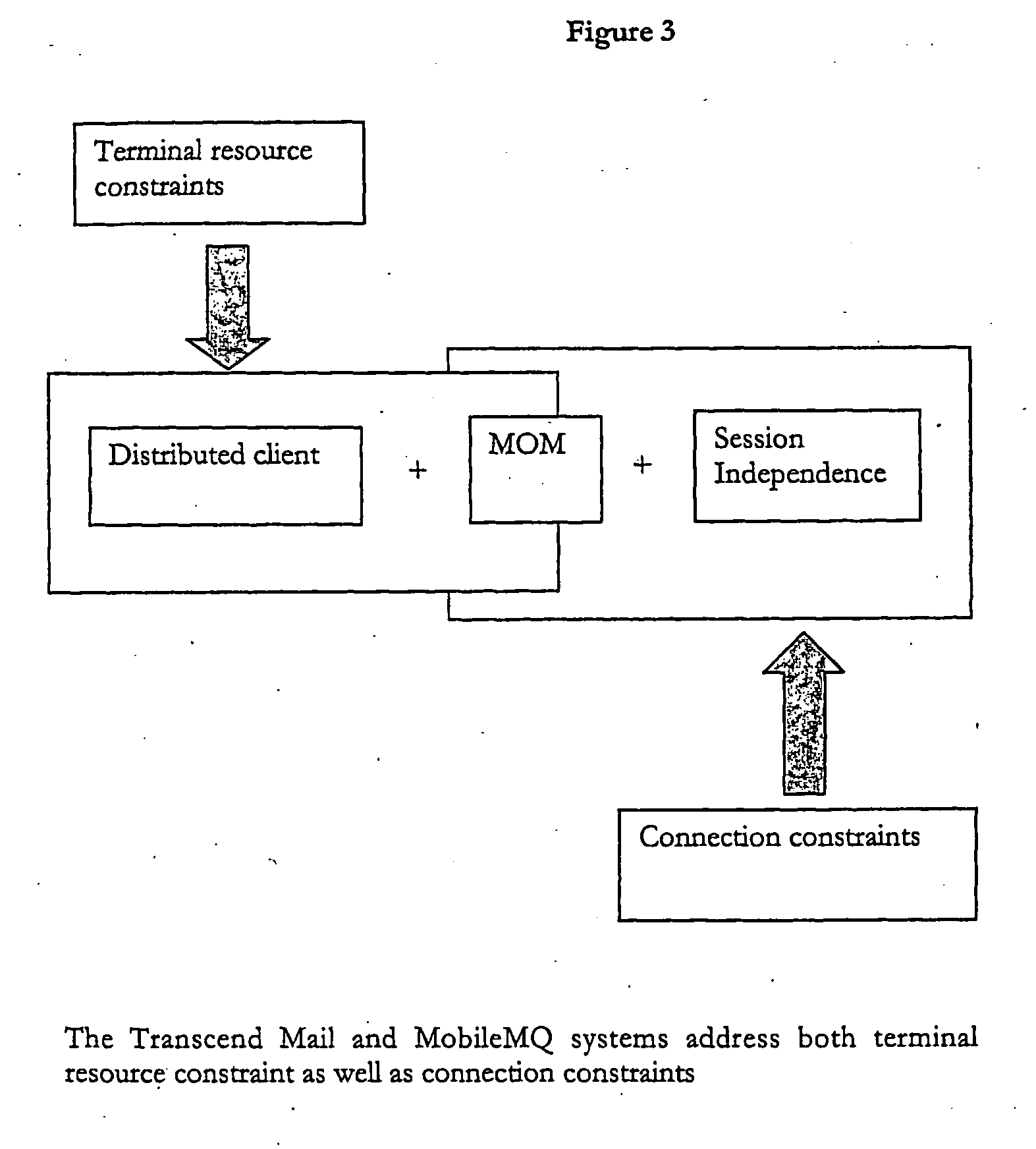

The present invention envisages a data access, replication or communications system comprising a software application that is distributed across a terminal-side component running on a terminal and a server-side component; in which the terminal-side component and the server-side component (i) together constitute a client to a server and (ii) collaborate by sending messages using a message queuing system over a network Hence, we split (i.e. distribute) the functionality of an application that serves as the client in a client-server configuration into component parts that run on two or more physical devices that commuunicate with each other over a network connection using a message queuing system, such as message oriented middleware. The component parts collectively act as a client in a larger client-server arrangement, with the server being, for example, a mail server. We call this a ‘Distributed Client’ model. A core advantage of the Distributed Client model is that it allows a terminal, such as mobile device with limited processing capacity, power, and connectivity, to enjoy the functionality of full-featured client access to a server environment using minimum resources on the mobile device by distributing some of the functionality normally associated with the client onto the server side, which is not so resource constrained.

Owner:MALIKIE INNOVATIONS LTD

Method and system for passing messages between threads

InactiveUS7058955B2Multiprogramming arrangementsSpecific program execution arrangementsMessage passingMessage queue

A method and system for passing messages between threads is provided, in which a sending thread communicates with a receiving thread by passing a reference to the message to a message queue associated with the receiving thread. The reference may be passed without explicitly invoking the inter-process or inter-thread message passing services of the computer's operating system. The sending thread may also have a message queue associated with it, and the sending thread's queue may include a reference to the receiving thread's queue. The sending thread can use this reference to pass messages to the receiving thread's queue.

Owner:MICROSOFT TECH LICENSING LLC

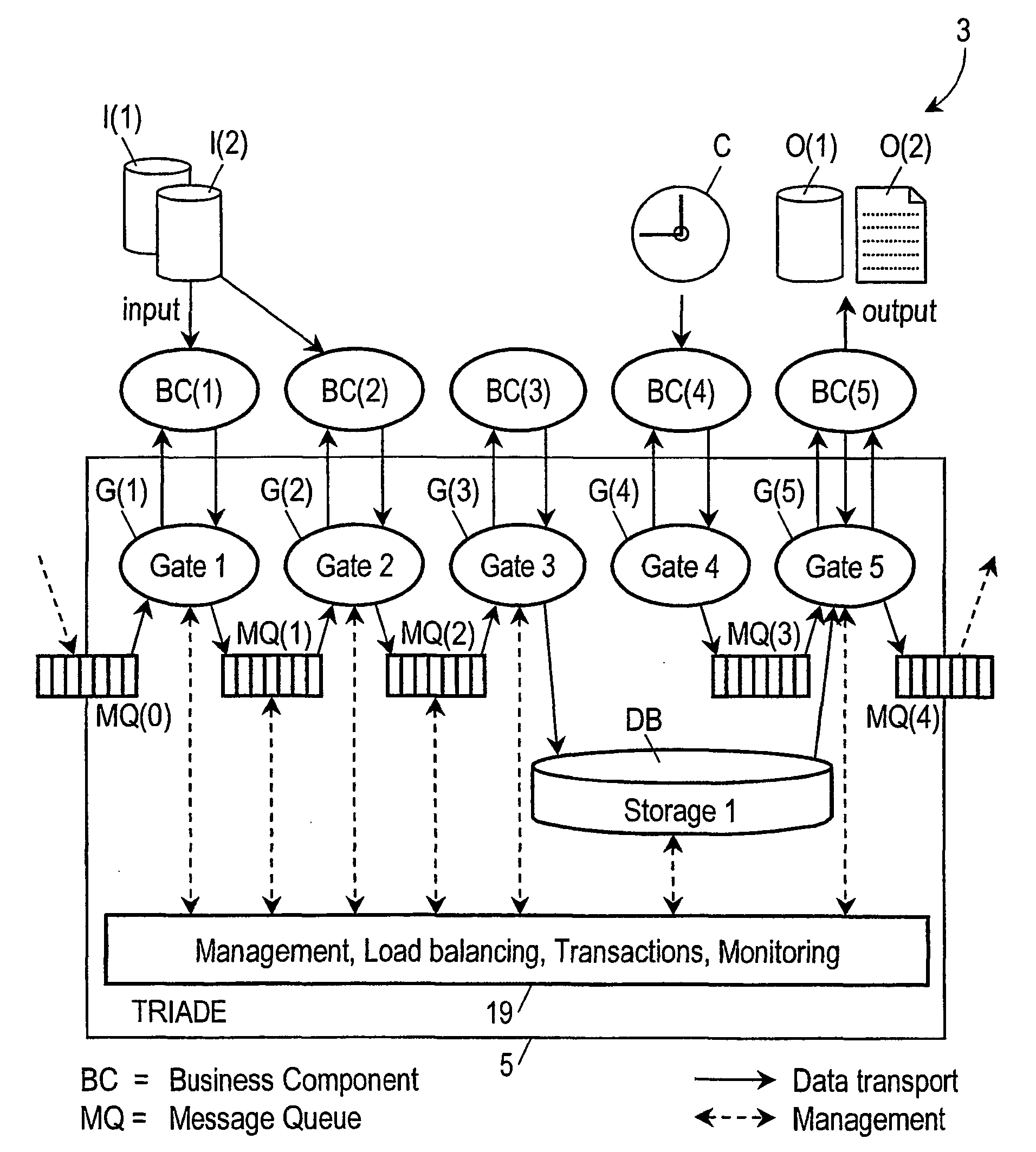

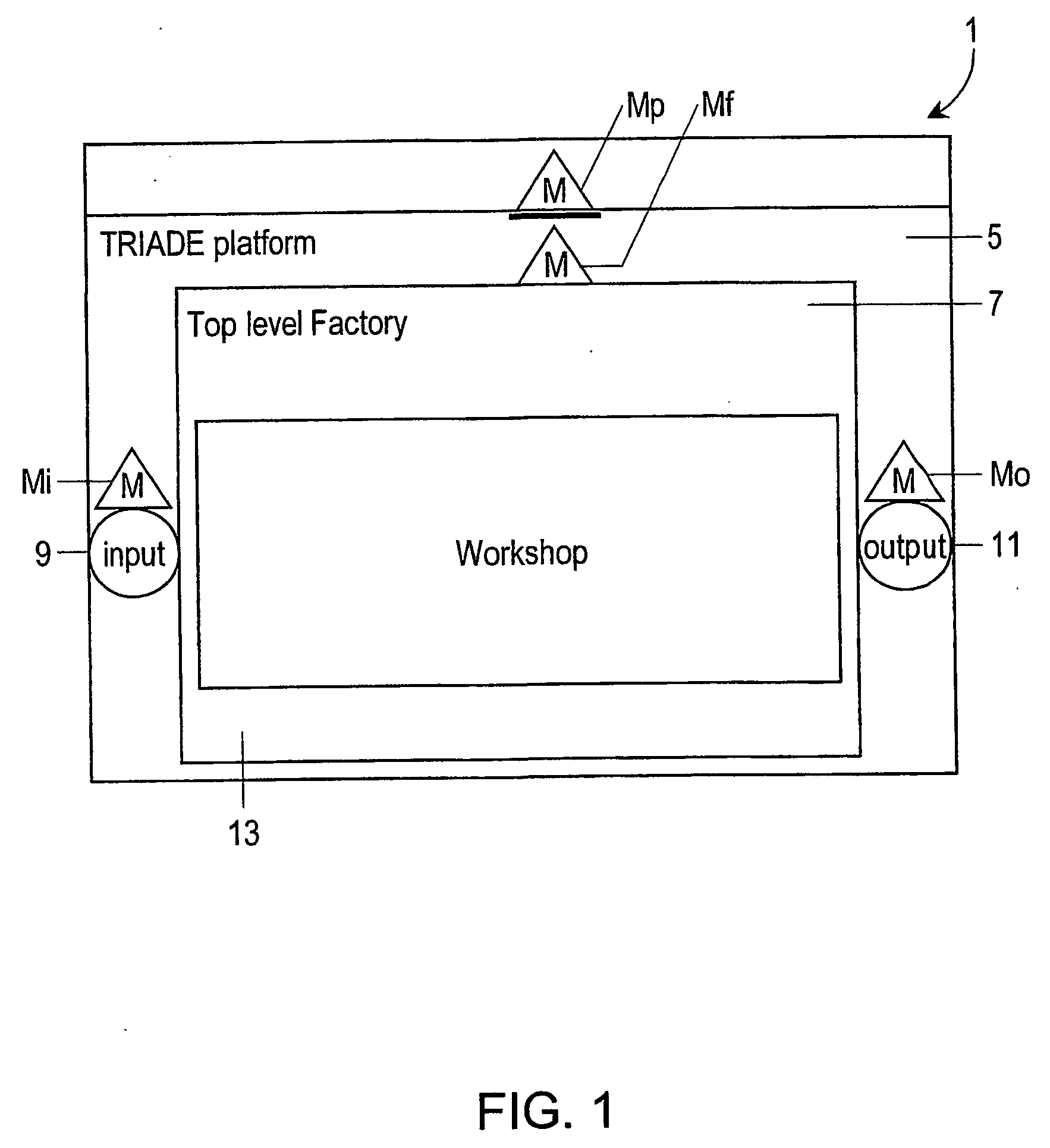

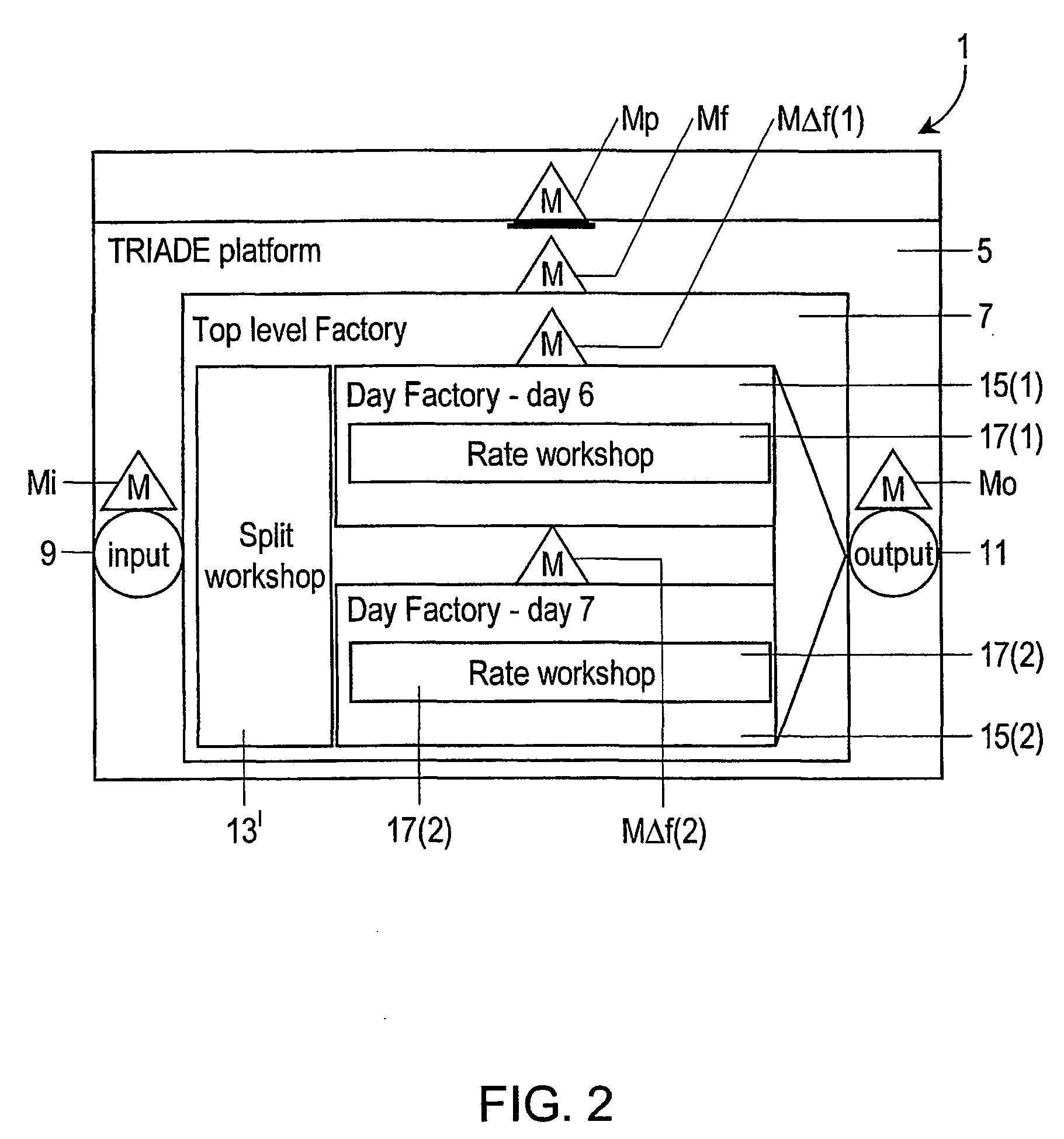

System and method for processing transaction data

InactiveUS20040024626A1FinanceMultiple digital computer combinationsMessage queueSoftware architecture

Computer system provided with a software architecture for processing transaction data which comprises a platform (5) with at least one logical processing unit (21) comprising the following components: a plurality of gates (G(k)); one or more message queues (MQ(k)), these being memories for temporary storage of data; one or more databases (DB); a hierarchical structure of managers in the form of software modules for the control of the gates (G(j)), the messages queues (MQ(k)), the one or more databases, the at least one logical processing unit (21) and the platform (5), wherein the gates are defined as software modules with the task of communicating with corresponding business components (BC(j)) located outside the platform (5), which are defined as software modules for carrying out a predetermined transformation on a received set of data.

Owner:NEDERLANDSE ORG VOOR TOEGEPAST-NATUURWETENSCHAPPELIJK ONDERZOEK (TNO)

System and method for providing an actively invalidated client-side network resource cache

A system and method for providing an actively invalidated client-side network resource cache are disclosed. A particular embodiment includes: a client configured to request, for a client application, data associated with an identifier from a server; the server configured to provide the data associated with the identifier, the data being subject to subsequent change, the server being further configured to establish a queue associated with the identifier at a scalable message queuing system, the scalable message queuing system including a plurality of gateway nodes configured to receive connections from client systems over a network, a plurality of queue nodes containing subscription information about queue subscribers, and a consistent hash table mapping a queue identifier requested on a gateway node to a corresponding queue node for the requested queue identifier; the client being further configured to subscribe to the queue at the scalable message queuing system to receive invalidation information associated with the data; the server being further configured to signal the queue of an invalidation event associated with the data; the scalable message queuing system being configured to convey information indicative of the invalidation event to the client; and the client being further configured to re-request the data associated with the identifier from the server upon receipt of the information indicative of the invalidation event from the scalable message queuing system.

Owner:IMVU

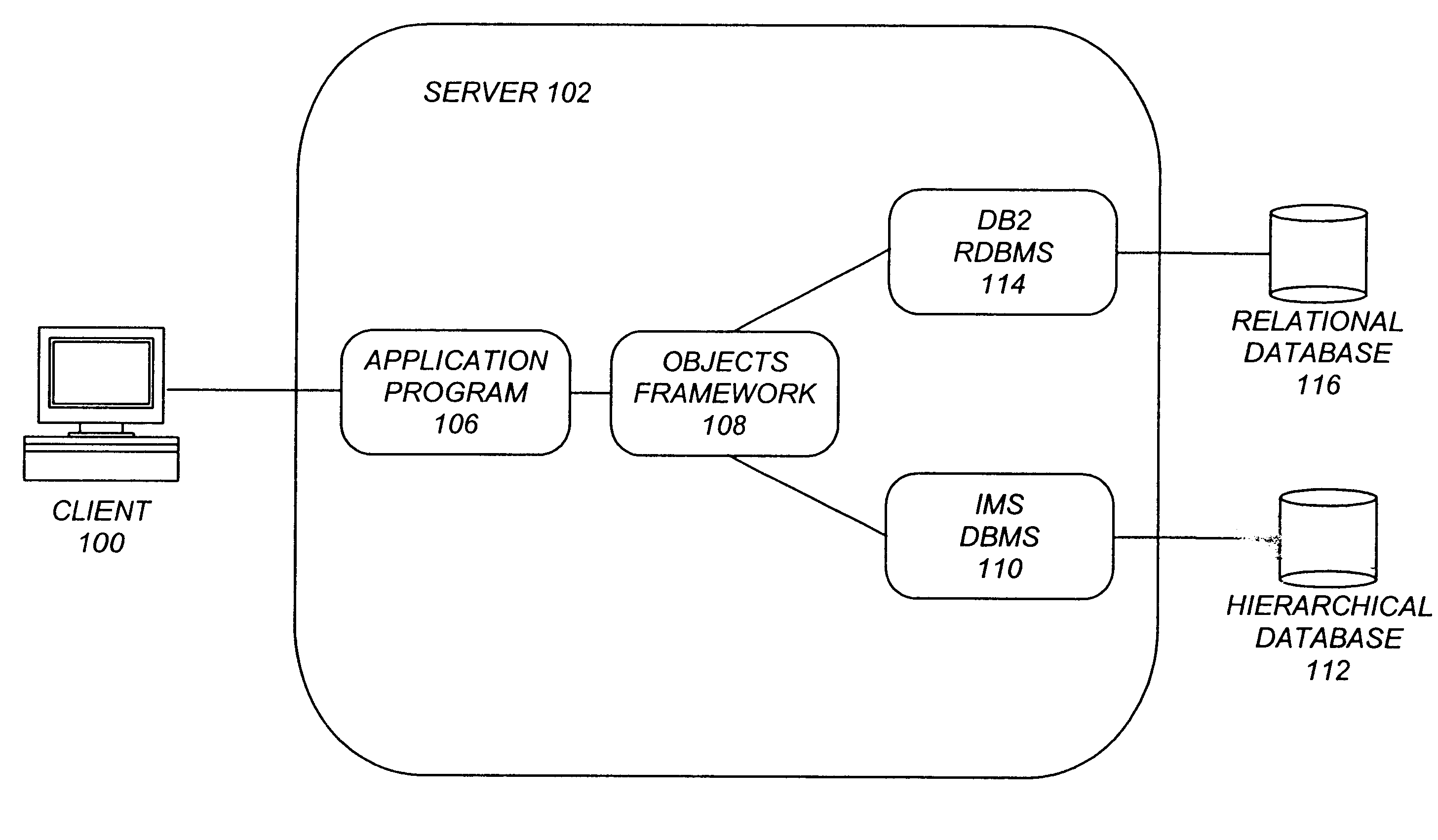

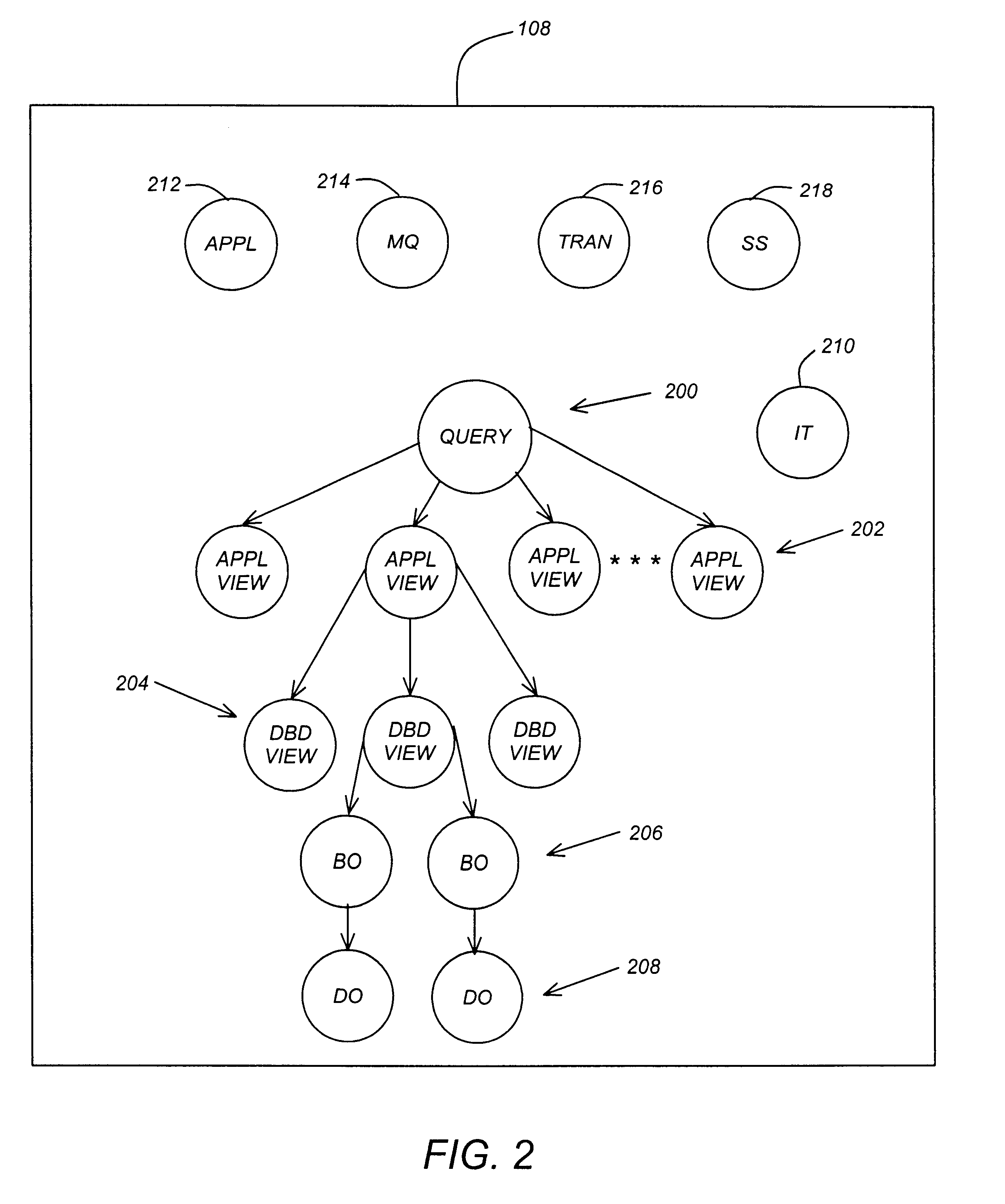

Object-oriented programming model for accessing both relational and hierarchical databases from an objects framework

InactiveUS6539398B1Data processing applicationsObject oriented databasesMessage queueRelational database

A method, apparatus, and article of manufacture for accessing a database. A hierarchical database system is modeled into an objects framework, wherein the objects framework corresponds to one or more application views, database definitions, and data defined and stored in the hierarchical database system. The objects framework also provides mechanisms for accessing a relational database system, wherein the objects framework provides industry-standard interfaces for attachment to the relational database system. Transactions from an application program for both the hierarchical database system and the relational database system are processed through the objects framework using message queue objects.

Owner:IBM CORP

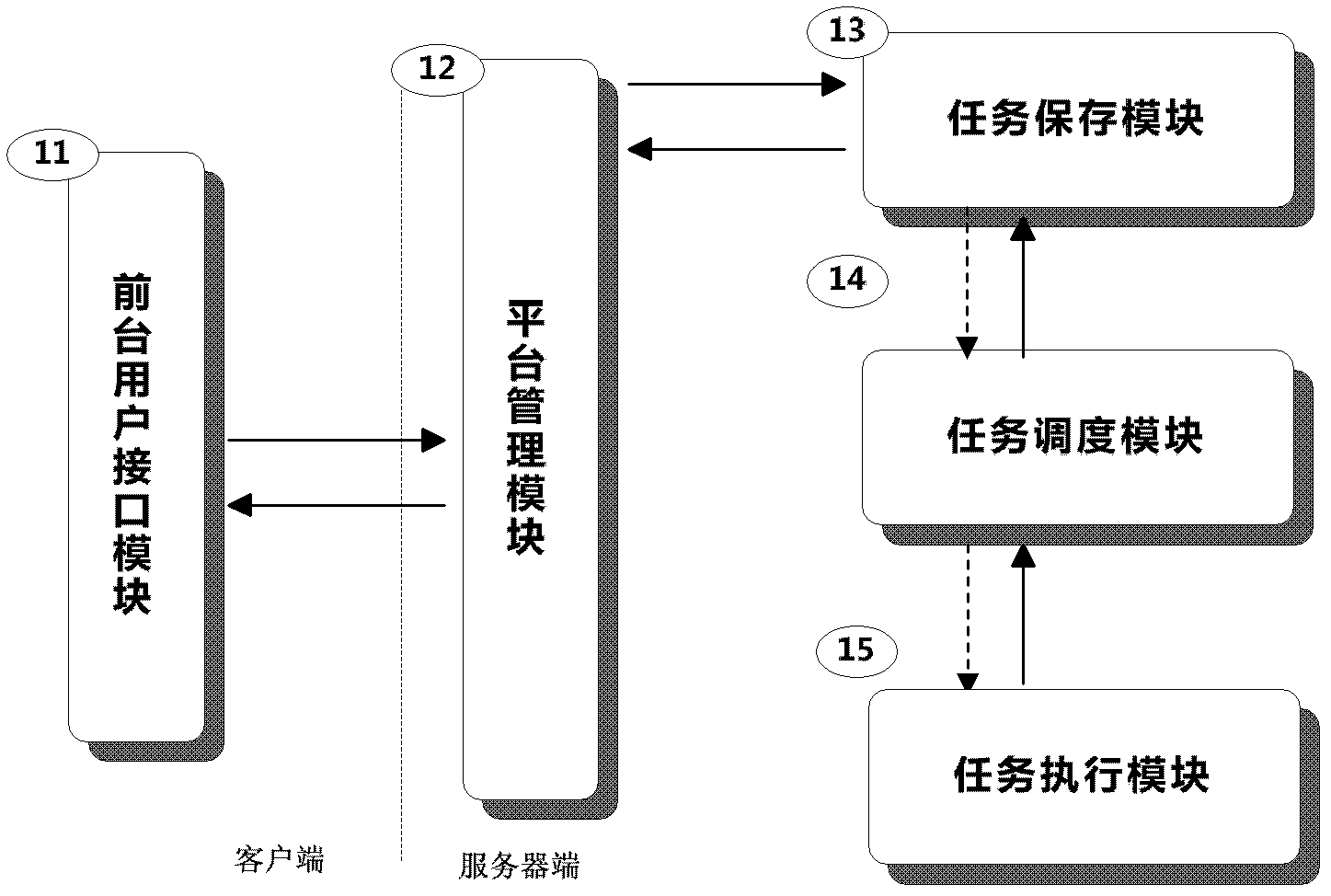

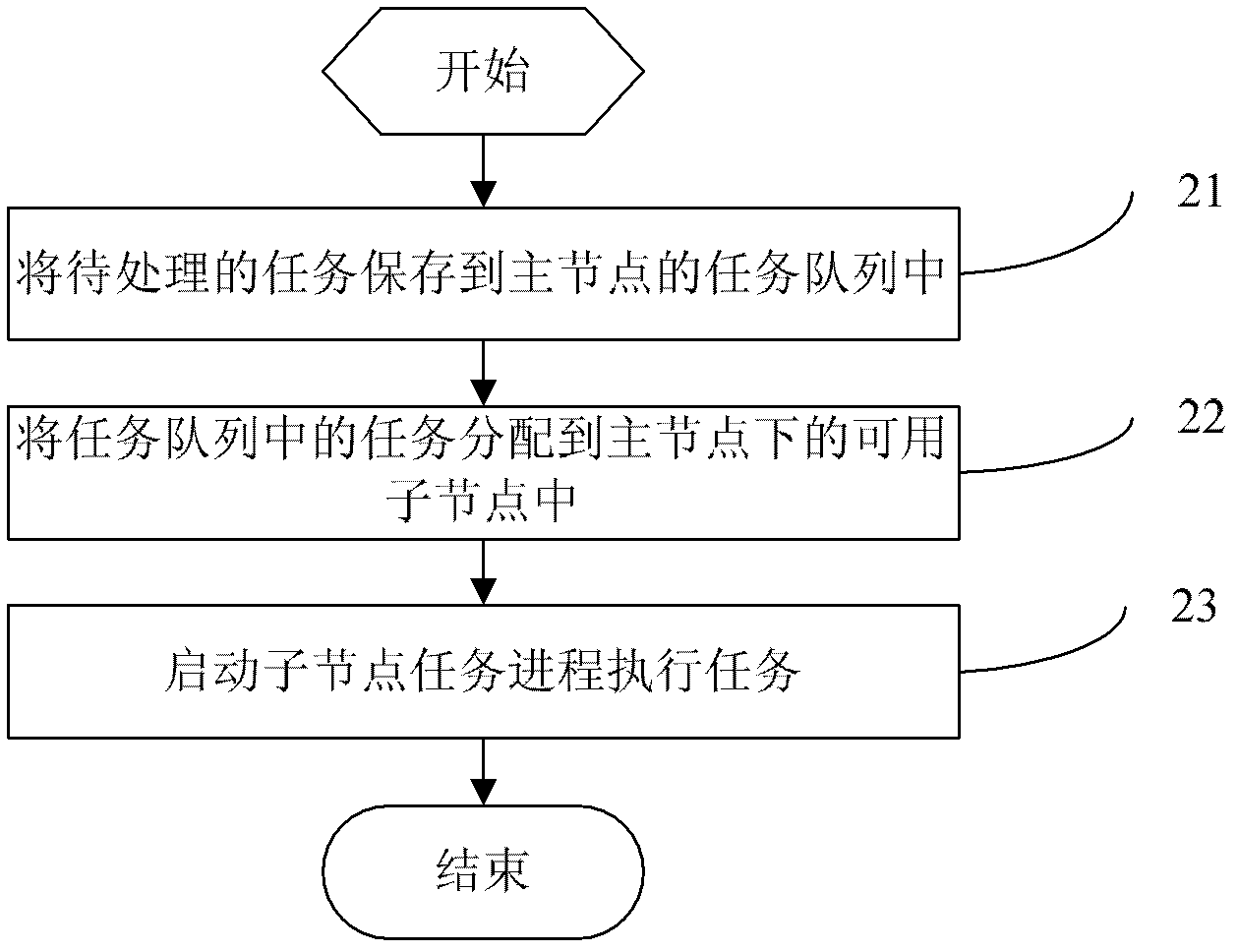

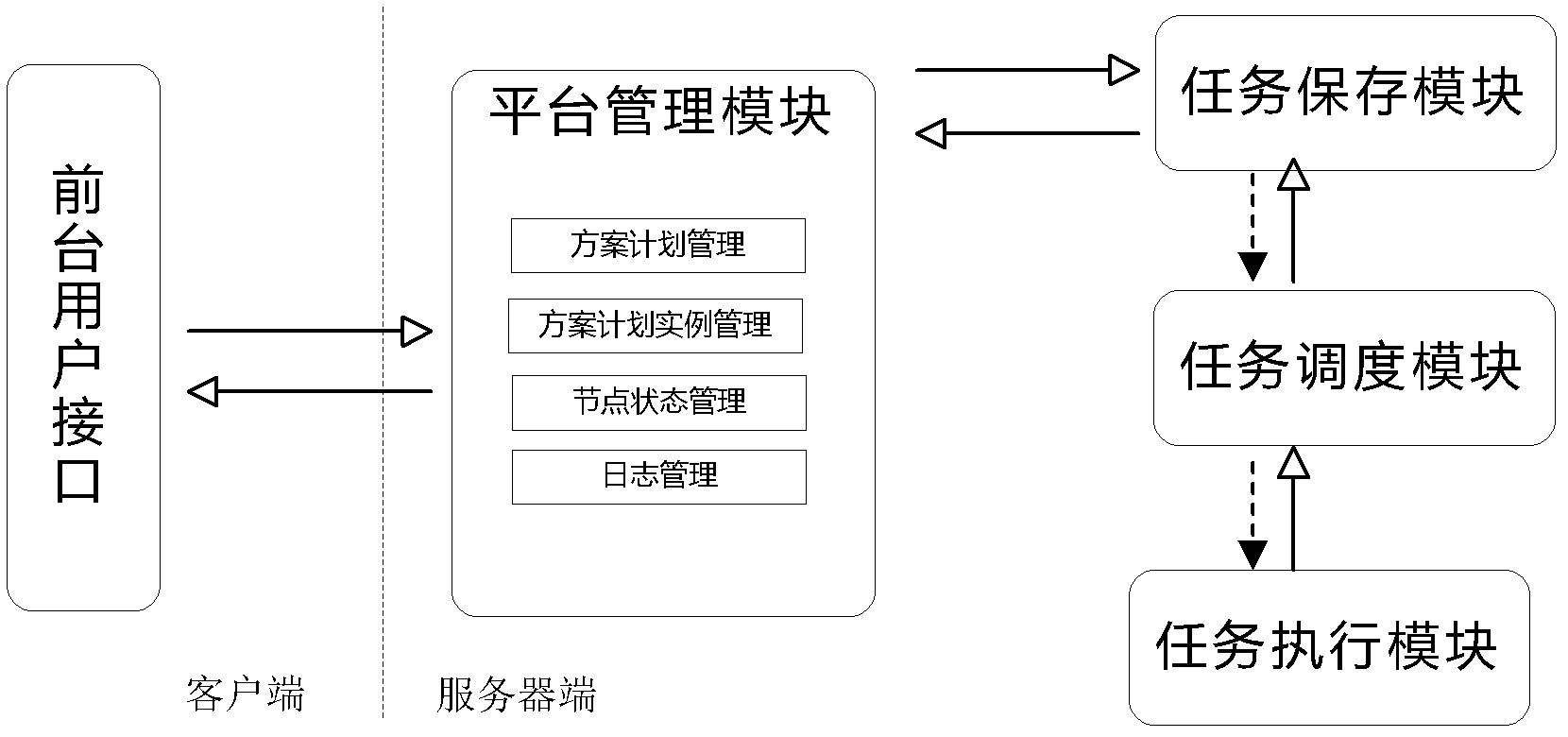

Distributed task scheduling method and system based on messaging middleware

ActiveCN102521044AGuaranteed uptimeImprove the efficiency of task executionProgram initiation/switchingResource allocationMessage queueEqualization

The invention discloses a distributed task scheduling method and a system based on messaging middleware, belonging to the field of task scheduling of a distributed system. The scheduling of distributed tasks is carried out via message queuing, the tasks to be implemented are stored in a task queue of a master node at first and are then uniformly distributed into available sub-nodes under the master node through load equalization, and independent task processes are started by the sub-nodes to implement the tasks. During scheduling and implementation of the tasks, the real-time monitoring of the states of the master node and the sub-nodes can be realized through node monitoring. In case of faults, the tasks to be implemented or the tasks under implementation can be switched to the spare master node or the available sub-nodes. By adopting the method and the system, the independence of the tasks can be ensured, and the efficiency of task implementation can be improved. Due to the fault switching mechanism, the reliability of the scheduling and implementation of the tasks can be further ensured.

Owner:BEIJING TUOMING COMM TECH

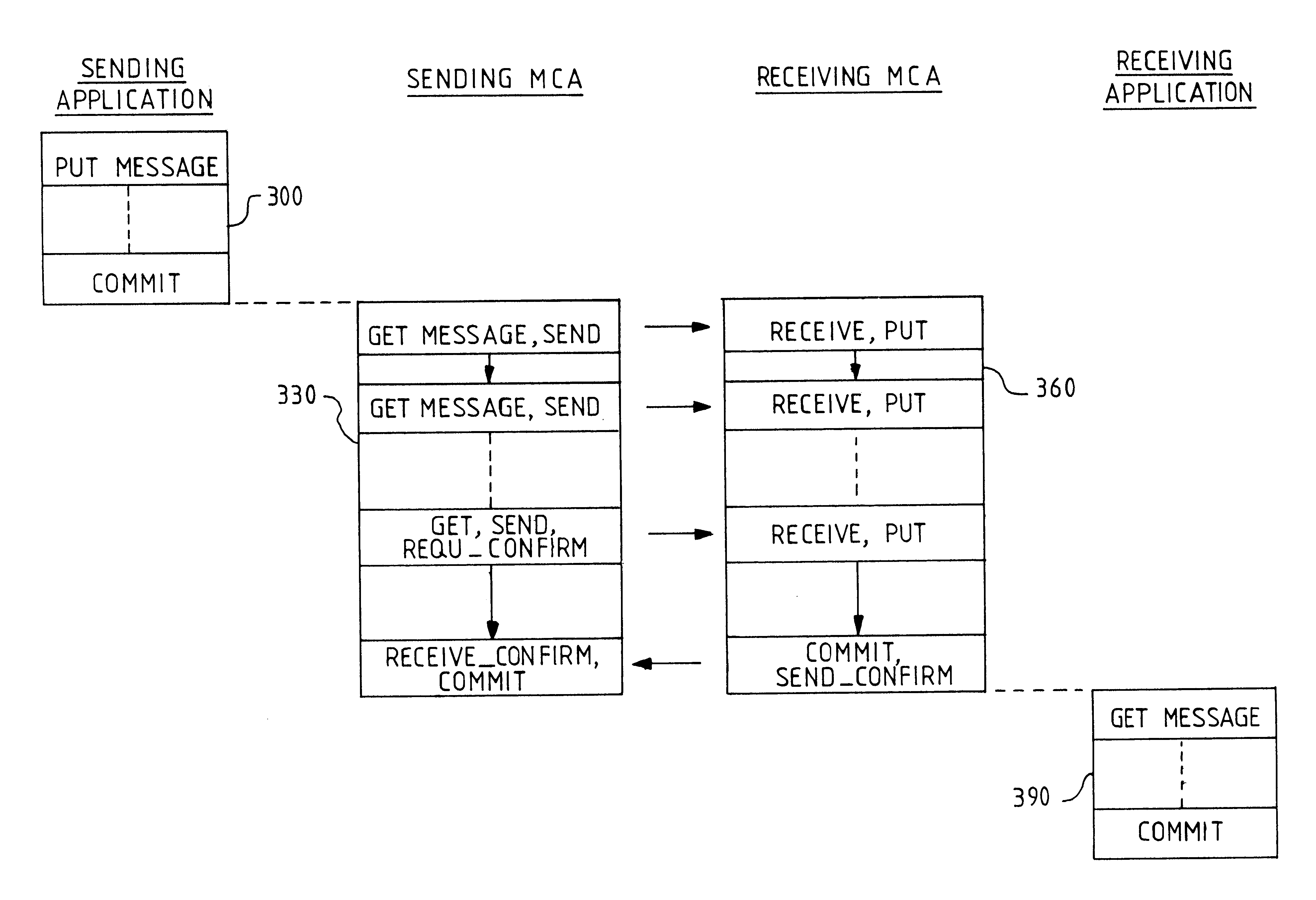

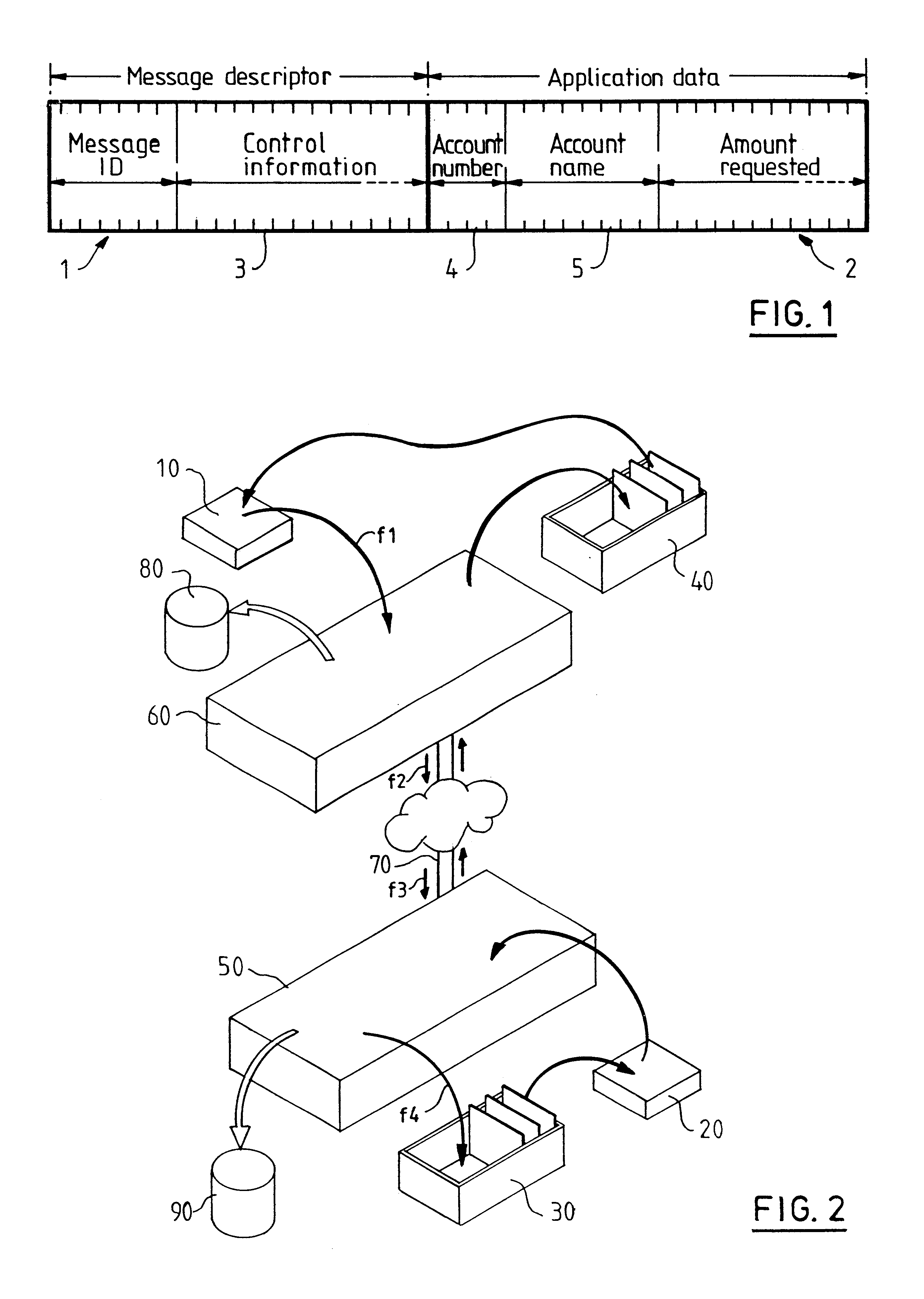

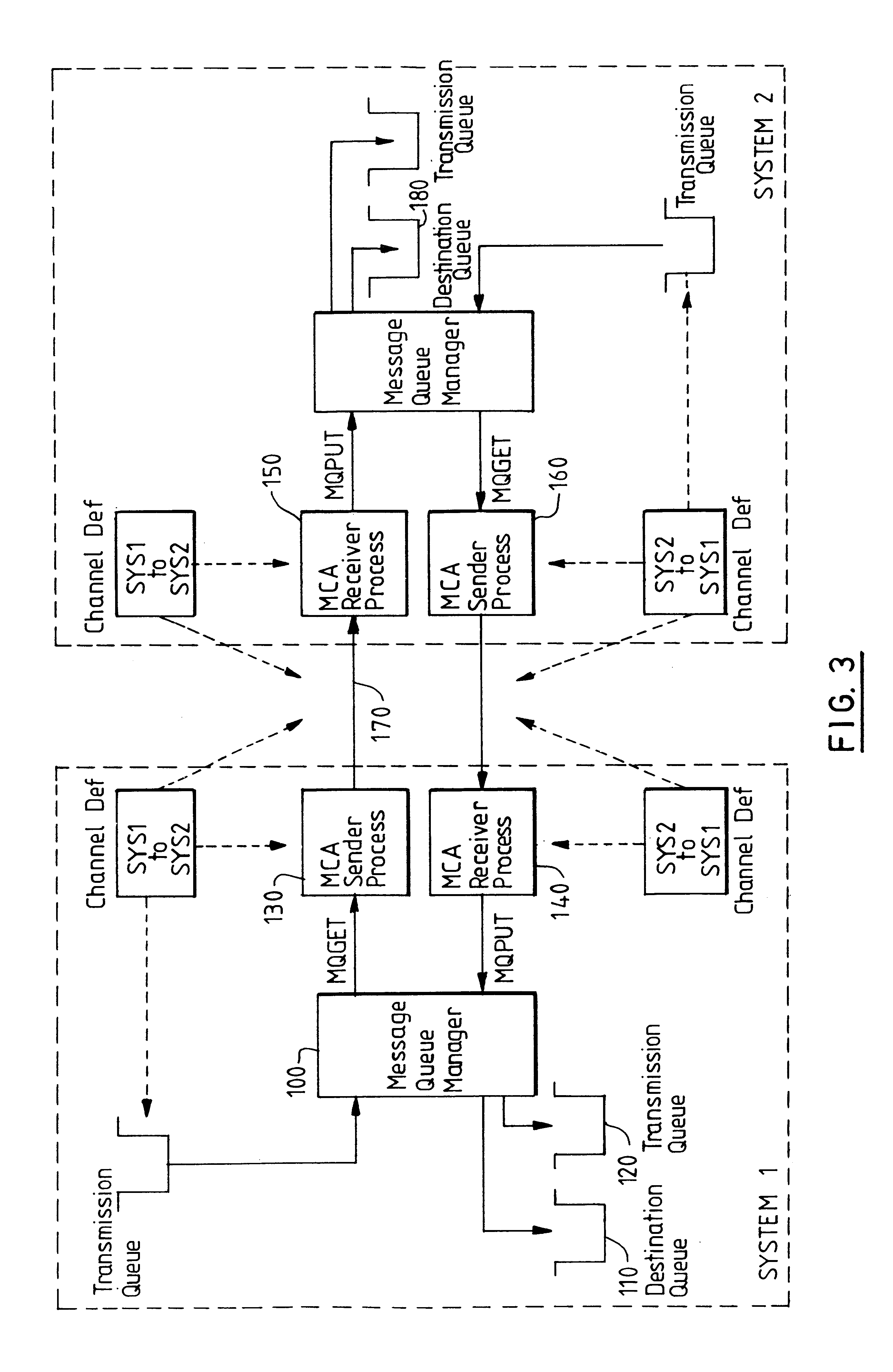

Method of transferring messages between computer programs across a network

A method of delivering messages between application programs is provided which ensures that no messages are lost and none are delivered more than once. The method uses asynchronous message queuing. One or more queue manager programs (100) is located at each computer of a network for controlling the transmission of messages to and from that computer. Messages to be transmitted to a different queue manager are put onto special transmission queues (120). Transmission to an adjacent queue manager comprises a sending process (130) on the local queue manager (100) getting messages from a transmission queue and sending them as a batch of messages within a syncpoint-manager-controlled unit of work. A receiving process (150) on the receiving queue manager receives the messages and puts them within a second syncpoint-manager-controlled unit of work to queues (180) that are under the control of the receiving queue manager. Commitment of the batch is coordinated by the sender transmitting a request for commitment and for confirmation of commitment with the last message of the batch, commit at the sender then being triggered by the confirmation that is sent by the receiver in response to the request.The invention avoids the additional message flow that is a feature of two-phase commit procedures, avoiding the need for resource managers to synchronise with each other. It further reduces the commit flows by permitting batching of a number of messages.

Owner:IBM CORP

Method and apparatus for providing interactive program guide (IPG) and video-on-demand (VOD) user interfaces

InactiveUS7124424B2Television system detailsDigital computer detailsMessage queueDistribution system

User interfaces for a number of services offered by an information distribution system. In one method, first (e.g., interactive program guide) and second (e.g., video-on-demand) applications are provided to support a first and second user interfaces for first and second services, respectively. A control mechanism coordinates the passing of control between the applications. A root application supports communication between the first and second applications and a hardware layer. The control mechanism may be implemented with first and second message queues maintained for the first and second applications, respectively. Control may be passed to an application via a (launch) message provided to the associated message queue. Each application is operable in an active or inactive state. Only one application is typically active at any given moment, and this application processes key inputs at the terminal. The transition between the active and inactive states may be based on occurrence of events.

Owner:COX COMMUNICATIONS

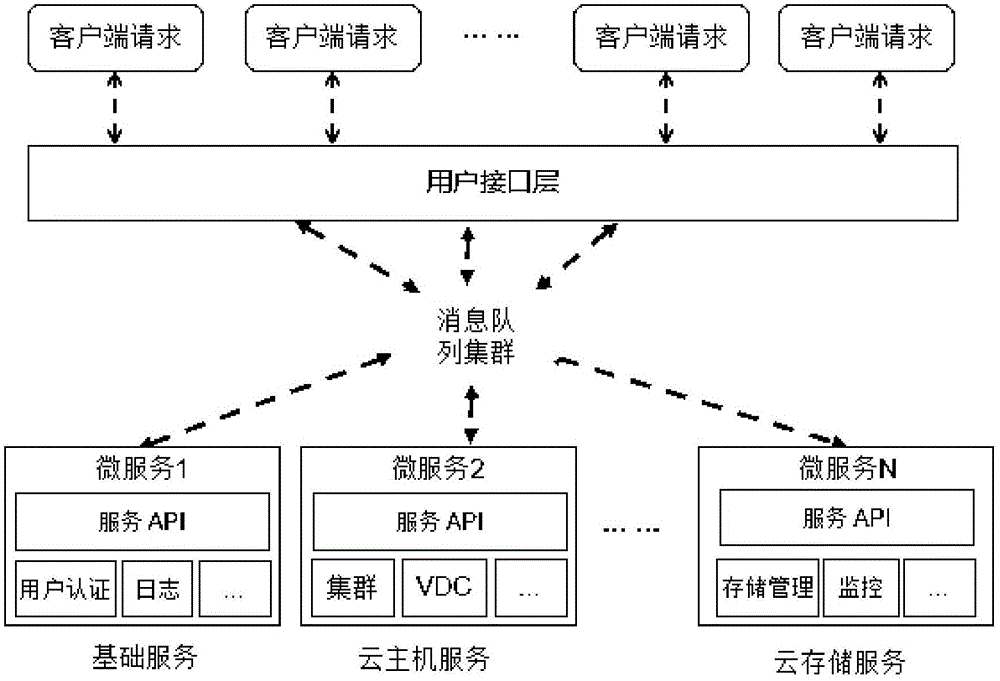

Cloud management platform based on micro-service architecture

InactiveCN105162884AReal-time deploymentImprove deployment efficiencyData switching networksMessage queueService Component Architecture

The invention provides a cloud management platform based on a micro-service architecture. The cloud management platform comprises a user interface layer for processing a function request instruction of a terminal user, at least one cloud micro-service module for providing function services for the terminal user via an API interface and a message queue cluster for completing information interaction between the user interface layer and the cloud micro-service modules, wherein the cloud micro-service modules are service components into which cloud application functions are decomposed by a function boundary. In the cloud management platform based on the micro-service architecture, the cloud application functions are decomposed into the service components by the function boundary; each service component corresponds to one cloud micro-service module; the plurality of cloud micro-service modules are utilized to realize the functions of the cloud applications; due to the own characteristics of micro-services, the cloud management platform based on the micro-service architecture is capable of realizing real-time deployment; besides, micro-services have independent running processes so that each cloud micro-service in the cloud management platform can be independently deployed, and therefore, the deployment efficiency of the cloud management platform is improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

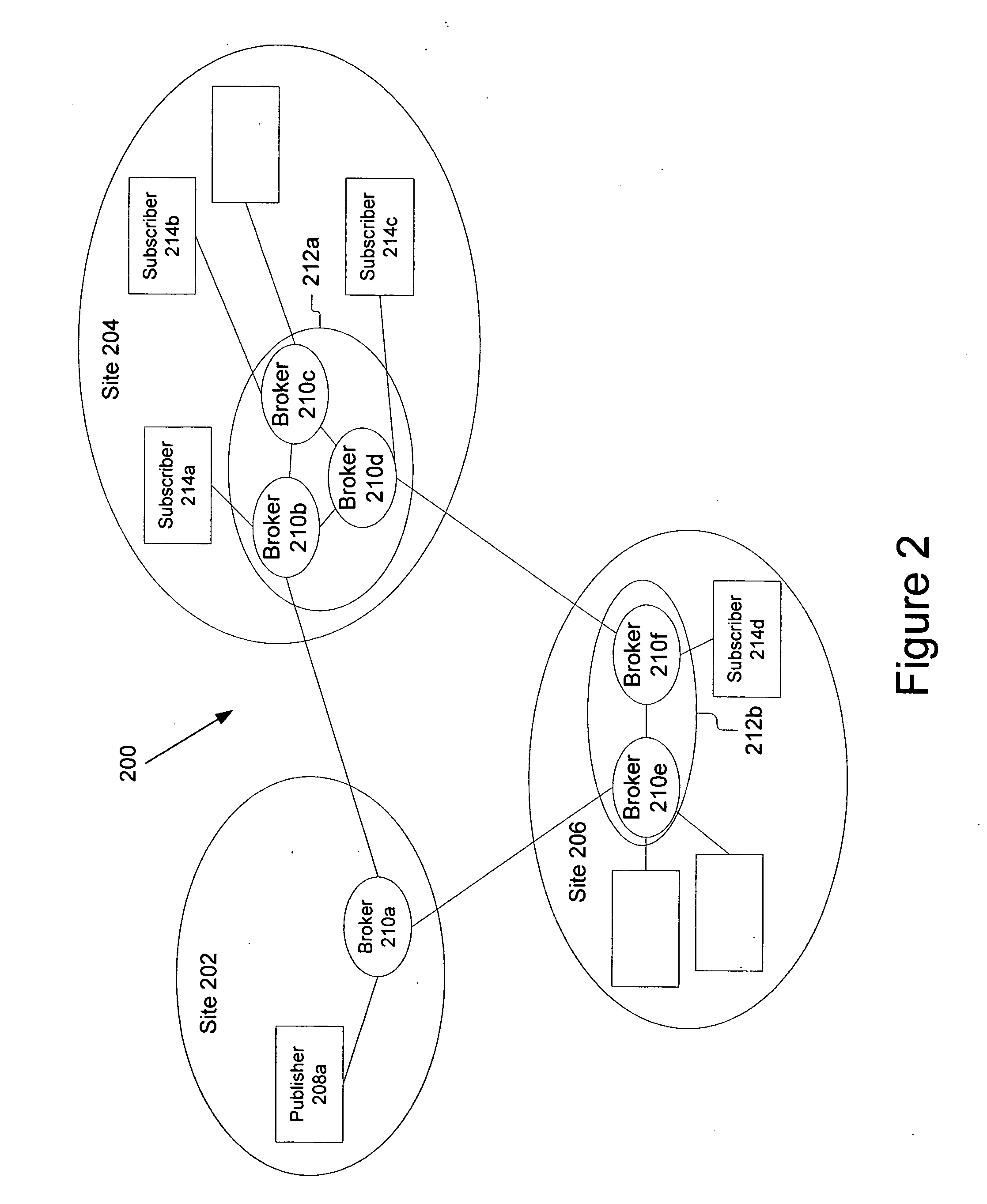

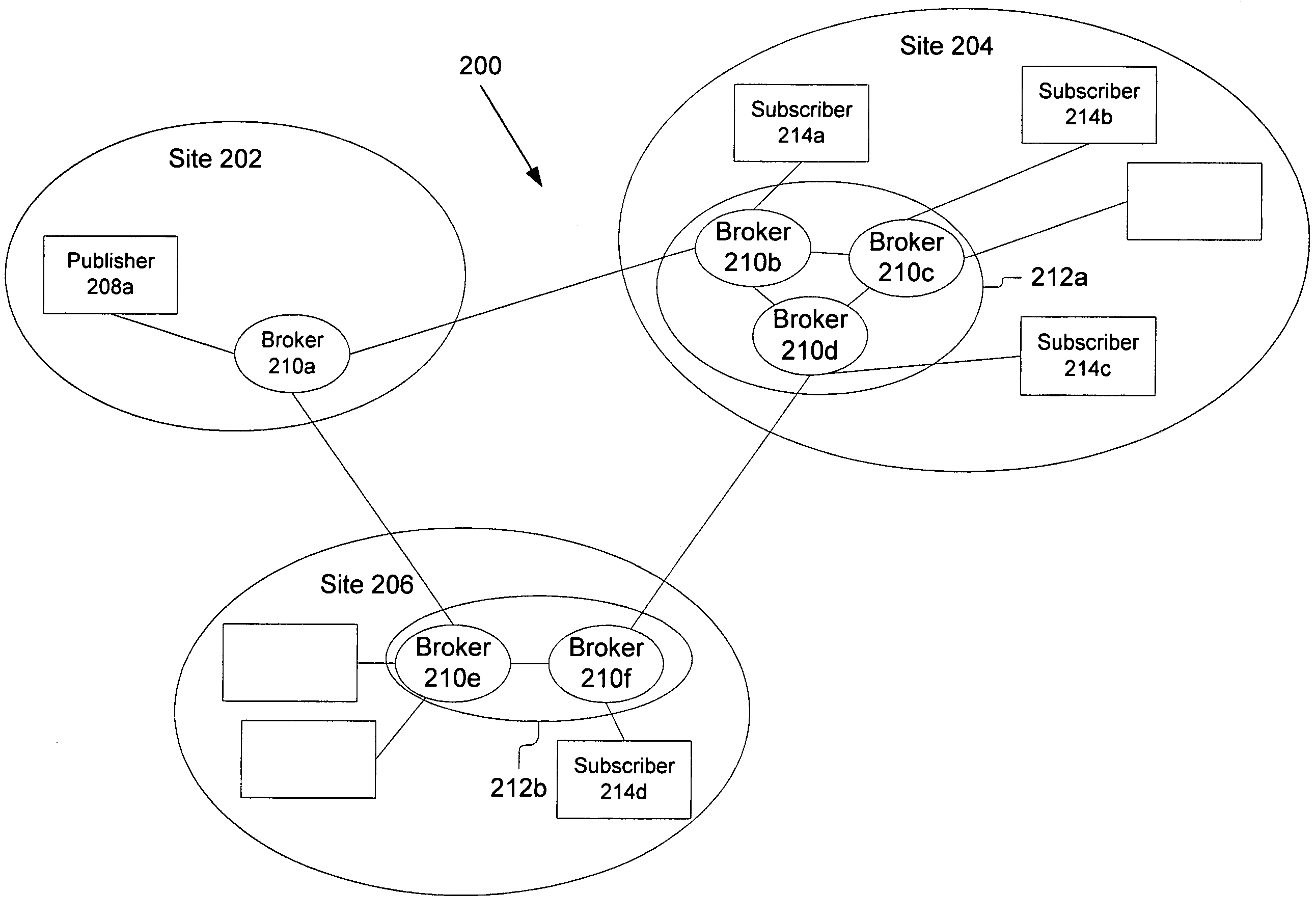

Dynamic subscription and message routing on a topic between publishing nodes and subscribing nodes

ActiveUS7406537B2Allocation is accurateDigital computer detailsData switching networksMessage queueMessage routing

A system for dynamic message routing on a topic between publishing nodes and subscribing nodes includes a plurality of message queues, at least one topic / node table, a subscribing module, a publishing module, and other modules to send messages between one or more publisher and one or more subscribers. These modules are coupled together by a bus in a plurality of nodes and provide for the dynamic message routing on a topic between publishing nodes and subscribing nodes. The message queues store messages at each node for delivery to subscribers local to that node. The topic / node table lists which clients subscribe to which topics, and is used by the other modules to ensure proper distribution of messages. The subscribing module is use to establish a subscription to a topic for that node. The publishing module is used to identify subscribers to a topic and transmit messages to subscribers dynamically. The other modules include various devices to optimize message communication in a publish / subscribe architecture operating on a distributed computing system. The present invention also includes a number of novel methods including: a method for publishing a message on a topic, a method for forwarding a message on a topic, a method for subscribing to messages on a topic, a method for automatically removing subscribers, a method for direct publishing of messages, and methods for optimizing message transmission between nodes.

Owner:AUREA SOFTWARE

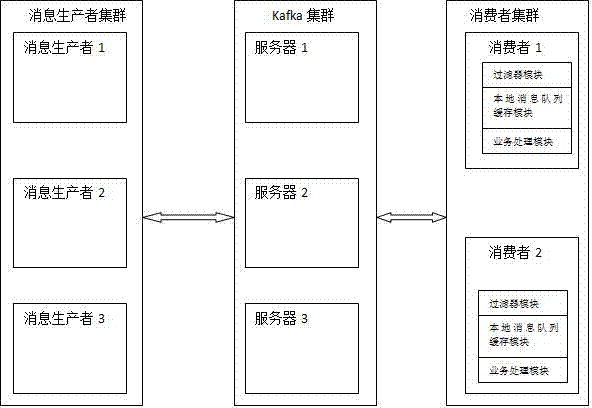

Message processing system and processing method based on kafka

InactiveCN104754036AEasy to handleReduce visitsData switching networksMessage queueResource consumption

The invention discloses a message processing system and processing method based on kafka and belongs to the technical field of message processing. The message processing system and processing method based on kafka are aimed to solve the problems of the prior art that repeated messages appear in an order system frequently, the processing for each message consumes much system resource, the system resource consumption would greatly increase the database visitor volume at the consumer end, the message processing ability is lowered, and the system stability and service effectiveness are lowered. The message processing system based on kafka comprises a message producer cluster composed of one or more message producers, a kafka cluster, and a consumer cluster composed of one or more consumers, the kafka cluster is composed of one or more servers, and the consumer cluster comprises a filter module, a local message queue caching module and a business processing module. The message processing method realized through the system is used for the system especially the order system which needs to process a large number of messages.

Owner:HEYI INFORMATION TECH BEIJING

Systems and methods for providing centralized management of heterogeneous distributed enterprise application integration objects

InactiveUS7383355B1Multiple digital computer combinationsTransmissionEnterprise application integrationMessage queue

In the distributed enterprise application integration system, modularized components located on multiple hosts are centrally managed so as to facilitate communication among application programs. Collaboration services traditionally associated with a central server, such as, for example, message queues, message publishers / subscribers, and message processes, are instead distributed to multiple hosts and monitored by a central registry service. This system allow configuration management to be performed in a central location using a top-level approach, while implementation and execution tasks are distributed and delegated to various components that communicate with the applications.

Owner:ORACLE INT CORP

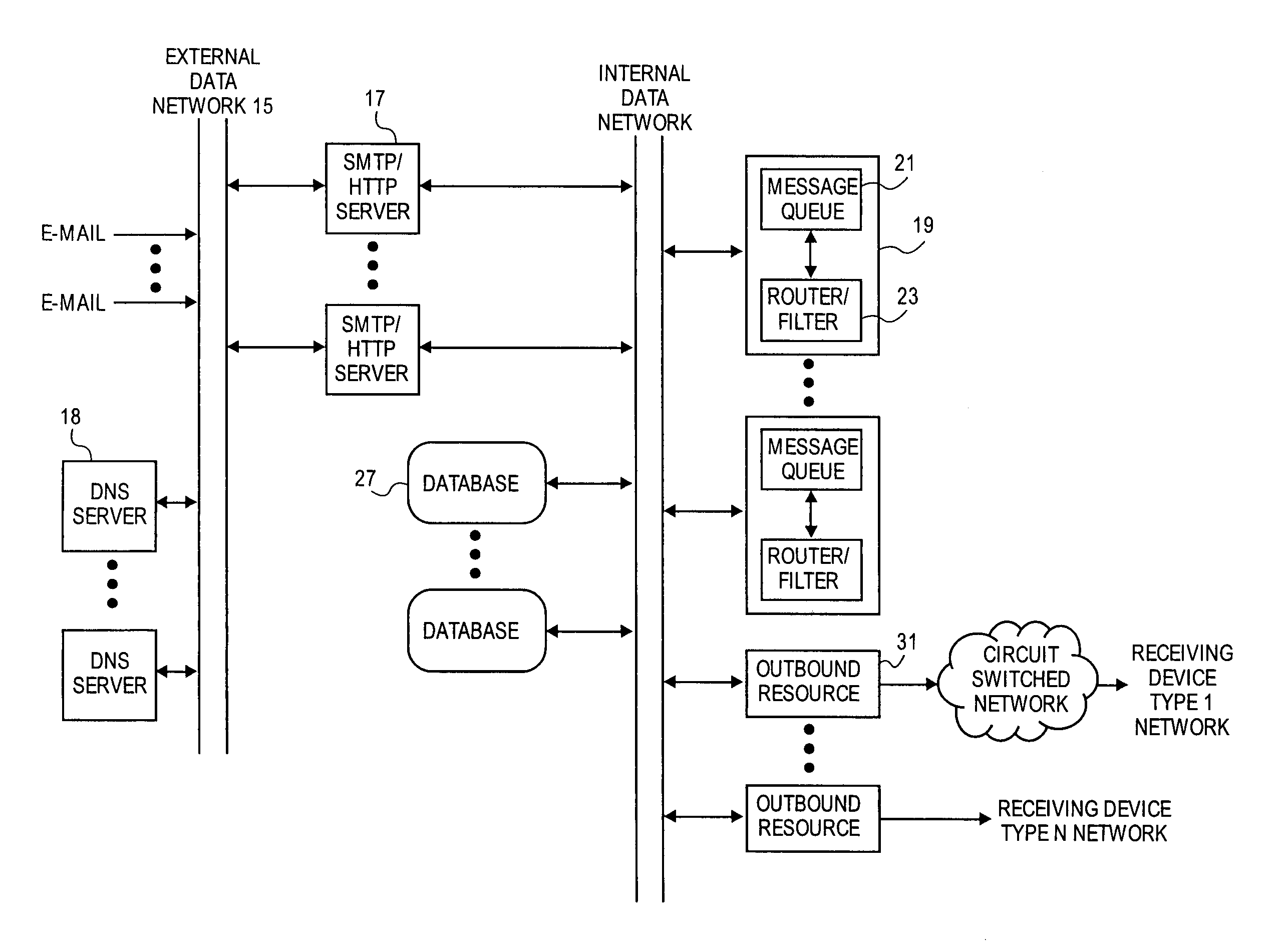

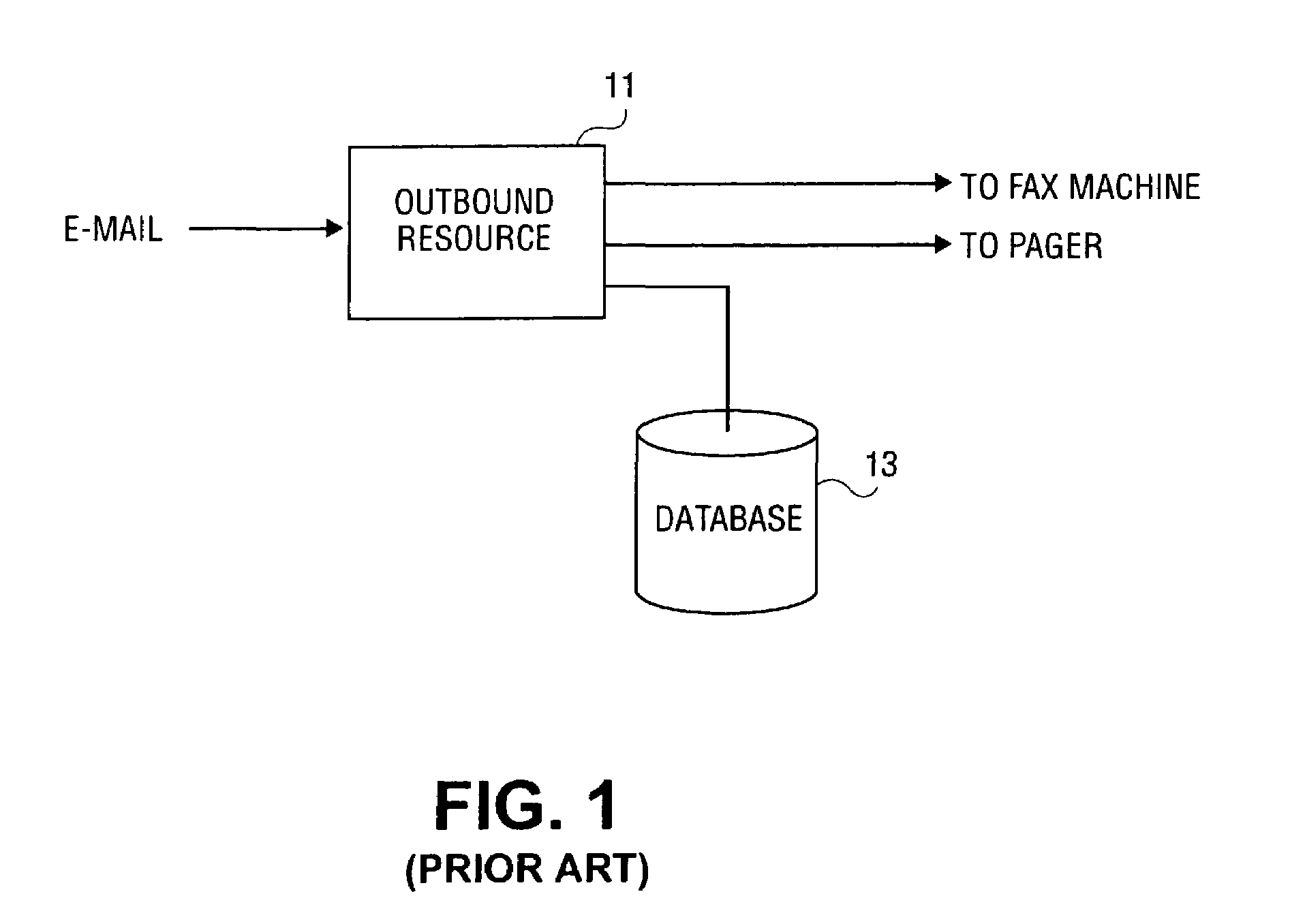

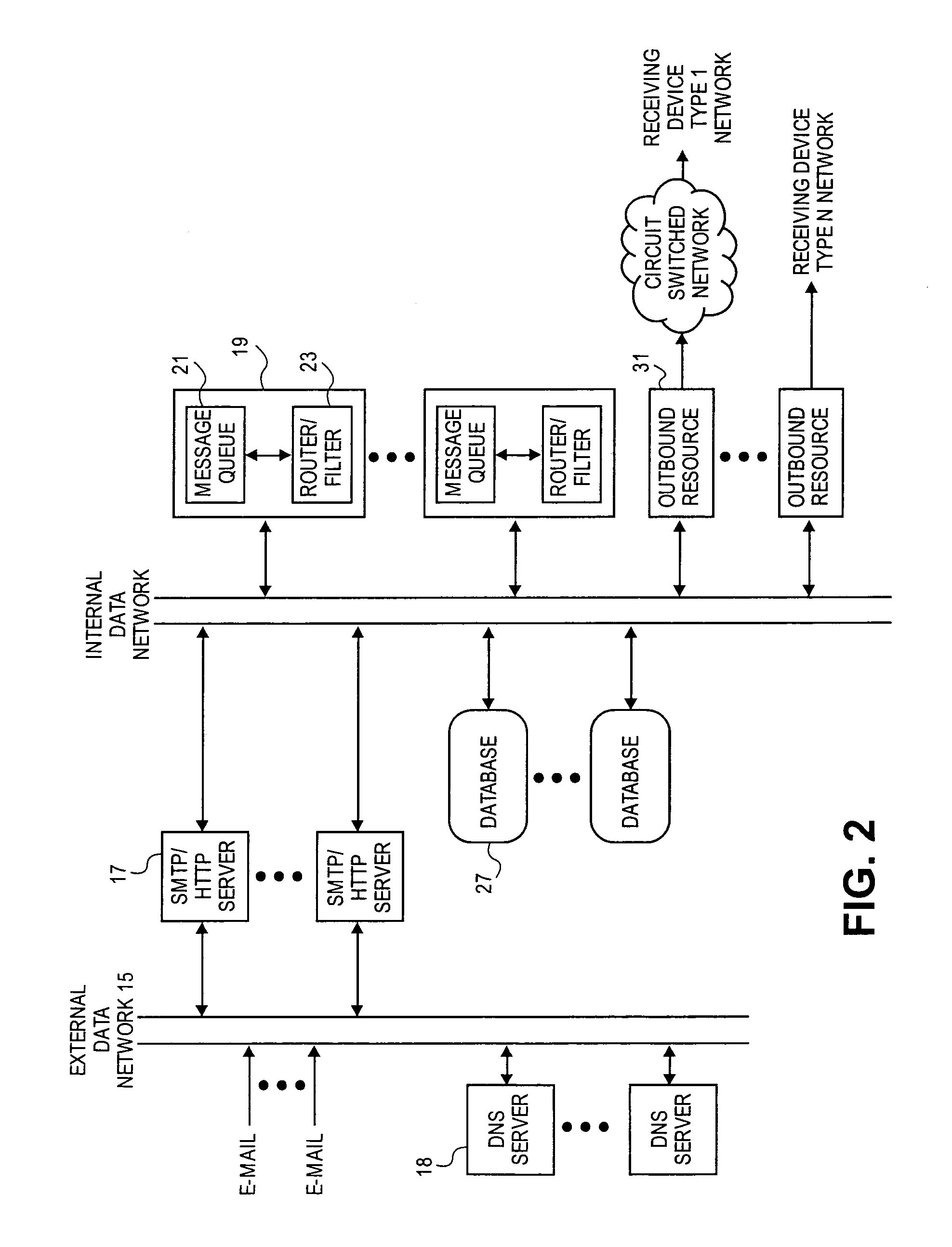

Scalable architecture for transmission of messages over a network

InactiveUS7020132B1Improve scalabilityHigh degreeMultiplex system selection arrangementsHybrid transportMessage queueNetworked Transport of RTCM via Internet Protocol

A method and apparatus is disclosed for delivering messages that utilizes a message queue and a router / filter within a private data network. The private network is connected to an external data network such as the Internet, and has separate outbound resource servers to provide a high degree of scalability for handling a variety of message types.

Owner:J2 CLOUD SERVICES LLC

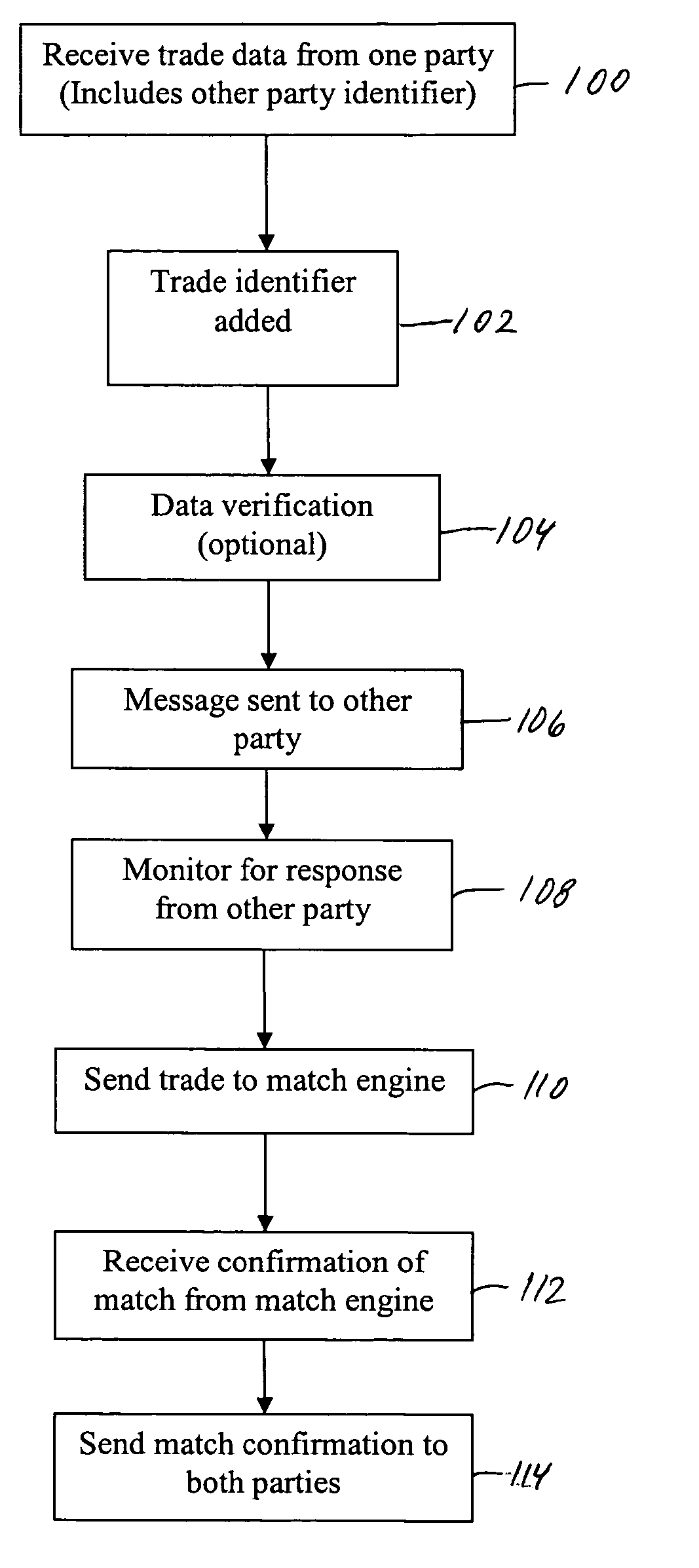

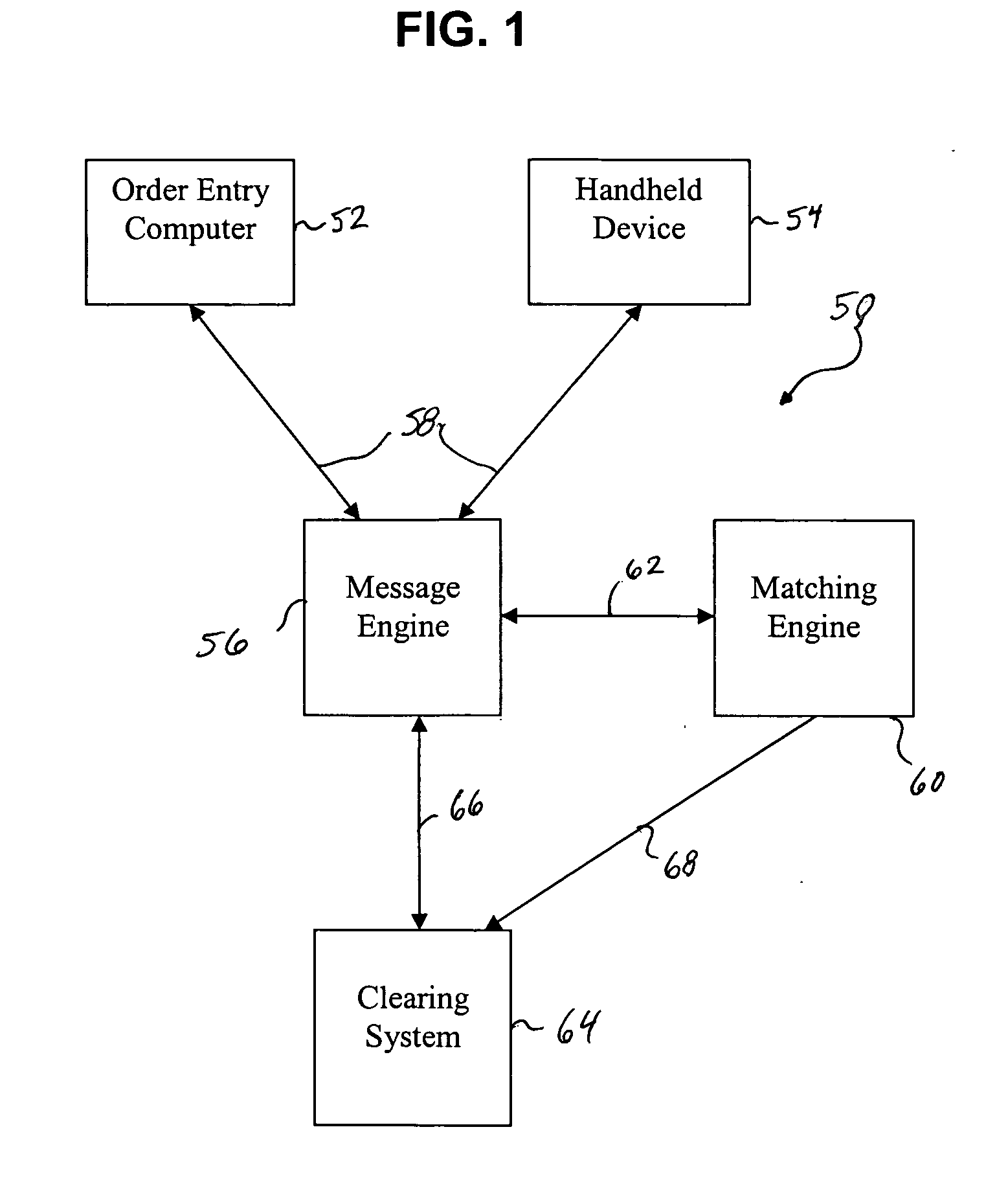

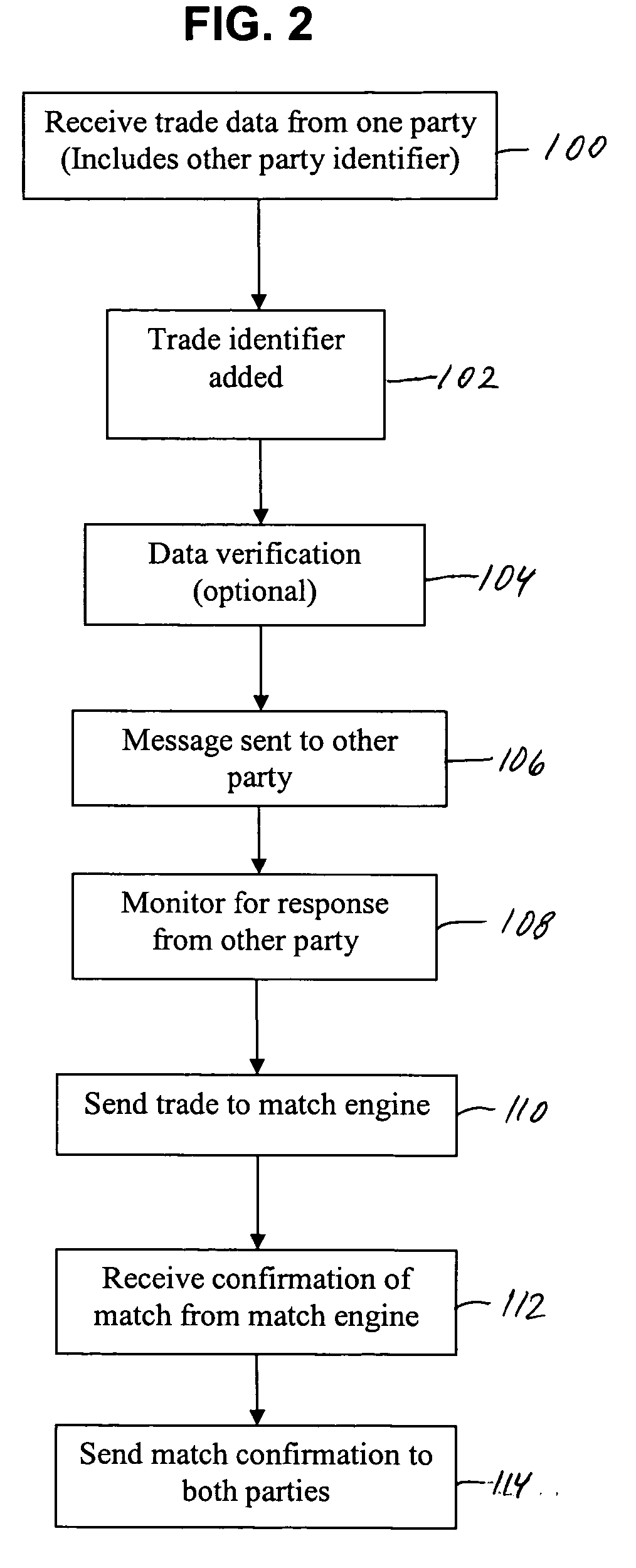

Intra-day matching message system and method

A system and method that provides a message engine to facilitate intra-day receiving of first data relative to the trade transaction on behalf of one party to the trade transaction, wherein the first data include a code identifying an opposing party to the transaction; sending a message to the designated opposing party prior to submitting the trade transaction for matching prior to end-of-day clearing; and monitoring a message queue for a response and sending a message to at least one of the parties based on the response.

Owner:BOARD OF TRADE OF THE CITY OF CHICAGO

Method and apparatus for parallel sequencing of messages between disparate information systems

ActiveUS20070118601A1Facilitating concurrent processingConducive to diversificationMultiple digital computer combinationsData switching networksMessage queueParallel sorting

A system and method are provided for coordinating concurrent processing of a plurality of messages communicated over a network between a source system and a target system. The plurality of messages are configured for including a pair of related messages having a common first unique message identifier and at least one unrelated message having a second unique message identifier different from the first unique identifier. The system and method comprise an input queue for storing the plurality of messages when received and an output message queue for storing the plurality of messages during message processing. The output message queue includes a plurality of execution streams for facilitating concurrent processing of the at least one unrelated message with at least one of the pair of related messages. The system and method also include a sequencer module coupled to the output message queue for determining using the first and second unique identifiers which of the plurality of messages are the pair of related messages and which of the plurality of messages are the at least one unrelated message. The sequencer module is further configured for identifying a sequence order for the pair of related messages according to a respective sequence indicator associated with each of the related messages, such that the sequence indicators are configured for use in assigning a first position in the sequence order for a first message of the pair of related messages and for use in assigning a second position in the sequence order for a second message of the pair of related messages. The system and method also have a registry coupled to the sequencer module and configured for storing a pending message status indicator for the first message in the output message queue, wherein the sequencer module inhibits the progression of processing through the output message queue for the second message until the pending message status for the first message is removed from the registry while facilitating concurrent processing of the at least one unrelated message though the output message queue.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

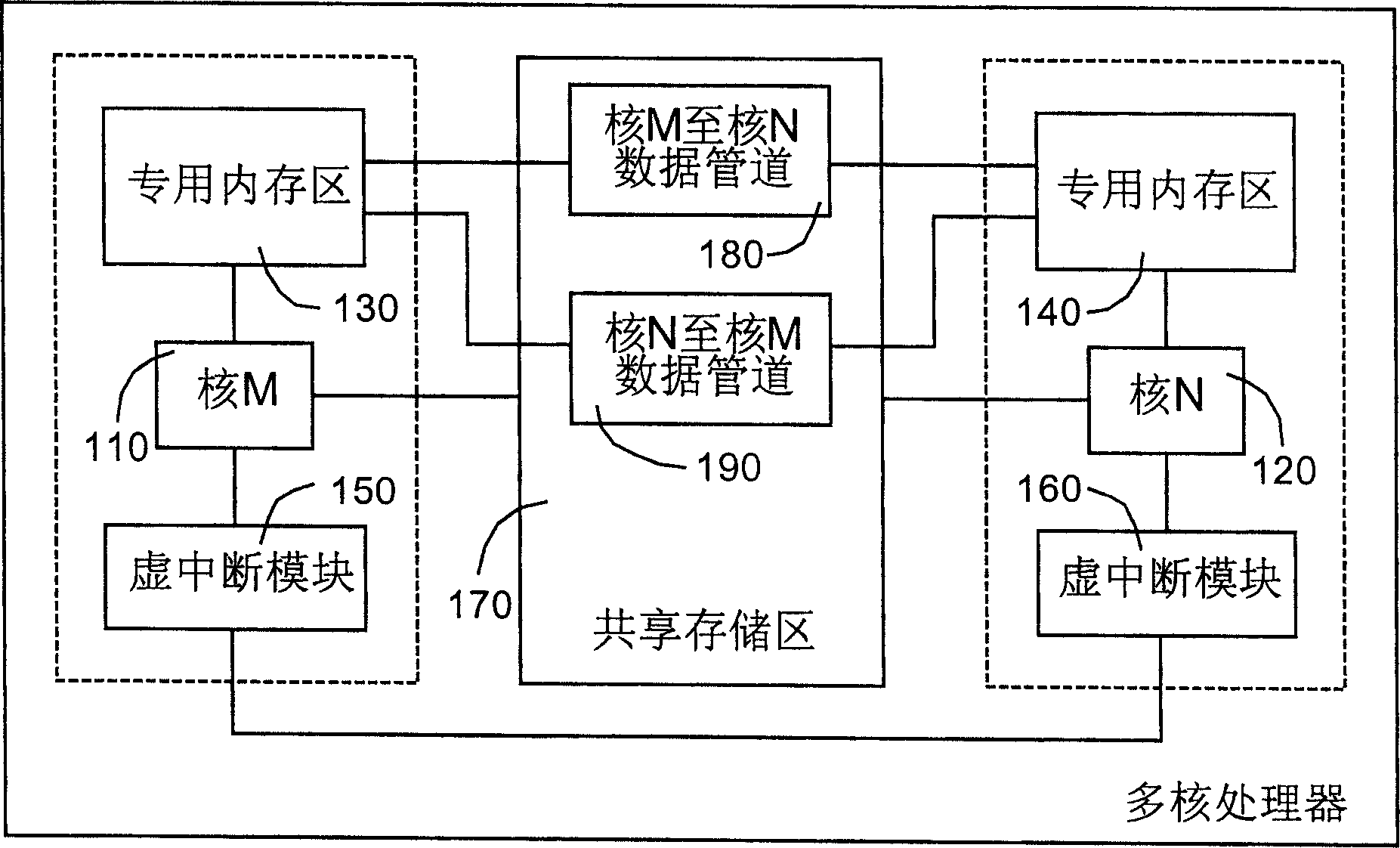

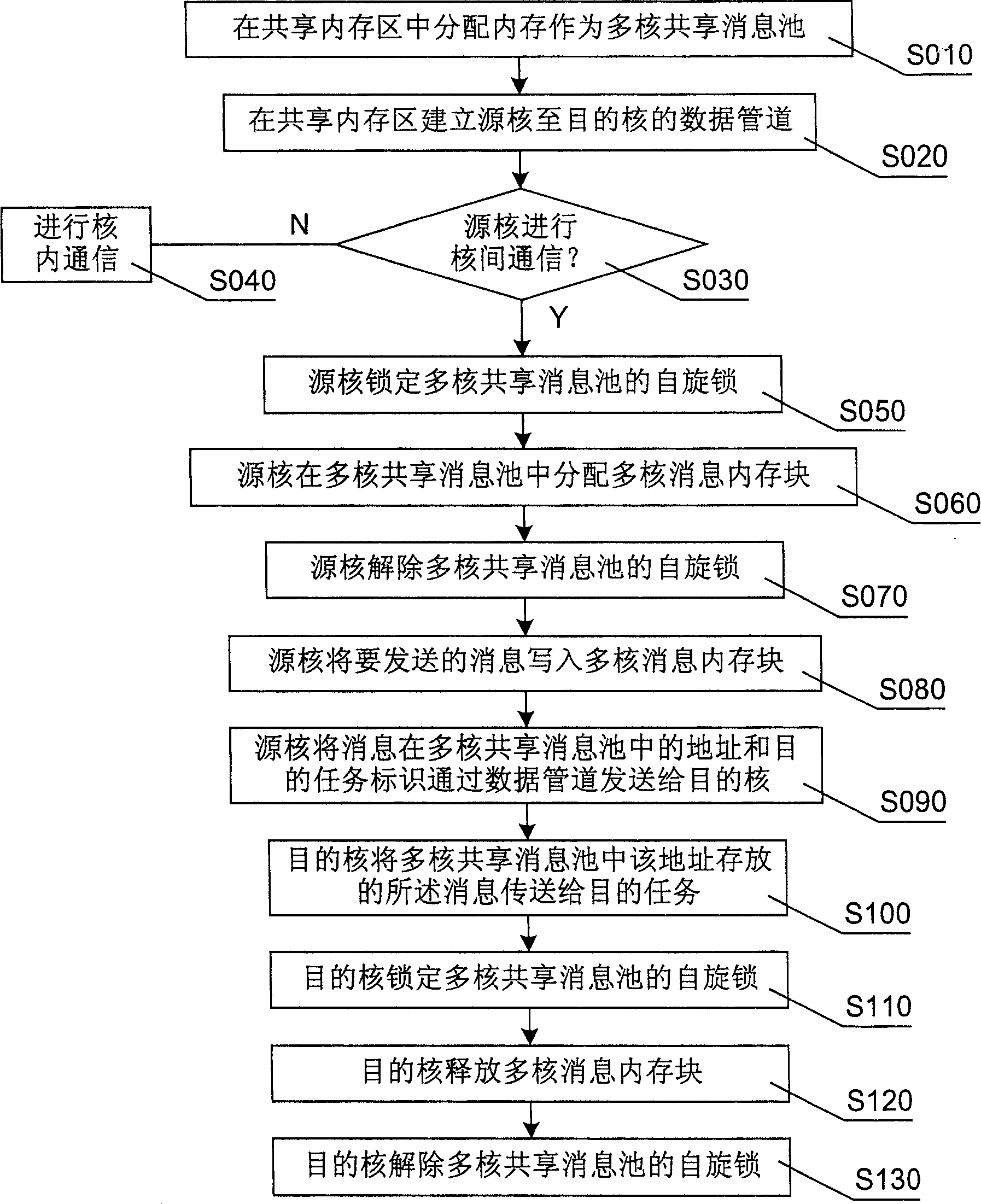

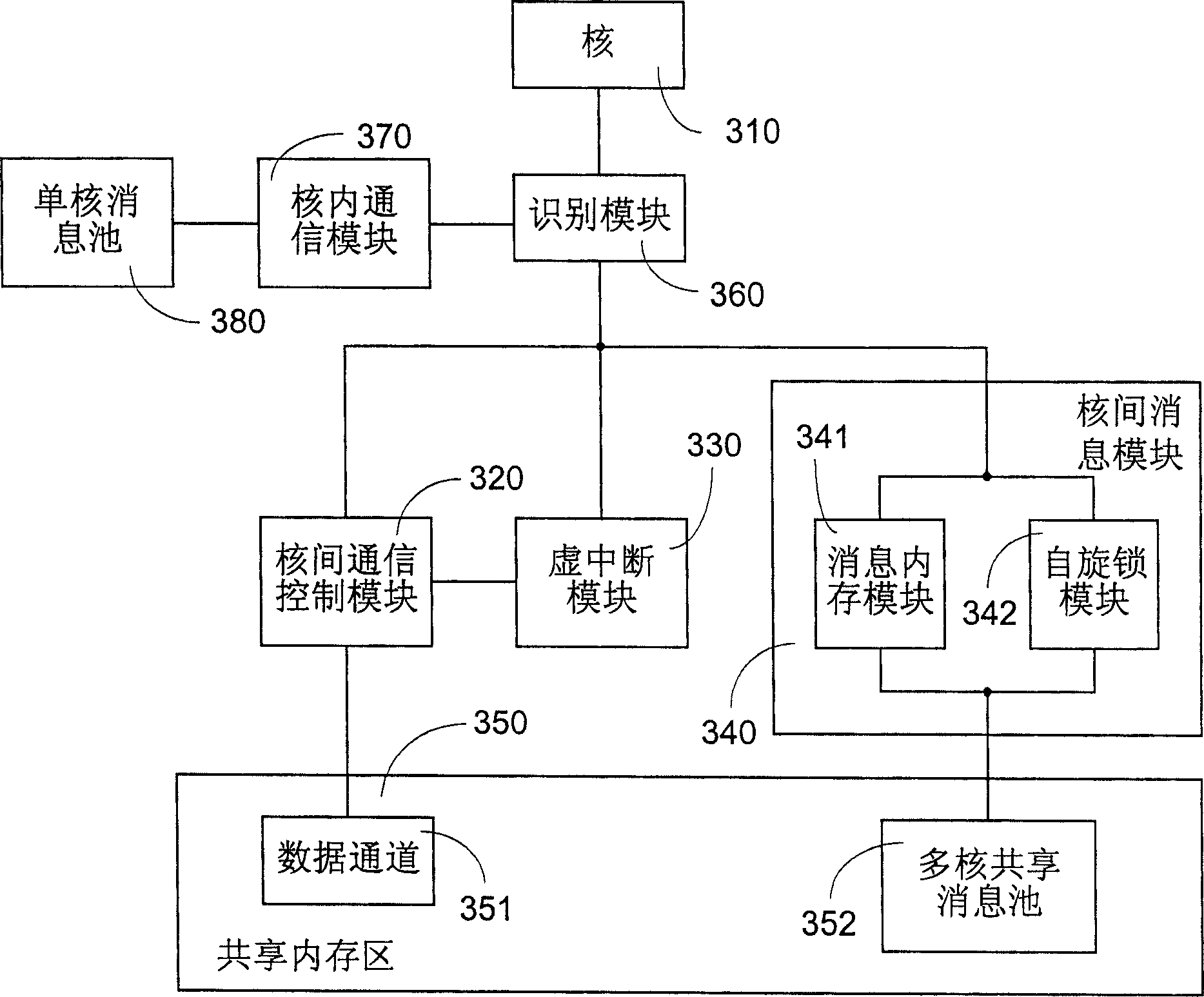

Inter core communication method and apparatus for multi-core processor in embedded real-time operating system

ActiveCN1904873APrevent copyingImprove efficiencyDigital computer detailsElectric digital data processingMessage queueDistributed memory

The invention discloses an inter-core communicating method of multi-core processor in embedded real time operation system. It includes following steps: distributing memory as multi-core information sharing pool; writing information the source core would send into the pool; sending the information to target core; target core sending the information storing in pool to target mission. The invention also discloses an inter-core communicating device. It improves the inter-core communicating efficiency and realizes the unification of inner core communication and inter-core communication.

Owner:DATANG MOBILE COMM EQUIP CO LTD

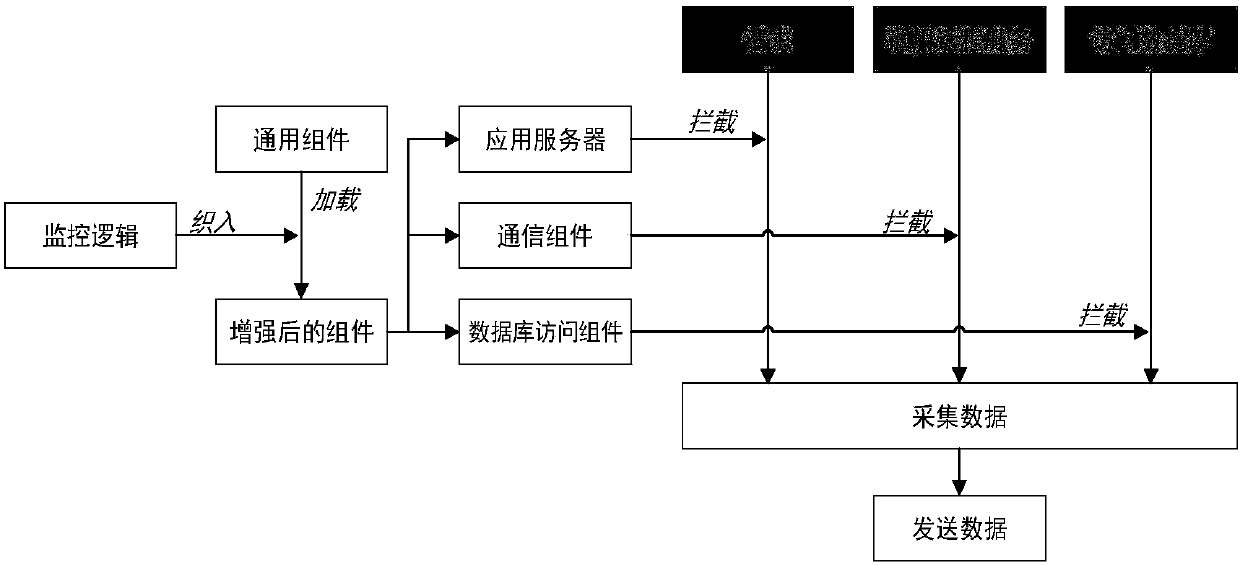

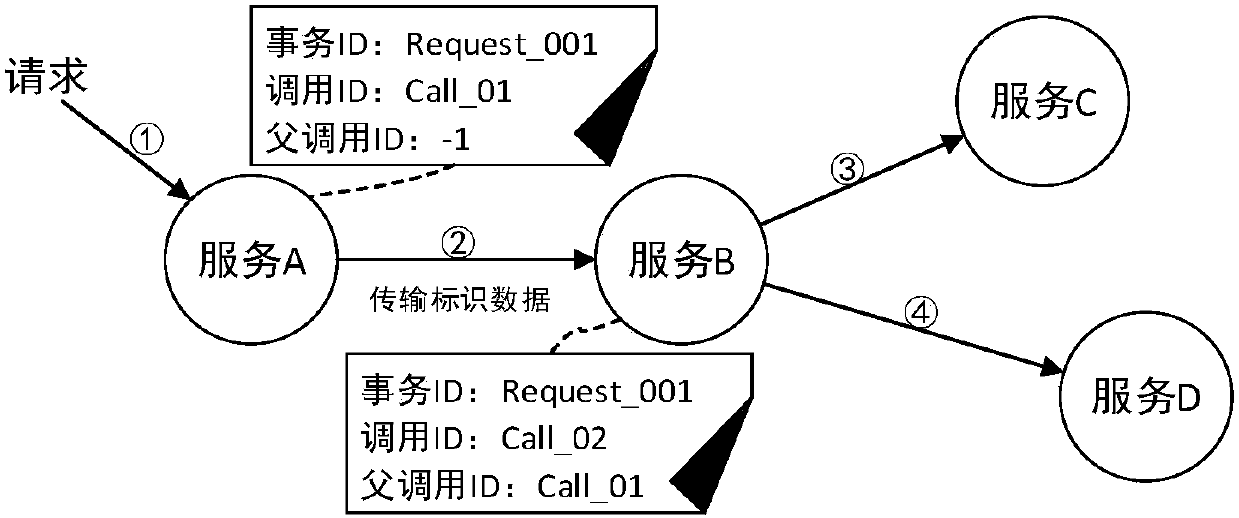

Microservice architecture span process tracking-oriented monitoring system and method

InactiveCN107766205AEffective positioningInterprogram communicationDatabase distribution/replicationMessage queueData acquisition

The invention discloses a microservice architecture span process tracking-oriented monitoring system and method. The system mainly includes a data collection module, a data transmission module and a data storage module. The data collection module adopts dynamic AOP technology to embed monitoring logic code into a common component without the need for modifying service code, and ensures transparency of a monitoring function to an application system. The data transmission module adopts an asynchronous manner based on a message queue, uses message middleware to realize accessing and transmissionof monitoring data, mitigates the problem that speeds of both data transmission and data processing are not synchronized, and also reduces coupling between the data collection module and the data storage module at the same time. For data storage, a user transaction request is used as a unit to record situations, which contain multiple service spans, for the one transaction request. The monitoringsystem uses a column-type model database for data storage.

Owner:WUHAN UNIV

Method and device for managing priority during the transmission of a message

ActiveUS20050117589A1Easy to manageLow costMultiple digital computer combinationsStore-and-forward switching systemsMessage queueInterconnection

Method of managing priority during the transmission of a message, in an interconnections network comprising at least one transmission agent which comprises at least one input and at least one output, each input comprising a means of storage organized as a queue of messages. A message priority is assigned during the creation of the message, and a queue priority equal to the maximum of the priorities of the messages of the queue is assigned to at least one queue of messages of an input. A link priority is assigned to a link linking an output of a first transmission agent to an input of a second transmission agent, equal to the maximum of the priorities of the queues of messages of the inputs of said first agent comprising a first message destined for that output of said first agent which is coupled to said link, and the priority of the link is transmitted to that input of said second agent which is coupled to the link.

Owner:QUALCOMM TECHNOLOGIES INC

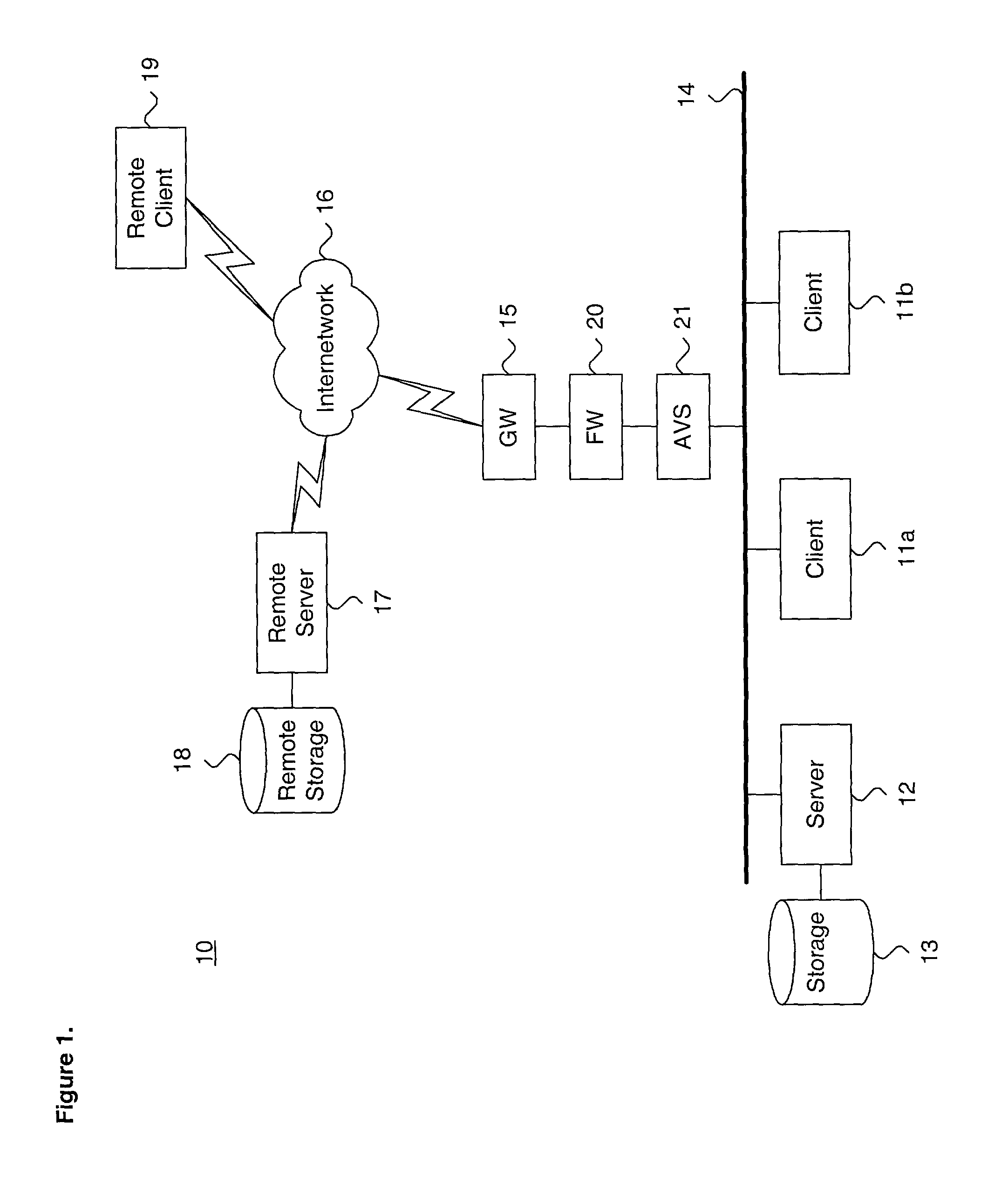

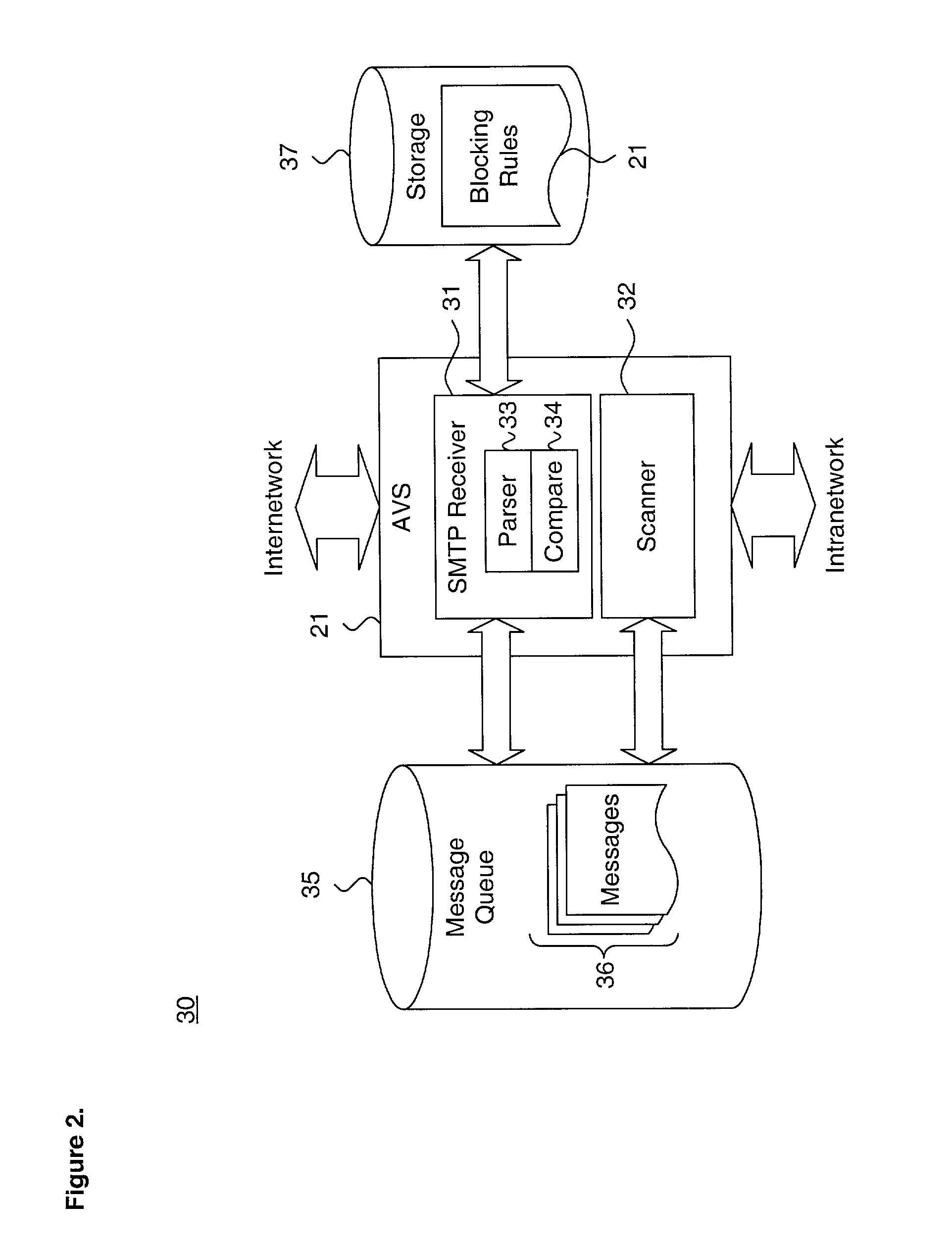

System and method for providing dynamic screening of transient messages in a distributed computing environment

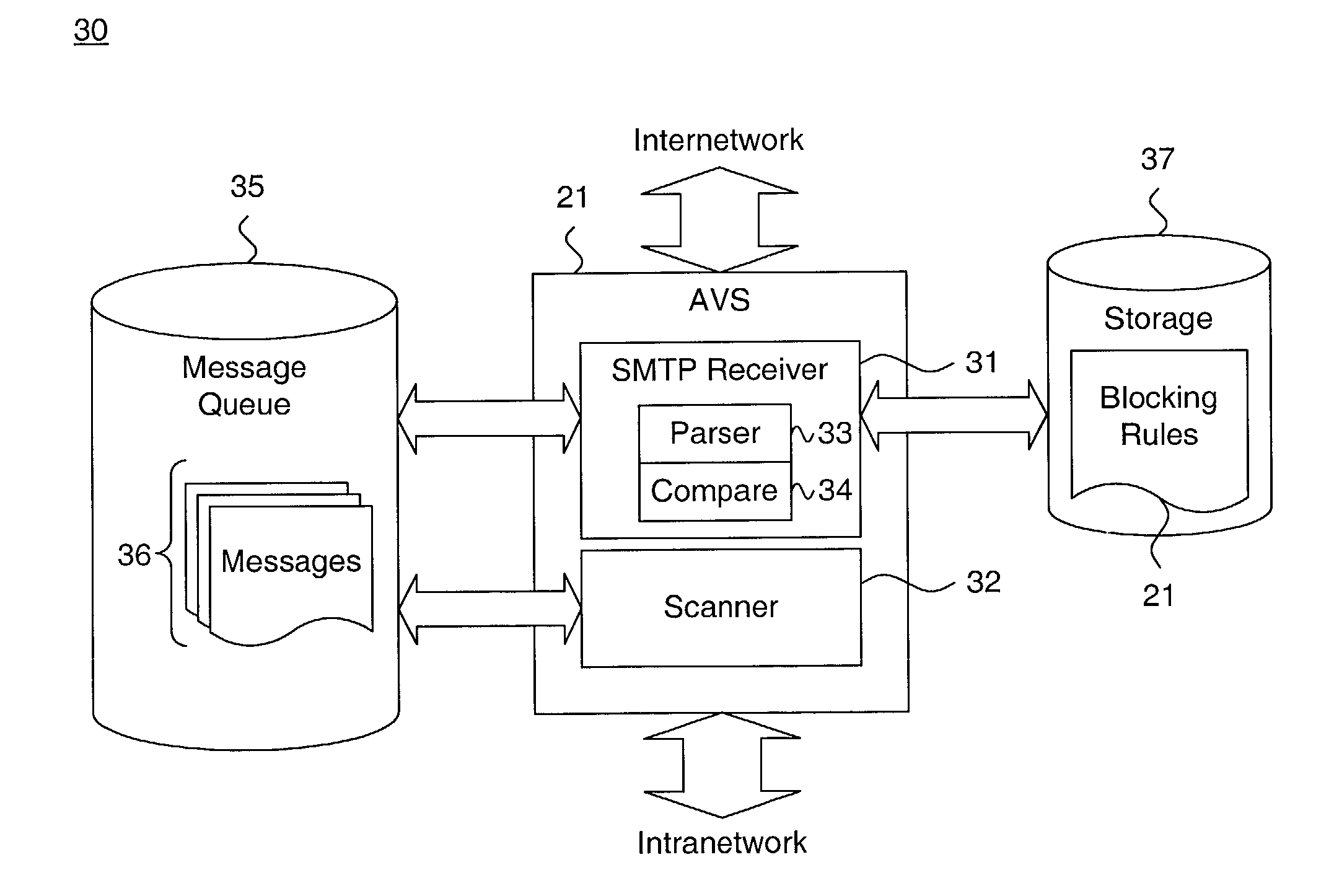

ActiveUS7117533B1Efficient detectionMemory loss protectionDigital computer detailsMessage queueDistributed Computing Environment

A system and method for providing dynamic screening of transient messages in a distributed computing environment is disclosed. An incoming message is intercepted at a network domain boundary. The incoming message includes a header having a plurality of address fields, each storing contents. A set of blocking rules is maintained. Each blocking rule defines readily-discoverable characteristics indicative of messages infected with at least one of a computer virus, malware and bad content. The contents of each address field are identified and checked against the blocking rules to screen infected messages and identify clean messages. Each such clean message is staged into an intermediate message queue pending further processing.

Owner:MCAFEE LLC

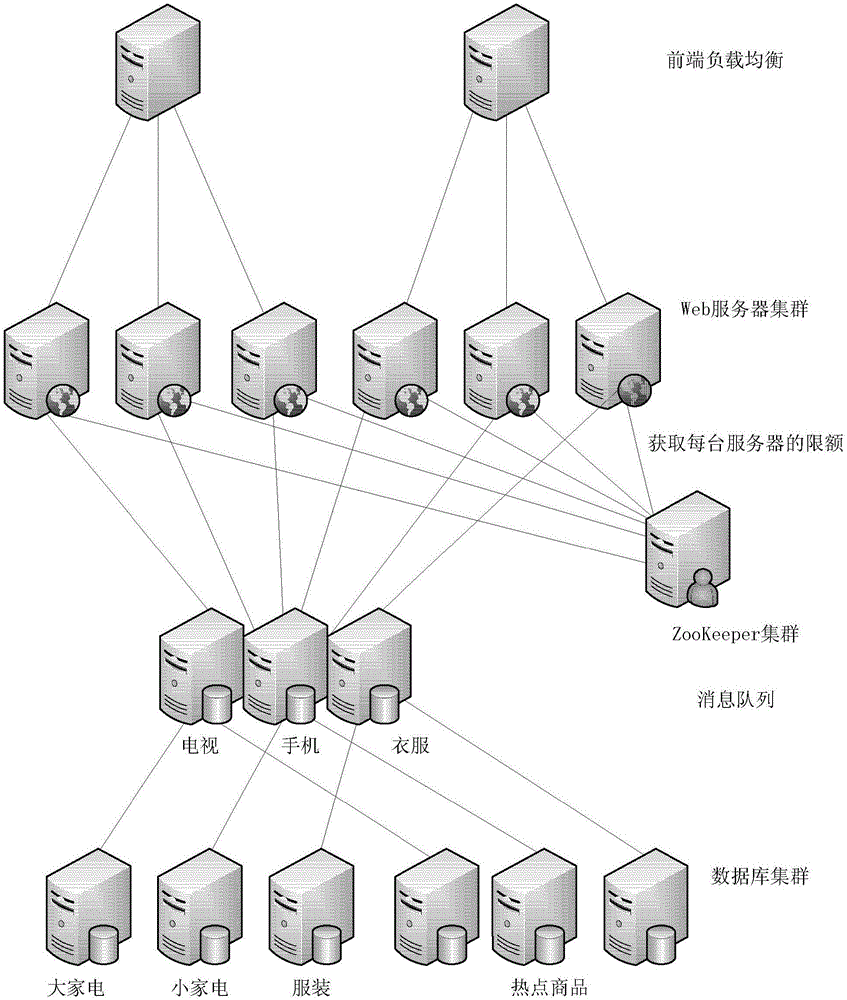

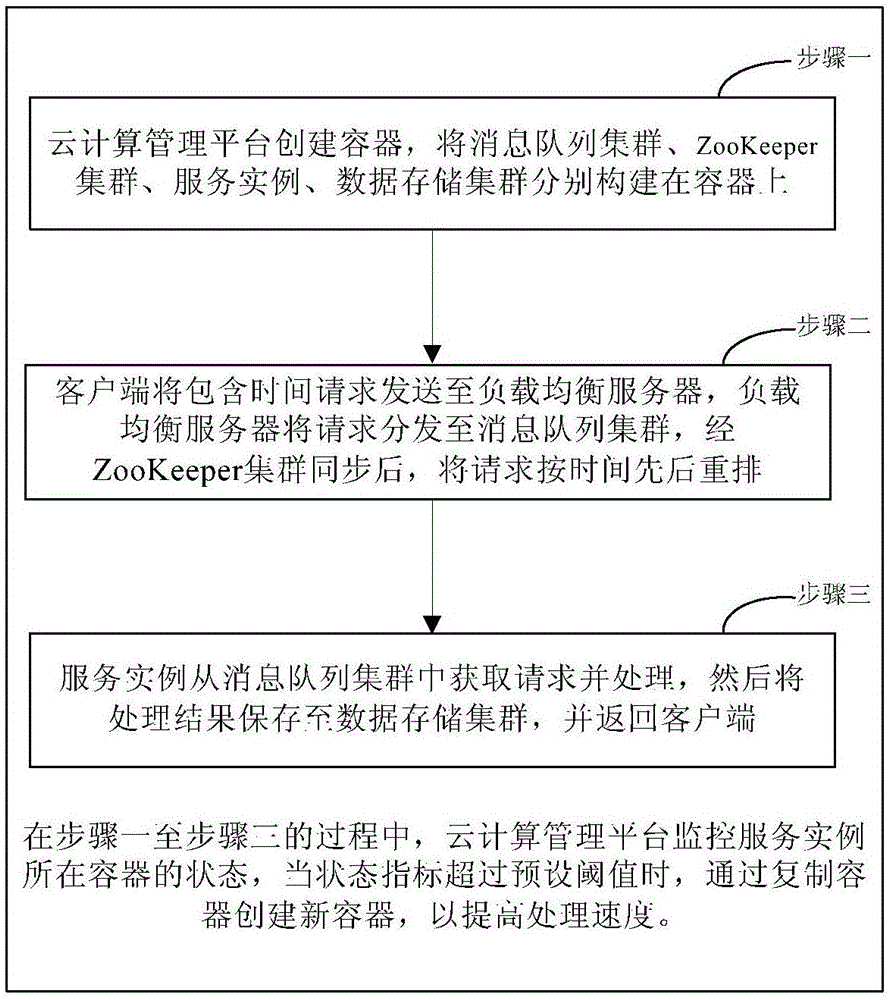

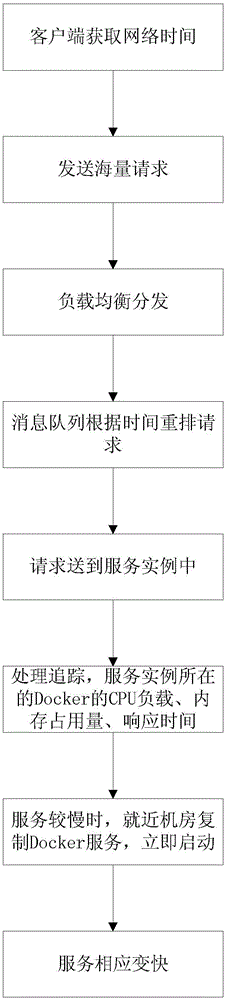

Elastic cloud distributed massive request processing method, device and system

ActiveCN106453564ACreate quicklyImprove service processing performanceTransmissionMessage queueElastic cloud

The invention provides an elastic cloud distributed massive request processing method, device and system, and can instantaneously and automatically carry out expansion when a server cannot respond to a peak value. The method comprises the steps that: S1: a cloud computing management platform creates a container, and a message queue cluster, a ZooKeeper cluster, a service instance and a data storage cluster are respectively constructed on the container; S2: a client sends requests containing time to a load balancing server, the load balancing server distributes the requests to the message queue cluster, and after being synchronized by the ZooKeeper cluster, the requests are rearranged according to a time sequence; S3: the service instance acquires the requests from the message queue cluster, processes the requests, then stores a processing result to the data storage cluster, and returns the processing result to the client; and in the process of S1 to S3, the cloud computing management platform monitors a state of the container where the service instance is positioned, and when a state index exceeds a preset threshold, a new container is created by duplicating the container so as to improve a processing speed.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

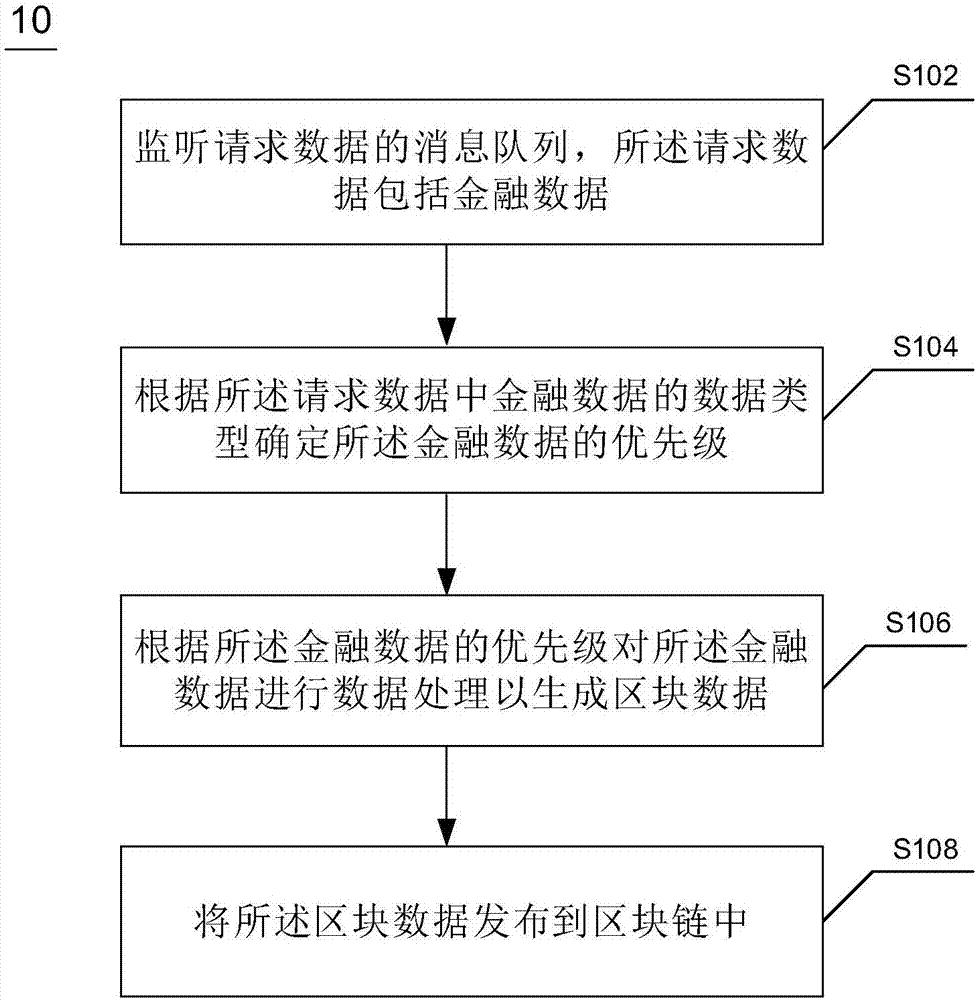

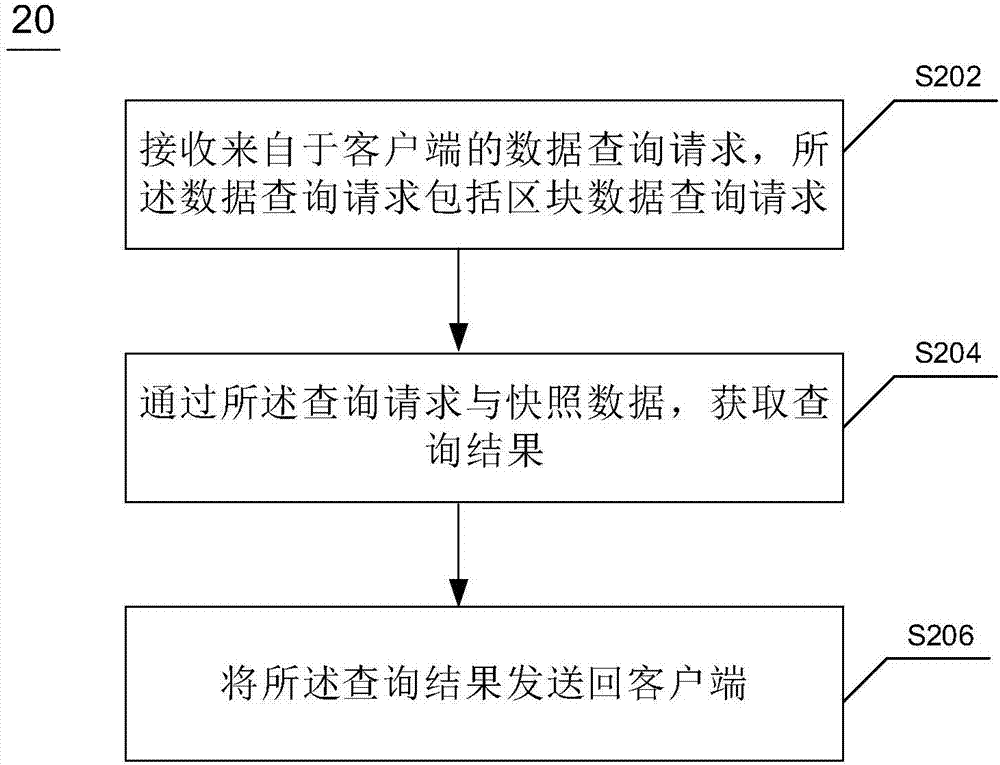

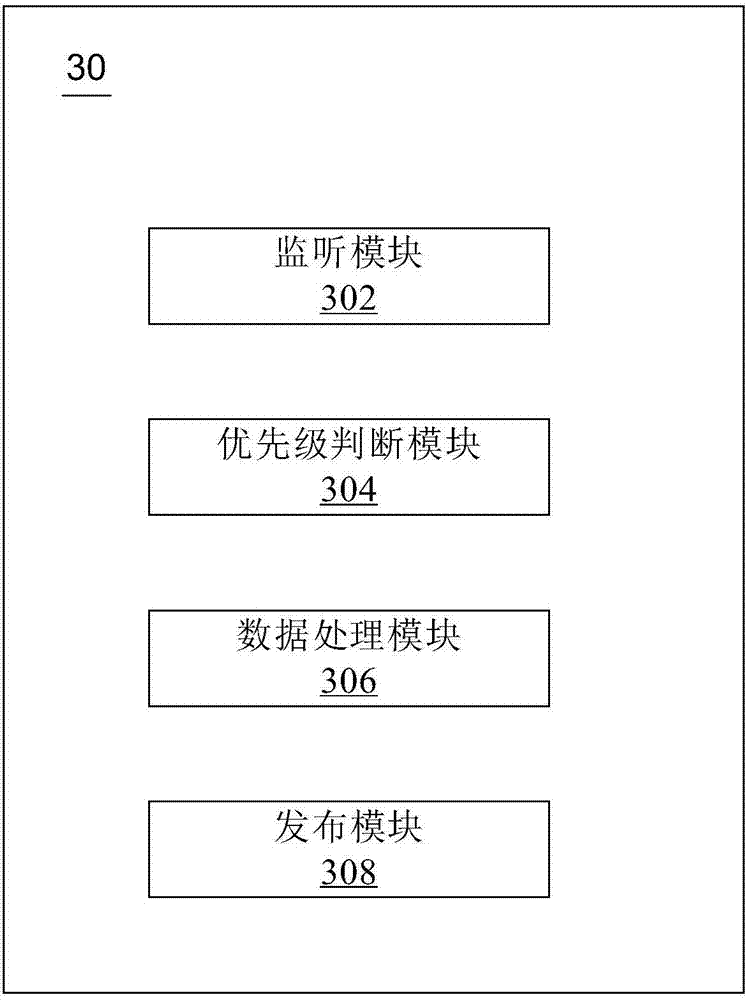

Financial data processing method and device based on blockchain and electronic equipment

InactiveCN106991164ASolve high concurrency issuesDatabase distribution/replicationSpecial data processing applicationsMessage queueFinancial data processing

The invention discloses a financial data processing method and device based on the blockchain and electronic equipment. The method includes the steps that a message queue of request data is monitored, wherein the request data includes financial data; the priority of the financial data is determined according to the data type of the financial data in the request data; the financial data is processed according to the priority of the financial data to generate block data; the block data is issued into the blockchain. The financial data processing method and device based on the blockchain and the electronic equipment can solve the problem of high concurrency of the upper chains of transactions in the process of issuing the financial data into the blockchain.

Owner:JINGDONG TECH HLDG CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com