Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

505 results about "Concurrency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, concurrency is the ability of different parts or units of a program, algorithm, or problem to be executed out-of-order or in partial order, without affecting the final outcome. This allows for parallel execution of the concurrent units, which can significantly improve overall speed of the execution in multi-processor and multi-core systems. In more technical terms, concurrency refers to the decomposability property of a program, algorithm, or problem into order-independent or partially-ordered components or units.

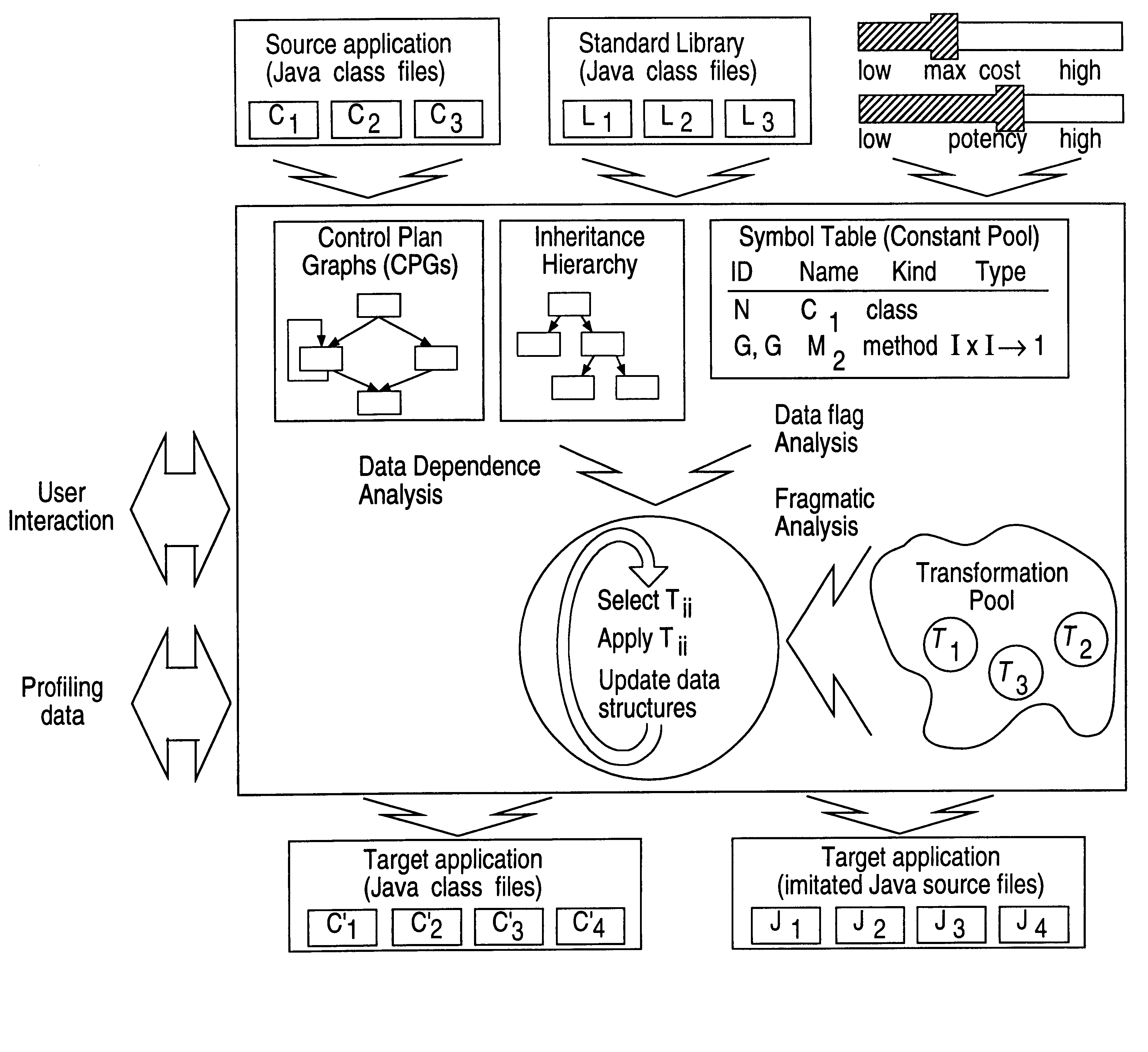

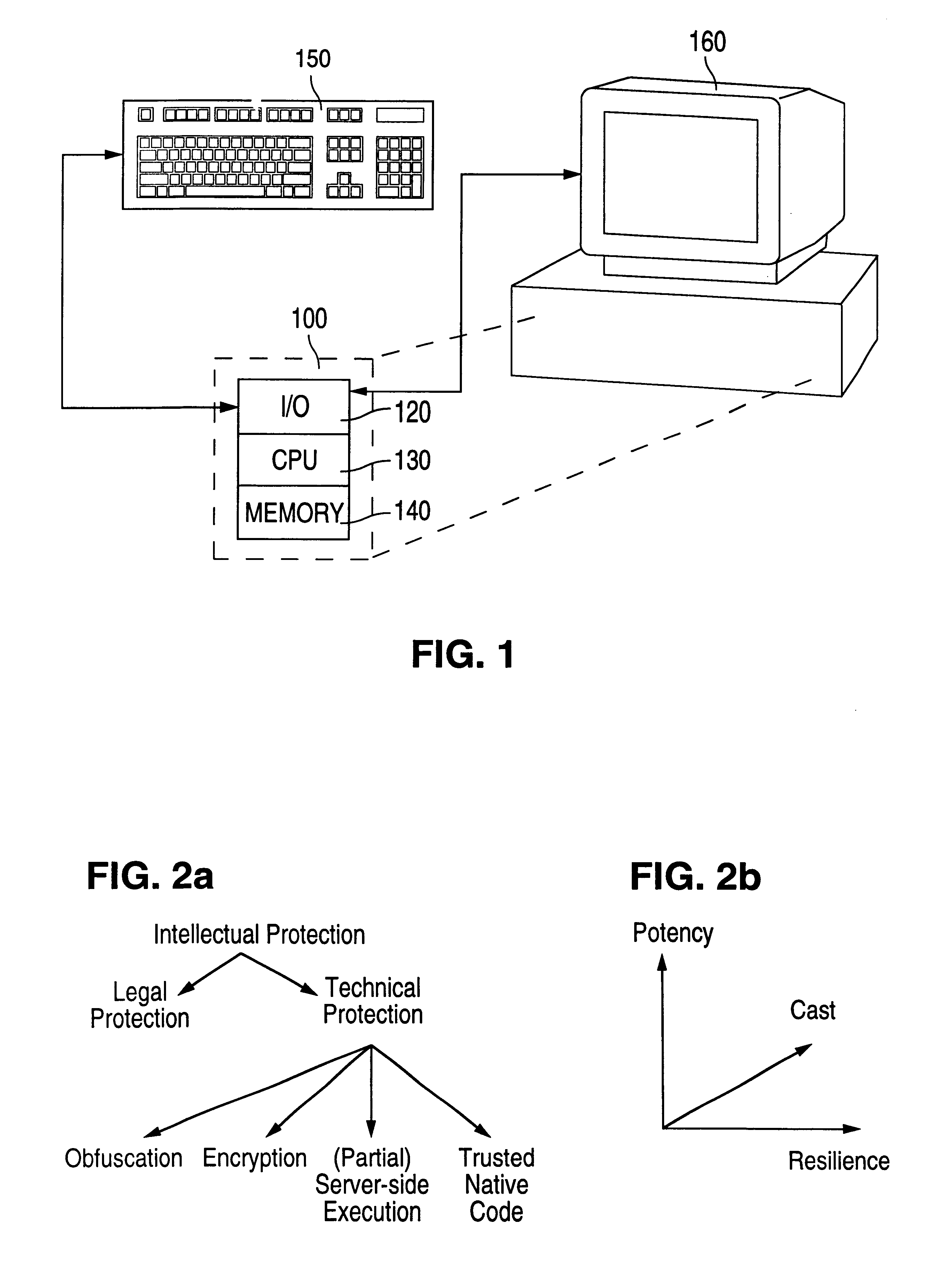

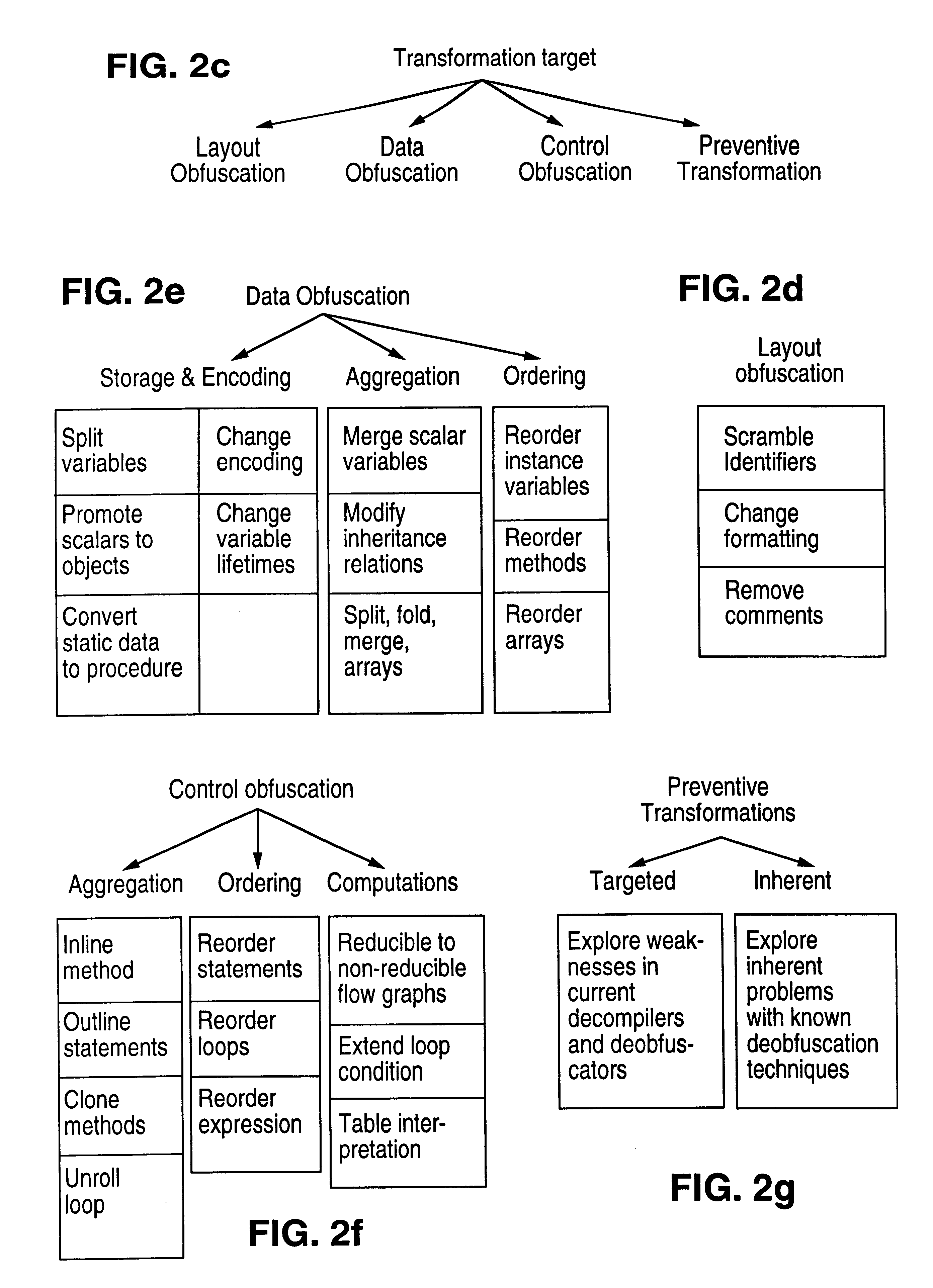

Obfuscation techniques for enhancing software security

InactiveUS6668325B1Guaranteed maximum utilizationDigital data processing detailsUnauthorized memory use protectionObfuscationTheoretical computer science

The present invention provides obfuscation techniques for enhancing software security. In one embodiment, a method for obfuscation techniques for enhancing software security includes selecting a subset of code (e.g., compiled source code of an application) to obfuscate, and obfuscating the selected subset of the code. The obfuscating includes applying an obfuscating transformation to the selected subset of the code. The transformed code can be weakly equivalent to the untransformed code. The applied transformation can be selected based on a desired level of security (e.g., resistance to reverse engineering). The applied transformation can include a control transformation that can be creating using opaque constructs, which can be constructed using aliasing and concurrency techniques. Accordingly, the code can be obfuscated for enhanced software security based on a desired level of obfuscation (e.g., based on a desired potency, resilience, and cost).

Owner:INTERTRUST TECH CORP

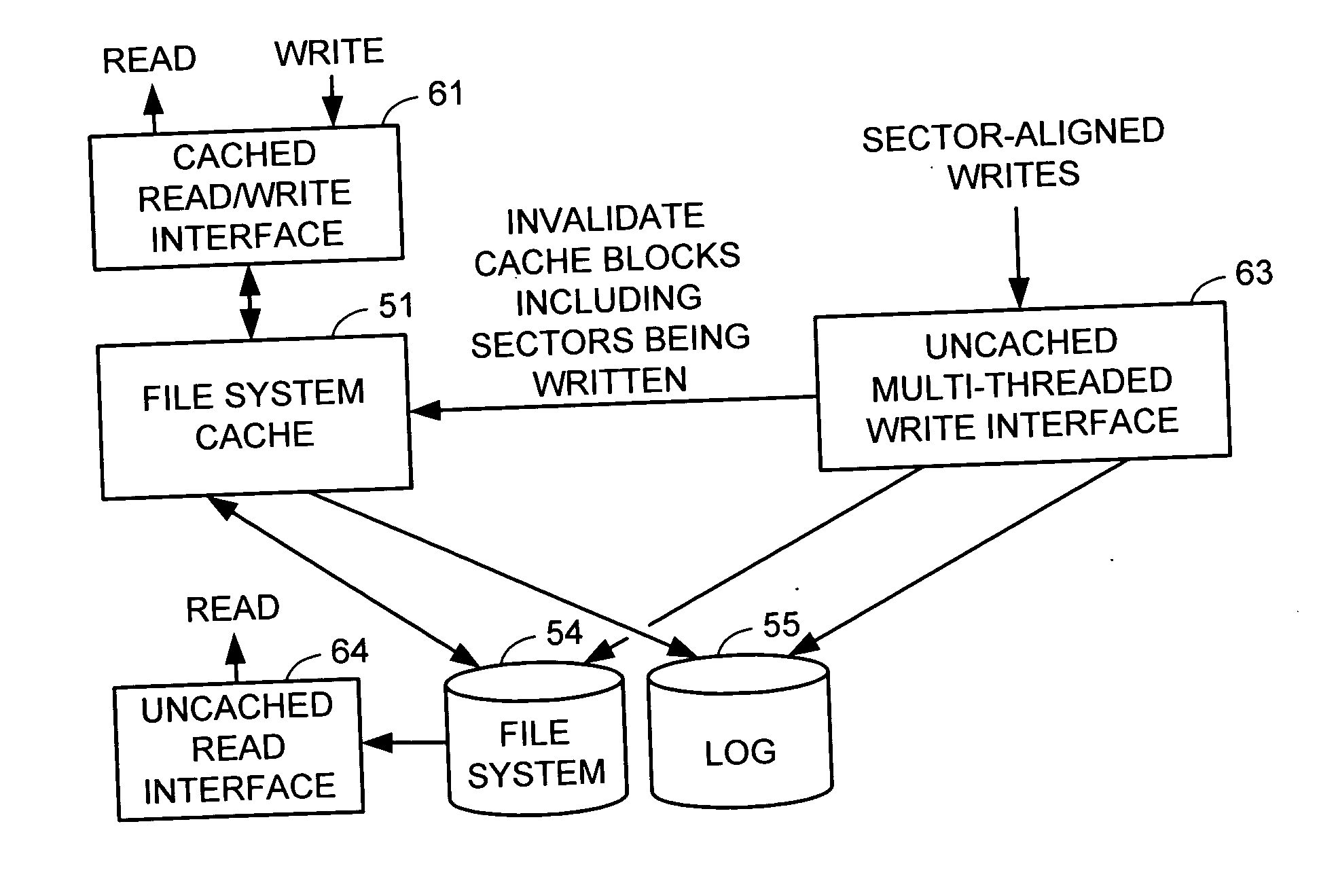

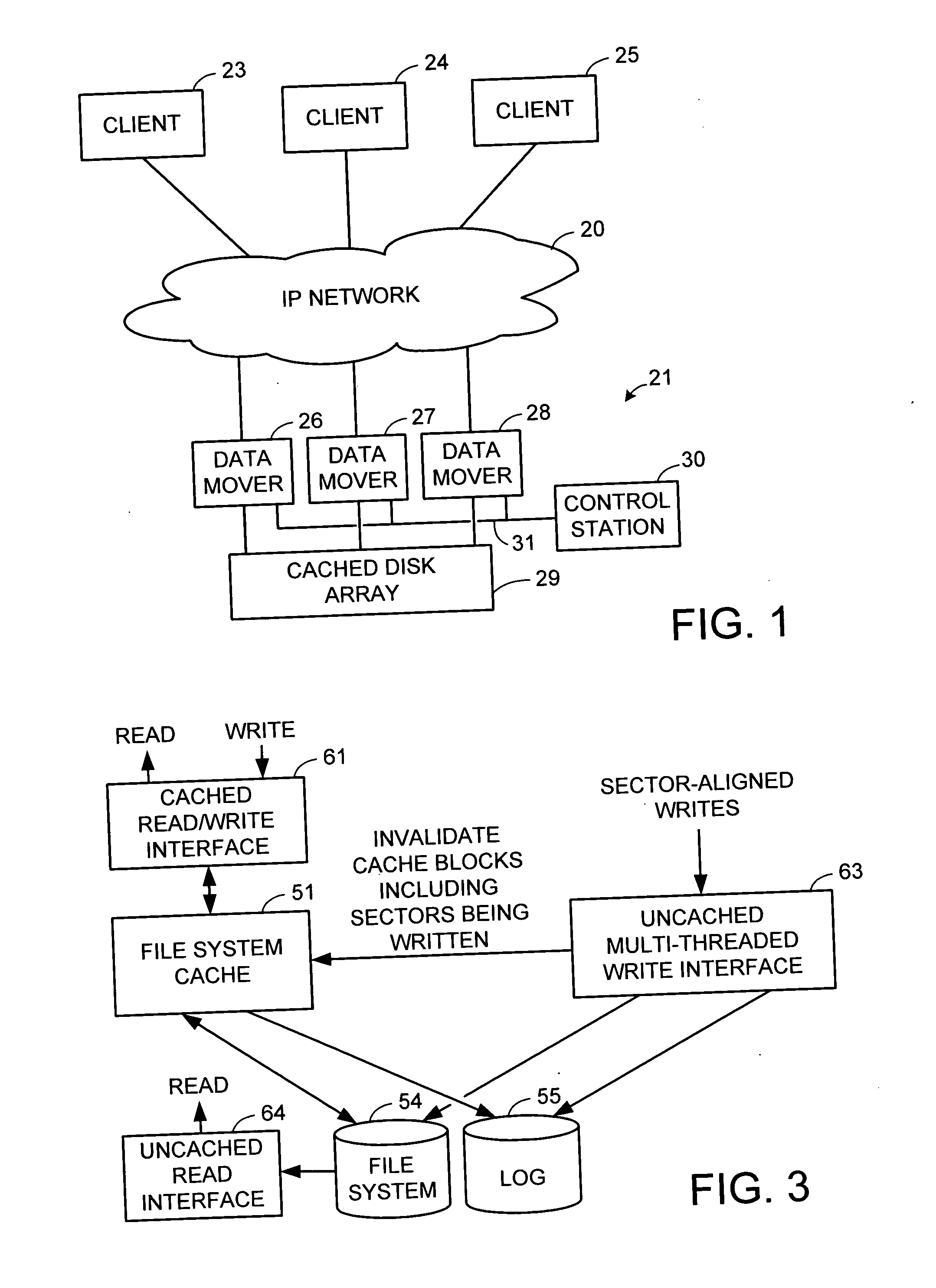

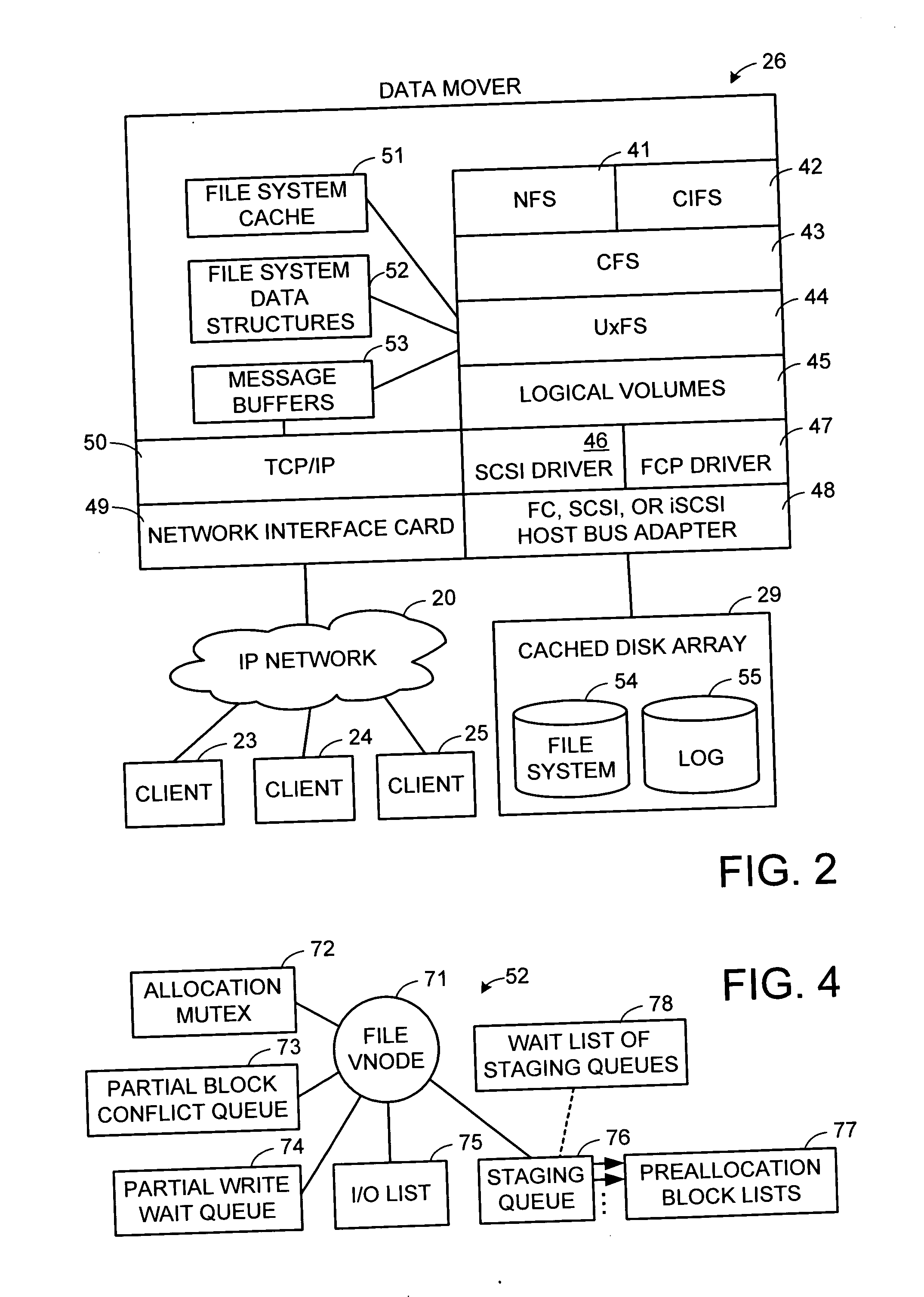

Multi-threaded write interface and methods for increasing the single file read and write throughput of a file server

ActiveUS20050066095A1Digital data information retrievalDigital data processing detailsData integrityFile allocation

A write interface in a file server provides permission management for concurrent access to data blocks of a file, ensures correct use and update of indirect blocks in a tree of the file, preallocates file blocks when the file is extended, solves access conflicts for concurrent reads and writes to the same block, and permits the use of pipelined processors. For example, a write operation includes obtaining a per file allocation mutex (mutually exclusive lock), preallocating a metadata block, releasing the allocation mutex, issuing an asynchronous write request for writing to the file, waiting for the asynchronous write request to complete, obtaining the allocation mutex, committing the preallocated metadata block, and releasing the allocation mutex. Since no locks are held during the writing of data to the on-disk storage and this data write takes the majority of the time, the method enhances concurrency while maintaining data integrity.

Owner:EMC IP HLDG CO LLC

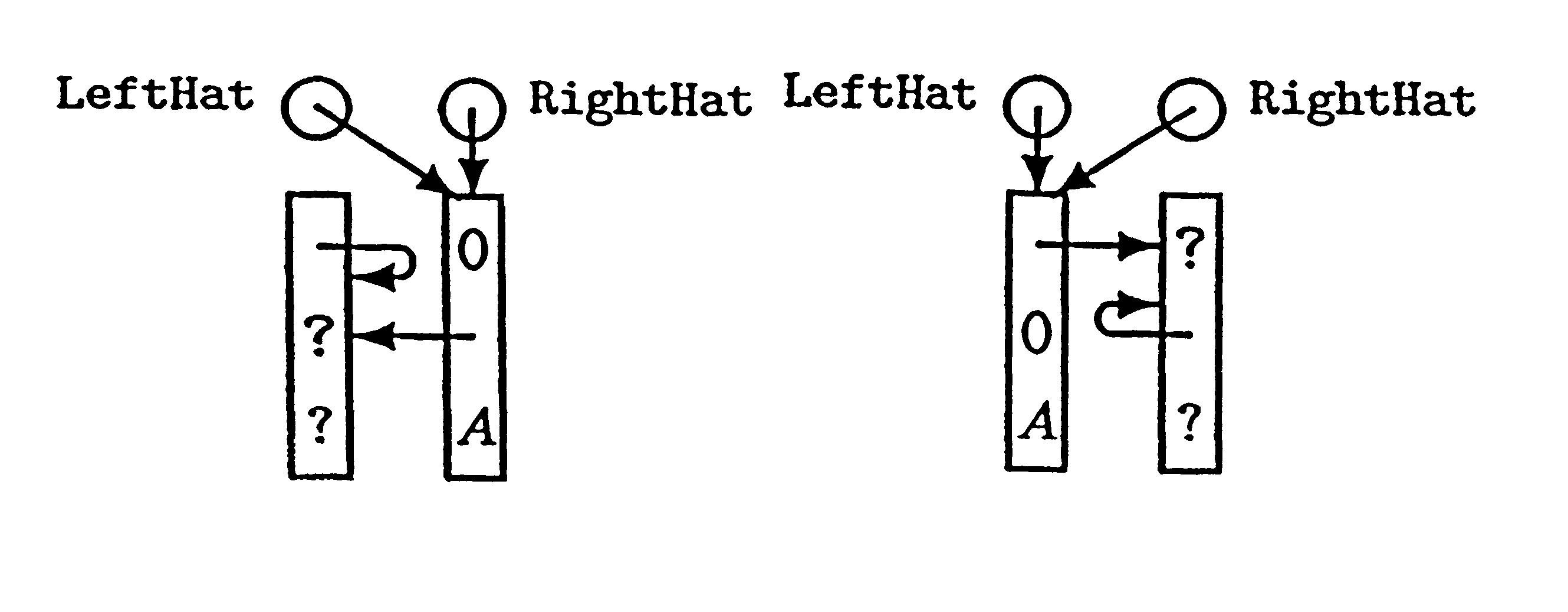

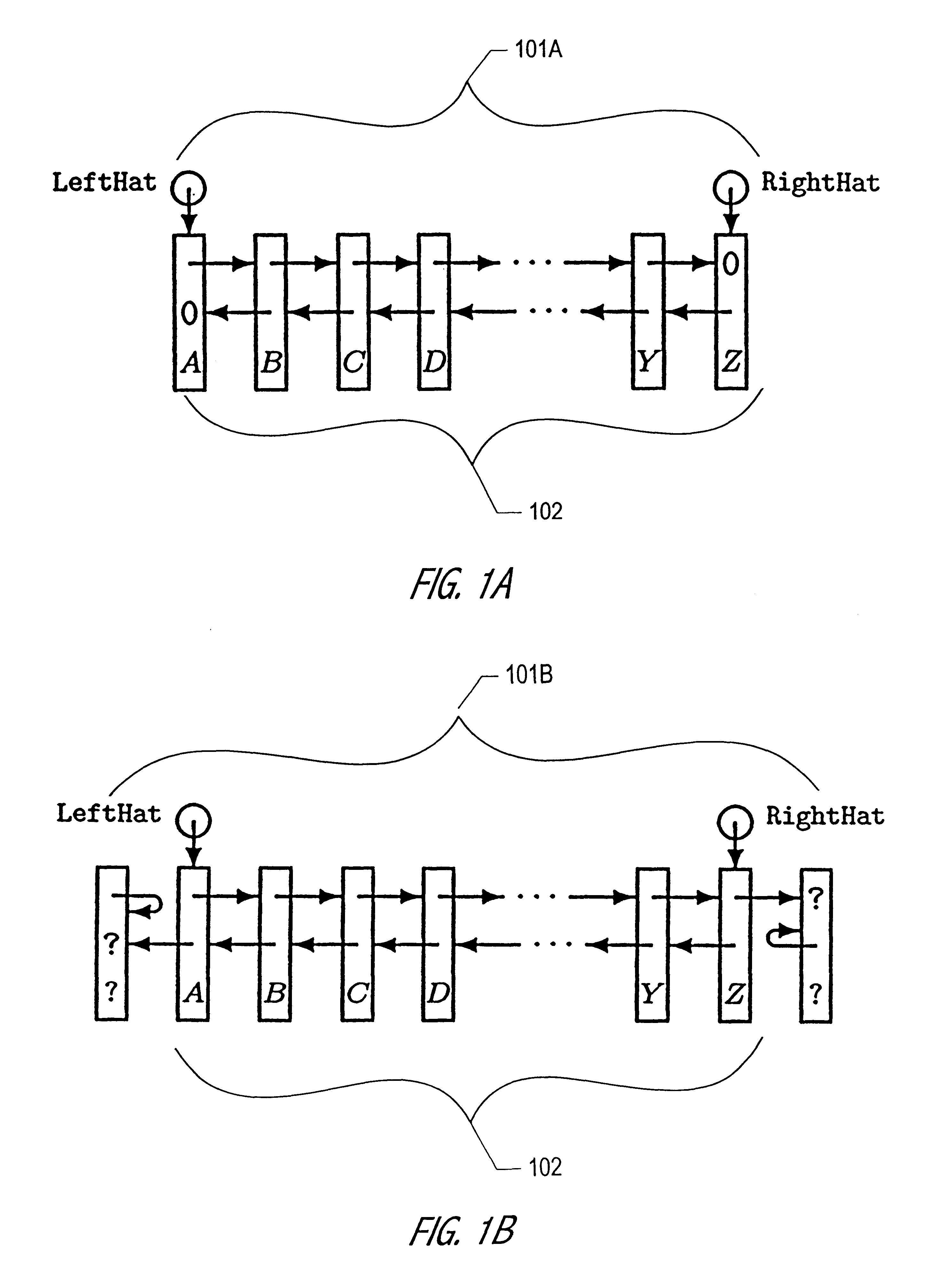

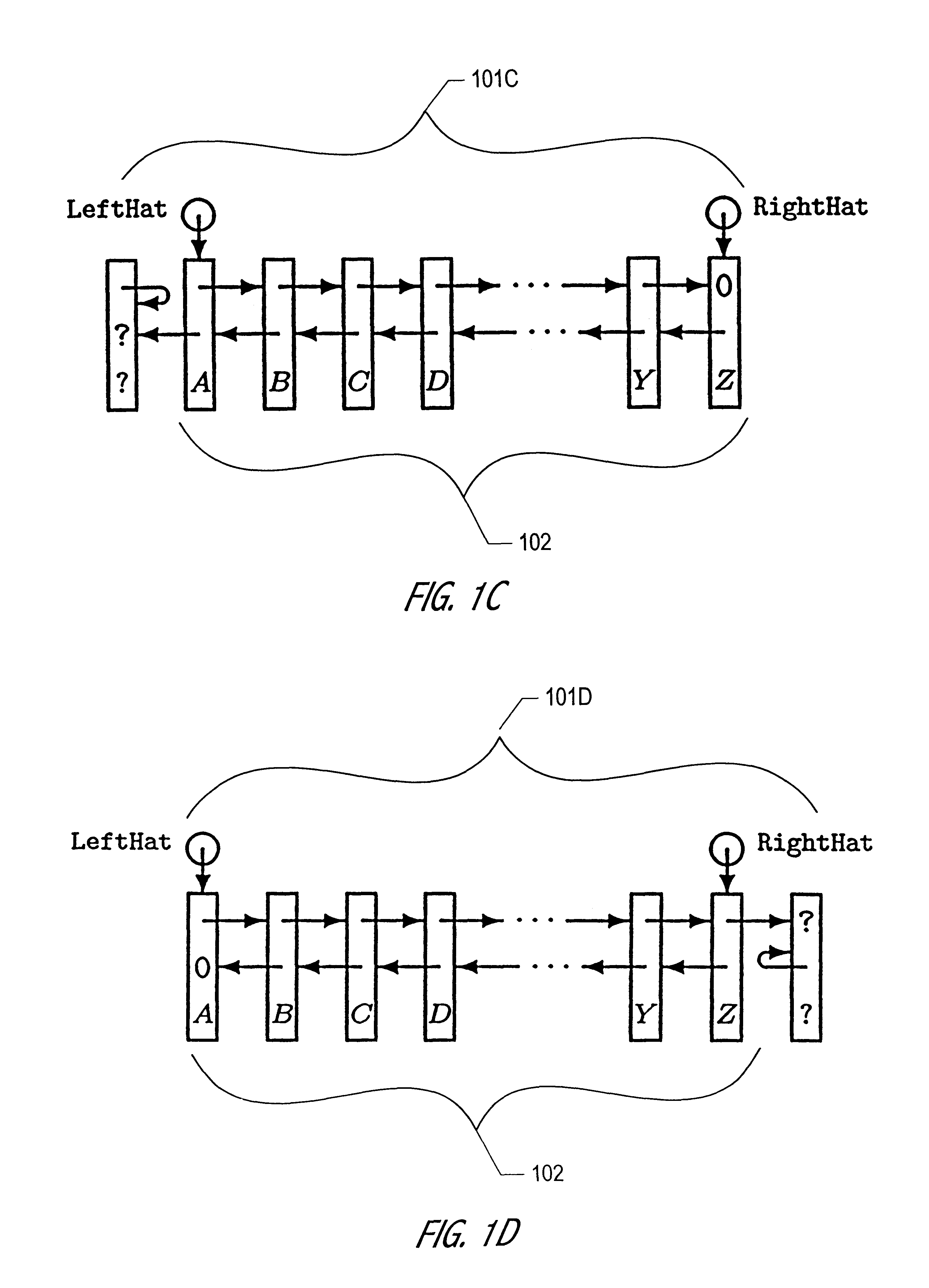

Lock-free implementation of concurrent shared object with dynamic node allocation and distinguishing pointer value

A novel linked-list-based concurrent shared object implementation has been developed that provides non-blocking and linearizable access to the concurrent shared object. In an application of the underlying techniques to a deque, non-blocking completion of access operations is achieved without restricting concurrency in accessing the deque's two ends. In various realizations in accordance with the present invention, the set of values that may be pushed onto a shared object is not constrained by use of distinguishing values. In addition, an explicit reclamation embodiment facilitates use in environments or applications where automatic reclamation of storage is unavailable or impractical.

Owner:ORACLE INT CORP

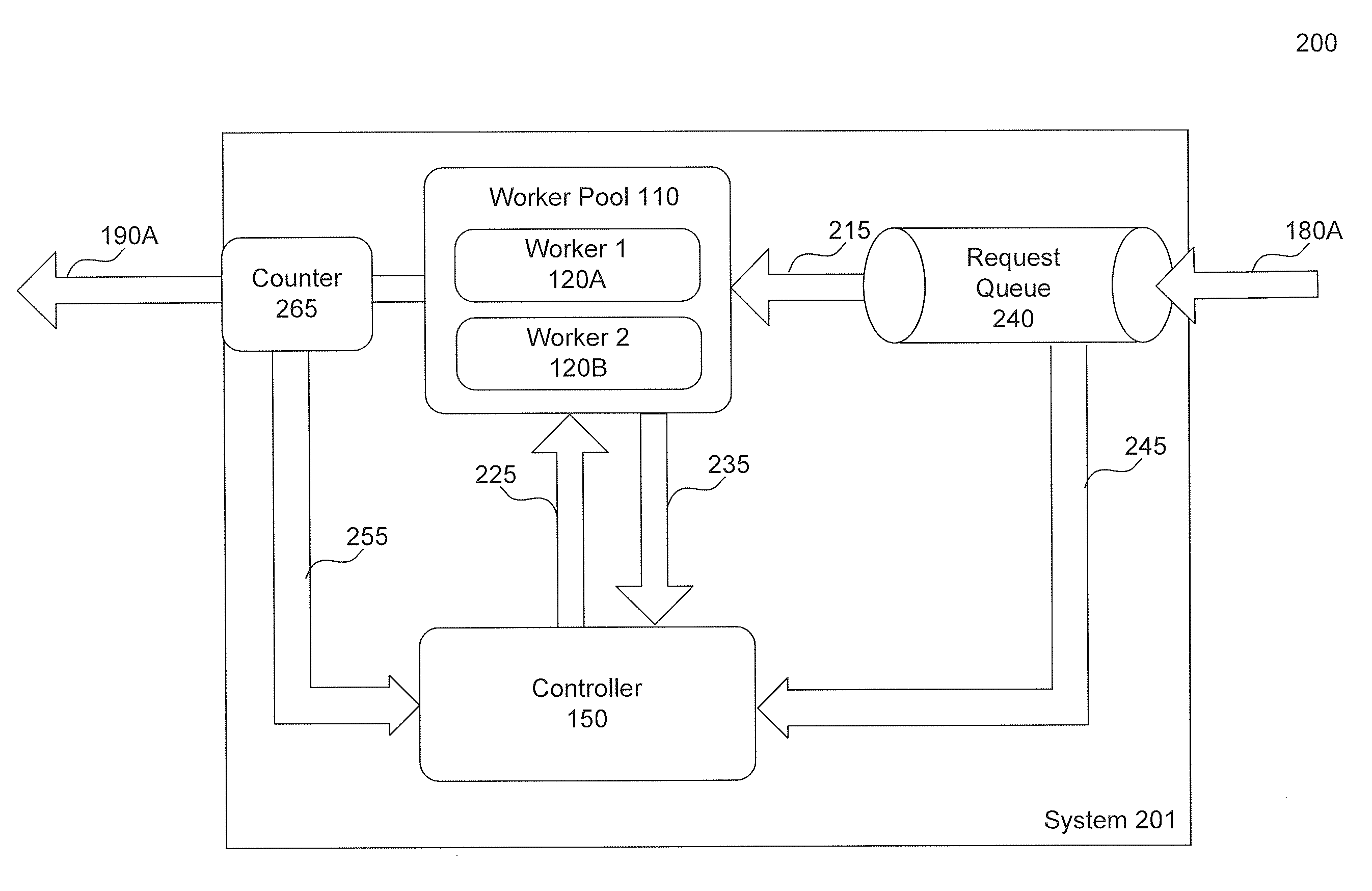

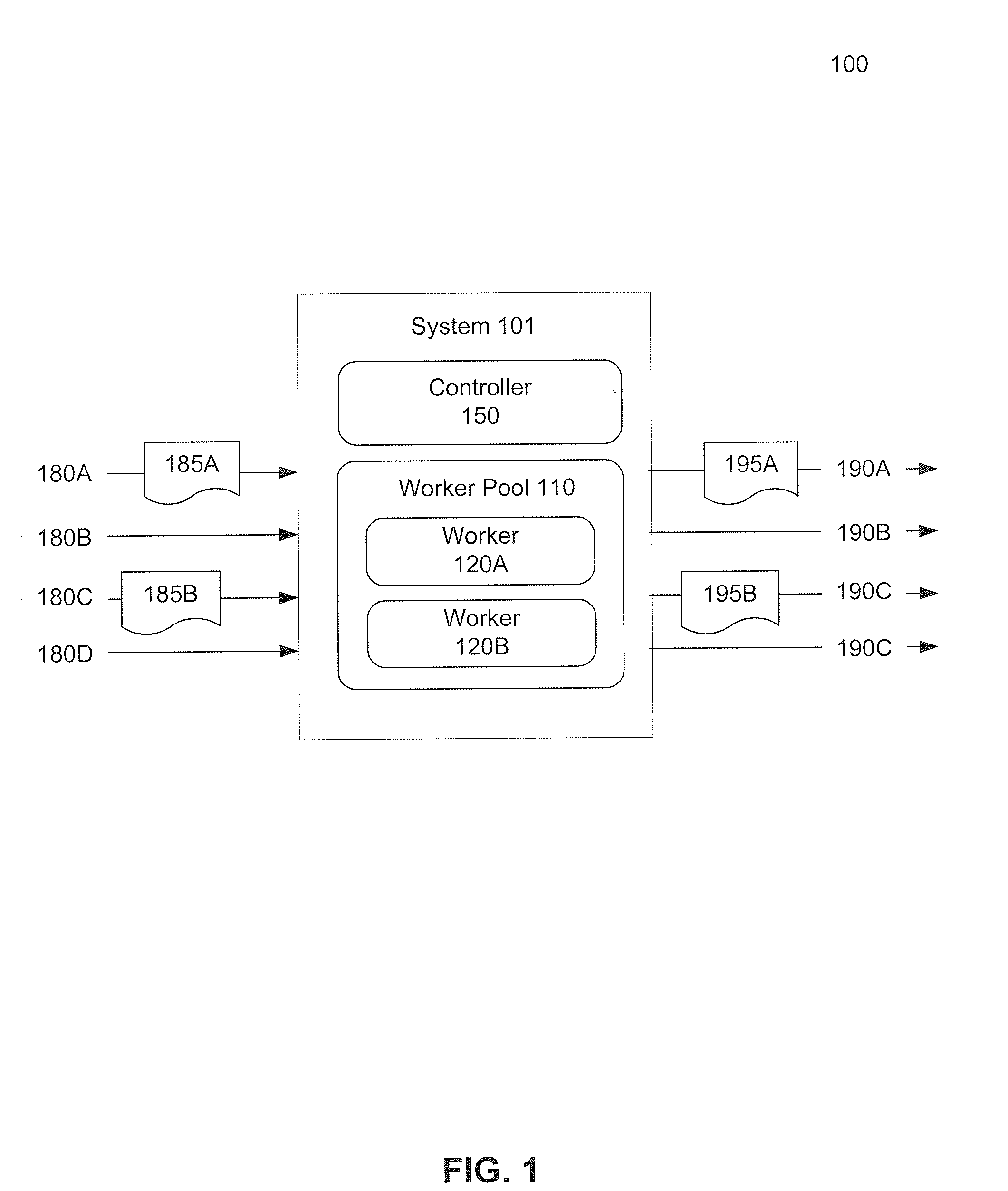

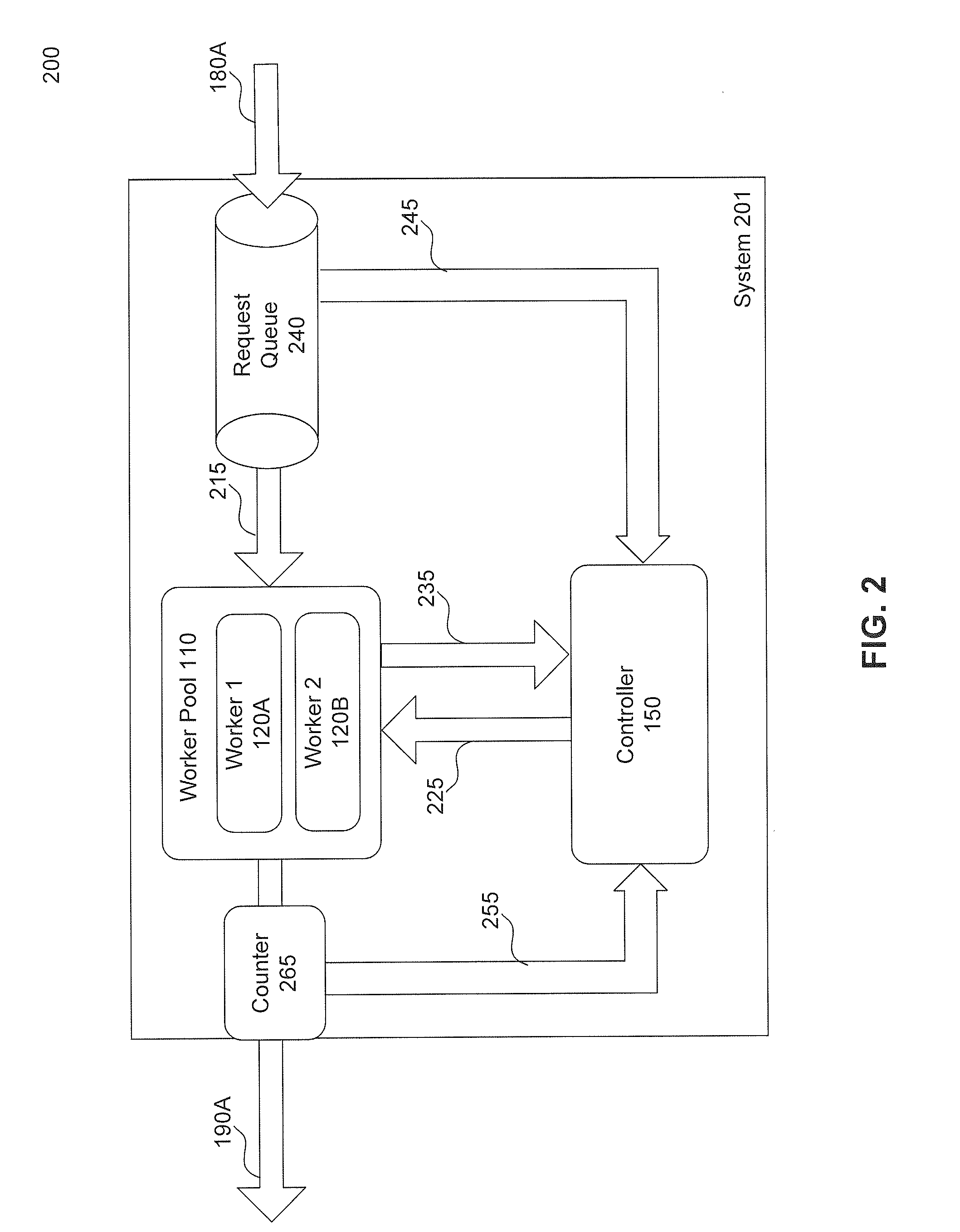

Dynamically Tuning A Server Multiprogramming Level

Methods, apparatus and computer program products for allocating a number of workers to a worker pool in a multiprogrammable computer are provided, to thereby tune server multiprogramming level. The method includes the steps of monitoring throughput in relation to a workload concurrency level and dynamically tuning a multiprogramming level based upon the monitoring. The dynamic tuning includes adjusting with a first adjustment for a first interval and with a second adjustment for a second interval, wherein the second adjustment utilizes data stored from the first adjustment.

Owner:IANYWHERE SOLUTIONS

Automated task parallel method suitable for distributed machine learning and system thereof

ActiveCN105956021AEasy programmingReduce the burden onDatabase distribution/replicationCharacter and pattern recognitionCouplingData access

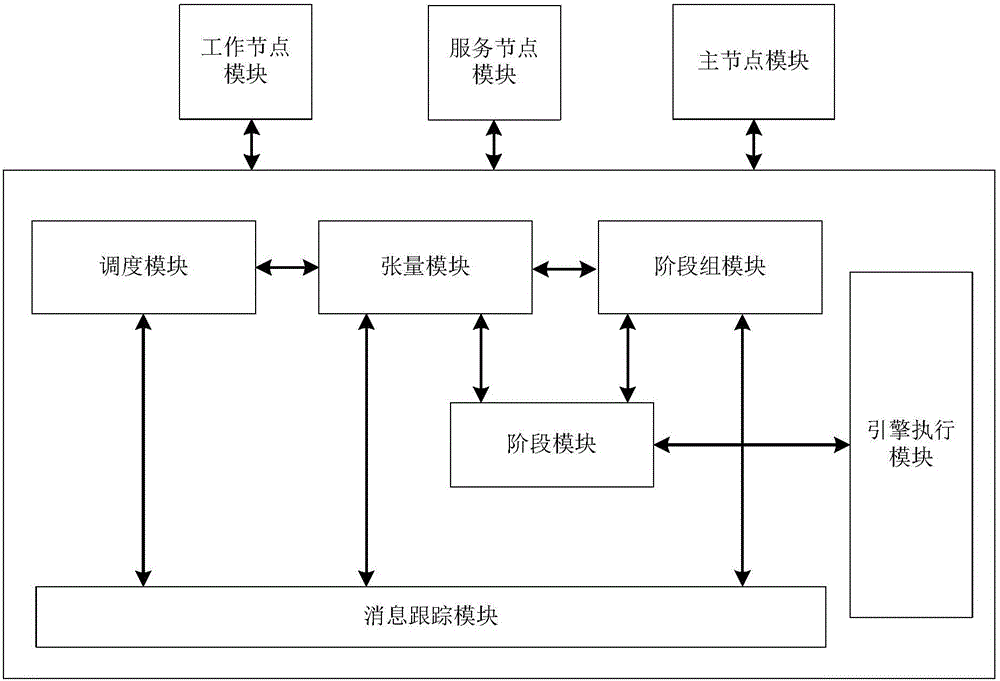

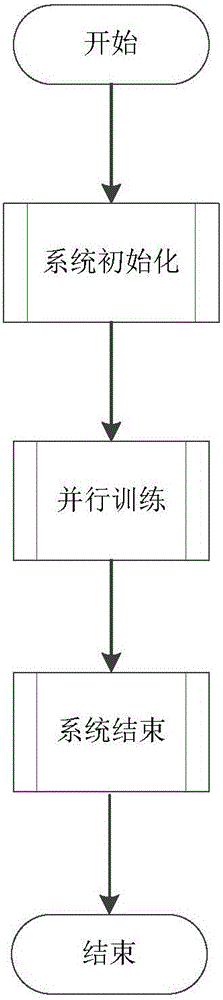

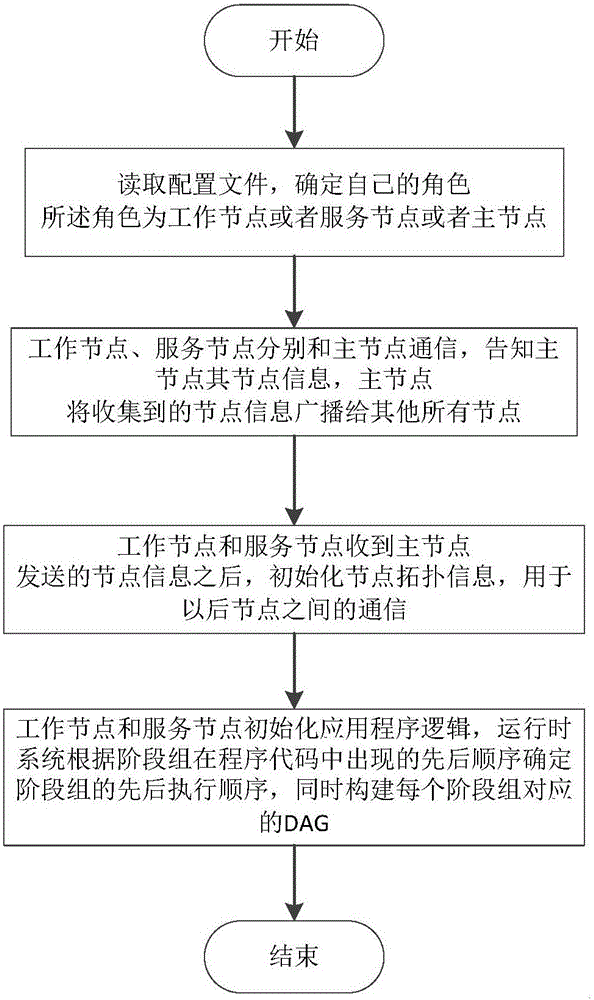

The invention provides an automated task parallel method suitable for distributed machine learning and a system thereof. The method and the system solve defects of a programming interface in existing distributed machine learning, and tight coupling of system data access behaviors and application logic caused by just providing a reading-writing interface of key value pairs. The defect intensifies network bandwidth resource competition in distributed cluster, and causes that programming personnel is difficult to perform parallelization on a task. The system comprises a work node module, a service node module, a host node module, a tensor module, a scheduling module, a message tracking module, a stage module, a stage group module, and an executing engine module. Through providing higher-level programming abstraction, the system decouples logic of reading-writing access behaviors and an application program. In operation, the system firstly dynamically partitions tasks according to the load condition of a service node, and then machine learning tasks are automatically executed in a parallel manner, so as to greatly reduce burden of programming personnel to compile high-concurrency machine learning applications.

Owner:HUAZHONG UNIV OF SCI & TECH

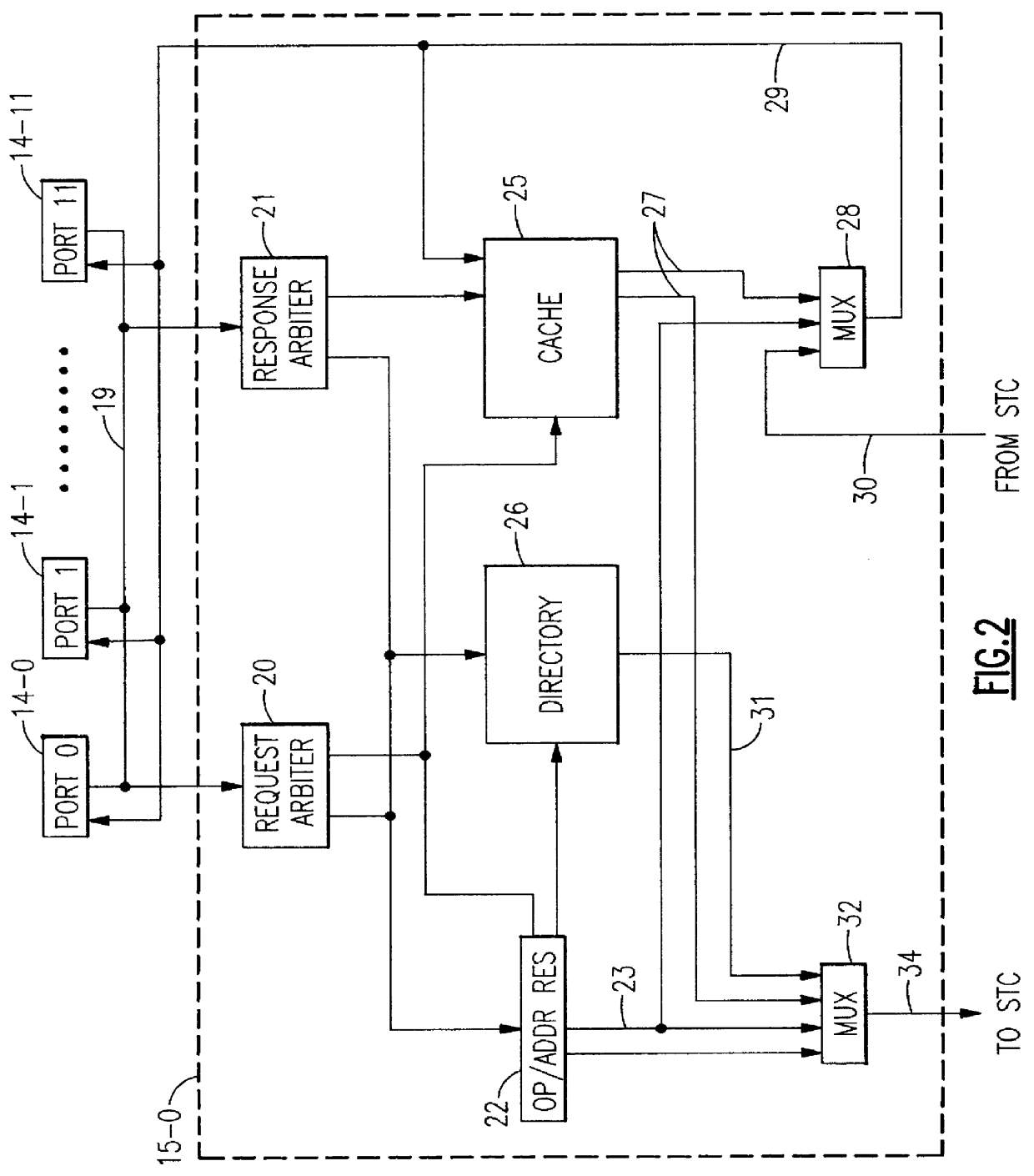

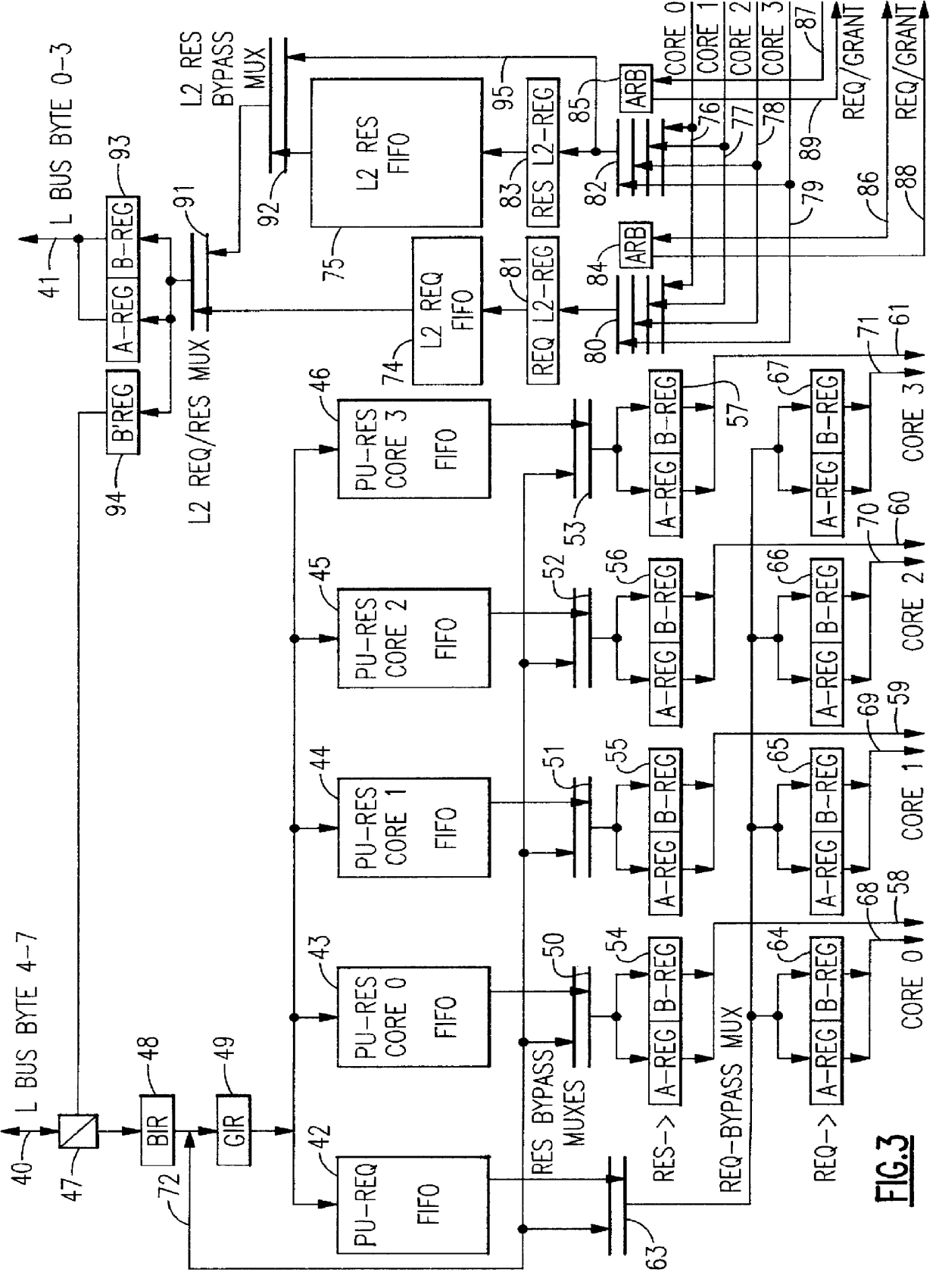

High performance shared cache

InactiveUS6101589AError detection/correctionMemory adressing/allocation/relocationComputer architectureEngineering

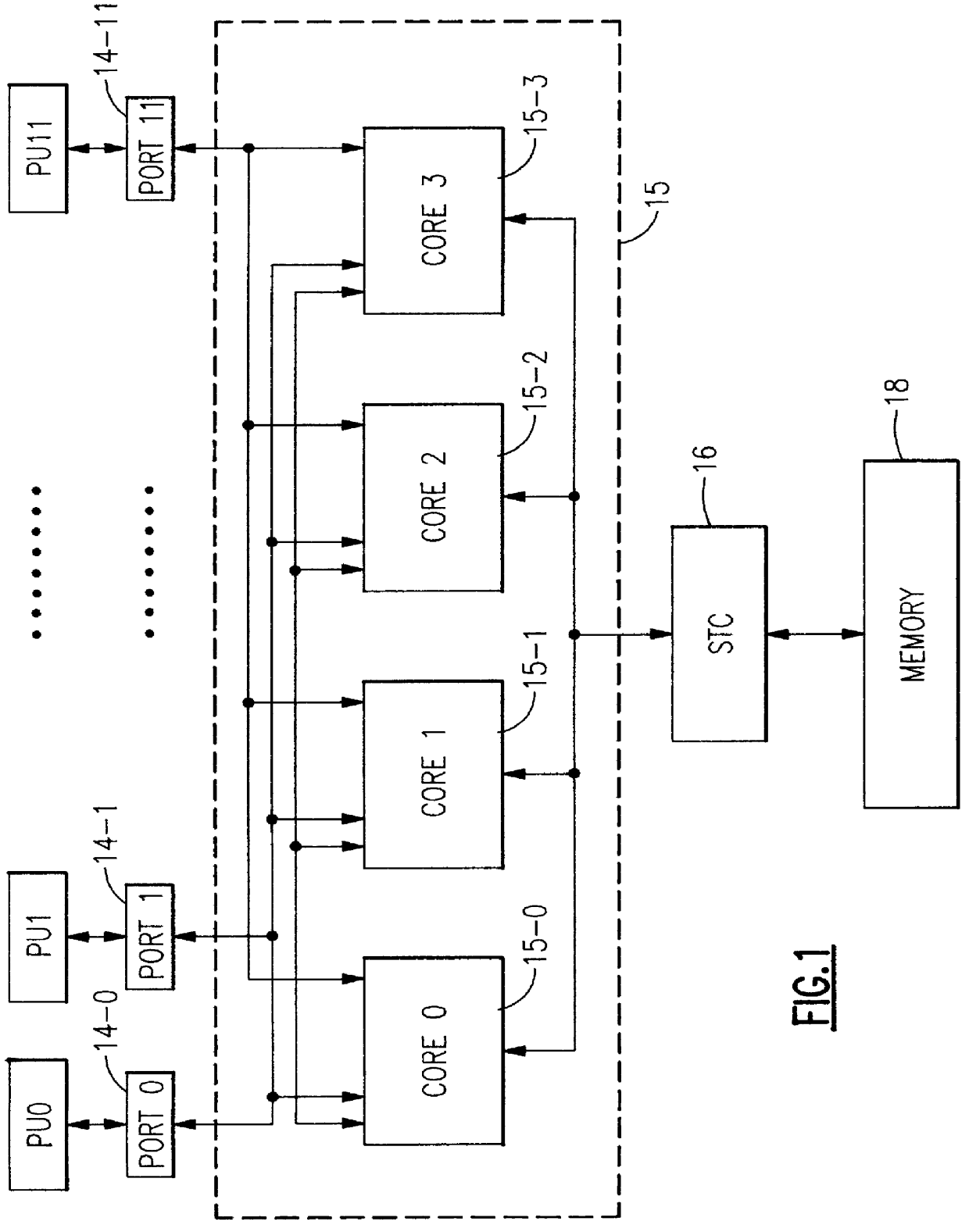

A high performance cache unit in a multiprocessing computer system comprises a shared level n cache 15 which is divided into a number of independently operated cache cores each of which containing a cache array for being used as buffer between plurality of processing units PU0-PU1 and a memory 18. Data requests and response requests issued by the processing units are separately executed in an interleaved mode to achieve a high degree of concurrency. For this purpose each cache core comprises arbitration circuits 101, 106 for an independent selection of pending data requests and response requests for execution. Selected data requests are identified by a cache directory lookup as linefetch-match or linefetch-miss operations and separately stored during their execution in operation registers 112, 114. Selected response requests are stored independently of the data requests in registers 105, 108, 109 and successively executed during free operation cycles which are not used by the execution of data requests. In this manner each of the cache cores can concurrently perform a linefetch-match operation, a linefetch-miss operation and a store operation for one processing unit or for a number of processing units.

Owner:IBM CORP

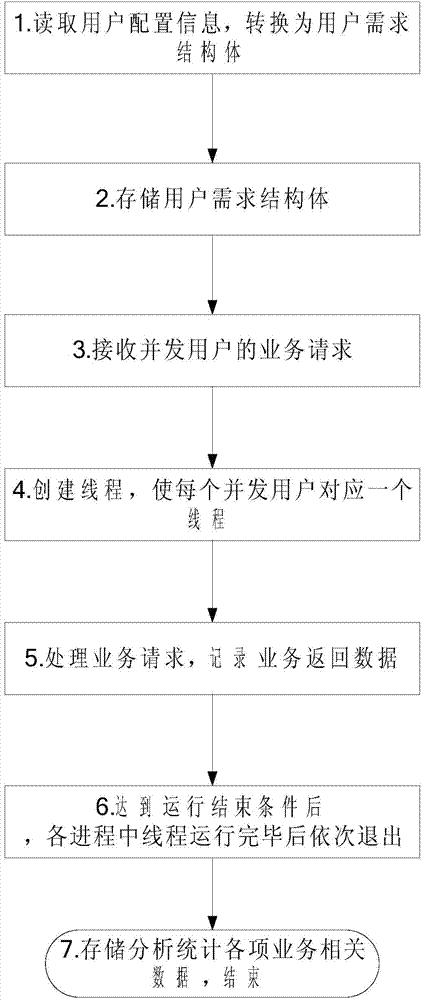

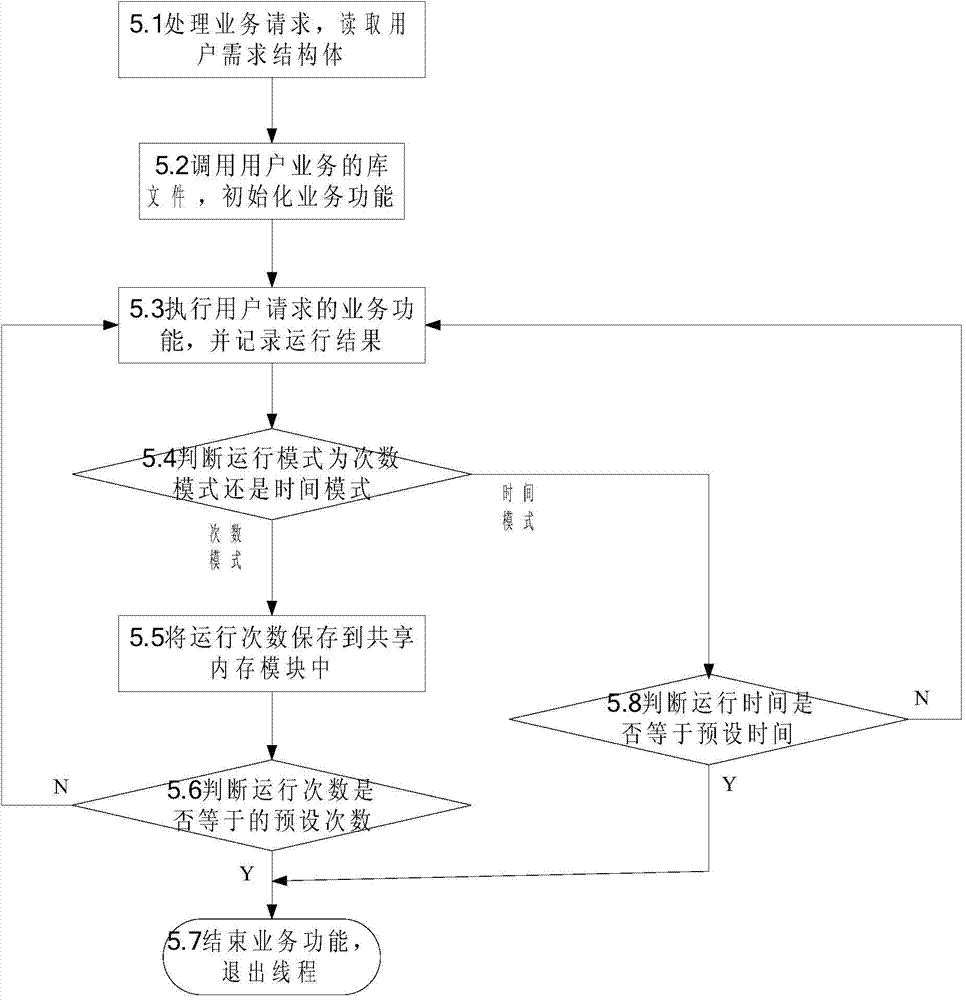

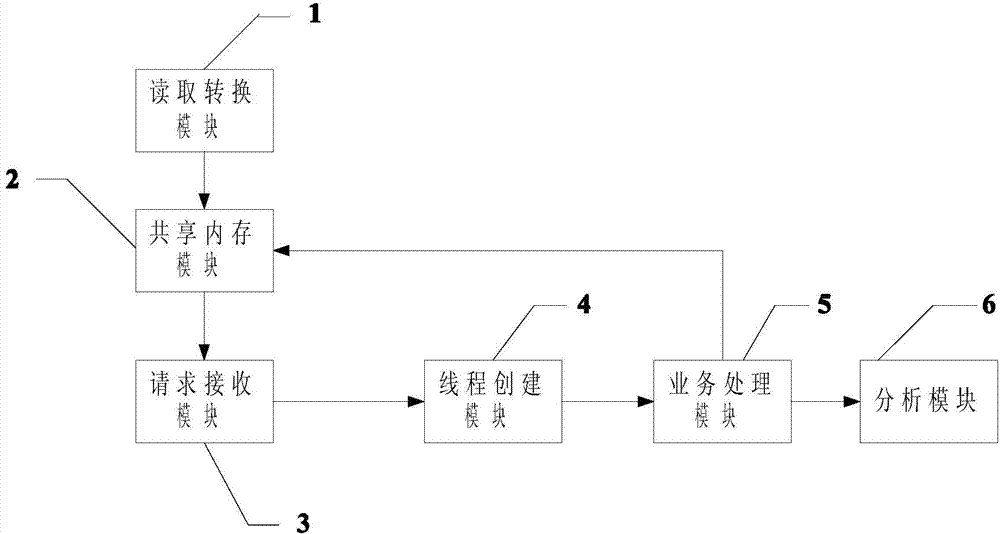

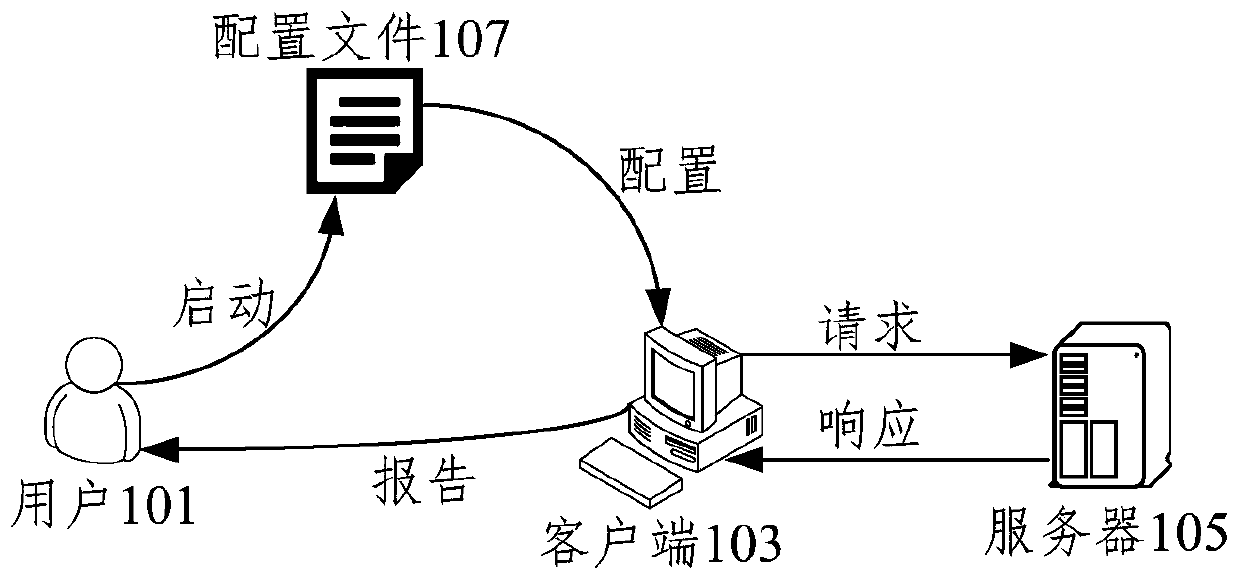

Method and system for test, simulation and concurrence of software performance

InactiveCN103544103AAvoid influenceAvoid interference of response time with each otherSoftware testing/debuggingUser inputSoftware engineering

The invention relates to a method for test, simulation and concurrence of software performance. The method for the test, simulation and concurrence of the software performance specifically comprises the steps that (1) user configuration information which is input by a user is read, (2) a user requirement structural body is stored in a shared memory module and mapping is established, (3) service requests of concurrent users are received and at least one test progress is established according to the number of the concurrent users and the user requirement structural body, (4) test threads are established, (5) each test thread is used for processing the service request of a corresponding user and is stopped when a stopping condition is met, (6) operation is ended and the test threads in each test process are stopped after operation of the test threads in each process is ended in sequence, (7) relevant data of each service are stored, analyzed and counted, and then all the processes are finished. According to the method and system for the test, simulation and concurrence of the software performance, the fact that how to simulate user concurrence is explained, bottlenecks are prevented from occurring, and the purpose of a high-concurrency scene by means of a small number of hardware sources is achieved; concurrency stability is guaranteed; support to different user services is achieved; help is provided for positioning and development cycle shorting.

Owner:烟台中科网络技术研究所

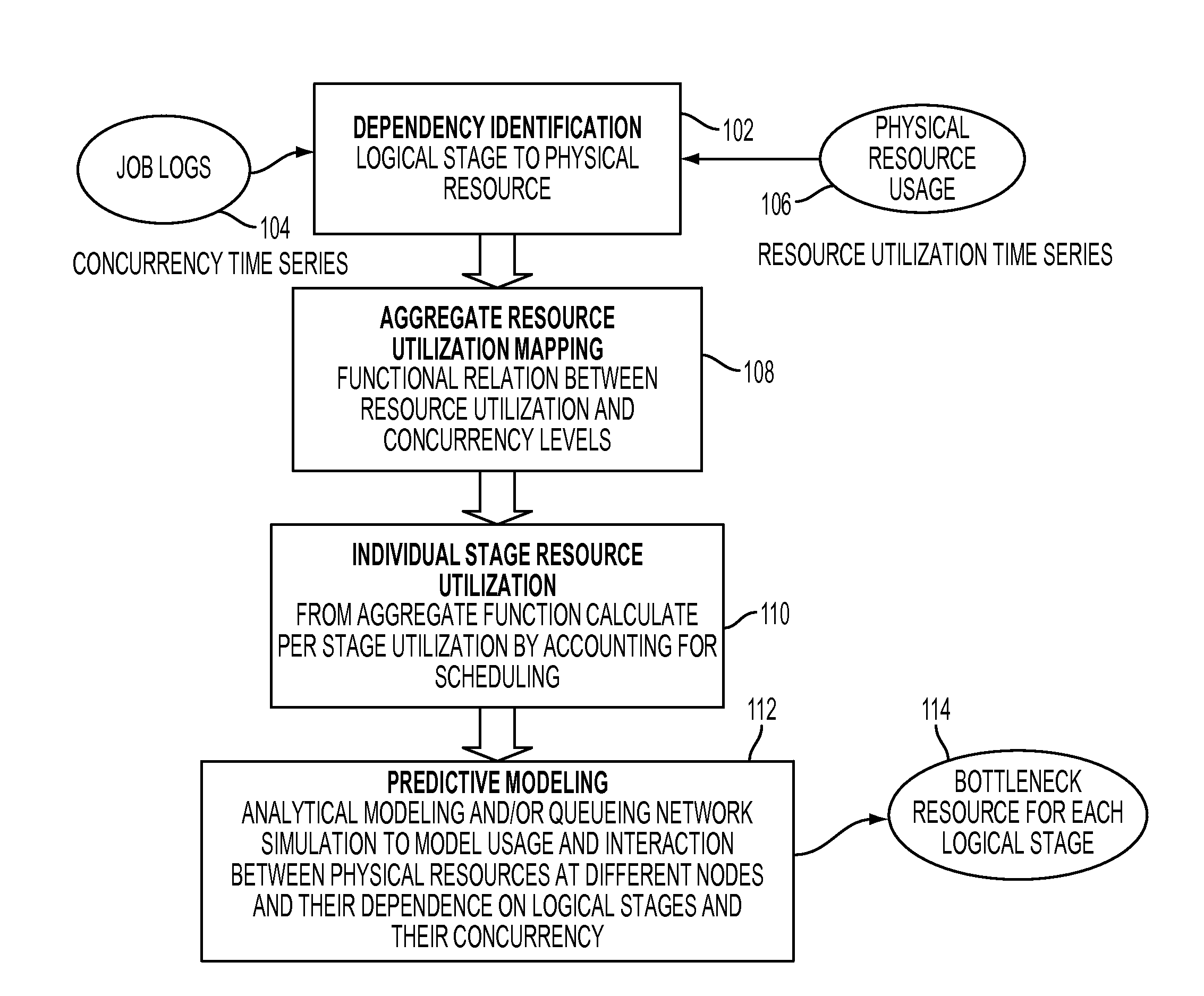

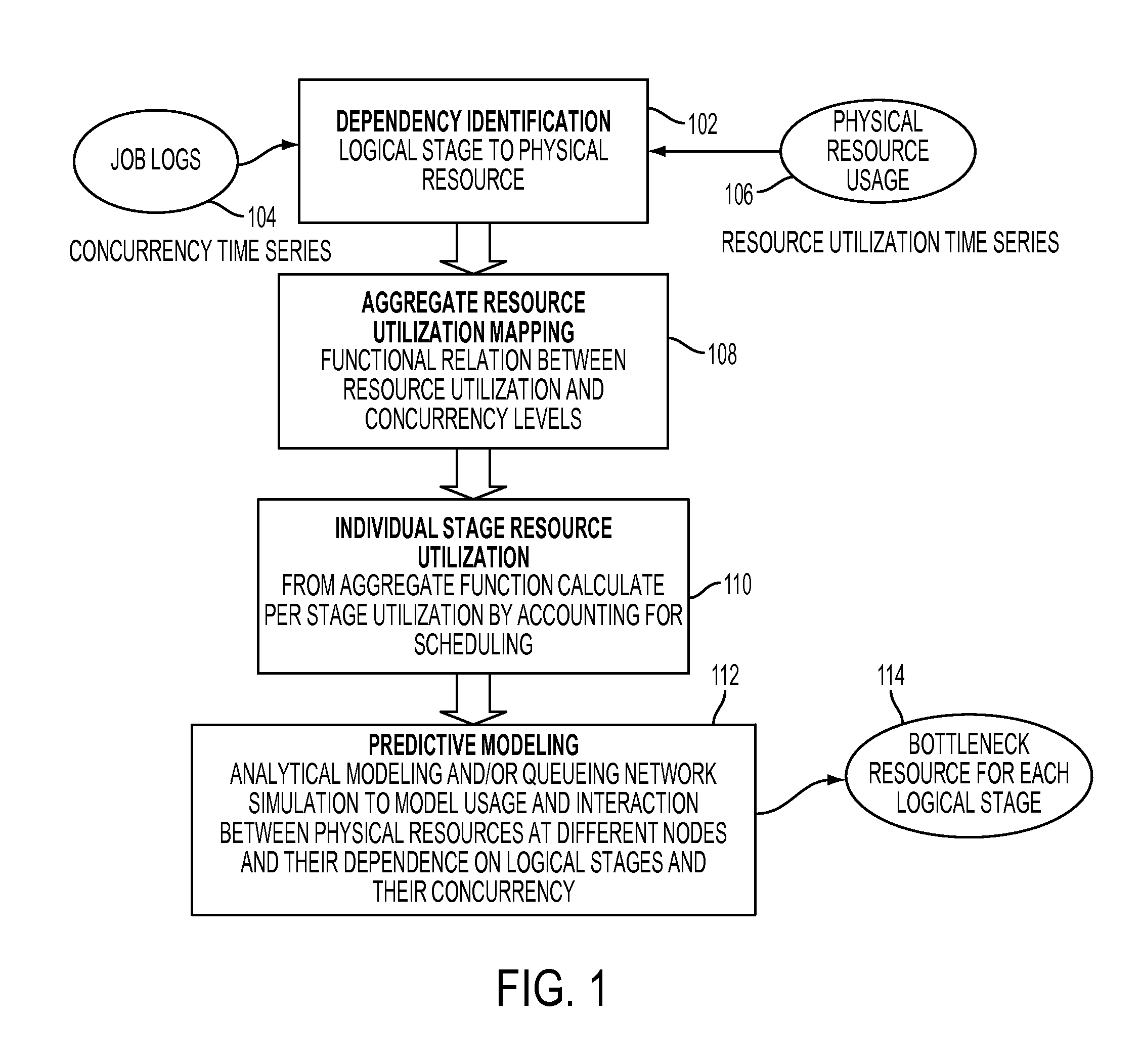

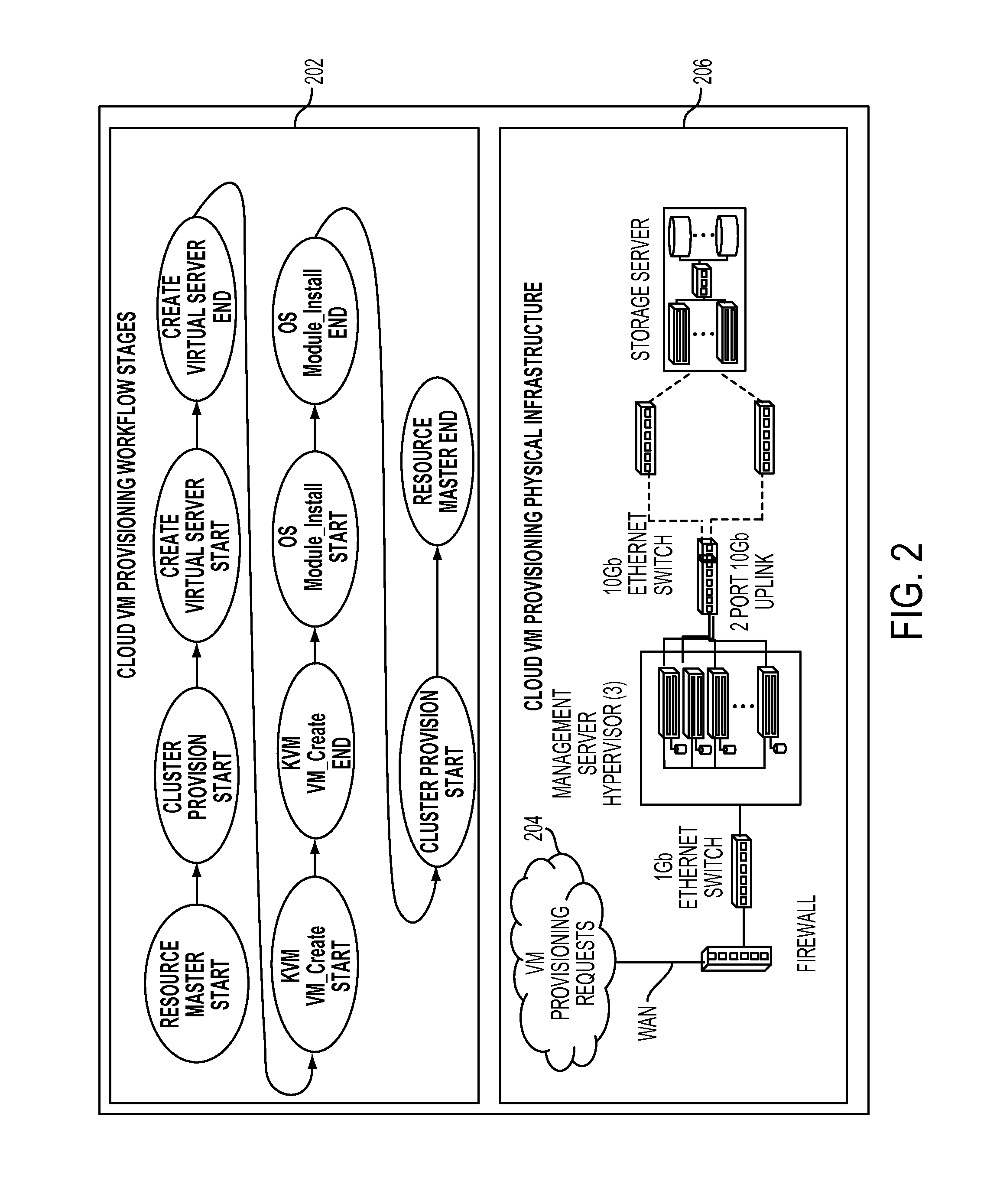

Resource bottleneck identification for multi-stage workflows processing

InactiveUS20150178129A1Program initiation/switchingDesign optimisation/simulationPhases of clinical researchComputing systems

Identifying resource bottleneck in multi-stage workflow processing may include identifying dependencies between logical stages and physical resources in a computing system to determine which logical stage involves what set of resources; for each of the identified dependencies, determining a functional relationship between a usage level of a physical resource and concurrency level of a logical stage; estimating consumption of the physical resources by each of the logical stages based on the functional relationship determined for each of the logical stages; and performing a predictive modeling based on the estimated consumption to determine a concurrency level at which said each of the logical stages will become bottleneck.

Owner:IBM CORP

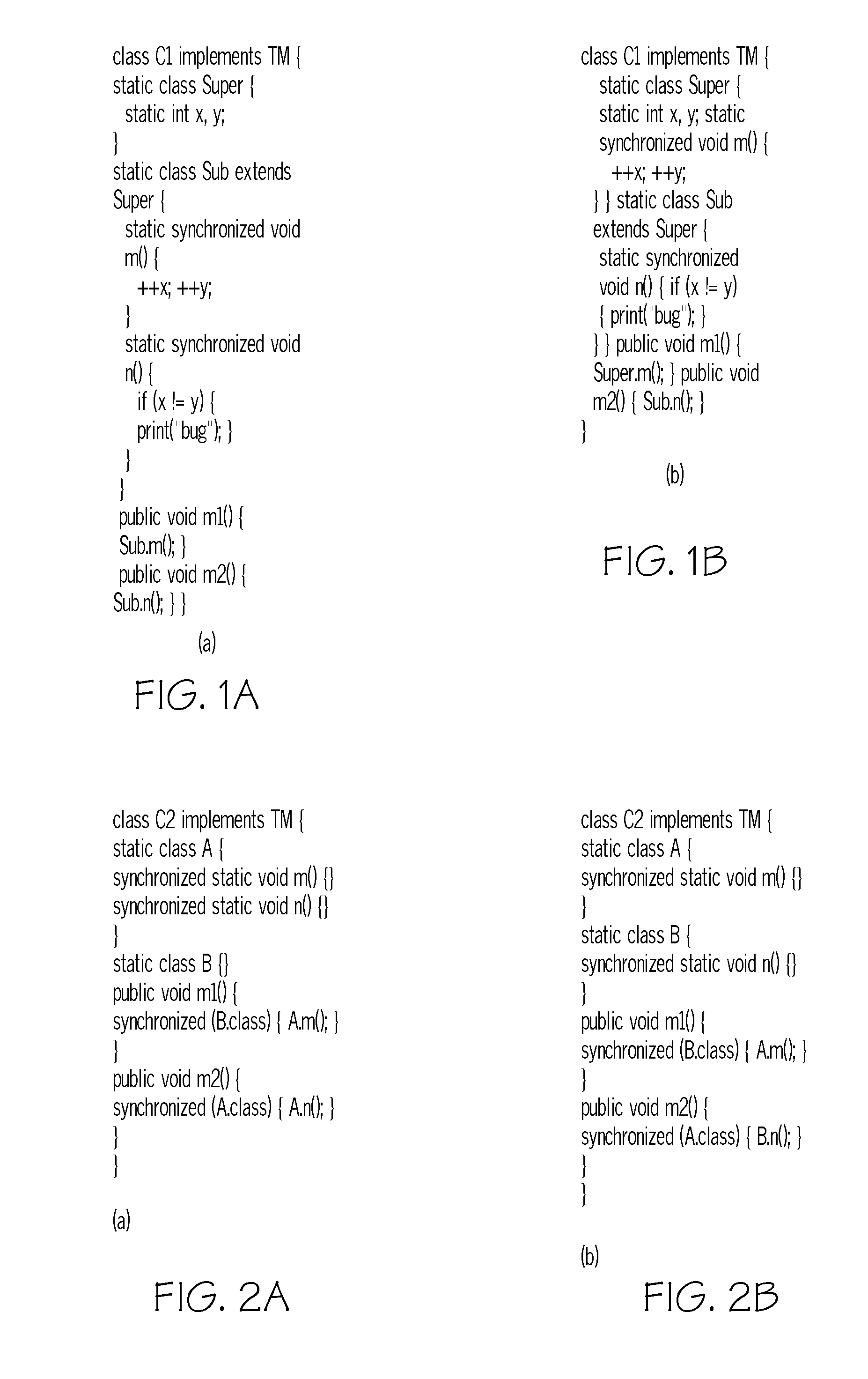

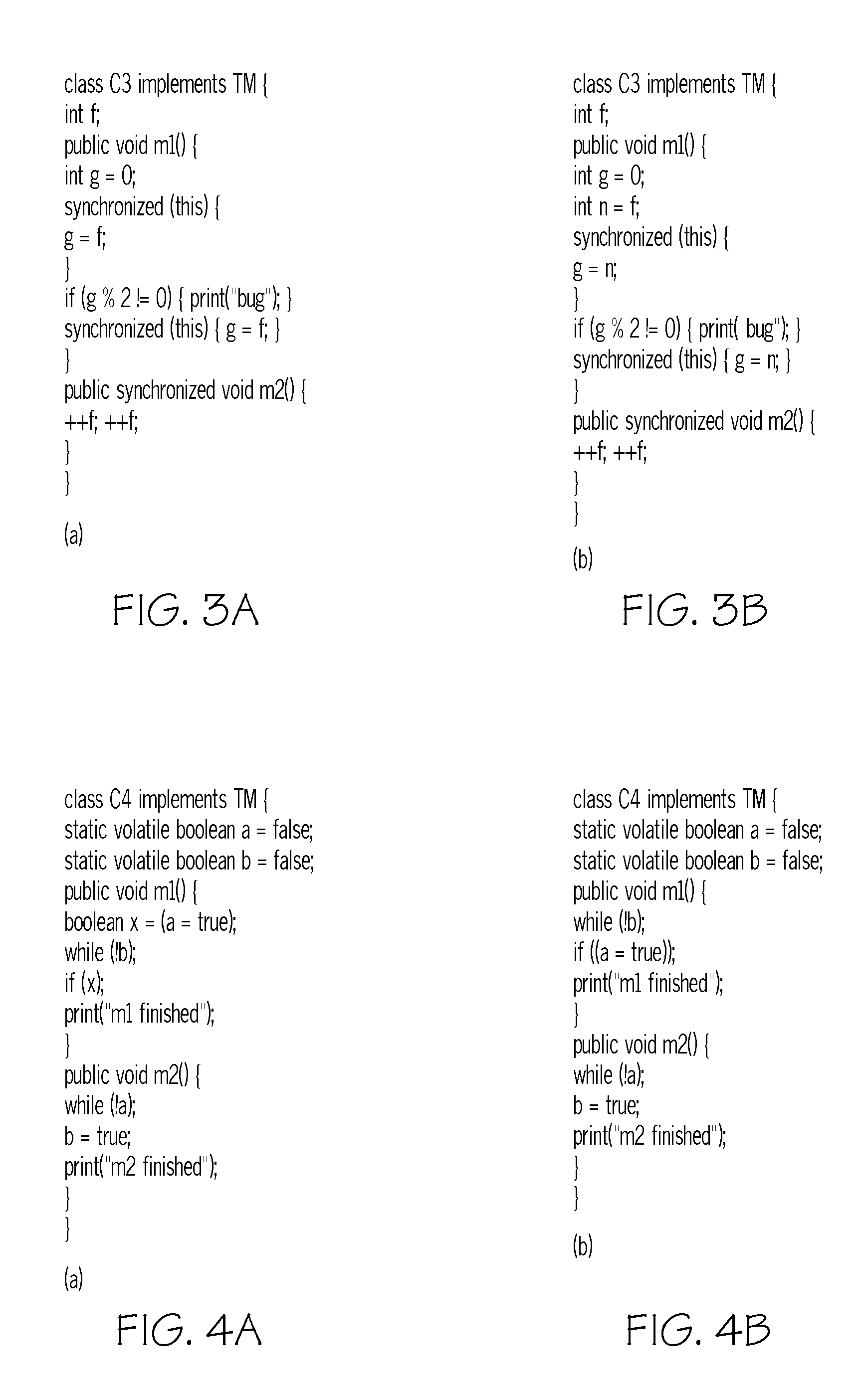

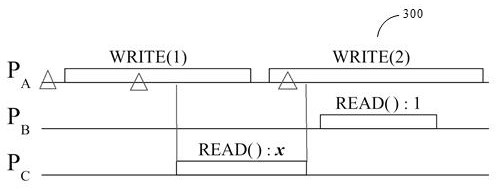

Correct refactoring of concurrent software

InactiveUS20110219361A1Precise correctness resultEasy to implementCode refactoringProgram controlLanguage constructJava Memory Model

Automated refactorings as implemented in modern IDEs for Java usually make no special provisions for concurrent code. Thus, refactored programs may exhibit unexpected new concurrent behaviors. We analyze the types of such behavioral changes caused by current refactoring engines and develop techniques to make them behavior-preserving, ranging from simple techniques to deal with concurrency-related language constructs to a framework that computes and tracks synchronization dependencies. By basing our development directly on the Java Memory Model we can state and prove precise correctness results about refactoring concurrent programs. We show that a broad range of refactorings are not influenced by concurrency at all, whereas other important refactorings can be made behavior-preserving for correctly synchronized programs by using our framework. Experience with a prototype implementation shows that our techniques are easy to implement and require only minimal changes to existing refactoring engines.

Owner:IBM CORP

Technique for dynamically restricting thread concurrency without rewriting thread code

ActiveUS8245207B1Easy to scaleReduce the amount requiredSoftware engineeringDigital computer detailsParallel computingWorkload

Owner:NETWORK APPLIANCE INC

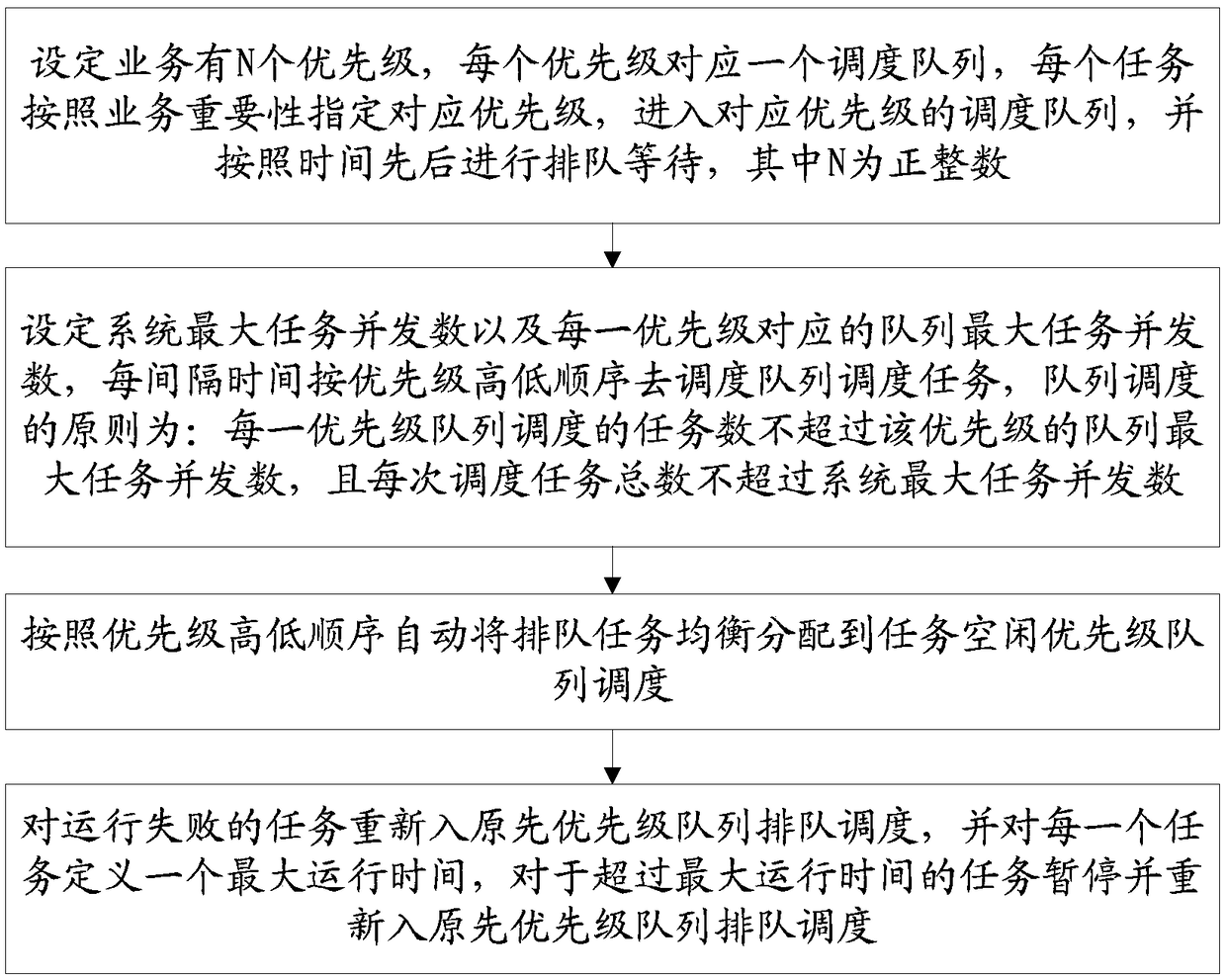

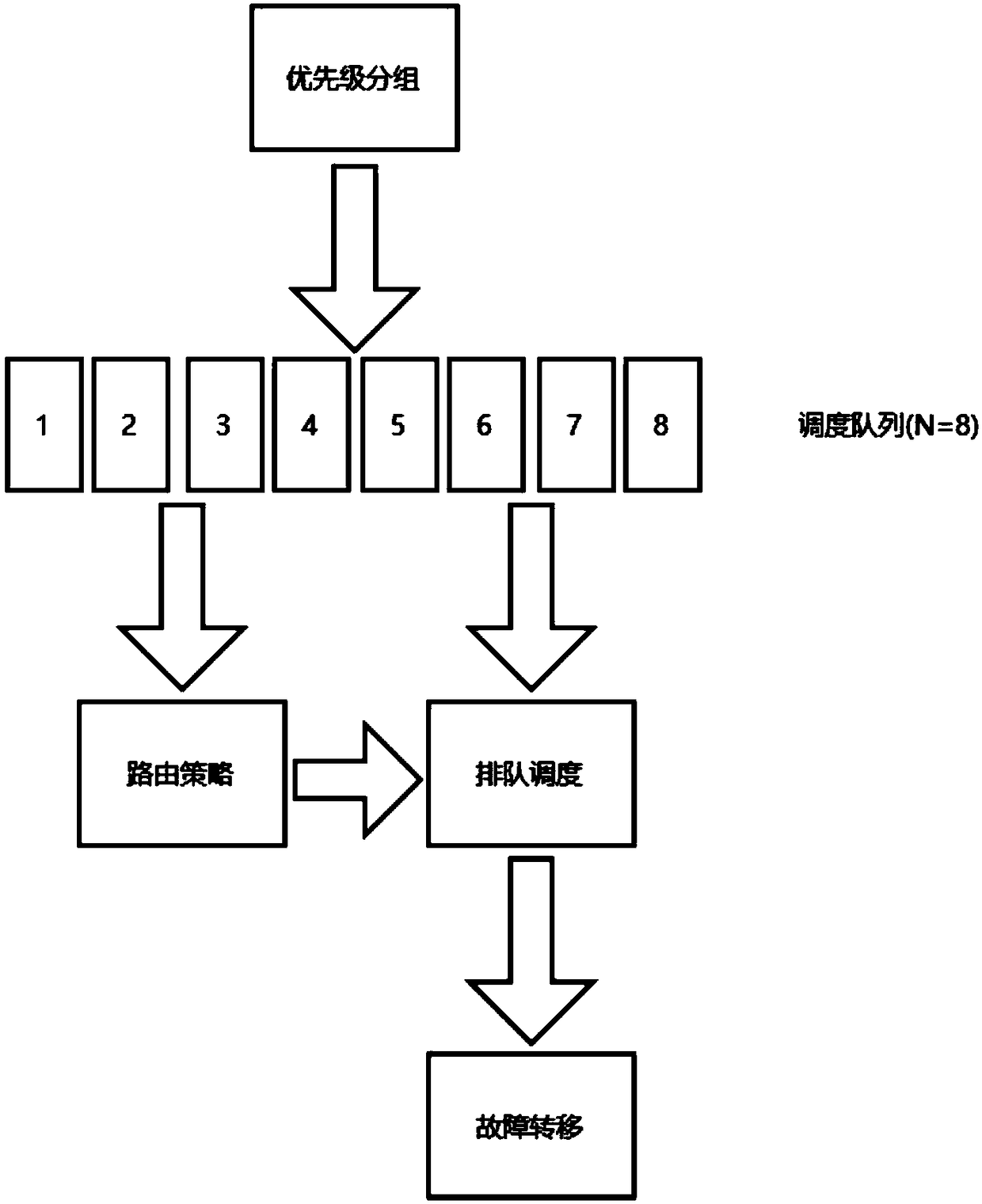

Hadoop cluster-based task scheduling method and computer device

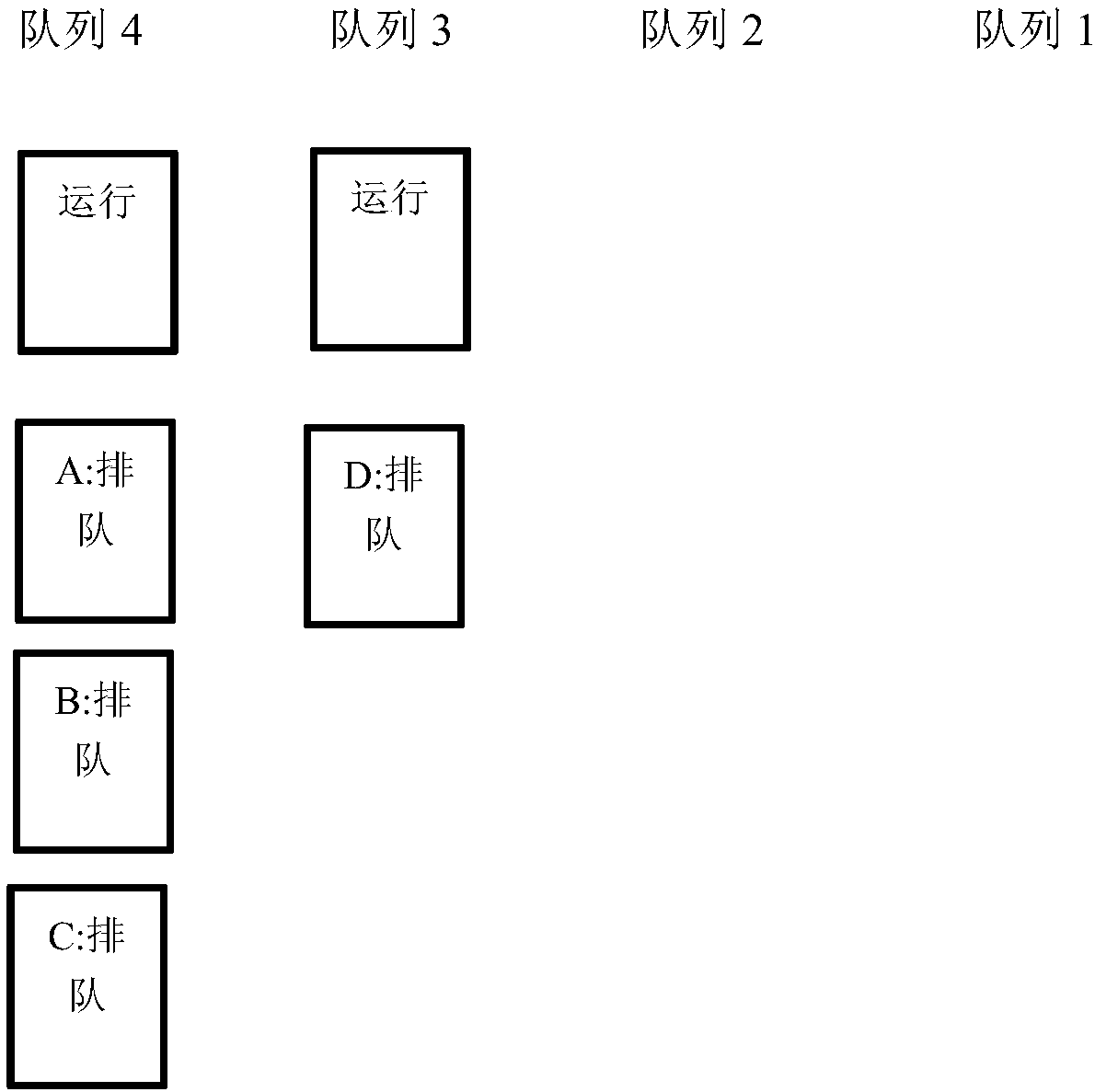

ActiveCN108762896ABest processing speedEasy to handleProgram initiation/switchingFailoverCluster based

The invention provides a Hadoop cluster-based task scheduling method. The method comprises the following steps of 10, setting that a business has N priorities, enabling each priority to correspond toone scheduling queue, enabling each task to specify the corresponding priority according to business importance, entering the scheduling queue of the corresponding priority, and performing queuing according to a time sequence, wherein N is a positive integer; 20, setting a maximum task concurrency number of a system and a maximum task concurrency number of the queue corresponding to each priority,scheduling the tasks in the scheduling queues according to a priority sequence from high to low at a time interval; 30, automatically distributing the queued tasks to the task idle priority queue forscheduling in a balanced manner according to the priority sequence from high to low; and 40, enabling the tasks of running failure and the tasks exceeding a predetermined maximum running time to re-enter the original priority queue for queuing and scheduling. The invention furthermore provides a computer device. Task priority grouping, queuing scheduling, route strategies and fault transfer are realized, so that the scheduling efficiency of the cluster tasks is greatly improved.

Owner:福建星瑞格软件有限公司

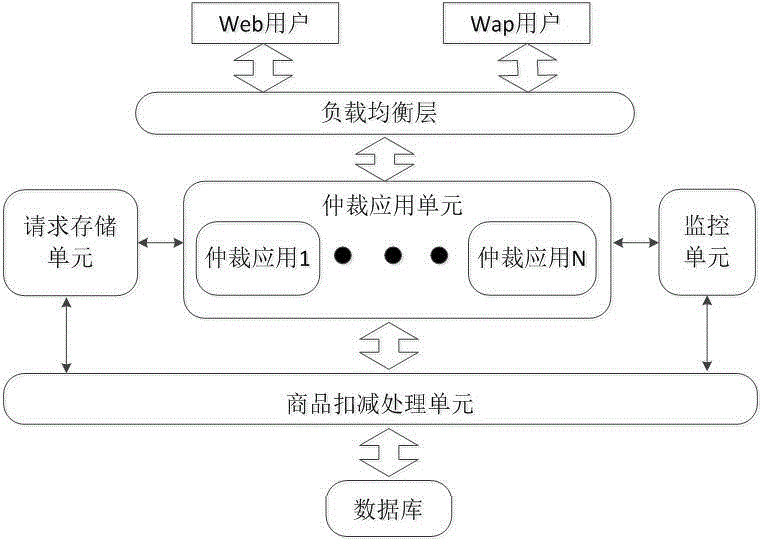

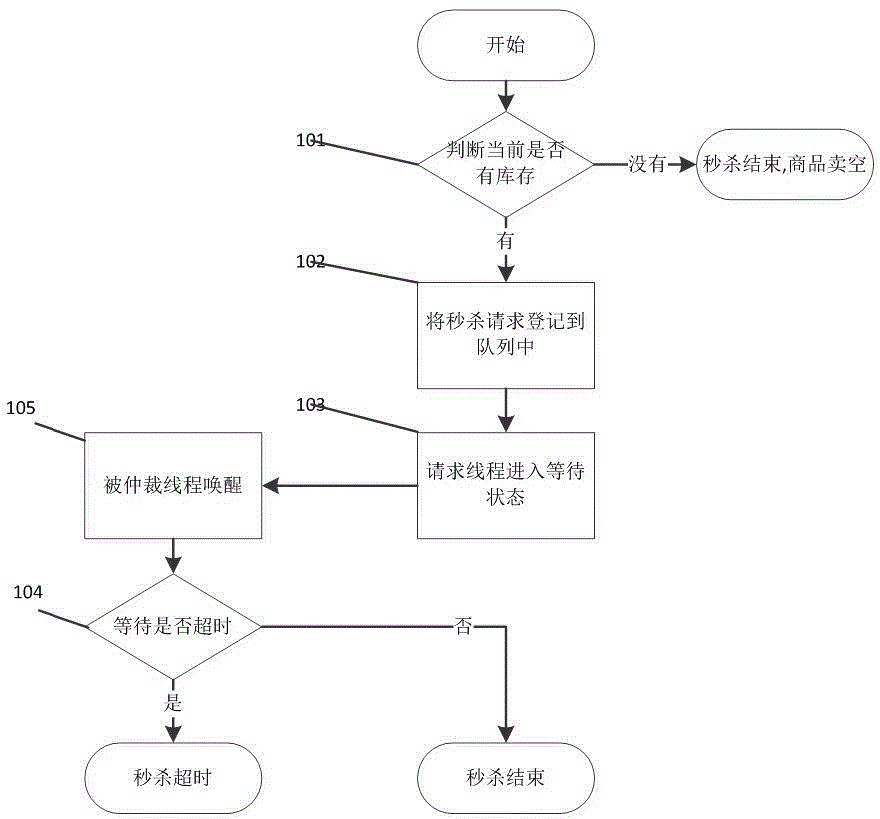

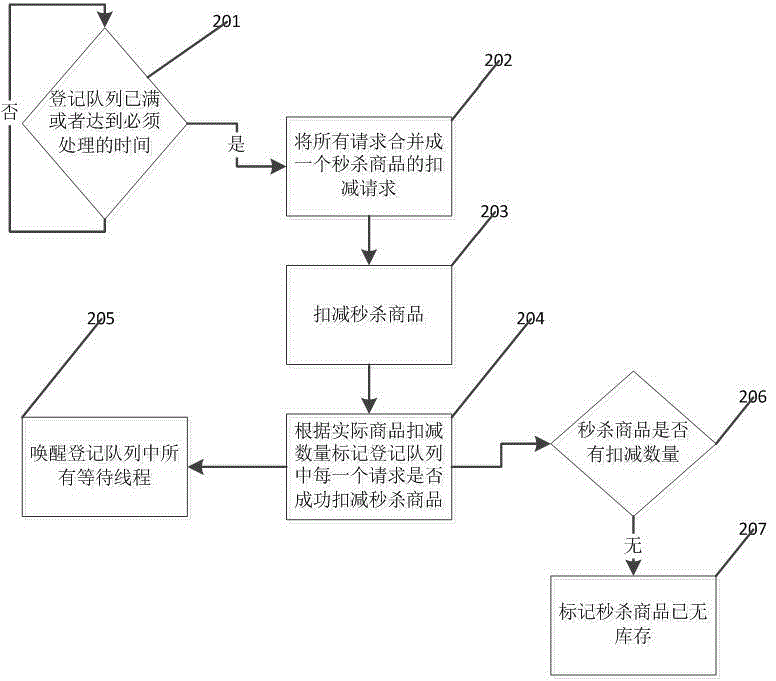

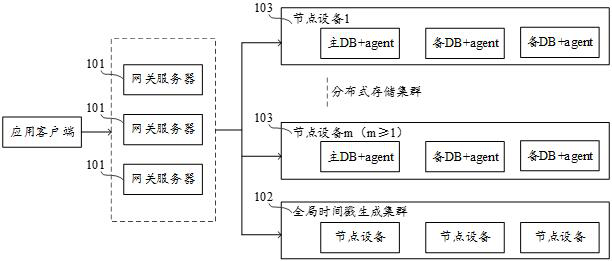

System and method for processing high-concurrency data request

ActiveCN104636957ASolve bottlenecksReduce overheadInterprogram communicationData switching networksE-commerceDistributed computing

The invention belongs to the technical field of electronic commerce, and in particular relates to a system and a method for processing a high-concurrency data request. A main body of the system provided by the invention is divided into three parts, namely, a load balancing layer, an arbitration application unit and a goods deduction processing unit, wherein the load balancing layer is used for equally distributing a great amount of client requests to arbitration applications at a rear end; the arbitration application unit is used for receiving user requests which are distributed by the load balancing layer, carrying out qualification judgment, merging and recombining the user requests, and then transmitting to the goods deduction processing unit to carry out deduction processing of goods; the goods deduction processing unit is used for receiving goods deduction requests from the arbitration applications, and carrying out inventory quantity deduction on second-kill goods. By virtue of a single-thread batch processing manner, the bottleneck of a database system caused by multi-thread high concurrency is avoided; meanwhile, high business handling capability is reserved; the cost of system resources is also reduced as low as possible.

Owner:SHANGHAI HANDPAL INFORMATION TECH SERVICE

Methods and apparatus for optimizing concurrency in multiple core systems

InactiveUS20110213949A1Improve system performanceEliminate needGeneral purpose stored program computerMultiprogramming arrangementsComputer hardwareParallel processing

Owner:META PLATFORMS TECH LLC

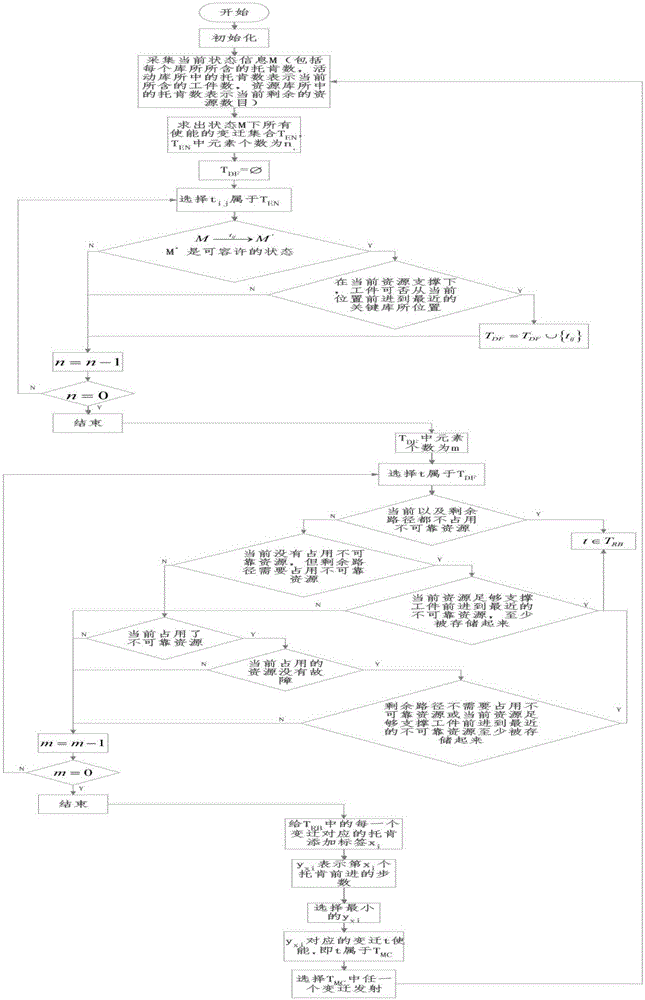

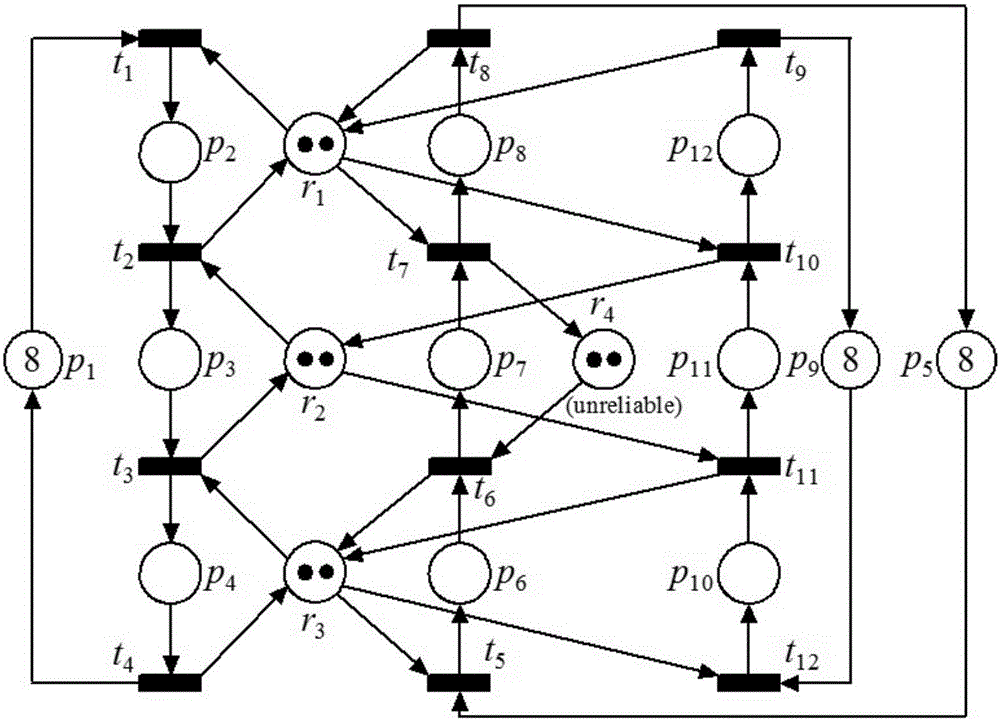

Petri-network-based control method for automatic manufacture system

ActiveCN105022377ARun fastAvoid deadlockTotal factory controlProgramme total factory controlImproved algorithmComputer science

The invention provides a petri-network-based control method for an automatic manufacture system. Through operation of a deadlock avoidance algorithm, a robustness enhancing algorithm and a concurrency improving algorithm, a group of transition set can be produced. Any transmission of transmission belonging to the set can meet requirements for zero deadlock, robustness and system concurrency improvement. After each transition is transmitted, the three algorithms need to be calculated again successively and a new transition set is produced. Through said circulation, a group of event sequence is produced dynamically in real time. According to the invention, when resource faults of a system occur, programs that do not need the fault resources are not blocked by programs that need the fault resources, so that smooth processing can continue and system concurrency can be improved.

Owner:XIDIAN UNIV

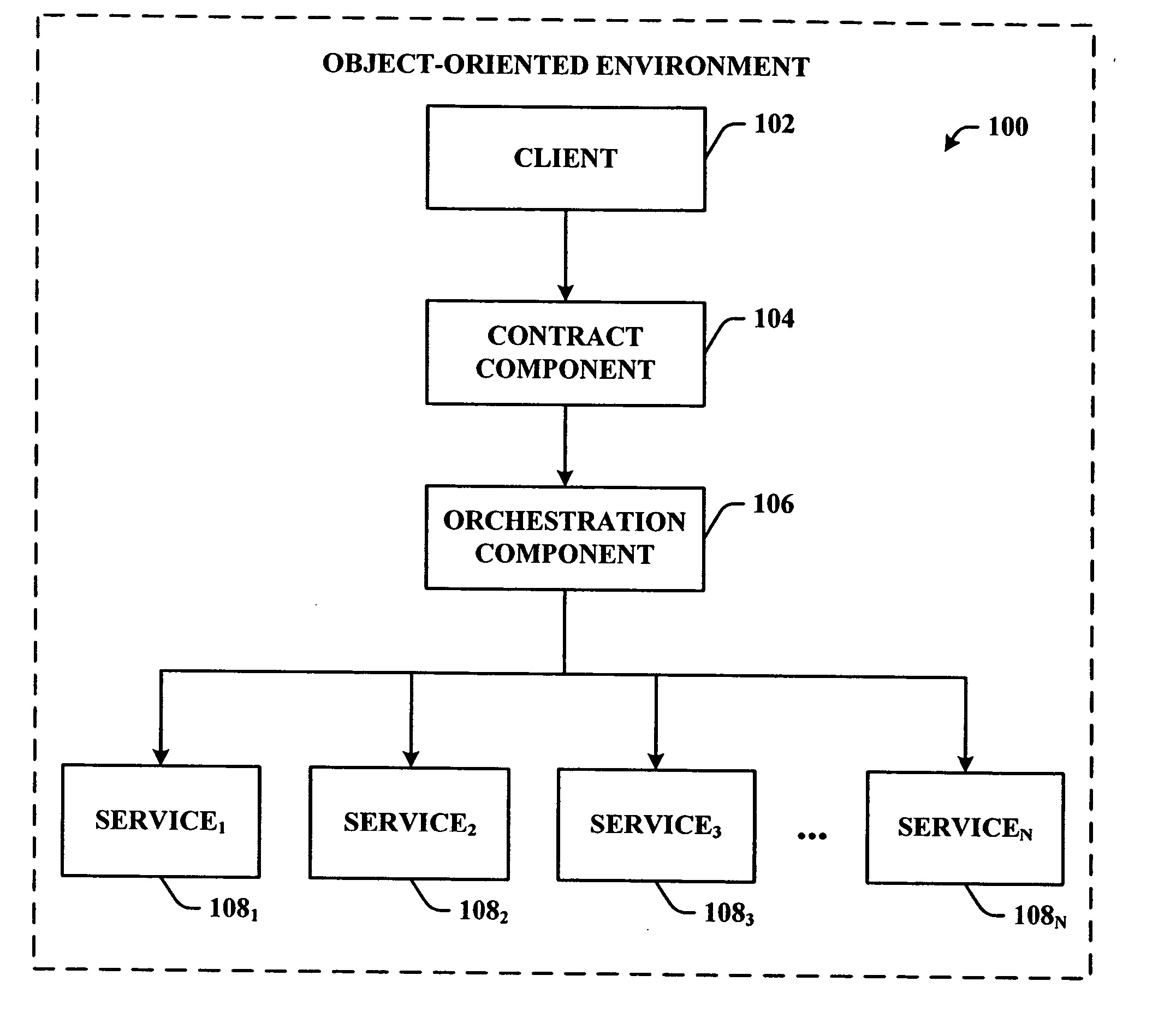

Implementation of concurrent programs in object-oriented languages

InactiveUS20060020446A1Readability of applicationDistributed supportProgram synchronisationInterprogram communicationMessage switchingMessage passing

The present invention adds support for concurrency to a mainstream object-oriented language. Language extensions are provided that can enable programs to be developed that can either be run in one address space, distributed across several process on a single computer, or distributed across a local-area or wide-area network, without recoding the program. Central to this aspect is the notion of a service, which can execute its own algorithmic (logical) thread. Services do not share memory or synchronize using explicit synchronization primitives. Rather, both data sharing and synchronization is accomplished via message-passing, e.g., a set of explicitly declared messages are sent between services. Messages can contain data that is shared, and the pattern of message exchange provide the necessary synchronization.

Owner:MICROSOFT TECH LICENSING LLC

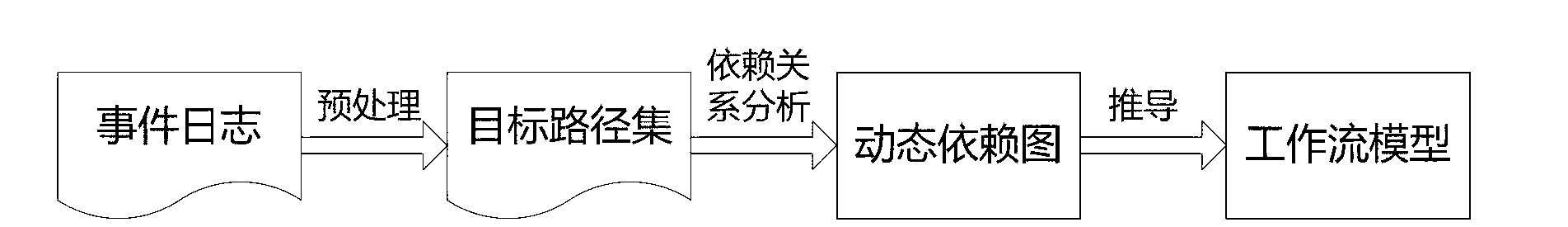

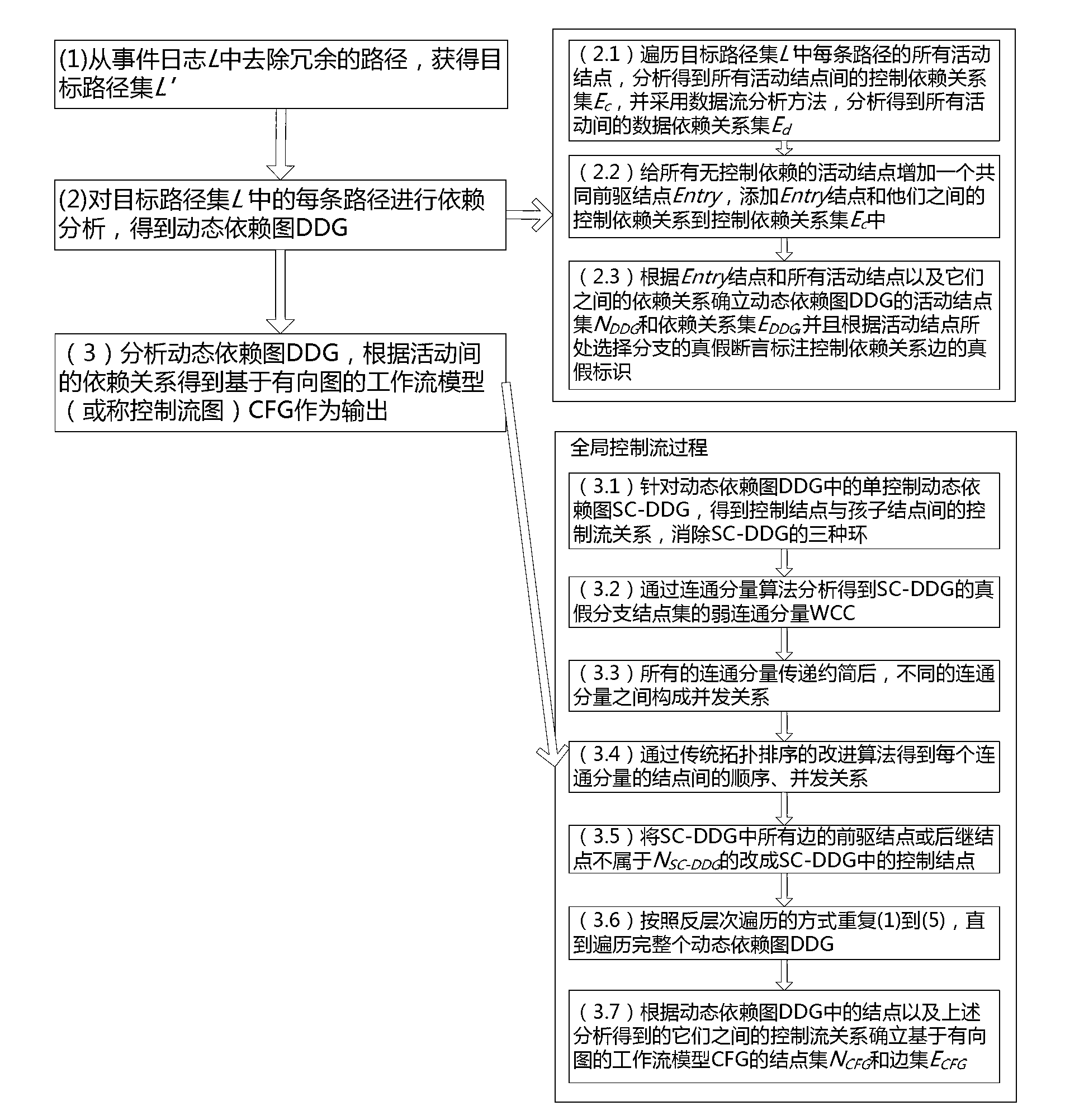

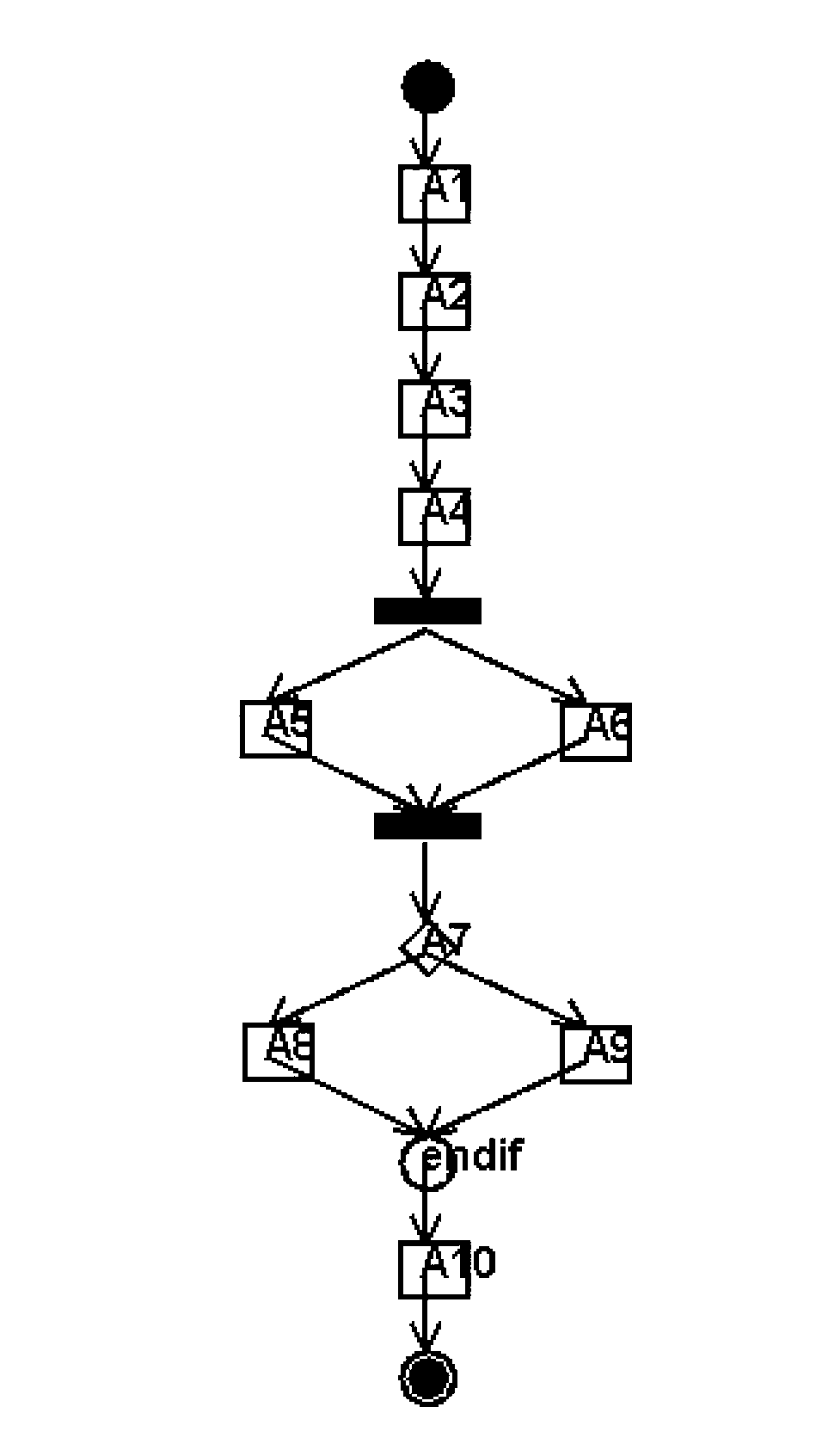

Workflow excavating method based on inter-movement dependency relation analysis

The invention discloses a workflow excavating method based on an inter-movement dependency relation analysis. The method comprises the following steps of: removing a redundant path from an event log L to obtain a target path set L'; carrying out dependency relation R analysis on movement of each path in the target path set L' to obtain a dynamic dependency graph (DDG); and analyzing the dynamic dependency graph DDG and obtaining a workflow model CFG (Control Flow Graph) based on a directed graph according to an inter-movement dependency relation and taking the workflow model as an output. The method disclosed by the invention can effectively identify a concurrency relation in a workflow, so as to dig out a whole workflow model.

Owner:NANJING UNIV OF SCI & TECH

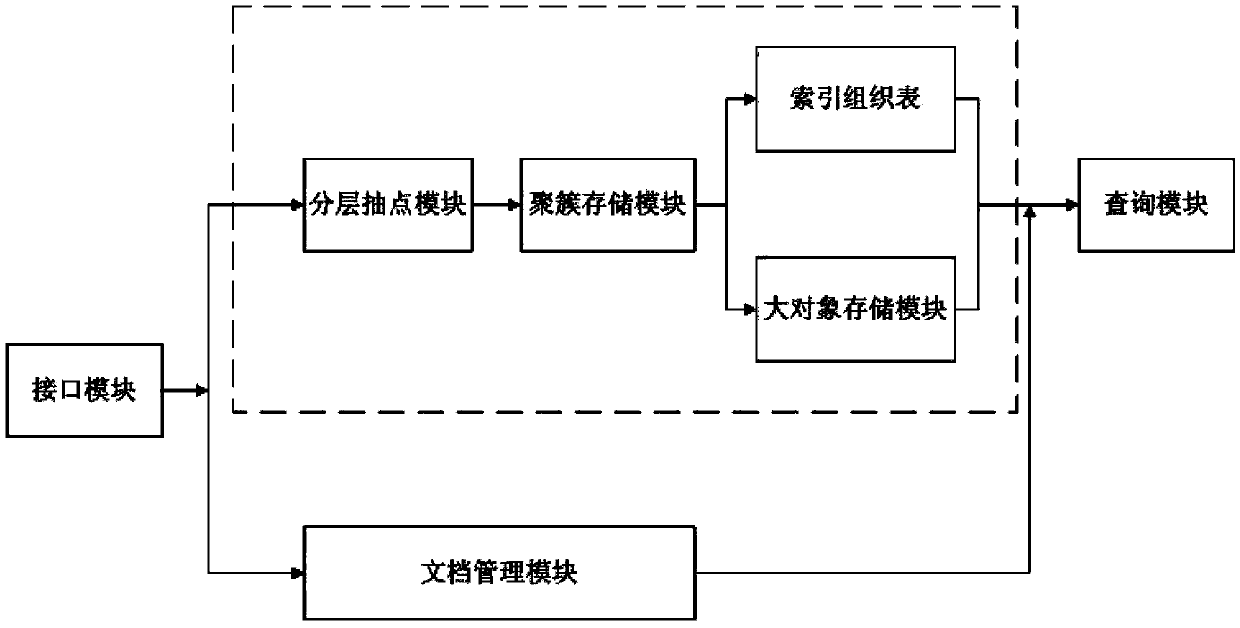

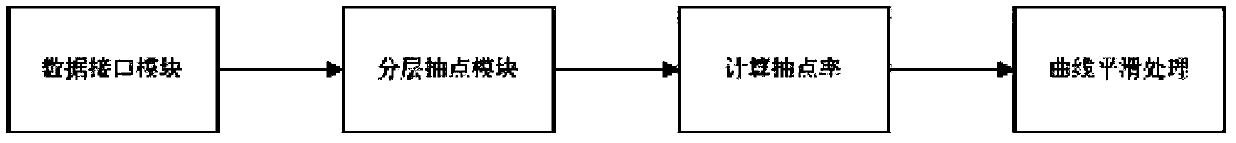

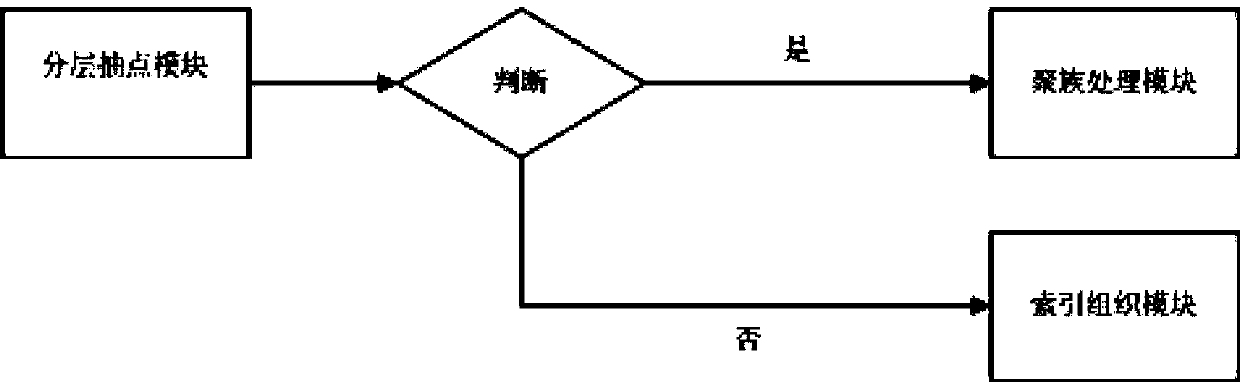

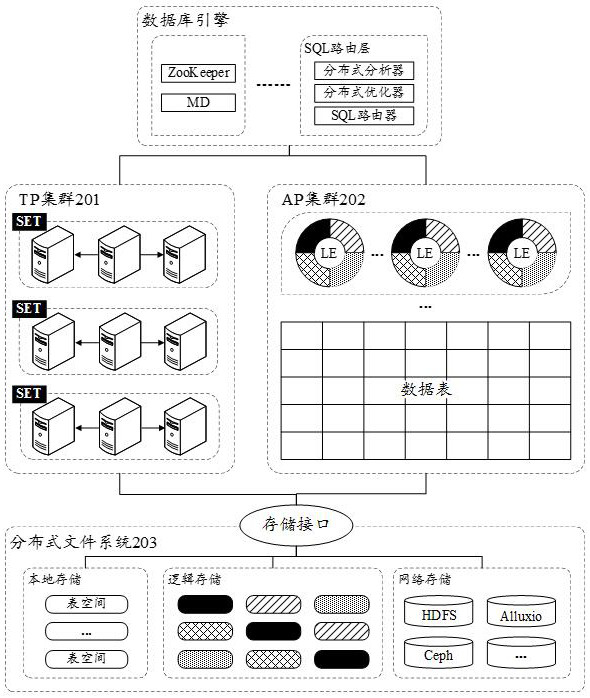

Concurrency OLAP (On-Line Analytical Processing)-oriented test data hierarchy cluster query processing system and method

ActiveCN103473260AReduce loadImprove query efficiencySpecial data processing applicationsObject basedHandling system

The invention discloses a concurrency OLAP (On-Line Analytical Processing)-oriented test data hierarchy cluster query processing system and a concurrency OLAP (On-Line Analytical Processing)-oriented test data hierarchy cluster query processing method. The I / O price of a test data access in the concurrency OLAP is reduced by a series of DBMS (Data Base Management System) technologies of hierarchy test count, cluster, an index organization chart, large object-based storage and the like, so the processing capacity of concurrency query is imporved. According to the system and the method, the concurrency query processing and optimization techniques can be realized in a database management system by aiming at the I / O performance and concurrency OLAP processing performance, and the oriented I / O performance is supported to optimize and set a concurrency OLAP so as to process a load, thereby improving the predictable performance displayed by test data waveforms, and realizing the accelerated processing of the test data query of the large-scale concurrency OLAP.

Owner:BEIJING INST OF CONTROL ENG

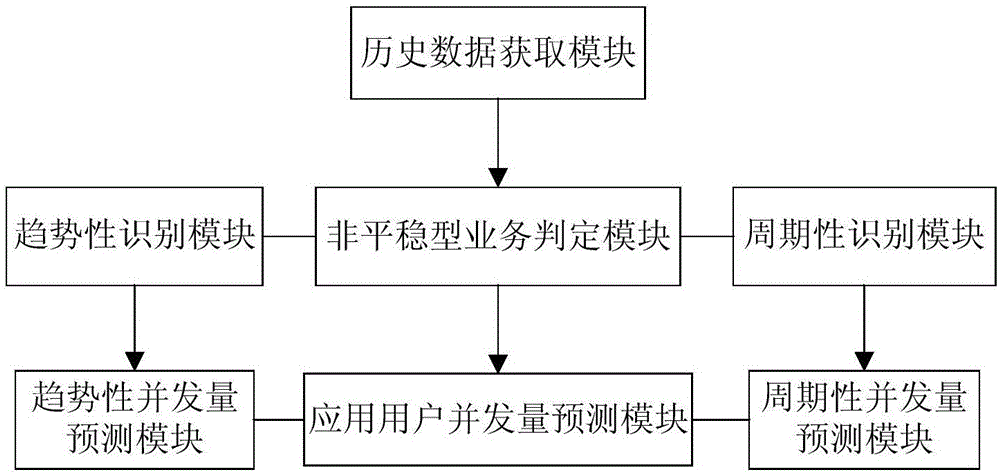

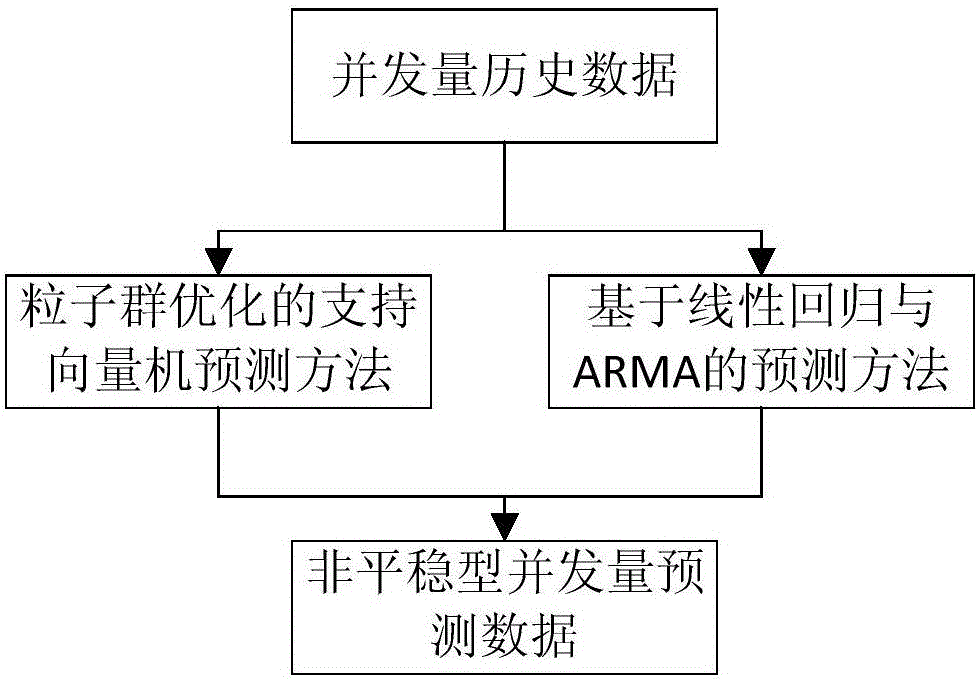

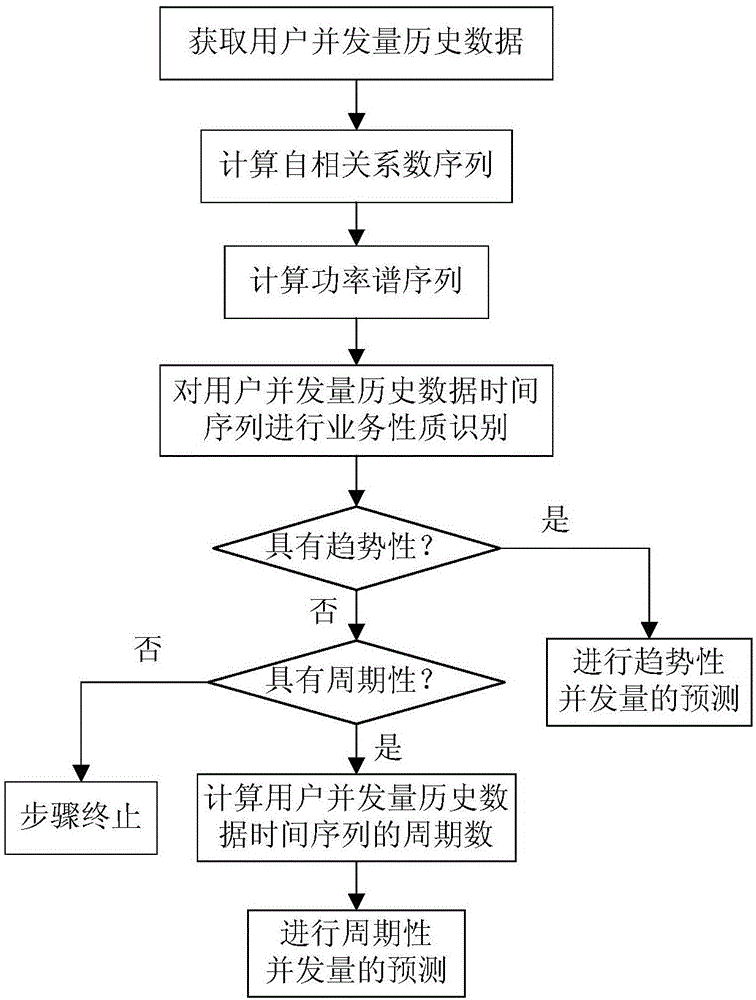

System and method for predicting non-steady application user concurrency in cloud environment

ActiveCN106533750AGood forecastImprove accuracyData switching networksPrediction systemDistributed computing

The invention provides a system and method for predicting the non-steady application user concurrency in a cloud environment, and relates to the technical field of service performance optimization in the cloud environment. The system comprises a historical data obtaining module, a non-steady service judgment module and an application user concurrency prediction module; historical data is analyzed; identification of service properties is carried out; therefore, the fact that a concurrency sequence has the tendency or the periodicity is judged; and concurrency prediction is carried out respectively for the two properties. According to the system and the method provided by the invention, for the characteristics of the tendency and periodic application user concurrency of non-steady services in the cloud environment, a prediction model is constructed by utilizing different prediction methods; the concurrency containing two properties is predicted; characteristics of a tendency and periodic concurrency sequence can be automatically identified; furthermore, the periodicity of the sequence can be automatically calculated; manual intervention is unnecessary; the prediction process can be automatically completed; and simultaneously, the non-steady service concurrency prediction accuracy can be effectively increased.

Owner:NORTHEASTERN UNIV

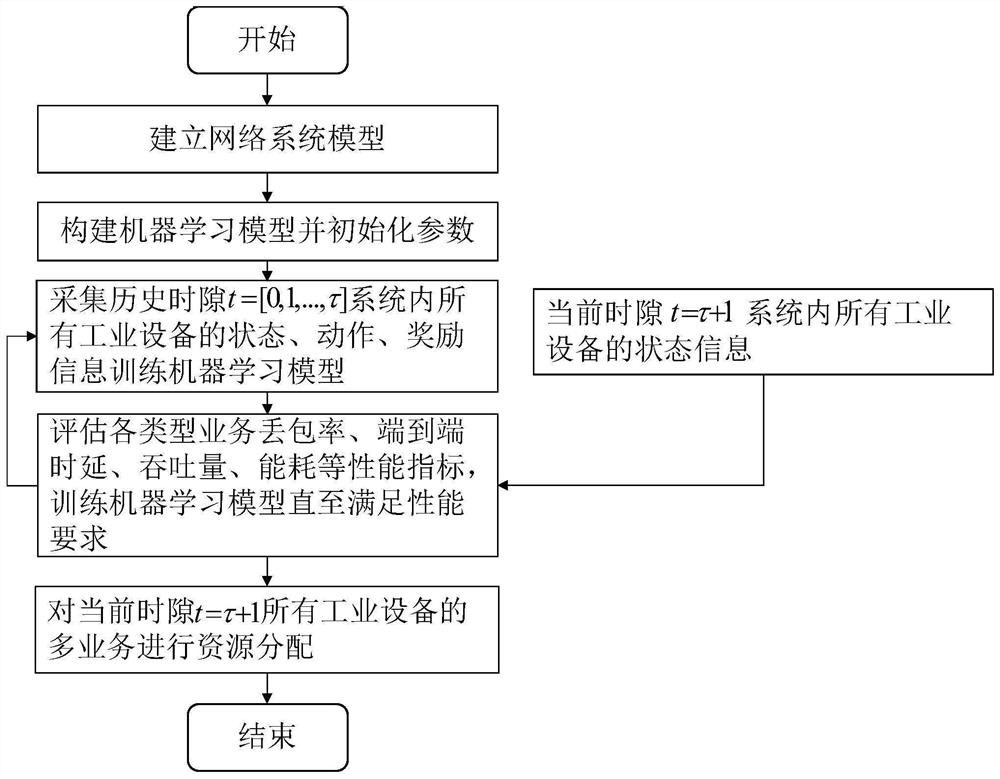

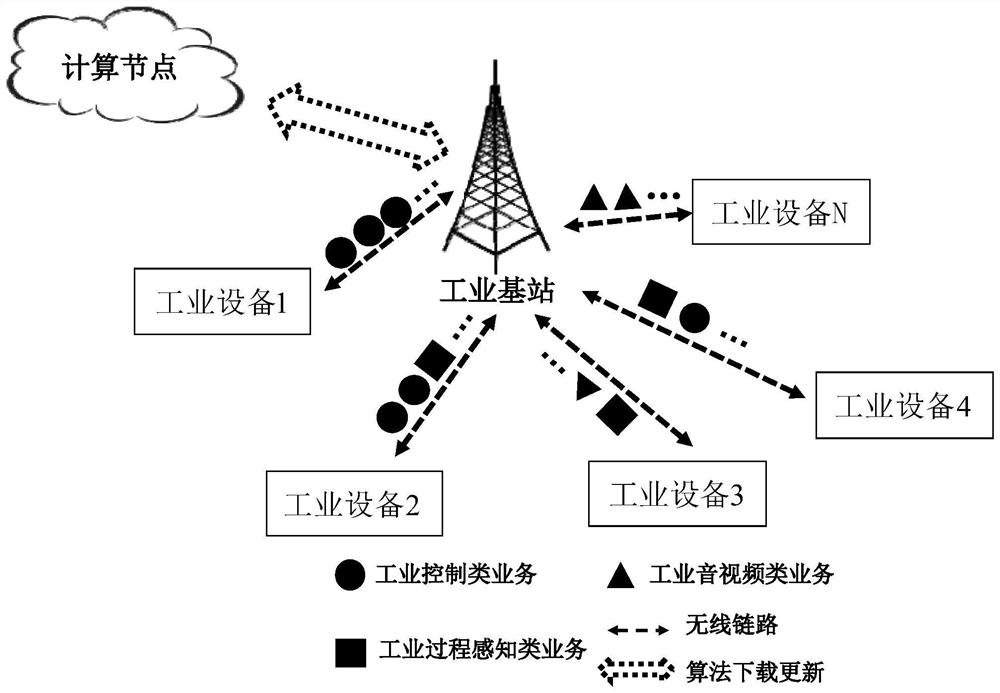

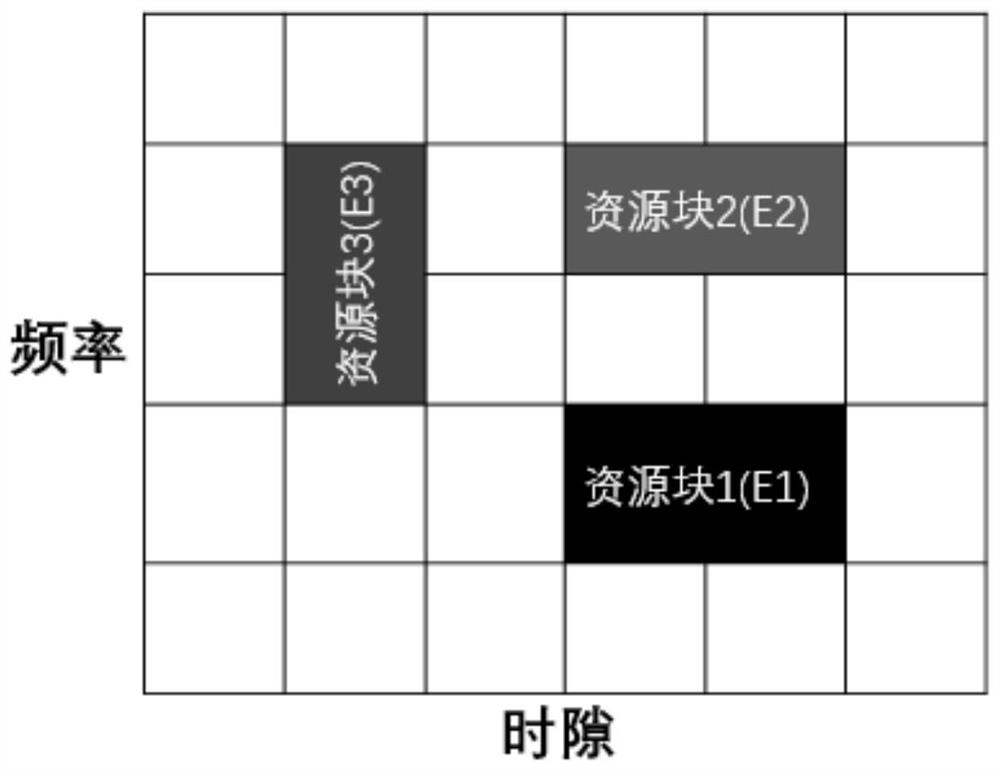

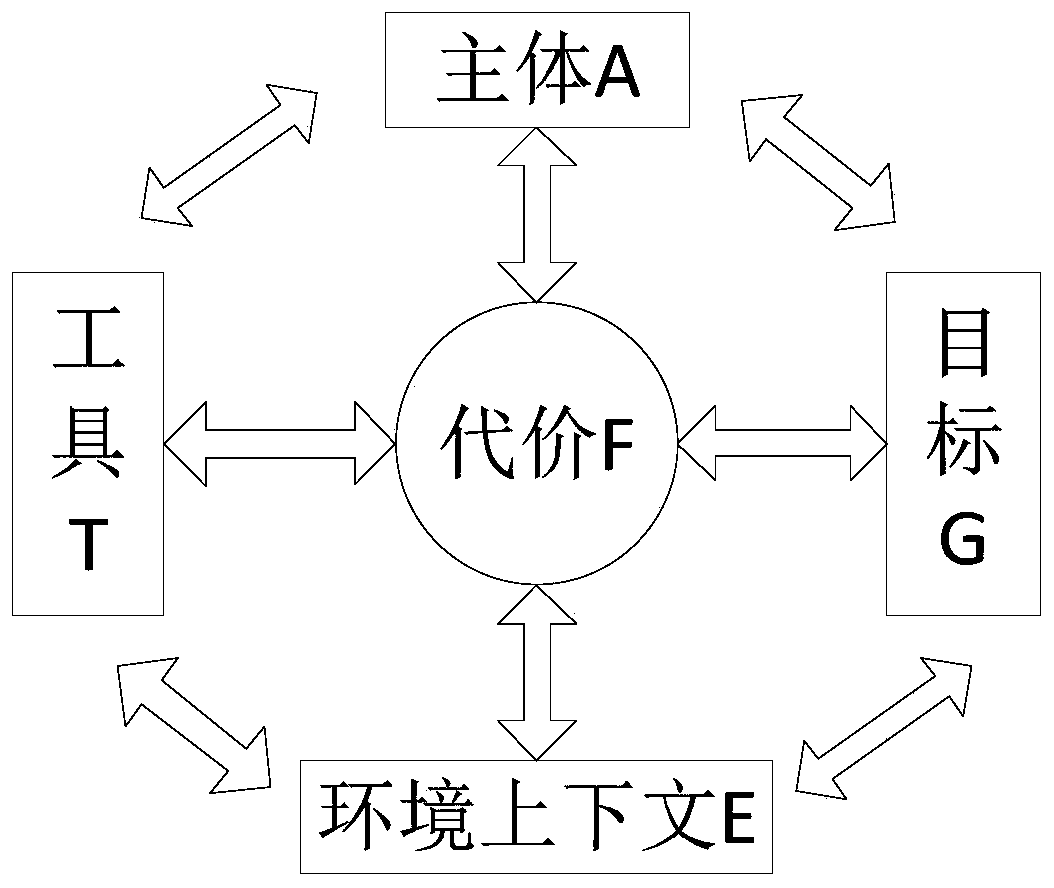

Dynamic resource allocation method for high-concurrency multi-service industrial 5G network

ActiveCN111629380AEnhanced Mobile BandwidthEffective distributionNeural architecturesPhysical realisationDynamic resourceIndustrial equipment

The invention relates to an industrial 5G network technology, in particular to a dynamic resource allocation method for a high-concurrency multi-service industrial 5G network. The method comprises thefollowing steps: establishing a network system model, and constructing a machine learning model for high-concurrency multi-service-oriented industrial 5G network dynamic resource allocation; collecting the state, action and reward information of all industrial equipment in an industrial 5G network at different time slots, and training the machine learning model; circularly evaluating network performance indexes of different services, and training the machine learning model until performance requirements are met; and taking the state information of all the industrial equipment in the current time slot industrial 5G network as the input of the machine learning model, and carrying out resource allocation on a plurality of different types of concurrent services of all the industrial equipment. According to the invention, the problem of resource conflicts caused by different requirements of various types of services such as control commands, industrial audios and videos, perception data and the like on real-time performance, reliability and throughput in the concurrent communication process of large-scale heterogeneous industrial equipment in an industrial 5G network is solved.

Owner:SHENYANG INST OF AUTOMATION - CHINESE ACAD OF SCI +2

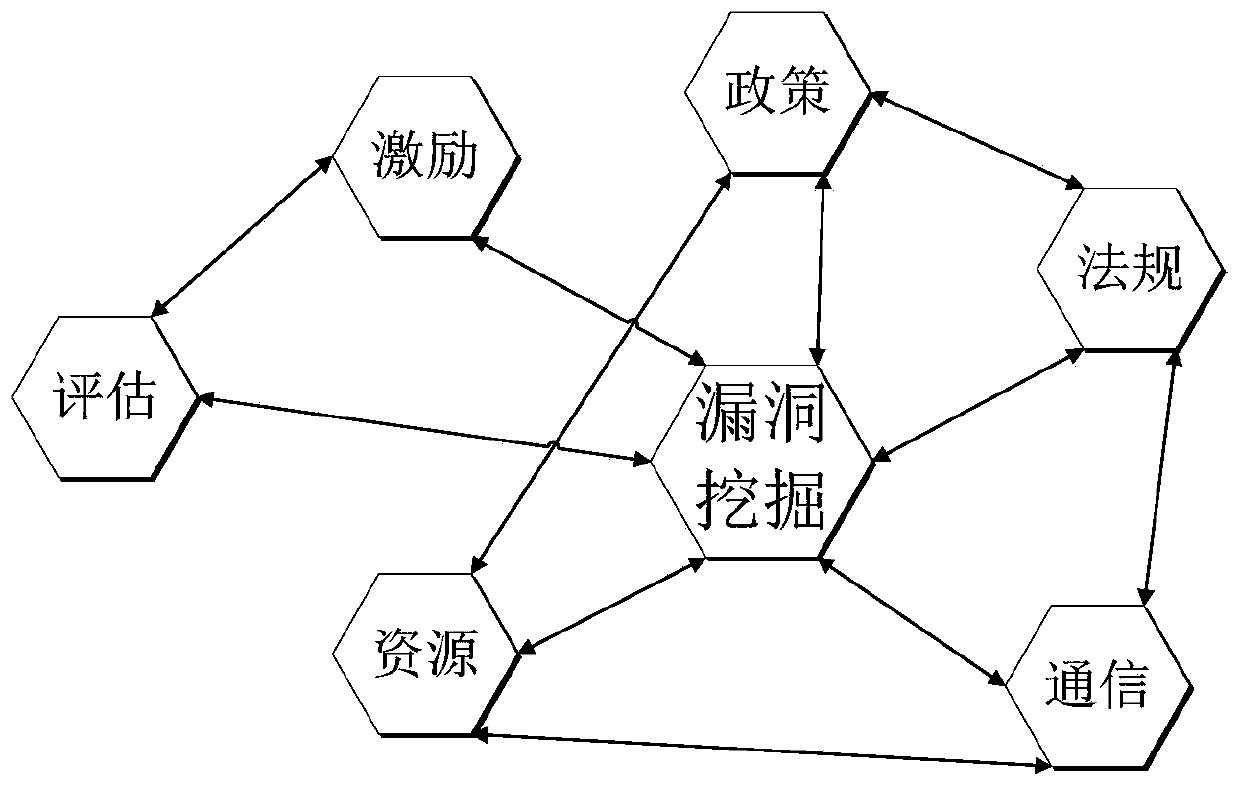

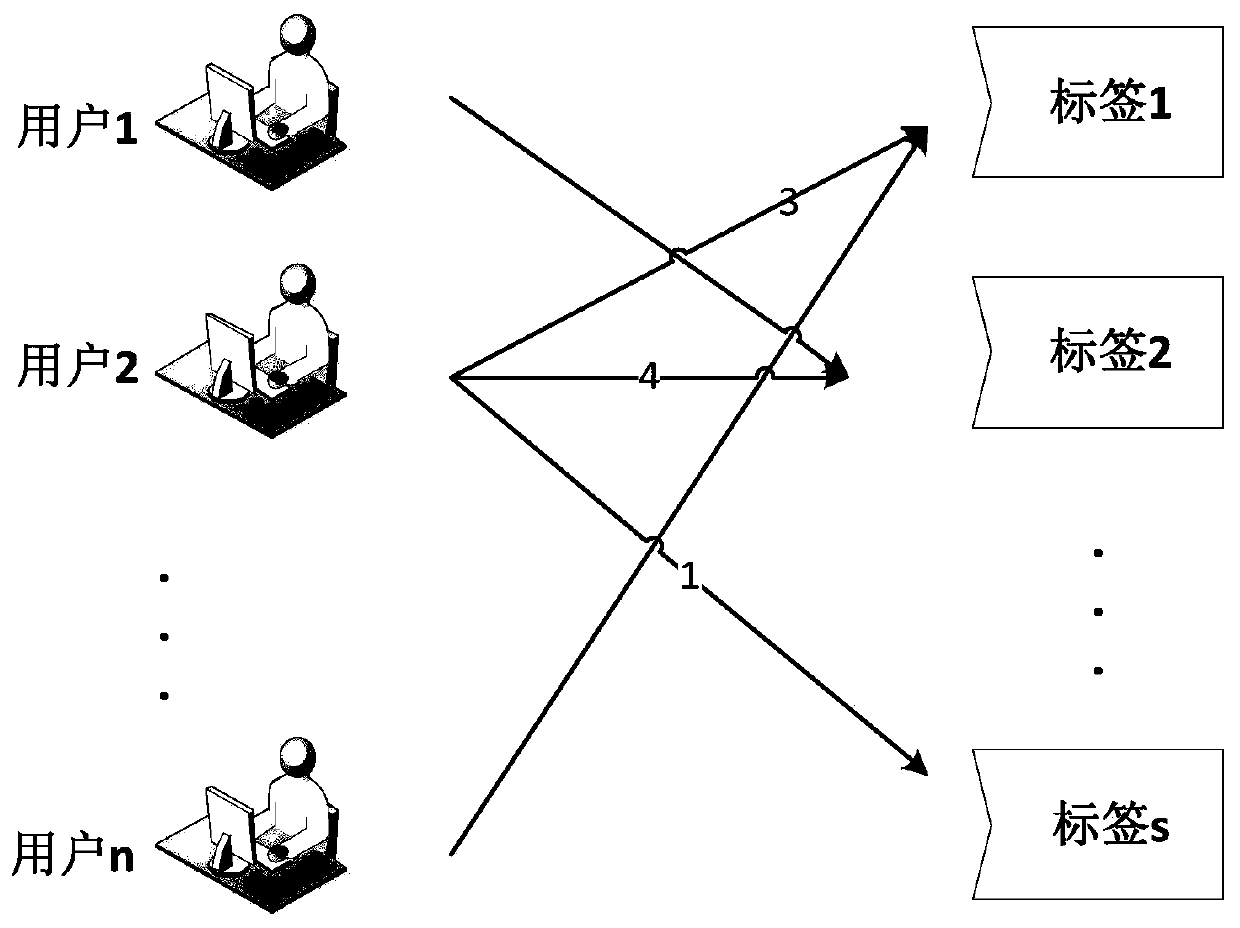

Vulnerability mining model construction method based on swarm intelligence

ActiveCN110708279AImprove digging efficiencyReduce excavation costsPlatform integrity maintainanceTransmissionCrowd sourcingEngineering

The invention discloses a vulnerability mining model construction method based on swarm intelligence. The vulnerability mining model construction method comprises the following steps: step 1, modelinga user individual; step 2, performing intelligent decomposition on a vulnerability mining task based on a vulnerability mining scene; 3, solving an optimal vulnerability mining path; and step 4, gathering and fusing crowd sourcing vulnerability mining results and establishing a feedback learning model. Compared with the prior art, the invention has the advantages that: the invention has positiveeffects; the model reflects crowd-sourcing concepts such as crowd collaboration, machine collaboration, computing resource collaboration and tool sharing; the method has the advantages of natural high-concurrency capability, rapid and efficient search and solution capability, extremely strong environmental adaptability, high robustness and self-recovery capability, strong expandability and high flexibility, the vulnerability mining efficiency is effectively improved, and the vulnerability mining cost is reduced.

Owner:CHINA ELECTRONICS TECH CYBER SECURITY CO LTD

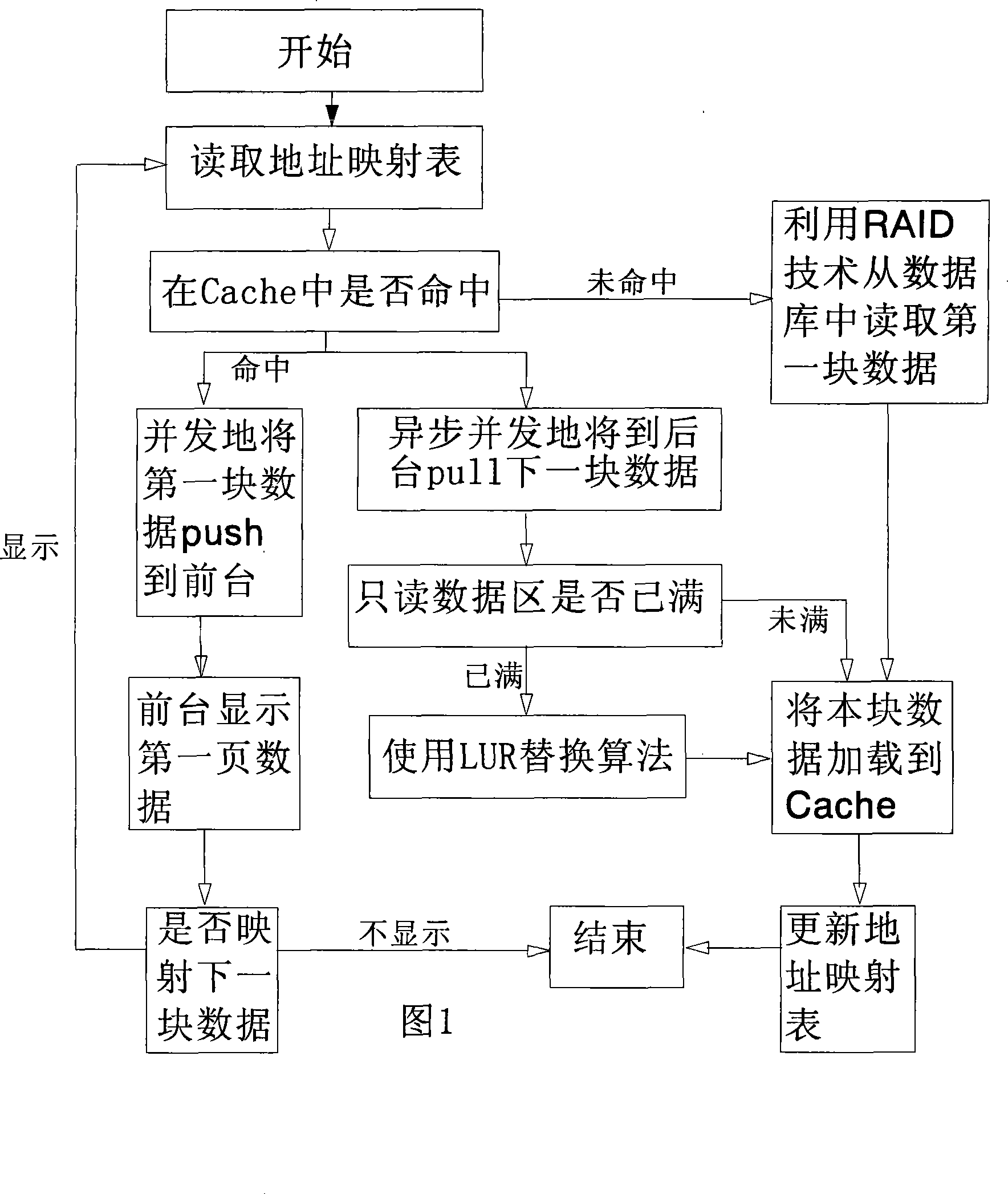

Mass data high performance reading display process

InactiveCN101236564AImprove performanceImprove hit rateData switching networksSpecial data processing applicationsRAIDTime delays

The invention relates to a mass data high performance fetch display method, which can fully mine potential computational capabilities of a client, a cache and a database, wherein, the client is mainly responsible for transmission of data request and display of echo data; the cache adopts an optimized partition structure and comprises a mapping table region and a data region; a 'jump iterative algorithm' is adopted to realize asynchronous push of search data towards a foreground, and simultaneously pull of a next data block with given size to a background; an optimized LRU algorithm is used for the data region, thereby the hit ratio of cache data is greatly improved and the mean hit ratio reaches over 90 percent. By adoption of the 'soft RAID technology', the concurrency is improved, thereby the access performance of the database is obviously improved; the problems difficult to be solved of no time delay display of the client, high performance concurrent access, high throughput of data and so on during the process of operating the mass data are solved; moreover, the cost of integration and mining of the prior resources is minimized.

Owner:INSPUR TIANYUAN COMM INFORMATION SYST CO LTD

Transaction processing method and device, computer equipment and storage medium

ActiveCN112463311AImprove the accuracy of anomaly detectionImprove performanceDatabase distribution/replicationSpecial data processing applicationsEngineeringDatabase

The invention discloses a transaction processing method and device, computer equipment and a storage medium, and belongs to the technical field of databases. The method comprises the steps of obtaining a read-write set of a target transaction in an execution stage of the target transaction, verifying whether the target transaction meets a self concurrency consistency level or not according to a logic starting moment and a logic submitting moment of the target transaction in a verification stage, and dynamically updating the logic starting moment based on a predetermined strategy. Thus, the logic starting moment and the logic submitting moment which are finally updated are enabled not to generate data exception which is not allowed by the own concurrency consistency level under the legal condition, and then the target transaction is submitted, so that different transactions in the whole system can correspond to different concurrency consistency levels, and the user experience is improved. The data exception detection accuracy is greatly improved, and the transaction processing efficiency and the performance of a database system are improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

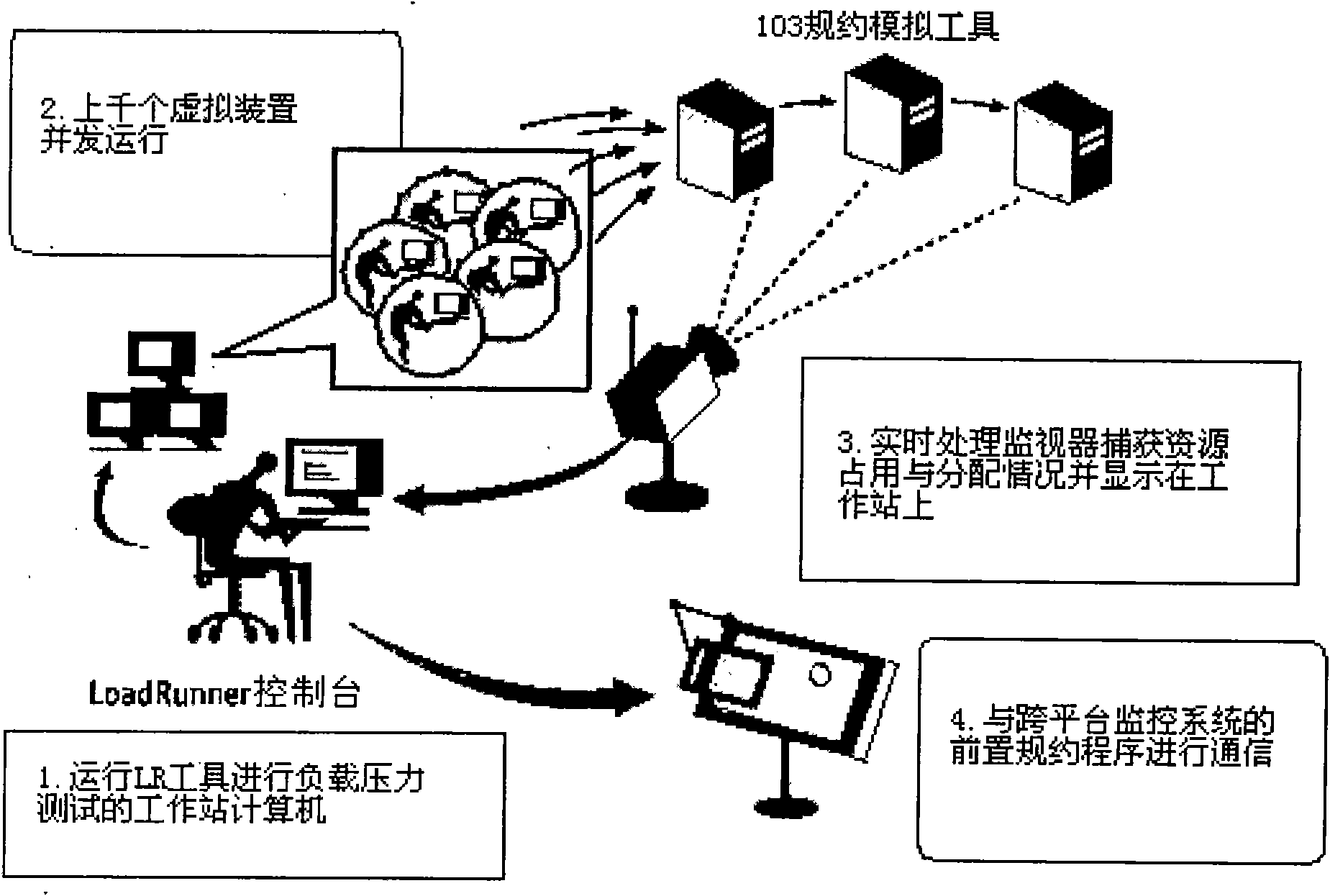

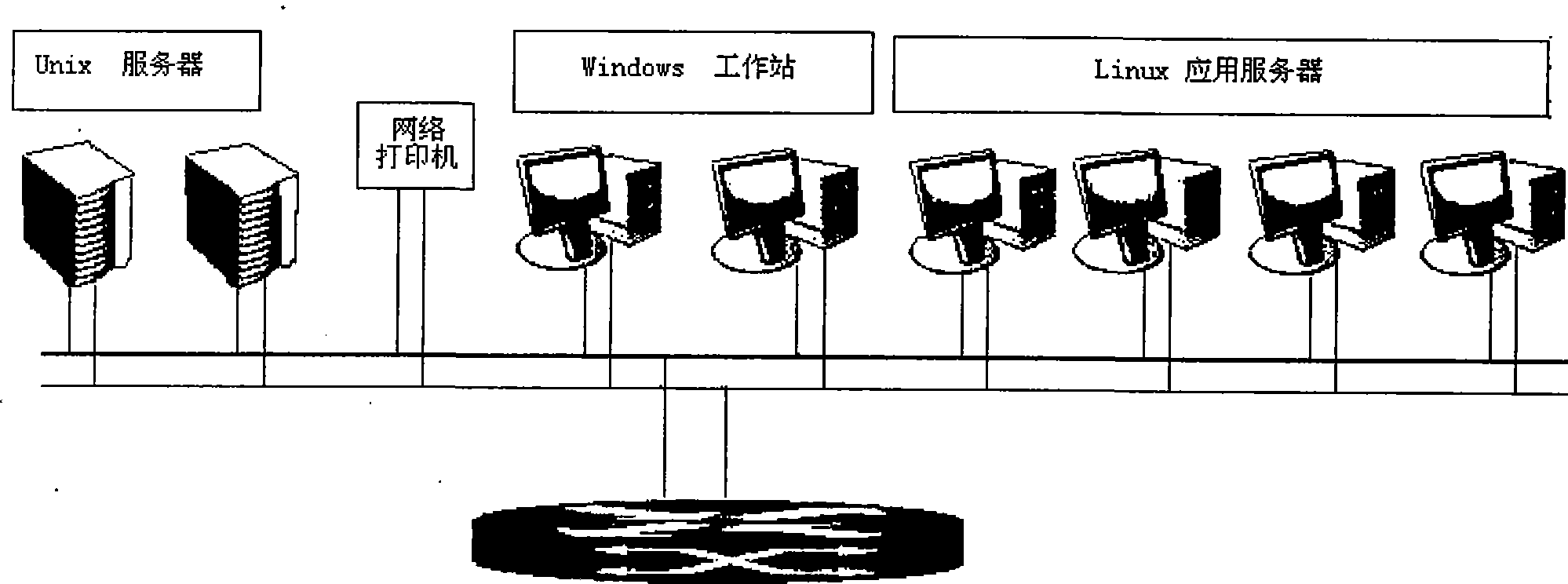

Cross-platform station automatic monitoring system testing method based on concurrency simulating tools

ActiveCN101776915AReduce development costsEfficient and reliable long-term stability simulation testingTesting/monitoring control systemsControl layerSystem testing

The invention relates to a cross-platform station automatic monitoring system testing method based on concurrency simulating tools. Two-stage concurrency simulating tools are adopted to finish high-efficiency test, i.e. a 103 scale protocol simulating tool and an LR load pressure testing tool. The testing method realizes the purpose of constructing a large-scale station automatic monitoring system testing environment by using a minimized device emulation computer simulating tool and ensures that a station spacer layer is virtualized into a data transmission request processing center of a single similar clouding computing by adopting several computers, thereby the systematic difference characteristic of the cross-platform monitoring system of a ground shielding station control layer is maximized. The invention can solve many problems caused by the traditional simple mechanical laboratory test mode and realize high-efficiency, reliable and long-term stability simulation test of the cross-platform station automatic monitoring system.

Owner:GUODIAN NANJING AUTOMATION

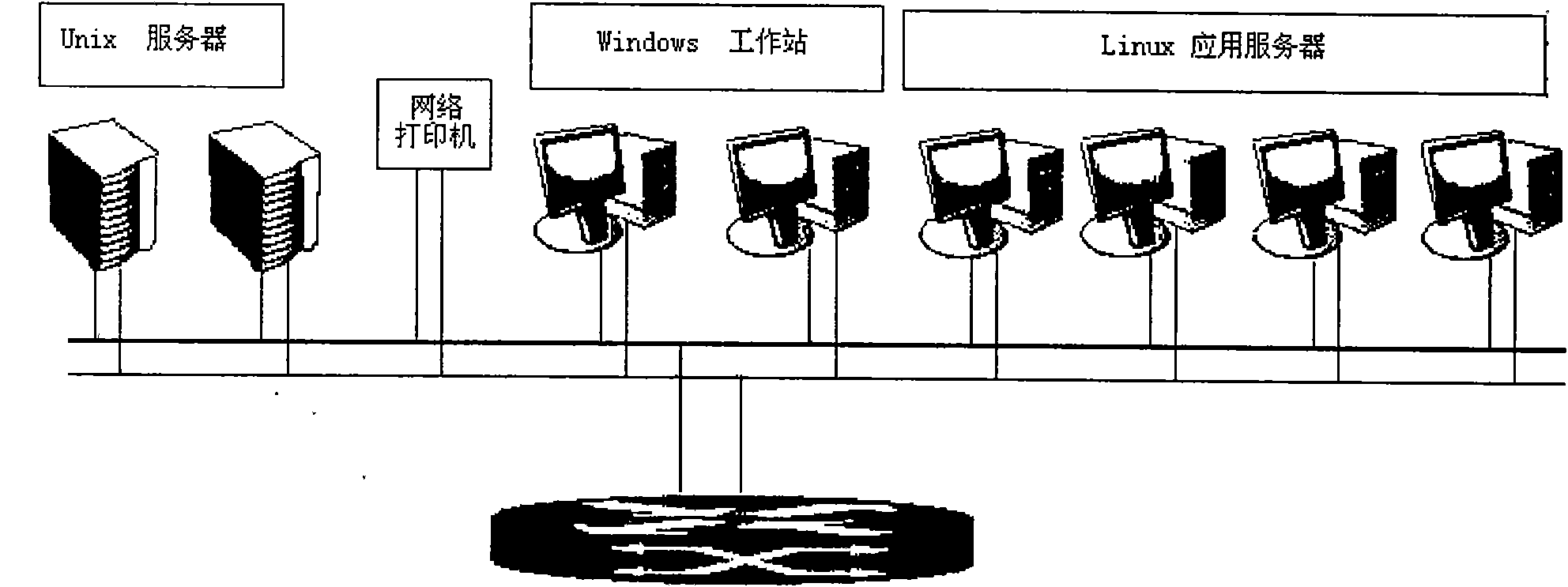

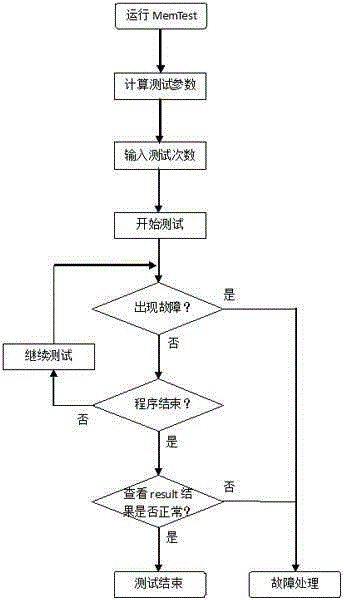

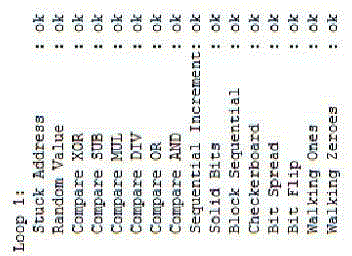

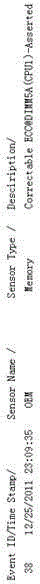

Test method for memory pressure of Linux server

The invention discloses a test method for the memory pressure of a Linux server. The process of the test method comprises the steps of 1, copying a MemTest script and a memtester-4.3.0.tar.gz file to any catalog on a Linux server needing to be subjected to the memory pressure test; 2, giving the script and the file an executable right: #chmod777MemTest; 3, executing a script program: #. / MemTest; 4, analyzing a test result. By adopting the method, third party software is not required, and the method is easy and feasible; the method is compiled by using a Shell script, is strong in universality and can test in a cross-platform mode; the optimal test parameter is automatically calculated, and the method supports the self definition circulation times, and is suitable for different test environments; multi-process concurrency is realized, and the test efficiency is high; the program has no residual files and has no influence to a system.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

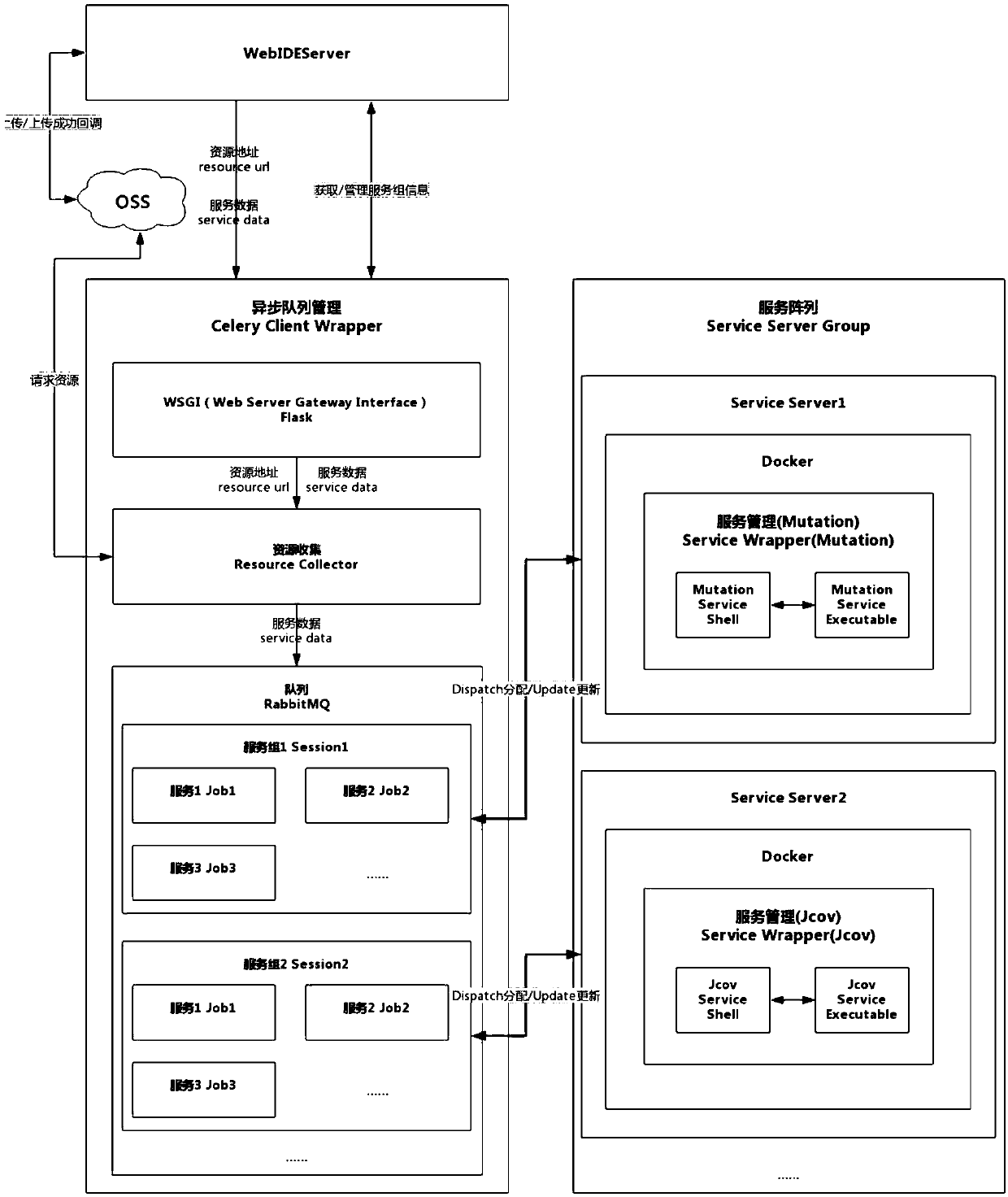

Multi-language-oriented high-concurrency online development support method

PendingCN110502212AImprove fault toleranceEnable sharing and real-time updatesResource allocationSoftware testing/debuggingBasic modeCoding block

The invention provides a multi-language-oriented high-concurrency online development support method, capable of providing an online development environment for all users entering a platform, so that the users can open browsers anytime and anywhere to use the platform for development. The multi-language-oriented high-concurrency online development support method includes the steps: providing a codeeditor for carrying out intelligent prompting; providing multi-type page support, including a JMeter test providing form type editing interface and a basic mode development page; carrying out load balancing forwarding on the background request, realizing a code execution mechanism based on Docker, being compatible with multiple programming languages and project types, and carrying out expansion conveniently; and developing asynchronous queue service management to realize distributed calculation, and improving the system performance. The multi-language-oriented high-concurrency online development support method has the beneficial effects that due to the code intelligent prompting function, editing and repeated copying and pasting operations of simple code blocks are reduced, and the user development efficiency is improved; multi-type and multi-language view angles are adopted, and diversified development requirements are met; request distribution is carried out to solve the problems ofhigh load and high concurrency; and asynchronous queue service management is provided, and the expandability of the service is greatly improved, and the possibility is provided for scalability.

Owner:NANJING MUCE INFORMATION TECH

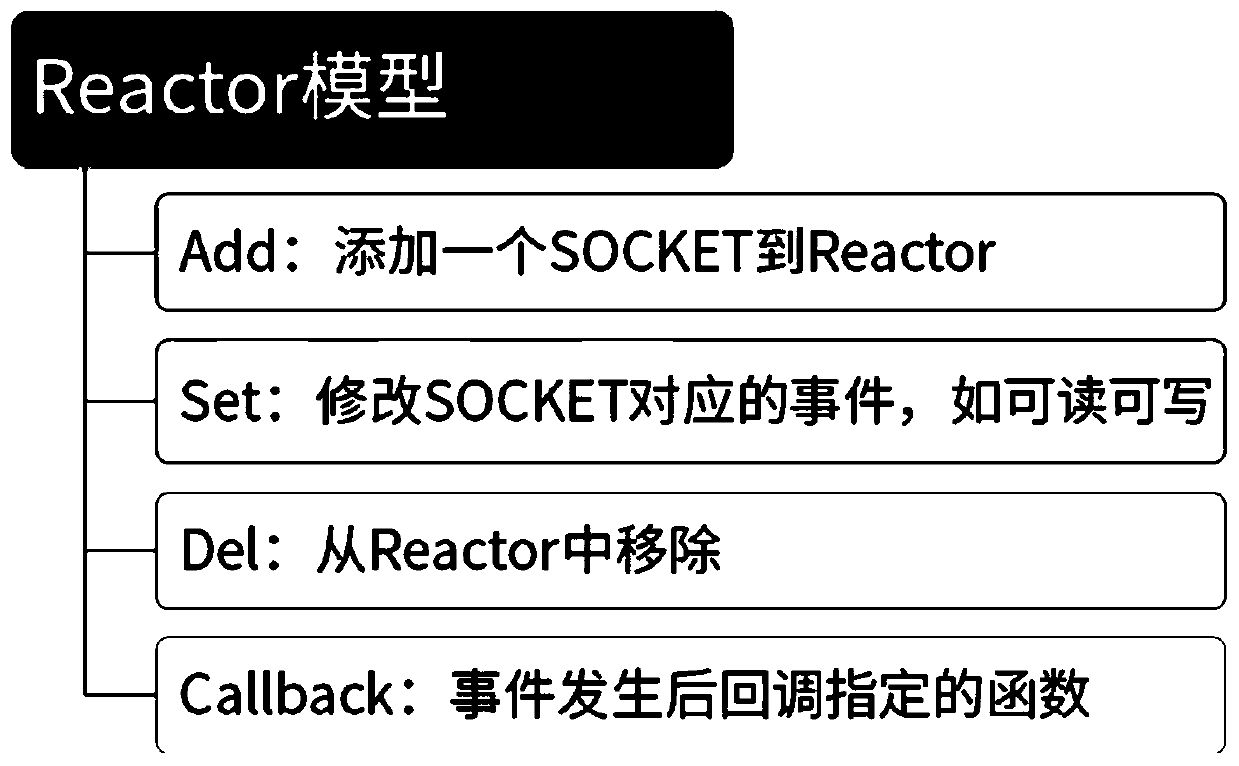

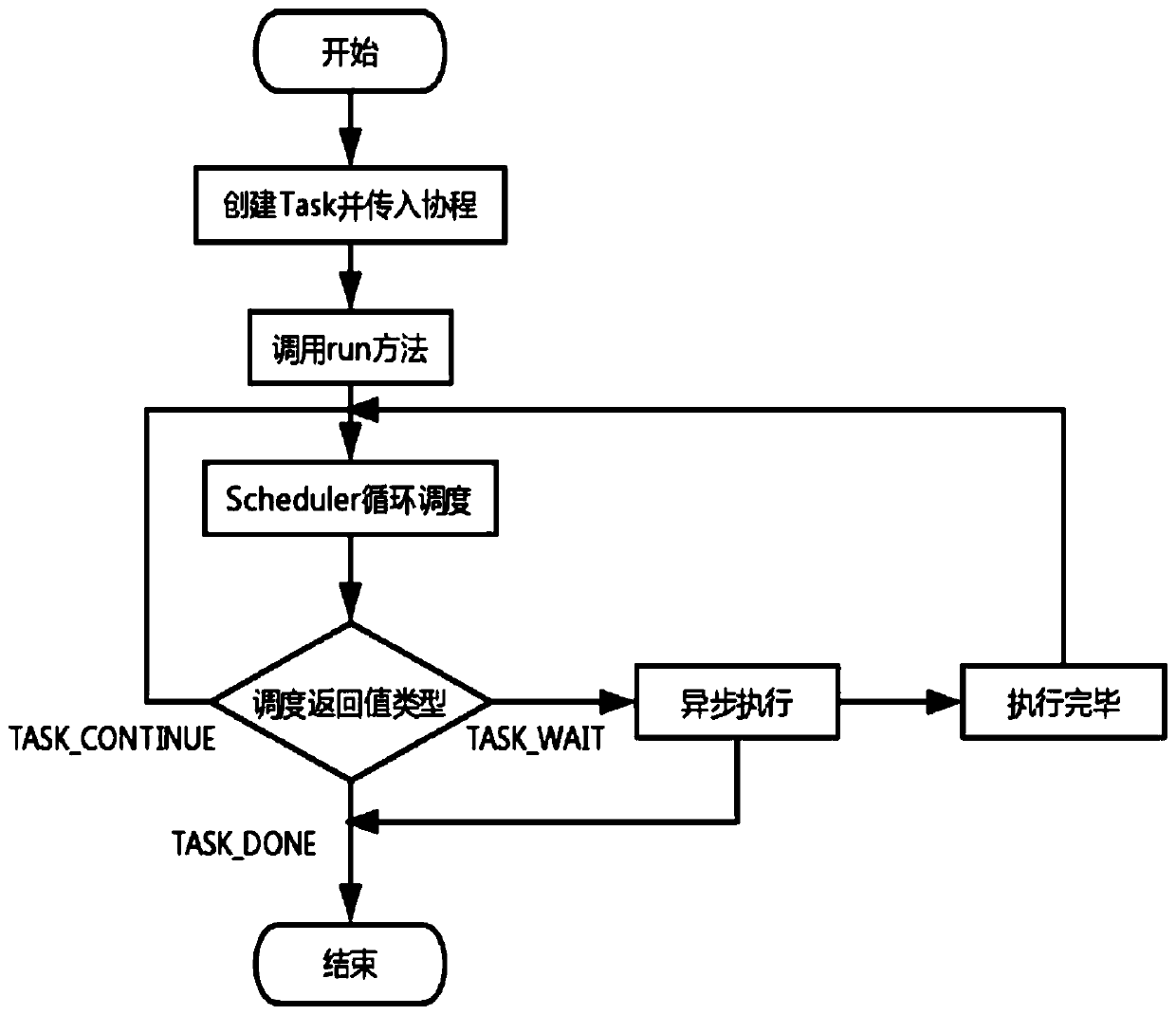

Method for processing high-concurrency IO based on PHP

PendingCN110795254AReduce blocking waitsEasy to handleProgram initiation/switchingInterprogram communicationLogisimMultithreading

The invention discloses a method for processing high-concurrency IO based on PHP. The method comprises: Swoole creating one or more processes according to configuration; the Master process creating NReactor threads; creating a coroutine in each Reactor thread; and asynchronously processing the IO task through coroutine scheduling. According to the invention, a Swoole model is used, through the multi-thread Reactor model (based on epoll) and a multi-process Worker, a coroutine is created in each thread. Through coroutine scheduling, an IO task is asynchronously processed, namely, when meetingthe condition of IO time consumption, firstly processing other code logics through coroutine scheduling until IO is completed and then continuing to execute previous codes; according to the method, system resources are fully utilized, IO blockage waiting and system resource waste are reduced, the IO processing capacity under high concurrency is greatly improved, and the problems of Accept performance bottleneck and convulsion group can be solved.

Owner:武汉智美互联科技有限公司

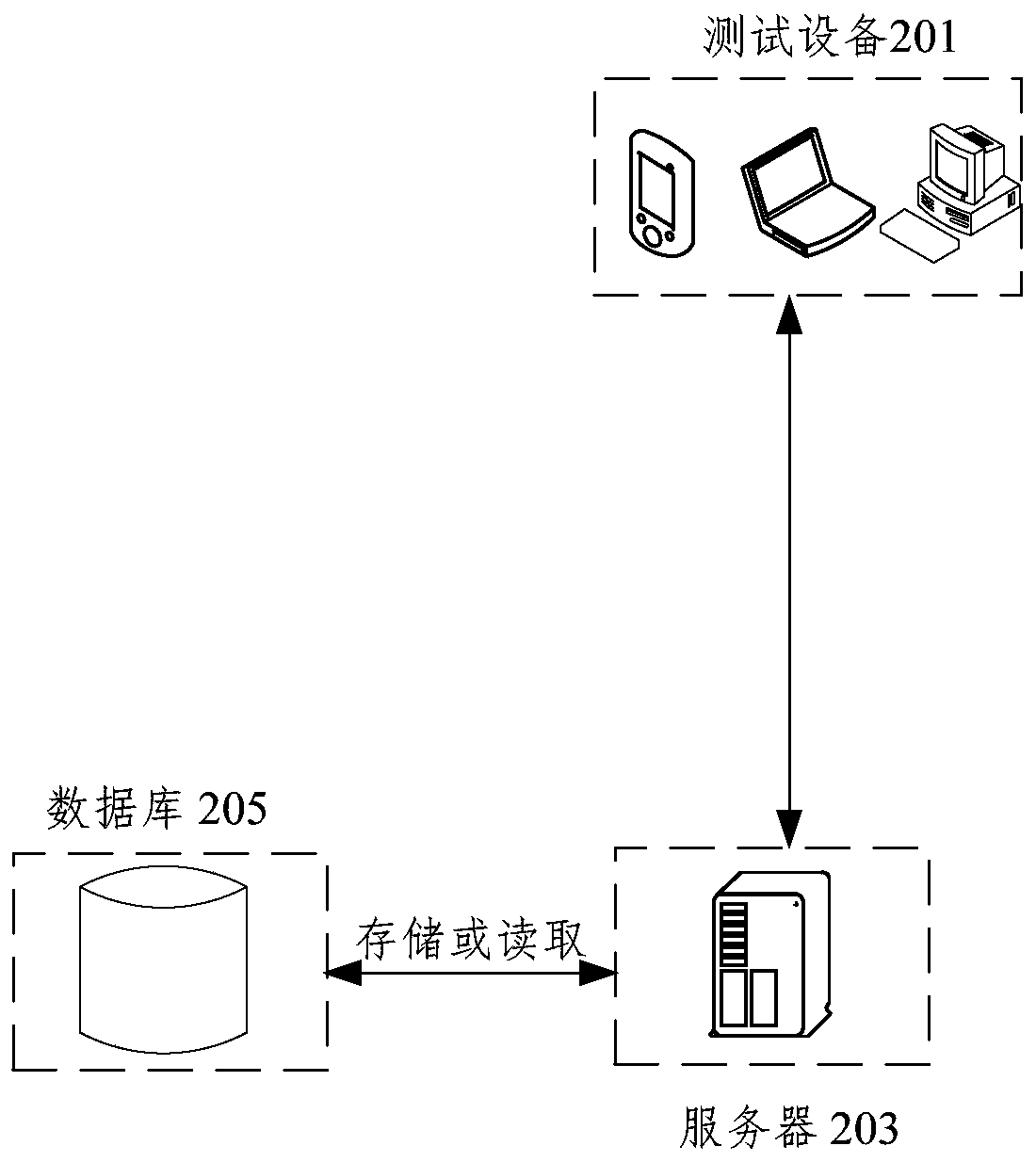

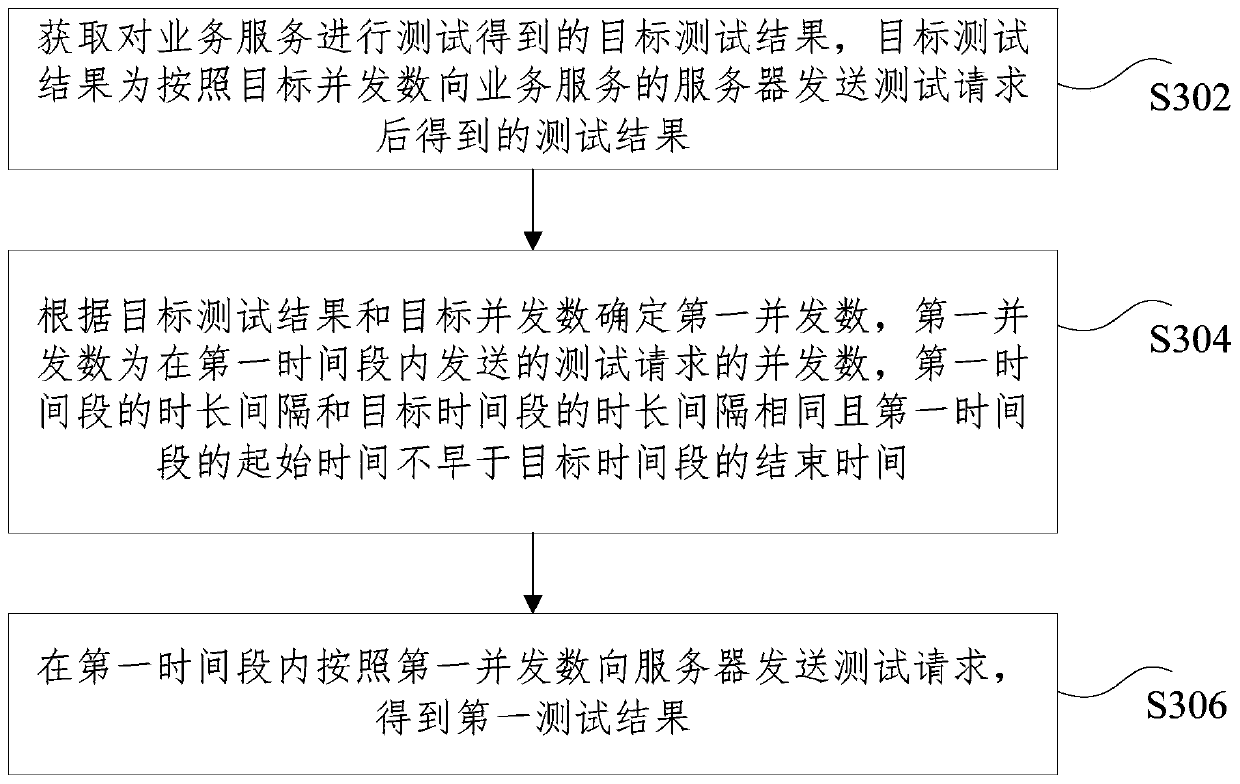

Business service test method and device, storage medium and electronic device

ActiveCN111162934ASolve the technical problem of low efficiency of service testingImprove test efficiencyData switching networksStart timeConcurrency

The invention discloses a business service test method and device, a storage medium and an electronic device. The method comprises the steps that a target test result obtained by testing a business service is acquired, wherein the target test result is obtained after a test request is sent to a server of the business service according to a target concurrency number, and the target concurrency number is used for indicating the concurrency number of the test request sent in a target time period; a first concurrency number is determined according to the target test result and the target concurrency number, wherein the first concurrency number is the concurrency number of the test request sent in the first time period, the time length interval of the first time period is the same as the time length interval of the target time period, and the starting time of the first time period is not earlier than the ending time of the target time period; and a test request is sent to the server according to the first concurrent number in the first time period to obtain a first test result. According to the invention, the technical problem of low efficiency of service testing in related technologiesis solved.

Owner:微民保险代理有限公司

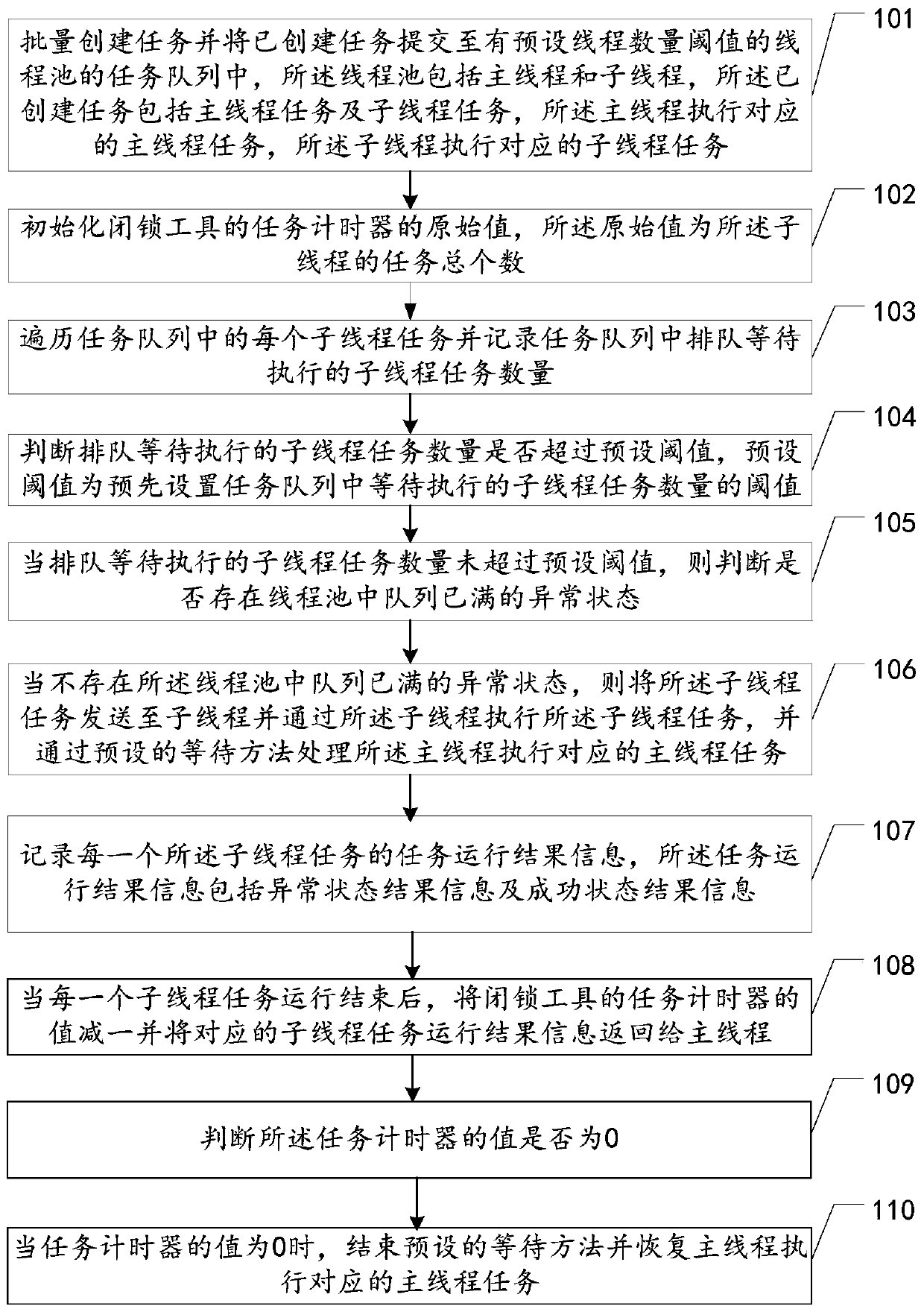

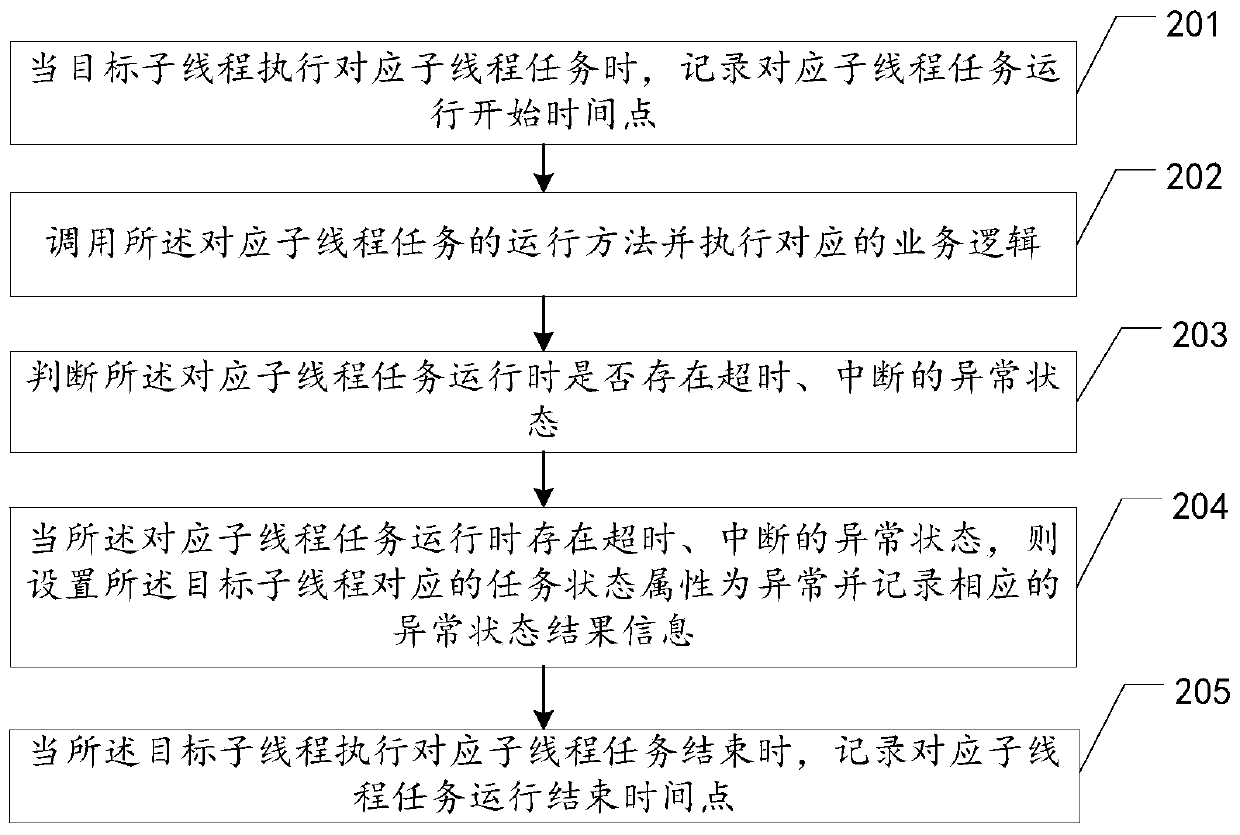

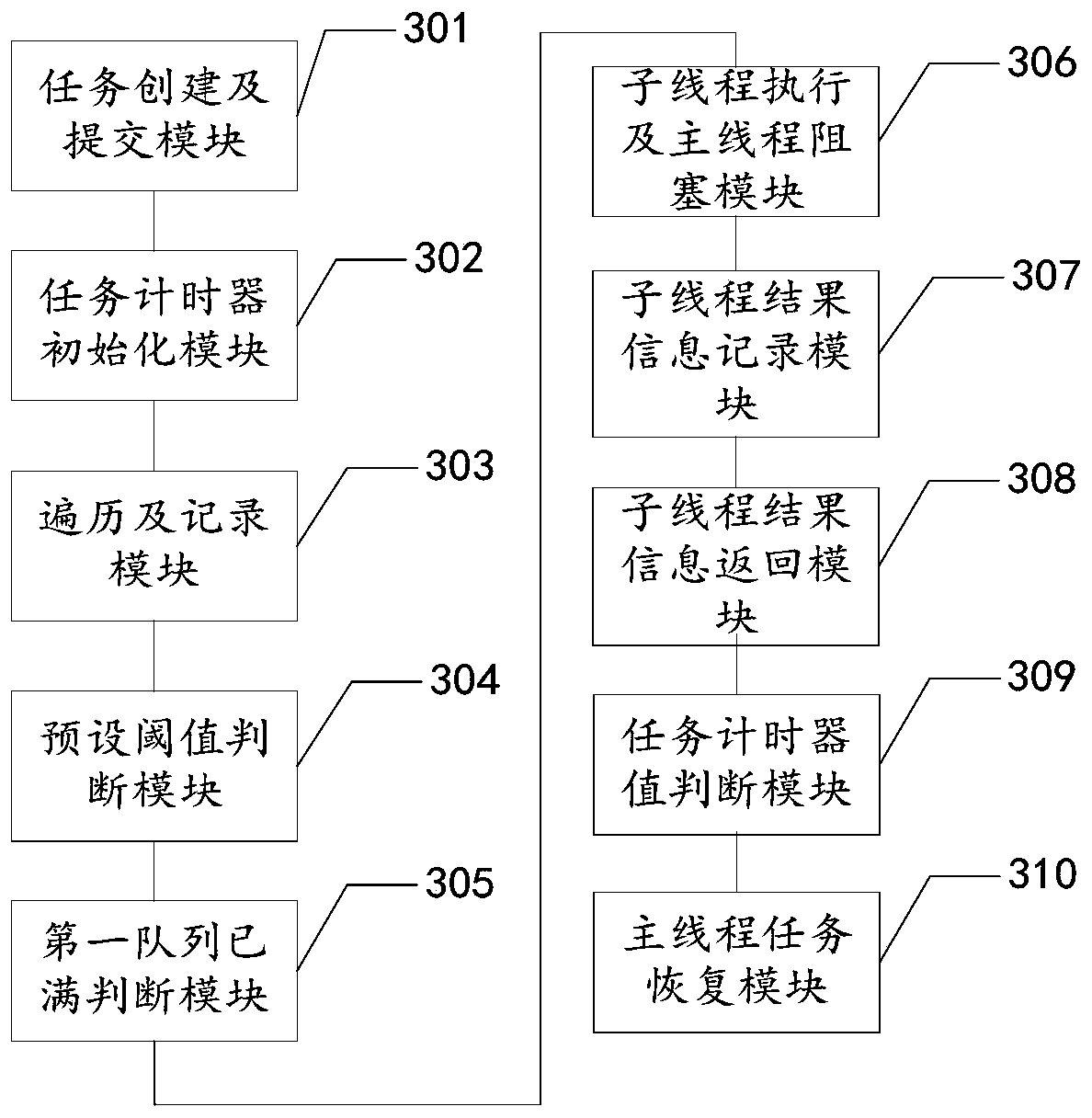

Multi-thread concurrency monitoring method, device and apparatus and storage medium

PendingCN111488255AImprove stabilityProgram initiation/switchingResource allocationParallel computingEngineering

The invention relates to the technical field of server high-concurrency processing, and discloses a multi-thread concurrency monitoring method, device and apparatus and a storage medium, which are used for preventing the conditions of memory overflow and too slow response of a server and improving the running stability of the server. The method comprises the steps of creating tasks in batches andsubmitting the tasks to a task queue, and an original value of a task timer of a locking tool is initialized to be the total number of the tasks of sub-threads; traversing the sub-thread tasks and recording the number of to-be-executed tasks; judging whether the task number exceeds a preset threshold value or not; if the preset threshold value is not exceeded, judging whether a queue is full or not; if the queue is not full, submitting the sub-thread task to the sub-thread and blocking the main thread; recording running result information of each task; after the task operation of each sub-thread is finished, subtracting one from the value of a task timer of the locking tool and returning task operation result information to a main thread; and when the value of the task timer is 0, recovering the main thread to execute the corresponding main thread task.

Owner:ONE CONNECT SMART TECH CO LTD SHENZHEN

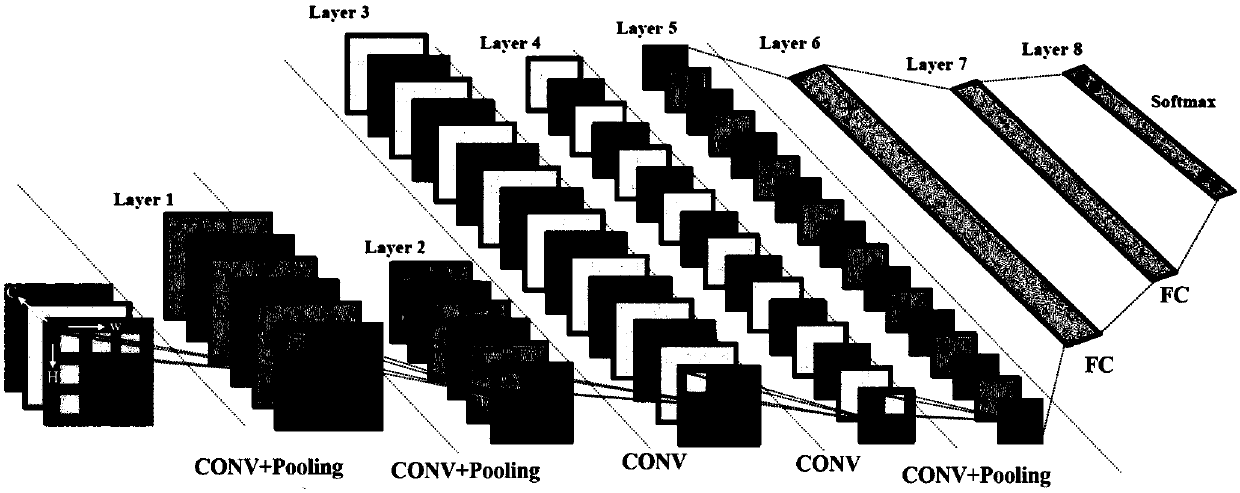

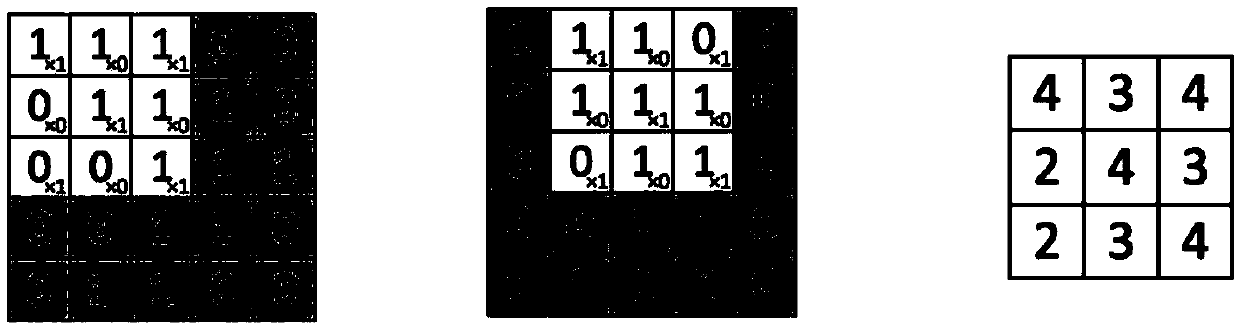

Neural network calculation special circuit and related calculation platform and implementation method thereof

ActiveCN110766127AImprove hardware utilizationSave hardware resourcesProcessor architectures/configurationNeural architecturesComputer hardwareMap reading

The invention discloses a special circuit for a neural network and a related computing platform and implementation method thereof. The special circuit comprises: a data reading module which comprisesa feature map reading sub-module and a weight reading sub-module which are respectively used for reading feature map data and weight data from an on-chip cache to a data calculation module when a depthwise convolution operation is executed, wherein the feature map reading sub-module is also used for reading the feature map data from the on-chip cache to the data calculation module when executing pooling operation; a data calculation module which comprises a dwconv module used for executing depthwise convolution calculation and a pooling module used for executing pooling calculation; and a datawrite-back module which is used for writing a calculation result of the data calculation module back to the on-chip cache. The use of hardware resources is reduced by multiplexing the read logic andthe write-back logic of the two types of operations. The special circuit provided by the invention adopts a high-concurrency pipeline design, so that the computing performance can be further improved.

Owner:XILINX TECH BEIJING LTD

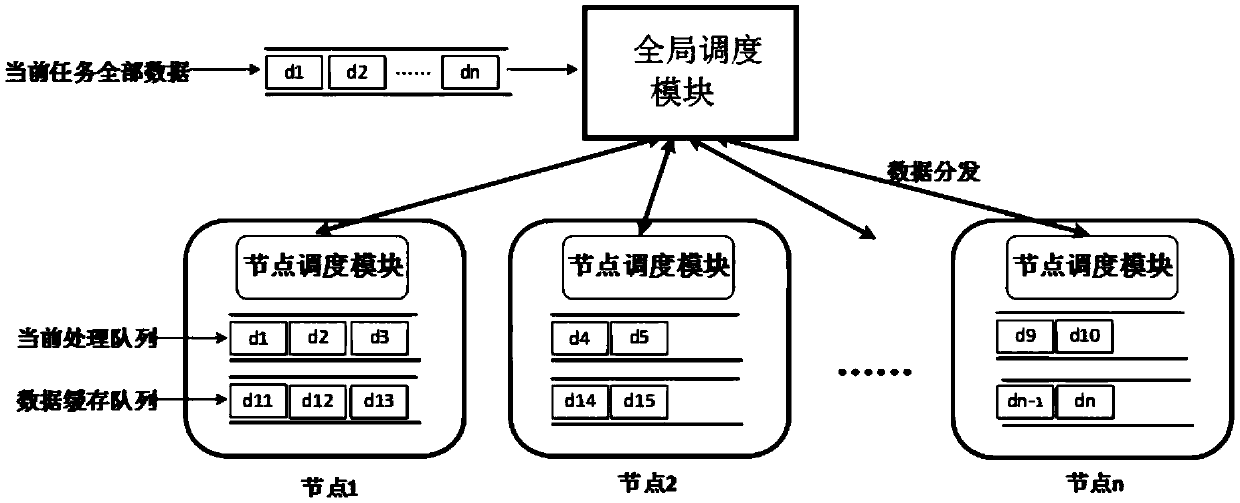

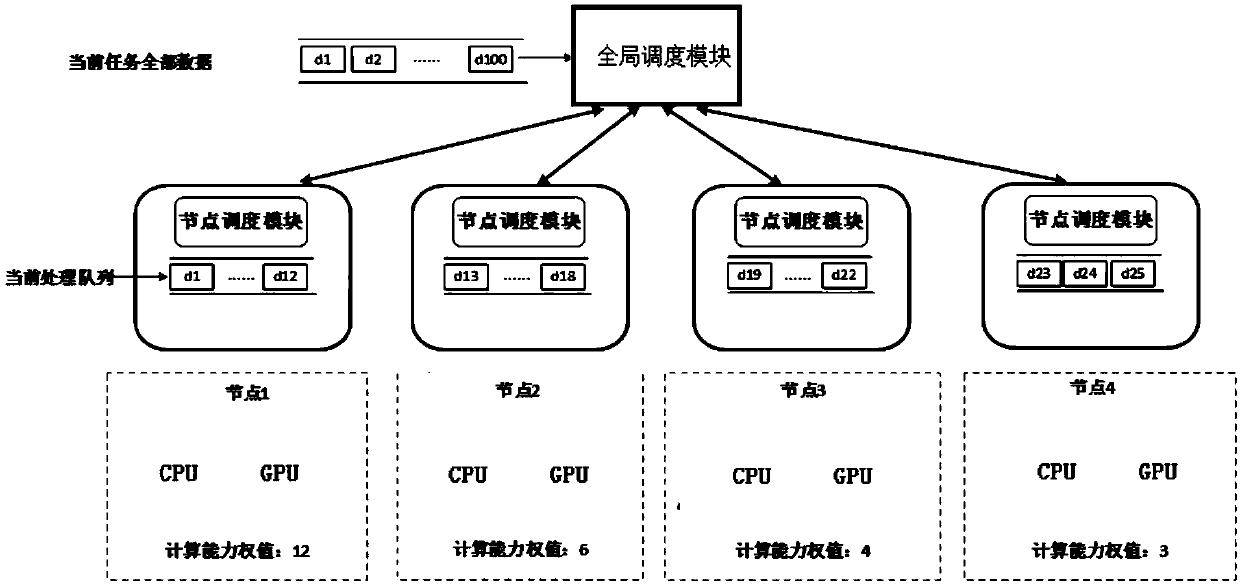

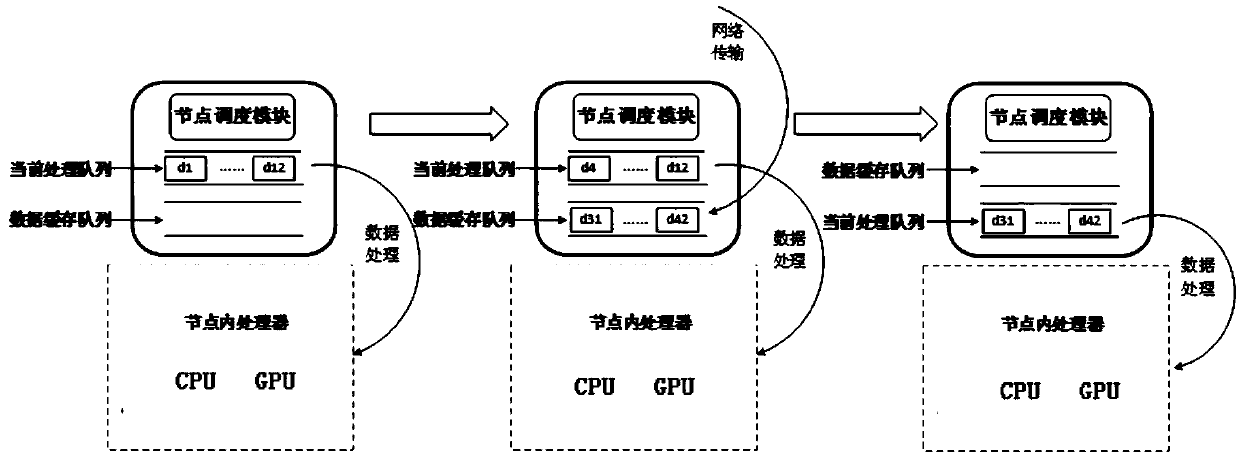

Dynamic scheduling method and system for collaborative computing between CPU and GPU based on two-level scheduling

ActiveCN109254846AGuaranteed concurrencyReduce task processing timeResource allocationEnergy efficient computingTwo-level schedulingBatch Number

The invention discloses a dynamic scheduling method and a system for collaborative computing between a CPU and a GPU based on two-level scheduling. The method includes: forecasting the processing capacity of each node in the system, a global scheduling module dynamically distributing data to each node according to the processing capacity of each node according to the batch number of the request ofthe node scheduling module in each node, when the node scheduling module finds that the data queue used to place the processing data is empty, requesting the next batch of data to be processed from the global scheduling module, and dynamically scheduling the tasks according to the CPU and GPU processing capacity in the node. According to the heterogeneity of system resources, the invention allowsweak nodes to share fewer tasks and strong nodes to process more tasks, which can improve the overall concurrency degree of the CPU / GPU heterogeneous hybrid parallel system and reduce the task completion time.

Owner:NARI TECH CO LTD +4

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com