Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

4484 results about "Shared memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer science, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Shared memory is an efficient means of passing data between programs. Depending on context, programs may run on a single processor or on multiple separate processors.

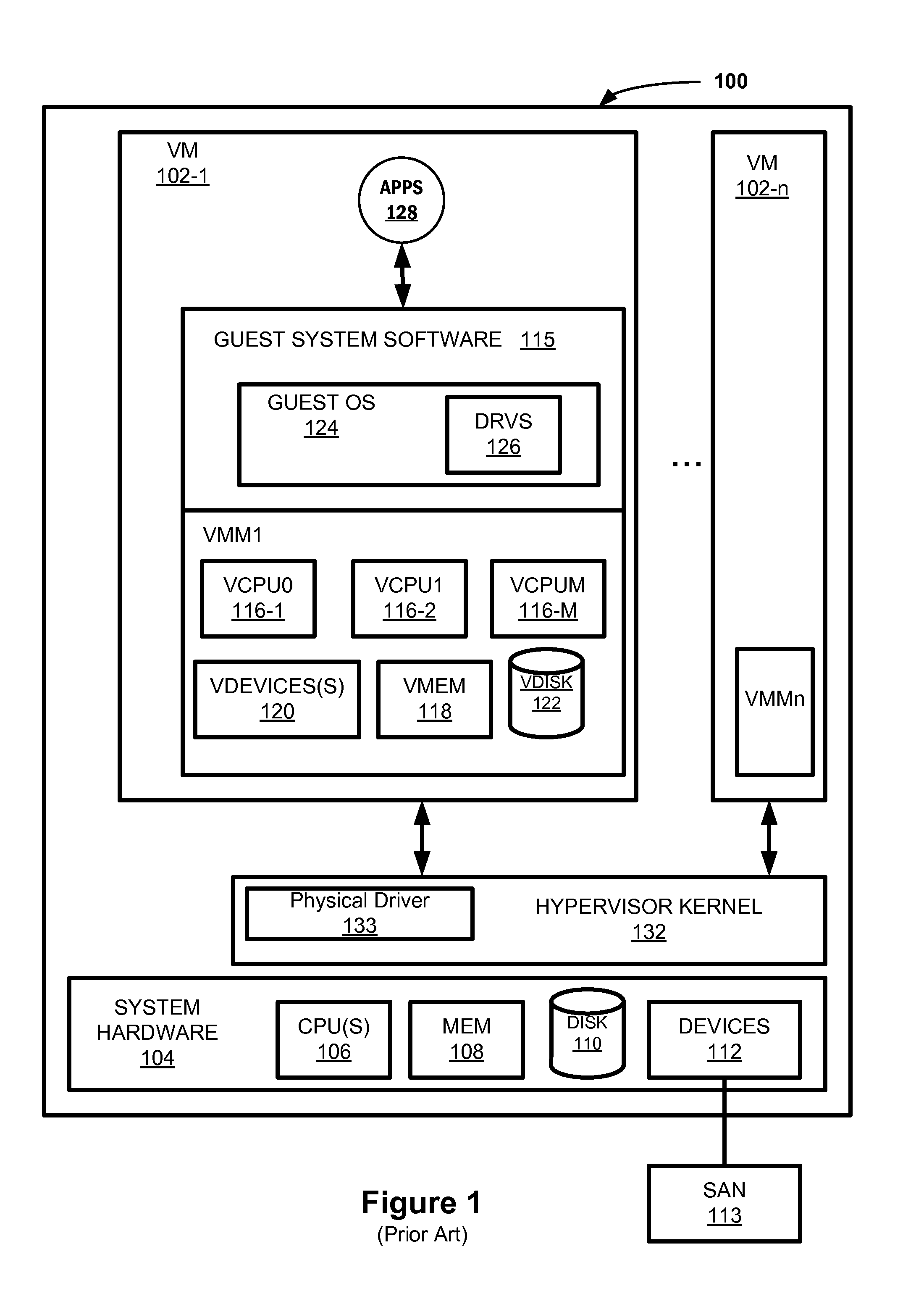

Transparent Memory-Mapped Emulation of I/O Calls

ActiveUS20090113425A1Memory architecture accessing/allocationError detection/correctionSemanticsMemory map

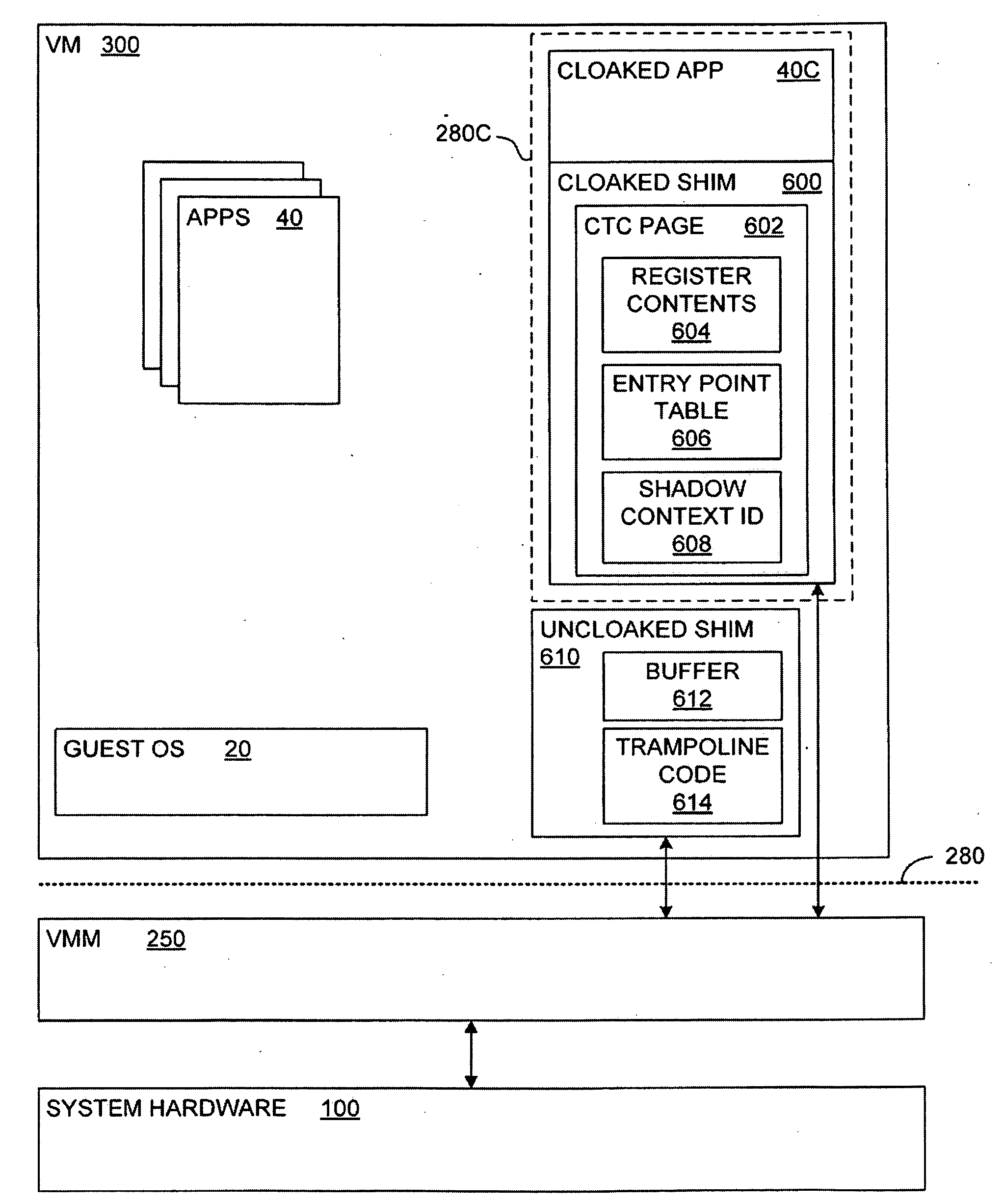

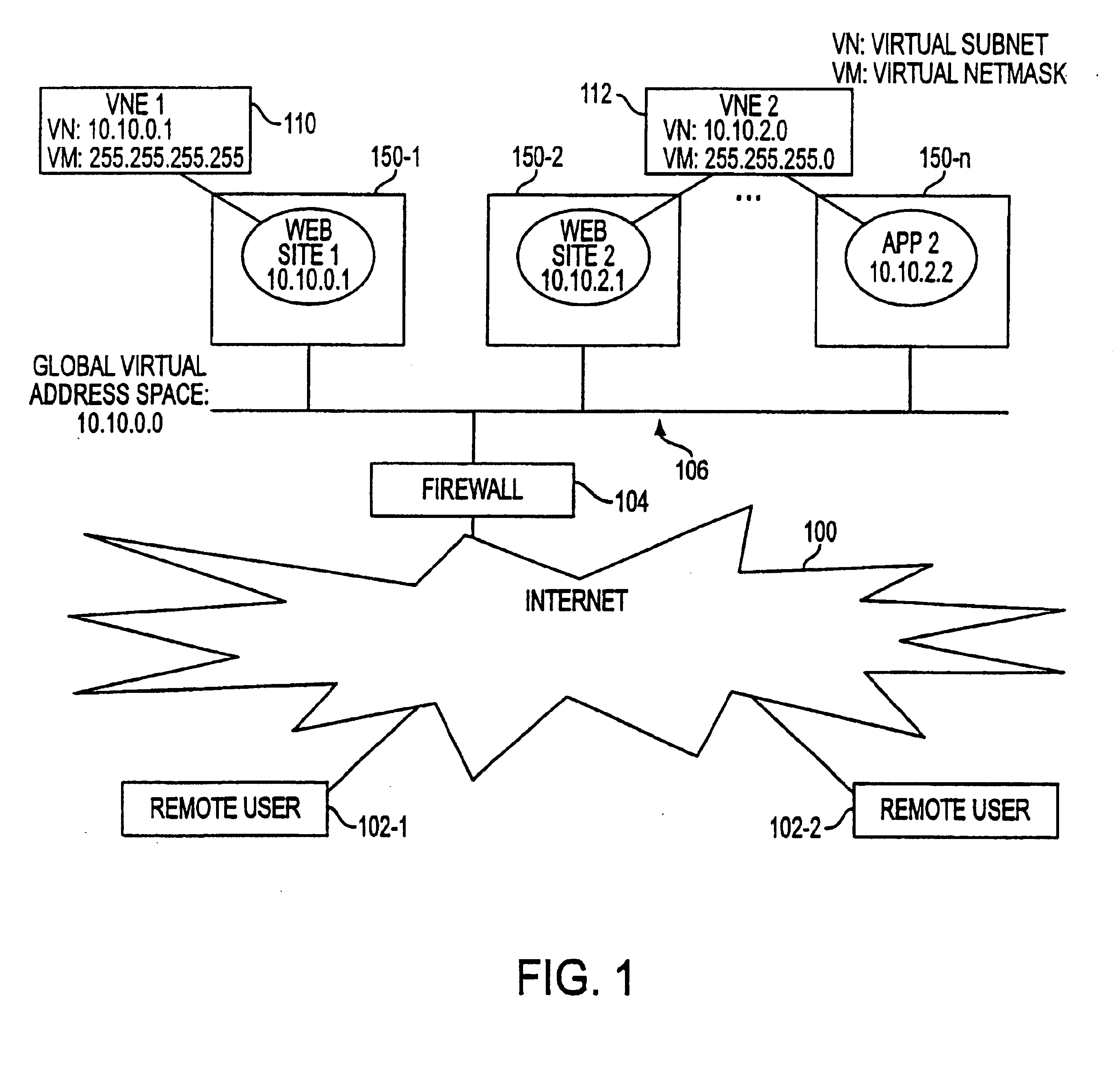

A virtual-machine-based system provides a mechanism to implement application file I / O operations of protected data by implementing the I / O operations semantics in a shim layer with memory-mapped regions. The semantics of these I / O operations are emulated in a shim layer with memory-mapped regions by using a mapping between a process' address space and a file or shared memory object. Data that is protected from viewing by a guest OS running in a virtual machine may nonetheless be accessed by the process.

Owner:VMWARE INC

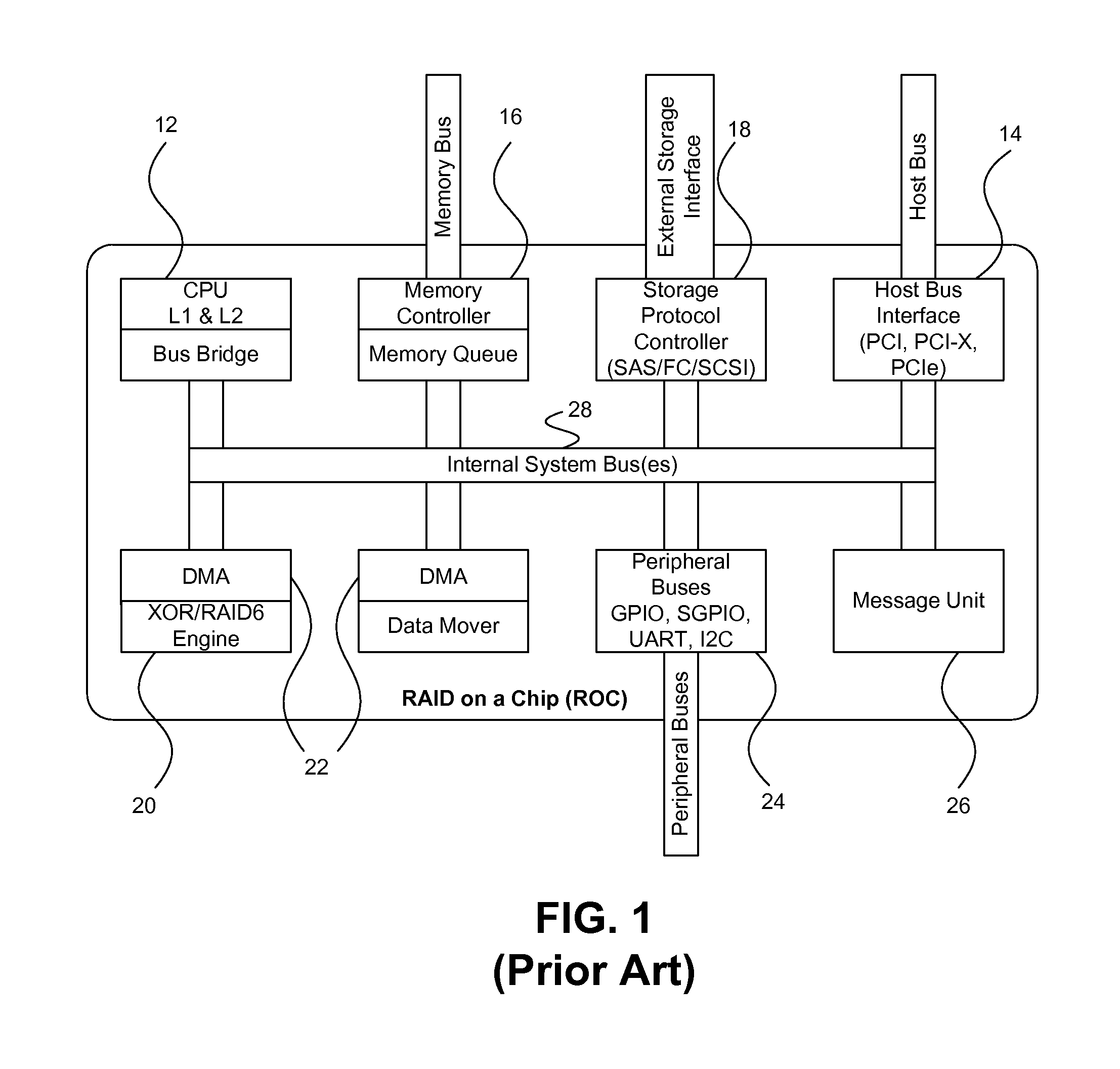

On-chip shared memory based device architecture

ActiveUS7743191B1Reduce disadvantagesLow costRedundant array of inexpensive disk systemsRecord information storageExtensibilityRAID

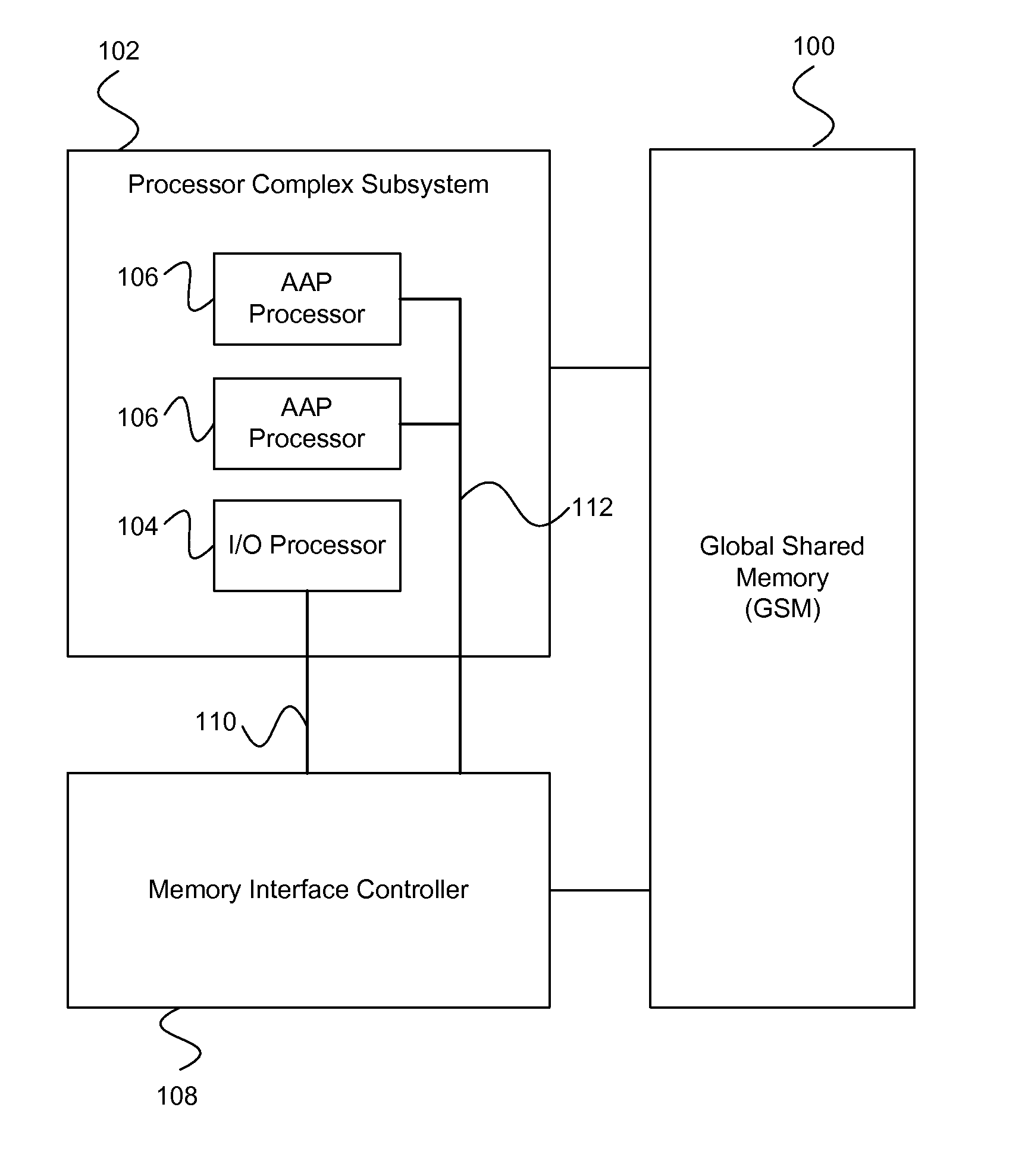

A method and architecture are provided for SOC (System on a Chip) devices for RAID processing, which is commonly referred as RAID-on-a-Chip (ROC). The architecture utilizes a shared memory structure as interconnect mechanism among hardware components, CPUs and software entities. The shared memory structure provides a common scratchpad buffer space for holding data that is processed by the various entities, provides interconnection for process / engine communications, and provides a queue for message passing using a common communication method that is agnostic to whether the engines are implemented in hardware or software. A plurality of hardware engines are supported as masters of the shared memory. The architectures provide superior throughput performance, flexibility in software / hardware co-design, scalability of both functionality and performance, and support a very simple abstracted parallel programming model for parallel processing.

Owner:MICROSEMI STORAGE SOLUTIONS

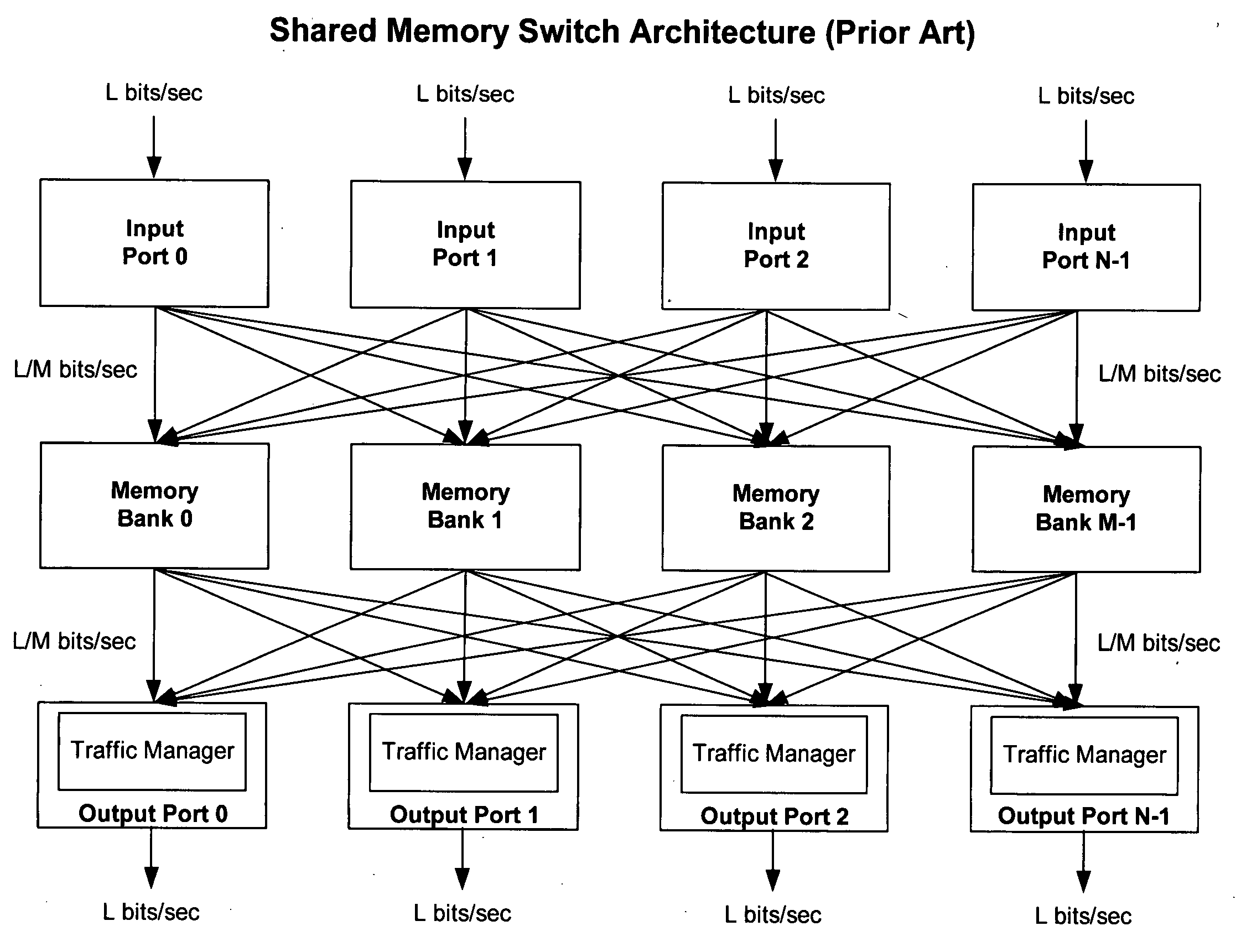

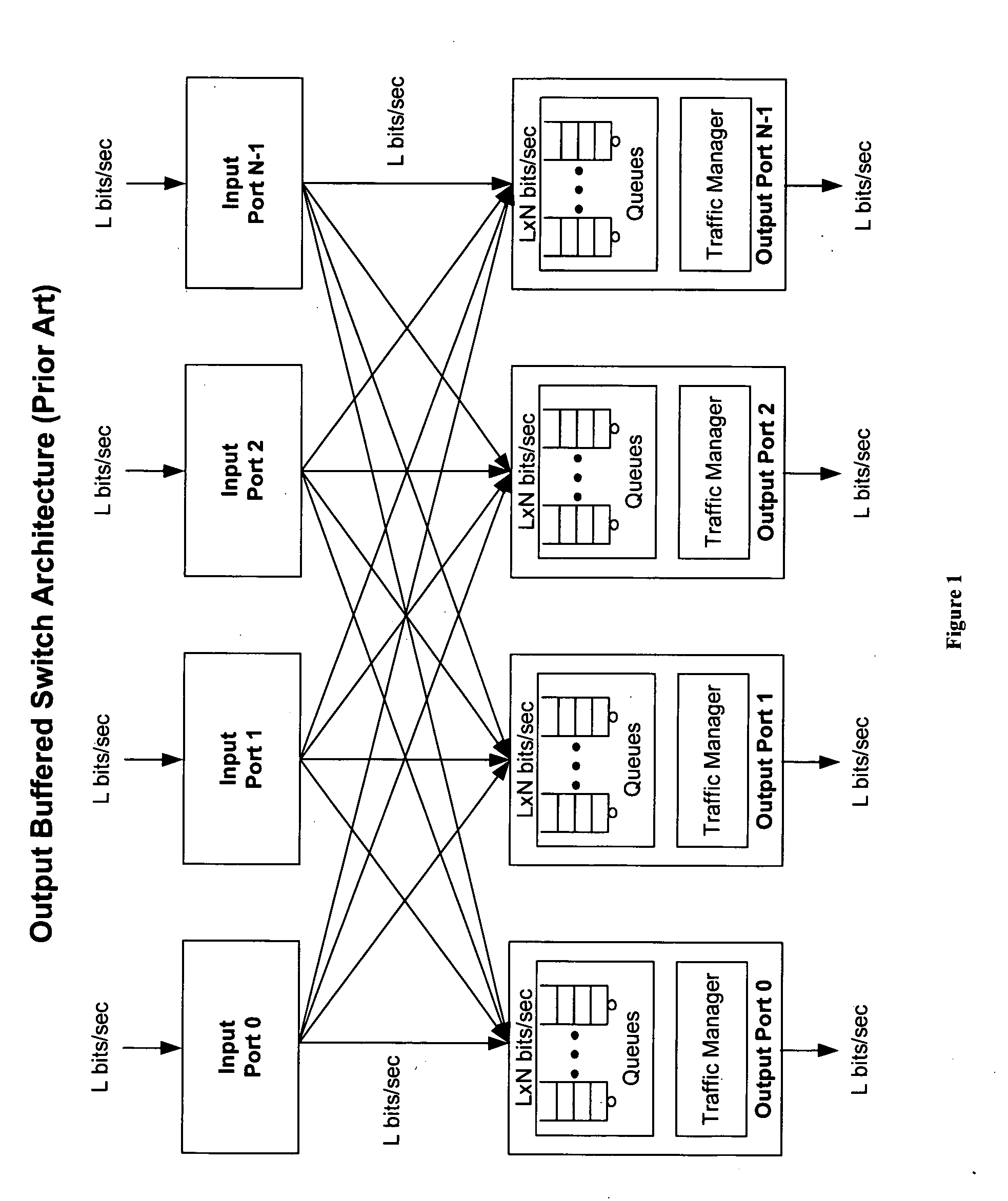

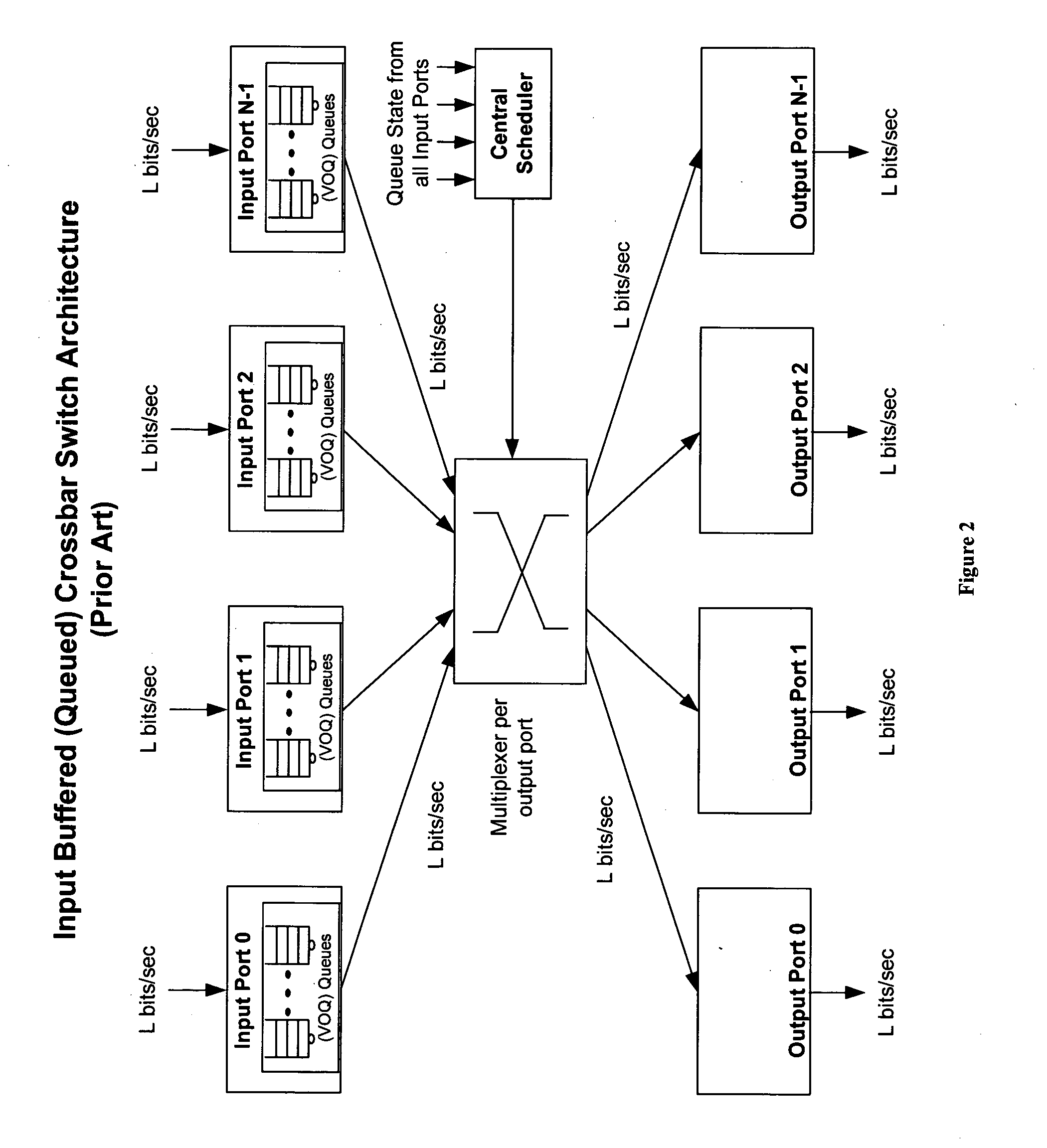

Method of and system for physically distributed, logically shared, and data slice-synchronized shared memory switching

An improved data networking technique and apparatus using a novel physically distributed but logically shared and data-sliced synchronized shared memory switching datapath architecture integrated with a novel distributed data control path architecture to provide ideal output-buffered switching of data in networking systems, such as routers and switches, to support the increasing port densities and line rates with maximized network utilization and with per flow bit-rate latency and jitter guarantees, all while maintaining optimal throughput and quality of services under all data traffic scenarios, and with features of scalability in terms of number of data queues, ports and line rates, particularly for requirements ranging from network edge routers to the core of the network, thereby to eliminate both the need for the complication of centralized control for gathering system-wide information and for processing the same for egress traffic management functions and the need for a centralized scheduler, and eliminating also the need for buffering other than in the actual shared memory itself,—all with complete non-blocking data switching between ingress and egress ports, under all circumstances and scenarios.

Owner:QOS LOGIX

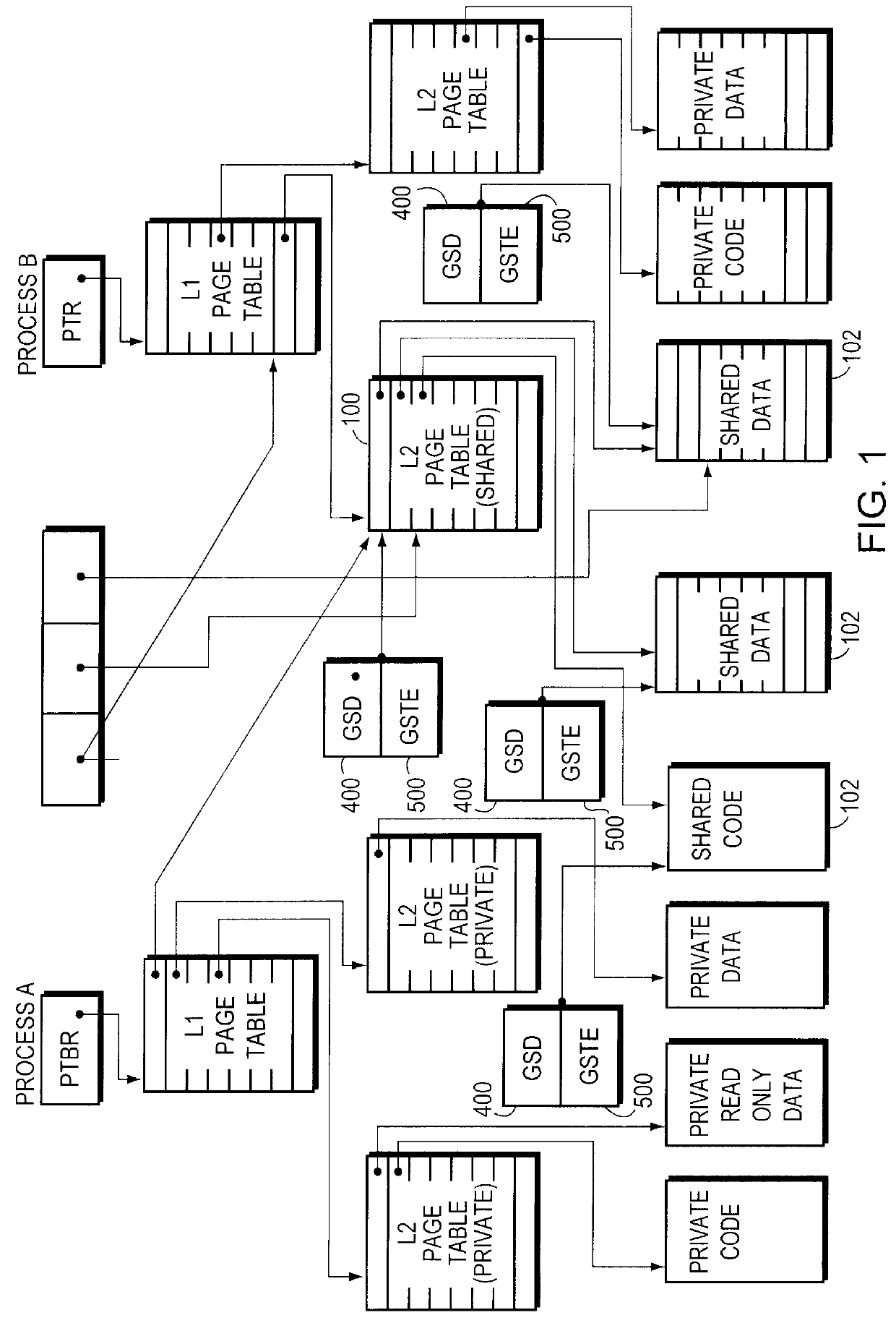

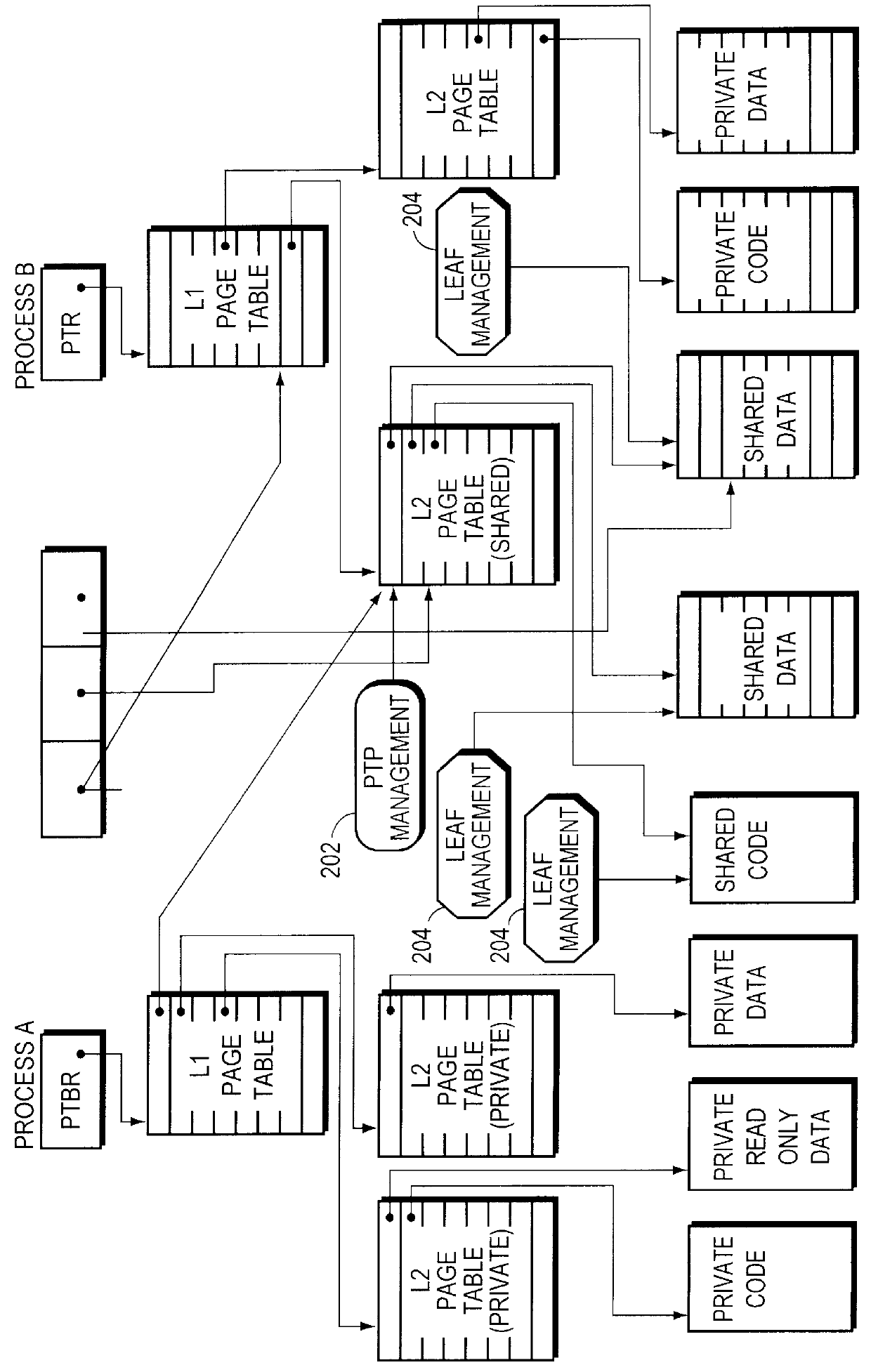

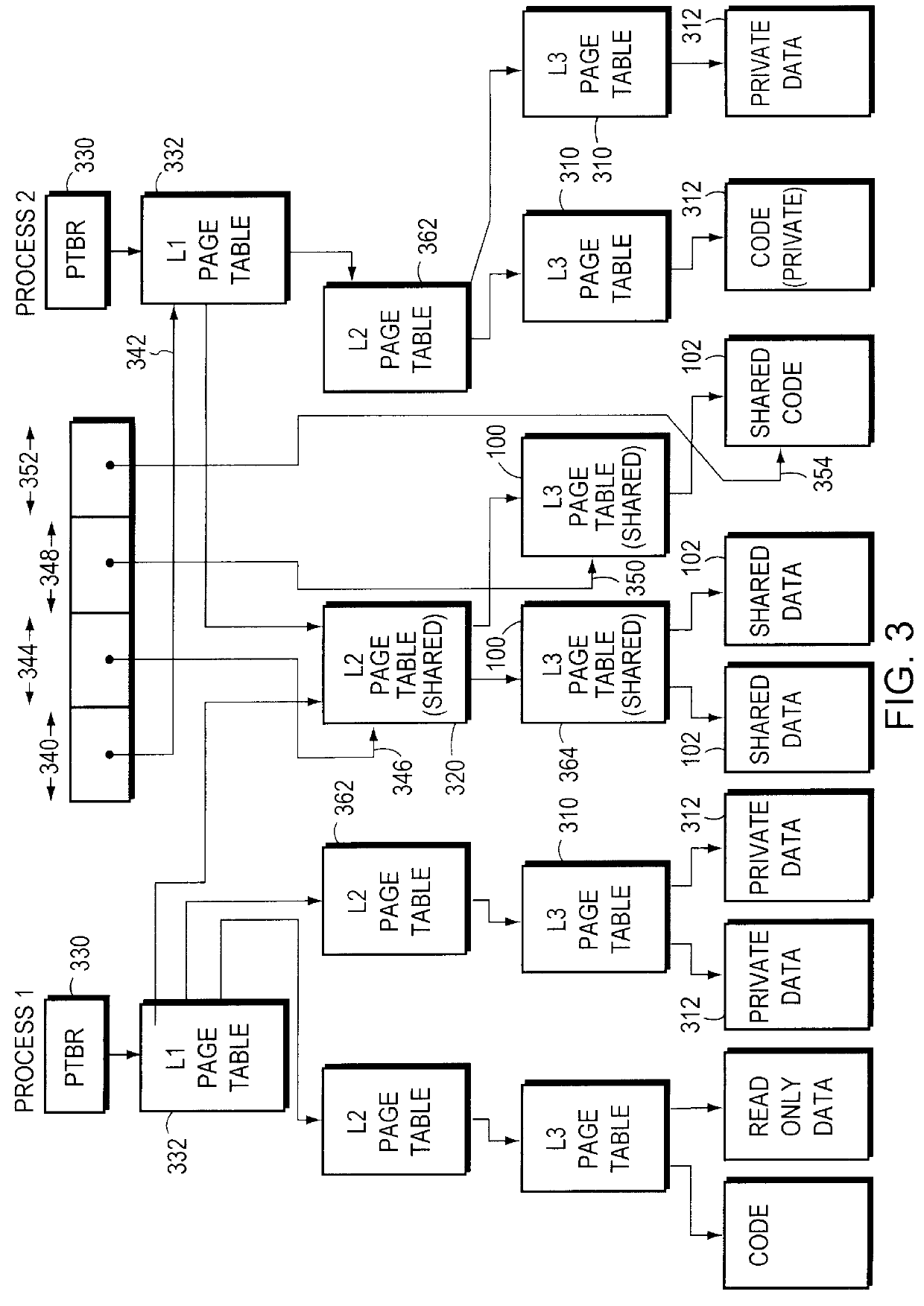

Sharing memory pages and page tables among computer processes

InactiveUS6085296AOptimizationReduce fragmentationMemory adressing/allocation/relocationMicro-instruction address formationPage tableComputer memory

A method of managing computer memory pages. The sharing of a program-accessible page between two processes is managed by a predefined mechanism of a memory manager. The sharing of a page table page between the processes is managed by the same predefined mechanism. The data structures used by the mechanism are equally applicable to sharing program-accessible pages or page table pages.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

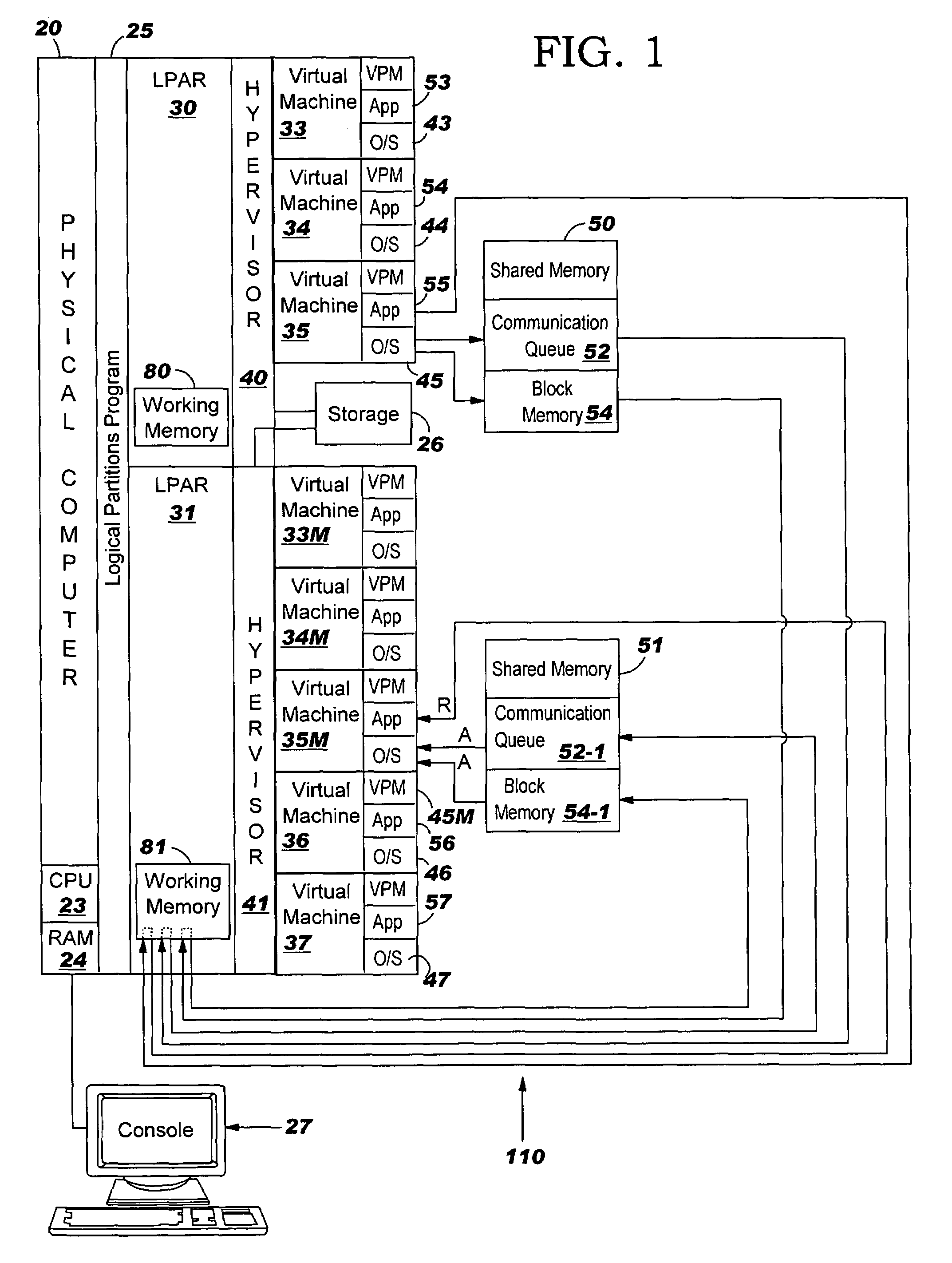

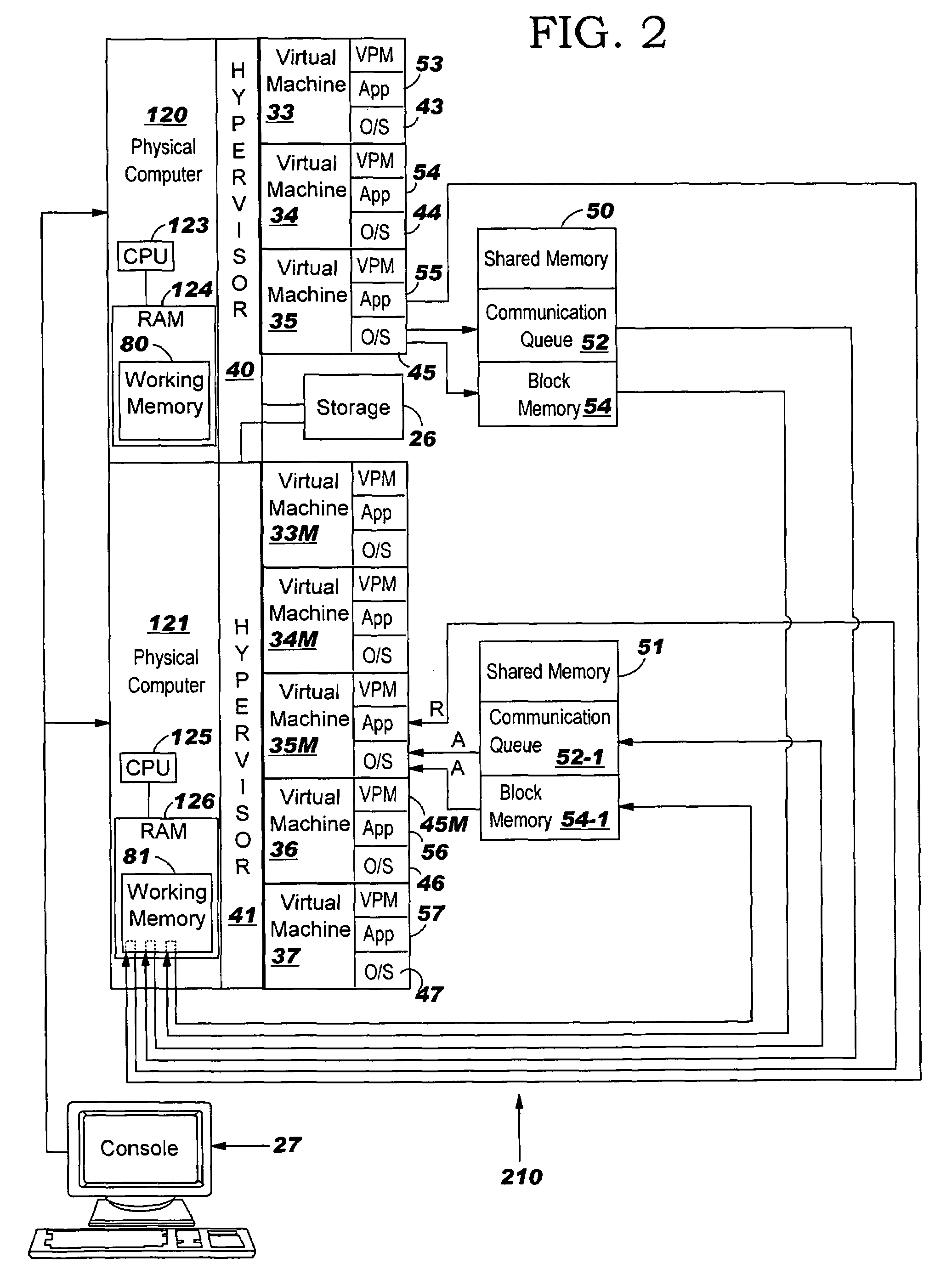

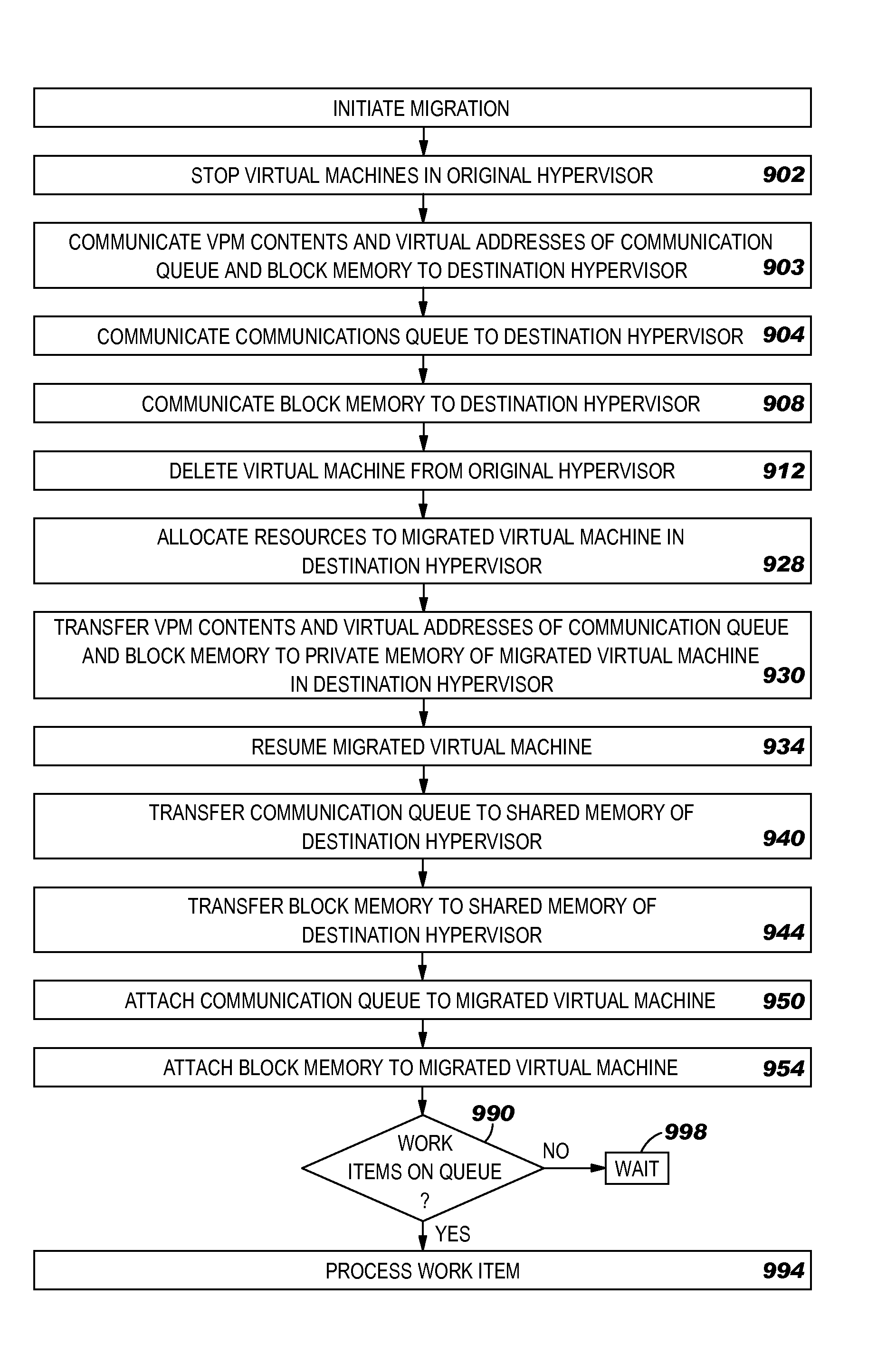

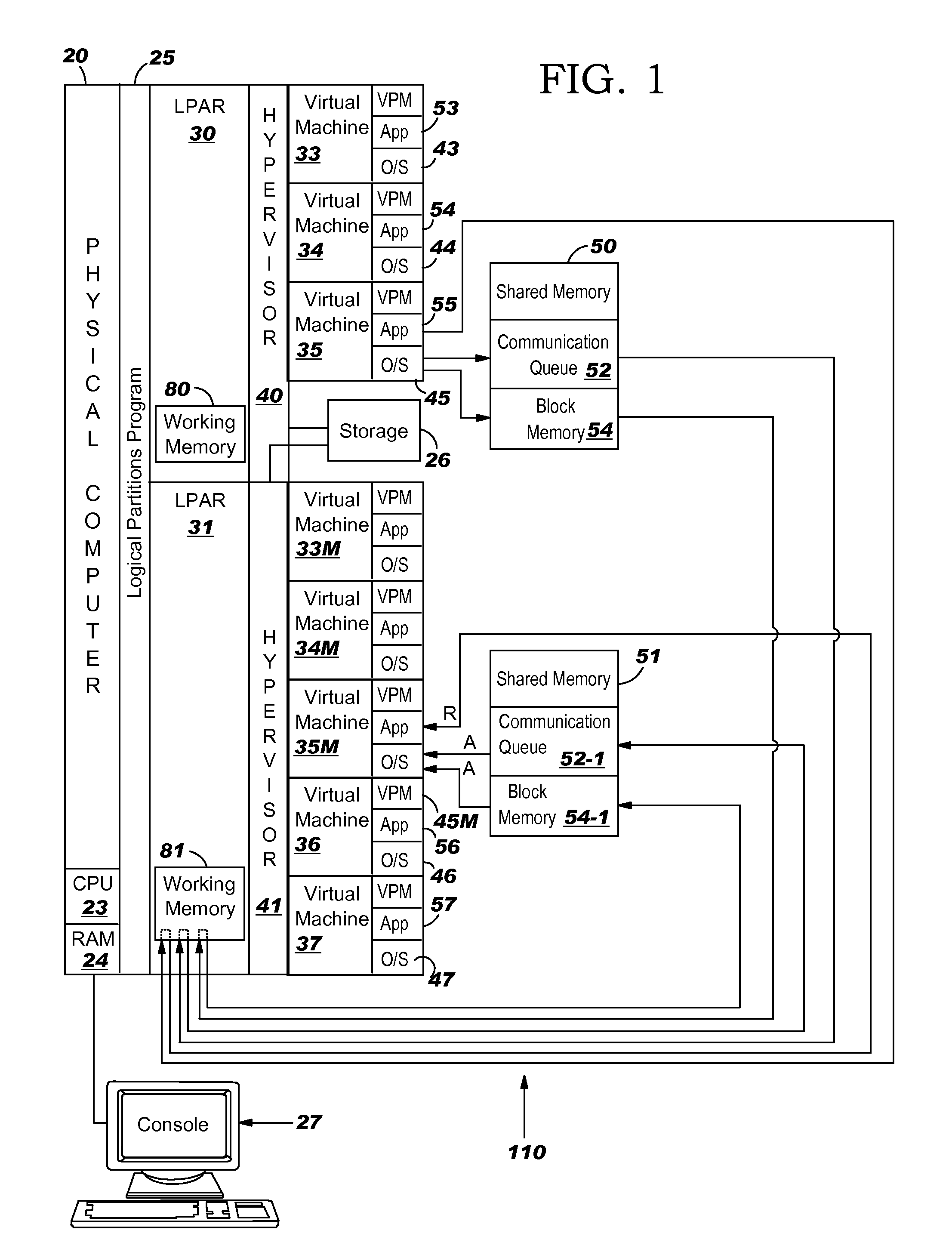

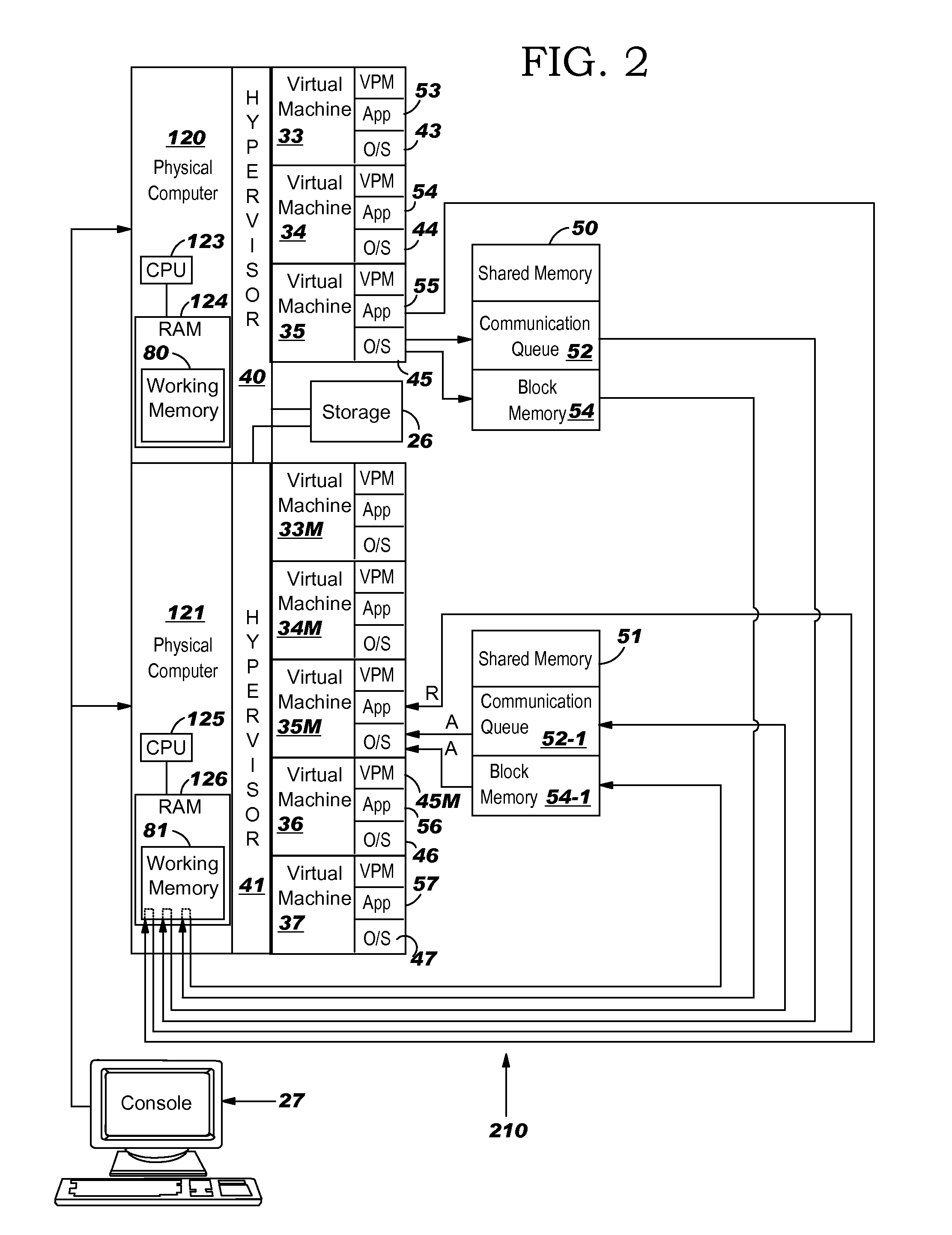

System, method and program to migrate a virtual machine

ActiveUS7257811B2Resource allocationSoftware simulation/interpretation/emulationOperational systemApplication software

A system, method and program product for migrating a first virtual machine from a first real computer to a second real computer or from a first LPAR to a second LPAR in a same real computer. Before migration, the first virtual machine comprises an operating system and an application in a first private memory private to the first virtual machine. A communication queue of the first virtual machine resides in a shared memory shared by the first and second computers or the first and second LPARs. The operating system and application are copied from the first private memory to the shared memory. The operating system and application are copied from the shared memory to a second private memory private to the first virtual machine in the second computer or second LPAR. Then, the first virtual machine is resumed in the second computer or second LPAR.

Owner:INT BUSINESS MASCH CORP

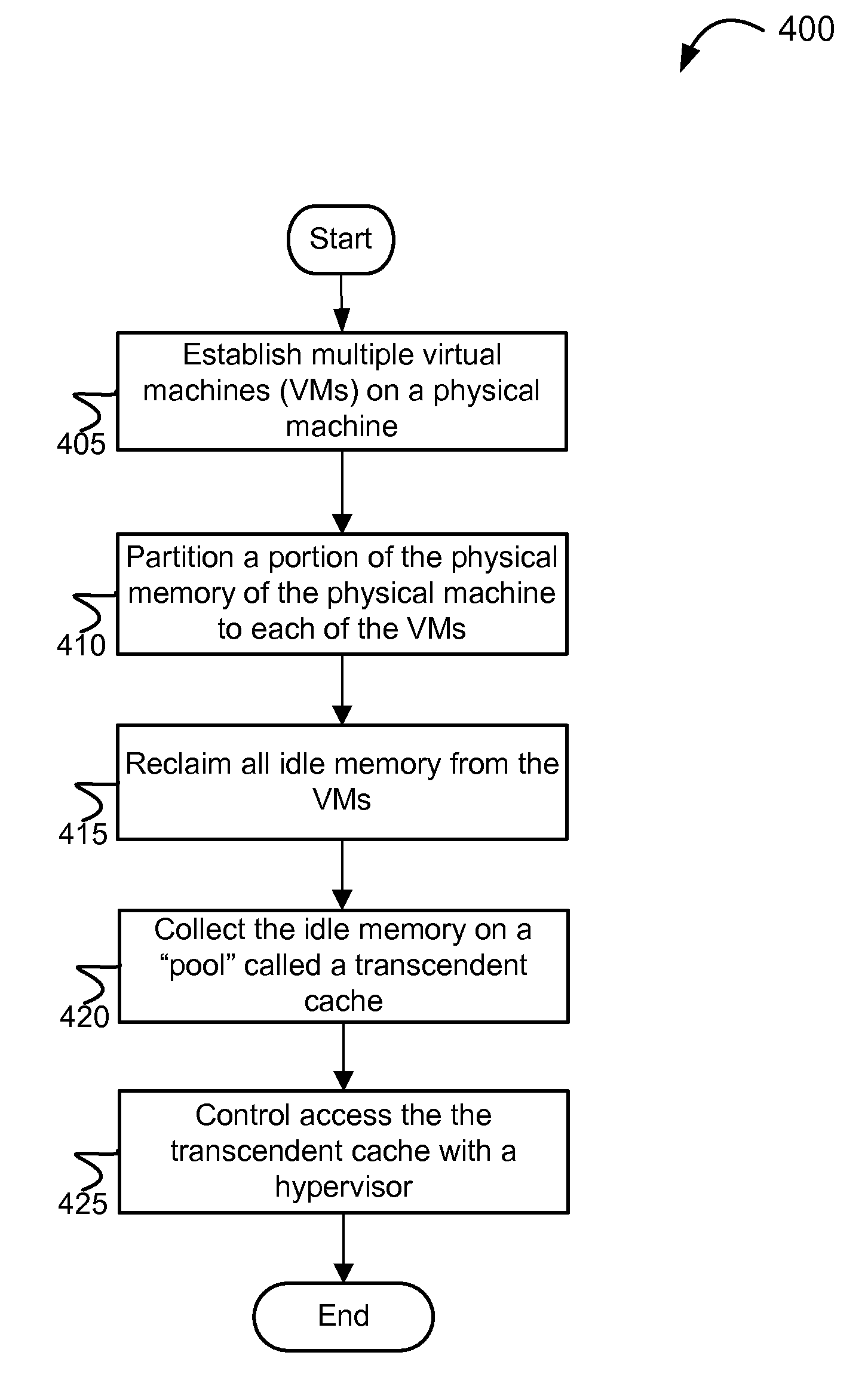

Methods and systems for implementing transcendent page caching

ActiveUS20100186011A1Memory adressing/allocation/relocationSoftware simulation/interpretation/emulationShared memoryVirtual machine

This disclosure describes, generally, methods and systems for implementing transcendent page caching. The method includes establishing a plurality of virtual machines on a physical machine. Each of the plurality of virtual machines includes a private cache, and a portion of each of the private caches is used to create a shared cache maintained by a hypervisor. The method further includes delaying the removal of the at least one of stored memory pages, storing the at least one of stored memory pages in the shared cache, and requesting, by one of the plurality of virtual machines, the at least one of the stored memory pages from the shared cache. Further, the method includes determining that the at least one of the stored memory pages is stored in the shared cache, and transferring the at least one of the stored shared memory pages to the one of the plurality of virtual machines.

Owner:ORACLE INT CORP

Method and apparatus for reducing inefficiencies in shared memory devices

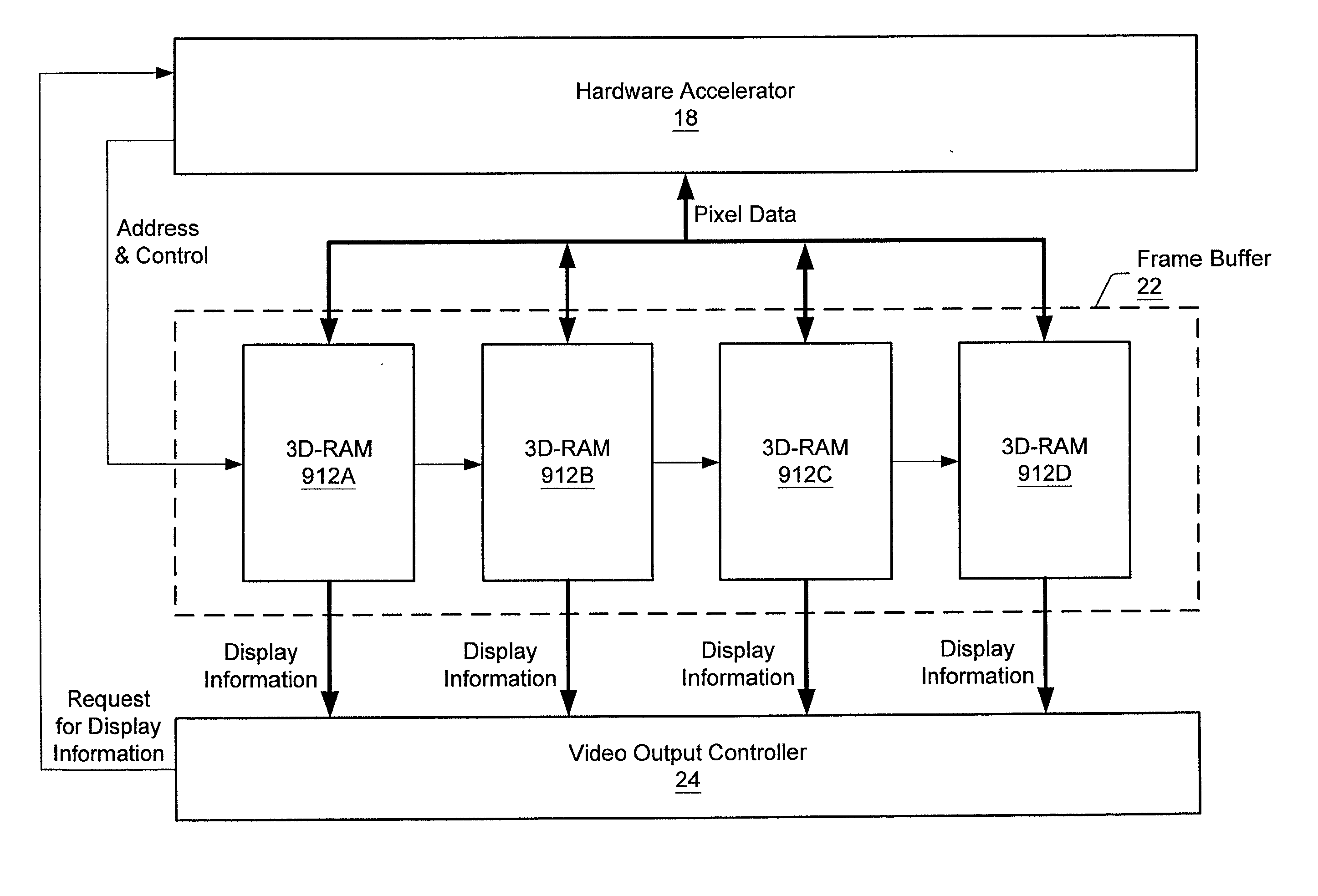

InactiveUS20030043158A1Cathode-ray tube indicatorsDigital output to display deviceGraphicsGraphic system

A graphics system that may be shared between multiple display channels includes a frame buffer, an arbiter, and two pixel output buffers. The arbiter arbitrates between the display channels' requests for display information from the frame buffer and forwards a selected request to the frame buffer. The frame buffer is divided into a first and a second portion. The arbiter alternates display channel requests for data between the first and second portions of the frame buffer. The frame buffer outputs display information in response to receiving the forwarded request, and pixels corresponding to this display information are stored in the output buffers. The arbiter selects which request to forward to the frame buffer based on a relative state of neediness of each of the requesting display channels.

Owner:ORACLE INT CORP

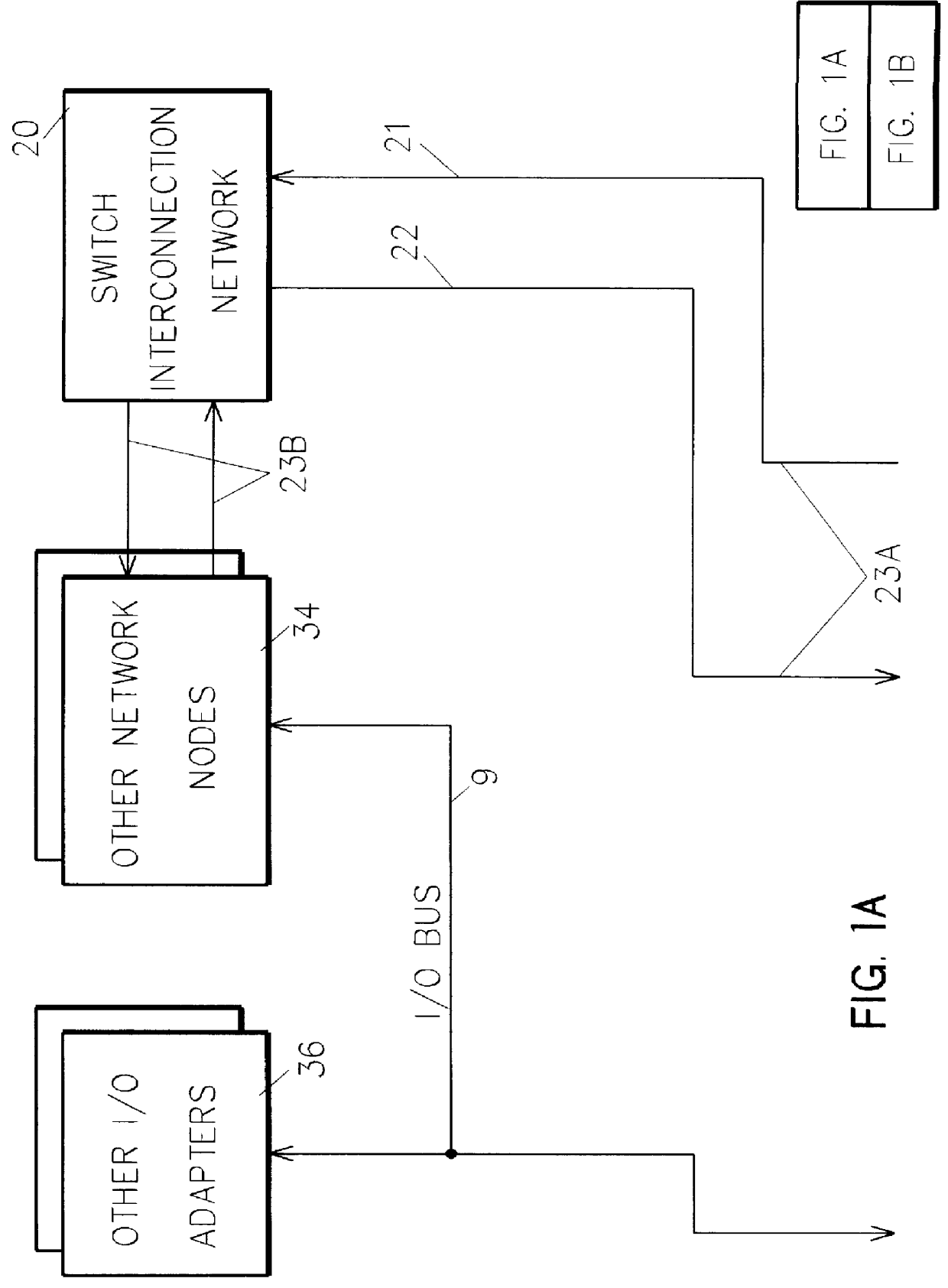

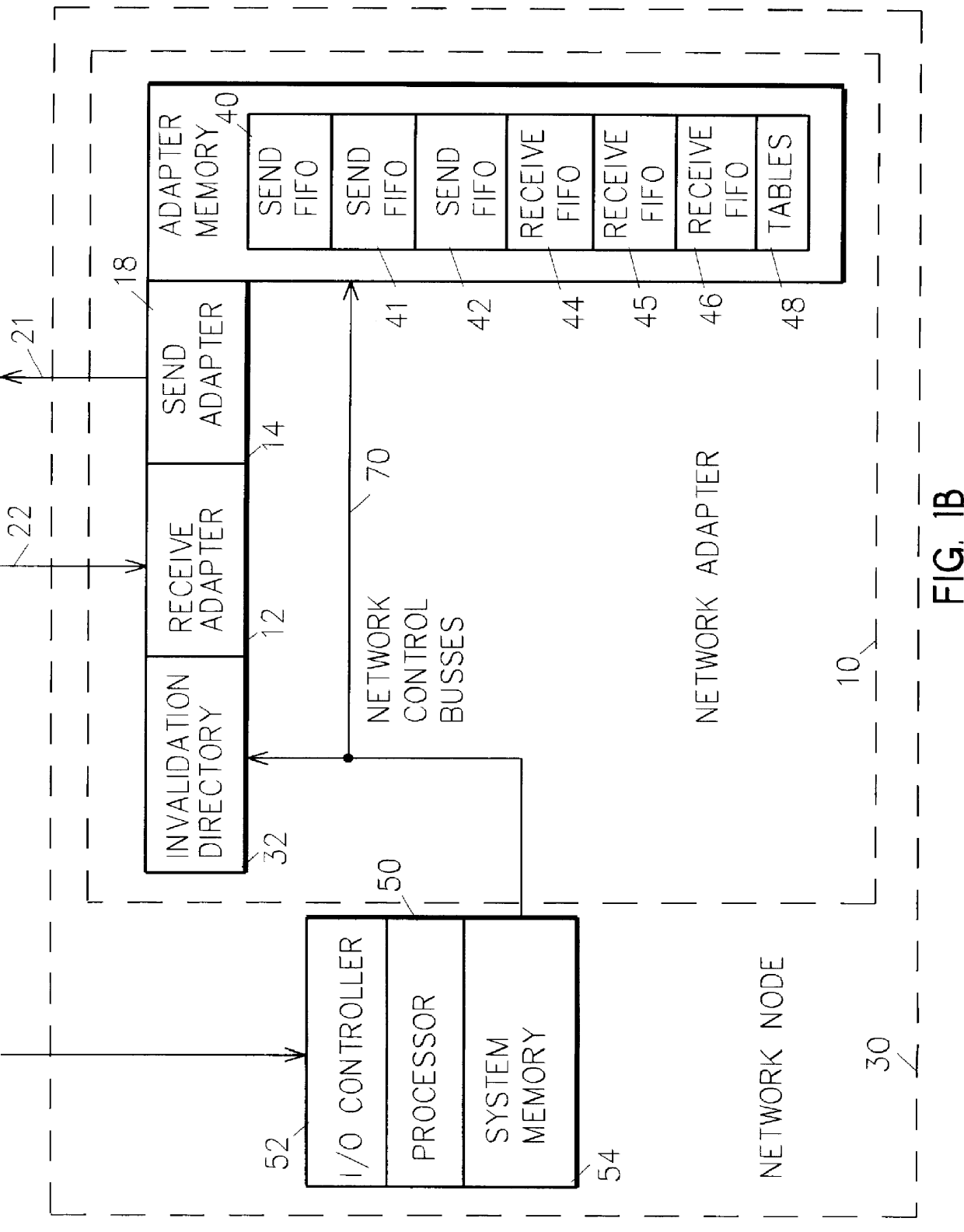

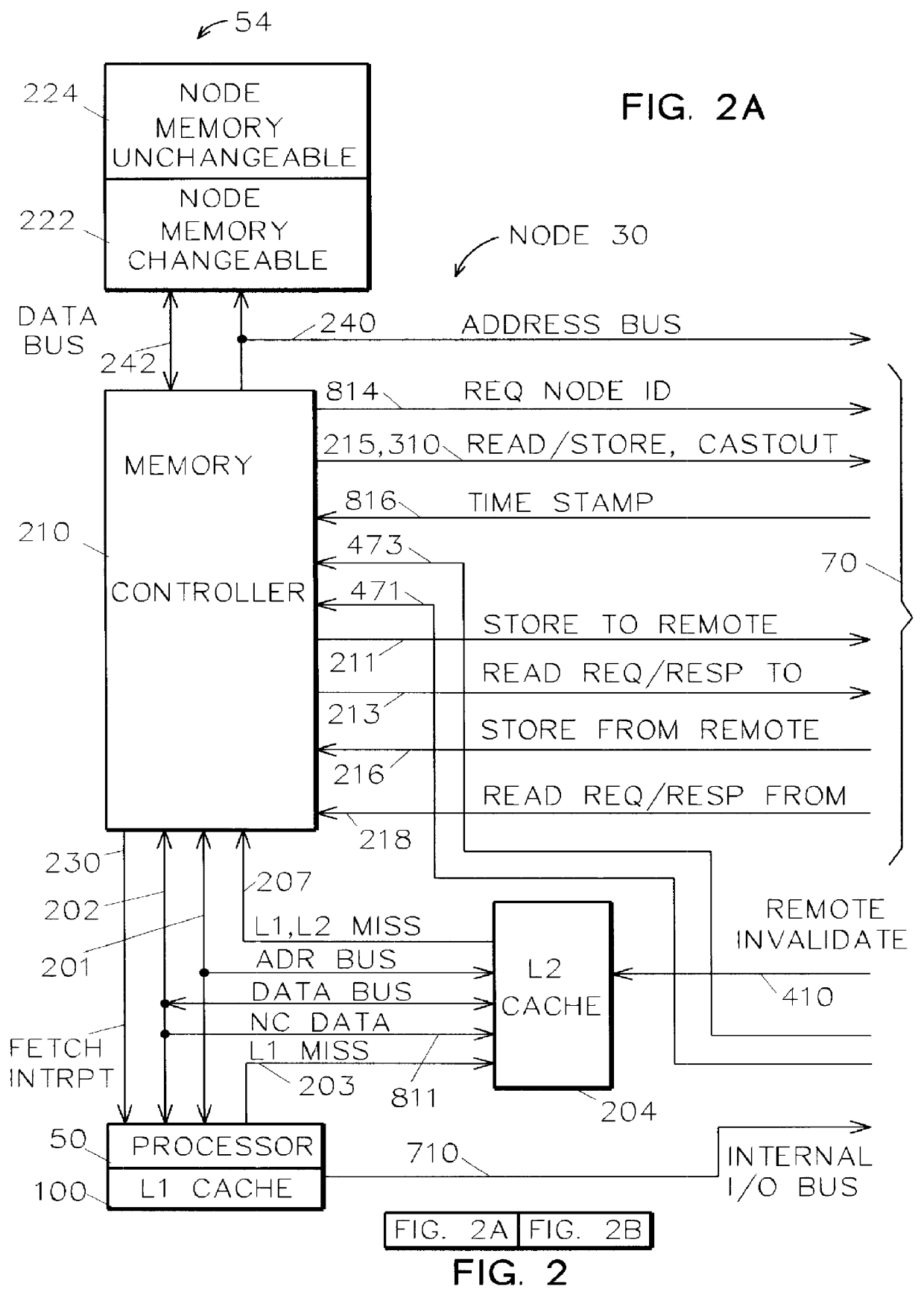

Memory controller for controlling memory accesses across networks in distributed shared memory processing systems

InactiveUS6044438AMore efficient cache coherent systemData processing applicationsMemory adressing/allocation/relocationRemote memory accessRemote direct memory access

A shared memory parallel processing system interconnected by a multi-stage network combines new system configuration techniques with special-purpose hardware to provide remote memory accesses across the network, while controlling cache coherency efficiently across the network. The system configuration techniques include a systematic method for partitioning and controlling the memory in relation to local verses remote accesses and changeable verses unchangeable data. Most of the special-purpose hardware is implemented in the memory controller and network adapter, which implements three send FIFOs and three receive FIFOs at each node to segregate and handle efficiently invalidate functions, remote stores, and remote accesses requiring cache coherency. The segregation of these three functions into different send and receive FIFOs greatly facilitates the cache coherency function over the network. In addition, the network itself is tailored to provide the best efficiency for remote accesses.

Owner:IBM CORP

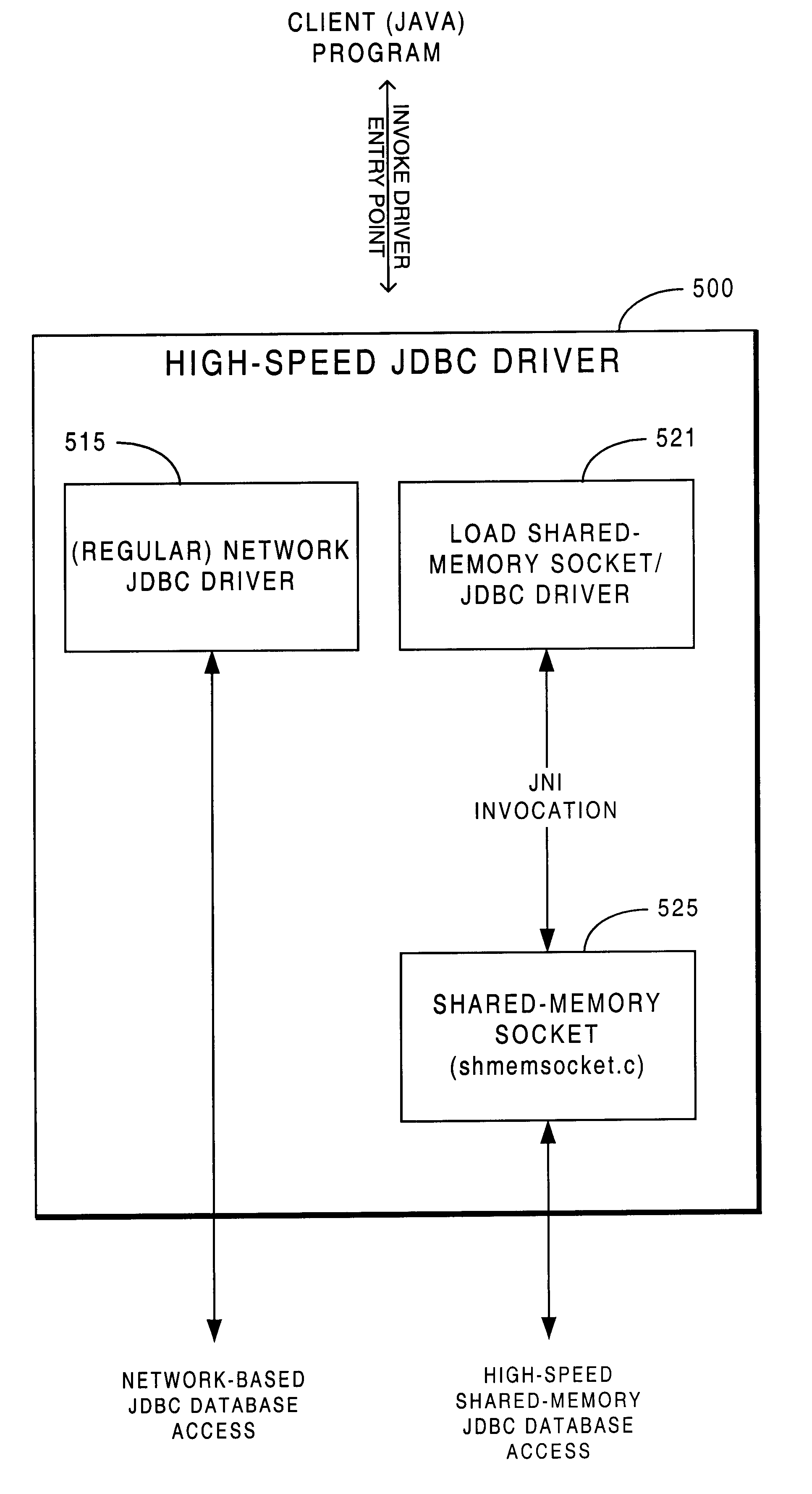

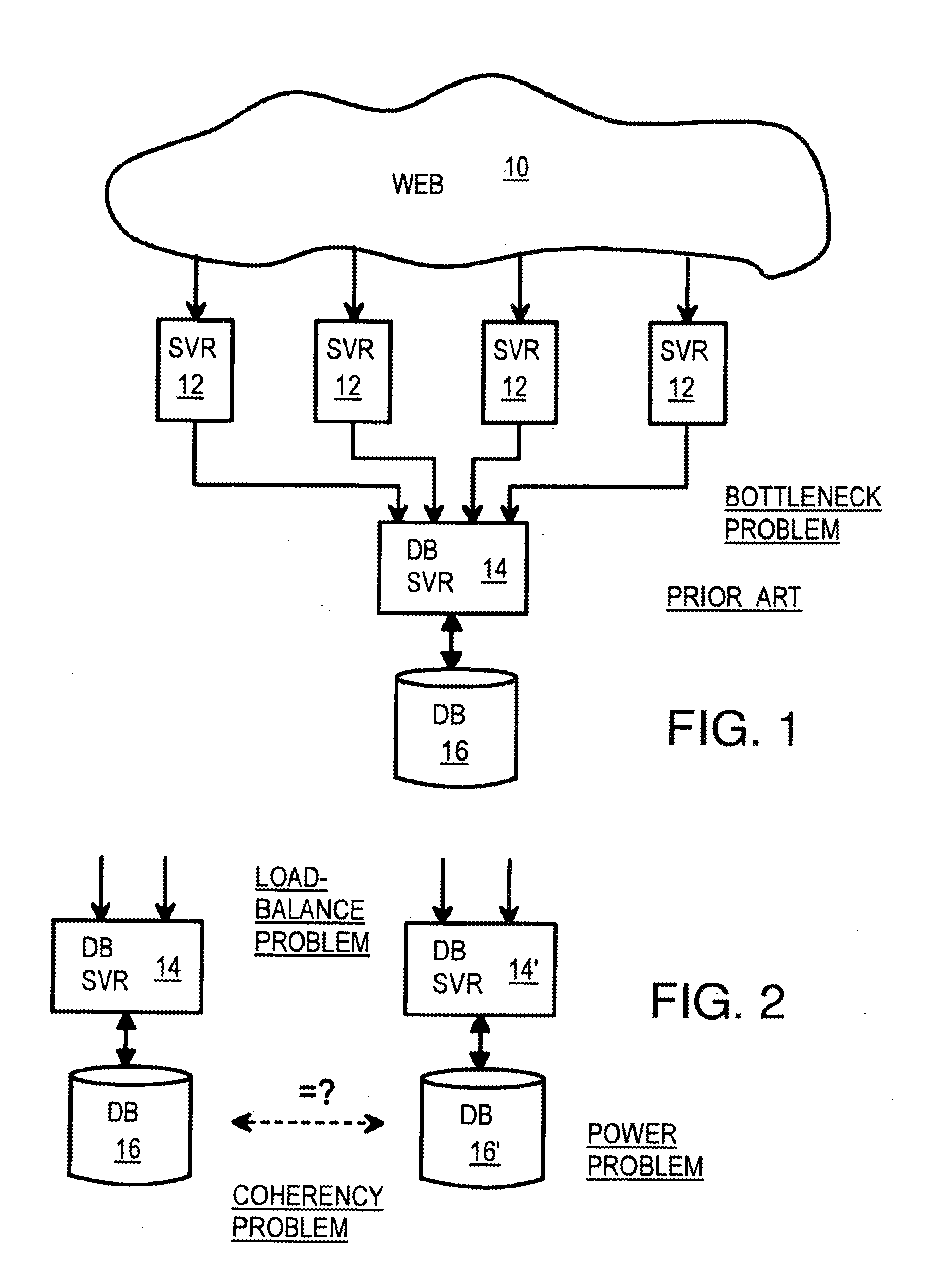

Methodology providing high-speed shared memory access between database middle tier and database server

InactiveUS6687702B2Data processing applicationsInterprogram communicationApplication serverDatabase server

A multi-tier database system is modified such that a middle-tier application server (EJB server) and a database server run on the same host computer and communicate via shared-memory interprocess communication. The system includes a database (e.g., JDBC) driver thread that attaches to the database server, specifically by attaching to the database server's shared memory segment. Operation of the JDBC driver is modified to provide direct access between the middle tier (i.e., EJB server) and the database server, when the two are operating on the same host computer.

Owner:SYBASE INC

Converting program code with access coordination for a shared memory

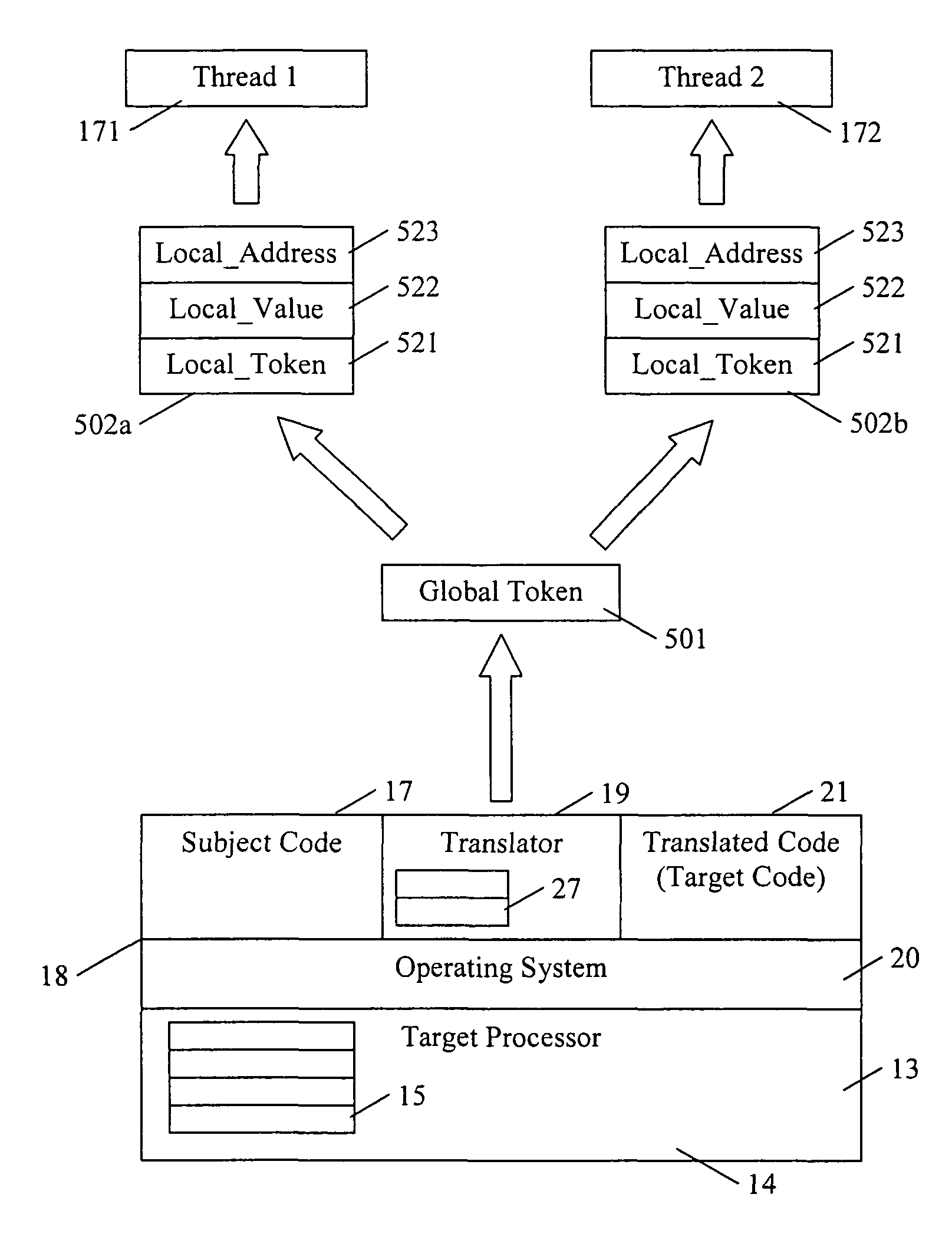

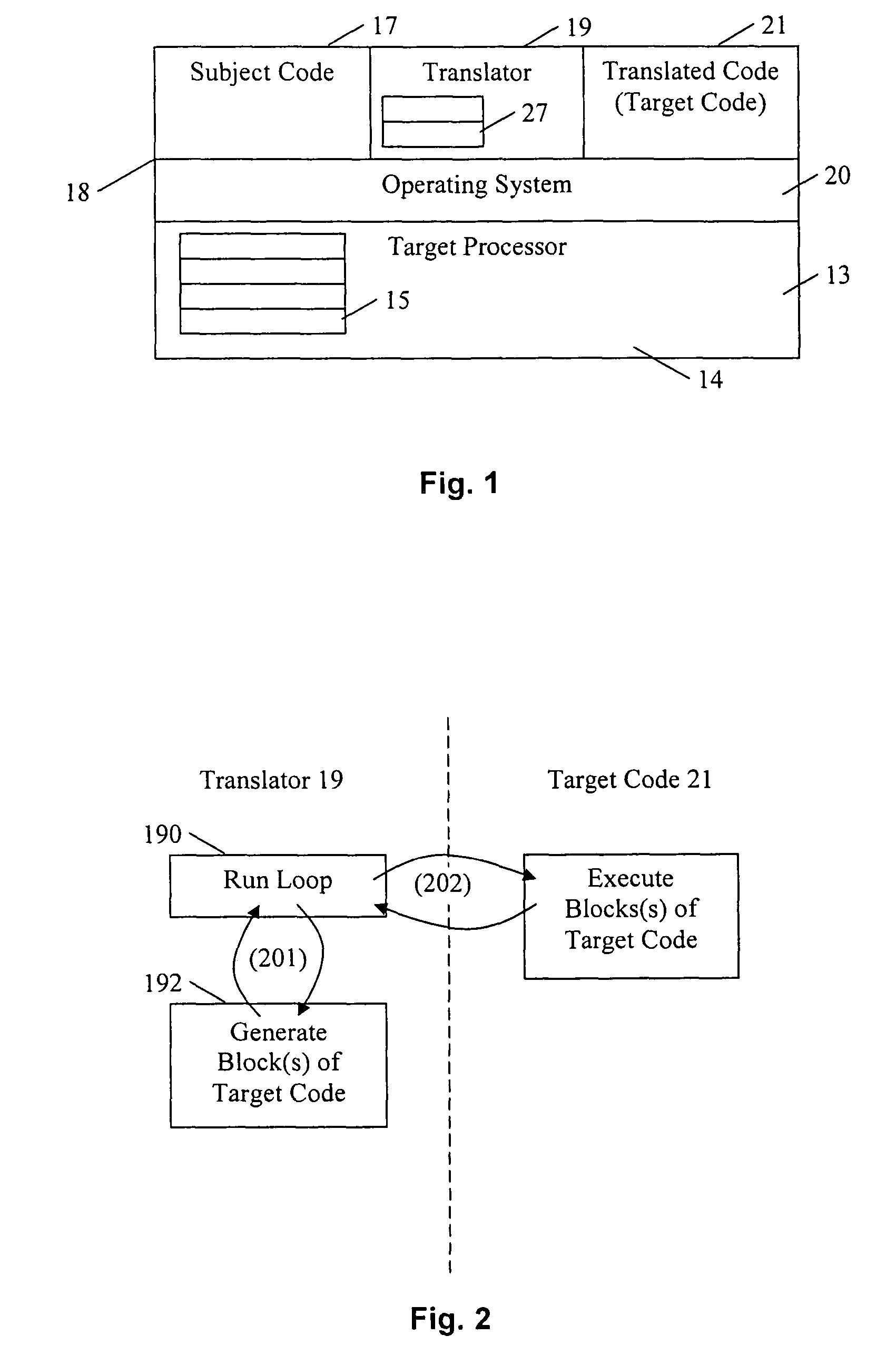

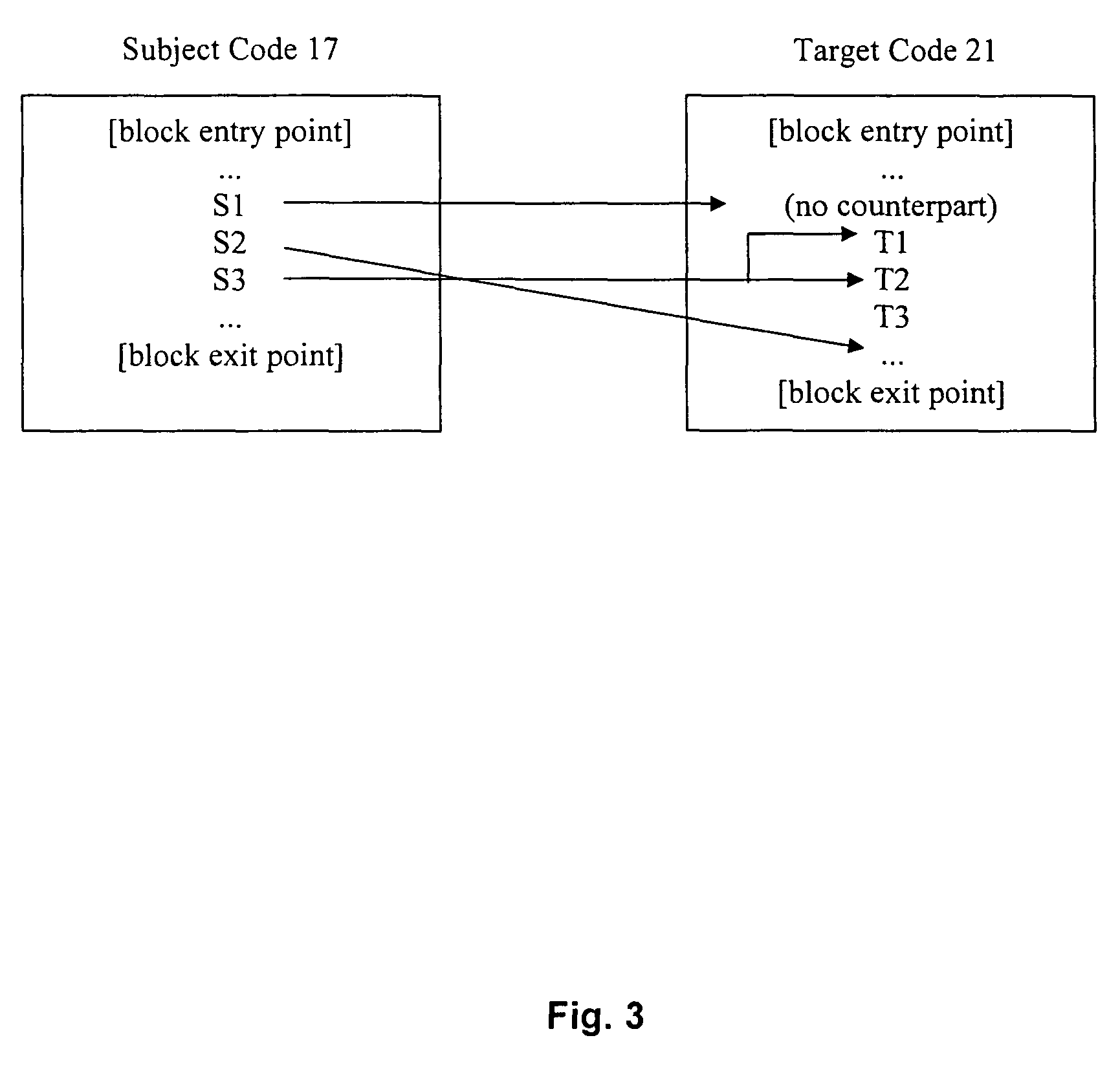

A dynamic binary translator 19 converts a subject program 17 into target code 21 on a target processor 13. For a multi-threaded subject environment, the translator 19 provides a global token 501 common to each thread 171, 172, and one or more sets of local data 502, which together are employed to coordinate access to a memory 18 as a shared resource. Adjusting the global token 501 allows the local datastructures 502a,b in each thread to detect potential interference with the shared resource 18.

Owner:IBM CORP

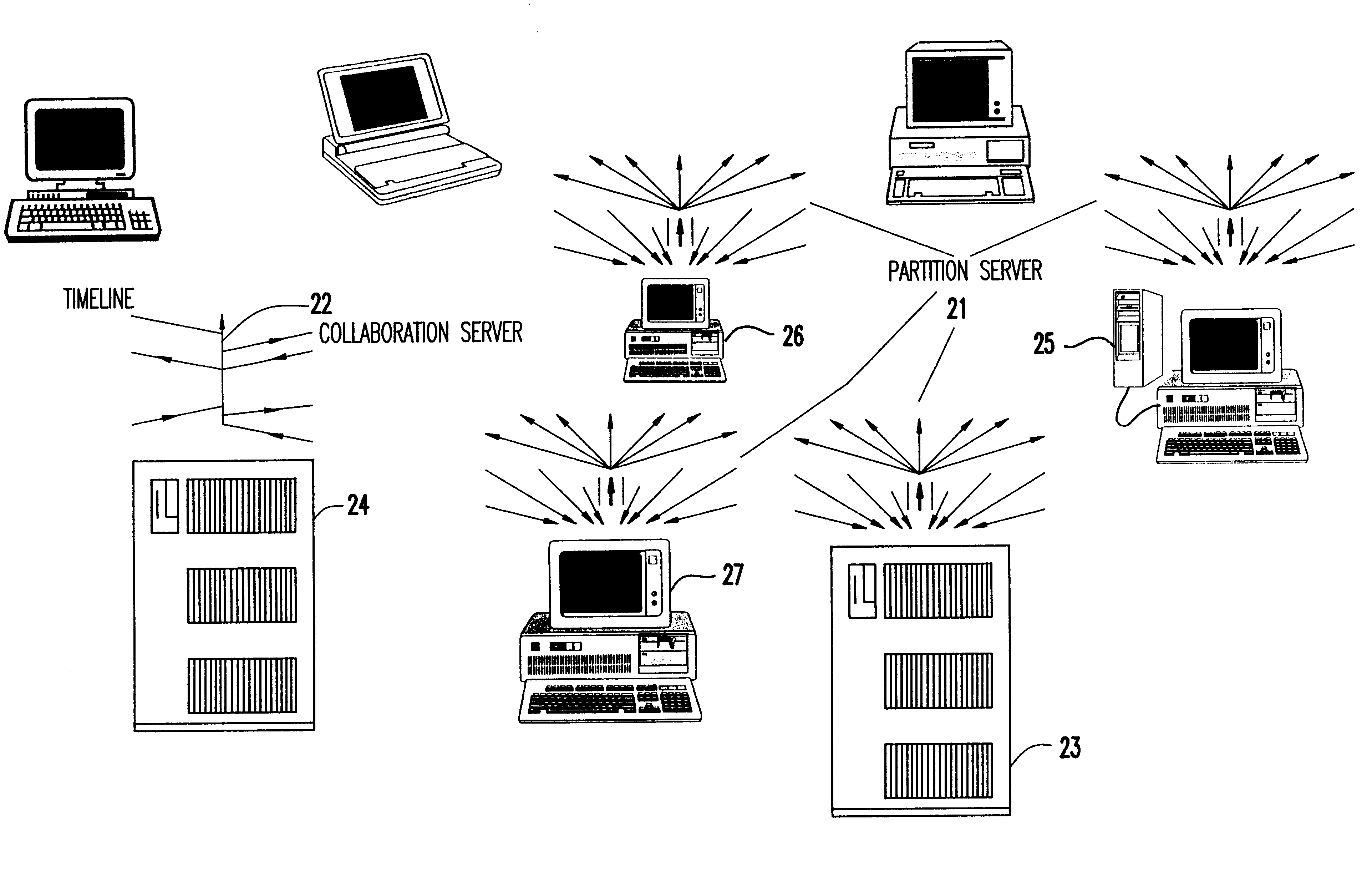

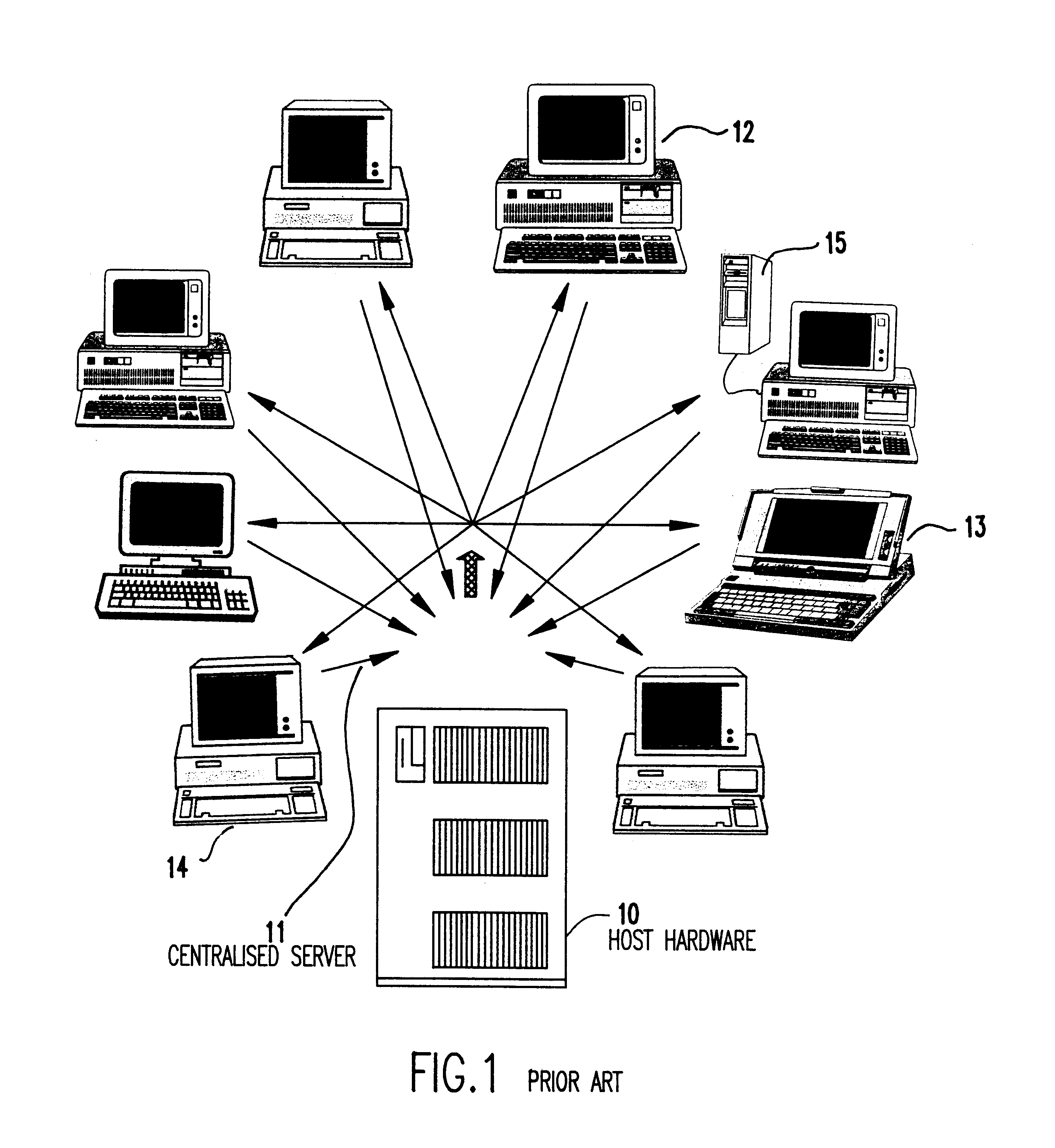

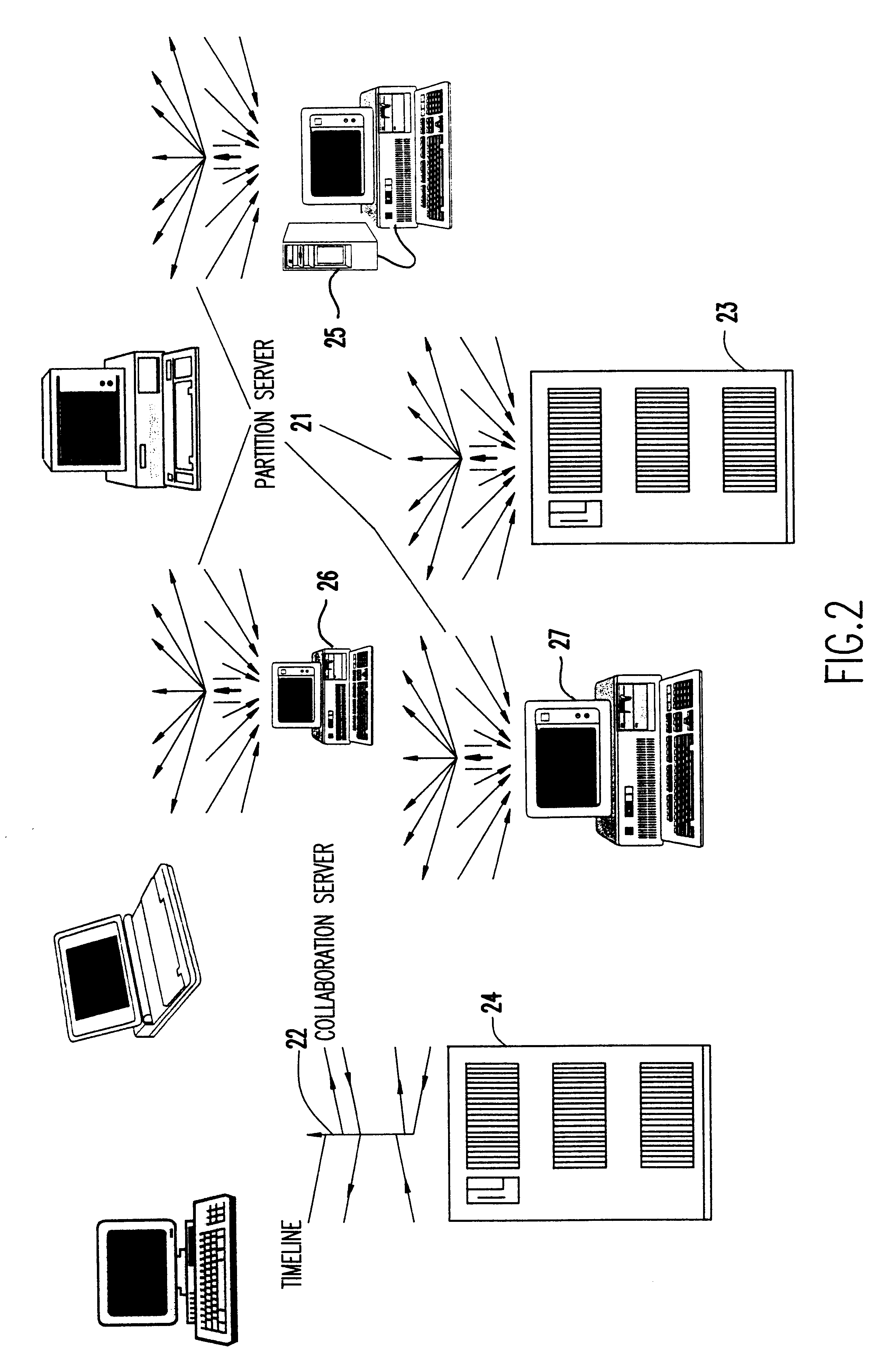

Distributed server for real-time collaboration

InactiveUS6334141B1Avoid focusSpecial service provision for substationDigital computer detailsExtensibilityData collaboration

A distributed server for real-time collaboration is substituted for a centralized server to address the problem of the development of unacceptable communication and computation bottlenecks resulting from the use of a one-software-process-based centralized server running somewhere on the available network. The substitute distributed server improves scaleability of real-time collaboration by being based on multiple, independently-communicating, asynchronous, independent (i.e., no shared memory, data, variables, etc.) software processes. The processes can be distributed to multiple machines throughout the network and run simultaneously in order to avoid the centralized server's bottlenecks. To be used, a distributed server requires a disjoint, fully covering partitioning of a work space, wherein it can handle partition hierarchies and groups comprehensively. The distributed server solution is general because of the ability of distributed servers to work with different definitions of a modification. The distributed server solution is extensible because of its simple and comprehensive treatment of inter-partition synchronization.

Owner:ACTIVISION PUBLISHING

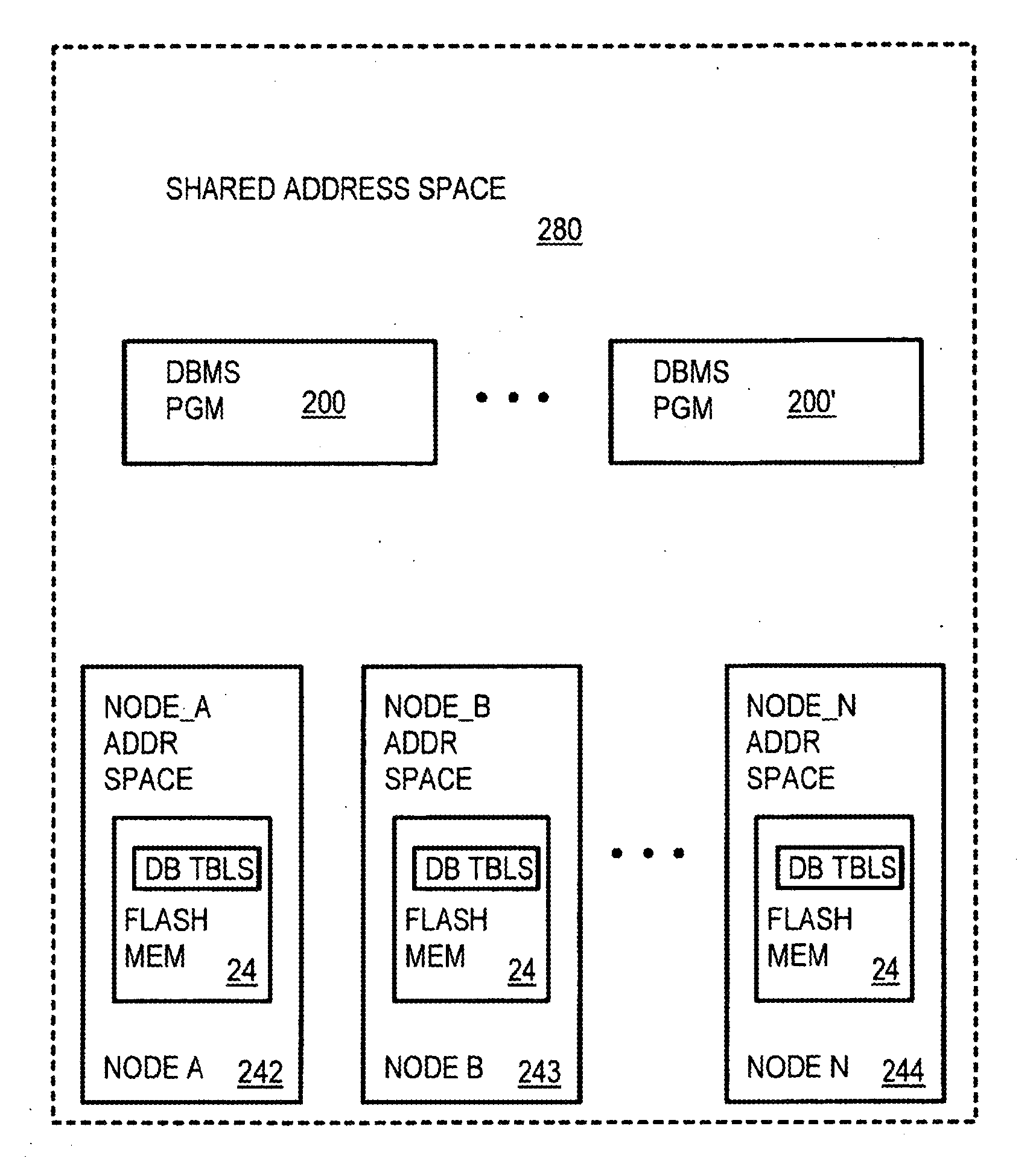

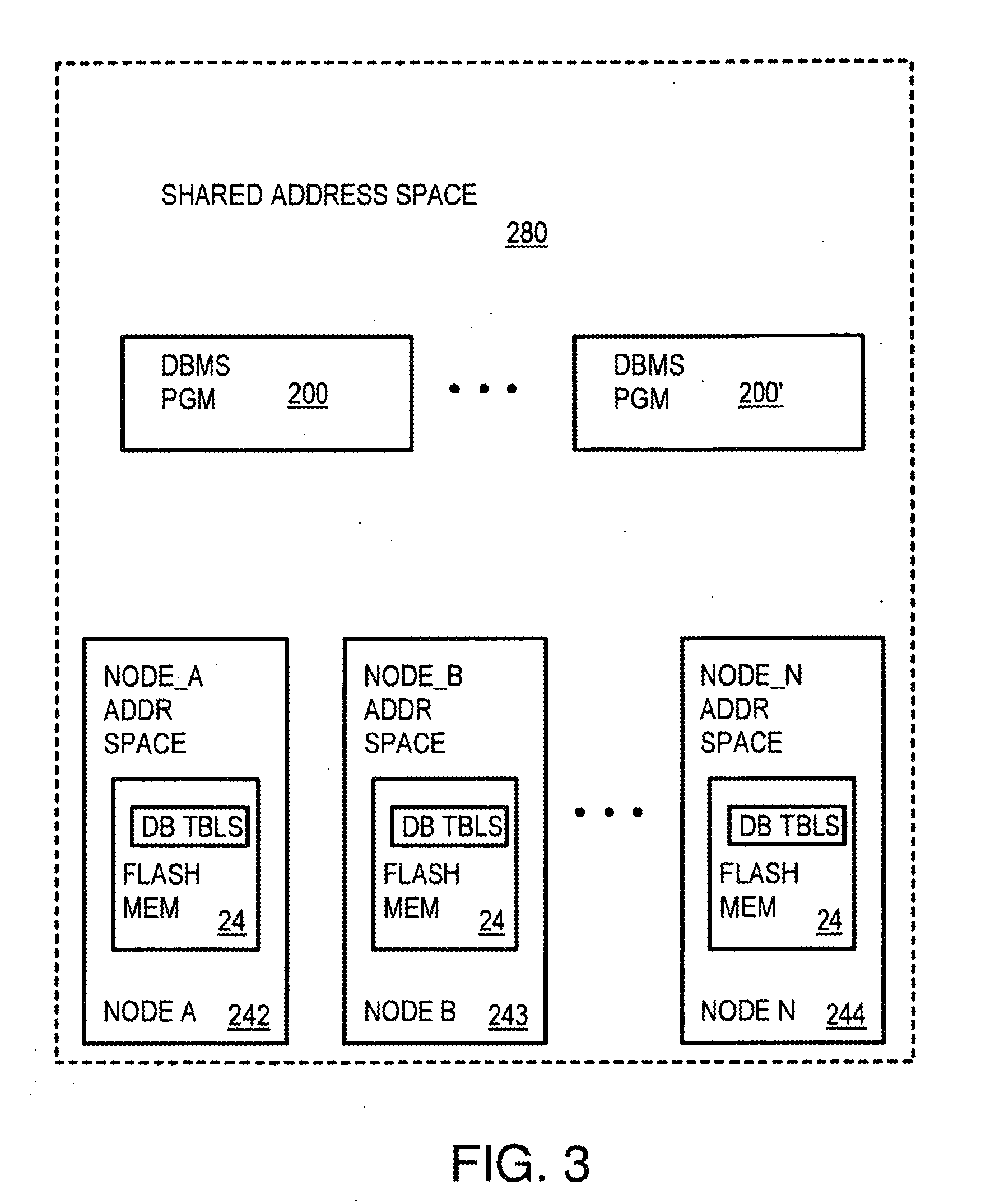

Cluster of processing nodes with distributed global flash memory using commodity server technology

InactiveUS20120017037A1Database management systemsSpecial data processing applicationsDram memoryMultiple applications

Approaches for a distributed storage system that comprises a plurality of nodes. Each node, of the plurality of nodes, executes one or more application processes which are capable of accessing persistent shared memory. The persistent shared memory is implemented by solid state devices physically maintained on each of the plurality of nodes. Each the one or more application processes, maintained on a particular node, of the plurality of nodes, communicates with a shared data fabric (SDF) to access the persistent shared memory. The persistent shared memory comprises a scoreboard implemented in shared DRAM memory that is mapped to a persistent storage. The scoreboard provides a crash tolerant mechanism for enabling application processes to communicate with the shared data fabric (SDF).

Owner:SANDISK TECH LLC

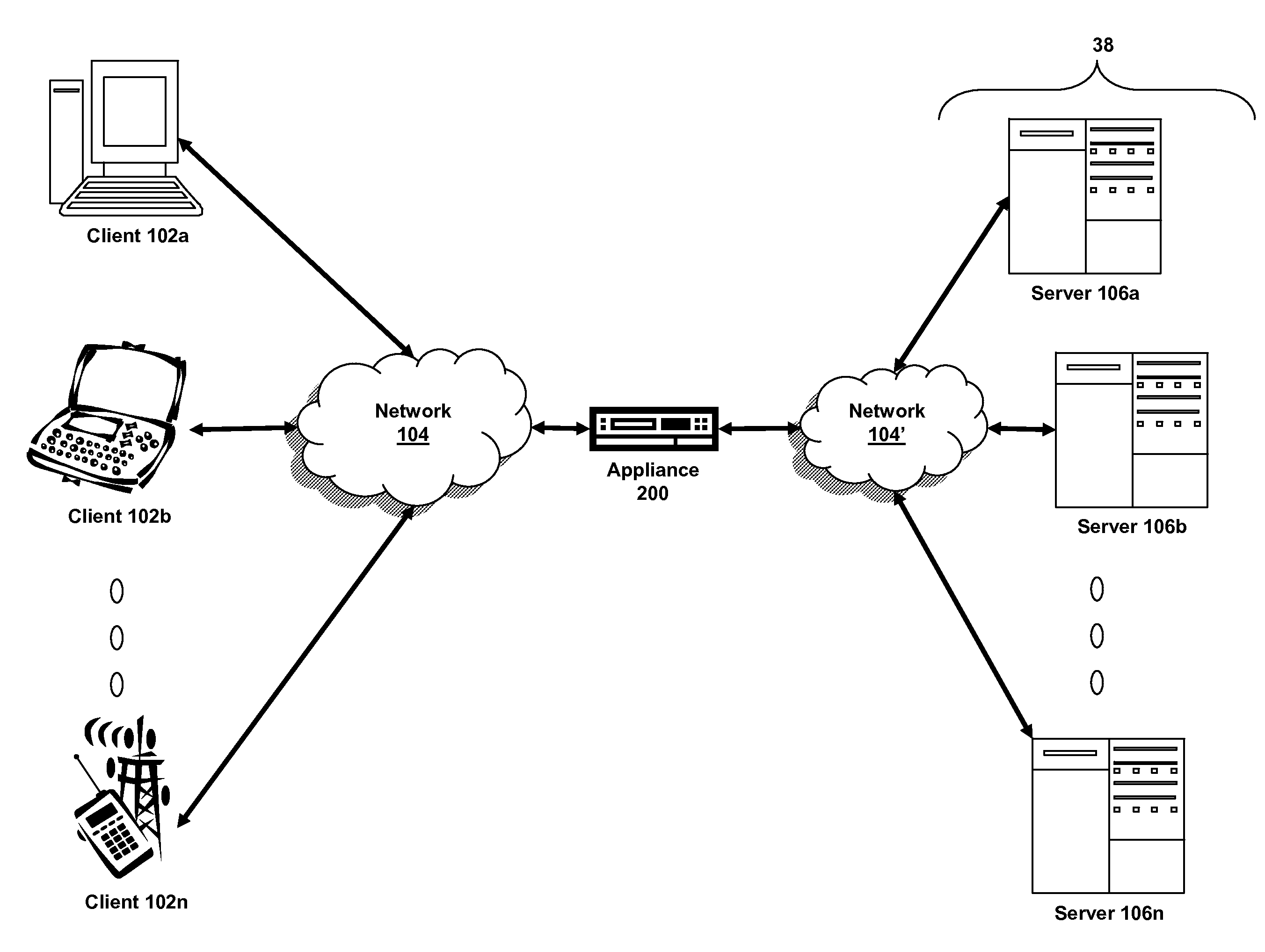

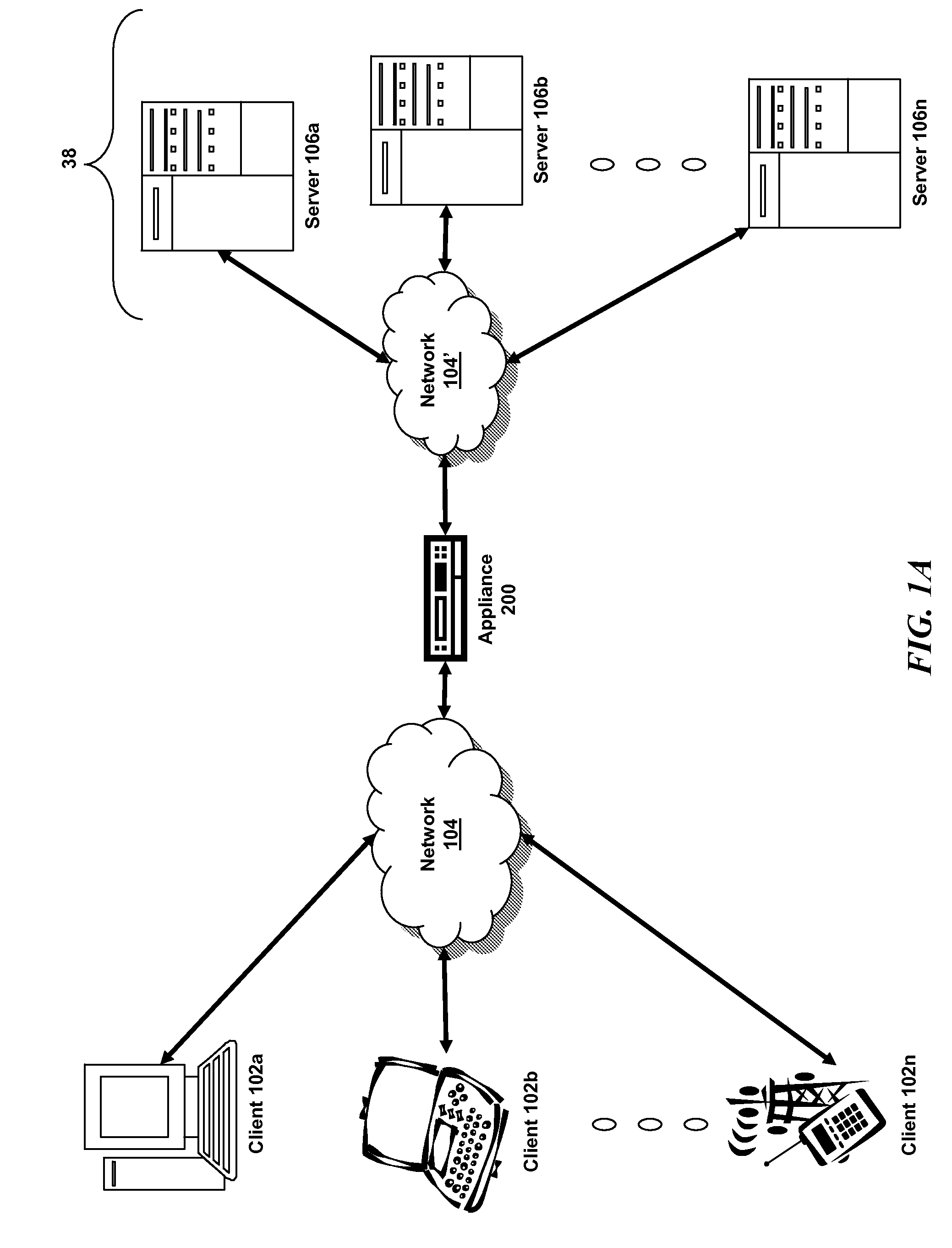

Systems and methods for object rate limiting in multi-core system

The present invention is directed towards systems and methods for managing a rate of request for an object transmitted between a server and one or more clients via a multi-core intermediary device. A first core of the intermediary device can receive a request for an object and assume ownership of the object. The first core can store the object in shared memory along with a rate-related counter for the object and generate a hash to the object and counter. Other cores can obtain the hash from the first core and access the object and counter in shared memory. Policy engines and throttlers in operation on each core can control the rate of access to the stored object.

Owner:CITRIX SYST INC

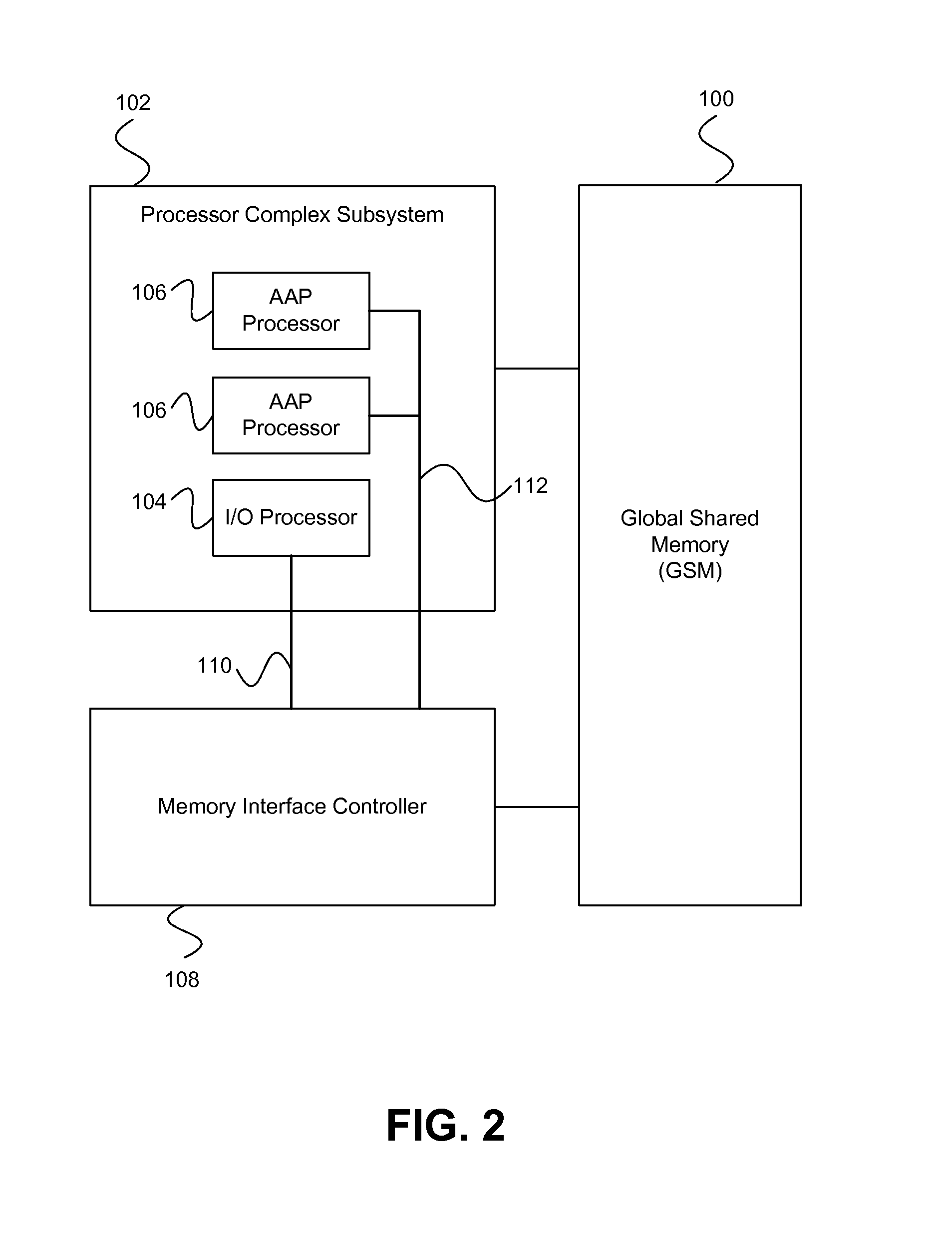

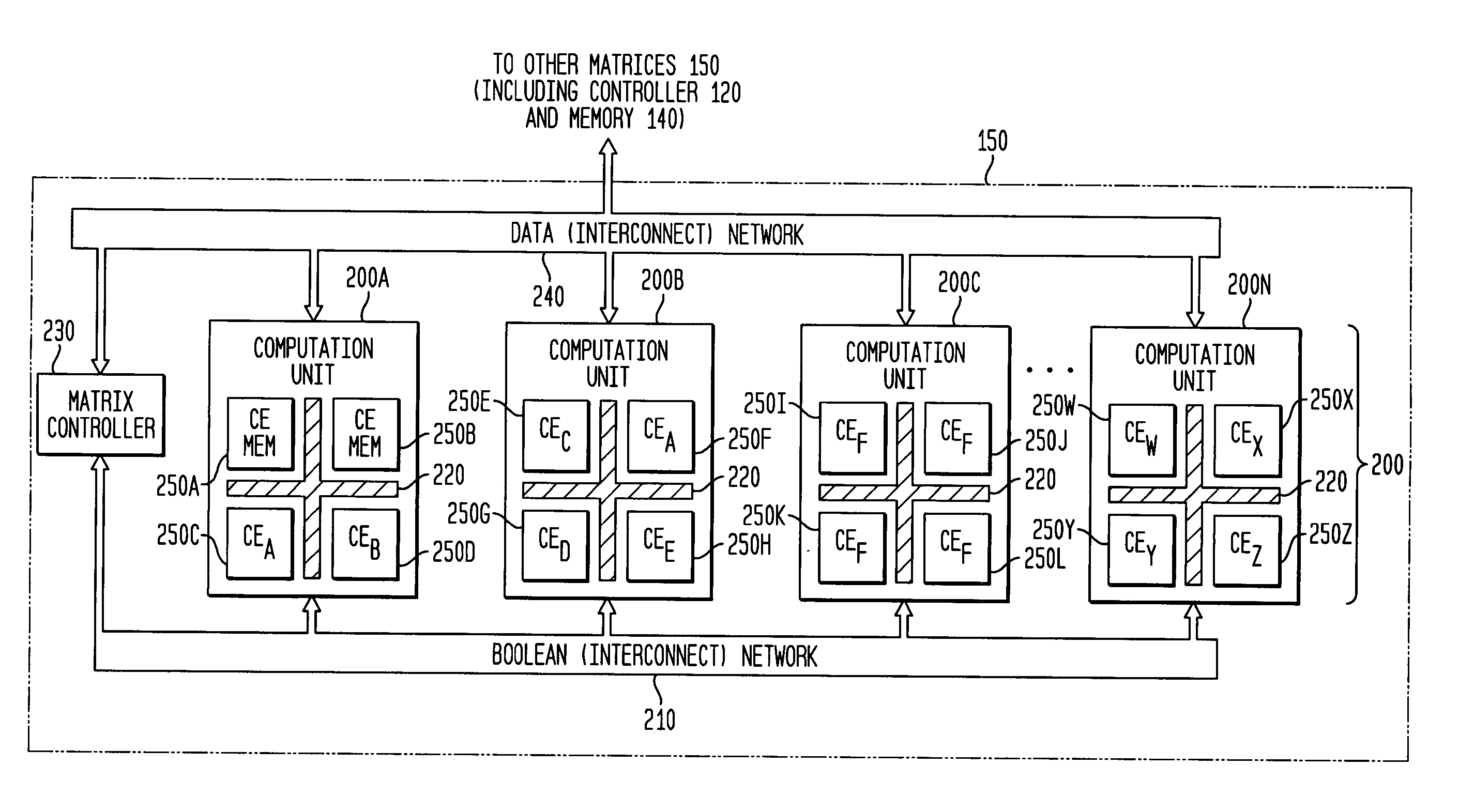

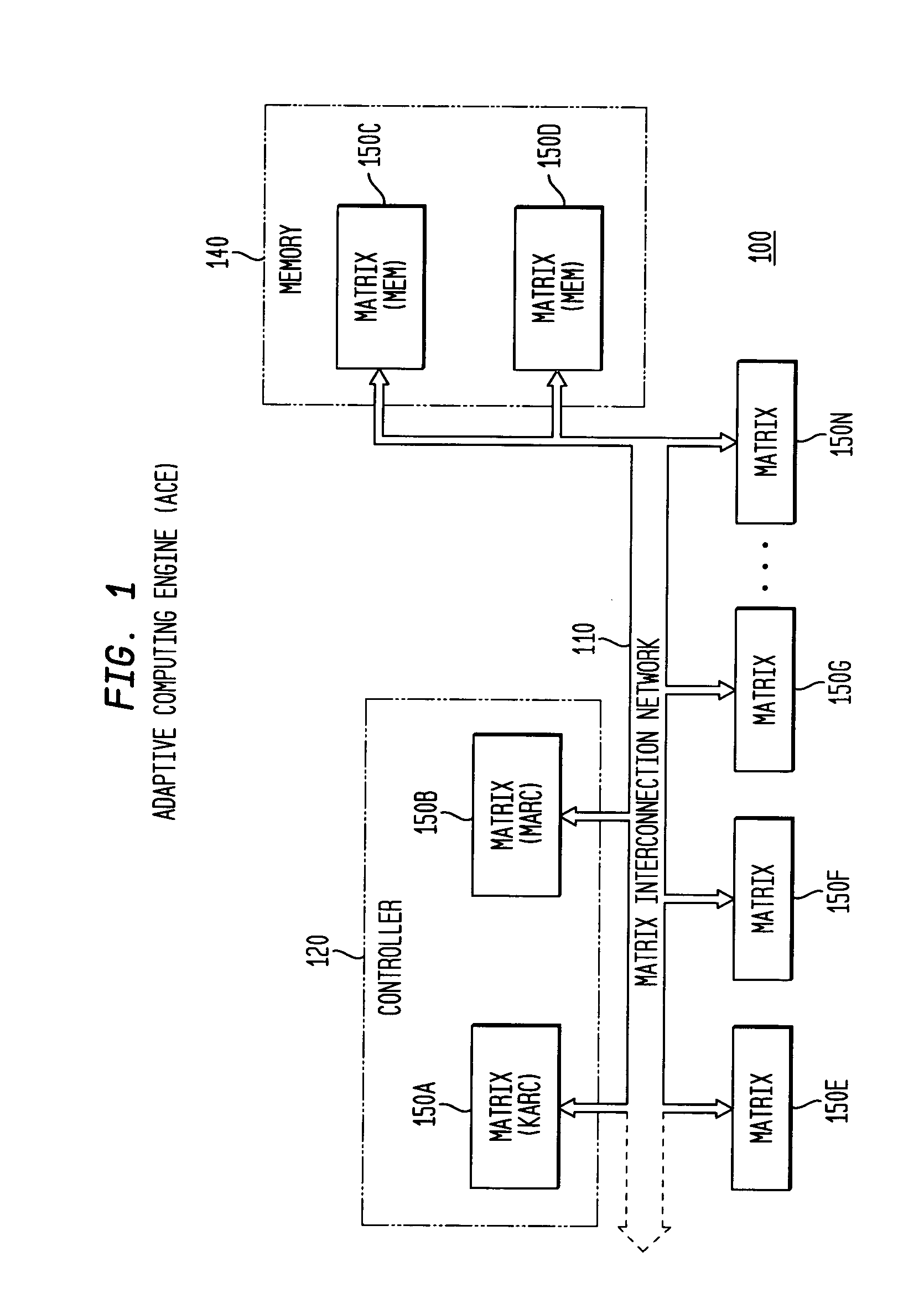

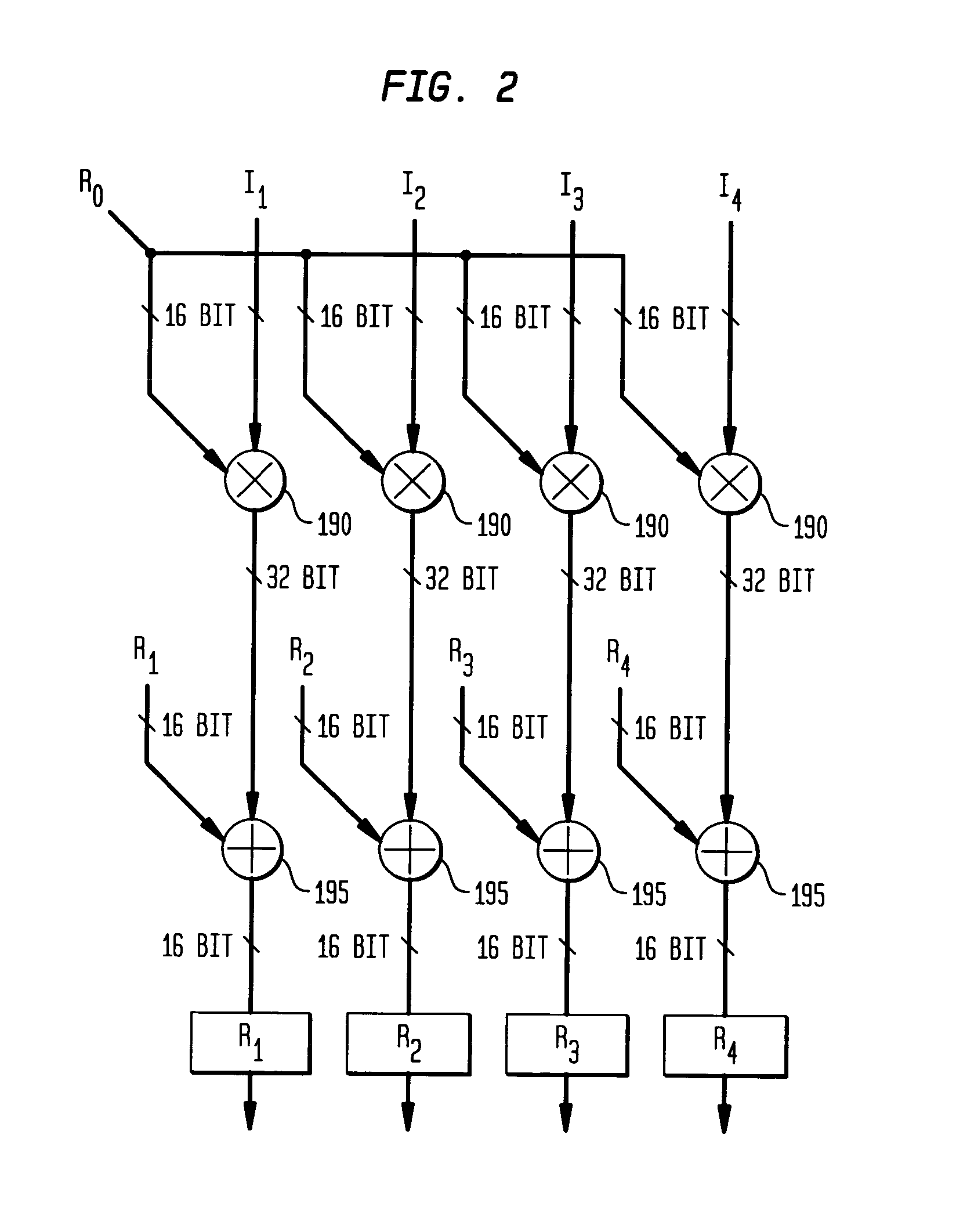

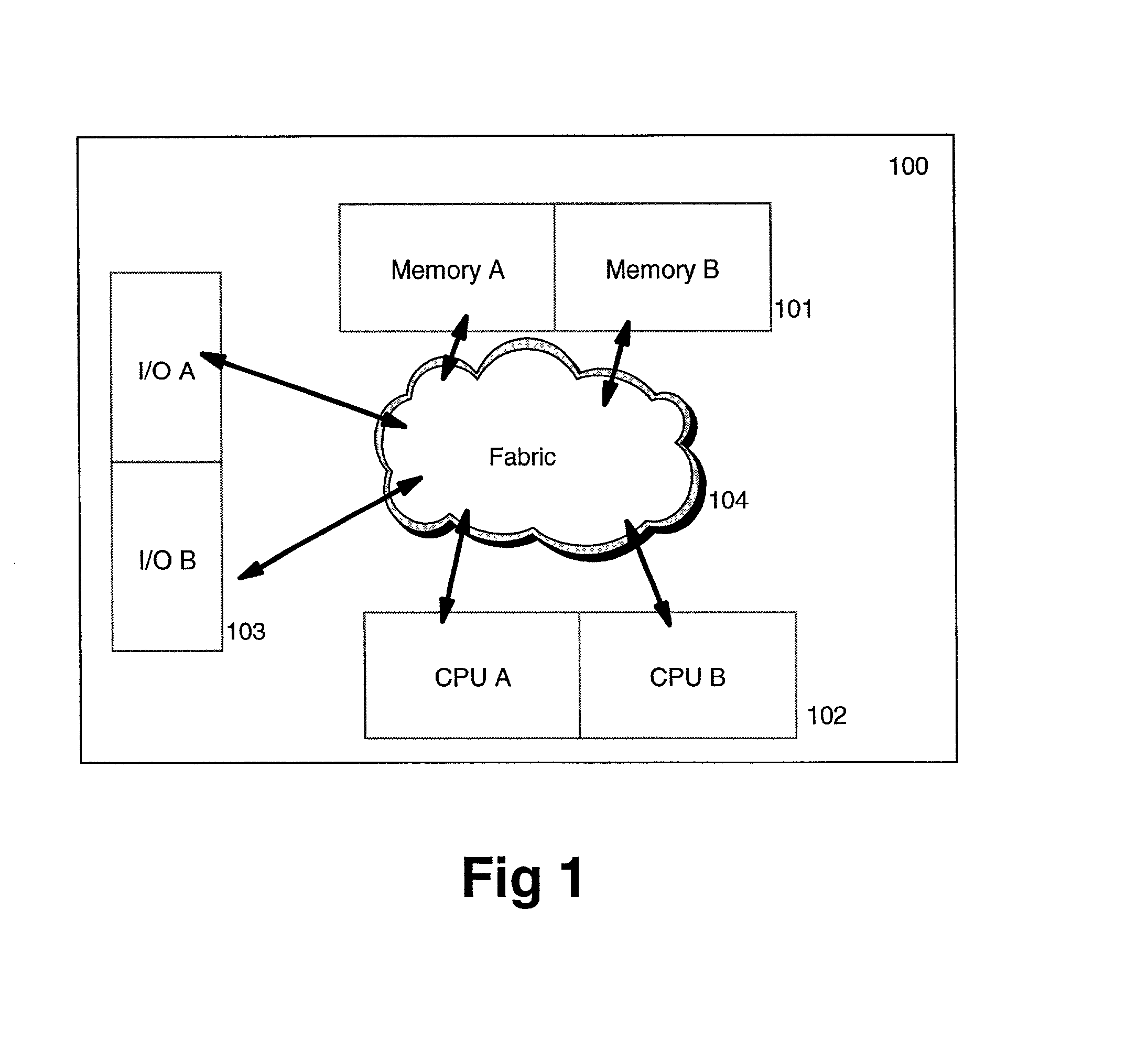

Asynchronous, independent and multiple process shared memory system in an adaptive computing architecture

The present invention provides a system and method for implementation and use of a shared memory. The shared memory may be accessed both independently and asynchronously by one or more processes at corresponding nodes, allowing data to be streamed to multiple processes and nodes without regard to synchronization of the plurality of processes. The various nodes may be adaptive computing nodes, kernel or controller nodes, or one or more host processor nodes. The present invention maintains memory integrity, not allowing memory overruns, underruns, or deadlocks. The present invention also provides for “push back” after a memory read, for applications in which it is desirable to “unread” some elements previously read from the memory.

Owner:NVIDIA CORP

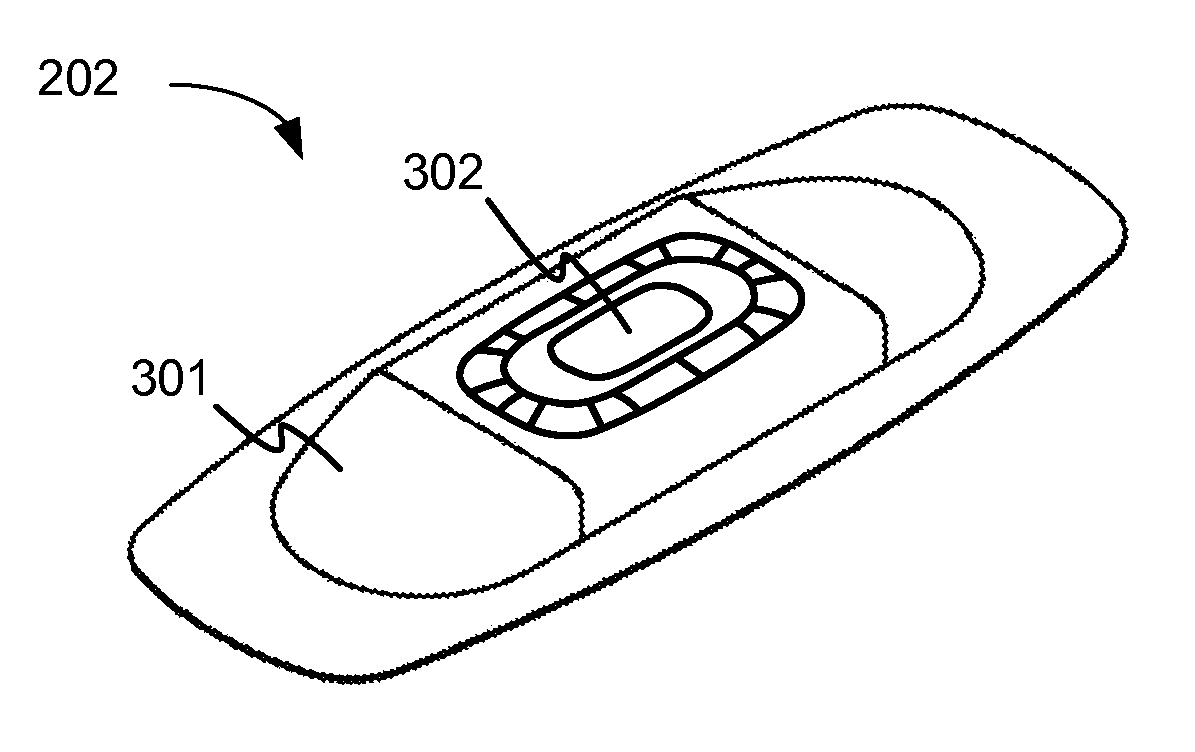

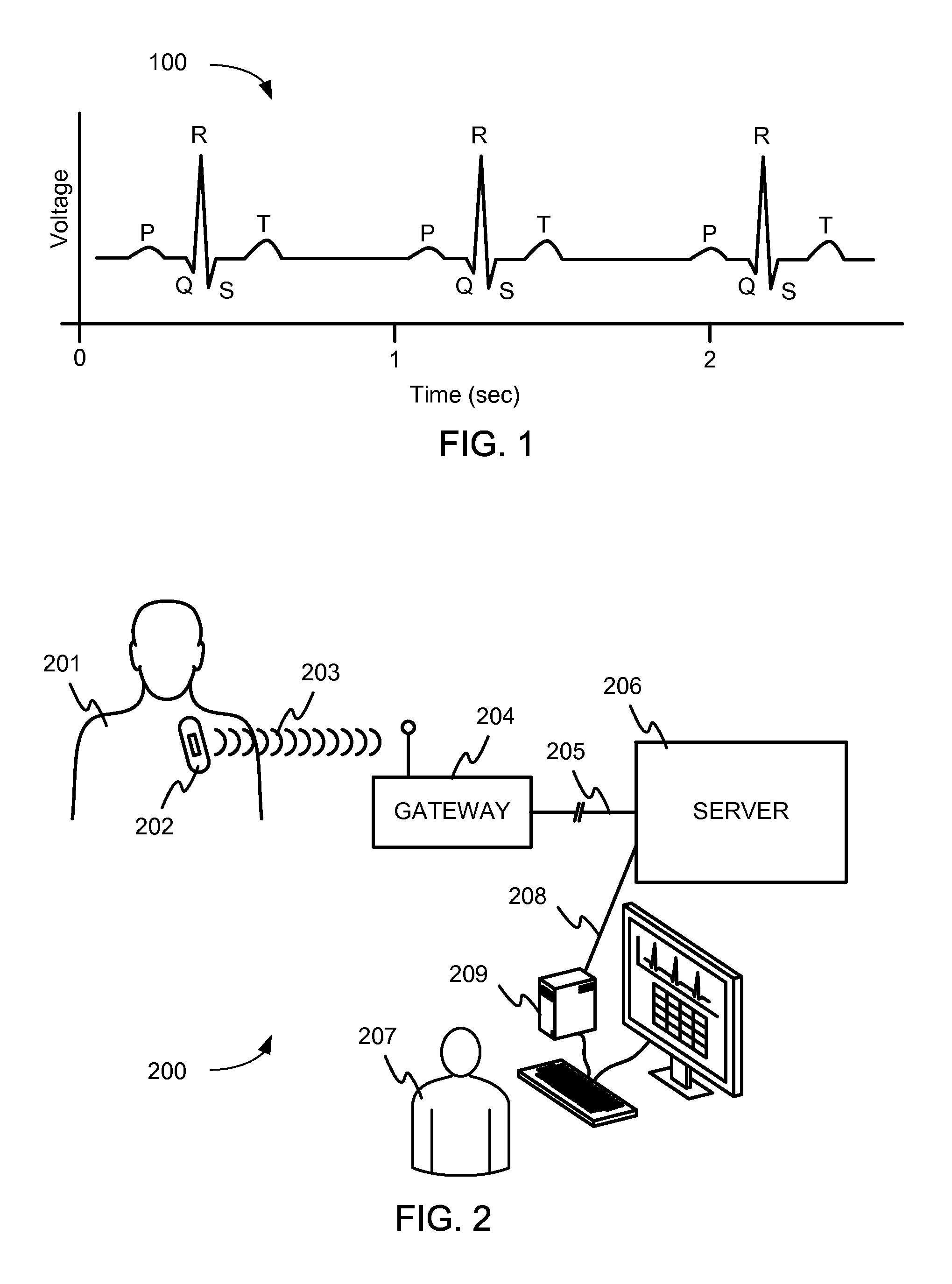

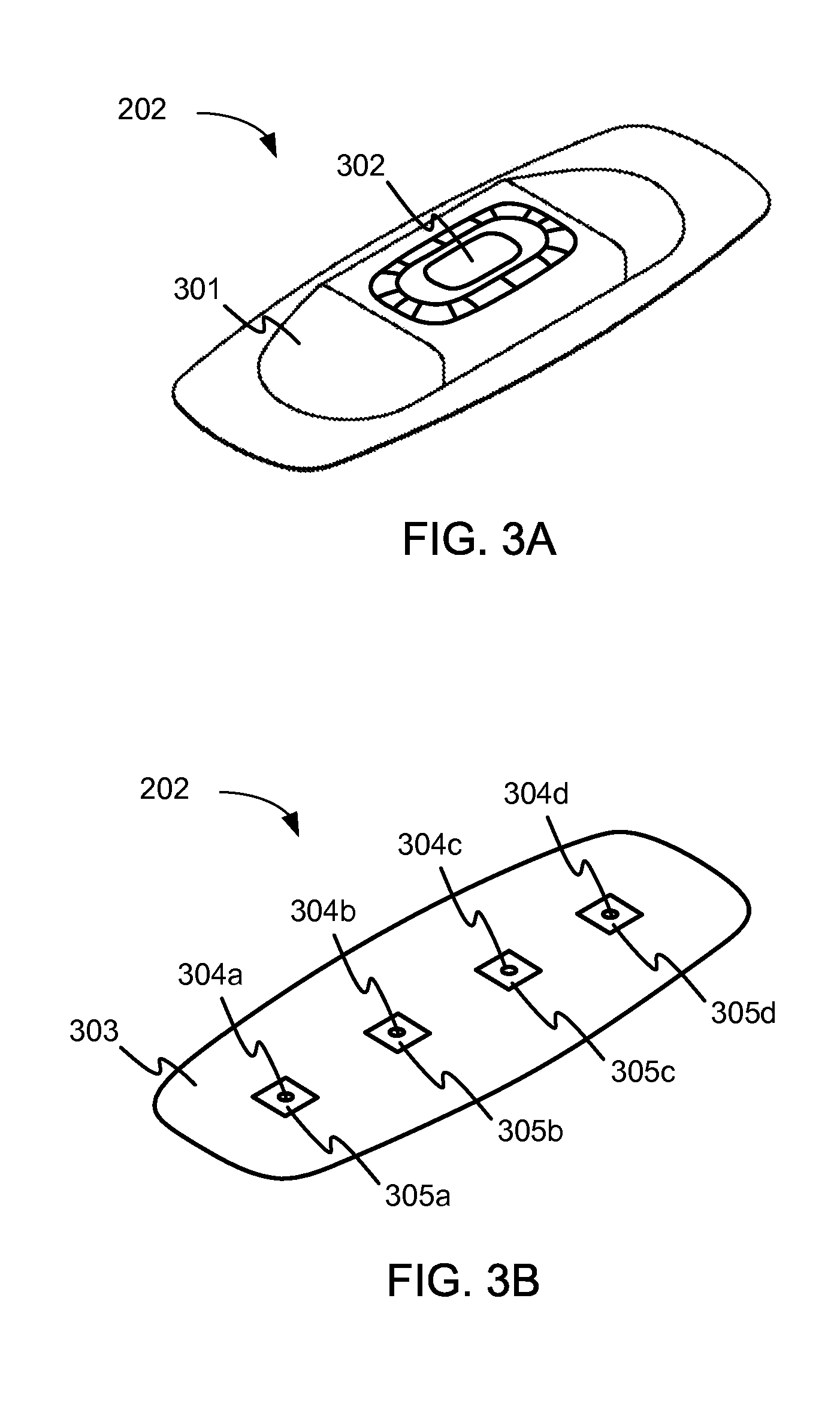

Heuristic management of physiological data

Systems and methods for management of physiological data, for example data obtained from monitoring an electrocardiogram signal of a patient. In one example use, digital data is obtained and episodes of arrhythmias are detected. Snapshots of the digitized ECG signal may be stored for later physician review. One or more techniques may be used to avoid recording of redundant data, while ensuring that at least a minimum number of episodes of each detected arrhythmia can be stored. The system may automatically tailor its data collection to the cardiac characteristics of a particular patient. In one technique, memory is allocated to include for each detectable arrhythmia a memory segment designated to receive ECG snapshots representing only the respective arrhythmia. A shared memory pool may receive additional snapshots of as the designated memory segments fill.

Owner:MEDTRONIC MONITORING

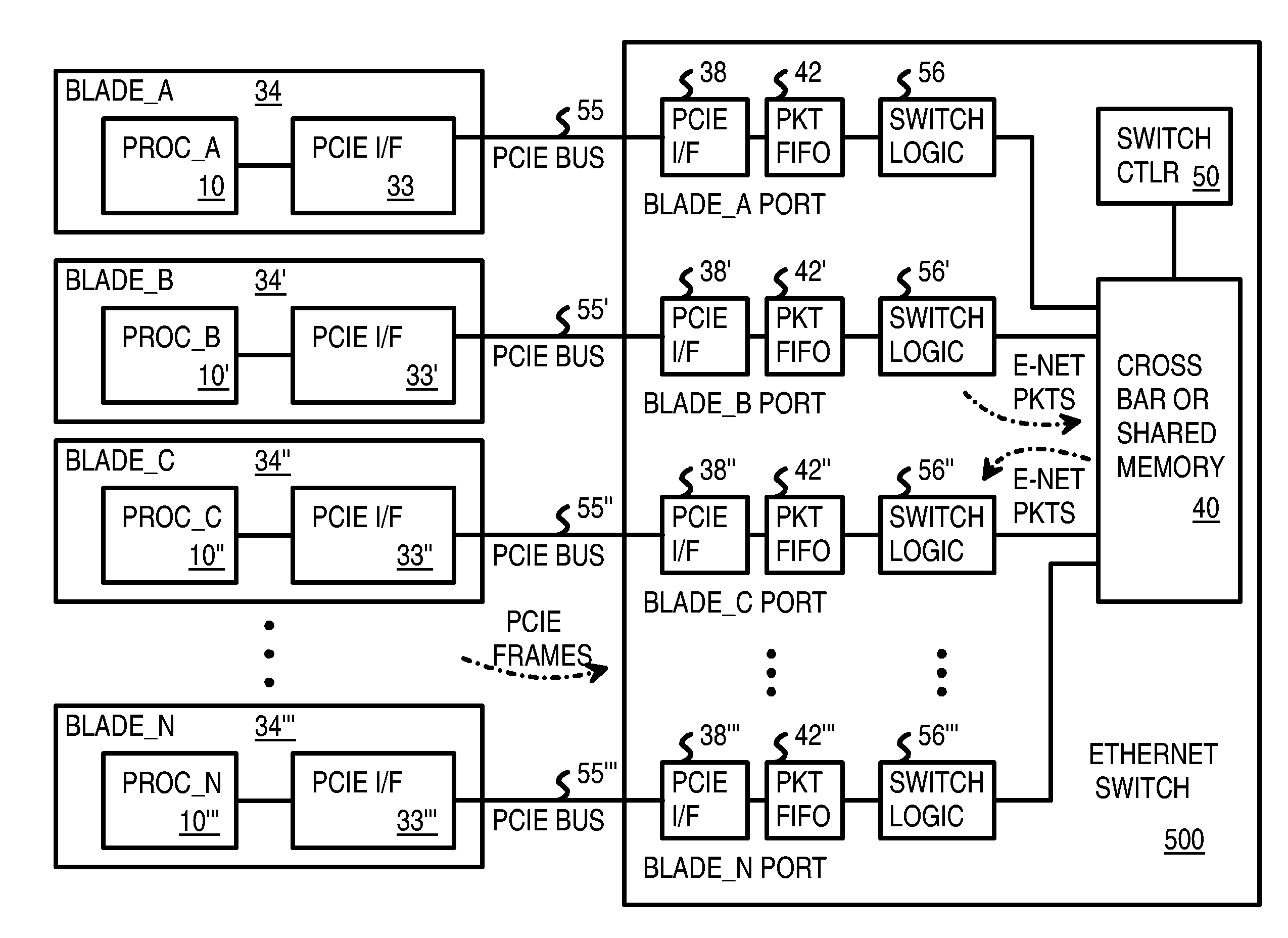

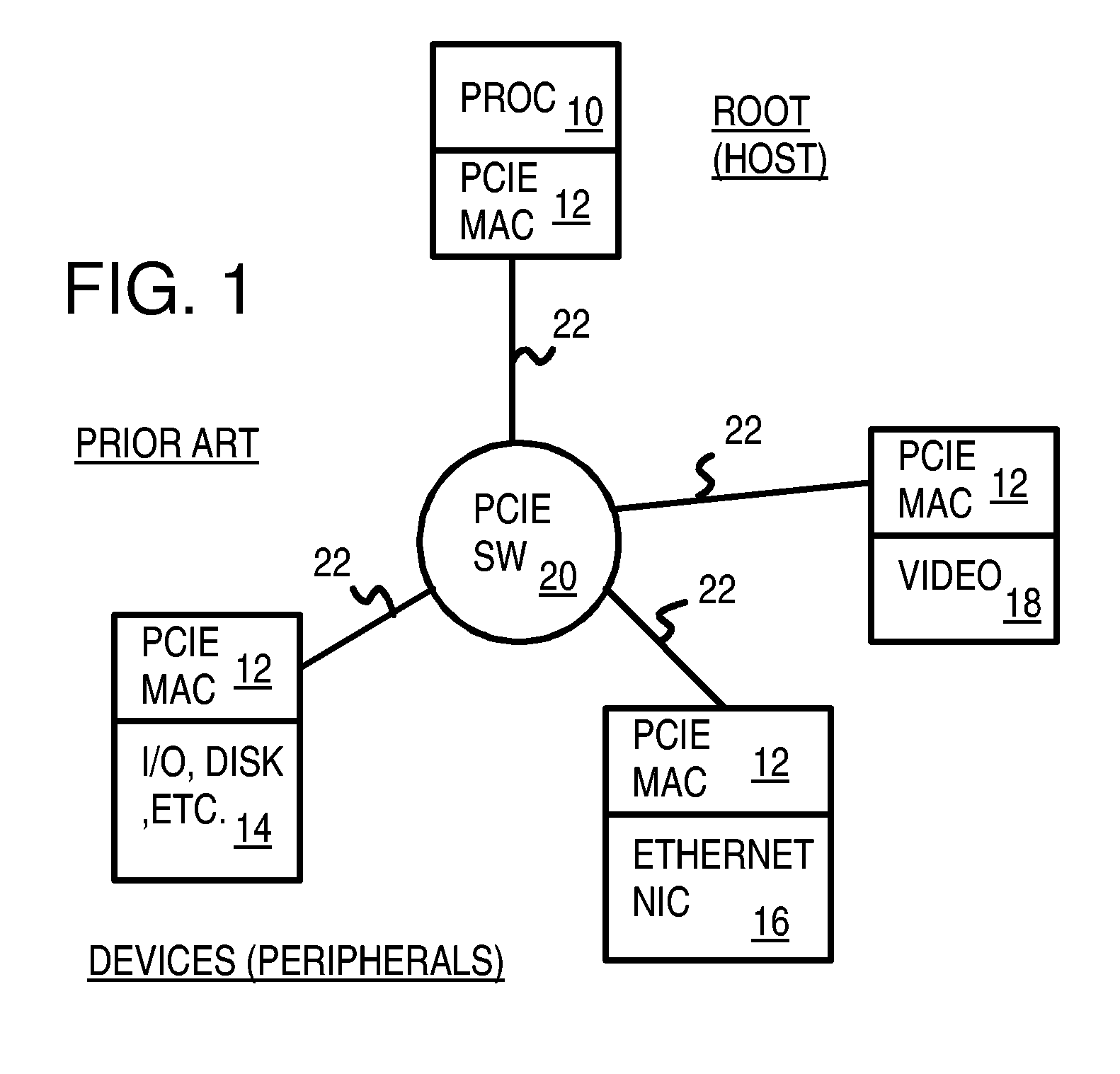

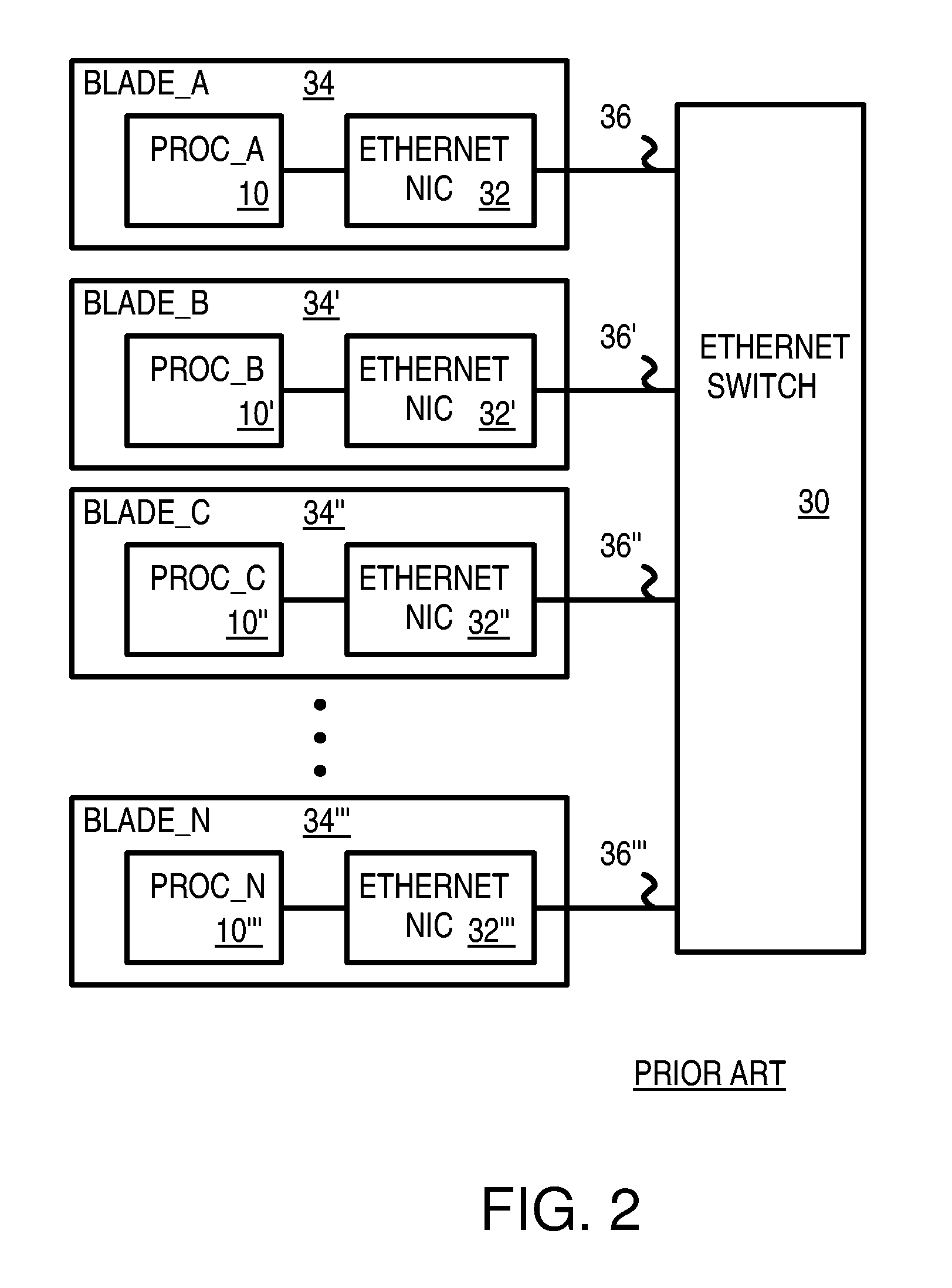

Pseudo-ethernet switch without ethernet media-access-controllers (MAC's) that copies ethernet context registers between PCI-express ports

A Pseudo-Ethernet switch has a routing table that uses Ethernet media-access controller (MAC) addresses to route Ethernet packets through a switch fabric between an input port and an output port. However, the input port and output port have Peripheral Component Interconnect Express (PCIE) interfaces that read and write PCI-Express packets to and from host-processor memories. When used in a blade system, host processor boards have PCIE physical links that connect to the PCIE ports on the Pseudo-Ethernet switch. The Pseudo-Ethernet switch does not have Ethernet MAC and Ethernet physical layers, saving considerable hardware. The switch fabric can be a cross-bar switch or can be a shared memory that stores Ethernet packet data embedded in the PCIE packets. Write and read pointers for a buffer storing an Ethernet packet in the shared memory can be passed from input to output port to perform packet switching.

Owner:DIODES INC

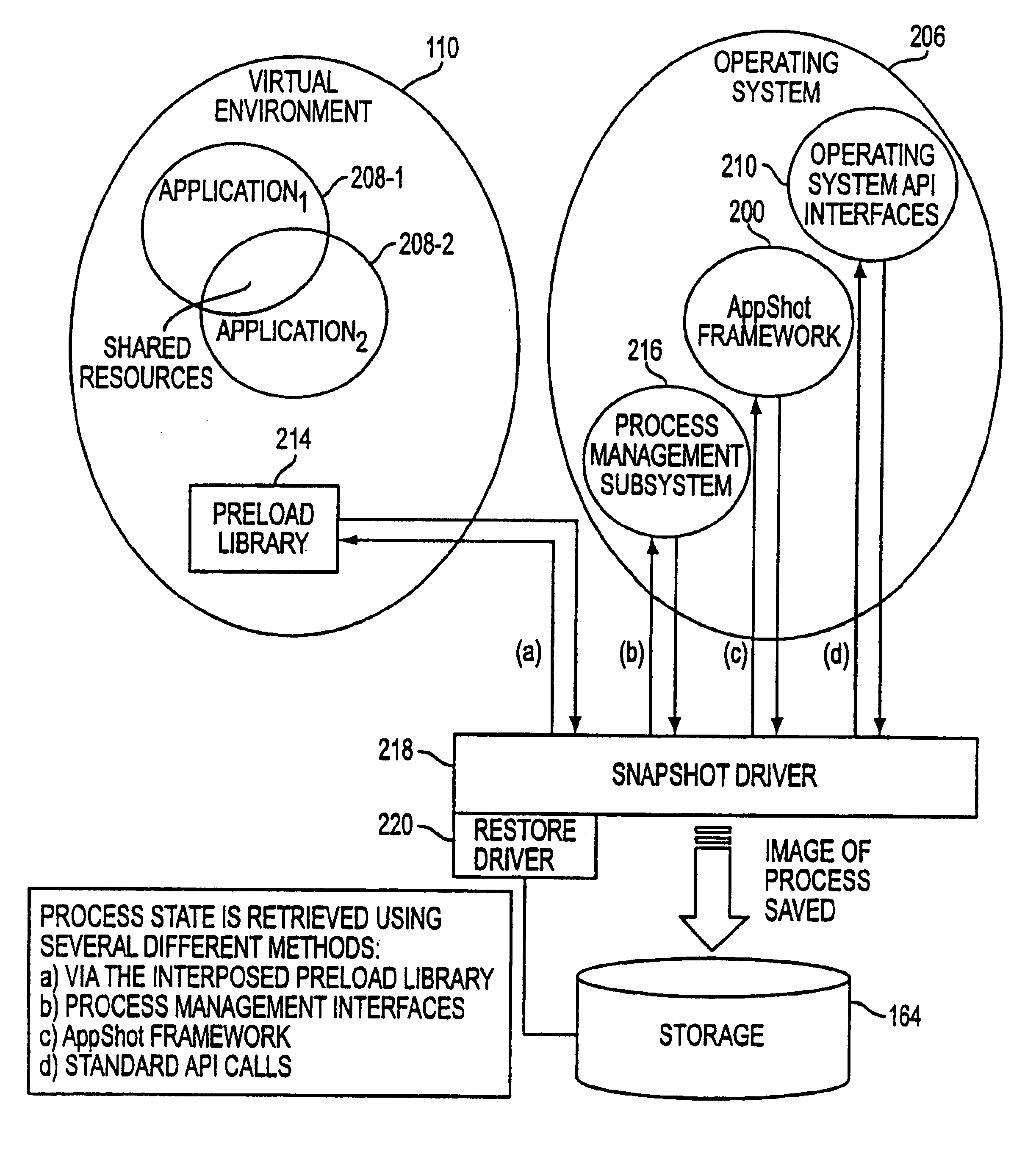

Snapshot restore of application chains and applications

InactiveUS6848106B1Data processing applicationsProgram initiation/switchingOperational systemSystem call

The present invention saves all process state, memory, and dependencies related to a software application to a snapshot image. Interprocess communication (IPC) mechanisms such as shared memory and semaphores must be preserved in the snapshot image as well. IPC mechanisms include any resource that is shared between two process or any communication mechanism or channel that allow two processes to communicate or interoperate is a form of IPC. Between snapshots, memory deltas are flushed to the snapshot image, so that only the modified-pages need be updated. Software modules are included to track usage of resources and their corresponding handles. At snapshot time, state is saved by querying the operating system kernel, the application snapshot / restore framework components, and the process management subsystem that allows applications to retrieve internal process-specific information not available through existing system calls. At restore time, the reverse sequence of steps for the snapshot procedure is followed and state is restored by making requests to the kernel, the application snapshot / restore framework, and the process management subsystem.

Owner:SYMANTEC OPERATING CORP

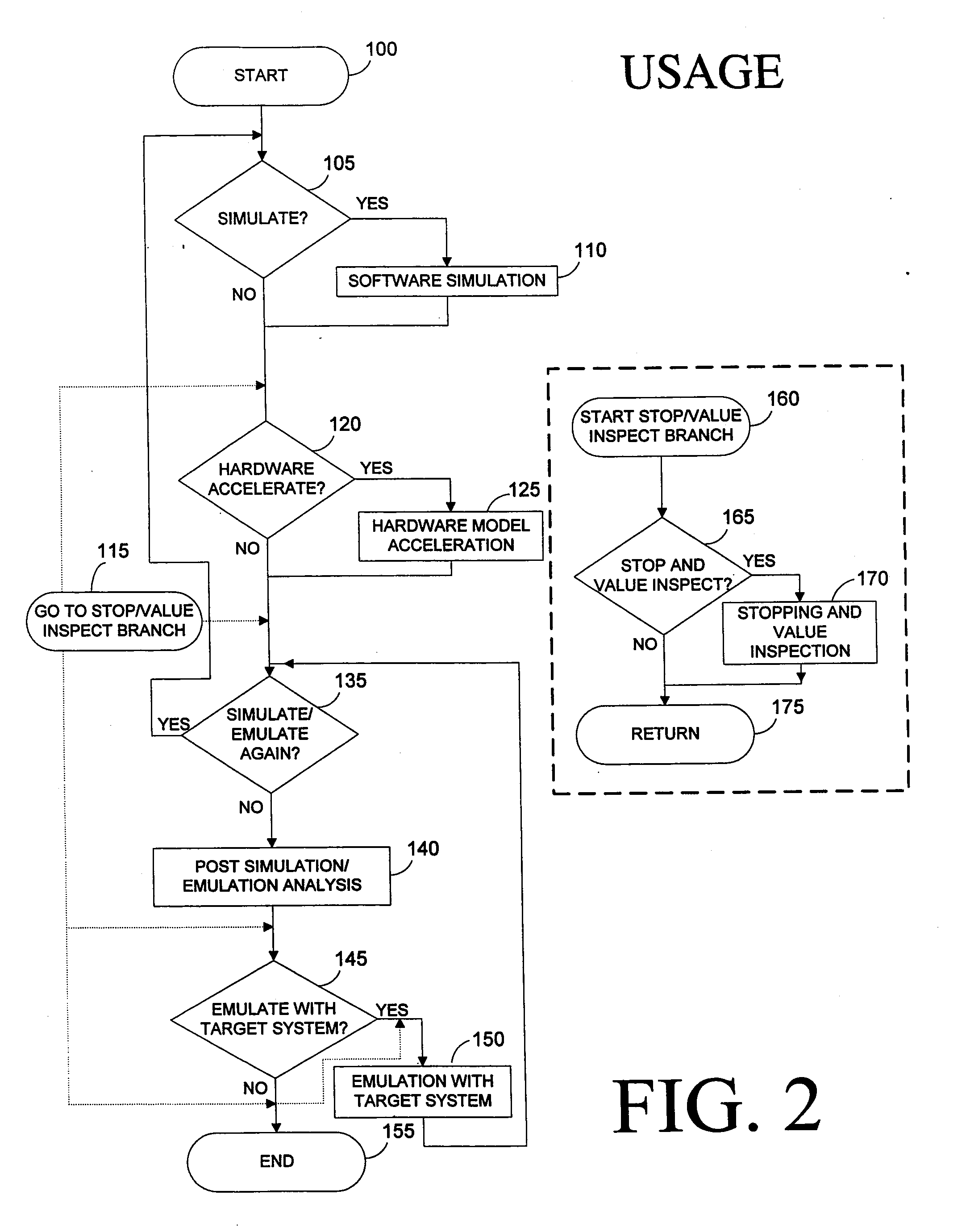

Common shared memory in a verification system

InactiveUS20110307233A1LessSimilar performance levelAnalogue computers for electric apparatusCAD circuit designCoded elementWorkstation

The debug system described in this patent specification provides a system that generates hardware elements from normally non-synthesizable code elements for placement on an FPGA device. This particular FPGA device is called a Behavior Processor. This Behavior Processor executes in hardware those code constructs that were previously executed in software. When some condition is satisfied (e.g., If . . . then . . . else loop) which requires some intervention by the workstation or the software model, the Behavior Processor works with an Xtrigger device to send a callback signal to the workstation for immediate response.

Owner:CADENCE DESIGN SYST INC

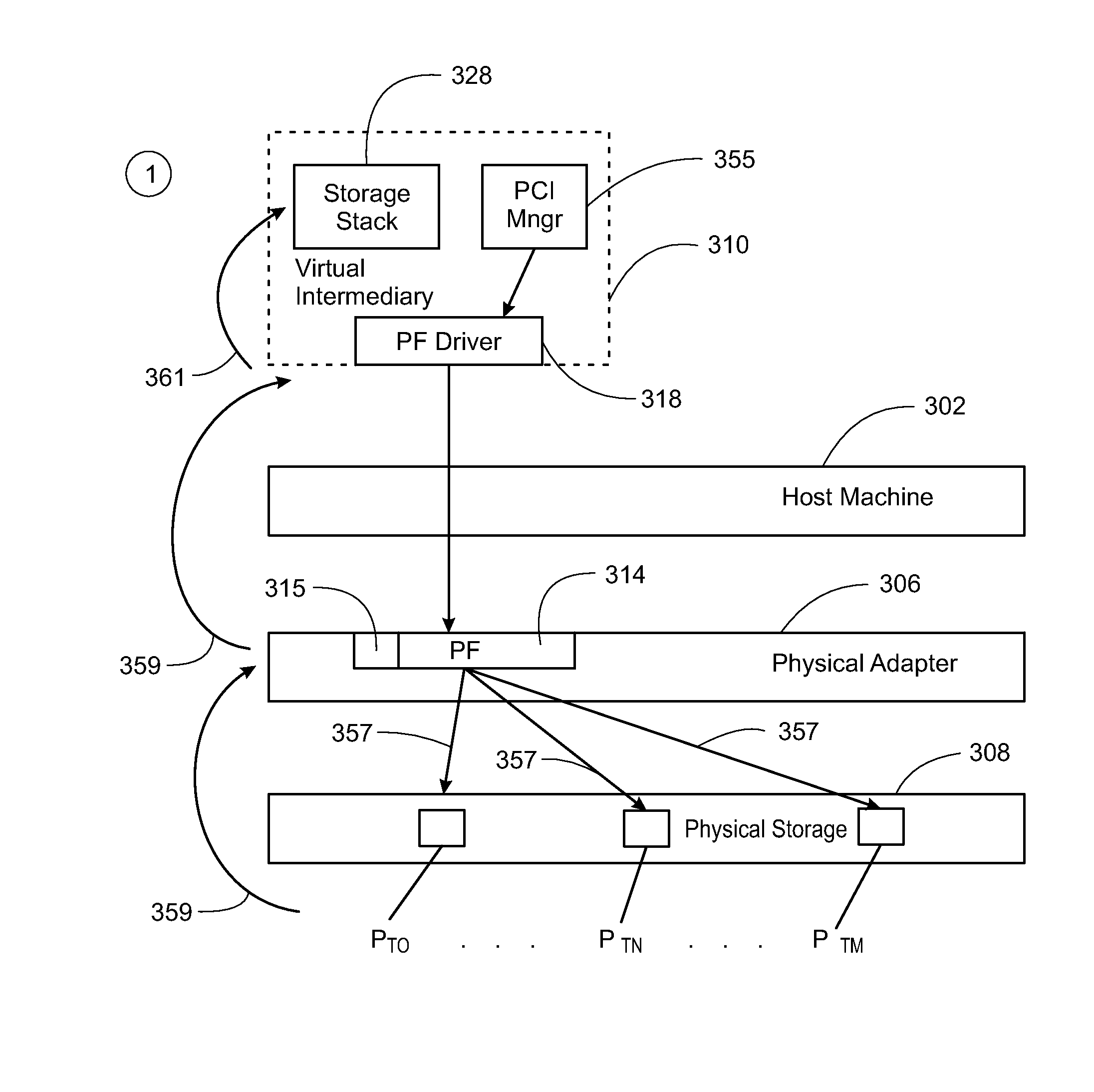

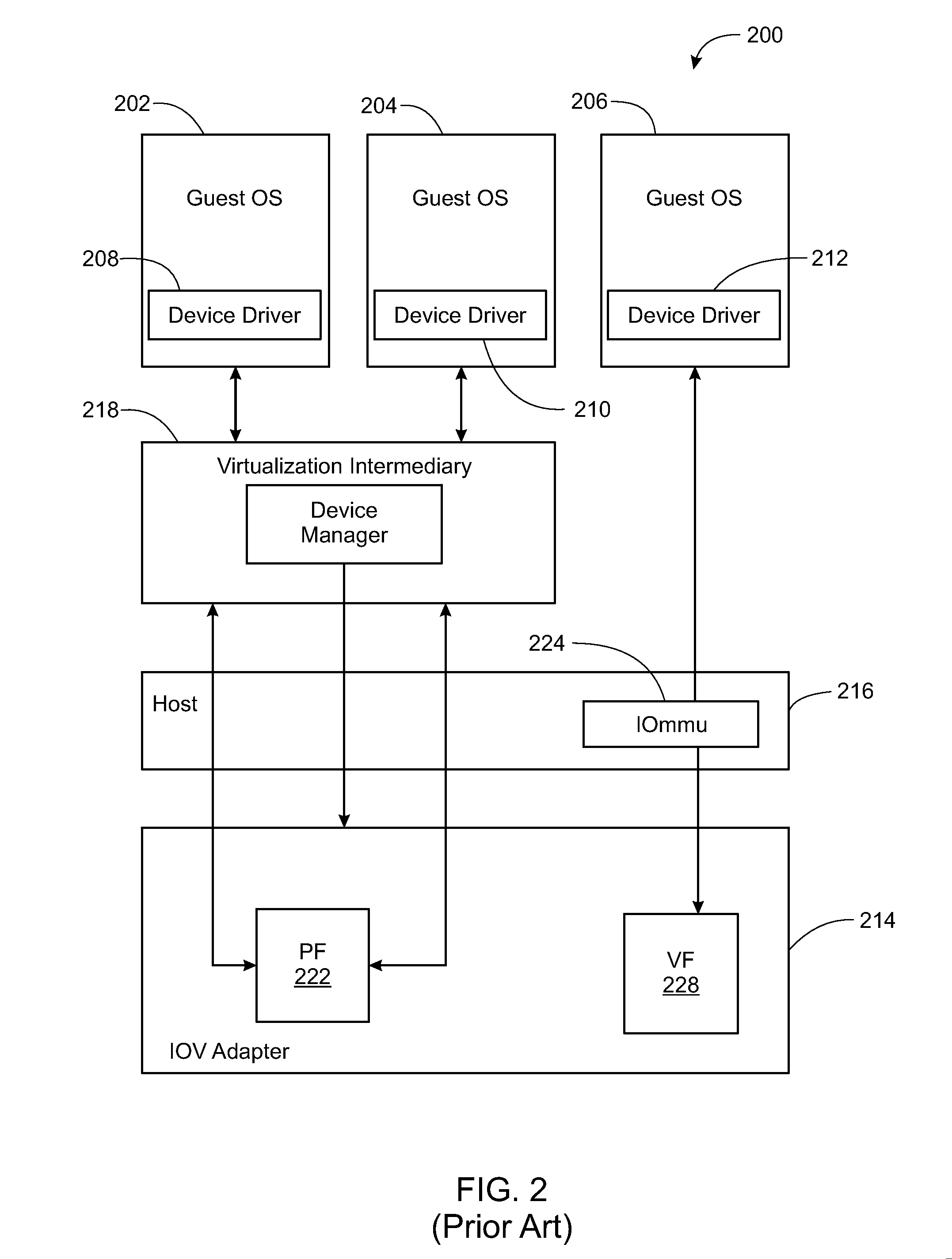

Virtualization intermediary/virtual machine guest operating system collaborative SCSI path management

A method of direct access by a virtual machine (VM) running on a host machine to physical storage via a virtual function (VF) running on an input / output (IO) adapter comprising: providing by a virtualization intermediary running on the host machine an indication of an active path associated with a virtual storage device; obtaining by a guest driver running within a guest operating system of the VM the stored indication of the active path from the shared memory region; dispatching an IO request by the guest driver to the VF that includes an indication of the active path; and sending by the VF an IO request that includes the indicated active path.

Owner:VMWARE INC

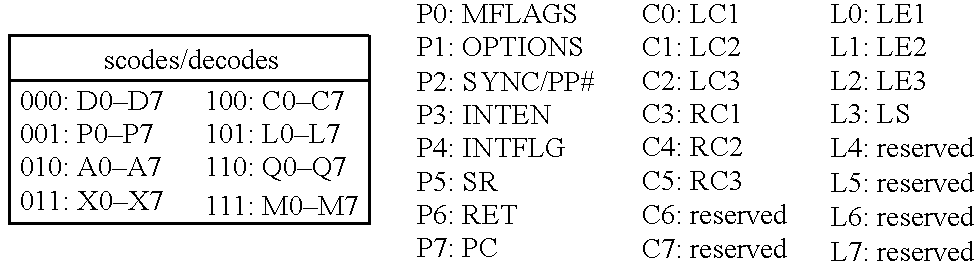

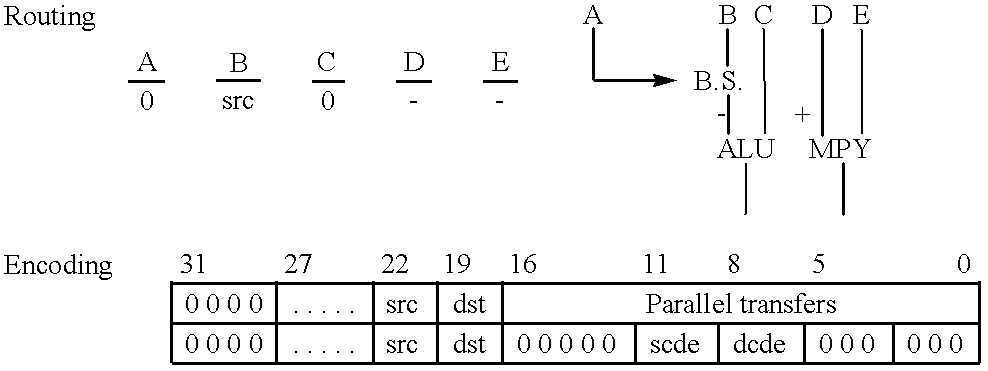

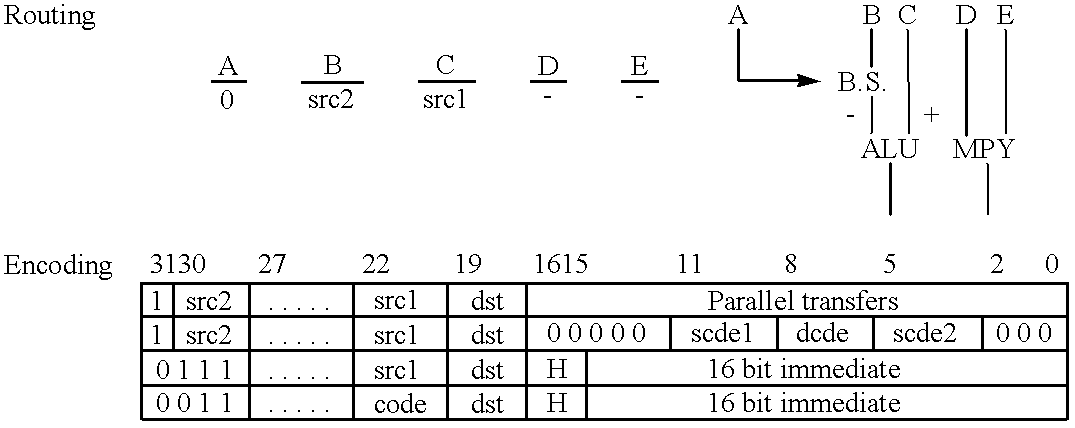

Single integrated circuit embodying a risc processor and a digital signal processor

InactiveUS6260088B1Save spaceImprove versatilityGeneral purpose stored program computerMultiple digital computer combinationsDigital signal processingComputer image

A single integrated circuit includes first and second data processors operating on different instruction sets independently operating on disjoint programs and data. The single integrated circuit preferably includes an external interface, a shared data transfer controller and shared memory divided into plural independently accessible memory banks. The two data processors are preferably a digital signal processor (DSP) and a reduced instruction set computer (RISC) processor. The DSP and RISC processors are suitably programmed to perform differing aspects of computer image processing.

Owner:TEXAS INSTR INC

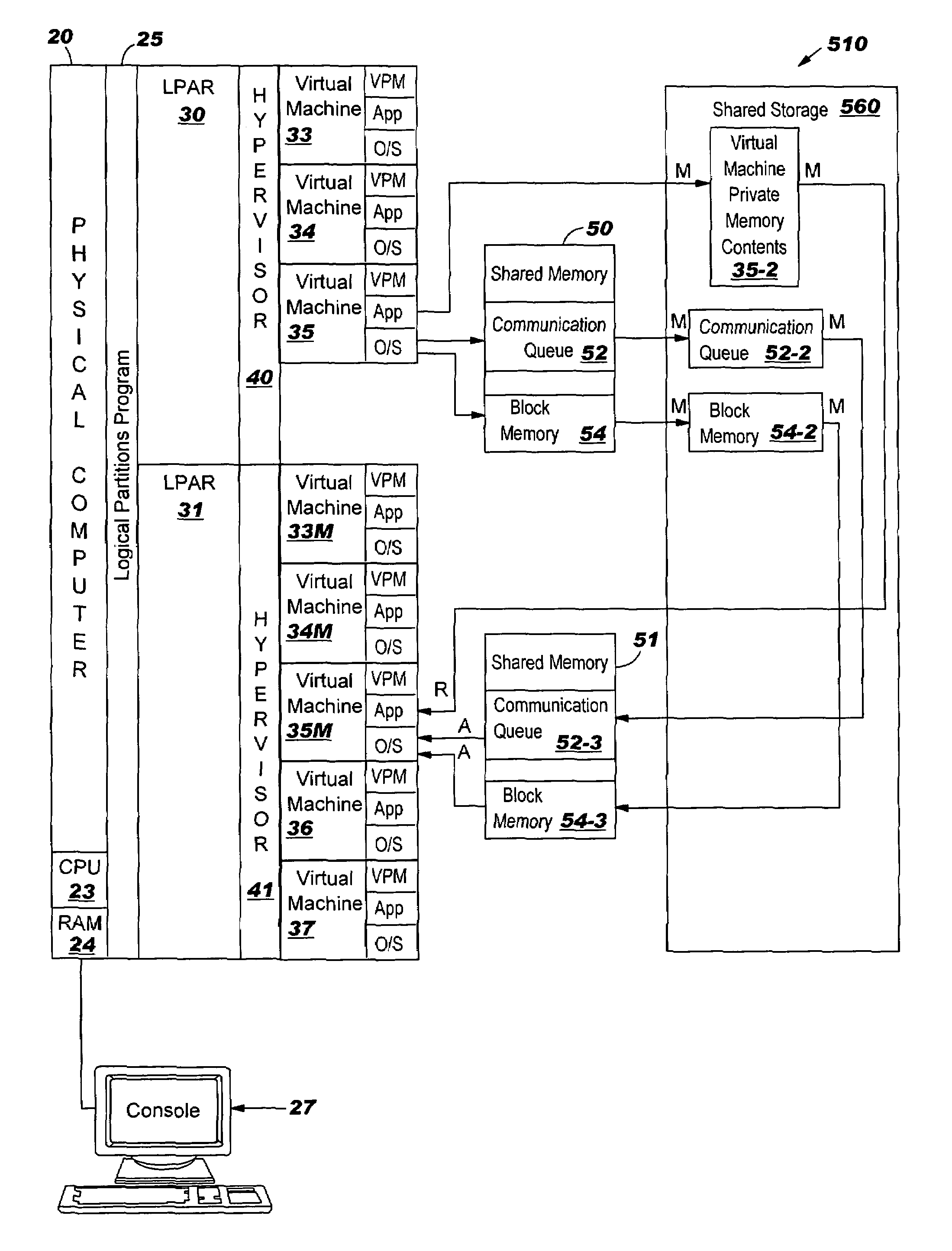

System, method and program to migrate a virtual machine

ActiveUS20070169121A1Resource allocationSoftware simulation/interpretation/emulationOperational systemApplication software

A system, method and program product for migrating a first virtual machine from a first real computer to a second real computer or from a first LPAR to a second LPAR in a same real computer. Before migration, the first virtual machine comprises an operating system and an application in a first private memory private to the first virtual machine. A communication queue of the first virtual machine resides in a shared memory shared by the first and second computers or the first and second LPARs. The operating system and application are copied from the first private memory to the shared memory. The operating system and application are copied from the shared memory to a second private memory private to the first virtual machine in the second computer or second LPAR. Then, the first virtual machine is resumed in the second computer or second LPAR.

Owner:INT BUSINESS MASCH CORP

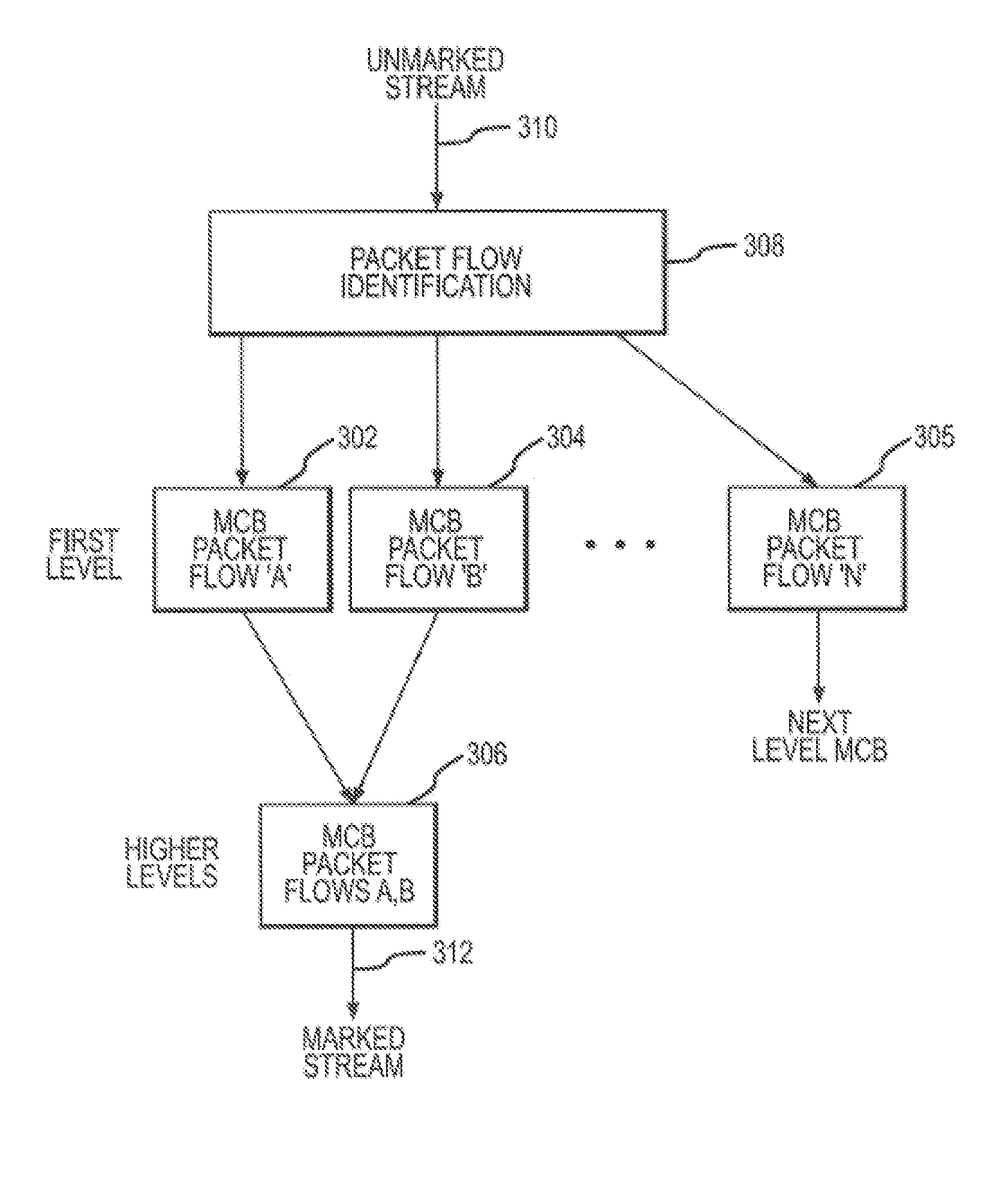

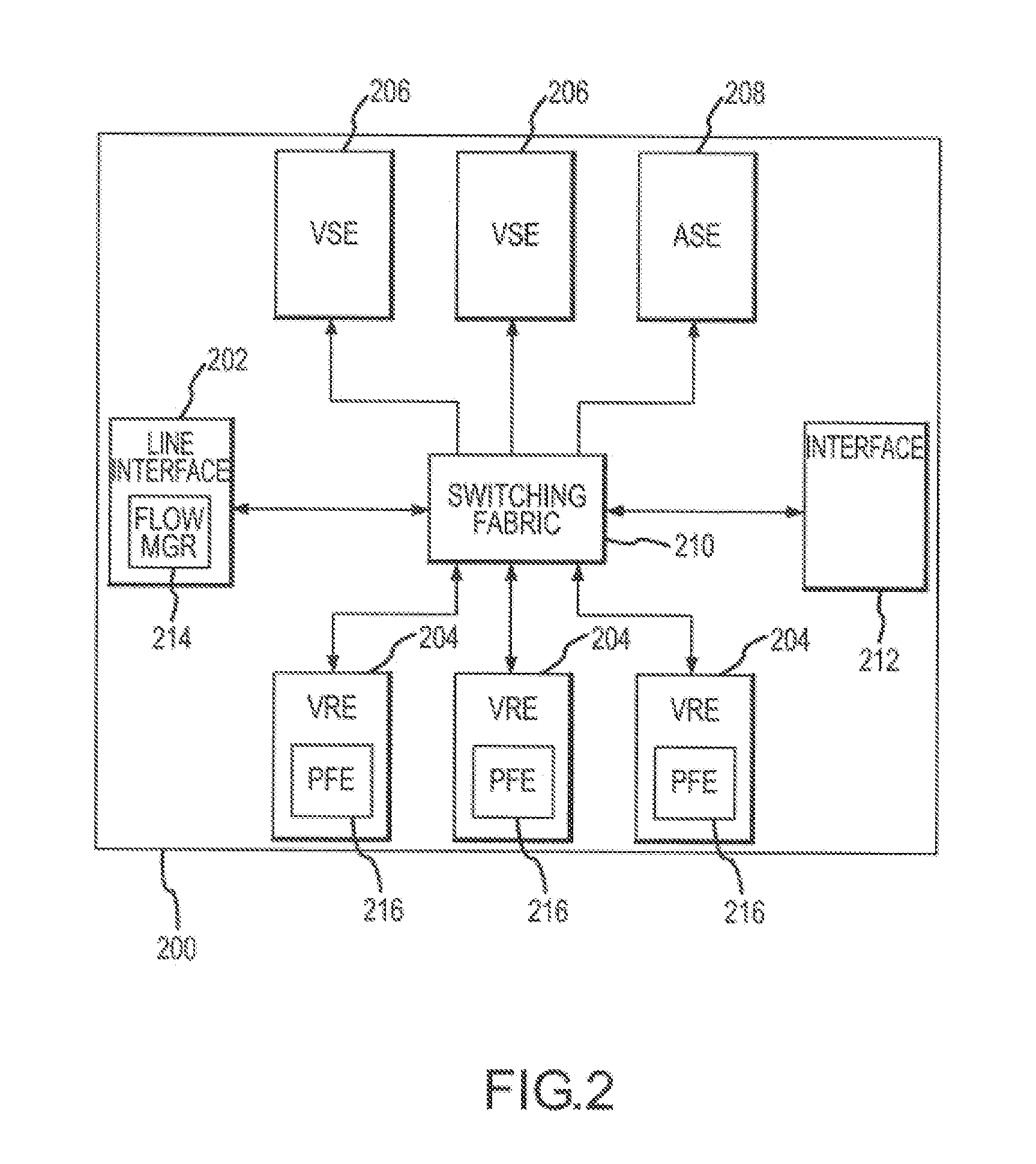

System and method for hierarchical metering in a virtual router based network switch

A virtual routing platform includes a line interface a plurality of virtual routing engines (VREs) to identify packets of different packet flows and perform a hierarchy of metering including at least first and second levels of metering on the packet flows. A first level of metering may be performed on packets of a first packet flow using a first metering control block (MCB). The first level of metering may be one level of metering in a hierarchy of metering levels. A second level of metering on the packets of the first packet flow and packets of a second flow using a second MCB. The second level of metering may be another level of metering in the hierarchy. A cache-lock may be placed on the appropriate MCB prior to performing the level of metering. The first and second MCBs may be data structures stored in a shared memory of the virtual routing platform. The cache-lock may be released after performing the level of metering using the MCB. The cache-lock may comprise setting a lock-bit of a cache line index in a cache tag store, which may identify a MCB in the cache memory. The virtual routing platform may be a multiprocessor system utilizing a shared memory having a first and second processors to perform levels of metering in parallel. In one embodiment, a virtual routing engine may be shared by a plurality of virtual router contexts running in a memory system of a CPU of the virtual routing engine. In this embodiment, the first packet flow may be associated with one virtual router context and the second packet flow is associated with a second virtual router context. The first and second routing contexts may be of a plurality of virtual router contexts resident in the virtual routing engine.

Owner:GOOGLE LLC

Wireless communication system and method

InactiveUS20100202346A1Network traffic/resource managementNetwork topologiesCommunications systemInter vehicle communication

Provided in some embodiments is a method of wireless inter-vehicle communication. The method includes storing a message packet in a shared memory location of a first communication device. The shared memory location is wirelessly accessible by one or more other communication devices. The method also includes assessing, at a second communication device, whether or not the message packet stored in the shared memory location of the first communication device is intended to be received at the second communication device and assessing, at the second communication device, whether or not to accept the message packet from the shared memory location of the first communication device. Further, the method includes receiving at least a portion of the message packet at the second communication device if it is determined that the message packet should be accepted from the shared memory location of the first communication device.

Owner:GRAVITY TECH

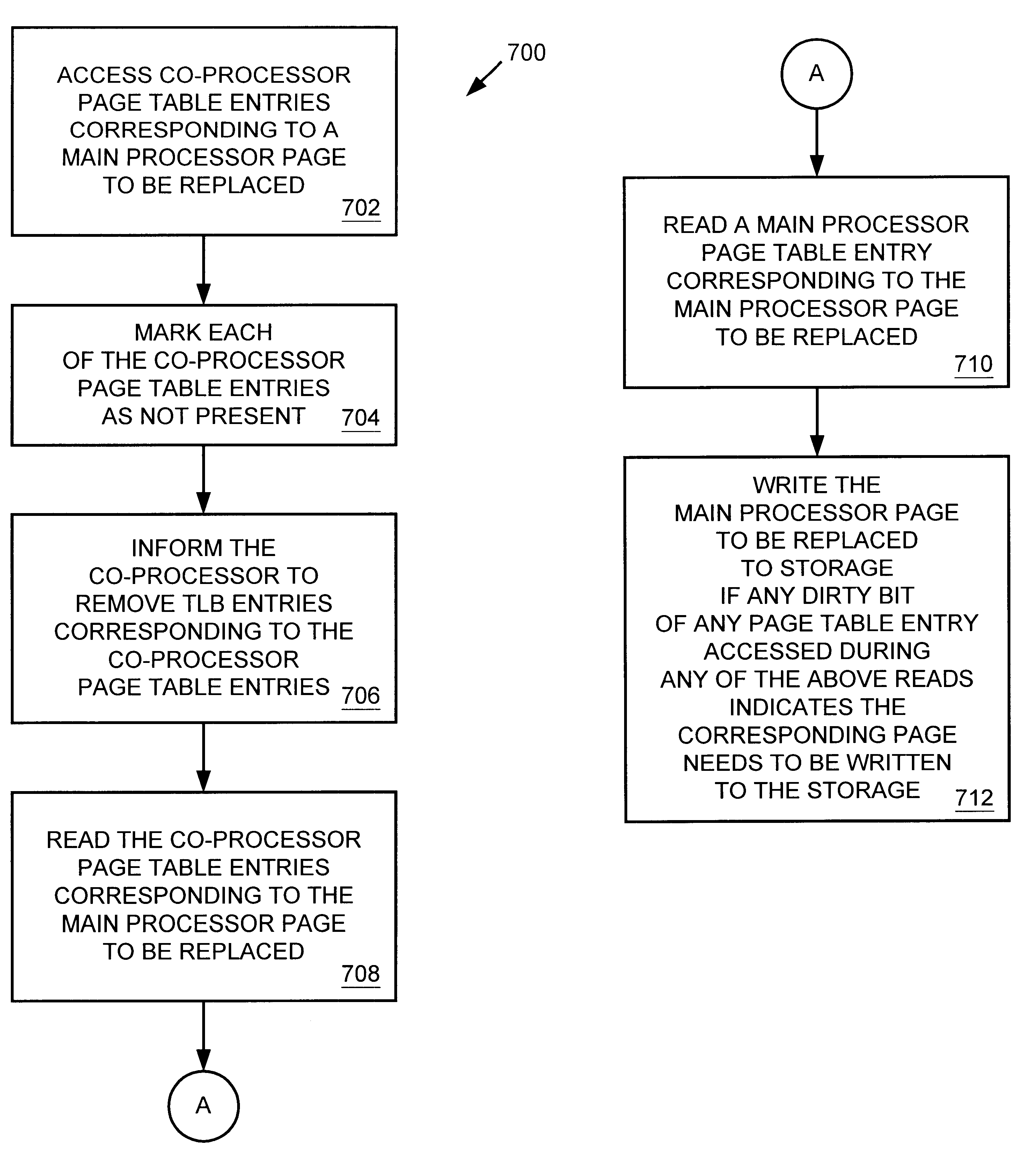

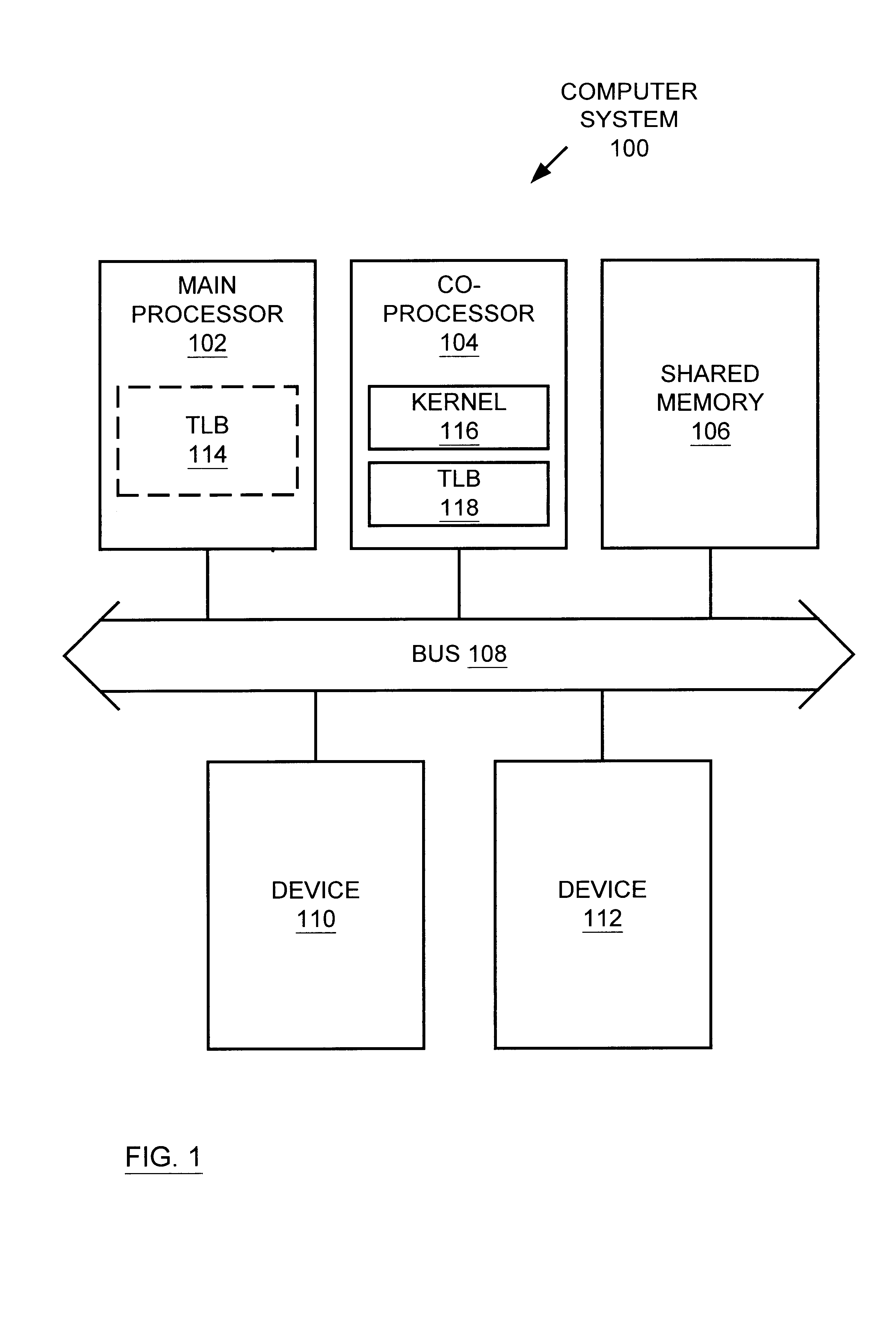

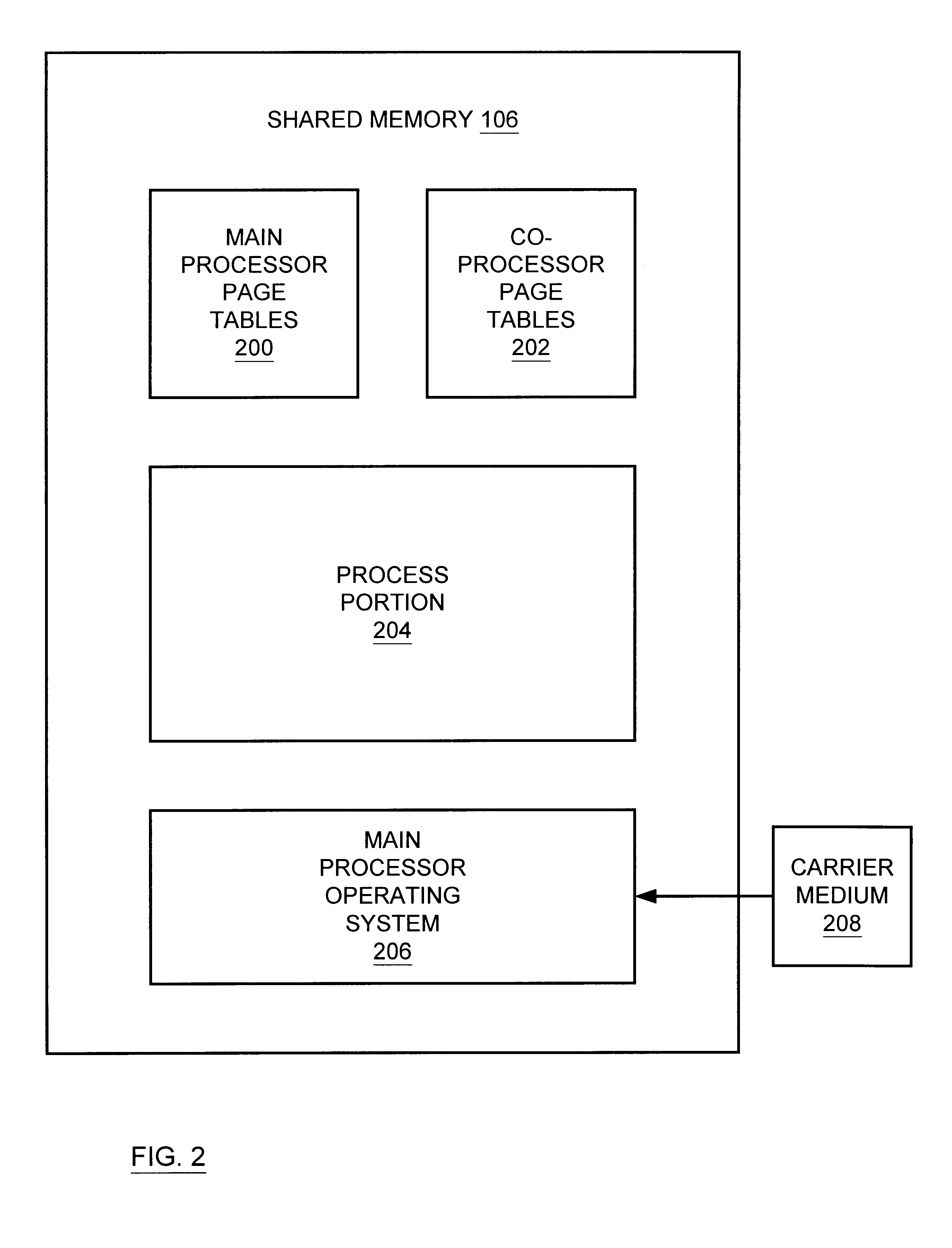

Multiprocessor system implementing virtual memory using a shared memory, and a page replacement method for maintaining paged memory coherence

InactiveUS6684305B1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryComputer architecture

A computer system including a first processor, a second processor in communication with the first processor, a memory coupled to the first and second processors (i.e., a shared memory) and including multiple memory locations, and a storage device coupled to the first processor. The first and second processors implement virtual memory using the memory. The first processor maintains a first set of page tables and a second set of page tables in the memory. The first processor uses the first set of page tables to access the memory locations within the memory. The second processor uses the second set of page tables, maintained by the first processor, to access the memory locations within the memory. A virtual memory page replacement method is described for use in the computer system, wherein the virtual memory page replacement method is designed to help maintain paged memory coherence within the multiprocessor computer system.

Owner:GLOBALFOUNDRIES US INC

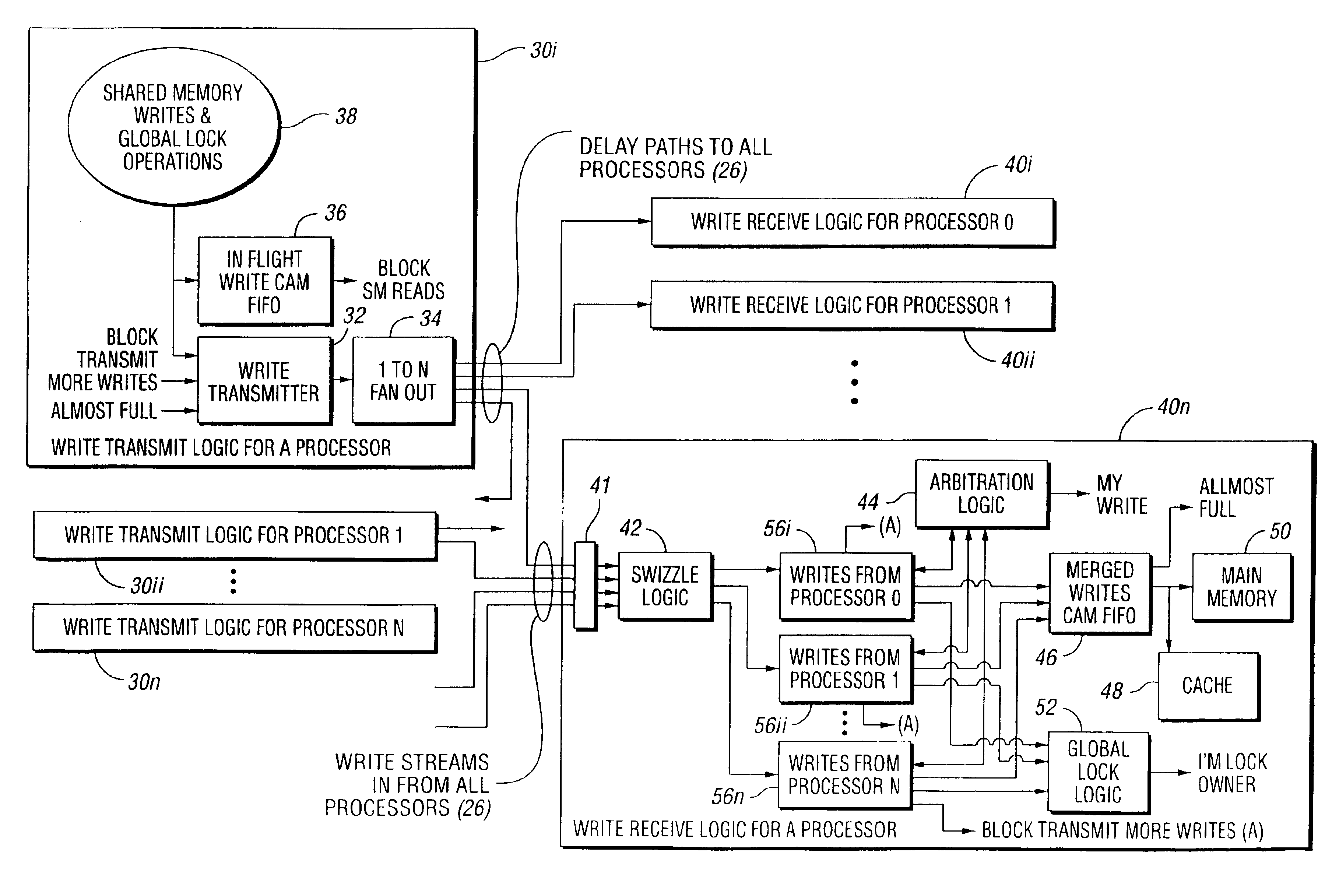

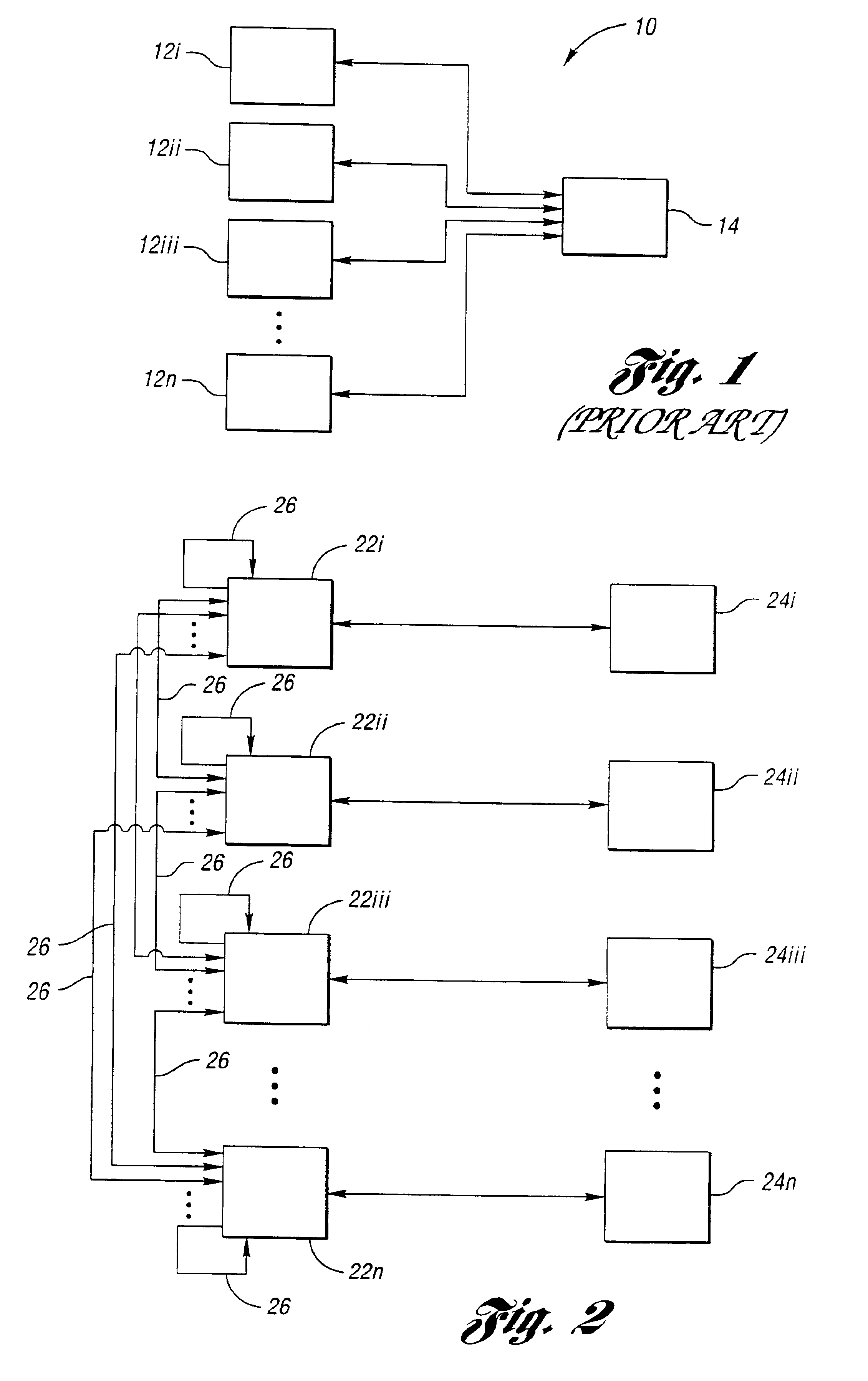

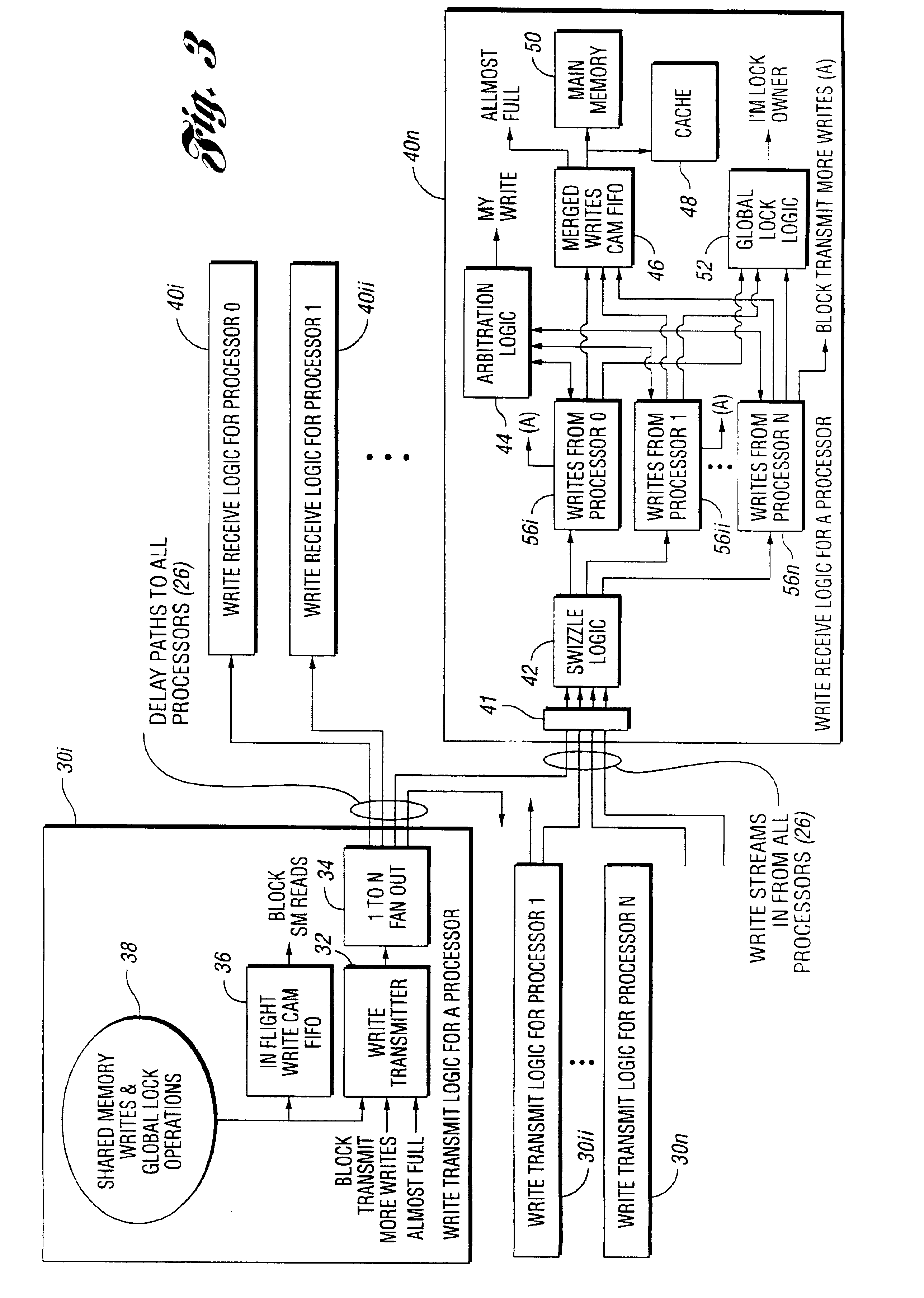

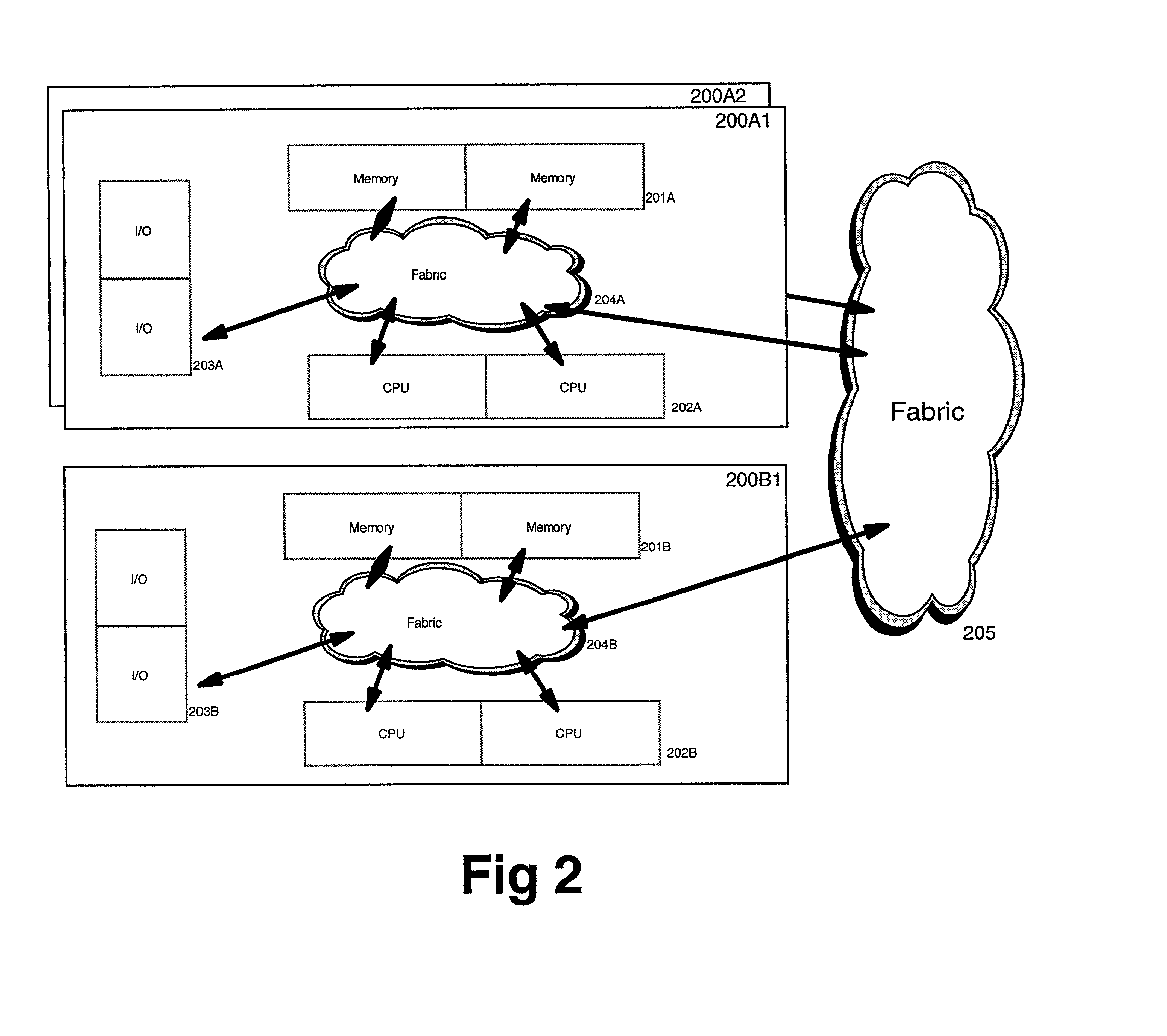

System and method for a distributed shared memory

A system and method for a distributed shared memory. The system includes multiple processors, each processor transmitting write commands issued therefrom concerning a shared memory to each of the processors, such that each processor receives each shared memory write command transmitted. The system also includes multiple local memories, each local memory associated with one of the processors and having a copy of the shared memory, wherein each processor completes each received shared memory write command at its associated local memory such that the copies of the shared memory remain consistent at all times. The method includes transmitting write commands concerning the shared memory to each of the processors, such that each processor receives each shared memory write command transmitted, and completing each received shared memory write command at the associated local memory such that the copies of the shared memory remain consistent at all times.

Owner:ORACLE INT CORP

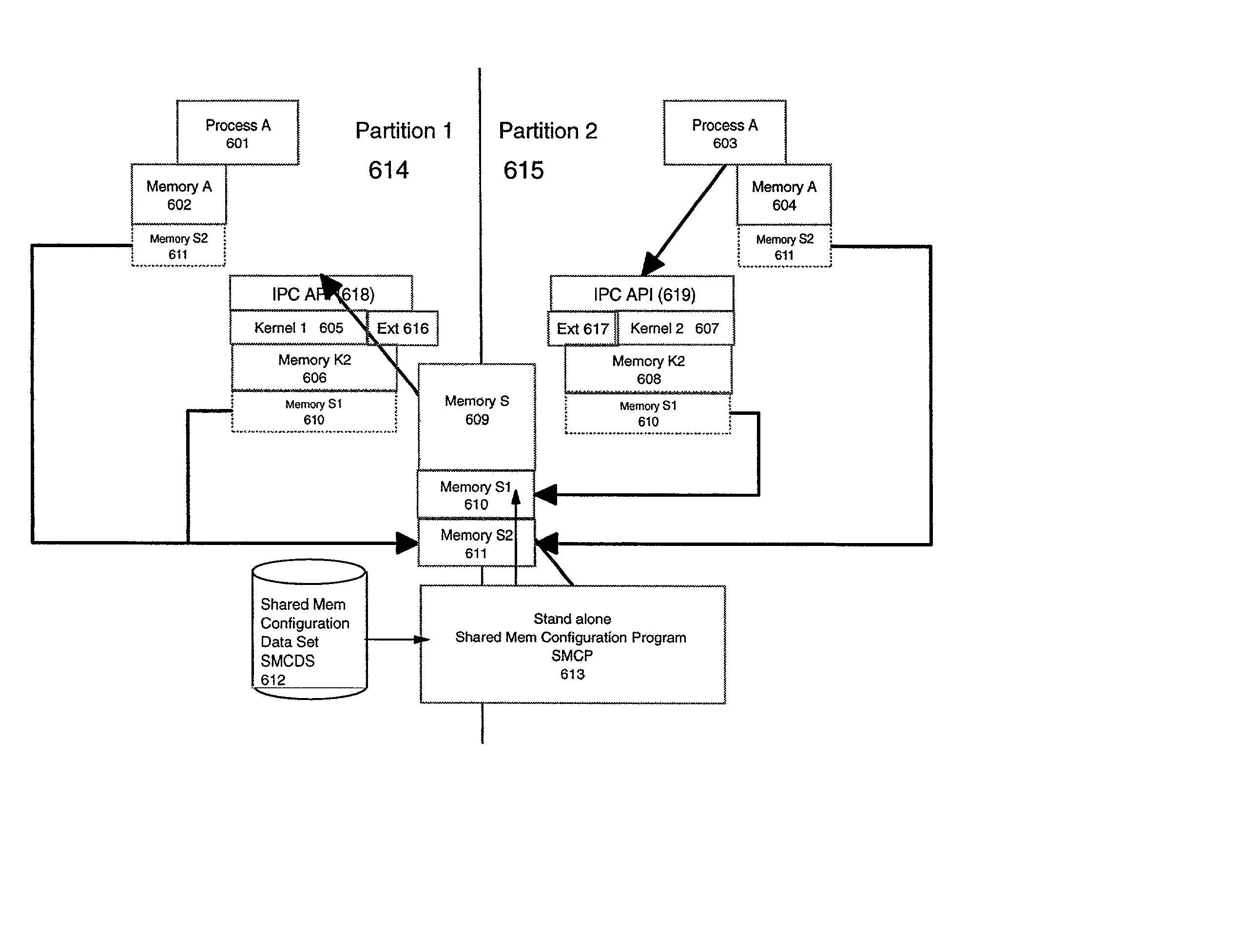

Inter-partition message passing method, system and program product for a security server in a partitioned processing environment

InactiveUS20020129274A1Facilitate movement of dataEasy to moveInterprogram communicationMultiple digital computer combinationsClient-sideHandling system

A partitioned processing system is disclosed wherein a common security server is run in a first partition and at least one server client is run in at least one other partition, each partition having a shared memory or memory-to-memory connection to said first partition, which enables security client server communication with the common security server. The partitioned processing system additionally has a main storage having a first portion accessible by the first partition and a second portion accessible by the second partition. Also included is a mechanism connected to the security client for sending a request for authorization by a user to the security client. A first transmitter in the security client sends the request for authorization from the security client to the common security server by way of said main storage. A second transmitter in the common security server sends a response to the request for authorization from the common security server to the security client by way of said main storage. A third transmitter in the security client then sends the response from the security client to the user.

Owner:IBM CORP

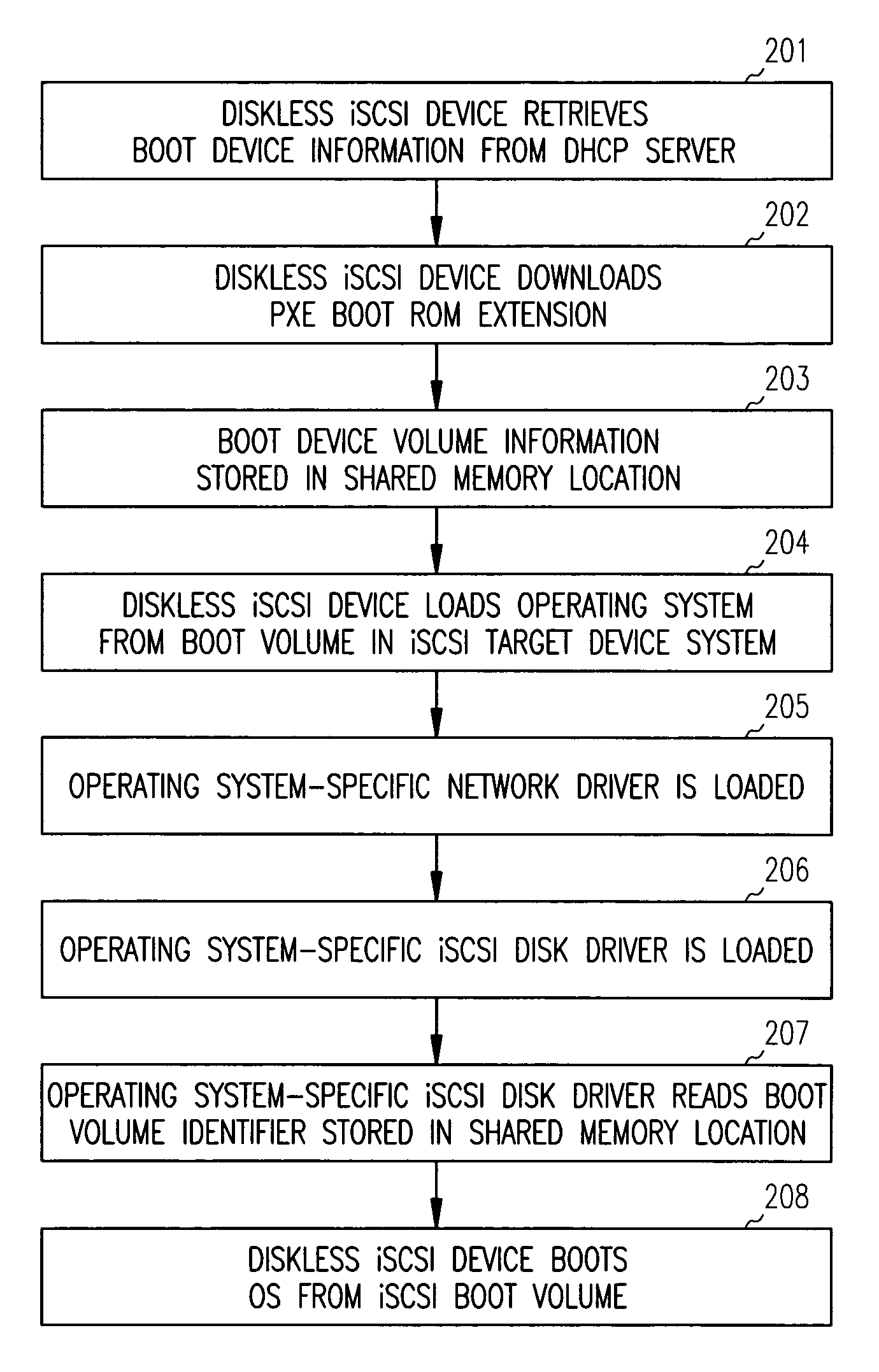

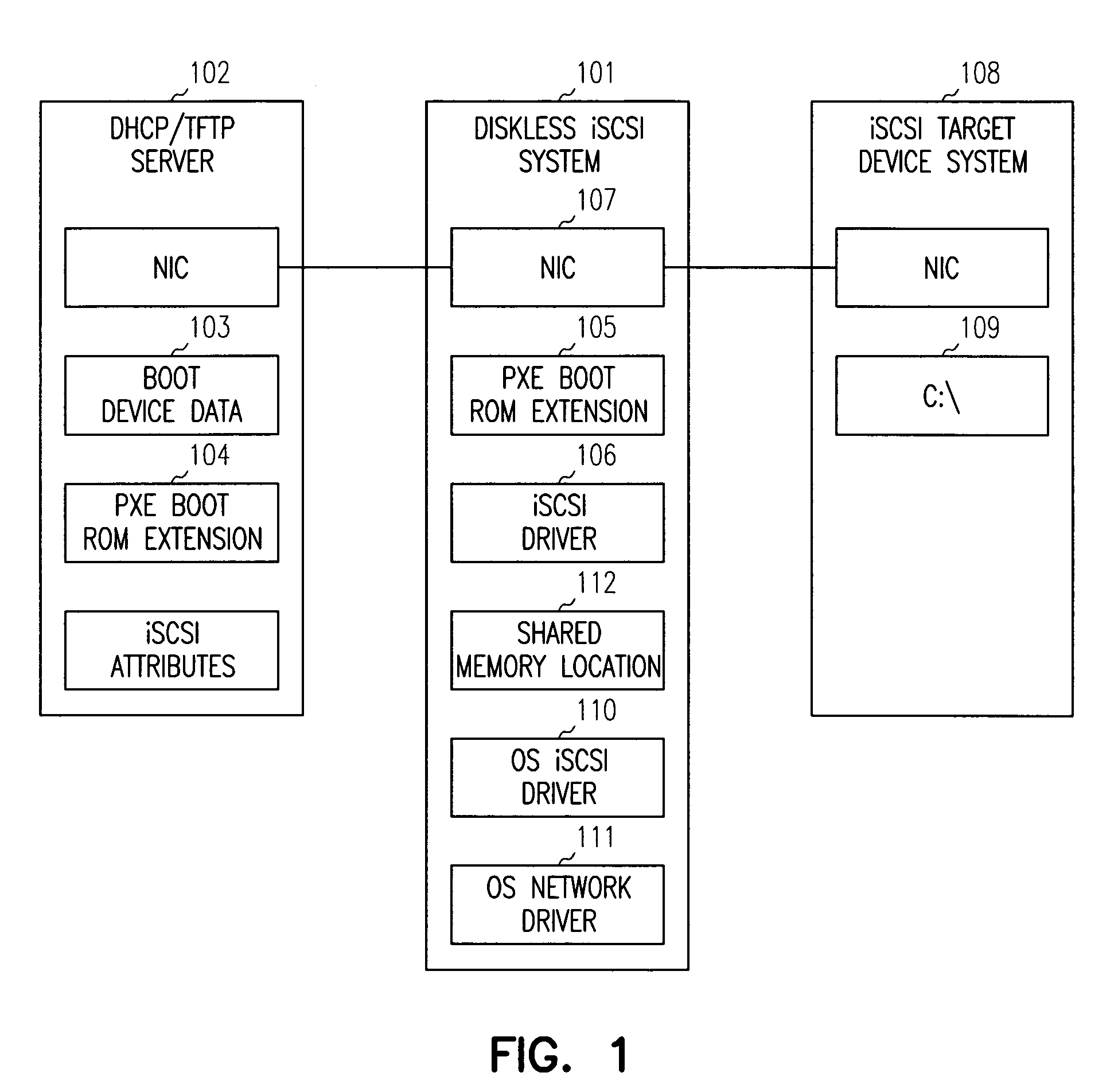

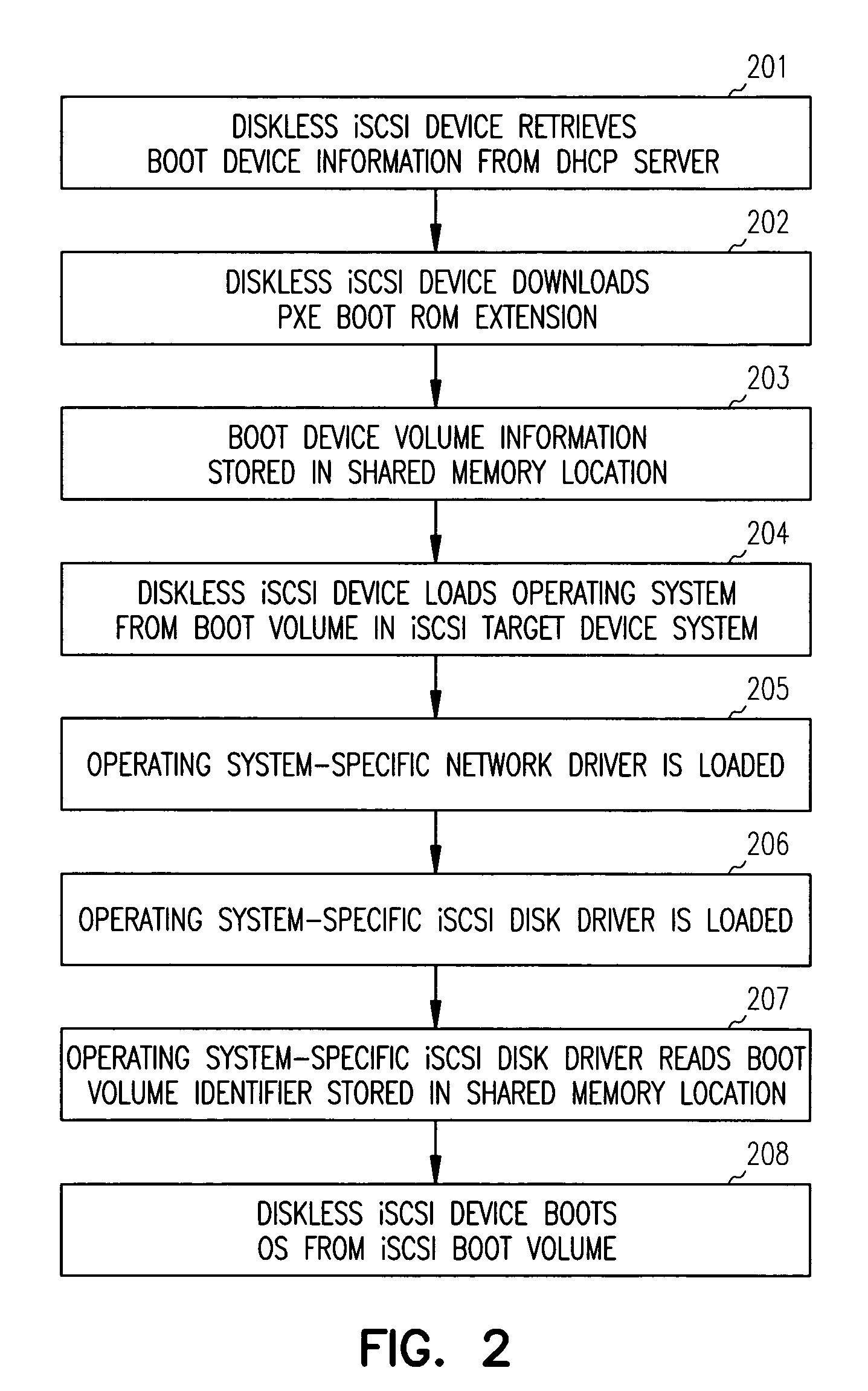

iSCSI system os boot configuration modification

A Pre-boot Execution Environment (PXE) boot extension is loaded upon system boot and is operable to store an iSCSI boot disk identifier identifying a remote disk drive to a shared memory location. As an operating system is loaded via iSCSI over a network connection to a remote disk, an operating system iSCSI driver is loaded. The operating system iSCSI driver reads the boot disk identifier stored in the shared memory location and uses the boot disk identifier to configure the operating system.

Owner:CISCO TECH INC

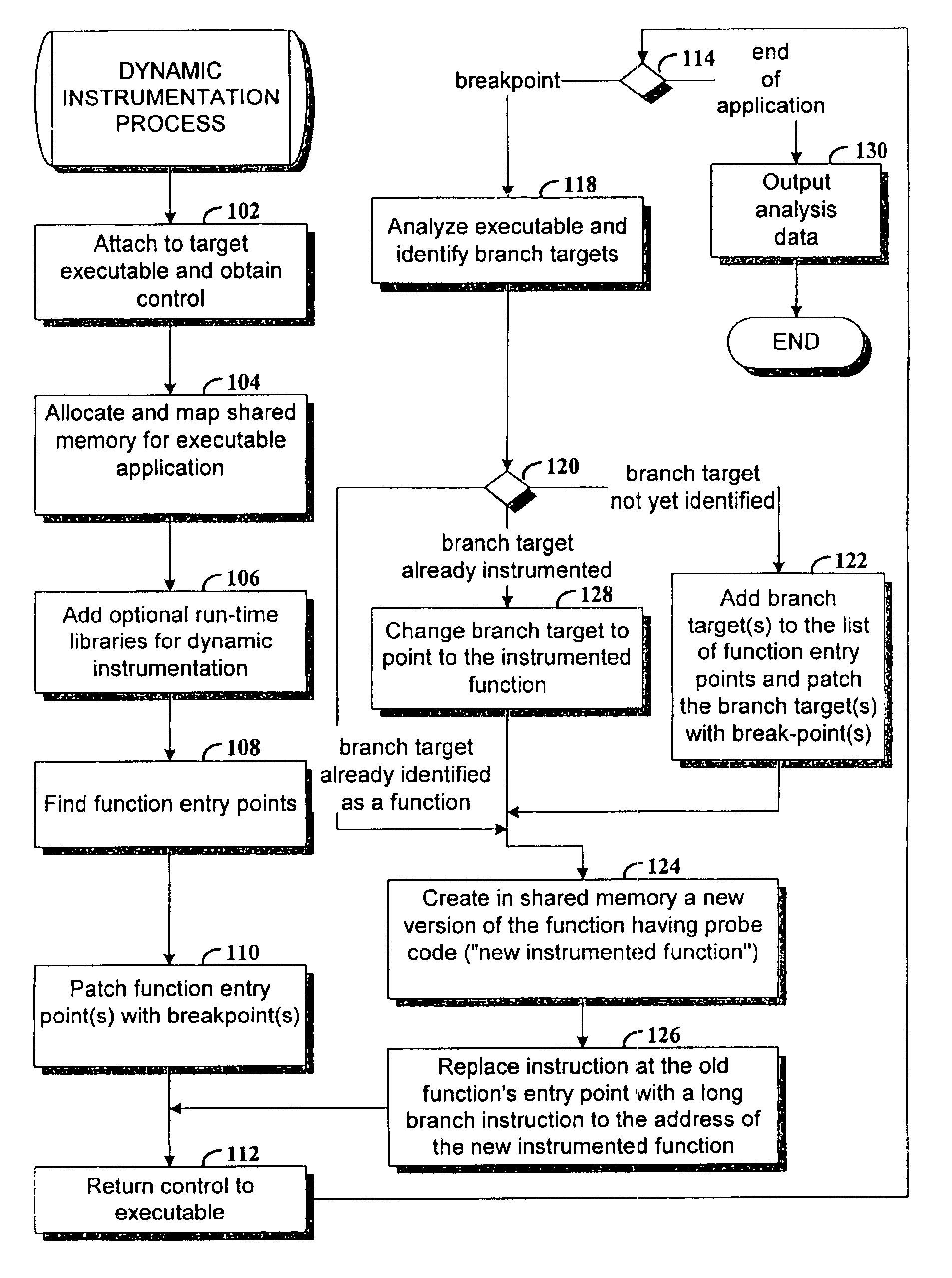

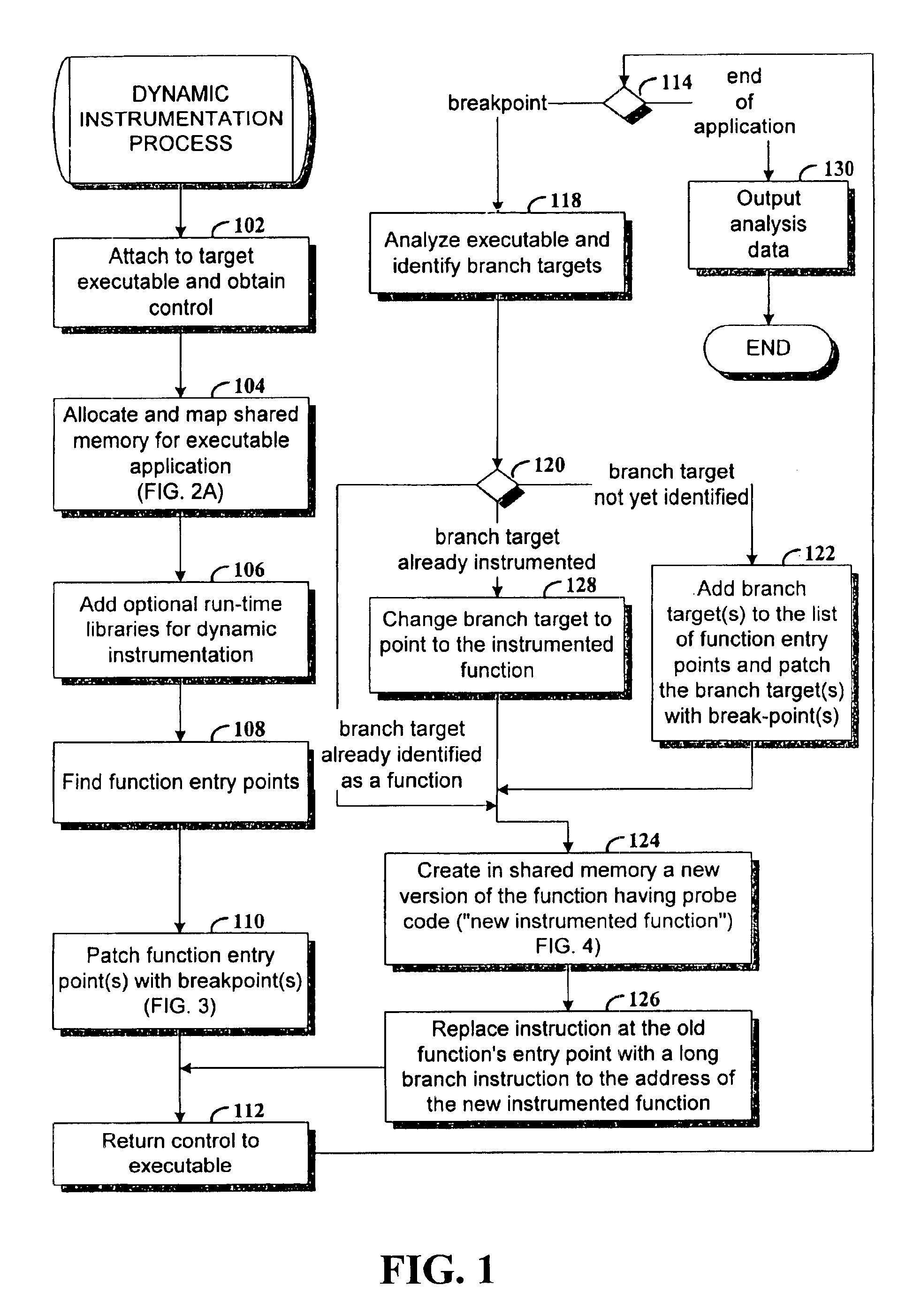

Dynamic instrumentation of an executable program by means of causing a breakpoint at the entry point of a function and providing instrumentation code

InactiveUS6918110B2Software testing/debuggingSpecific program execution arrangementsDynamic instrumentationEntry point

Method and apparatus for dynamic instrumentation of an executable application program. The application program includes a plurality of functions, each function having an entry point and an endpoint. When the application is executed, a shared memory segment is created for an instrumentation program and the application program. Upon initial invocation of the original functions in the application program, corresponding substitute functions are created in the shared memory segment, the substitute versions including instrumentation code. Thereafter, the substitute functions are executed in lieu of the original functions in the application program.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

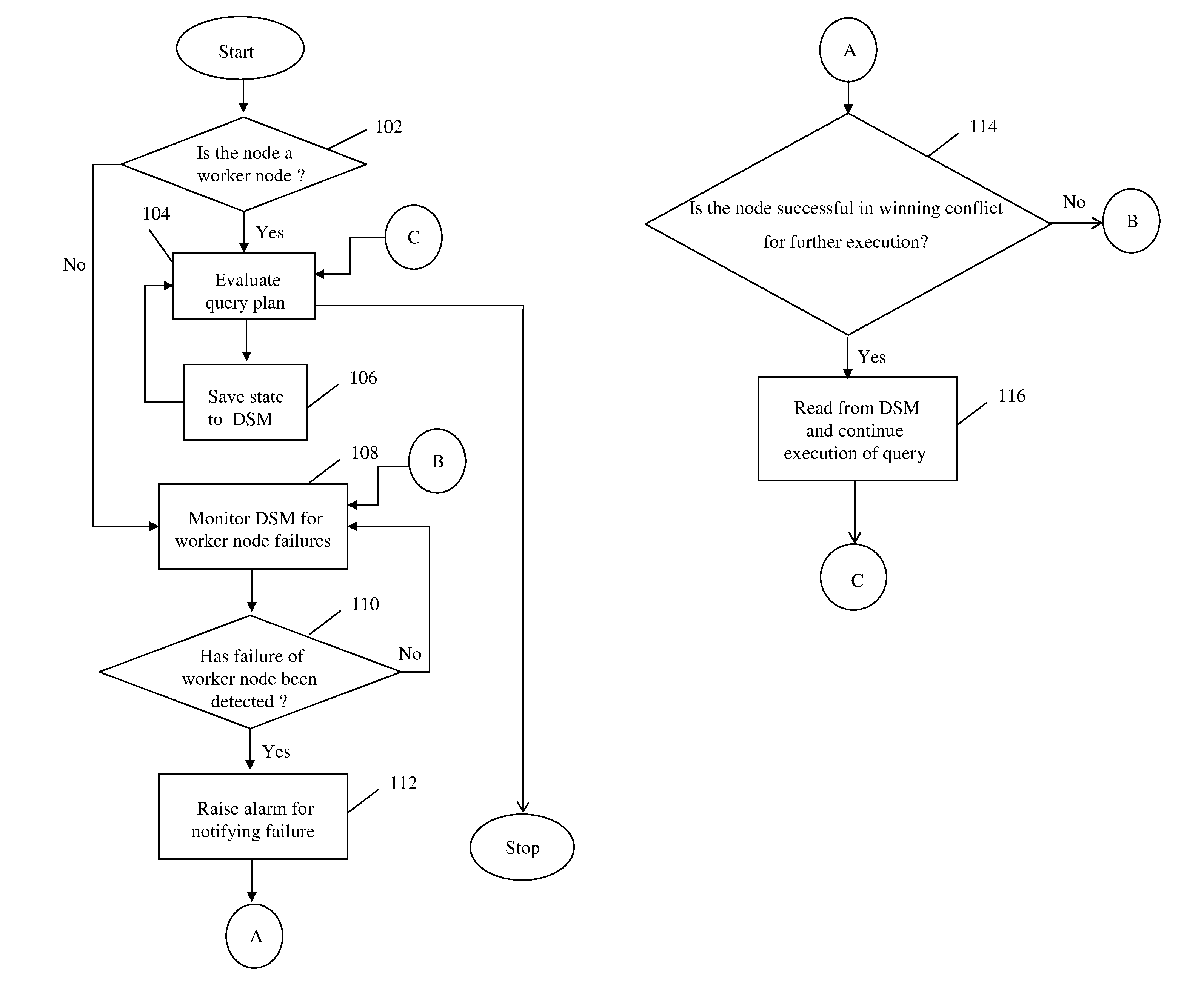

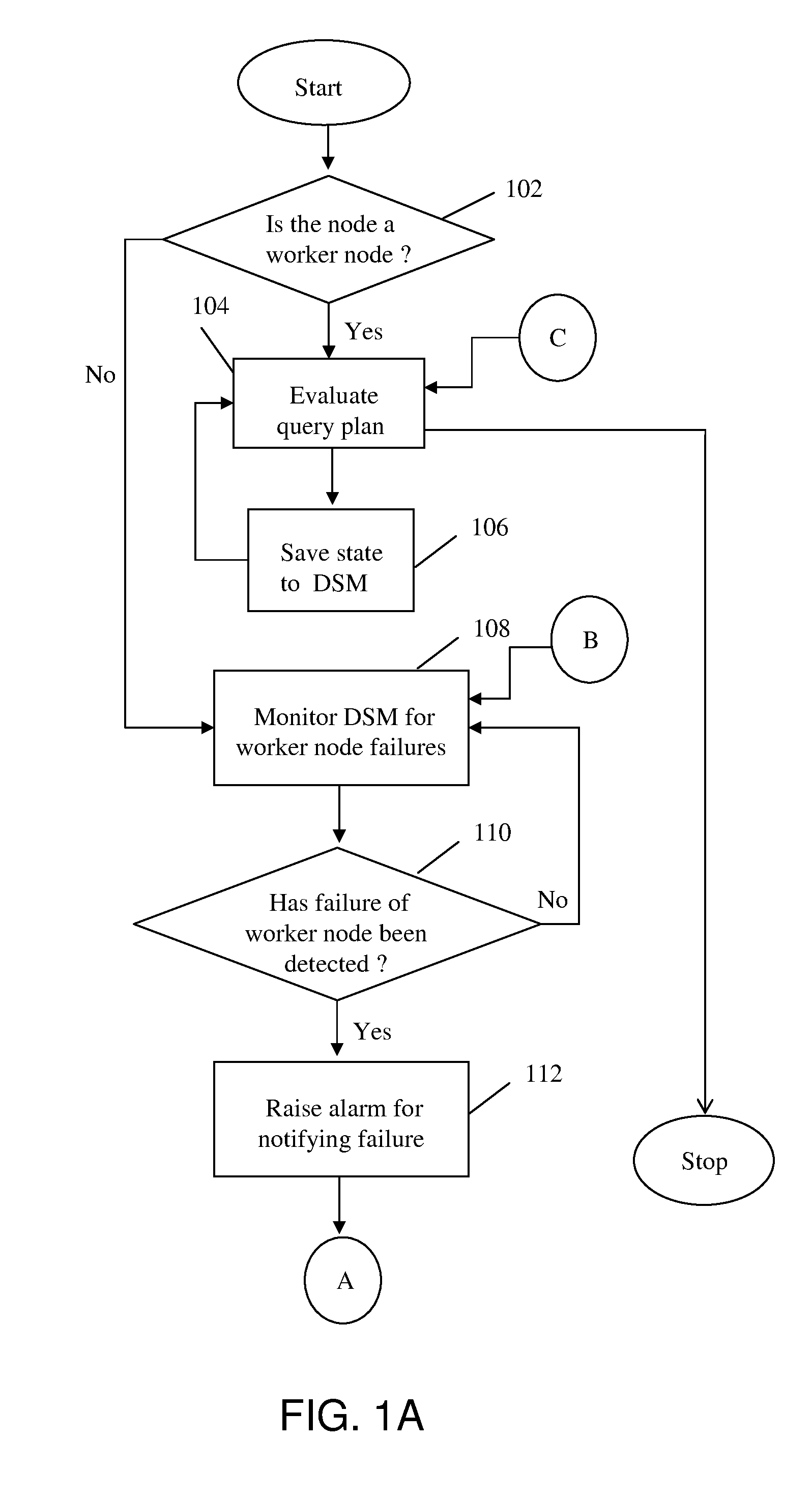

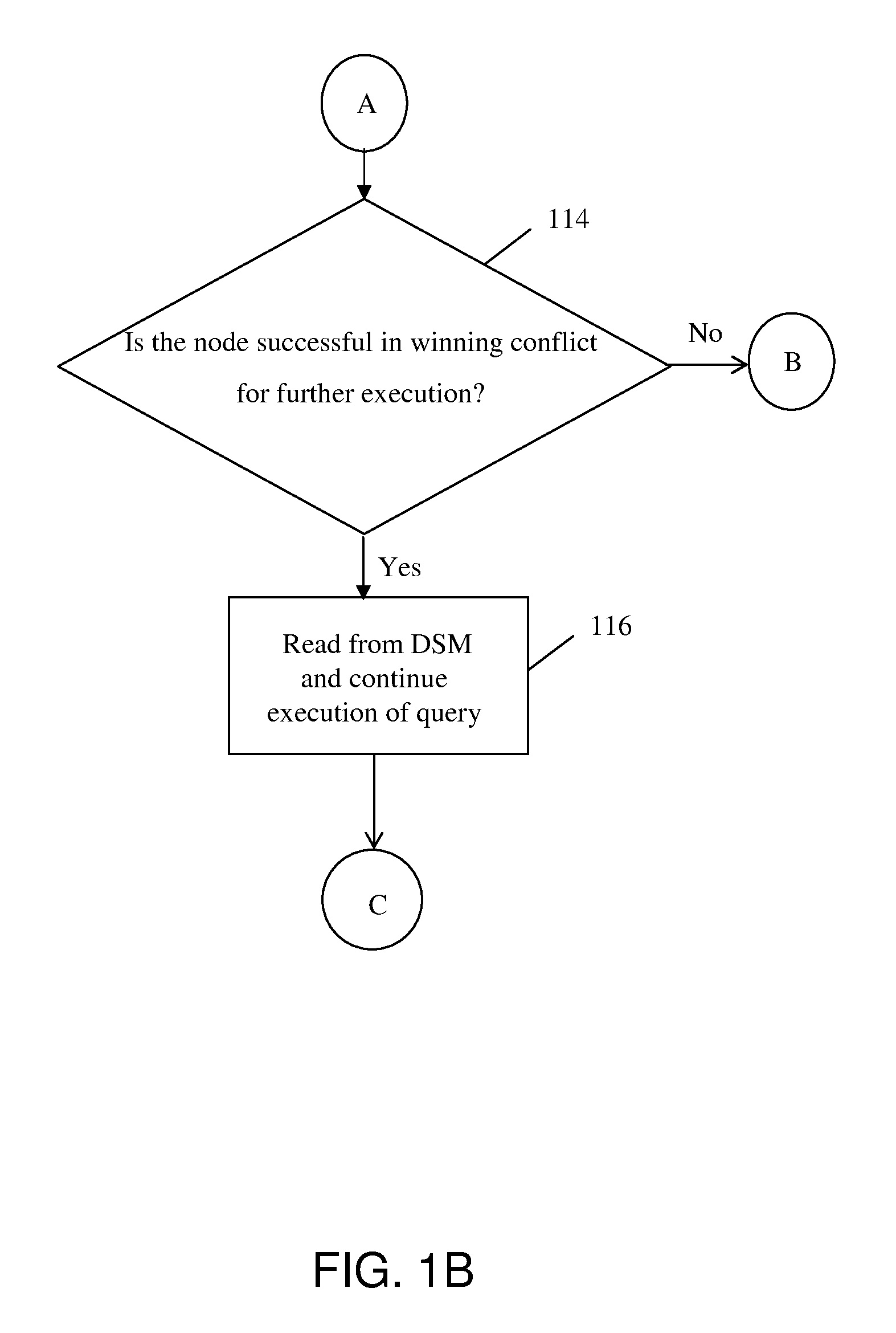

Method and system for automatic failover of distributed query processing using distributed shared memory

A method and system for implementing automatic recovery from failure of resources in a grid-based distributed database is provided. The method includes determining the category of each node in the subgroup of nodes, where the determination identifies each node as at least one of a worker node and an idle node. The method further includes saving state of each worker node engaged in execution of a task in a shared memory at pre-determined time intervals. Each worker node is monitored by one or more idle nodes in each sub-group. Upon detection of no change in state of worker node for a pre-determined period of time, a failure notification is raised by one or more idle nodes that have detected failure of the worker node.

Owner:INFOSYS LTD

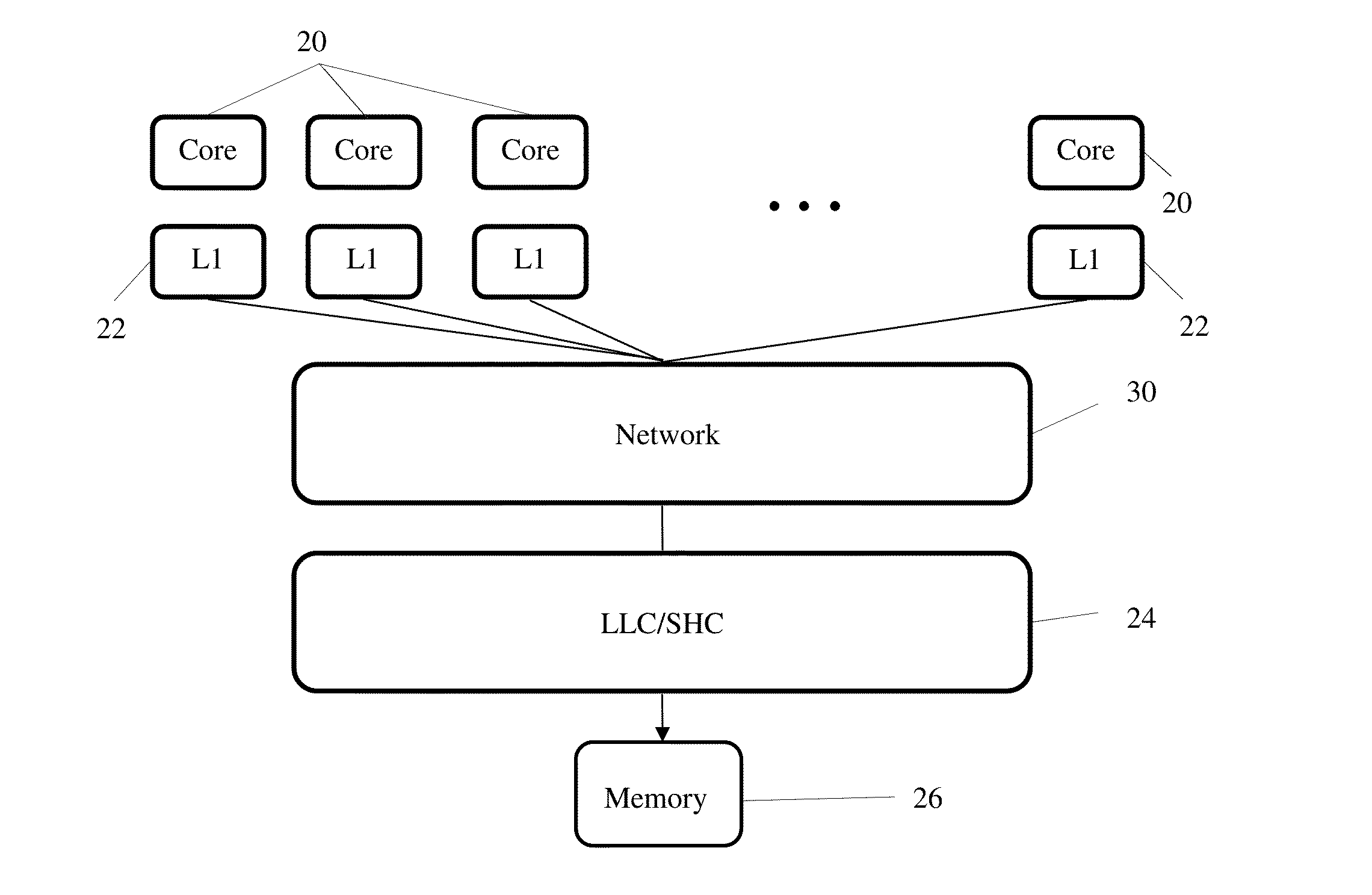

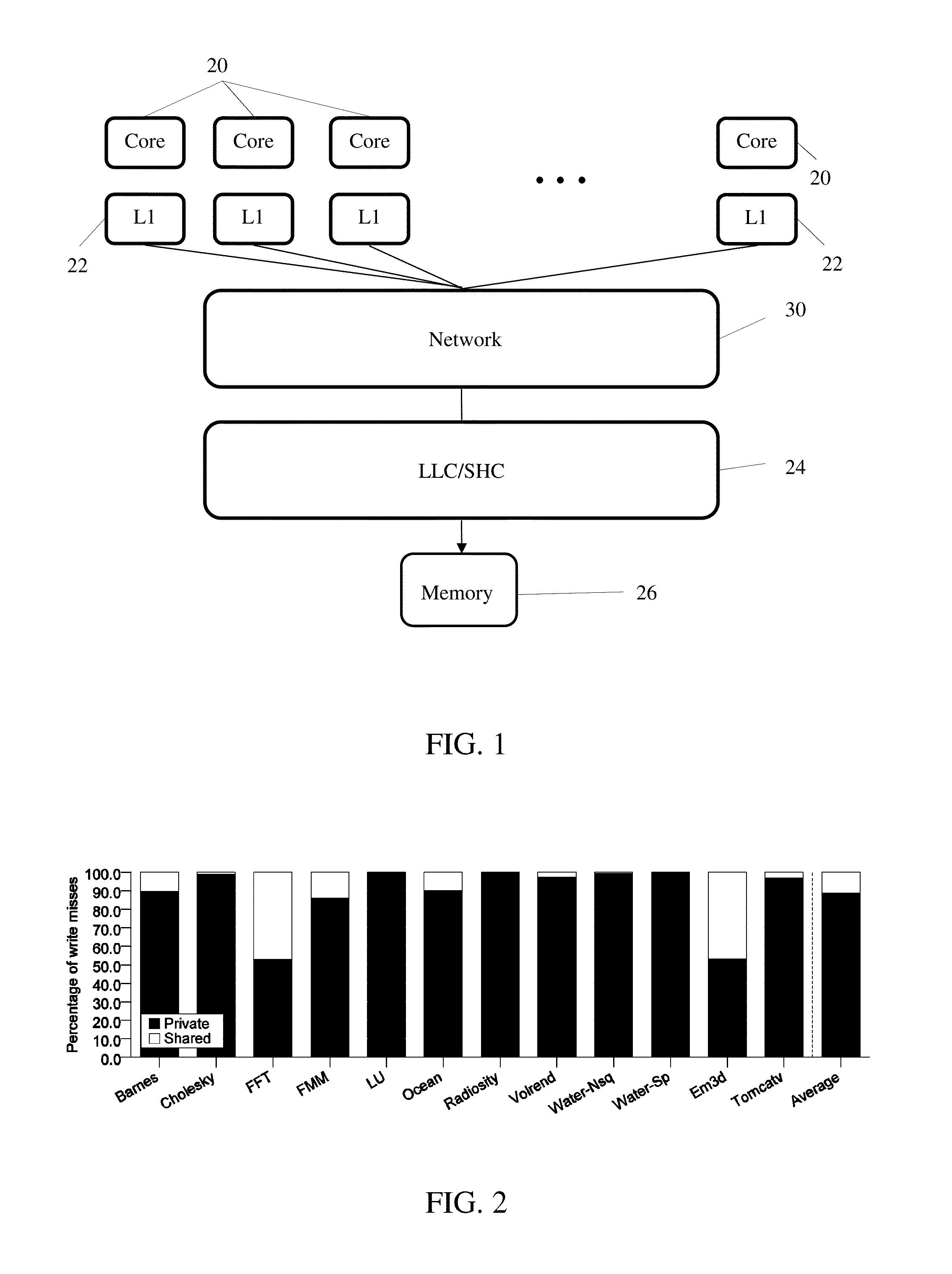

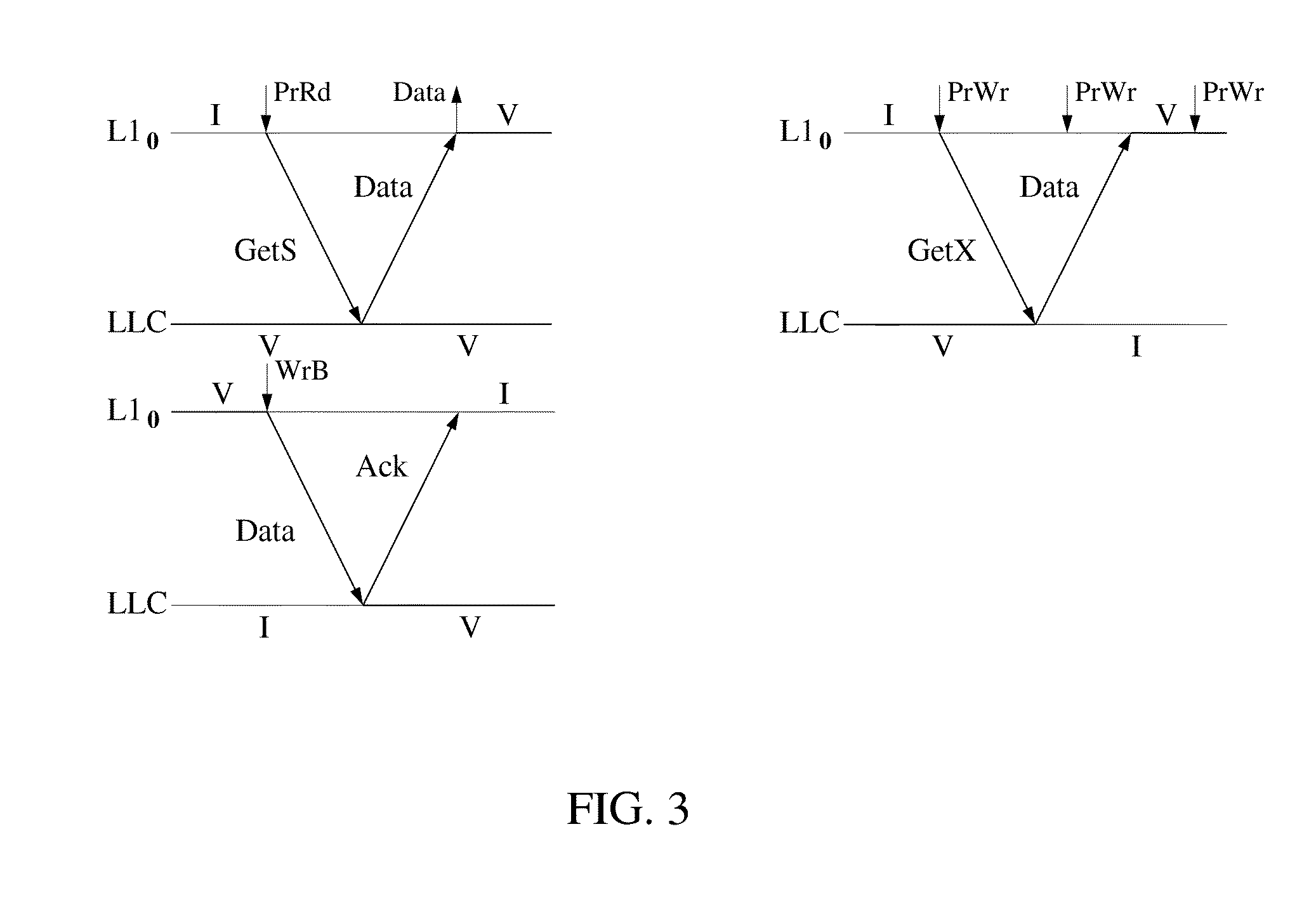

System and method for simplifying cache coherence using multiple write policies

ActiveUS20130254488A1Improve power efficiencyReduce hardware costsEnergy efficient ICTMemory adressing/allocation/relocationCache hierarchyCache coherence

System and methods for cache coherence in a multi-core processing environment having a local / shared cache hierarchy. The system includes multiple processor cores, a main memory, and a local cache memory associated with each core for storing cache lines accessible only by the associated core. Cache lines are classified as either private or shared. A shared cache memory is coupled to the local cache memories and main memory for storing cache lines. The cores follow a write-back to the local memory for private cache lines, and a write-through to the shared memory for shared cache lines. Shared cache lines in local cache memory enter a transient dirty state when written by the core. Shared cache lines transition from a transient dirty to a valid state with a self-initiated write-through to the shared memory. The write-through to shared memory can include only data that was modified in the transient dirty state.

Owner:ETA SCALE AB

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com