Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

28843 results about "Computer architecture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer engineering, computer architecture is a set of rules and methods that describe the functionality, organization, and implementation of computer systems. Some definitions of architecture define it as describing the capabilities and programming model of a computer but not a particular implementation. In other definitions computer architecture involves instruction set architecture design, microarchitecture design, logic design, and implementation.

System and method for designing, developing and implementing internet service provider architectures

ActiveUS20030172145A1Metering/charging/biilling arrangementsDigital computer detailsComputer architectureService provision

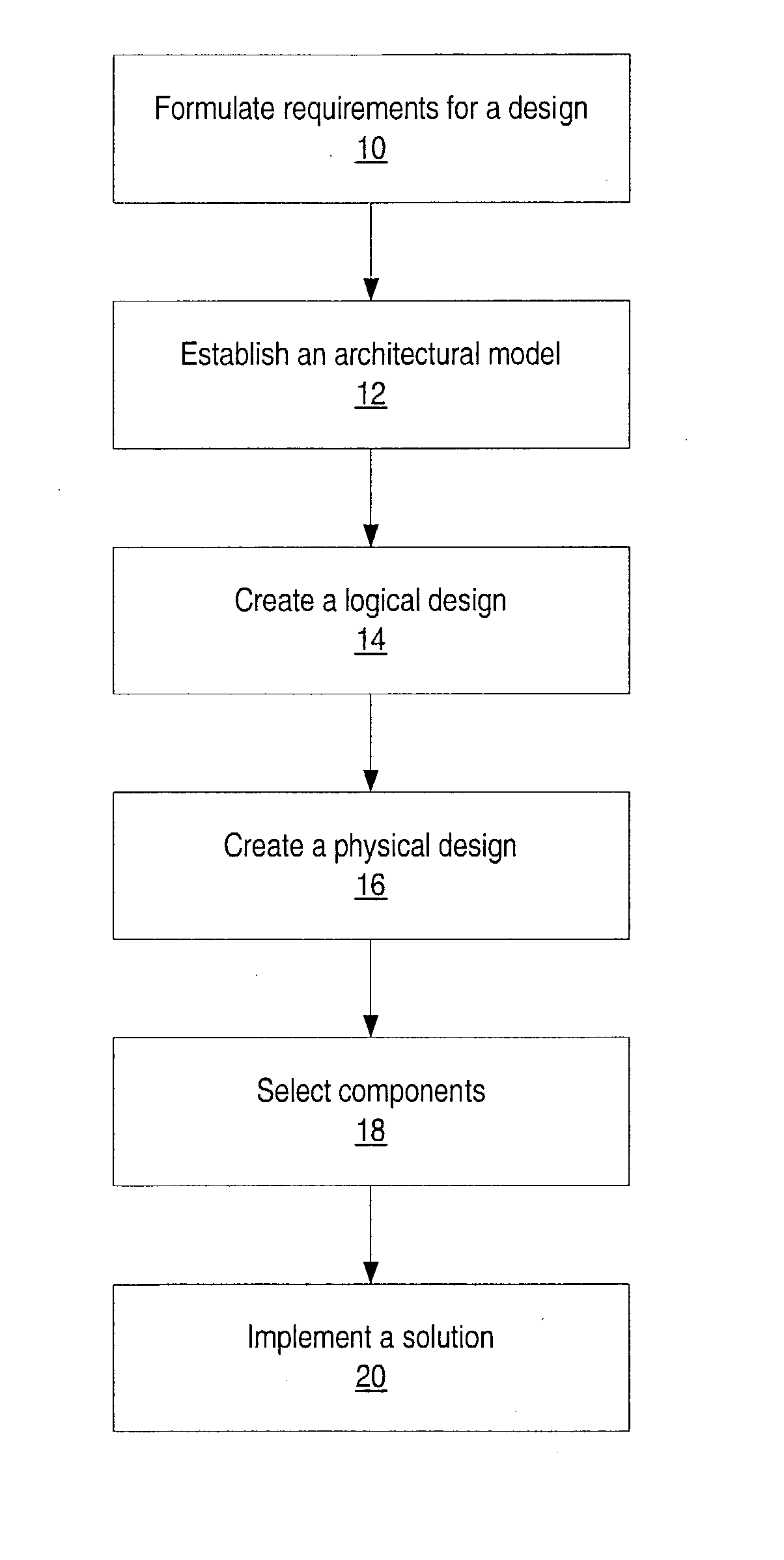

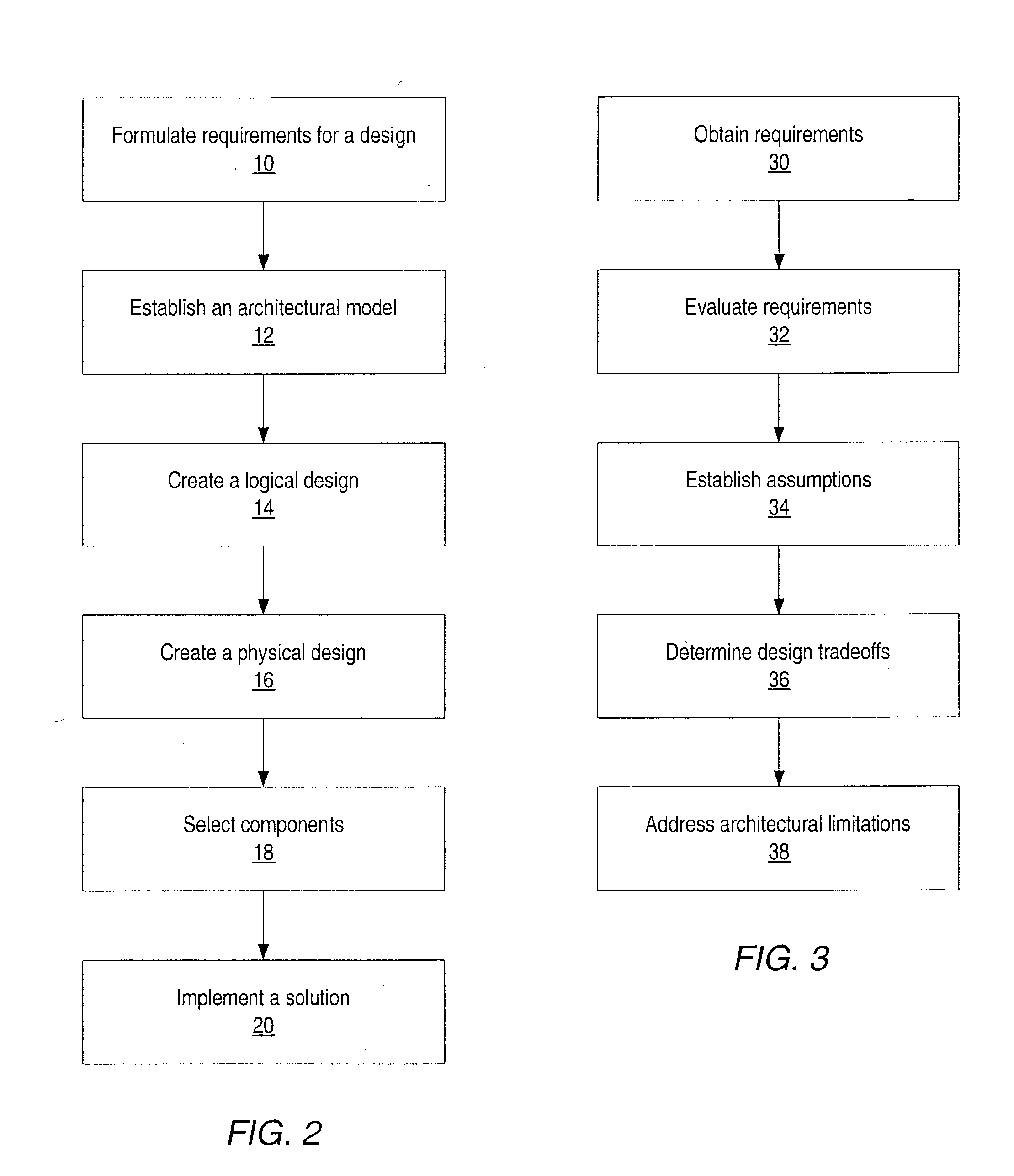

System and method for designing, developing and implementing Internet Service Provider (ISP) architectures. One embodiment of a method for designing and implementing ISP architectures may include formulating a set of design requirements for an ISP architecture, establishing an architectural model for the ISP architecture using the set of design requirements, generating a logical design for the ISP architecture from the architectural model and the set of design requirements, and generating a physical design for the ISP architecture using the architectural model and the logical design. One embodiment may also include selecting one or more components of the ISP architecture and implementing the ISP architecture according to the logical design and the physical design. In one embodiment the system and method for designing, developing and implementing ISP architectures may be used to design, develop and implement an N-tiered ISP architecture.

Owner:SUN MICROSYSTEMS INC

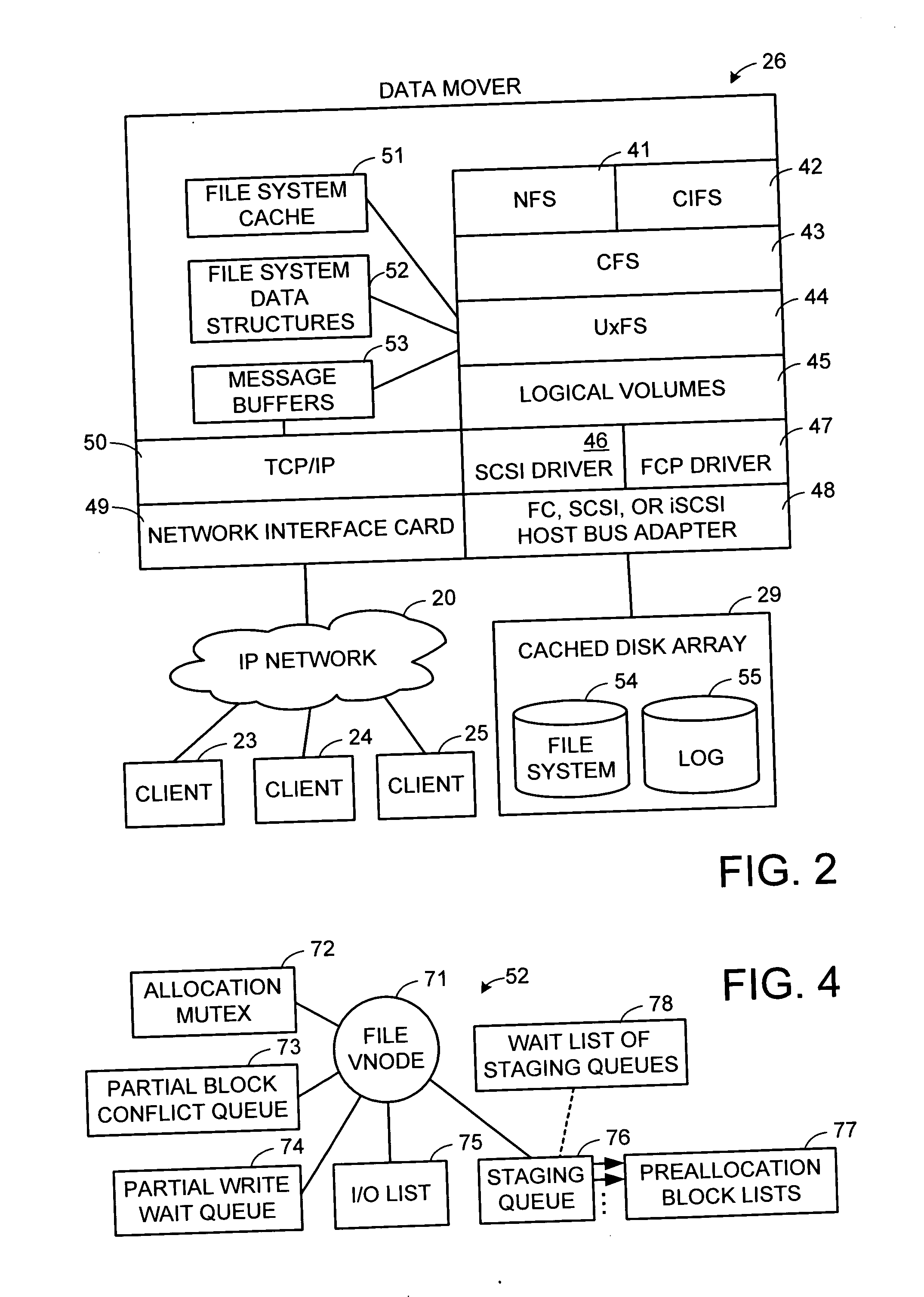

Multi-threaded write interface and methods for increasing the single file read and write throughput of a file server

ActiveUS20050066095A1Digital data information retrievalDigital data processing detailsData integrityFile allocation

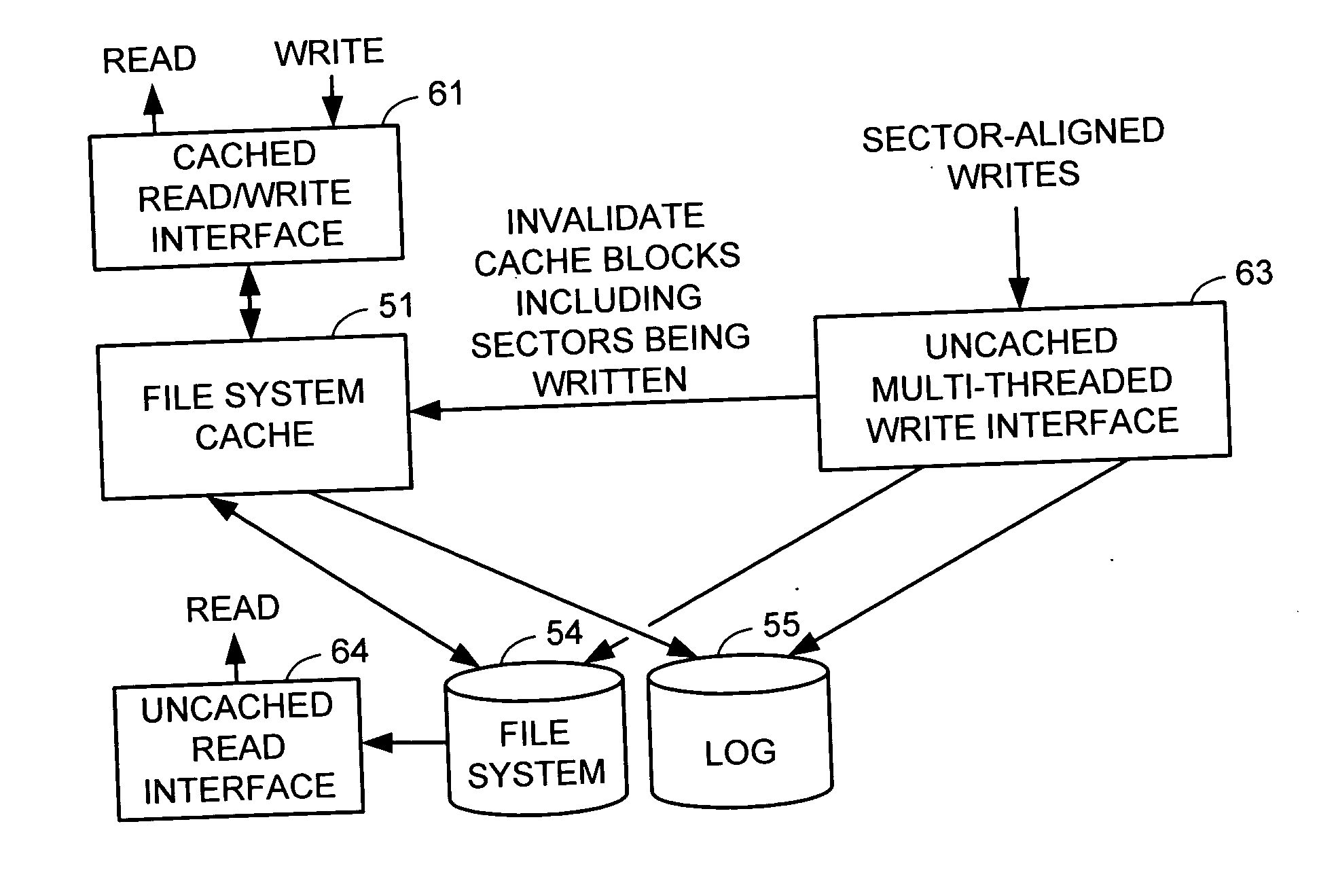

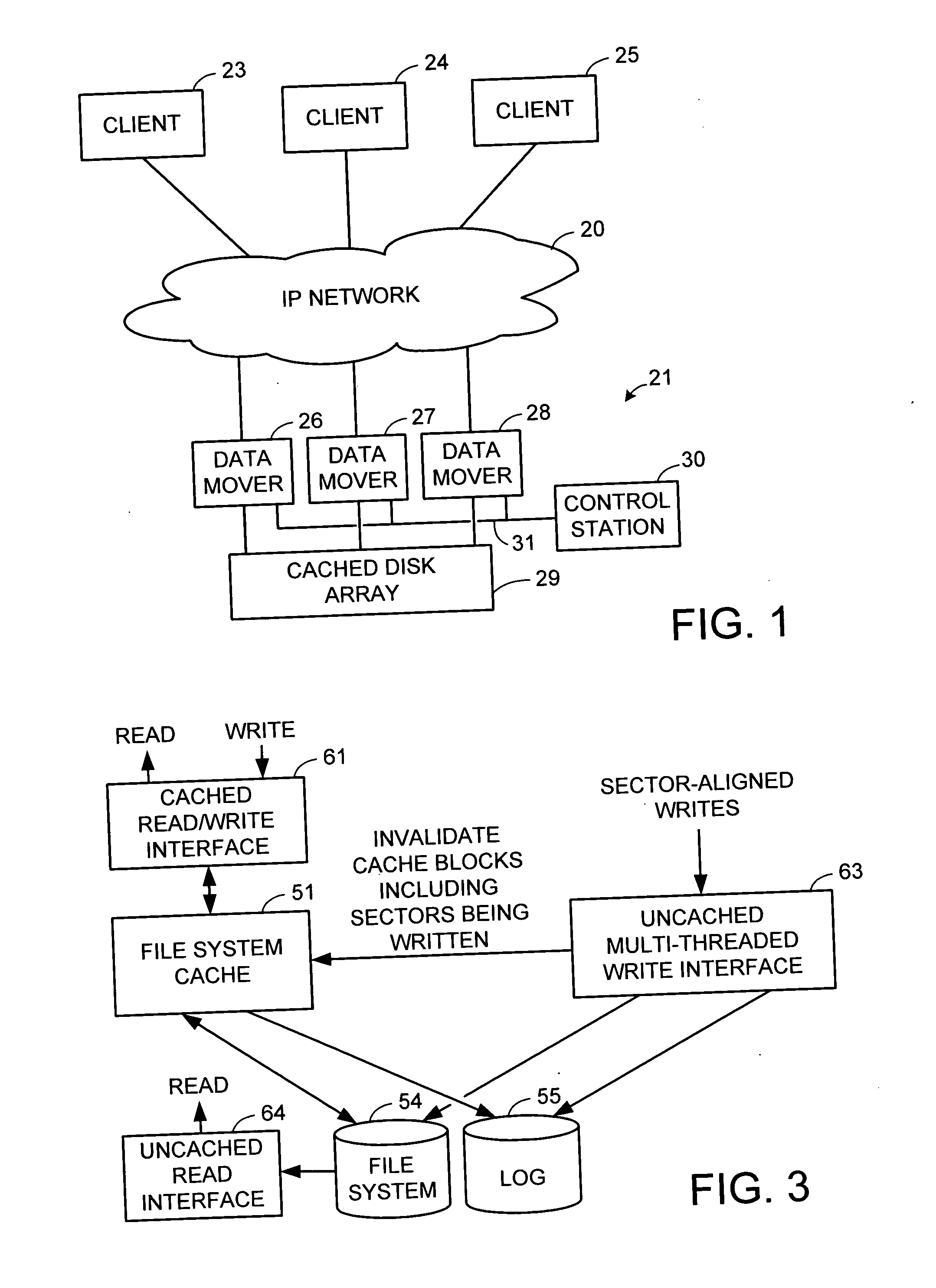

A write interface in a file server provides permission management for concurrent access to data blocks of a file, ensures correct use and update of indirect blocks in a tree of the file, preallocates file blocks when the file is extended, solves access conflicts for concurrent reads and writes to the same block, and permits the use of pipelined processors. For example, a write operation includes obtaining a per file allocation mutex (mutually exclusive lock), preallocating a metadata block, releasing the allocation mutex, issuing an asynchronous write request for writing to the file, waiting for the asynchronous write request to complete, obtaining the allocation mutex, committing the preallocated metadata block, and releasing the allocation mutex. Since no locks are held during the writing of data to the on-disk storage and this data write takes the majority of the time, the method enhances concurrency while maintaining data integrity.

Owner:EMC IP HLDG CO LLC

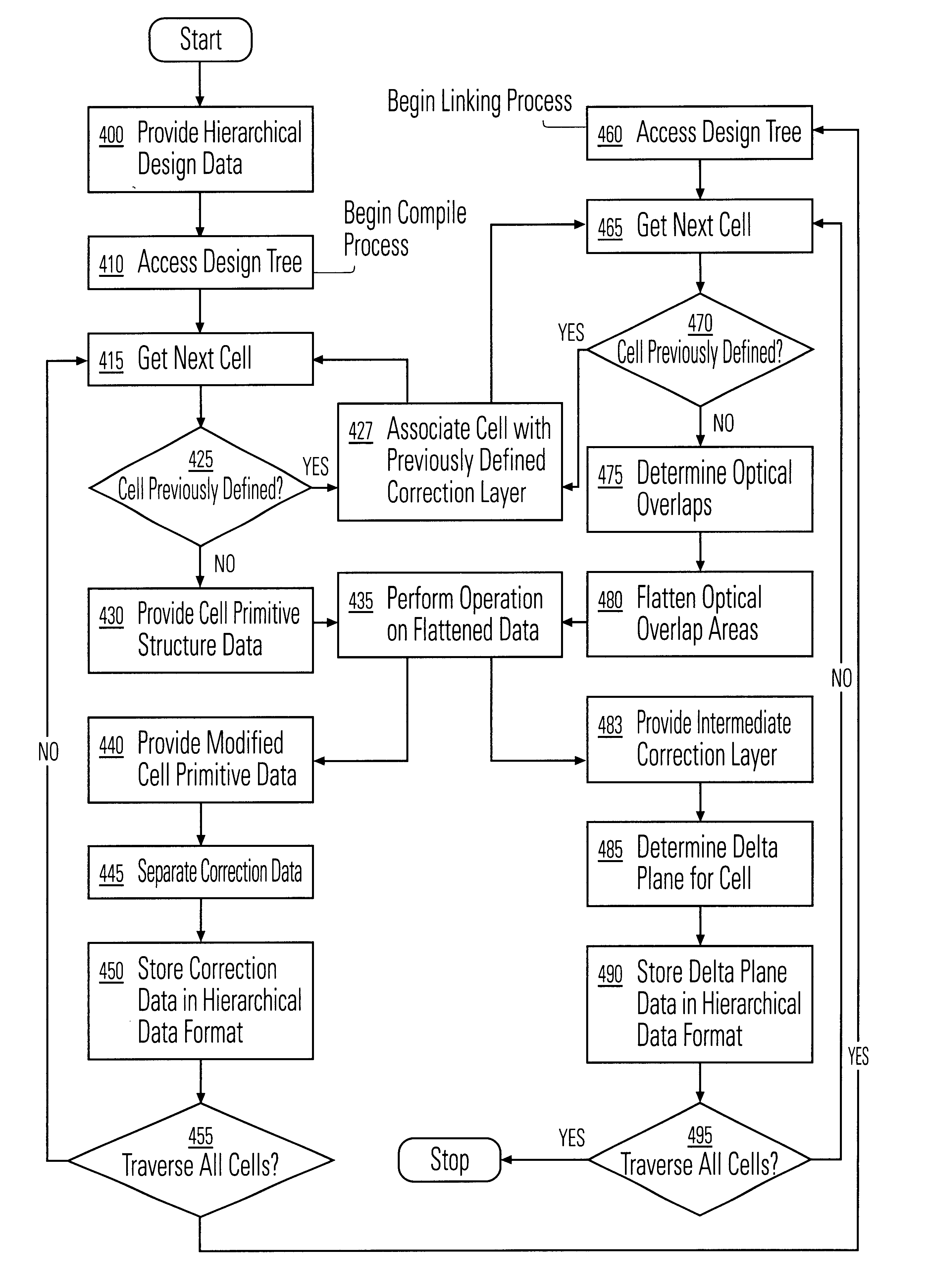

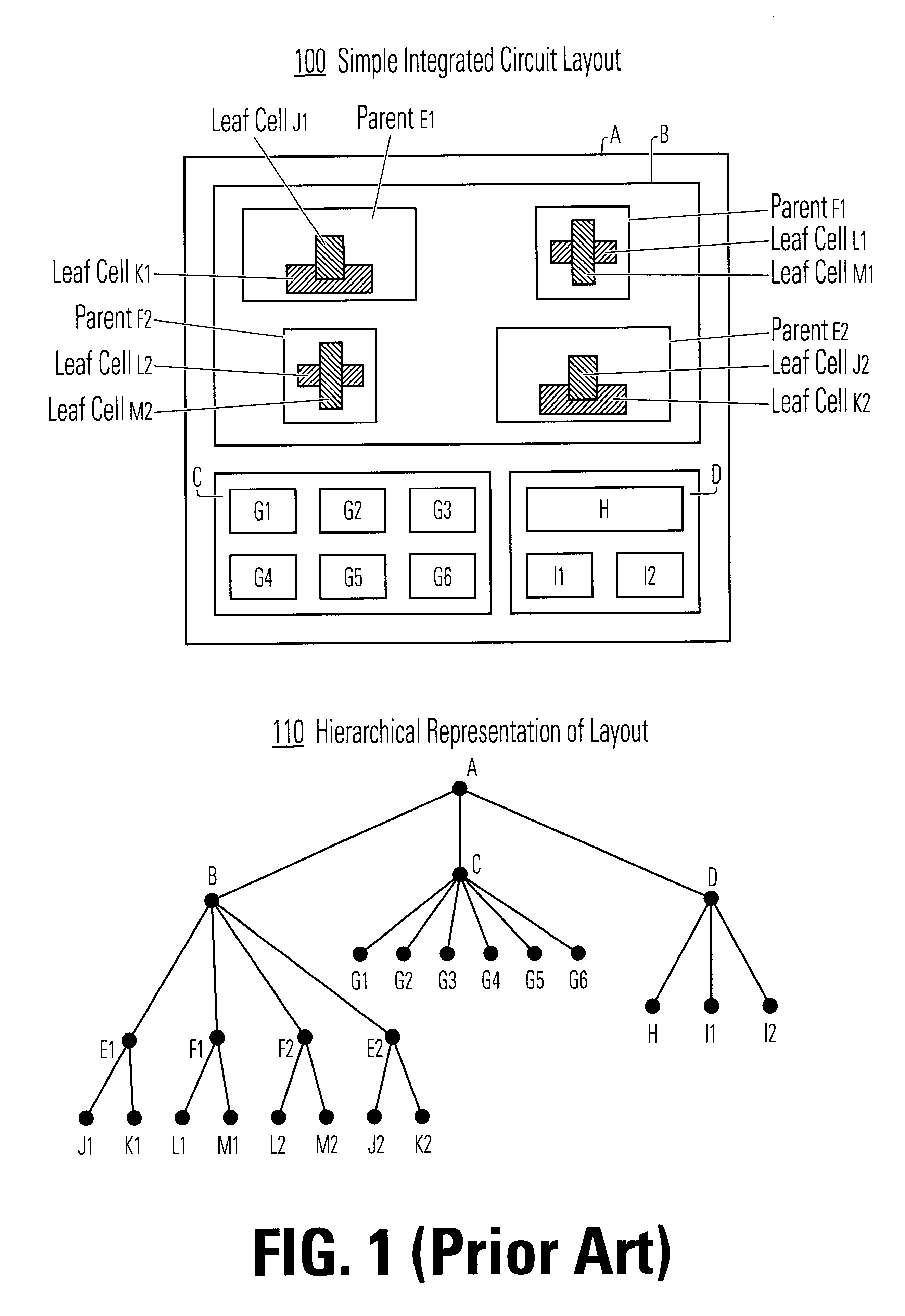

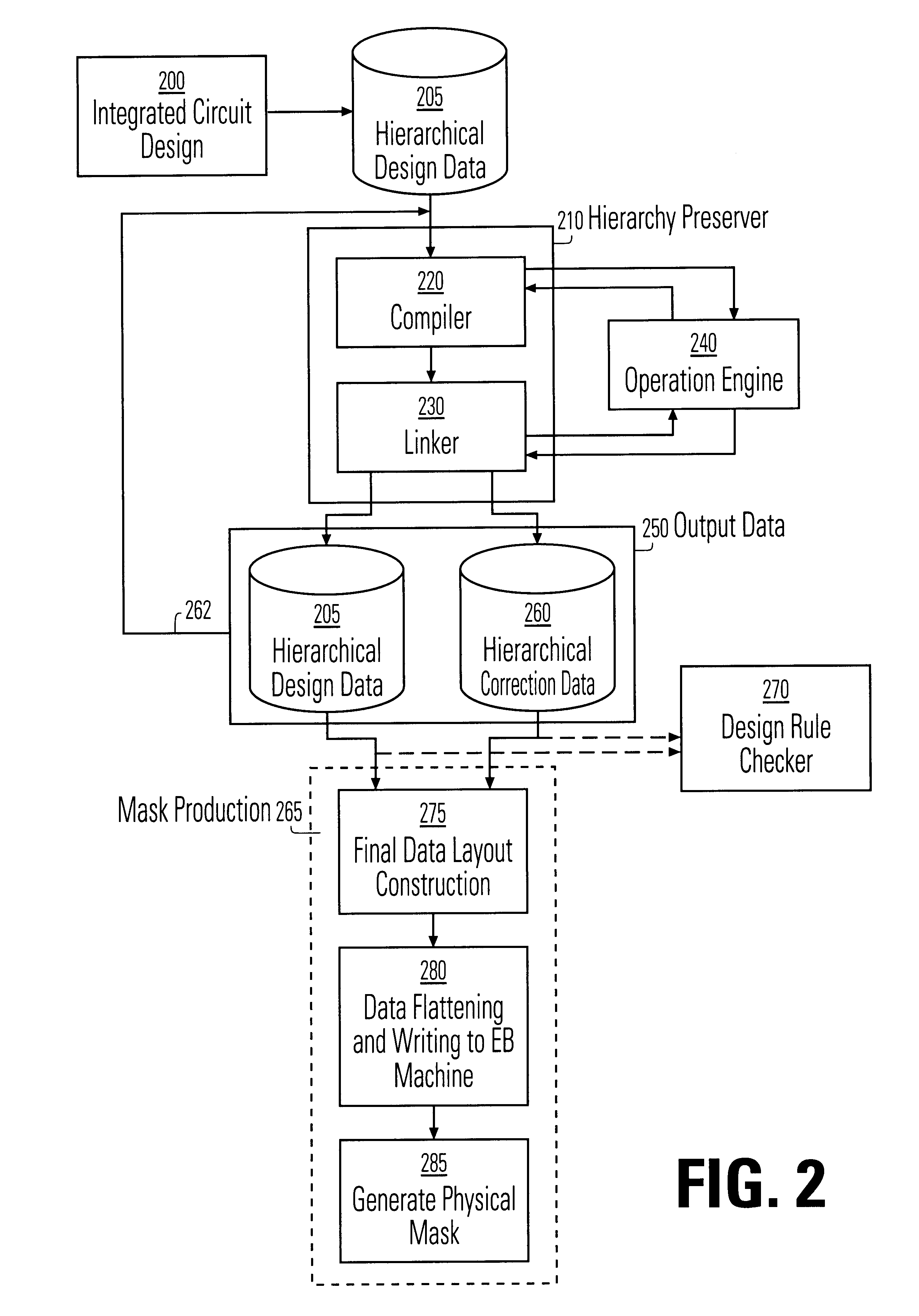

Data hierarchy layout correction and verification method and apparatus

InactiveUS6370679B1Computation using non-denominational number representationOriginals for photomechanical treatmentComputer architectureValidation methods

A method and apparatus for the correction of integrated circuit layouts for optical proximity effects which maintains the original true hierarchy of the original layout is provided. Also provided is a method and apparatus for the design rule checking of layouts which have been corrected for optical proximity effects. The OPC correction method comprises providing a hierarchically described integrated circuit layout as a first input, and a particular set of OPC correction criteria as a second input. The integrated circuit layout is then analyzed to identify features of the layout which meet the provided OPC correction criteria. After the areas on the mask which need correction have been identified, optical proximity correction data is generated in response to the particular set of correction criteria. Finally, a first program data is generated which stores the generated optical proximity correction data in a hierarchical structure that corresponds to the hierarchical structure of the integrated circuit layout. As the output correction data is maintained in true hierarchical format, layouts which are OPC corrected according to this method are able to be processed through conventional design rule checkers with no altering of the data.

Owner:SYNOPSYS INC

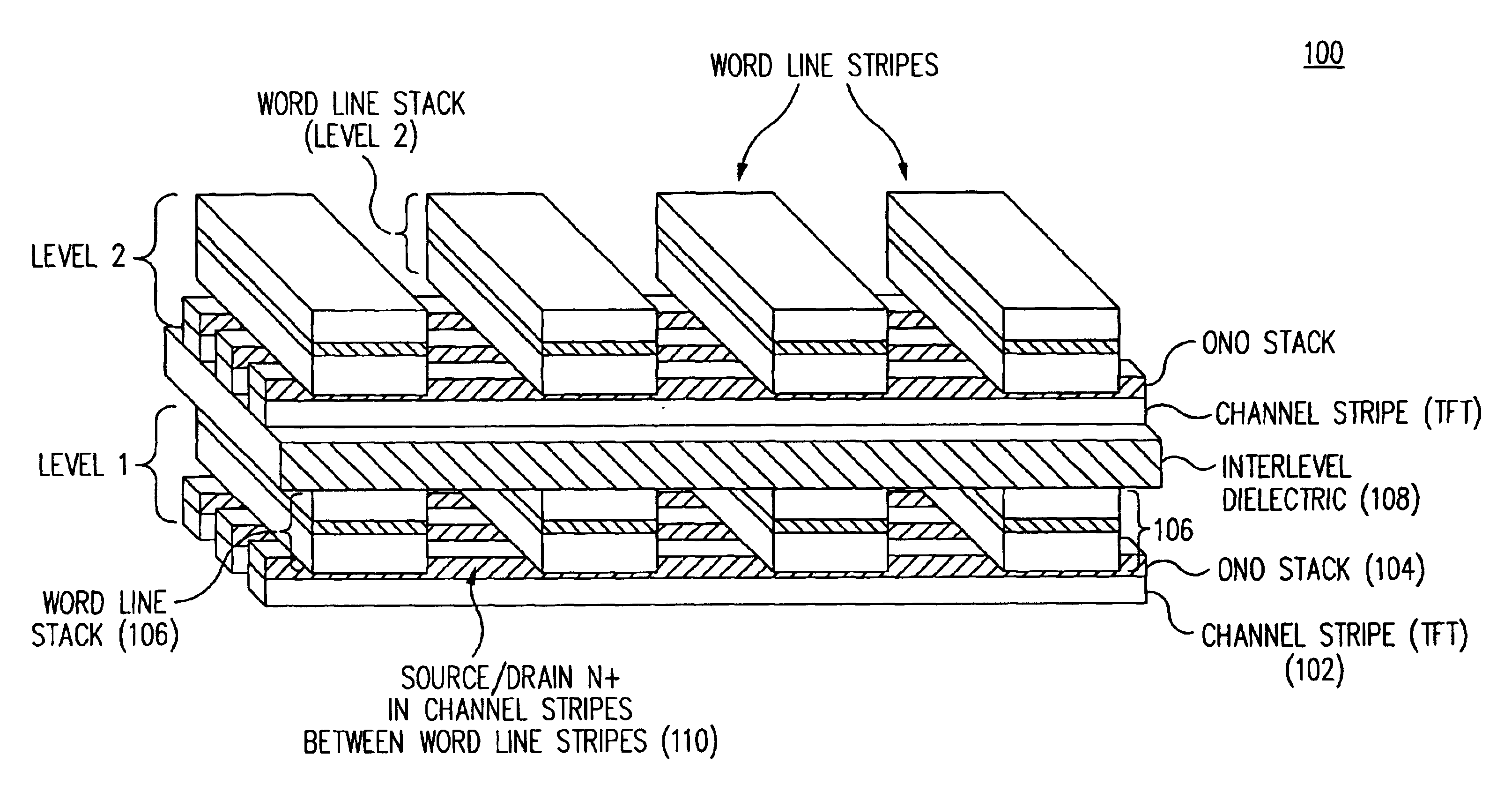

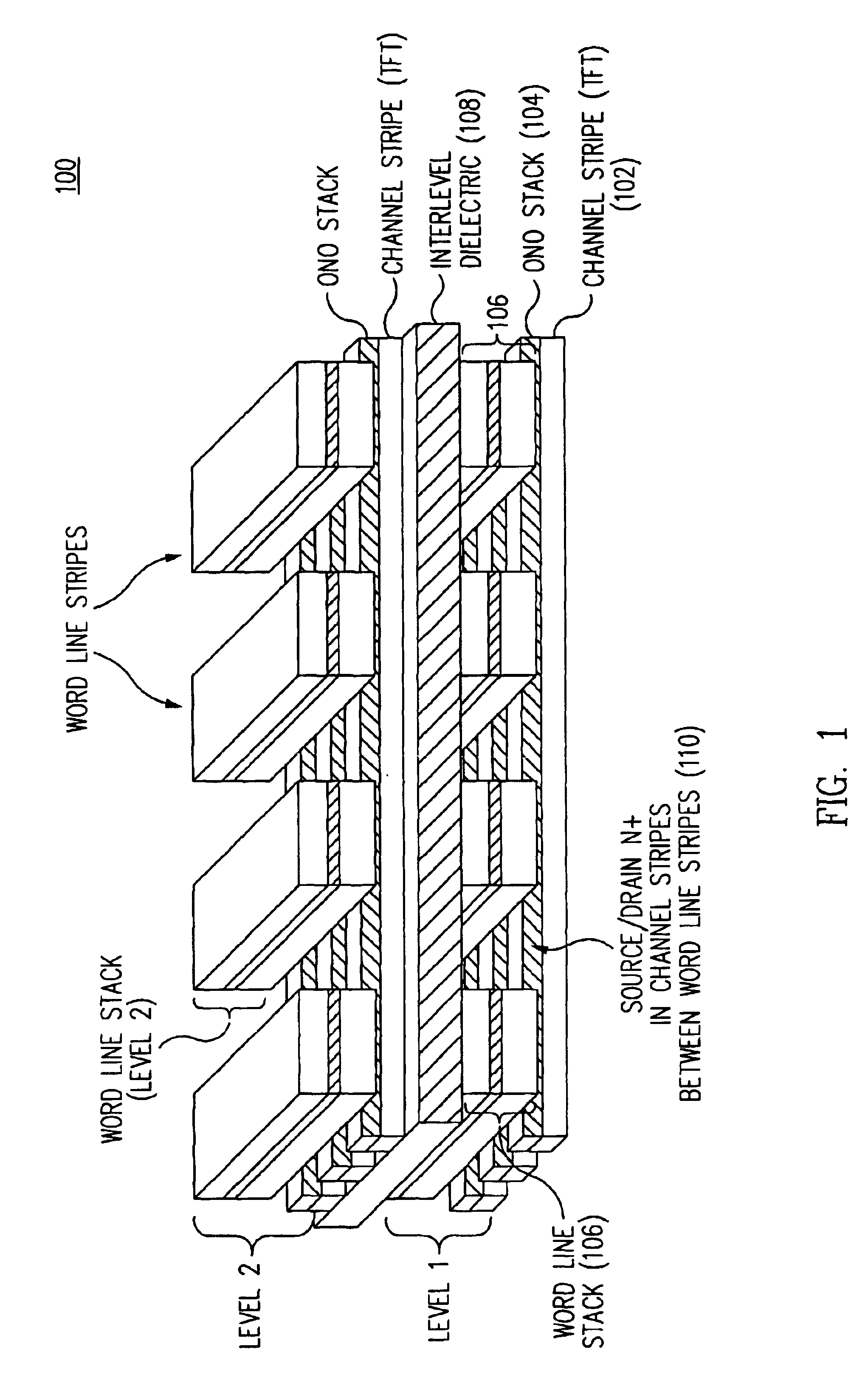

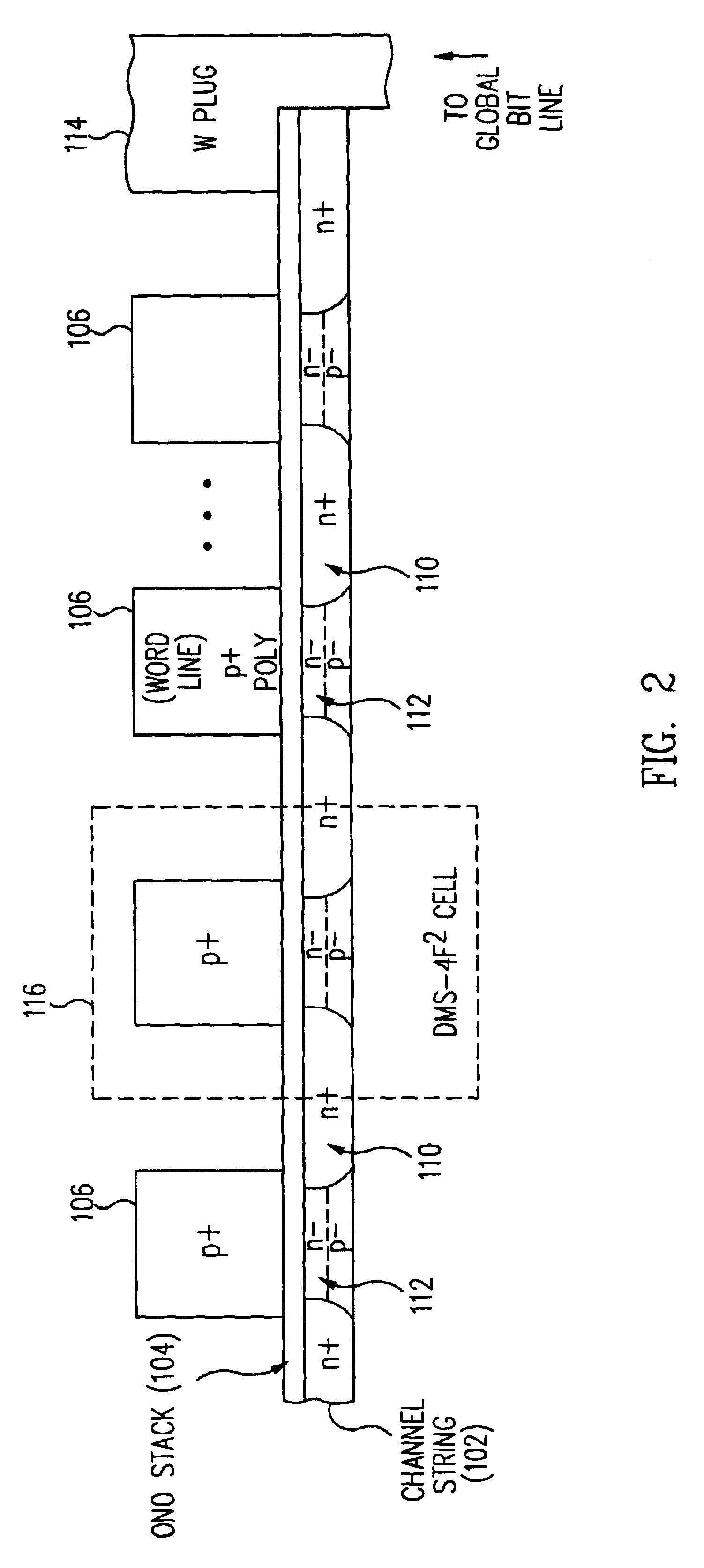

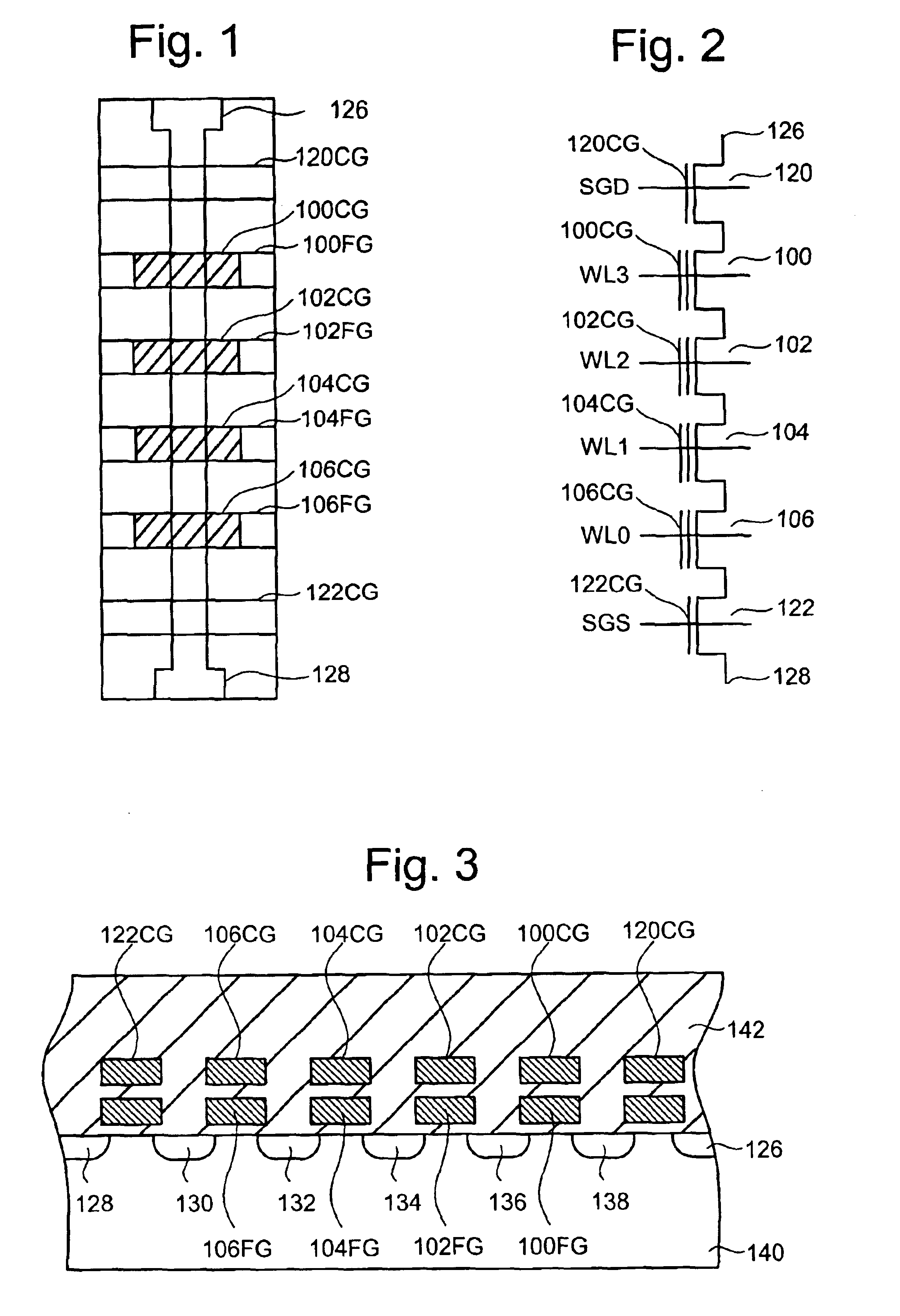

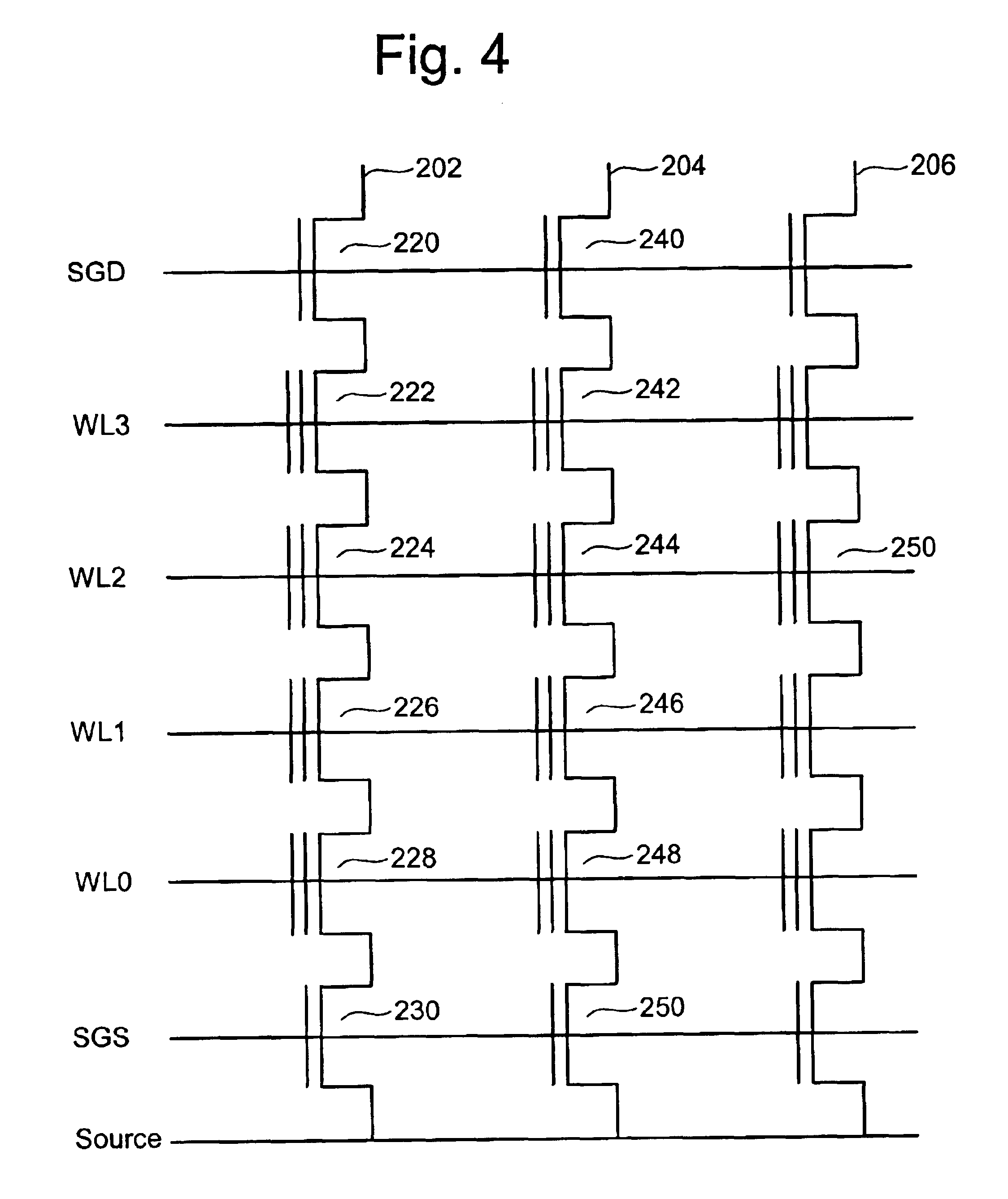

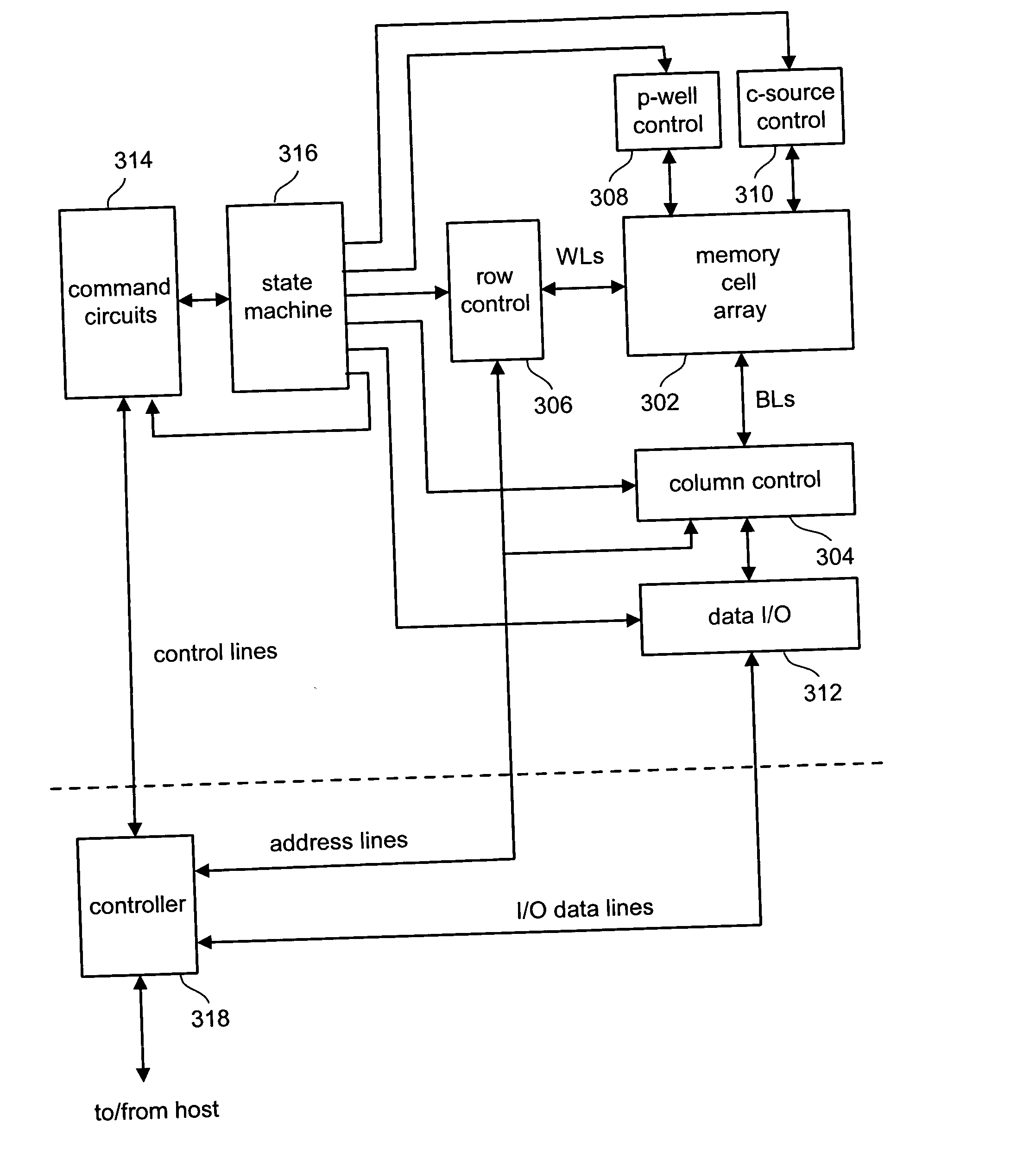

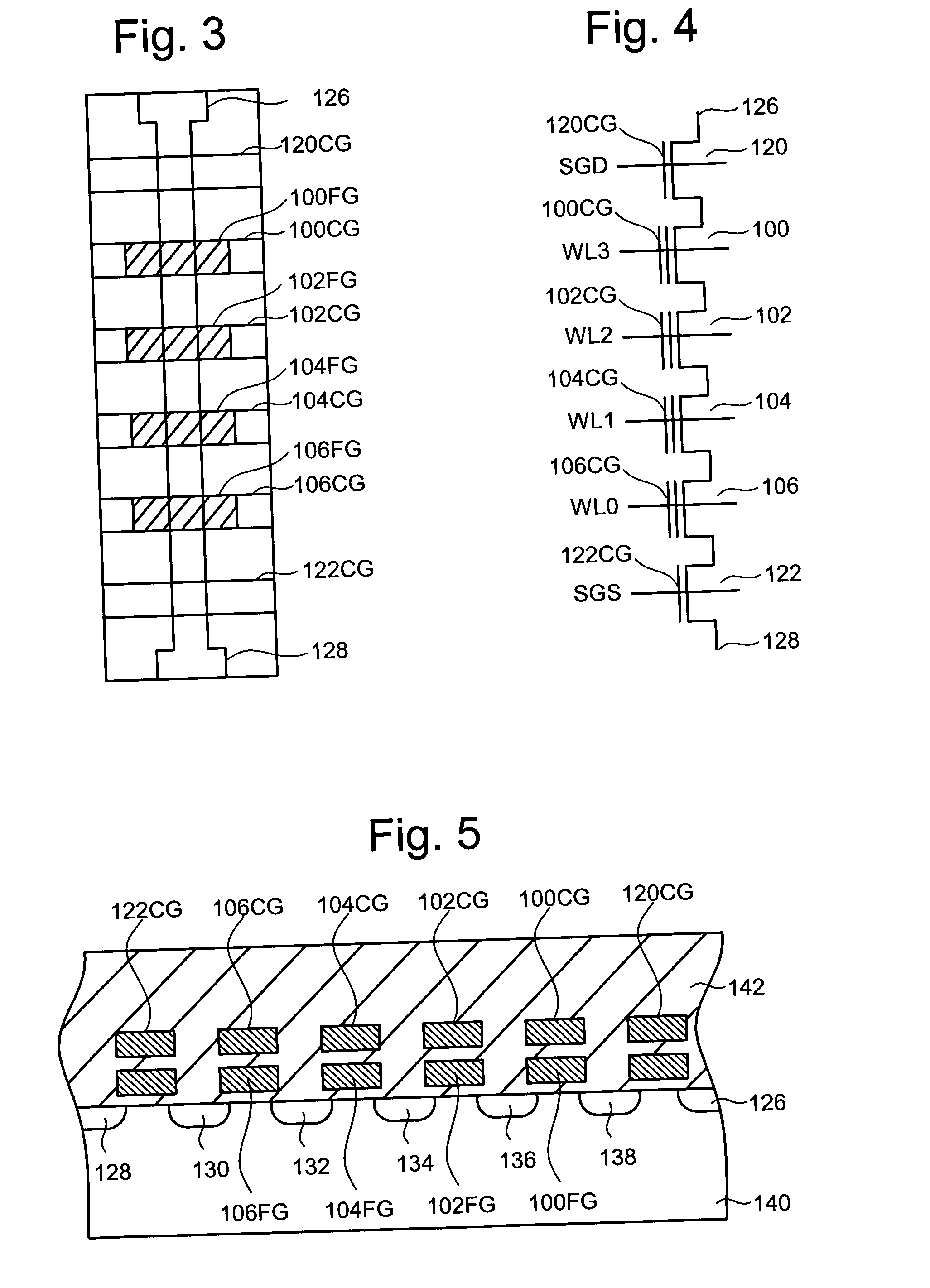

Method for fabricating programmable memory array structures incorporating series-connected transistor strings

InactiveUS7005350B2Reduce in quantityDense memory arraySolid-state devicesRead-only memoriesBit lineComputer architecture

A three-dimensional flash memory array incorporates thin film transistors having a charge storage dielectric arranged in series-connected NAND strings to achieve a 4F2 memory cell layout. The memory array may be programmed and erased using only tunneling currents, and no leakage paths are formed through non-selected memory cells. Each NAND string includes two block select devices for respectively coupling one end of the NAND string to a global bit line, and the other end to a shared bias node. Pairs of NAND strings within a block share the same global bit line. The memory cells are preferably depletion mode SONOS devices, as are the block select devices. The memory cells may be programmed to a near depletion threshold voltage, and the block select devices are maintained in a programmed state having a near depletion mode threshold voltage. NAND strings on more than one layer may be connected to global bit lines on a single layer. By interleaving the NAND strings on each memory level and using two shared bias nodes per block, very little additional overhead is required for the switch devices at each end of the NAND strings. The NAND strings on different memory levels are preferably connected together by way of vertical stacked vias, each preferably connecting to more than one memory level. Each memory level may be produced with less than three masks per level.

Owner:SANDISK TECH LLC

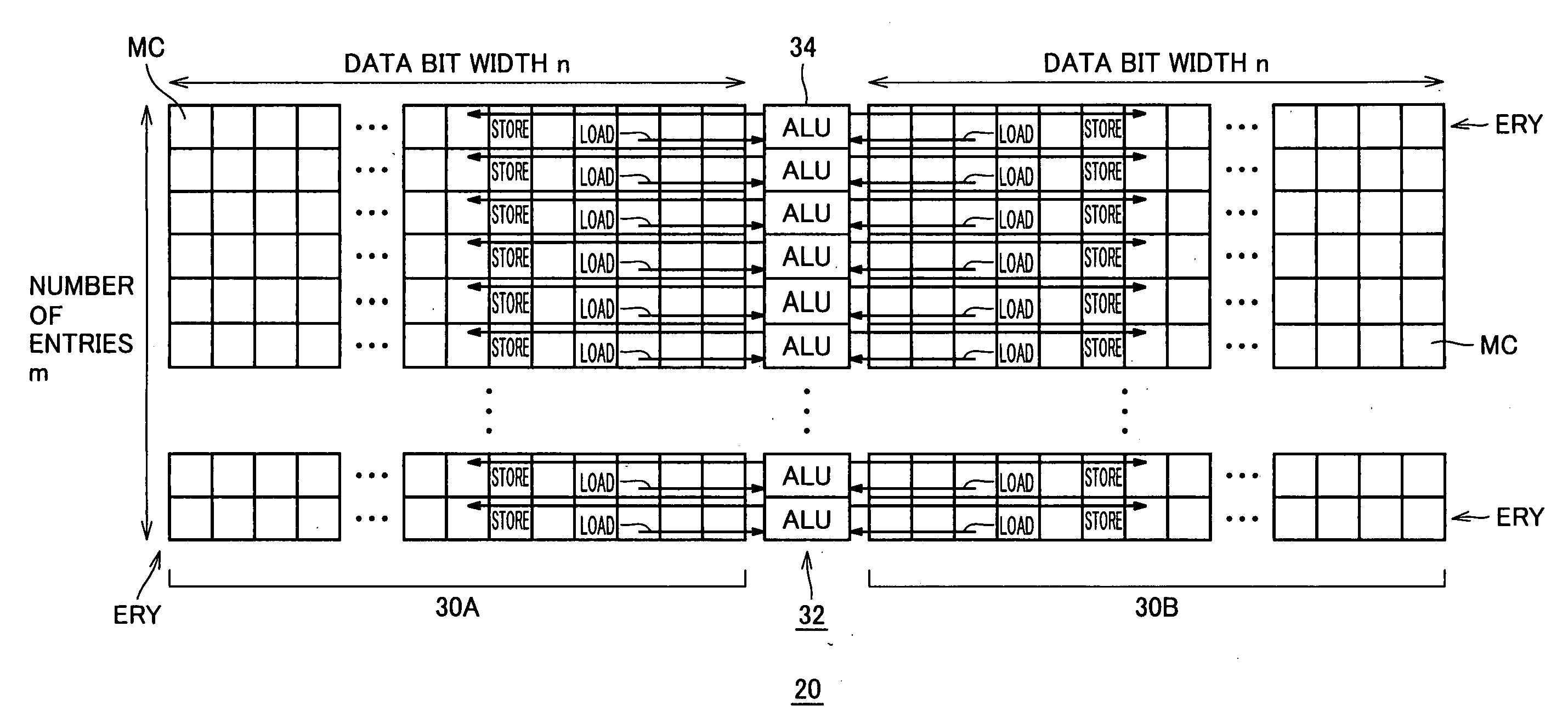

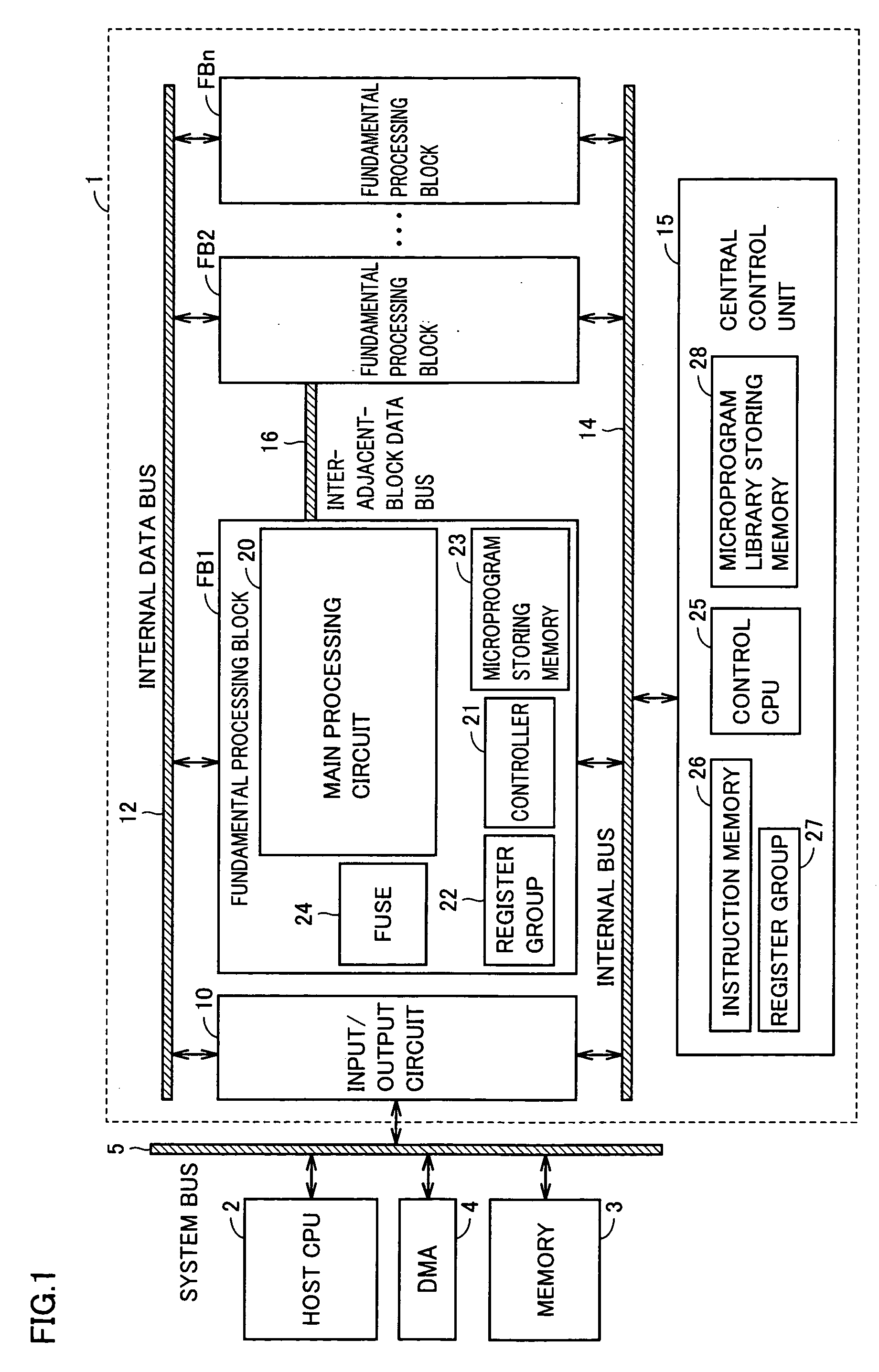

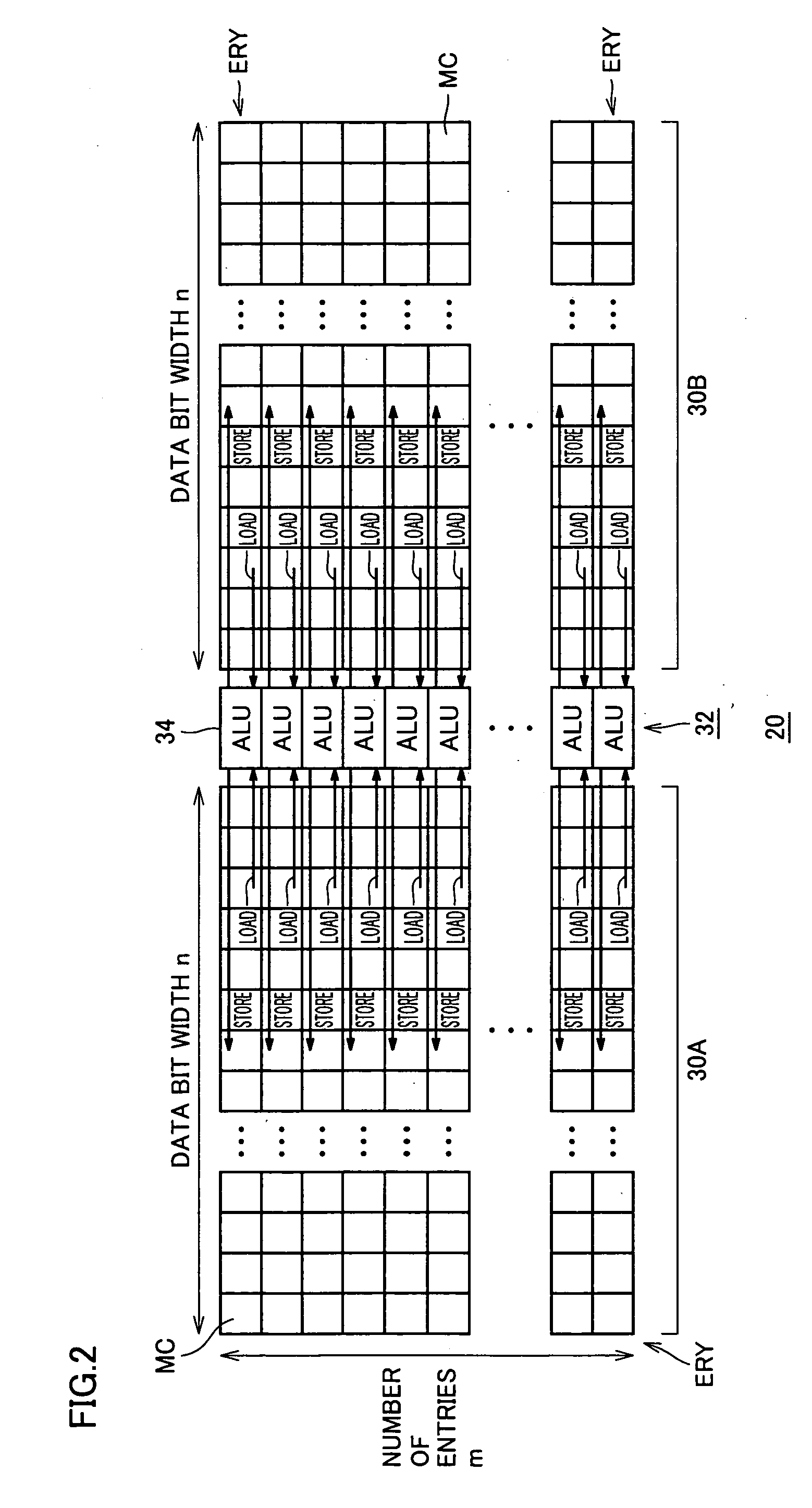

Parallel operational processing device

InactiveUS20070180006A1Easy to operateReduce power consumptionEnergy efficient ICTDigital data processing detailsAudio power amplifierParallel computing

In a parallel operational processing device having an operational processing unit arranged between memory blocks each having a plurality of memory cells arranged in rows and columns, the respective columns of each memory block are alternately connected to the operational processing units on the opposite sides of the memory block. By selecting one word line in one memory block, data can be transferred to two operational processing units. The number of the word lines selected per one operational processing unit is reduced, and power consumption is reduced. The bit operation units and sense amplifiers / write drivers of the operational processing units have arrangement pitch conditions mitigated and are reduced in number, and an isolation region between the memory blocks is not required and the layout area is reduced. Thus, the parallel operational processing device with a layout area and the power consumption reduced, can achieve a fast operation.

Owner:RENESAS ELECTRONICS CORP

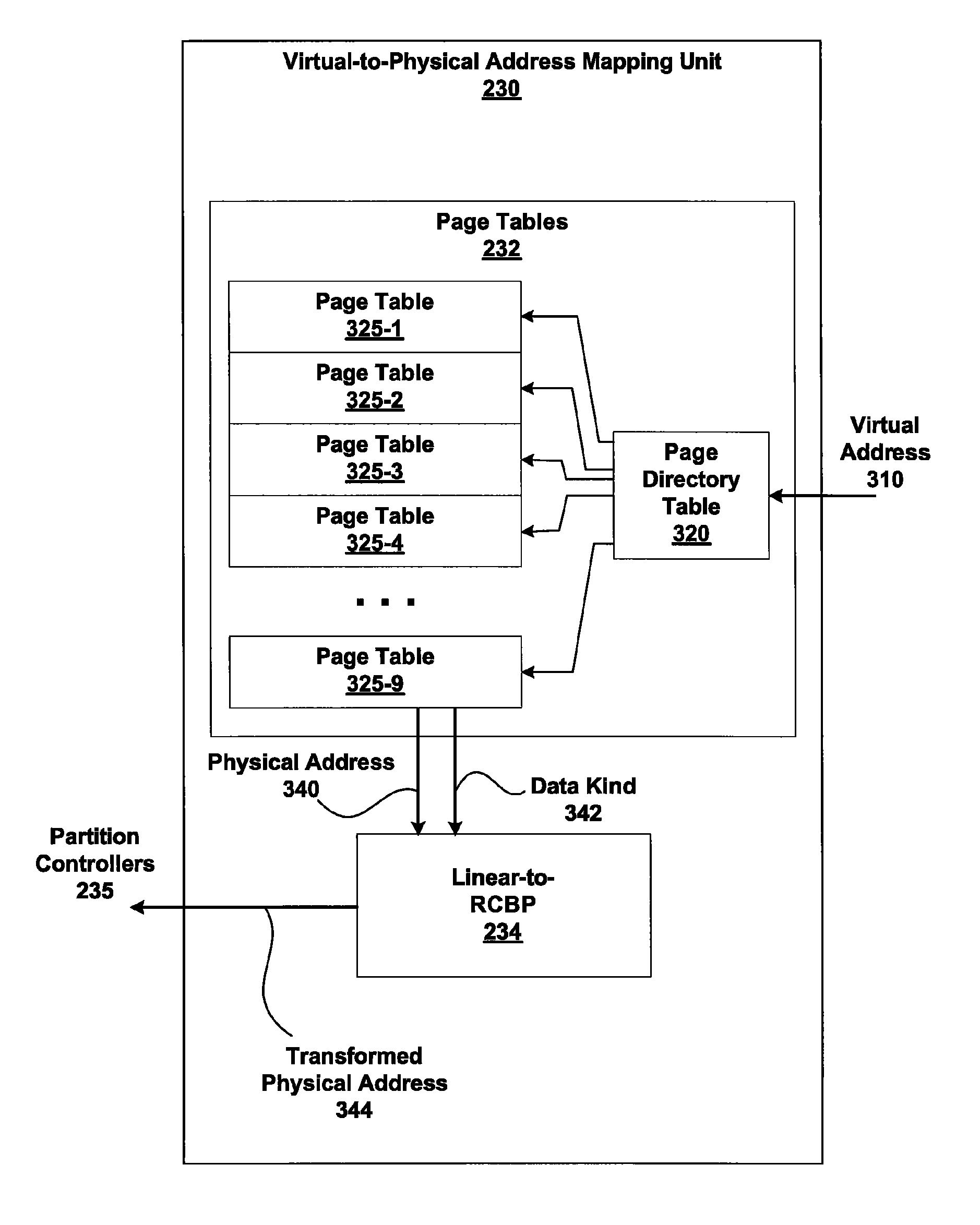

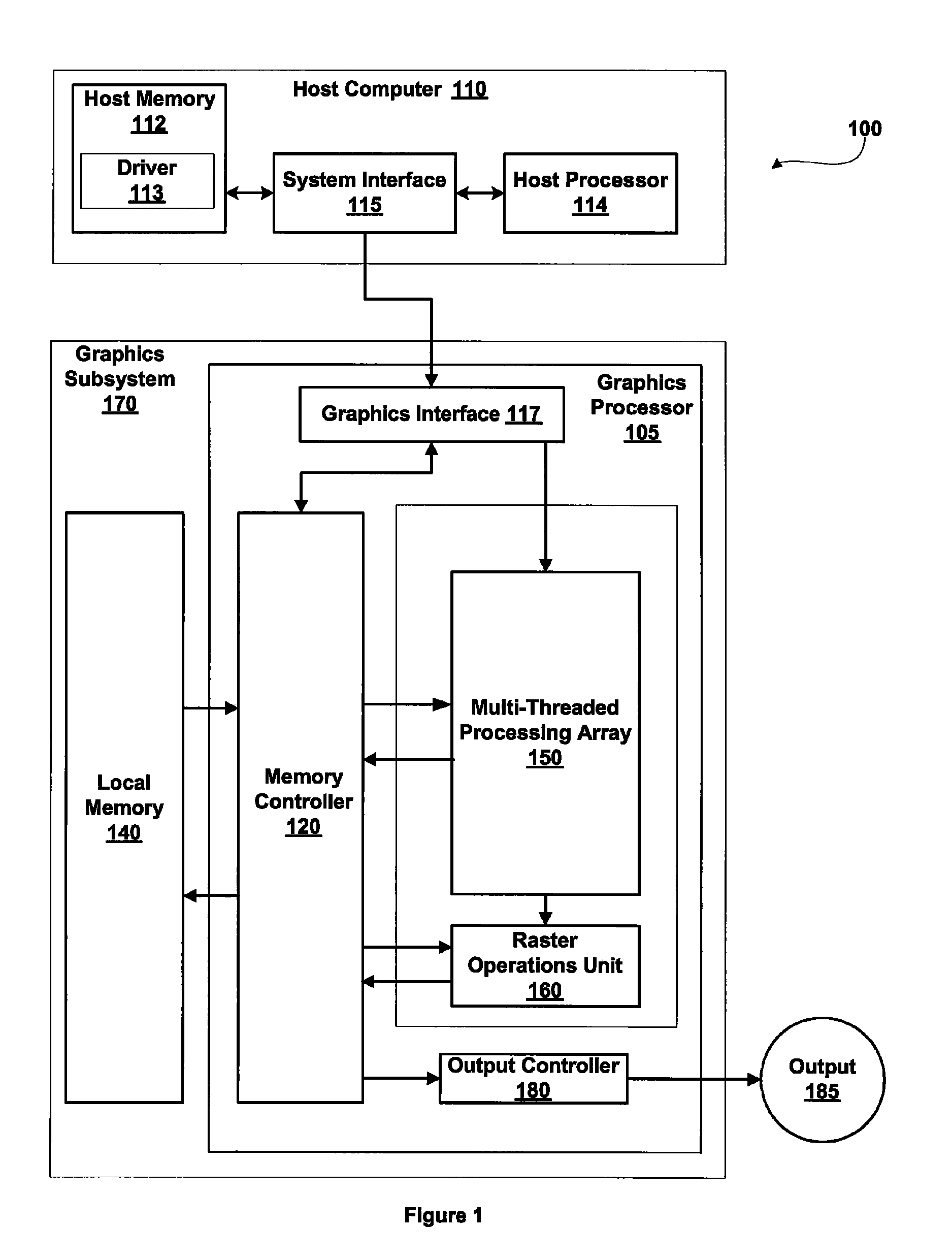

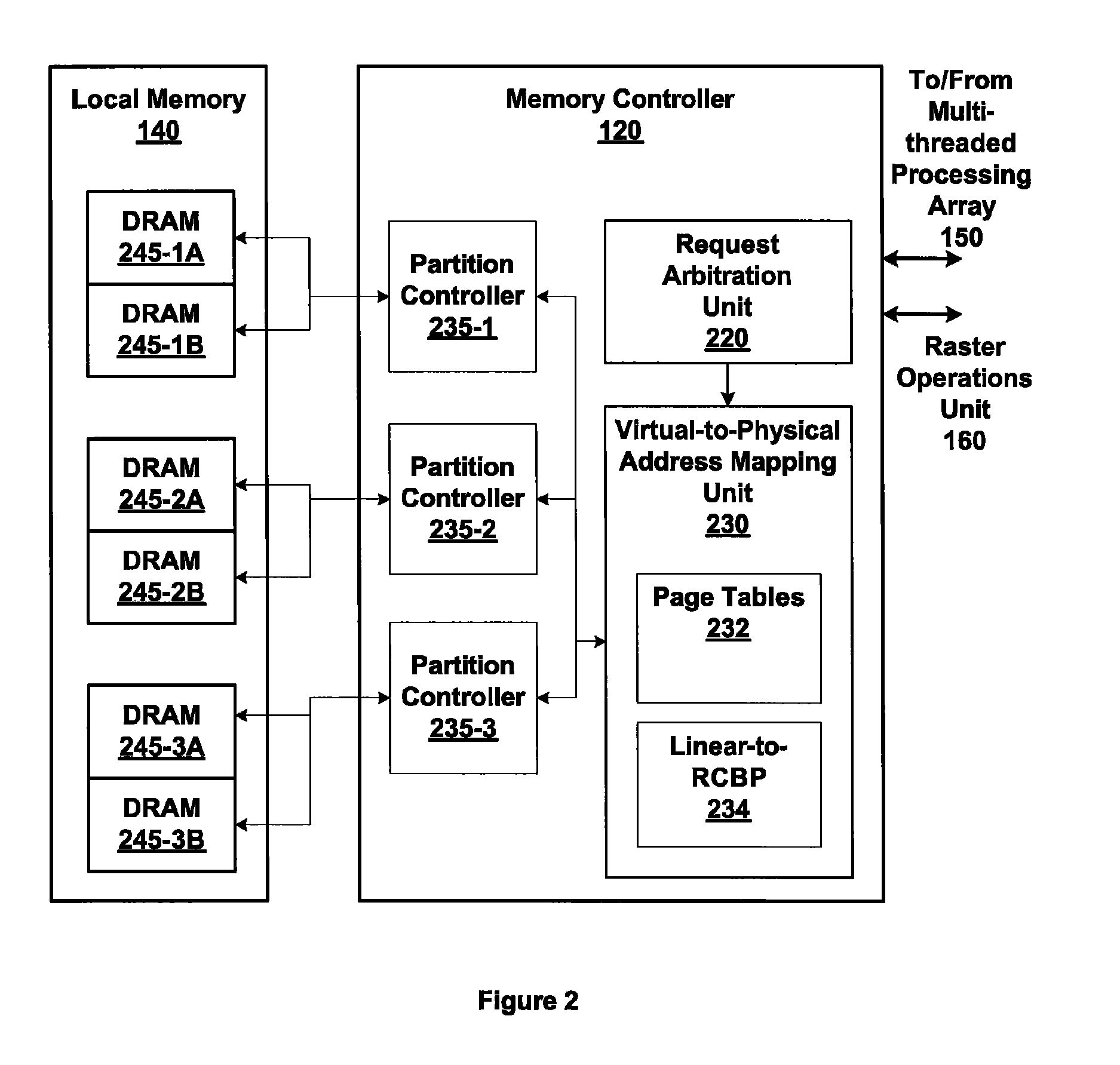

Memory addressing controlled by PTE fields

ActiveUS7805587B1Reduce accessMemory adressing/allocation/relocationComputer security arrangementsMemory addressDram memory

Embodiments of the present invention enable virtual-to-physical memory address translation using optimized bank and partition interleave patterns to improve memory bandwidth by distributing data accesses over multiple banks and multiple partitions. Each virtual page has a corresponding page table entry that specifies the physical address of the virtual page in linear physical address space. The page table entry also includes a data kind field that is used to guide and optimize the mapping process from the linear physical address space to the DRAM physical address space, which is used to directly access one or more DRAM. The DRAM physical address space includes a row, bank and column address. The data kind field is also used to optimize the starting partition number and partition interleave pattern that defines the organization of the selected physical page of memory within the DRAM memory system.

Owner:NVIDIA CORP

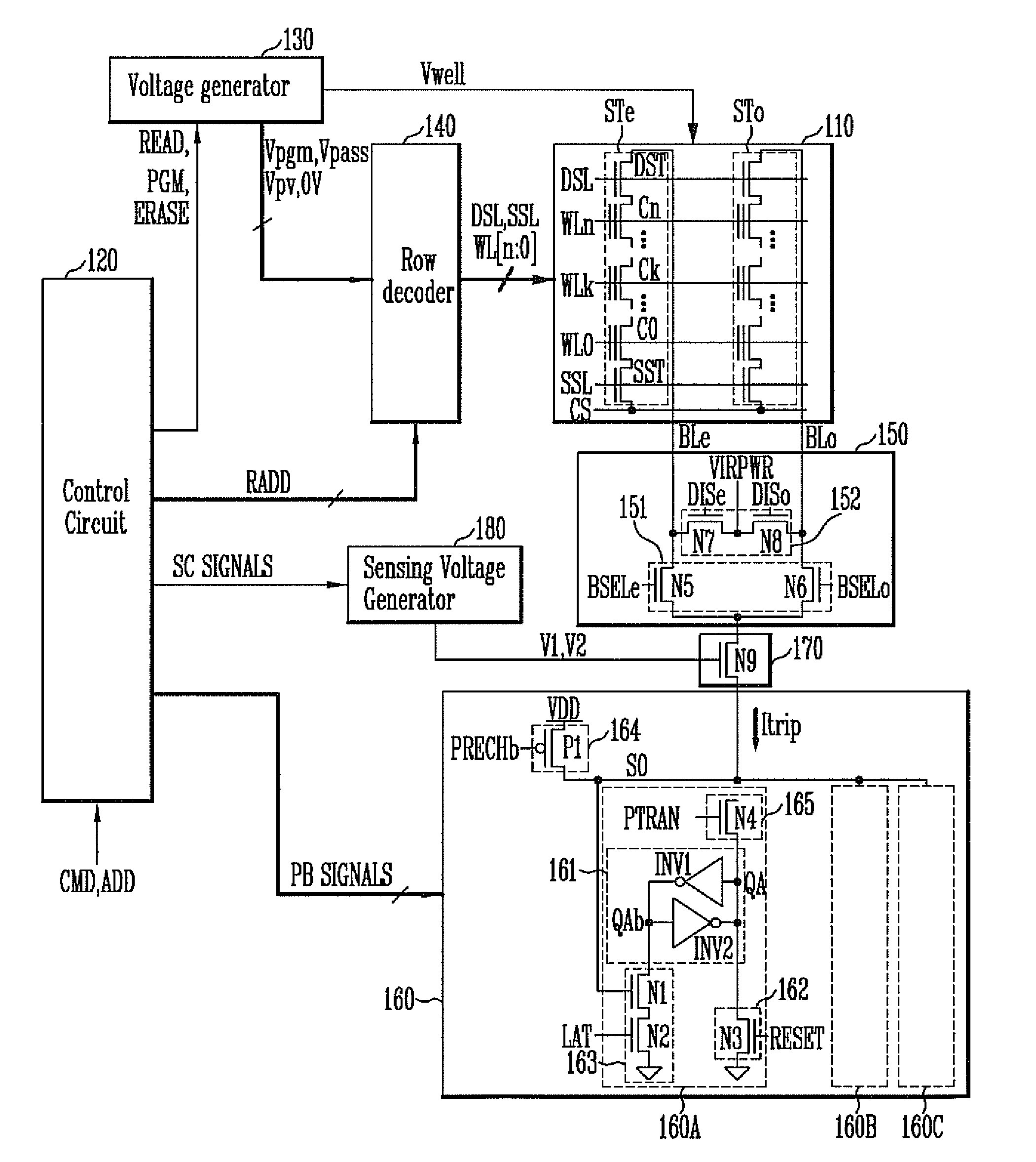

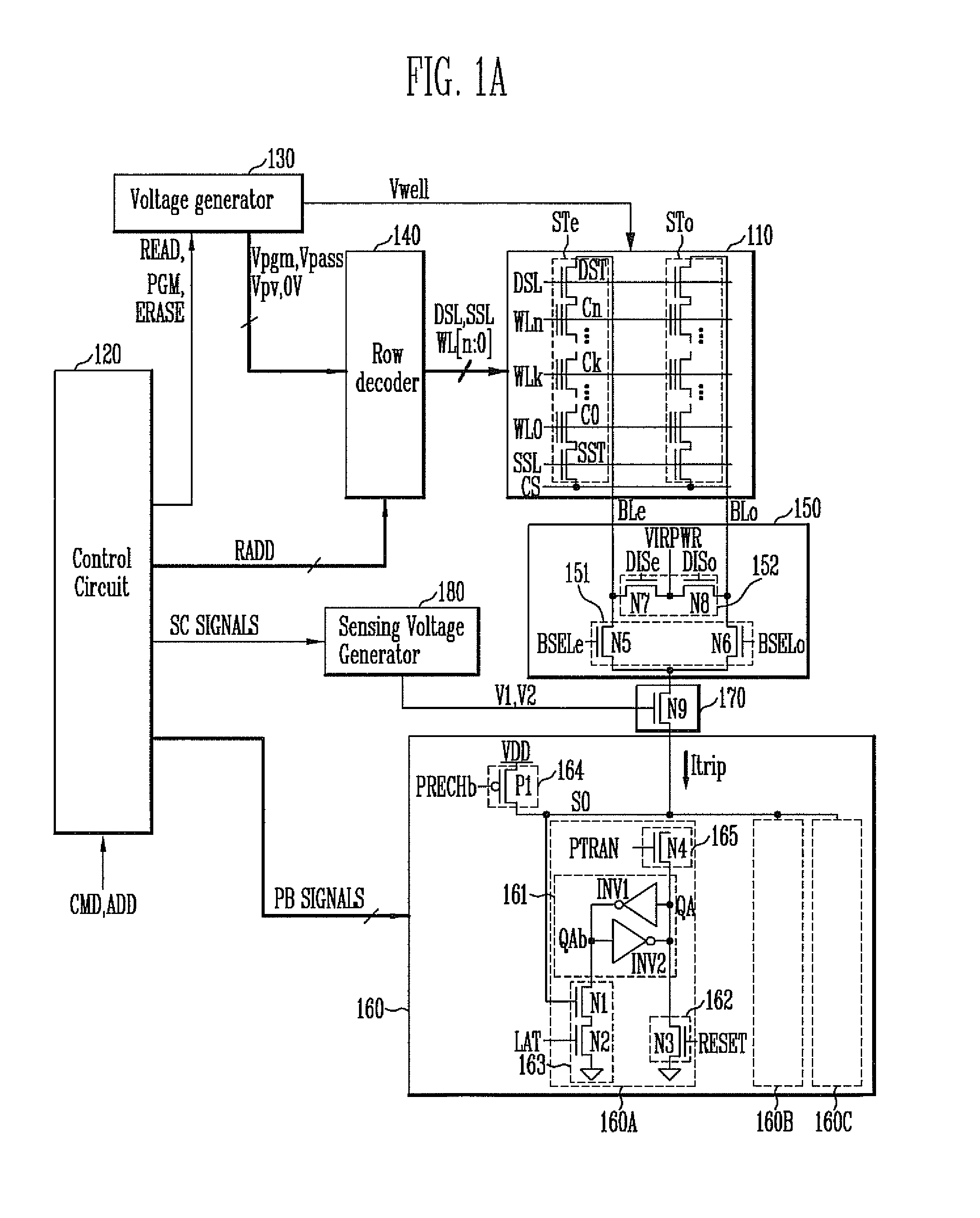

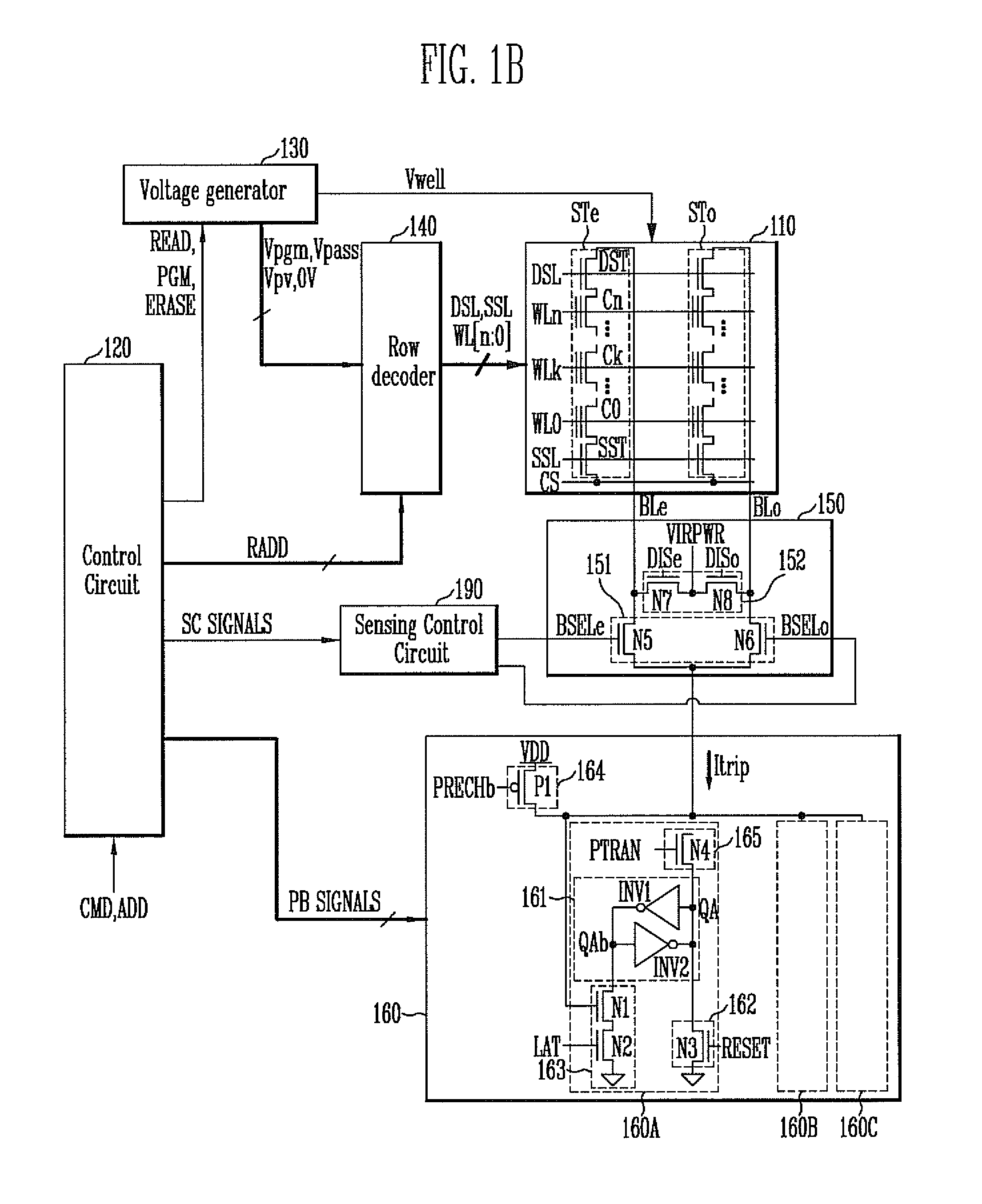

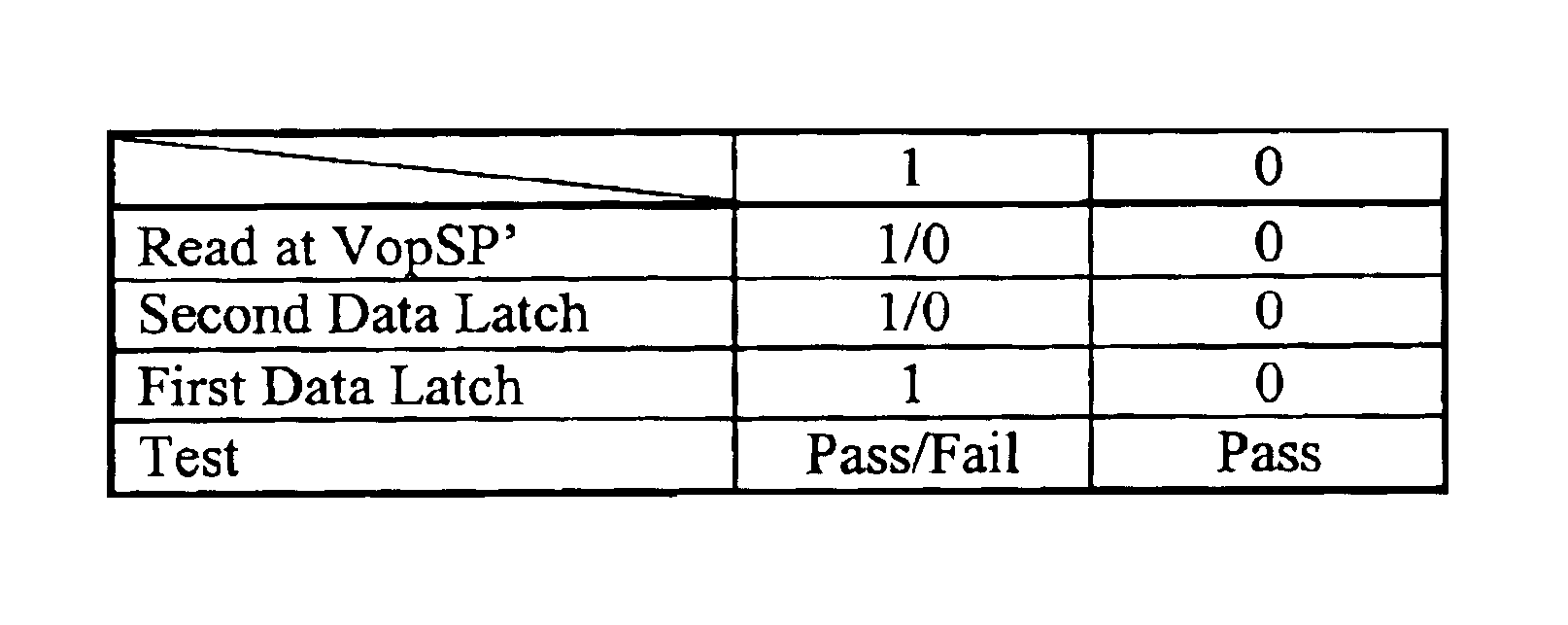

Semiconductor memory device and method of operating the same

ActiveUS8526239B2Minimizing deteriorationImprove reliabilityRead-only memoriesDigital storageComputer architectureControl circuit

A semiconductor memory device includes a memory string coupled to a bit line, a page buffer configured to sense a sensing current of the bit line in an erase verification operation or a program verification operation, and a sensing control circuit configured to differently set a level of the sensing current in the erase verification operation and the program verification operation in order to sense the threshold voltage level of a selected memory cell of the memory string.

Owner:SK HYNIX INC

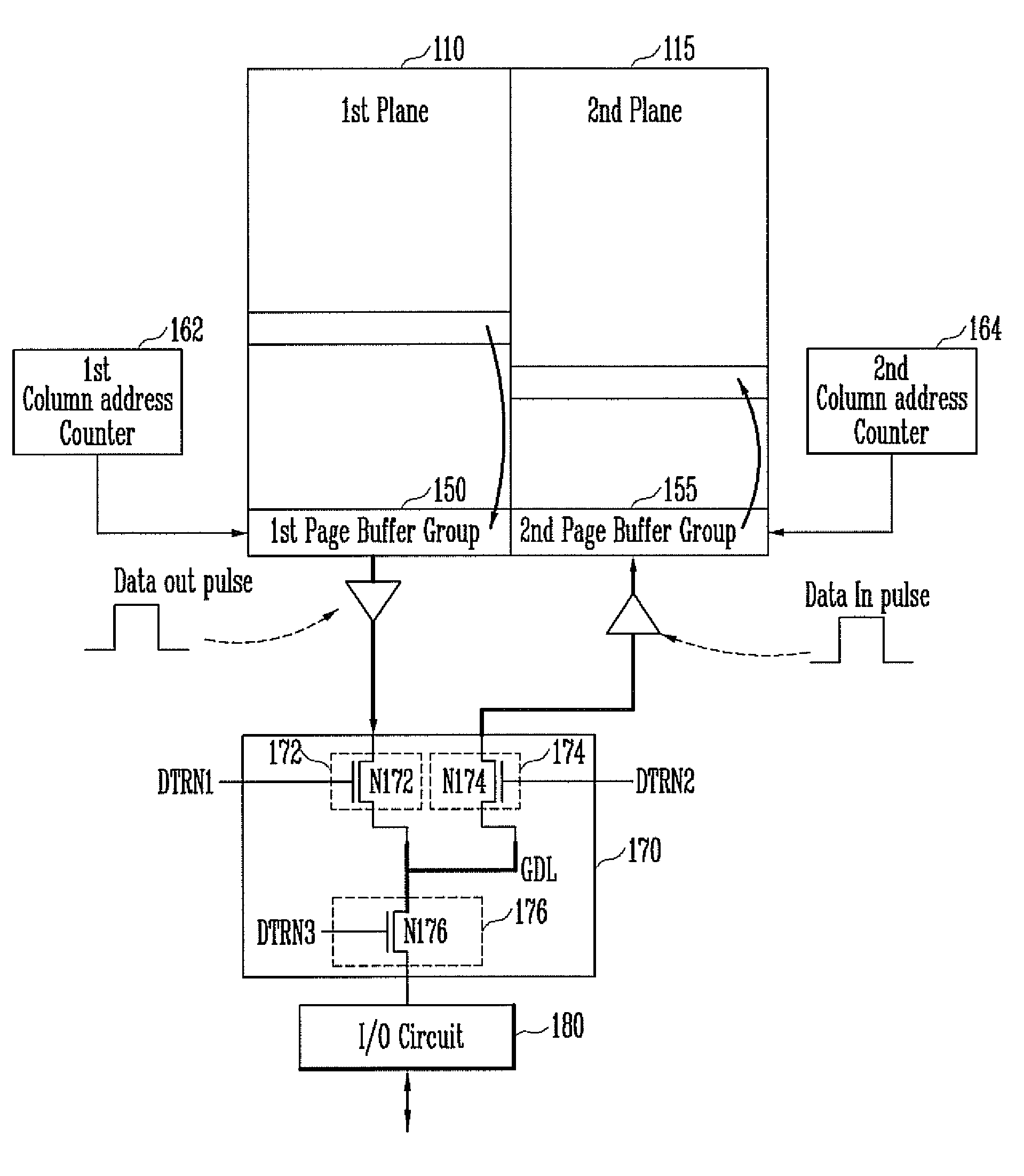

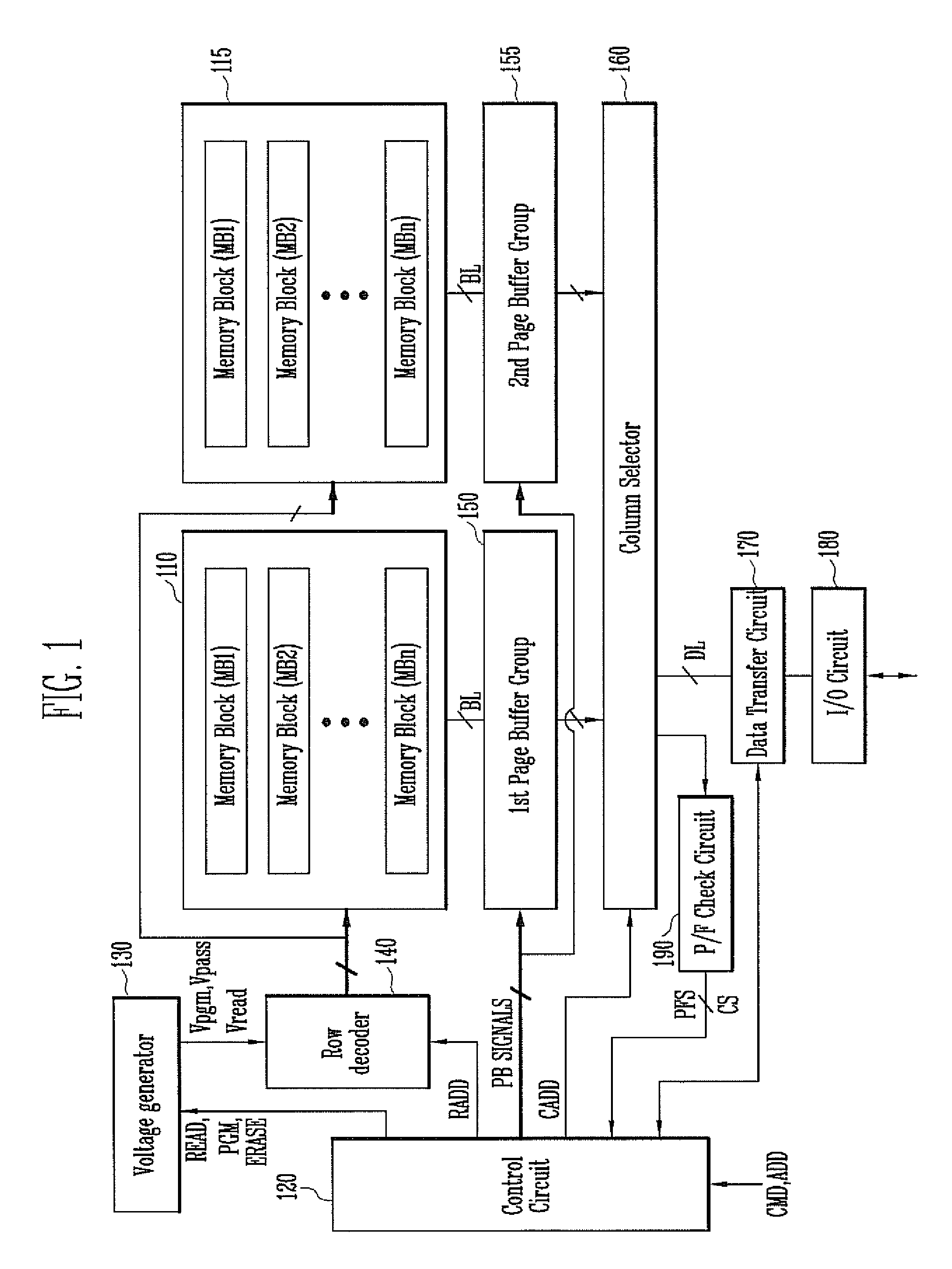

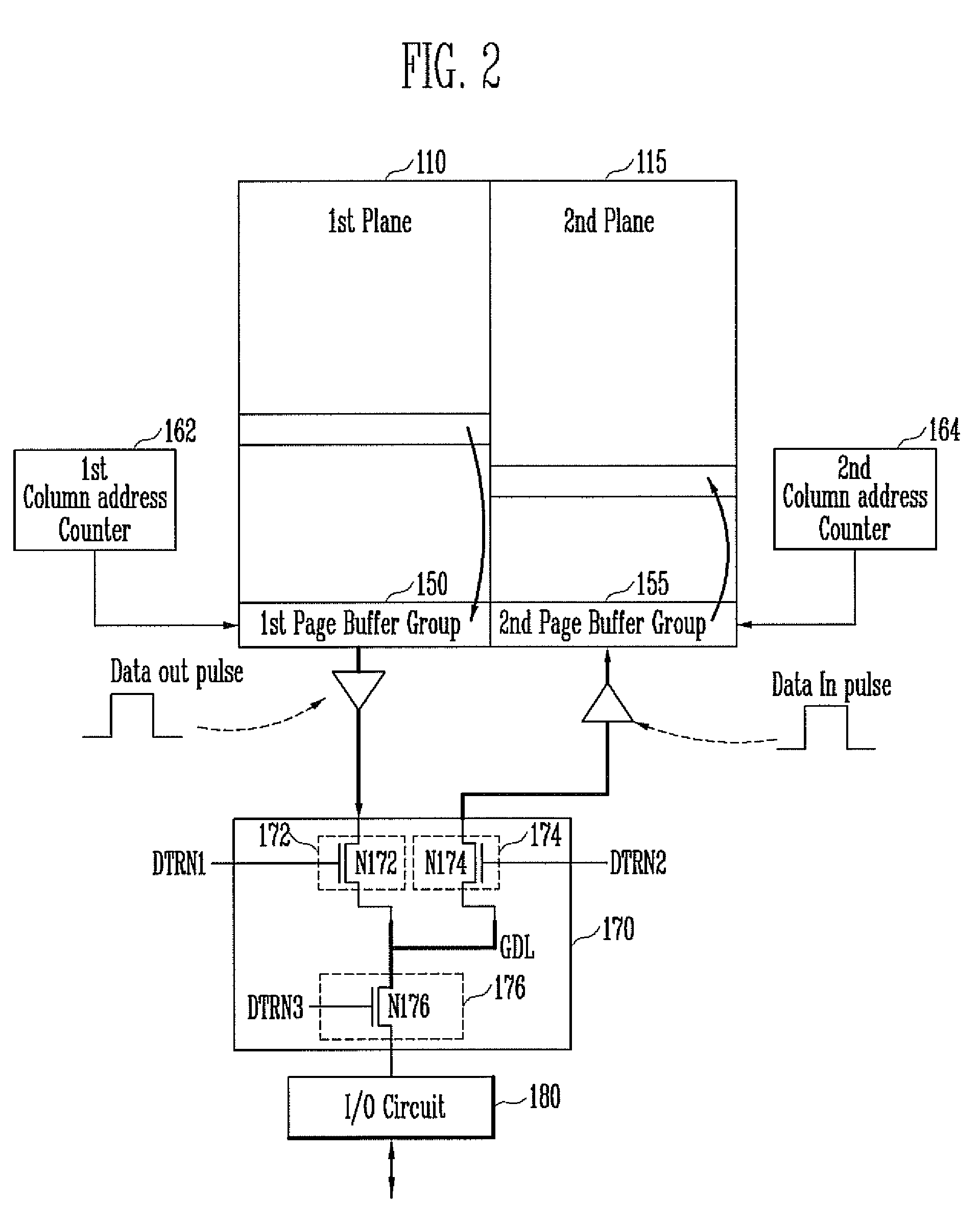

Semiconductor memory device and method of operation the same

A semiconductor memory device includes a first plane and a second plane each configured to include a plurality of memory cells, and a data transfer circuit configured to transfer first data, stored in the memory cells of the first plane, to the second plane and transfer second data, stored in the memory cells of the second plane, to the first plane when a copyback operation is performed and to transfer the first data or the second data to an I / O circuit when a read operation is performed.

Owner:SK HYNIX INC

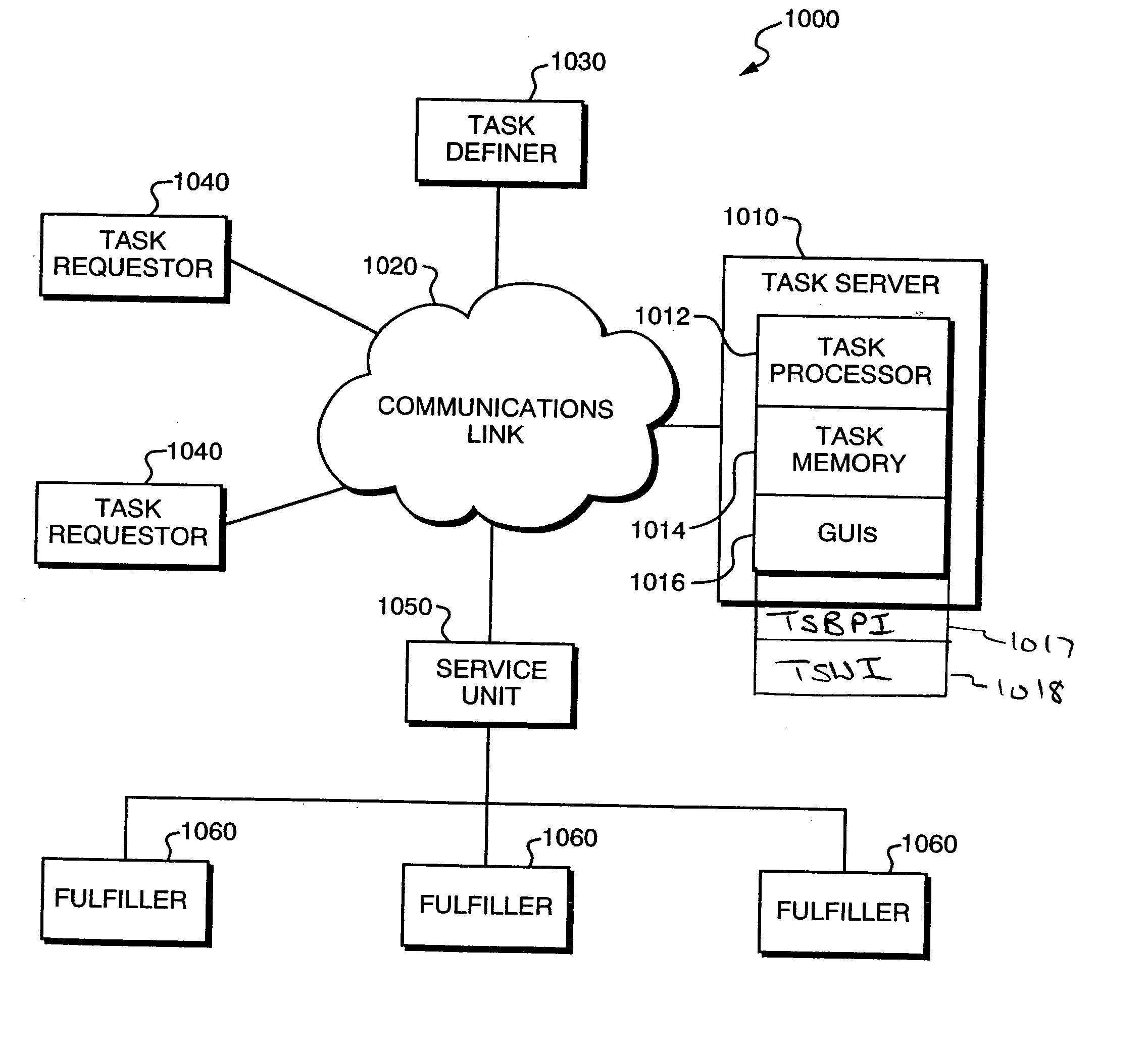

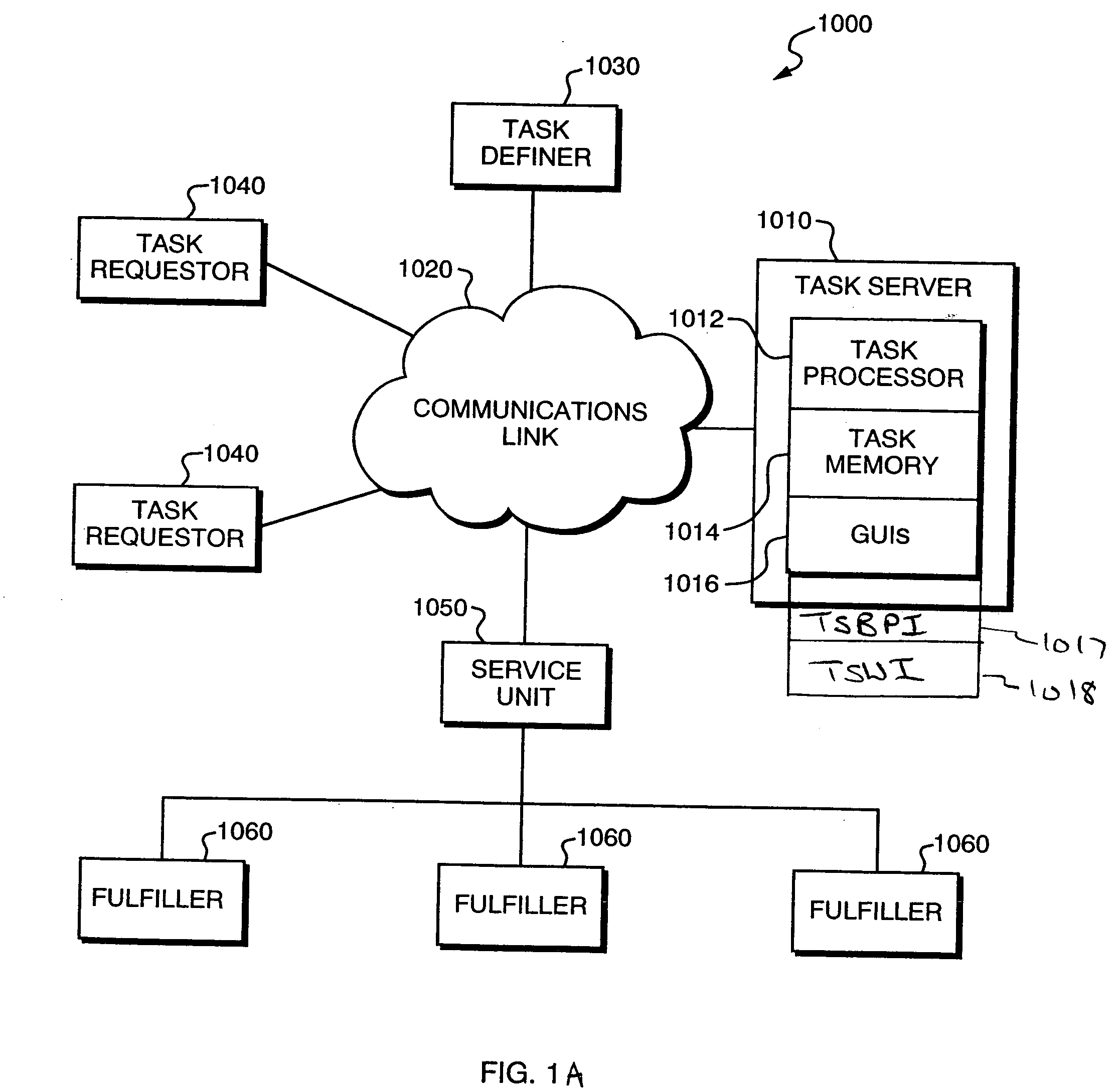

Computer-implemented process management system

InactiveUS20050022198A1Easy to operateMultiprogramming arrangementsOffice automationGraphicsComputer architecture

A task management system including a task server linking a plurality of system users, including at least one task definer, at least one task requestor and at least one task fulfiller all linked over a communications link. The task server includes a task processor for processing tasks, a task memory for storing task definitions and one or more graphical user interfaces (GUIs) for interfacing the system users to the task server to facilitate operation of said task processing system. The GUIs include task view interfaces, task fulfiller interfaces, which are used by task requesters and task fulfillers to request and fulfill tasks, respectively. The GUIs also include a plurality of administrative editor interfaces, which are used by task definers to define, group and sequence tasks.

Owner:TASKSERVER

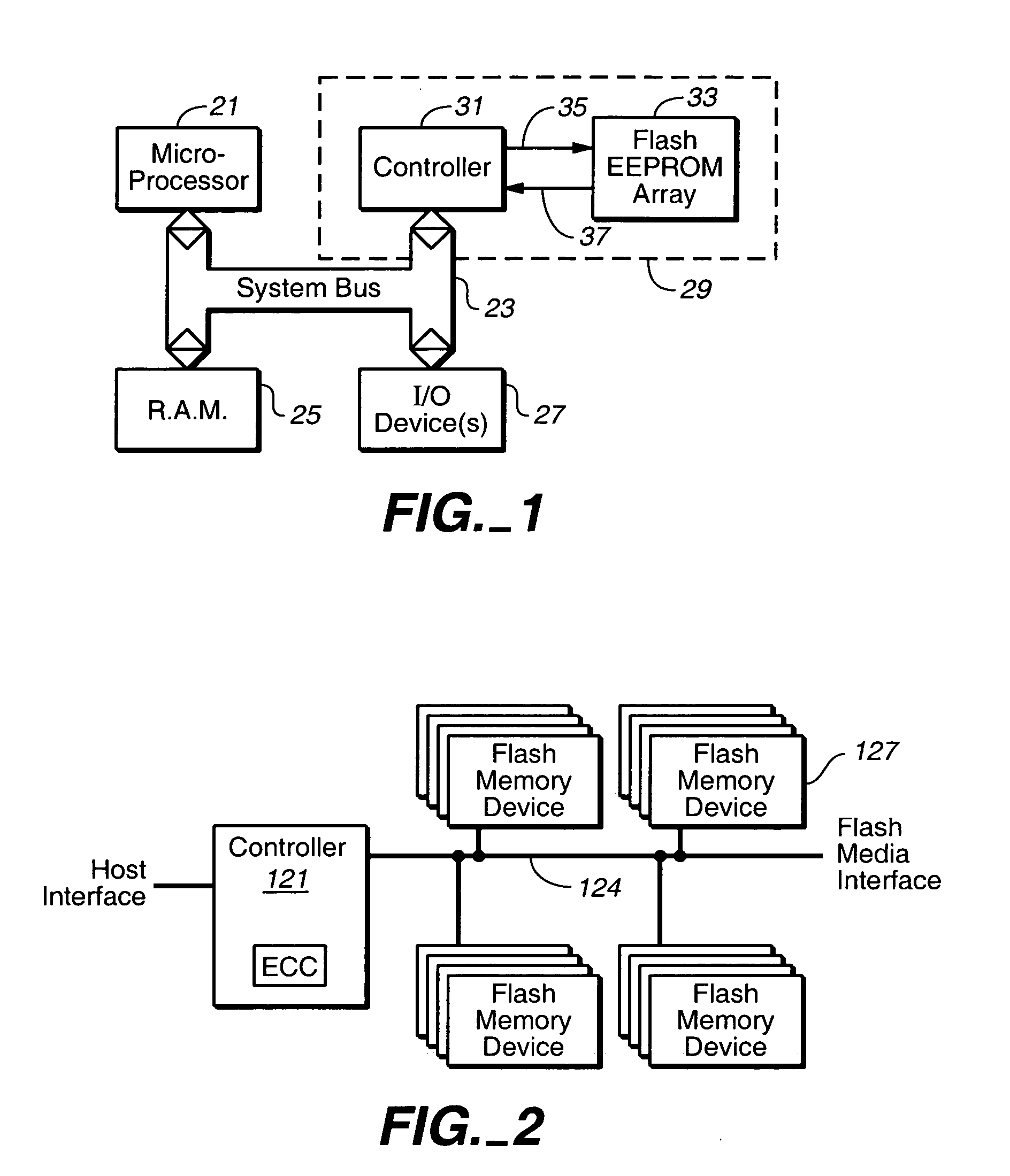

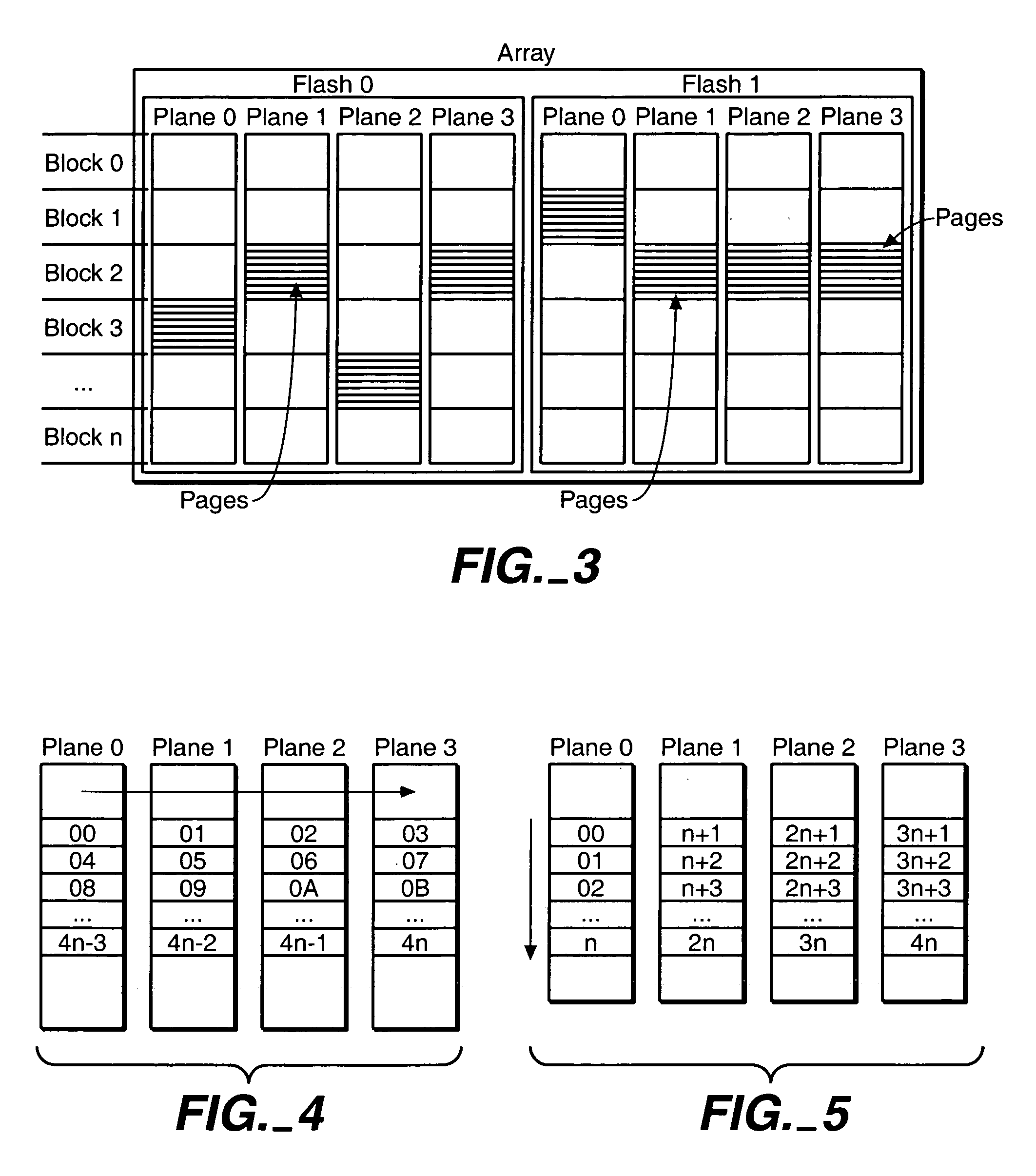

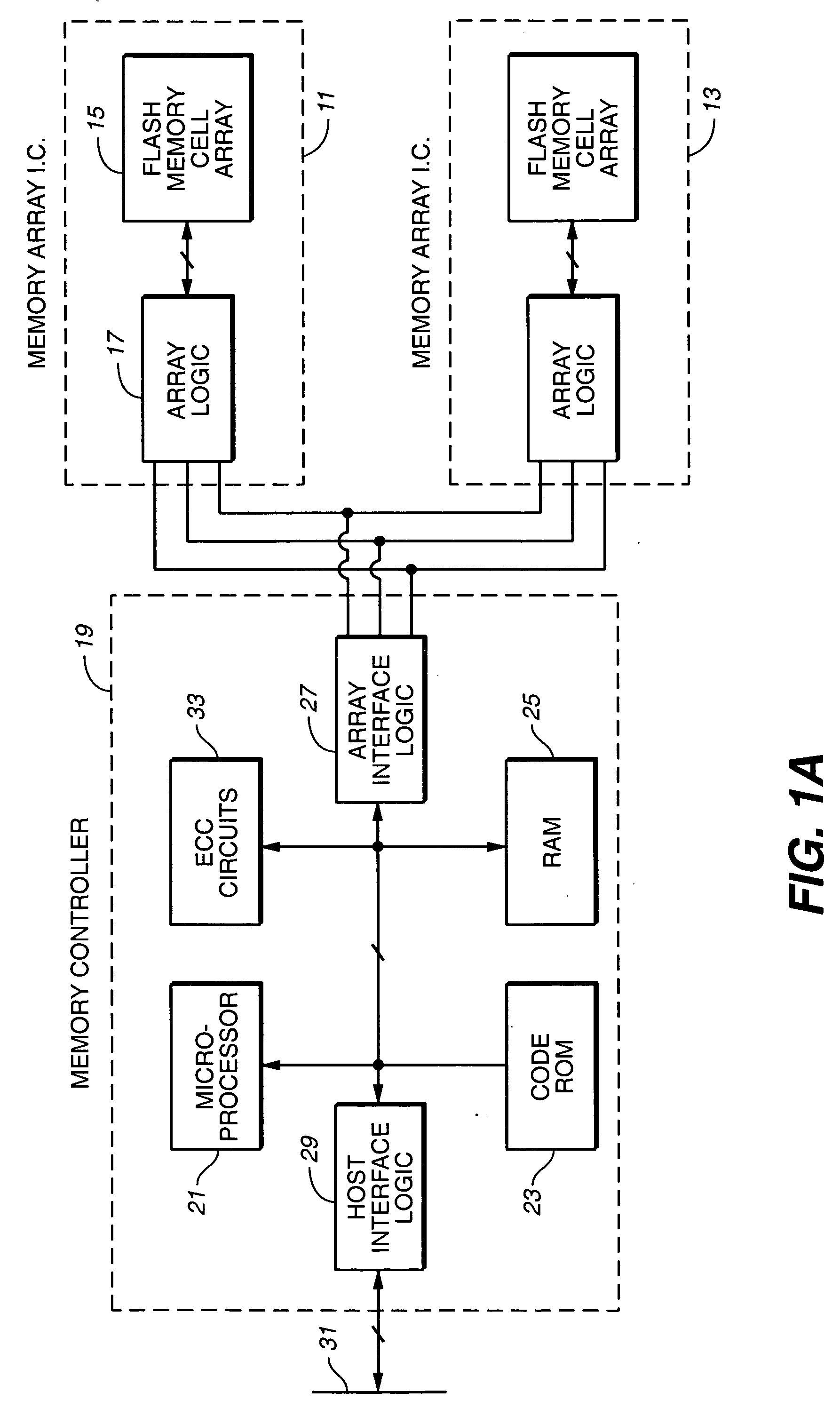

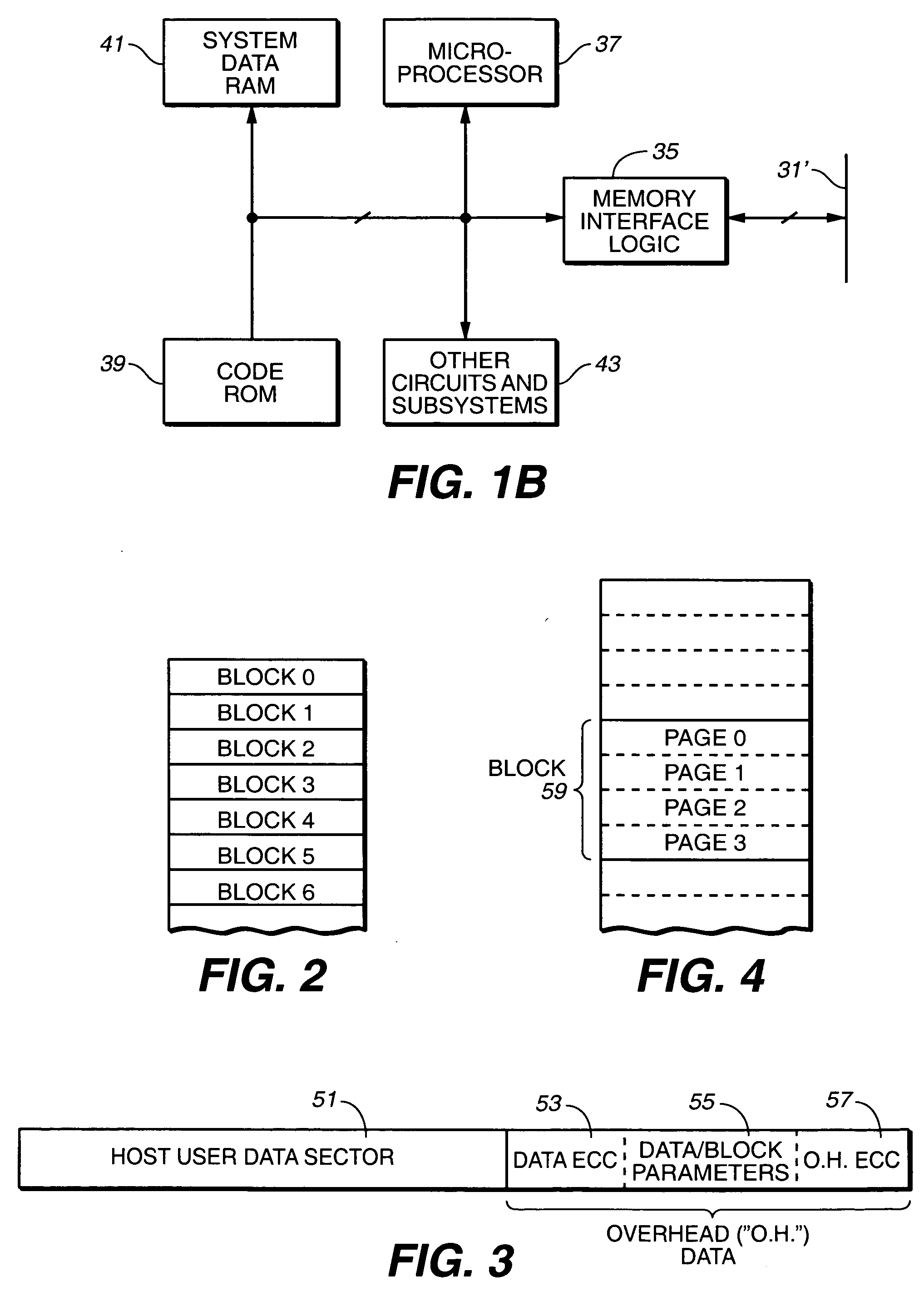

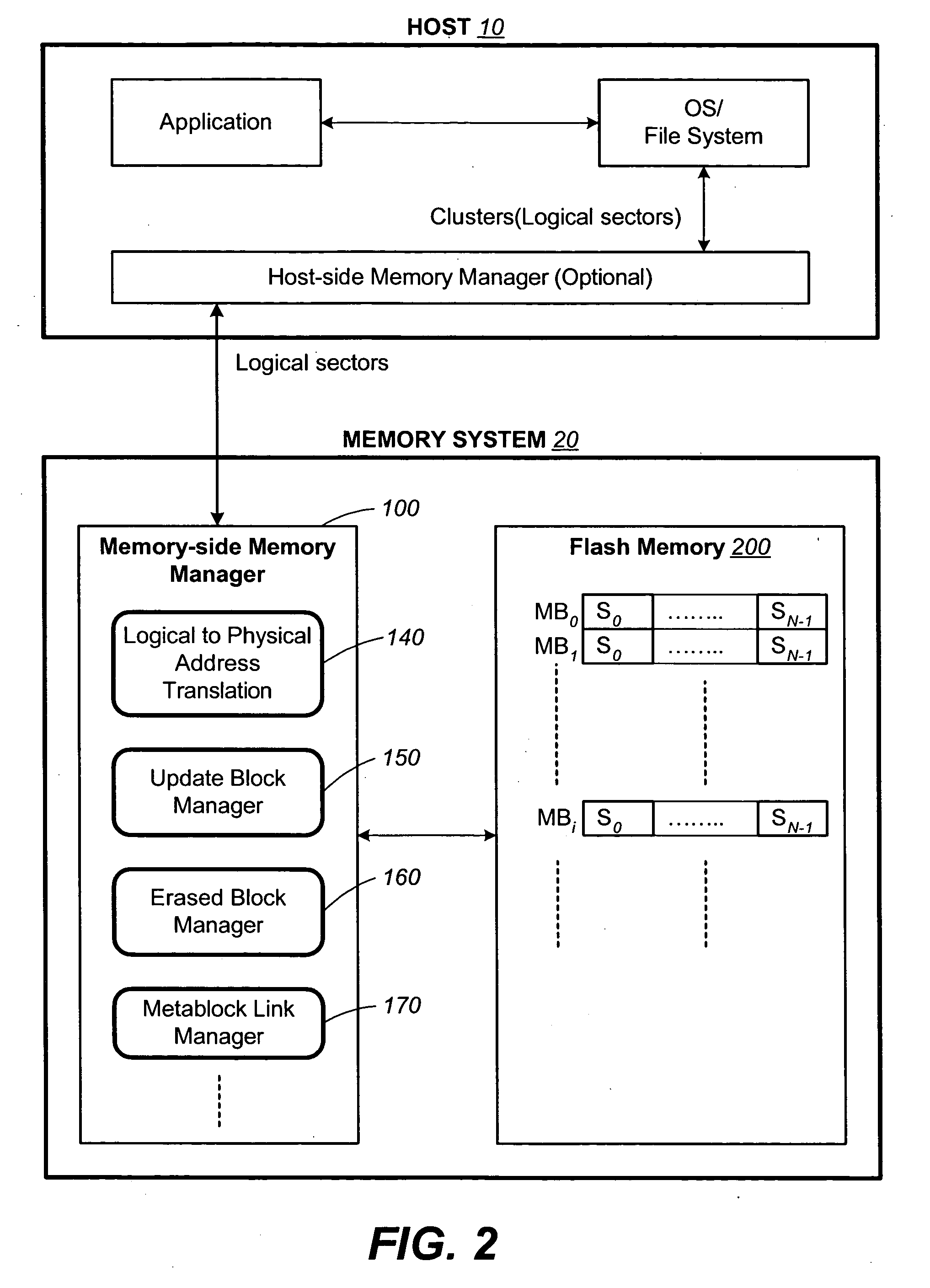

Adaptive mode switching of flash memory address mapping based on host usage characteristics

ActiveUS20050144361A1Reduced useful lifeOptimal performance characteristicMemory architecture accessing/allocationMemory adressing/allocation/relocationDegree of parallelismSelf adaptive

In a non-volatile memory storage system such as a flash EEPROM system, a controller switches the manner in which data sectors are mapped into blocks and metablocks of the memory in response to host programming and controller data consolidation patterns, in order to improve performance and reduce wear. Data are programmed into the memory with different degrees of parallelism.

Owner:SANDISK TECH LLC

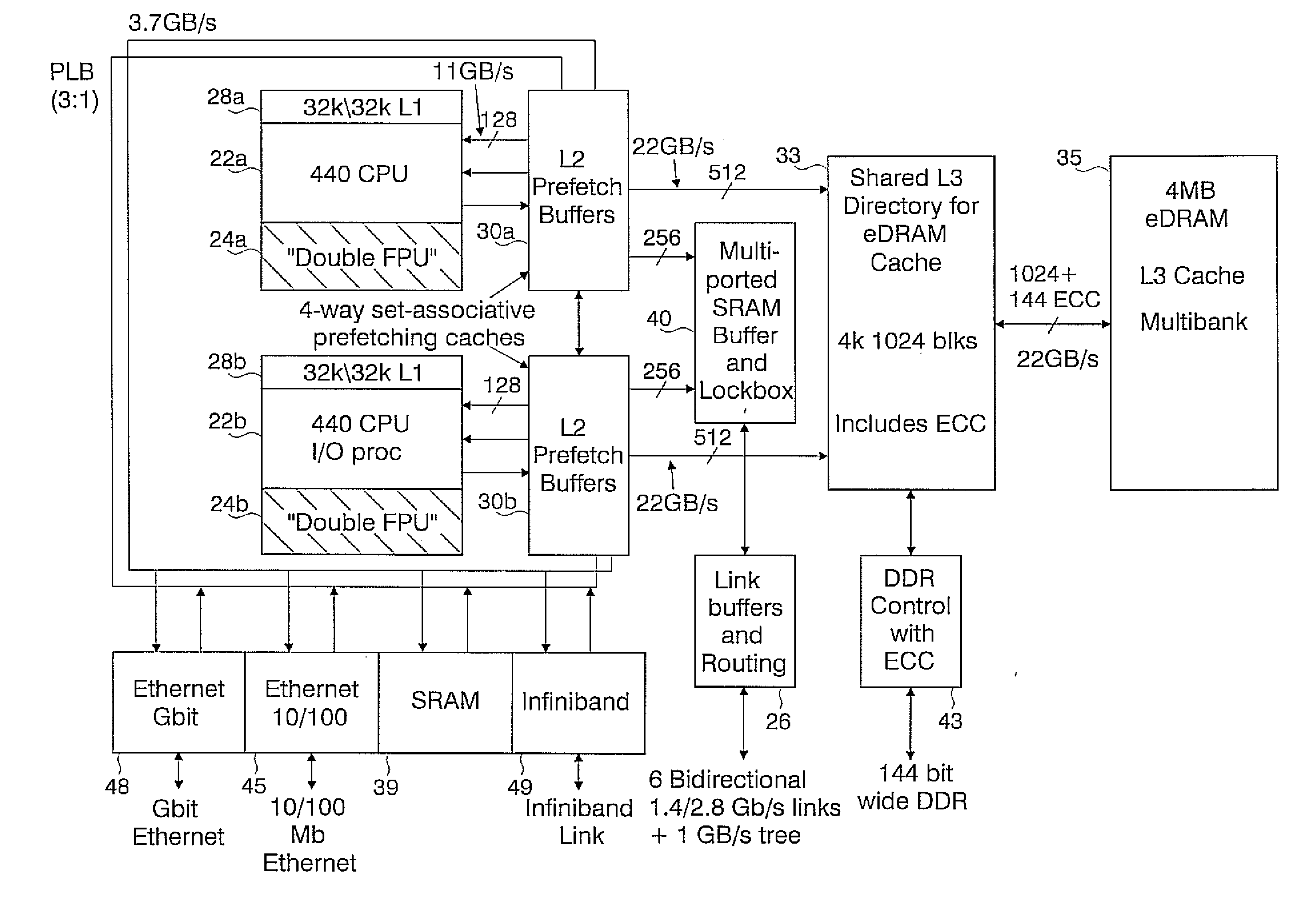

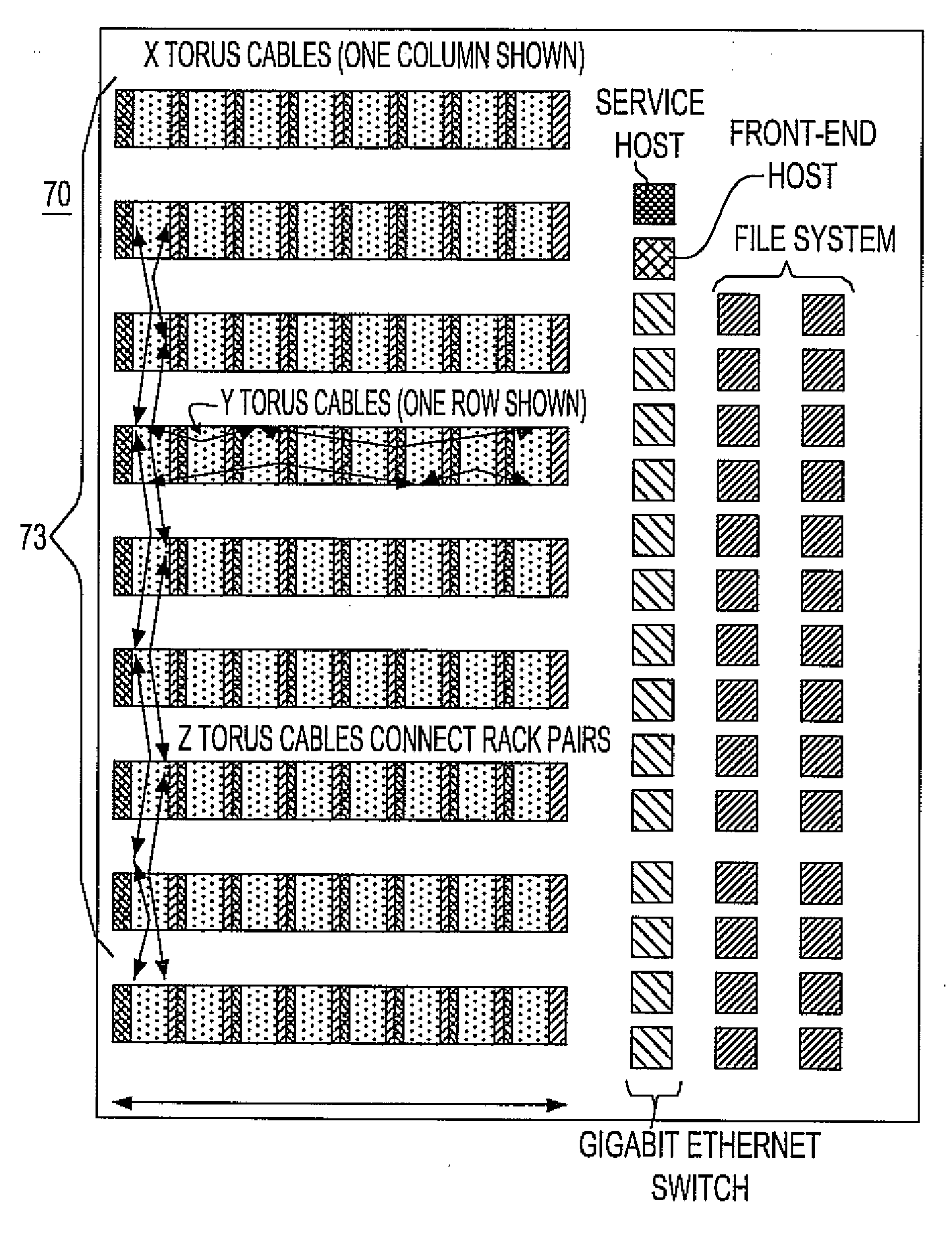

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

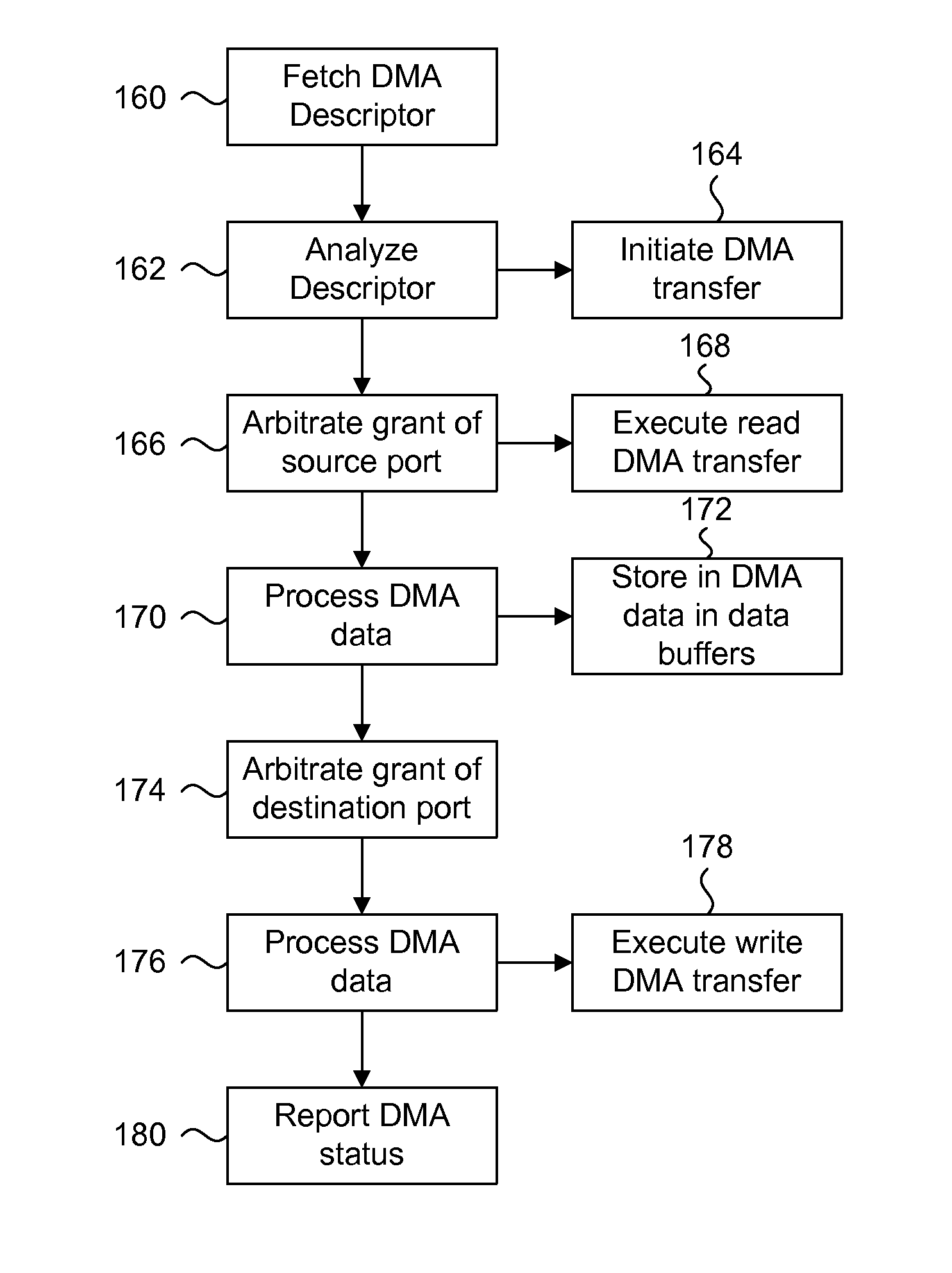

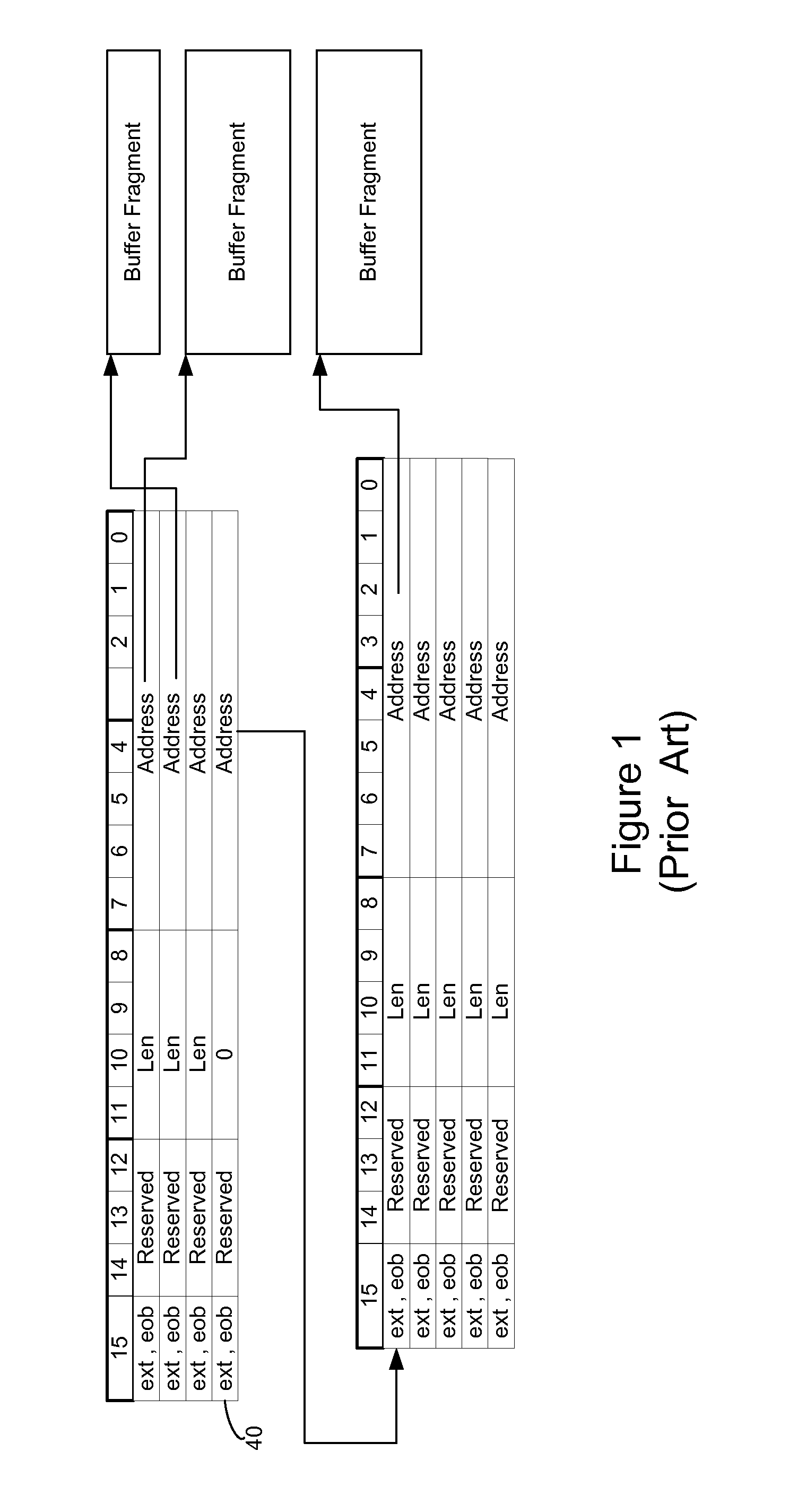

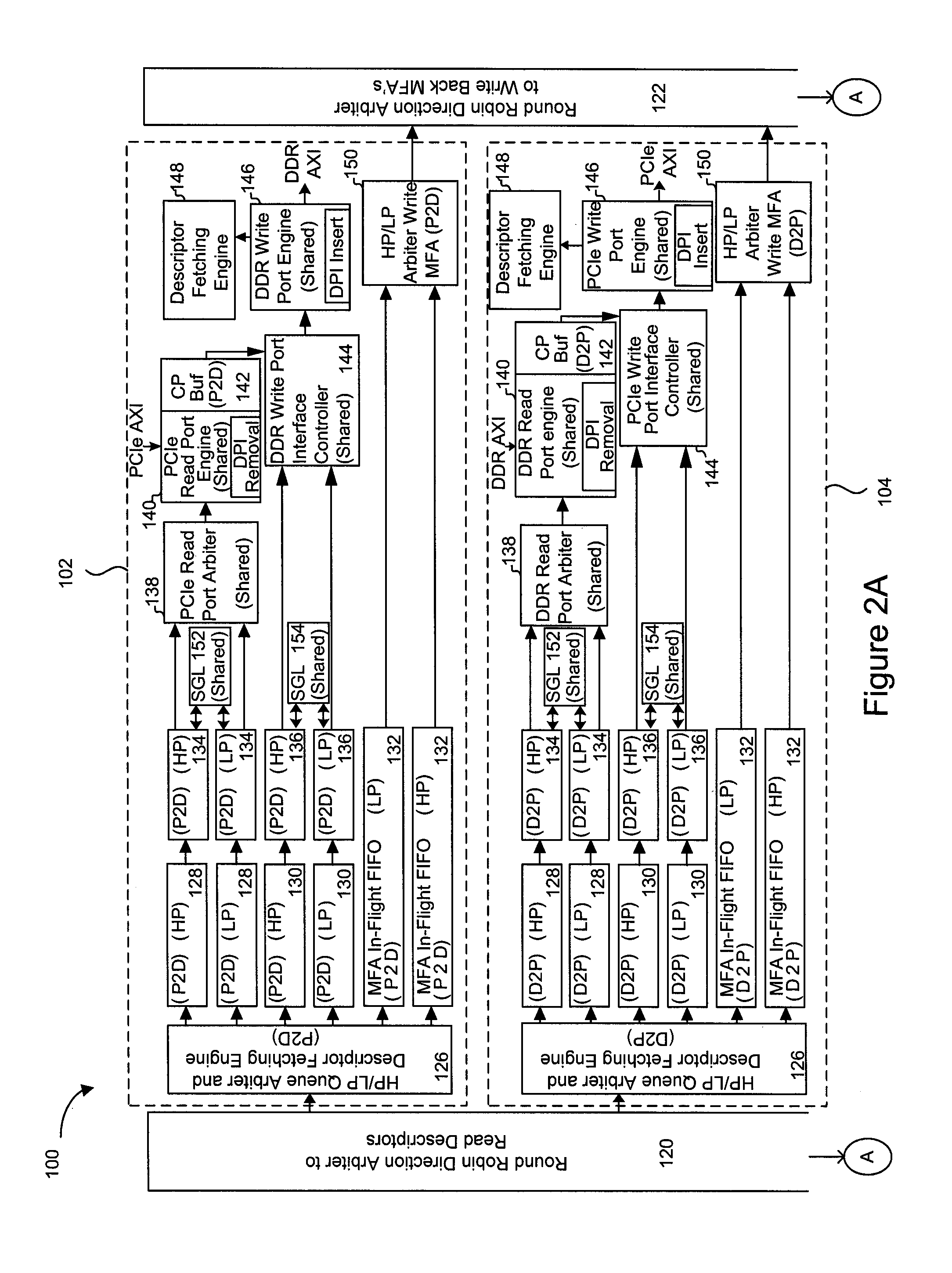

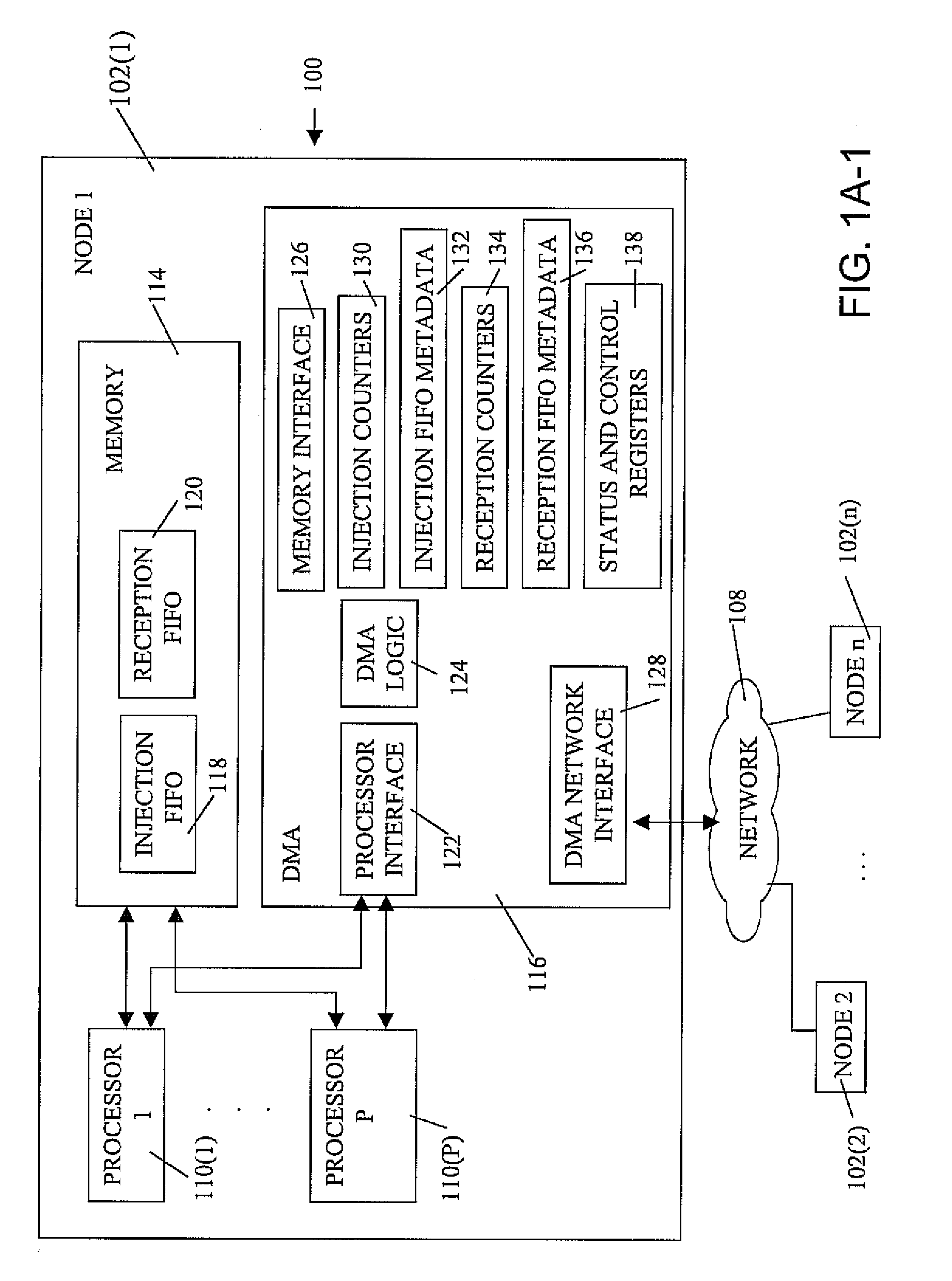

Logical address direct memory access with multiple concurrent physical ports and internal switching

A DMA engine is provided that is suitable for higher performance System On a Chip (SOC) devices that have multiple concurrent on-chip / off-chip memory spaces. The DMA engine operates either on logical addressing method or physical addressing method and provides random and sequential mapping function from logical address to physical address while supporting frequent context switching among a large number of logical address spaces. Embodiments of the present invention utilize per direction (source-destination) queuing and an internal switch to support non-blocking concurrent transfer of data on multiple directions. A caching technique can be incorporated to reduce the overhead of address translation.

Owner:MICROSEMI SOLUTIONS (US) INC

Detecting over programmed memory

ActiveUS6917542B2Lower requirementRead-only memoriesDigital storageComputer architectureThreshold voltage

Owner:SANDISK TECH LLC

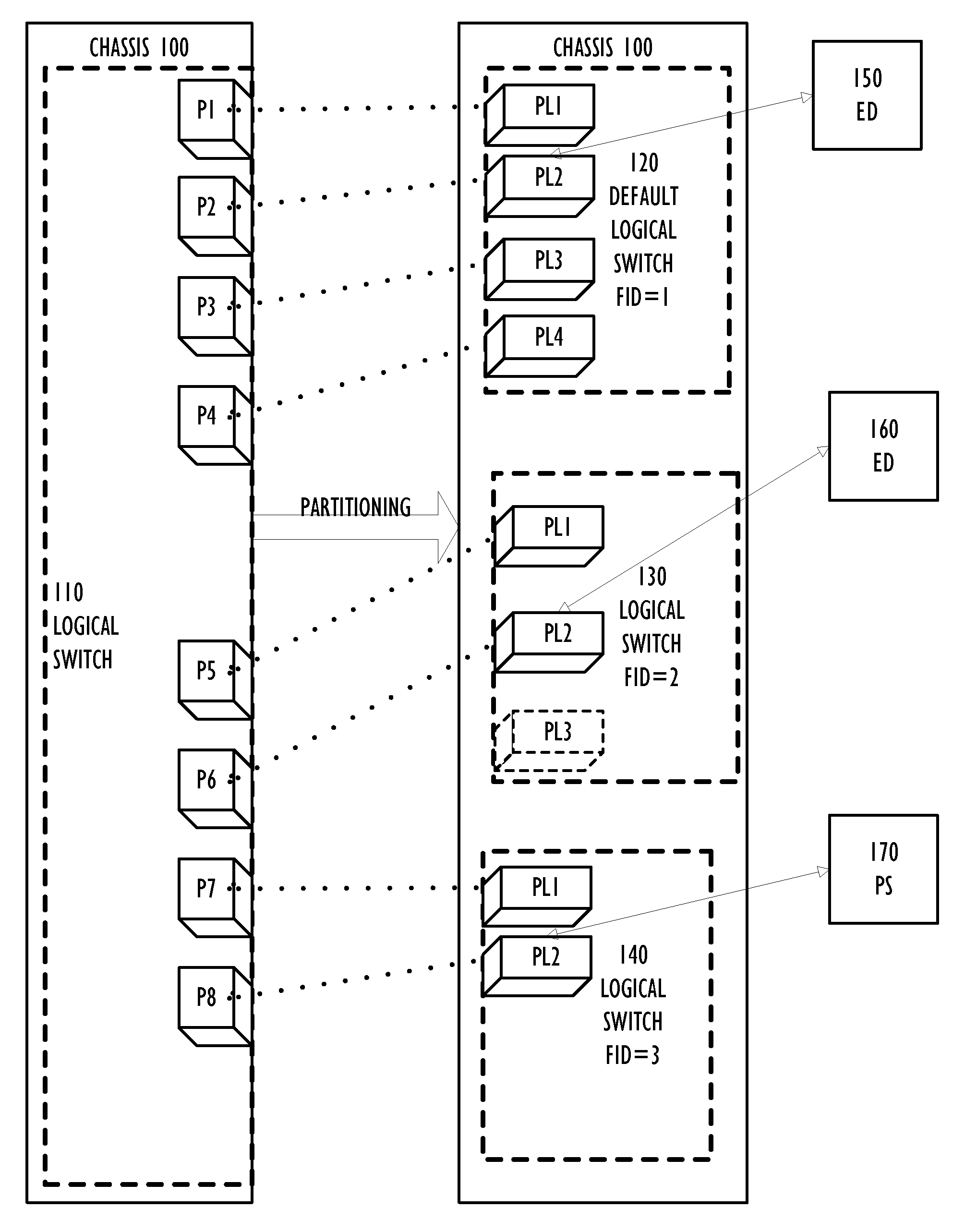

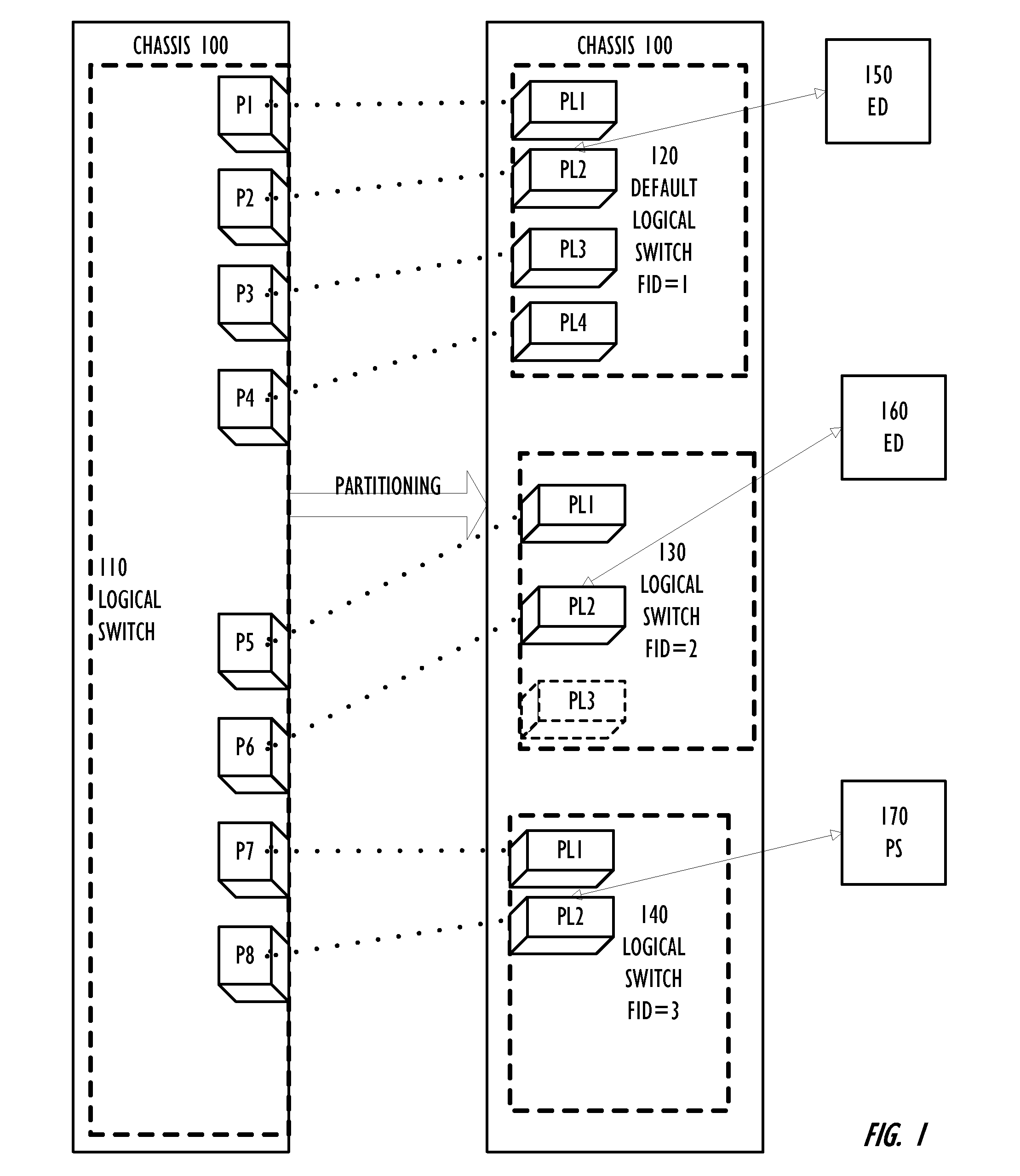

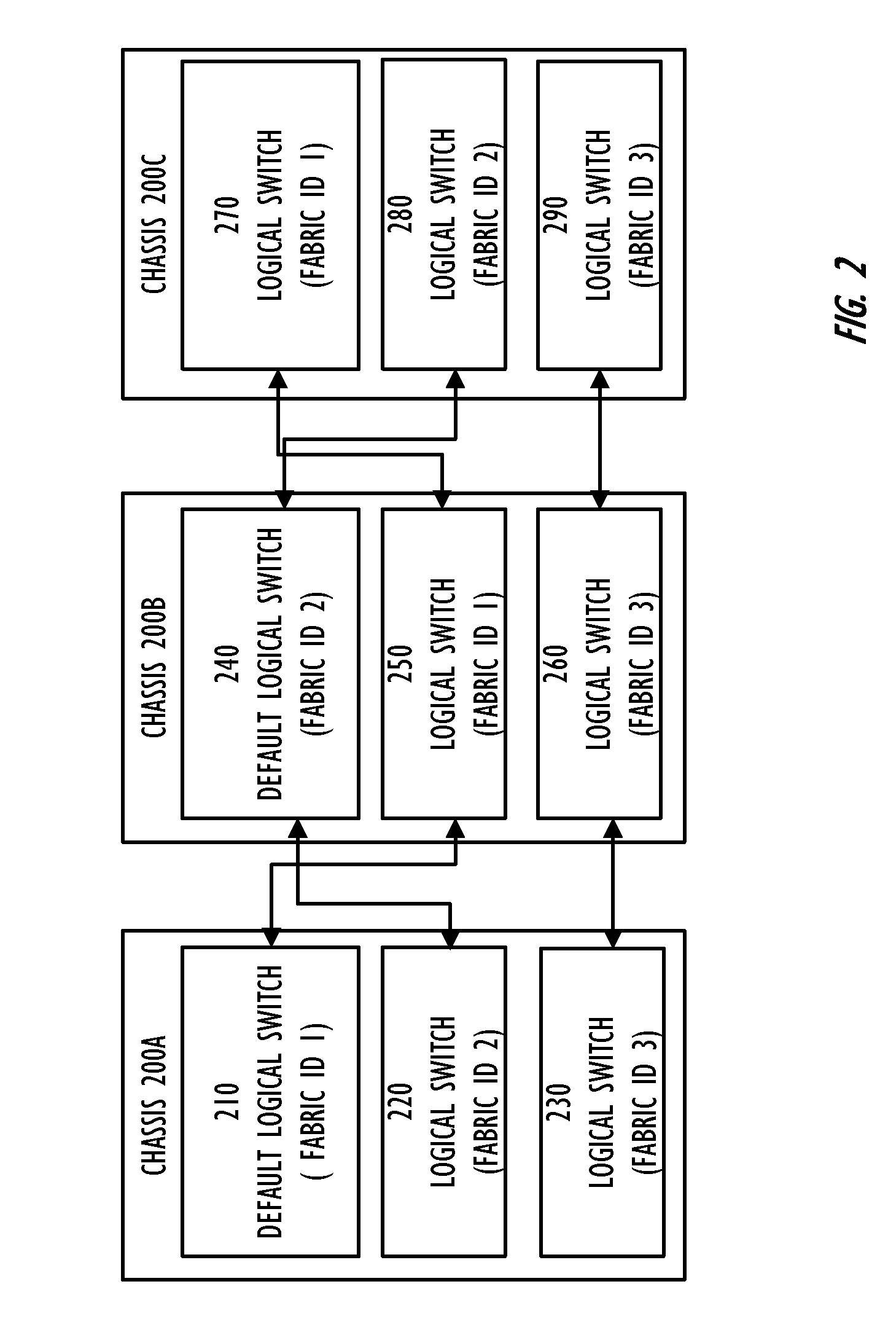

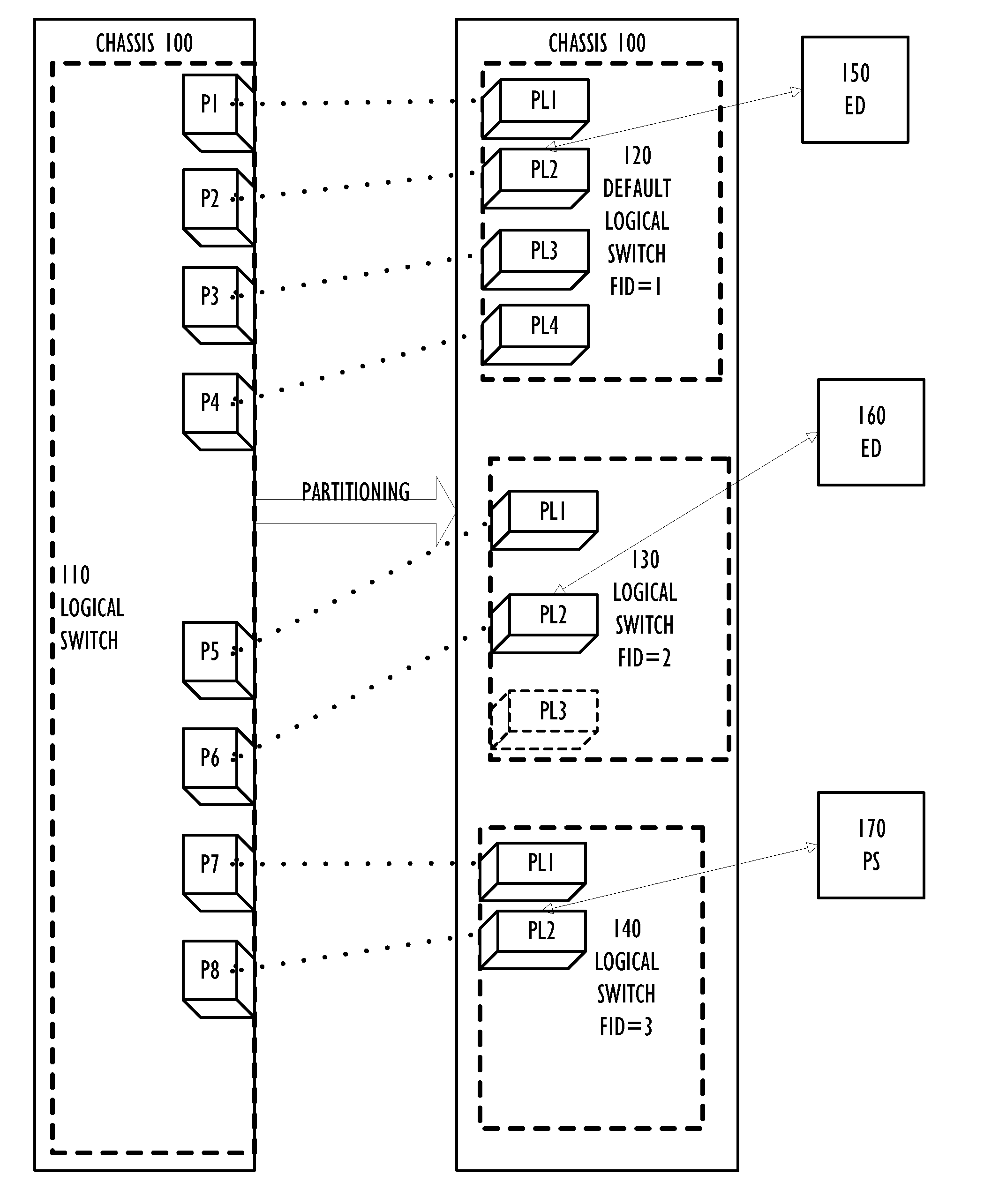

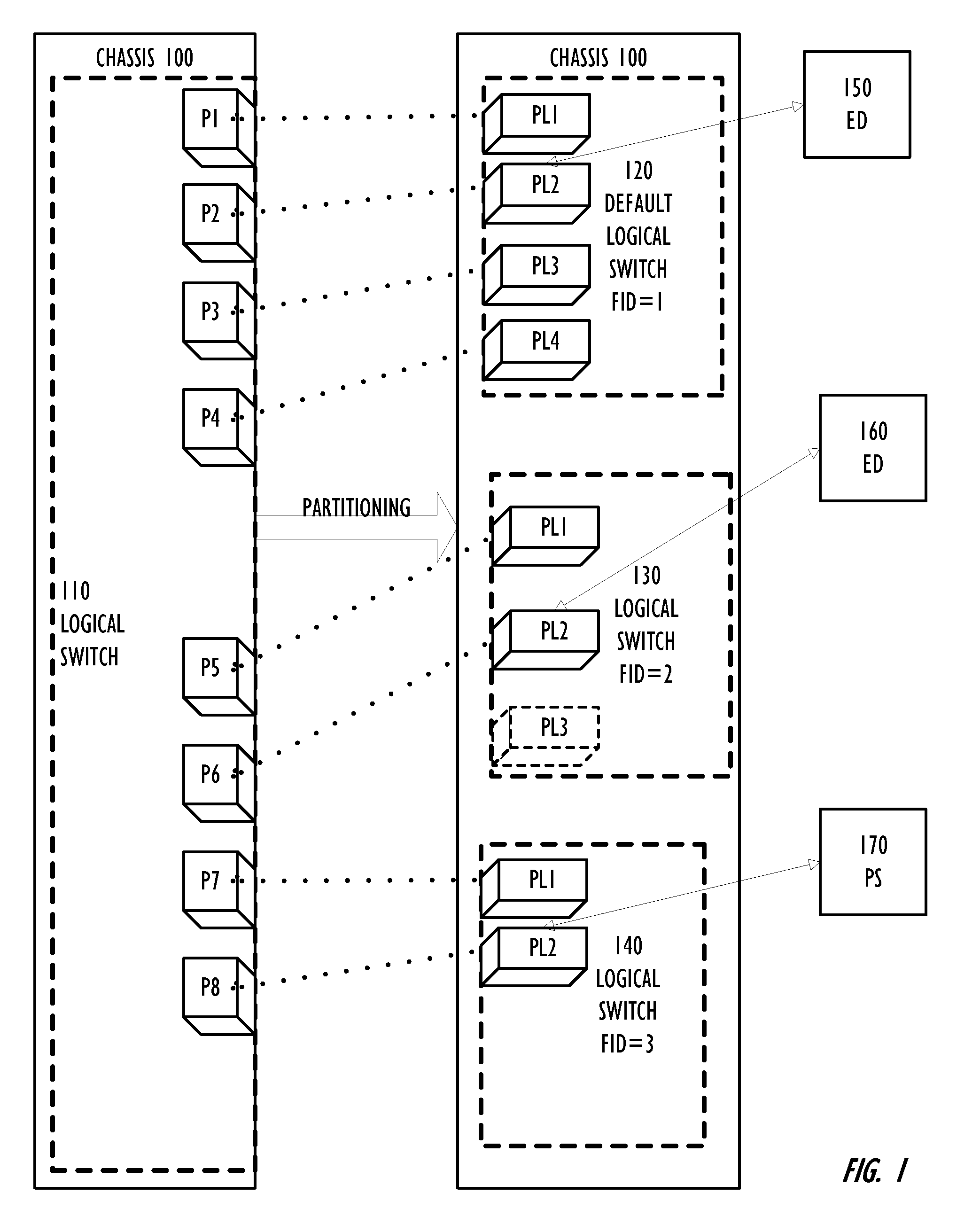

Partitioning of Switches and Fabrics into Logical Switches and Fabrics

A Layer 2 network switch is partitionable into a plurality of switch fabrics. The single-chassis switch is partitionable into a plurality of logical switches, each associated with one of the virtual fabrics. The logical switches behave as complete and self-contained switches. A logical switch fabric can span multiple single-chassis switch chassis. Logical switches are connected by inter-switch links that can be either dedicated single-chassis links or logical links. An extended inter-switch link can be used to transport traffic for one or more logical inter-switch links. Physical ports of the chassis are assigned to logical switches and are managed by the logical switch. Legacy switches that are not partitionable into logical switches can serve as transit switches between two logical switches.

Owner:AVAGO TECH INT SALES PTE LTD

Transit Switches in a Network of Logical Switches

ActiveUS20110085559A1Data switching by path configurationWireless commuication servicesComputer architectureTier 2 network

A Layer 2 network switch is partitionable into a plurality of switch fabrics. The single-chassis switch is partitionable into a plurality of logical switches, each associated with one of the virtual fabrics. The logical switches behave as complete and self-contained switches. A logical switch fabric can span multiple single-chassis switch chassis. Logical switches are connected by inter-switch links that can be either dedicated single-chassis links or logical links. An extended inter-switch link can be used to transport traffic for one or more logical inter-switch links. Physical ports of the chassis are assigned to logical switches and are managed by the logical switch. Legacy switches that are not partitionable into logical switches can serve as transit switches between two logical switches.

Owner:AVAGO TECH INT SALES PTE LTD

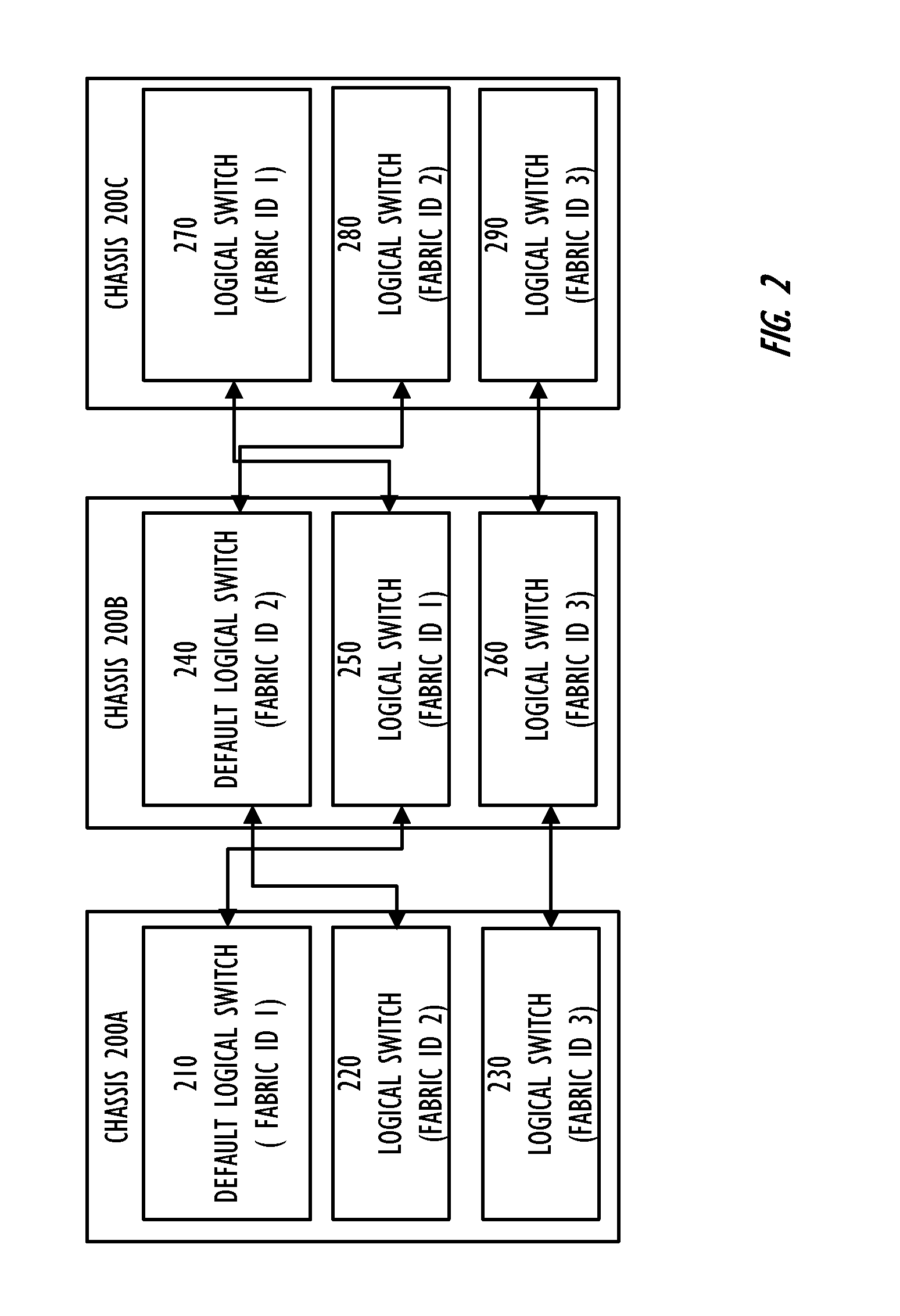

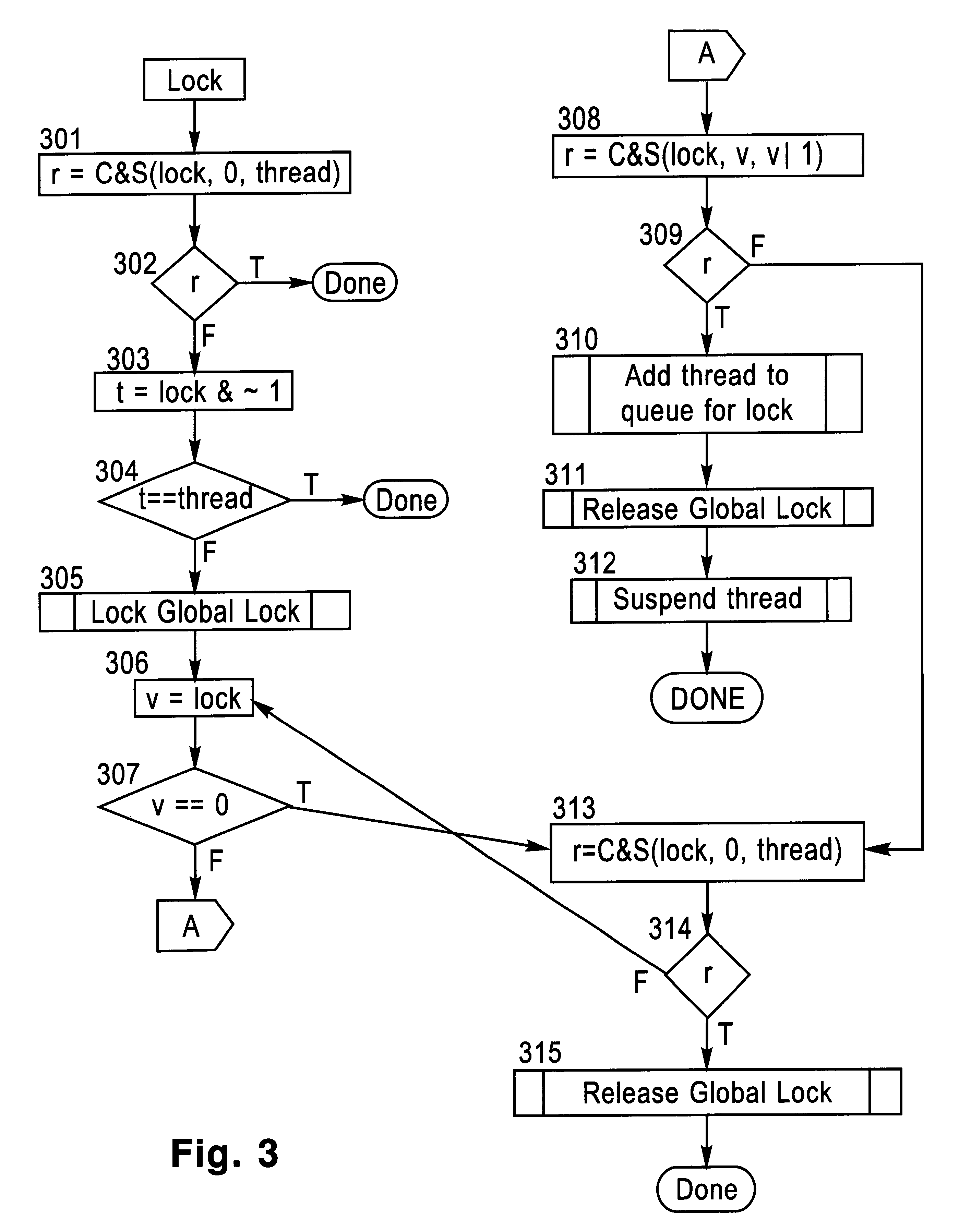

Locking and unlocking mechanism for controlling concurrent access to objects

InactiveUS6247025B1Data processing applicationsDigital data information retrievalNormal caseSpin locks

A lock / unlock mechanism to control concurrent access to objects in a multi-threaded computer processing system comprises two parts: a thread pointer (or thread identifier), and a one-bit flag called a "Bacon bit". Preferably, when an object is not locked (i.e., no thread has been granted access to the object), the thread identifier and Bacon bit are set to 0. When an object is locked by a particular thread (i.e., the thread has been granted access to the object), the thread identifier is set to a value that identifies the particular thread; if no other threads are waiting to lock the object, the Bacon bit is set to 0; however, if other threads are waiting to lock the object, the Bacon bit is set to "1', which indicates the there is a queue of waiting threads associated with the object. To lock an object, a single CompareAndSwap operation is preferably used, much like with spin-locks; if the lock is already held by another thread, enqueueing is handled in out-of-line code. To unlock an object, in the normal case, a single CompareAndSwap operation may be used. This single operation atomically tests that the current thread owns the lock, and that no other threads are waiting for the object (i.e., the Bacon bit is "0'). A global lock is preferably used to change the Bacon bit of the lock. This provides an lock / unlock mechanism which combines many of the desirable features of both spin locking and queued locking, and can be used as the basis for a very fast implementation of the synchronization facilities of the Java language.

Owner:IBM CORP

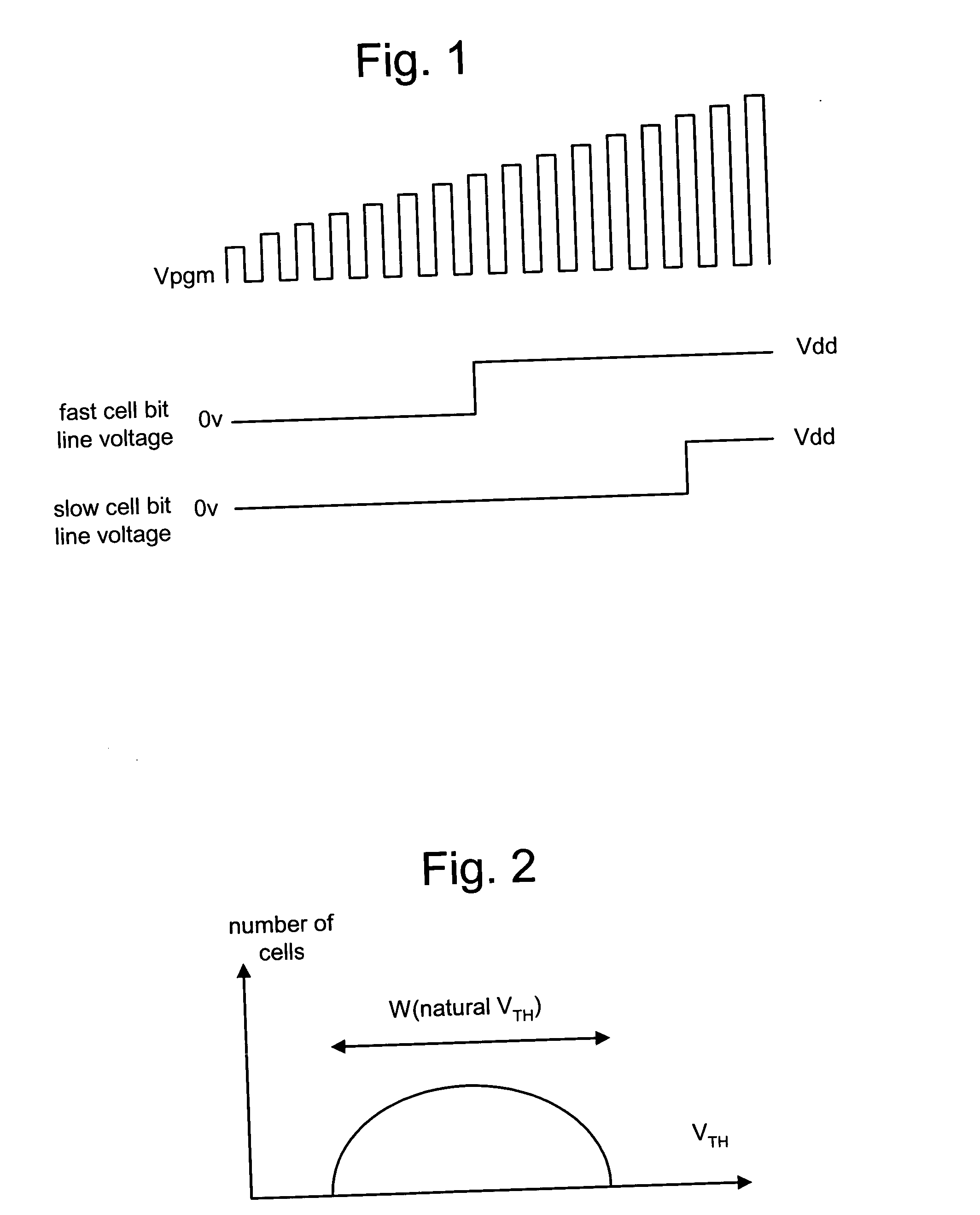

Behavior based programming of non-volatile memory

ActiveUS20050083735A1Speed up the processTransistorSolid-state devicesBit lineComputer architecture

The process for programming a set of memory cells is improved by adapting the programming process based on behavior of the memory cells. For example, a set of program pulses is applied to the word line for a set of flash memory cells. A determination is made as to which memory cells are easier to program and which memory cells are harder to program. Bit line voltages (or other parameters) can be adjusted based on the determination of which memory cells are easier to program and which memory cells are harder to program. The programming process will then continue with the adjusted bit line voltages (or other parameters).

Owner:KIOXIA CORP +1

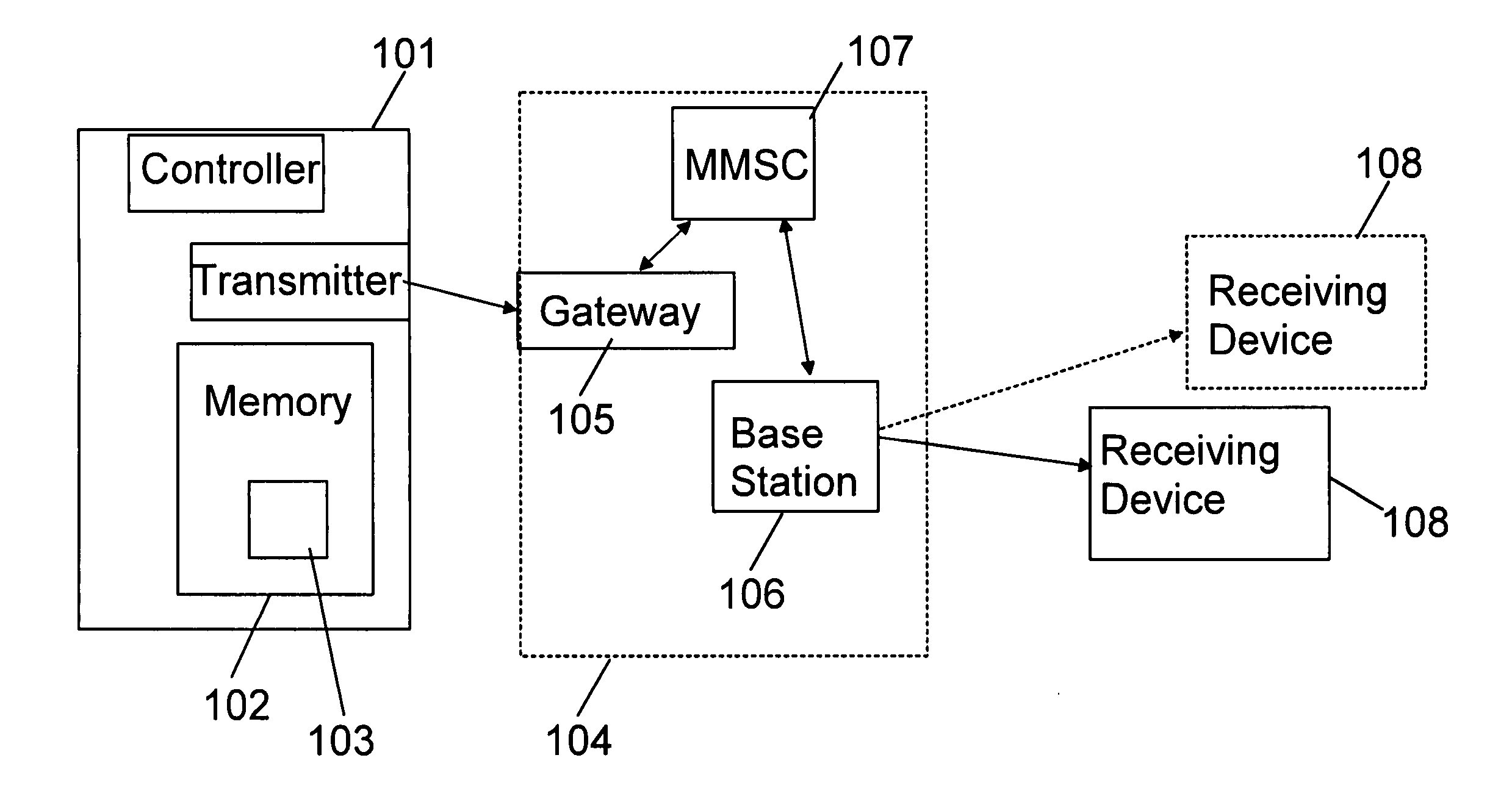

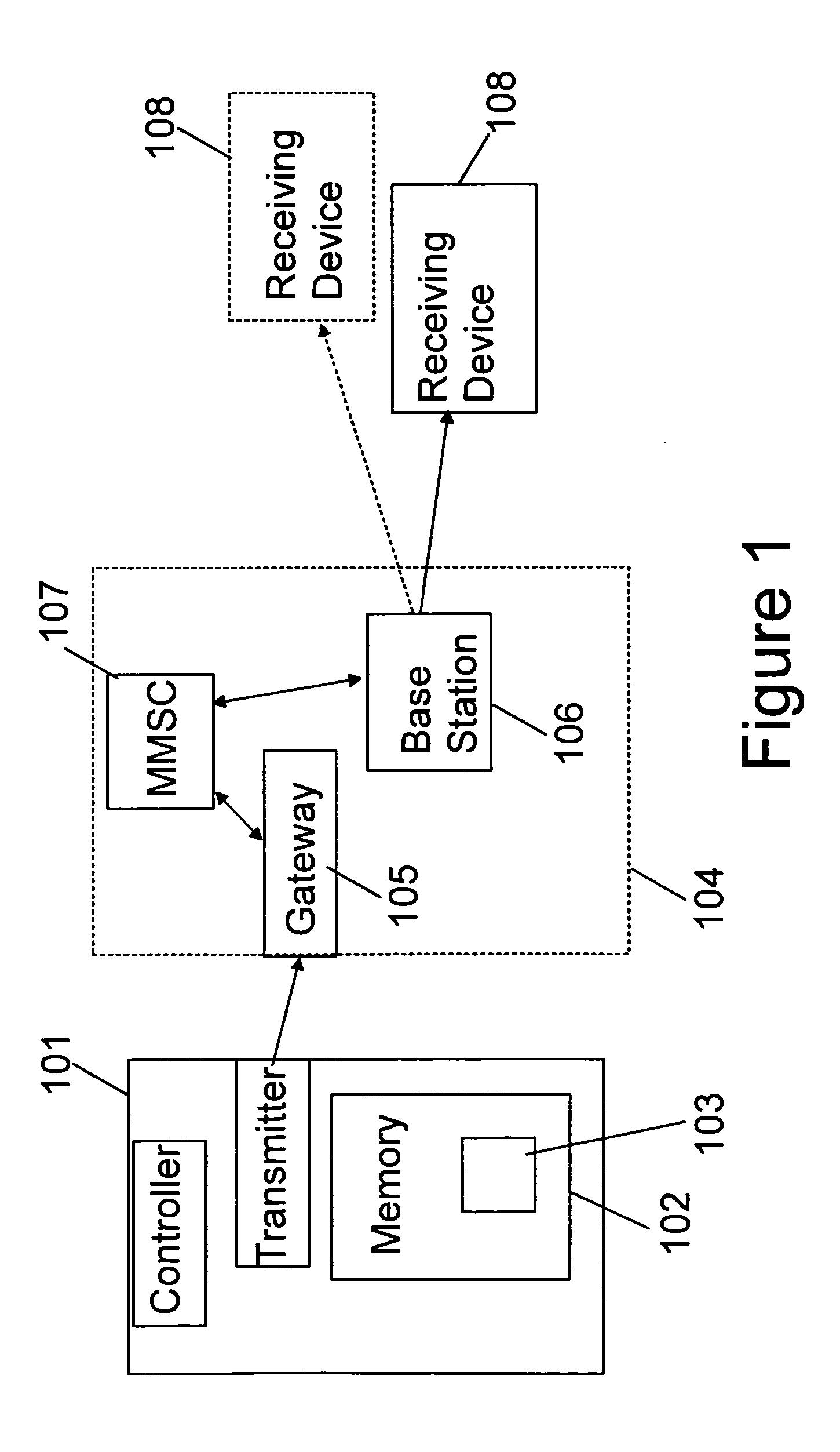

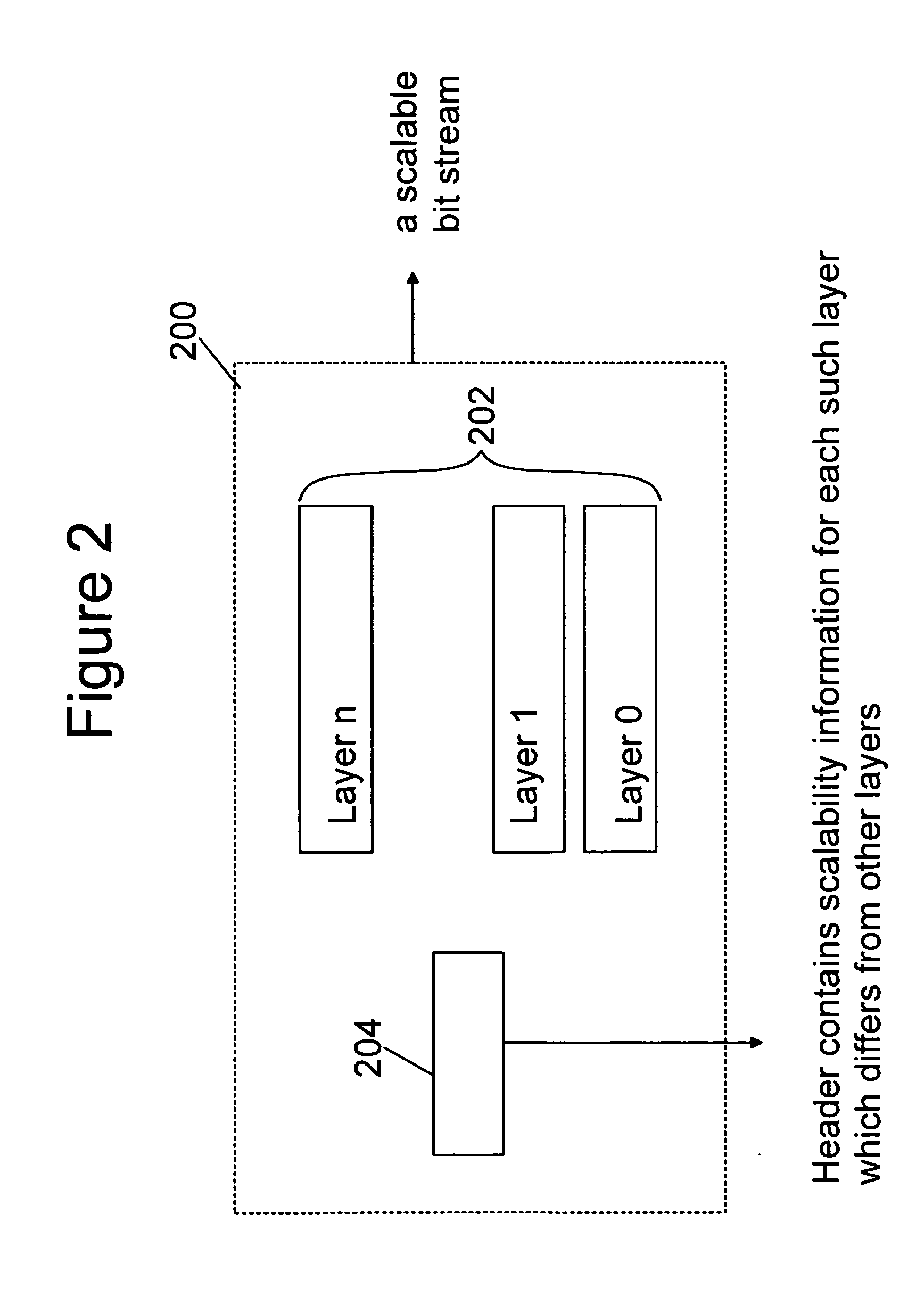

Coding, storage and signalling of scalability information

ActiveUS20060256851A1Reduce computational complexityAvoid inclusionsColor television with pulse code modulationColor television with bandwidth reductionExtensibilityComputer architecture

A method and device for encoding, decoding, storage and transmission of a scalable data stream to include layers having different coding properties including: producing one or more layers of the scalable data stream, wherein the coding properties include at least one of the following: Fine granularity scalability information; Region-of-interest scalability information; Sub-sample scalable layer information; Decoding dependency information; and Initial parameter sets, and signaling the layers with the characterized coding property such that they are readable by a decoder without the need to decode the entire layers. A corresponding method of encoding, decoding, storage, and transmission of a scalable bit stream is also disclosed, wherein at least two scalability layers are present and each layer has a set of at least one property, such as those above identified.

Owner:NOKIA TECHNOLOGLES OY

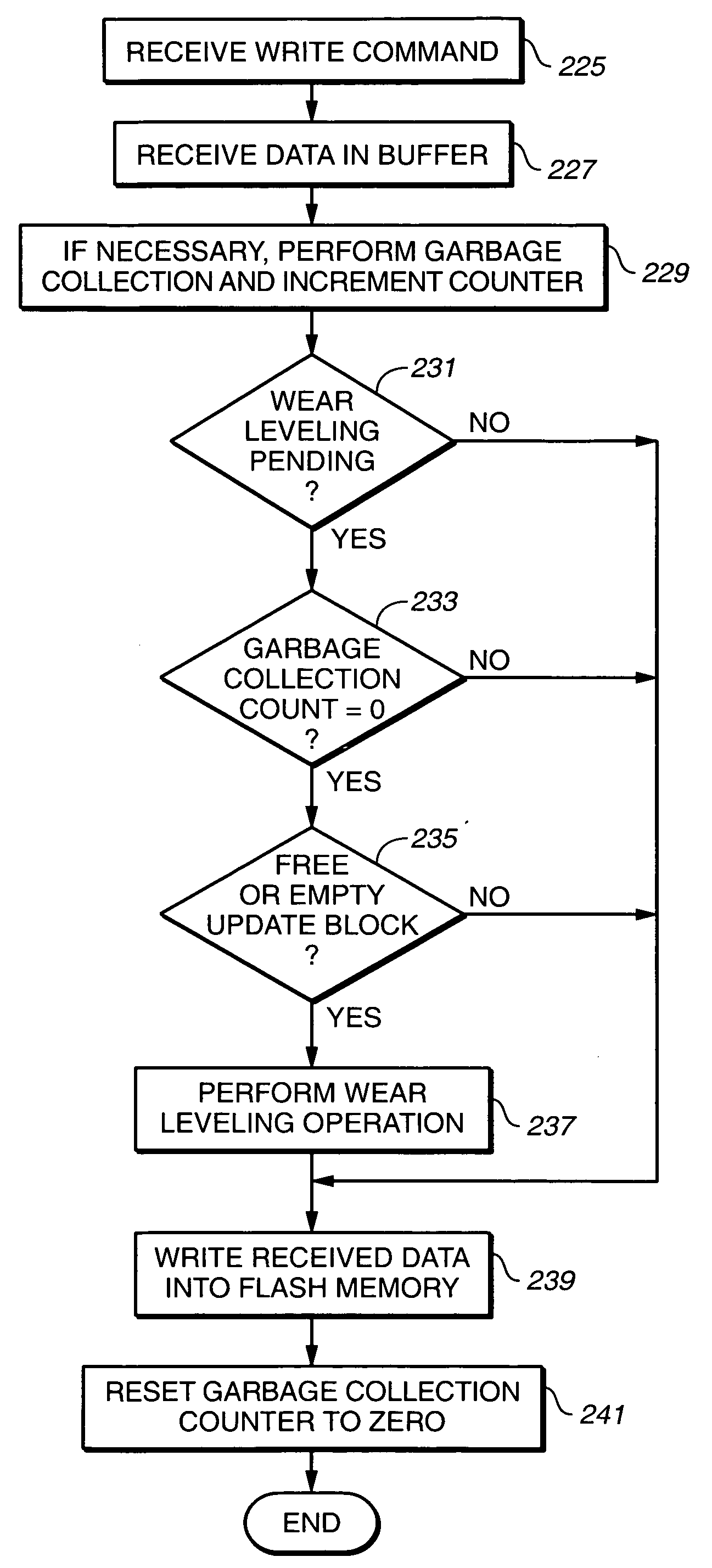

Scheduling of housekeeping operations in flash memory systems

ActiveUS20060161728A1Good performanceMemory architecture accessing/allocationRead-only memoriesEEPROMHousekeeping

A re-programmable non-volatile memory system, such as a flash EEPROM system, having its memory cells grouped into blocks of cells that are simultaneously erasable is operated to perform memory system housekeeping operations in the foreground during execution of a host command, wherein the housekeeping operations are unrelated to execution of the host command. Both one or more such housekeeping operations and execution of the host command are performed within a time budget established for executing that particular command. One such command is to write data being received to the memory. One such housekeeping operation is to level out the wear of the individual blocks that accumulates through repetitive erasing and re-programming.

Owner:SANDISK TECH LLC

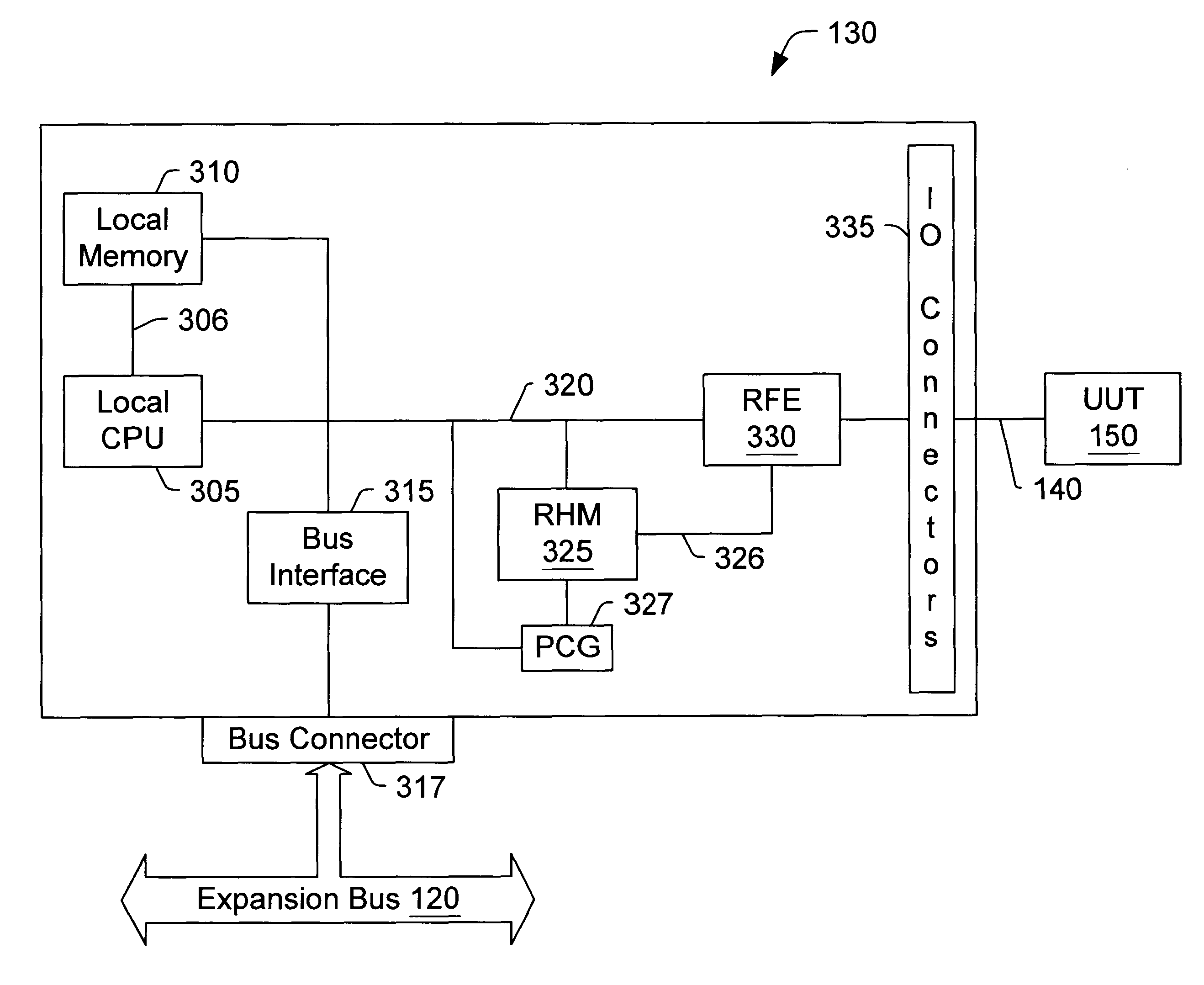

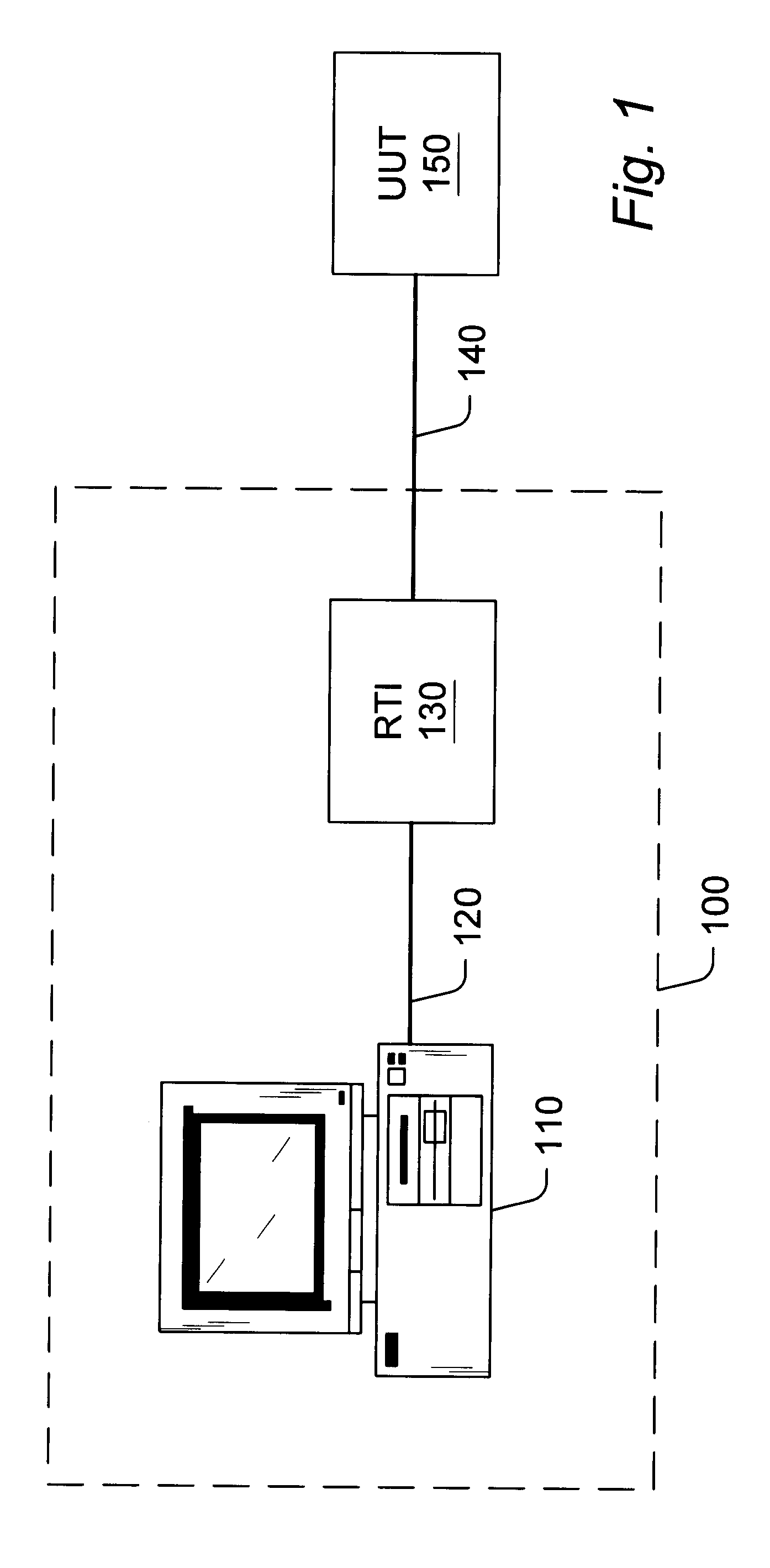

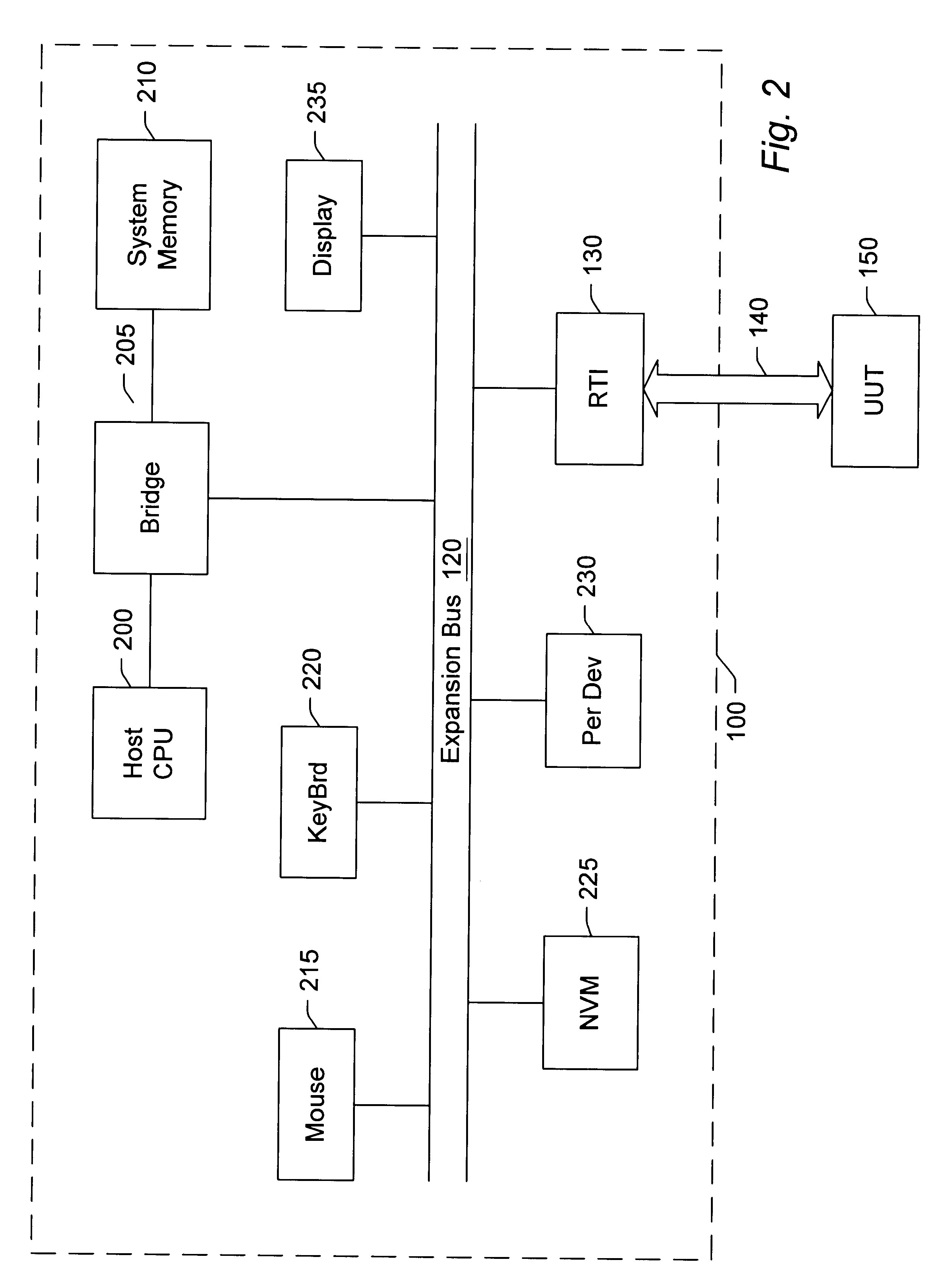

Reconfigurable test system

A reconfigurable test system including a host computer coupled to a reconfigurable test instrument. The reconfigurable test instrument includes reconfigurable hardware-i.e. a reconfigurable hardware module with one or more programmable elements such as Field Programmable Gate Arrays for realizing an arbitrary hardware architecture and a reconfigurable front end with programmable transceivers for interfacing with any desired physical medium-and optionally, an embedded processor. A user specifies system features with a software configuration utility which directs a component selector to select a set of software modules and hardware configuration files from a series of libraries. The modules are embedded in a host software driver or downloaded for execution on the embedded CPU. The configuration files are downloaded to the reconfigurable hardware. The entire selection process is performed in real-time and can be changed whenever the user deems necessary. Alternatively, the user may create a graphical program in a graphical programming environment and compile the program into various software modules and configuration files for host execution, embedded processor execution, or programming the reconfigurable hardware.

Owner:NATIONAL INSTRUMENTS

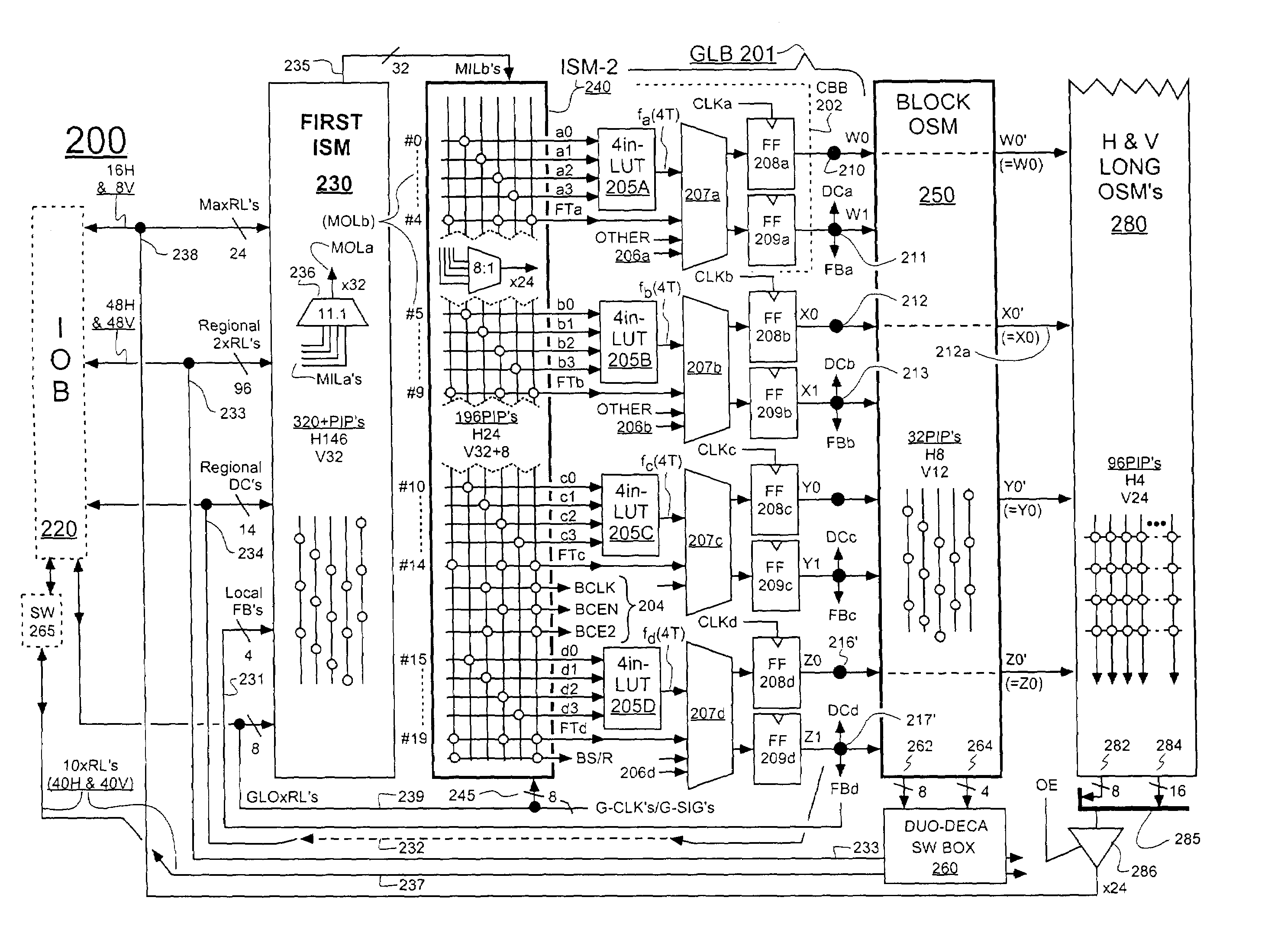

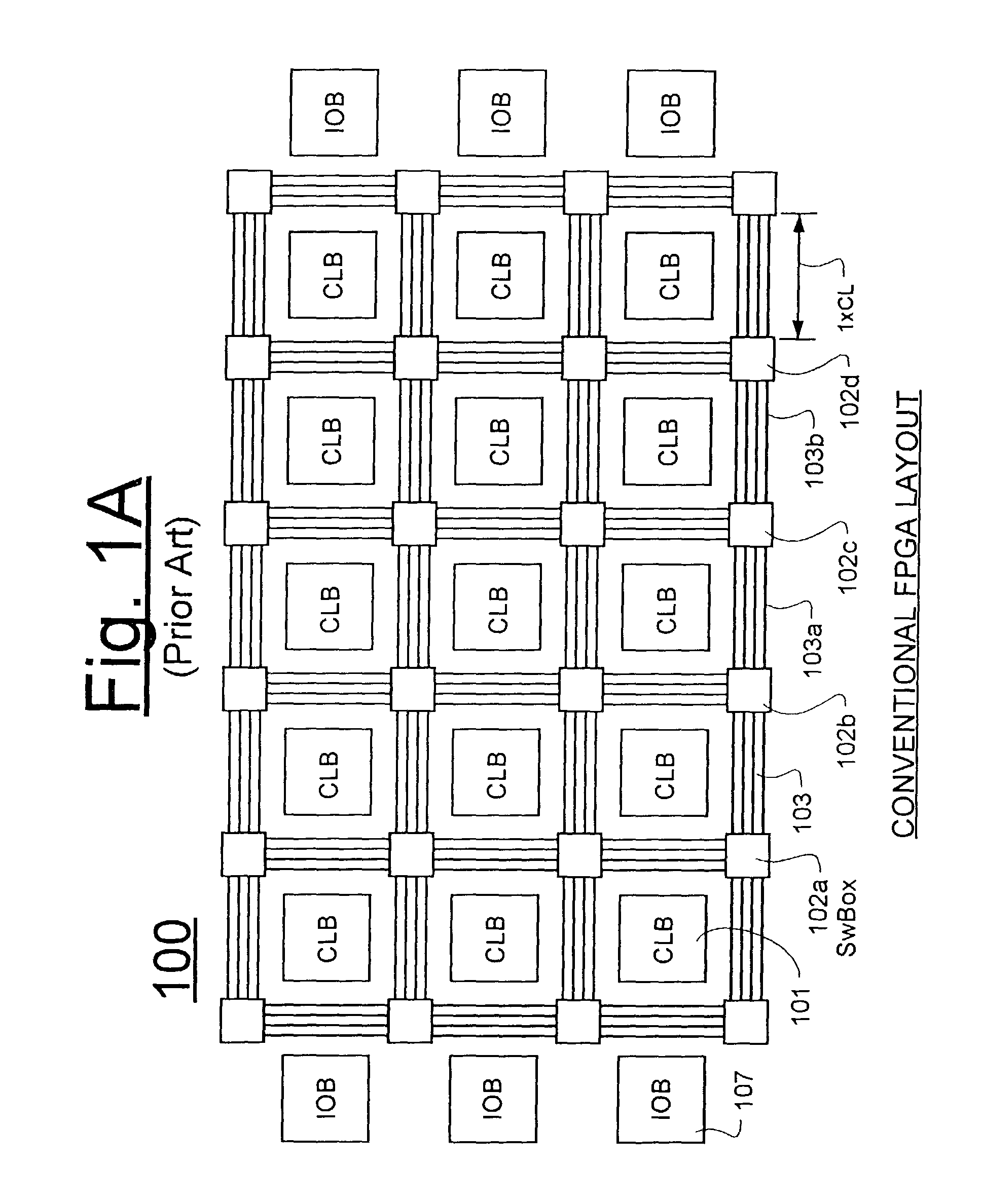

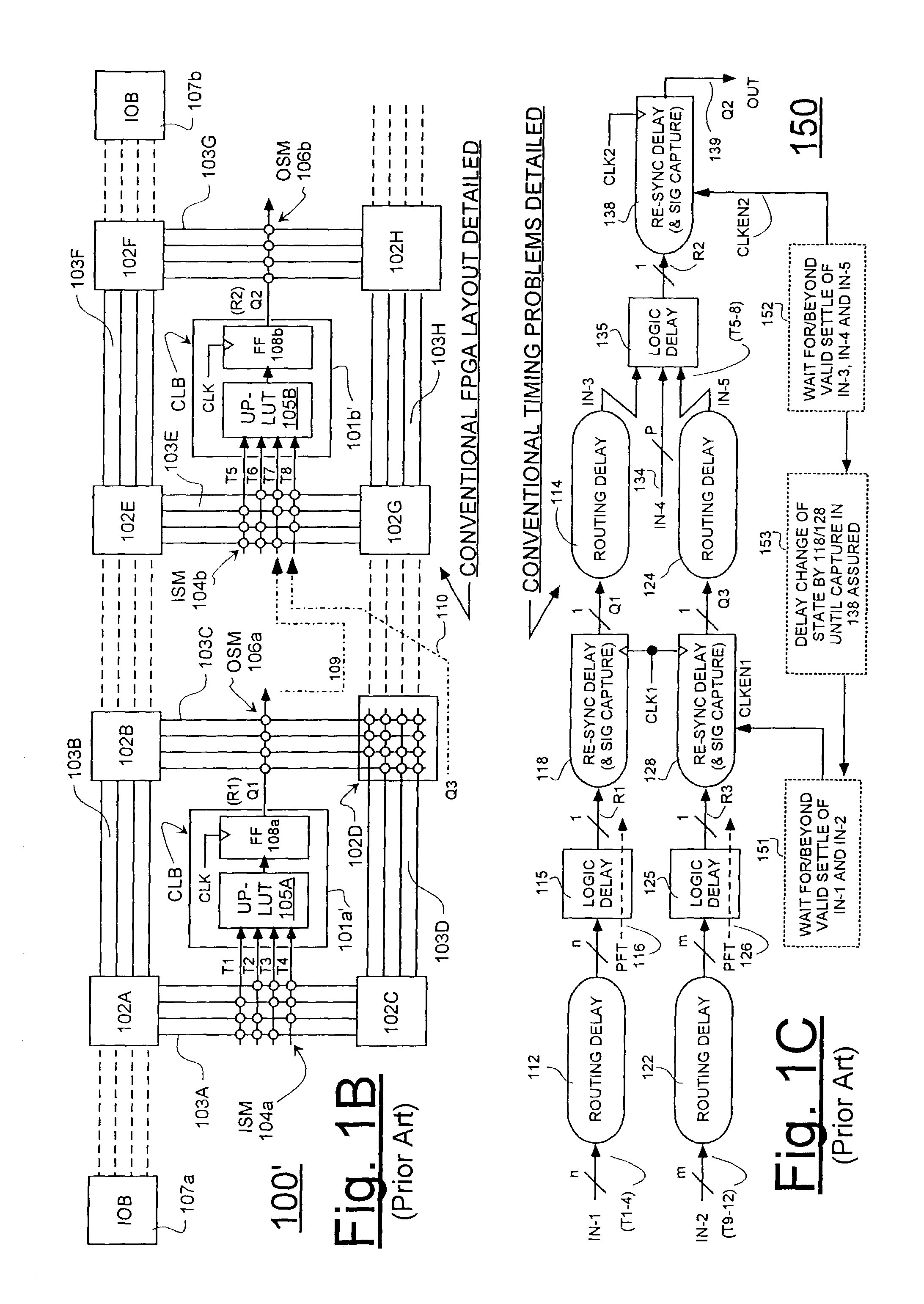

FPGA with register-intensive architecture

ActiveUS7028281B1Minimize resourceReduce consumptionSolid-state devicesCAD circuit designProcessor registerMultiplexer

Field programmable gate arrays (FPGA's) may be structured in accordance with the disclosure to have a register-intensive architecture that provides, for each of plural function-spawning LookUp Tables (e.g. a 4-input, base LUT's) within a logic block, a plurality of in-block accessible registers. A register-feeding multiplexer means may be provided for allowing each of the plural registers to equivalently capture and store a result signal output by the corresponding, base LUT of the plural registers. Registerable, primary and secondary feedthroughs may be provided for each base LUT so that locally-acquired input signals of the LUT may be fed-through to the corresponding, in-block registers for register-recovery purposes without fully consuming (wasting) the lookup resources of the associated, base LUT. A multi-stage, input switch matrix (ISM) may be further provided for acquiring and routing input signals from adjacent, block-interconnect lines (AIL's) and / or block-intra-connect lines (e.g., FB's) to the base LUT's and / or their respective, registerable feedthroughs. Techniques are disclosed for utilizing the many in-block registers and / or the registerable feedthroughs and / or the multi-stage ISM's for efficiently implementing various circuit designs by appropriately configuring such register-intensive FPGA's.

Owner:LATTICE SEMICON CORP

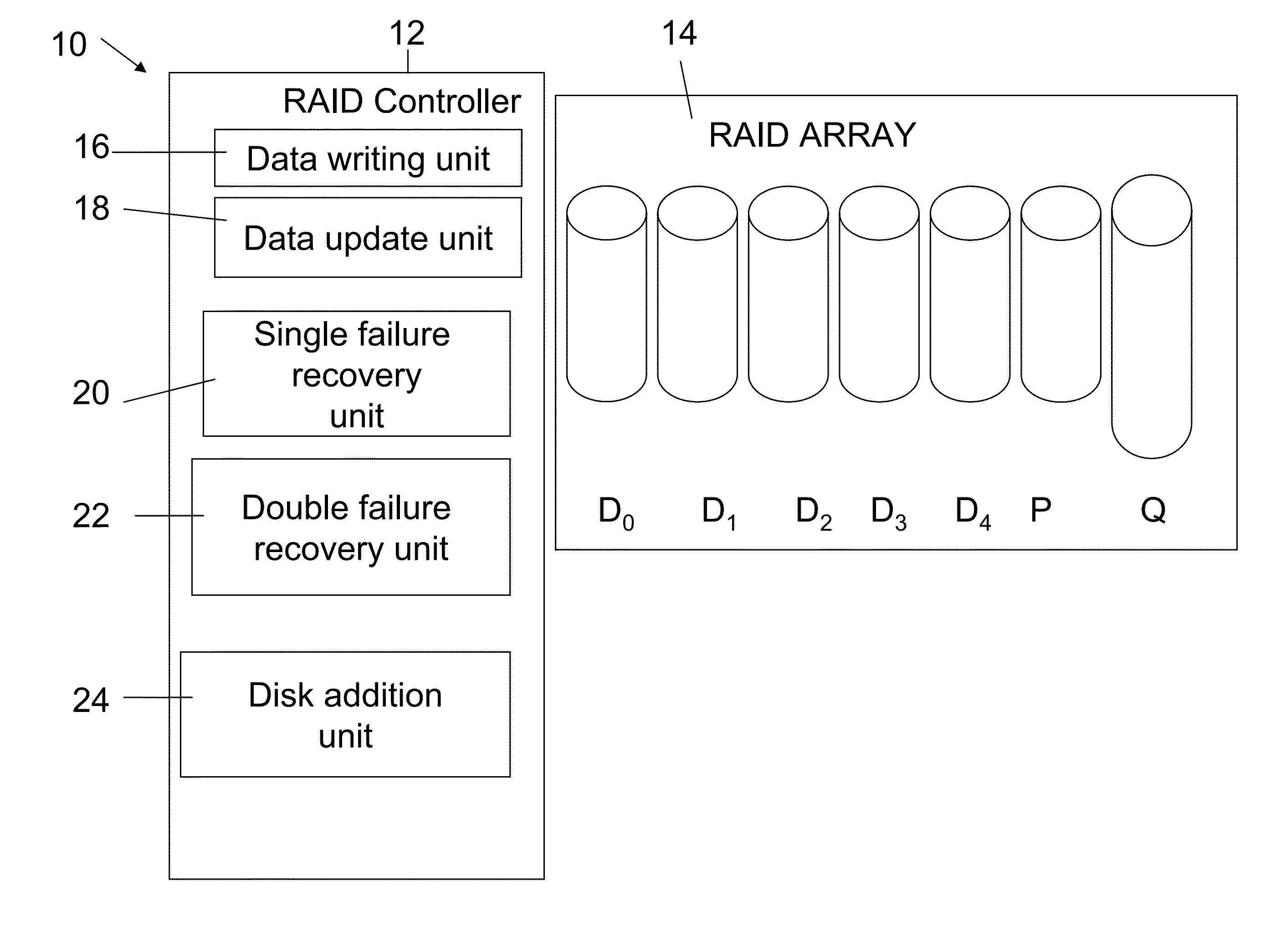

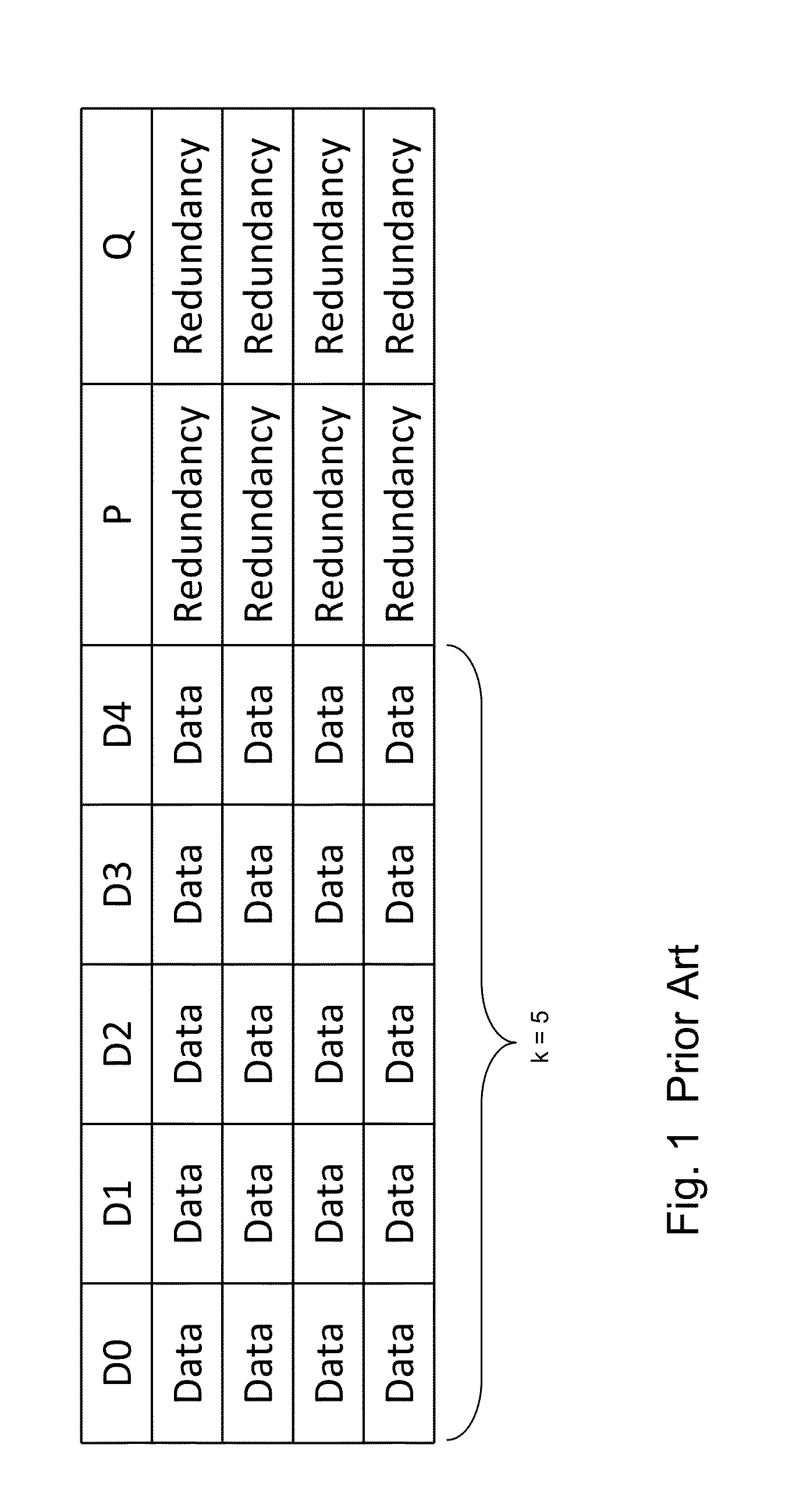

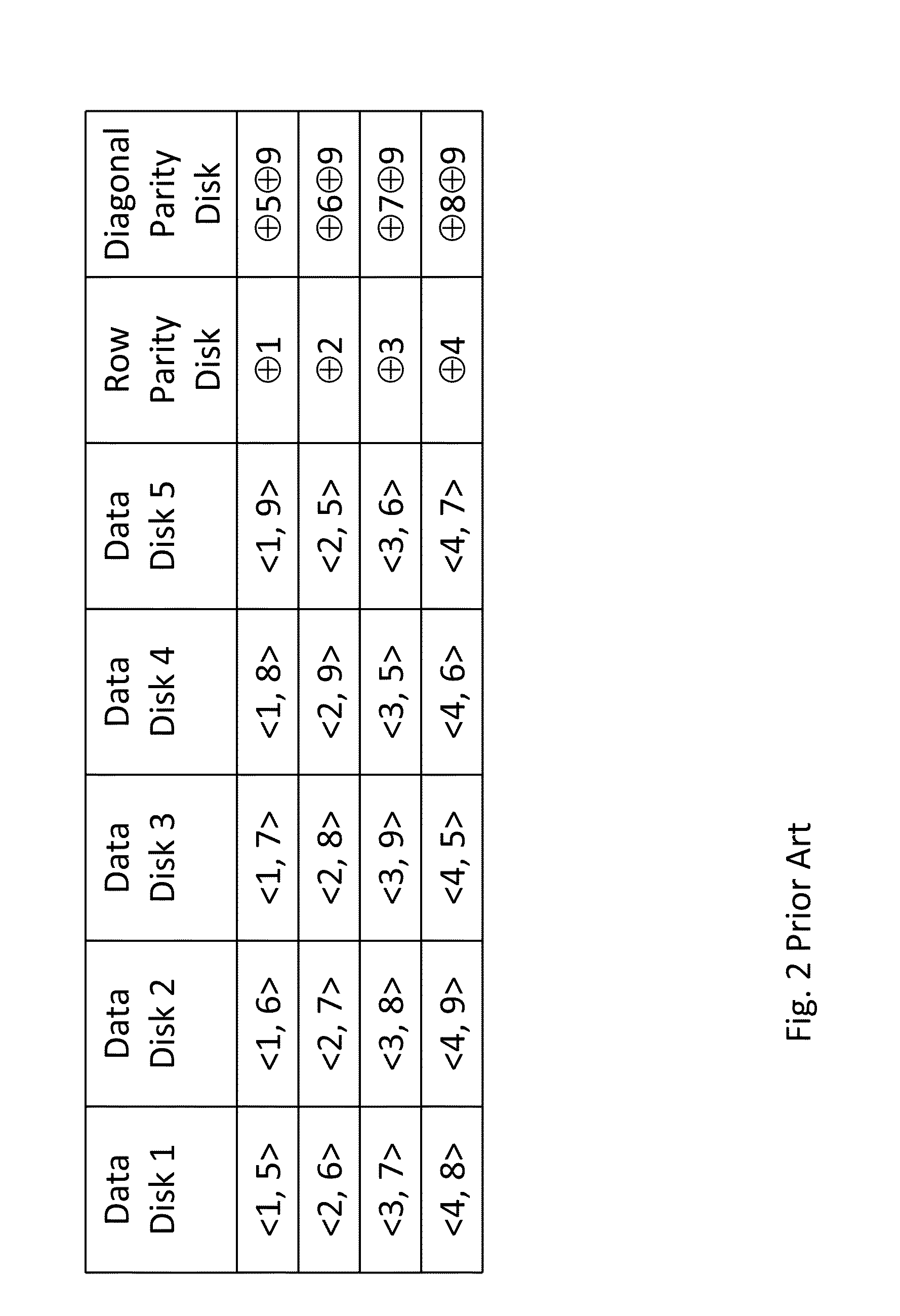

Secure data storage in raid memory devices

ActiveUS20130124776A1Reduce overheadUtility and advantageMemory loss protectionError detection/correctionRAIDComputer architecture

A redundant array of independent disk (RAID) memory storage system comprising data storage blocks arranged in a first plurality of data rows and a second plurality of data columns, wherein parity data is stored in additionally defined parity blocks, and wherein numbers of data blocks in respective columns are different, to accommodate the additional diagonal parity data block that the geometry of the system requires. The system is suitable for an SSD array in which sequential disk readout is not required.

Owner:EMC IP HLDG CO LLC

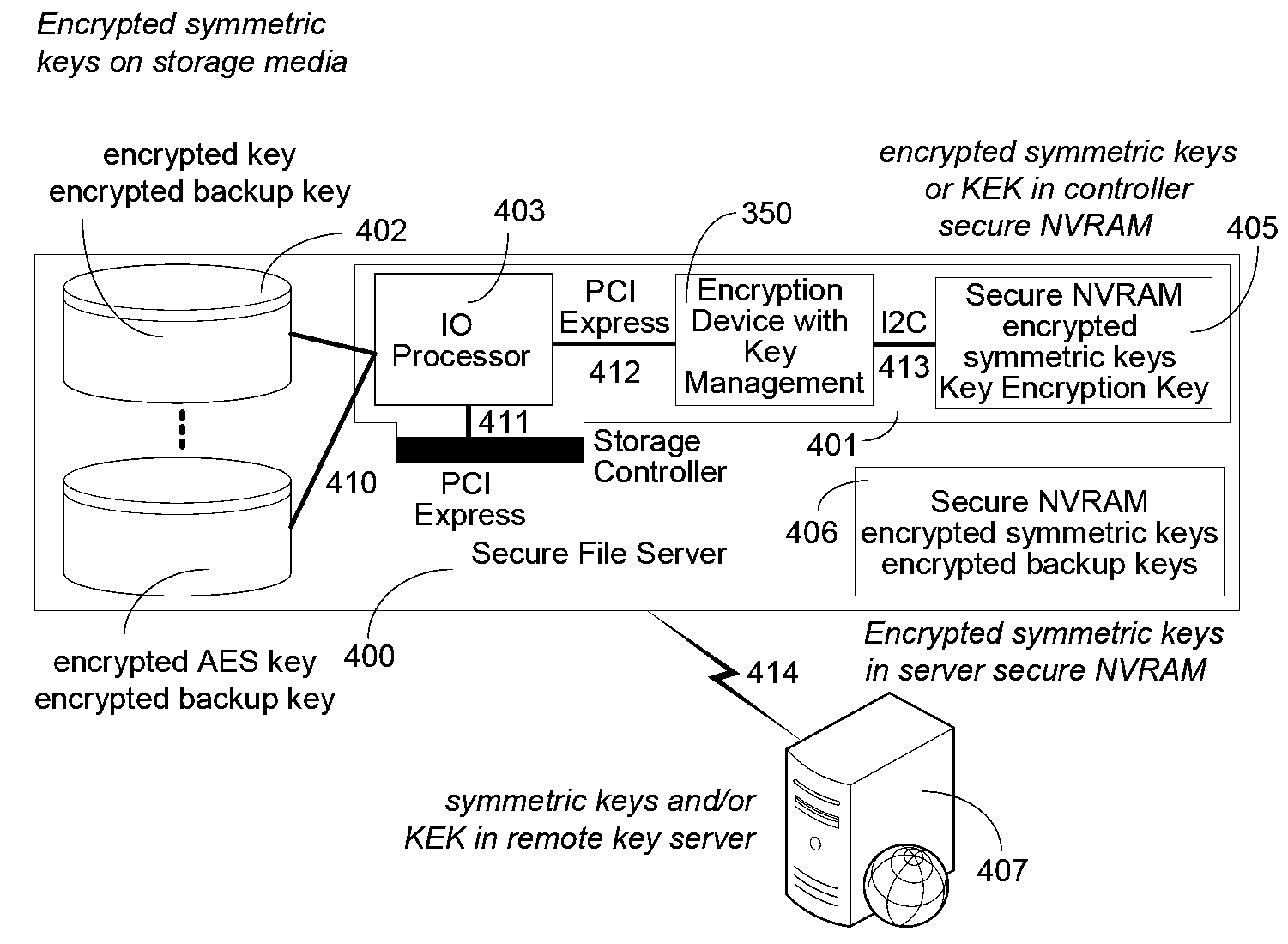

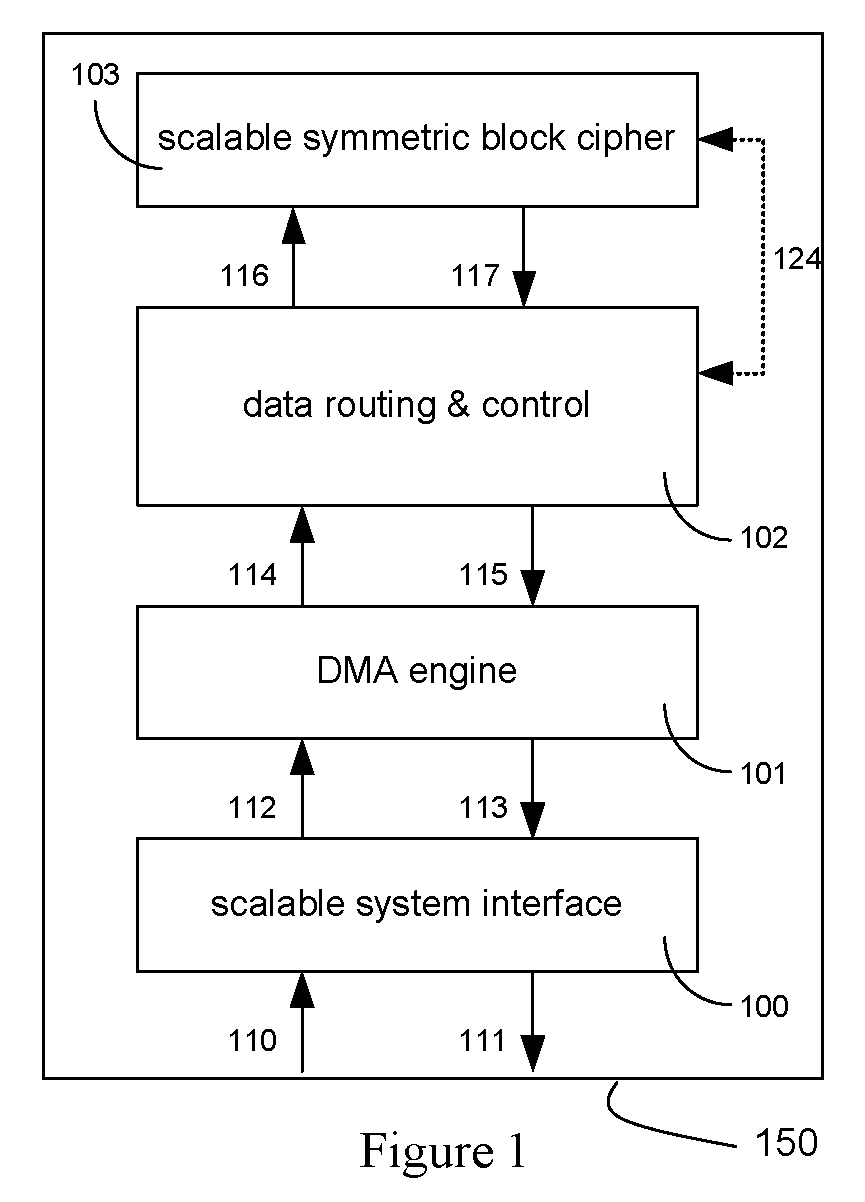

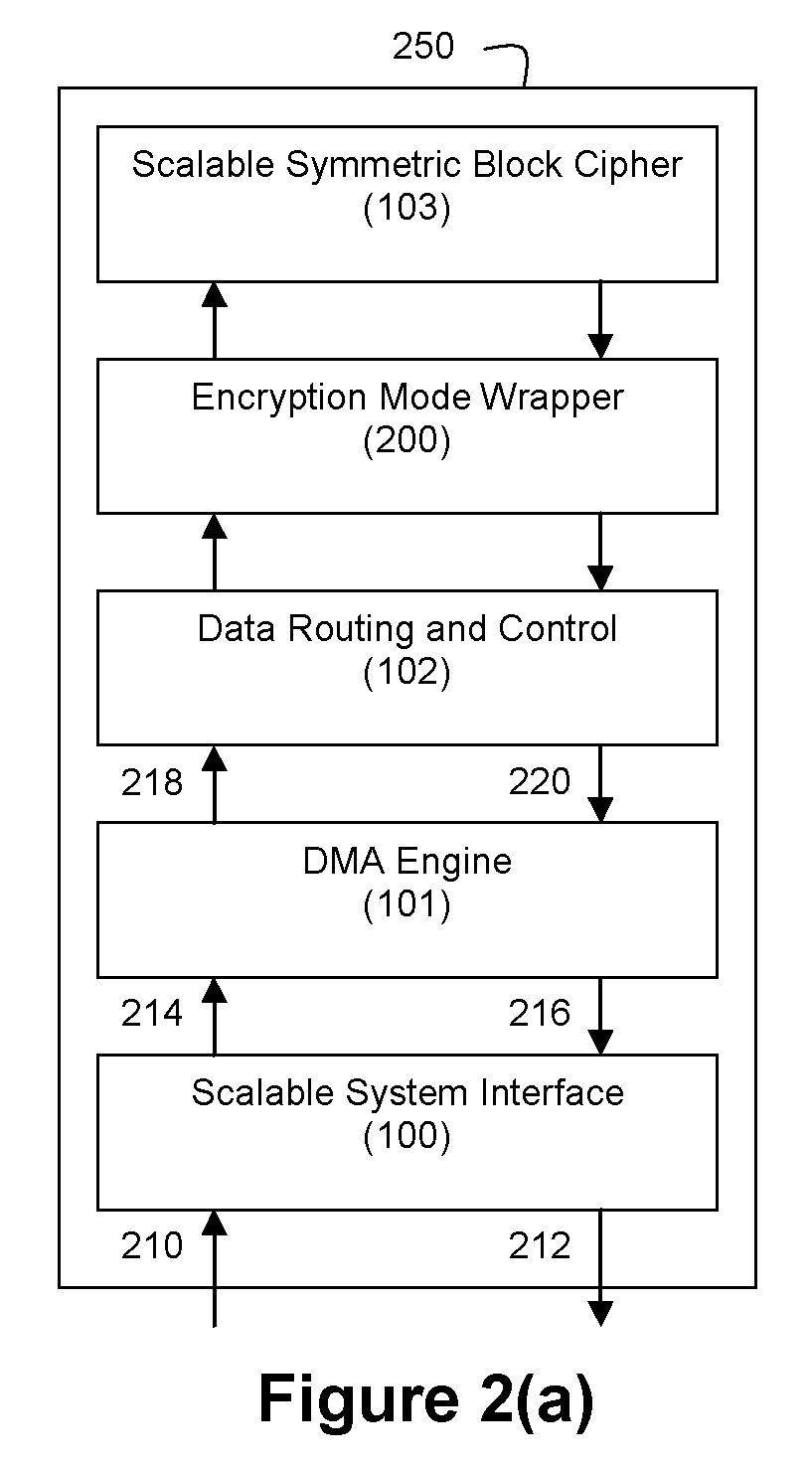

Method and Apparatus for Hardware-Accelerated Encryption/Decryption

ActiveUS20090060197A1Avoid contactMaximize availabilityEncryption apparatus with shift registers/memoriesSecret communicationMultiple encryptionComputer hardware

An integrated circuit for data encryption / decryption and secure key management is disclosed. The integrated circuit may be used in conjunction with other integrated circuits, processors, and software to construct a wide variety of secure data processing, storage, and communication systems. A preferred embodiment of the integrated circuit includes a symmetric block cipher that may be scaled to strike a favorable balance among processing throughput and power consumption. The modular architecture also supports multiple encryption modes and key management functions such as one-way cryptographic hash and random number generator functions that leverage the scalable symmetric block cipher. The integrated circuit may also include a key management processor that can be programmed to support a wide variety of asymmetric key cryptography functions for secure key exchange with remote key storage devices and enterprise key management servers. Internal data and key buffers enable the device to re-key encrypted data without exposing data. The key management functions allow the device to function as a cryptographic domain bridge in a federated security architecture.

Owner:IP RESERVOIR

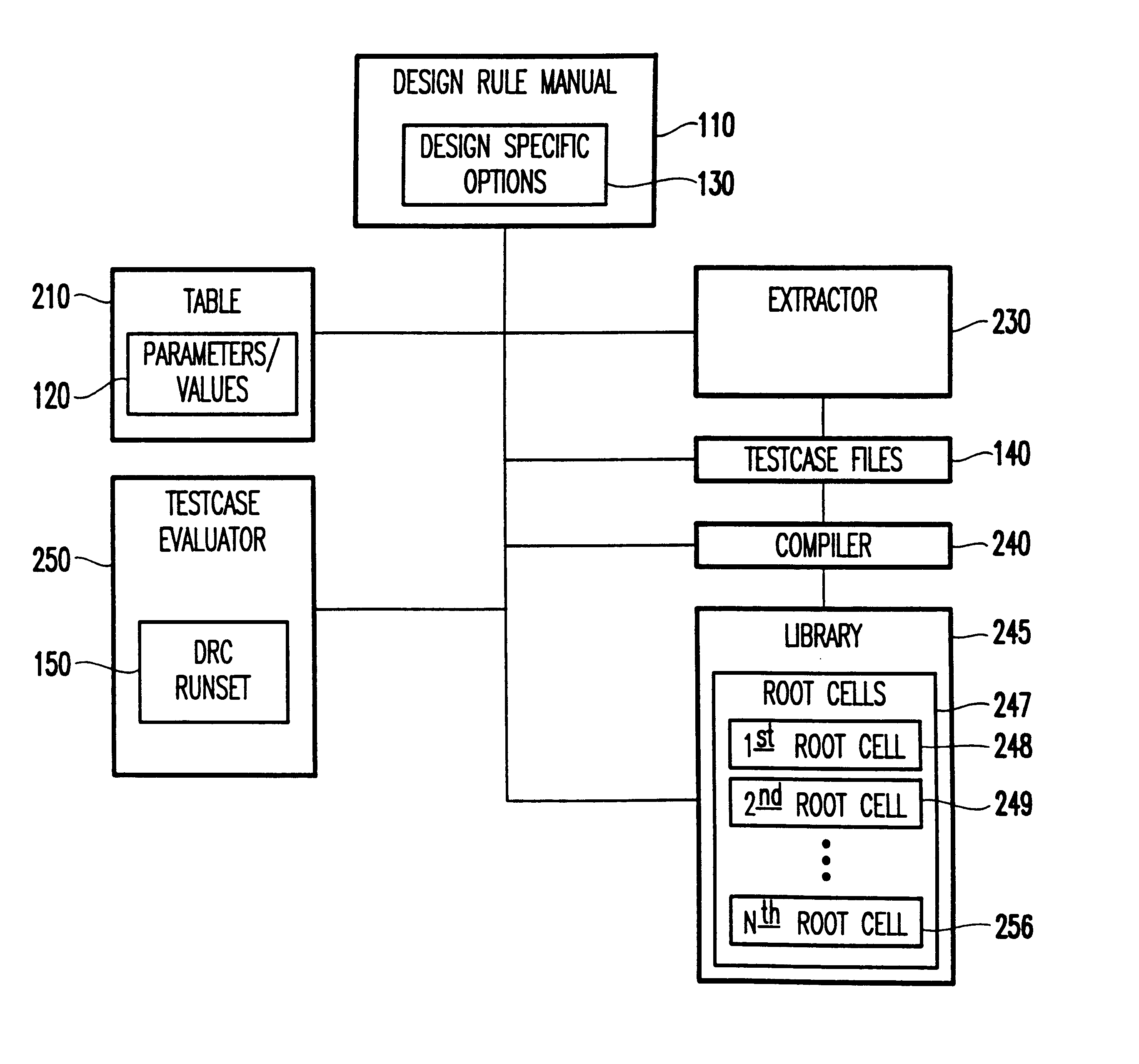

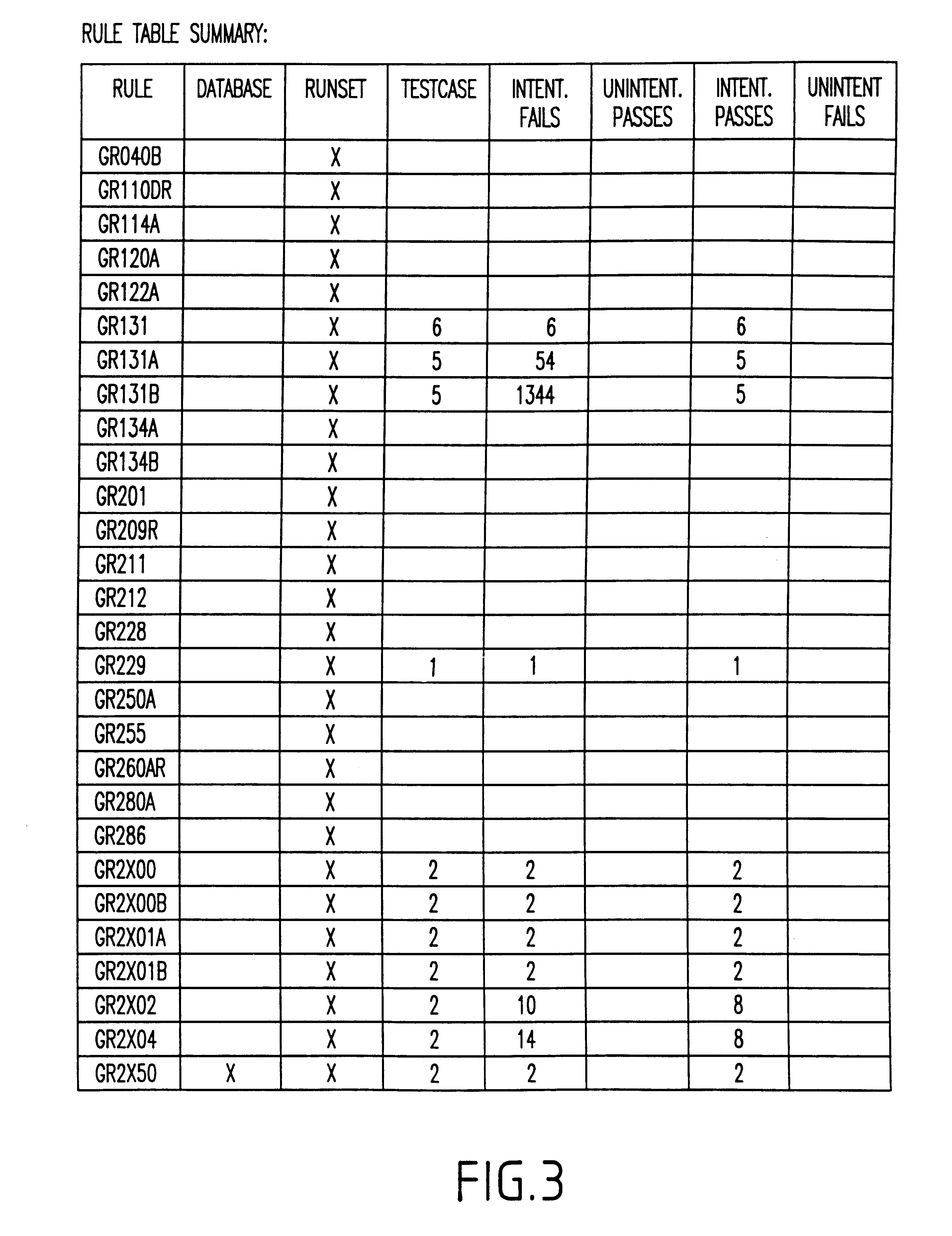

Method for comprehensively verifying design rule checking runsets

InactiveUS6732338B2Extraction of informationComputer aided designSoftware simulation/interpretation/emulationComputer architectureDesign rule checking

A system and method for automatically creating testcases for design rule checking comprises first creating a table with a design rule number, a description, and the values from a design rule manual. Next, any design specific options are derived that affect the flow of the design rule checking, including back end of the line stack options. Then, the design rule values and any design specific options (including back end of the line stack options) are extracted into testcases. Next, the testcases are organized such that there is one library with a plurality of root cells, further comprising one root cell for checking all rules pertaining to the front end of the line, and another root cell for checking design specific options including back end of the line stack options. Finally, the DRC runset is run against the testcases to determine if the DRC runset provides for design rule checking.

Owner:IBM CORP

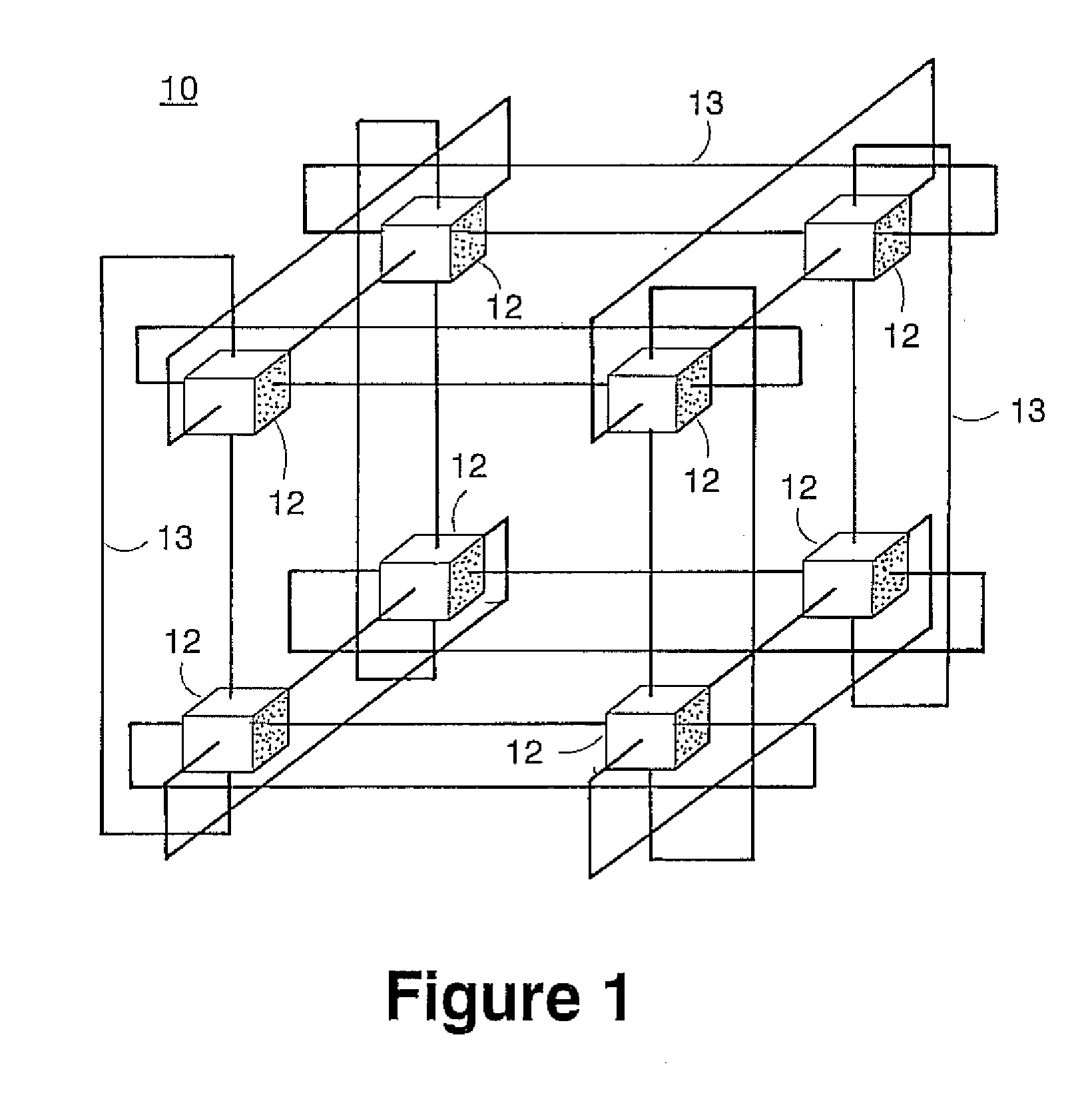

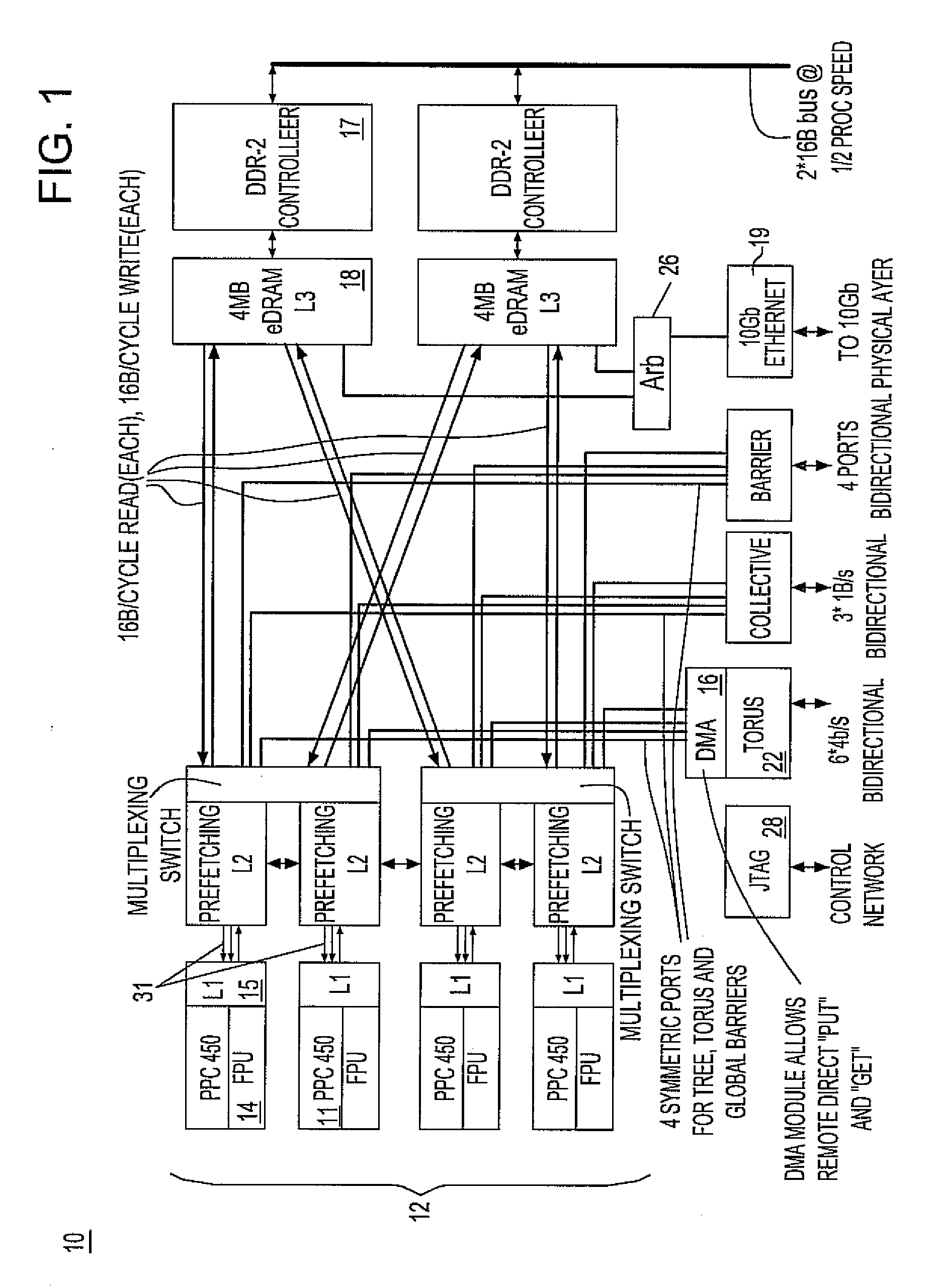

Ultrascalable petaflop parallel supercomputer

InactiveUS20090006808A1Massive level of scalabilityUnprecedented level of scalabilityProgram control using stored programsArchitecture with multiple processing unitsMessage passingPacket communication

A novel massively parallel supercomputer of petaOPS-scale includes node architectures based upon System-On-a-Chip technology, where each processing node comprises a single Application Specific Integrated Circuit (ASIC) having up to four processing elements. The ASIC nodes are interconnected by multiple independent networks that optimally maximize the throughput of packet communications between nodes with minimal latency. The multiple networks may include three high-speed networks for parallel algorithm message passing including a Torus, collective network, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. Novel use of a DMA engine is provided to facilitate message passing among the nodes without the expenditure of processing resources at the node.

Owner:IBM CORP

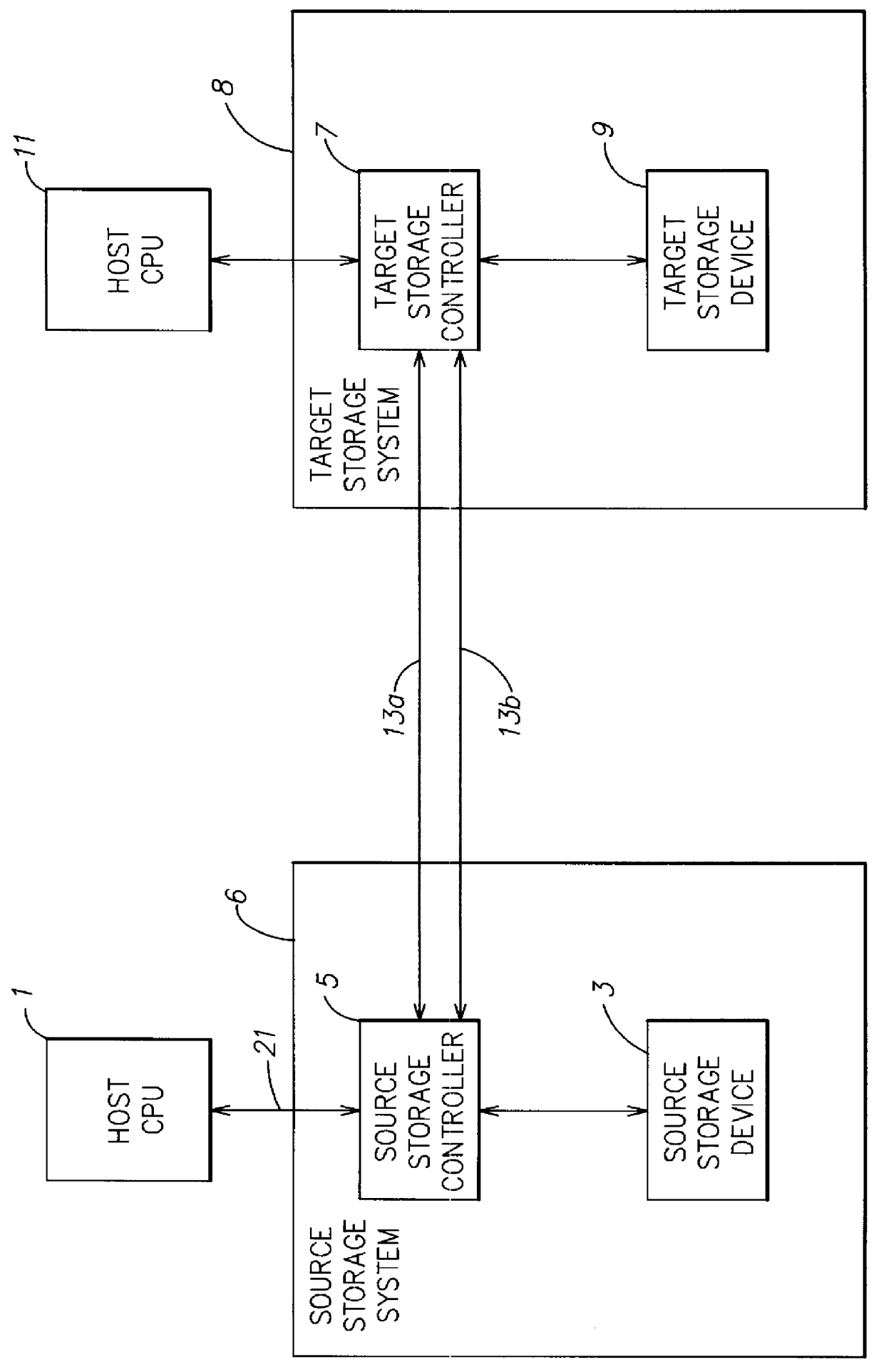

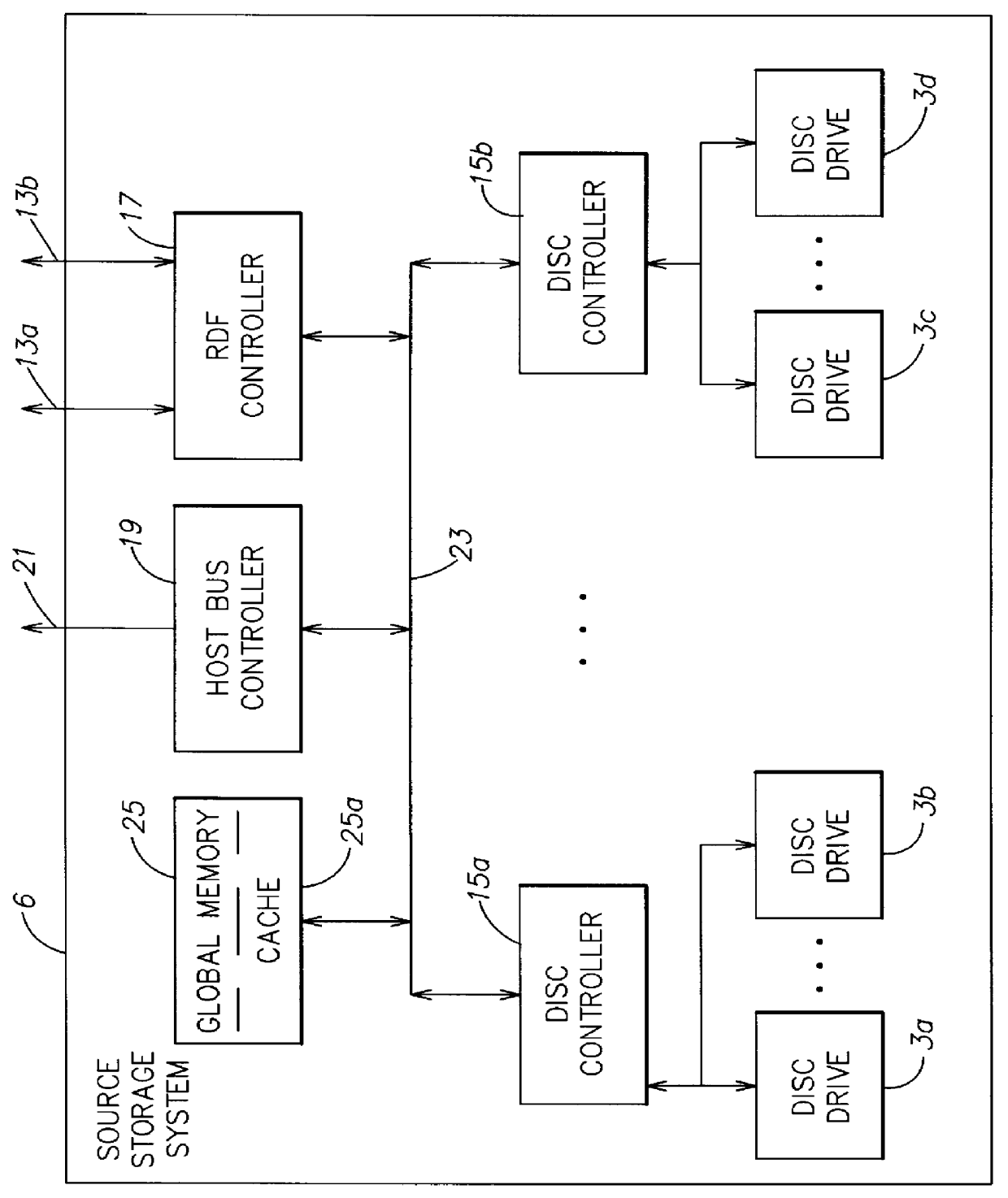

Method and apparatus for asynchronously updating a mirror of a source device

InactiveUS6157991AInput/output to record carriersMemory loss protectionComputer hardwareTelecommunications link

In a computer system including a CPU, a first storage system coupled to the CPU, a second storage system, and a communication link coupling the second storage system to the first storage system, a method and apparatus for asynchronously mirroring, to the second storage system, a plurality of units of data written by the CPU to the first storage system. In one aspect of the invention, the CPU writes units of data to the first storage system in a first order, and the units of data are asynchronously transmitted over the communication link from the first storage system to the second storage system in a second order that is different than the first order. In another aspect, the units of data are committed in the second storage system in an order that is independent of the order in which the units of data are received at the second storage system. In a further aspect of the invention, a packet of information is transmitted over the communication link to the target storage system to specify a commitment order in which the units of data should be committed in the target storage system. In another aspect of the invention, a single copy of each of the units of data is written into the first storage device, without buffering a copy to support asynchronous mirroring. In a further aspect, the storage locations in the first storage system are organized into a plurality of consistency sets, and the units of data are asynchronously transmitted over the communication link so that each consistency set has a representation in the second storage system that is consistent with a valid representation of the consistency set in the first storage system at some point in time.

Owner:EMC IP HLDG CO LLC

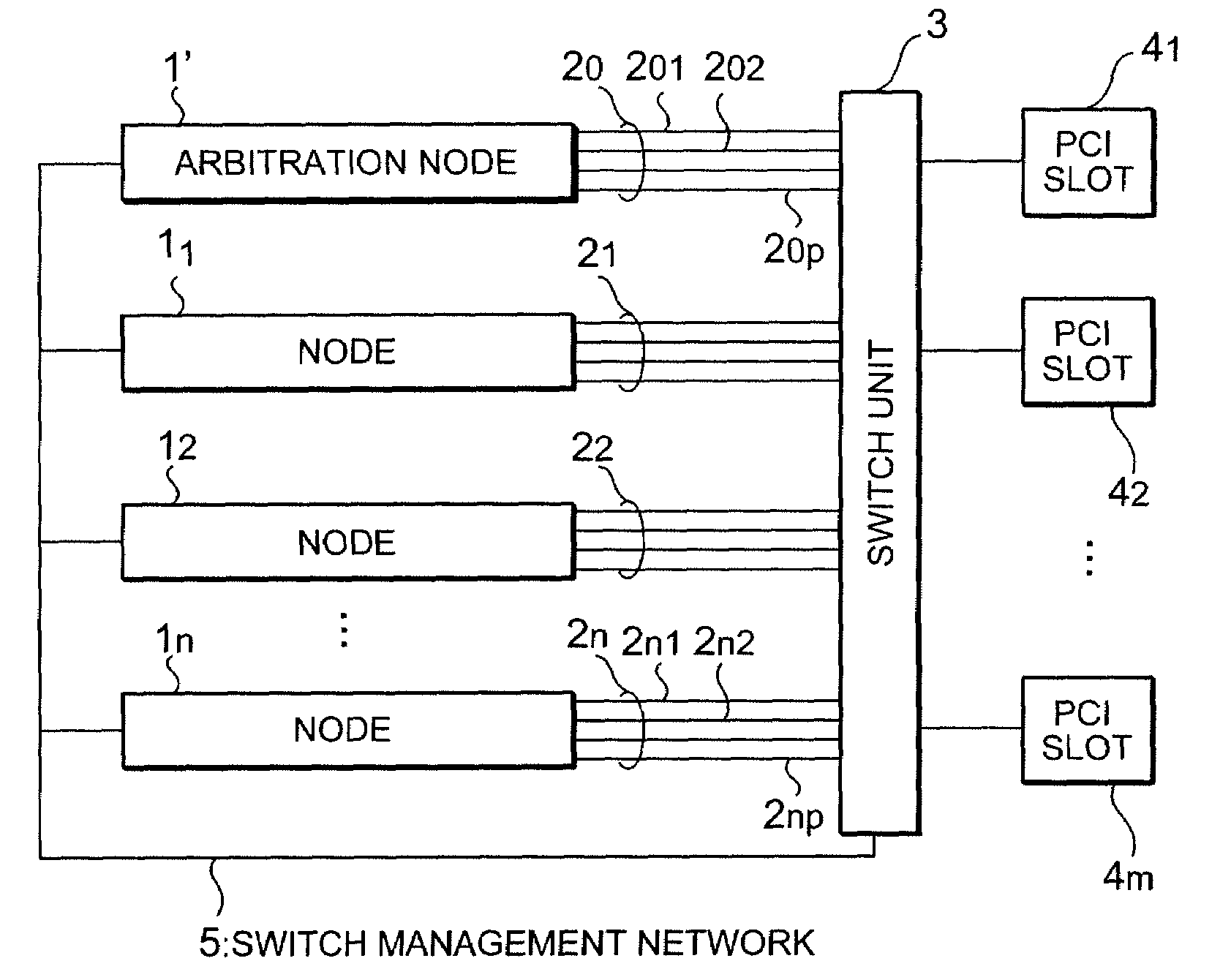

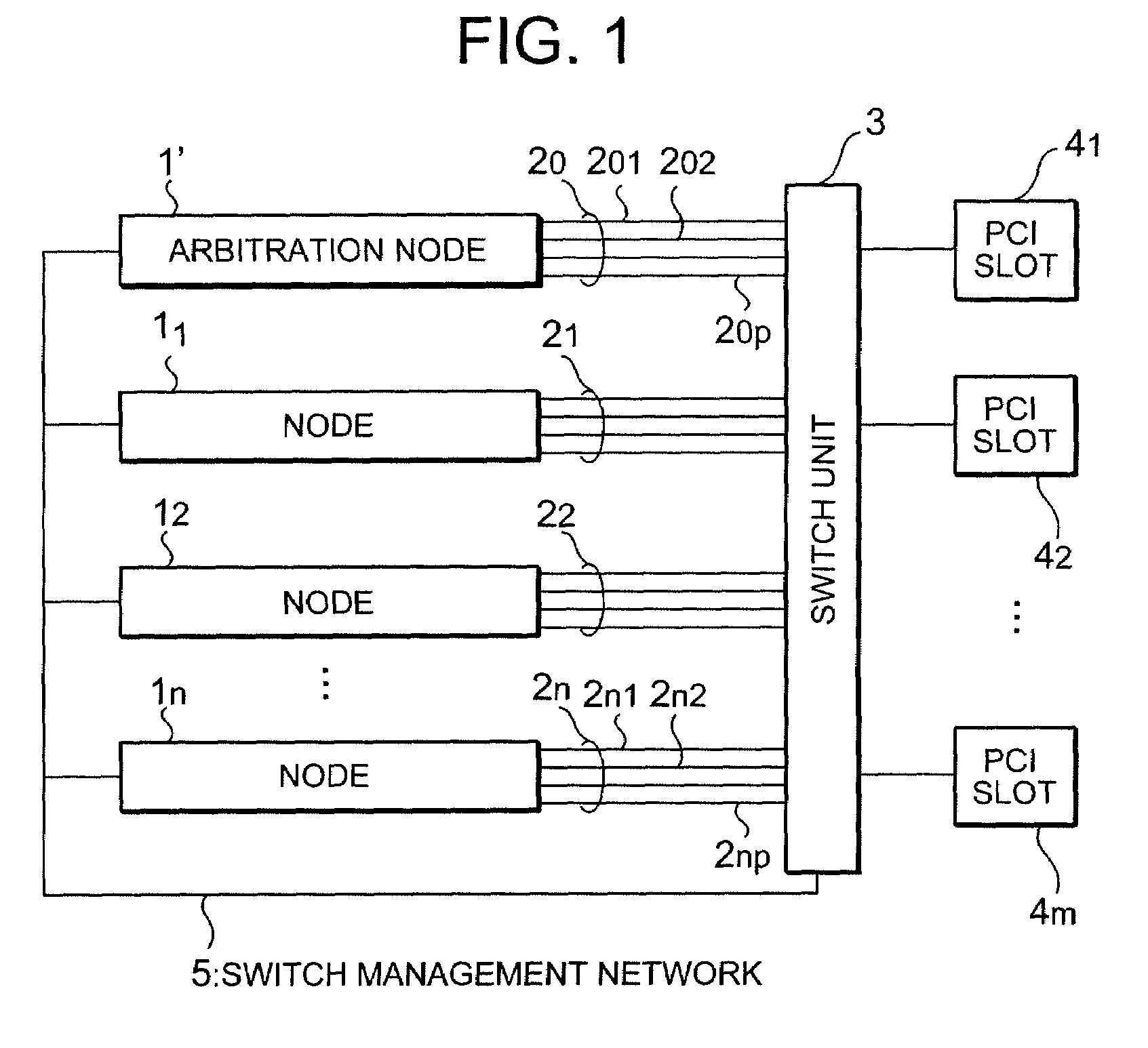

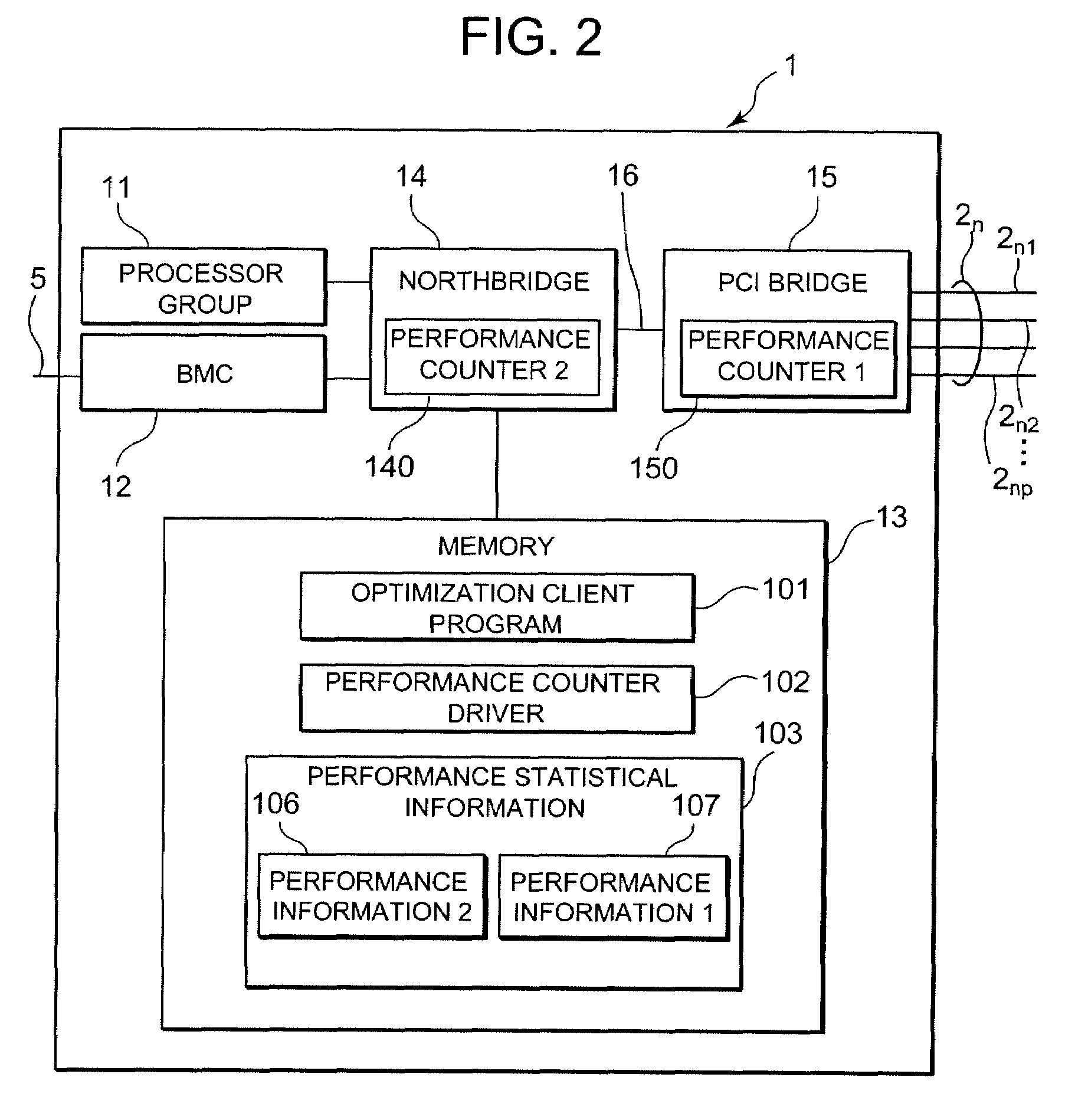

Cluster system, load distribution method, optimization client program, and arbitration server program

InactiveUS7623460B2Lower performance requirementsError preventionTransmission systemsComputer architectureCluster systems

An exemplary cluster system according to the present invention includes a first node including a plurality of paths respectively connected to I / O slots via a switch and a second node including a plurality of paths connected to the switch, wherein the first node includes a first load measuring part which measures a first load for each path thereof, and the switch makes switching to disconnect an I / O slot from the first node and connect the I / O slot to the second node when the first load of the path connected to the I / O slot is higher than a first threshold.

Owner:NEC CORP

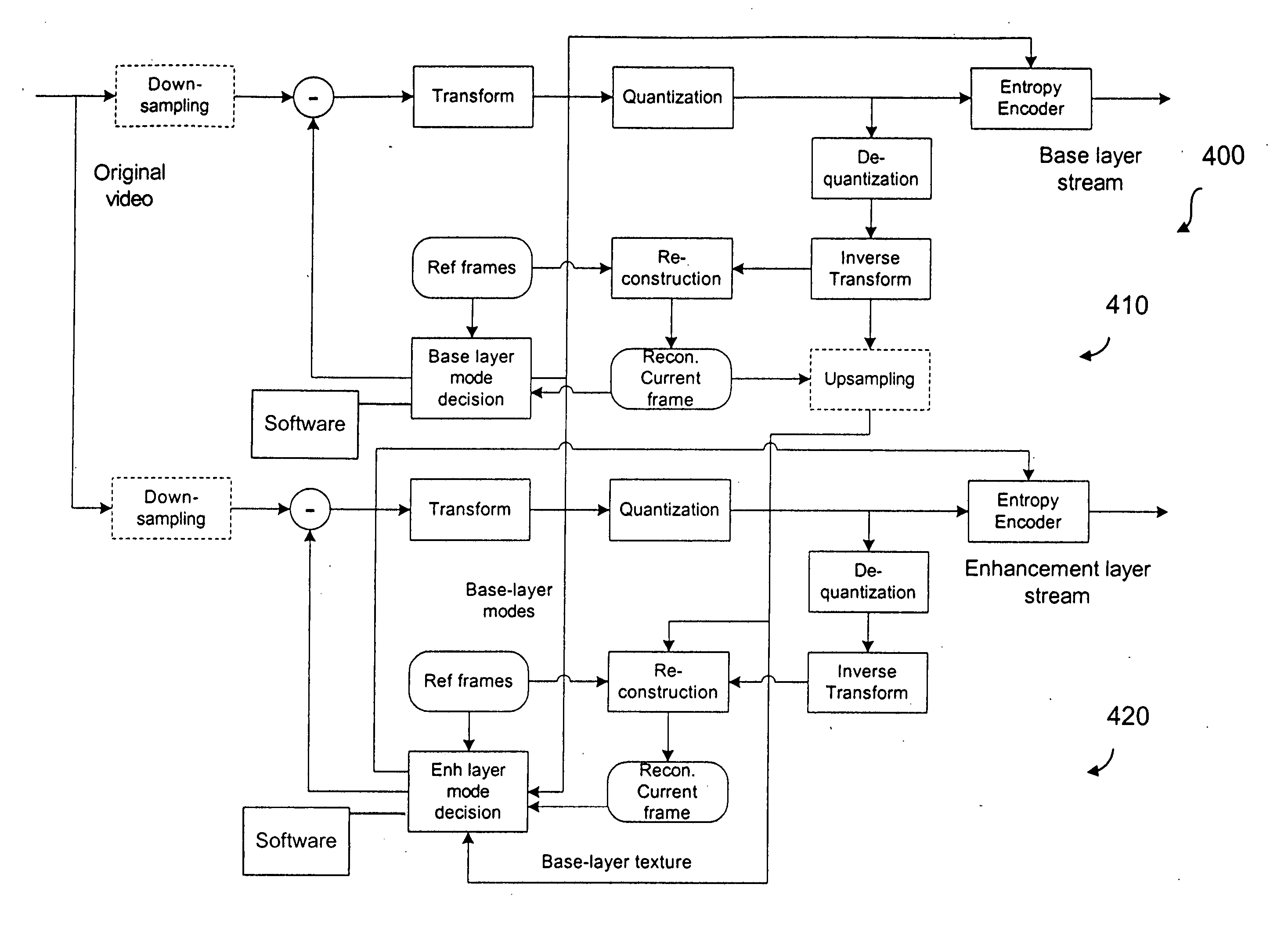

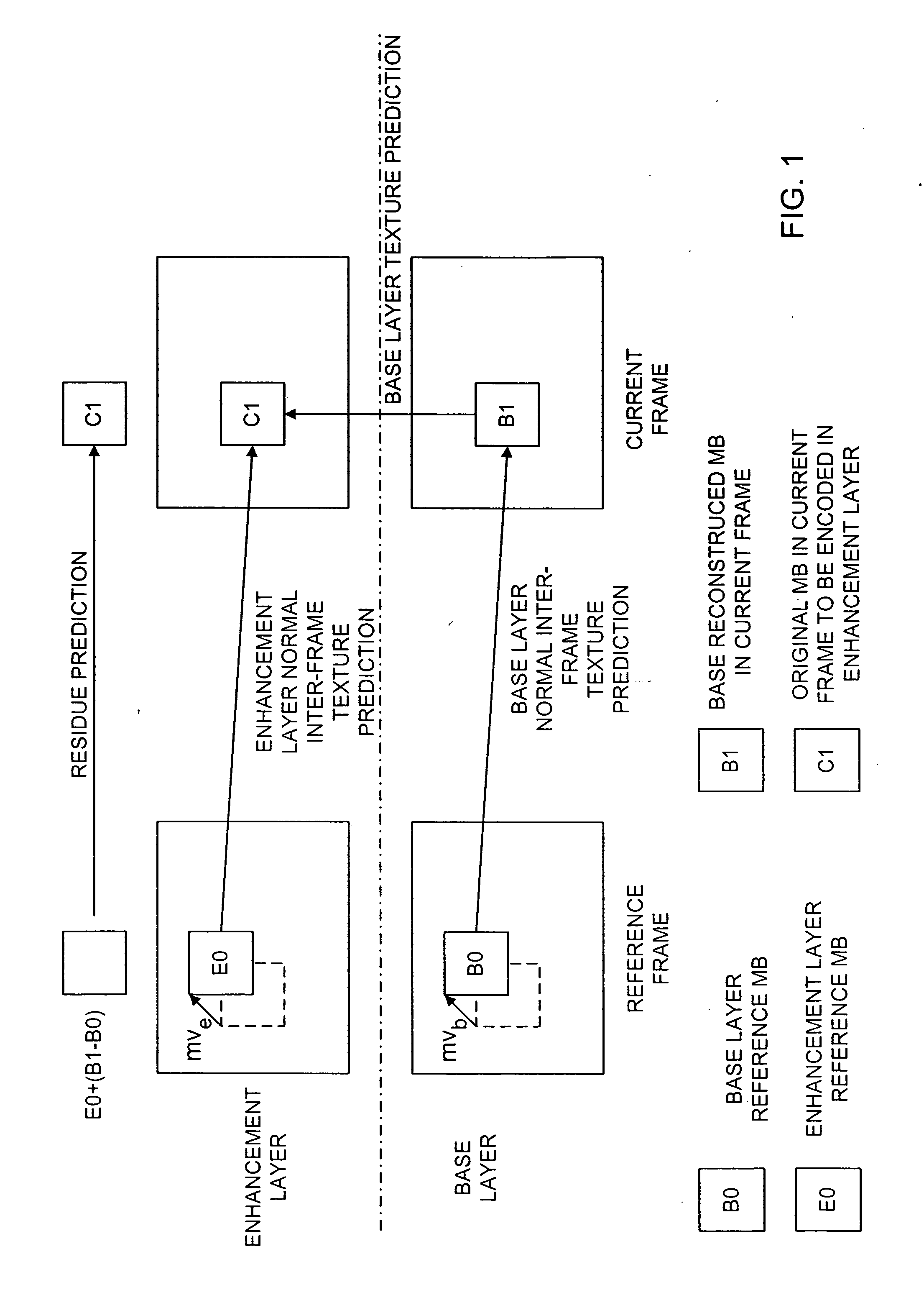

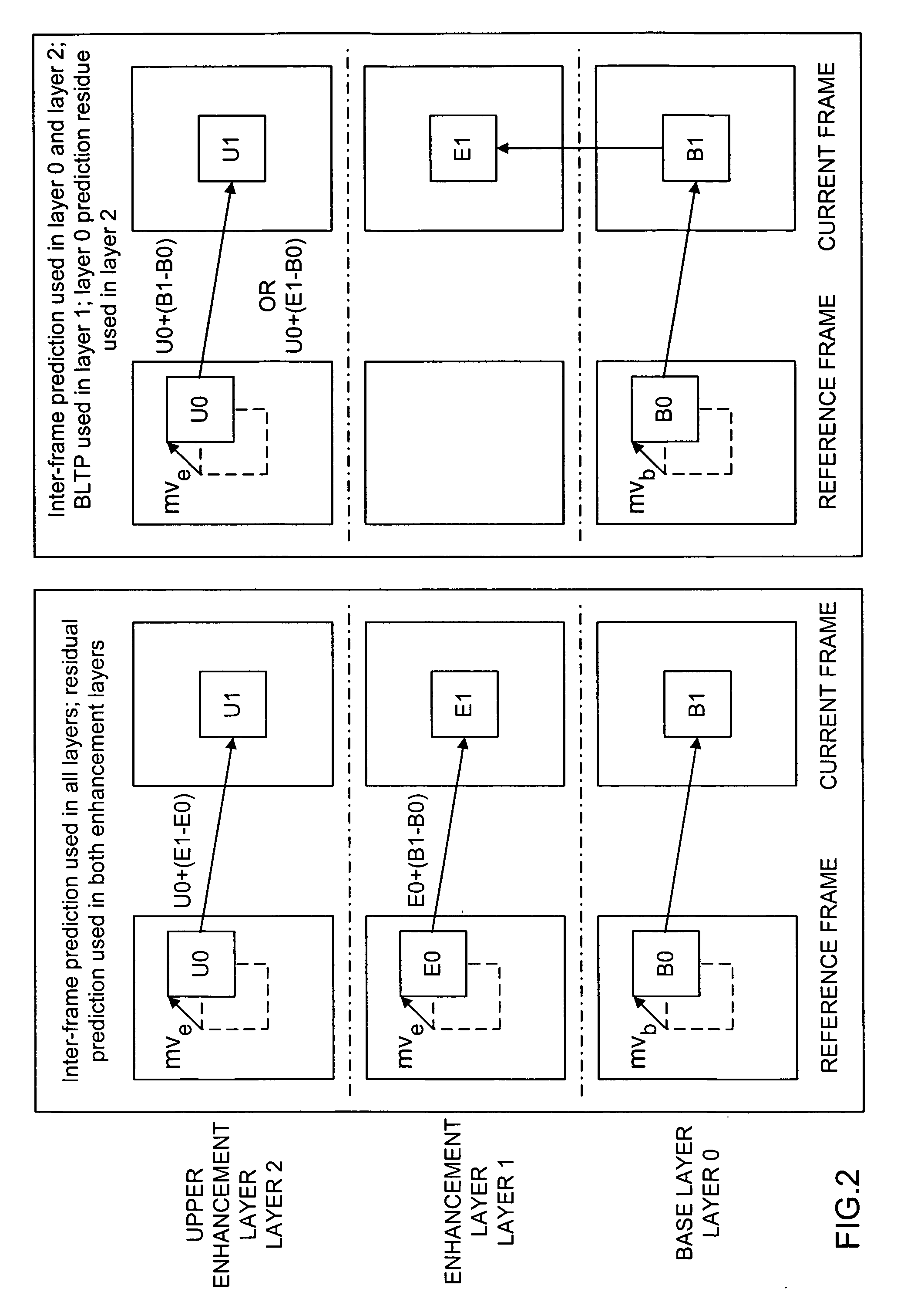

Method and system for inter-layer prediction mode coding in scalable video coding

InactiveUS20060153295A1Eliminate redundancyImproving inter-layer prediction modeColor television with pulse code modulationColor television with bandwidth reductionComputer architectureInter layer

The present invention improves residue prediction by using MI even when the base layer MB is encoded in intra mode such as copying intra 4×4 mode of one 4×4 block in the base layer to multiple neighboring 4×4 blocks in the enhancement layer if the base layer resolution is lower than the enhancement layer resolution, using the intra 4×4 mode as intra 8×8 mode if the base layer resolution is lower than the enhancement layer resolution and the base layer resolution is half of the enhancement layer resolution in both dimensions, carrying out direct calculation of the base layer prediction residue used in RP, clipping of prediction residue for reducing memory requirement and tunneling of prediction residue in BLTP mode; and conditional coding of RP flag to save flag bits and reduce implementation complexity

Owner:NOKIA CORP

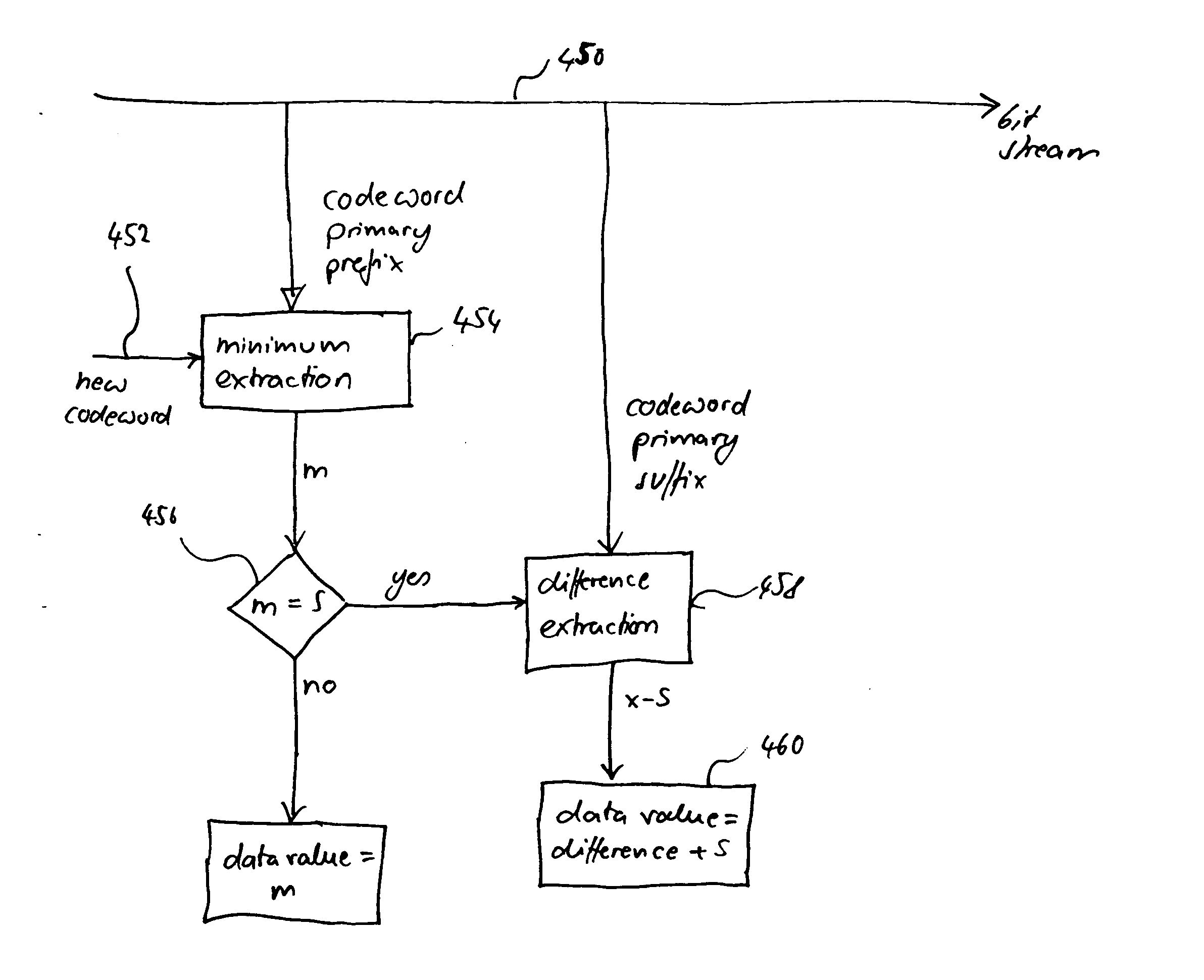

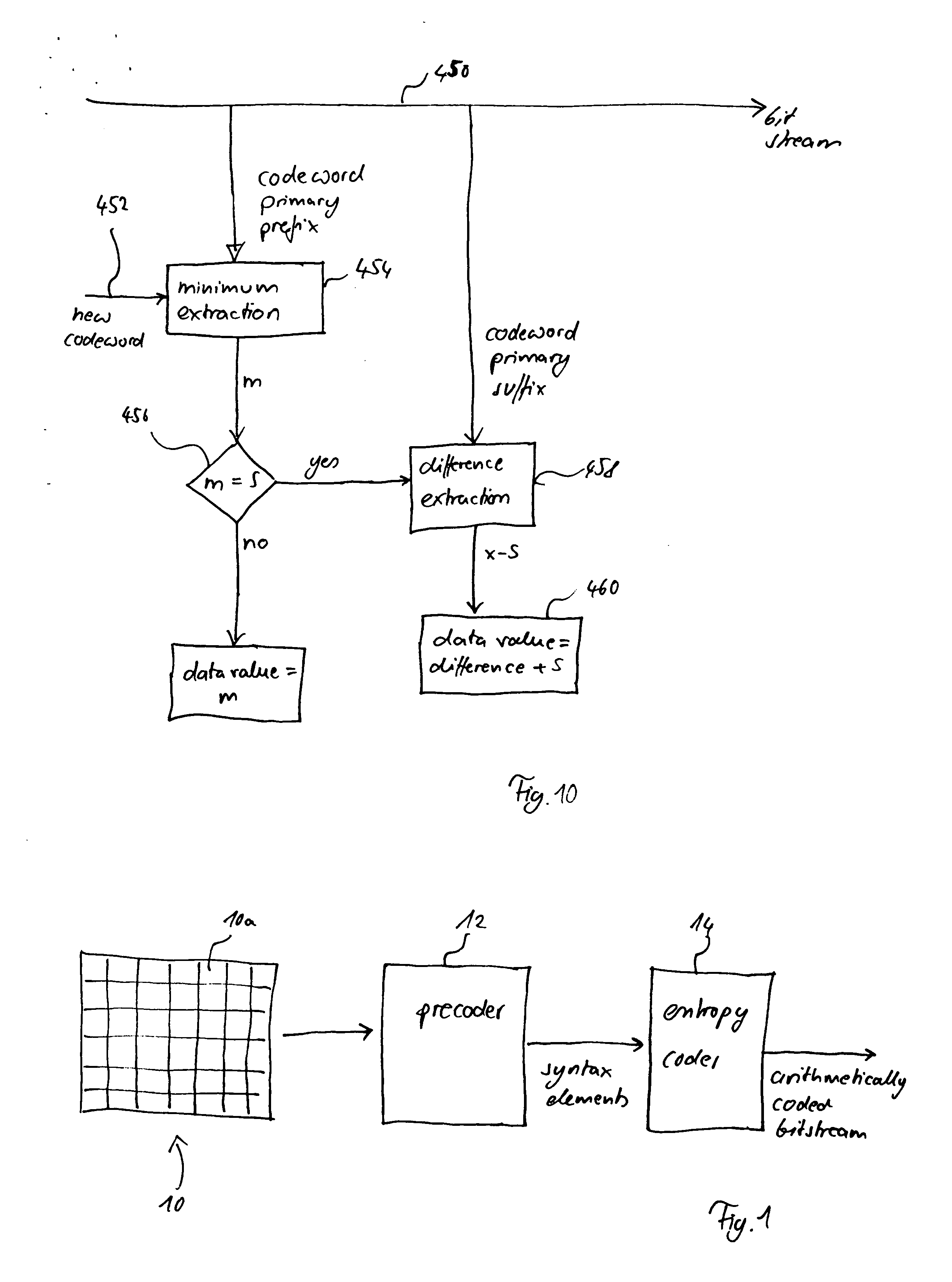

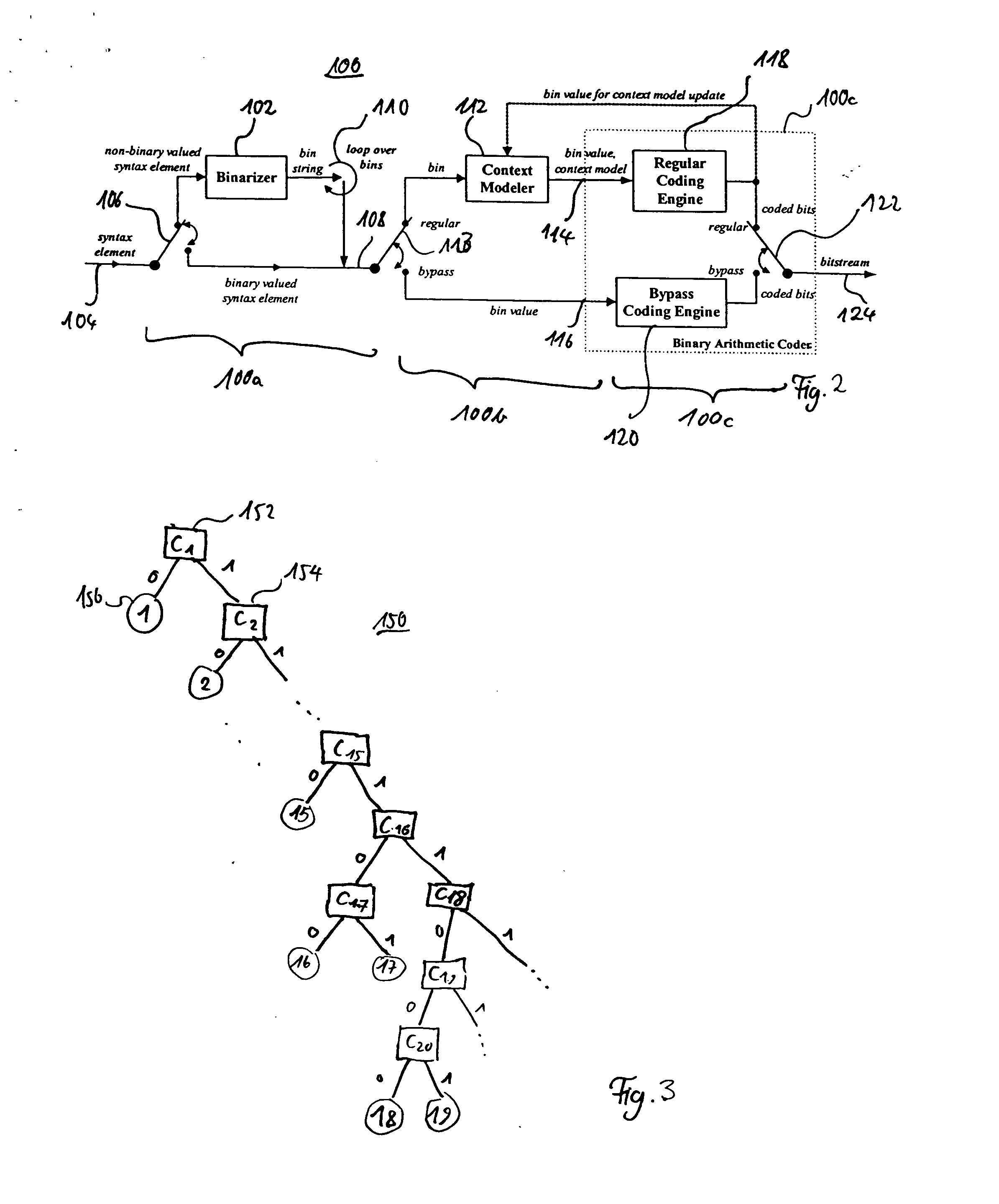

Method and apparatus for binarization and arithmetic coding of a data value

ActiveUS20050038837A1Moderate computational overheadEfficient compressionDigital data processing detailsDigital computer detailsComputer architectureCut off value

Binarization a data value comprises binarizing the minimum of the data value and a predetermined cut-off value in accordance with a first binarization scheme, in order to yield a primary prefix. If the data value is greater than the cut-off value, binarizing a difference of the data value minus the predetermined cut-off value in accordance with a second binarization scheme to obtain a binary suffix, the first binarization scheme being different from the second binarization scheme, and appending the primary suffix to the primary prefix is performed. A very effective compression of data values may be achiever by using the binarization scheme for preparing the syntax elements for the arithmetic coding, the binarization scheme substantially being a combination of two different binarization schemes, and by using binary arithmetic coding instead of m-ary arithmetic coding for coding the binarized syntax elements.

Owner:GE VIDEO COMPRESSION LLC

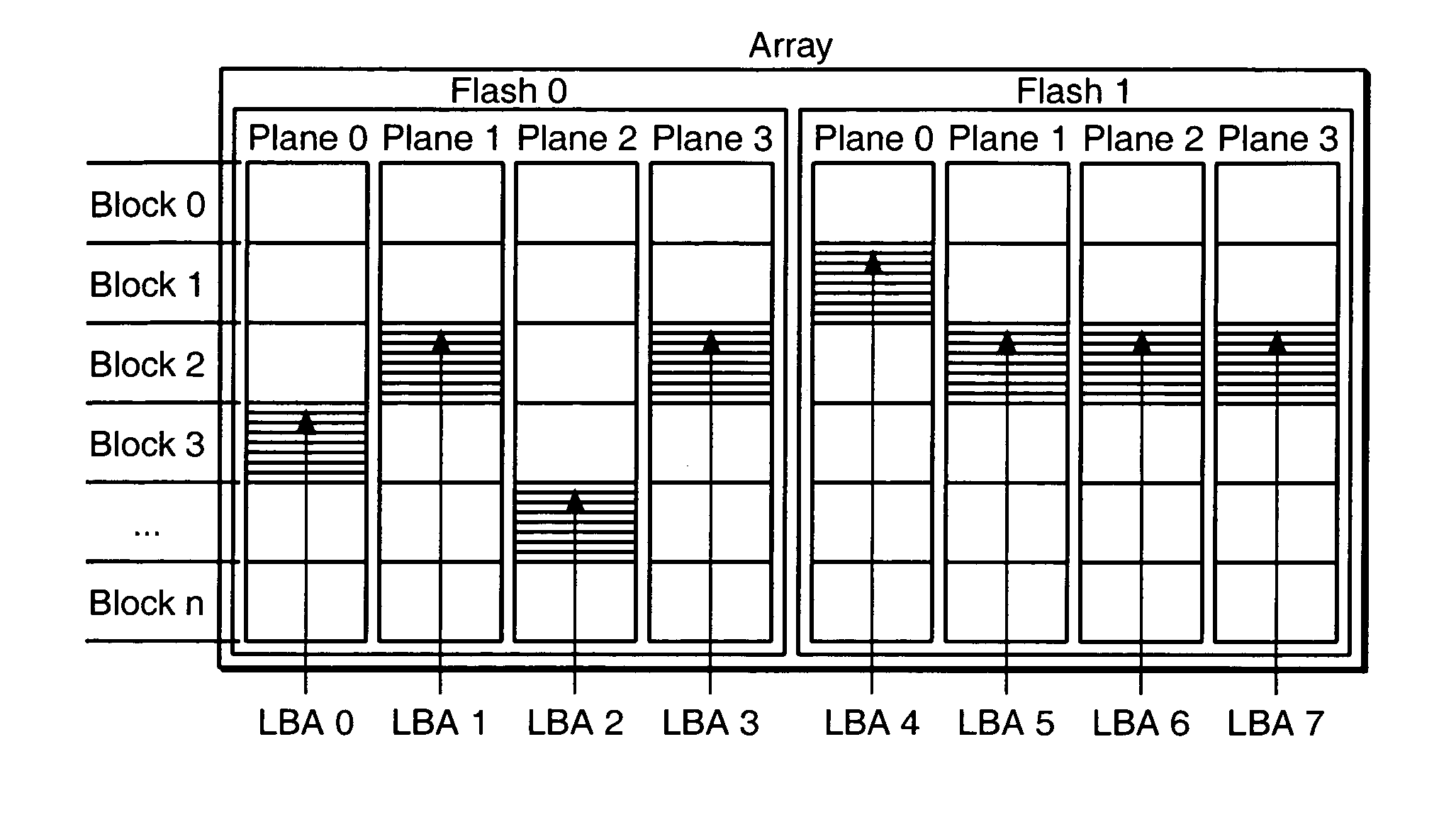

Non-volatile memory and method with memory planes alignment

InactiveUS20050141313A1Good ECCExtra level of reliabilityMemory architecture accessing/allocationMemory adressing/allocation/relocationComputer architectureLogic cell

A non-volatile memory is constituted from a set of memory planes, each having its own set of read / write circuits so that the memory planes can operate in parallel. The memory is further organized into erasable blocks, each for storing a logical group of logical units of data. In updating a logical unit, all versions of a logical unit are maintained in the same plane as the original. Preferably, all versions of a logical unit are aligned within a plane so that they are all serviced by the same set of sensing circuits. In a subsequent garbage collection operation, the latest version of the logical unit need not be retrieved from a different plane or a different set of sensing circuits, otherwise resulting in reduced performance. In one embodiment, any gaps left after alignment are padded by copying latest versions of logical units in sequential order thereto.

Owner:SANDISK TECH LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com