Patents

Literature

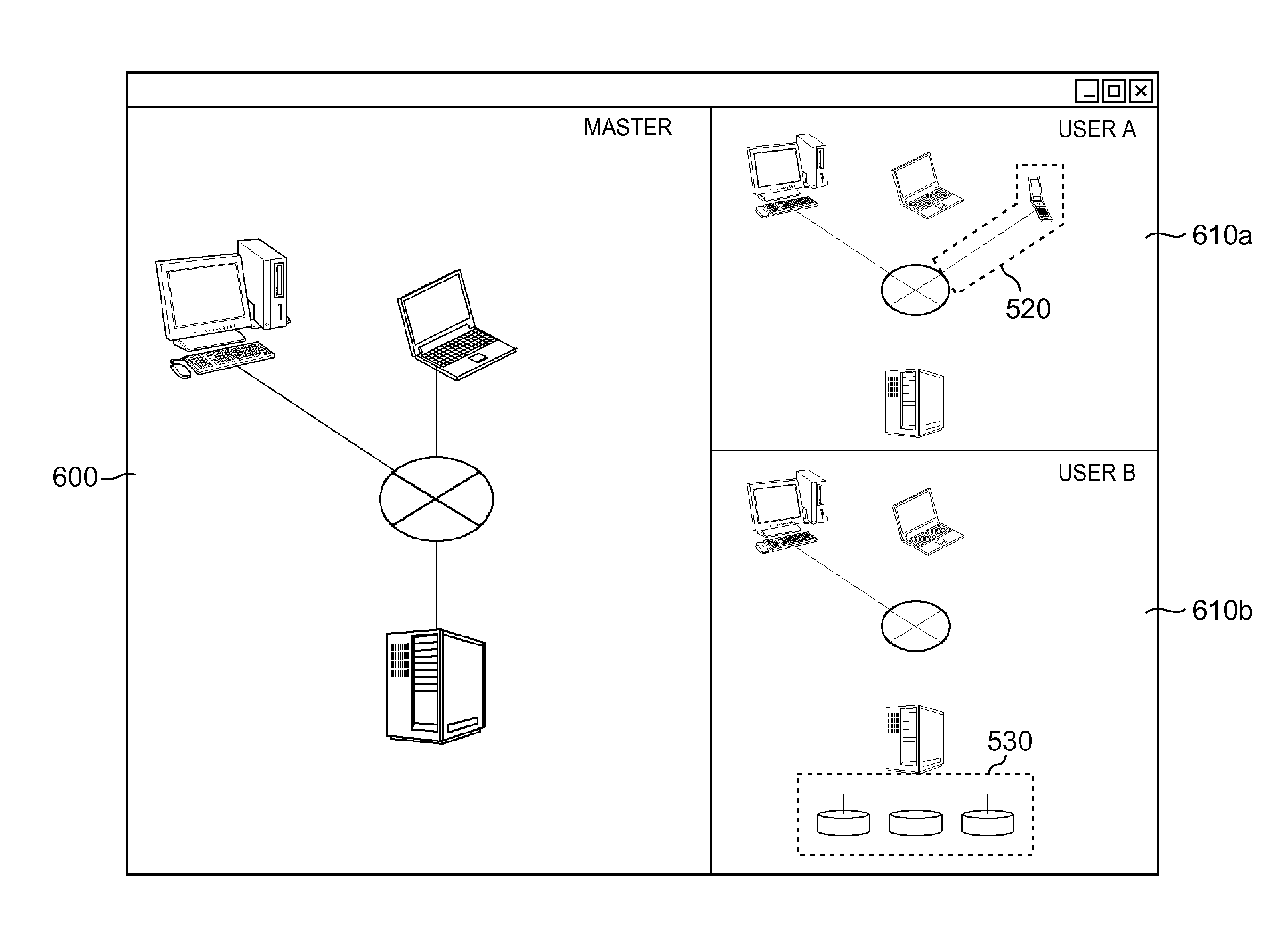

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

4198results about "Program synchronisation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

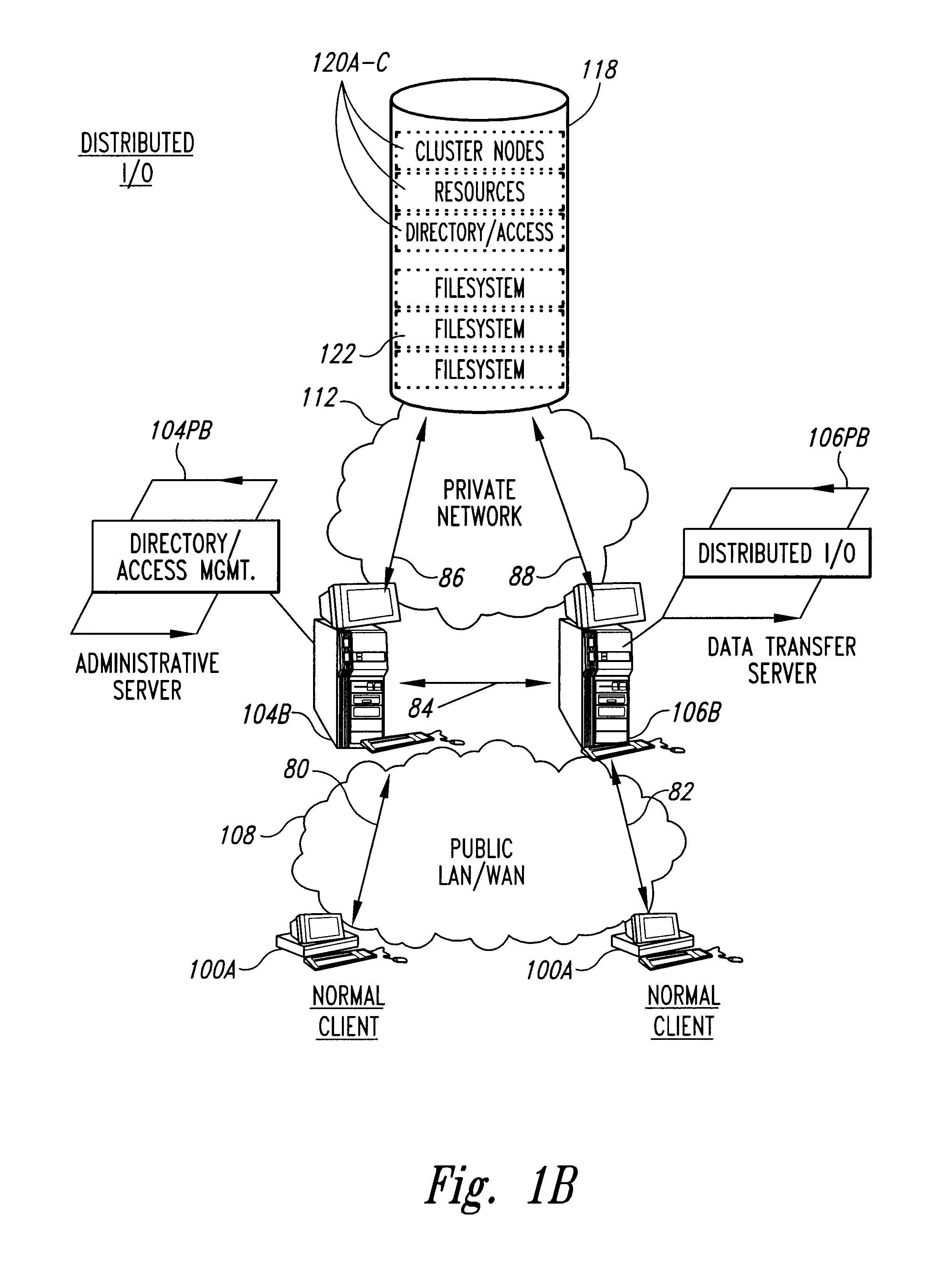

Clustered file management for network resources

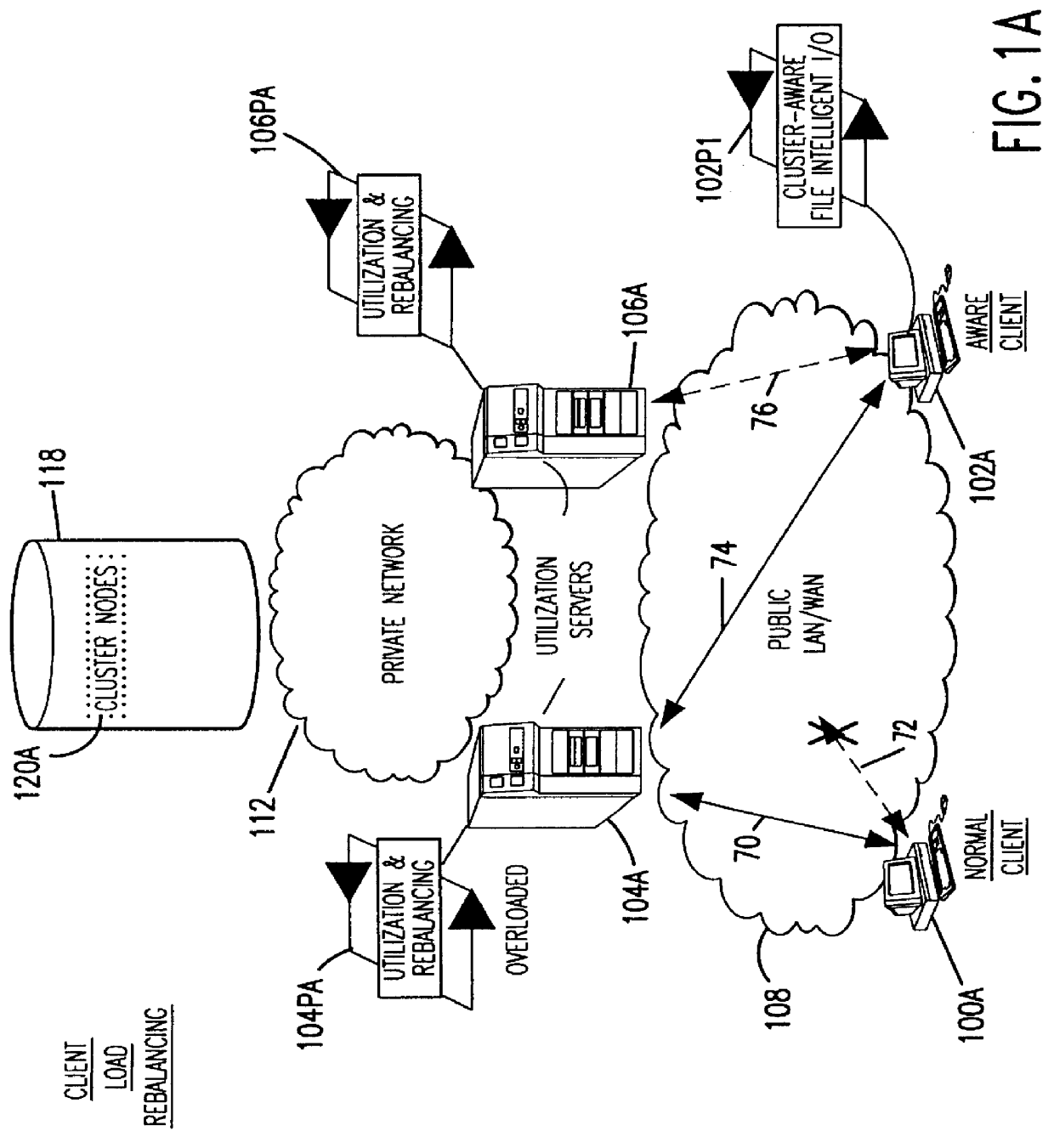

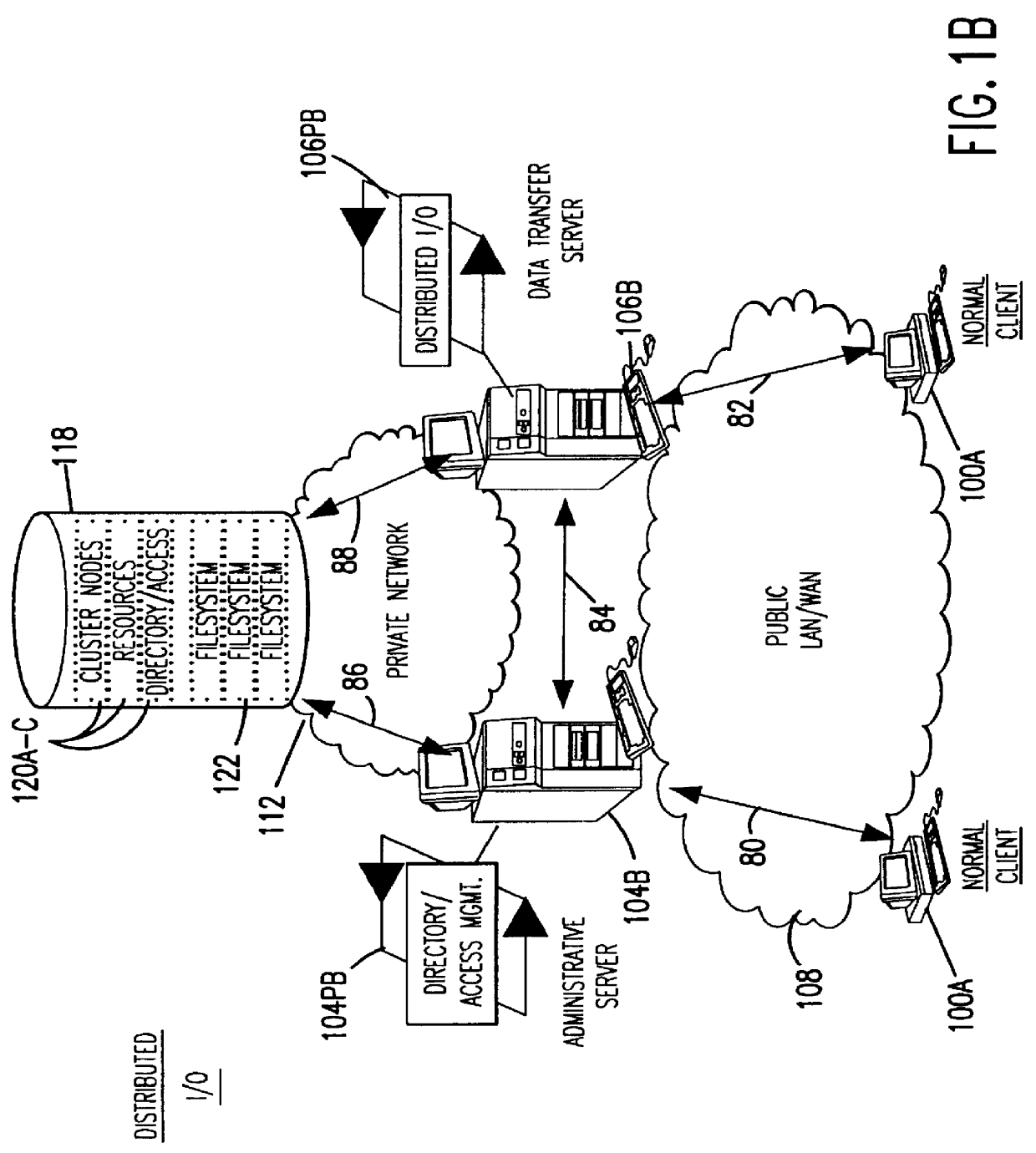

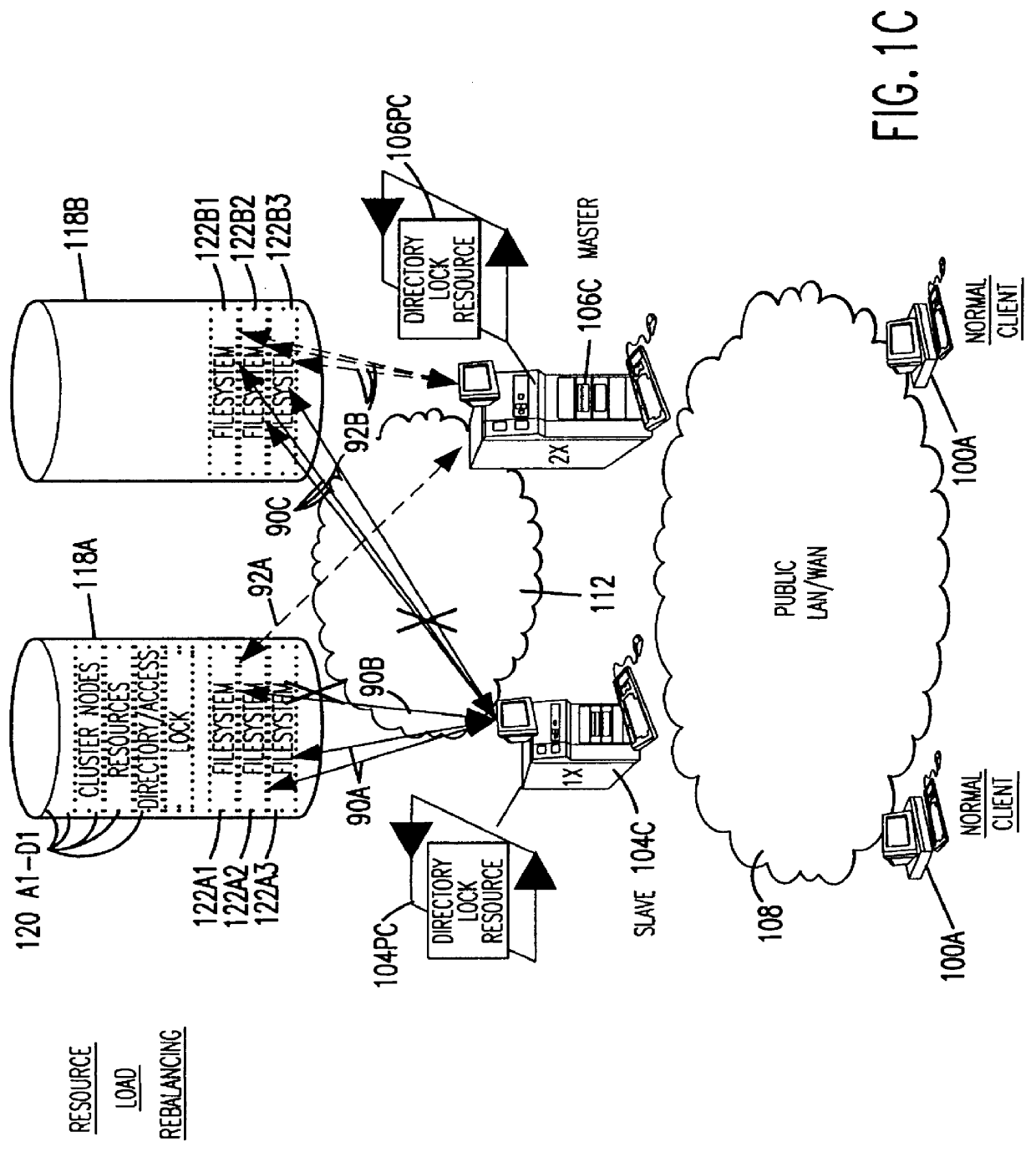

InactiveUS6101508AData processing applicationsProgram synchronisationFile systemClustered file system

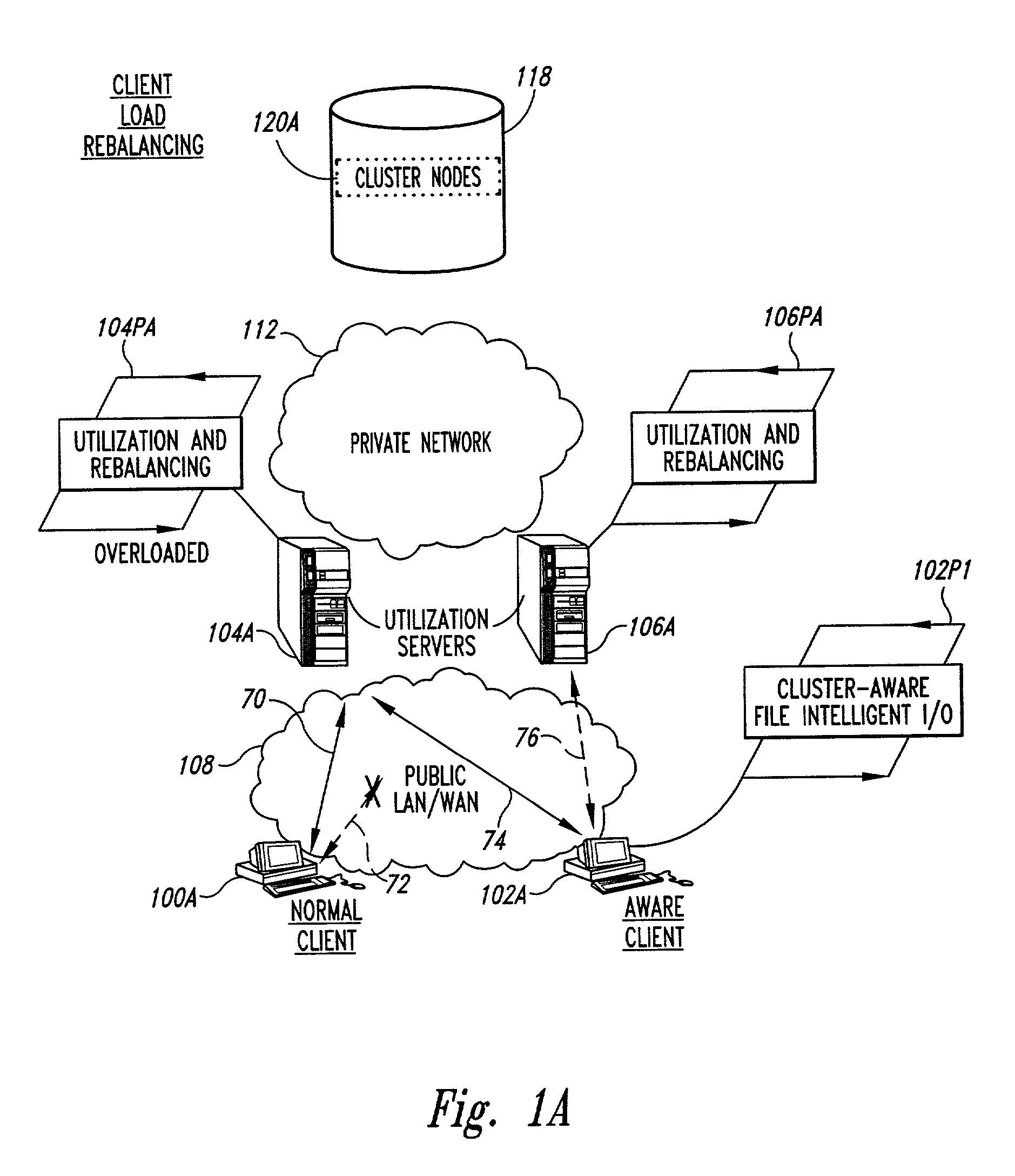

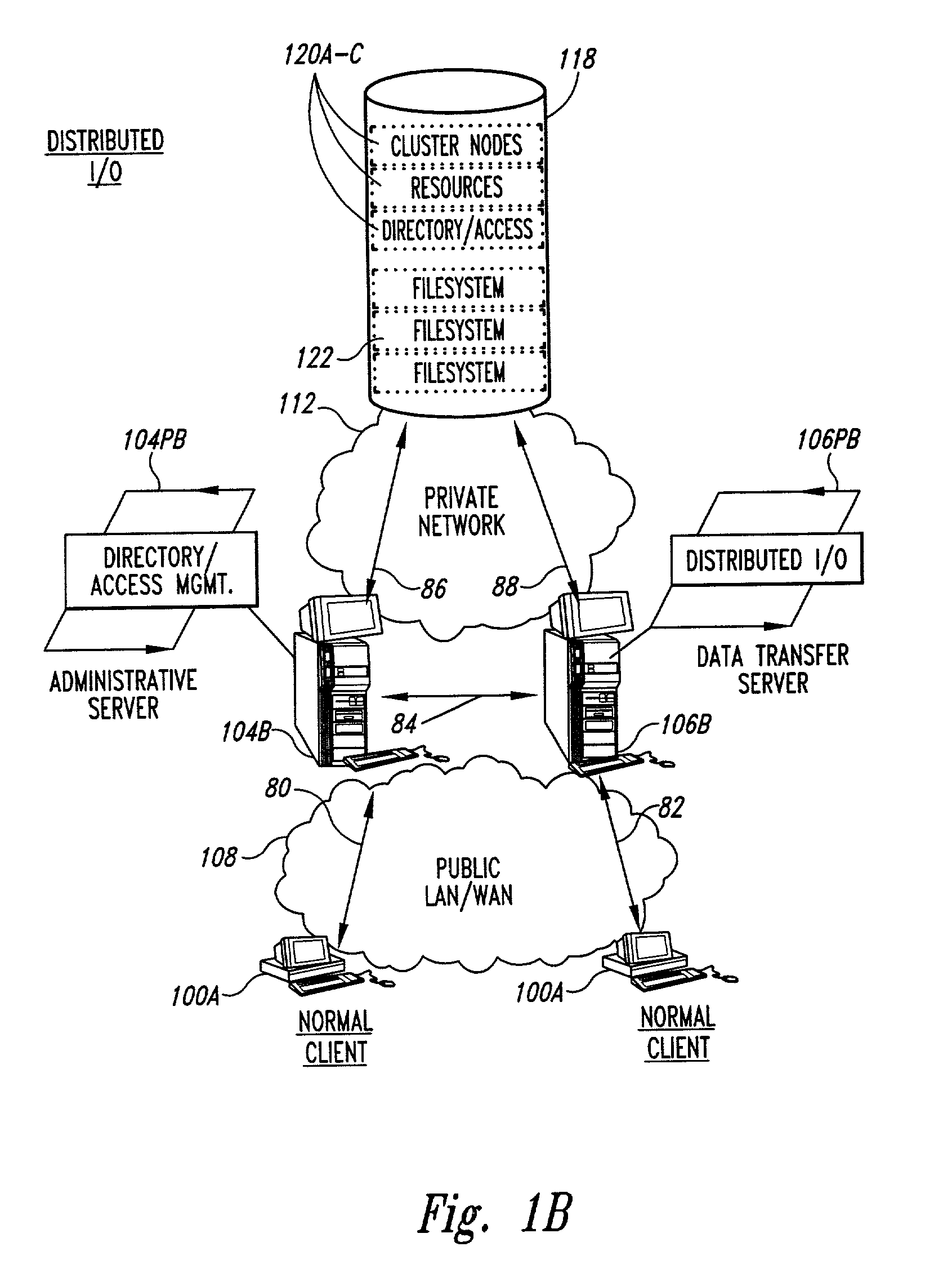

Methods for operating a network as a clustered file system is disclosed. The methods involve client load rebalancing, distributed Input and Output (I / O) and resource load rebalancing. Client load rebalancing refers to the ability of a client enabled with processes in accordance with the current invention to remap a path through a plurality of nodes to a resource. Distributed I / O refers to the methods on the network which provide concurrent input / output through a plurality of nodes to resources. Resource rebalancing includes remapping of pathways between nodes, e.g. servers, and resources, e.g. volumes / file systems. The network includes client nodes, server nodes and resources. Each of the resources couples to at least two of the server nodes. The method for operating comprising the acts of: redirecting an I / O request for a resource from a first server node coupled to the resource to a second server node coupled to the resource; and splitting the I / O request at the second server node into an access portion and a data transfer portion and passing the access portion to a corresponding administrative server node for the resource, and completing at the second server nodes subsequent to receipt of an access grant from the corresponding administrative server node a data transfer for the resource. In an alternate embodiment of the invention the methods may additionally include the acts of: detecting a change in an availability of the server nodes; and rebalancing the network by applying a load balancing function to the network to re-assign each of the available resources to a corresponding available administrative server node responsive to the detecting act.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

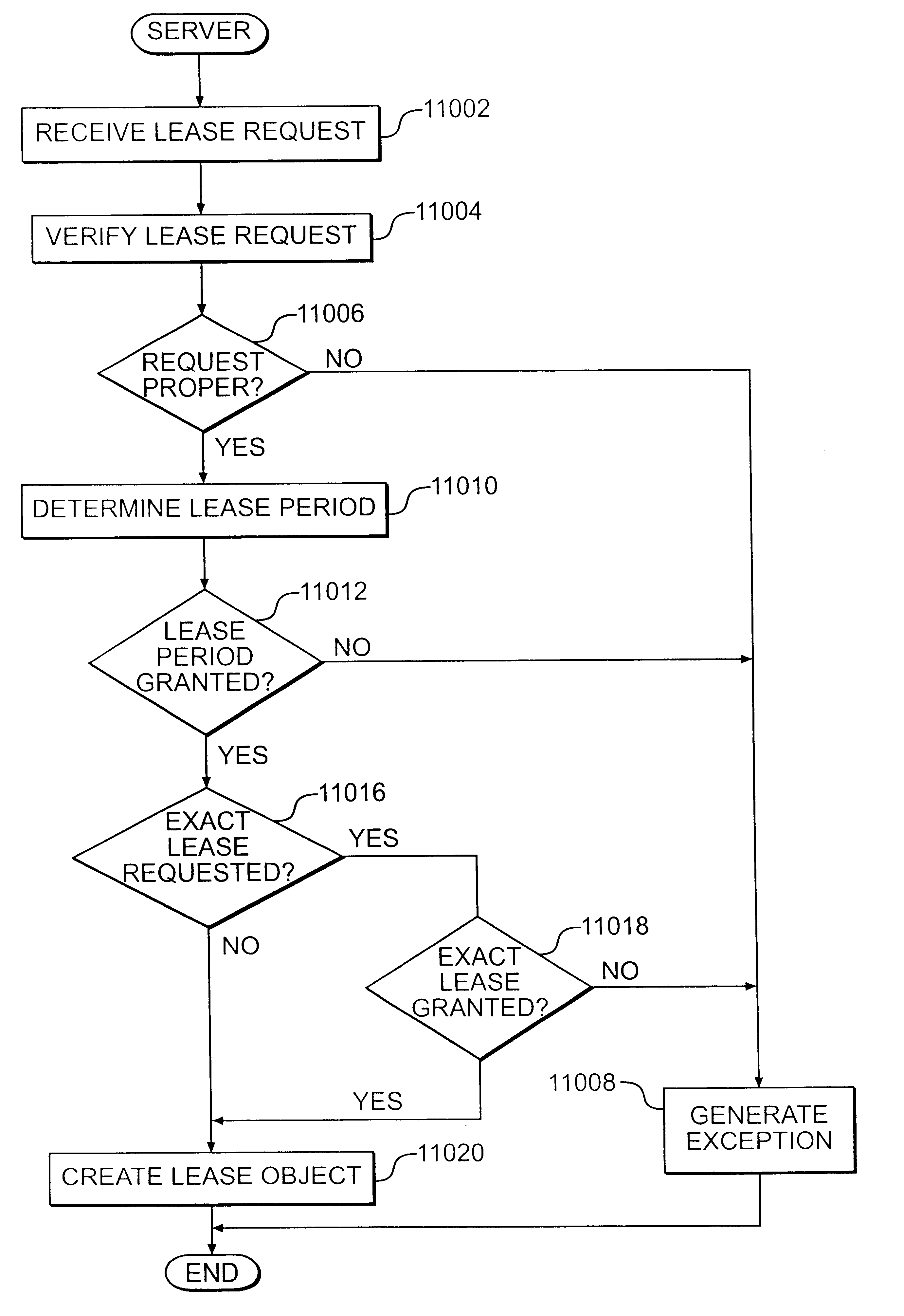

Method and system for leasing storage

A method and system for leasing storage locations in a distributed processing system is provided. Consistent with this method and system, a client requests access to storage locations for a period of time (lease period) from a server, such as the file system manager. Responsive to this request, the server invokes a lease period algorithm, which considers various factors to determine a lease period during which time the client may access the storage locations. After a lease is granted, the server sends an object to the client that advises the client of the lease period and that provides the client with behavior to modify the lease, like canceling the lease or renewing the lease. The server supports concurrent leases, exact leases, and leases for various types of access. After all leases to a storage location expire, the server reclaims the storage location.

Owner:SUN MICROSYSTEMS INC

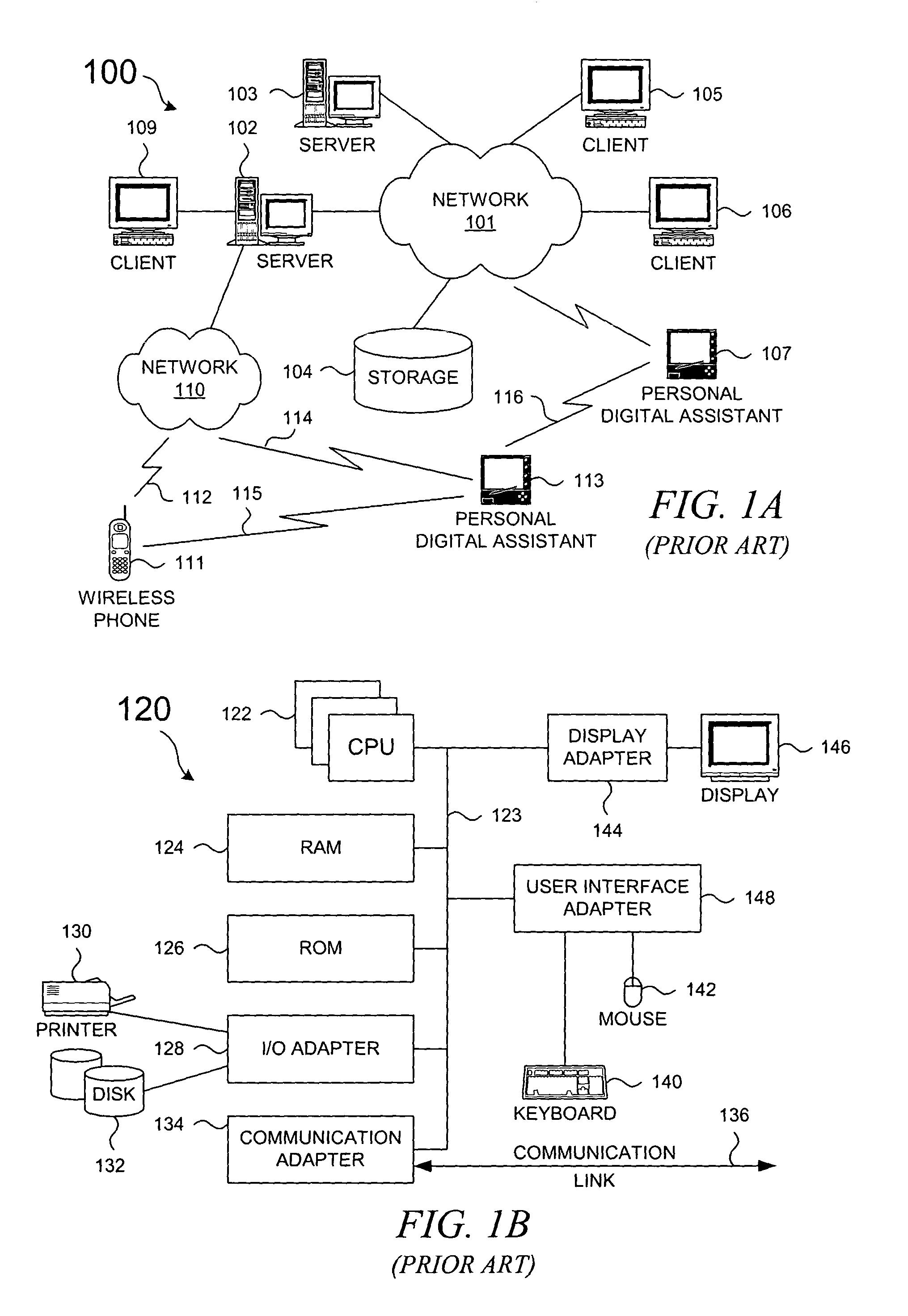

Dynamic load balancing of a network of client and server computer

InactiveUS20030126200A1Program synchronisationMultiple digital computer combinationsDynamic load balancingClient-side

Methods for load rebalancing by clients in a network are disclosed. Client load rebalancing allows the clients to optimize throughput between themselves and the resources accessed by the nodes. A network which implements this embodiment of the invention can dynamically rebalance itself to optimize throughput by migrating client I / O requests from overutilized pathways to underutilized pathways. Client load rebalancing refers to the ability of a client enabled with processes in accordance with the current invention to remap a path through a plurality of nodes to a resource. The remapping may take place in response to a redirection command emanating from an overloaded node, e.g. server. These embodiments disclosed allow more efficient, robust communication between a plurality of clients and a plurality of resources via a plurality of nodes. In an embodiment of the invention a method for load balancing on a network is disclosed. The network includes at least one client node coupled to a plurality of server nodes, and at least one resource coupled to at least a first and a second server node of the plurality of server nodes. The method comprises the acts of: receiving at a first server node among the plurality of server nodes a request for the at least one resource; determining a utilization condition of the first server node; and re-directing subsequent requests for the at least one resource to a second server node among the plurality of server nodes in response to the determining act. In another embodiment of the invention the method comprises the acts of: sending an I / O request from the at least one client to the first server node for the at least one resource; determining an I / O failure of the first server node; and re-directing subsequent requests from the at least one client for the at least one resource to an other among the plurality of server nodes in response to the determining act.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

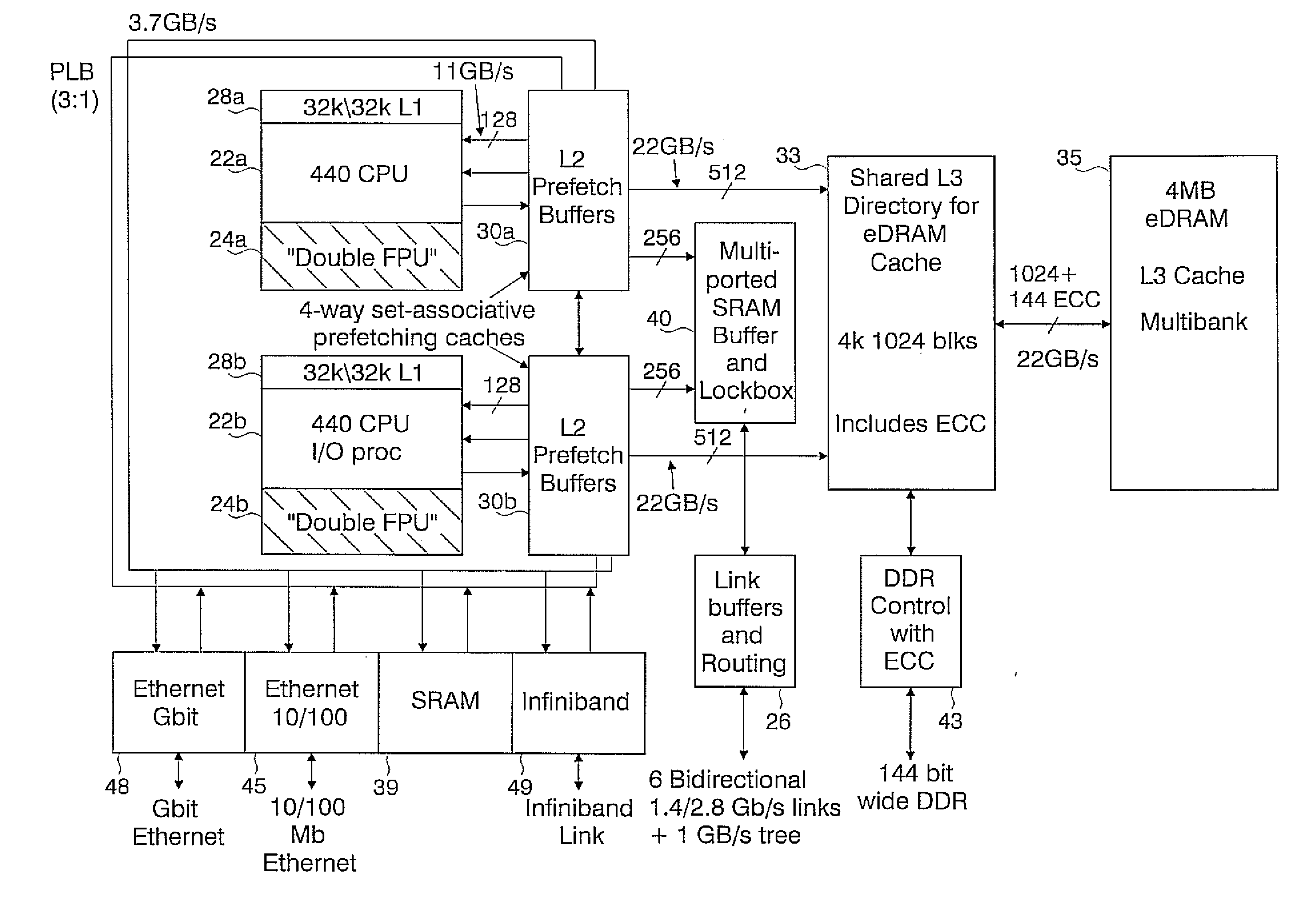

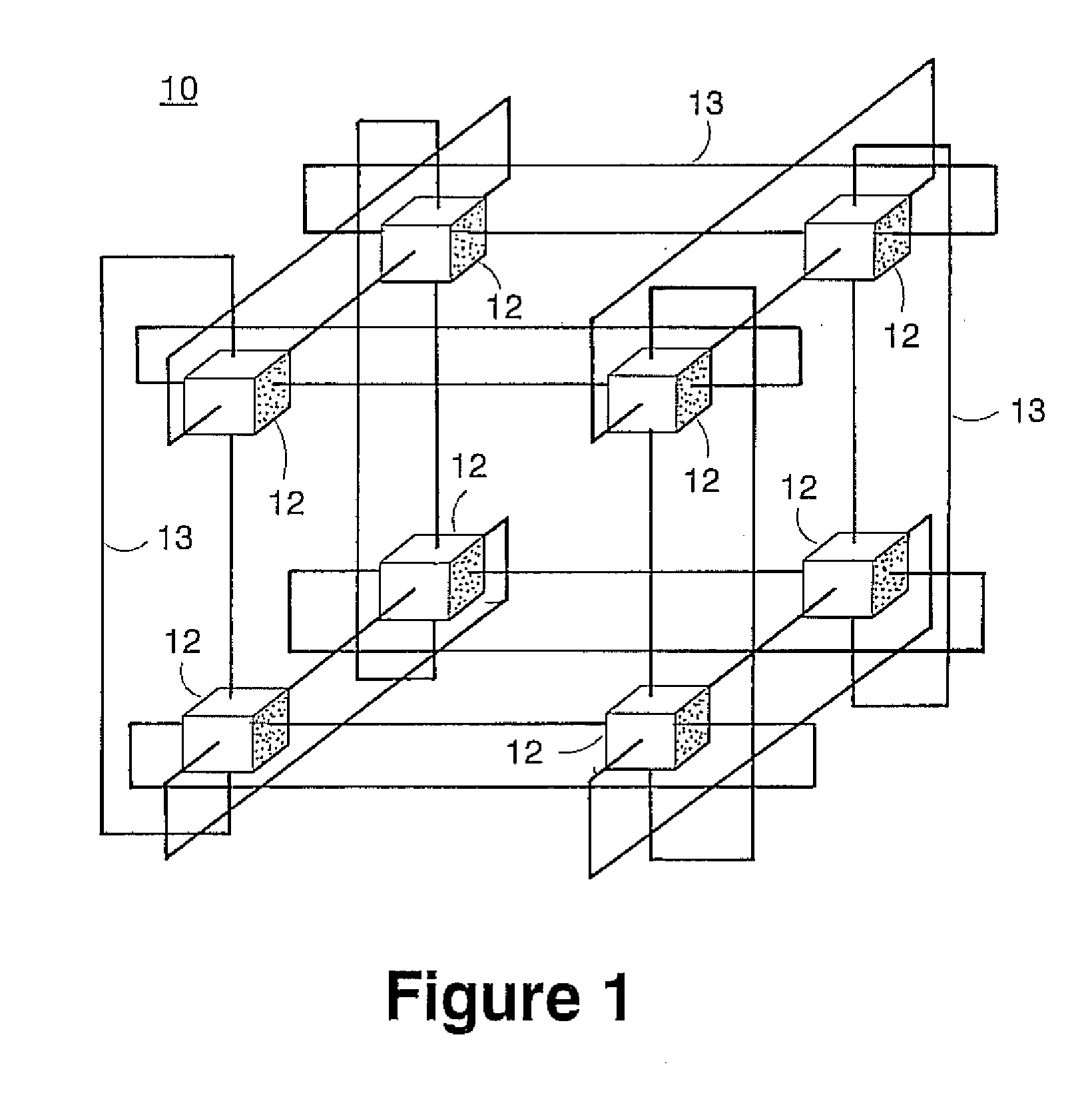

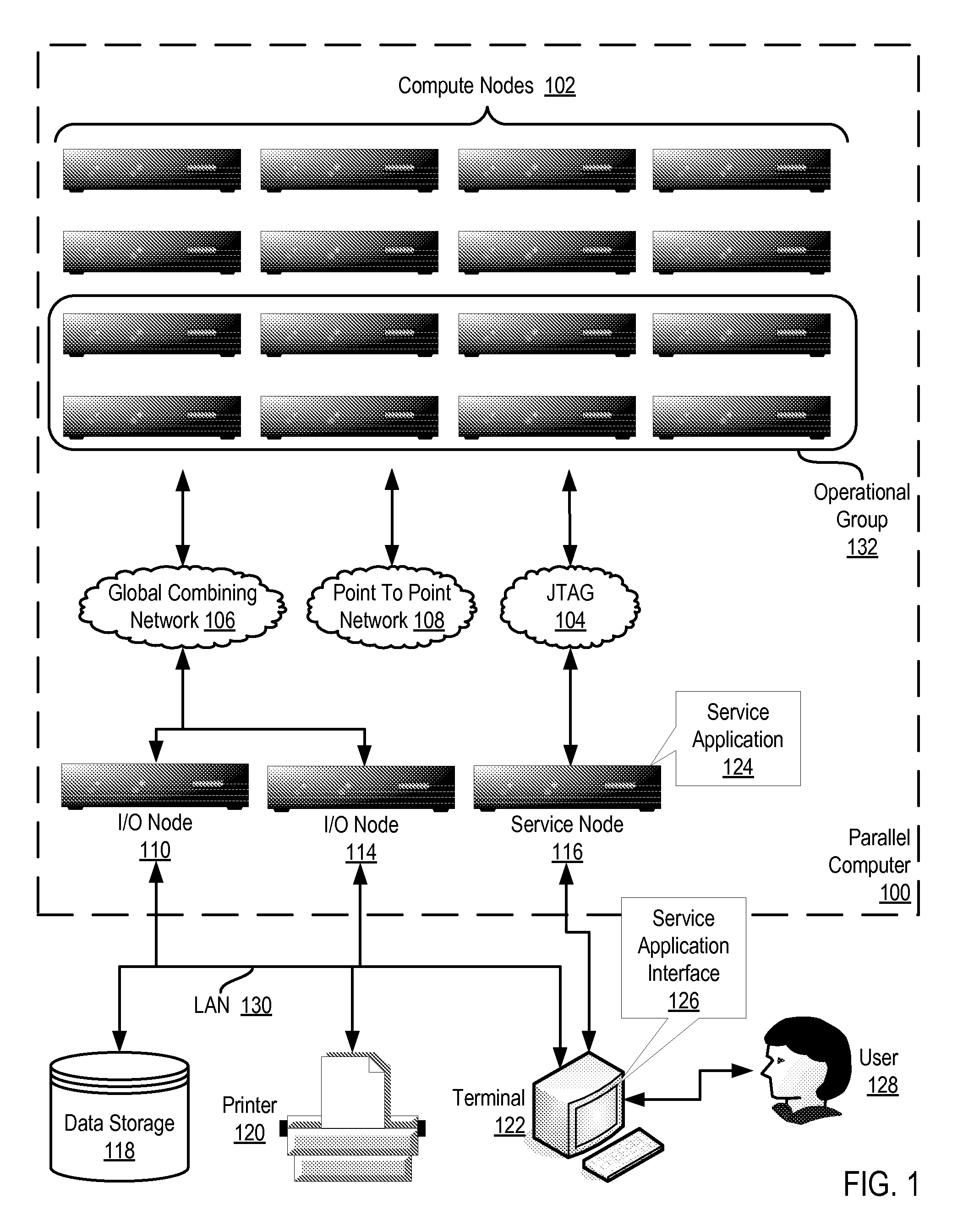

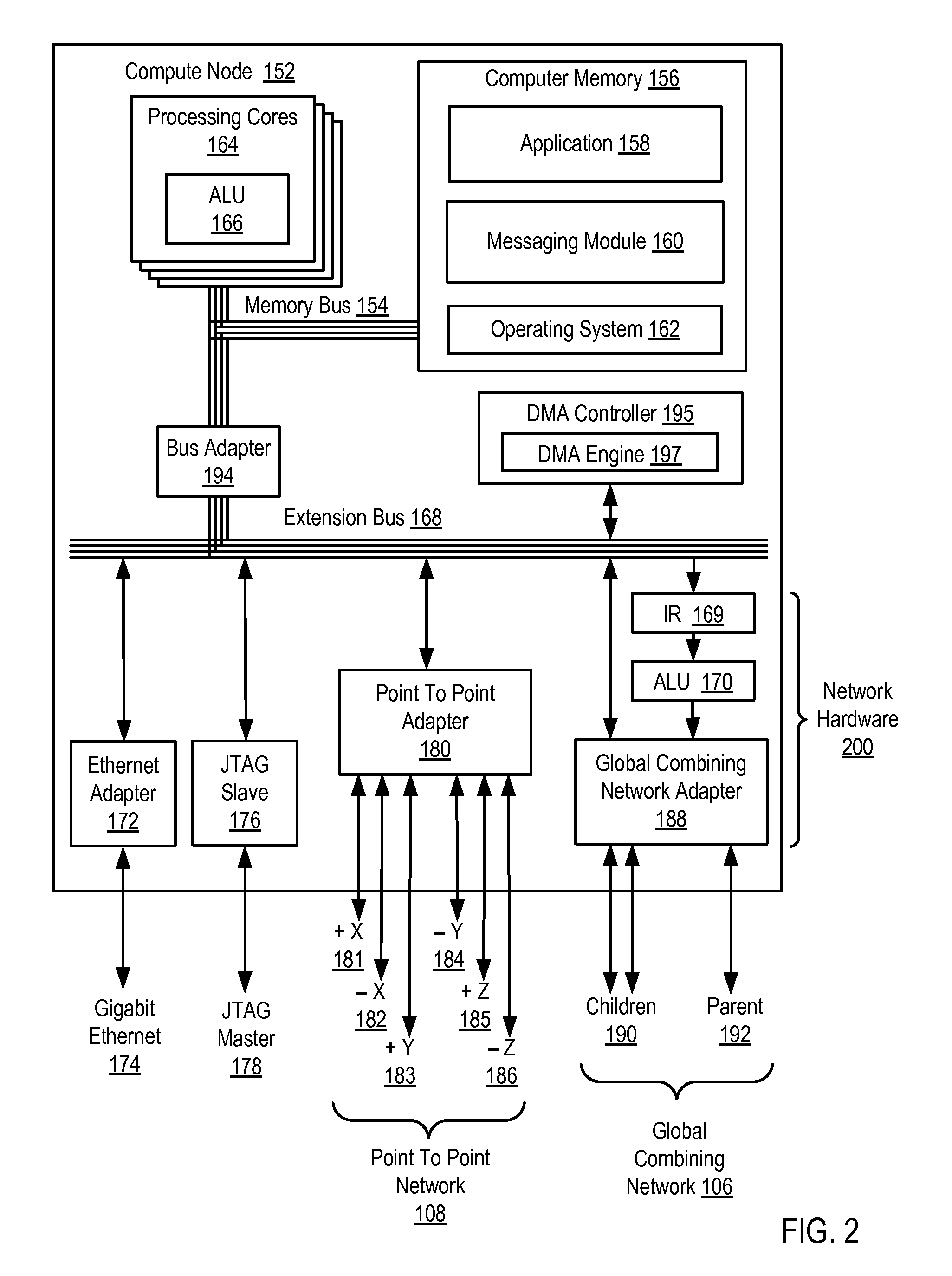

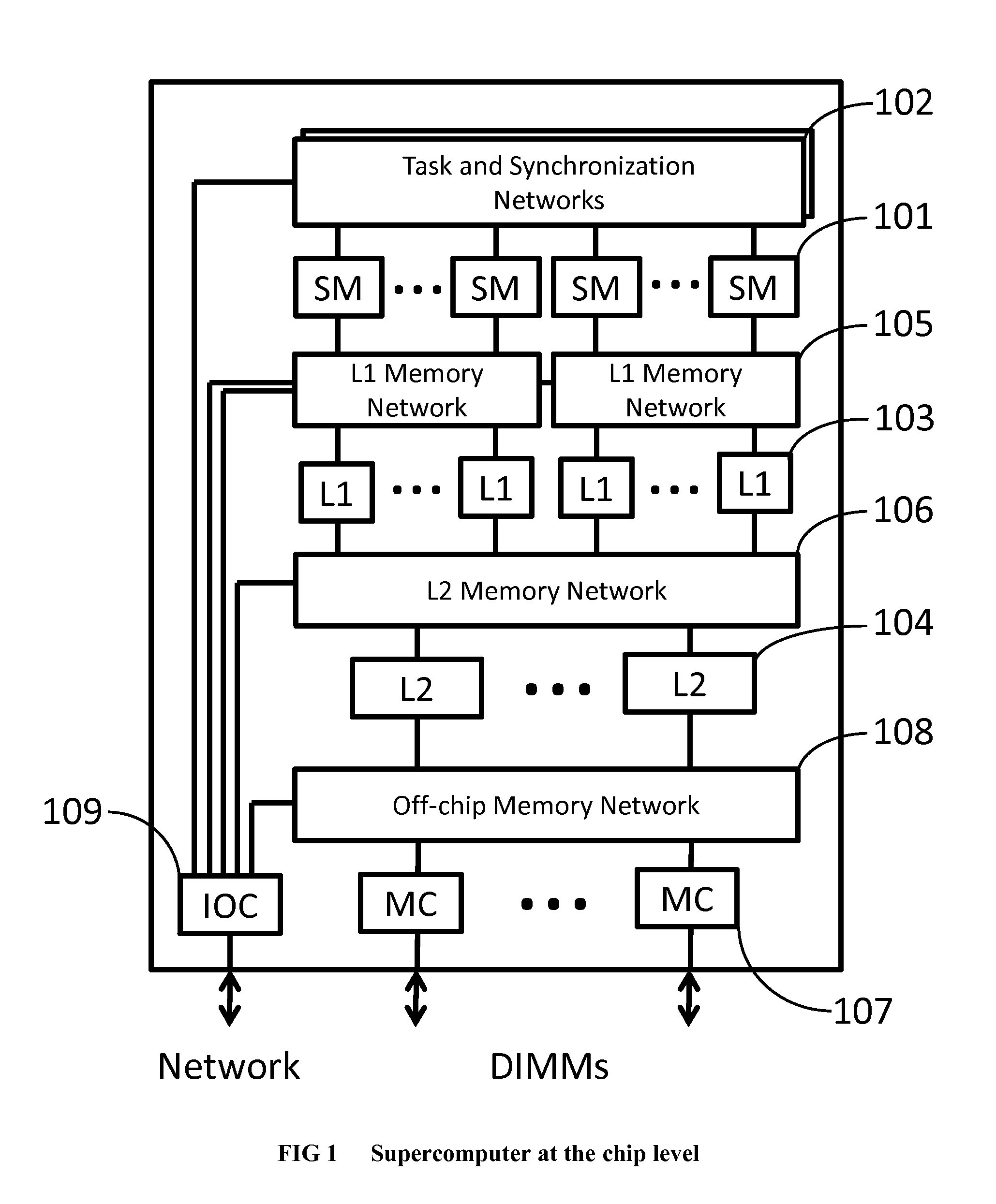

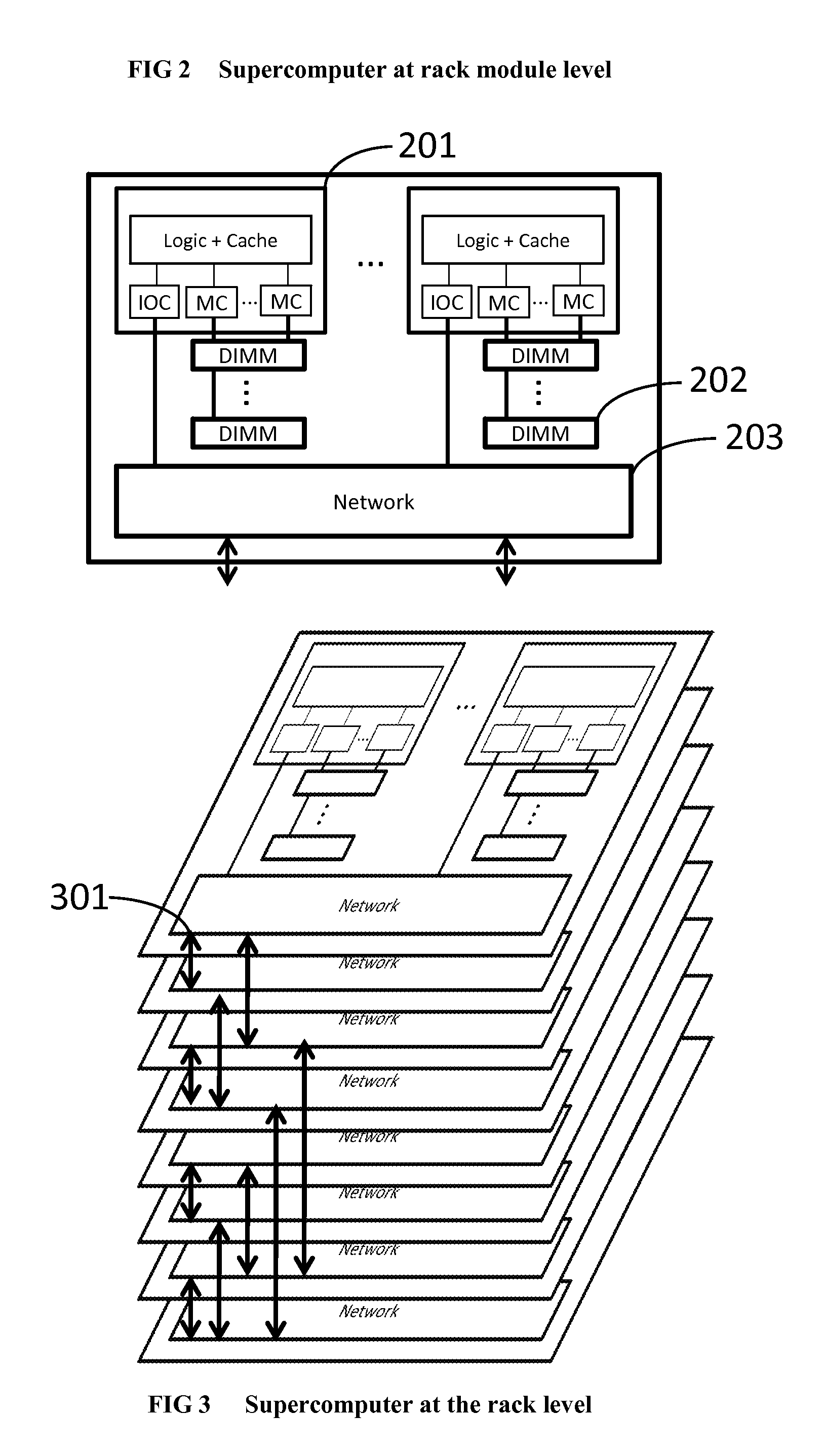

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

Apparatus, system, and method for data block usage information synchronization for a non-volatile storage volume

An apparatus, system, and method are disclosed for data block usage information synchronization for a non-volatile storage volume. The method includes referencing first data block usage information for data blocks of a non-volatile storage volume managed by a storage manager. The first data block usage information is maintained by the storage manager. The method also includes synchronizing second data block usage information managed by a storage controller with the first data block usage information maintained by the storage manager. The storage manager maintains the first data block usage information separate from second data block usage information managed by the storage controller.

Owner:UNIFICATION TECH LLC

Apparatus, system, and method for storage space recovery after reaching a read count limit

ActiveUS20090125671A1Shorten the counting processReduce bit error rateMemory architecture accessing/allocationProgram synchronisationSolid-state storagePhysical address

An apparatus, system, and method are disclosed for storage space recovery after reaching a read count limit. A read module reads data in a storage division of solid-state storage. A read counter module then increments a read counter corresponding to the storage division. A read counter limit module determines if the read count exceeds a maximum read threshold, and if so, a storage division selection module selects the corresponding storage division for recovery. A data recovery module reads valid data packets from the selected storage division, stores the valid data packets in another storage division of the solid-state storage, and updates a logical index with a new physical address of the valid data.

Owner:UNIFICATION TECH LLC

Dynamic load balancing of a network of client and server computer

InactiveUS6886035B2Improve throughputProgram synchronisationMultiple digital computer combinationsDynamic load balancingClient-side

Methods for load rebalancing by clients in a network are disclosed. Client load rebalancing allows the clients to optimize throughput between themselves and the resources accessed by the nodes. A network, which implements this embodiment of the invention, can dynamically rebalance itself to optimize throughput by migrating client I / O requests from over_utilized pathways to under_utilized pathways. Client load rebalancing allows a client to re-map a path through a plurality of nodes to a resource. The re-mapping may take place in response to a redirection command from an overloaded node.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

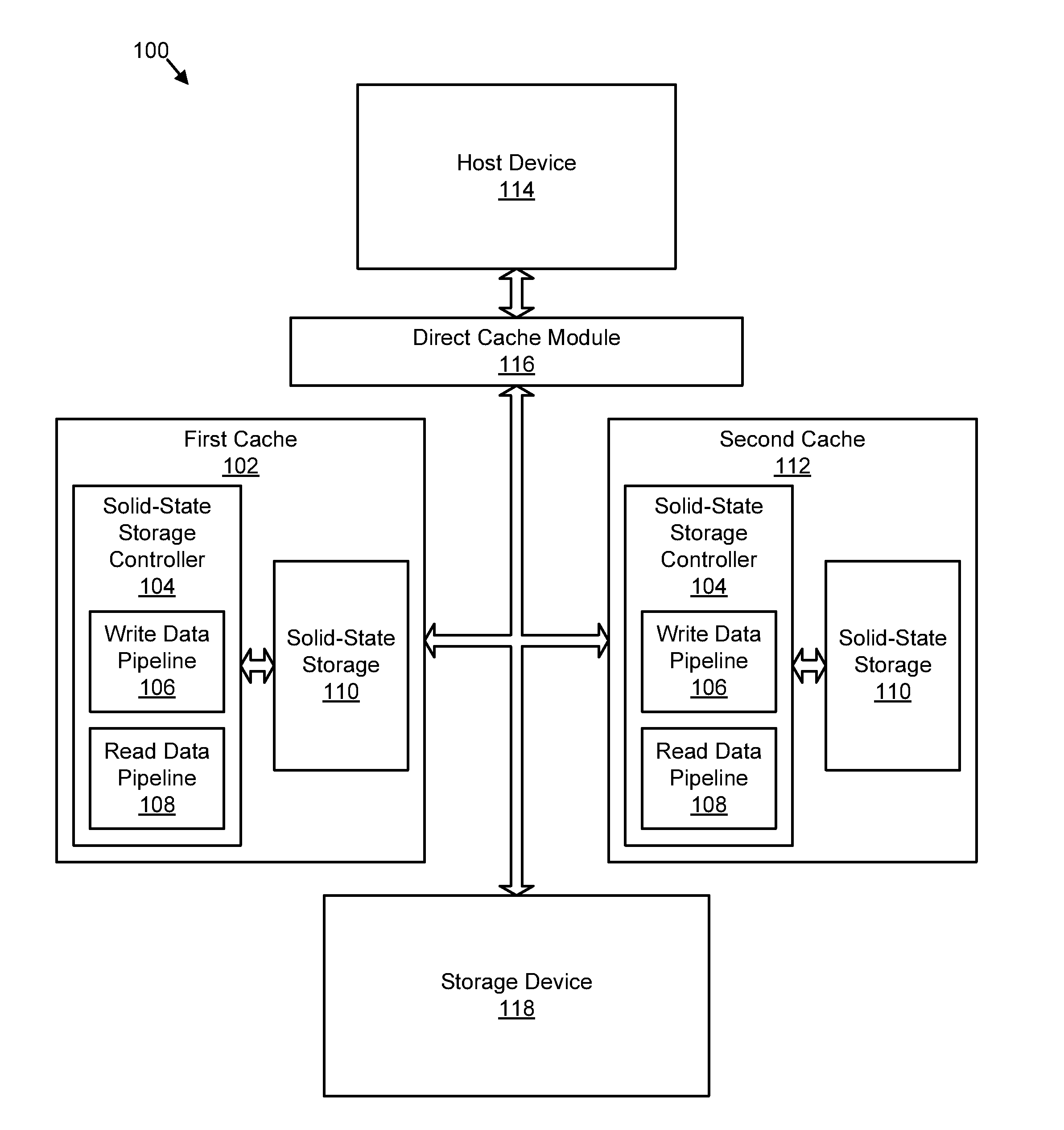

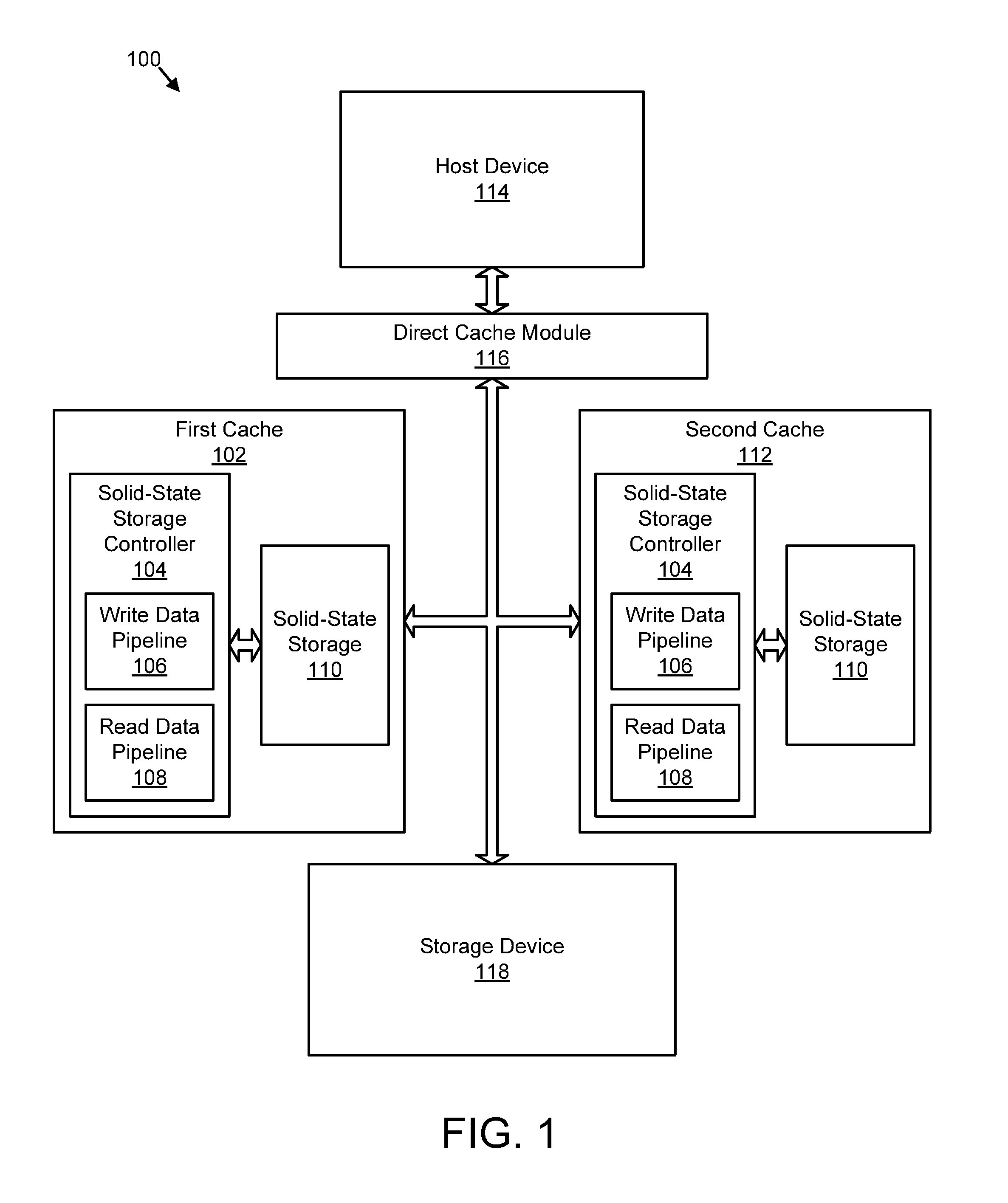

Apparatus, system, and method for redundant write caching

ActiveUS20110022801A1Improve power performanceAvoid data lossMemory architecture accessing/allocationProgram synchronisationOperating system

An apparatus, system, and method are disclosed for redundant write caching. The apparatus, system, and method are provided with a plurality of modules including a write request module, a first cache write module, a second cache write module, and a trim module. The write request module detects a write request to store data on a storage device. The first cache write module writes data of the write request to a first cache. The second cache write module writes the data to a second cache. The trim module trims the data from one of the first cache and the second cache in response to an indicator that the storage device stores the data. The data remains available in the other of the first cache and the second cache to service read requests.

Owner:SANDISK TECH LLC

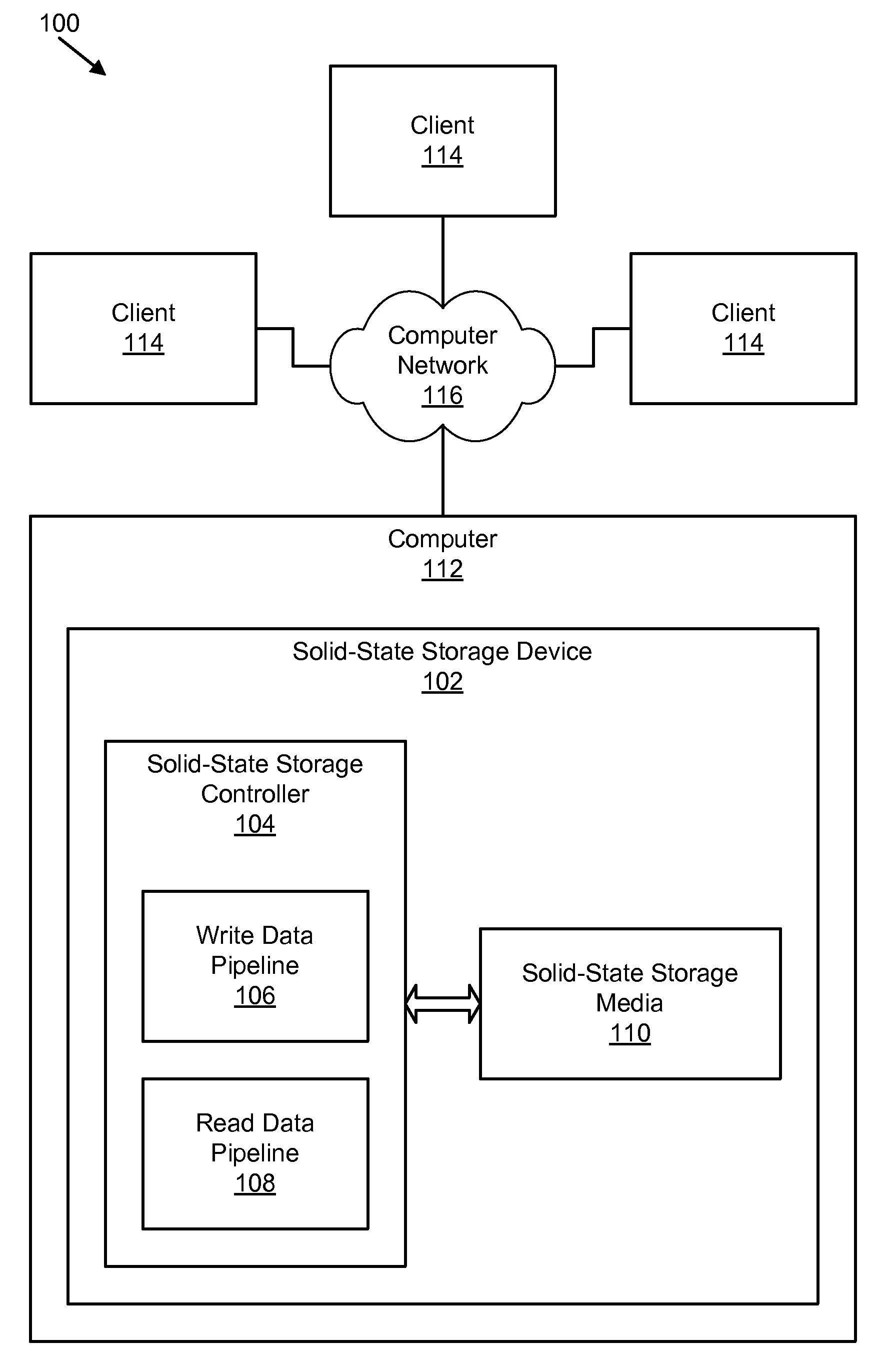

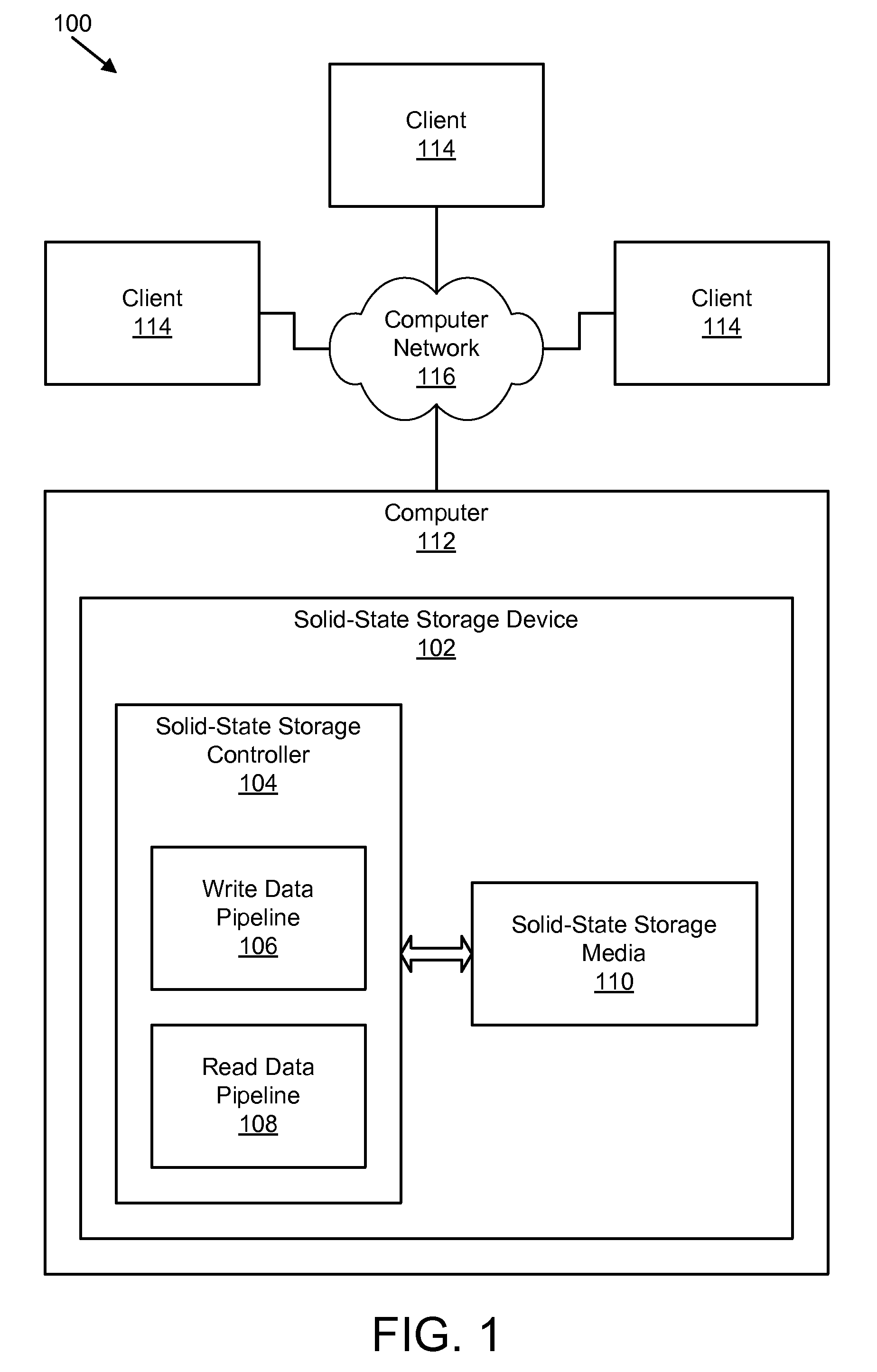

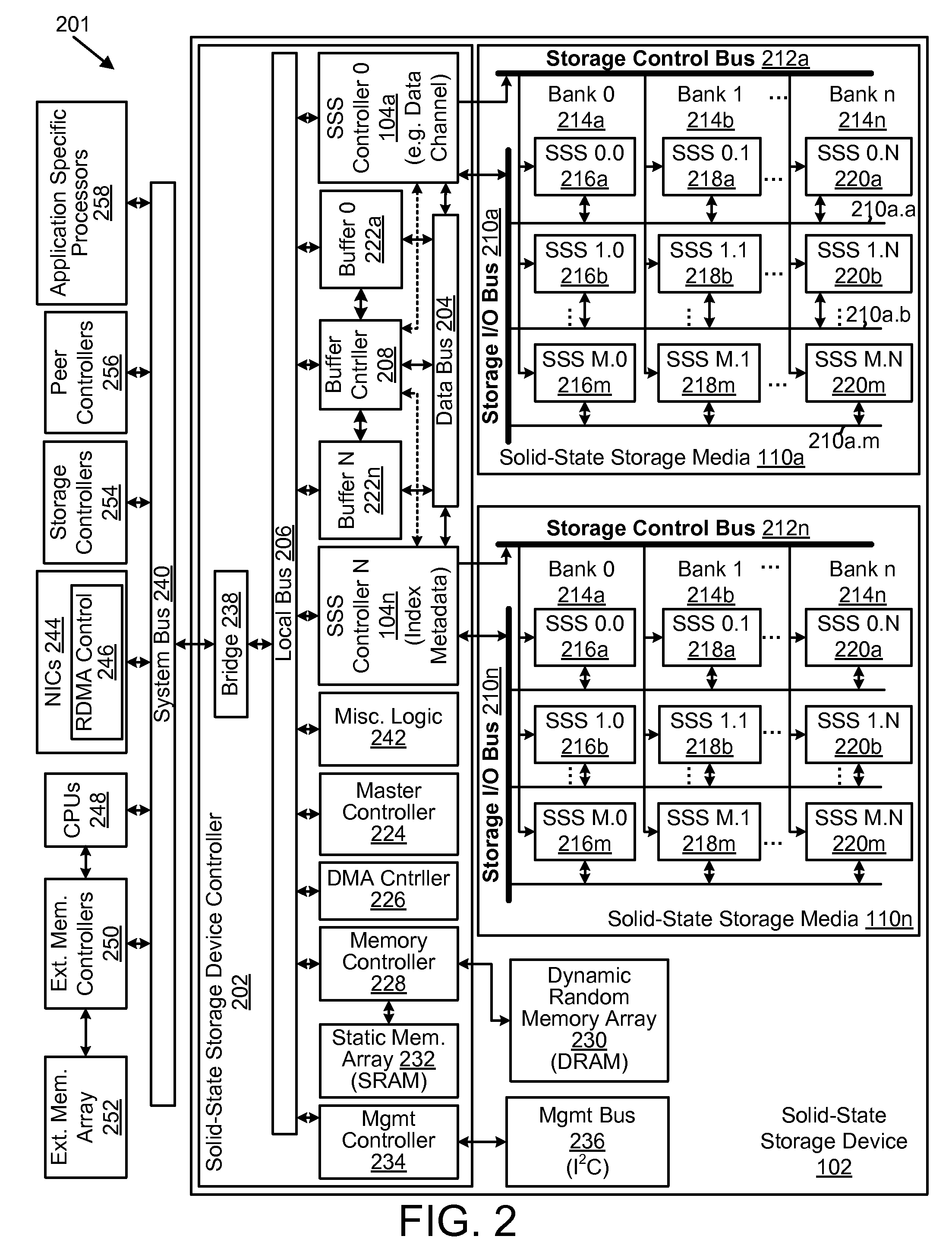

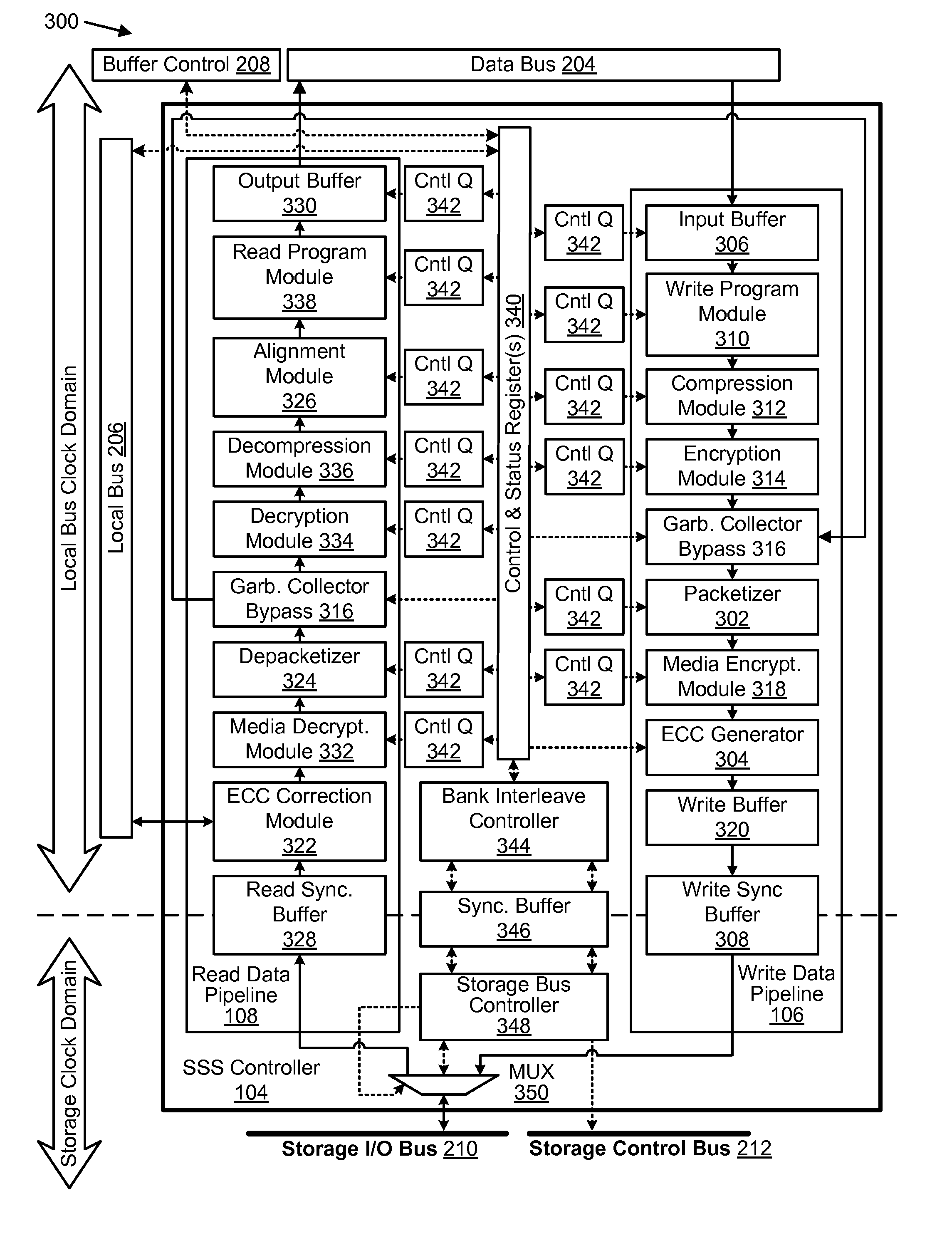

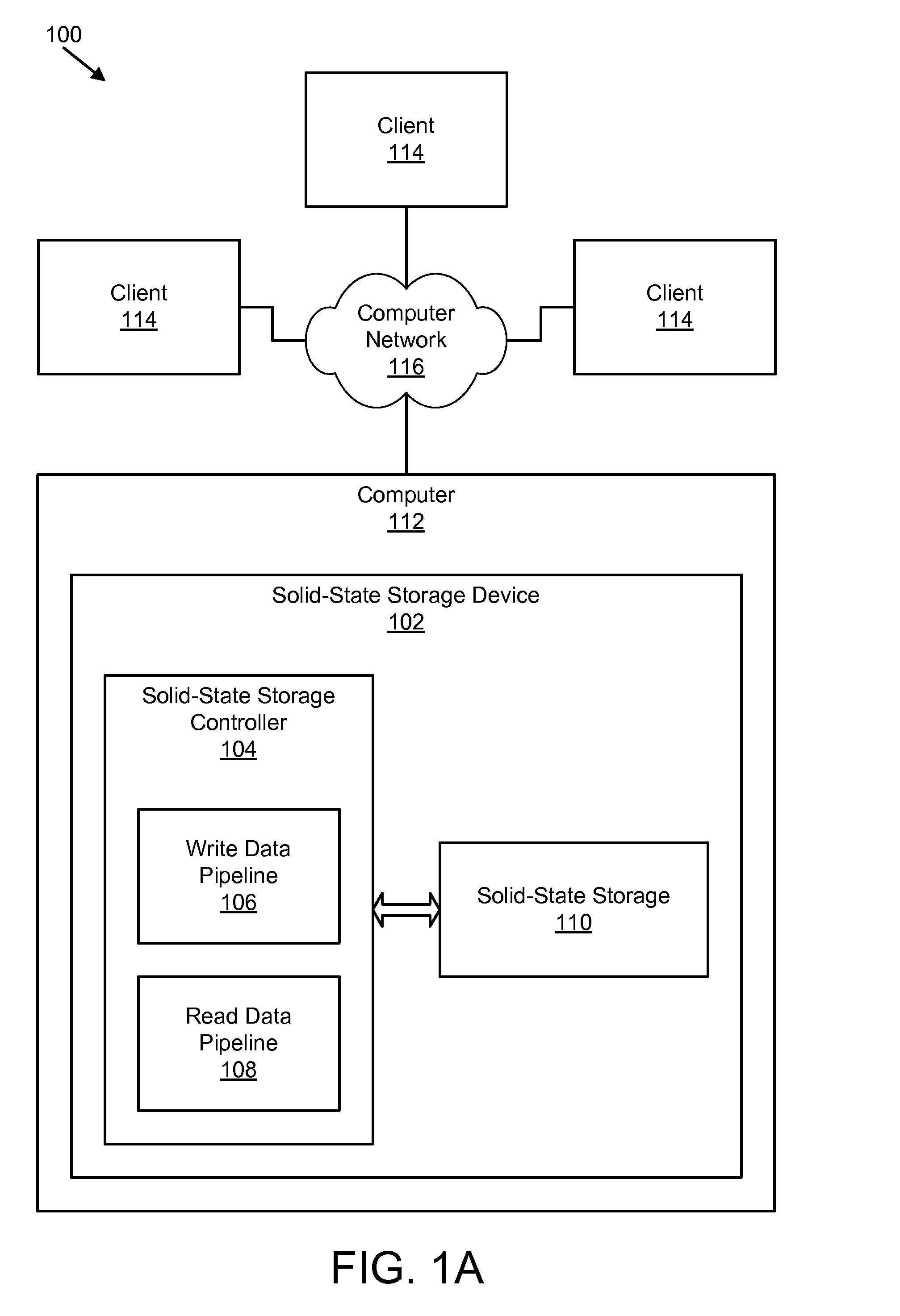

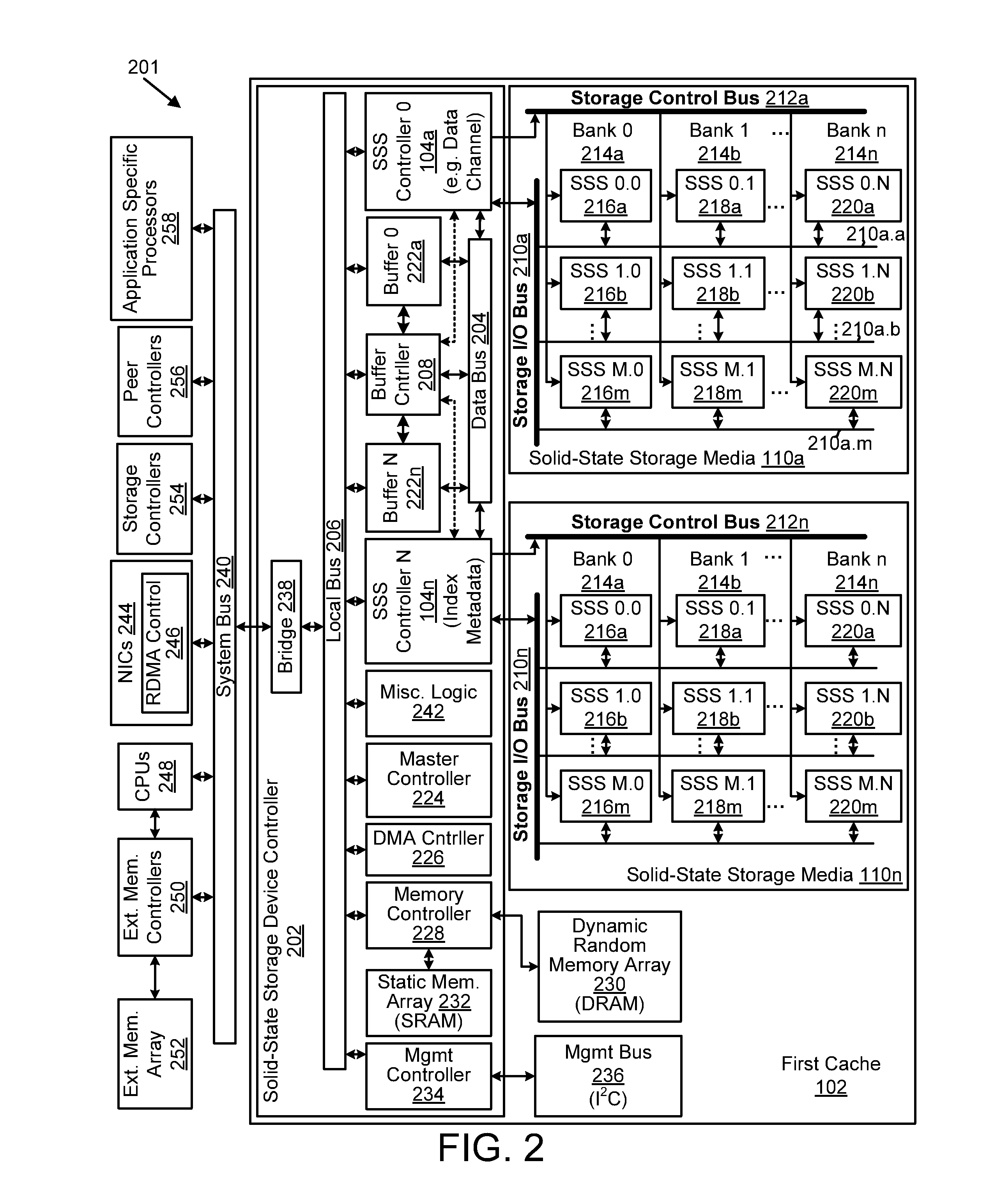

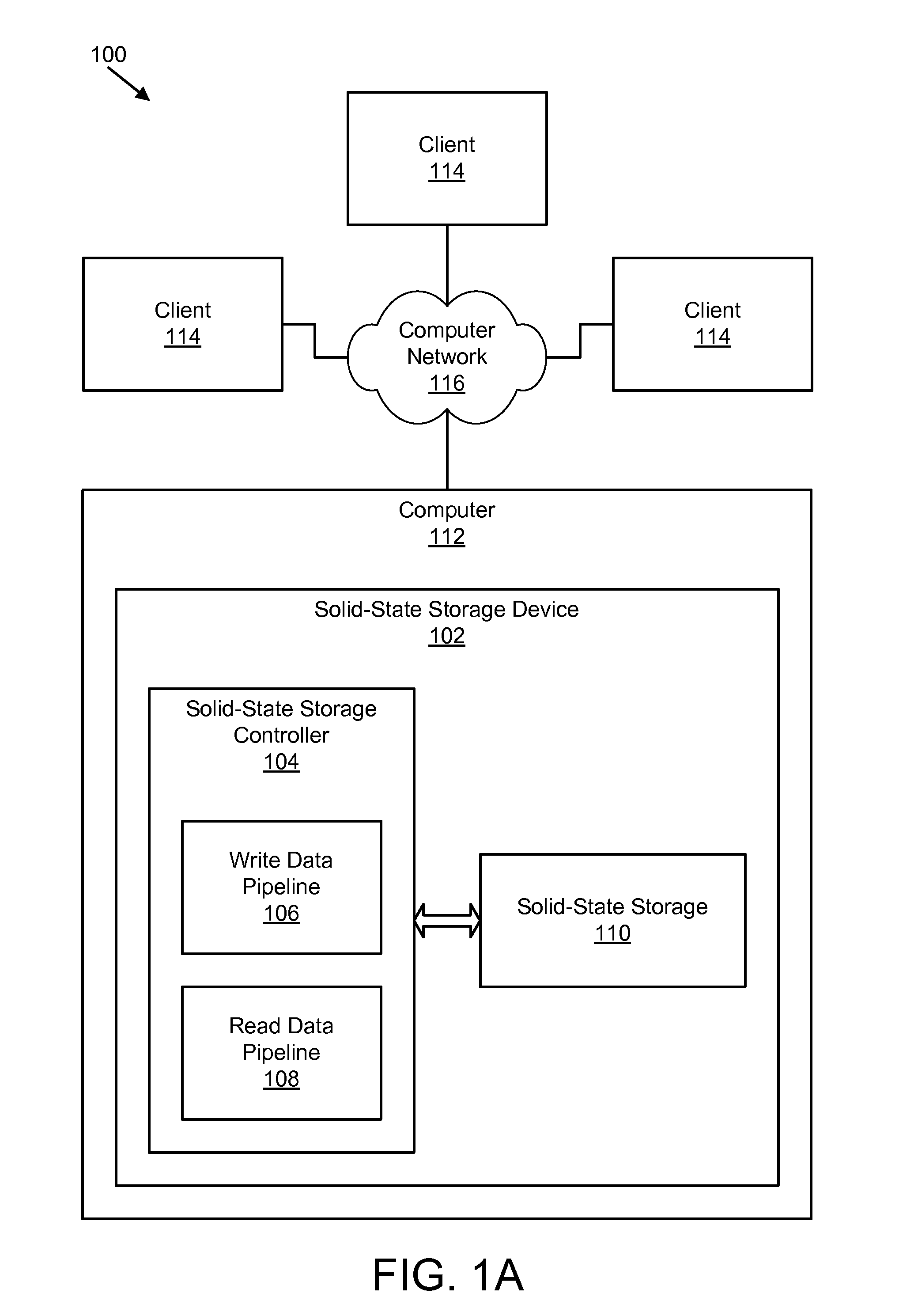

Apparatus, system, and method for managing data using a data pipeline

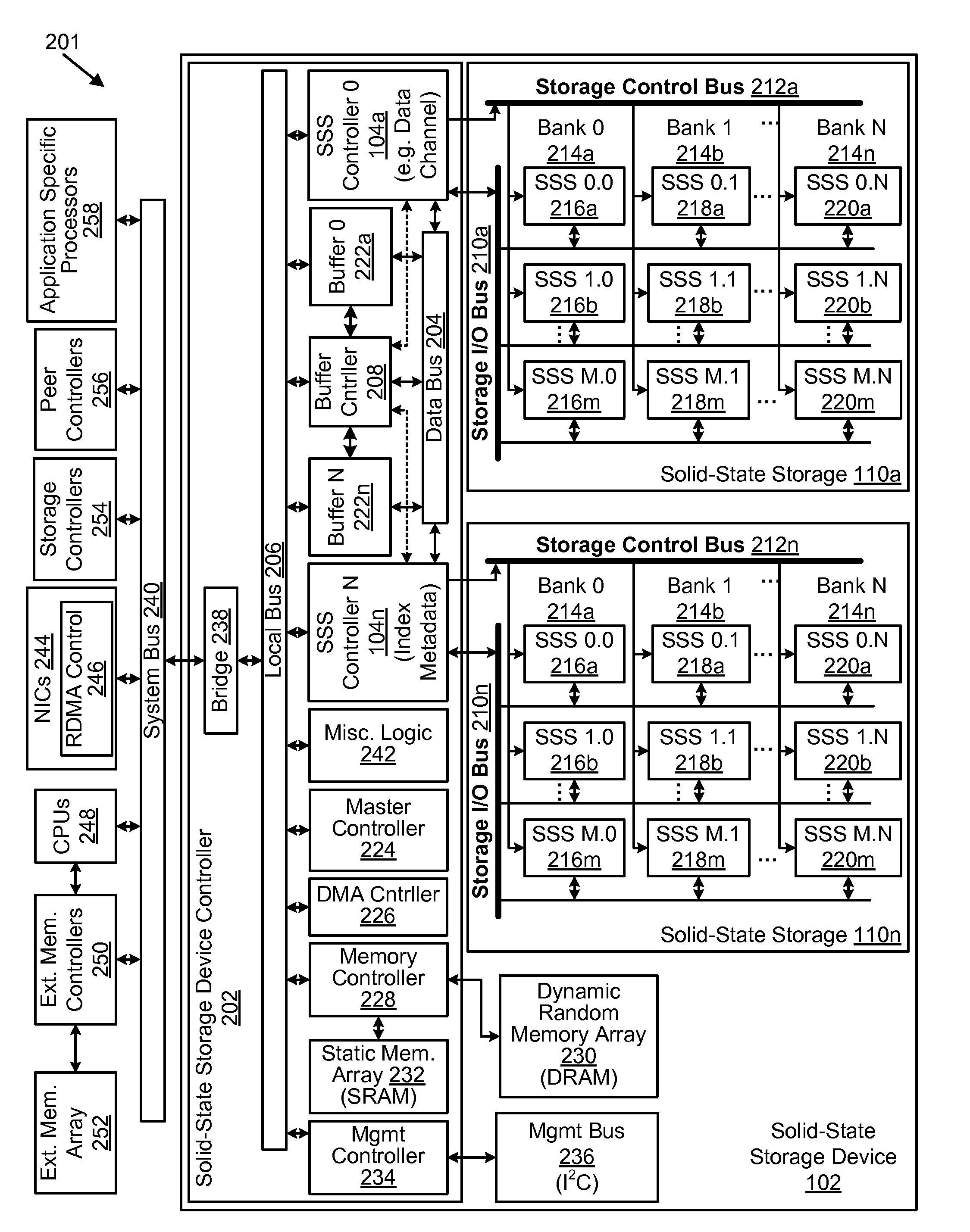

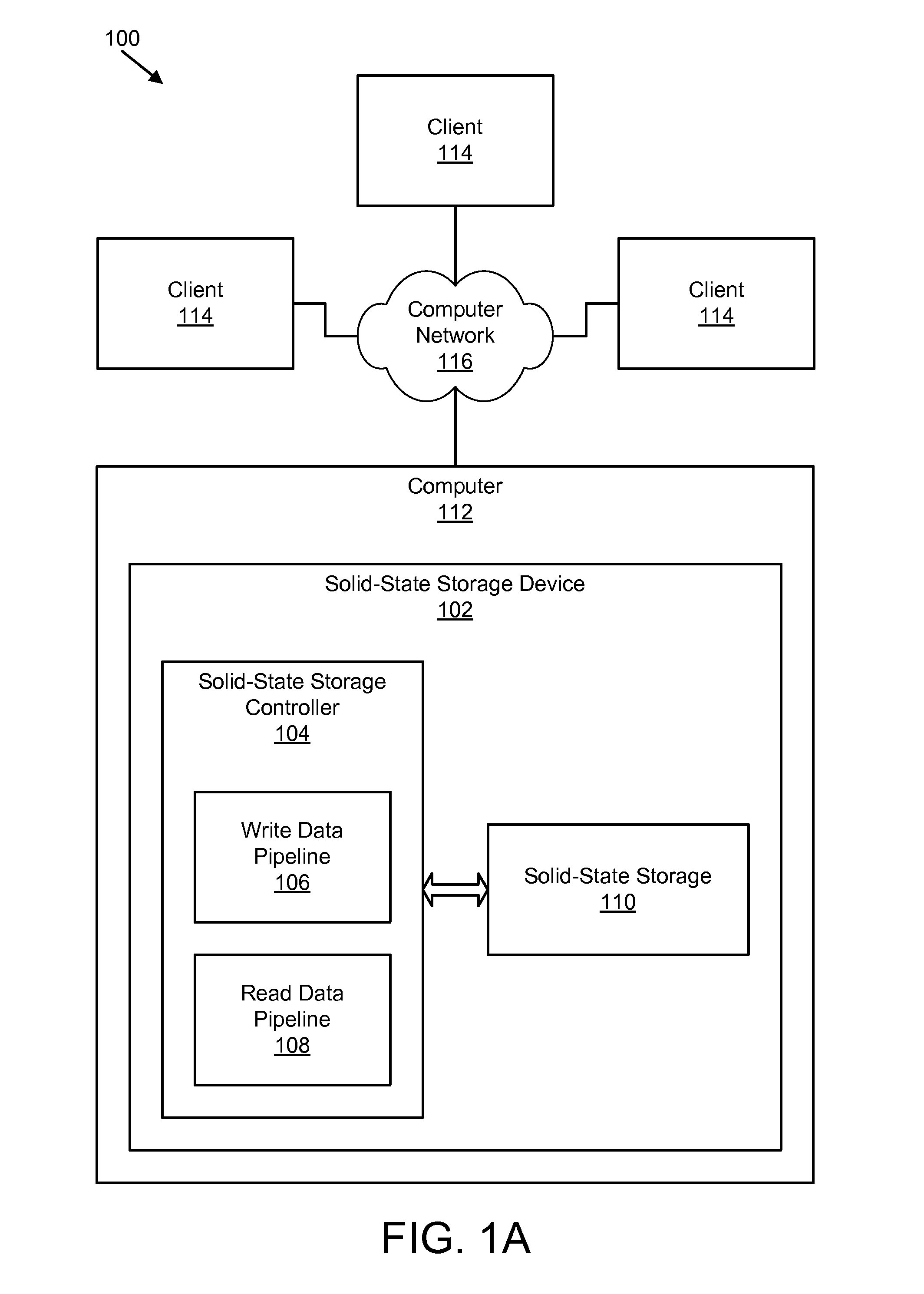

ActiveUS20080141043A1Energy efficient ICTInput/output to record carriersSolid-state storageData segment

An apparatus, system, and method are disclosed for managing data in a solid-state storage device. A solid-state storage and solid-state controller are included. The solid-state storage controller includes a write data pipeline and a read data pipeline The write data pipeline includes a packetizer and an ECC generator. The packetizer receives a data segment and creates one or more data packets sized for the solid-state storage. The ECC generator generates one or more error-correcting codes (“ECC”) for the data packets received from the packetizer. The read data pipeline includes an ECC correction module, a depacketizer, and an alignment module. The ECC correction module reads a data packet from solid-state storage, determines if a data error exists using corresponding ECC and corrects errors. The depacketizer checks and removes one or more packet headers. The alignment module removes unwanted data, and re-formats the data as data segments of an object.

Owner:UNIFICATION TECH LLC

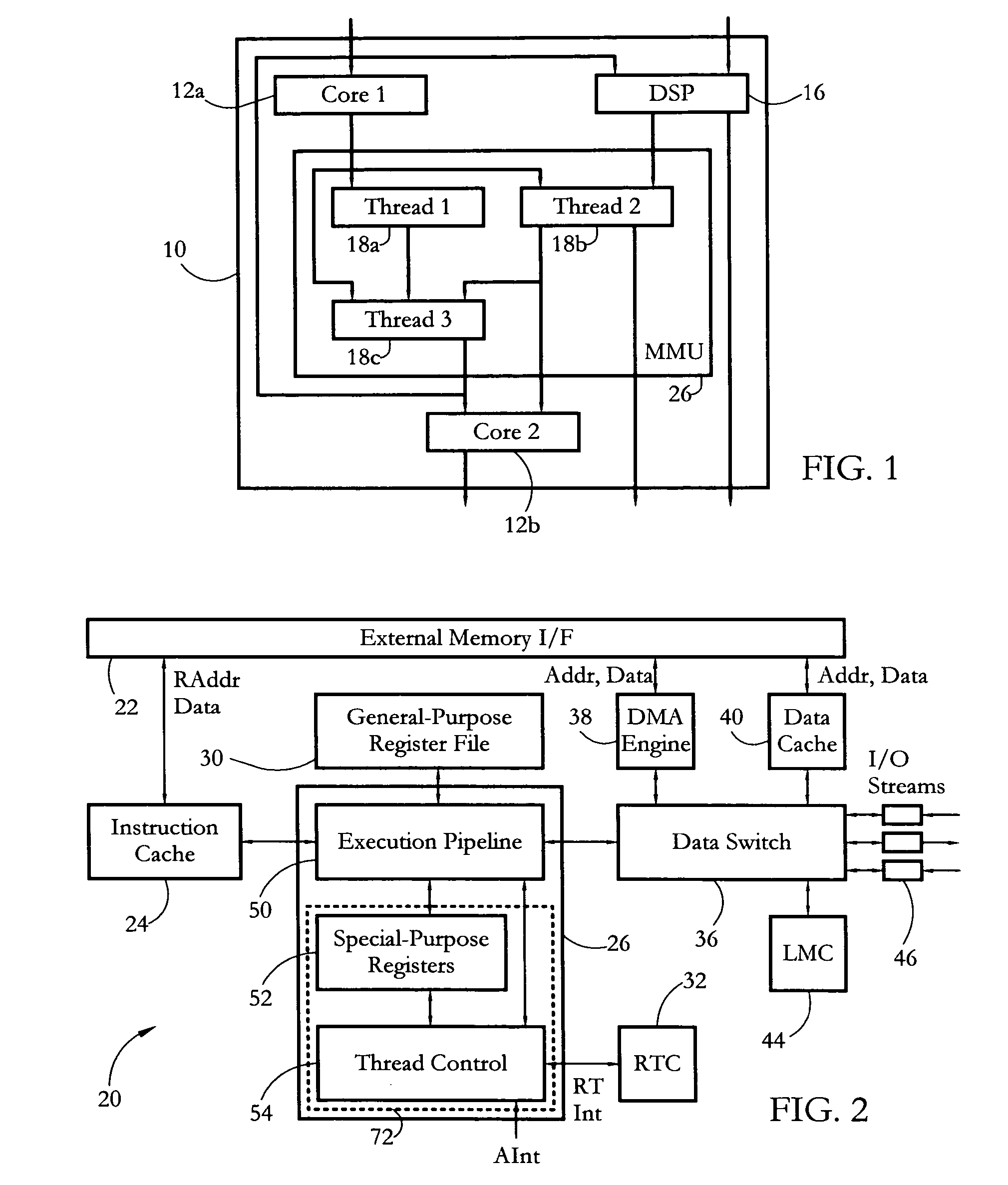

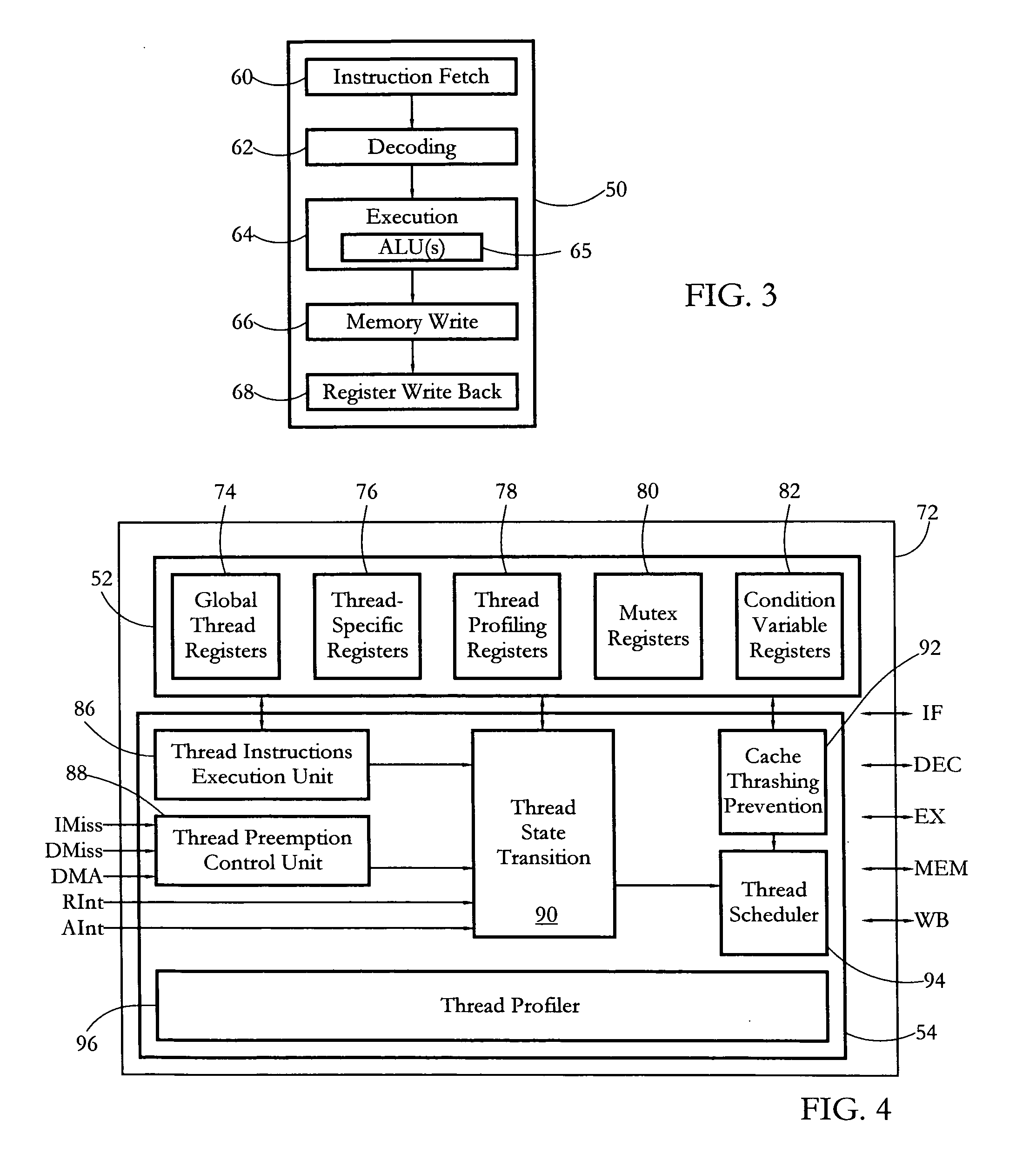

Hardware multithreading systems and methods

According to some embodiments, a multithreaded microcontroller includes a thread control unit comprising thread control hardware (logic) configured to perform a number of multithreading system calls essentially in real time, e.g. in one or a few clock cycles. System calls can include mutex lock, wait condition, and signal instructions. The thread controller includes a number of thread state, mutex, and condition variable registers used for executing the multithreading system calls. Threads can transition between several states including free, run, ready and wait. The wait state includes interrupt, condition, mutex, I-cache, and memory substates. A thread state transition controller controls thread states, while a thread instructions execution unit executes multithreading system calls and manages thread priorities to avoid priority inversion. A thread scheduler schedules threads according to their priorities. A hardware thread profiler including global, run and wait profiler registers is used to monitor thread performance to facilitate software development.

Owner:GEO SEMICONDUCTOR INC

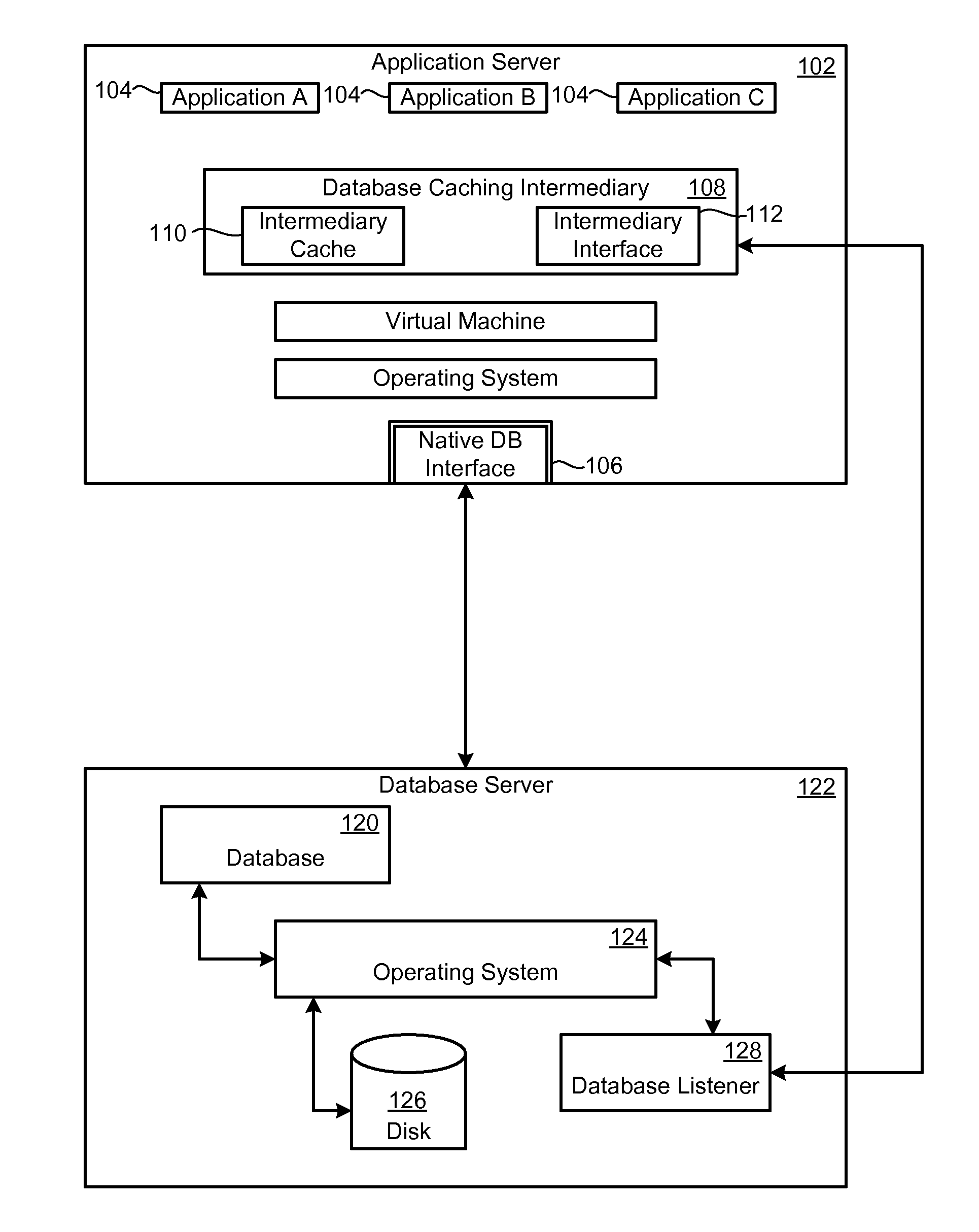

Database Caching and Invalidation using Database Provided Facilities for Query Dependency Analysis

InactiveUS20060271510A1Sure easyDigital data information retrievalProgram synchronisationDatabase cachingDatabase

Database data is maintained reliably and invalidated based on actual changes to data in the database. Updates or changes to data are detected without parsing queries submitted to the database. The dependencies of a query can be determined by submitting a version of the received query to the database through a native facility provided by the database to analyze how query structures are processed. The caching system can access the results of the facility to determine the tables, rows, or other partitions of data a received query is dependent upon or modifies. An abstracted form of the query can be cached with an indication of the tables, rows, etc. that queries of that structure access or modify. The tables a write or update query modifies can be cached with a time of last modification. When a query is received for which the results are cached, the system can readily determine dependency information for the query, the last time the dependencies were modified, and compare this time with the time indicated for when the cached results were retrieved. By passing versions of write queries to the database, updates to the database can be detected.

Owner:TERRACOTTA

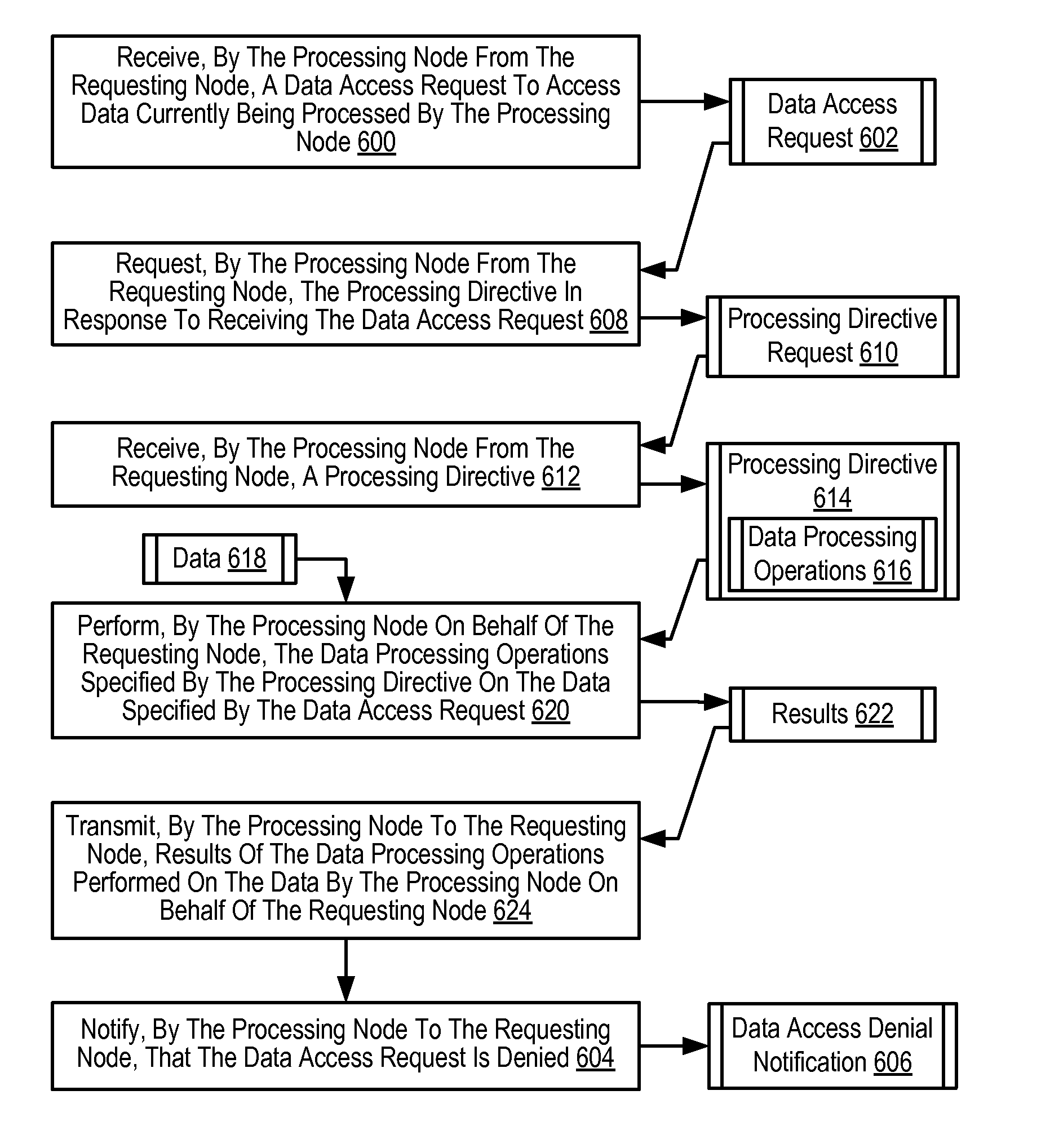

Processing data access requests among a plurality of compute nodes

InactiveUS7895260B2Program synchronisationMultiple digital computer combinationsData accessParallel computing

Methods, apparatus, and products are disclosed for processing data access requests among a plurality of compute nodes. One compute node operates as a processing node, and one compute nodes operates as a requesting node. The processing node receives, from the requesting node, a data access request to access data currently being processed by the processing node. The processing node also receives, from the requesting node, a processing directive. The processing directive specifies data processing operations to be performed on the data specified by the data access request. The processing node performs, on behalf of the requesting node, the data processing operations specified by the processing directive on the data specified by the data access request. The processing node transmits, to the requesting node, results of the data processing operations performed on the data by the processing node on behalf of the requesting node.

Owner:INT BUSINESS MASCH CORP

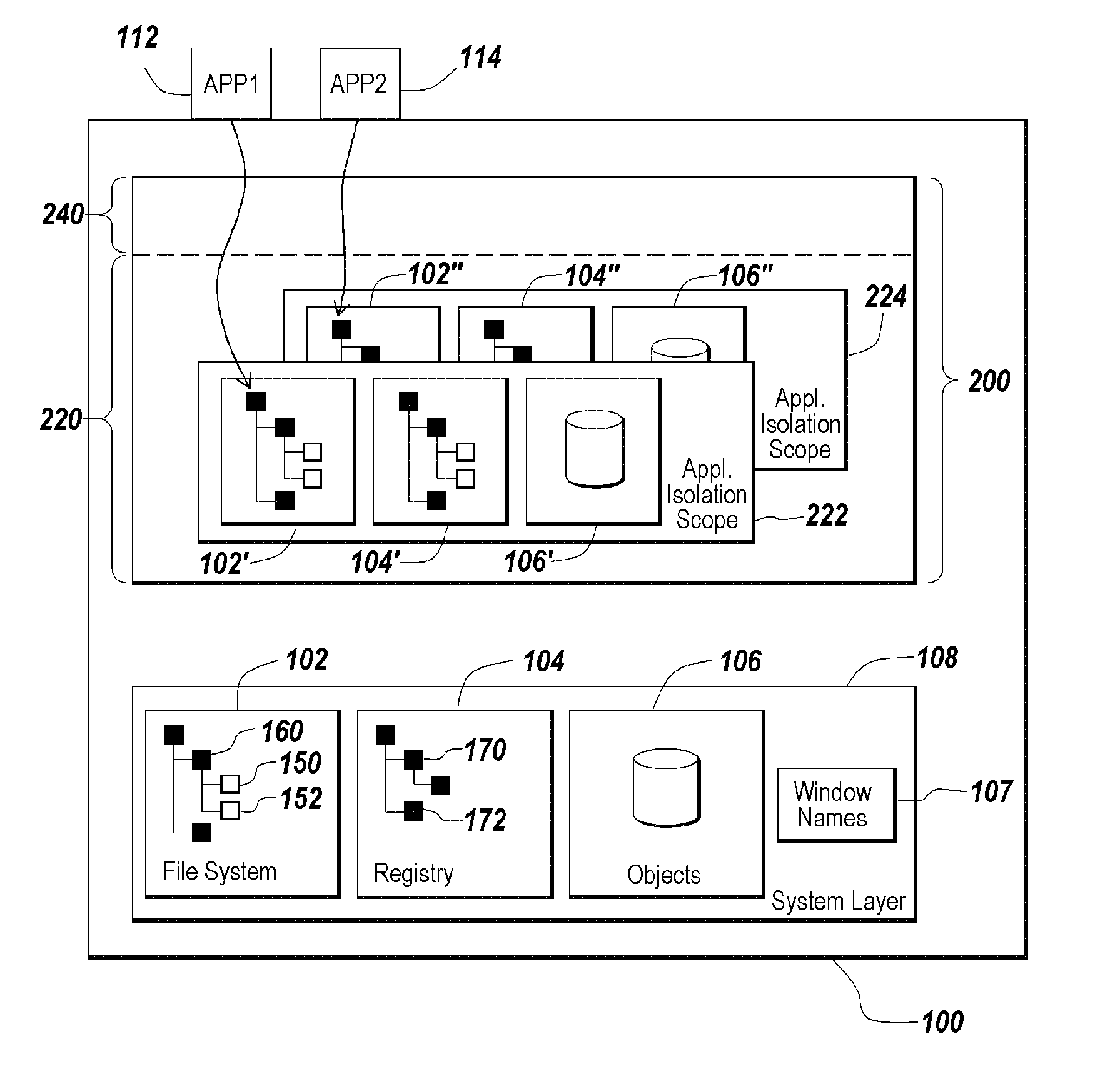

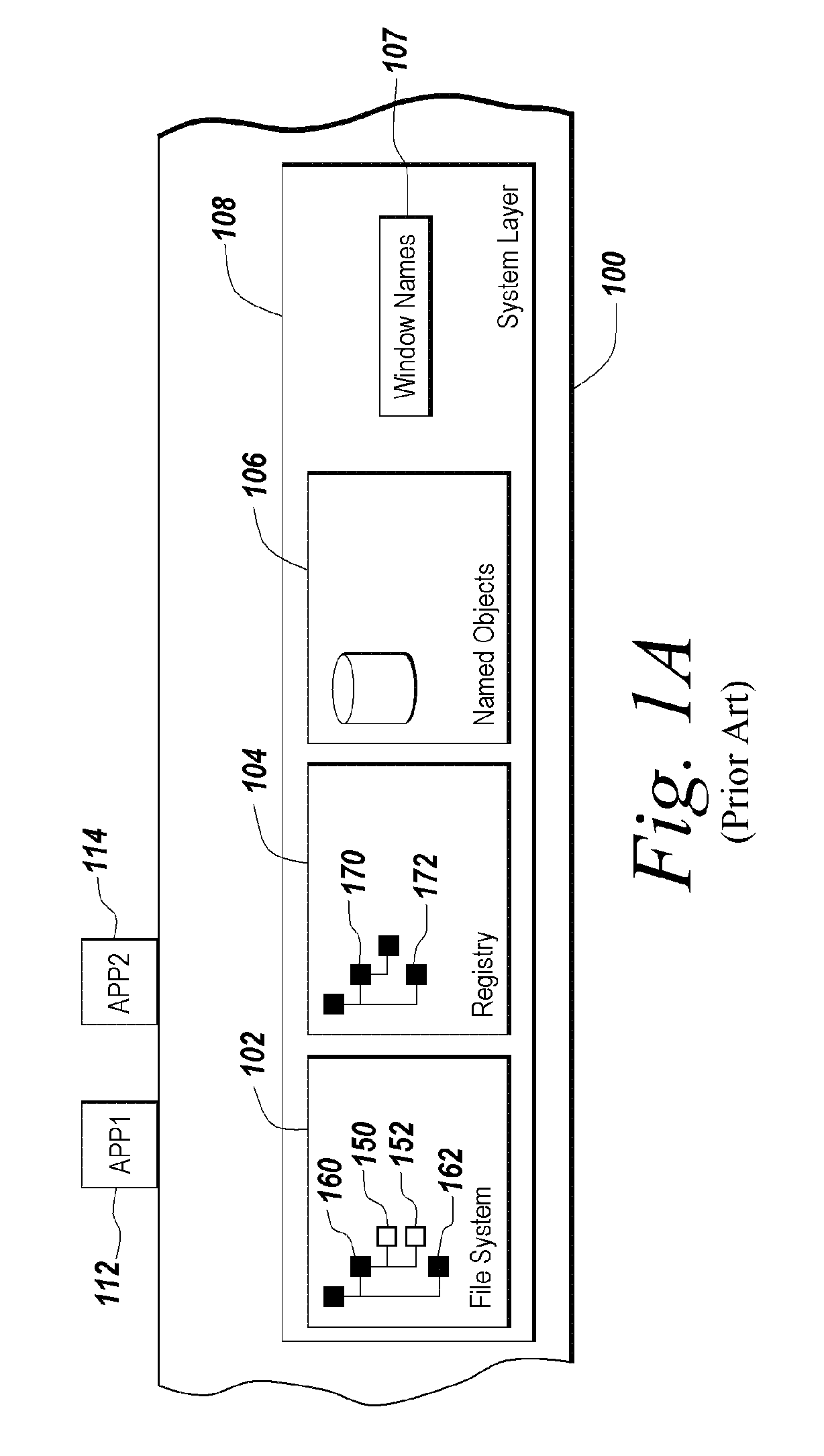

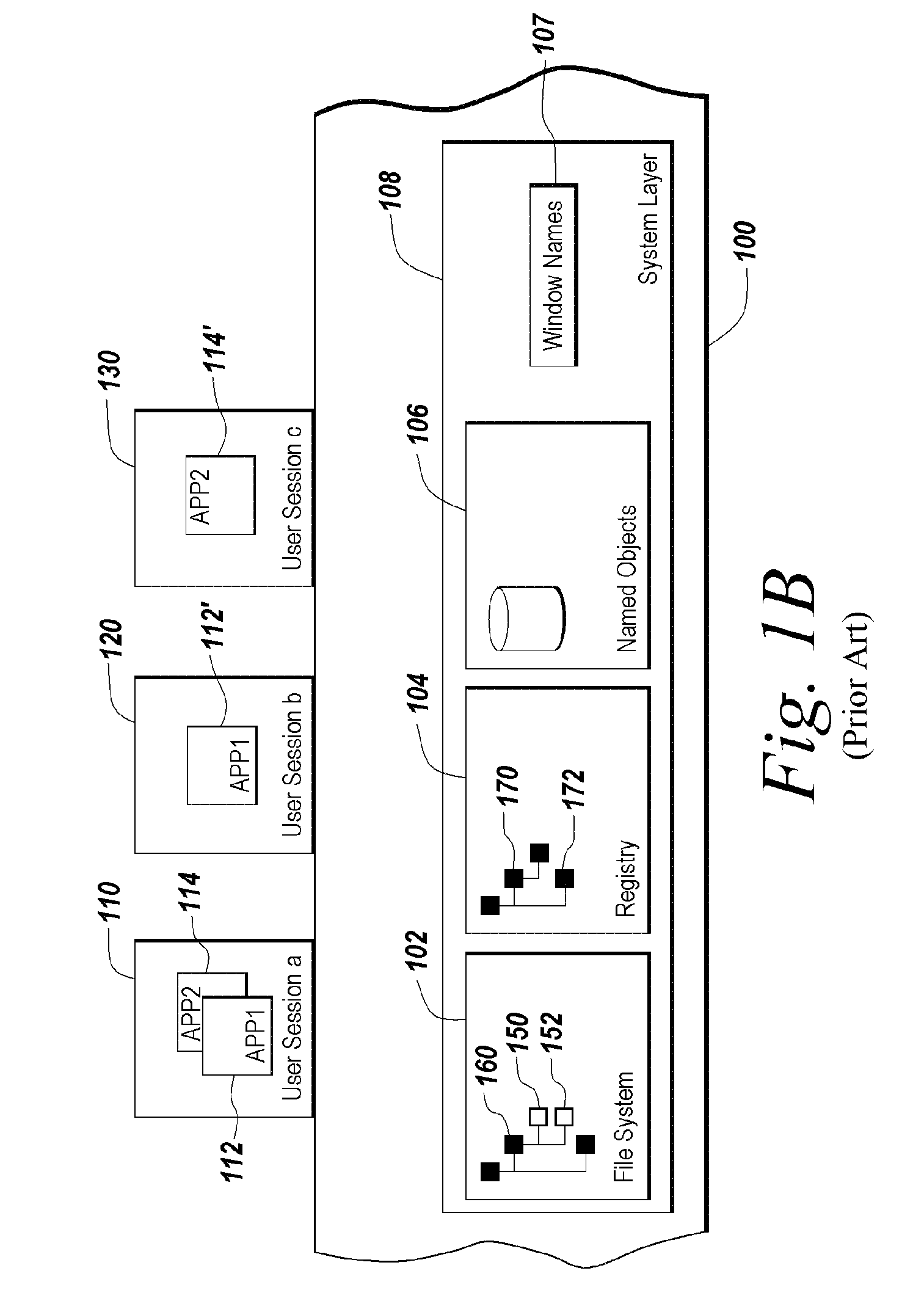

Method and apparatus for isolating execution of software applications

A method for isolating access by application programs to native resources provided by an operating system redirects a request for a native resource made by an application program executing on behalf of a user to an isolation environment. The isolation environment includes a user isolation scope and an application isolation scope. An instance of the requested native resource is located in the user isolation scope corresponding to the user. The request for the native resource is fulfilled using the version of the resource located in the user isolation scope. If an instance of the requested native resource is not located in the user isolation scope, the request is redirected to an application isolation scope. The request for the native resource is fulfilled using the version of the resource located in the application isolation scope. If an instance of the requested native resource is not located in the application isolation scope, the request is redirected to a system scope.

Owner:CITRIX SYST INC

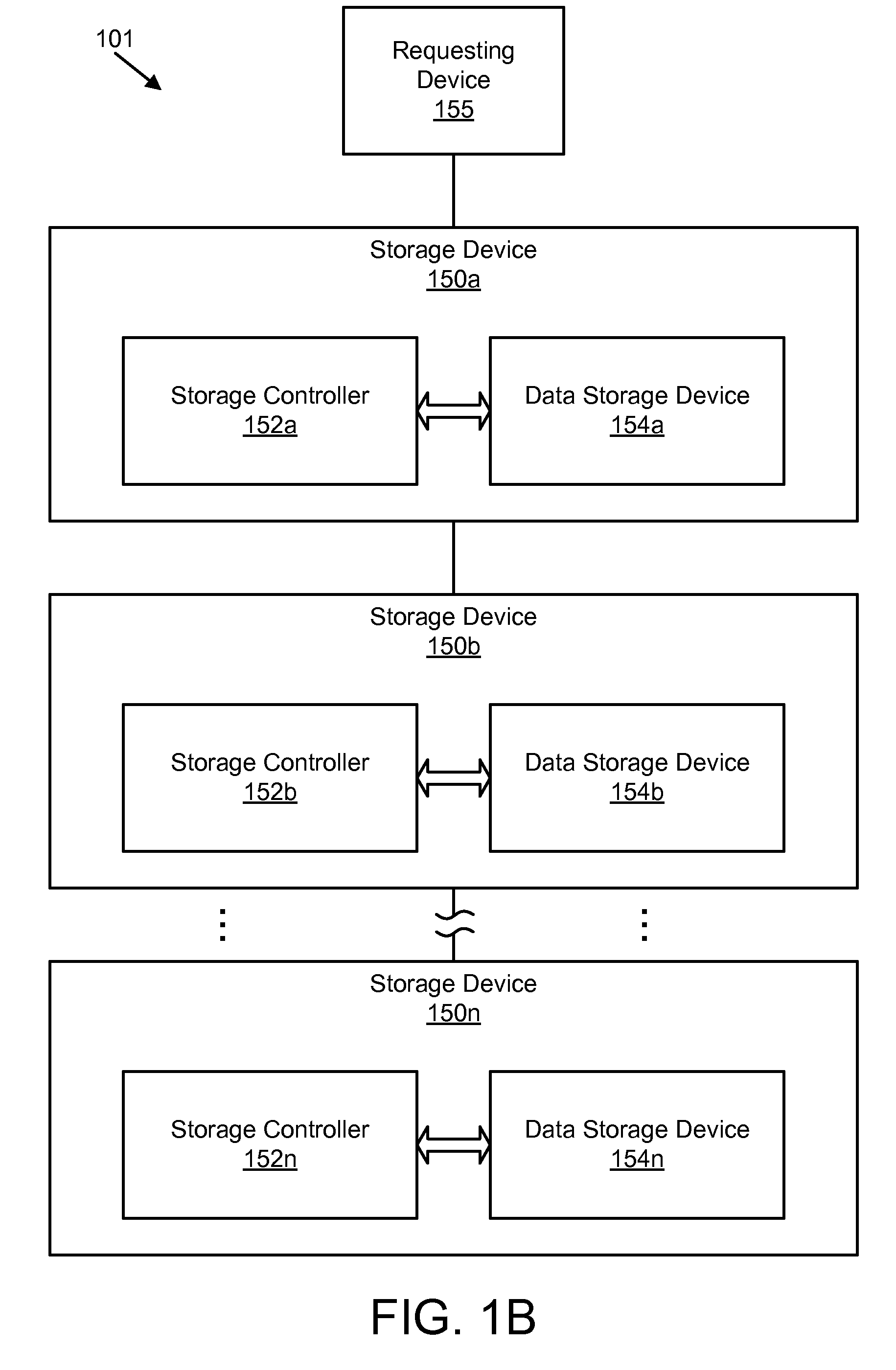

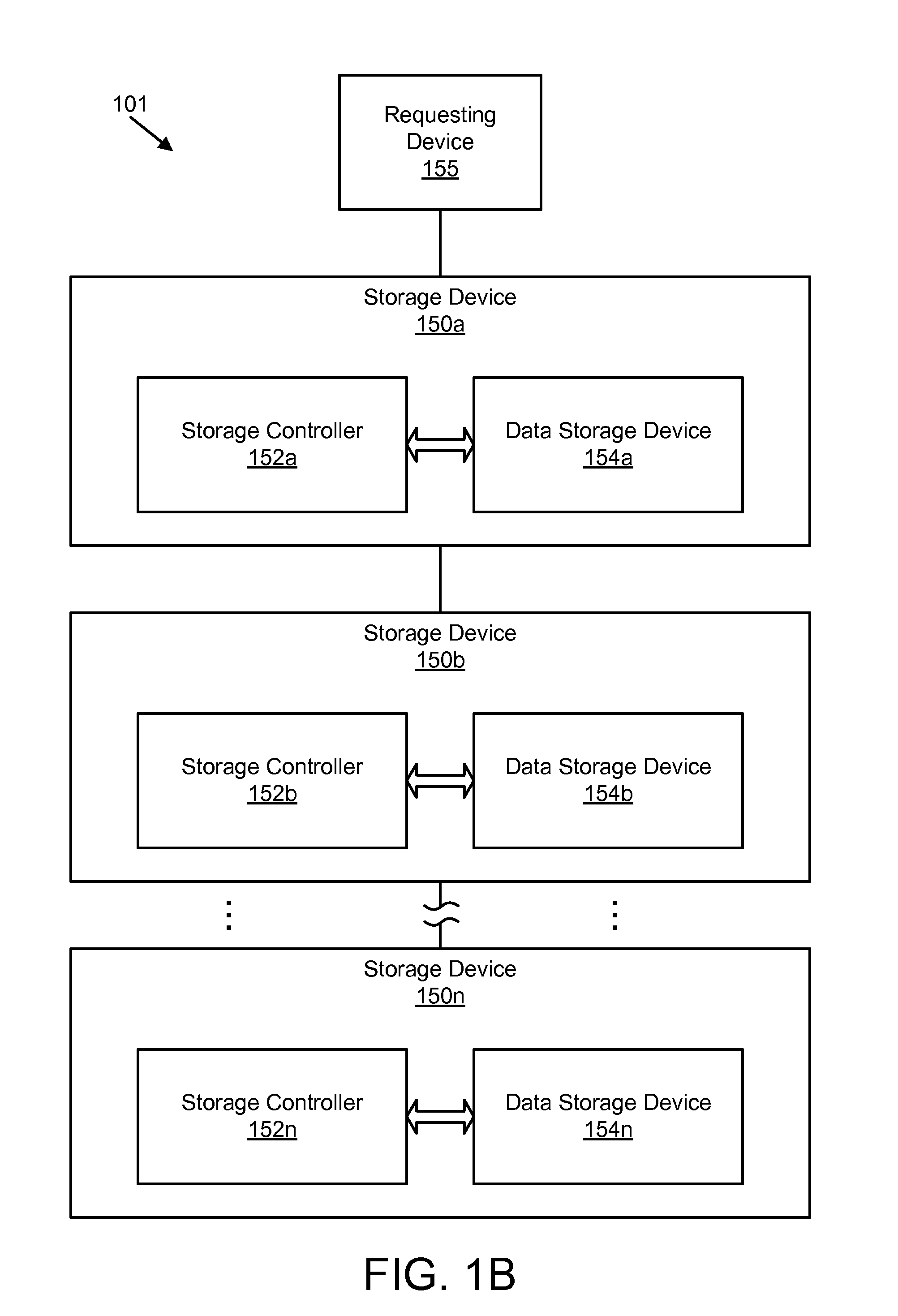

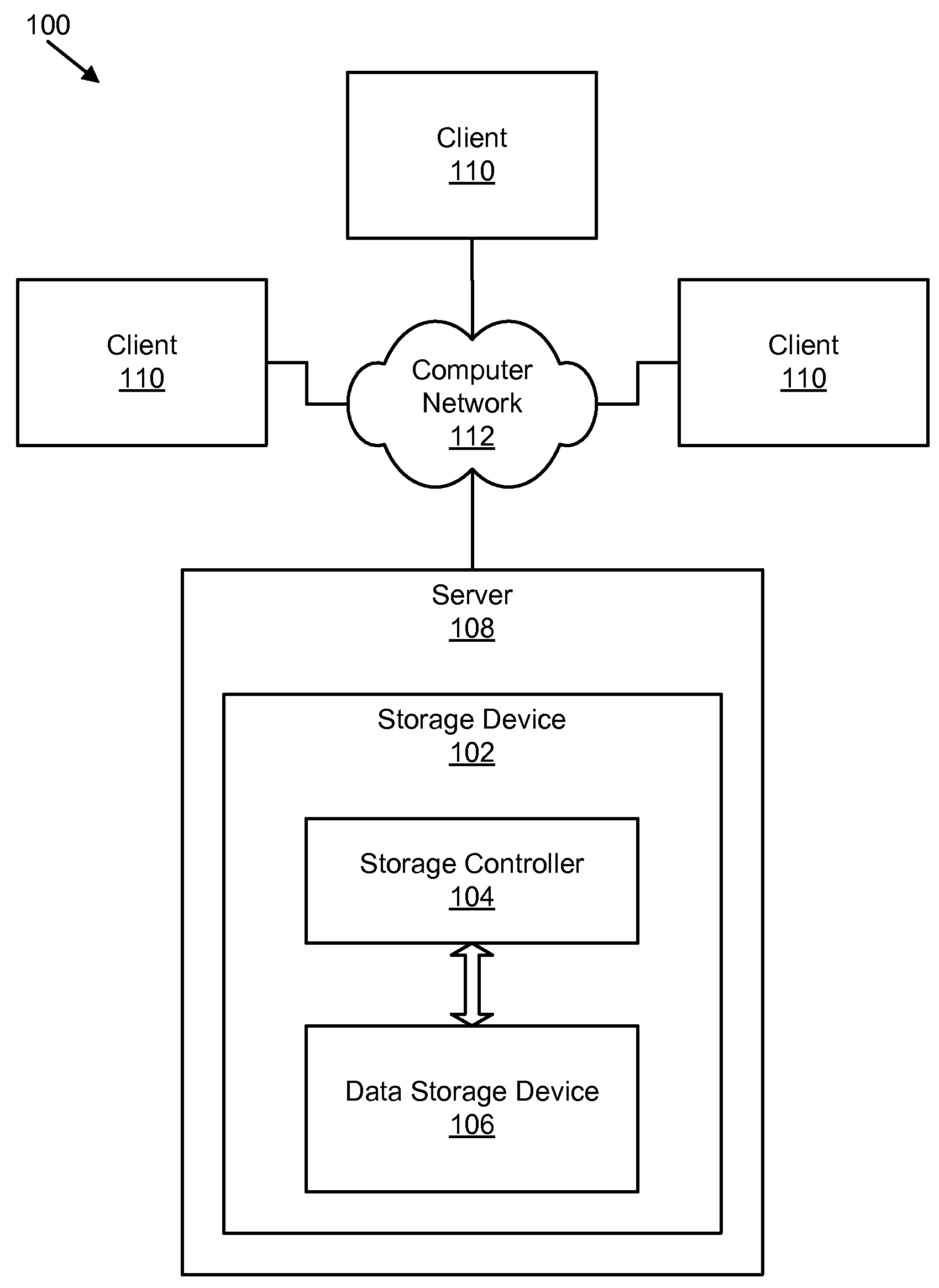

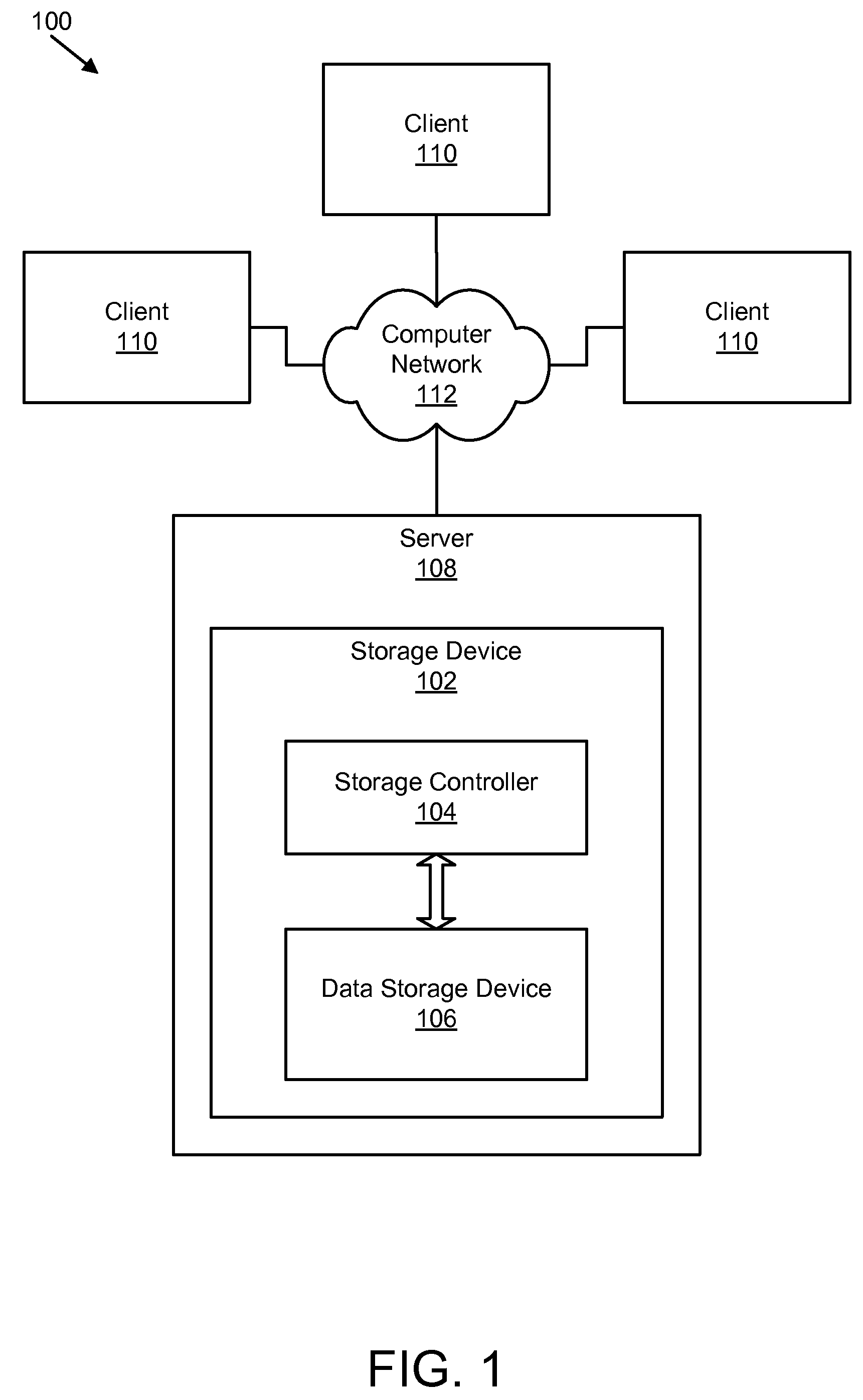

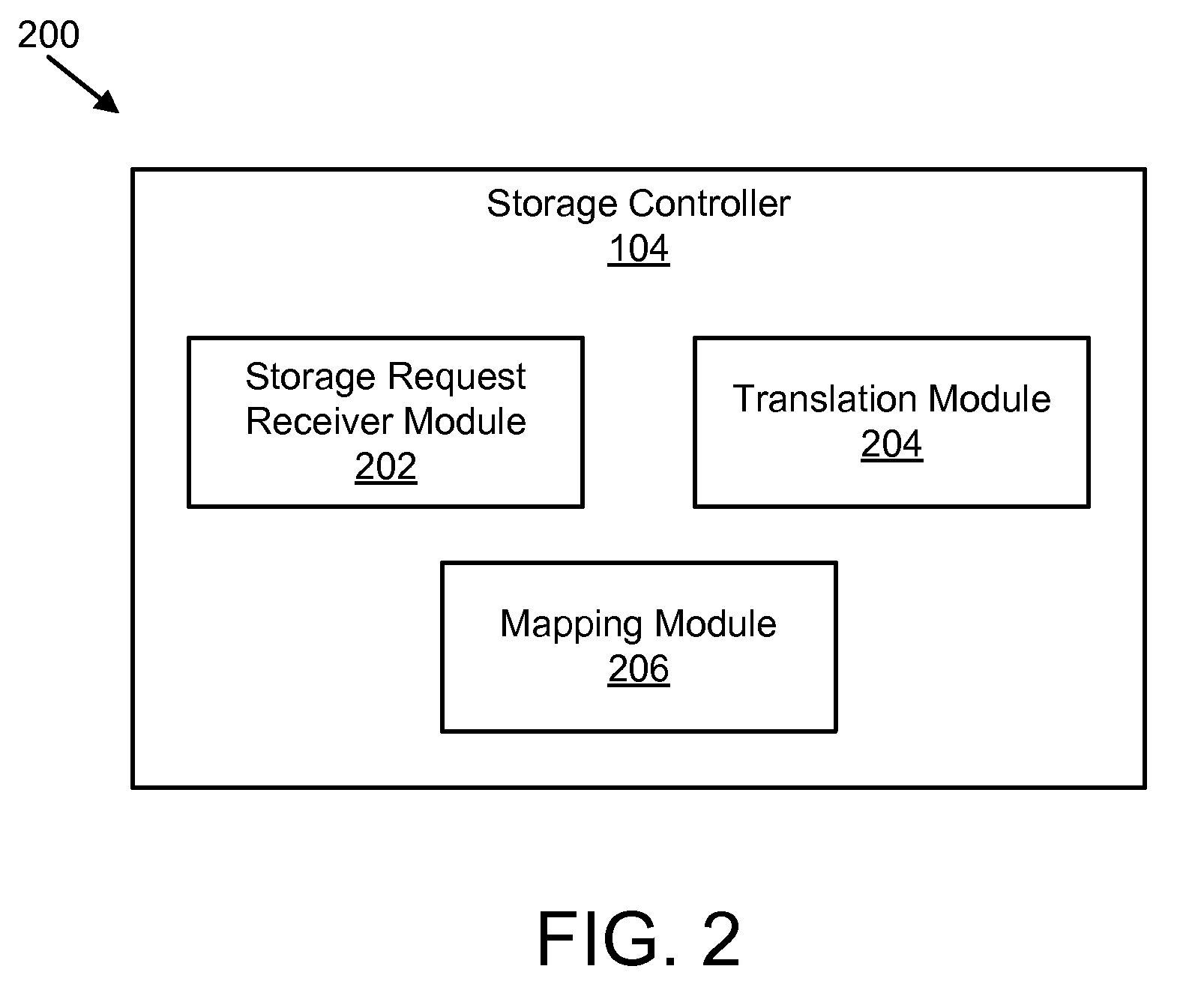

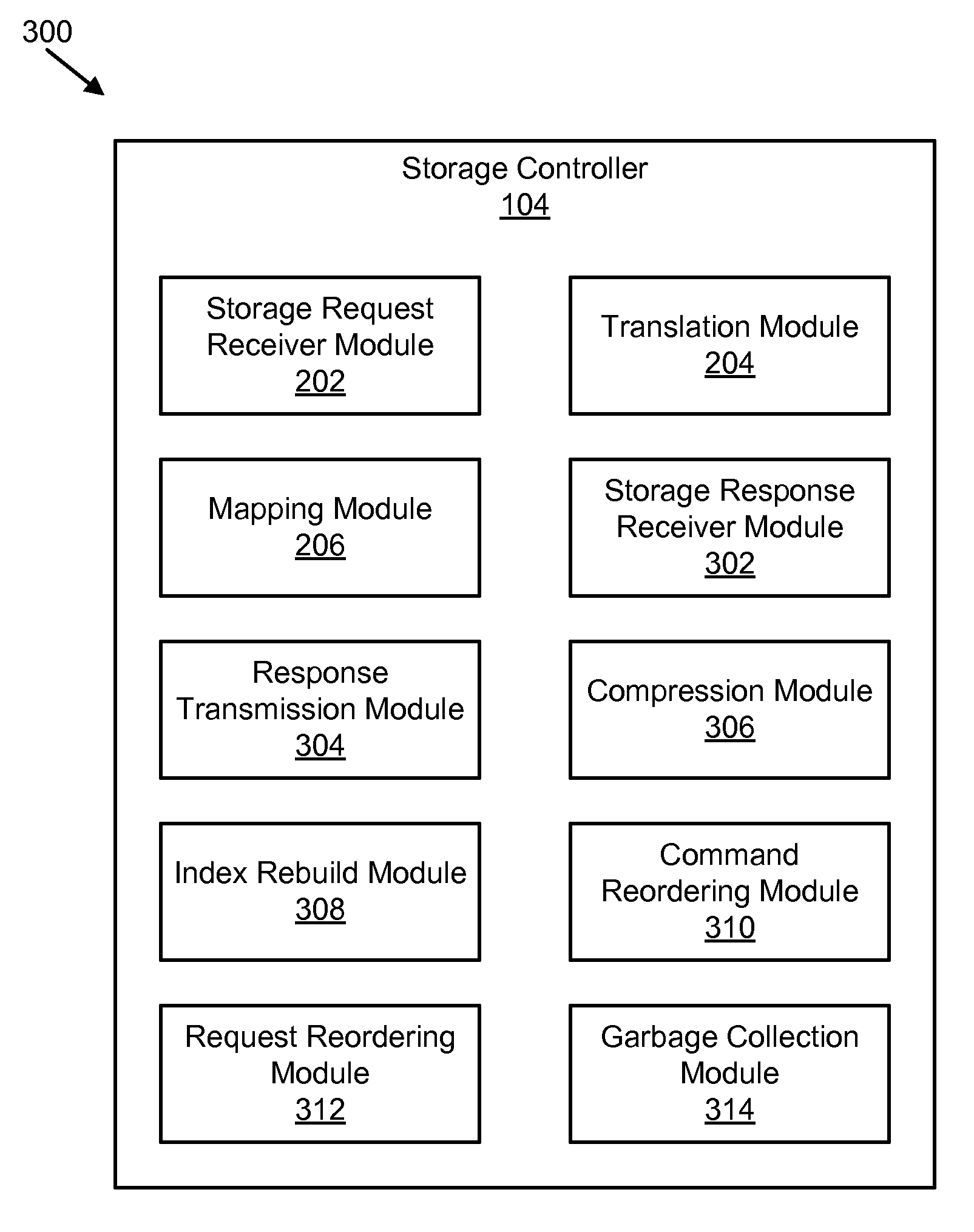

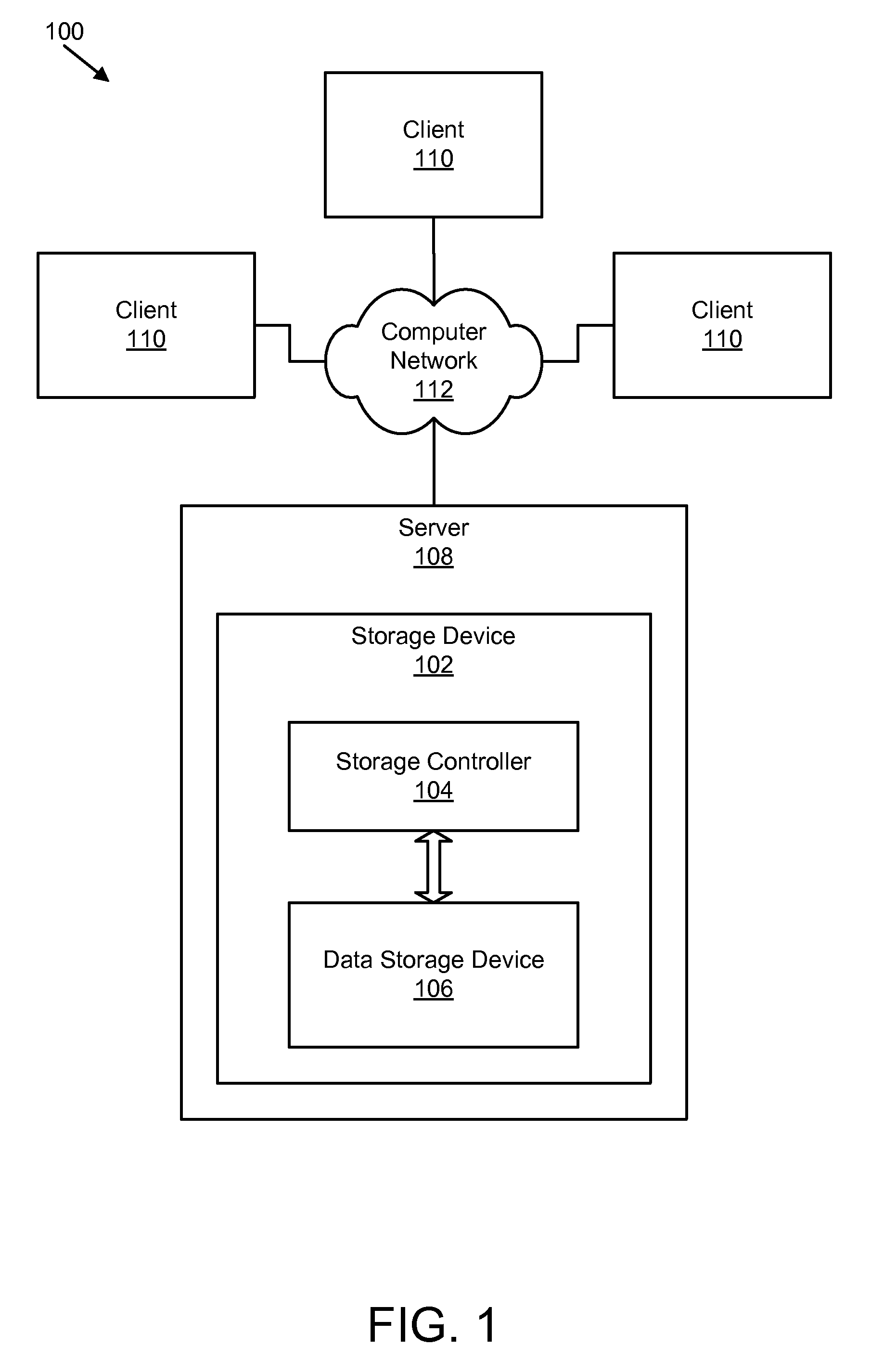

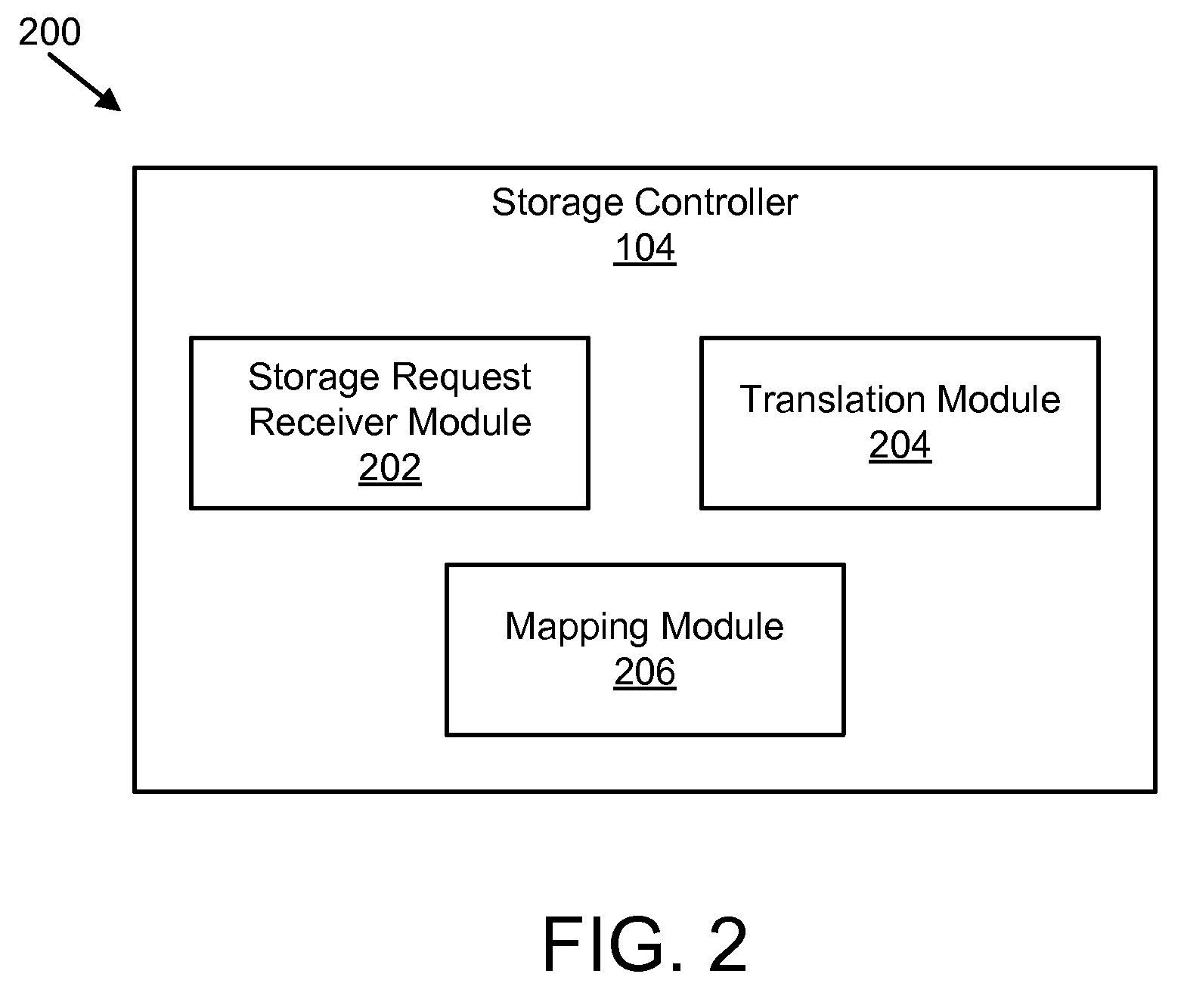

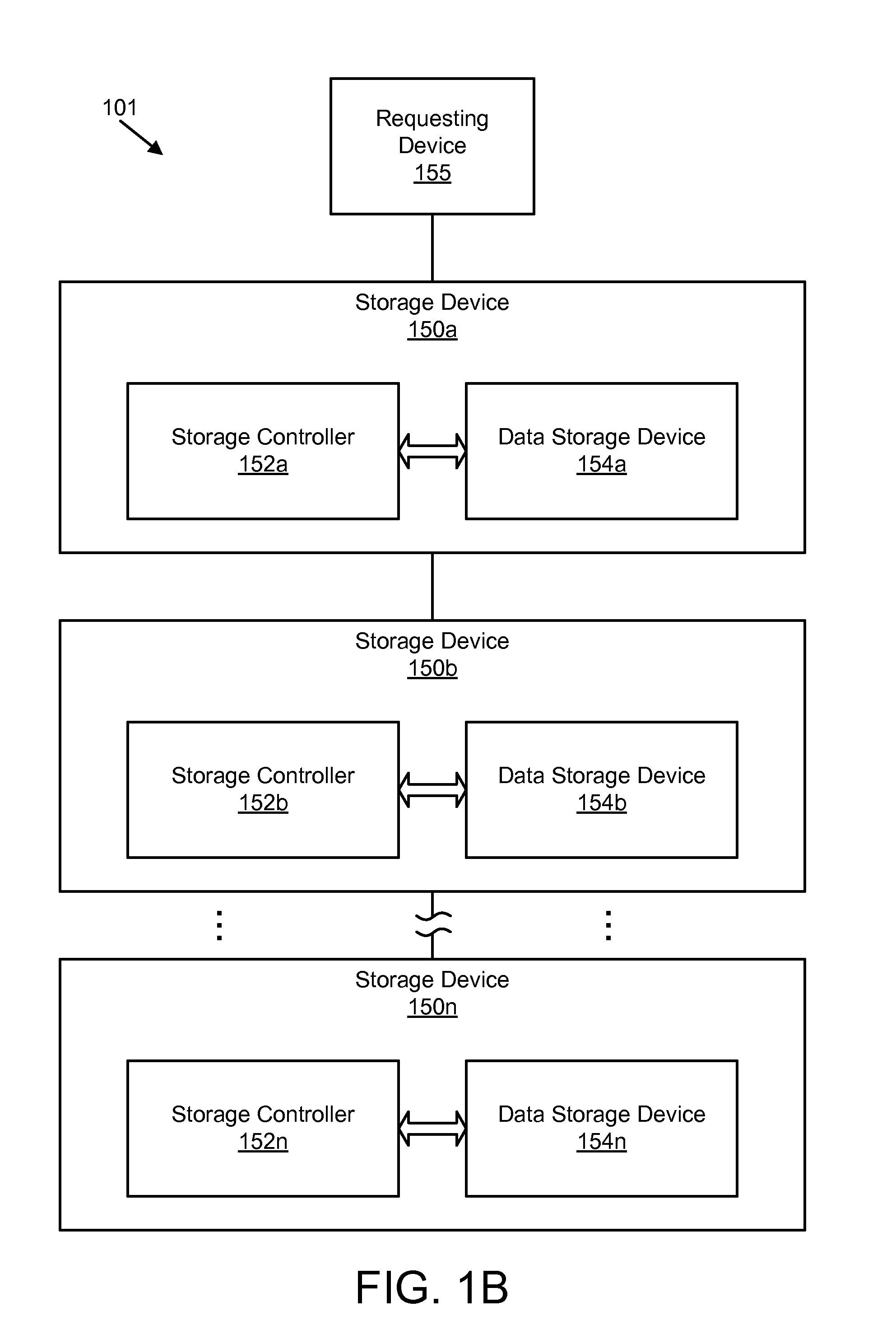

Apparatus, system, and method for converting a storage request into an append data storage command

ActiveUS20090150605A1Shorten write timeImprove efficiencyMemory architecture accessing/allocationProgram synchronisationData segmentNetwork packet

An apparatus, system, and method are disclosed for converting a storage request to an append data storage command. A storage request receiver module receives a storage request from a requesting device. The storage request is to store a data segment onto a data storage device. The storage request includes source parameters for the data segment. The source parameters include a virtual address. A translation module translates the storage request to storage commands. At least one storage command includes an append data storage command that directs the data storage device to store data of the data segment and the one or more source parameters with the data, including a virtual address, at one or more append points. A mapping module maps source parameters of the data segment to locations where the data storage device appended the data packets of the data segment and source parameters.

Owner:UNIFICATION TECH LLC

Apparatus, system, and method for efficient mapping of virtual and physical addresses

ActiveUS20090150641A1Efficient mappingEfficient identificationMemory architecture accessing/allocationProgram synchronisationData segmentData store

An apparatus, system, and method are disclosed for efficiently mapping virtual and physical addresses. A forward mapping module uses a forward map to identify physical addresses of data of a data segment from a virtual address. The data segment is identified in a storage request. The virtual addresses include discrete addresses within a virtual address space where the virtual addresses sparsely populate the virtual address space. A reverse mapping module uses a reverse map to determine a virtual address of a data segment from a physical address. The reverse map maps the data storage device into erase regions such that a portion of the reverse map spans an erase region of the data storage device erased together during a storage space recovery operation. A storage space recovery module uses the reverse map to identify valid data in an erase region prior to an operation to recover the erase region.

Owner:UNIFICATION TECH LLC

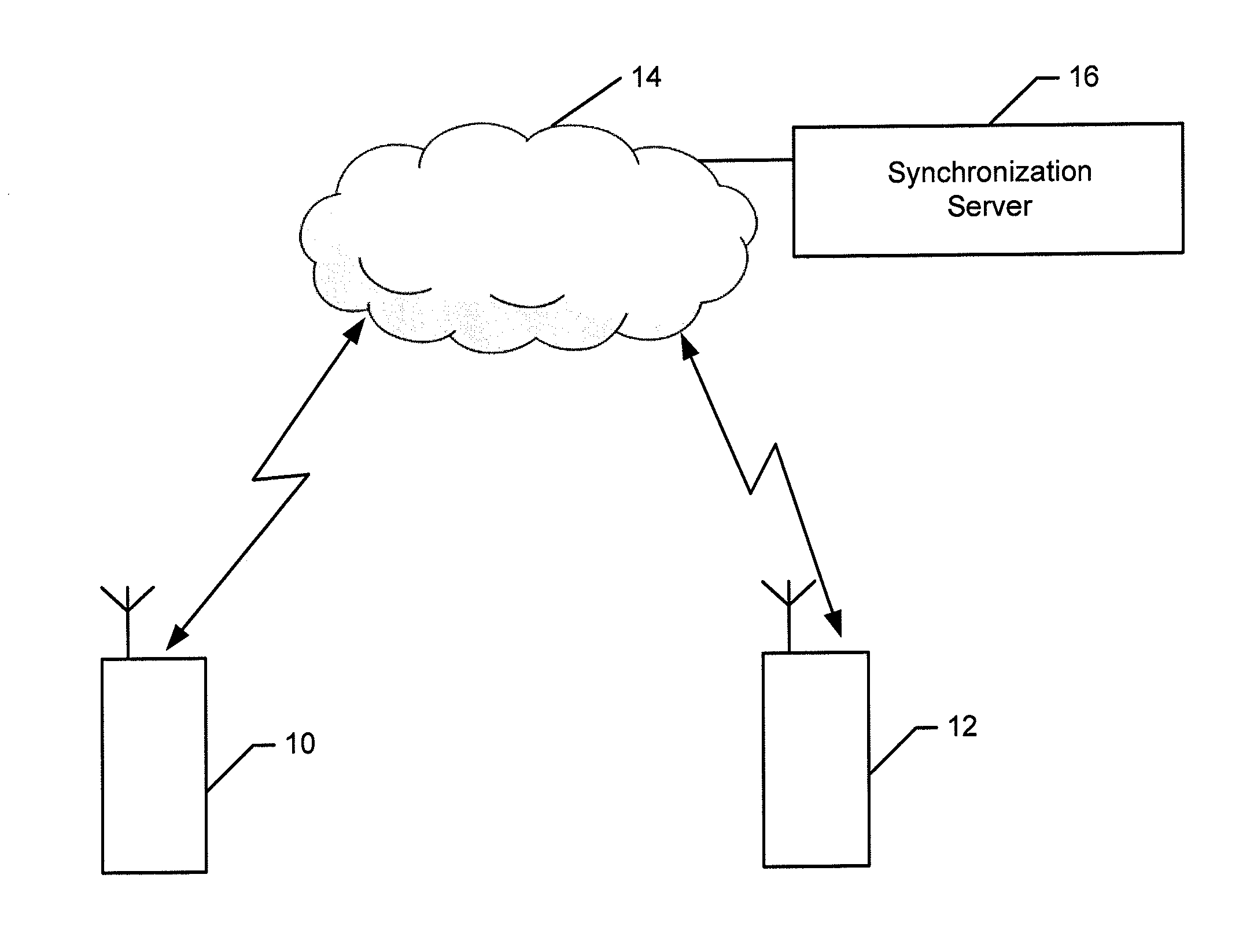

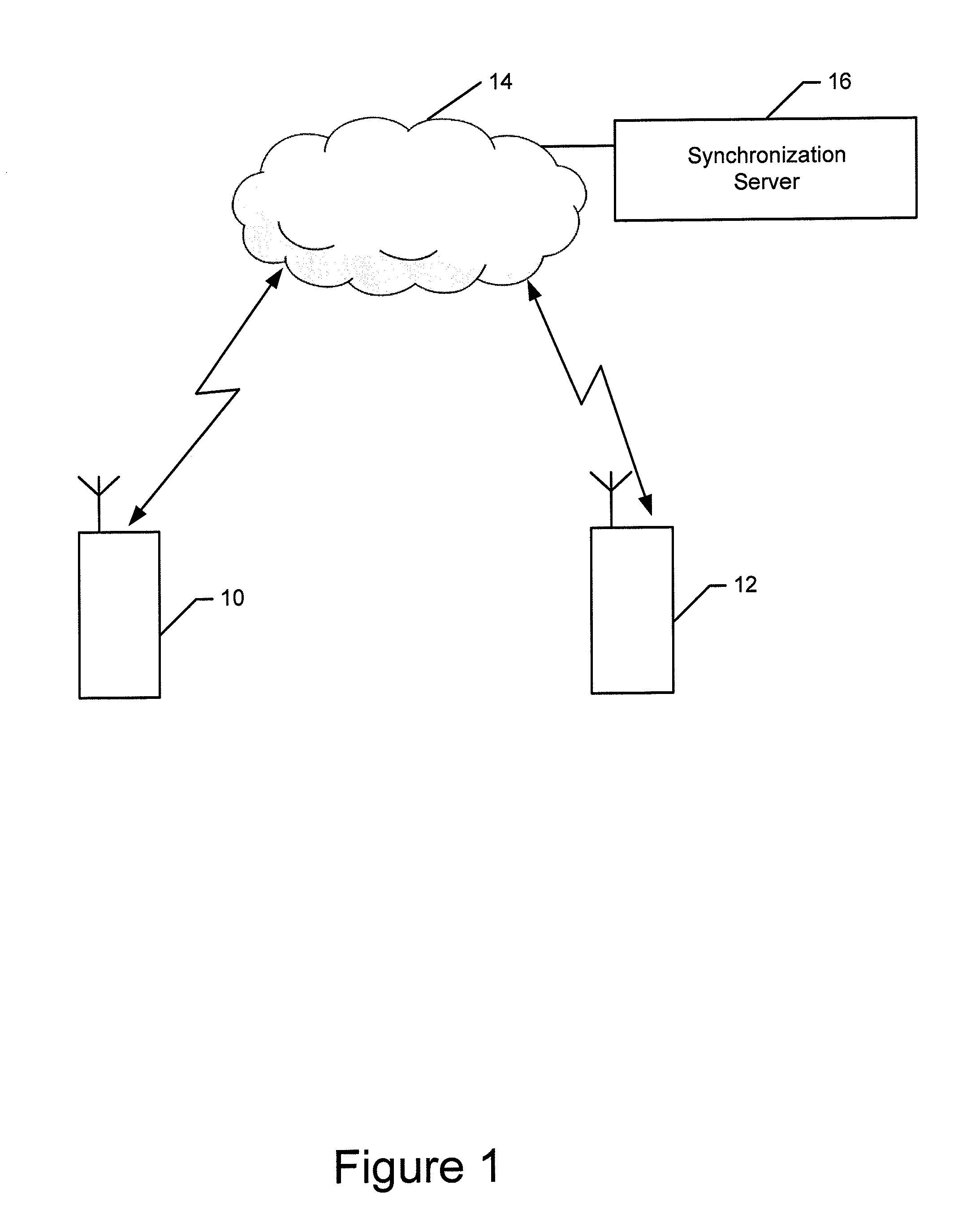

Method and apparatus for synchronizing tasks performed by multiple devices

ActiveUS20130275625A1Good synchronizationTo offer comfortProgram synchronisationMultiple digital computer combinationsComputer programReal-time computing

A method, apparatus and computer program product are provided to synchronize multiple devices. In regards to a method, an indication is received that a view of a task is presented by a first device. The method causes state information to be provided to a second device to permit the second device to be synchronized with the first device and to present a view of the task, either the same view or a different view than that presented by the first device. The method also receives information relating to a change in state of the task that is provided by one of the devices while a first view of the task is presented thereupon. Further, the method causes updated state information to be provided to another one of the devices to cause the other device to remain synchronized and to update a second view of the task that is presented.

Owner:NOKIA TECHNOLOGLES OY

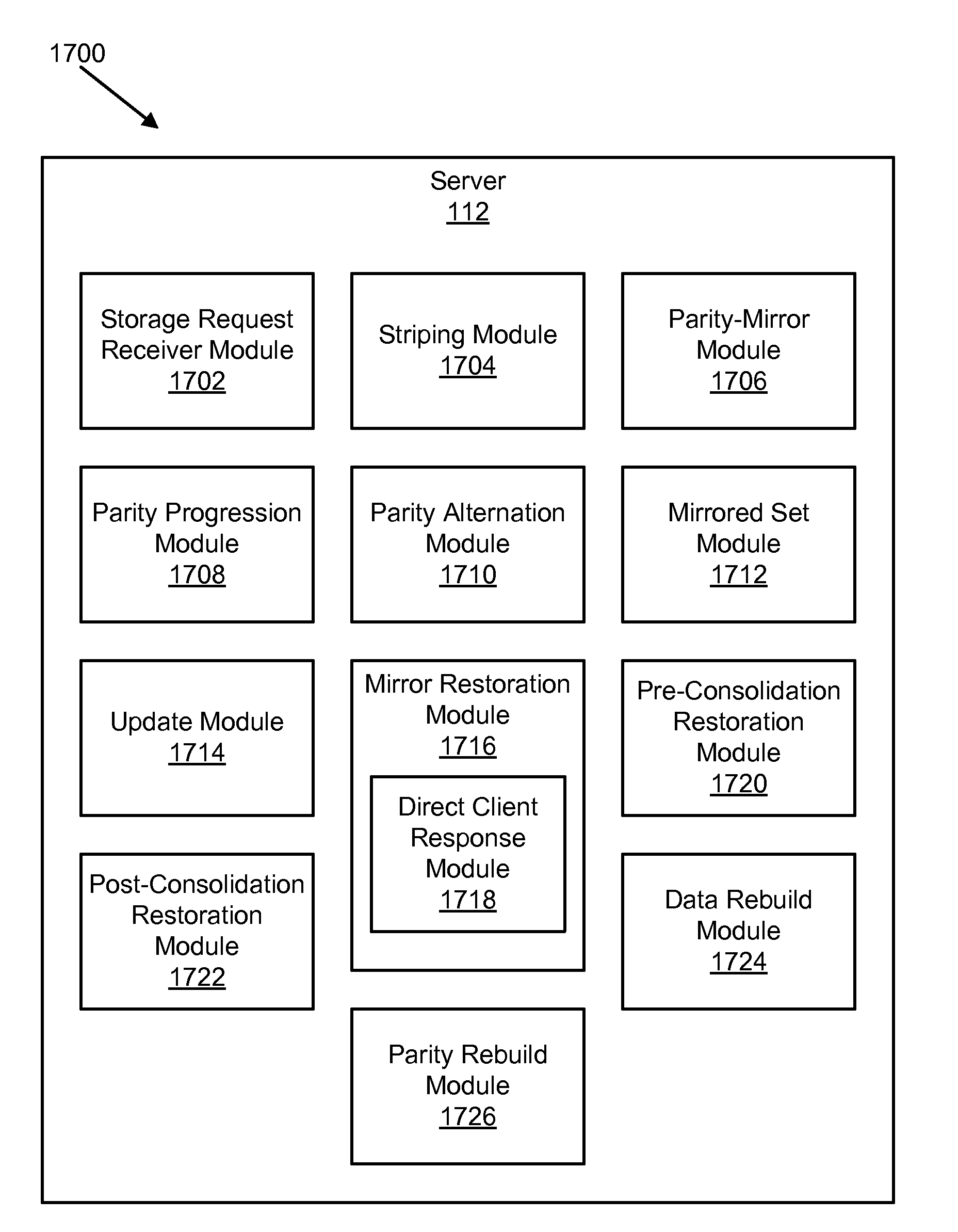

Apparatus, system, and method for data storage using progressive raid

ActiveUS20080168304A1Reliable and reliableImprove performanceEnergy efficient ICTInput/output to record carriersRAIDData segment

Owner:SANDISK TECH LLC

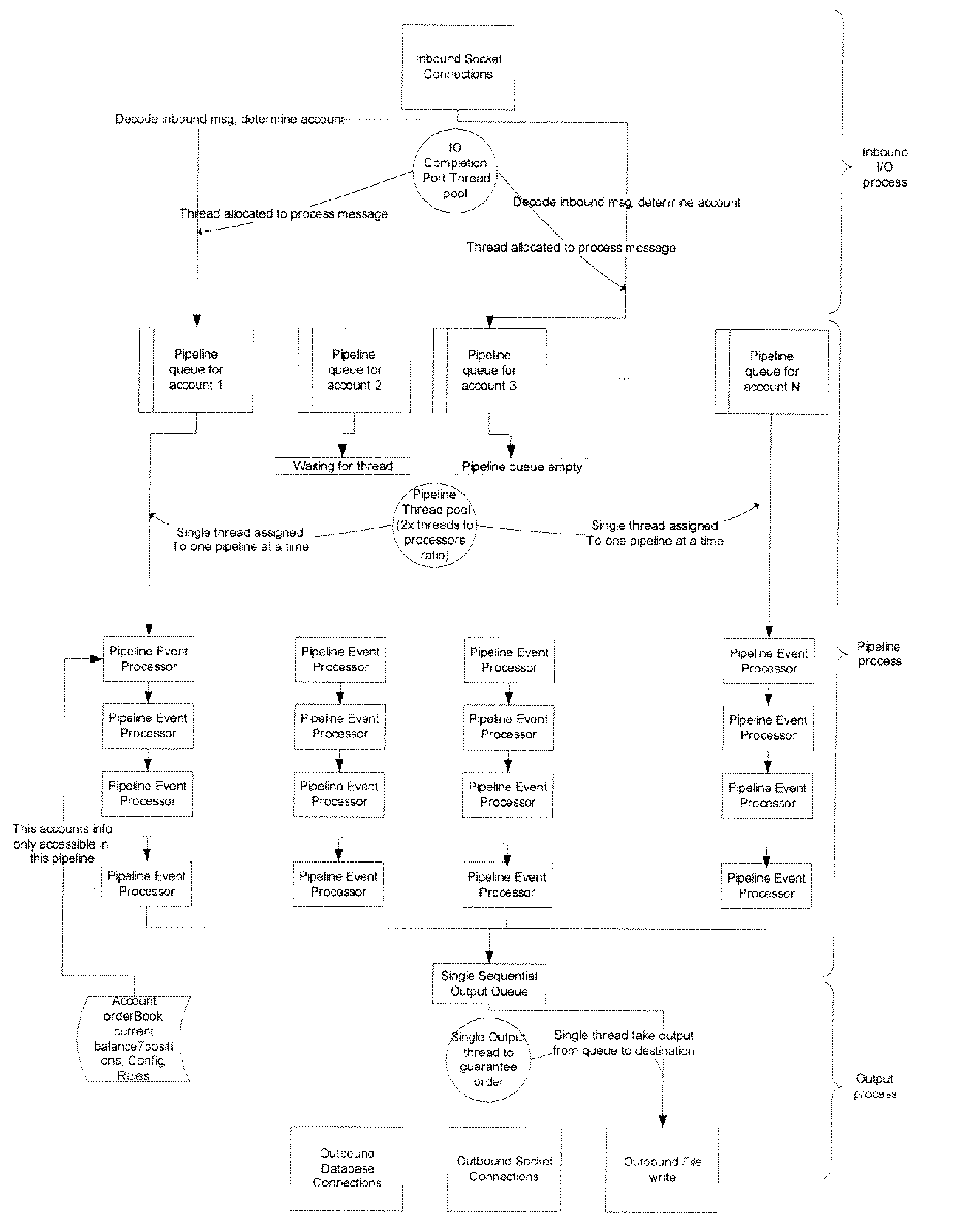

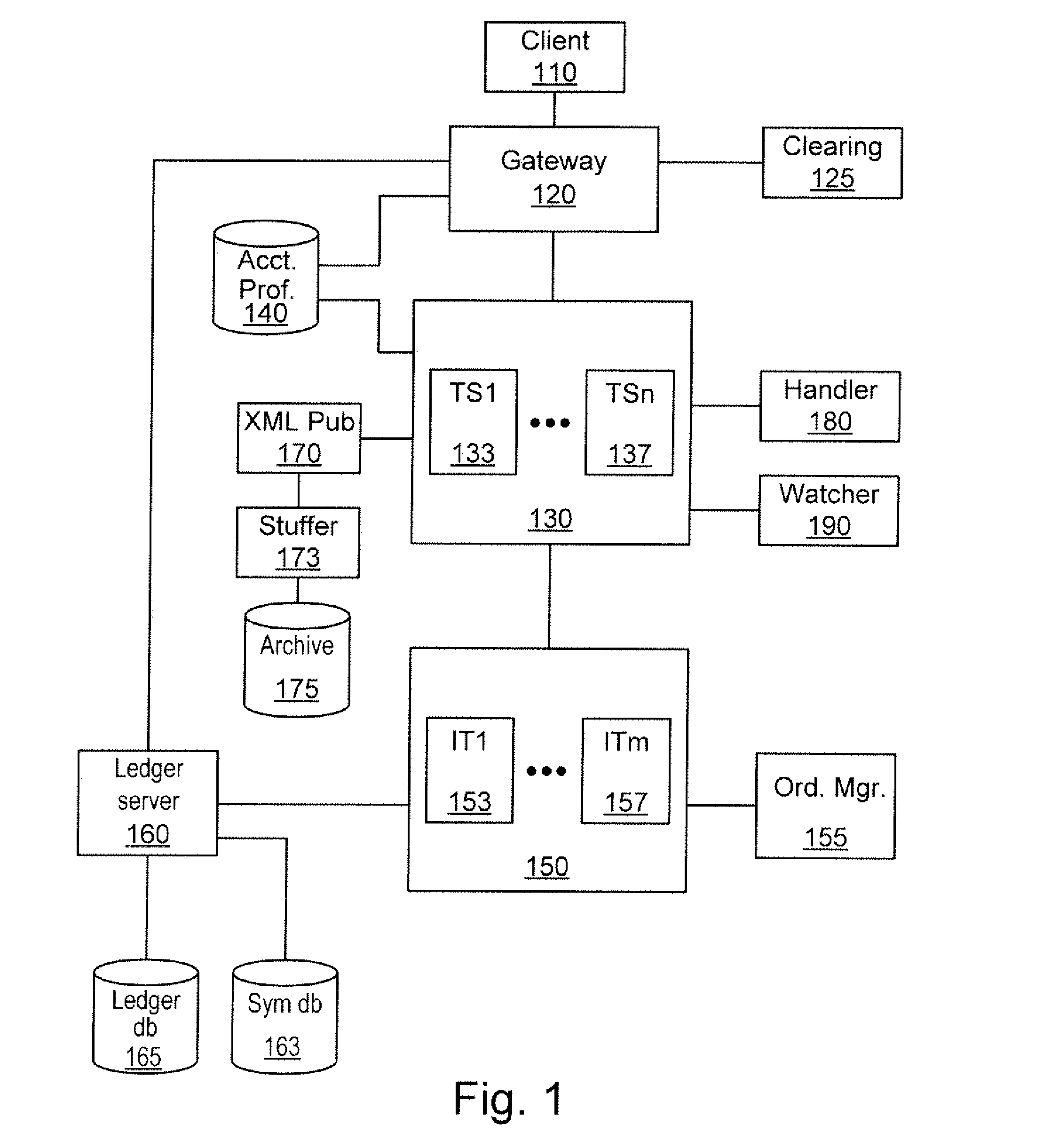

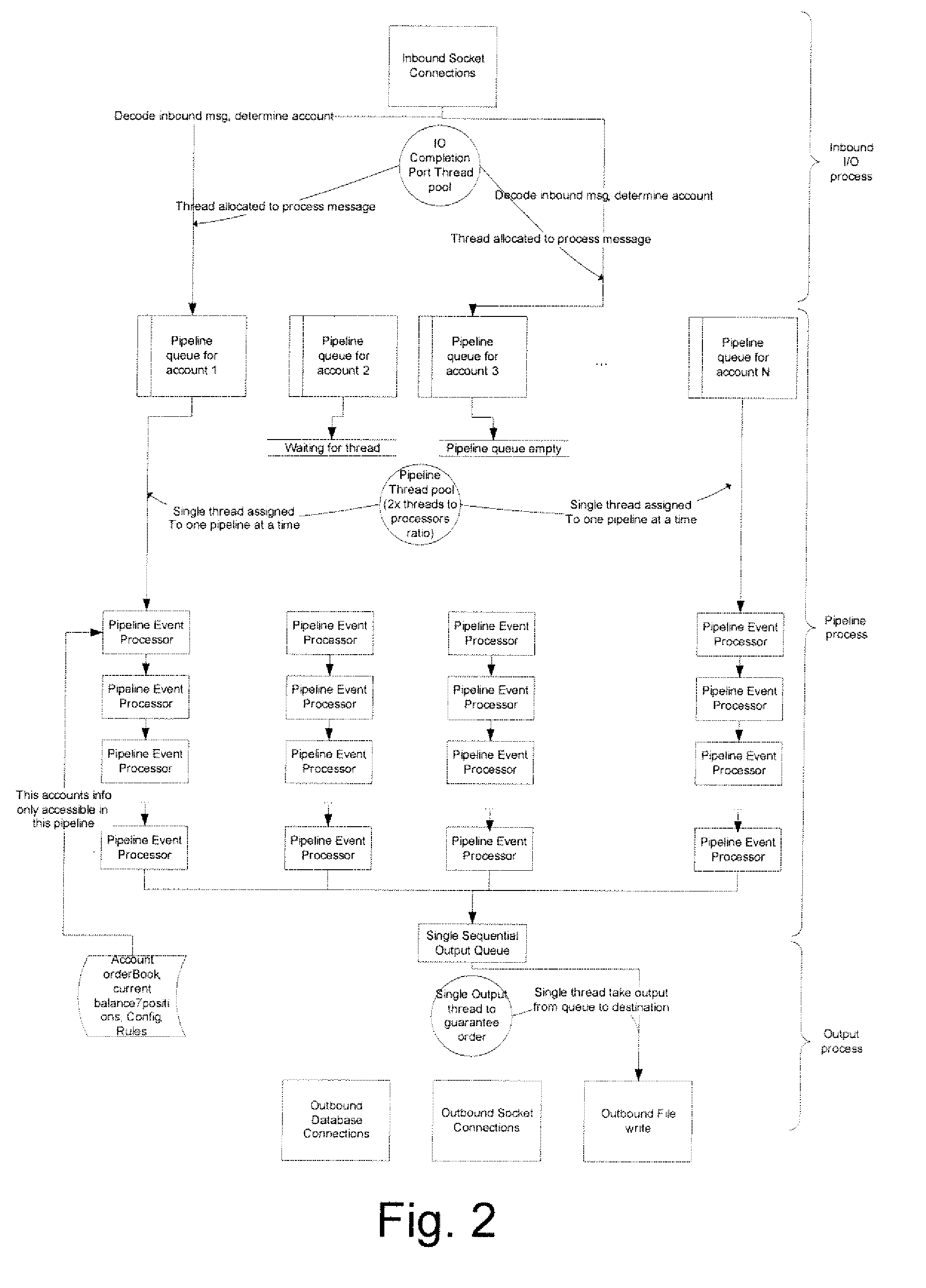

Order Management System and Method for Electronic Securities Trading

ActiveUS20080015970A1Eliminate needEnhance profit or mitigate lossFinanceProgram synchronisationOrder management systemClient-side

A system includes a communication module to communicate with at least one client; and a server to process a task identified by the client, the task associated with an account, the server including a plurality of account-centric pipelines, one or more account-centric pipelines each configured to process the task.

Owner:EZE CASTLE SOFTWARE

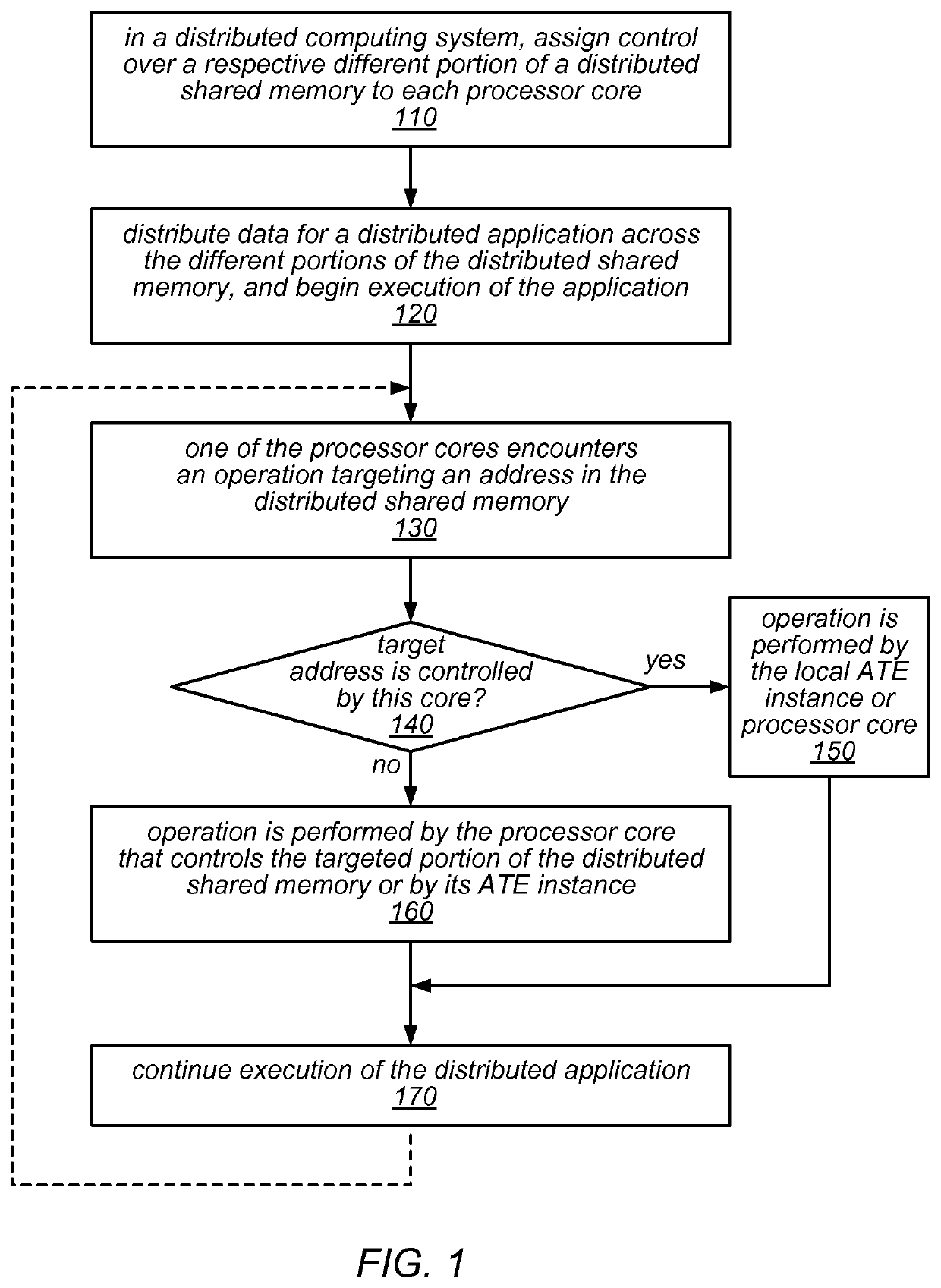

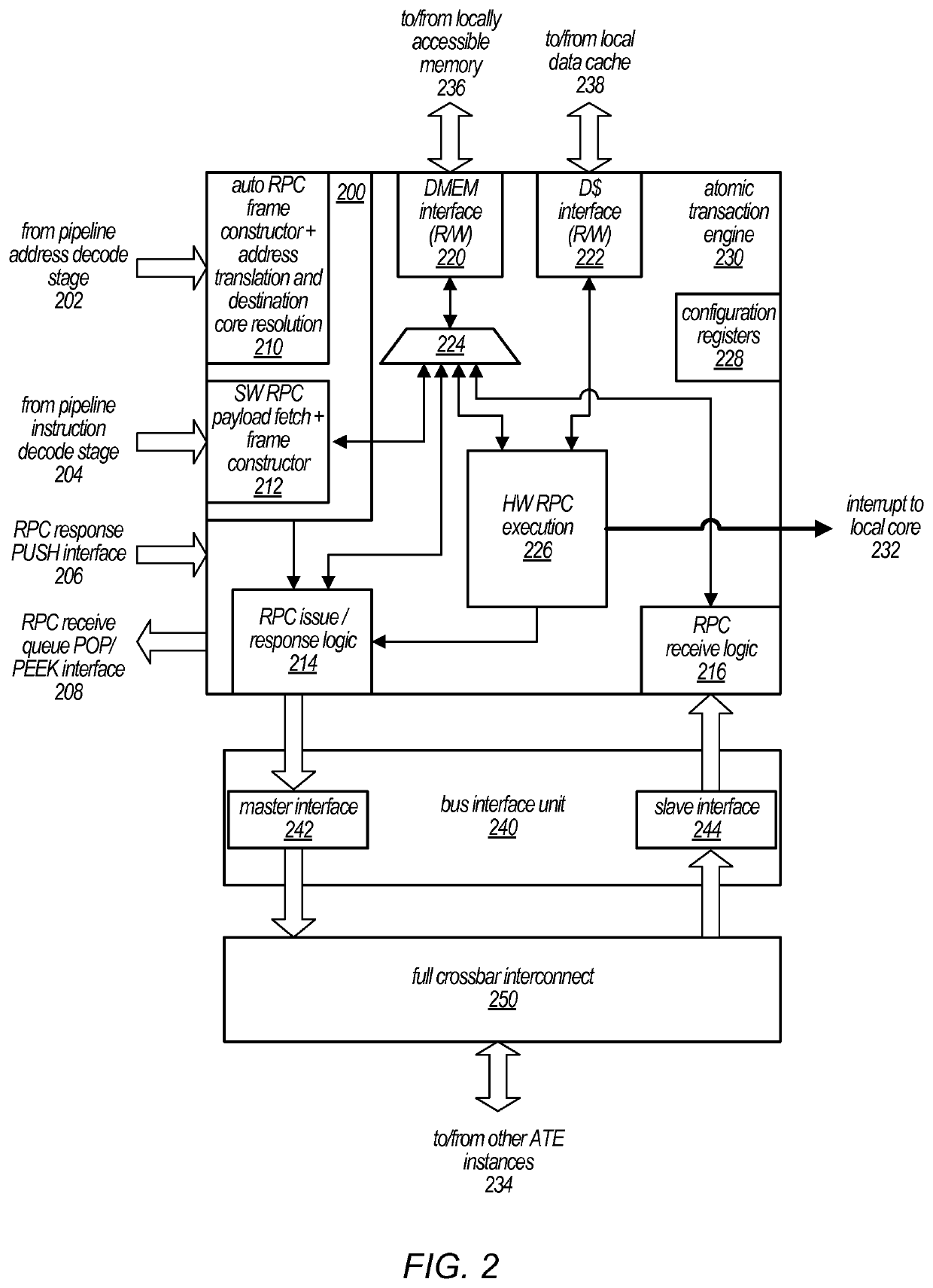

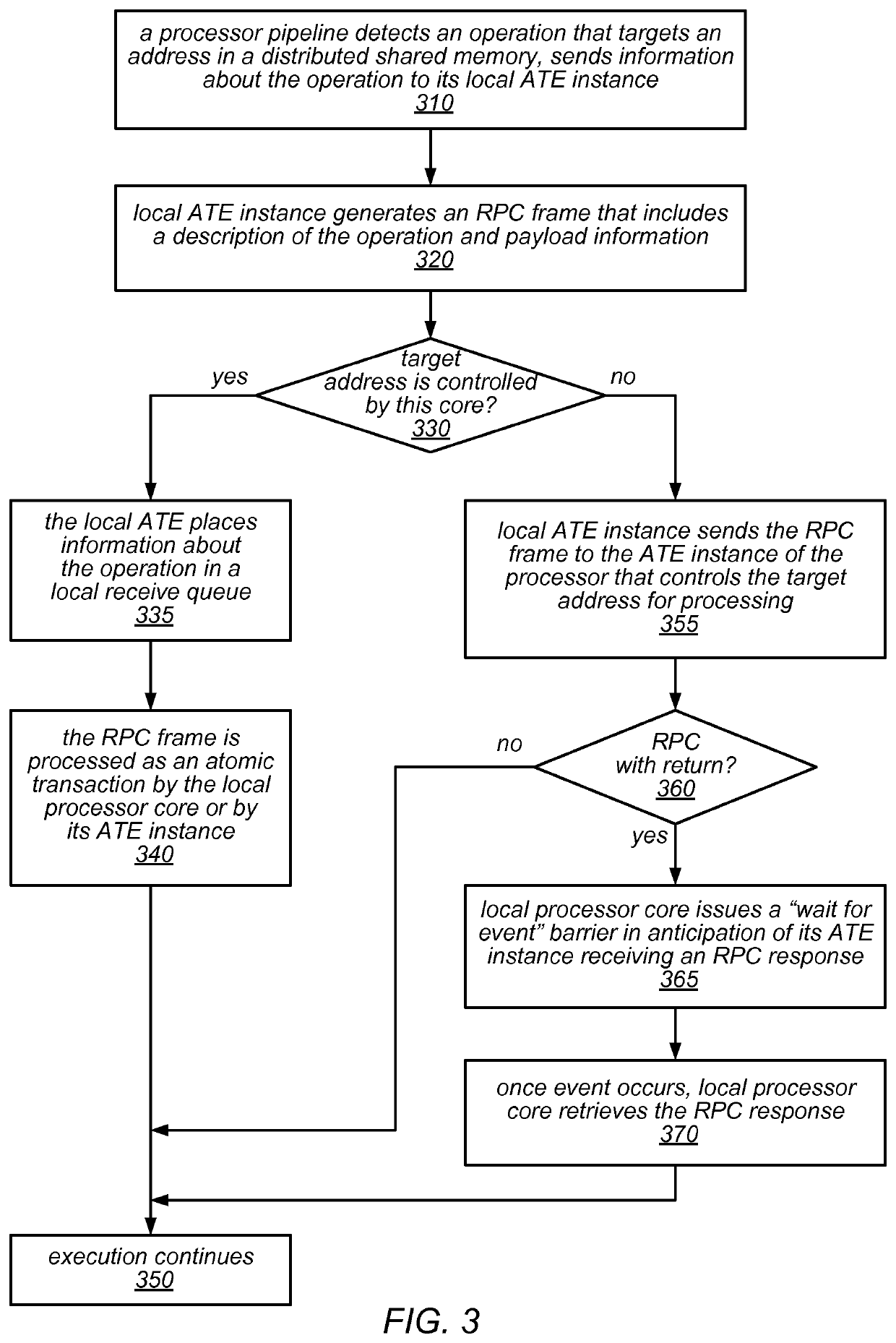

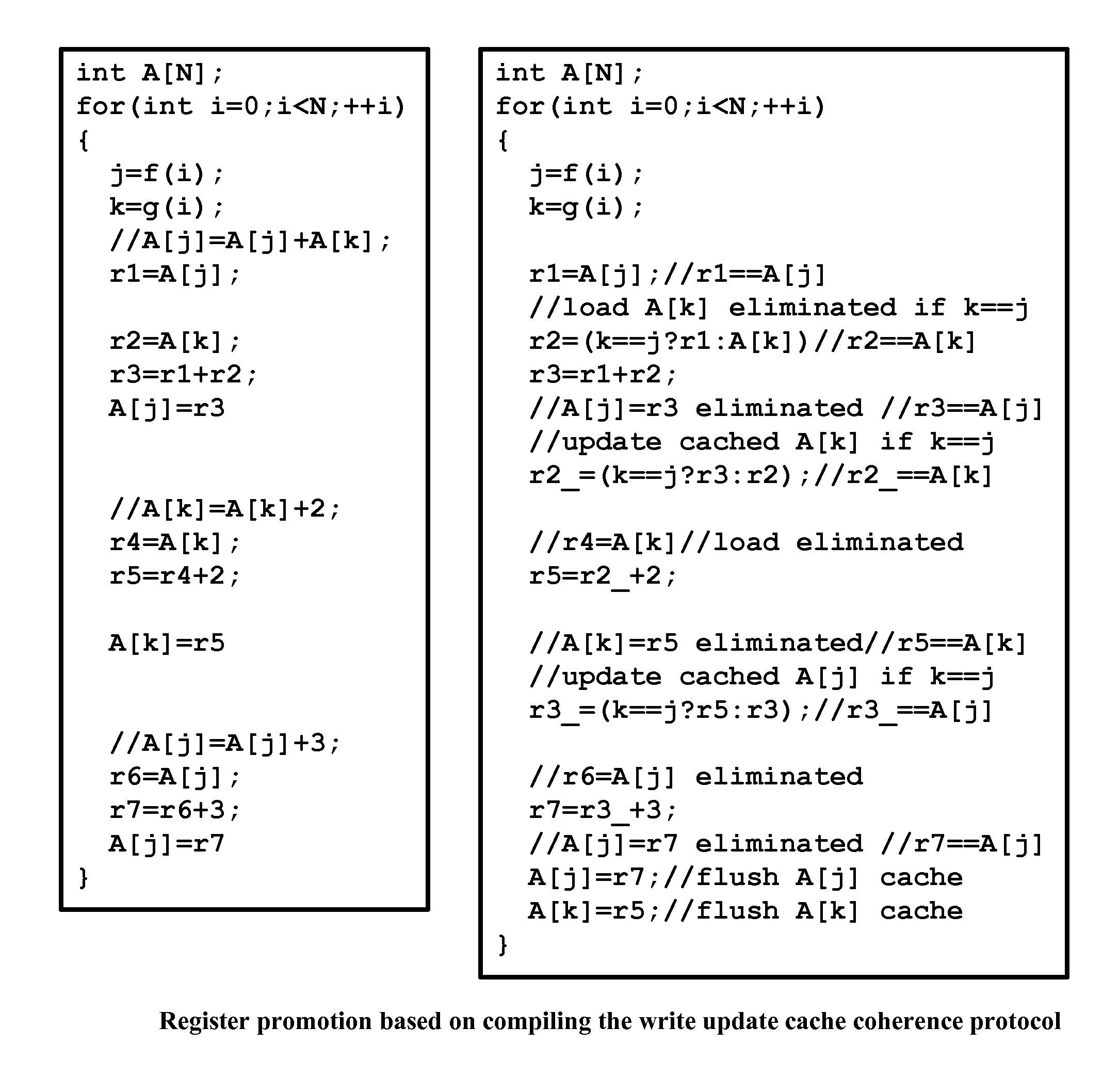

Distributed shared memory using interconnected atomic transaction engines at respective memory interfaces

ActiveUS10732865B2Light weightLess complexInput/output to record carriersProgram synchronisationComputer architectureMemory interface

A hardware-assisted Distributed Memory System may include software configurable shared memory regions in the local memory of each of multiple processor cores. Accesses to these shared memory regions may be made through a network of on-chip atomic transaction engine (ATE) instances, one per core, over a private interconnect matrix that connects them together. For example, each ATE instance may issue Remote Procedure Calls (RPCs), with or without responses, to an ATE instance associated with a remote processor core in order to perform operations that target memory locations controlled by the remote processor core. Each ATE instance may process RPCs (atomically) that are received from other ATE instances or that are generated locally. For some operation types, an ATE instance may execute the operations identified in the RPCs itself using dedicated hardware. For other operation types, the ATE instance may interrupt its local processor core to perform the operations.

Owner:ORACLE INT CORP

Method and system for converting a single-threaded software program into an application-specific supercomputer

ActiveUS20130125097A1Improve efficiencyLow overhead implementationMemory architecture accessing/allocationTransformation of program codeSupercomputerComputer architecture

The invention comprises (i) a compilation method for automatically converting a single-threaded software program into an application-specific supercomputer, and (ii) the supercomputer system structure generated as a result of applying this method. The compilation method comprises: (a) Converting an arbitrary code fragment from the application into customized hardware whose execution is functionally equivalent to the software execution of the code fragment; and (b) Generating interfaces on the hardware and software parts of the application, which (i) Perform a software-to-hardware program state transfer at the entries of the code fragment; (ii) Perform a hardware-to-software program state transfer at the exits of the code fragment; and (iii) Maintain memory coherence between the software and hardware memories. If the resulting hardware design is large, it is divided into partitions such that each partition can fit into a single chip. Then, a single union chip is created which can realize any of the partitions.

Owner:GLOBAL SUPERCOMPUTING CORP

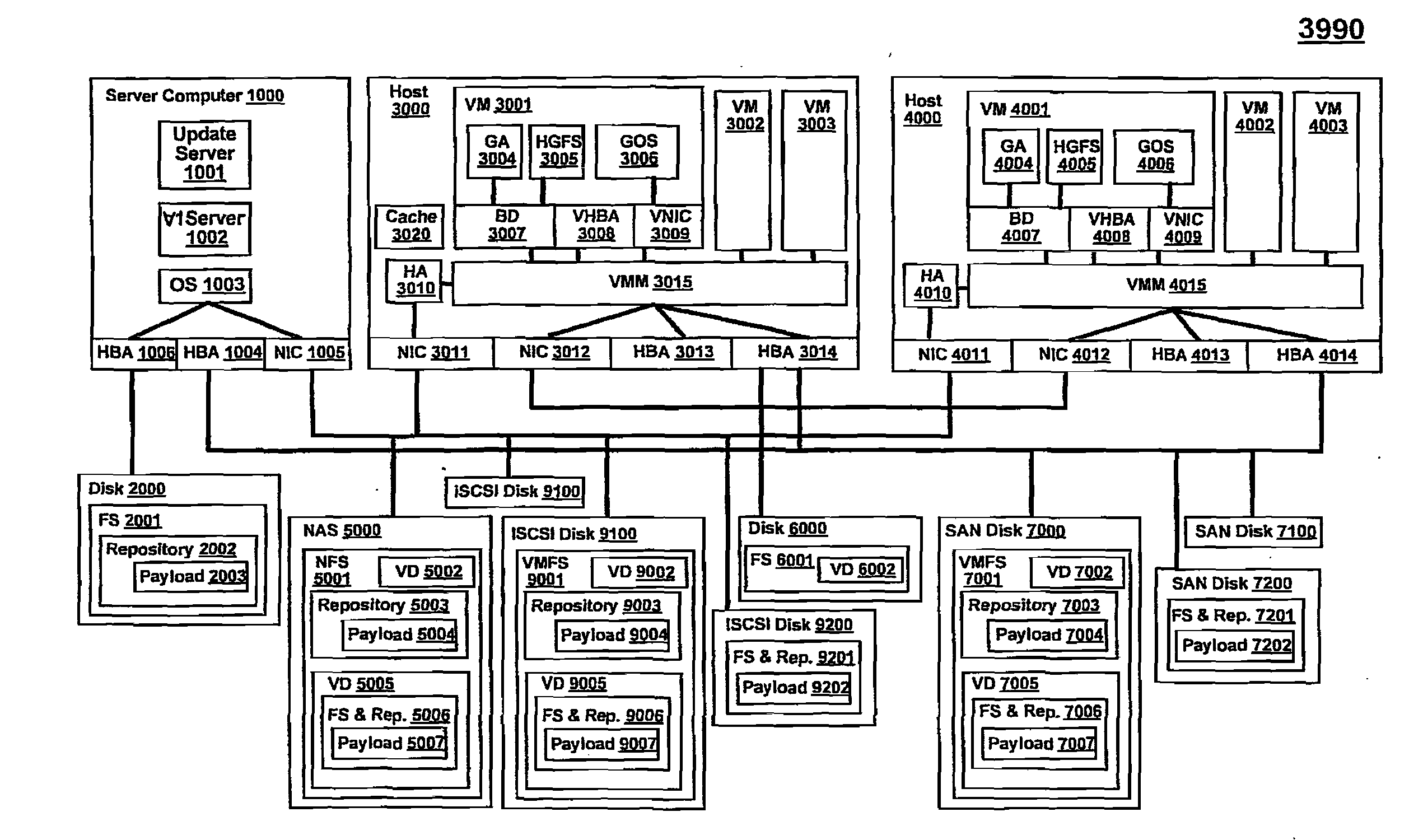

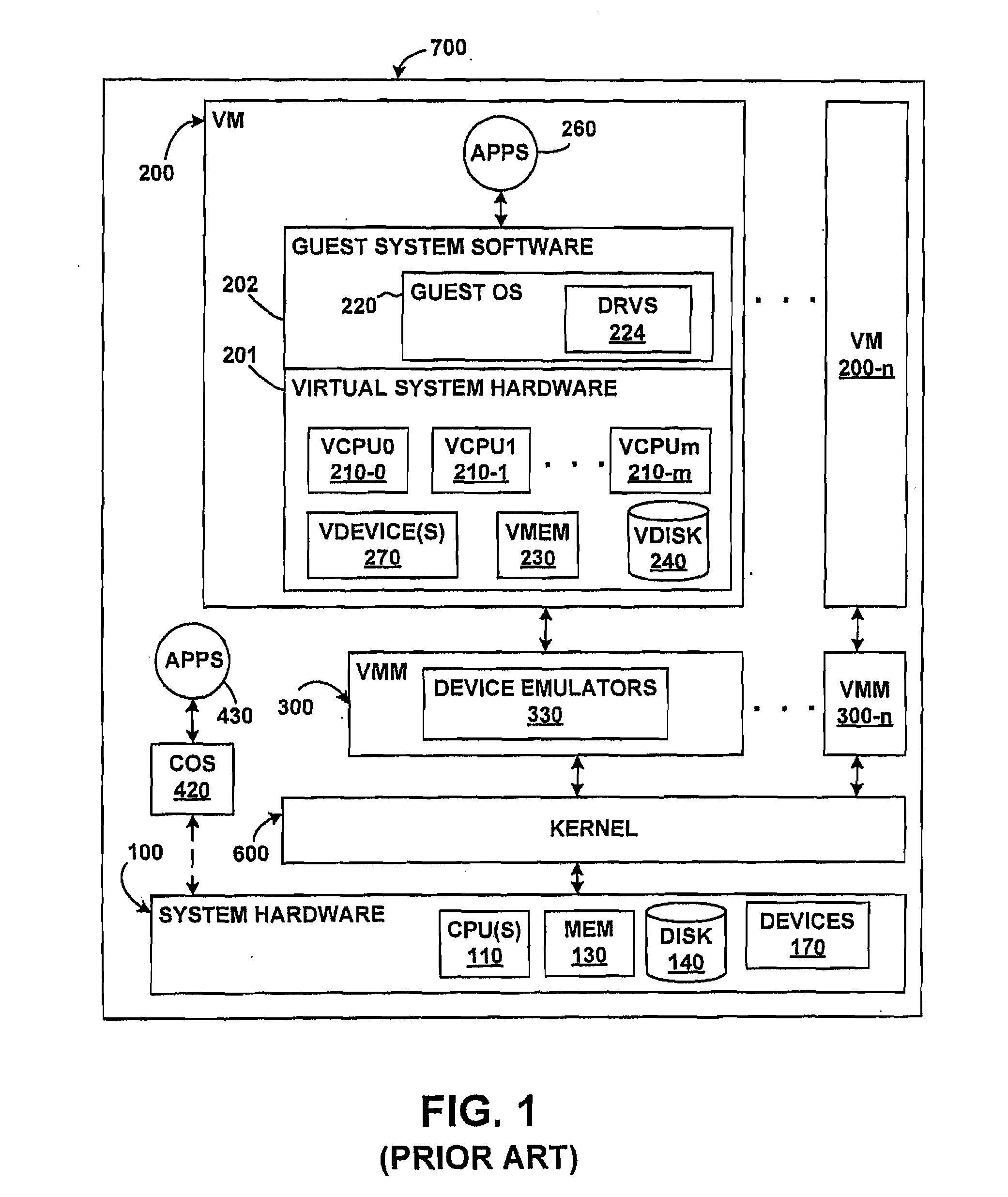

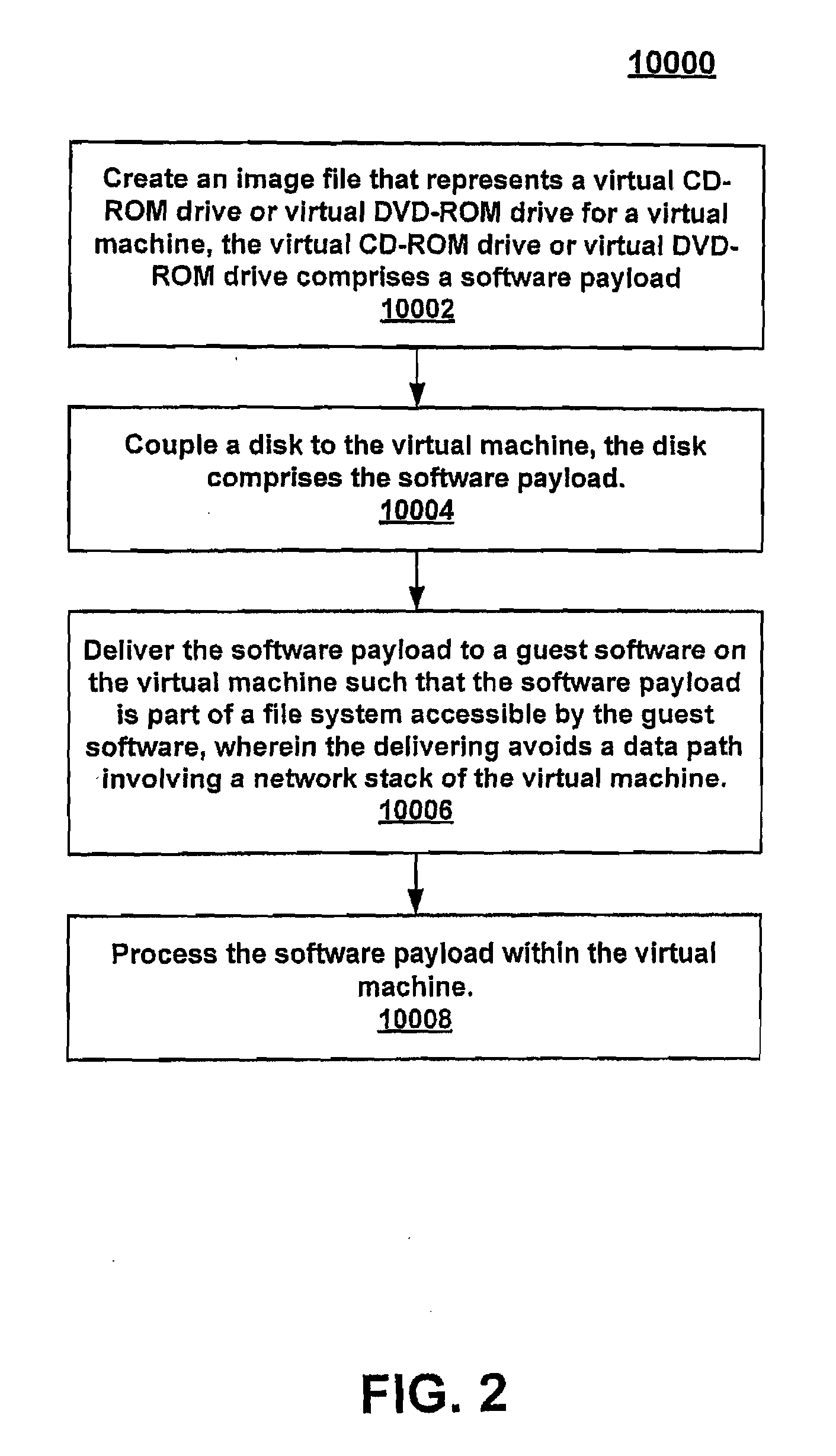

Software delivery for virtual machines

ActiveUS20080244577A1Program synchronisationSoftware simulation/interpretation/emulationFile systemDatapath

Owner:VMWARE INC

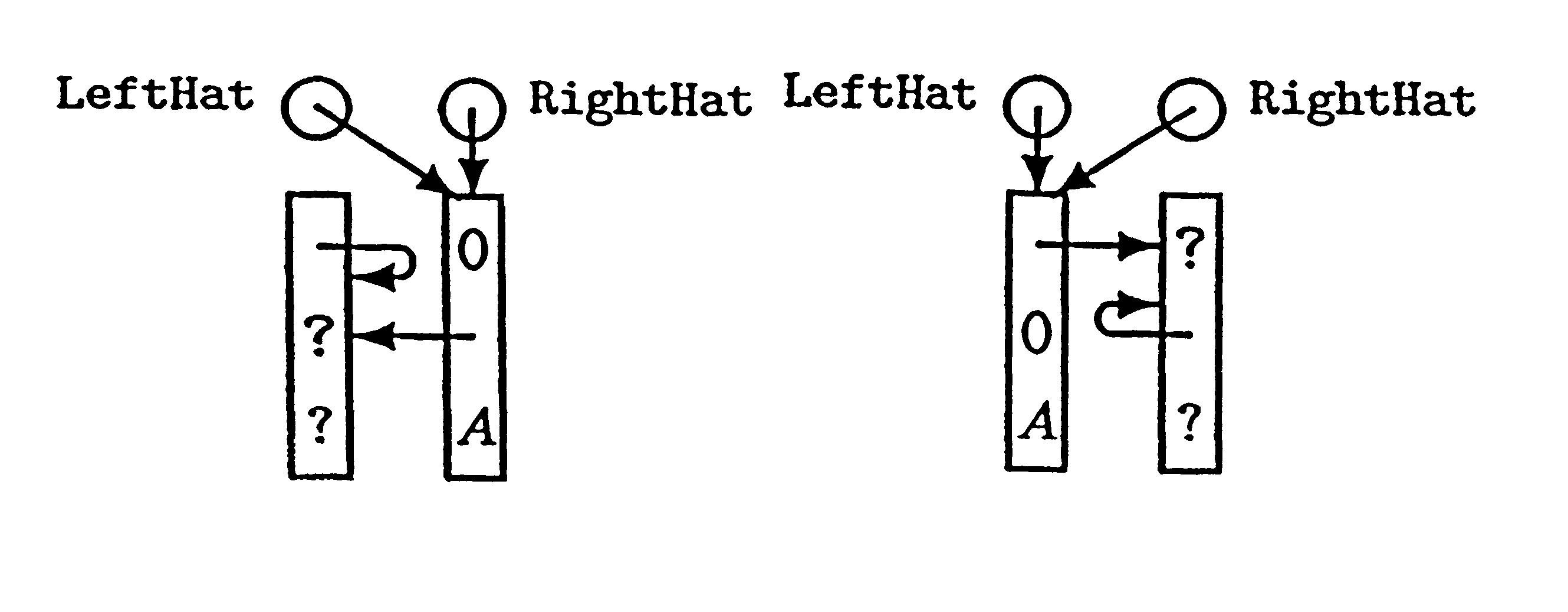

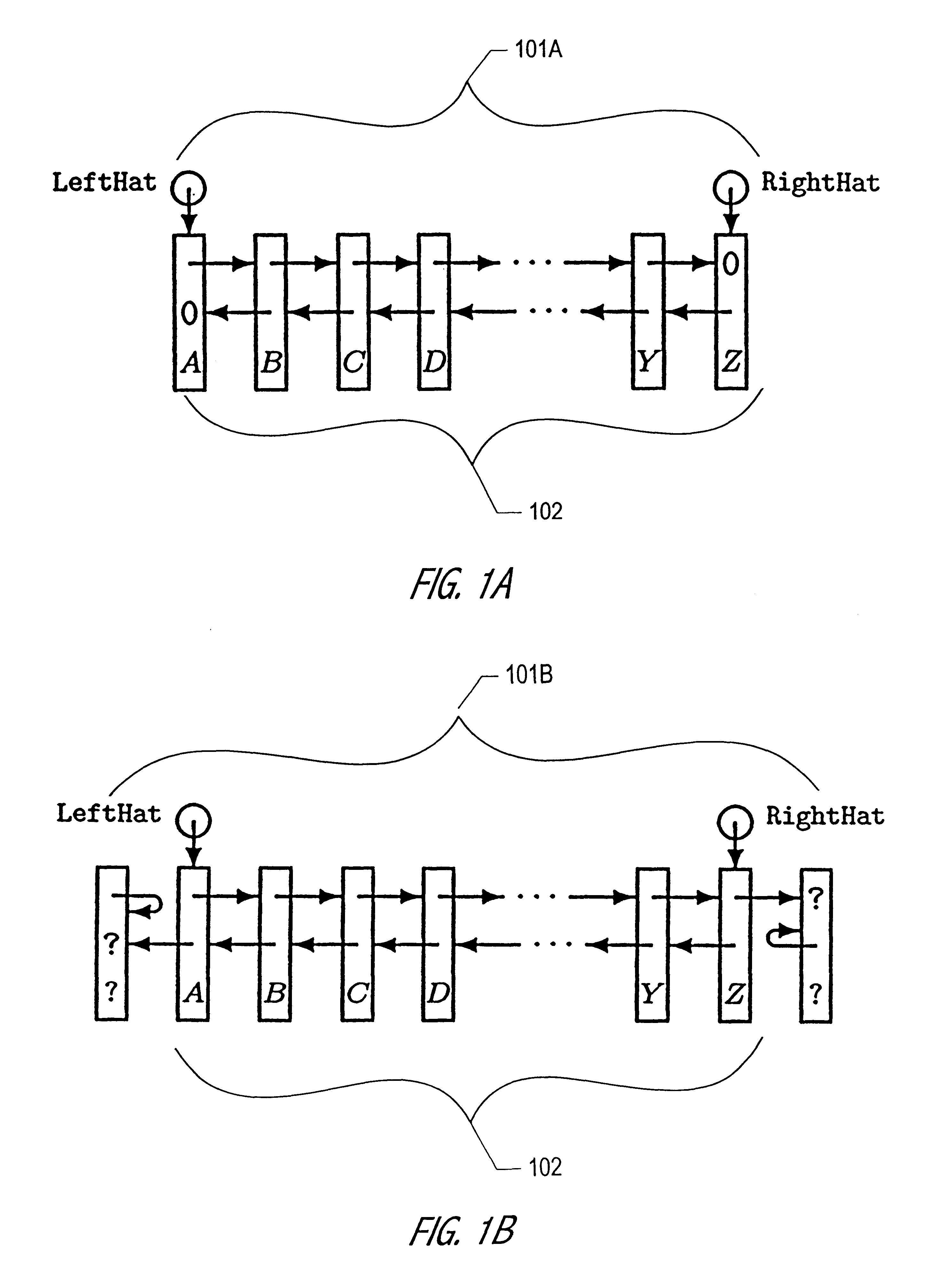

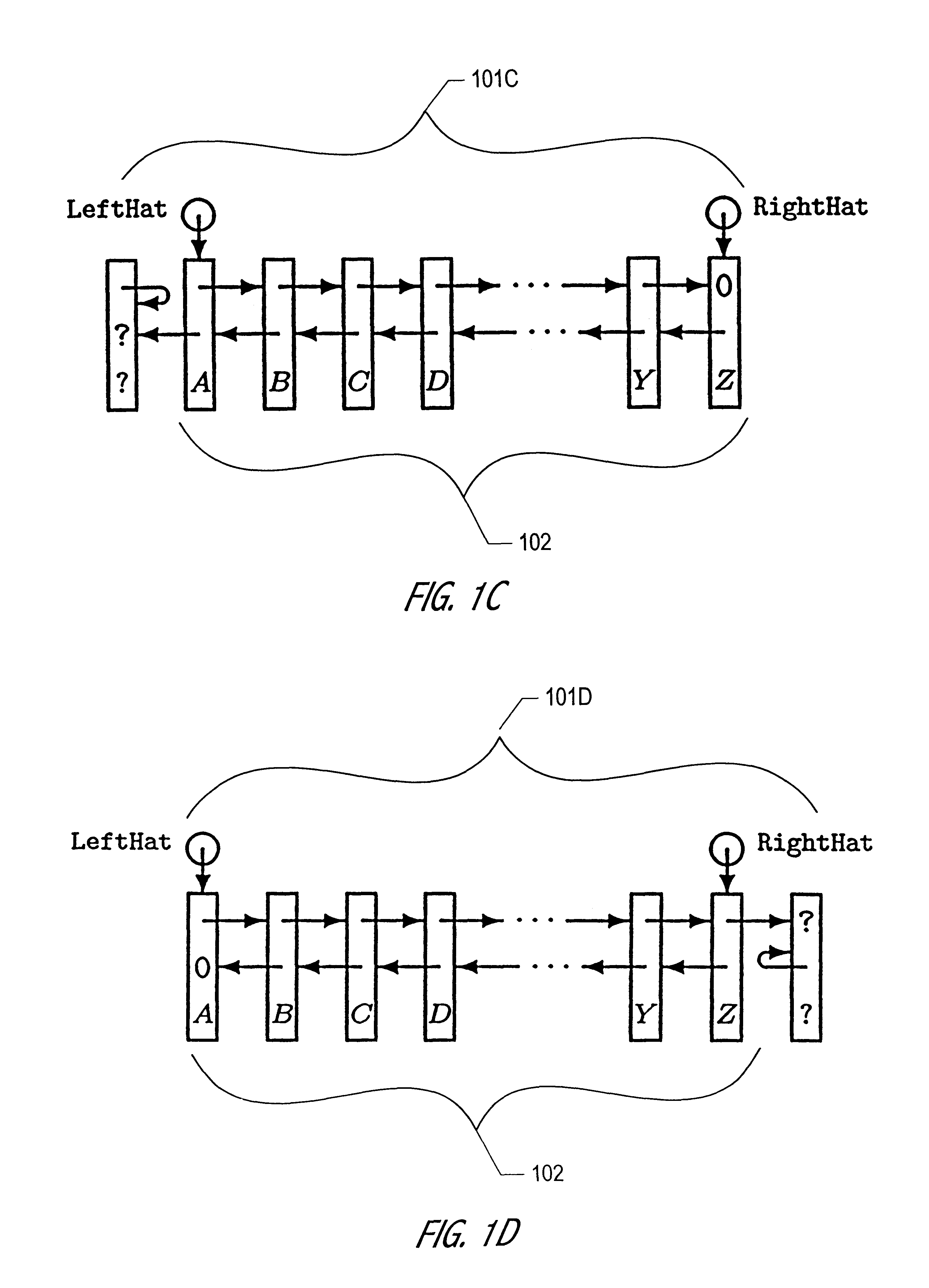

Lock-free implementation of concurrent shared object with dynamic node allocation and distinguishing pointer value

A novel linked-list-based concurrent shared object implementation has been developed that provides non-blocking and linearizable access to the concurrent shared object. In an application of the underlying techniques to a deque, non-blocking completion of access operations is achieved without restricting concurrency in accessing the deque's two ends. In various realizations in accordance with the present invention, the set of values that may be pushed onto a shared object is not constrained by use of distinguishing values. In addition, an explicit reclamation embodiment facilitates use in environments or applications where automatic reclamation of storage is unavailable or impractical.

Owner:ORACLE INT CORP

Joint editing of an on-line document

InactiveUS20100083136A1Input/output for user-computer interactionProgram synchronisationComputer graphics (images)

Owner:IBM CORP

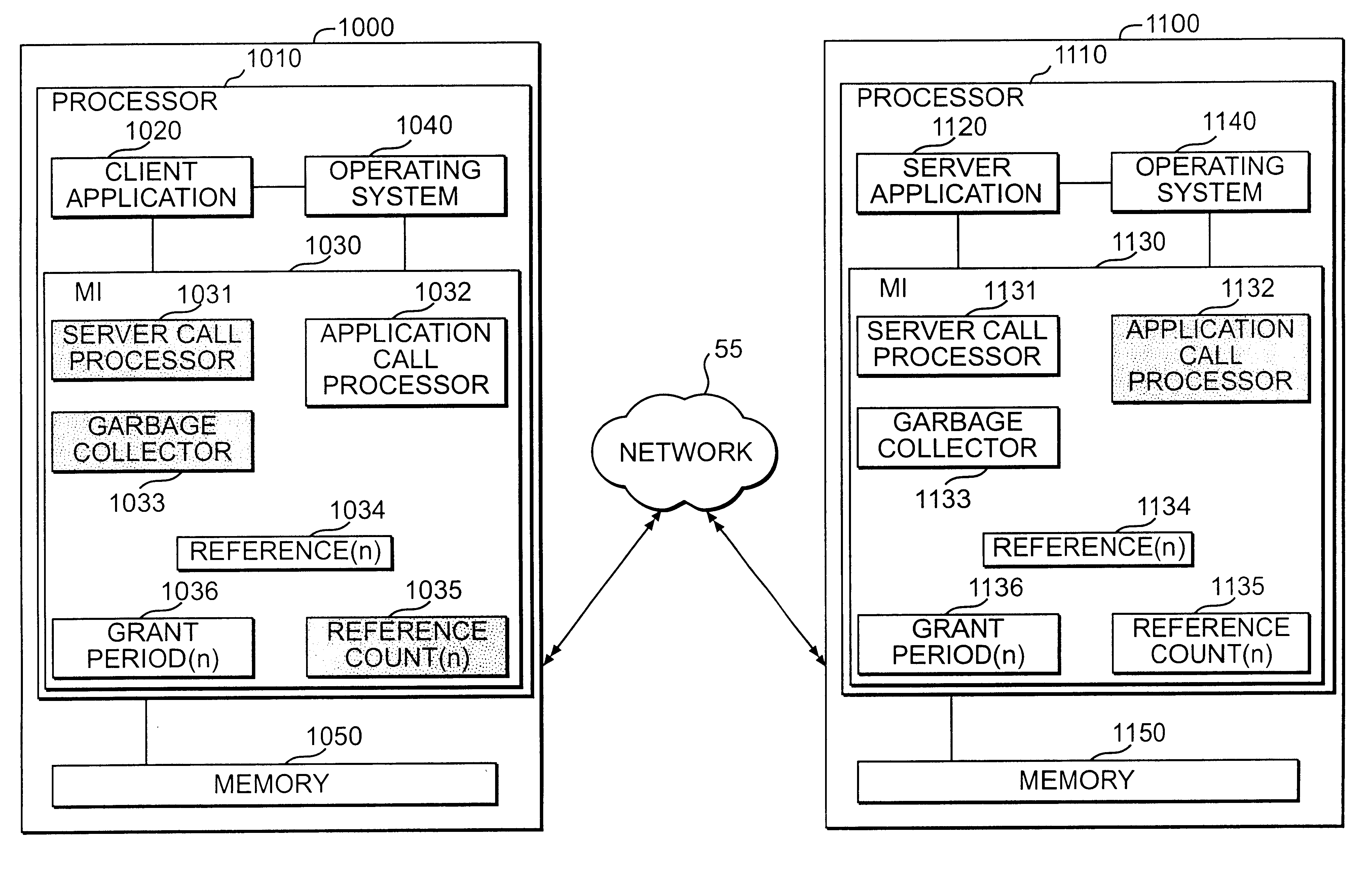

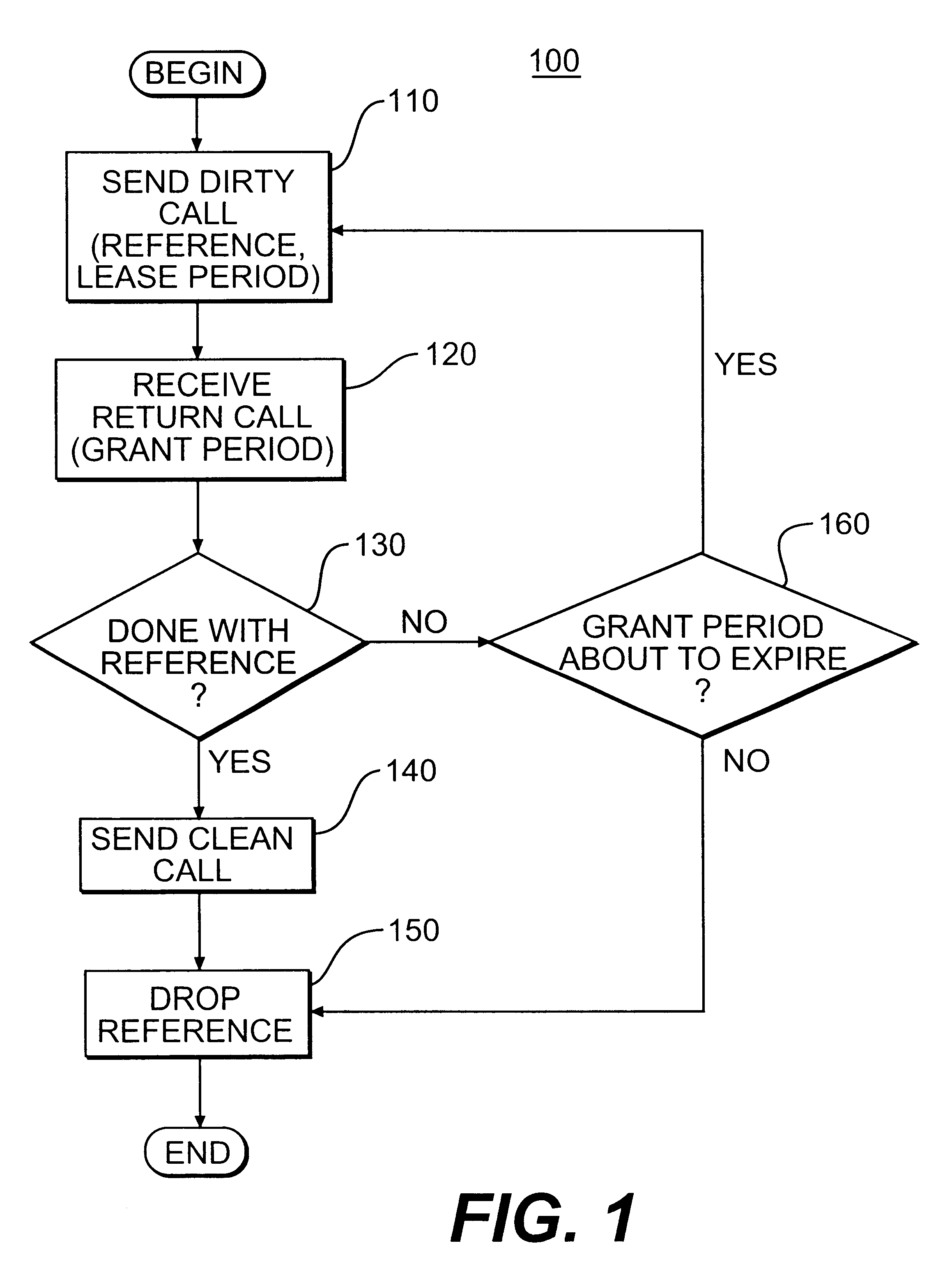

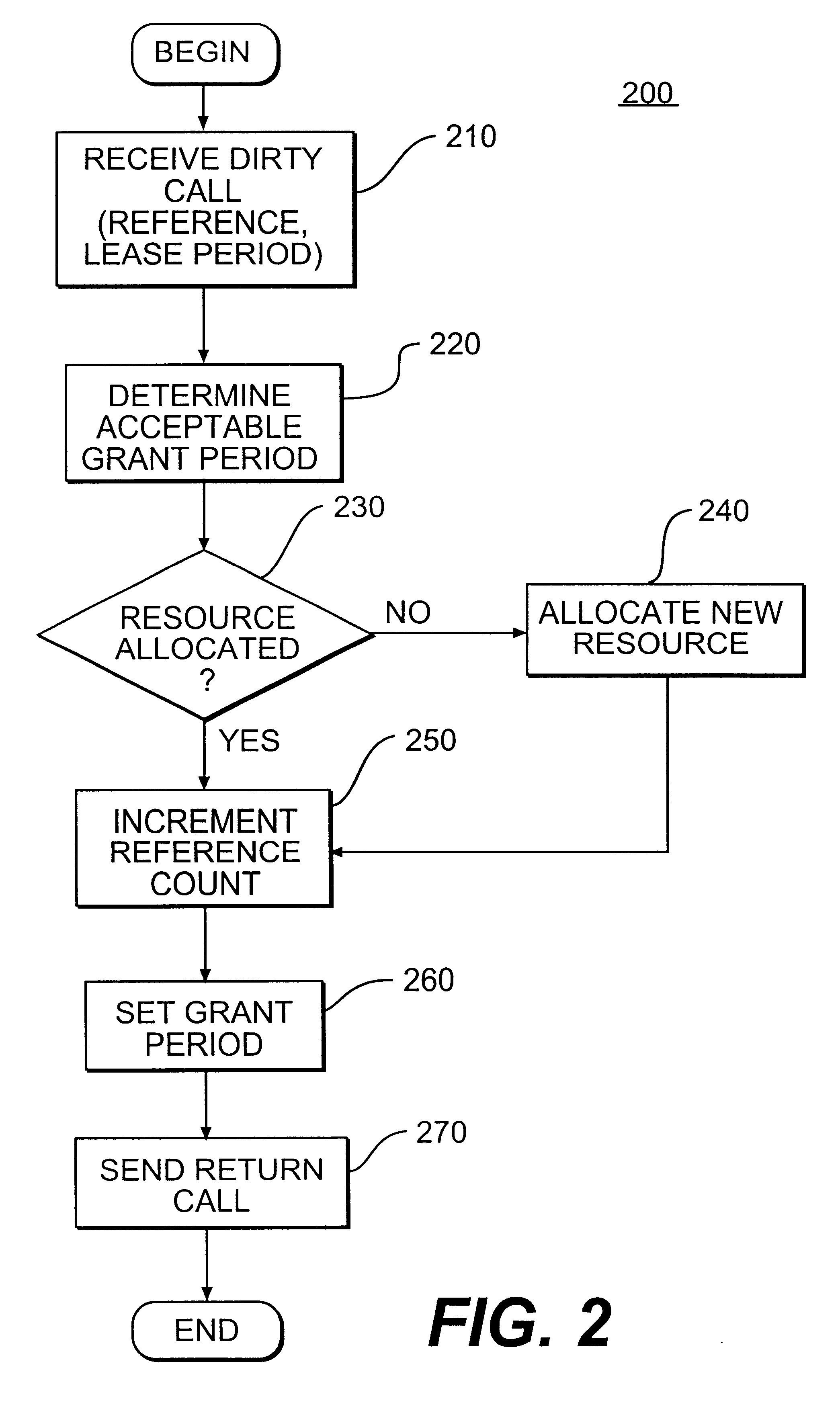

Method and system for leasing storage

A method and system for leasing storage locations in a distributed processing system is provided. Consistent with this method and system, a client requests access to storage locations for a period of time (lease period) from a server, such as the file system manager. Responsive to this request, the server invokes a lease period algorithm, which considers various factors to determine a lease period during which time the client may access the storage locations. After a lease is granted, the server sends an object to the client that advises the client of the lease period and that provides the client with behavior to modify the lease, like canceling the lease or renewing the lease. The server supports concurrent leases, exact leases, and leases for various types of access. After all leases to a storage location expire, the server reclaims the storage location.

Owner:SUN MICROSYSTEMS INC

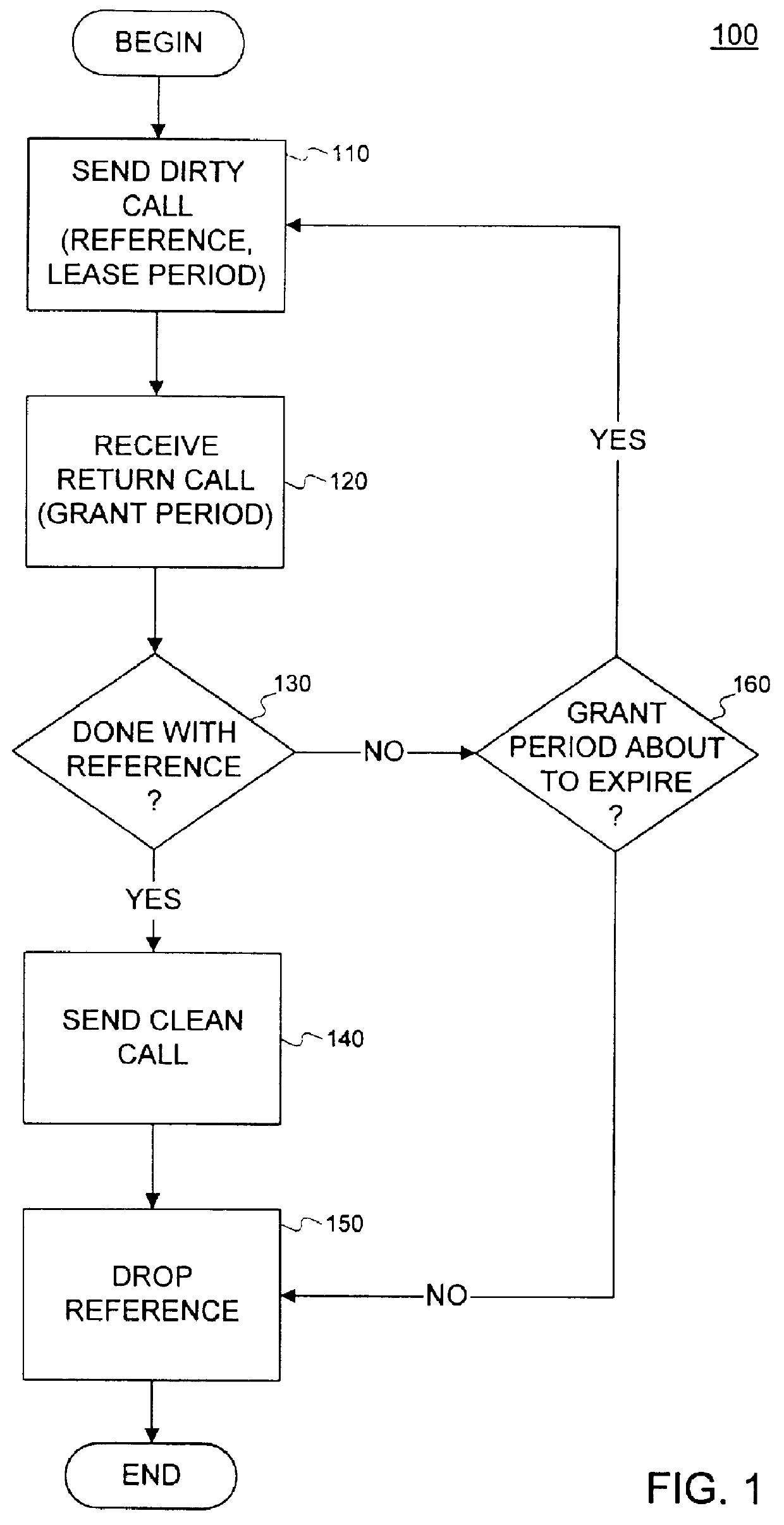

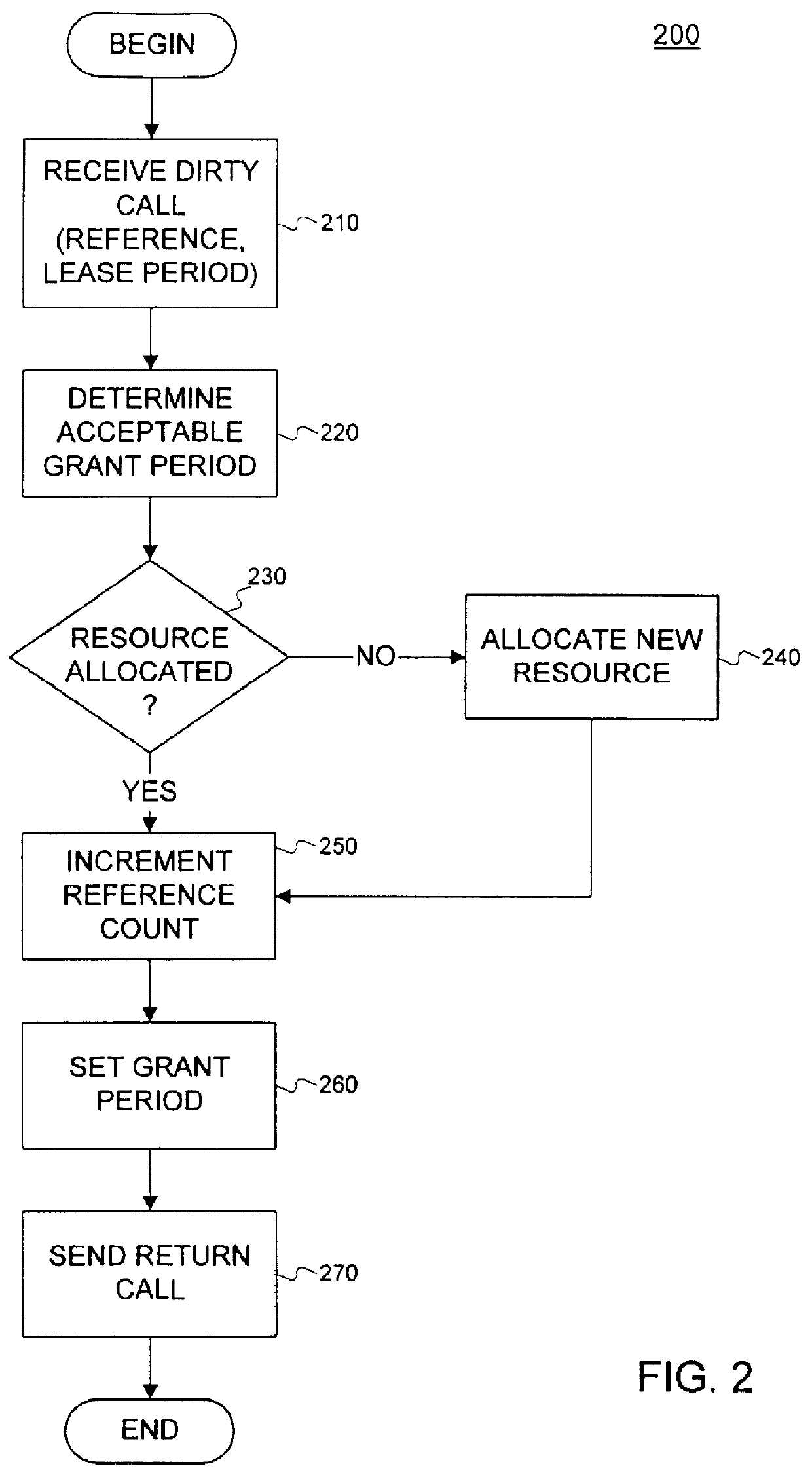

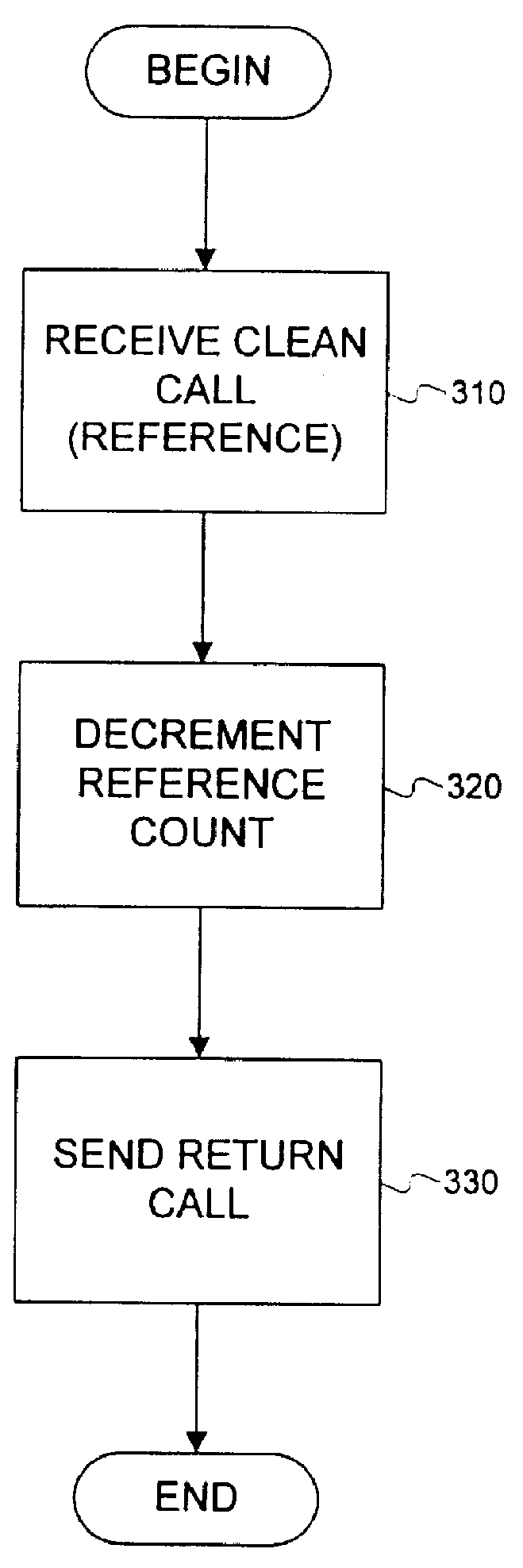

Leasing for failure detection

A system for using a lease to detect a failure and to perform failure recovery is provided. In using this system, a client requests a lease from a server to utilize a resource managed by the server for a period of time. Responsive to the request, the server grants the lease, and the client continually requests renewal of the lease. If the client fails to renew the lease, the server detects that an error has occurred to the client. Similarly, if the server fails to respond to a renew request, the client detects that an error has occurred to the server. As part of the lease establishment, the client and server exchange failure-recovery routines that each invokes if the other experiences a failure.

Owner:SUN MICROSYSTEMS INC

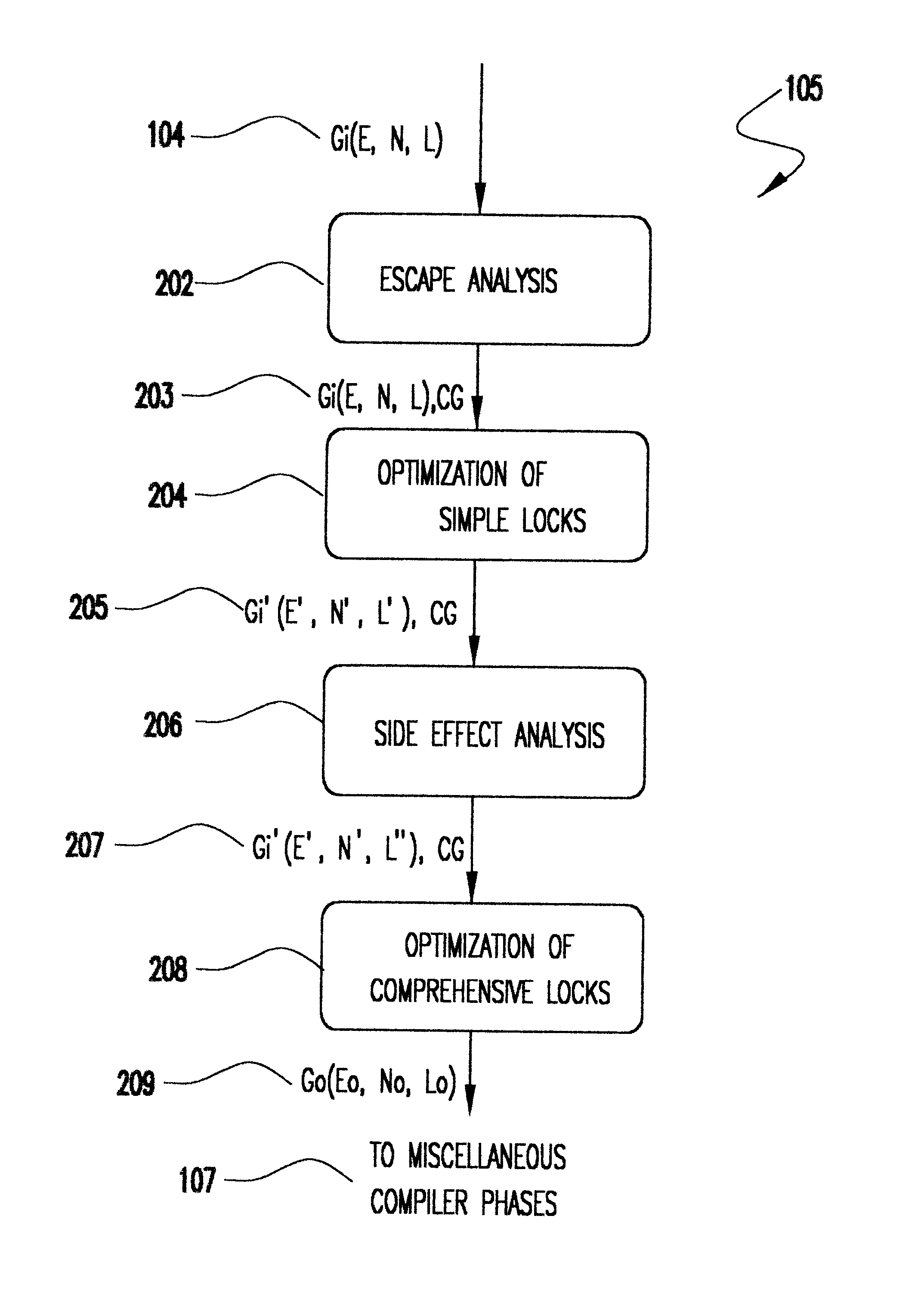

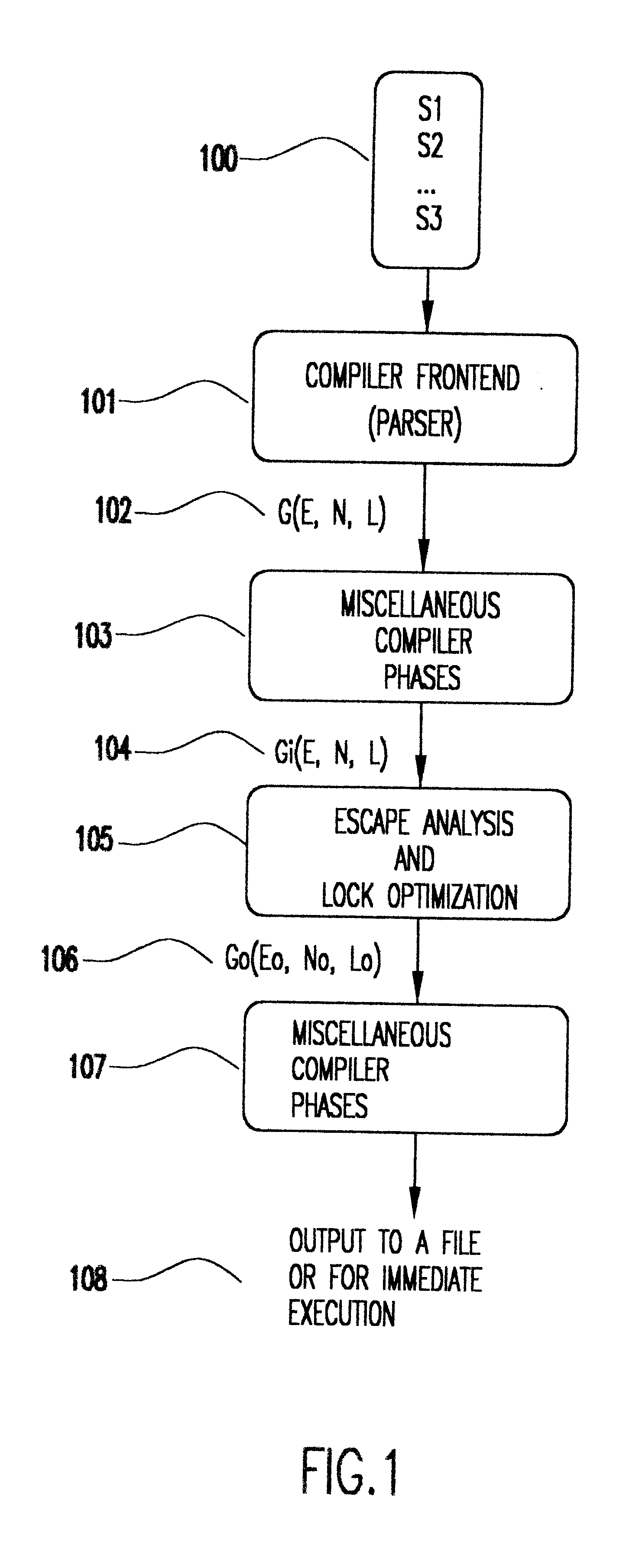

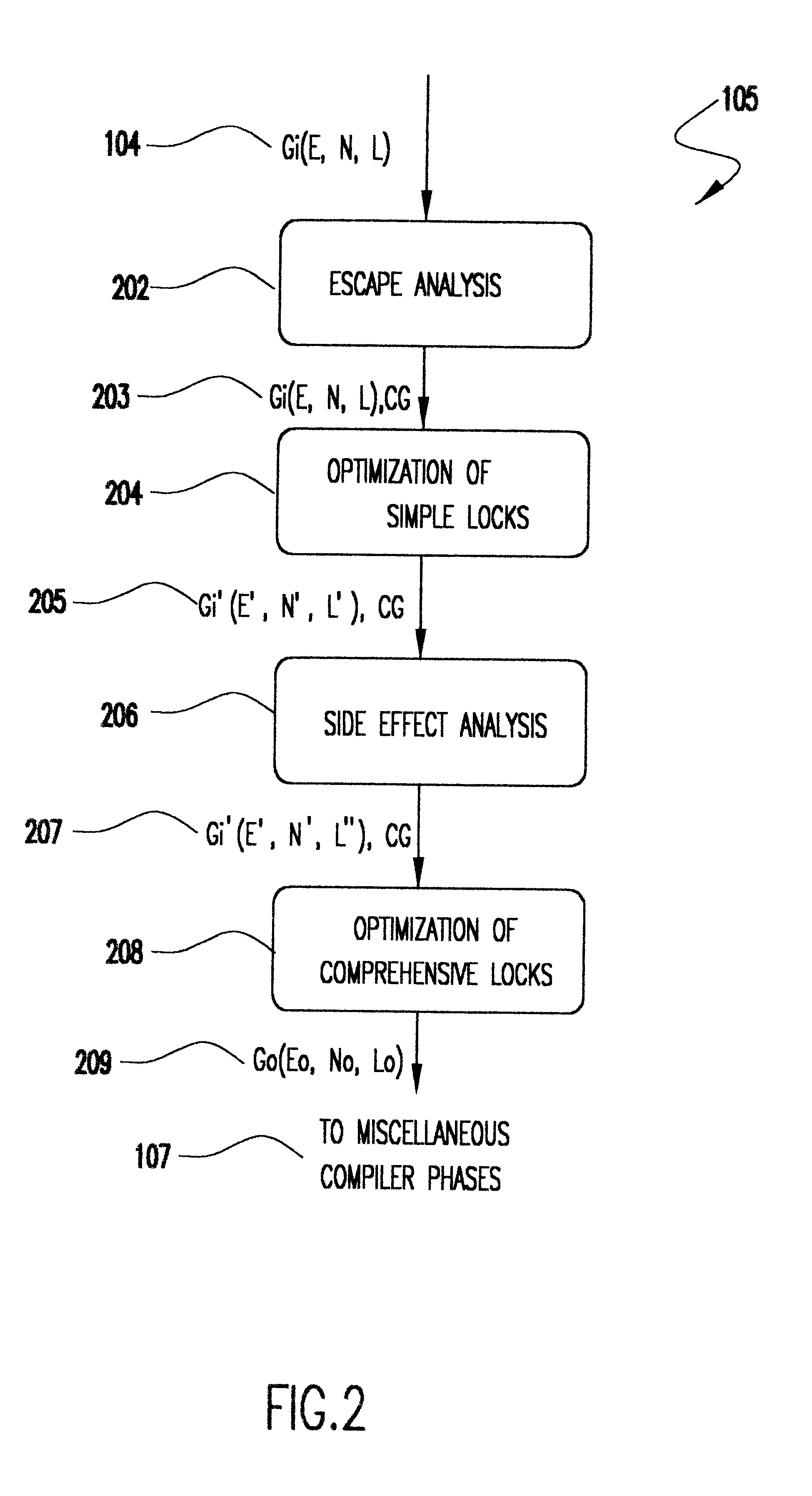

Method for optimizing locks in computer programs

InactiveUS6530079B1Easy to operateProgram synchronisationSoftware engineeringProgram planningSemantics

A method and several variants for using information about the scope of access of objects acted upon by mutual exclusion, or mutex, locks to transform a computer program by eliminating locking operations from the program or simplifying the locking operations, while strictly performing the semantics of the original program. In particular, if it can be determined by a compiler that the object locked can only be accessed by a single thread it is not necessary to perform the "acquire" or "release" part of the locking operation, and only its side effects must be performed. Likewise, if it can be determined that the side effects of a locking operation acting on a variable which is locked in multiple threads are not needed, then only the locking operation, and not the side effects, needs to be performed. This simplifies the locking operation, and leads to faster programs which use fewer computer processor resources to execute; and programs which perform fewer shared memory accesses, which in turn not only causes the optimized program, but also other programs executing on the same computing machine to execute faster. The method also describes how information about the semantics of the locking operation side effects and the information about the scope of access can also be used to eliminate performing the side effect parts of the locking operation, thereby completely eliminating the locking operation. The method also describes how to analyze the program to compute the necessary information about the scope of access. Variants of the method show how one or several of the features of the method may be performed.

Owner:IBM CORP

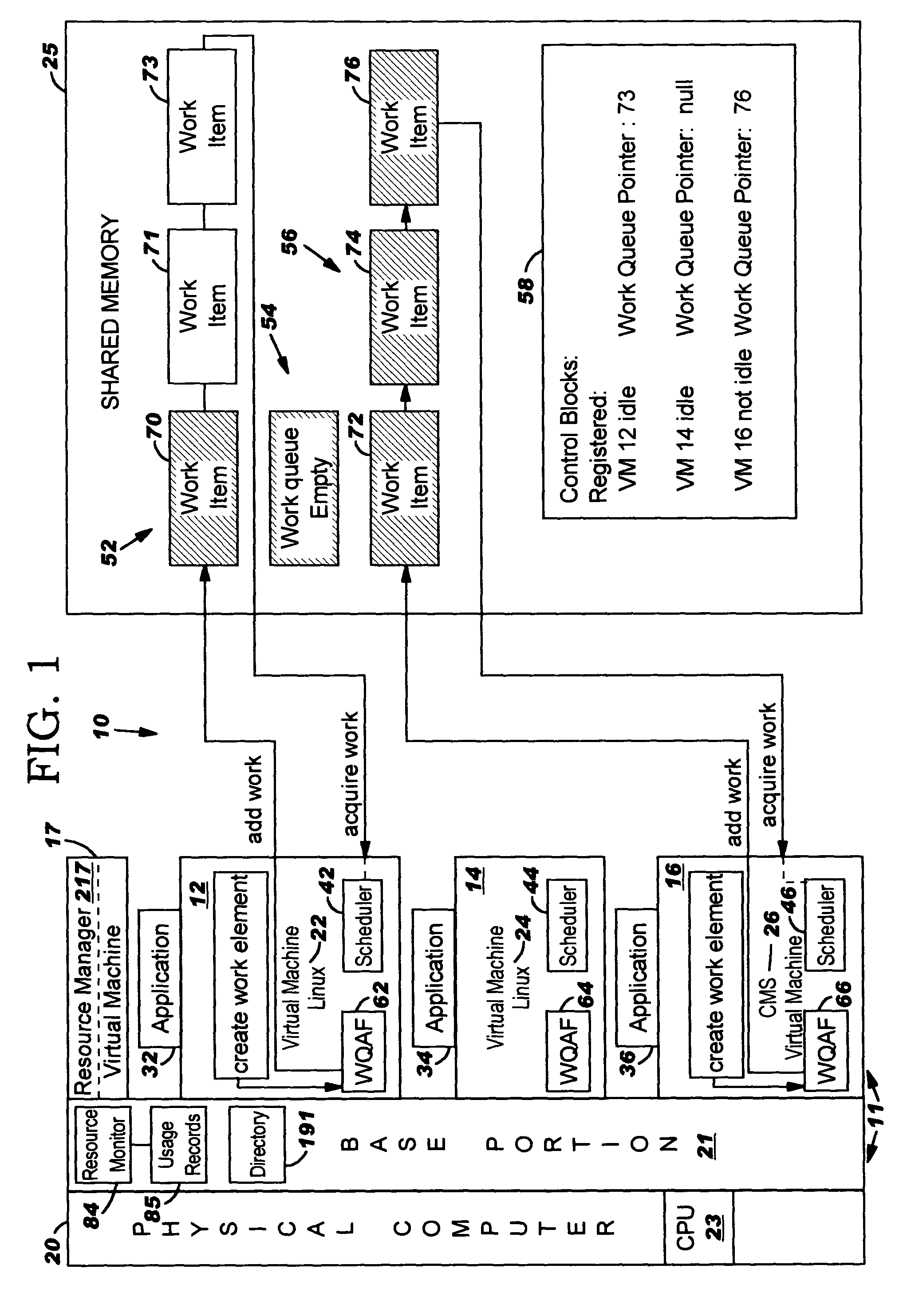

Management of virtual machines to utilize shared resources

A technique for utilizing resources in a virtual machine operating system. The virtual machine operating system comprises a multiplicity of virtual machines. A share of resources is allocated to each of the virtual machines. Utilization by one of the virtual machines of the resources allocated to the one virtual machine is automatically monitored. If the one virtual machine needs additional resources, the one virtual machine is automatically cloned. The clone is allocated a share of the resources taken from the shares of other of the virtual machines, such that the resultant shares allocated to the one virtual machine and the clone together are greater than the share allocated to the one virtual machine before the one virtual machine was cloned. The clone performs work with its resources that would have been performed by the one virtual machine if not for the existence of said clone.

Owner:HUAWEI TECH CO LTD

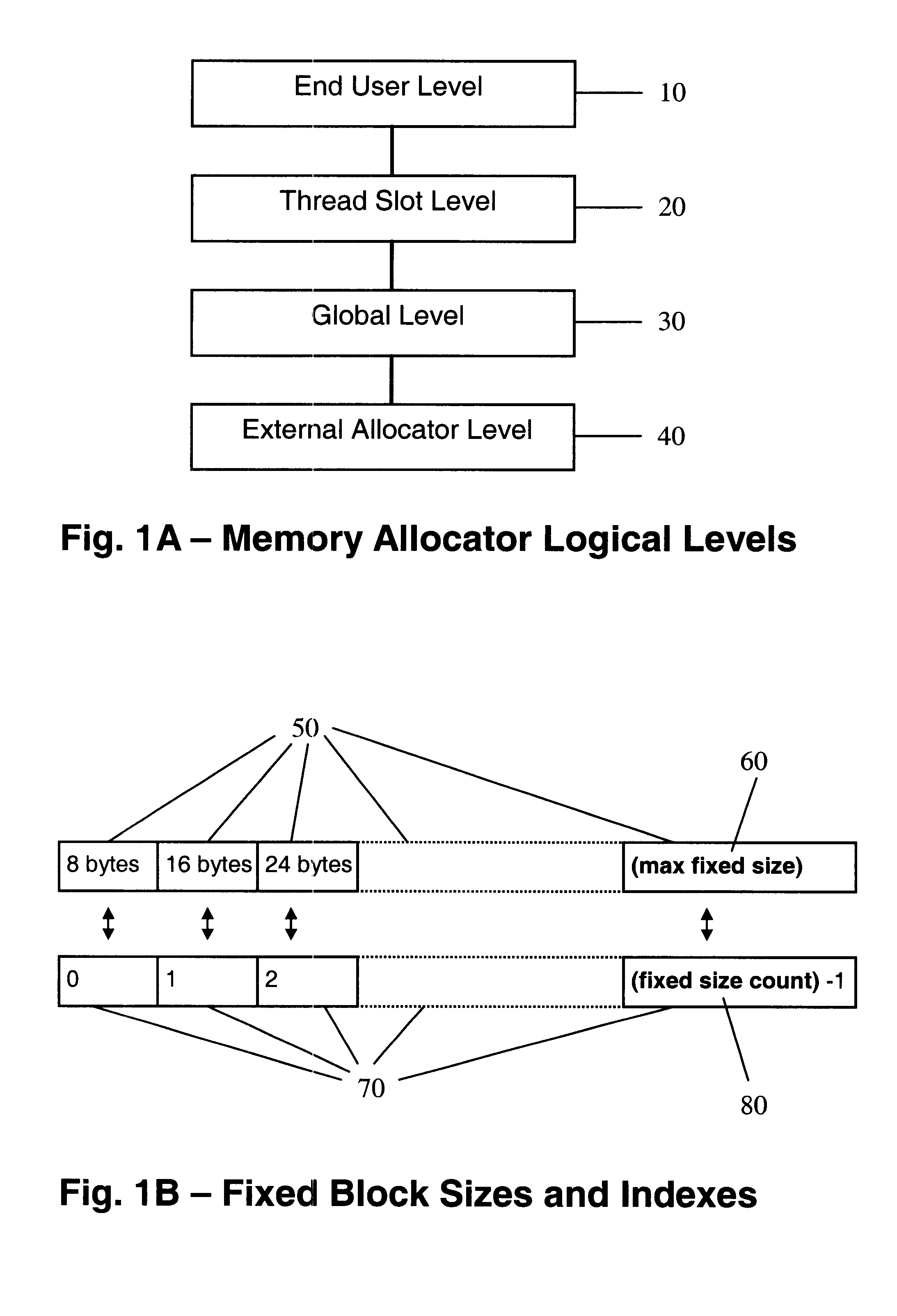

Memory allocator for multithread environment

InactiveUS6539464B1Shorten the timeLong delayResource allocationProgram synchronisationGeneral purposeSerialization

Memory allocator combines private (per thread) sets of fixed-size free blocks lists, a global, common for all threads, set of fixed-sized free block lists, and a general-purpose external coalescing allocator. Blocks of size bigger than the maximal fixed size are managed directly by the external allocator. The lengths of fixed-size lists are changed dynamically in accordance with the allocation and disallocation workload. A service thread performs updates of all the lists and collects memory associated with terminated user threads. A mutex-free serialization method, utilizing thread suspension, is used in the process.

Owner:GETOV RADOSLAV NENKOV

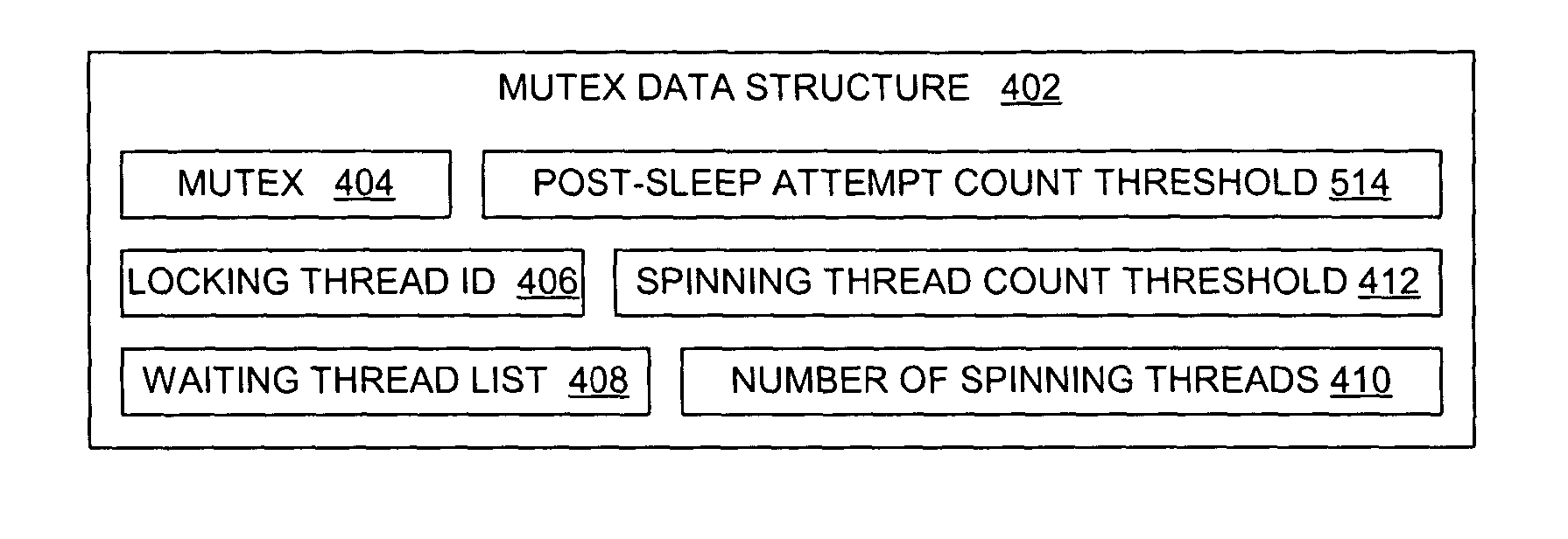

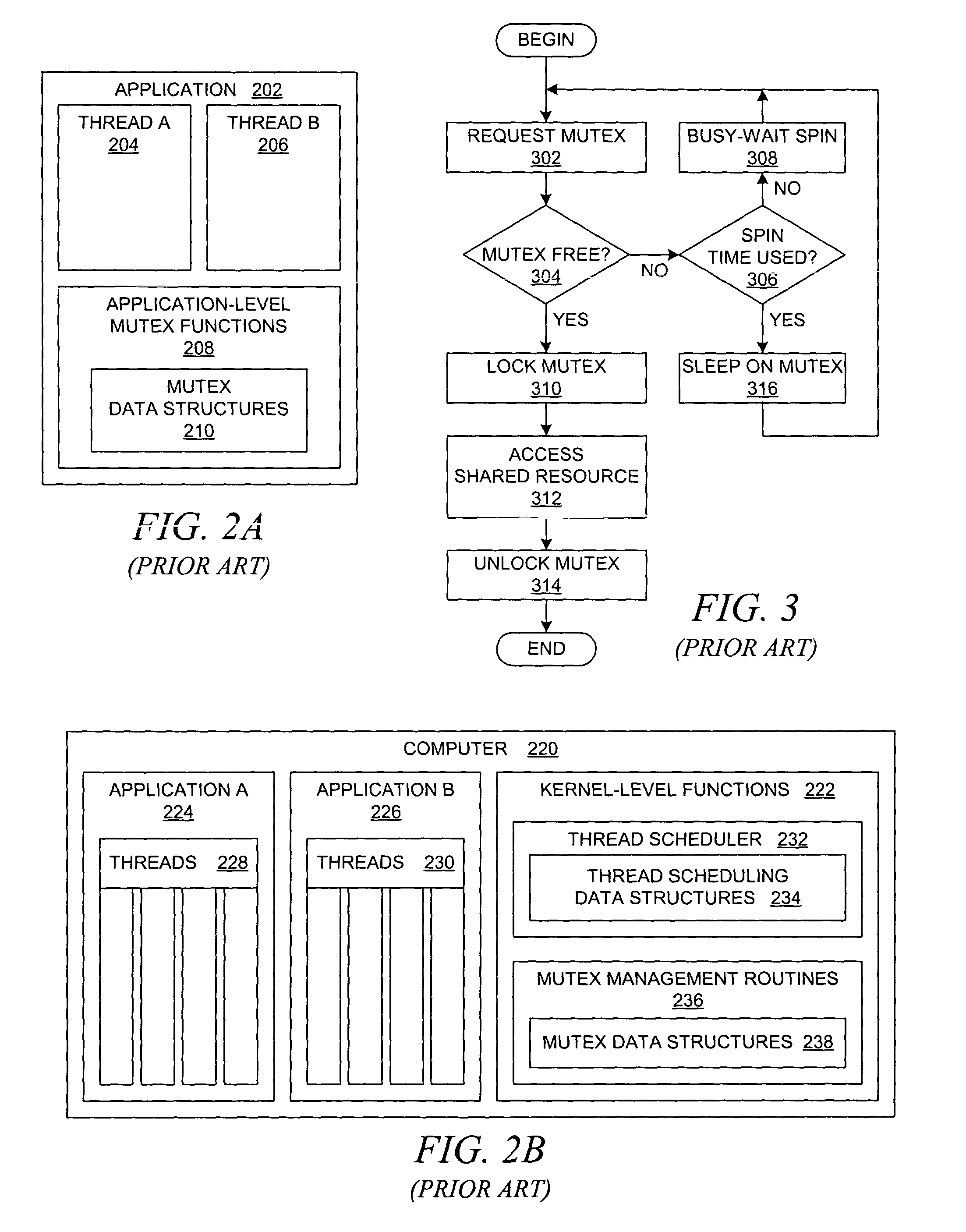

Method and system for dynamically bounded spinning threads on a contested mutex

A method for managing a mutex in a data processing system is presented. For each mutex, a count is maintained of the number of threads that are spinning while waiting to acquire a mutex. If a thread attempts to acquire a locked mutex, then the thread enters a spin state or a sleep state based on restrictive conditions and the number of threads that are spinning during the attempted acquisition. In addition, the relative length of time that is required by a thread to spin on a mutex after already sleeping on the mutex may be used to regulate the number of threads that are allowed to spin on the mutex.

Owner:IBM CORP

Method and apparatus for dynamic lock granularity escalation and de-escalation in a computer system

A method and apparatus for dynamic lock granularity escalation and de-escalation in a computer system is provided. Upon receiving a request for a resource, a scope of a previously granted lock is modified. According to one embodiment, hash lock de-escalation is employed. In hash lock de-escalation, the scope of the previously granted lock held on a set of resources is reduced by de-escalating the previously granted lock from a coarser-grain lock to one or more finer-grain locks on members of the set. According to another embodiment, hash lock escalation is employed. In hash lock escalation, the scope of previously granted locks held on one or more members of the set of resources are released and promoted into a coarser-grain lock that covers the set of resources as well as the requested resource.

Owner:ORACLE INT CORP

Popular searches

Transmission Special data processing applications Memory adressing/allocation/relocation Object oriented databases Program loading/initiating Data switching networks Distributed object oriented systems Program control using wired connections Data switching by path configuration Efficient regulation technologies

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com