Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

88 results about "Memory allocator" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The memory allocator is a very important component, which significantly affects both performance an stability of the game. The purpose of is to allow the allocator to be developed independently on the application, allowing both Bohemia Interactive and community to fix bugs and improve performance without having to modify the core game files.

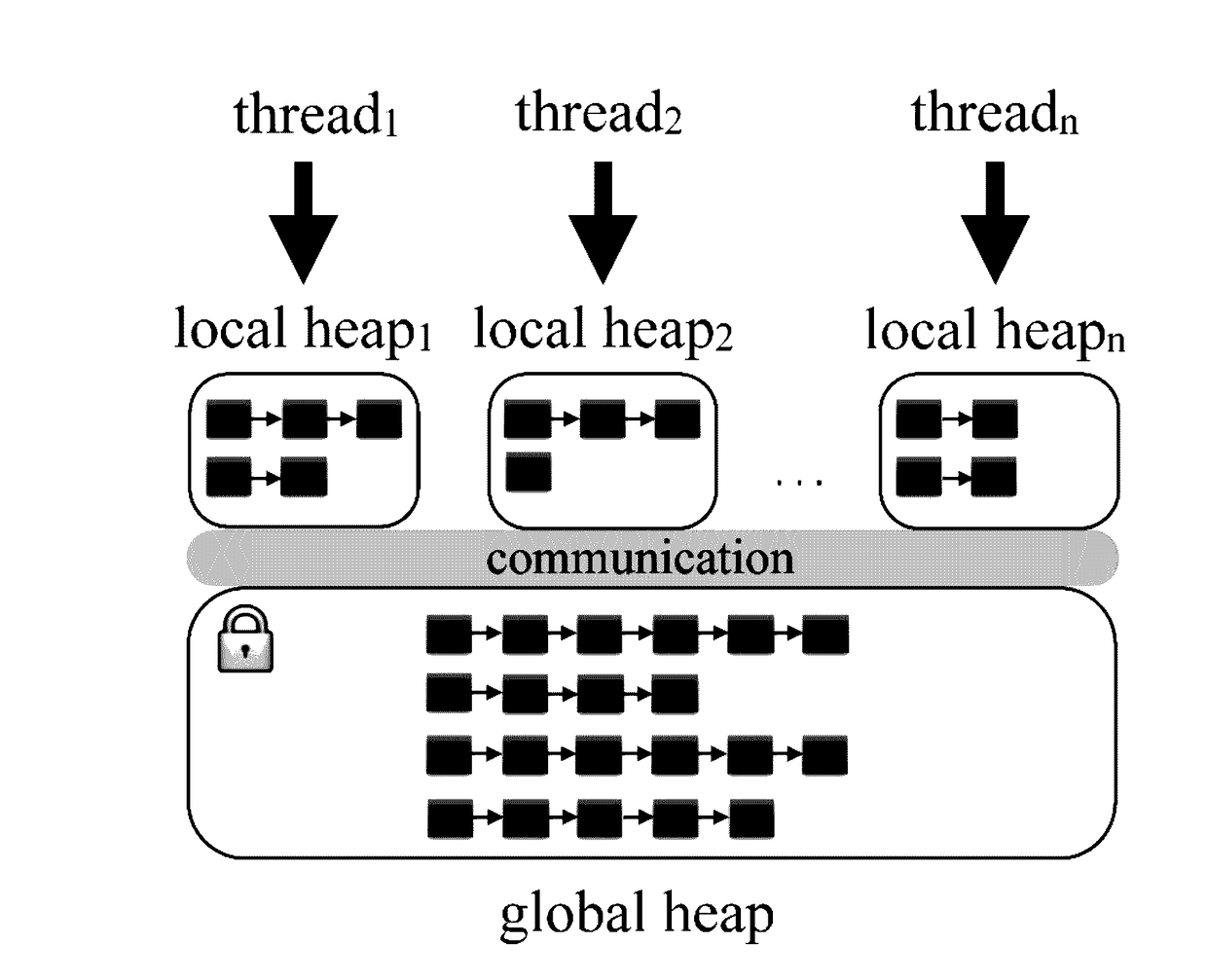

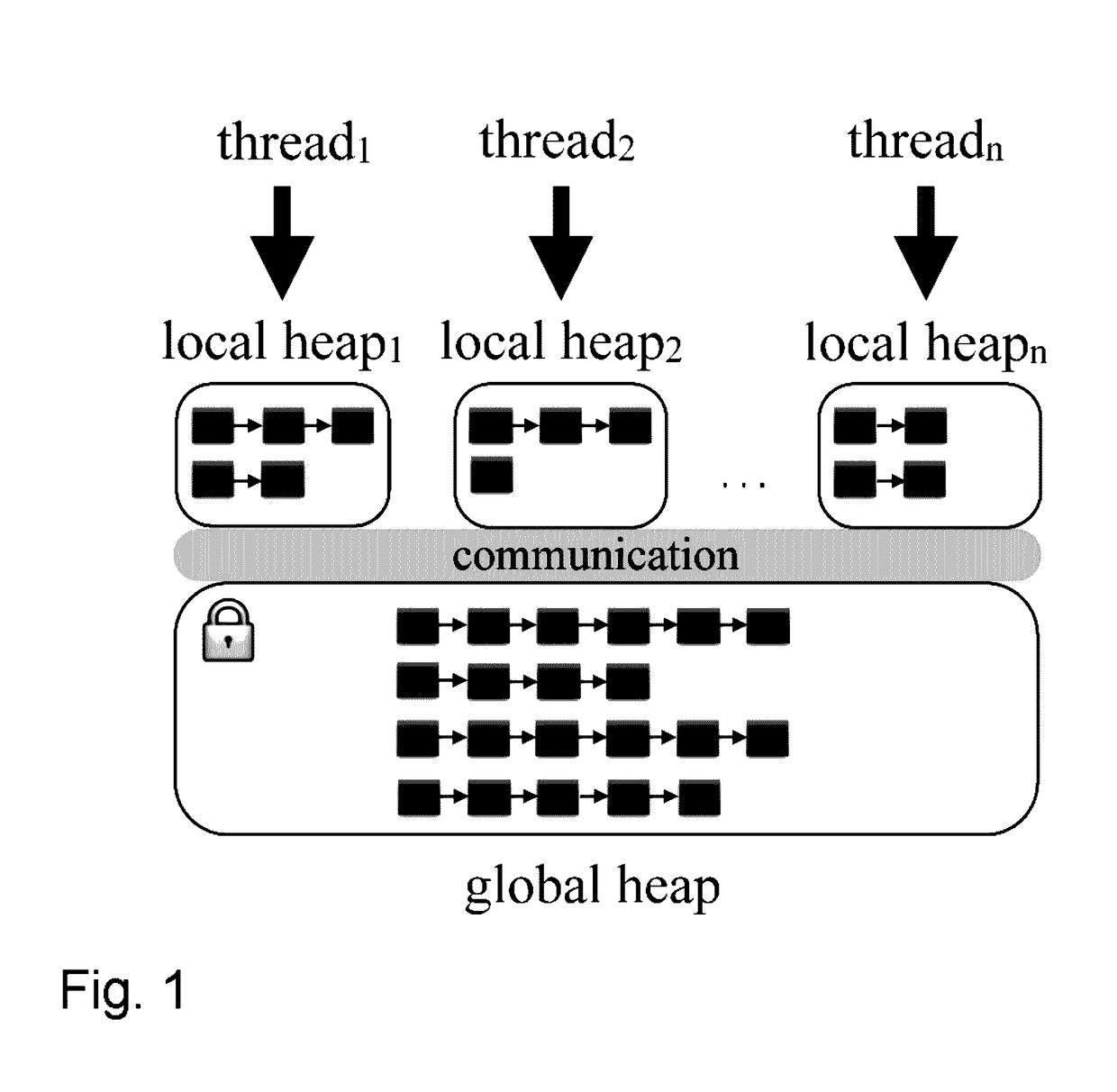

Memory allocator for multithread environment

InactiveUS6539464B1Shorten the timeLong delayResource allocationProgram synchronisationGeneral purposeSerialization

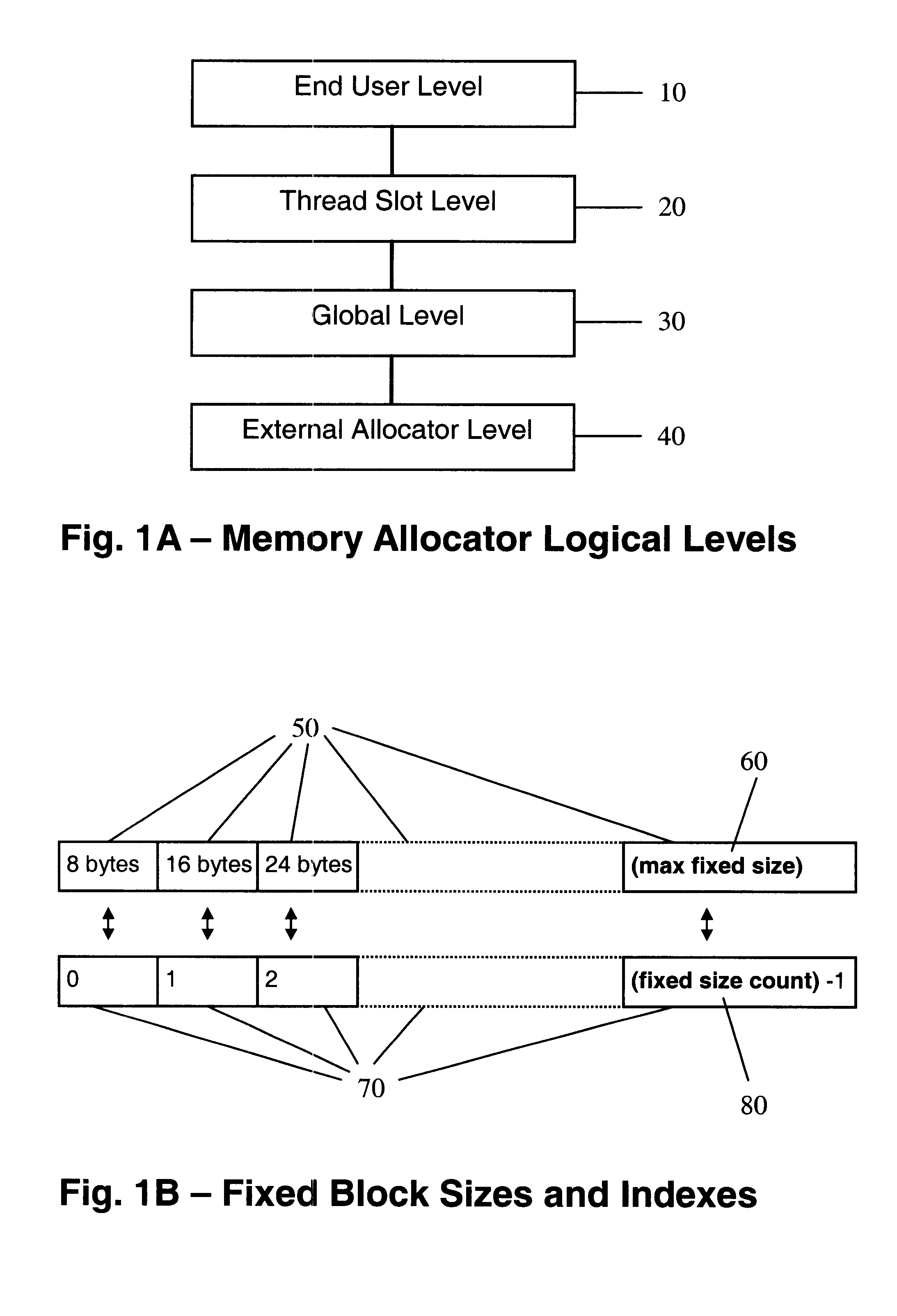

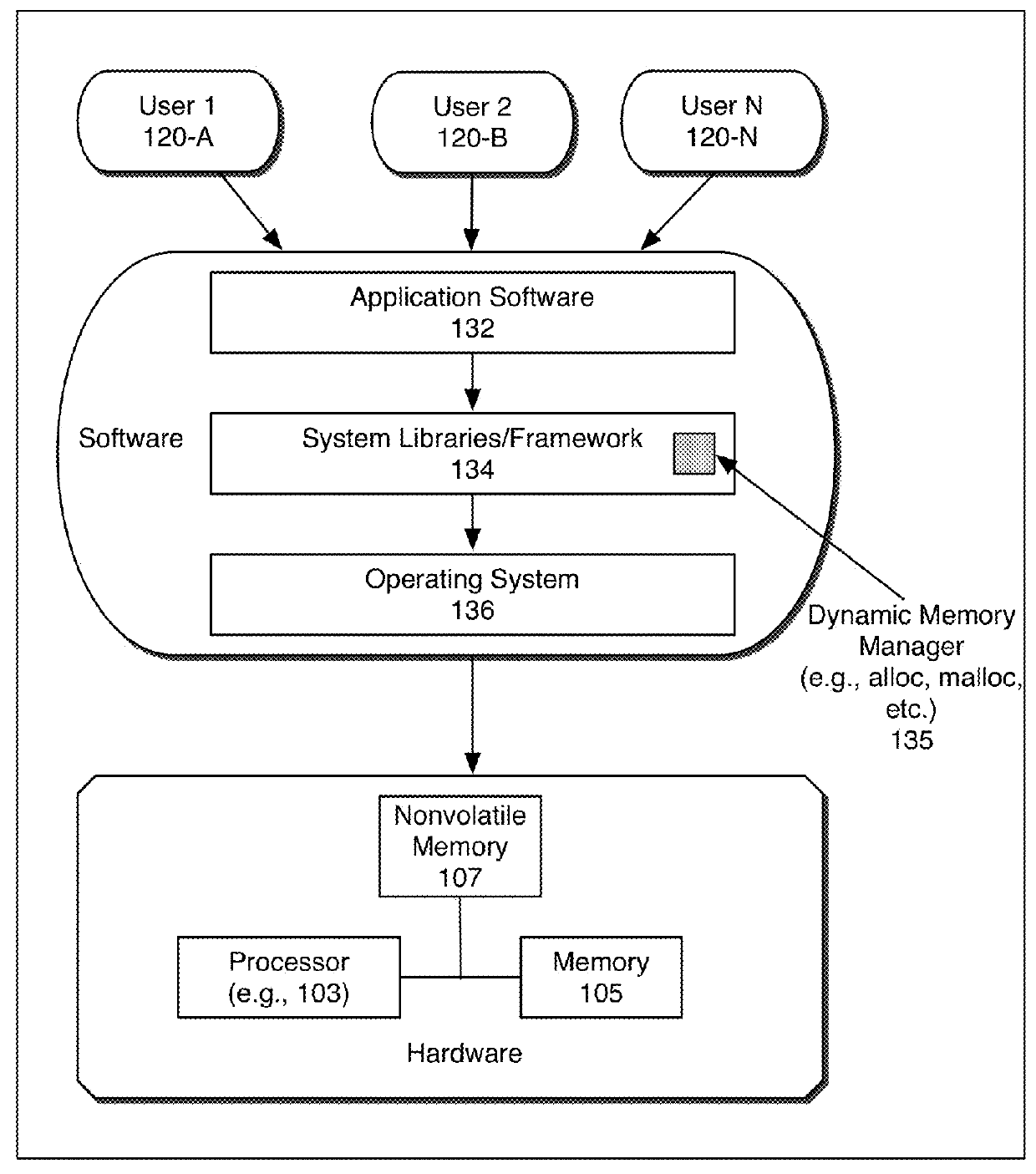

Memory allocator combines private (per thread) sets of fixed-size free blocks lists, a global, common for all threads, set of fixed-sized free block lists, and a general-purpose external coalescing allocator. Blocks of size bigger than the maximal fixed size are managed directly by the external allocator. The lengths of fixed-size lists are changed dynamically in accordance with the allocation and disallocation workload. A service thread performs updates of all the lists and collects memory associated with terminated user threads. A mutex-free serialization method, utilizing thread suspension, is used in the process.

Owner:GETOV RADOSLAV NENKOV

Method and apparatus for efficient virtual memory management

InactiveUS6886085B1Reduce the amount of dataReduce the number of dataMemory architecture accessing/allocationMemory adressing/allocation/relocationWaste collectionVirtual memory management

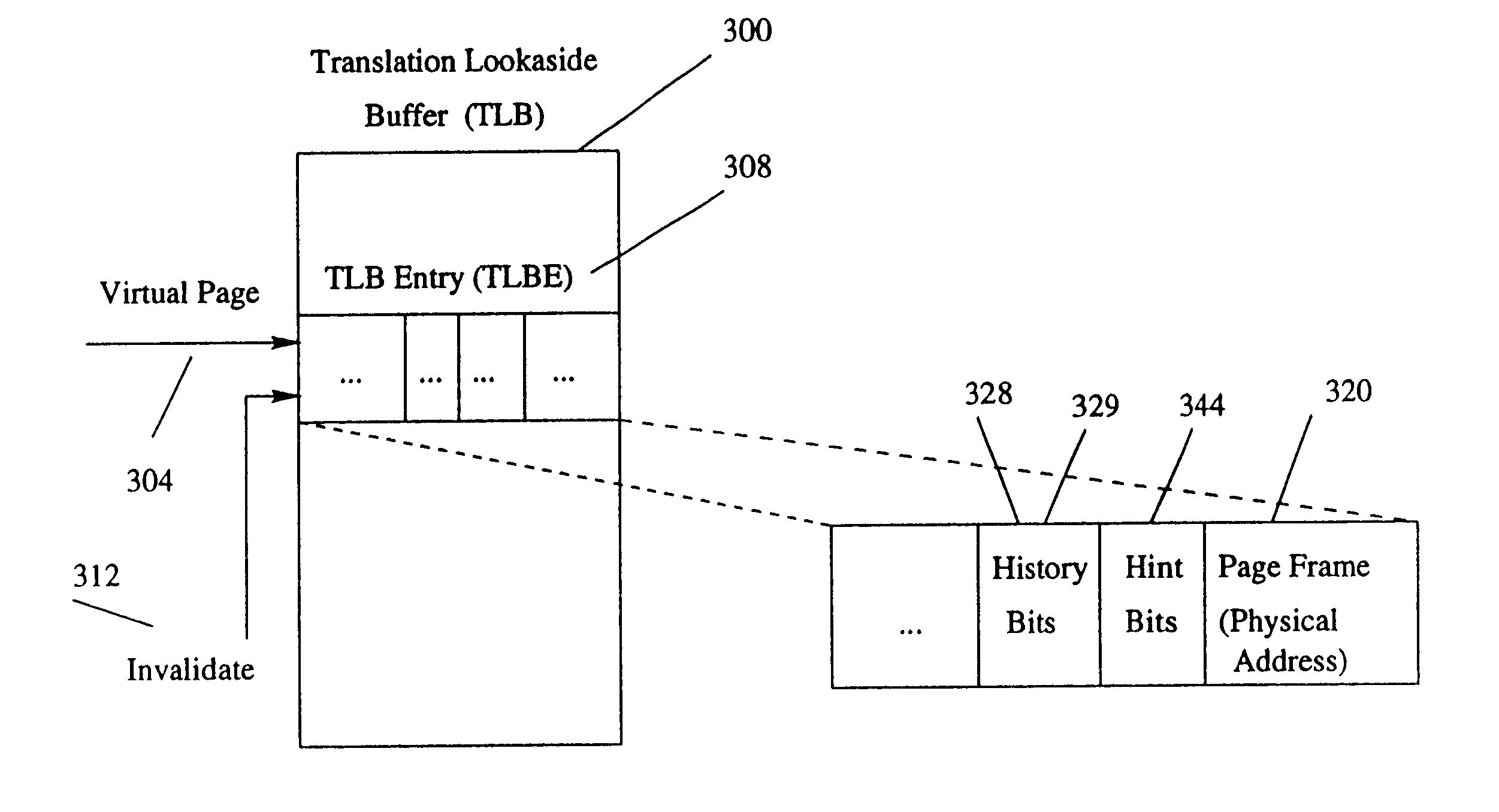

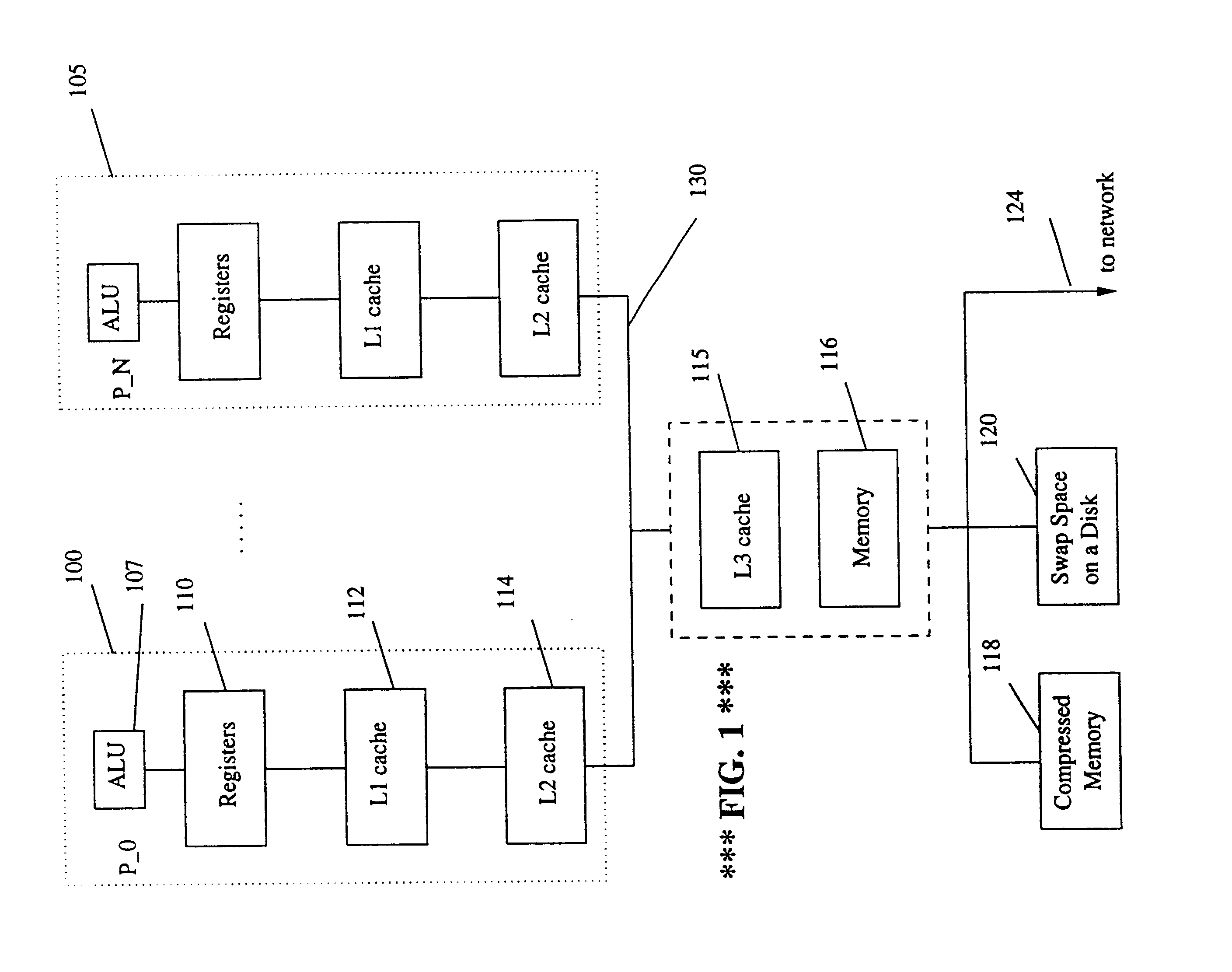

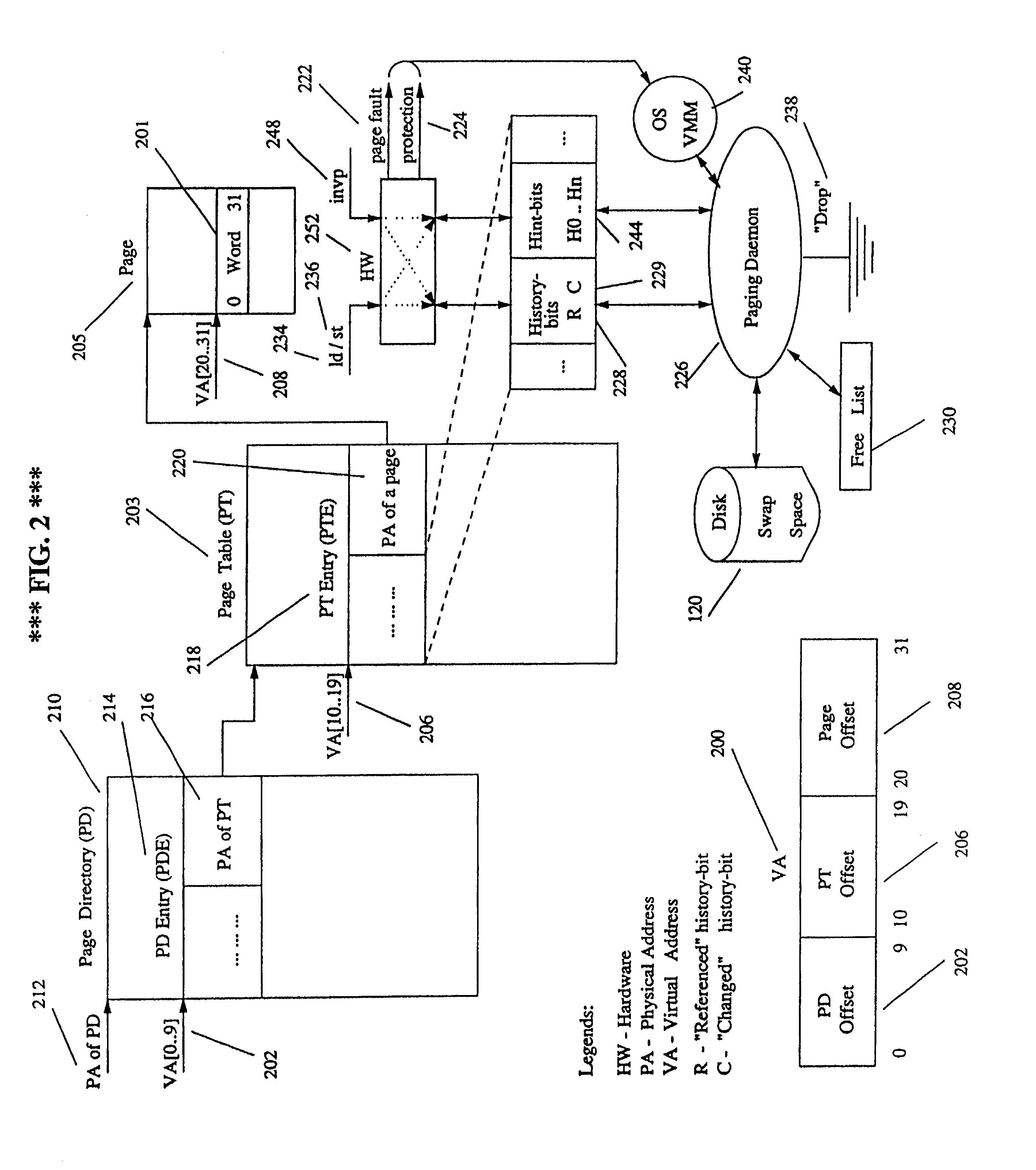

A method and an apparatus that improves virtual memory management. The proposed method and apparatus provides an application with an efficient channel for communicating information about future behavior of an application with respect to the use of memory and other resources to the OS, a paging daemon, and other system software. The state of hint bits, which are integrated into page table entries and TLB entries and are used for communicating information to the OS, can be changed explicitly with a special instruction or implicitly as a result of referencing the associated page. The latter is useful for canceling hints. The method and apparatus enables memory allocators, garbage collectors, and compilers (such as those used by the Java platform) to use a page-aligned heap and a page-aligned stack to assist the OS in effective management of memory resources. This mechanism can also be used in other system software.

Owner:IBM CORP

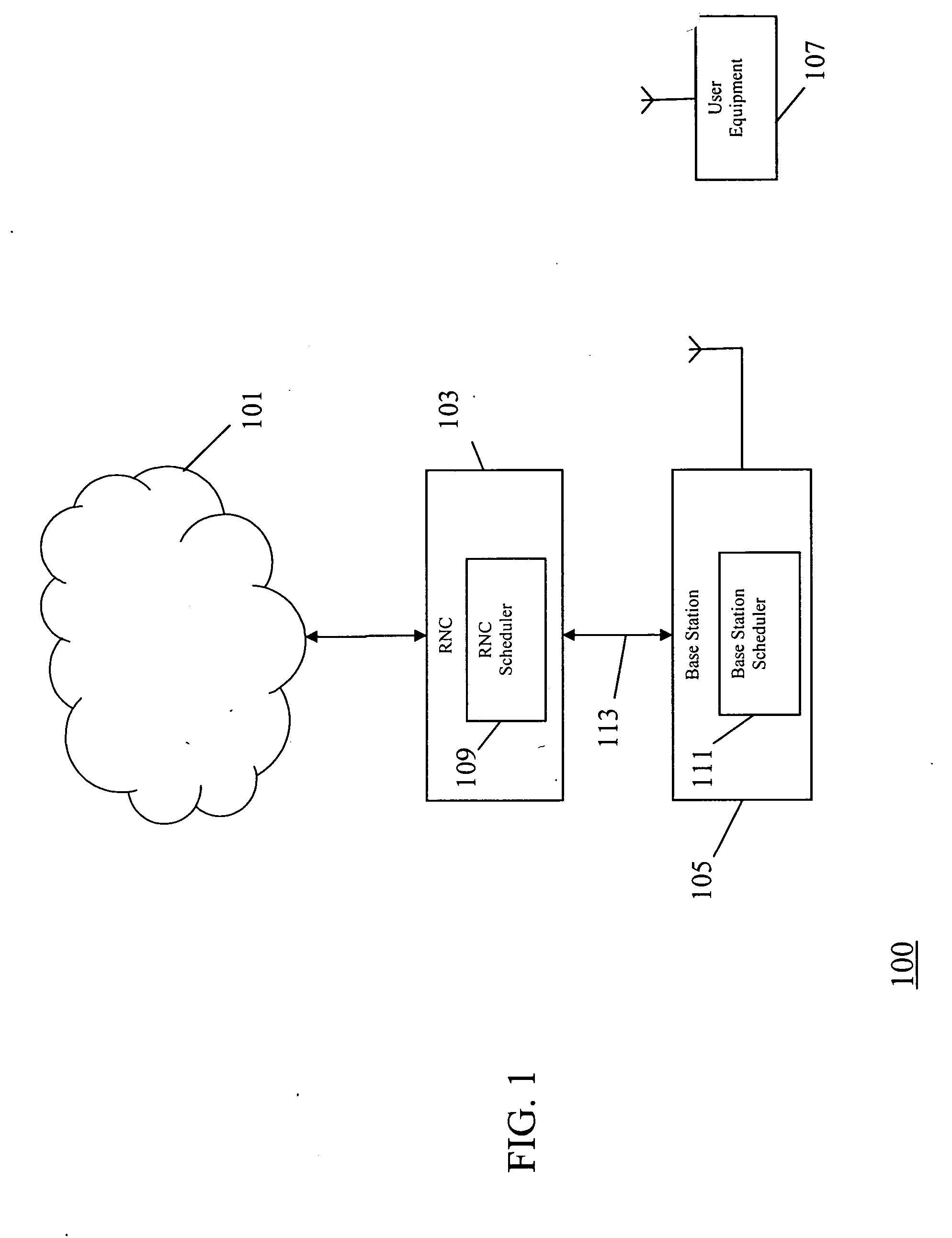

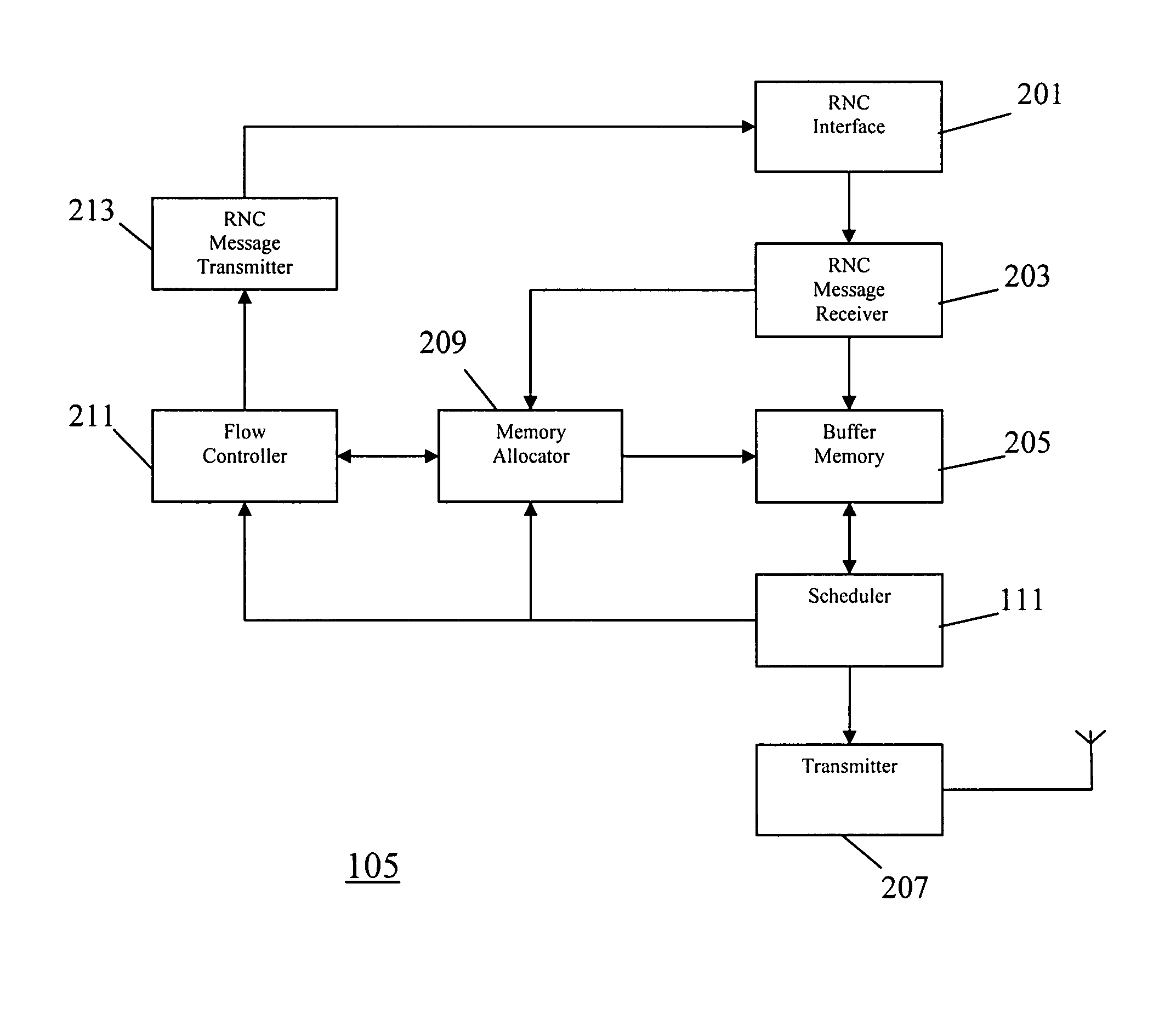

Flow control in a cellular communication system

InactiveUS20060223585A1Efficient flow controlProvide compatibilityNetwork traffic/resource managementSubstation equipmentTraffic capacityRadio networks

A base station comprises an RNC message receiver which receives data packets from a Radio Network Controller, RNC. The data packets are transmitted over air interface channels by a transmitter. Buffer memory buffers the data packets prior to transmission and a scheduler schedules the data packets for transmission over the air interface channels. A memory allocator determines a first memory allocation of the buffer memory for a first air interface communication with a first user equipment. A flow controller determining a transfer allowance for transferring of data from the RNC to the base station in response to the first memory allocation and a current buffer memory usage of the first air interface communication. The transfer allowance is transmitted to the RNC by an RNC message transmitter. The RNC only transmits data packets to the base station if the current transfer allowance is sufficient. Thereby an efficient flow control is achieved.

Owner:SONY CORP

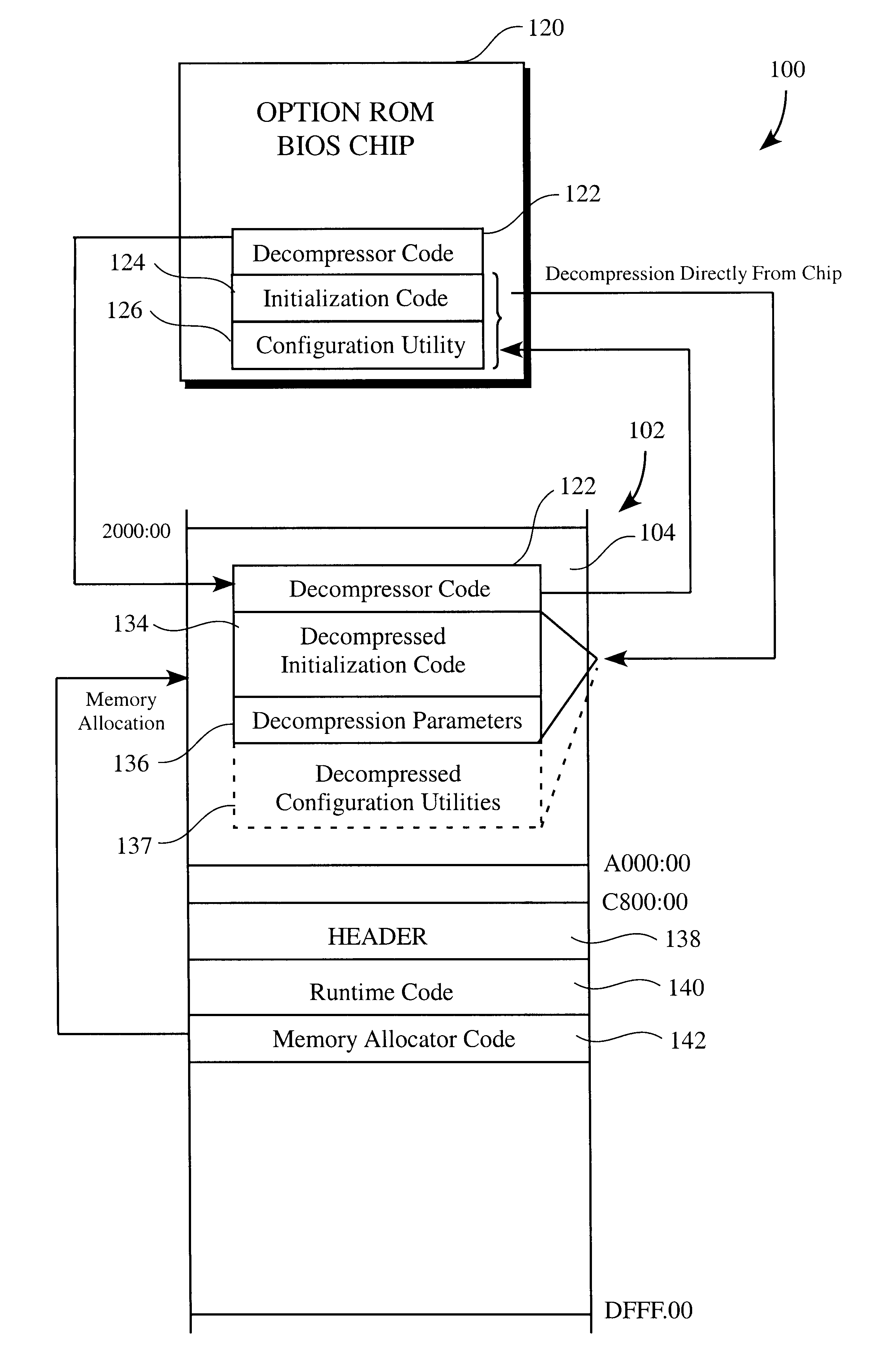

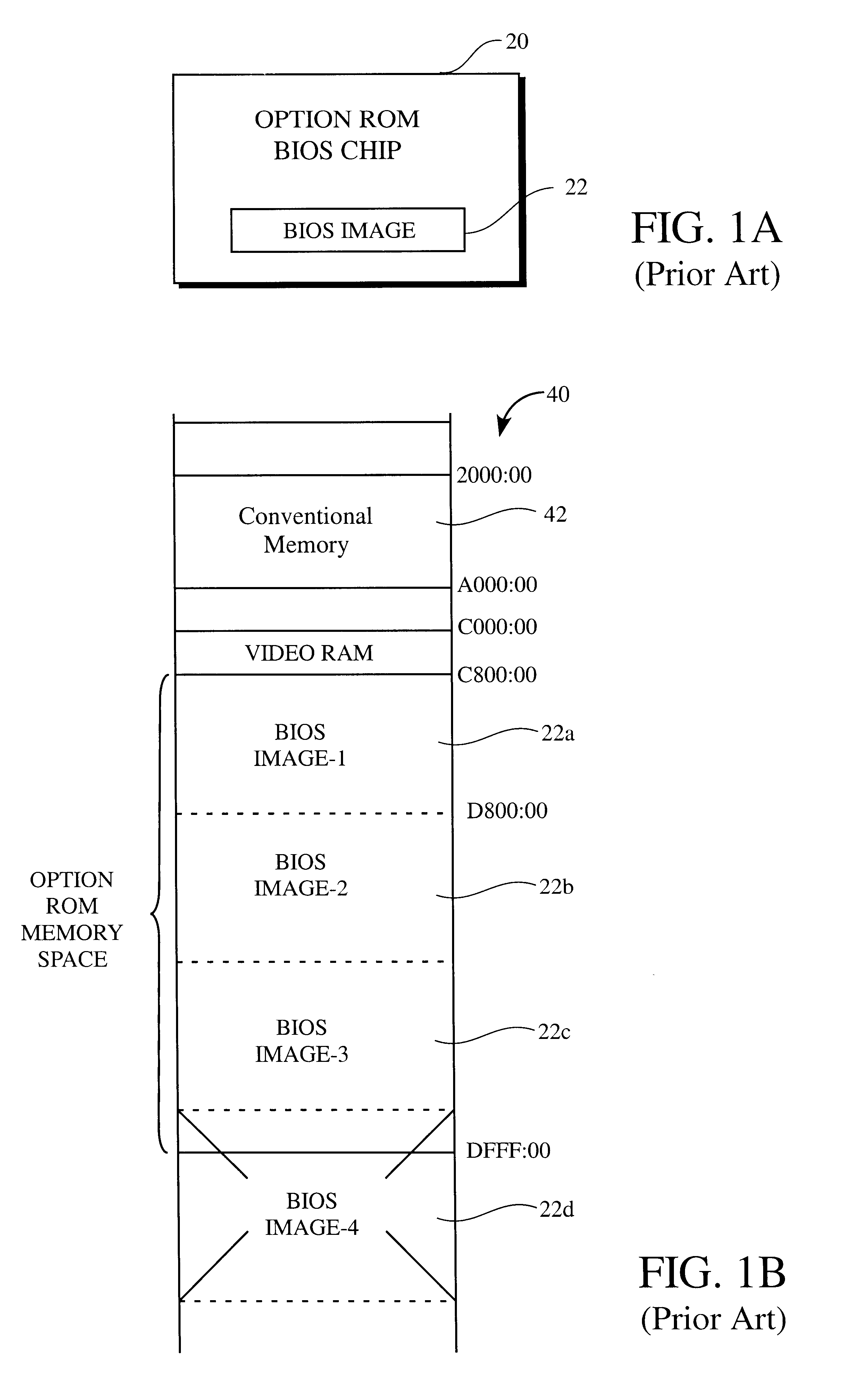

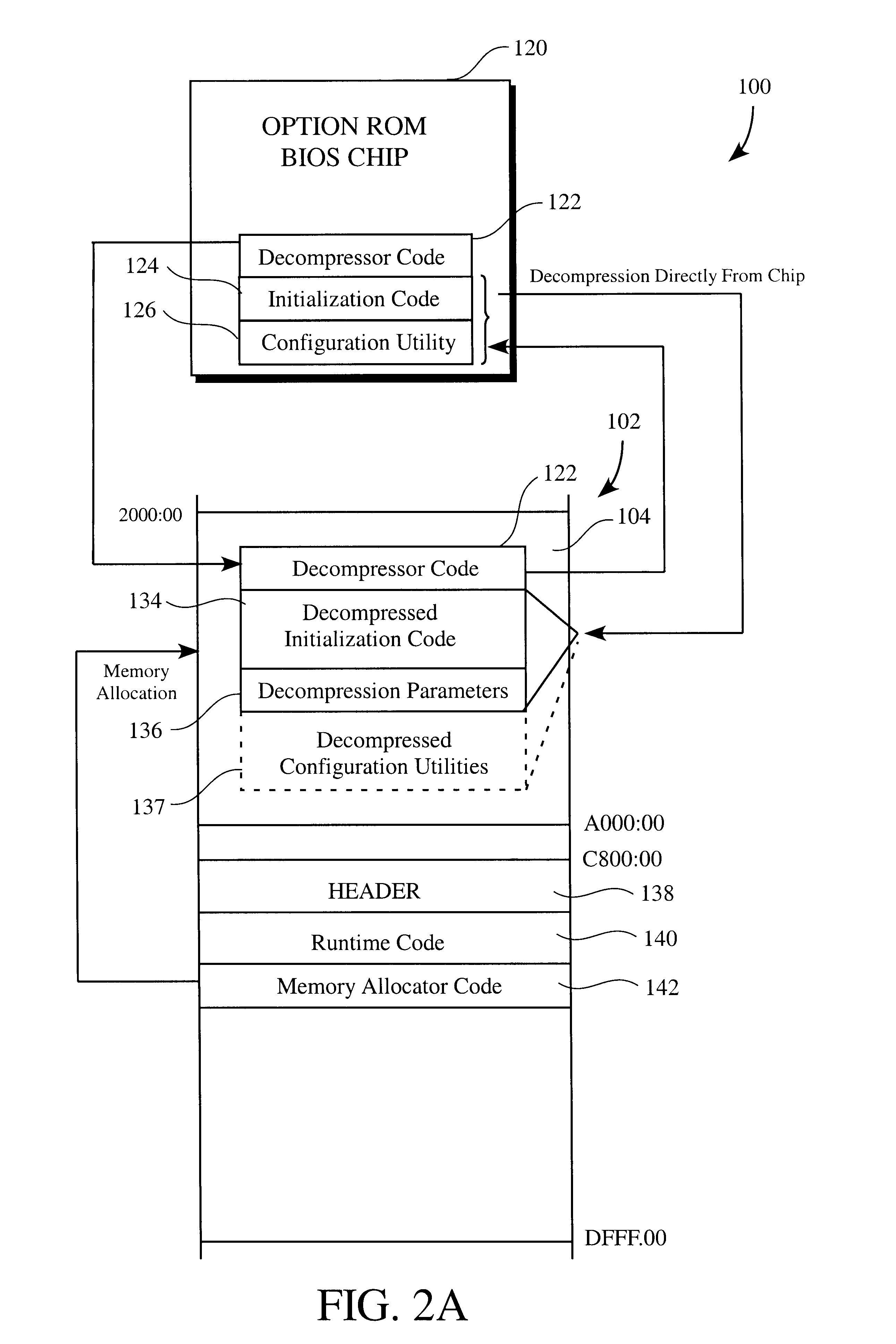

Method of conserving memory resources during execution of system BIOS

Methods of conserving memory resources available to a computer system during execution of a system BIOS are provided. The method includes (a) executing the system BIOS; (b) loading the header, runtime code, and memory allocator code associated with the option ROM BIOS chip into the option ROM memory space; (c) passing control to the memory allocator code; (d) executing the memory allocator code to allocate conventional memory of the system RAM; (e) copying the decompressor code from the option ROM BIOS chip to the allocated conventional memory; (f) passing control to the decompressor code; (g) executing the decompressor code to decompress the compressed initialization code directly from the option ROM BIOS chip and thus loading the decompressed initialization code into the conventional memory; and (h) executing the decompressed initialization code to initialize the adapter card or controller.

Owner:PMC-SIERRA

In-memory space management for database systems

ActiveUS20090037498A1Data processing applicationsDigital data processing detailsTransaction-level modelingSpace management

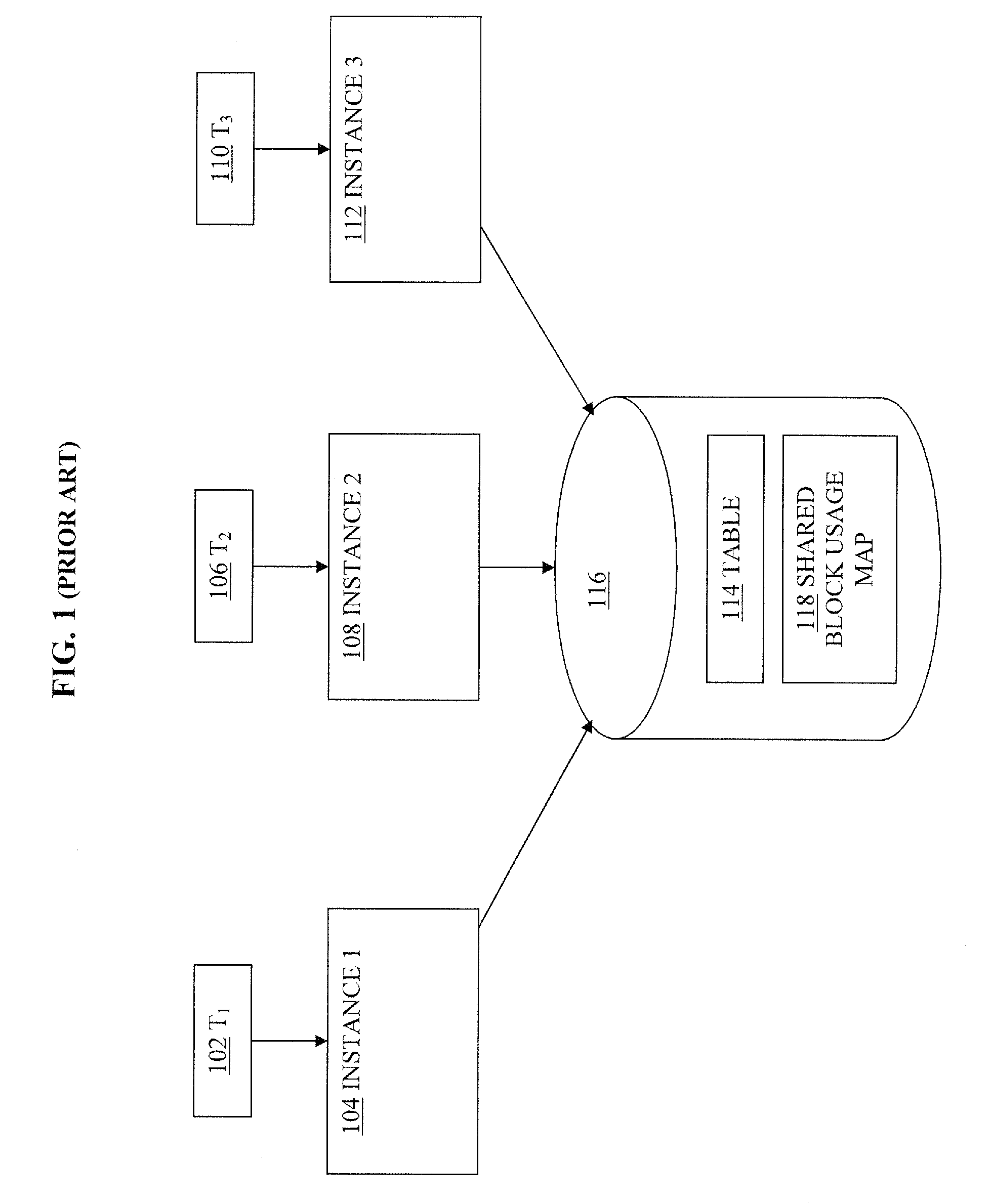

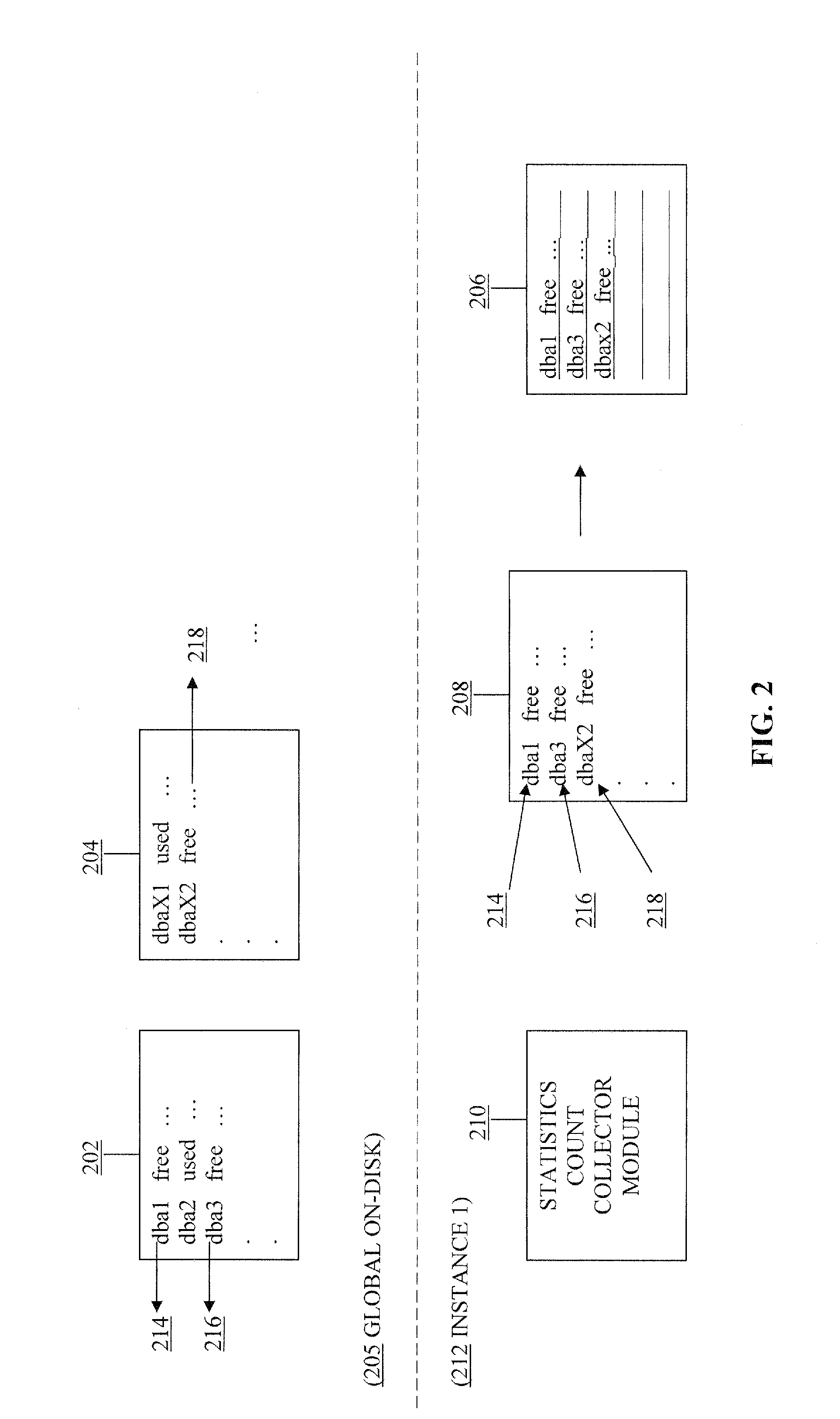

A framework for in-memory space management for content management database systems is provided. A per-instance in-memory dispenser is partitioned. An incoming transaction takes a latch on a partition and obtains sufficient block usage to perform and complete the transaction. Generating redo information is decoupled from transaction level processing and, instead, is performed when block requests are loaded into the in-memory dispenser or synced therefrom to a per-instance on-disk structure.

Owner:ORACLE INT CORP

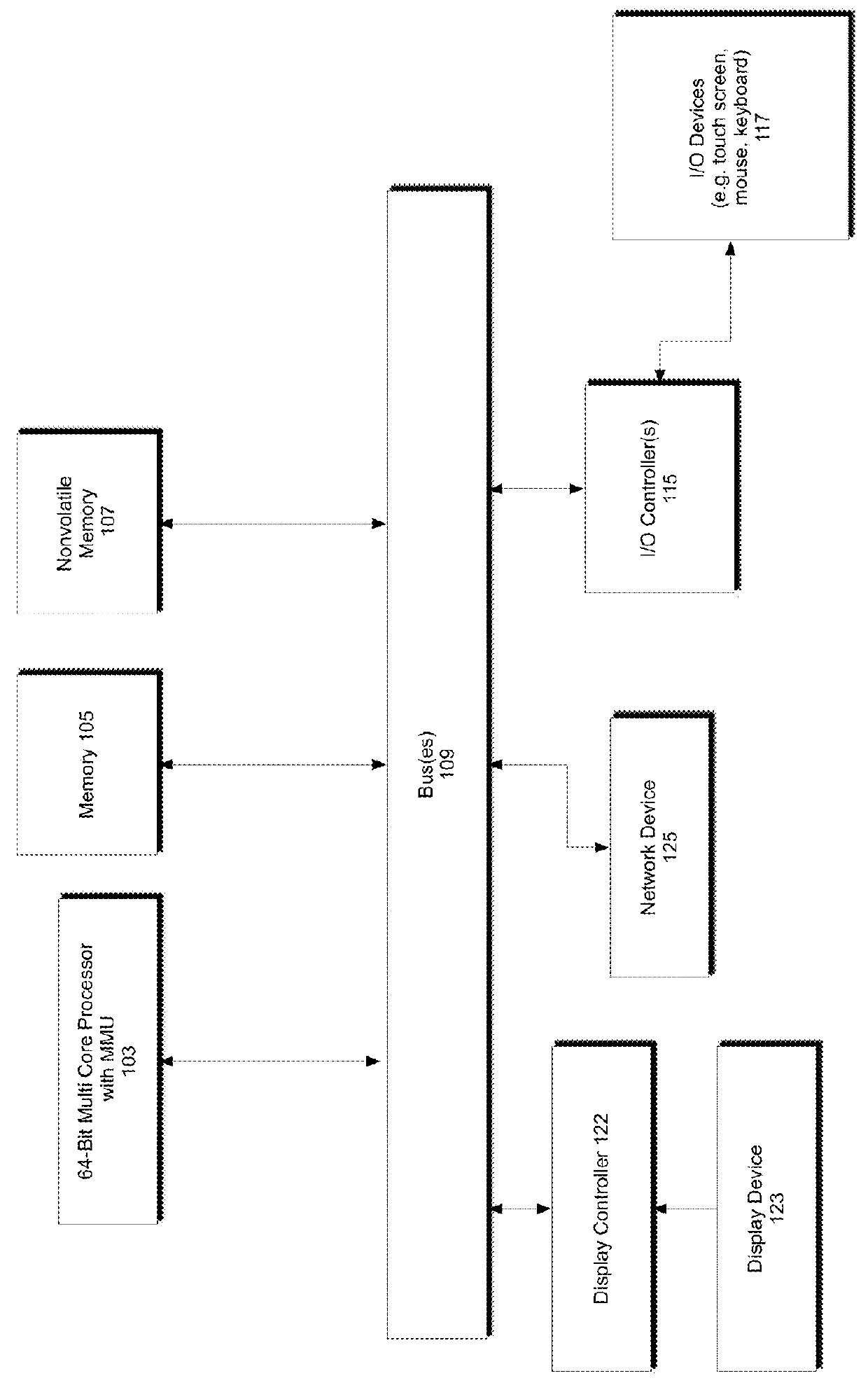

Optimized memory allocator for a multiprocessor computer system

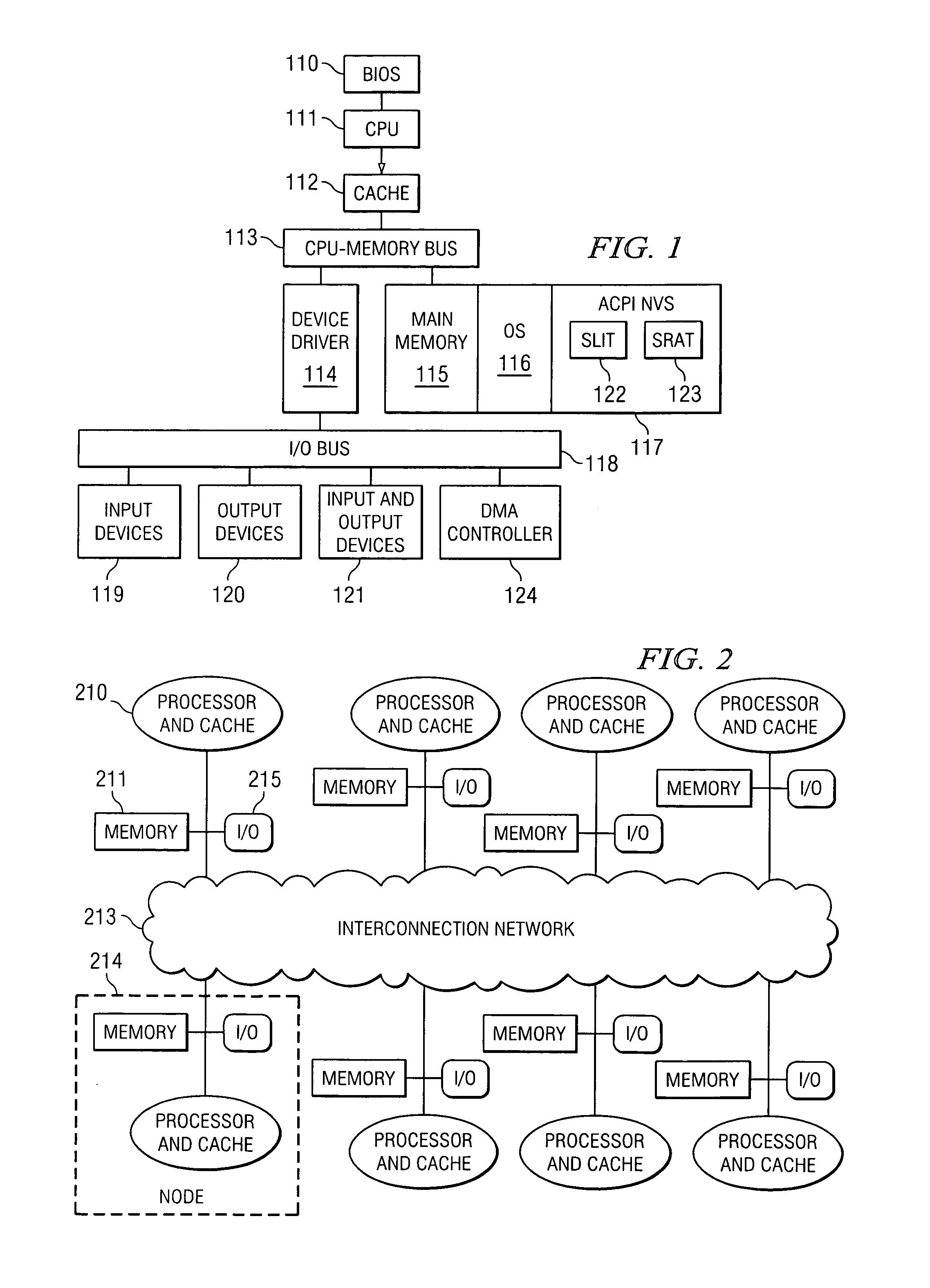

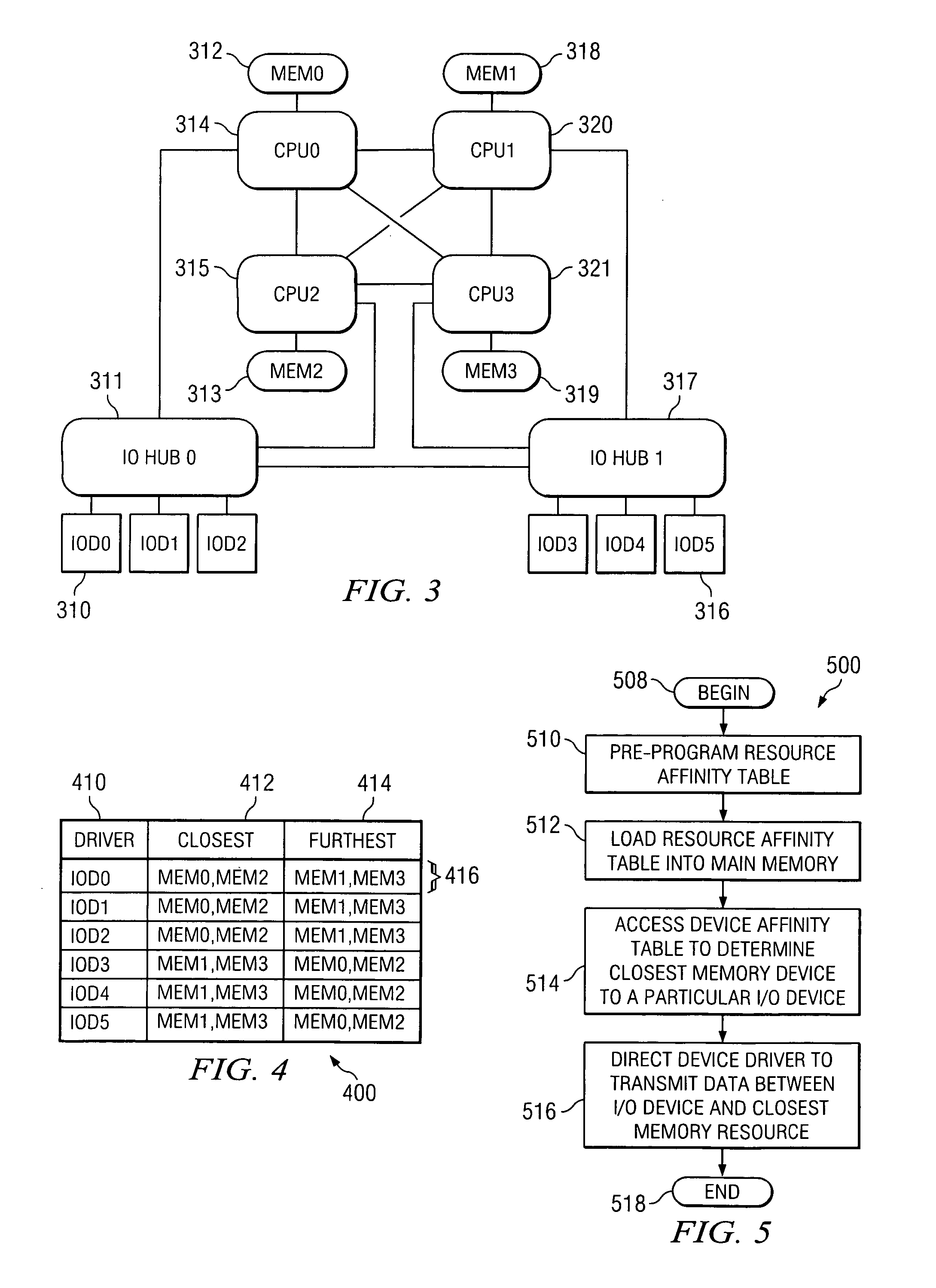

ActiveUS20070233967A1Maximize total data transmittedImproving I/O performance measureMemory architecture accessing/allocationMemory systemsAccess timeMulti processor

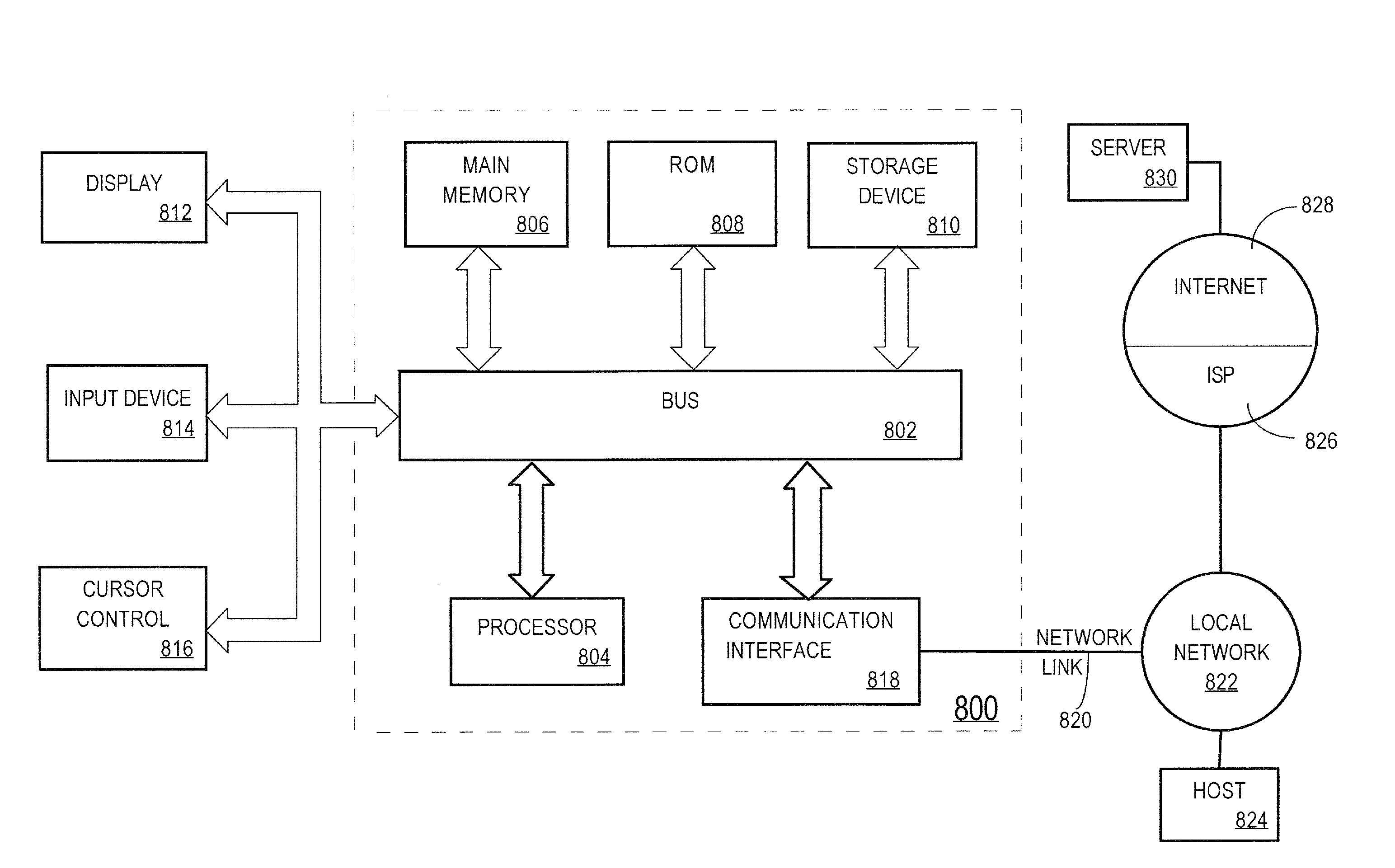

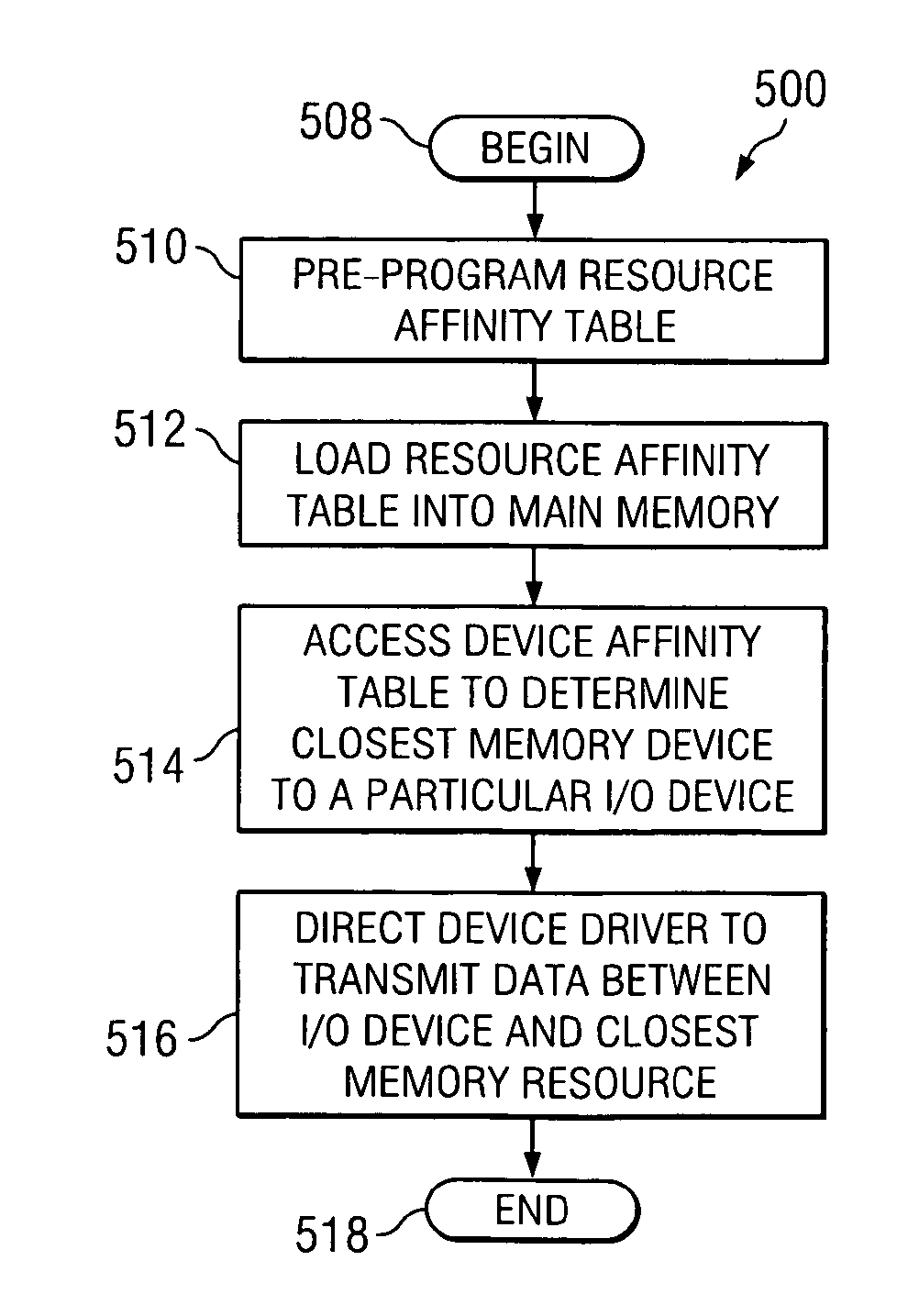

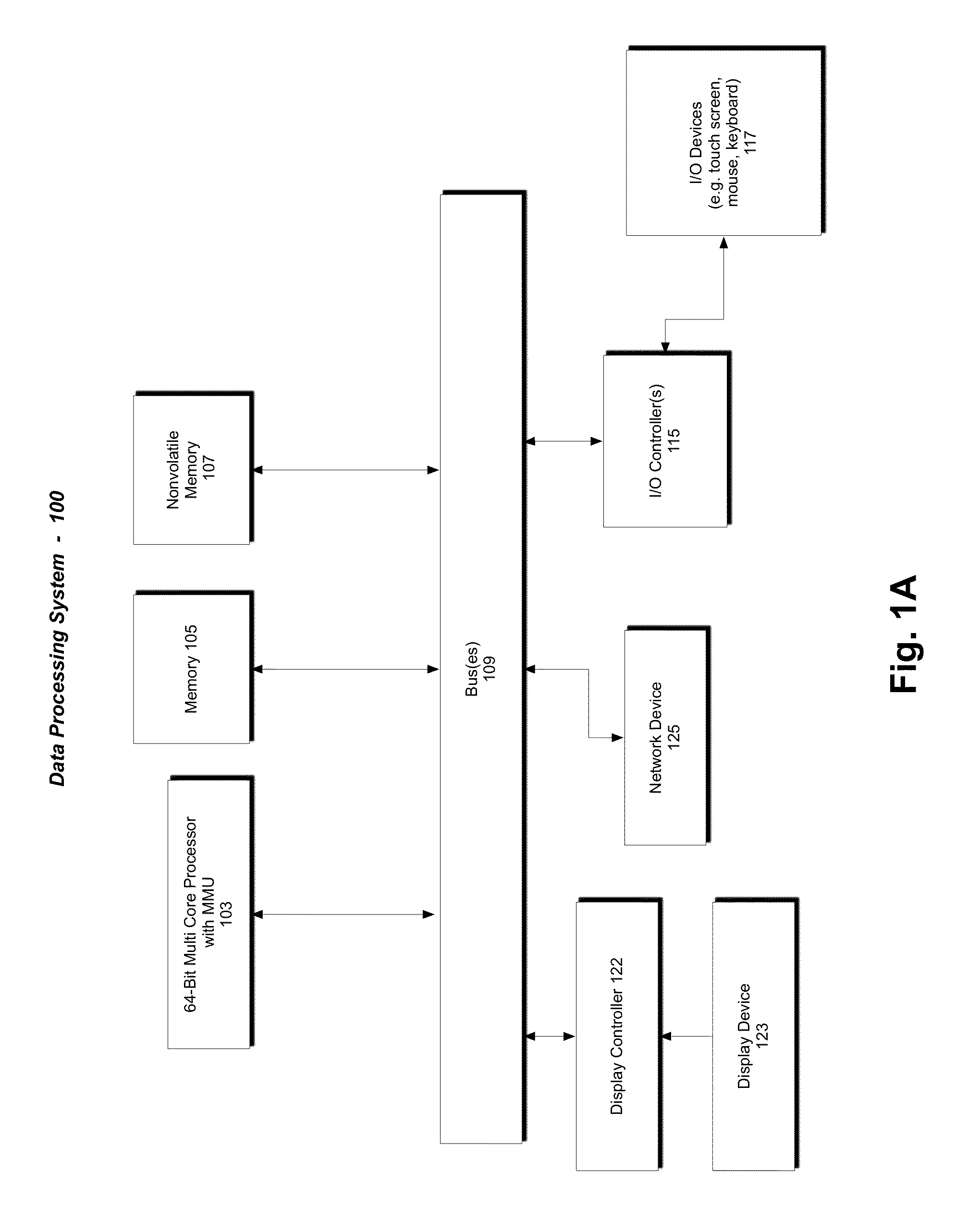

The present disclosure describes systems and methods for allocating memory in a multiprocessor computer system such as a non-uniform memory access (NUMA) machine having distribute shared memory. The systems and methods include allocating memory to input-output devices (I / O devices) based at least in part on which memory resource is physically closest to a particular I / O device. Through these systems and methods memory is allocated more efficiently in a NUMA machine. For example, allocating memory to an I / O device that i80s on the same node as a memory resource, reduces memory access time thereby maximizing data transmission. The present disclosure further describes a system and method for improving performance in a multiprocessor computer system by utilizing a pre-programmed device affinity table. The system and method includes listing the memory resources physically closest to each I / O device and accessing the device table to determine the closest memory resource to a particular I / O device. The system and method further includes directing a device driver to transmit data between the I / O device and the closest memory resource.

Owner:DELL PROD LP

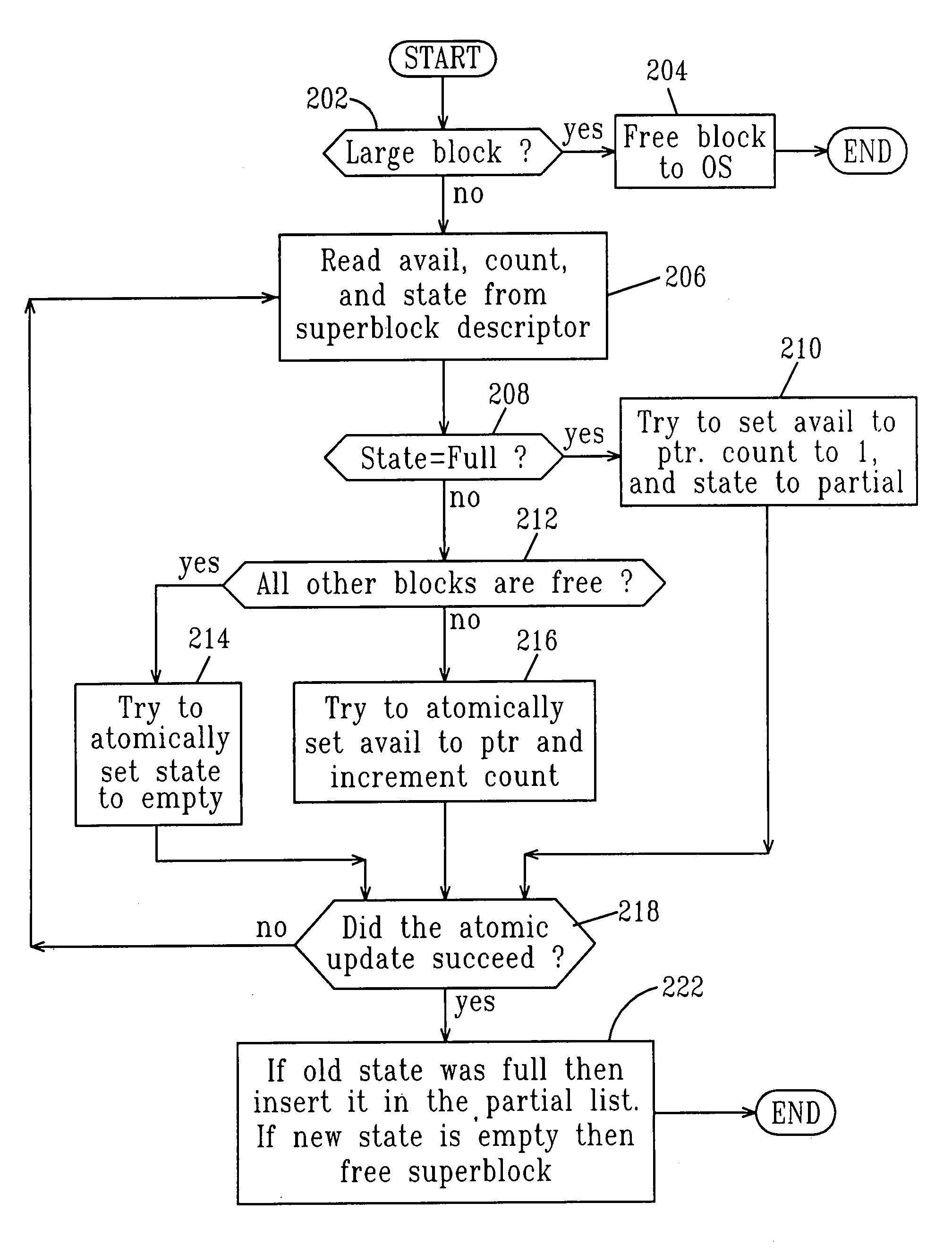

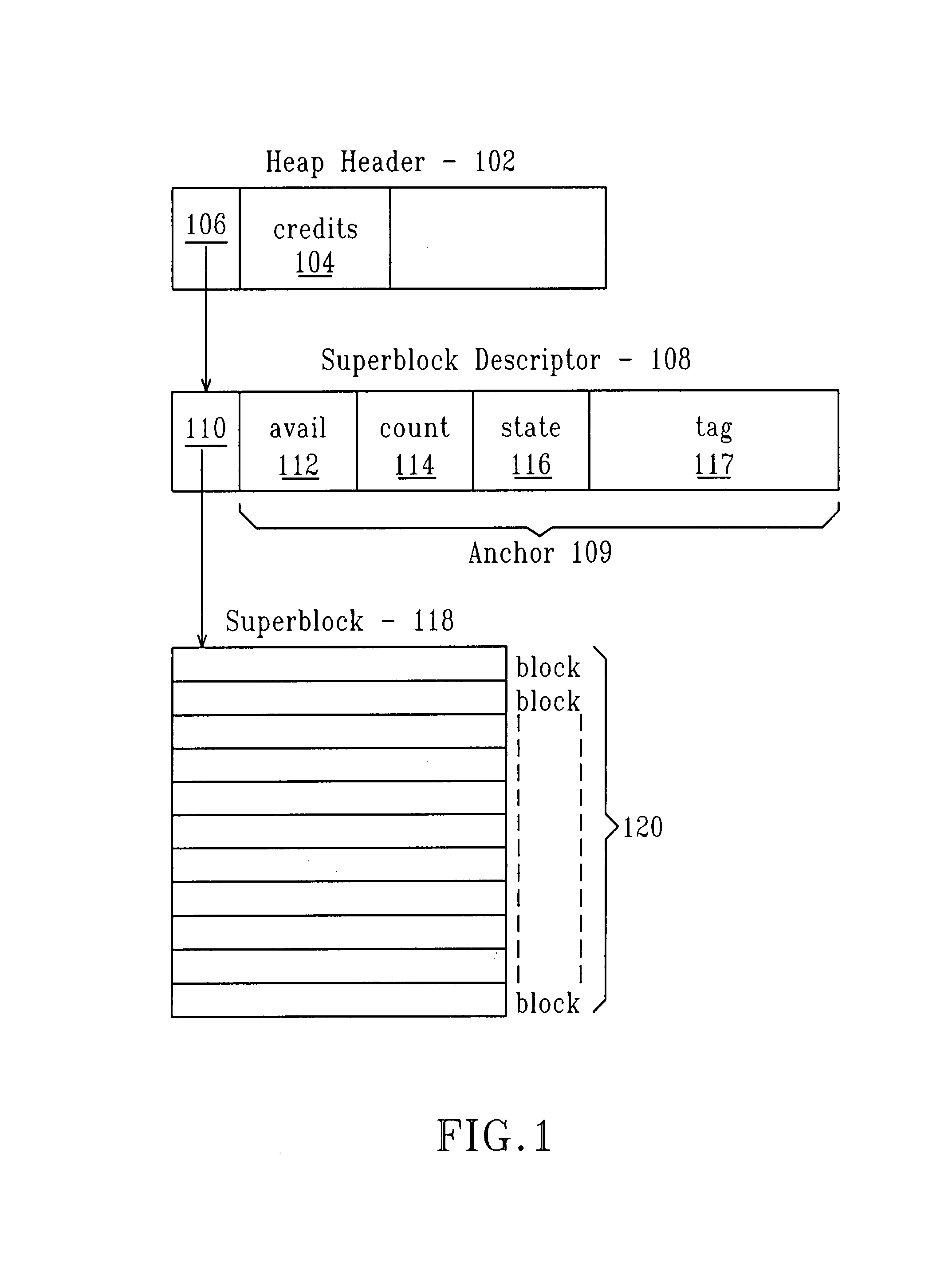

Method for completely lock-free user-level dynamic memory

ActiveUS20050216691A1Memory adressing/allocation/relocationProgram controlGeneral purposeThread scheduling

The present invention relates to a method, computer program product and system for a general purpose dynamic memory allocator that is completely lock-free, and immune to deadlock, even when presented with the possibility of arbitrary thread failures and regardless of thread scheduling. Further the invention does not require special hardware or scheduler support and does not require the initialization of substantial portions of the address space.

Owner:GOOGLE LLC

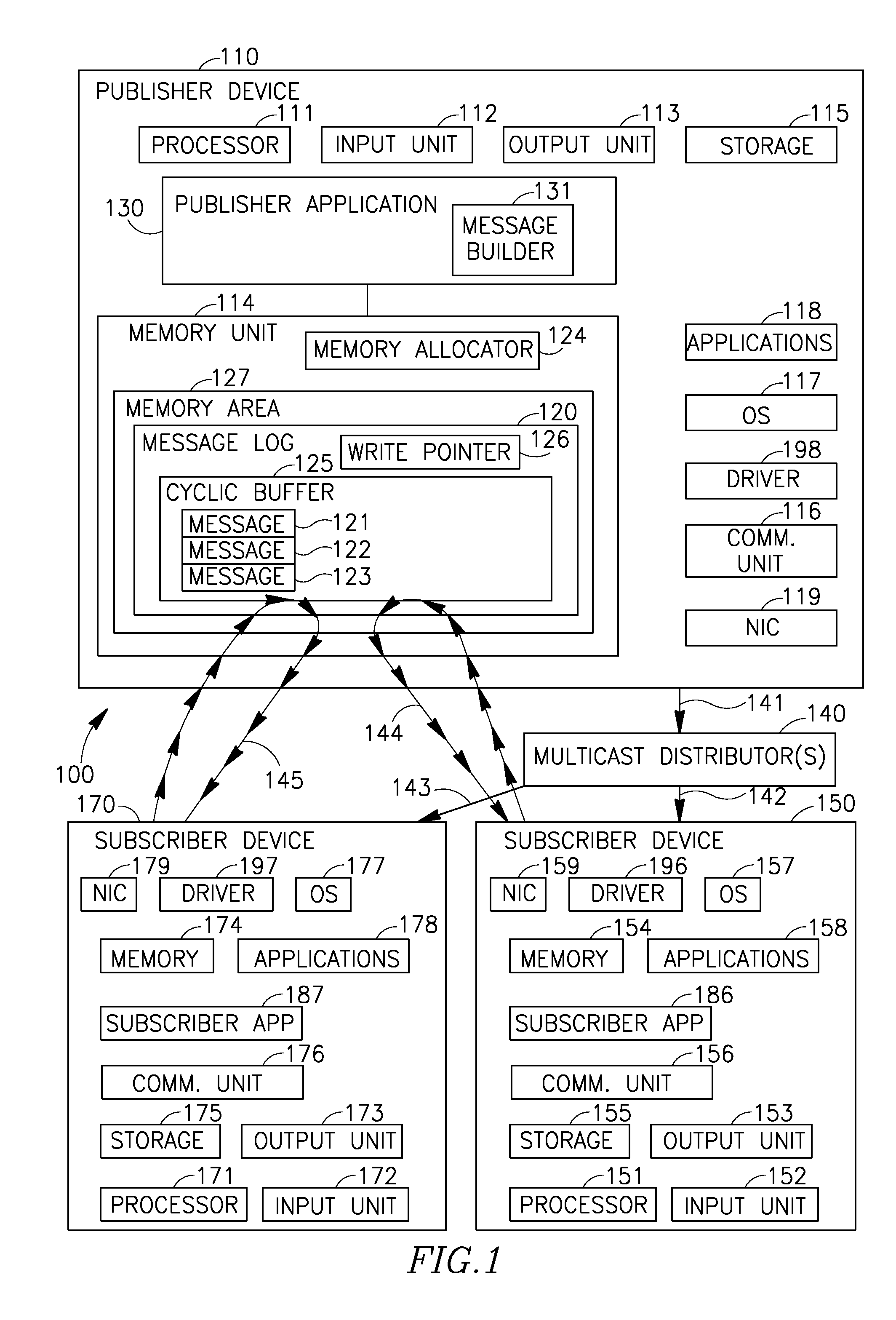

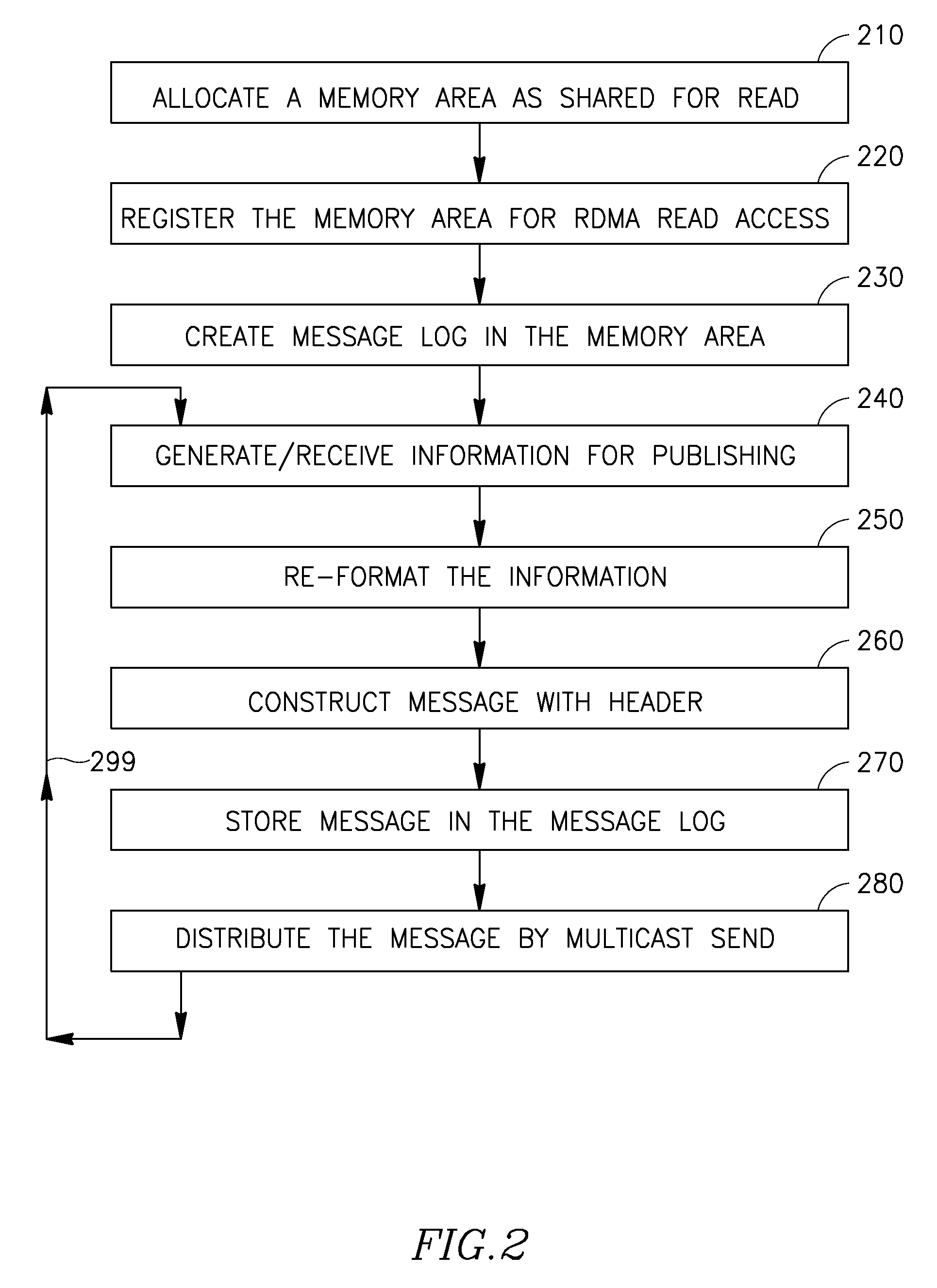

Device, system, and method of distributing messages

ActiveUS20100049821A1Multiple digital computer combinationsProgram controlNetwork structureRemote direct memory access

Device, system, and method of distributing messages. For example, a data publisher capable of communication with a plurality of subscribers via a network fabric, the data publisher comprising: a memory allocator to allocate a memory area of a local memory unit of the data publisher to be accessible for Remote Direct Memory Access (RDMA) read operations by one or more of the subscribers; and a publisher application to create a message log in said memory area, to send a message to one or more of the subscribers using a multicast transport protocol, and to store in said memory area a copy of said message. A subscriber device handles recovery of lost messages by directly reading the lost messages from the message log of the data publisher using RDMA read operation(s).

Owner:MELLANOX TECH TLV

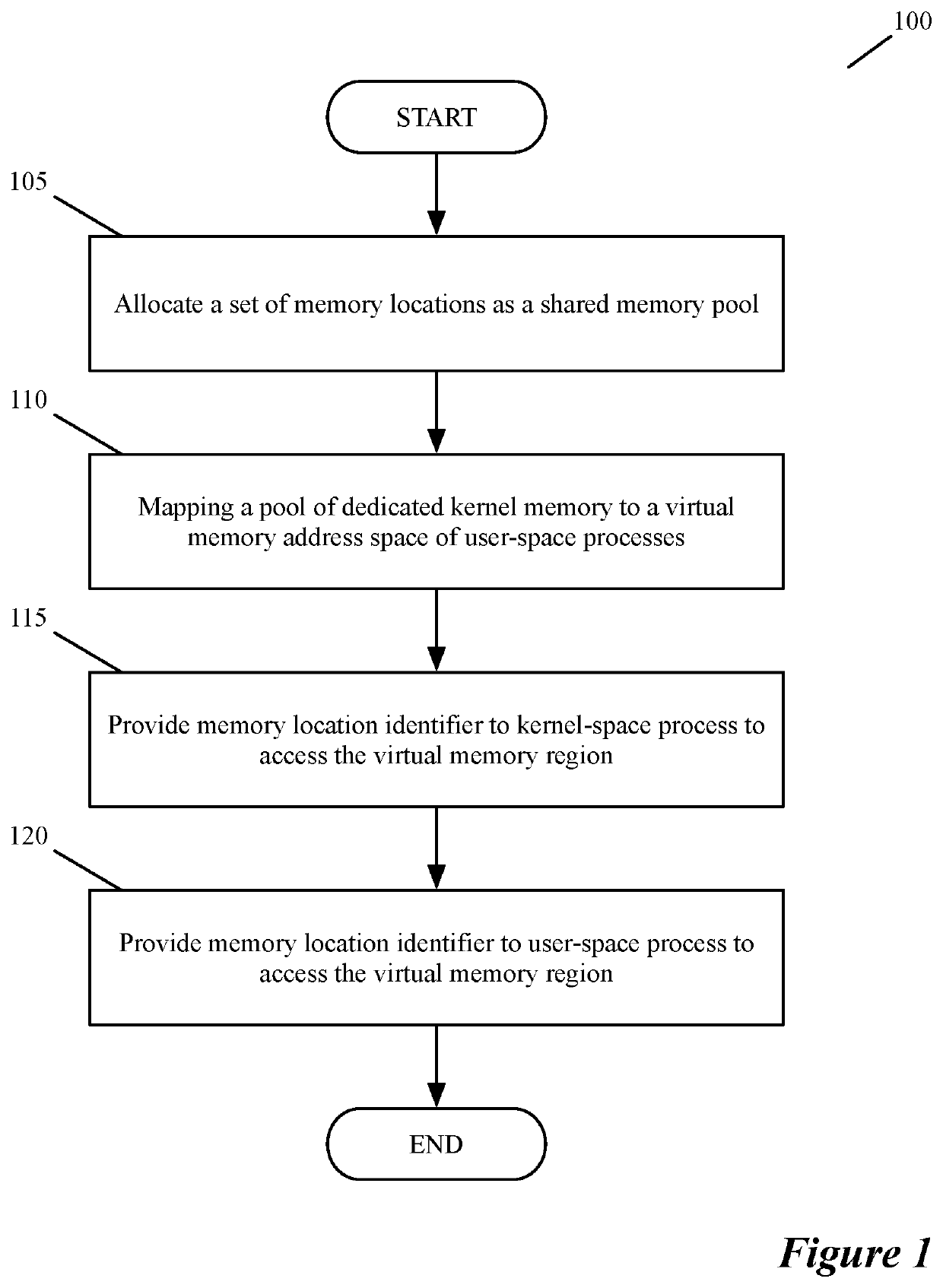

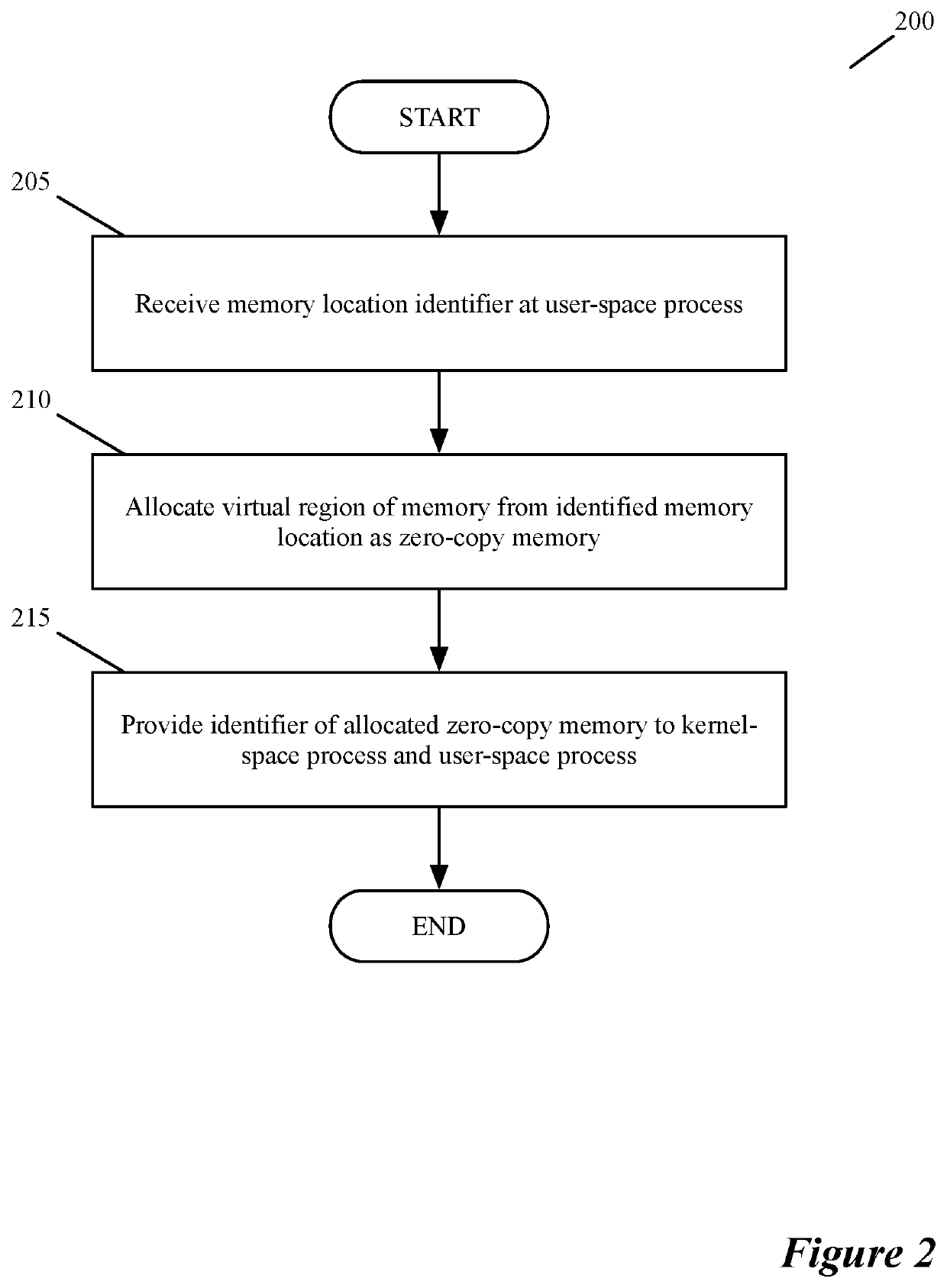

Memory allocator for I/O operations

ActiveUS20220035673A1Performs betterMemory architecture accessing/allocationResource allocationMemory addressTerm memory

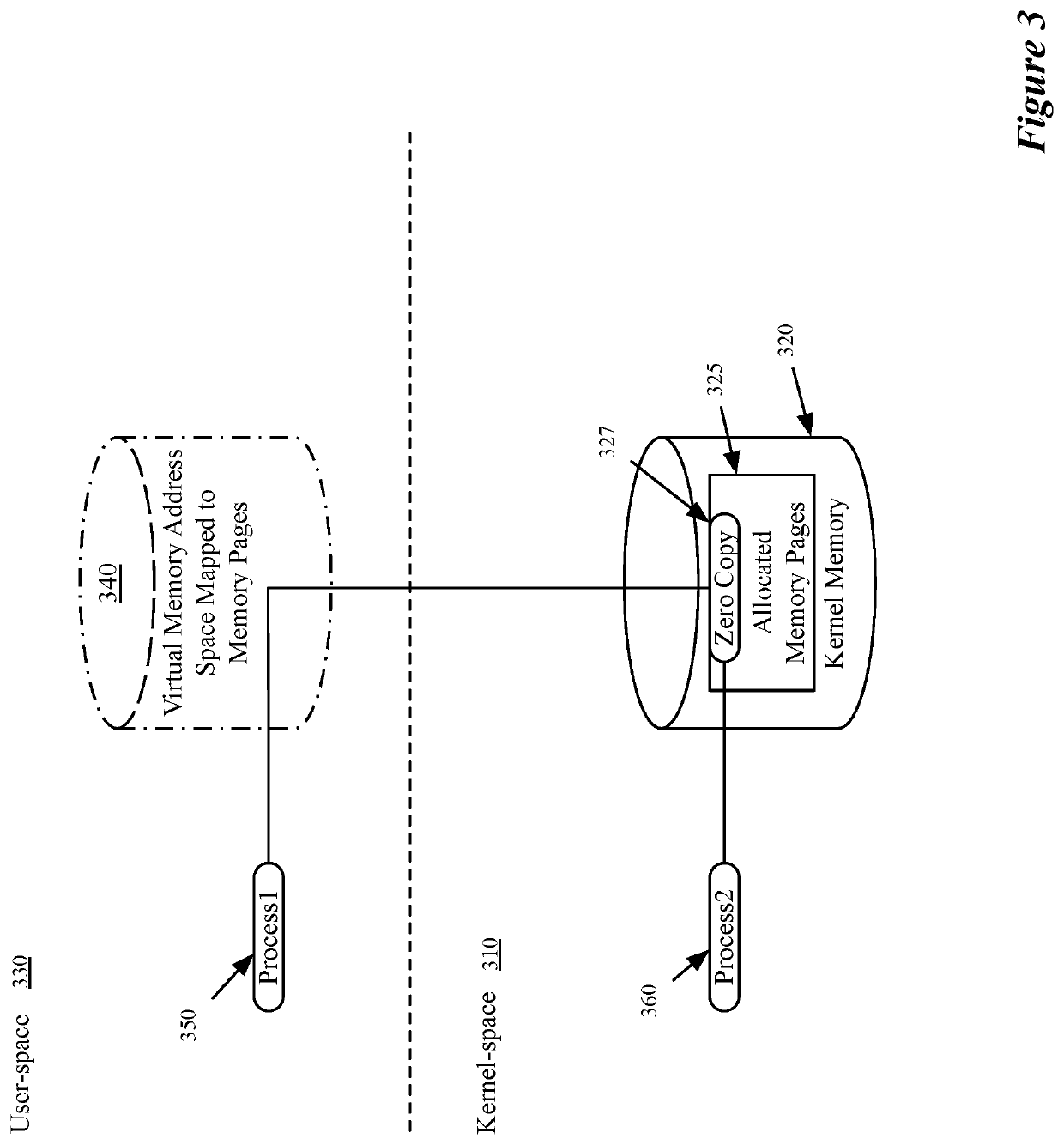

Some embodiments provide a novel method for sharing data between user-space processes and kernel-space processes without copying the data. The method dedicates, by a driver of a network interface controller (NIC), a memory address space for a user-space process. The method allocates a virtual region of the memory address space for zero-copy operations. The method maps the virtual region to a memory address space of the kernel. The method allows access to the virtual region by both the user-space process and a kernel-space process.

Owner:VMWARE INC

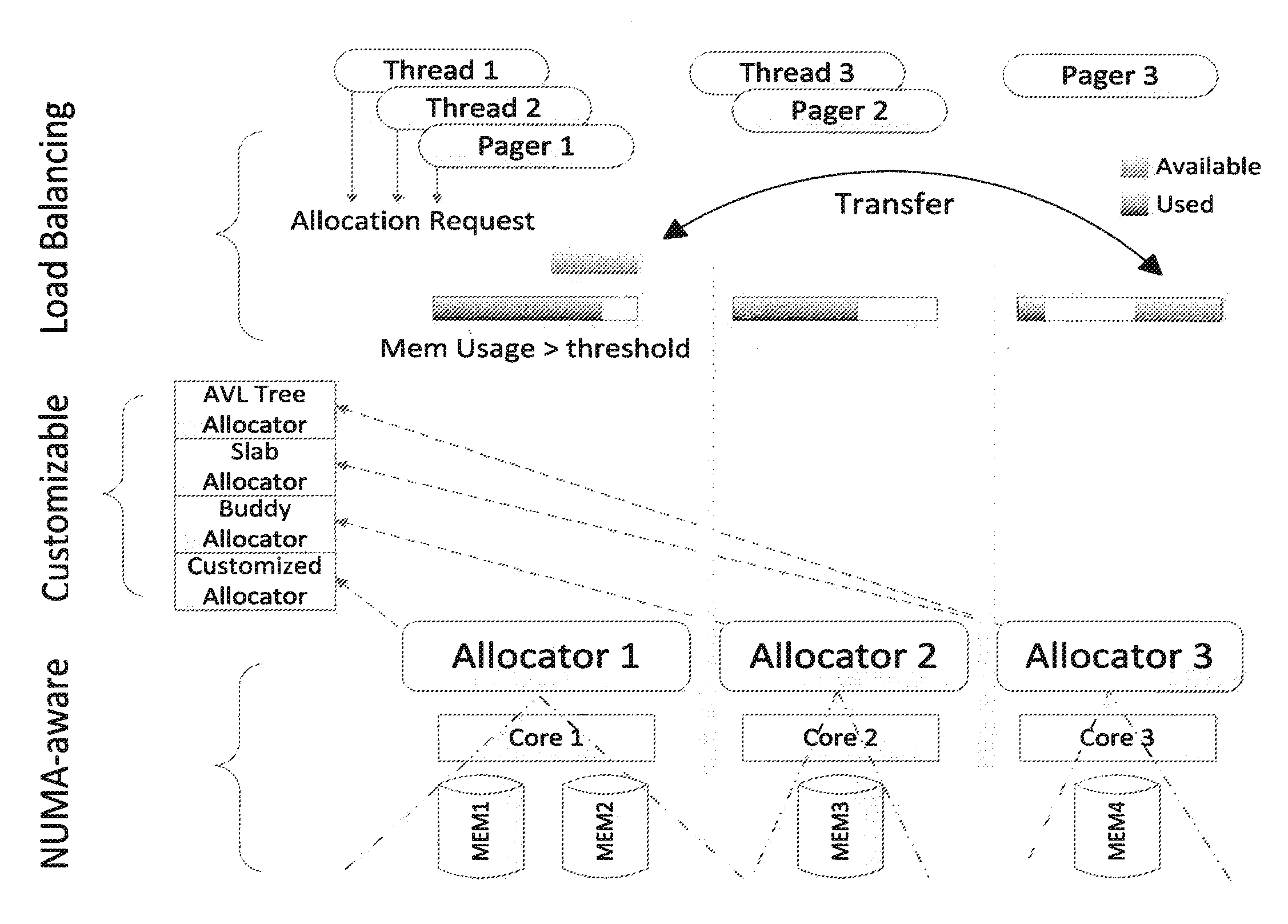

Scalable, customizable, and load-balancing physical memory management scheme

InactiveUS20130232315A1Improve scalabilityResource allocationMemory adressing/allocation/relocationLoad SheddingPager

A physical memory management scheme for handling page faults in a multi-core or many-core processor environment is disclosed. A plurality of memory allocators is provided. Each memory allocator may have a customizable allocation policy. A plurality of pagers is provided. Individual threads of execution are assigned a pager to handle page faults. A pager, in turn, is bound to a physical memory allocator. Load balancing may also be provided to distribute physical memory resources across allocators. Allocations may also be NUMA-aware.

Owner:SAMSUNG ELECTRONICS CO LTD

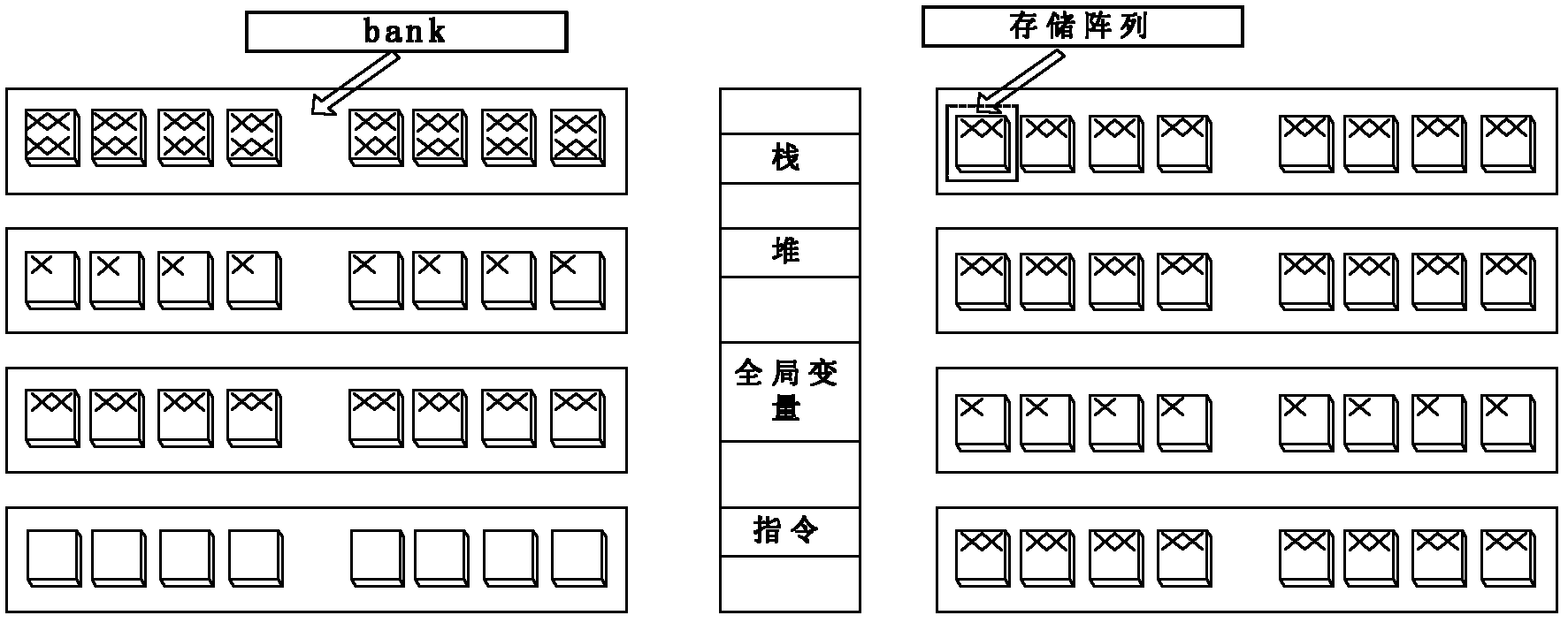

Memory management method and device capable of realizing memory level parallelism

InactiveCN102662853AImprove parallelismReduce row cache conflictsMemory adressing/allocation/relocationAnalysis dataOperational system

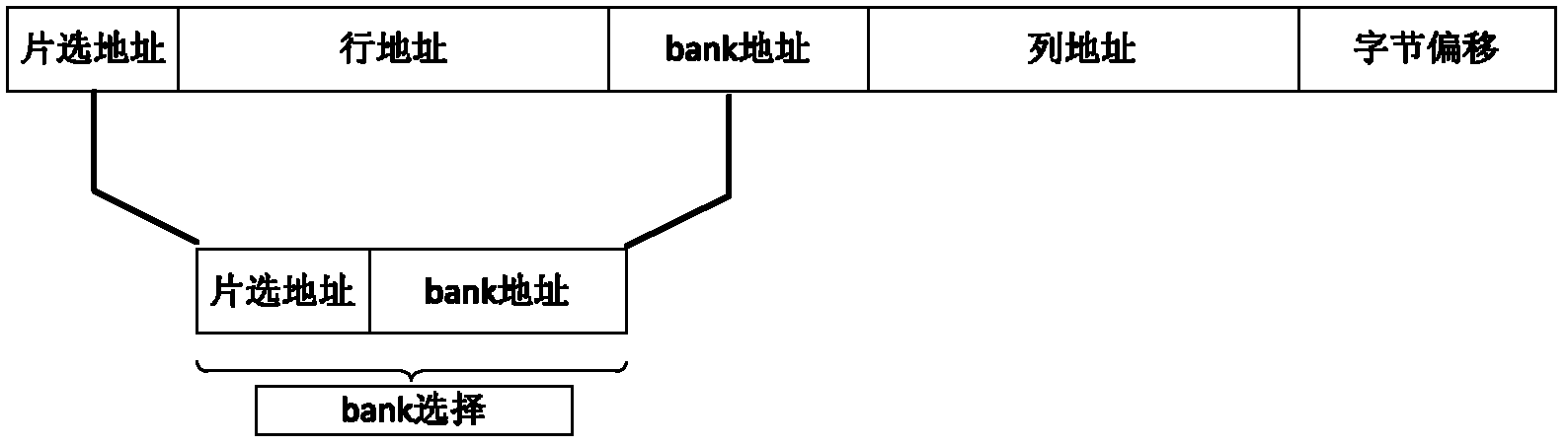

The invention relates to a memory management method and device capable of realizing memory level parallelism. A bank concept is introduced to a memory dispenser, and the dispenser can identity different banks according to the address range by a bank grouping building address and relevancy between banks. Data is divided into multiple different data units, and then the data is scattered to all bank groups of a main memory, so that the memory access parallelism degree is improved and the line cache collision is reduced. At the same time, the memory management device completely works in an operation system layer, analyzes the collision expense between the data units by utilizing the information provided by the compiler and the operation system, and extends the memory dispenser according to the actual allocation of the main memory, so that the application program is not needed to be amended and the memory management device is independent of the special bottom-level hardware.

Owner:北京北大众志微系统科技有限责任公司

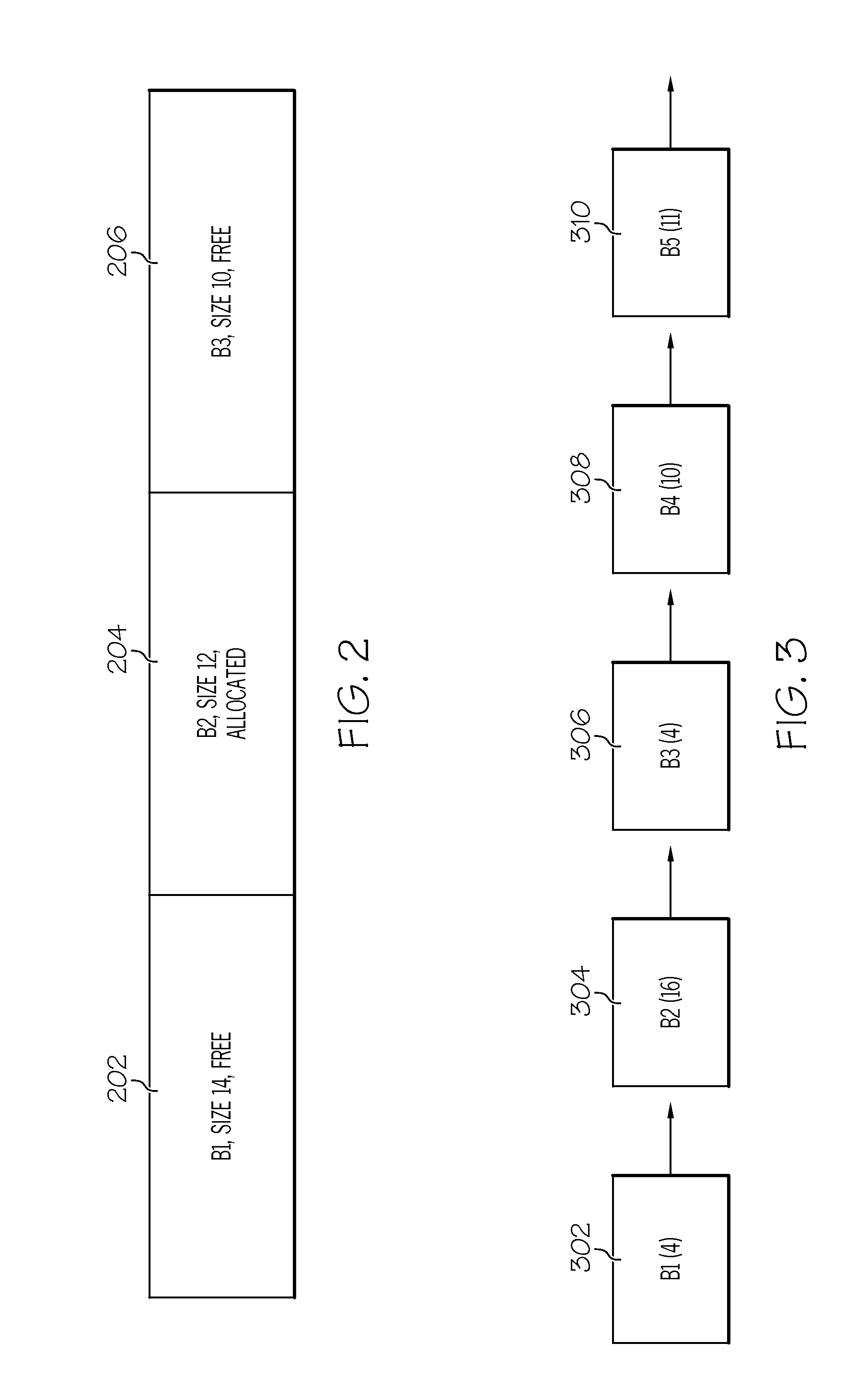

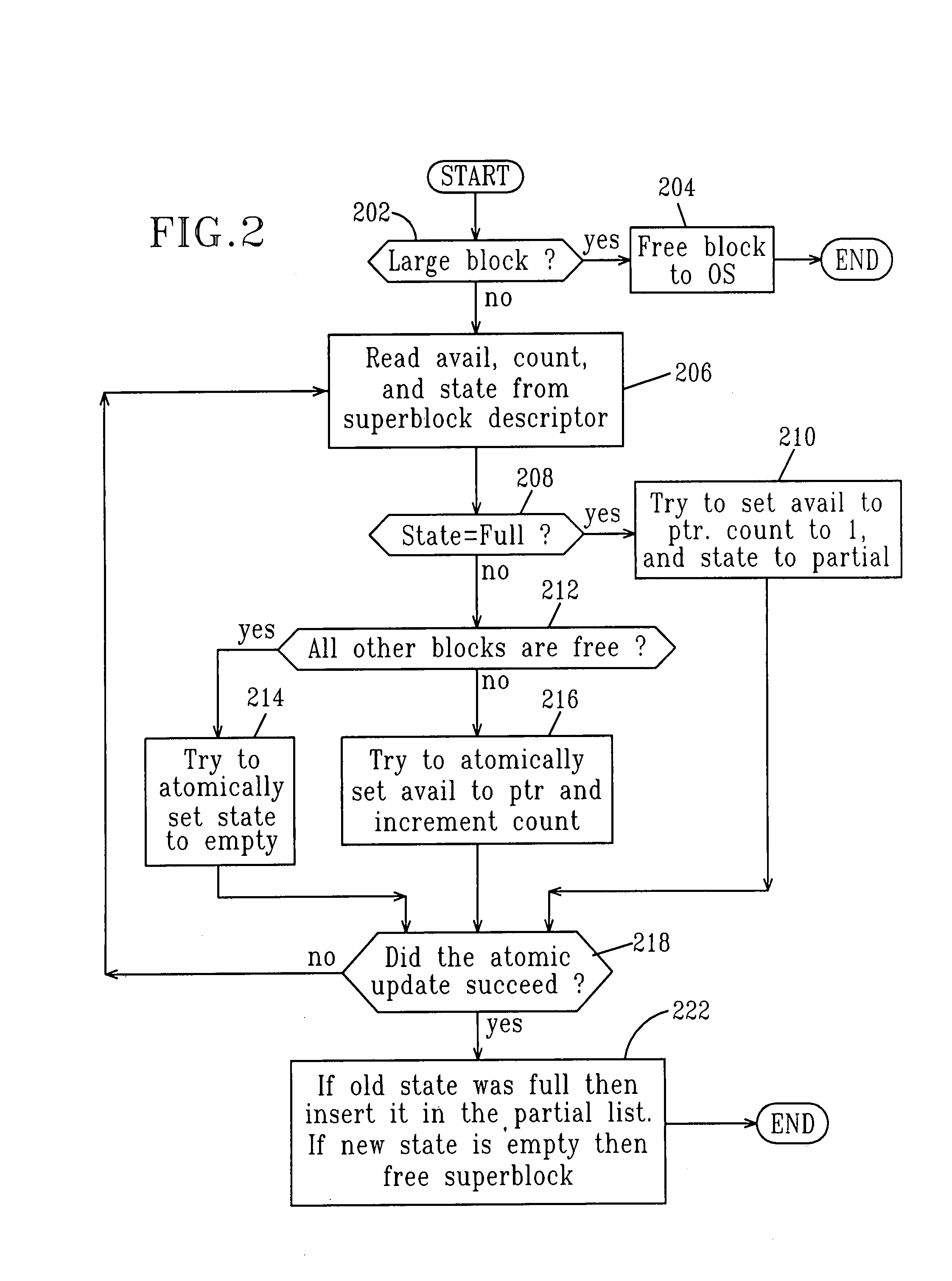

Memory-block coalescing based on run-time demand monitoring

InactiveUS6839822B2Exact matchData processing applicationsMemory adressing/allocation/relocationComputerized systemRunning time

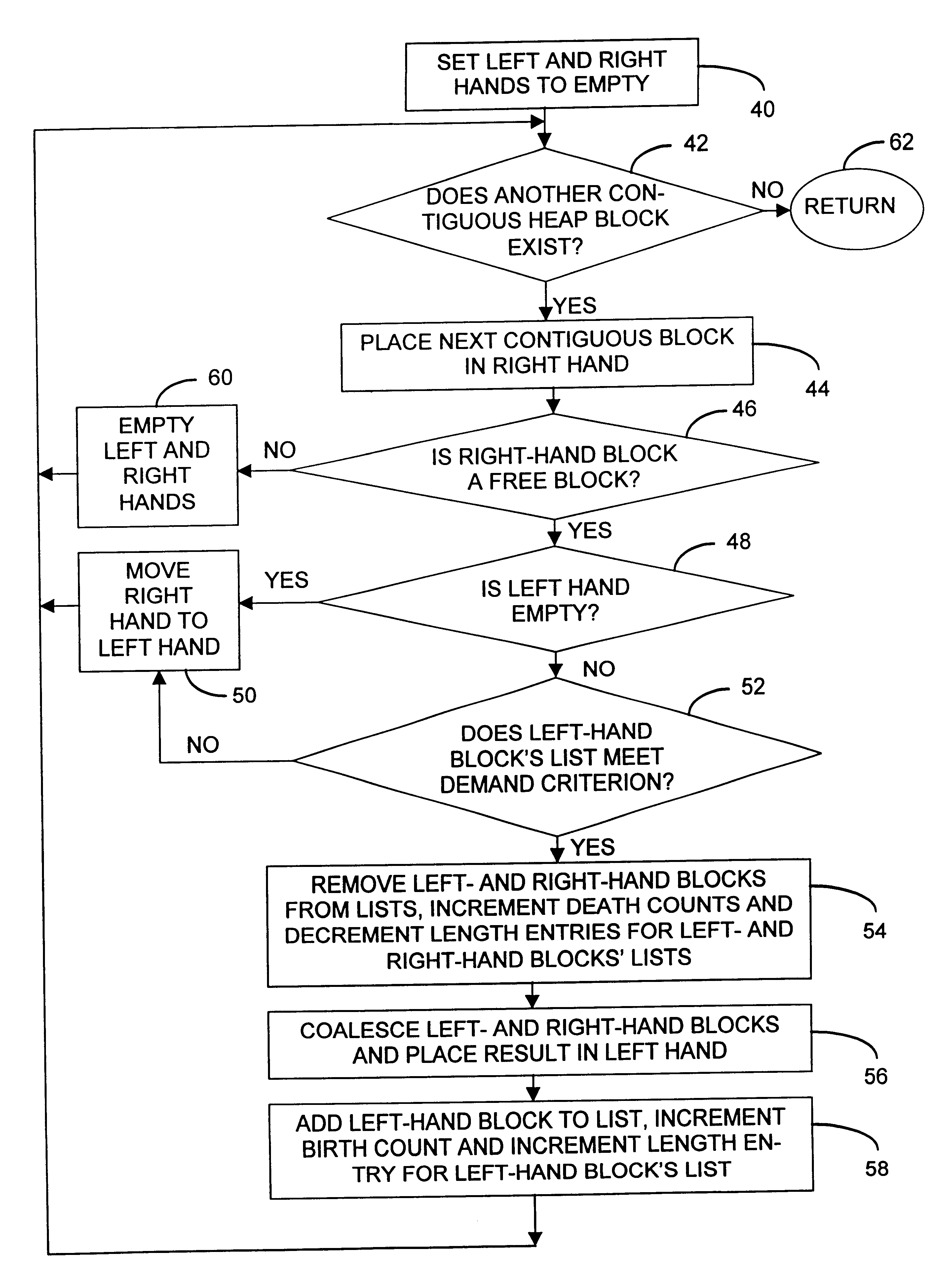

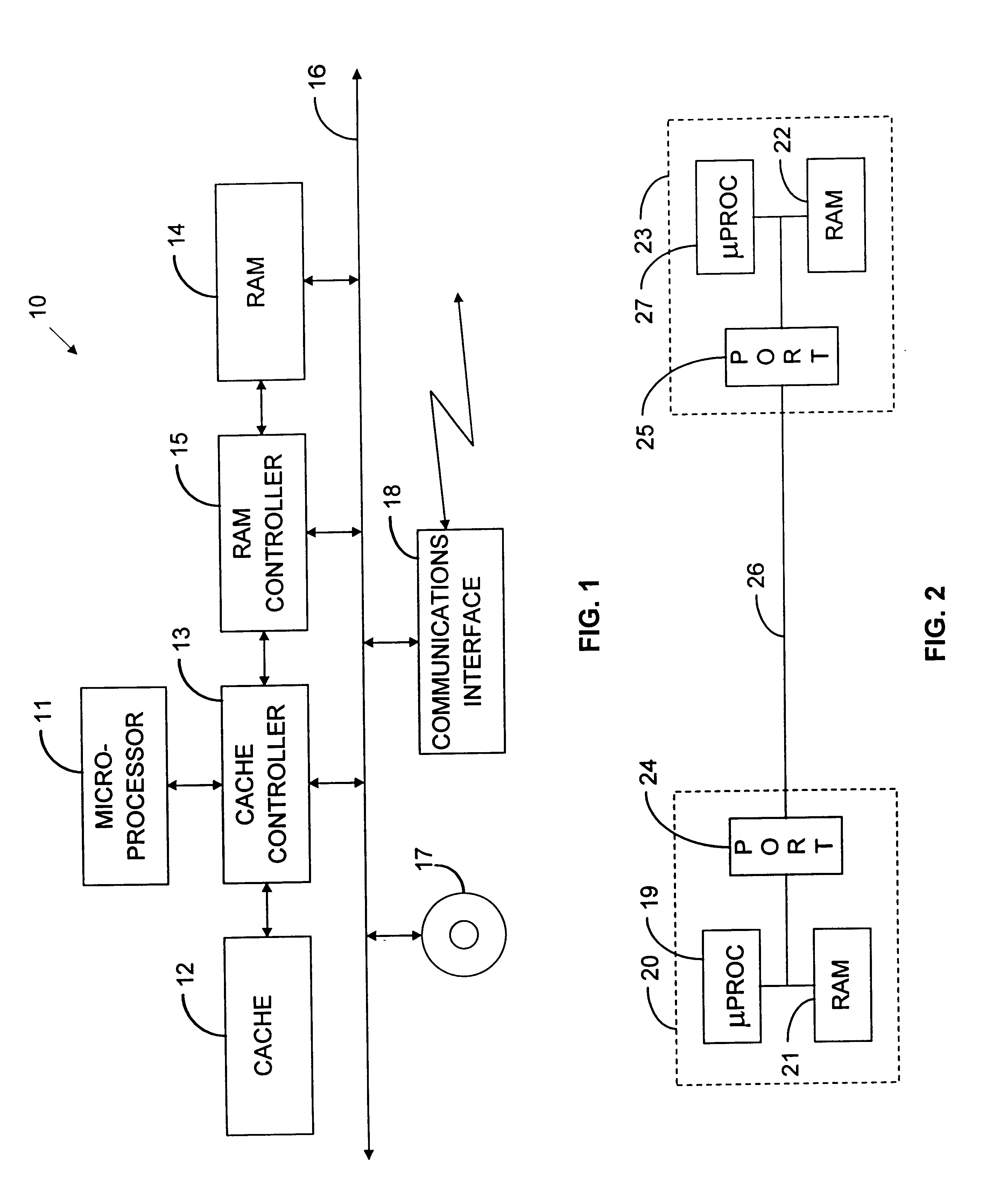

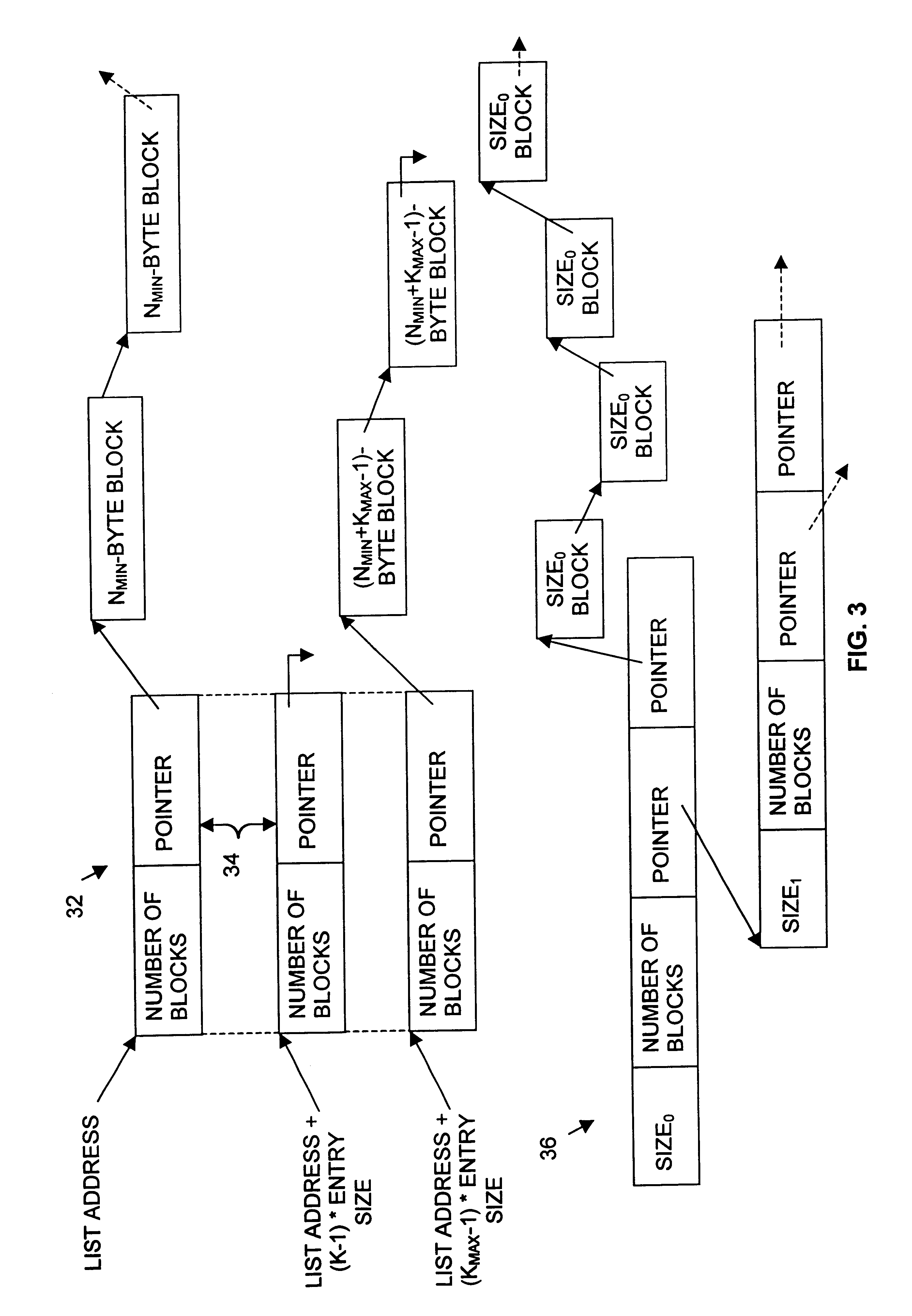

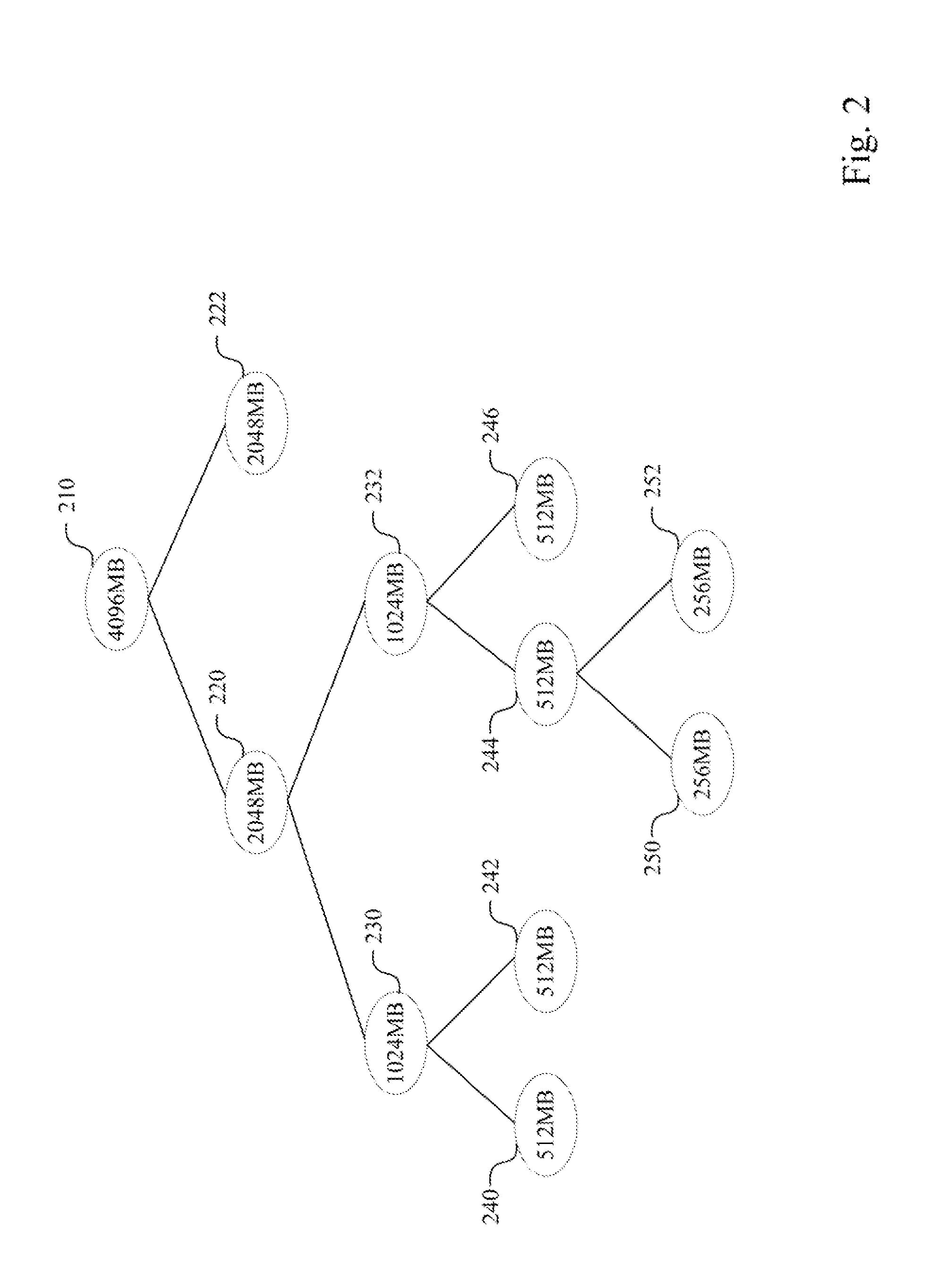

A computer system (10) implements a memory allocator that employs a data structure (FIG. 3) to maintain an inventory of dynamically allocated memory available to receive new data. It receives from one or more programs requests that it allocate memory from a dynamically allocable memory “heap.” It responds to such requests by performing the requested allocation and removing the thus-allocated memory block from the inventory. Conversely, it adds to the inventory memory blocks that the supported program or programs request be freed. In the process, it monitors the frequencies with which memory blocks of different sizes are allocated, and it projects from those frequencies future demand for different-sized memory blocks. When it needs to coalesce multiple smaller blocks to fulfil an actual or expected request for a larger block, it bases its selection of which constituent blocks to coalesce on whether enough free blocks of a constituent block's size exist to meet the projected demand for them.

Owner:ORACLE INT CORP

Memory allocation improvements

ActiveUS20140359248A1Memory adressing/allocation/relocationProgram controlVirtual memoryParallel computing

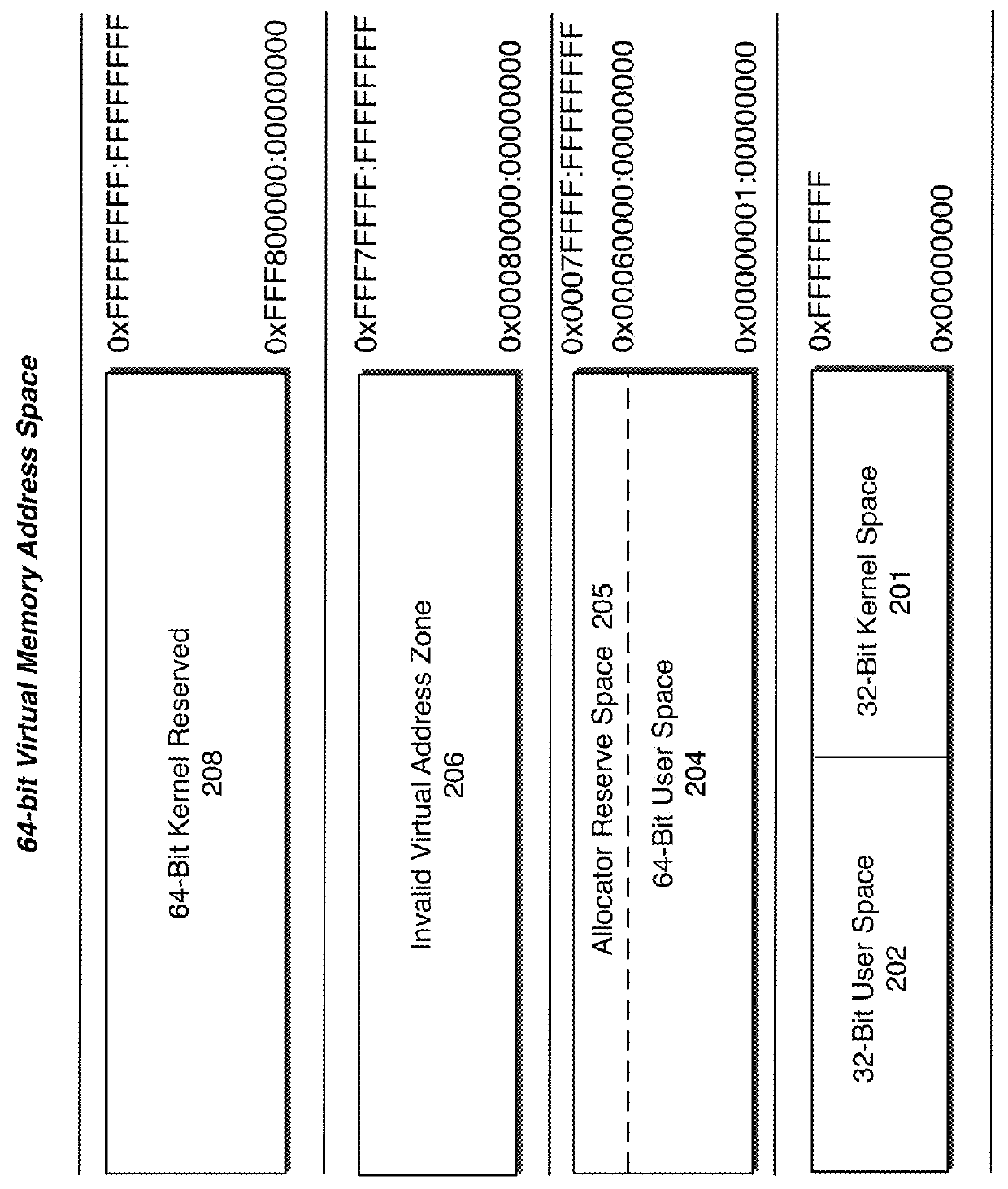

In one embodiment, a memory allocator of a memory manager can service memory allocation requests within a specific size-range from a section of pre-reserved virtual memory. The pre-reserved virtual memory allows allocation requests within a specific size range to be allocated in the pre-reserved region, such that the virtual memory address of a memory allocation serviced from the pre-reserved region can indicate elements of metadata associated with the allocations that would otherwise contribute to overhead for the allocation.

Owner:APPLE INC

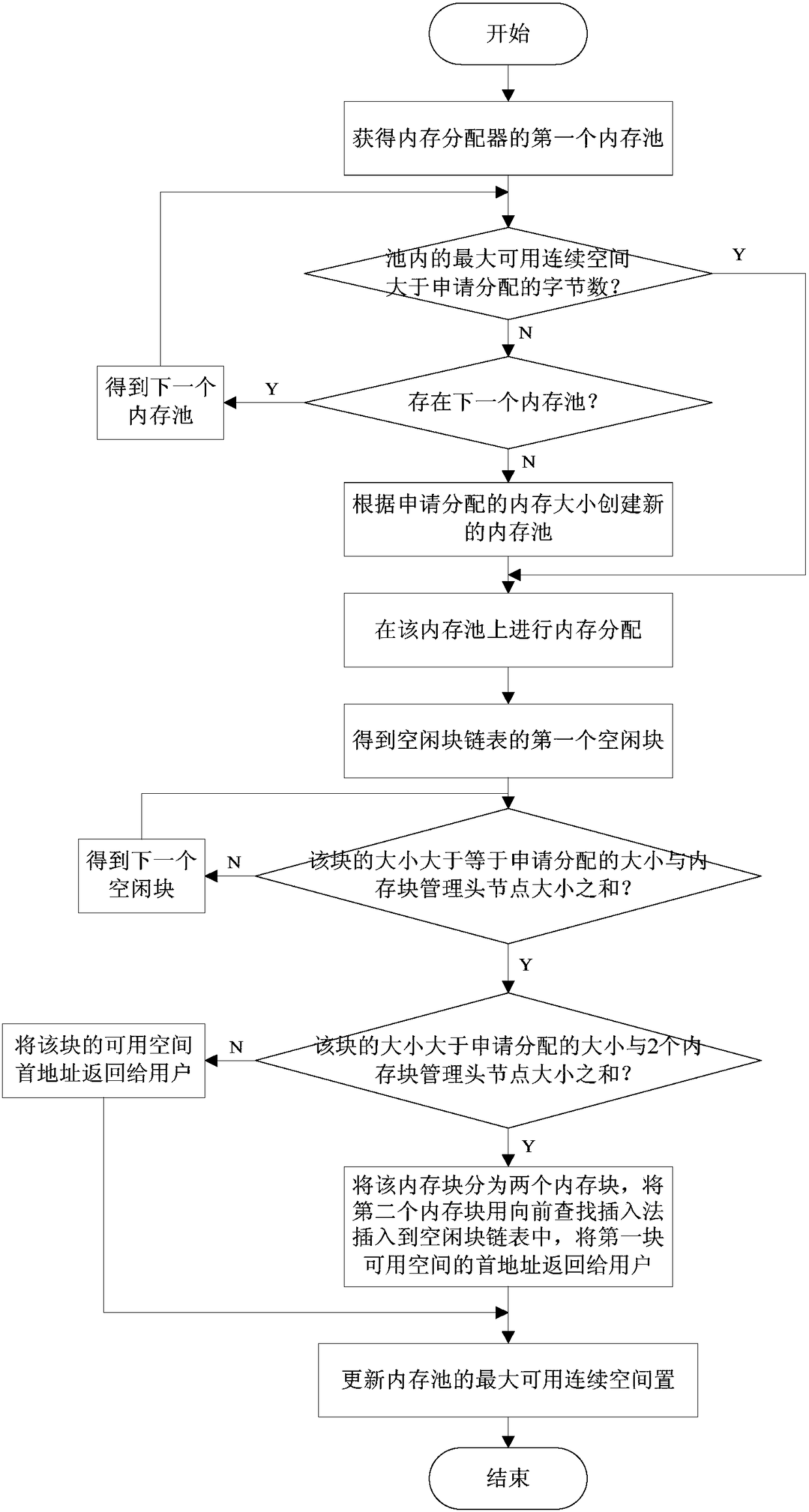

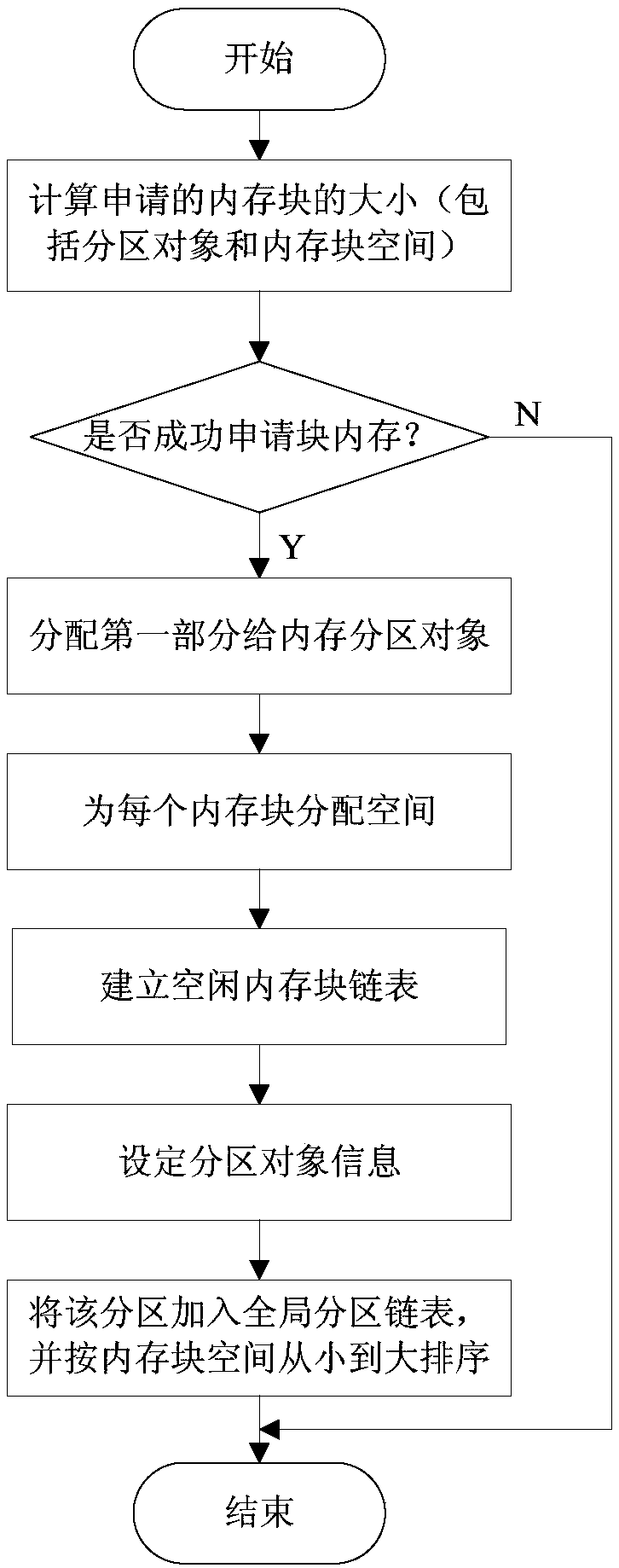

Embedded software memory management system

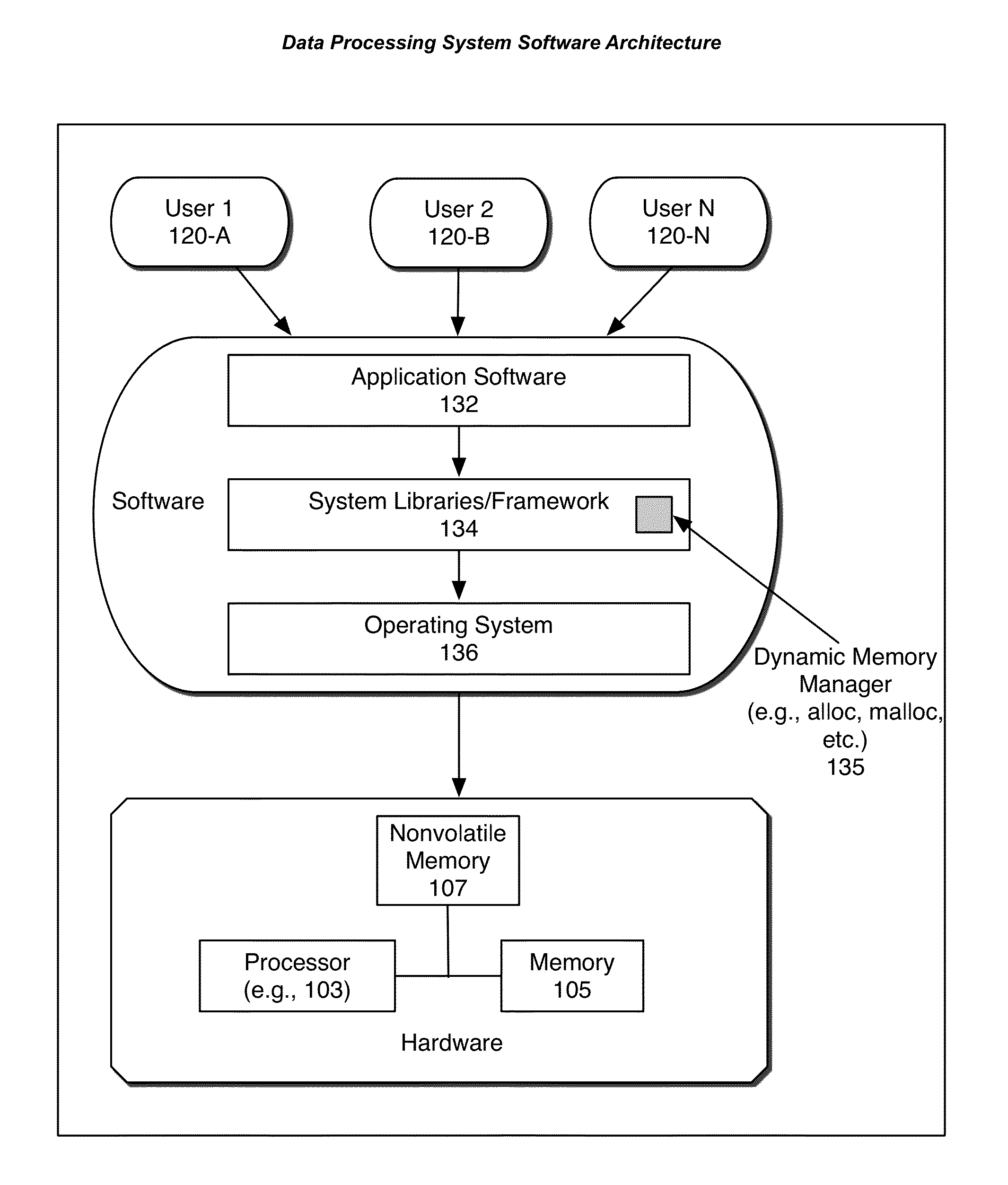

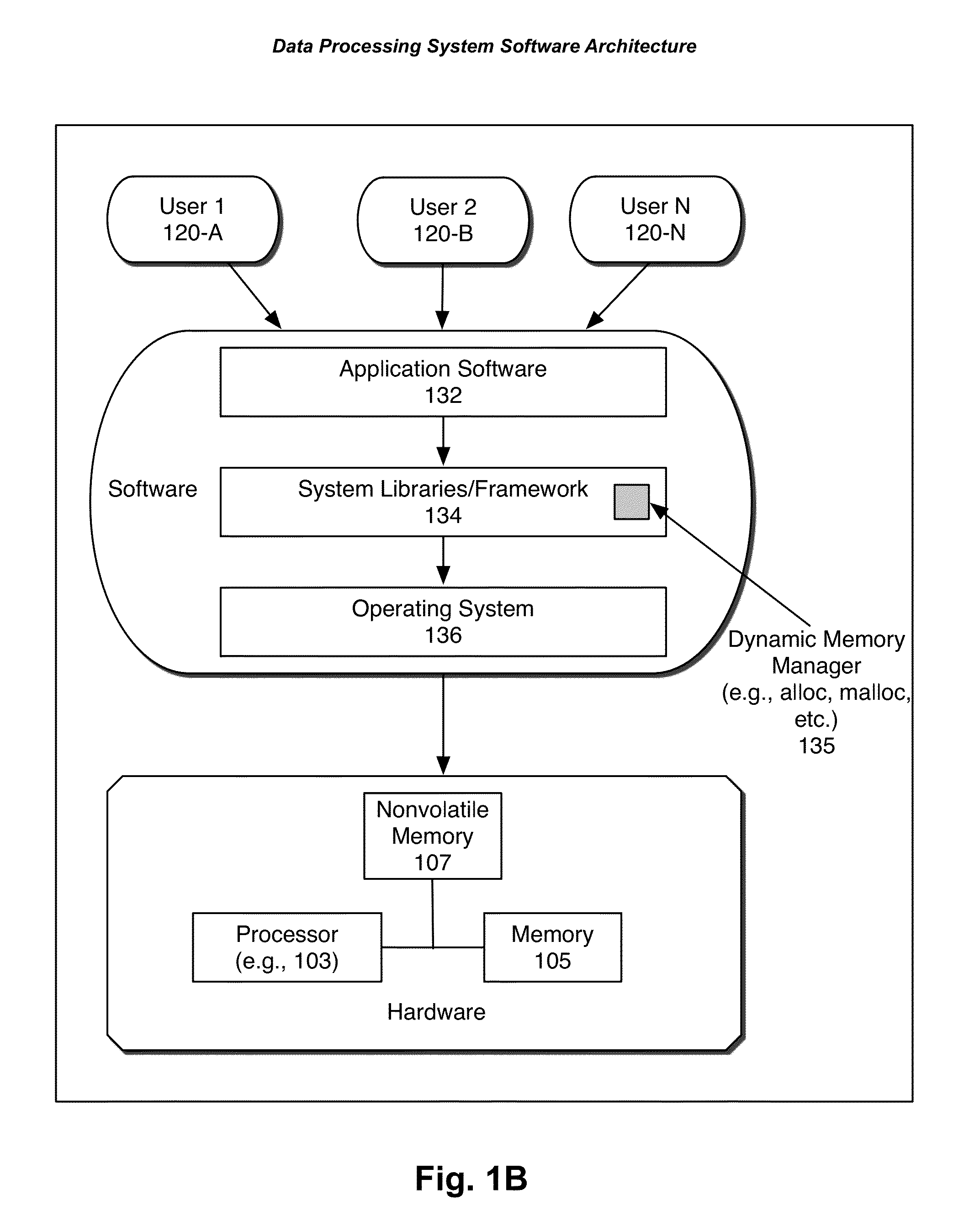

ActiveCN108132842AImprove management efficiencyGeneration of restrictionsResource allocationMemory adressing/allocation/relocationDynamic memory managementMemory allocator

The invention relates to an embedded software memory management system, and relates to the technical field of embedded software memory management. According to the system, a static allocation method is adopted, the number of partitions in a system memory area and the number and size of memory blocks in each partition are preset, a dynamic memory allocation method is adopted on the memory area of auser based on a pool type memory management mechanism, memory requests and release requests are processed by creating a memory allocator, and traditional dynamic memory allocation and a release algorithm are improved. According to the system, flexibility of the system is enhanced by adopting a combined method of statically allocating the system memory area and dynamically allocating the system memory area, meanwhile generation of a large number of fragments is limited to prevent the occurrence of memory leaks, and the method can effectively reduce time consumption of requesting and memory releasing, so that management efficiency of dynamic memory is improved.

Owner:TIANJIN JINHANG COMP TECH RES INST

Flow control in a cellular communication system

InactiveUS8085657B2Highly effective flow controlHighly efficient flow controlError preventionTransmission systemsRadio networksAir interface

A base station comprises an RNC message receiver which receives data packets from a Radio Network Controller, RNC. The data packets are transmitted over air interface channels by a transmitter. The base station includes buffer memory for buffering the data packets prior to transmission, a scheduler for scheduling the data packets for transmission over the air interface channels, and a memory allocator for determining a first memory allocation of the buffer memory for a first air interface communication with a first user equipment. The base station further includes a flow controller for determining a transfer allowance for transferring of data from the RNC to the base station in response to the first memory allocation and a current buffer memory usage of the first air interface communication. The transfer allowance is transmitted to the RNC by an RNC message transmitter for achieving an efficient flow control.

Owner:SONY CORP

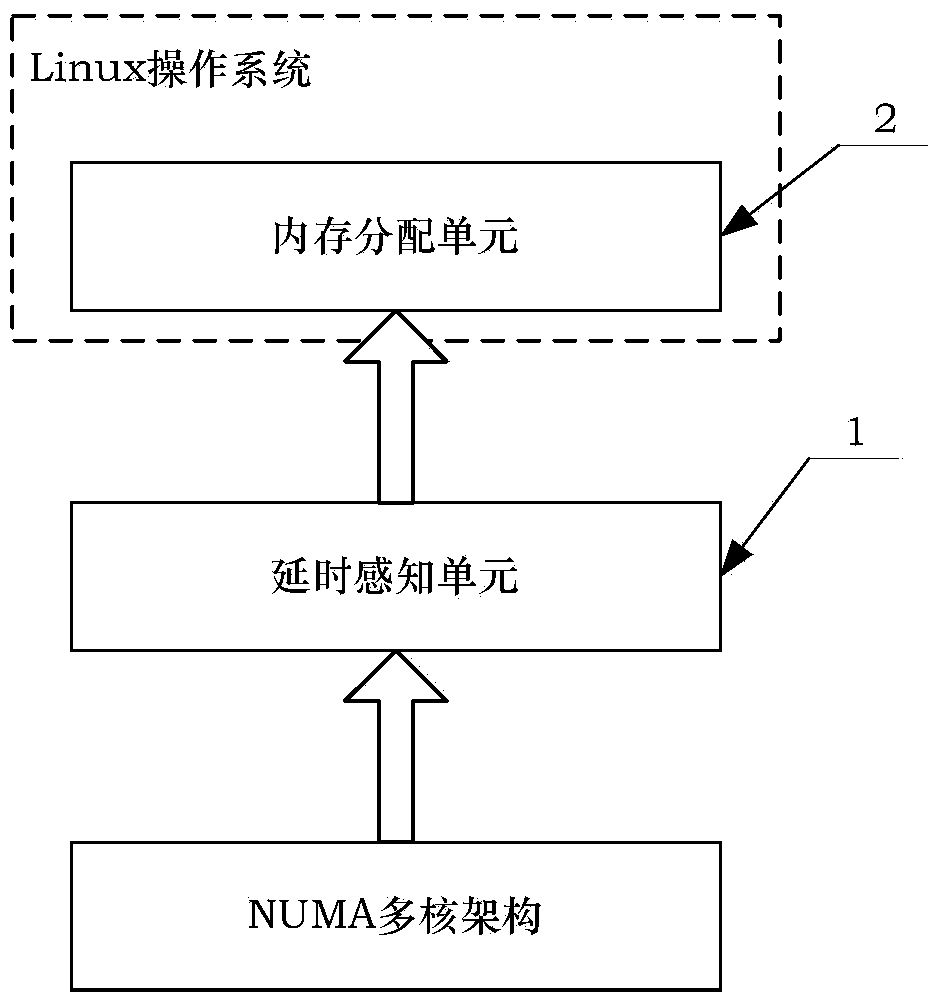

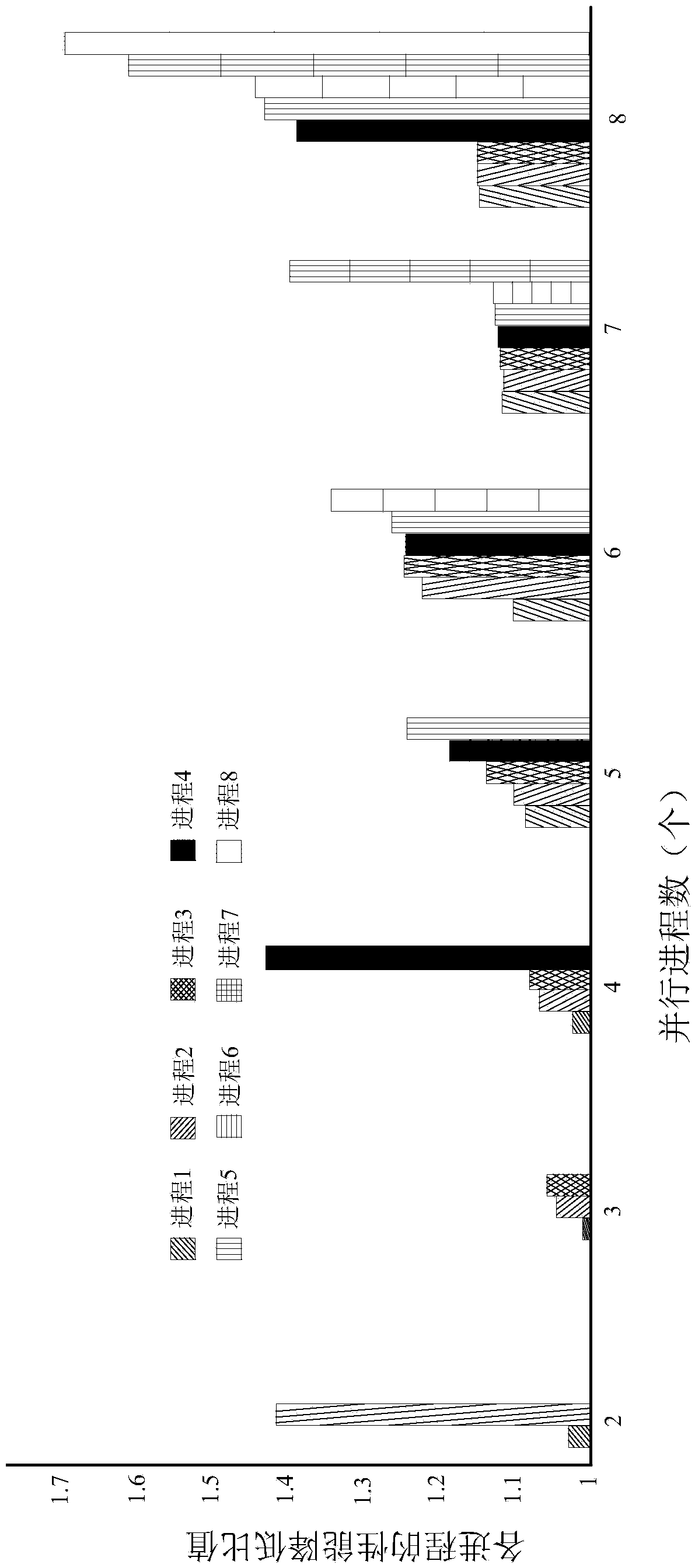

Memory allocation method and delay perception-memory allocation apparatus suitable for memory access delay balance among multiple nodes in NUMA construction

The present invention discloses a memory allocation method and delay perception-memory allocation apparatus suitable for memory access delay balance among multiple nodes in an NUMA construction. The apparatus comprises a delay perception unit (1) embedded inside a GQ unit of the NUMA multi-core construction, and a memory allocation unit (2) embedded inside a Linux operating system. According to the memory allocation method disclosed by the present invention, memory access delay among nodes in memory can be perceived periodicallyby the delay perception unit (1), whether memory access delay among nodes in memory is balanced can be obtained by the memory allocation unit (2), and a memory allocation node is selected according to a balance state, and is finally output to the Buddy memory allocator of the Linux operating system, thereby realizing physical memory allocation. According to the apparatus disclosed by the present invention aiming at an NUMA multi-core construction server, in the premise of ensuring memory access delay balance, application performance is stabilized, and unfairness of shared memory among application processes is reduced.

Owner:凯习(北京)信息科技有限公司

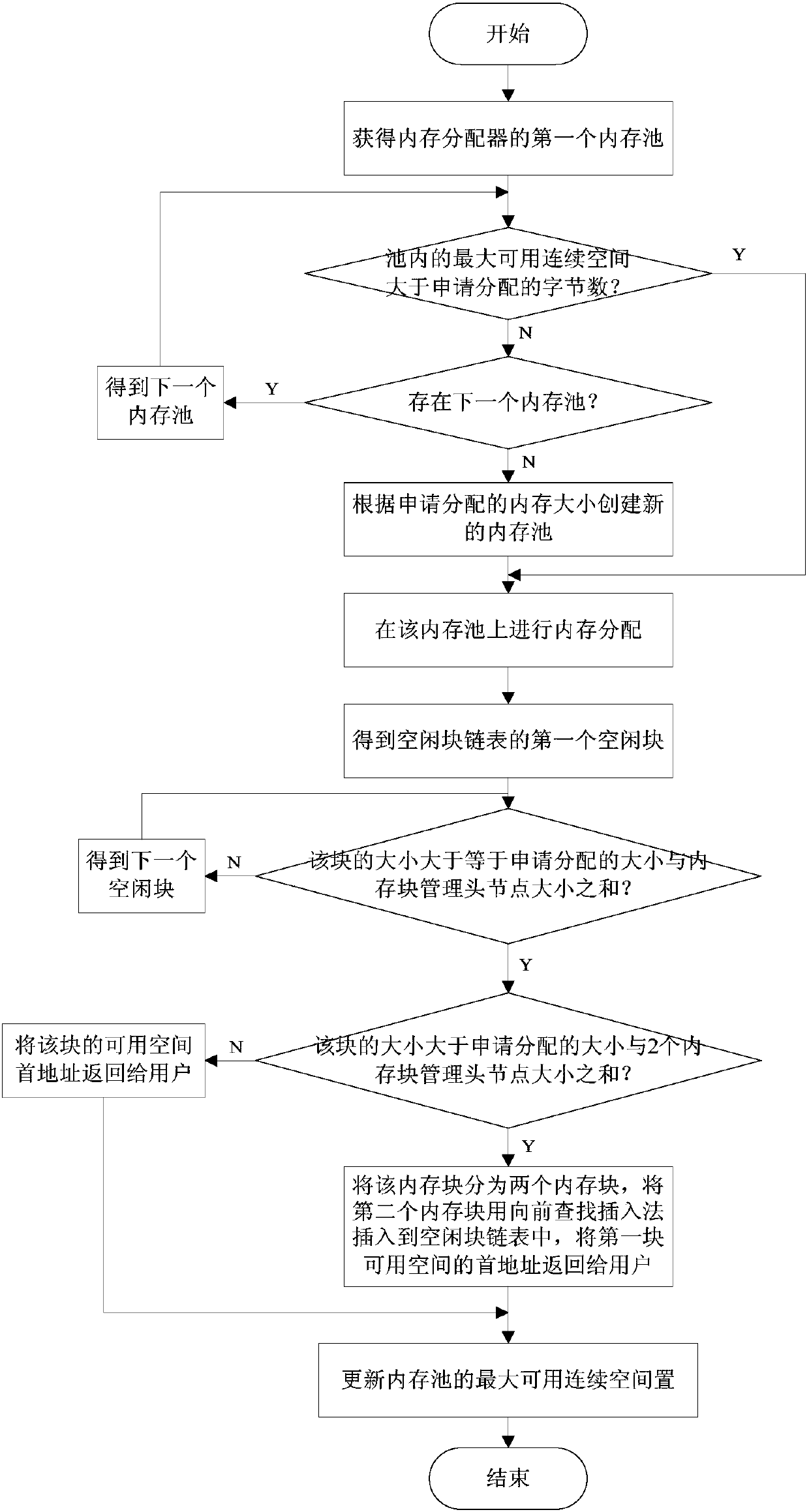

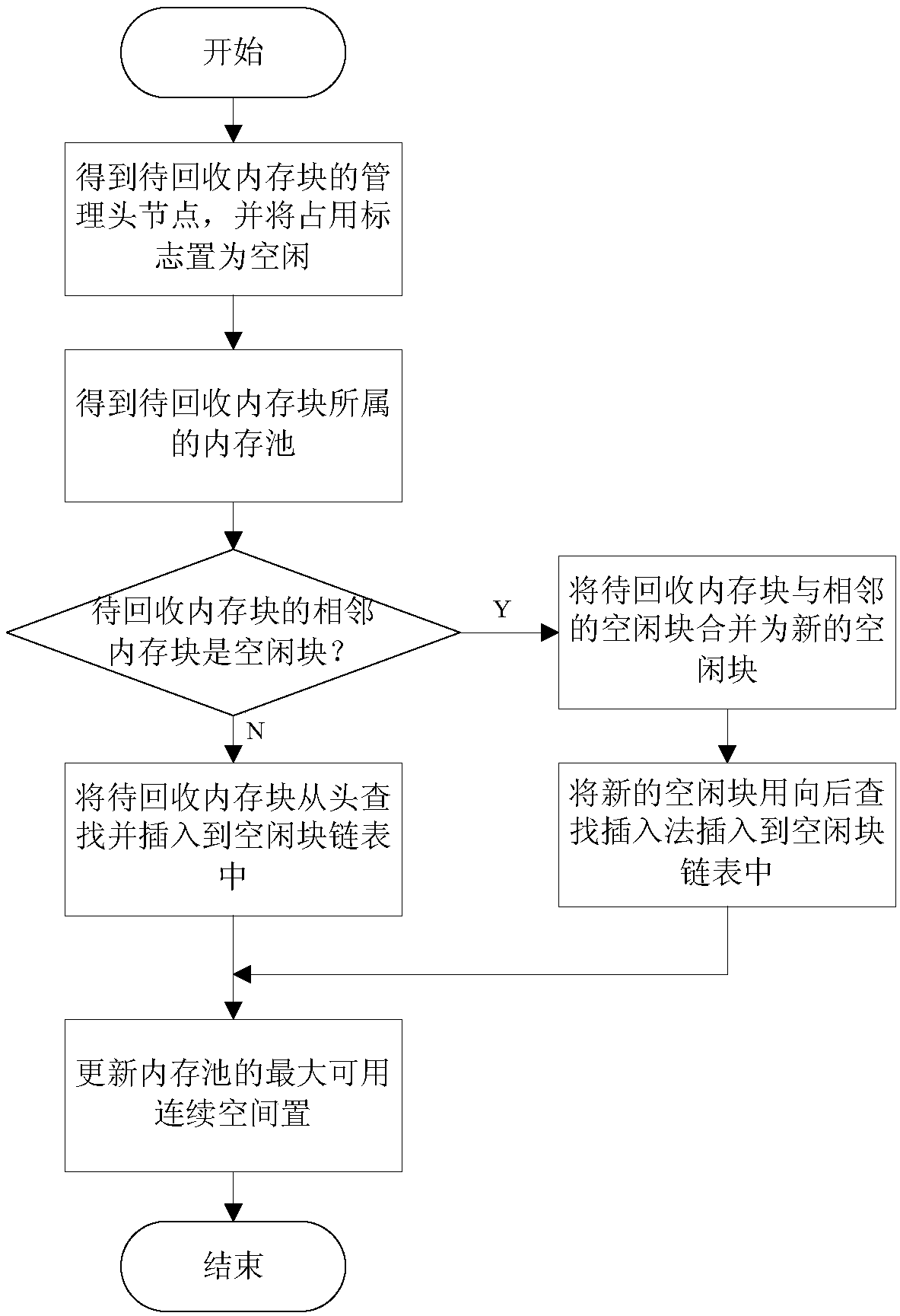

Embedded software memory management method

ActiveCN108038002AImprove management efficiencyGeneration of restrictionsResource allocationEmbedded softwareC dynamic memory allocation

The invention relates to the technical field of embedded software memory management, in particular to an embedded software memory management method. By means of static allocation, the partition area quantity in a system memory area and quantity and size of memory blocks in each partition area are preset, then a dynamic memory allocation mode is adopted for a user memory area on the basis of a pool-type memory management mechanism, memory application and release requests are processed through creating of a memory allocator, and traditional dynamic memory allocation and release algorithms are improved. By combination of static system memory area allocation and dynamic user area allocation, system flexibility is improved while generation of a great quantity of fragments is limited, and memoryleakage is avoided. In addition, by adoption of the method, time consumption in memory application and release can be effectively reduced, and dynamic memory management efficiency is improved.

Owner:TIANJIN JINHANG COMP TECH RES INST

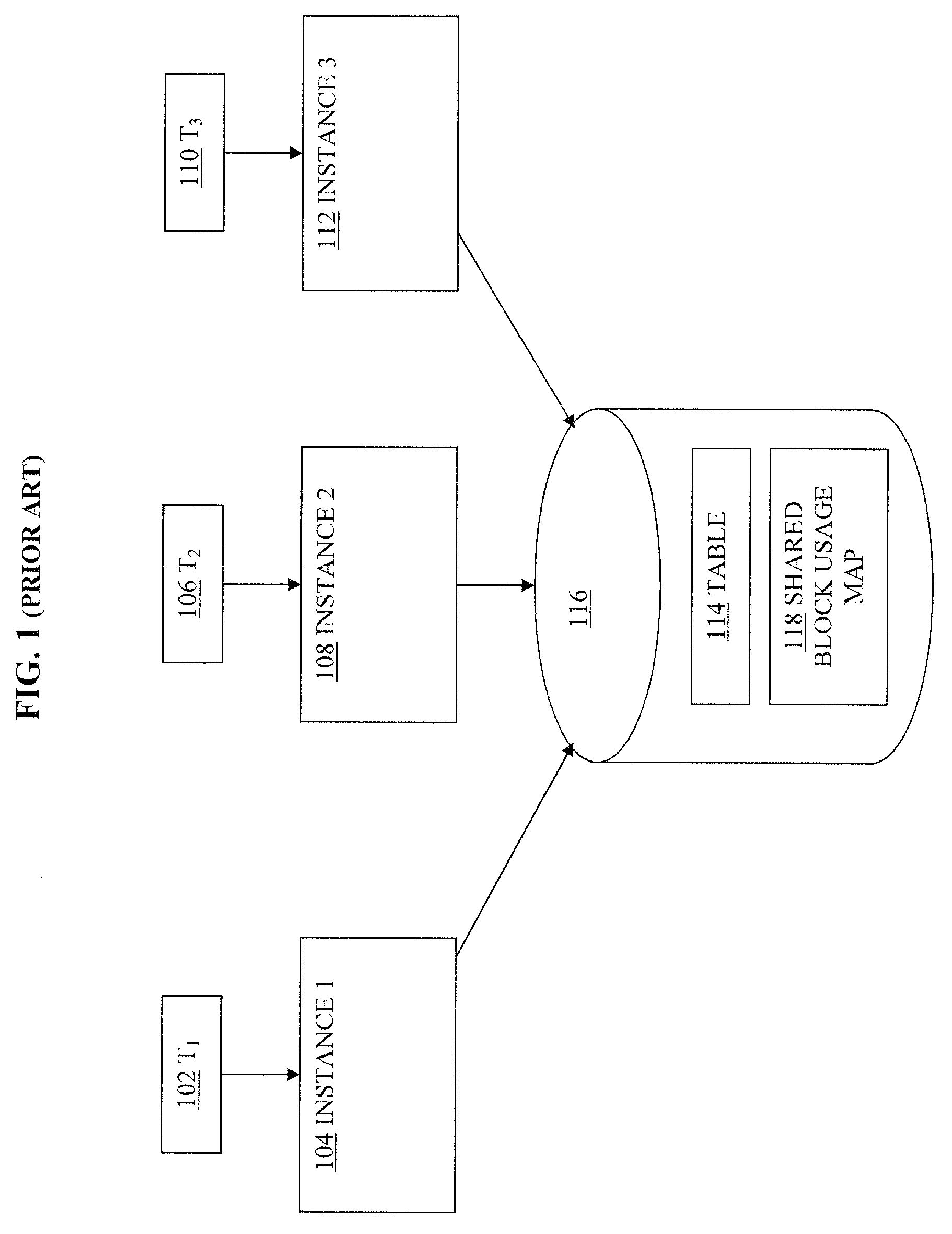

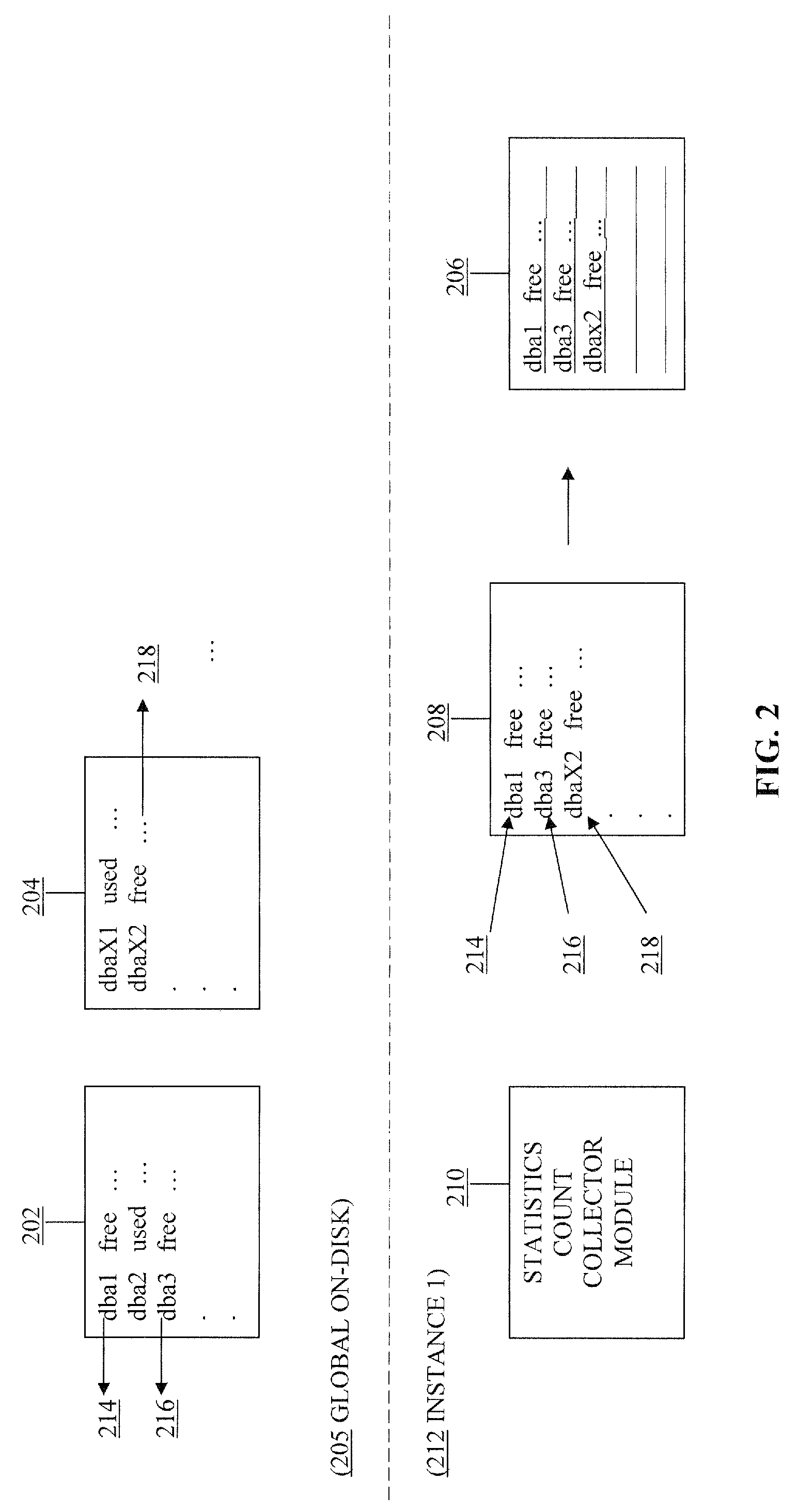

In-memory space management for database systems

ActiveUS7664799B2Data processing applicationsDigital data processing detailsTransaction-level modelingSpace management

A framework for in-memory space management for content management database systems is provided. A per-instance in-memory dispenser is partitioned. An incoming transaction takes a latch on a partition and obtains sufficient block usage to perform and complete the transaction. Generating redo information is decoupled from transaction level processing and, instead, is performed when block requests are loaded into the in-memory dispenser or synced therefrom to a per-instance on-disk structure.

Owner:ORACLE INT CORP

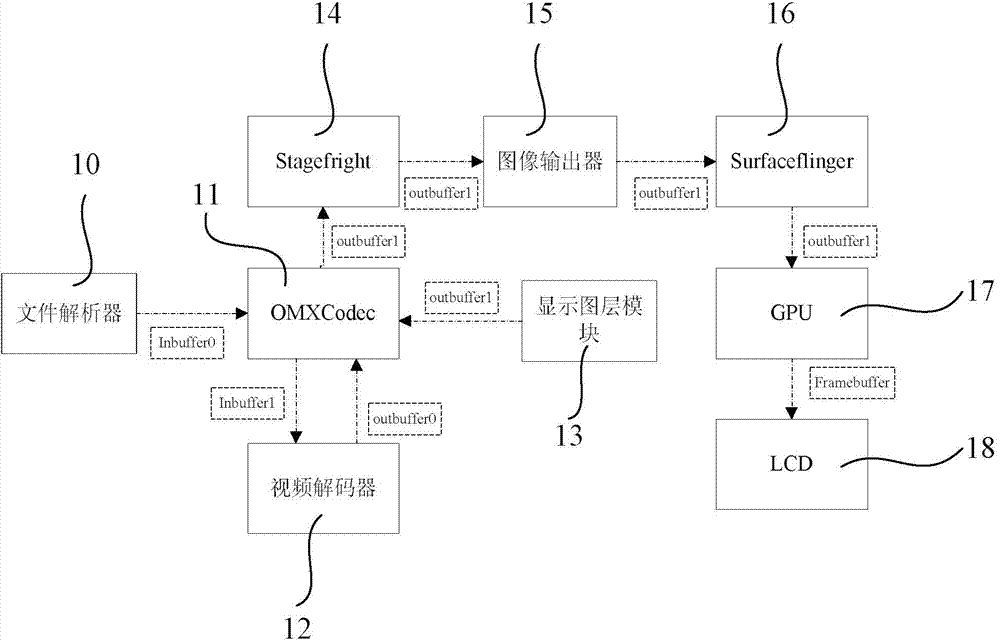

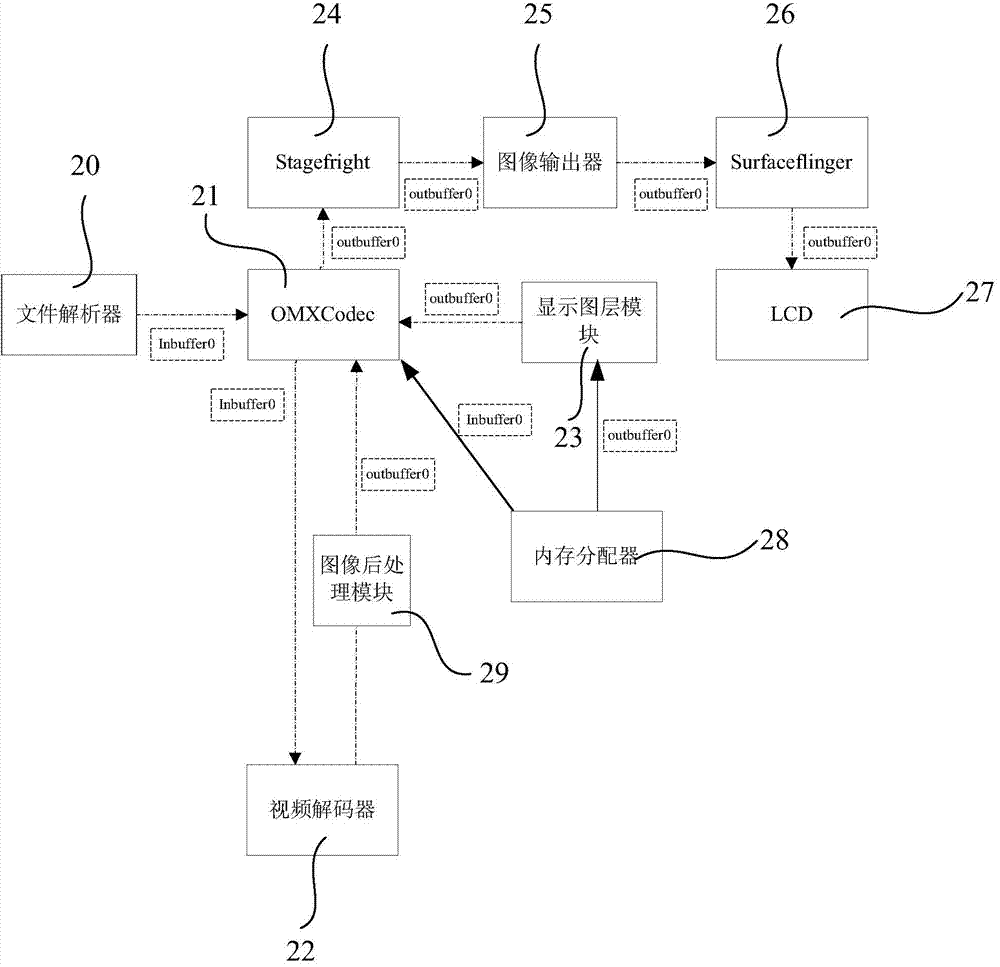

Multimedia video playing system applied to Android platform and multimedia video playing method applied to multimedia video playing system of Android platform

InactiveCN104754409AAvoid timeAvoiding Power Consumption Overhead IssuesSelective content distributionComputer moduleVideo decoder

The invention provides a multimedia video playing system applied to an Android platform and a multimedia video playing method applied to the multimedia video playing system of the Android platform. According to the invention, two buffer groups (input and output) are provided for a file parser, an OMXCodec, a video decoder, a display image layer module, a Stagefright and an image synthesis module to use through a memory allocator, thereby being capable of avoiding problems in the prior art that the number of buffer groups is large, a requirement for the memory size is high in playing, a large amount of data copy exists among different buffer groups, CPU resources are occupied, and the overall playing performance is affected.

Owner:LEADCORE TECH

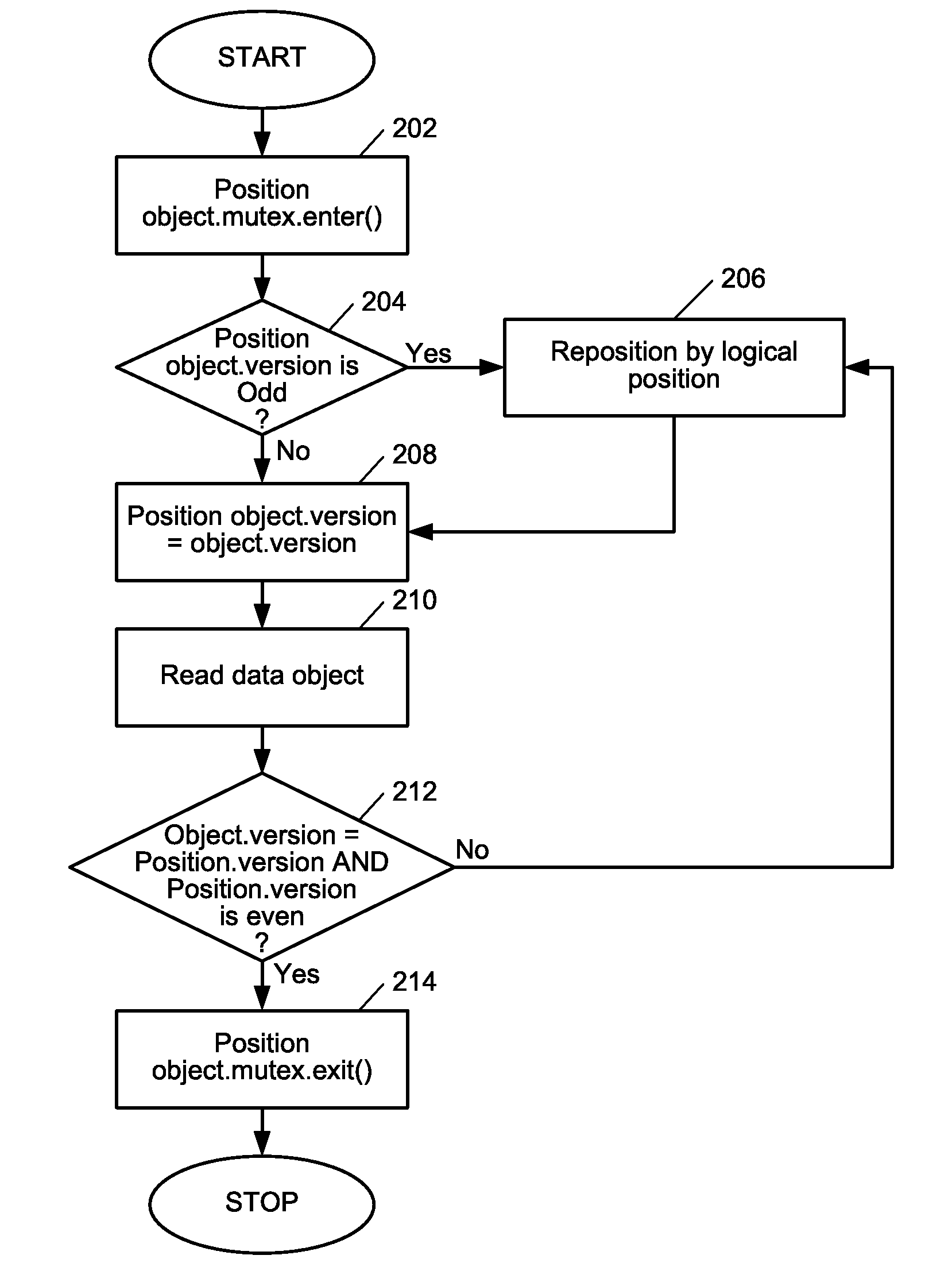

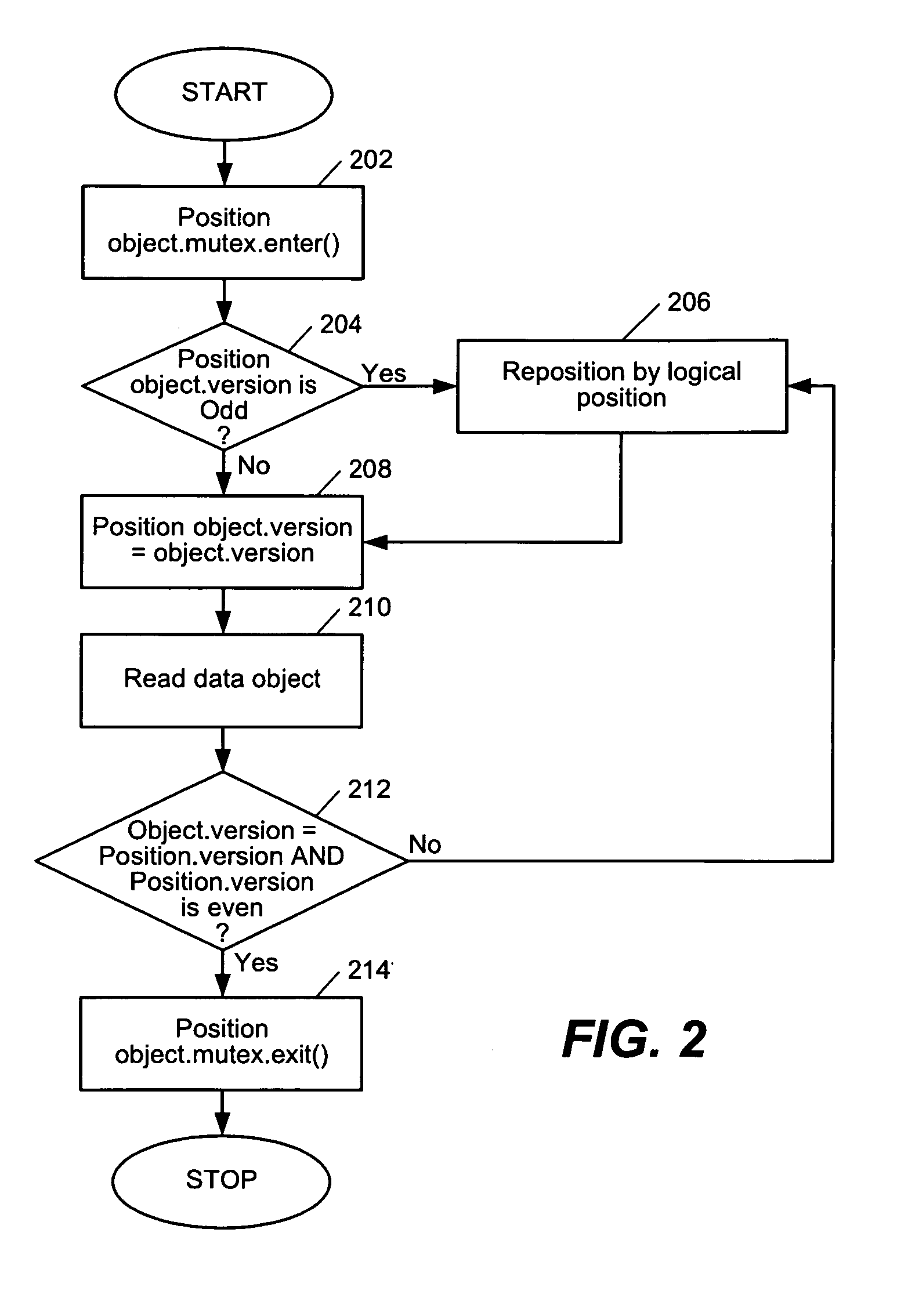

Memory allocator for optimistic data access

A method, system and computer readable media for optimistic access of data objects in a processing system. The method, system and computer readable media comprise providing a list of position objects. Each of the position objects can be associated with a data object. The method, system and computer readable medium include utilizing a thread to mutex a position object of the list of position objects and to associate the position object with a data object, and accessing the data object by the thread. The method, system and computer readable medium record a free level of a memory allocator as a read level of the position object and record a version number of the data object as the version number of the position object after the access has been determined to be safe.

Owner:IBM CORP

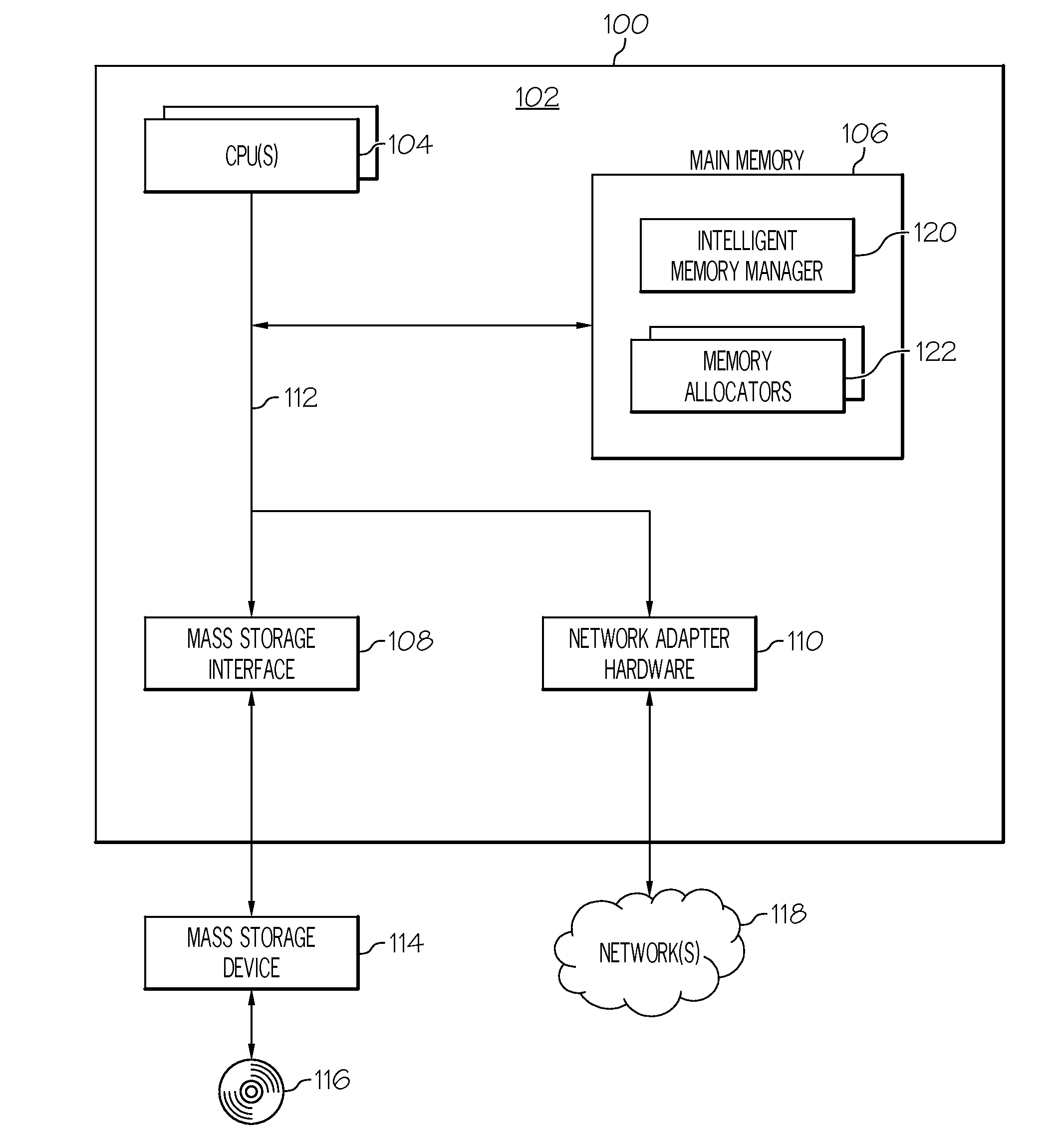

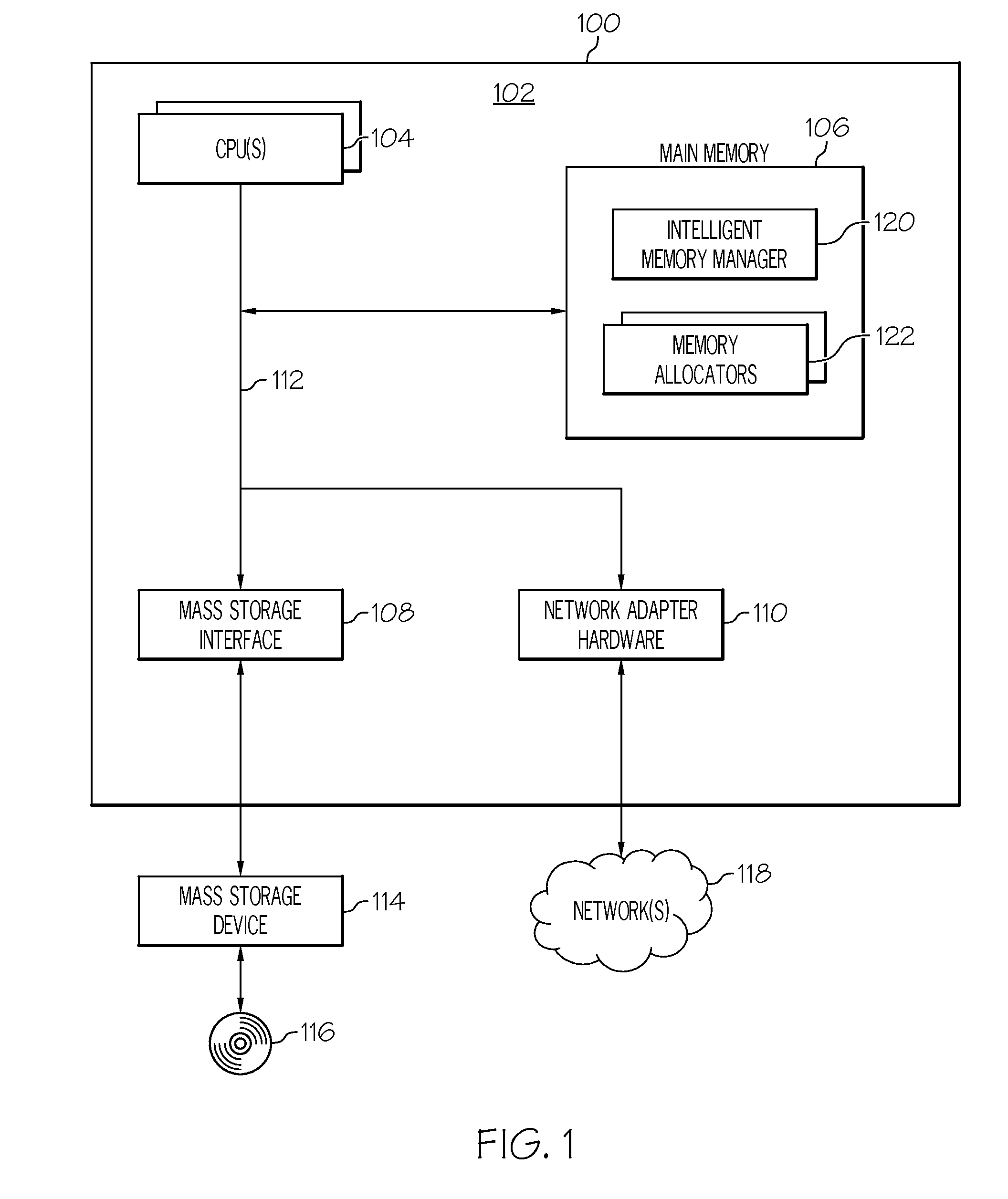

Intelligent computer memory management

ActiveUS20120072686A1Easy to handleImprove memory utilizationMemory architecture accessing/allocationMemory loss protectionParallel computingComputer memory

A plurality of memory allocators are initialized within a computing system. At least a first memory allocator and a second memory allocator in the plurality of memory allocators are each customizable to efficiently handle a set of different memory request size distributions. The first memory allocator is configured to handle a first memory request size distribution. The second memory allocator is configured to handle a second memory request size distribution. The second memory request size distribution is different than the first memory request size distribution. At least the first memory allocator and the second memory allocator that have been configured are deployed within the computing system in support of at least one application. Deploying at least the first memory allocator and the second memory allocator within the computing system improves at least one of performance and memory utilization of the at least one application.

Owner:IBM CORP

Memory allocation improvements

ActiveUS9361215B2Resource allocationMemory adressing/allocation/relocationVirtual memoryParallel computing

In one embodiment, a memory allocator of a memory manager can service memory allocation requests within a specific size-range from a section of pre-reserved virtual memory. The pre-reserved virtual memory allows allocation requests within a specific size range to be allocated in the pre-reserved region, such that the virtual memory address of a memory allocation serviced from the pre-reserved region can indicate elements of metadata associated with the allocations that would otherwise contribute to overhead for the allocation.

Owner:APPLE INC

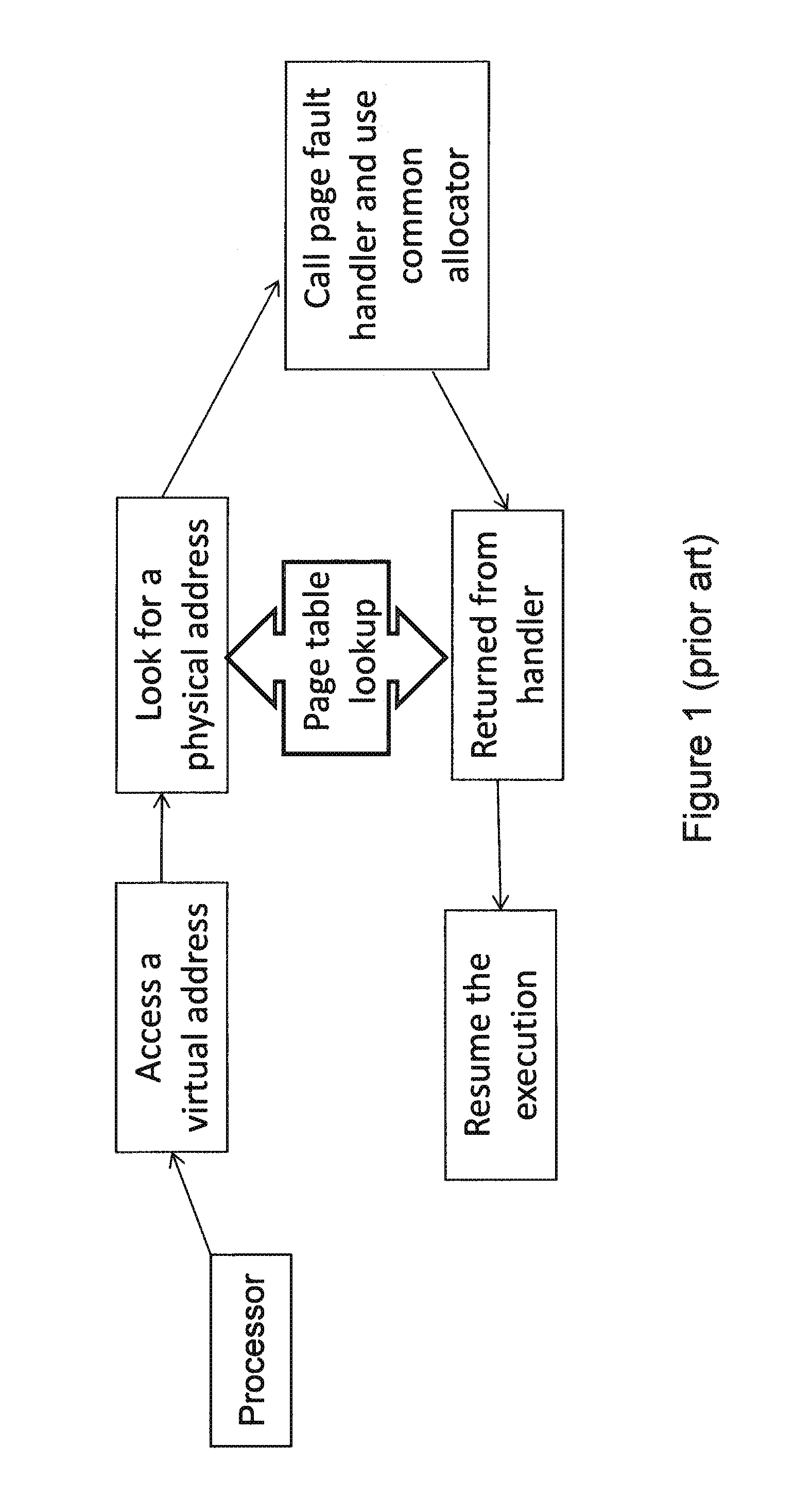

Memory allocation and page address translation system and method

InactiveUS20150154119A1Reduce the number of timesShorten the timeMemory architecture accessing/allocationMemory adressing/allocation/relocationManagement unitPage table

A memory allocation and page address translation system includes a buddy memory allocator, a plurality of guest page tables, a memory management unit and a buddy translation lookaside buffer. The buddy memory allocator is configured for allocating machine physical memory space to a virtual machine monitor and a plurality of virtual machines. Each of the virtual machine monitor and the virtual machines receives memory chunks with different sizes. The guest page tables are configured for providing virtual address translation references for the virtual machine monitor and the virtual machines. The memory management unit is configured for translating a virtual address into a guest physical address. The buddy translation lookaside buffer is configured for translating the guest physical address into a machine physical address.

Owner:NAT TAIWAN UNIV

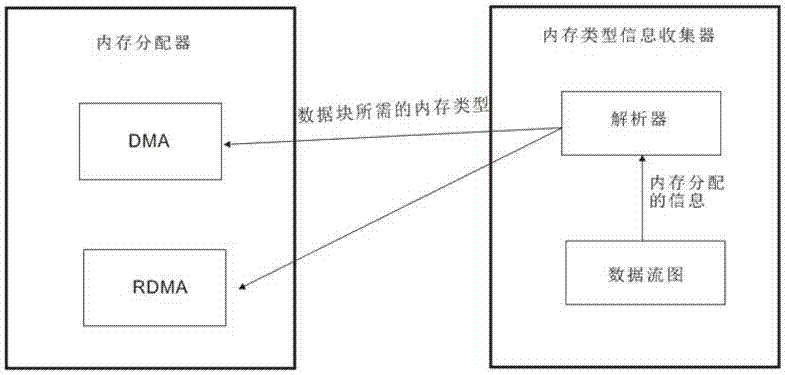

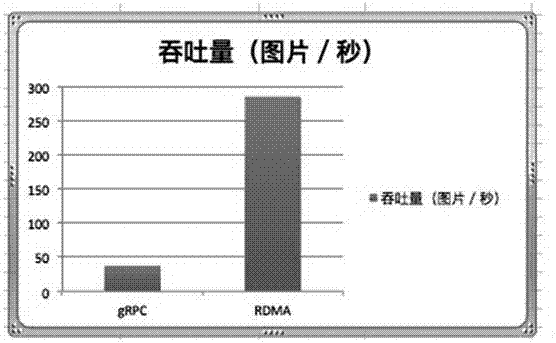

Zero copy data flow based on RDMA

The invention discloses zero copy data flow based on RDMA. The zero copy data flow mainly comprises a memory allocator and an information collector; the memory allocator is used for achieving allocation rules of different memories; the memory classification information collector is used for analyzing a data flow calculation graph and calculating a data source and a data receiving node according to every side in the data flow calculation graph to determine a buffering area management rule of every step. The zero copy data flow based on the RDMA has the advantages that the high tensor transmission speed and high-speed extension of a GPU can be achieved and unnecessary memory copy is eliminated.

Owner:CLUSTAR TECH LO LTD

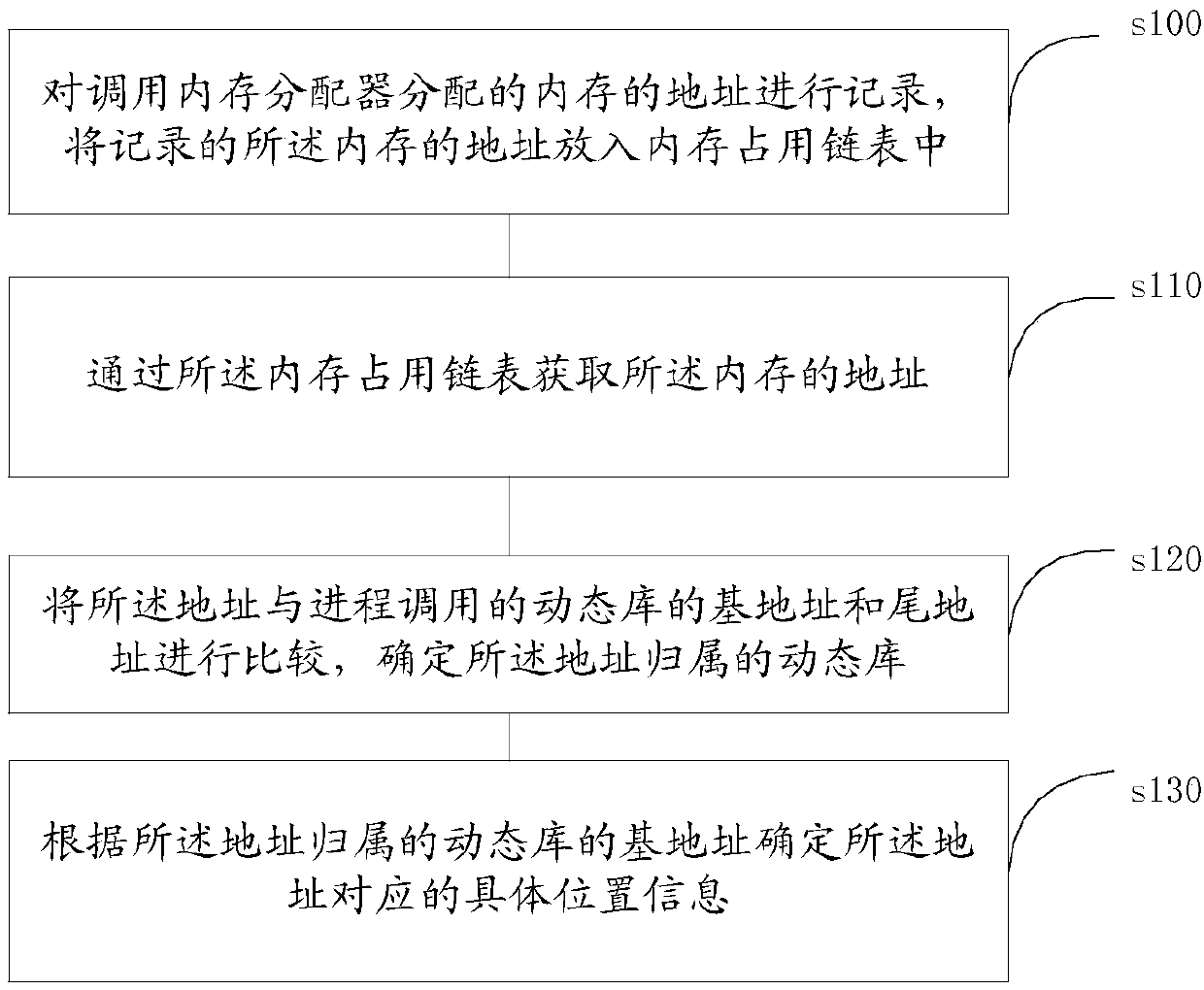

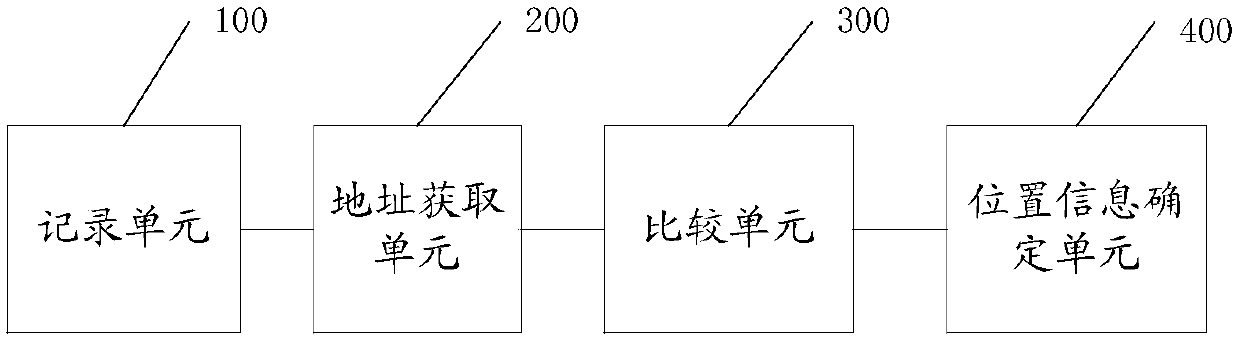

Memory leakage point locating method, apparatus and system, and readable storage medium

InactiveCN107608885AAchieve positioningEffective positioningSoftware testing/debuggingComputer hardwareMemory footprint

The invention discloses a memory leakage point locating method. The method comprises the steps of recording address stack information of a memory allocated by a called memory allocator, and putting the recorded address stack information of the memory into a memory occupation link table; obtaining the address stack information of the memory through the memory occupation link table; comparing the address stack information with basic address stack information and tail address stack information of a dynamic library called by a process, and determining the dynamic library which the address stack information belongs to; and according to the basic address stack information of the dynamic library which the address stack information belongs to, determining specific position information corresponding to the address stack information. According to the method, a memory leakage point can be effectively located. The invention furthermore discloses a memory leakage point locating apparatus and system, and a computer readable storage medium, which have the abovementioned beneficial effects.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

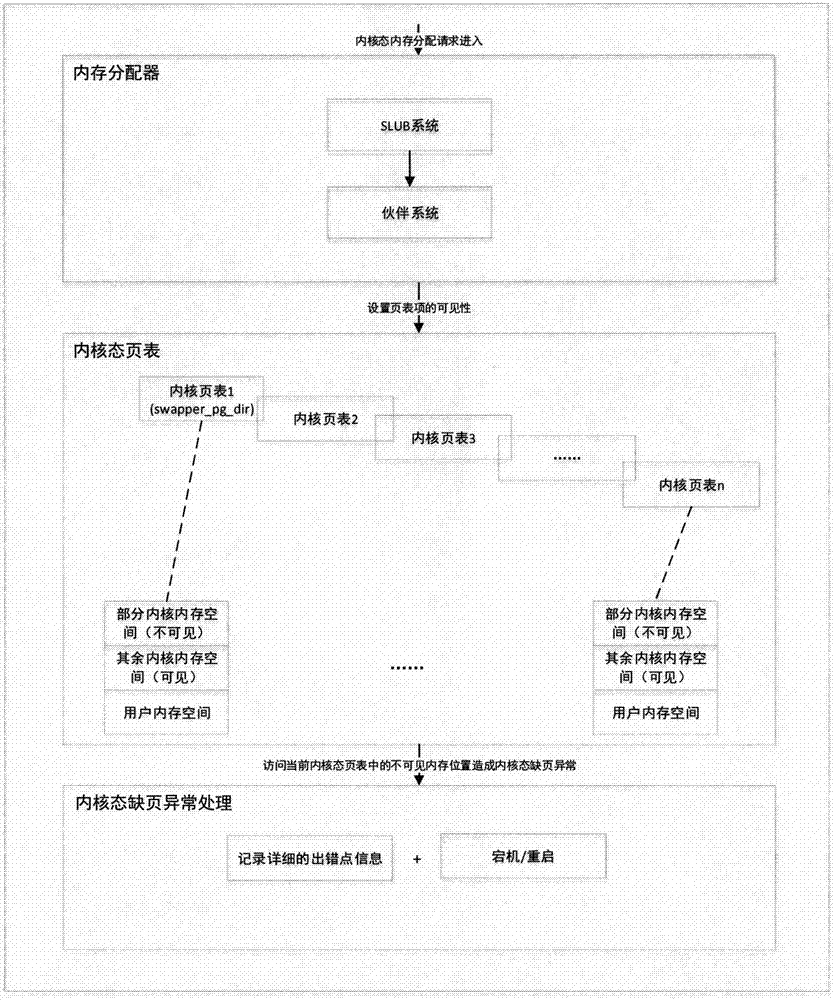

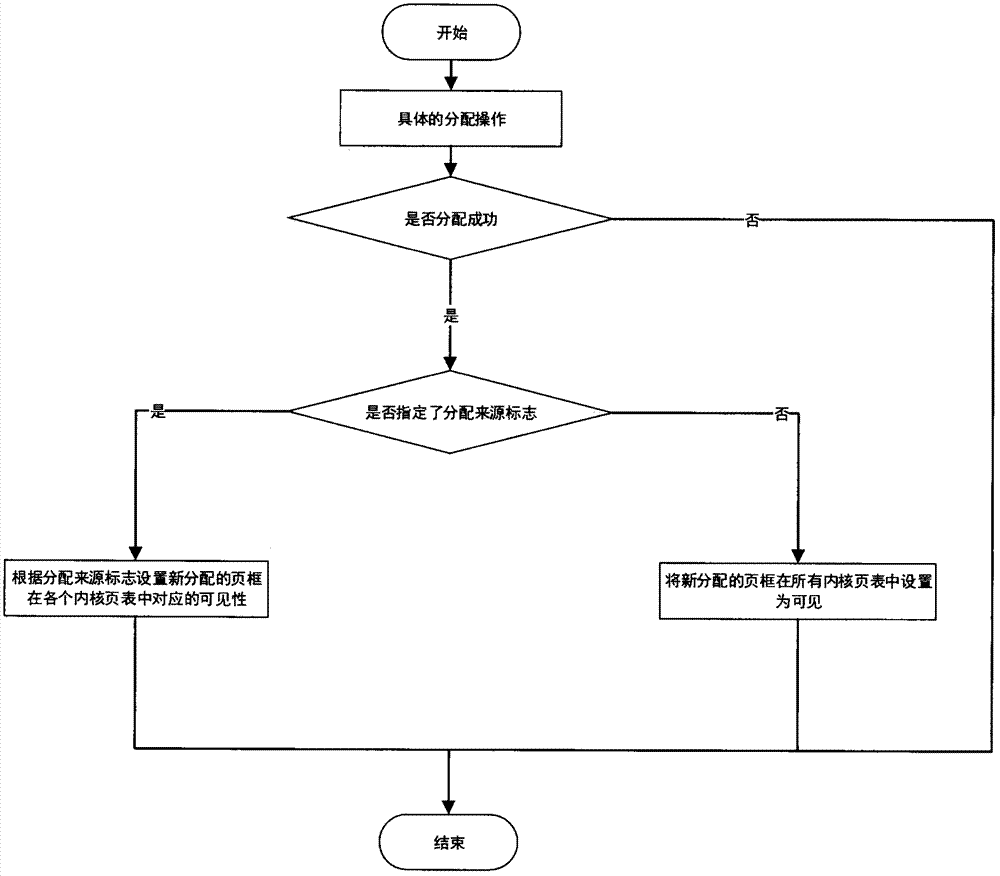

Kernel dynamic data isolation and protection technology based on multi-kernel page table

InactiveCN107168801ATroubleshoot mixed page issuesLimit memory space locationResource allocationDigital data protectionLinux kernelPage table

The invention discloses a kernel dynamic data isolation and protection technology based on multi-kernel page table in the Linux system. The implementation of this technology mainly involves the page table management, memory allocator and page fault handling in the Linux system. The realization method is as follows: first, creating multiple sets of kernel page tables when the kernel is initialized, so as to construct multiple relatively independent memory views in the Linux kernel. Under normal circumstances, the correct operation of the kernel is guaranteed through the switching among various kernel page tables. Secondly, modifying the memory allocator in the Linux system; by allocating flags, distinguishing that which memory view does the current allocated memory correspond to, and modifying the kernel view table corresponding to the memory view so that the allocated memory is visible in the memory view but invisible to other memory views. Finally modifying the missing page program, adding a new kernel state page fault handling logic so as to deal with kernel page fault exception caused by access of the kernel to the invisible memory area under the current page table.

Owner:NANJING UNIV

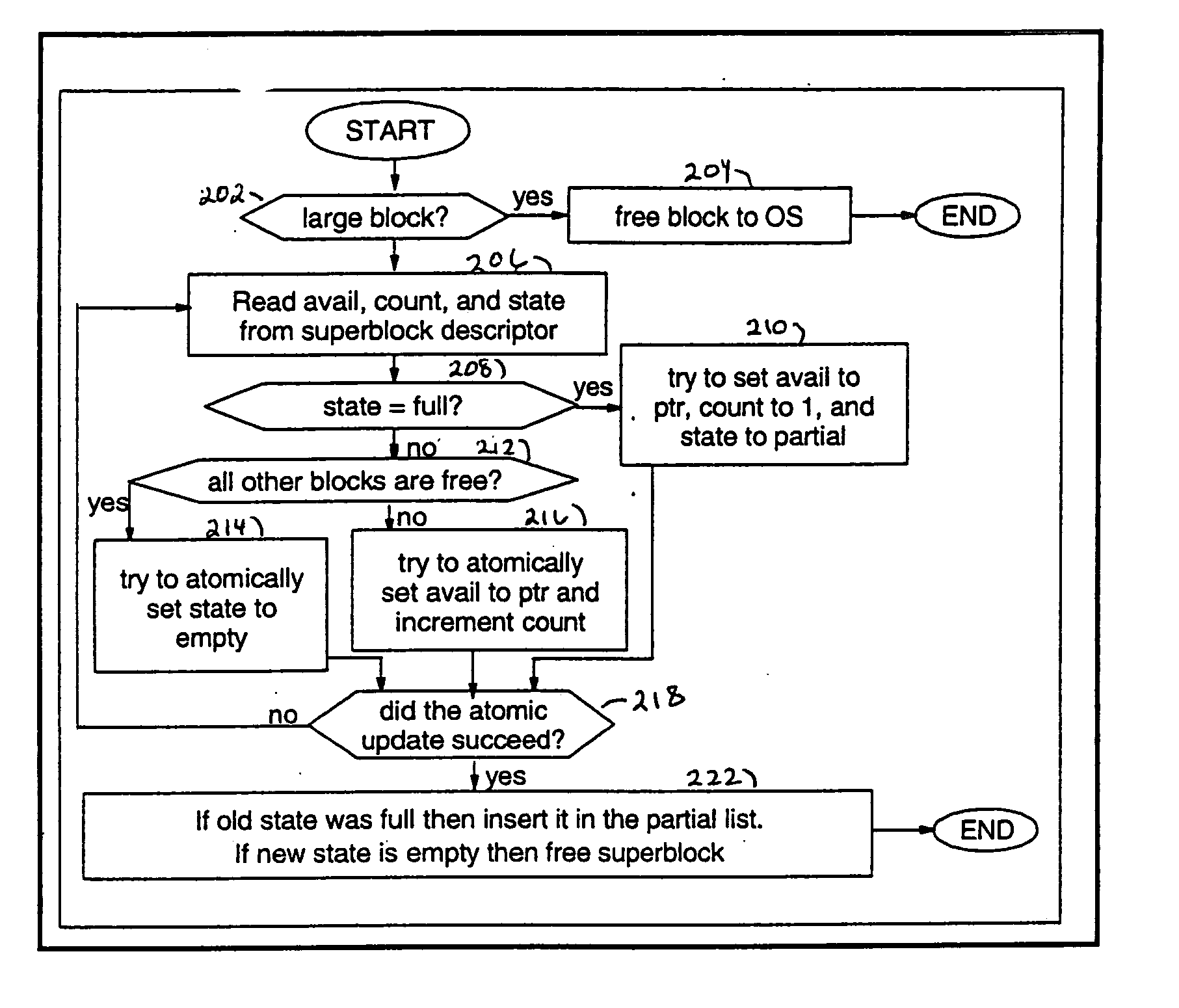

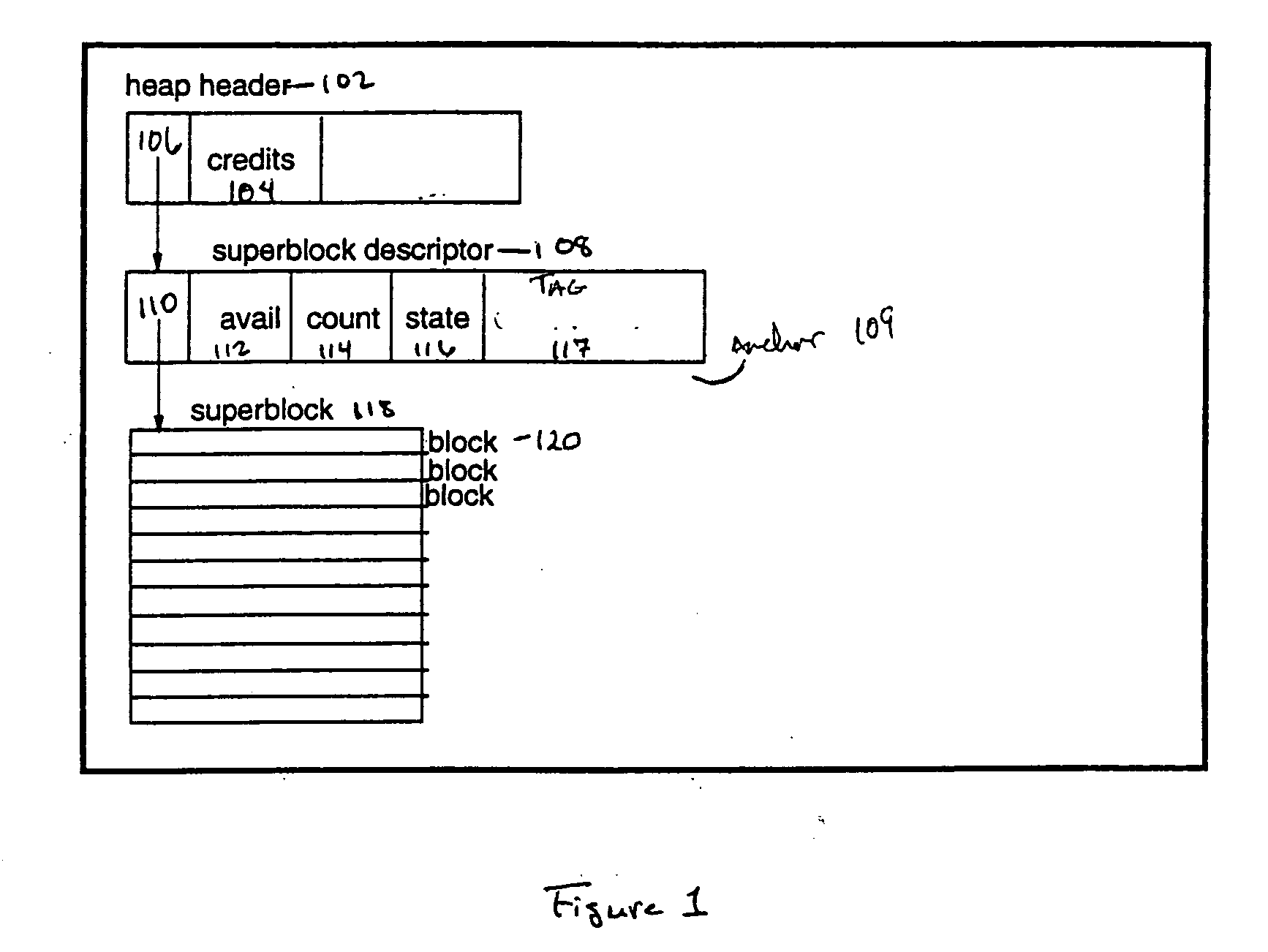

Method for completely lock-free user-level dynamic memory allocation

ActiveUS7263592B2Memory adressing/allocation/relocationProgram controlGeneral purposeThread scheduling

The present invention relates to a method, computer program product and system for a general purpose dynamic memory allocator that is completely lock-free, and immune to deadlock, even when presented with the possibility of arbitrary thread failures and regardless of thread scheduling. Further the invention does not require special hardware or scheduler support and does not require the initialization of substantial portions of the address space.

Owner:GOOGLE LLC

Optimized Memory Allocator By Analyzing Runtime Statistics

InactiveUS20110191758A1Memory adressing/allocation/relocationProgram controlParallel computingMemory allocator

A computer readable storage medium including a set of instructions executable by a processor. The set of instructions operable to determine memory allocation parameters for a program executing using a standard memory allocation routine, create an optimized memory allocation routine based on the memory allocation parameters and execute the program using the optimized memory allocation routine.

Owner:WIND RIVER SYSTEMS

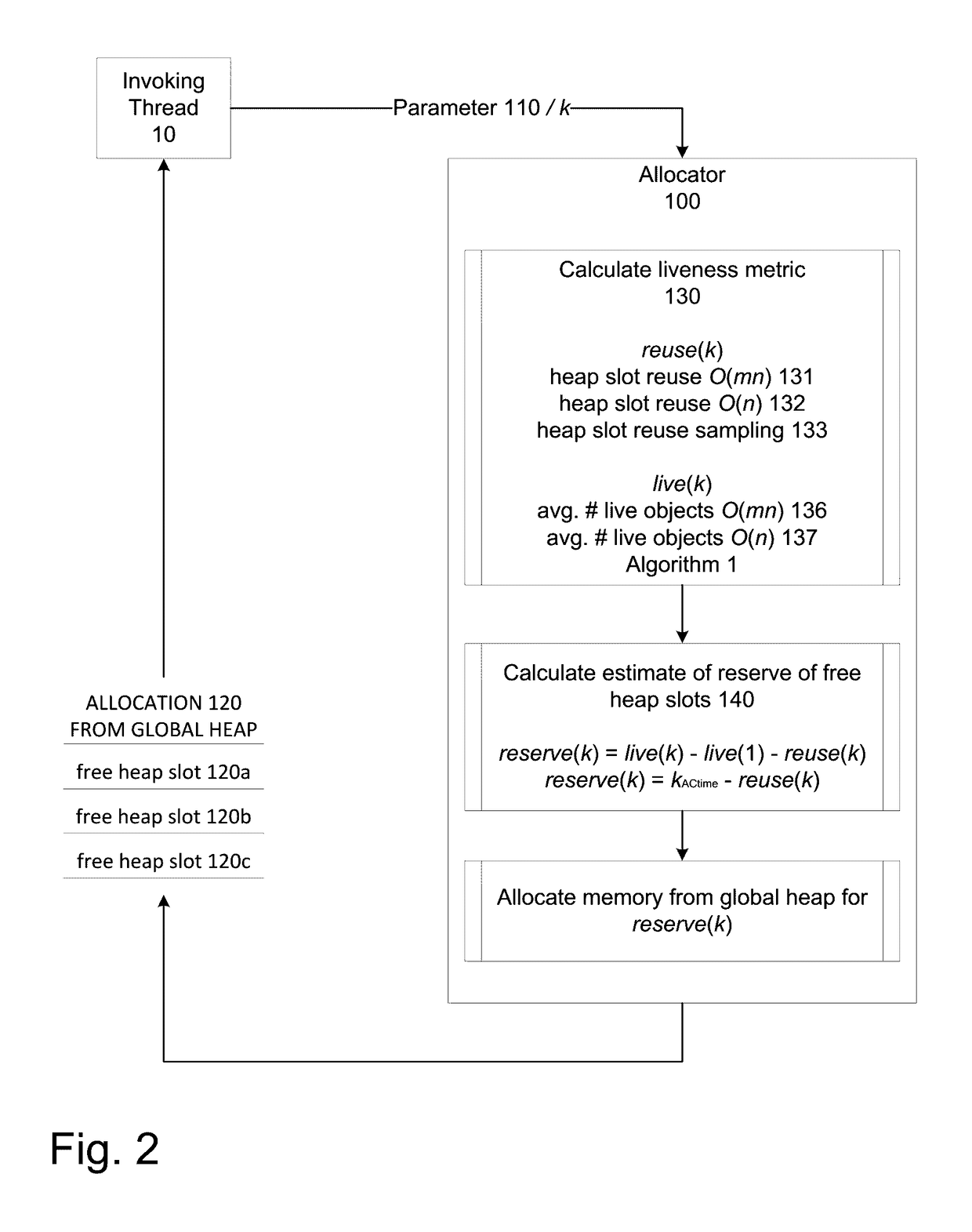

Parallel memory allocator employing liveness metrics

ActiveUS20170075806A1Increase likelihoodImprove severityMemory architecture accessing/allocationInput/output to record carriersParallel computingProgram Thread

A liveness-based memory allocation module operating so that a program thread invoking the memory allocation module is provided with an allocation of memory including a reserve of free heap slots beyond the immediate requirements of the invoking thread. The module receives a parameter representing a thread execution window from an invoking thread; calculates a liveness metric based upon the parameter; calculates a reserve of memory to be passed to the invoking thread based upon the parameter; returns a block of memory corresponding to the calculated reserve of memory. Equations, algorithms, and sampling strategies for calculating liveness metrics are disclosed, as well as a method for adaptive control of the module to achieve a balance between memory efficiency and potential contention as specified by a single control parameter.

Owner:UNIVERSITY OF ROCHESTER

Increasing memory capacity of a frame buffer via a memory splitter chip

ActiveUS8489839B1Increase memory capacityImprove performanceCathode-ray tube indicatorsMemory systemsGraphicsData transmission

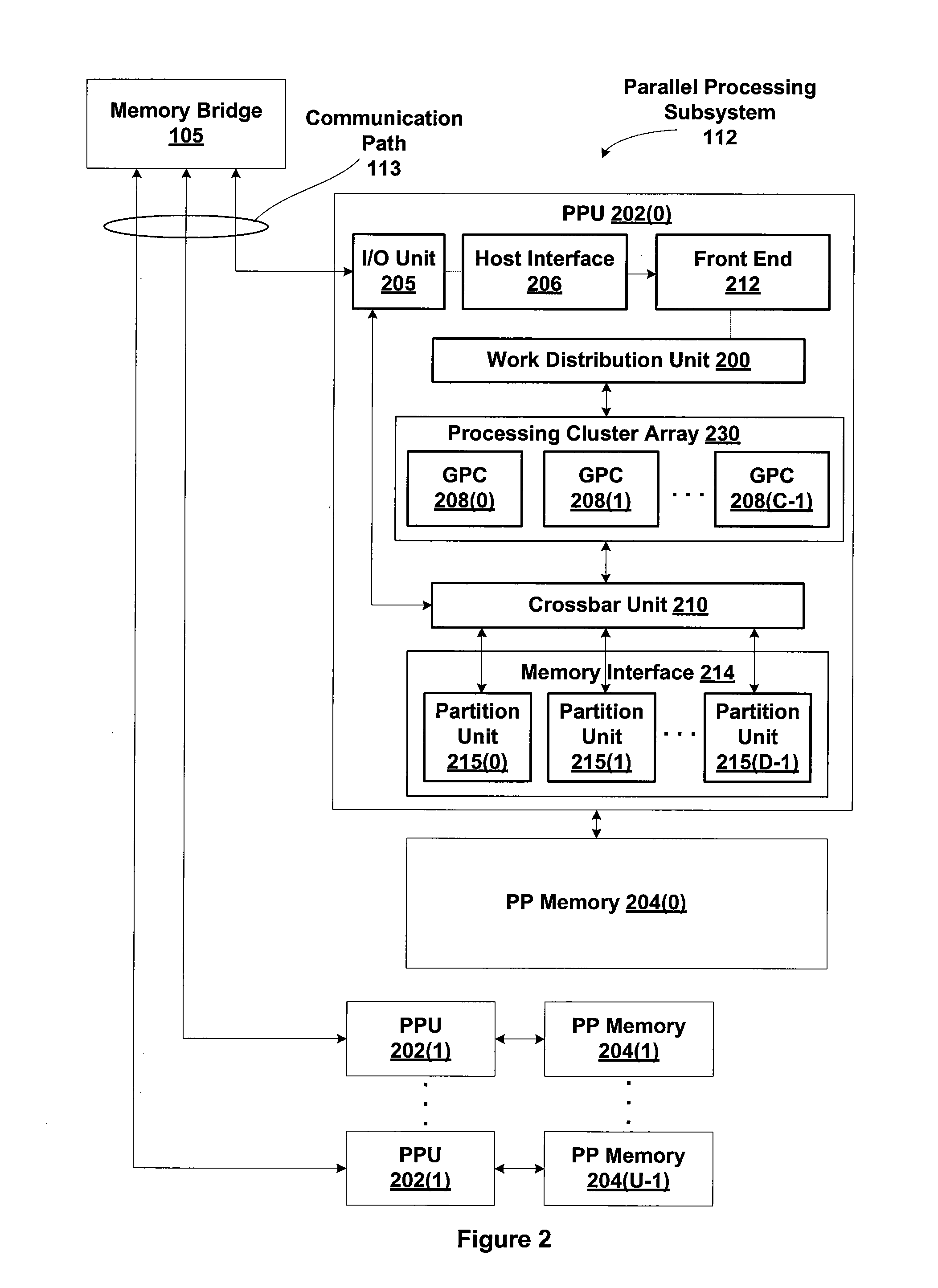

The memory splitter chip couples multiple DRAM units to the PPU, thereby expanding the memory capacity available to the PPU for storing data and increasing the overall performance of the graphics processing system. The memory splitter chip includes logic for managing the transmission of data between the PPU and the DRAM units when the transmission frequencies and the burst lengths of the PPU interface and the DRAM interfaces differ. Specifically, the memory splitter chip implements an overlapping transmission mode, a pairing transmission mode or a combination of the two modes when the transmission frequencies or the burst lengths differ.

Owner:NVIDIA CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com