Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

62 results about "Uniform memory access" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

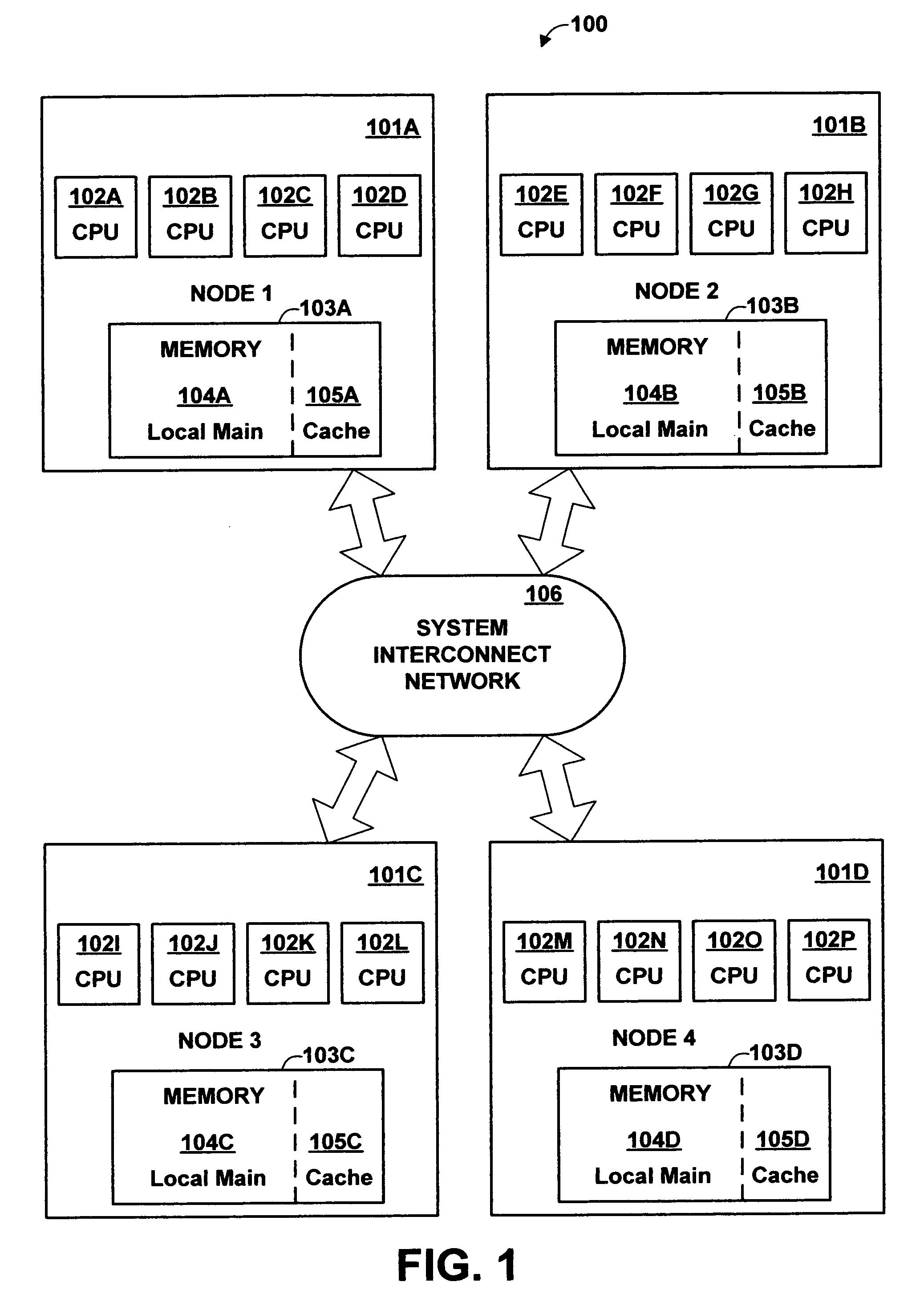

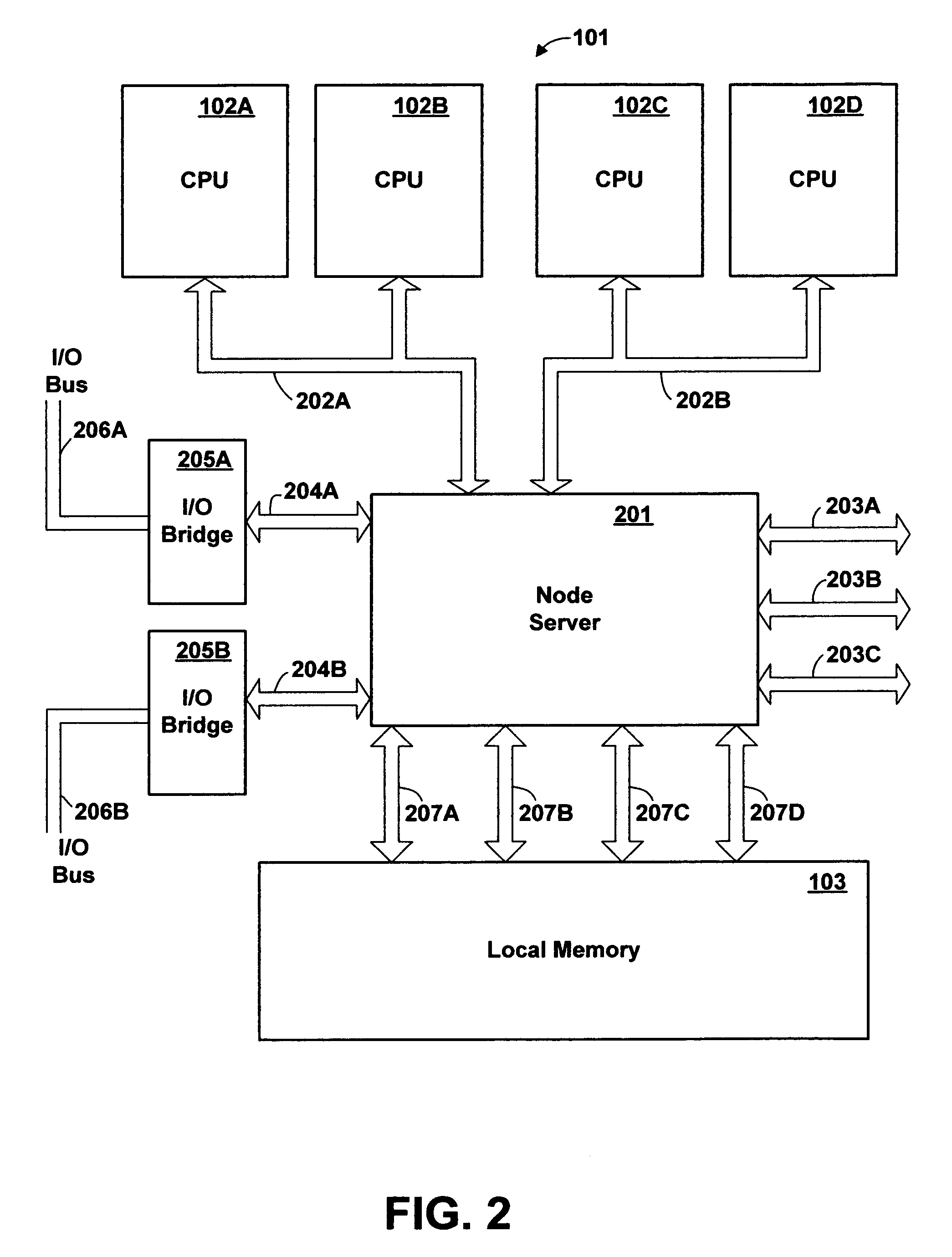

Uniform memory access (UMA) is a shared memory architecture used in parallel computers. All the processors in the UMA model share the physical memory uniformly. In an UMA architecture, access time to a memory location is independent of which processor makes the request or which memory chip contains the transferred data. Uniform memory access computer architectures are often contrasted with non-uniform memory access (NUMA) architectures. In the UMA architecture, each processor may use a private cache. Peripherals are also shared in some fashion. The UMA model is suitable for general purpose and time sharing applications by multiple users. It can be used to speed up the execution of a single large program in time-critical applications.

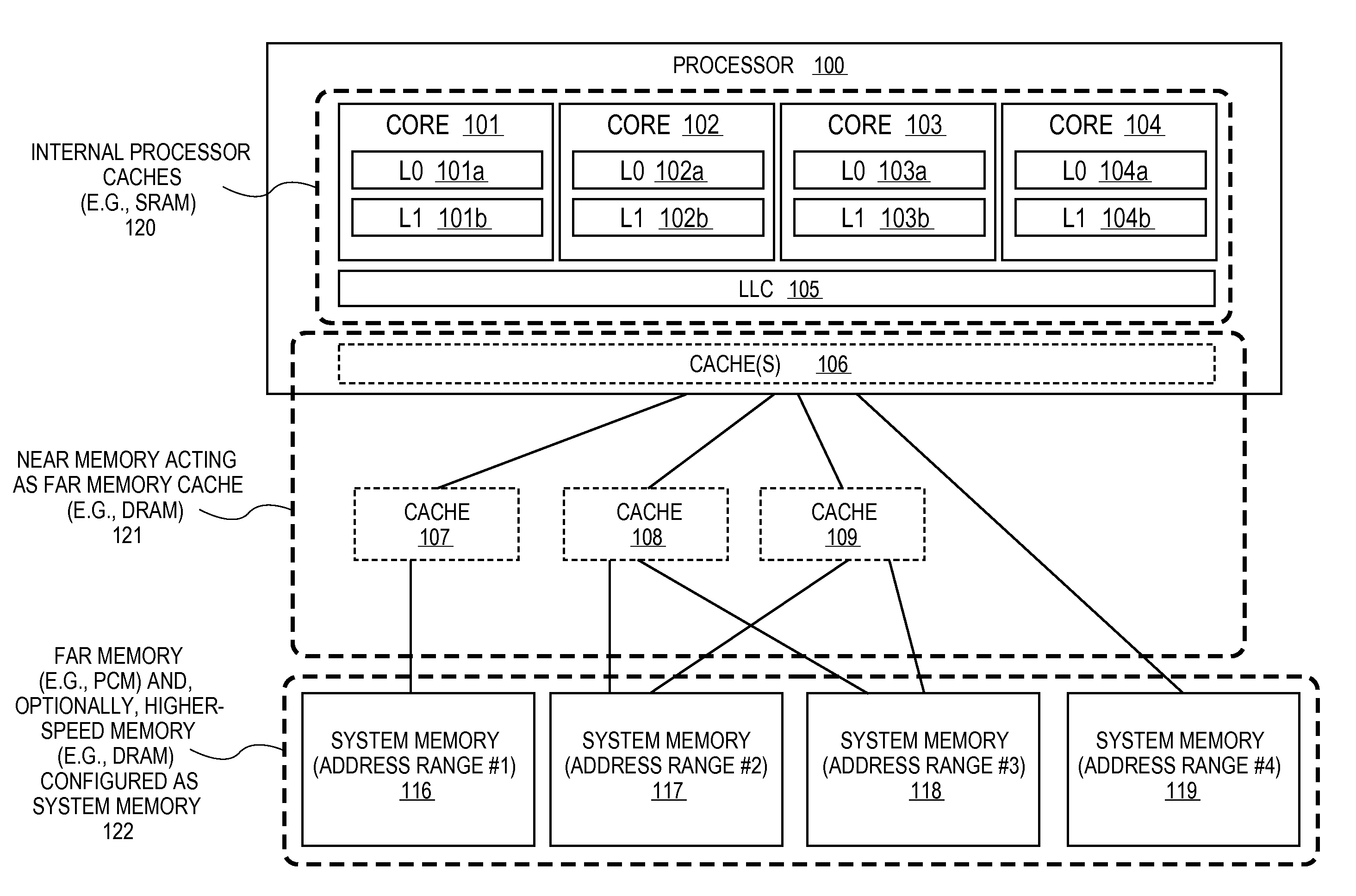

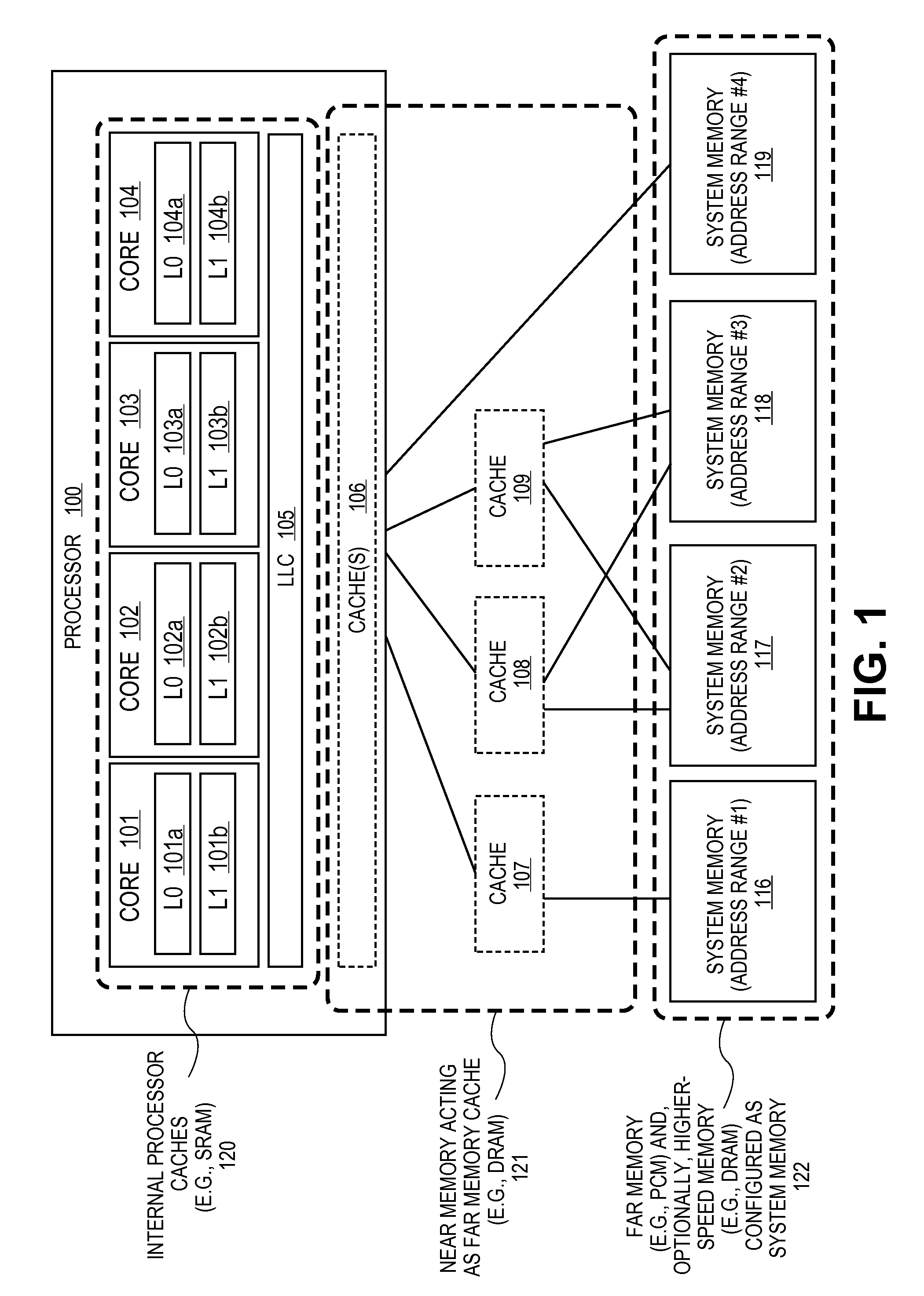

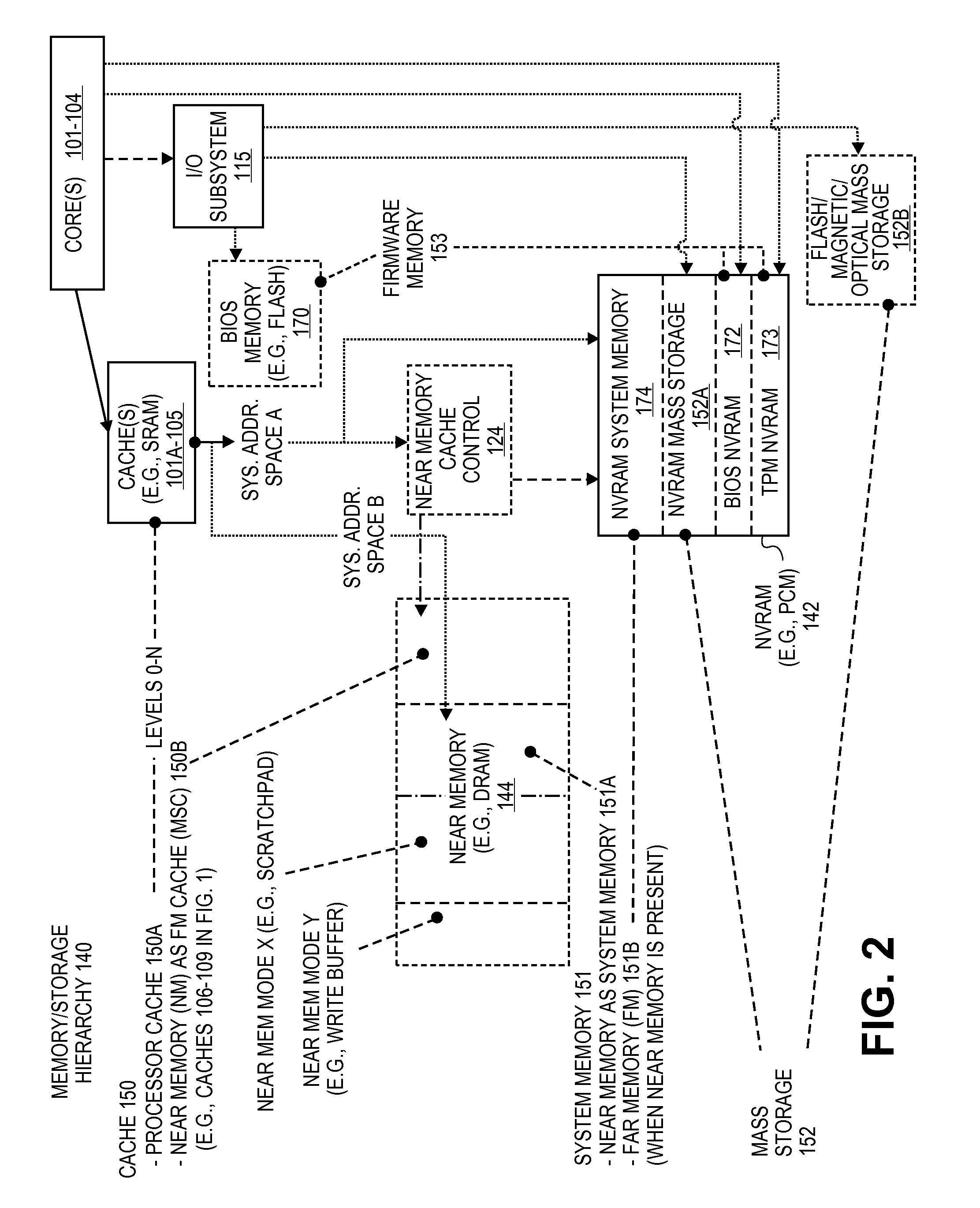

Memory channel that supports near memory and far memory access

ActiveUS20140040550A1Memory architecture accessing/allocationError detection/correctionUniform memory accessDirect memory access

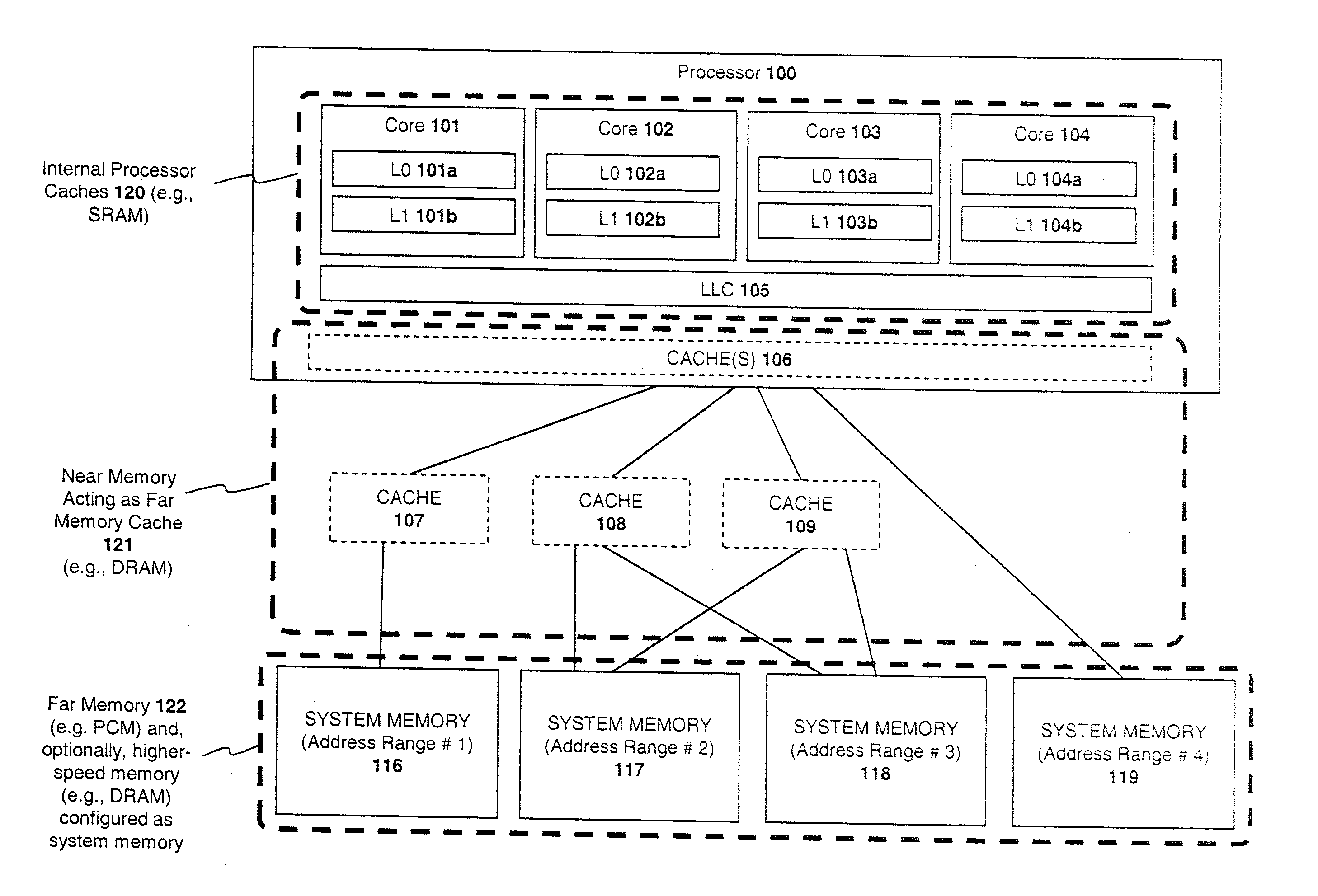

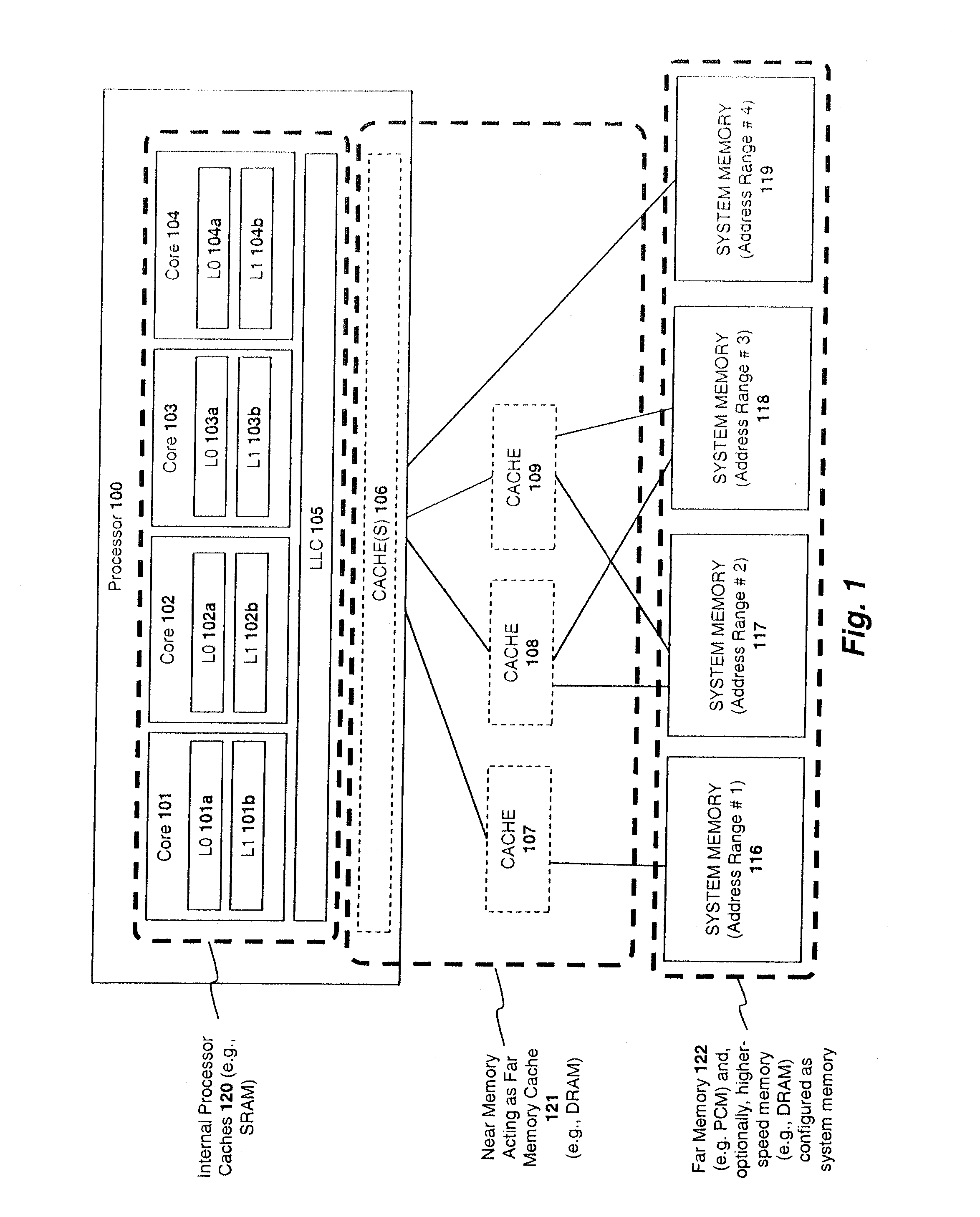

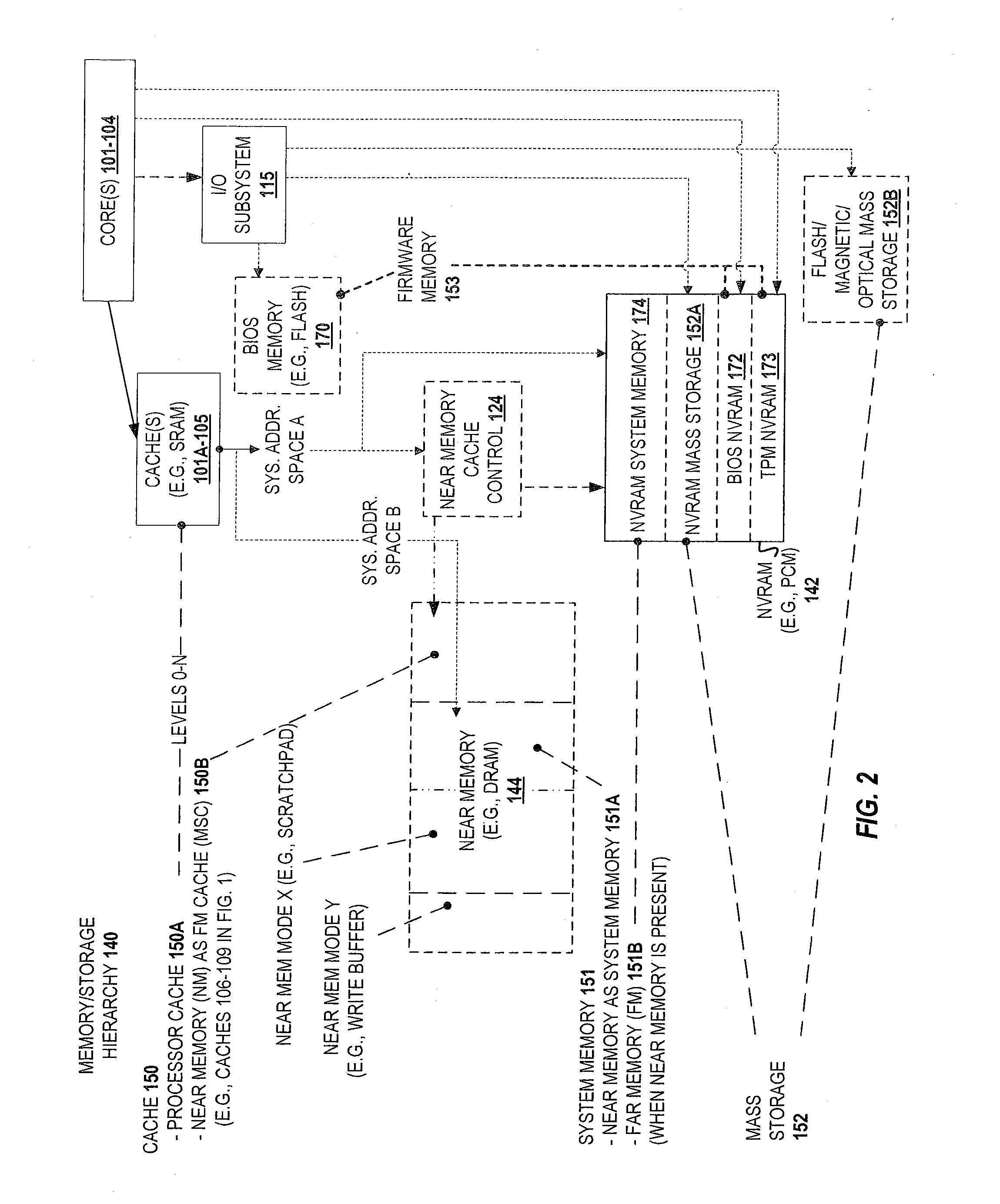

A semiconductor chip comprising memory controller circuitry having interface circuitry to couple to a memory channel. The memory controller includes first logic circuitry to implement a first memory channel protocol on the memory channel. The first memory channel protocol is specific to a first volatile system memory technology. The interface also includes second logic circuitry to implement a second memory channel protocol on the memory channel. The second memory channel protocol is specific to a second non volatile system memory technology. The second memory channel protocol is a transactional protocol.

Owner:TAHOE RES LTD

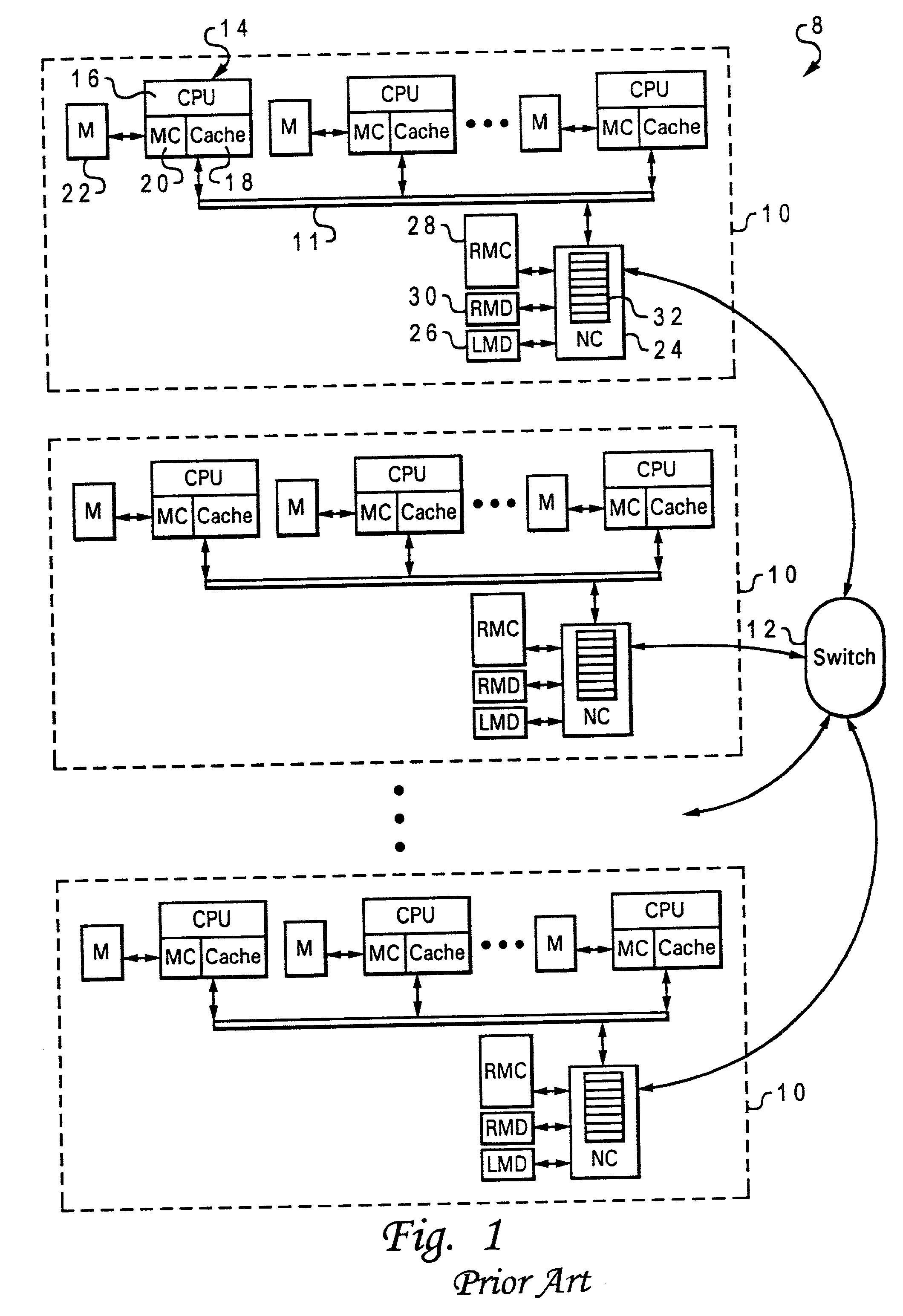

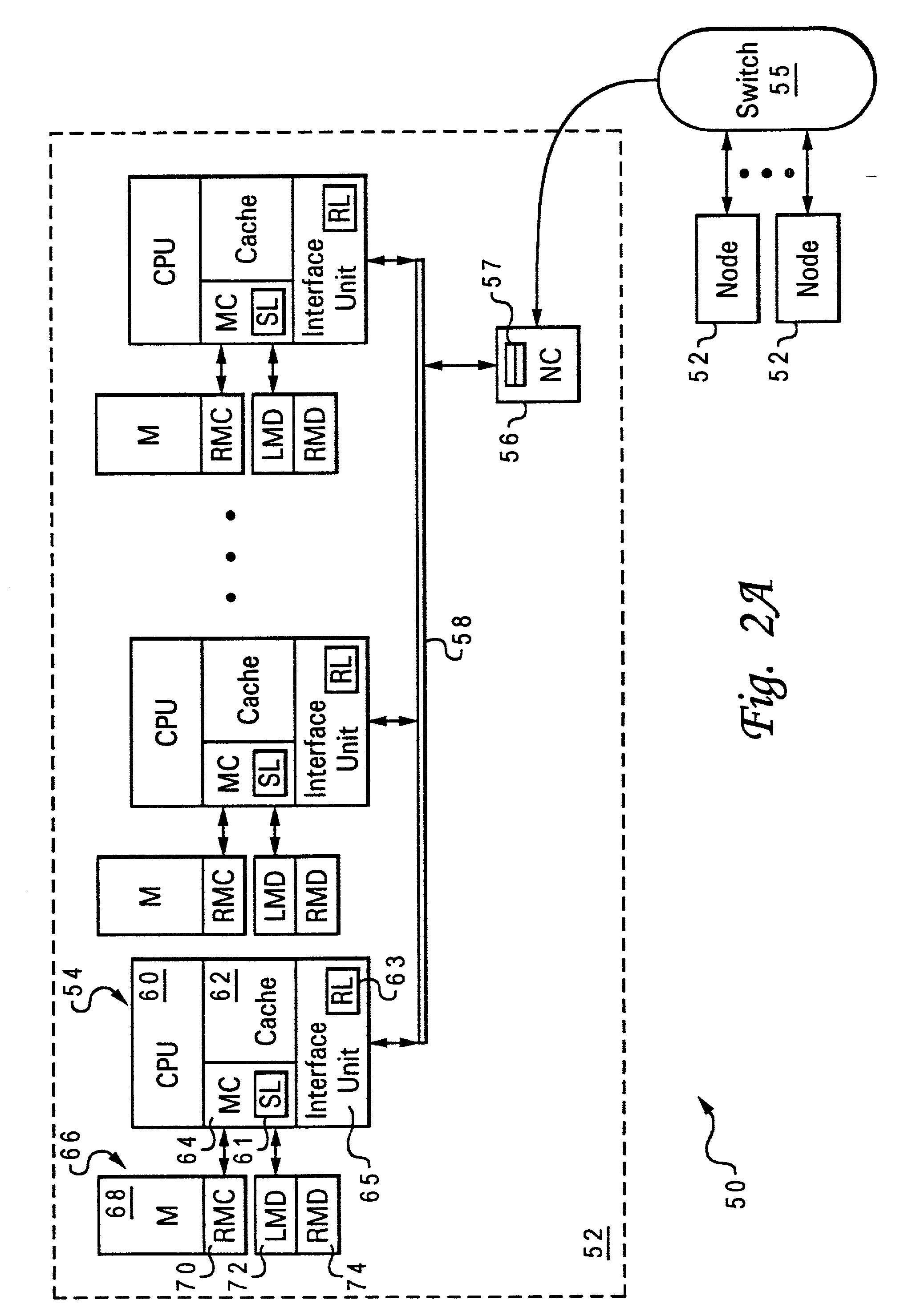

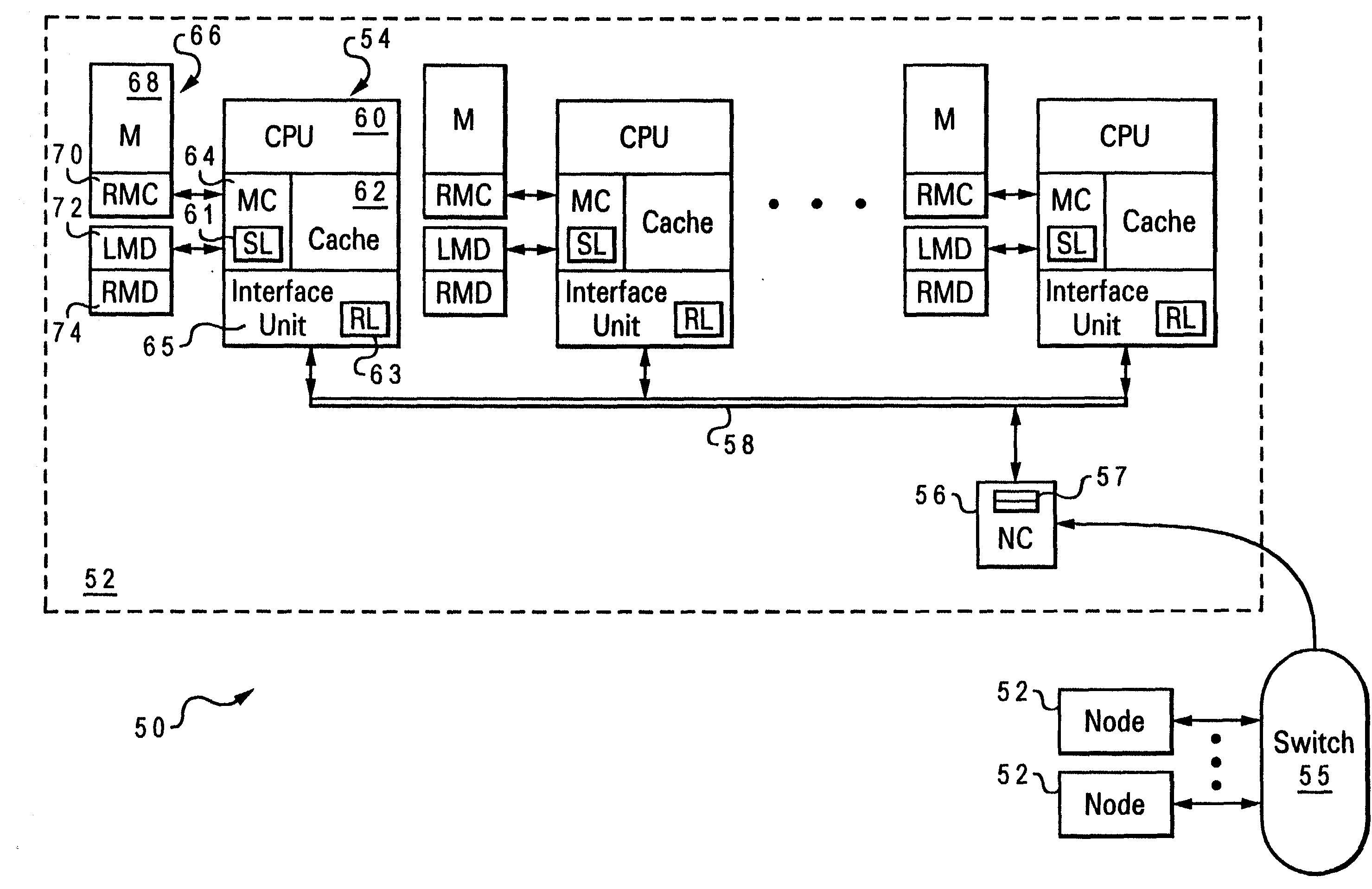

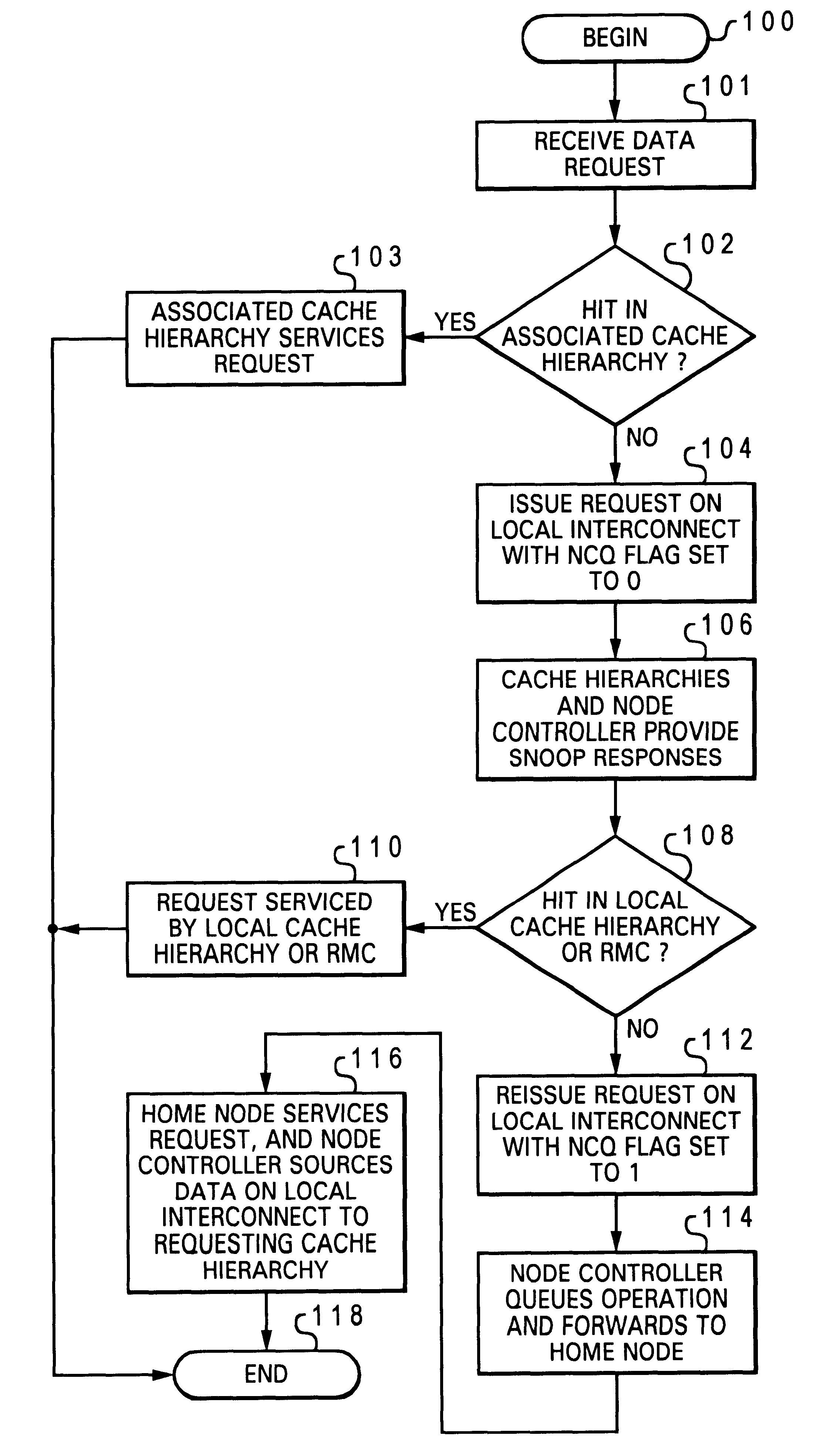

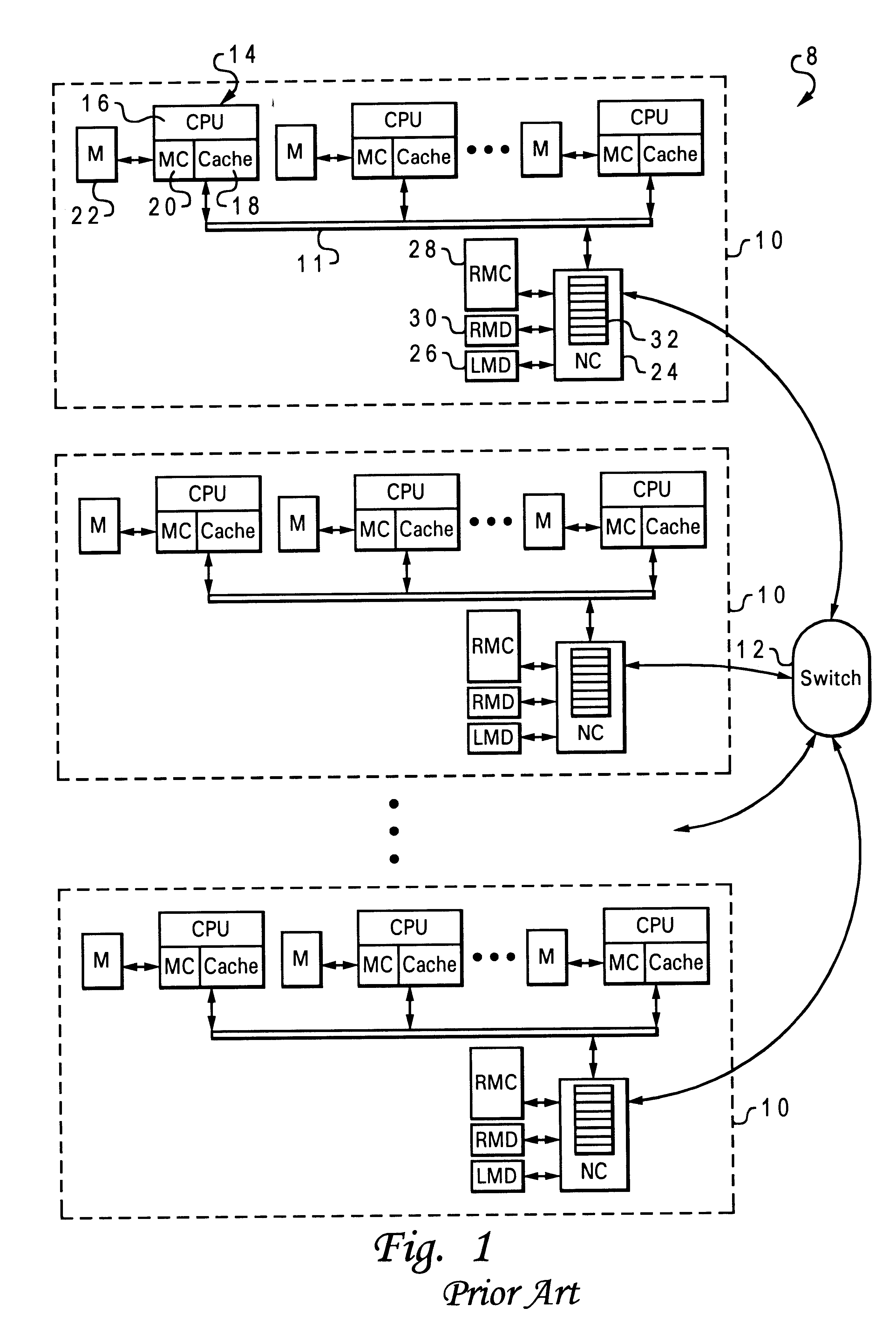

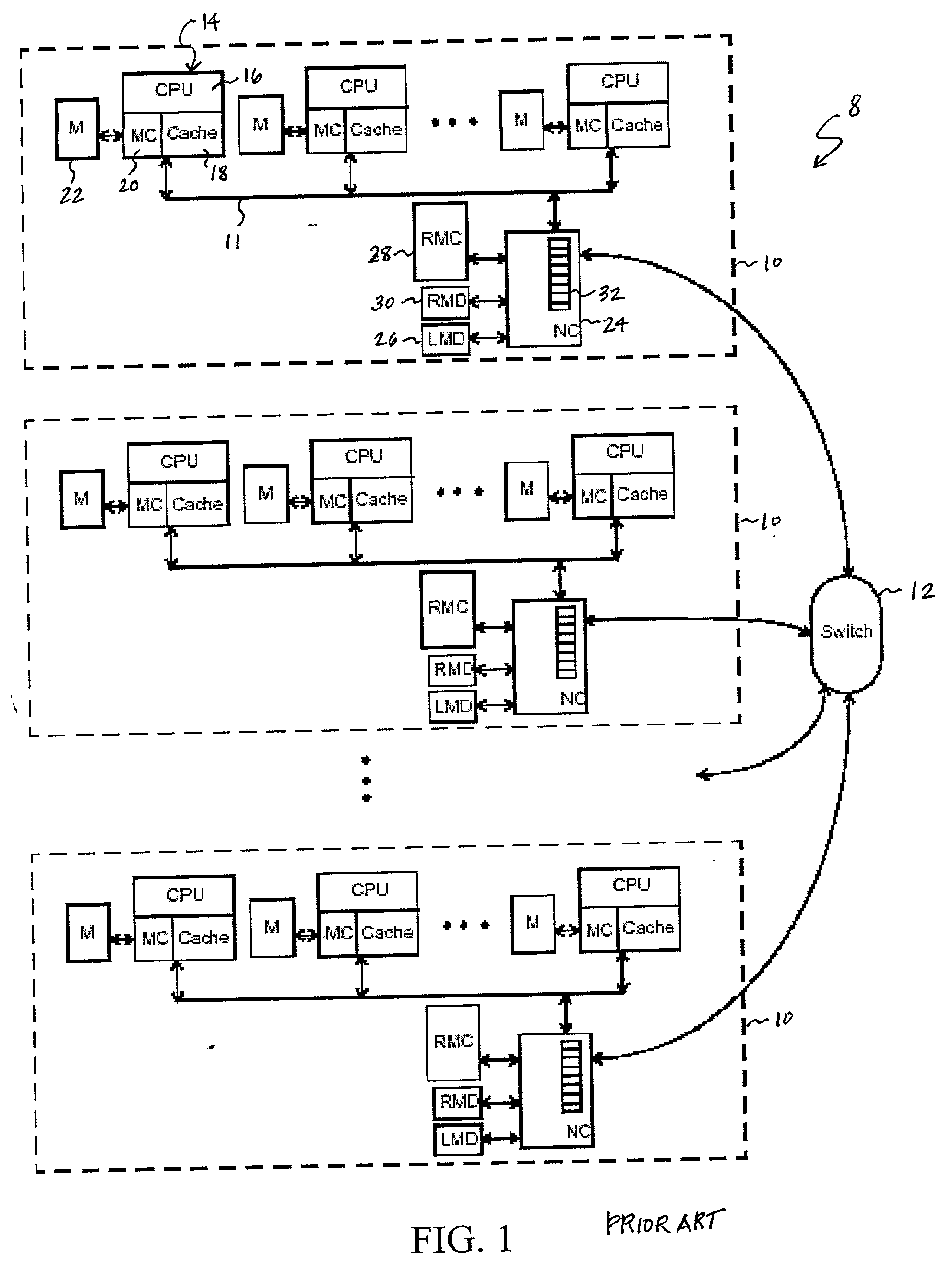

Two-stage request protocol for accessing remote memory data in a NUMA data processing system

InactiveUS20030009643A1Memory architecture accessing/allocationMemory adressing/allocation/relocationRemote memoryReceipt

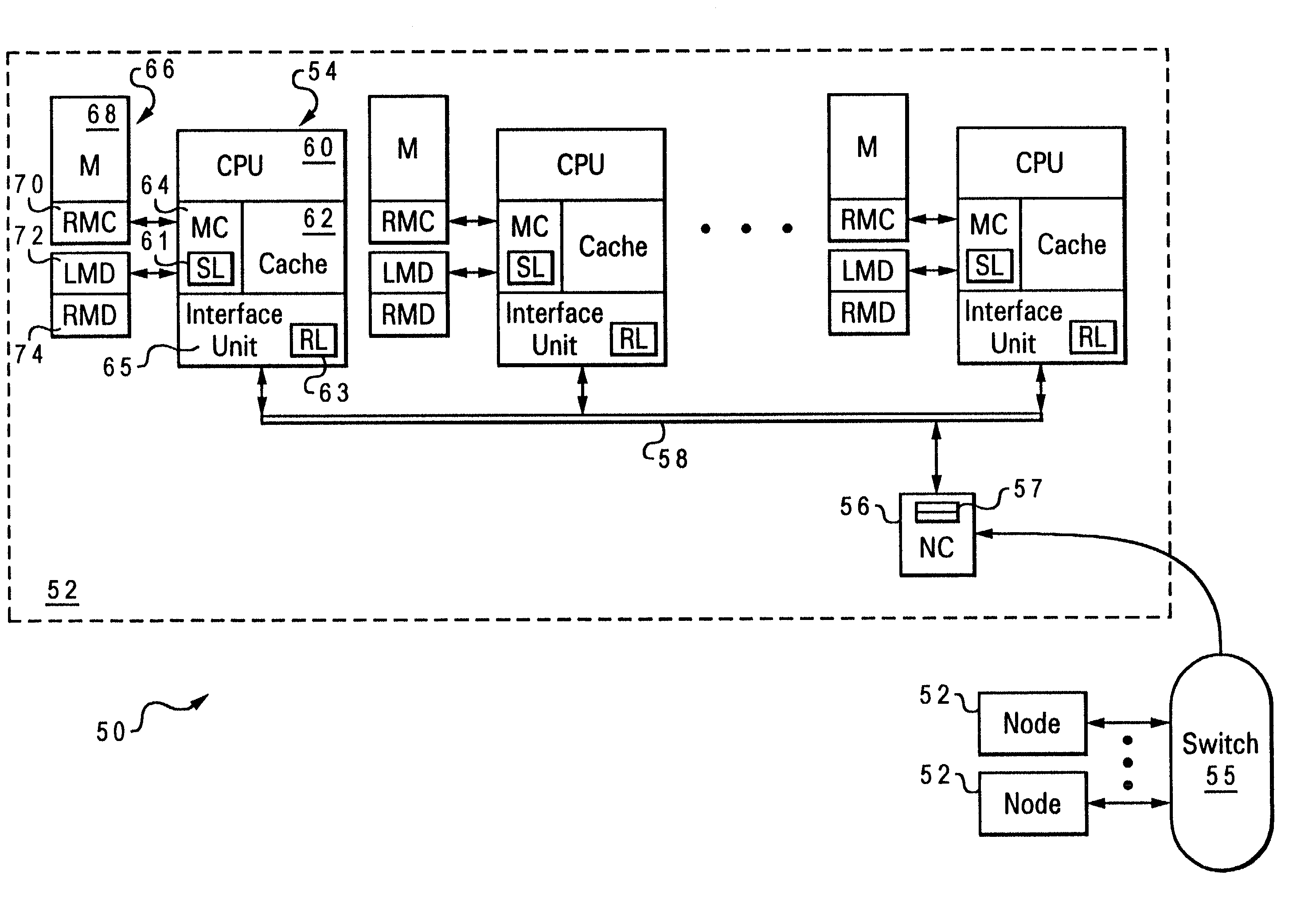

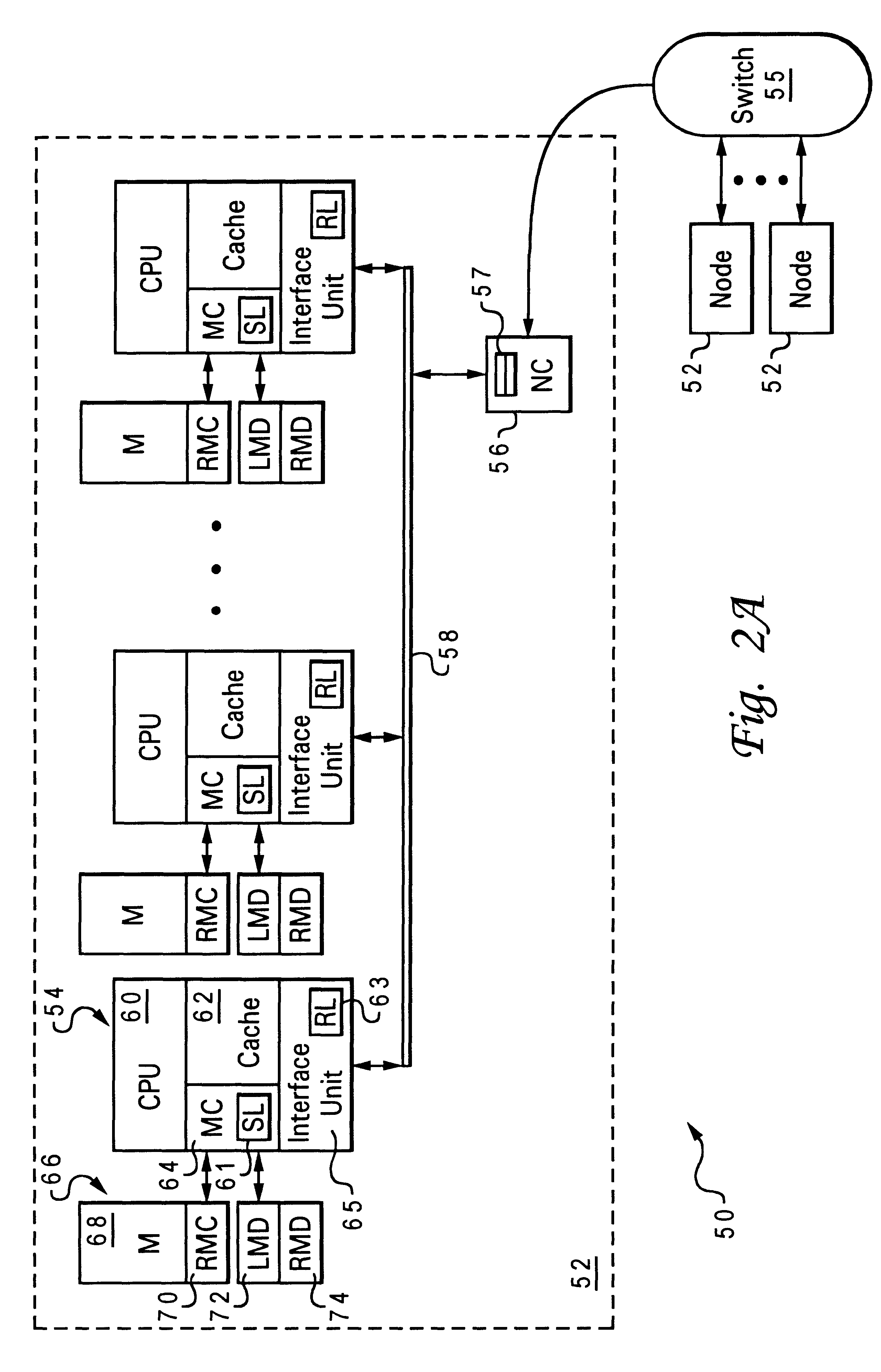

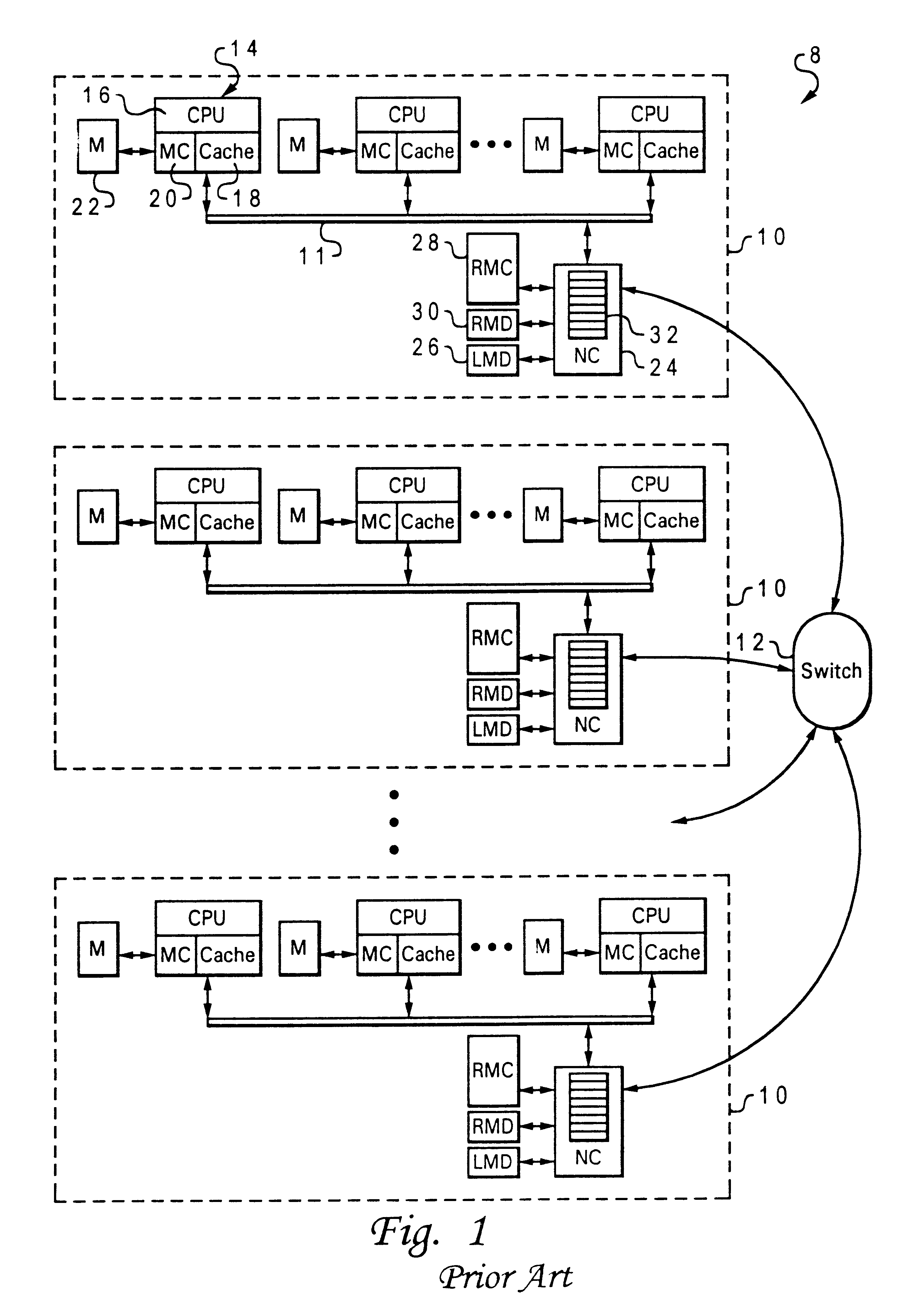

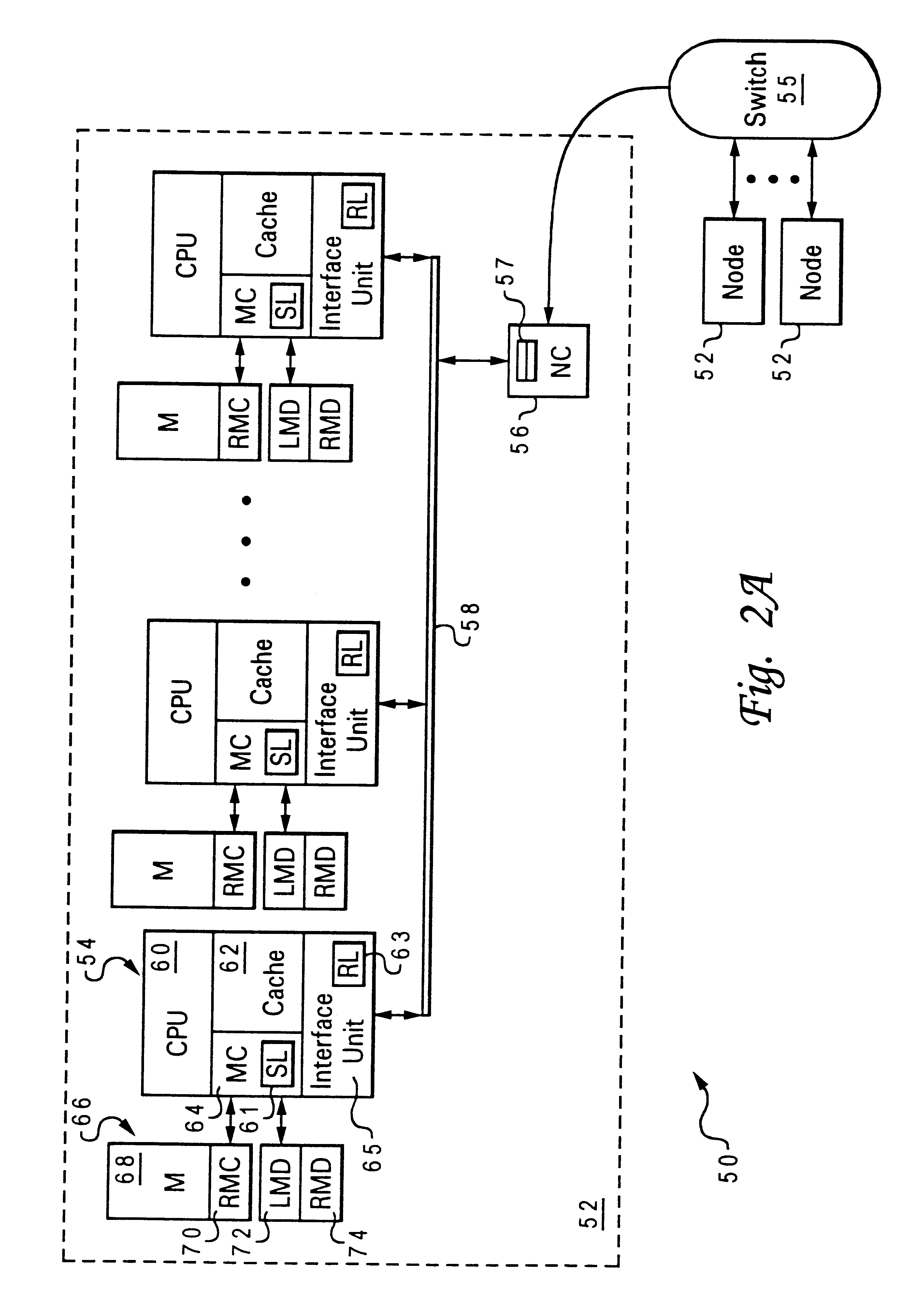

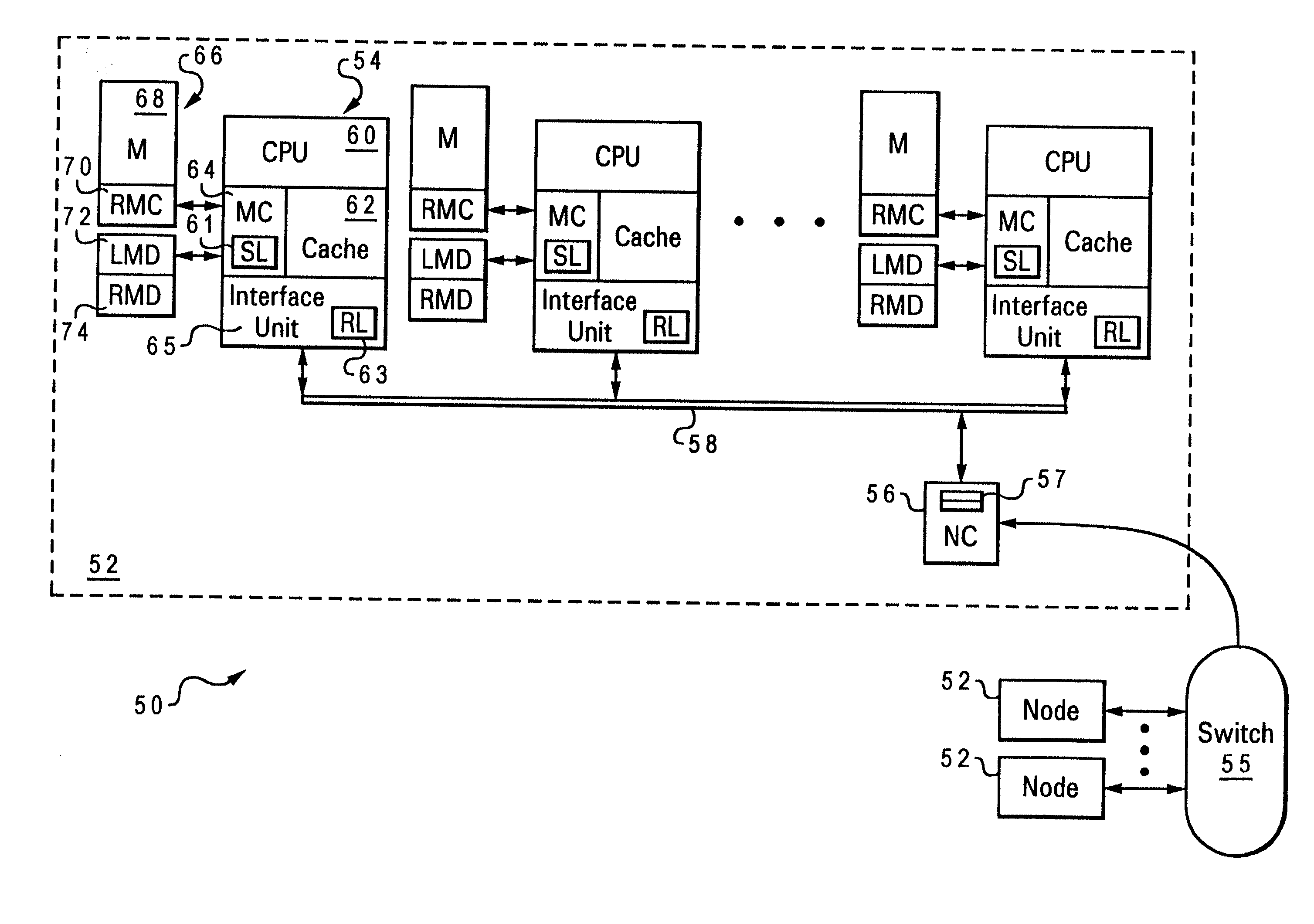

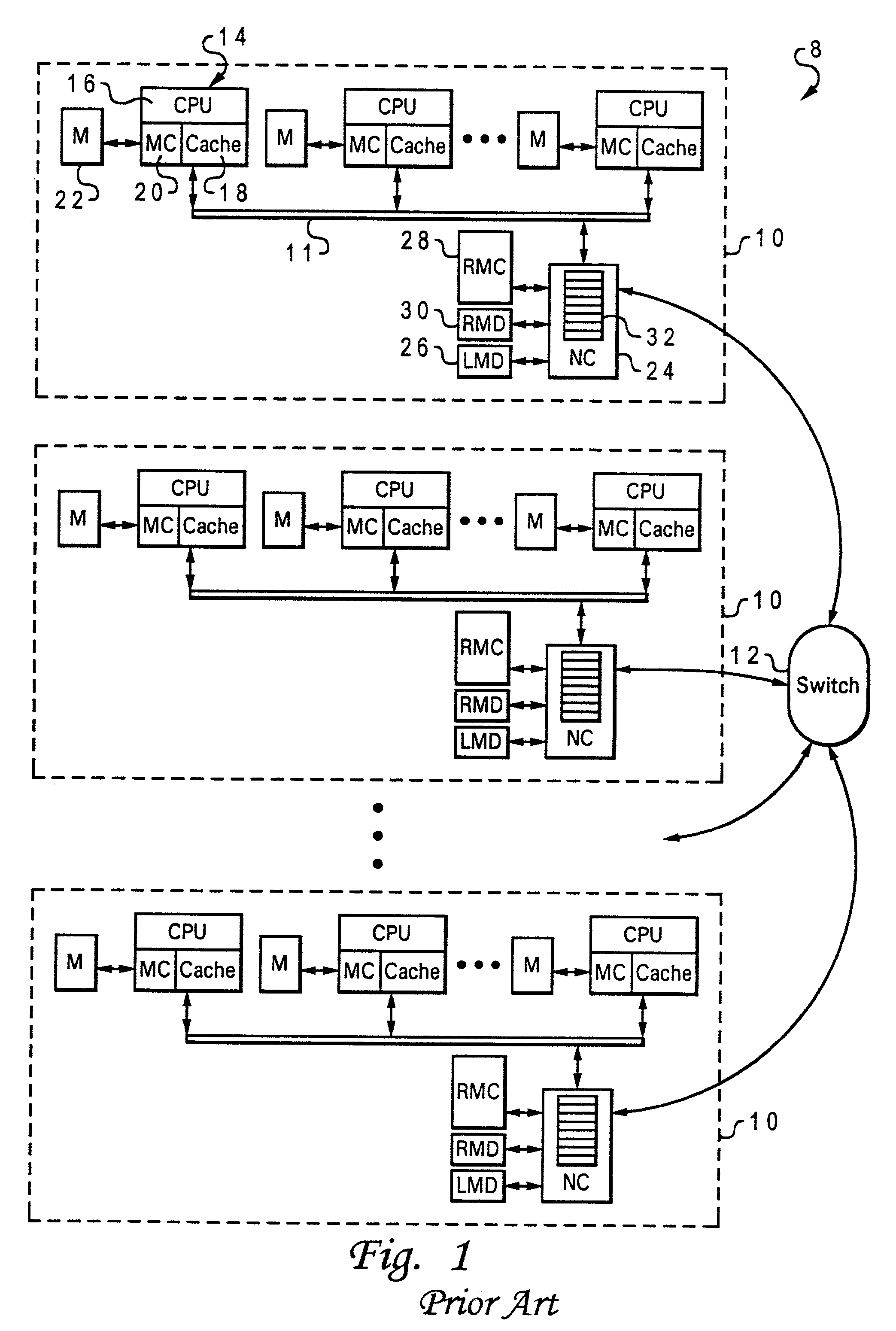

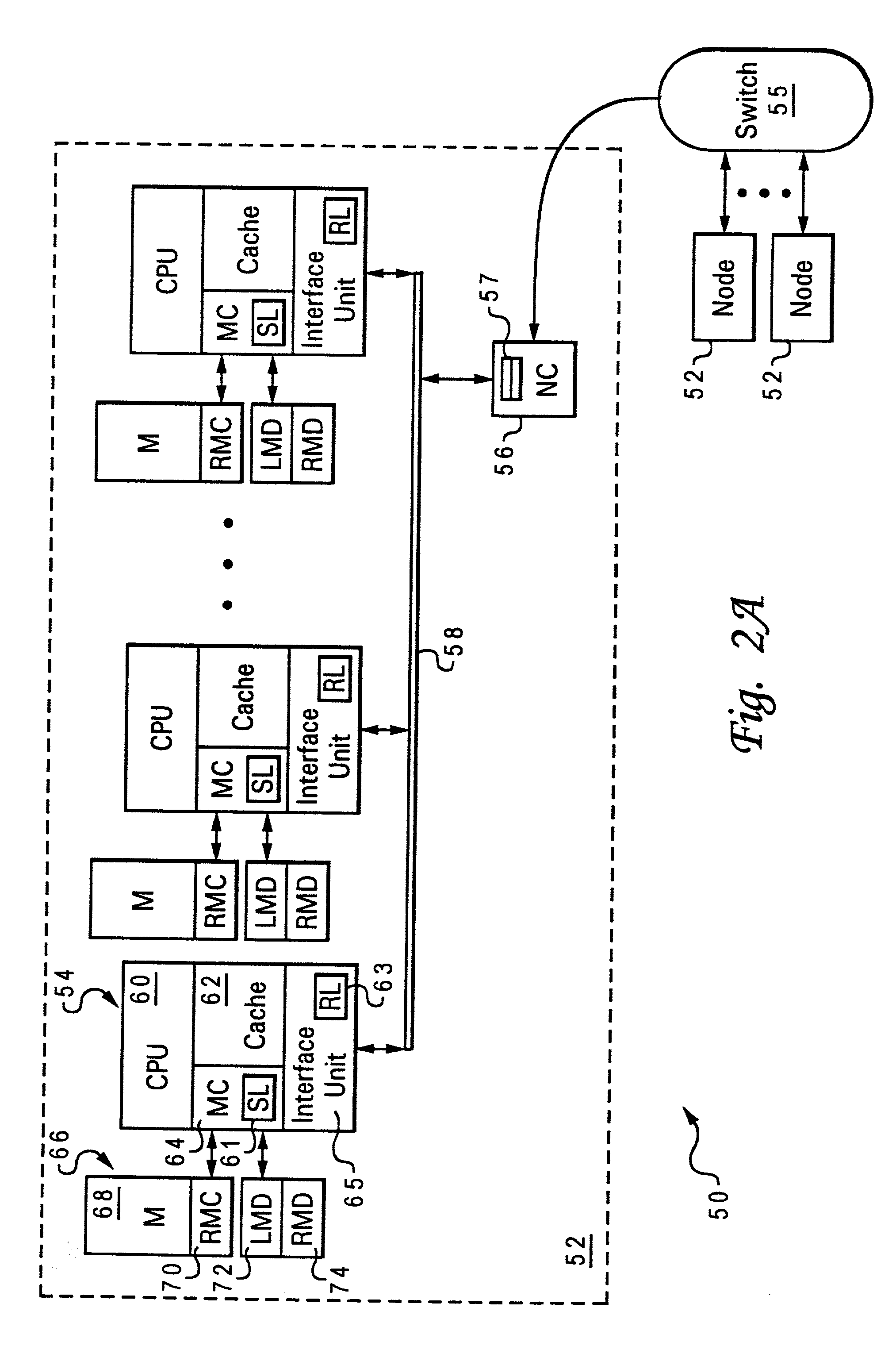

A non-uniform memory access (NUMA) computer system includes a remote node coupled by a node interconnect to a home node having a home system memory. The remote node includes a local interconnect, a processing unit and at least one cache coupled to the local interconnect, and a node controller coupled between the local interconnect and the node interconnect. The processing unit first issues, on the local interconnect, a read-type request targeting data resident in the home system memory with a flag in the read-type request set to a first state to indicate only local servicing of the read-type request. In response to inability to service the read-type request locally in the remote node, the processing unit reissues the read-type request with the flag set to a second state to instruct the node controller to transmit the read-type request to the home node. The node controller, which includes a plurality of queues, preferably does not queue the read-type request until receipt of the reissued read-type request with the flag set to the second state.

Owner:IBM CORP

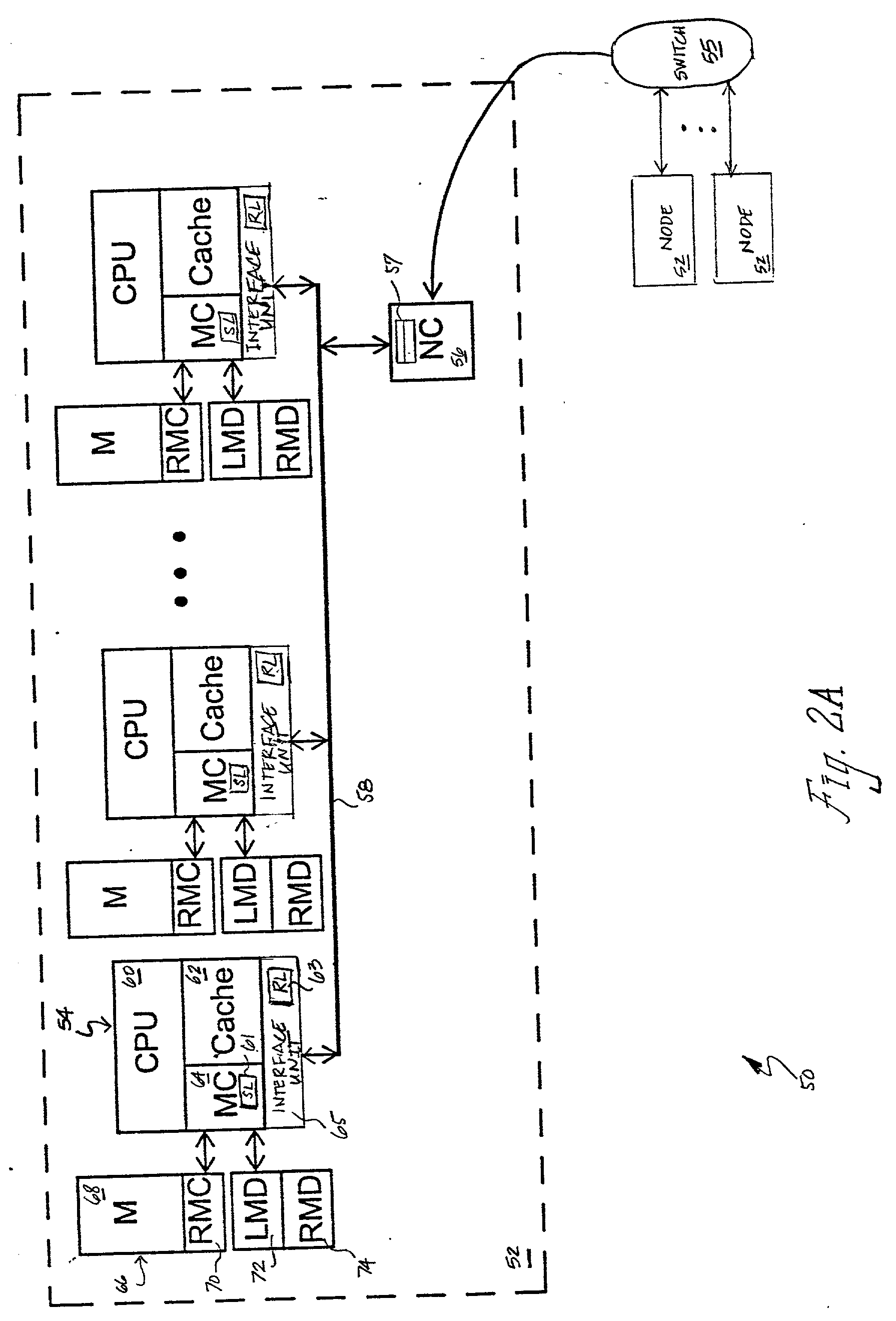

Decentralized global coherency management in a multi-node computer system

InactiveUS20030009637A1Memory adressing/allocation/relocationDigital computer detailsComputerized systemConsistency management

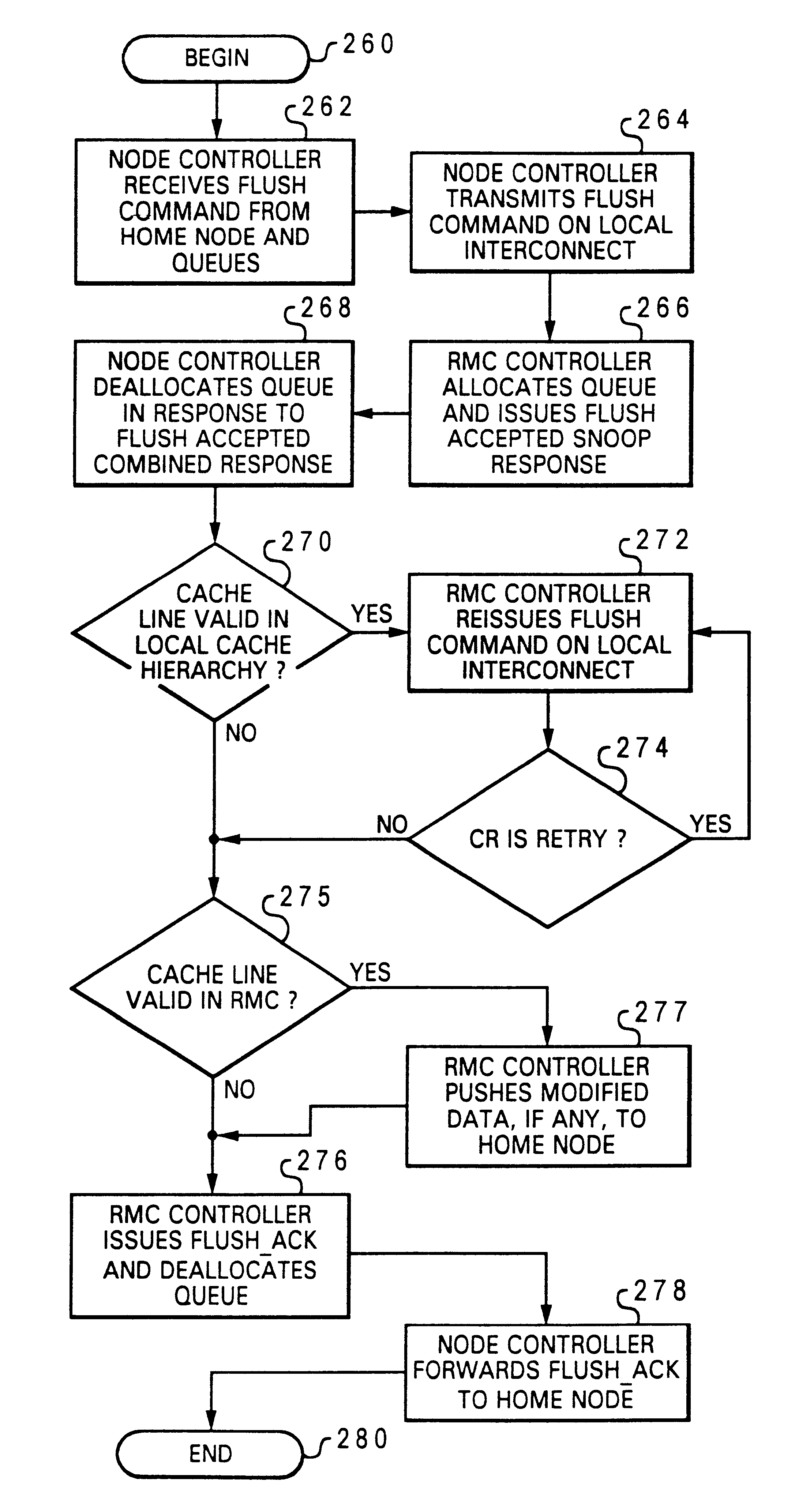

A non-uniform memory access (NUMA) computer system includes a first node and a second node coupled by a node interconnect. The second node includes a local interconnect, a node controller coupled between the local interconnect and the node interconnect, and a controller coupled to the local interconnect. In response to snooping an operation from the first node issued on the local interconnect by the node controller, the controller signals acceptance of responsibility for coherency management activities related to the operation in the second node, performs coherency management activities in the second node required by the operation, and thereafter provides notification of performance of the coherency management activities. To promote efficient utilization of queues within the node controller, the node controller preferably allocates a queue to the operation in response to receipt of the operation from the node interconnect and then deallocates the queue in response to transferring responsibility for coherency management activities to the controller.

Owner:IBM CORP

Memory channel that supports near memory and far memory access

ActiveUS9342453B2Memory architecture accessing/allocationEnergy efficient ICTUniform memory accessDirect memory access

A semiconductor chip comprising memory controller circuitry having interface circuitry to couple to a memory channel. The memory controller includes first logic circuitry to implement a first memory channel protocol on the memory channel. The first memory channel protocol is specific to a first volatile system memory technology. The interface also includes second logic circuitry to implement a second memory channel protocol on the memory channel. The second memory channel protocol is specific to a second non volatile system memory technology. The second memory channel protocol is a transactional protocol.

Owner:TAHOE RES LTD

Two-stage request protocol for accessing remote memory data in a NUMA data processing system

InactiveUS6615322B2Memory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemComputerized system

A non-uniform memory access (NUMA) computer system includes a remote node coupled by a node interconnect to a home node having a home system memory. The remote node includes a local interconnect, a processing unit and at least one cache coupled to the local interconnect, and a node controller coupled between the local interconnect and the node interconnect. The processing unit first issues, on the local interconnect, a read-type request targeting data resident in the home system memory with a flag in the read-type request set to a first state to indicate only local servicing of the read-type request. In response to inability to service the read-type request locally in the remote node, the processing unit reissues the read-type request with the flag set to a second state to instruct the node controller to transmit the read-type request to the home node. The node controller, which includes a plurality of queues, preferably does not queue the read-type request until receipt of the reissued read-type request with the flag set to the second state.

Owner:INT BUSINESS MASCH CORP

Decentralized global coherency management in a multi-node computer system

InactiveUS6754782B2Memory adressing/allocation/relocationDigital computer detailsComputerized systemConsistency management

A non-uniform memory access (NUMA) computer system includes a first node and a second node coupled by a node interconnect. The second node includes a local interconnect, a node controller coupled between the local interconnect and the node interconnect, and a controller coupled to the local interconnect. In response to snooping an operation from the first node issued on the local interconnect by the node controller, the controller signals acceptance of responsibility for coherency management activities related to the operation in the second node, performs coherency management activities in the second node required by the operation, and thereafter provides notification of performance of the coherency management activities. To promote efficient utilization of queues within the node controller, the node controller preferably allocates a queue to the operation in response to receipt of the operation from the node interconnect and then deallocates the queue in response to transferring responsibility for coherency management activities to the controller.

Owner:INT BUSINESS MASCH CORP

Dynamic history based mechanism for the granting of exclusive data ownership in a non-uniform memory access (numa) computer system

InactiveUS20030009641A1Program synchronisationMemory adressing/allocation/relocationExclusive orData access

A non-uniform memory access (NUMA) computer system includes at least one remote node and a home node coupled by a node interconnect. The home node contains a home system memory and a memory controller. In response to receipt of a data request from a remote node, the memory controller determines whether to grant exclusive or non-exclusive ownership of requested data specified in the data request by reference to history information indicative of prior data accesses originating in the remote node. The memory controller then transmits the requested data and an indication of exclusive or non-exclusive ownership to the remote node.

Owner:IBM CORP

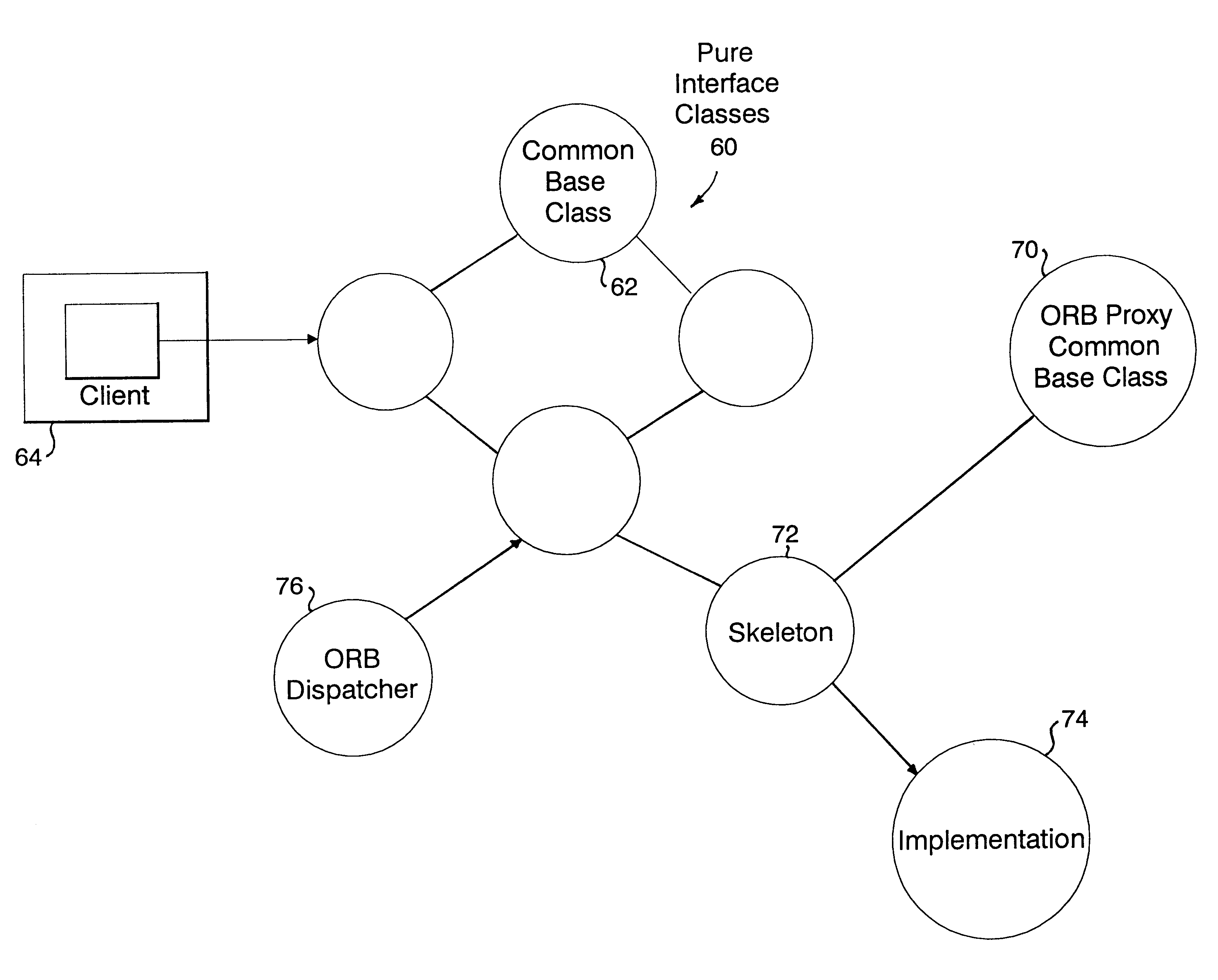

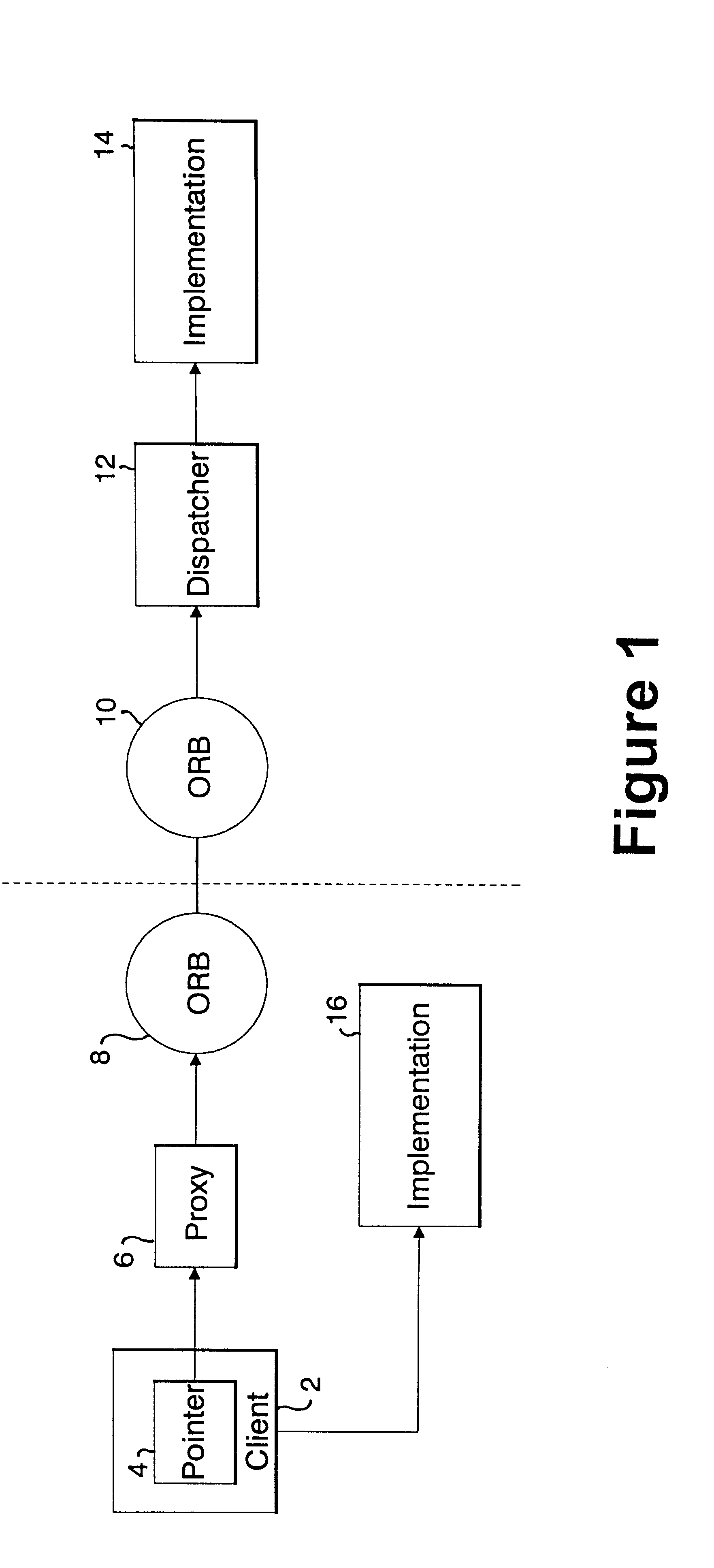

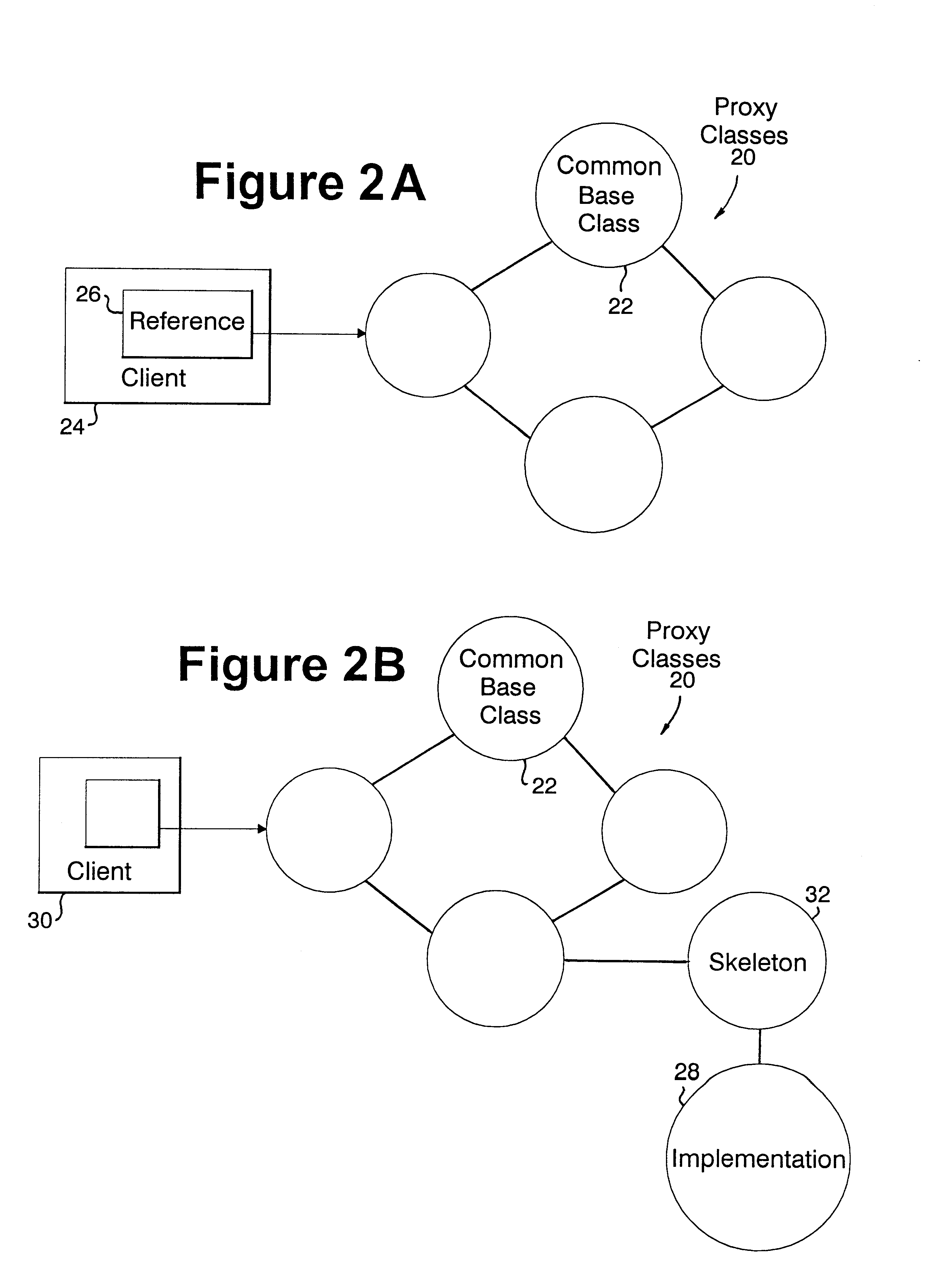

Uniform access to and interchange between objects employing a plurality of access methods

InactiveUS6182155B1Efficiently navigateMultiprogramming arrangementsMemory systemsProgramming languagePublic interface

Uniform access to and interchange between objects with use in any environment that supports interface composition through interface inheritance and implementation inheritance from a common base class is provided. Proxies are used to provide both cross-language and remote access to objects. The proxies and the local implementations for objects share a common set of interface base classes, so that the interface of a proxy for an object is indistinguishable from a similar interface of the actual implementation. Each proxy is taught how to deal with call paramters that are proxies of the other kind. A roster of language identifiers is developed, and a method is added to each object implementation which, when called, checks whether it matches the language that the object implementation is written in. If so, it returns a direct pointer to the object implementation. Common client coding can then be used to deal with both same language and cross-language calls.

Owner:IBM CORP

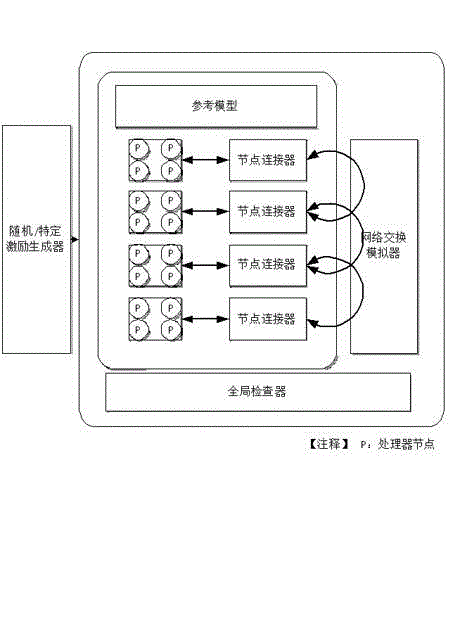

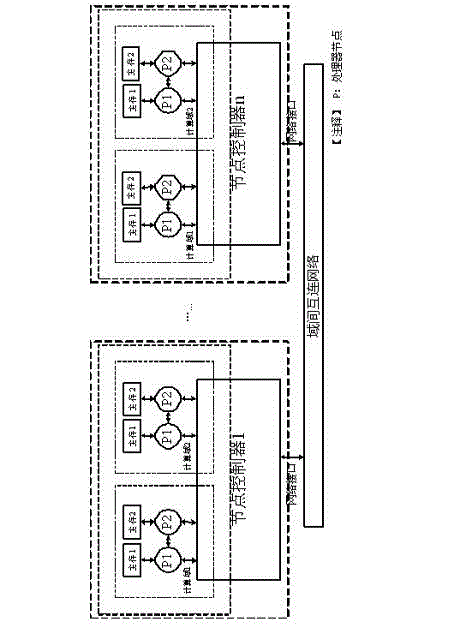

Extension Cache Coherence protocol-based multi-level consistency simulation domain verification and test method

ActiveCN103150264AImprove performanceReduce Topological ComplexityMemory architecture accessing/allocationMemory adressing/allocation/relocationUniform memory accessMulti processor

The invention discloses an extension Cache Coherence protocol-based multi-level consistency domain simulation verification and test method. An extension Cache Coherence protocol-based multi-level consistency domain CC-NUMA (Cache Coherent Non-Uniform Memory Access) system protocol simulation model is constructed. A protocol table inquiring and state converting executing mechanism in a key node of a system ensures that a Cache Coherence protocol is maintained in a single computing domain and is simultaneous maintained among a plurality of computing domains, and accuracy and stability are ensured by intra-domain and inter-domain transmission; and a credible protocol inlet conversion coverage rate evaluating drive verification method is provided, transactions are processed by loading an optimized transaction generator promoting model, a coverage rate index is obtained after the operation is ended, and the verification efficiency is increased in comparison with a random transaction promoting mechanism. Through constructing one multi-processor multi-consistency domain verification system model and developing relevant simulation verification, the applicability and the effectiveness of the method are further confirmed.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

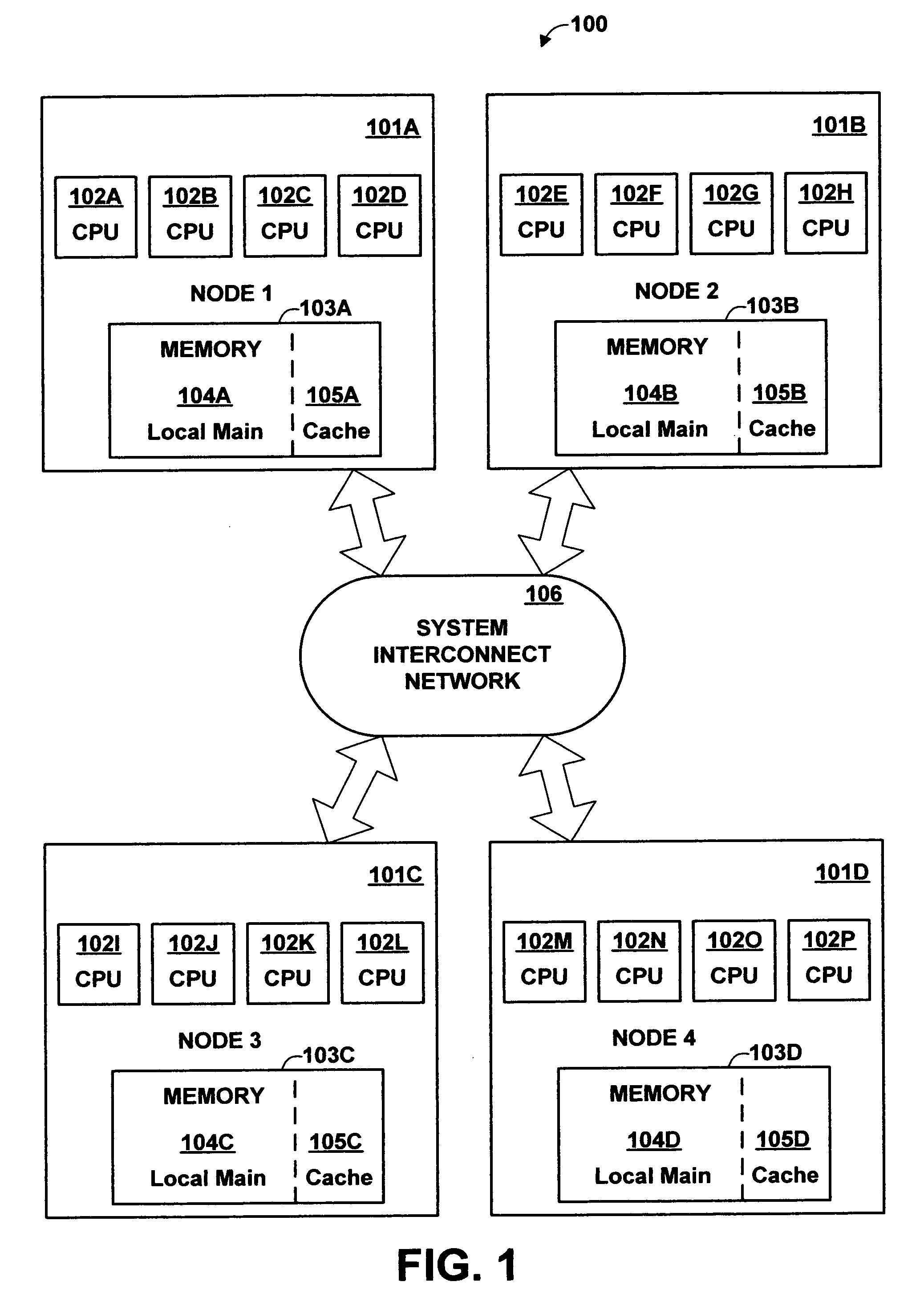

Method and apparatus for dispatching tasks in a non-uniform memory access (NUMA) computer system

InactiveUS7159216B2Avoid starvationShort timeProgram initiation/switchingMemory adressing/allocation/relocationDistributed memoryAccess time

A dispatcher for a non-uniform memory access computer system dispatches threads from a common ready queue not associated with any CPU, but favors the dispatching of a thread to a CPU having a shorter memory access time. Preferably, the system comprises multiple discrete nodes, each having a local memory and one or more CPUs. System main memory is a distributed memory comprising the union of the local memories. A respective preferred CPU and preferred node may be associated with each thread. When a CPU becomes available, the dispatcher gives at least some relative priority to a thread having a preferred CPU in the same node as the available CPU over a thread having a preferred CPU in a different node. This preference is relative, and does not prevent the dispatch from overriding the preference to avoid starvation or other problems.

Owner:DAEDALUS BLUE LLC

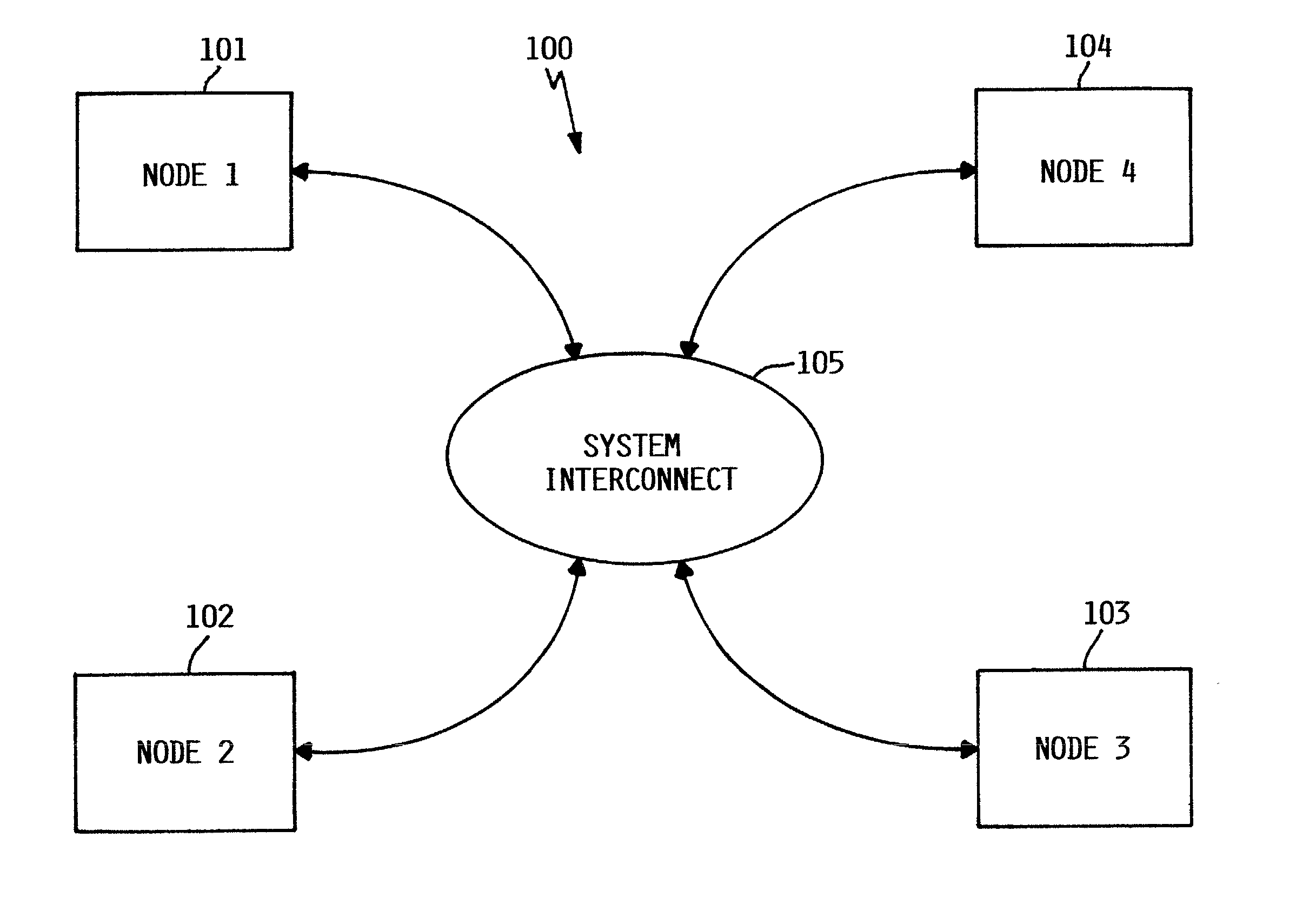

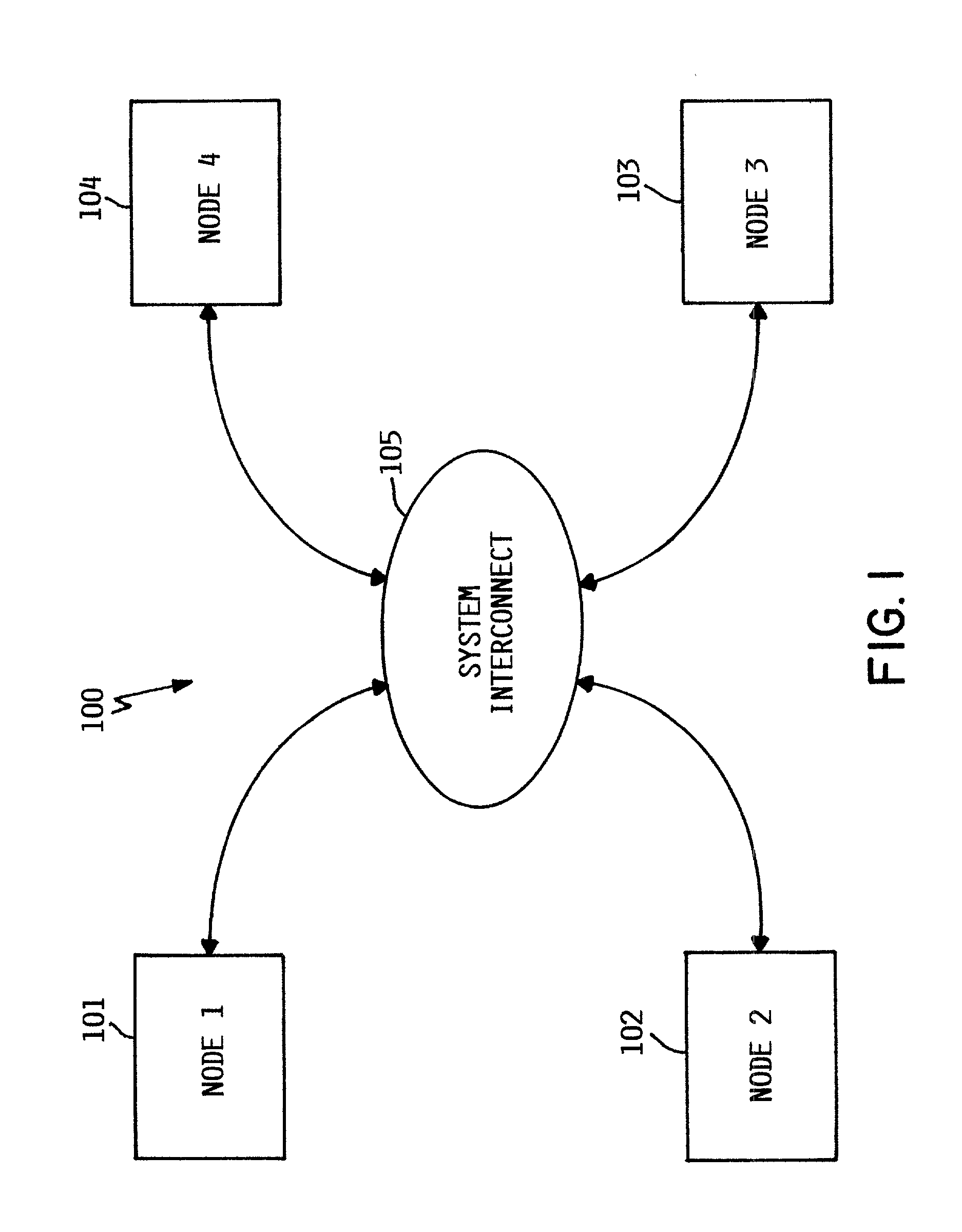

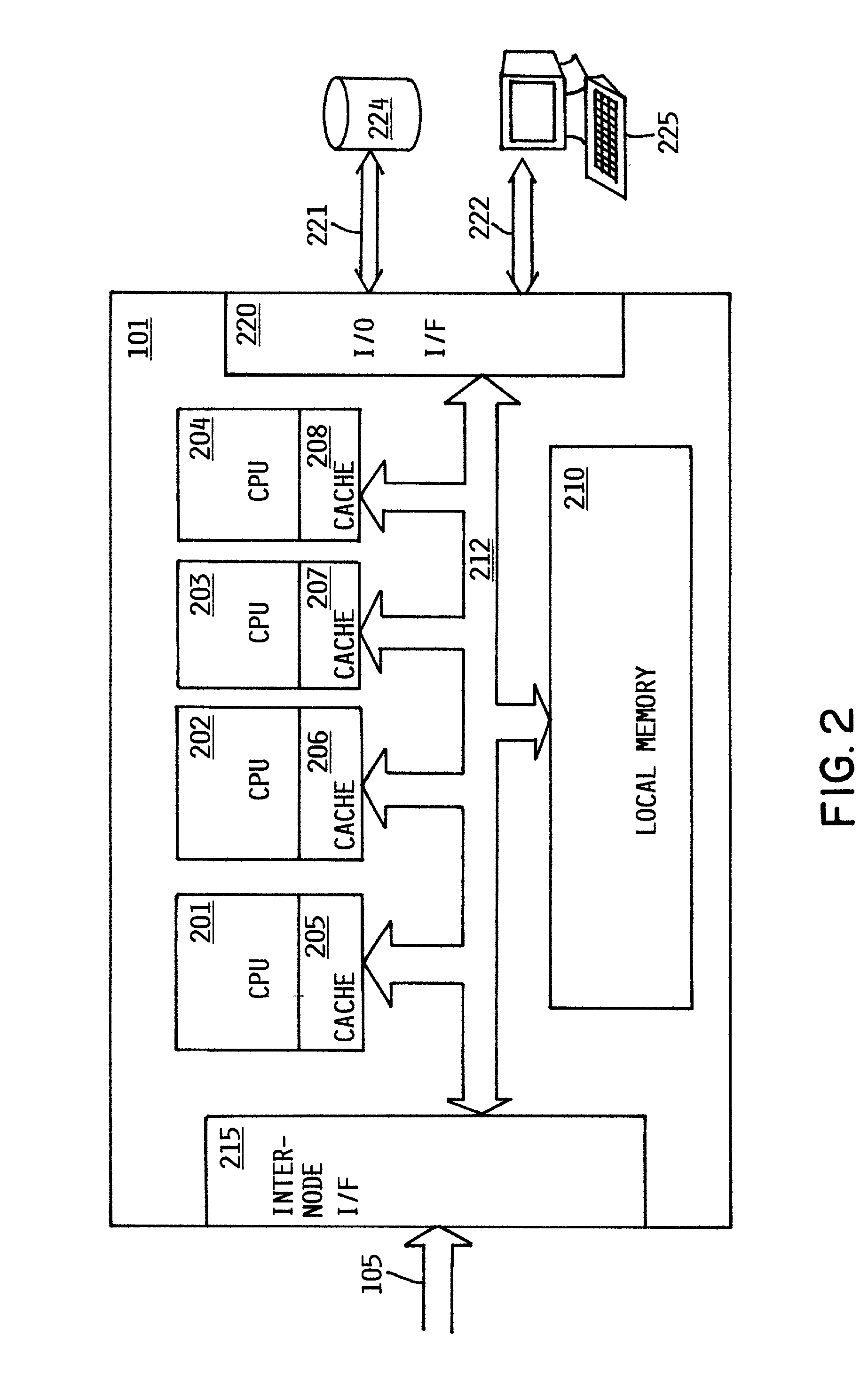

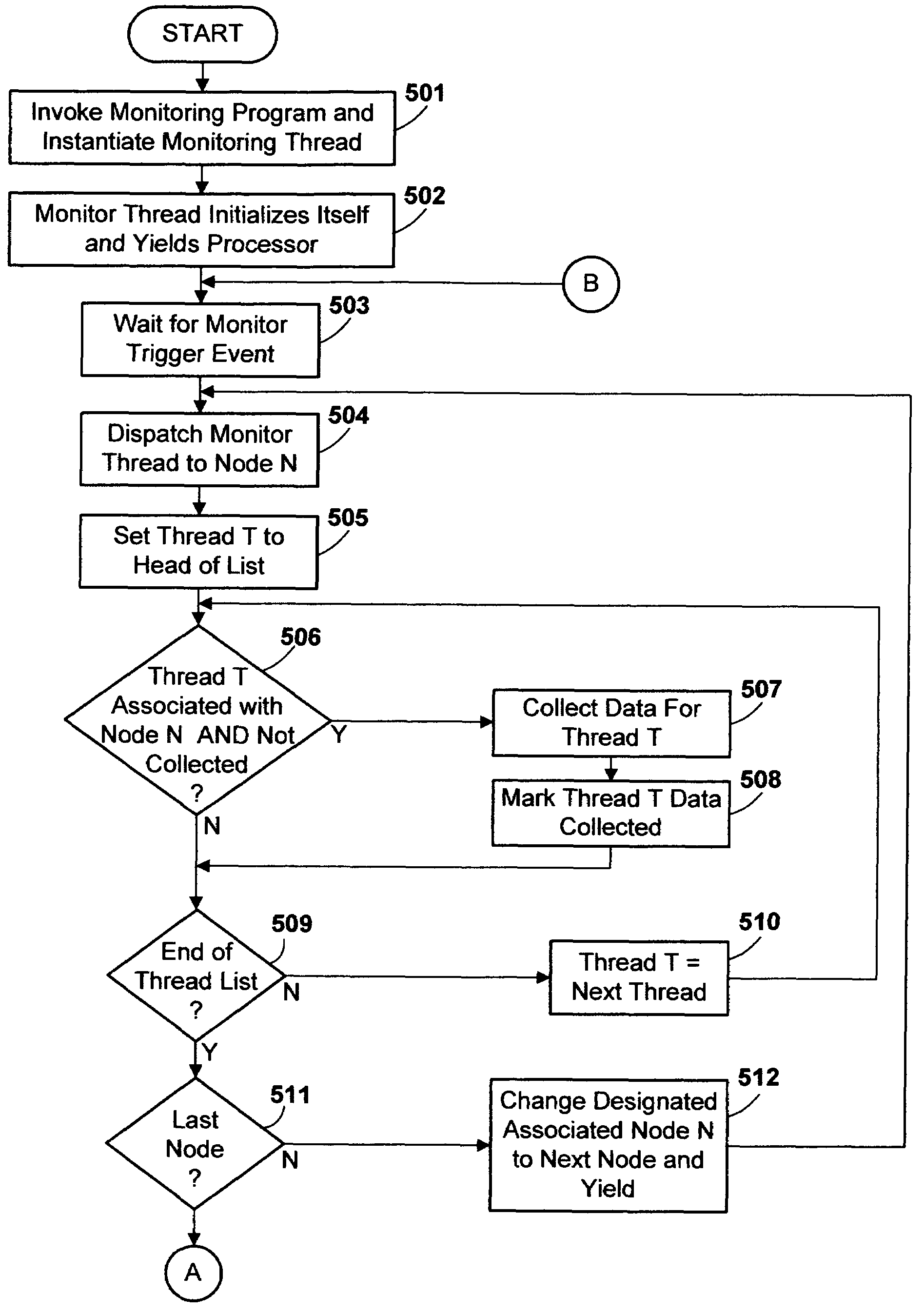

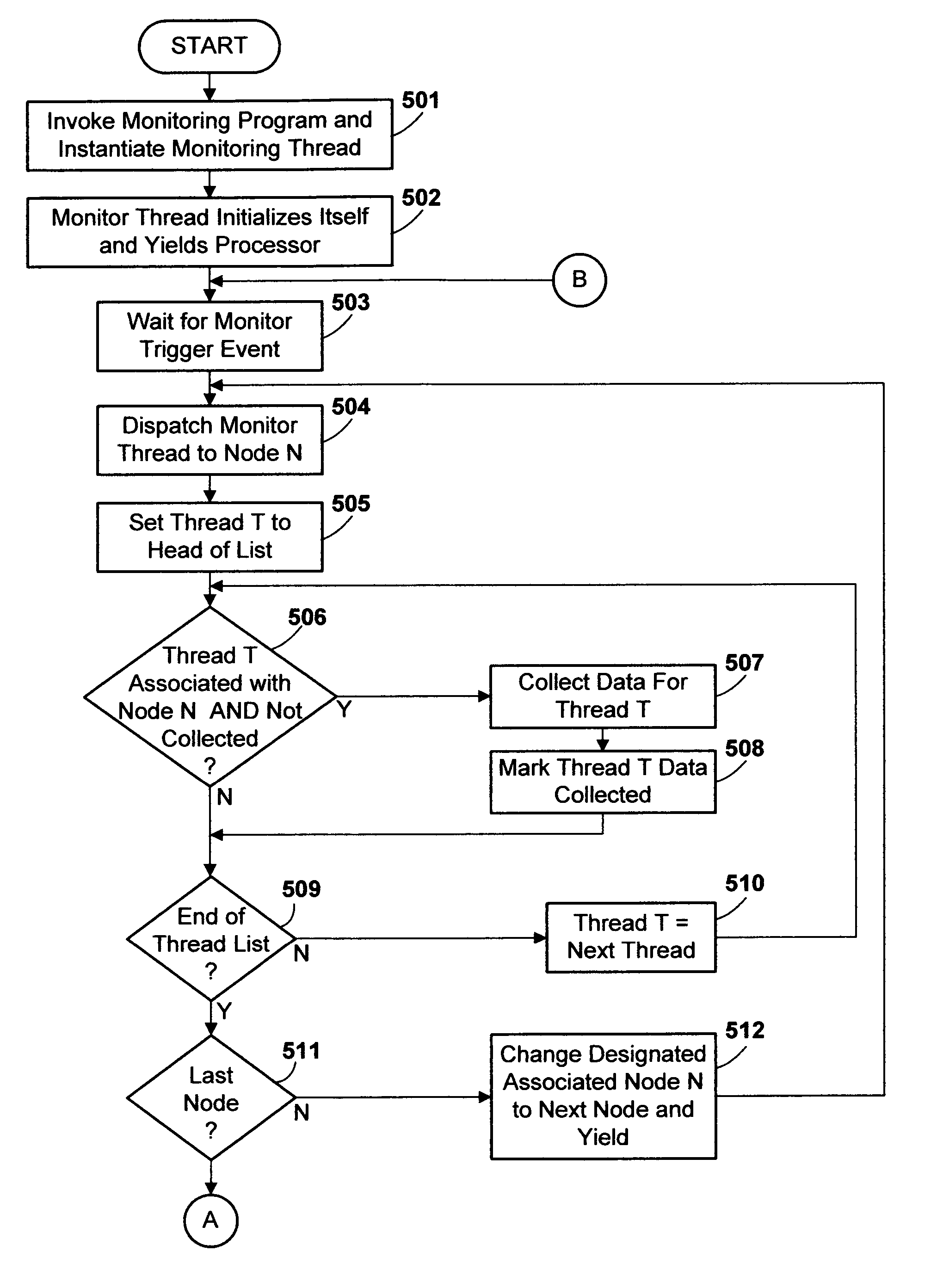

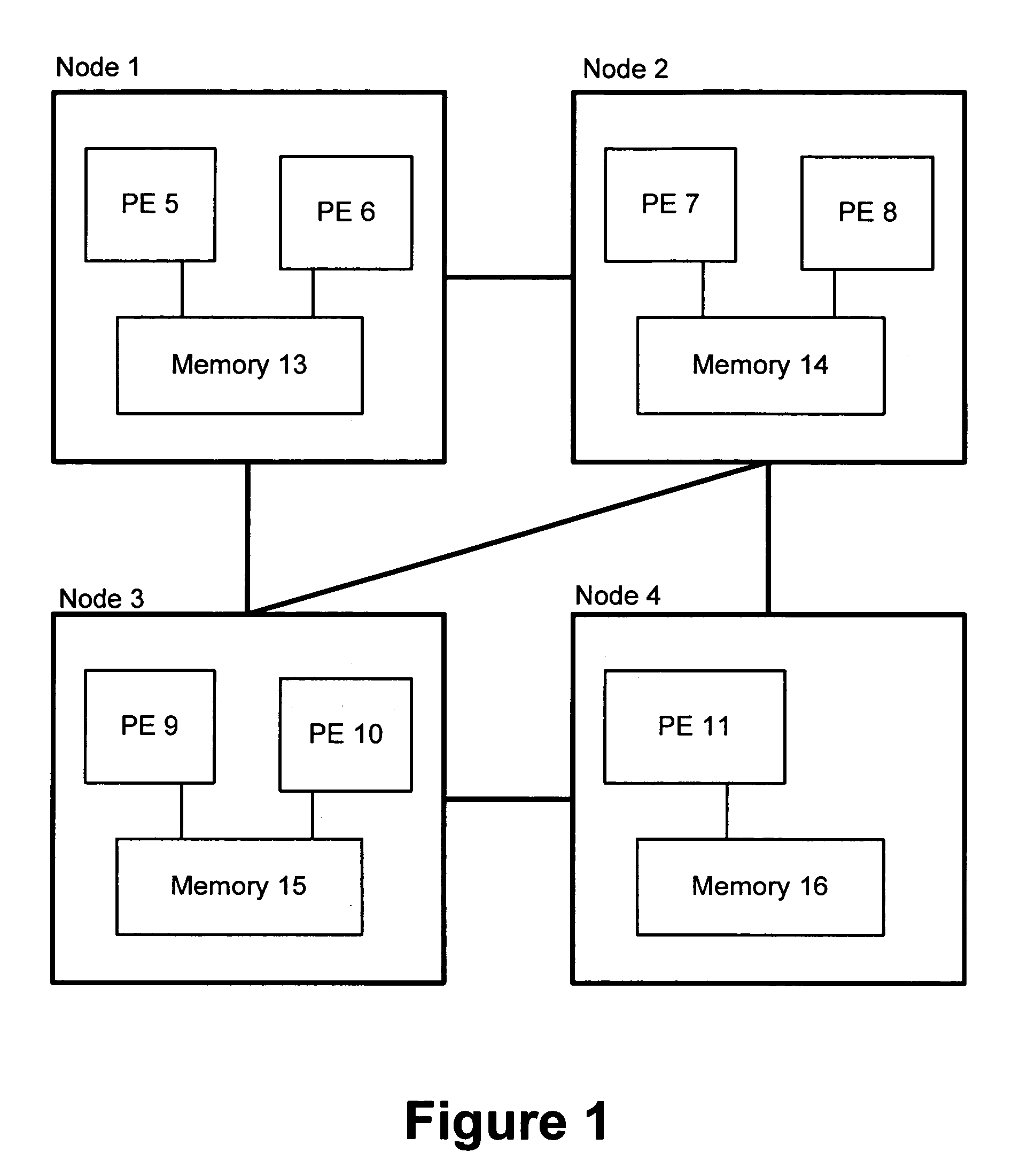

Method and apparatus for monitoring processes in a non-uniform memory access (NUMA) computer system

ActiveUS7383396B2Reduce the ratioImprove efficiencyError detection/correctionMultiprogramming arrangementsUniform memory accessData access

A monitoring process for a NUMA system collects data from multiple monitored threads executing in different nodes of the system. The monitoring process executes on different processors in different nodes. The monitoring process intelligently collects data from monitored threads according to the node it which it is executing to reduce the proportion of inter-node data accesses. Preferably, the monitoring process has the capability to specify a node to which it should be dispatched next to the dispatcher, and traverses the nodes while collecting data from threads associated with the node in which the monitor is currently executing. By intelligently associating the data collection with the node of the monitoring process, the frequency of inter-node data accesses for purposes of collecting data by the monitoring process is reduced, increasing execution efficiency.

Owner:META PLATFORMS INC

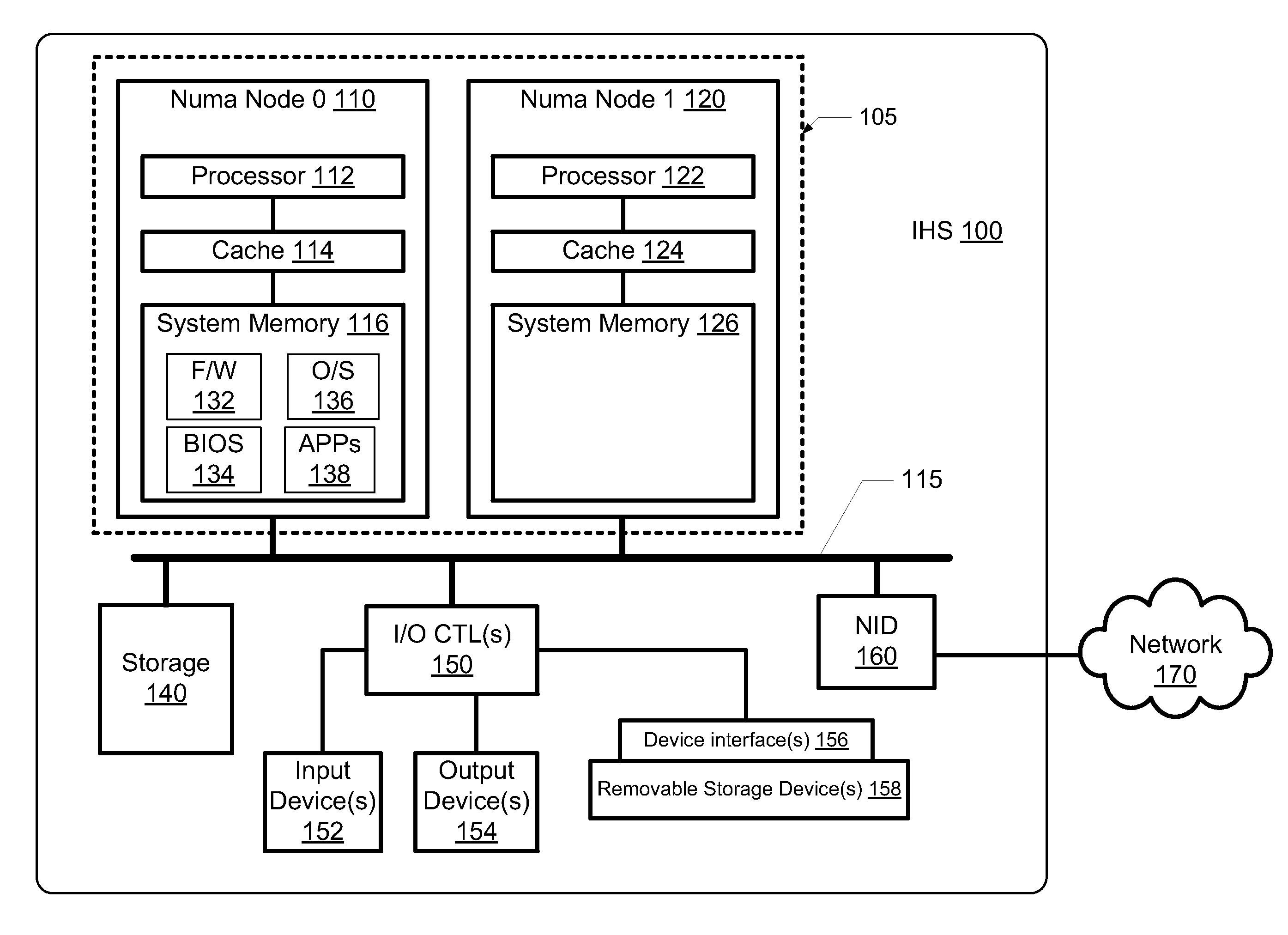

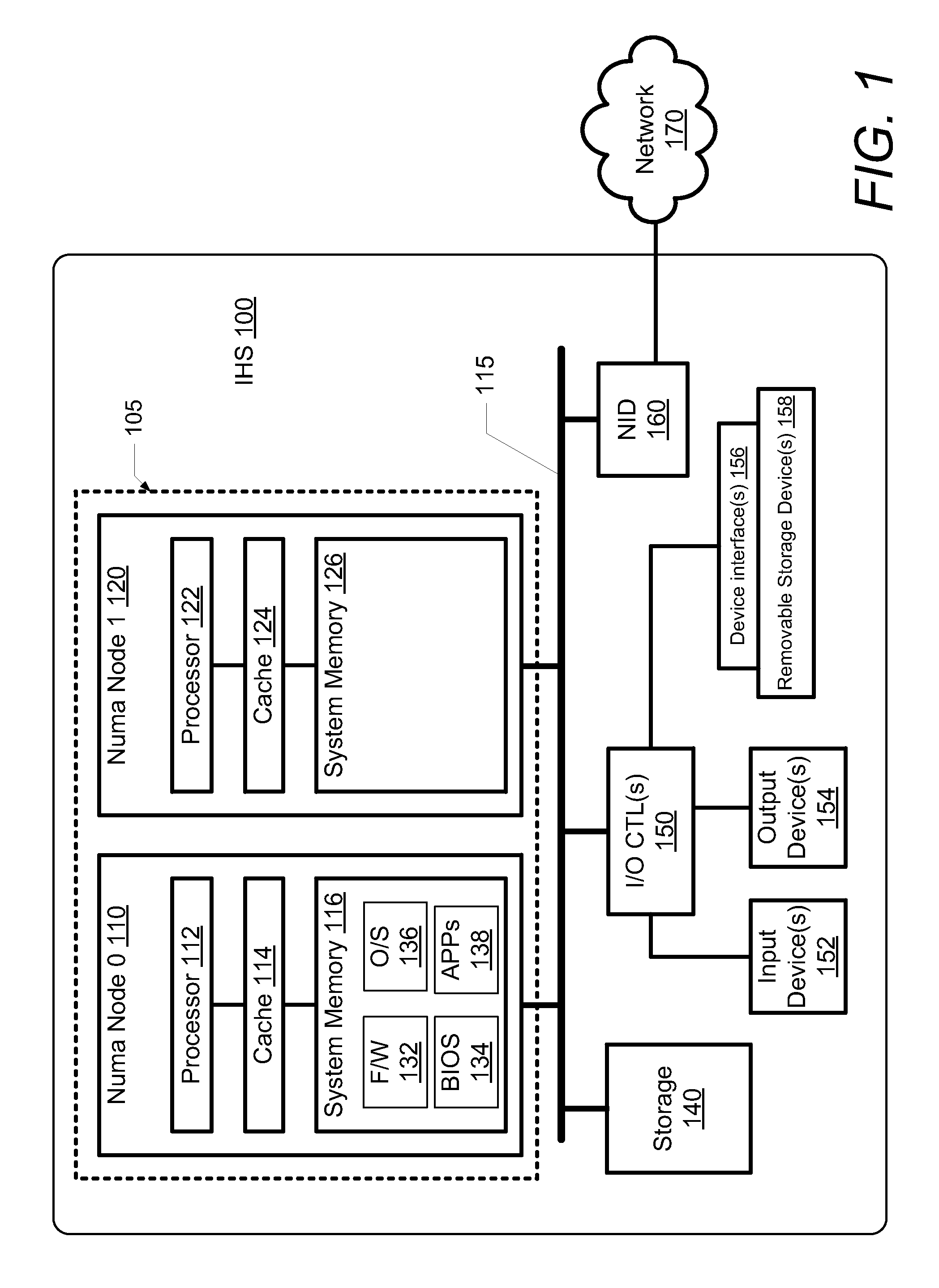

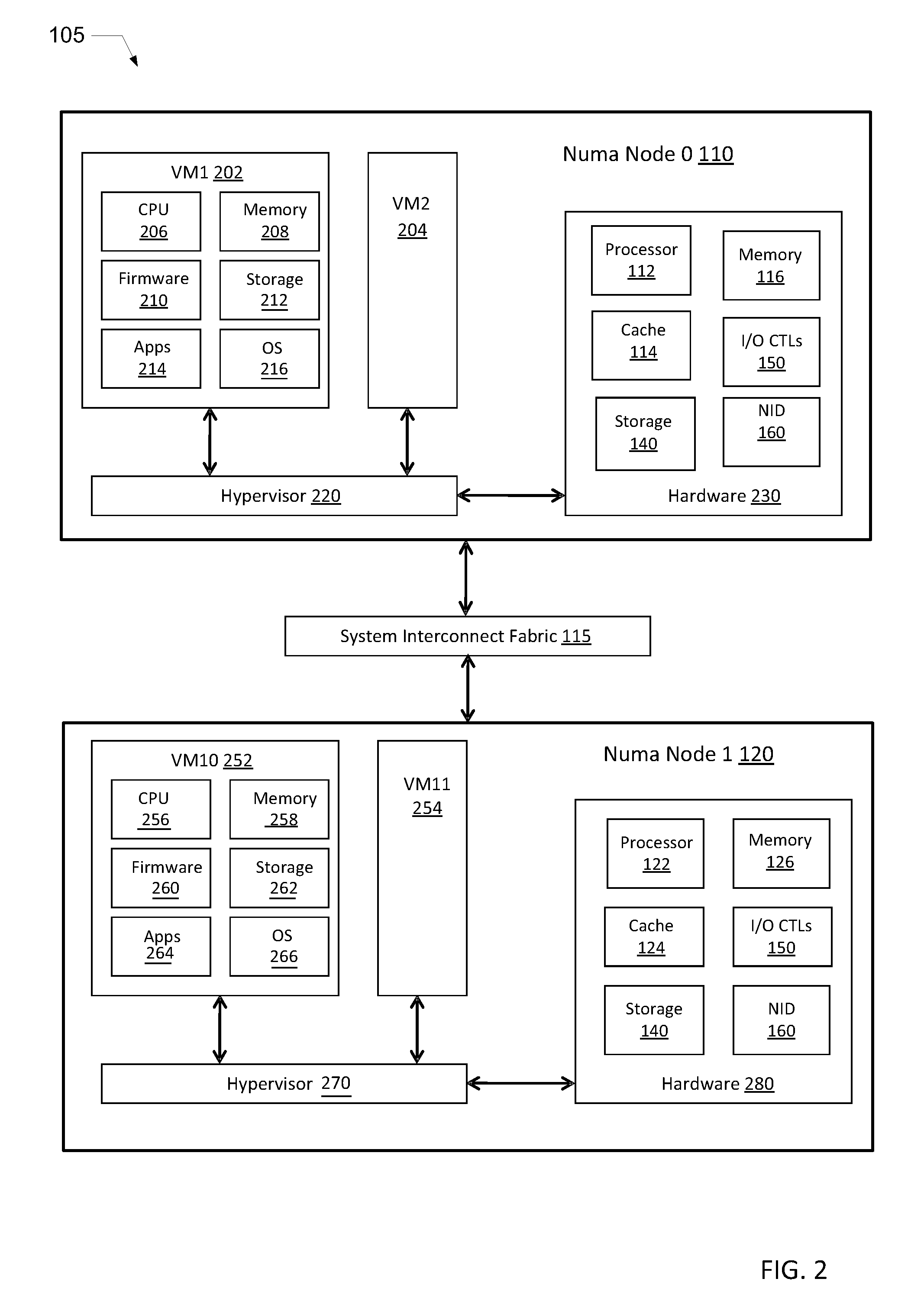

Method of migrating virtual machines between non-uniform memory access nodes within an information handling system

ActiveUS20150277779A1Easy to processInput/output to record carriersSoftware simulation/interpretation/emulationUniform memory accessParallel computing

A method for allocating virtual machines (VMs) to run within a non-uniform memory access (NUMA) system includes a first processing node and a second processing node. A request is received at the first processing node for additional capacity for at least one of (a) establishing an additional VM and (b) increasing processing resources to an existing VM on the first processing node. In response to receiving the request, a migration manager identifies whether the first processing node has the additional capacity requested. In response to identifying that the first processing node does not have the additional capacity requested, at least one VM is selected from an ordered array of the multiple VMs executing on the first processing node. The selected VM has low processor and memory usage relative to the other VMs. The selected VM is migrated from the first processing node to the second processing node for execution.

Owner:DELL PROD LP

Method and apparatus for monitoring processes in a non-uniform memory access (NUMA) computer system

ActiveUS20060259704A1Reduce proportionReduce the ratioError detection/correctionMultiprogramming arrangementsCollections dataReal-time computing

A monitoring process for a NUMA system collects data from multiple monitored threads executing in different nodes of the system. The monitoring process executes on different processors in different nodes. The monitoring process intelligently collects data from monitored threads according to the node it which it is executing to reduce the proportion of inter-node data accesses. Preferably, the monitoring process has the capability to specify a node to which it should be dispatched next to the dispatcher, and traverses the nodes while collecting data from threads associated with the node in which the monitor is currently executing. By intelligently associating the data collection with the node of the monitoring process, the frequency of inter-node data accesses for purposes of collecting data by the monitoring process is reduced, increasing execution efficiency.

Owner:META PLATFORMS INC

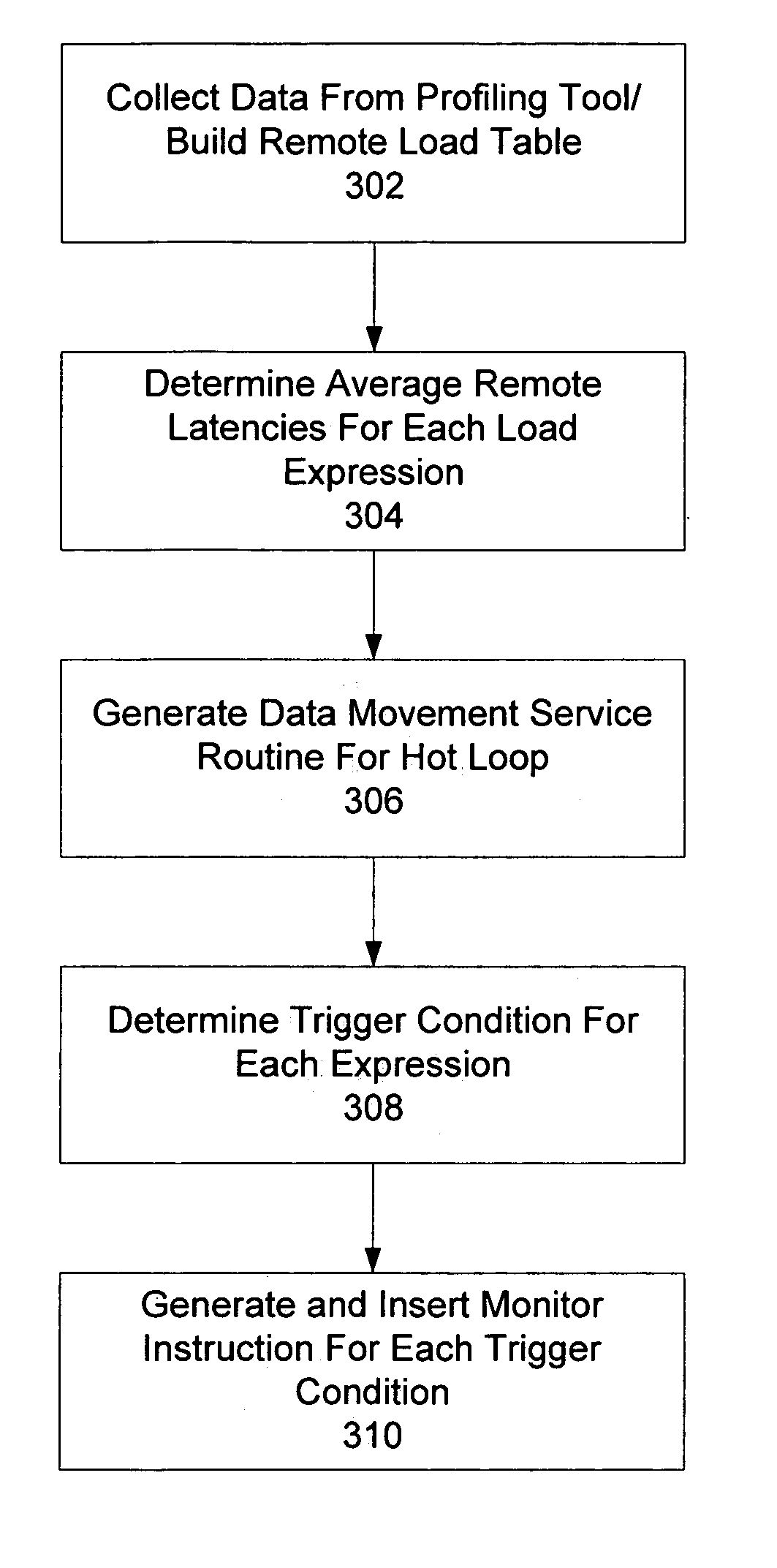

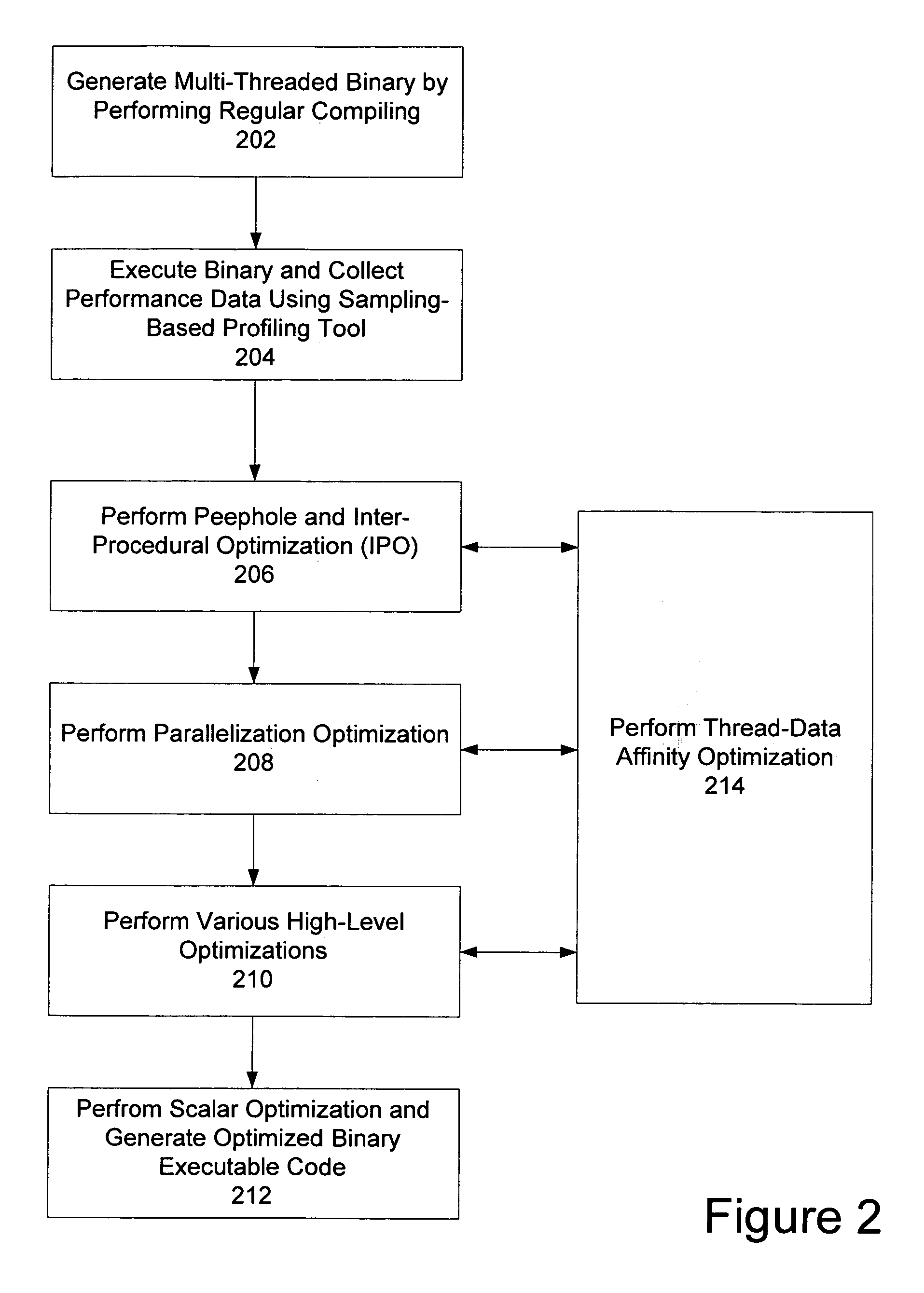

Thread-data affinity optimization using compiler

InactiveUS8037465B2Compact integrationSpecific program execution arrangementsMemory systemsUniform memory accessParallel computing

Thread-data affinity optimization can be performed by a compiler during the compiling of a computer program to be executed on a cache coherent non-uniform memory access (cc-NUMA) platform. In one embodiment, the present invention includes receiving a program to be compiled. The received program is then compiled in a first pass and executed. During execution, the compiler collects profiling data using a profiling tool. Then, in a second pass, the compiler performs thread-data affinity optimization on the program using the collected profiling data.

Owner:INTEL CORP

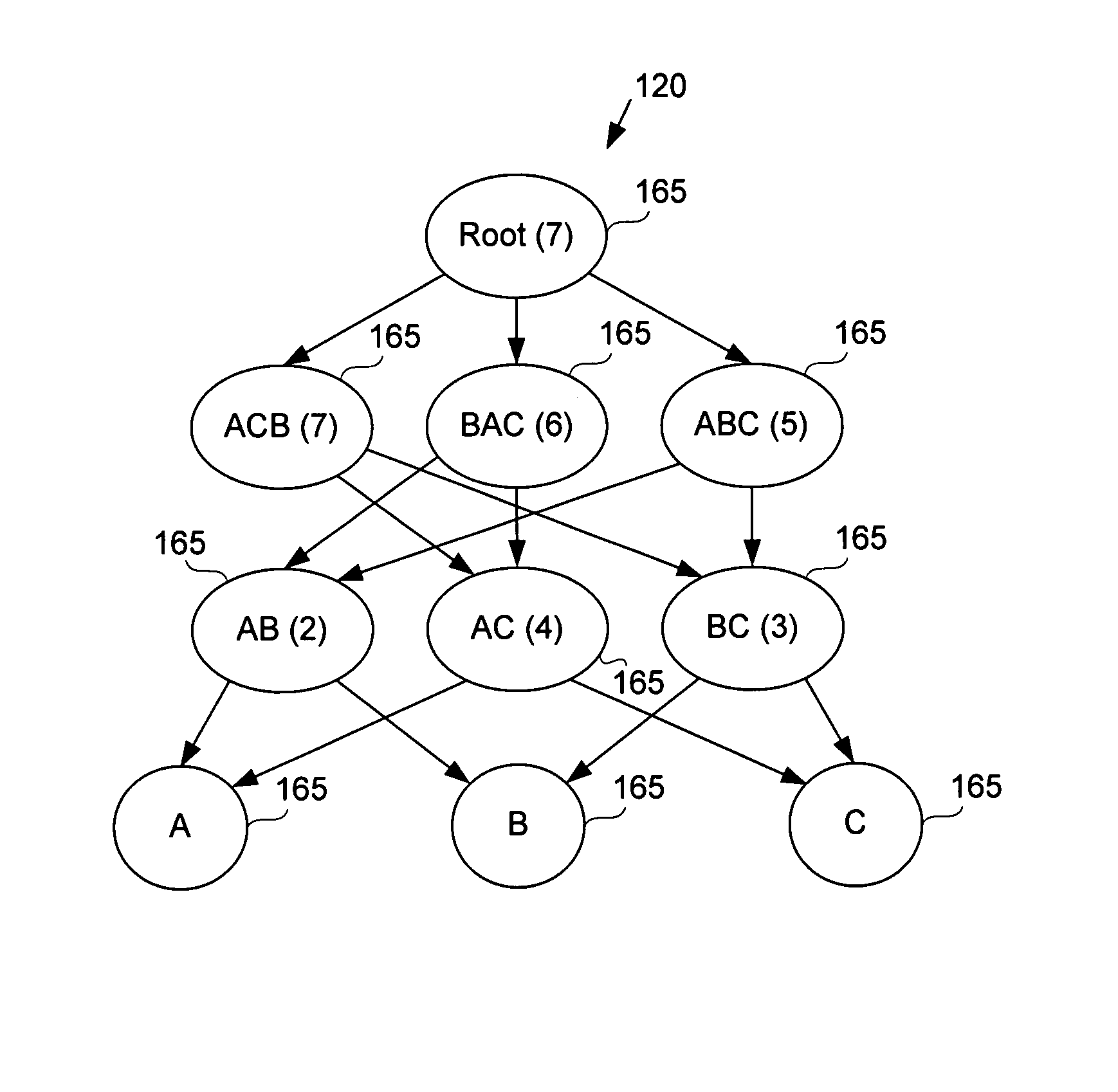

Method and system for managing resource allocation in non-uniform resource access computer systems

ActiveUS7313795B2Easily decideFacilitate efficient allocation of resourceResource allocationMultiple digital computer combinationsUniform memory accessComputer architecture

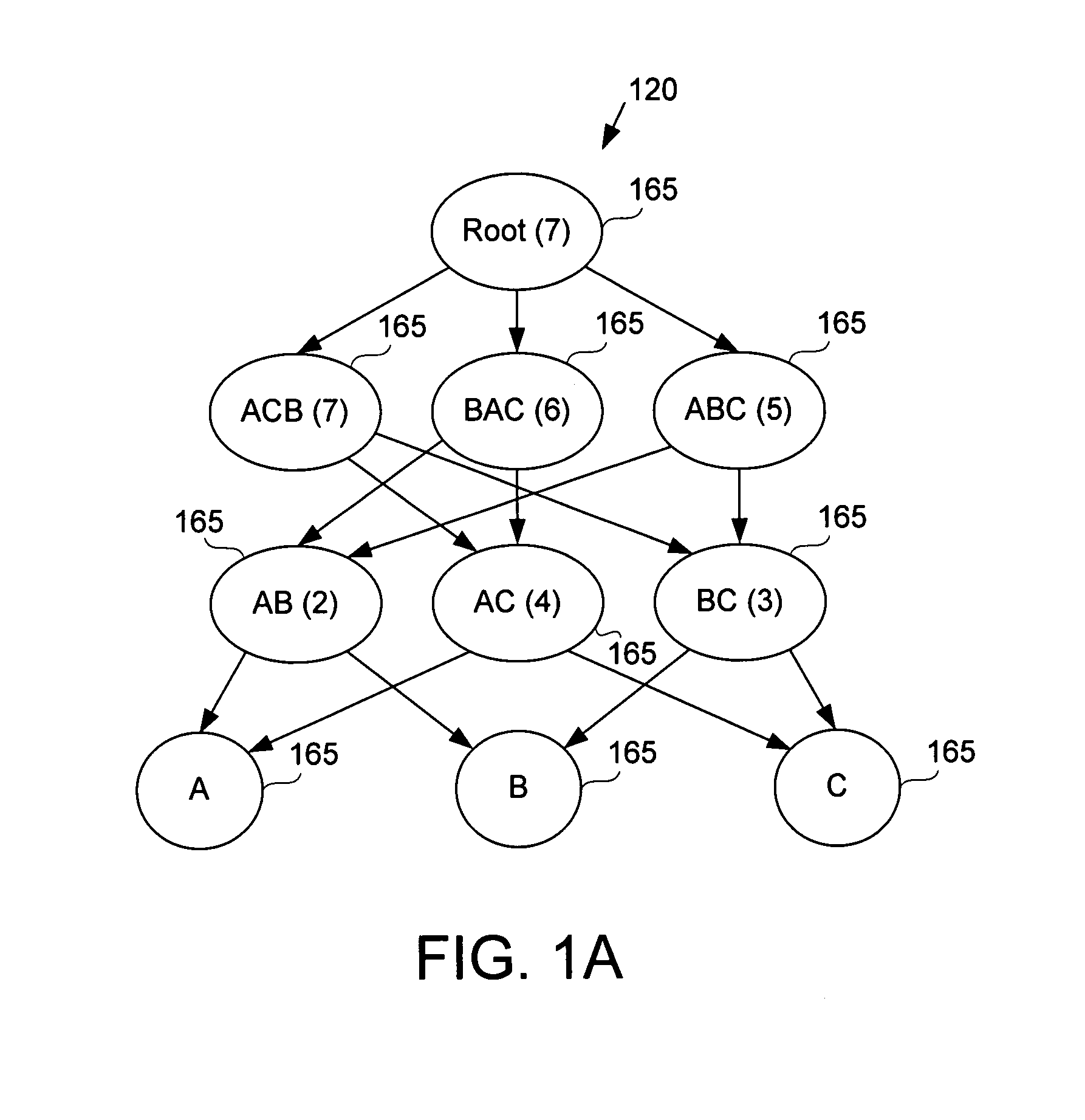

A method and system of managing resource allocation in a non-uniform resource access computer system is disclosed. A method comprises determining access costs between resources in a computer system having non-uniform access costs between the resources. The method also includes constructing a hierarchical data structure comprising the access costs. The hierarchical data structure is traversed to manage a set of the resources.

Owner:ORACLE INT CORP

Uniform network access

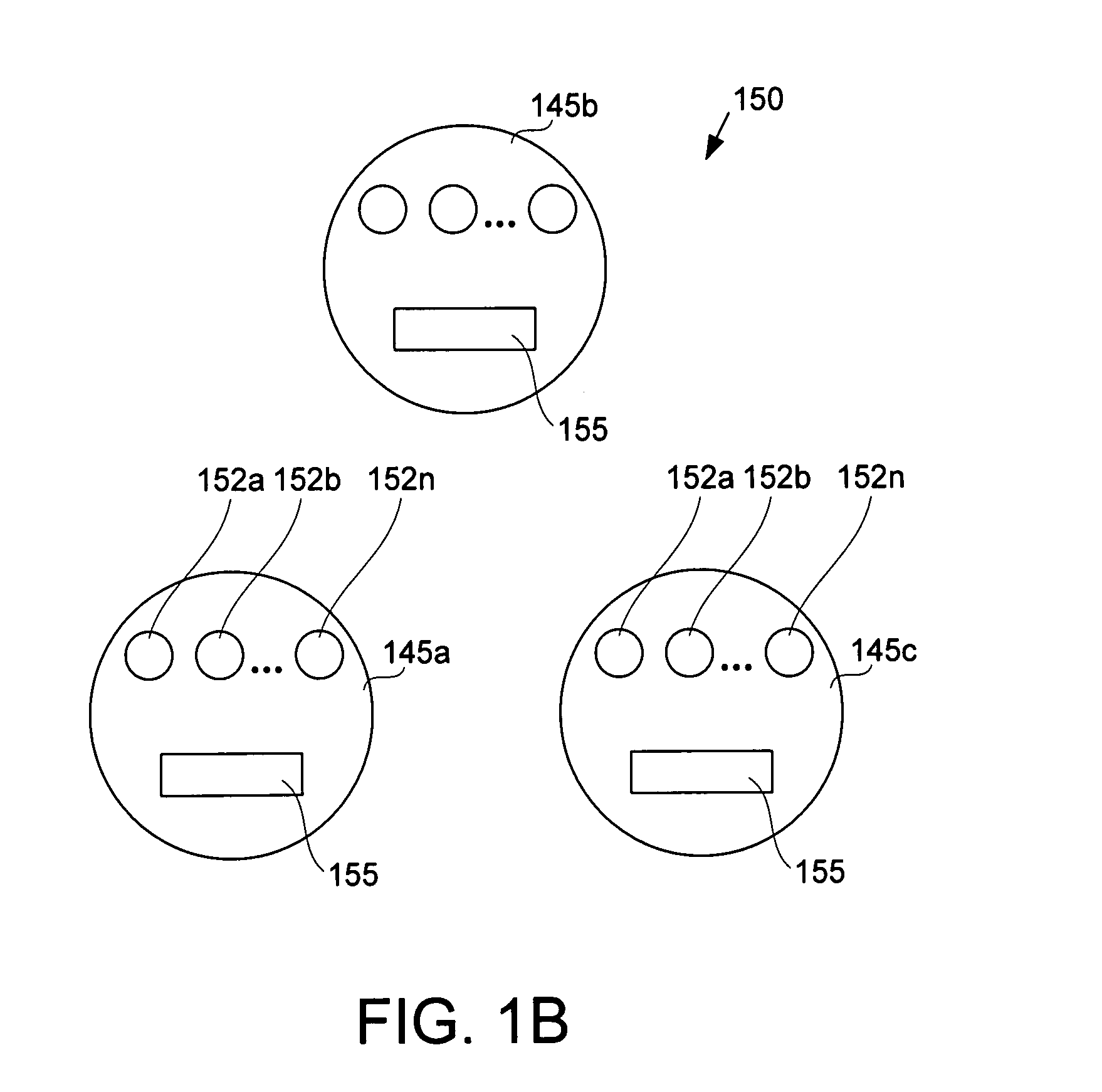

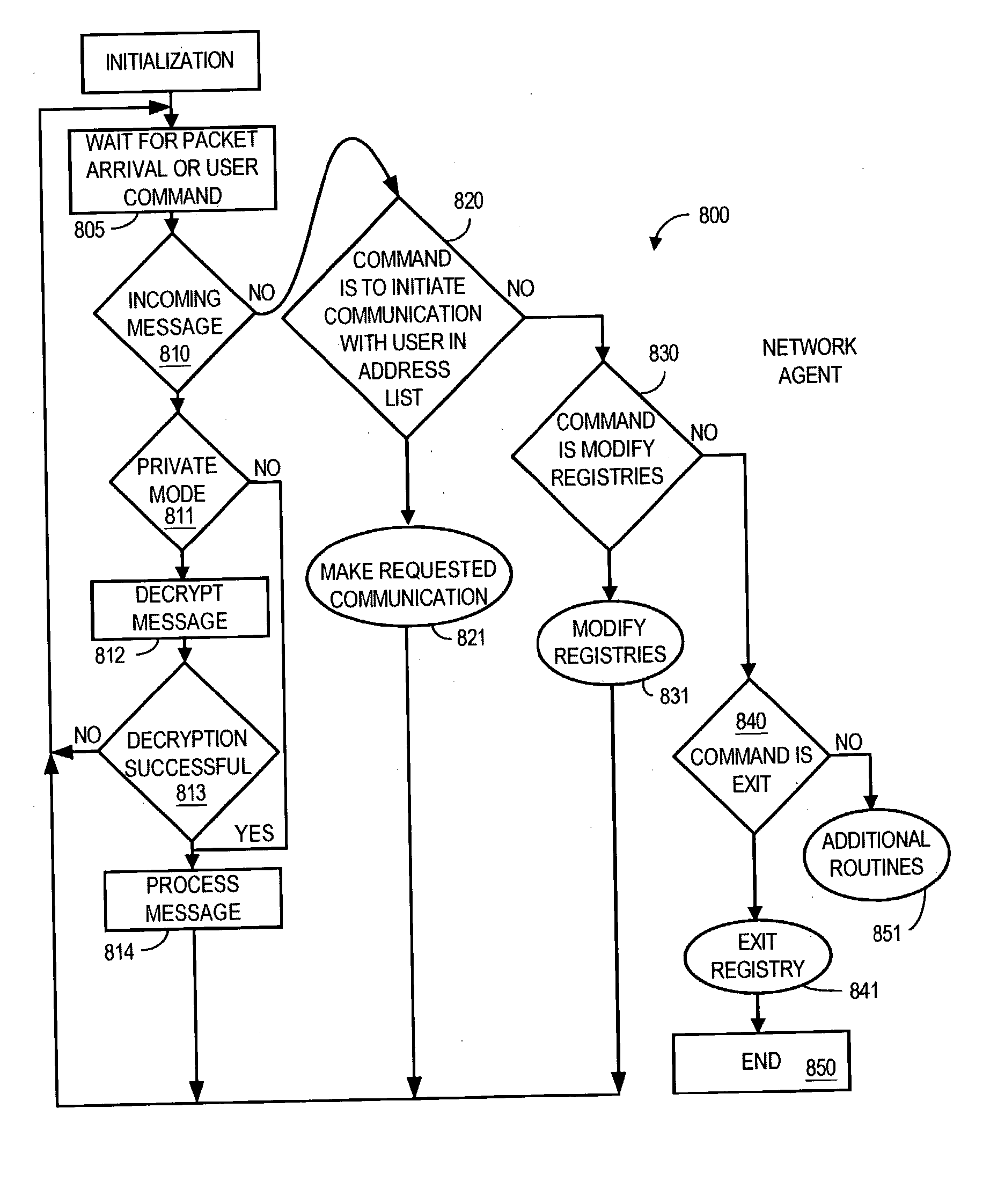

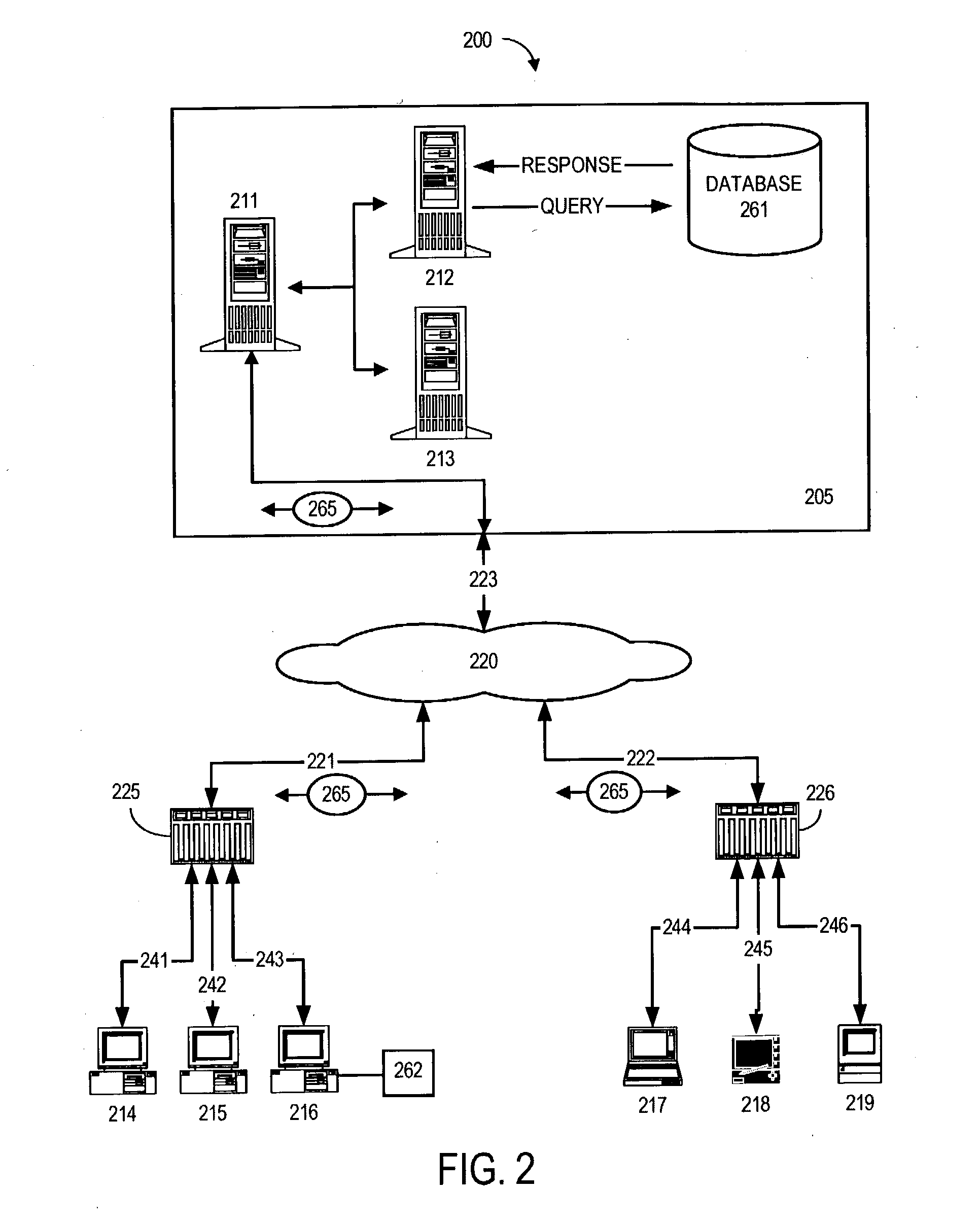

InactiveUS20030135630A1Facilitate communicationMultiple digital computer combinationsSecuring communicationUniform memory accessTelecommunications link

According to some embodiments, a registry is displayed. The registry may, for example, indicate resources available from a plurality of remote network access devices via a communications network. Moreover, a personal network address may be associated with each available resource, the personal network address including an destination address portion and an application program identifier portion. A direct communications link may then be established between a first network access device hosting an available resource and a second network address device using the personal network address associated with the resource.

Owner:PEER COMM CORP

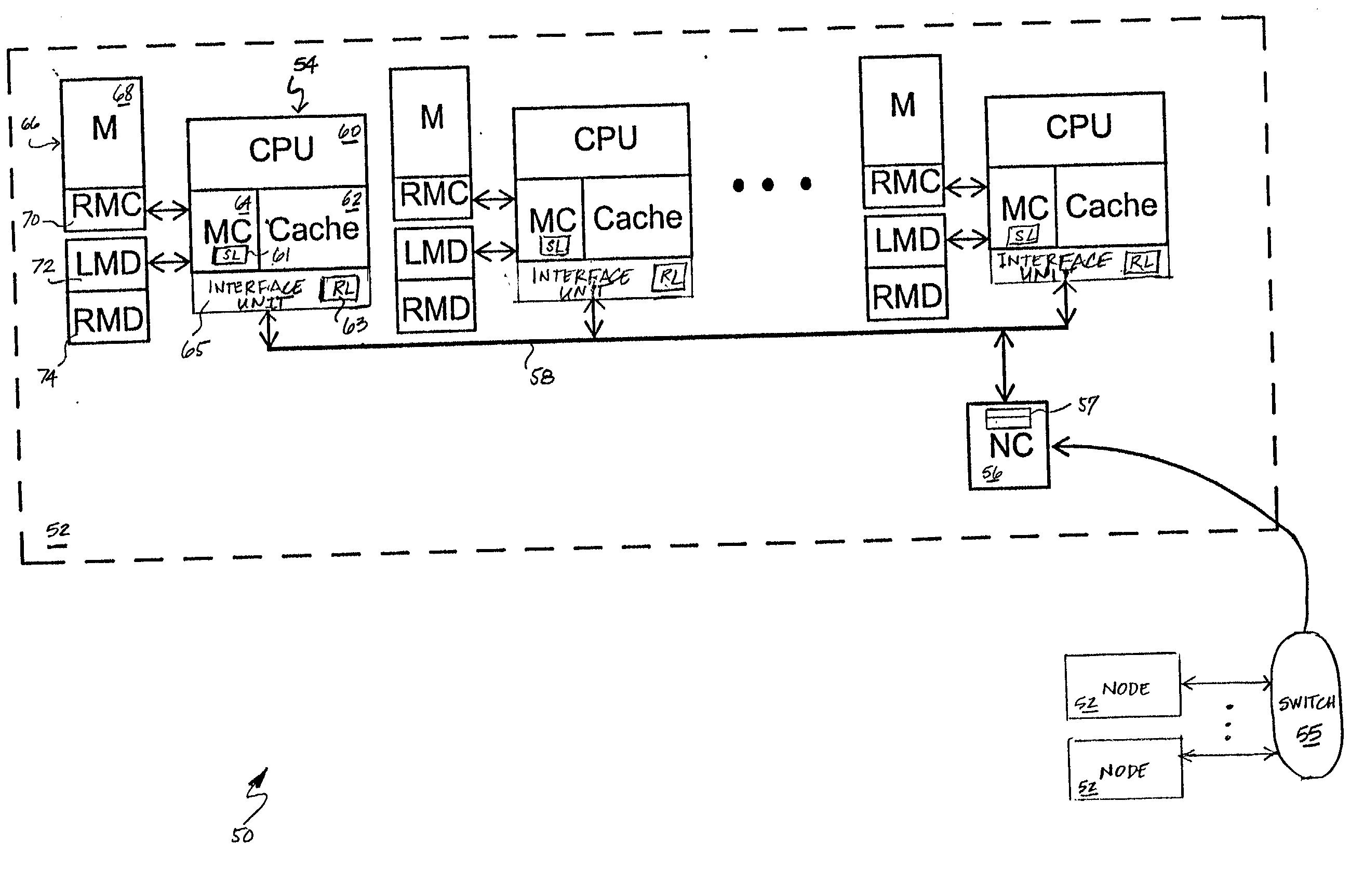

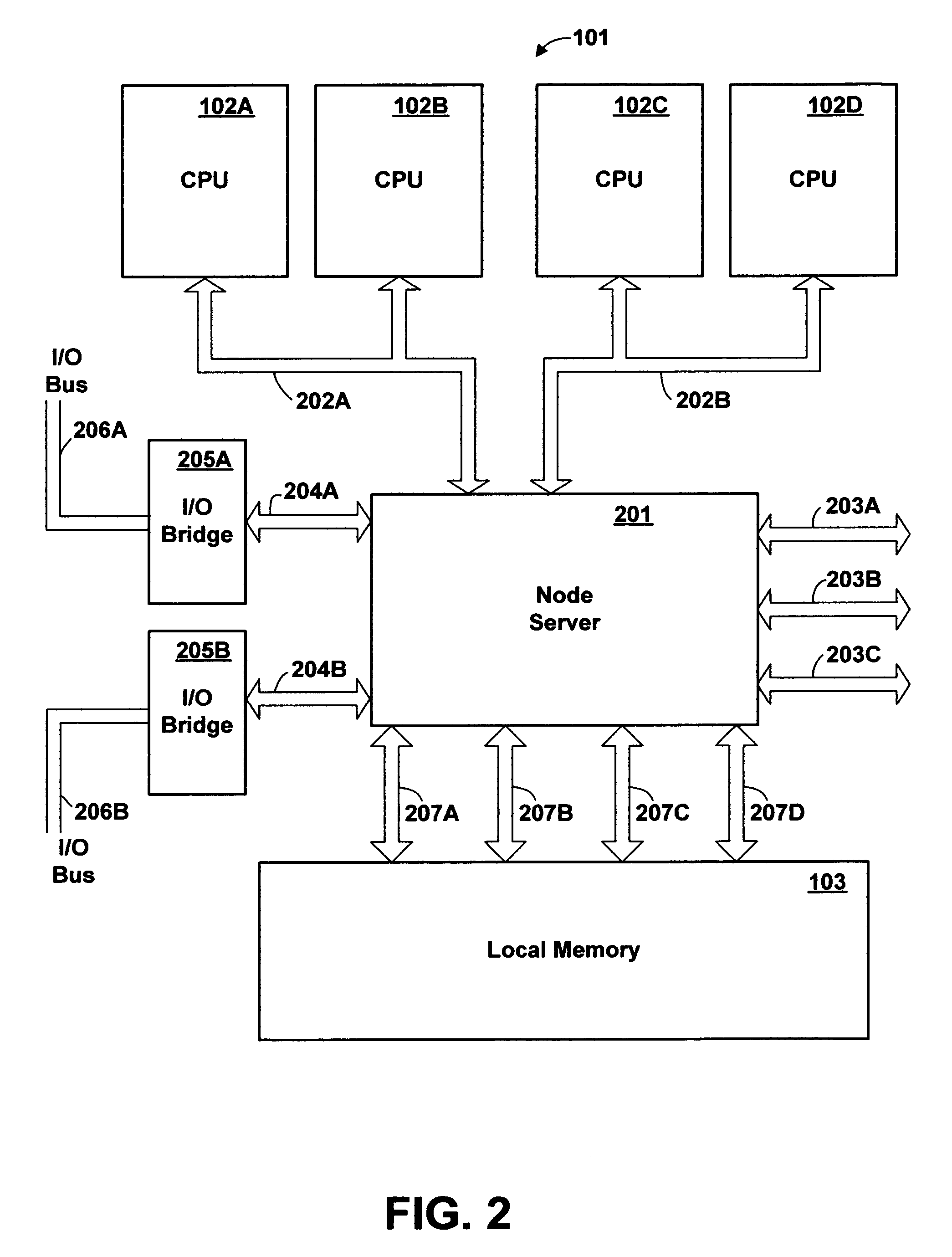

Non-uniform memory access (NUMA) computer system having distributed global coherency management

InactiveUS20030009635A1Memory adressing/allocation/relocationDigital computer detailsMemory controllerReceipt

A computer system includes a home node and one or more remote nodes coupled by a node interconnect. The home node includes a local interconnect, a node controller coupled between the local interconnect and the node interconnect, a home system memory, and a memory controller coupled to the local interconnect and the home system memory. In response to receipt of a data request from the remote node, the memory controller transmits requested data from the home system memory to the remote node and, in a separate transfer, conveys responsibility for global coherency management for the requested data from the home node to the remote node. By decoupling responsibility for global coherency management from delivery of the requested data in this manner, the memory controller queue allocated to the data request can be deallocated earlier, thus improving performance.

Owner:IBM CORP

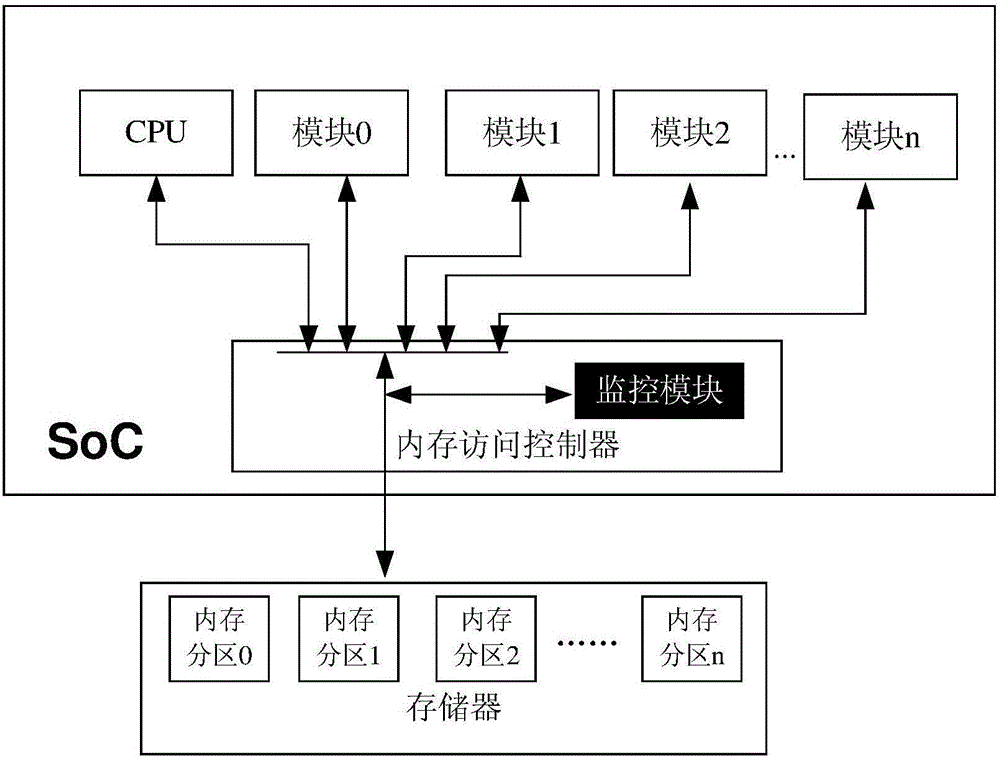

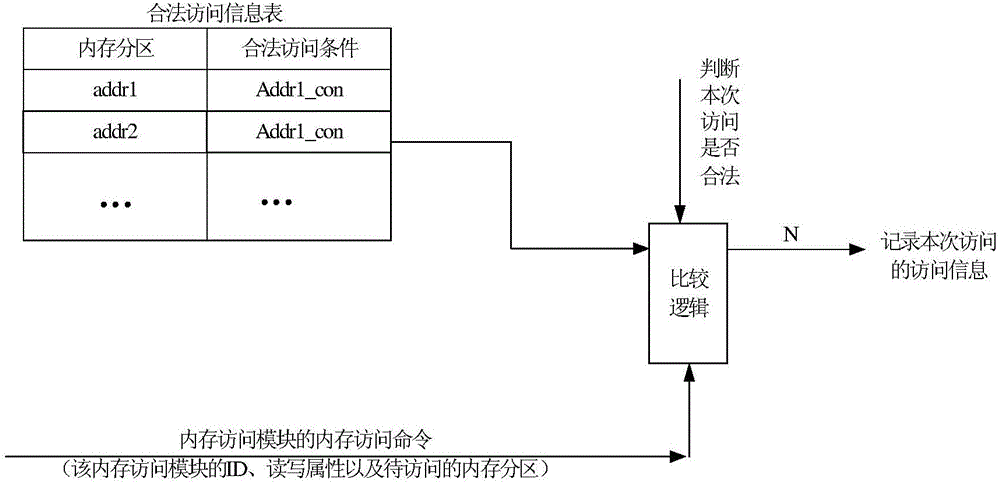

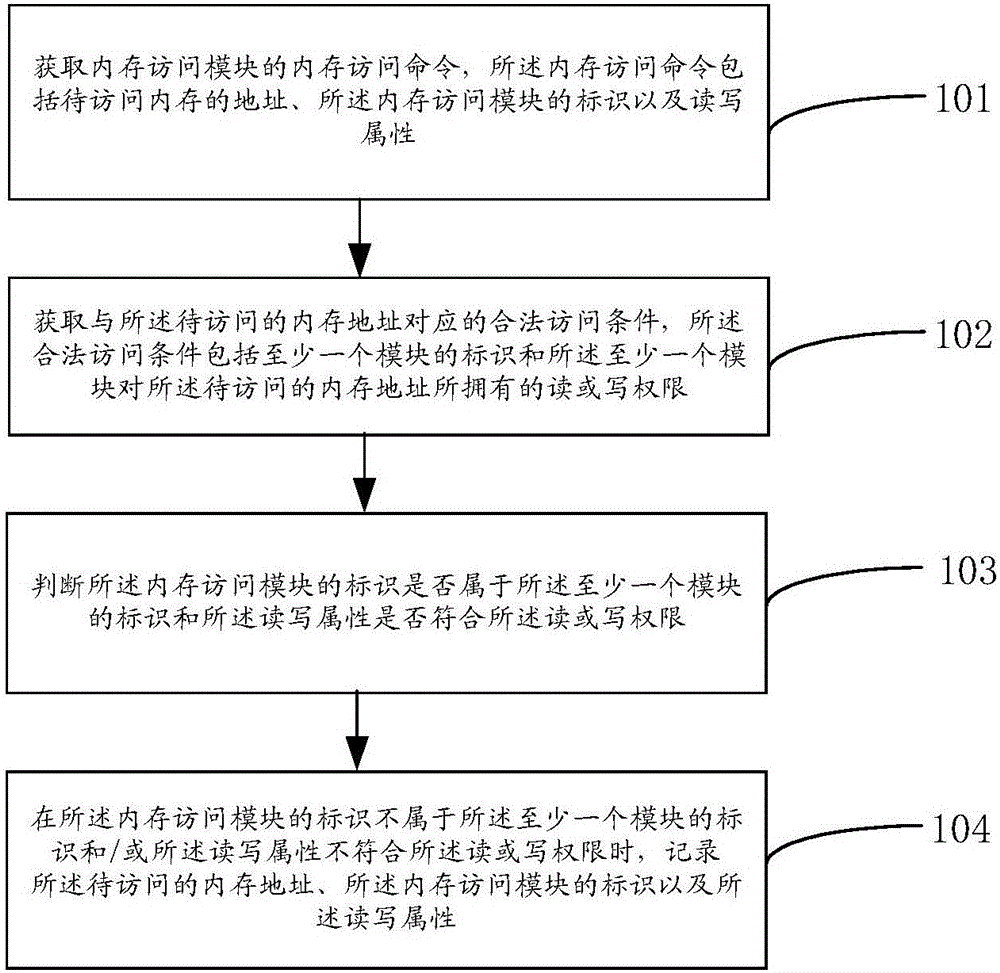

Memory monitor method, memory access controller and SoC system

The invention provides a memory monitor method, a memory access controller and a SoC system and relates to the communication field. By means of the memory monitor method, the memory access controller and the SoC system, when abnormal memory access occurs, modules having illegal access to memory can be quickly and accurately positioned. The method comprises following steps: obtaining a memory access command (comprising an address of memory to be accessed, identification and read-write attributes of the memory access module) sent by a memory access module; obtaining a legal access condition corresponding to the memory address to be accessed, wherein the condition comprises at least one identification of a module, and at least one read or write authority owned by a module to the memory address to be accessed; determining whether the identification of the memory access module belongs to the at least one module identification and whether the read-write attributes accord with the read or write authorities; if the identification of the memory access module does not belong to the at least one module identification and / or the read-write attributes do not accord with the read or write authorities, recording the memory address to be accessed, the identification of the memory access module and the read-write attributes.

Owner:HUAWEI TECH CO LTD

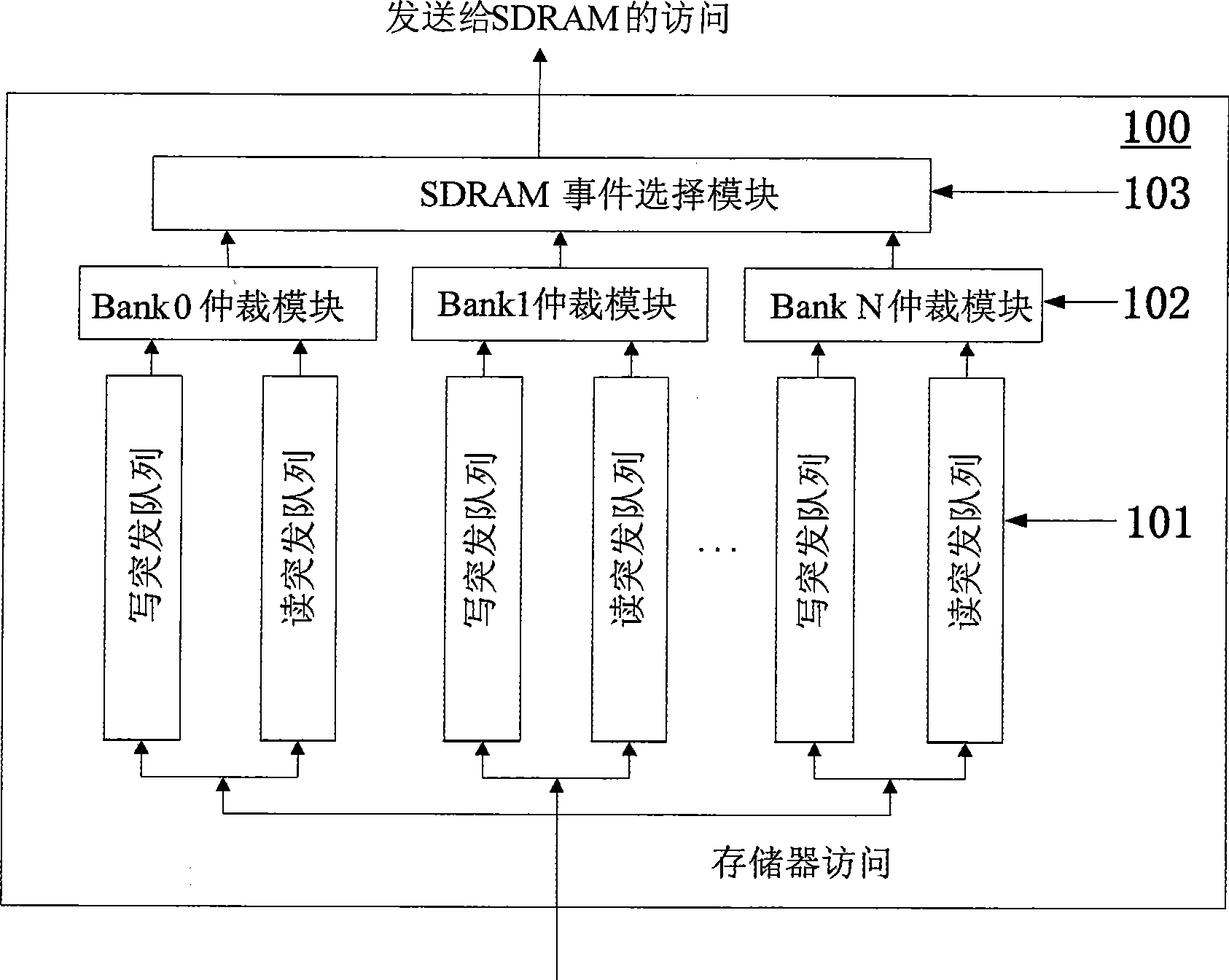

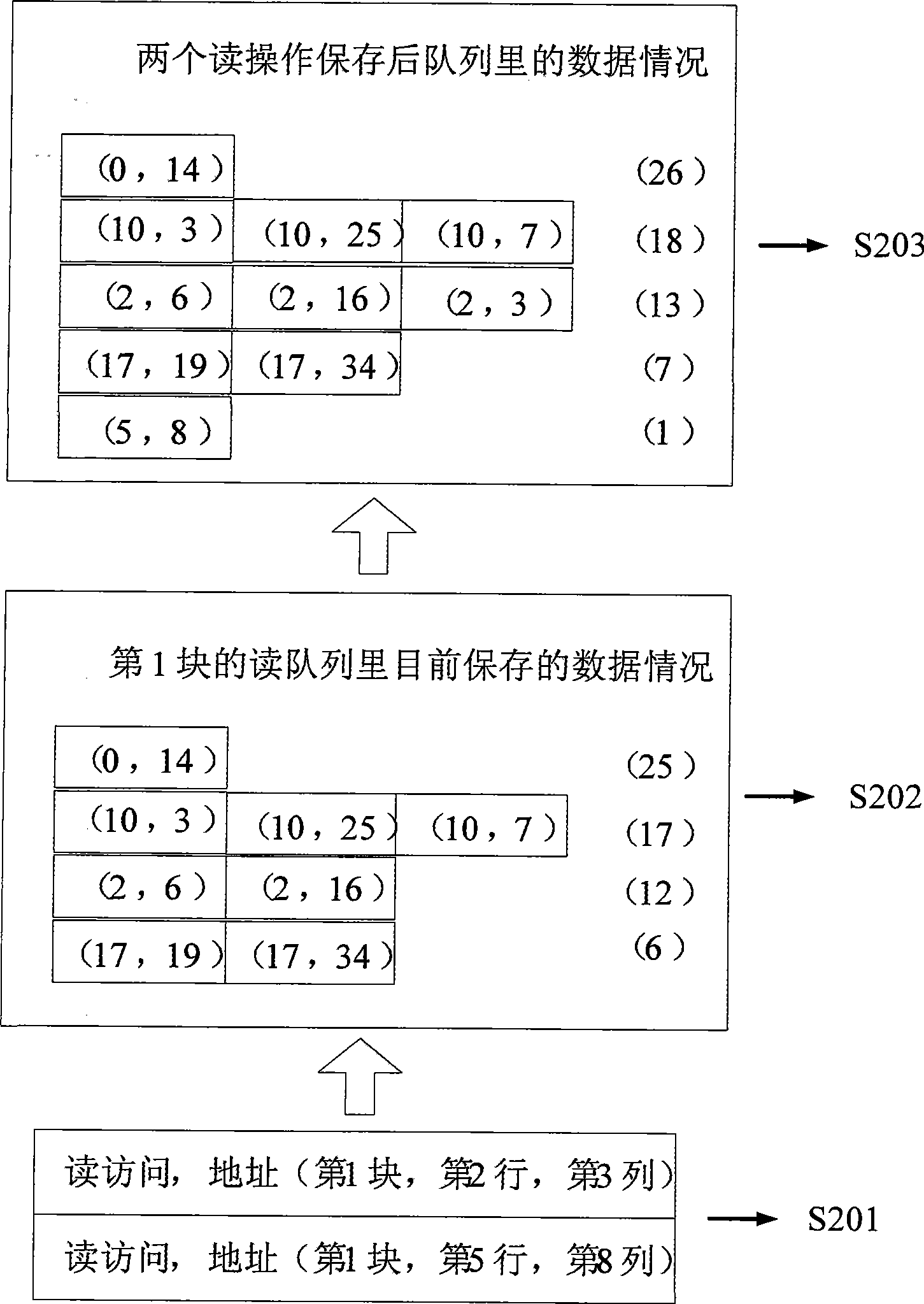

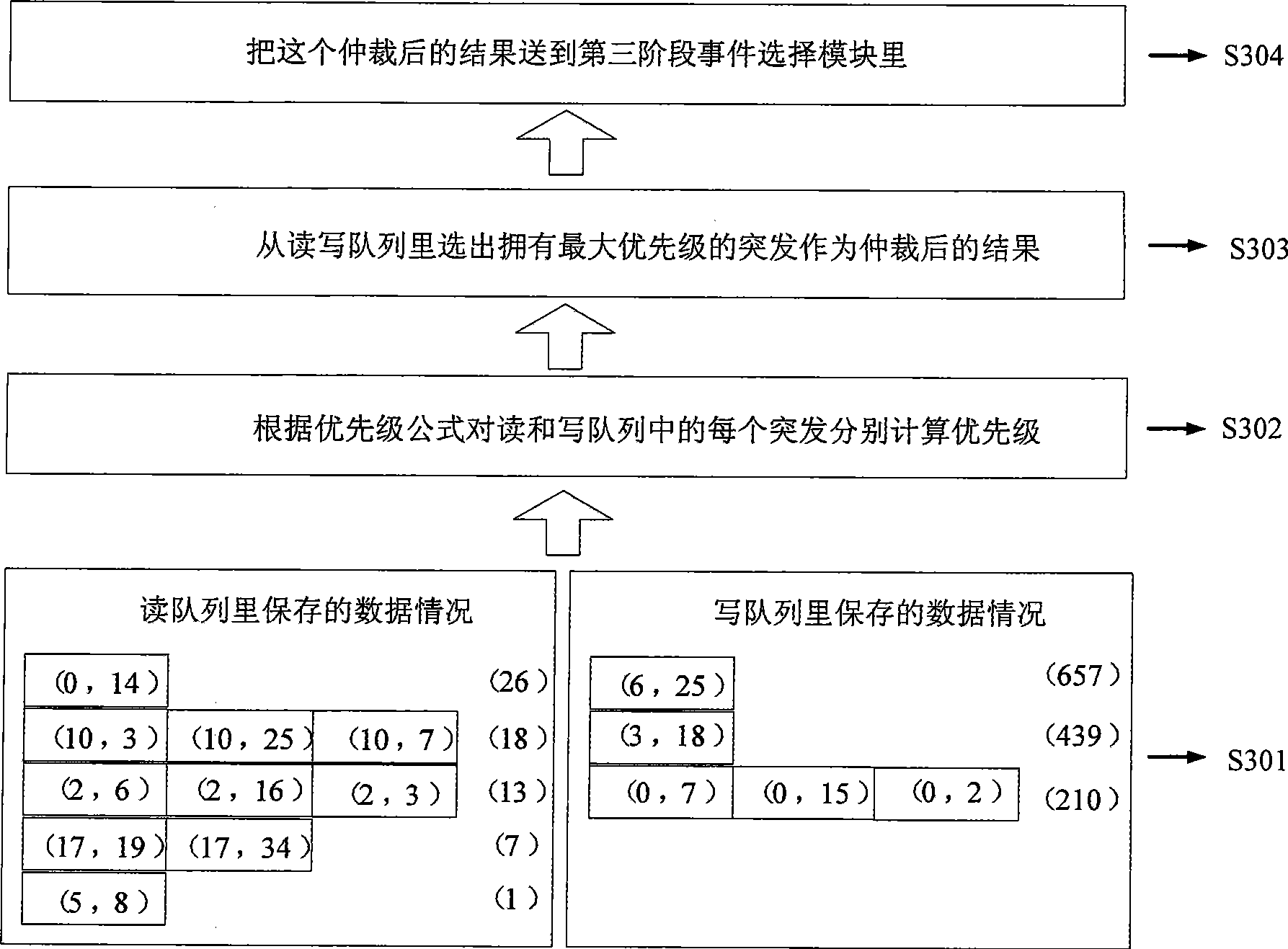

Outburst disorder based memory controller, system and its access scheduling method

InactiveCN101470678ASolve Bandwidth BottlenecksIncrease data bandwidthElectric digital data processingUniform memory accessDirect memory access

The invention provides a memory controller based on the burst disorder memory access dispatching, which is used to the burst disorder memory access dispatching accessed by a memory, and comprises a reading-writing queue module for storing the reading-writing memory access from a processor in a two-dimension mode, a block arbitration module for arbitrating a burst memory access from each block in each memory clock period, and an event selection module for selecting the final memory access operation from the burst of each block after arbitrated to send to the memory. The block is conducted with the burst arbitration to dispatch the disorder memory access through changing the structure of a queue and adopting the priority expression provided by the invention, thereby increasing the data bandwidth of the memory, and reducing the executing time of the processor.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

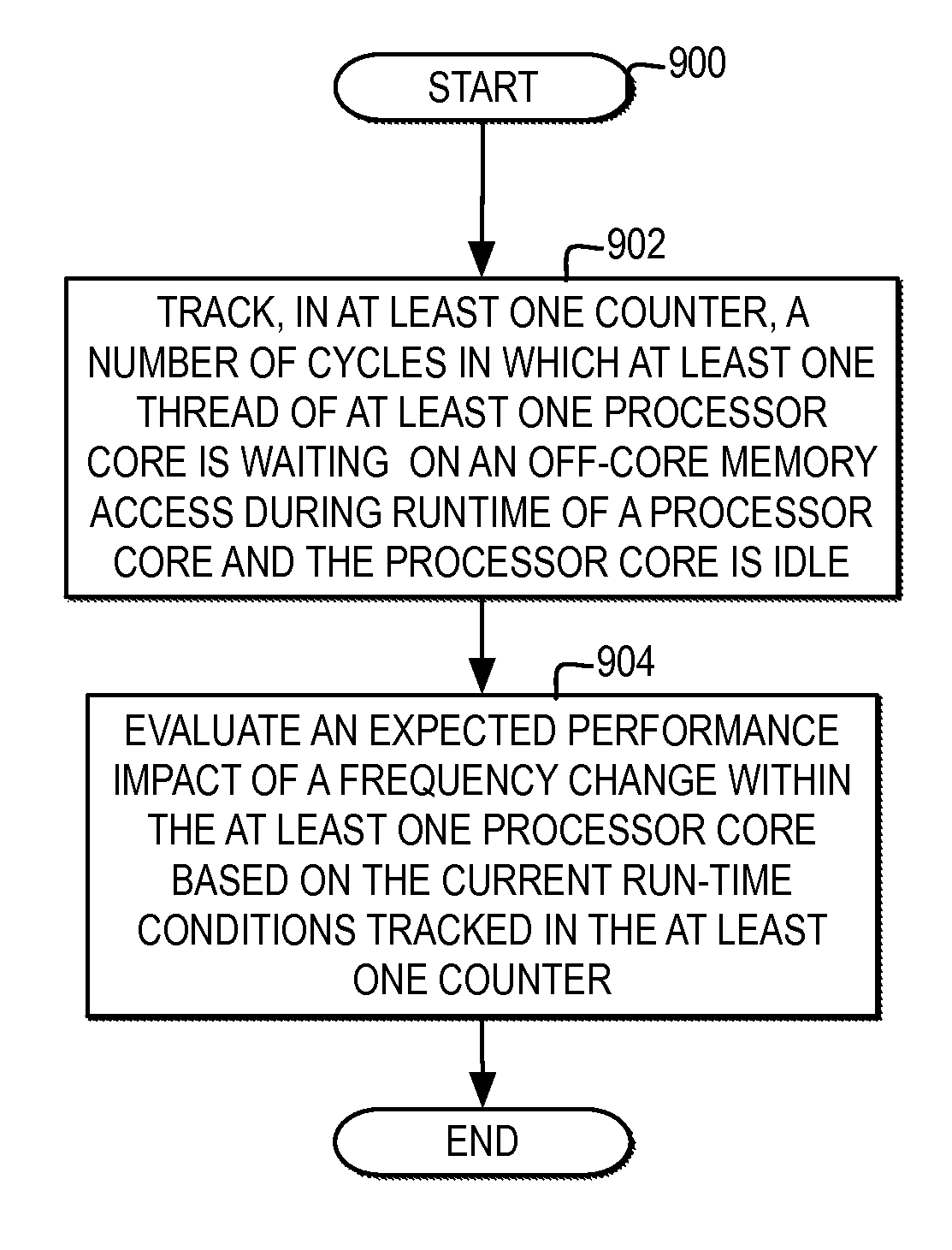

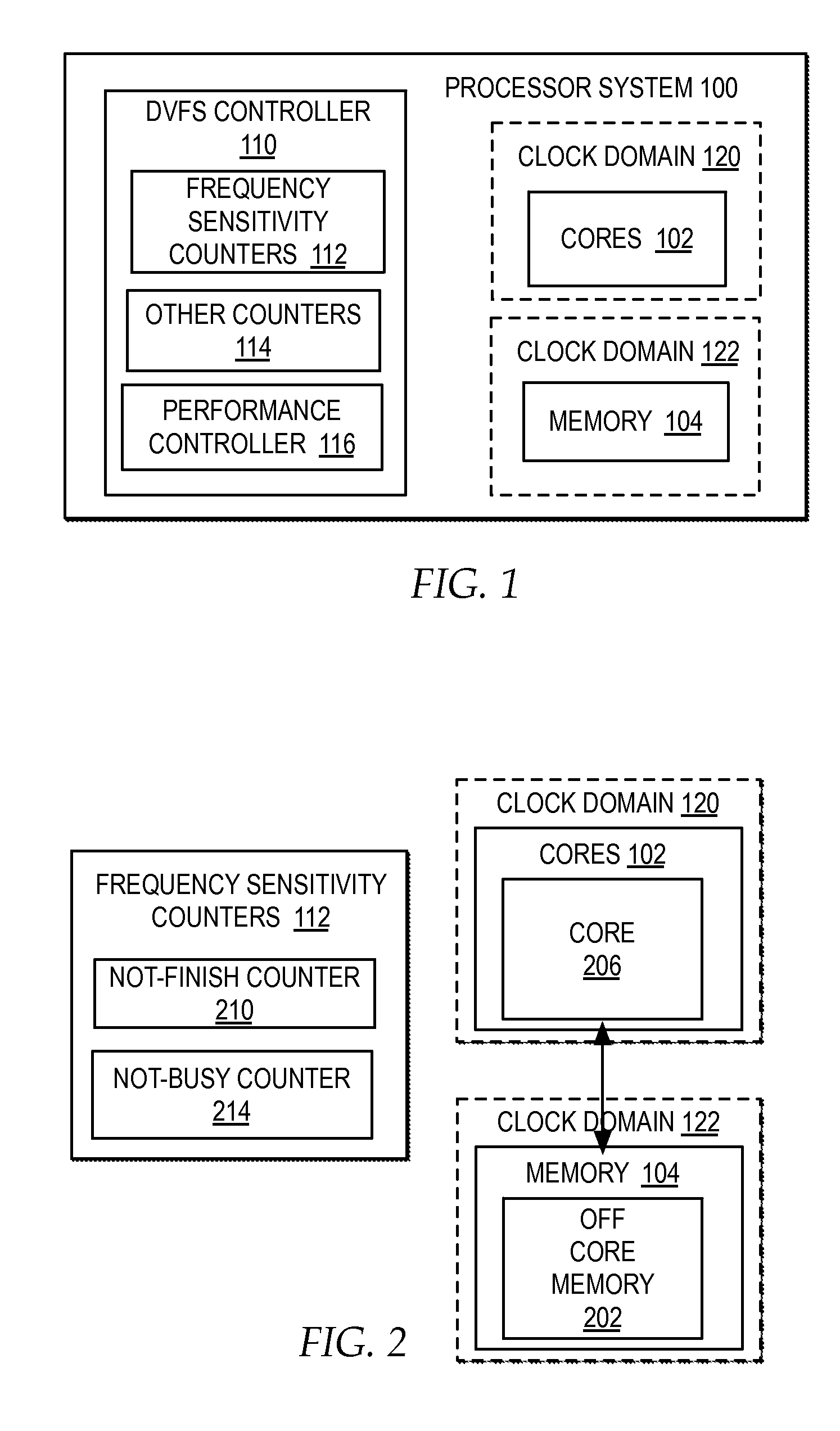

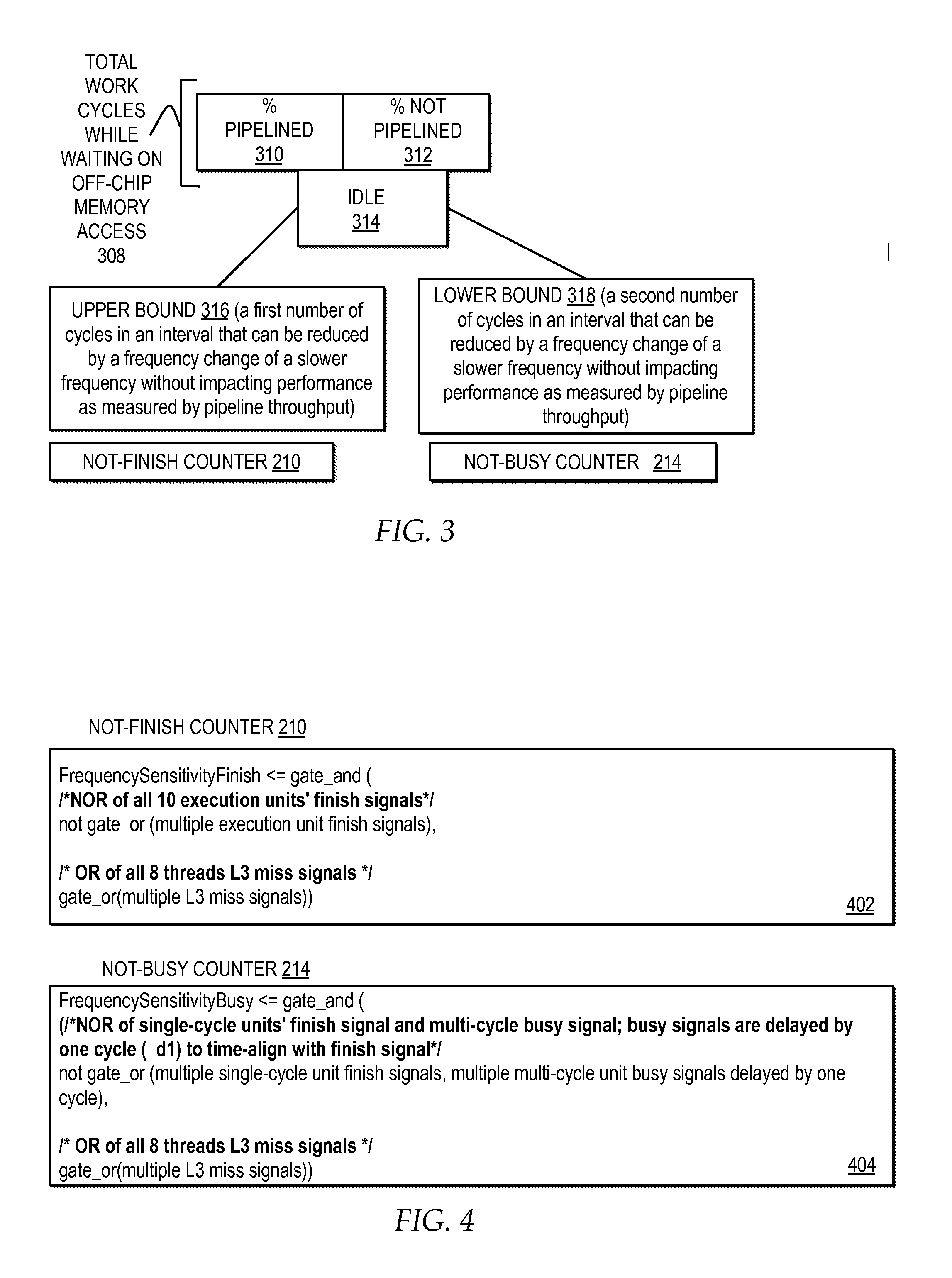

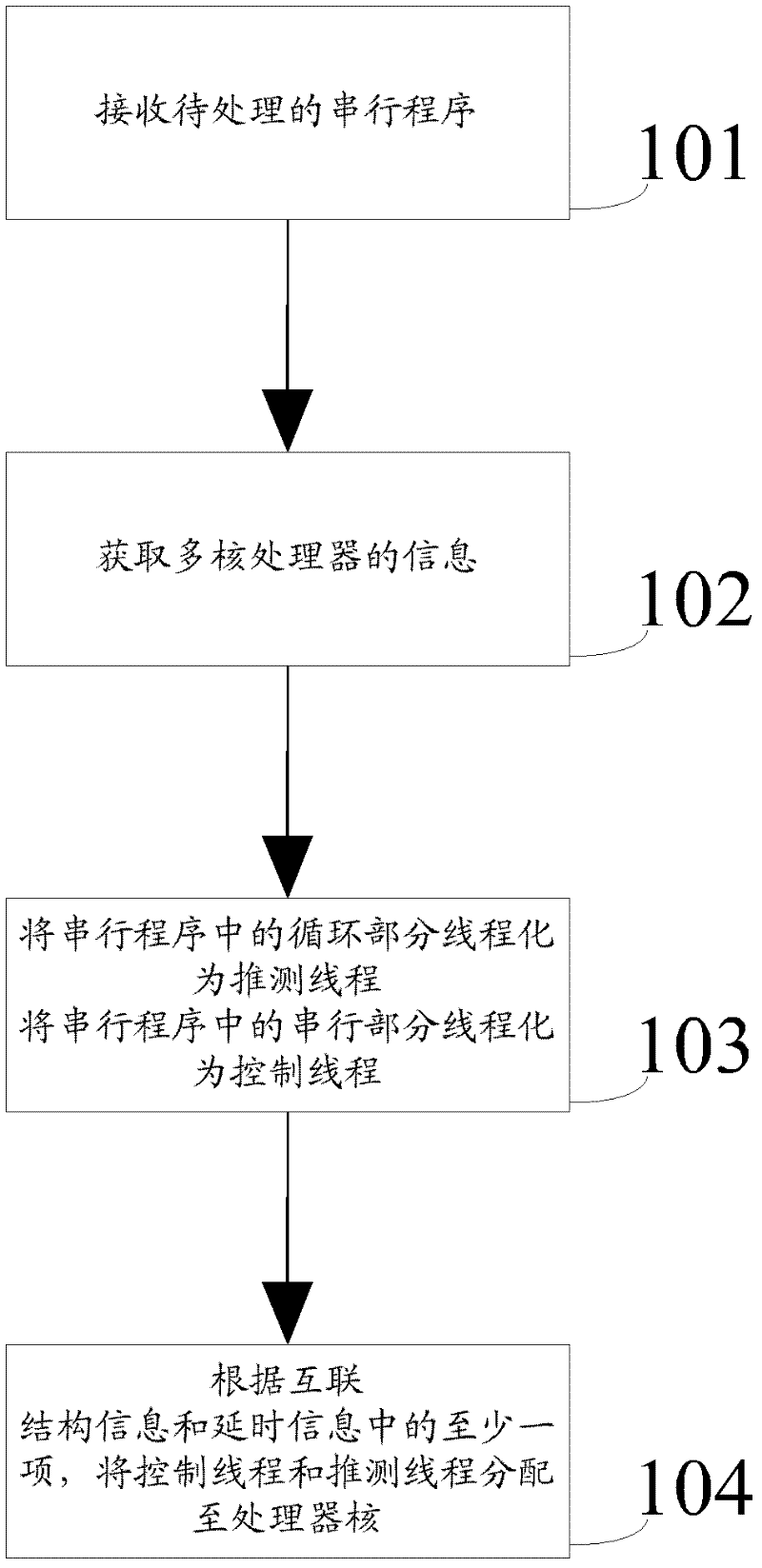

Tracking pipelined activity during off-core memory accesses to evaluate the impact of processor core frequency changes

ActiveUS20160041594A1Volume/mass flow measurementHardware monitoringUniform memory accessParallel computing

A processor system tracks, in at least one counter, a number of cycles in which at least one execution unit of at least one processor core is idle and at least one thread of the at least one processor core is waiting on at least one off-core memory access during run-time of the at least one processor core during an interval comprising multiple cycles. The processor system evaluates an expected performance impact of a frequency change within the at least one processor core based on the current run-time conditions for executing at least one operation tracked in the at least one counter during the interval.

Owner:IBM CORP

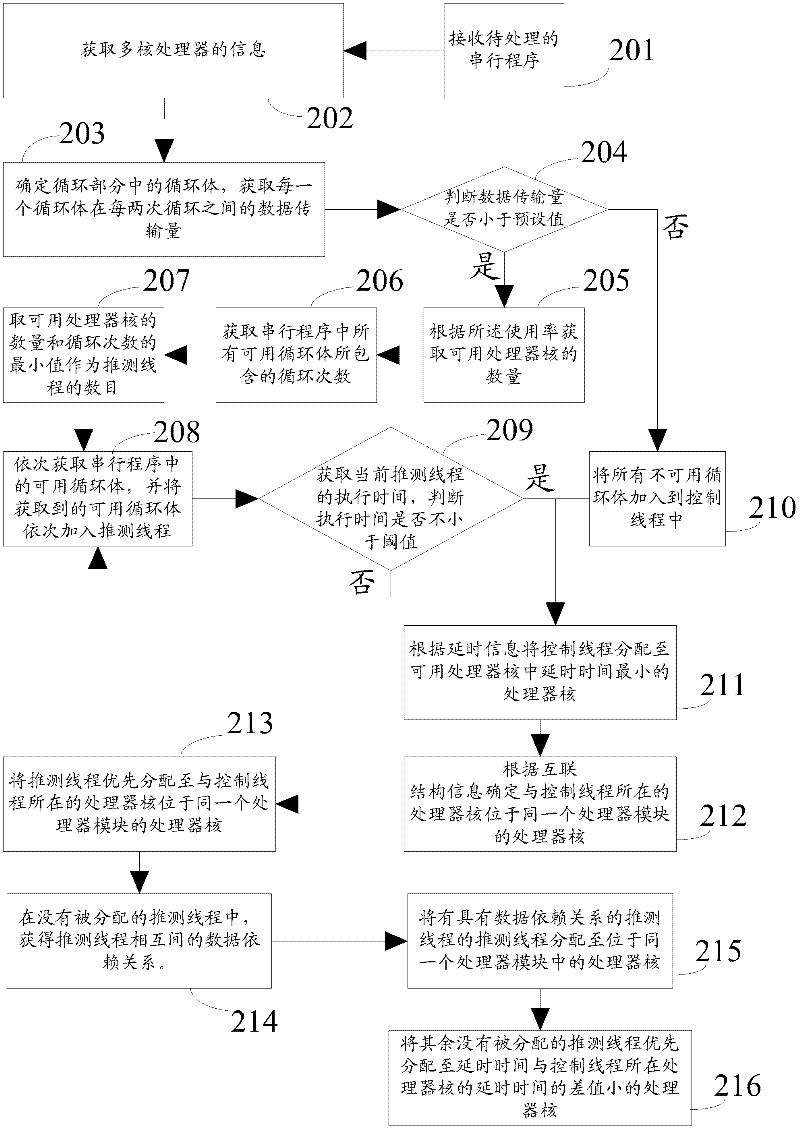

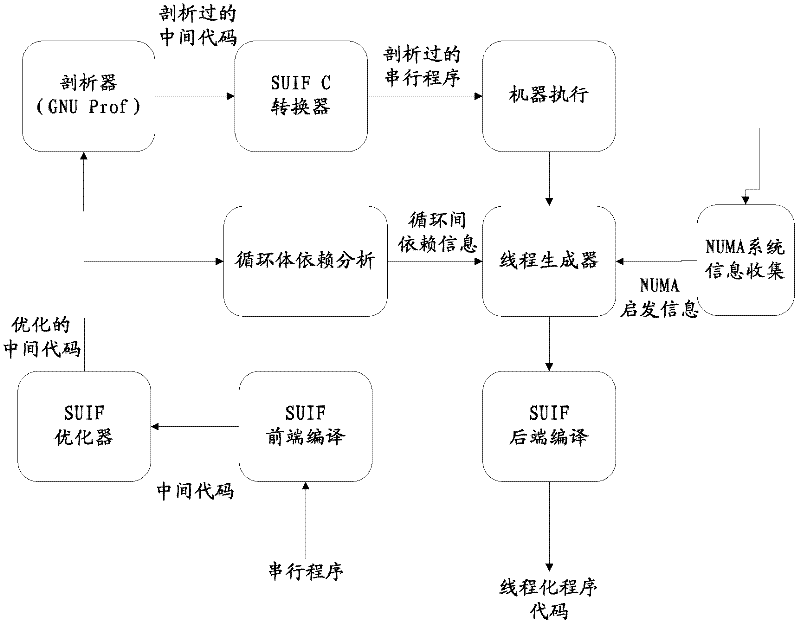

Method and device for threading serial program in nonuniform memory access system

ActiveCN102520915AReduce performanceResource allocationConcurrent instruction executionUniform memory accessThe Internet

The embodiment of the invention discloses a method and a device for threading a serial program in a nonuniform memory access system, which relates to the multi-thread technical field, and can alleviate the worsening of properties of a multi-nuclear processor caused by the access and memory delay difference when executing the serial program in the nonuniform memory access system. The method comprises the steps that: the serial program to be processed is received; information of a multi-nuclear processor is acquired, and the information comprises an internet structure information of the multi-nuclear processor and at least one item in the access and memory delay of each processor nuclear; a usable cycling part of the serial program is threaded into an inferred thread, and a serial part and an unusable cycling part of the serial program are threaded into a control thread; and the control thread and the inferred thread are allocated to the processor nuclear according to at least one of the internet structure information and the delay information. The method and the device are used for executing the serial program in a threading way in the nonuniform memory access system supporting an inferred multi-thread mechanism.

Owner:HUAWEI TECH CO LTD +1

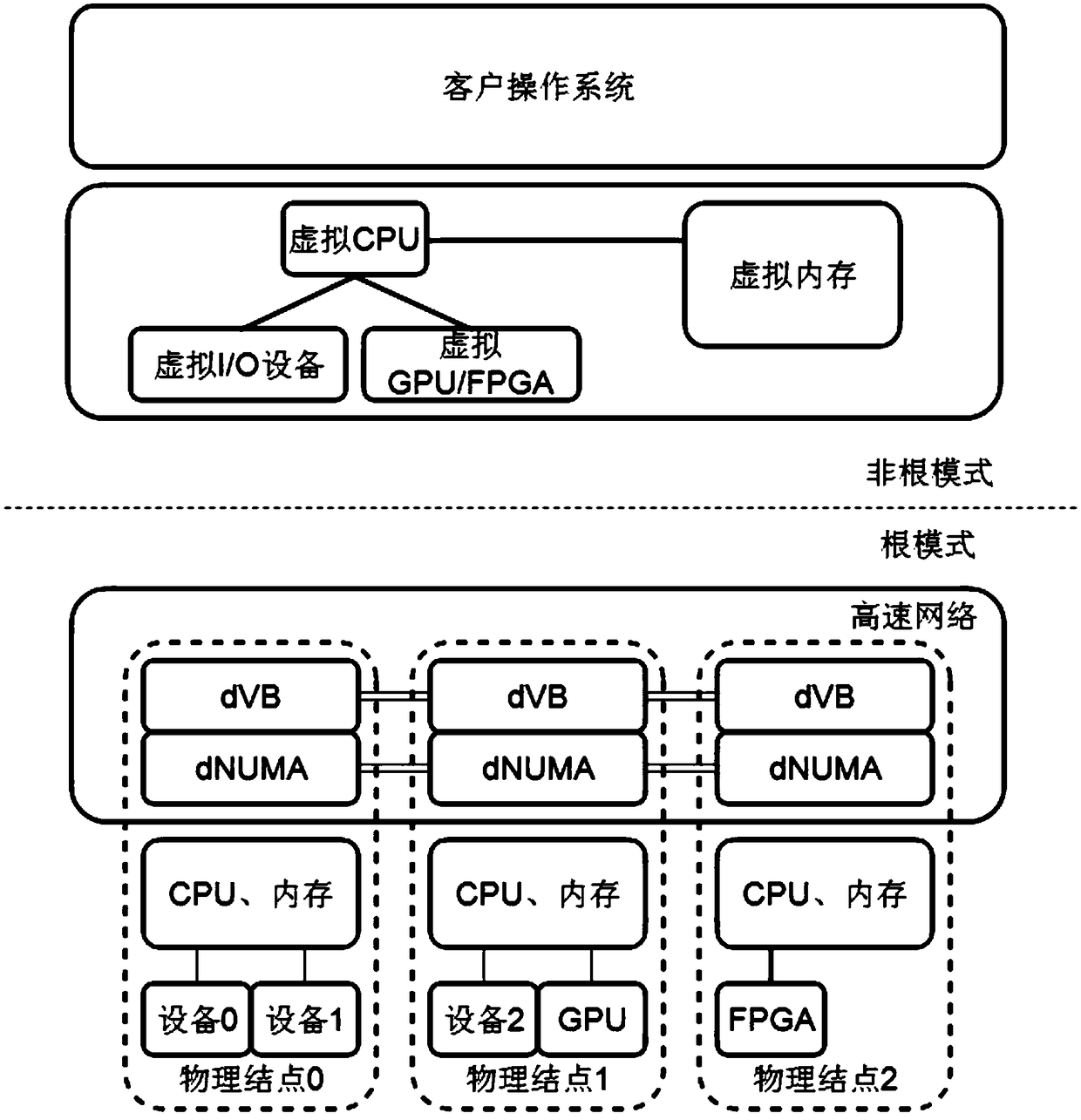

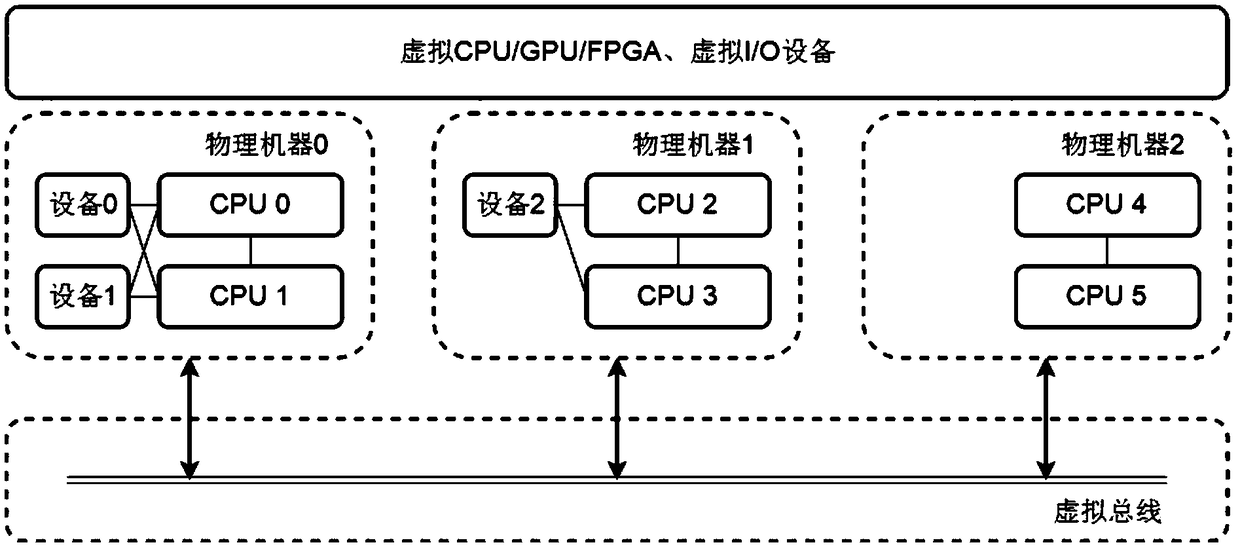

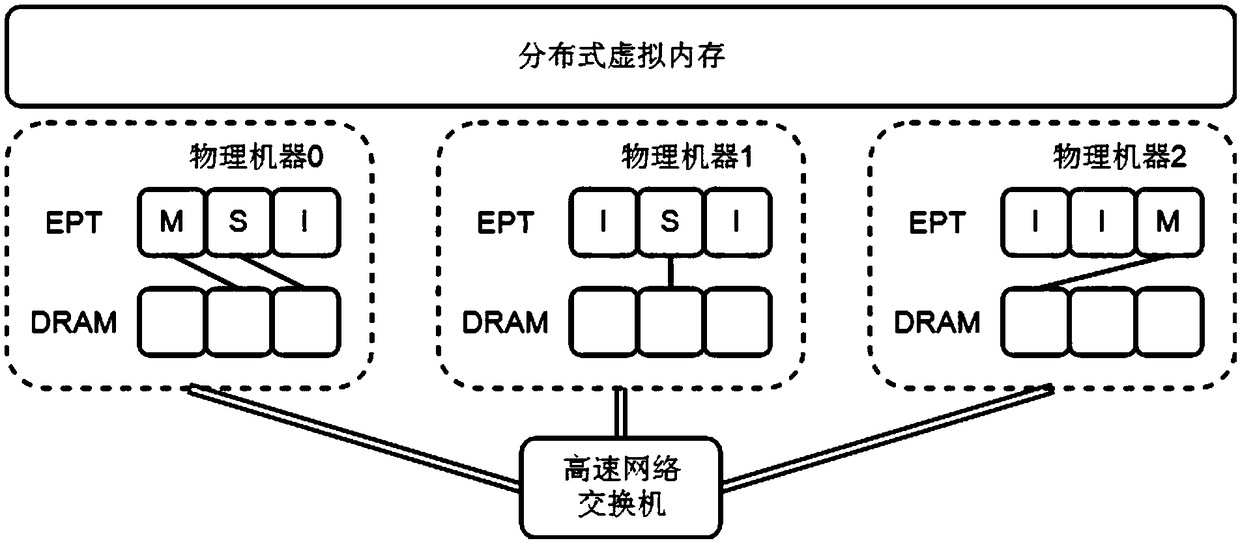

Distributed virtual machine manager

The invention provides a distributed virtual machine manager. The distributed virtual machine manager comprises a virtual machine management module and a client operating system. The virtual machine management module comprises a distributed sharing bus module and a distributed nonuniform memory access module operated on each physical machine node. Through the distributed sharing bus module and thedistributed nonuniform memory access module, uniform interfaces of mass resources are abstracted to a virtual machine to provide for an upper layer client operating system. The client operating system is used for the distributed nonuniform memory access module to realize a dNUMA-TSO model and NUMA affinity setting. Through the distributed virtual machine manager, heterogeneous resources on a plurality of physical machines are abstracted to the virtual machine through a virtualization polymerization technology, so as to provide mass resources for the client operating system which is operated on an upper layer, thereby satisfying application scenarios having ultrahigh resource and performance demands.

Owner:SHANGHAI JIAO TONG UNIV

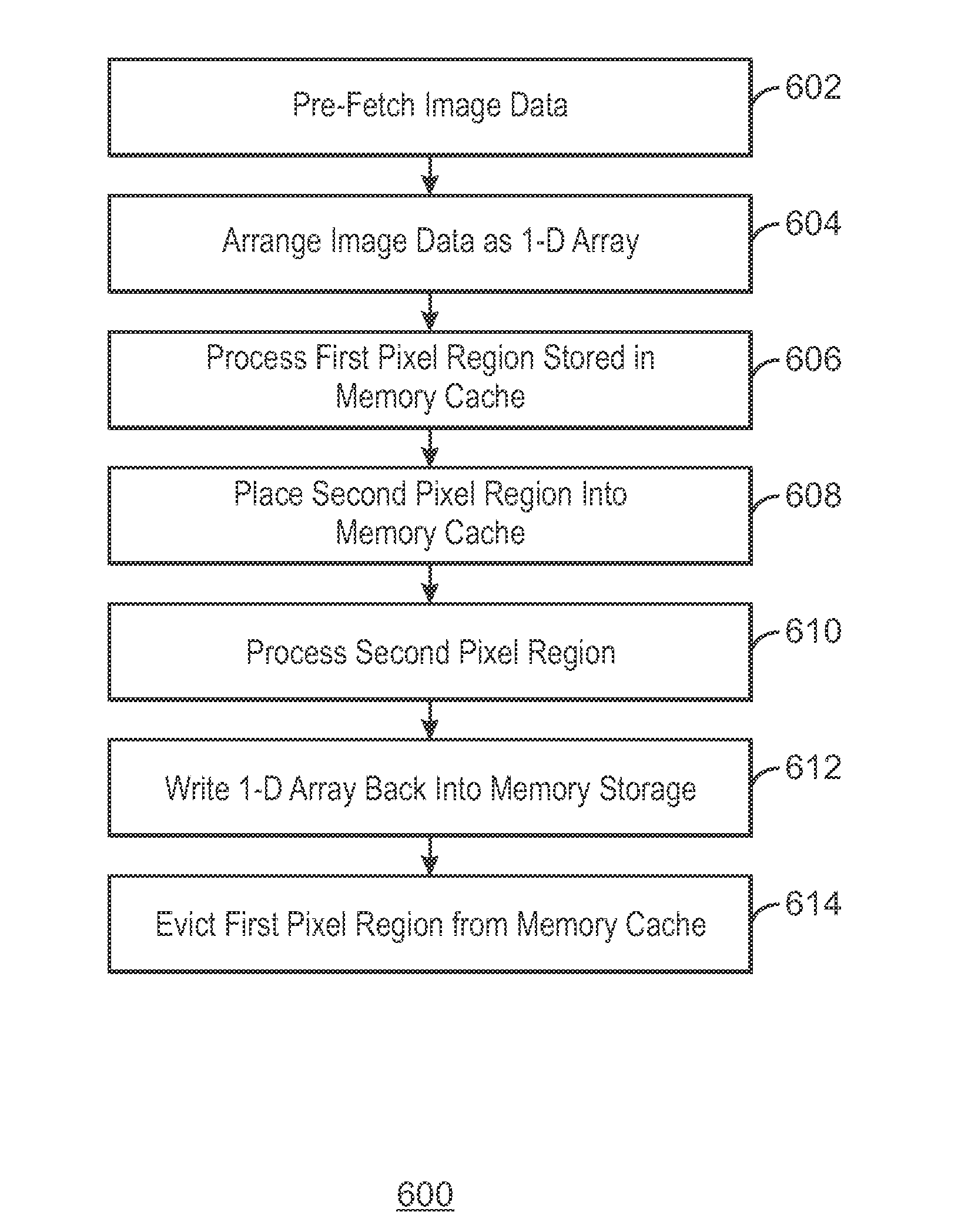

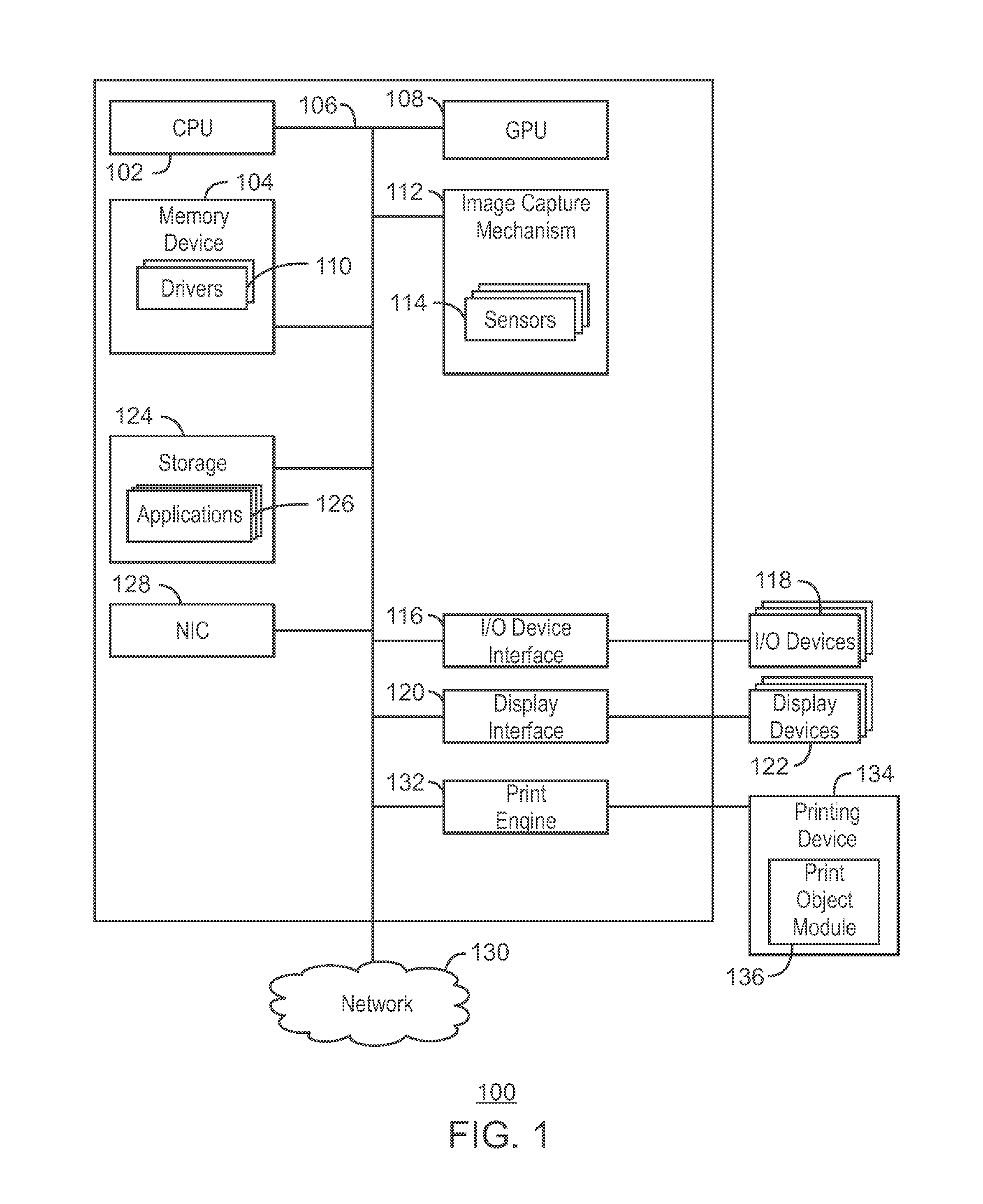

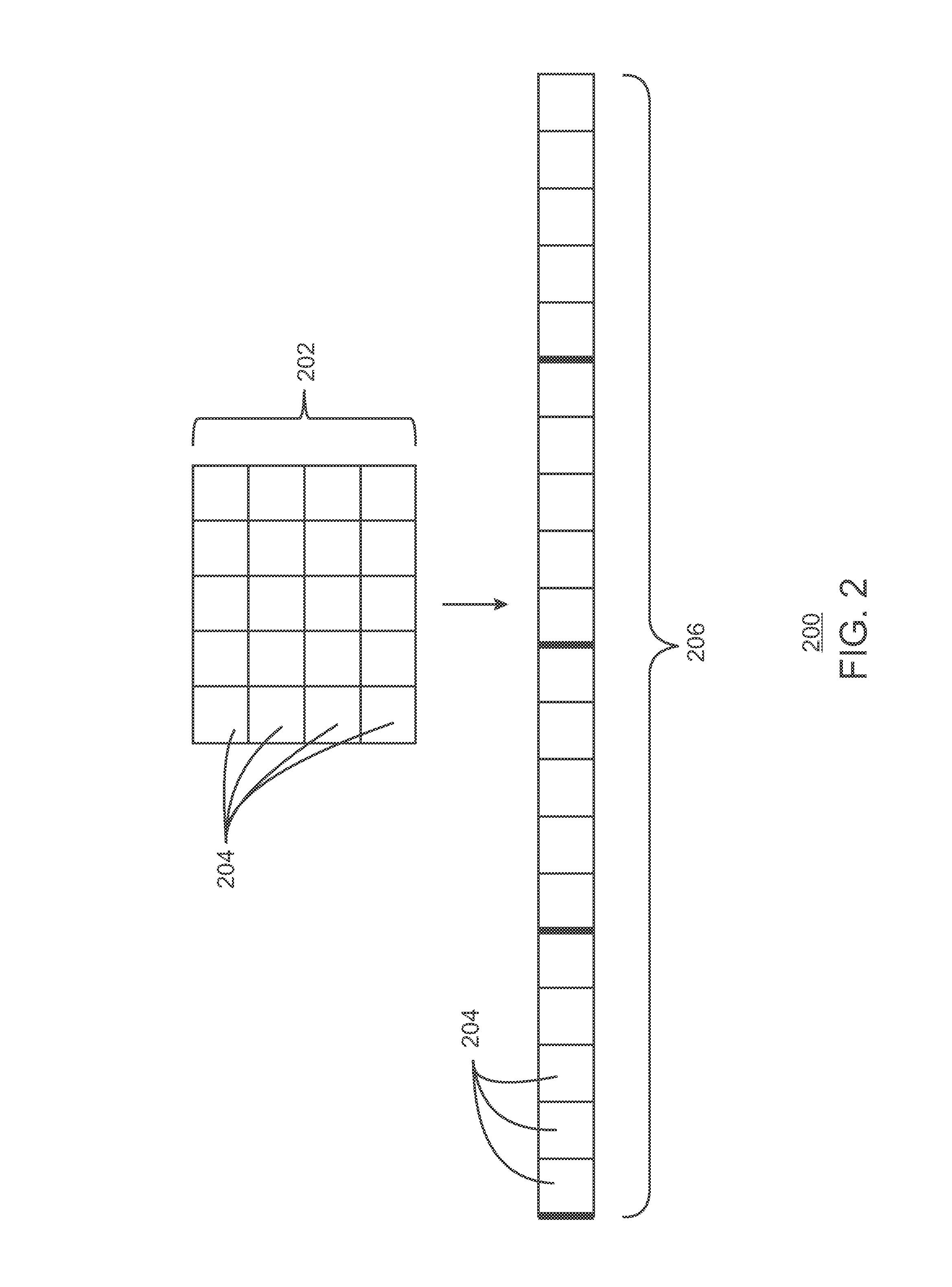

Optimizing image memory access

InactiveUS20140184630A1Memory adressing/allocation/relocationImage memory managementUniform memory accessDirect memory access

An apparatus and system for accessing an image in a memory storage is disclosed herein. The apparatus includes logic to pre-fetch image data, wherein the image data includes pixel regions. The apparatus also includes logic to arrange the image data as a set of one-dimensional arrays to be linearly processed. The apparatus further includes logic to process a first pixel region from the image data, wherein the first pixel region is stored in a cache. Additionally, the apparatus includes logic to place a second pixel region from the image data into the cache, wherein the second pixel region is to be processed after the first pixel region has been processed, and logic to process the second pixel region. Logic to write the set of one-dimensional arrays back into the memory storage is also provided, and the first pixel region is evicted from the cache.

Owner:INTEL CORP

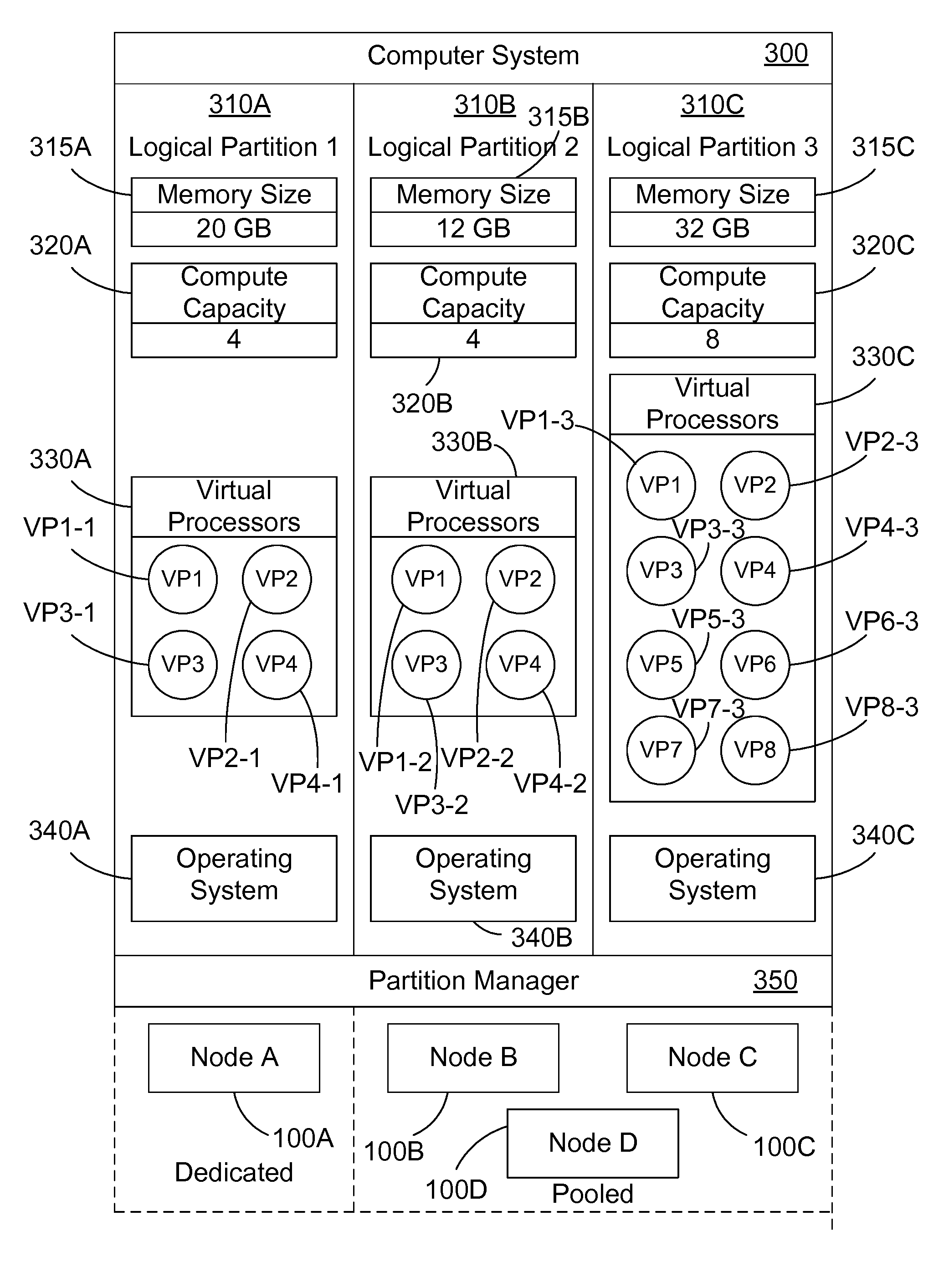

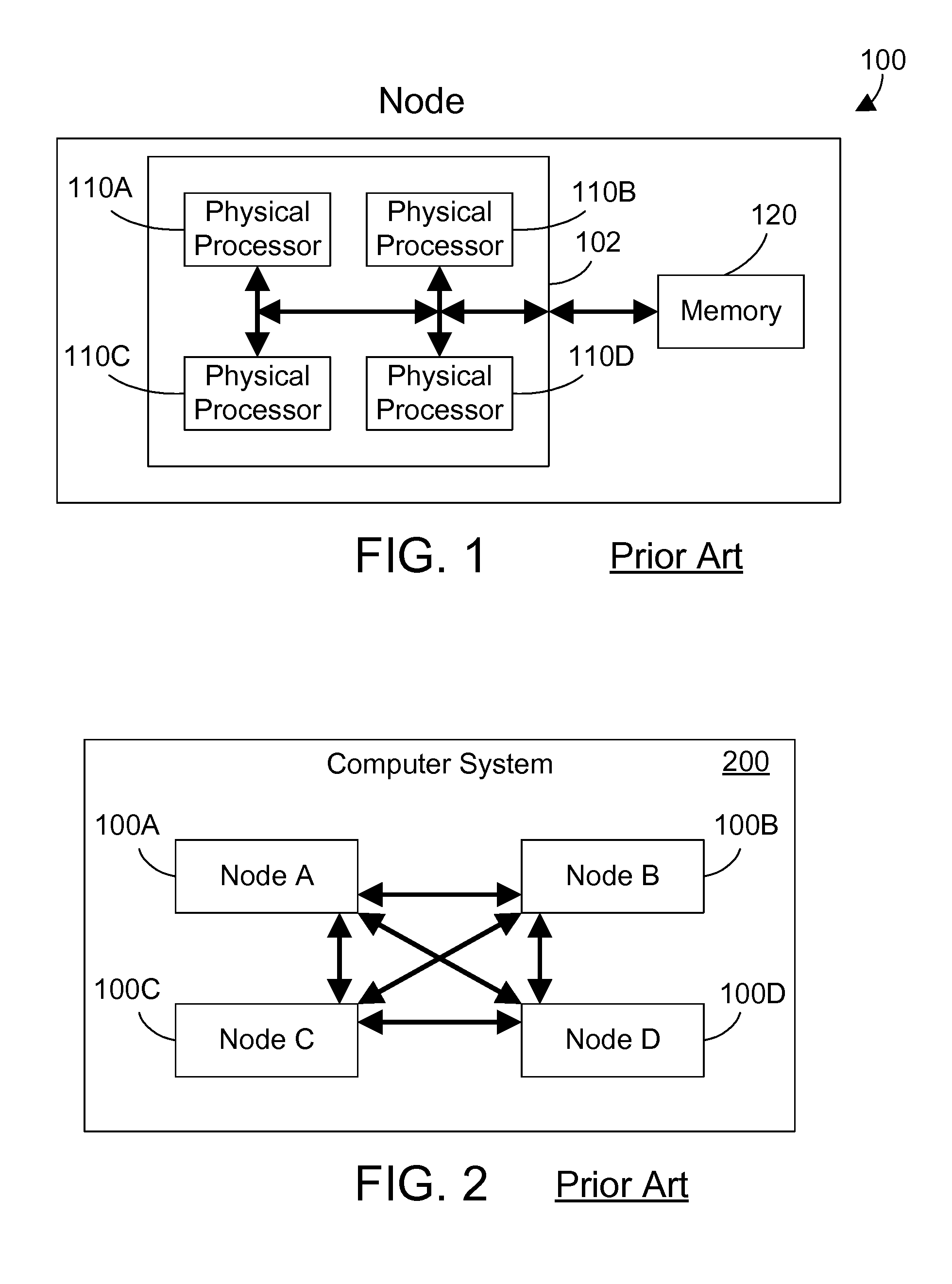

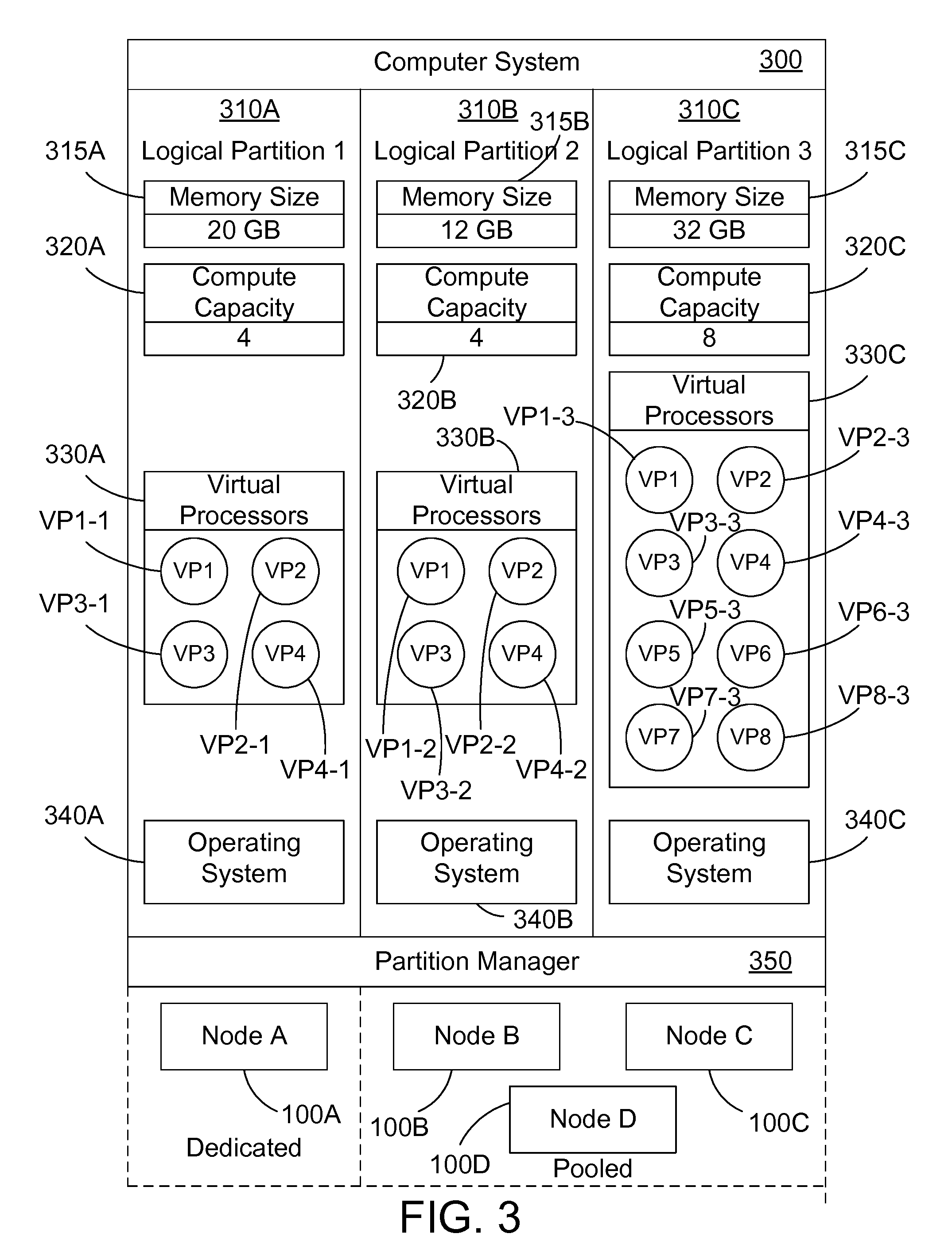

Non-Uniform Memory Access (NUMA) Enhancements for Shared Logical Partitions

InactiveUS20130080712A1Memory architecture accessing/allocationProgram controlUniform memory accessOperational system

In a NUMA-topology computer system that includes multiple nodes and multiple logical partitions, some of which may be dedicated and others of which are shared, NUMA optimizations are enabled in shared logical partitions. This is done by specifying a home node parameter in each virtual processor assigned to a logical partition. When a task is created by an operating system in a shared logical partition, a home node is assigned to the task, and the operating system attempts to assign the task to a virtual processor that has a home node that matches the home node for the task. The partition manager then attempts to assign virtual processors to their corresponding home nodes. If this can be done, NUMA optimizations may be performed without the risk of reducing the performance of the shared logical partition.

Owner:IBM CORP

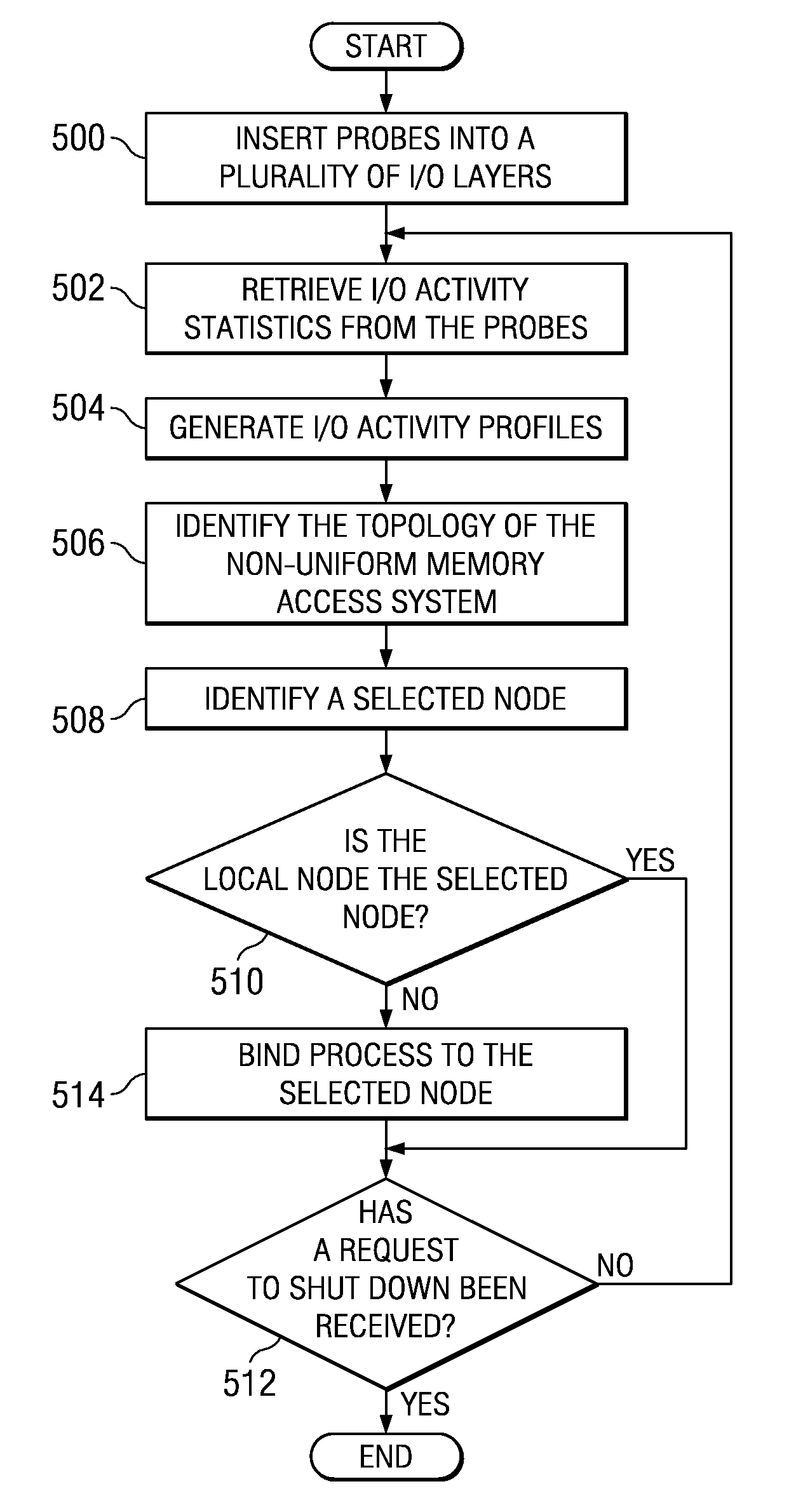

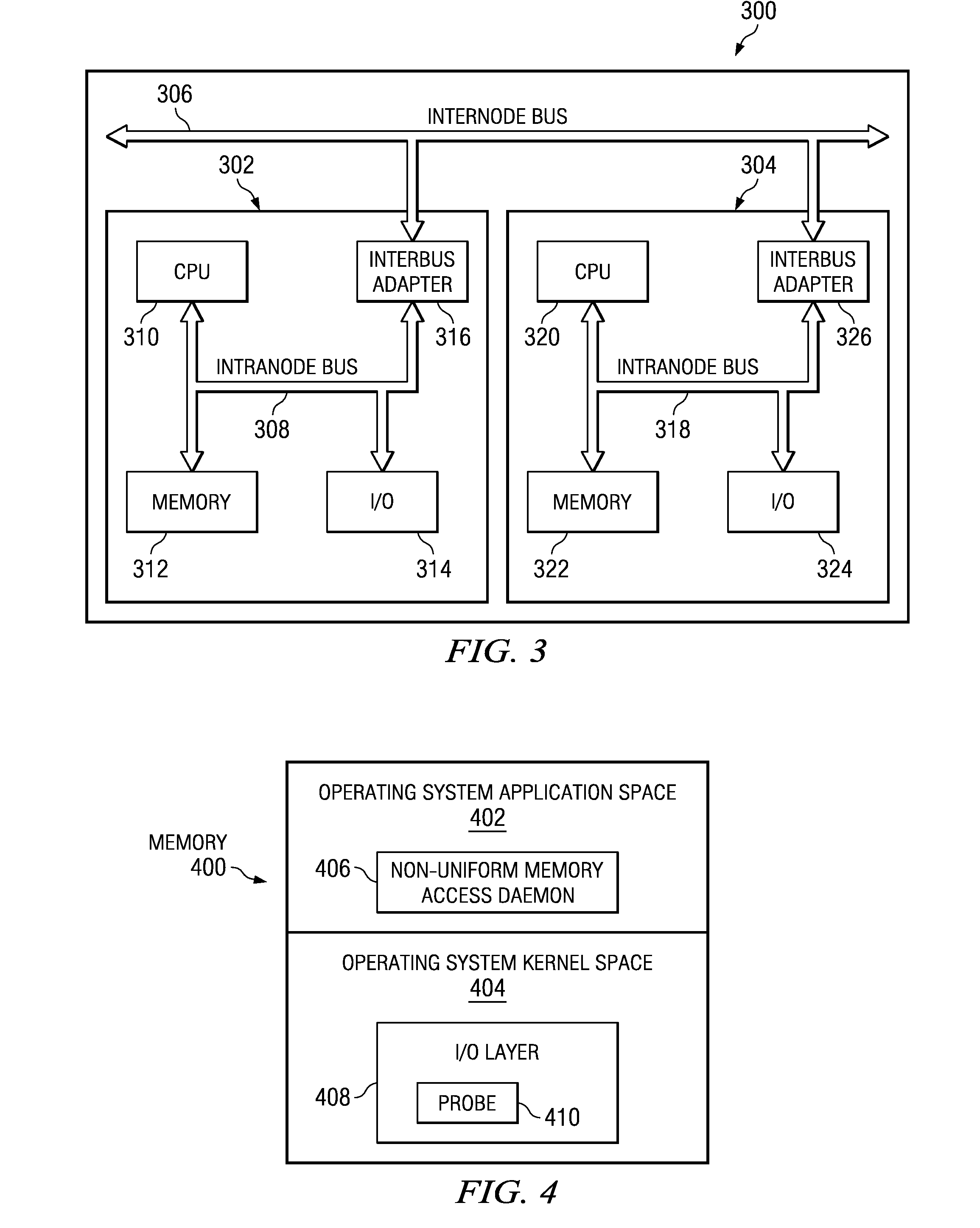

Binding processes in a non-uniform memory access system

A computer implemented method, apparatus, and computer usable program product for binding a process to a selected node of a multi-node system. Input / output activity statistics for a process are retrieved from a set of probes. The set of probes detects a flow of data through an input / output device utilized by the process. A topology of the multi-node system that comprises a location of the input / output device is identified. A node is selected according to a decision policy to form a selected node. The process is bound to the selected node according to the decision policy.

Owner:SAP AG

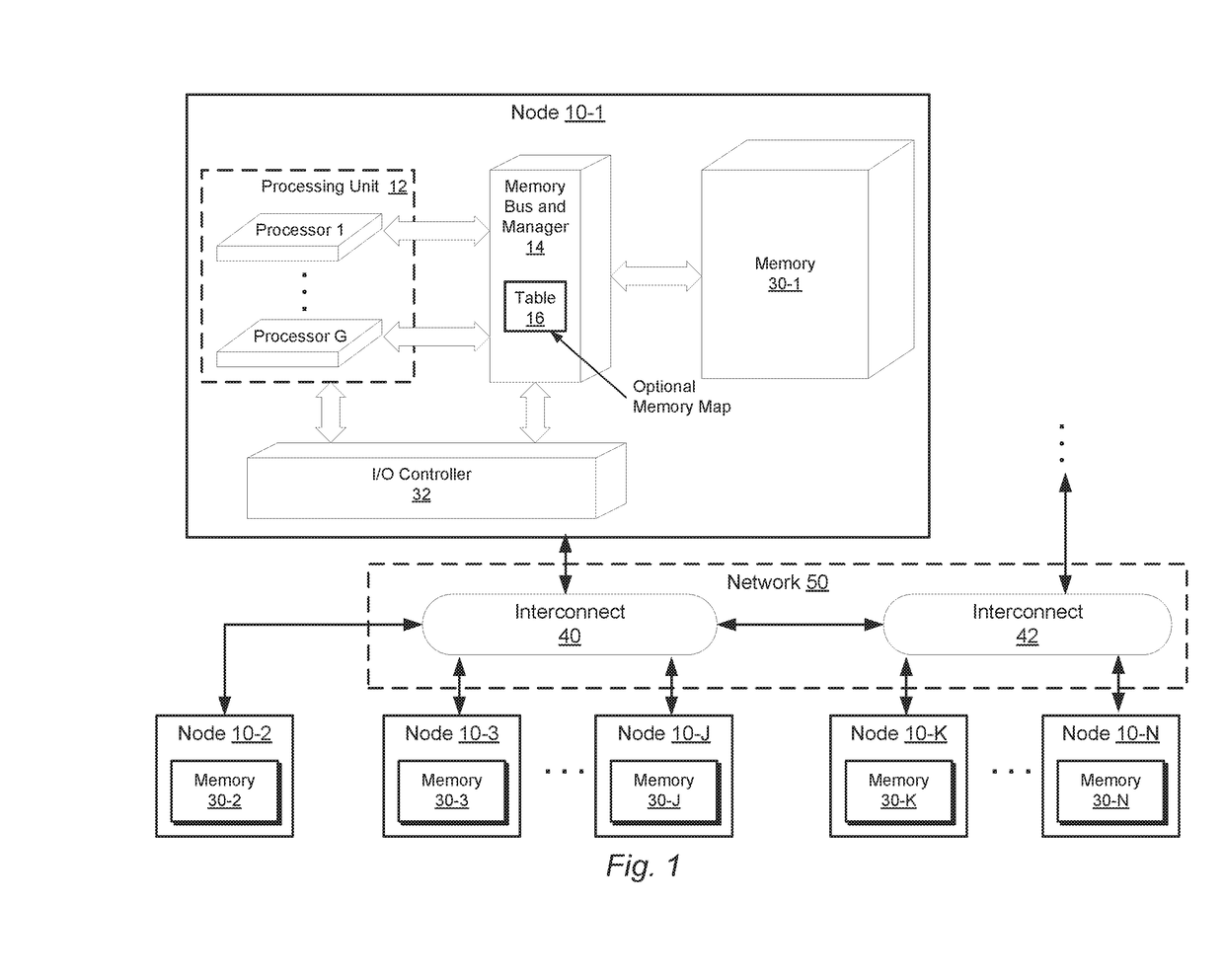

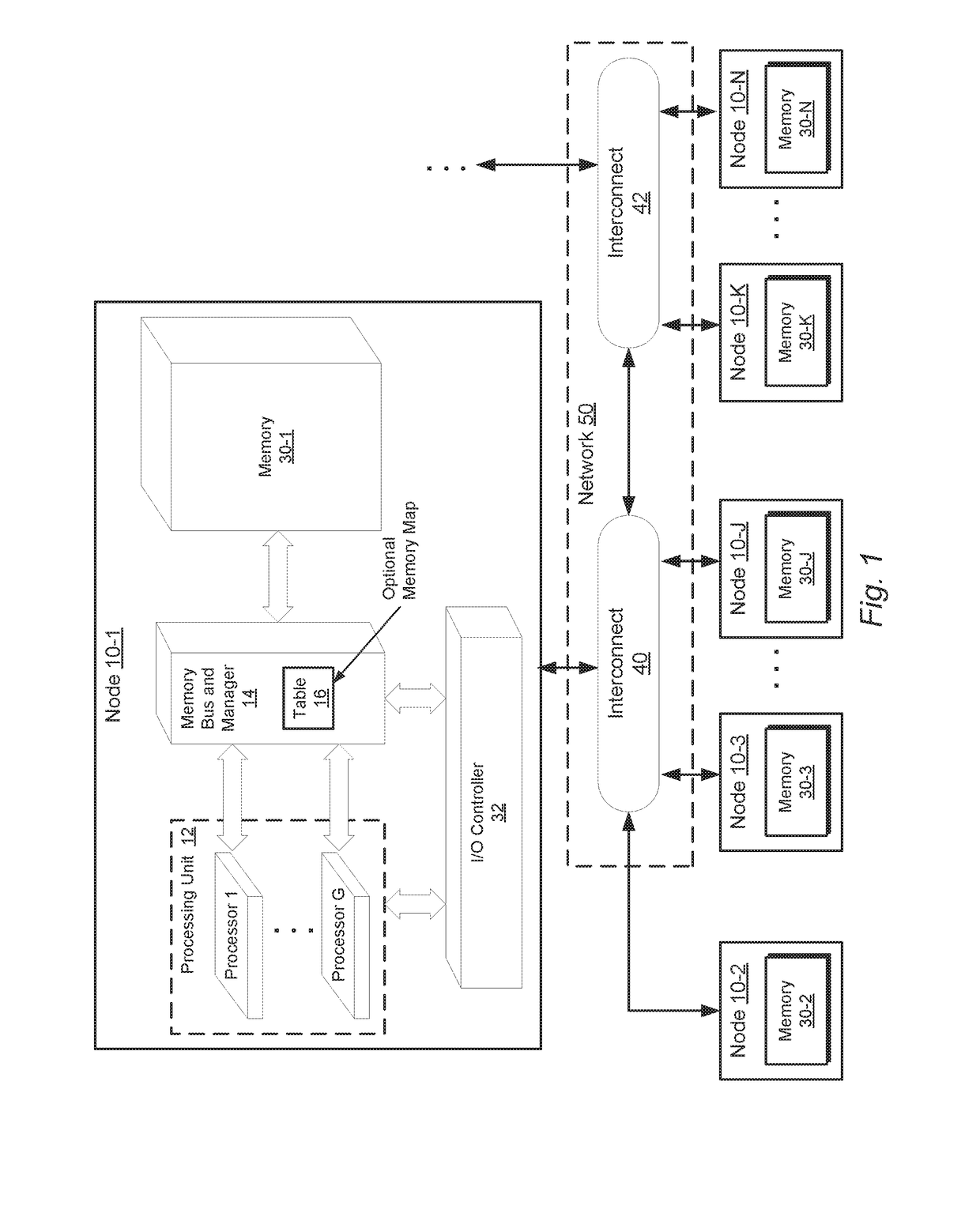

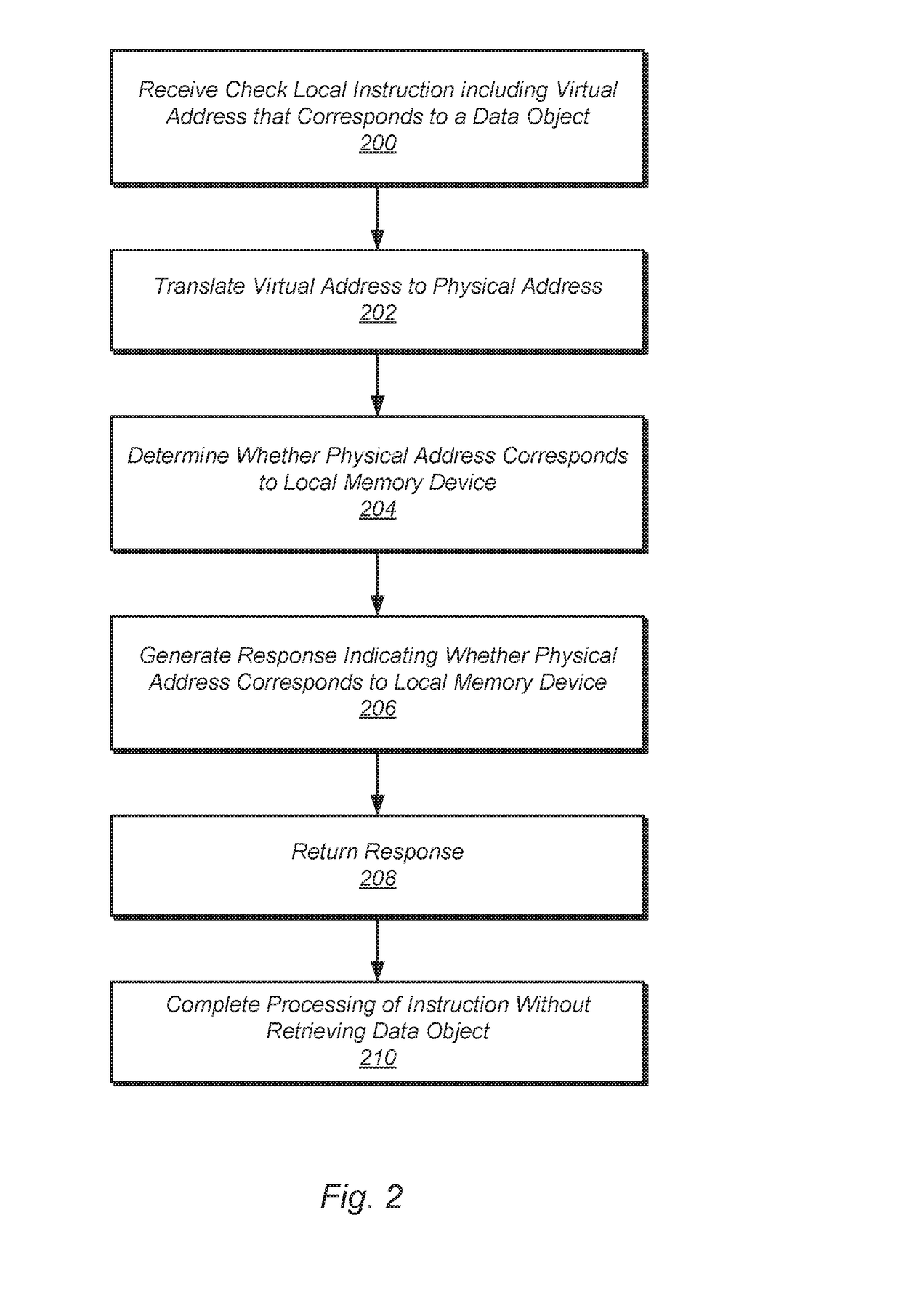

User-level instruction for memory locality determination

InactiveUS20170228164A1Handle data efficientlyEfficient processingInput/output to record carriersMemory adressing/allocation/relocationUniform memory accessComputer architecture

Systems and methods for efficiently processing data in a non-uniform memory access (NUMA) computing system are disclosed. A computing system includes multiple nodes connected in a NUMA configuration. Each node includes a processing unit which includes one or more processors. A processor in a processing unit executes an instruction that identifies an address corresponding to a data location. The processor determines whether a memory device stores data corresponding to the address. A response is returned to the processor. The response indicates whether the memory device stores data corresponding to the address. The processor completes processing of the instruction without retrieving the data.

Owner:ADVANCED MICRO DEVICES INC

Directory cache method for node control chip in cache coherent non-uniform memory access (CC-NUMA) system

ActiveCN102708190AImprove efficiencyImprove acceleration performanceMemory adressing/allocation/relocationSpecial data processing applicationsUniform memory accessParallel computing

The invention provides a directory cache method for a node control chip in a cache coherent non-uniform memory access (CC-NUMA) system. A directory cache module is designed for achieving and optimizing the access control of a memory. In researches and designs of computer architectures, locality of application program access is often considered, wherein the phenomenon that recently visited data are revisited before long is called temporal locality; and based on the characteristic, the cache is introduced in the CC-NUMA system based on a directory for caching directory entries, and a least recently used (LRU) replacement algorithm is utilized, so that visiting pressure of the directory is well reduced, and a bottleneck effect of the visiting of the memory is relieved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Computer systems, methods and programs for providing uniform access to configurable objects derived from disparate sources

ActiveUS7353237B2Avoid wastingEasy to adjustData processing applicationsSpecific program execution arrangementsUniform memory accessProgramming language

Owner:SAP AG

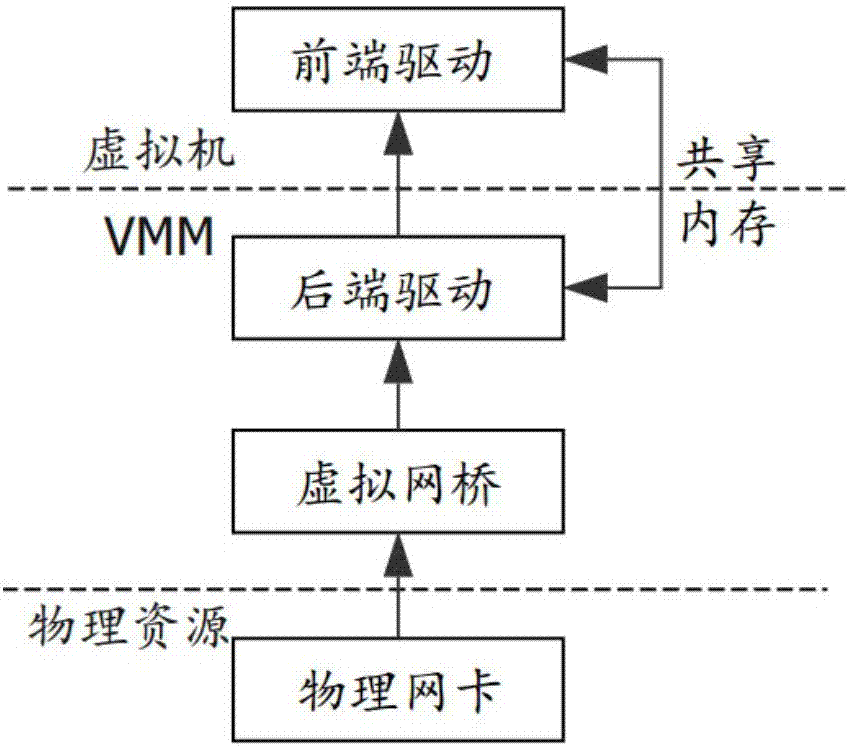

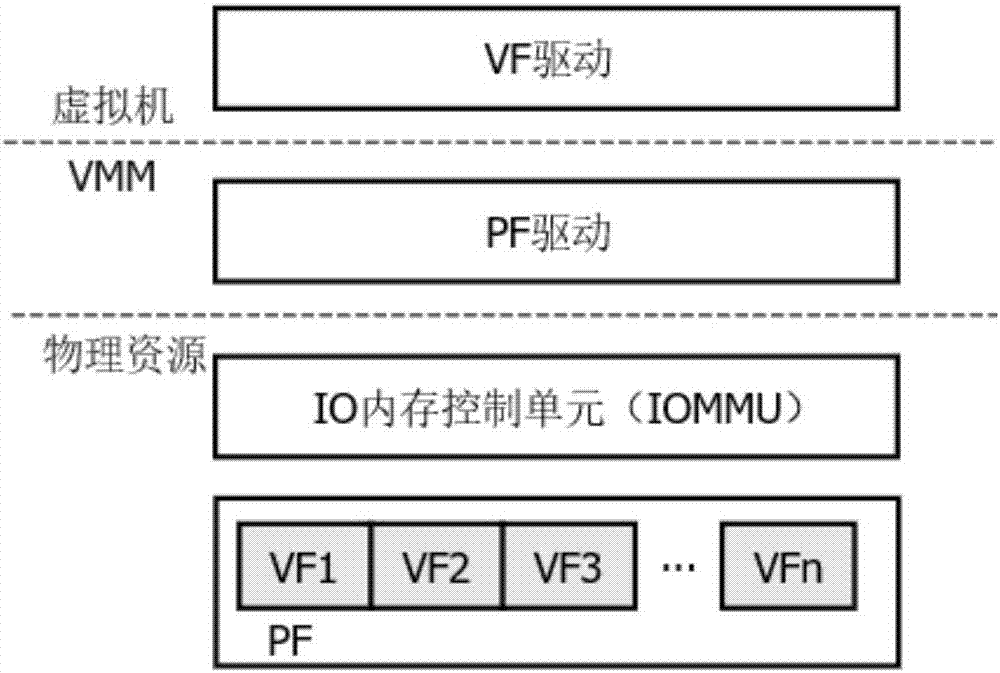

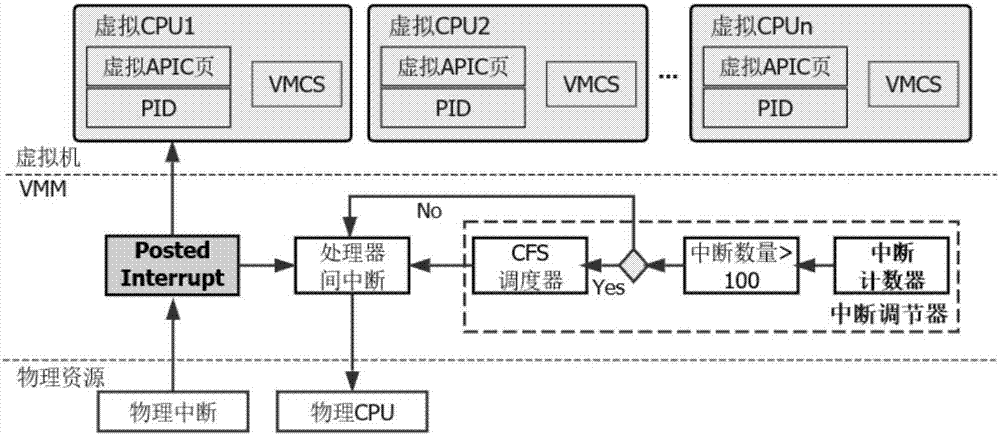

Efficient network IO processing method based on NUMA and hardware assisted technology

ActiveCN107038061AReduce VM-Exit operationsReduce Interrupt Delivery LatencyResource allocationSoftware simulation/interpretation/emulationUniform memory accessVirtualization

The present invention discloses an efficient network I / O processing method based on the NUMA and the hardware assisted technology. The method is characterized in that in the virtualized environment, when an SRIOV (Single-Root I / O Virtualization) direct allocation device or a paravirtualization device generates a piece of physical interruption, by analyzing the CPU for processing the physical interruption, the target CPU and the node affinity of the NUMA (Non- Uniform Memory Access Architecture) where the underlying network card is located are interrupted; combined with the virtual CPU running information, interruption processing efficiency of the Intel APICv hardware technology and the Posted-Interrupt mechanism on the multi-core server is optimized; and under the condition that the context switching load caused by the VM-exit is fully reduced, all submission delays and call delays from generating interruption to processing interruption by the virtual machine are effectively eliminated, so that the I / O response rate of the virtual machine is greatly improved, and data packet processing efficiency of the data center network is greatly optimized.

Owner:SHANGHAI JIAO TONG UNIV

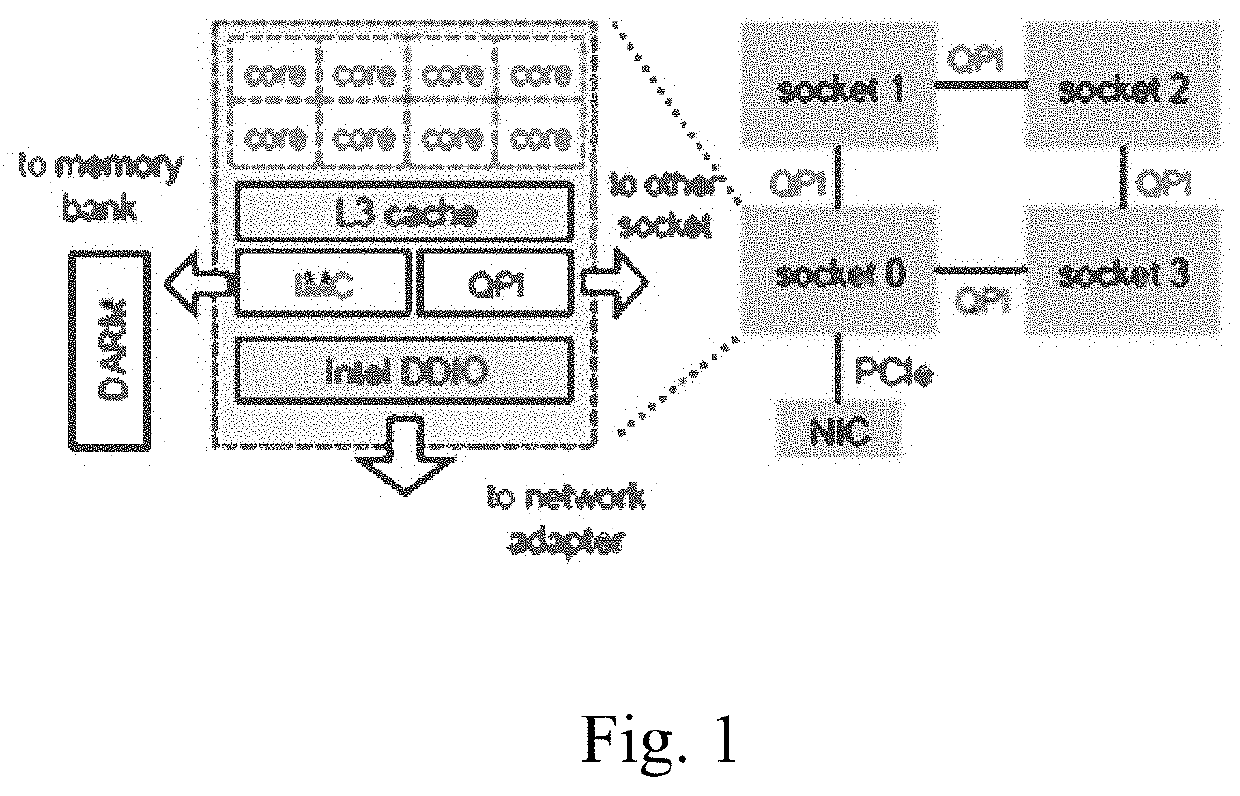

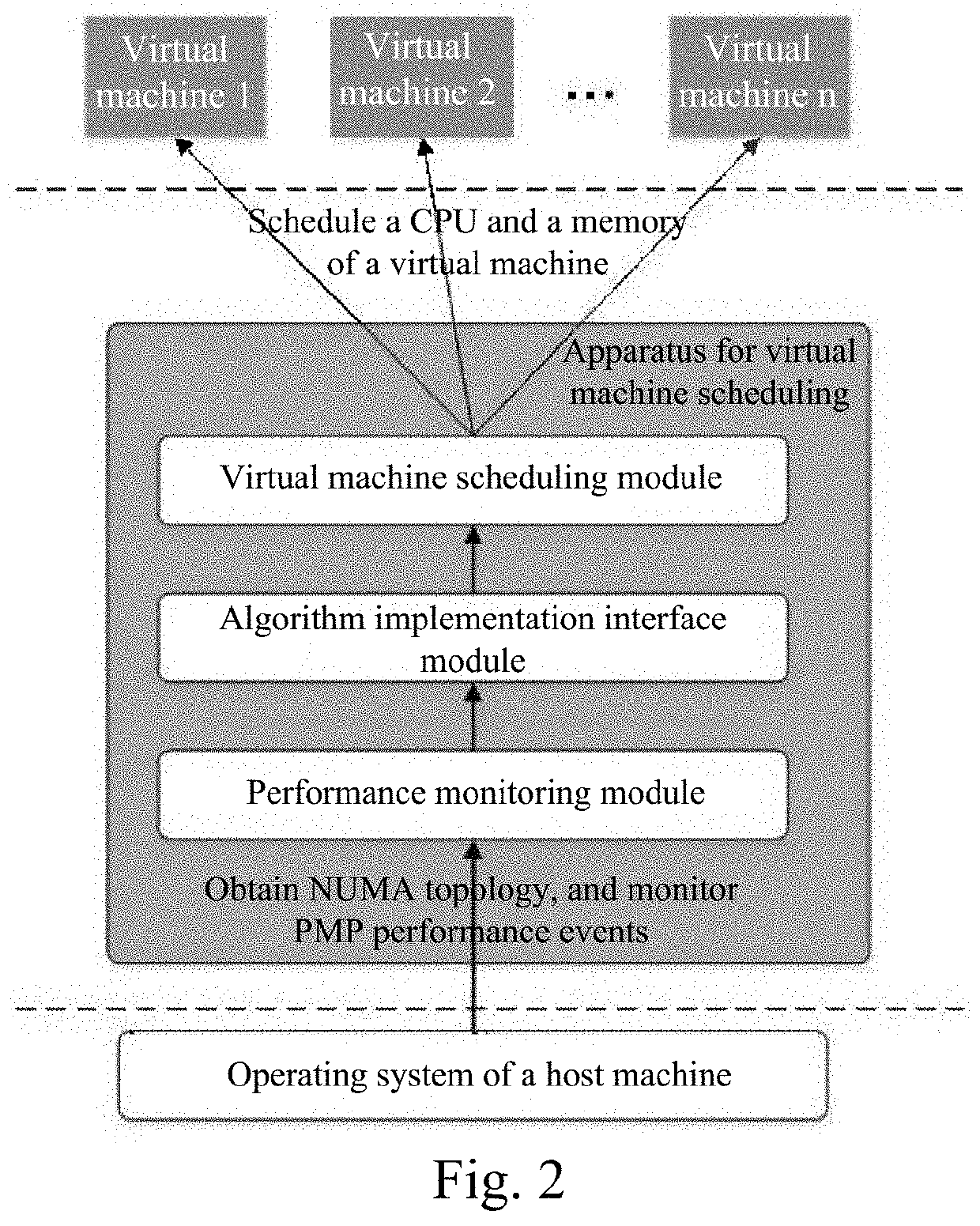

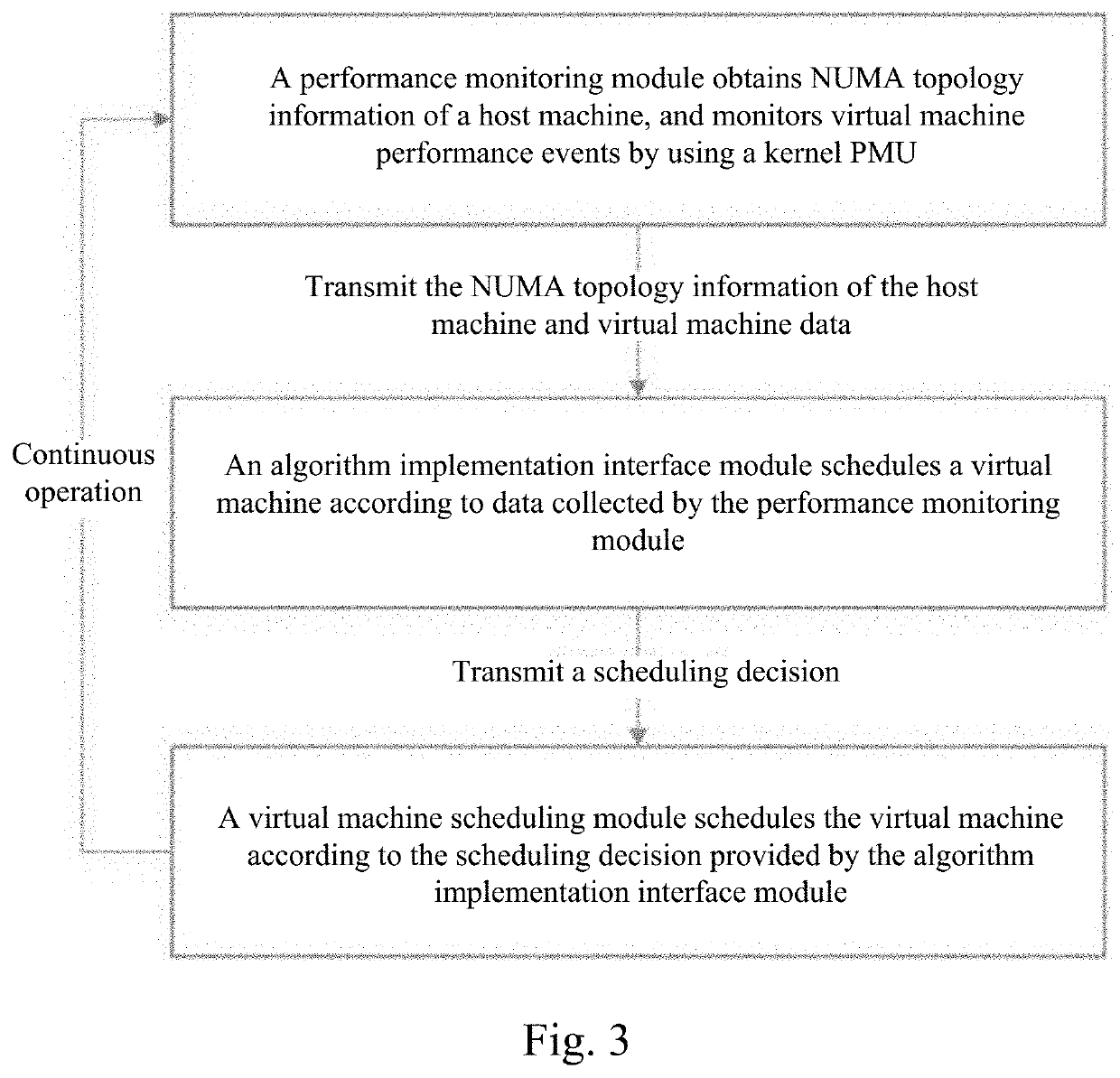

Apparatus and method for virtual machine scheduling in non-uniform memory access architecture

ActiveUS20200073703A1Improve efficiencyReduce performance overheadMemory architecture accessing/allocationProgram initiation/switchingUniform memory accessTopology information

The present invention discloses an apparatus and a method for virtual machine scheduling in a non-uniform memory access (NUMA) architecture. The method includes the following steps: obtaining, by a performance monitoring module, NUMA topology information of a host machine NUMA, and monitoring virtual machine performance events by using a kernel PMU; transmitting the NUMA topology information of the host machine NUMA and the virtual machine performance events to an algorithm implementation interface module; invoking, by the algorithm implementation interface module, an algorithm, and transmitting a scheduling decision that is obtained by using a scheduling algorithm to a virtual machine scheduling module after execution of the scheduling algorithm is complete; scheduling, by the virtual machine scheduling module according to the scheduling decision transmitted by the algorithm implementation interface module, a VCPU and a memory of a virtual machine; and after the scheduling of the virtual machine is complete, redirecting to continue performing performance monitoring of the virtual machine. According to the method of the present invention, only implementation of NUMA scheduling optimization algorithm needs to be considered, and there is no need to consider details, for example, collection of data such as information and performance of a virtual machine, and specific scheduling of the virtual machine, thereby greatly improving the research efficiency of a researcher.

Owner:SHANGHAI JIAO TONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com