Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

279 results about "Virtual Processor" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Tao Virtual Processor (VP) was a virtual machine from Tao Group.

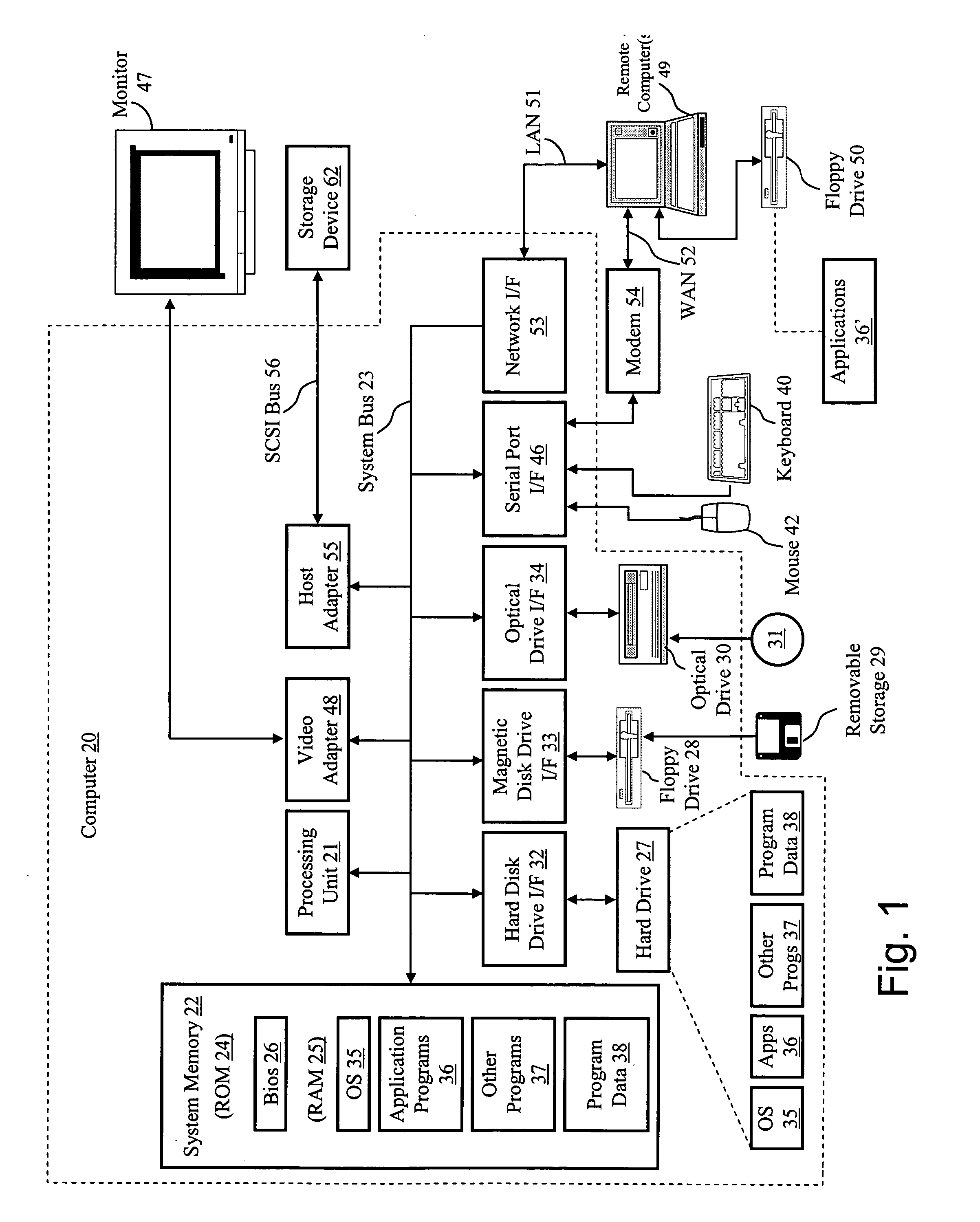

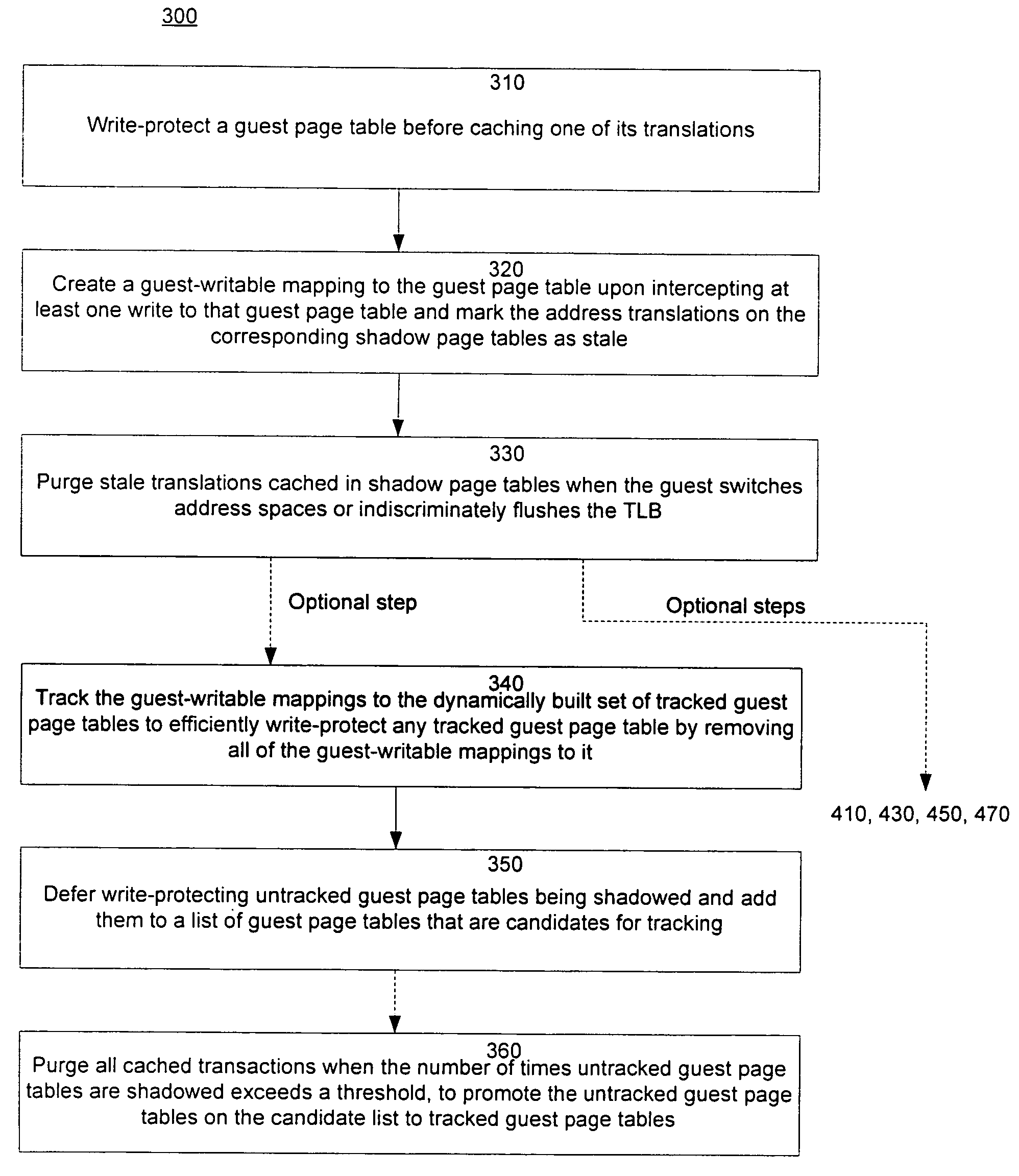

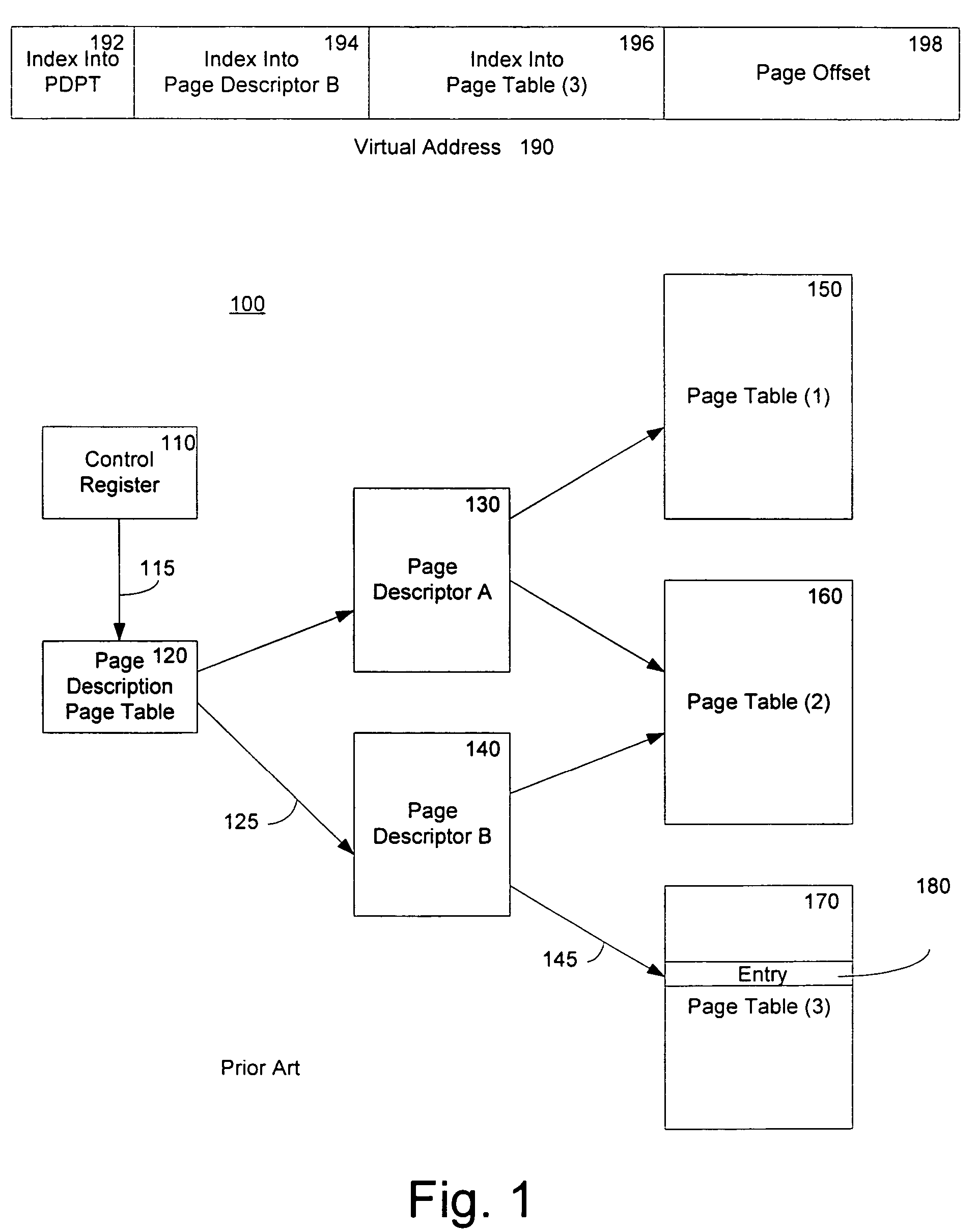

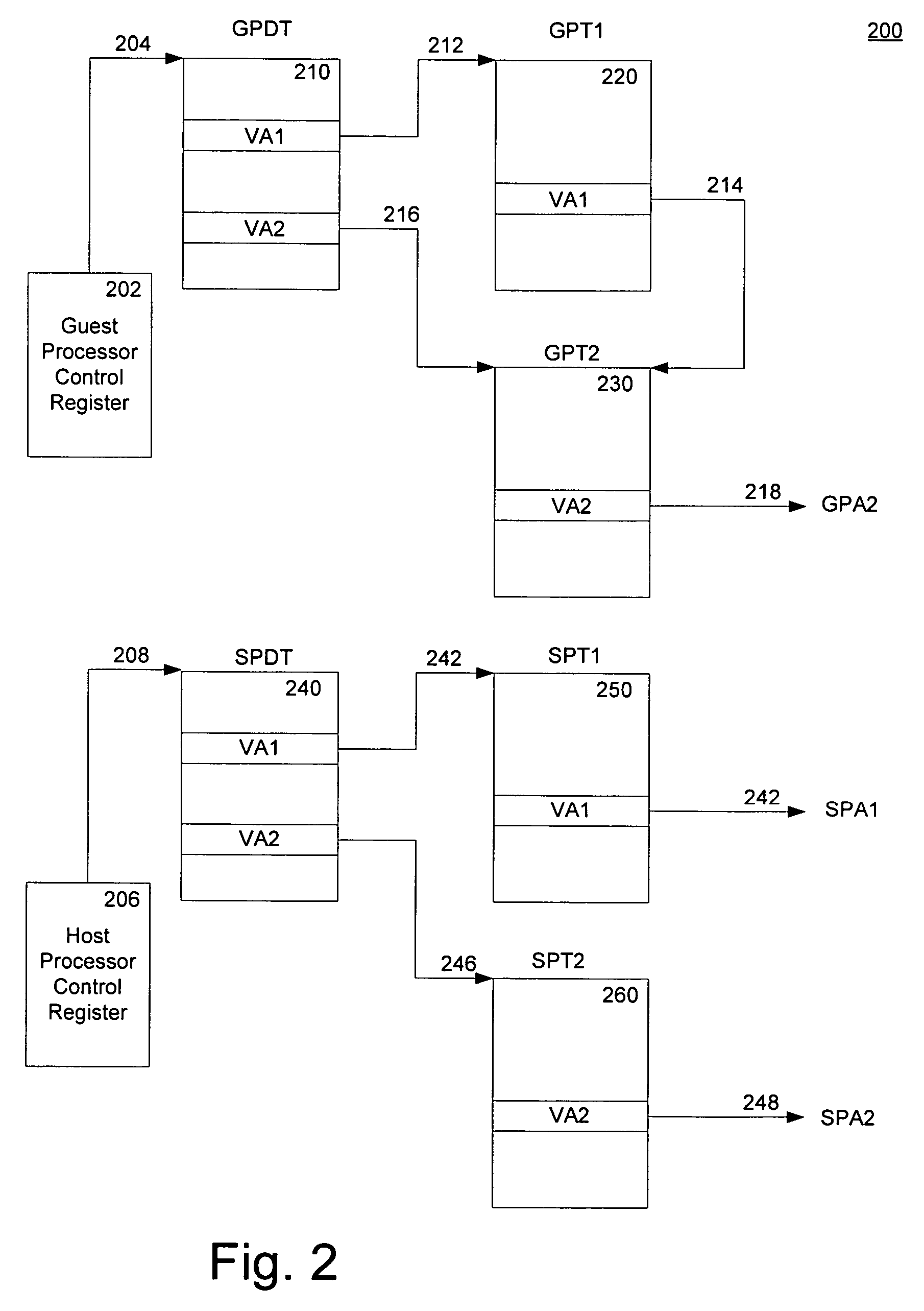

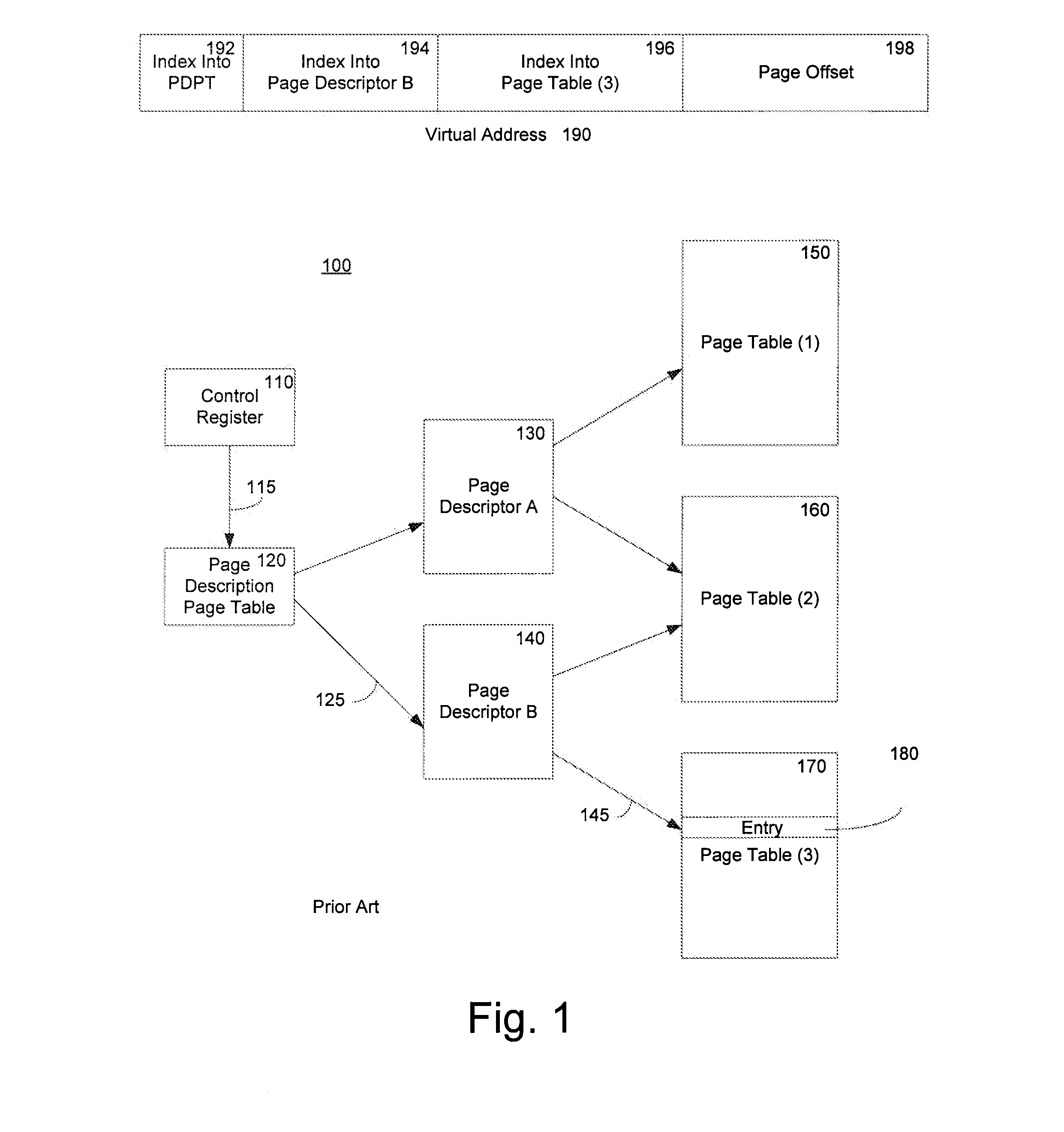

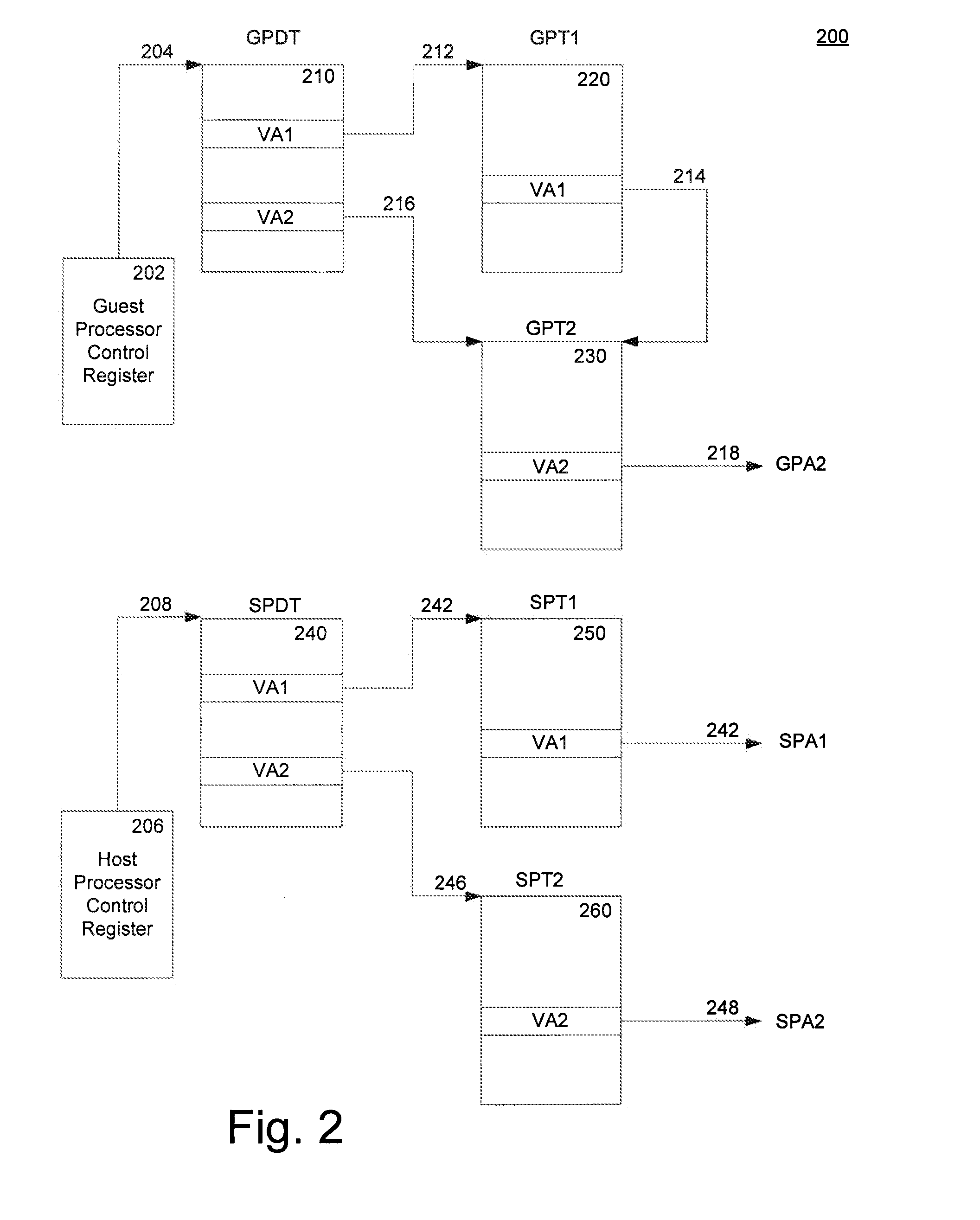

Method and system for caching address translations from multiple address spaces in virtual machines

InactiveUS20060259734A1Reduce memory overheadLow costMemory architecture accessing/allocationMemory systemsVirtualizationPage table

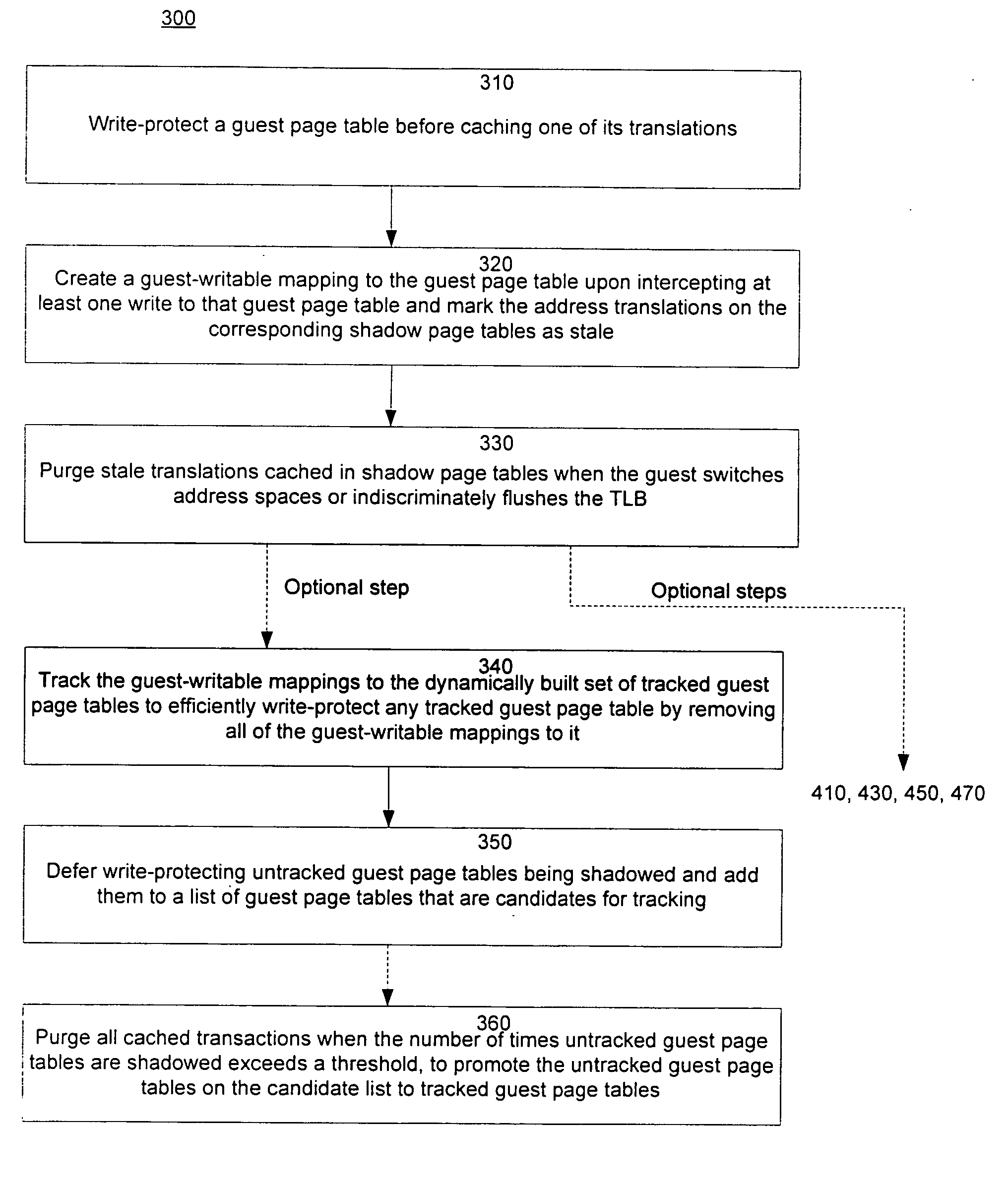

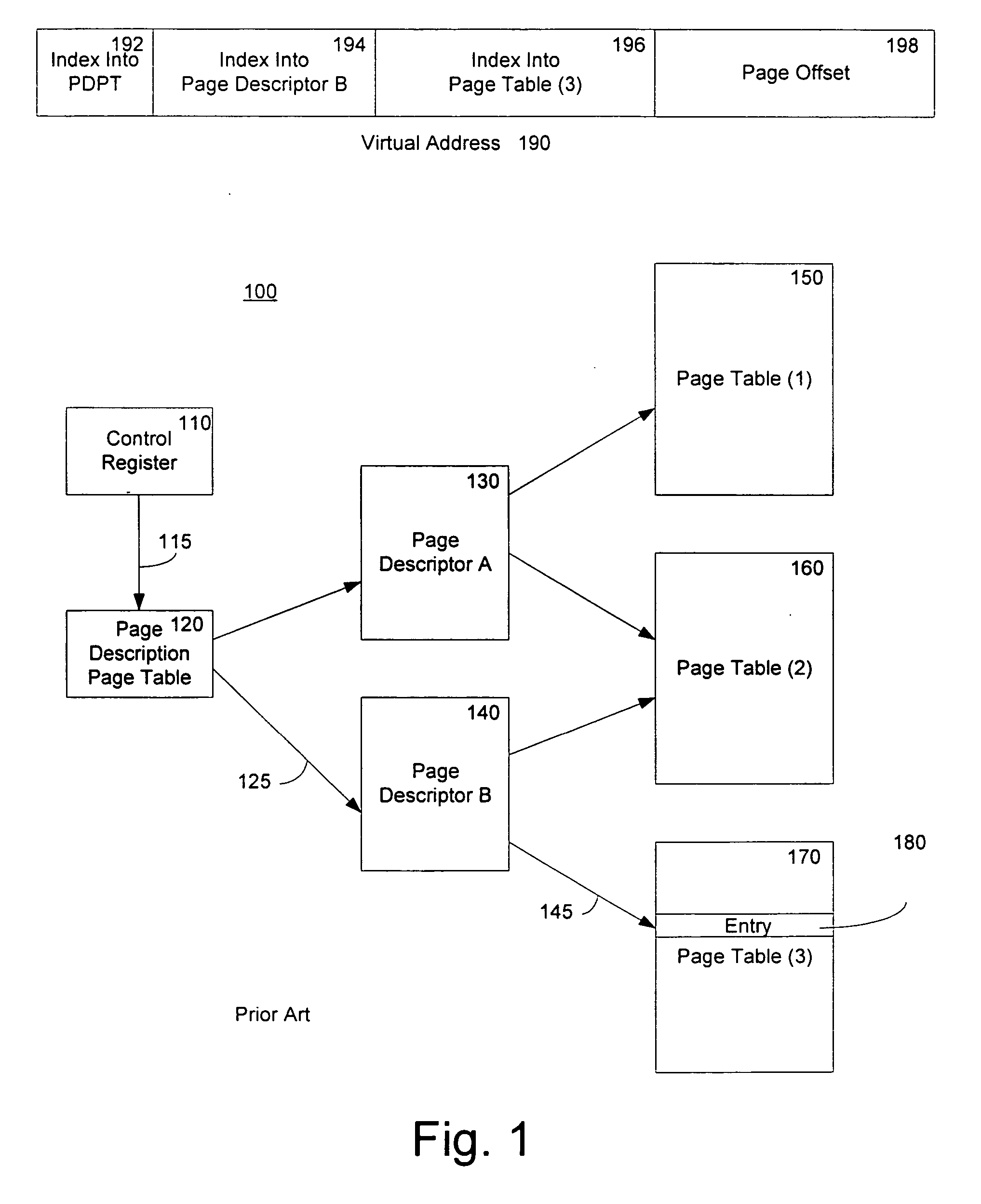

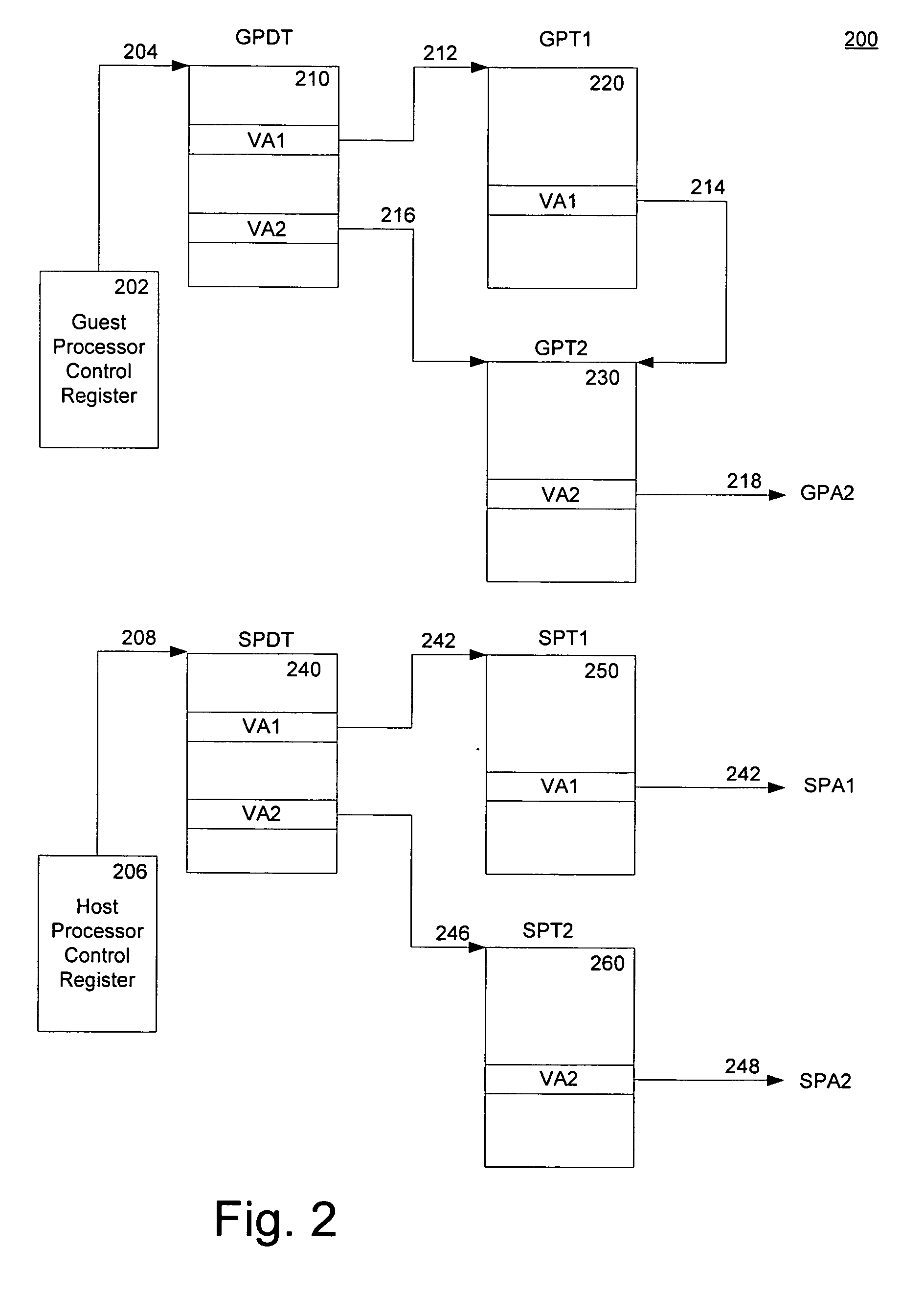

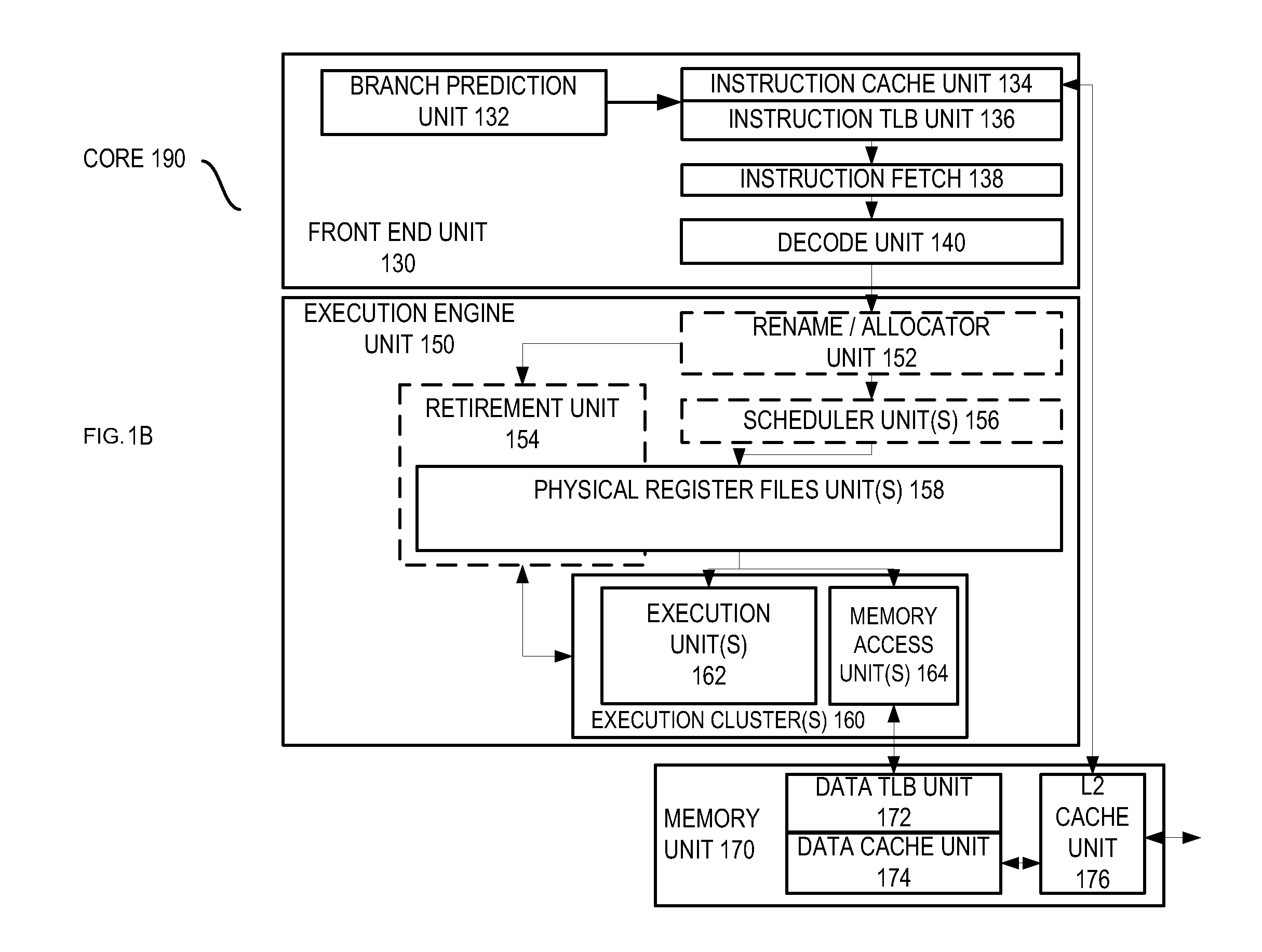

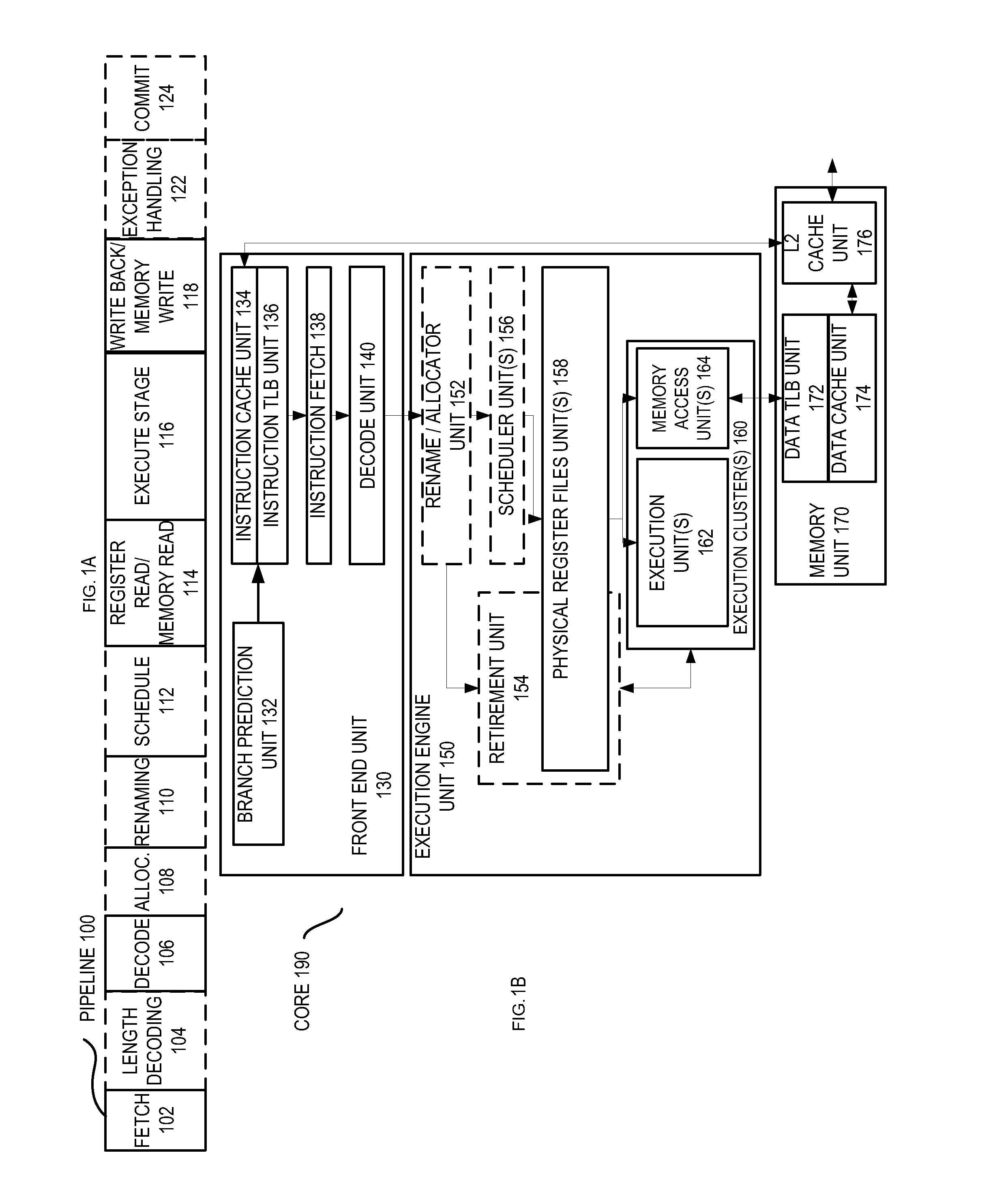

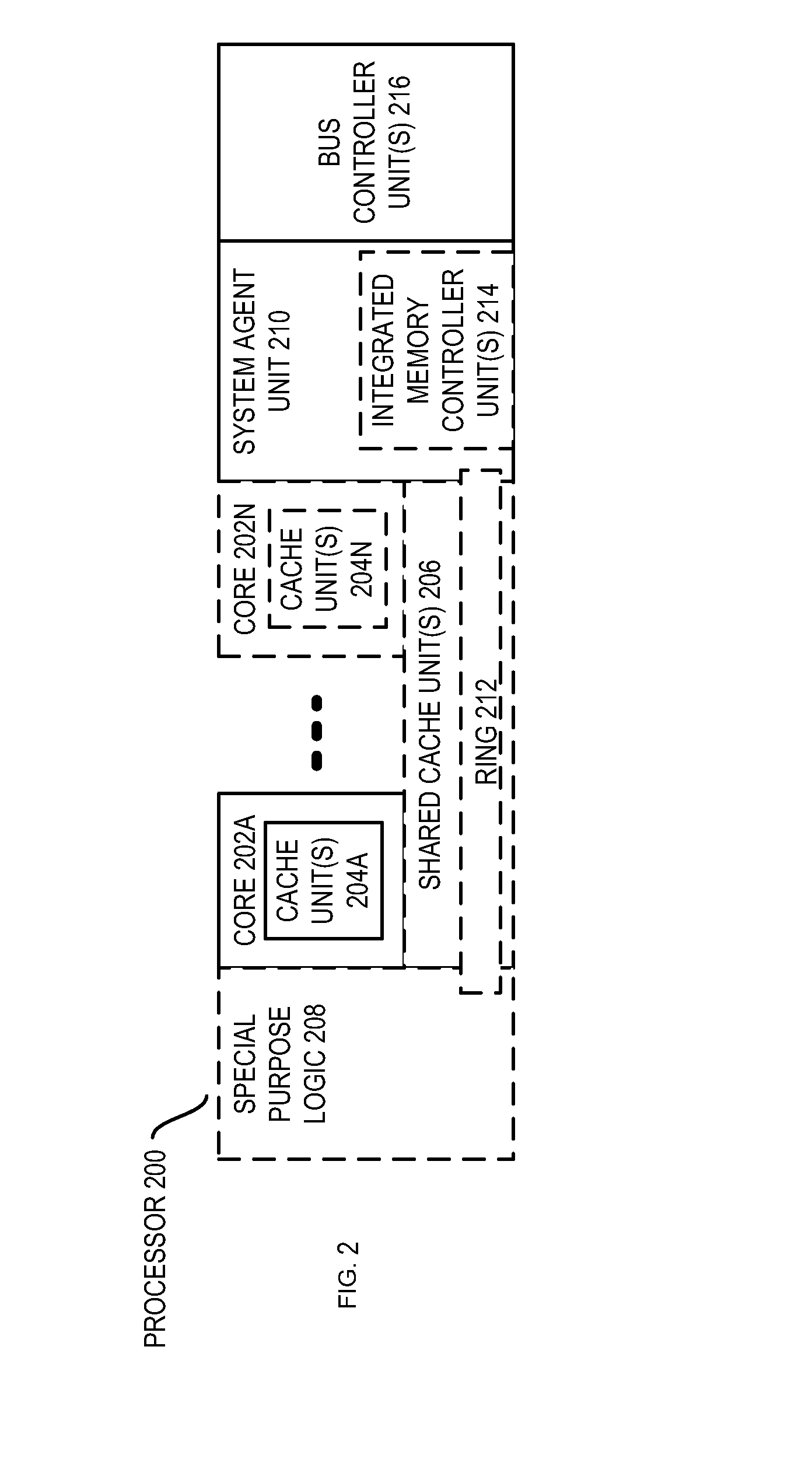

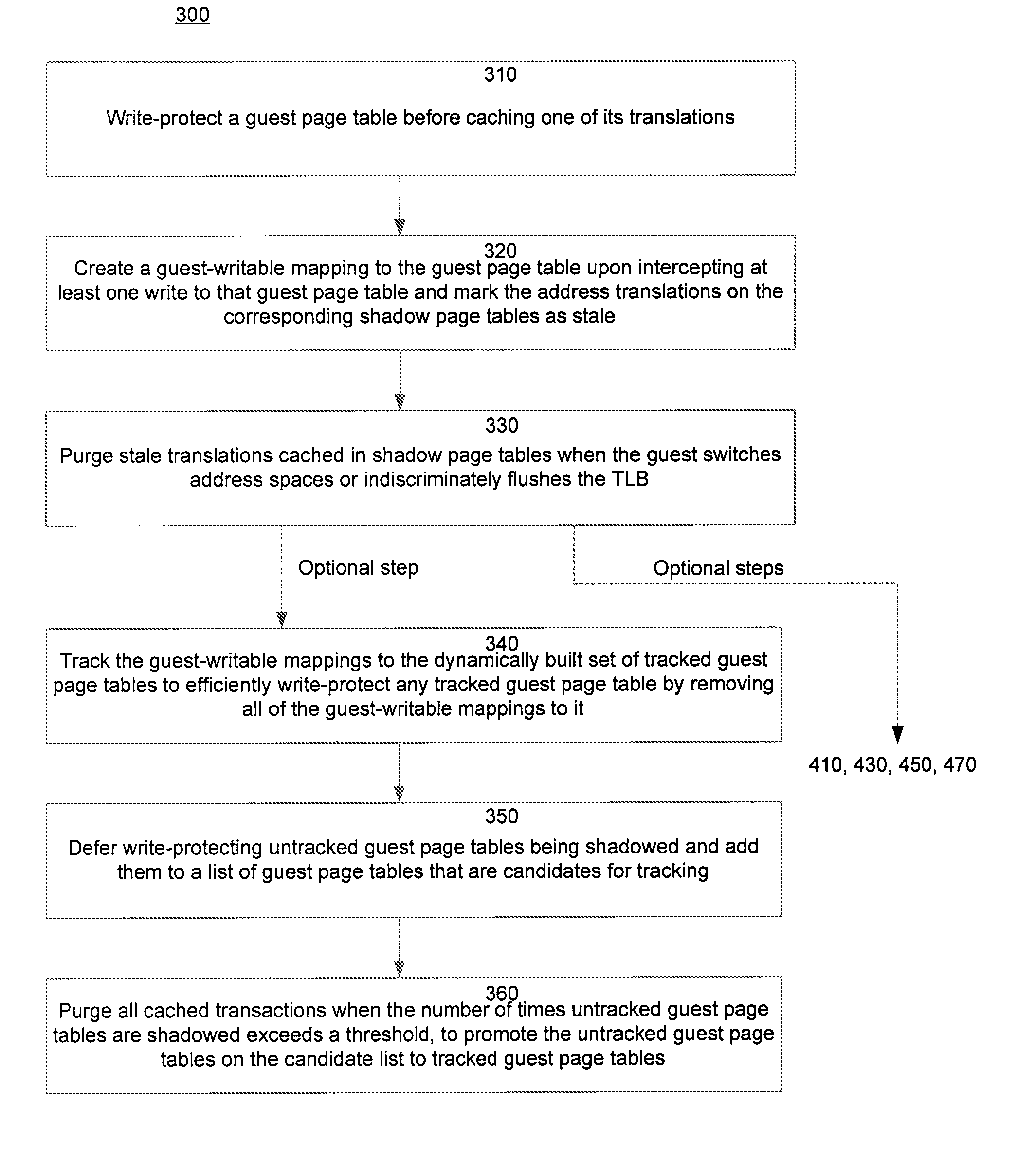

A method of virtualizing memory through shadow page tables that cache translations from multiple guest address spaces in a virtual machine includes a software version of a hardware tagged translation look-aside buffer. Edits to guest page tables are detected by intercepting the creation of guest-writable mappings to guest page tables with translations cached in shadow page tables. The affected cached translations are marked as stale and purged upon an address space switch or an indiscriminate flush of translations by the guest. Thereby, non-stale translations remain cached but stale translations are discarded. The method includes tracking the guest-writable mappings to guest page tables, deferring discovery of such mappings to a guest page table for the first time until a purge of all cached translations when the number of untracked guest page tables exceeds a threshold, and sharing shadow page tables between shadow address spaces and between virtual processors.

Owner:MICROSOFT TECH LICENSING LLC

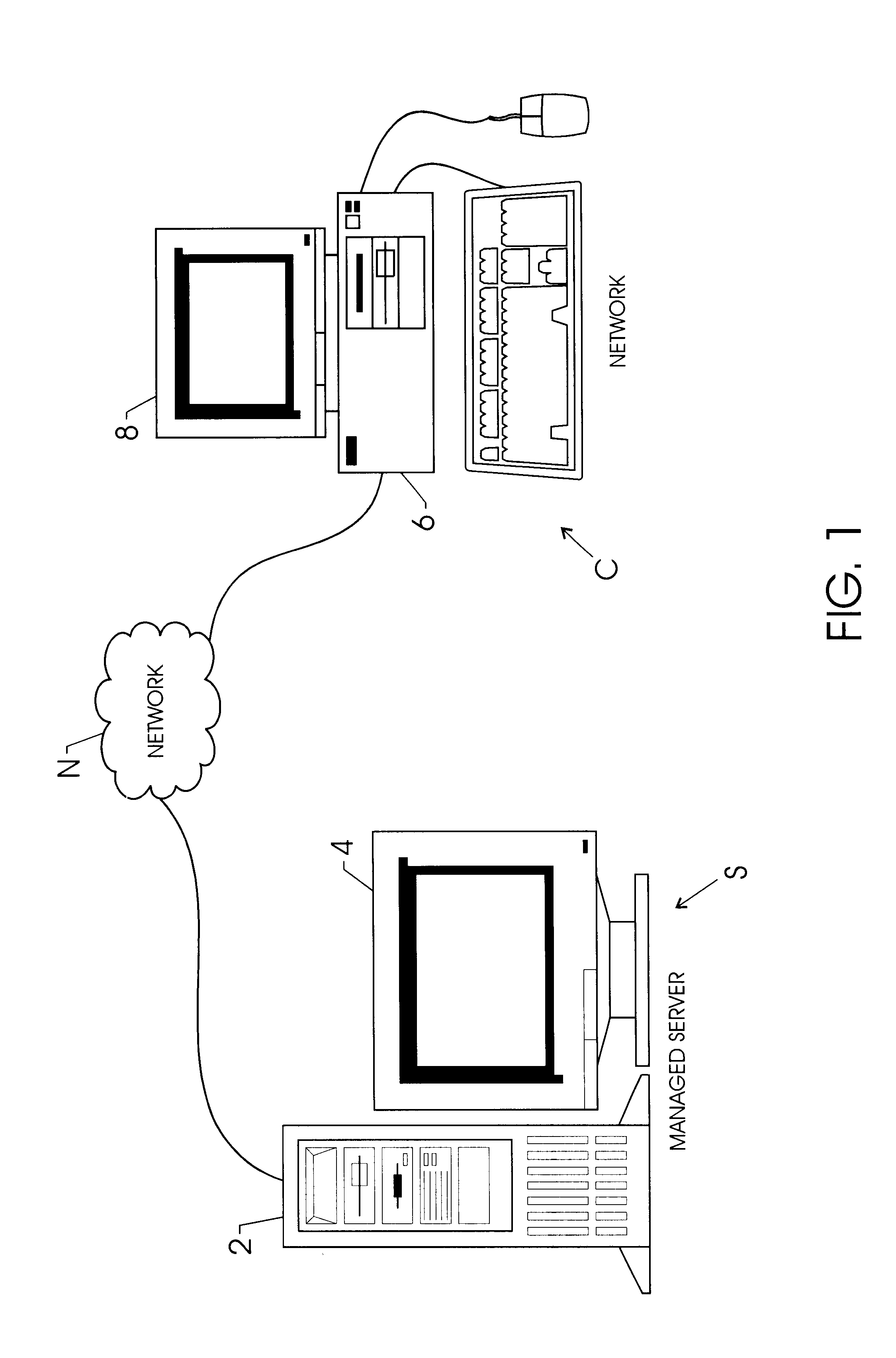

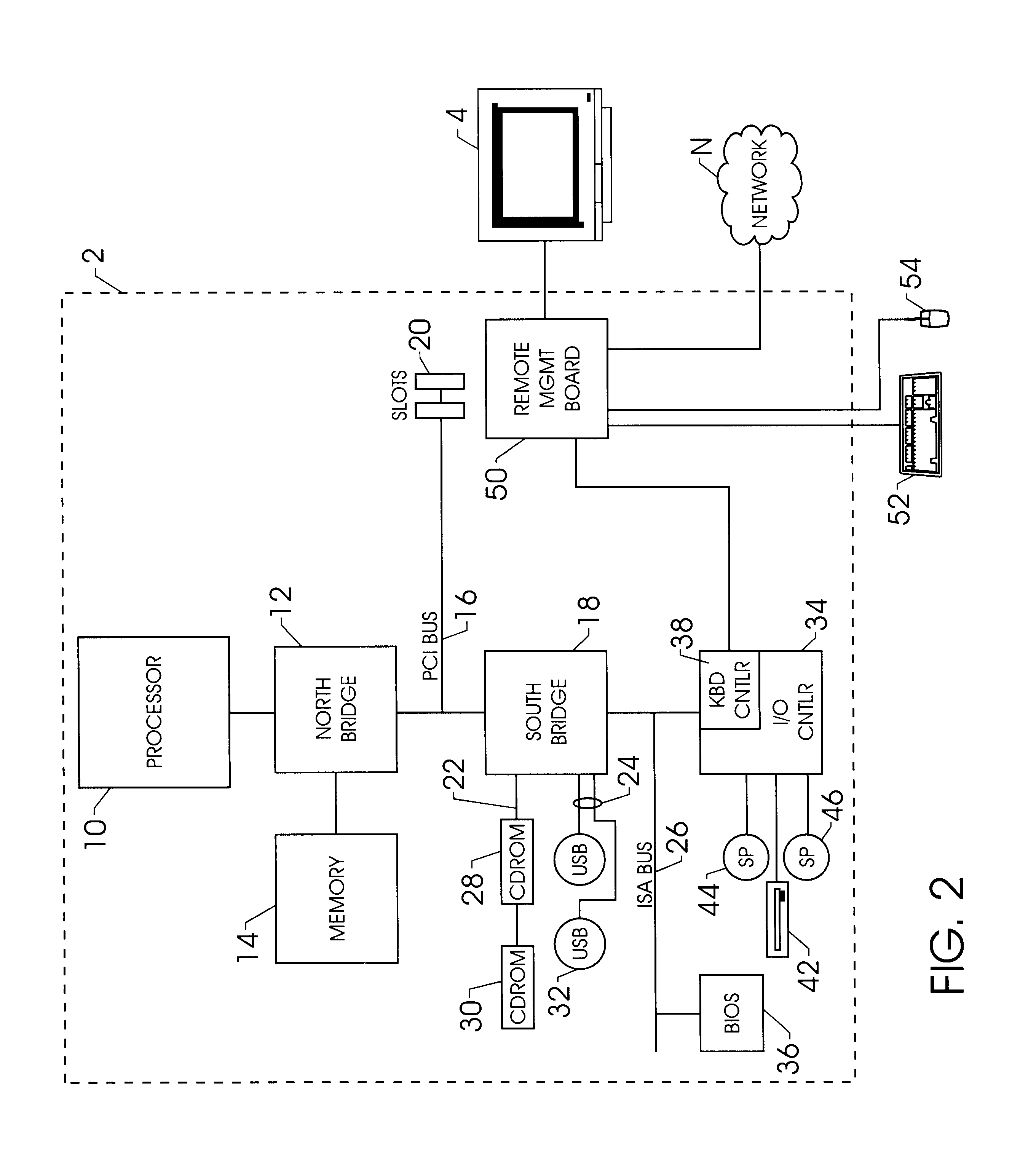

Operating system independent method and apparatus for graphical remote access

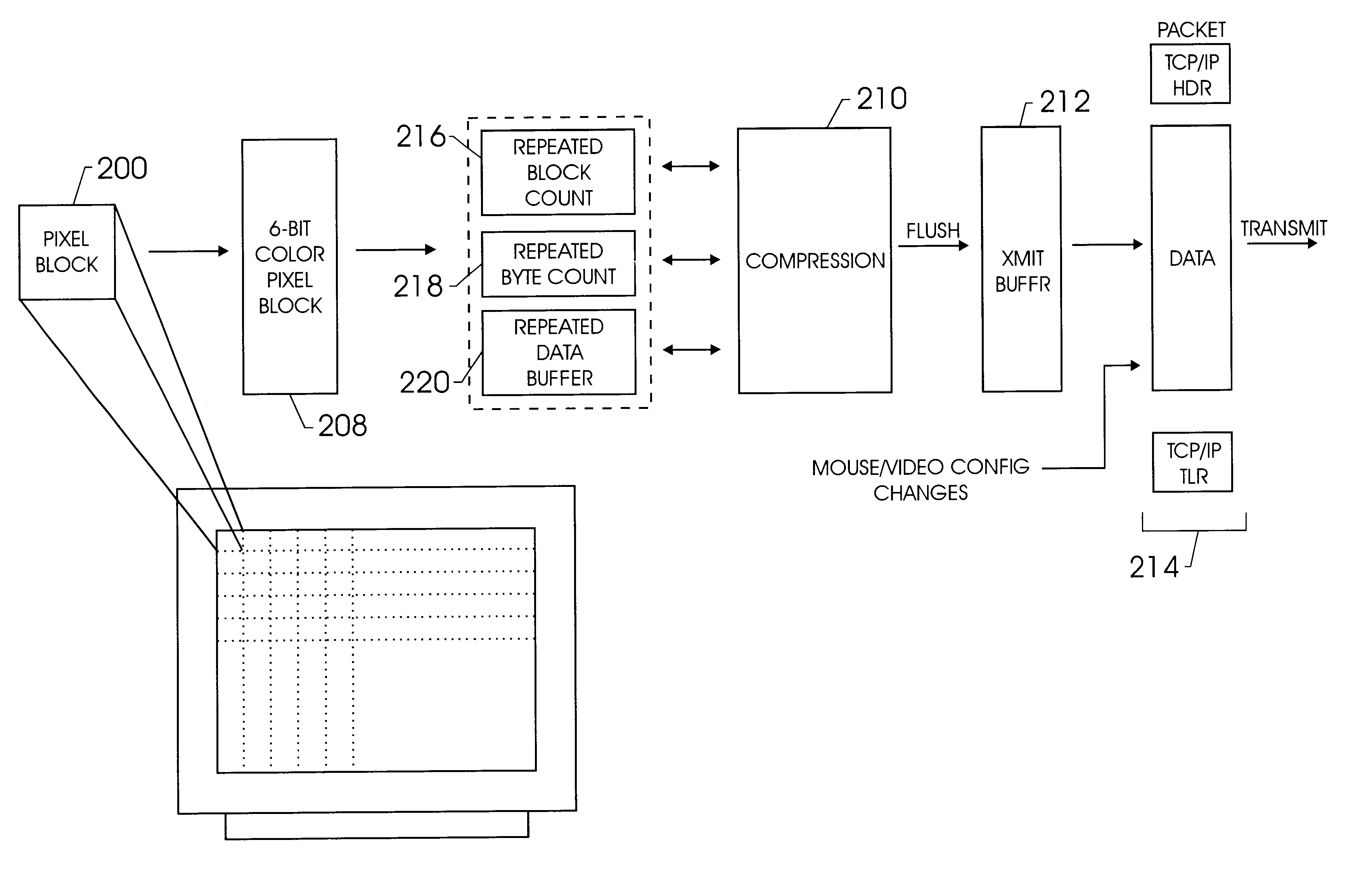

A method and apparatus for updating video graphics changes of a managed server to a remote console independent of an operating system. The screen (e.g. frame buffer) of the managed server is divided into a number of blocks. Each block is periodically monitored for changes by calculating a hash code and storing the code in a hash code table. When the hash code changes, the block is transmitted to the remote console. Color condensing may be performed on the color values of the block before the hash codes are calculated and before transmission. Compression is performed on each block and across blocks to reduce bandwidth requirements on transmission. Periodically, the configuration of a video graphics controller and a pointing device of the managed server are checked for changes, such as changes to resolution, color depth and cursor movement. If changes are found, the changes are transmitted to the remote console. The method and apparatus may be performed by a separate processor as part of a remote management board, a "virtual" processor by causing the processor of the managed server to enter a system management mode, or a combination of the two.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

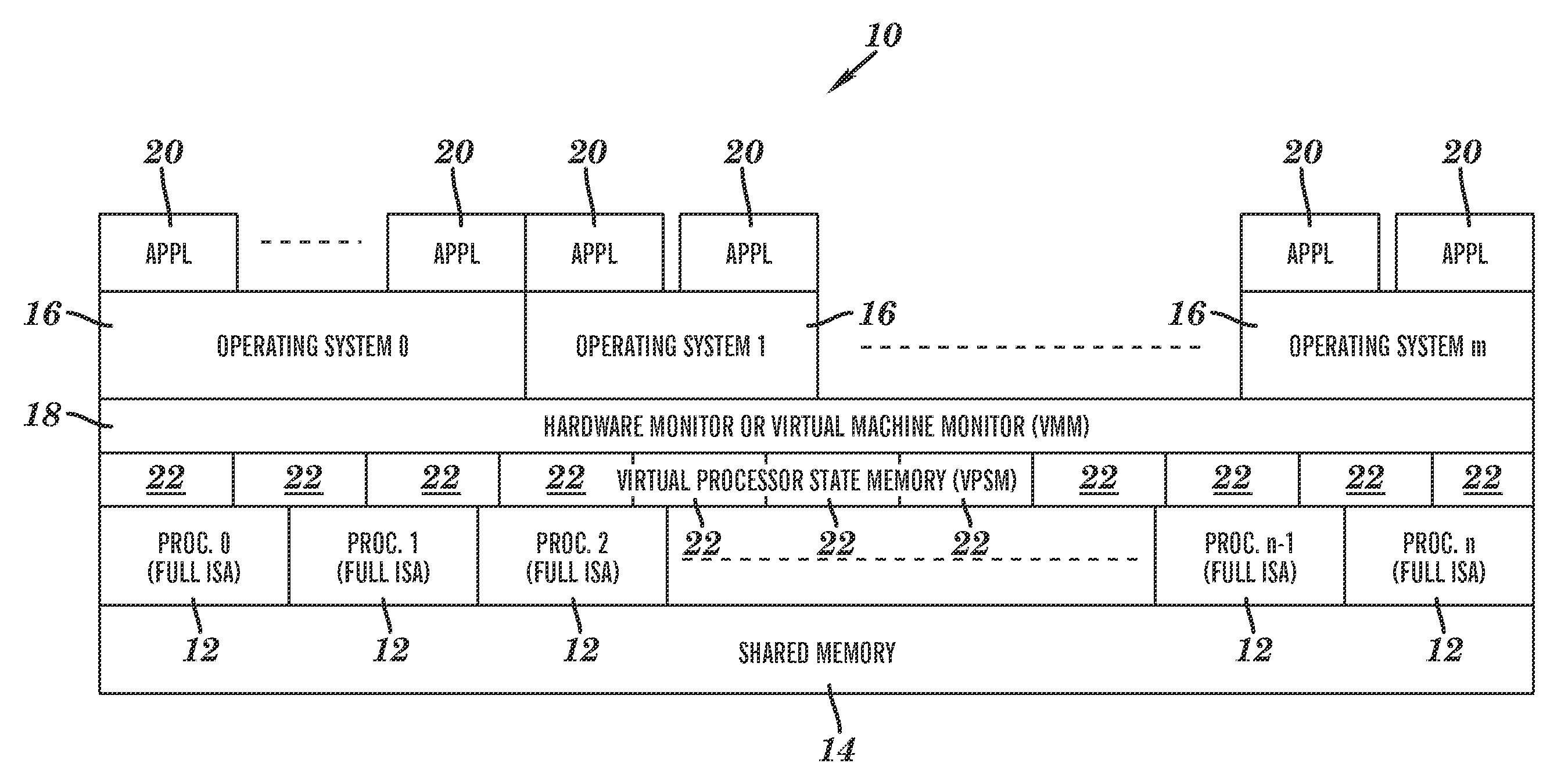

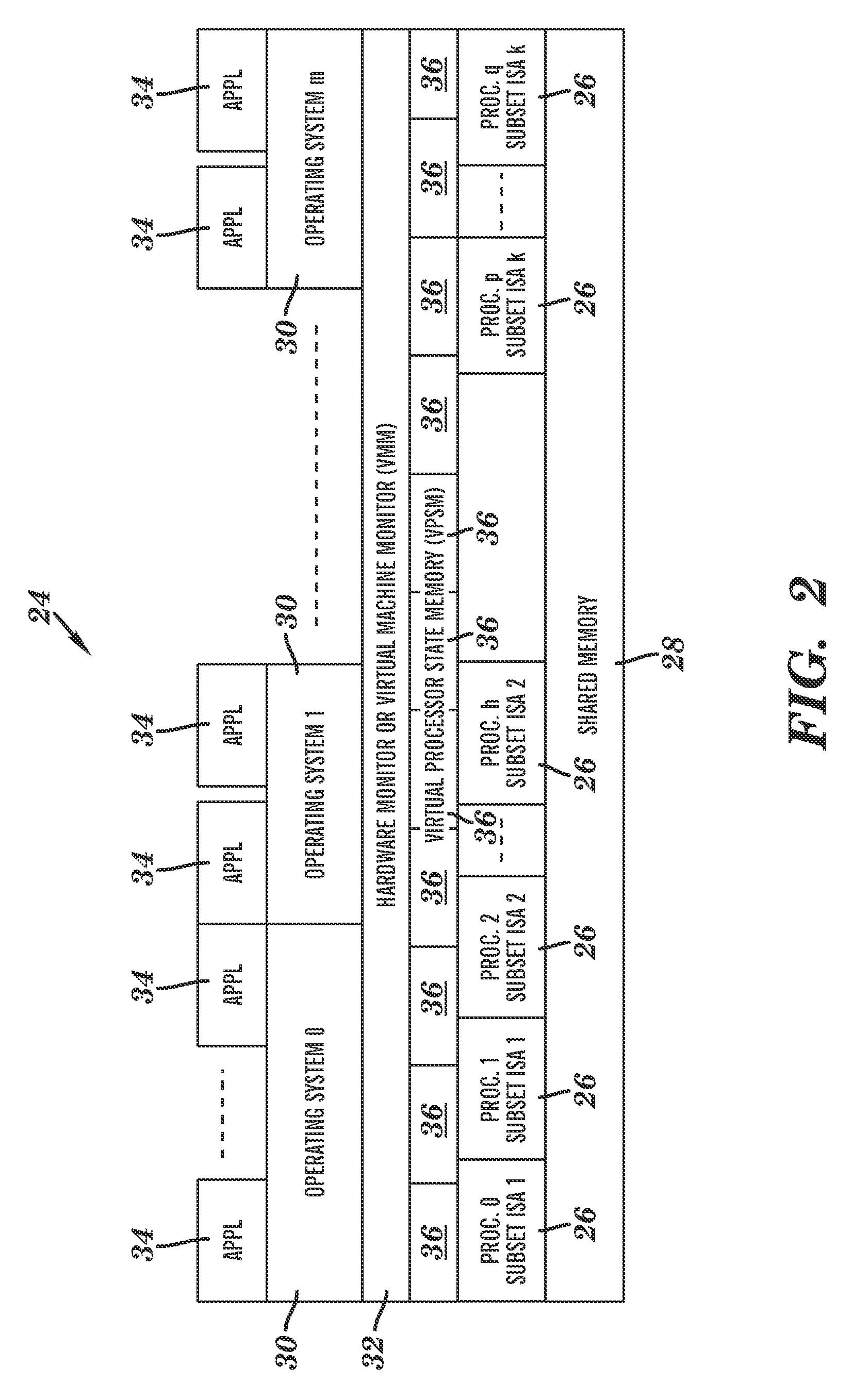

System and method for detecting access to shared structures and for maintaining coherence of derived structures in virtualized multiprocessor systems

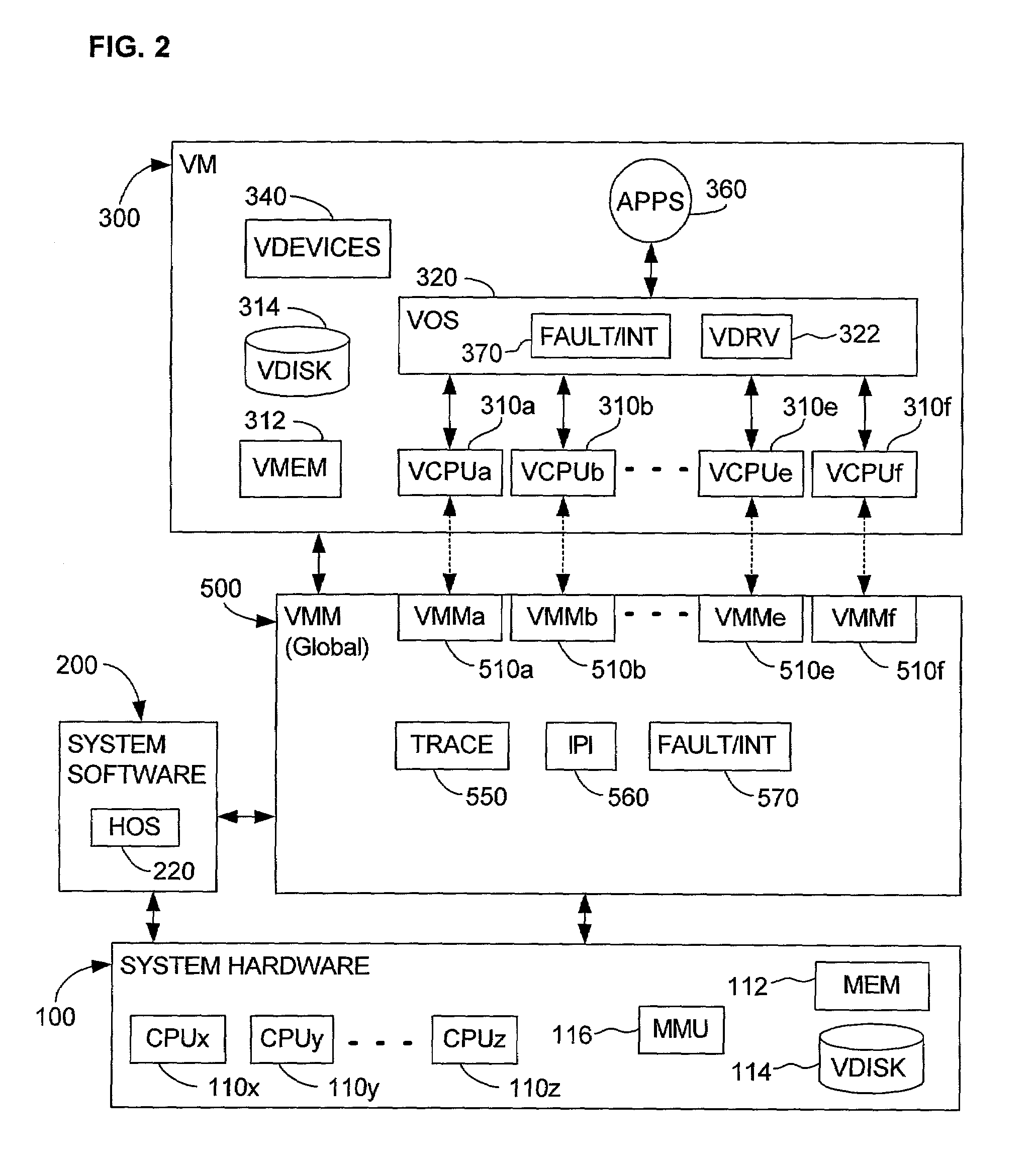

A computer system includes at least one virtual machine that has a plurality of virtual processors all running on an underlying hardware platform. A software interface layer such as a virtual machine monitor establishes traces on primary structures located in a common memory space as needed for the different virtual processors. Whenever any one of the virtual processors generates a trace event, such as accessing a traced structure, then a notification is sent to at least the other virtual processors that have a trace on the accessed primary structure. In some applications, the VMM derives and maintains secondary structures corresponding to the primary structures, such as where the VMM converts, through binary translation, original code intended to run on a virtual processor into code that can be run on an underlying physical processor of the hardware platform. In these applications, the VMM may rederive or invalidate the secondary structures as needed upon receipt of the notification of the trace event. Different semantics are provided for the notification, providing different choices of performance versus guaranteed consistency between primary and secondary structures. In the preferred embodiment of the invention, a dedicated sub-system is included within the VMM for each virtual processor; each sub-system establishes traces, senses trace events, issues the notification, and performs other operations relating specifically to its respective virtual processor.

Owner:VMWARE INC

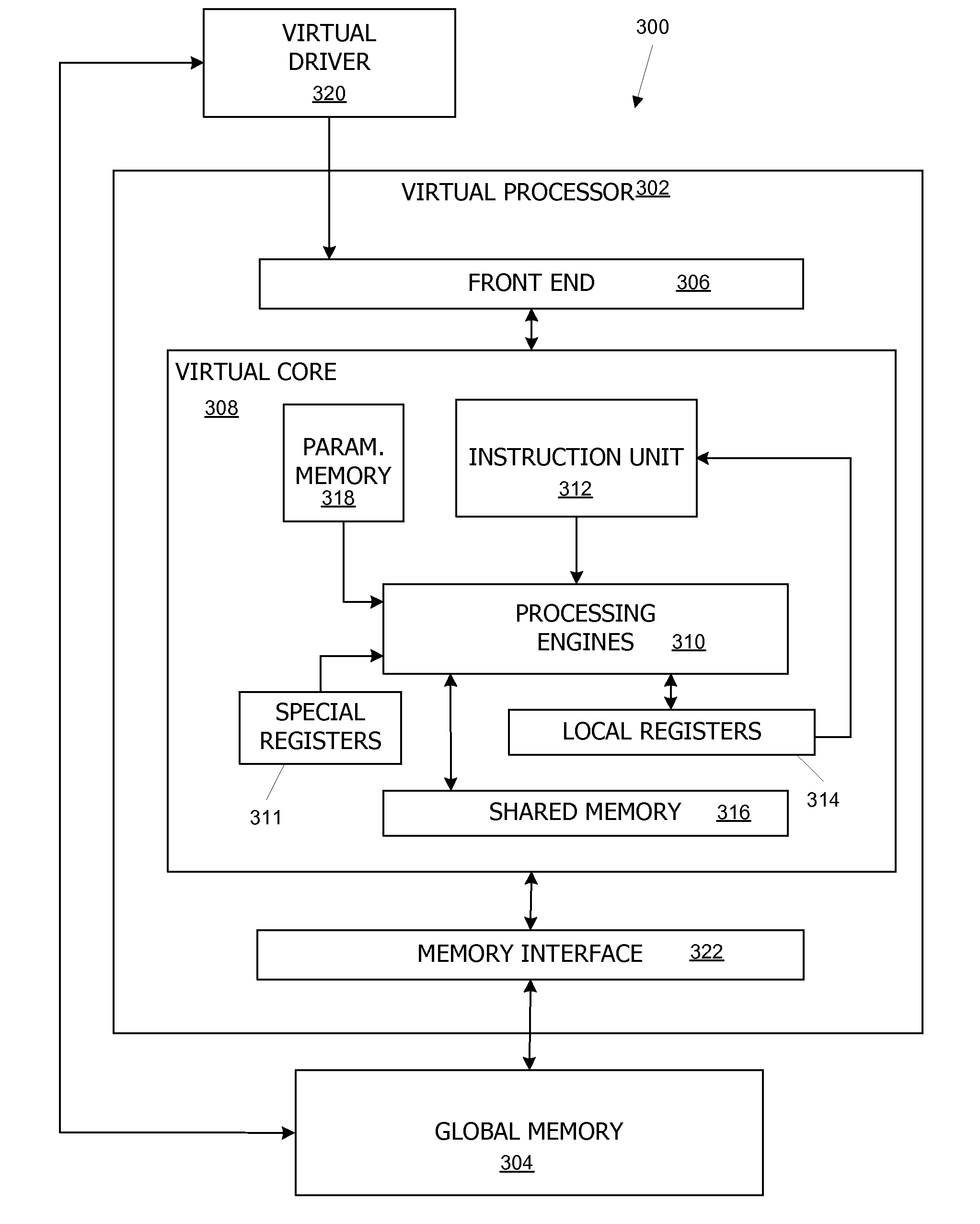

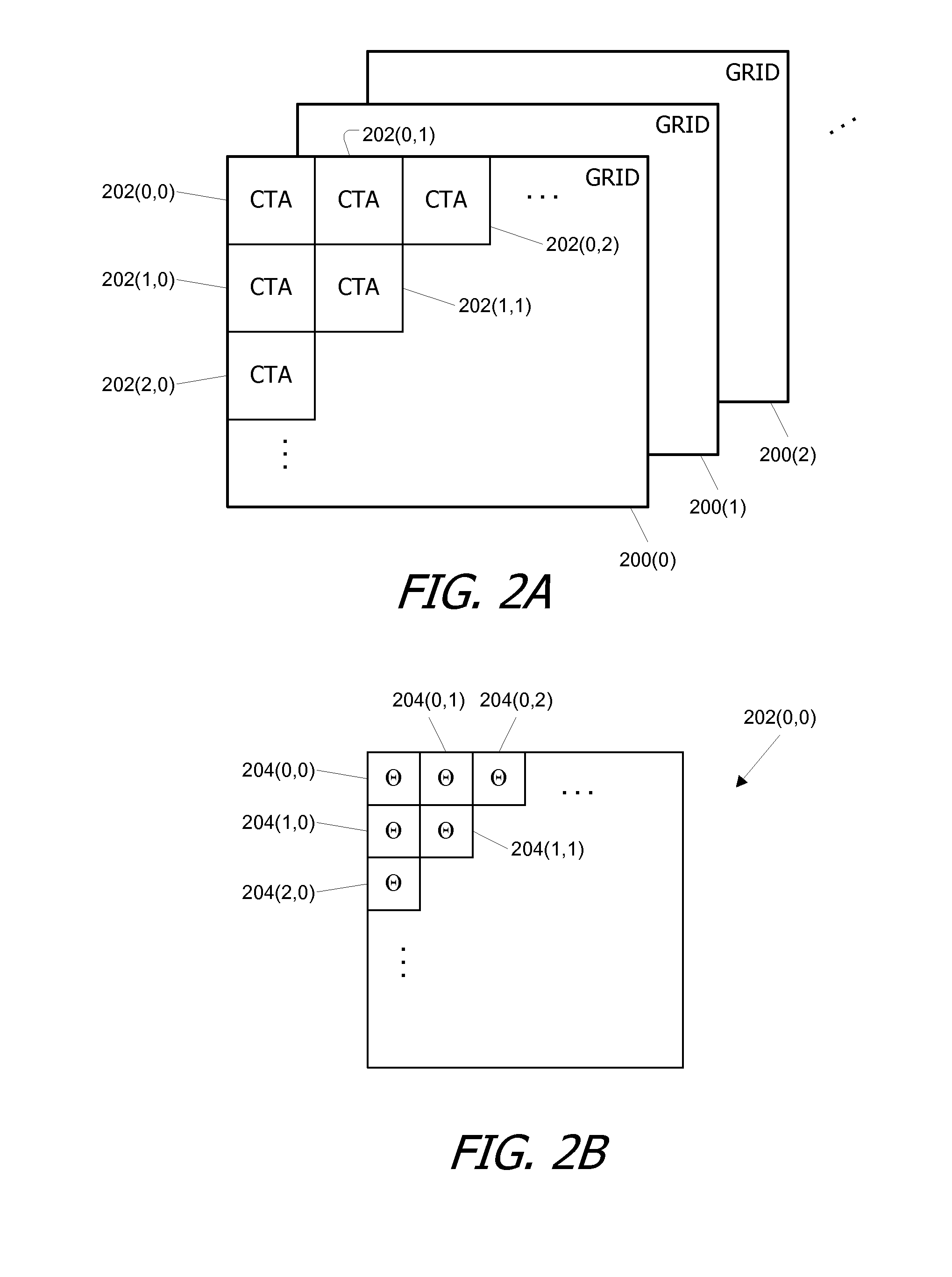

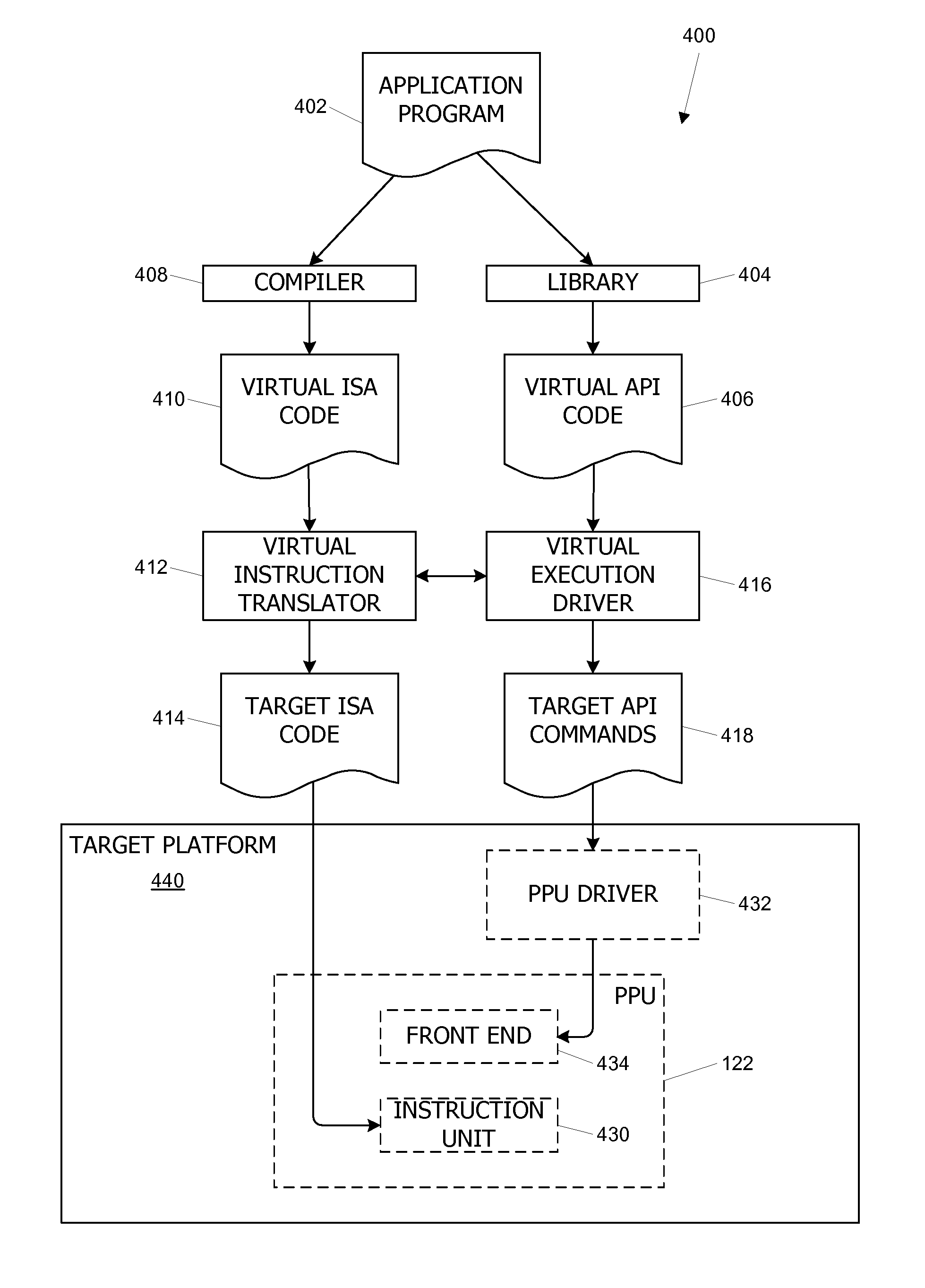

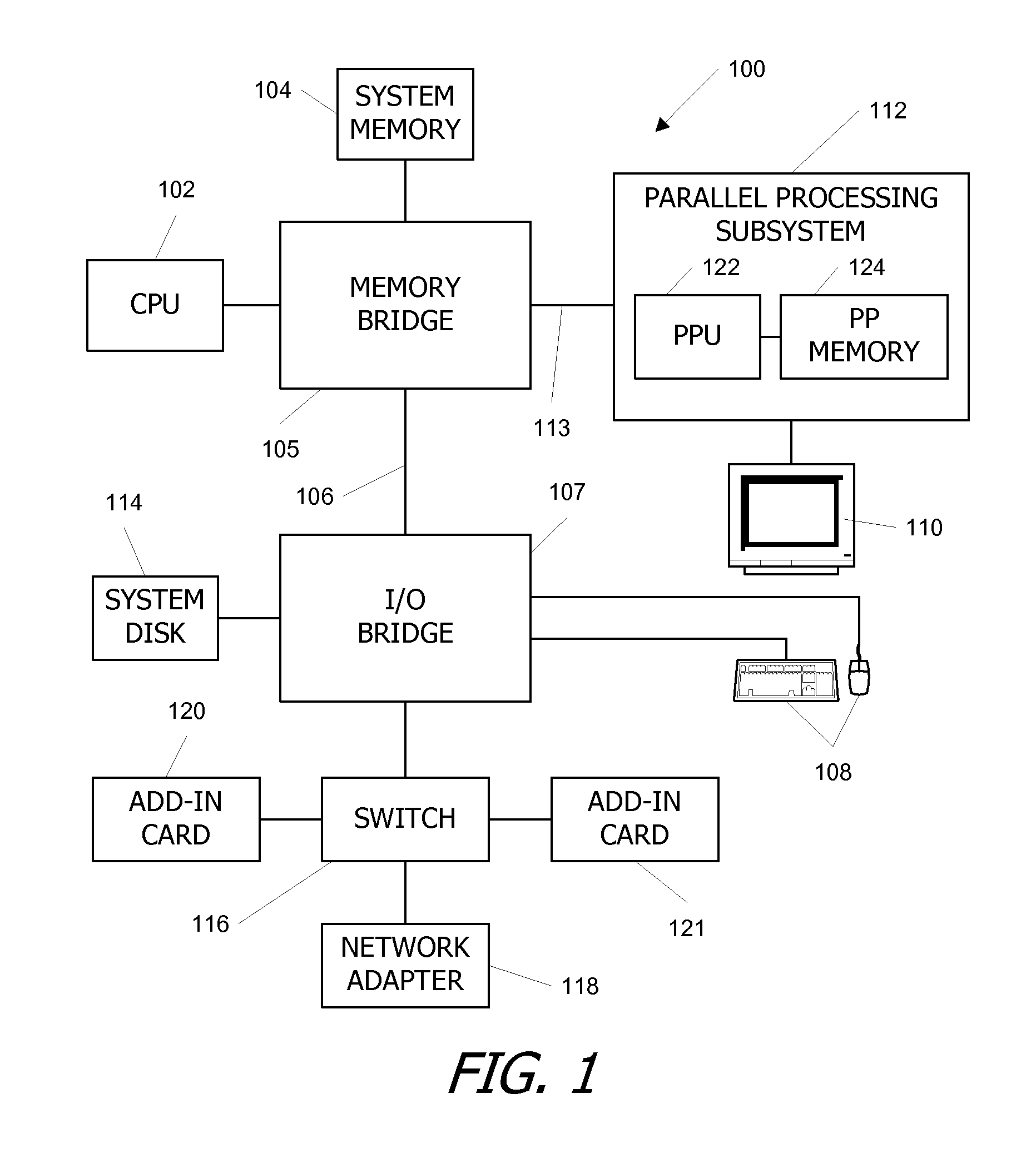

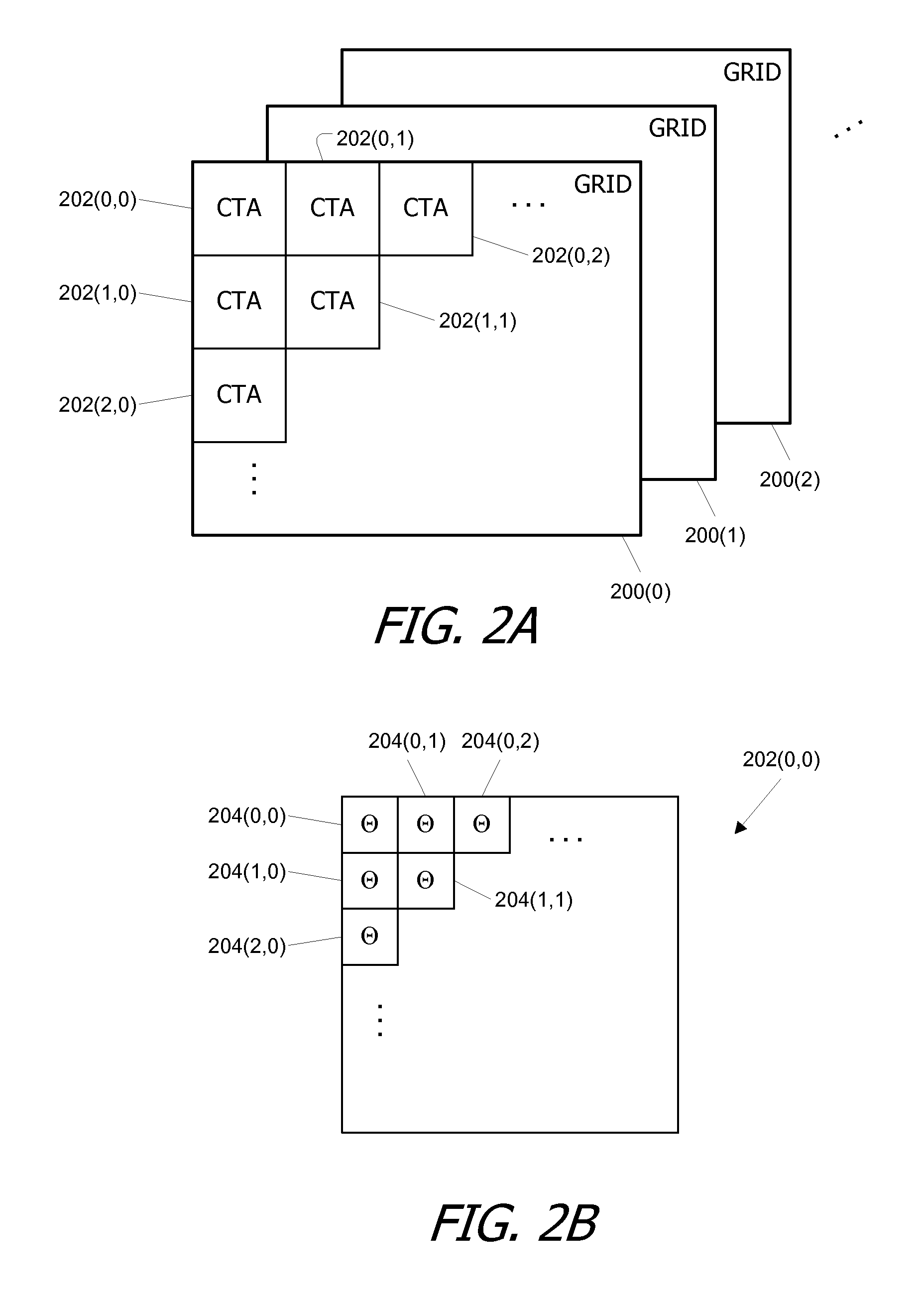

Virtual architecture and instruction set for parallel thread computing

ActiveUS20080184211A1Improve developmentImprove portabilityProgram control using stored programsSoftware engineeringApplication softwareData sharing

A virtual architecture and instruction set support explicit parallel-thread computing. The virtual architecture defines a virtual processor that supports concurrent execution of multiple virtual threads with multiple levels of data sharing and coordination (e.g., synchronization) between different virtual threads, as well as a virtual execution driver that controls the virtual processor. A virtual instruction set architecture for the virtual processor is used to define behavior of a virtual thread and includes instructions related to parallel thread behavior, e.g., data sharing and synchronization. Using the virtual platform, programmers can develop application programs in which virtual threads execute concurrently to process data; virtual translators and drivers adapt the application code to particular hardware on which it is to execute, transparently to the programmer.

Owner:NVIDIA CORP

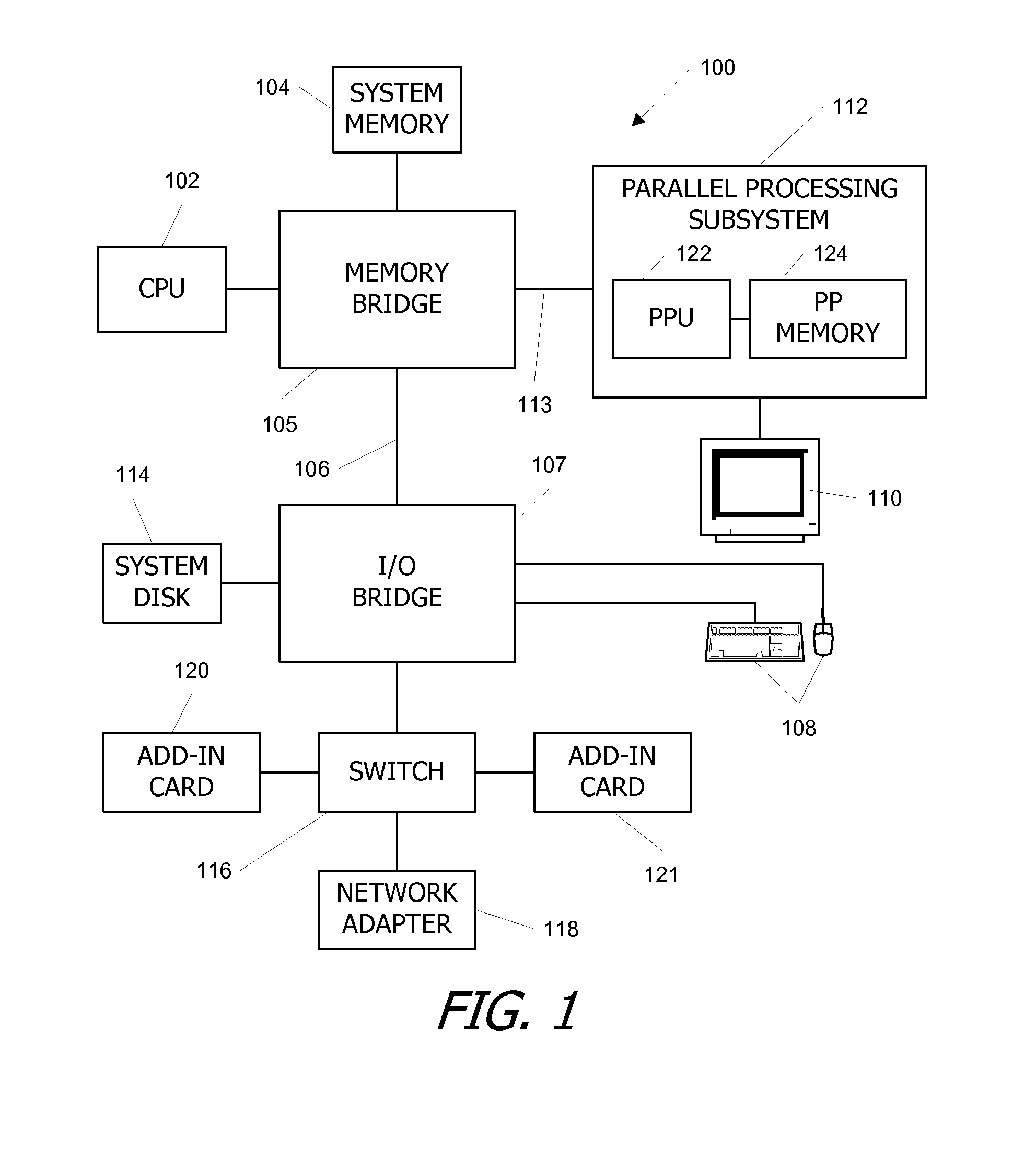

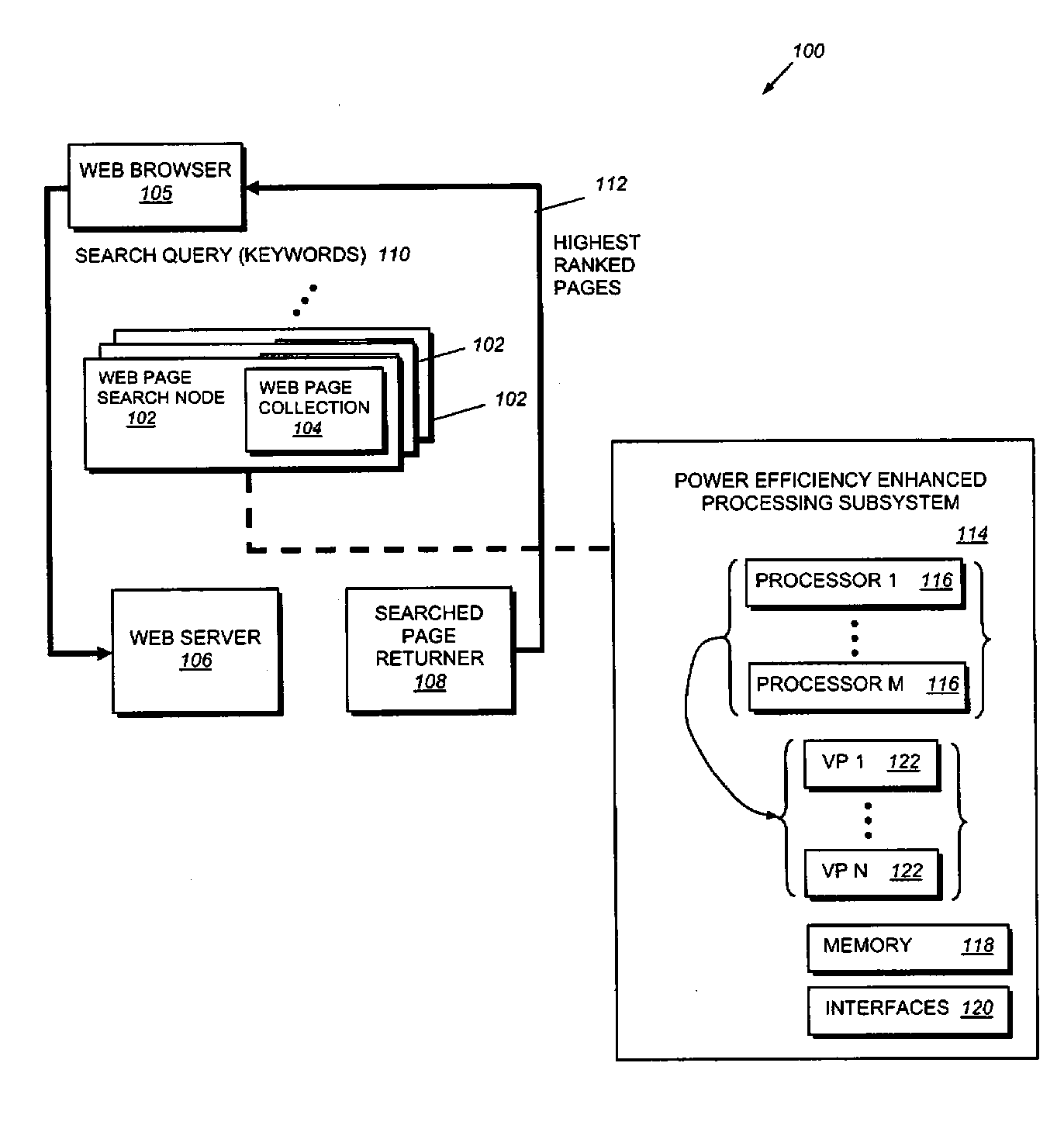

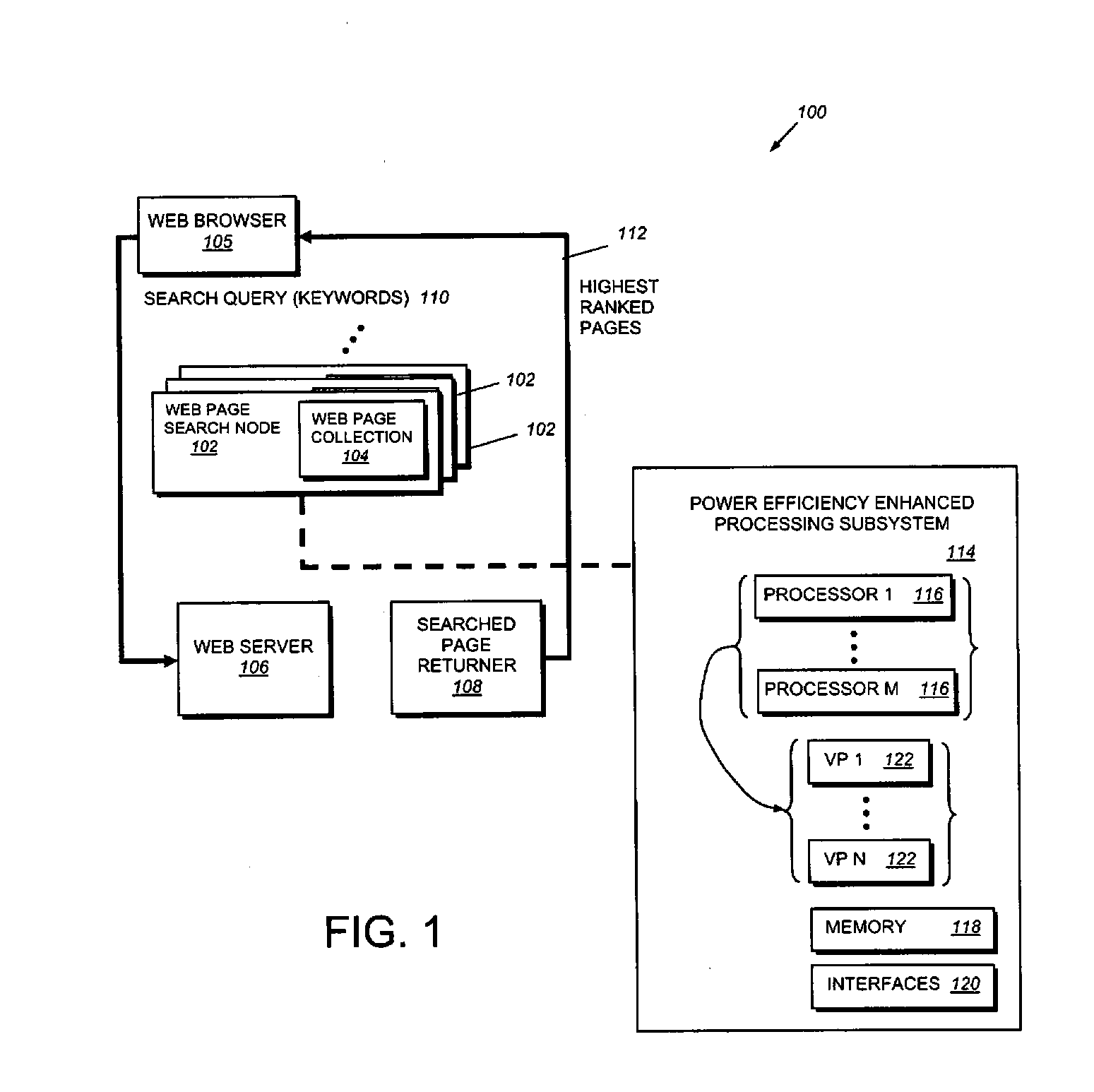

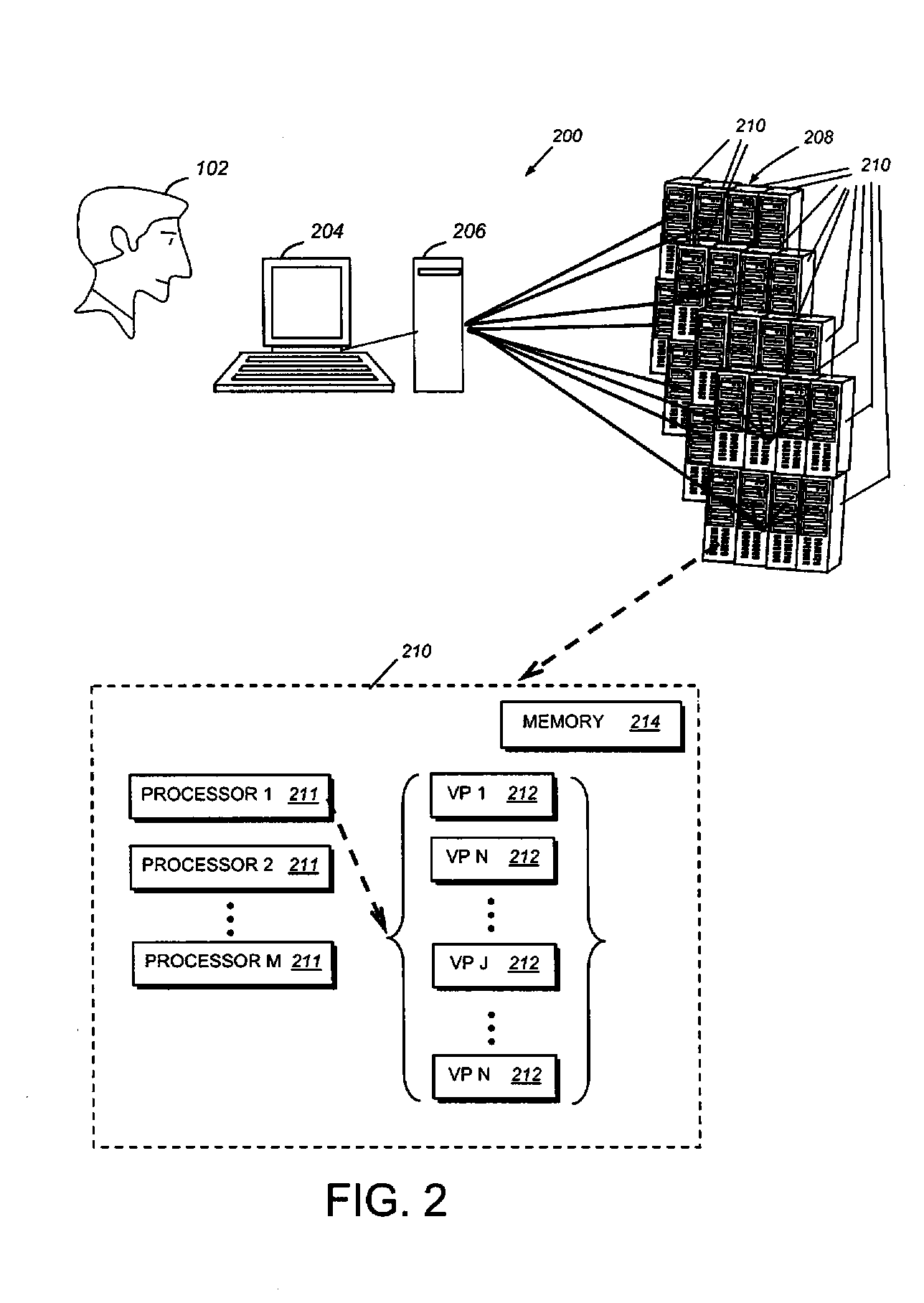

Parallel processing computer systems with reduced power consumption and methods for providing the same

ActiveUS20090083263A1Reduce power consumptionEnergy efficient ICTWeb data indexingWeb browserWeb service

This invention provides a computer system architecture and method for providing the same which can include a web page search node including a web page collection. The system and method can also include a web server configured to receive, from a given user via a web browser, a search query including keywords. The node is caused to search pages in its own collection that best match the search query. A search page returner may be provided which is configured to return, to the user, high ranked pages. The node may include a power-efficiency-enhanced processing subsystem, which includes M processors. The M processors are configured to emulate N virtual processors, and they are configured to limit a virtual processor memory access rate at which each of the N virtual processors accesses memory. The memory accessed by each of the N virtual processors may be RAM. In select embodiments, the memory accessed by each of the N virtual processors includes DRAM having a high capacity yet lower power consumption then SRAM.

Owner:GRANGER RICHARD

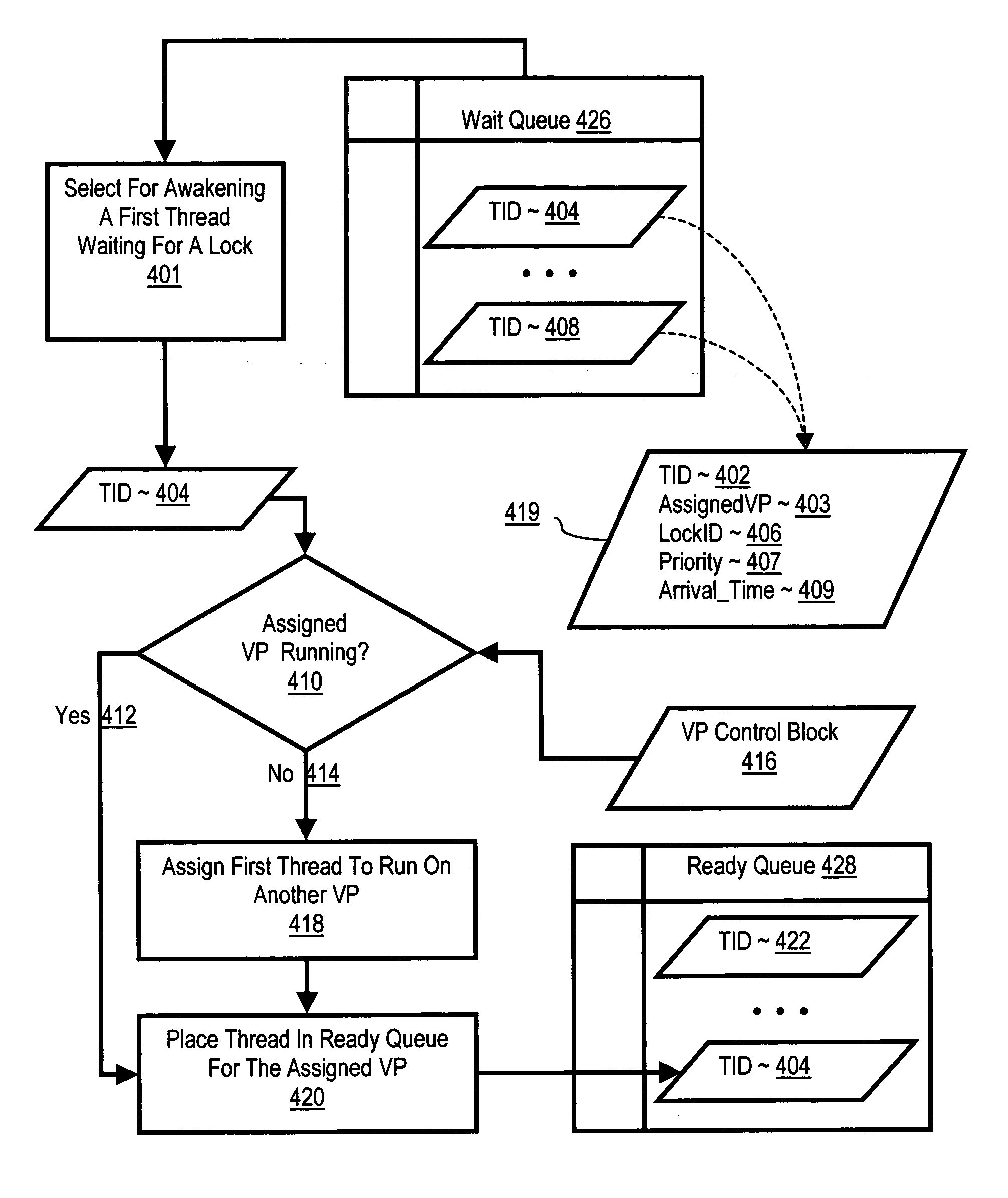

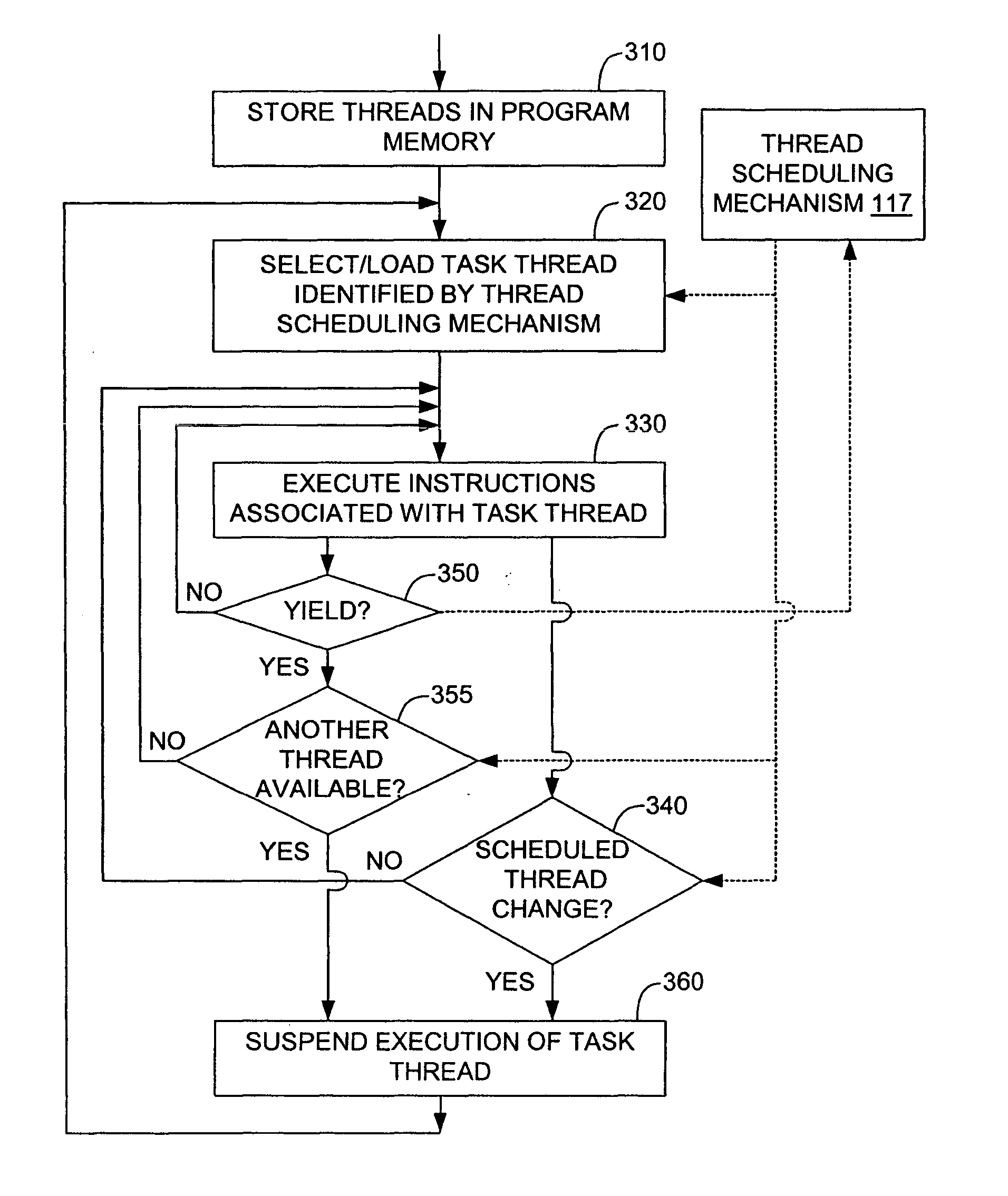

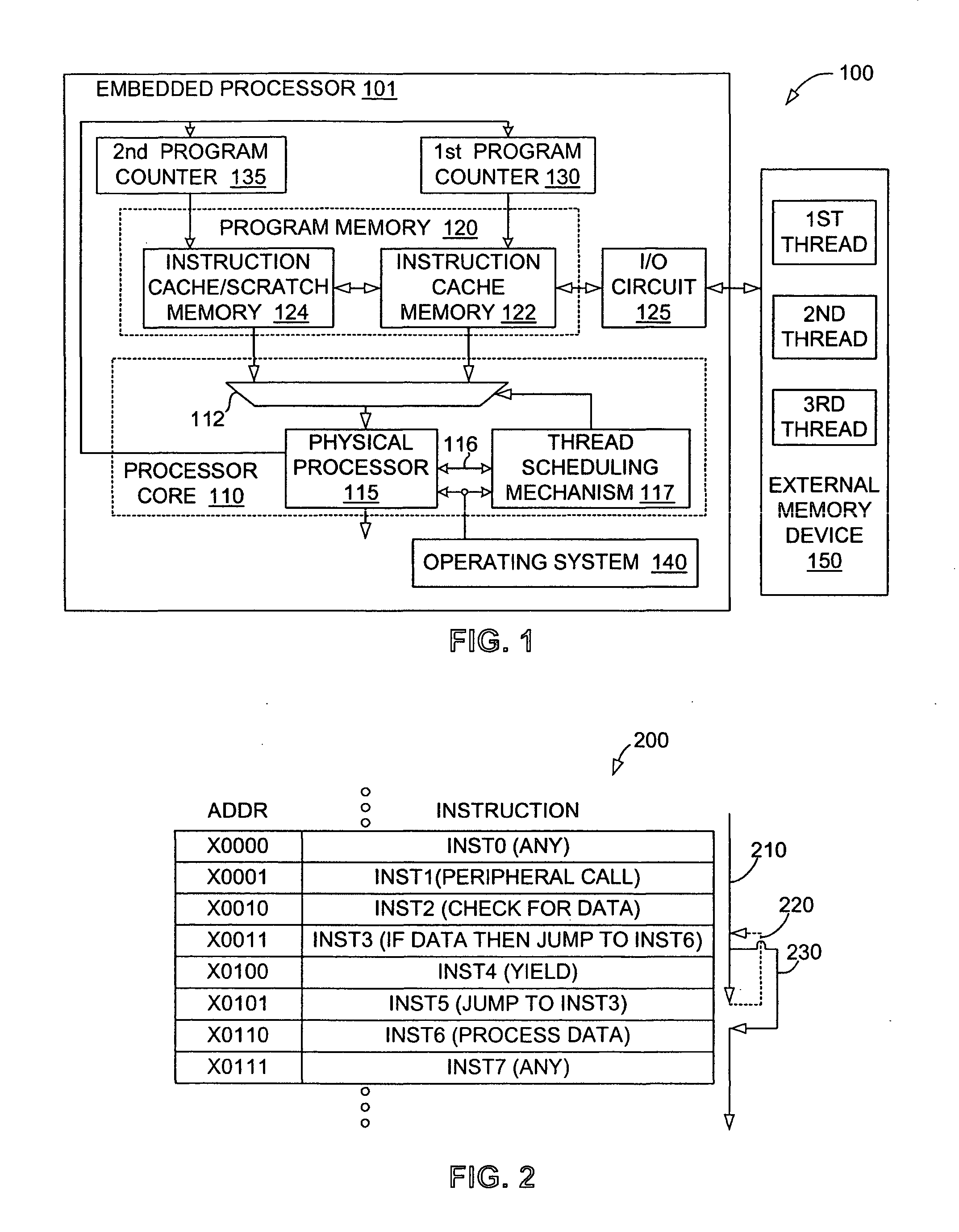

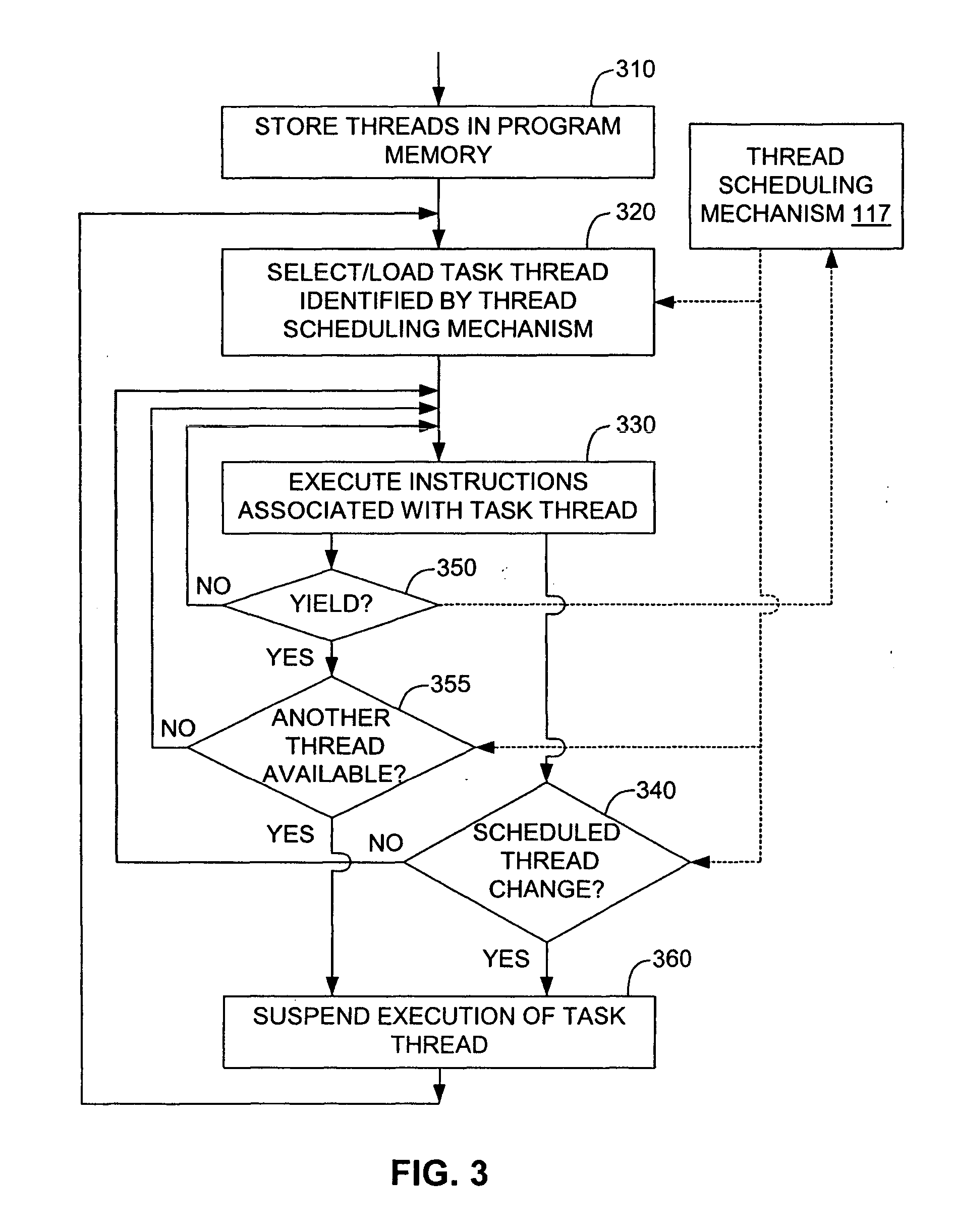

Scheduling threads in a multi-threaded computer

InactiveUS20060130062A1Reduce riskImprove utilization efficiencyMultiprogramming arrangementsMemory systemsVirtual ProcessorReal-time computing

Scheduling threads in a multi-threaded computer including selecting for awakening a thread that is waiting for a lock, the thread having an assigned virtual processor; determining whether the assigned virtual processor is running; and if the assigned virtual processor is not running, assigning the thread to run on another virtual processor. Selecting a thread may include selecting the thread according to thread priority or selecting the thread according to sequence of thread arrival in a wait queue. Selecting a thread may include selecting a thread having an assigned virtual processor that is running. Selecting a thread may include selecting a thread having an assigned virtual processor that is running and has at least a predetermined amount of time remaining in its current time slice.

Owner:IBM CORP

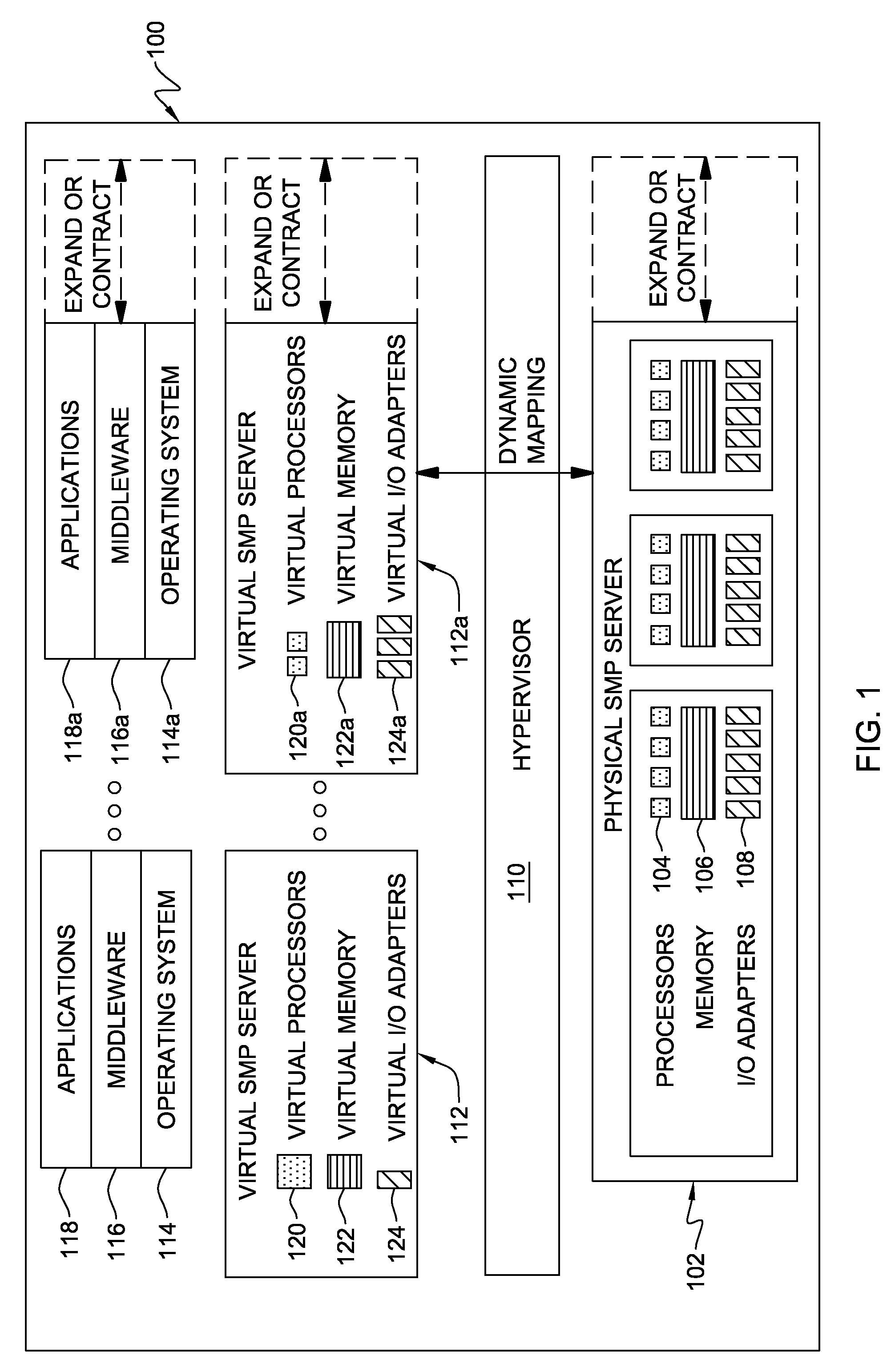

Systems and methods for exposing processor topology for virtual machines

ActiveUS20060136653A1Multiprogramming arrangementsComputer security arrangementsOperational systemPhysics processing unit

The present invention is directed to making a guest operating system aware of the topology of the subset of host resources currently assigned to it. At virtual machine boot time a Static Resource Affinity Table (SRAT) will be used by the virtualizer to group guest physical memory and guest virtual processors into virtual nodes. Thereafter, in one embodiment, the host physical memory behind a virtual node can be changed by the virtualizer as necessary, and the virtualizer will provide physical processors appropriate for the virtual processors in that node.

Owner:MICROSOFT TECH LICENSING LLC

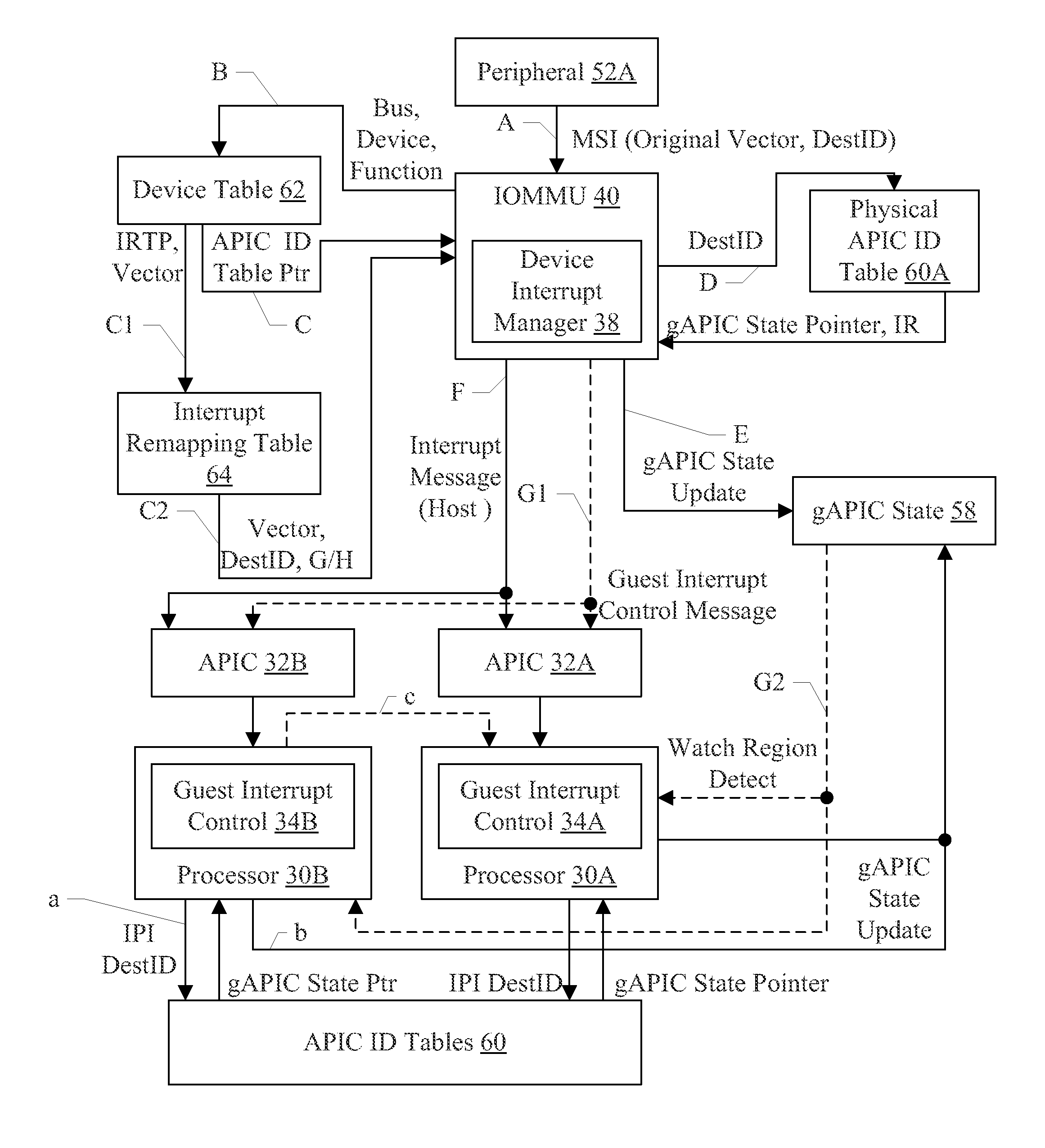

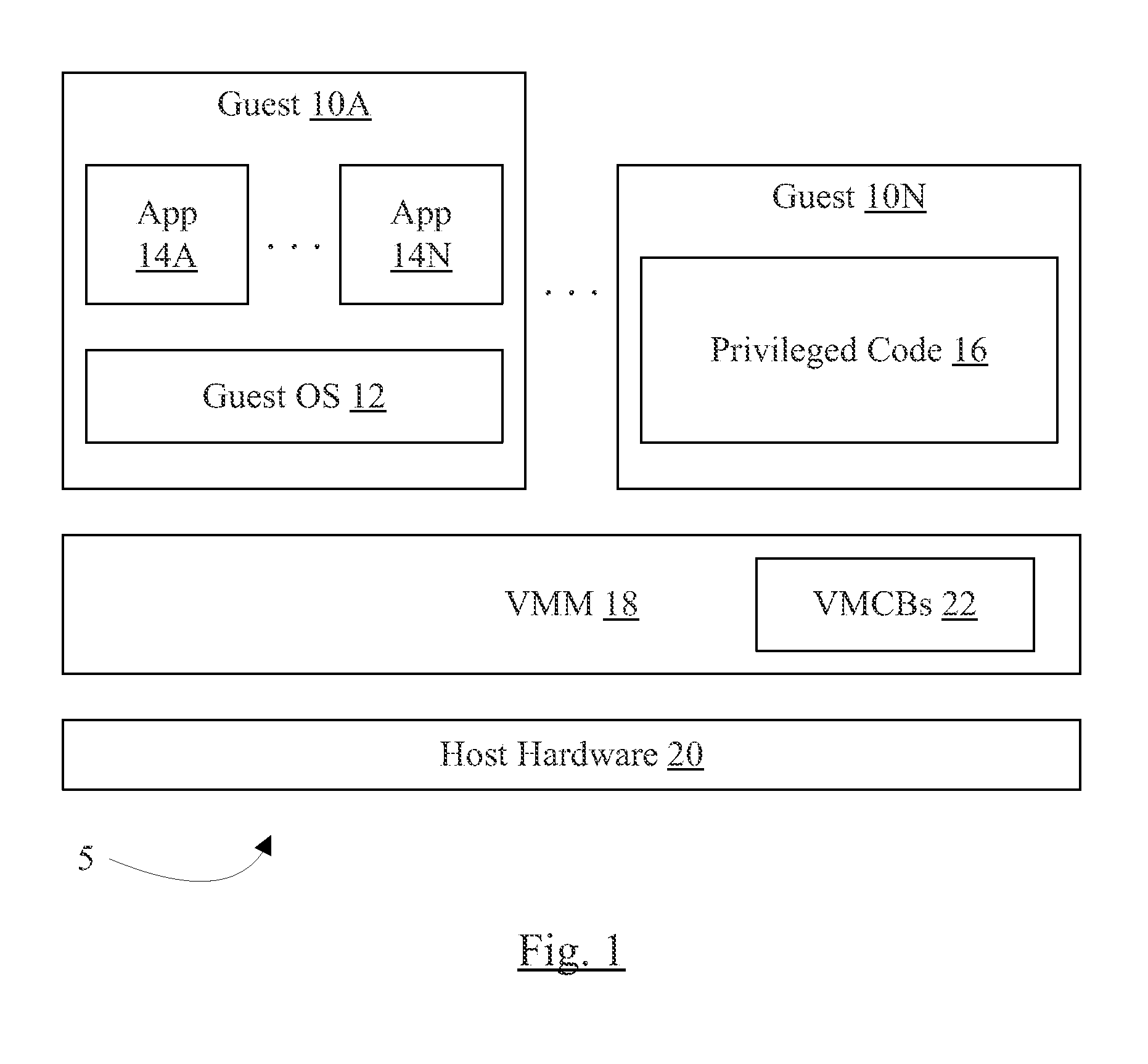

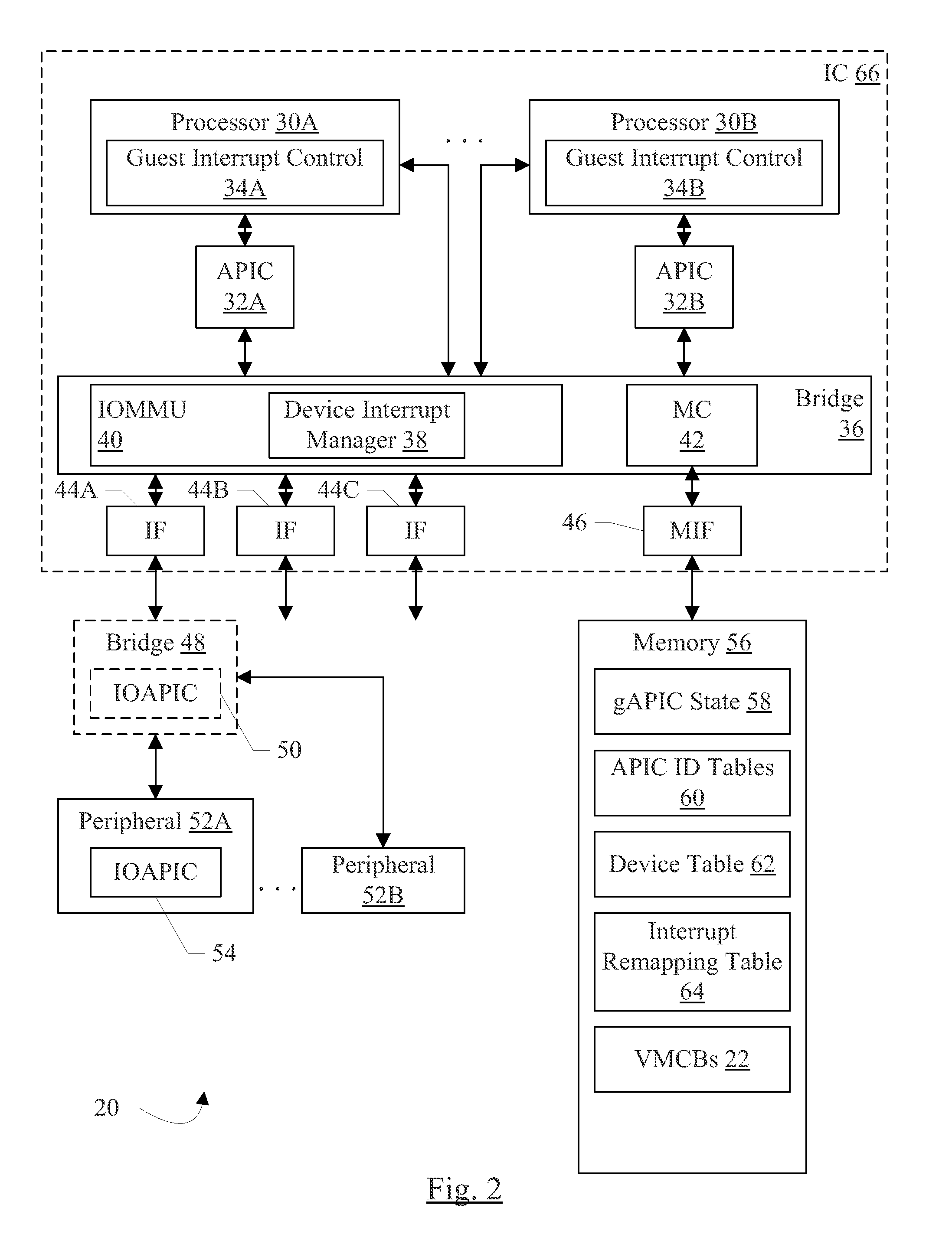

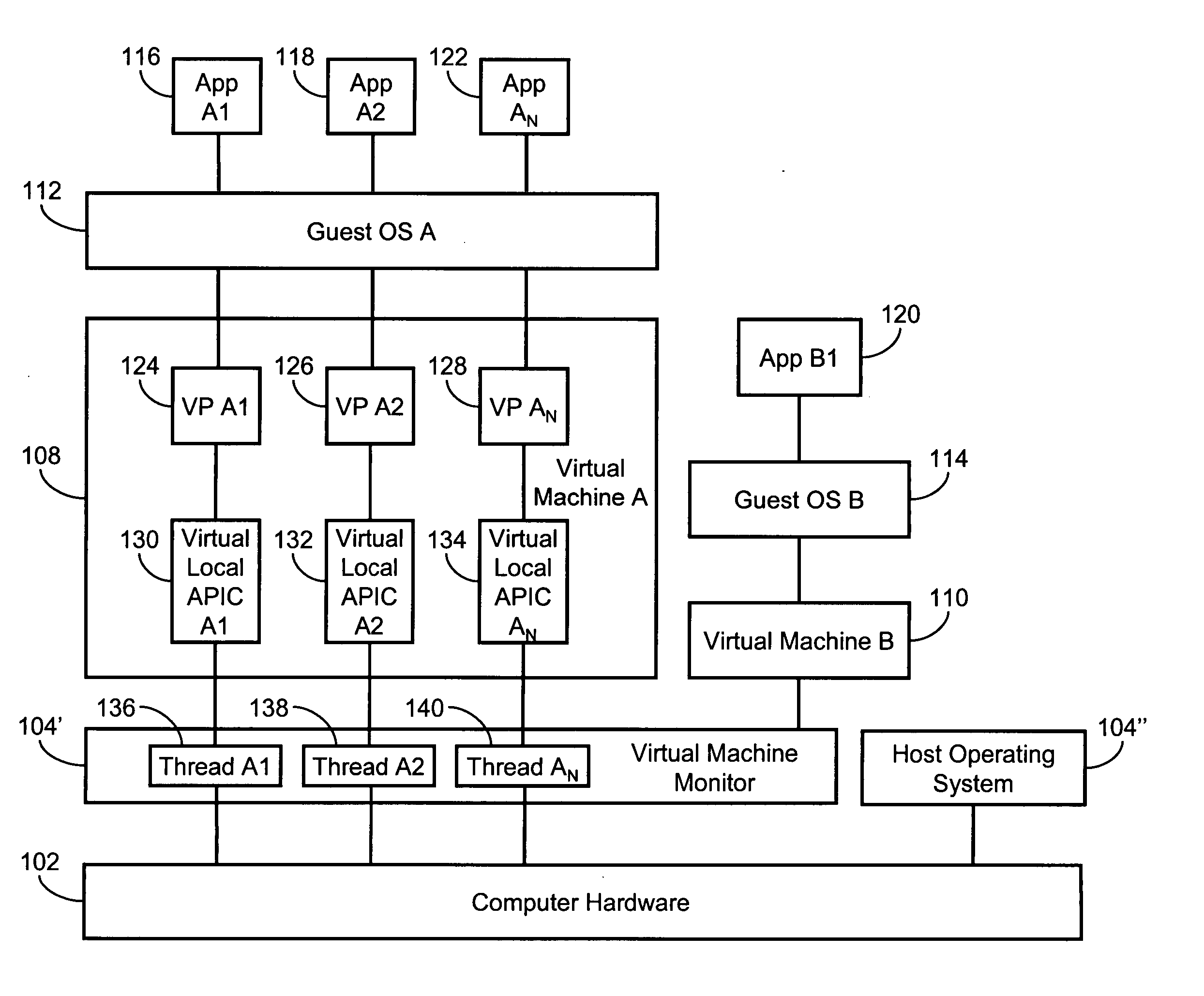

Interrupt Virtualization

ActiveUS20110197003A1Multiprogramming arrangementsSoftware simulation/interpretation/emulationVirtualizationVirtual Processor

In an embodiment, a device interrupt manager may be configured to receive an interrupt from a device that is assigned to a guest. The device interrupt manager may be configured to transmit an operation targeted to a memory location in a system memory to record the interrupt for a virtual processor within the guest, wherein the interrupt is to be delivered to the targeted virtual processor. In an embodiment, a virtual machine manager may be configured to detect that an interrupt has been recorded by the device interrupt manager for a virtual processor that is not currently executing. The virtual machine manager may be configured to schedule the virtual processor for execution on a hardware processor, or may prioritize the virtual processor for scheduling, in response to the interrupt.

Owner:ADVANCED MICRO DEVICES INC

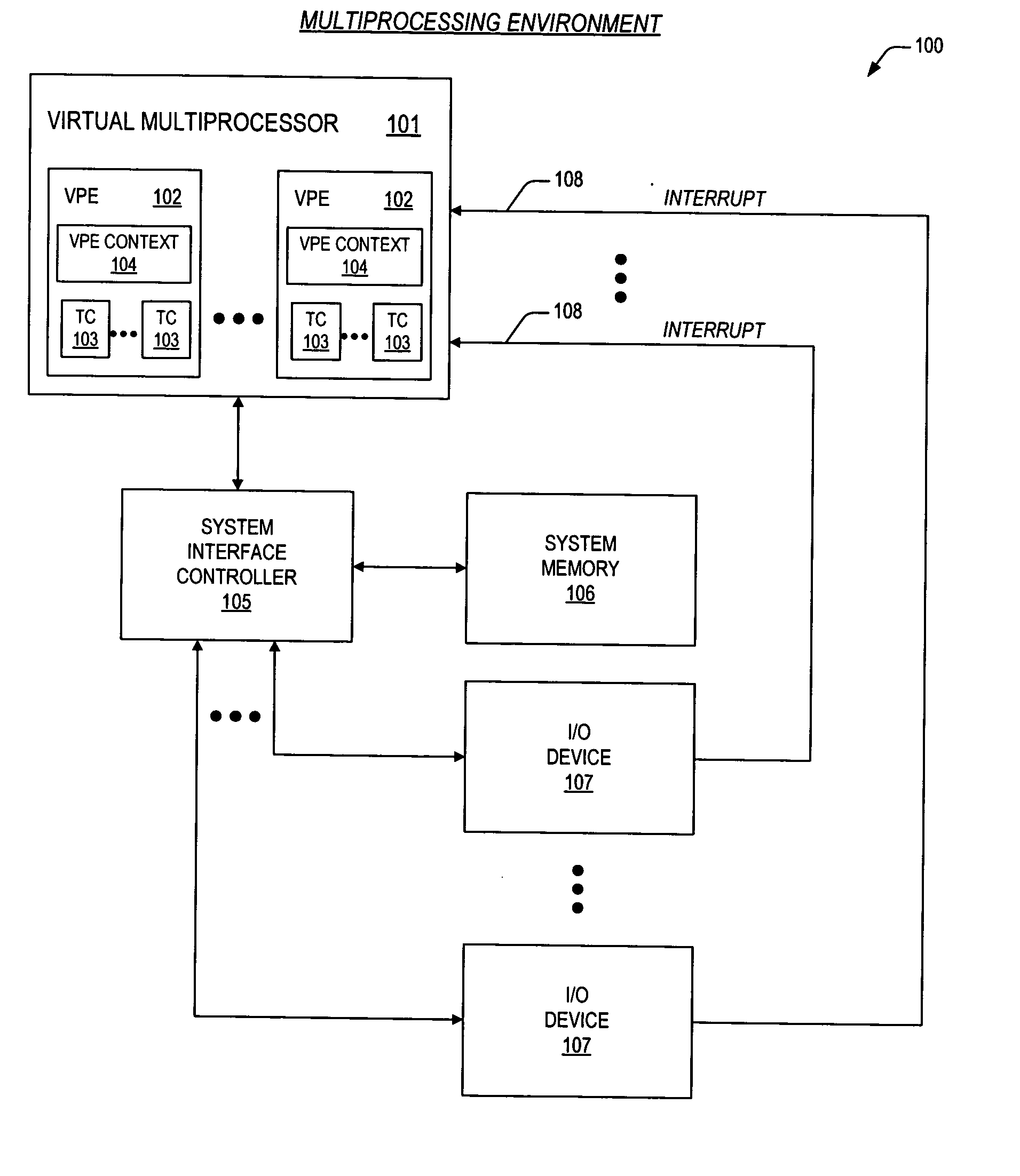

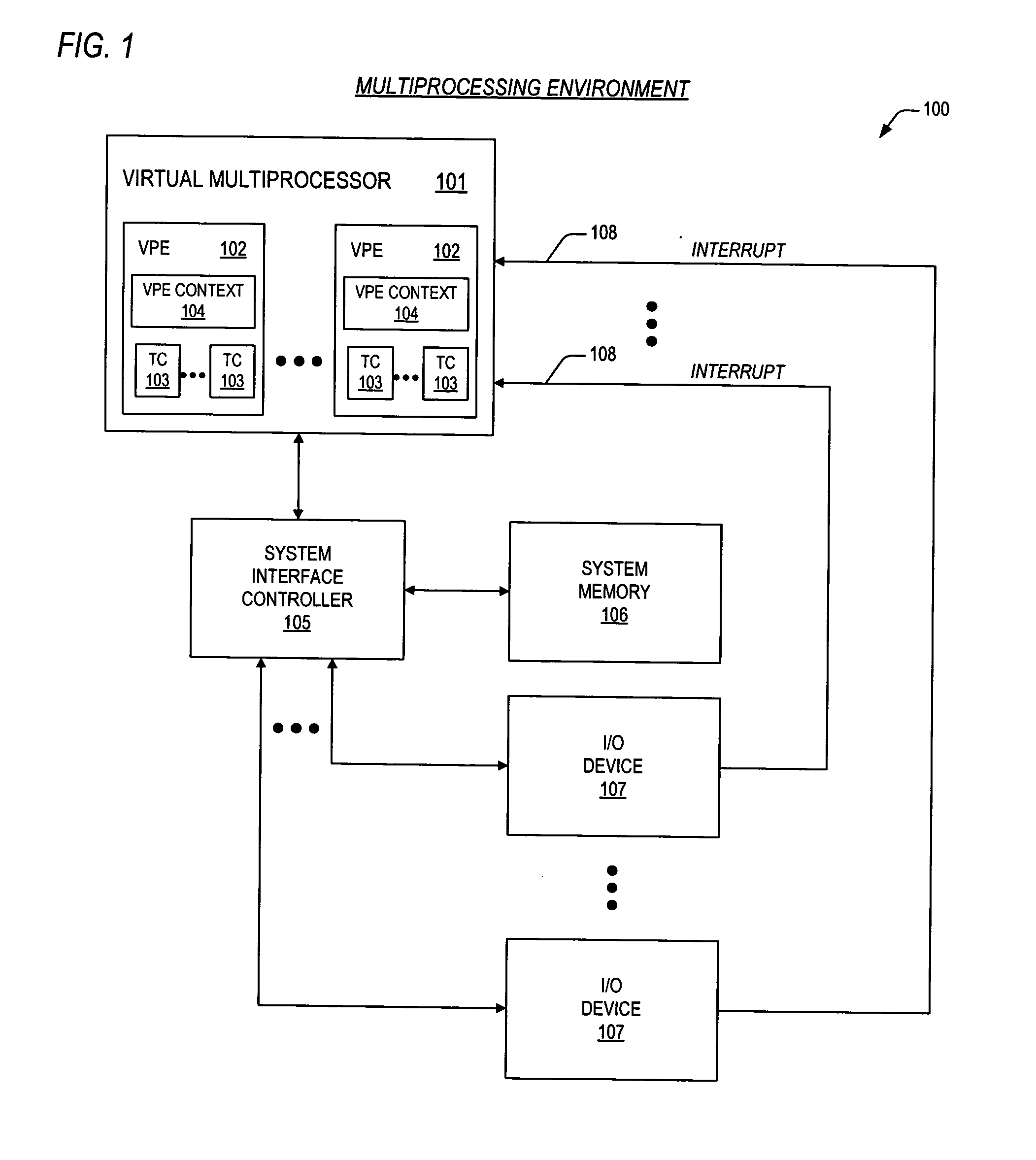

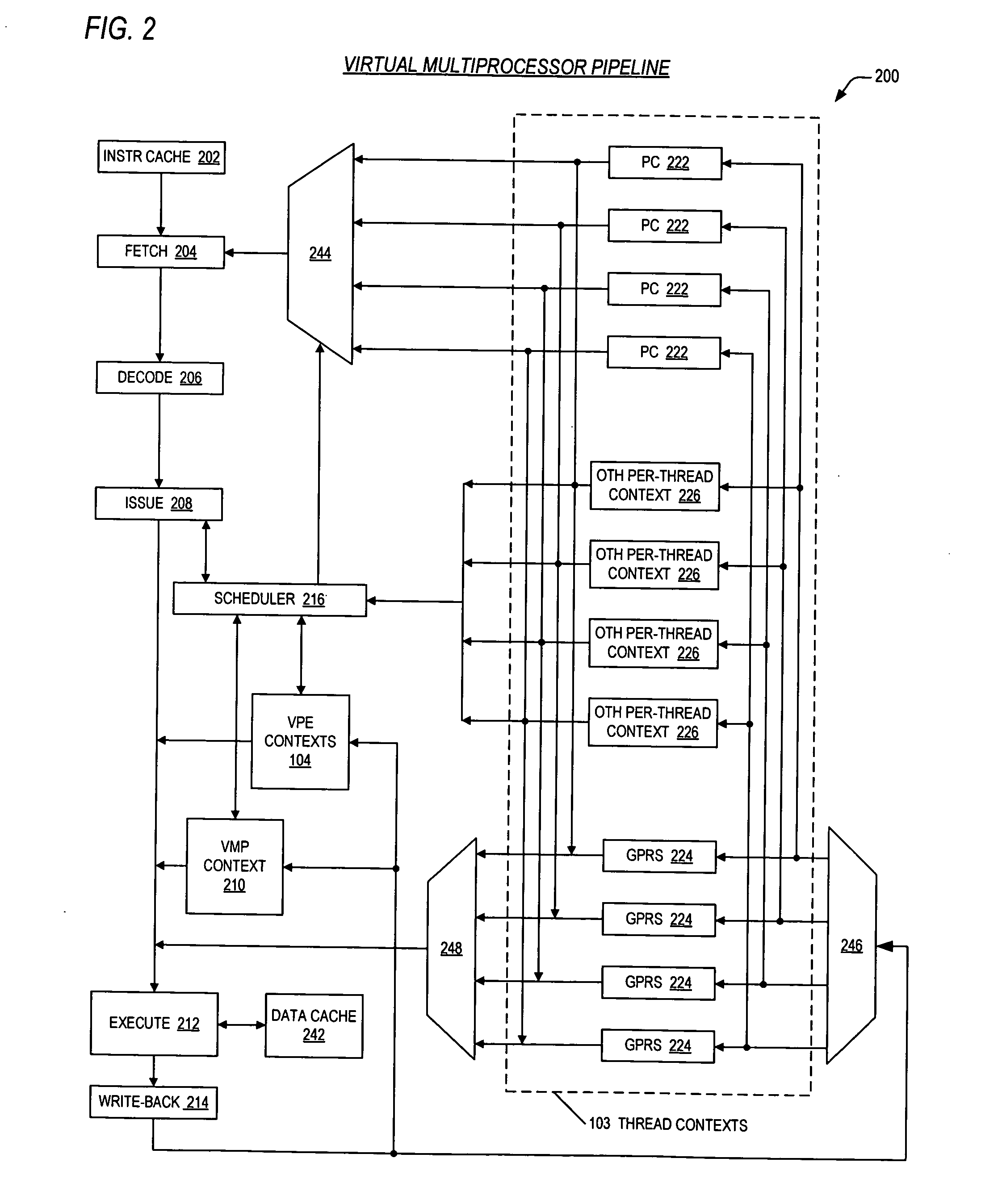

Mechanisms for dynamic configuration of virtual processor resources

ActiveUS20050125629A1Program initiation/switchingSoftware engineeringMulti processorProcessing element

Mechanisms for dynamically configuring the resources of a virtual multiprocessor are provided. The invention contemplates provision of an apparatus to configure resources for one or more virtual processing elements in a virtual multiprocessor. The apparatus includes a virtual multiprocessor context, one or more virtual processing element contexts, and configuration logic. The virtual multiprocessor context, prescribes the resources, and controls a configuration state of the virtual multiprocessor. The one or more virtual processing element contexts each exclusively correspond to one of the one or more virtual processing elements. The one or more virtual processing element contexts each have first logic, for prescribing whether the one of the one or more virtual processing elements is permitted to configure the resources; and second logic, for prescribing a subset of the resources that is allocated to said one of the one or more virtual processing elements. The configuration logic is coupled to the virtual multiprocessor context and the one or more virtual processing element contexts. The configuration logic detects whether the one of the one or more virtual processing elements is permitted to configure the resources, updates the virtual multiprocessor context to direct that the virtual multiprocessor enter the configuration state, and configures the resources by updating a prescribed virtual processing element context.

Owner:MIPS TECH INC

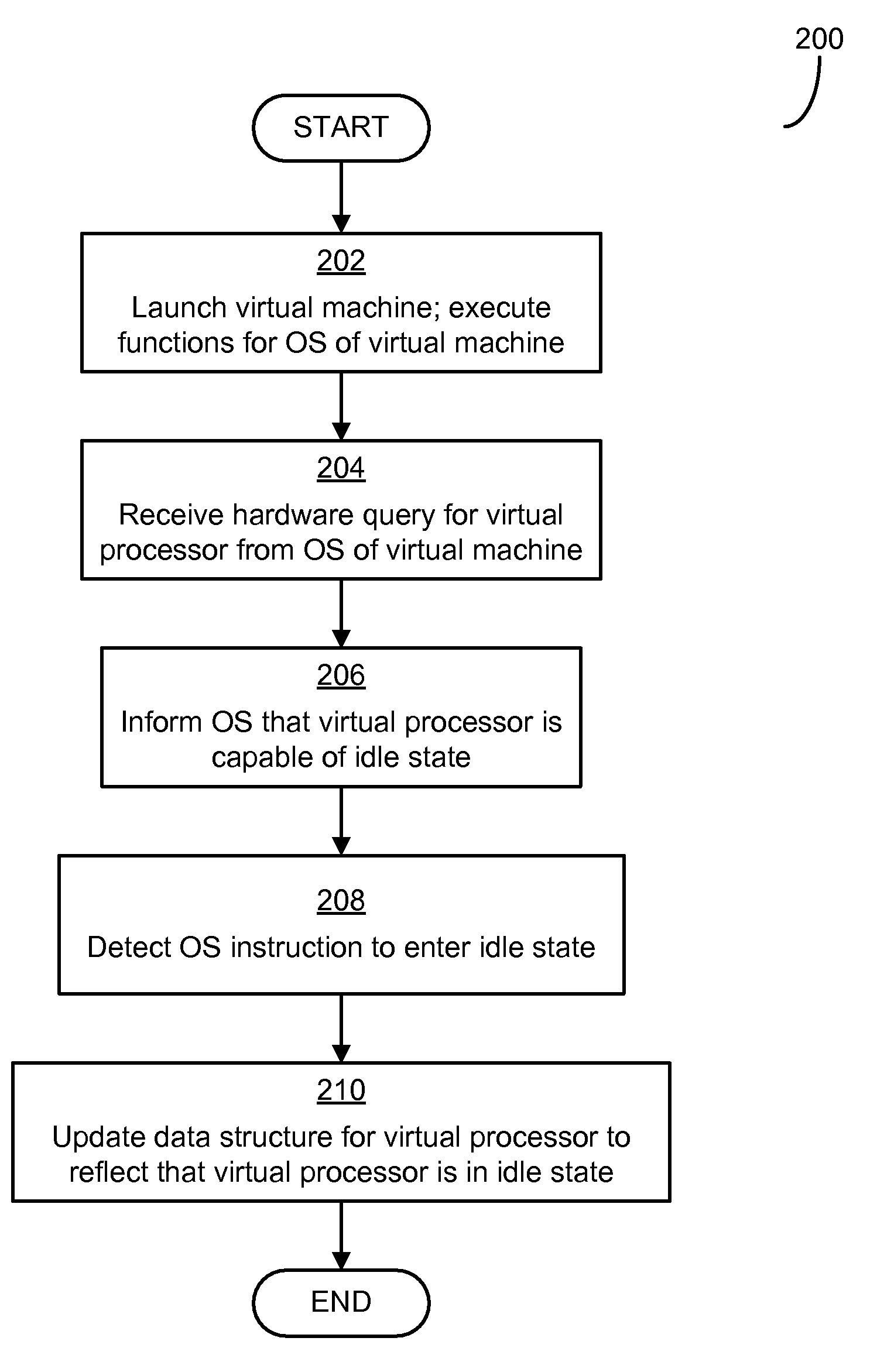

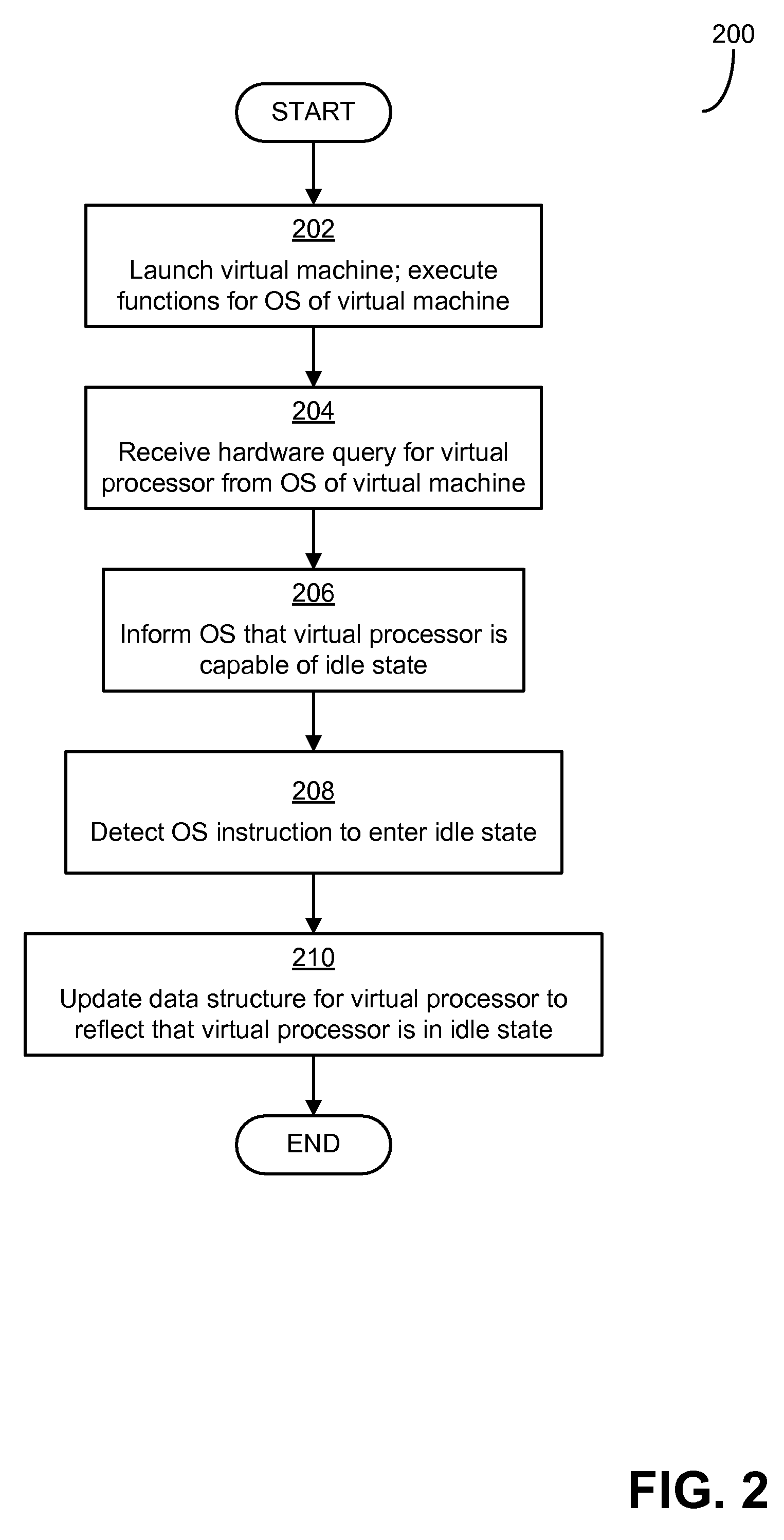

Power-saving operating system for virtual environment

ActiveUS20100218183A1Amount of timeReduce power consumptionEnergy efficient ICTEfficient power electronics conversionOperational systemComputer science

Principles for enabling power management techniques for virtual machines. In a virtual machine environment, a physical computer system may maintain management facilities to direct and control one or more virtual machines executing thereon. In some techniques described herein, the management facilities may be adapted to place a virtual processor in an idle state in response to commands from a guest operating system. One or more signaling mechanisms may be supported such that the guest operating system will command the management facilities to place virtual processors in the idle state.

Owner:MICROSOFT TECH LICENSING LLC

Machine instruction for enhanced control of multiple virtual processor systems

InactiveUS20050108711A1Facilitates increased processor efficiencyEasy to cleanMultiprogramming arrangementsConcurrent instruction executionControl systemThread scheduling

Owner:INFINEON TECH AG

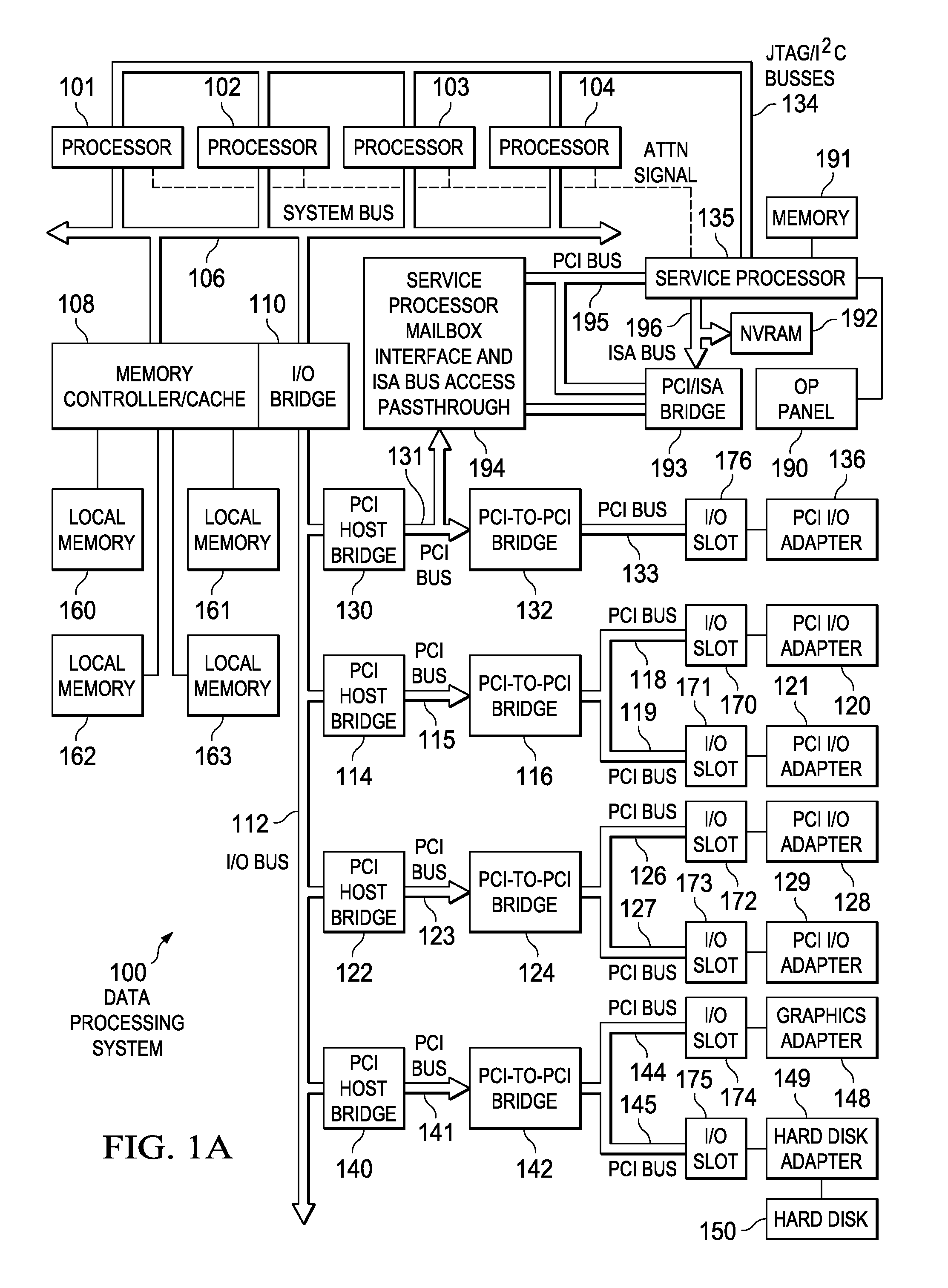

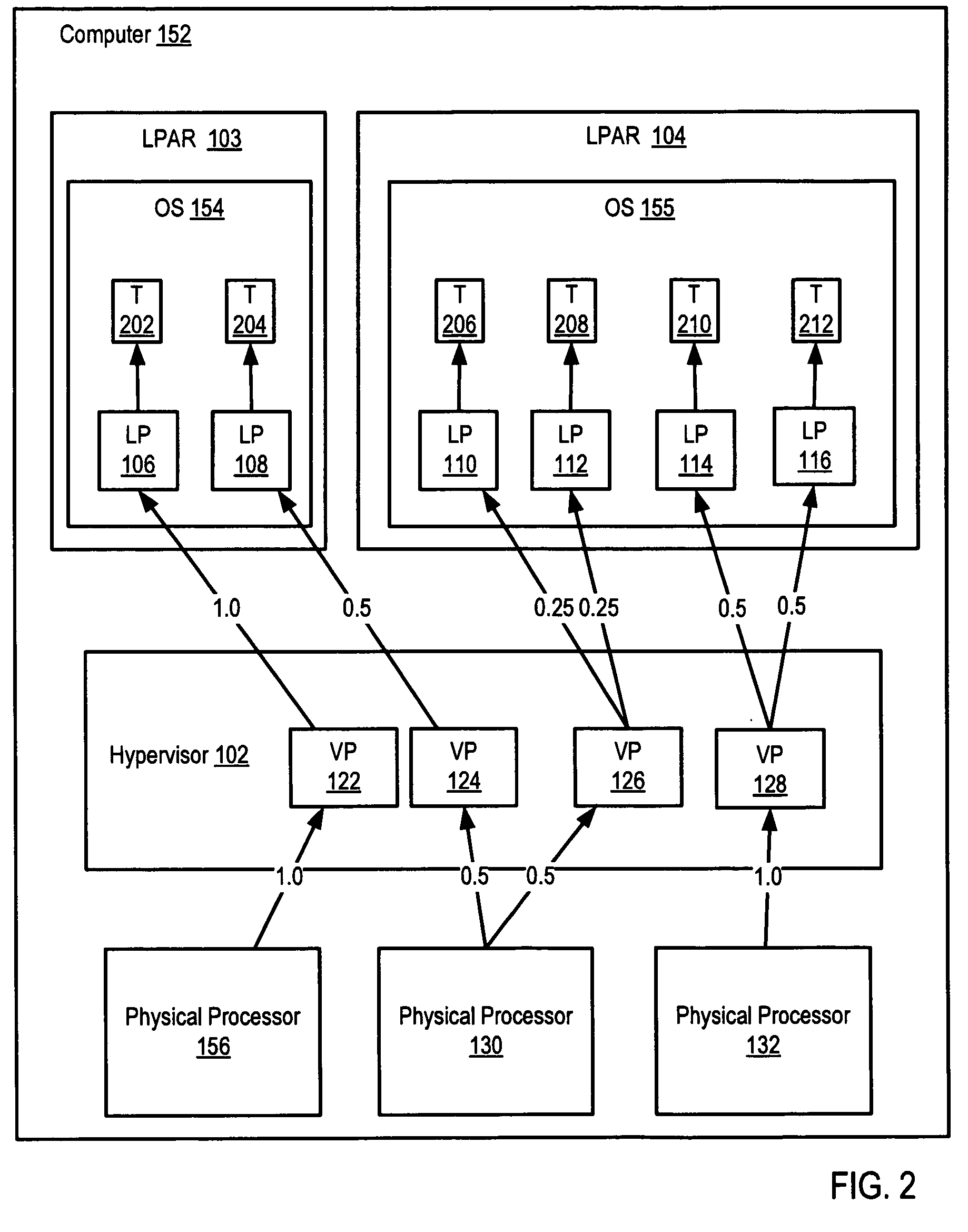

Method and apparatus for virtual processor dispatching to a partition based on shared memory pages

InactiveUS20090204959A1Memory architecture accessing/allocationMultiprogramming arrangementsData processing systemVirtual Processor

The present invention provides a computer implemented method, data processing system, and computer program product for mapping and dispatching virtual processors in a data processing system having at least a first partition and a second partition. The data processing system runs a first partition on a virtual processor during a first timeslice. The data processing system identifies an at least one physical page used by the first partition and the second partition. The data processing system maps the at least one physical page to the first partition and the second partition. The data processing system determines a fitness value based on the mapping. The data processing system dispatches the Virtual processor to the second partition on a second timeslice based on the fitness value, wherein the second timeslice immediately succeeds after the first timeslice, whereby the at least one physical page remains in cache during at least the first timeslice and the second timeslice.

Owner:IBM CORP

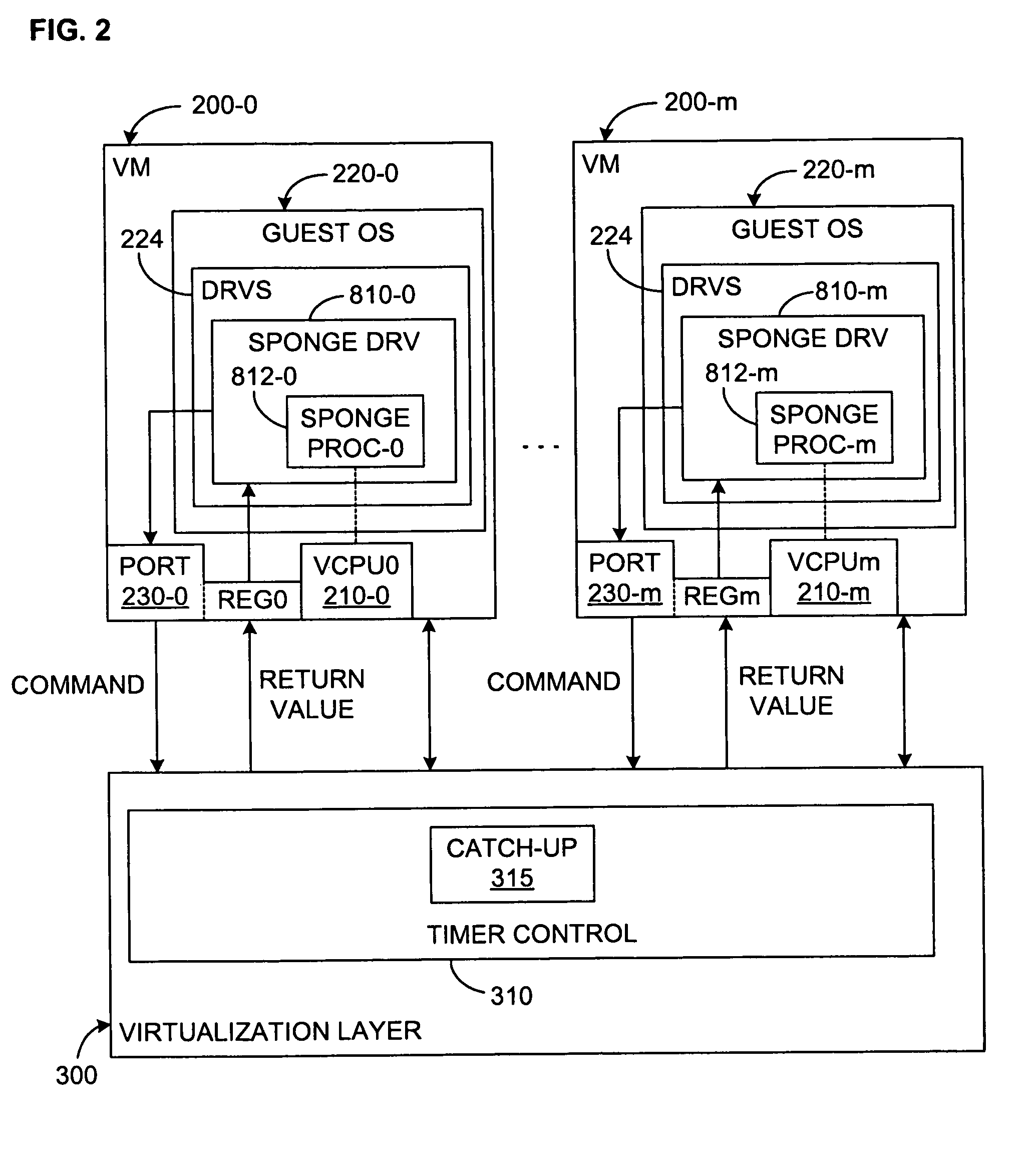

Method and system for improving the accuracy of timing and process accounting within virtual machines

ActiveUS7945908B1Reduces likelihood of deschedulingAvoid violationsSoftware simulation/interpretation/emulationMemory systemsOperational systemVirtual computing

A sponge process, for example within a driver in a guest operating system, is associated in a virtual computer system with each virtual processor in one or more virtual machines. When timer interrupts become backlogged, for example because a virtual machine is temporarily descheduled to allow other virtual machines to run, and upon occurrence of a trigger event, a conventional interrupt is disengaged and catch-up interrupts are instead directed into an appropriate one of the sponge processes. The backlogged timer interrupts are thus delivered without unfairly attributing descheduled time to whatever processes happened to be running while the catch-up interrupts are delivered, and without violating typical guest operating system timing assumptions.

Owner:VMWARE INC

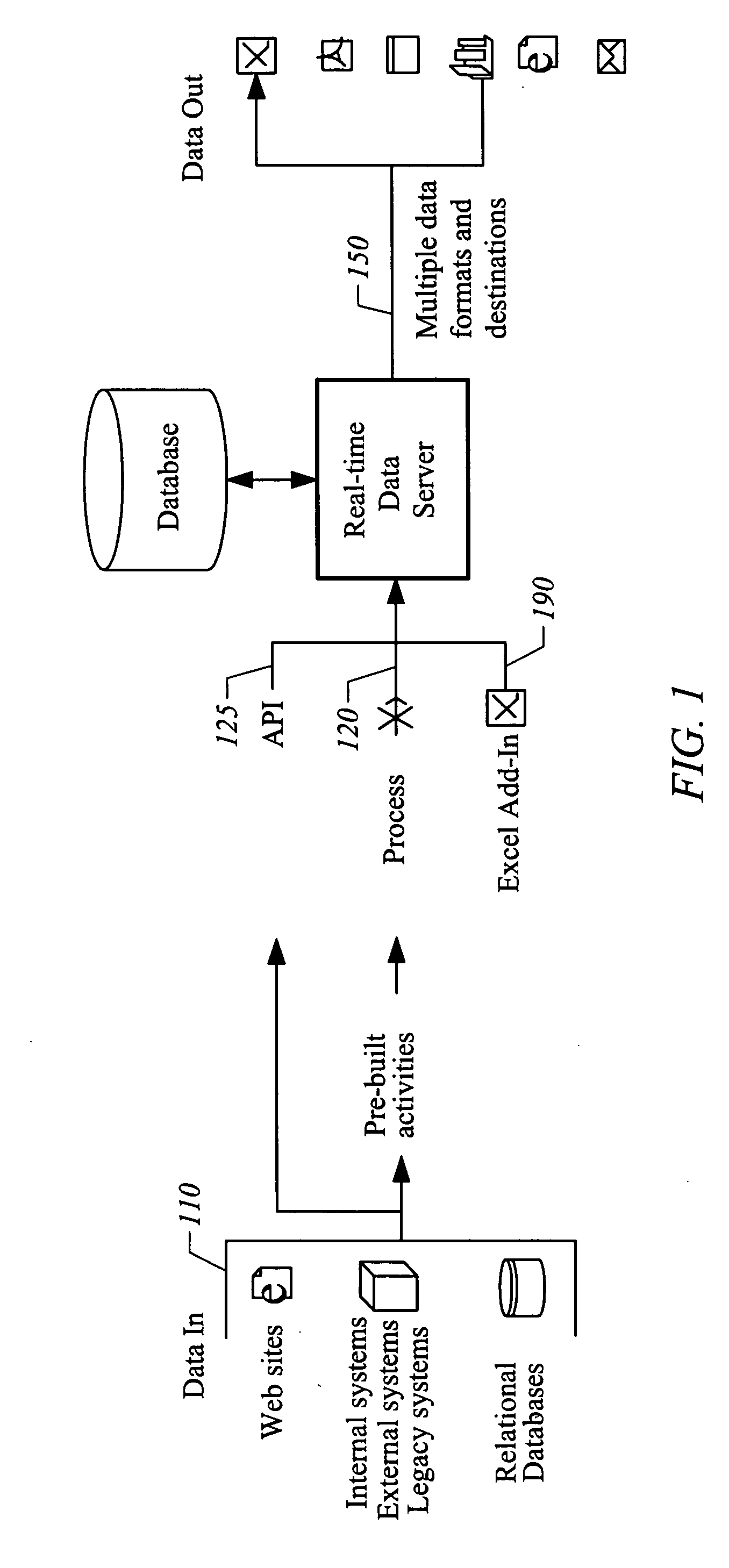

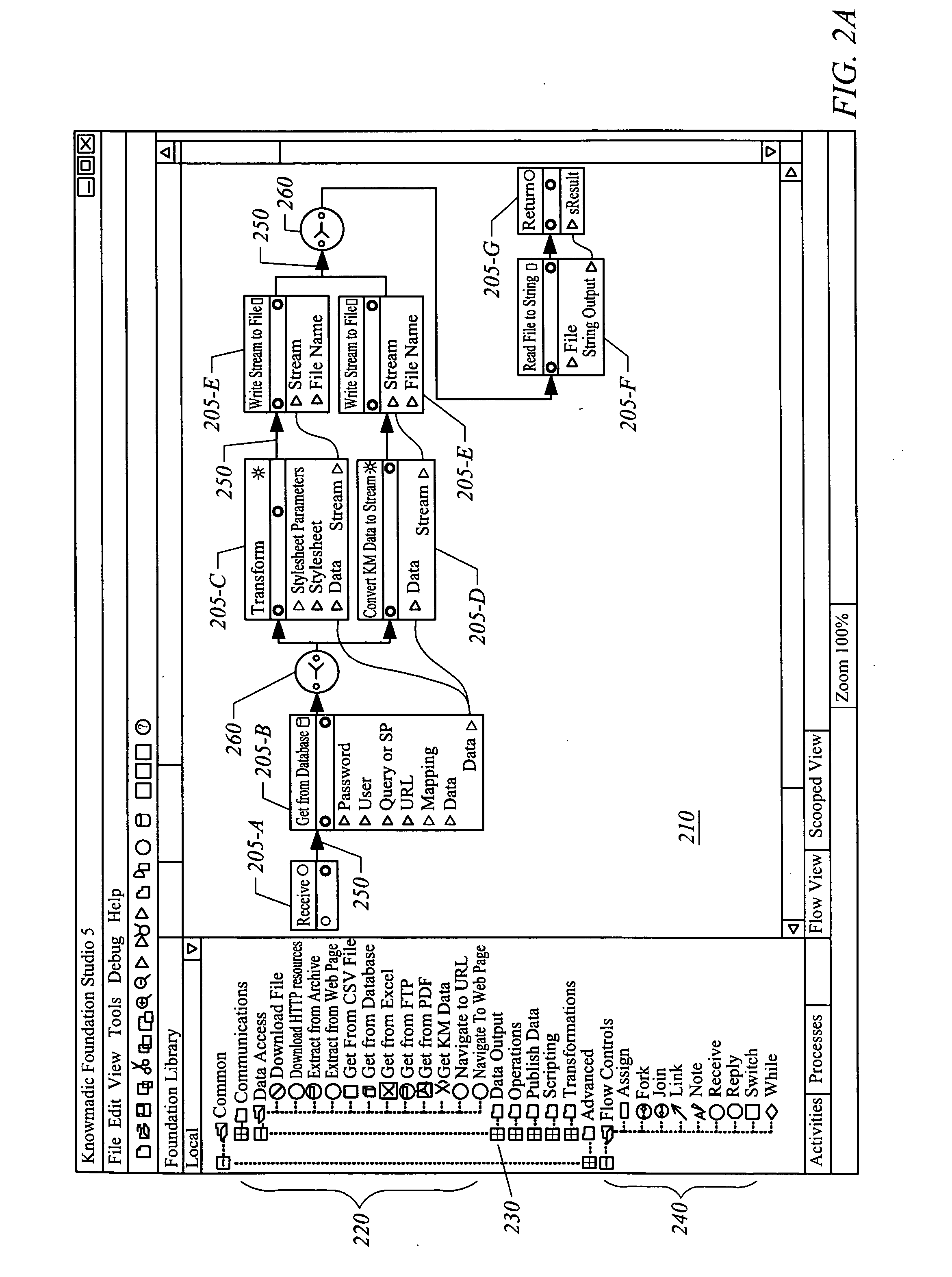

Execution engine for business processes

InactiveUS20060095274A1Data processing applicationsResource allocationPaper documentDocument preparation

An execution engine is disclosed for executing business processes. An executable object model is generated for a business process document. Executable object models of business processes are assigned to virtual processors.

Owner:IP3 2017 SERIES 200 OF ALLIED SECURITY TRUST I

Virtual architecture and instruction set for parallel thread computing

ActiveUS8321849B2Improve portabilityMore portableProgram control using stored programsSoftware engineeringApplication softwareData sharing

A virtual architecture and instruction set support explicit parallel-thread computing. The virtual architecture defines a virtual processor that supports concurrent execution of multiple virtual threads with multiple levels of data sharing and coordination (e.g., synchronization) between different virtual threads, as well as a virtual execution driver that controls the virtual processor. A virtual instruction set architecture for the virtual processor is used to define behavior of a virtual thread and includes instructions related to parallel thread behavior, e.g., data sharing and synchronization. Using the virtual platform, programmers can develop application programs in which virtual threads execute concurrently to process data; virtual translators and drivers adapt the application code to particular hardware on which it is to execute, transparently to the programmer.

Owner:NVIDIA CORP

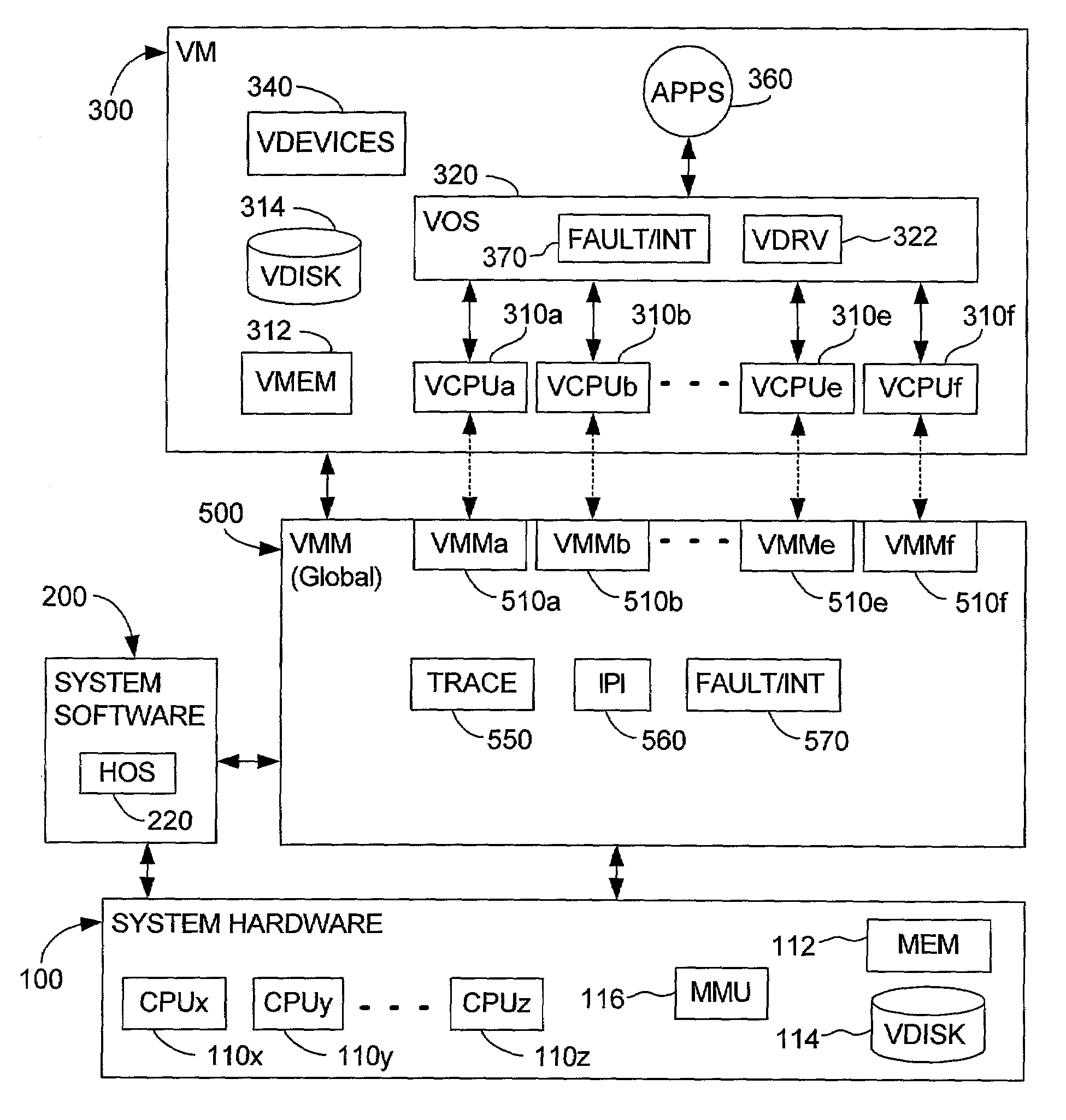

Systems and methods for initializing multiple virtual processors within a single virtual machine

ActiveUS20060005188A1Improve efficiencyProgram initiation/switchingSoftware simulation/interpretation/emulationMulti processorInit

The present invention is a system for and method of initializing multiple virtual processors in a virtual machine (VM) environment. The method of initializing multiple virtual processors includes the steps of the host creating a multiple processor VM and activating a “starter virtual processor,” the “starter virtual processor” issuing a startup command to a next virtual processor, the virtual machine monitor (VMM) giving the target virtual processor the highest priority for accessing the hardware resources, the VMM forcing the “starter virtual processor” to relinquish control of the hardware resources, the VMM handing control of the hardware resources to the target virtual processor, the target virtual processor executing and completing its startup routine, the VMM forcing the target virtual processor to relinquish control of the hardware resources, and the VMM handing control of the hardware resources back to the “starter virtual processor” for activating subsequent virtual processors.

Owner:MICROSOFT TECH LICENSING LLC

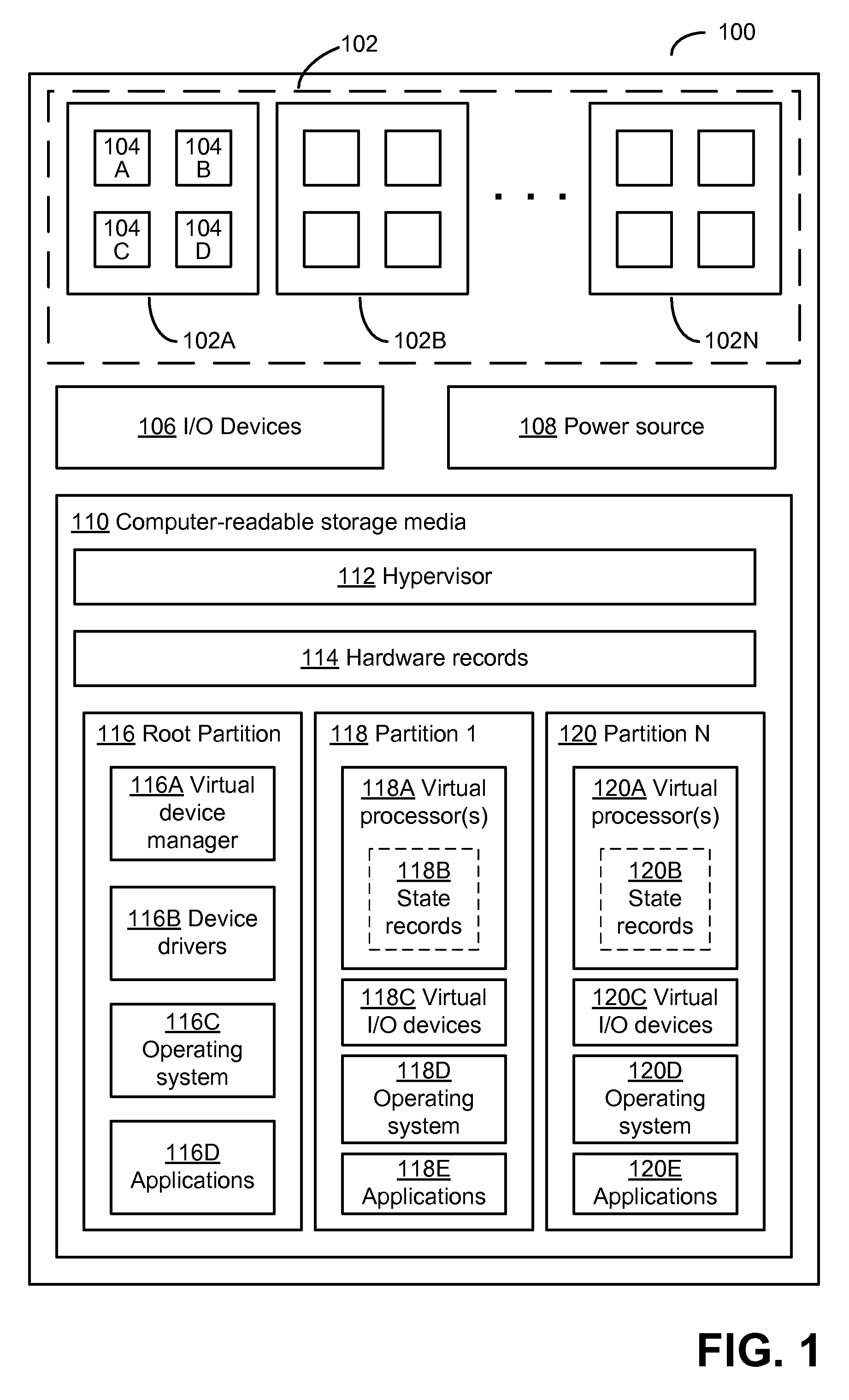

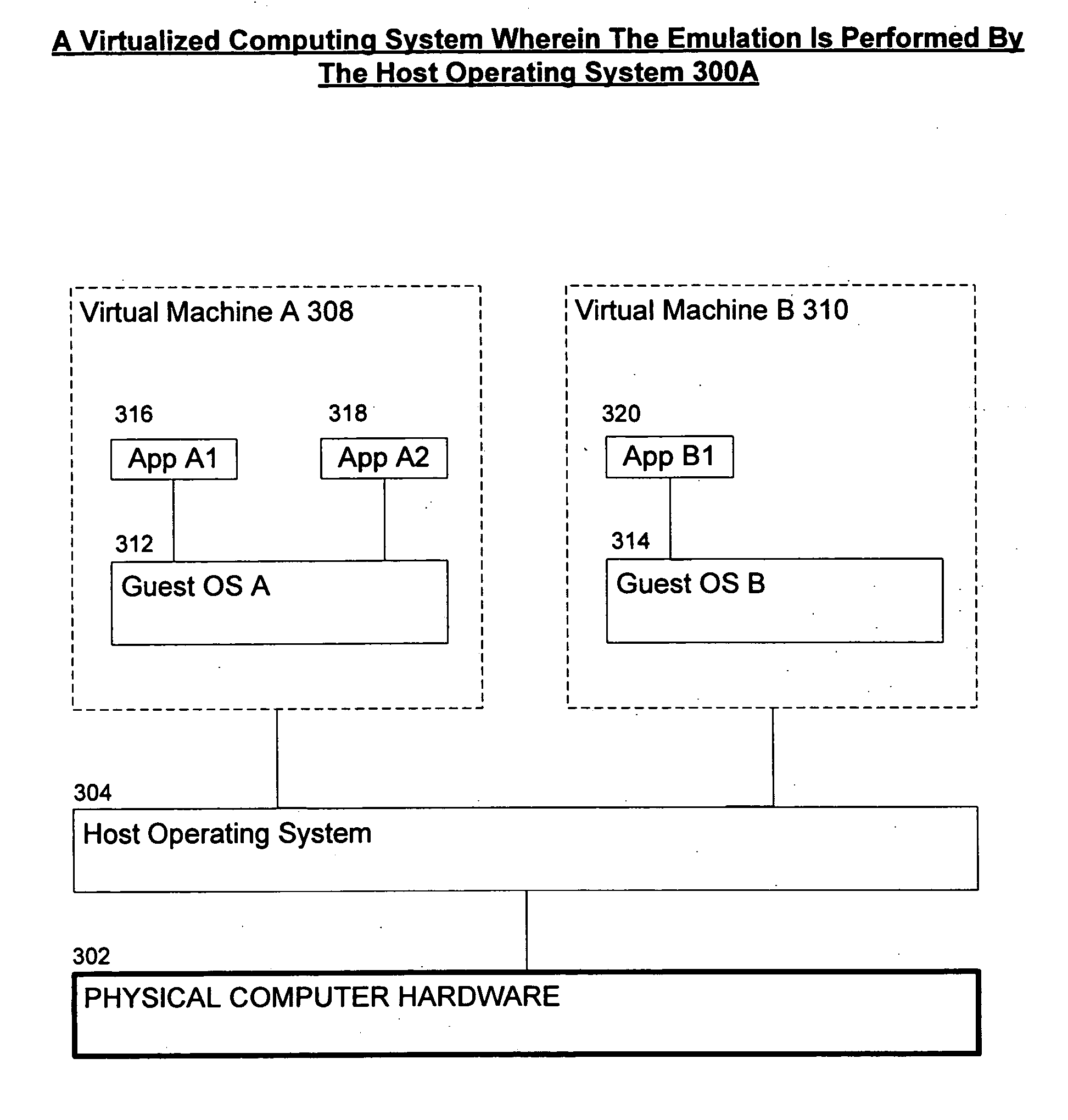

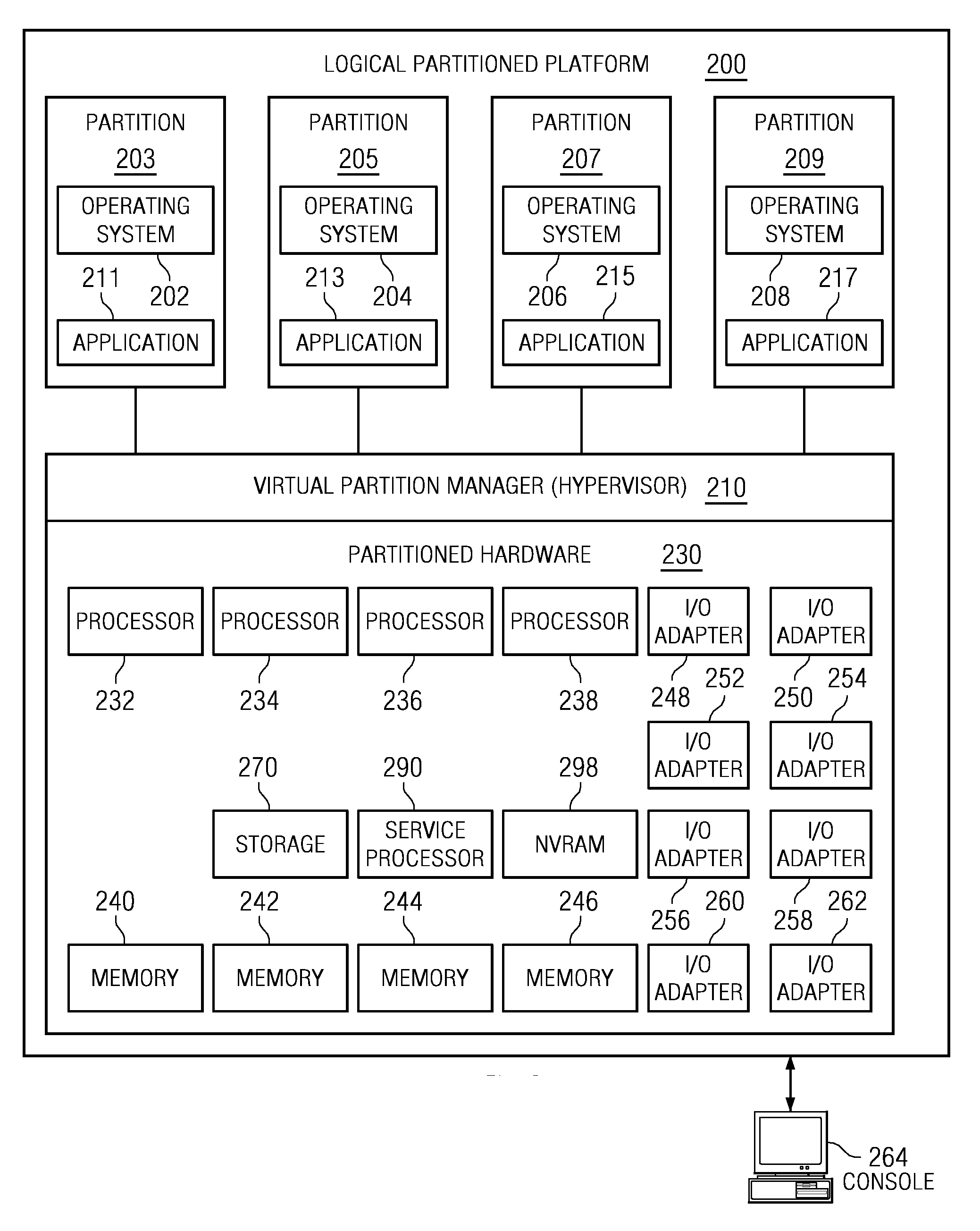

Systems and methods for hypervisor discovery and utilization

ActiveUS20060248528A1Software simulation/interpretation/emulationMemory systemsVirtual deviceComputer science

Systems and methods are provided, whereby partitions may become enlightened and discover the presence of a hypervisor. Several techniques of hypervisor discovery are discussed, such as detecting the presence of virtual processor registers (e.g. model specific registers or special-purpose registers) or the presence of virtual hardware devices. Upon discovery, information (code and / or data) may be injected in a partition by the hypervisor, whereby such injection allows the partition to call the hypervisor. Moreover, the hypervisor may present a versioning mechanism that allows the partition to match up the version of the hypervisor to its virtual devices. Next, once code and / or data is injected, calling conventions are established that allow the partition and the hypervisor to communicate, so that the hypervisor may perform some operations on behalf of the partition. Four exemplary calling conventions are considered: restartable instructions, a looping mechanism, shared memory transport, and synchronous or asynchronous processed packets. Last, cancellation mechanisms are considered, whereby partition requests may be cancelled.

Owner:MICROSOFT TECH LICENSING LLC

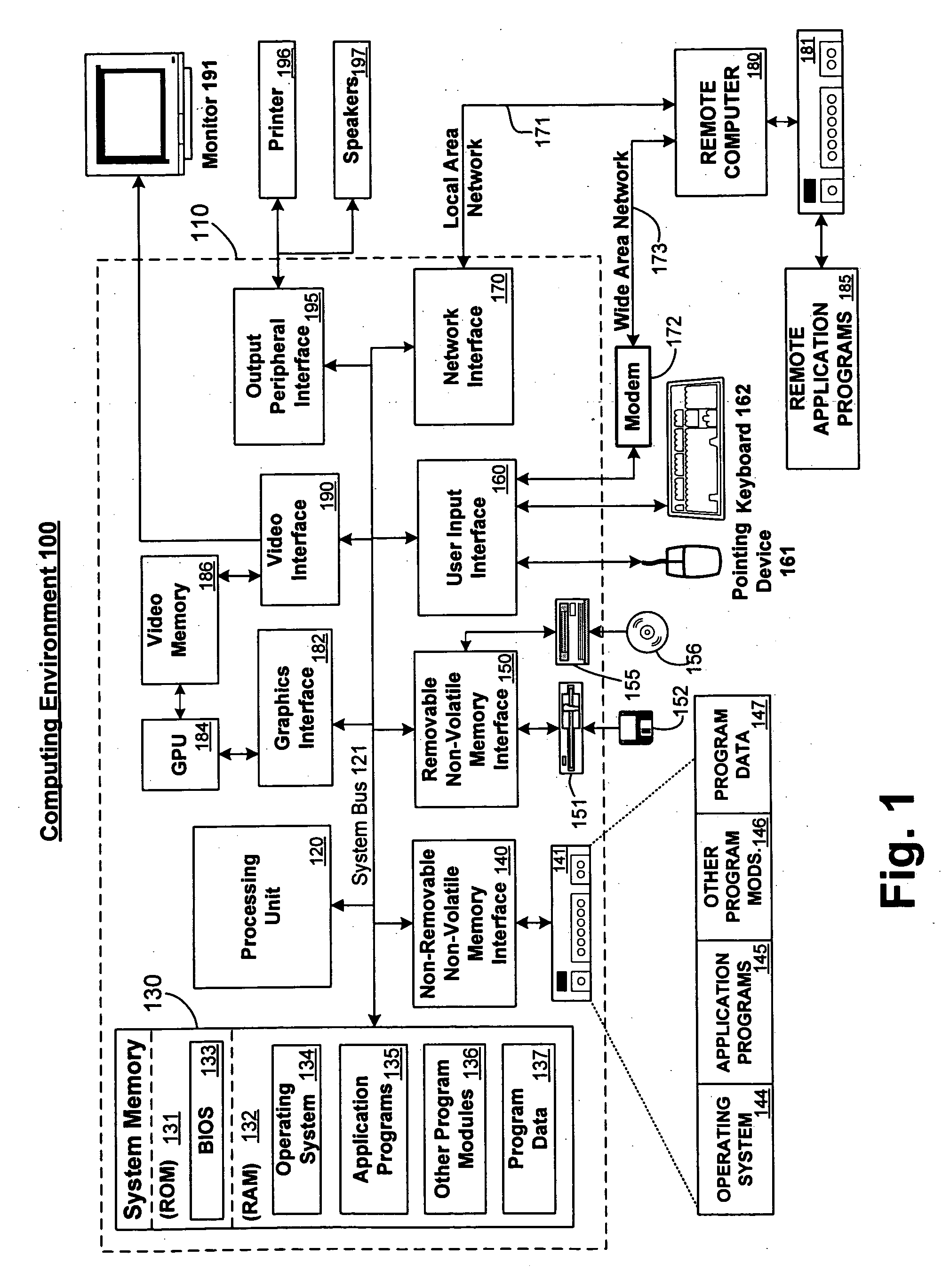

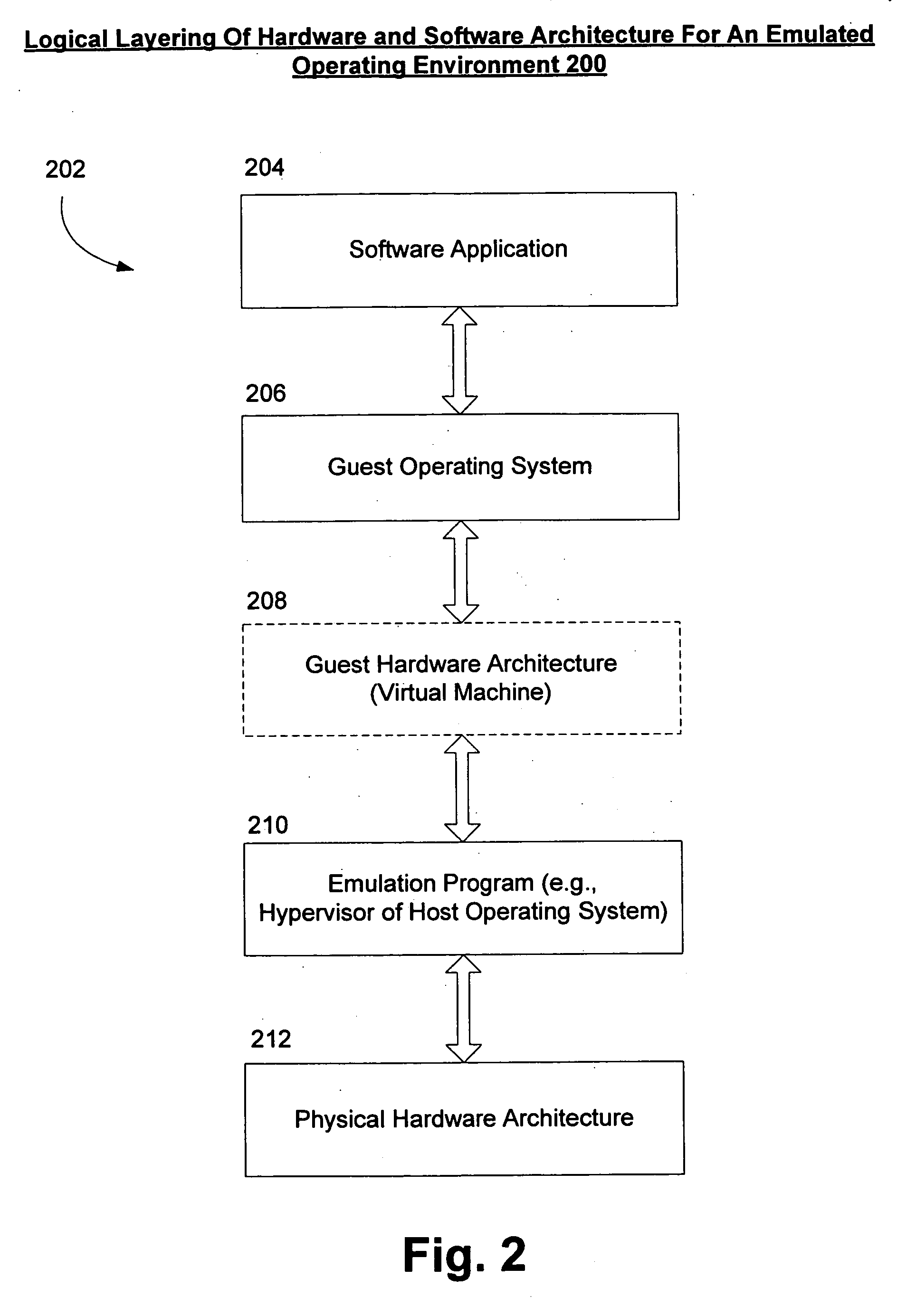

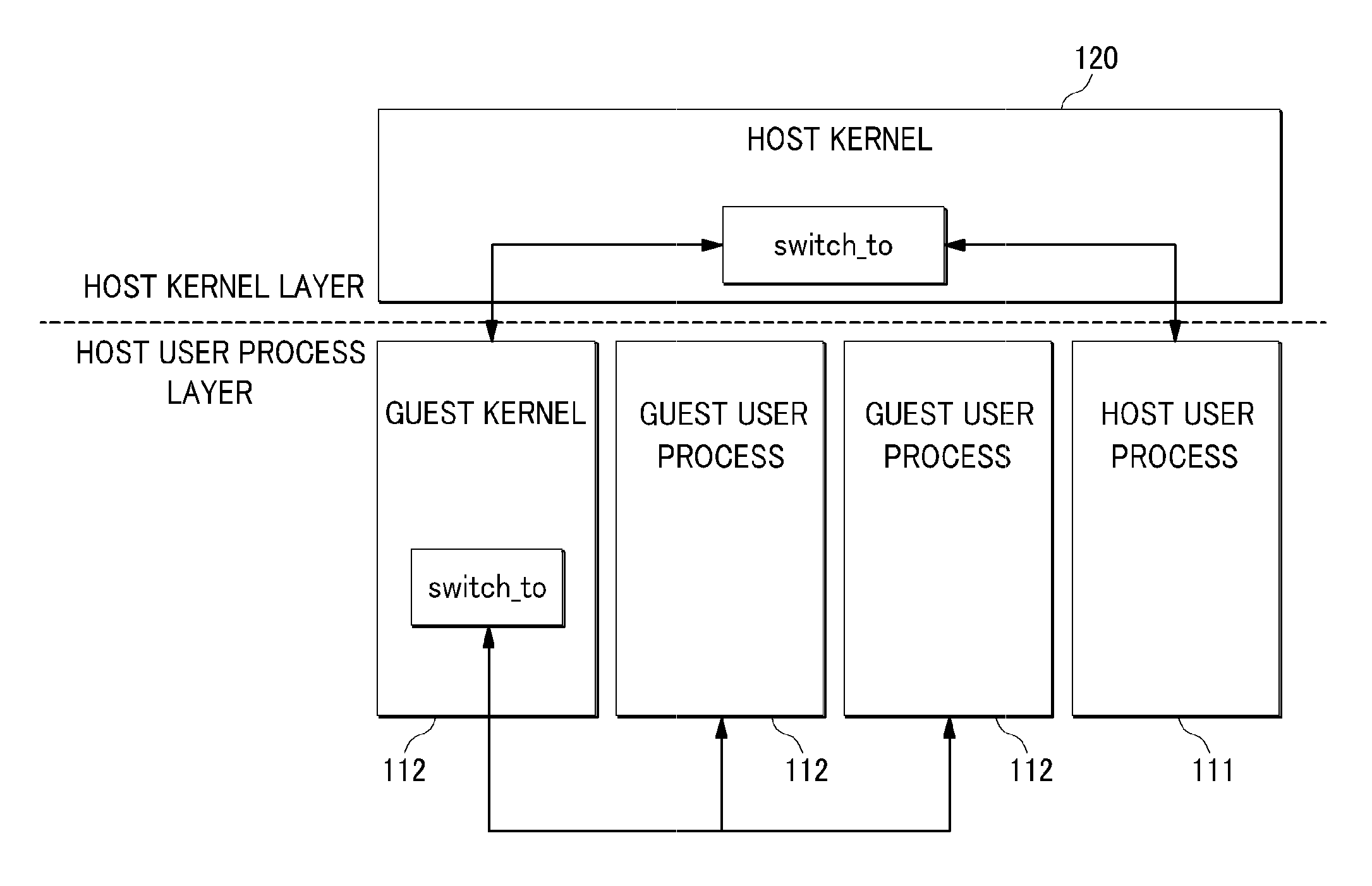

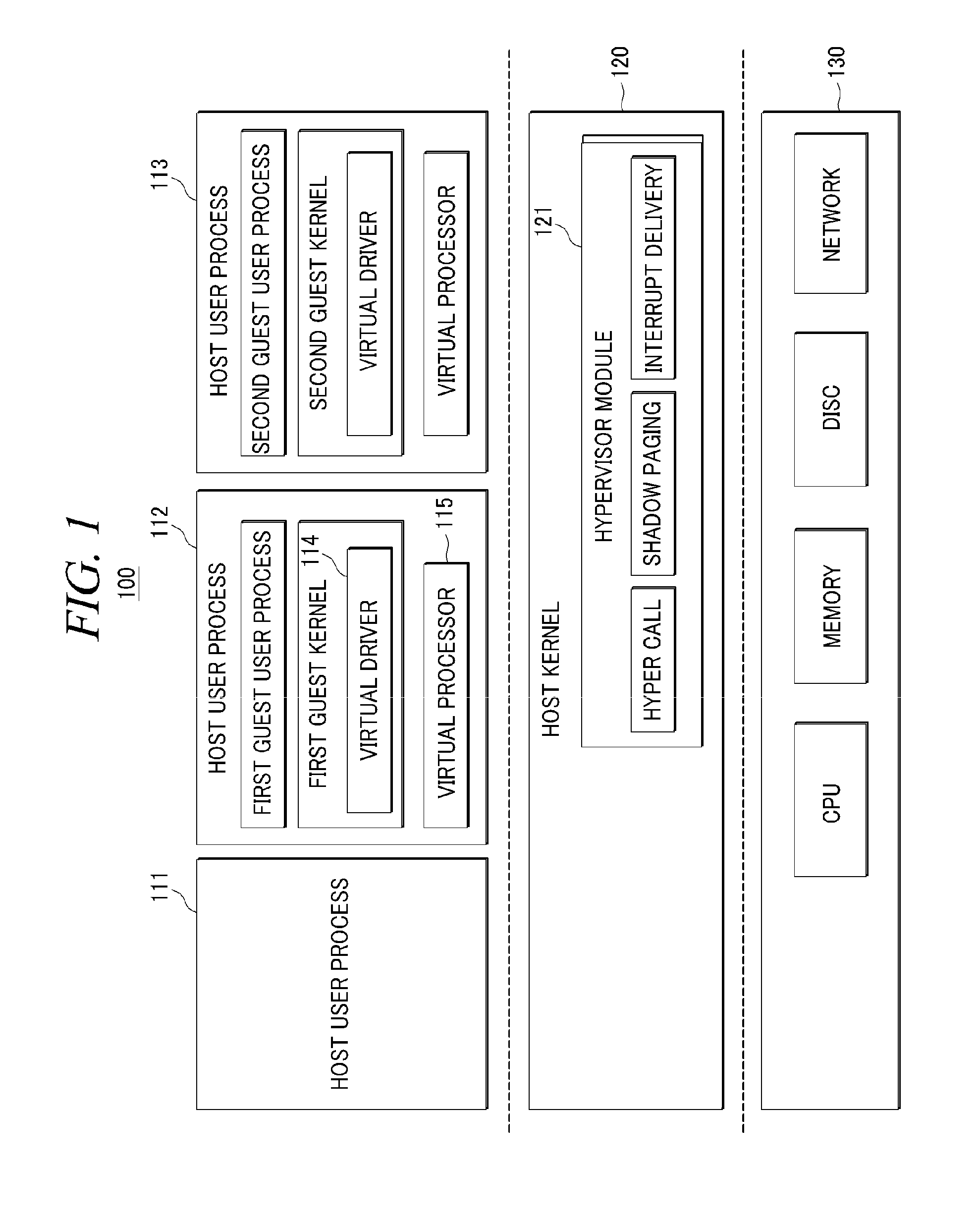

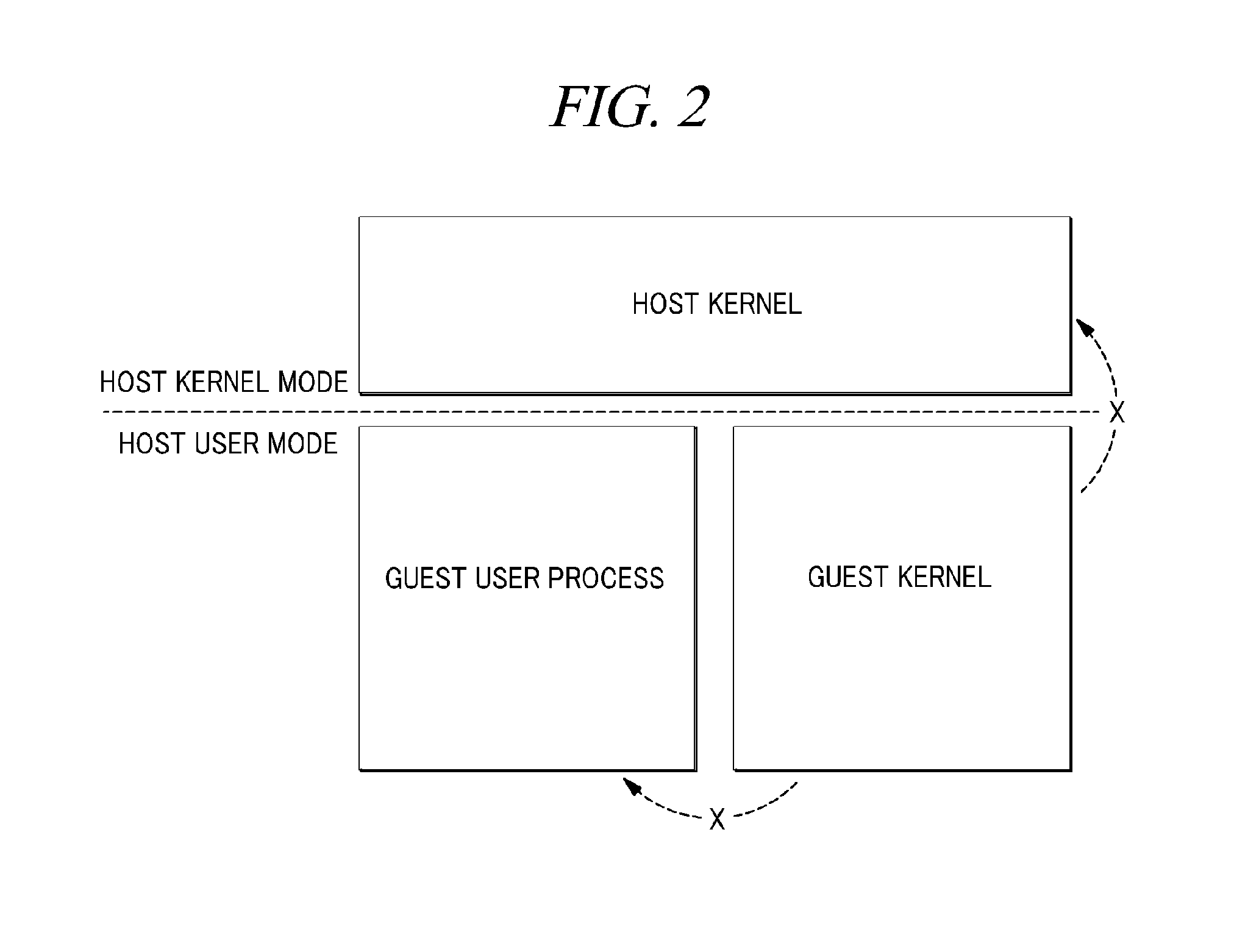

Virtualization apparatus

InactiveUS20110167422A1Simple procedureIncrease speedDigital computer detailsSoftware simulation/interpretation/emulationAddress spaceVirtual Processor

A virtualization apparatus includes one or more guest machines each comprised of a guest kernel and a guest user process, a hypervisor module installed in a host kernel and handling a request of the guest machine with regard to the virtualization apparatus, and a virtual processor supporting the guest machine to serve as a host user process and handling an interrupt and a switching of the guest machine, wherein address spaces of the guest kernel and the guest user process are designed to be separated from each other.

Owner:RES & BUSINESS FOUND SUNGKYUNKWAN UNIV

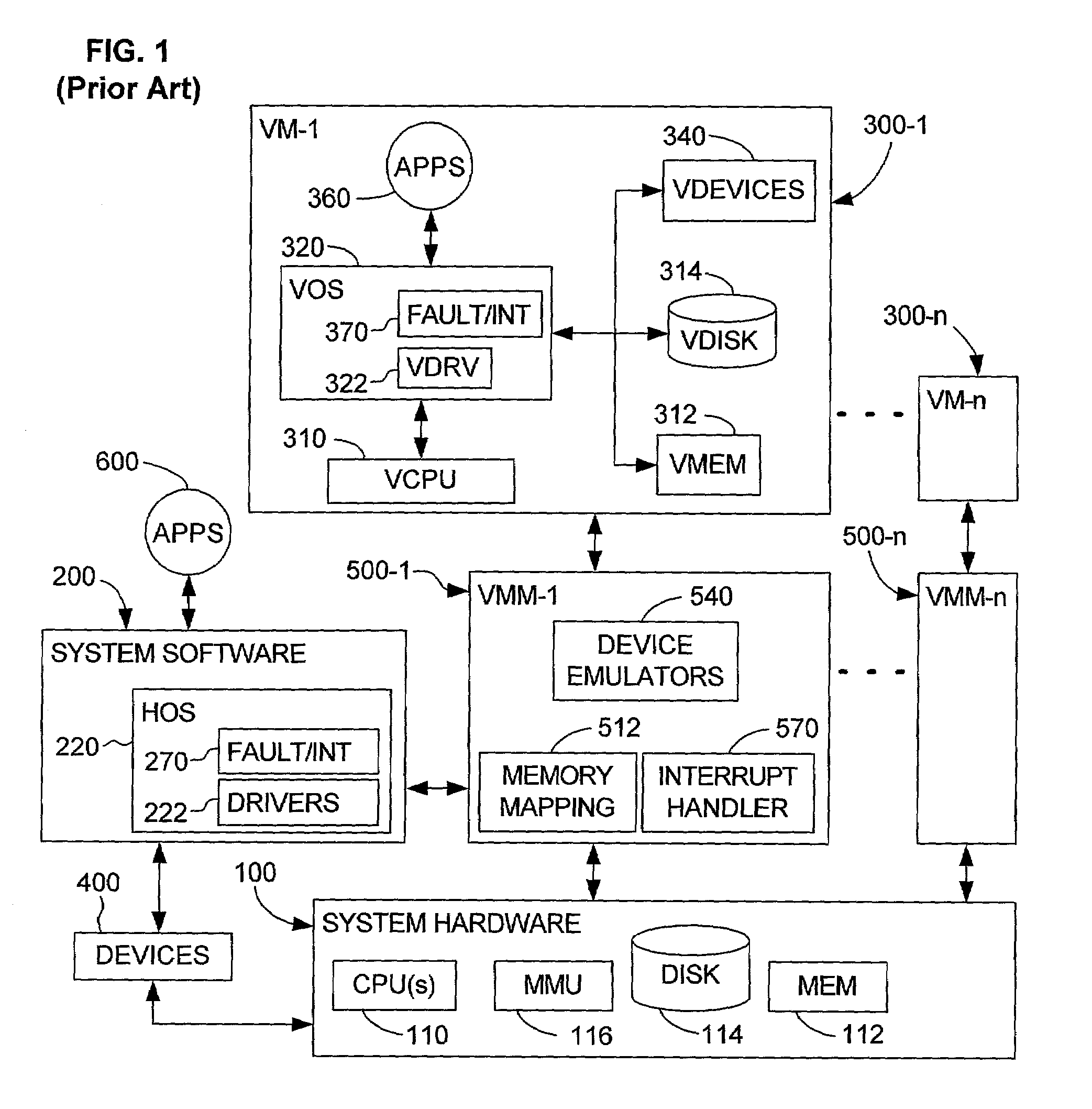

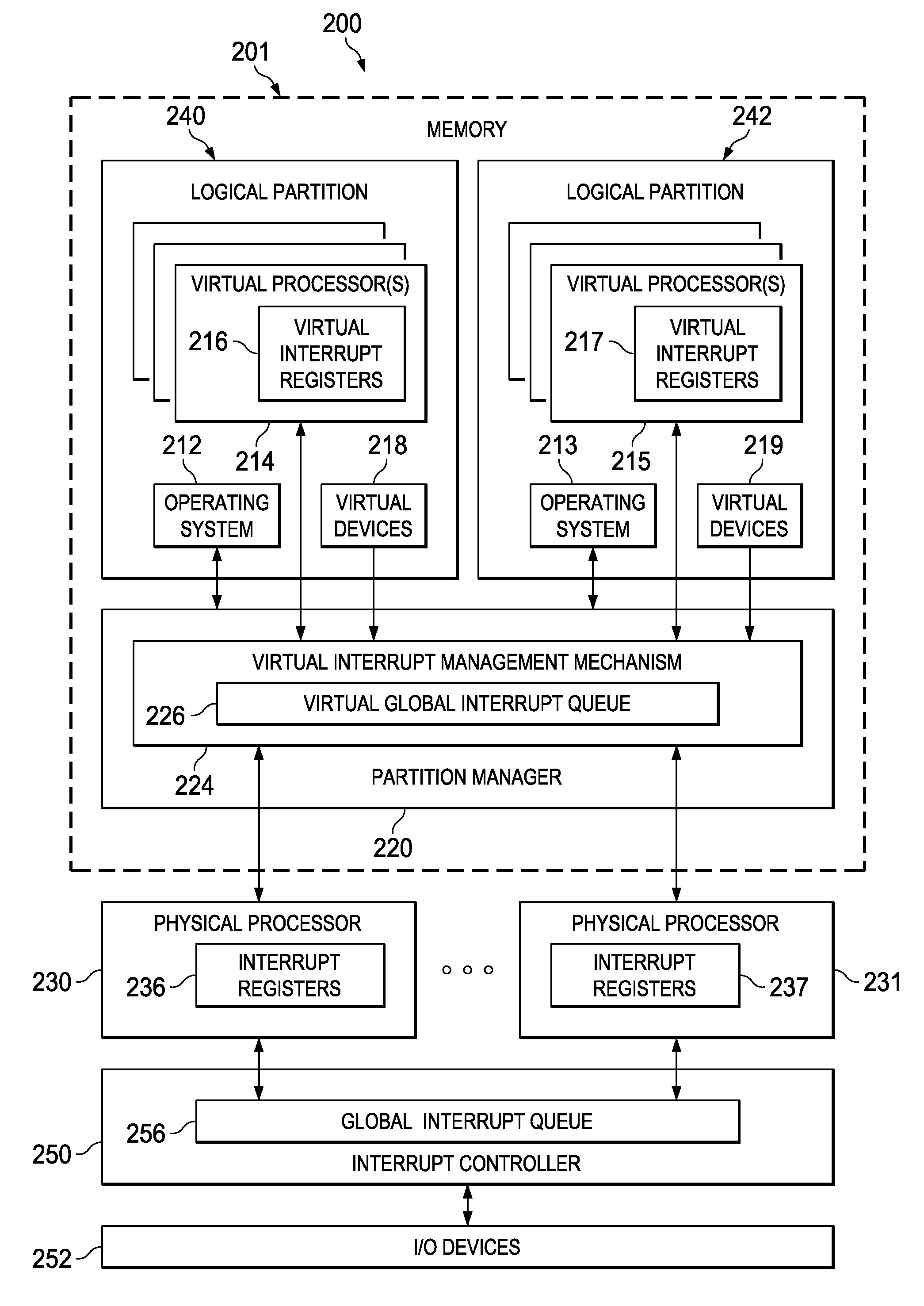

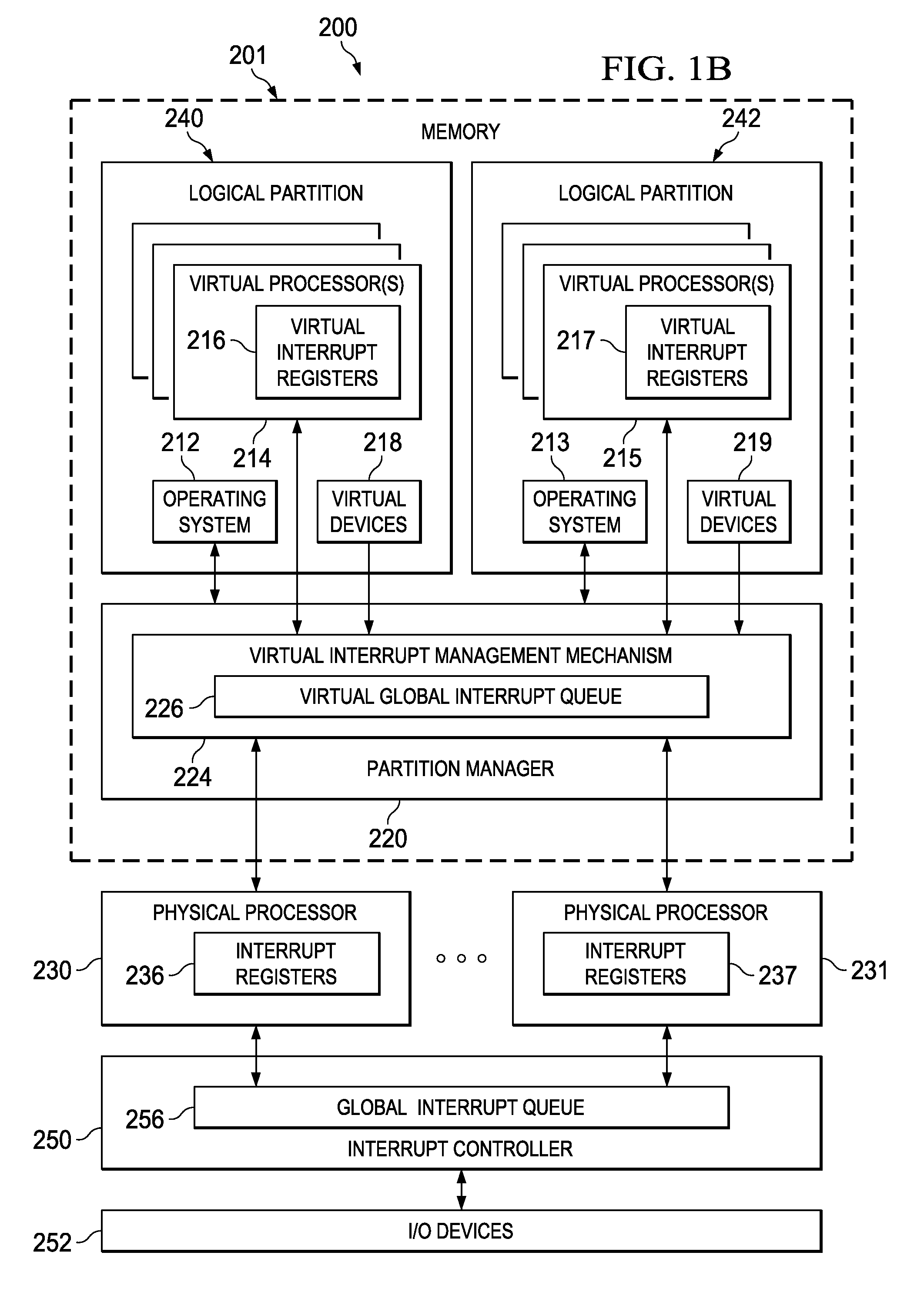

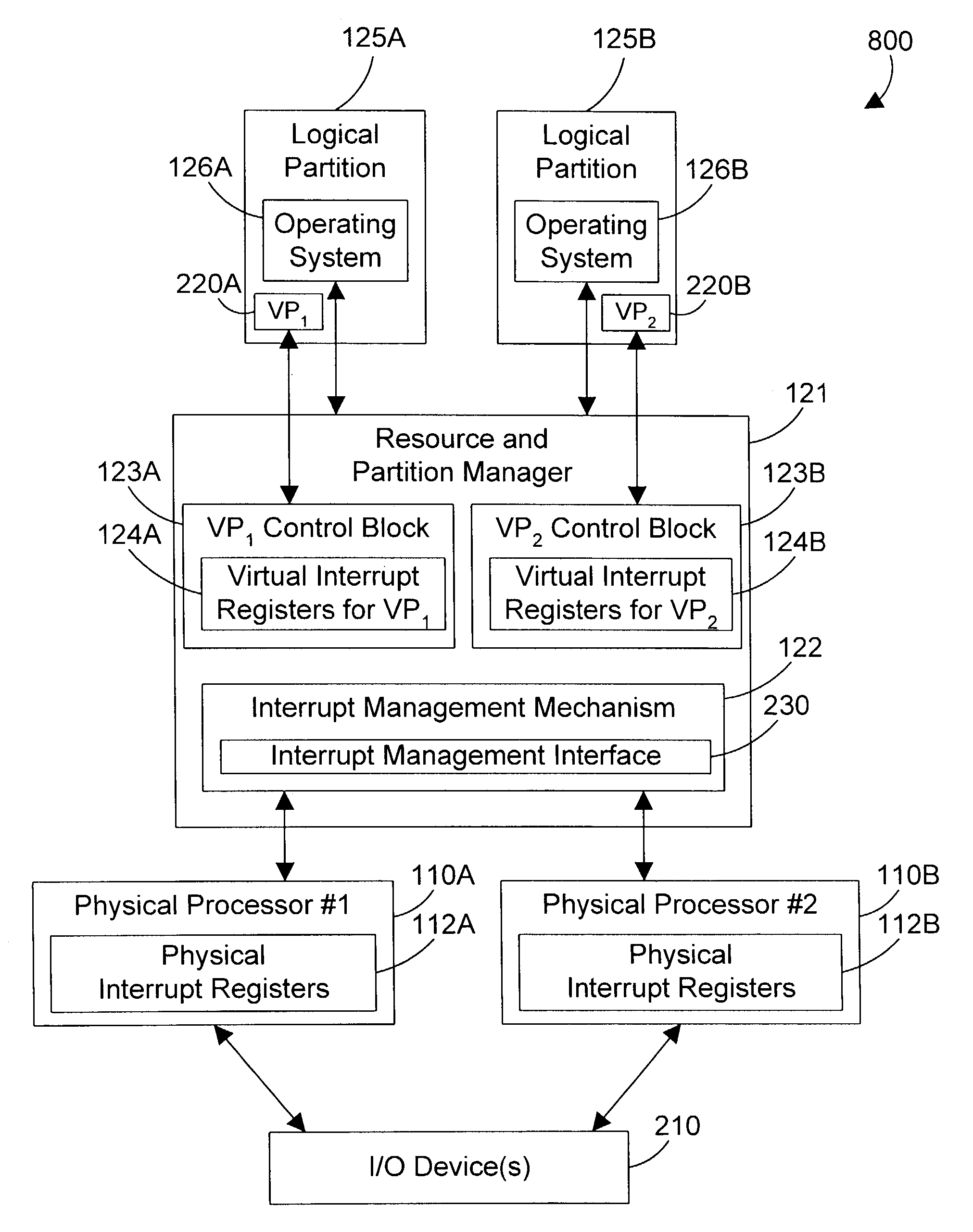

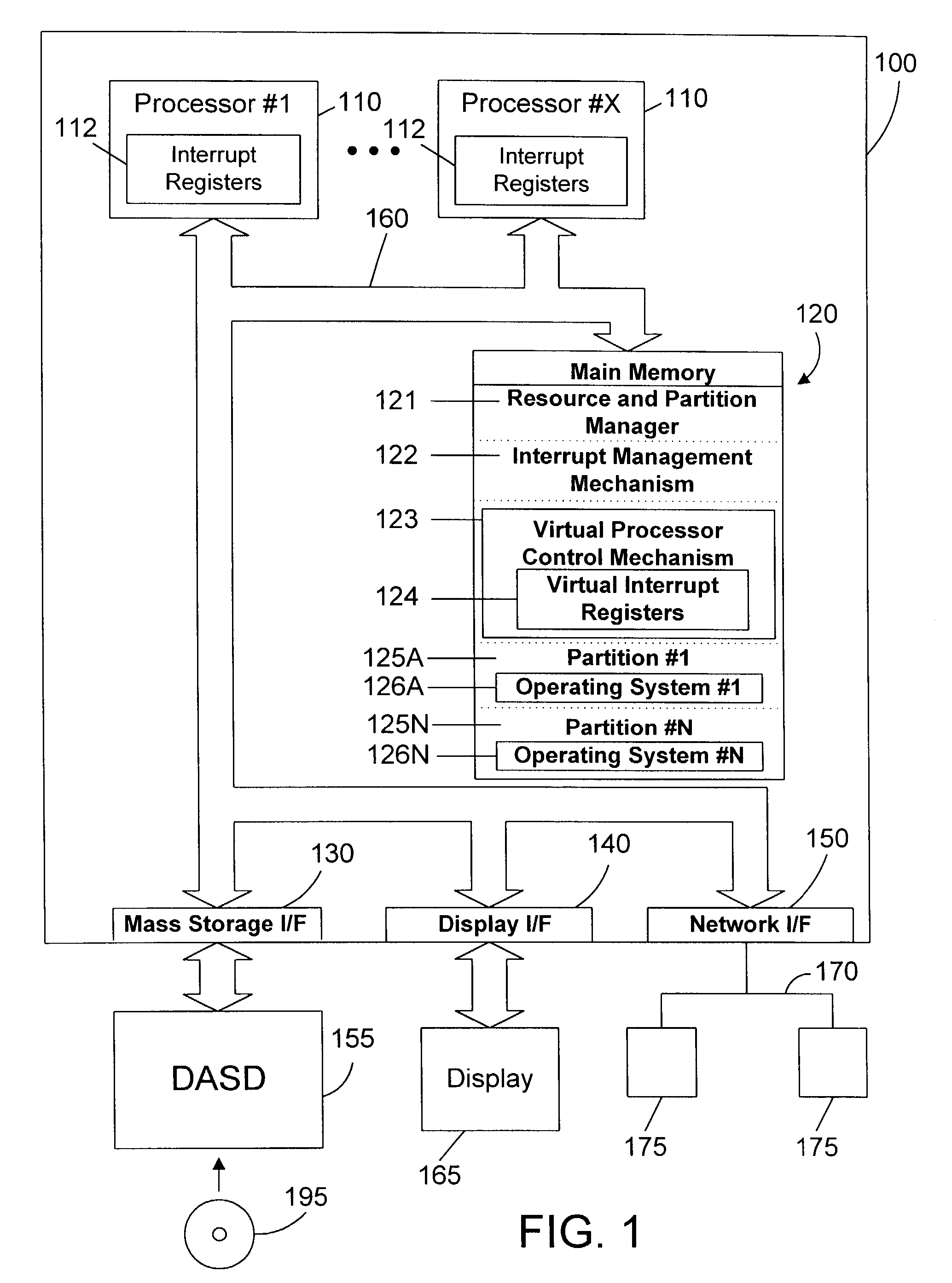

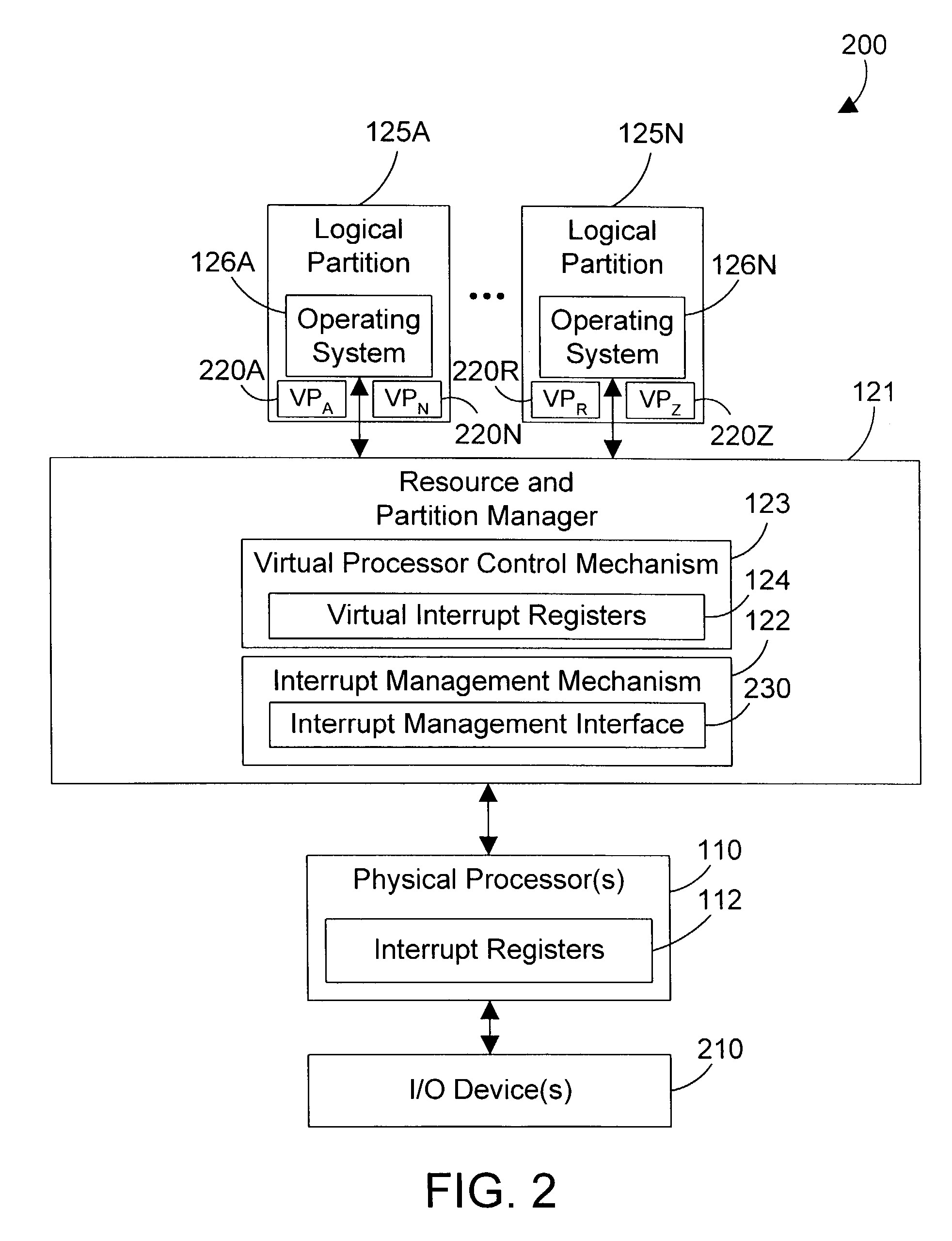

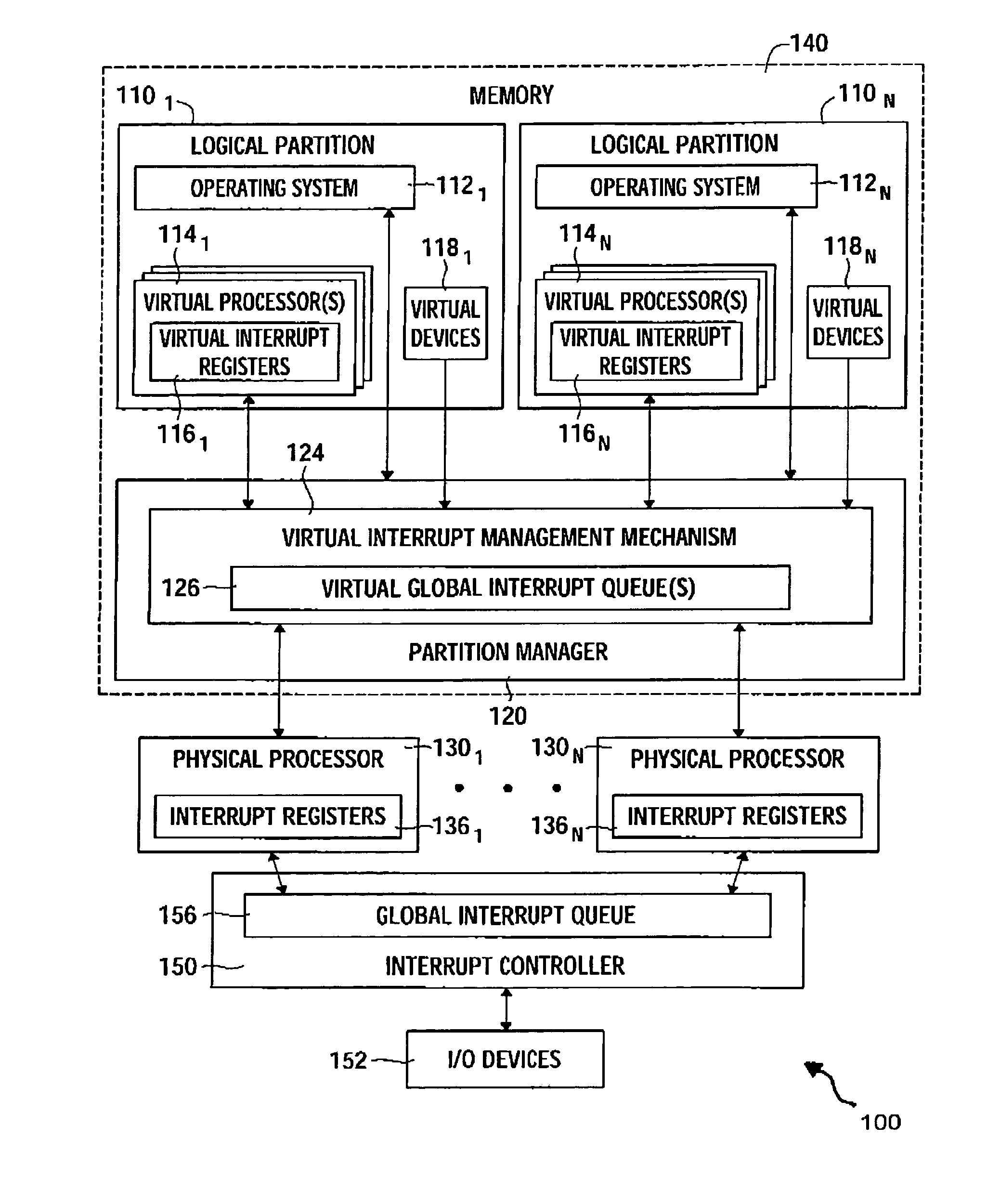

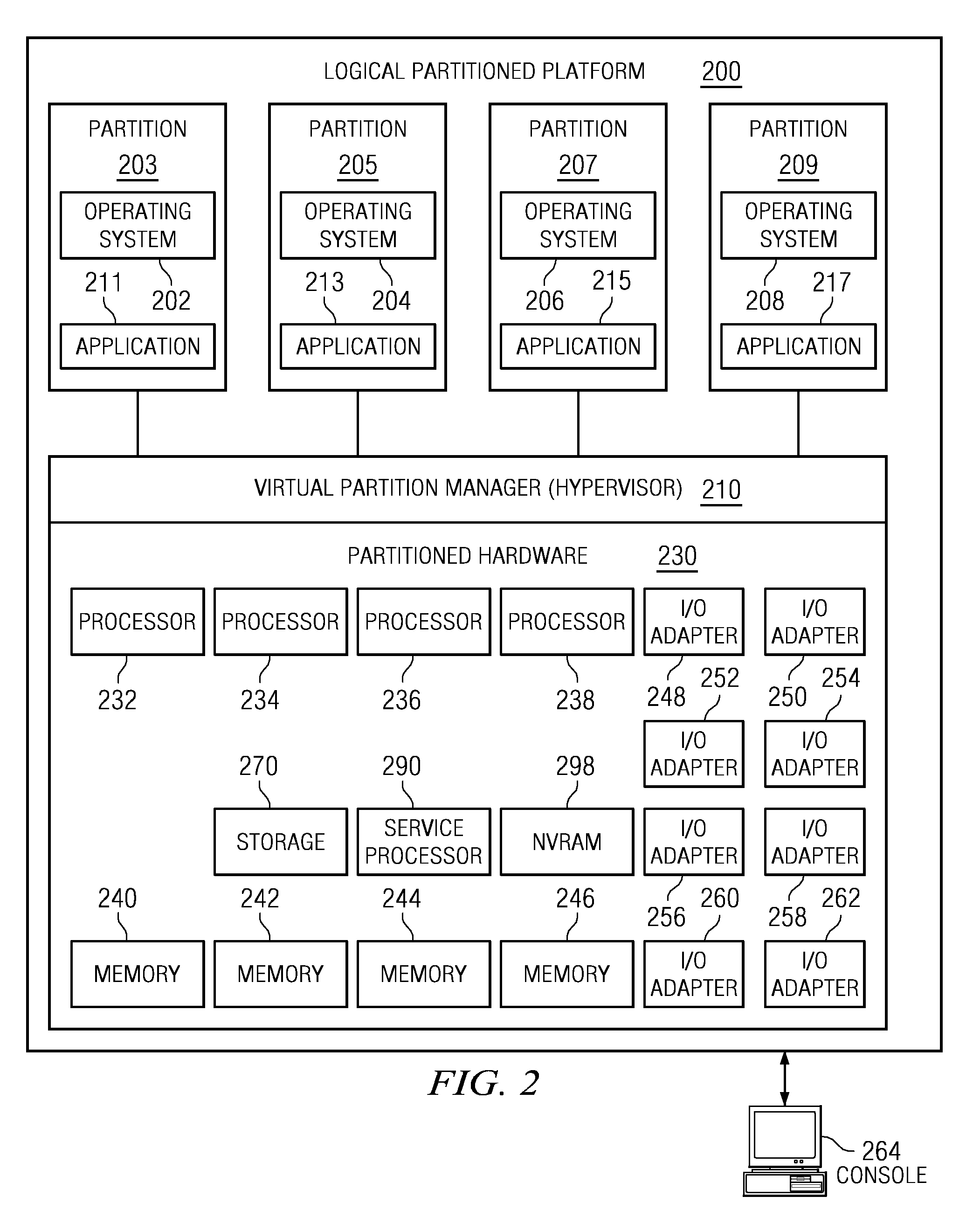

Apparatus and method for virtualizing interrupts in a logically partitioned computer system

A resource and partition manager virtualizes interrupts without using any additional hardware in a way that does not disturb the interrupt processing model of operating systems running on a logical partition. In other words, the resource and partition manager supports virtual interrupts in a logically partitioned computer system that may include share processors with no changes to a logical partition's operating system. A set of virtual interrupt registers is created for each virtual processor in the system. The resource and partition manager uses the virtual interrupt registers to process interrupts for the corresponding virtual processor. In this manner, from the point of view of the operating system, the interrupt processing when the operating system is running in a logical partition that may contain shared processors and virtual interrupts is no different that the interrupt processing when the operating system is running in computer system that only contains dedicated processor partitions.

Owner:IBM CORP

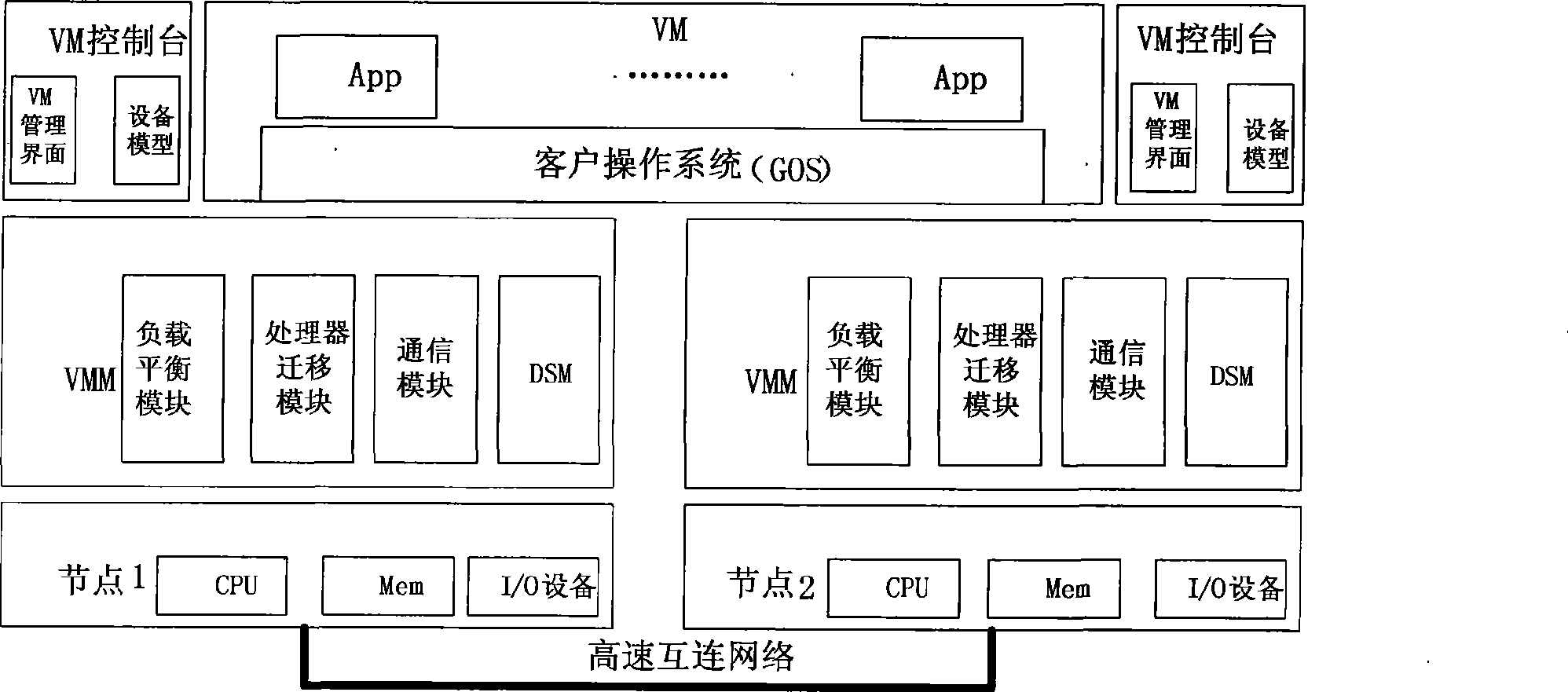

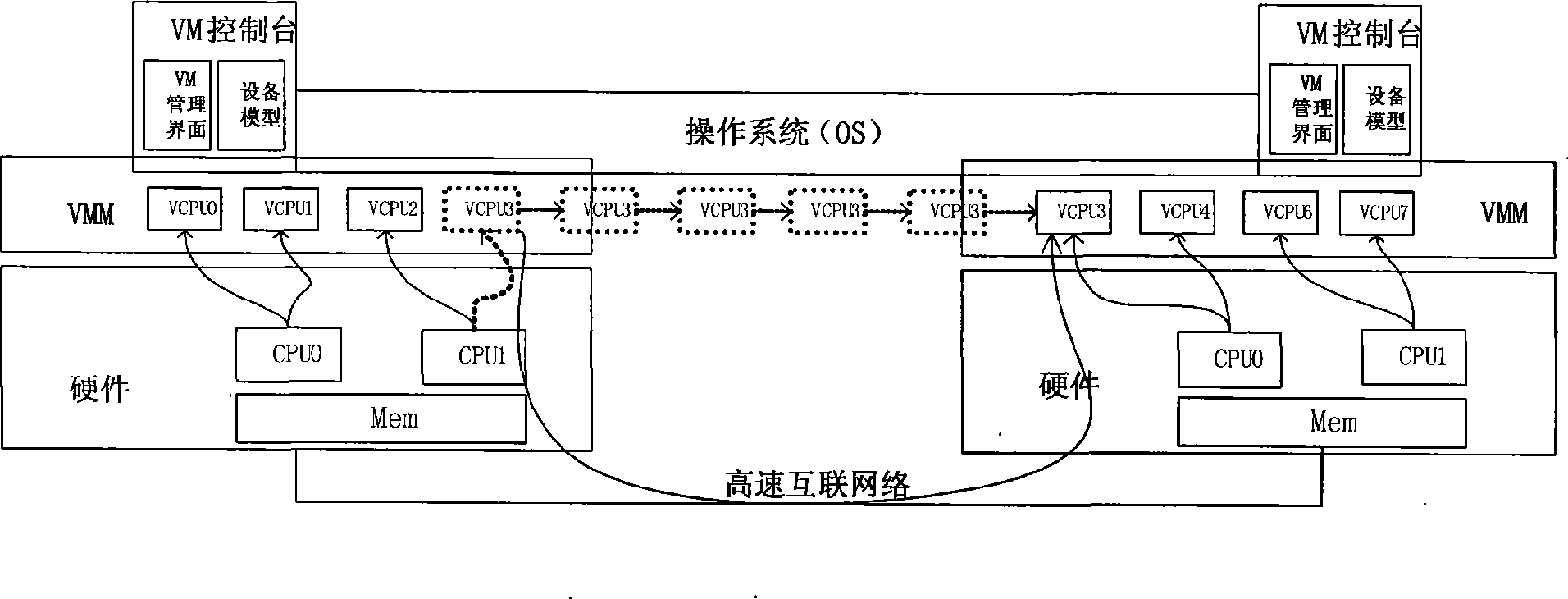

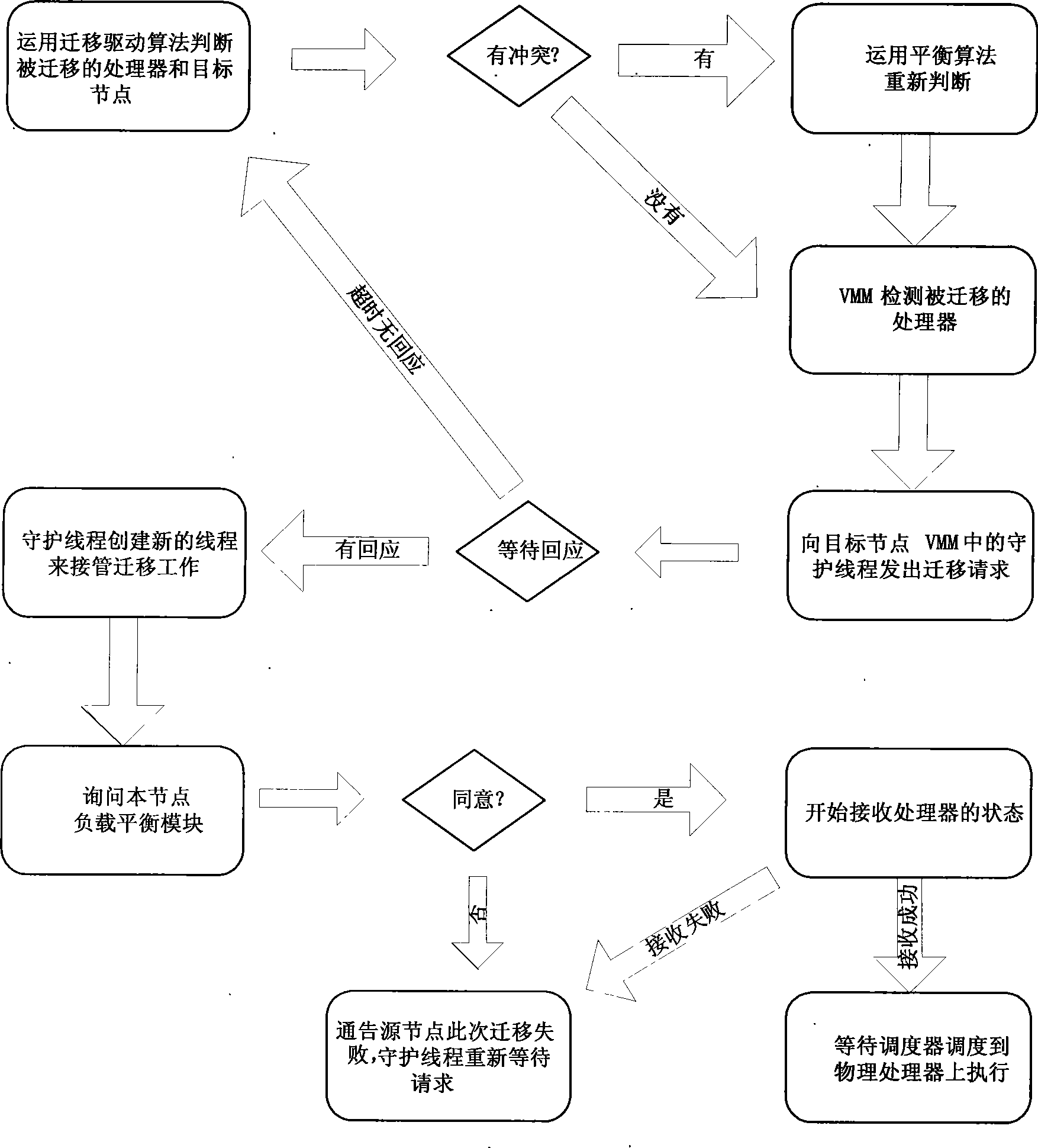

Cluster load balance method transparent for operating system

InactiveCN101452406AImplement a load balancing strategyIncrease profitResource allocationVirtualizationOperational system

The present invention provides a cluster load balancing method transparent to an operation system. Main functional modules comprise a load balancing module, a processor migrating module and a communication module. The method is characterized by comprising the following steps: 1, driving a virtual processor to migrate; 2, driving balance migration; 3, sending a migrating request to a target node and negotiating; 4, storing and restoring a state of the virtual processor; and 5, communicating. The method better solves the problem of low resource utilization rate of a cluster system. Along with the development of more popularization of the cluster system and the continuous development of hardware virtualization technology in the future, the method can be a good solution for the low resource utilization rate of the cluster system and has good application prospect.

Owner:HUAWEI TECH CO LTD

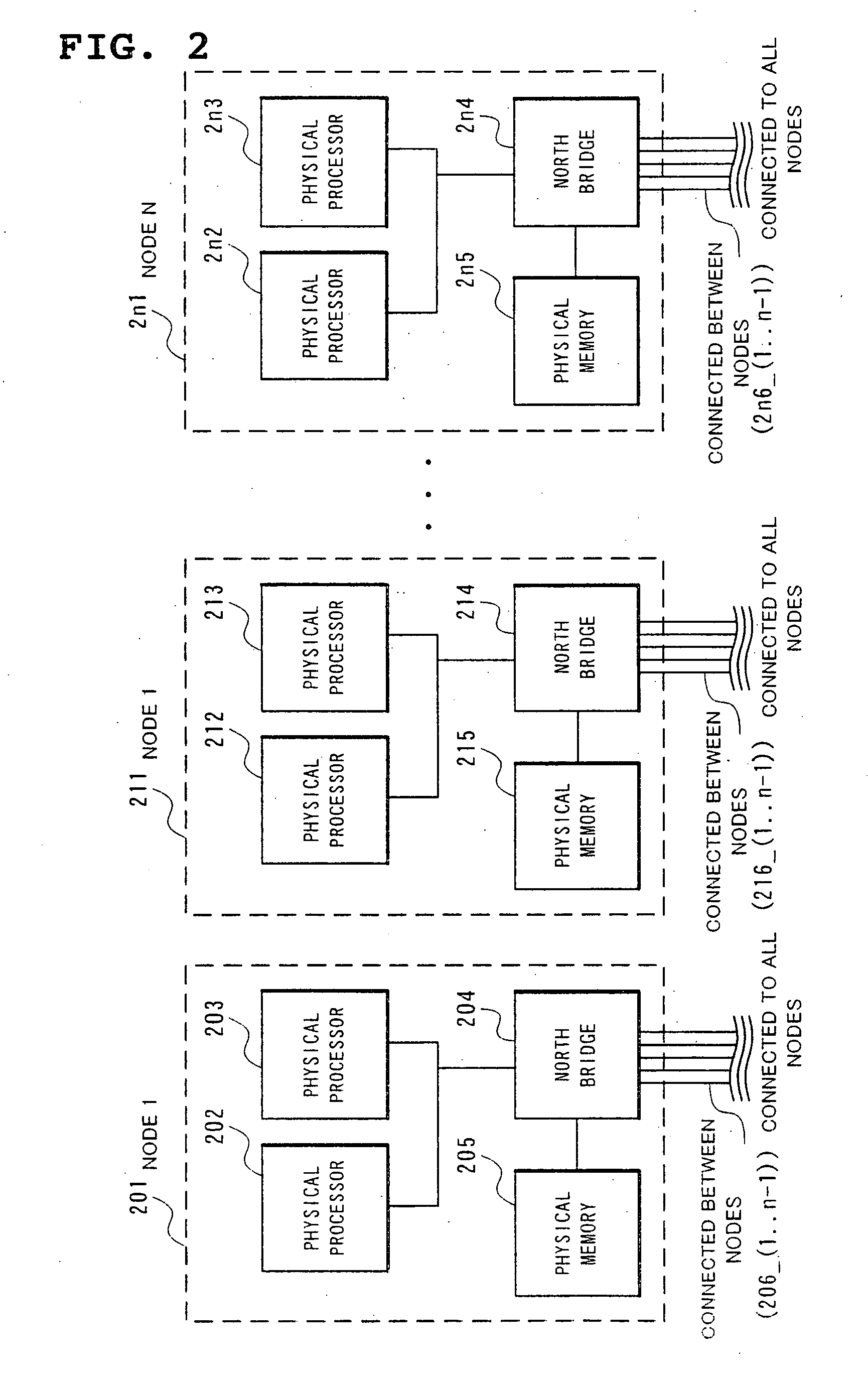

Virtual computer system, and physical resource reconfiguration method and program thereof

InactiveUS20070226449A1Easy to useImprove performanceError detection/correctionMultiprogramming arrangementsAccess timeDelayed time

Provided is a virtual computer system capable of finding a configuration whose total sum of memory access delays is smaller than that of a current configuration. In a virtual computer system in which with memory access times within a node and between nodes differing from each other, a hypervisor controls a plurality of virtual processors which execute a process on a plurality of nodes, the hypervisor includes a unit which obtains a total sum of memory access delay time on the virtual machine based on affinity information indicative of a latency or a band of communication between the virtual processors and traffic between the virtual processors, and a unit which reconfigures physical resources based on the total sum of delay time.

Owner:NEC CORP

Method and system for caching address translations from multiple address spaces in virtual machines

InactiveUS7363463B2Low costEfficient detectionMemory architecture accessing/allocationMemory systemsVirtualizationPage table

A method of virtualizing memory through shadow page tables that cache translations from multiple guest address spaces in a virtual machine includes a software version of a hardware tagged translation look-aside buffer. Edits to guest page tables are detected by intercepting the creation of guest-writable mappings to guest page tables with translations cached in shadow page tables. The affected cached translations are marked as stale and purged upon an address space switch or an indiscriminate flush of translations by the guest. Thereby, non-stale translations remain cached but stale translations are discarded. The method includes tracking the guest-writable mappings to guest page tables, deferring discovery of such mappings to a guest page table for the first time until a purge of all cached translations when the number of untracked guest page tables exceeds a threshold, and sharing shadow page tables between shadow address spaces and between virtual processors.

Owner:MICROSOFT TECH LICENSING LLC

Hetergeneous processor apparatus and method

A heterogeneous processor architecture is described. For example, a processor according to one embodiment of the invention comprises: a first set of one or more physical processor cores having first processing characteristics; a second set of one or more physical processor cores having second processing characteristics different from the first processing characteristics; virtual-to-physical (V-P) mapping logic to expose a plurality of virtual processors to software, the plurality of virtual processors to appear to the software as a plurality of homogeneous processor cores, the software to allocate threads to the virtual processors as if the virtual processors were homogeneous processor cores; wherein the V-P mapping logic is to map each virtual processor to a physical processor within the first set of physical processor cores or the second set of physical processor cores such that a thread allocated to a first virtual processor by software is executed by a physical processor mapped to the first virtual processor from the first set or the second set of physical processors.

Owner:INTEL CORP

Method and System For Caching Address Translations From Multiple Address Spaces In Virtual Machines

ActiveUS20080215848A1Low costEfficient detectionMemory architecture accessing/allocationMemory adressing/allocation/relocationVirtualizationPage table

A method of virtualizing memory through shadow page tables that cache translations from multiple guest address spaces in a virtual machine includes a software version of a hardware tagged translation look-aside buffer. Edits to guest page tables are detected by intercepting the creation of guest-writable mappings to guest page tables with translations cached in shadow page tables. The affected cached translations are marked as stale and purged upon an address space switch or an indiscriminate flush of translations by the guest. Thereby, non-stale translations remain cached but stale translations are discarded. The method includes tracking the guest-writable mappings to guest page tables, deferring discovery of such mappings to a guest page table for the first time until a purge of all cached translations when the number of untracked guest page tables exceeds a threshold, and sharing shadow page tables between shadow address spaces and between virtual processors.

Owner:MICROSOFT TECH LICENSING LLC

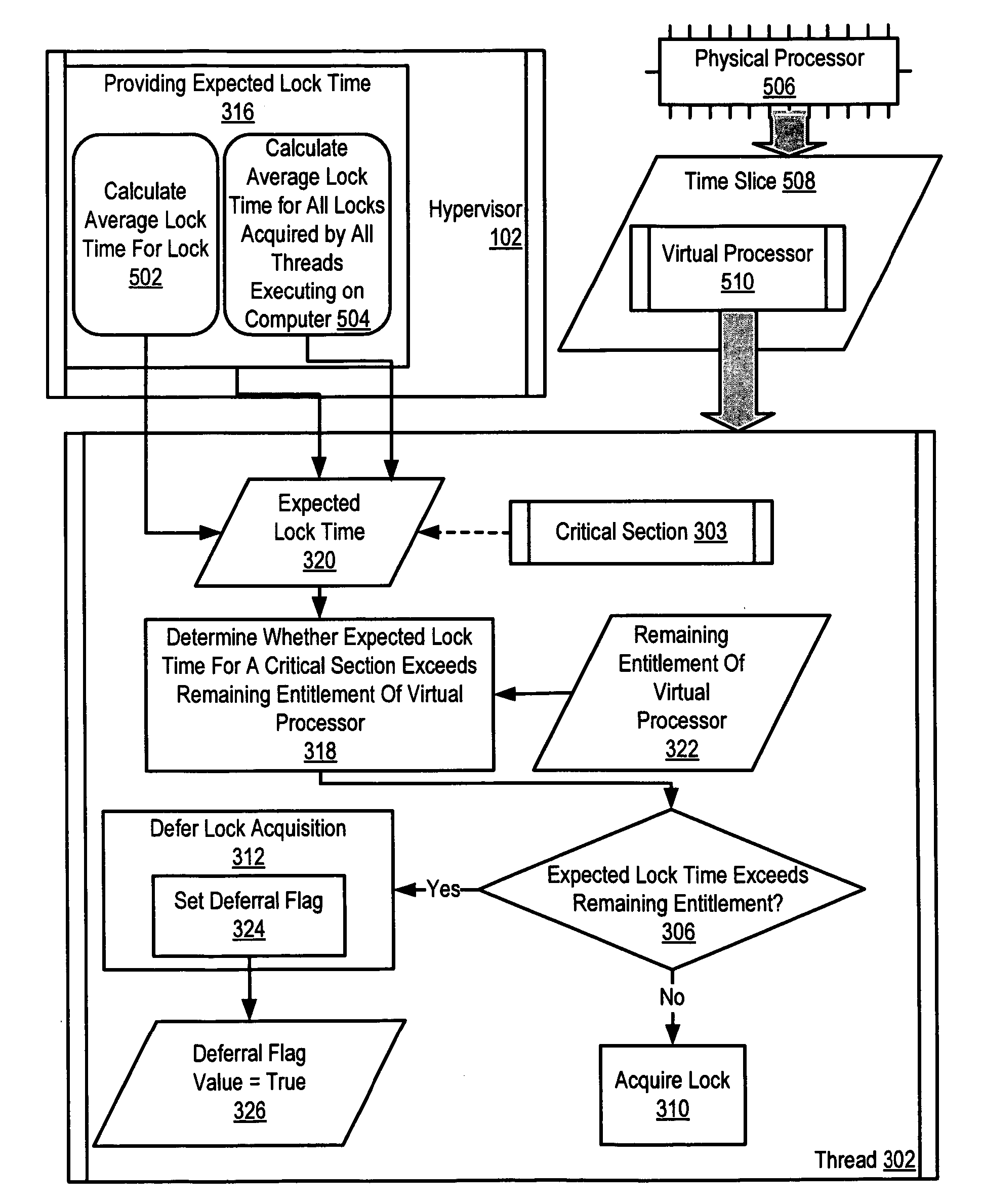

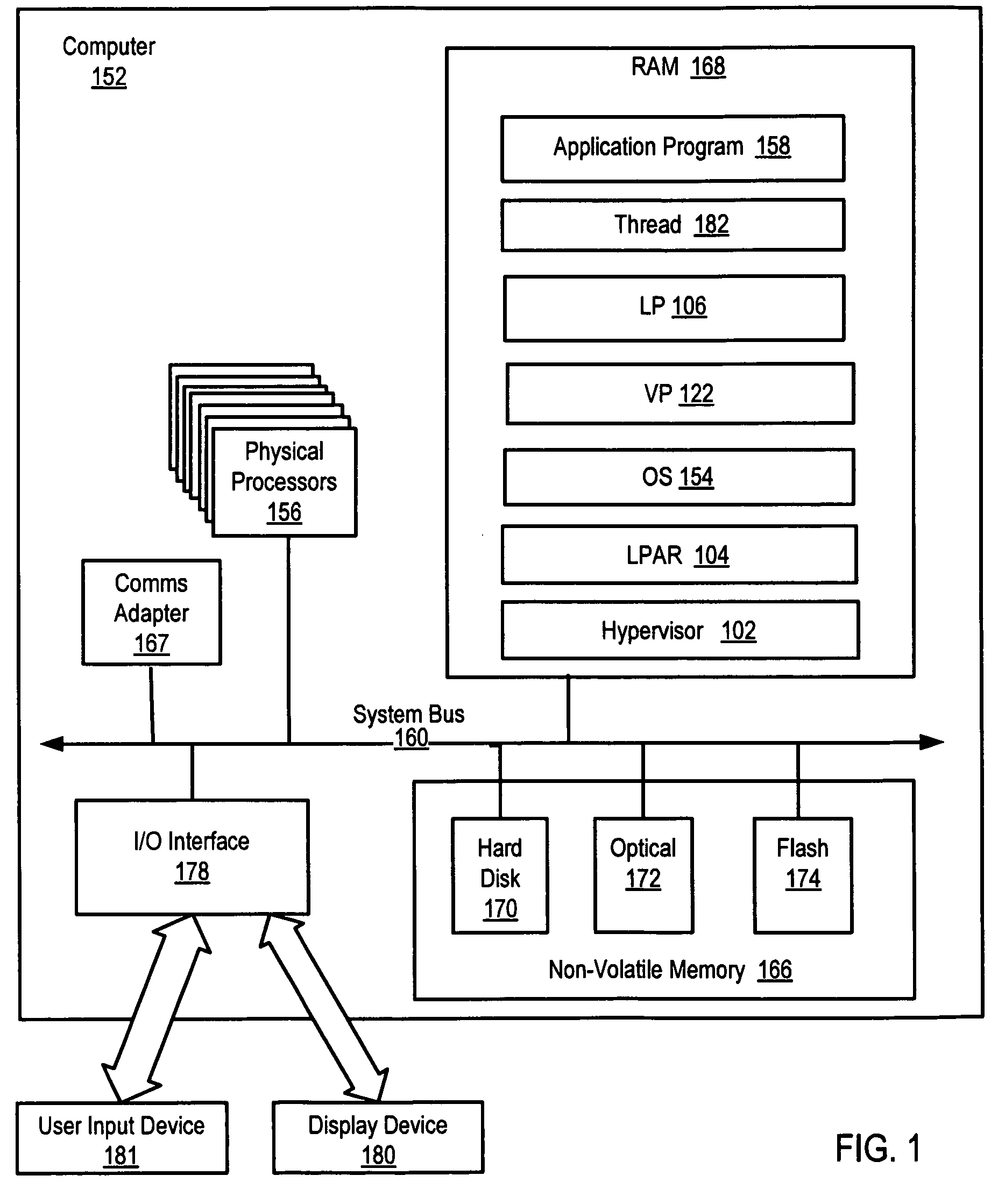

Administration of locks for critical sections of computer programs in a computer that supports a multiplicity of logical partitions

InactiveUS20060277551A1Multiprogramming arrangementsSoftware simulation/interpretation/emulationCritical sectionPhysics processing unit

Administration of locks for critical sections of computer programs in a computer that supports a multiplicity of logical partitions that include determining by a thread executing on a virtual processor executing in a time slice on a physical processor whether an expected lock time for a critical section of the thread exceeds a remaining entitlement of the virtual processor in the time slice and deferring acquisition of a lock if the expected lock time exceeds the remaining entitlement.

Owner:IBM CORP

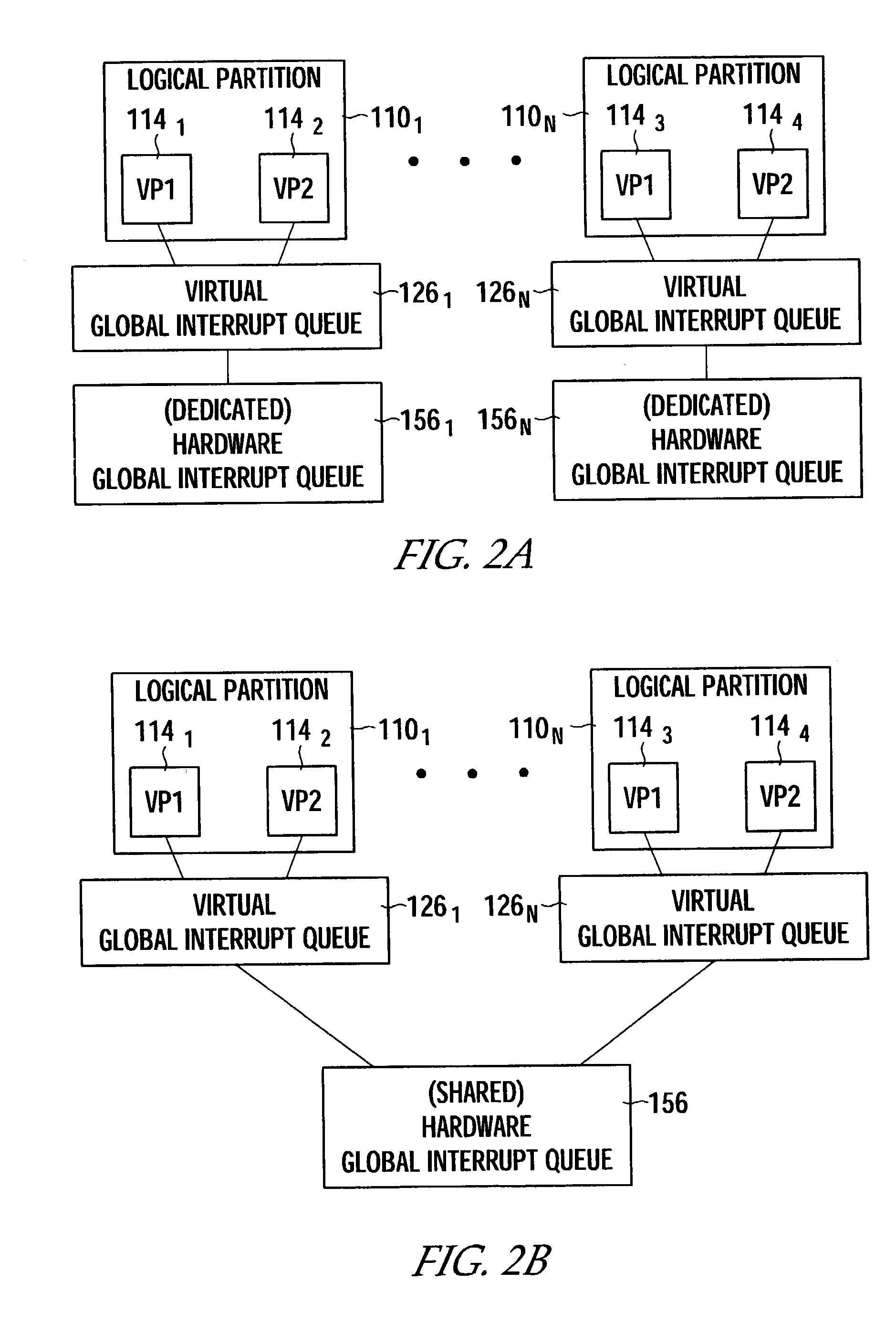

Virtualization of a global interrupt queue

ActiveUS7281075B2Program initiation/switchingSoftware simulation/interpretation/emulationVirtualizationPartitioned systems

Owner:INTEL CORP

Optimizing System Performance Using Spare Cores in a Virtualized Environment

ActiveUS20110010709A1Multiprogramming arrangementsSoftware simulation/interpretation/emulationVirtualizationProcessing core

A mechanism for optimizing system performance using spare processing cores in a virtualized environment. When detecting a workload partition needs to run on a virtual processor in the virtualized system, a state of the virtual processor is changed to a wait state. A first node comprising memory that is local to the workload partition is determined. A determination is also made as to whether a non-spare processor core in the first node is available to run the workload partition. If no non-spare processor core is available, a free non-spare processor core in a second node is located, and the state of the free non-spare processor core in the second node is changed to an inactive state. The state of a spare processor core in the first node is changed to an active state, and the workload partition is dispatched to the spare processor core in the first node for execution.

Owner:IBM CORP

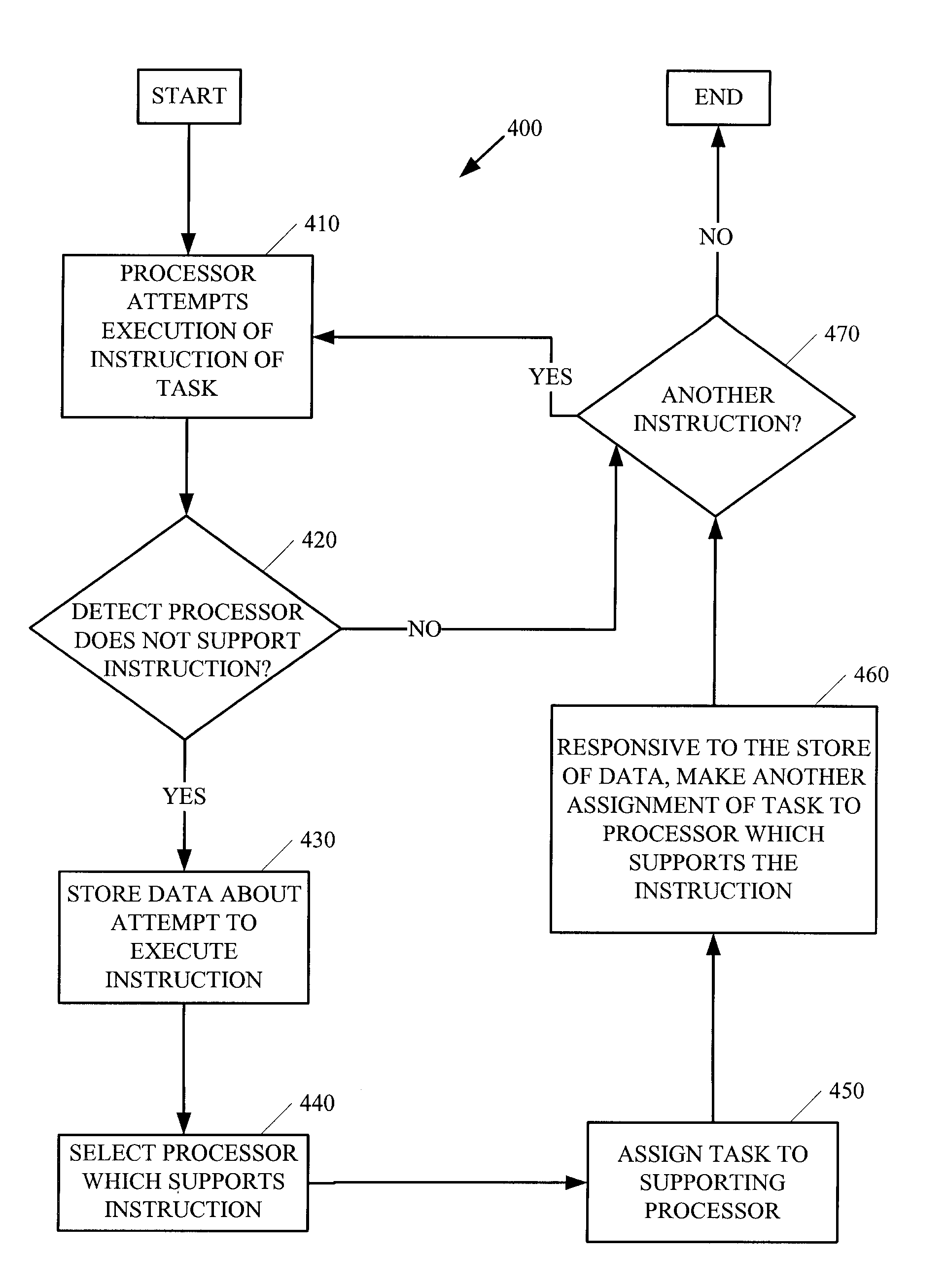

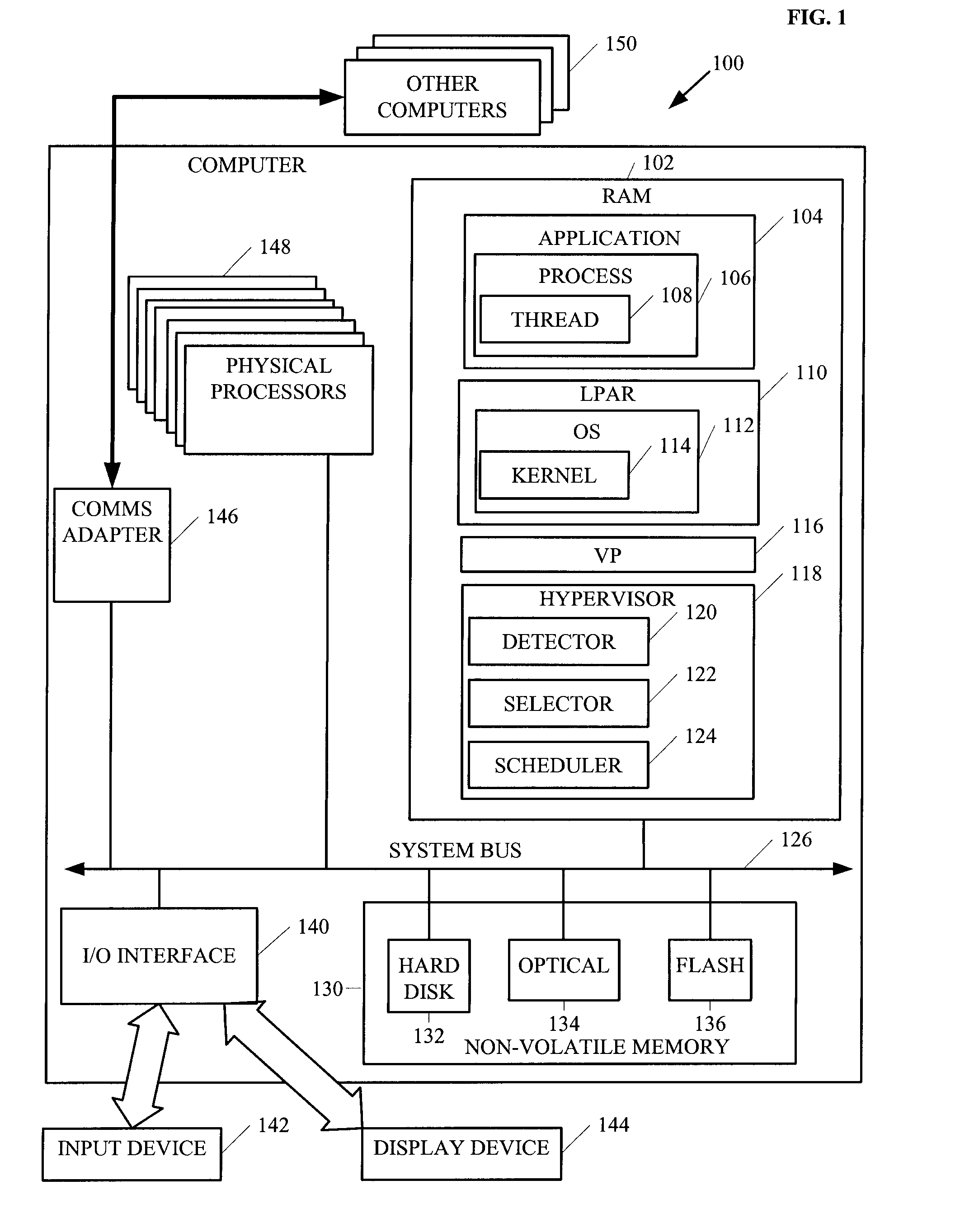

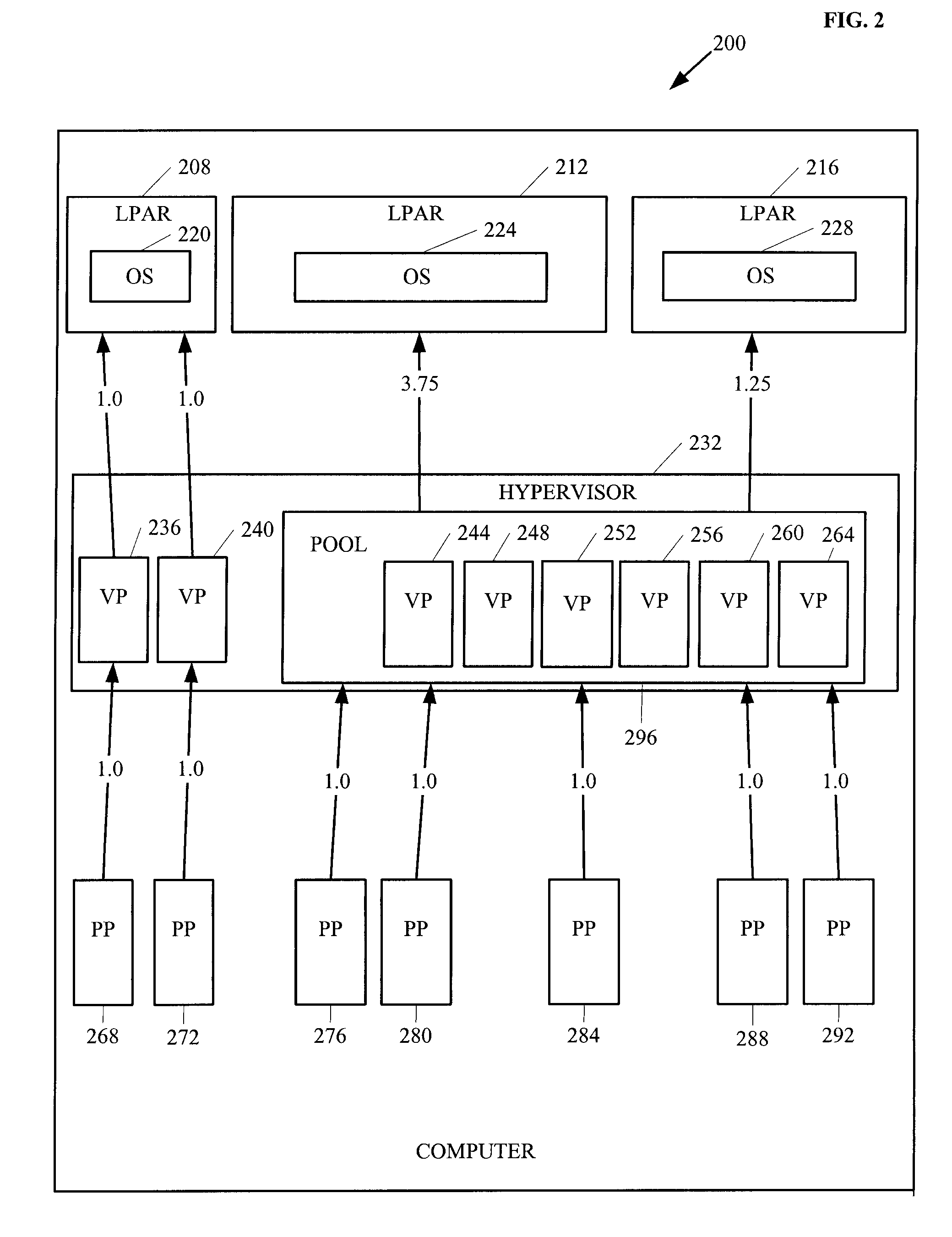

Assigning tasks to processors in heterogeneous multiprocessors

ActiveUS20090037911A1General purpose stored program computerMultiprogramming arrangementsPhysics processing unitInstruction set

Methods and arrangements of assigning tasks to processors are discussed. Embodiments include transformations, code, state machines or other logic to detect an attempt to execute an instruction of a task on a processor not supporting the instruction (non-supporting processor). The method may involve selecting a processor supporting the instruction (supporting physical processor). In many embodiments, the method may include storing data about the attempt to execute the instruction and, based upon the data, making another assignment of the task to a physical processor supporting the instruction. In some embodiments, the method may include representing the instruction set of a virtual processor as the union of the instruction sets of the physical processors comprising the virtual processor and assigning a task to the virtual processor based upon the representing.

Owner:IBM CORP

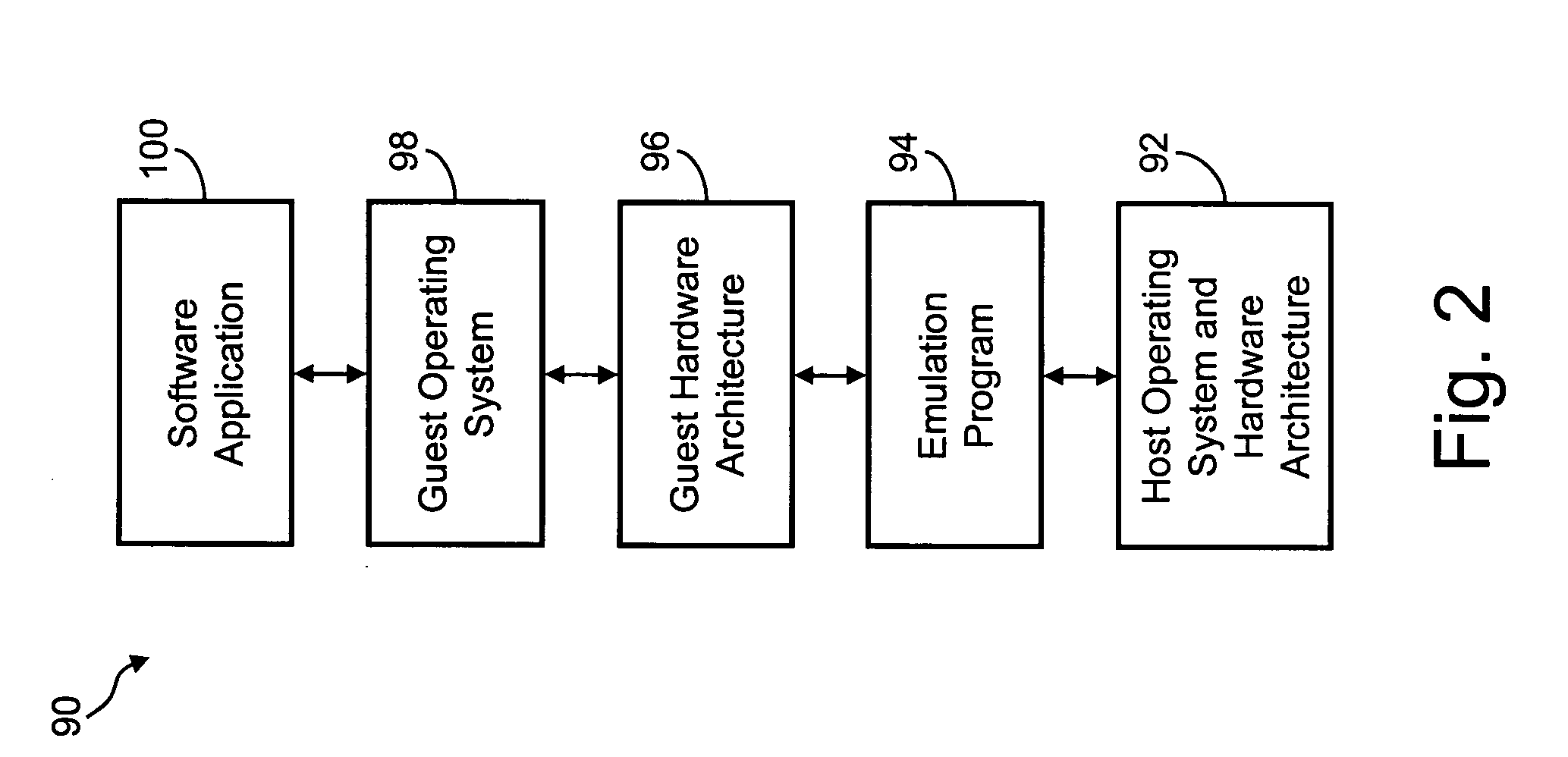

Virtualizing the execution of homogeneous parallel systems on heterogeneous multiprocessor platforms

InactiveUS20080163206A1SavingClosely matchedEnergy efficient ICTError detection/correctionVirtualizationPhysics processing unit

A method of virtual processing includes running a virtual processor (1), which when the virtual processor (1) encounters a faulting instruction unmaps the virtual processor (1) from the physical processor (A), and generates a list of other of the physical processors that could execute the instruction. Then determines if one of the other of the physical processors in the list is currently idle, and when one of the other of the physical processors in the list is determined to be currently idle, maps the virtual processor (1) to a physical processor (B) which is the one of the other of the physical processors in the list that was determined to be currently idle.

Owner:IBM CORP

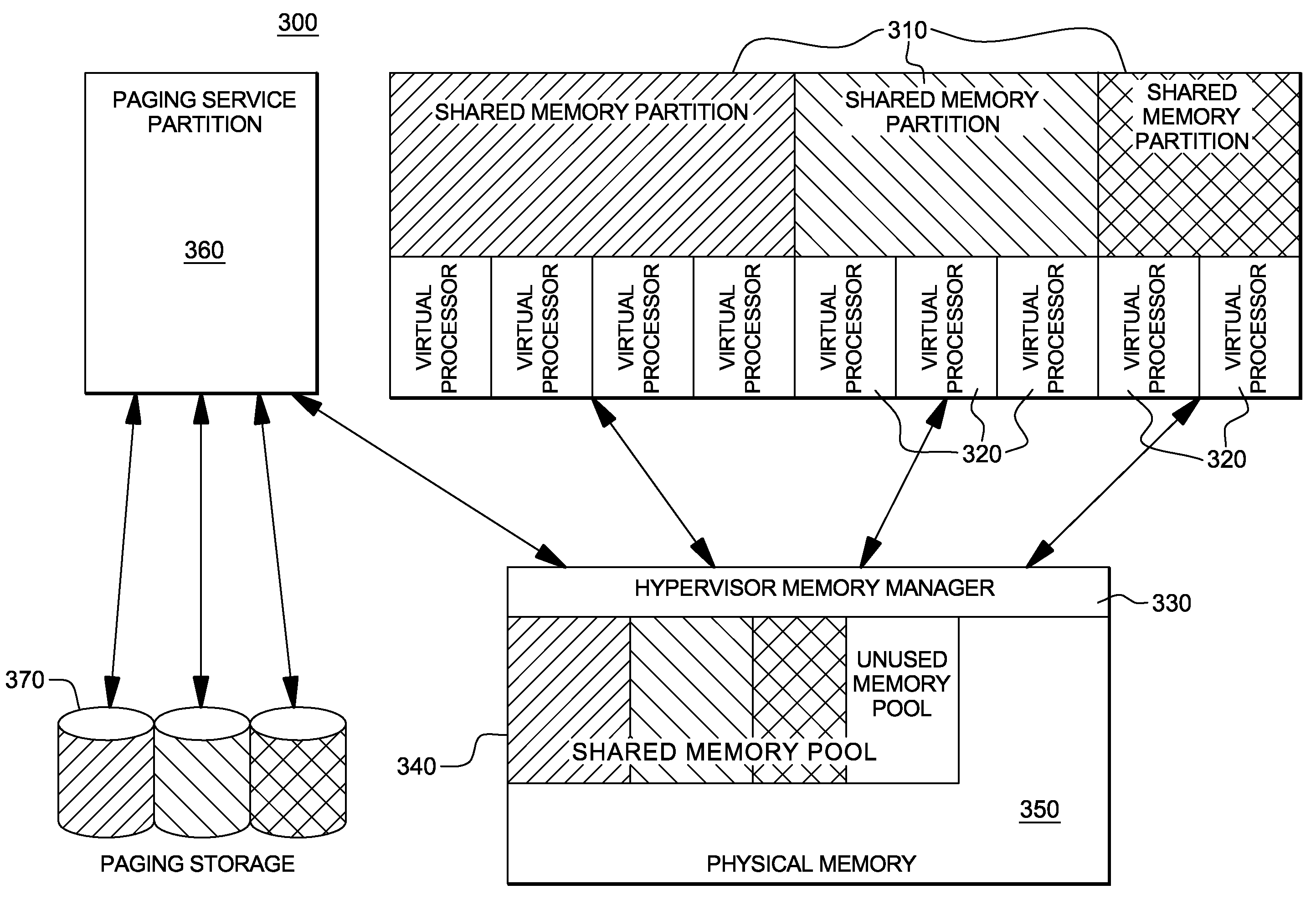

Hypervisor Page Fault Processing in a Shared Memory Partition Data Processing System

ActiveUS20090307436A1Optimize allocationMemory adressing/allocation/relocationMultiprogramming arrangementsVirtual ProcessorInput/output

Hypervisor page fault processing logic is provided for a shared memory partition data processing system. The logic, responsive to an executing virtual processor of the shared memory partition data processing system encountering a hypervisor page fault, allocates an input / output (I / O) paging request to the virtual processor from an I / O paging request pool and increments an outstanding I / O paging request count for the virtual processor. A determination is then made whether the outstanding I / O paging request count for the virtual processor is at a predefined threshold, and if not, the logic places the virtual processor in a wait state with interrupt wake-up reasons enabled based on the virtual processor's state, otherwise, it places the virtual processor in a wait state with interrupt wake-up reasons disabled.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com