Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1180 results about "Low resource" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Answer: The resources a computer has are mainly processing speed, hard disk storage, and memory. The phrase low on resources usually means the computer is running out of memory. The best way to prevent this error from coming up is to install more RAM on your machine.

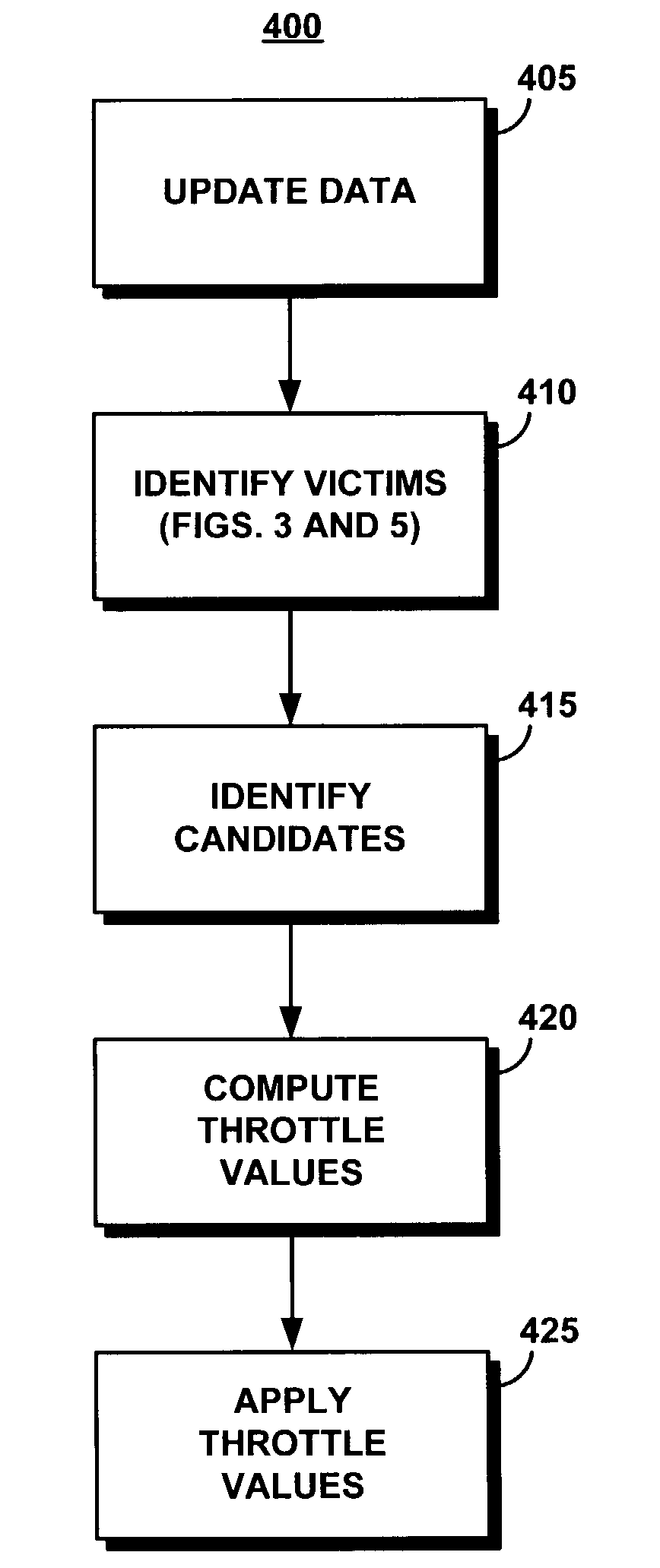

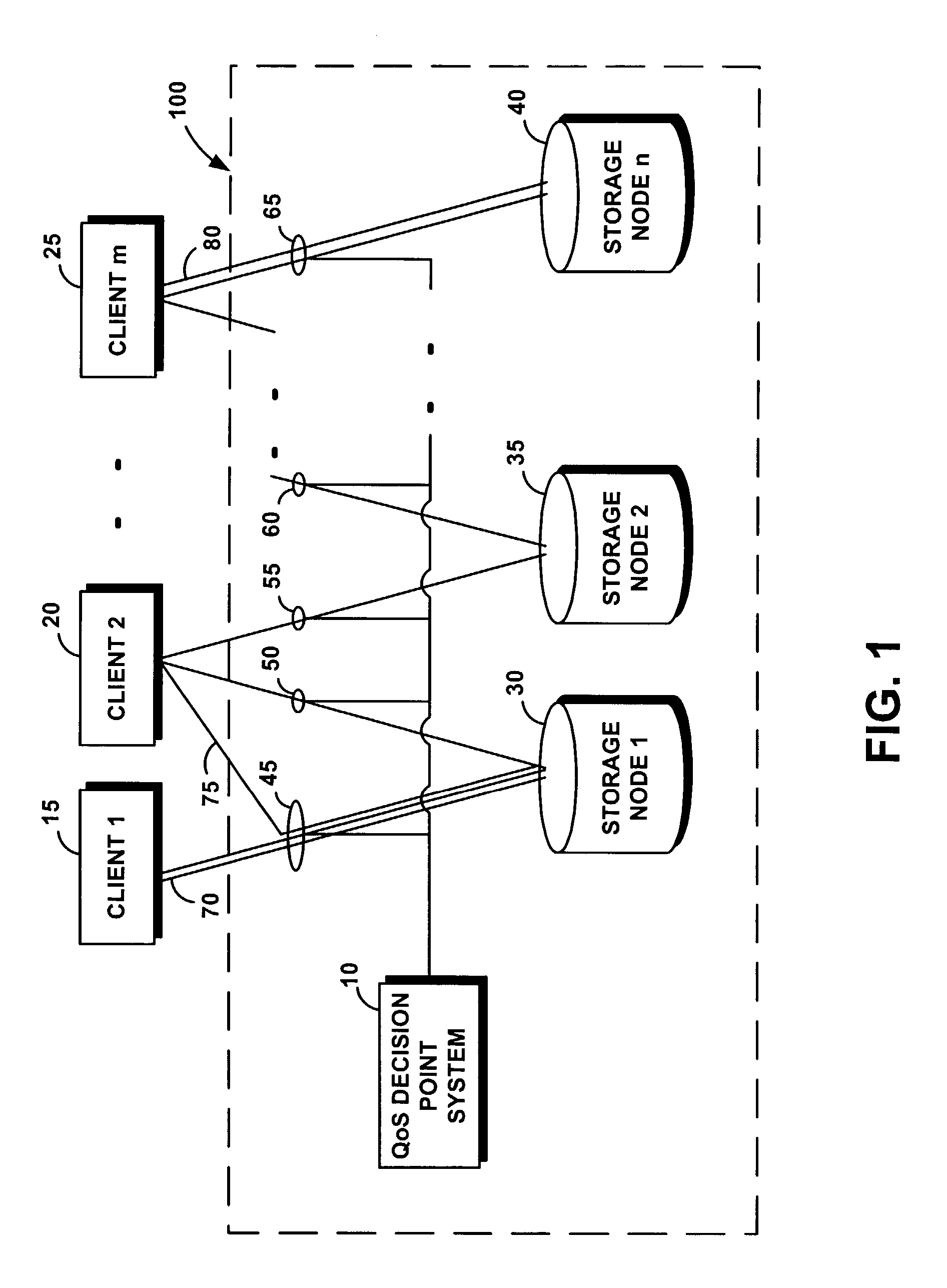

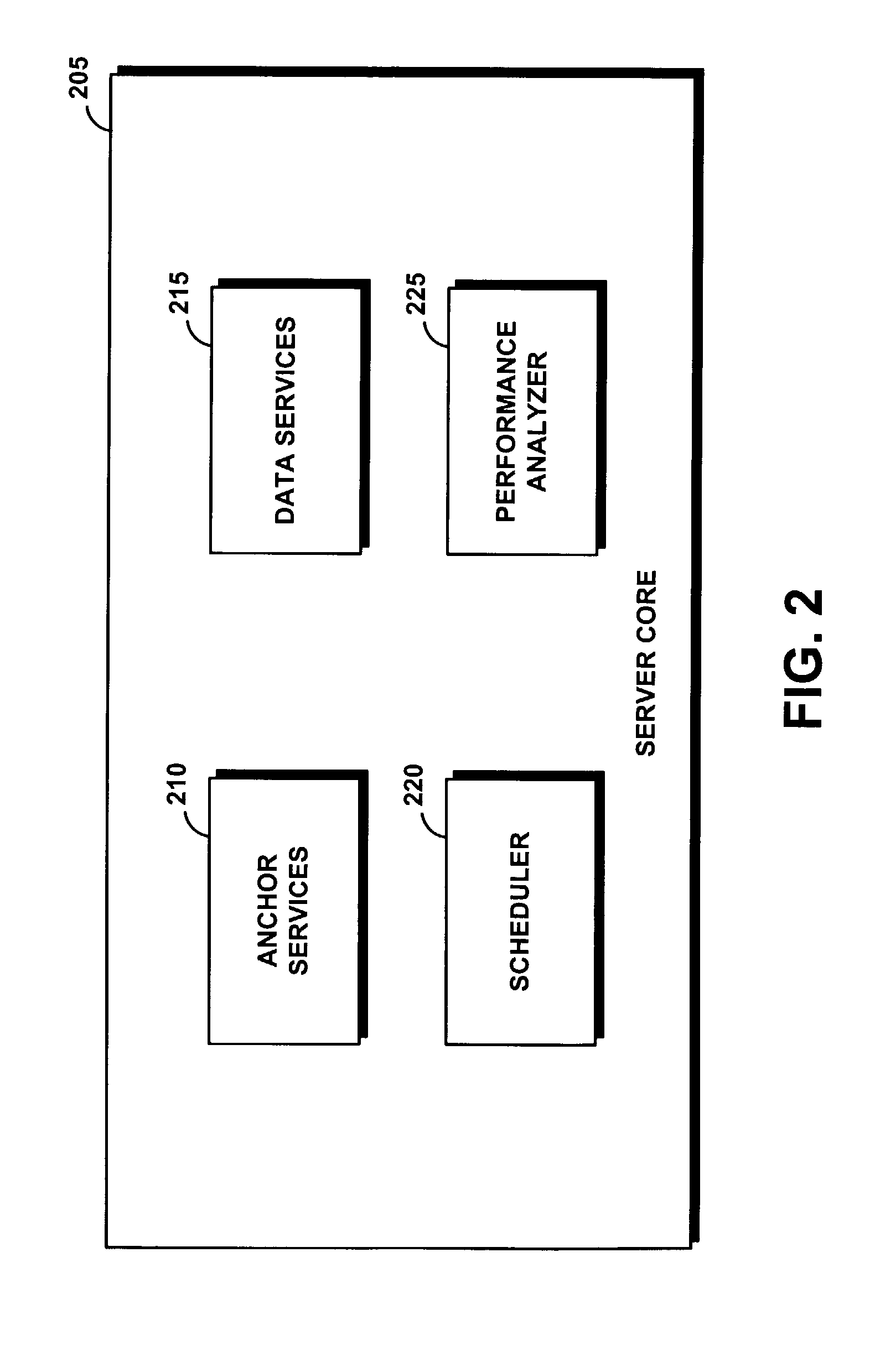

System and method for utilizing informed throttling to guarantee quality of service to I/O streams

InactiveUS7519725B2Good decisionAccurate I/O throttling decisionError preventionFrequency-division multiplex detailsQuality of serviceWorkload

The present system and associated method resolve the problem of providing statistical performance guarantees for applications generating streams of read / write accesses (I / Os) on a shared, potentially distributed storage system of finite resources, by initiating throttling whenever an I / O stream is receiving insufficient resources. The severity of throttling is determined in a dynamic, adaptive way at the storage subsystem level. Global, real-time knowledge about I / O streams is used to apply controls to guarantee quality of service to all I / O streams, providing dynamic control rather than reservation of bandwidth or other resources when an I / O stream is created that will always be applied to that I / O stream. The present system throttles at control points to distribute resources that are not co-located with the control point. A competition model is used with service time estimators in addition to estimated workload characteristics to determine which I / O needs to be throttled and the level of throttling required. A decision point issues throttling commands to enforcement points and selects which streams, and to what extent, need to be throttled.

Owner:IBM CORP

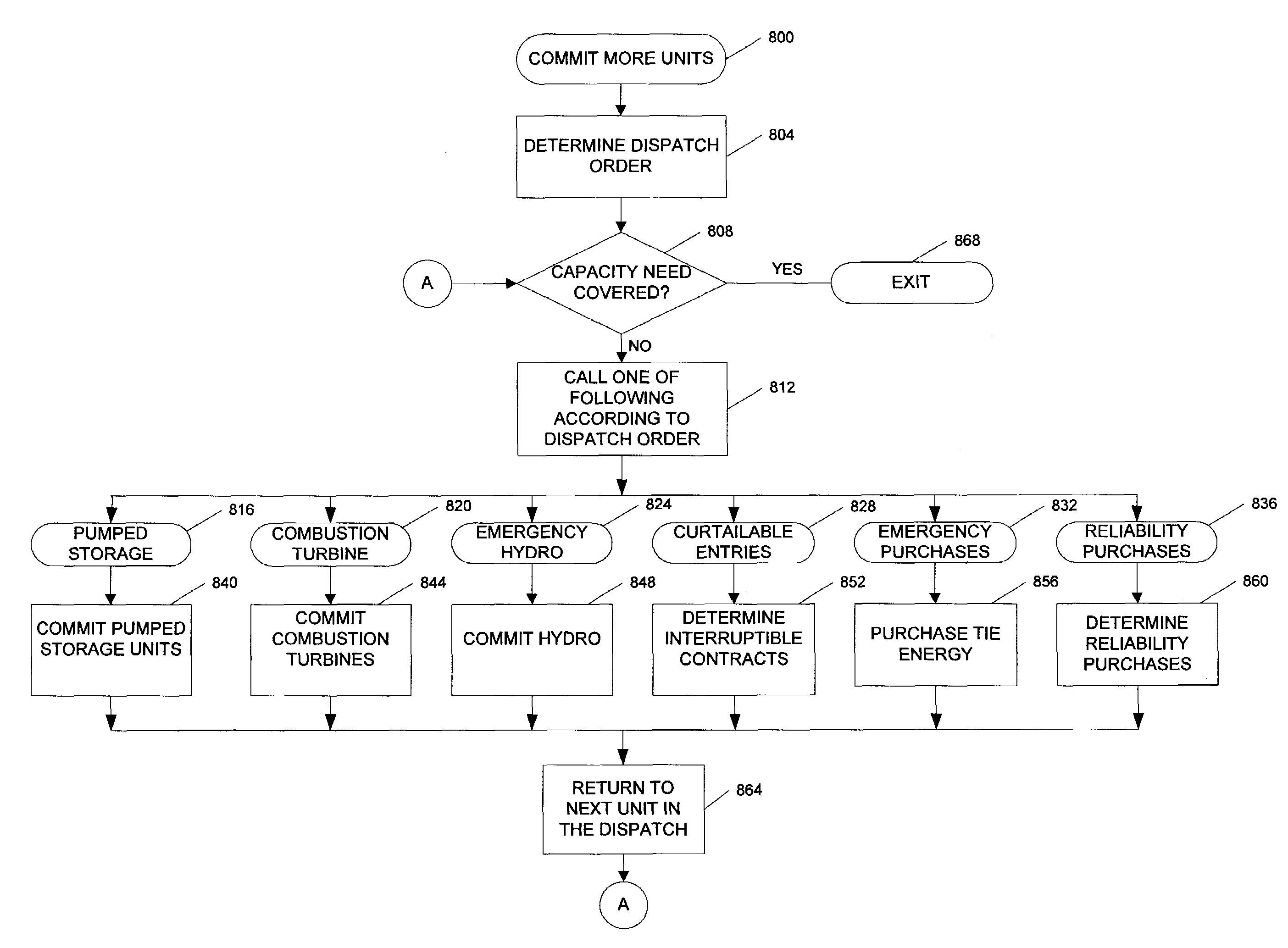

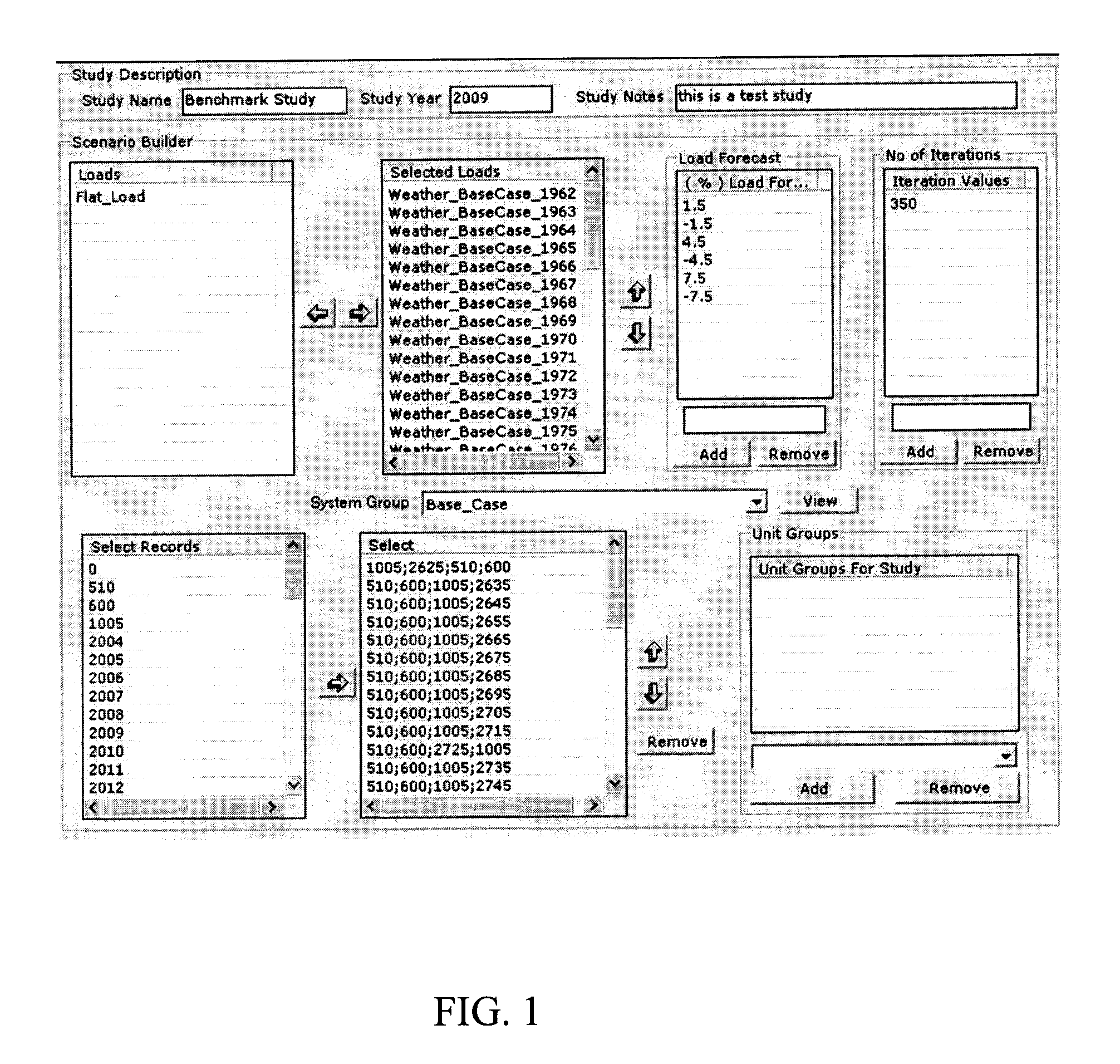

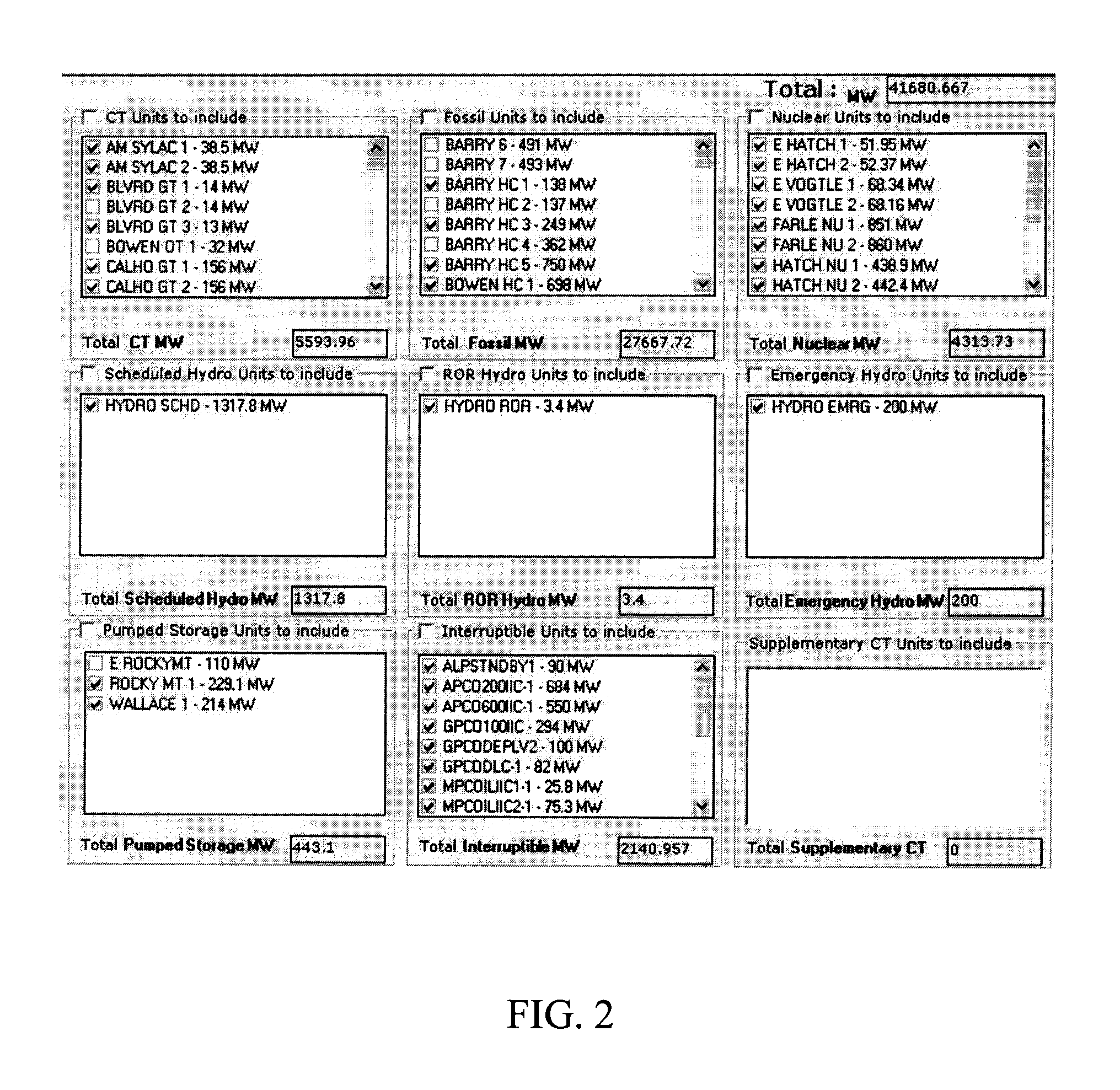

System and method for determining expected unserved energy to quantify generation reliability risks

A method, system and program product for quantifying a risk of an expected unserved energy in an energy generation system using a digital simulation. An energy load demand forecast is generated based at least in part on a weather year model. A plurality of energy generation resources are committed to meet the energy load demand. An operating status is determined for each committed energy generation resource in the energy generation system. A determination is made as to whether or not the committed resources are sufficient to meet the energy load demand. A dispatch order for a plurality of additional energy resources is selected if the committed resources are not sufficient to meet the energy load demand. Additional resources are committed based on the selected dispatch order until the energy load demand is met. The expected unserved energy is determined and an equivalent amount of energy load demand is shed based at least in part on an expected duration of unserved energy and a customer class grouping. An associated cost for the expected unserved energy is also determined.

Owner:SOUTHERN COMPANY SERVICES

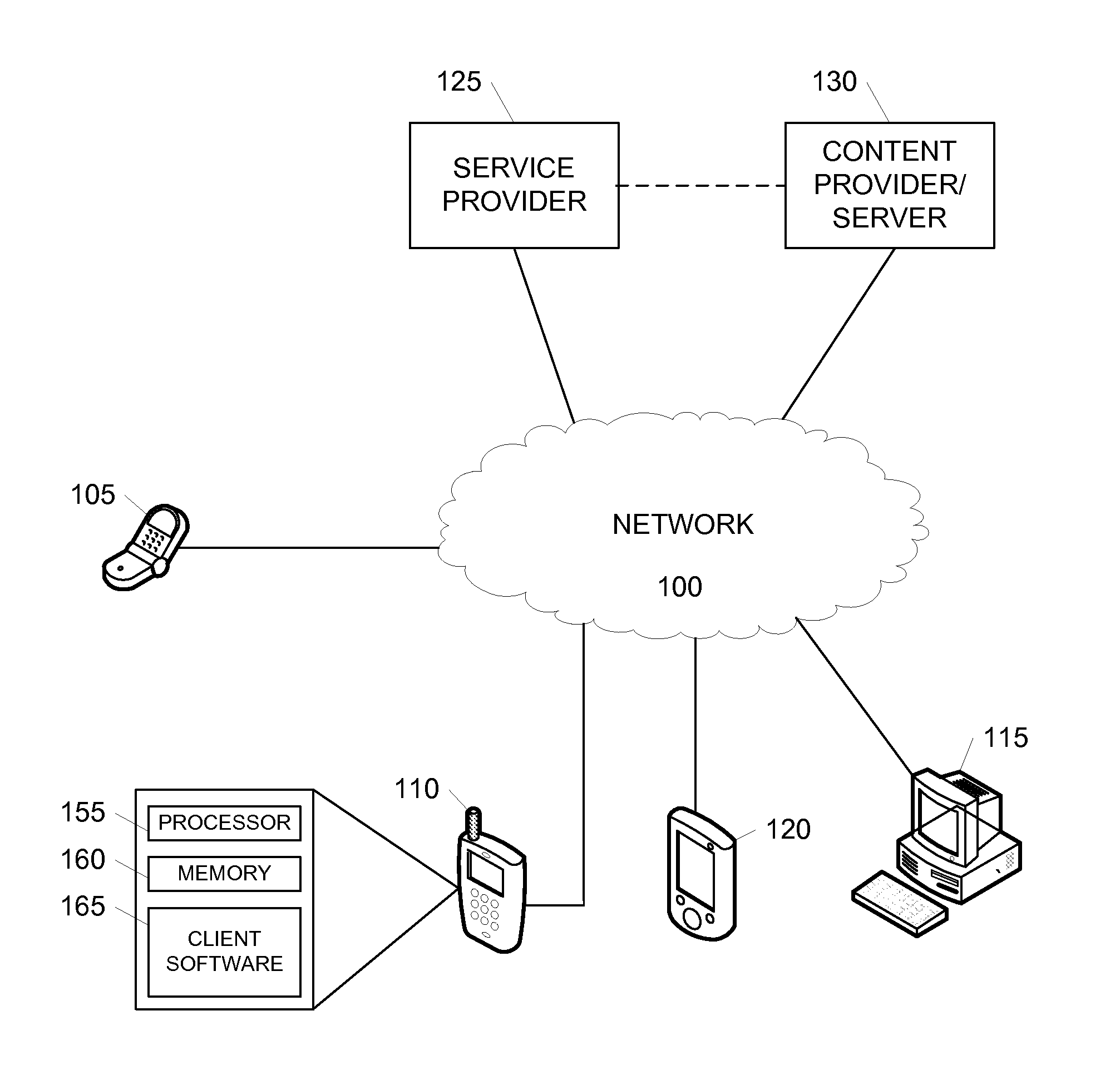

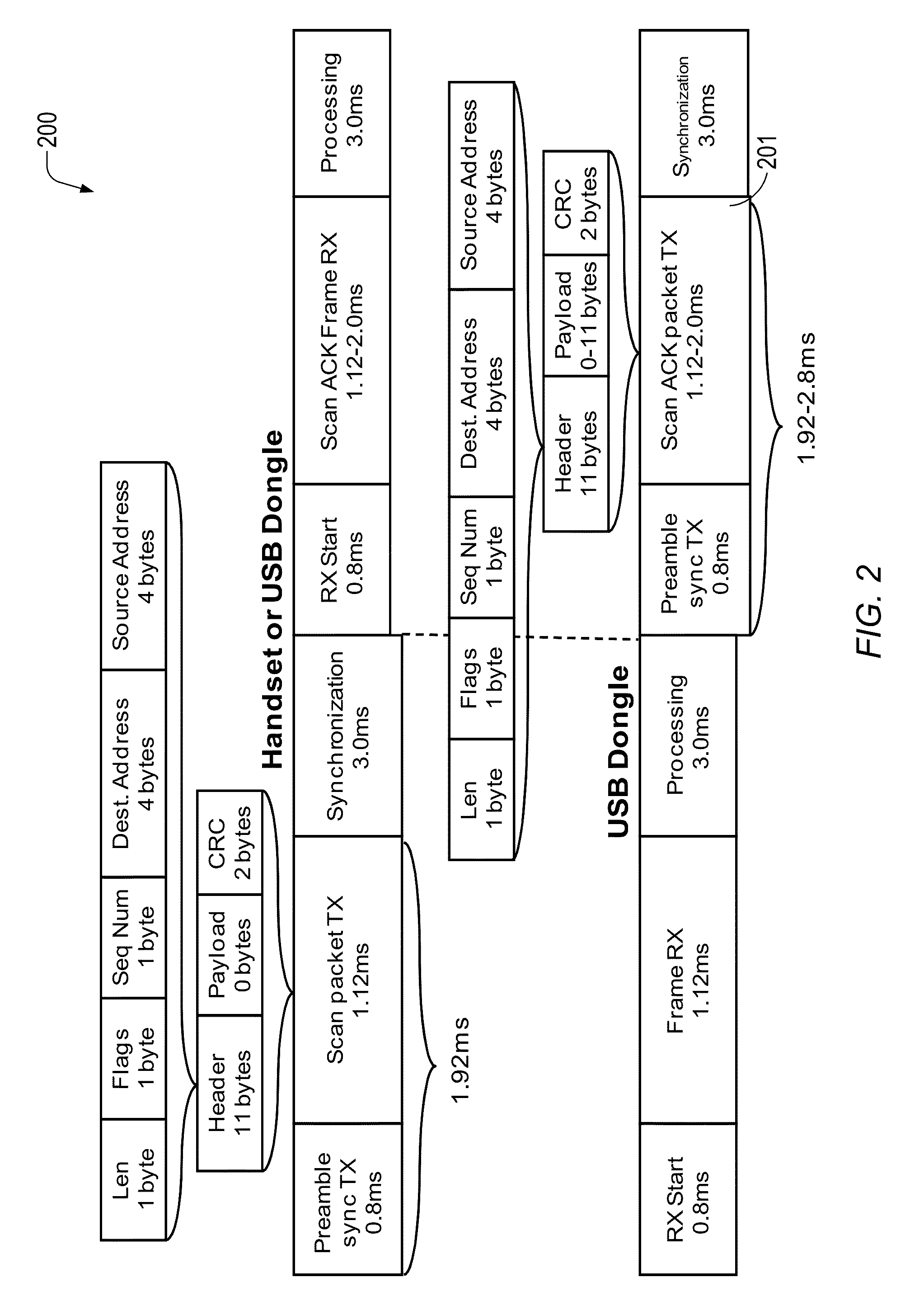

Optimized Polling in Low Resource Devices

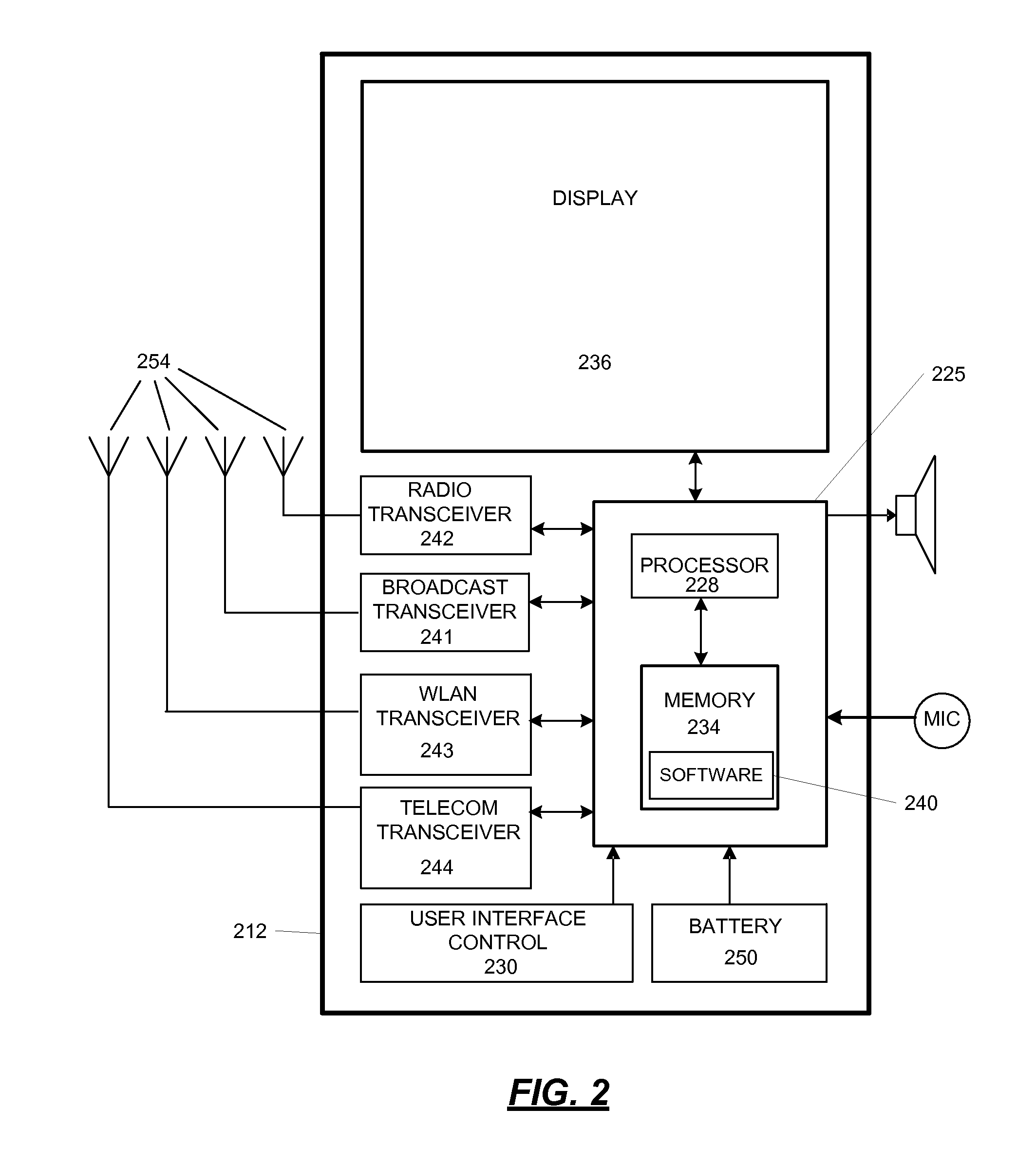

InactiveUS20110208810A1Efficient managementPower managementMultiple digital computer combinationsMultiplexingTraffic capacity

Methods and systems for optimizing server polling by a mobile client are described, thereby allowing mobile terminals to conserve battery life by more efficiently using resources such as the processor and transceiver in the mobile terminal A broker system may be used to minimize wireless communication traffic used for polling. A broker stub intercepts server polling messages at the client, multiplexes the sever requests together, and forwards the multiplexed message to a broker skeleton that de-multiplexes and forwards the messages as appropriate. Polling may also be dynamically adapted based on user behavior, or a server guard may be used to monitor changes to data, and notify a client to poll its respective server when the server guard detects new or updated data on that server for that client.

Owner:NOKIA CORP

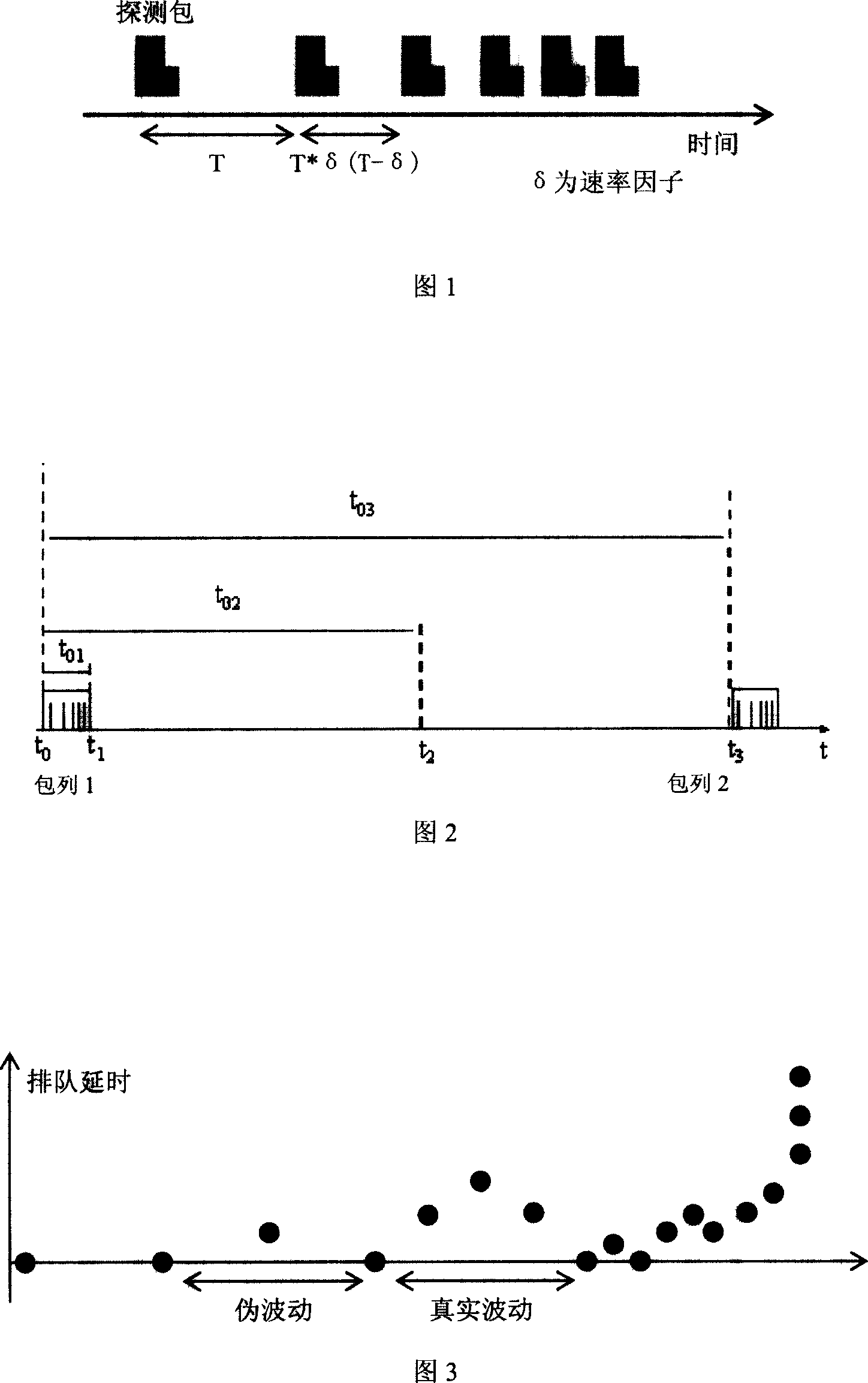

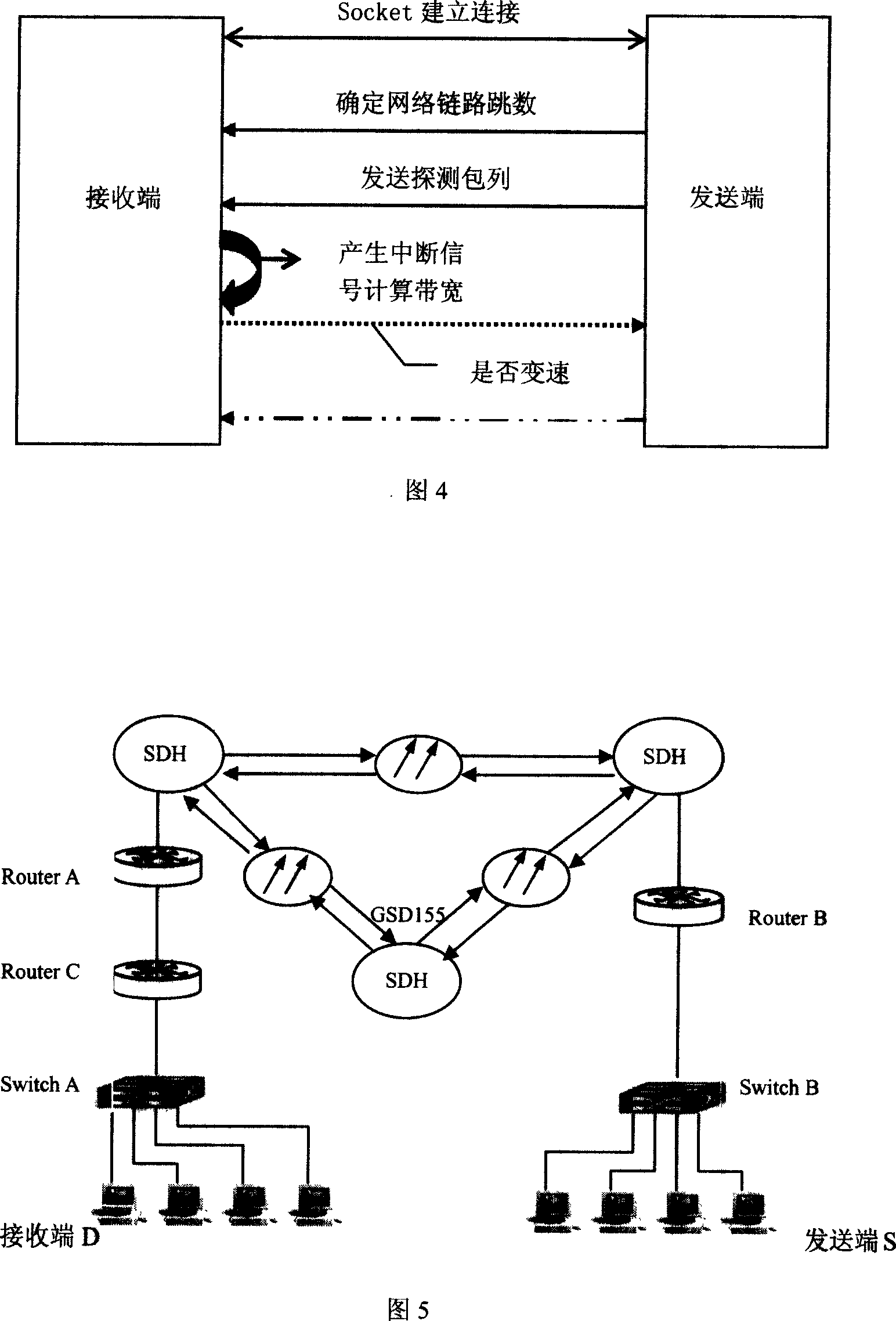

End-to-end low available bandwidth measuring method

Through mutual cooperation between sending end and receiving end together the disclosed method accomplishes measuring work. The sending end is in charge of sending measurement packets in multiple speeds, and the receiving end is in charge of receiving measurement packets, and making statistics and analysis for bandwidth condition. The method uses detection sequence quick self-congestion algorithm, pseudo fluctuation filtration algorithm, quick feedback algorithm of self-adaptive detecting threshold, and detected value self-adapted quick smoothing algorithm etc. Comparing with each type of usable tool for measuring bandwidth, the disclosed method not only realizes better measuring effect on high usable bandwidth, but also realizes more accurate, quick measurement on low usable bandwidth. Advantages are: consuming low resources of network bandwidth, small influence on route of network to be measured.

Owner:SOUTHWEAT UNIV OF SCI & TECH

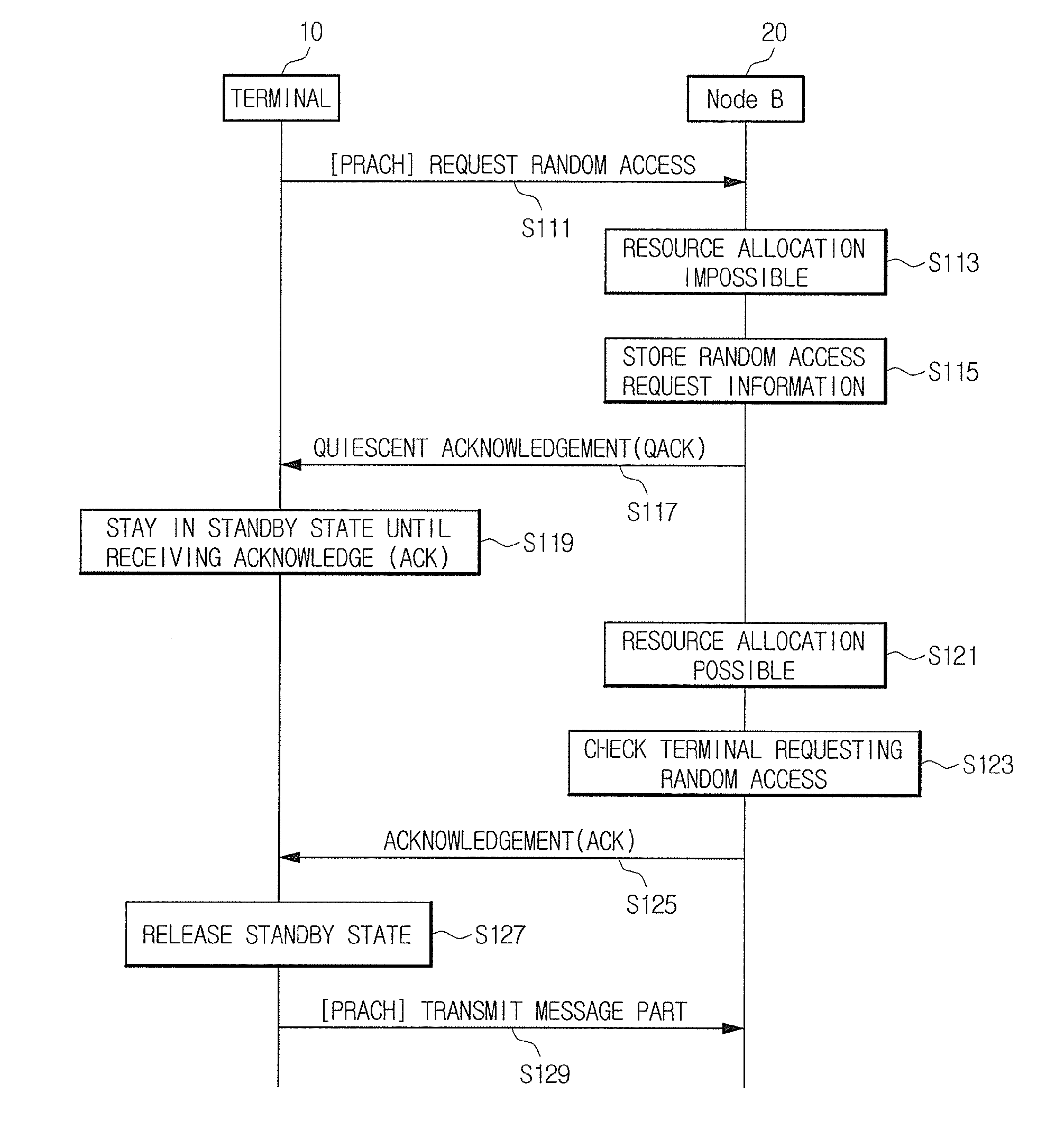

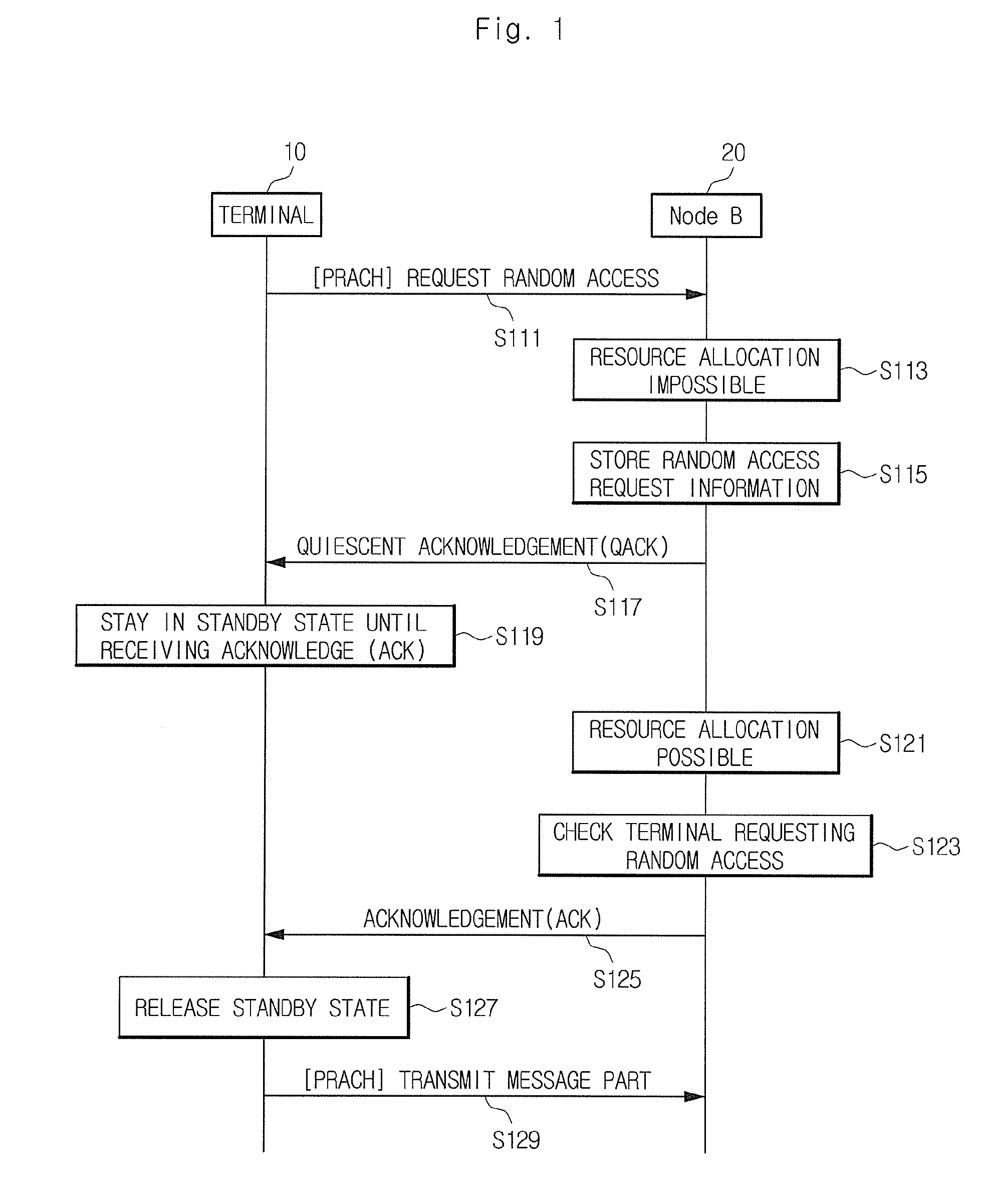

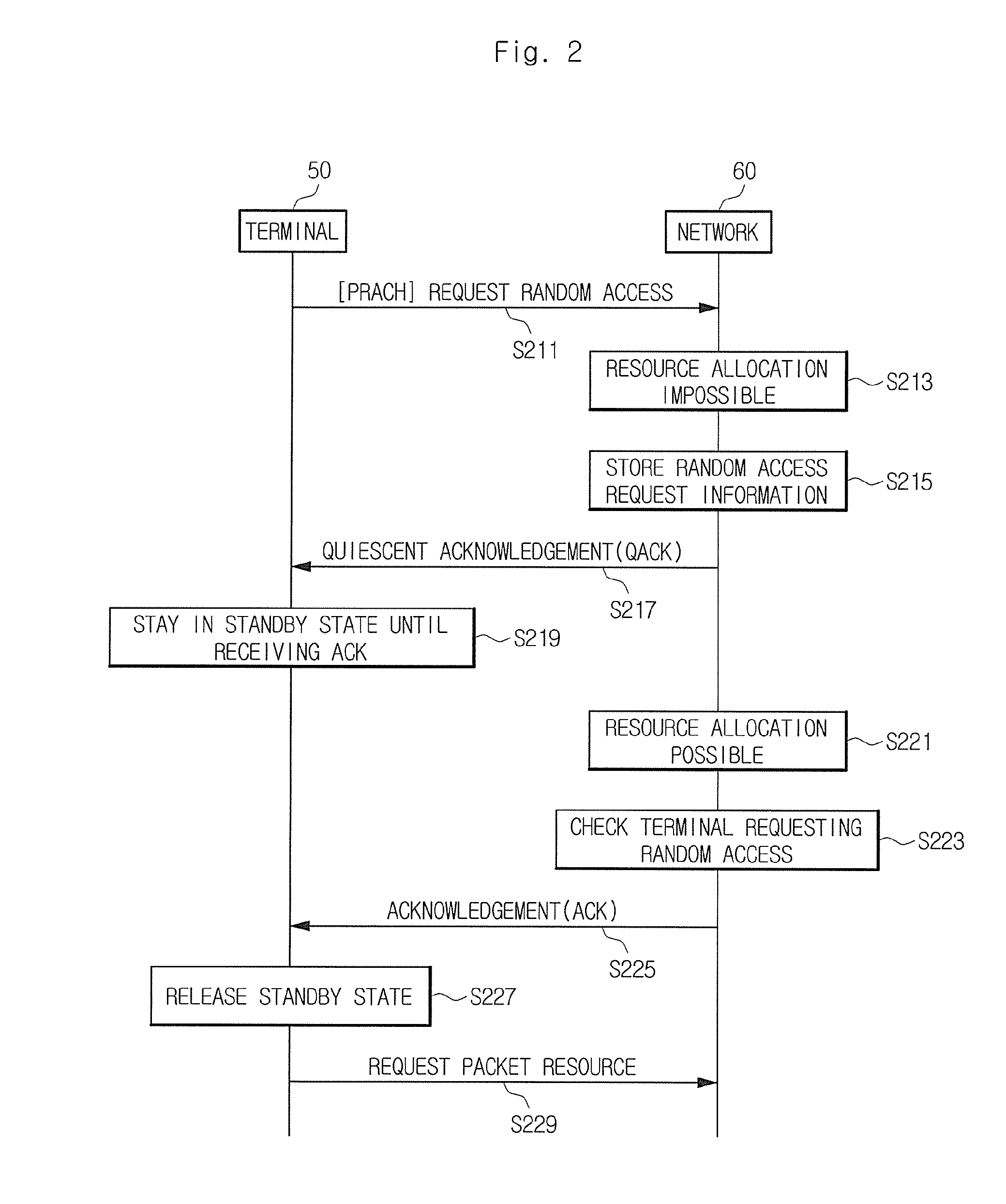

Method for random access process in mobile communication system

InactiveUS20100214984A1Avoid random accessEnergy efficient ICTError prevention/detection by using return channelTelecommunicationsMobile communication systems

The present invention relates to a method for a random access process in a mobile communication system. According to the exemplary embodiments, in the case where a mobile terminal requests a random access to a network, when the network is not able to promptly allocate a resource for the random access to the mobile terminal due to an insufficient resource, the mobile terminal is maintained in a standby state without repeatedly requesting the random access to the network. Accordingly, it is possible to reduce the uplink signal interference caused by the repeated random access request, reduce the possibility of communication contention between the mobile terminals for the access to the mobile communication system, and reduce the power consumption of the mobile terminal.

Owner:PANTECH CO LTD

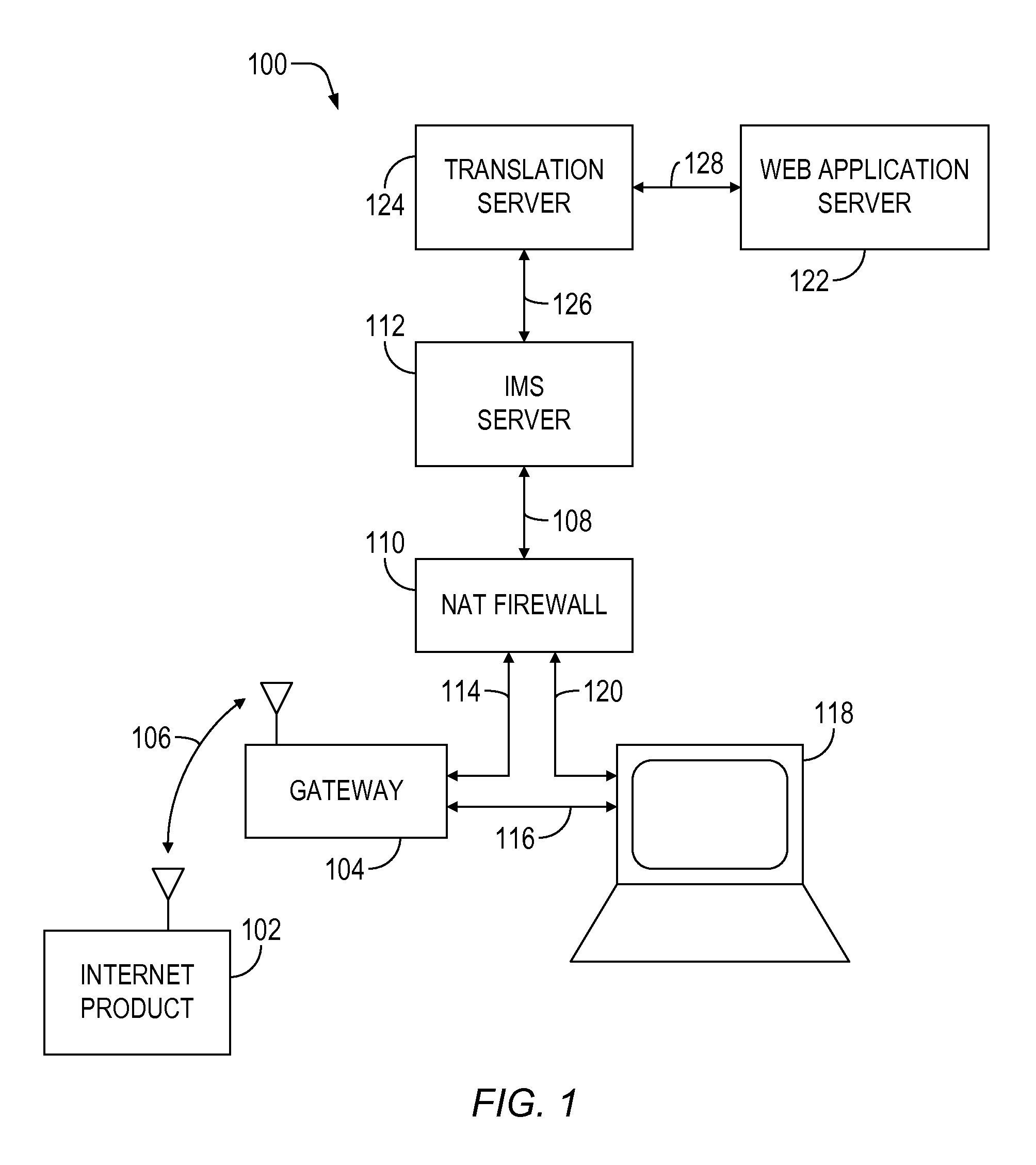

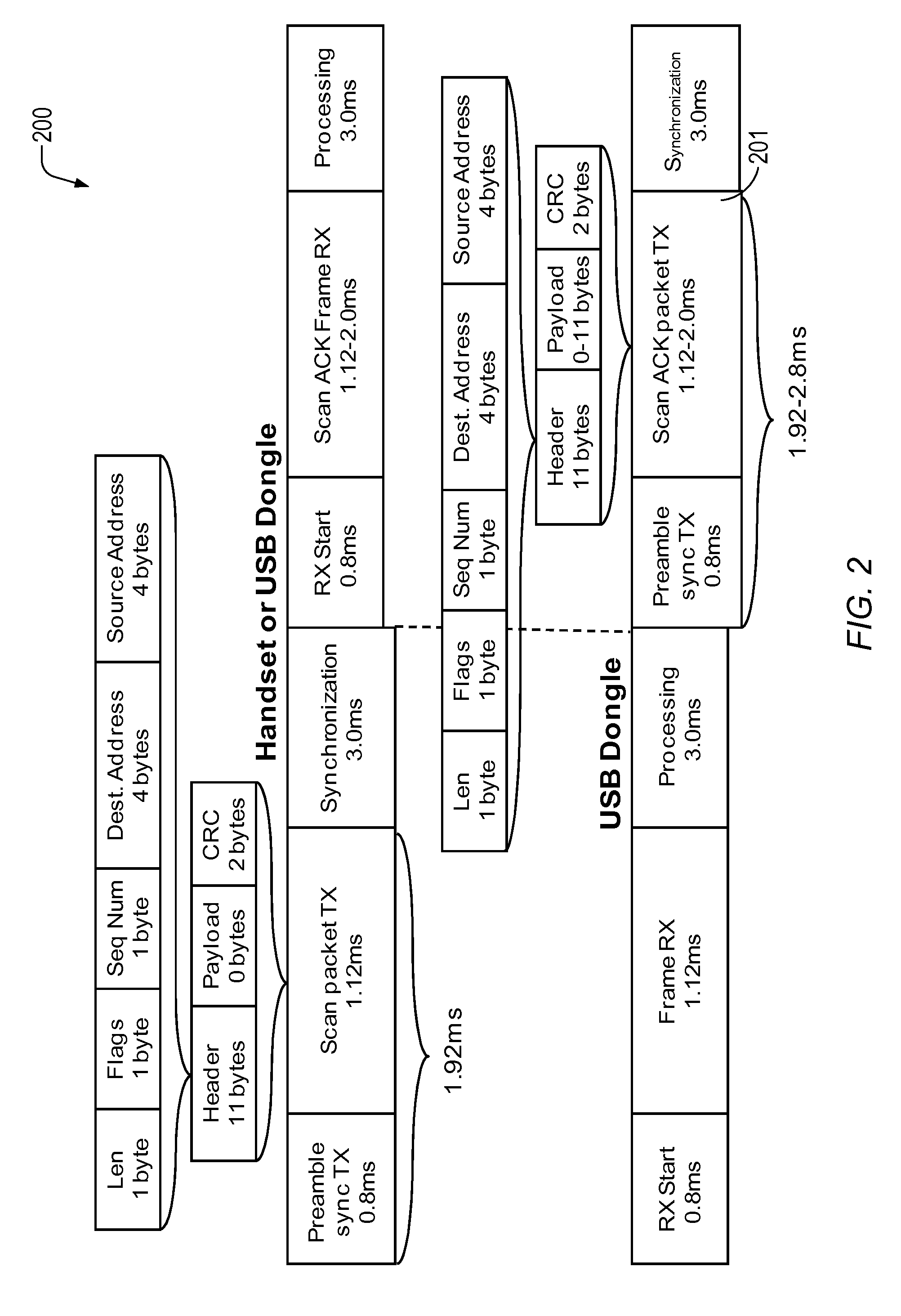

Wireless internet product system

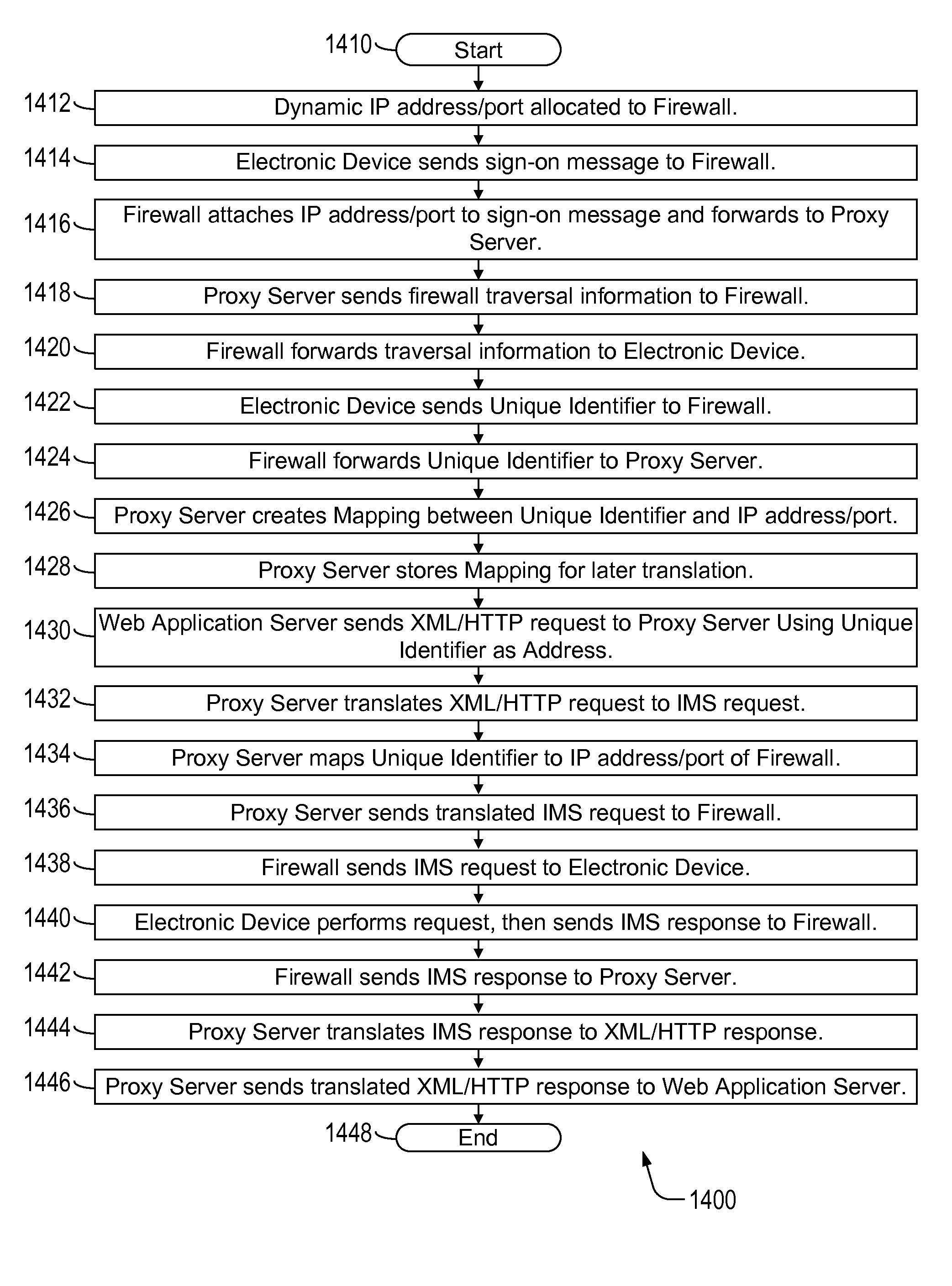

InactiveUS8392561B1Reduce system overheadReduce power consumptionMultiple digital computer combinationsTwo-way working systemsProduct systemWeb service

Low resource internet devices such as consumer electronics products connect to web service by means of a proxy method where the connected device does not need to maintain the expensive and fragile web service interface itself, but rather uses simple low level protocols to communicate through a gateway that executes software to translate a low level proprietary wireless protocol to a proprietary low level internet protocol that can pass through a firewall to proxy servers that translate the low level protocols thus presenting an interface that makes the internet device appear to have a full web service interface to enable communication between the internet devices and the web server.

Owner:BOARD OF TRUSTEES OPERATING MICHIGAN STATE UNIV +1

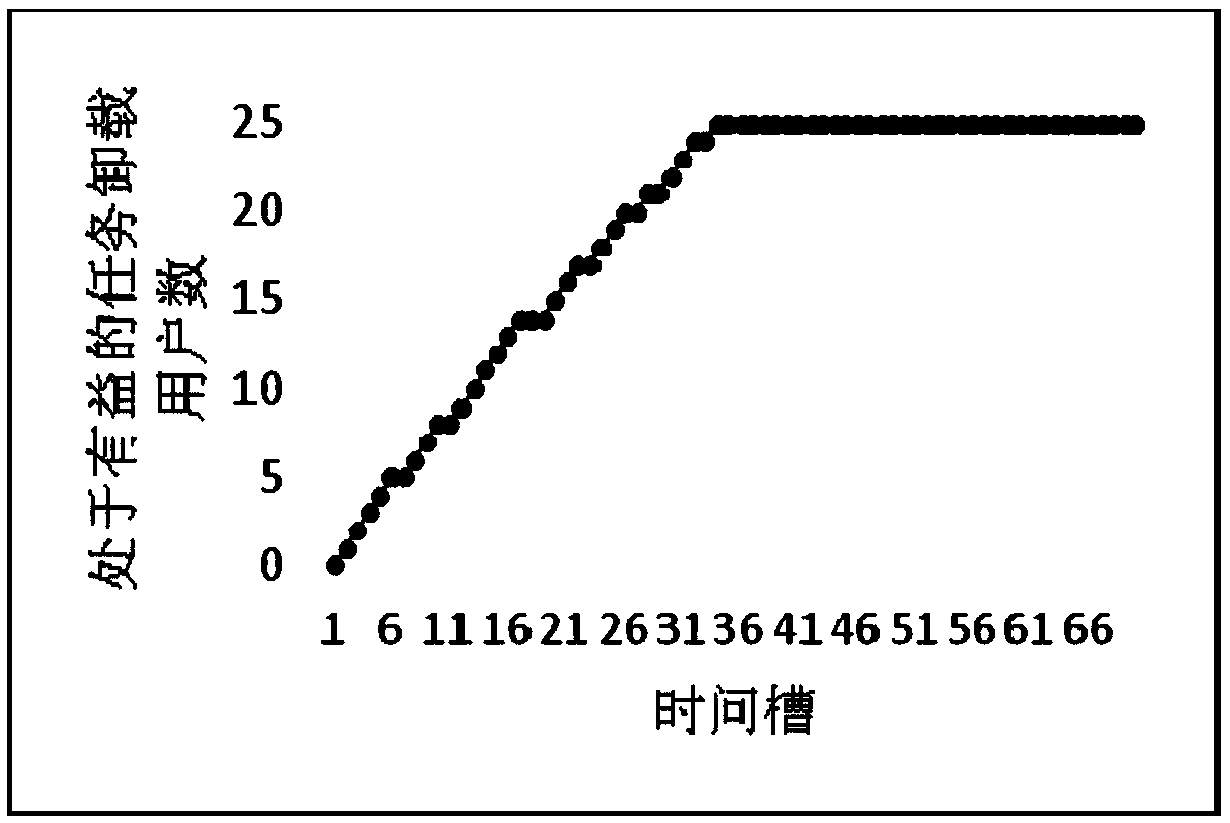

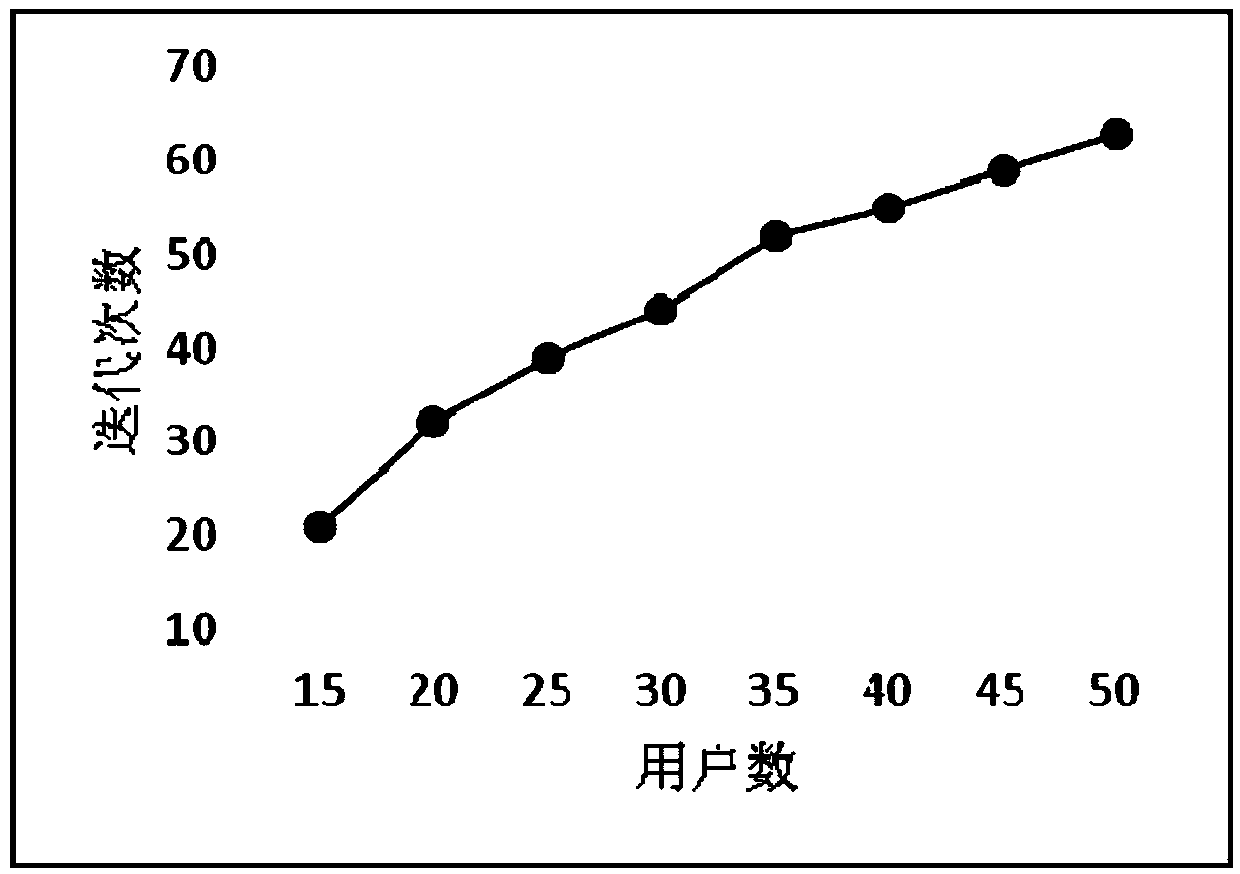

Mobile edge computing task unloading method in multi-user scene

ActiveCN108920279AGuaranteed service qualityEnsure fairnessProgram initiation/switchingResource allocationPersonalizationMobile edge computing

The invention discloses a mobile edge computing task unloading method in a multi-user scene, and relates to the processing technical field of mobile computing systems. The invention aims to reduce response time delay and energy consumption of a mobile device. The multi-user scene is multiple mobile devices and an MEC server which are connected. Each mobile device can select one of multiple channels between the mobile device and the MEC server to perform communication. The MEC server is connected with a center cloud server through a backbone network. The method specifically comprises the following steps: constructing a multi-user scene task unloading model; and performing a two-stage task unloading strategy based on a game theory: the first stage unloading strategy is as follows: determining whether unloading is executed on the mobile device or on the MEC server, and the second stage unloading strategy is as follows: while MEC server resources are insufficient, determining whether to wait on the MEC server or execute on the center cloud server. The method is capable of, under the precondition of guaranteeing service quality and fairness of a user, considering personal requirements of the user.

Owner:HARBIN INST OF TECH

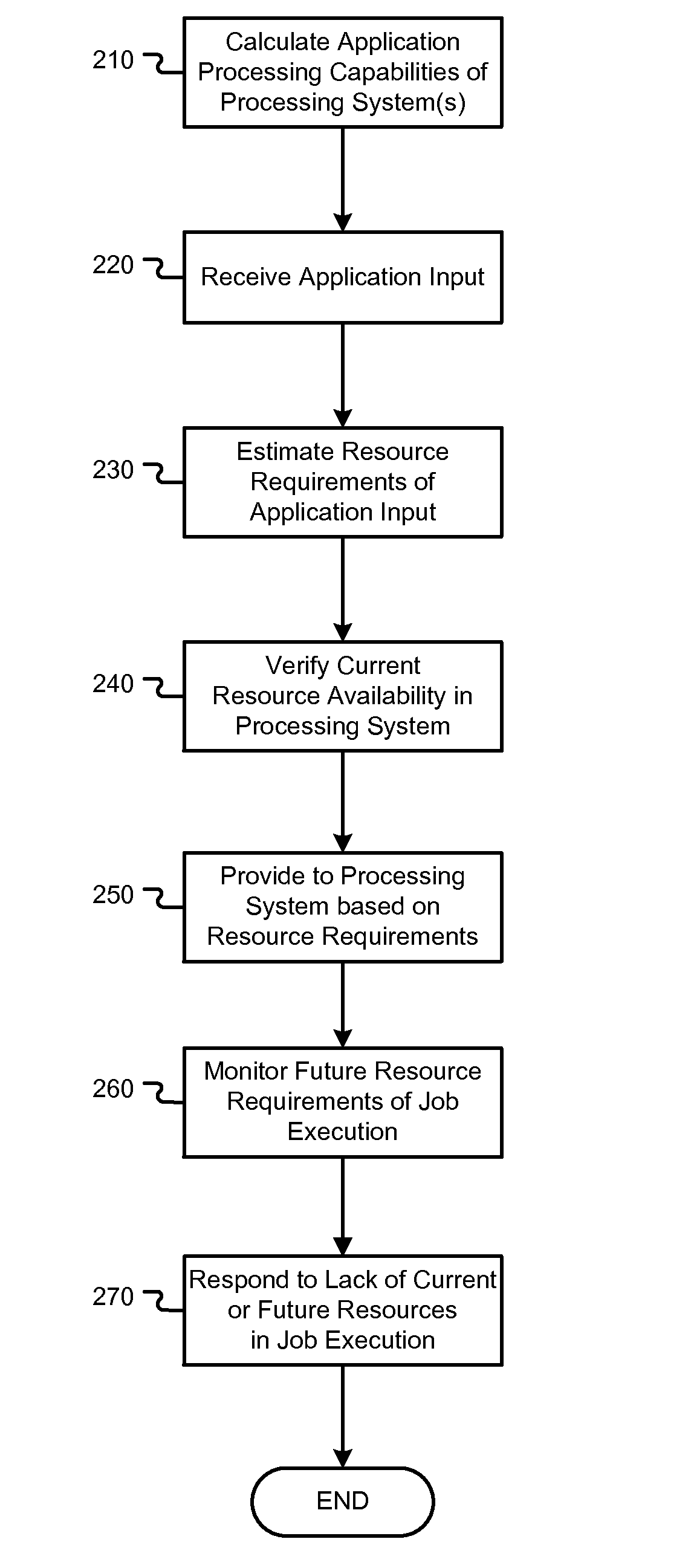

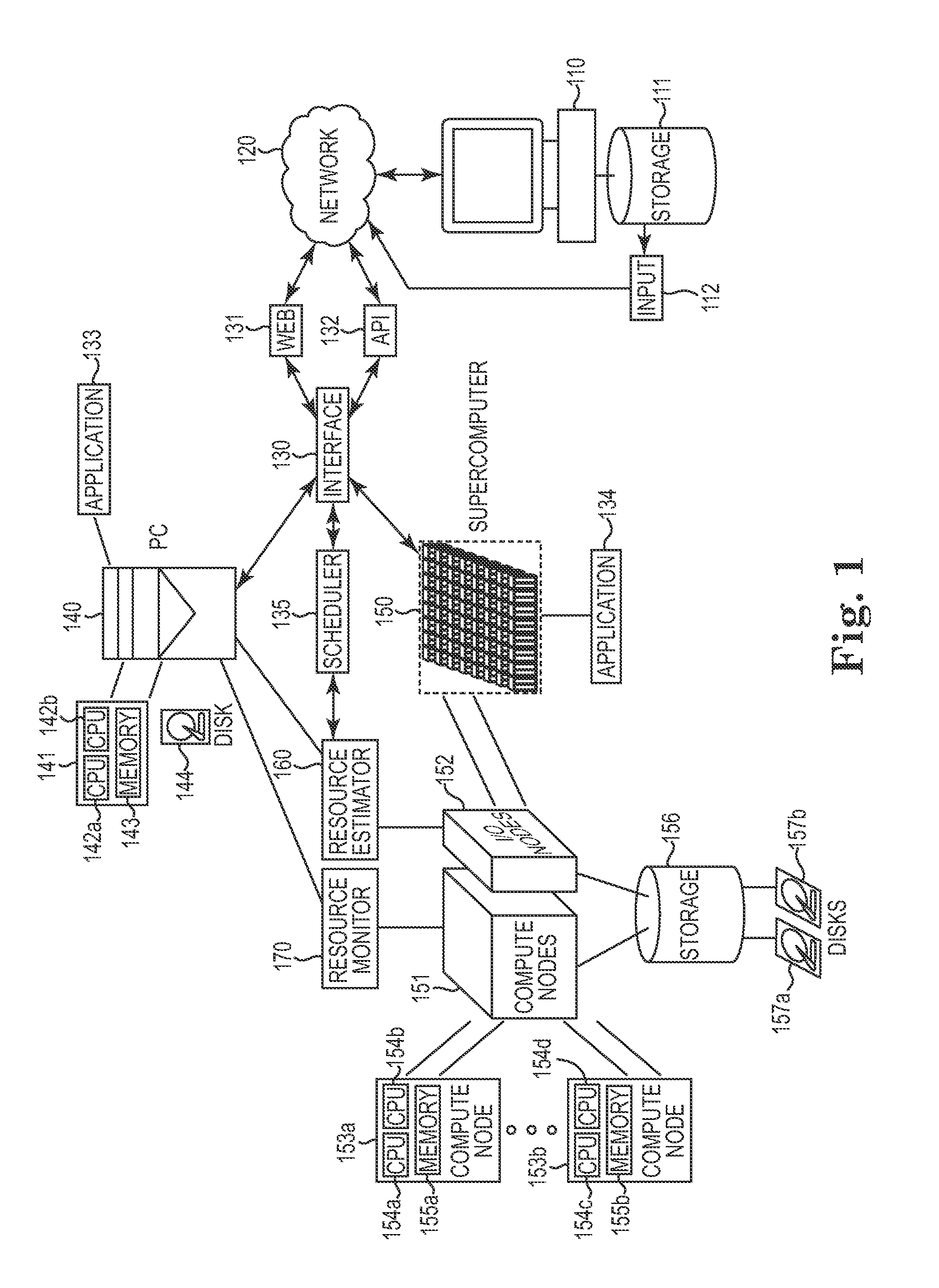

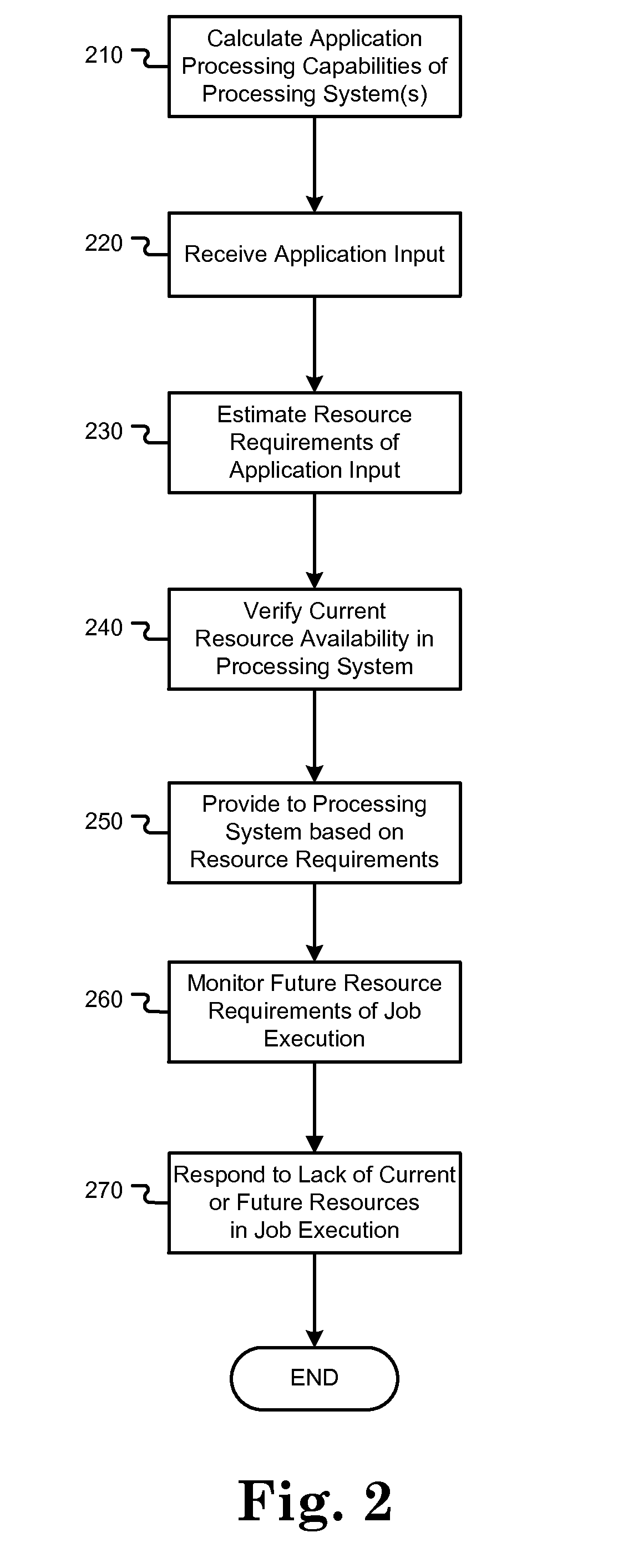

Real-time computing resource monitoring

InactiveUS20120110582A1Avoid failureSave resourcesResource allocationError avoidanceComputerized systemResource based

Techniques used to enhance the execution of long-running or complex software application instances and jobs on computing systems are disclosed herein. In one embodiment, a real time, self-predicting job resource monitor is employed to predict inadequate system resources on the computing system and failure of a job execution on the computing system. This monitor may not only determine if inadequate resources exist prior to execution of the job, but may also detect in real time if inadequate resources will be encountered during the execution of the job for cases where resource availability has unexpectedly decreased. If a resource deficiency is predicted on the executing computer system, the system may pause the job and automatically take corrective action or alert a user. The job may resume after the resource deficiency is met. Additional embodiments also integrate this resource monitoring capability with the adaptive selection of a computer system or application execution environment based on resource capability predictions and benchmarks.

Owner:IBM CORP

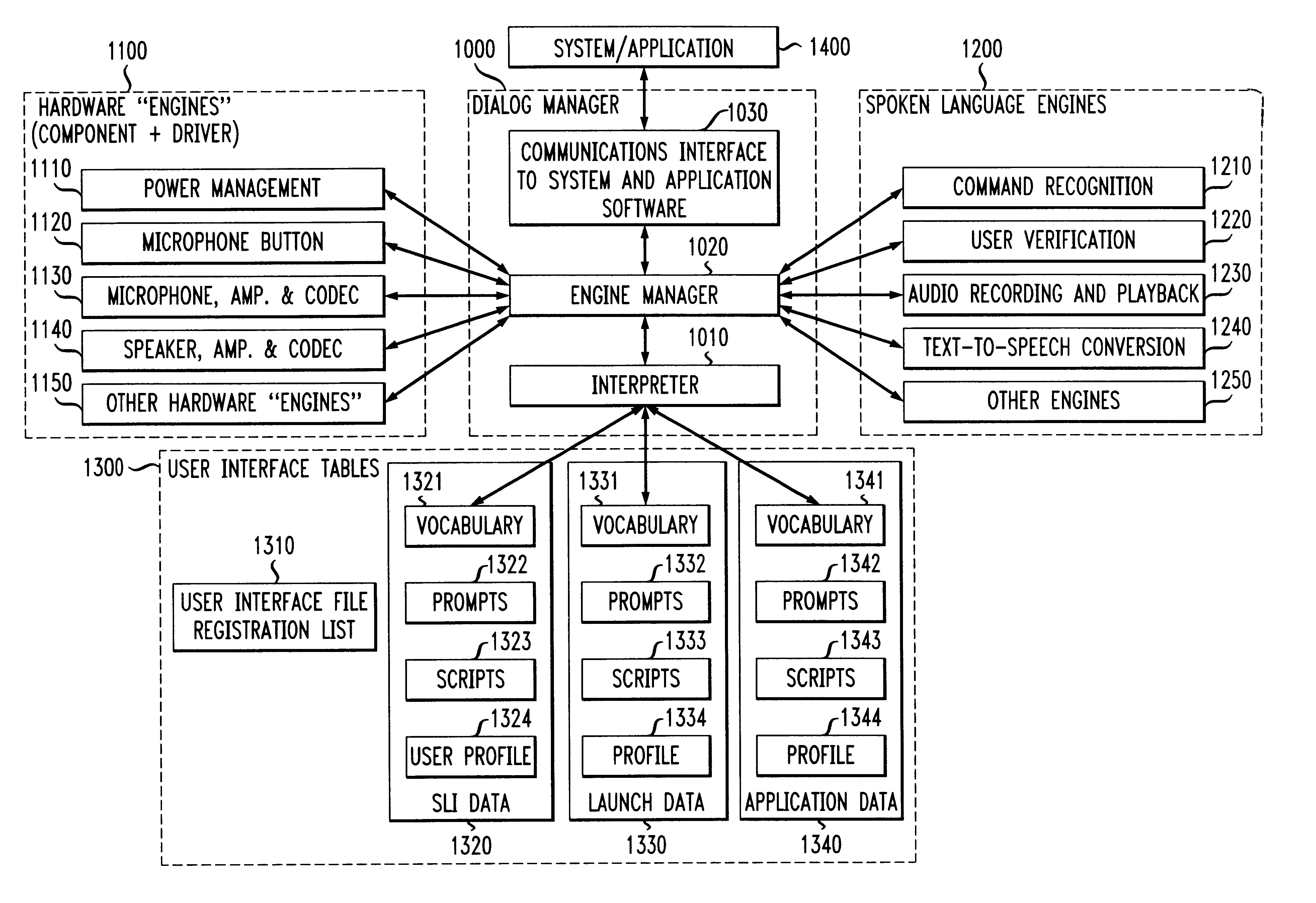

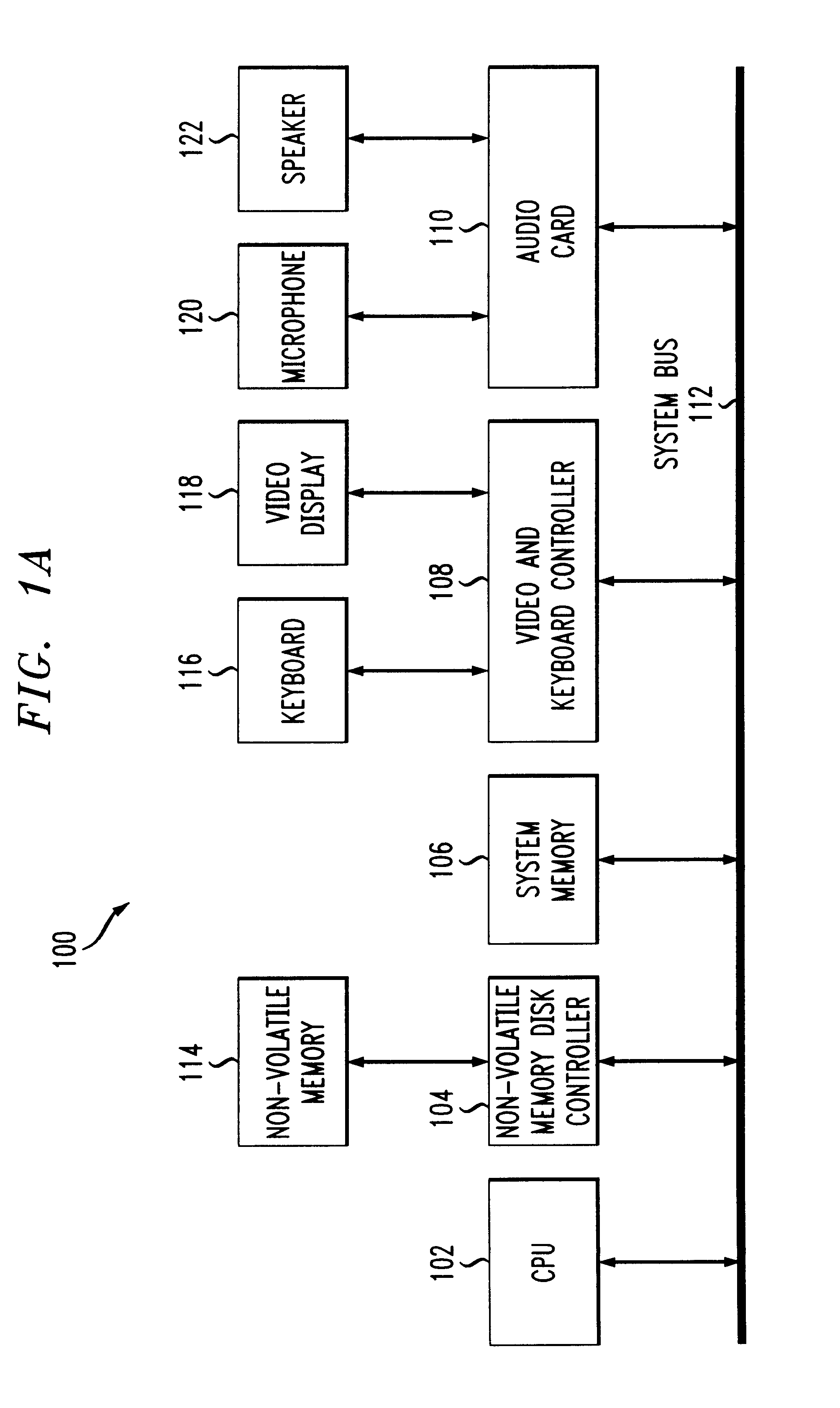

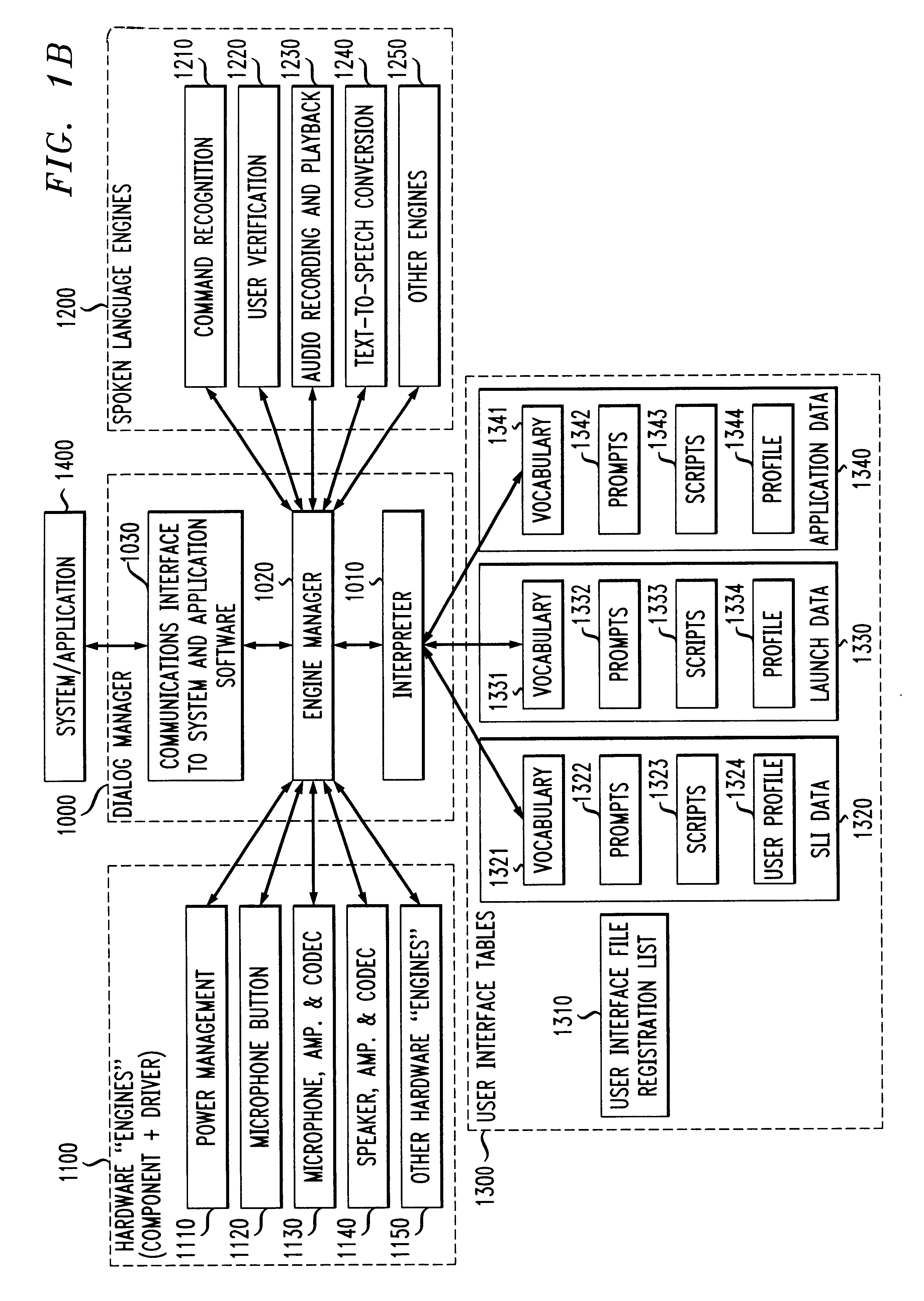

Scalable low resource dialog manager

InactiveUS6513009B1Easy to carryEasily scalable architectureSpeech recognitionSpoken languageData set

A spoken language interface between a user and at least one application or system includes a dialog manager operatively coupled to the application or system, an audio input system, an audio output system, a speech decoding engine and a speech synthesizing engine; and at least one user interface data set operatively coupled to the dialog manager, the user interface data set representing spoken language interface elements and data recognizable by the application. The dialog manager enables connection between the input audio system and the speech decoding engine such that a spoken utterance provided by the user is provided from the input audio system to the speech decoding engine. The speech decoding engine decodes the spoken utterance to generate a decoded output which is returned to the dialog manager. The dialog manager uses the decoded output to search the user interface data set for a corresponding spoken language interface element and data which is returned to the dialog manager when found, and provides the spoken language interface element associated data to the application for processing in accordance therewith. The application, on processing that element, provides a reference to an interface element to be spoken. The dialog manager enables connection between the audio output system and the speech synthesizing engine such that the speech synthesizing engine which, accepting data from that element, generates a synthesized output that expresses that element, the audio output system audibly presenting the synthesized output to the user.

Owner:NUANCE COMM INC

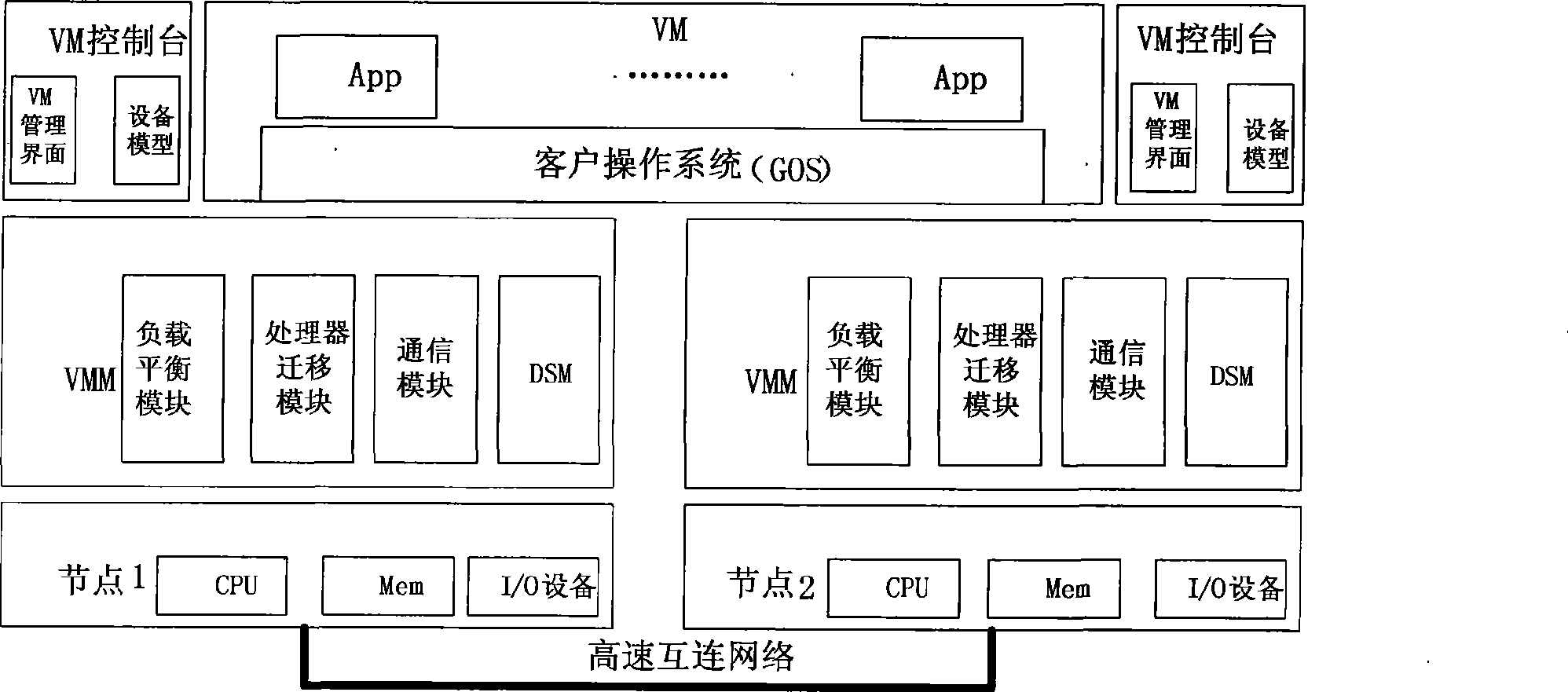

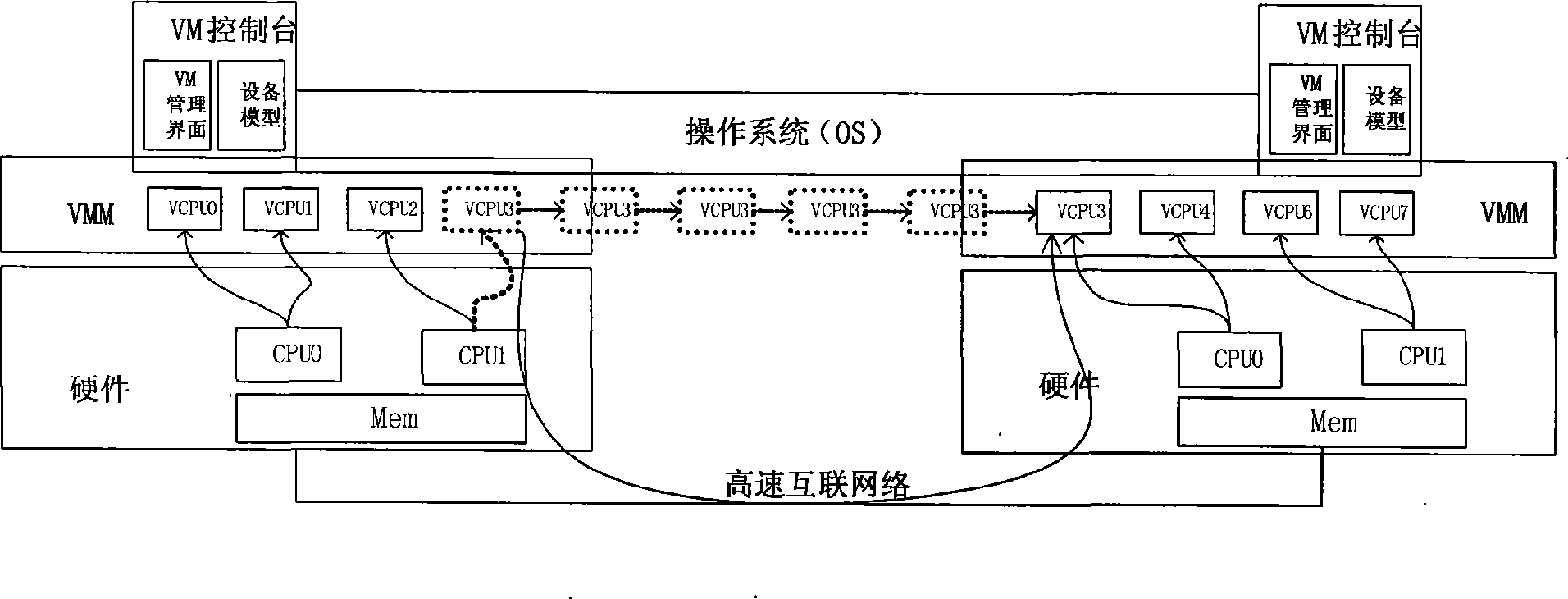

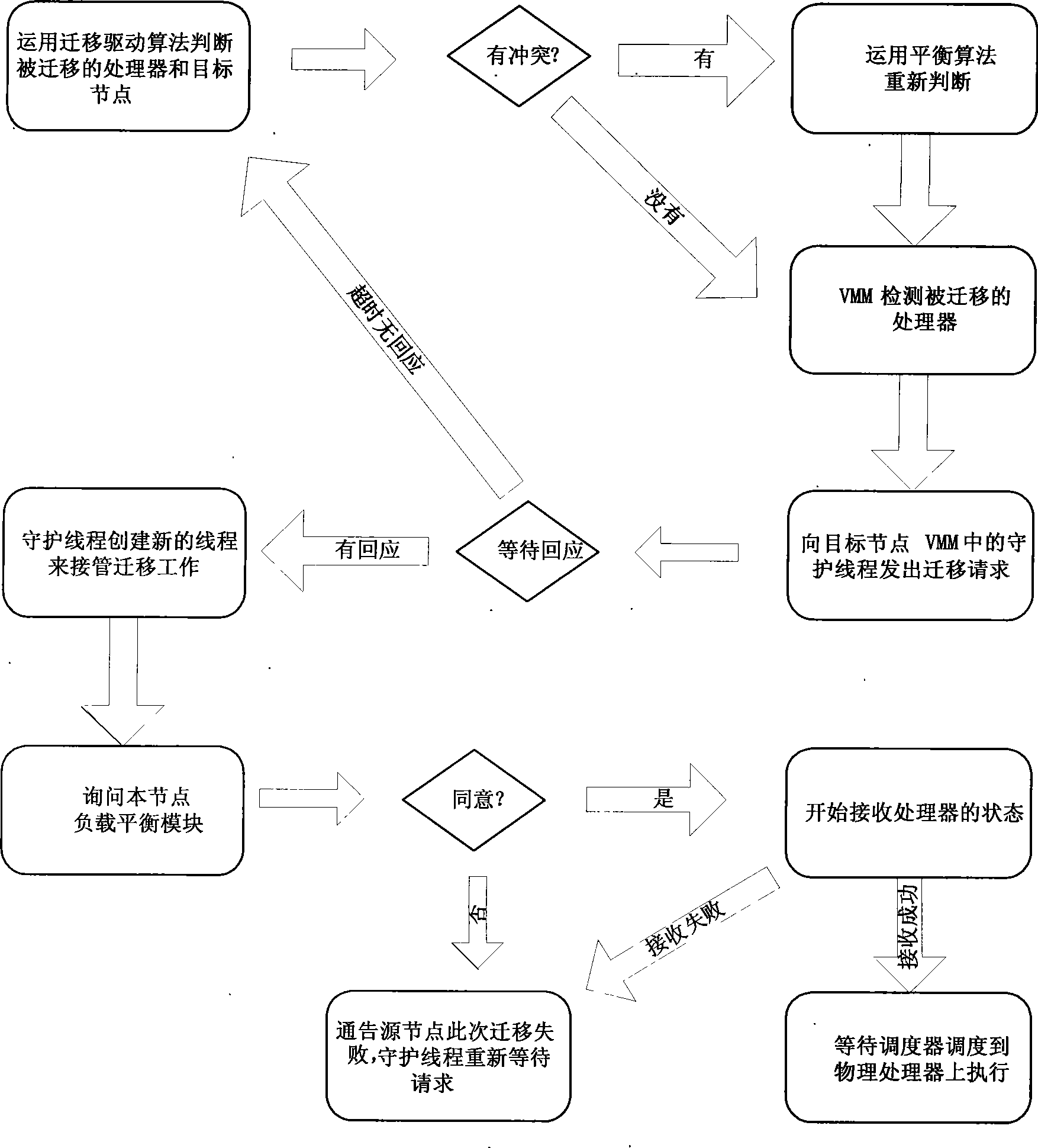

Cluster load balance method transparent for operating system

InactiveCN101452406AImplement a load balancing strategyIncrease profitResource allocationVirtualizationOperational system

The present invention provides a cluster load balancing method transparent to an operation system. Main functional modules comprise a load balancing module, a processor migrating module and a communication module. The method is characterized by comprising the following steps: 1, driving a virtual processor to migrate; 2, driving balance migration; 3, sending a migrating request to a target node and negotiating; 4, storing and restoring a state of the virtual processor; and 5, communicating. The method better solves the problem of low resource utilization rate of a cluster system. Along with the development of more popularization of the cluster system and the continuous development of hardware virtualization technology in the future, the method can be a good solution for the low resource utilization rate of the cluster system and has good application prospect.

Owner:HUAWEI TECH CO LTD

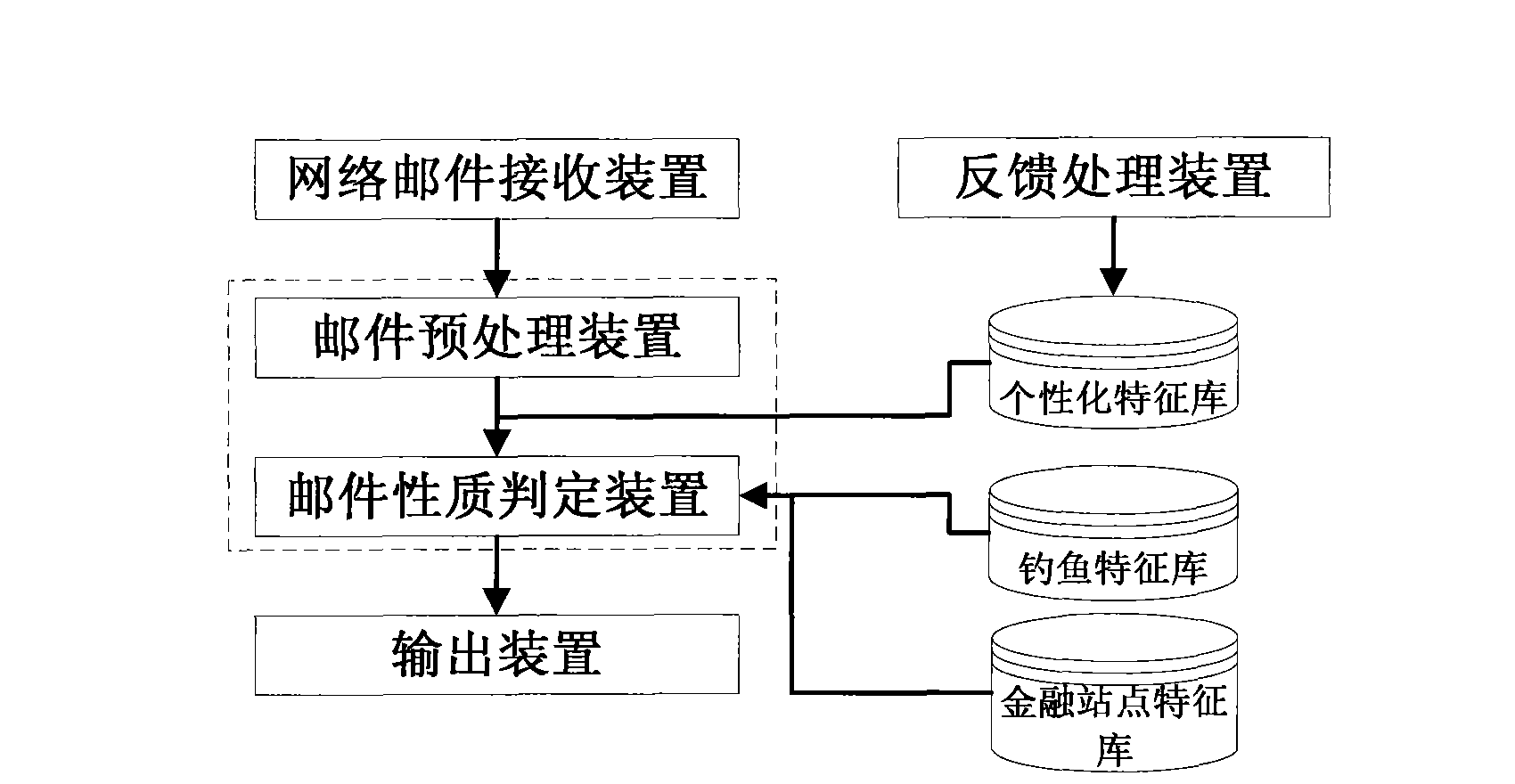

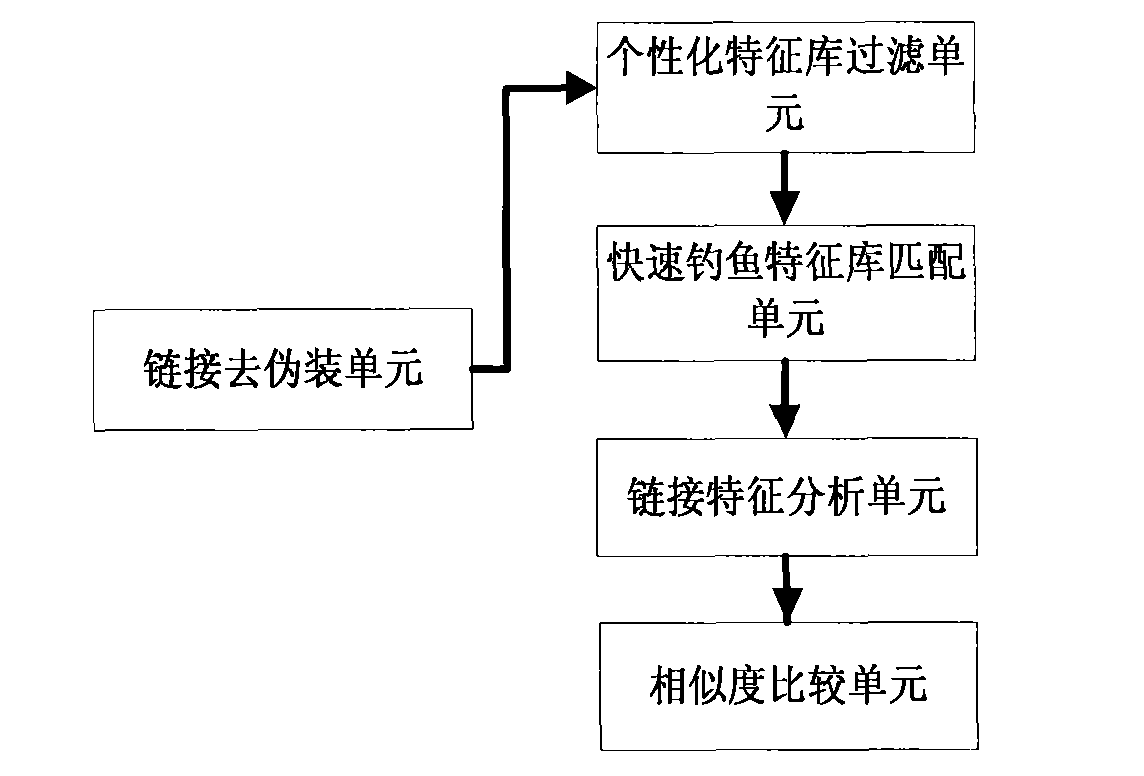

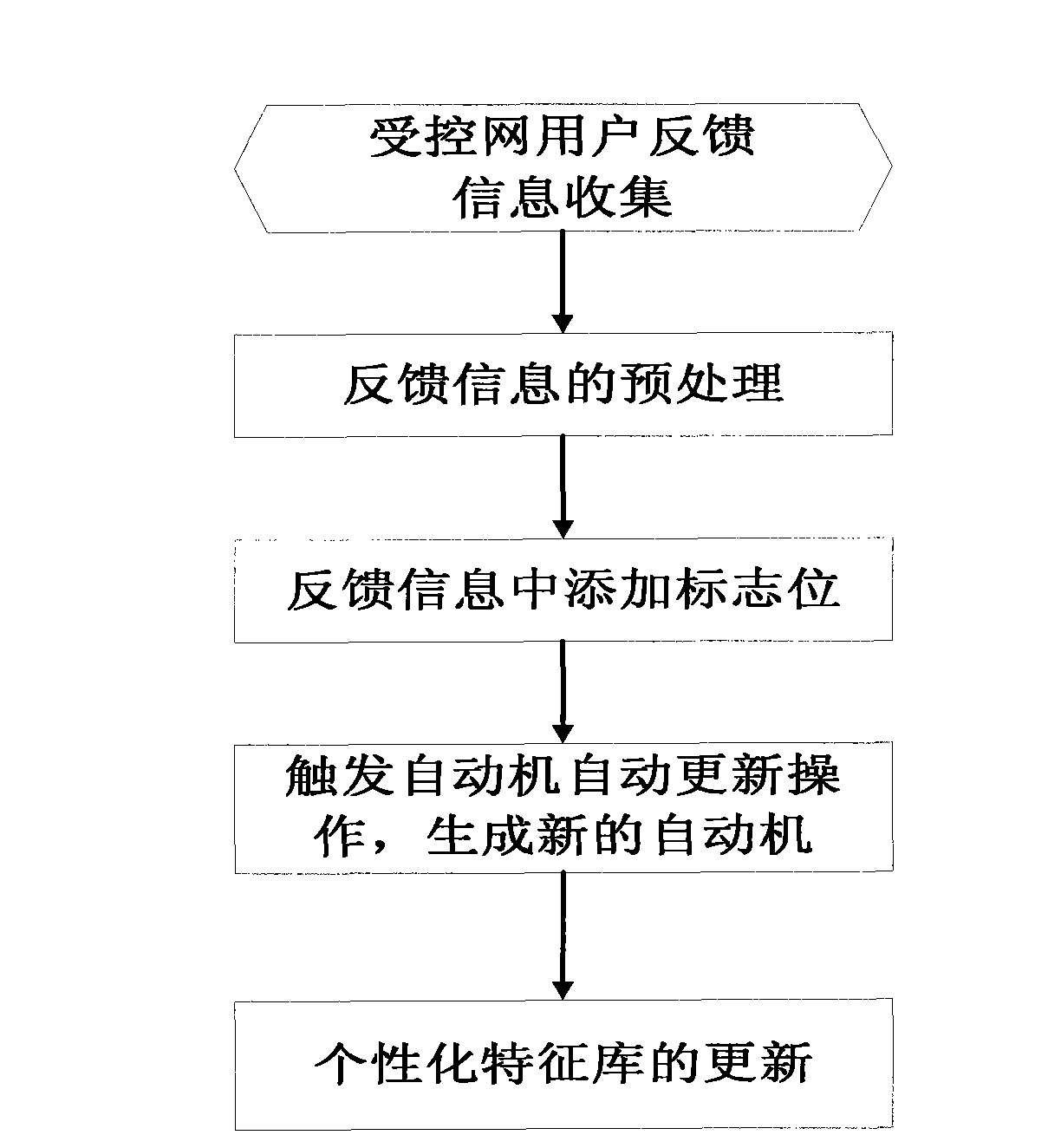

System and method for anti-phishing emails based on link domain name and user feedback

The invention provides a system and a method for anti-phishing emails based on link domain name and user feedback. The system comprises an email receiving device, an email pre-processing device, an email property judging device, an outputting device and a feedback processing device. The method comprises the following steps: analyzing characteristics of the link domain name in the email, combiningwith a controlled network user feedback strategy, and identifying phishing emails and doubtful phishing emails. The invention has advantages of high identification efficiency, low resource consumptionand no error rate. The invention can be configured to email servers, gateway servers and the like that require high real-time performance, protect the controlled network user against cheating by thephishing emails, resist the interference from the spiteful user in the controlled network, and can be widely applied to the application fields of network email filtering management, anti-phishing attack and the like.

Owner:杨辉

Design method of hardware accelerator based on LSTM recursive neural network algorithm on FPGA platform

InactiveCN108090560AImprove forecastImprove performanceNeural architecturesPhysical realisationNeural network hardwareLow resource

The invention discloses a method for accelerating an LSTM neural network algorithm on an FPGA platform. The FPGA is a field-programmable gate array platform and comprises a general processor, a field-programmable gate array body and a storage module. The method comprises the following steps that an LSTM neural network is constructed by using a Tensorflow pair, and parameters of the neural networkare trained; the parameters of the LSTM network are compressed by adopting a compression means, and the problem that storage resources of the FPGA are insufficient is solved; according to the prediction process of the compressed LSTM network, a calculation part suitable for running on the field-programmable gate array platform is determined; according to the determined calculation part, a softwareand hardware collaborative calculation mode is determined; according to the calculation logic resource and bandwidth condition of the FPGA, the number and type of IP core firmware are determined, andacceleration is carried out on the field-programmable gate array platform by utilizing a hardware operation unit. A hardware processing unit for acceleration of the LSTM neural network can be quicklydesigned according to hardware resources, and the processing unit has the advantages of being high in performance and low in power consumption compared with the general processor.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

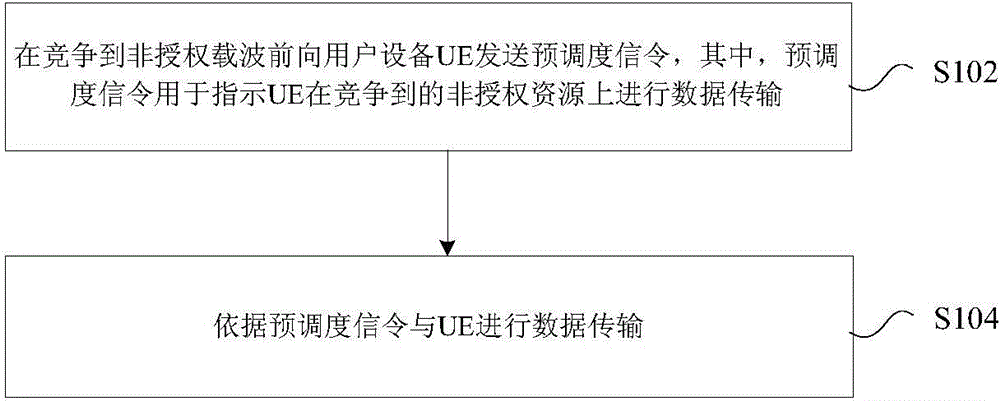

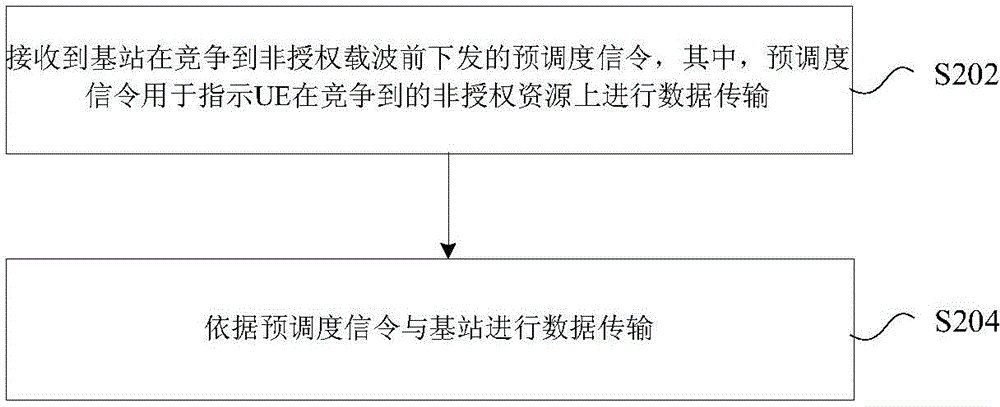

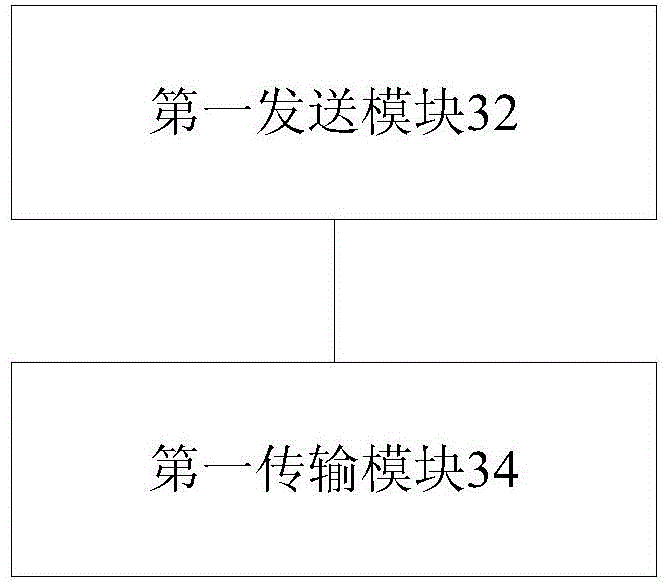

Data transmission method, data transmission device, base station and user equipment

ActiveCN105992373AReduce data transmission delayEfficient data transferPower managementSignal allocationFrequency spectrumSpectral efficiency

The invention discloses a data transmission method, a data transmission device, a base station and user equipment. The method includes the following steps that: a pre-scheduling signal is sent to the UE (user equipment) before non-authorized carriers are obtained through competition, wherein the pre-scheduling signal is used for instructing the UE to carry out data transmission on the non-authorized resources which are obtained through competition; and data transmission with the UE is carried out according to the pre-scheduling signal. With the data transmission method of the invention adopted, the problems of uncontinuous data transmission and low resource utilization rate which are caused by the limitations of resource competition and resource holding time when non-authorized carriers are adopted to carry out data transmission in the prior art can be solved; limited holding time on the non-authorized carriers can be effectively utilized, spectrum efficiency can be improved, and the time delay effect of the data transmission of the non-authorized carriers can be decreased.

Owner:ZTE CORP

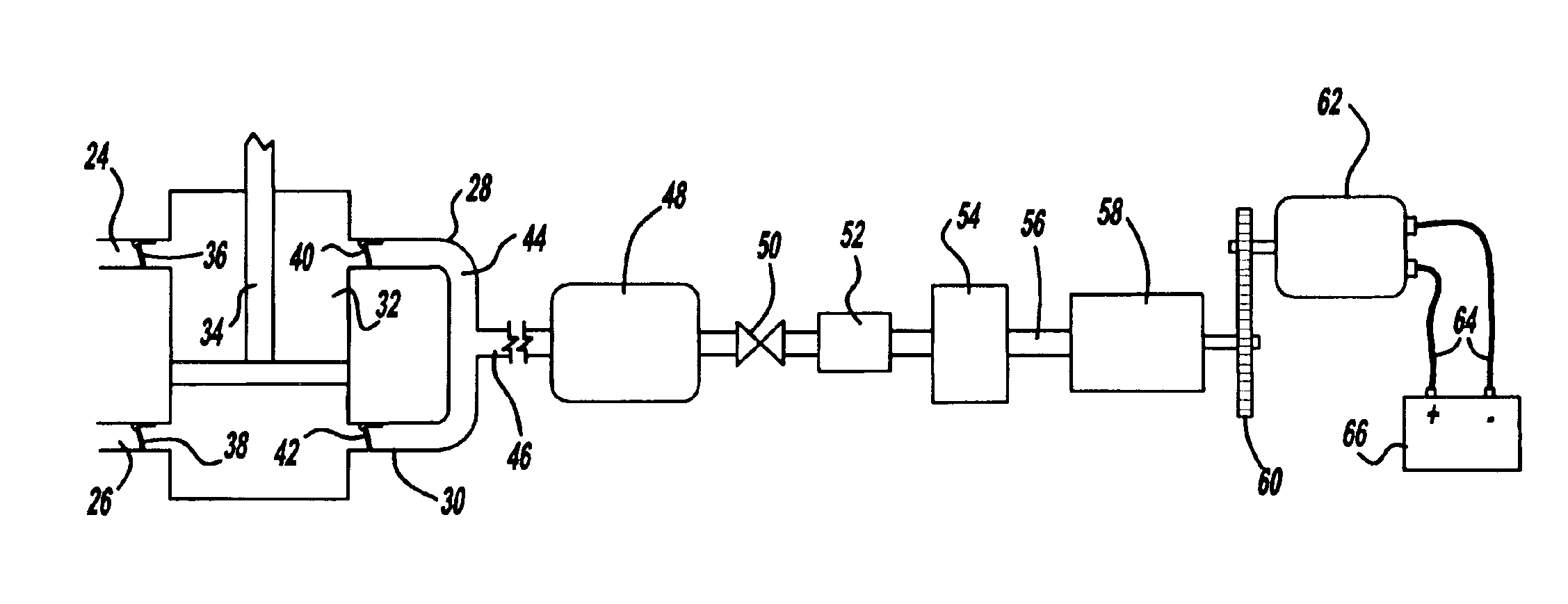

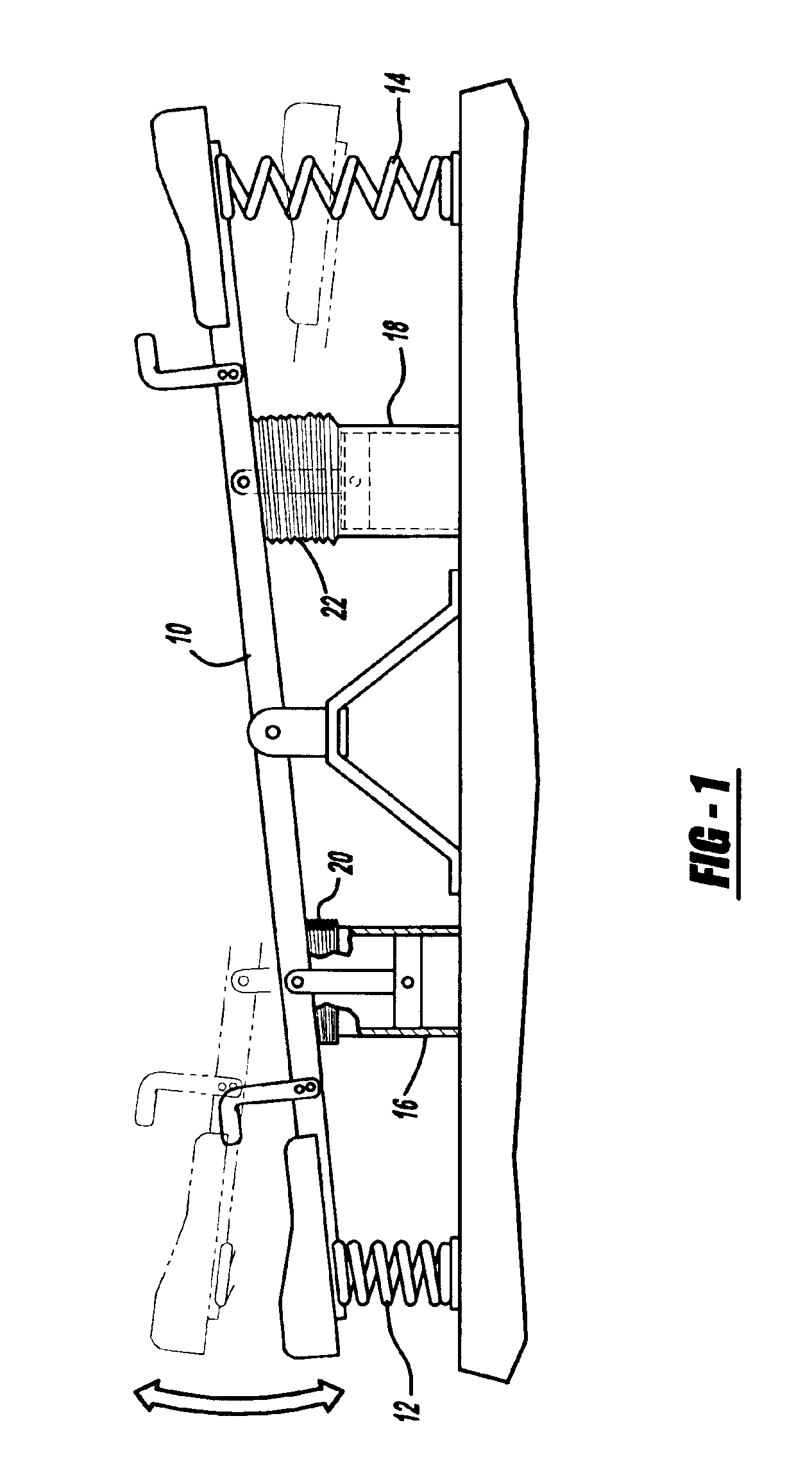

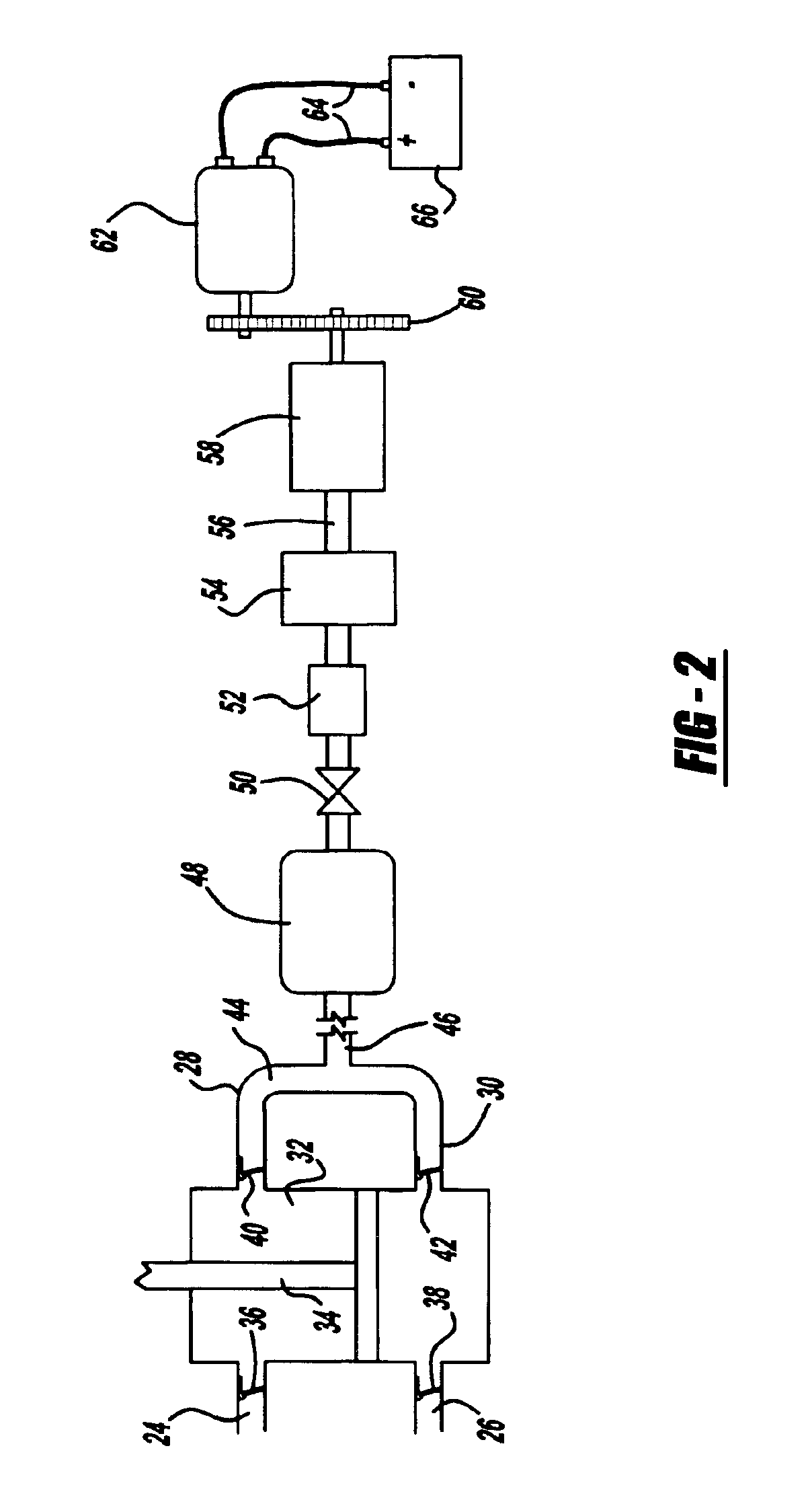

Pneumatic human power conversion system based on children's play

InactiveUS7005757B2Low efficiencyLow-cost of operationFrom muscle energyAmusementsStored energyElectricity

When large numbers of children play in a playground, part of the power of their play could be usefully harnessed resulting in large energy storage. This stored energy can then be converted for basic, low-power, applications in the school such as lighting, communication, or operating fans. Energy can be produced through the use of pneumatic (i.e., compressed air) systems such as cylinders, motors, valves, and regulators for the conversion of human power of children's play in school playgrounds and other public places. The energy of the compressed air can then be converted to electricity for purposes such as lighting and communication. This provides a low-cost, low-resource means of generation of electricity, especially for use in developing countries.

Owner:PANDIAN SHUNMUGHAM RAJASEKARA

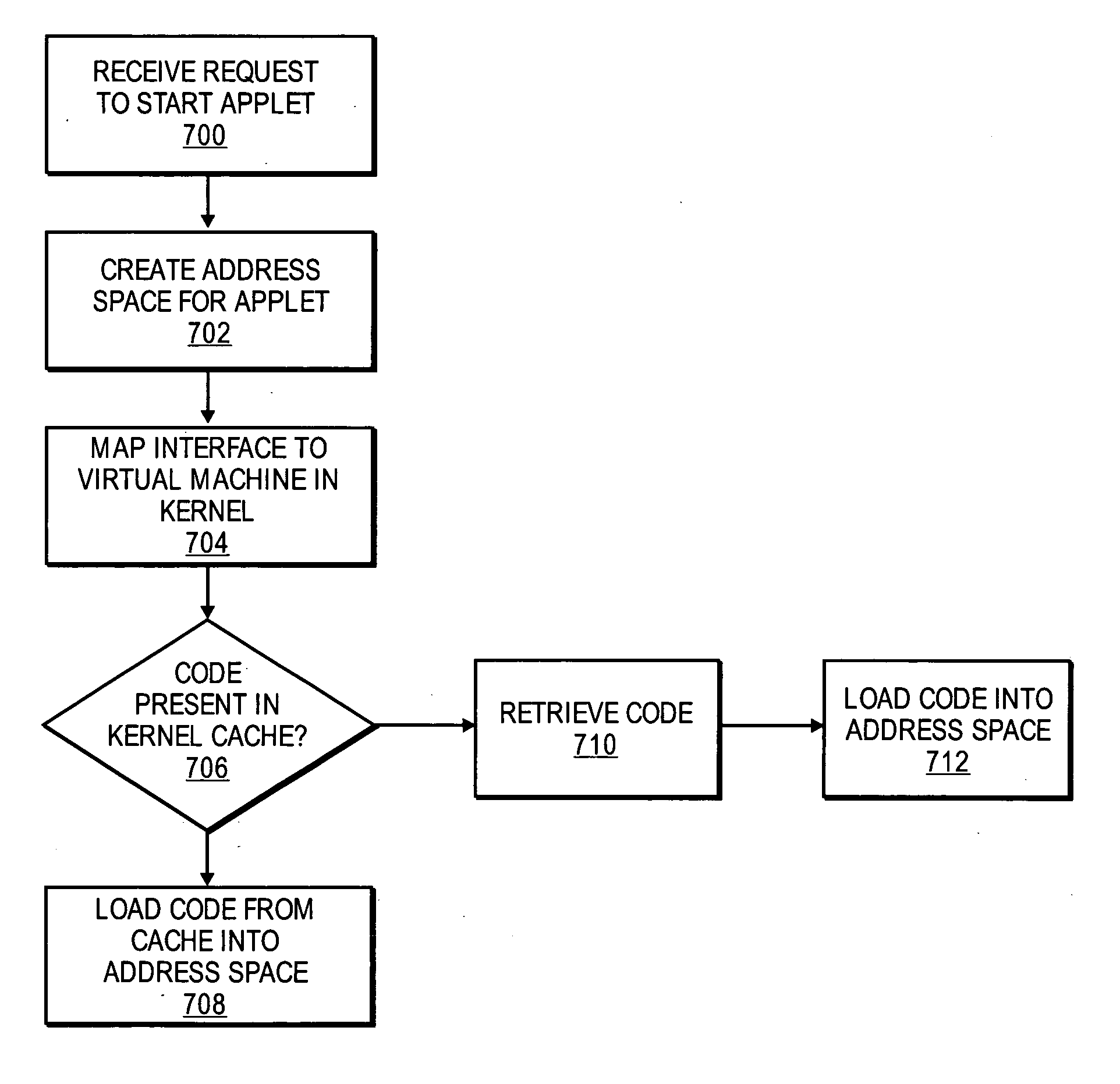

Purpose domain for in-kernel virtual machine for low overhead startup and low resource usage

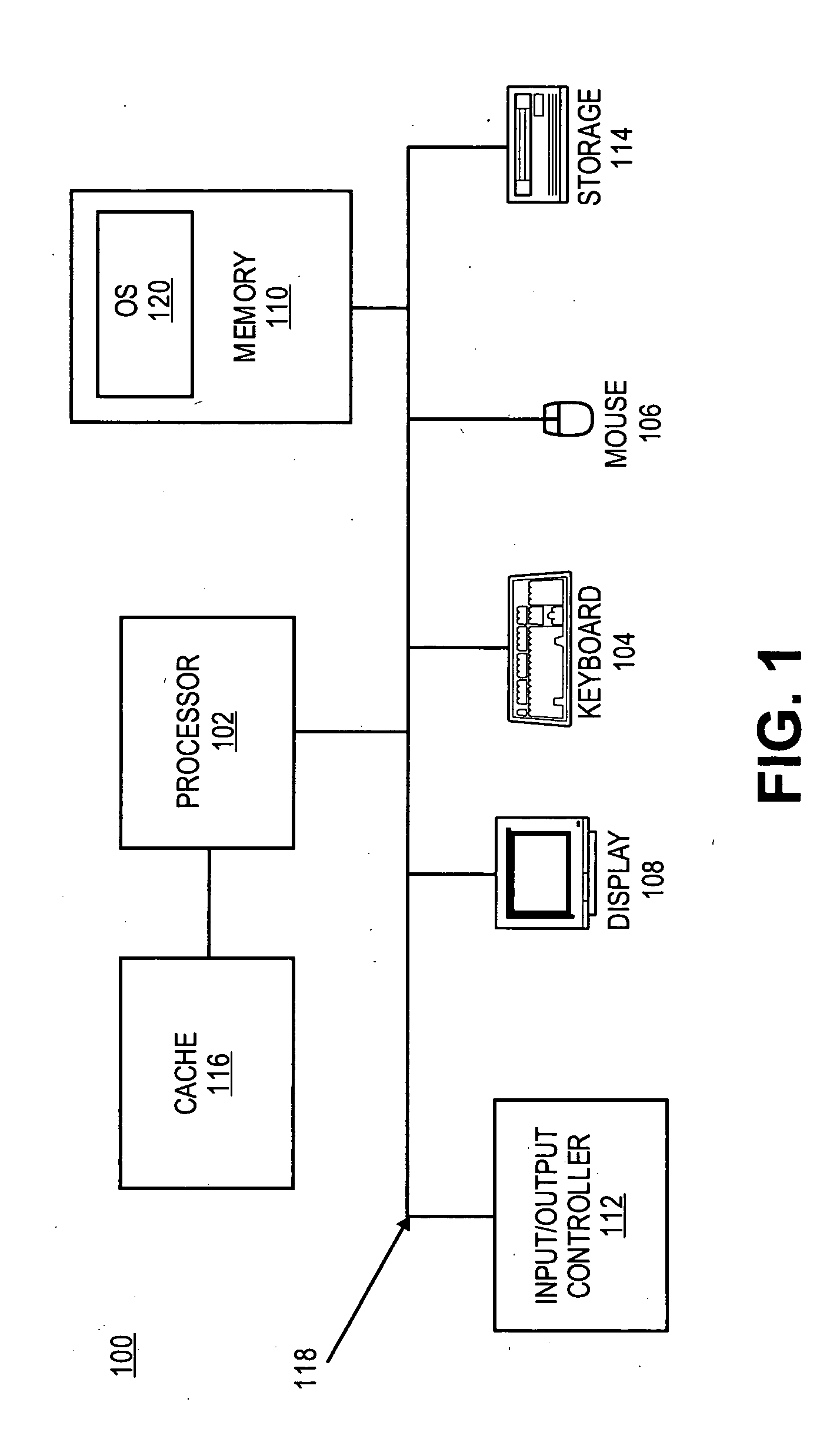

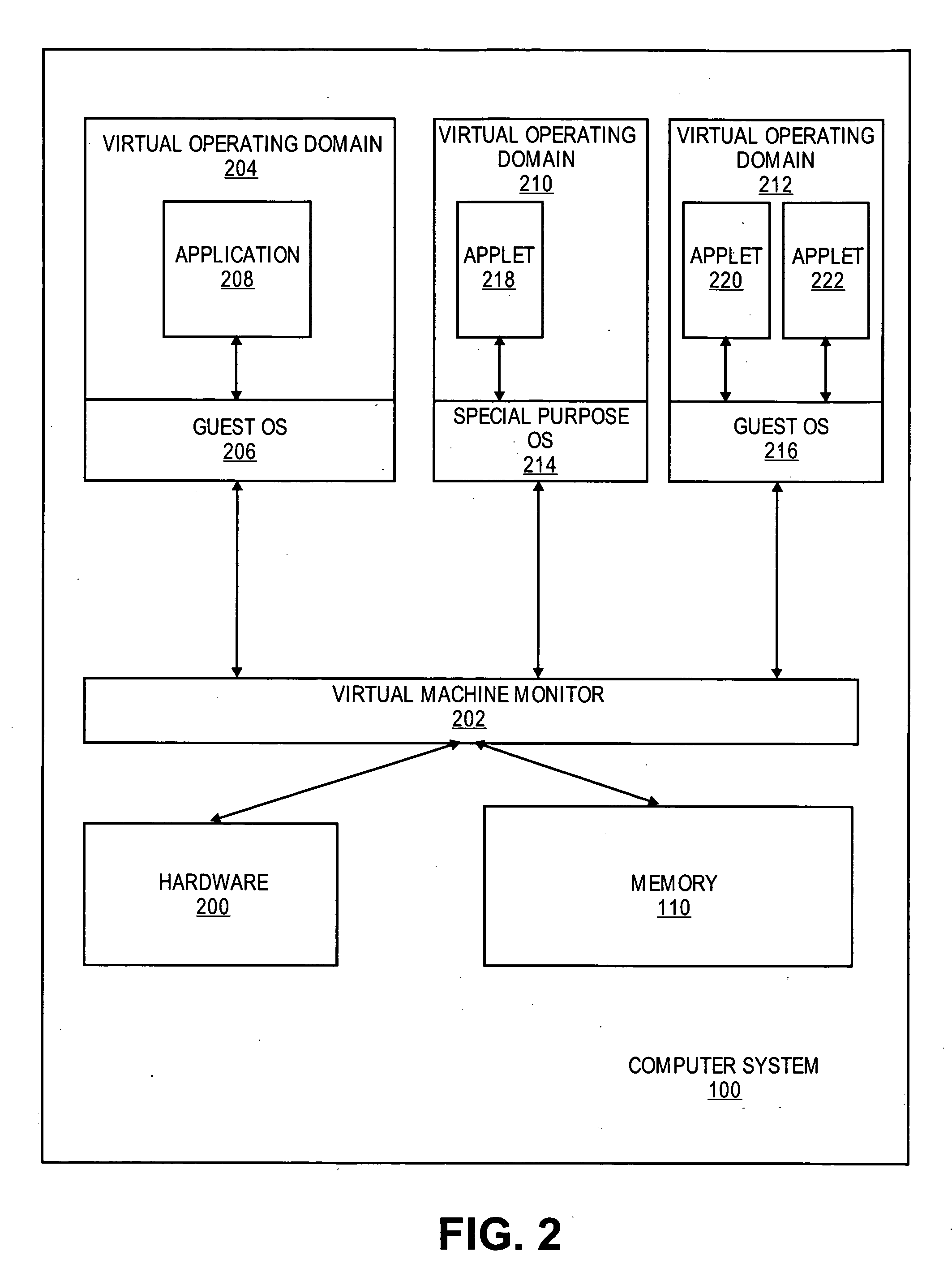

ActiveUS20070169024A1Software simulation/interpretation/emulationMemory systemsOperational systemAnalog computer

Embodiments of the present invention provide an architecture for securely and efficiently executing byte code generated from a general programming language. In particular, a computer system is divided into a hierarchy comprising multiple types of virtual machines. A thin layer of software, known as a virtual machine monitor, virtualizes the hardware of the computer system and emulates the hardware of the computer system to form a first type of virtual machine. This first type of virtual machine implements a virtual operating domain that allows running its own operating system. Within a virtual operating domain, a byte code interpreter may further implement a second type of virtual machine that executes byte code generated from a program written in a general purpose programming language. The byte code interpreter is incorporated into the operating system running in the virtual operating domain. The byte code interpreter implementing the virtual machine that executes byte code may be divided into a kernel component and one or more user level components. The kernel component of the virtual machine is integrated into the operating system kernel. The user level component provides support for execution of an applet and couples the applet to the operating system. In addition, an operating system running in a virtual operating domain may be configured as a special purpose operating system that is optimized for the functions of a particular byte code interpreter.

Owner:RED HAT

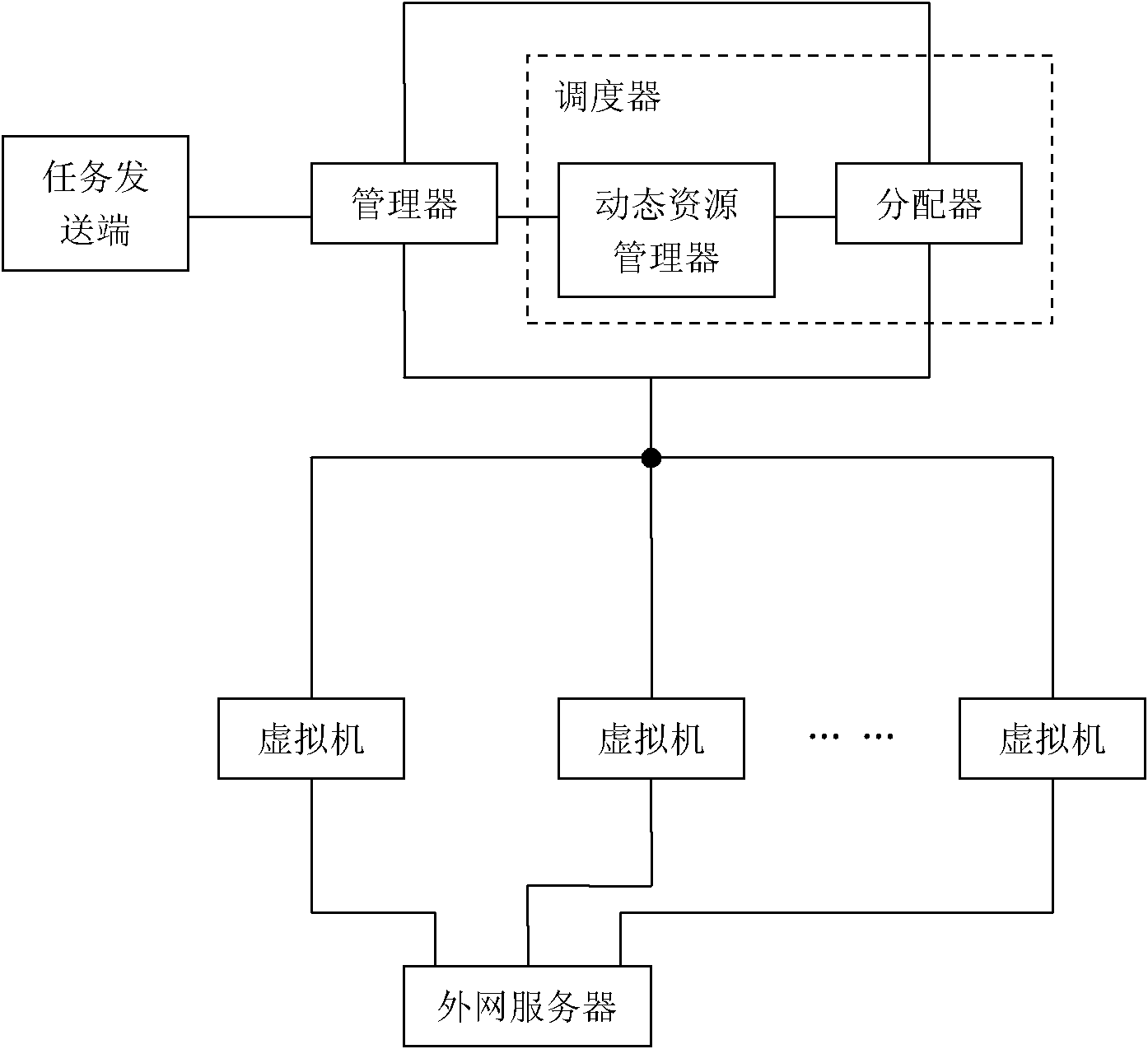

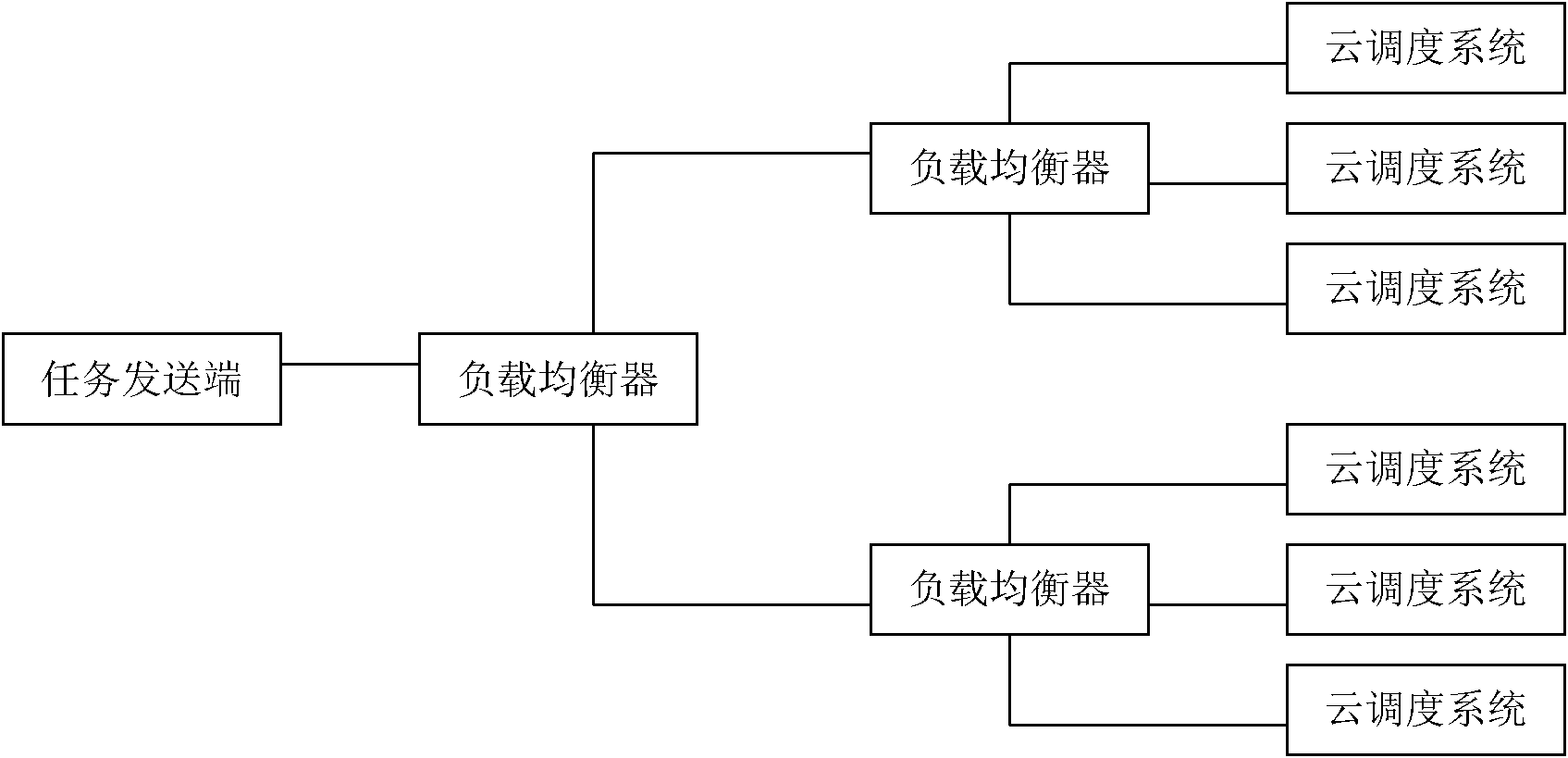

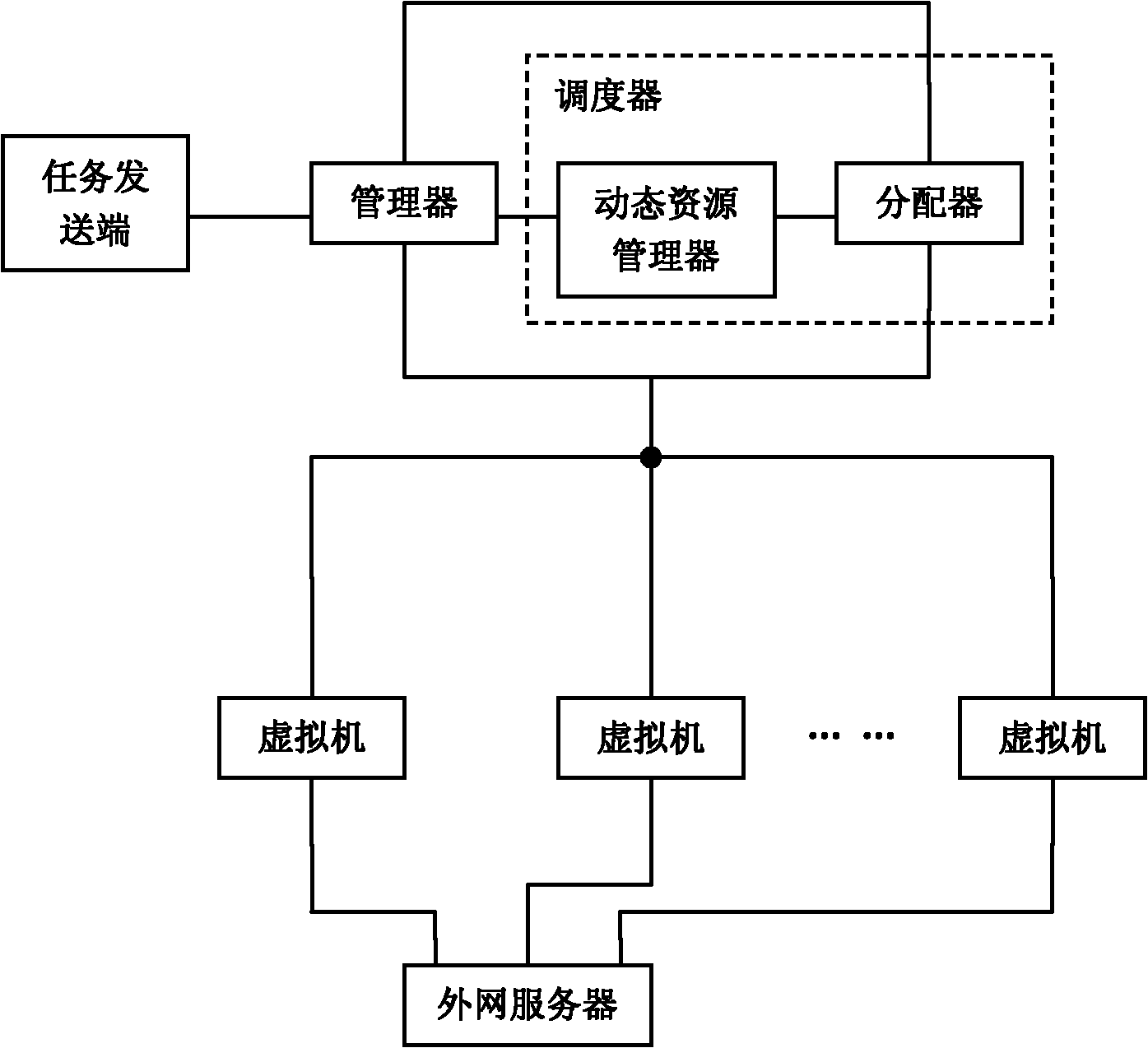

Cloud scheduling system and method and multistage cloud scheduling system

The invention relates to cloud platform technology, solves the problems of low task dispatching speed and low resource utilization rate of the conventional cloud platform during scheduling, and provides a cloud scheduling system, a cloud scheduling method and a multistage cloud scheduling system. The invention adopts the technical scheme that: the cloud scheduling system comprises a task transmitting end, a scheduler, a manager, n virtual machines and an external network server, wherein the scheduler is connected with the manager; the n virtual machines are connected with the scheduler; the task transmitting end is connected with the manager; the n virtual machines are connected with the manager; the external network server is connected with the n virtual machines; and n is a positive integer. The cloud scheduling system has the advantages that the system can schedule large-scale tasks efficiently at a high speed and is applicable to the cloud platform.

Owner:戴元顺

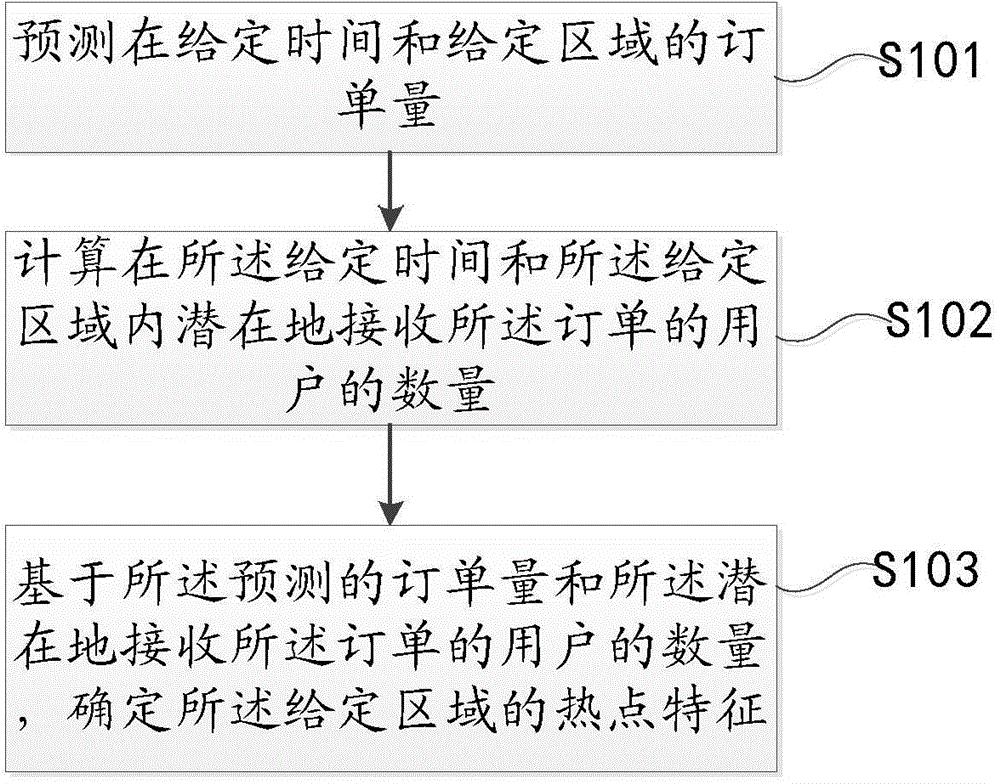

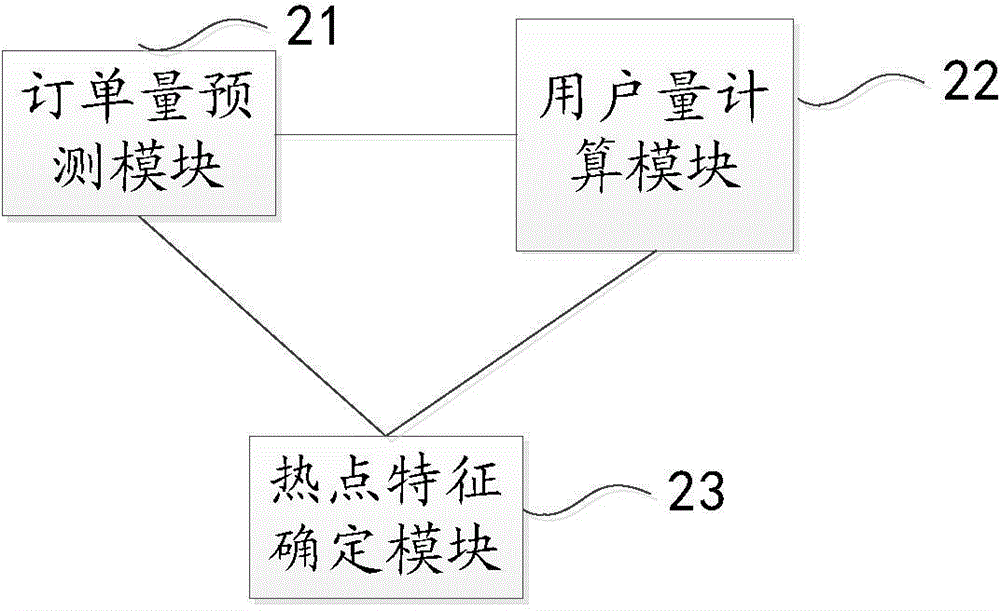

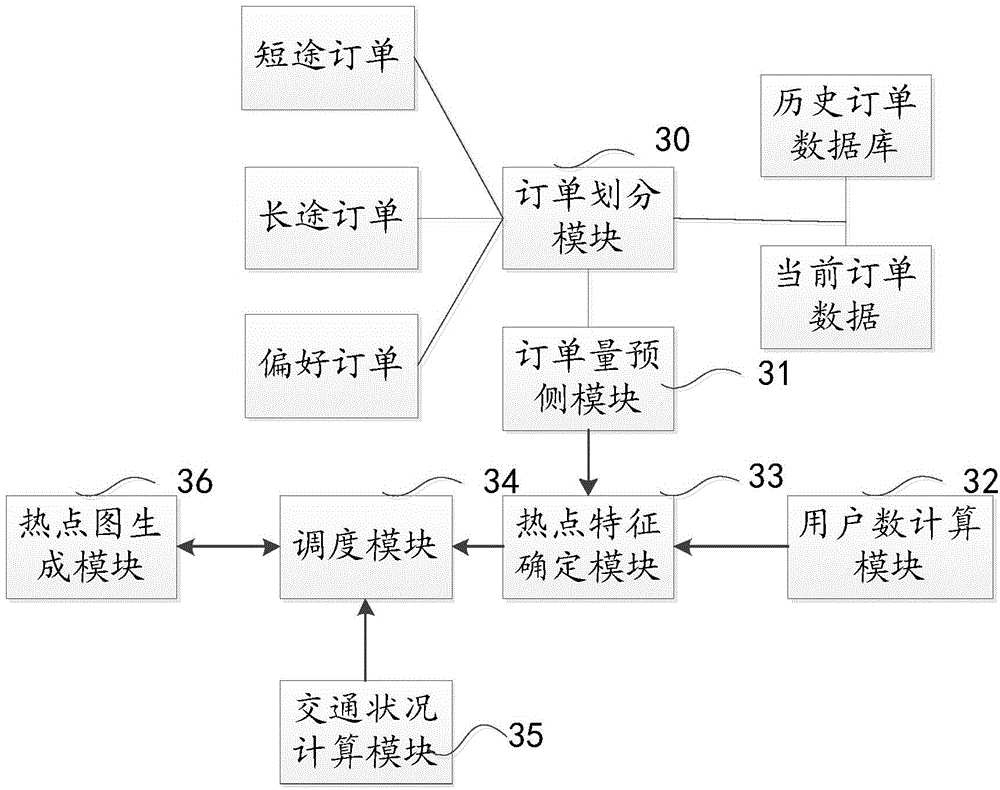

Dispatching method and dispatching system based on orders

InactiveCN104599088AEffectively reflect supply and demandSolve needsBuying/selling/leasing transactionsResourcesLow resourceComputer science

An embodiment of the invention provides a dispatching method based on orders. The dispatching method includes predicting order quantity within given time and given area; calculating the number of potential users receiving the orders within the given time and the given area; determining hotspot characteristics of the given area on the basis of the predicted order quantity and the potential users receiving the orders. The embodiment of the invention further provides a dispatching system based on the orders. By the dispatching method and the dispatching system based on the orders, dispatching information can be conveniently and visually provided for the users, and the problems of low resource dispatching efficiency and resource waste are solved.

Owner:BEIJING DIDI INFINITY TECH & DEV

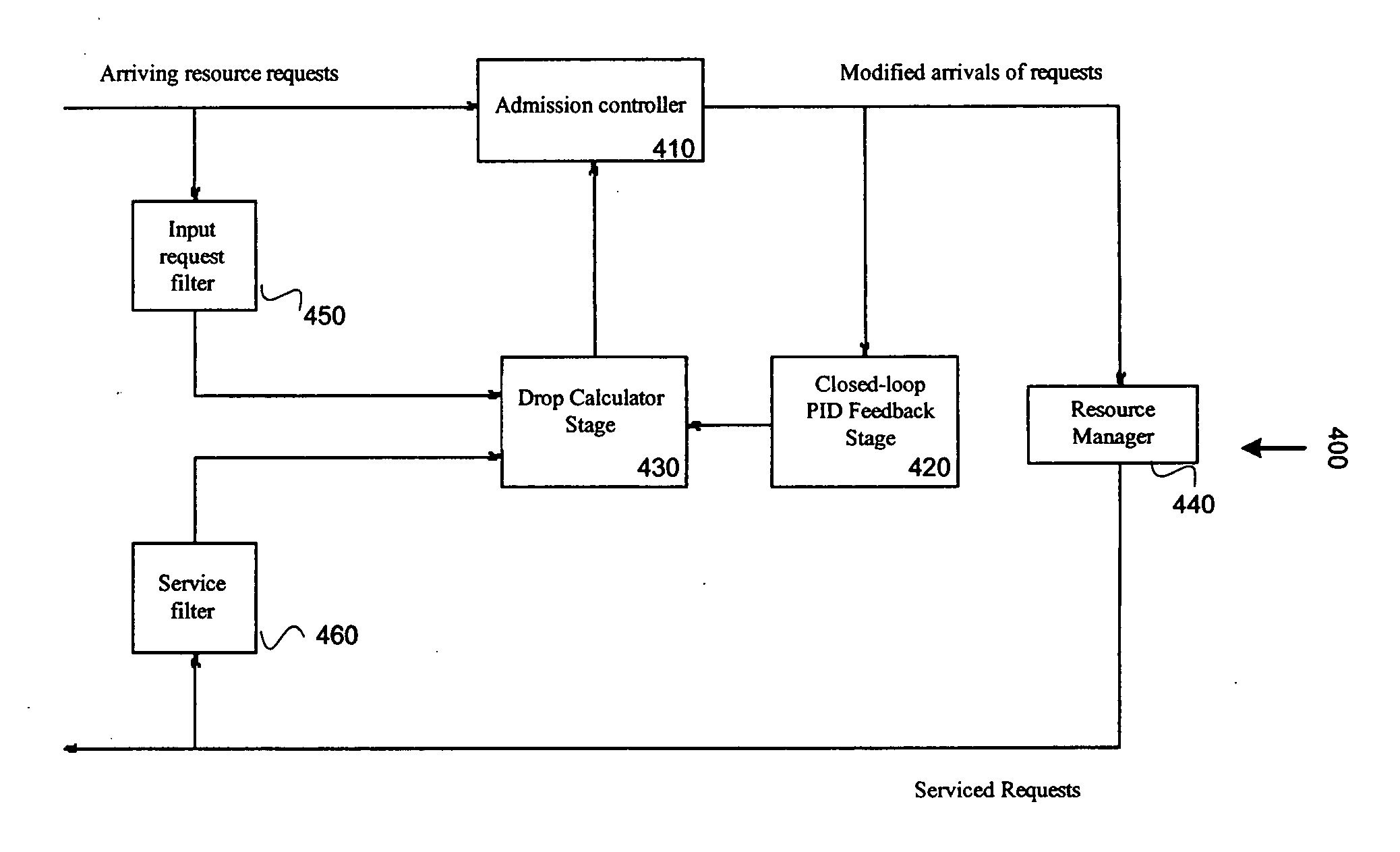

Nonlinear adaptive control of resource-distribution dynamics

InactiveUS20060013132A1Improves controller performanceImprove performanceError preventionTransmission systemsReal systemsResource Management System

Nonlinear adaptive resource management systems and methods are provided. According to one embodiment, a controller identifies and prevents resource starvation in resource-limited systems. To function correctly, system processes require resources that can be exhausted when under high load conditions. If the load conditions continue a complete system failure may occur. Controllers functioning in accordance with embodiments of the present invention avoid these failures by distribution shaping that completely avoids undesirable states. According to one embodiment, a Markov Birth / Death Chain model of the resource usage is built based on the structure of the system, with the number of states determined by the amount of resources, and the transition probabilities by the instantaneous rates of observed consumption and release. A control stage is used to guide a controller that denies some resource requests in real systems in a principled manner, thereby reducing the demand rate and the resulting distribution of resource states.

Owner:CREATIVE TECH CORP

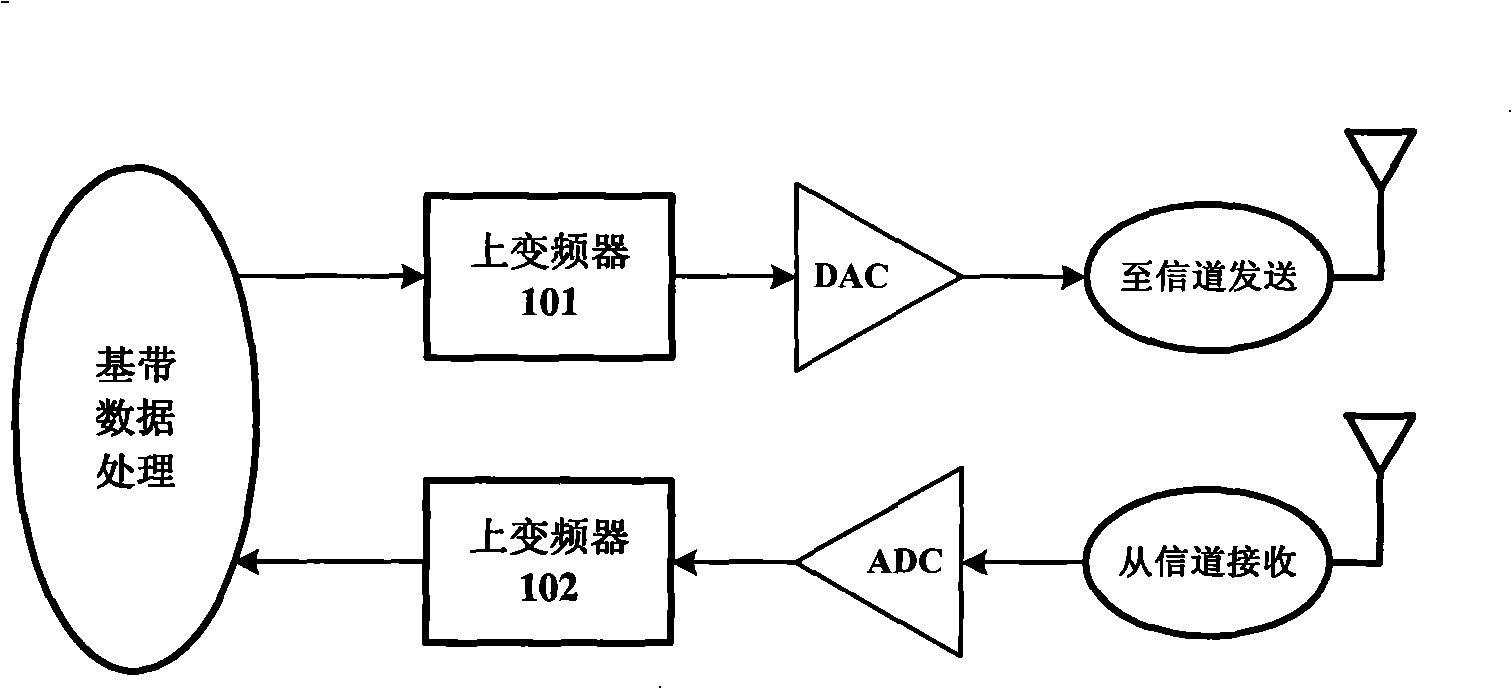

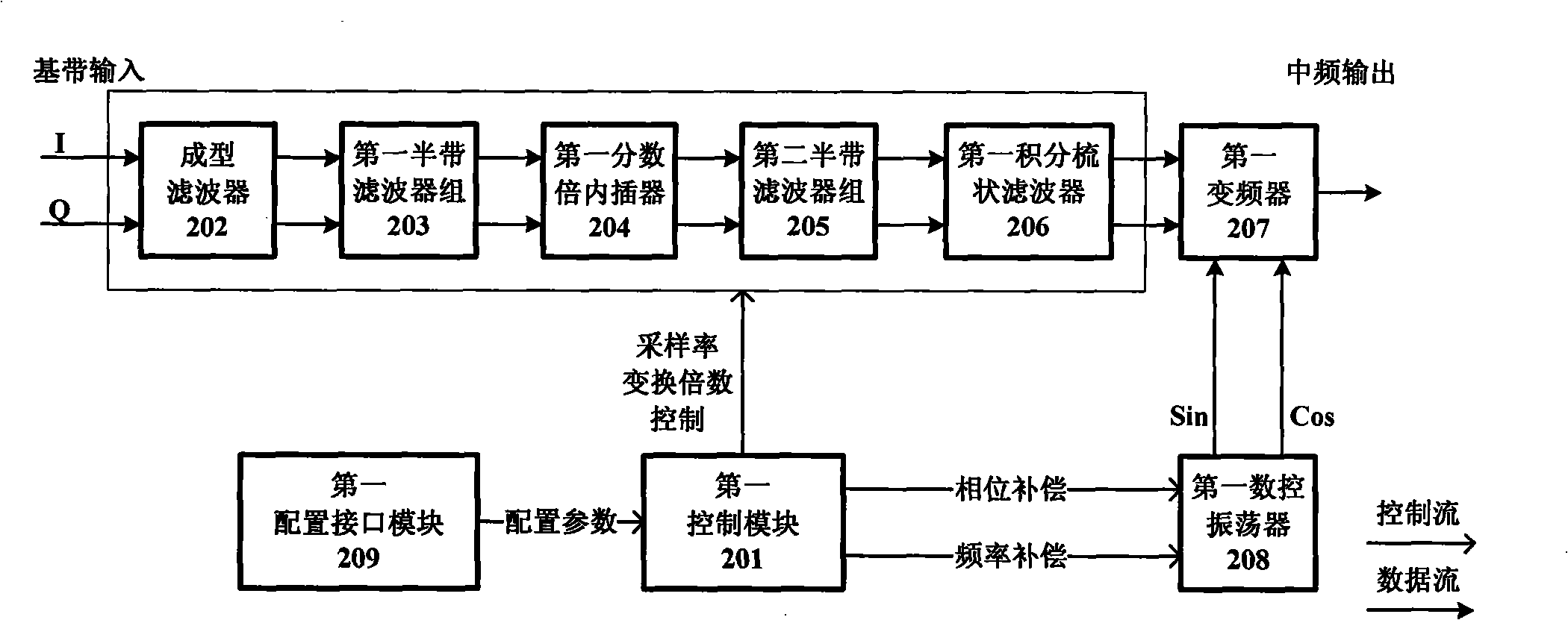

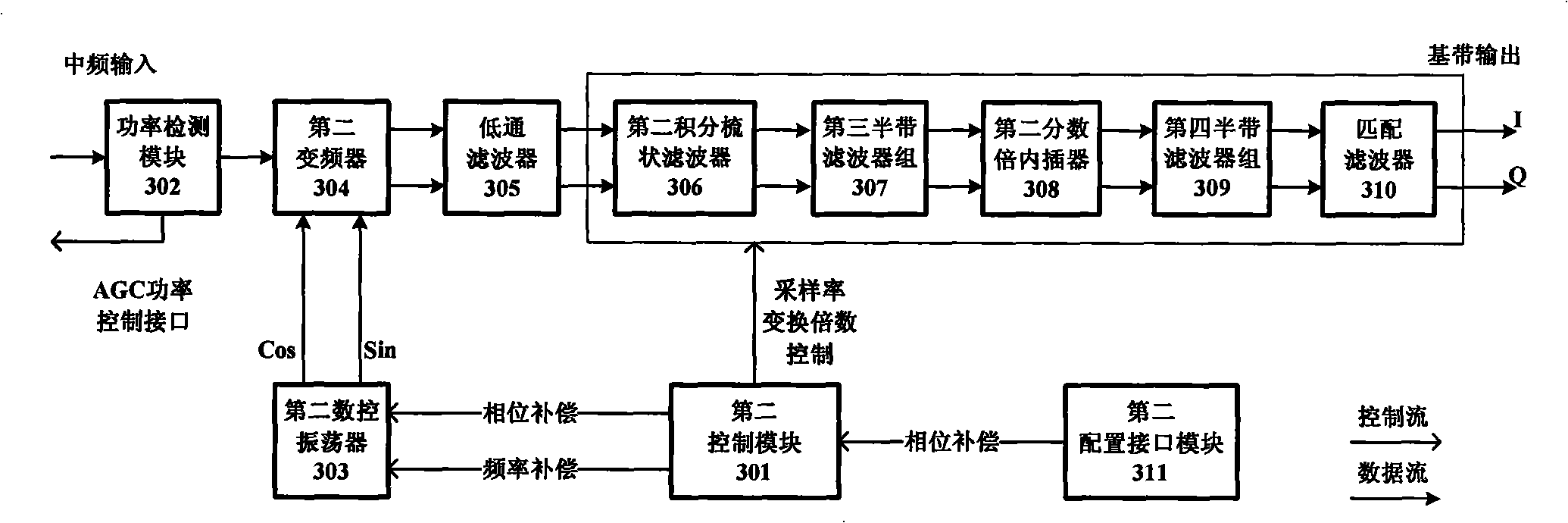

An easy-to-realize method and device for full digital frequency conversion

InactiveCN101262240AImprove versatilityIncrease working frequencyModulation transferenceTransmissionControl signalIntermediate frequency

The invention discloses an all digital frequency converting method and a device thereof, being easily realized for hardware. The method and the device are essentially used for sample rate convertion of rational number-times of baseband signals and the convertion of the baseband signals and the intermediate frequency signals in digital communication. Under the coordination of control signals and enabling signals, the convertion of signal sample rate can be finished and the convertion of the baseband signals and the intermediate signals can be finished through the reasonable matching of variable integral number-times wave filtering and fraction-times interpolation. The system of the invention essentially comprises a frequency mixer, a cascade connection integral comb filter, a fraction-time interpolating device, a half-band filter, a signal shaping filter, a power detection module and a control interface. The configurable hardware implemented structure of the invention is applicable to a plurality of modulation methods, has the advantages of low resource consumption and good portability, and is used for various wireless communication systems such as multilevel phase shift keying (MPSK), orthogonal frequency division multiplex (OFDM), direct sequence spread spectrum (DSSS) and continuous phase modulation (CPM), etc.

Owner:ZHEJIANG UNIV

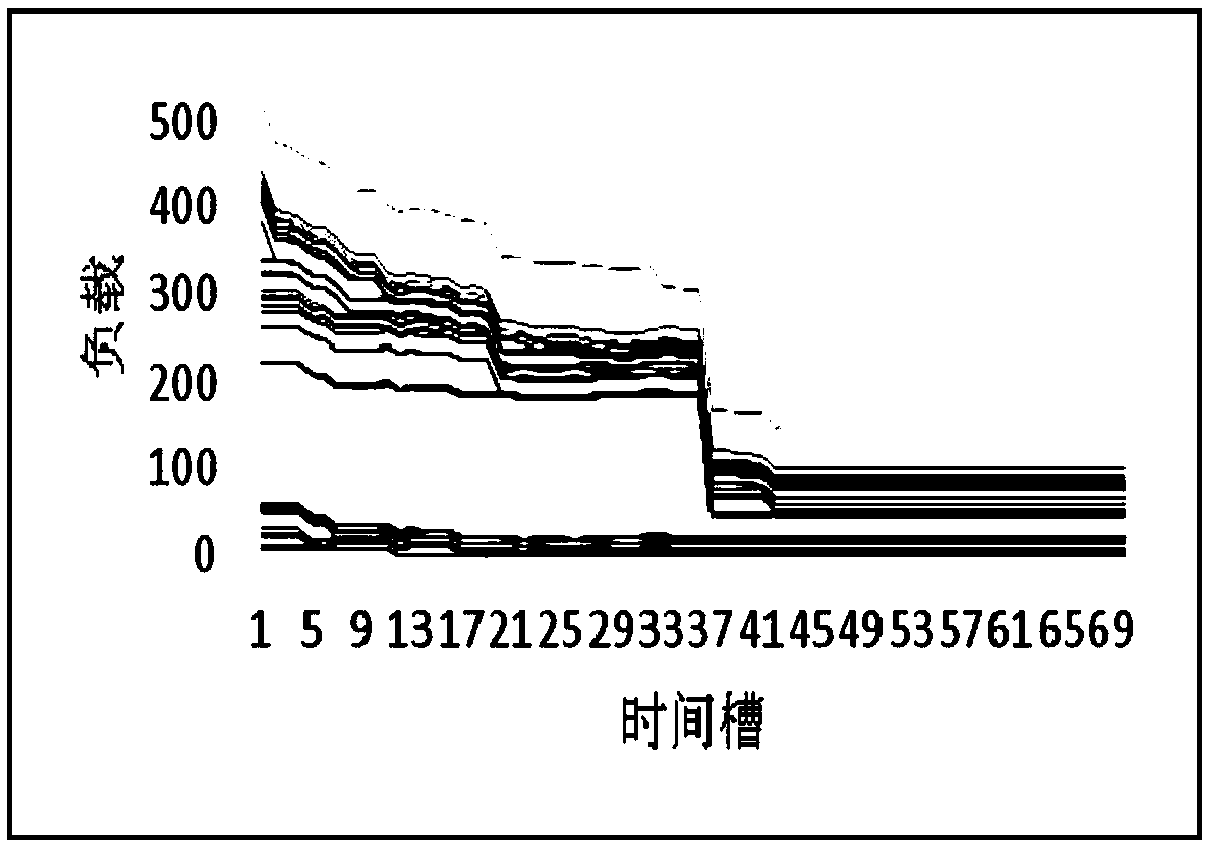

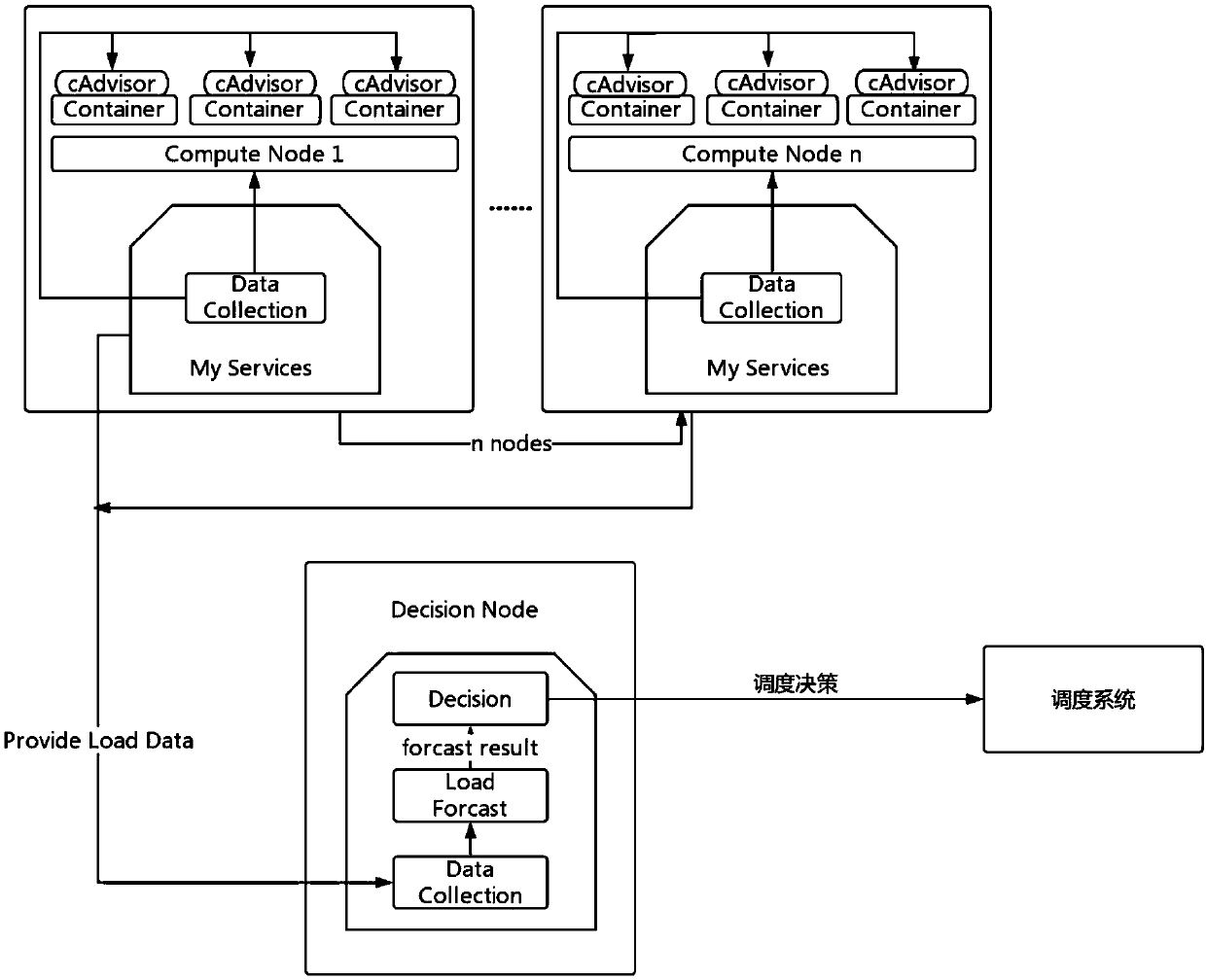

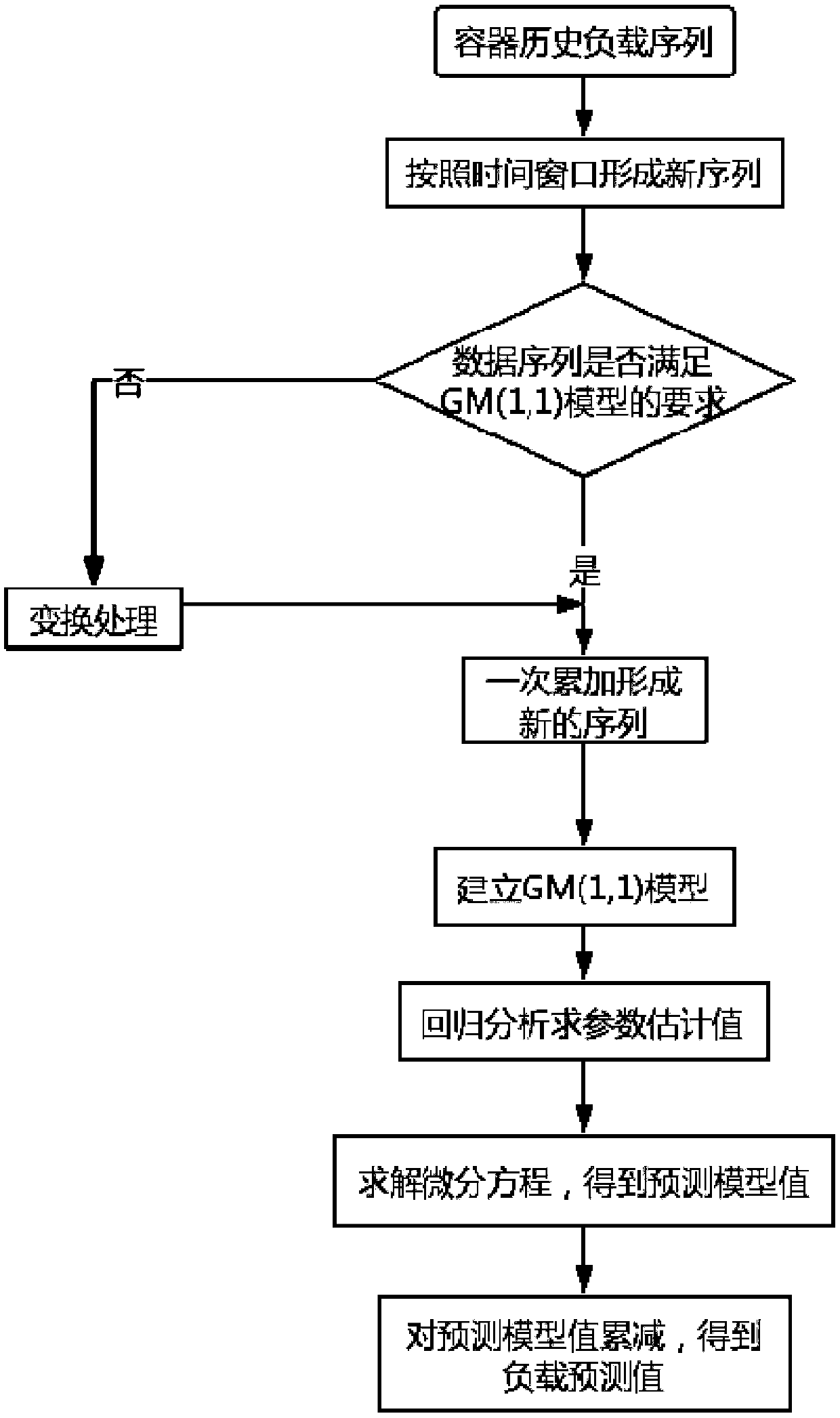

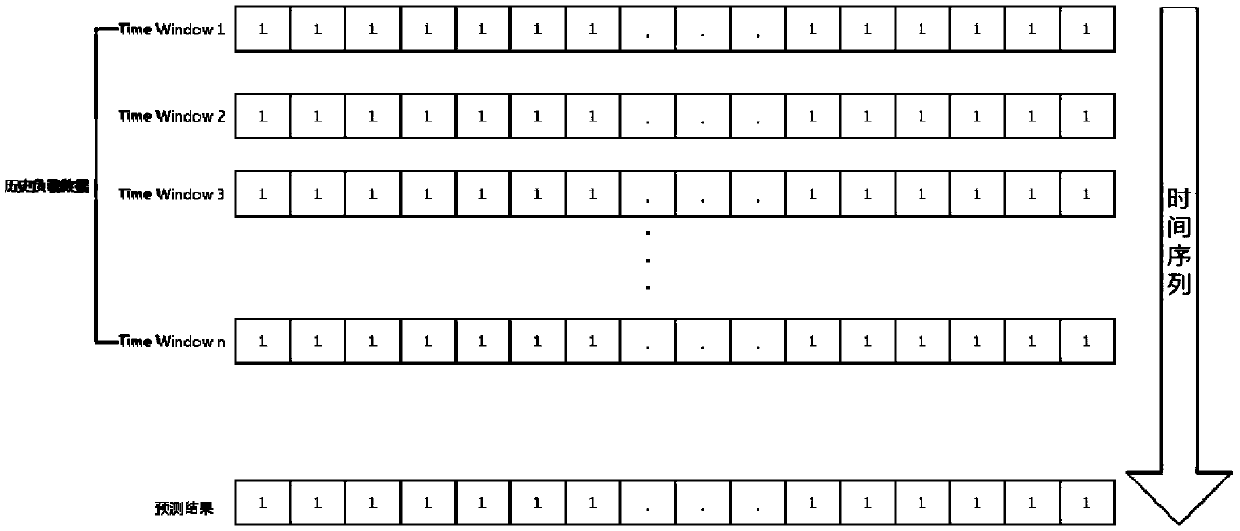

Intelligent resource optimization method of container cloud platform based on load prediction

ActiveCN108829494AReduce overheadReduce performanceResource allocationTransmissionLoad forecastingResource utilization

The invention discloses an intelligent resource optimization method of a container cloud platform based on load prediction, and belongs to the field of container cloud platforms. The method comprisesthe following steps of: based on a grayscale model, predicting the load condition of the next time window of each container instance according to the historical load of the container instance; judgingwhether the load of a node is too high or too low according to the load prediction value of all containers on each physical node; then executing the corresponding scheduling algorithm, migrating somecontainers on the node with over high load to other nodes, so that the load of the node is in a normal range; migrating all container instances on the node with over low load to other nodes so that the node is empty. According to the invention, aiming at the problem that the resource utilization is not balanced and the resource scheduling is delayed in a prior data center, load forecasting analysis is introduced, the load of the data center is scheduled and optimized in advance, the performance loss caused by the over high load of the node and the low resource utilization rate caused by the over low load are avoided, thereby improving the resource utilization efficiency of the platform.

Owner:杭州谐云科技有限公司

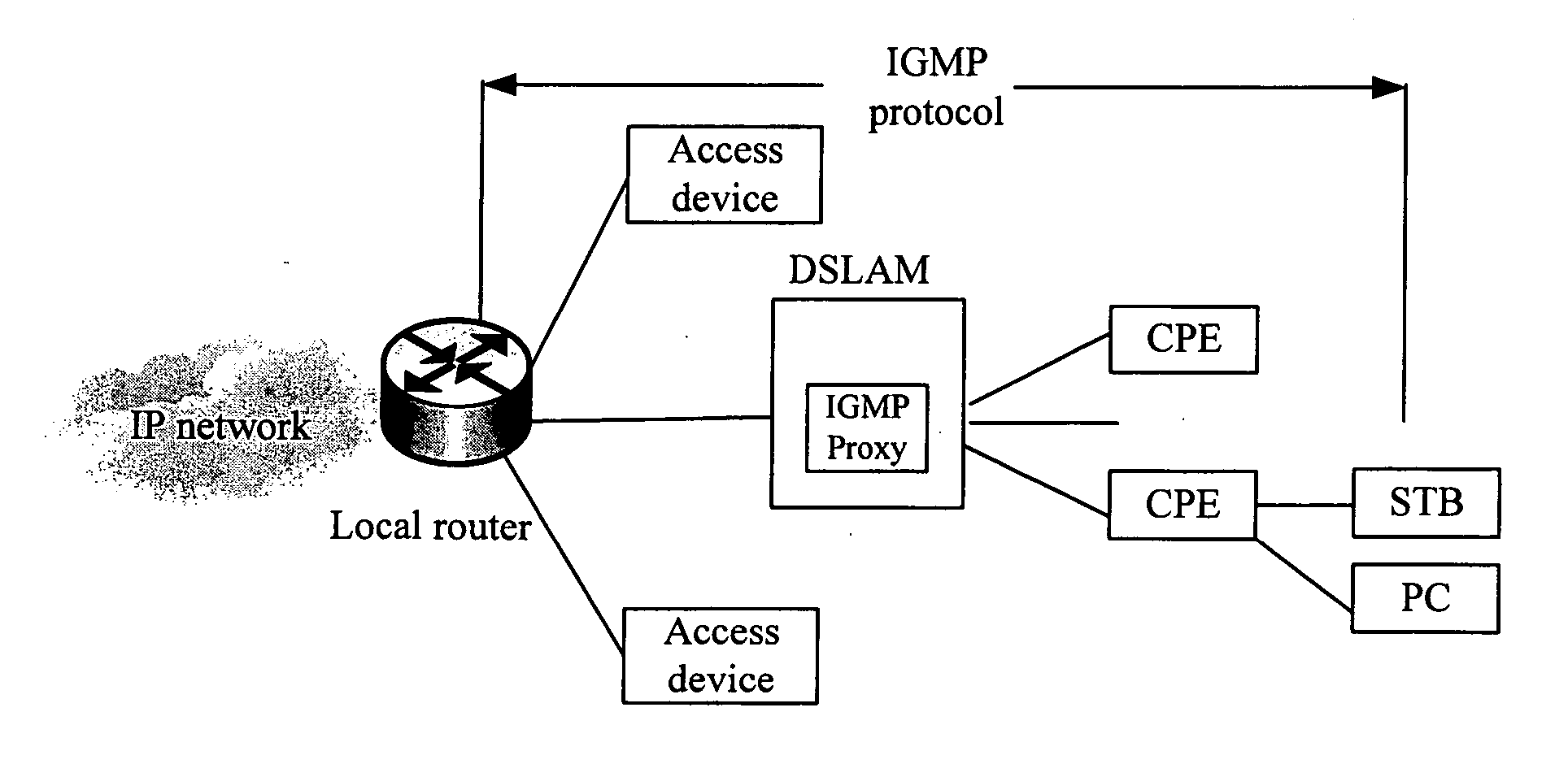

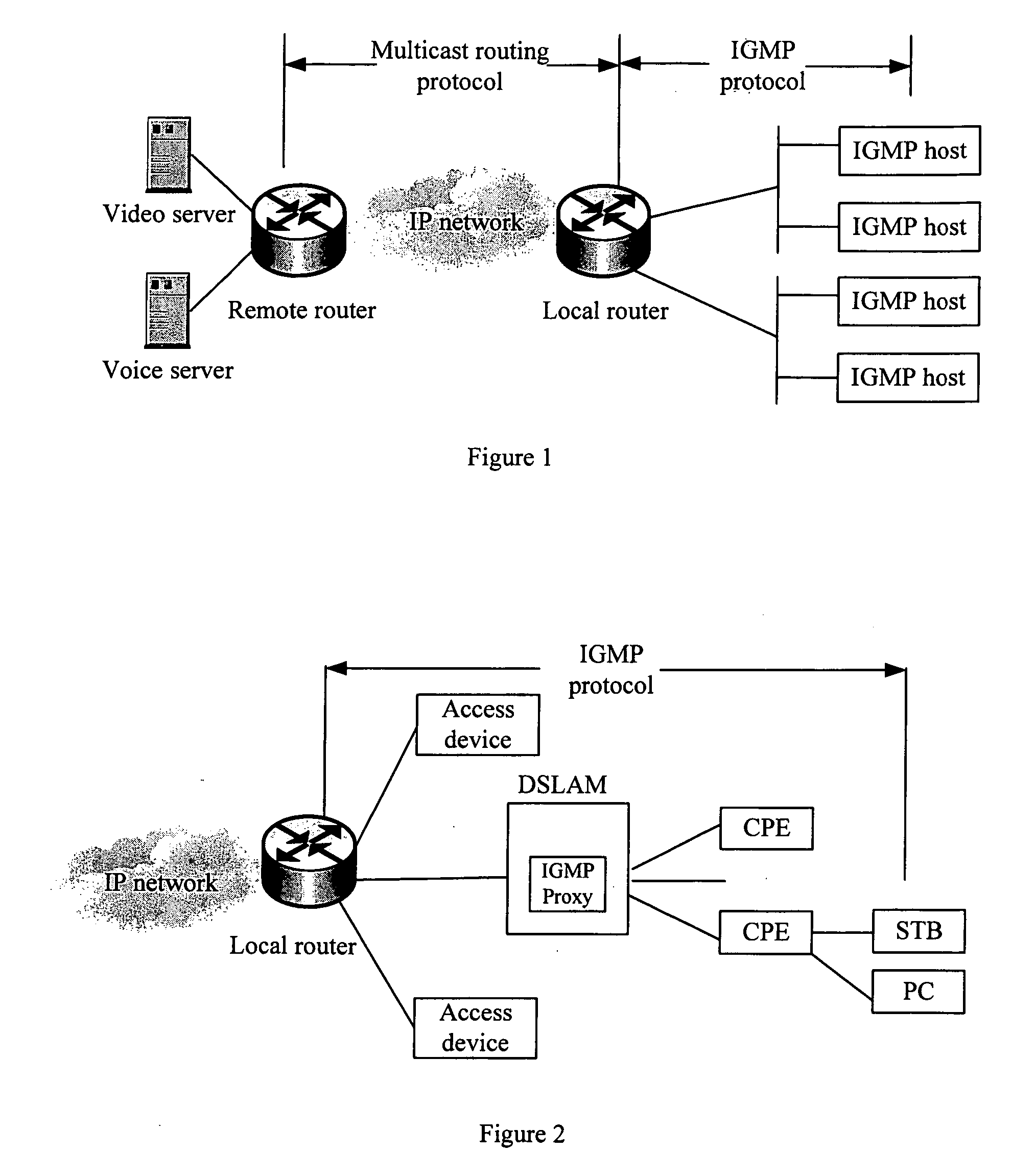

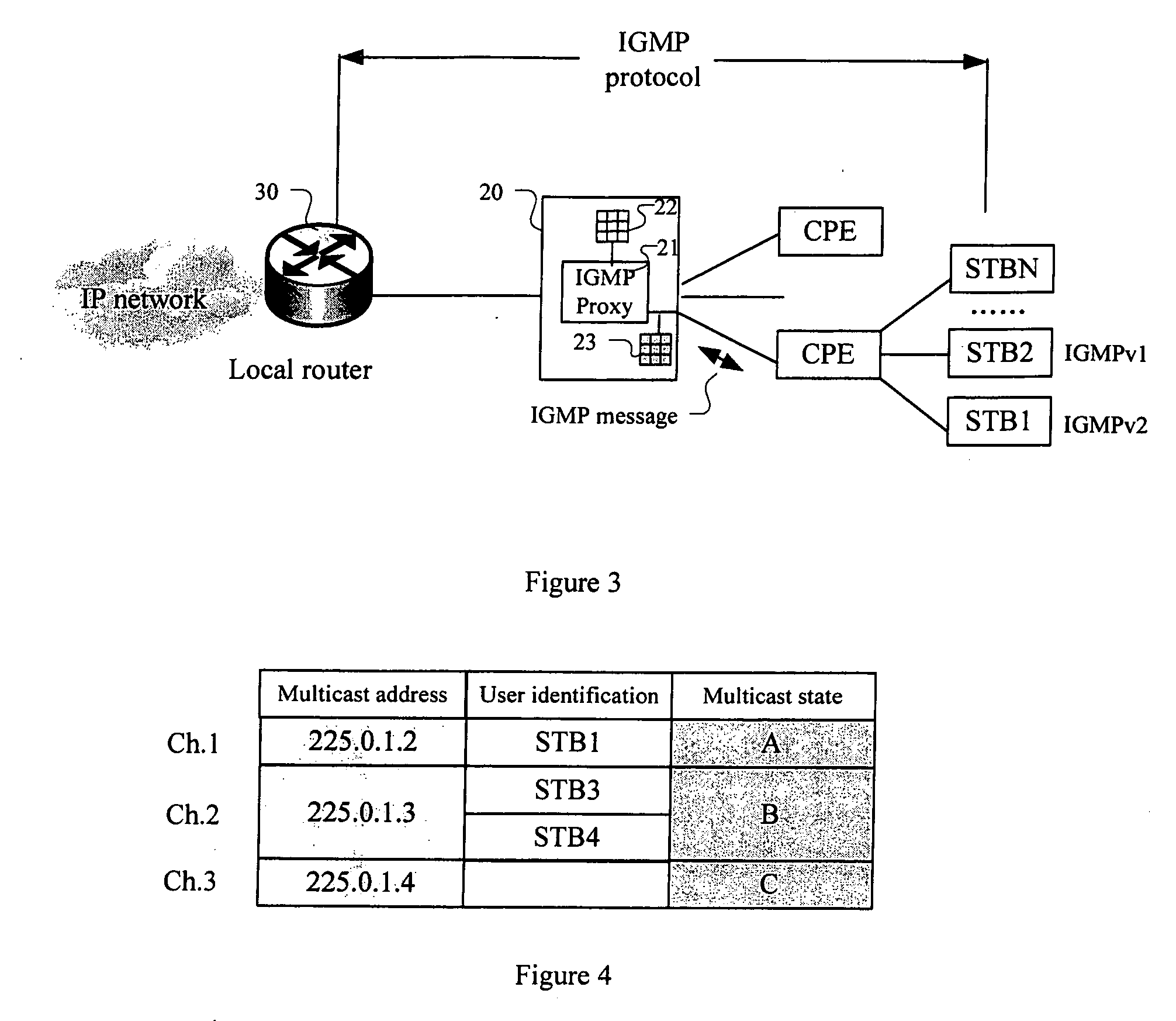

Method and apparatus for multicast management of user interface in a network access device

ActiveUS20070011350A1Reduces occupied memory resourceIncrease speedData switching by path configurationMultiple digital computer combinationsProtocol processingCombined use

The present invention discloses a method and apparatus for multicast management of user interface in a network access device, which comprises setting a user multicast information table for the user interface in the network access device, IGMP protocol processing unit obtaining the user identification information from a user IGMP message, retrieving and updating the user multicast information table based on types of multicast messages; checking the user multicast information table of the user interface when multicast resources of the user interface are not sufficient, immediately terminating the multicast GSQ process and directly performing quick-leaving when the user multicast information table of a multicast group is empty, in conjunction with use of items of multicast state. The present invention can greatly enhance speed and performance of switching for multicast channels of the network access device.

Owner:RPX CORP

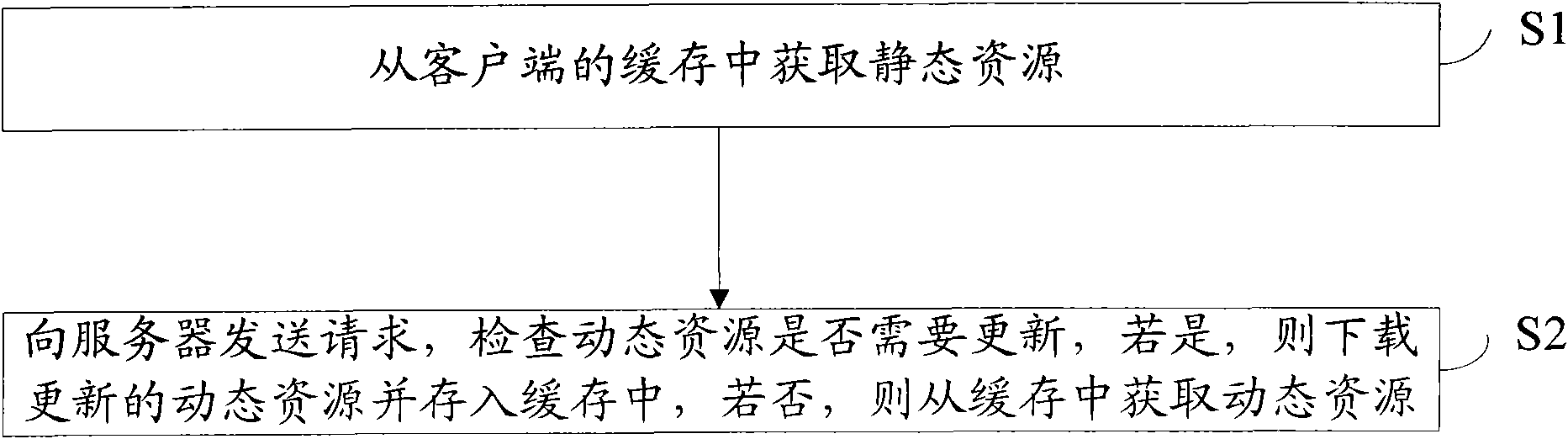

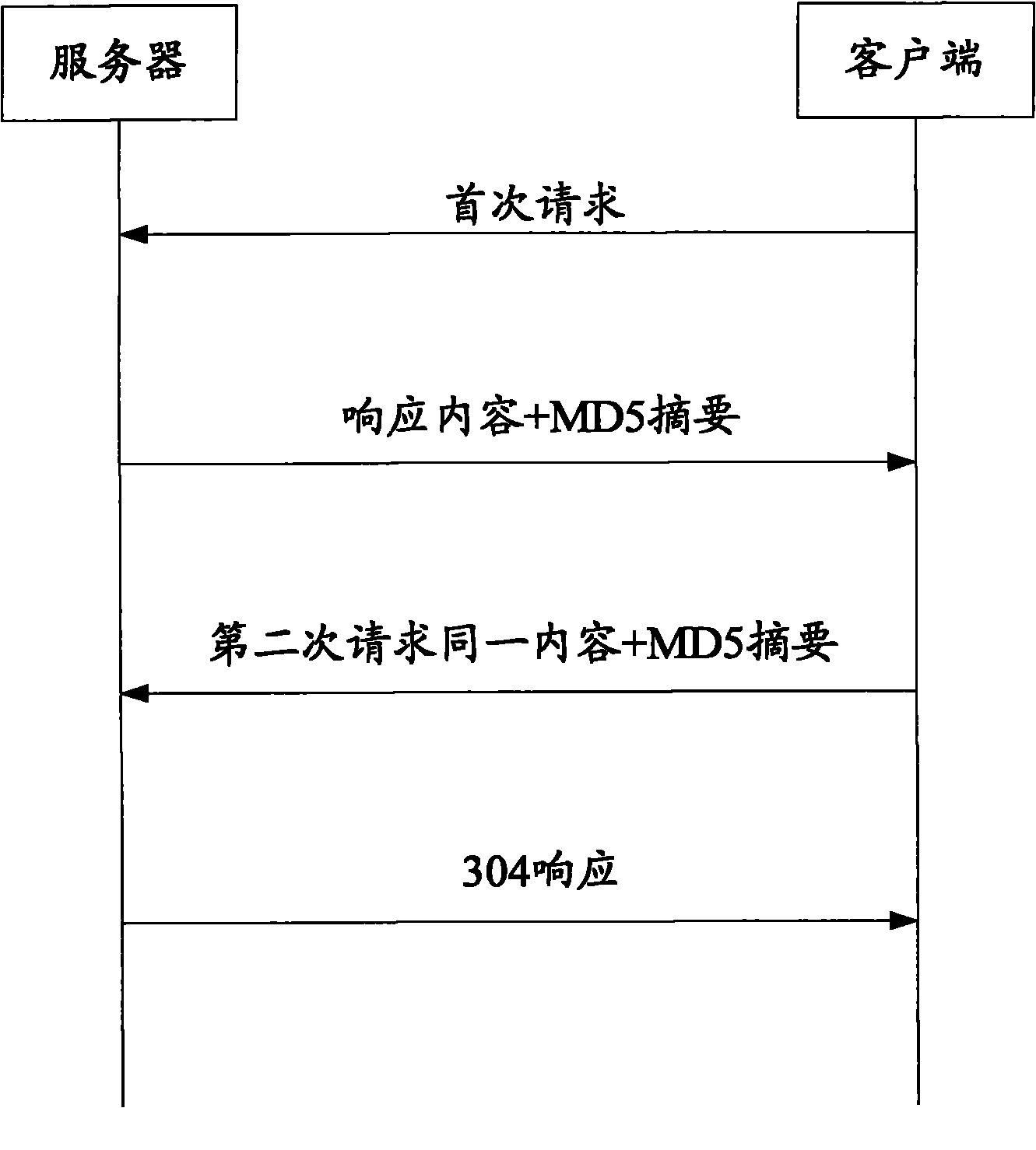

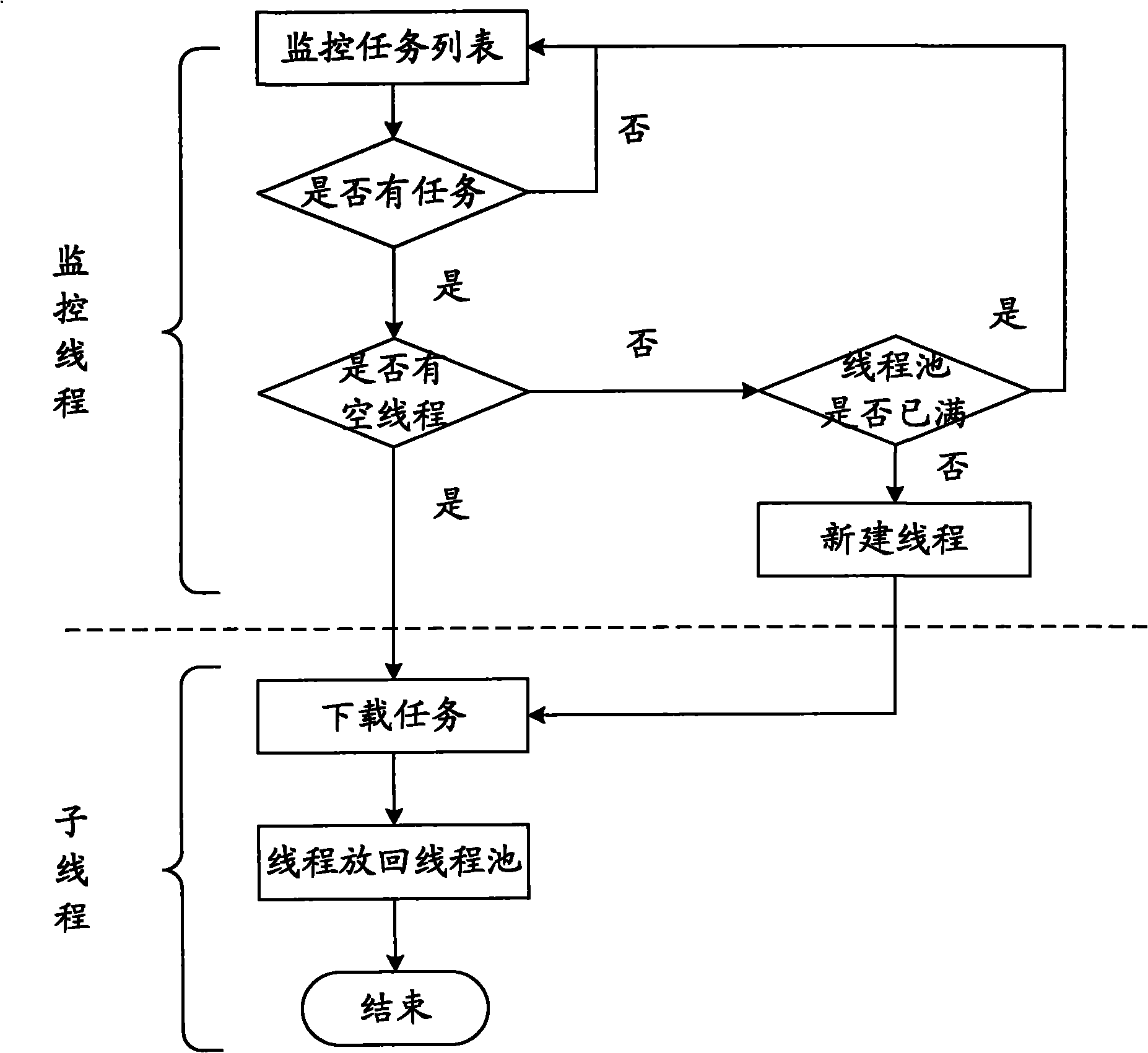

Method for rapidly displaying user interface of embedded type platform

InactiveCN102081650AOpen fastReduce the number of requestsSpecial data processing applicationsTraffic capacityDynamic resource

The invention discloses a method for rapidly displaying a user interface of an embedded type platform, which divides the resource of the user interface into a static resource and a dynamic resource. The method provided by the invention comprises the following steps: acquiring the static resource from the cache of a client; sending a request to a server; and checking whether the dynamic resource needs to be updated or not, if the dynamic resource needs to be updated, downloading the updated dynamic resource and storing into the cache, and if the dynamic resource needs not to be updated, acquiring the dynamic resource from the cache. The method provided by the invention reduces the times of network requests to the greatest extent and shortens the time taken for opening pages to the greatest extent through an effective strategy, solves the problems of insufficient cache resources caused by the limit of hardware resources in the existing embedded type environment, enhances the display speed of the interface, reduces the times of the network requests and reduces the network flow, thereby slowing the accumulation of cache resources.

Owner:上海网达软件股份有限公司

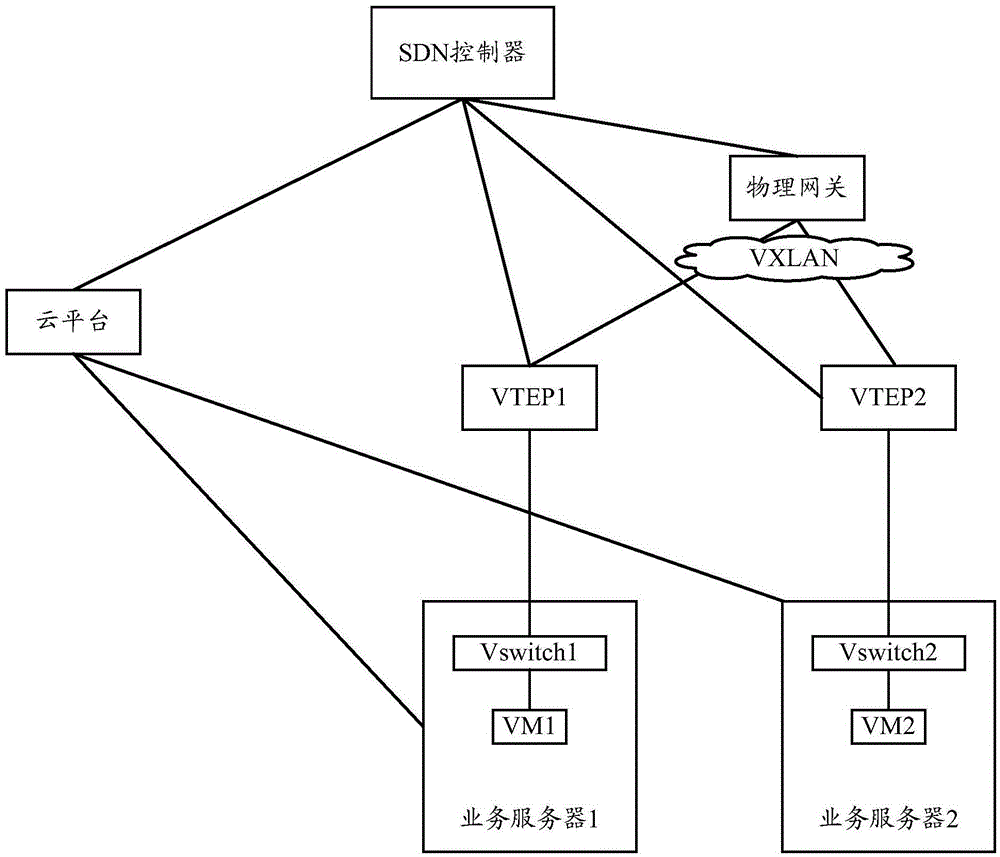

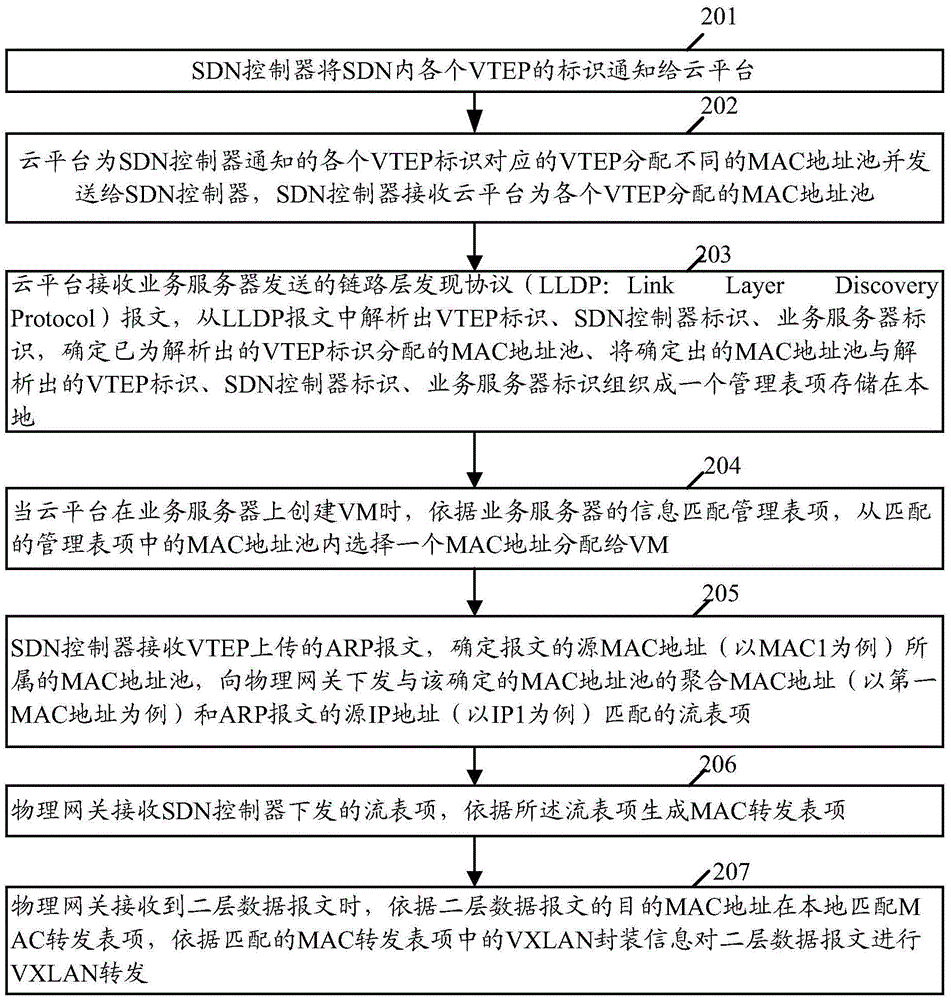

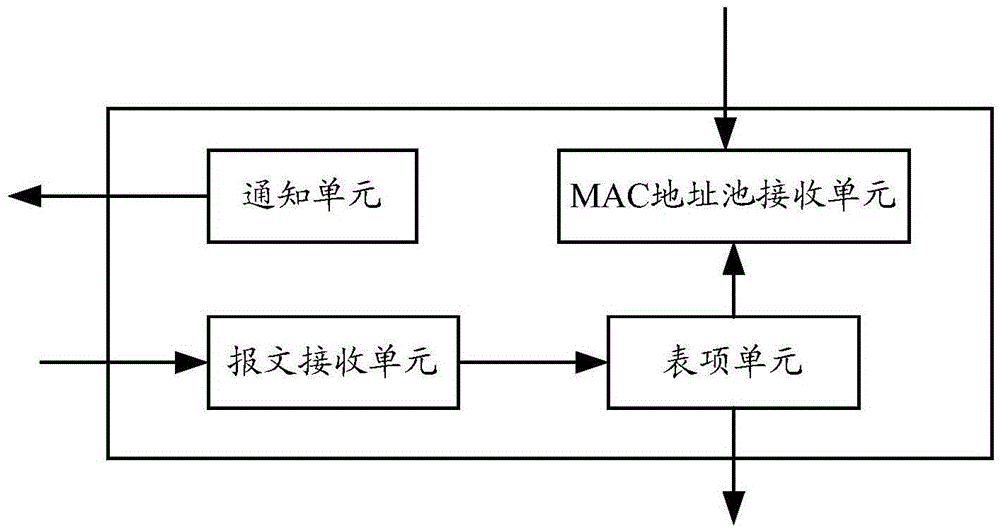

Message forwarding method and device applied to SDN

The invention provides a message forwarding method and a device applied to an SDN. According to the technical scheme of the invention, an SDN controller issues the flow entry of each VM to a physical gateway with the VTEP instead of the VM as a unit, wherein the MAC address in the issued flow entry is the aggregated MAC address of an MAC address pool allocated to the VTEP instead of the real MAC address of the VM. When an MAC forwarding table entry is generated by the physical gateway based on flow entries, a single MAC forwarding table entry is generated based on N (larger than one) flow entries. That means, for the above N flow entries, only one MAC forwarding table entry is stored in the hardware table entry resource of the physical gateway. Therefore, the hardware table entry resource of the physical gateway is saved. The defect that the hardware table entry resource of the physical gateway is insufficient can be overcome.

Owner:NEW H3C TECH CO LTD

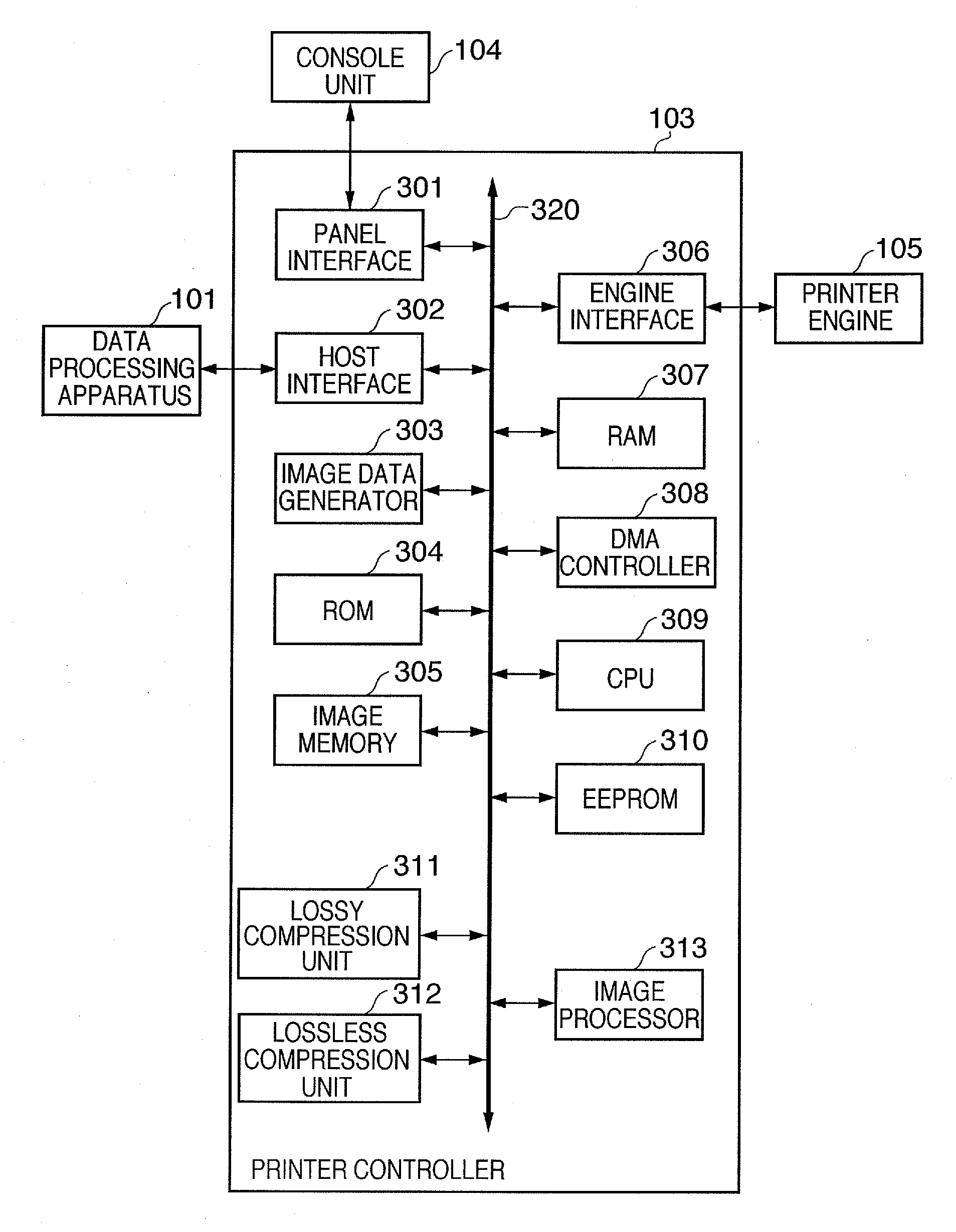

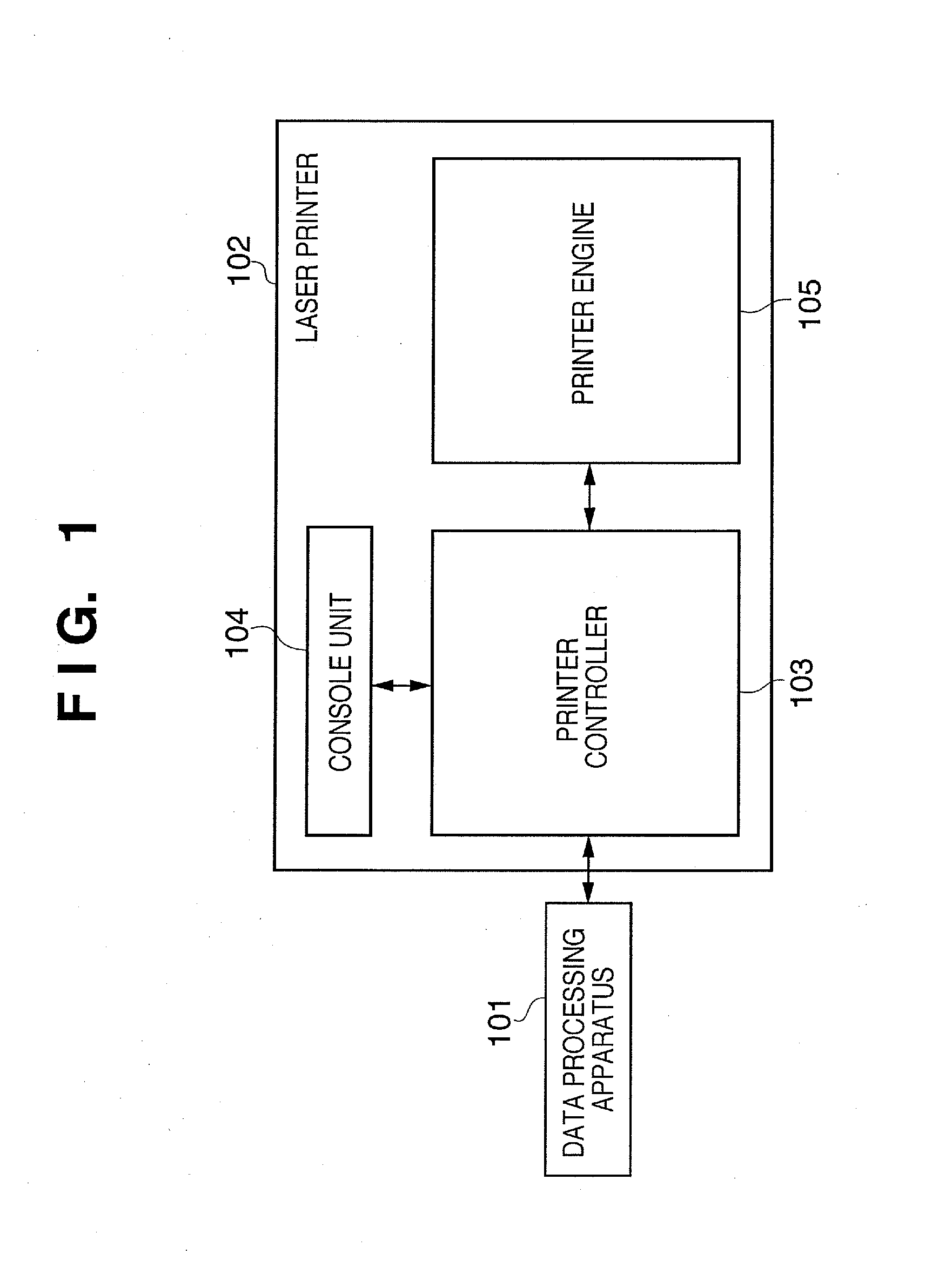

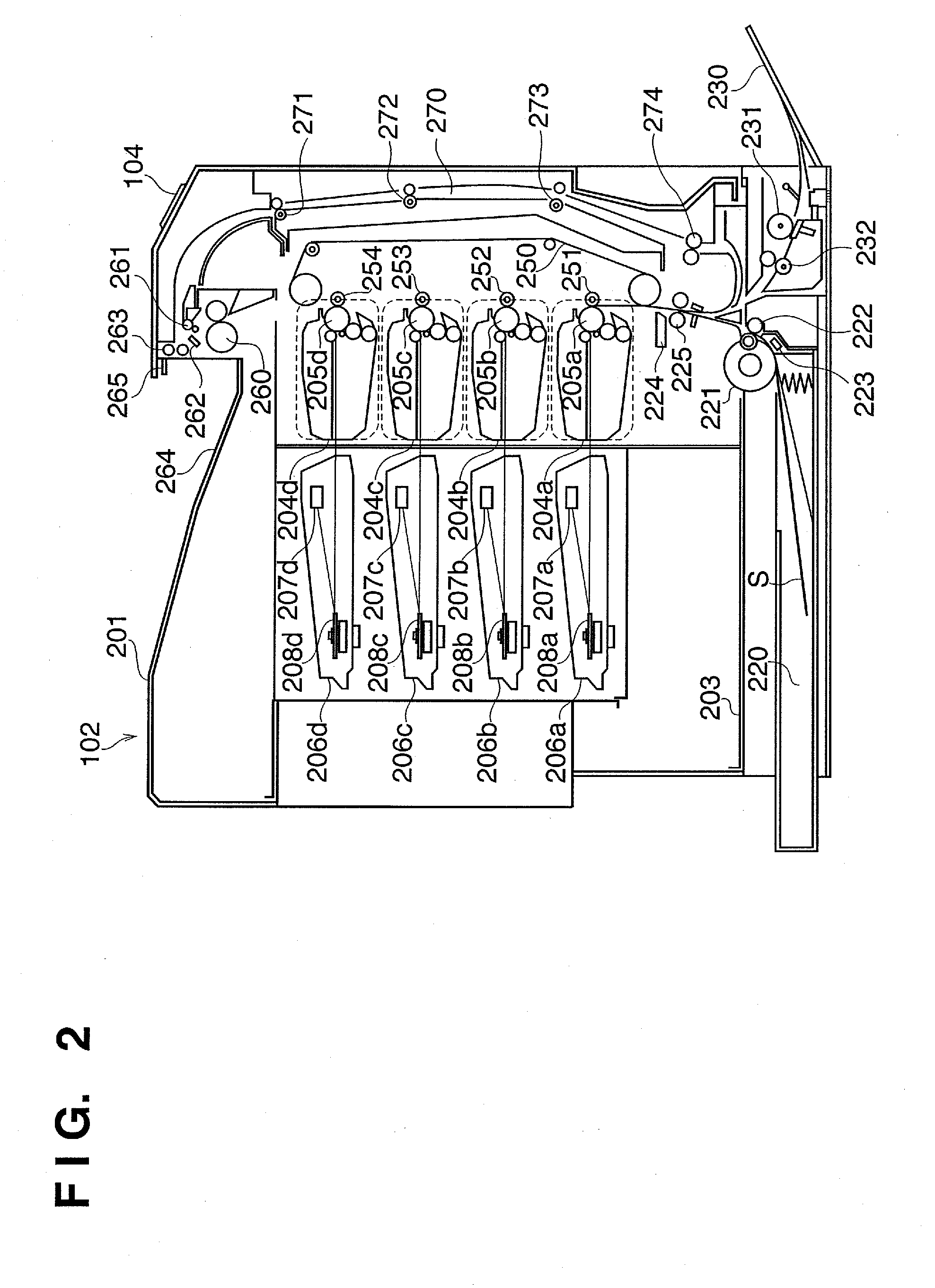

Print control apparatus and control method thereof and device driver

InactiveUS20080137135A1Eliminate the problemAvoid inefficiencyVisual presentation using printersDigital output to print unitsResource reallocationLow resource

A job controller allocates a resource to a plurality of PDL analyzers and, in a case where an out-of-resource condition occurs in any of the PDL analyzers while the PDL analyzers are performing PDL data analysis in parallel, causes a PDL analyzer to interrupt the processing being performed in parallel with processing by the other PDL analyzer, releases a resource corresponding to the interrupted processing, and reallocates the released resource to the other PDL analyzer to allow the other PDL analyzer to continue processing.

Owner:CANON KK

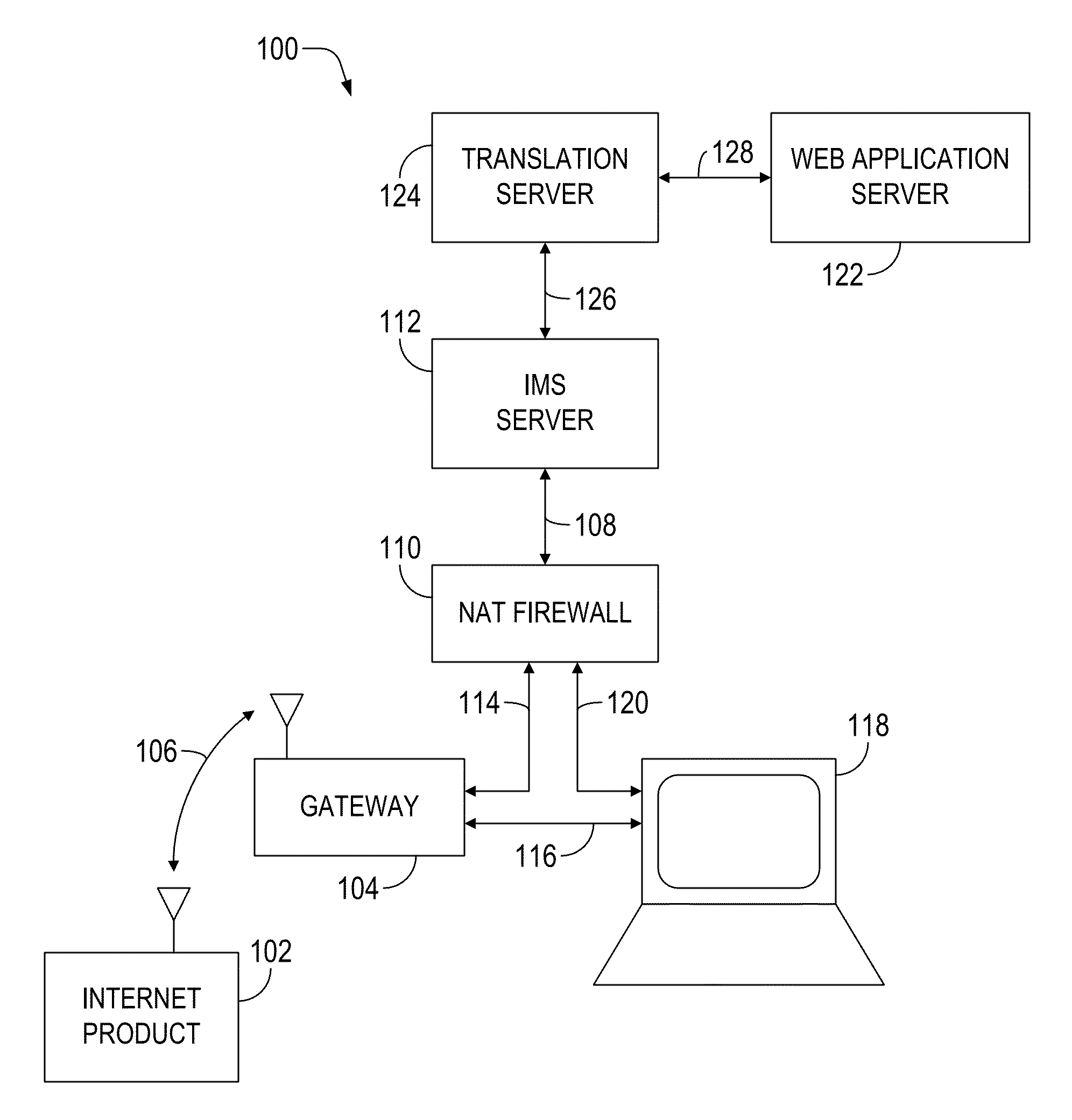

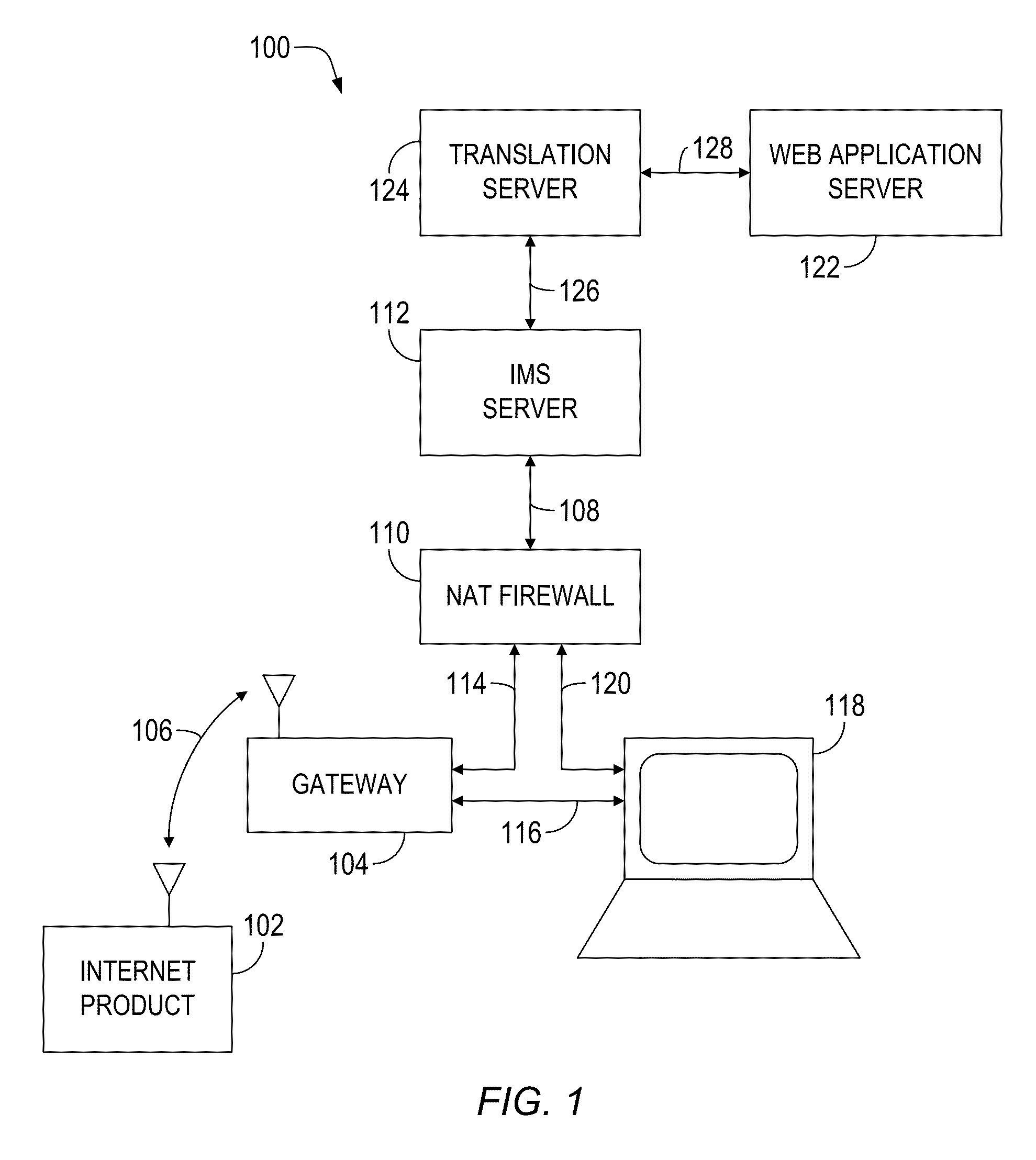

Wireless internet product system

InactiveUS20130185786A1Reduce system overheadReduce power consumptionMultiple digital computer combinationsProgram controlProduct systemWeb service

Low resource internet devices such as consumer electronics products connect to web service by means of a proxy method where the connected device does not need to maintain the expensive and fragile web service interface itself, but rather uses simple low level protocols to communicate through a gateway that executes software to translate a low level proprietary wireless protocol to a proprietary low level internet protocol that can pass through a firewall to proxy servers that translate the low level protocols thus presenting an interface that makes the internet device appear to have a full web service interface to enable communication between the internet devices and the web server.

Owner:ARRAYENT

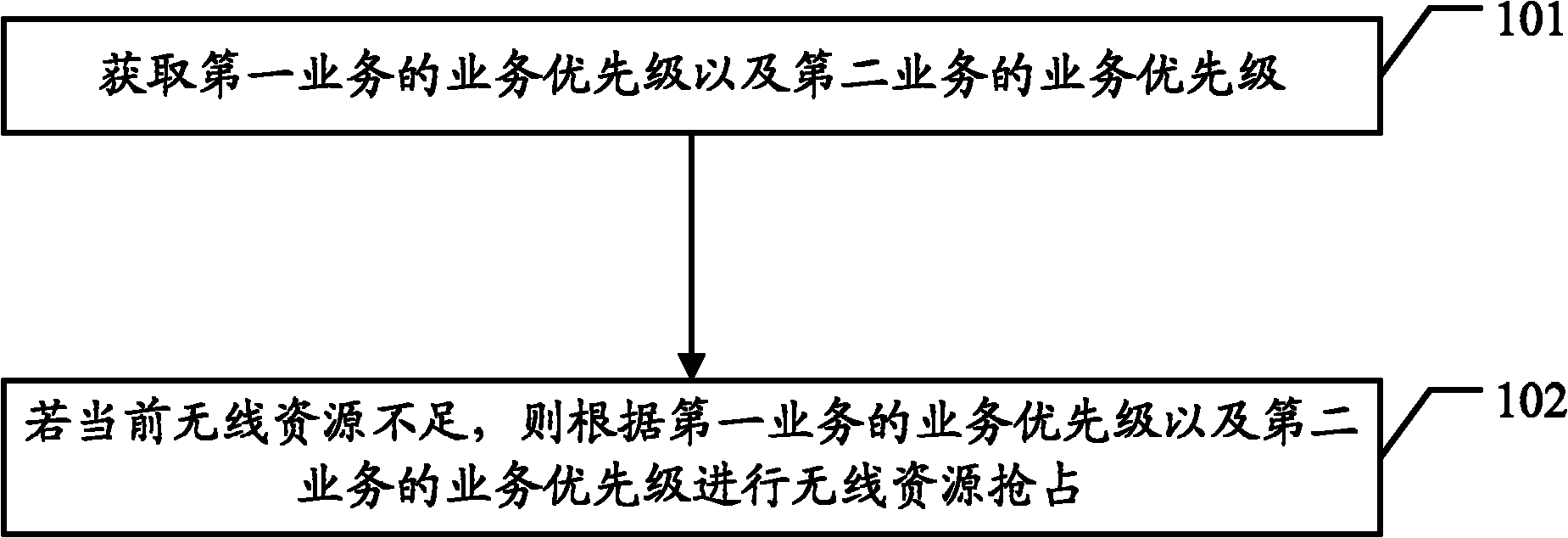

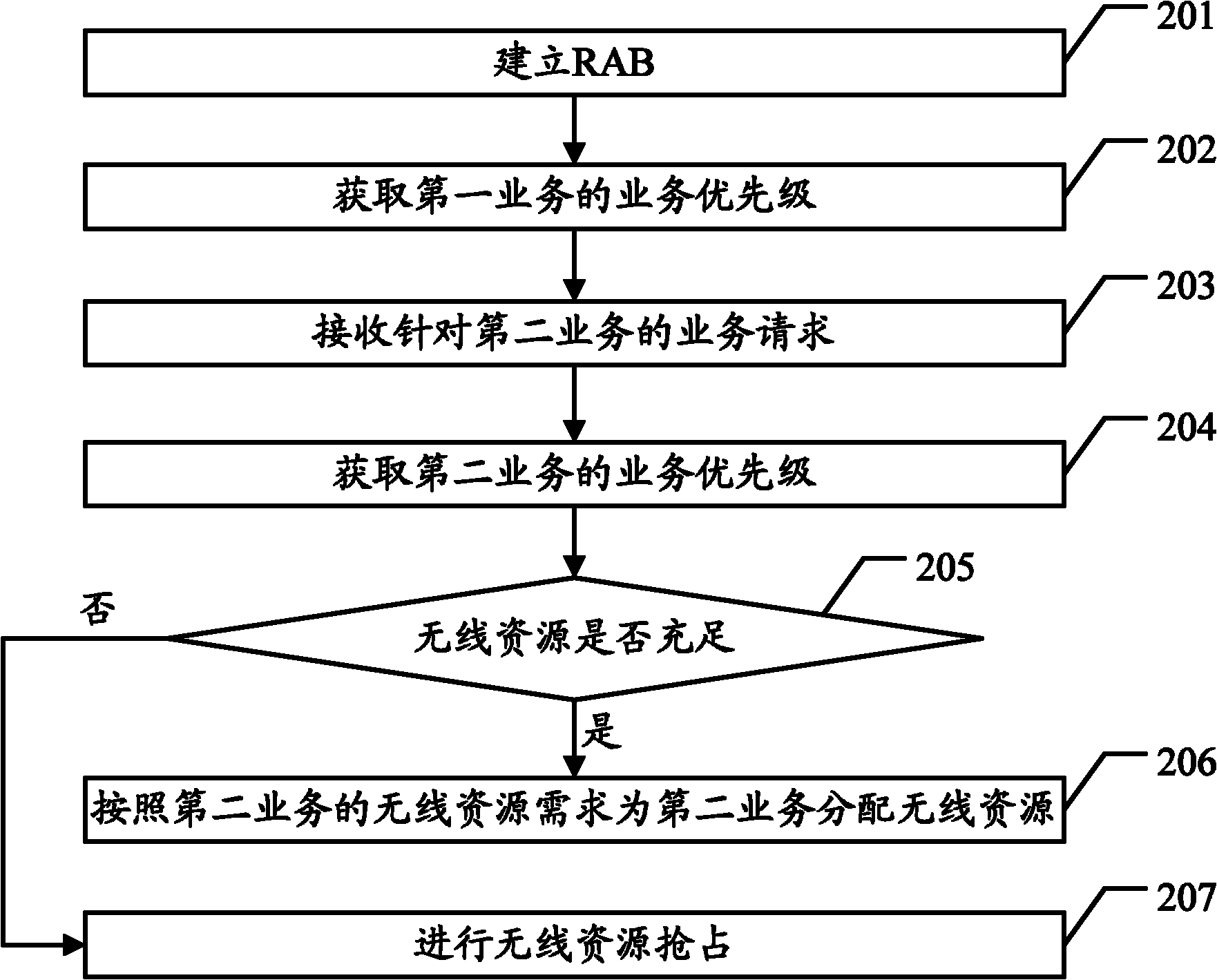

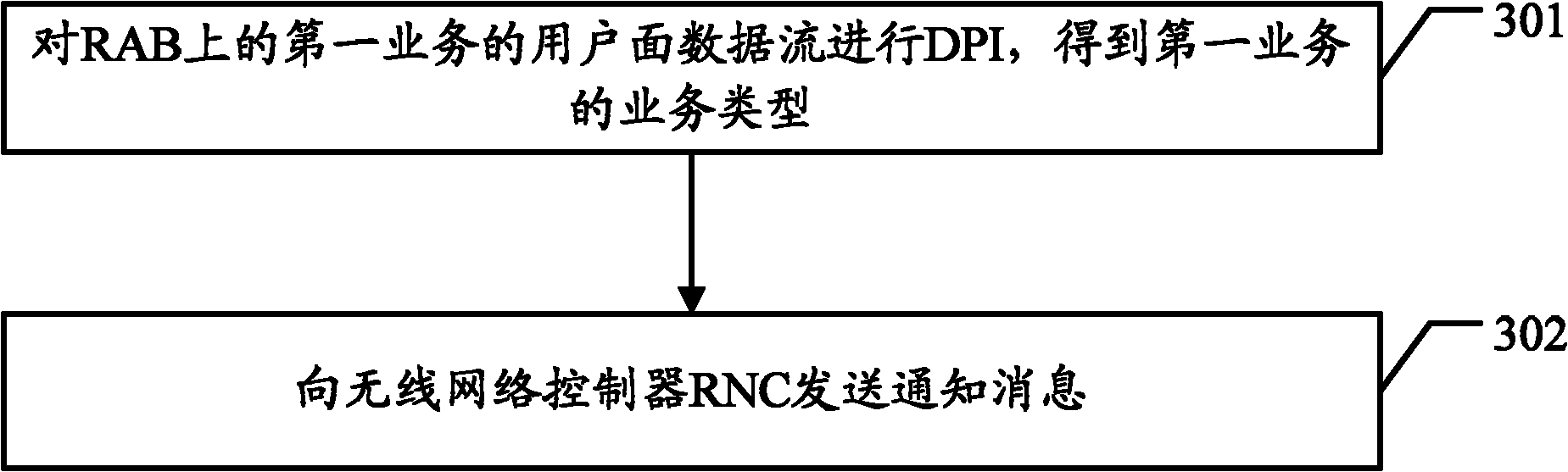

Resource scheduling method and radio network controller

InactiveCN102056319AAvoid occupyingRealize a reasonable distributionWireless communicationRadio Network ControllerLow resource

The embodiment of the invention discloses a resource scheduling method and a radio network controller which are used for realizing reasonable allocation of radio resources. The method provided by the embodiment of the invention comprises the following steps: acquiring the service priority of a first service and the service priority of a second service; and if the current radio resources are insufficient, seizing radio resources according to the service priority of the first service and the service priority of the second service. The embodiment of the invention also provides a radio network controller.

Owner:HUAWEI TECH CO LTD

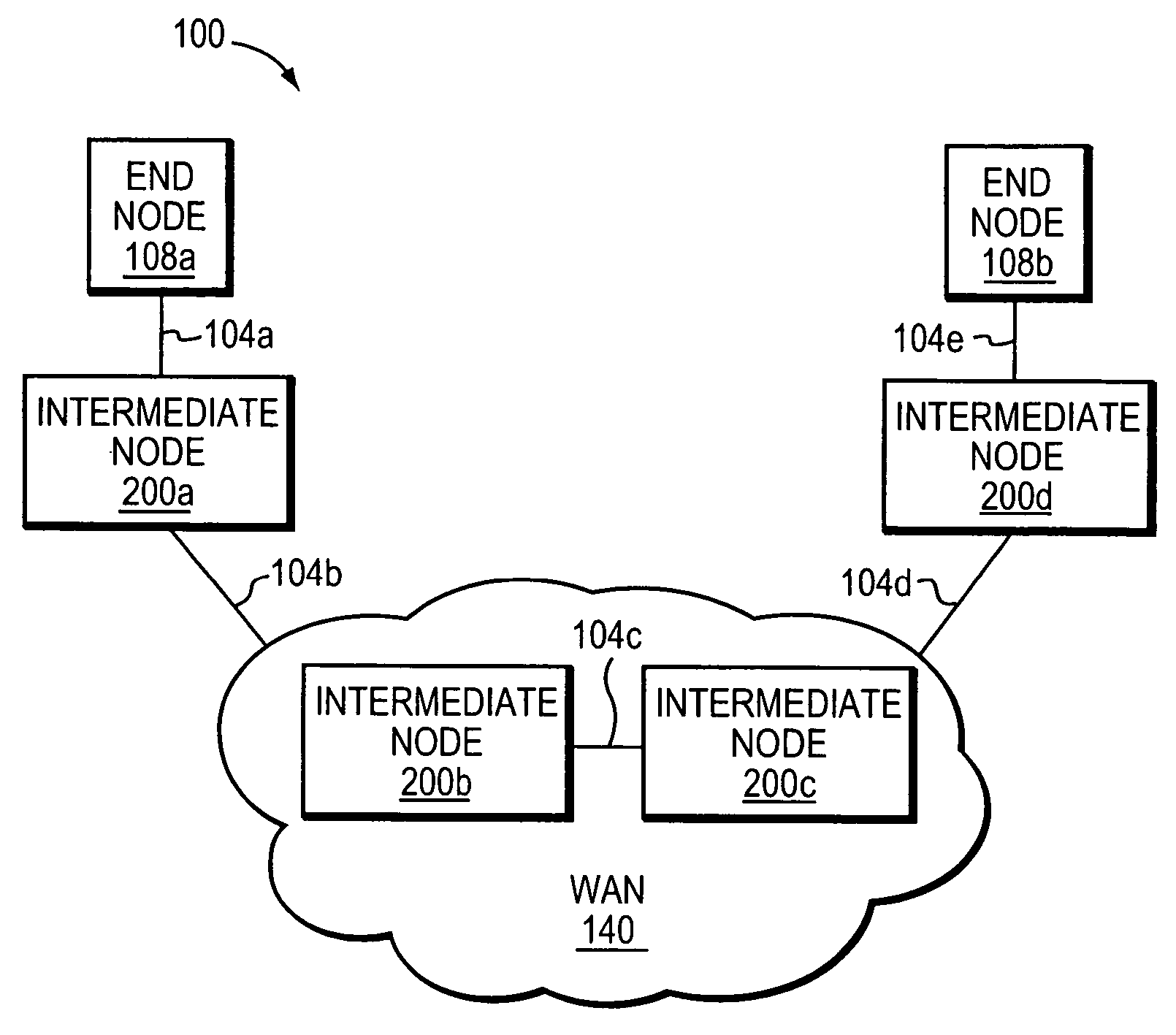

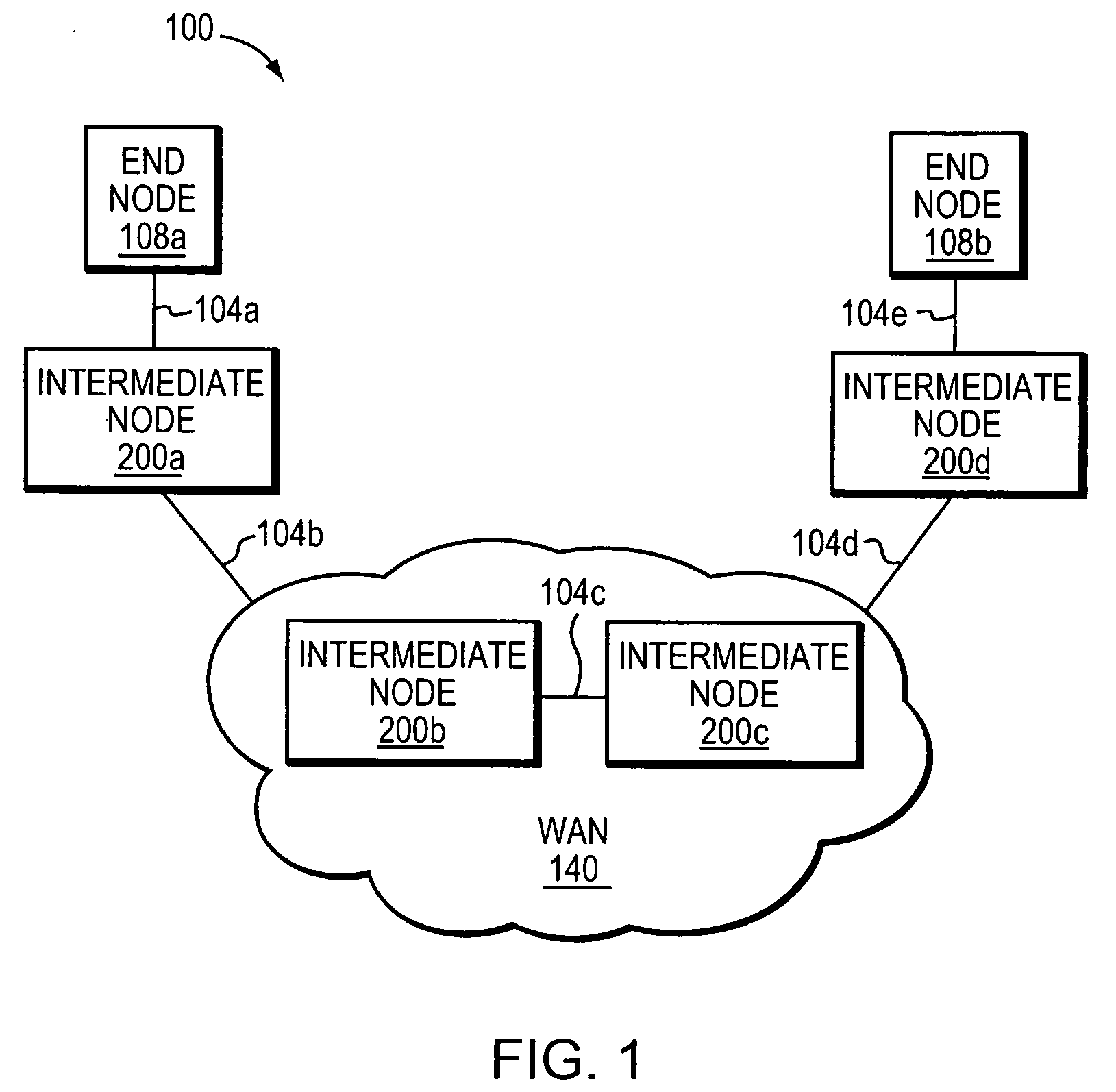

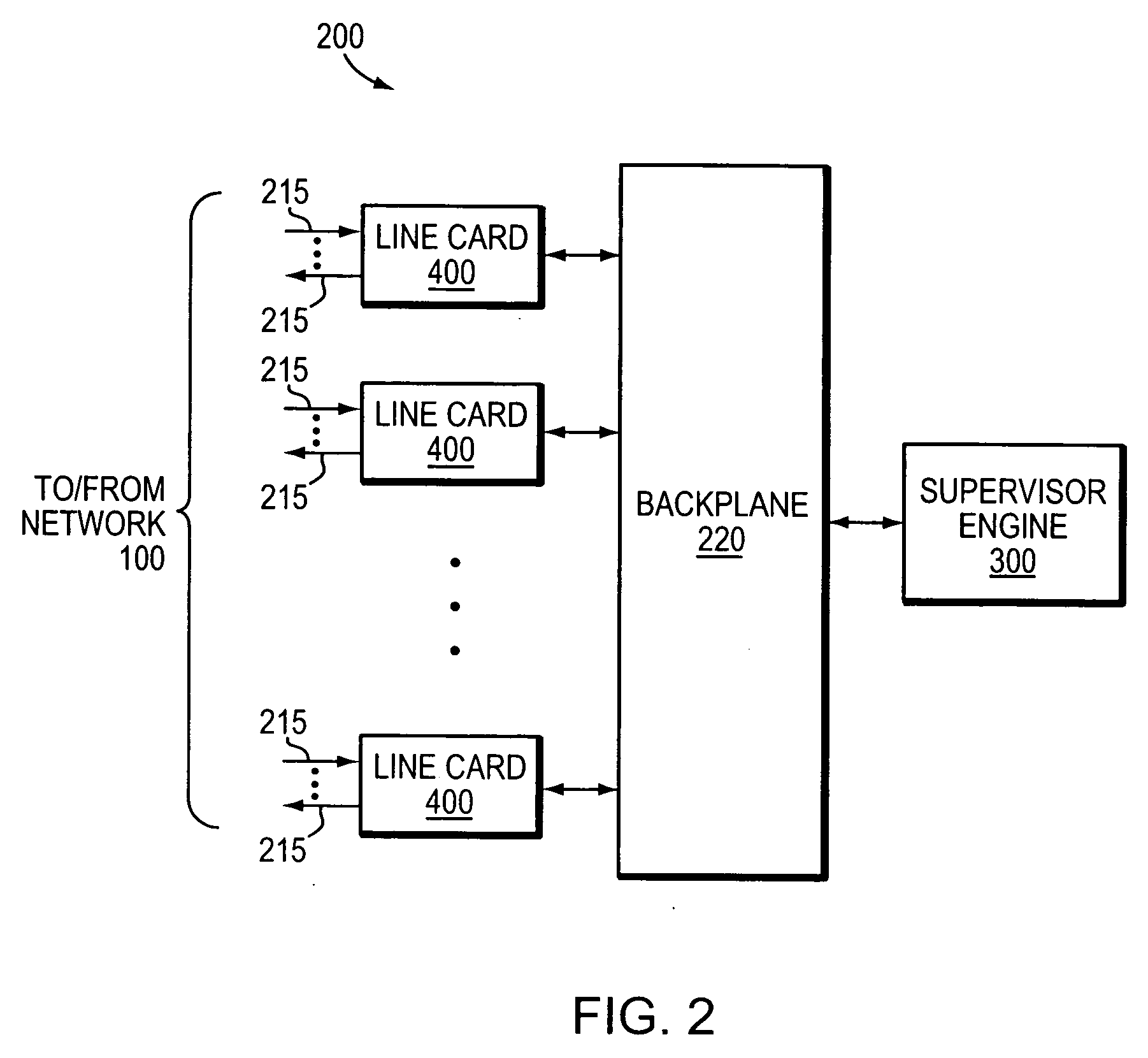

System and method for reporting out-of-resources (OOR) conditions in a data network

ActiveUS20060092952A1Avoiding unnecessary disruption to data flowReduce usageData switching by path configurationType-length-valueLow resource

A system and method for advertising out-of-resources (OOR) conditions for entities, such as nodes, line cards and data links, in a manner that does not involve using a maximum cost to indicate the entity is “out-of-resources.” According to the technique, an OOR condition for an entity is advertised in one or more type-length-value (TLV) objects contained in an advertisement message. The advertisement message is flooded to nodes on a data network to inform them of the entity's OOR condition. Head-end nodes that process the advertisement message may use information contained in the TLV object to determine a path for a new label switched path (LSP) that does not include the entity associated with the OOR condition.

Owner:CISCO TECH INC

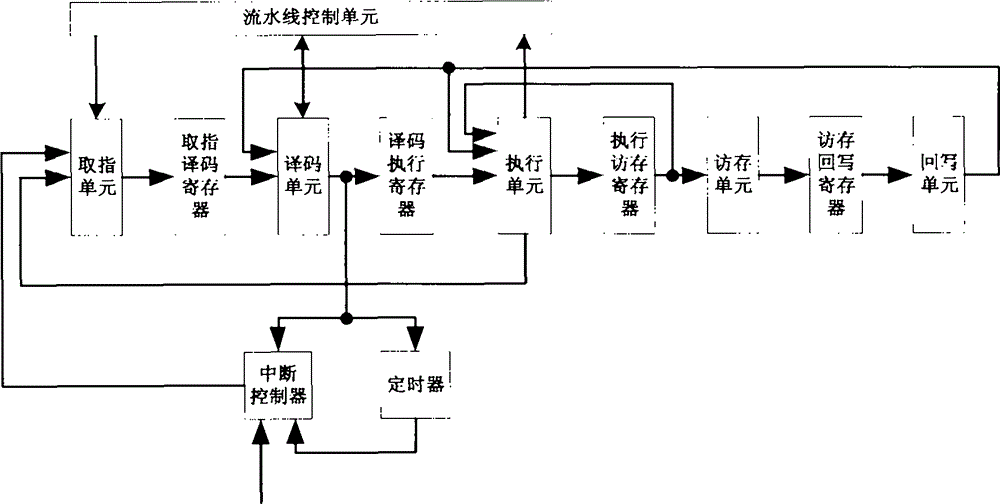

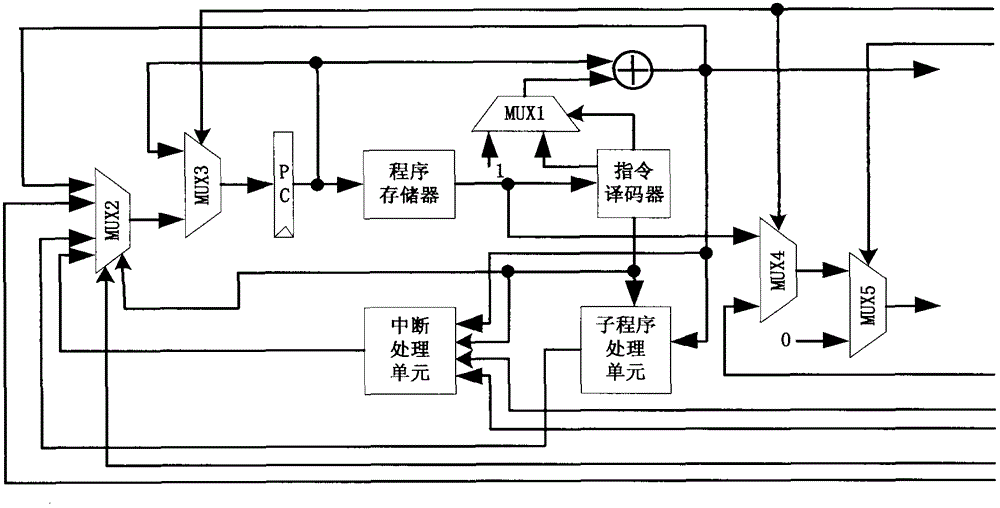

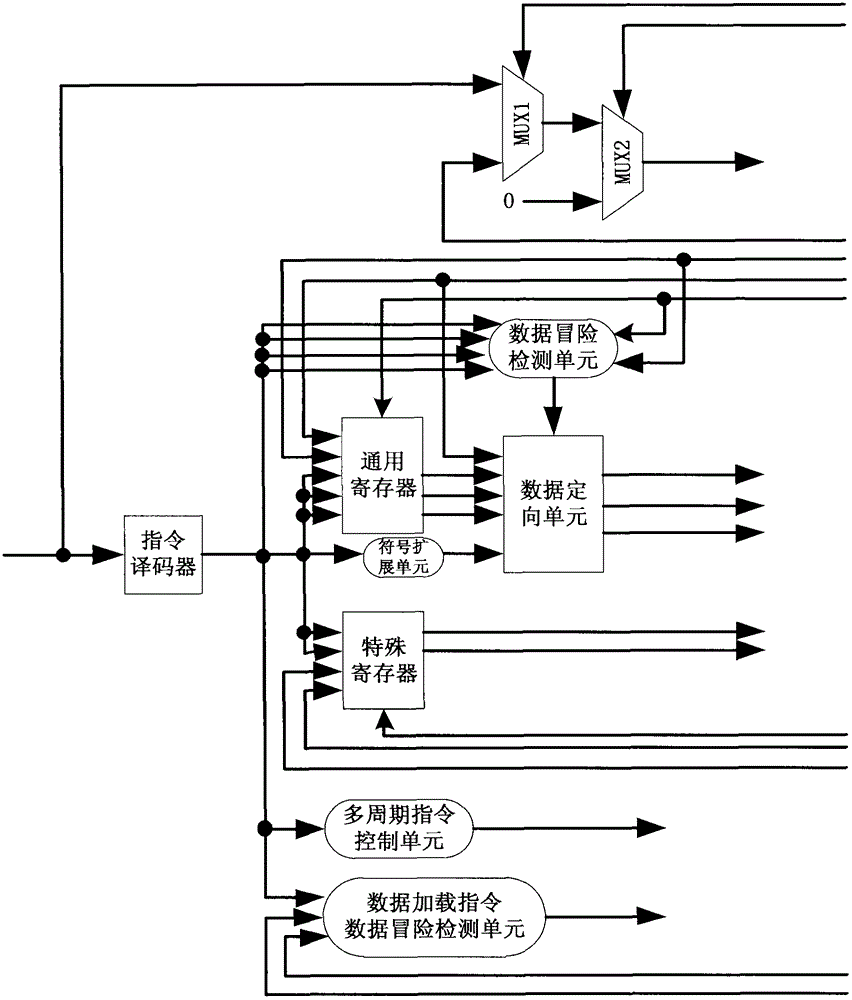

ASIP (application-specific instruction-set processor) based on extensible processor architecture and realizing method thereof

InactiveCN103150146AOvercoming complexityOvercome resourcesConcurrent instruction executionMemory systemsCircuit complexityComputer architecture

The invention discloses an ASIP based on an extensible processor architecture and a realizing method thereof, which mainly solve the problem of high circuit complexity and large resource consumption in the prior art. Aiming at the extensible processor architecture after optimization, the invention adopts a five-level-pipeline realizing method, and adopts a data orientation technology and a branch non-execution strategy to solve data hazards and control hazards in pipeline treatment respectively. The ASIP comprises an instruction fetch unit, a decoding unit, an execution unit, a memory access unit, a write-back unit, a pipeline control unit, a timer and an interrupt controller. According to the ASIP and the method, the characteristics of a pipeline processor and an FPGA (field-programmable gate array) are considered sufficiently, the pipeline structure is partitioned reasonably, and the hardware resources of the FPGA are utilized to the utmost extent; and the ASIP and the method have the advantages of simple circuit design, low resource consumption, high processor performance and low power consumption, and are highly suitable for large-scale parallel processing.

Owner:XIDIAN UNIV

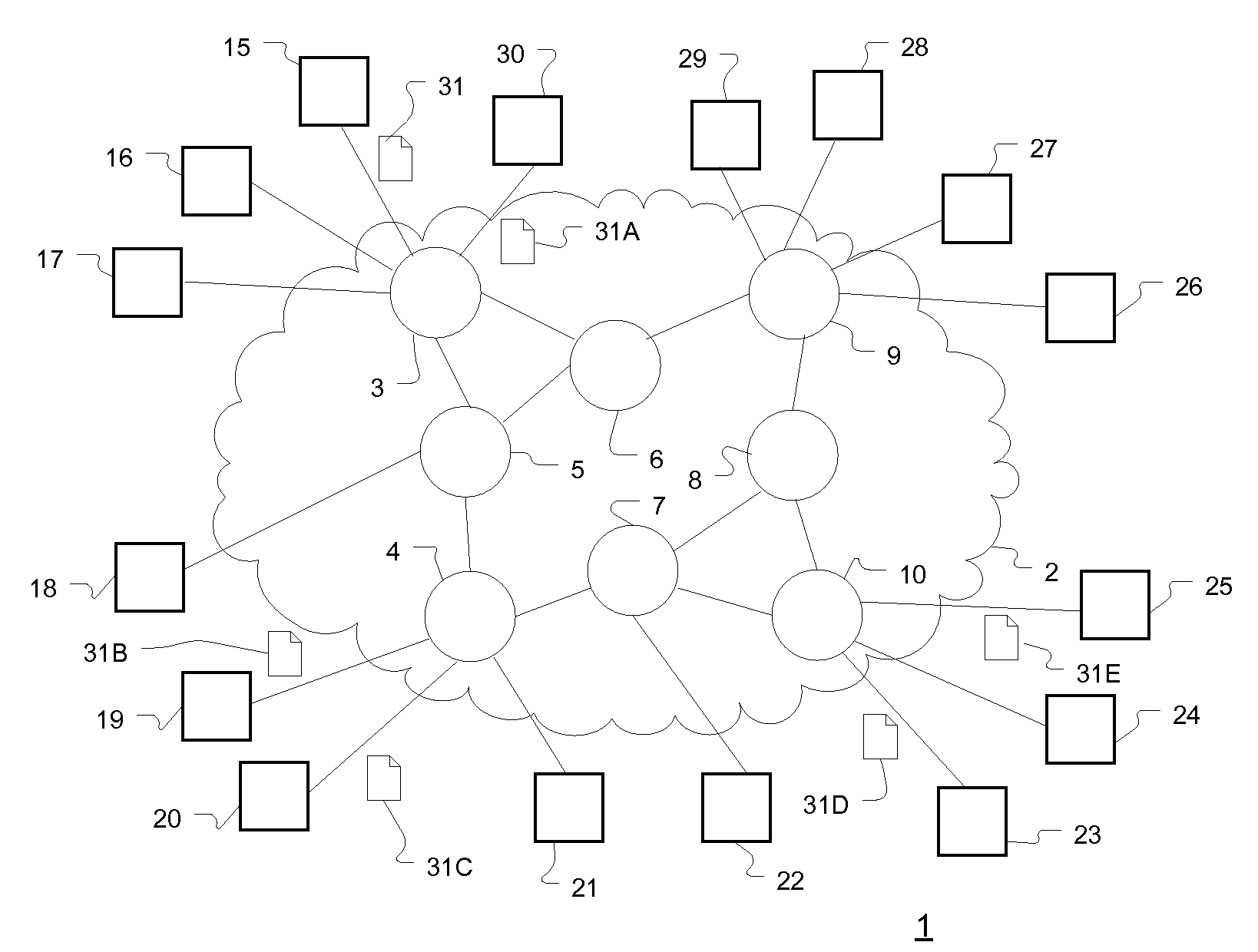

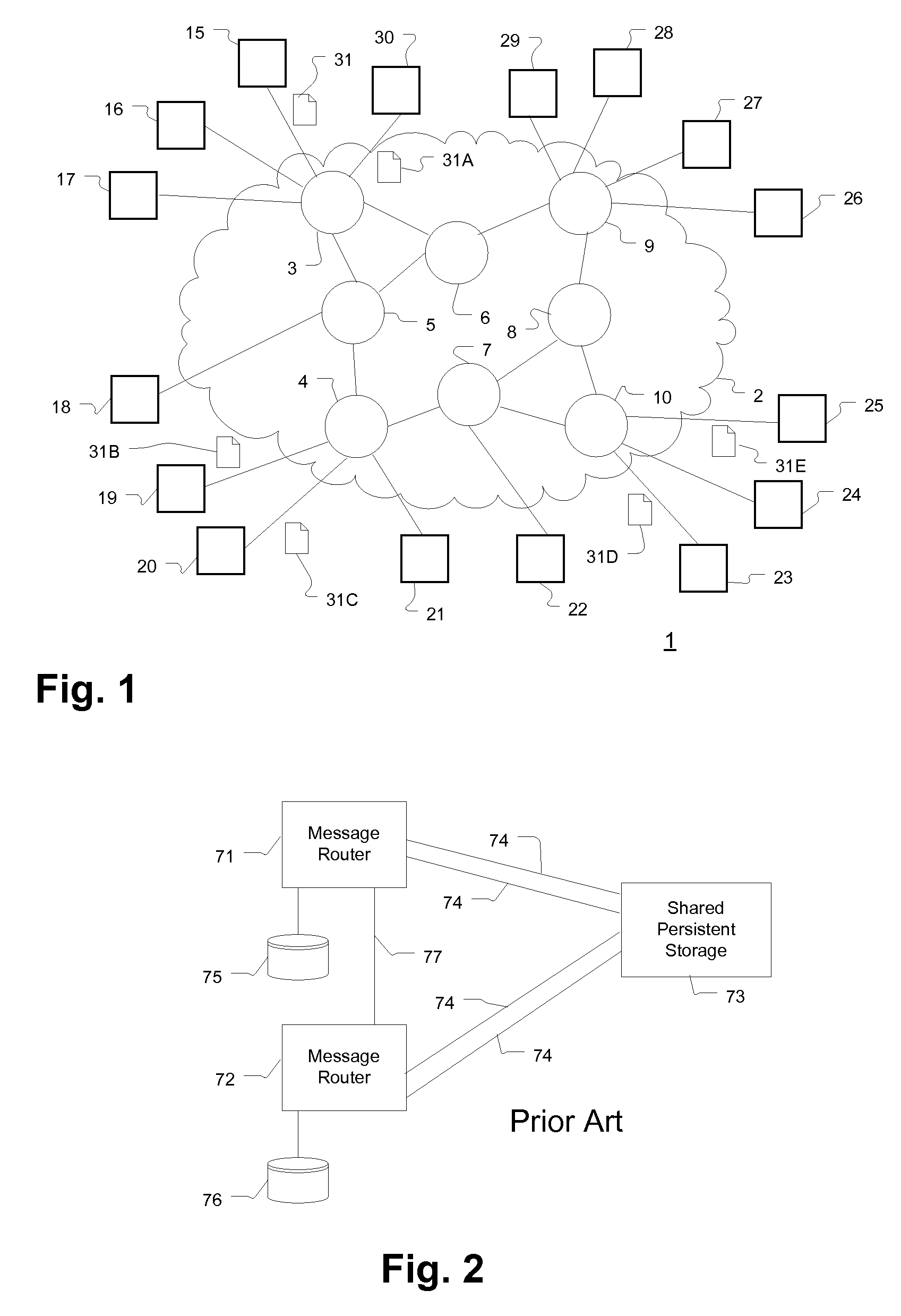

Low latency, high throughput data storage system

ActiveUS7716525B1Lower latencyImprove throughputError detection/correctionData switching current supplyComputer hardwareMessage delivery

A method of providing assured message delivery with low latency and high message throughput, in which a message is stored in non-volatile, low latency memory with associated destination list and other meta data. The message is only removed from this low-latency non-volatile storage when an acknowledgement has been received from each destination indicating that the message has been successfully received, or if the message is in such memory for a period exceeding a time threshold or if memory resources are running low, the message and associated destination list and other meta data is migrated to other persistent storage. The data storage engine can also be used for other high throughput applications.

Owner:SOLACE CORP

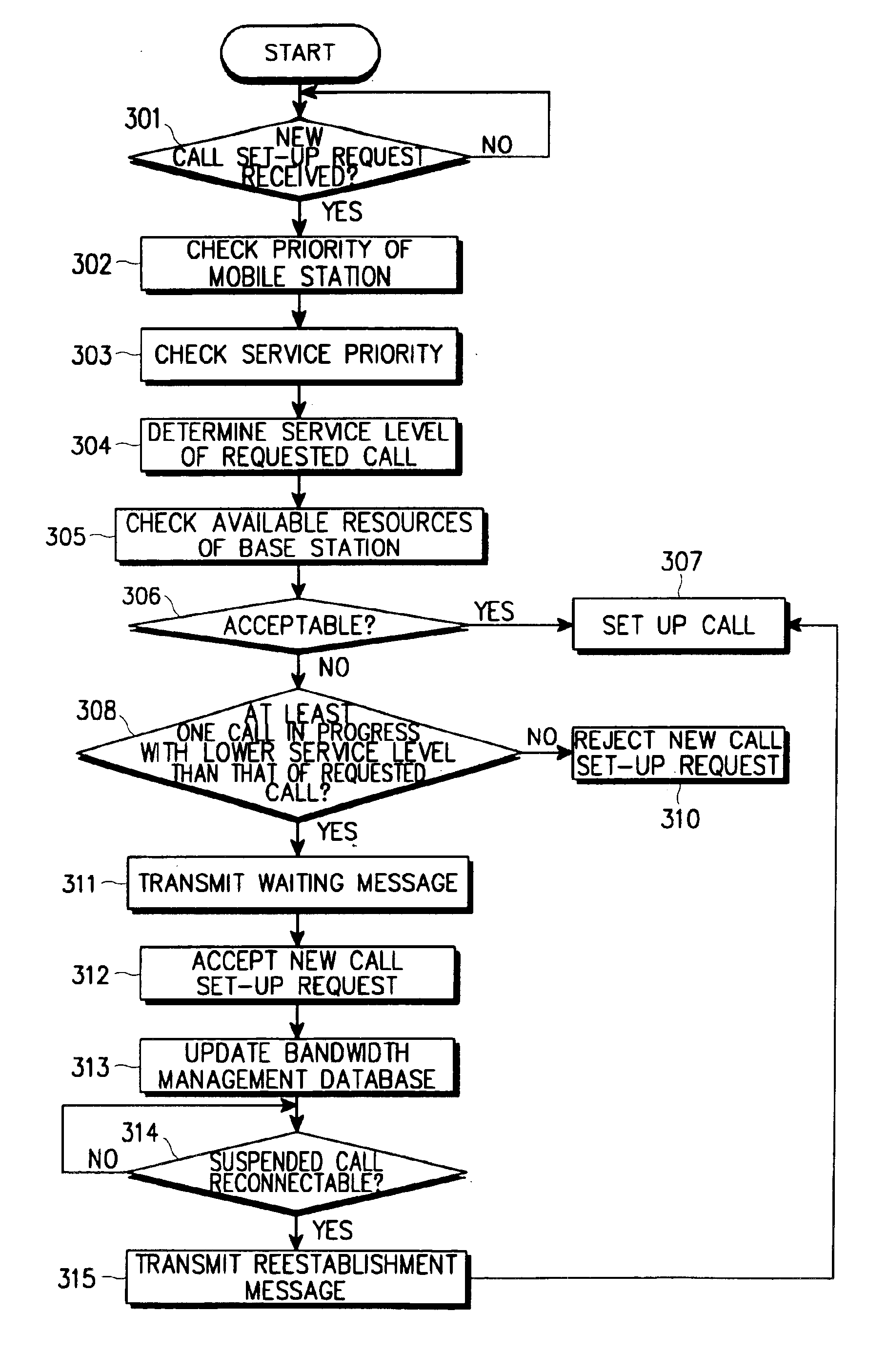

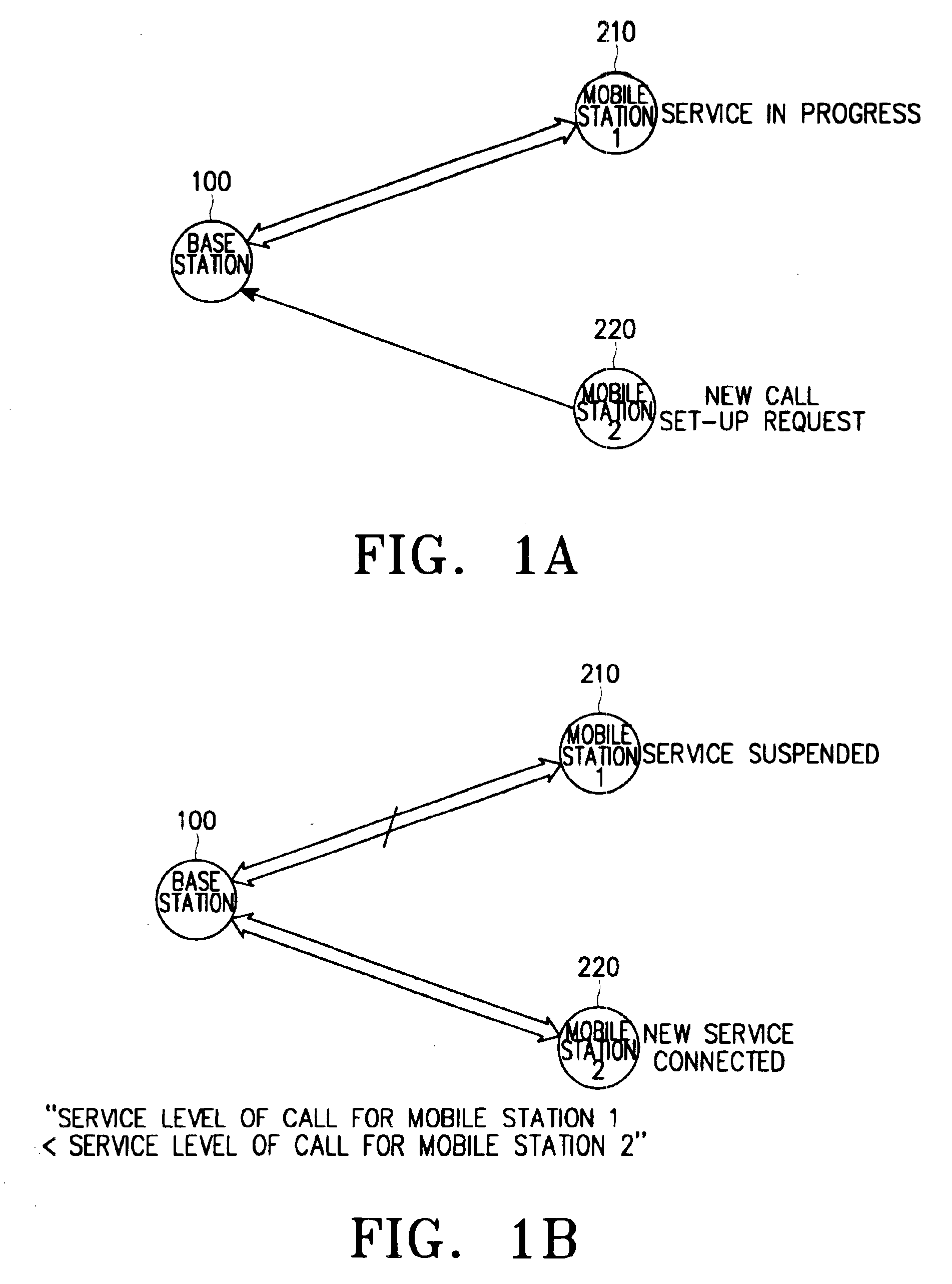

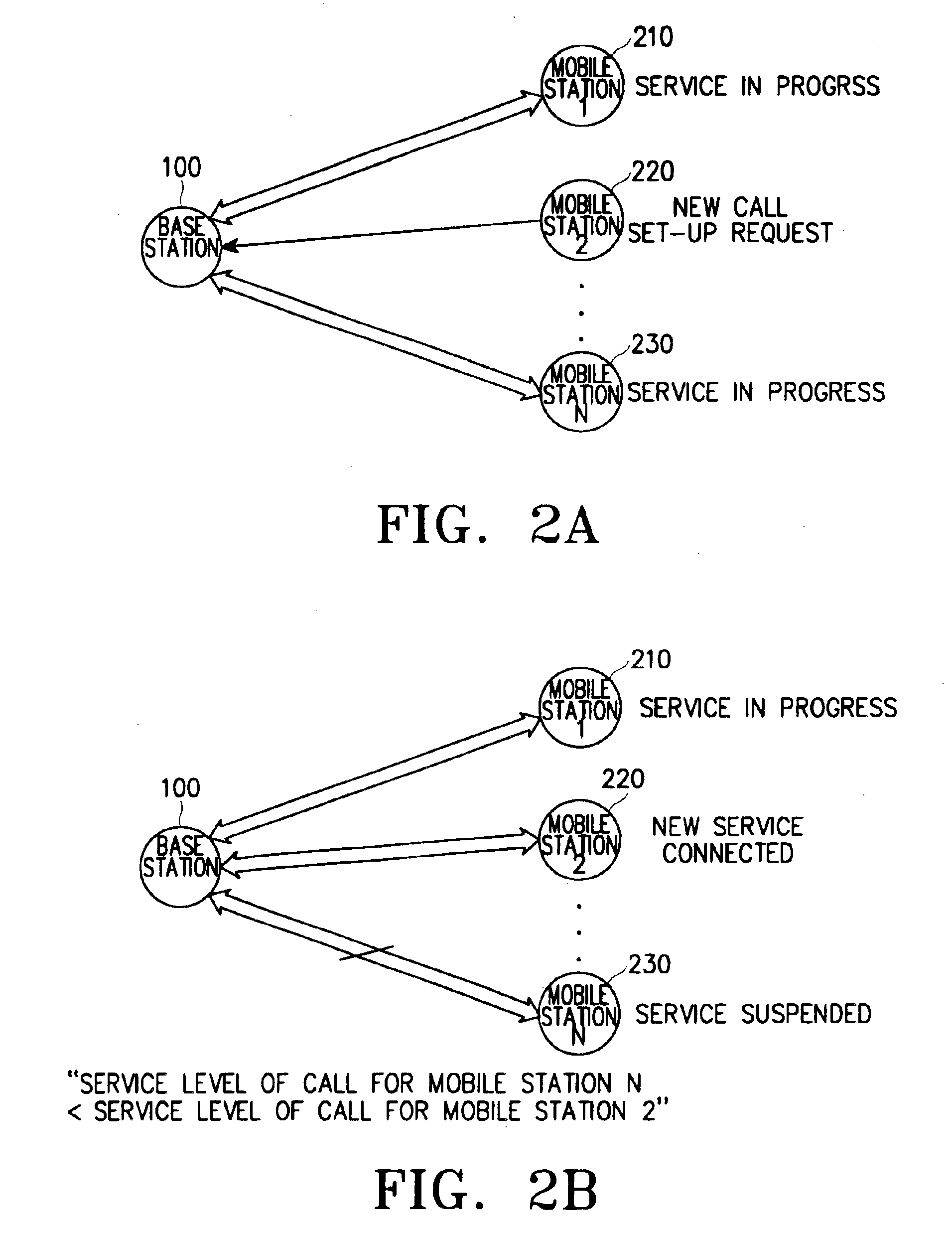

Call processing method and apparatus for effective QoS control in mobile communication system

InactiveUS6889048B1Effective controlEfficient managementNetwork traffic/resource managementTime-division multiplexQuality of serviceQuality control

A call processing method and apparatus for effective QoS (Quality of Service) control in a mobile communication system is provided. In one embodiment of the present invention, a base station checks available resources upon receipt of a call set-up request from a mobile station. If the resources are not enough to satisfy the QoS of the requested call, the base station suspends a call in progress with a lower service level than that of the requested call. Later, if available resources are secured, the base station resumes the suspended call.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com