Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

8412 results about "Execution unit" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer engineering, an execution unit (also called a functional unit) is a part of the central processing unit (CPU) that performs the operations and calculations as instructed by the computer program. It may have its own internal control sequence unit, which is not to be confused with the CPU's main control unit, some registers, and other internal units such as an arithmetic logic unit (ALU), address generation unit (AGU), floating-point unit (FPU), load-store unit (LSU), branch execution unit (BEU) or some smaller and more specific components.

Speculative execution and rollback

ActiveUS20130117541A1Resolve dependenciesDigital computer detailsMemory systemsSpeculative executionExecution unit

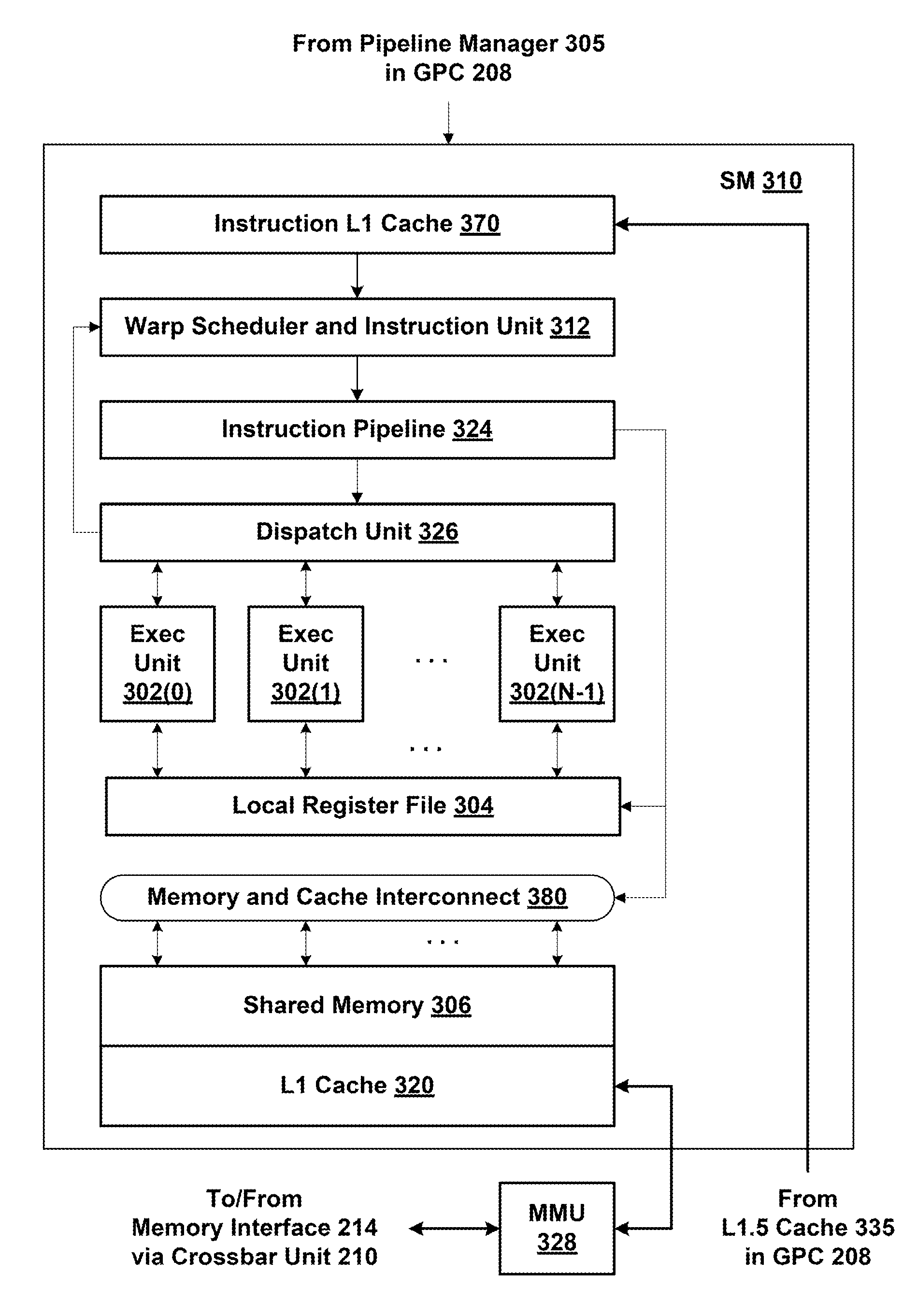

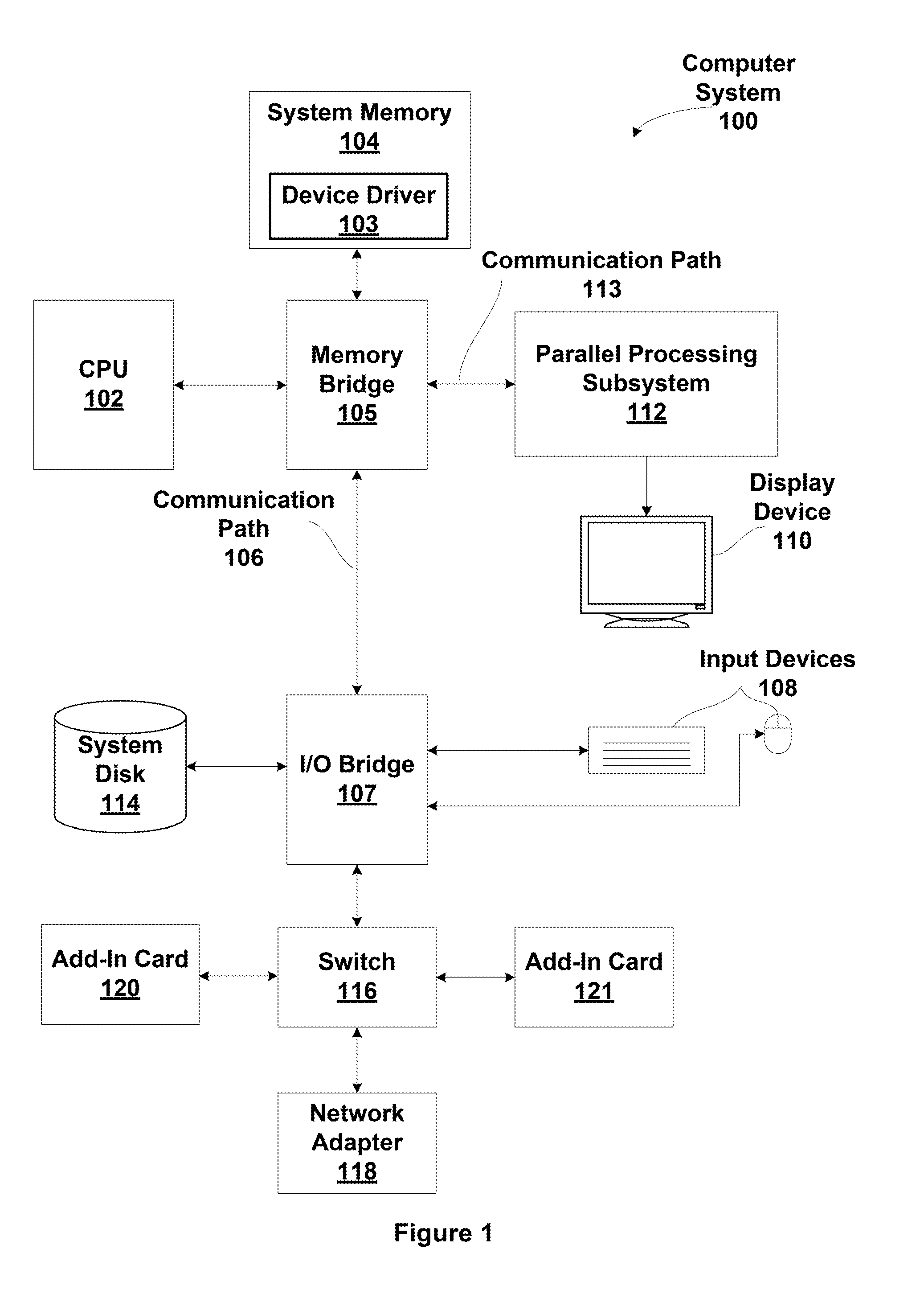

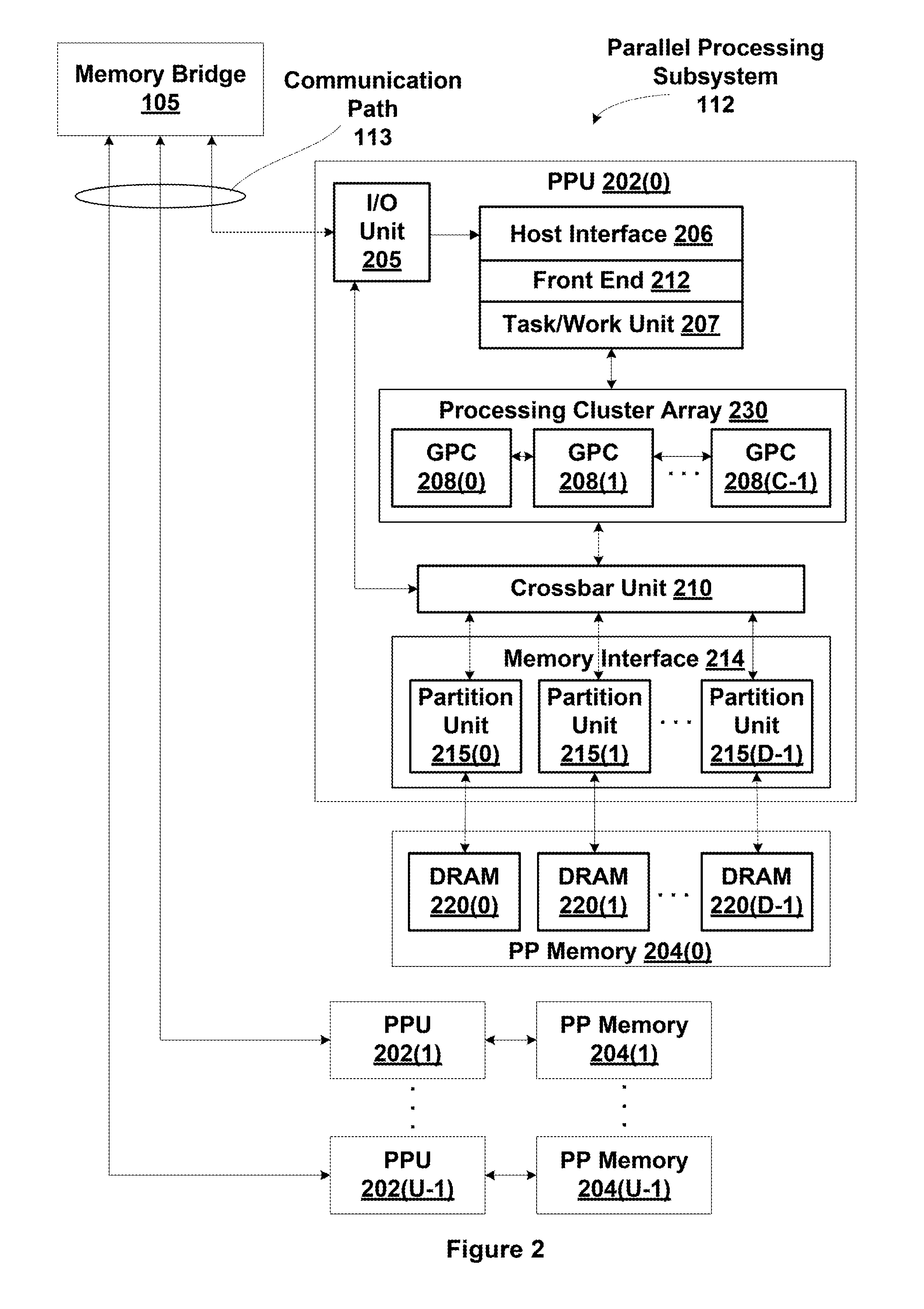

One embodiment of the present invention sets forth a technique for speculatively issuing instructions to allow a processing pipeline to continue to process some instructions during rollback of other instructions. A scheduler circuit issues instructions for execution assuming that, several cycles later, when the instructions reach multithreaded execution units, that dependencies between the instructions will be resolved, resources will be available, operand data will be available, and other conditions will not prevent execution of the instructions. When a rollback condition exists at the point of execution for an instruction for a particular thread group, the instruction is not dispatched to the multithreaded execution units. However, other instructions issued by the scheduler circuit for execution by different thread groups, and for which a rollback condition does not exist, are executed by the multithreaded execution units. The instruction incurring the rollback condition is reissued after the rollback condition no longer exists.

Owner:NVIDIA CORP

Algorithm mapping, specialized instructions and architecture features for smart memory computing

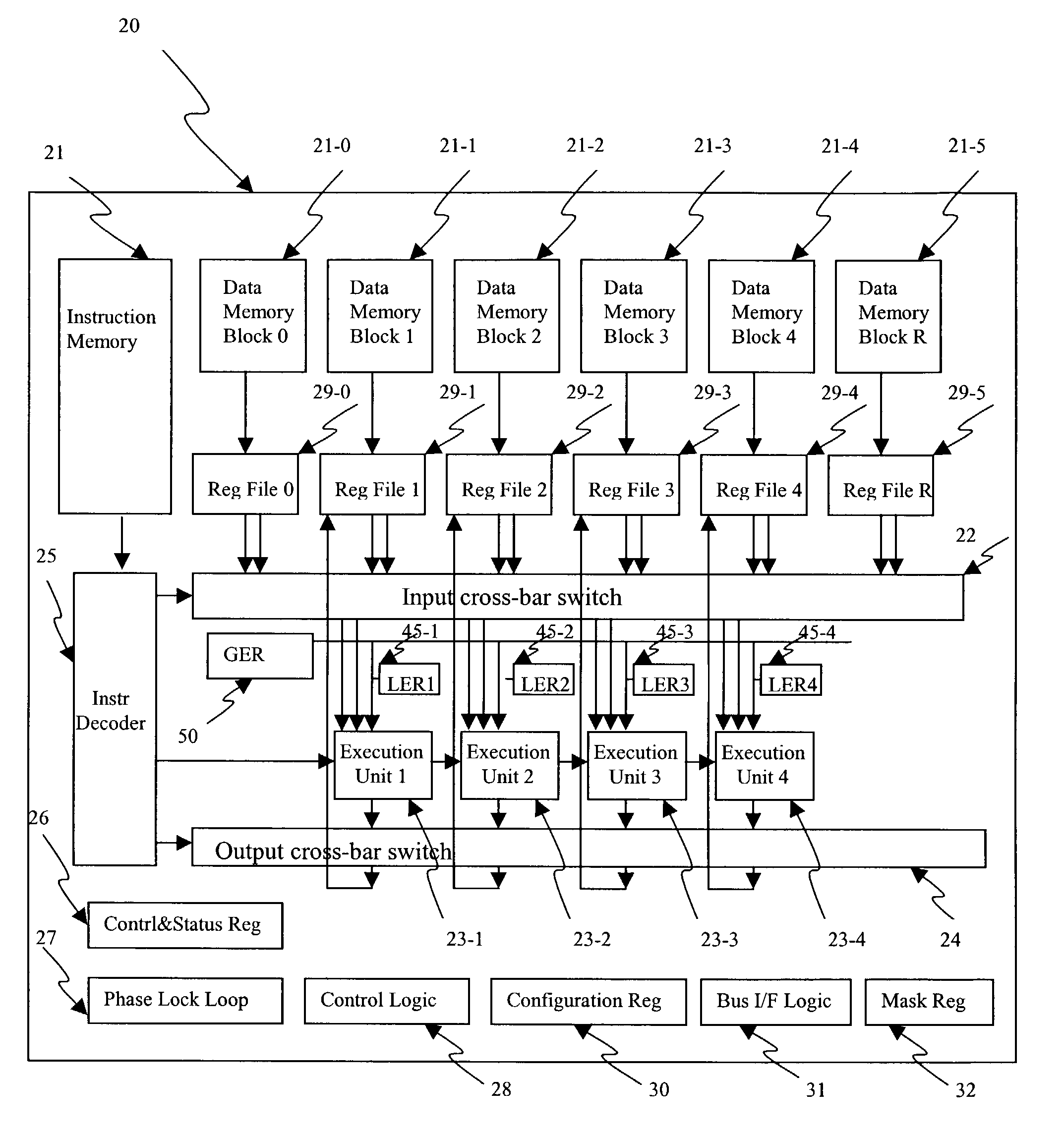

InactiveUS7546438B2Improve performanceLow costMultiplex system selection arrangementsDigital computer detailsSmart memoryExecution unit

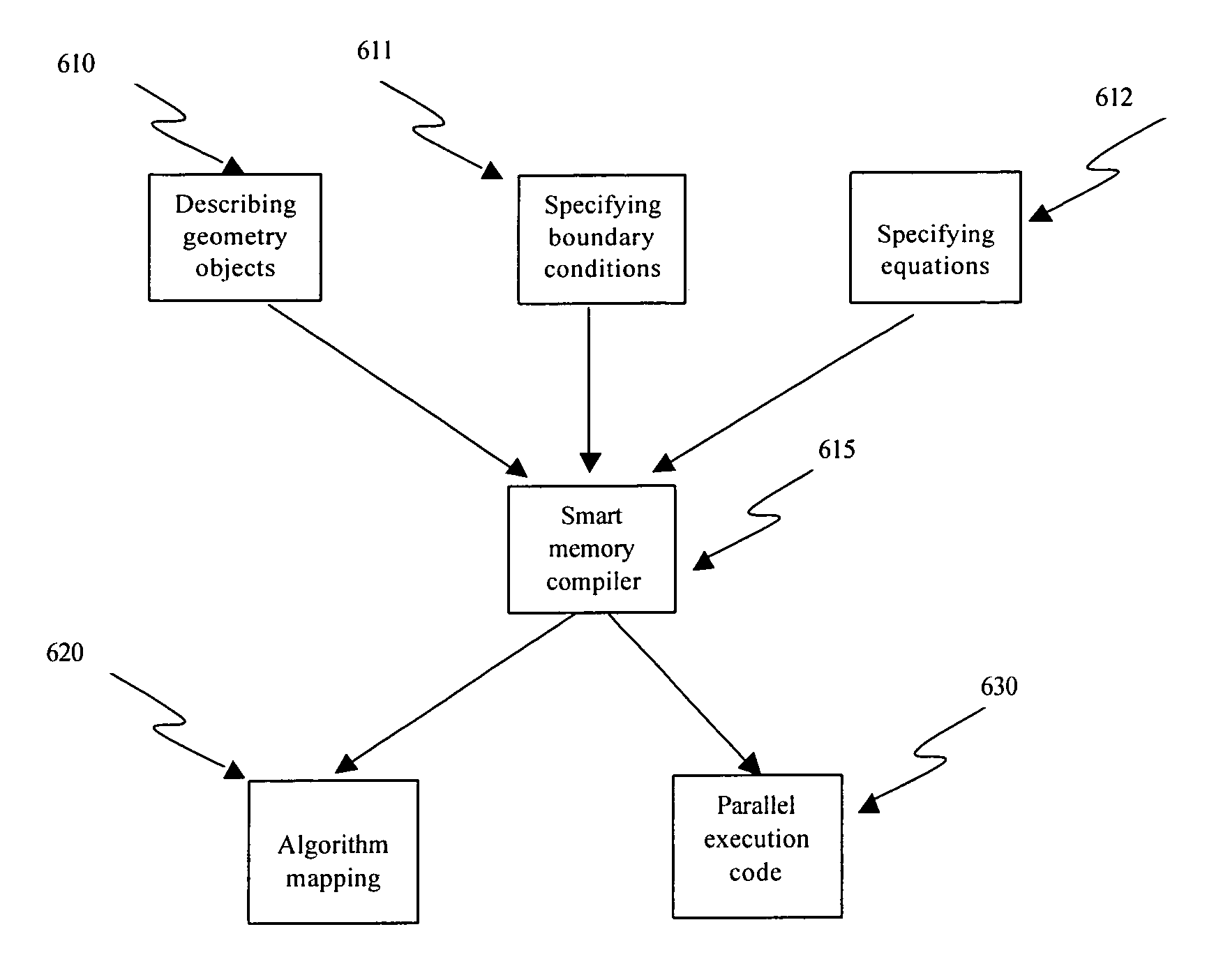

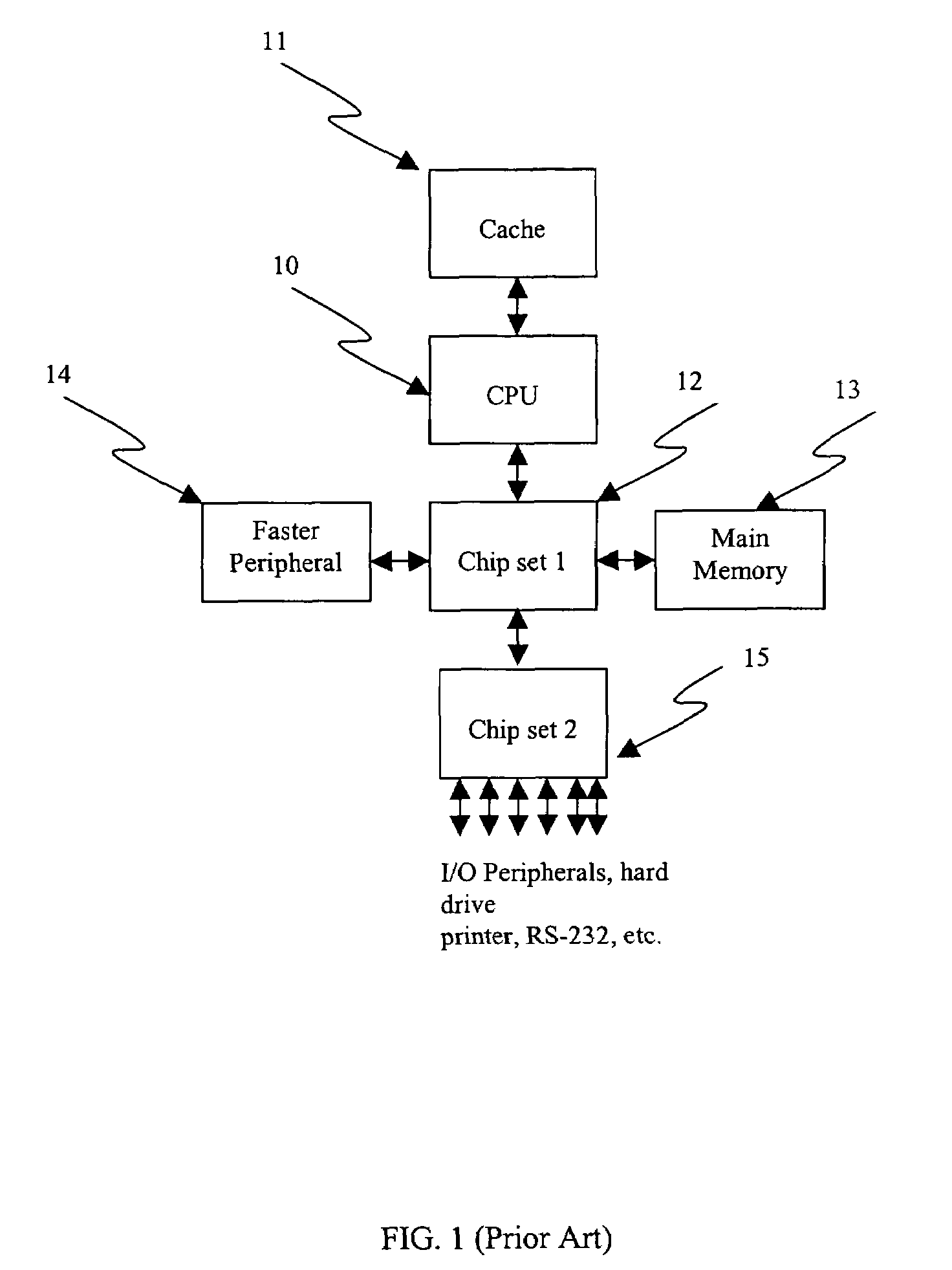

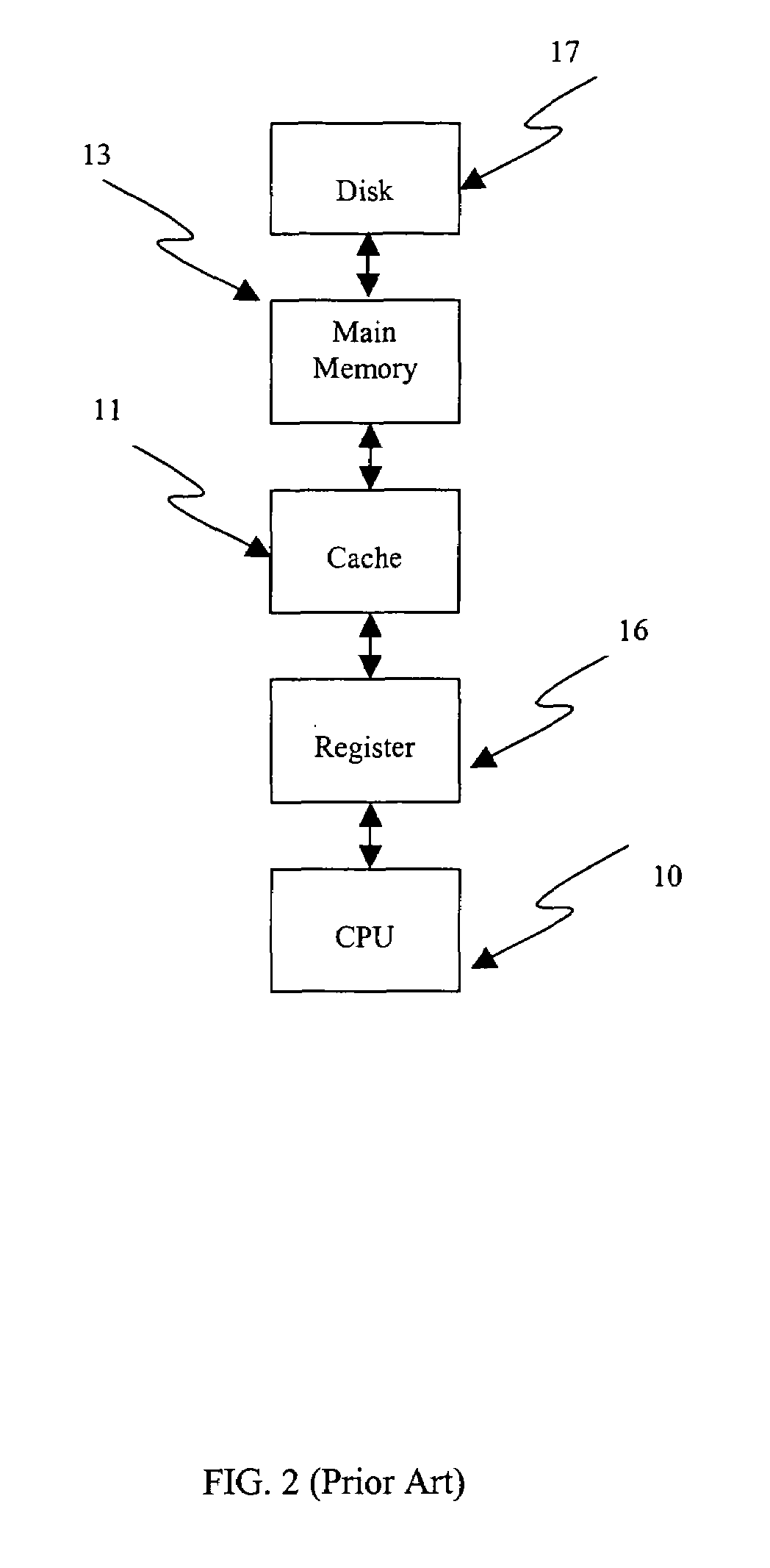

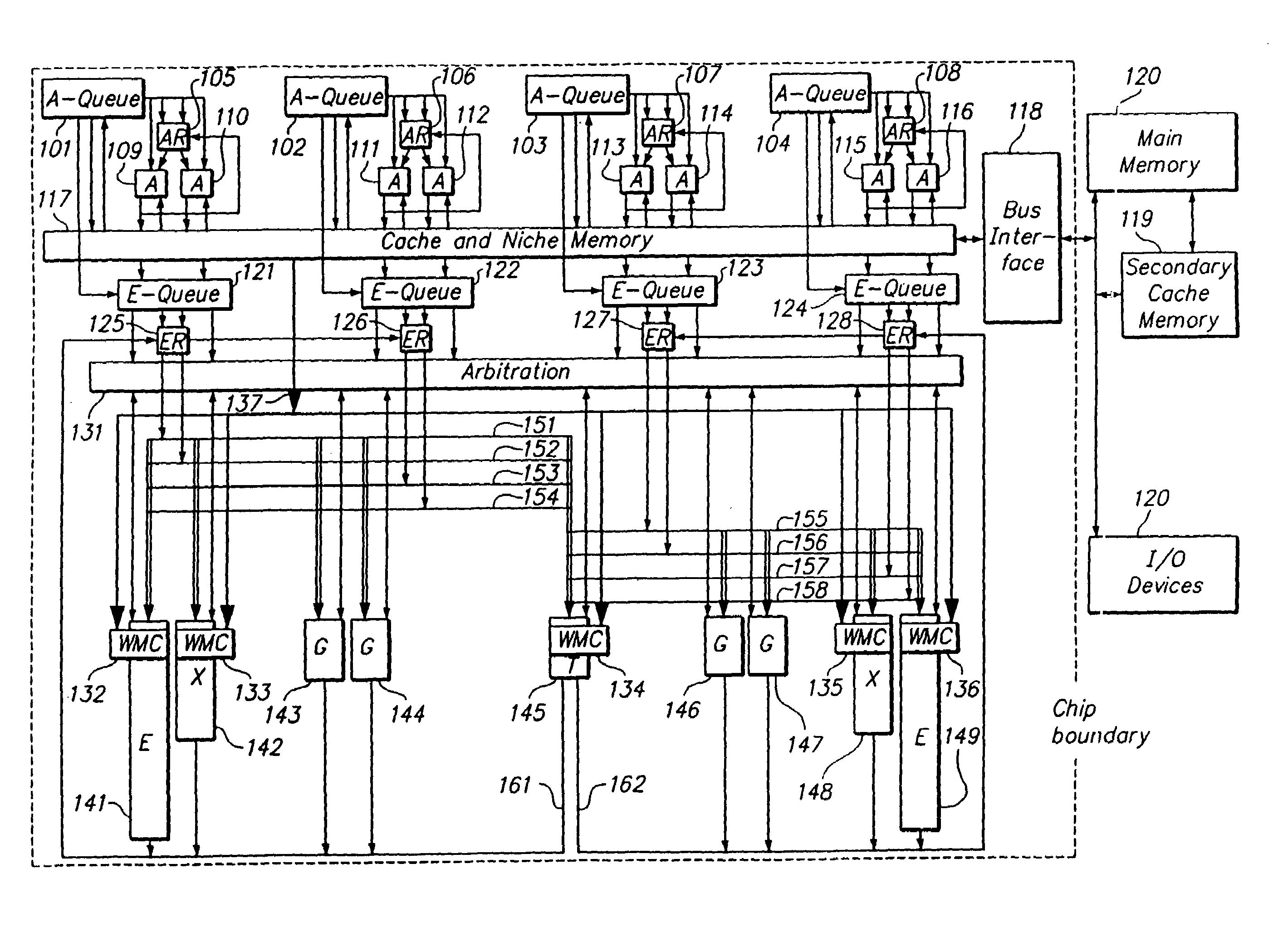

A smart memory computing system that uses smart memory for massive data storage as well as for massive parallel execution is disclosed. The data stored in the smart memory can be accessed just like the conventional main memory, but the smart memory also has many execution units to process data in situ. The smart memory computing system offers improved performance and reduced costs for those programs having massive data-level parallelism. This smart memory computing system is able to take advantage of data-level parallelism to improve execution speed by, for example, use of inventive aspects such as algorithm mapping, compiler techniques, architecture features, and specialized instruction sets.

Owner:STRIPE INC

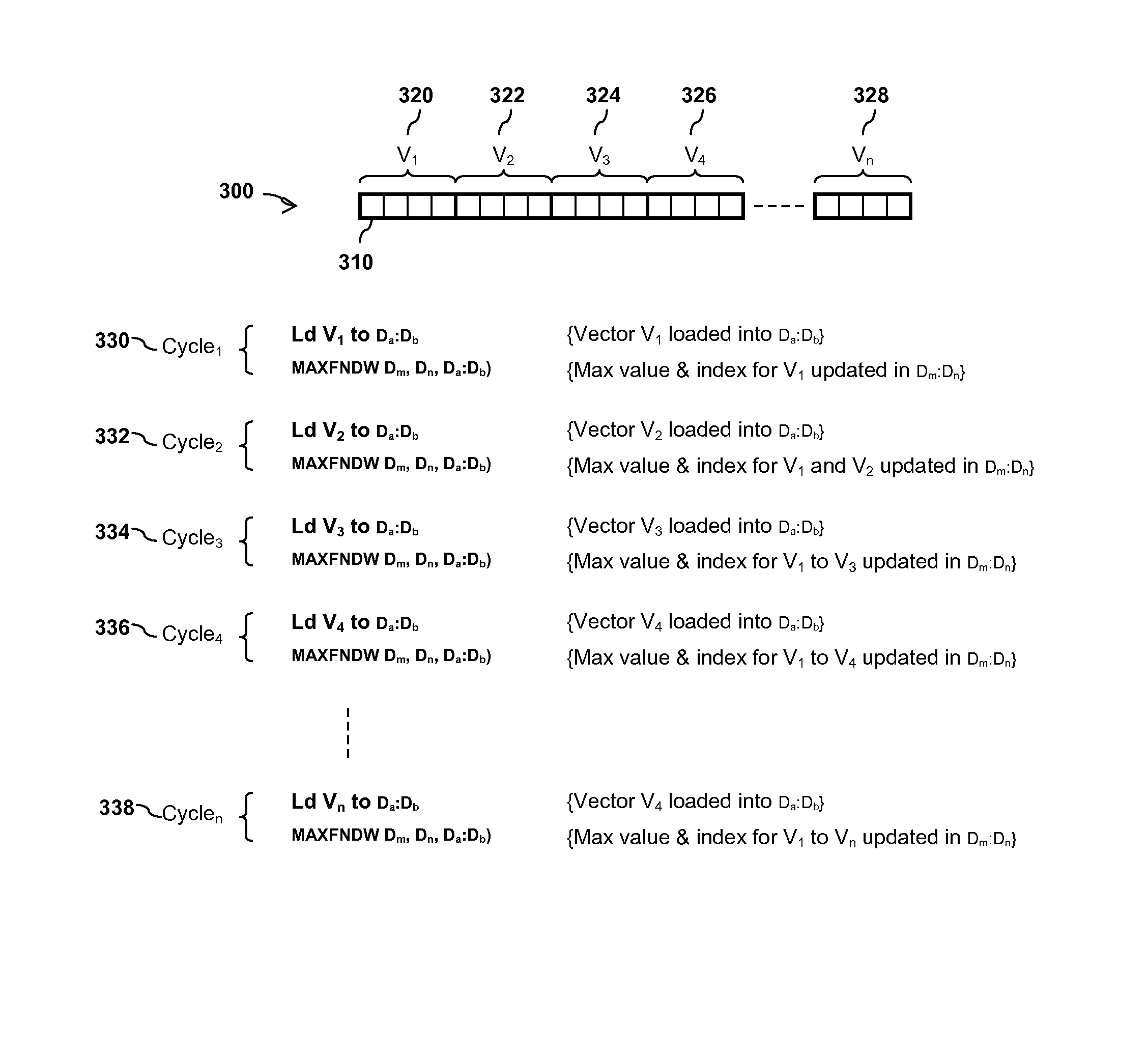

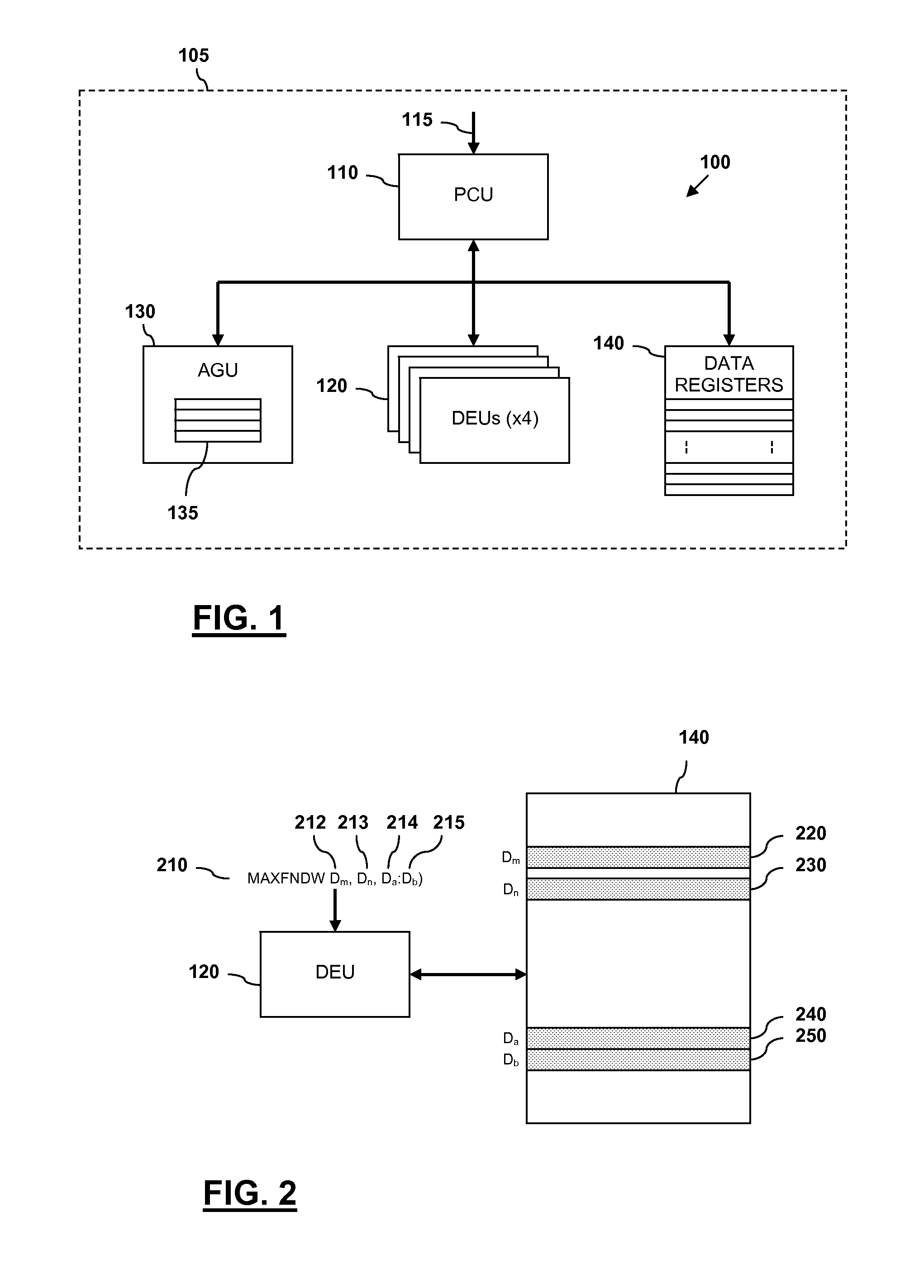

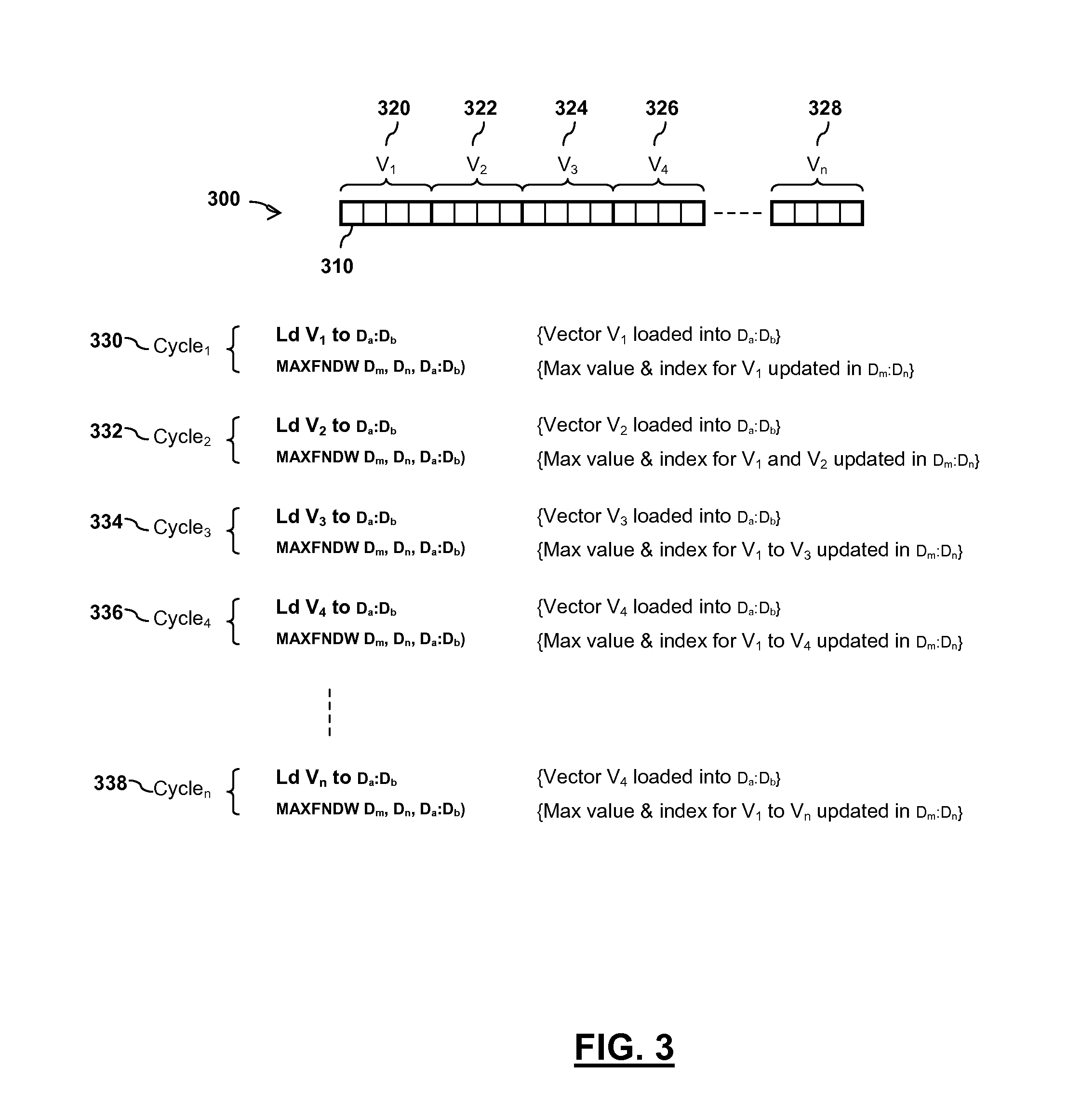

Integrated circuit device and method for determining an index of an extreme value within an array of values

InactiveUS9165023B2Digital data information retrievalDigital data processing detailsProcessor registerComputer module

An integrated circuit device comprises at least one digital signal processor, DSP, module, the at least one DSP module comprising a plurality of data registers and at least one data execution unit, DEU, module arranged to execute operations on data stored within the data registers. The at least one DEU module is arranged to, in response to receiving an extreme value index instruction, compare a previous extreme value located within a first data register set of the DSP module with at least one input vector data value located within a second data register set of the DSP module, and determine an extreme value thereof. The at least one DEU module is further arranged to, if the determined extreme value comprises an input vector data value located within the second data register set, store the determined extreme value in the first data register set, determine an index value for the determined extreme value, and store the determined index value in the first data register set.

Owner:NXP USA INC

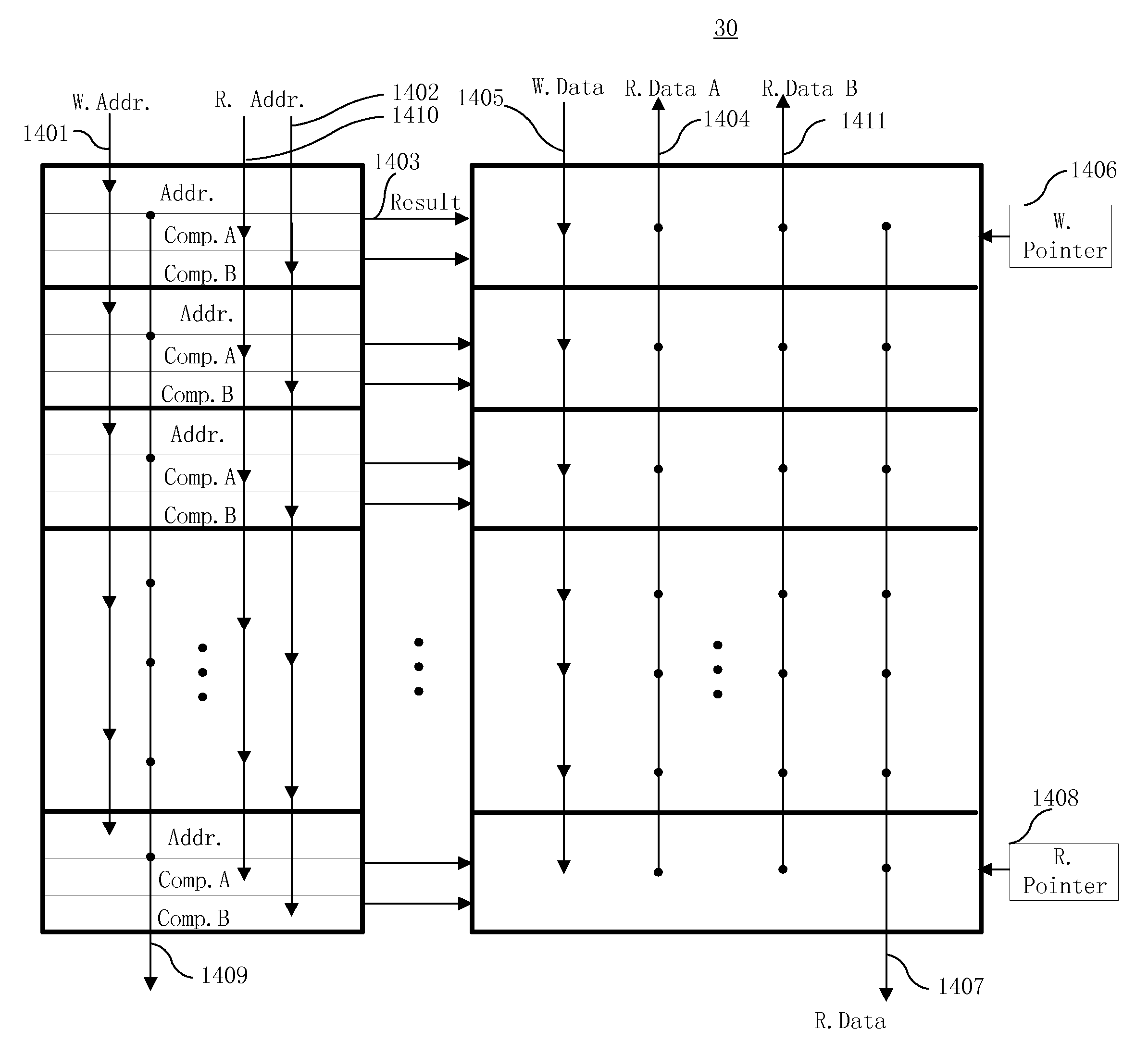

Processor-cache system and method

ActiveUS9047193B2Shorten the counting processEfficient and uniform structureEnergy efficient ICTRegister arrangementsAddress generation unitProcessor register

A digital system is provided. The digital system includes an execution unit, a level-zero (L0) memory, and an address generation unit. The execution unit is coupled to a data memory containing data to be used in operations of the execution unit. The L0 memory is coupled between the execution unit and the data memory and configured to receive a part of the data in the data memory. The address generation unit is configured to generate address information for addressing the L0 memory. Further, the L0 memory provides at least two operands of a single instruction from the part of the data to the execution unit directly, without loading the at least two operands into one or more registers, using the address information from the address generation unit.

Owner:SHANGHAI XINHAO MICROELECTRONICS

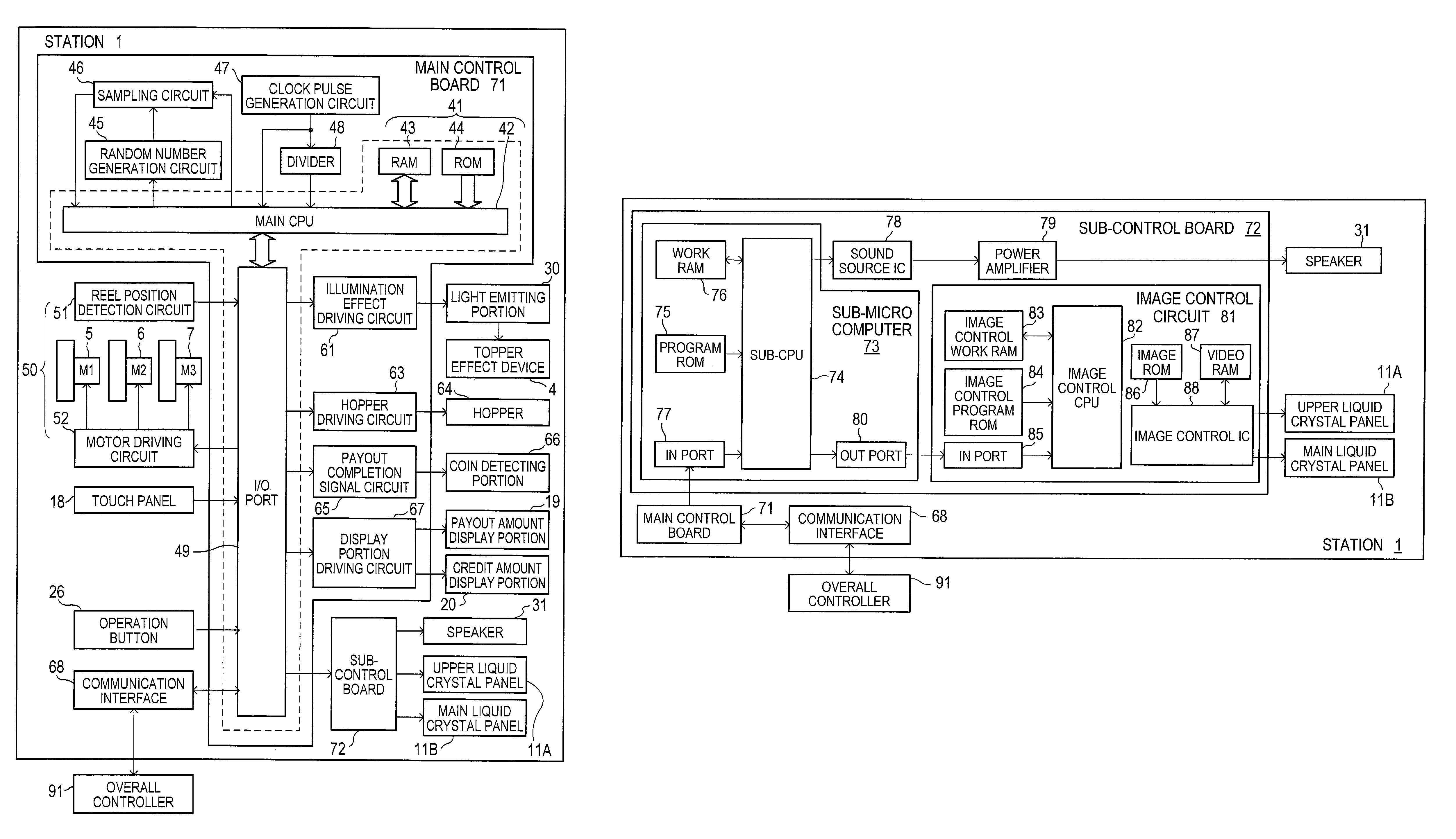

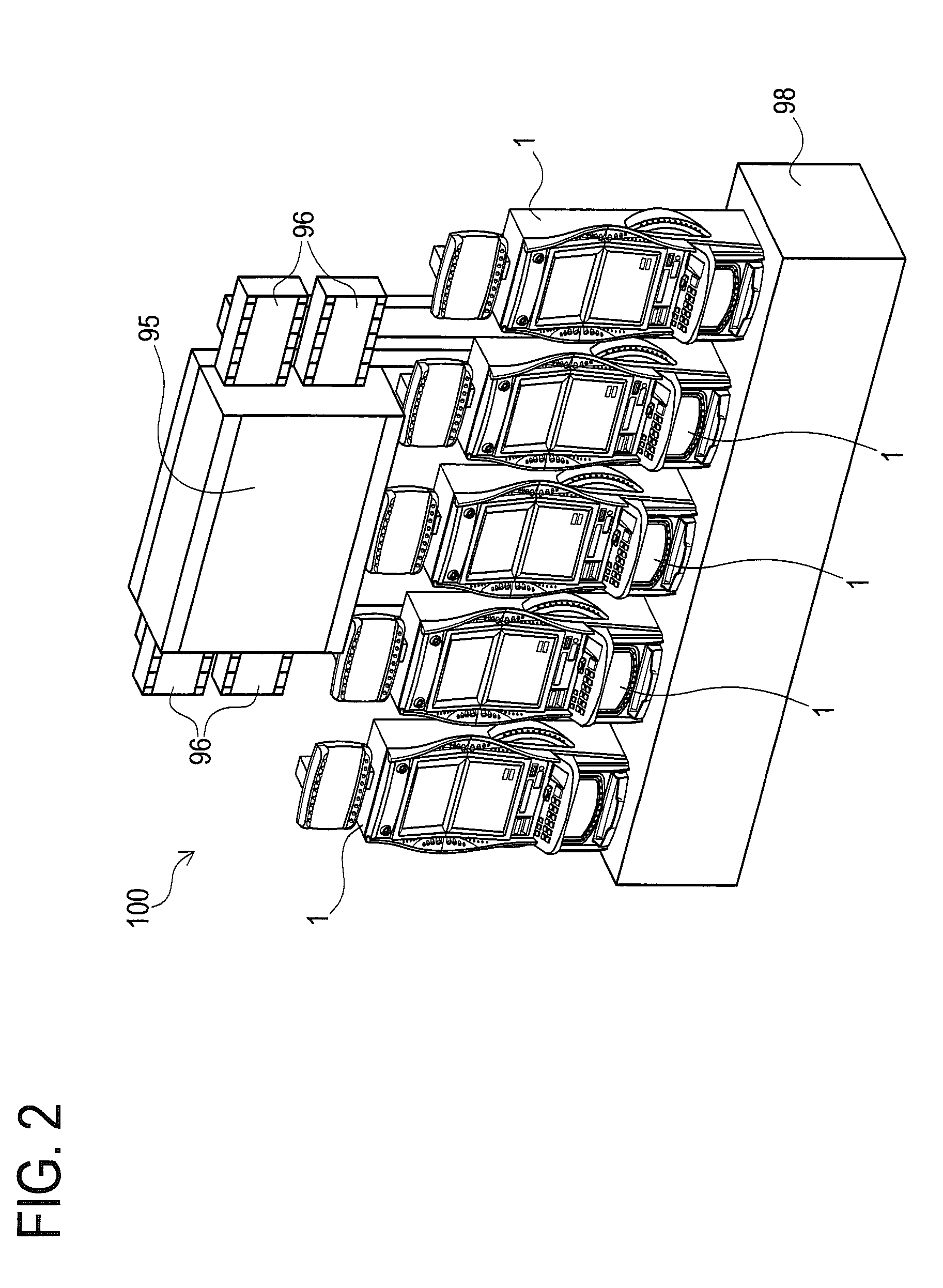

Gaming machine

InactiveUS8167699B2Apparatus for meter-controlled dispensingVideo gamesExecution unitHuman–computer interaction

A gaming machine includes plural stations and a processor. Each station can determine a game result and execute a game independently. The processor accepts each station's entry to an event game when a predetermined condition is satisfied. An event game consists of a plural number of unit event games. When executing unit event games, the processor collects a game charge from a station whose entry to the event game has been accepted.

Owner:UNIVERSAL ENTERTAINMENT CORP

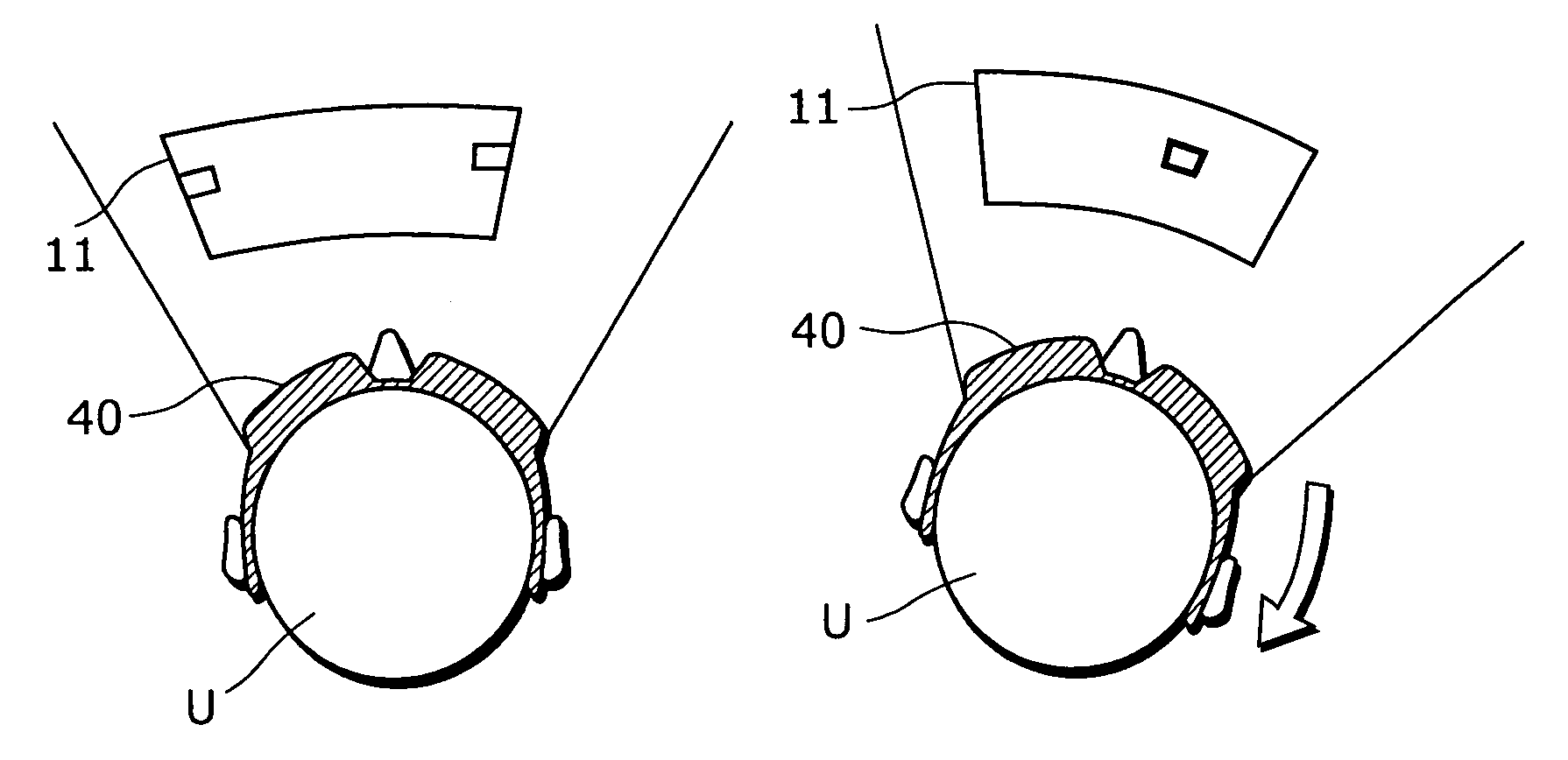

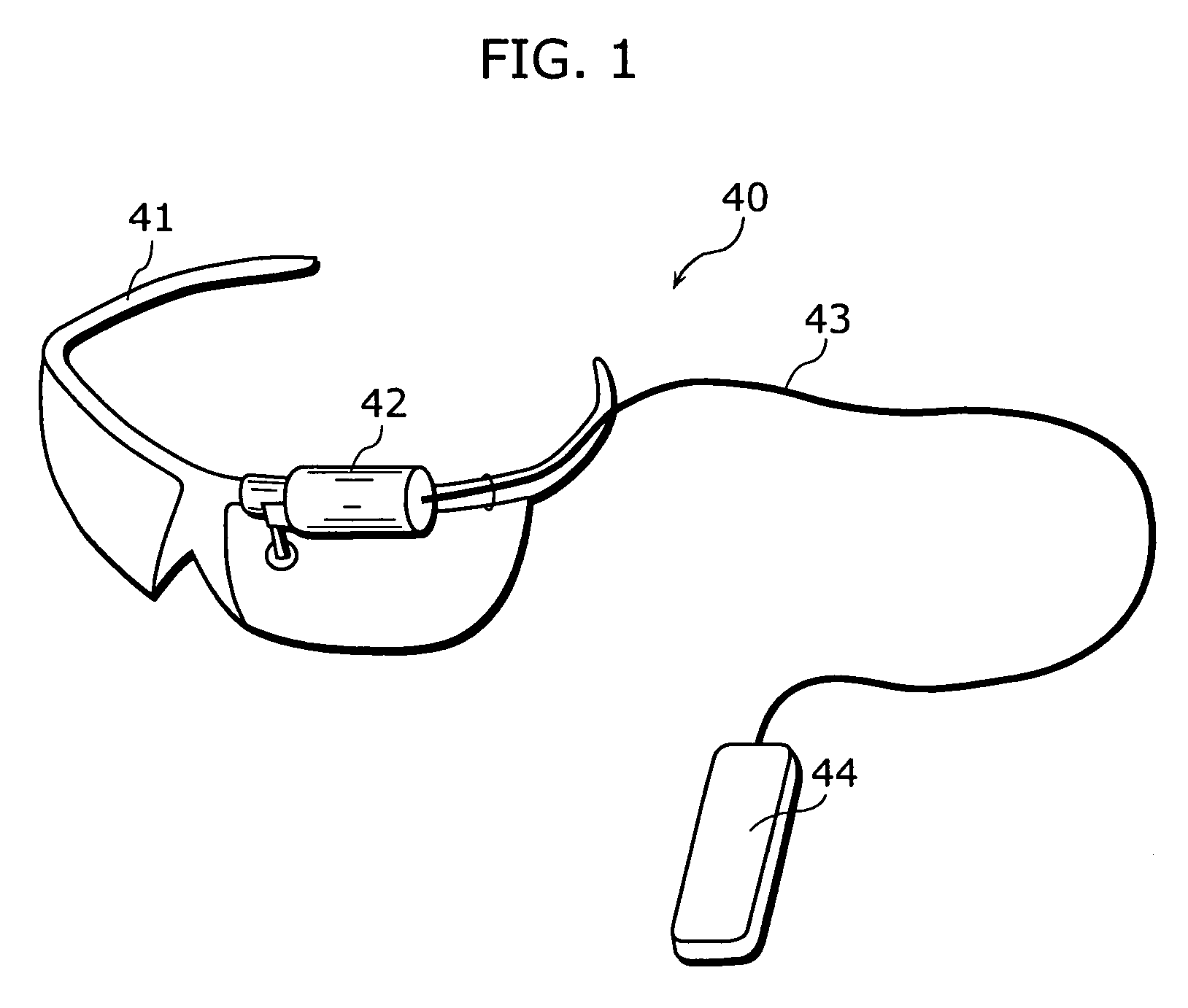

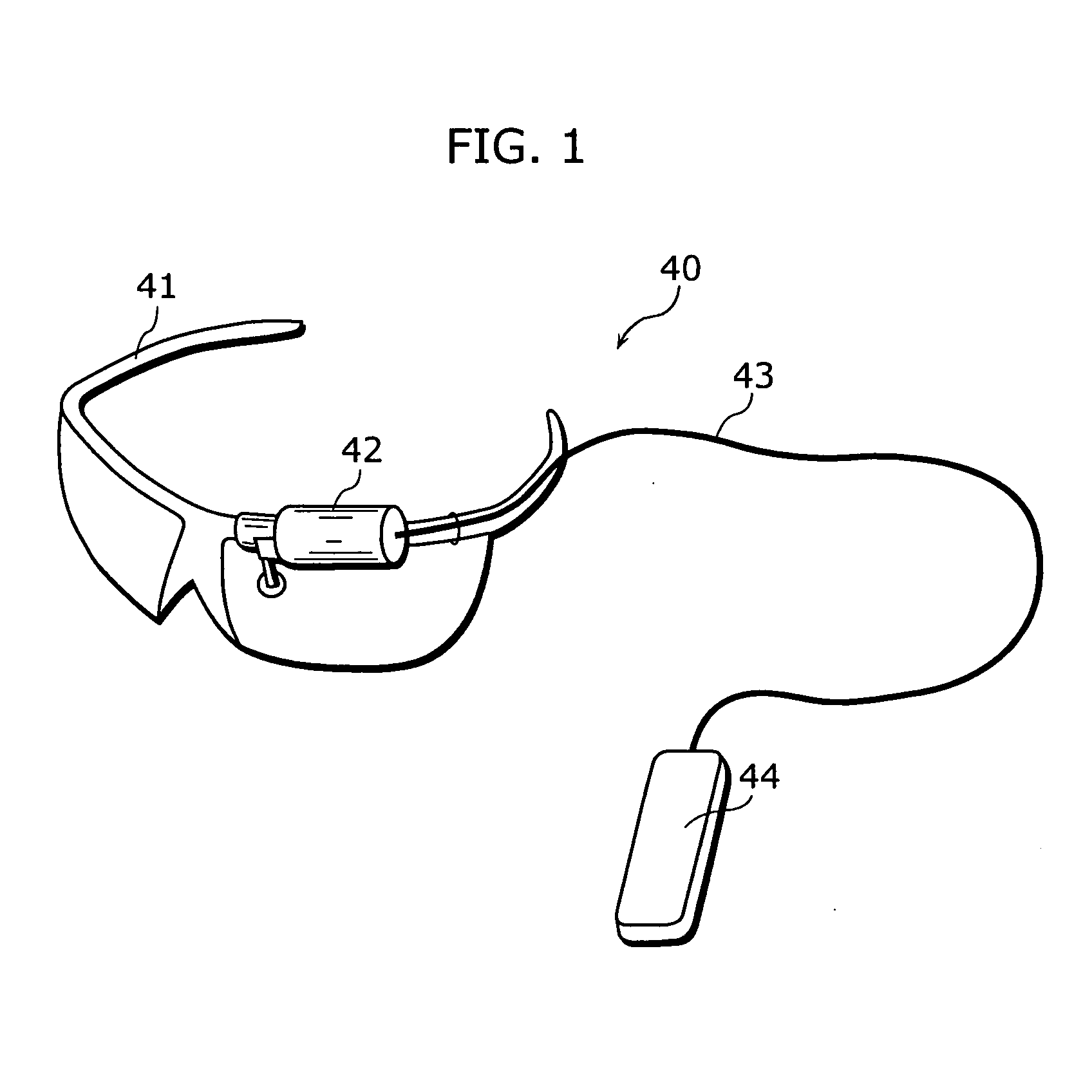

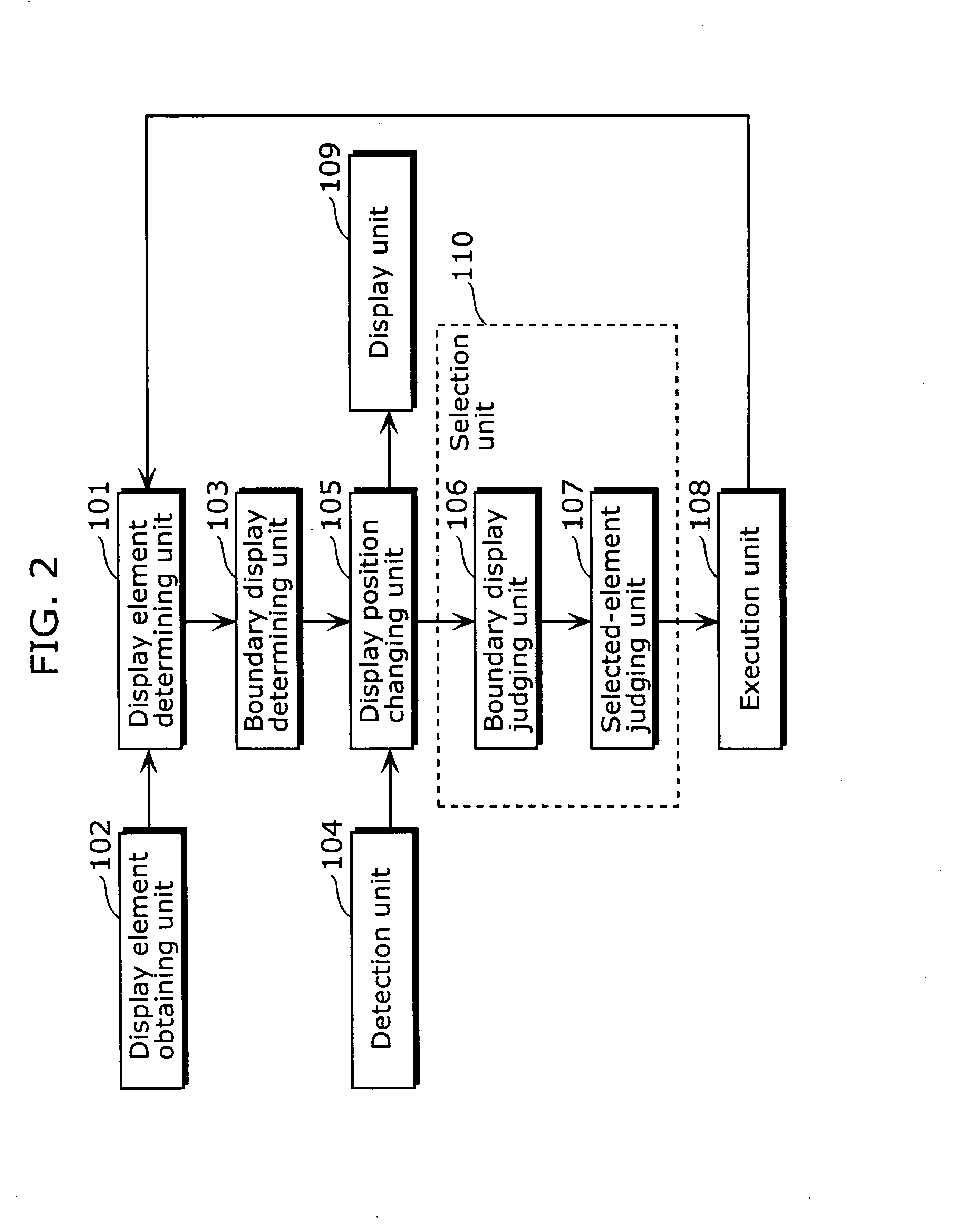

Display apparatus and method for hands free operation that selects a function when window is within field of view

InactiveUS7928926B2High-accuracy hands-free operationHigh-accuracy and hands-free operationCathode-ray tube indicatorsOptical elementsComputer hardwareComputer graphics (images)

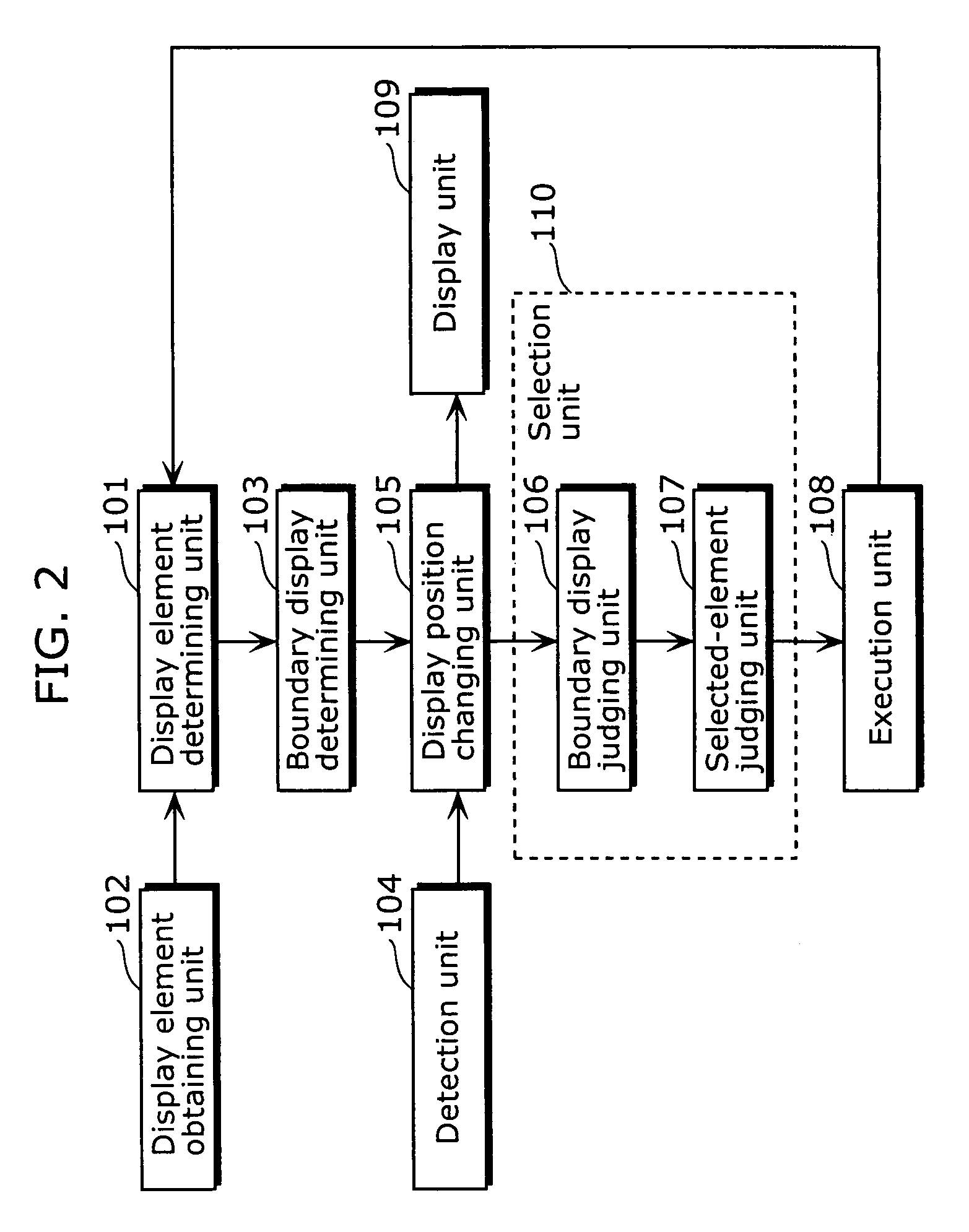

The present invention provides a display apparatus which enables high-accuracy, hands-free operation, while adopting a low-cost configuration. The display apparatus according to the present invention includes: an obtainment unit that obtains a display element which is information to be displayed to the user; a determining unit that determines a display position of the obtained display element, at which a portion of the obtained display element can not be seen by the user; a display unit that displays the display element at the determined display position; a detection unit that detects a direction of change when an orientation of the user's head is changed; a selection unit that selects the display element to be displayed in the detected direction of change; and an execution unit that executes a predetermined process related to the selected display element.

Owner:PANASONIC CORP

Application Execution Device and Application Execution Device Application Execution Method

InactiveUS20070271446A1High protection levelDigital computer detailsPlatform integrity maintainanceExecution unitOperating system

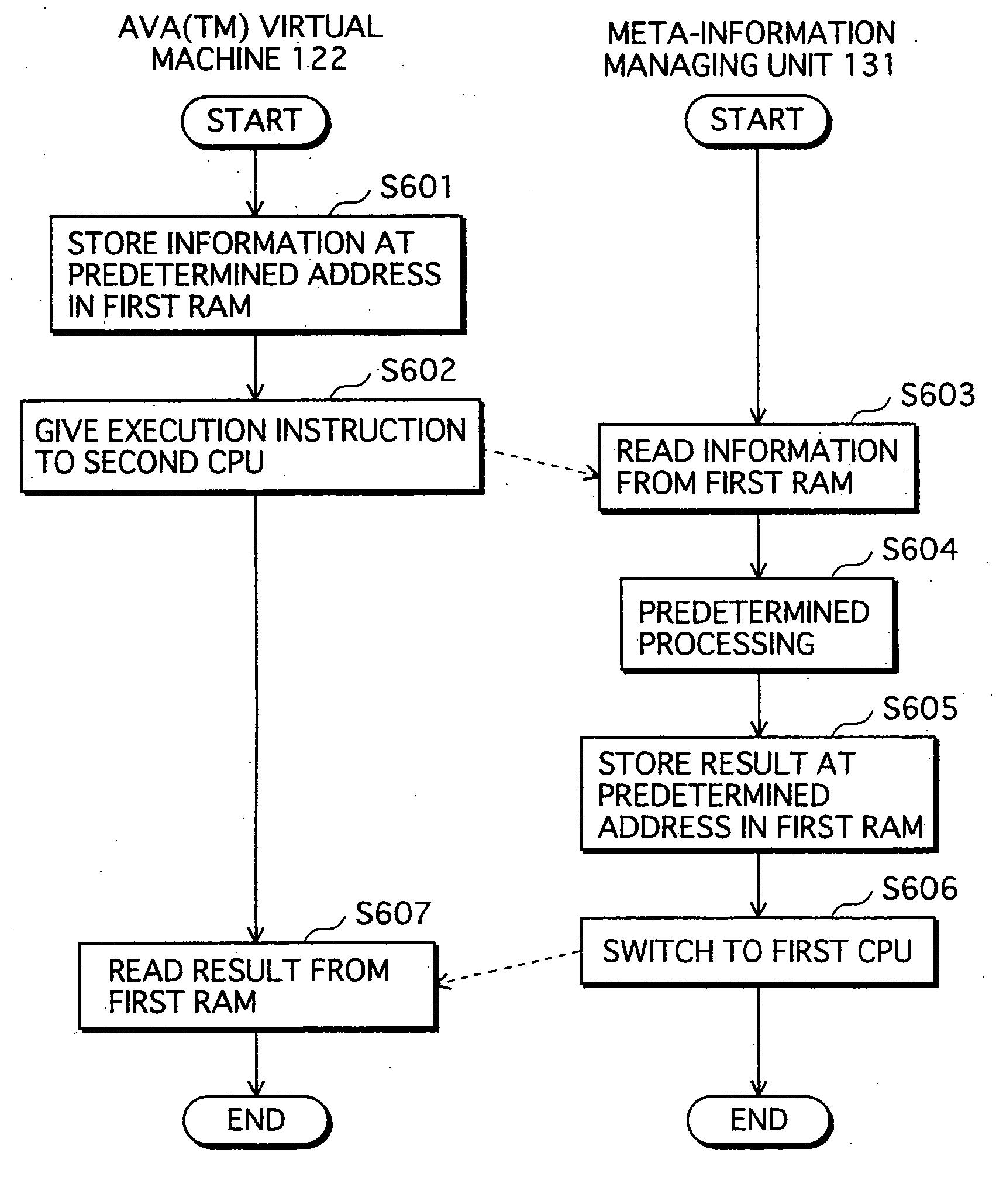

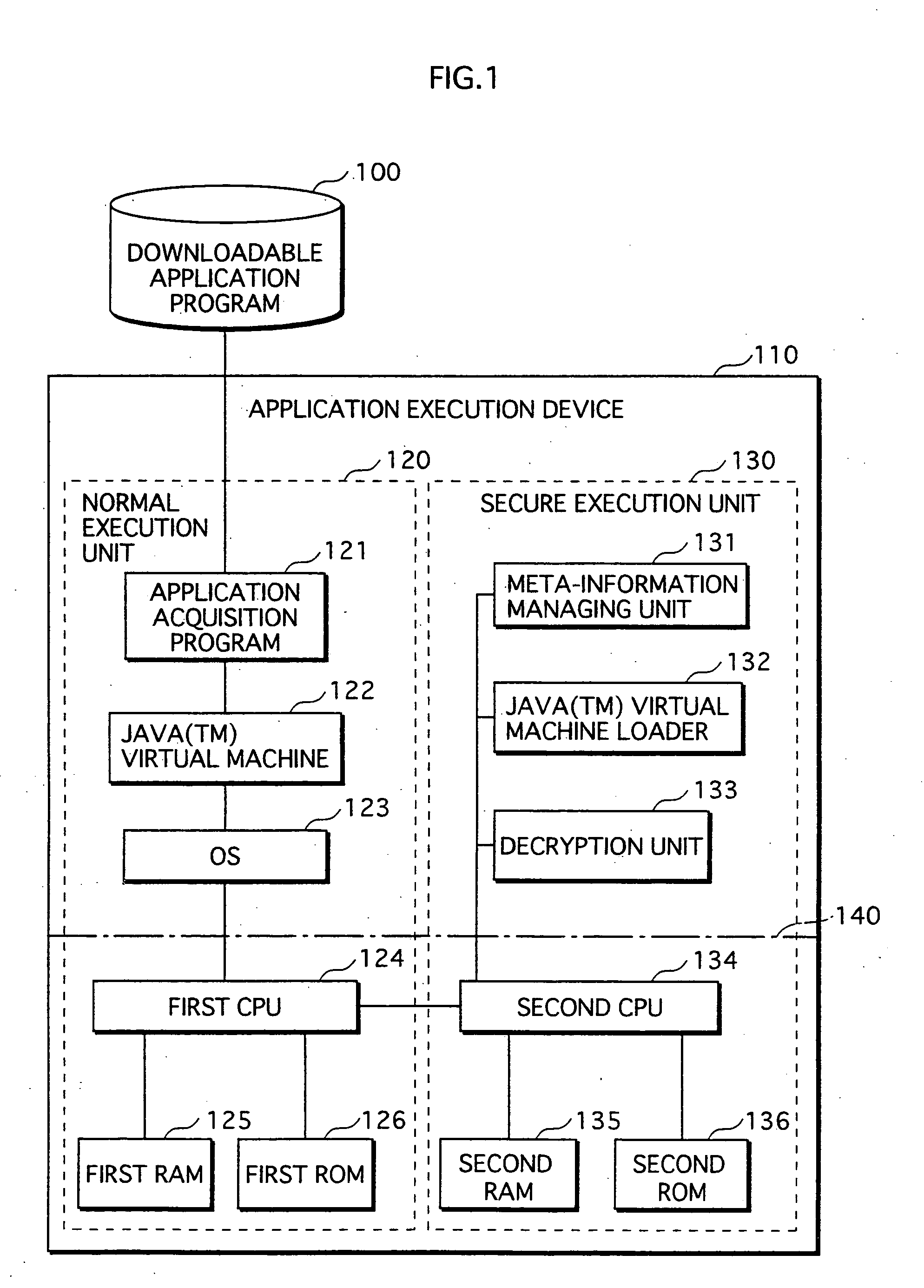

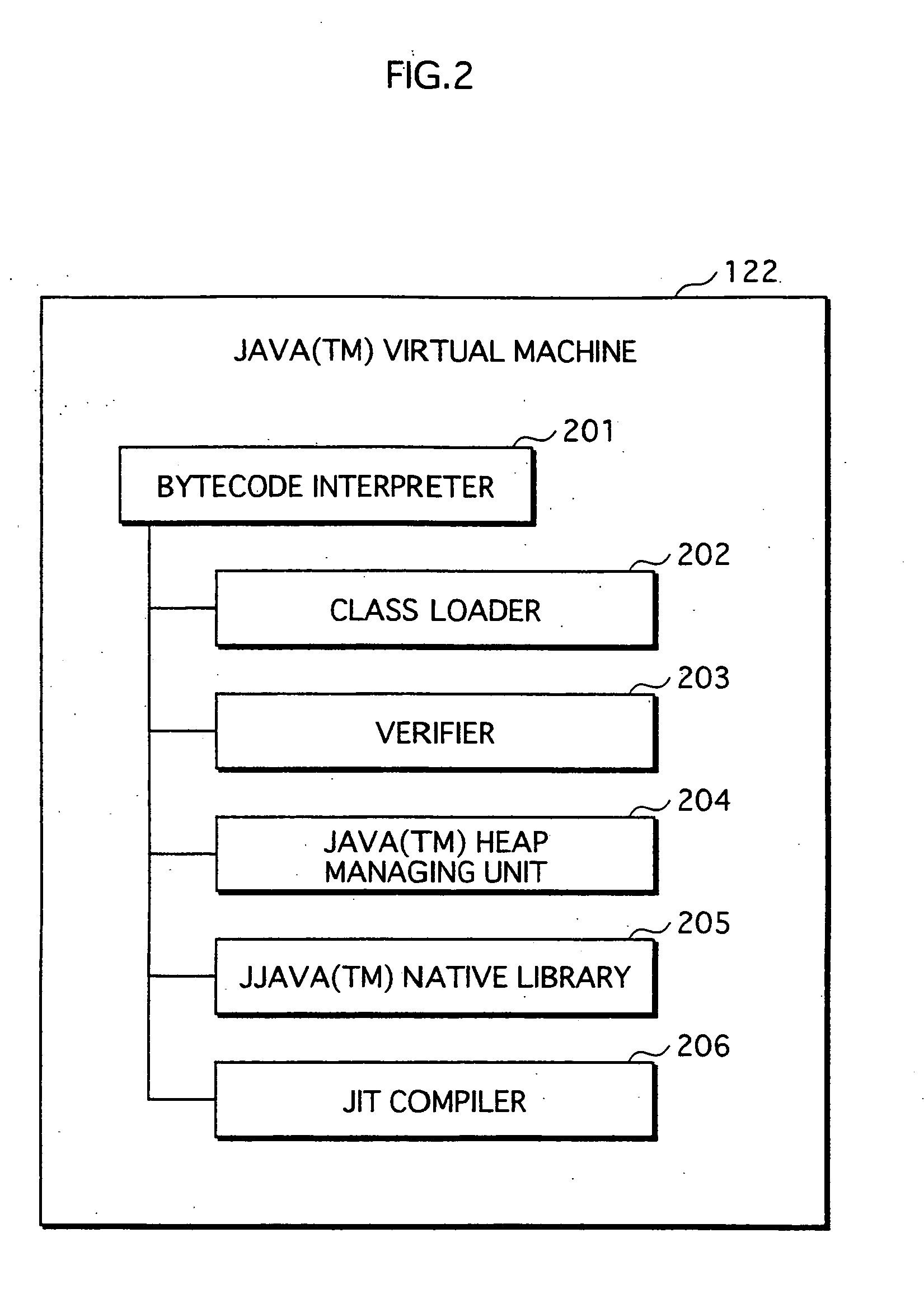

The conventional application protection technique complicates an application to make it difficult to analyze the application. However, with such a complication method, the complicate program can be analyzed sooner or later by taking a lot of time no matter how the degree of the complication is high. Also, it is impossible to protect the application from unauthorized copying. The meta-information managing unit that is to be executed in the secure execution unit stores the meta-information of the application in an area that can not be accessed by a debugger. When the meta-information is required by the normal execution unit to execute the application, a result of predetermined calculation using the meta-information is notified to the normal execution unit. In this way, the meta-information of the application can be kept secret.

Owner:PANASONIC CORP

System for automatically configuring features on a mobile telephone based on geographic location

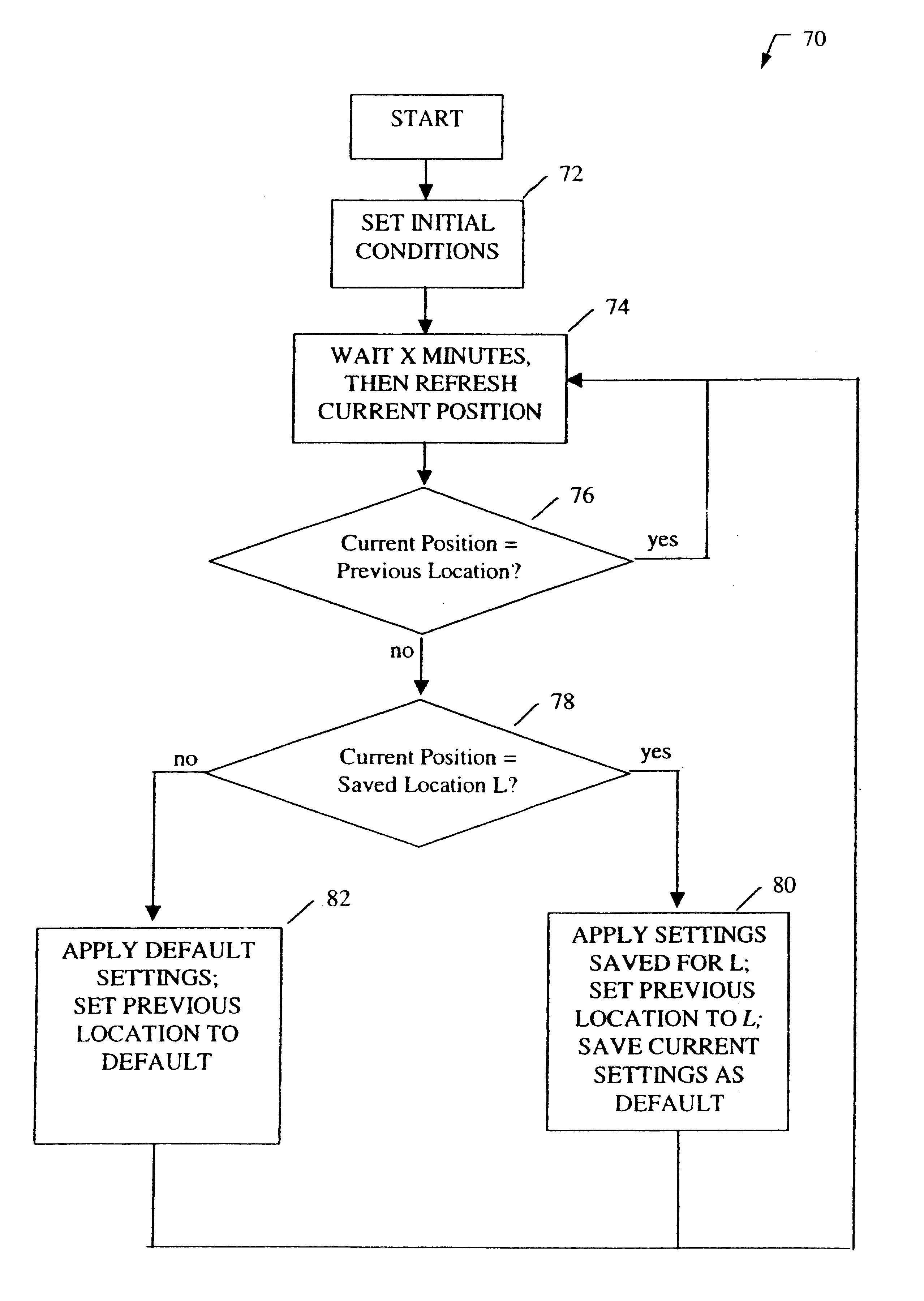

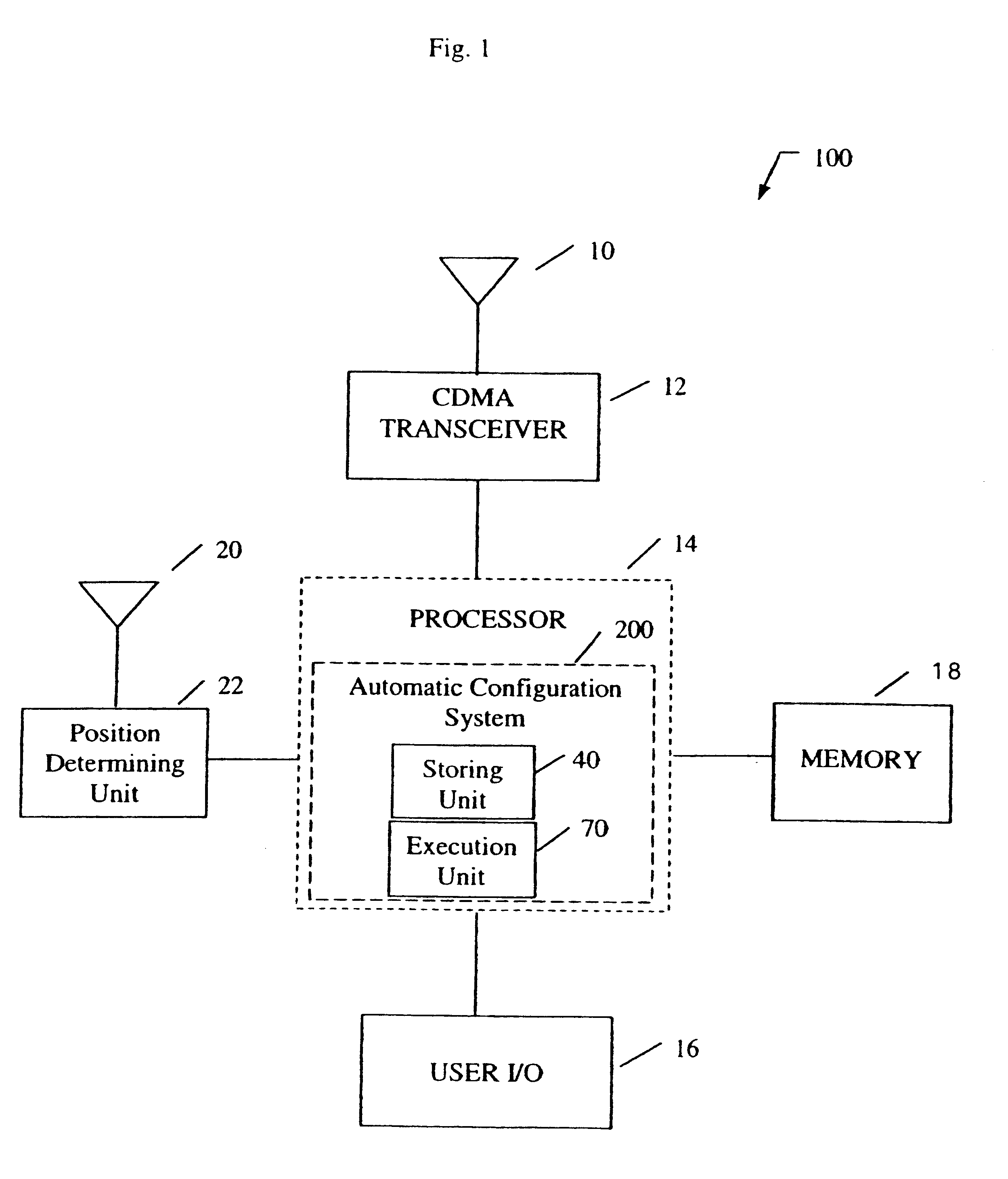

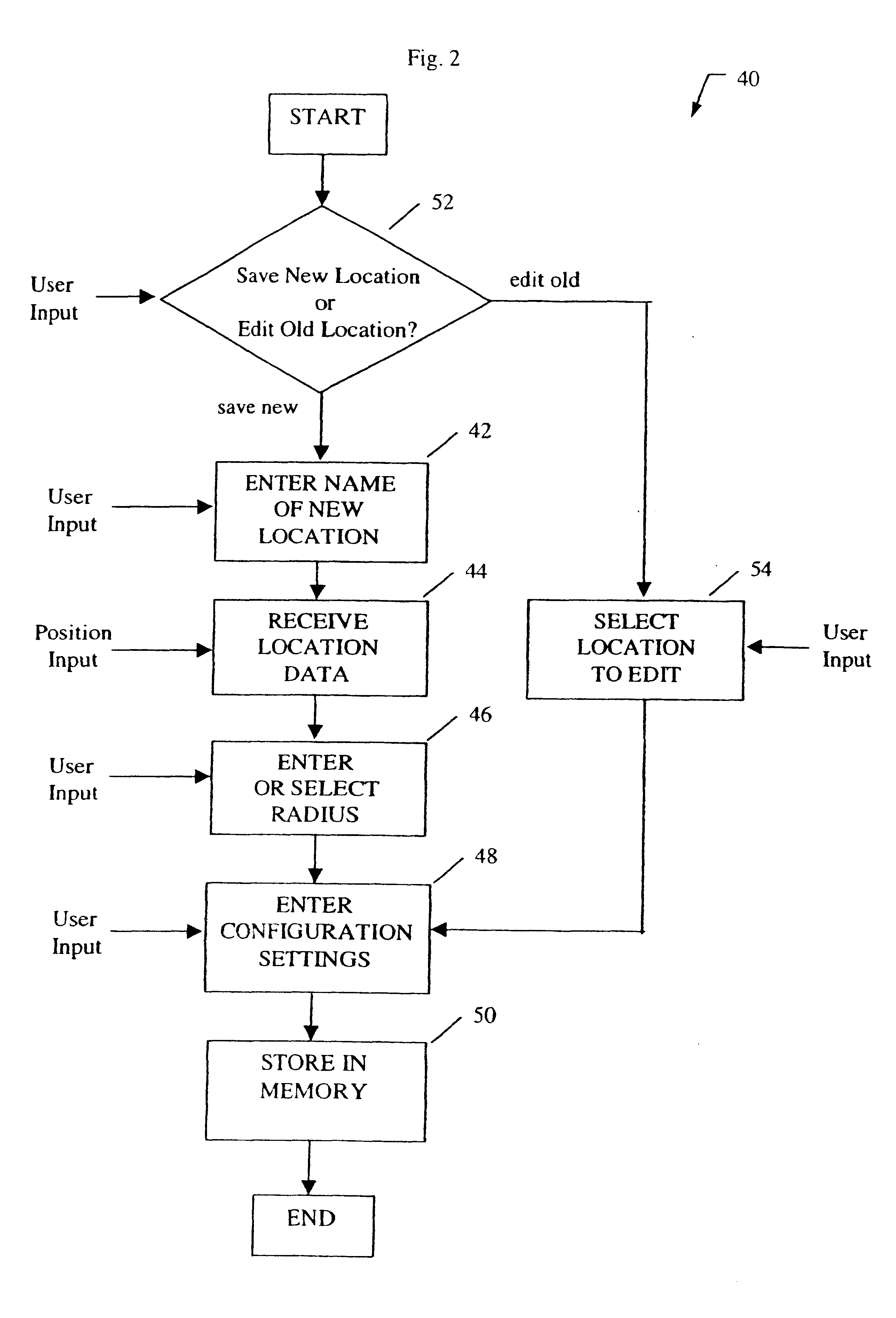

A system for automatically reconfiguring a mobile telephone based on geographic location. In the illustrative embodiment, the invention includes a storing unit (40) and an execution unit (70). The storing unit (40) allows the user to save a particular location and the desired configurations corresponding to that location. The execution unit (70) monitors the position of the telephone and, upon entering a saved location, executes the configurations corresponding to that location. The execution unit (70) also returns the configurations to their previous settings after the telephone has exited from the saved location.

Owner:QUALCOMM INC

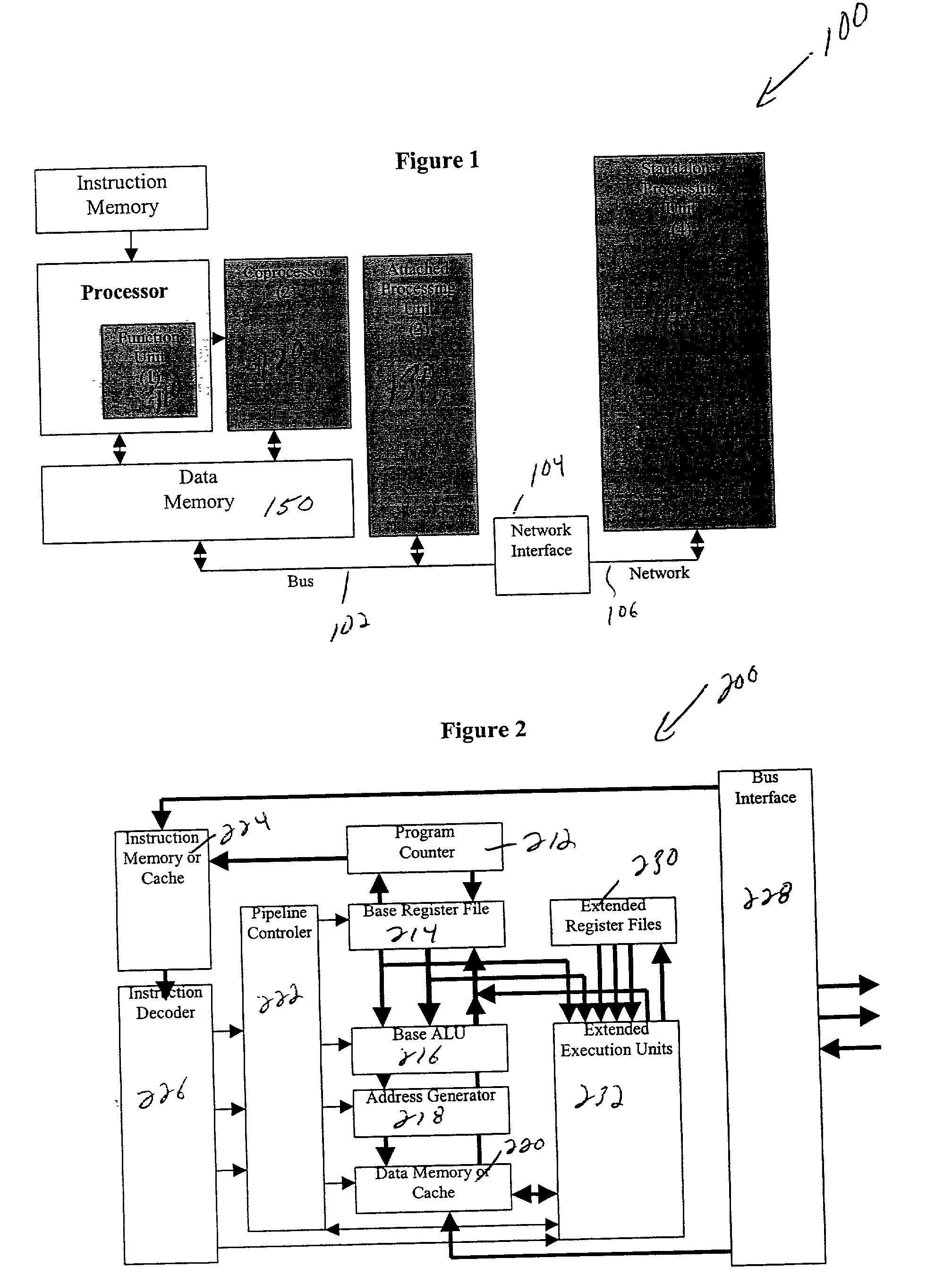

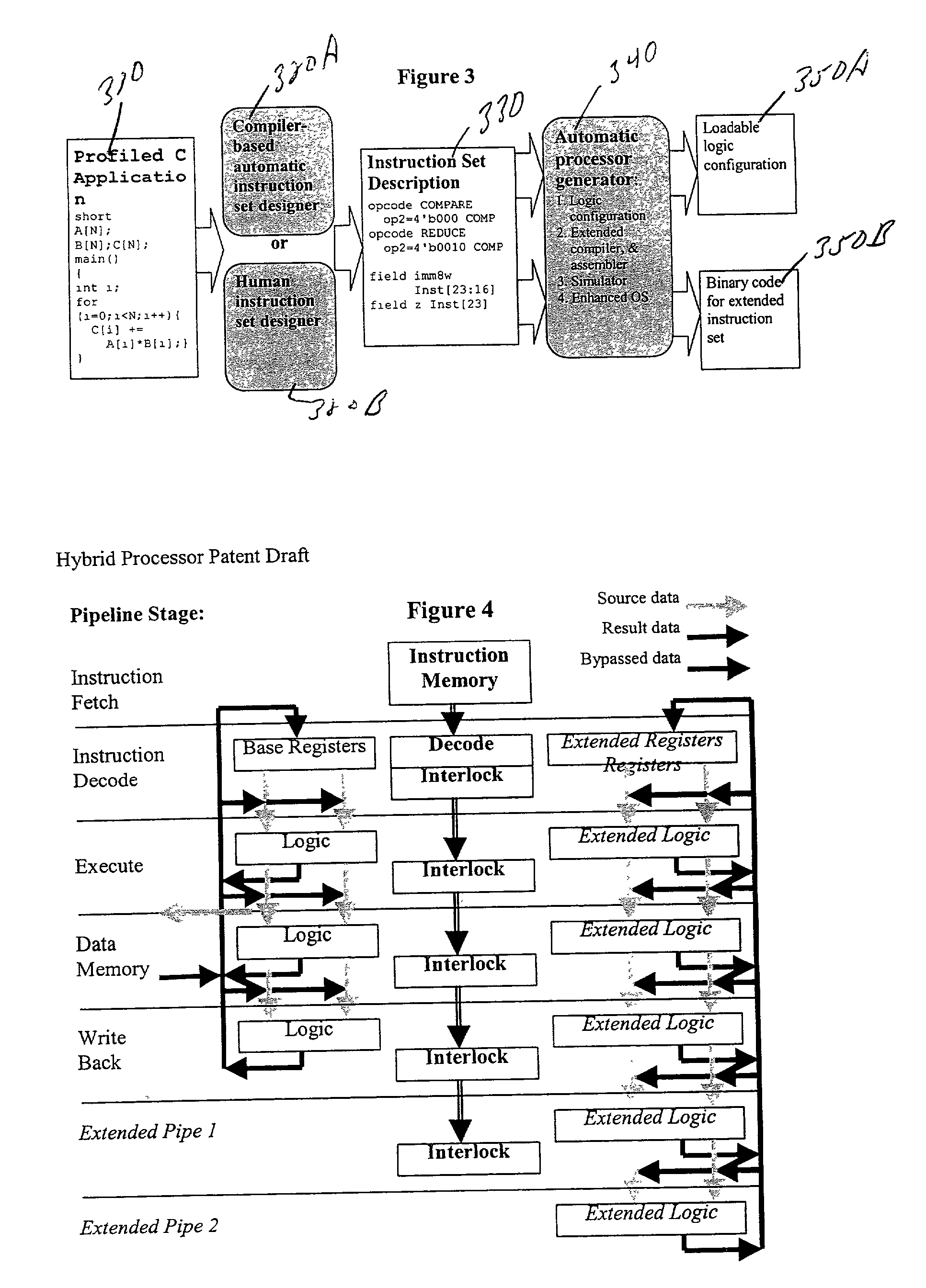

High-performance hybrid processor with configurable execution units

InactiveUS20050166038A1High bandwidthFlexibilityInstruction analysisConcurrent instruction executionHigh bandwidthLatency (engineering)

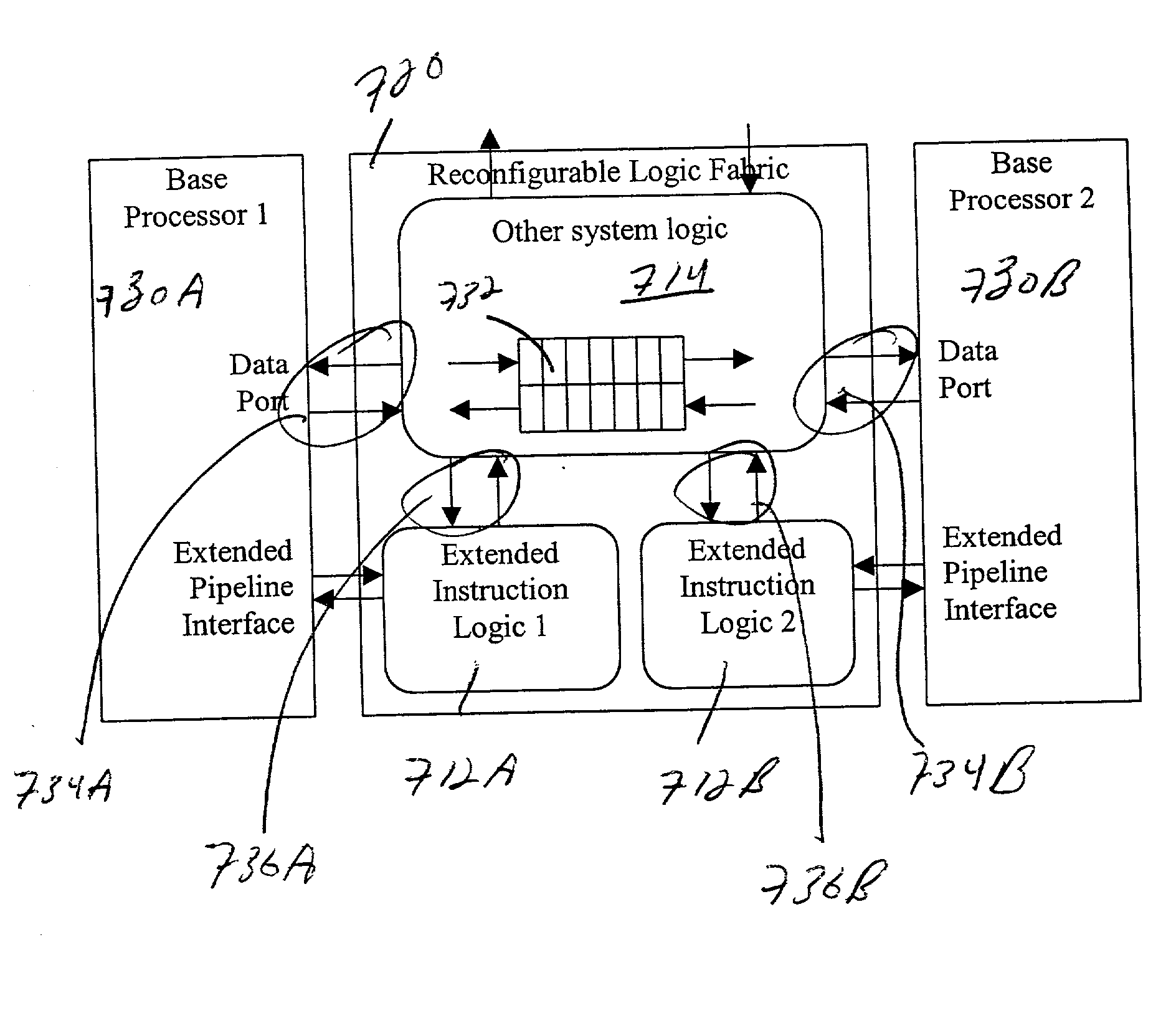

A new general method for building hybrid processors achieves higher performance in applications by allowing more powerful, tightly-coupled instruction set extensions to be implemented in reconfigurable logic. New instructions set configurations can be discovered and designed by automatic and semi-automatic methods. Improved reconfigurable execution units support deep pipelining, addition of additional registers and register files, compound instructions with many source and destination registers and wide data paths. New interface methods allow lower latency, higher bandwidth connections between hybrid processors and other logic.

Owner:TENSILICA

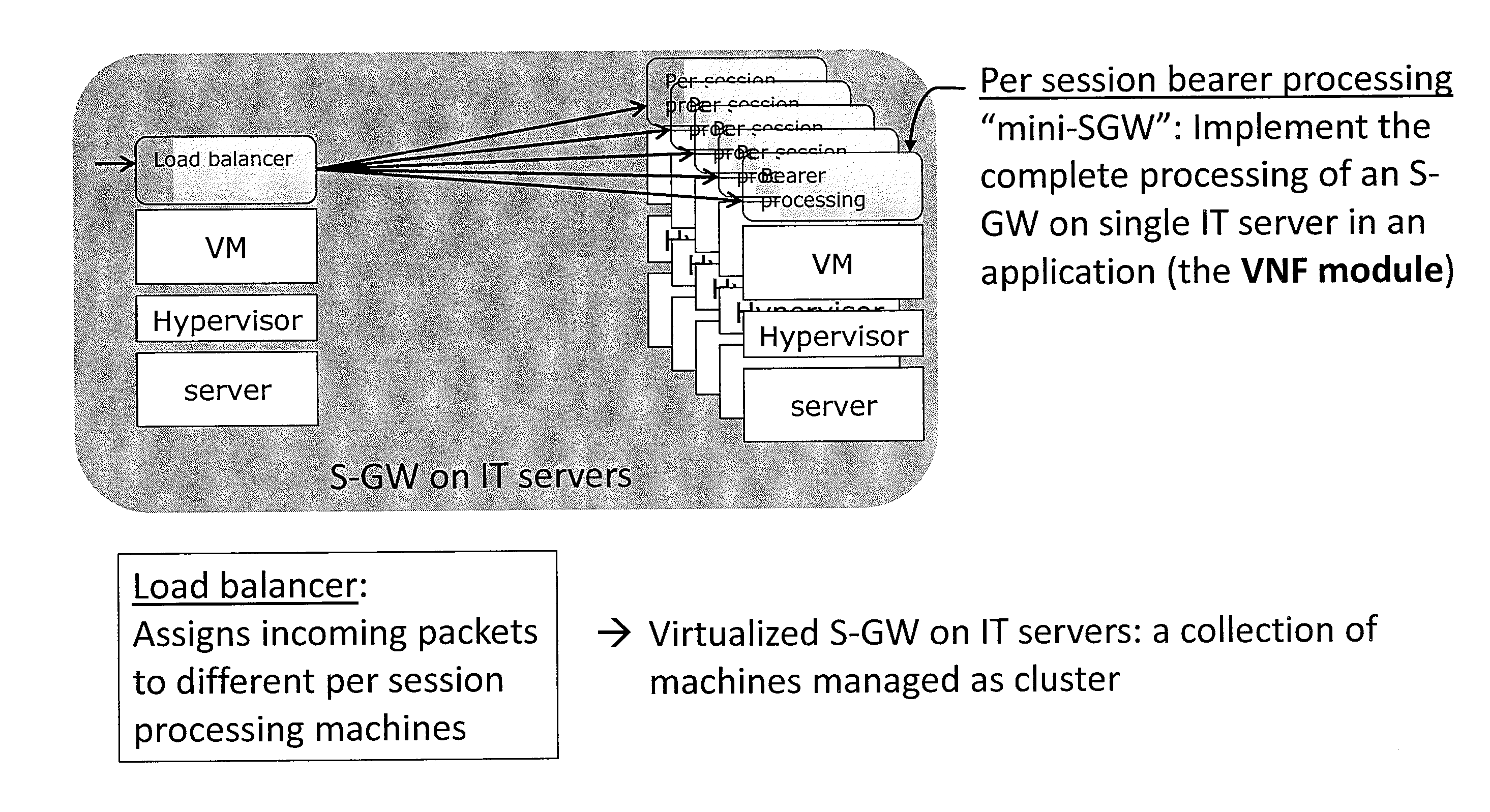

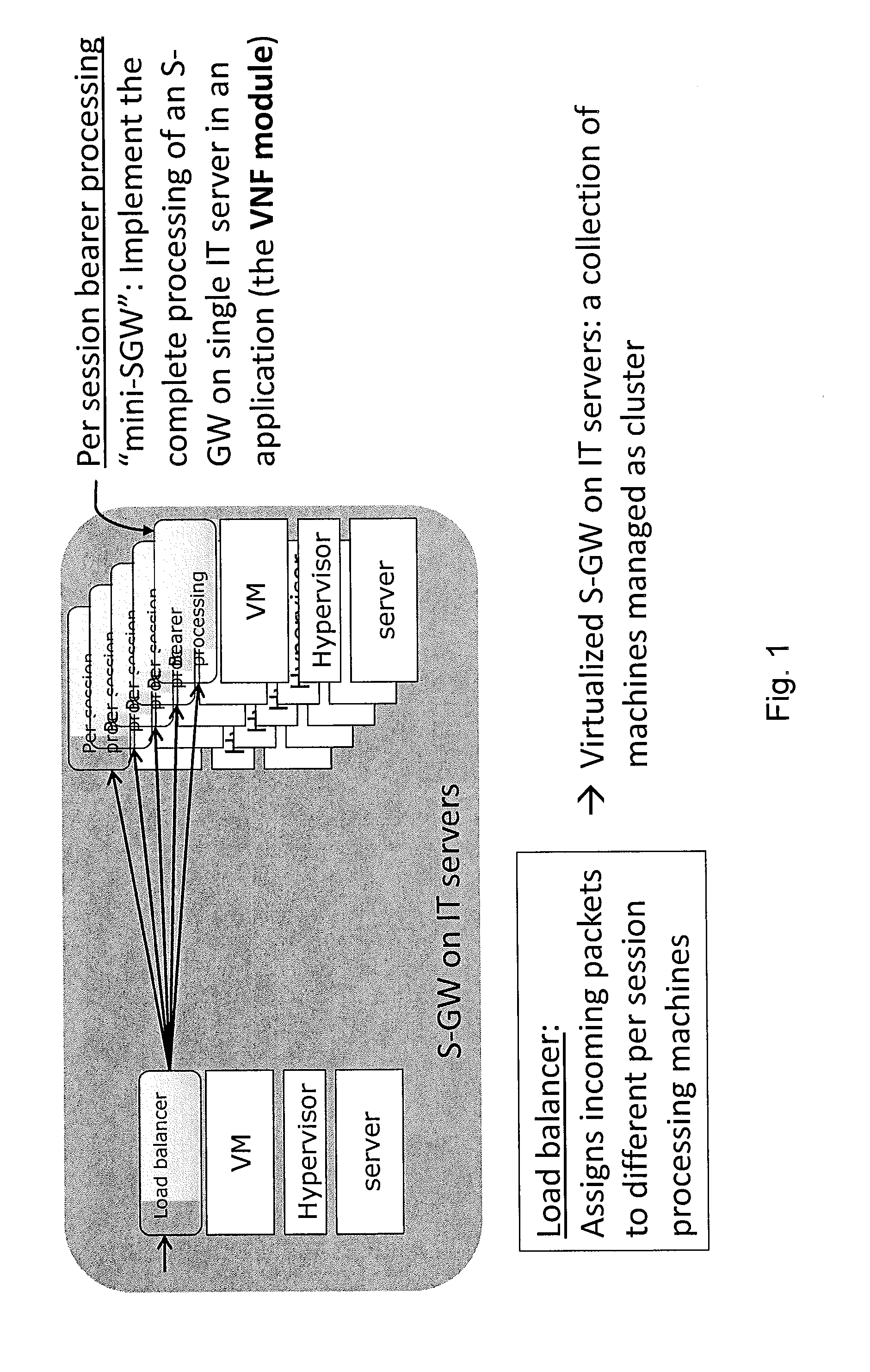

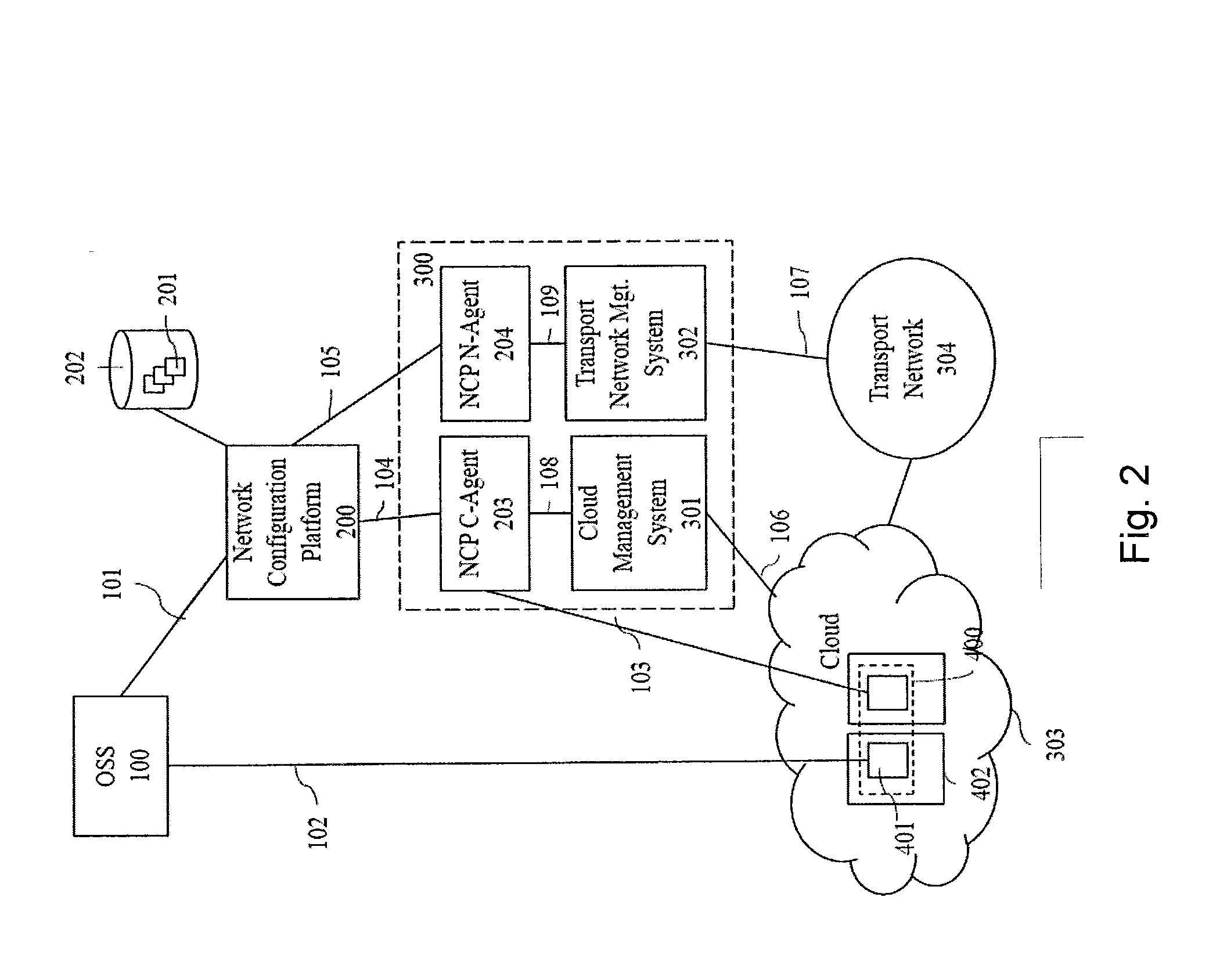

Method and apparatus for network virtualization

ActiveUS20150082308A1Avoid wasting resourcesFlexible implementationData switching networksSoftware simulation/interpretation/emulationExecution unitNetwork virtualization

A method for implementing an entity of a network by virtualizing said network entity and implementing it on one or more servers each acting as an execution unit for executing thereon one or more applications running and / or one or more virtual machines running on said execution unit, each of said application programs or virtual machines running on a server and implementing at least a part of the functionality of said network entity being called a virtual network function VNF module, wherein a plurality of said VNF modules together implement said network entity to thereby form a virtual network function VNF, said method comprising the steps of: obtaining m key performance indicators (KPI) specifying the required overall performance of the VNF, obtaining n performance characteristics for available types of execution units, determining one or more possible deployment plans based on the obtained m KPI and n performance characteristics, each deployment plan specifying the number and types of execution units, such that the joint performance of VNF modules running on these execution units achieves the required overall performance of the VNF.

Owner:NTT DOCOMO INC

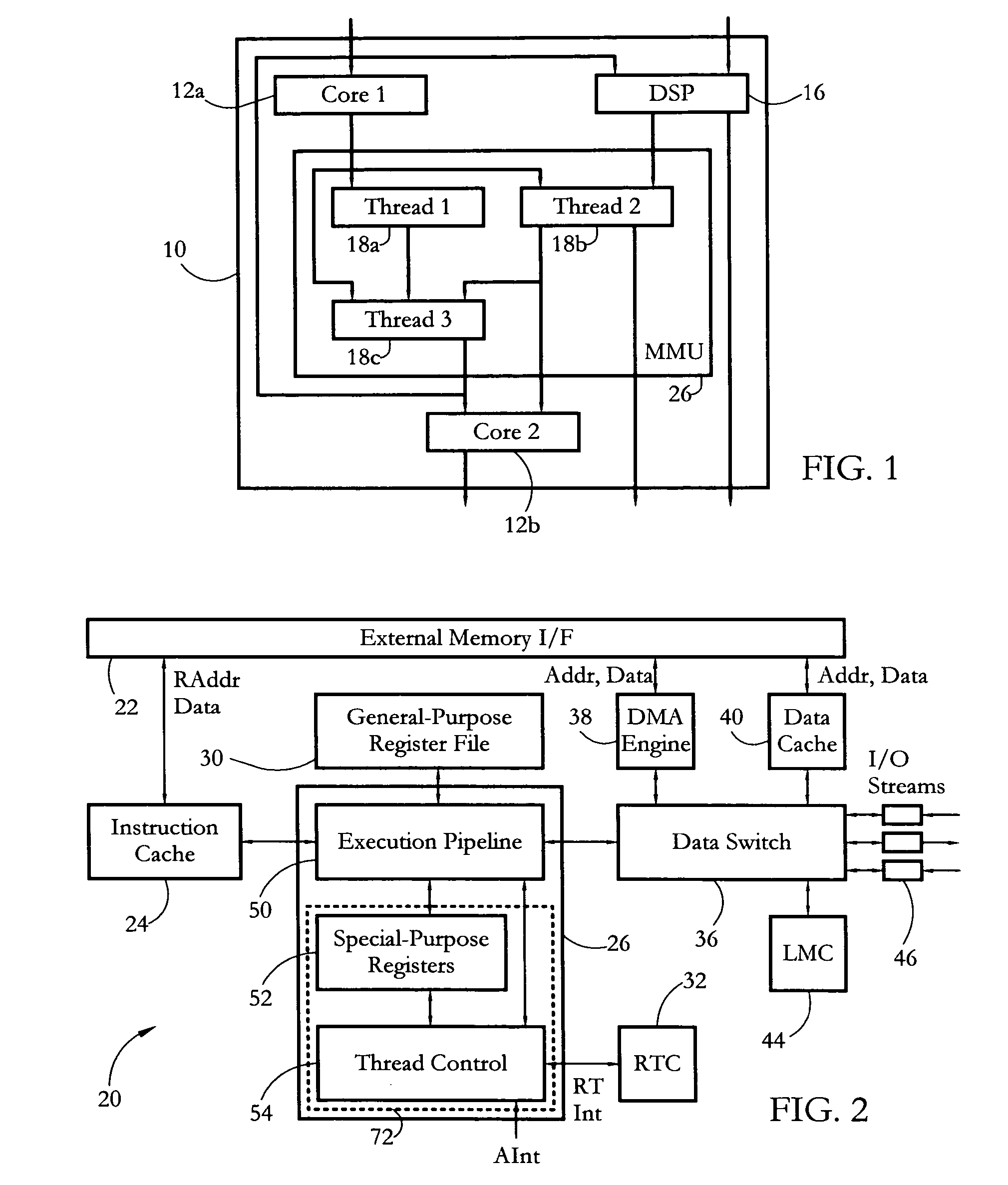

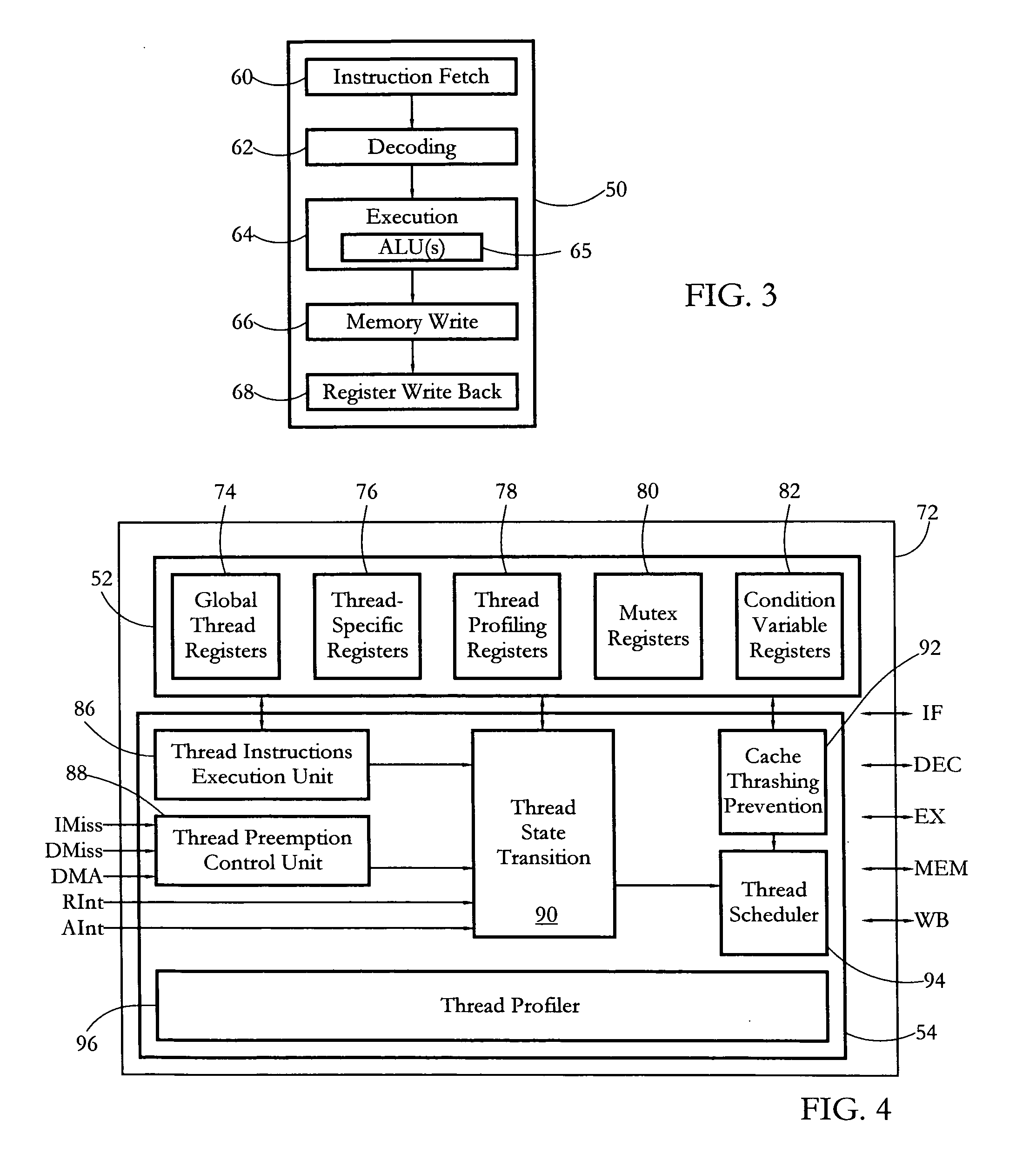

Hardware multithreading systems and methods

According to some embodiments, a multithreaded microcontroller includes a thread control unit comprising thread control hardware (logic) configured to perform a number of multithreading system calls essentially in real time, e.g. in one or a few clock cycles. System calls can include mutex lock, wait condition, and signal instructions. The thread controller includes a number of thread state, mutex, and condition variable registers used for executing the multithreading system calls. Threads can transition between several states including free, run, ready and wait. The wait state includes interrupt, condition, mutex, I-cache, and memory substates. A thread state transition controller controls thread states, while a thread instructions execution unit executes multithreading system calls and manages thread priorities to avoid priority inversion. A thread scheduler schedules threads according to their priorities. A hardware thread profiler including global, run and wait profiler registers is used to monitor thread performance to facilitate software development.

Owner:GEO SEMICONDUCTOR INC

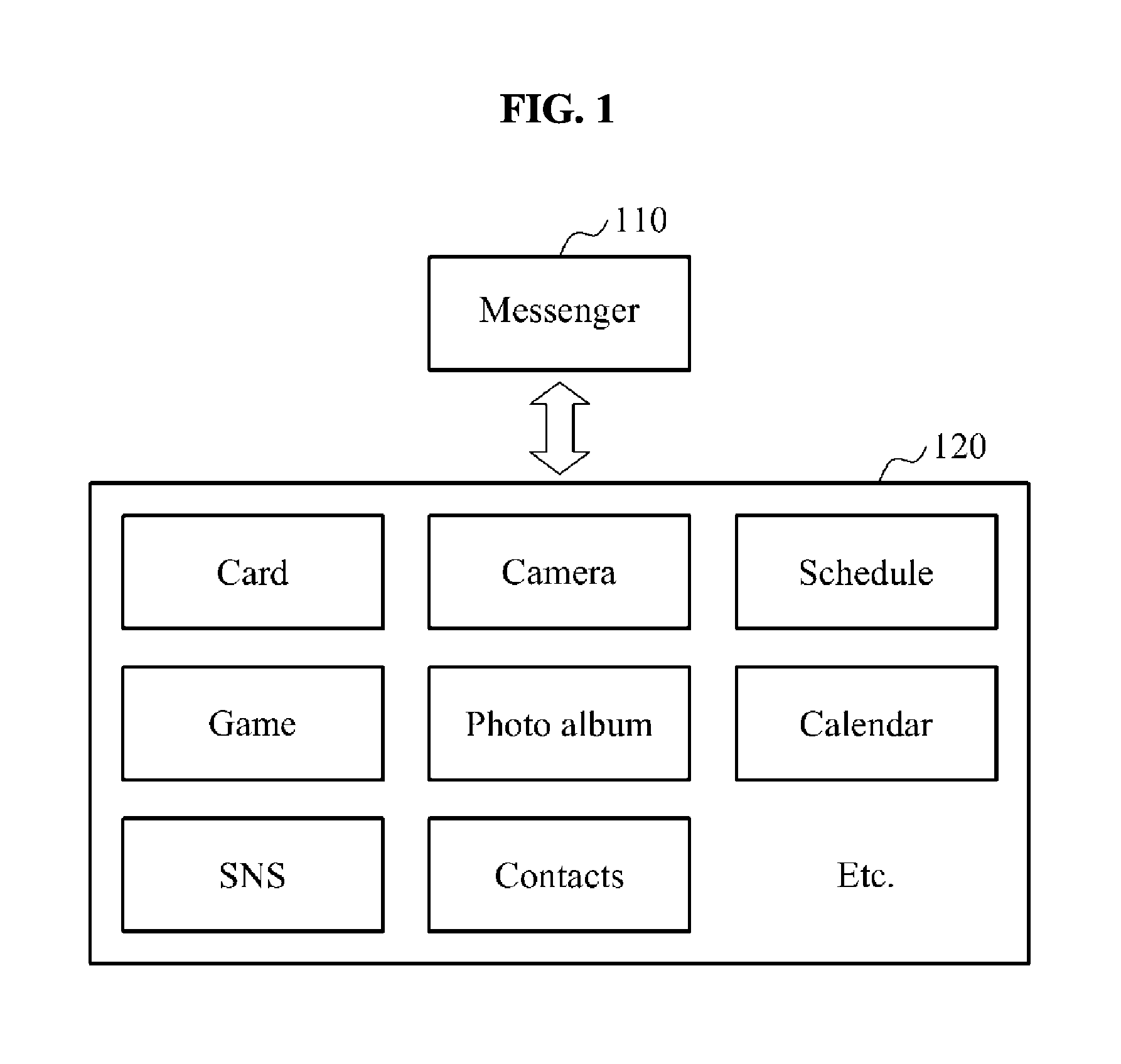

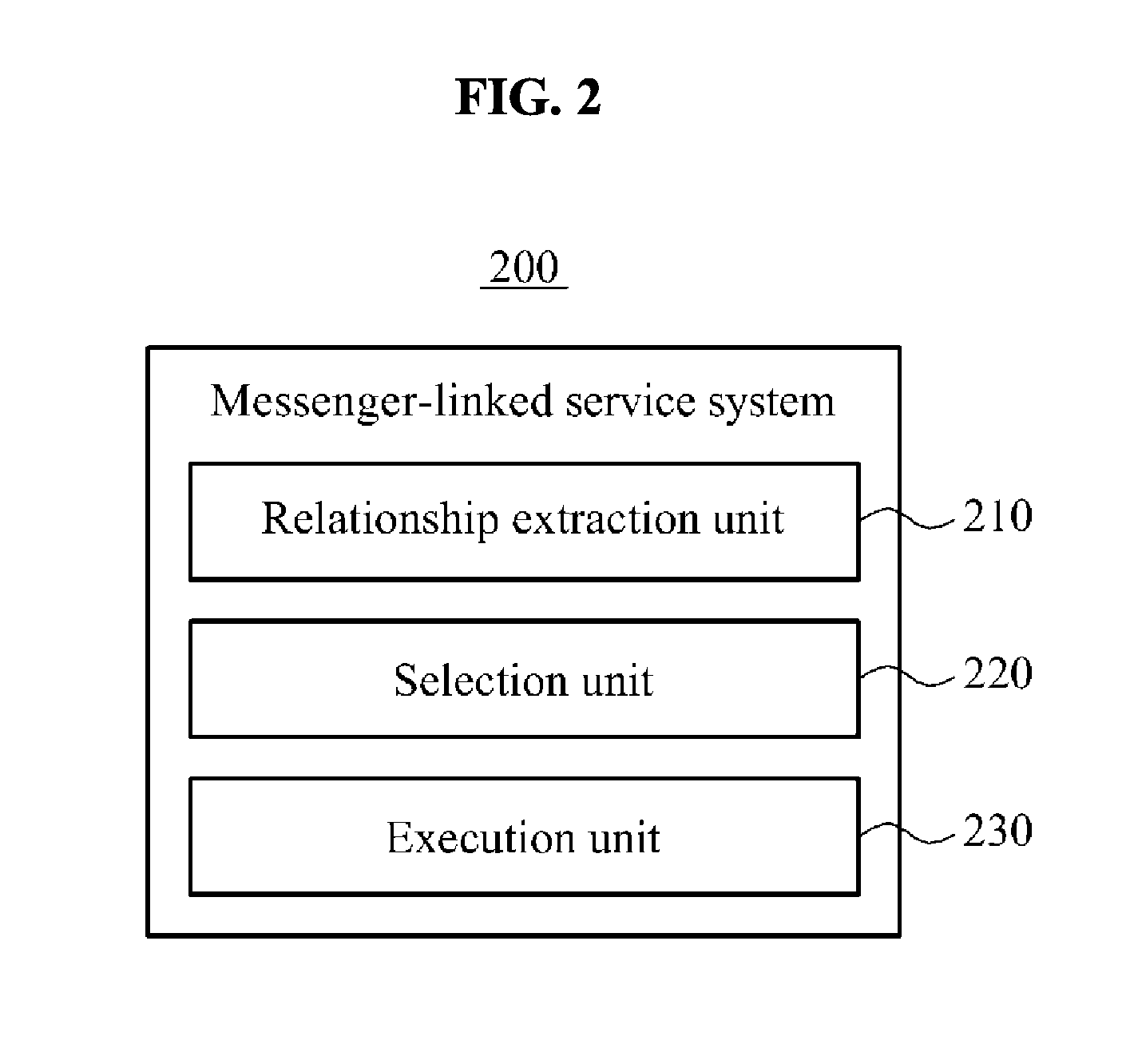

Messenger-linked service system and method using a social graph of a messenger platform

InactiveUS20130332543A1Reduce deliveryData processing applicationsServices signallingSocial graphExecution unit

A messenger-linked service system and method using a social graph based on a human relationship of a messenger are provided. The messenger-linked service system may include a relationship extraction unit to extract a social graph of a friend relationship of the messenger, a selection unit to select data in the messenger-linked service, and an execution unit to either transmit or to execute a sharing request of the selected data to the friend using the social graph.

Owner:LINE CORPORATION

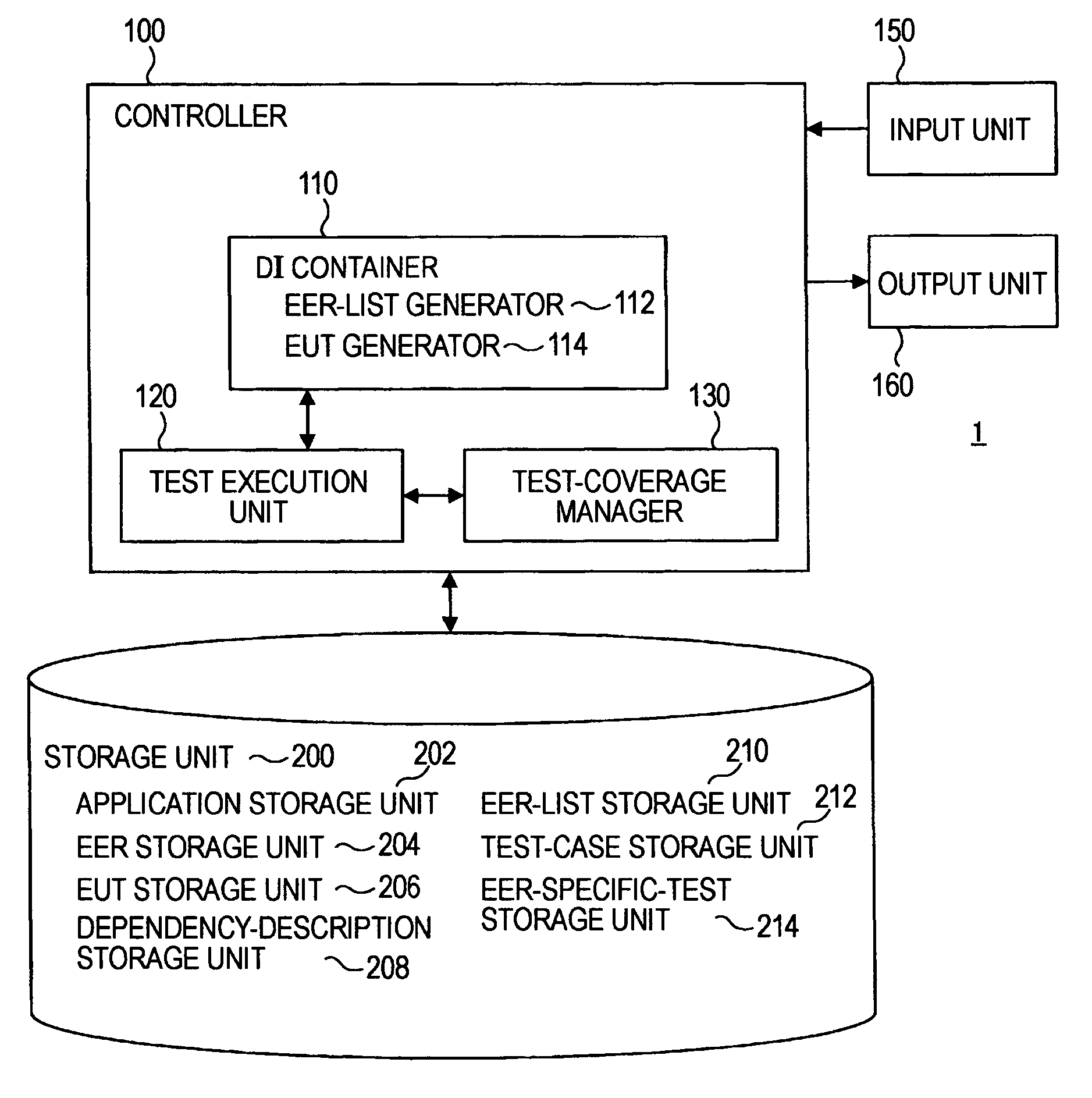

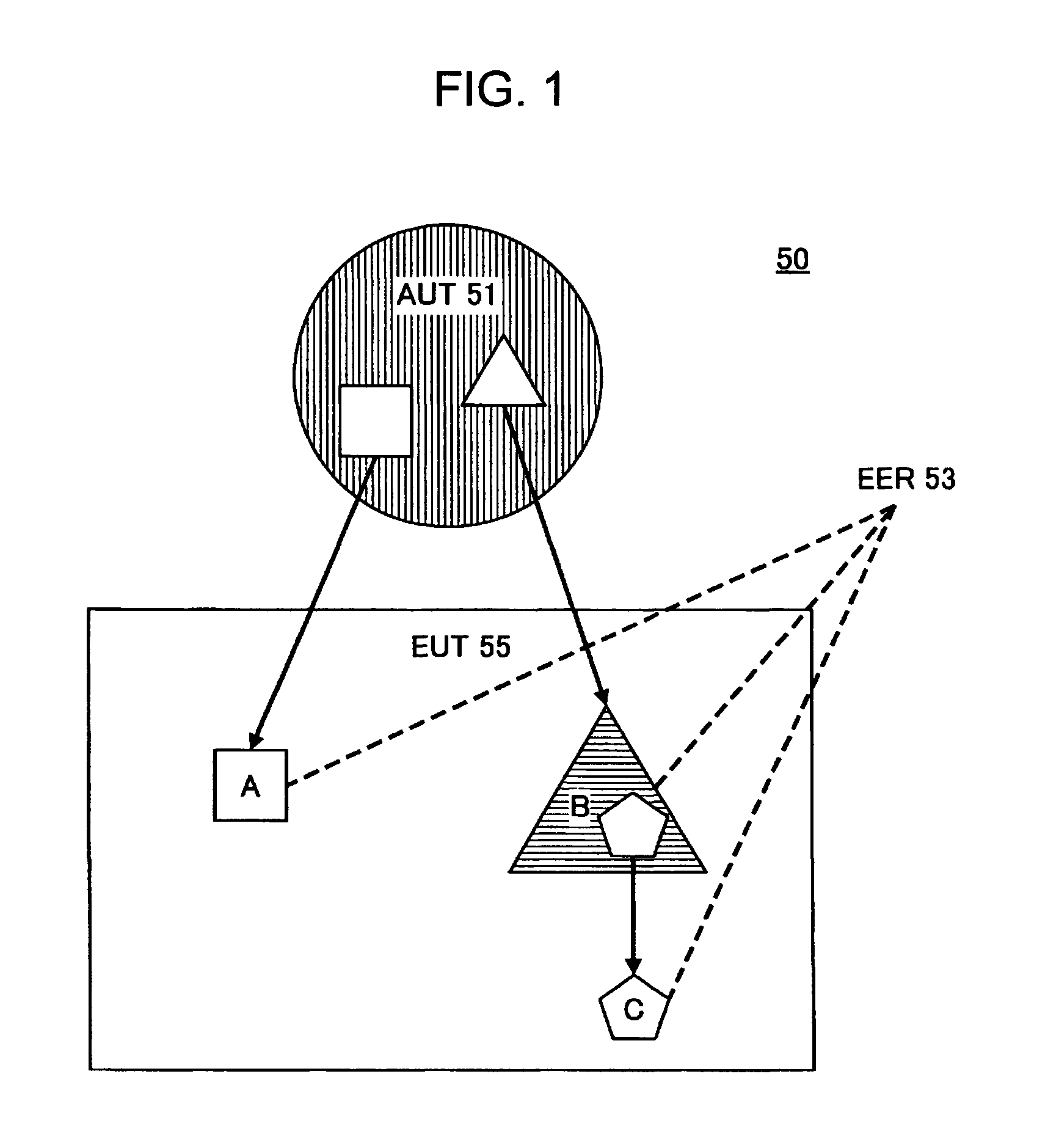

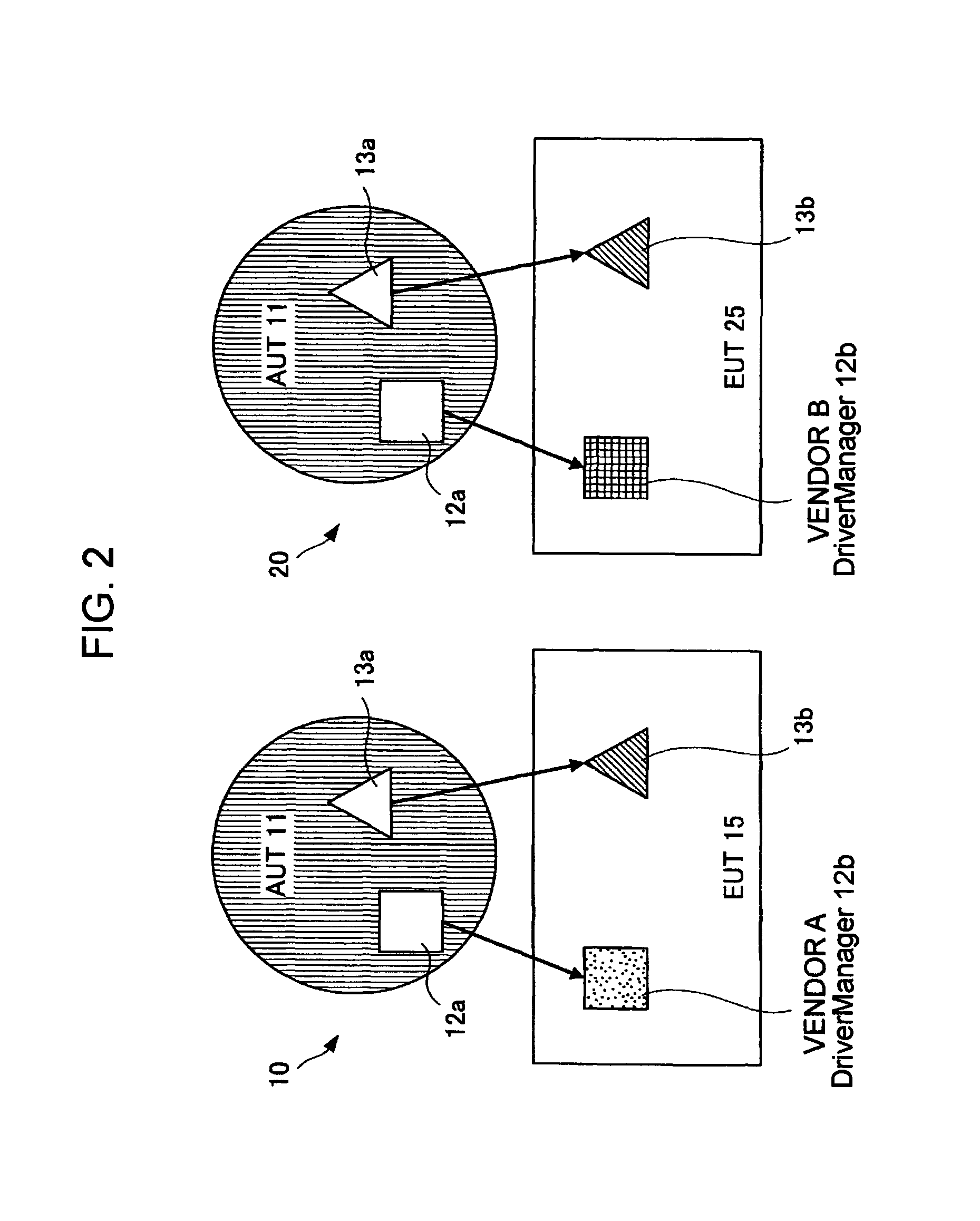

Program testing method and testing device

InactiveUS8214803B2Error detection/correctionSpecific program execution arrangementsDependency injectionExecution unit

A testing device for testing a system configured of an application and a set of execution-environment-dependent resources used by the application includes a DI container for injecting one resource set (EUT) into an application for which a test is executed via an application interface, the resource set being a candidate of dependency injection into the application, and a test execution unit that executes a test on the application with the one resource set having been injected therein. When another resource set that is different from the one resource set exists the injection by the DI container and the test by the test execution unit are executed on the other resource set.

Owner:INT BUSINESS MASCH CORP

Adaptive computing ensemble microprocessor architecture

ActiveUS7389403B1Improve performanceImprove efficiencyEnergy efficient ICTGeneral purpose stored program computerExecution controlPower usage

An Adaptive Computing Ensemble (ACE) includes a plurality of flexible computation units as well as an execution controller to allocate the units to Computing Ensembles (CEs) and to assign threads to the CEs. The units may be any combination of ACE-enabled units, including instruction fetch and decode units, integer execution and pipeline control units, floating-point execution units, segmentation units, special-purpose units, reconfigurable units, and memory units. Some of the units may be replicated, e.g. there may be a plurality of integer execution and pipeline control units. Some of the units may be present in a plurality of implementations, varying by performance, power usage, or both. The execution controller dynamically alters the allocation of units to threads in response to changing performance and power consumption observed behaviors and requirements. The execution controller also dynamically alters performance and power characteristics of the ACE-enabled units, according to the observed behaviors and requirements.

Owner:ORACLE INT CORP

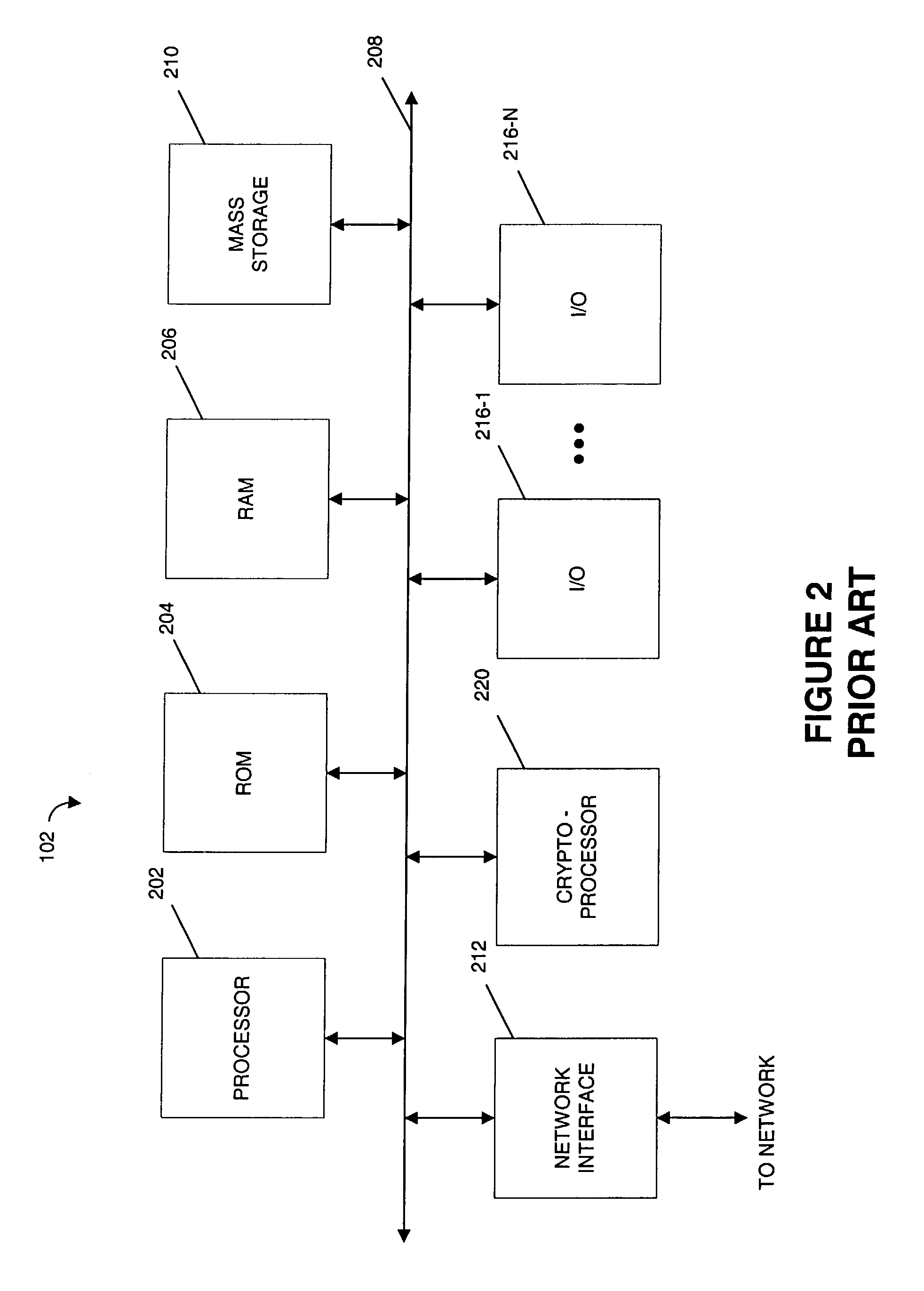

Stream processor with cryptographic co-processor

InactiveUS20030084309A1Energy efficient ICTStatic indicating devicesProcessing coreDirect memory access

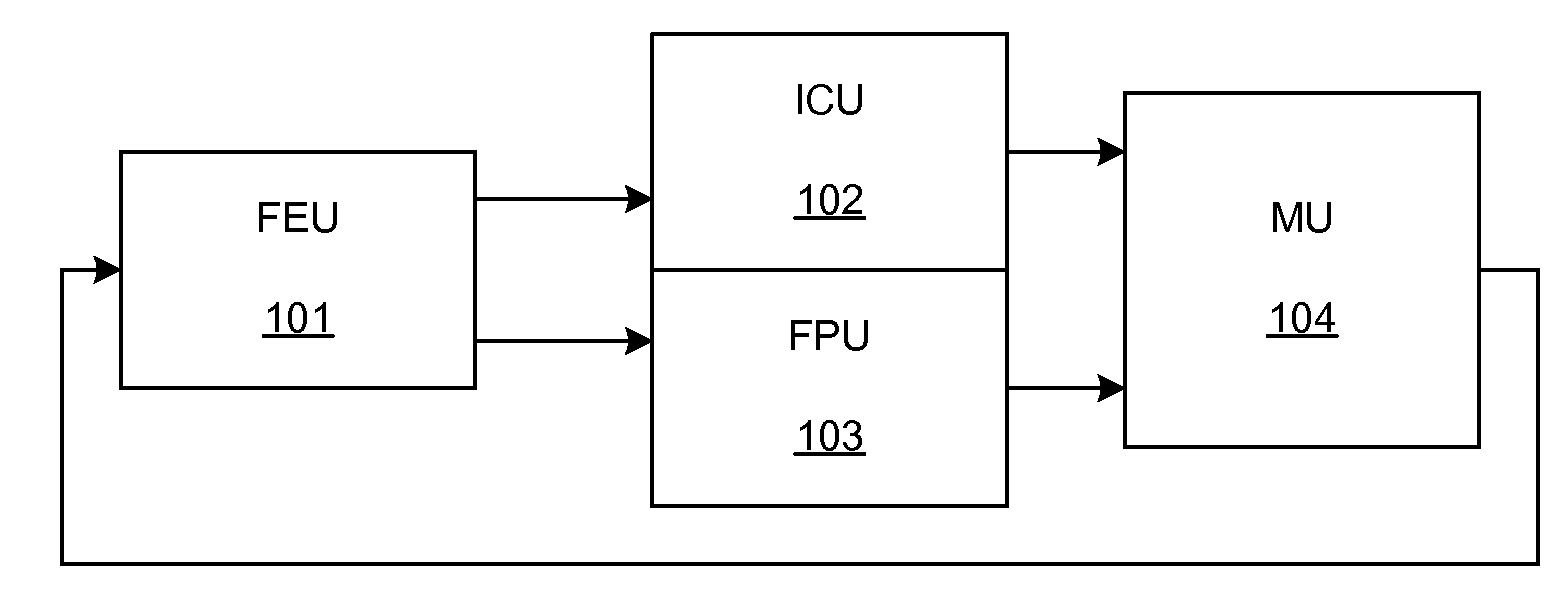

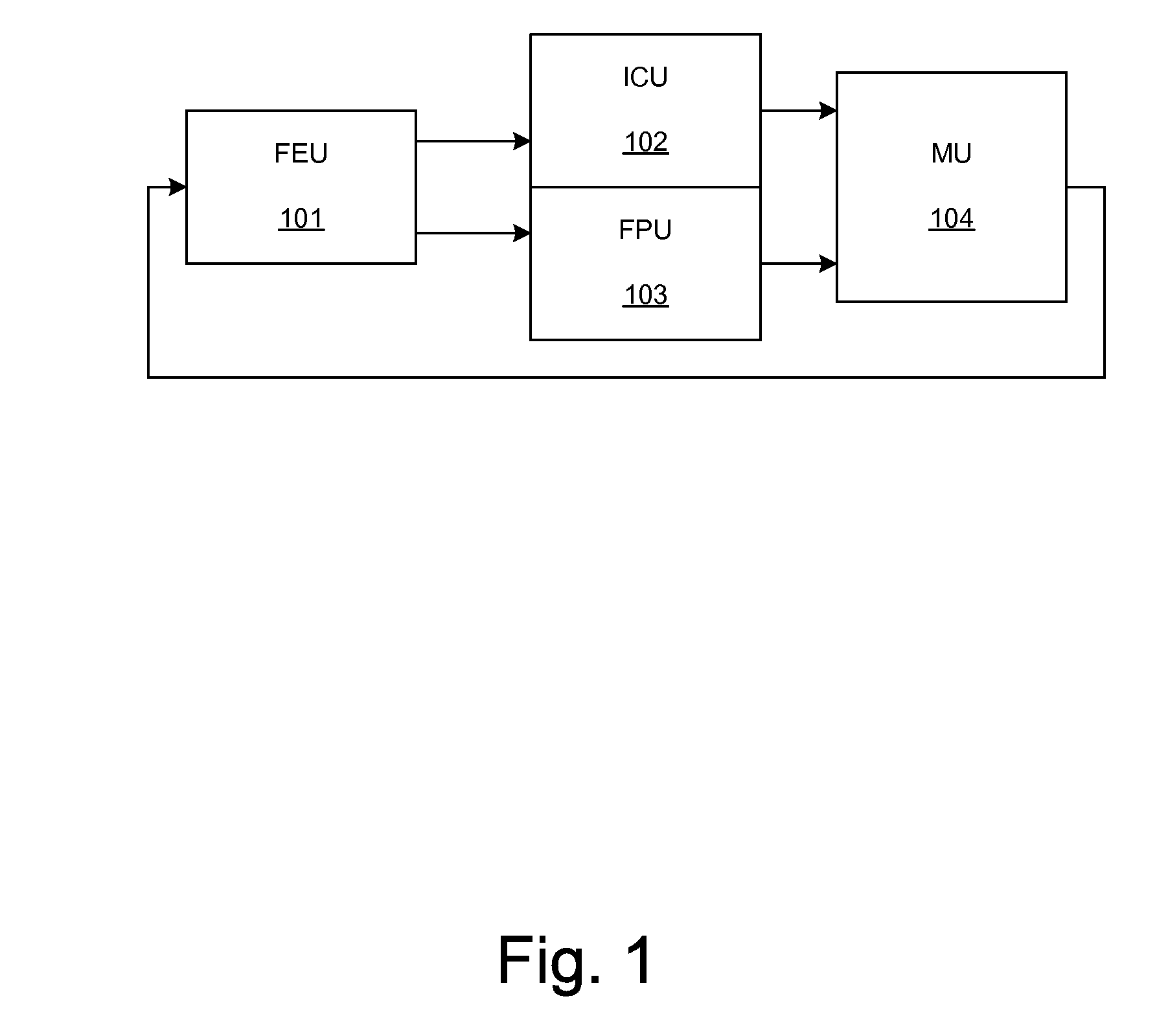

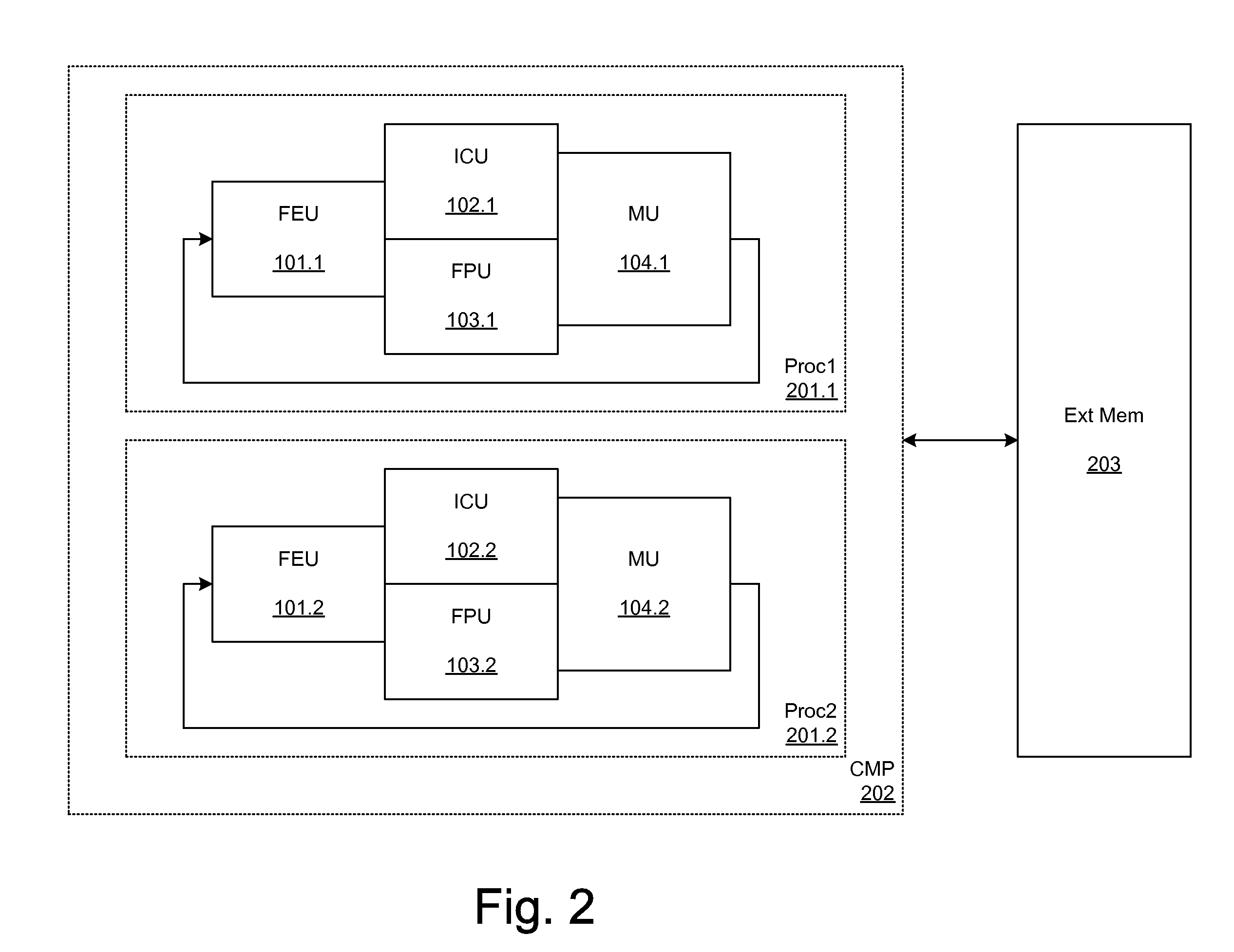

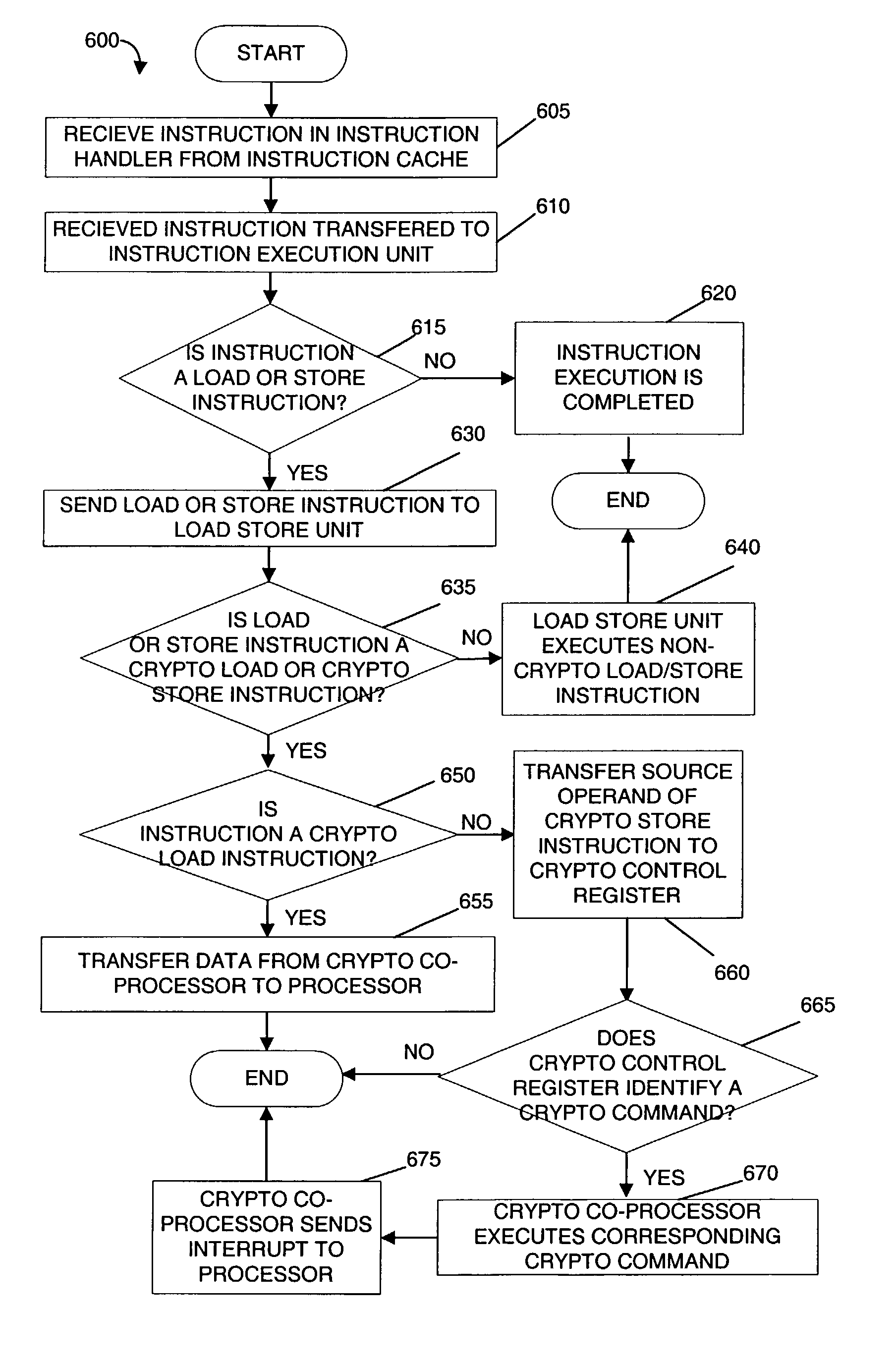

A microprocessor includes a first processing core, a first cryptographic coprocessor and an integer multiplier unit that is coupled to the first processing core and the first cryptographic co-processor. The first processing core includes an instruction decode unit, an instruction execution unit, a load / store unit. The first cryptographic coprocessor is located on a first die with the first processing core. The first cryptographic co-processor includes a cryptographic control register, a direct memory access engine that is coupled to the load / store unit in the first processing core and a cryptographic memory.

Owner:SUN MICROSYSTEMS INC

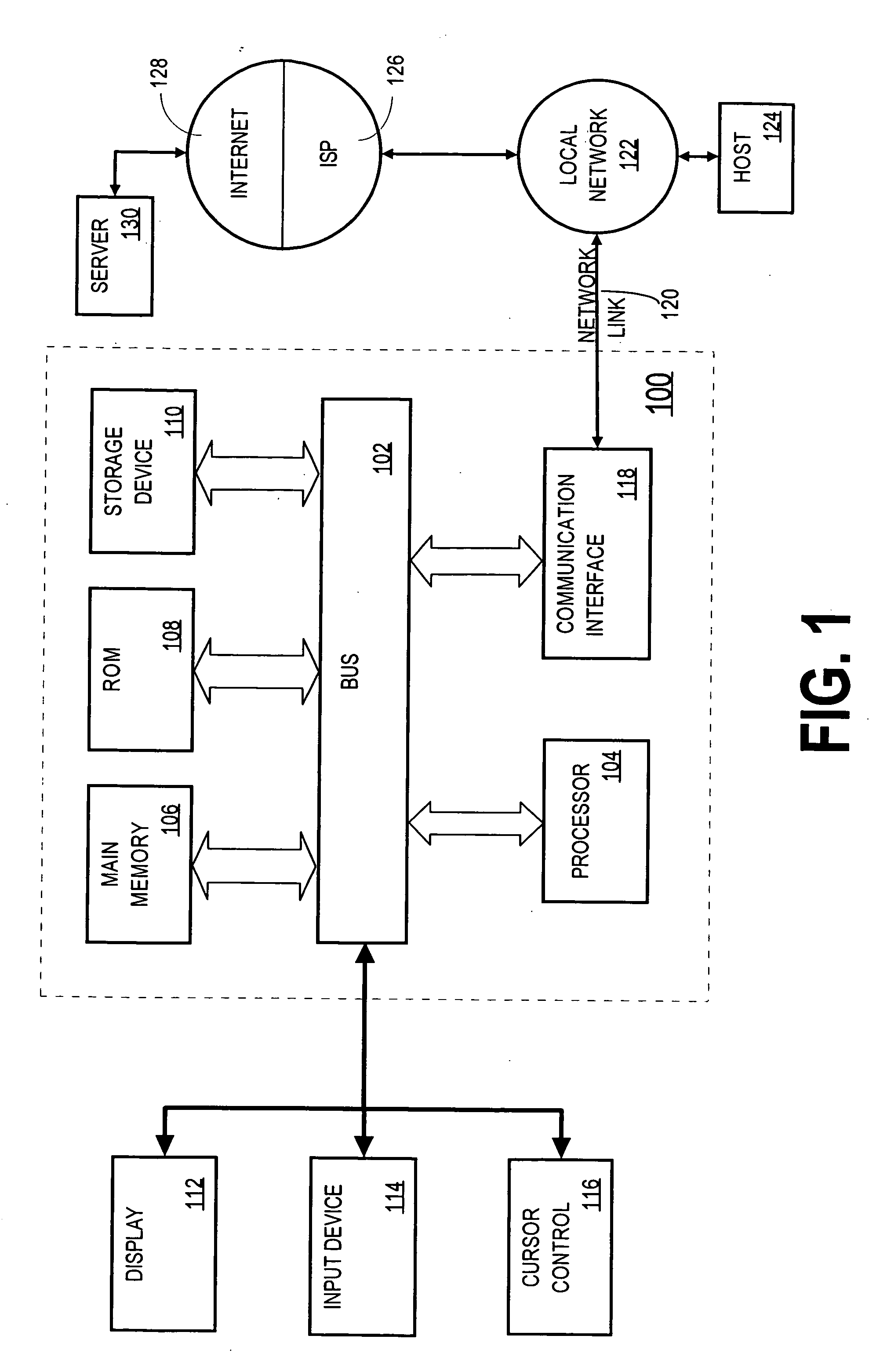

Using a virtual machine instance as the basic unit of user execution in a server environment

InactiveUS20050132368A1Data processing applicationsMultiple digital computer combinationsOperational systemExecution unit

Techniques are provided for instantiating separate Java virtual machines for each session established by a server. Because each session has its own virtual machine, the Java programs executed by the server for each user connected to the server are insulated from the Java programs executed by the server for all other users connected to the server. The separate VM instances can be created and run, for example, in separate units of execution that are managed by the operating system of the platform on which the server is executing. For example, the separate VM instances may be executed either as separate processes, or using separate system threads. Because the units of execution used to run the separate VM instances are provided by the operating system, the operating system is able to ensure that the appropriate degree of insulation exists between the VM instances.

Owner:ORACLE INT CORP

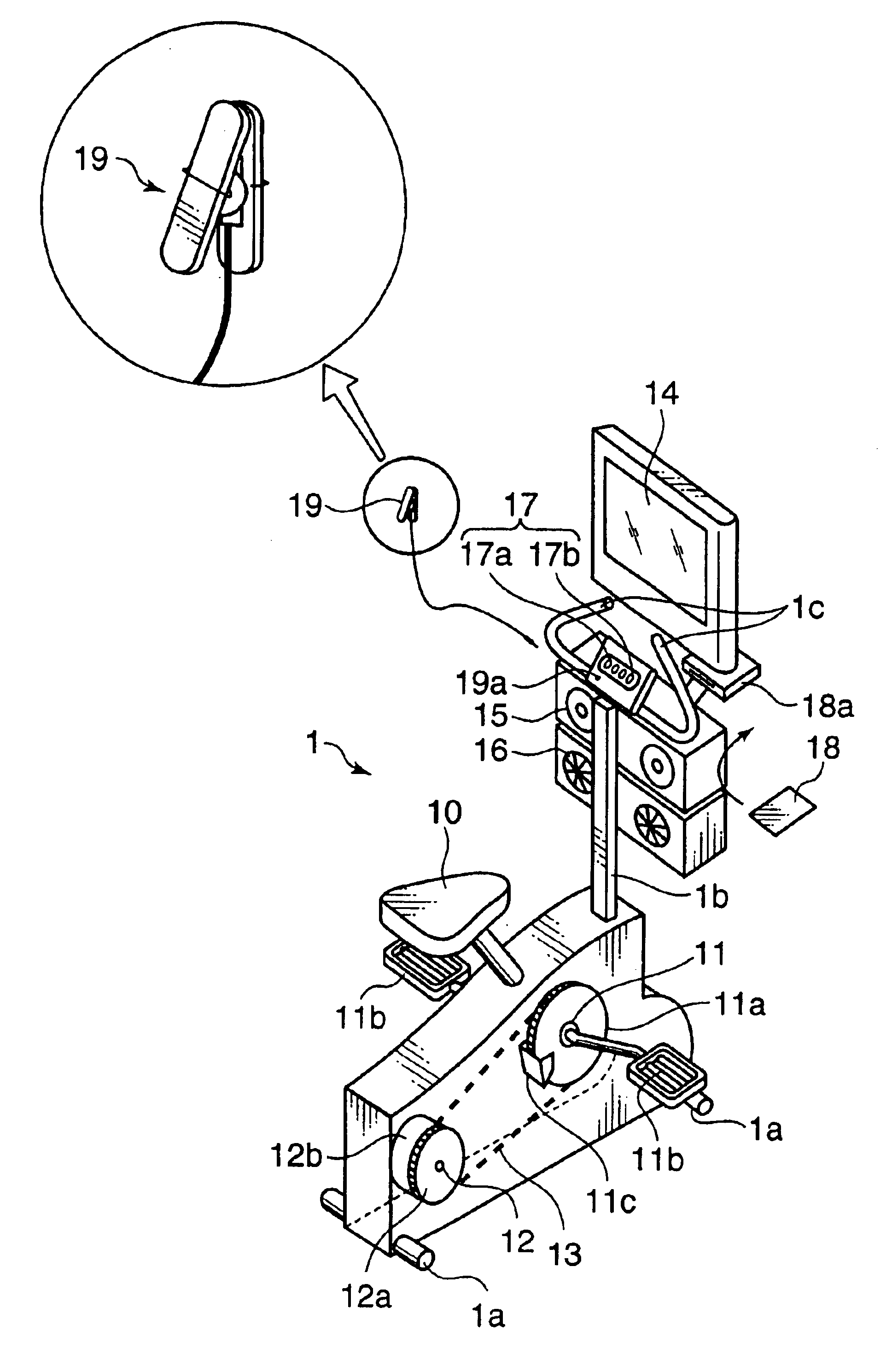

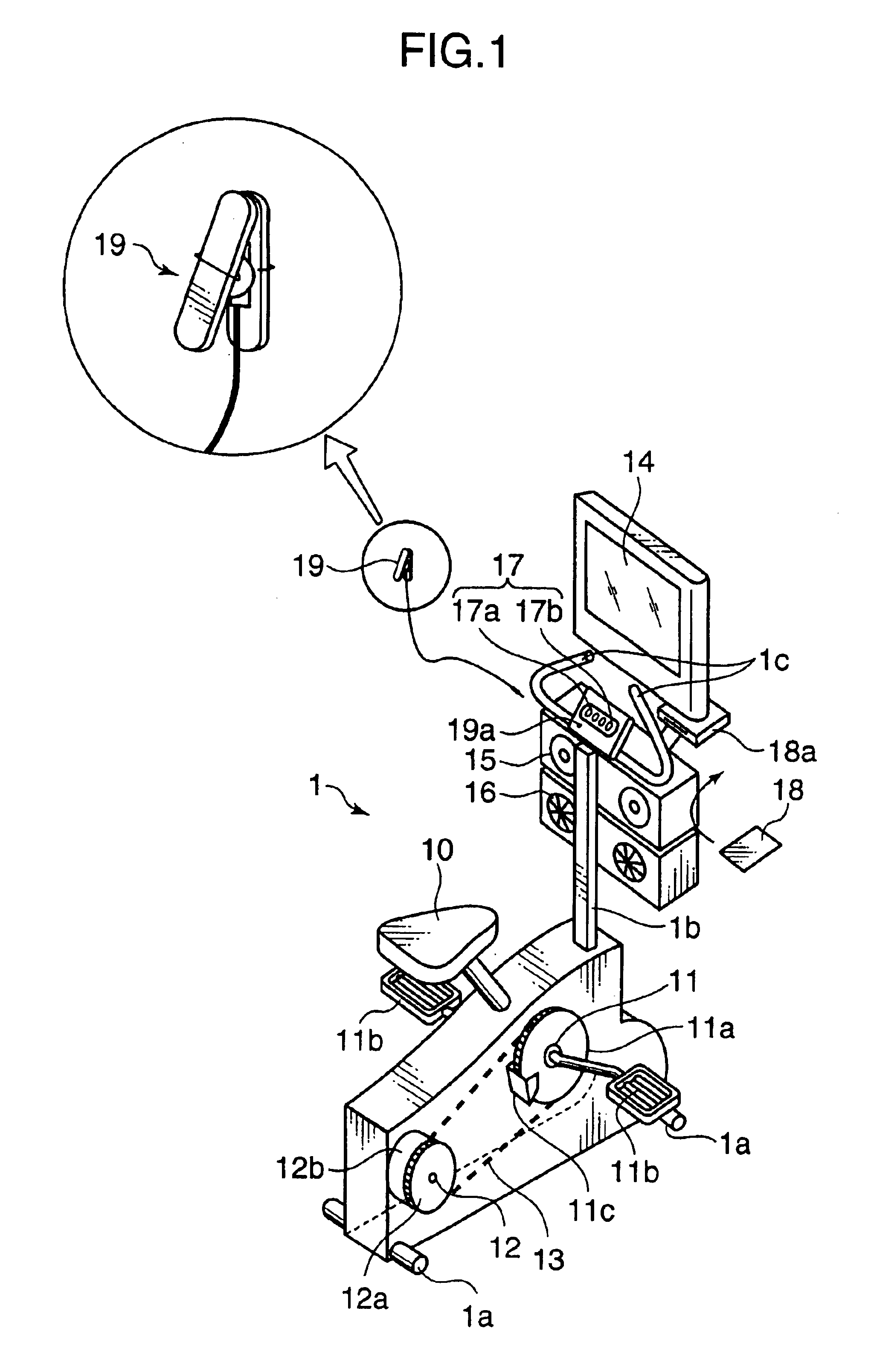

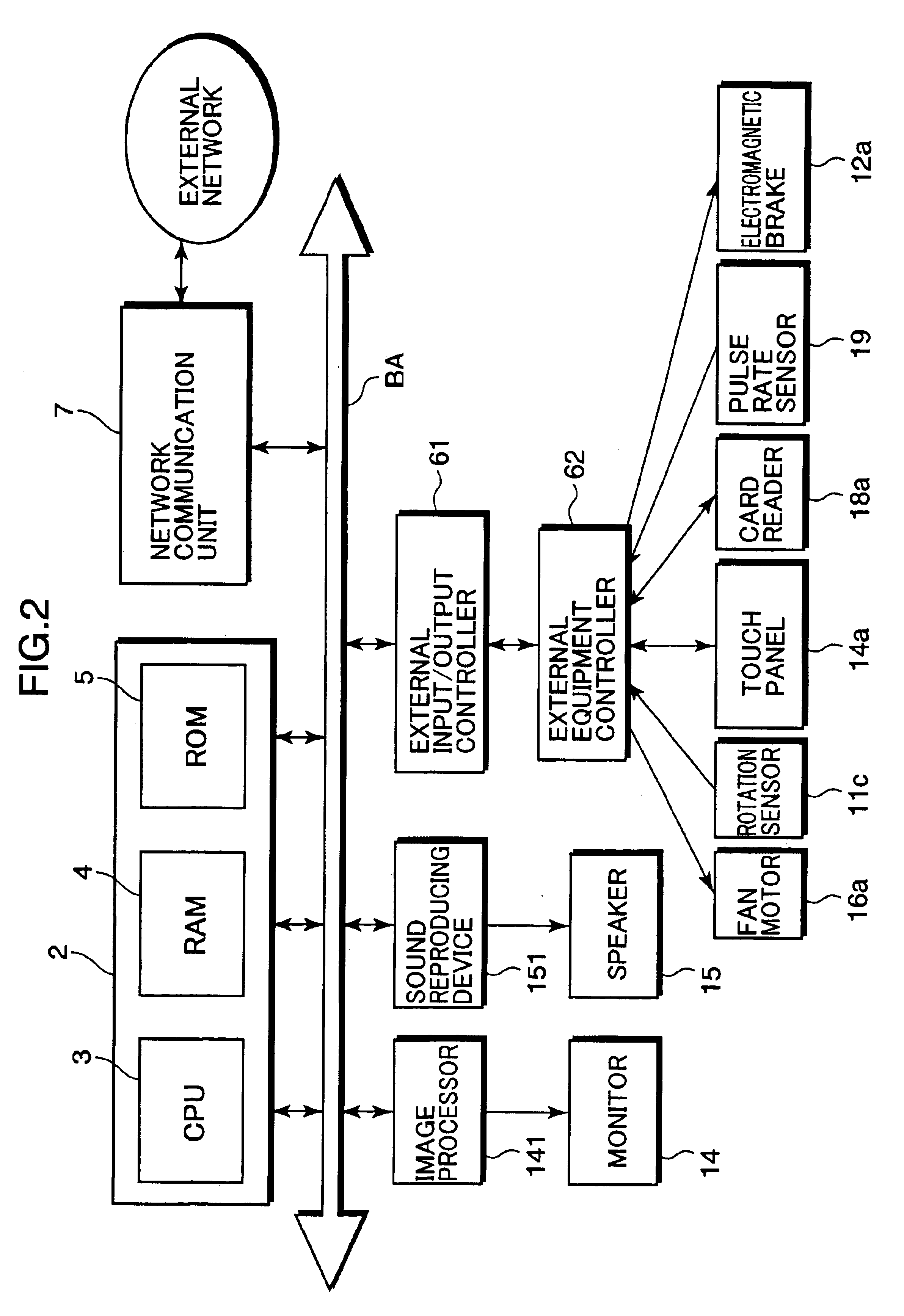

Exercise assisting method and apparatus implementing such method

Owner:KONAMI SPORTS & LIFE

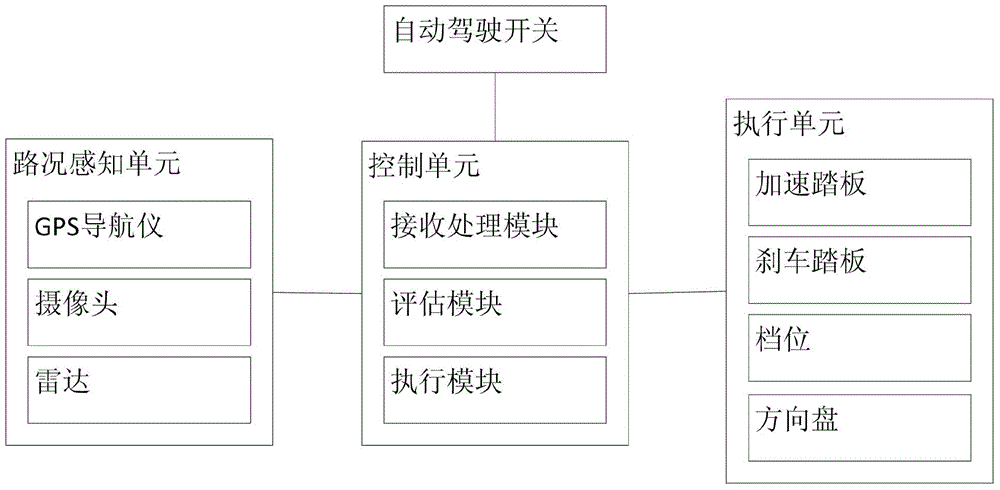

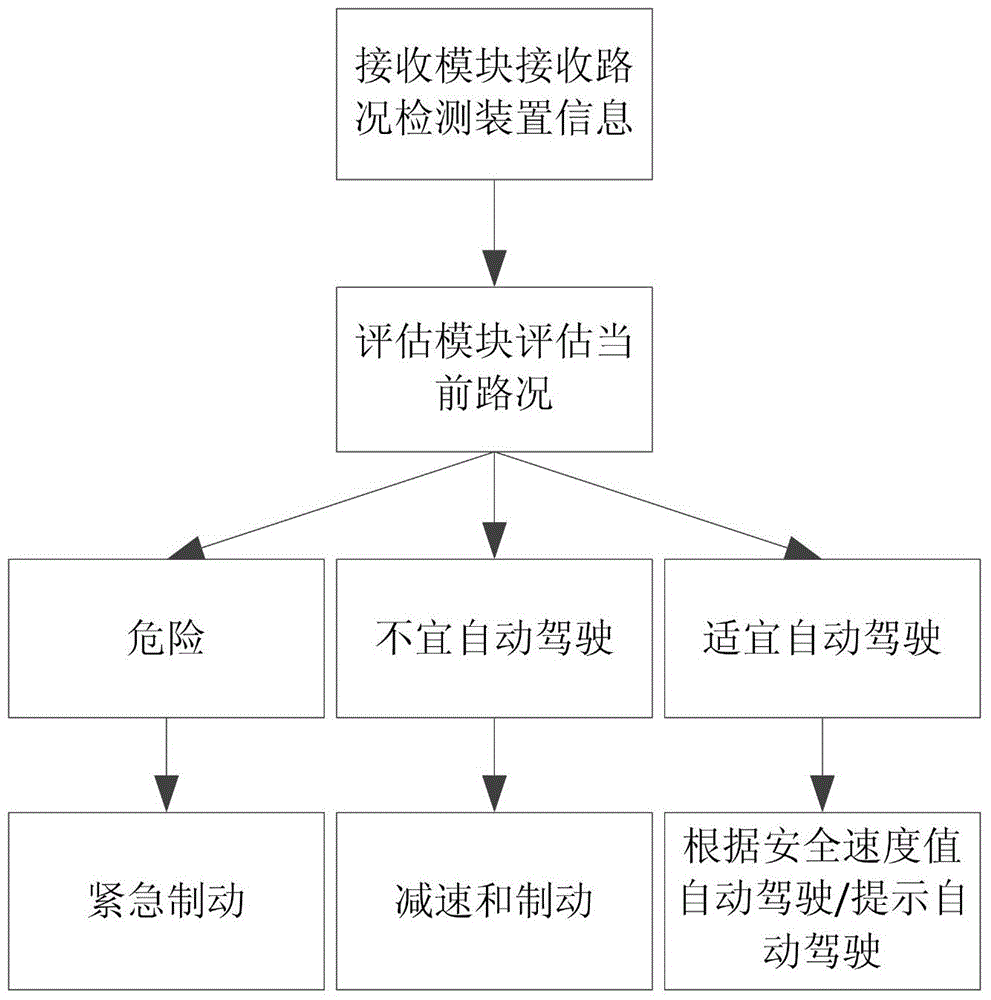

Intelligent driving system and control method thereof

ActiveCN104477167AReduce workloadEasy to switch betweenExternal condition input parametersSteering wheelRadar

The invention discloses an intelligent driving system and a control method of the intelligent driving system. The system comprises a road condition sensing unit, a control unit, an execution unit and an automatic driving switch. The road condition sensing unit comprises a GPS navigator, a camera and radar. The control unit comprises a receiving module, an assessment module and an execution module. The execution unit comprises an accelerator pedal, a brake pedal, gears and a steering wheel. The receiving module of the control unit receives the information from the road condition sensing system. The assessment module assesses the current road condition according to received information. If the current road condition is suitable for automatic driving, the value of automatic driving speed under which safety can be guaranteed is worked out, and the execution unit controls an execution component to execute corresponding operation according to the assessment result. According to the intelligent driving system and the control method of the intelligent driving system, the utility progress of the automatic driving technology is accelerated, the workload of a driver is reduced, the possibility of misoperation caused by manual driving is reduced, and driving safety is improved.

Owner:ZHEJIANG UNIV +1

Algorithm mapping, specialized instructions and architecture features for smart memory computing

InactiveUS6970988B1Improve performanceLow costMultiplex system selection arrangementsProgram controlSmart memoryExecution unit

A smart memory computing system that uses smart memory for massive data storage as well as for massive parallel execution is disclosed. The data stored in the smart memory can be accessed just like the conventional main memory, but the smart memory also has many execution units to process data in situ. The smart memory computing system offers improved performance and reduced costs for those programs having massive data-level parallelism. This smart memory computing system is able to take advantage of data-level parallelism to improve execution speed by, for example, use of inventive aspects such as algorithm mapping, compiler techniques, architecture features, and specialized instruction sets.

Owner:STRIPE INC

Apparatus and method for efficient battery utilization in portable personal computers

InactiveUS6167524AExtend battery lifeConstant load on the battery during operationEnergy efficient ICTVolume/mass flow measurementControl powerElectrical battery

An apparatus and method controlling power consumption in portable personal computers by dynamically allocating power to the system logic. Expected total power consumption is calculated and compared to an optimum power efficiency value. The expected power consumption values for each execution unit are stored in a look-up table in actual or compressed form. If the expected total power consumption value exceeds the power efficiency value, selected execution units are made inactive. Conversely, if the power efficiency value exceeds the expected total power consumption value, execution unit functions are added in order to maintain a level current demand on the battery.

Owner:LENOVO (SINGAPORE) PTE LTD

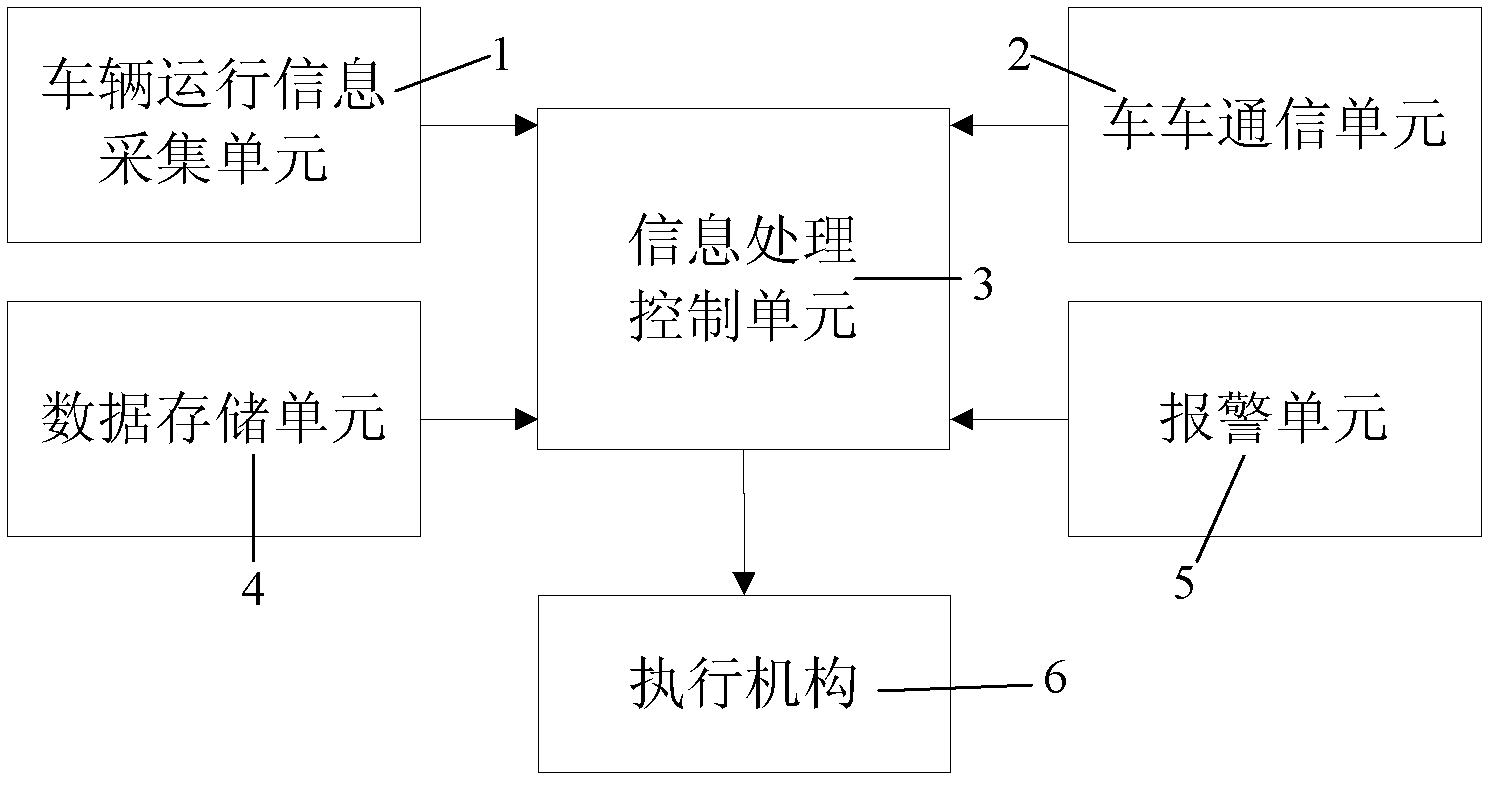

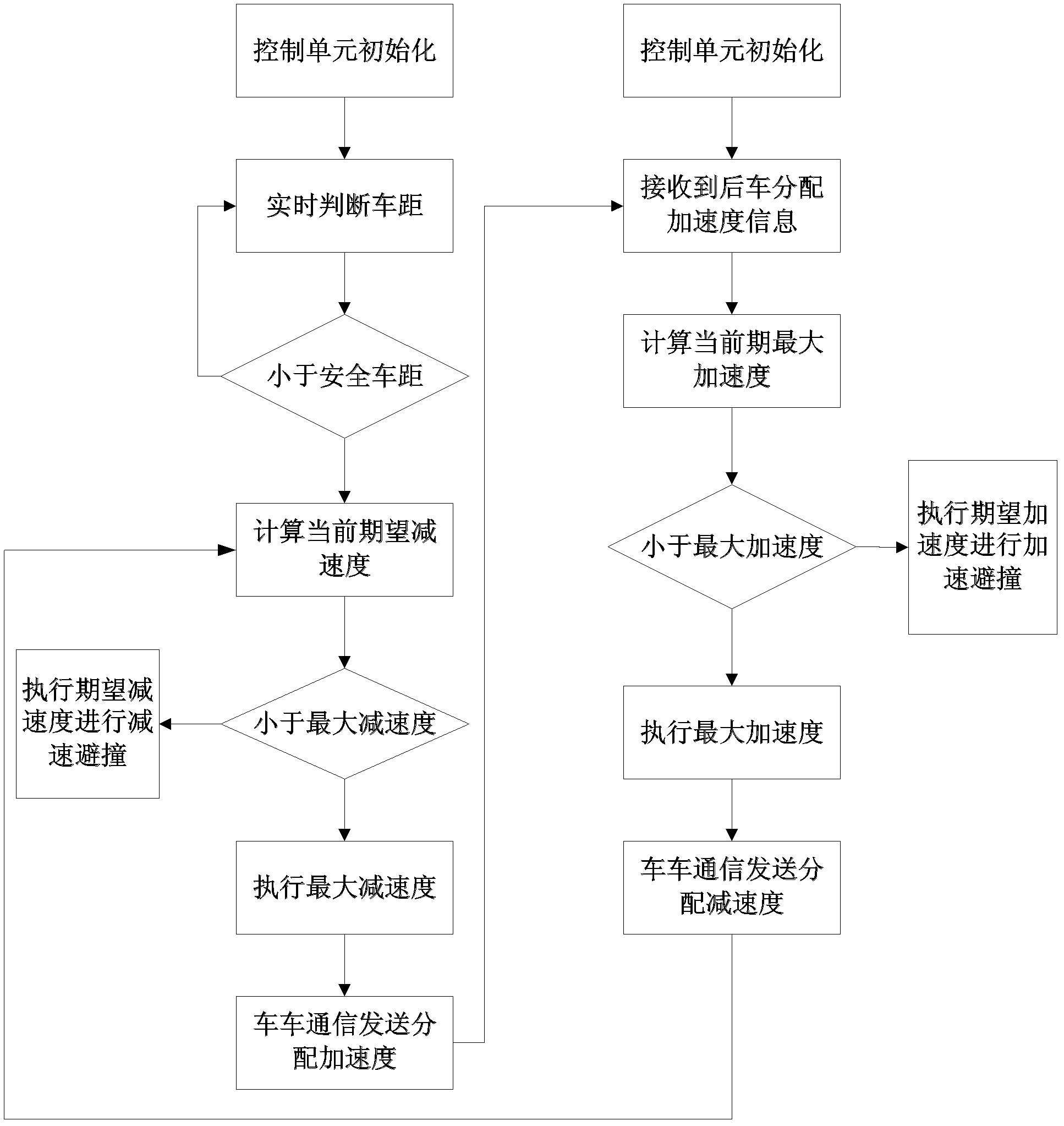

Cooperative anti-collision device based on vehicle-vehicle communication and anti-collision method

ActiveCN102616235AEnsure safetyReduce collisionAnti-collision systemsInformation processingTime information

The invention provides a cooperative anti-collision device based on vehicle-vehicle communication, belonging to the technical field of intelligent transportation / automobile safety control. The device comprises a vehicle operation information acquisition unit, a vehicle-vehicle communication unit, an information processing control unit, a data storage unit, an alarm unit and an execution unit. The vehicle operation information acquisition unit comprises a wheel speed sensor and a ranging module. Through the invention, the safety guarantee in vehicle running is enhanced; however, each vehicle executing cooperative collision prevention needs to be additionally provided with a cooperative anti-collision device, and a single vehicle only can implement the functions of a common anti-collision device; the vehicle-vehicle communication unit can acquire the information of space, speed and the like of the vehicles around in real time and perform real-time information interaction with the vehicles around; when the space is less than the safe space, the information processing control unit of the rear vehicle calculates the expected deceleration to avoid collision by reducing speed; and if the collision can not be prevented only by the rear vehicle, acceleration allocation information is sent to the front vehicle to notify the front vehicle to accelerate for cooperative collision prevention.

Owner:BEIHANG UNIV

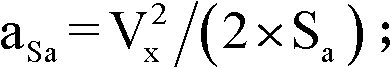

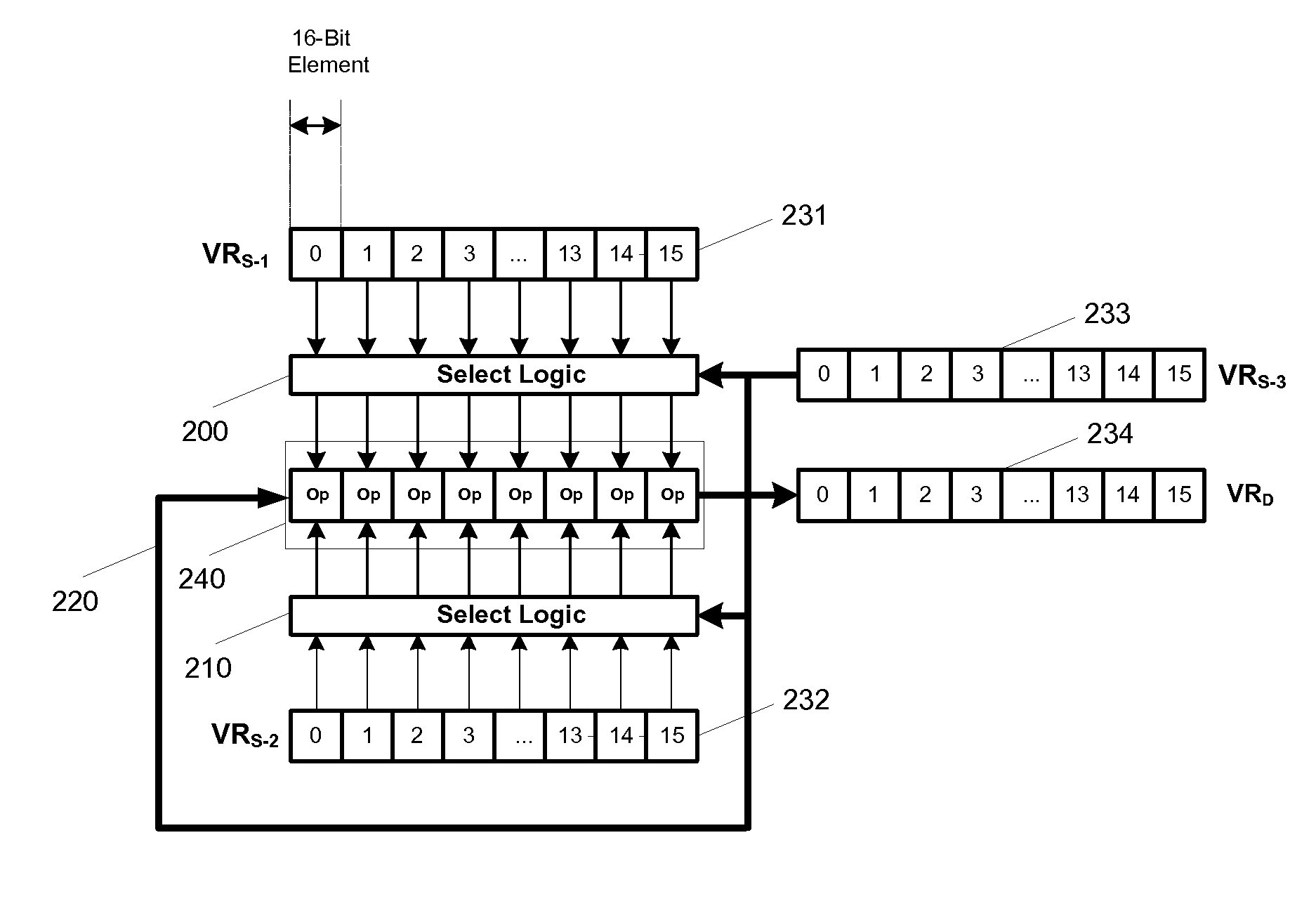

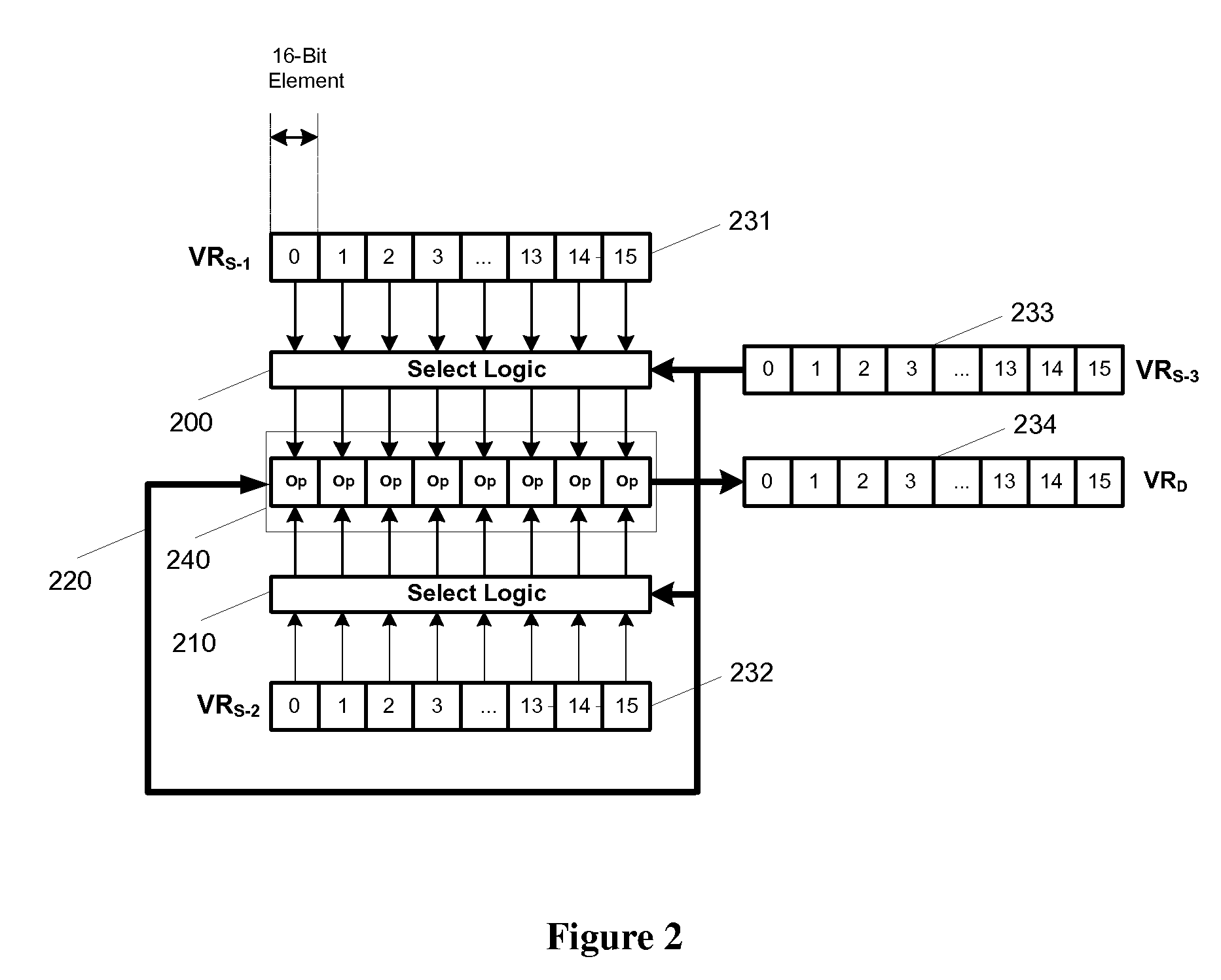

Flexible vector modes of operation for SIMD processor

InactiveUS20100274988A1General purpose stored program computerMachine execution arrangementsComputer architectureVector element

In addition to the usual modes of SIMD processor operation, where corresponding elements of two source vector registers are used as input pairs to be operated upon by the execution unit, or where one element of a source vector register is broadcast for use across the elements of another source vector register, the new system provides several other modes of operation for the elements of one or two source vector registers. Improving upon the time-costly moving of elements for an operation such as DCT, the present invention defines a more general set of modes of vector operations. In one embodiment, these new modes of operation use a third vector register to define how each element of one or both source vector registers are mapped, in order to pair these mapped elements as inputs to a vector execution unit. Furthermore, the decision to write an individual vector element result to a destination vector register, for each individual element produced by the vector execution unit, may be selectively disabled, enabled, or made to depend upon a selectable condition flag or a mask bit.

Owner:MIMAR TIBET

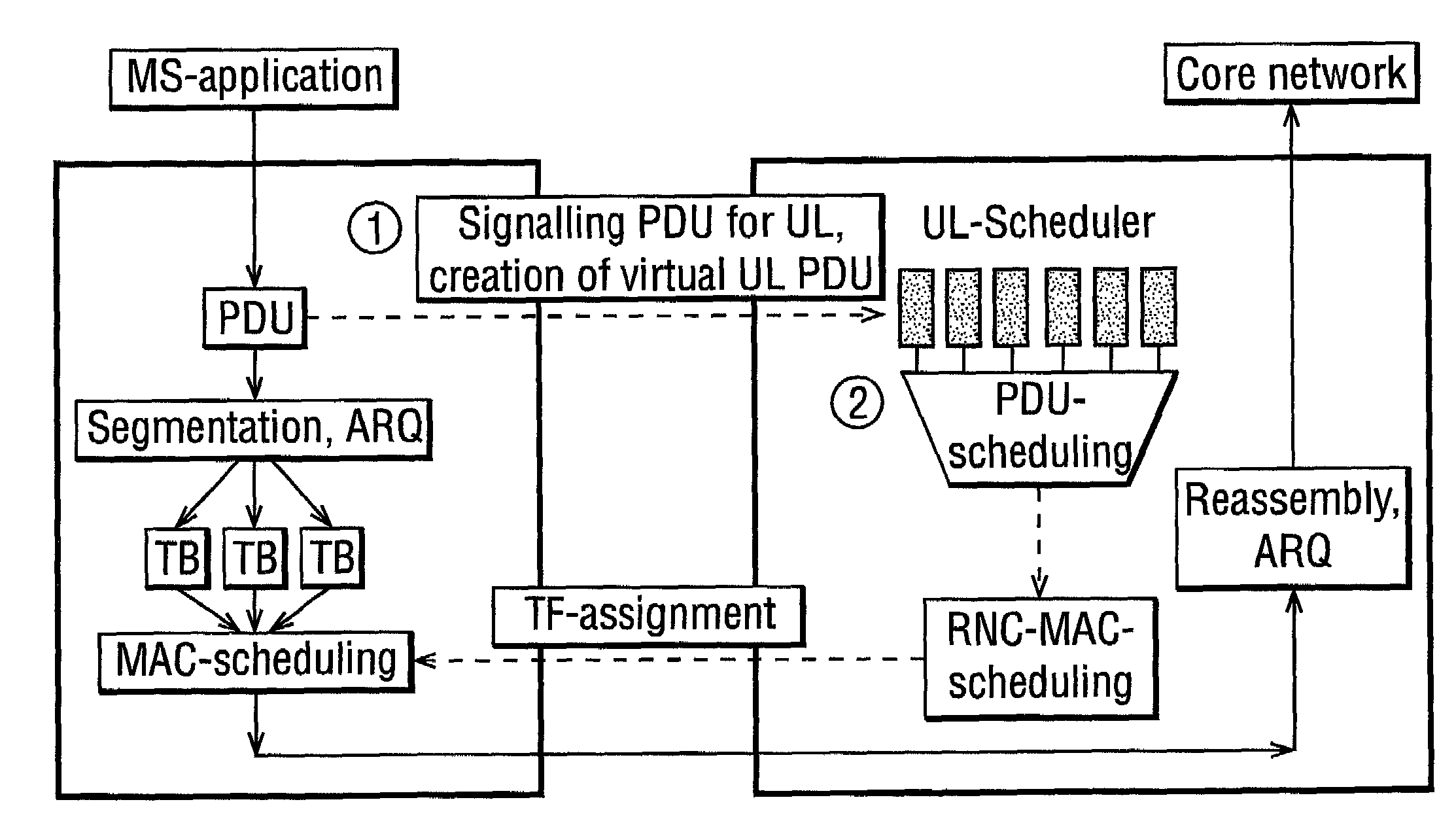

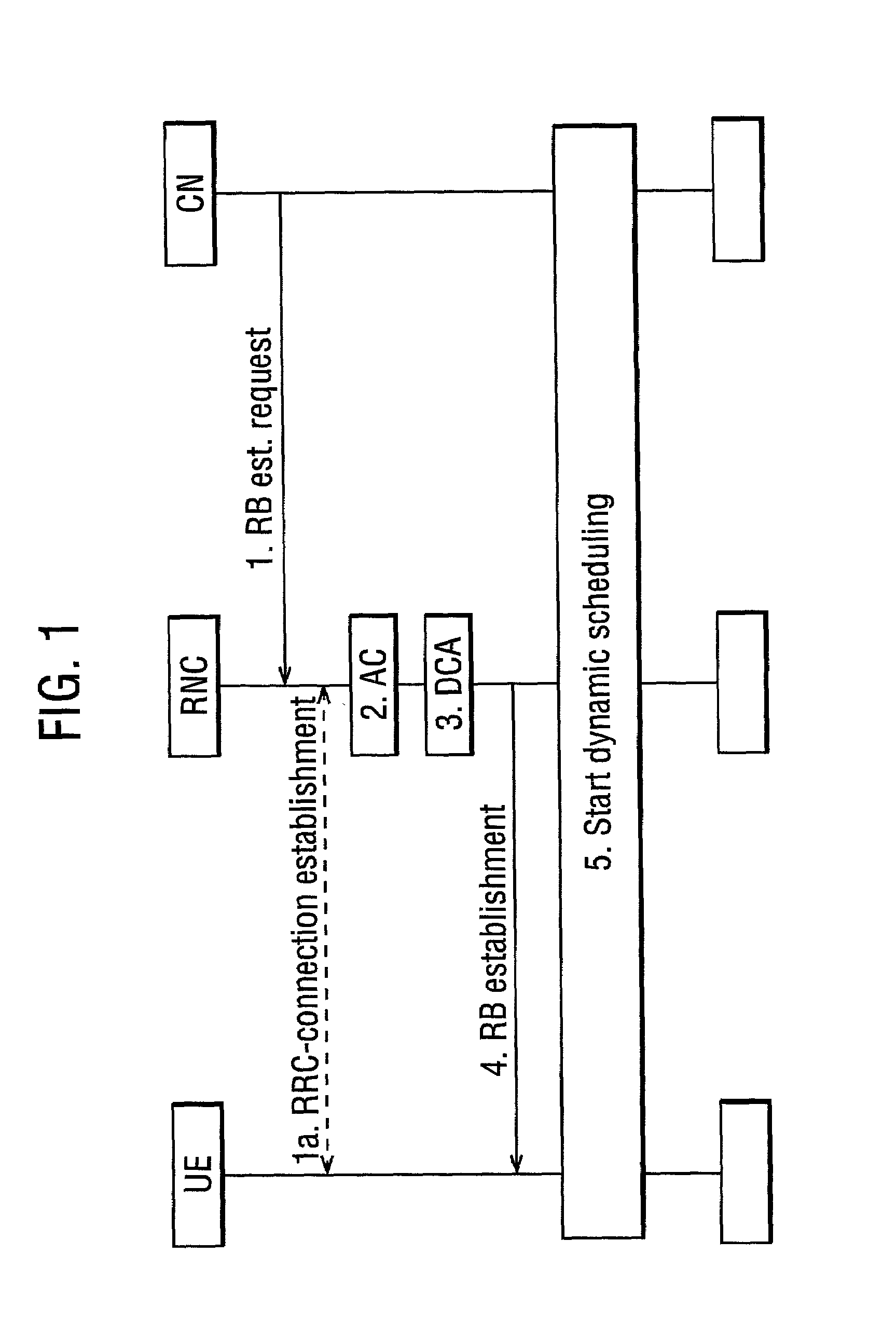

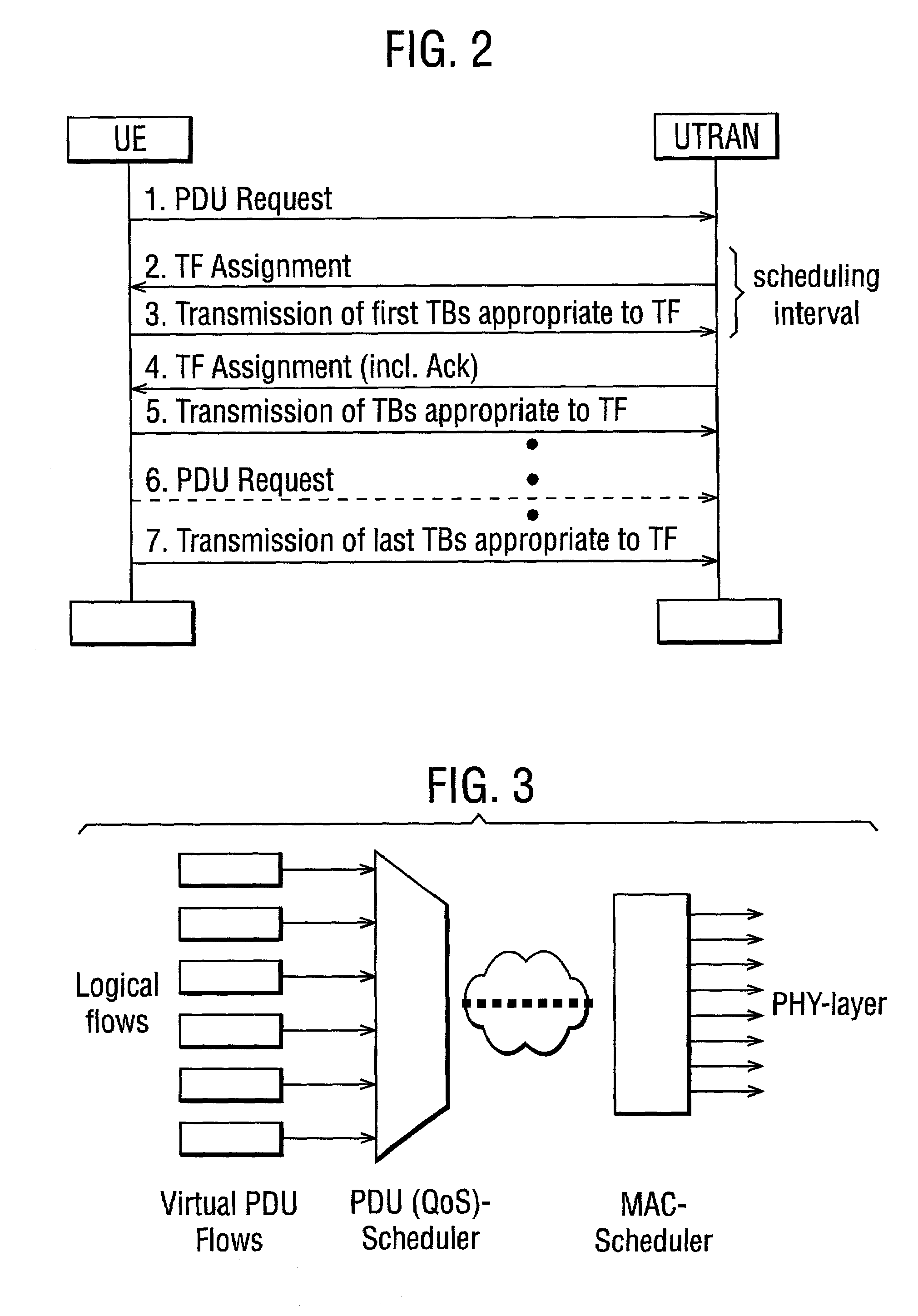

Method and system for UMTS packet transmission scheduling on uplink channels

ActiveUS7145895B2Error prevention/detection by using return channelNetwork traffic/resource managementQuality of serviceData stream

An improved method for packet transmission scheduling and an improved packet transmission scheduling system in mobile telecommunication systems. Both the improved method and the system especially adapted to be used for UMTS systems. A quality of service scheduling of multiple data flows in a mobile telecommunication system is proposed, wherein a priority order of protocol data units (PDU) of multiple data flows with regard to predefined flow's quality of service requirements is determined, a serving of the protocol data units (PDU) is performed by dynamically determining transport blocks (TB) to be transmitted by the physical layer (PHY-layer) with regard to the defined priority order and in dependence of allocated radio resource constraints, by assigning to each transport block (TB) a respective associated transport format (TF), and by creating transport block sets (TBS) with the determined transport blocks (TB) to be transmitted by the physical layer (PHY-layer) by using the respective associated transport format (TF) as assigned.

Owner:LUCENT TECH INC

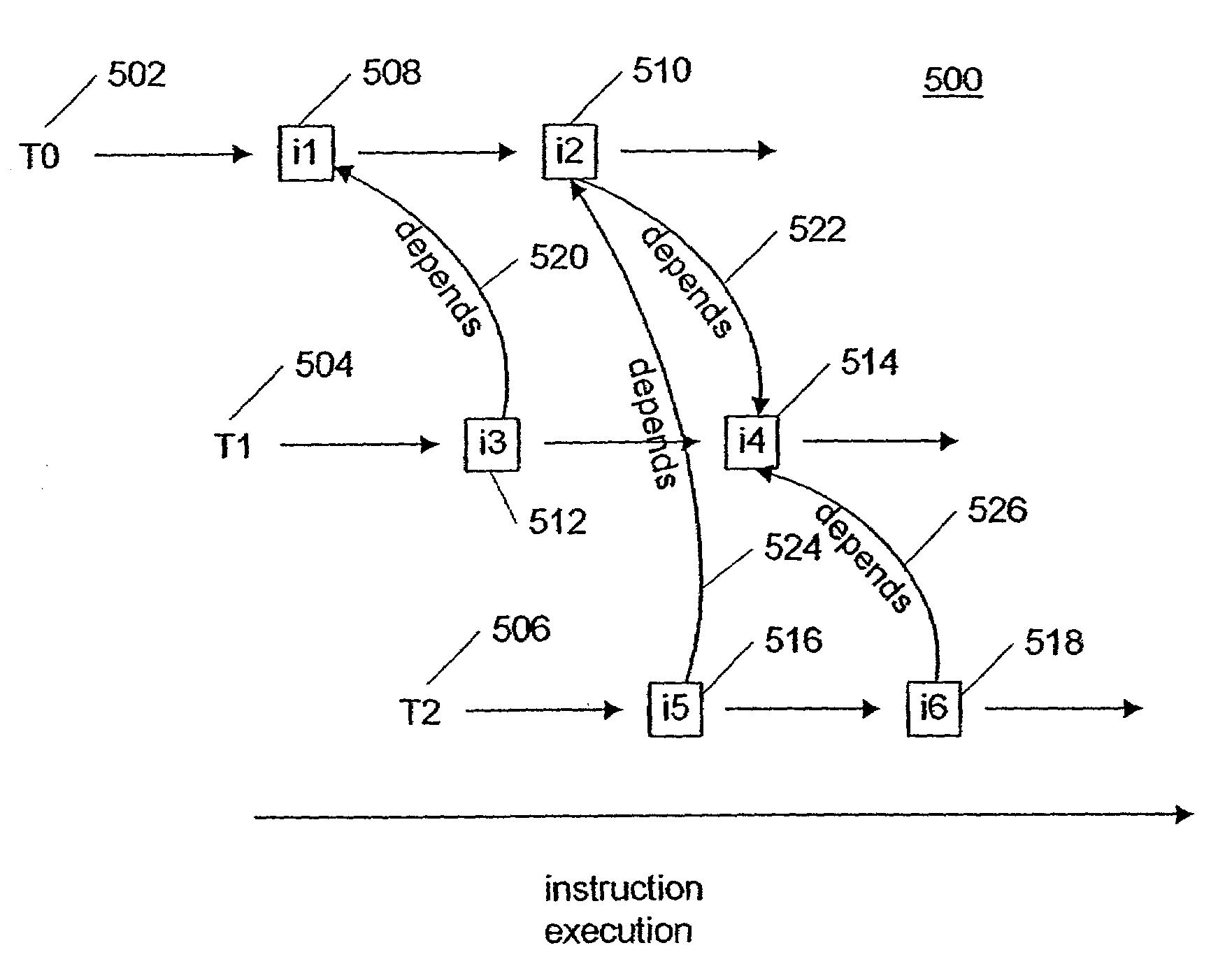

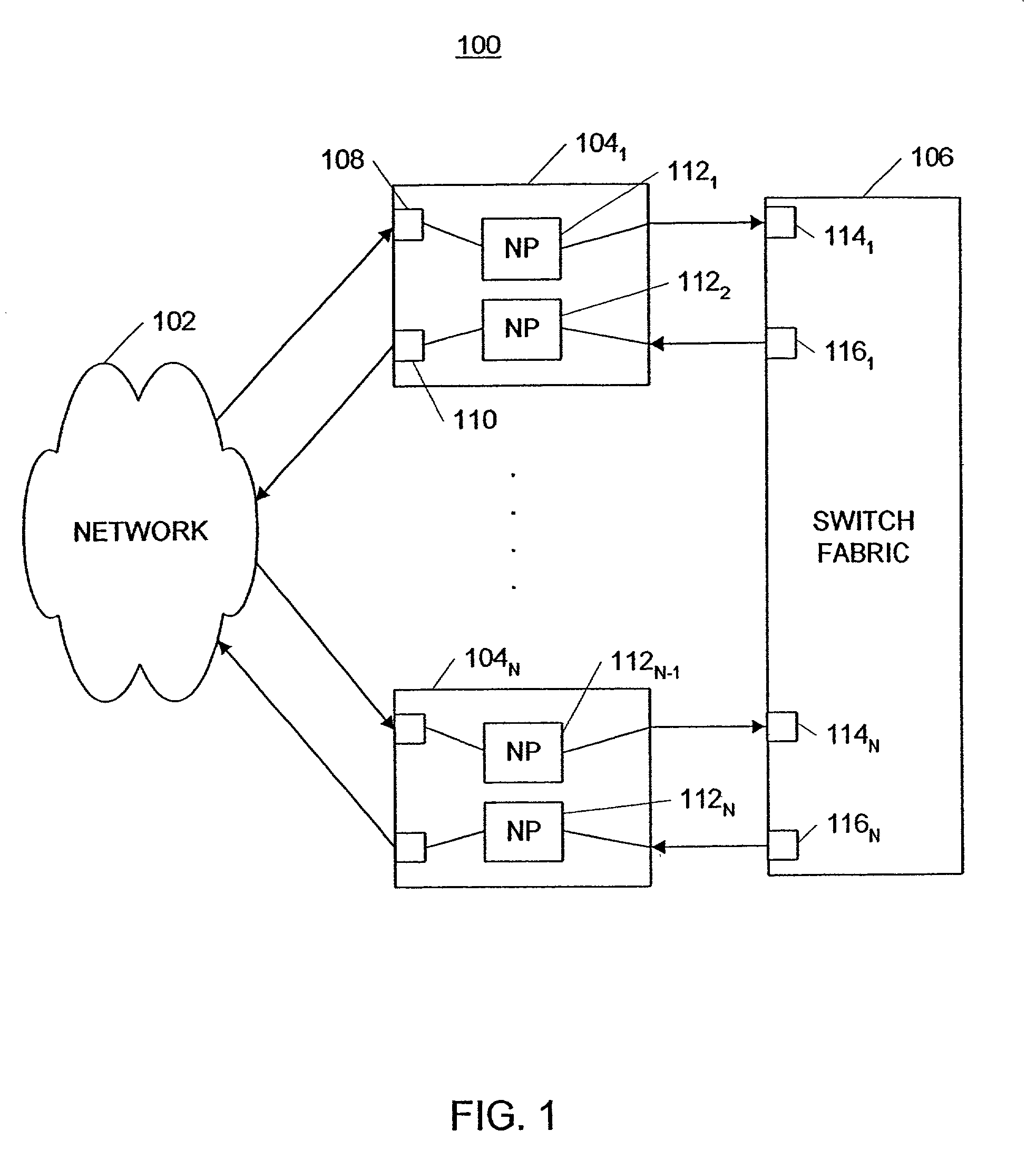

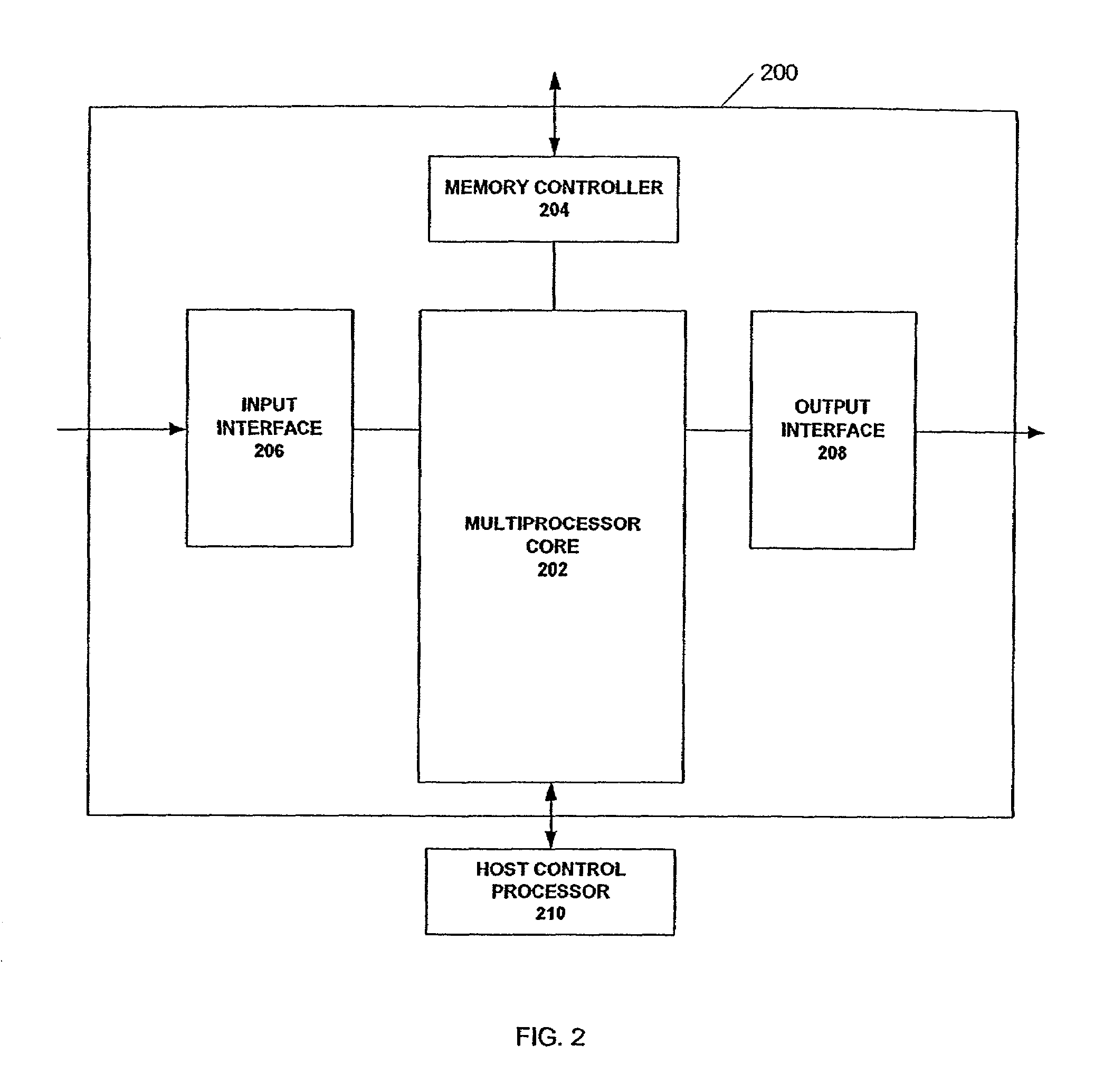

System and method for instruction-level parallelism in a programmable multiple network processor environment

InactiveUS6950927B1Digital computer detailsConcurrent instruction executionInstruction unitExecution unit

A system and method process data elements with instruction-level parallelism. An instruction buffer holds a first instruction and a second instruction, the first instruction being associated with a first thread, and the second instruction being associated with a second thread. A dependency counter counts satisfaction of dependencies of instructions of the second thread on instructions of the first thread. An instruction control unit is coupled to the instruction buffer and the dependency counter, the instruction control unit increments and decrements the dependency counter according to dependency information included in instructions. An execution switch is coupled to the instruction control unit and the instruction buffer, and the execution switch routes instructions to instruction execution units.

Owner:UNITED STATES OF AMERICA +1

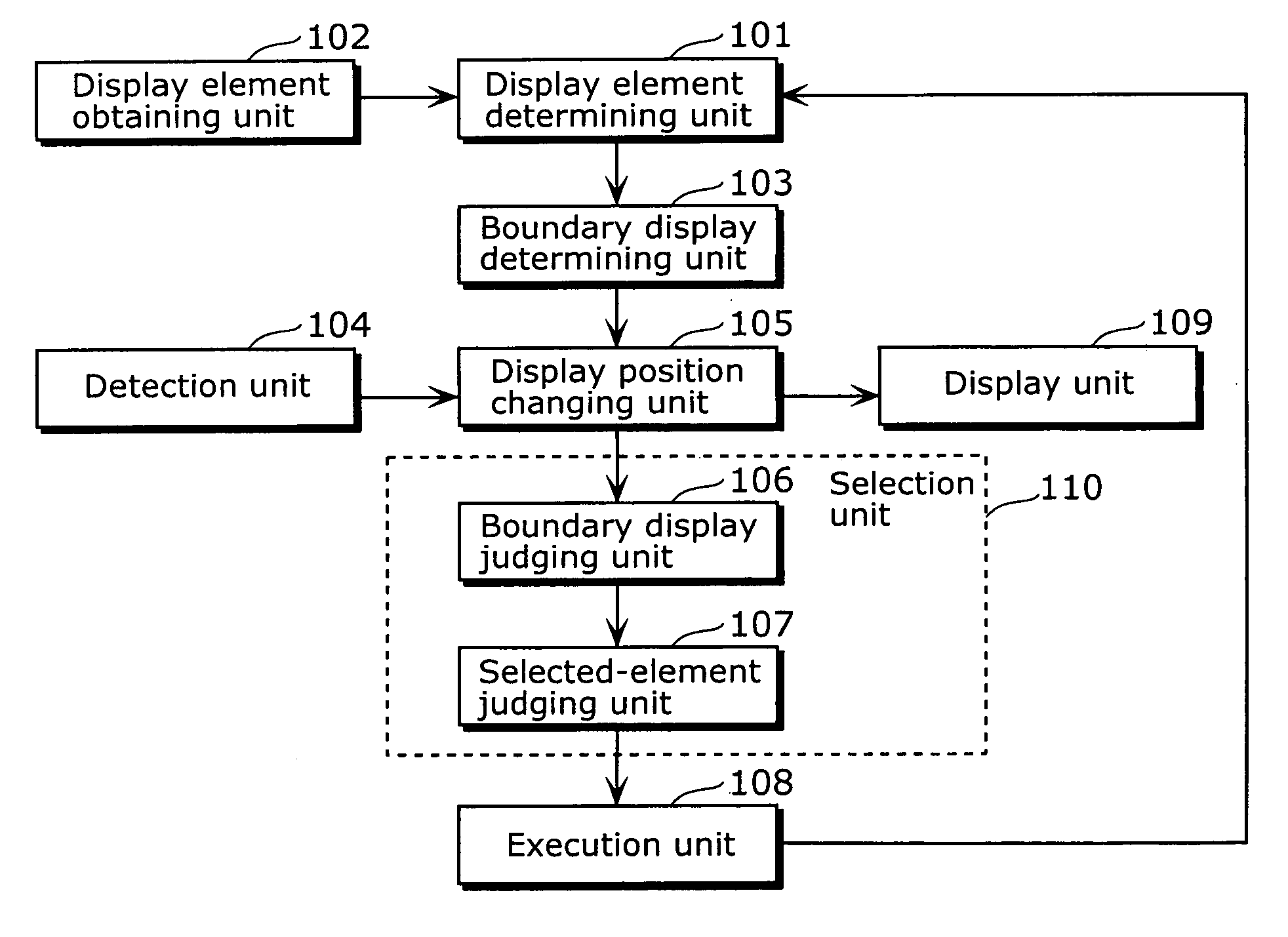

Display apparatus and control method thereof

InactiveUS20070296646A1Low accuracy in estimatingIncrease hardware costCathode-ray tube indicatorsOptical elementsComputer hardwareComputer graphics (images)

The present invention provides a display apparatus which enables high-accuracy, hands-free operation, while adopting a low-cost configuration. The display apparatus according to the present invention includes: an obtainment unit that obtains a display element which is information to be displayed to the user; a determining unit that determines a display position of the obtained display element, at which a portion of the obtained display element can not be seen by the user; a display unit that displays the display element at the determined display position; a detection unit that detects a direction of change when an orientation of the user's head is changed; a selection unit that selects the display element to be displayed in the detected direction of change; and an execution unit that executes a predetermined process related to the selected display element.

Owner:PANASONIC CORP

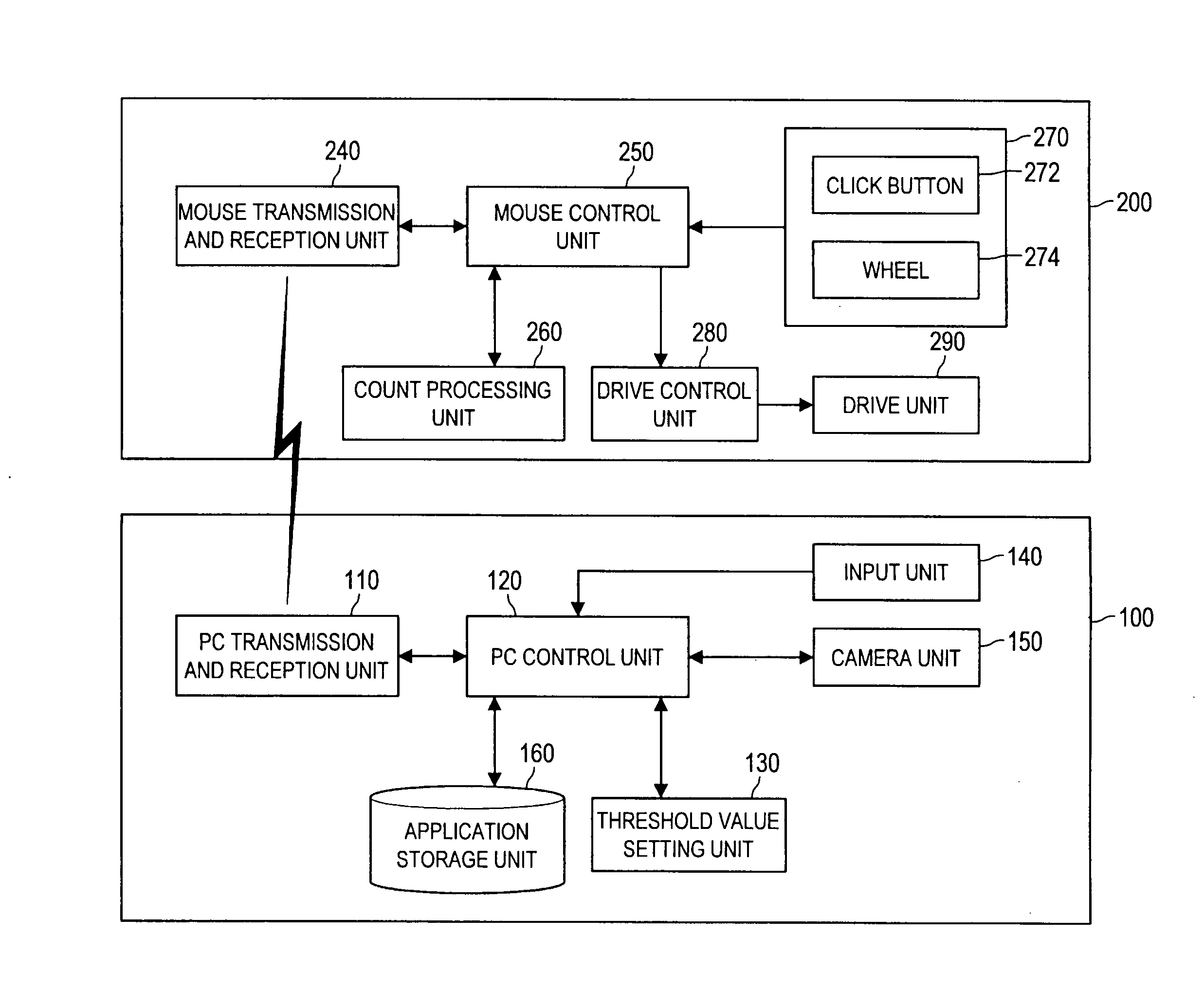

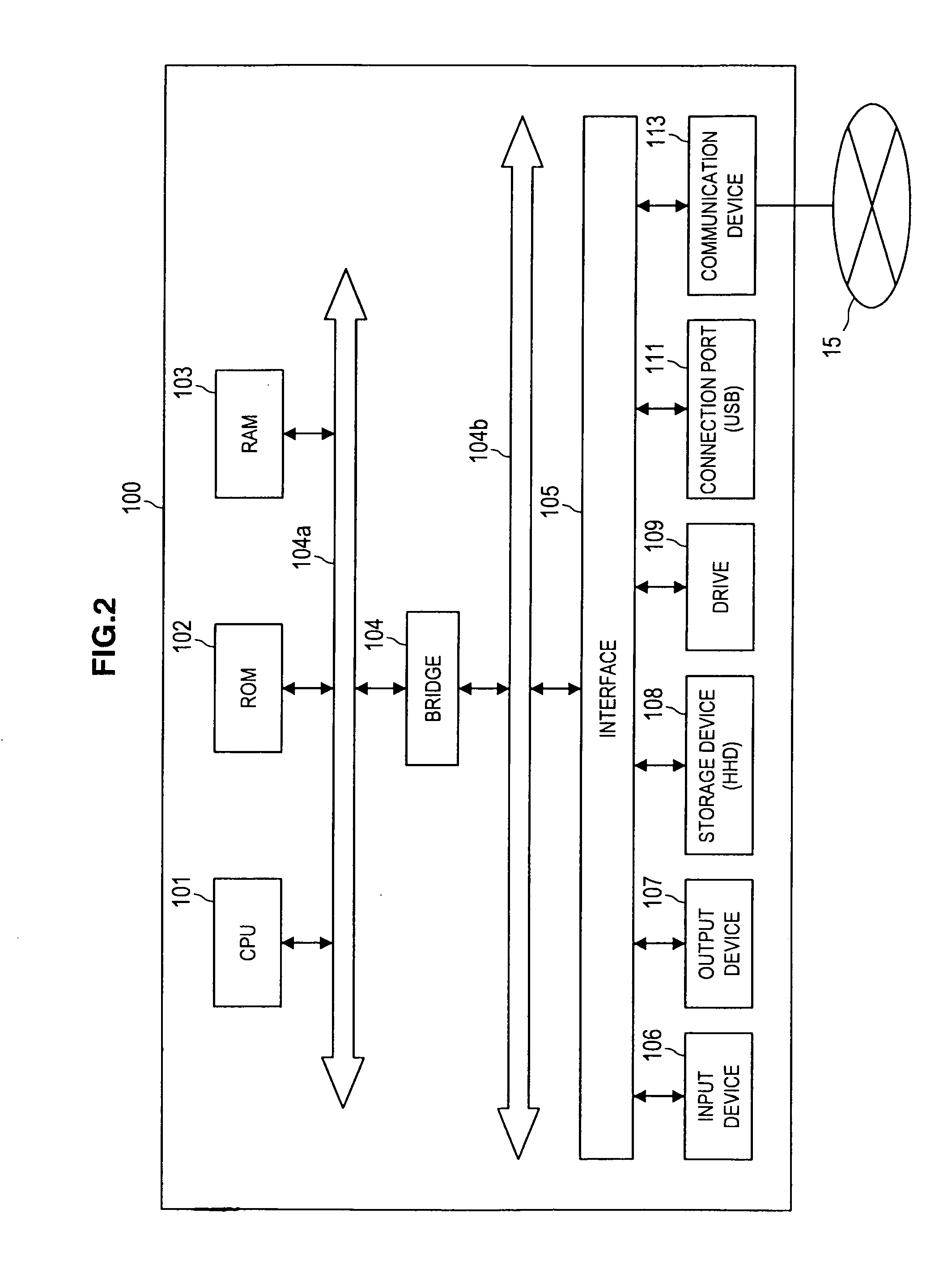

Control system, operation device and control method

InactiveUS20100274932A1Improve convenienceMultiprogramming arrangementsInput/output processes for data processingInformation processingValue set

Owner:SONY CORP

Programmable processor with group floating-point operations

InactiveUS7216217B2Reduce in quantityImprove performanceInstruction analysisMemory adressing/allocation/relocationComputer architectureEngineering

A programmable processor that comprises a general purpose processor architecture, capable of operation independent of another host processor, having a virtual memory addressing unit, an instruction path and a data path; an external interface; a cache operable to retain data communicated between the external interface and the data path; at least one register file configurable to receive and store data from the data path and to communicate the stored data to the data path; and a multi-precision execution unit coupled to the data path. The multi-precision execution unit is configurable to dynamically partition data received from the data path to account for an elemental width of the data and is capable of performing group floating-point operations on multiple operands in partitioned fields of operand registers and returning catenated results. In other embodiments the multi-precision execution unit is additionally configurable to execute group integer and / or group data handling operations.

Owner:MICROUNITY

Mechanism for suppressing instruction replay in a processor

Owner:ADVANCED MICRO DEVICES INC

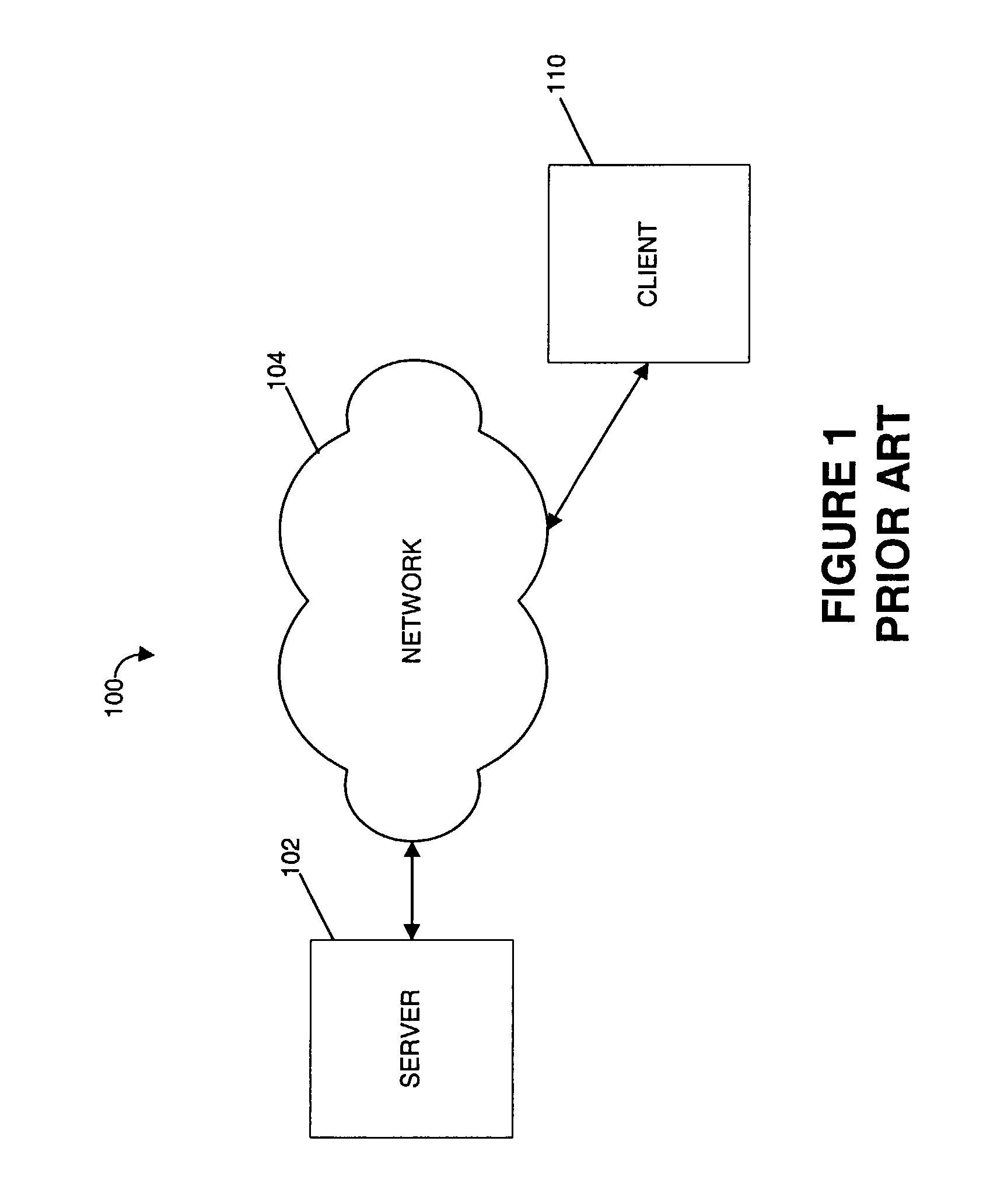

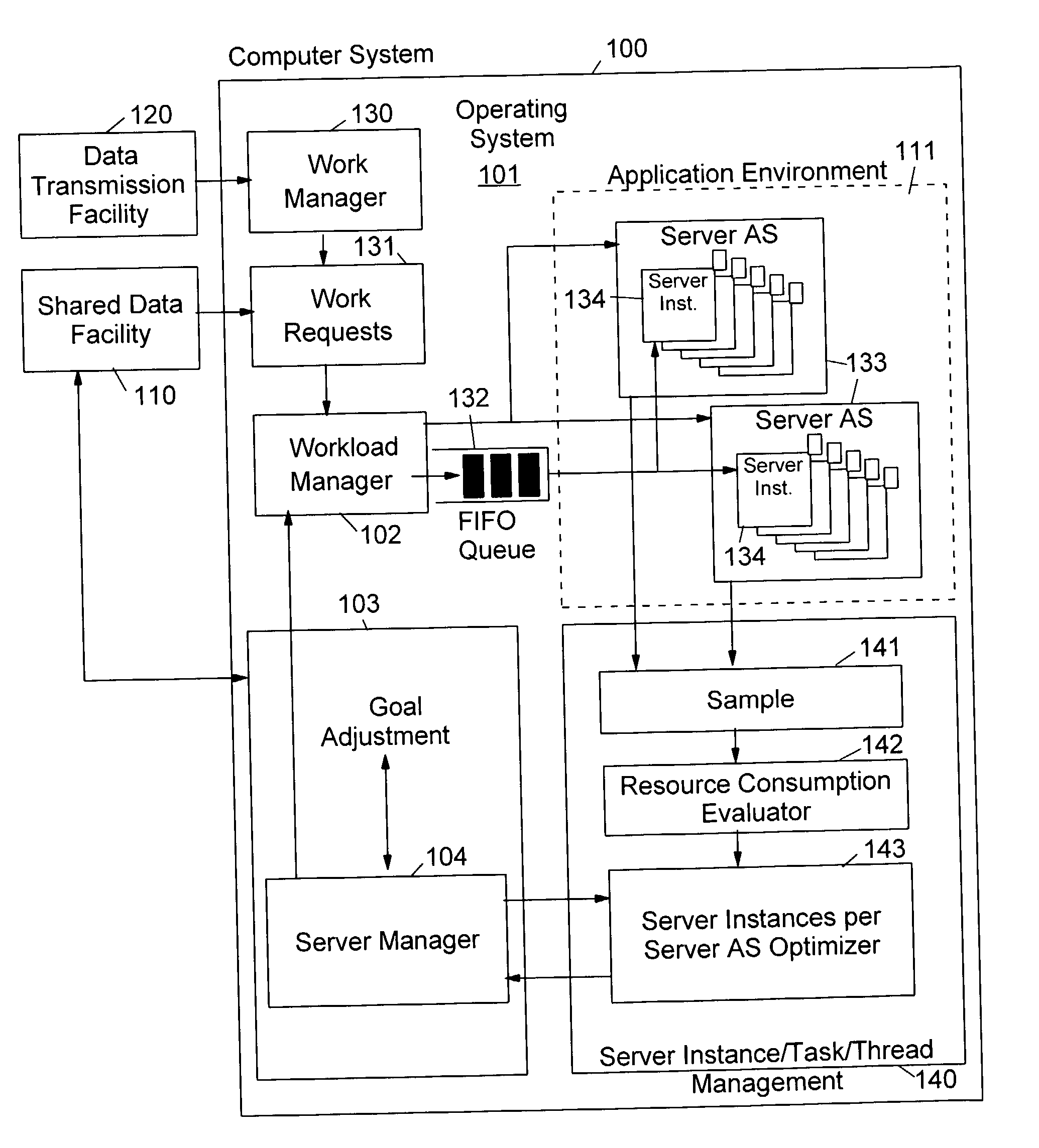

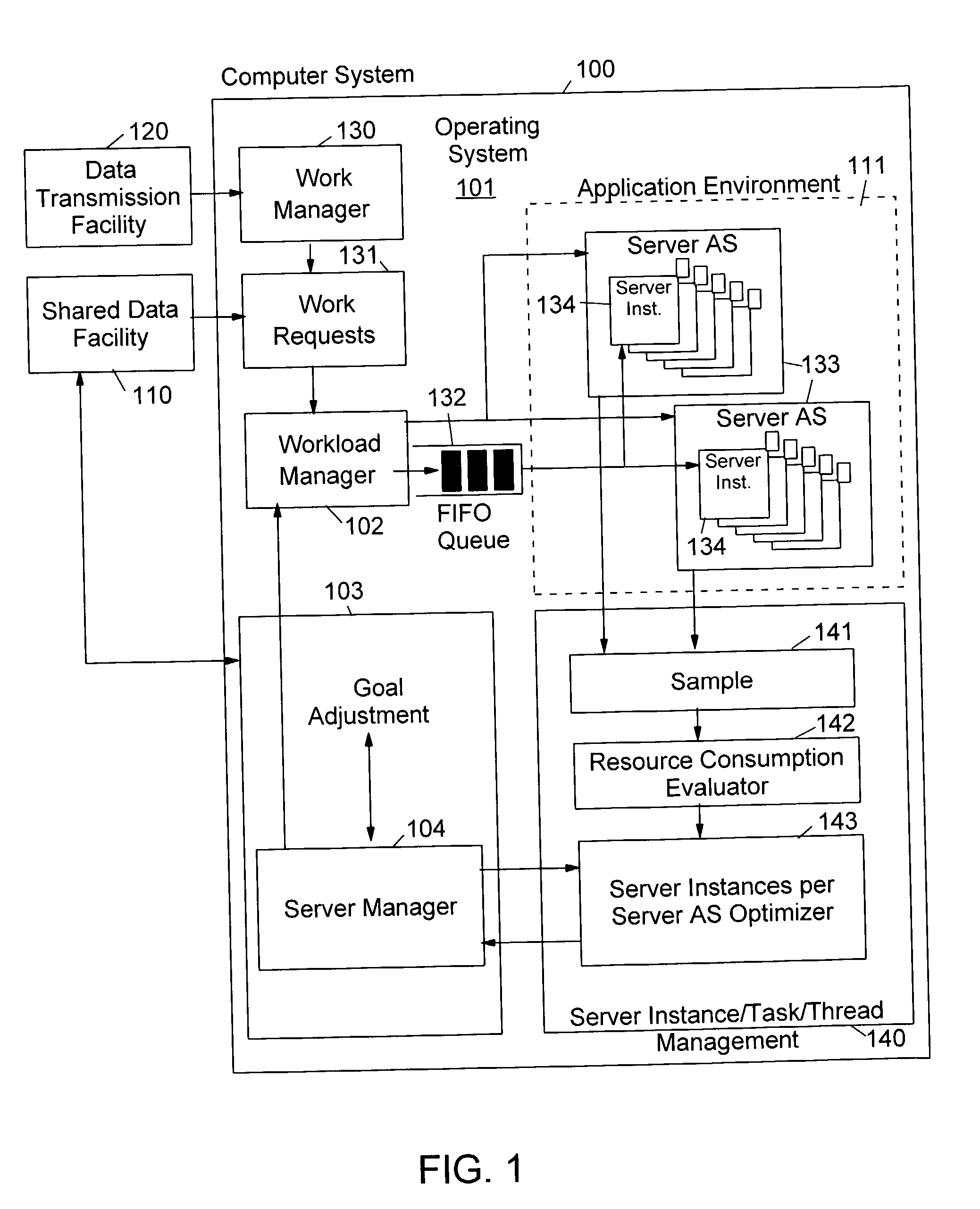

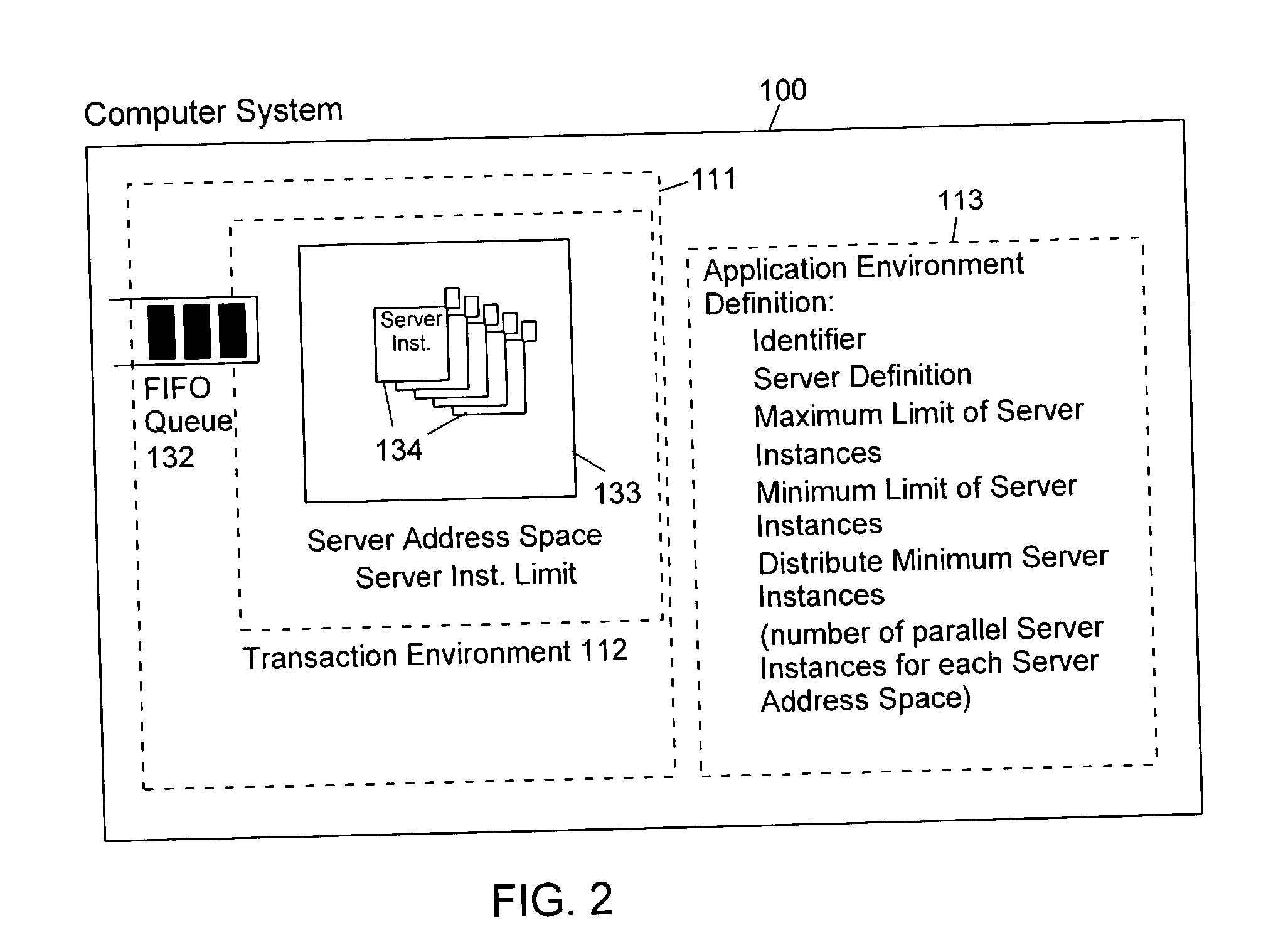

Method and apparatus for controlling the number of servers in a hierarchical resource environment

InactiveUS20030005028A1Improve overall utilizationImprove system performanceResource allocationMemory systemsResource consumptionComputerized system

The invention relates to the control of servers which process client work requests in a computer system on the basis of resource consumption. Each server contains multiple server instances (also called "execution units") which execute different client work requests in parallel. A workload manager determines the total number of server containers and server instances in order to achieve the goals of the work requests. The number of server instances started in each server container depends on the resource consumption of the server instances in each container and on the resource constraints, service goals and service goal achievements of the work units to be executed. At predetermined intervals during the execution of the work units the server instances are sampled to check whether they are active or inactive. Dependent on the number of active server instances the number of server address spaces and server instances is repeatedly adjusted to achieve an improved utilization of the available virtual storage and an optimization of the system performance in the execution of the application programs.

Owner:IBM CORP

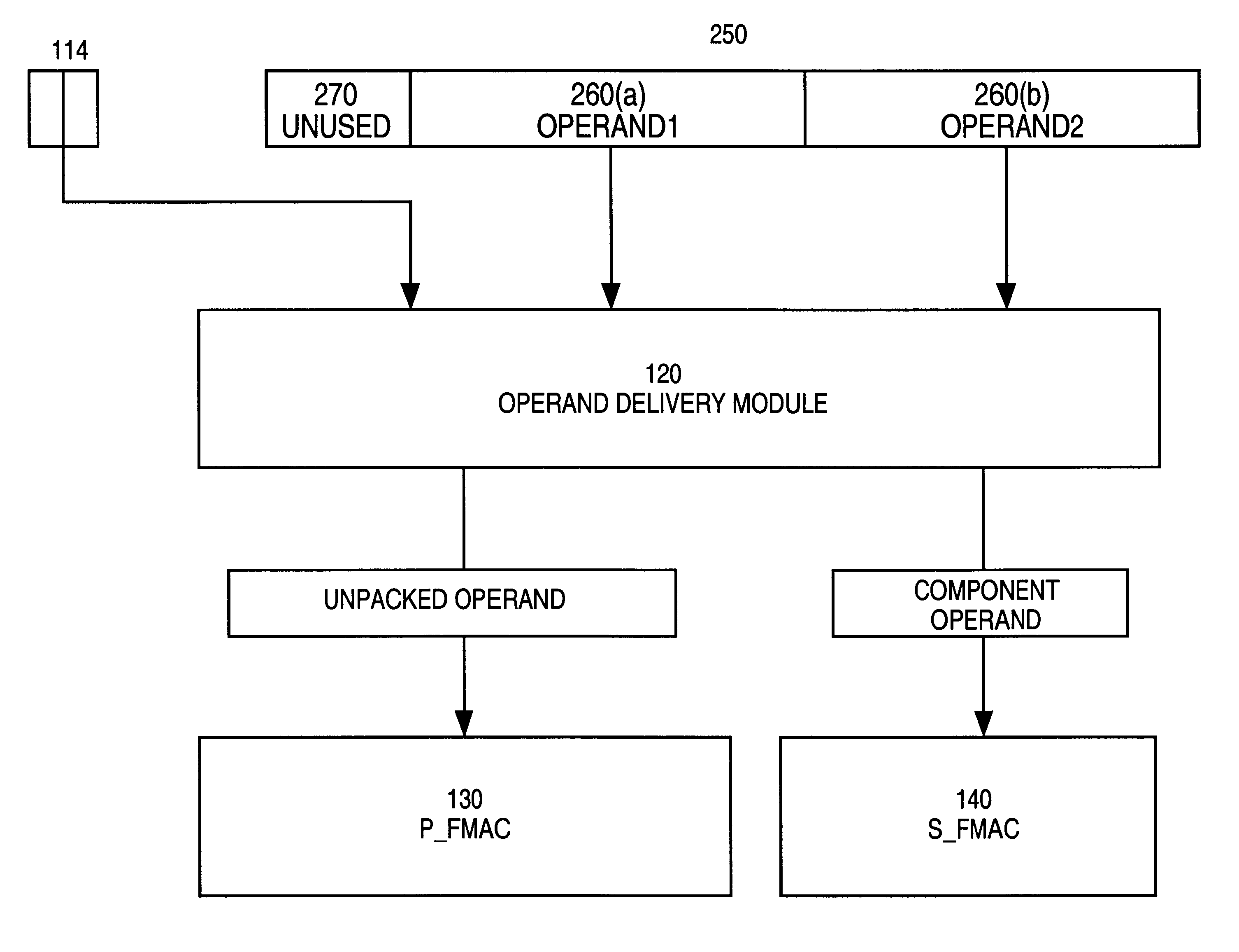

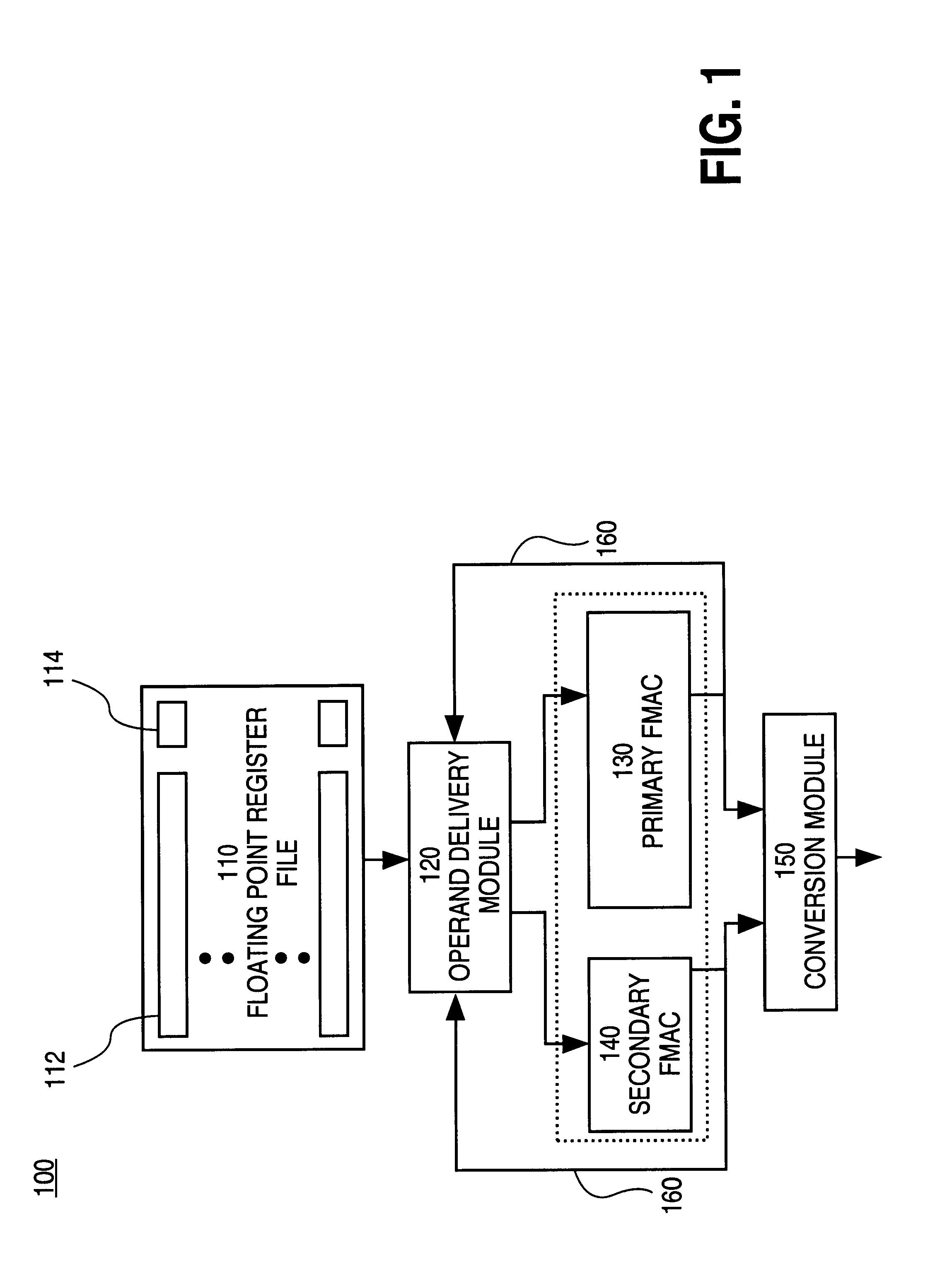

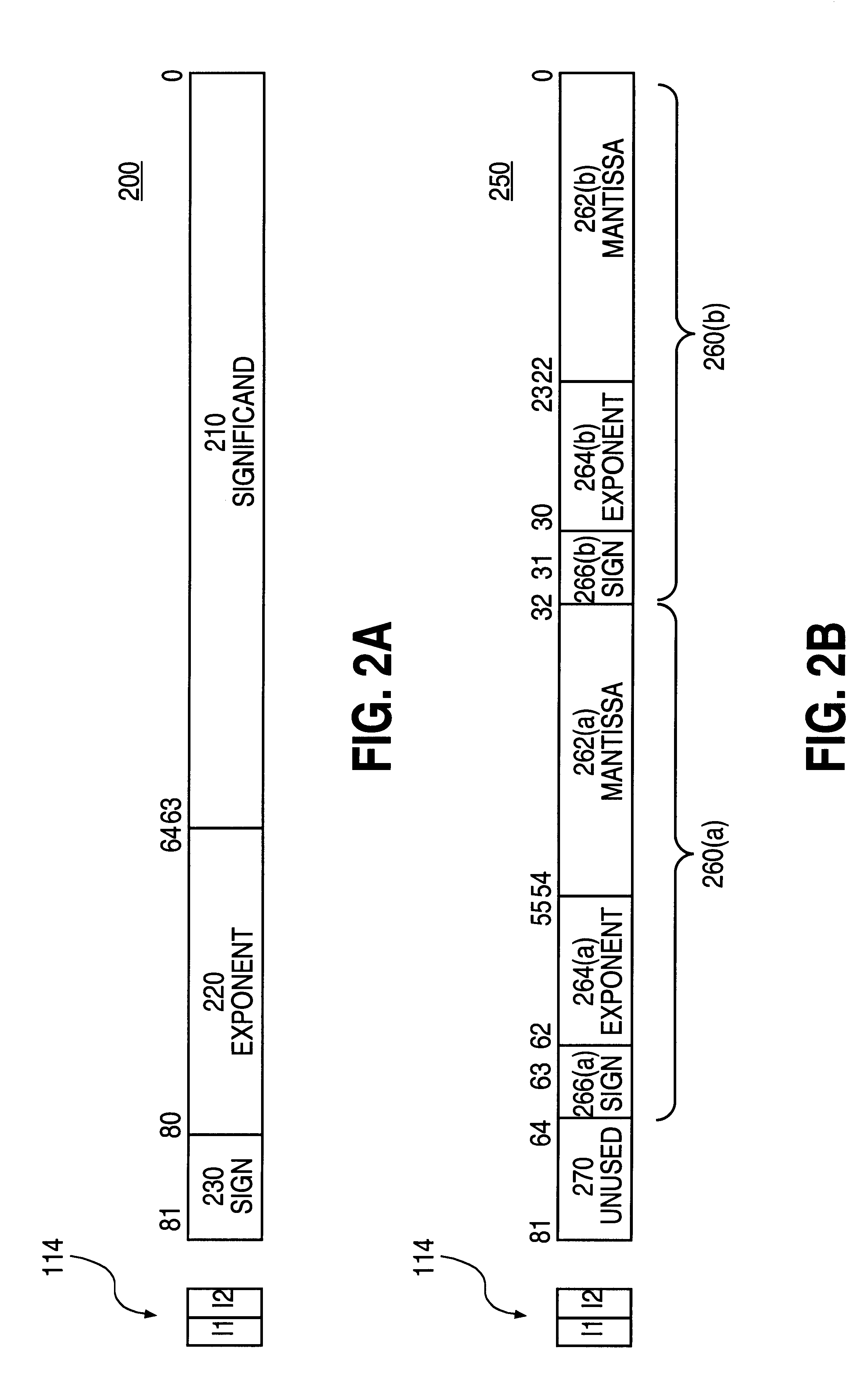

Scalar hardware for performing SIMD operations

InactiveUS6292886B1Digital computer detailsConcurrent instruction executionProcessor registerExecution unit

A system for processing SIMD operands in a packed data format includes a scalar FMAC and a vector FMAC coupled to a register file through an operand delivery module. For vector operations, the operand delivery module bit steers a SIMD operand of the packed operand into an unpacked operand for processing by the first execution unit. Another SIMD operand is processed by the vector execution unit.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com