Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

258 results about "Instruction buffer" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Microprocessors

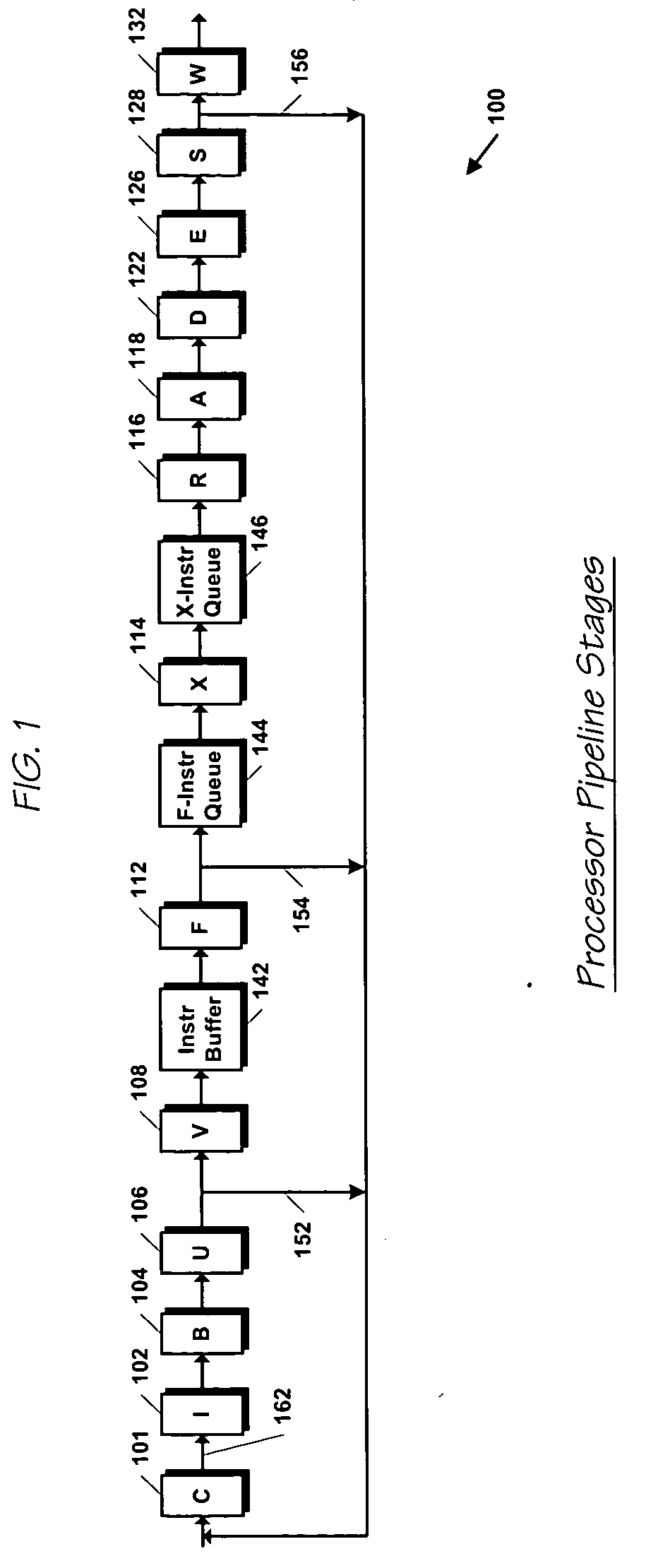

A processor (100) is provided that is a programmable fixed point digital signal processor (DSP) with variable instruction length, offering both high code density and easy programming. Architecture and instruction set are optimized for low power consumption and high efficiency execution of DSP algorithms, such as for wireless telephones, as well as pure control tasks. The processor includes an instruction buffer unit (106), a program flow control unit (108), an address / data flow unit (110), a data computation unit (112), and multiple interconnecting busses. Dual multiply-accumulate blocks improve processing performance. A memory interface unit (104) provides parallel access to data and instruction memories. The instruction buffer is operable to buffer single and compound instructions pending execution thereof. A decode mechanism is configured to decode instructions from the instruction buffer. The use of compound instructions enables effective use of the bandwidth available within the processor. A soft dual memory instruction can be compiled from separate first and second programmed memory instructions. Instructions can be conditionally executed or repeatedly executed. Bit field processing and various addressing modes, such as circular buffer addressing, further support execution of DSP algorithms. The processor includes a multistage execution pipeline with pipeline protection features. Various functional modules can be separately powered down to conserve power. The processor includes emulation and code debugging facilities with support for cache analysis.

Owner:TEXAS INSTR INC

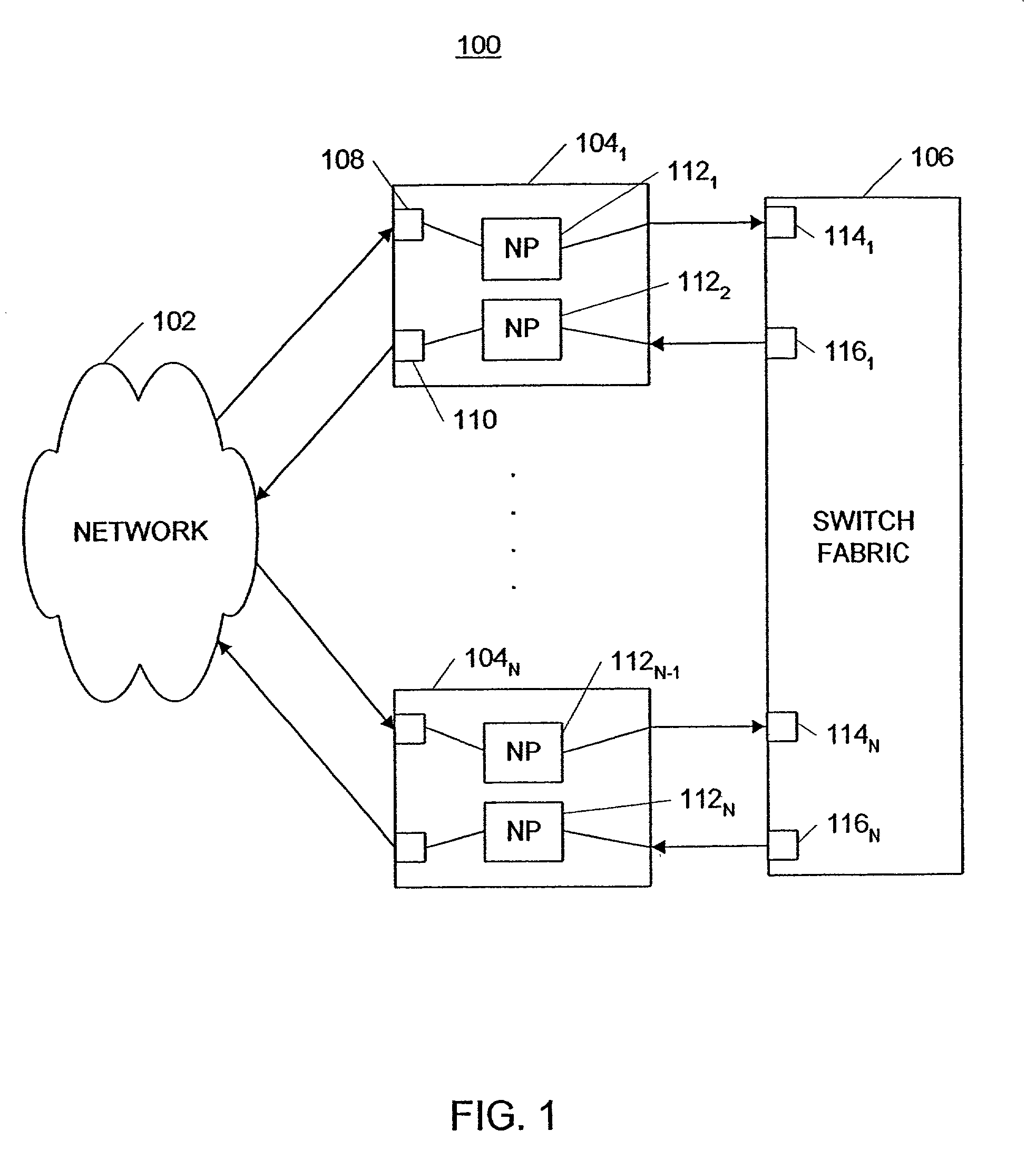

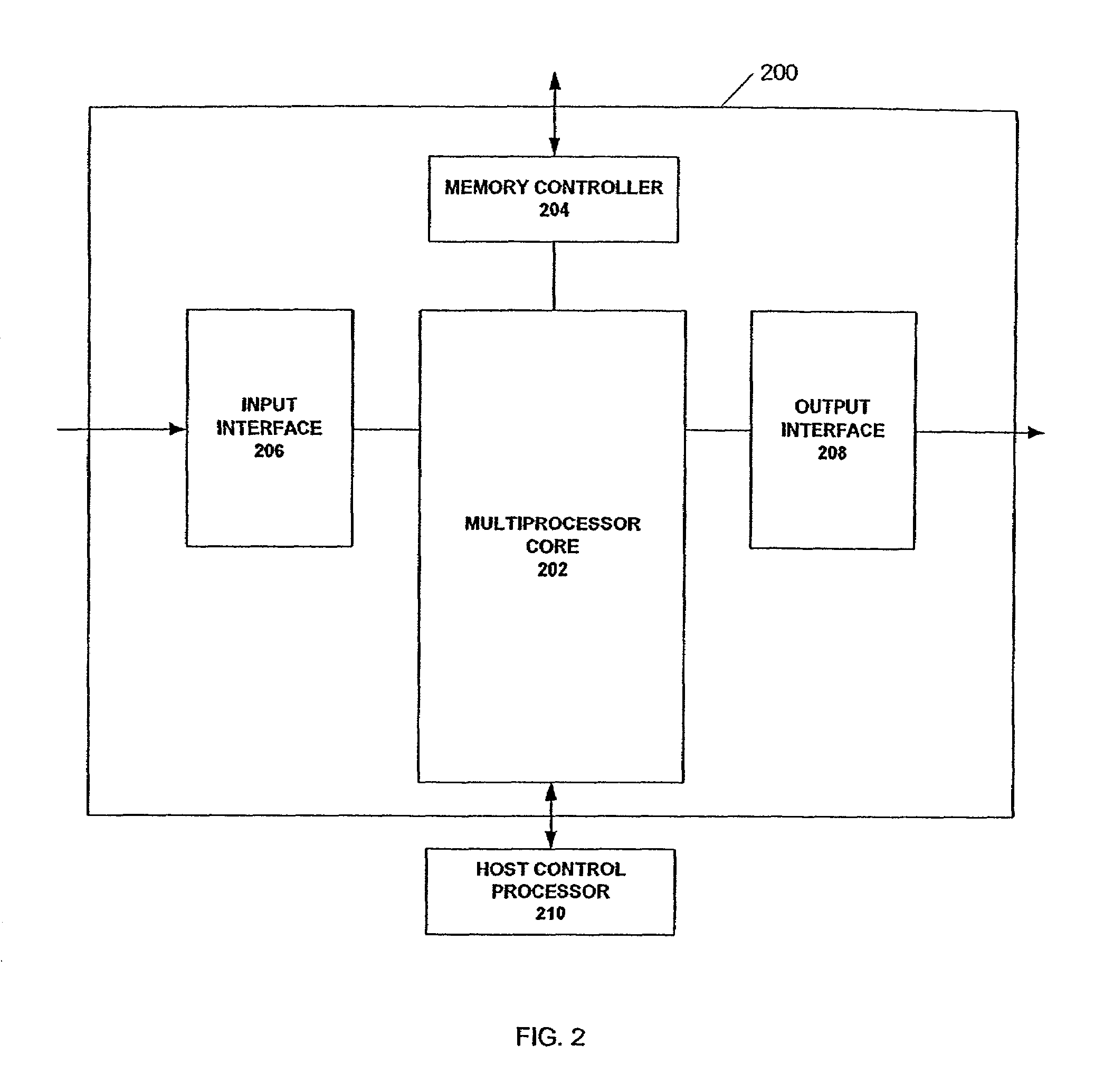

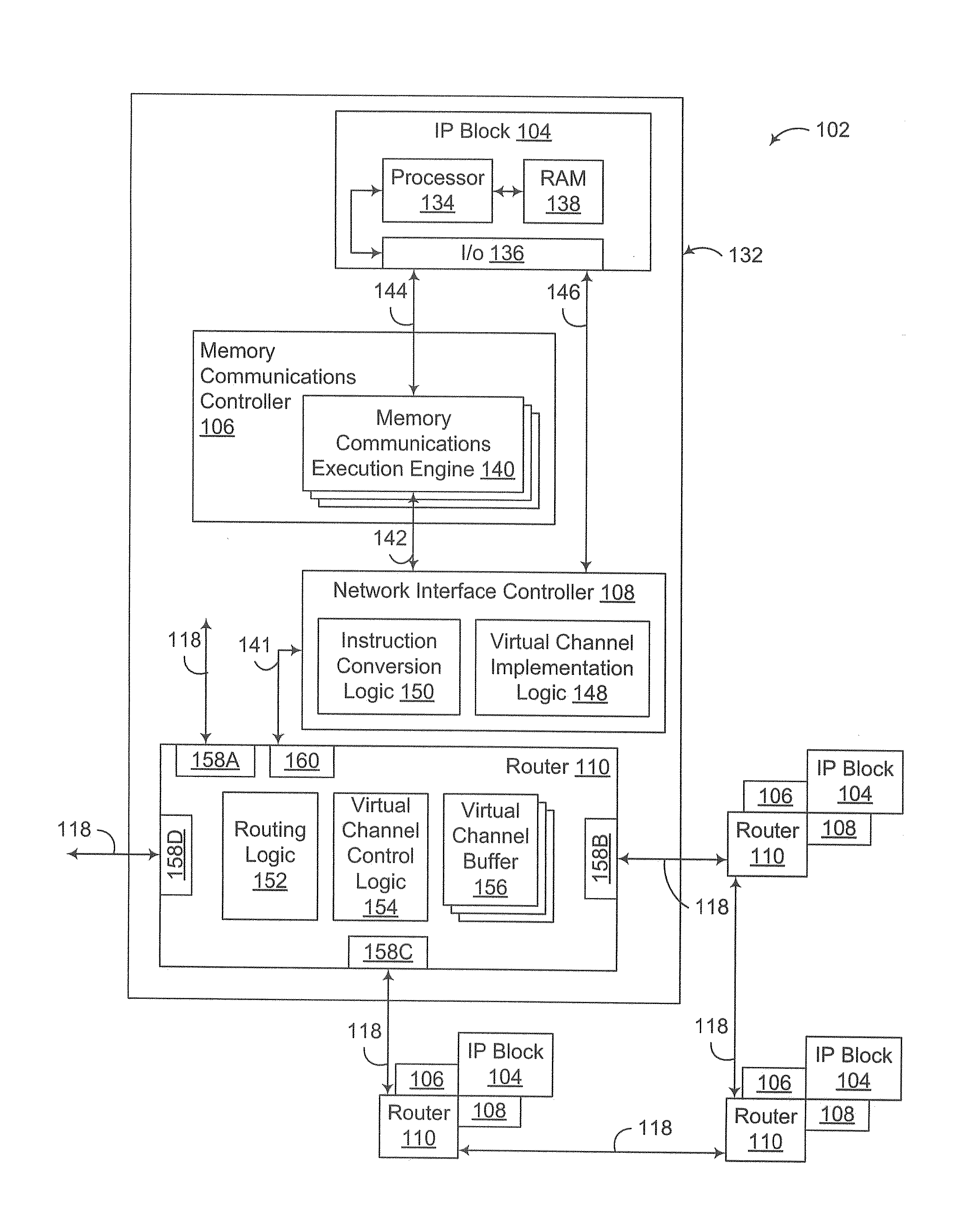

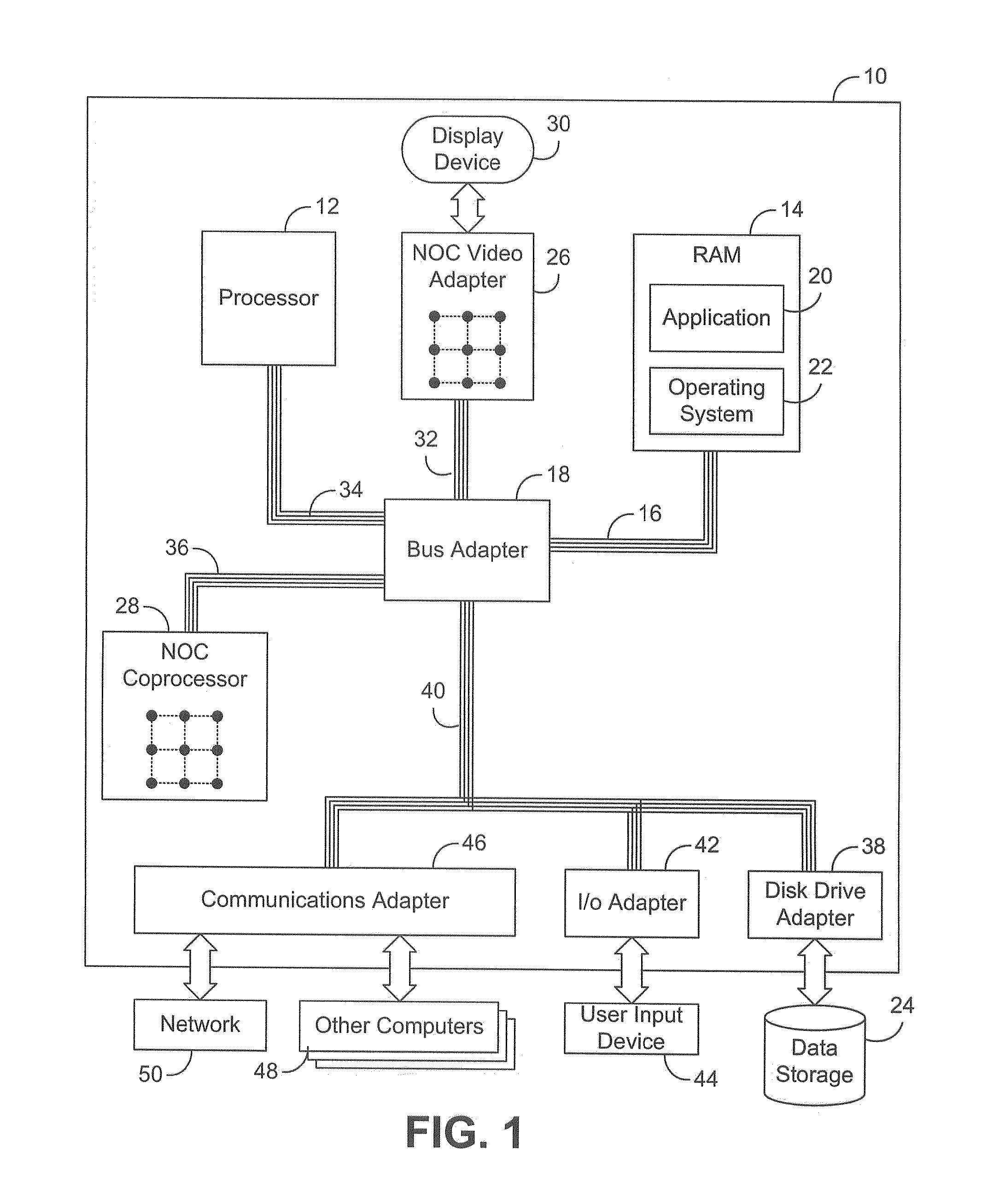

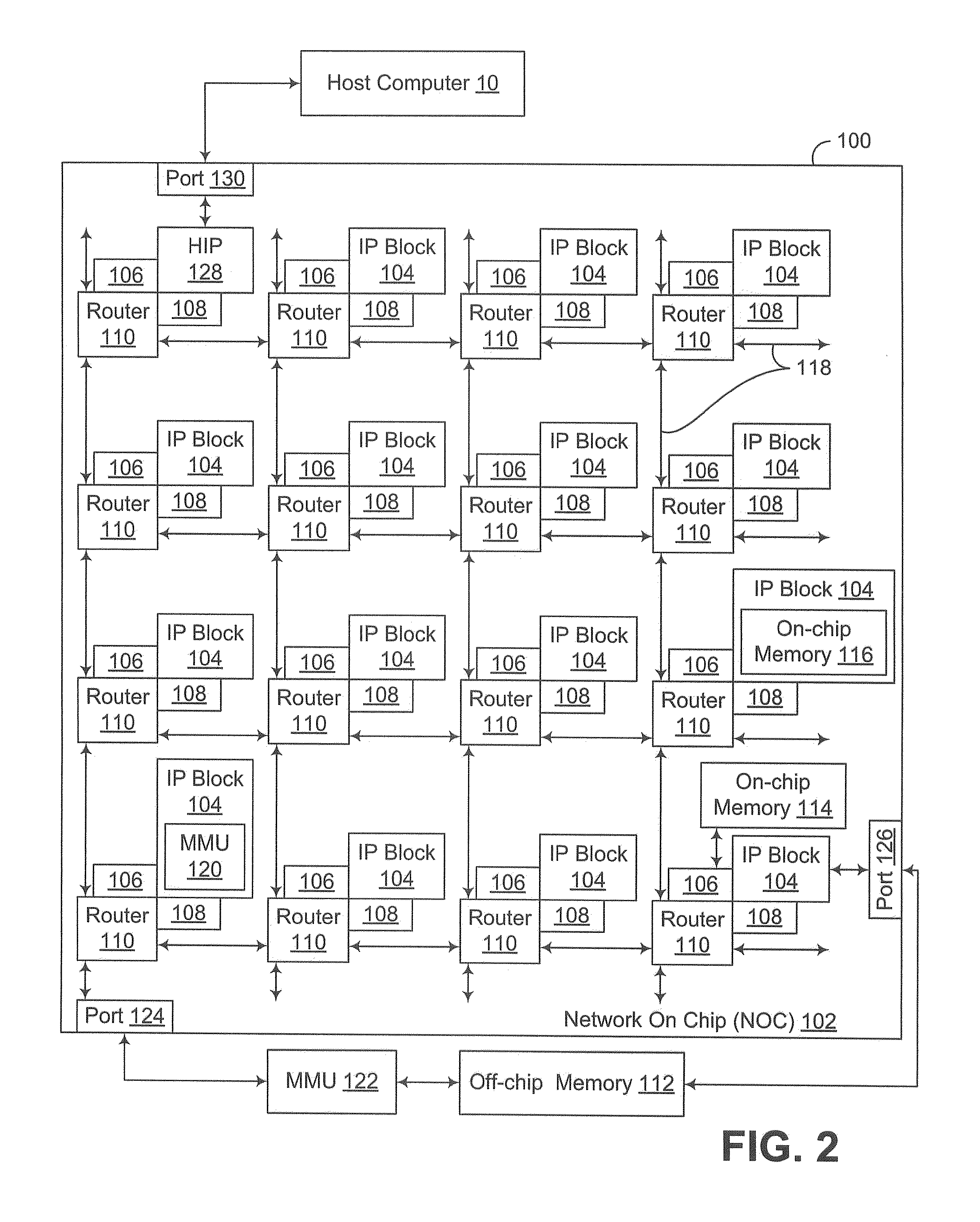

System and method for instruction-level parallelism in a programmable multiple network processor environment

InactiveUS6950927B1Digital computer detailsConcurrent instruction executionInstruction unitExecution unit

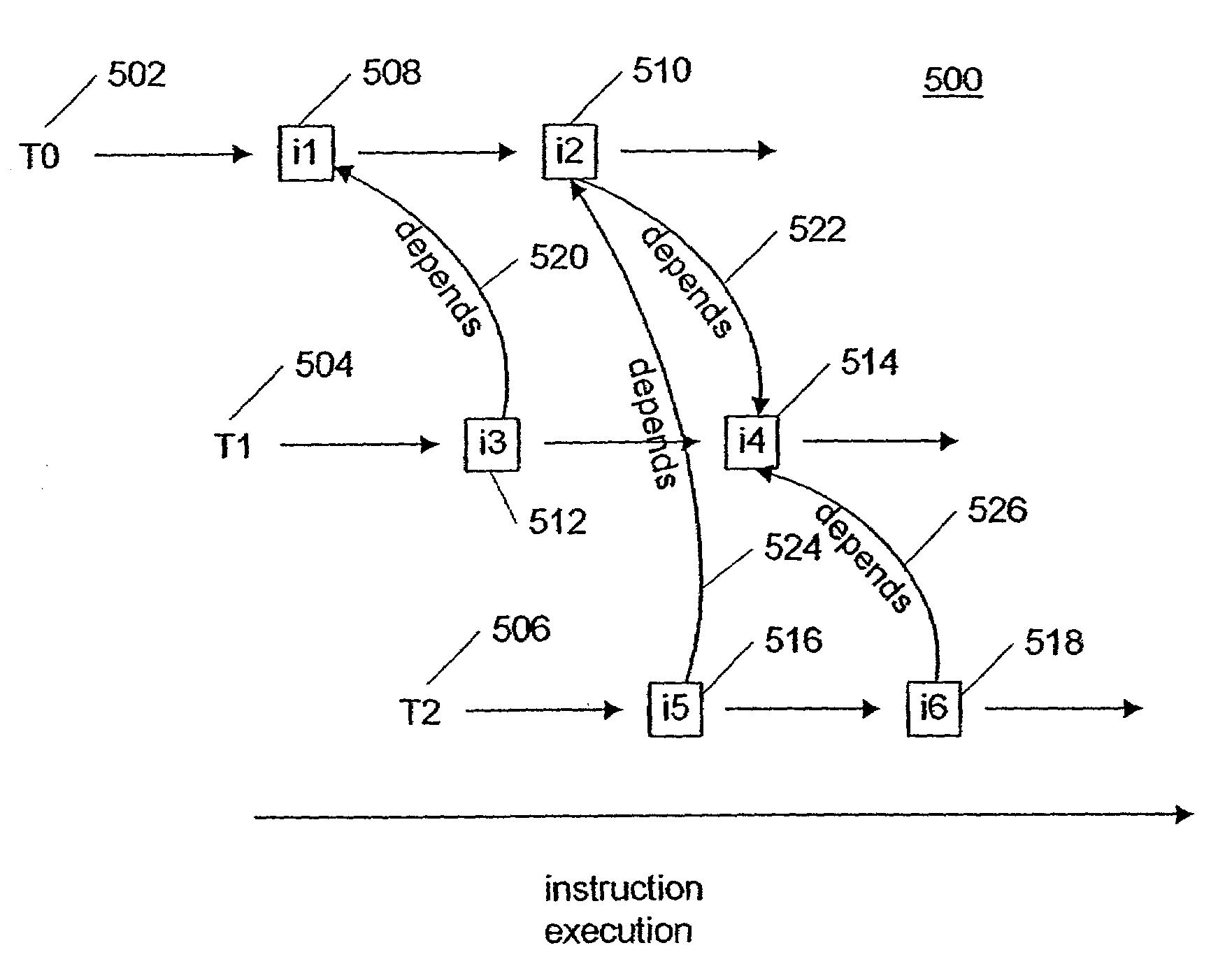

A system and method process data elements with instruction-level parallelism. An instruction buffer holds a first instruction and a second instruction, the first instruction being associated with a first thread, and the second instruction being associated with a second thread. A dependency counter counts satisfaction of dependencies of instructions of the second thread on instructions of the first thread. An instruction control unit is coupled to the instruction buffer and the dependency counter, the instruction control unit increments and decrements the dependency counter according to dependency information included in instructions. An execution switch is coupled to the instruction control unit and the instruction buffer, and the execution switch routes instructions to instruction execution units.

Owner:UNITED STATES OF AMERICA +1

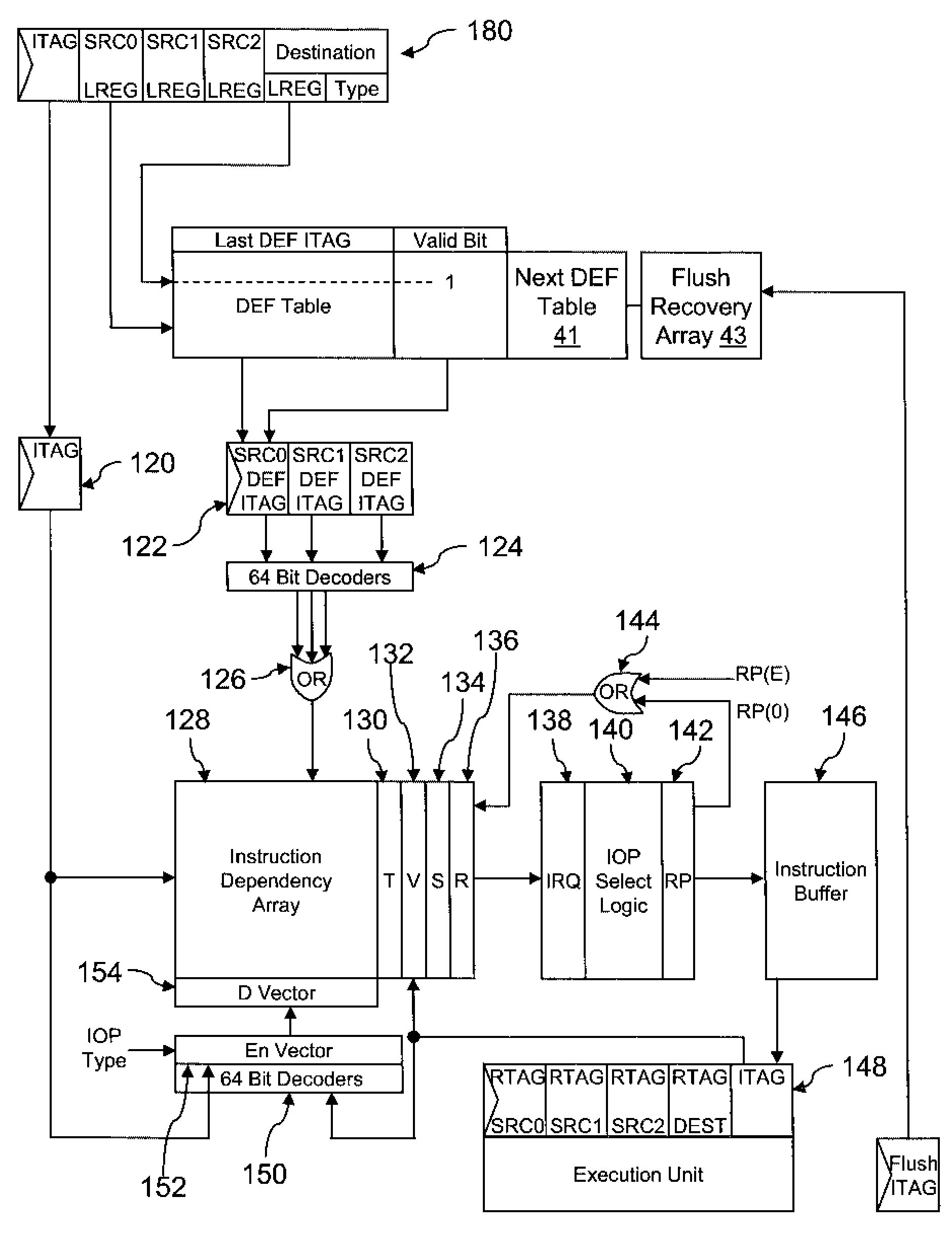

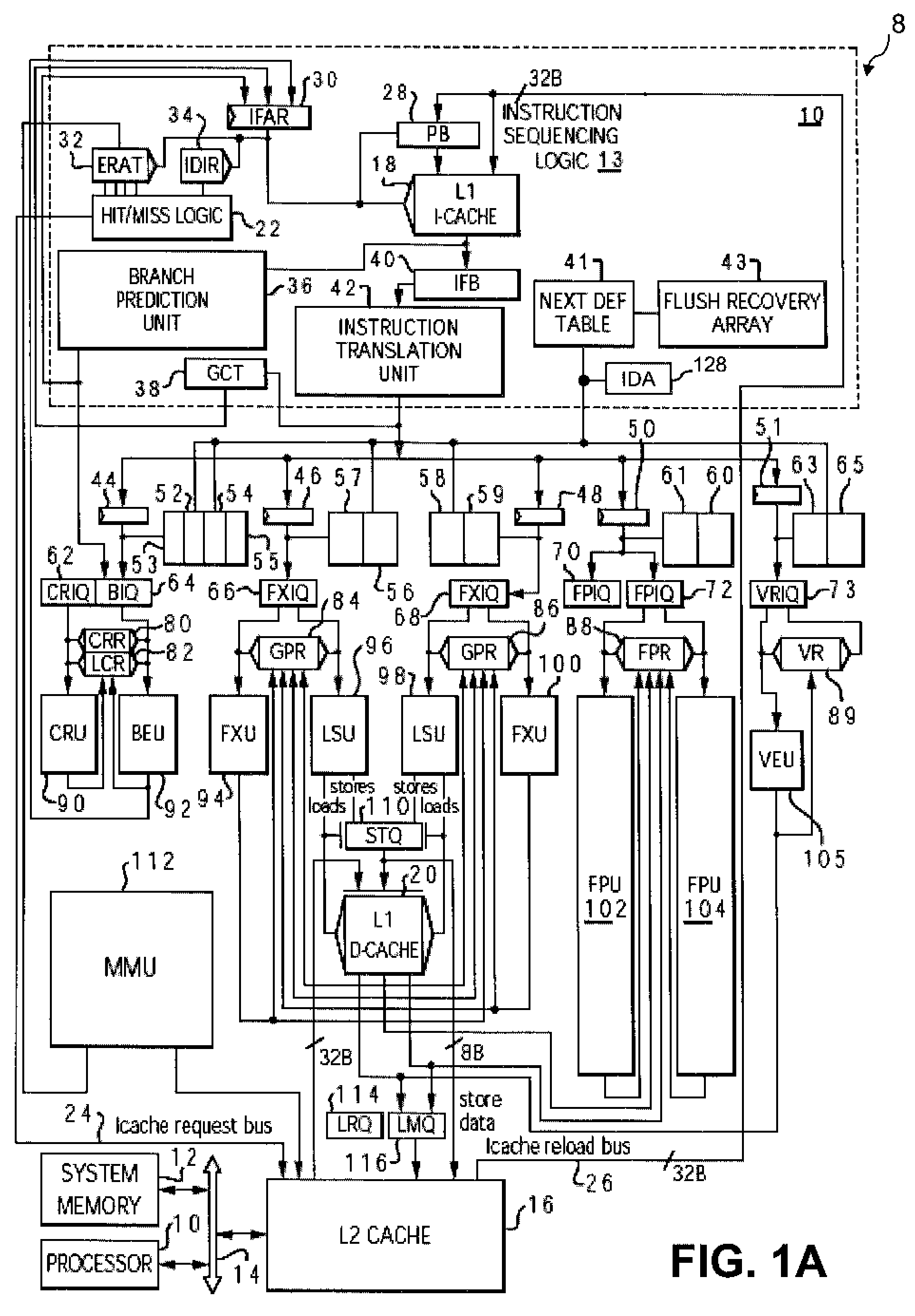

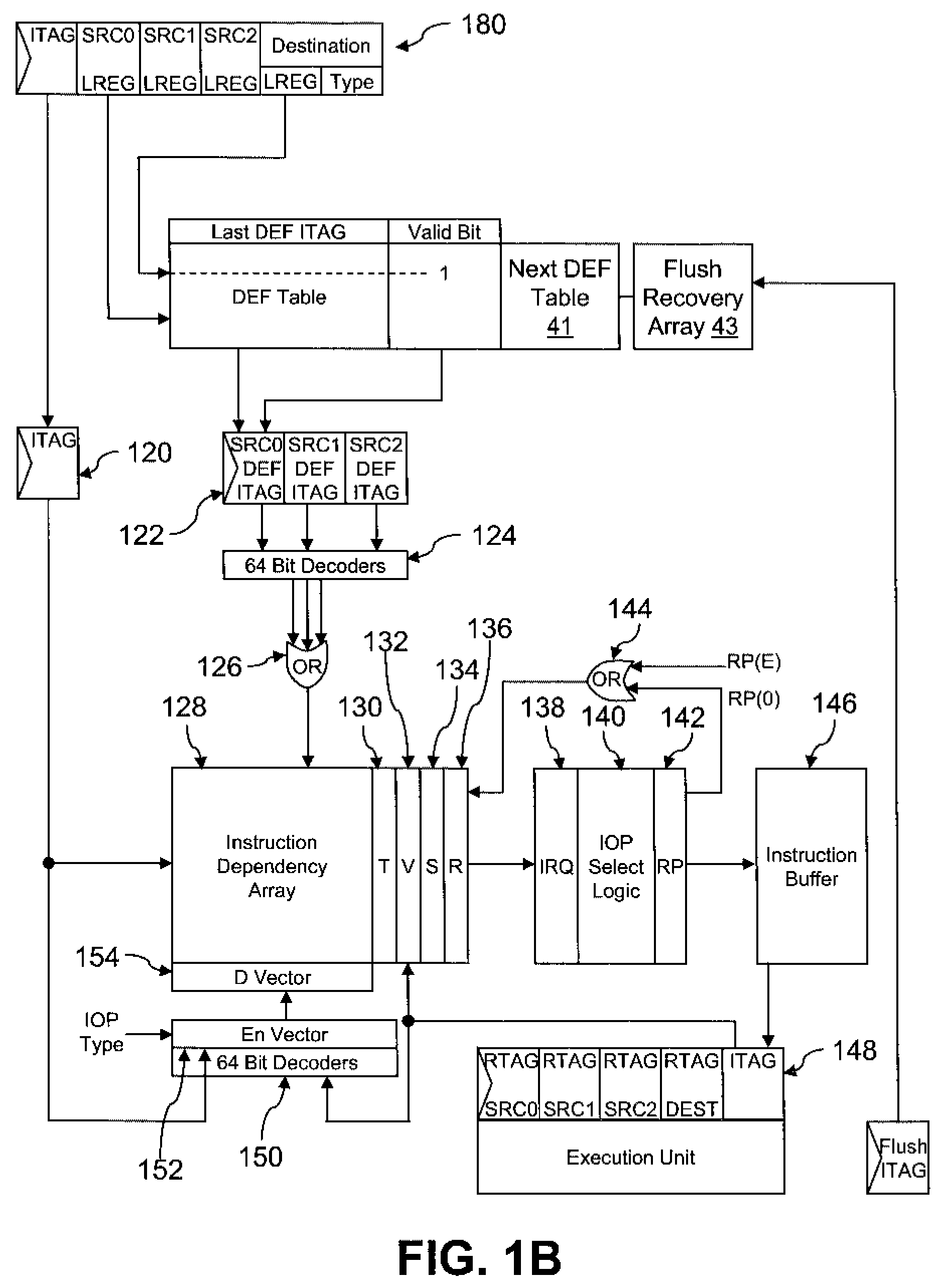

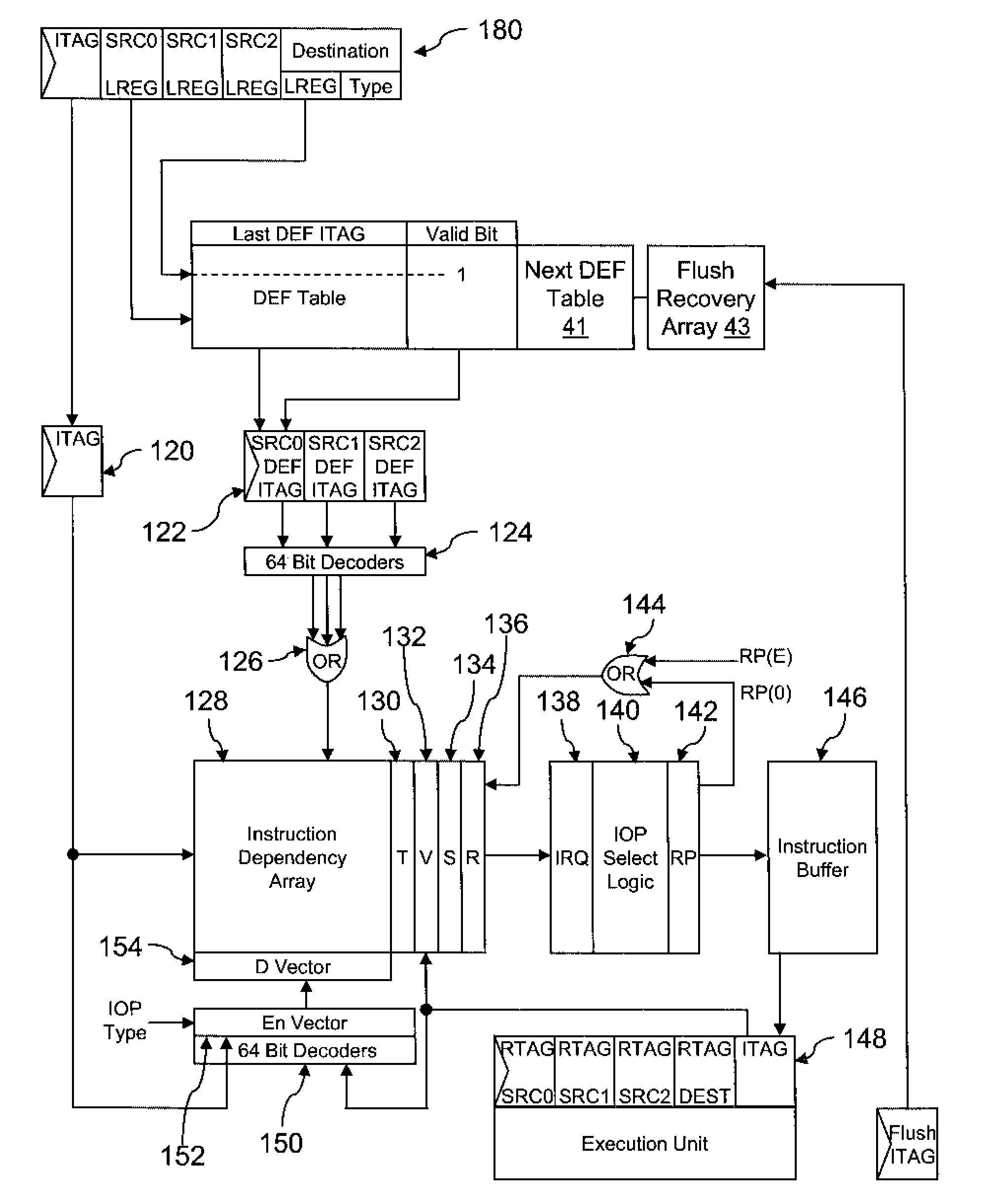

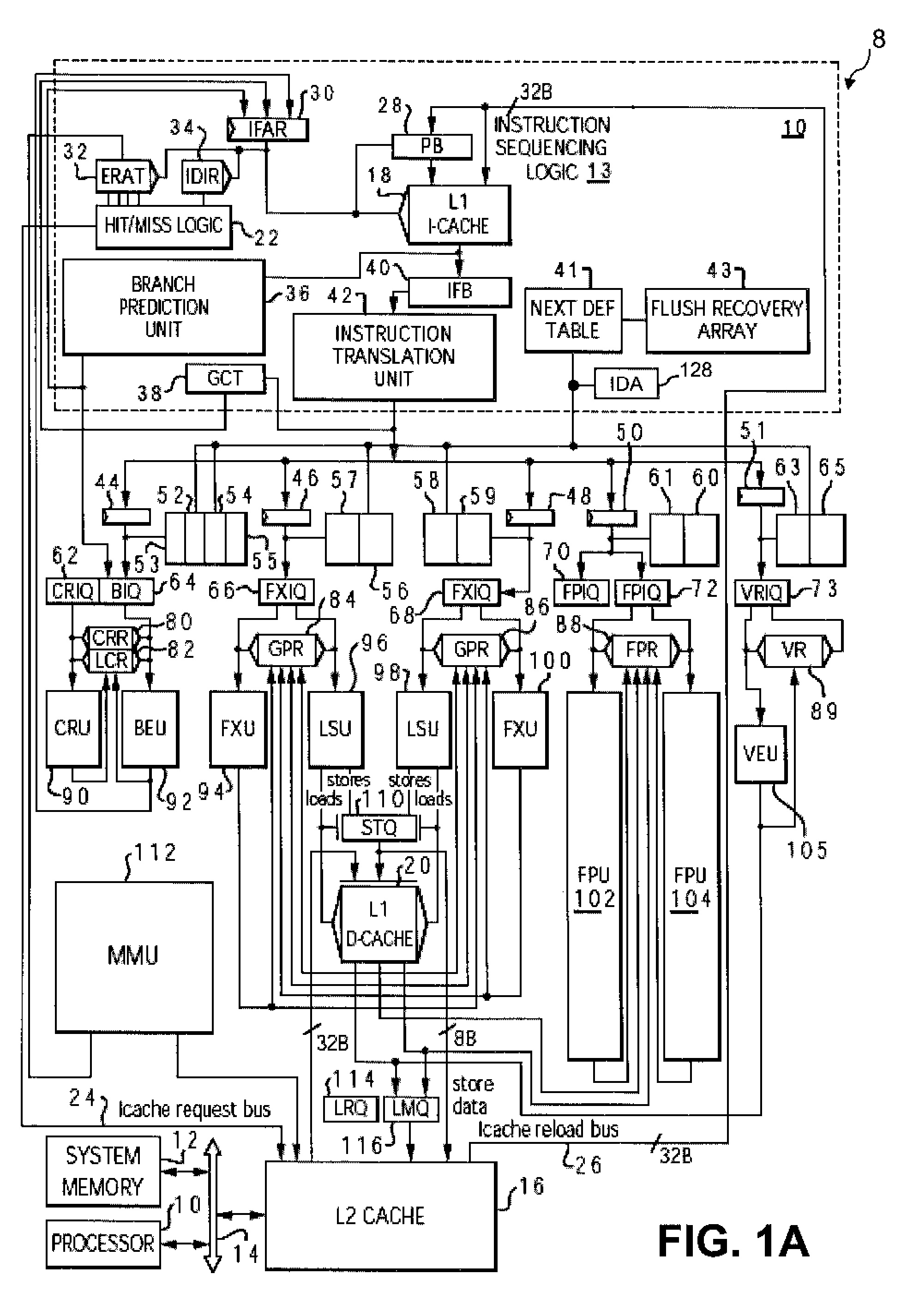

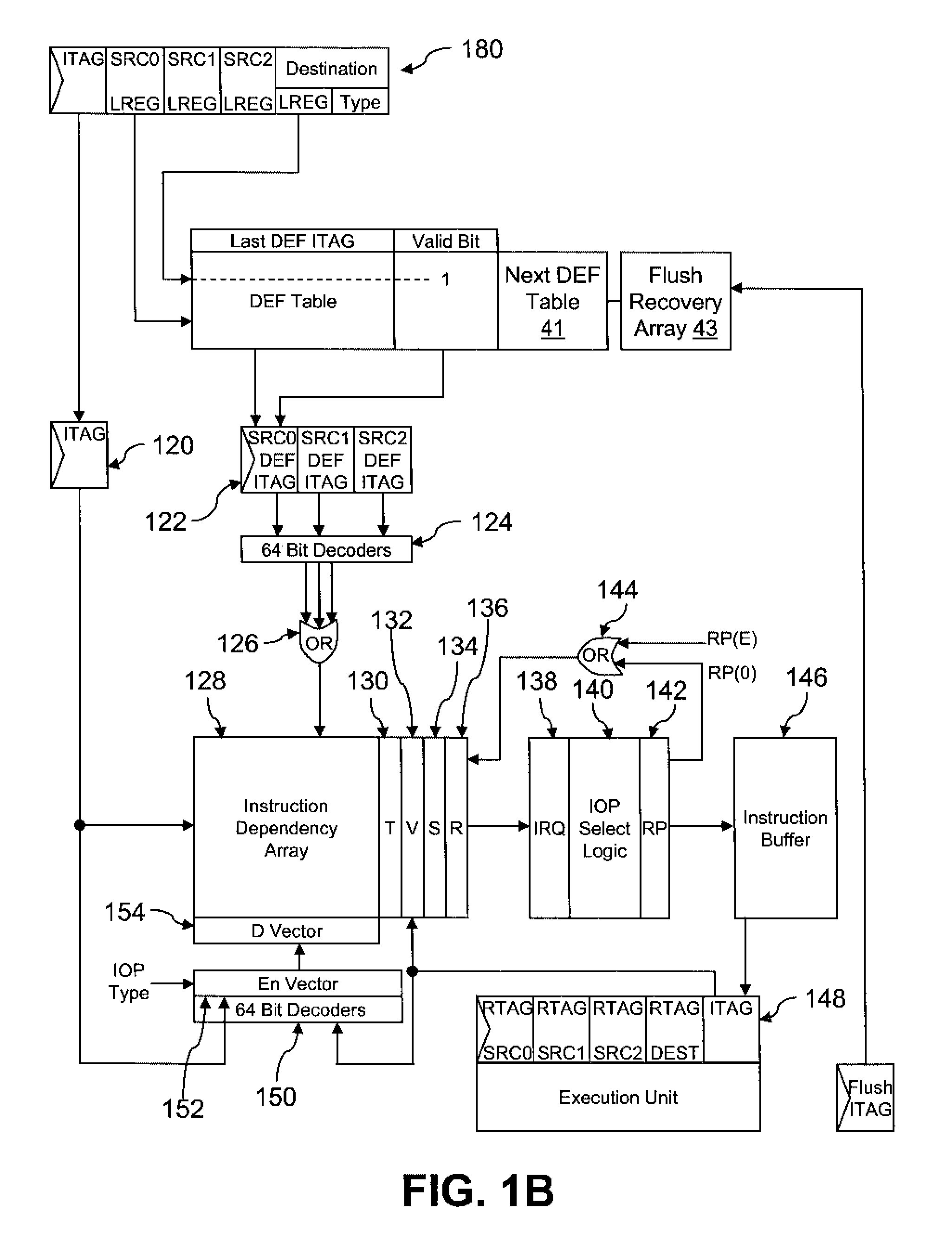

Method and system for tracking instruction dependency in an out-of-order processor

InactiveUS7711929B2Register arrangementsDigital computer detailsArray data structureData preparation

A method of tracking instruction dependency in a processor issuing instructions speculatively includes recording in an instruction dependency array (IDA) an entry for each instruction that indicates data dependencies, if any, upon other active instructions. An output vector read out from the IDA indicates data readiness based upon which instructions have previously been selected for issue. The output vector is used to select and read out issue-ready instructions from an instruction buffer.

Owner:INT BUSINESS MASCH CORP

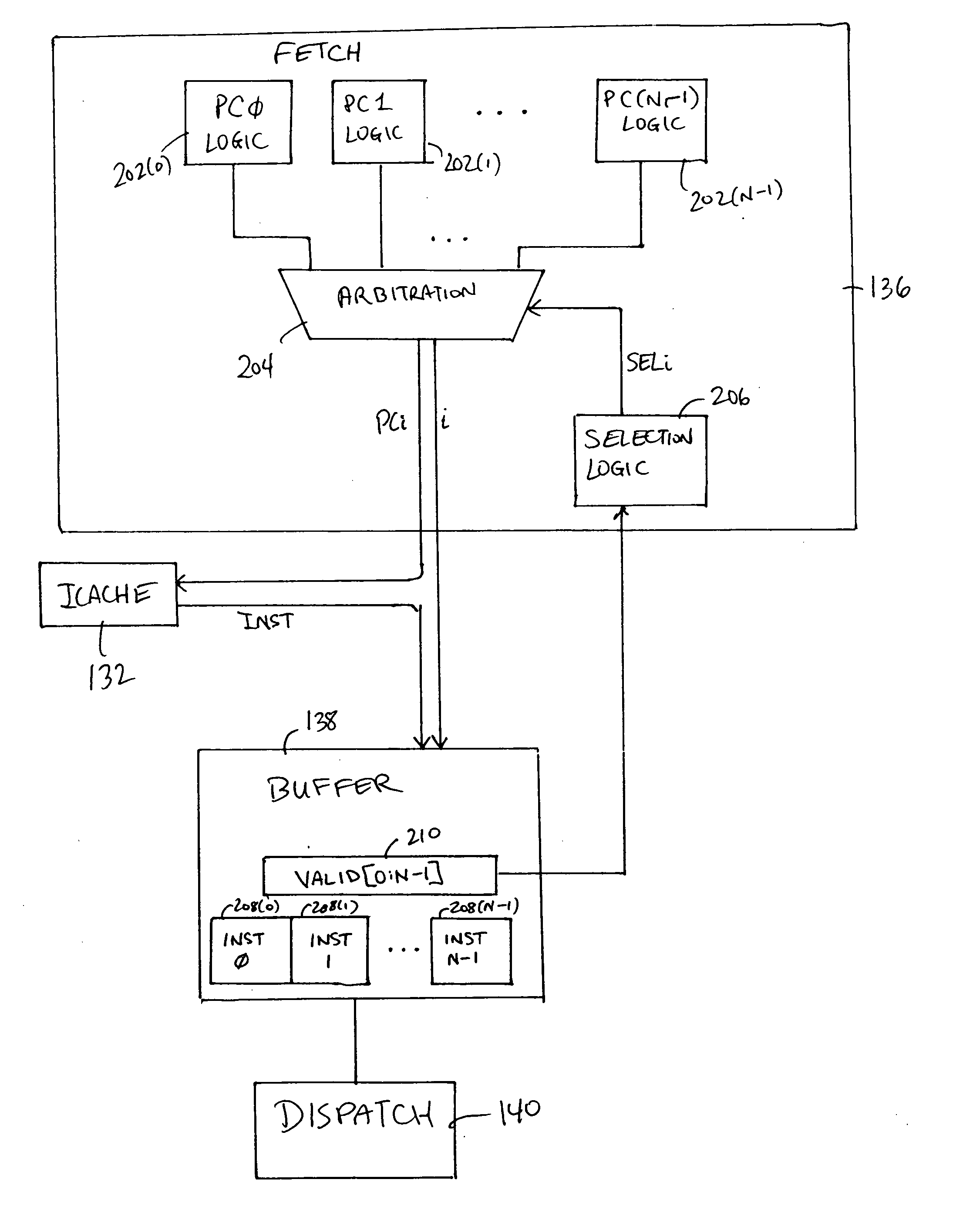

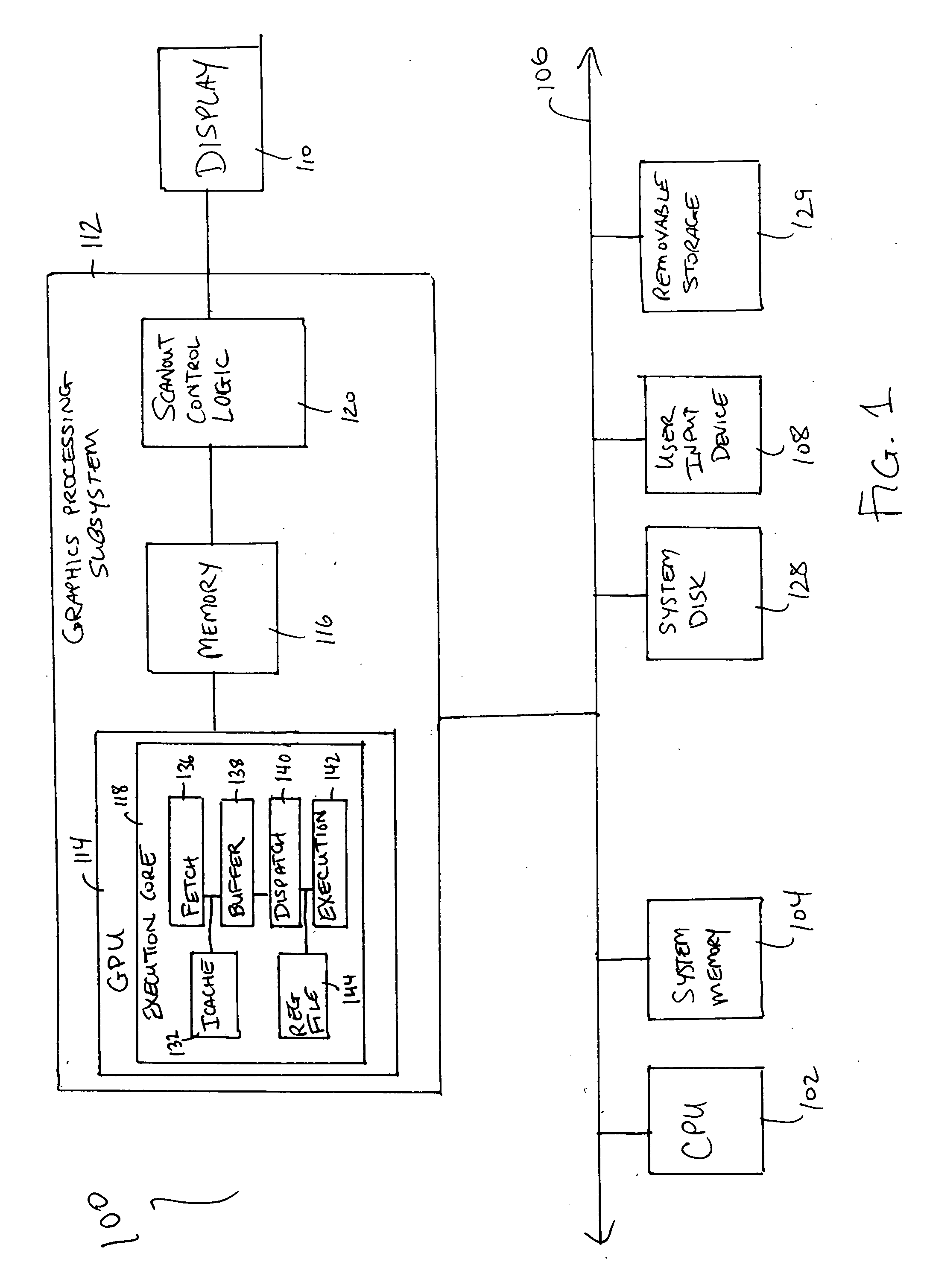

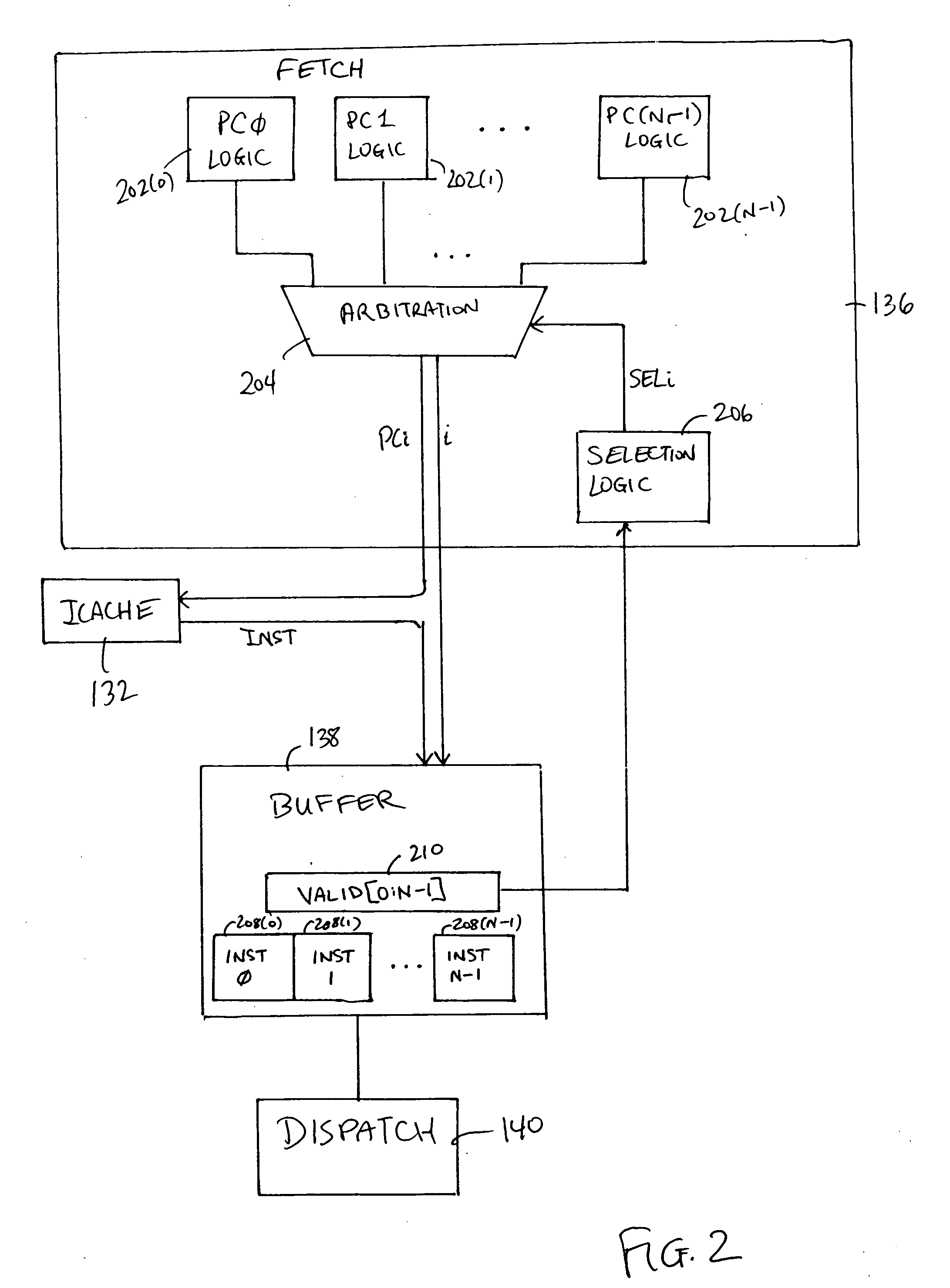

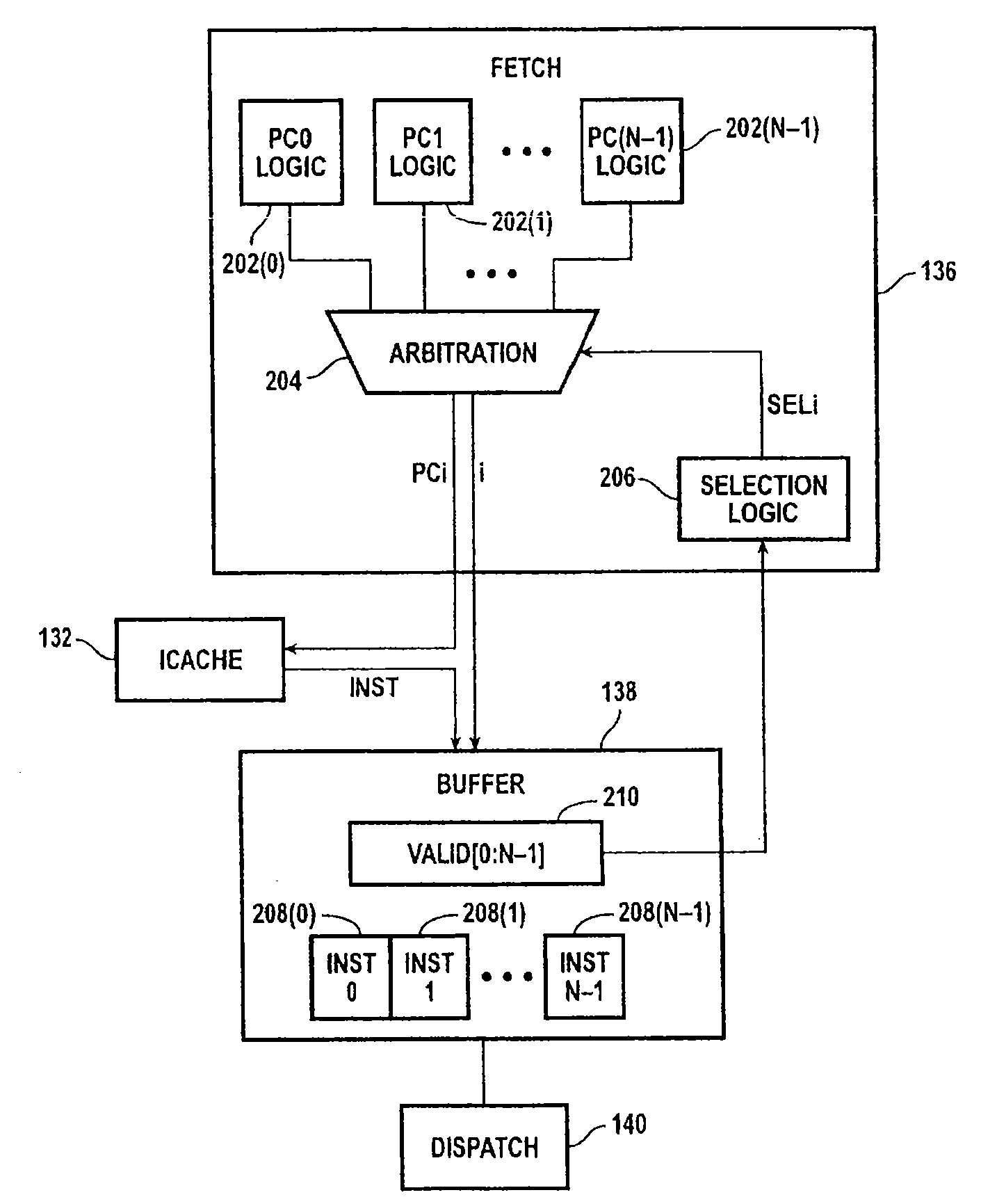

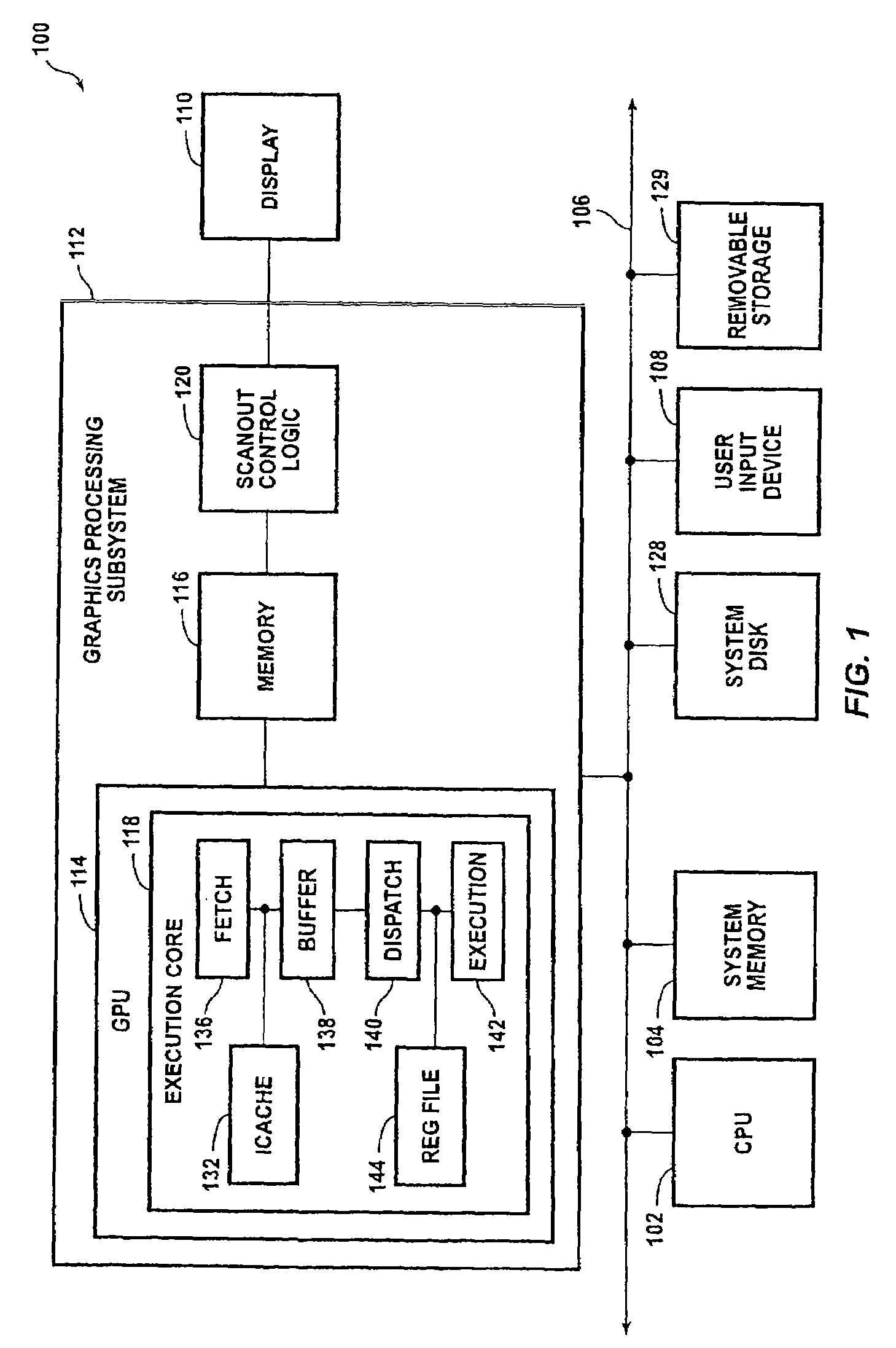

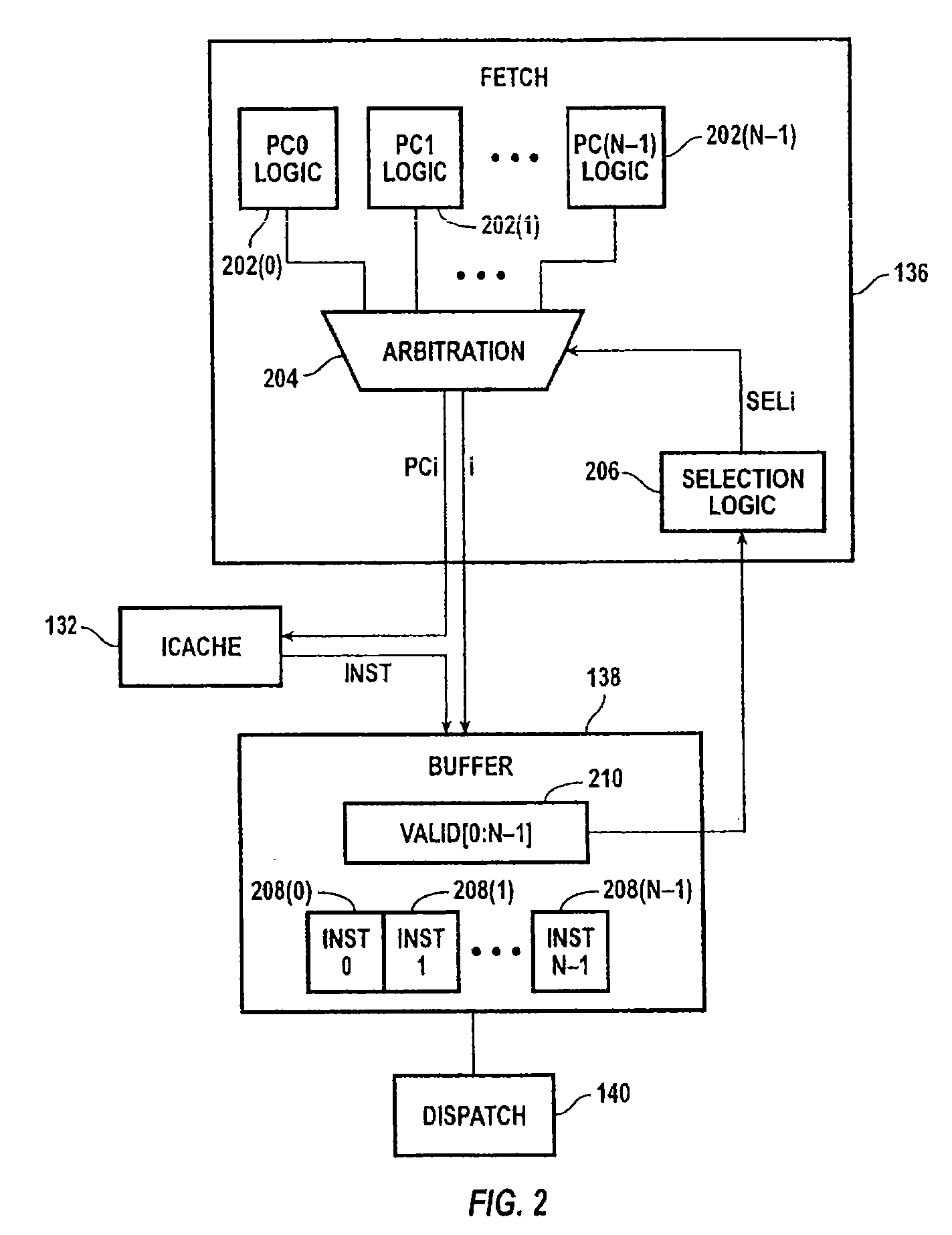

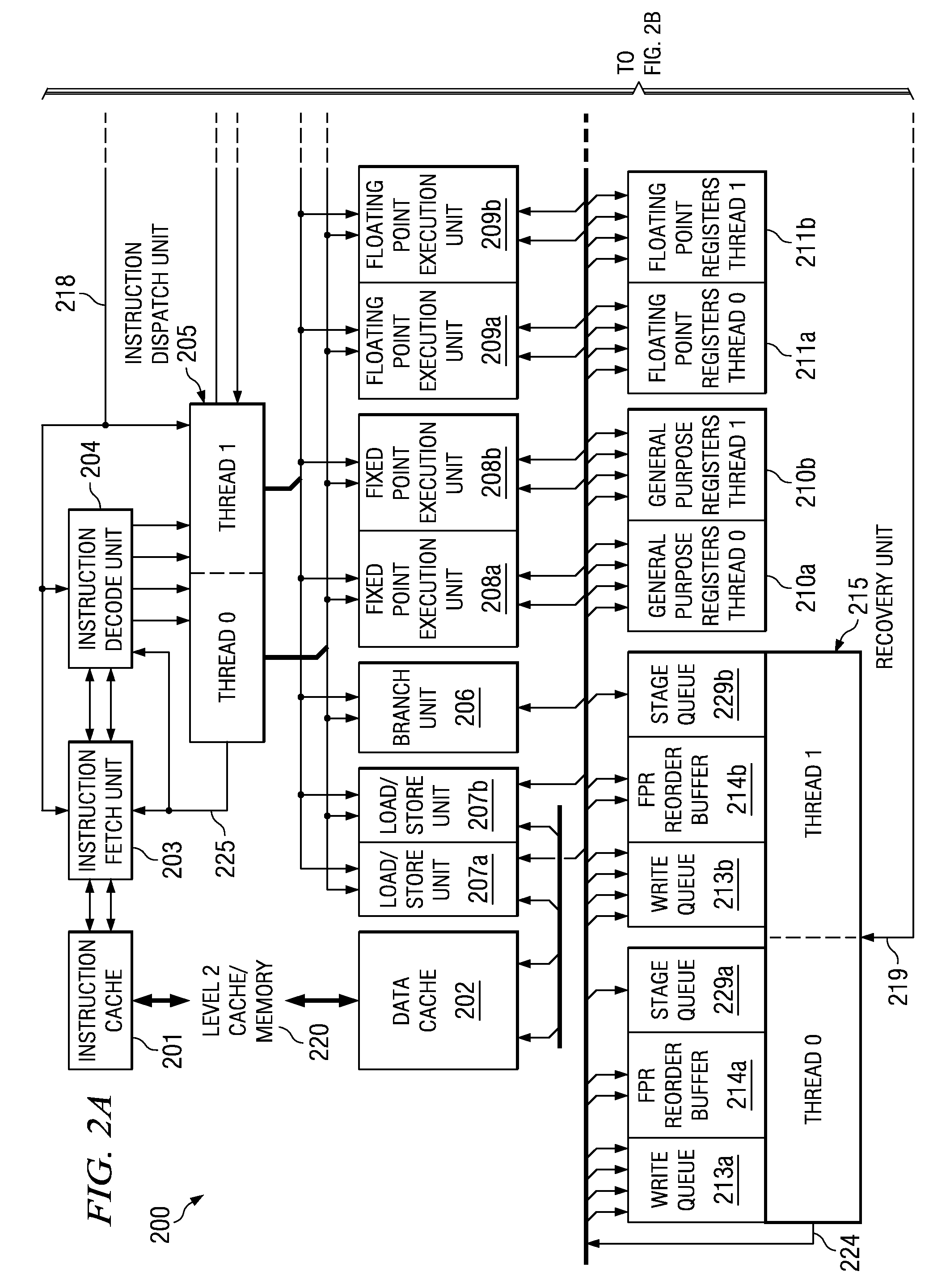

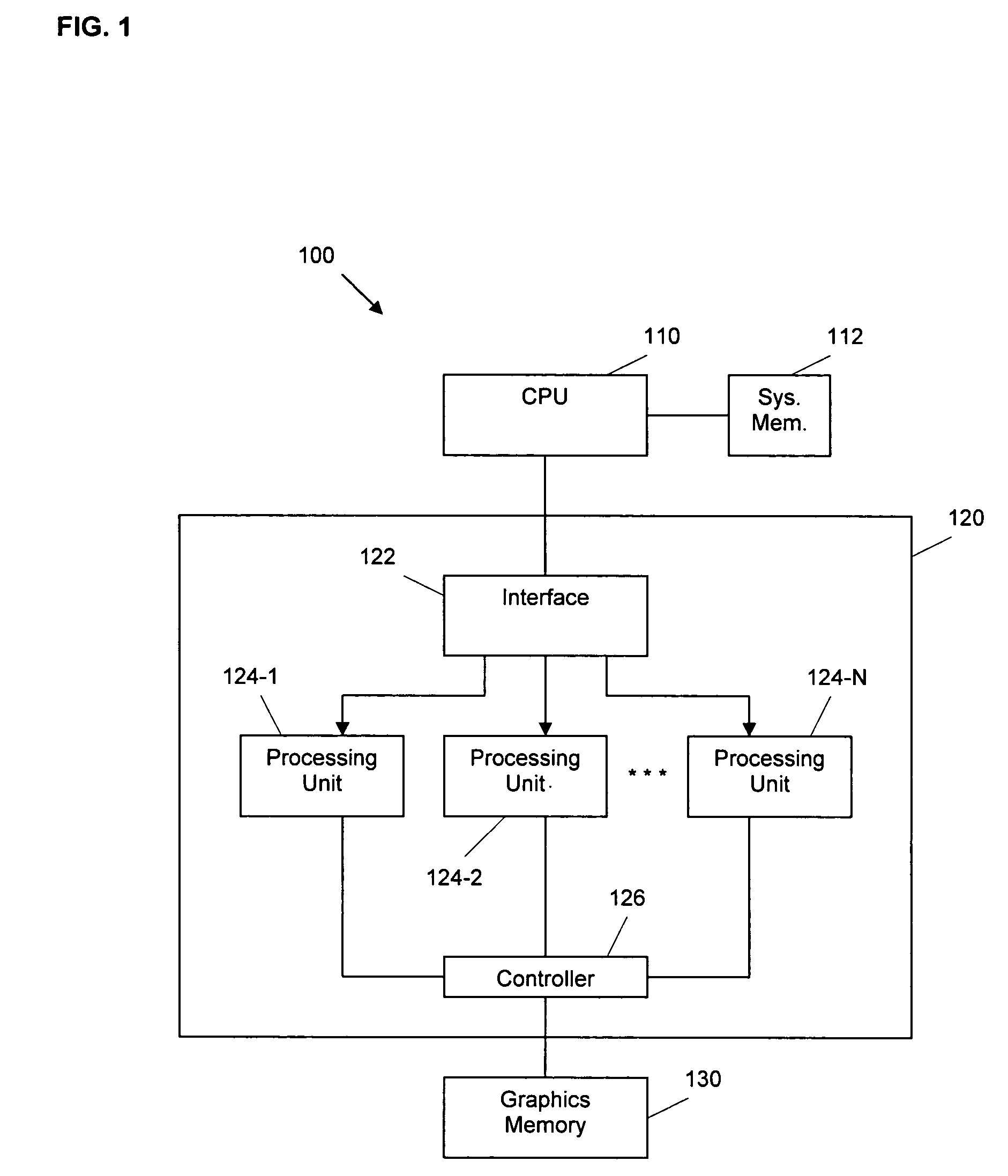

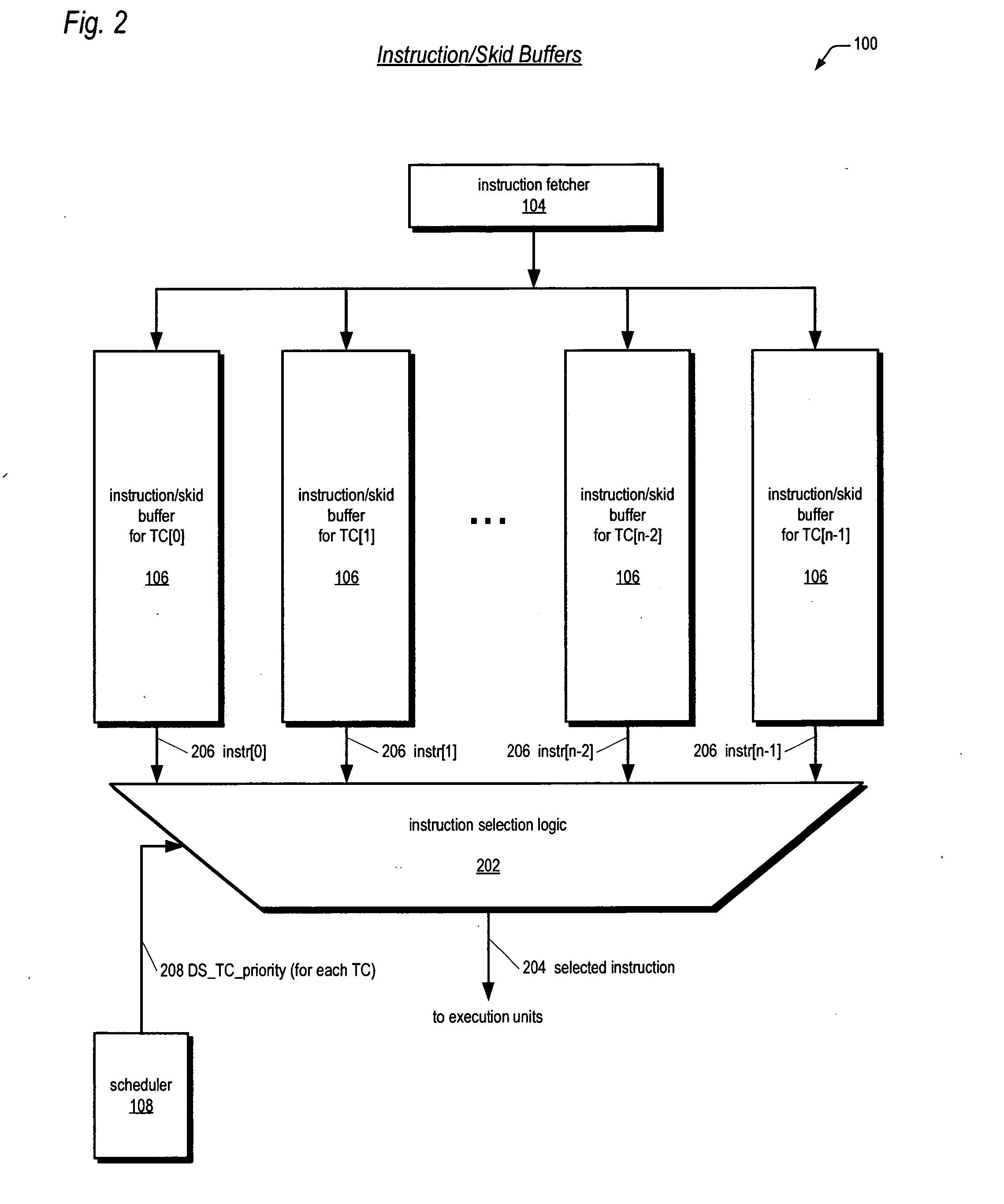

Across-thread out of order instruction dispatch in a multithreaded graphics processor

ActiveUS20050138328A1Digital computer detailsMultiprogramming arrangementsGraphicsInstruction buffer

Instruction dispatch in a multithreaded microprocessor such as a graphics processor is not constrained by an order among the threads. Instructions are fetched into an instruction buffer that is configured to store an instruction from each of the threads. A dispatch circuit determines which instructions in the buffer are ready to execute and may issue any ready instruction for execution. An instruction from one thread may be issued prior to an instruction from another thread regardless of which instruction was fetched into the buffer first. Once an instruction from a particular thread has issued, the fetch circuit fills the available buffer location with the following instruction from that thread.

Owner:NVIDIA CORP

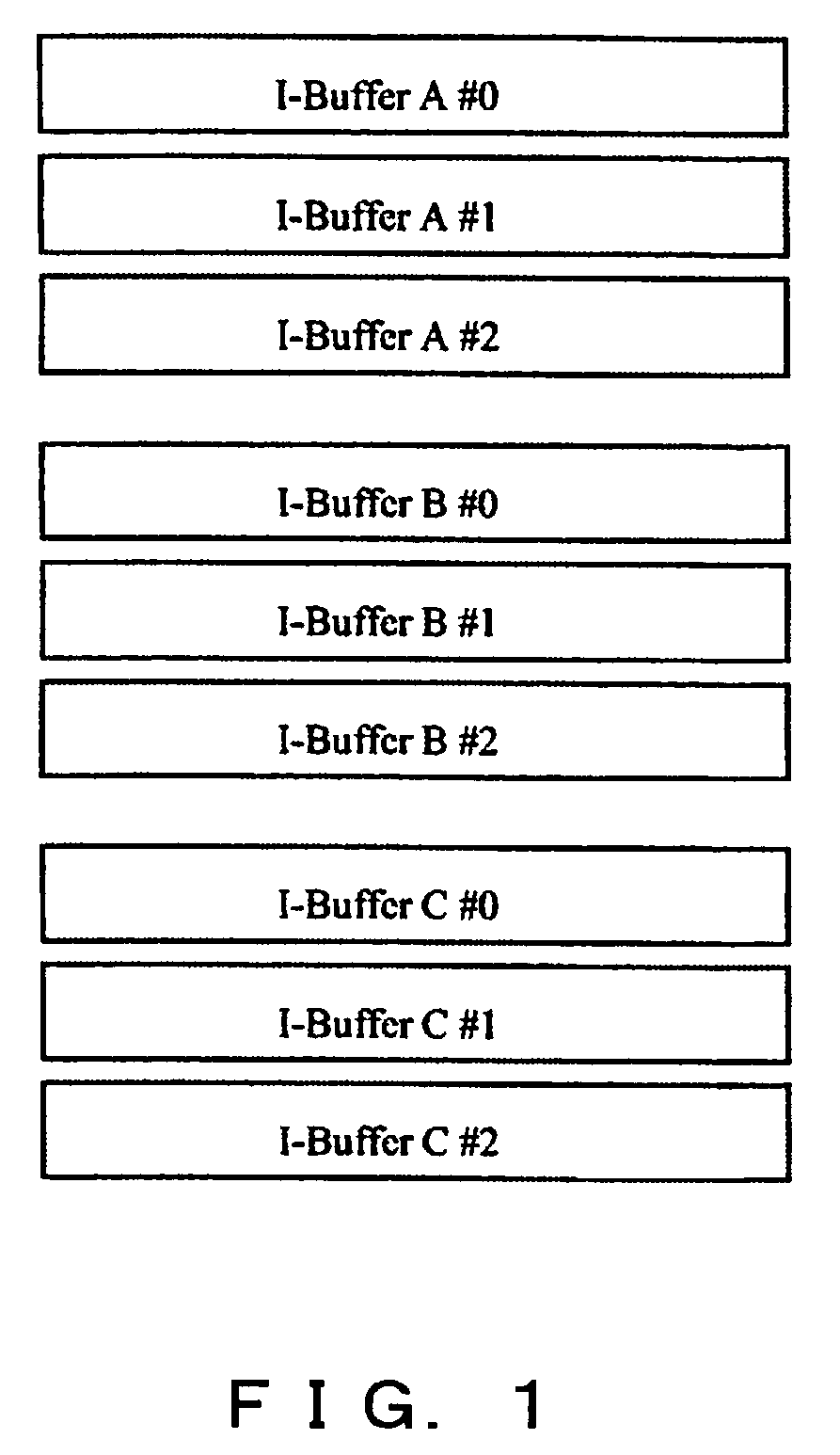

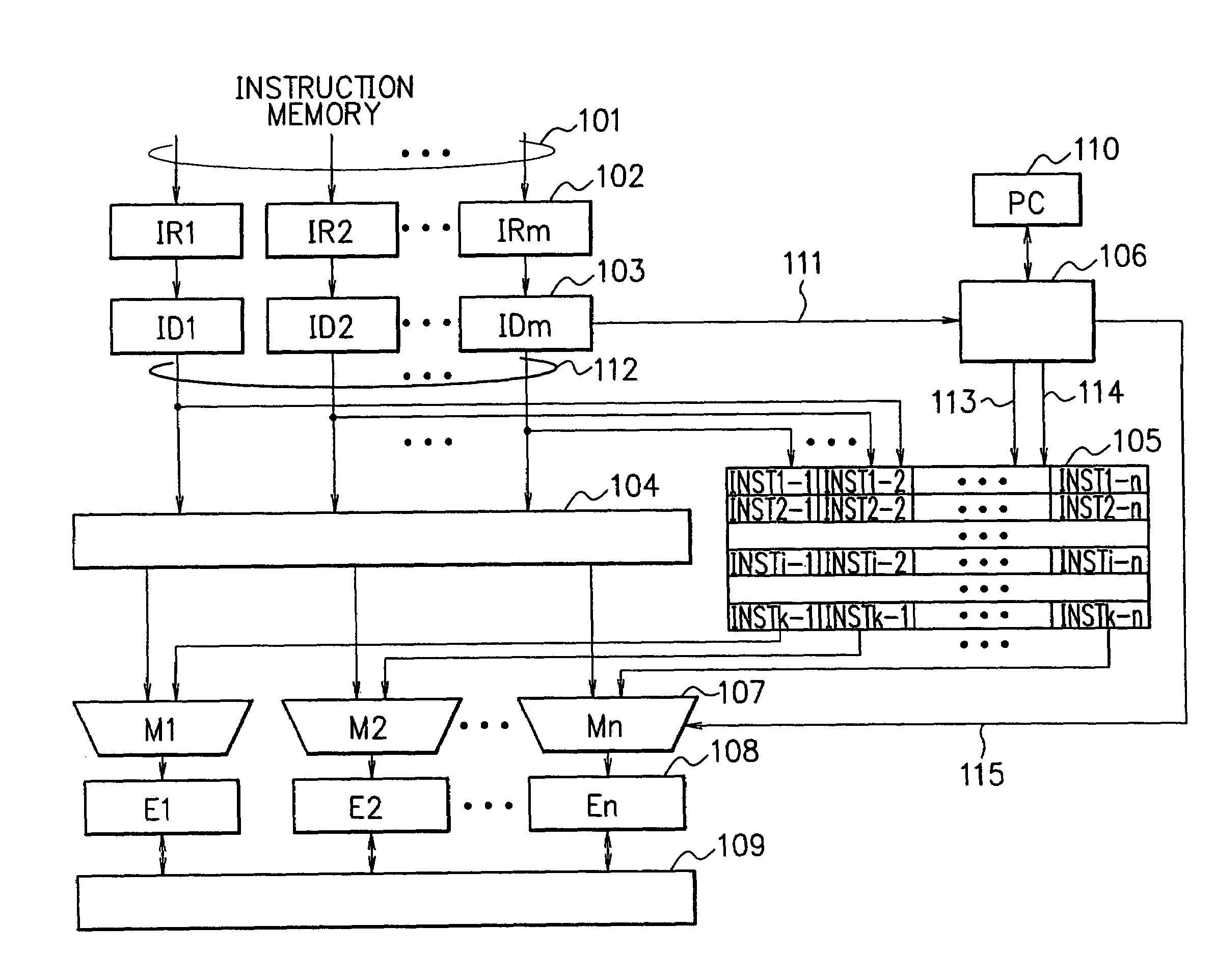

Vector crossing multithread processing method and vector crossing multithread microprocessor

InactiveCN102156637AImprove peak performanceGive full play to computing powerMultiprogramming arrangementsConcurrent instruction executionHardware structureThread scheduling

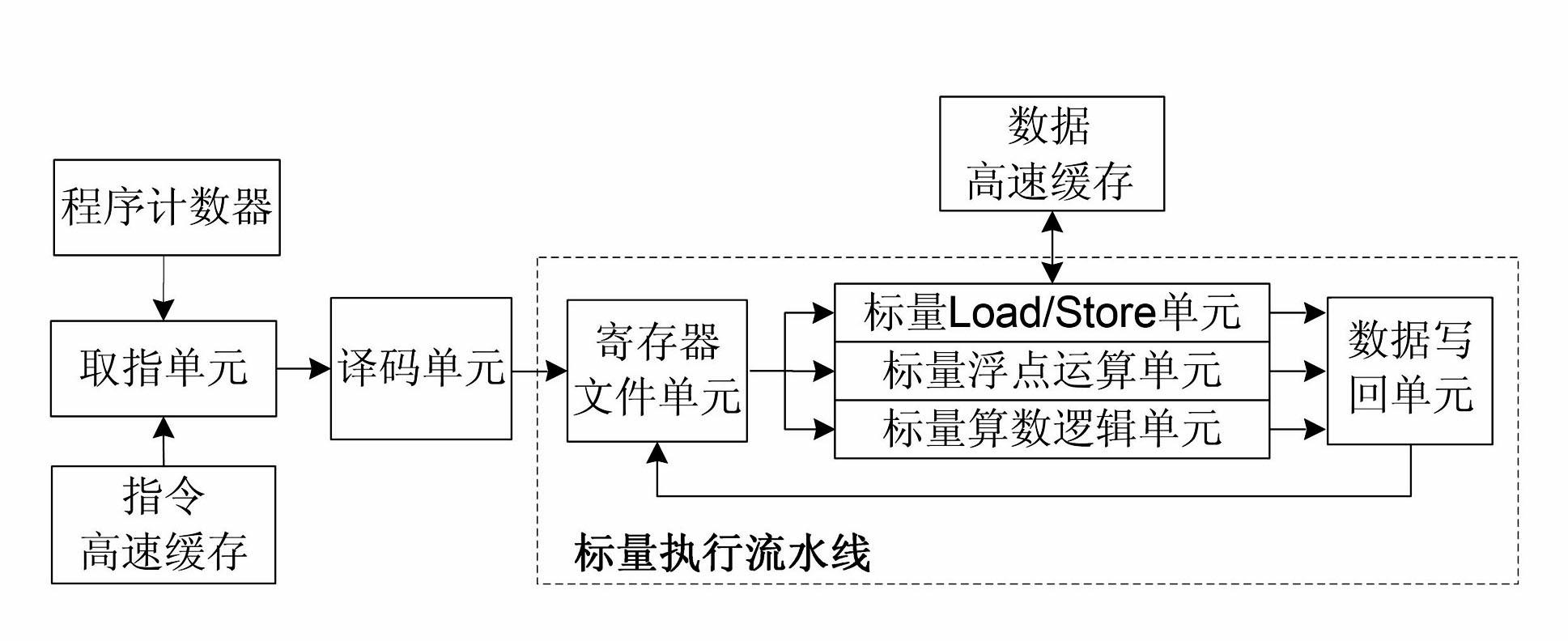

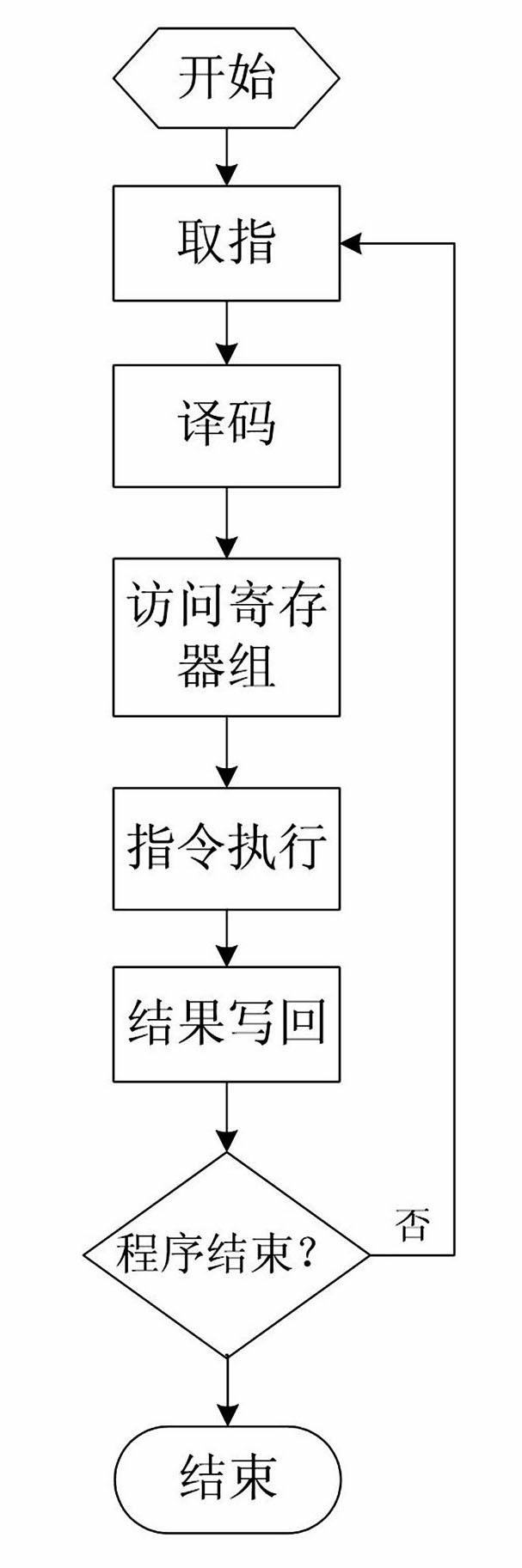

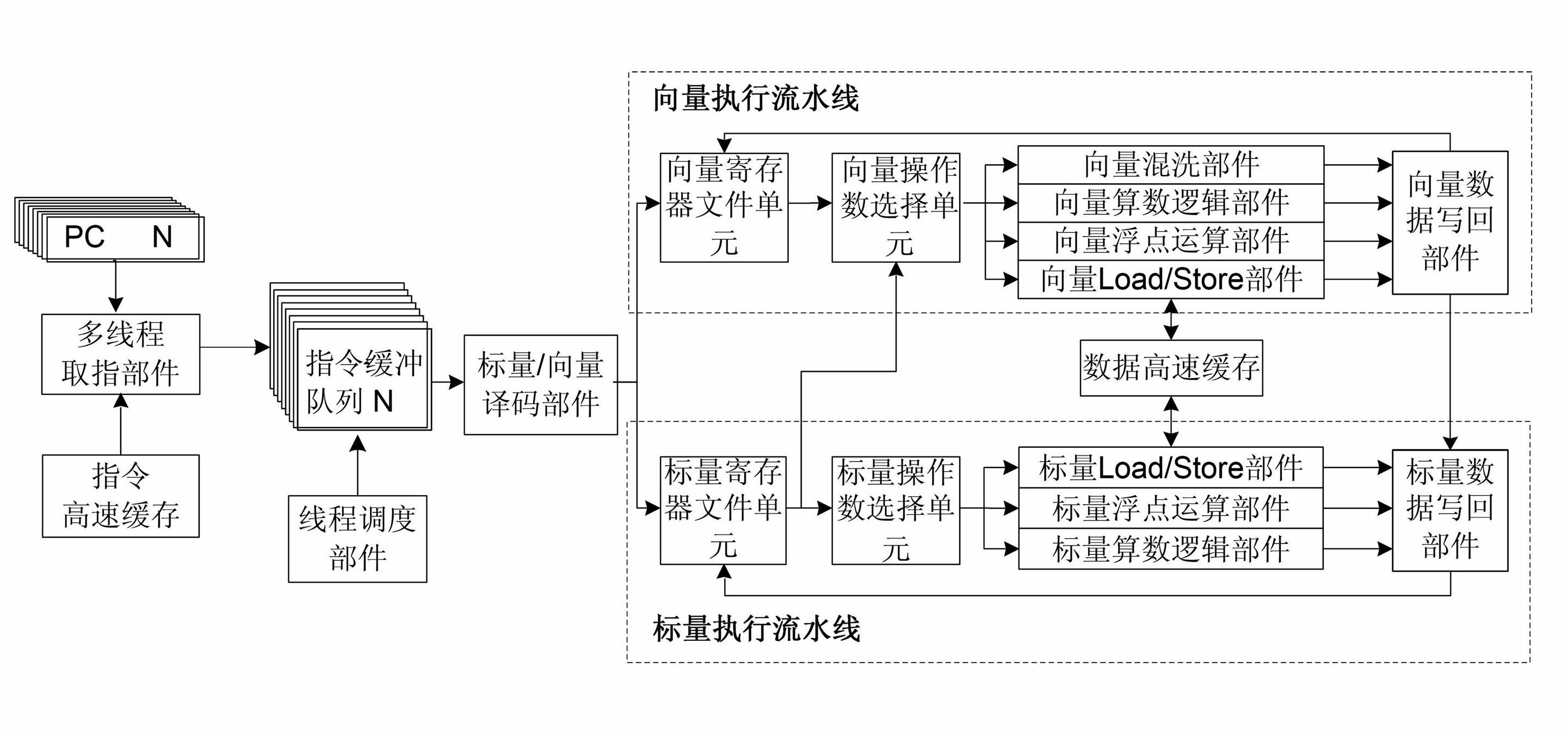

The invention discloses a vector crossing multithread processing method and a vector crossing multithread microprocessor. The processing method comprises the following steps: using a multithread instruction-acquiring part to choose a vector thread from N vector threads for reading an instruction and storing the read instruction to a corresponding instruction buffer array of the vector thread; using a thread scheduling part to choose an instruction buffer array from N instruction buffer arrays and taking out an instruction from the instruction buffer array for the purpose of decoding; and sending a decoded instruction to a vector executing streamline or scalar executing streamline so as to execute. The method can be realized by using hardware structure by the vector crossing multithread microprocessor. The method and the microprocessor provided by the invention have the advantages that the vector processing technique and multithread technique are combined, the hardware structure is simple, the operation capability is strong, the compatibility and expansibility are excellent, and the like.

Owner:NAT UNIV OF DEFENSE TECH

Across-thread out of order instruction dispatch in a multithreaded graphics processor

ActiveUS7310722B2Digital computer detailsMultiprogramming arrangementsGraphicsScheduling instructions

Instruction dispatch in a multithreaded microprocessor such as a graphics processor is not constrained by an order among the threads. Instructions are fetched into an instruction buffer that is configured to store an instruction from each of the threads. A dispatch circuit determines which instructions in the buffer are ready to execute and may issue any ready instruction for execution. An instruction from one thread may be issued prior to an instruction from another thread regardless of which instruction was fetched into the buffer first. Once an instruction from a particular thread has issued, the fetch circuit fills the available buffer location with the following instruction from that thread.

Owner:NVIDIA CORP

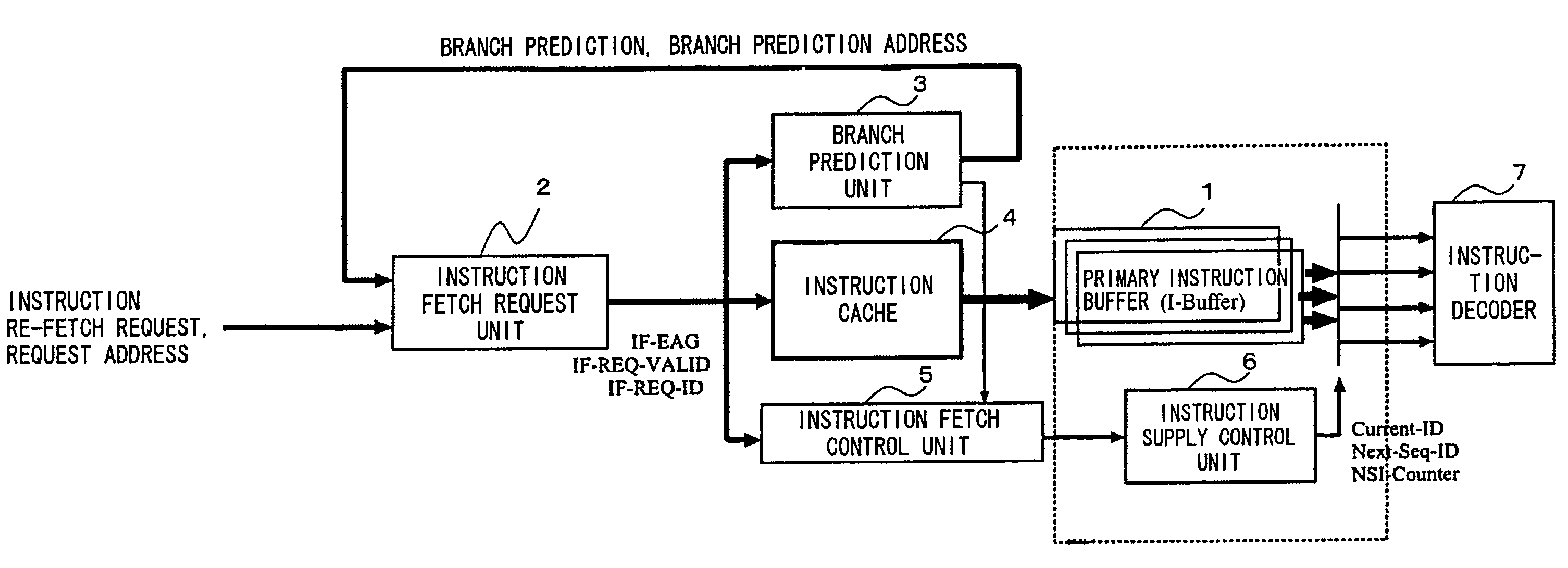

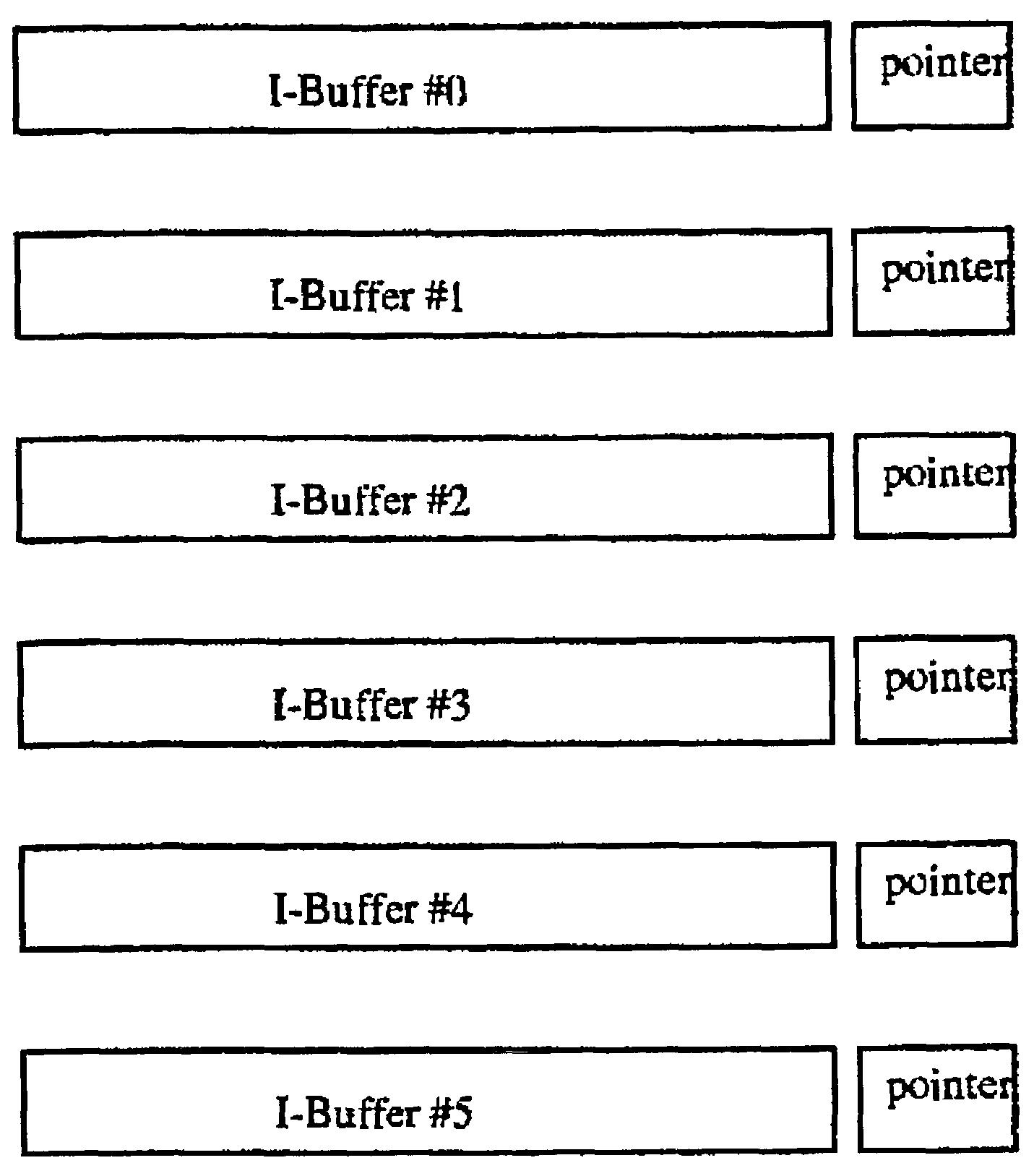

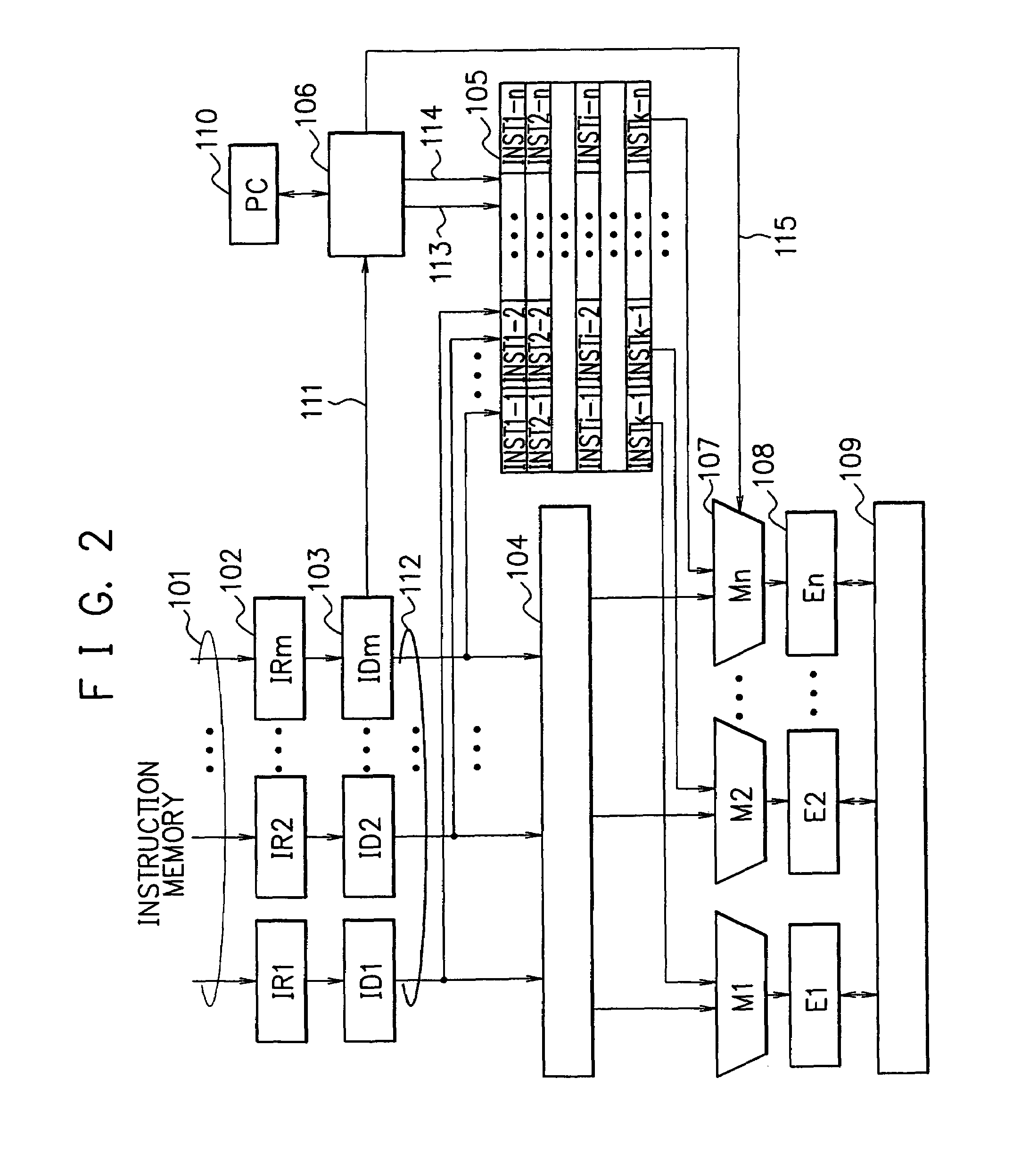

Instruction fetch control device and method thereof with dynamic configuration of instruction buffers

InactiveUS7783868B2Improve efficiencyInstruction compactDigital computer detailsConcurrent instruction executionParallel computingExecution unit

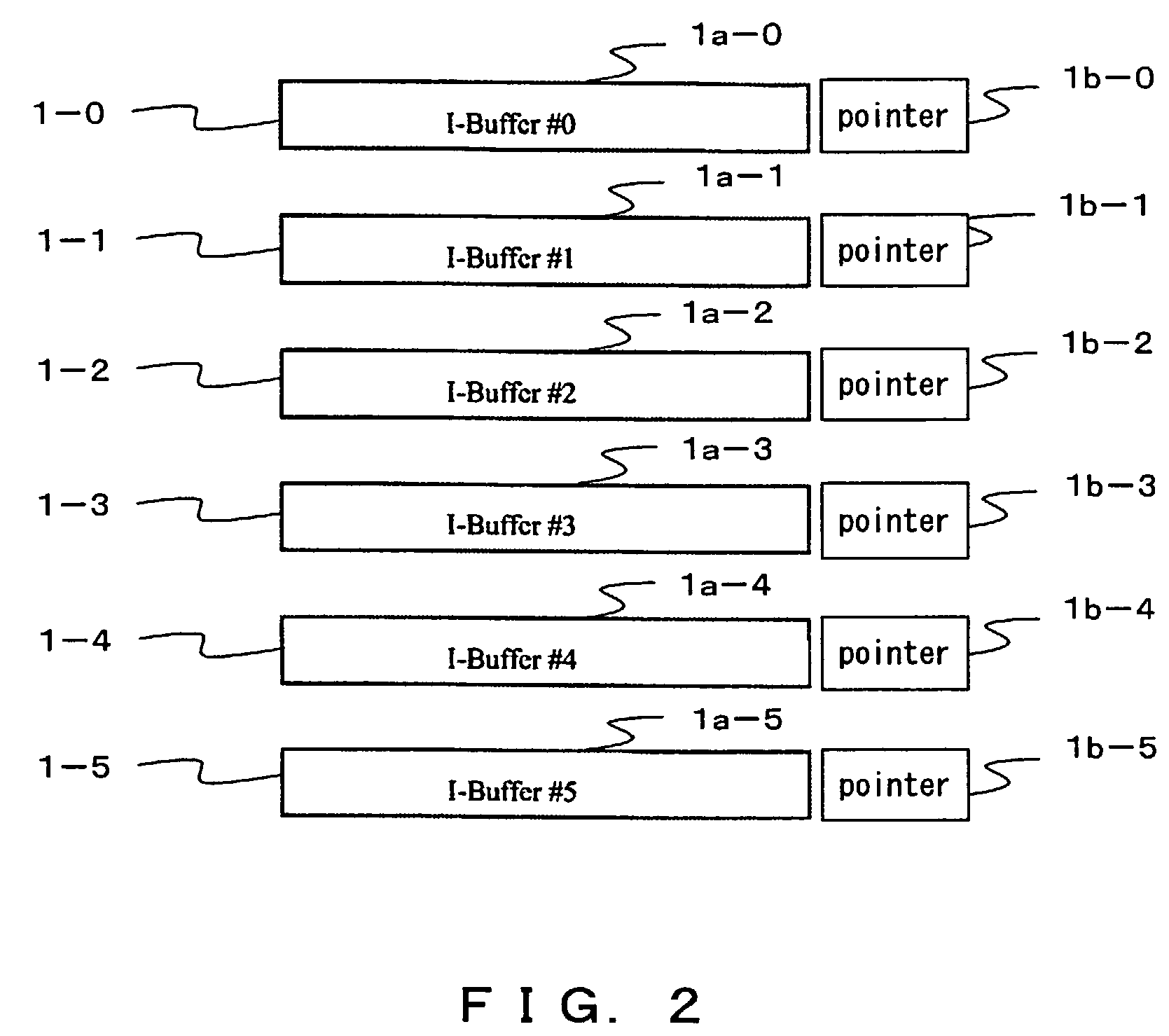

This is an instruction fetch control device supplying instructions to an instruction execution unit. The device comprises a plurality of instruction buffers storing an instruction string to be supplied to the instruction execution unit and a designation unit designating an instruction buffer storing the instruction string to be supplied next for each of the plurality of instruction buffers.

Owner:FUJITSU LTD

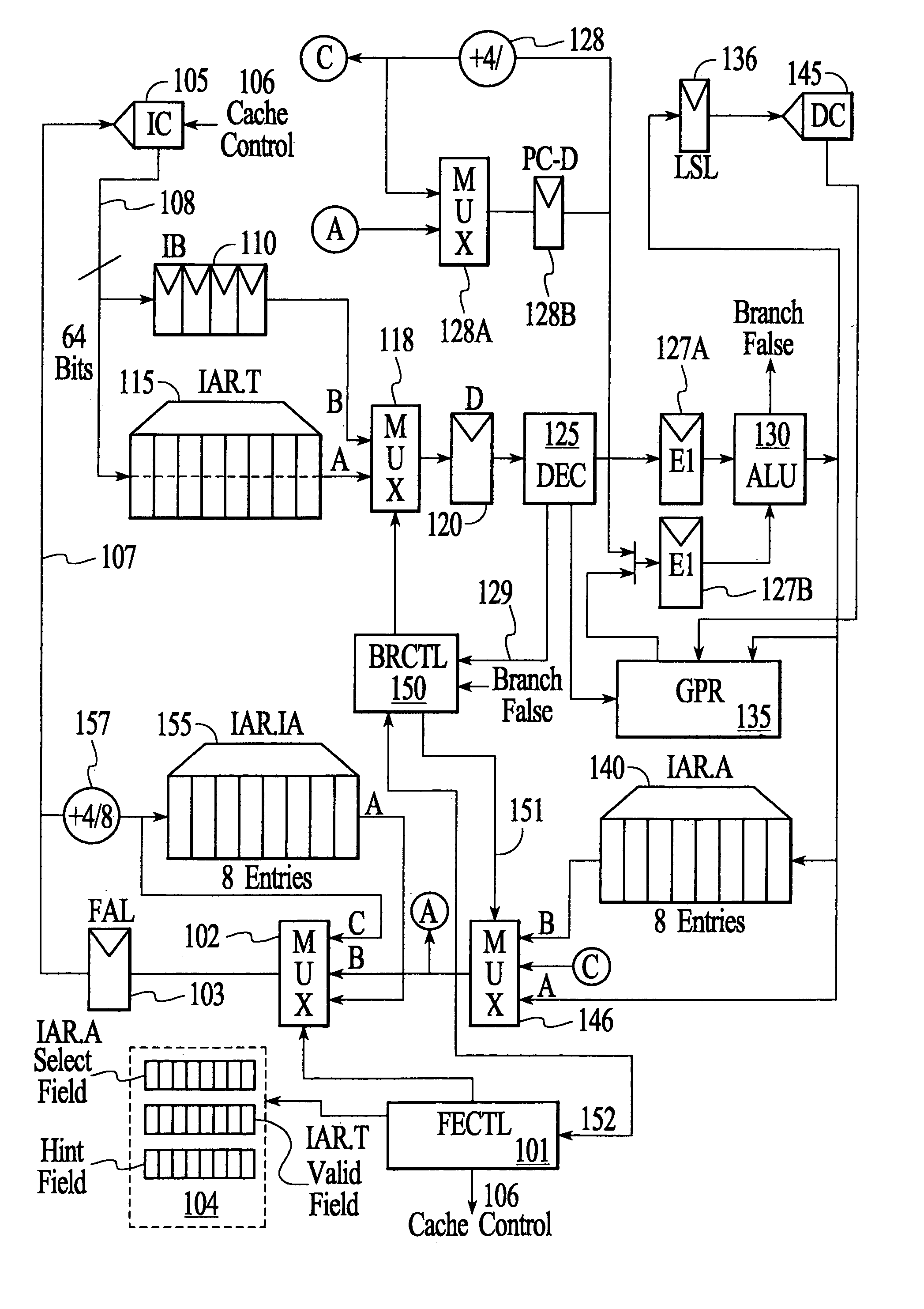

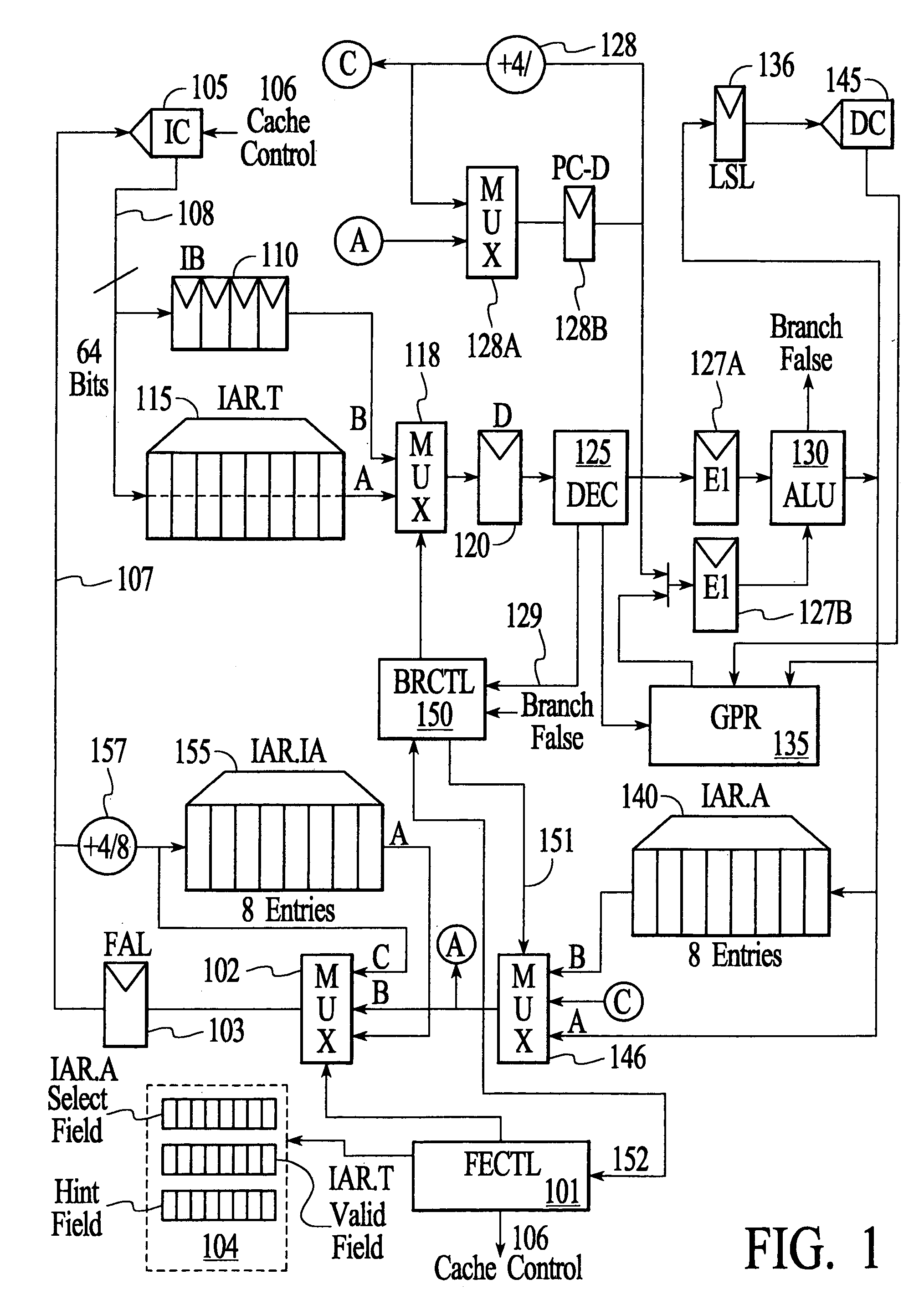

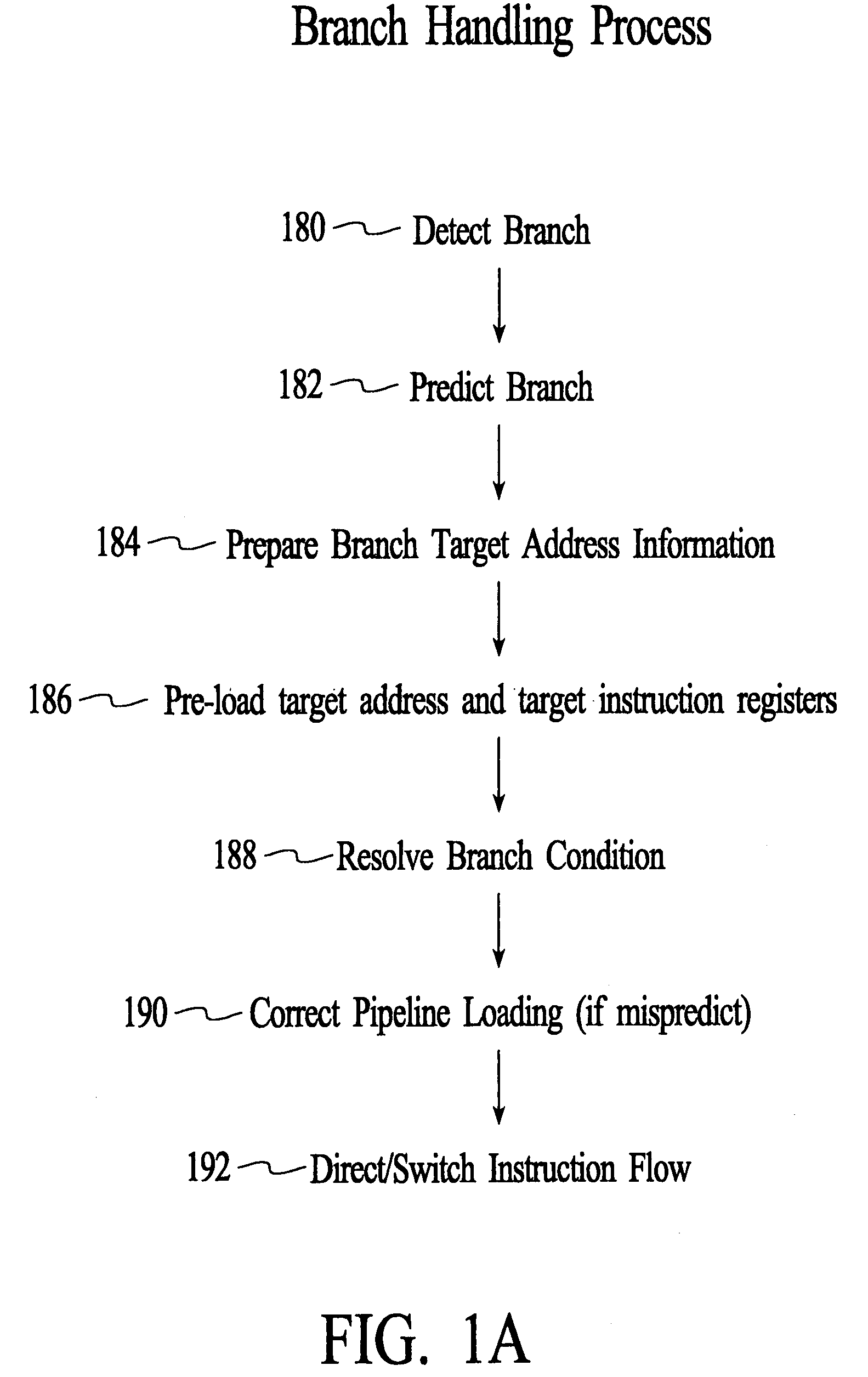

Method and apparatus for dynamically managing instruction buffer depths for non-predicted branches

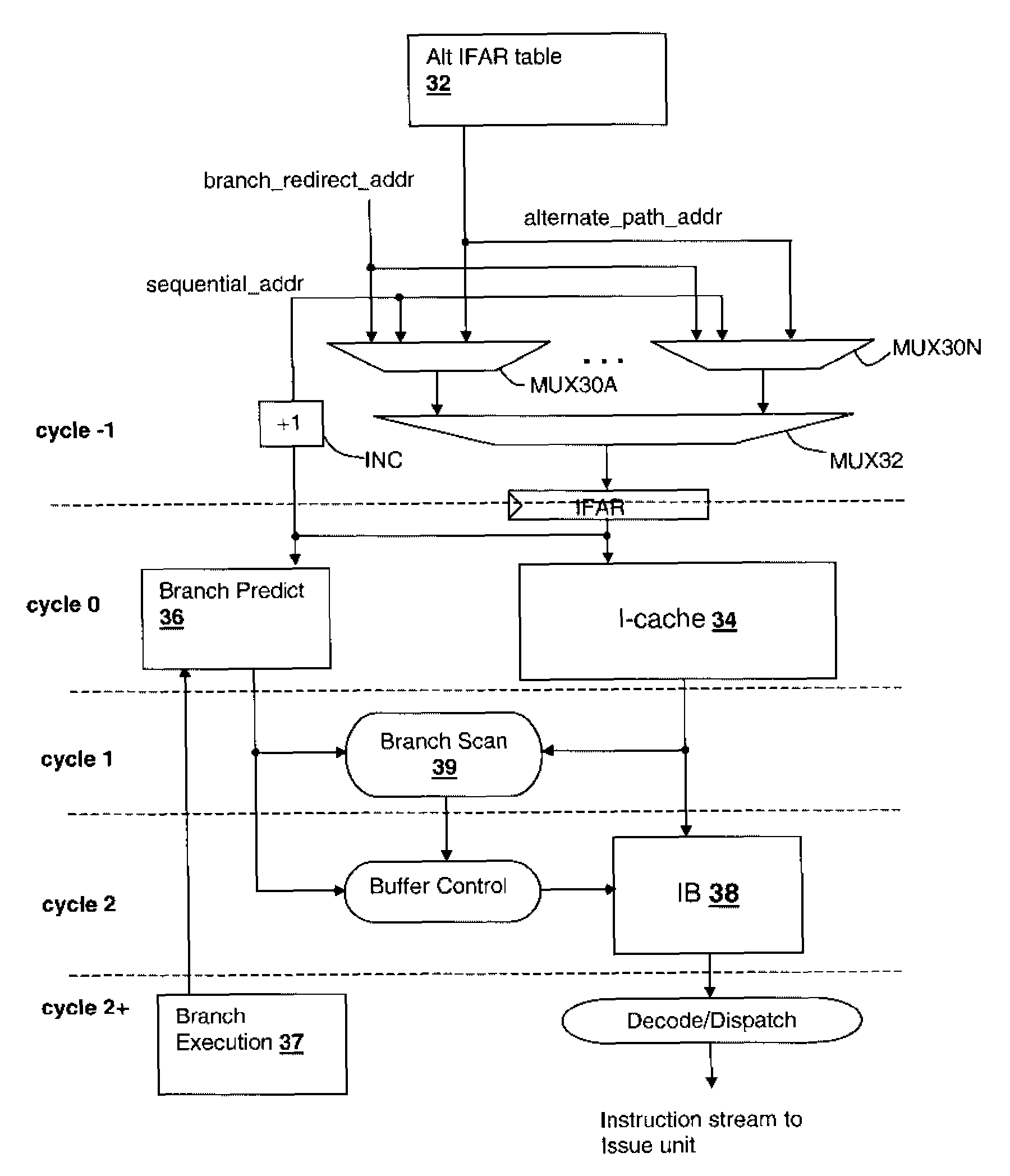

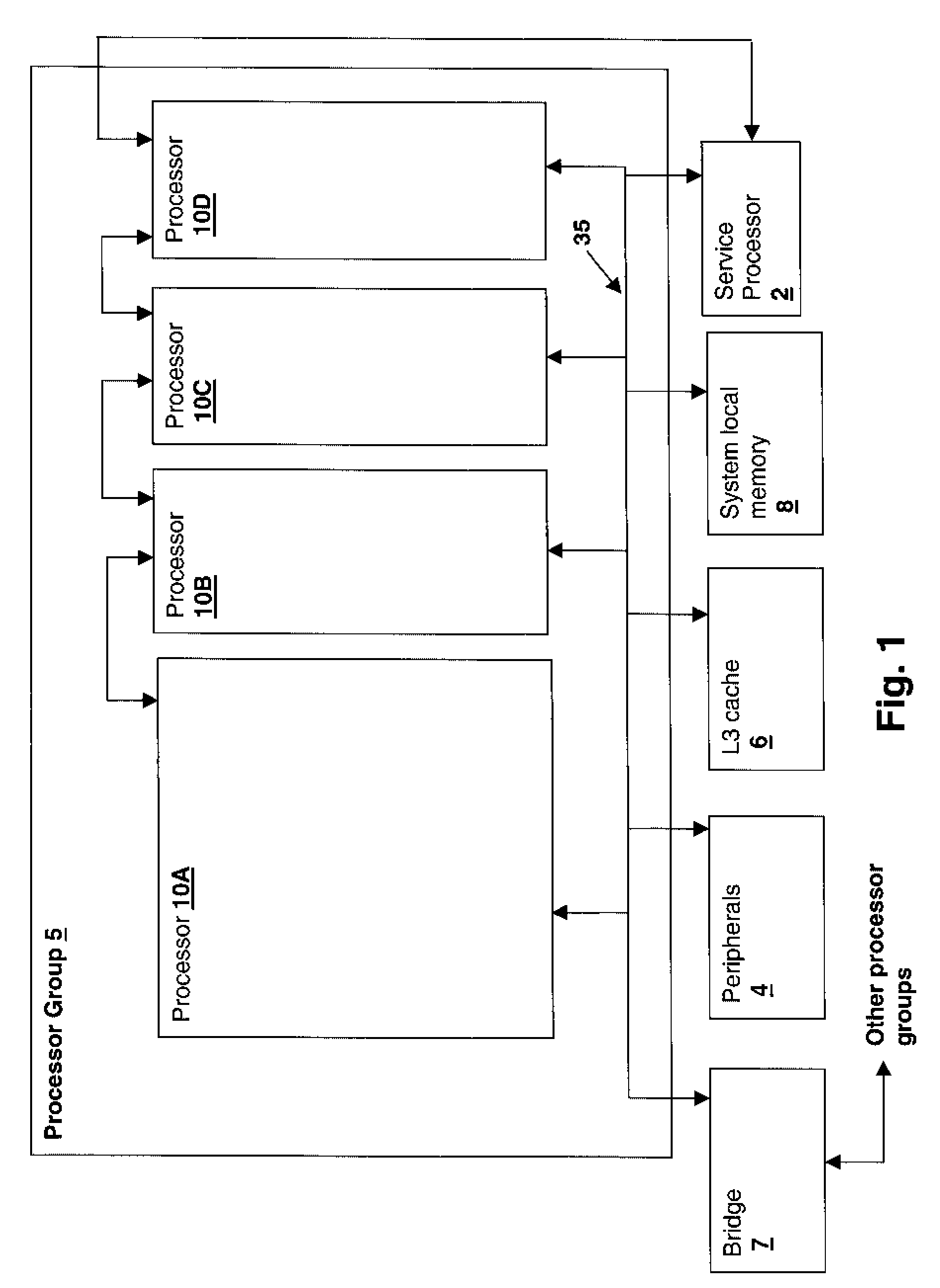

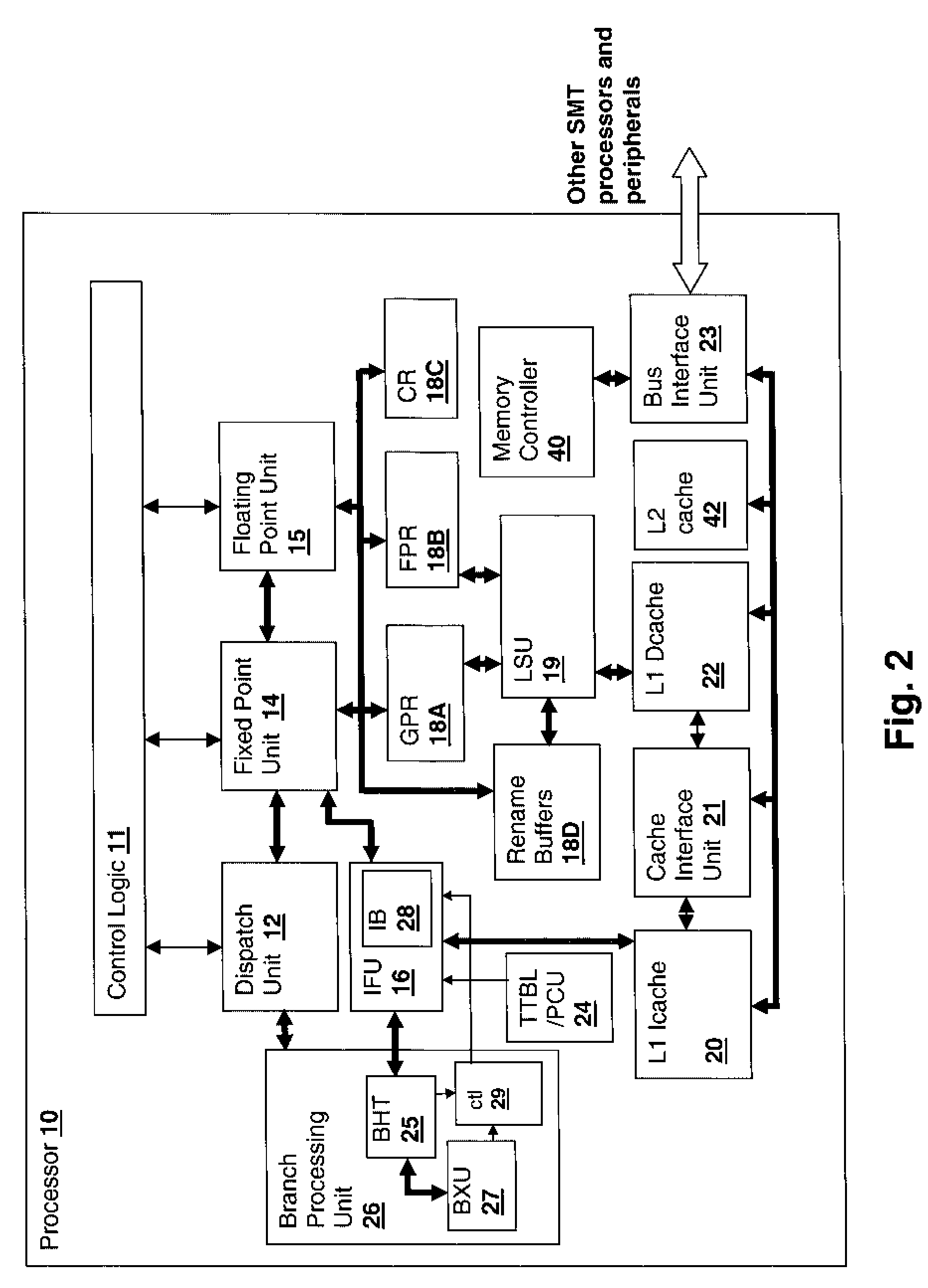

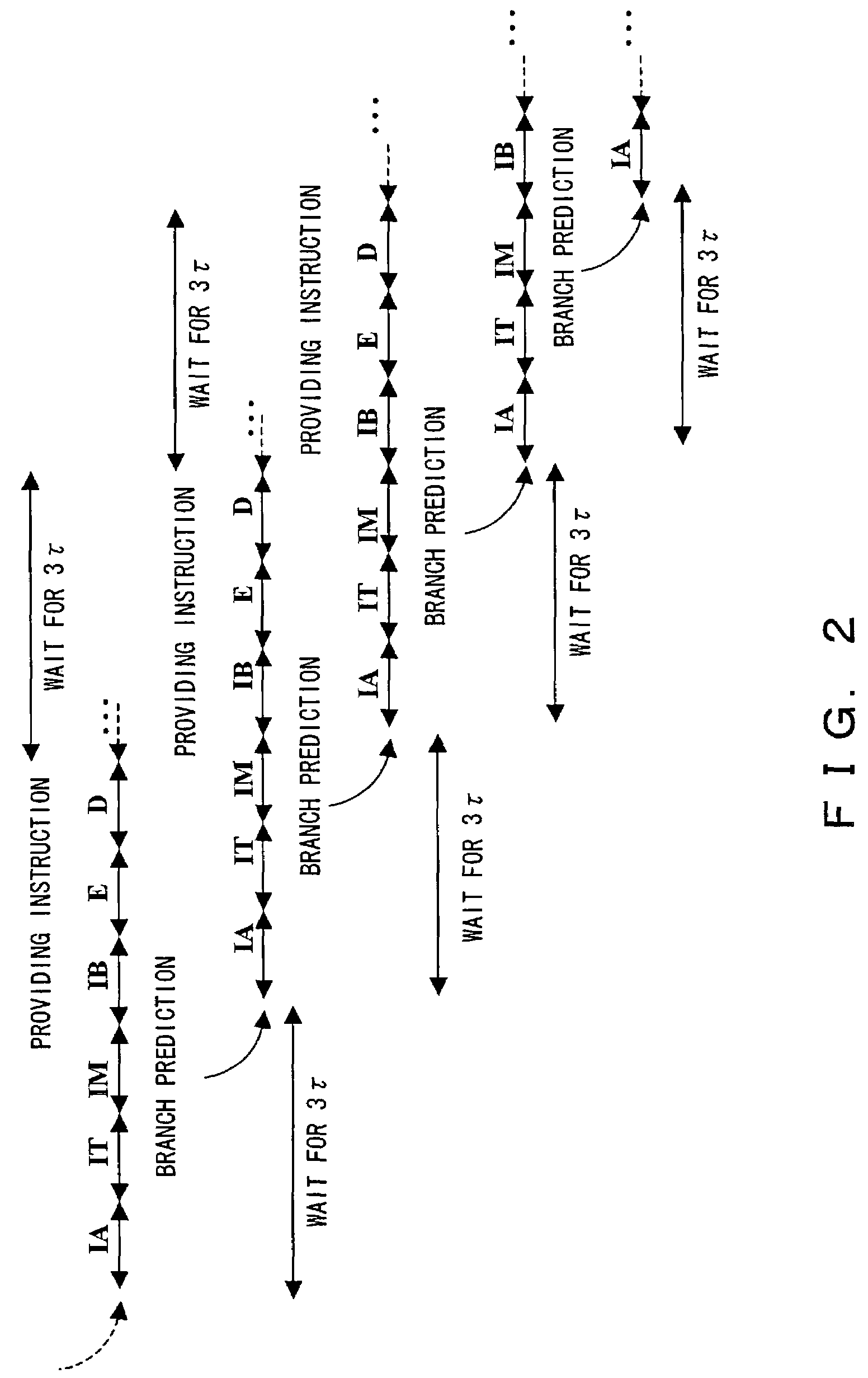

InactiveUS7779232B2Reduces resource and energy wastedDigital computer detailsSpecific program execution arrangementsProcessor registerDynamic management

A method and apparatus for dynamically managing instruction buffer depths for non-predicted branches reduces wasted energy and resources associated with low confidence branch prediction conditions. A portion of the instruction buffer for a instruction thread is allocated for storing predicted branch instruction streams and another portion, which may be zero-sized during high prediction confidence conditions, is allocated to the non-predicted branch instruction stream. The size of the buffers is adjusted dynamically in conformity with an on-going prediction confidence that provides a measure of how well branch prediction mechanisms are working for a given instruction thread. An alternate instruction fetch address table can be maintained and multiplexed with the main fetch address register for addressing the instruction cache, so that the instruction stream can be quickly shifted to the non-predicted path when a branch instruction is resolved to the non-predicted path.

Owner:INT BUSINESS MASCH CORP

Method and System for Tracking Instruction Dependency in an Out-of-Order Processor

InactiveUS20090063823A1Register arrangementsDigital computer detailsArray data structureData preparation

A method of tracking instruction dependency in a processor issuing instructions speculatively includes recording in an instruction dependency array (IDA) an entry for each instruction that indicates data dependencies, if any, upon other active instructions. An output vector read out from the IDA indicates data readiness based upon which instructions have previously been selected for issue. The output vector is used to select and read out issue-ready instructions from an instruction buffer.

Owner:IBM CORP

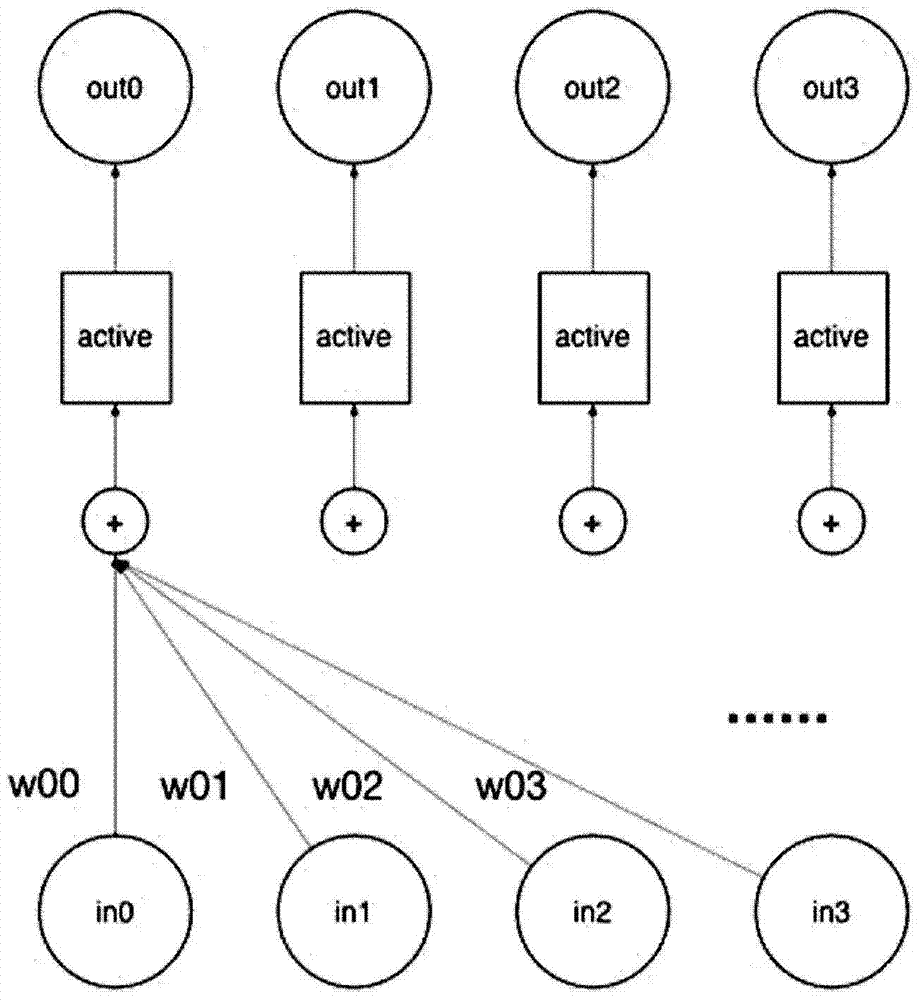

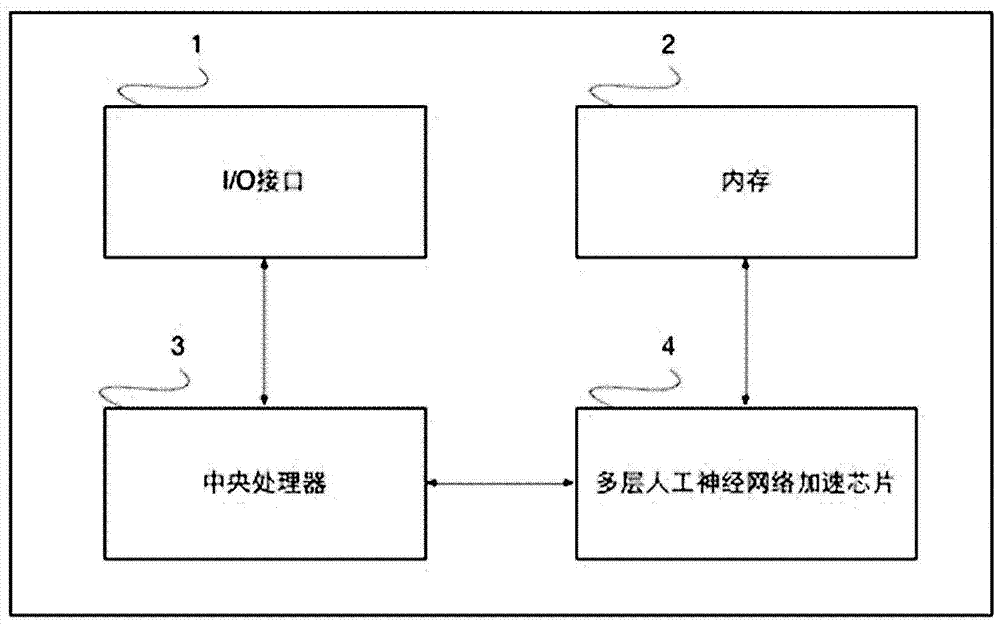

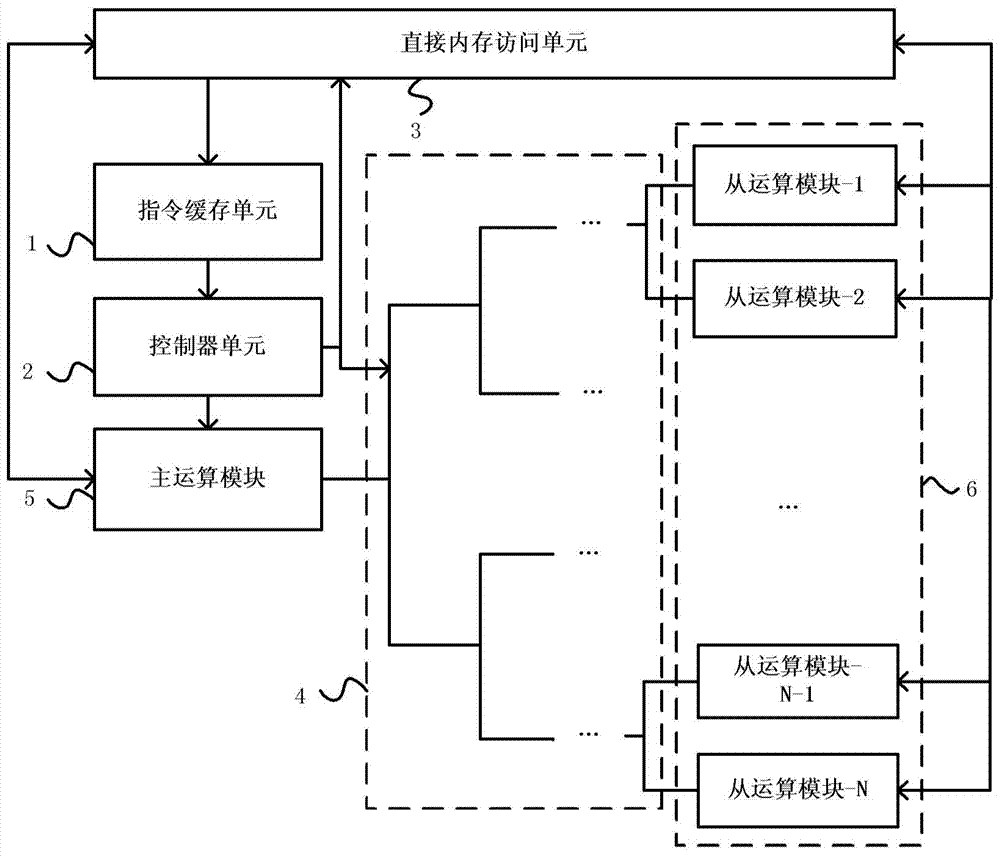

Artificial neural network compression coding device and artificial neural network compression coding method

ActiveCN106991477AReduce model sizeFast data processingDigital data processing detailsProgram controlActivation functionNerve network

An artificial neural network compression coding device comprises a memory interface unit, an instruction buffer memory, a controller unit and an operation unit, wherein the operation unit is used for performing corresponding operation on data from the memory interface unit according to the instruction of the controller unit. The operation unit mainly performs three steps of: 1, multiplexing an input neuron with weight data; 2, performing an addition tree operation for adding the weighted output neurons after the first step through an addition tree or obtaining a biased output neurons through adding the output neurons and a bias; and 3, executing a function activation operation, and obtaining a final output neuron. The invention further provides an artificial neural network compression coding method. The artificial neural network compression coding device and the artificial neural network compression coding method have advantages of effectively reducing size of an artificial neural network model, improving data processing speed of the artificial neural network, effectively reducing power consumption and improving resource utilization rate.

Owner:CAMBRICON TECH CO LTD

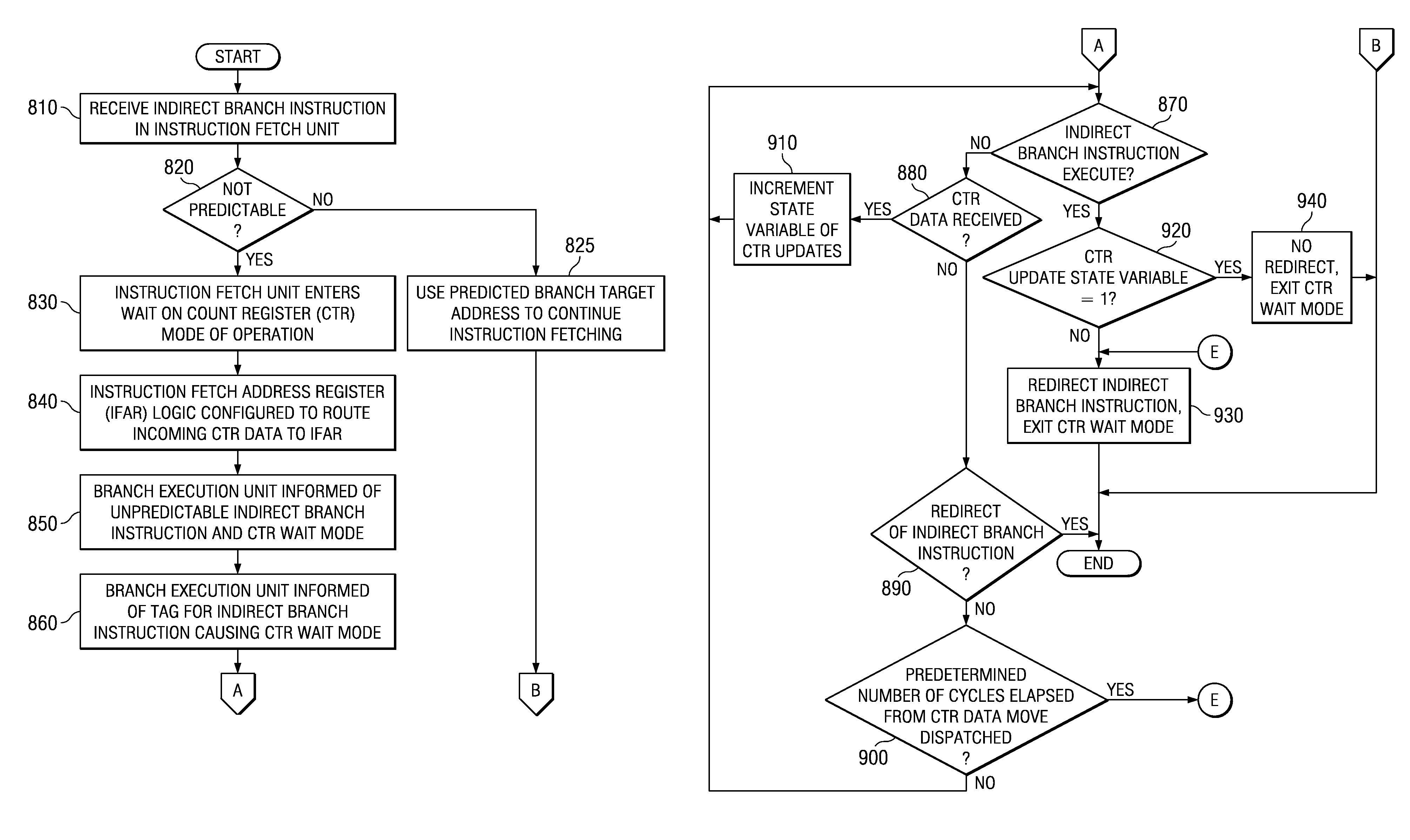

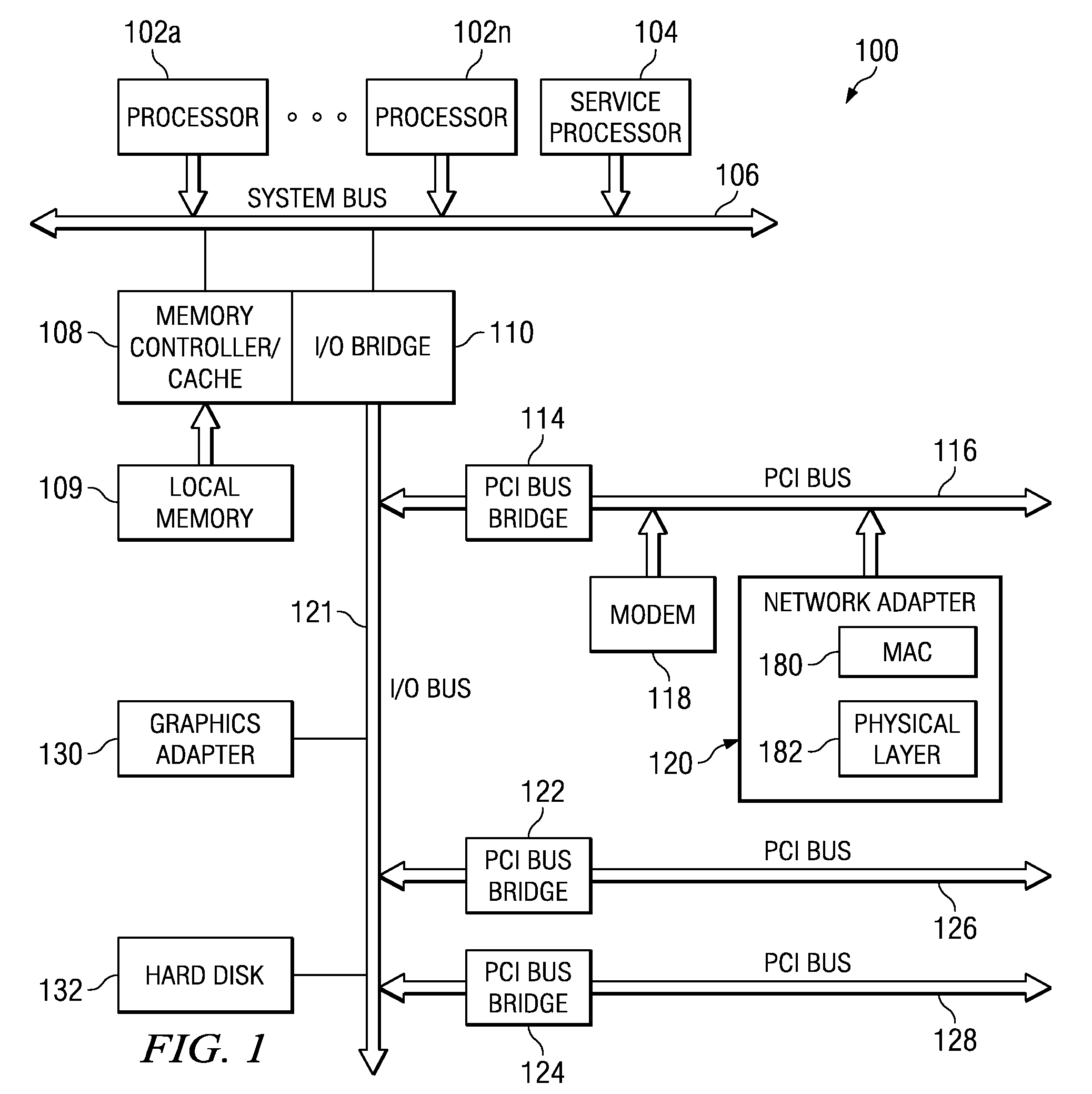

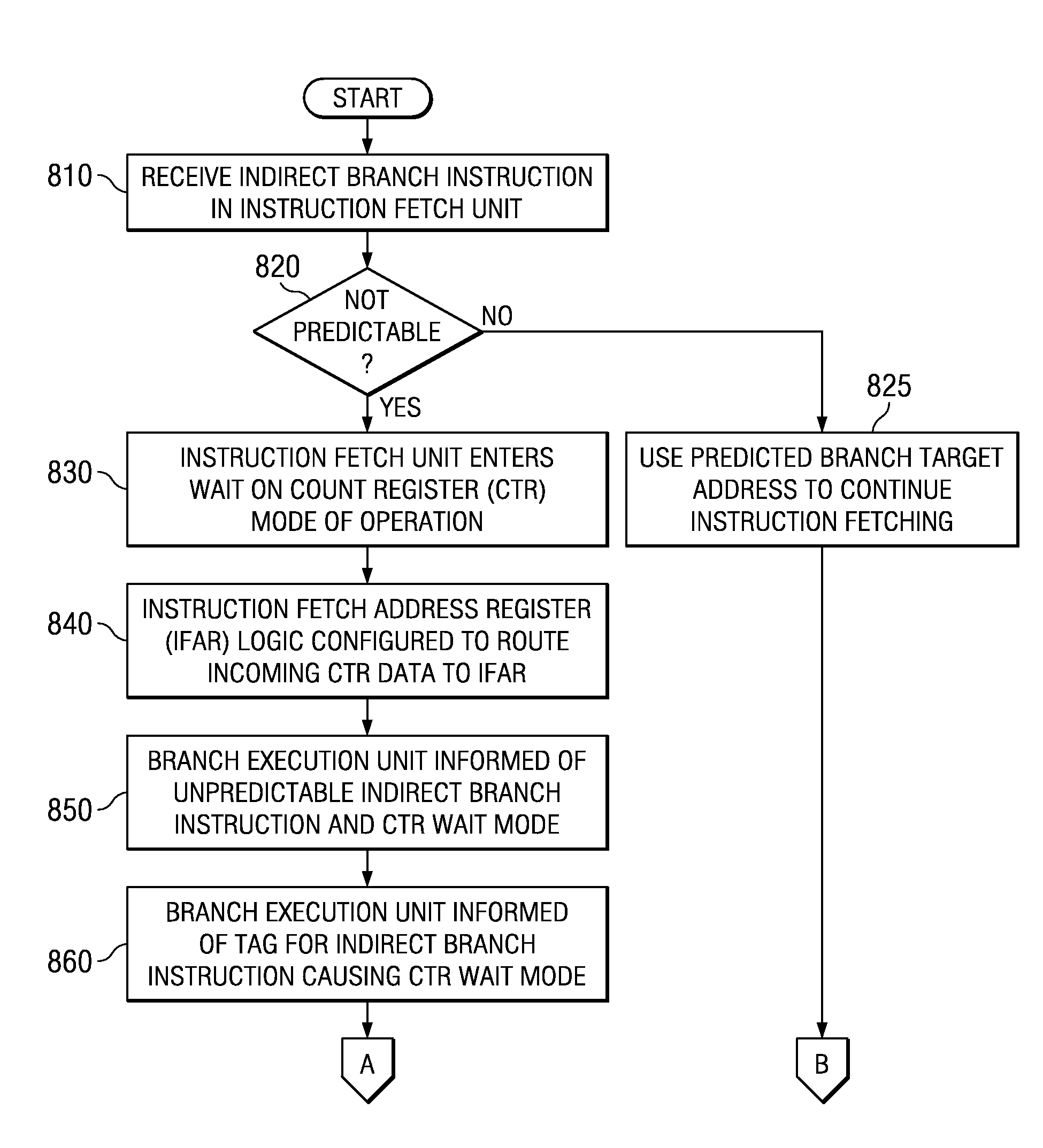

System and method for optimizing branch logic for handling hard to predict indirect branches

ActiveUS7809933B2Easy to handleImprove processor performanceDigital computer detailsSpecific program execution arrangementsParallel computingIndirect branch

A system and method for optimizing the branch logic of a processor to improve handling of hard to predict indirect branches are provided. The system and method leverage the observation that there will generally be only one move to the count register (mtctr) instruction that will be executed while a branch on count register (bcctr) instruction has been fetched and not executed. With the mechanisms of the illustrative embodiments, fetch logic detects that it has encountered a bcctr instruction that is hard to predict and, in response to this detection, blocks the target fetch from entering the instruction buffer of the processor. At this point, the fetch logic has fetched all the instructions up to and including the bcctr instruction but no target instructions. When the next mtctr instruction is executed, the branch logic of the processor grabs the data and starts fetching using that target address. Since there are no other target instructions that were fetched, no flush is needed if that target address is the correct address, i.e. the branch prediction is correct.

Owner:INT BUSINESS MASCH CORP

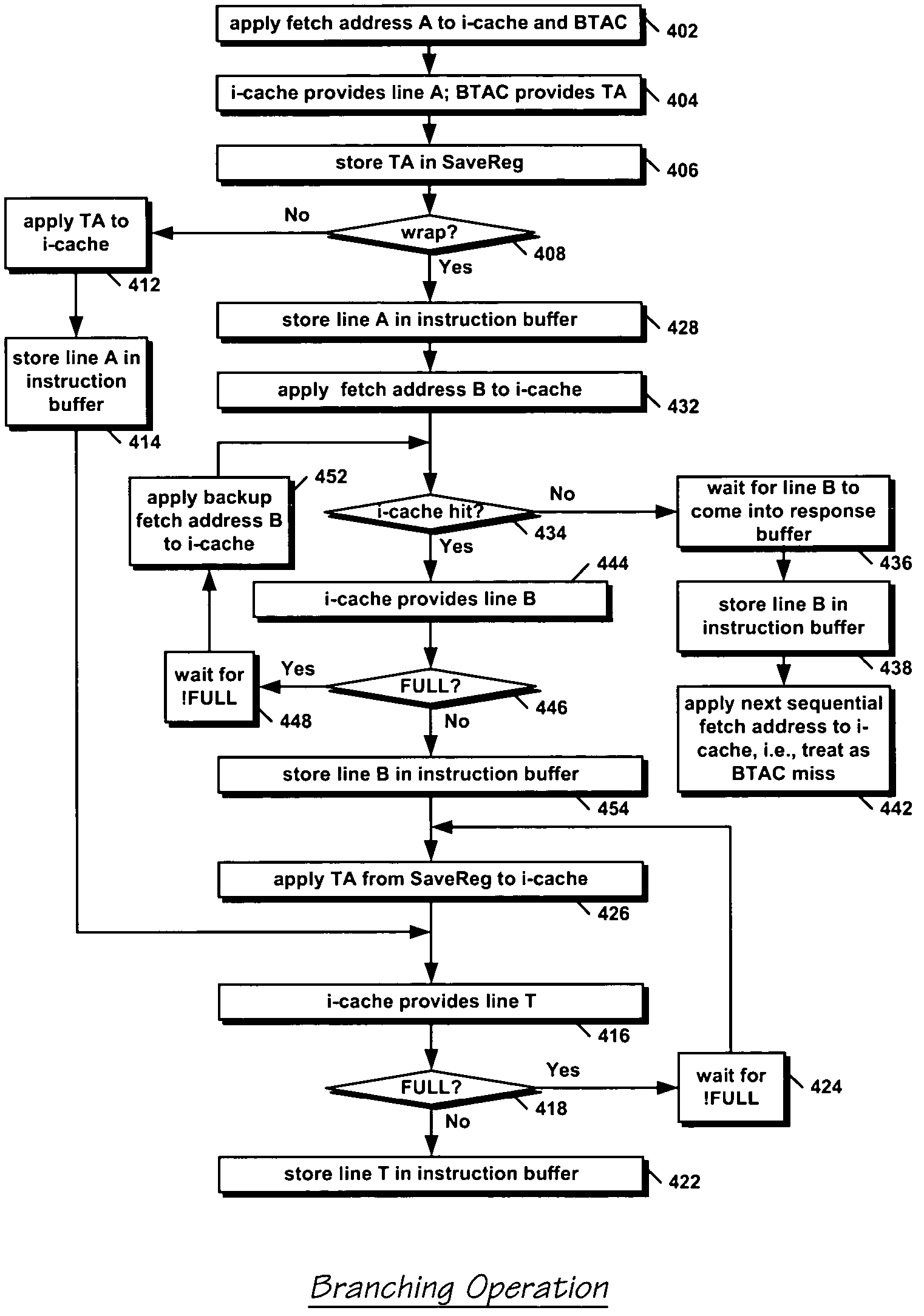

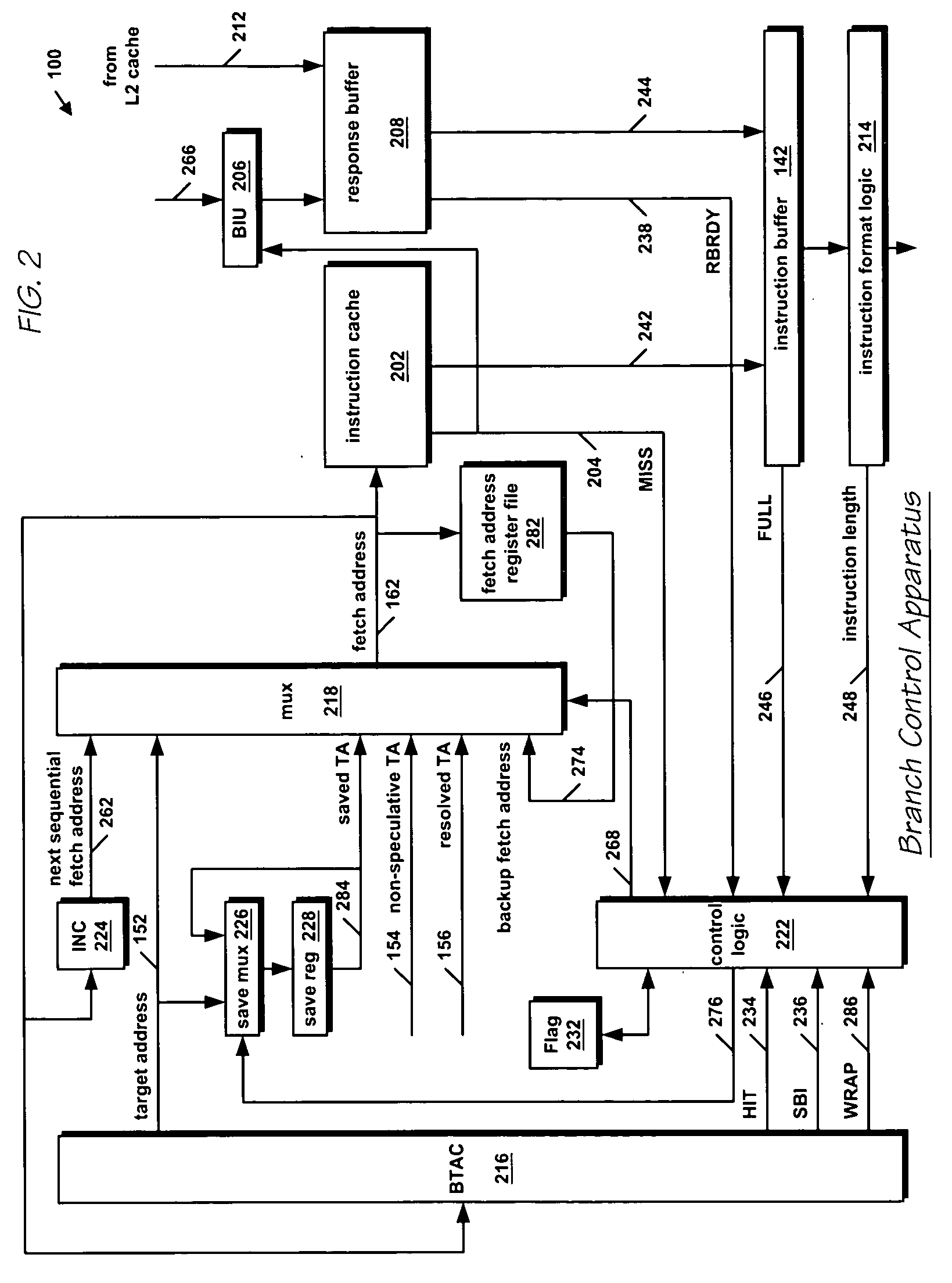

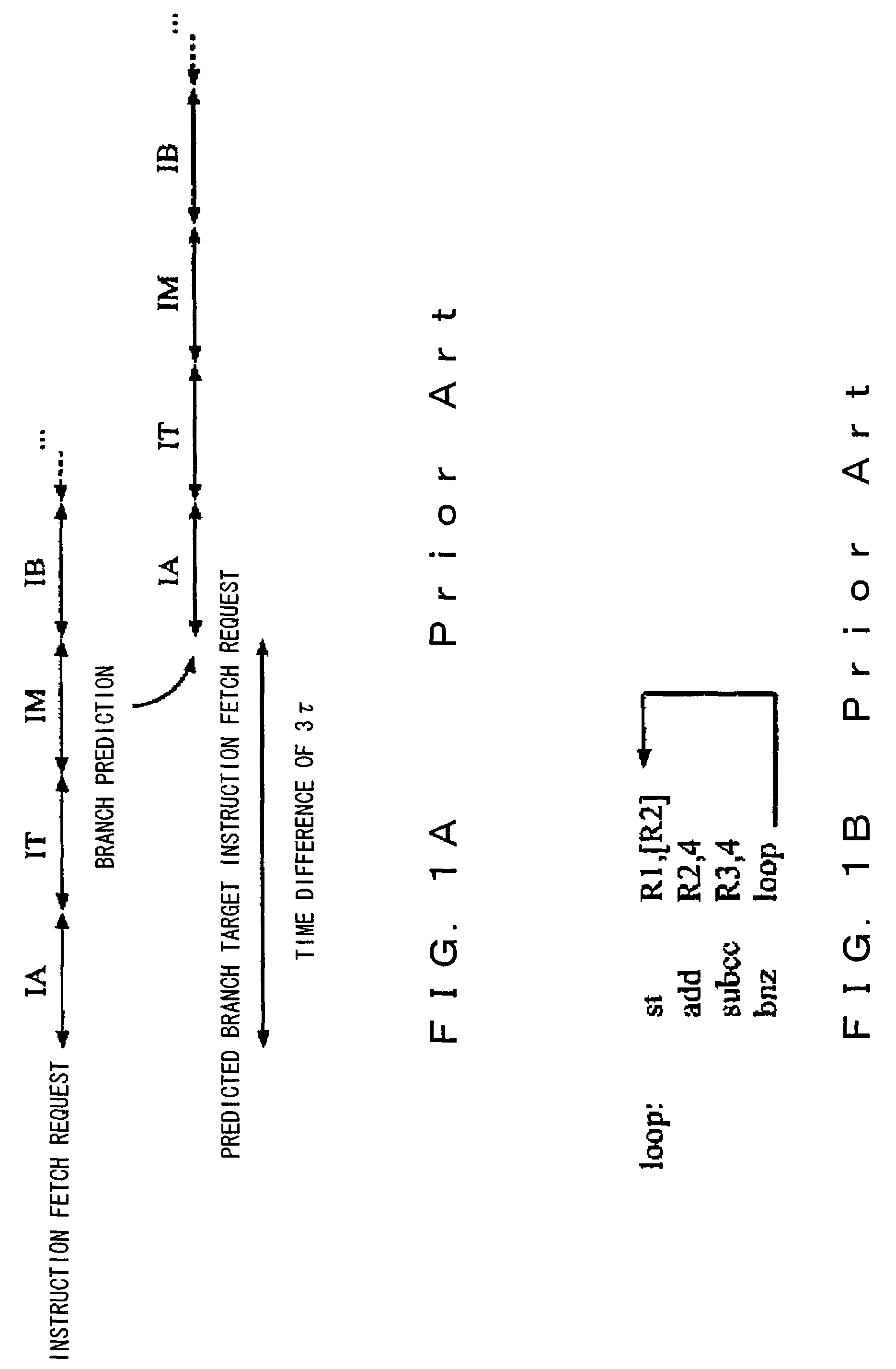

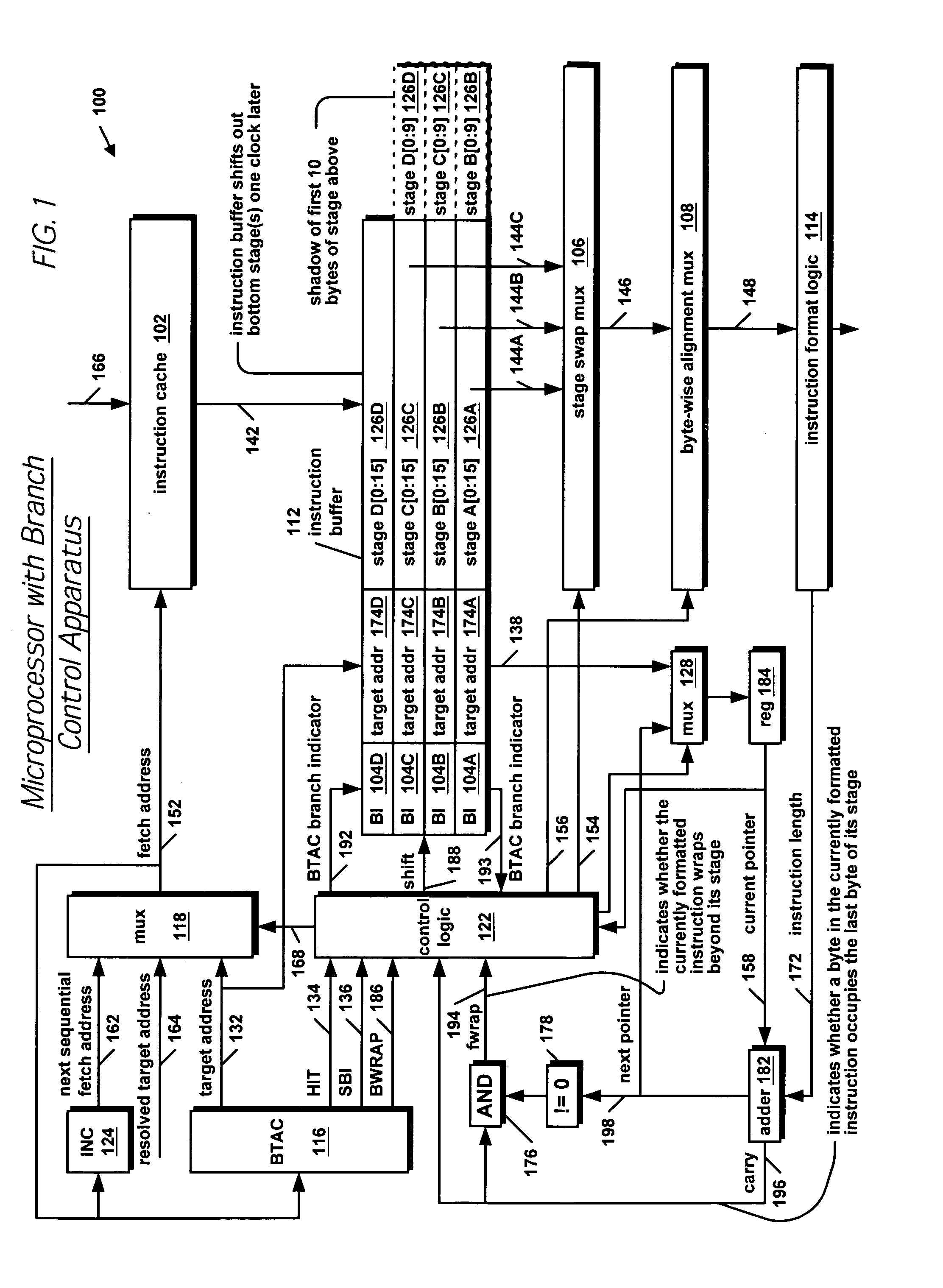

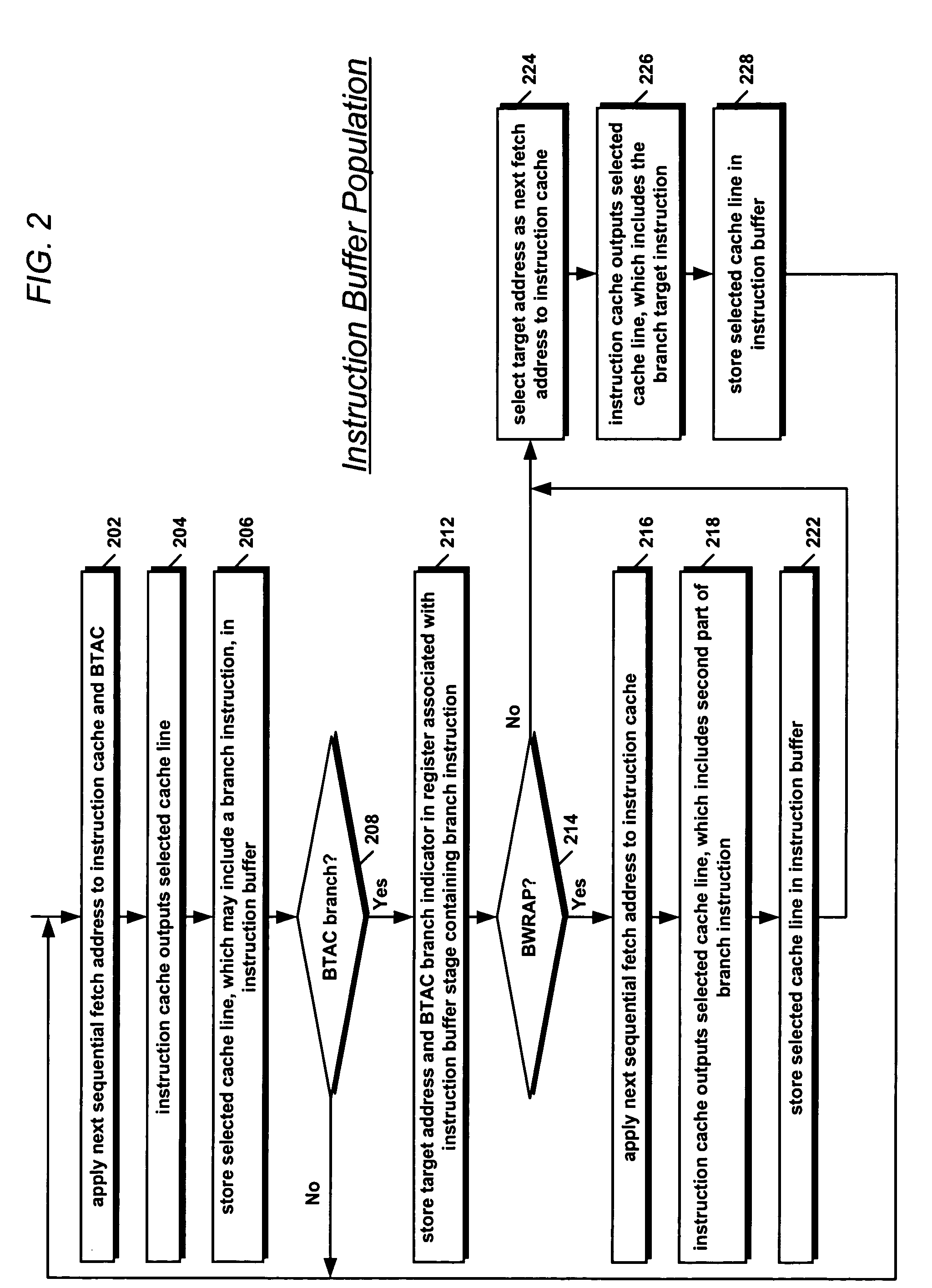

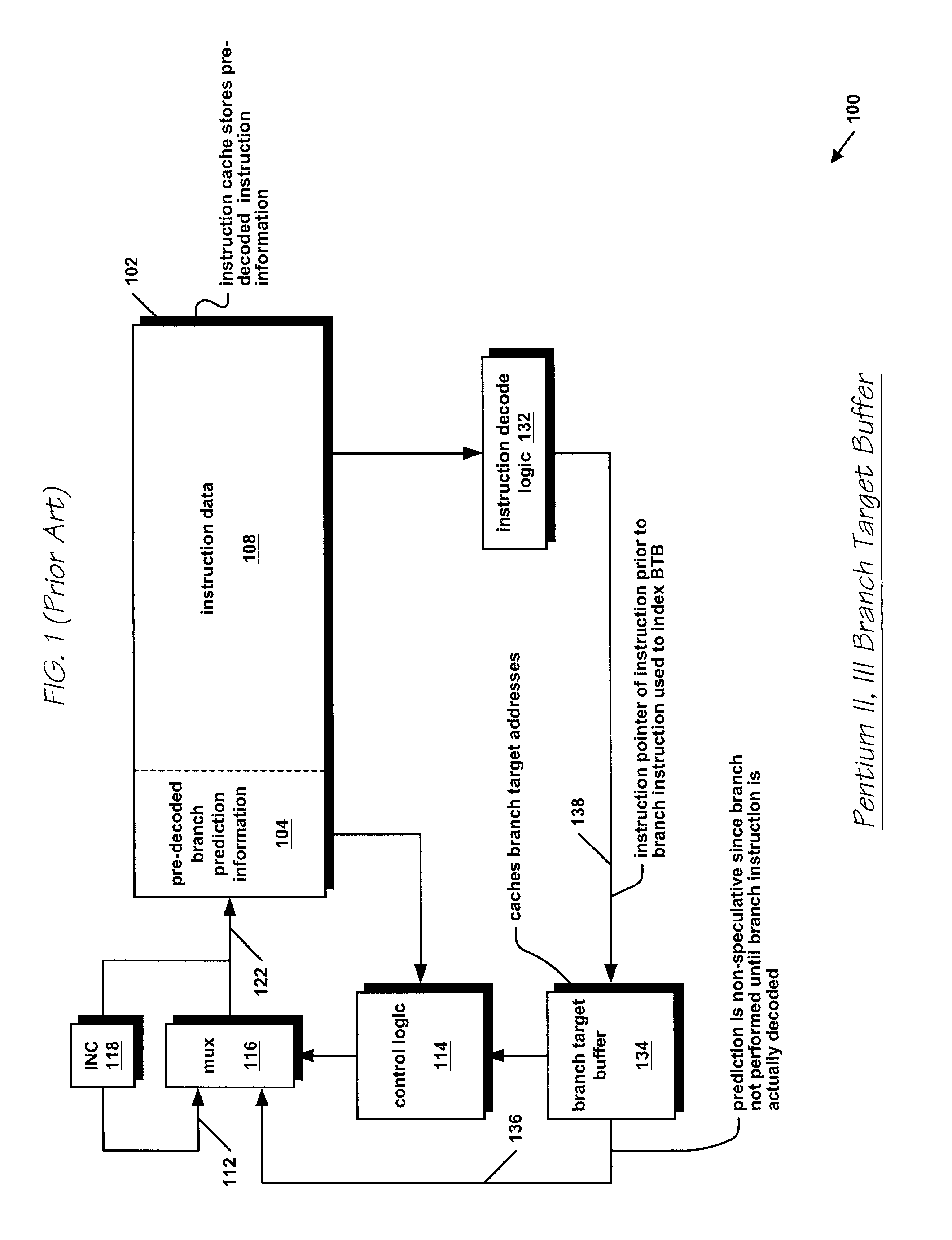

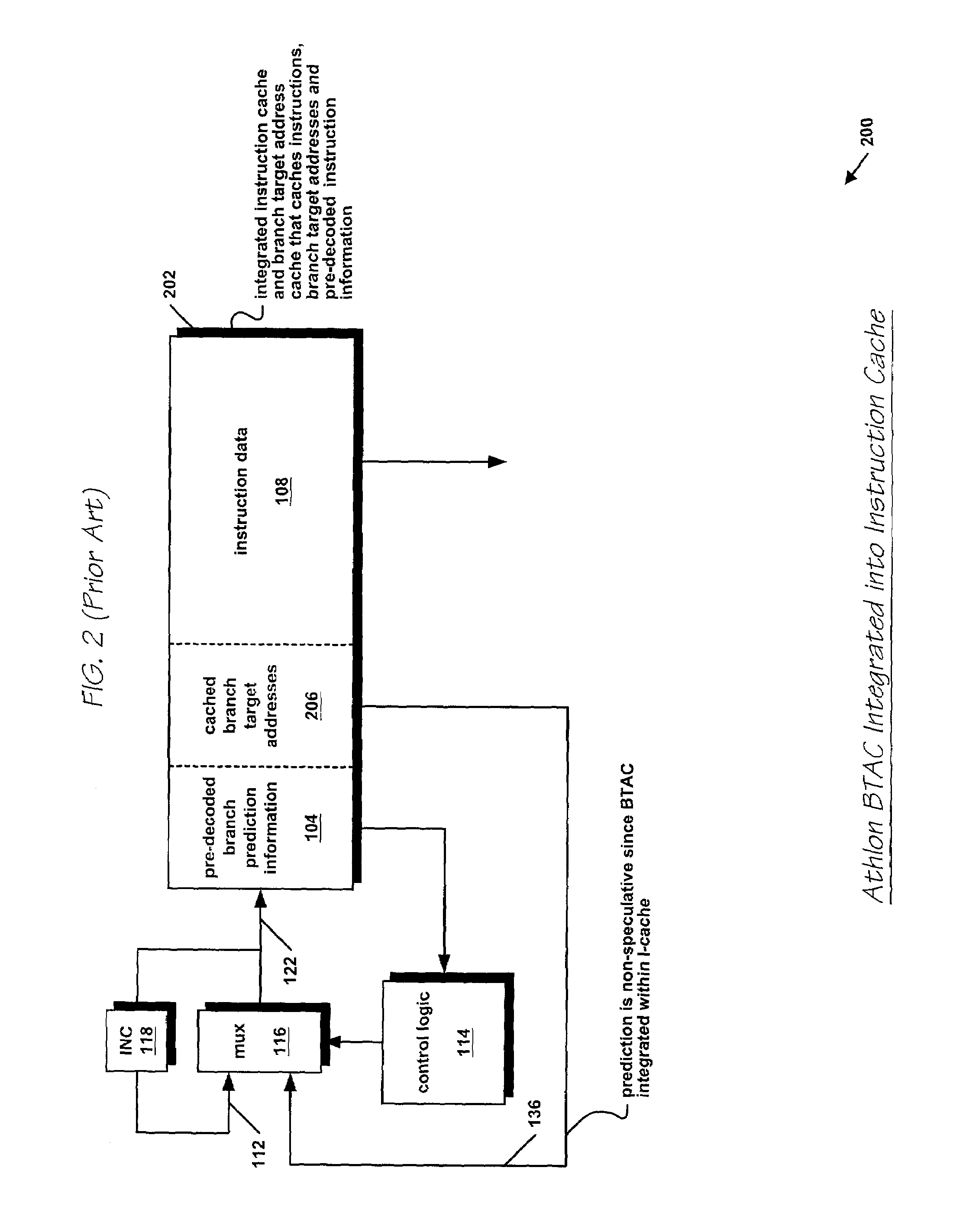

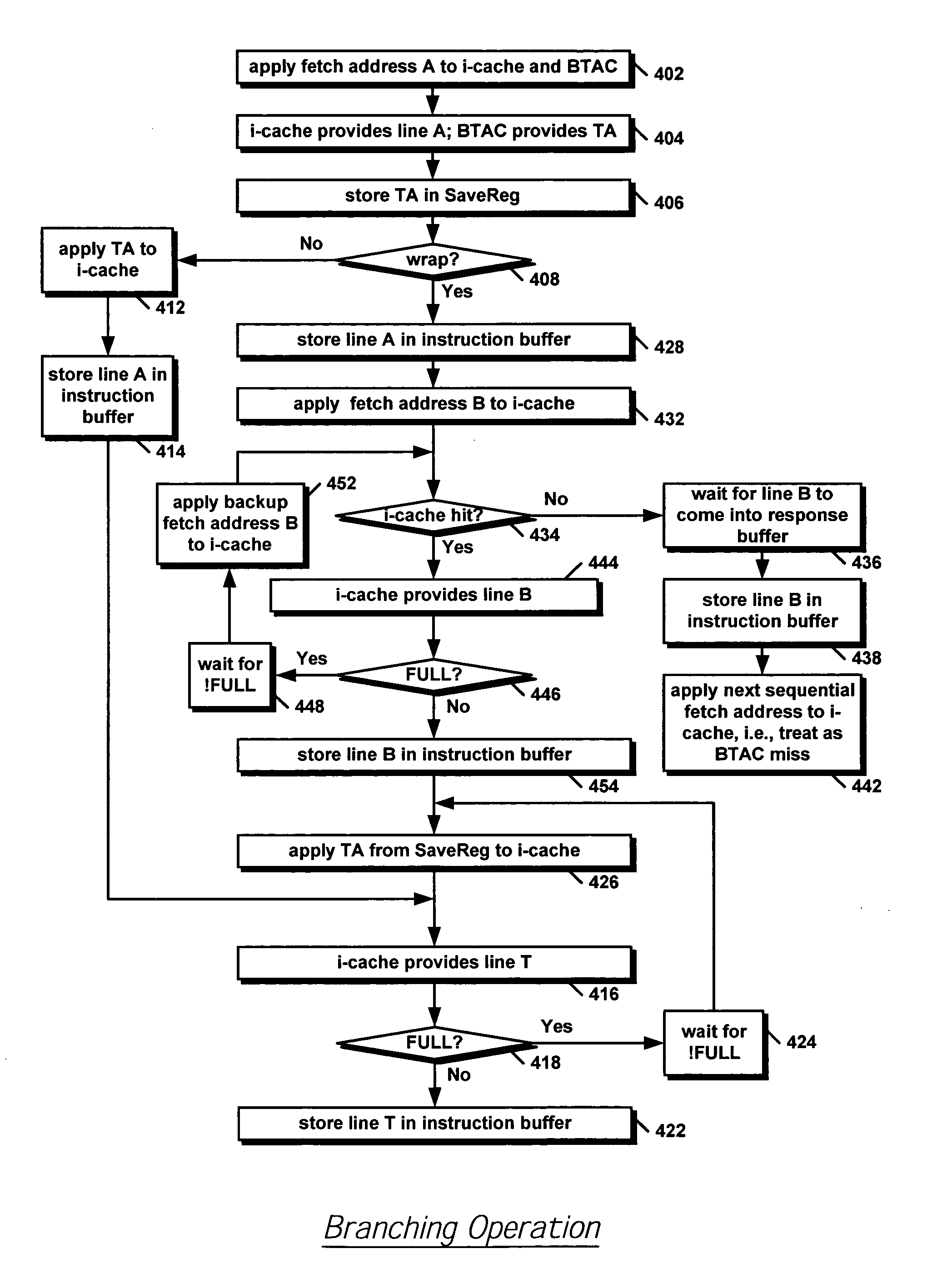

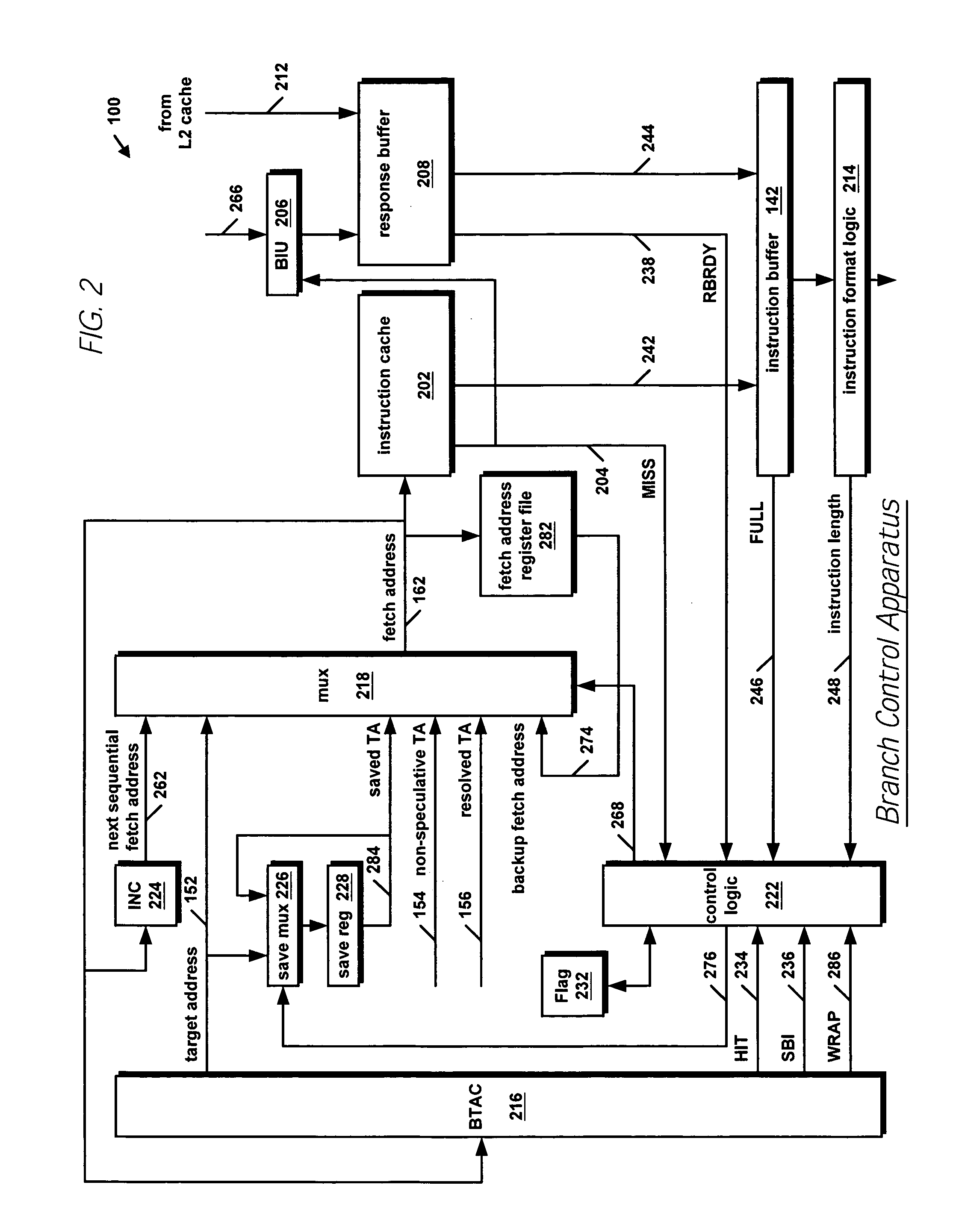

Apparatus and method for handling BTAC branches that wrap across instruction cache lines

InactiveUS20050198479A1Improves branch performanceAvoiding branch penaltyInstruction analysisRuntime instruction translationProcessor registerBranch target address cache

A branch control apparatus in a microprocessor. The apparatus includes a branch target address cache (BTAC) that caches indications of whether a branch instruction wraps across two cache lines. When an instruction cache fetch address of a first cache line containing the first part of the branch instruction hits in the BTAC, the BTAC outputs a target address of the branch instruction and indicates the wrap condition. The target address is stored in a register. The next sequential fetch address selects a second cache line containing the second part of the branch instruction. After the two cache lines containing the branch instruction are fetched, the target address from the register is provided to the instruction cache in order to fetch a third cache line containing a target instruction of the branch. The three cache lines are stored in order in an instruction buffer for decoding.

Owner:IP FIRST

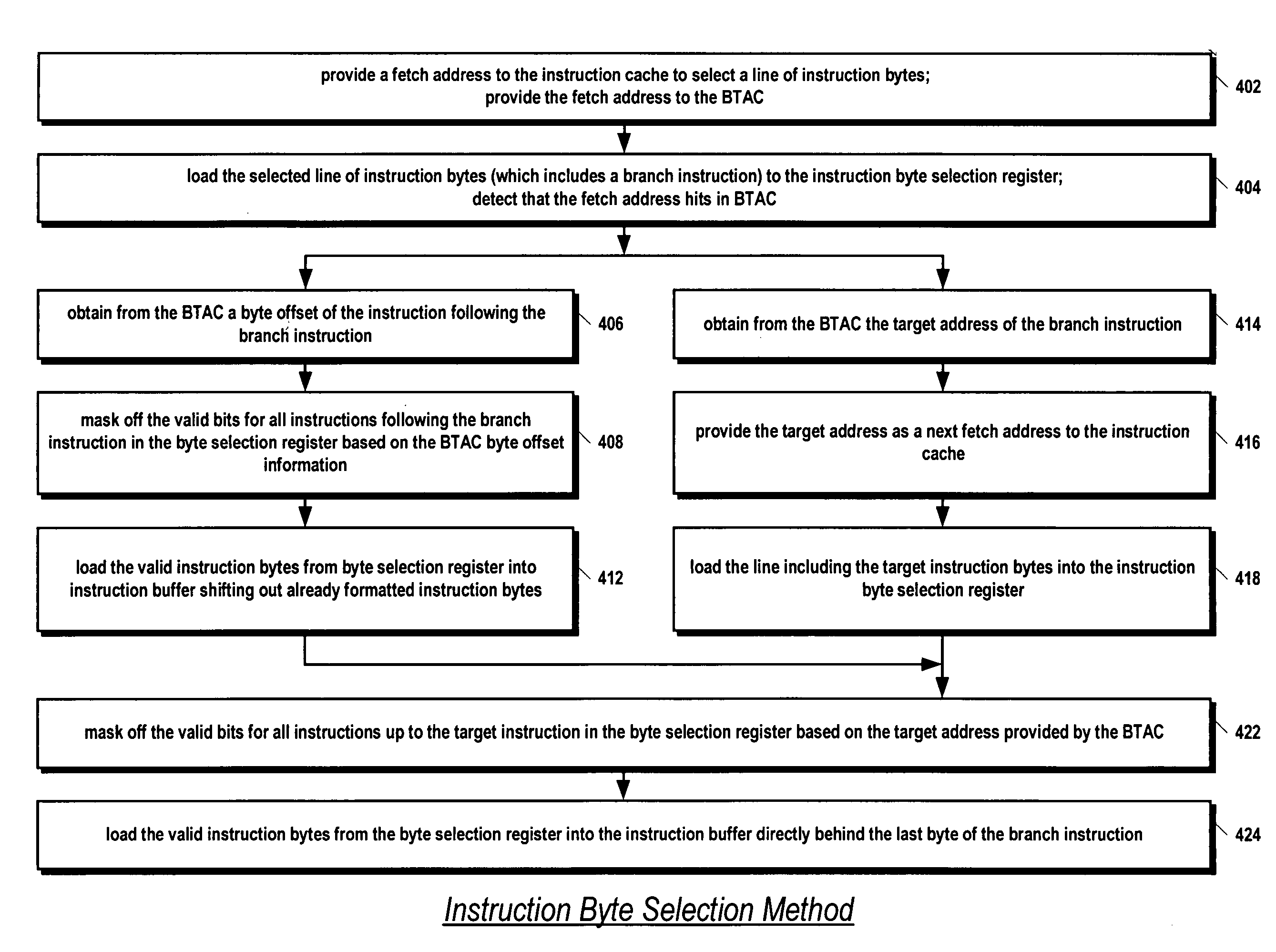

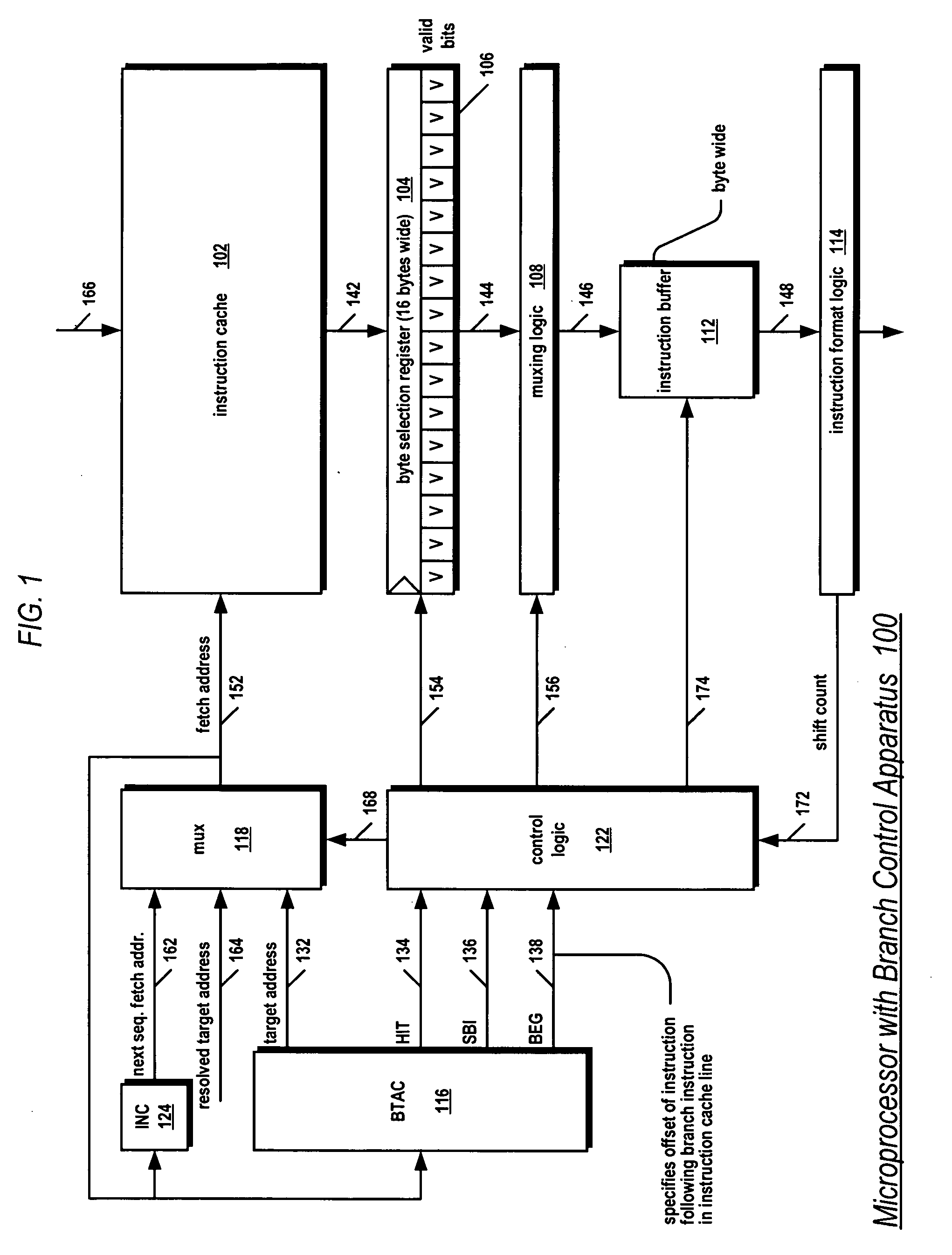

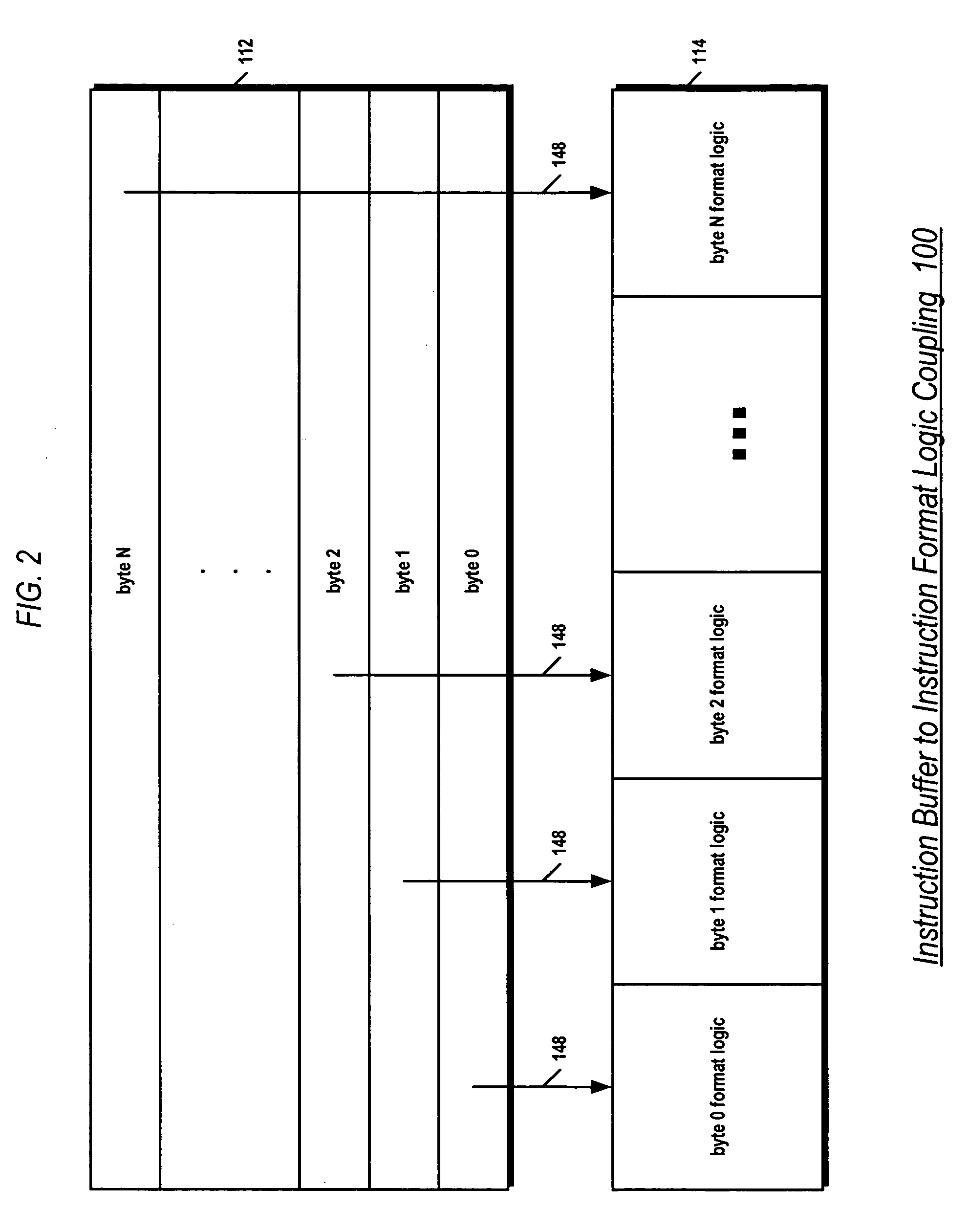

Apparatus and method for densely packing a branch instruction predicted by a branch target address cache and associated target instructions into a byte-wide instruction buffer

InactiveUS20050198481A1Alleviate Timing ConstraintsMemory adressing/allocation/relocationDigital computer detailsParallel computingBranch target address cache

A branch control apparatus in a microprocessor. A register receives a first cache line containing a branch instruction from an instruction cache in response to a fetch address. The fetch address hits in a BTAC that provides a target address of the branch instruction. The BTAC also provides an offset of the instruction following the branch instruction. The instructions following the branch instruction are invalidated based on the offset. Muxing logic packs only the valid instructions into a byte-wide instruction buffer that is directly coupled to instruction format logic. The instruction cache provides a second cache line containing the target instructions to the register in response to the target address. The instructions preceding the target instructions are invalidated based on the lower bits of the target address. The muxing logic packs only the valid target instructions into the instruction buffer immediately adjacent to the branch instruction bytes.

Owner:IP FIRST

Instruction unit with instruction buffer pipeline bypass

InactiveUS20110320771A1Memory adressing/allocation/relocationDigital computer detailsInstruction unitMethod selection

A circuit arrangement and method selectively bypass an instruction buffer for selected instructions so that bypassed instructions can be dispatched without having to first pass through the instruction buffer. Thus, for example, in the case that an instruction buffer is partially or completely flushed as a result of an instruction redirect (e.g., due to a branch mispredict), instructions can be forwarded to subsequent stages in an instruction unit and / or to one or more execution units without the latency associated with passing through the instruction buffer.

Owner:IBM CORP

Apparatus for controlling instruction fetch reusing fetched instruction

InactiveUS7676650B2Reducing performance of information processingQuickly executing instructionDigital computer detailsConcurrent instruction executionShort loopParallel computing

When an instruction stored in a specific instruction buffer is the same as another instruction stored in another instruction buffer and logically subsequent to the instruction in the specific instruction buffer, a connection is made from the instruction buffer storing a logically and immediately preceding instruction, not the instruction in the other instruction buffer, to the specific instruction buffer without the instruction in the other instruction buffer, and a loop is generated by instruction buffers, thereby performing a short loop in an instruction buffer system capable of arbitrarily connecting a plurality of instruction buffers.

Owner:FUJITSU LTD

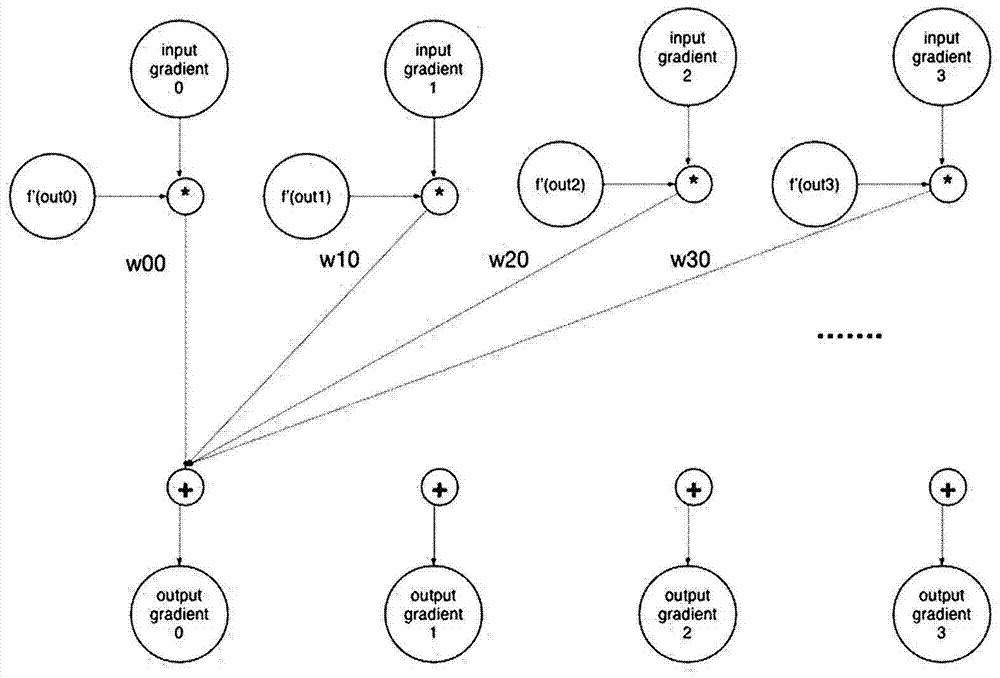

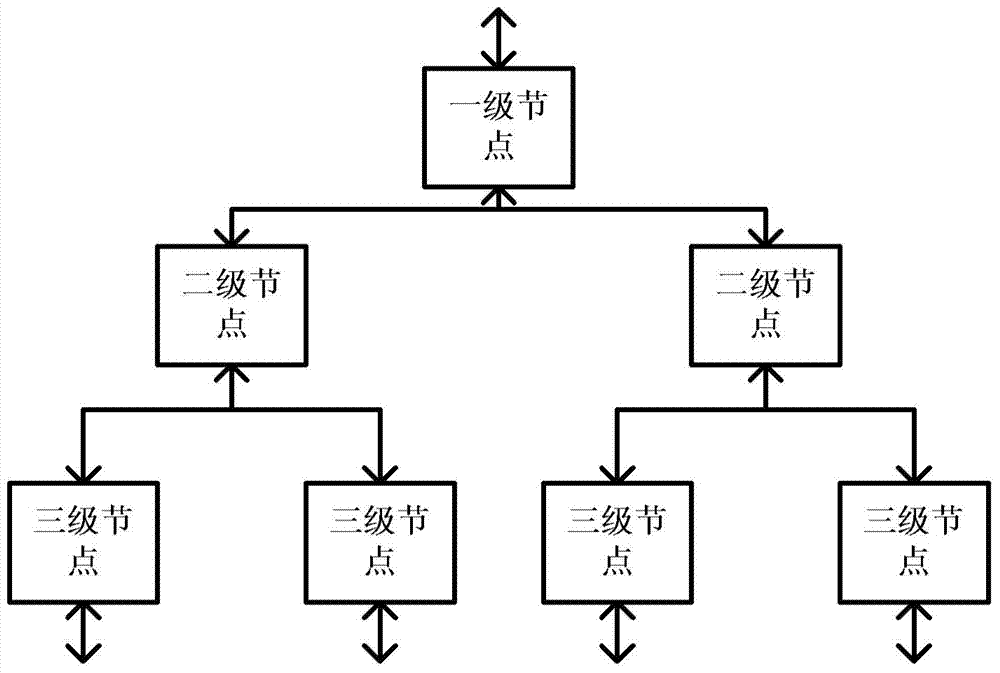

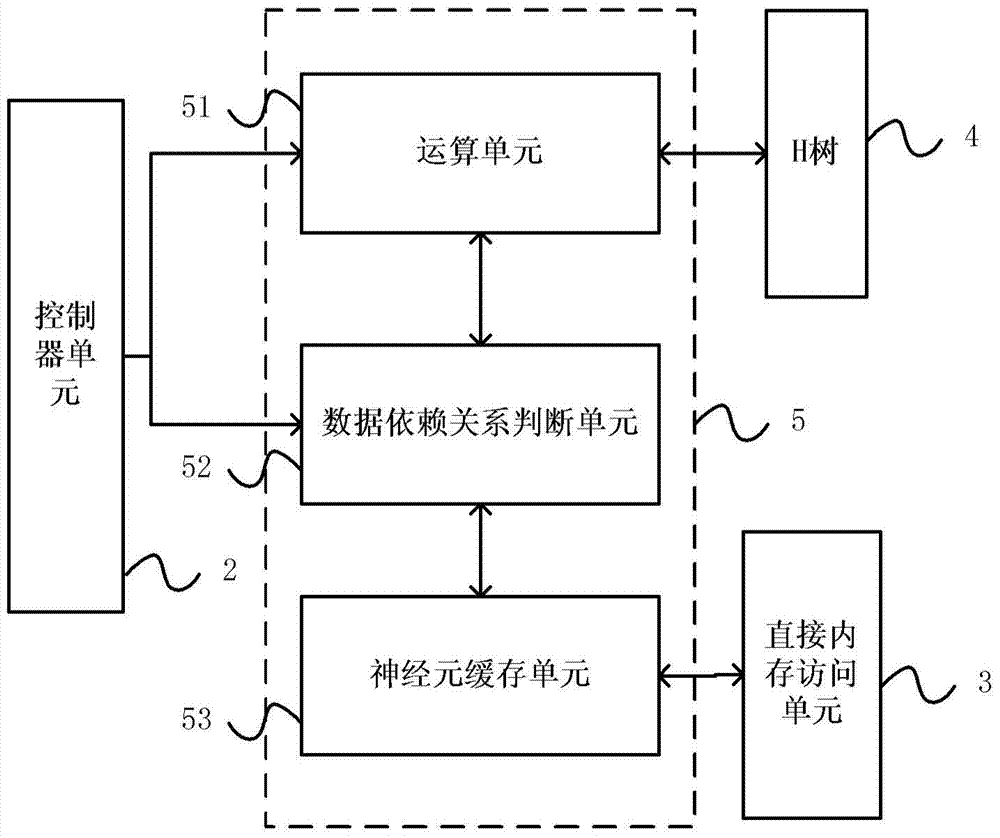

Device and method for executing reverse training of artificial neural network

The invention provides a device for executing reverse training of an artificial neural network. The device comprises an instruction buffer memory unit, a controller unit, a direct memory accessing unit, an H-tree module, a main operation module and a plurality of auxiliary operation modules. The device can realize reverse training of a multilayer artificial neural network. For each layer, weighted summation is performed on an input gradient vector and an output gradient vector of the layer is calculated. An input gradient vector of a next layer can be obtained through multiplying the output gradient vector by a derivative value of an excited function in forward operation. The gradient of the weight at this layer is obtained through multiplying the input gradient vector by an input neuron in forward operation. Then the weight of this layer can be updated according to the gradient of the weight at this layer.

Owner:CAMBRICON TECH CO LTD

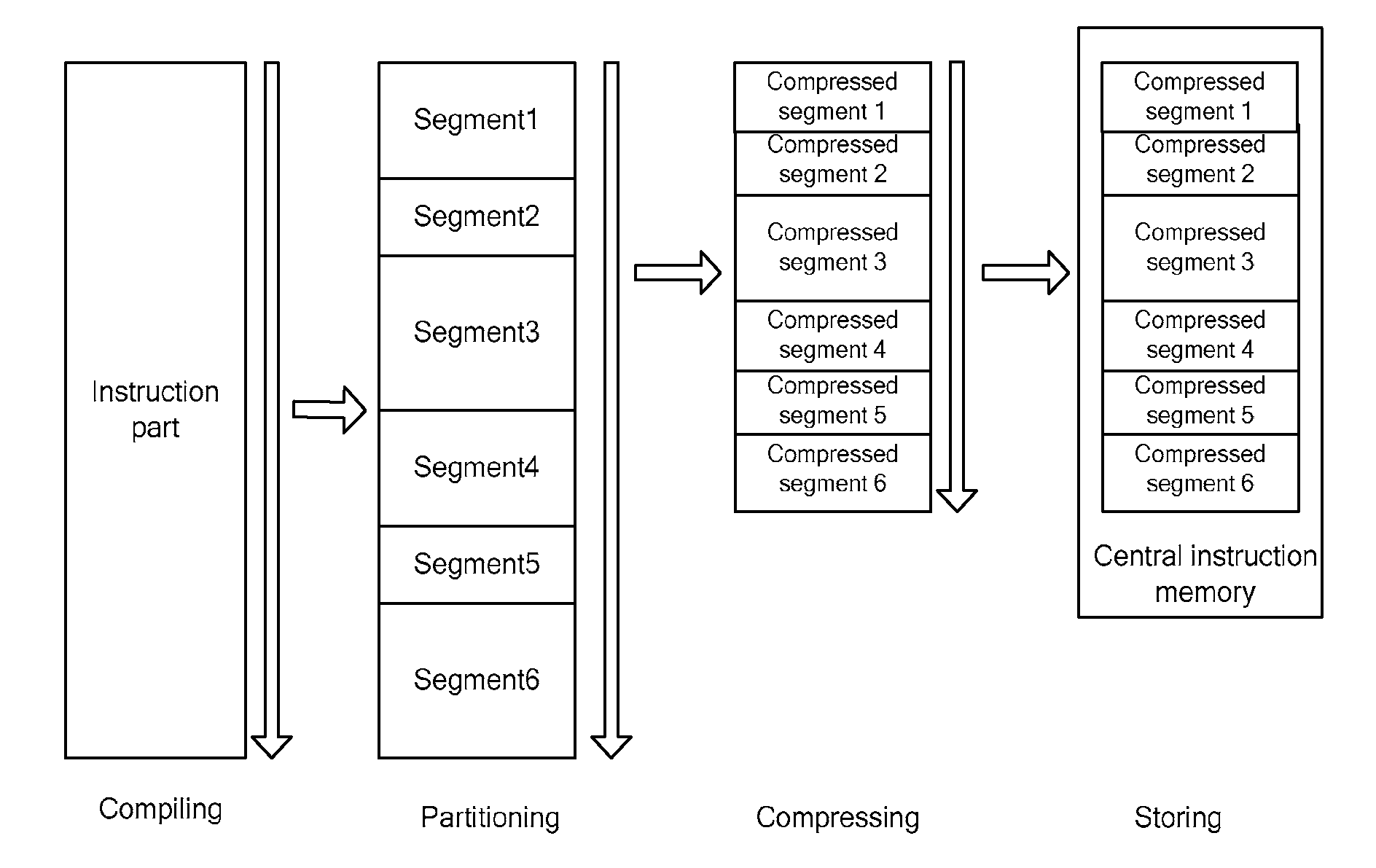

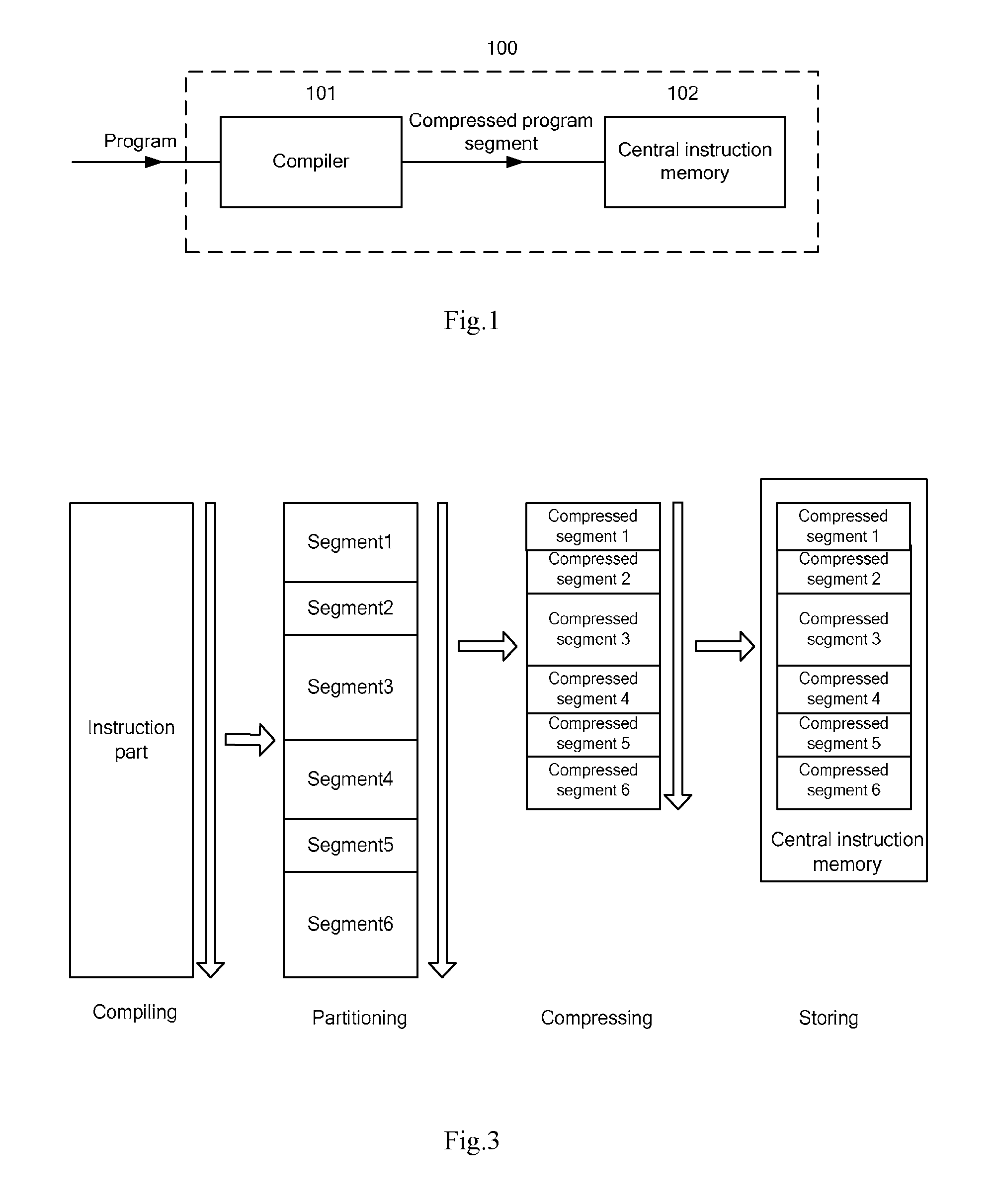

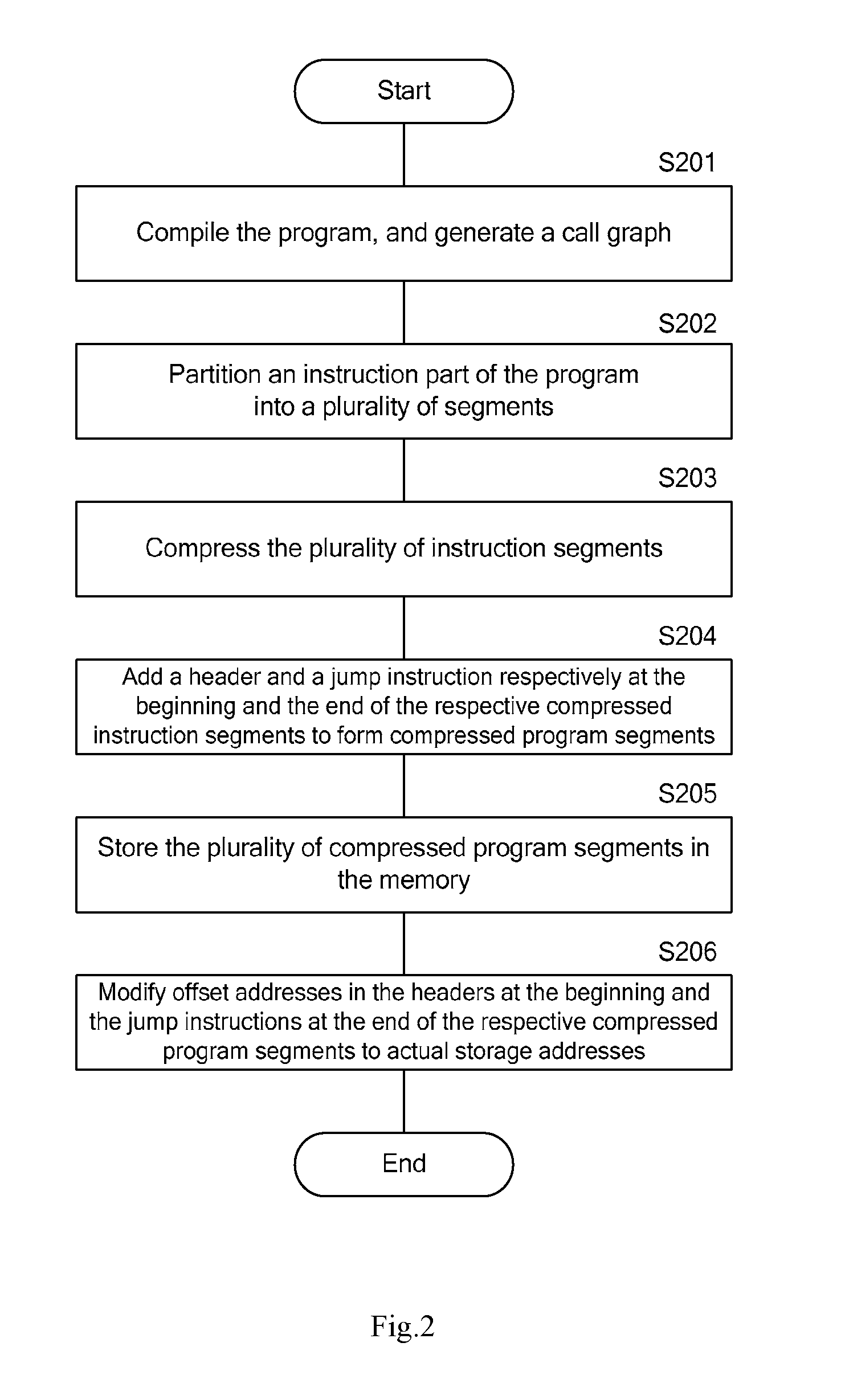

Overlay instruction accessing unit and overlay instruction accessing method

ActiveUS20090089507A1Reduce storage spaceImprove securitySoftware engineeringRuntime instruction translationProgram segmentParallel computing

The present invention provides an overlay instruction accessing unit and method, and a method and apparatus for compressing and storing a program. The overlay instruction accessing unit is used to execute a program stored in a memory in the form of a plurality of compressed program segments, and compresses: a buffer; a processing unit for issuing an instruction reading request, reading an instruction from the buffer, and executing the instruction; and a decompressing unit for reading a requested compressed instruction segment from the memory in response to the instruction reading request of the processing unit, decompressing the compressed instruction segment, and storing the decompressed instruction segment in the buffer, wherein while the processing unit is executing the instruction segment, the decompressing unit reads, according to a storage address of a compressed program segment to be invoked in a header corresponding to the instruction segment, a corresponding compressed instruction segment from the memory, decompresses the compressed instruction segment, and stores the decompressed instruction segment in the buffer for later use by the processing unit.

Owner:IBM CORP

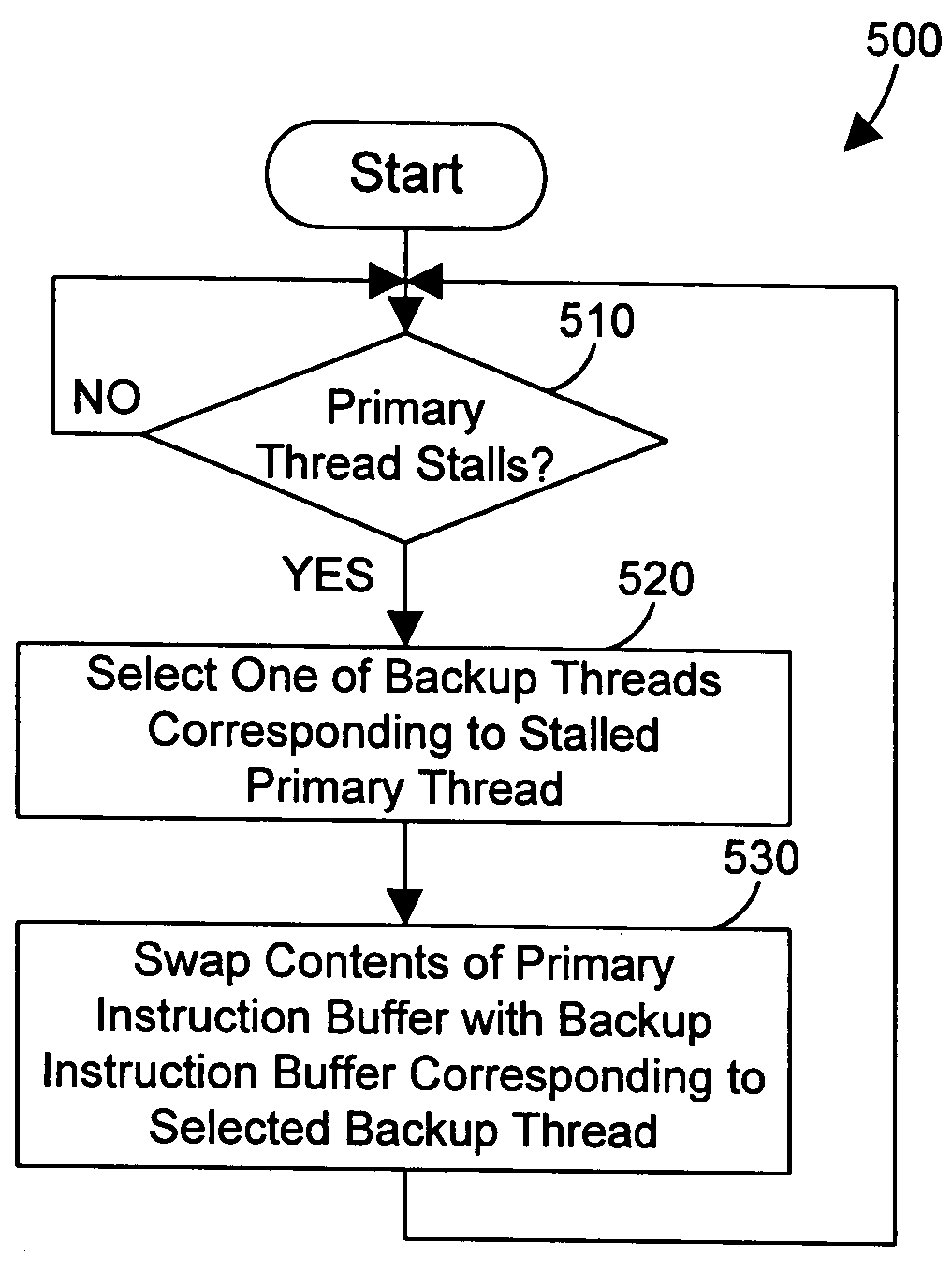

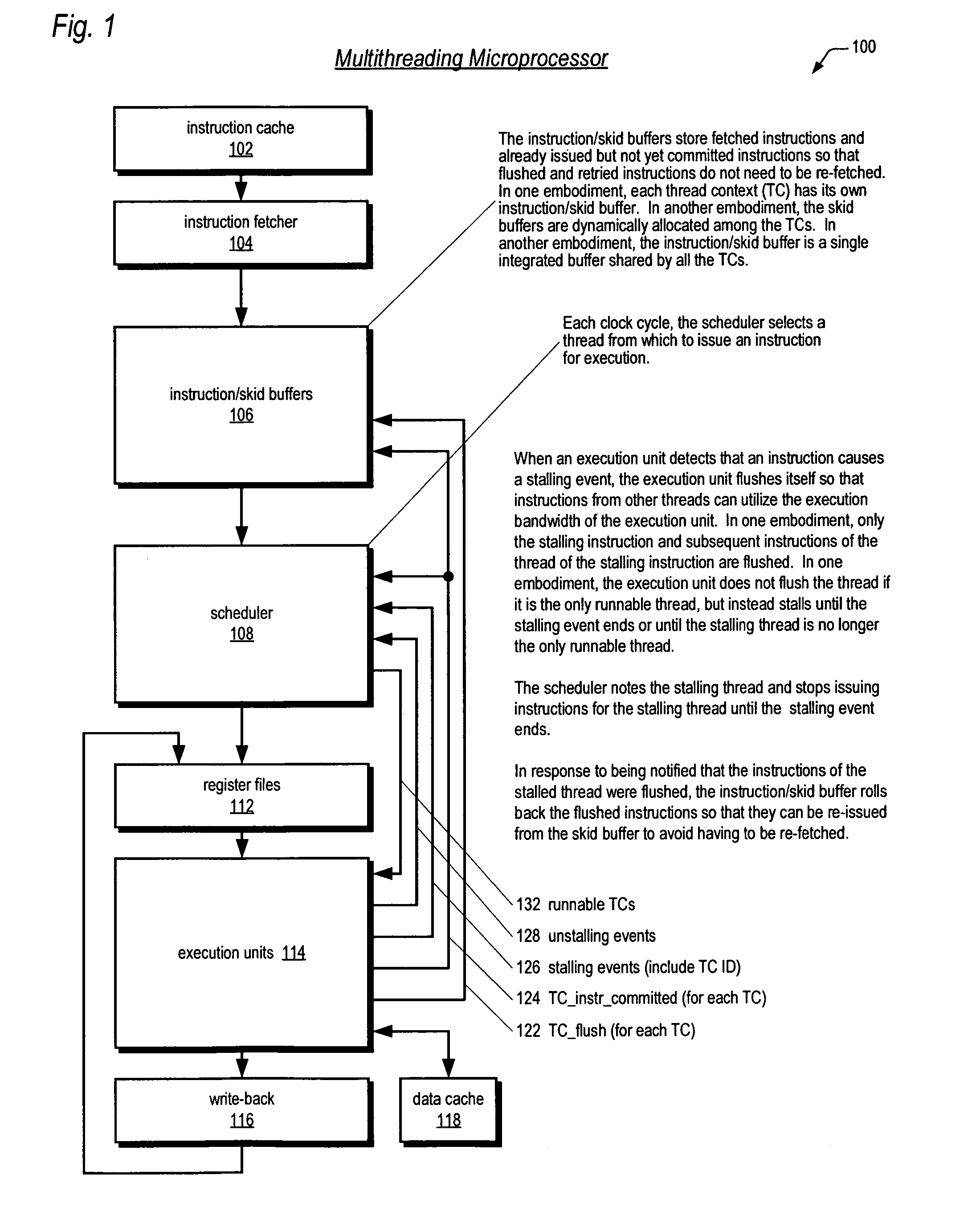

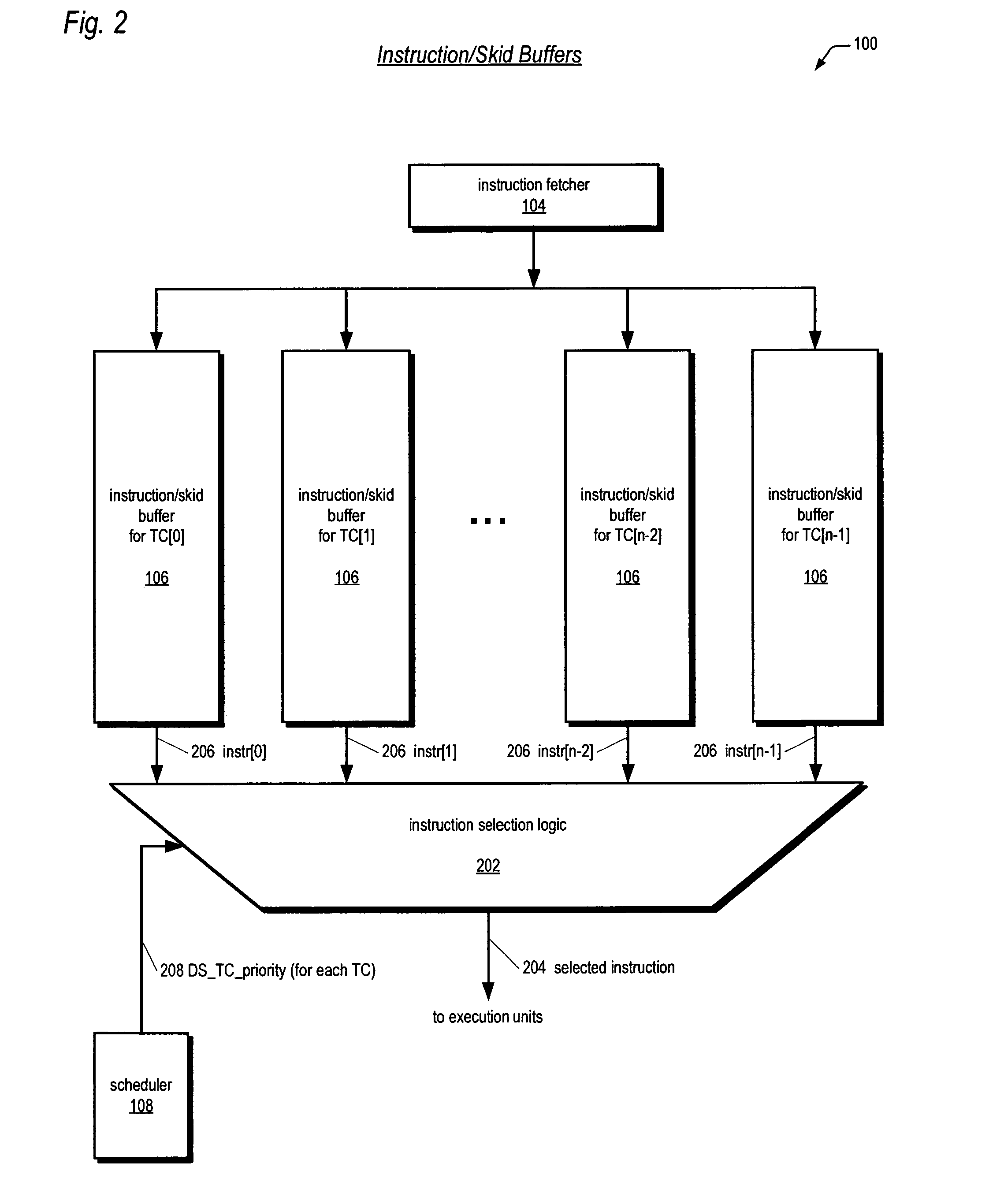

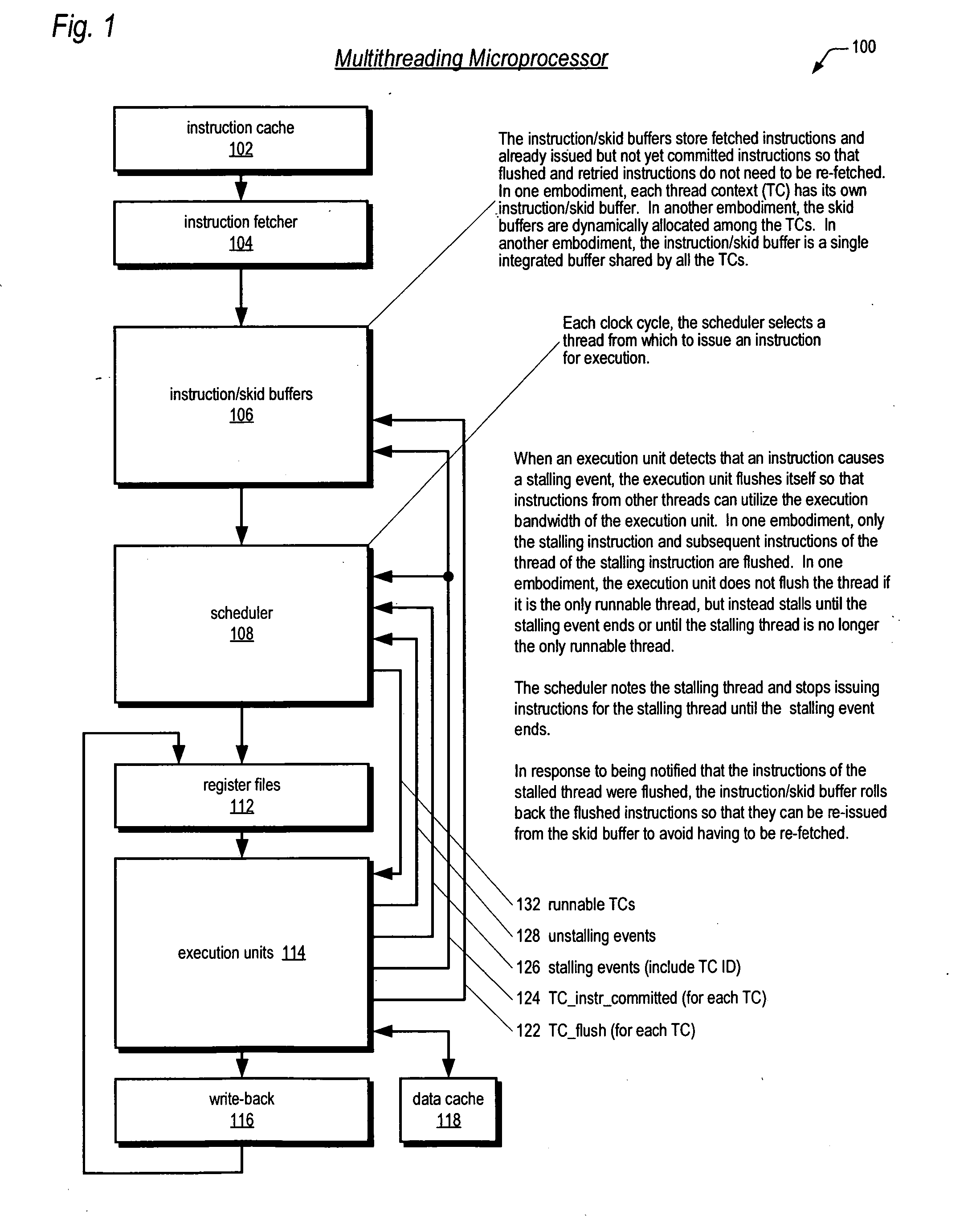

Multithreaded processor and method for switching threads

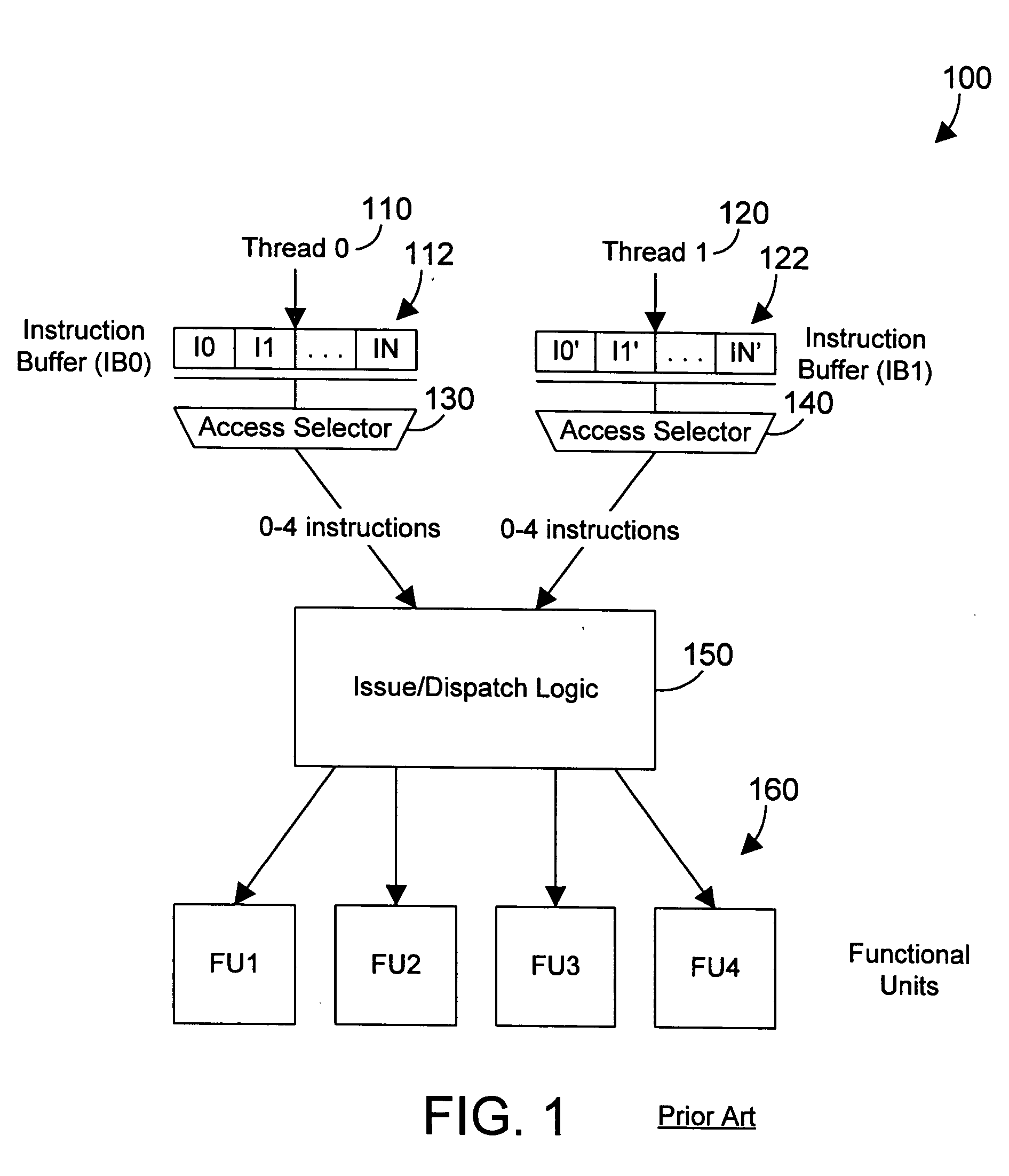

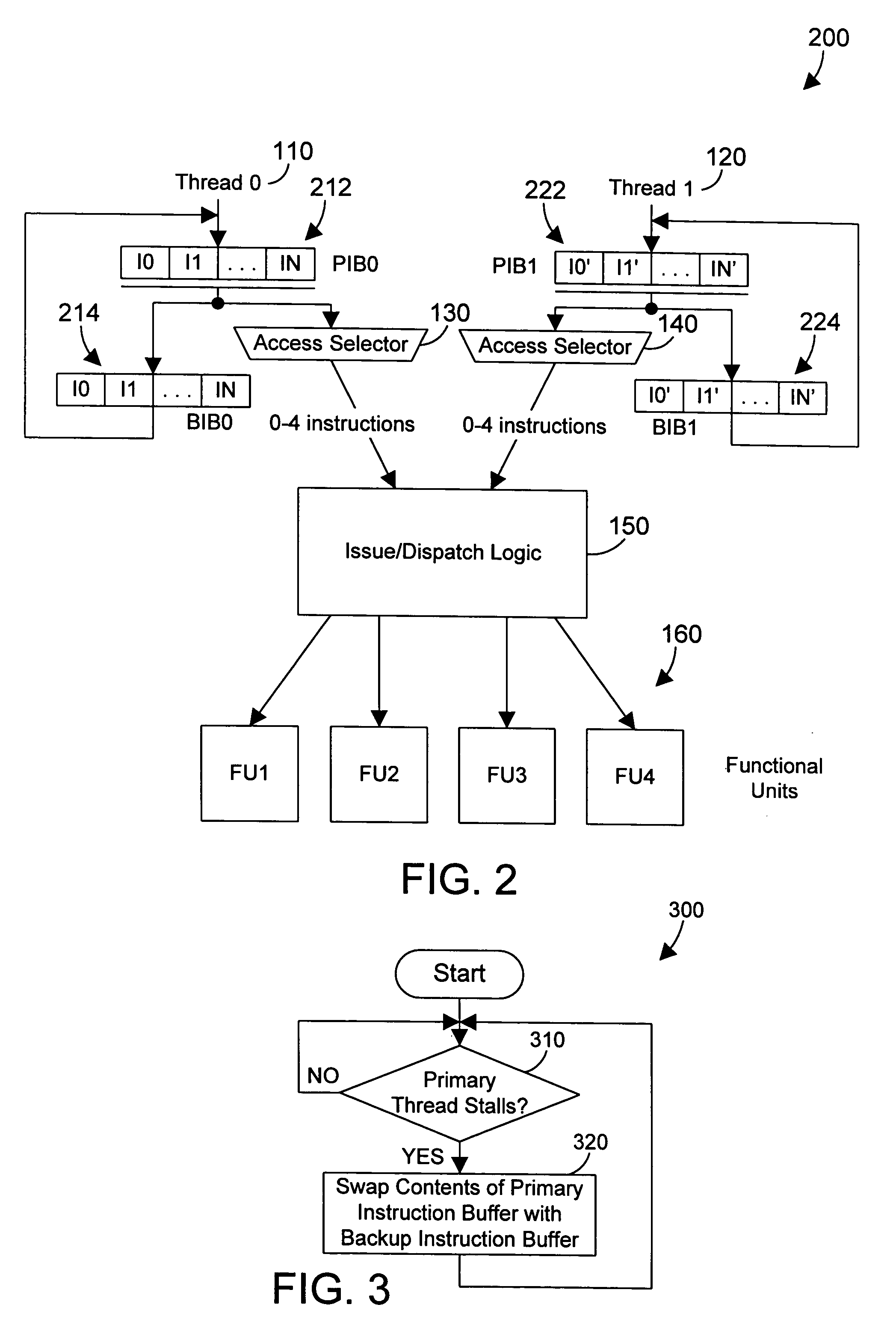

InactiveUS20050114856A1Improve throughputQuick switchProgram initiation/switchingDigital computer detailsInstruction pipelineInstruction buffer

A processor includes primary threads of execution that may simultaneously issue instructions, and one or more backup threads. When a primary thread stalls, the contents of its instruction buffer may be switched with the instruction buffer for a backup thread, thereby allowing the backup thread to begin execution. This design allows two primary threads to issue simultaneously, which allows for overlap of instruction pipeline latencies. This design further allows a fast switch to a backup thread when a primary thread stalls, thereby providing significantly improved throughput in executing instructions by the processor.

Owner:IBM CORP

Method, system and computer program product for an implicit predicted return from a predicted subroutine

InactiveUS20090210661A1Digital computer detailsSpecific program execution arrangementsParallel computingInstruction buffer

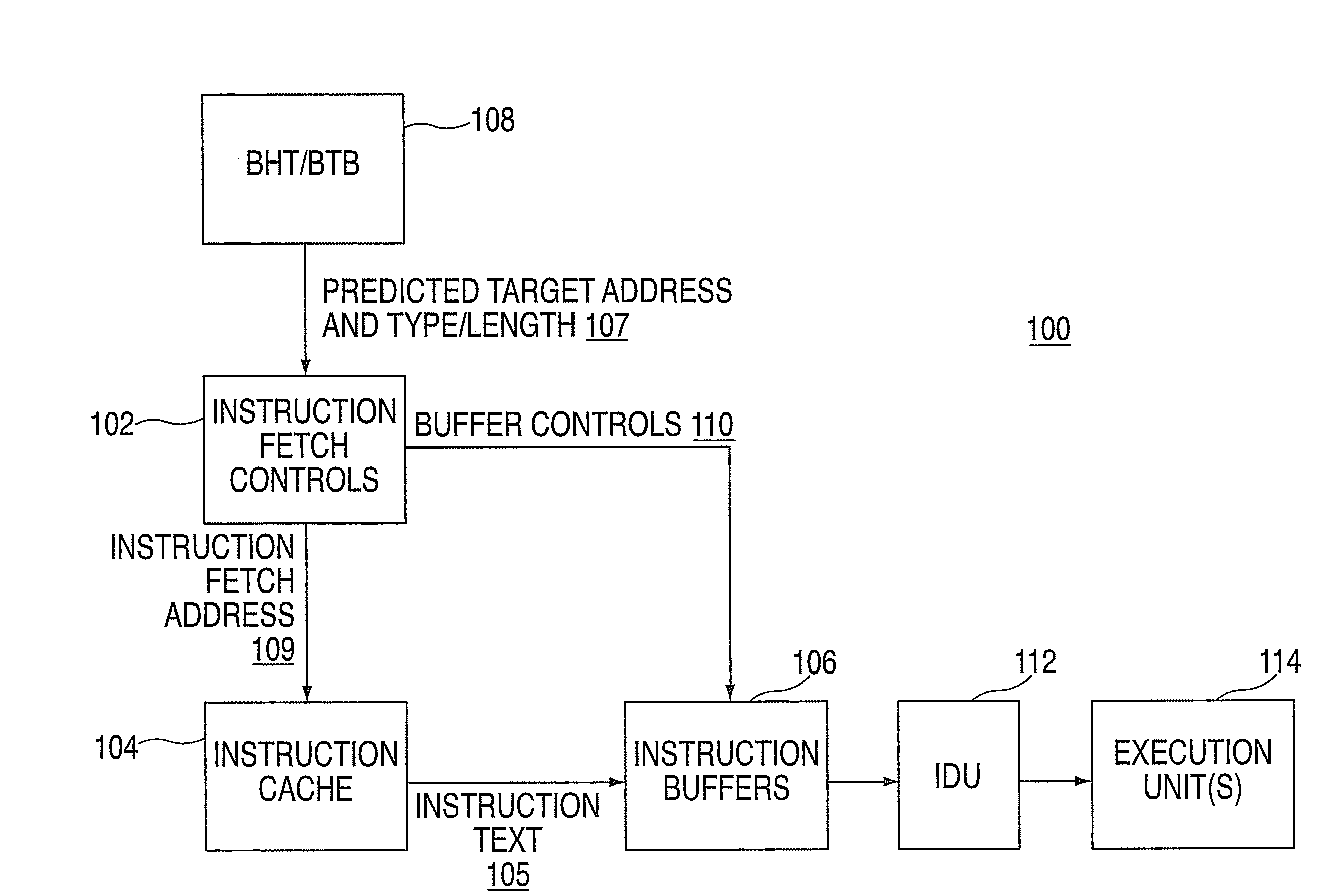

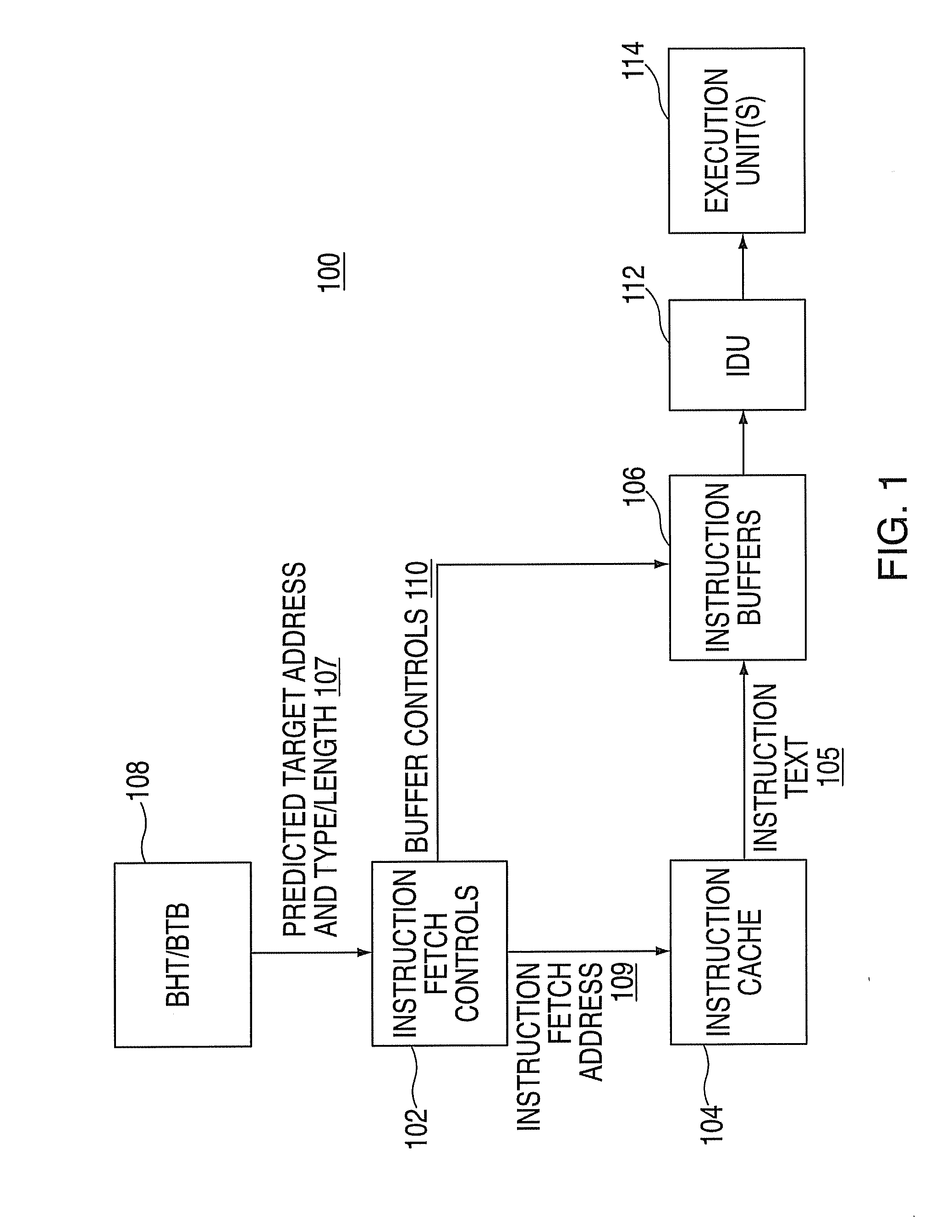

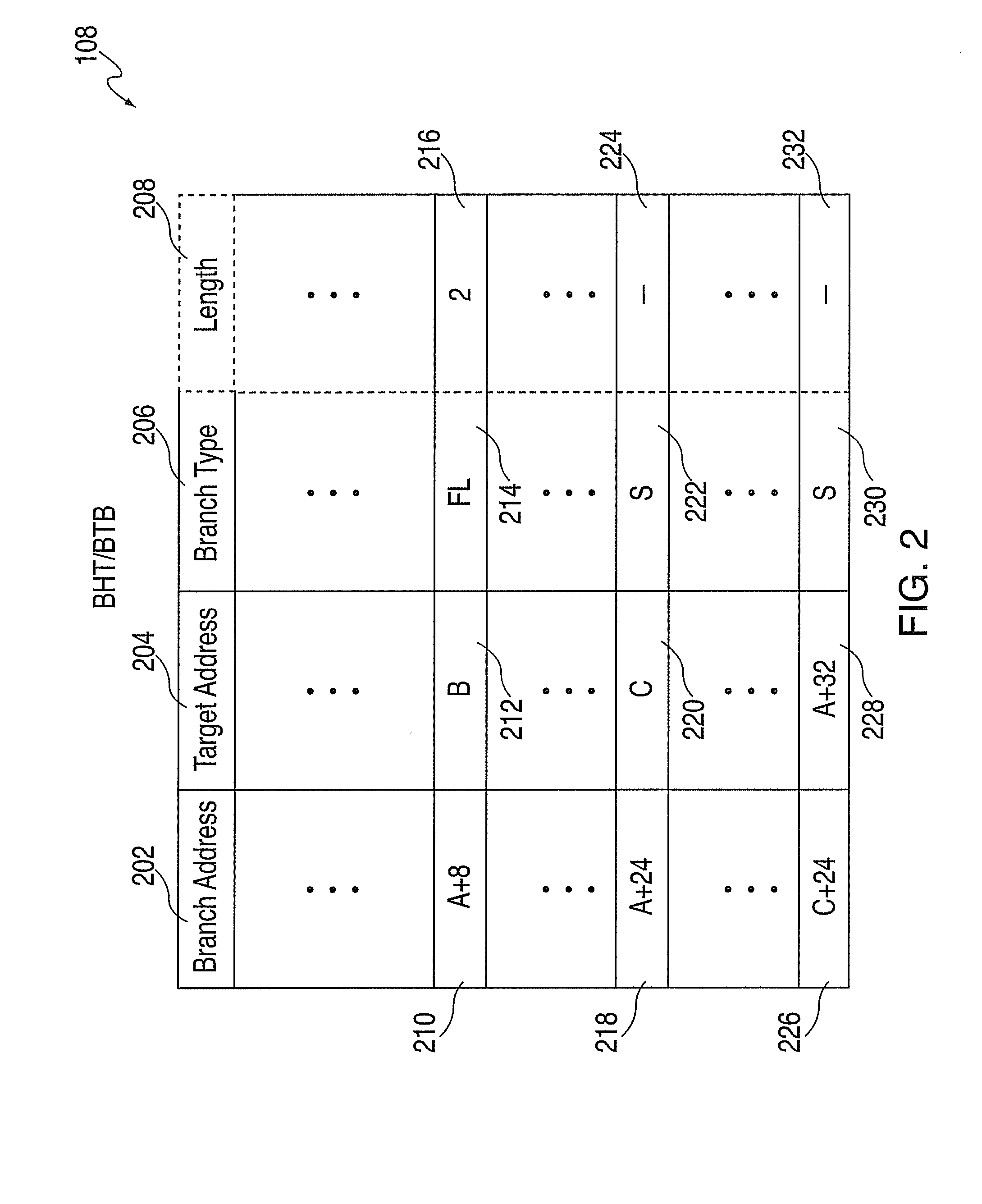

A method, system and computer program product for performing an implicit predicted return from a predicted subroutine are provided. The system includes a branch history table / branch target buffer (BHT / BTB) to hold branch information, including a target address of a predicted subroutine and a branch type. The system also includes instruction buffers, and instruction fetch controls to perform a method including fetching a branch instruction at a branch address and a return-point instruction. The method also includes receiving the target address and the branch type, and fetching a fixed number of instructions in response to the branch type. The method further includes referencing the return-point instruction within the instruction buffers such that the return-point instruction is available upon completing the fetching of the fixed number of instructions absent a re-fetch of the return-point instruction.

Owner:IBM CORP

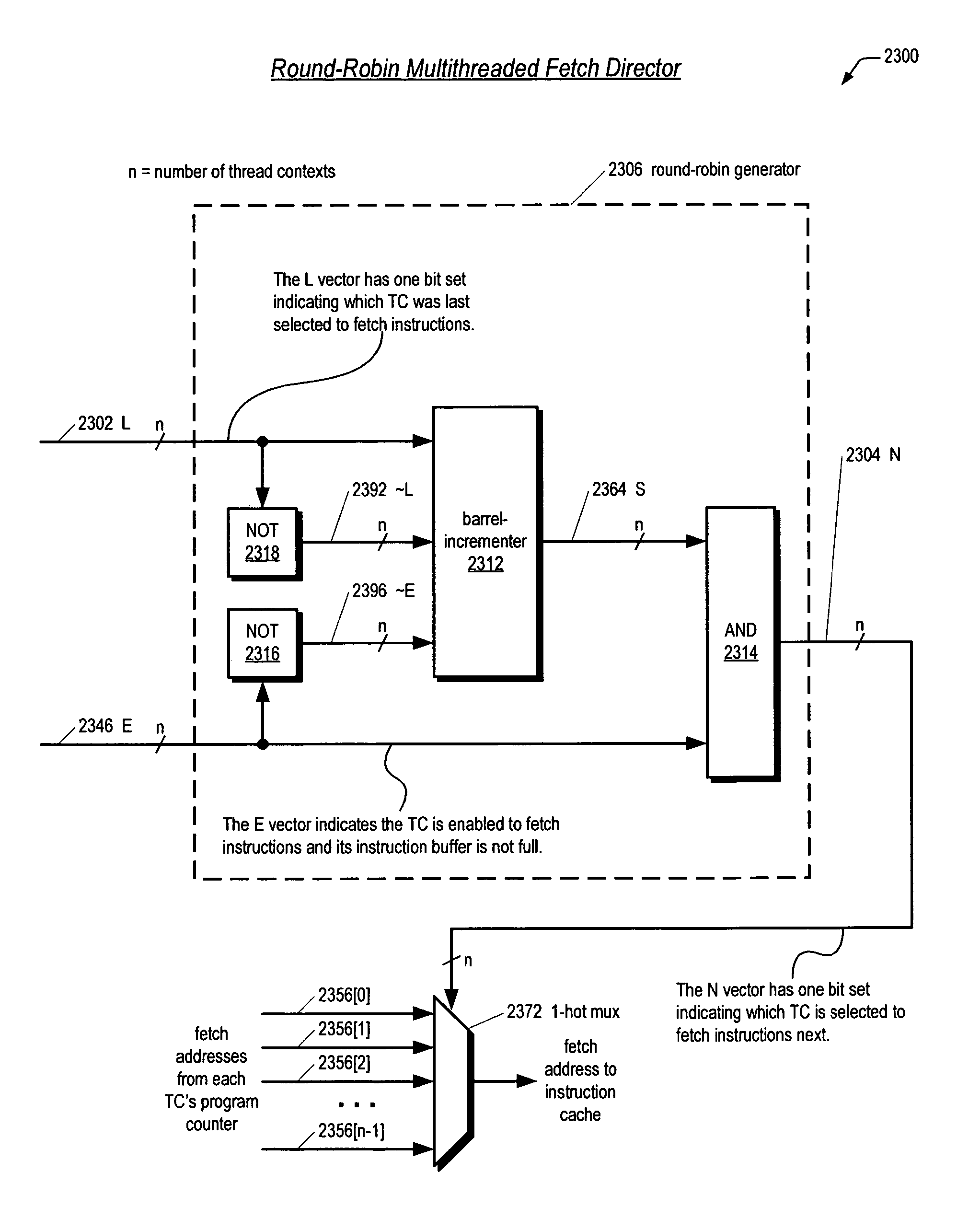

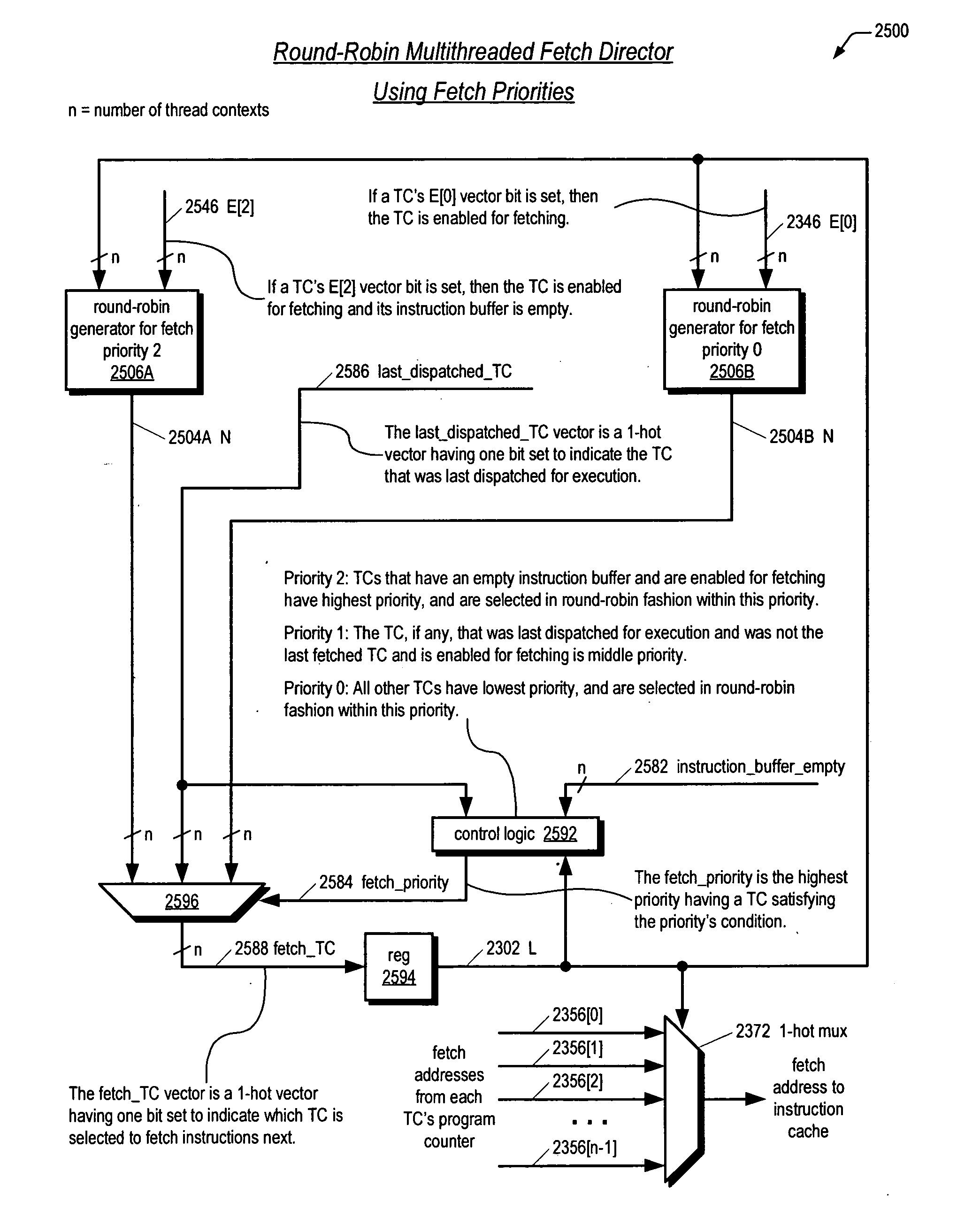

Fetch director employing barrel-incrementer-based round-robin apparatus for use in multithreading microprocessor

A fetch director in a multithreaded microprocessor that concurrently executes instructions of N threads is disclosed. The N threads request to fetch instructions from an instruction cache. In a given selection cycle, some of the threads may not be requesting to fetch instructions. The fetch director includes a circuit for selecting one of threads in a round-robin fashion to provide its fetch address to the instruction cache. The circuit adds a first addend to a 1-bit left-rotated version of a second addend to generate a sum and a carry-out bit. The circuit includes the carry-out bit as a carry-in bit of the add to generate the sum. The sum is ANDed with the inverse of the first addend to generate a 1-hot vector indicating which of the threads is selected next. The first addend is an N-bit vector where each bit is false if the corresponding thread is requesting to fetch instructions from the instruction cache. The second addend is a 1-hot vector indicating the last selected thread. In one embodiment threads with an empty instruction buffer are selected at highest priority; a last dispatched but not fetched thread at middle priority; all other threads at lowest priority. The threads are selected round-robin within the highest and lowest priorities.

Owner:ARM FINANCE OVERSEAS LTD

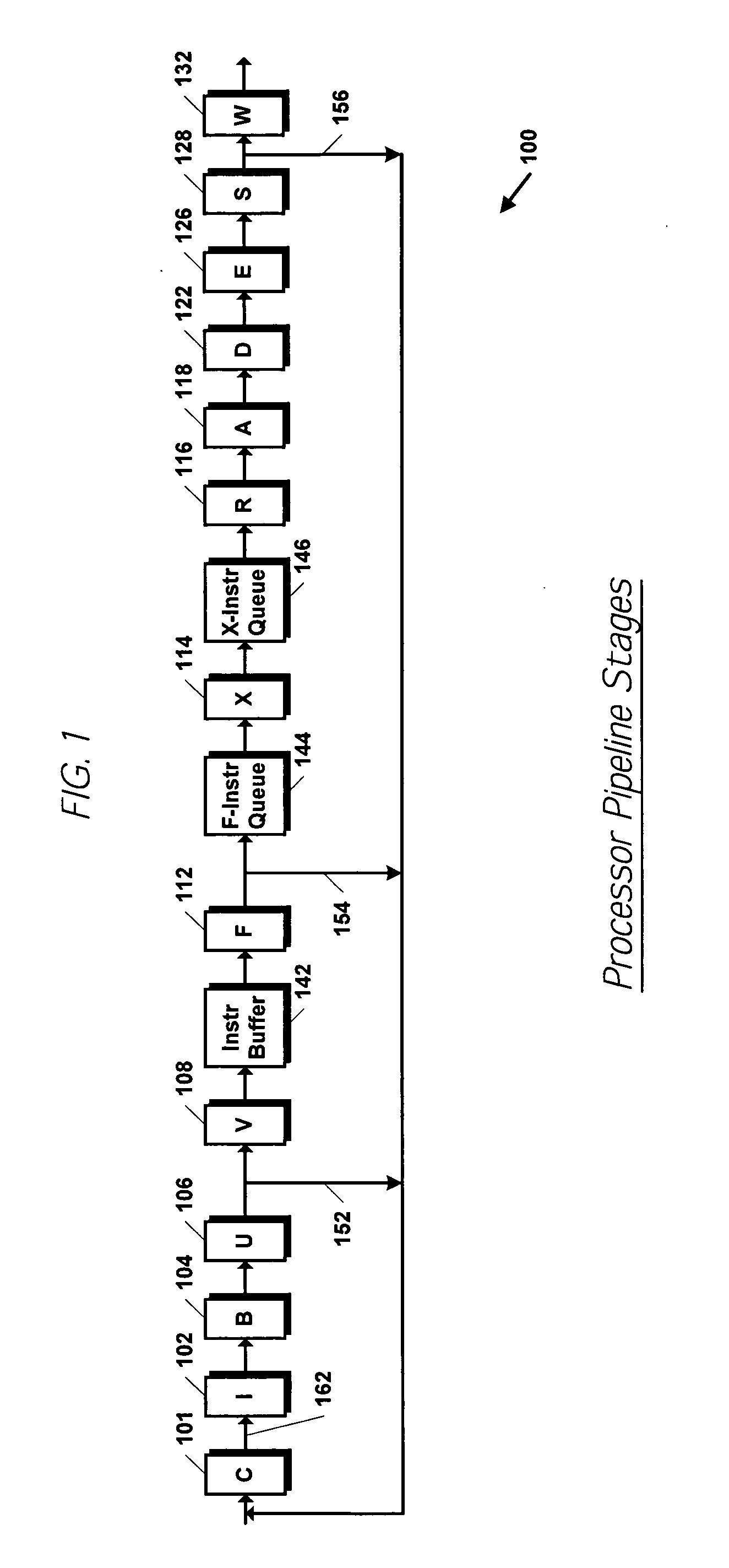

Apparatus and method for selectively accessing disparate instruction buffer stages based on branch target address cache hit and instruction stage wrap

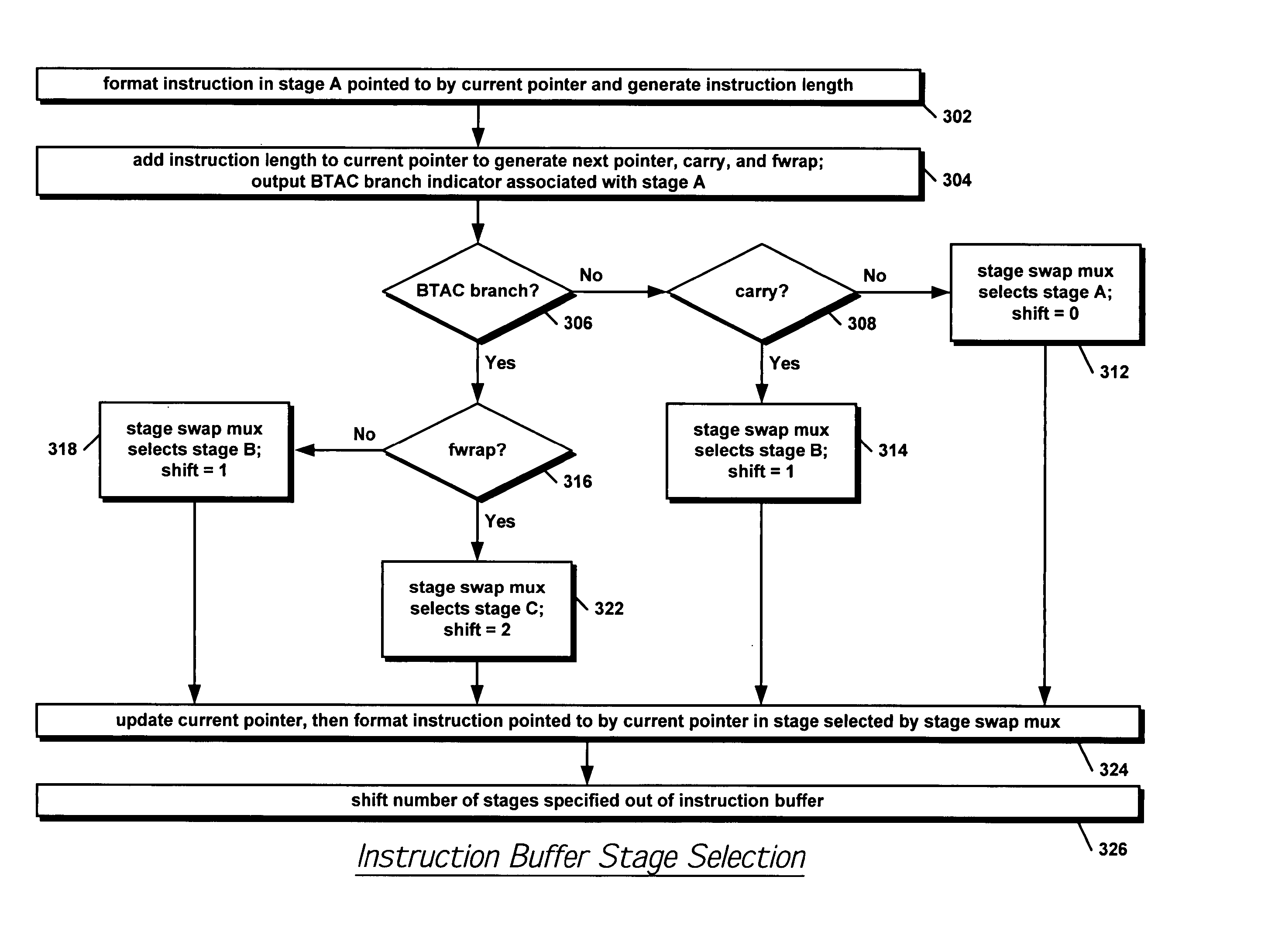

InactiveUS20050044343A1Increases the amount of cycle time availableZero penalty branchesInstruction analysisMemory adressing/allocation/relocationMultiplexerThree stage

A branch control apparatus in a microprocessor. The branch control apparatus includes an instruction buffer having a plurality of stages that buffer cache lines of instruction bytes received from an instruction cache. A multiplexer selects one of the bottom three stages in the instruction buffer to provide to instruction format logic. The multiplexer selects a stage based on a branch indicator, an instruction wrap indicator, and a carry indicator. The branch indicator indicates whether the processor previously branched to a target address provided by a branch target address cache. The branch indicator and target address are previously stored in association with the stage containing the branch instruction for which the target address is cached. The wrap indicator indicates whether the currently formatted instruction wraps across two cache lines. The carry indicator indicates whether the current instruction being formatted occupies the last byte of the currently formatted instruction buffer stage.

Owner:IP FIRST +1

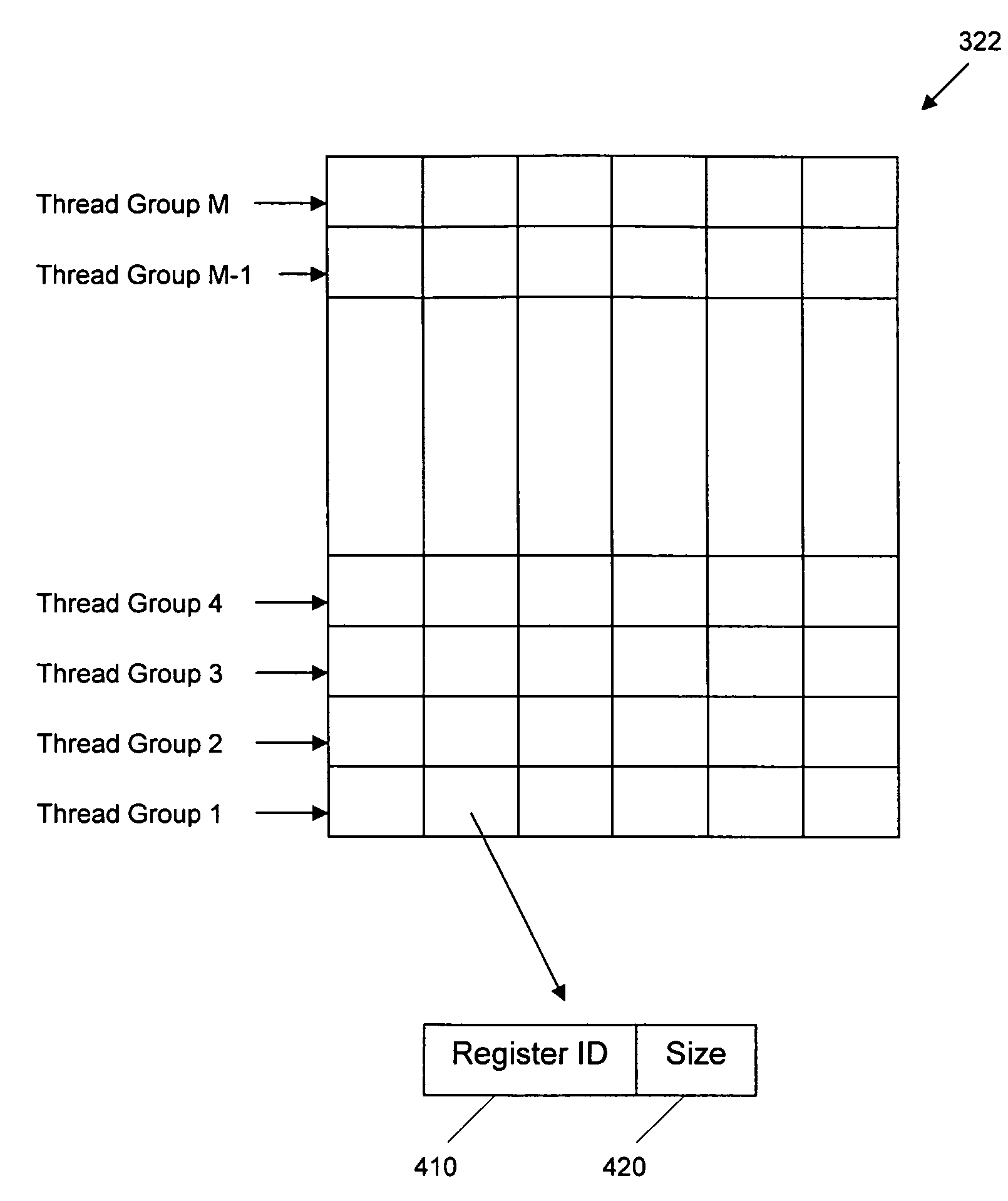

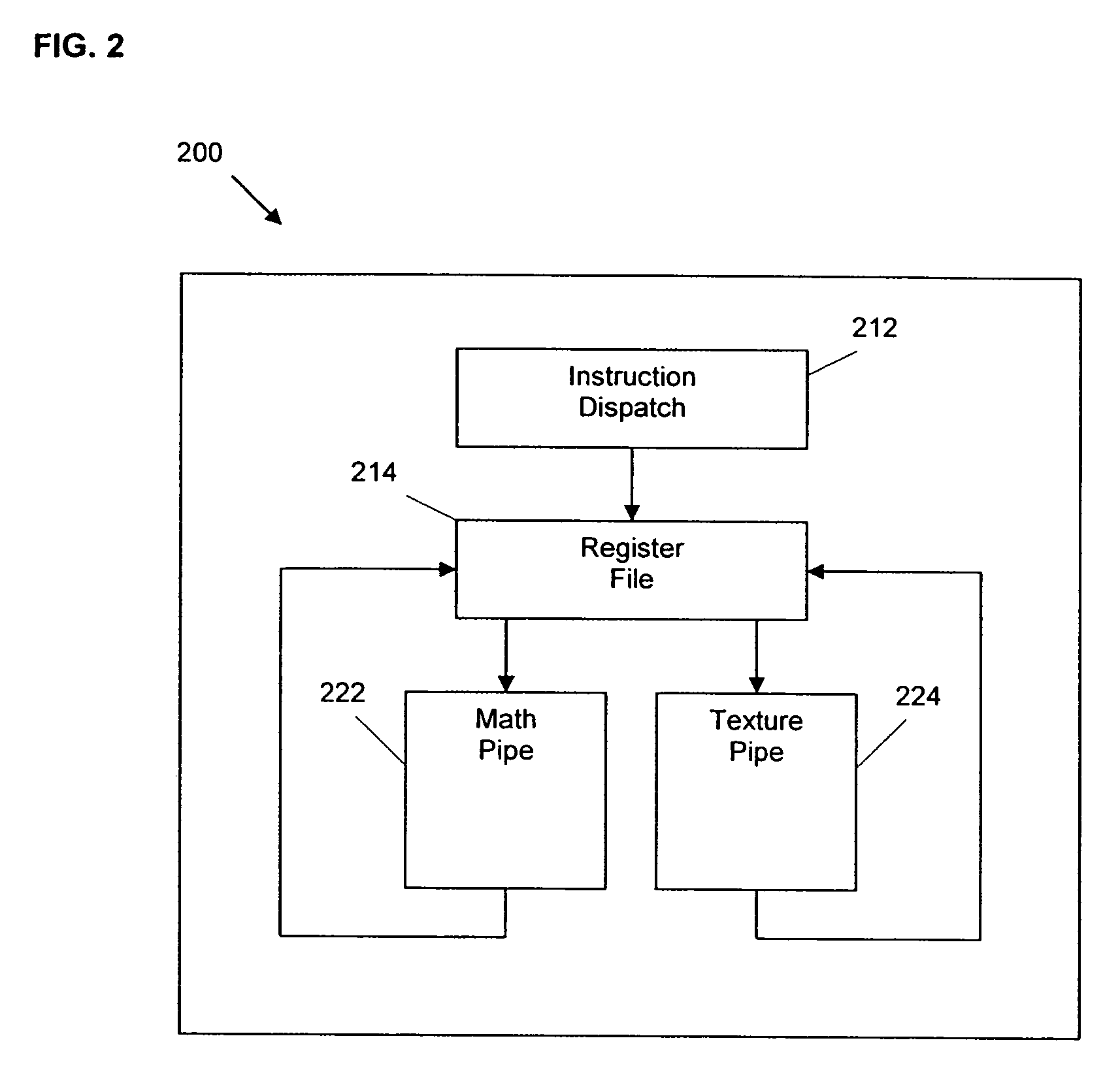

Tracking register usage during multithreaded processing using a scoreboard having separate memory regions and storing sequential register size indicators

ActiveUS7434032B1Addressing slow performanceDigital computer detailsMemory systemsProcessor registerParallel computing

A scoreboard memory for a processing unit has separate memory regions allocated to each of the multiple threads to be processed. For each thread, the scoreboard memory stores register identifiers of registers that have pending writes. When an instruction is added to an instruction buffer, the register identifiers of the registers specified in the instruction are compared with the register identifiers stored in the scoreboard memory for that instruction's thread, and a multi-bit value representing the comparison result is generated. The multi-bit value is stored with the instruction in the instruction buffer and may be updated as instructions belonging to the same thread complete their execution. Before the instruction is issued for execution, this multi-bit value is checked. If this multi-bit value indicates that none of the registers specified in the instruction have pending writes, the instruction is allowed to issue for execution.

Owner:NVIDIA CORP

Fetch director employing barrel-incrementer-based round-robin apparatus for use in multithreading microprocessor

ActiveUS20060179276A1Digital computer detailsMultiprogramming arrangementsParallel computingInstruction buffer

A fetch director in a multithreaded microprocessor that concurrently executes instructions of N threads is disclosed. The N threads request to fetch instructions from an instruction cache. In a given selection cycle, some of the threads may not be requesting to fetch instructions. The fetch director includes a circuit for selecting one of threads in a round-robin fashion to provide its fetch address to the instruction cache. The circuit 1-bit left rotatively increments a first addend by a second addend to generate a sum that is ANDed with the inverse of the first addend to generate a 1-hot vector indicating which of the threads is selected next. The first addend is an N-bit vector where each bit is false if the corresponding thread is requesting to fetch instructions from the instruction cache. The second addend is a 1-hot vector indicating the last selected thread. In one embodiment threads with an empty instruction buffer are selected at highest priority; a last dispatched but not fetched thread at middle priority; all other threads at lowest priority. The threads are selected round-robin within the highest and lowest priorities.

Owner:ARM FINANCE OVERSEAS LTD

Branch control memory

InactiveUS7159102B2Simplify the commissioning processImprove latency handlingDigital computer detailsNext instruction address formationParallel computingControl memory

A branch control memory store branch instructions which are adapted for optimizing performance of programs run on electronic processors. Flexible instruction parameter fields permit a variety of new branch control and branch instruction implementations best suited for a particular computing environment. These instructions also have separate prediction bits, which are used to optimize loading of target instruction buffers in advance of program execution, so that a pipeline within the processor achieves superior performance during actual program execution.

Owner:RENESAS ELECTRONICS CORP

System and Method for Optimizing Branch Logic for Handling Hard to Predict Indirect Branches

ActiveUS20080307210A1Improve processor performanceImprove performanceDigital computer detailsSpecific program execution arrangementsParallel computingIndirect branch

A system and method for optimizing the branch logic of a processor to improve handling of hard to predict indirect branches are provided. The system and method leverage the observation that there will generally be only one move to the count register (mtctr) instruction that will be executed while a branch on count register (bcctr) instruction has been fetched and not executed. With the mechanisms of the illustrative embodiments, fetch logic detects that it has encountered a bcctr instruction that is hard to predict and, in response to this detection, blocks the target fetch from entering the instruction buffer of the processor. At this point, the fetch logic has fetched all the instructions up to and including the bcctr instruction but no target instructions. When the next mtctr instruction is executed, the branch logic of the processor grabs the data and starts fetching using that target address. Since there are no other target instructions that were fetched, no flush is needed if that target address is the correct address, i.e. the branch prediction is correct.

Owner:IBM CORP

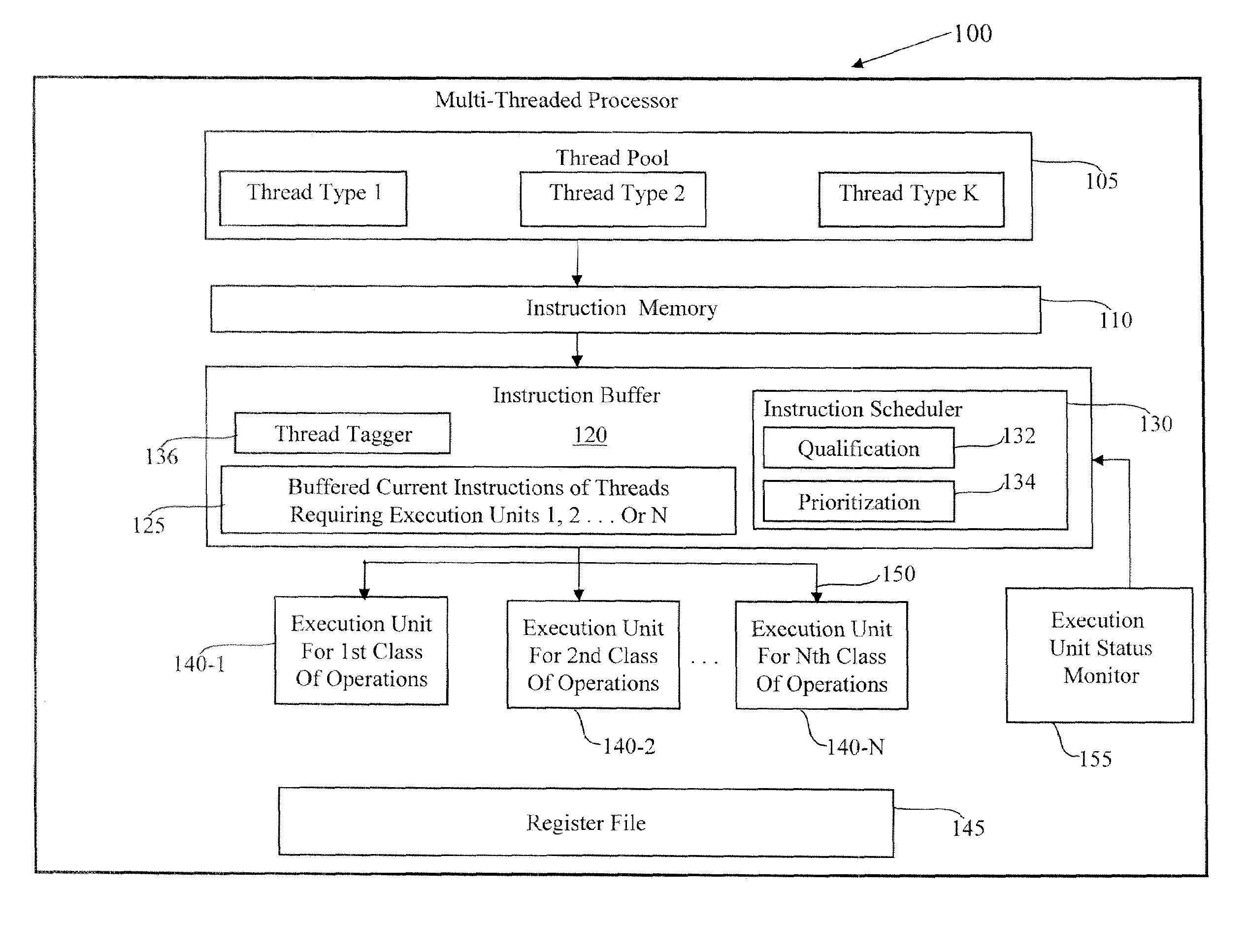

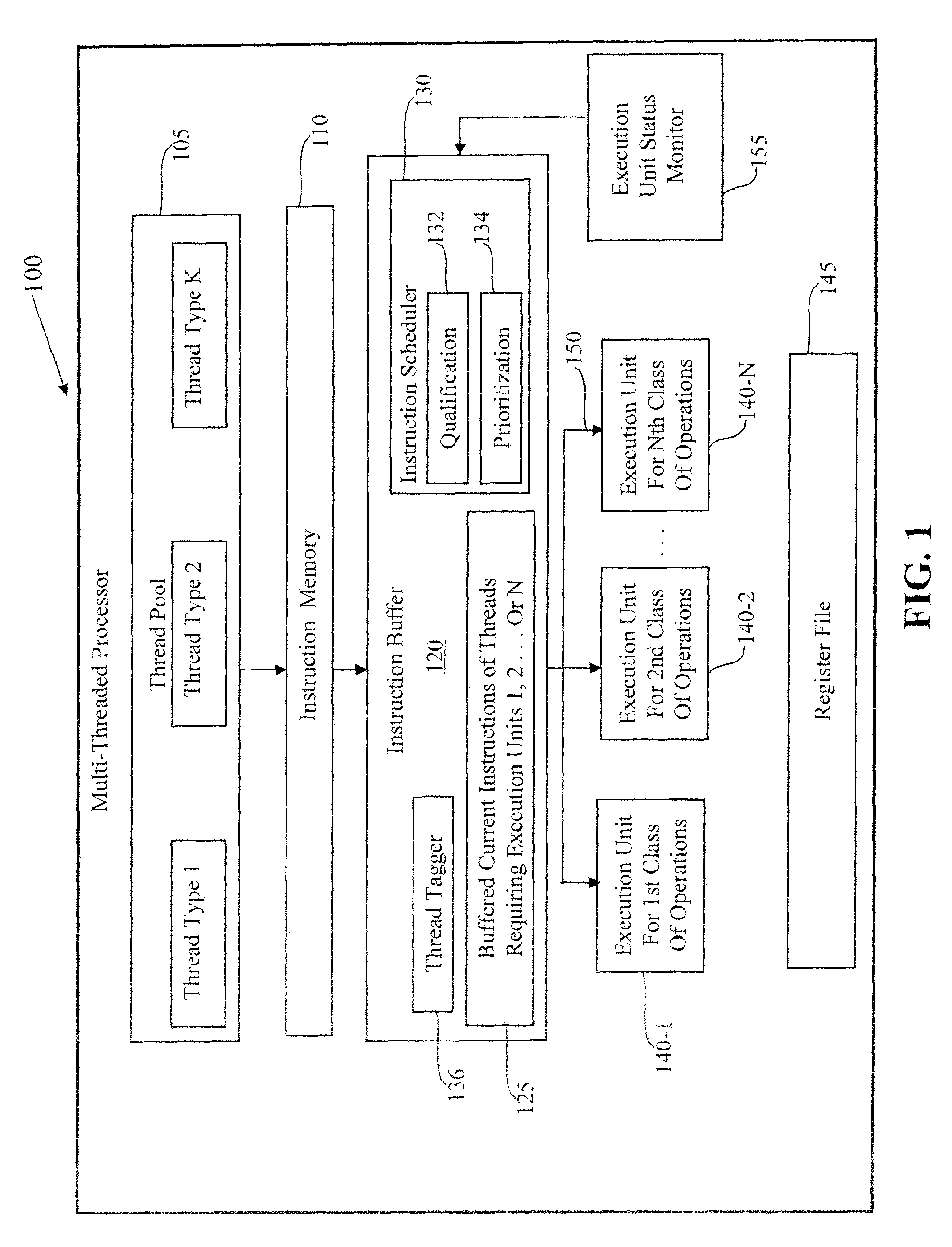

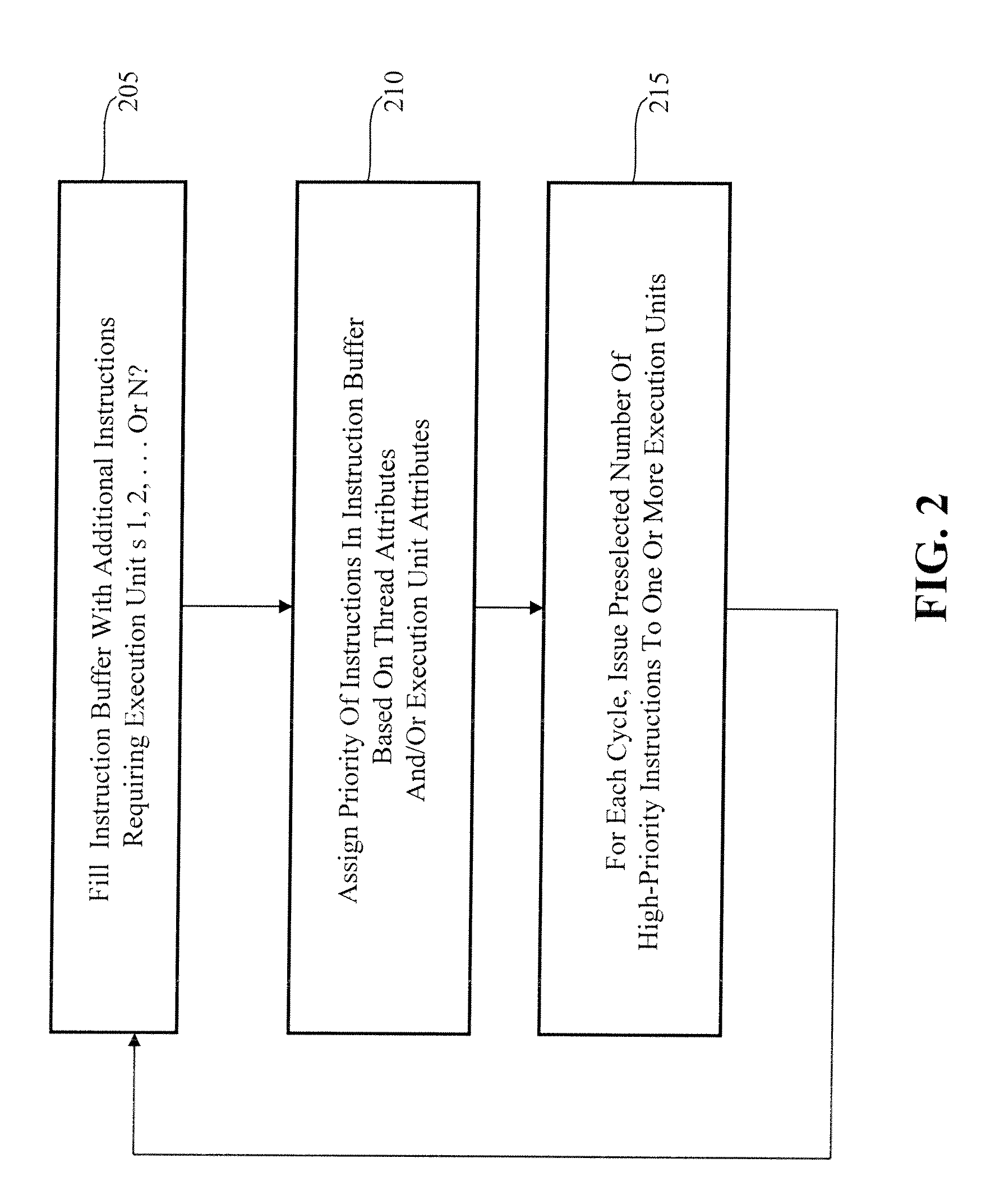

Prioritized issuing of operation dedicated execution unit tagged instructions from multiple different type threads performing different set of operations

A graphics processor buffers vertex thread and pixel threads. The different types of threads issue instructions corresponding to different sets of operations. A plurality of different types of execution units are provided, each type of execution unit servicing a different class of operations, such as an executing unit supporting texture operations, an execution unit supporting blending operations, and an execution unit supporting mathematical operations. Current instructions of the threads are buffered and prioritized in a common instruction buffer. A set of high priority instructions is issued per cycle to the plurality of different types of execution units.

Owner:NVIDIA CORP

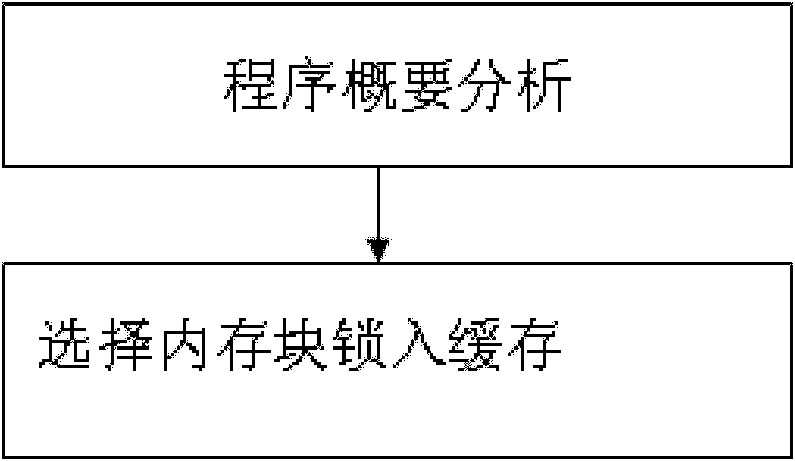

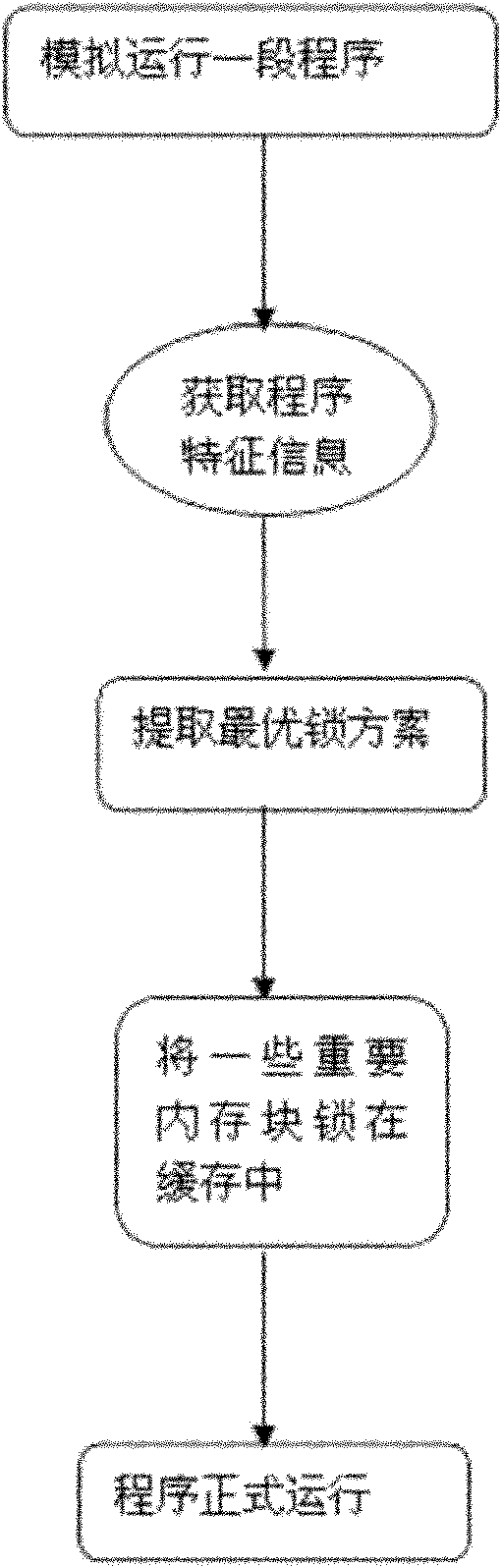

Method for realizing instruction buffer lock

InactiveCN101989236AHigh precisionReduce accessMemory adressing/allocation/relocationConcurrent instruction executionAccess timeCache hit rate

The invention discloses a method for realizing instruction buffer lock, which comprises the following steps: 1) analyzing program summary, pre-running a section of program, and recording a memory block access sequence, citation times, re-access time interval and hit times; and 2) selecting memory blocks to be locked in a buffer, setting an access counter and a least recently used (LRU) counter for each memory block, accessing memory block access times recorded in the counters, assigning weights N1 and N2 to the access counter and LRU counter in each memory block, counting according to N1*access times+N2*(LRU counter limit-LRU value), and if the counted values are greater than a threshold M, locking the memory blocks in the buffer, wherein N1+N2=1. In the invention, the memory blocks locked in the instruction buffer can be replaced only when the lock is removed, the buffer hit rate is improved greatly, the accesses to low-level storage are reduced, and average memory access delay is reduced.

Owner:ZHEJIANG UNIV

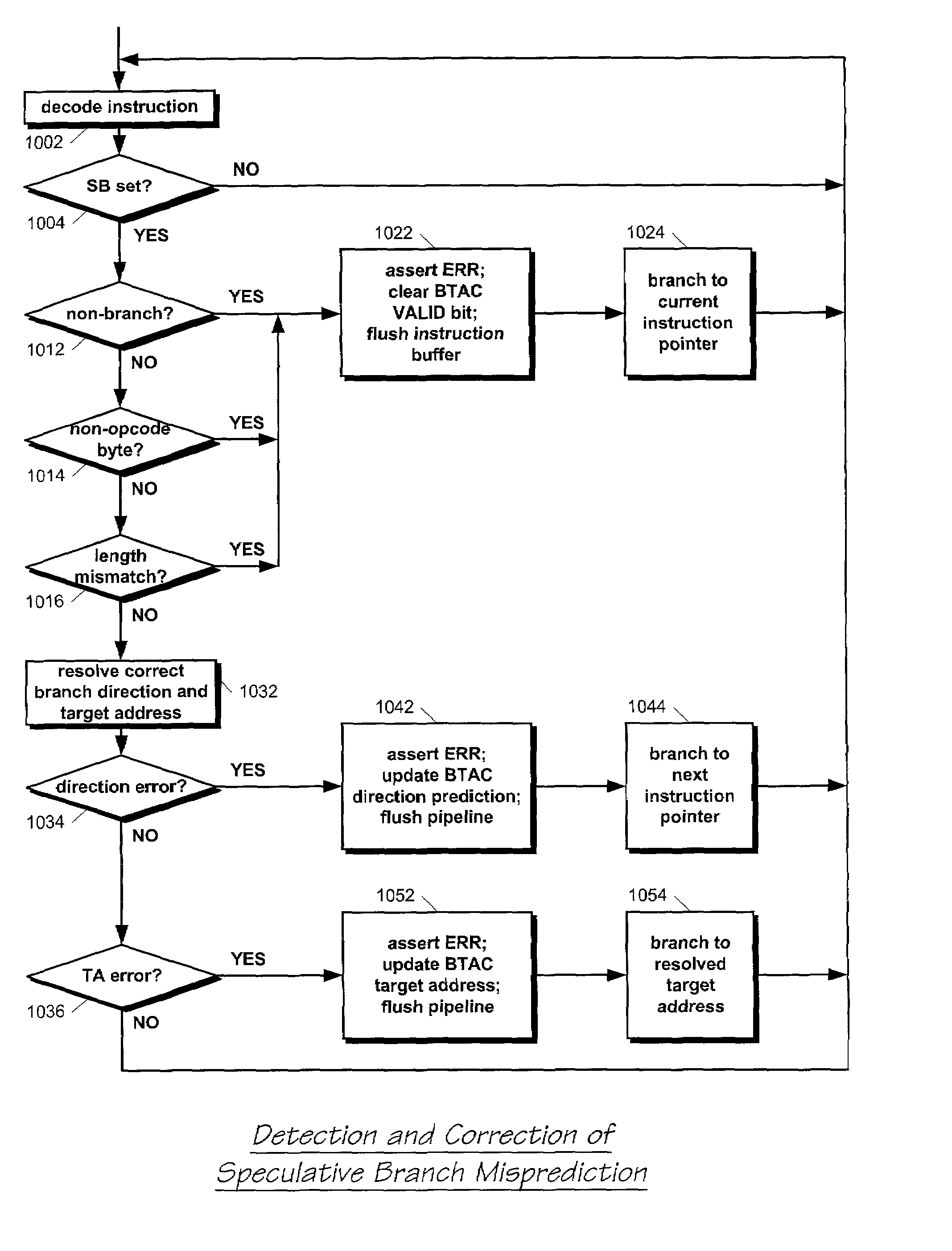

Microprocessor that detects erroneous speculative prediction of branch instruction opcode byte

InactiveUS7134005B2Easy to useImproved processor cycle timeDigital computer detailsConcurrent instruction executionParallel computingBranch target address cache

A microprocessor caches in a branch target address cache (BTAC), for each of a plurality of previously executed branch instructions: a prediction of whether the branch instruction will be taken and is present in a cache line of instruction bytes provided by an instruction cache in response to a fetch address, a target address of the branch instruction, and a location of an opcode byte of the branch instruction within the cache line. The instruction cache provides the cache line to an instruction buffer and the BTAC provides the prediction, the target address, and the location in response to the fetch address. The microprocessor branches to the target address. A byte in the cache line within the instruction buffer indicated by the location provided by the BTAC is marked. An instruction decoder formats the instruction bytes in the cache line. The microprocessor erroneously branched to the target address if the instruction decoder indicates the marked byte is in a non-opcode location within one of the formatted instructions.

Owner:IP FIRST

Apparatus and method for handling BTAC branches that wrap across instruction cache lines

InactiveUS20060010310A1Improves branch performanceAvoiding branch penaltyDigital computer detailsSpecific program execution arrangementsProcessor registerBranch target address cache

A branch control apparatus in a microprocessor. The apparatus includes a branch target address cache (BTAC) that caches indications of whether a branch instruction wraps across two cache lines. When an instruction cache fetch address of a first cache line containing the first part of the branch instruction hits in the BTAC, the BTAC outputs a target address of the branch instruction and indicates the wrap condition. The target address is stored in a register. The next sequential fetch address selects a second cache line containing the second part of the branch instruction. After the two cache lines containing the branch instruction are fetched, the target address from the register is provided to the instruction cache in order to fetch a third cache line containing a target instruction of the branch. The three cache lines are stored in order in an instruction buffer for decoding.

Owner:IP FIRST

Parallel computation processor, parallel computation control method and program thereof

InactiveUS7136989B2High-speed loop operationLittle overheadGeneral purpose stored program computerNext instruction address formationParallel computingExecution unit

A parallel computation processor being capable of high-speed loop operation. When instruction decoders decode the VLOOP instruction, which triggers loop operation, an instruction buffer starts storing normal instructions. The instruction buffer dispatches a VLIW instruction composed of n pieces of normal instructions to execution units each time n pieces of instructions are stored therein. The execution units concurrently execute the instructions. After all instructions comprised in a loop have been stored in the buffer and once dispatched as VLIW instructions to be executed, the loop is executed repeatedly.

Owner:NEC CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com