Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

767 results about "Delay" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Delay is an audio effect and an effects unit which records an input signal to an audio storage medium, and then plays it back after a period of time. The delayed signal may either be played back multiple times, or played back into the recording again, to create the sound of a repeating, decaying echo.

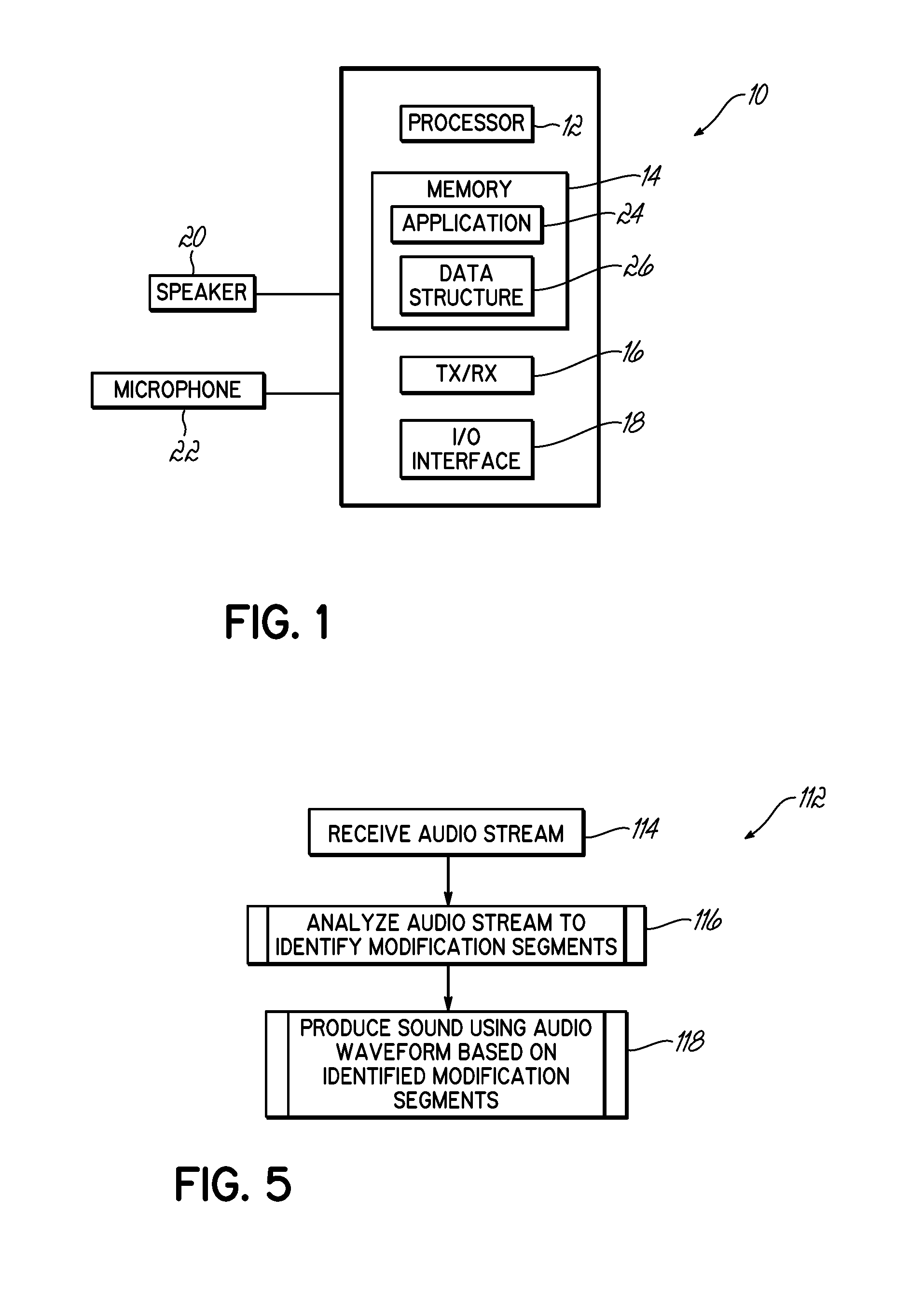

Method and system for mitigating delay in receiving audio stream during production of sound from audio stream

ActiveUS20140270196A1Reduce impactReduce delaysMicrophonesElectrical transducersCLARITYSound production

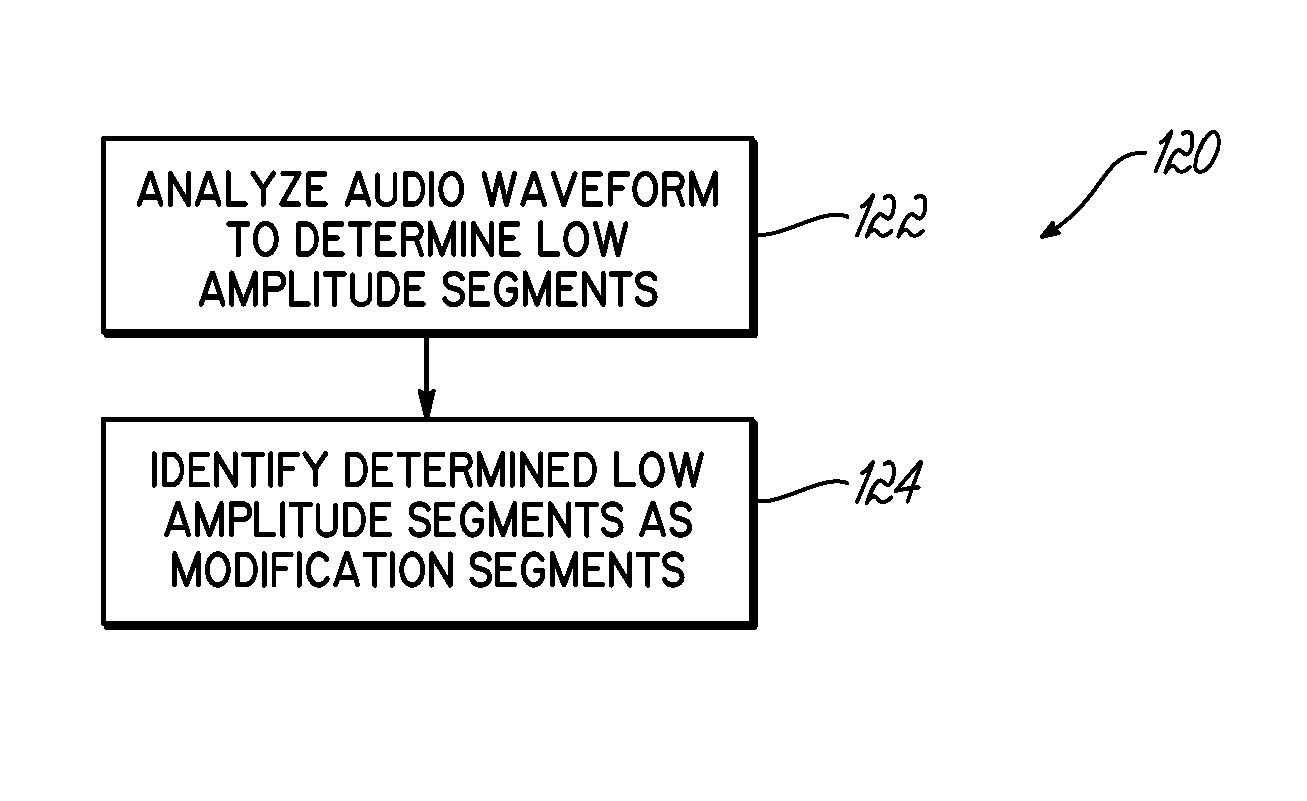

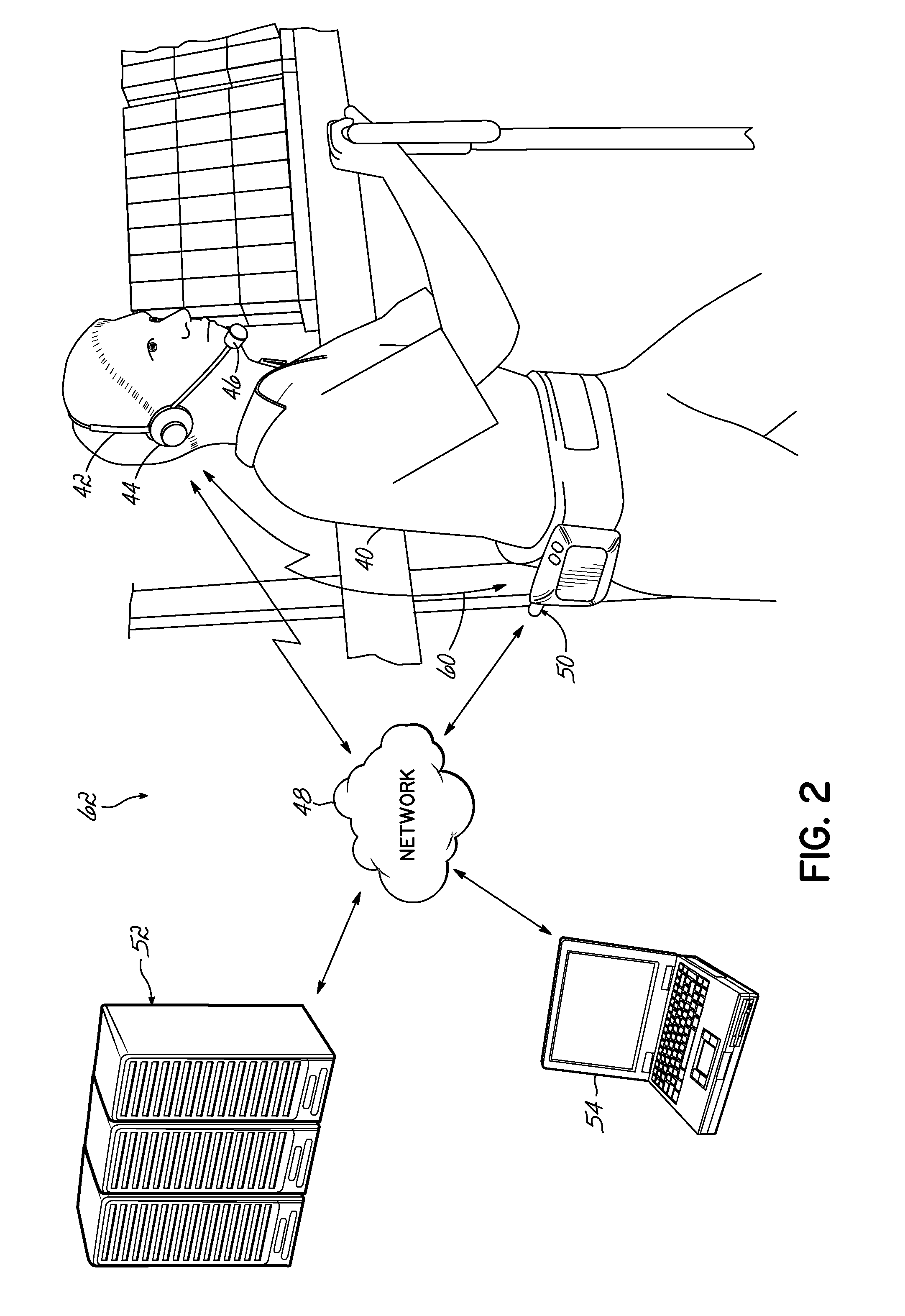

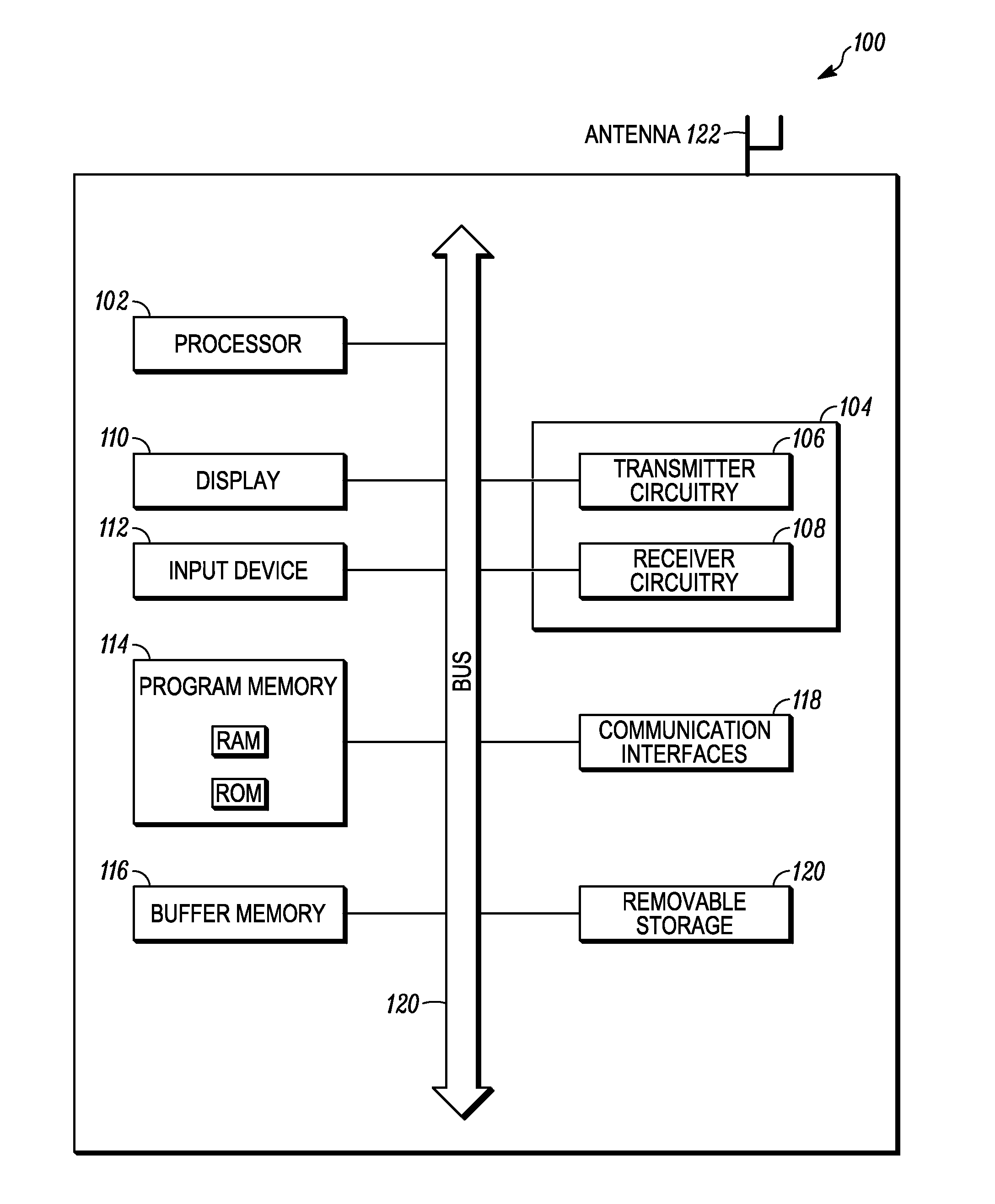

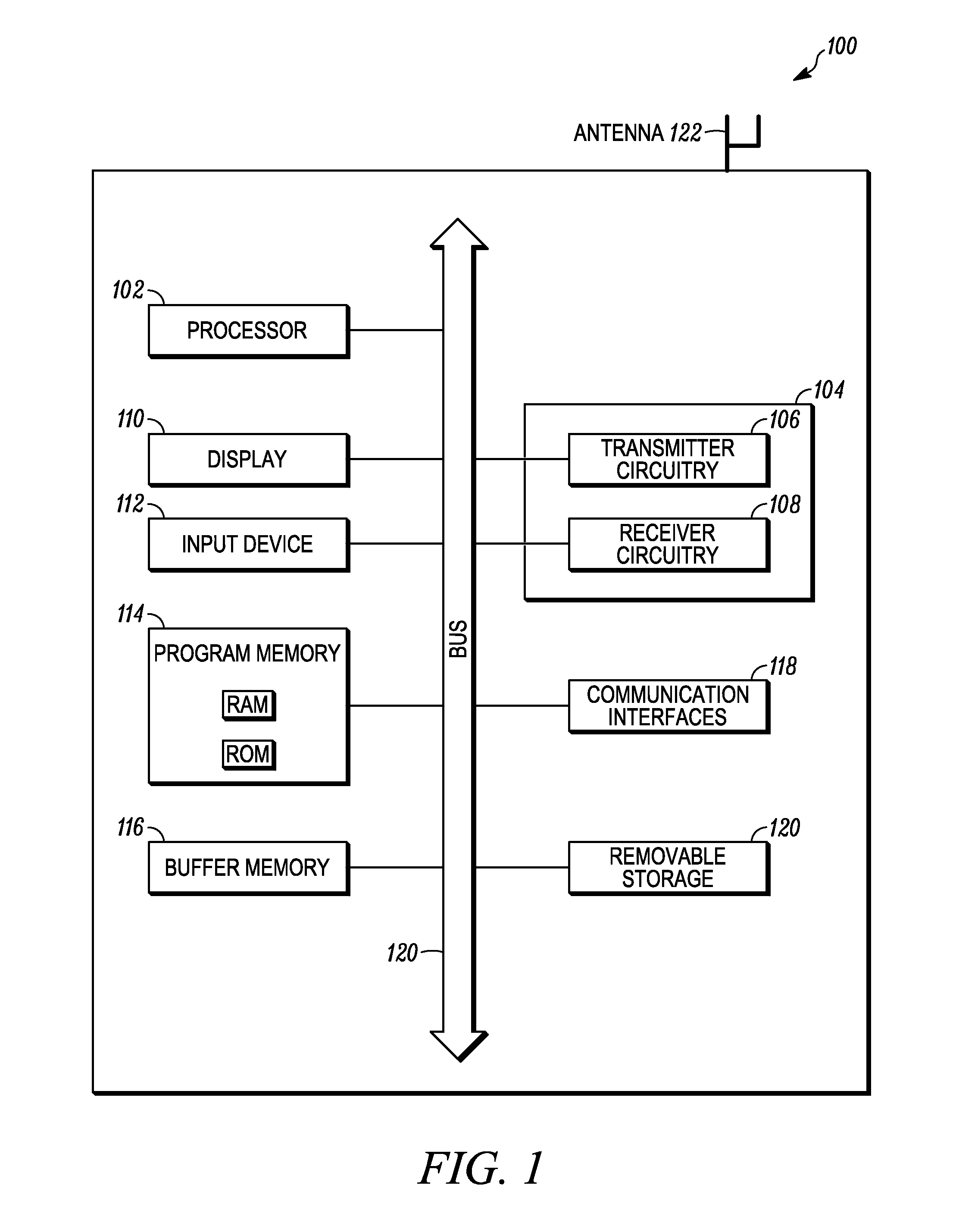

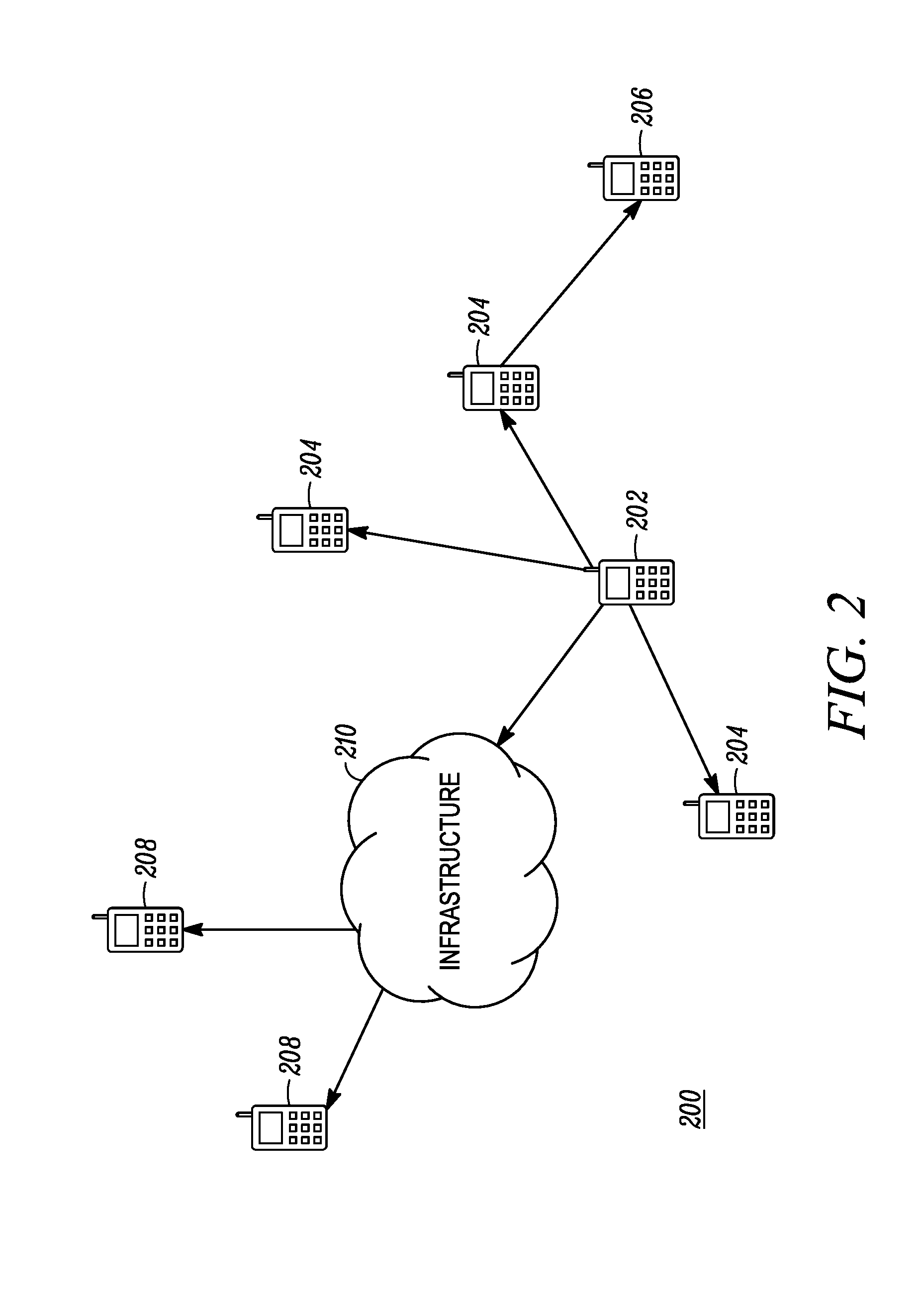

A communication component modifies production of an audio waveform at determined modification segments to thereby mitigate the effects of a delay in processing and / or receiving a subsequent audio waveform. The audio waveform and / or data associated with the audio waveform are analyzed to identify the modification segments based on characteristics of the audio waveform and / or data associated therewith. The modification segments show where the production of the audio waveform may be modified without substantially affecting the clarity of the sound or audio. In one embodiment, the invention modifies the sound production at the identified modification segments to extend production time and thereby mitigate the effects of delay in receiving and / or processing a subsequent audio waveform for production.

Owner:VOCOLLECT

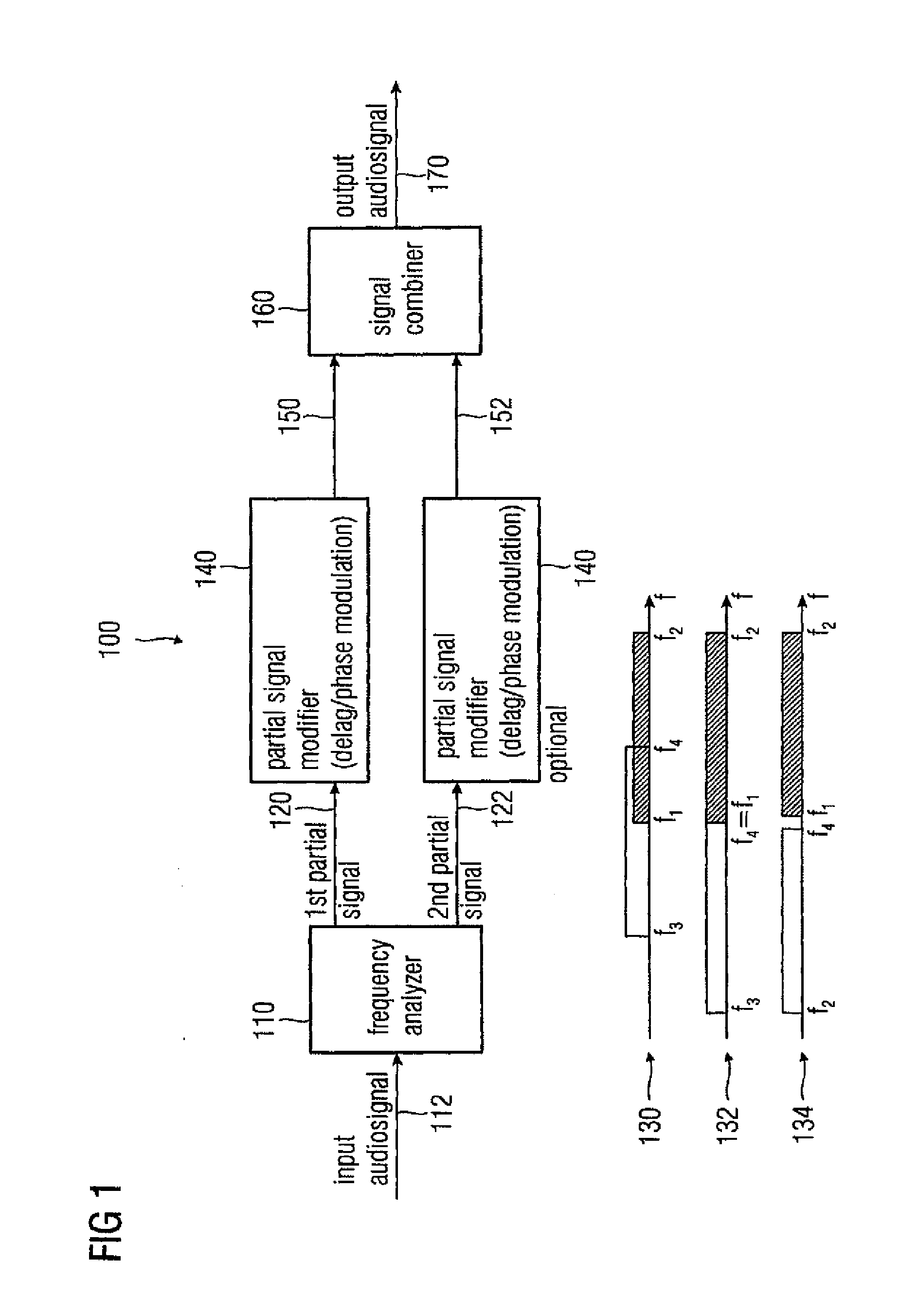

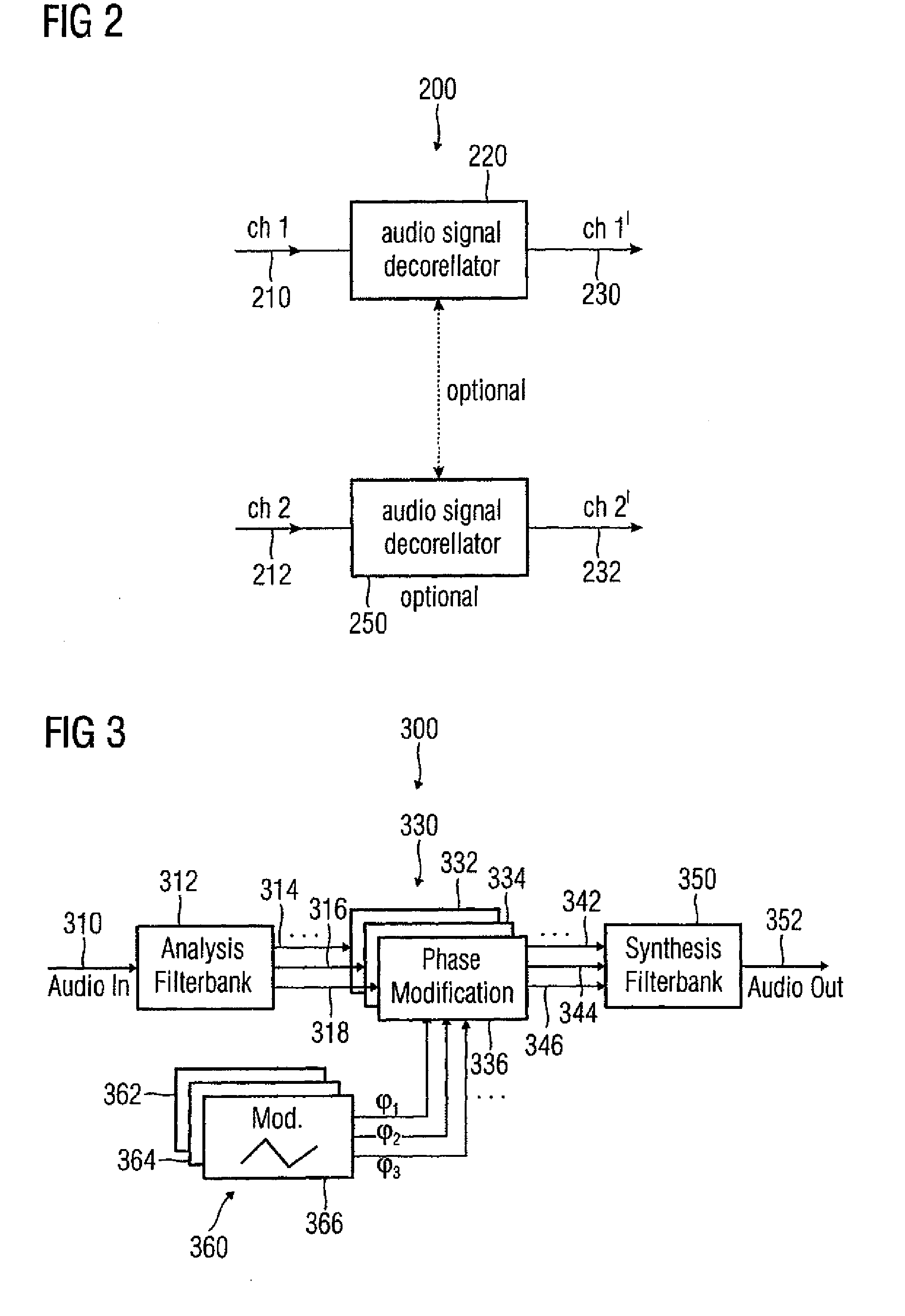

Audio signal decorrelator, multi channel audio signal processor, audio signal processor, method for deriving an output audio signal from an input audio signal and computer program

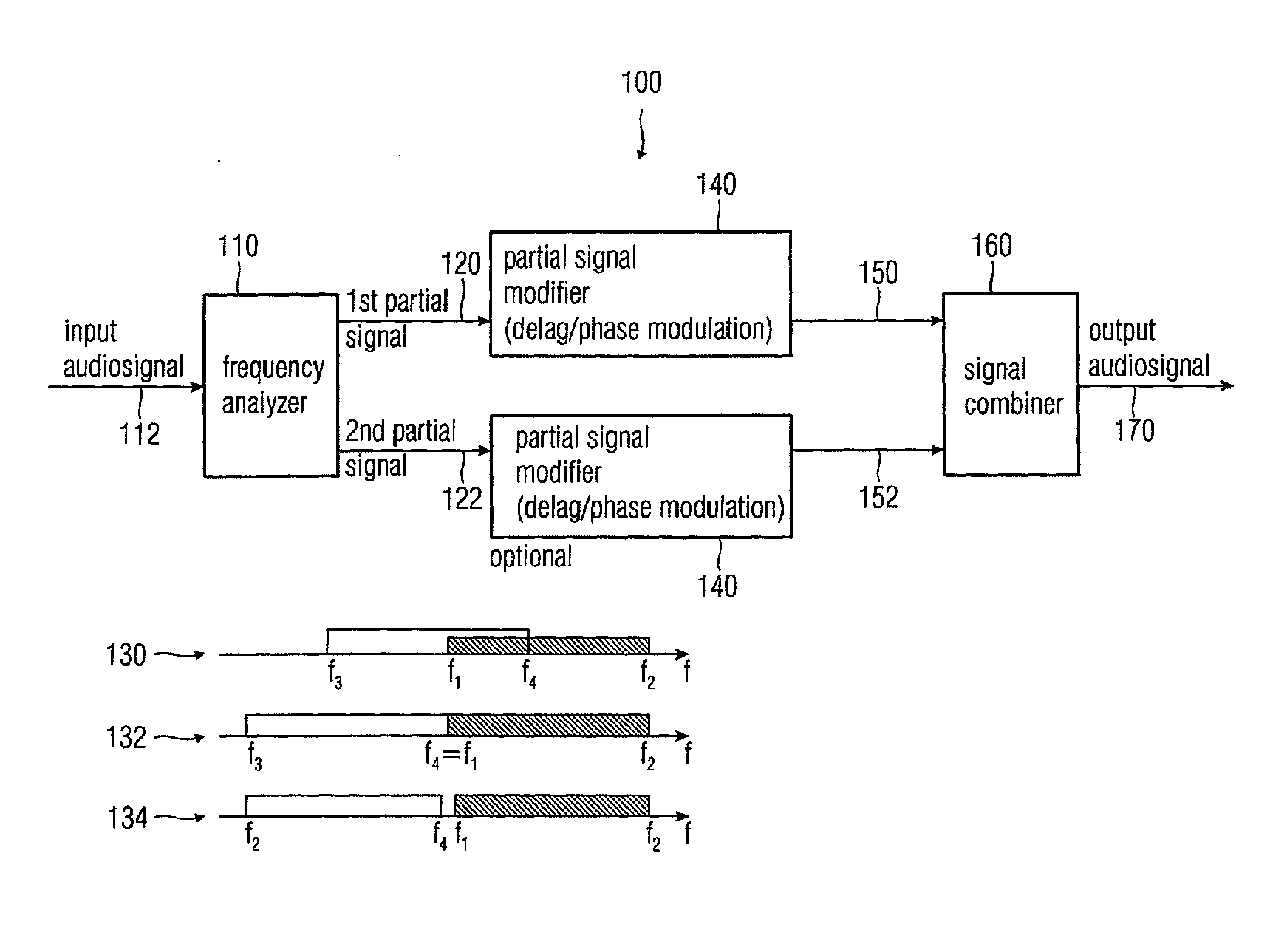

ActiveUS20090304198A1Simple designReducing and removing audio contentTwo-way loud-speaking telephone systemsSpeech analysisVIT signalsAudio signal

An audio signal decorrelator for deriving an output audio signal from an input audio signal has a frequency analyzer for extracting from the input audio signal a first partial signal descriptive of an audio content in a first audio frequency range and a second partial signal descriptive of an audio content in a second audio frequency range having higher frequencies compared to the second audio frequency range. A partial signal modifier for modifies the first and second partial signals, to obtain first and second processed partial signals, so that a modulation amplitude of a time variant phase shift or time variant delay applied to the first partial signal is higher than that applied to the second partial signal, or for modifying only the first partial signal. A signal combiner combines the first and second processed partial signals, or combines the first processed partial signal and the second partial signal, to obtain an output audio signal.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

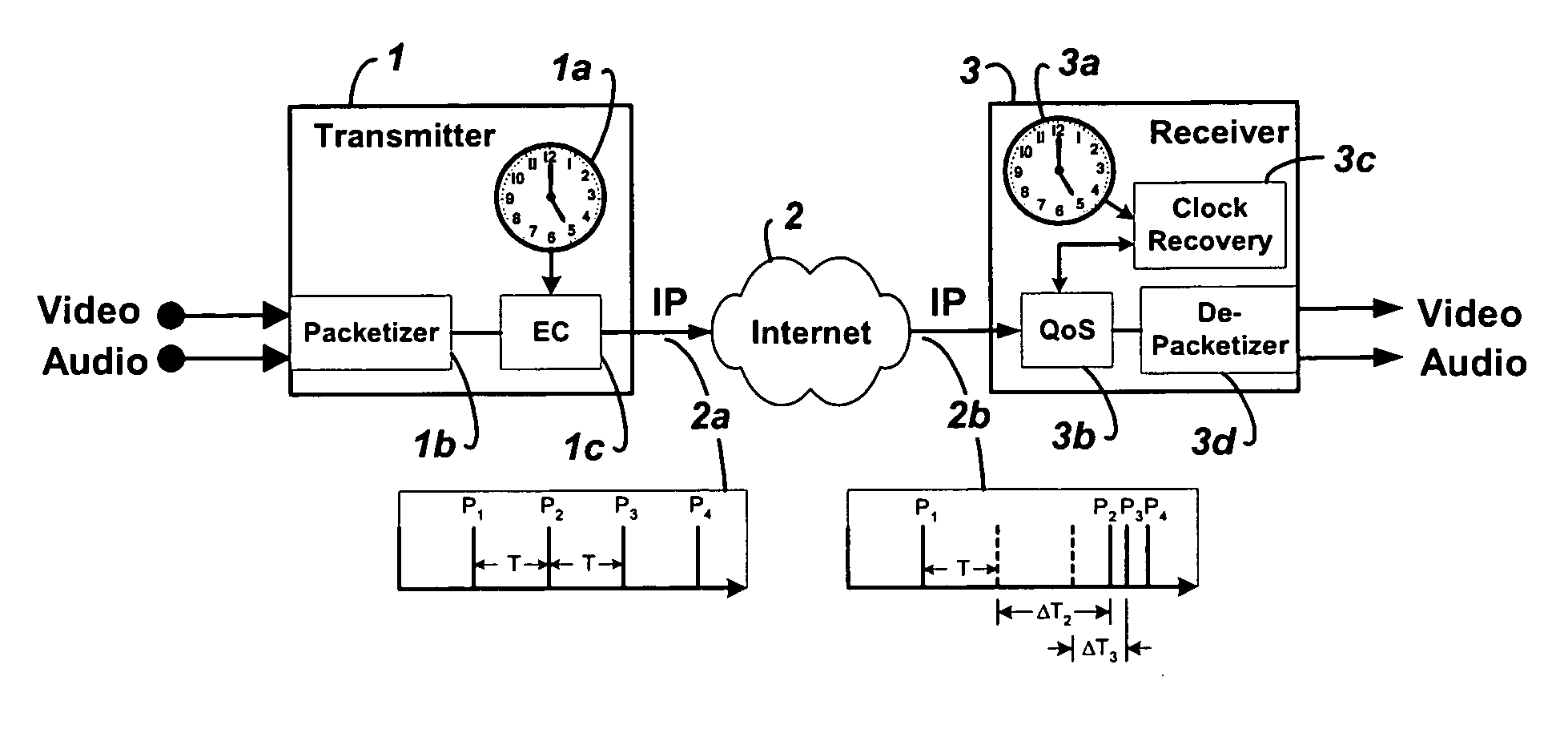

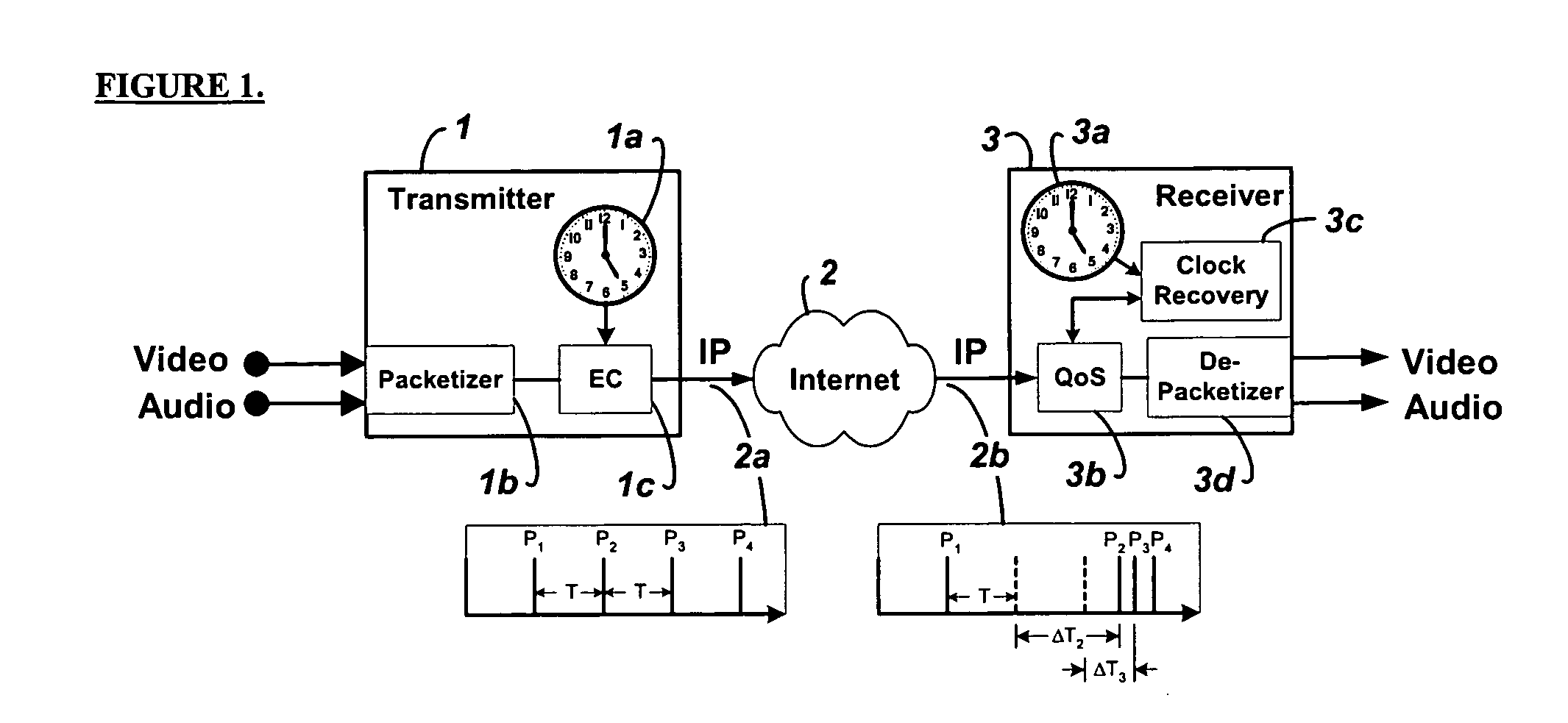

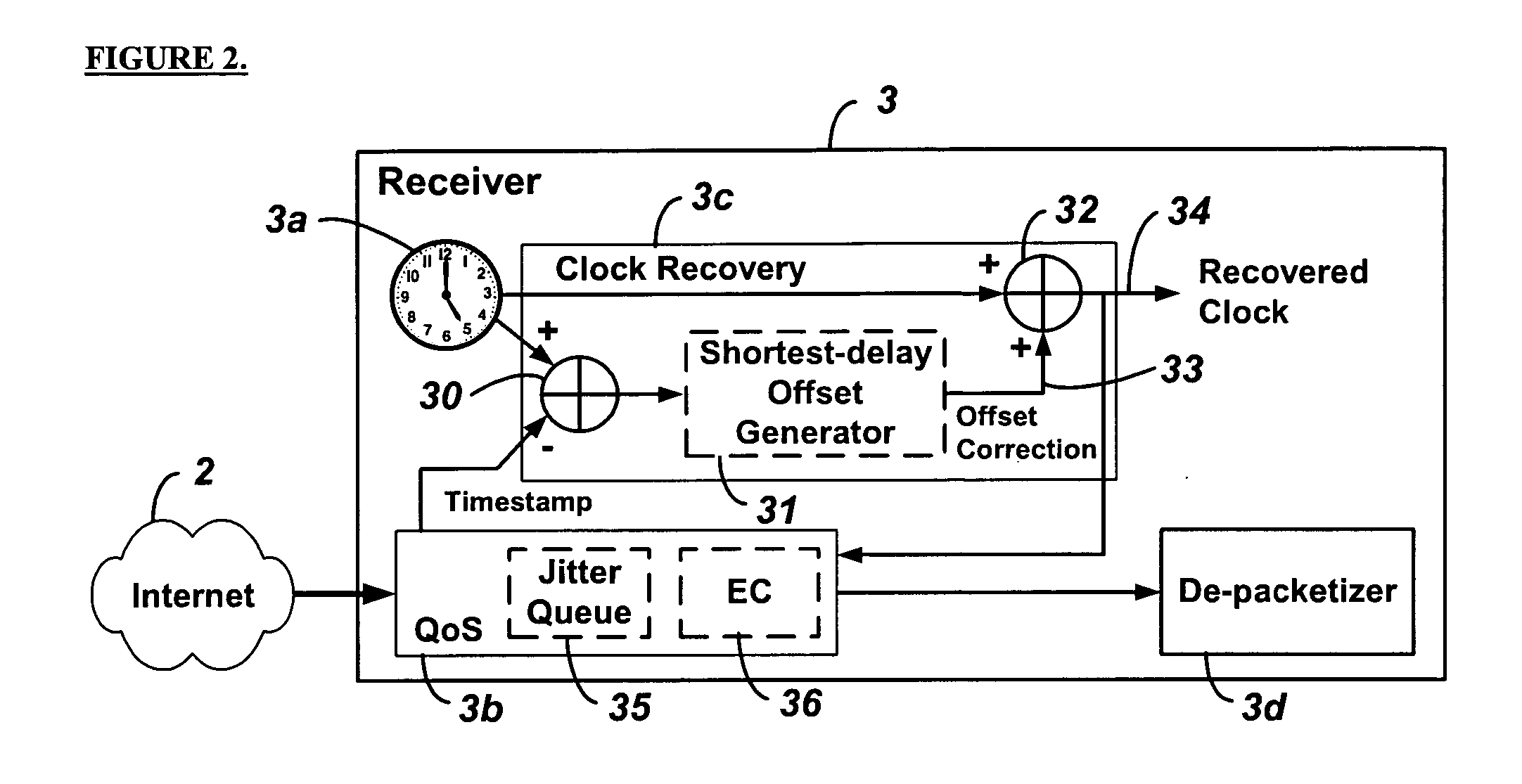

System and method for clock synchronization over packet-switched networks

ActiveUS20060013263A1Easy to replaceImprove abilitiesTime-division multiplexTransmissionQuality of serviceThe Internet

Embodiments of the invention enable the synchronization of clocks across packet switched networks, such as the Internet, sufficient to drive a jitter buffer and other quality-of-service related buffering. Packet time stamps referenced to a local clock create a phase offset signal. A shortest-delay offset generator uses a moving-window filter to select the samples of the phase offset signal having the shortest network propagation delay within the window. This shortest network propagation delay filter minimizes the effect of network jitter under the assumption that queuing delays account for most of the network jitter. The addition of this filtered phase offset signal to a free-running local clock creates a time reference that is synchronized to the remote clock at the source thus allowing for the transport of audio, video, and other time-sensitive real-time signals with minimal latency.

Owner:QVIDIUM TECH

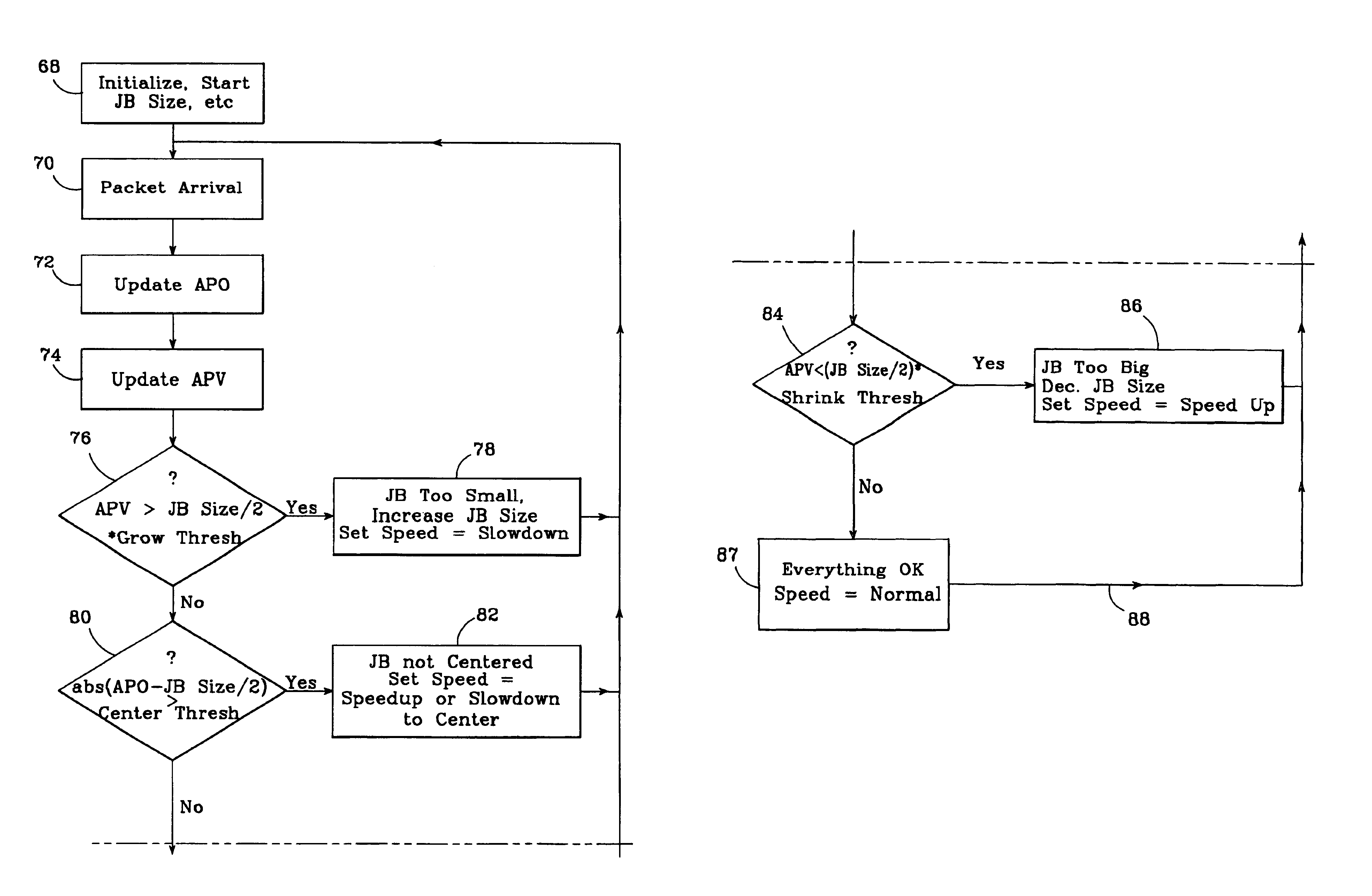

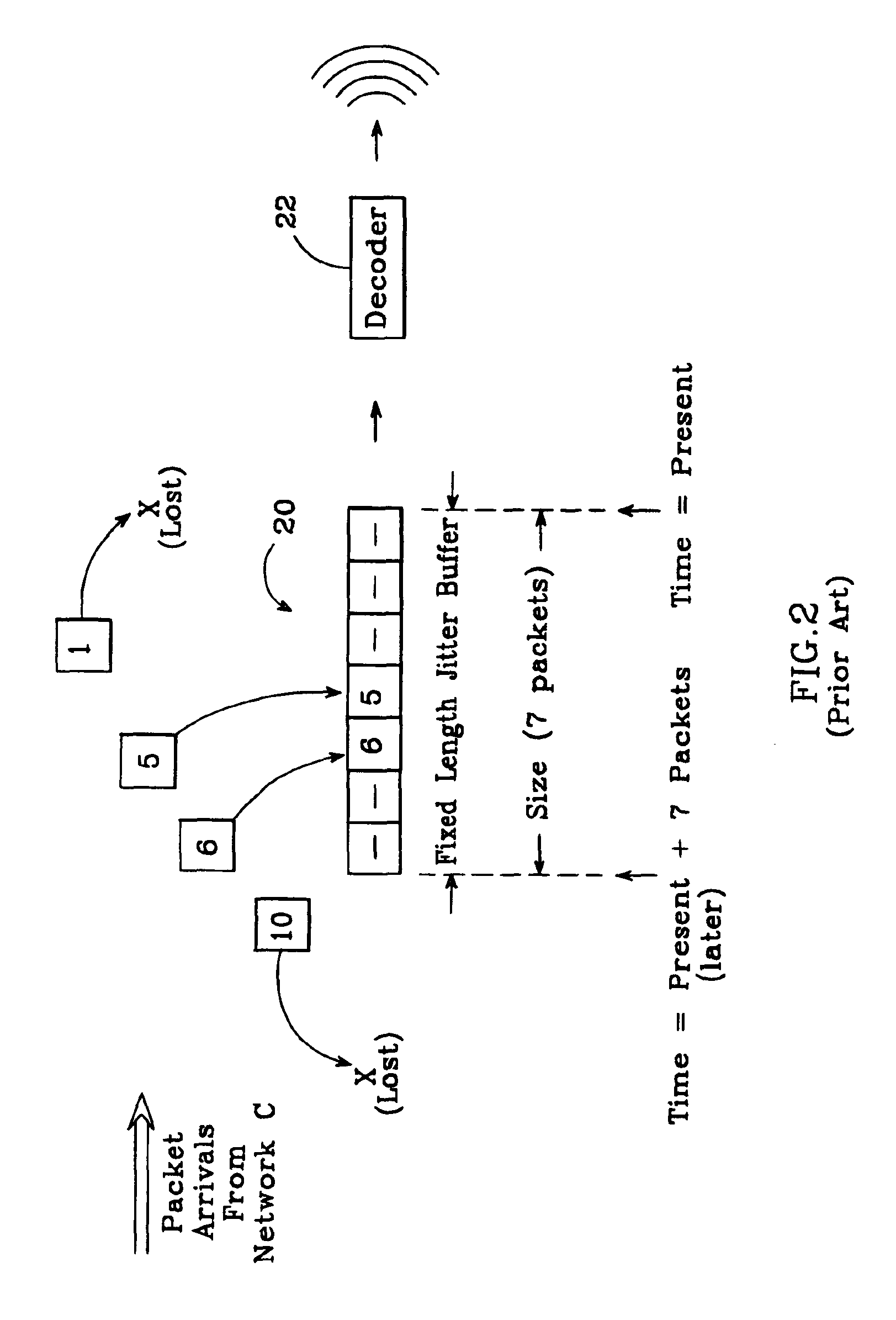

Adaptive jitter buffer for internet telephony

InactiveUS6862298B1Improve audio qualityTo overcome the large delayError preventionFrequency-division multiplex detailsPacket arrivalControl signal

In an improved system for receiving digital voice signals from a data network, a jitter buffer manager monitors packet arrival times, determines a time varying transit delay variation parameter and adaptively controls jitter buffer size in response to the variation parameter. A speed control module responds to a control signal from the jitter buffer manager by modifying the rate of data consumption from the jitter buffer, to compensate for changes in buffer size, preferably in a manner which maintains audio output with acceptable, natural human speech characteristics. Preferably, the manager also calculates average packet delay and controls the speed control module to adaptively align the jitter buffer's center with the average packet delay time.

Owner:GOOGLE LLC

Method and apparatus for processing an input speech signal during presentation of an output audio signal

InactiveUS6937977B2Accurately establishedTwo-way loud-speaking telephone systemsSpecial service for subscribersStart timeAudio signal

A start of an input speech signal is detected during presentation of an output audio signal and an input start time, relative to the output audio signal, is determined. The input start time is then provided for use in responding to the input speech signal. In another embodiment, the output audio signal has a corresponding identification. When the input speech signal is detected during presentation of the output audio signal, the identification of the output audio signal is provided for use in responding to the input speech signal. Information signals comprising data and / or control signals are provided in response to at least the contextual information provided, i.e., the input start time and / or the identification of the output audio signal. In this manner, the present invention accurately establishes a context of an input speech signal relative to an output audio signal regardless of the delay characteristics of the underlying communication system.

Owner:AUVO TECH

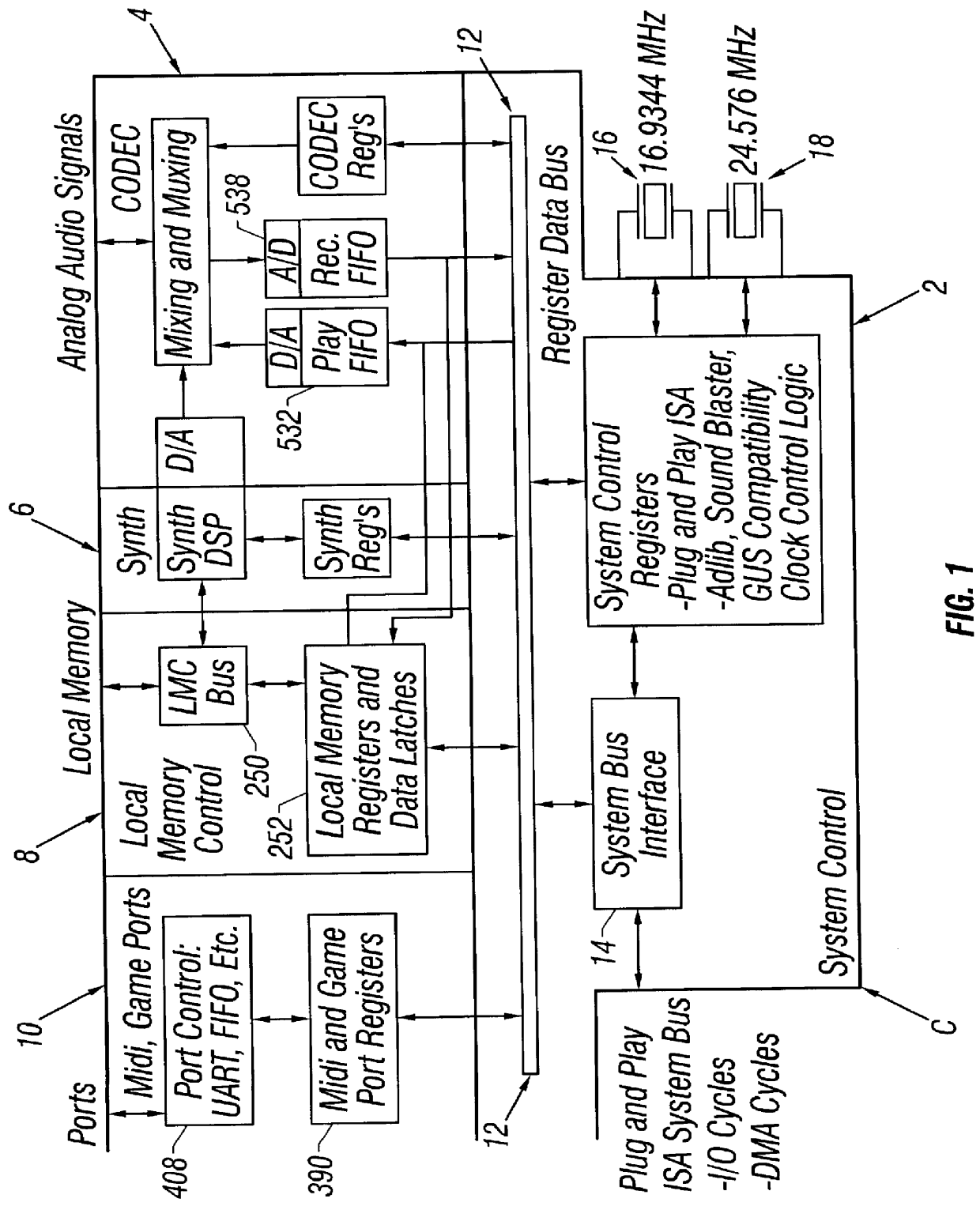

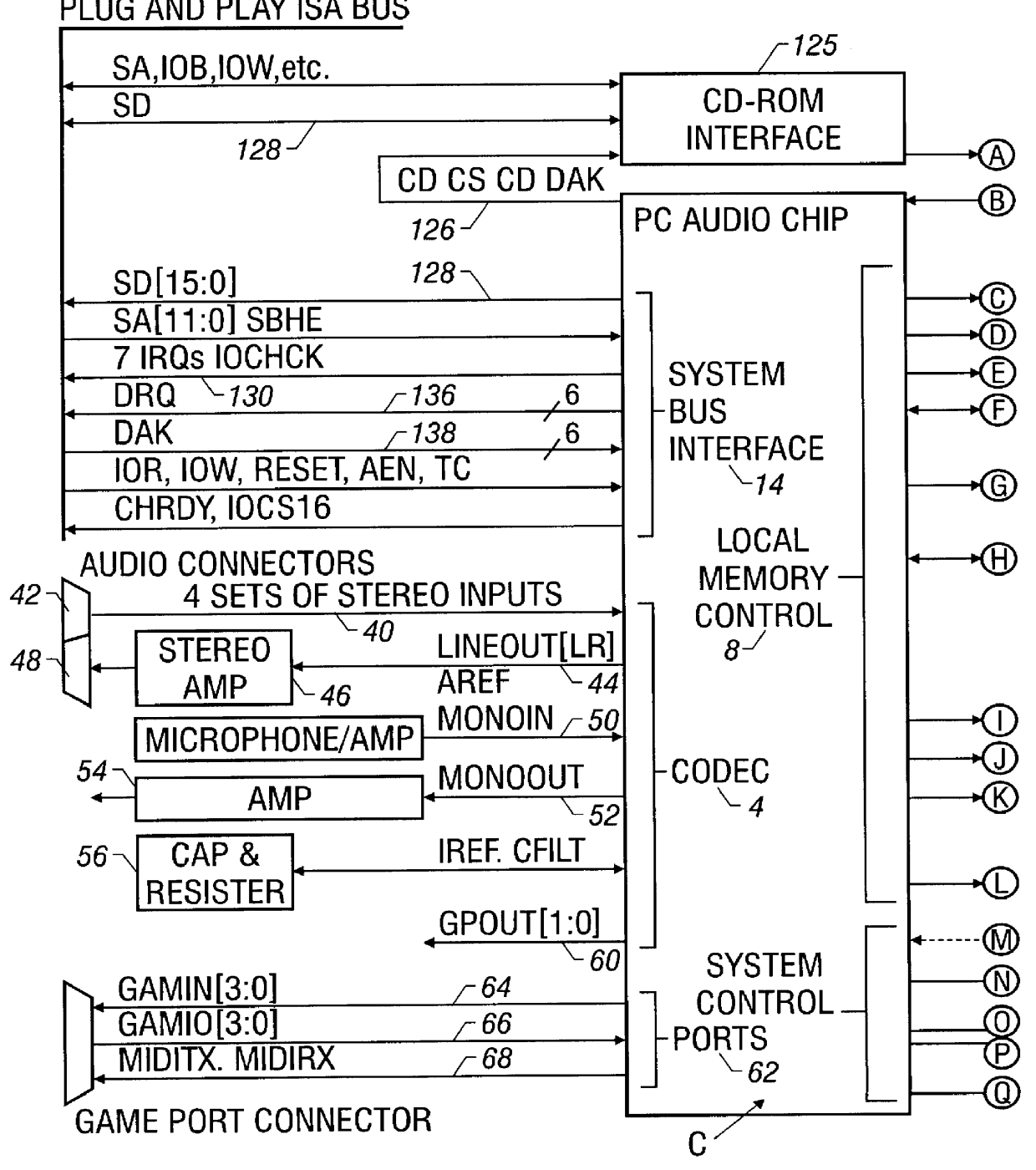

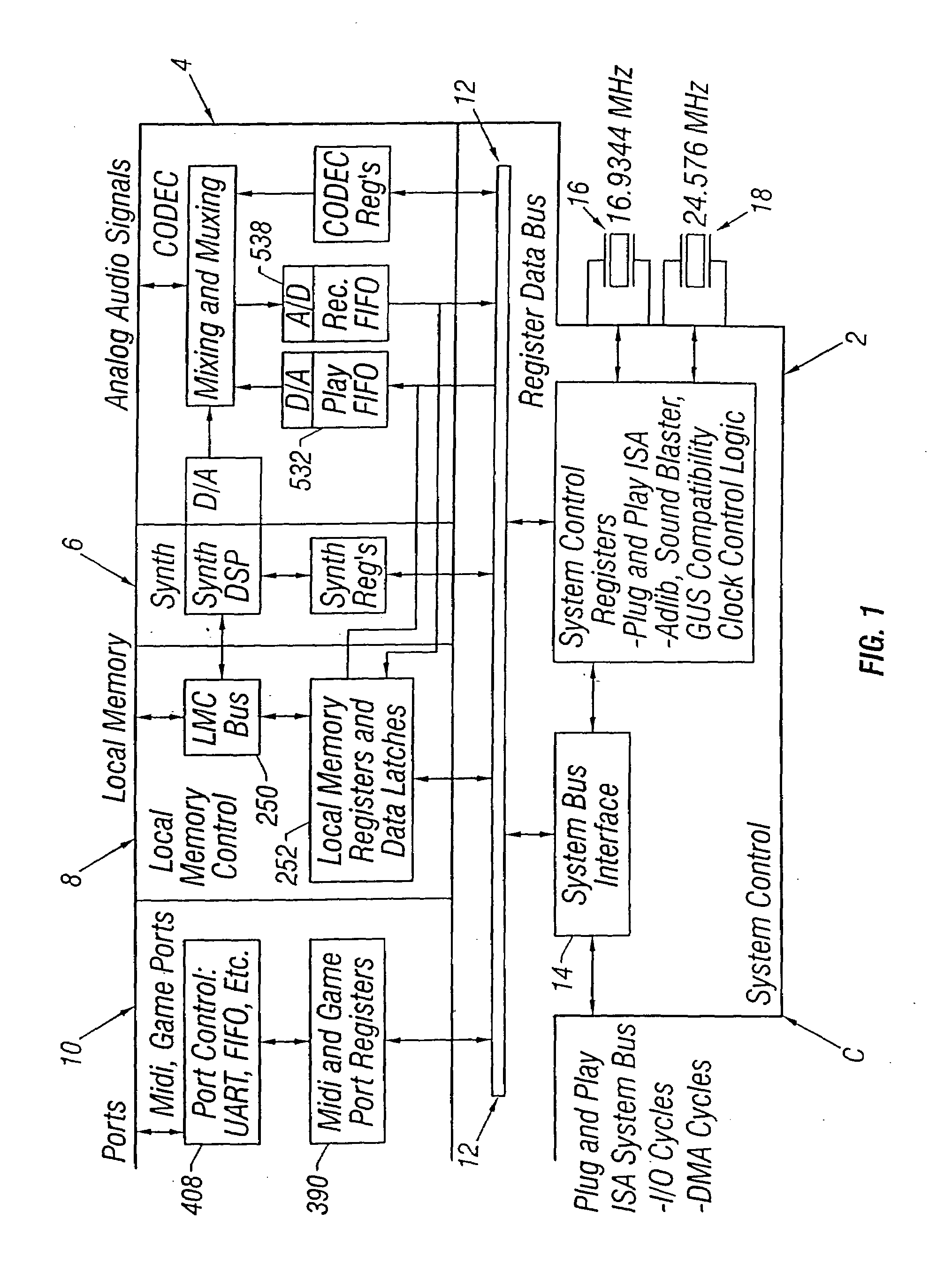

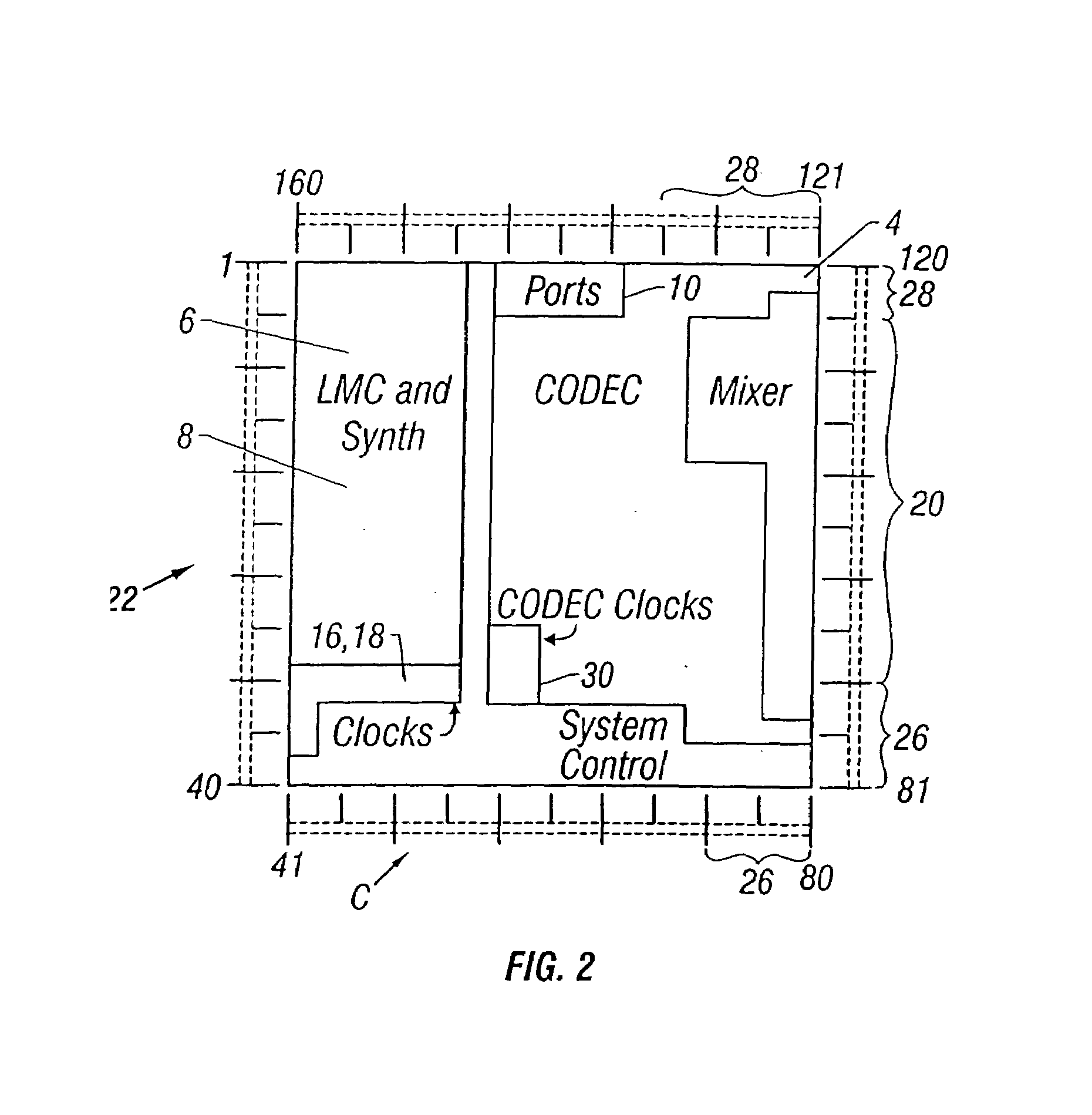

Digital wavetable audio synthesizer with delay-based effects processing

InactiveUS6047073AElectrophonic musical instrumentsCounting chain pulse countersAudio synthesisNoise

A digital wavetable audio synthesizer is described. The synthesizer can generate up to 32 high-quality audio digital signals or voices, including delay-based effects, at either a 44.1 KHz sample rate or at sample rates compatible with a prior art wavetable synthesizer. The synthesizer includes an address generator which has several modes of addressing wavetable data. The address generator's addressing rate controls the pitch of the synthesizer's output signal. The synthesizer performs a 10-bit interpolation, using the wavetable data addressed by the address generator, to interpolate additional data samples. When the address generator loops through a block of data, the signal path interpolates between the data at the end and start addresses of the block of data to prevent discontinuities in the generated signal. A synthesizer volume generator, which has several modes of controlling the volume, adds envelope, right offset, left offset, and effects volume to the data. The data can be placed in one of sixteen fixed stereo pan positions, or left and right offsets can be programmed to place the data anywhere in the stereo field. The left and right offset values can also be programmed to control the overall volume. Zipper noise is prevented by controlling the volume increment. A synthesizer LFO generator can add LFO variation to: (i) the wavetable data addressing rate, for creating a vibrato effect; and (ii) a voice's volume, for creating a tremolo effect. Generated data to be output from the synthesizer is stored in left and right accumulators. However, when creating delay-based effects, data is stored in one of several effects accumulators. This data is then written to a wavetable. The difference between the wavetable write and read addresses for this data provides a delay for echo and reverb effects. LFO variations added to the read address create chorus and flange effects. The volume of the delay-based effects data can be attenuated to provide volume decay for an echo effect. After the delay-based effects processing, the data can be provided with left and right offset volume components which determine how much of the effect is heard and its stereo position. The data is then stored in the left and right accumulators.

Owner:MICROSEMI SEMICON U S

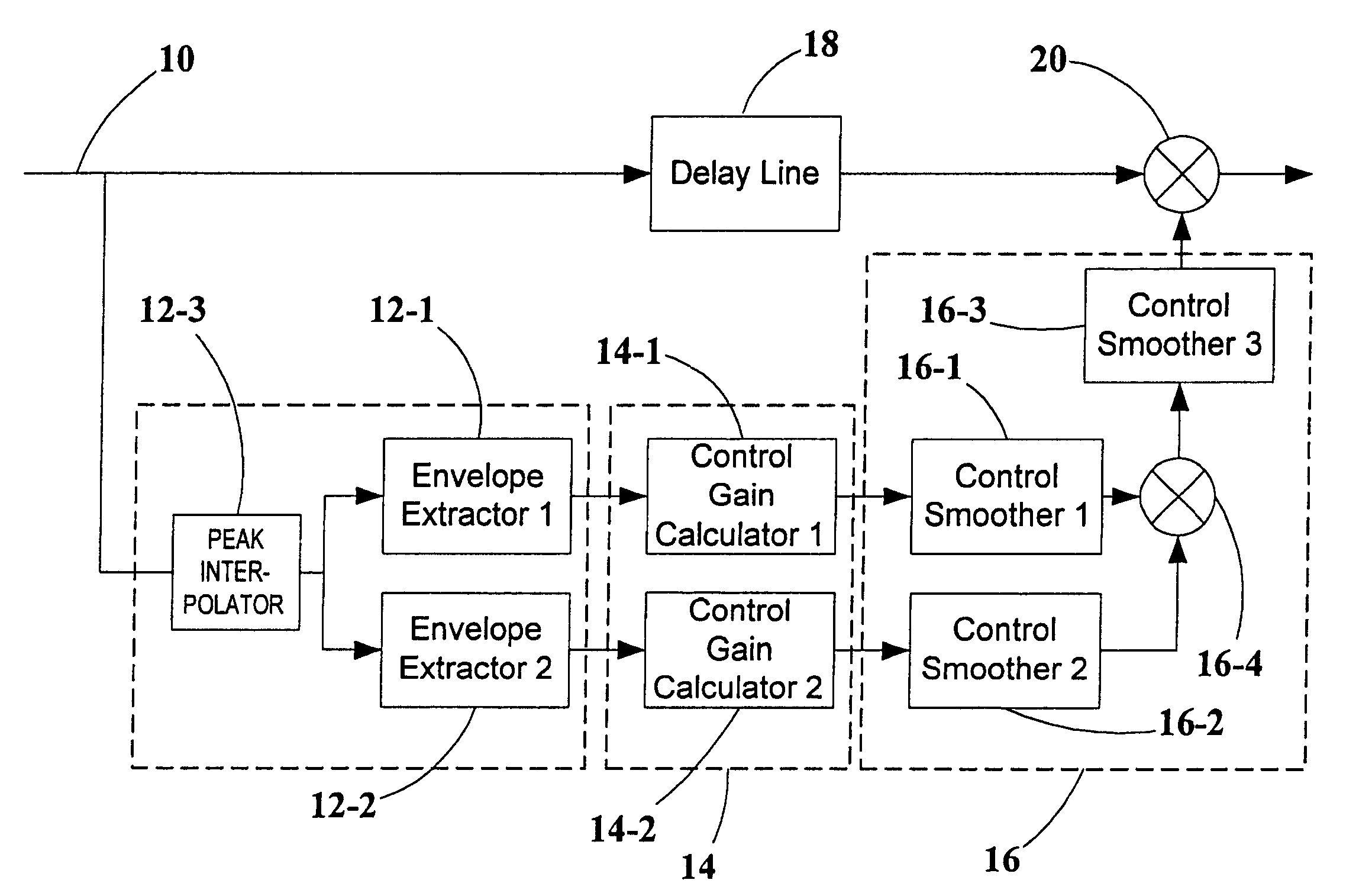

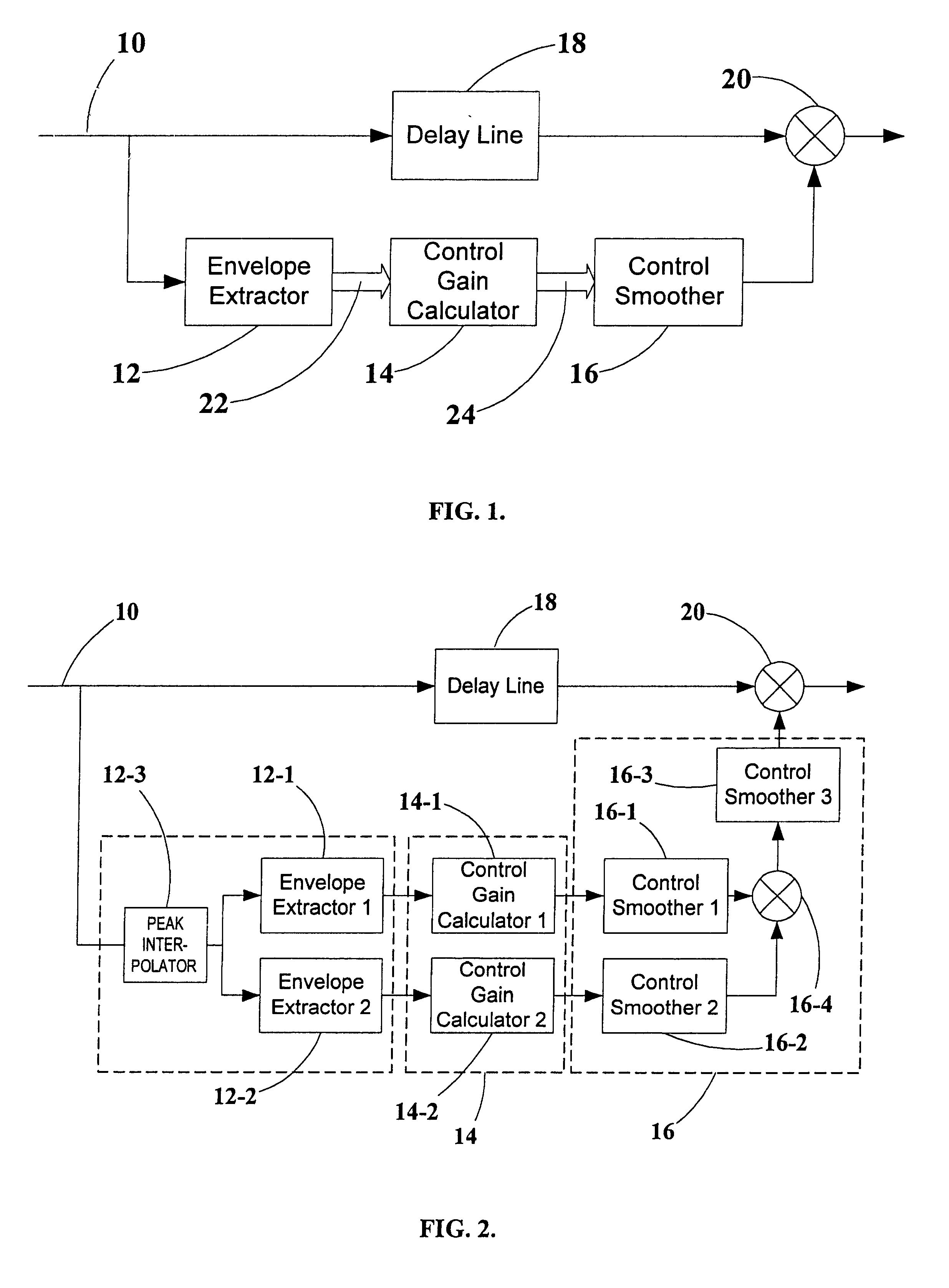

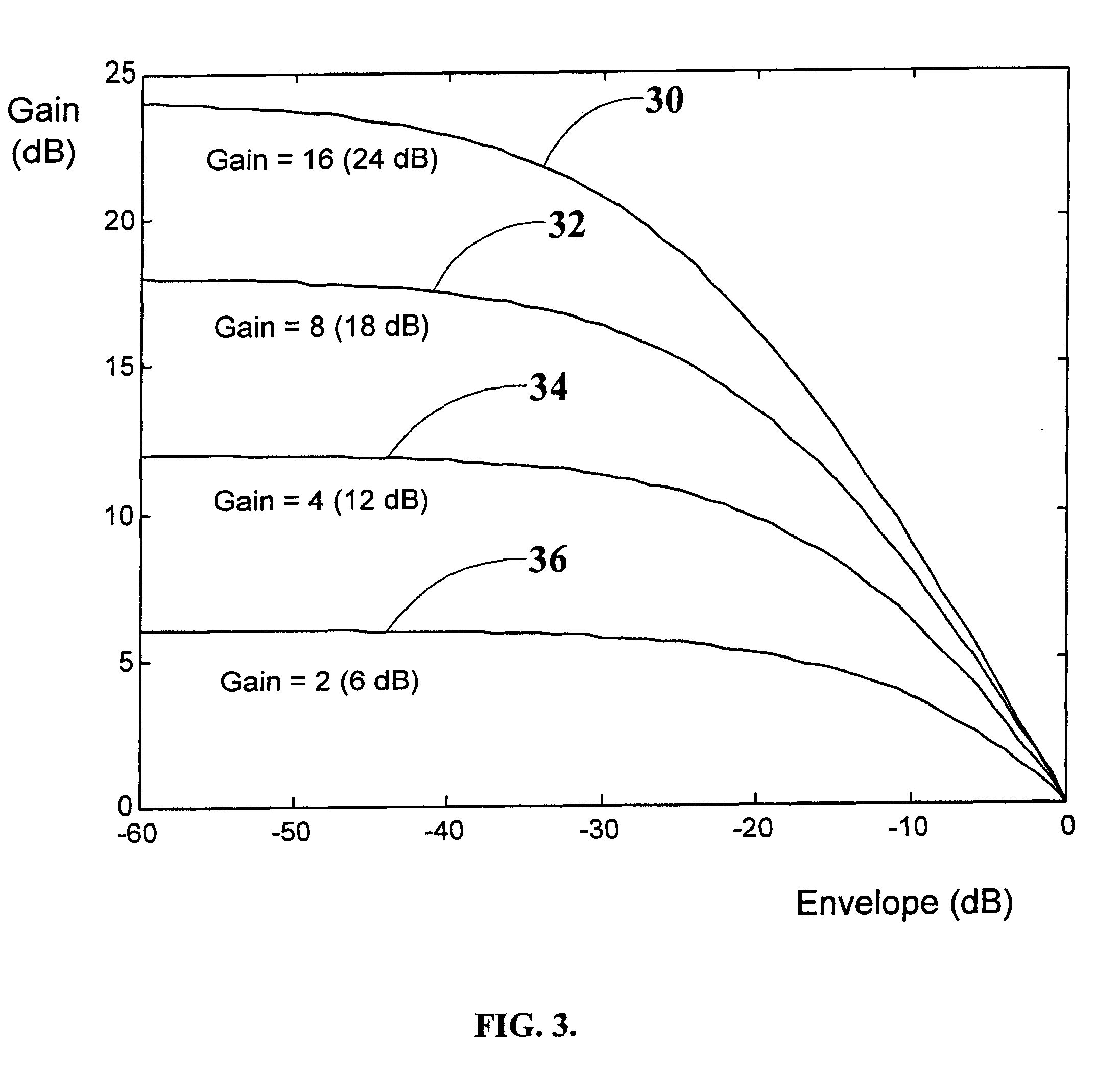

Dynamic range compressor-limiter and low-level expander with look-ahead for maximizing and stabilizing voice level in telecommunication applications

InactiveUS6535846B1Avoid excessive amplificationMaximizing and stabilizing voice levelInterconnection arrangementsSpeech analysisTelecommunication applicationSpeech recognition

A voice signal processing system with multiple parallel control paths, each of which address different problems, such as the high peak-to-RMS signal ratios characteristic of speech, wide variations in RMS speech levels, and high background noise levels. Different families of input-output control curves are used simultaneously to achieve efficient peak limiting and dynamic range compression as well as low-level dynamic expansion to prevent excessive amplification of background noise. In addition, a delay in the audio path relative to the control path makes it possible to employ an effective look-ahead in the control path, with FIR filtering smoothing-matched to the look-ahead. Digital domain peak interpolators are used for estimating the peaks of the input signal in the continuous time domain.

Owner:K S WAVES

Howling suppression using echo cancellation

ActiveUS20110110532A1Reduce and eliminate howlingInterconnection arrangementsTransmission noise suppressionCommunications systemEngineering

A method for reducing howling in a communication system containing collocated mobile devices is presented. In a transmitter, an audio signal is received at a microphone. Acoustic feedback is removed from the audio signal and the resulting signal is encoded and transmitted either using direct or trunked mode operation to a receiver. The encoded signal is decoded at the transmitter, in addition to at the receiver, and fed back to an echo canceller with sufficient delay to account for substantially the entirety of a loop delay from encoding of the audio signal to reception of the acoustic feedback at the microphone to enable removal of the acoustic feedback. An estimate of the acoustic feedback is used to initially remove the acoustic feedback, the error being fed back to the processor to adaptively change the signal being subtracted from the audio signal to better reduce the acoustic feedback.

Owner:MOTOROLA SOLUTIONS INC

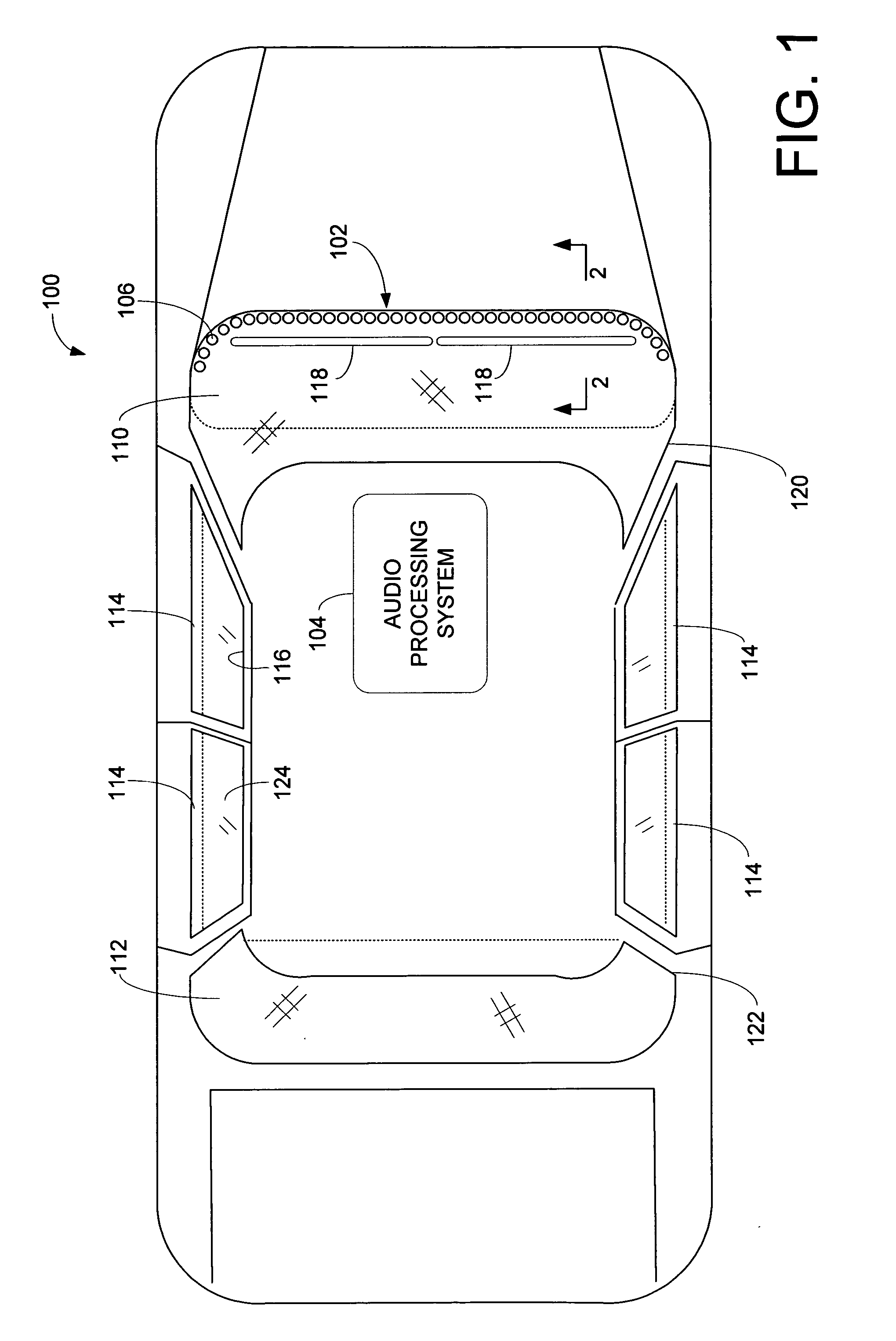

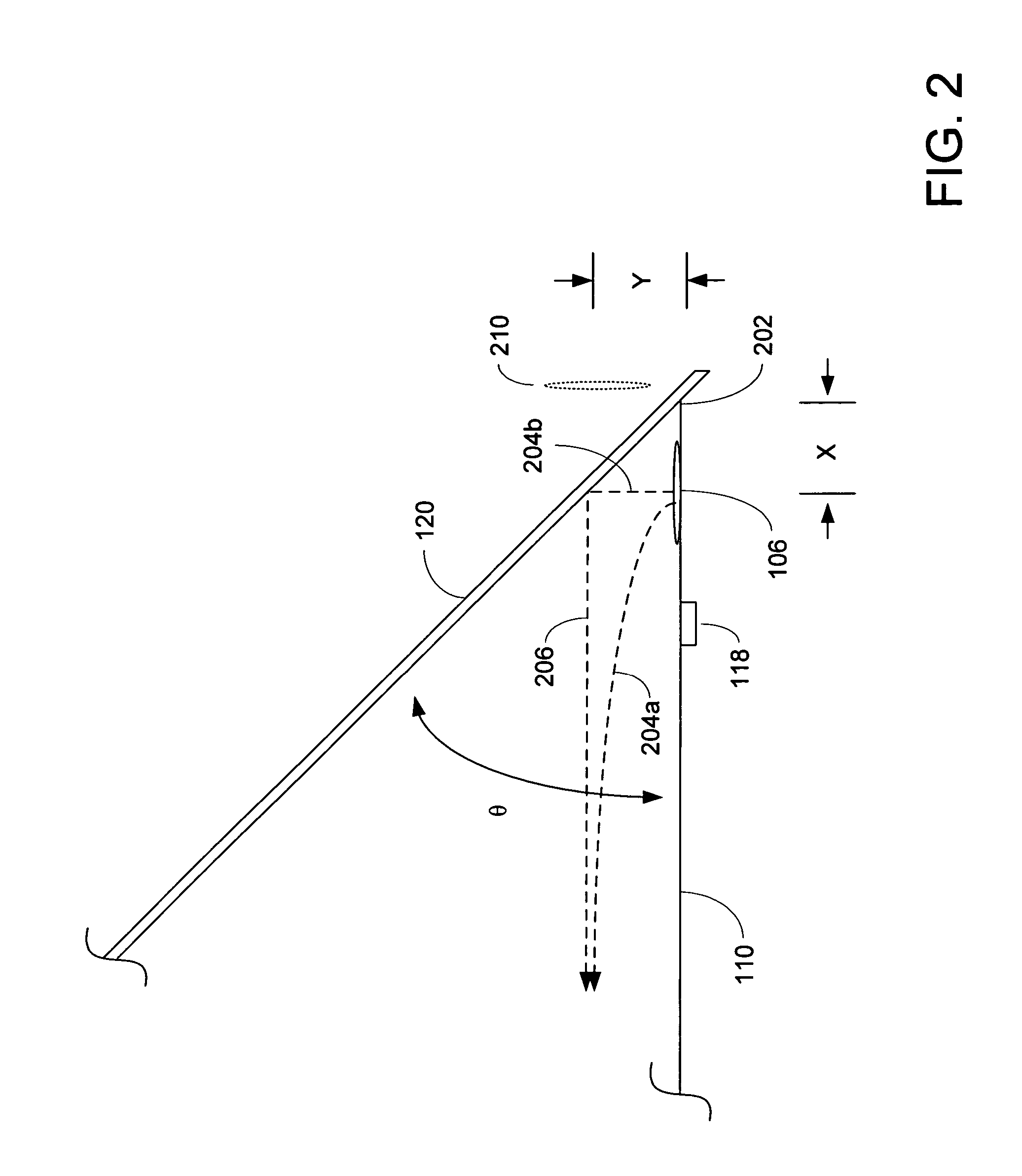

Vehicle loudspeaker array

ActiveUS20050259831A1Expand coverageImprove imaging clarityTransmissionFrequency/directions obtaining arrangementsUltrasound attenuationDashboard

An audio processing system for a vehicle includes a plurality of loudspeakers positioned to form a single line array. The loudspeaker line array is positionable in a vehicle on a dashboard of the vehicle substantially at the convergence of the dashboard and a window of the vehicle. When the loudspeaker line array is driven by an audio signal, a vertically and horizontally focused and narrowed sound pattern is perceived by a listener in the vehicle. The sound pattern is the result of the constructive combination of the direct sound impulses and the reflected sound impulses produced by each loudspeaker in the array. Using delay, attenuation and phase adjustment of the audio signal, the sound pattern may be controlled, limited, and directed to one or more locations in the vehicle.

Owner:HARMAN INT IND INC

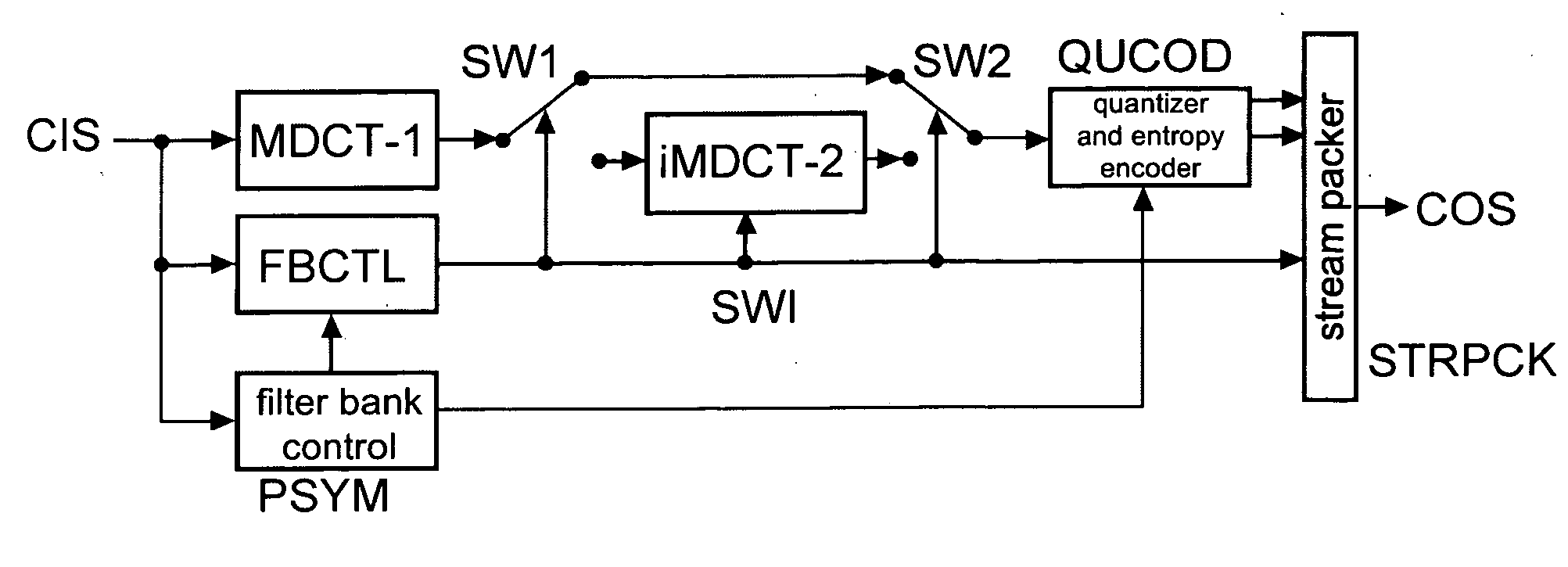

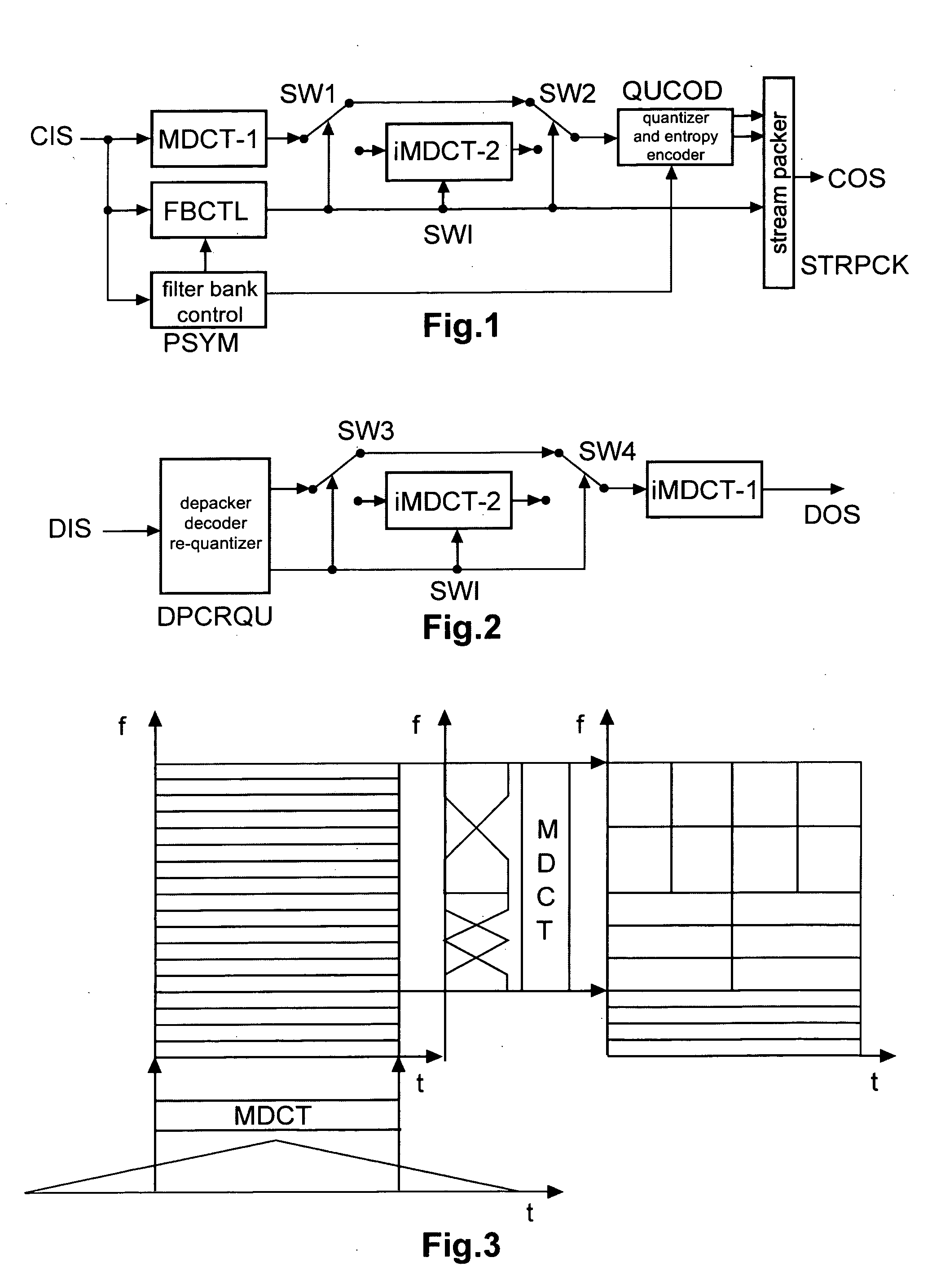

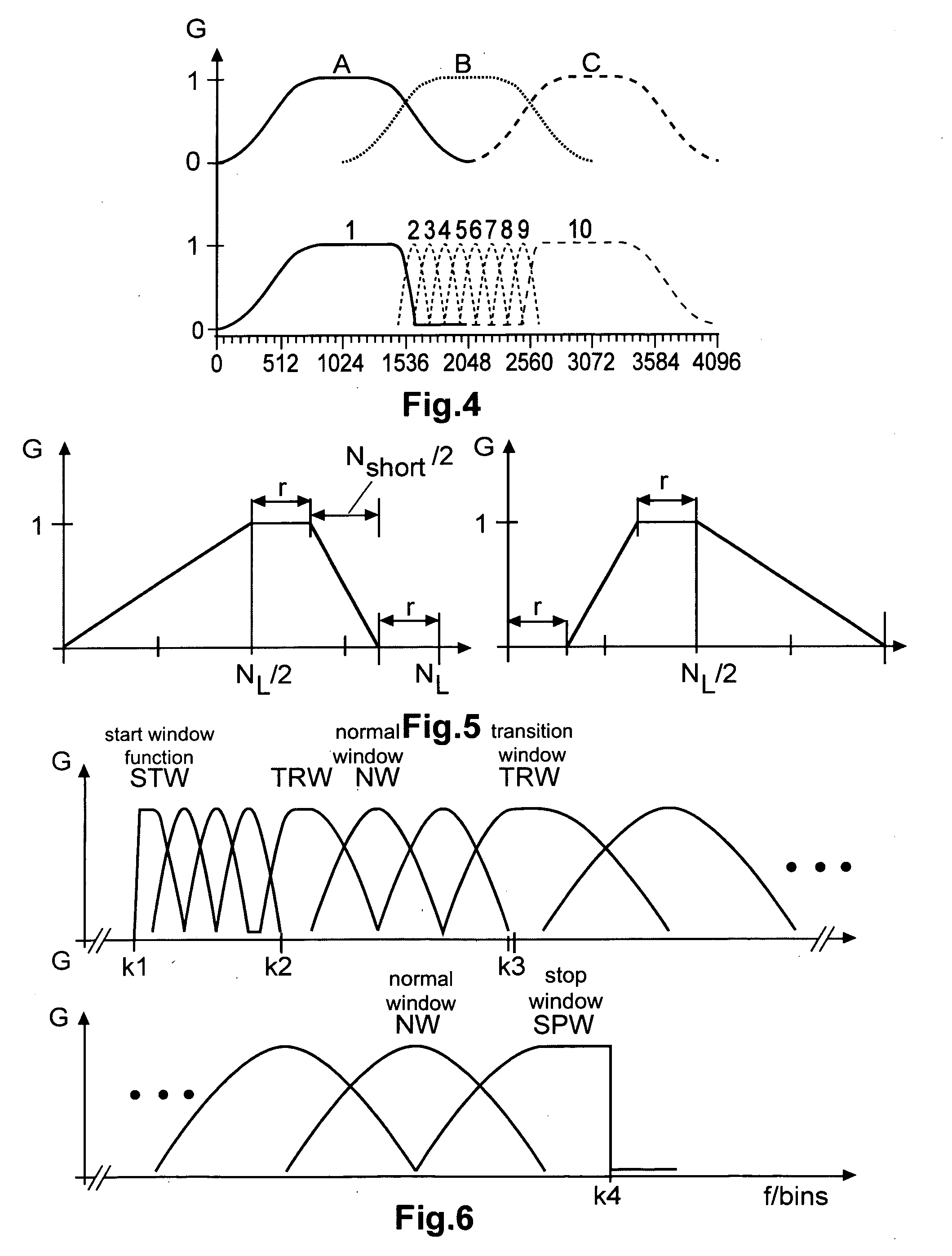

Method and apparatus for encoding and decoding an audio signal using adaptively switched temporal resolution in the spectral domain

ActiveUS20090012797A1Quality improvementReduce encoding delaySpeech synthesisTemporal resolutionFrequency spectrum

Perceptual audio codecs make use of filter banks and MDCT in order to achieve a compact representation of the audio signal, by removing redundancy and irrelevancy from the original audio signal. During quasi-stationary parts of the audio signal a high frequency resolution of the filter bank is advantageous in order to achieve a high coding gain, but this high frequency resolution is coupled to a coarse temporal resolution that becomes a problem during transient signal parts by producing audible pre-echo effects. The invention achieves improved coding / decoding quality by applying on top of the output of a first filter bank a second non-uniform filter bank, i.e. a cascaded MDCT. The inventive codec uses switching to an additional extension filter bank (or multi-resolution filter bank) in order to re-group the time-frequency representation during transient or fast changing audio signal sections. By applying a corresponding switching control, pre-echo effects are avoided and a high coding gain and a low coding delay are achieved.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

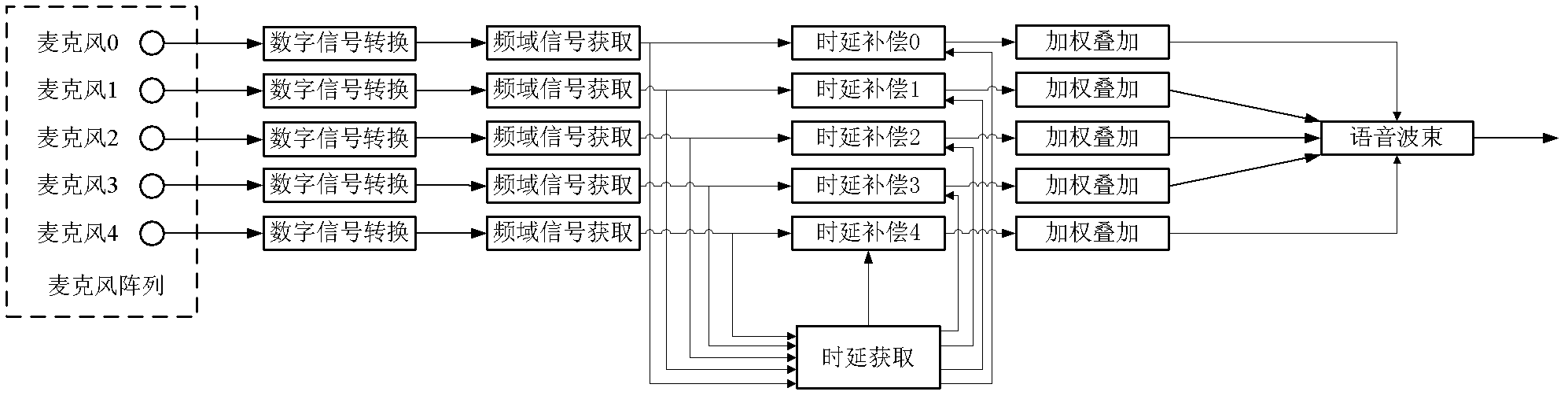

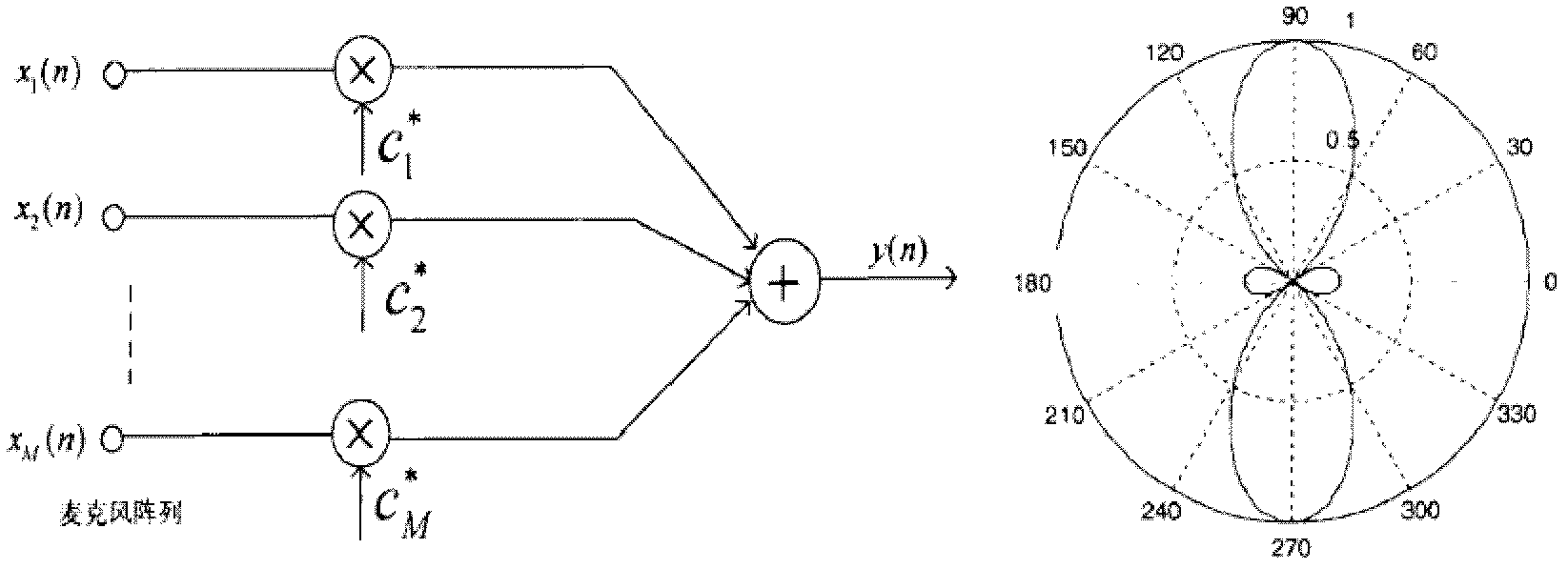

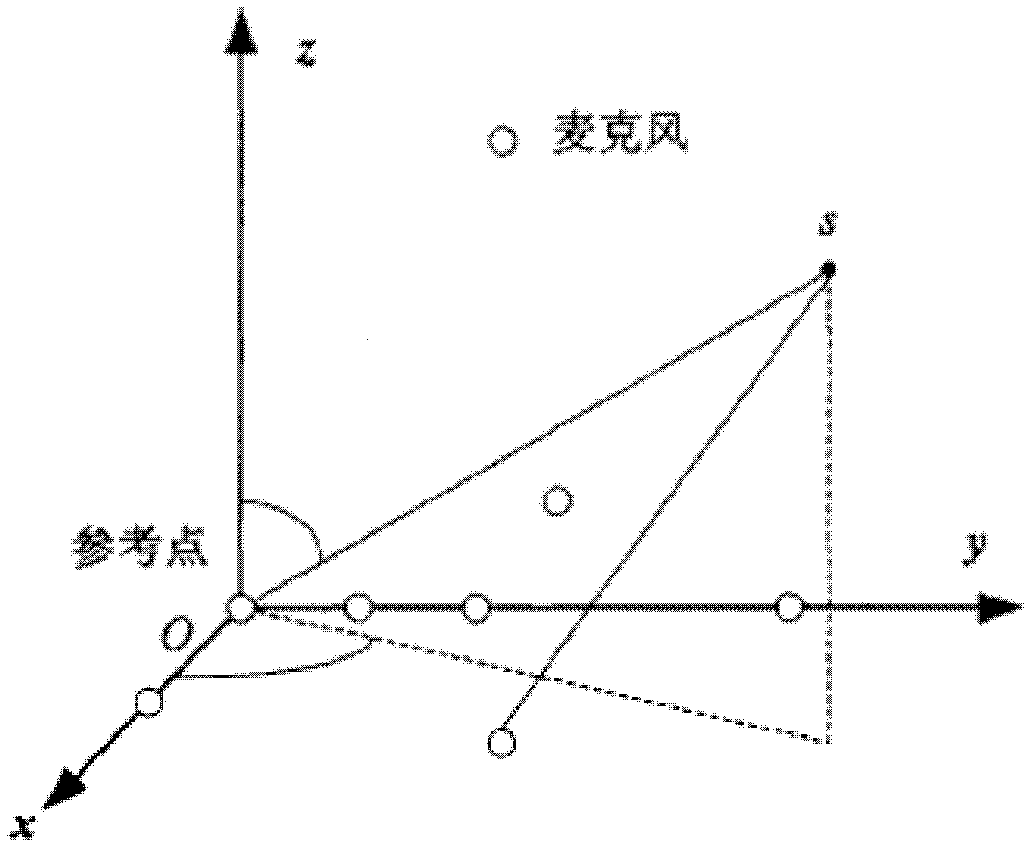

Microphone-array speech-beam forming method as well as speech-signal processing device and system

InactiveCN102324237AHigh positioning accuracyImprove directivitySpeech analysisSound producing devicesPhase conversionSound sources

The embodiment of the invention discloses a microphone-array speech-beam forming method, which comprises a digital-signal converting step, a frequency-region signal-obtaining step, a time-delay obtaining step, a time-delay compensating step and a weighted stacking step, wherein time-delay estimation based on phase conversion is adopted particularly in the time-delay obtaining step, thereby a beam-forming signal pointed to a target sound-source spatial position after enhancement processing is obtained. Compared with the prior art, the positioning precision and the directivity of a three-dimensional space are enhanced, the long-distance sound-picking capability in a complicated acoustics environment is greatly enhanced, high-quality voice signals are obtained, and noise and other interferences are reduced. The embodiment of the invention also discloses a speech-signal processing device and a system.

Owner:深圳市顺畅声学科技有限公司

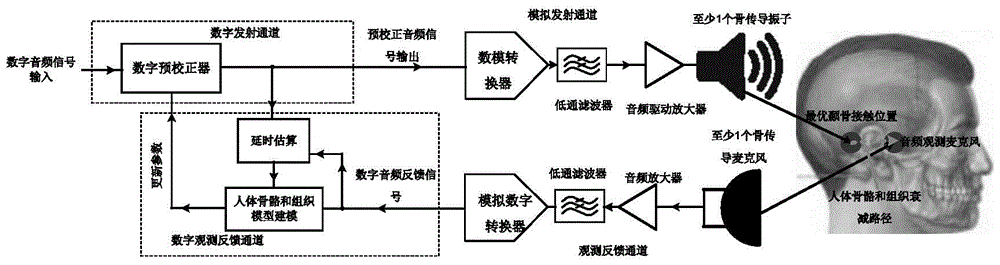

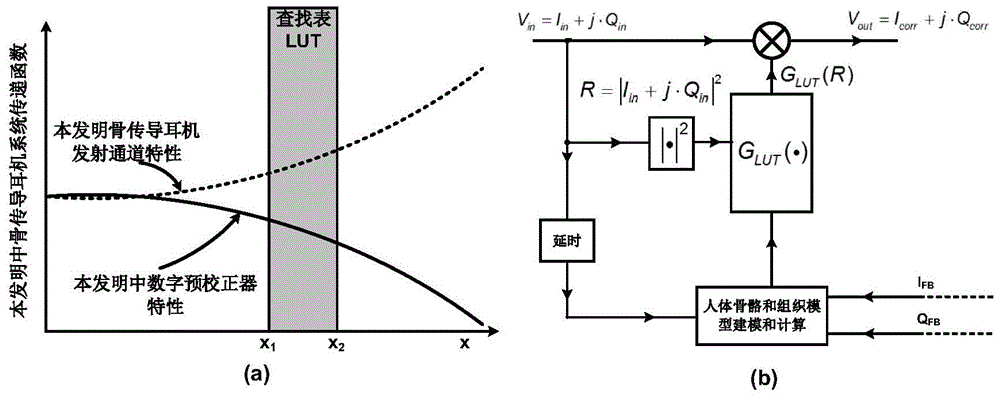

Bone conduction headset and audio processing method thereof

InactiveCN105721973ASimplify complexityReduce production processBone conduction transducer hearing devicesEarpiece/earphone attachmentsLow-pass filterBone conduction hearing

The invention discloses an audio processing method for a bone conduction headset, the bone conduction headset and an audio playback device based on the bone conduction headset. The bone conduction headset comprises a human bone and tissue model modeling module, a digital pre-corrector, a delay estimation unit, a digital-to-analog converter, an analog-digital converter, a first low-pass filter, a second low-pass filter, an audio amplifier, an audio driving amplifier, at least one bone conduction microphone, and at least one bone conduction vibrator. In the method, human bone and tissue attenuation effect information of different users is detected in real time; a compensating transfer function is generated based on the attenuation effect information; and an input audio signal is subjected to digital pre-correction via the compensating transfer function, and then the processed input audio signal is transmitted in human bones and tissues. Through adoption of the method, a problem that experience of the bone conduction headset is different caused by different tissue thickness of the different users is solved.

Owner:王泽玲

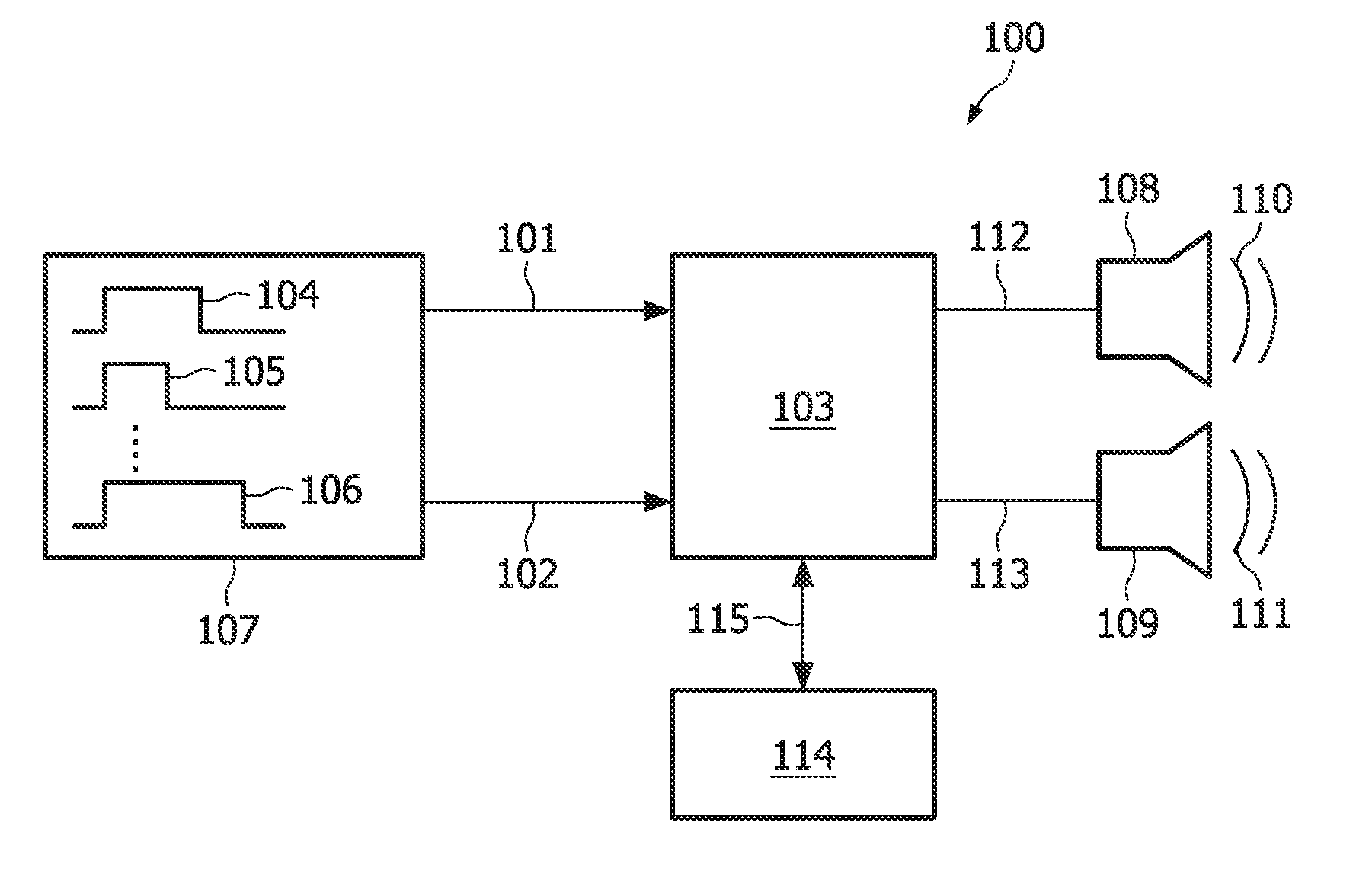

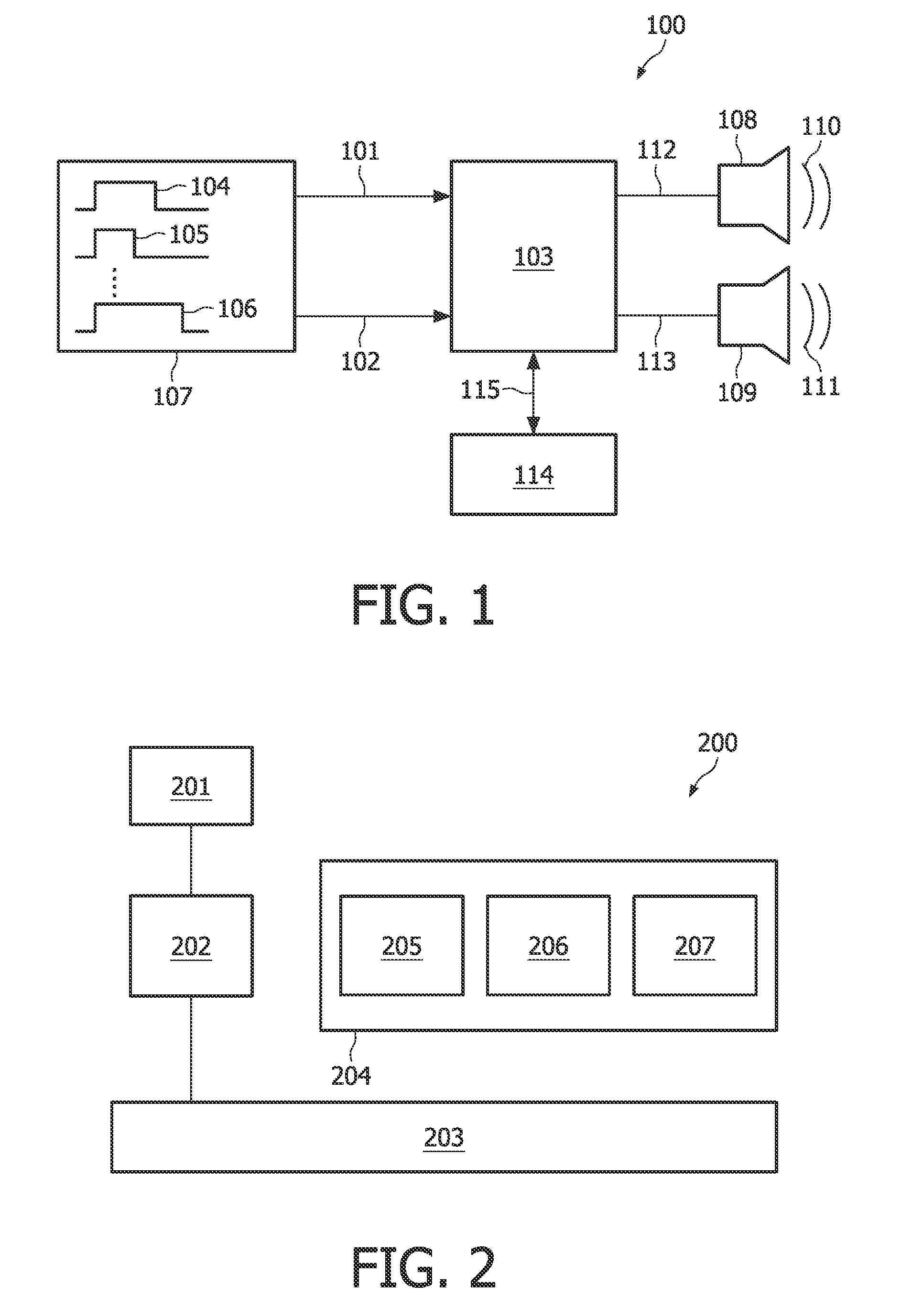

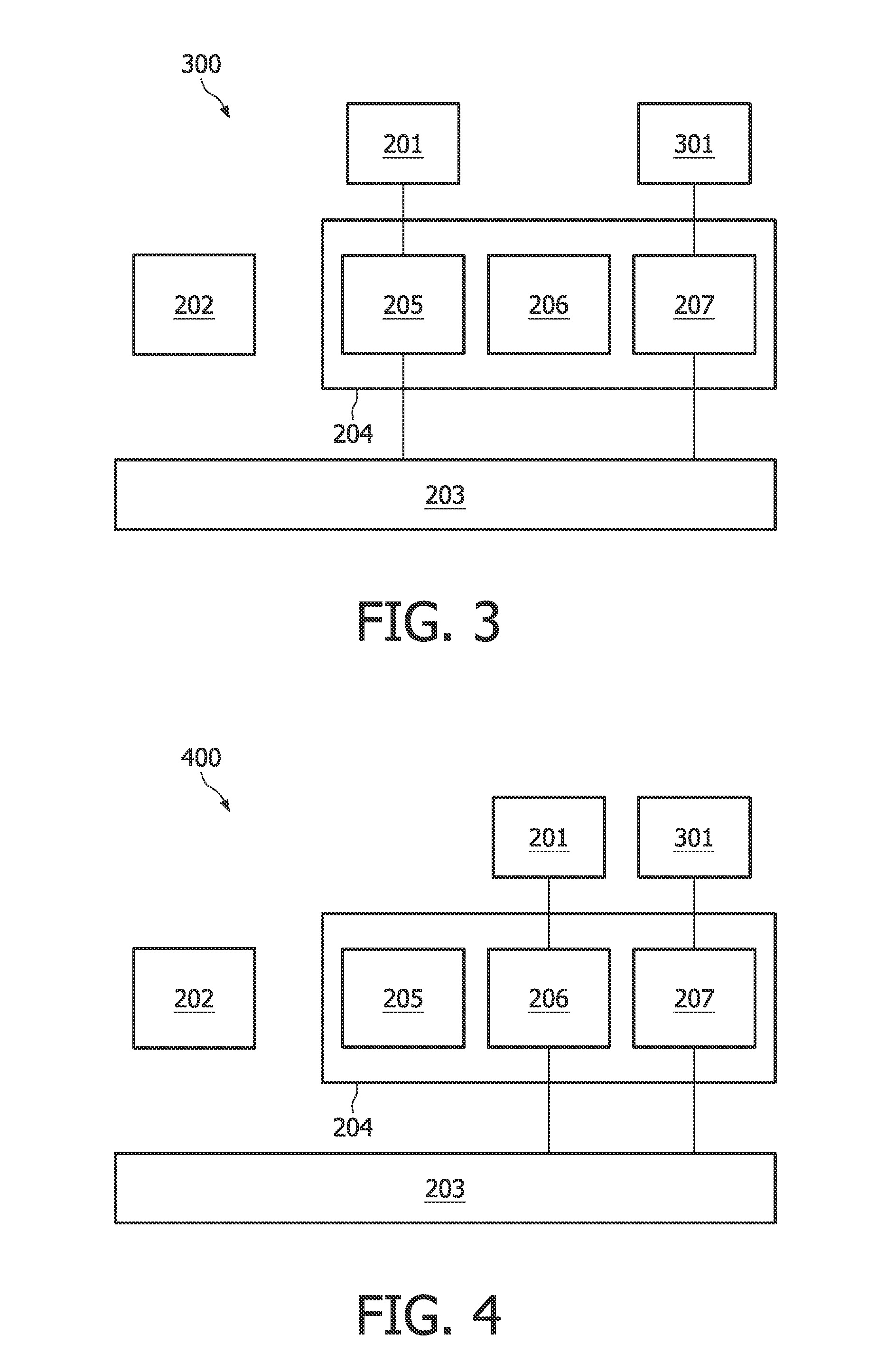

Device for and a method of processing audio data

InactiveUS20100215195A1Electronic editing digitised analogue information signalsSpeech analysisComputer hardwareTime delayed

According to an exemplary embodiment of the invention, a device (100) for processing audio data (101, 102) is provided, wherein the device (100) comprises a manipulation unit (103) (particularly a resampling unit) adapted for manipulating (particularly for resampling) selectively a transition portion of a first audio item (104) in a manner that a time-related audio property of the transition portion is modified (particularly, it is possible to simulate also the temporal delay effects of movement in a realistic manner).

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

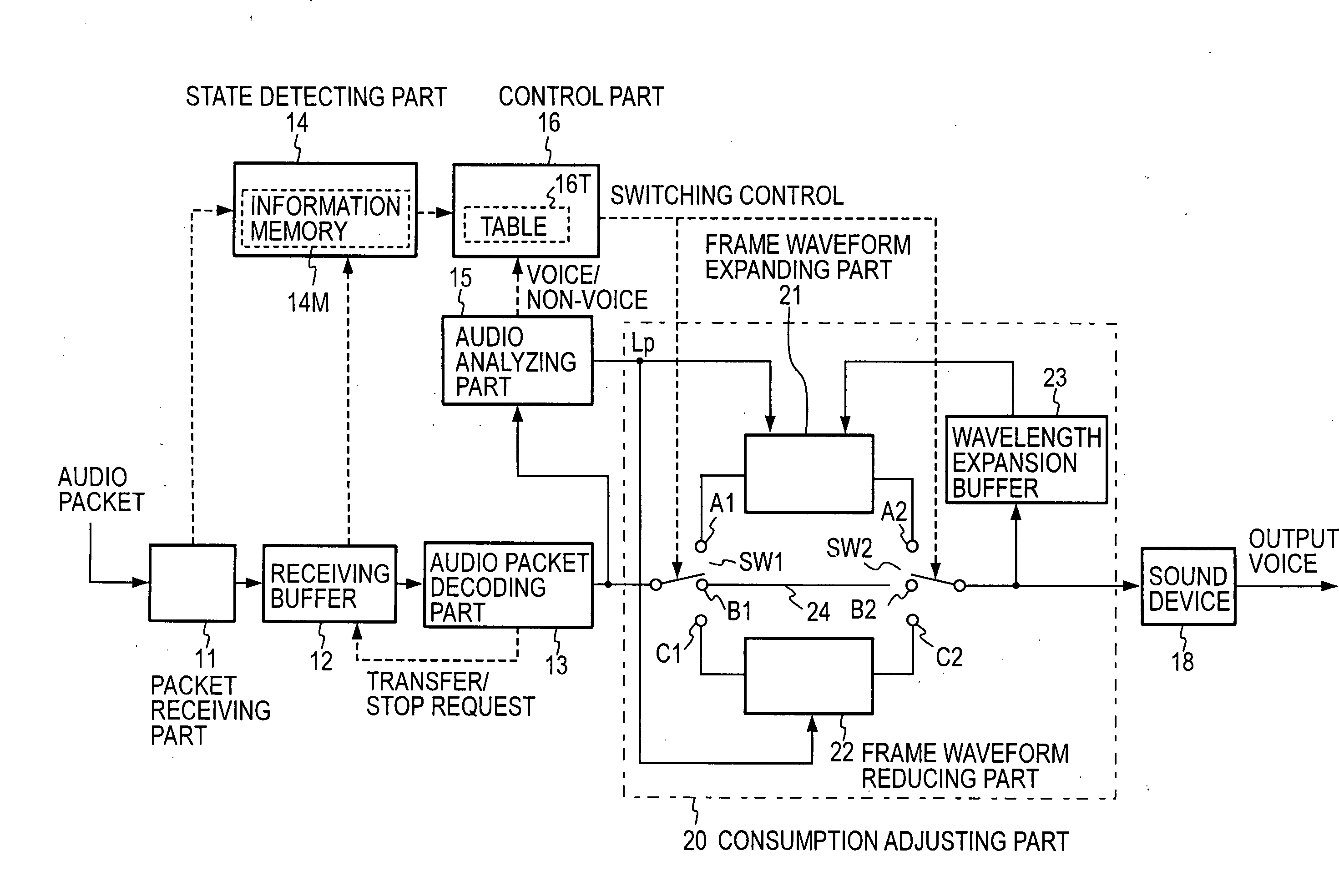

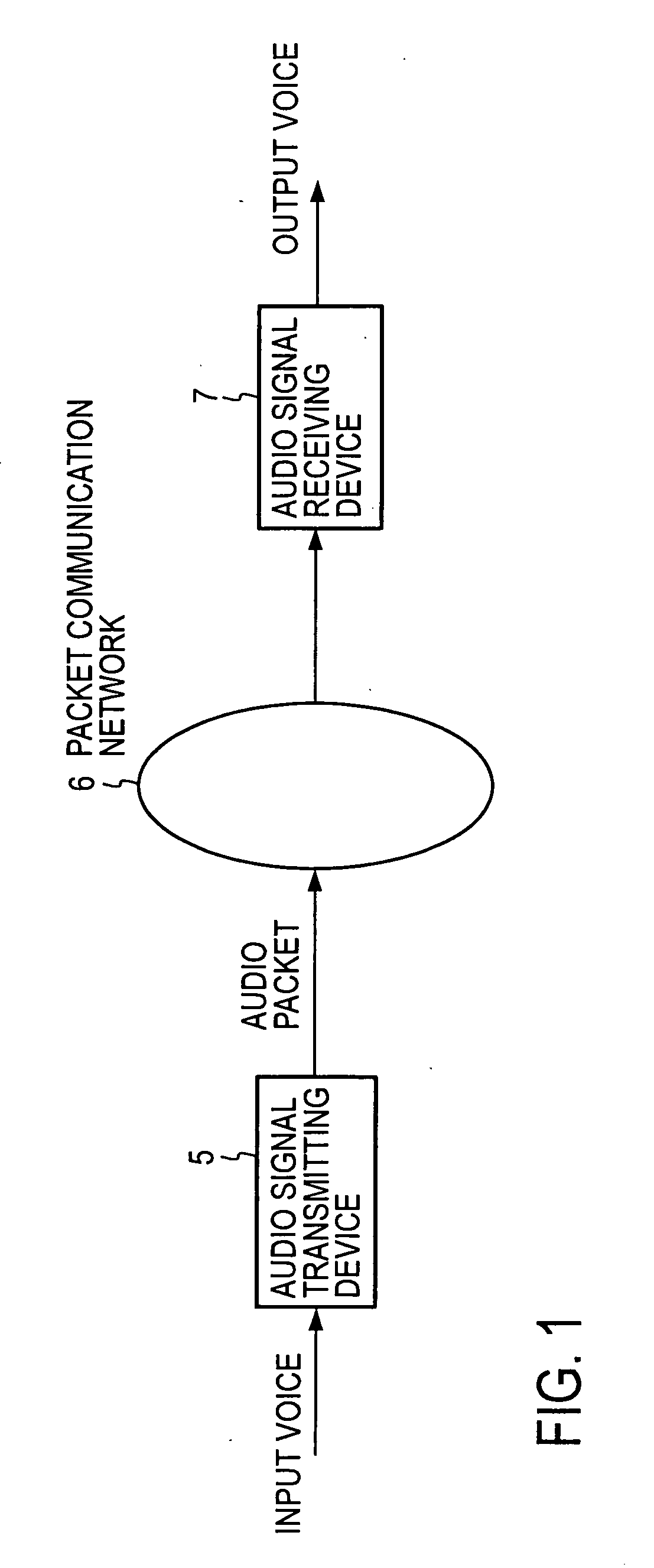

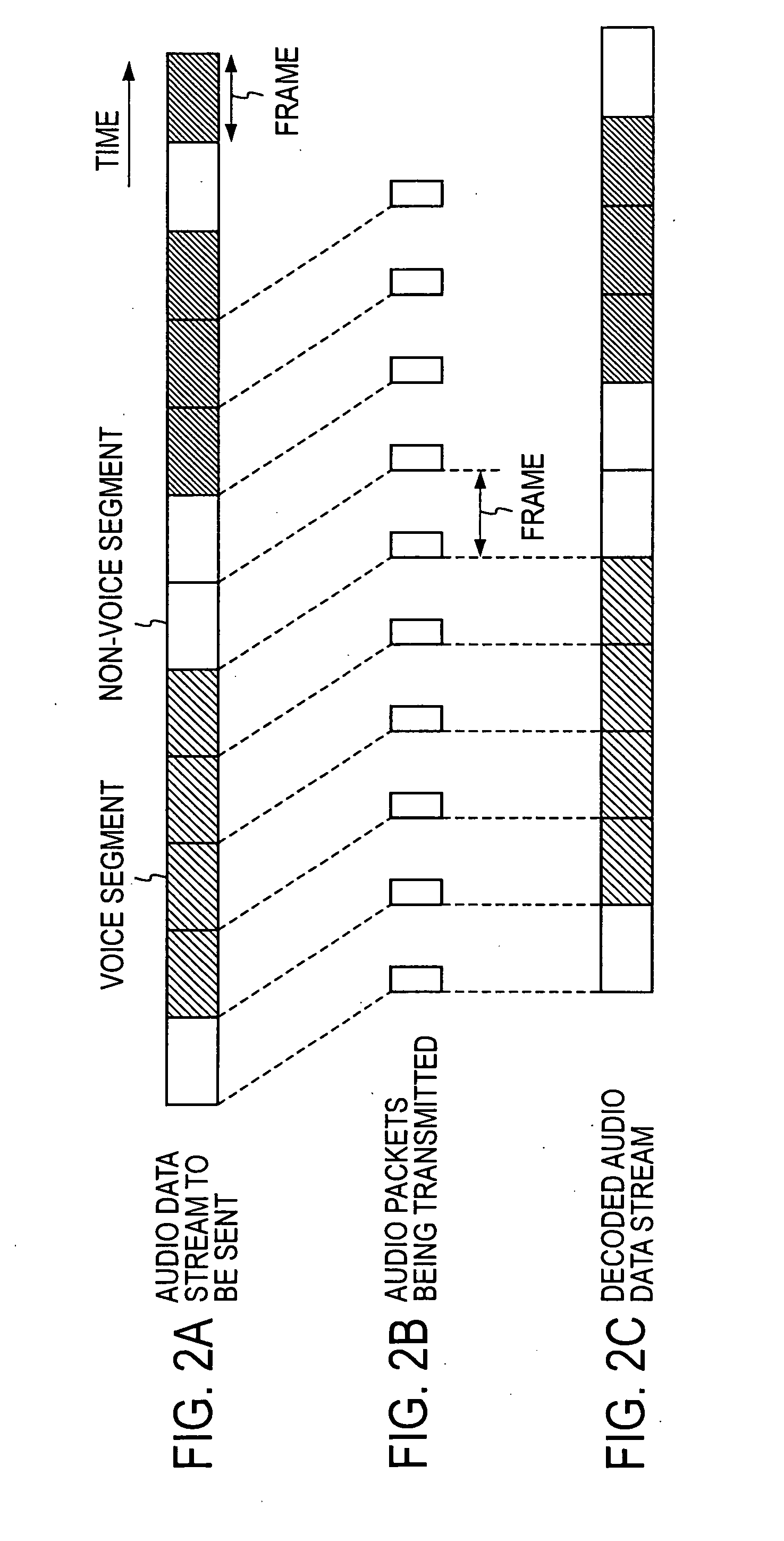

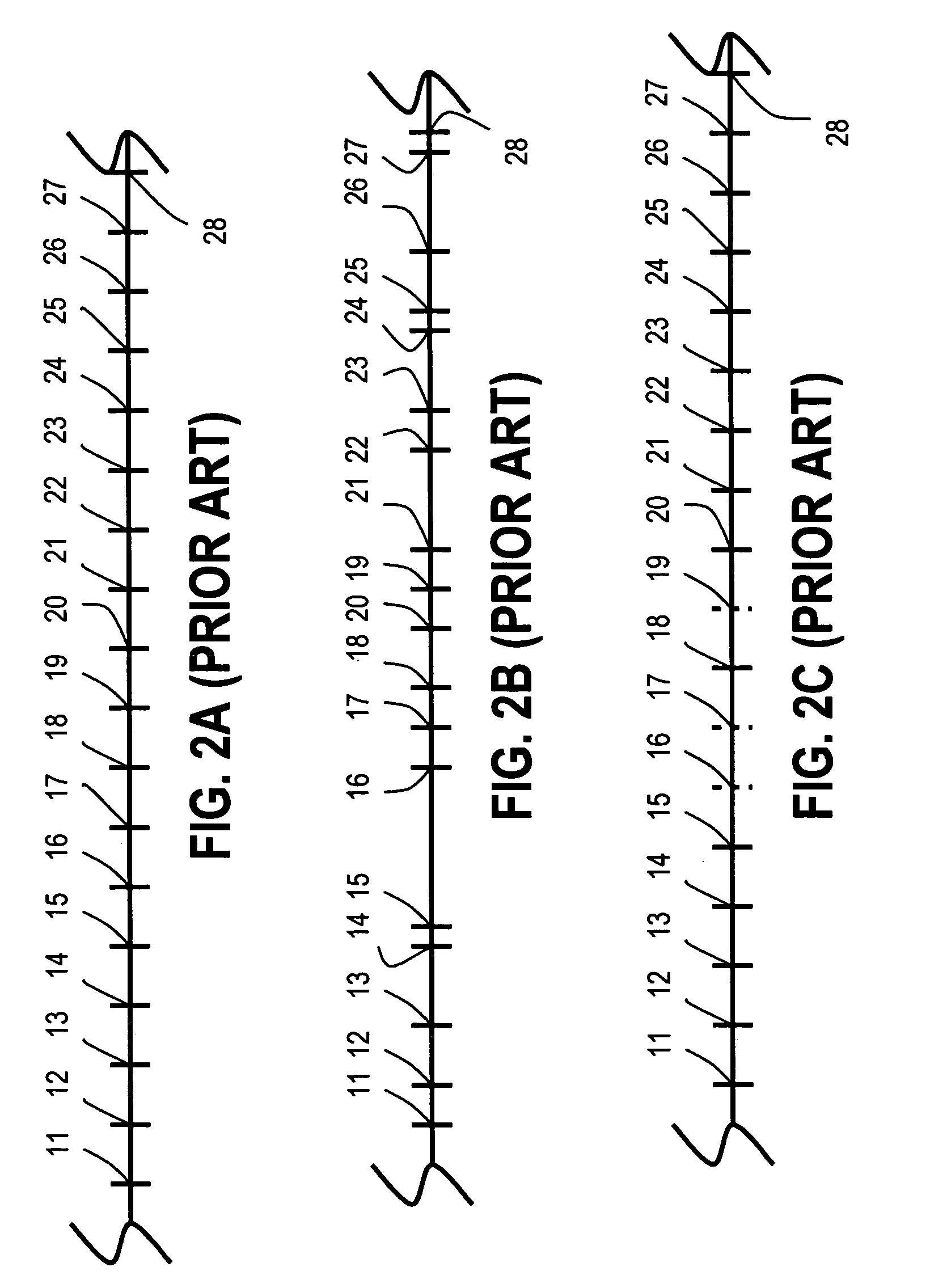

Sound packet reproducing method, sound packet reproducing apparatus, sound packet reproducing program, and recording medium

InactiveUS20070177620A1Communication Latency MinimizedLow costSpeech analysisTime-division multiplexData streamAudio frequency

The present invention prevents a receiving buffer from becoming empty by: storing received packets in the receiving buffer; detecting the largest arrival delay jitter of the packets and the buffer level of the receiving buffer by a state detecting part; obtaining an optimum buffer level for the largest delay jitter using a predetermined table by a control part; determining, based on the detected buffer level and the optimum buffer level, the level of urgency about the need to adjust the buffer level; expanding or reducing the waveform of a decoded audio data stream of the current frame decoded from a packet read out of the receiving buffer by a consumption adjusting part to adjust the consumption of reproduction frames on the basis of the urgency level, the detected buffer level, and the optimum buffer level.

Owner:NIPPON TELEGRAPH & TELEPHONE CORP

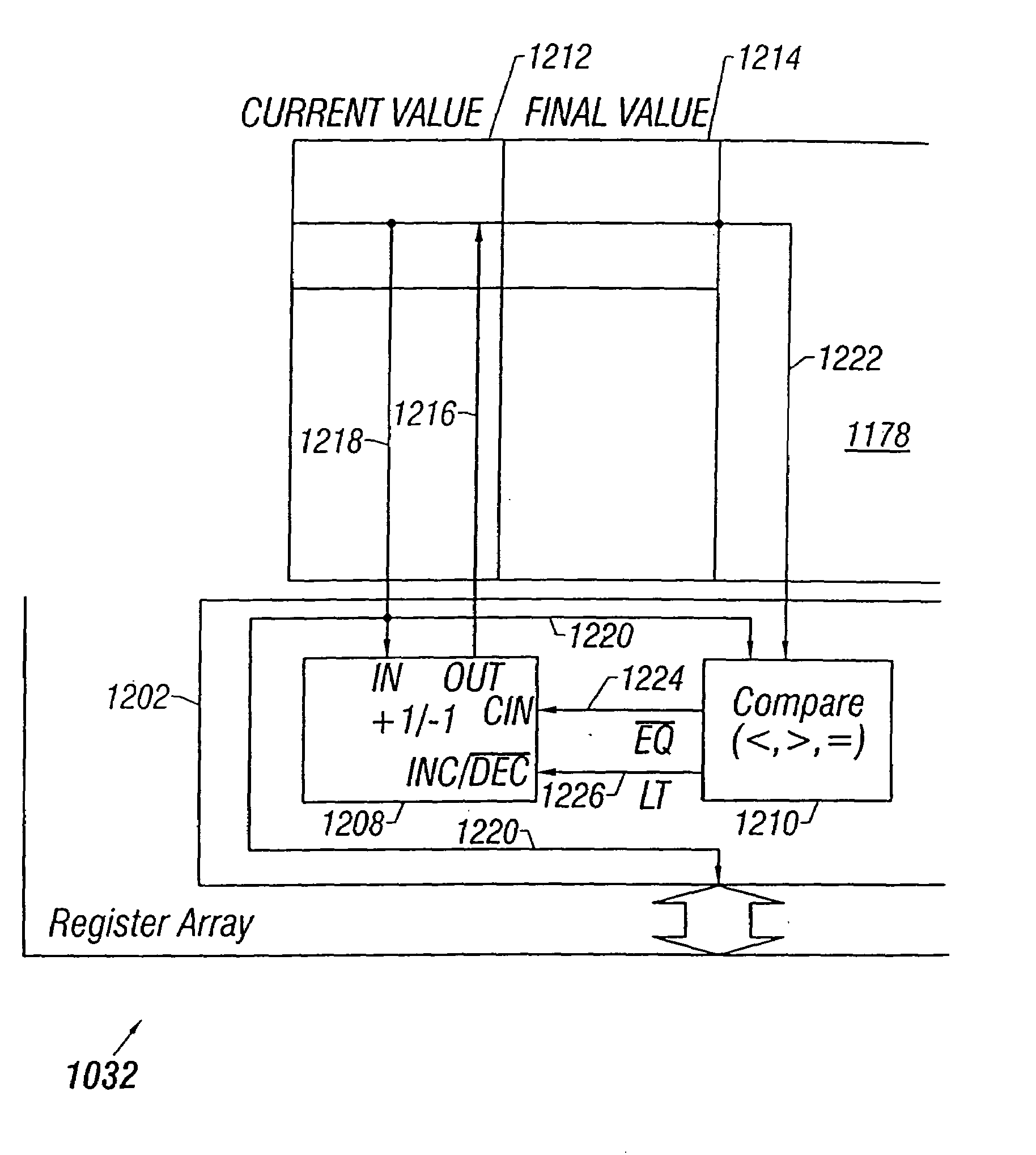

Wavetable audio synthesizer with left offset, right offset and effects volume control

InactiveUS7088835B1Avoid noiseLower the volumeElectrophonic musical instrumentsGain controlAudio synthesisData placement

A digital wavetable audio synthesizer is described. A synthesizer volume generator, which has several modes of controlling the volume, adds envelope, right offset, left offset, and effects volume to the data. The data can be placed in one of sixteen fixed stereo pan positions, or left and right offsets can be programmed to place the data anywhere in the stereo field. The left and right offset values can also be programmed to control the overall volume. Zipper noise is prevented by controlling the volume increment. A synthesizer LFO generator can ad LFO variation to: (i) the wavetable data addressing rate, for creating a vibrato effect; and (ii) a voice's volume, for creating a tremolo effect. Generated data to be output from the synthesizer is stored in left and right accumulators. However, when creating delay-based effects, data is stored in one of several effects accumulators. This data is then written to a wavetable. The difference between the wavetable write and read addresses for this data provides a delay for echo and reverb effects. LFO variations added to the read address create a chorus and flange effects. The volume of the delay-based effects data can be attenuated to provide volume decay for an echo effect. After the delay-based effects processing, the data can be provided with left and right offset volume components which determine how much of the effect is heard and its stereo position. The data is then stored in the left and right accumulators.

Owner:MICROSEMI SEMICON U S

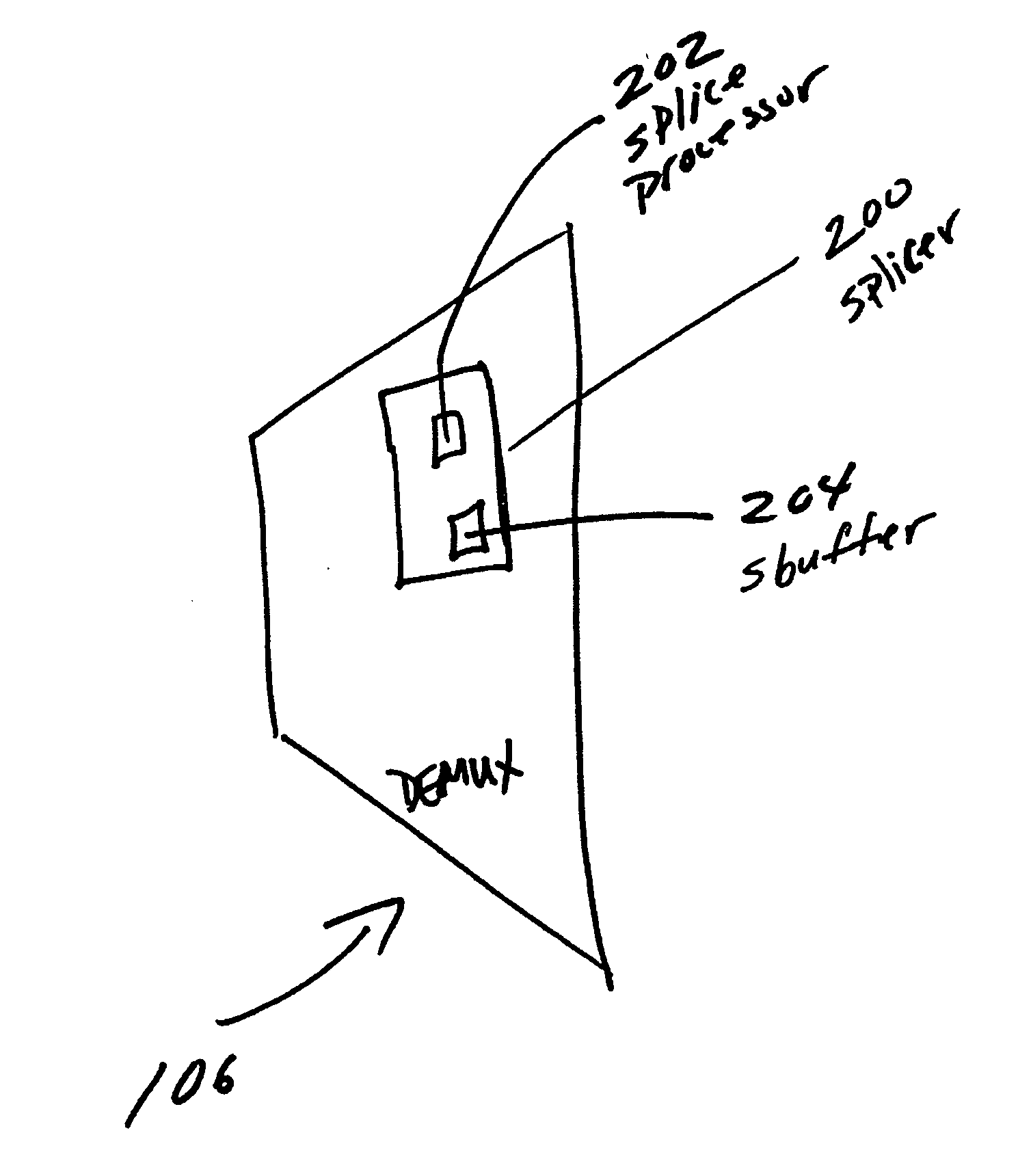

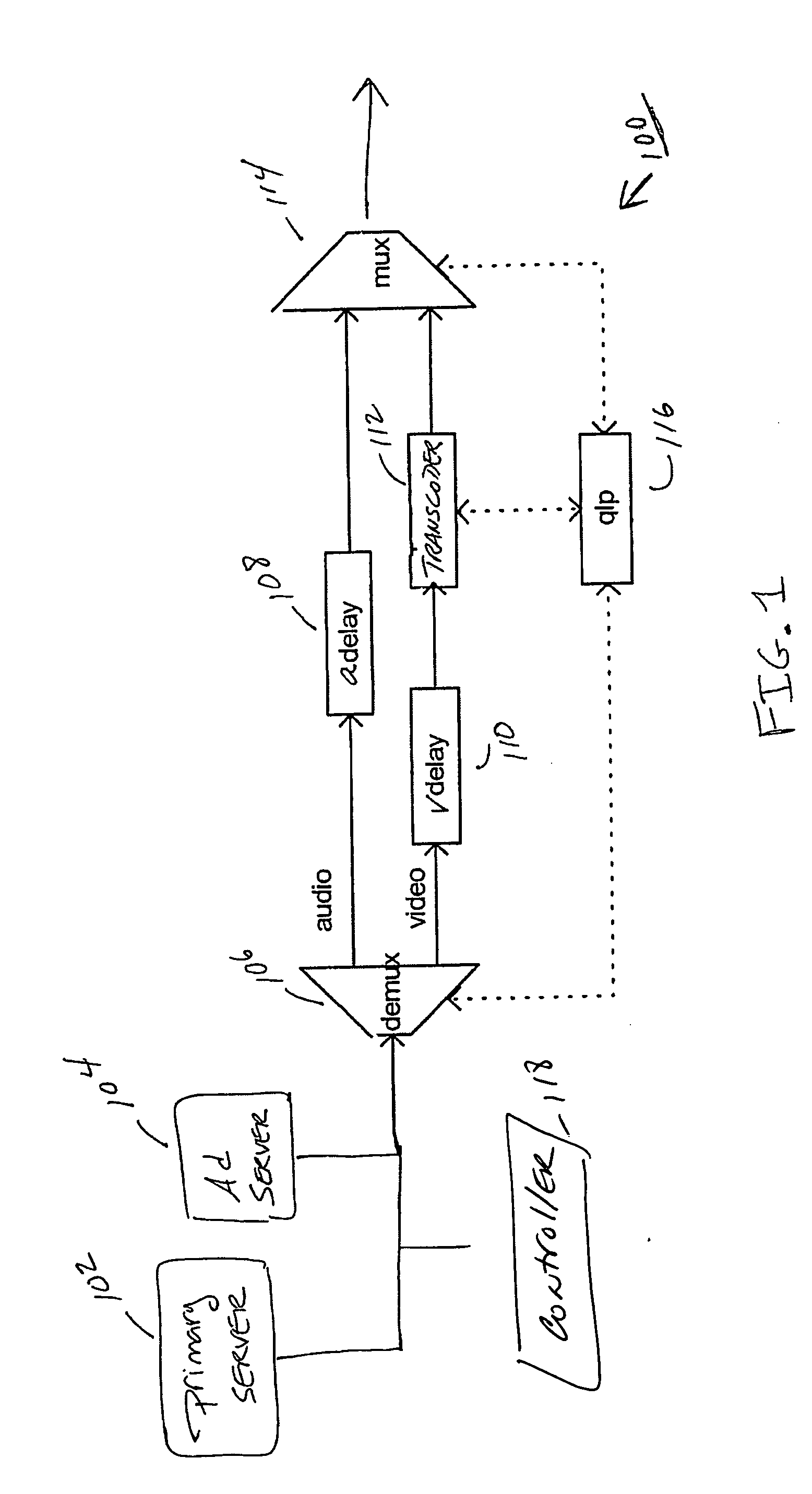

Audio splice process for digital Ad insertion

InactiveUS20050022253A1Avoid underflowPrevent overflowPulse modulation television signal transmissionColor signal processing circuitsComputer hardwareVariable bit rate vbr

A system and method for audio splicing (insertion) of an Ad audio stream in the compressed domain, where variable early delivery of the Ad audio stream and variable bit rate are allowed, without creating audio distortion, glitches, or other digital artefacts or errors, in the resultant audio stream is disclosed. The present system and method provides for a splice delay buffer which delays the first five Ad audio frames until transmission of the last frame of the primary audio stream, but before the splice time. Subsequent Ad audio frames are delayed by a fixed amount, where the fixed amount is greater than the frame delay of the primary audio stream, to allow for ease of splice back to the primary audio stream.

Owner:GOOGLE TECH HLDG LLC

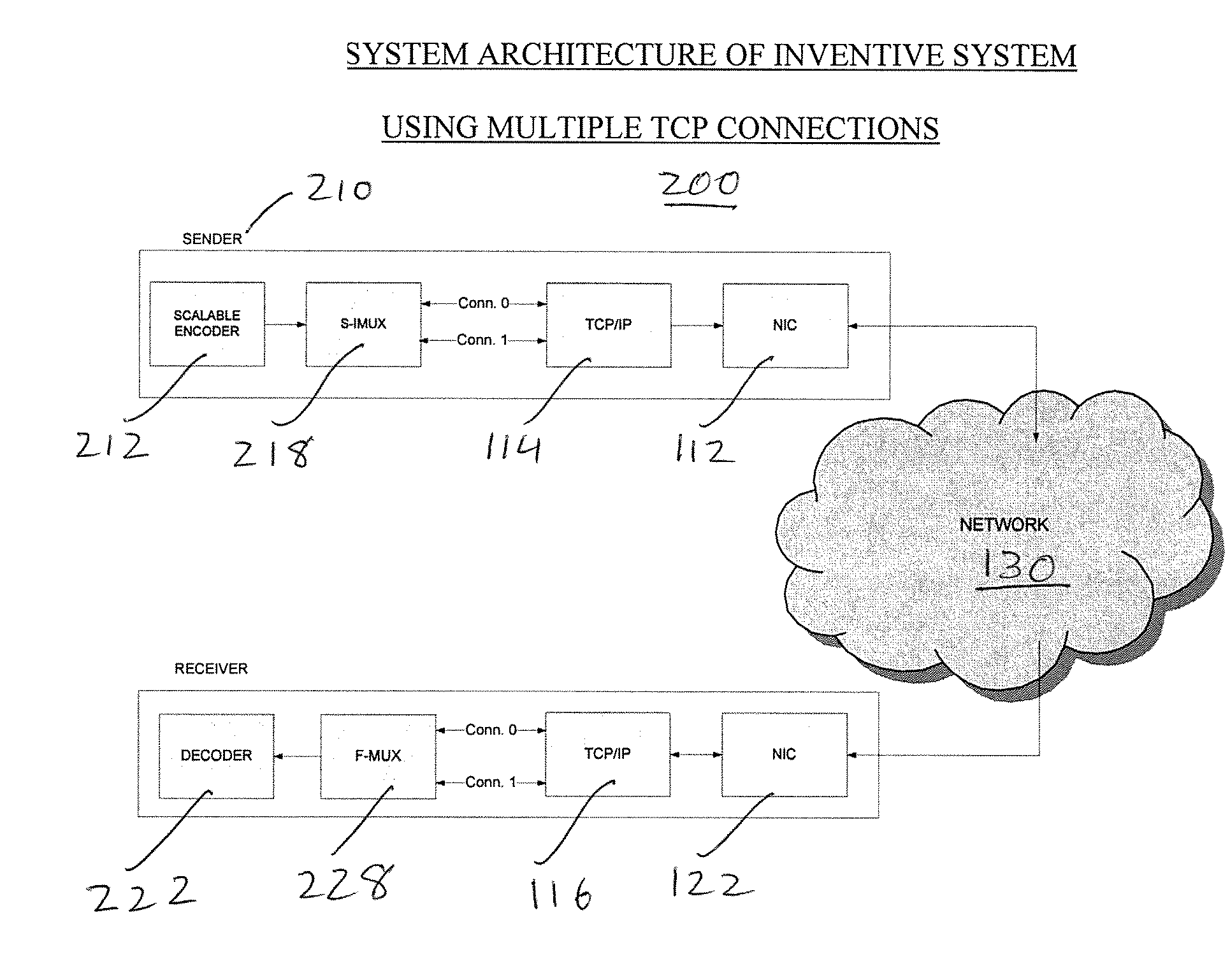

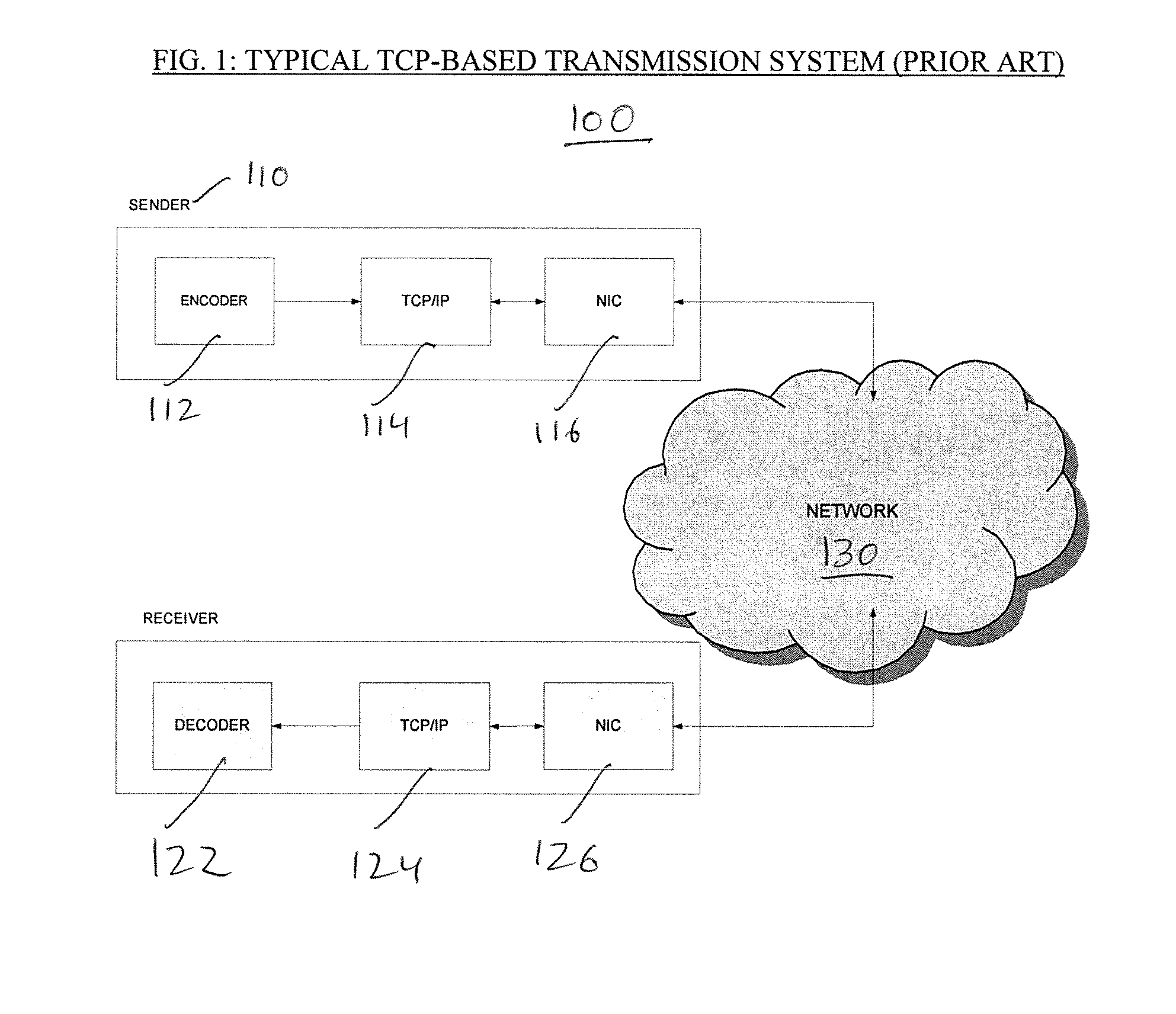

System and method for low-delay, interactive communication using multiple TCP connections and scalable coding

InactiveUS20080130658A1Low latency packet deliveryOvercome limitationsData switching by path configurationLatency (engineering)Low delay

Systems and methods for communication of scaleable-coded audiovisual signals over multiple TCP / IP connections are provided. The sender schedules and prioritizes transmission of individual scalable-coded data packets over the plurality of TCP connections according to their relative importance in the scalable coding structure for signal reconstruction quality and according to receiver feedback. Low-latency packet delivery over the multiple TCP / IP connections is maintained by avoiding transmission or retransmission of packets that are less important for reconstructed media quality.

Owner:VIDYO

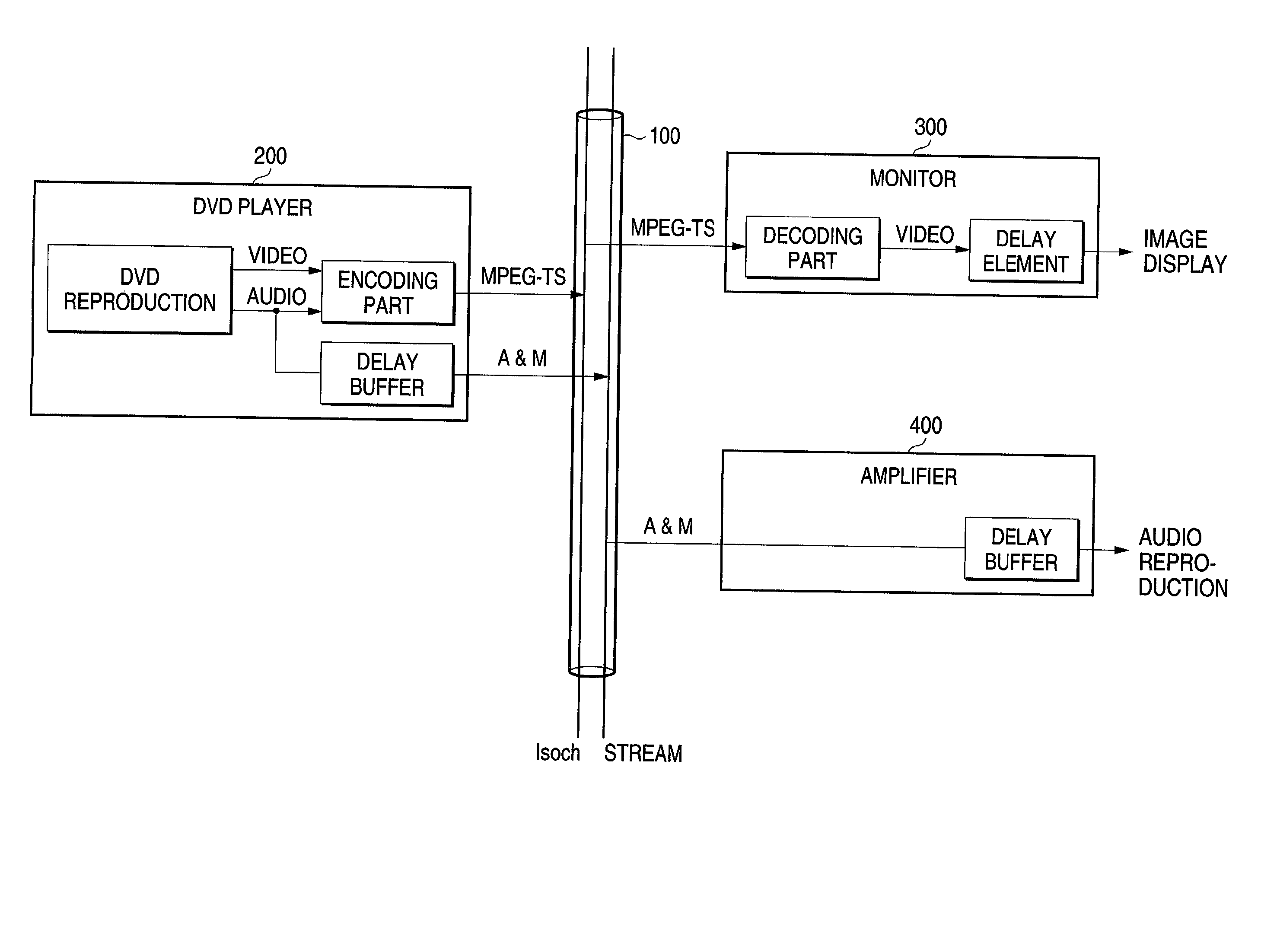

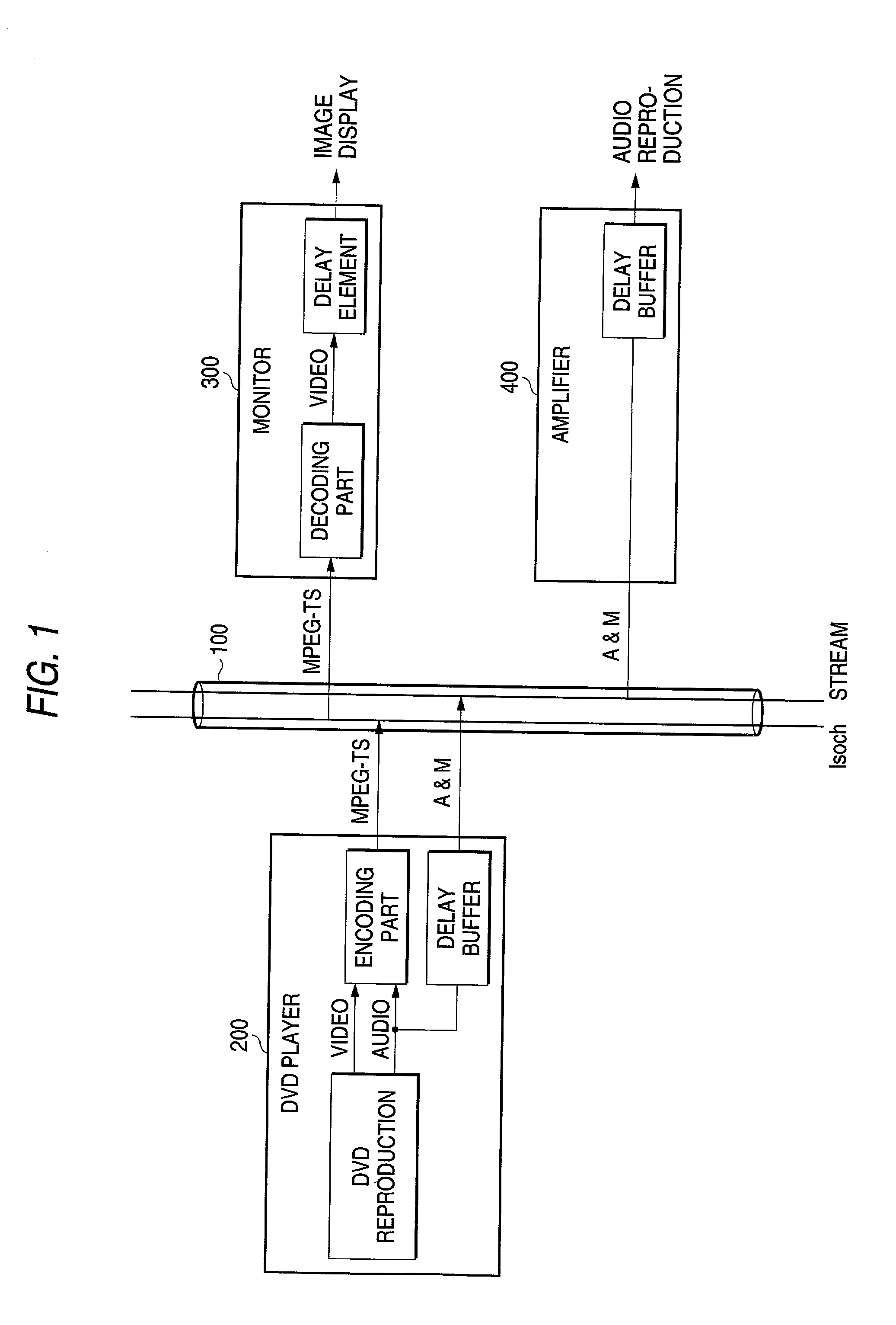

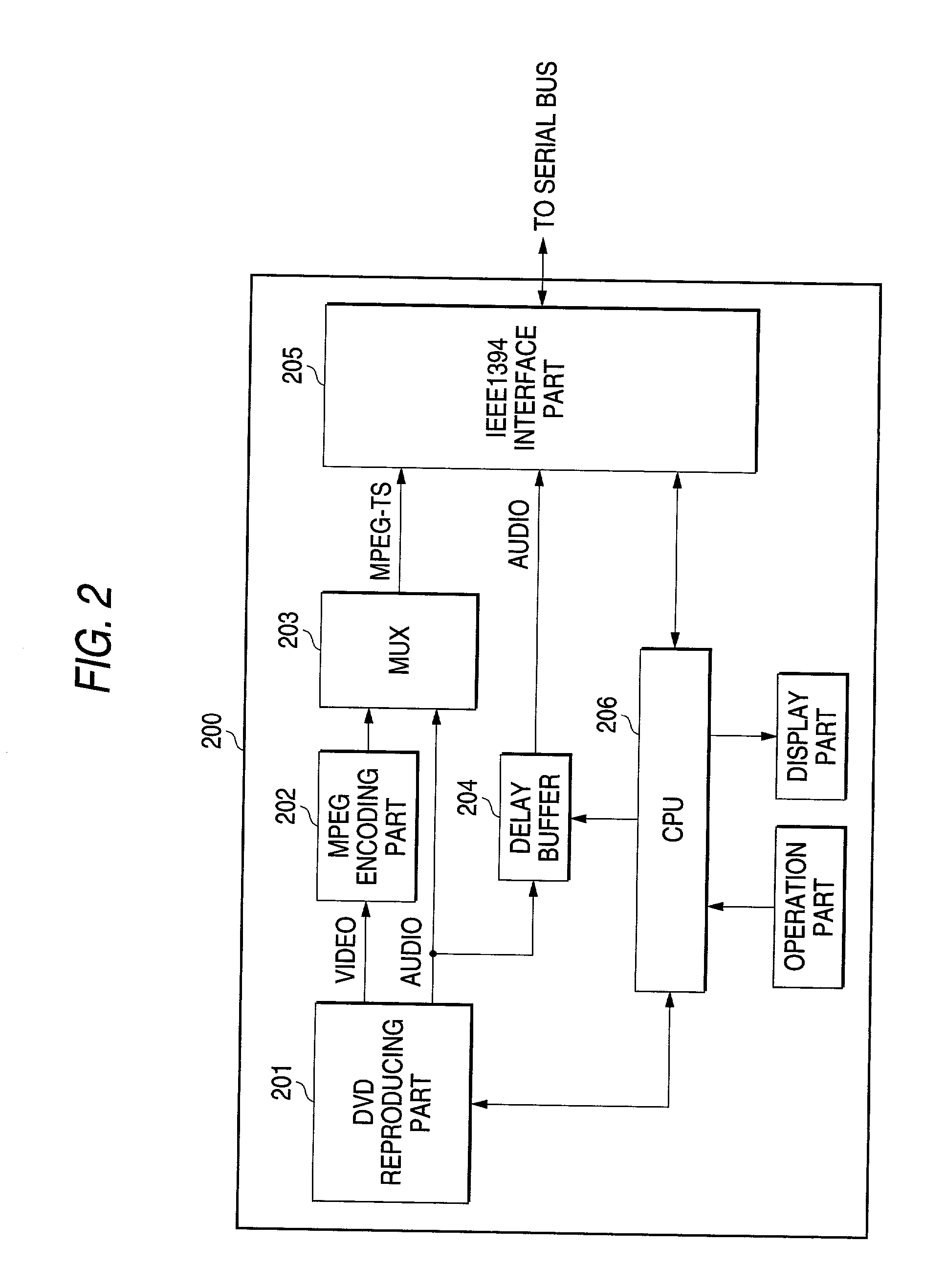

Video display apparatus, audio mixing apparatus, video-audio output apparatus and video-audio synchronizing method

InactiveUS20020174440A1Television system detailsPulse modulation television signal transmissionAudio power amplifierDelay

In a video-audio synchronizing method for synchronizing video and audio reproduced respectively by a monitor 300 and an amplifier 400 connected to a serial bus 100 to which video from a DVD player 200 and audio associated with the video are supplied, the monitor 300 sends out delay time information caused by an intrinsic delay element for video display to the amplifier 400 through the serial bus 100 as a control command, and the amplifier 400 delays the audio by time necessary for synchronization between the video and the audio according to the control command and outputs the audio.

Owner:PIONEER CORP

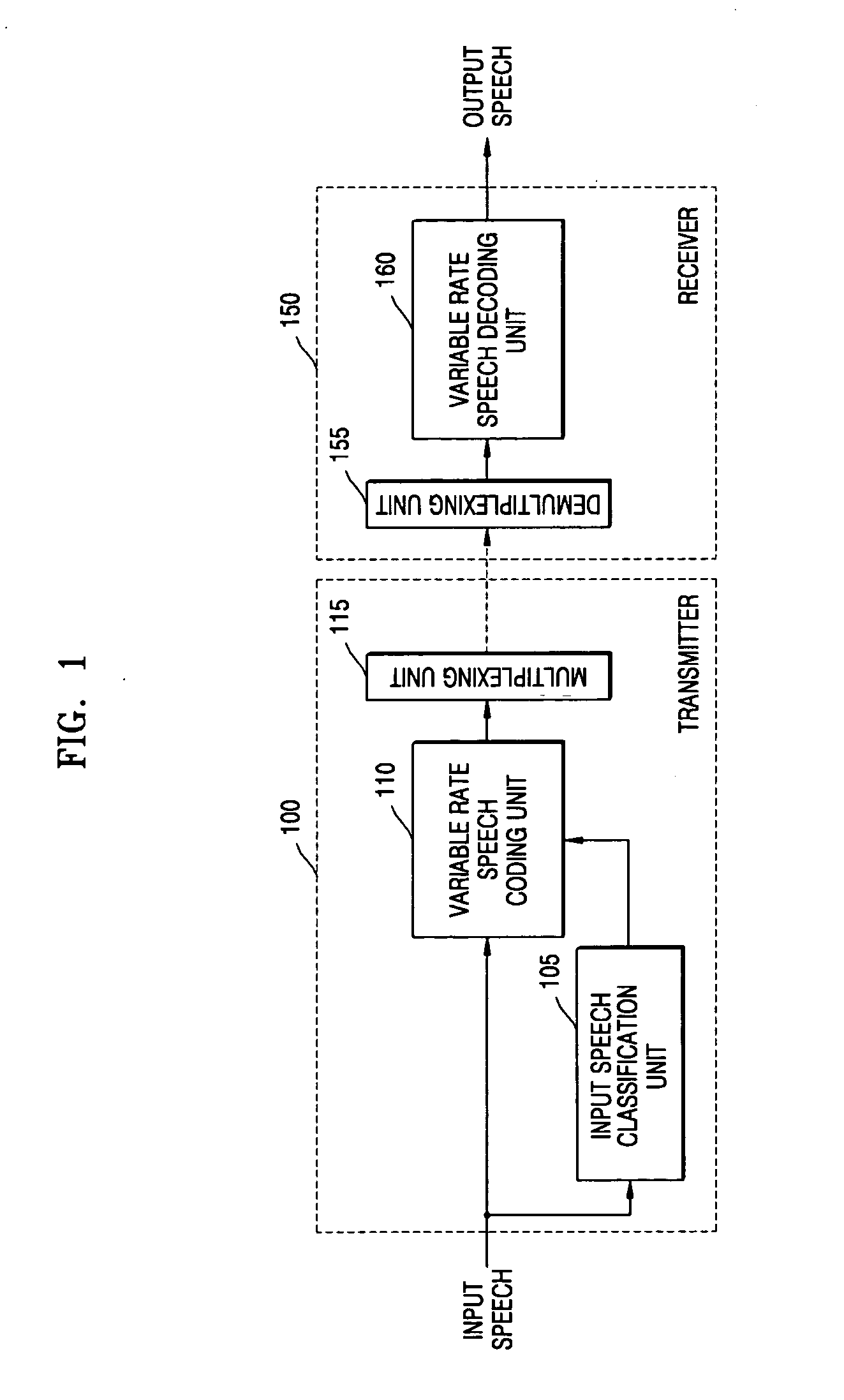

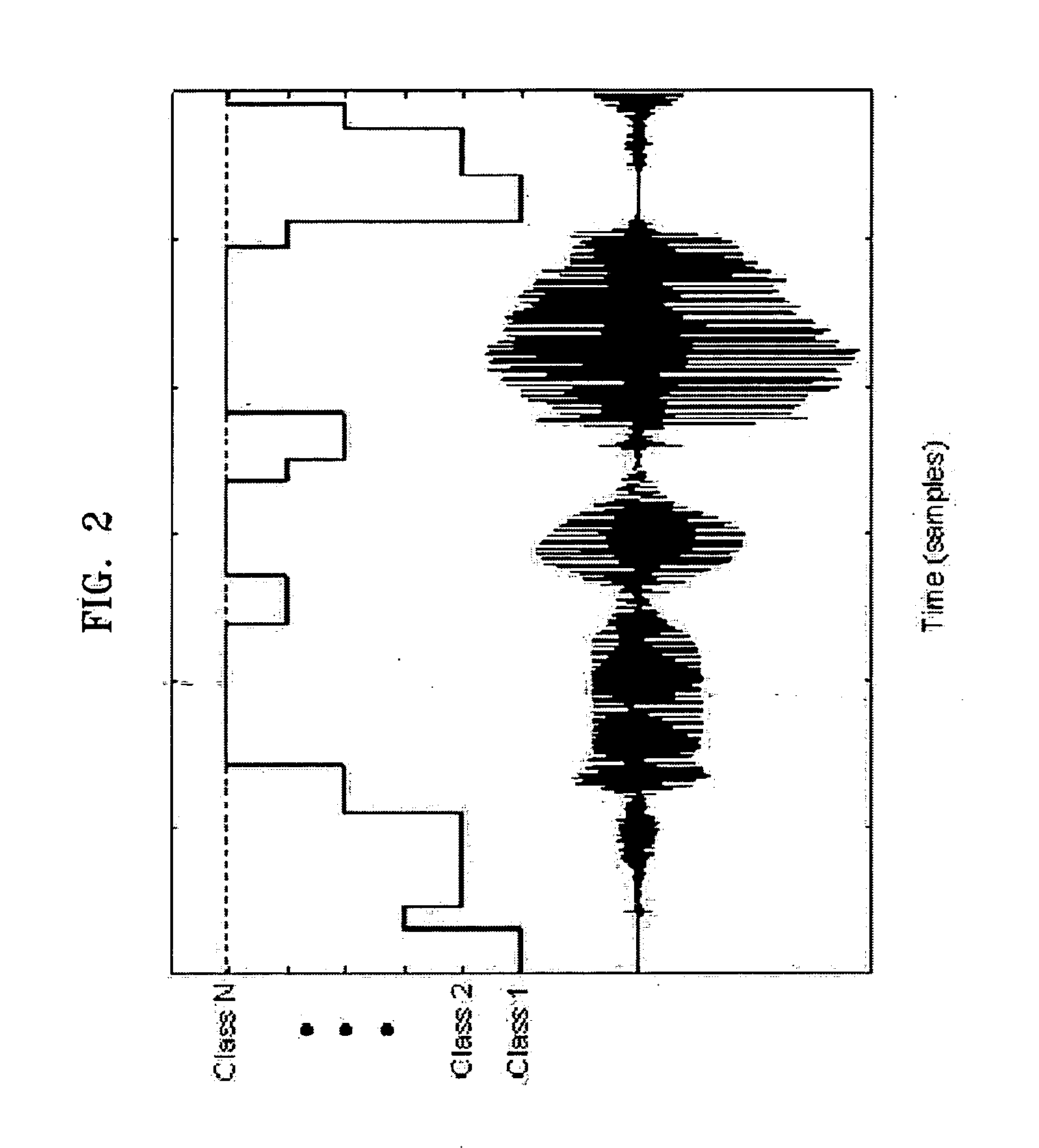

Variable-frame speech coding/decoding apparatus and method

InactiveUS20050143979A1Improve performanceImprove service qualitySpeech analysisSpeech codeNetwork conditions

There is provided a speech coding / decoding apparatus and method, in which the input speech signals are classified into several classes in accordance with characteristics of the input speech signals and the input speech signals are coded using frame sizes, quantizer structures, and bit assignment methods corresponding to the determined classes, or in which the frame sizes can be adjusted in accordance with network conditions or codec type of a counter part. Therefore, by optimally adjusting the frame size, the quantizer structure, and the bit assignment method in accordance with the characteristics of input speech, it is possible to improve the performance of the speech coding apparatus, and by adjusting the frame size in accordance with the speech codec type of a counter part, it is also possible to reduce the total end-to-end delay.

Owner:ELECTRONICS & TELECOMM RES INST +1

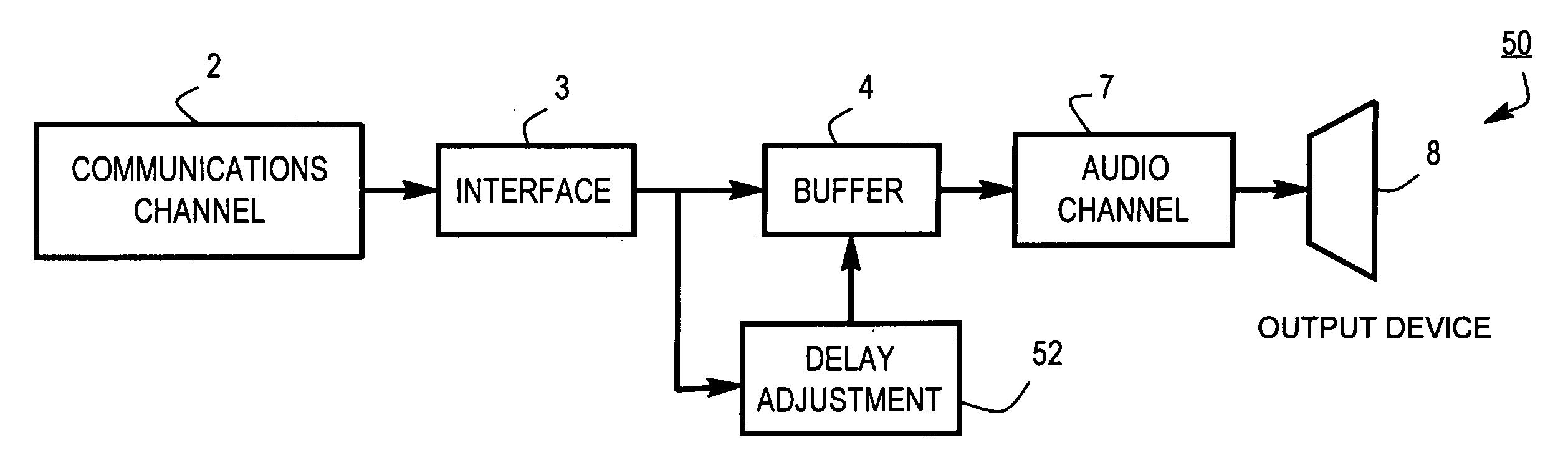

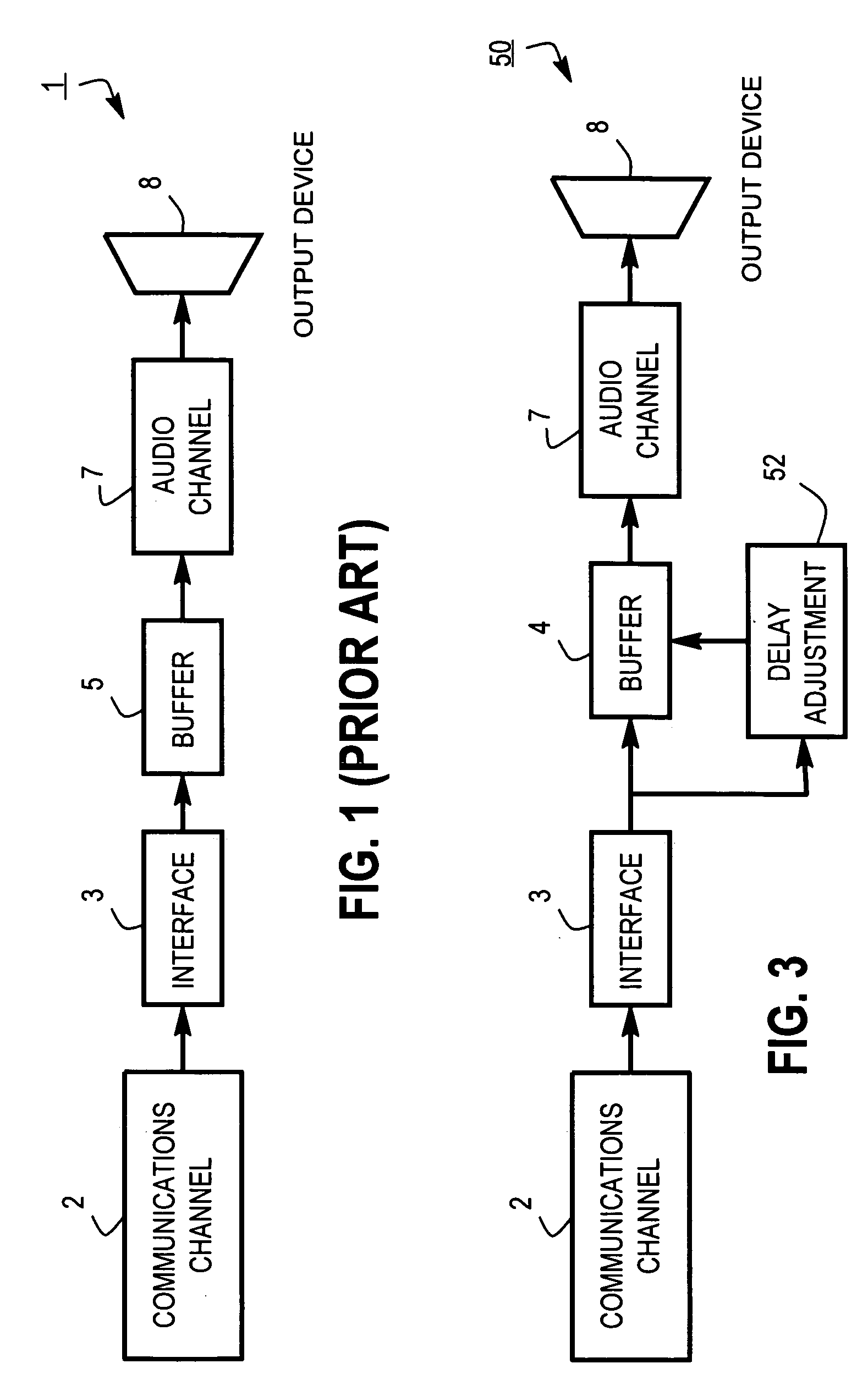

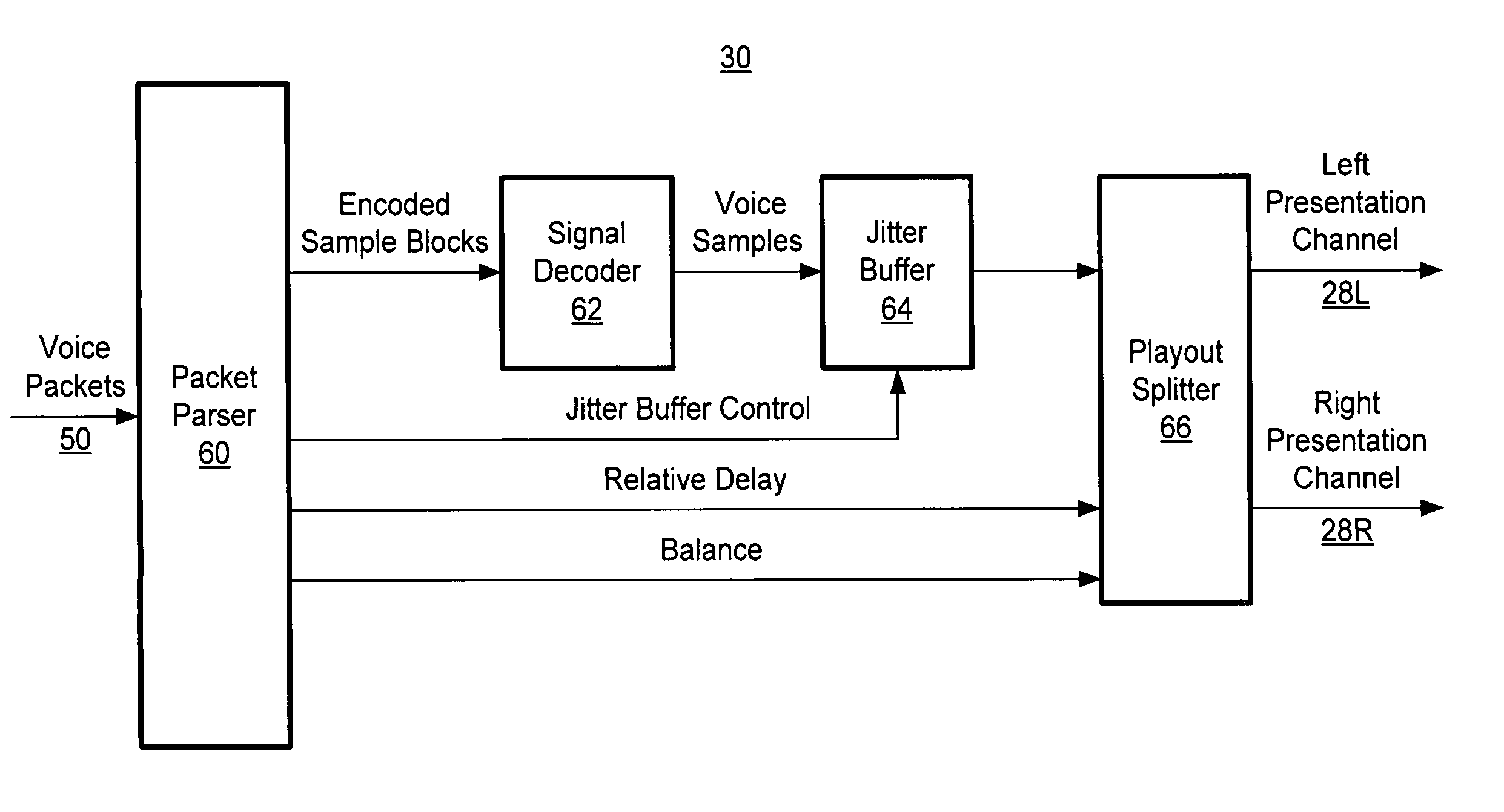

Audio receiver having adaptive buffer delay

InactiveUS20060092918A1Buffer delay be increaseIncreasing and decreasing buffer delayError preventionTransmission systemsInterval delaySelf adaptive

Generally speaking, there are provided systematic techniques for increasing and decreasing jitter buffer delay. The disclosed techniques typically utilize various combinations of: evaluating received data over a specified interval, increasing a recommended buffer delay if the interval delay exceeds a first threshold and decreasing the recommended buffer delay if the interval delay is less than a second threshold, causing the recommended buffer delay to decrease over time until an underflow condition is identified, and / or increasing the recommended buffer delay in response to identifying the underflow condition.

Owner:PIVOT VOIP

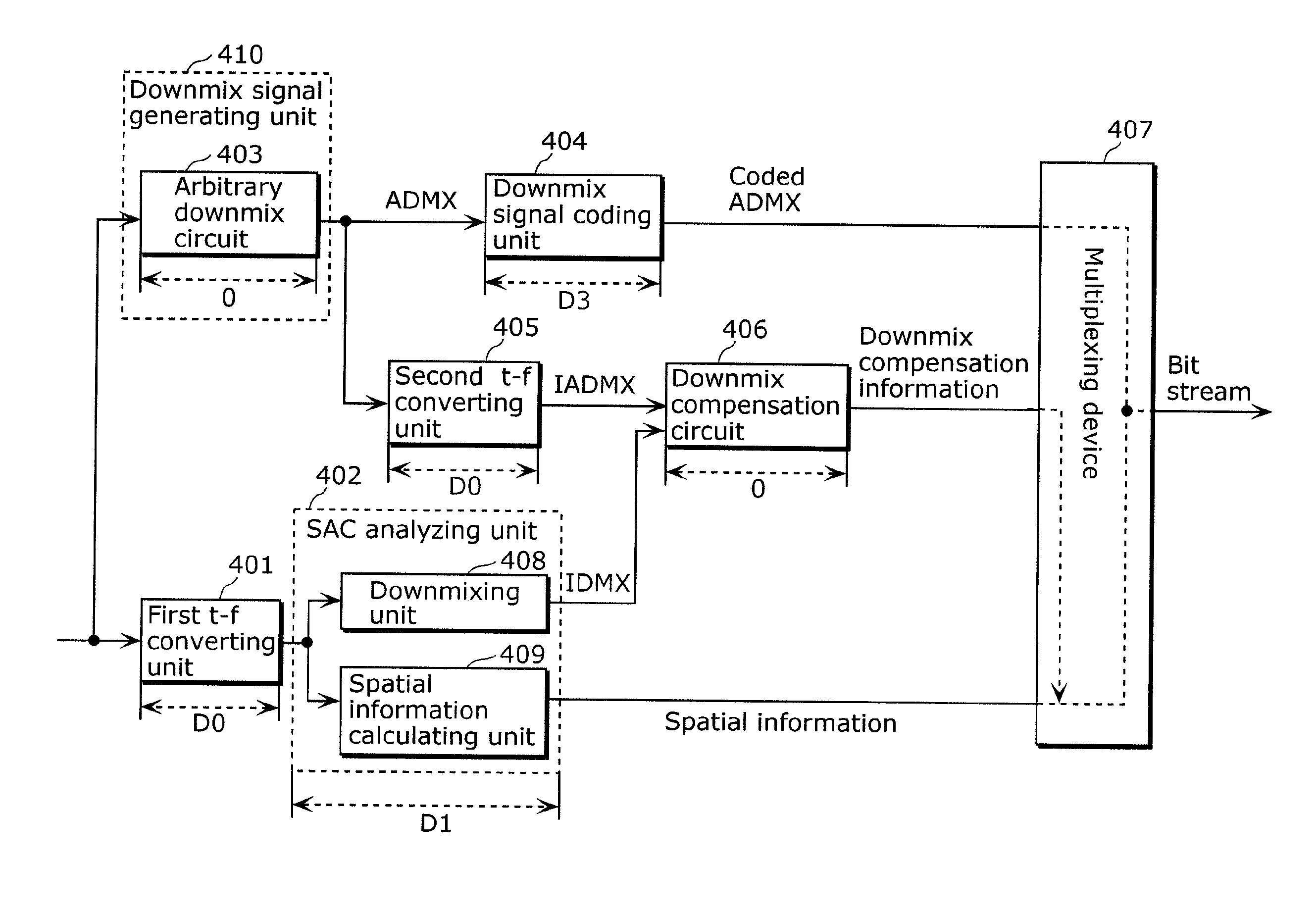

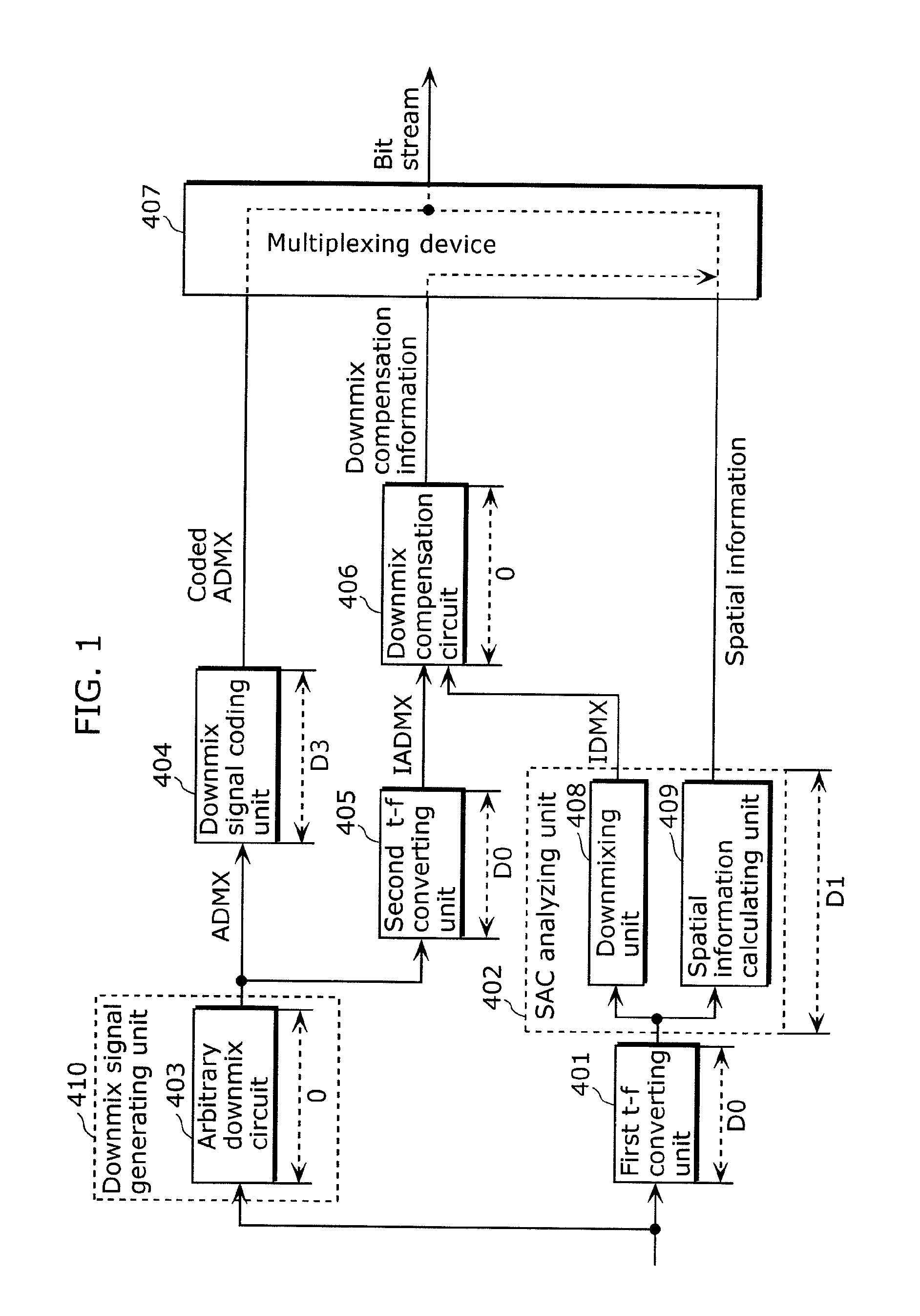

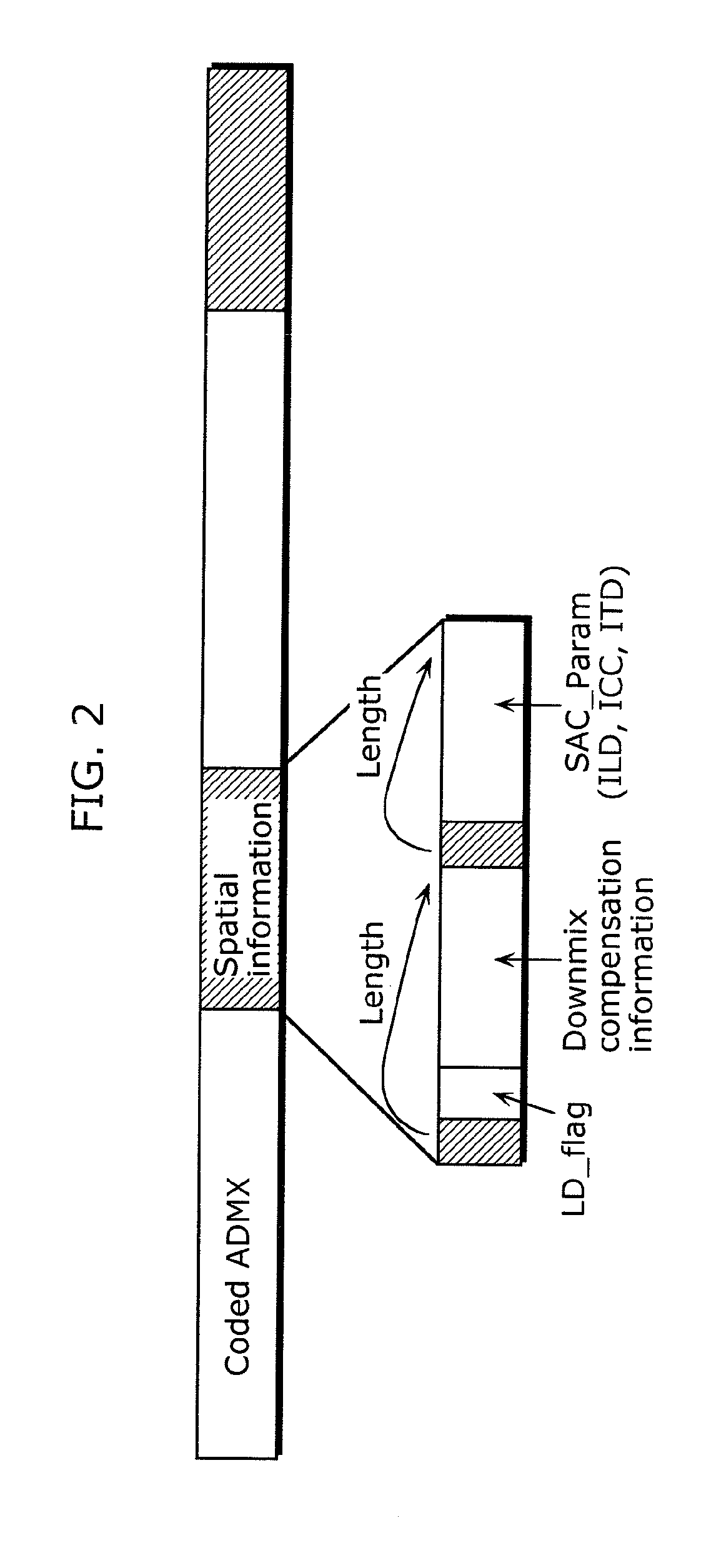

Audio coding apparatus, audio decoding apparatus, audio coding and decoding apparatus, and teleconferencing system

The delay in a multi-channel audio coding apparatus and a multi-channel audio decoding apparatus is reduced. The audio coding apparatus includes: a downmix signal generating unit (410) that generates, in a time domain, a first downmix signal that is one of a 1-channel audio signal and a 2-channel audio signal from an input multi-channel audio signal; a downmix signal coding unit (404) that codes the first downmix signal; a first t-f converting unit (401) that converts the input multi-channel audio signal into a multi-channel audio signal in a frequency domain; and a spatial information calculating unit (409) that generates spatial information for generating a multi-channel audio signal from a downmix signal.

Owner:PANASONIC CORP

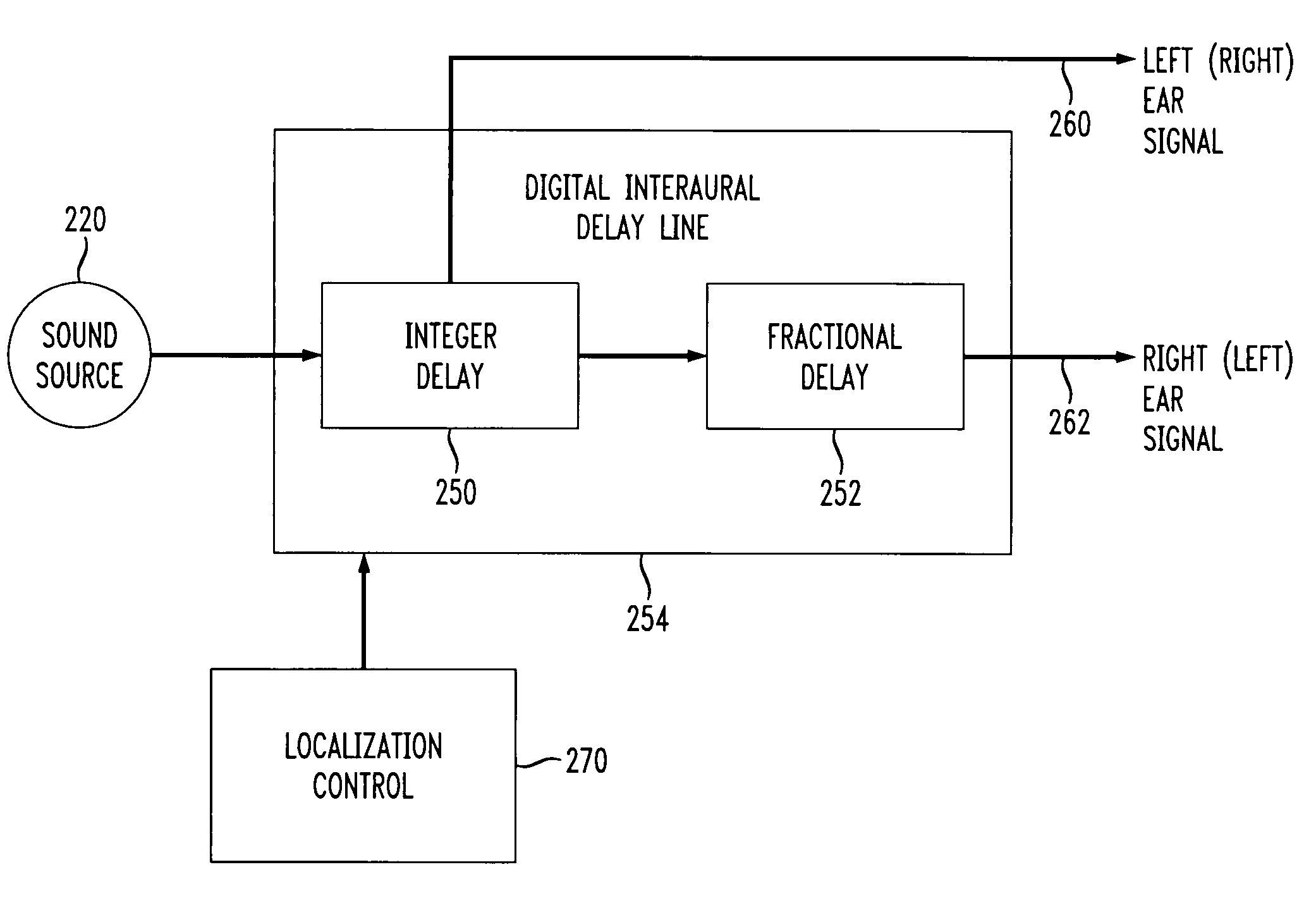

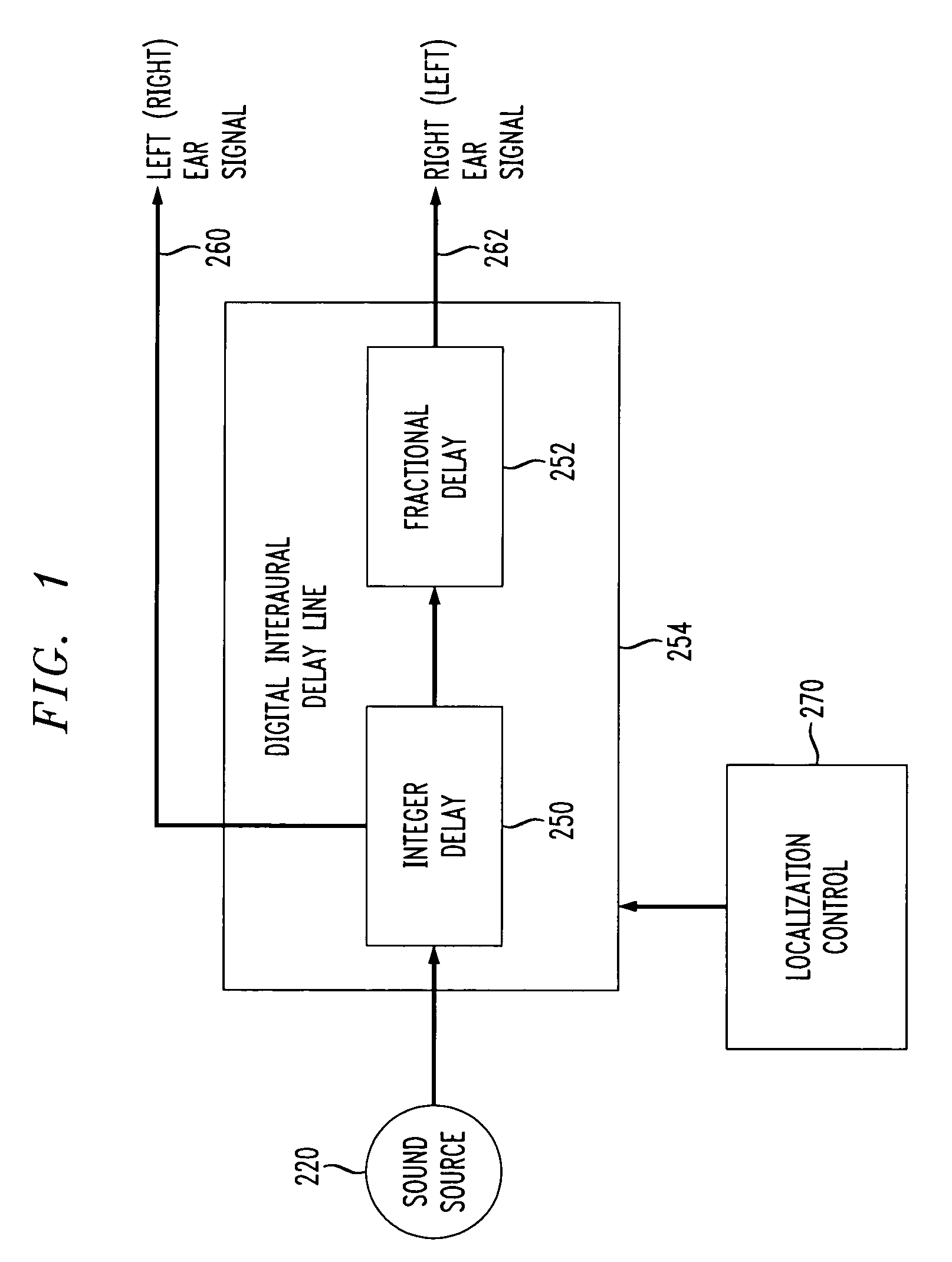

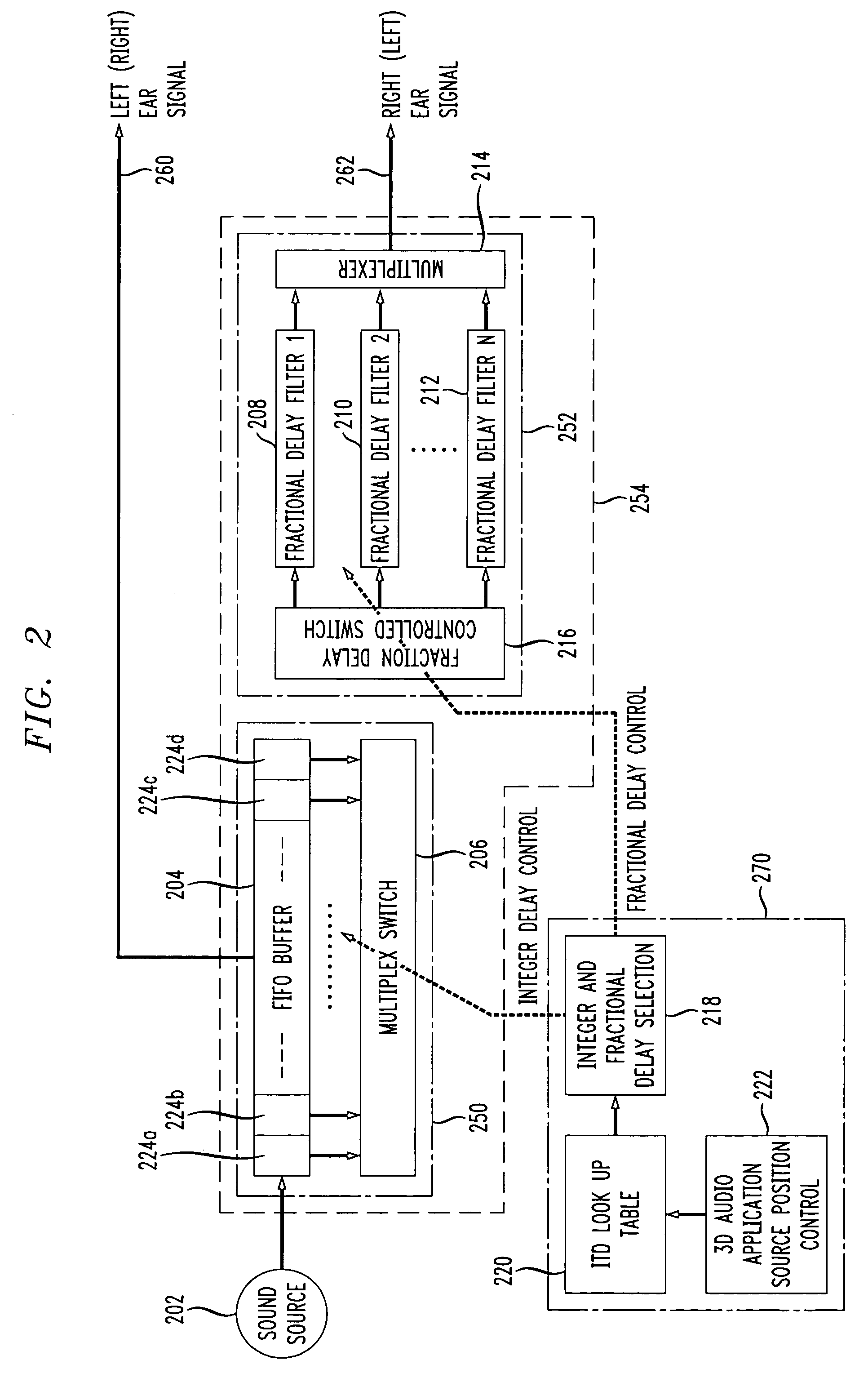

Method and apparatus for processing interaural time delay in 3D digital audio

InactiveUS7174229B1Stereophonic systemsSpecial data processing applicationsTelecommunicationsTime delays

A high quality digital 3D sound rendering is implemented using high resolution interaural time delays formed from two delay lines: a first delay line providing a rough estimate of the desired interaural time delay for a particular audio sample, and a second delay line in series with the first delay line providing a more finely resolved fractional delay. In the disclosed embodiment, the first delay module, i.e., the integer delay module, is formed from a first-in, first-out (FIFO) buffer with appropriate selection control of a desired sample as it passes through the FIFO buffer with each clock cycle based on the sampling rate. The second delay module (i.e., the fractional delay module) is formed from a plurality of polyphase (FIR) filters. The number of polyphase filters is determined based on the desired resolution of the interaural time delay.

Owner:LUCENT TECH INC

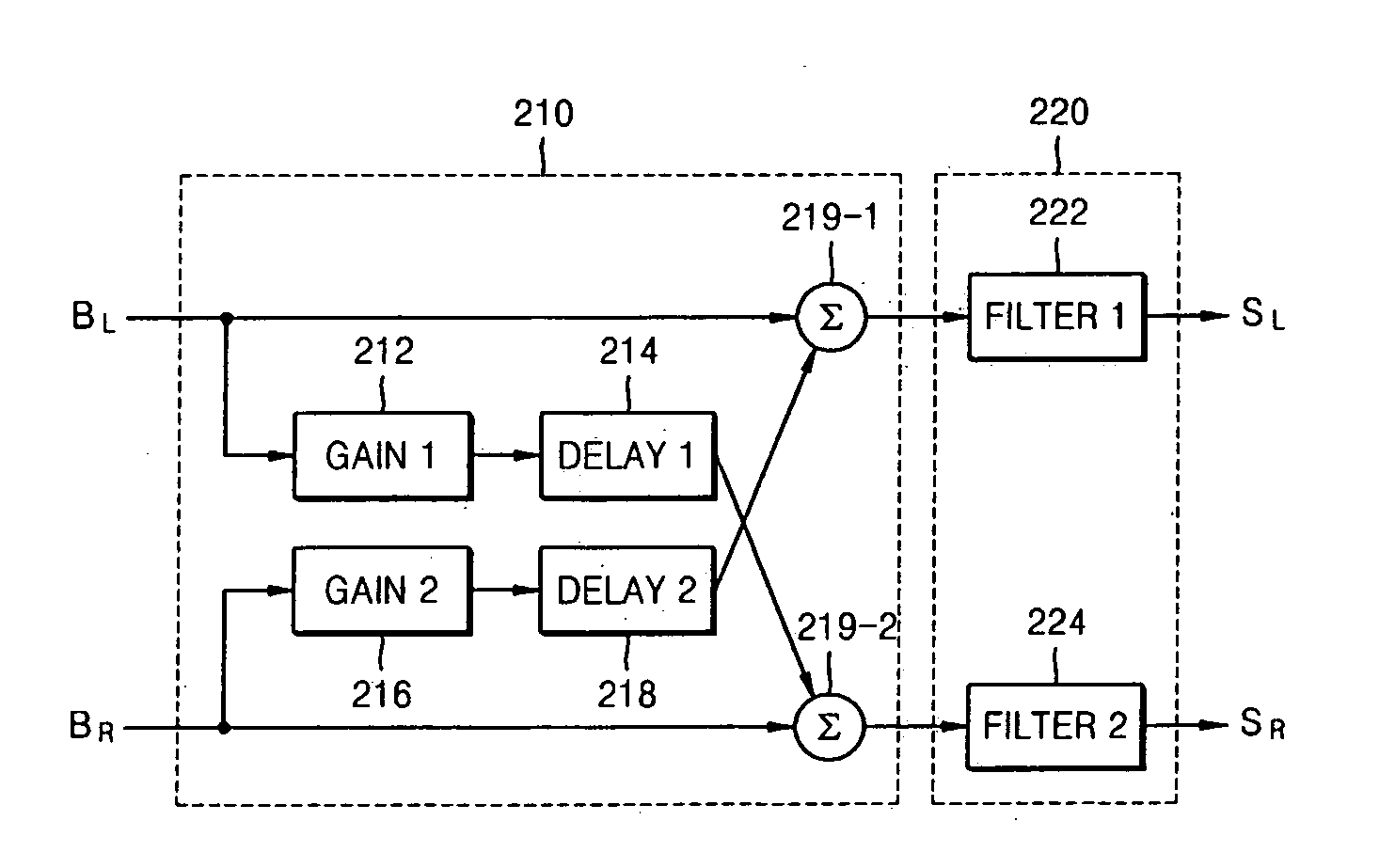

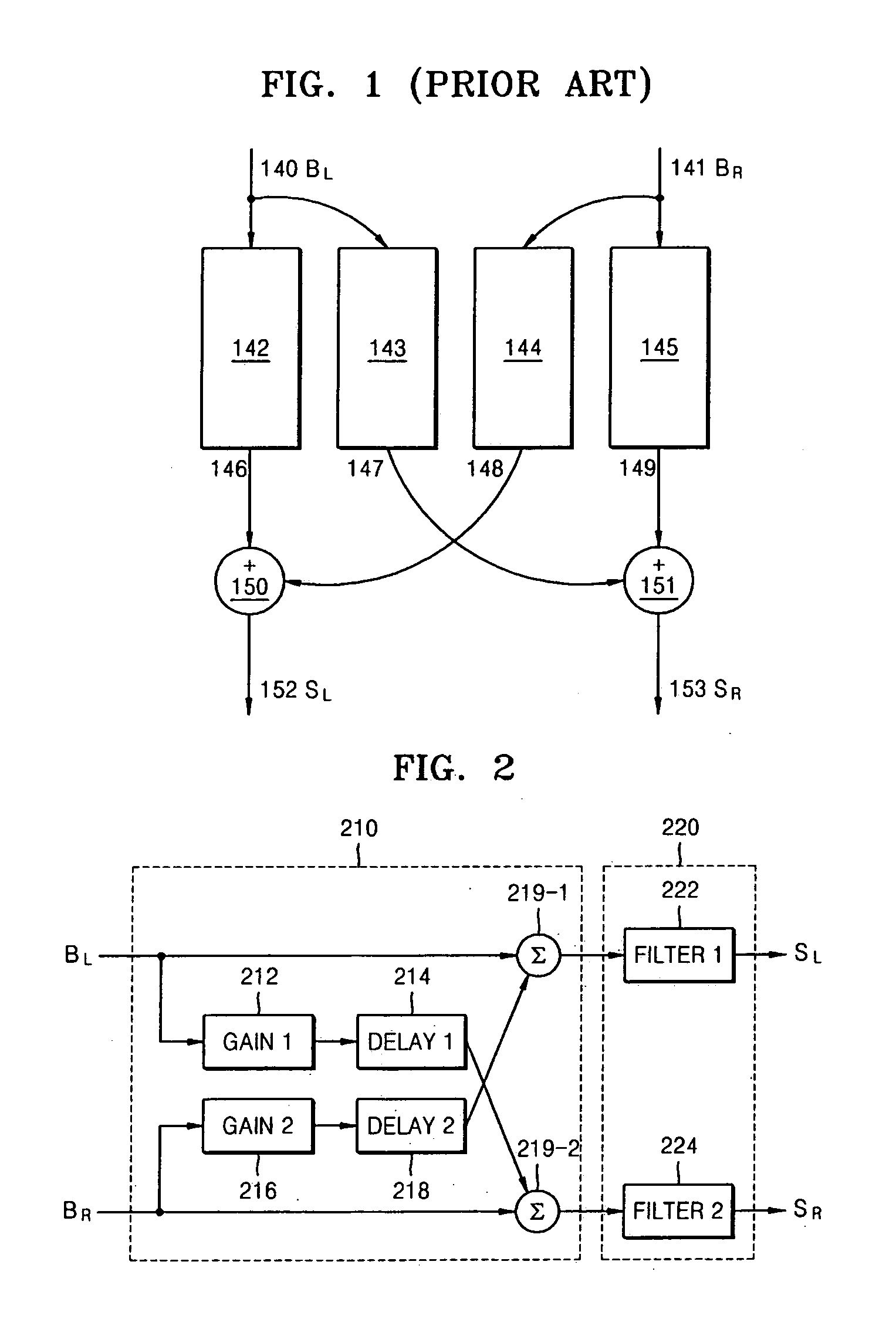

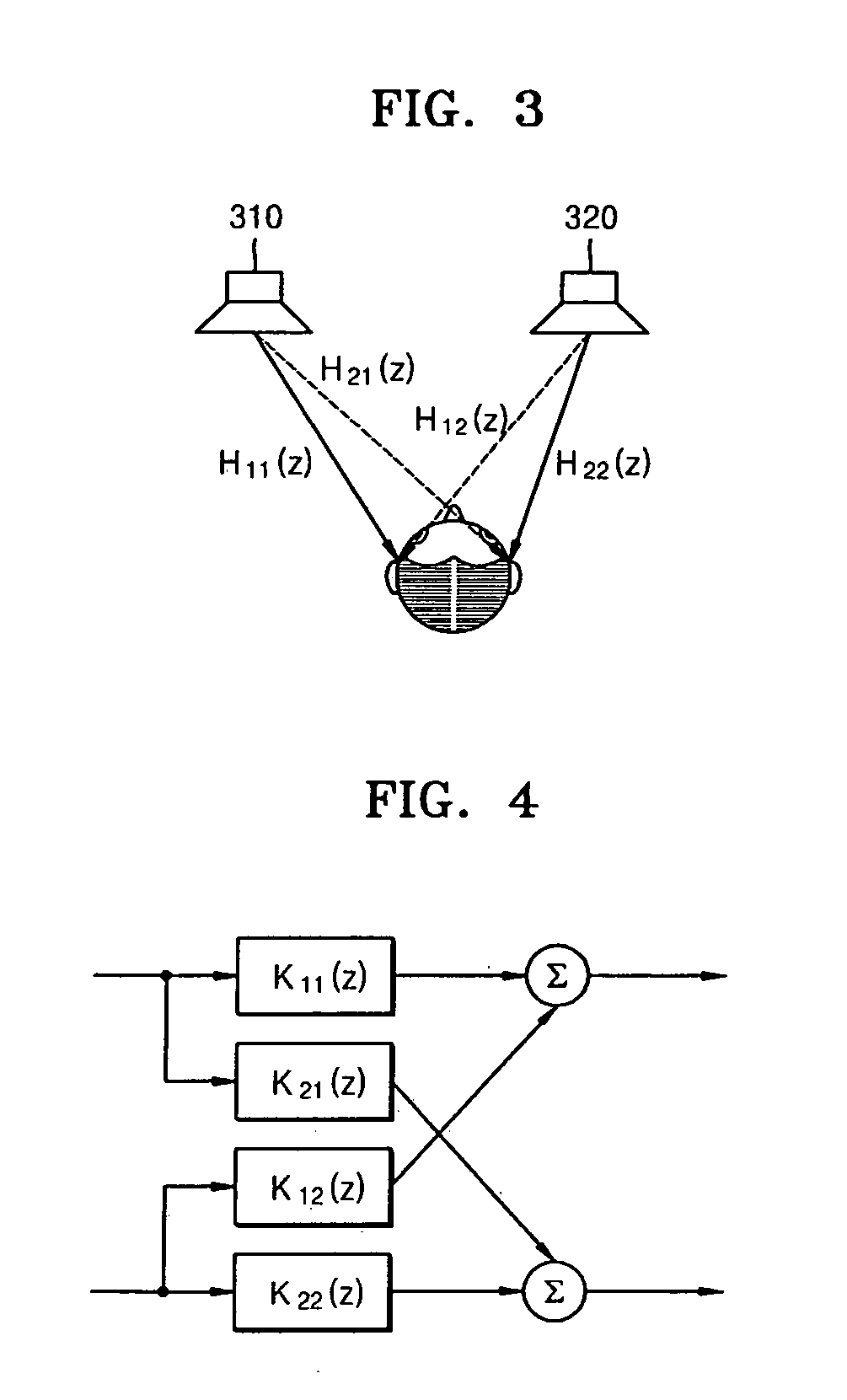

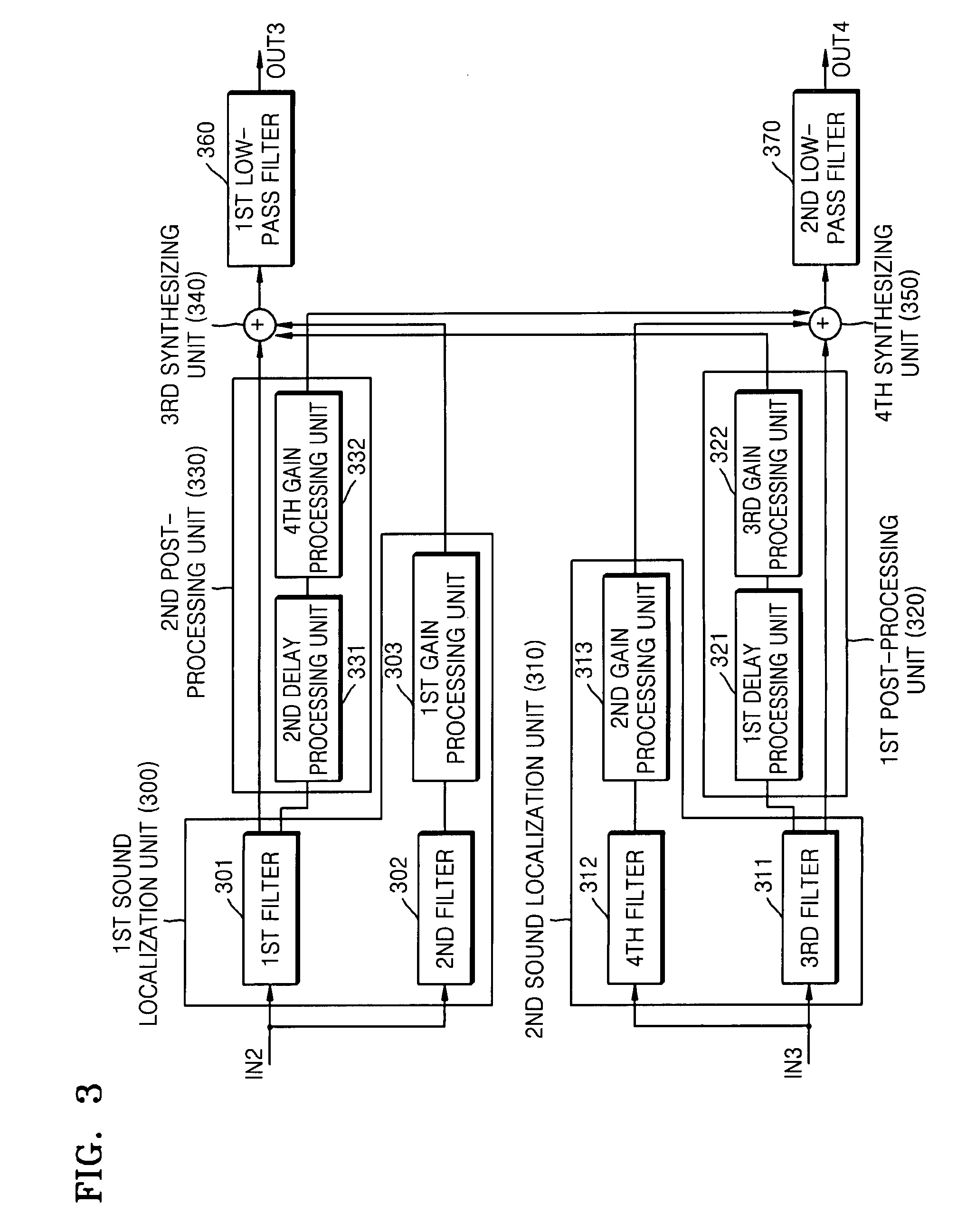

Apparatus and method to cancel crosstalk and stereo sound generation system using the same

ActiveUS20070076892A1Stereophonic circuit arrangementsTwo-channel systemsSound generationVocal tract

An apparatus and method for canceling crosstalk between 2-channel speakers and two ears of a listener in a stereo sound generation system. The crosstalk canceling apparatus includes a first signal processing unit to cross-mix first and second channel signals with gain and delay-adjusted first and second channel signals, a second signal processing unit to adjust frequency characteristics of the signals mixed in the first signal processing unit.

Owner:SAMSUNG ELECTRONICS CO LTD

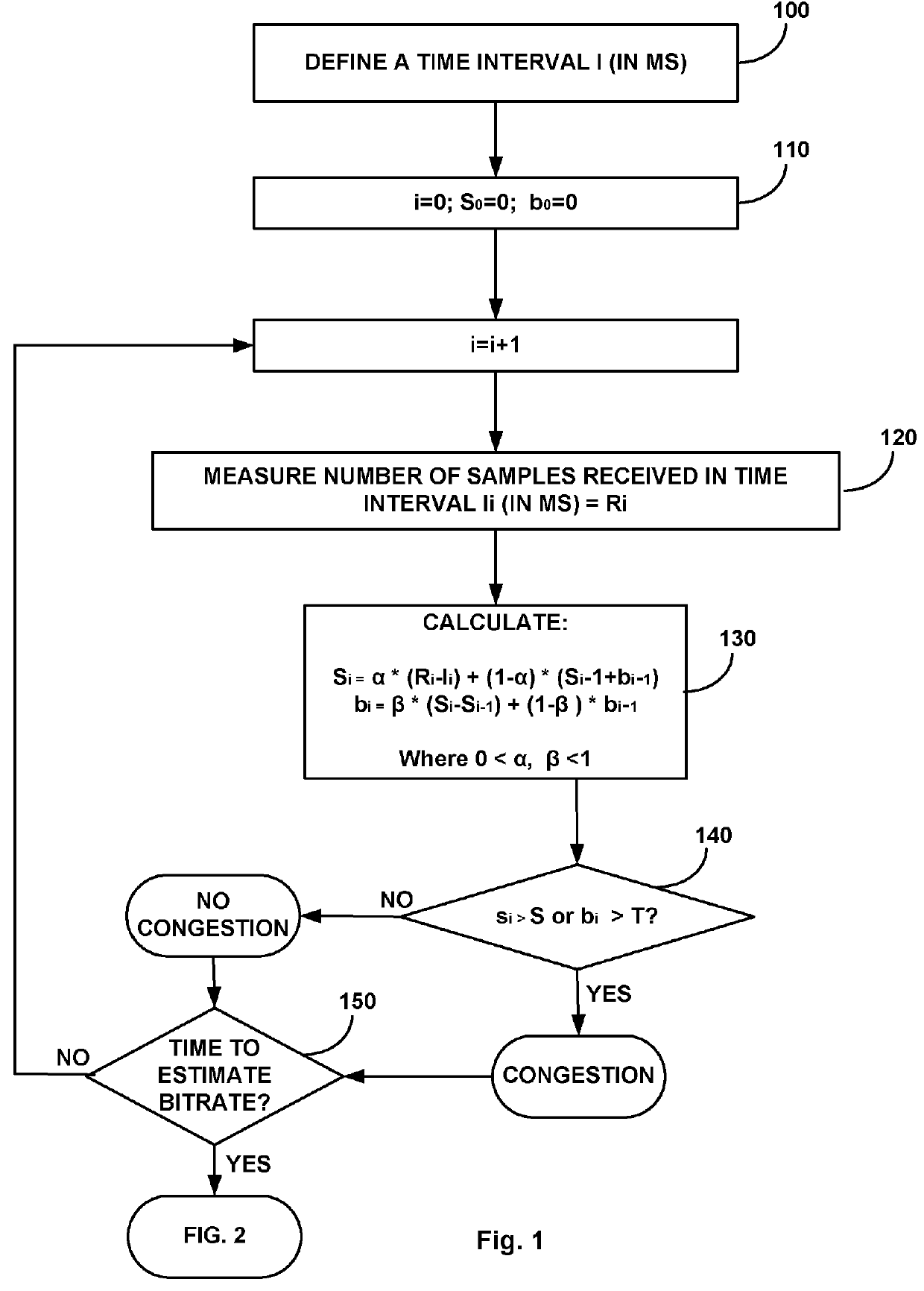

VOIP bandwidth management

A computerized method of optimizing audio quality in a voice stream between a sender and a receiver VoIP applications, comprising: defining by the receiver time intervals; determining by the receiver at the end of each time interval whether congestion exists, by calculating (i) one-way-delay and (ii) trend, using double-exponential smoothing; estimating by the receiver a bandwidth available to the sender based on said calculation; sending said estimated bandwidth by the receiver to the sender; and using by the sender said bandwidth estimate as maximum allowed send rate.

Owner:VIBER MEDIA S A R L

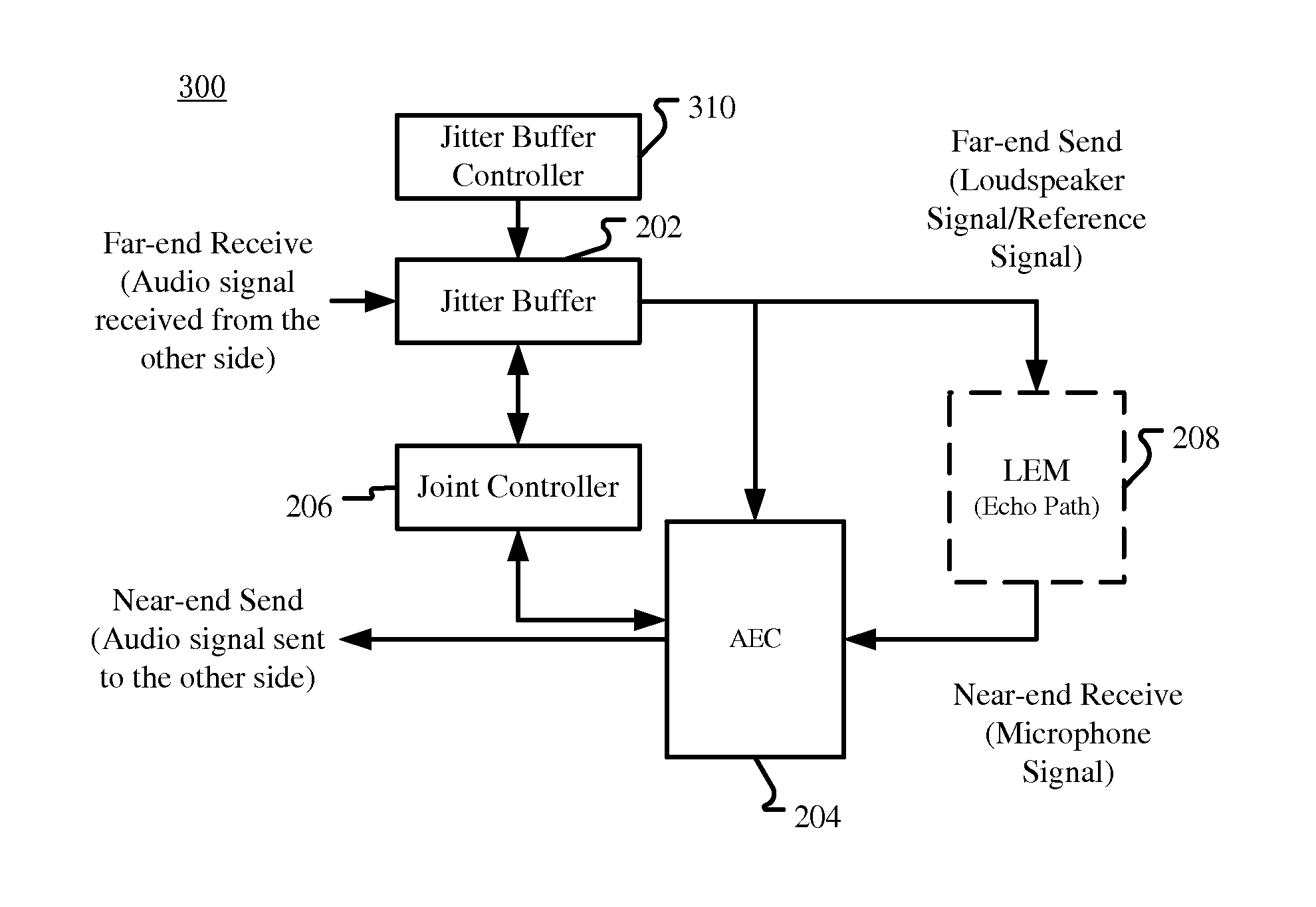

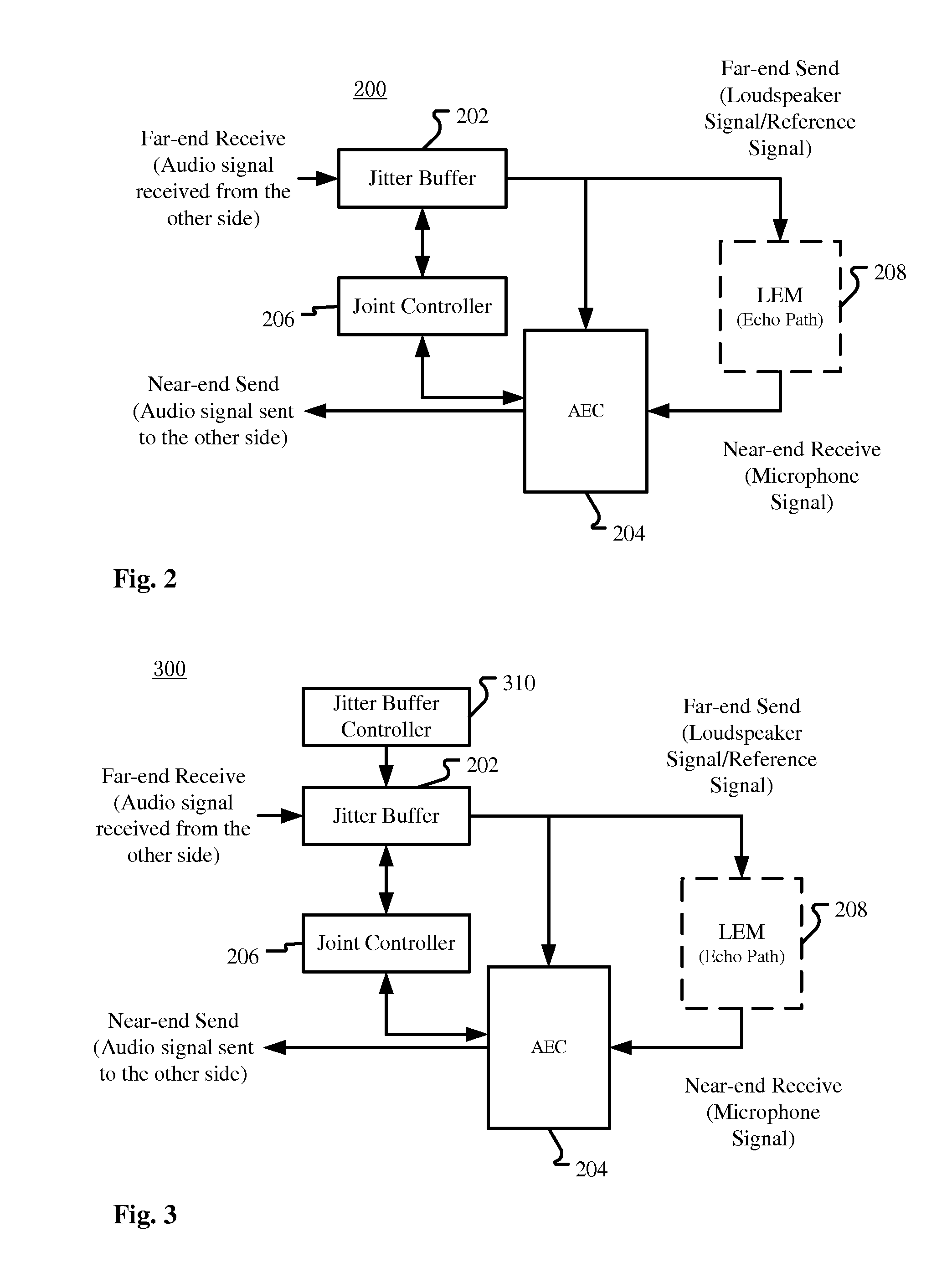

Method for Controlling Acoustic Echo Cancellation and Audio Processing Apparatus

ActiveUS20150332704A1Reducing delay jitterReduce latency jitterInterconnection arrangementsSpeech analysisMicrophone signalAudio frequency

A method for controlling acoustic echo cancellation and an audio processing apparatus are described. In one embodiment, the audio processing apparatus includes an acoustic echo canceller for suppressing acoustic echo in a microphone signal, a jitter buffer for reducing delay jitter of a received signal, and a joint controller for controlling the acoustic echo canceller by referring to at least one future frame in the jitter buffer.

Owner:DOLBY LAB LICENSING CORP

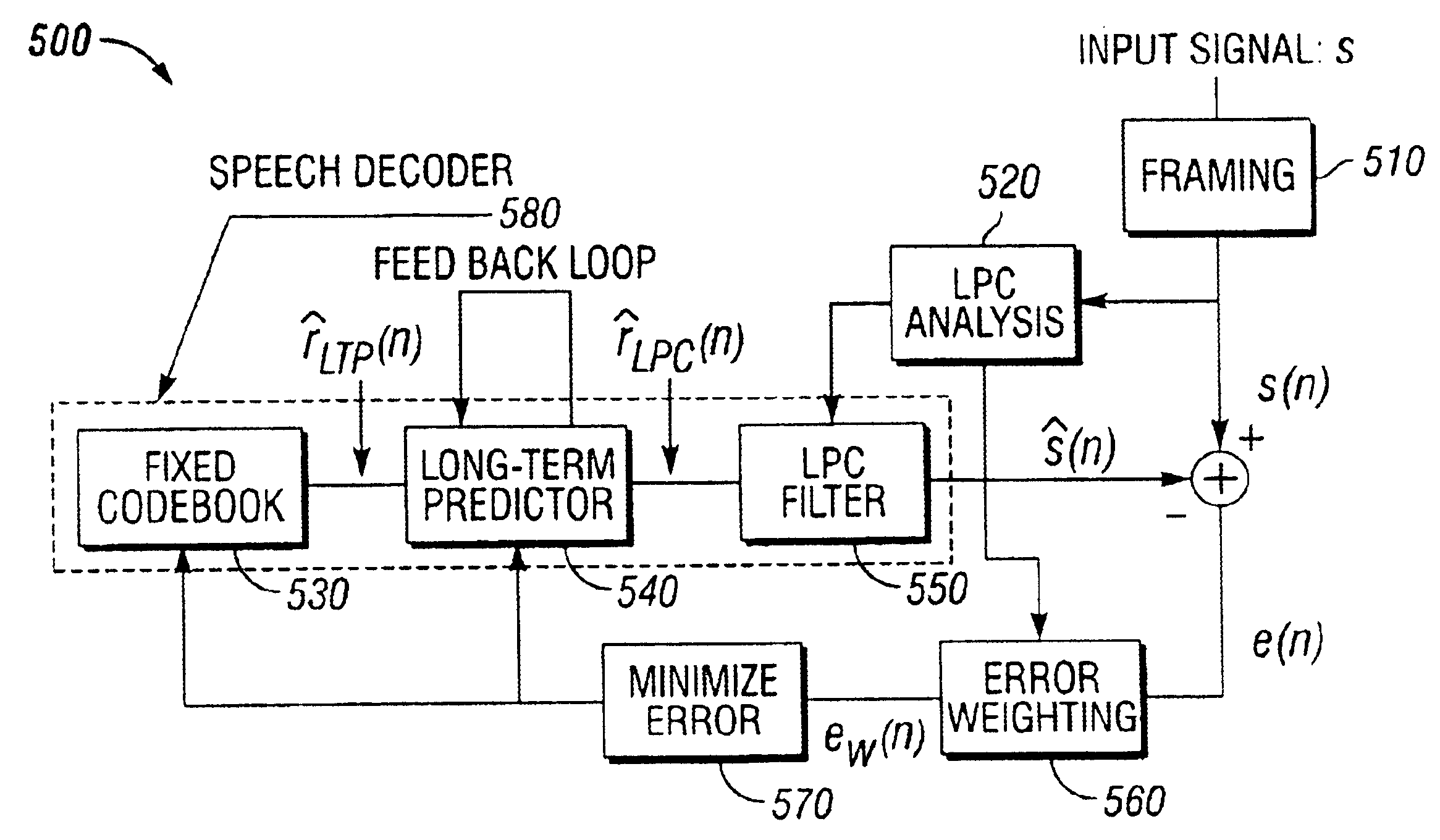

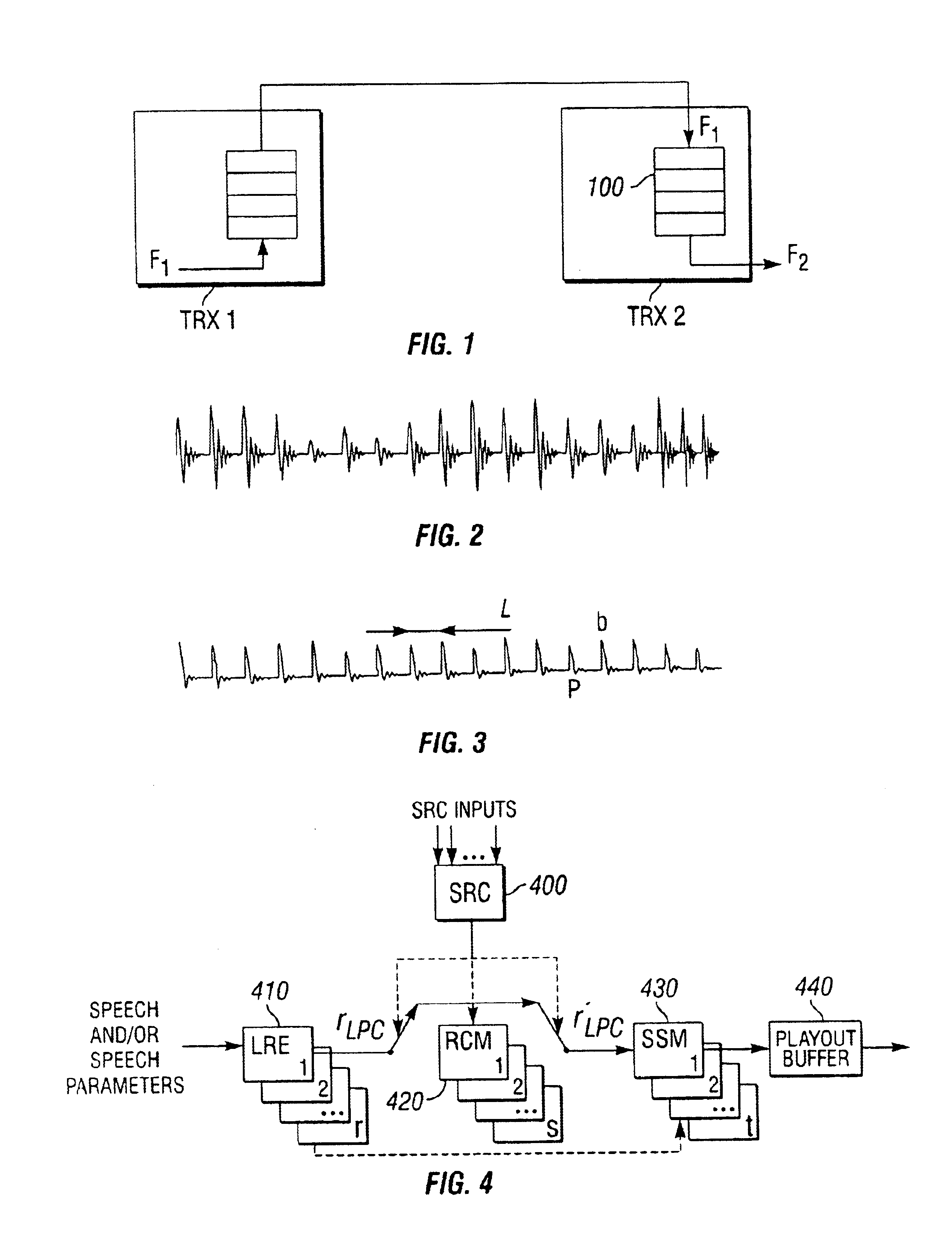

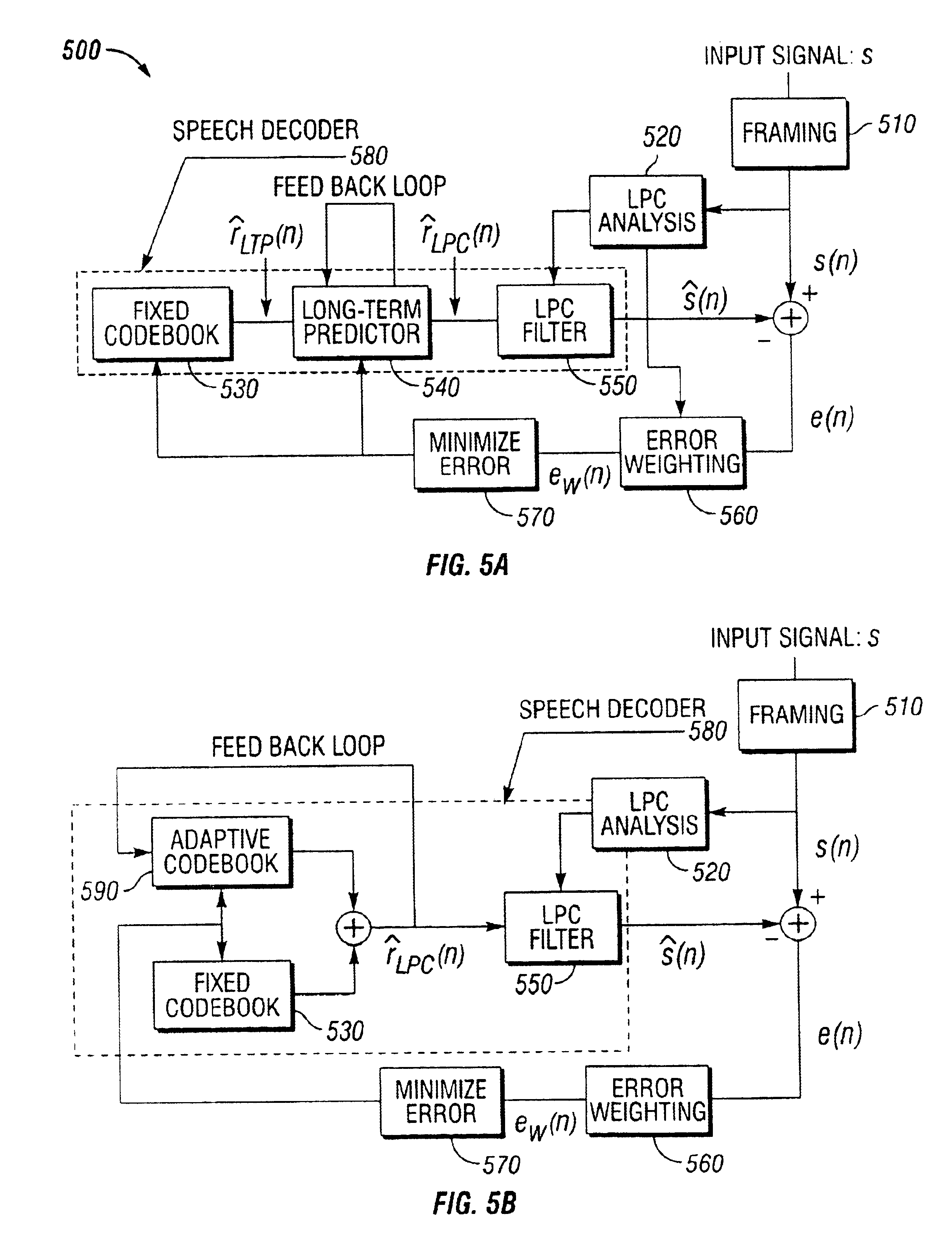

Method and apparatus in a telecommunications system

InactiveUS6873954B1Reducing audio artifactContinuously controlled with only small audio artifactsTime-division multiplexSpeech synthesisSample rate conversionSpeech synthesis

Audio artifacts due to overrun or underrun in a playout buffer caused by the sampling rates at a sending and receiving side not being at the same rate are reduced. An LPC-residual is modified on a sample-by-sample basis. The LPC-residual block, which includes N samples, is converted to a block comprising N+1 or N−1 samples. A sample rate controller decides whether samples should be added to or removed from the LPC-residual. The exact position at which to add respective remove samples is either chosen arbitrarily or found by searching for low energy segments in the LPC-residual. A speech synthesiser module then reproduces the speech. By using the proposed sample rate conversion method the playout buffer can be continuously controlled. Furthermore, since the method works on a sample-by-sample basis the buffer can be kept to a minimum and hence no extra delay is introduced.

Owner:TELEFON AB LM ERICSSON (PUBL)

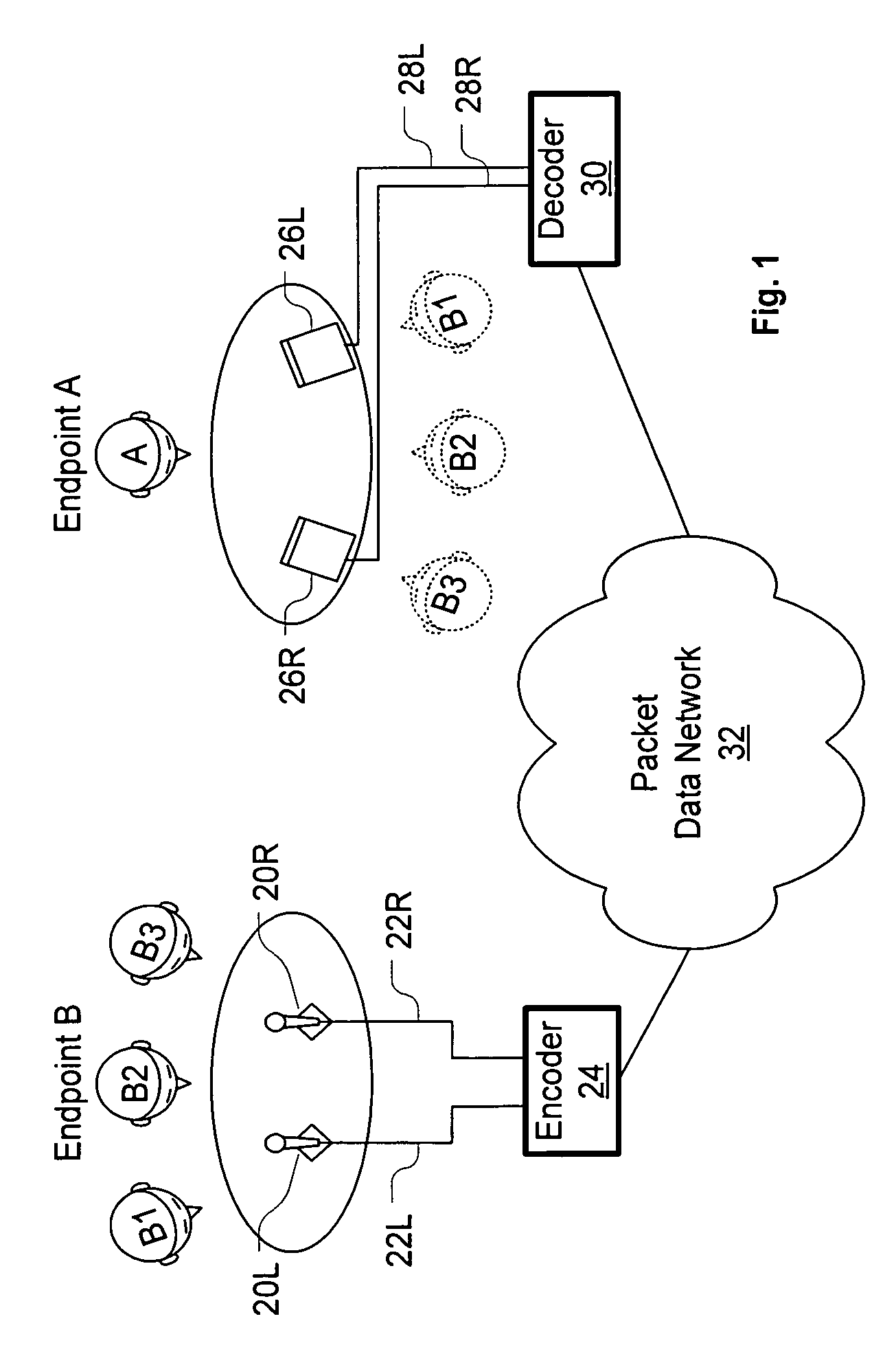

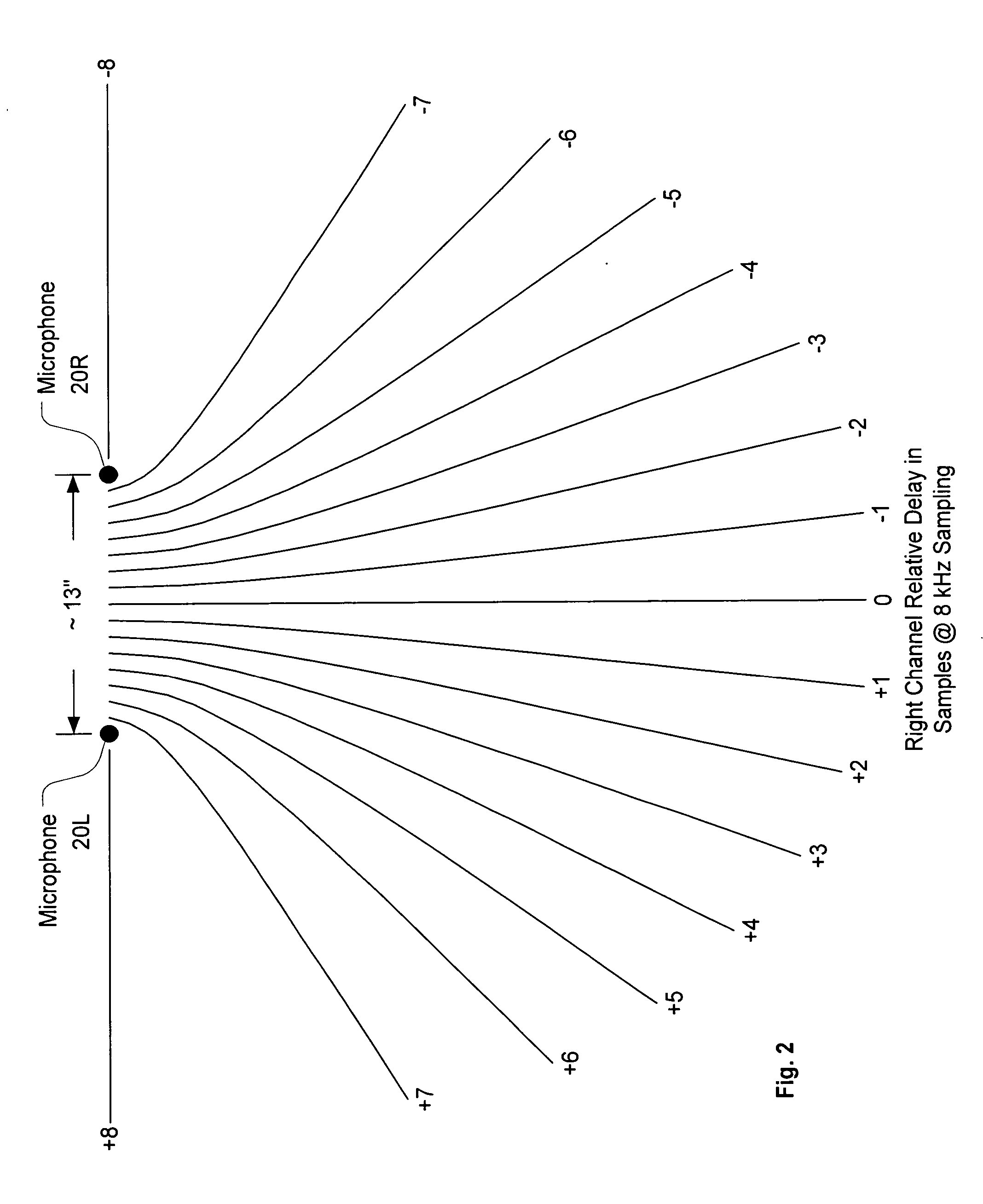

System and method for stereo conferencing over low-bandwidth links

InactiveUS20060023871A1High bandwidthSatisfactory sound qualityTwo-way loud-speaking telephone systemsPublic address systemsVocal tractComputer science

Owner:CISCO TECH INC

Apparatus and method of out-of-head localization of sound image output from headpones

InactiveUS20080175396A1Reduction in tirednessReduce fatiguePseudo-stereo systemsTwo-channel systemsSound imageHeadphones

An apparatus for and method of externalizing a sound image output to headphones are provided. The method of externalizing a sound image output to headphones includes: localizing the sound image of an input signal to a predetermined area in front of a listener; and signal-processing a left signal component and a right signal component of the input signal with different delay values and gain values, respectively. According to the method and apparatus, the sound image output to the headphones can be localized to a virtual sound stage in front of the listener, thereby reducing tiredness occurring when listening through headphones, and even when a sound source includes many monophonic component, the sound image can be externalized.

Owner:SAMSUNG ELECTRONICS CO LTD

Live broadcast data processing method and device, computer equipment and storage medium

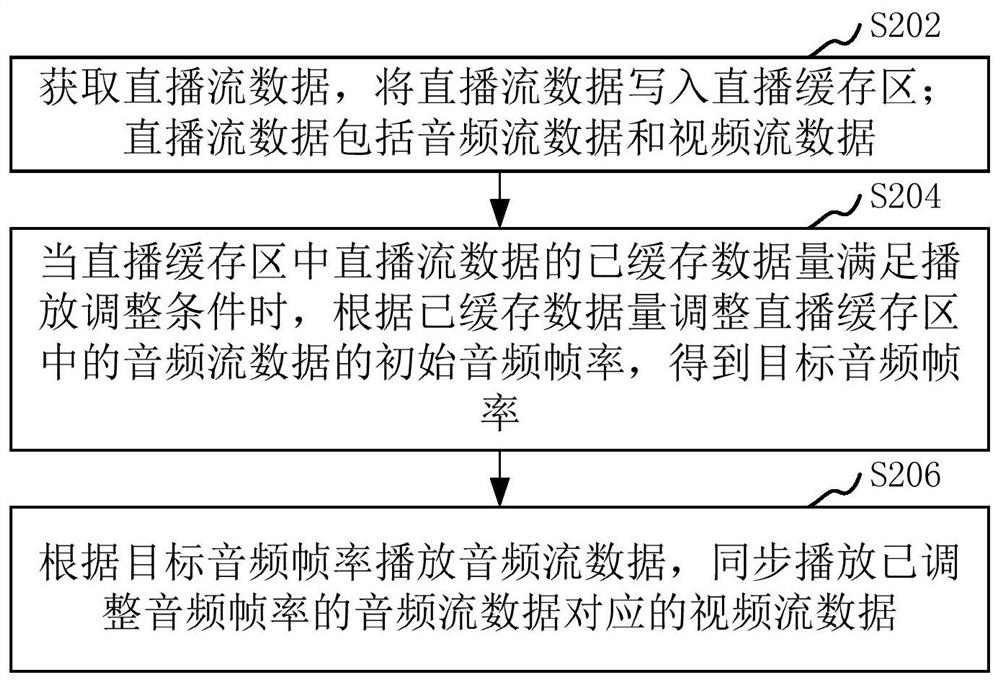

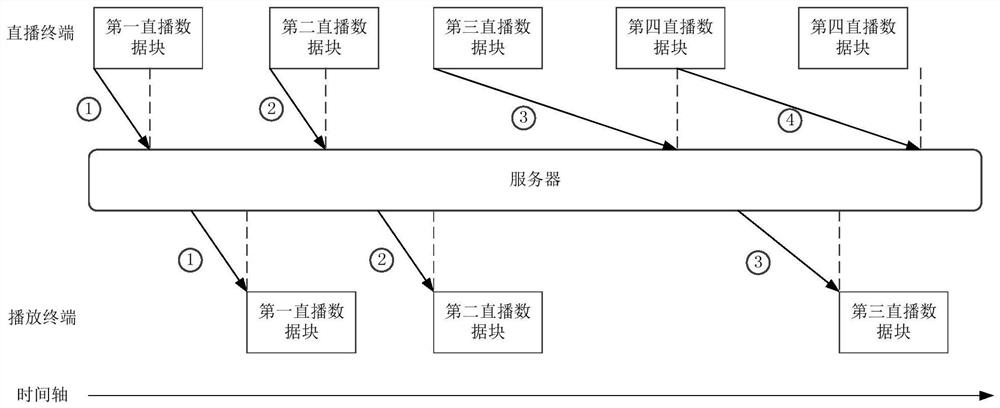

ActiveCN111918093AReduce playback lagIntegrity guaranteedSelective content distributionStreaming dataData synchronization

The invention relates to a live broadcast data processing method and device, computer equipment and a storage medium. The method comprises the following steps: acquiring live broadcast stream data, and writing the live broadcast stream data into a live broadcast cache region; wherein the live broadcast stream data comprise audio stream data and video stream data, when the cached data volume of thelive broadcast stream data in the live broadcast cache region satisfies a playing adjustment condition, adjusting the initial audio frame rate of the audio stream data in the live broadcast cache region according to the cached data volume to obtain a target audio frame rate, and playing the audio stream data according to the target audio frame rate, and synchronously playing the video stream datacorresponding to the audio stream data of which the audio frame rate is adjusted. By adopting the method, the playing delay of the playing end can be reduced.

Owner:TENCENT TECH (SHENZHEN) CO LTD

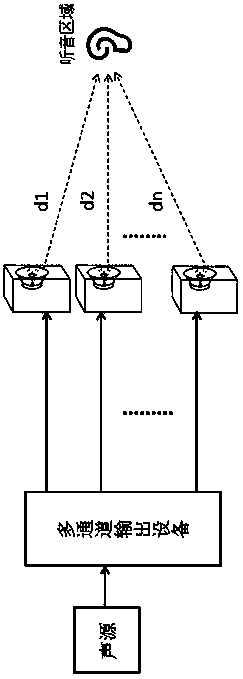

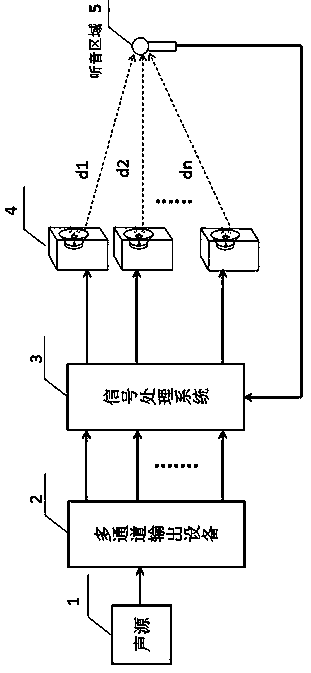

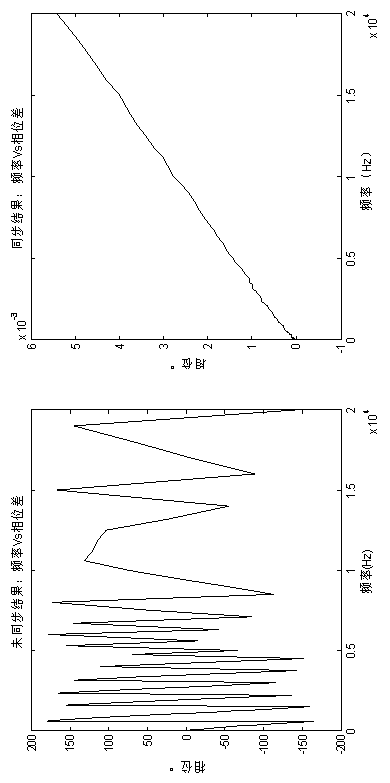

Method for compensating delay and frequency response characteristics of multi-output channel sound system

ActiveCN103414980APhase coincidenceConsistent frequency response characteristicsFrequency/directions obtaining arrangementsFilter (signal processing)Medium frequency

The invention discloses a method for compensating delay and frequency response characteristics of multi-output channel sound system and system implementation thereof. The method comprises the following steps that firstly, phase difference between channels of a multi-channel output device is measured, so that the situation that the multi-channel output device itself is in the state of synchronously outputting signals is ensured; then the delay of the signals which are output by the multi-channel output device and pass through a signal processing system, a loudspeaker box and a space transmission routine to reach a specific audition area is estimated through a delay estimation method, and delay compensation is conducted on the channels by comparing delay difference between the channels; finally, an FIR frequency response compensating filter is designed and achieved according to actually detected frequency response curves of a signal transmission routine, and the part above the low and medium frequency in the frequency response curves of the multiple channels is compensated into a straight line through the filter as far as possible. By means of the method, when sound waves transmitted out of each loudspeaker box reach the audition area, the phases of the sound waves are basically the same, and the frequency response characteristics of the channels are basically the same.

Owner:ZHEJIANG ELECTRO ACOUSTIC R&D CENT CAS +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com