Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

44216 results about "Loudspeaker" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A loudspeaker (or loud-speaker or speaker) is an electroacoustic transducer; a device which converts an electrical audio signal into a corresponding sound. The most widely used type of speaker in the 2010s is the dynamic speaker, invented in 1924 by Edward W. Kellogg and Chester W. Rice. The dynamic speaker operates on the same basic principle as a dynamic microphone, but in reverse, to produce sound from an electrical signal. When an alternating current electrical audio signal is applied to its voice coil, a coil of wire suspended in a circular gap between the poles of a permanent magnet, the coil is forced to move rapidly back and forth due to Faraday's law of induction, which causes a diaphragm (usually conically shaped) attached to the coil to move back and forth, pushing on the air to create sound waves. Besides this most common method, there are several alternative technologies that can be used to convert an electrical signal into sound. The sound source (e.g., a sound recording or a microphone) must be amplified or strengthened with an audio power amplifier before the signal is sent to the speaker.

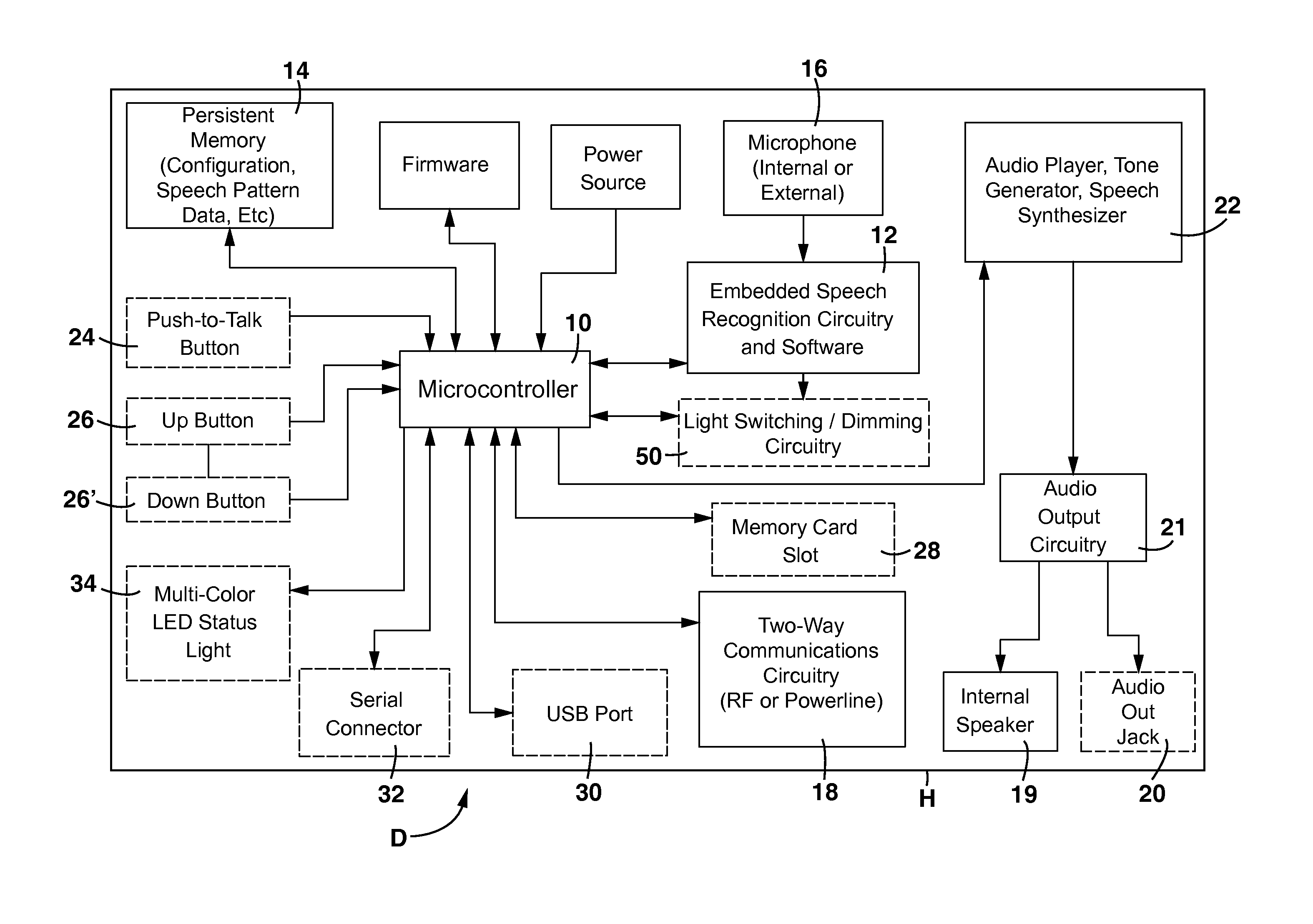

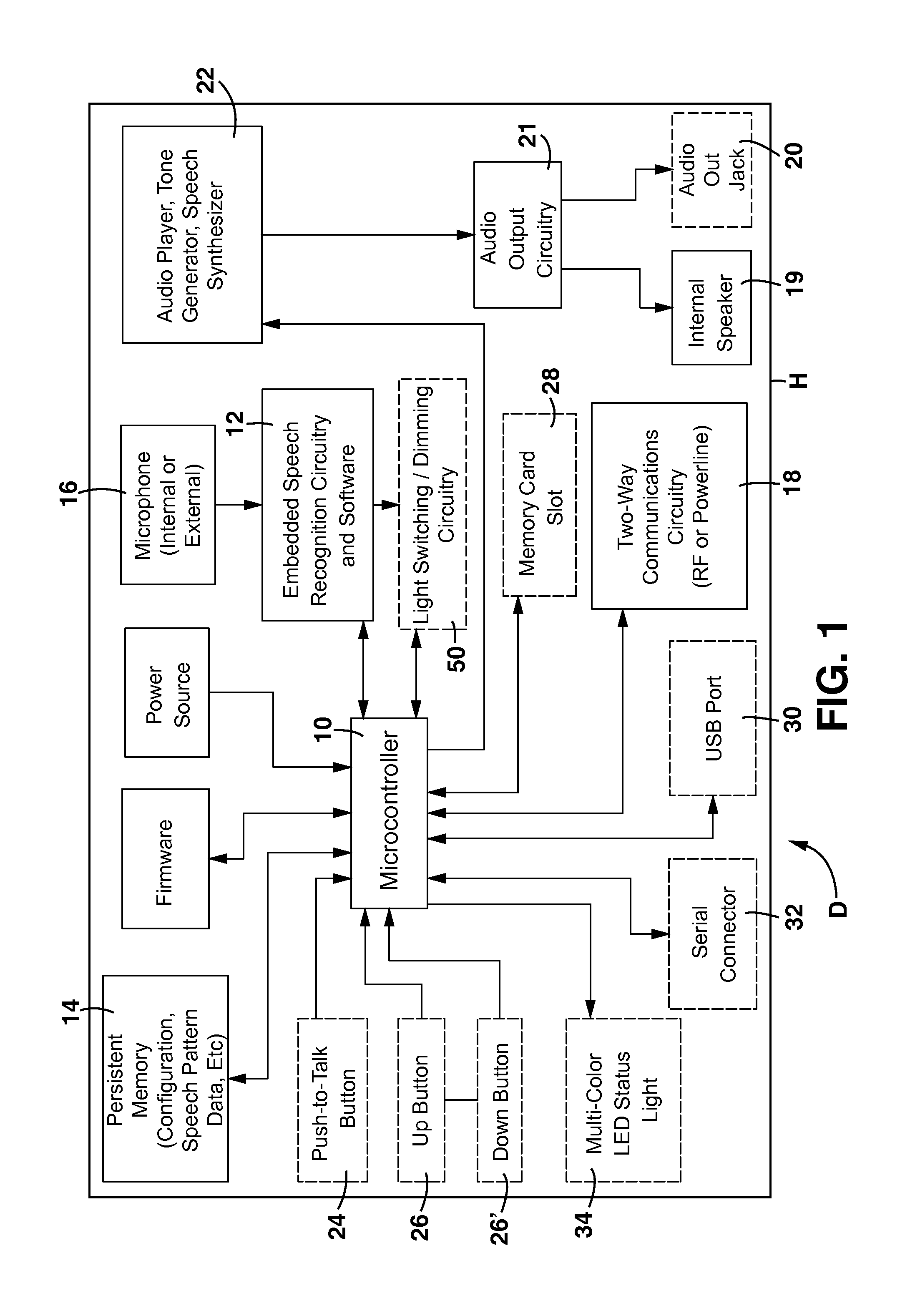

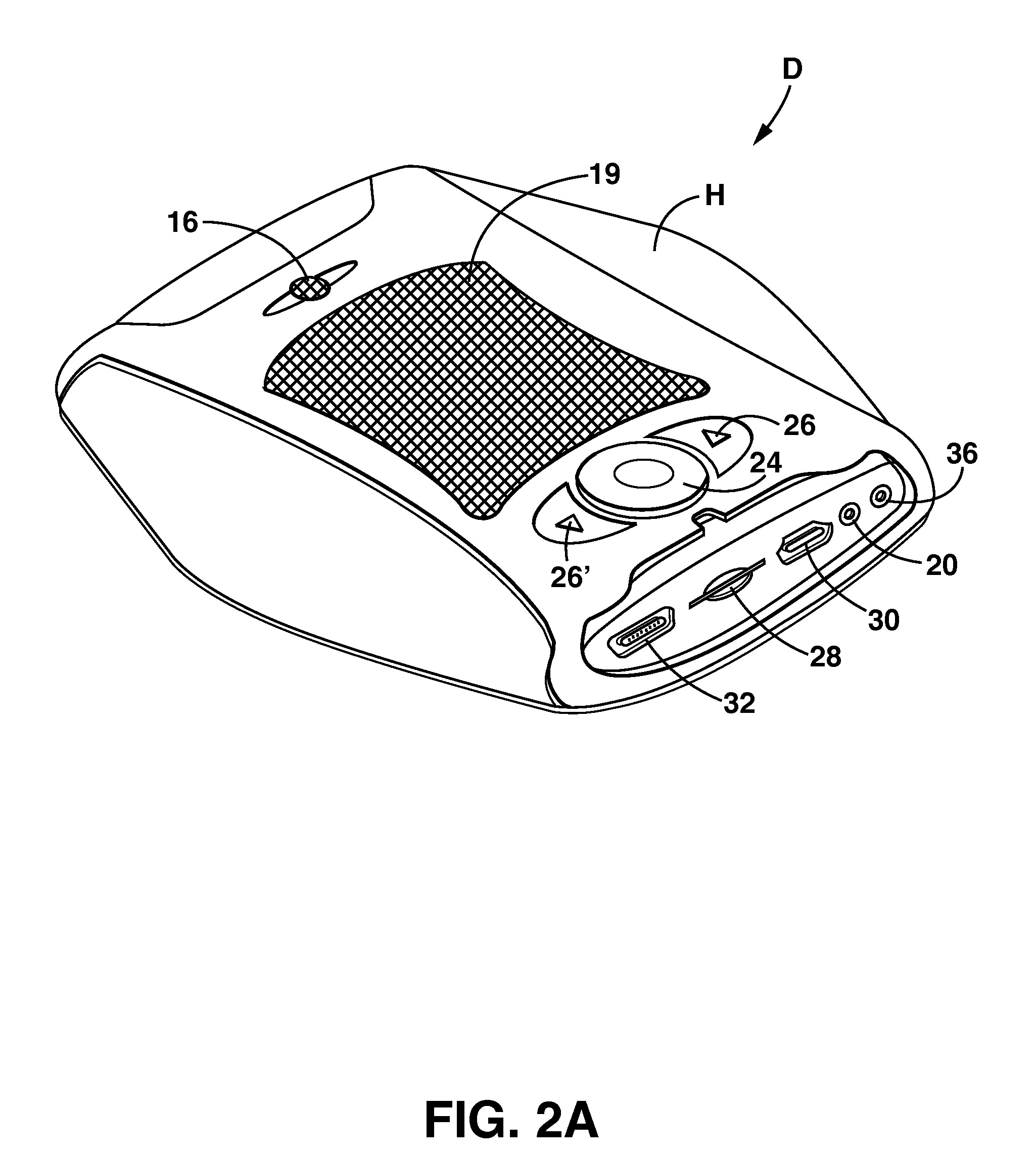

Interactive speech recognition device and system for hands-free building control

A self-contained wireless interactive speech recognition control device and system that integrates with automated systems and appliances to provide totally hands-free speech control capabilities for a given space. Preferably, each device comprises a programmable microcontroller having embedded speech recognition and audio output capabilities, a microphone, a speaker and a wireless communication system through which a plurality of devices can communicate with each other and with one or more system controllers or automated mechanisms. The device may be enclosed in a stand-alone housing or within a standard electrical wall box. Several devices may be installed in close proximity to one another to ensure hands-free coverage throughout the space. When two or more devices are triggered simultaneously by the same speech command, real time coordination ensures that only one device will respond to the command.

Owner:ROSENBERGER THEODORE ALFRED

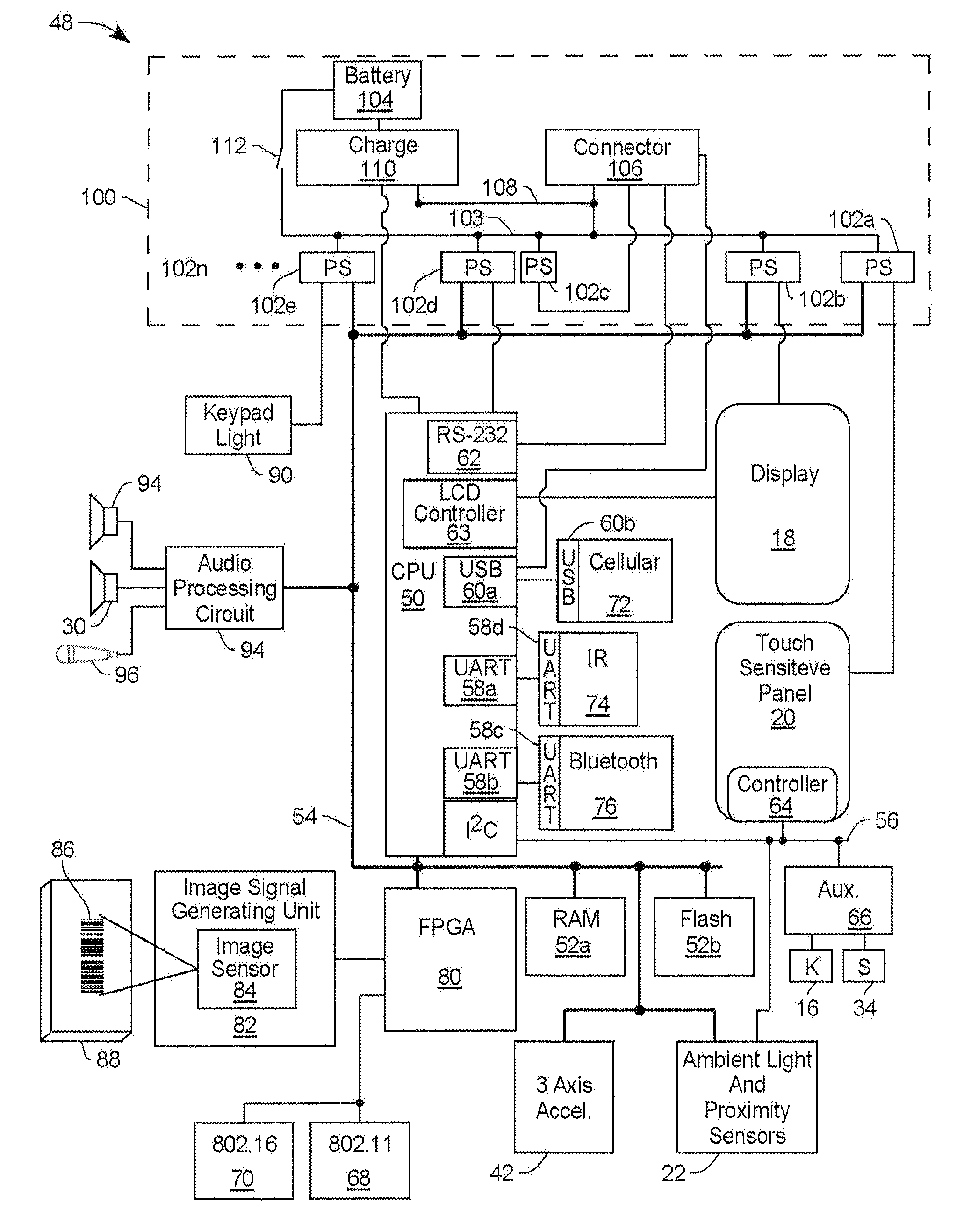

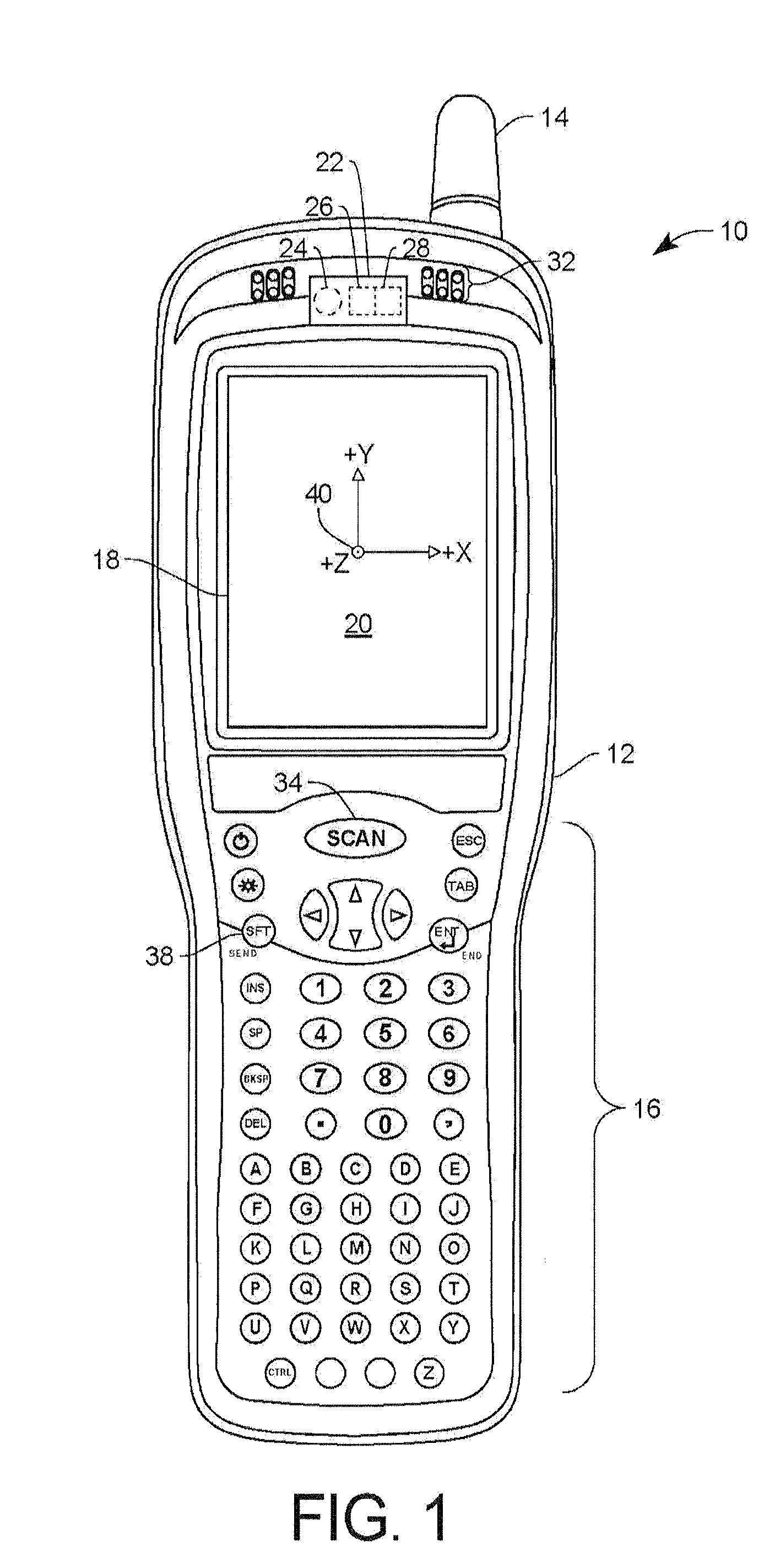

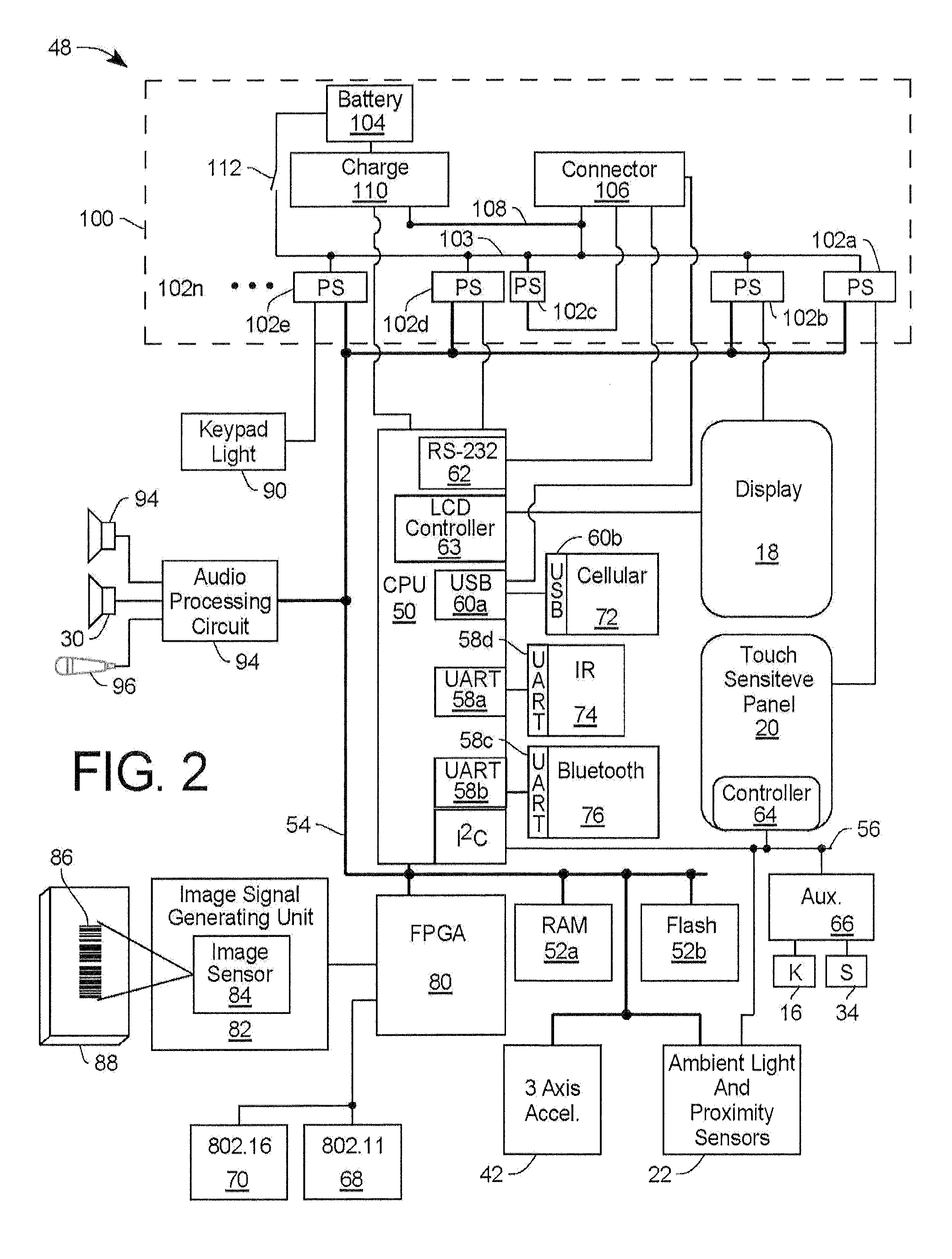

Power management scheme for portable data collection devices utilizing location and position sensors

A data collection device (DCD) is placed in a first low power mode after the DCD has been in a first predetermined position, and placed in a second low power mode after a first predetermined period of time. In another embodiment the DCD includes a wireless telephone, and a proximity sensor which detects when the DCD is close to a user's face, wherein the telephone is automatically put in a handset mode when the DCD is close to a user's face, and automatically put in a speakerphone mode when the DCD is not close to a user's face.

Owner:HAND HELD PRODS

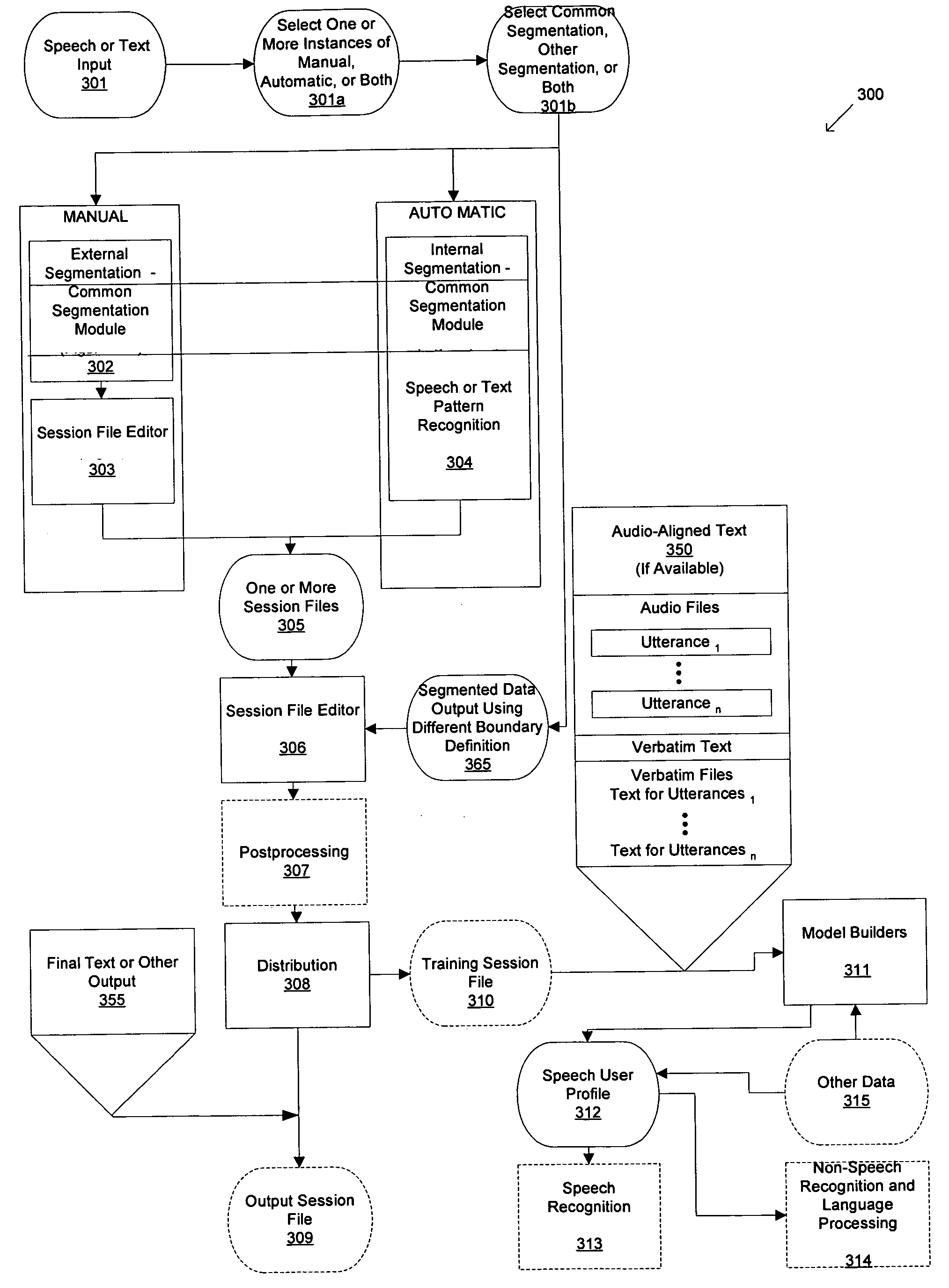

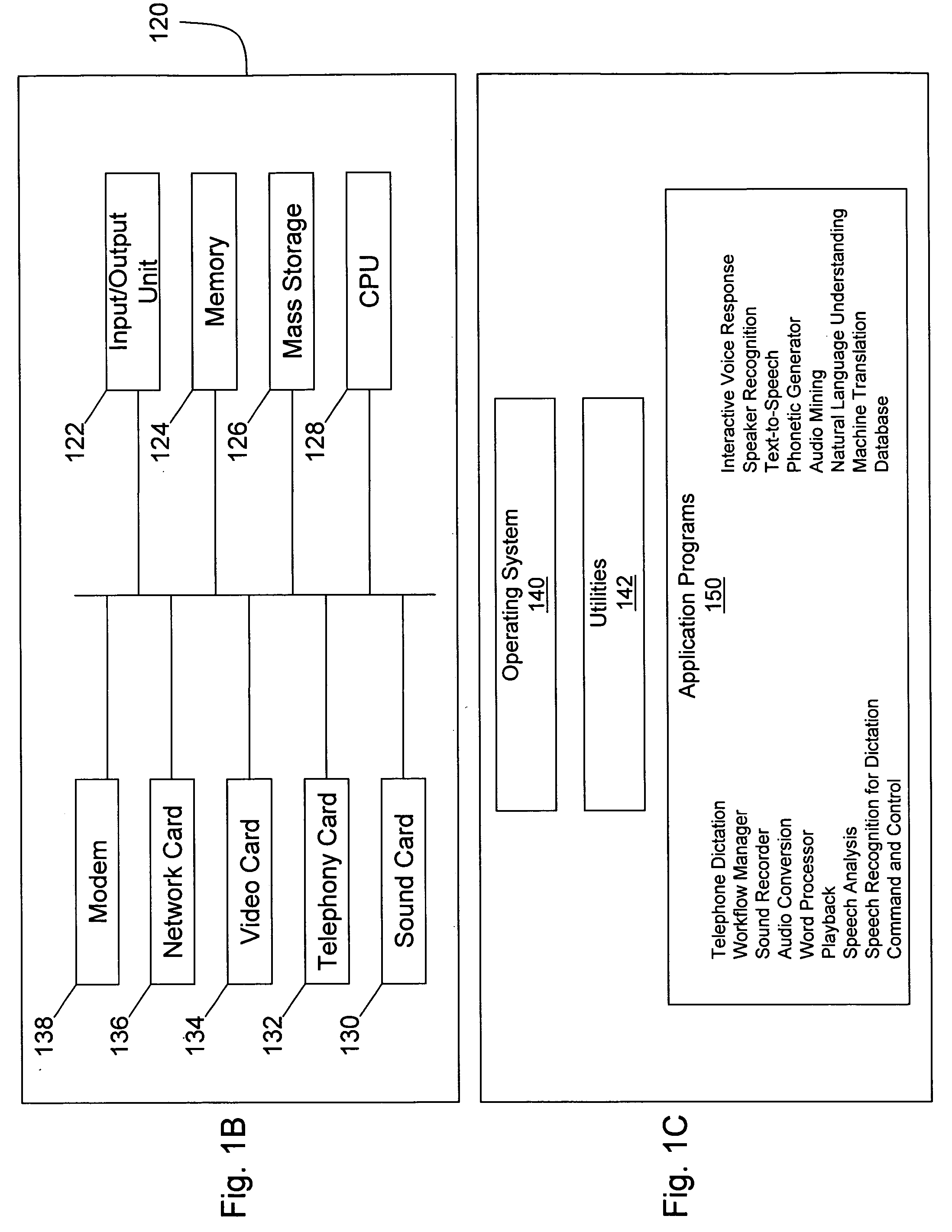

Synchronized pattern recognition source data processed by manual or automatic means for creation of shared speaker-dependent speech user profile

InactiveUS20060149558A1Avoids time-consuming generationMaximize likelihoodSpeech recognitionGraphicsData segment

An apparatus for collecting data from a plurality of diverse data sources, the diverse data sources generating input data selected from the group including text, audio, and graphics, the diverse data sources selected from the group including real-time and recorded, human and mechanically-generated audio, single-speaker and multispeaker, the apparatus comprising: means for dividing the input data into one or more data segments, the dividing means acting separately on the input data from each of the plurality of diverse data sources, each of the data segments being associated with at least one respective data buffer such that each of the respective data buffers would have the same number of segments given the same data; means for selective processing of the data segments within each of the respective data buffers; and means for distributing at least one of the respective data buffers such that the collected data associated therewith may be used for further processing.

Owner:CUSTOM SPEECH USA

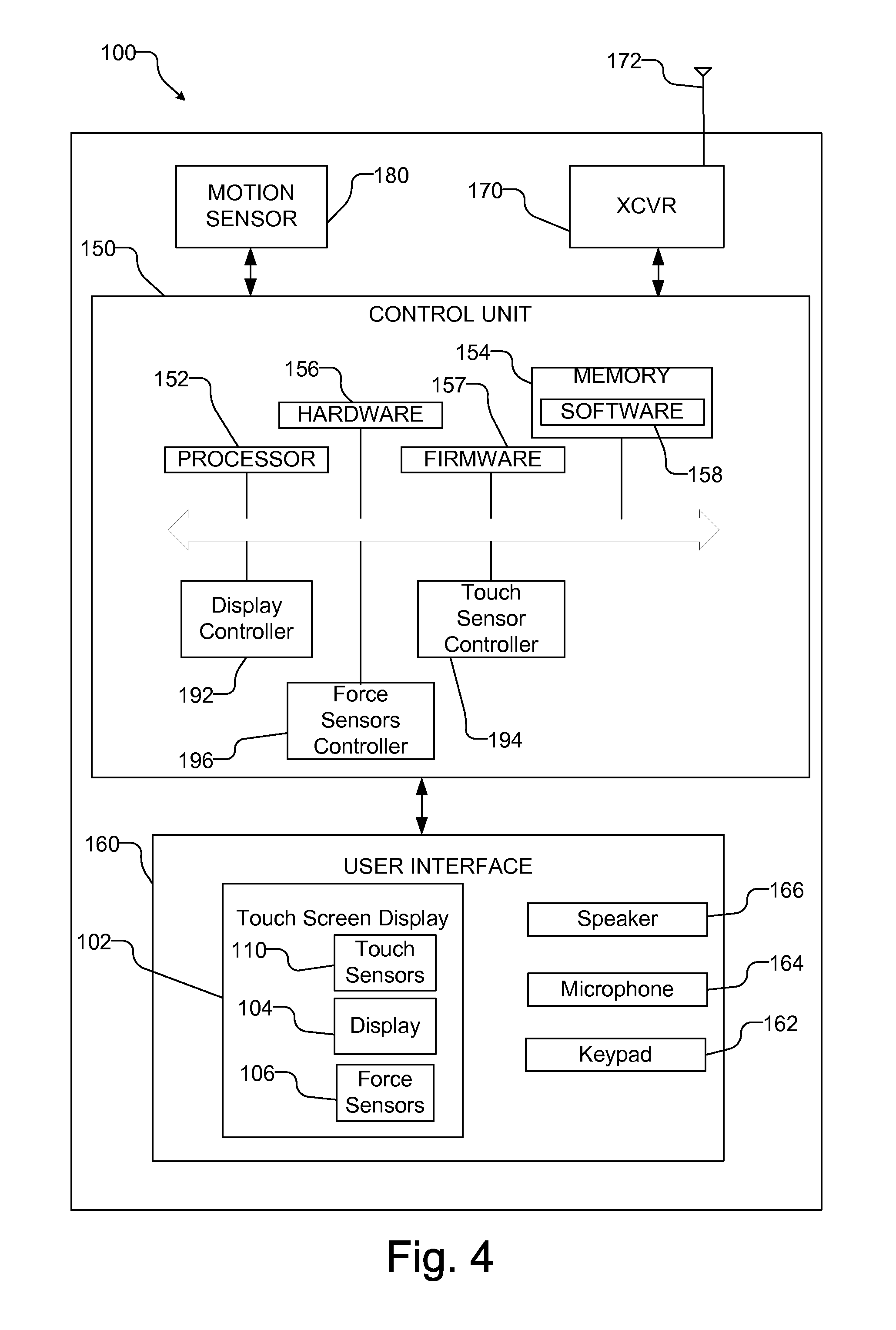

Force sensing touch screen

A computing device includes a touch screen display with a plurality of force sensors, each of which provides a signal in response to contact with the touch screen display. Using force signals from the plurality of force sensors, a characteristic of the contact is determined, such as the magnitude of the force, the centroid of force and the shear force. The characteristic of the contact is used to select a command which is processed to control the computing device. For example, the command may be related to manipulating data displayed on the touch screen display, e.g., by adjusting the scroll speed or the quantity of data selected in response to the magnitude of force, or related to an operation of an application on the computing device, such as selecting different focal ranges, producing an alarm, or adjusting the volume of a speaker in response to the magnitude of force.

Owner:QUALCOMM INC

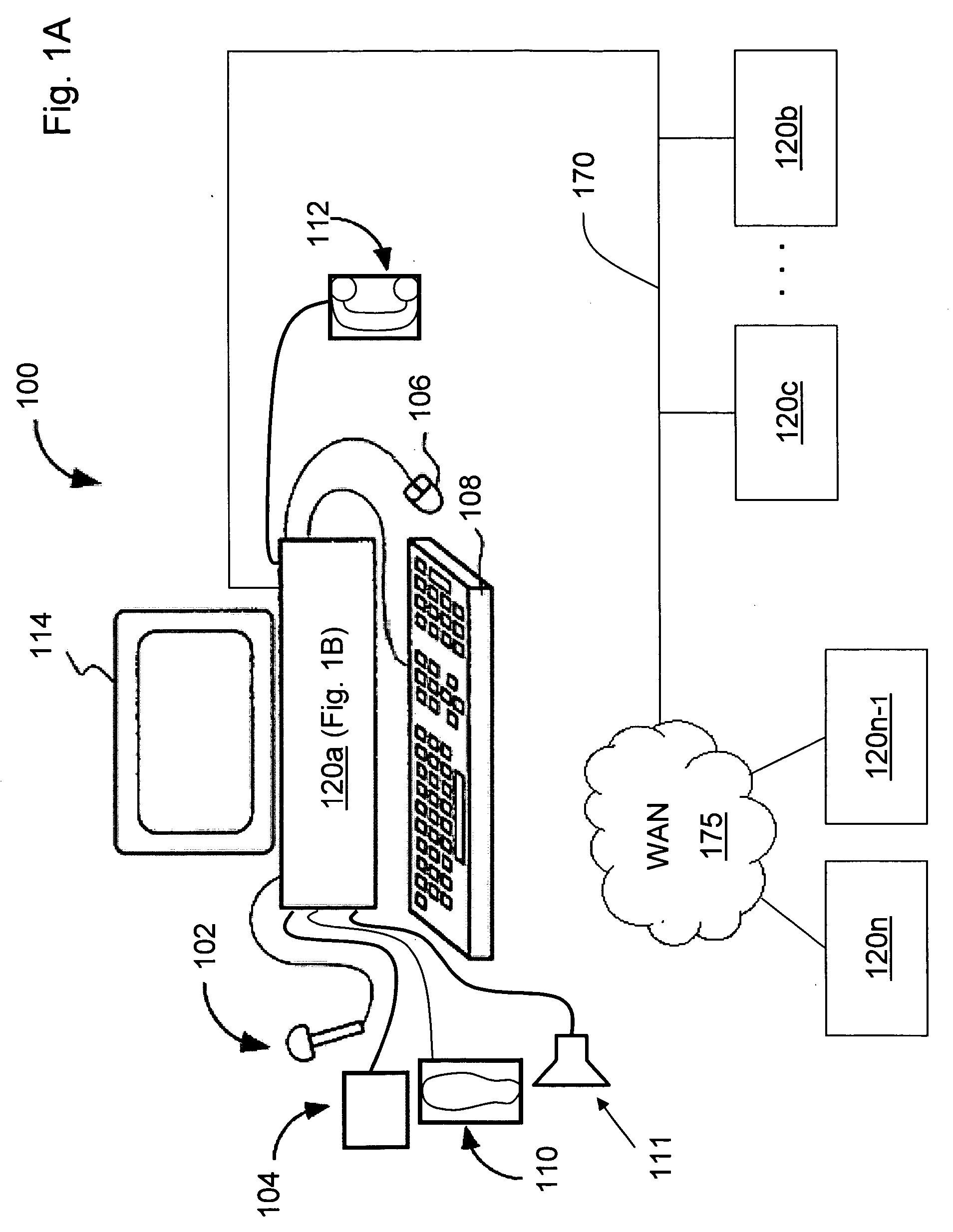

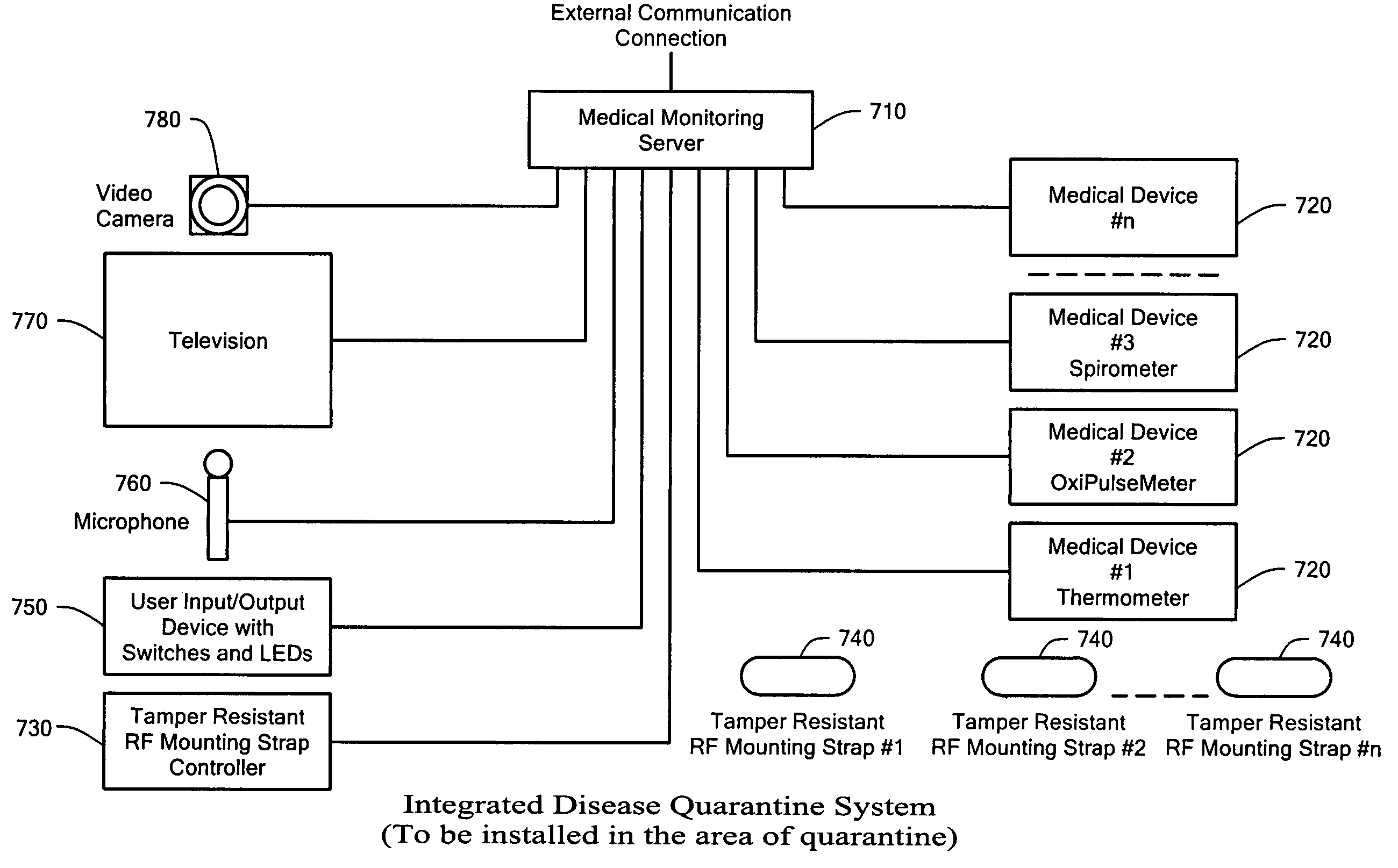

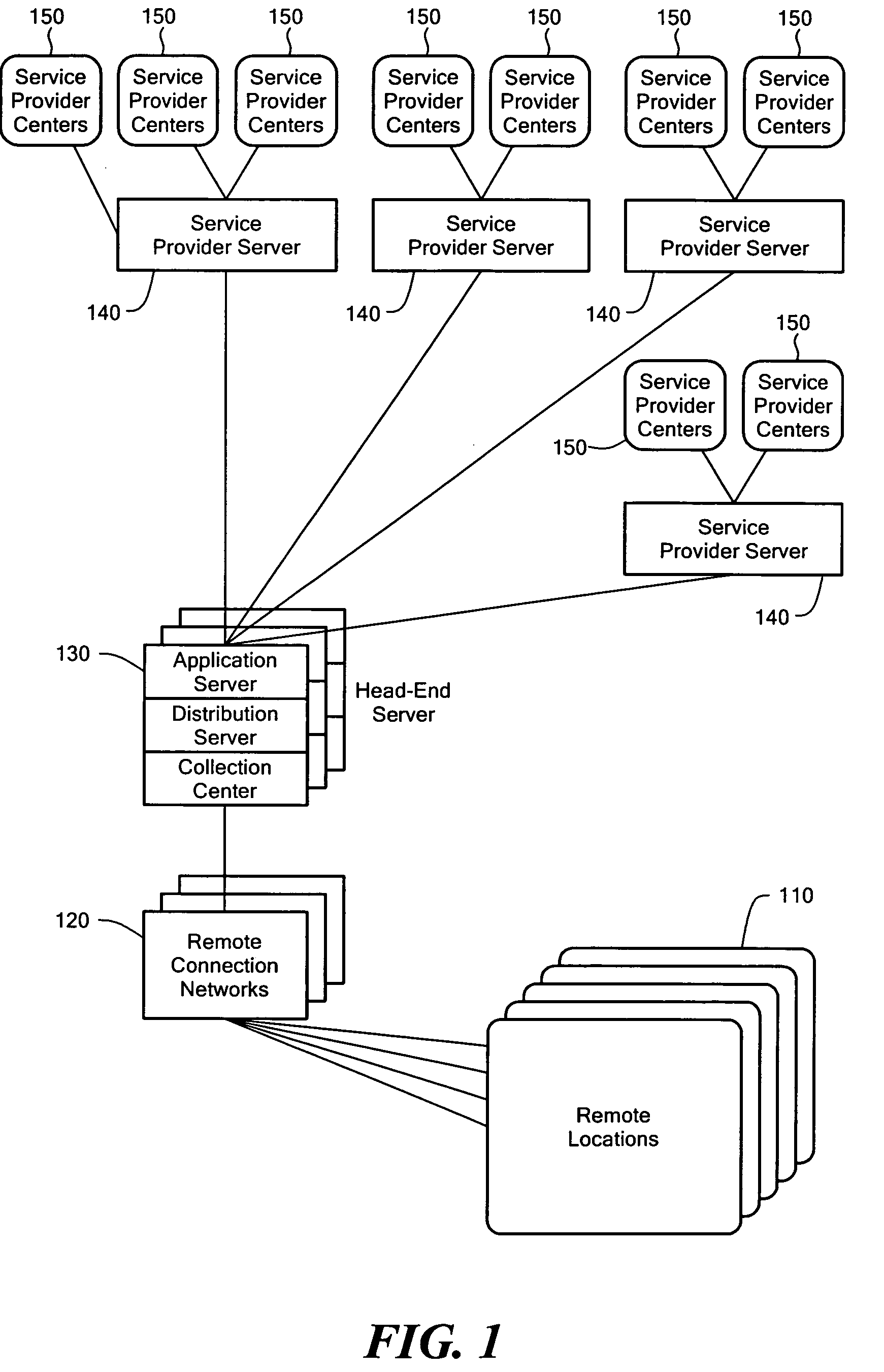

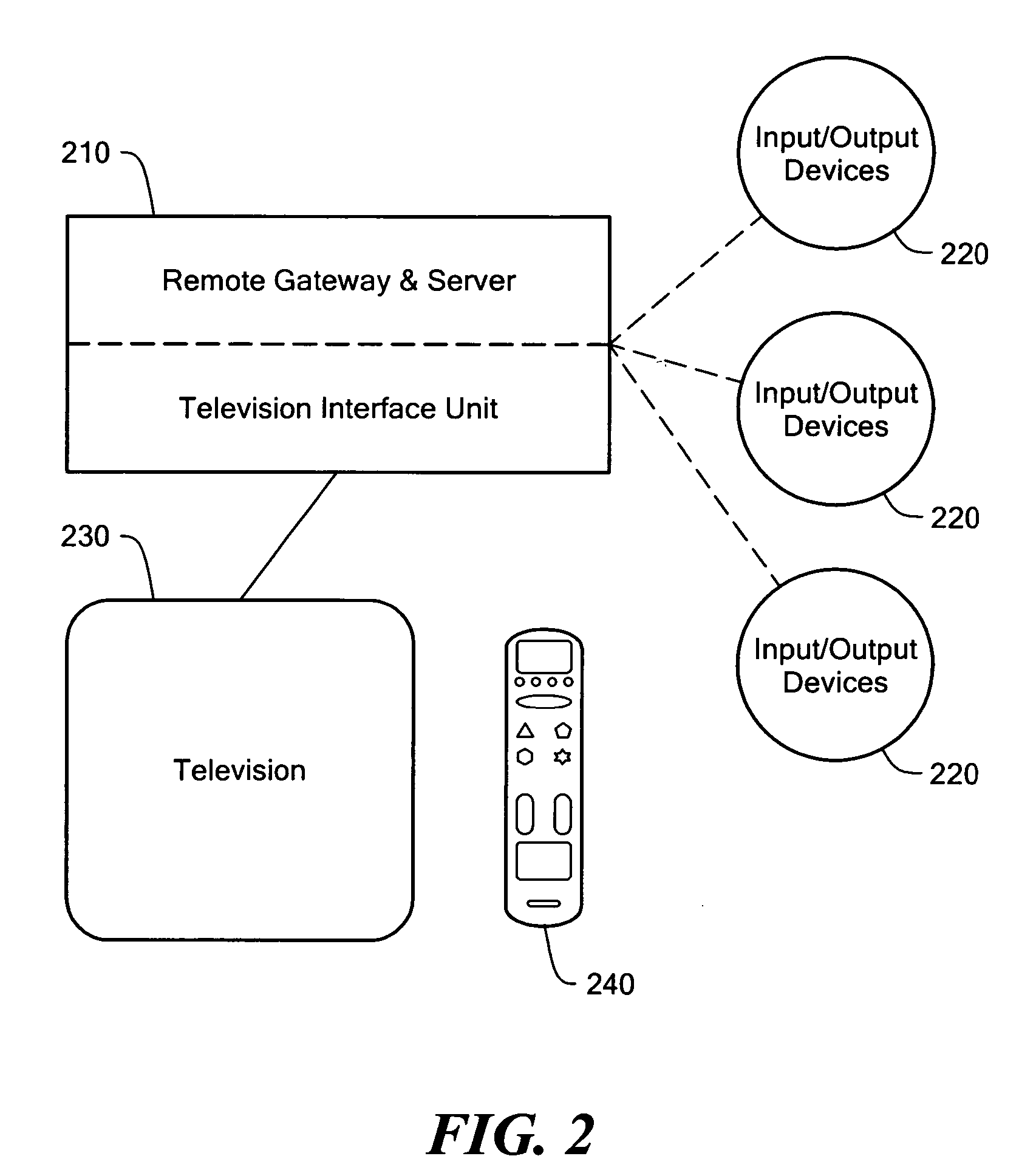

System, device, and method for remote monitoring and servicing

A system, device, and method for remote monitoring and servicing uses a gateway to collect user information, such as physiological, audio, video, and proximity information. The gateway processes the user information locally, and may send the user information or other information, such as alarms, to remote service providers through one or more head-end servers. The gateway also allows service provider information, such as audio and video information, to be conveyed from the service providers to the users. The gateway may output video information on a predetermined television channel, and may output audio information on the predetermined television channel or to a wireless remote controller with in-built speaker. The gateway may receive audio information from the user via a wireless remote controller with in-built microphone. The gateway provide for videoconferencing between the user and one or more remote service providers.

Owner:MATHUR MICHAEL

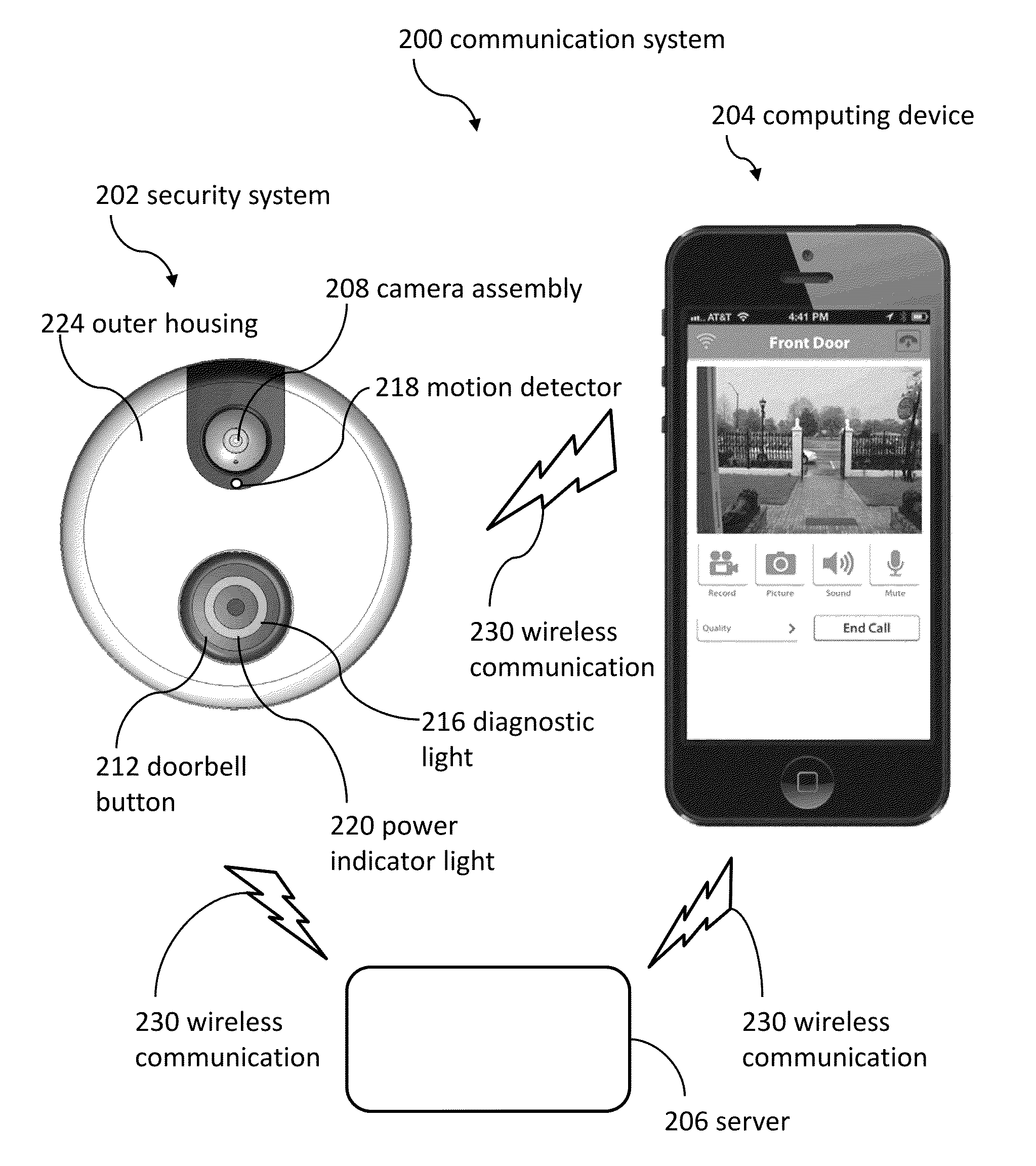

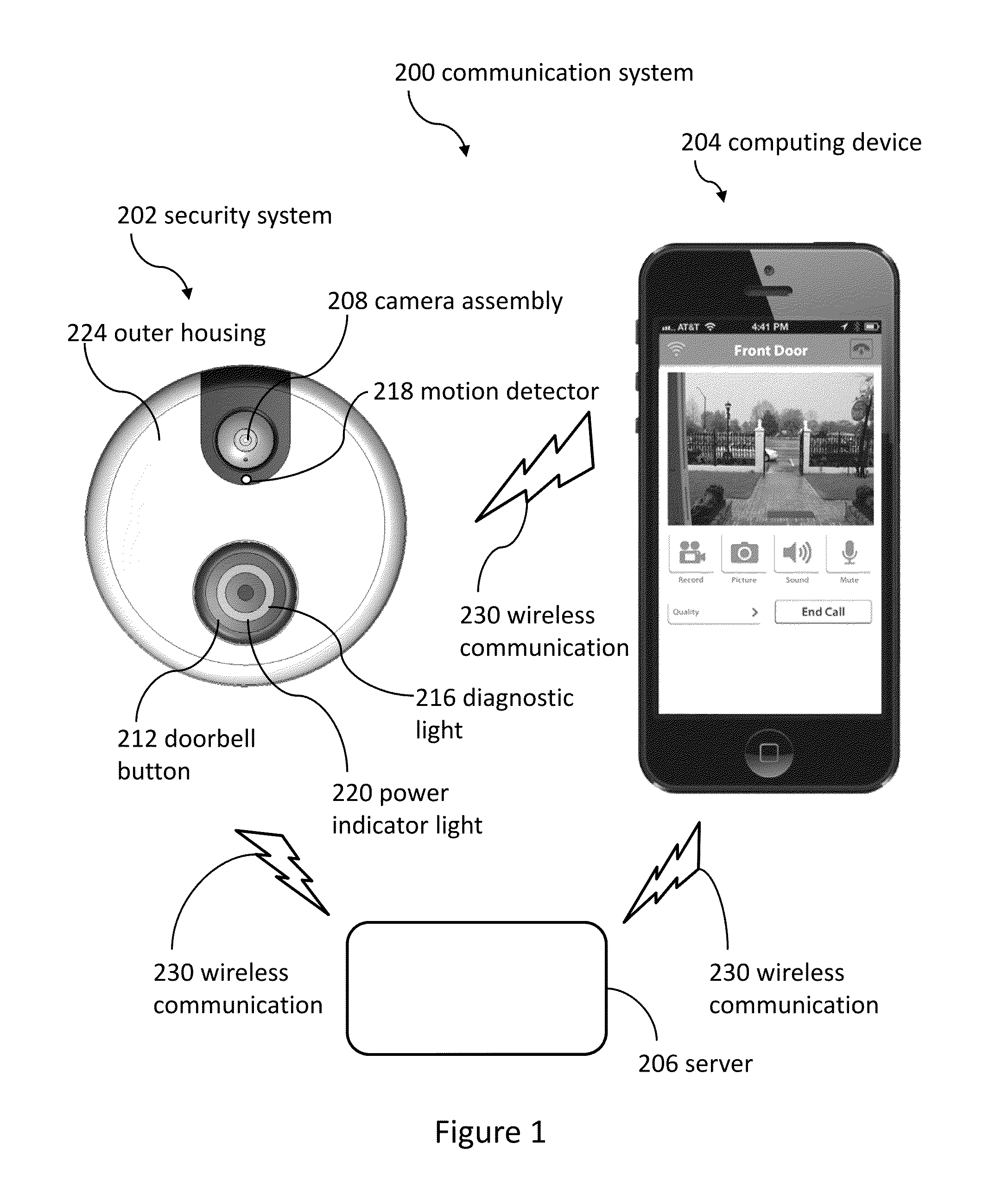

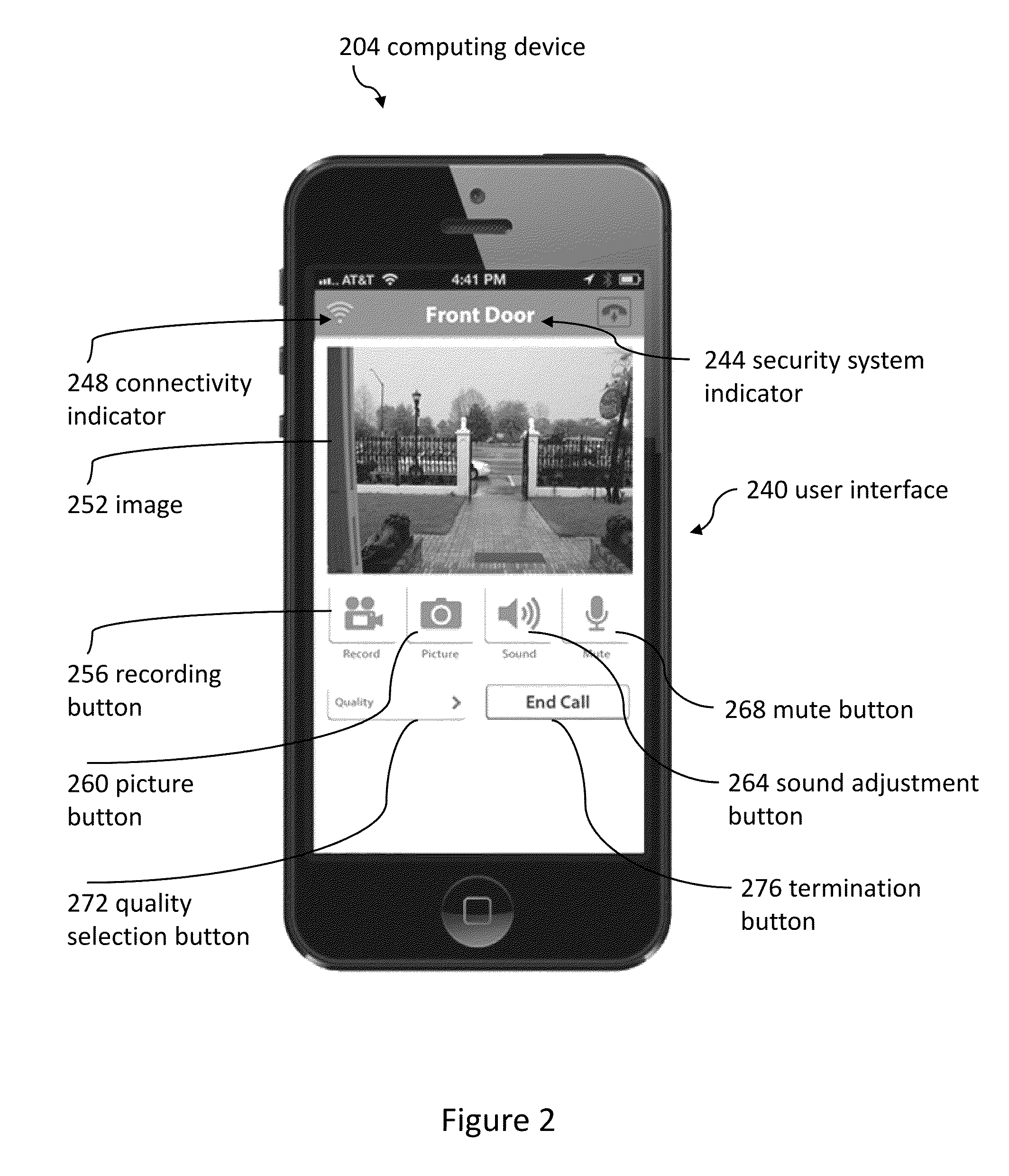

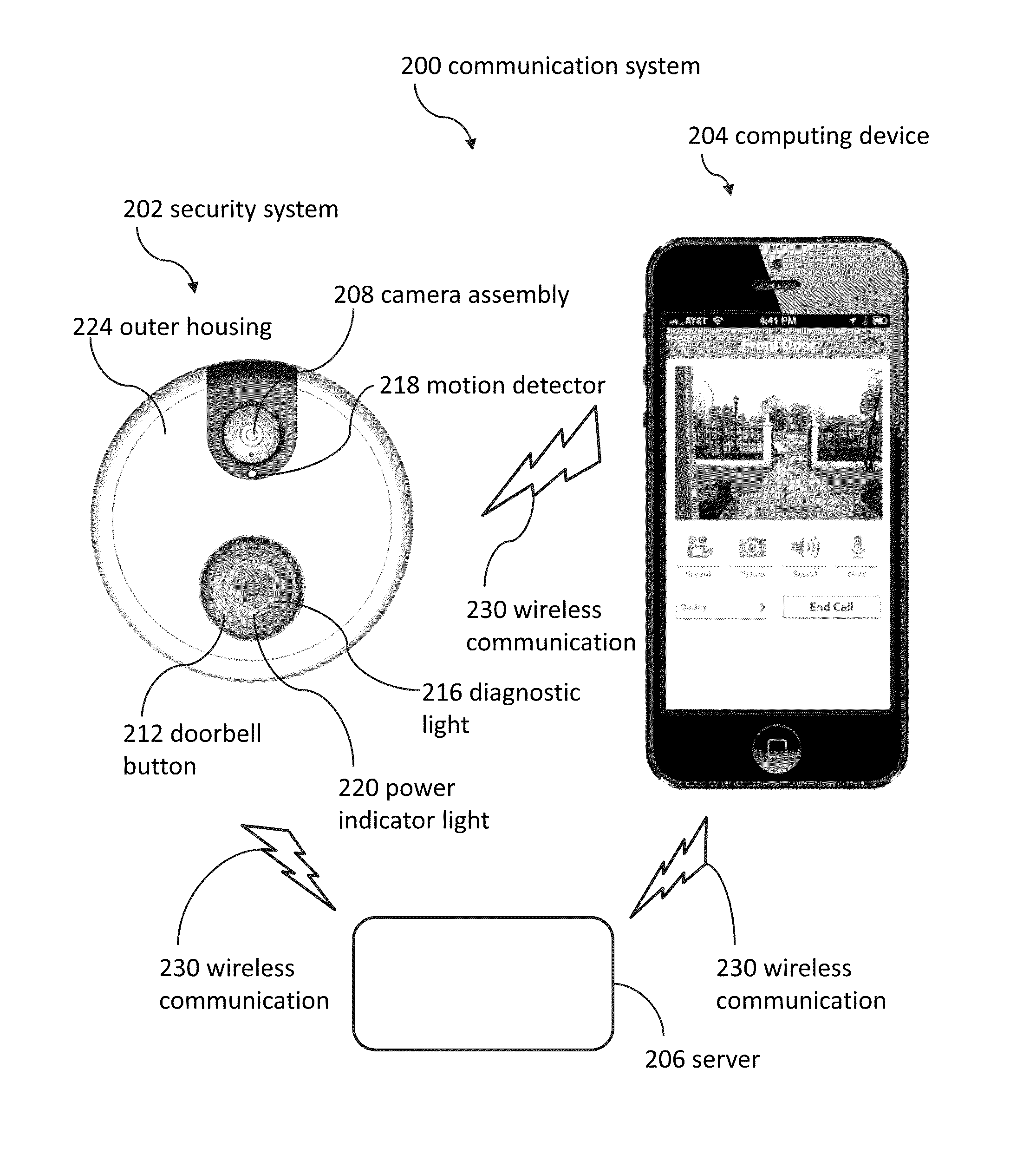

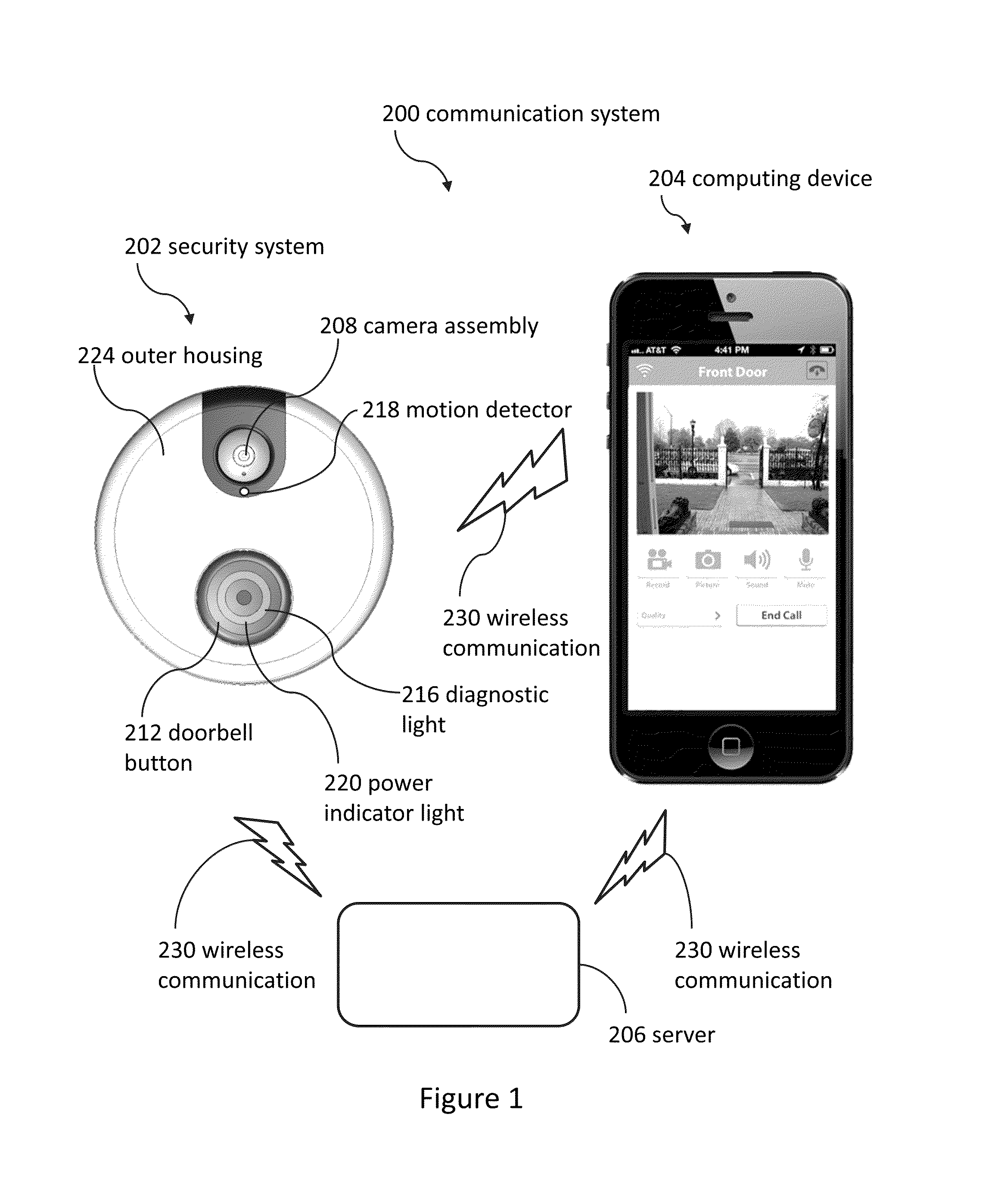

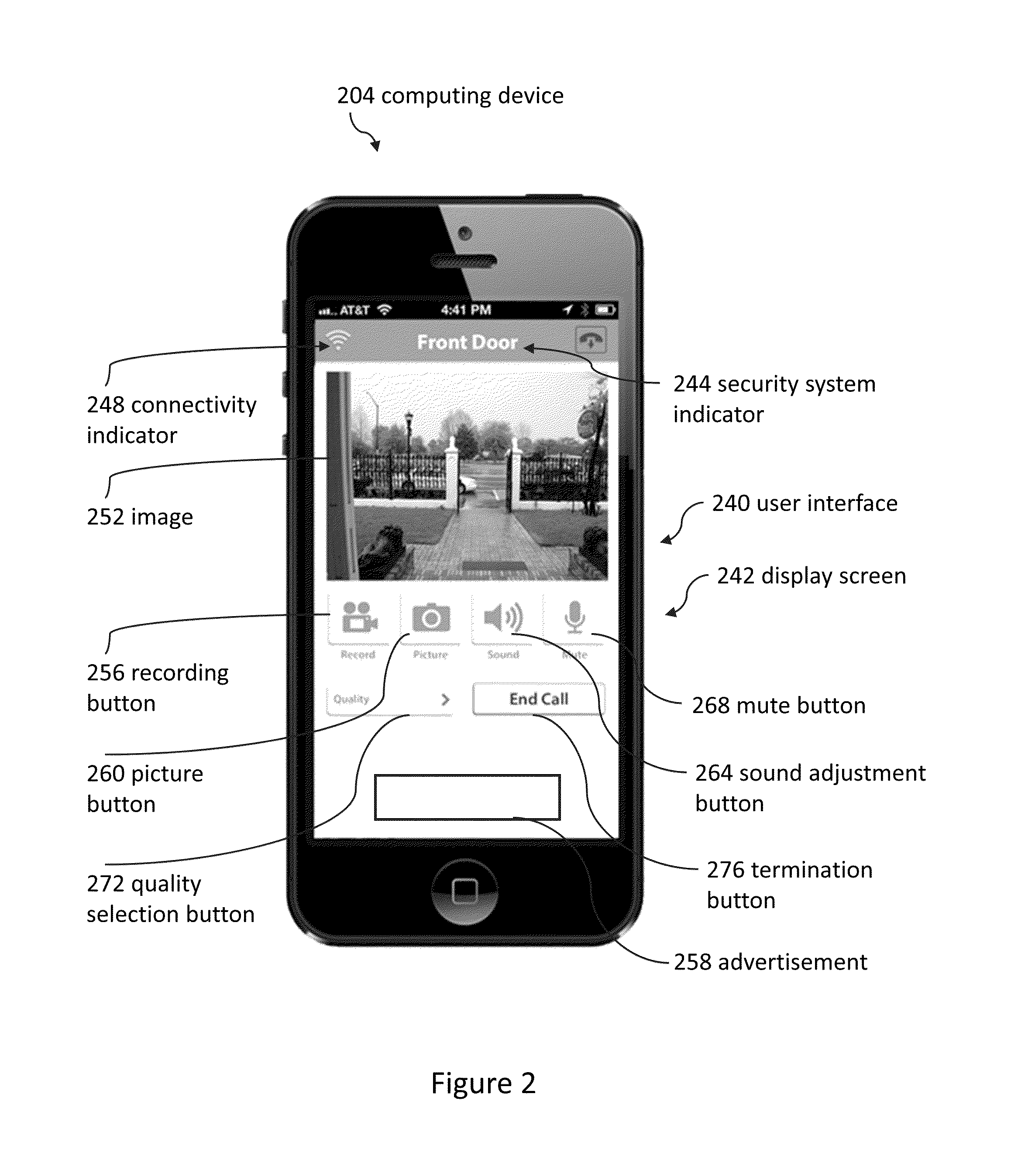

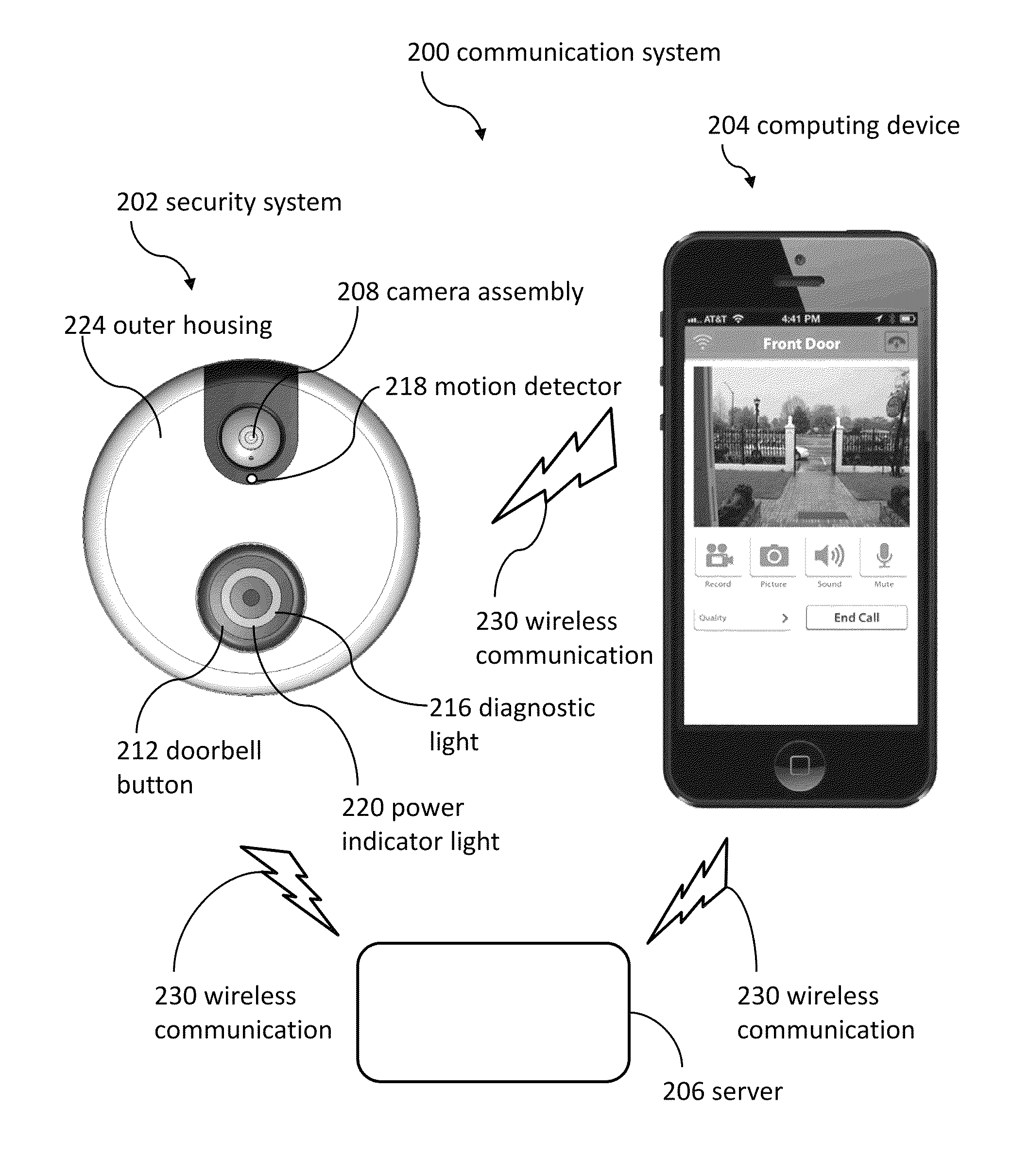

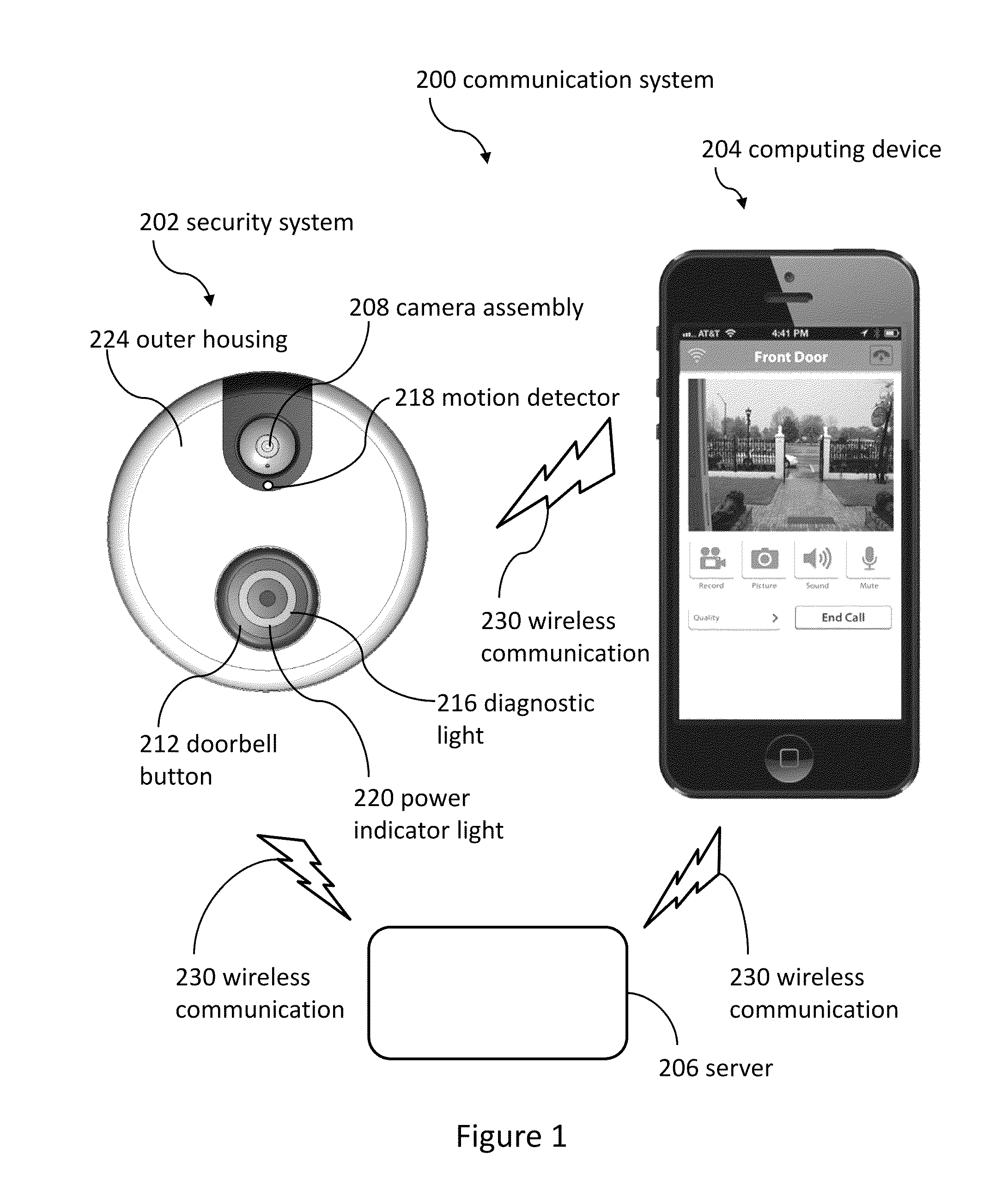

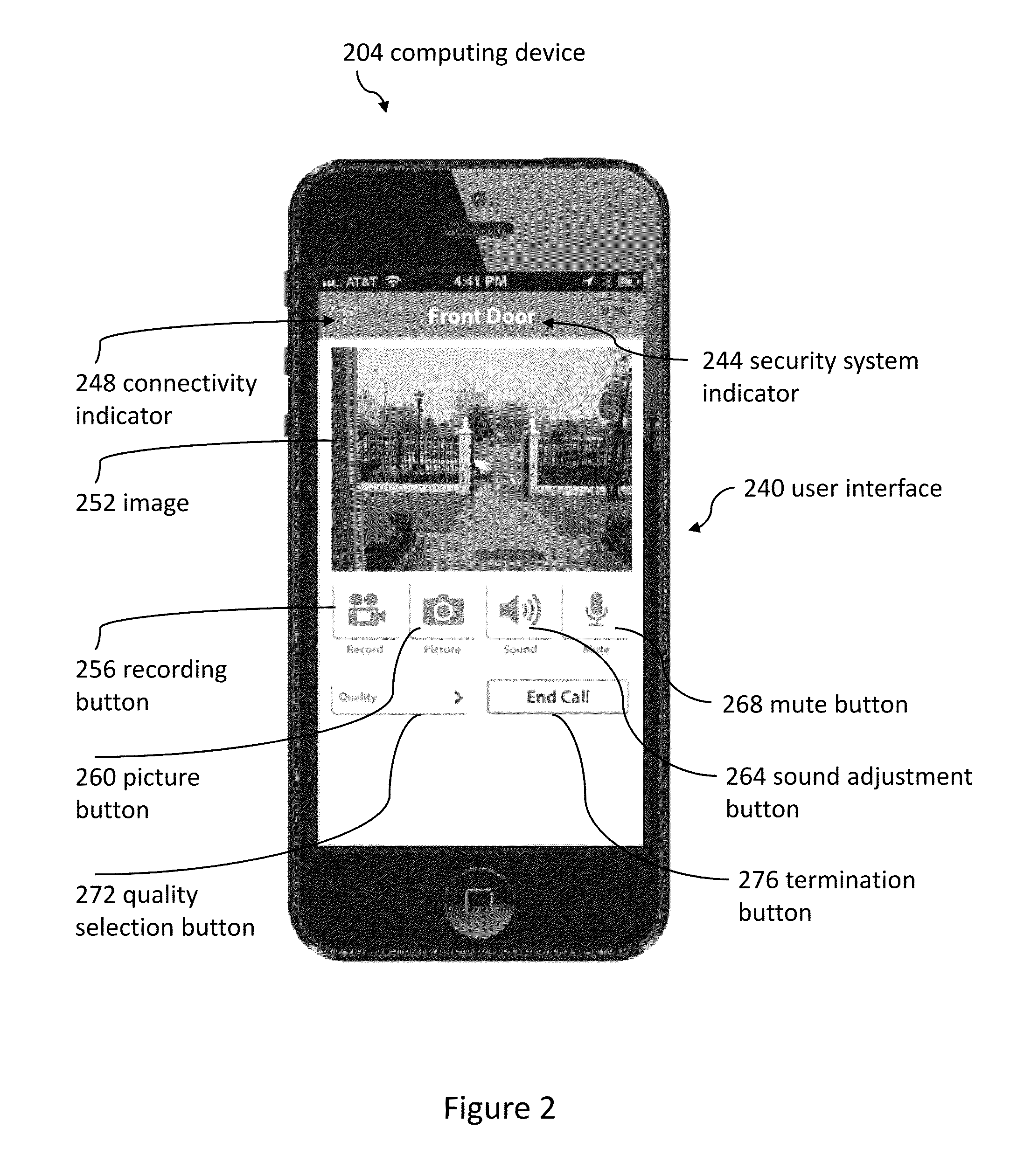

Doorbell communication systems and methods

ActiveUS8780201B1Reduce generationHigh activityColor television detailsClosed circuit television systemsDoorbellElectricity

Methods for using a doorbell that is configurable to wirelessly communicate with a remotely located computing device can include obtaining the doorbell that comprises a speaker, a microphone, and a camera. Methods can include entering a sleep mode in which the doorbell's wireless communication, the camera, and the microphone are disabled. Methods can include exiting the sleep mode and entering a standby mode in response to the doorbell detecting a first indication of a visitor. The standby mode can increase electrical activities of the doorbell's camera and microphone relative to the sleep mode. Methods can include entering an alert mode in response to detecting a second indication of the visitor. The doorbell can record an image using the camera during the alert mode. Wireless communication can be enabled during the alert mode to send an alert to the remotely located computing device.

Owner:SKYBELL TECH IP LLC

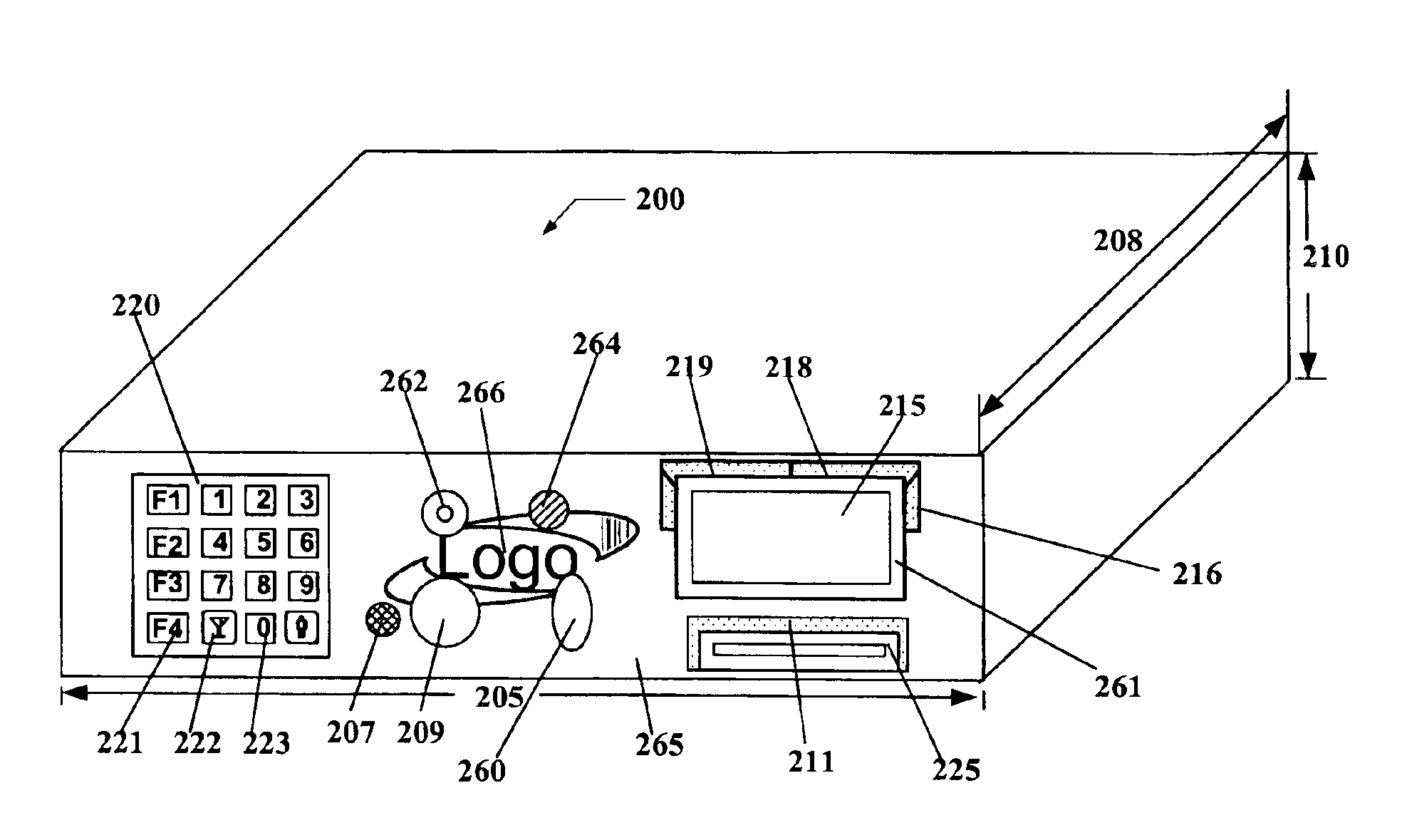

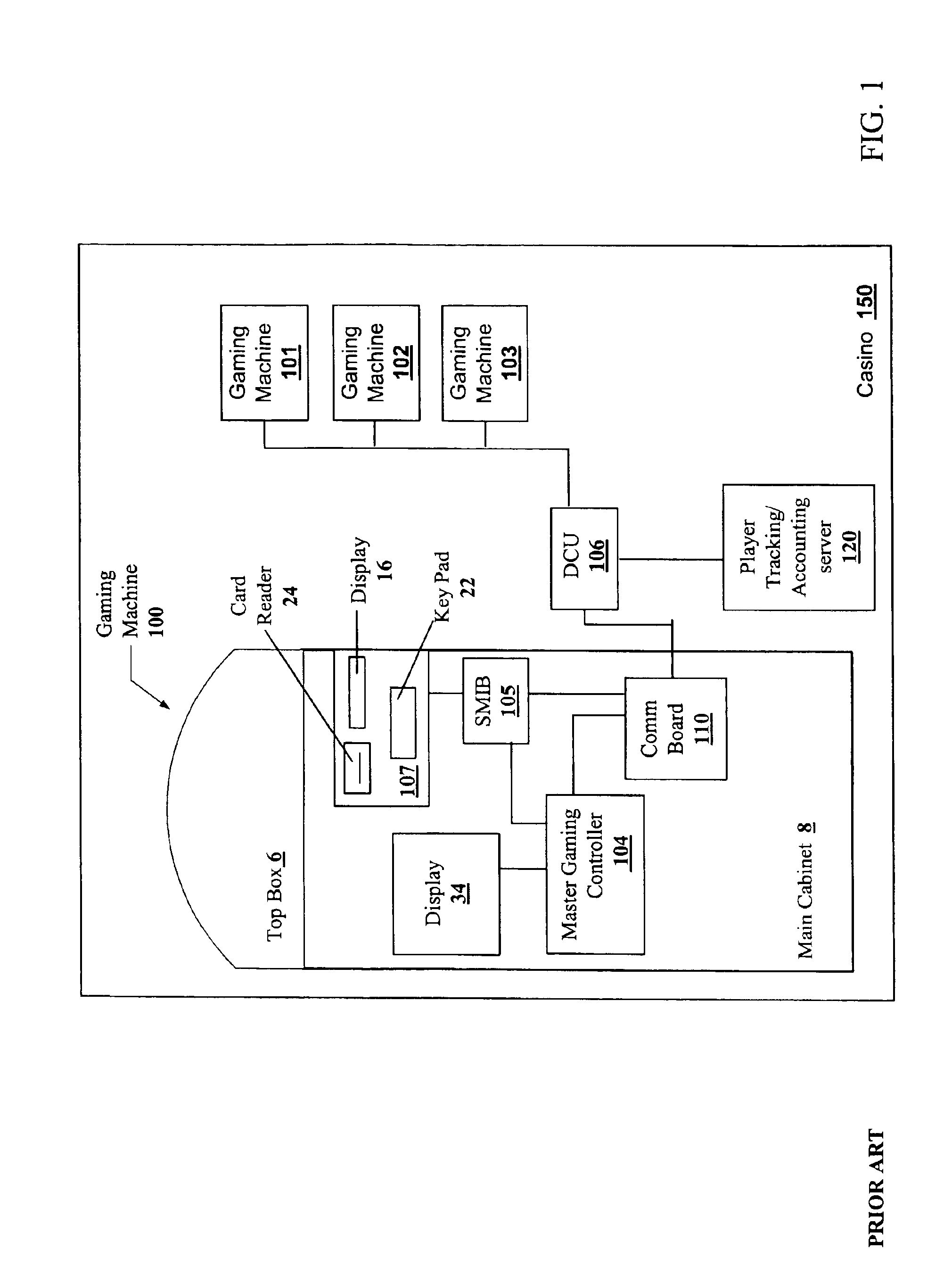

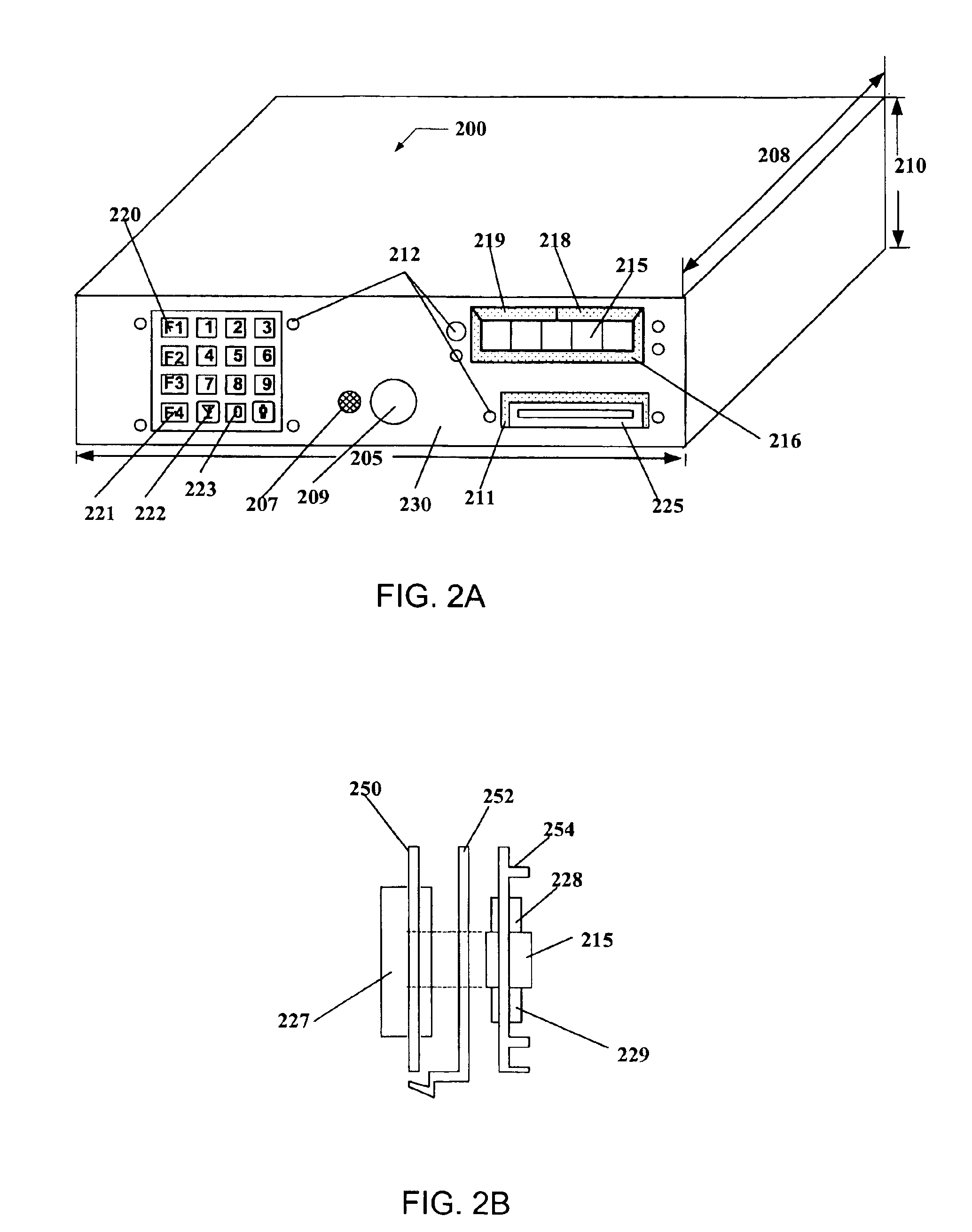

Player tracking communication mechanisms in a gaming machine

InactiveUS6908387B2Acutation objectsApparatus for meter-controlled dispensingDisplay deviceLoudspeaker

A disclosed a player tracking unit provides a display, one or more illumination devices adjacent to the display and a logic device designed to control illumination of the illumination devices in a manner that visually conveys gaming information to an individual viewing the devices. A speaker may be also provided on the player tracking unit to aurally convey gaming information such as voice messages designed to inform or instruct the player in some manner. The player tracking unit may include a wireless interface device designed or configured to allow player tracking information to be automatically downloaded from a portable wireless device carried by the player or player status information to be communicated to a casino service representative carrying a portable wireless device.

Owner:IGT

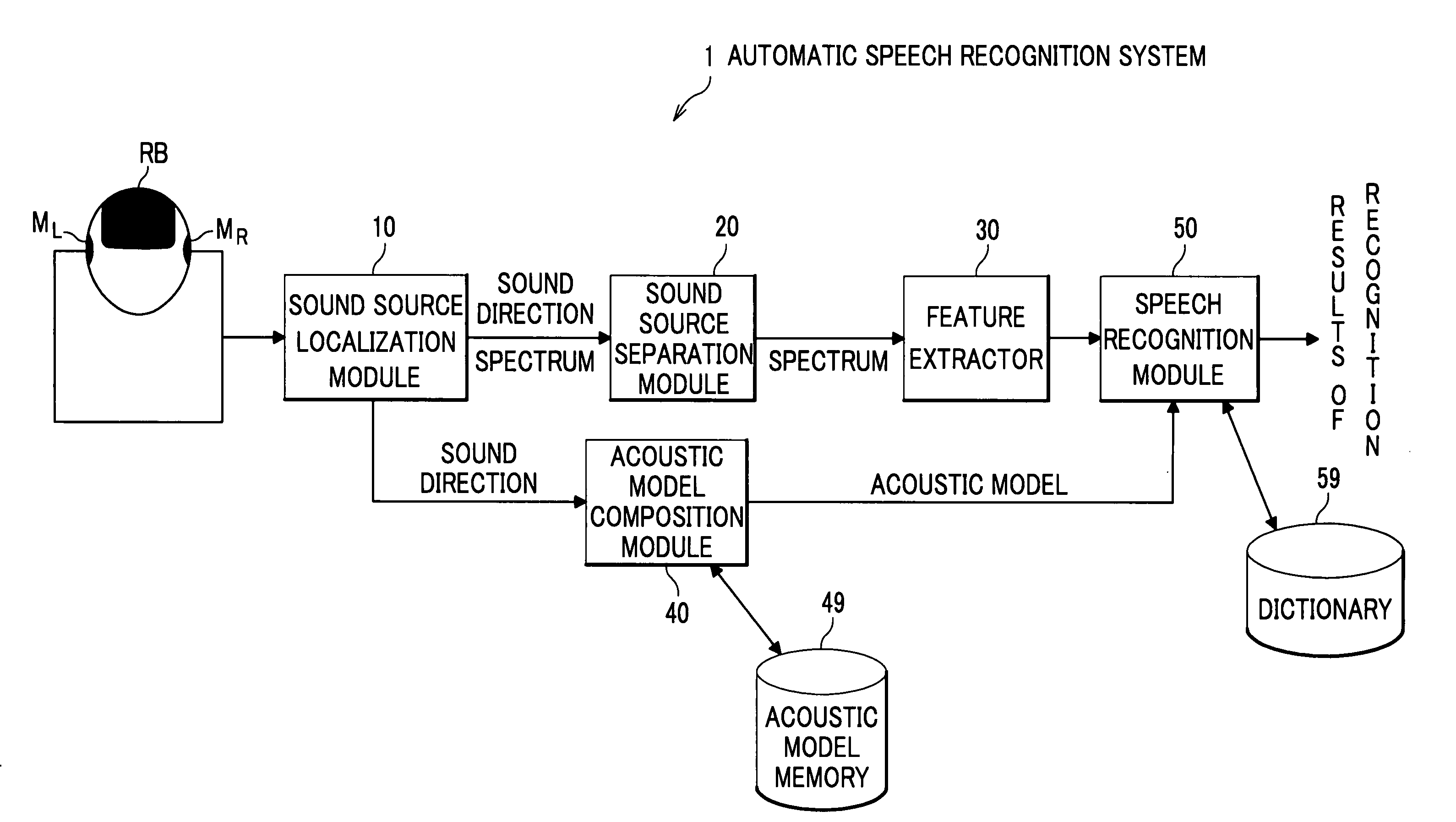

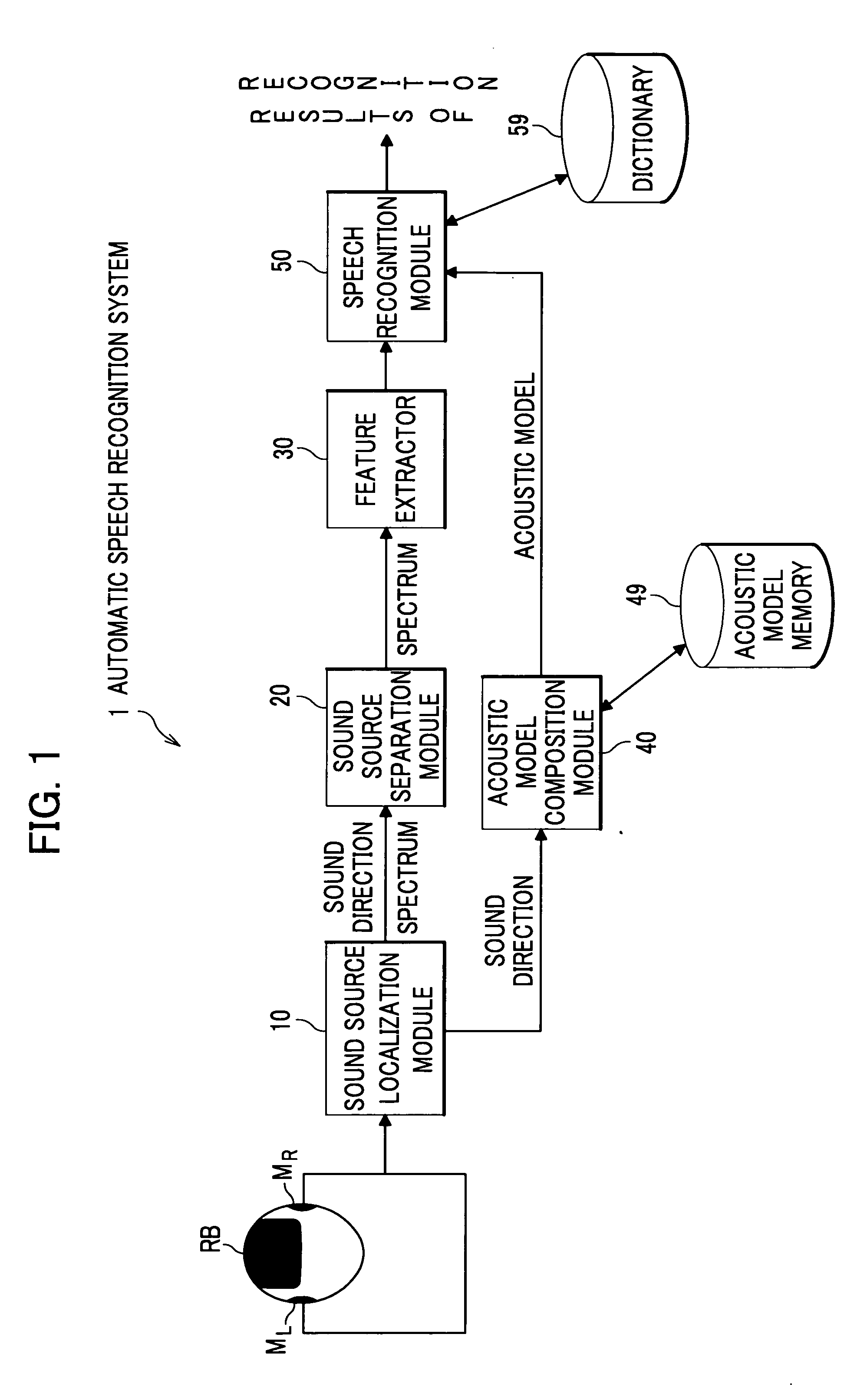

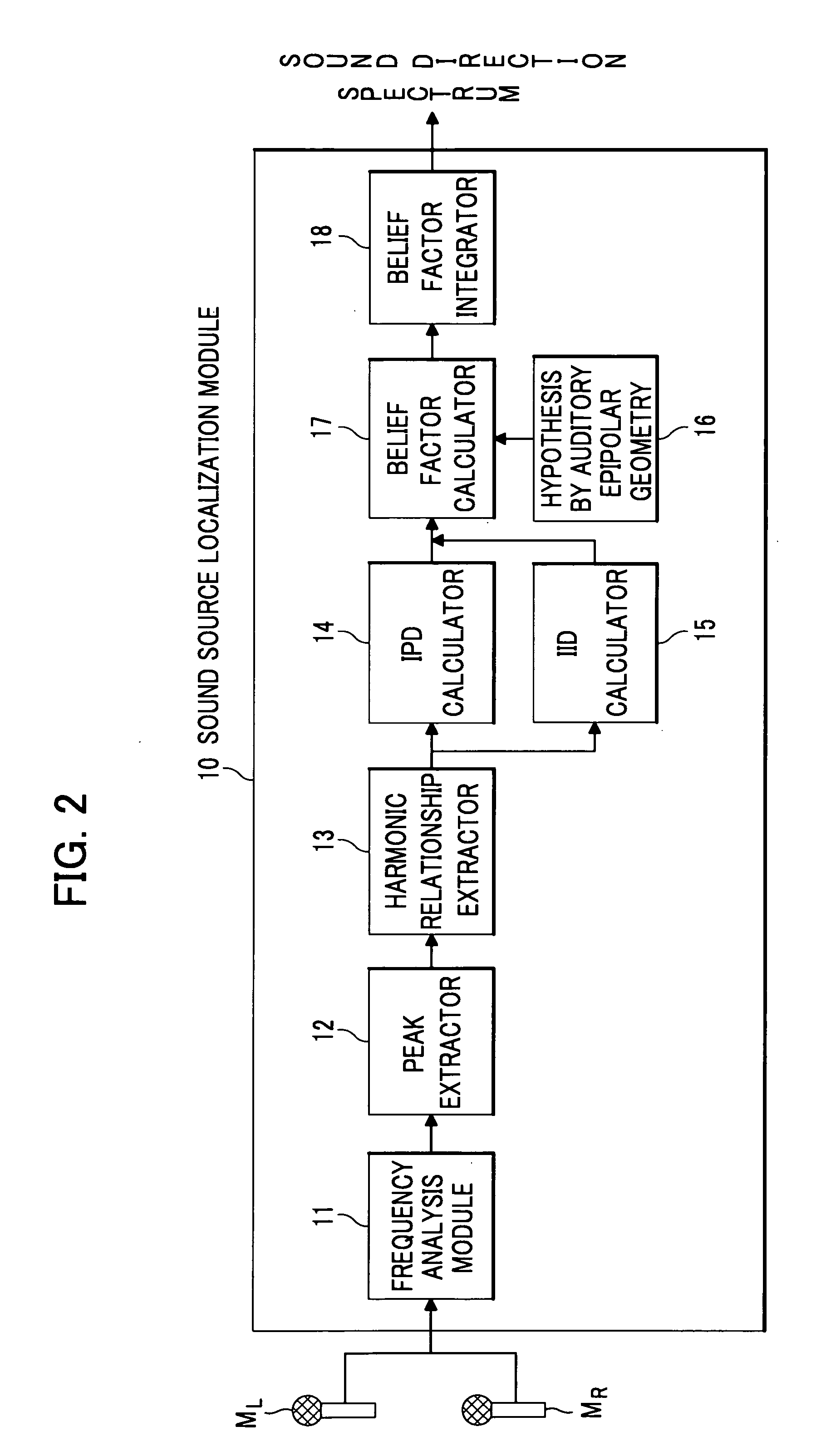

Automatic Speech Recognition System

InactiveUS20090018828A1Improve speech recognition rateImprove accuracySpeech recognitionSound source separationFeature extraction

An automatic speech recognition system includes: a sound source localization module for localizing a sound direction of a speaker based on the acoustic signals detected by the plurality of microphones; a sound source separation module for separating a speech signal of the speaker from the acoustic signals according to the sound direction; an acoustic model memory which stores direction-dependent acoustic models that are adjusted to a plurality of directions at intervals; an acoustic model composition module which composes an acoustic model adjusted to the sound direction, which is localized by the sound source localization module, based on the direction-dependent acoustic models, the acoustic model composition module storing the acoustic model in the acoustic model memory; and a speech recognition module which recognizes the features extracted by a feature extractor as character information using the acoustic model composed by the acoustic model composition module.

Owner:HONDA MOTOR CO LTD

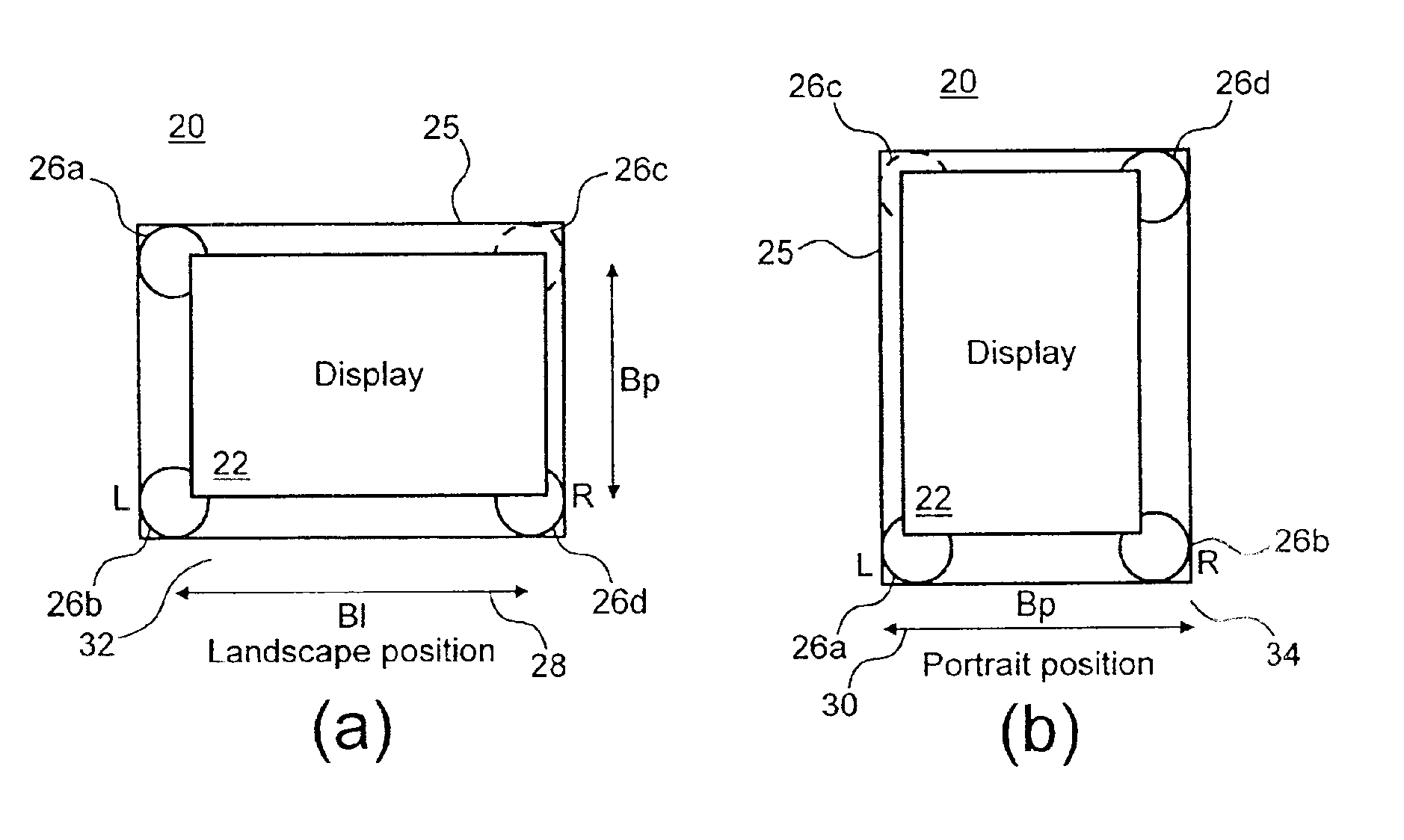

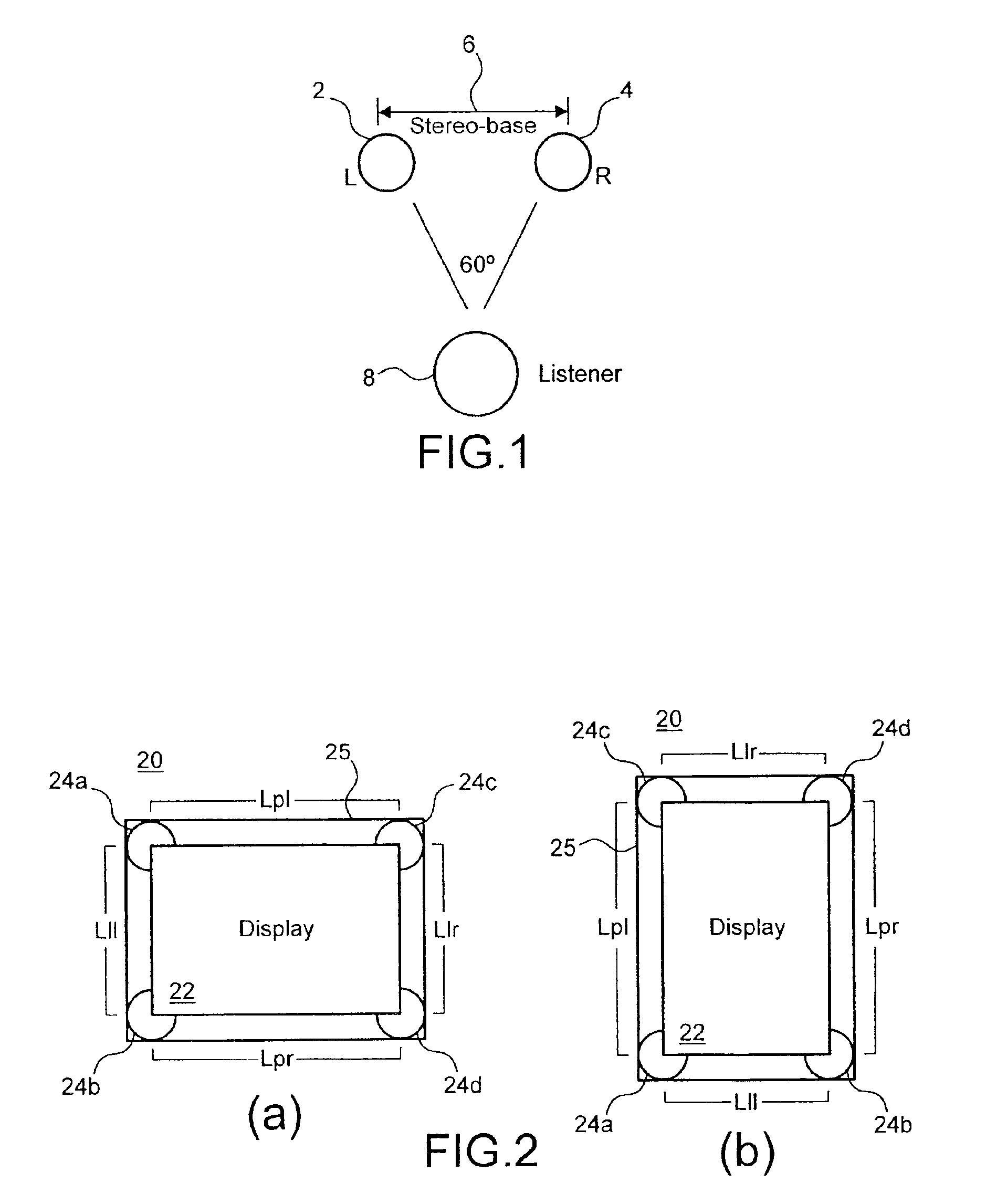

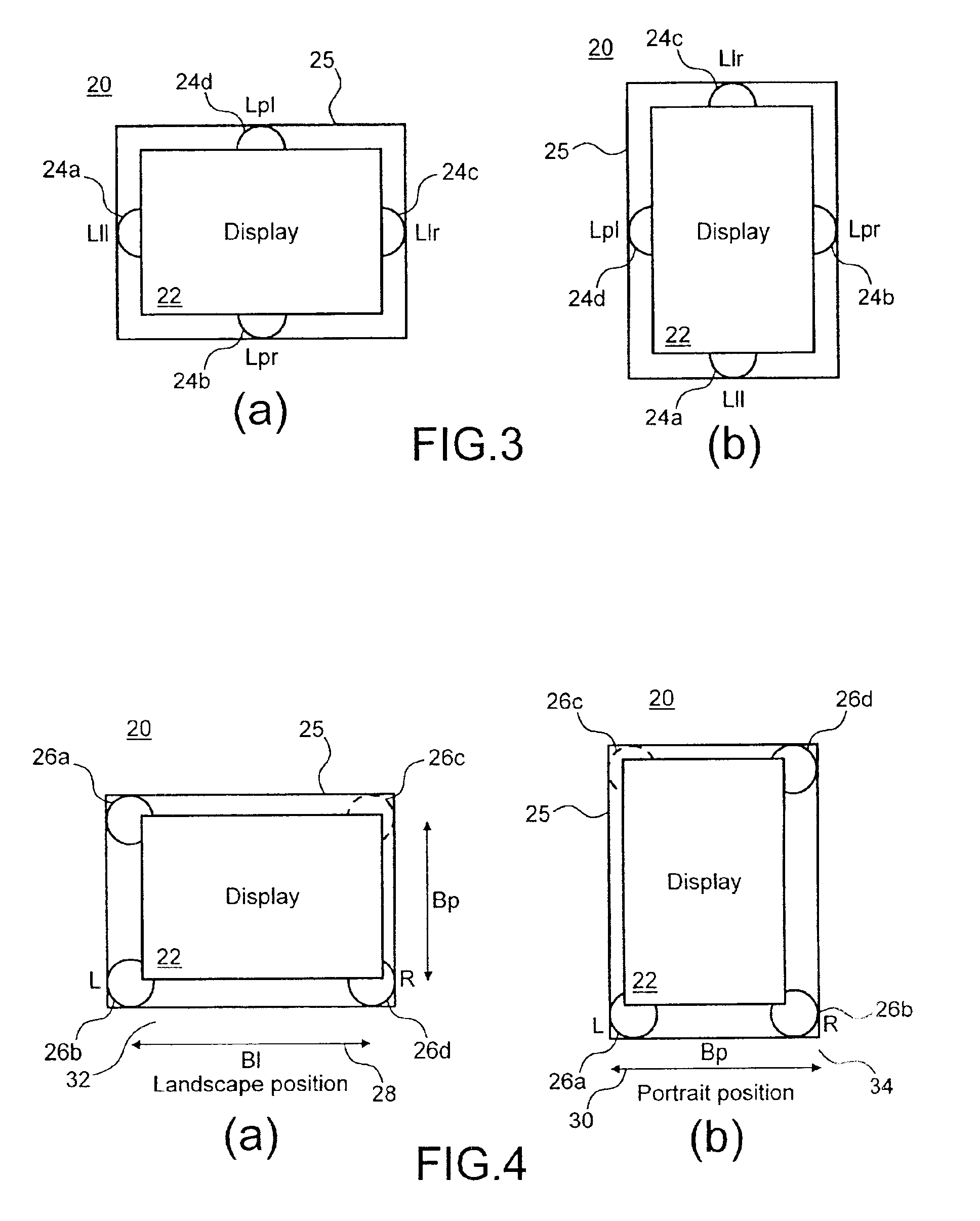

Stereophonic reproduction maintaining means and methods for operation in horizontal and vertical A/V appliance positions

InactiveUS6882335B2Improved audio listeningEnhance the imageTelevision system detailsDevices with sensorDisplay deviceEngineering

Display apparatus including a display and an orientation sensitive interface mechanism is disclosed. In an exemplary embodiment, the orientation sensitive interface includes first and second loudspeaker pairs. The first loudspeaker pair includes first and second loudspeakers and the second loudspeaker pair includes the second and third loudspeaker. The first and second loudspeaker pairs are disposed along transverse directions to each other. The display apparatus comprises a switch which switches between the first loudspeaker pair and the second loudspeaker pair. By providing the respective loudspeaker pairs, and switching between them, it is possible to orient the display apparatus in transverse directions corresponding to respective loudspeaker pairs, yet maintain a substantially stereophonic reproduction for each orientation.

Owner:HTC CORP

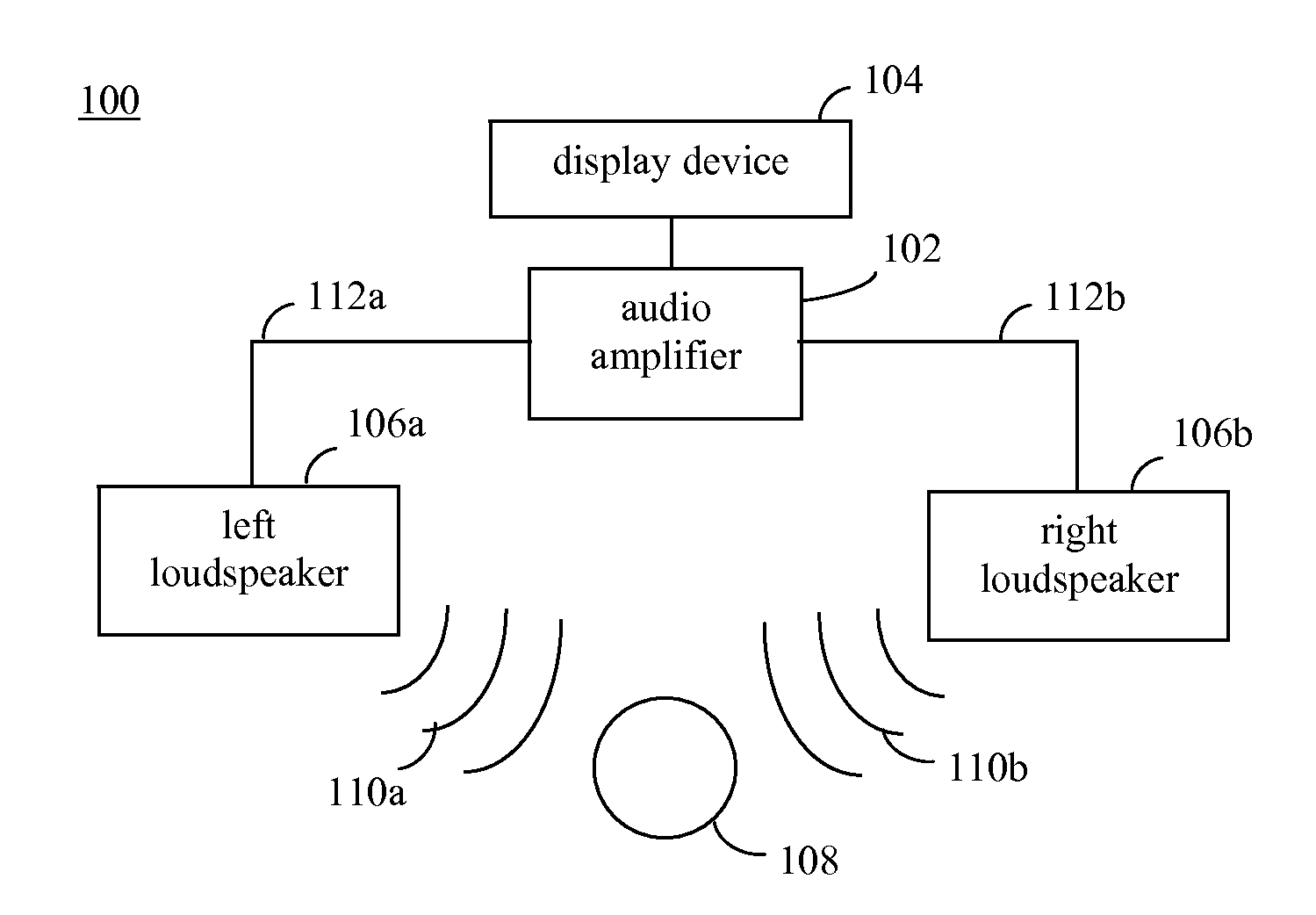

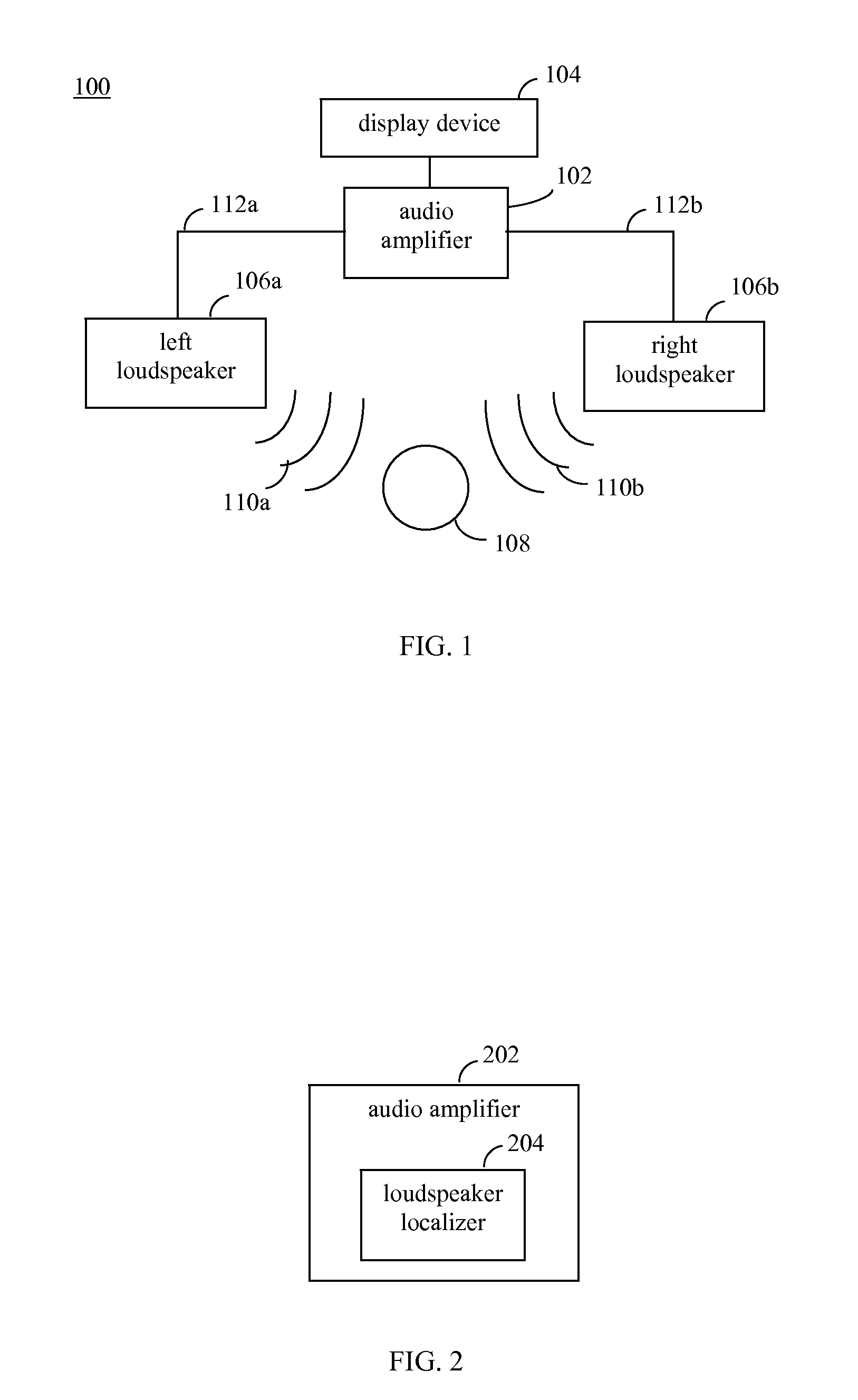

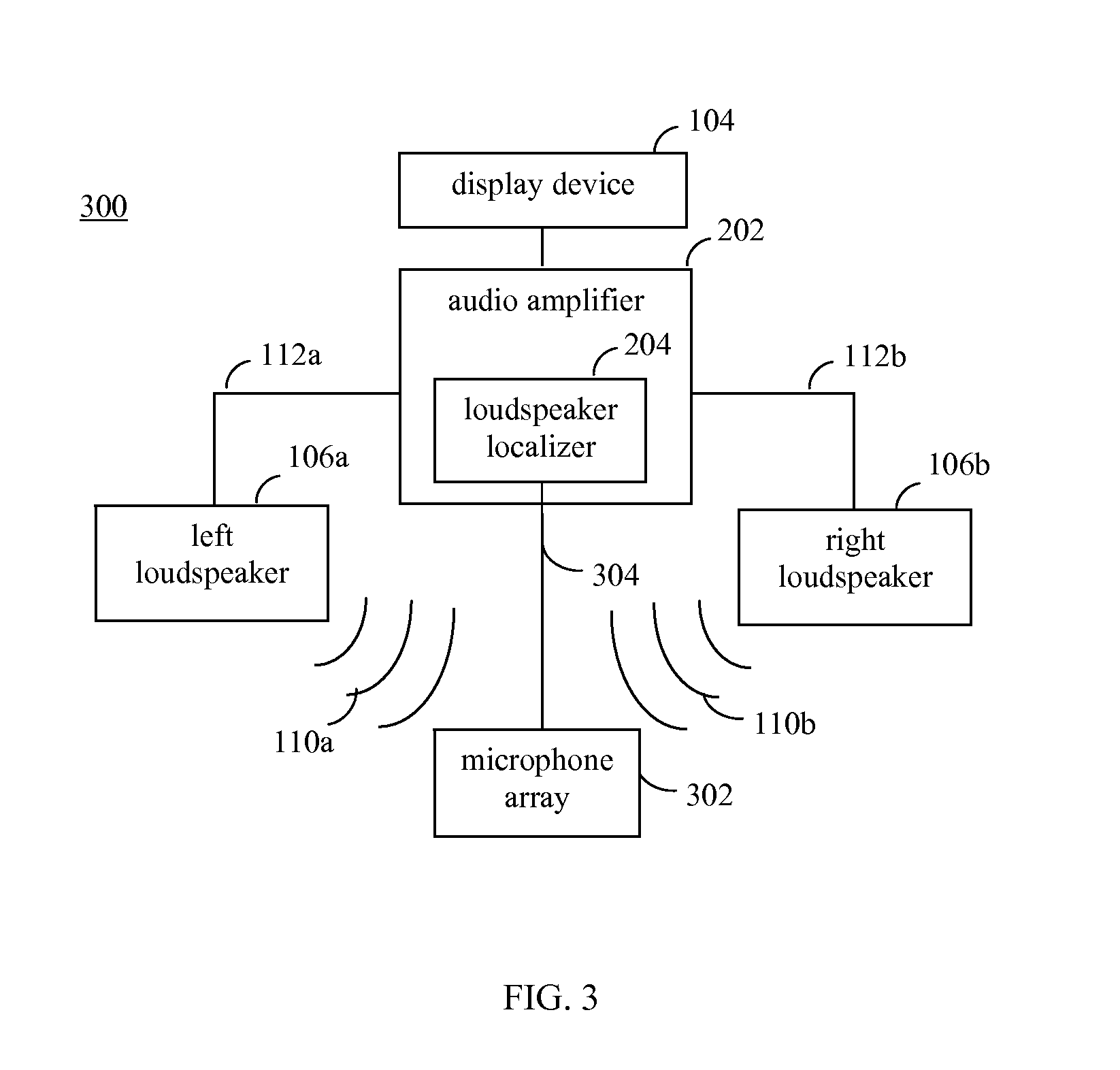

Loudspeaker localization techniques

Techniques for loudspeaker localization are provided. Sound is received from a loudspeaker at a plurality of microphone locations. A plurality of audio signals is generated based on the sound received at the plurality of microphone locations. Location information is generated that indicates a loudspeaker location for the loudspeaker based on the plurality of audio signals. Whether the generated location information matches a predetermined desired loudspeaker location for the loudspeaker is determined. A corrective action with regard to the loudspeaker is enabled to be performed if the generated location information is determined to not match the predetermined desired loudspeaker location for the loudspeaker.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

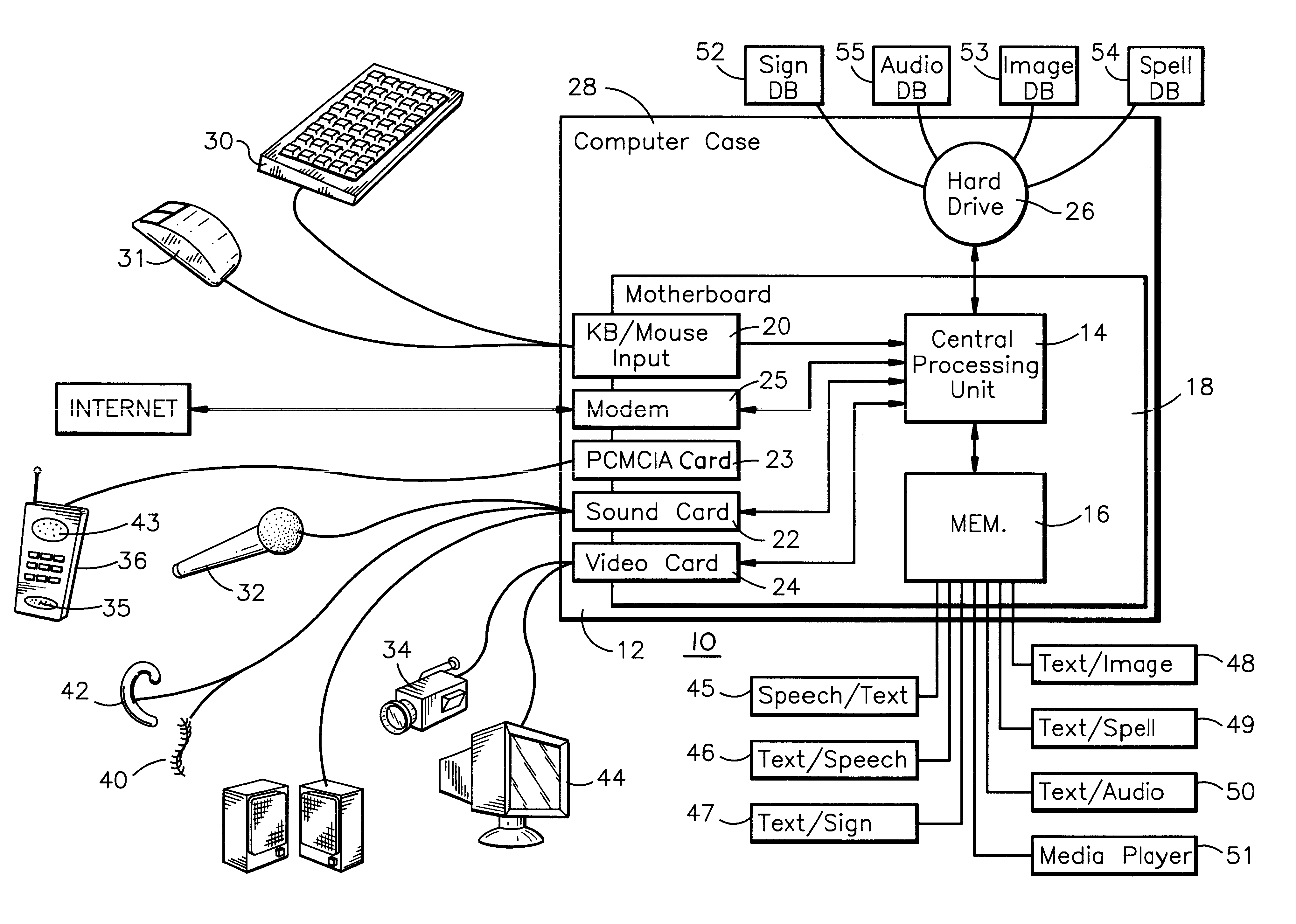

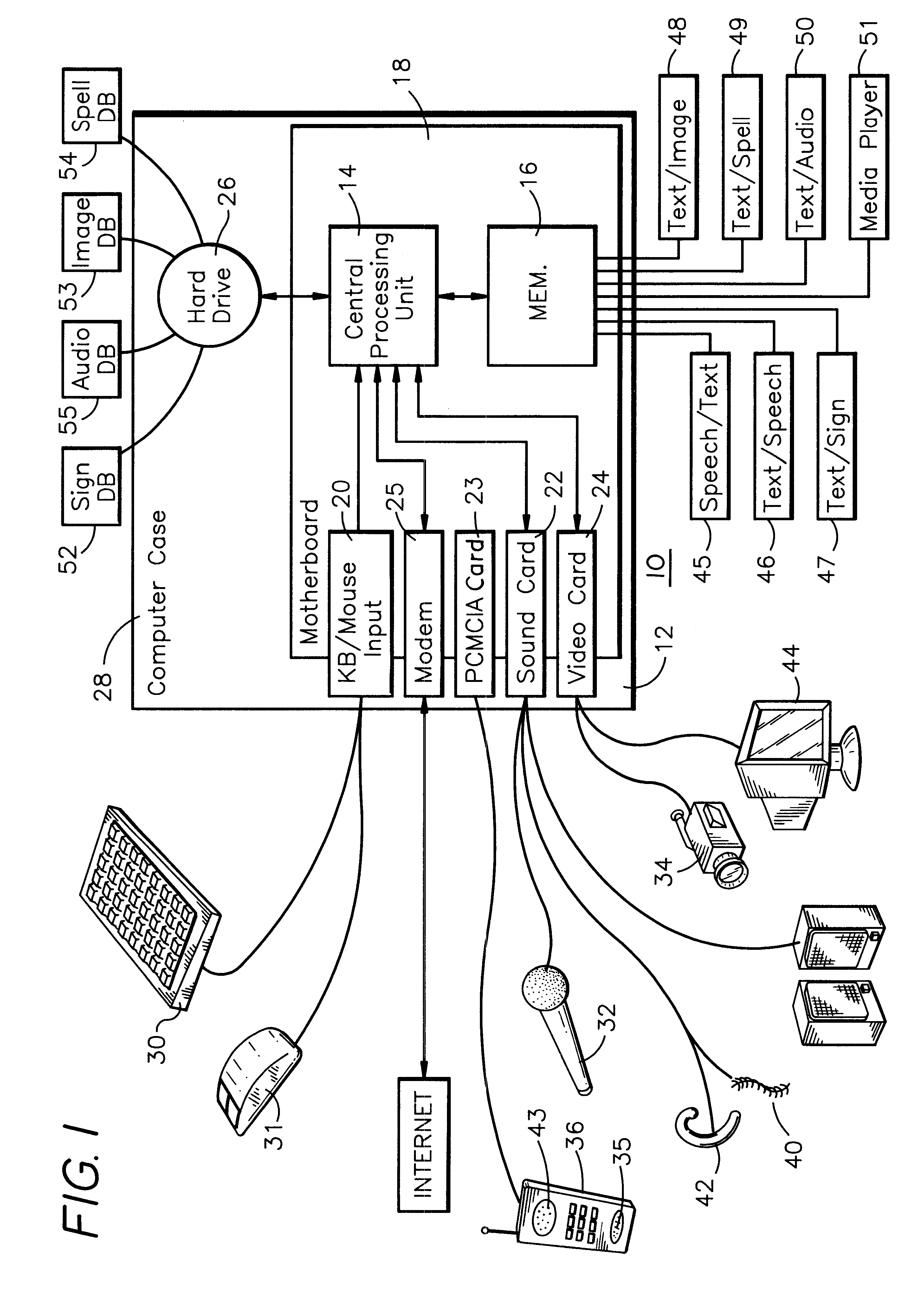

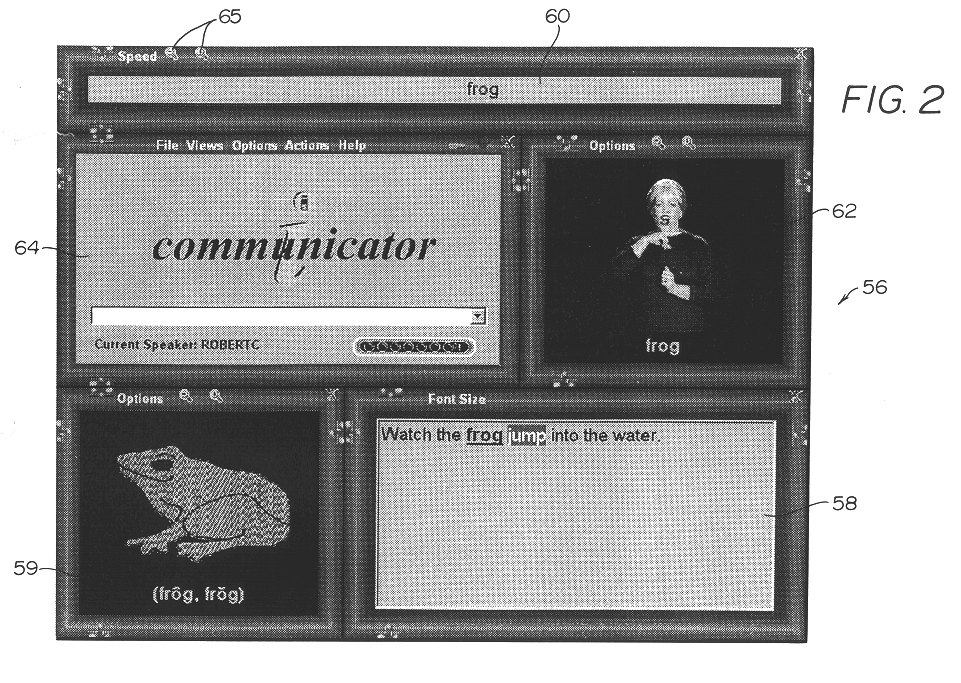

Electronic translator for assisting communications

InactiveUS6377925B1Hearing impaired stereophonic signal reproductionAutomatic exchangesData streamOutput device

An electronic translator translates input speech into multiple streams of data that are simultaneously delivered to the user, such as a hearing impaired individual. Preferably, the data is delivered in audible, visual and text formats. These multiple data streams are delivered to the hearing-impaired individual in a synchronized fashion, thereby creating a cognitive response. Preferably, the system of the present invention converts the input speech to a text format, and then translates the text to any of three other forms, including sign language, animation and computer generated speech. The sign language and animation translations are preferably implemented by using the medium of digital movies in which videos of a person signing words, phrase and finger spelled words, and of animations corresponding to the words, are selectively accessed from databases and displayed. Additionally the received speech is converted to computer-generated speech for input to various hearing enhancement devices used by the deaf or hearing-impaired, such as cochlear implants and hearing aids, or other output devices such as speakers, etc. The data streams are synchronized utilizing a high-speed personal computer to facilitate sufficiently fast processing that the text, video signing and audible streams can be generated simultaneously in real time. Once synchronized the data streams are presented to the subject concurrently in a method that allows the process of mental comprehension to occur. The electronic translator can also be interfaced to other communications devices, such as telephones. Preferably, the hearing-impaired person is also able to use the system's keyboard or mouse to converse or respond.

Owner:INTERACTIVE SOLUTIONS

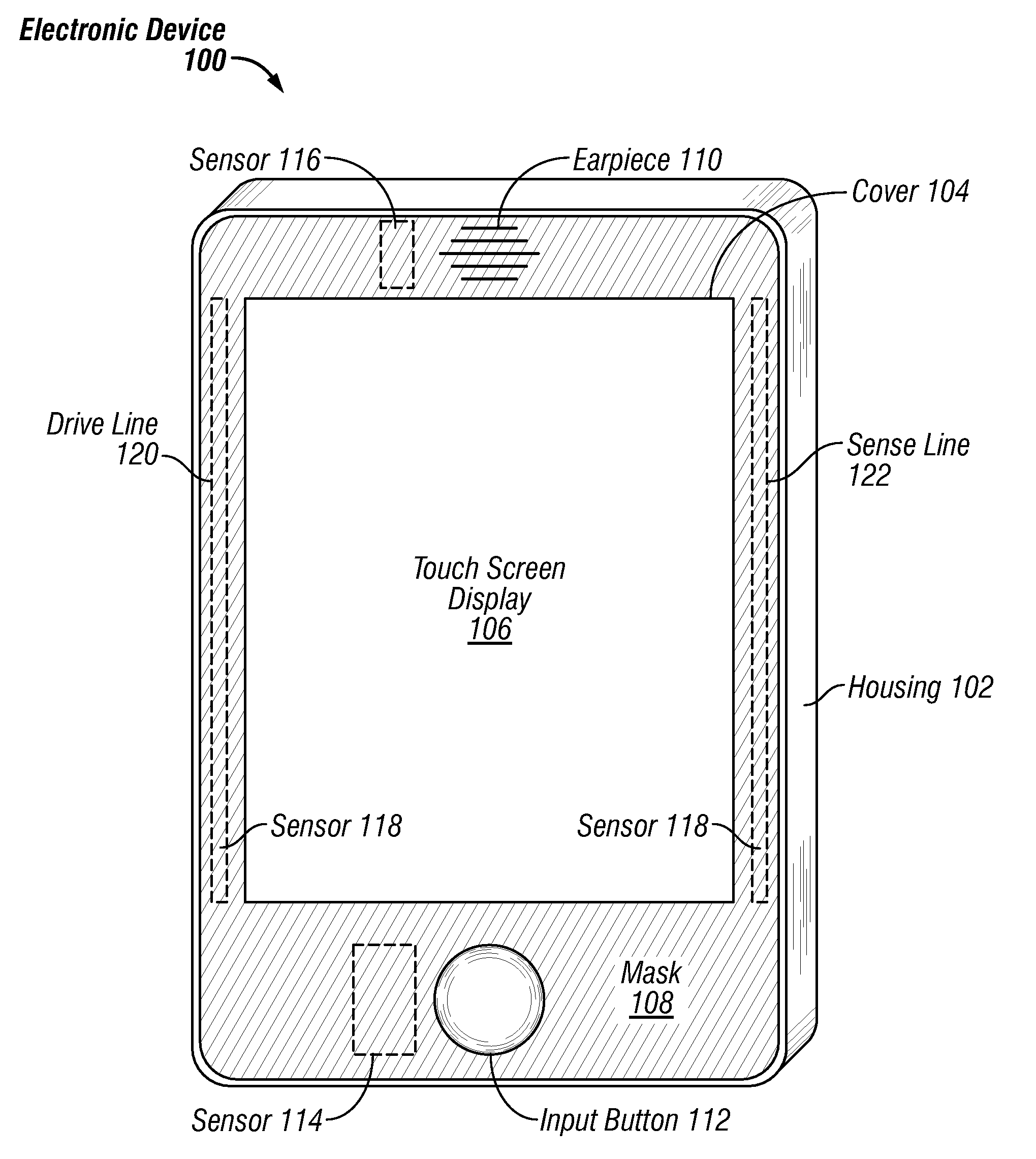

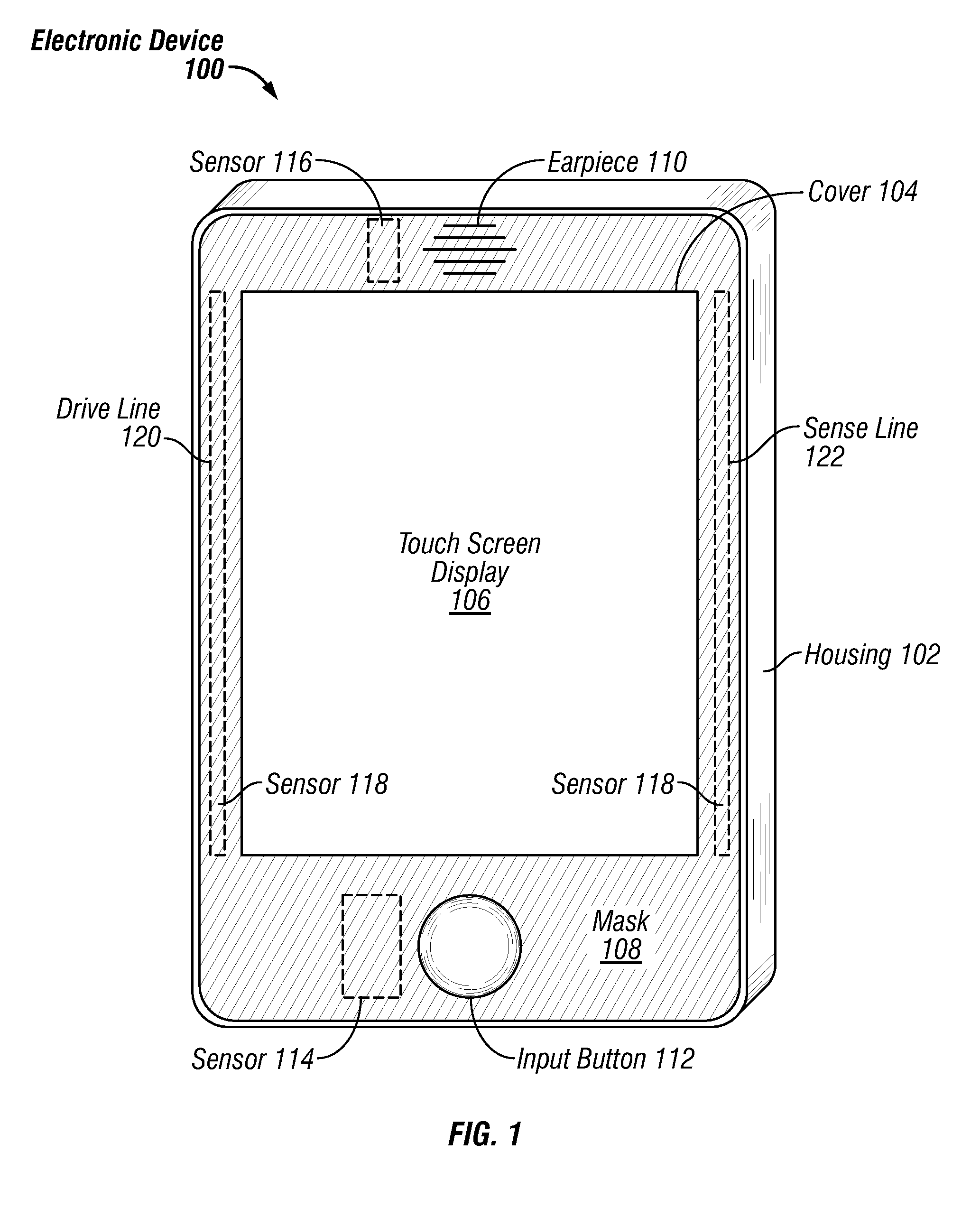

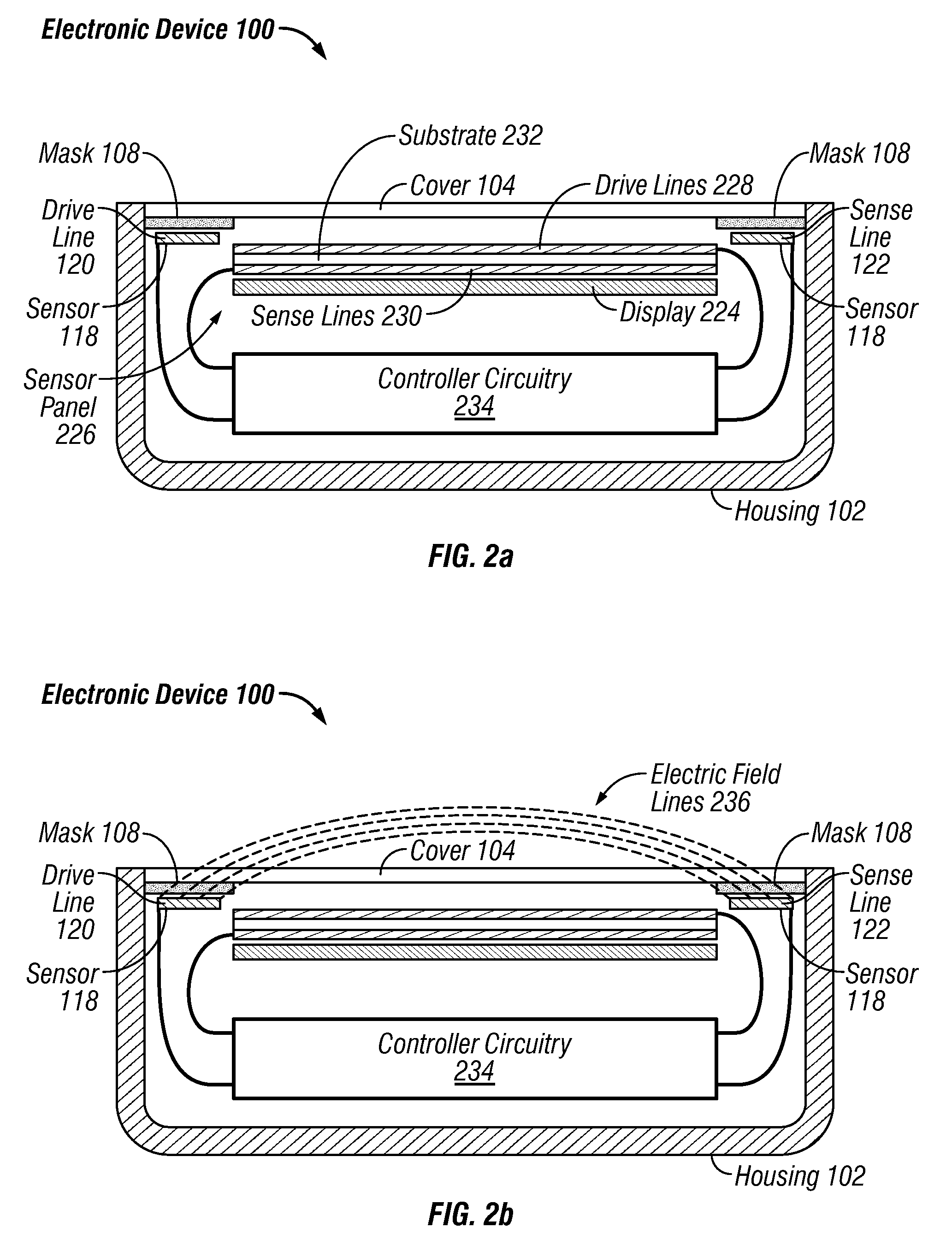

Capacitive sensor behind black mask

ActiveUS20100026656A1Enhance and provide additional functionalityElectronic switchingInput/output processes for data processingDisplay deviceCapacitive sensing

Devices having one or more sensors located outside a viewing area of a touch screen display are disclosed. The one or more sensors can be located behind an opaque mask area of the device; the opaque mask area extending between the sides of a housing of the device and viewing area of the touch screen display. In addition, the sensors located behind the mask can be separate from a touch sensor panel used to detect objects on or near the touch screen display, and can be used to enhance or provide additional functionality to the device. For example, a device having a sensor located outside the viewing area can be used to detect objects in proximity to a functional component incorporated in the device, such as an ear piece (i.e., speaker for outputting sound). The sensor can also output a signal indicating a level of detection which may be interpreted by a controller of the device as a level of proximity of an object to the functional component. In addition, the controller can initiate a variety of actions related to the functional component based on the output signal, such as adjusting the volume of the earpiece.

Owner:APPLE INC

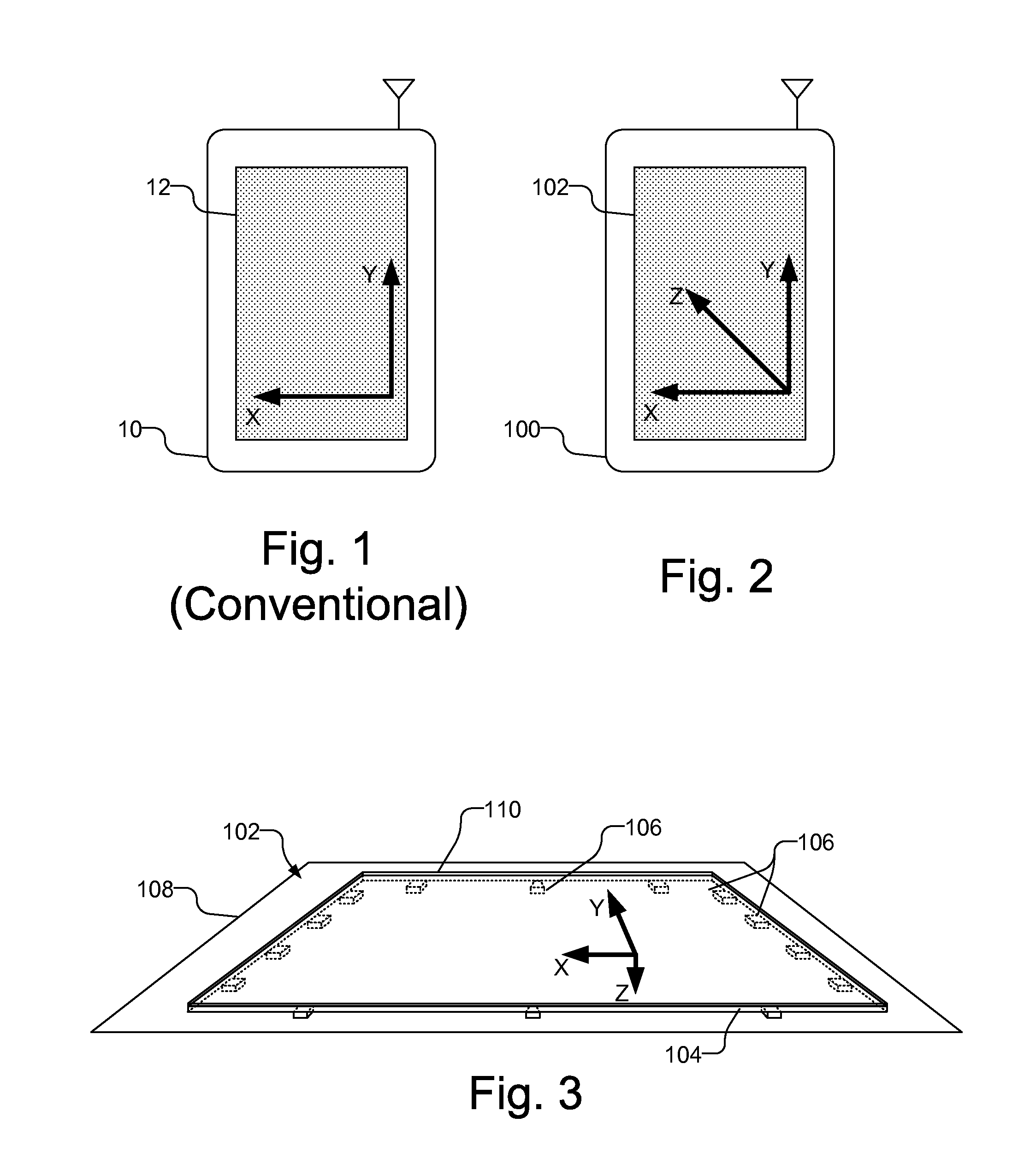

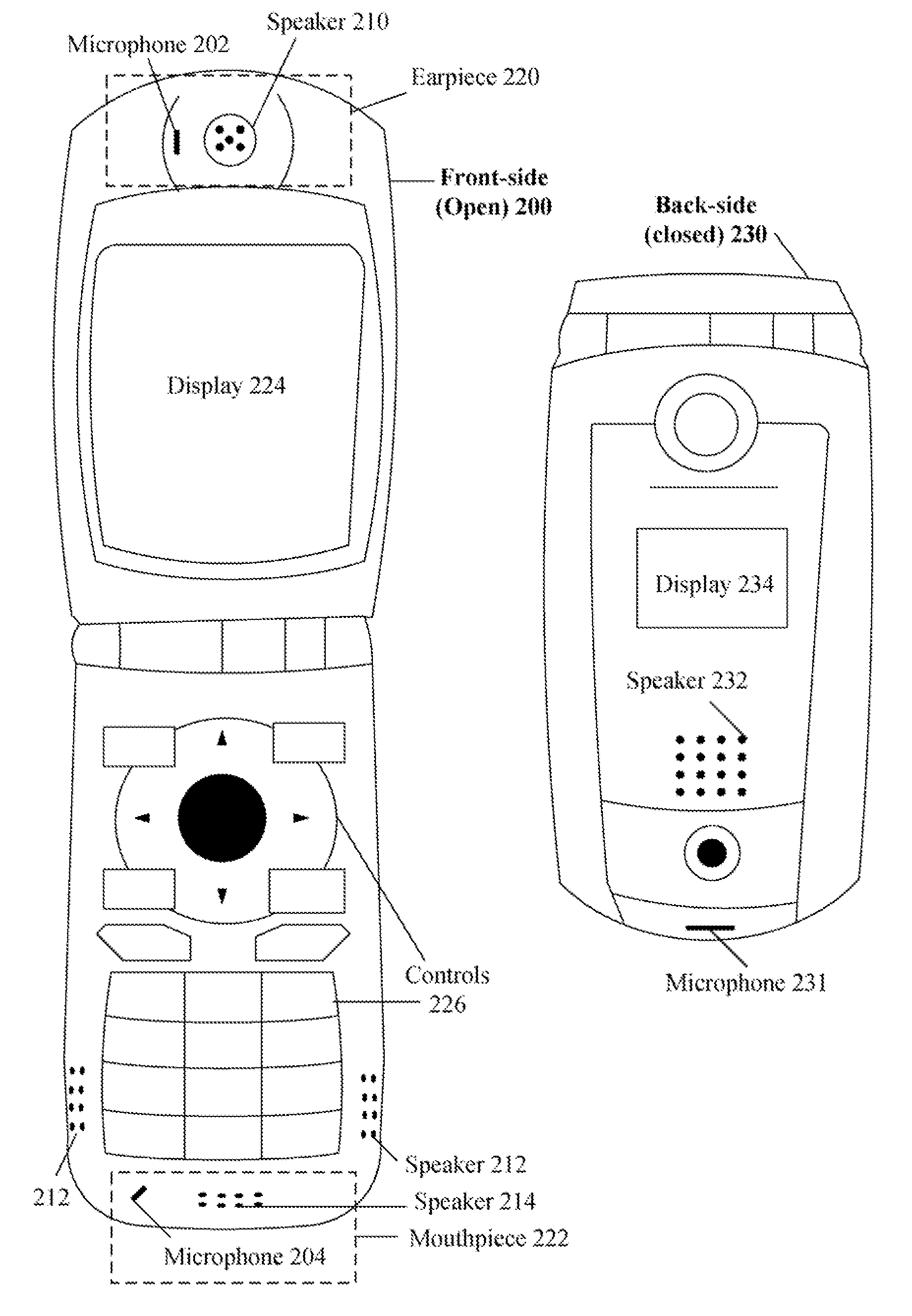

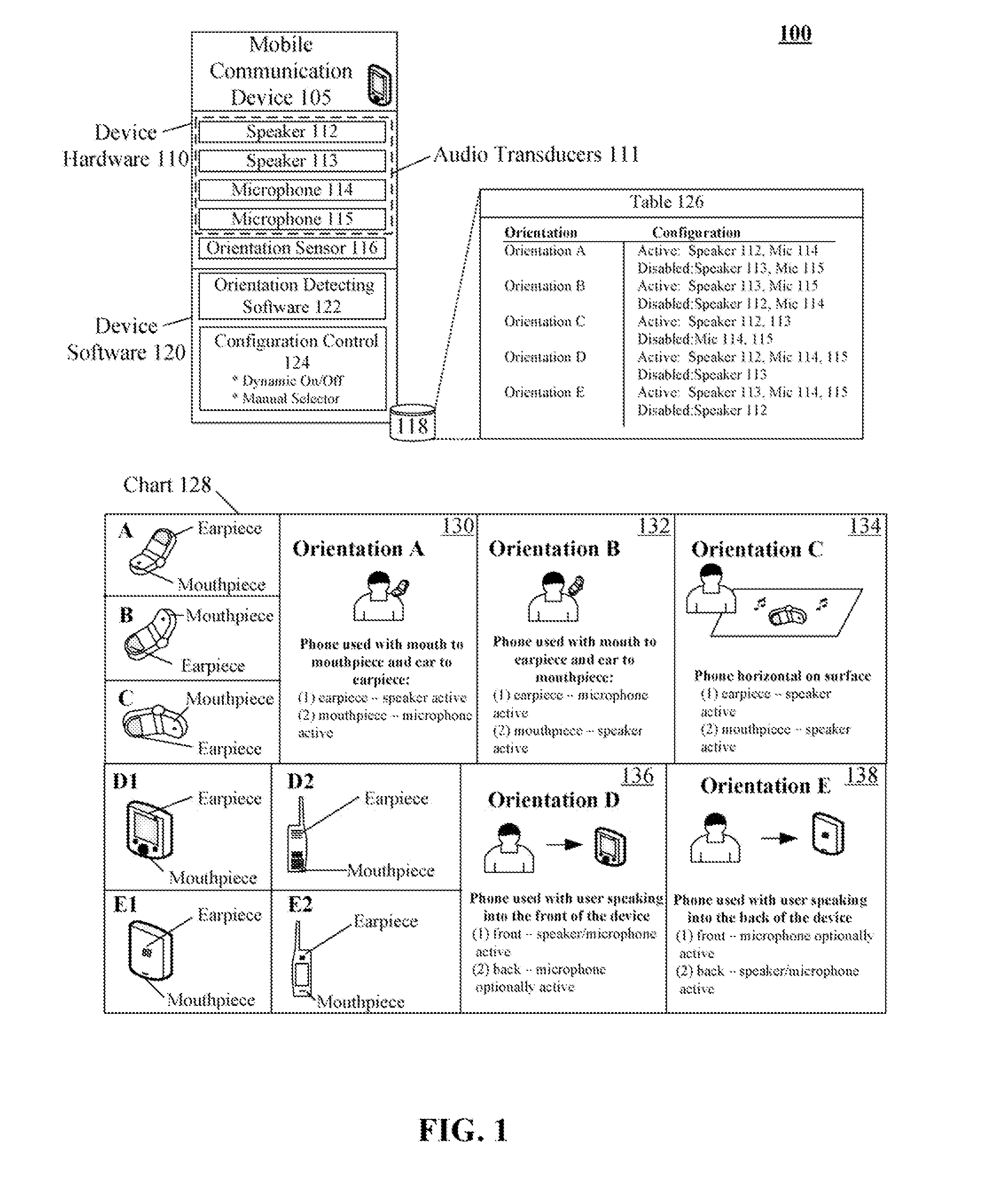

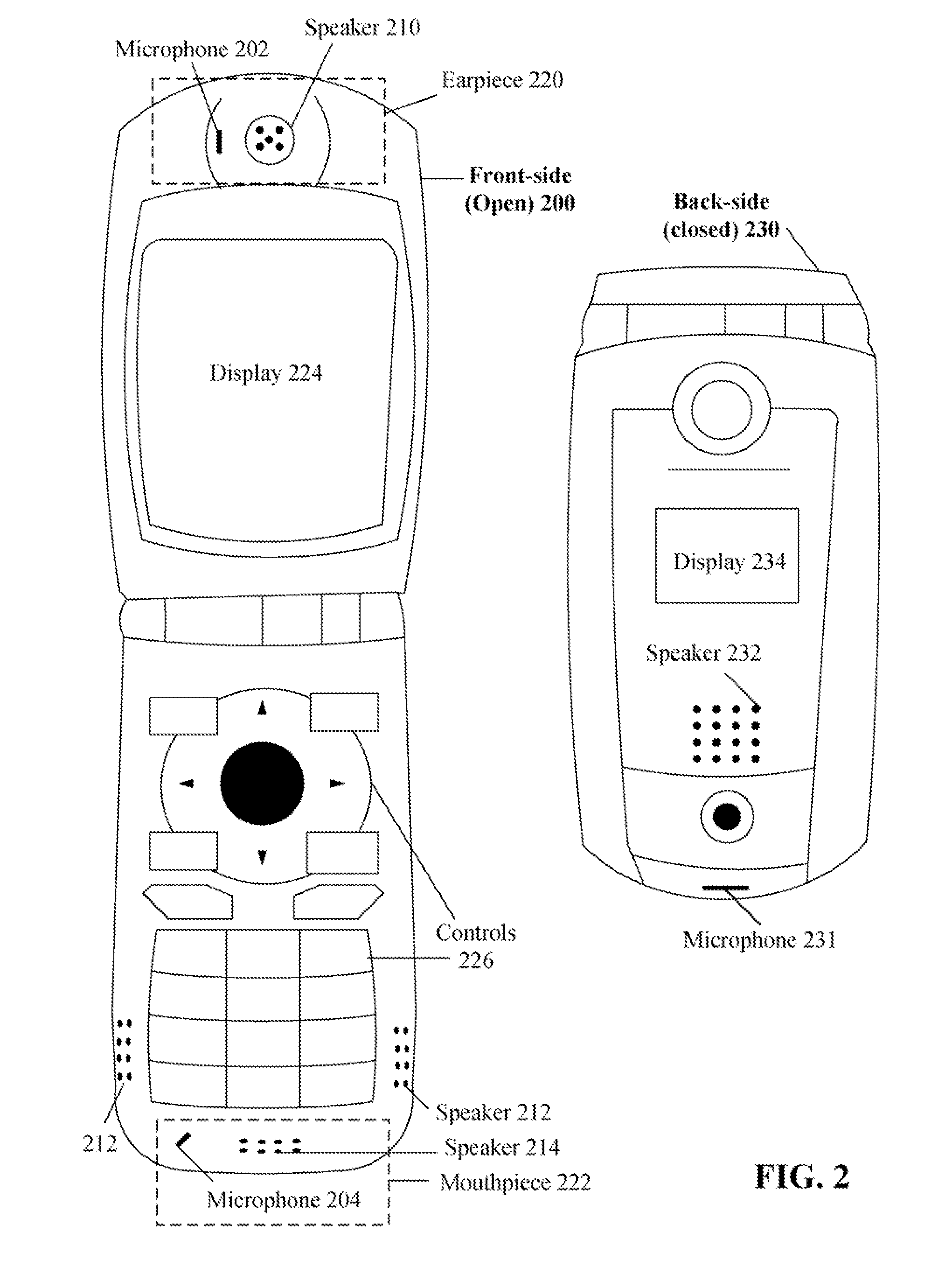

Automatic audio transducer adjustments based upon orientation of a mobile communication device

InactiveUS20080146289A1Save powerExtend battery lifeDevices with sensorSubstation equipmentAccelerometerTransducer

A solution for automatically activating different audio transducers of a mobile communication device based upon an orientation of the device. In the solution, a series of speaker / microphone assemblies can be positioned on the device, such as positioned near an earpiece and positioned near a mouthpiece. Different speaker / microphone assemblies can also be positioned on the front of the device and on the back of the device. The solution can automatically determine an orientation for the device, based upon a detected direction of a speech emitting source and / or based upon one or more sensors, such as a tilt sensor and an accelerometer. For example, when a device is in an upside down orientation, an earpiece microphone and a mouthpiece speaker can be activated. In another example, an otherwise deactivated rear facing speaker can be activated when the device is oriented in a rear facing orientation.

Owner:MOTOROLA INC

Doorbell communication systems and methods

ActiveUS8872915B1Consumes less powerImprove electrical activityPower managementColor television detailsDoorbellCommunications system

Methods can include using a doorbell to wirelessly communicate with a remotely located computing device. Doorbells can include a speaker, a microphone, a camera, and a button to sound a chime. A doorbell shipping mode can detect whether the doorbell is electrically coupled to an external power source. Methods can include entering a setup mode or a standby mode in response to detecting electricity from the external power source.

Owner:SKYBELL TECH IP LLC

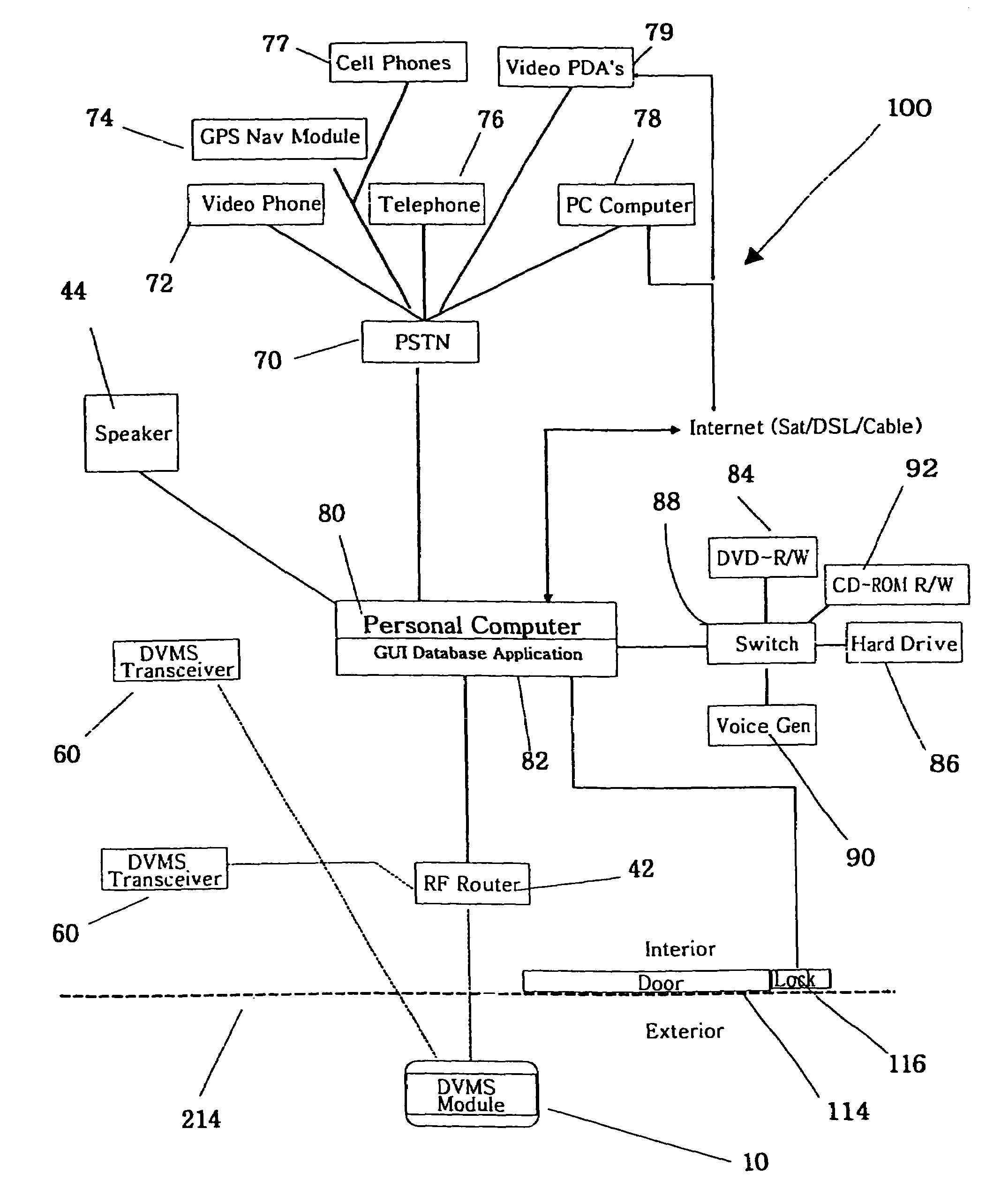

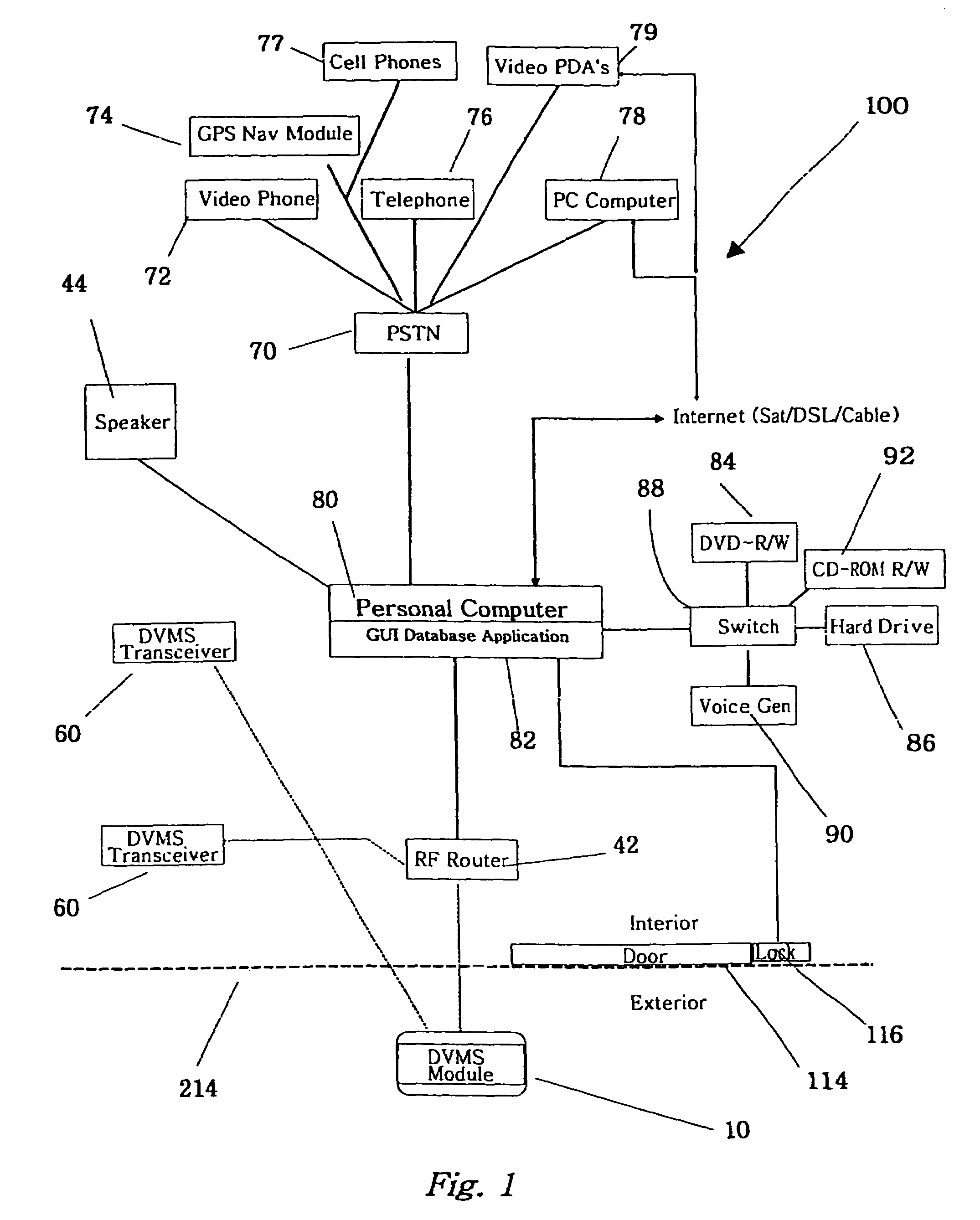

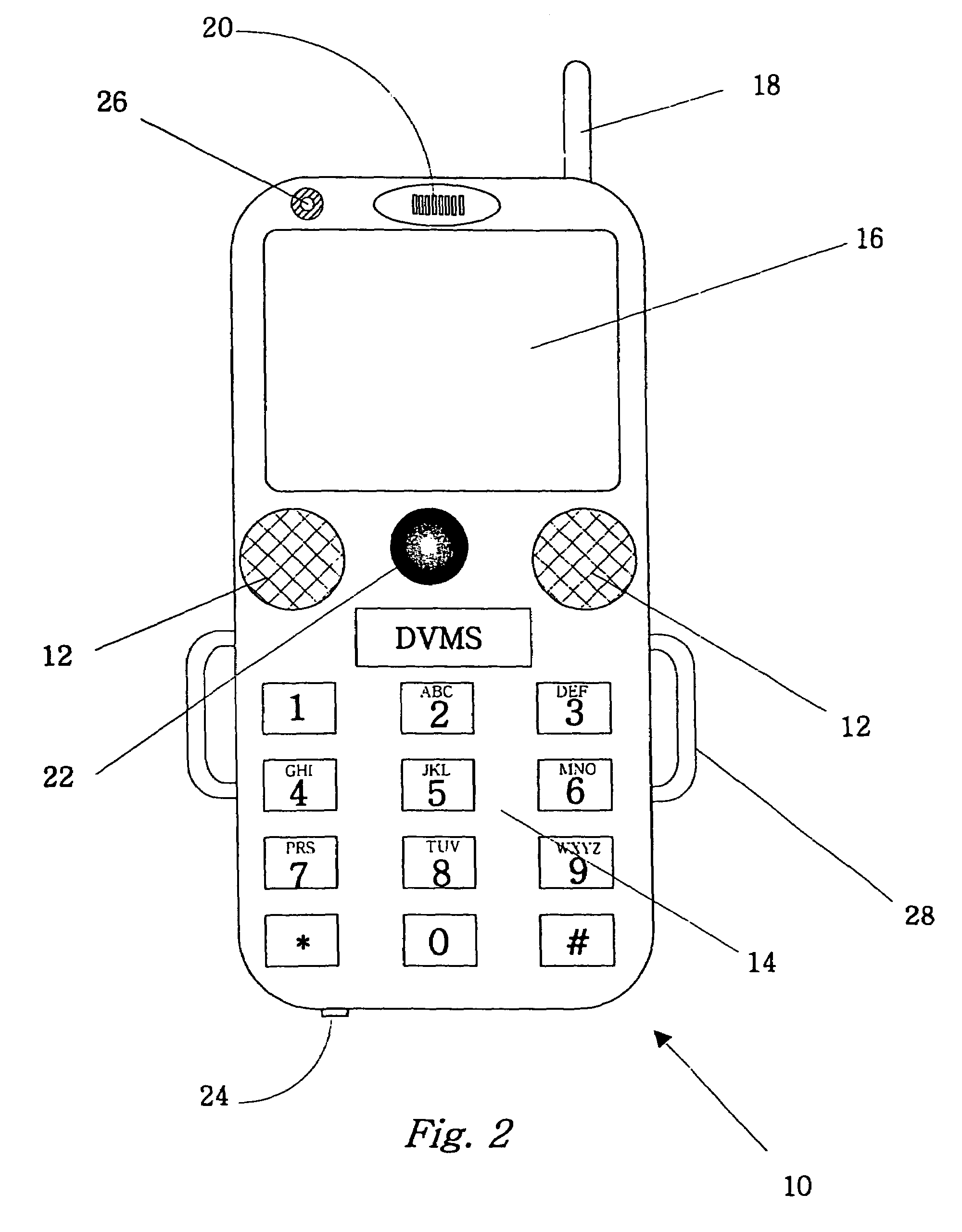

Automated audio video messaging and answering system

ActiveUS7193644B2Weaken energyReduce energy consumptionTelephonic communicationTwo-way working systemsProximity sensorDatabase application

The invention is an audio-video communication and answering system that synergistically improves communication between an exterior and an interior of a business or residence and a remote location, enables messages to be stored and accessed from both locally and remotely, and enables viewing, listening, and recording from a remote location. The system's properties make it particularly suitable as a sophisticated door answering-messaging system. The system has a DVMS module on the exterior. The DVMS module has a proximity sensor, a video camera, a microphone, a speaker, an RF transmitter, and an RF receiver. The system also has a computerized controller with a graphic user interface DVMS database application. The computerized controller is in communication with a public switching telephone network, and an RF switching device. The RF switching device enables communication between the DVMS module and the computerized controller. The RF switching device can be in communication with other RF devices, such as a cell phone, PDA, or computer.

Owner:SB IP HLDG LLC

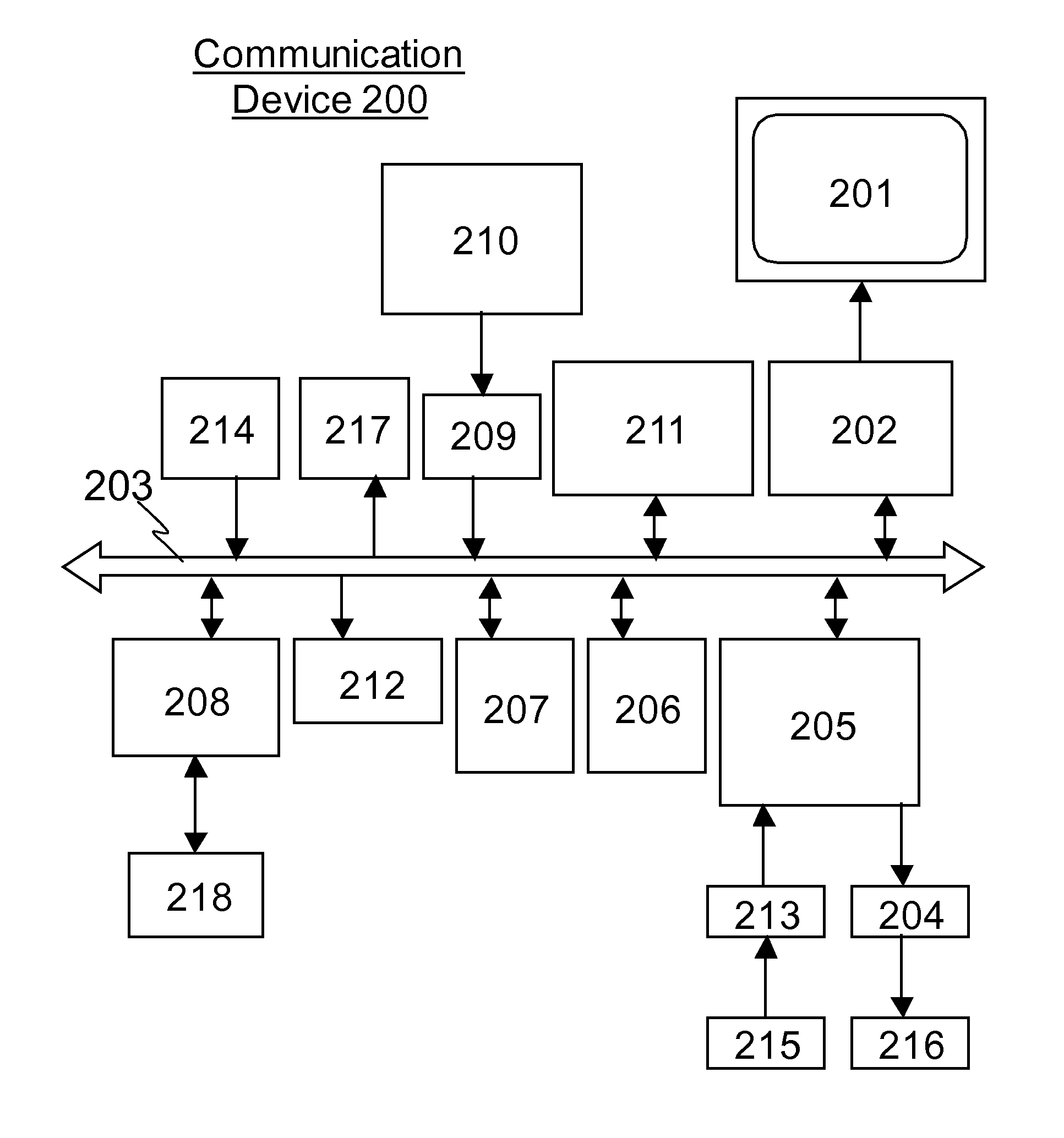

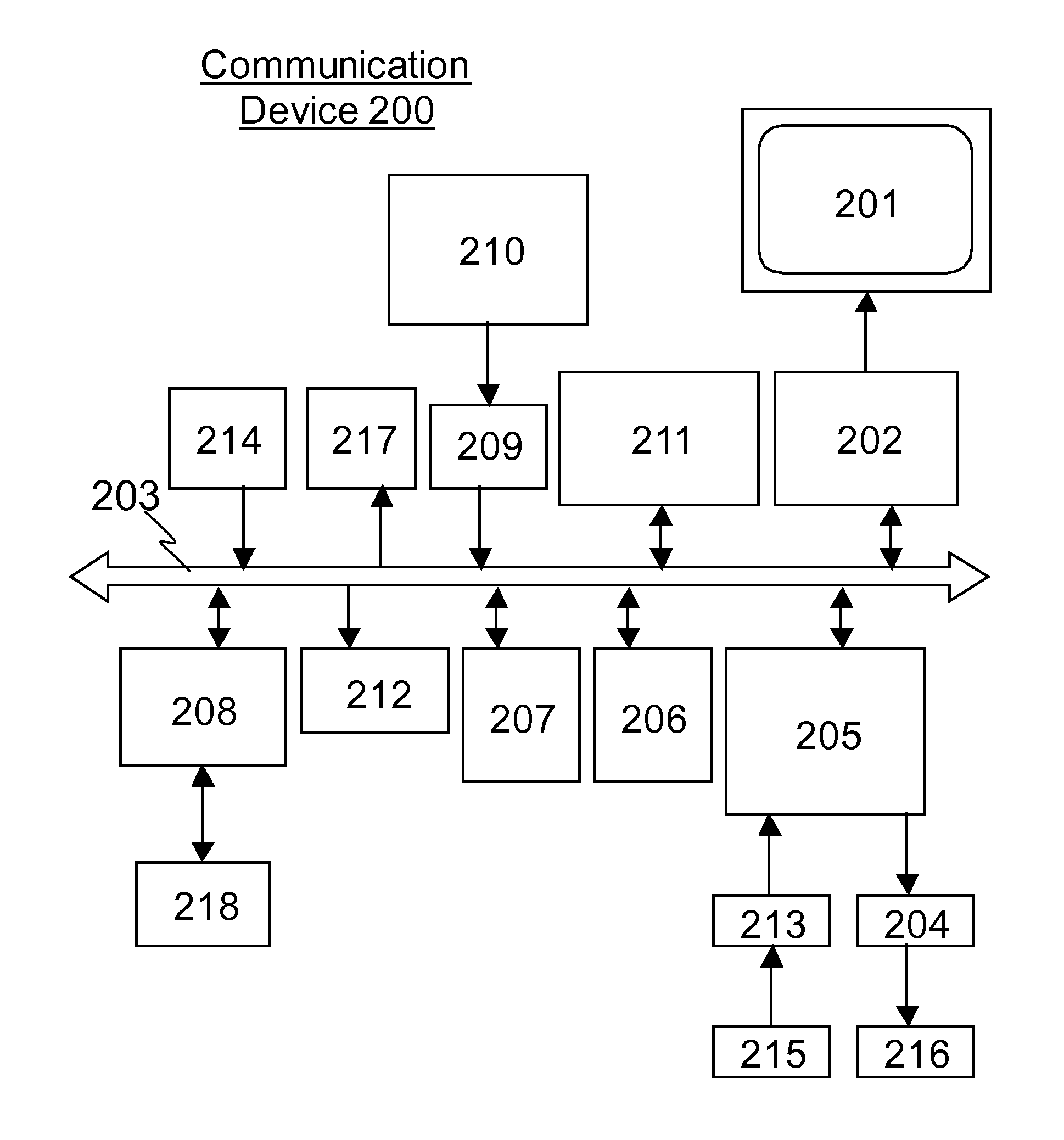

Communication device

InactiveUS8676273B1Provide mobilityMore convenienceDevices with voice recognitionAutomatic call-answering/message-recording/conversation-recordingComputer hardwareRing mode

A communication device, wherein when the communication device is in a ringing mode, audio data is output from the speaker, and when the communication device is in a silent mode, audio data is converted to a text data and the text data is output from the display in a visual fashion.

Owner:CORYDORAS TECH

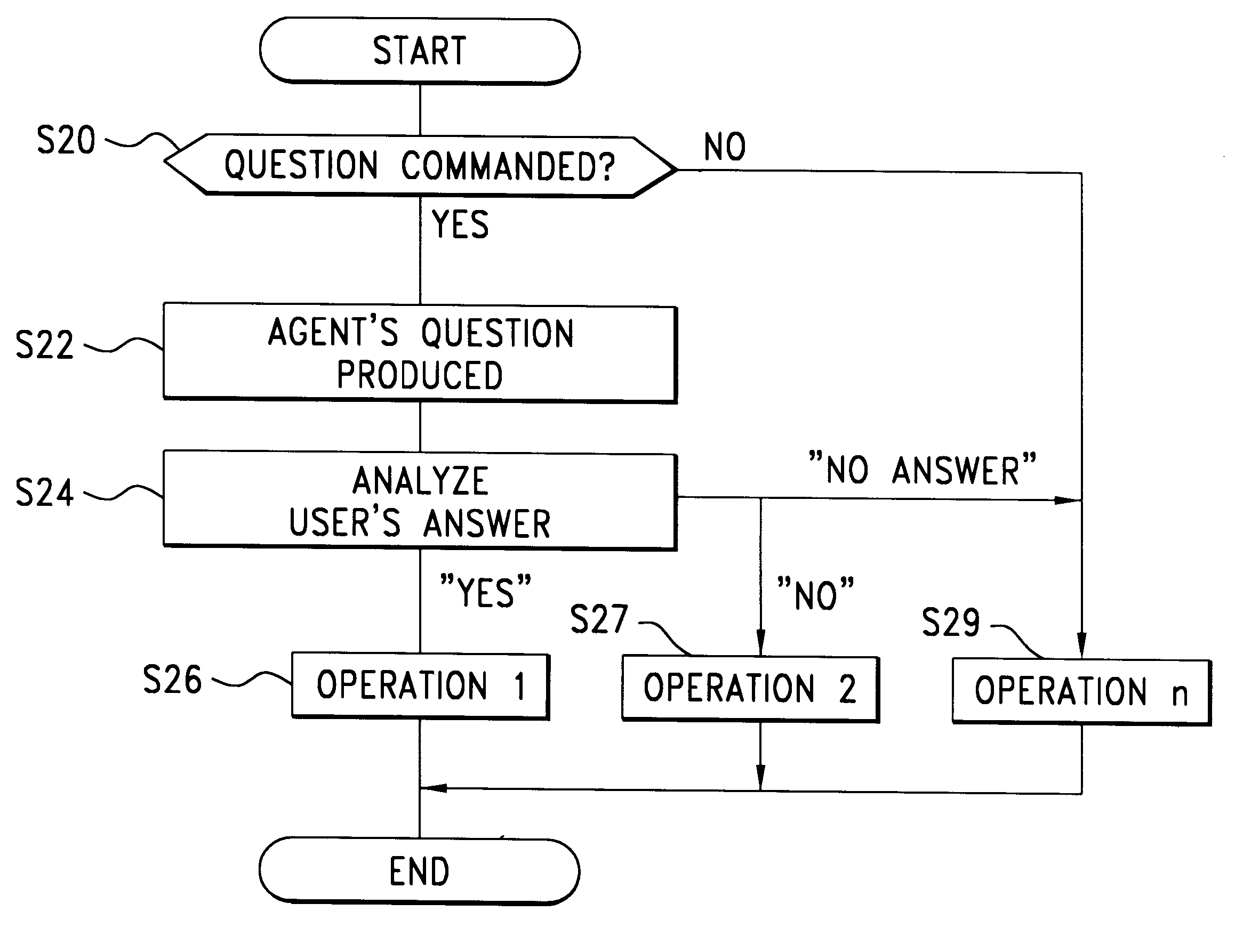

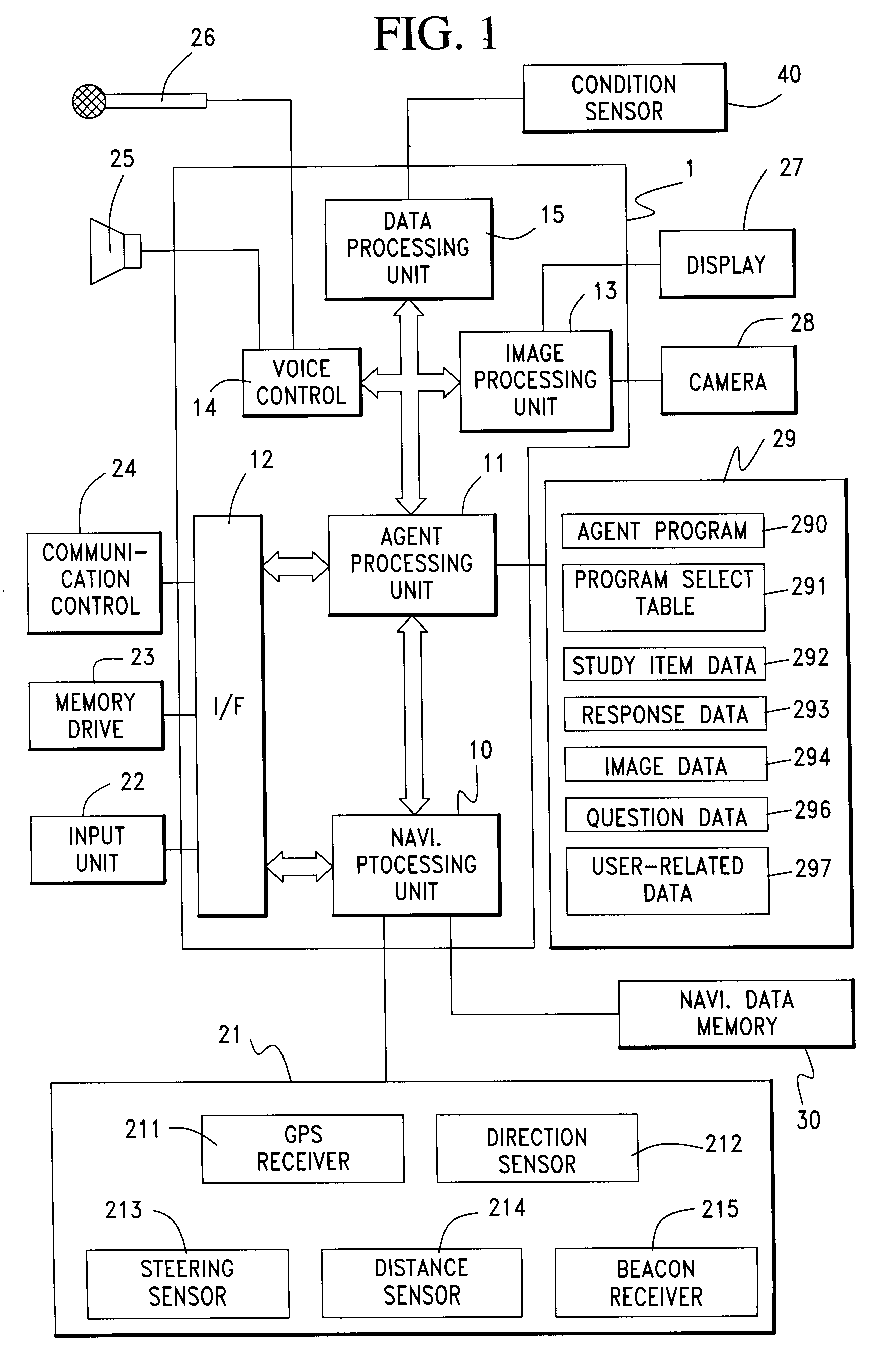

Interactive vehicle control system

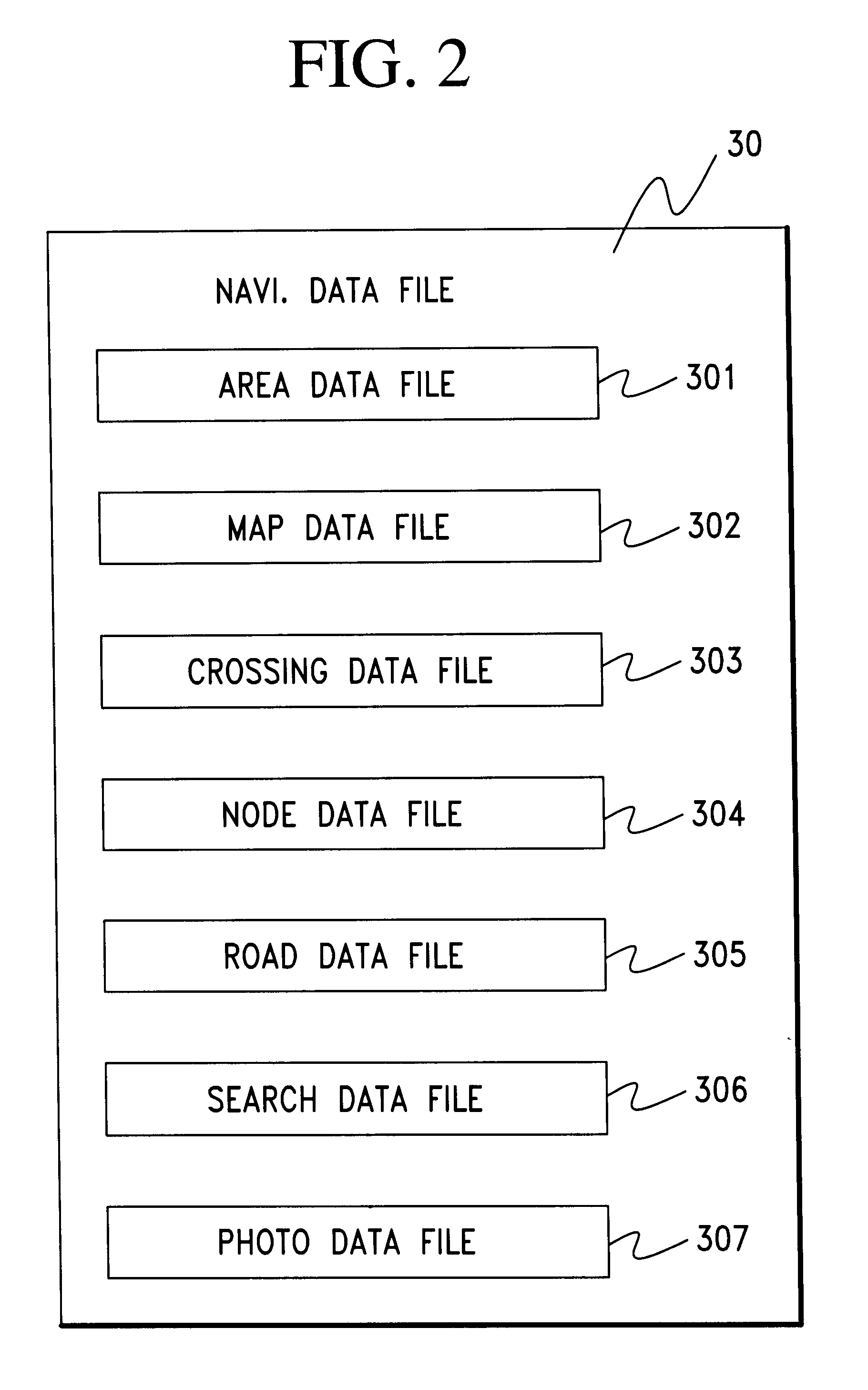

InactiveUS6351698B1Facilitate communicationImprove accuracyInstruments for road network navigationArrangements for variable traffic instructionsDriver/operatorDisplay device

An interactive navigation system includes a navigation processing unit, a current position sensor, a speaker, a microphone and a display. When it is preliminary inferred, based on receipt of a detection signal from the sensor, that a vehicle has probably been diverted from a drive route determined by the navigation processing unit, a machine voice question is produced through the speaker for confirmation of the inferred probability of diversion. A driver or user in the vehicle answers the question, which is input through the microphone and analyzed to be affirmative or negative, from which a final decision is made as to the vehicle diversion. In a preferred embodiment the question is spoken by a personified agent who appears on the display. The agent's activities are controlled by an agent processing unit. Communication between the agent and the user improves reliability and accuracy of inference of any vehicle condition which could not be determined perfectly by a sensor only.

Owner:EQUOS RES

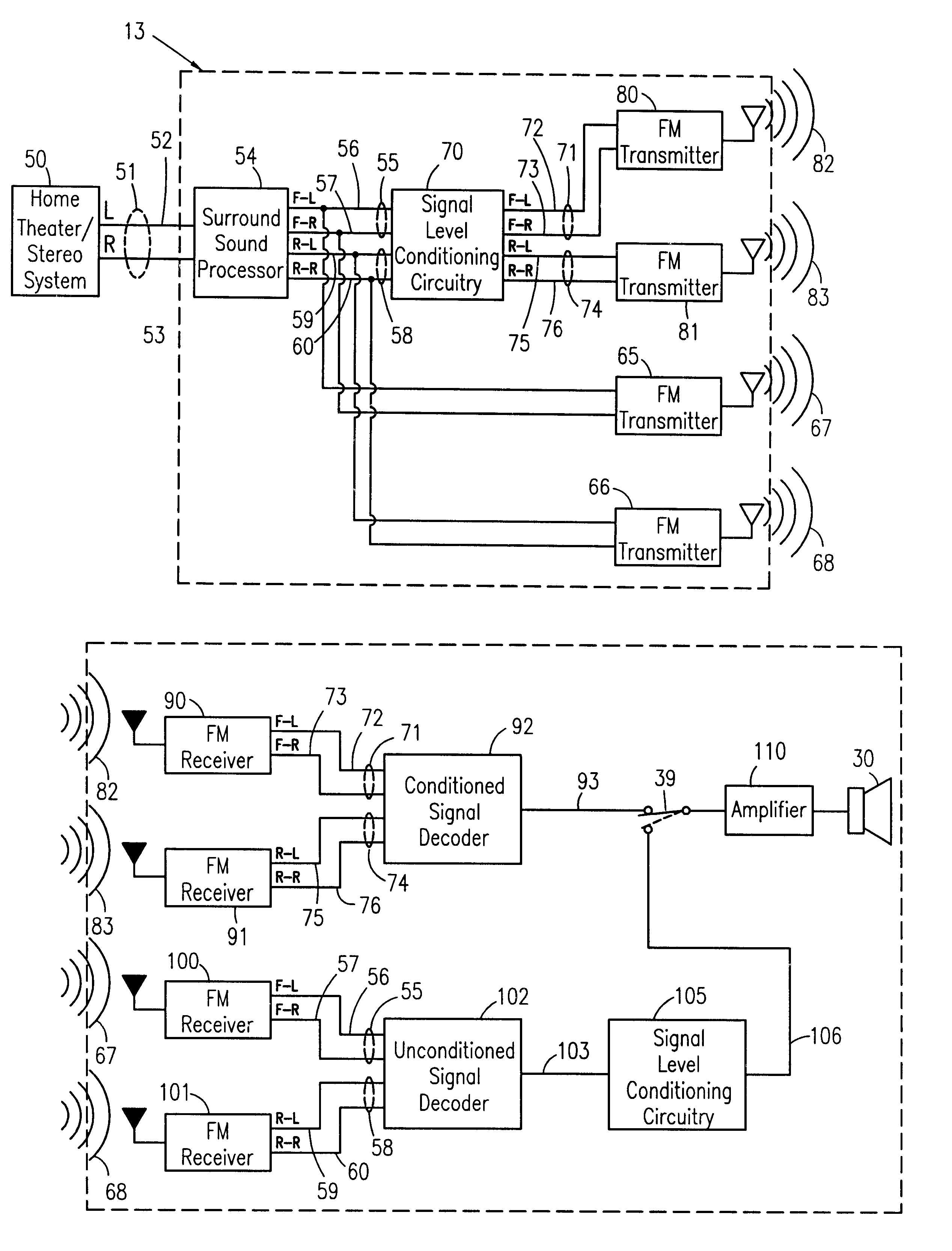

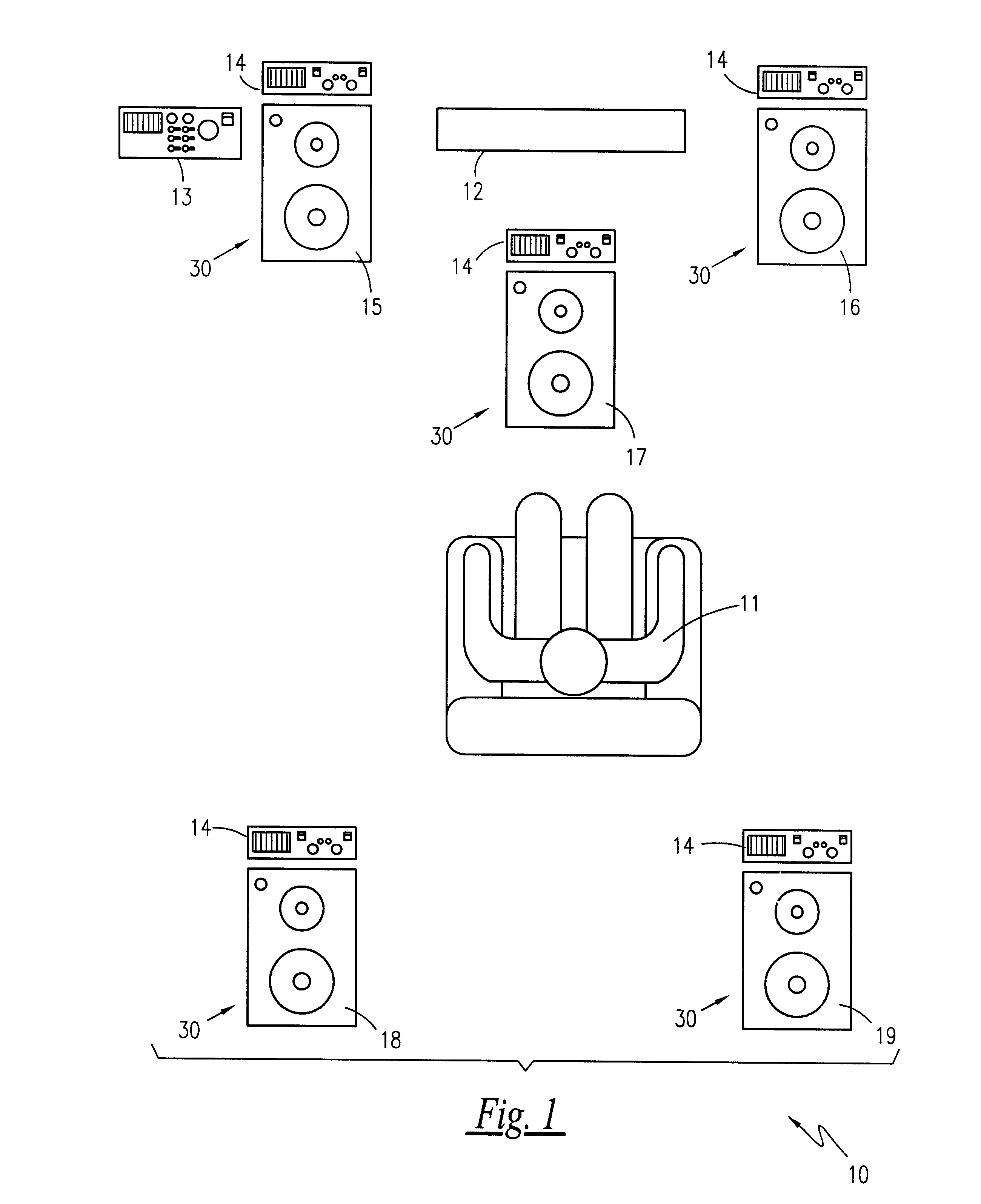

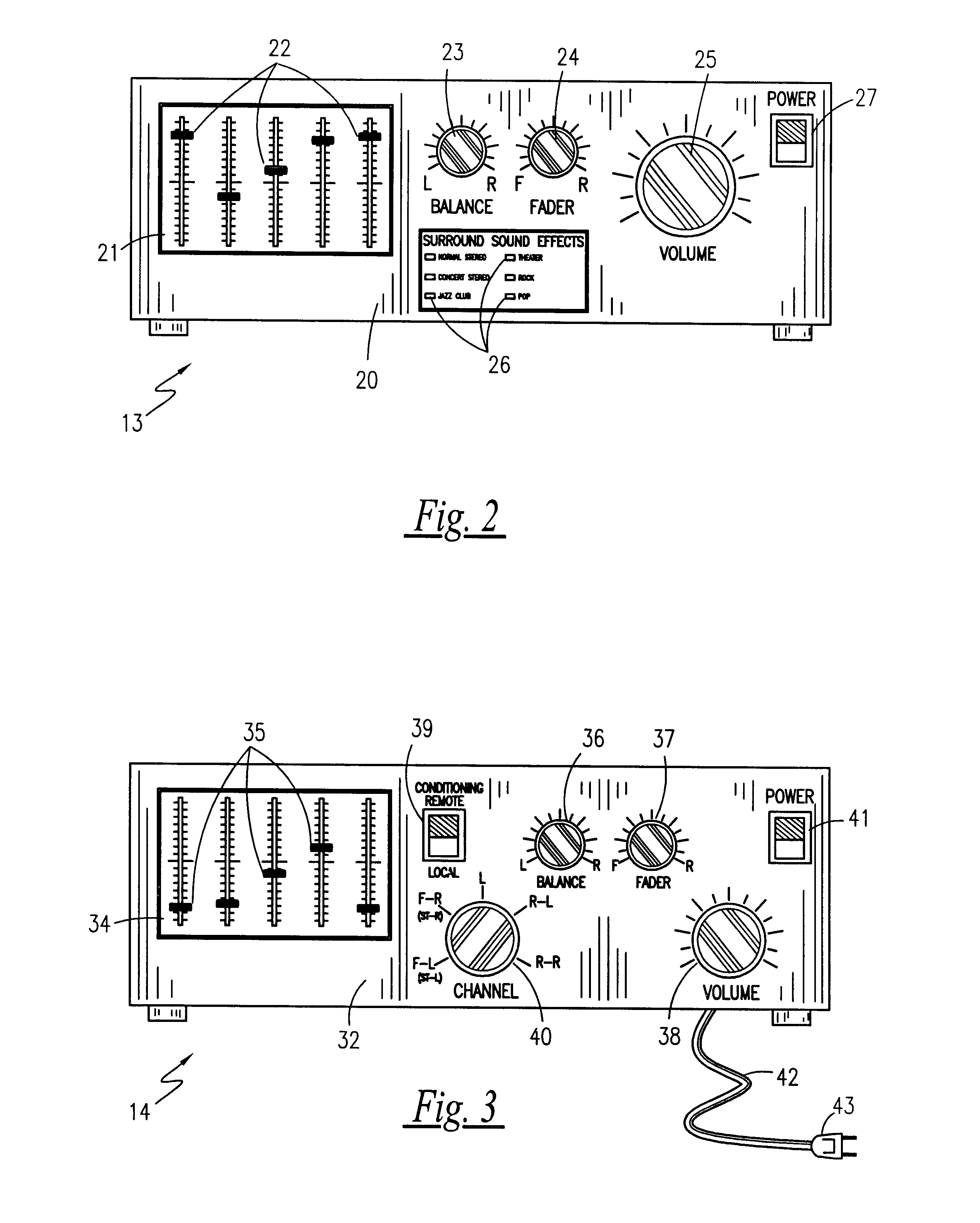

Wireless surround sound speaker system

Disclosed is a wireless surround sound speaker system wherein a transmitter broadcasts a variety of FM signals that correspond to the individual speaker channels commonly found in a surround sound system. Receivers, individually equipped with signal receiving, conditioning and amplification components, are configured to receive any one of the broadcast signals in a remote location and are used to drive a conventional loudspeaker in that location. Powered by wall socket or via DC battery packs, the receivers, used in conjunction with the transmitter, provide surround sound capabilities without the need for complex and difficult wiring.

Owner:ALLEN STEVEN W +1

Doorbell communication systems and methods

ActiveUS8823795B1High activityLess powerColor television detailsClosed circuit television systemsDoorbellEntryway

Communication systems configured to monitor an entryway to a building can include a security system configured to wirelessly communicate with a remote computing device. The security system can include a doorbell that comprises a camera, a speaker, and a microphone.

Owner:SKYBELL TECH IP LLC

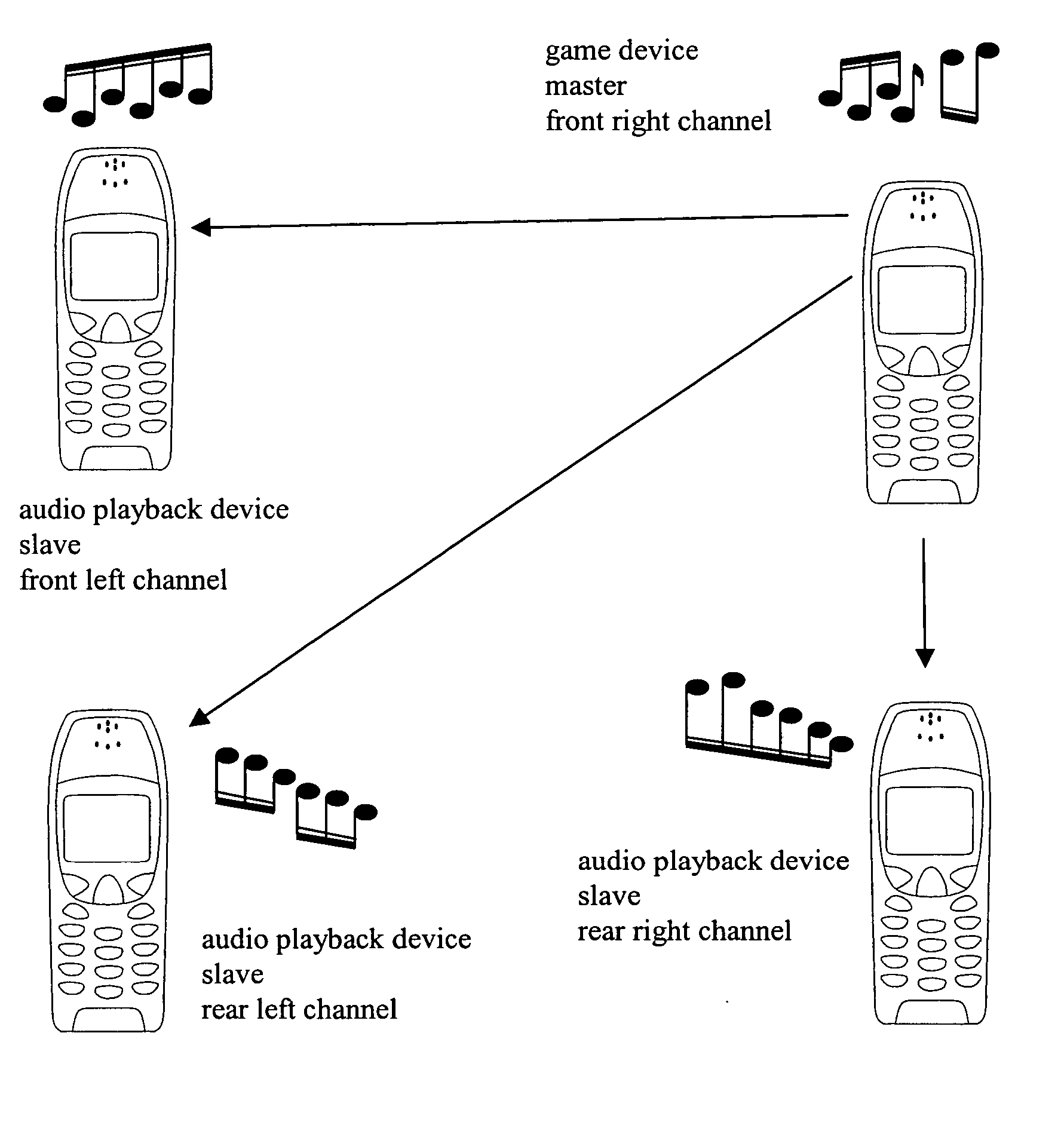

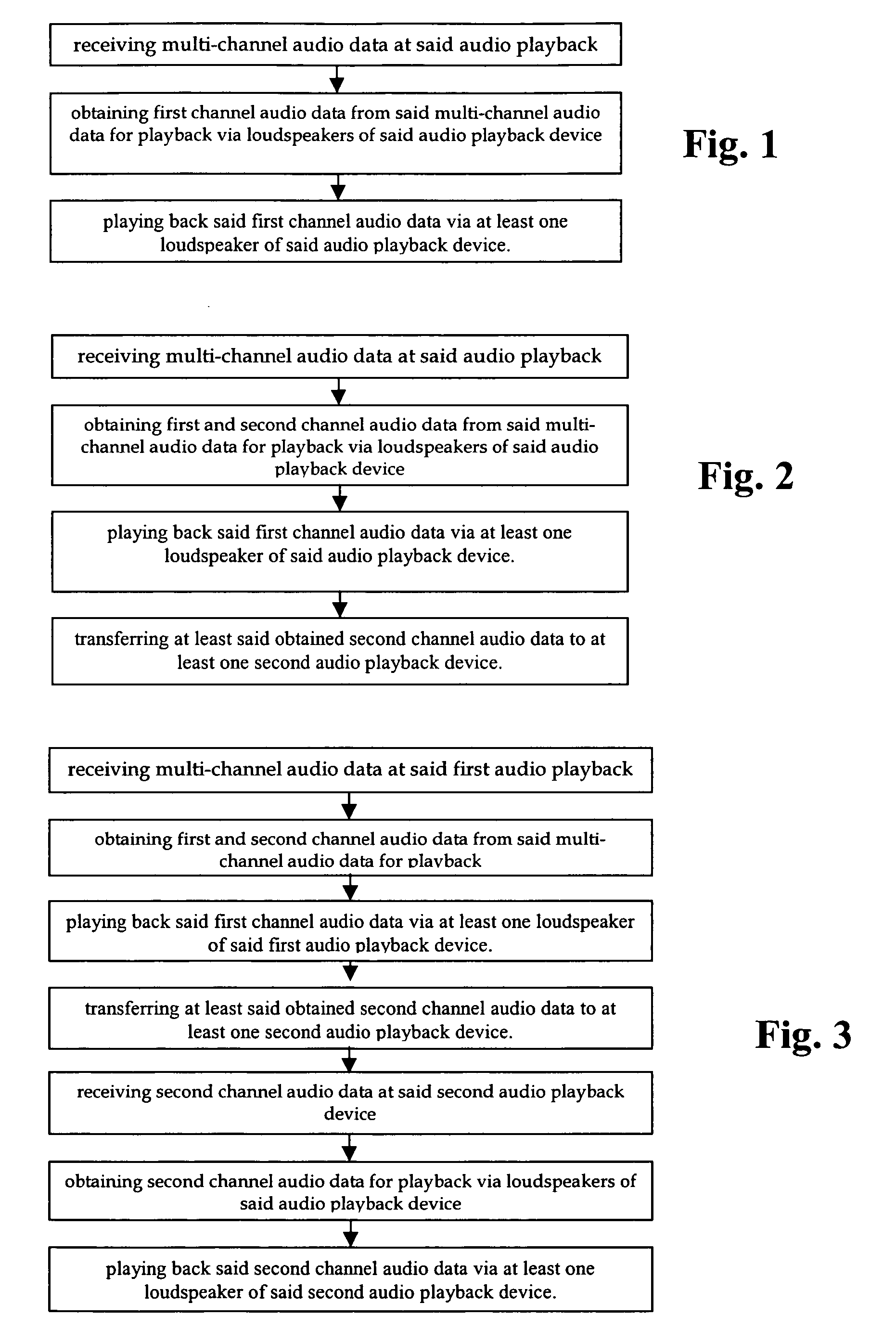

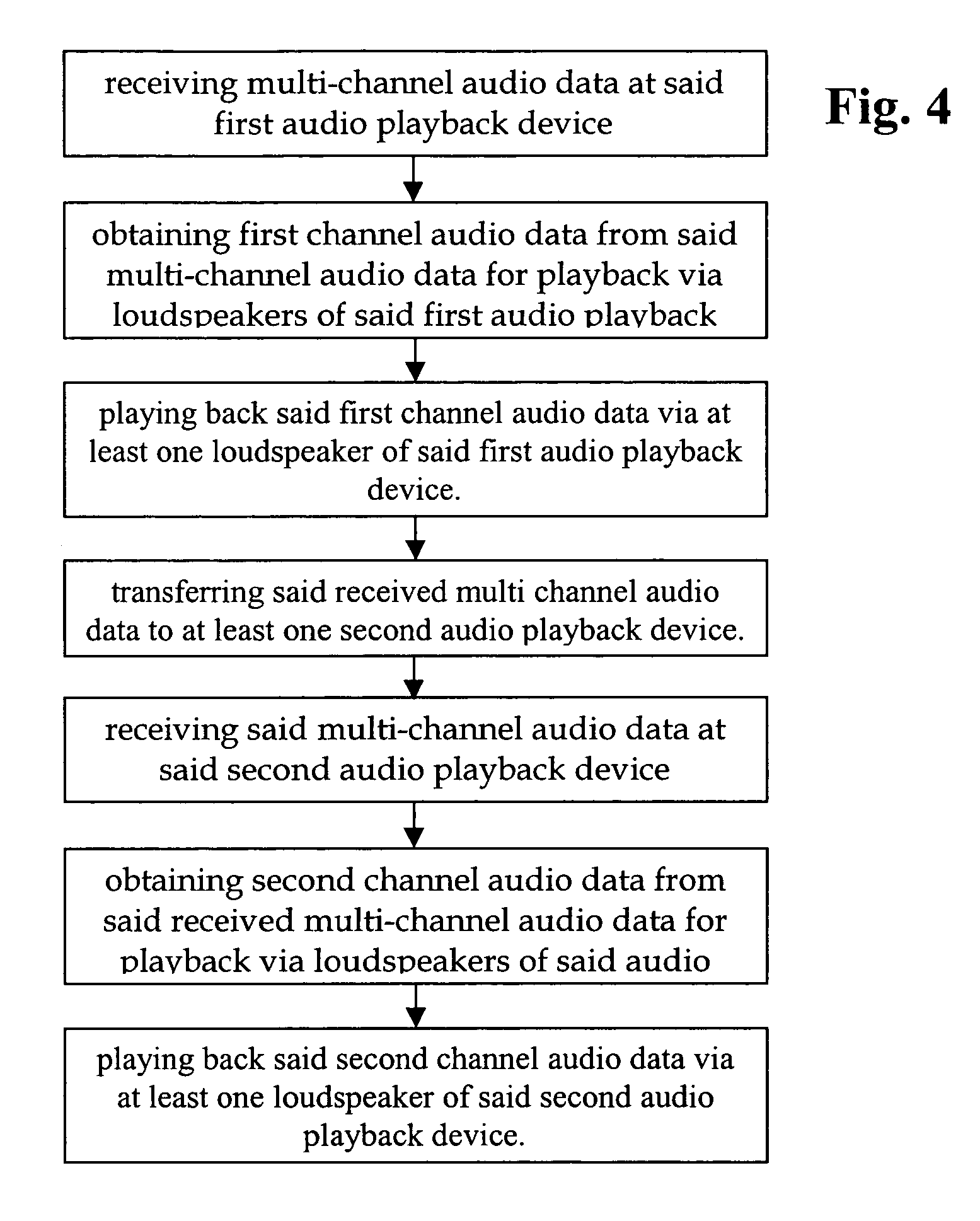

Audio playback device and method of its operation

InactiveUS20070087686A1Surround sound field/effectImproved audio playbackLoudspeaker enclosure positioningStereophonic systemsMobile deviceLoudspeaker

The invention relates to mobile audio playback devices and methods of its operation for enhanced music and sound experience for mobile devices. To provide stereo and surround sound, the present invention provides an audio playback device with a receiving means for receiving multi-channel audio data, obtaining means connected to the receiving means, for obtaining first channel audio data from the multi-channel audio data for playback on the audio playback device, and playback means having at least one loudspeaker, and being connected to the obtaining means for outputting the first channel audio data. The other not selected audio channels or all received audio data may be transferred to other terminals for playback or may be discarded.

Owner:NOKIA SOLUTIONS & NETWORKS OY

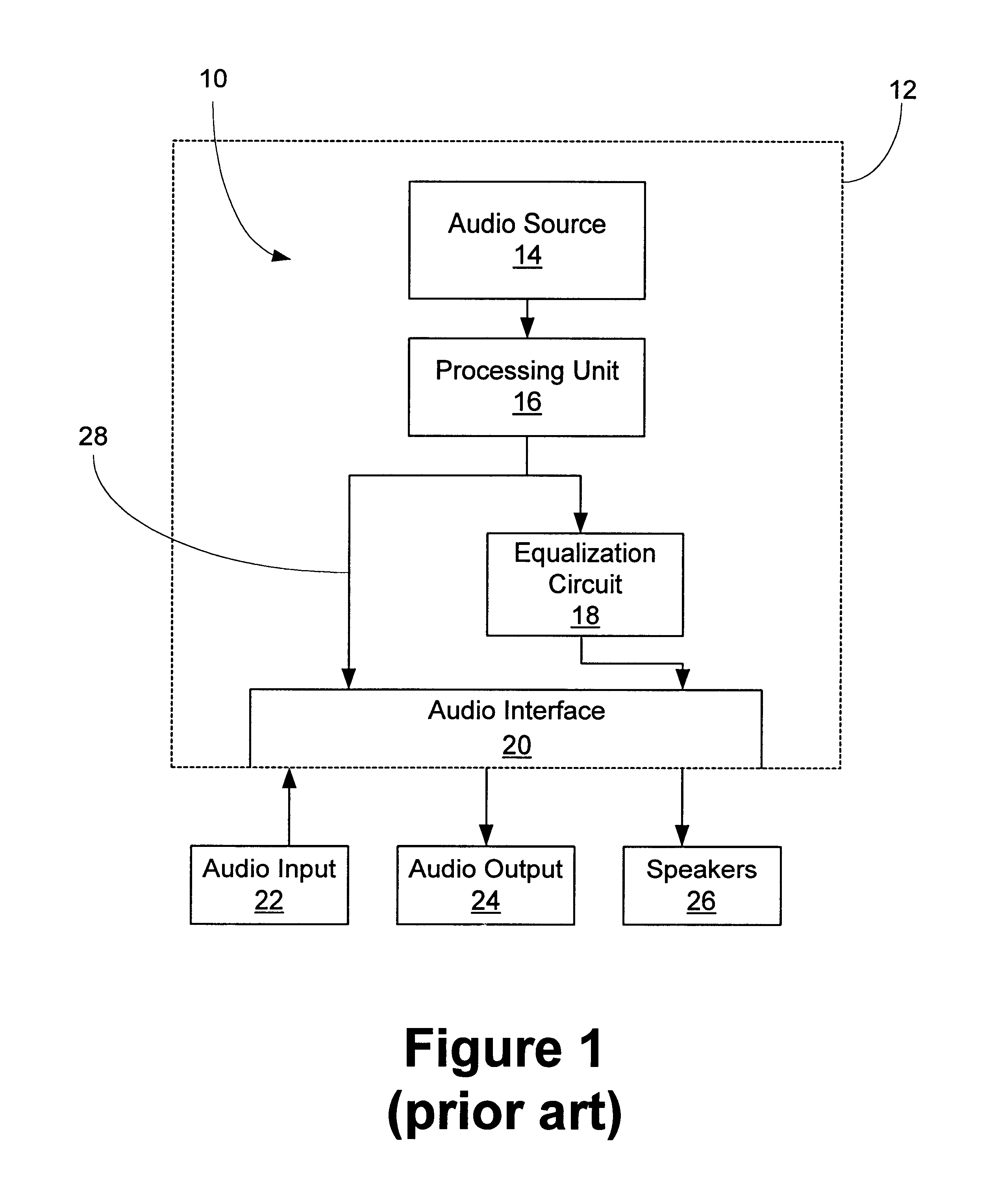

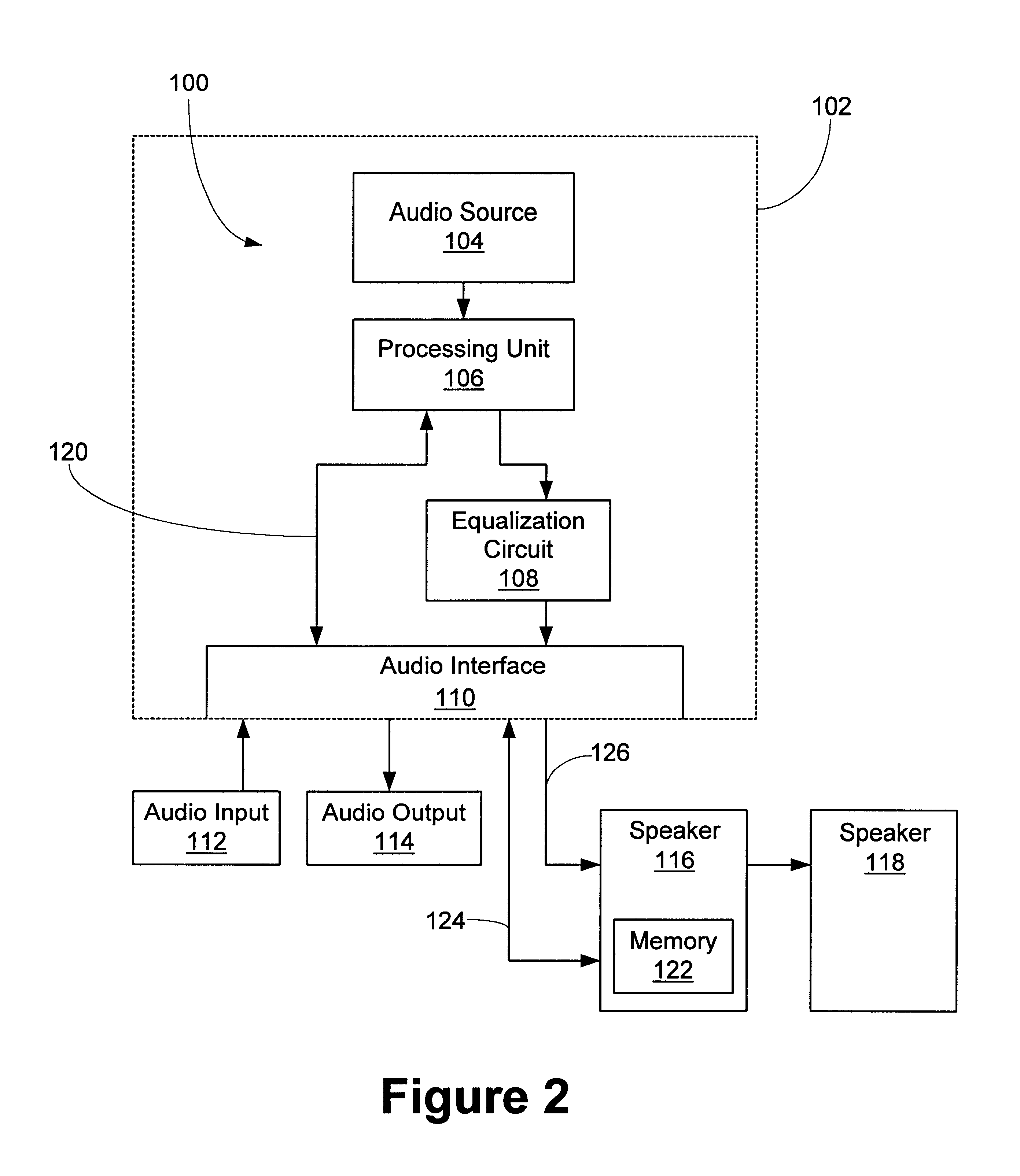

Plug and play compatible speakers

InactiveUS6859538B1Stereophonic circuit arrangementsFrequency response correctionTransducerLoudspeaker

A speaker includes at least one transducer and at least one memory device. The at least one transducer is adapted to receive an audio signal. The at least one memory device is adapted to store data related to the speaker. A method includes reading data from a memory device of at least one speaker. An audio signal is provided from an audio system.

Owner:HEWLETT PACKARD DEV CO LP

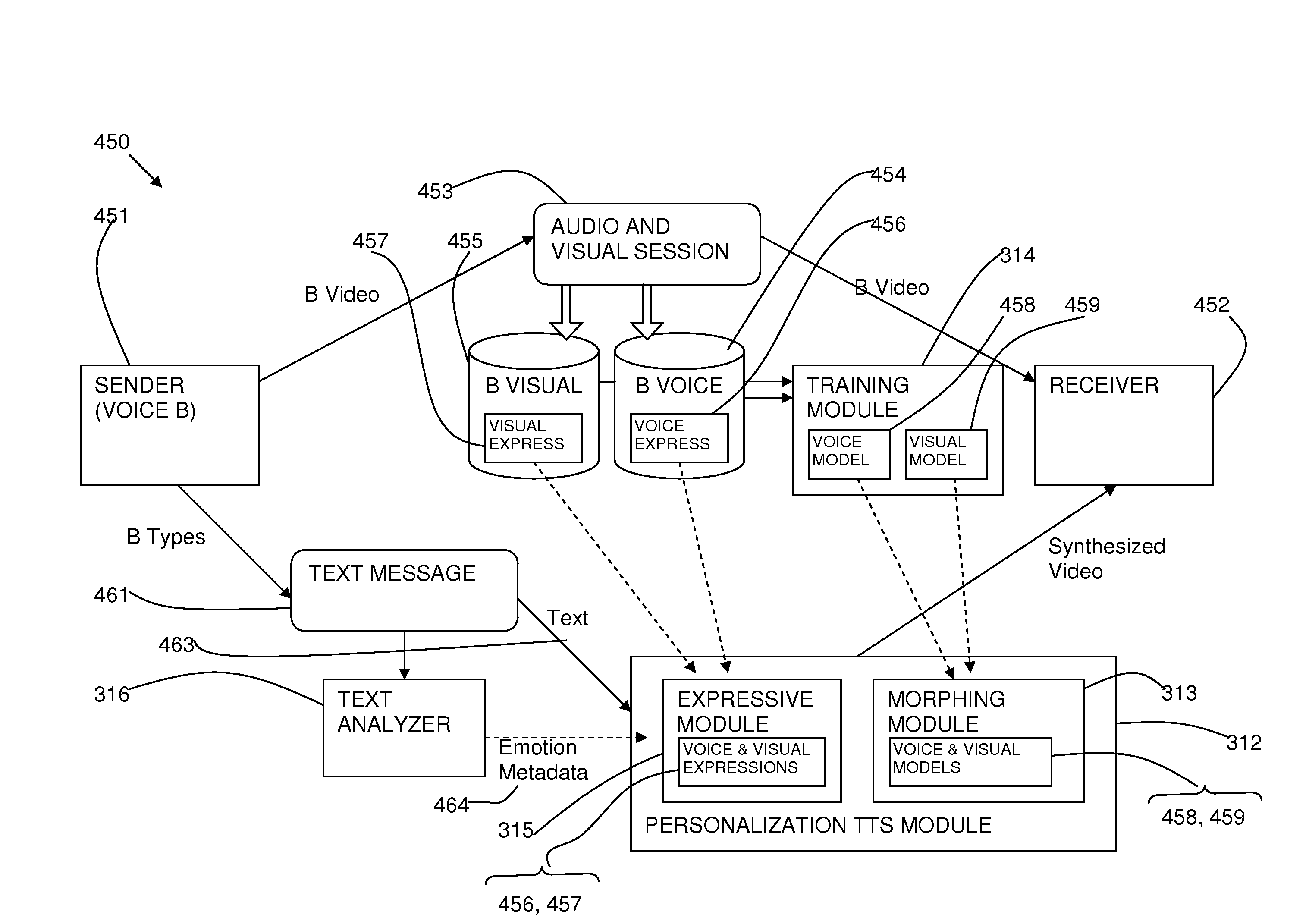

Method and system for text-to-speech synthesis with personalized voice

A method and system are provided for text-to-speech synthesis with personalized voice. The method includes receiving an incidental audio input (403) of speech in the form of an audio communication from an input speaker (401) and generating a voice dataset (404) for the input speaker (401). The method includes receiving a text input (411) at the same device as the audio input (403) and synthesizing (312) the text from the text input (411) to synthesized speech including using the voice dataset (404) to personalize the synthesized speech to sound like the input speaker (401). In addition, the method includes analyzing (316) the text for expression and adding the expression (315) to the synthesized speech. The audio communication may be part of a video communication (453) and the audio input (403) may have an associated visual input (455) of an image of the input speaker. The synthesis from text may include providing a synthesized image personalized to look like the image of the input speaker with expressions added from the visual input (455).

Owner:CERENCE OPERATING CO

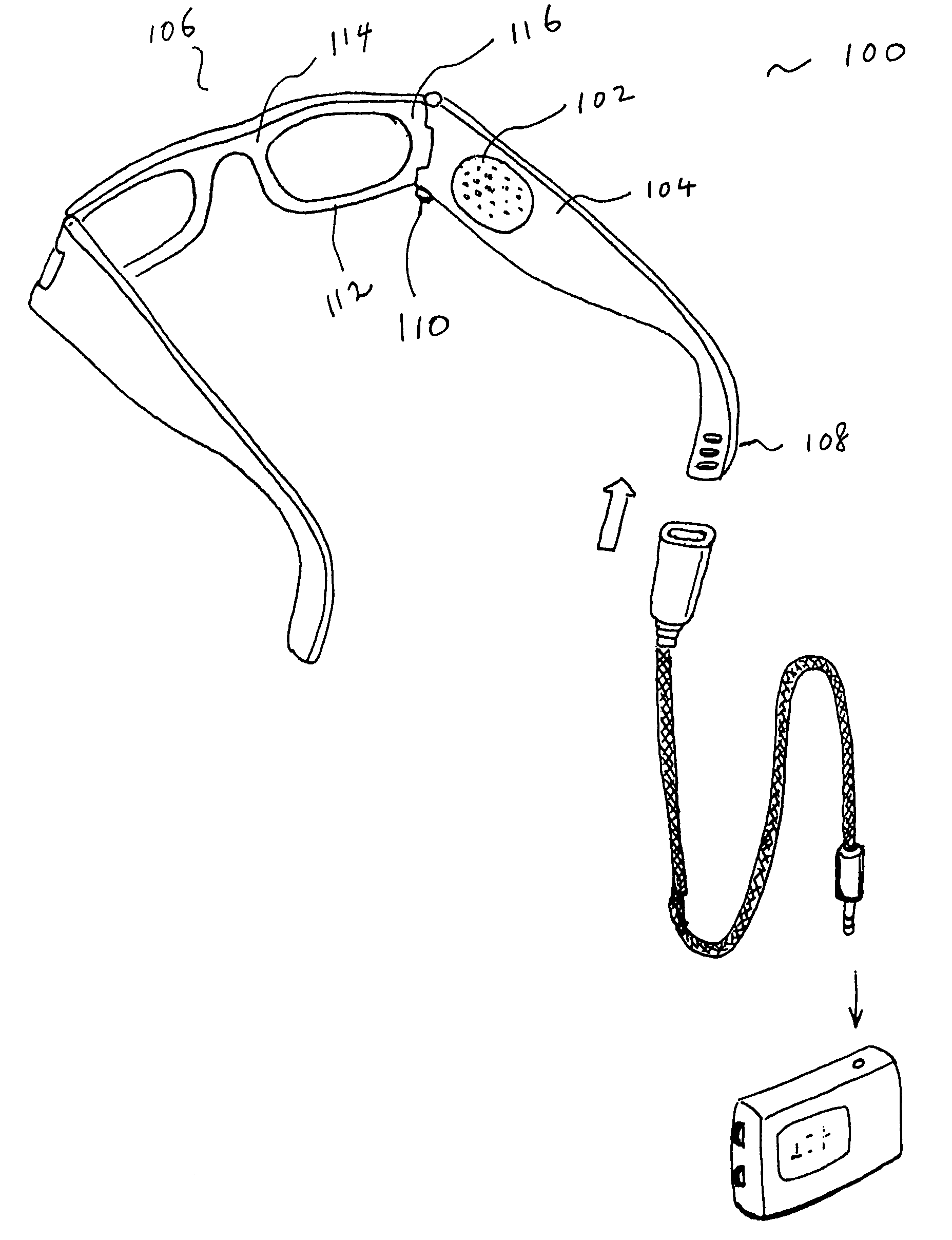

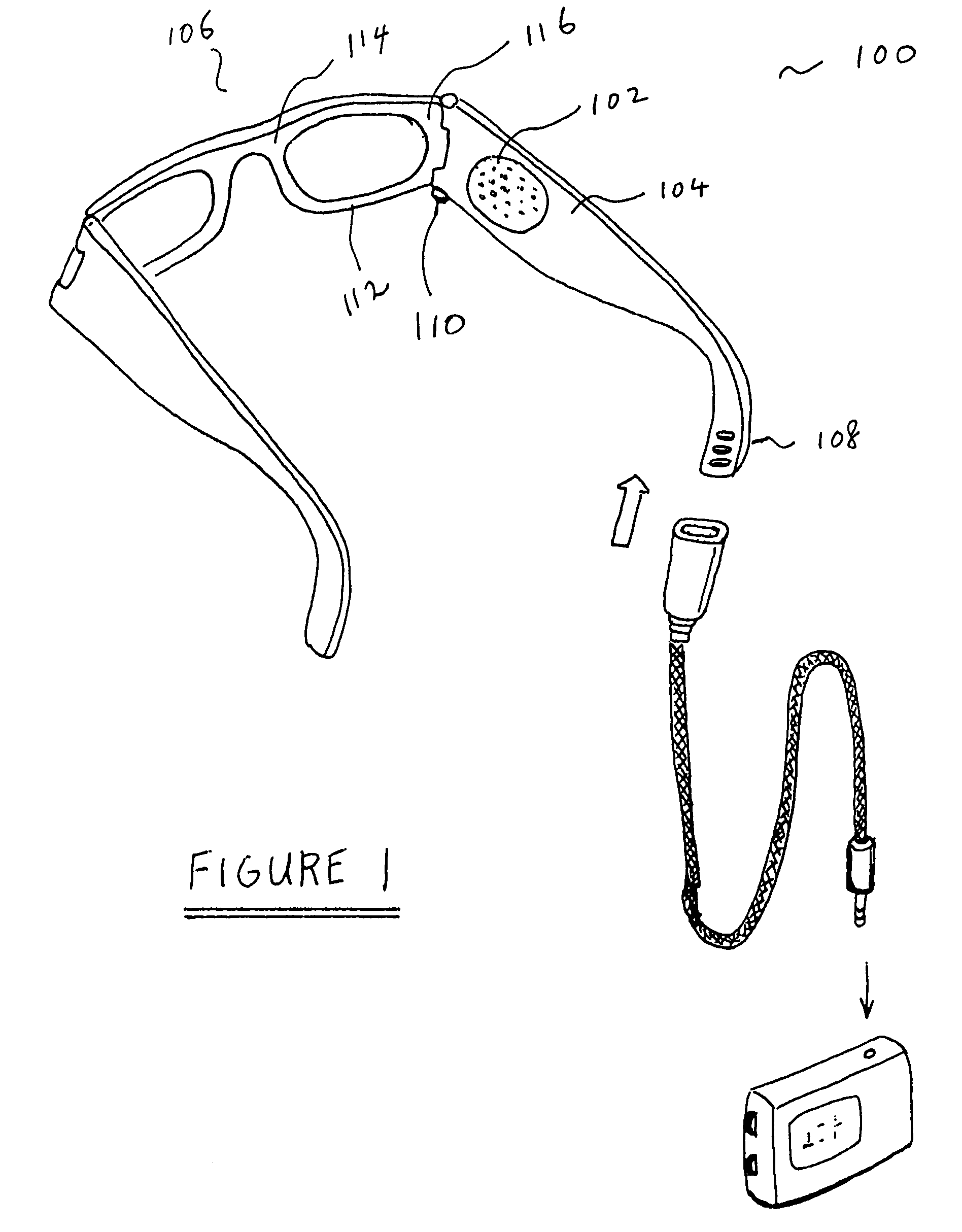

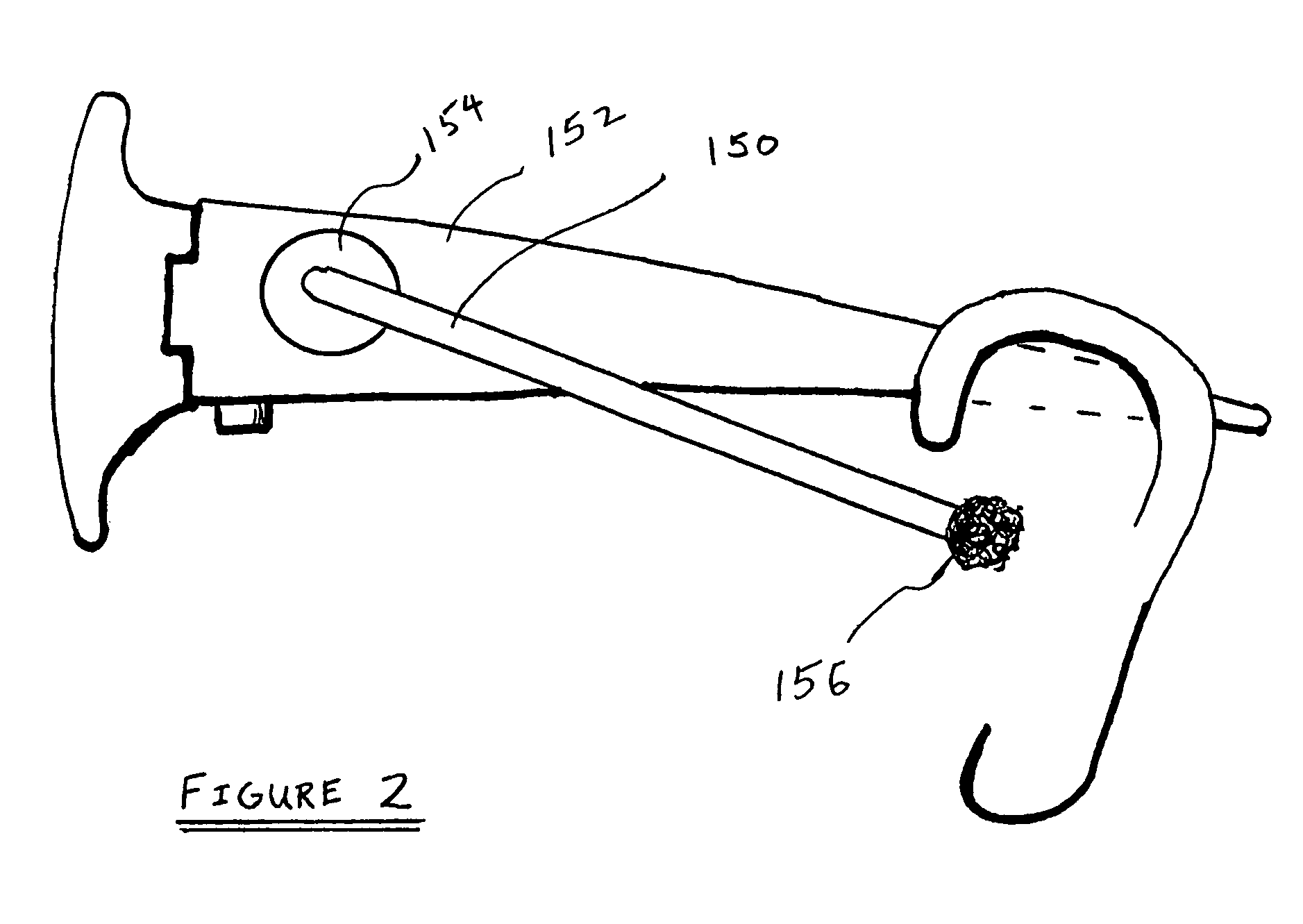

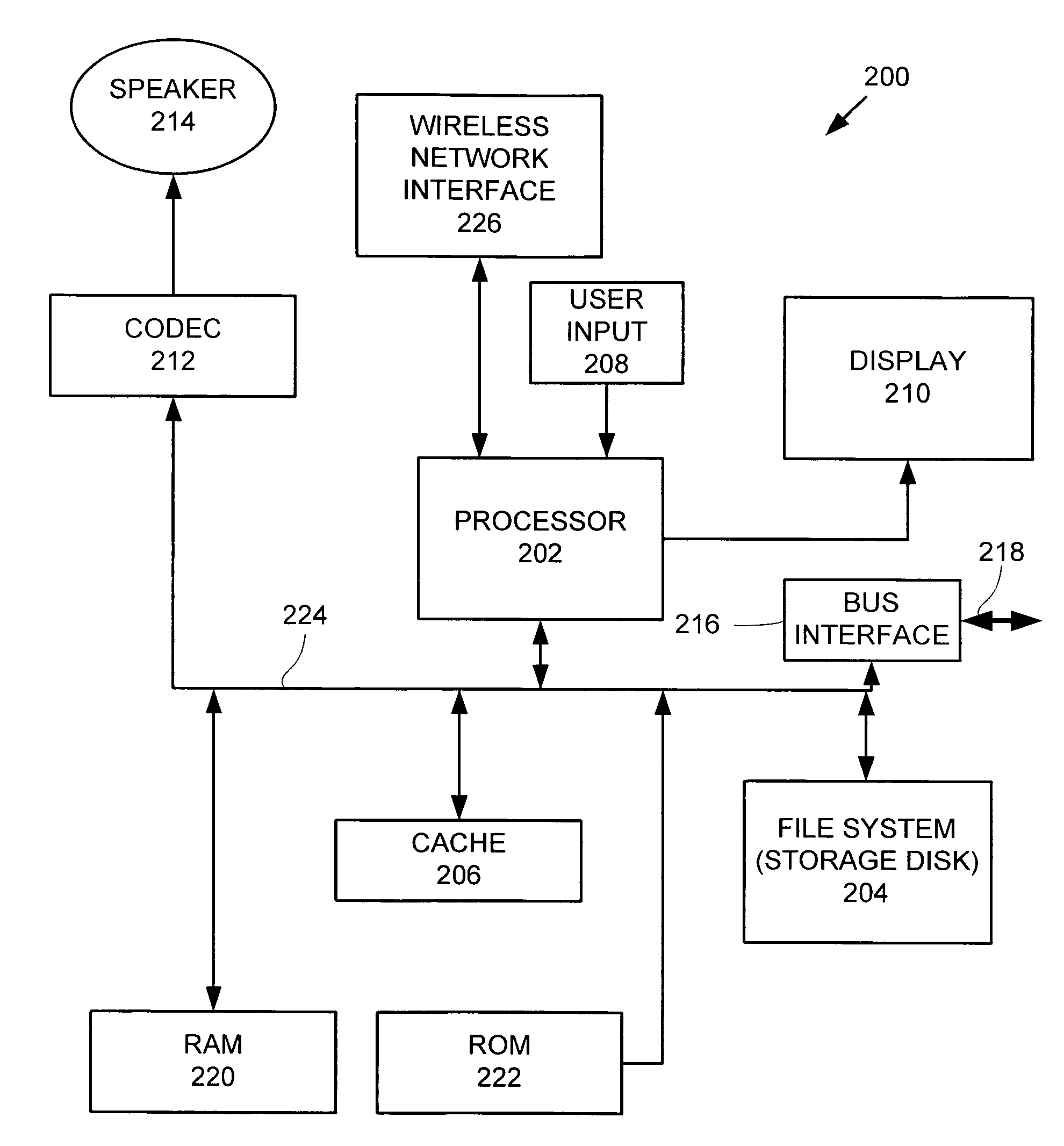

Eyeglasses with electrical components

ActiveUS7500747B2Easy to operateMinimize amountNon-optical adjunctsNon-optical partsUses eyeglassesElectricity

A pair of glasses with one or more electrical components partially or fully embedded in the glasses is disclosed. In one embodiment, a pair of glasses includes a speaker and an electrical connector, both at least partially embedded in the glasses, with the speaker and the connector electrically coupled together by an electrical conductor. In another embodiment, a pair of glasses includes a storage medium and an electrical connector. In yet another embodiment, a pair of glasses includes a speaker, a coder / decoder, a processor and a storage medium. The glasses can serve as a multimedia asset player. In a further embodiment, some of the electrical components are in a base tethered to a pair of glasses. Instead of just receiving signals, in one embodiment, a pair of glasses also has a microphone and a wireless transceiver. In another embodiment, a pair of glasses includes a preference indicator that allows a user to indicate the user's preference regarding, for example, what is being output by the glasses. In yet another embodiment, there can be one or more control knobs on the glasses. In a further embodiment, a pair of glasses includes a camera and electrical components for wireless connection. In yet a further embodiment, a pair of glasses includes a sensor.

Owner:INGENIOSPEC

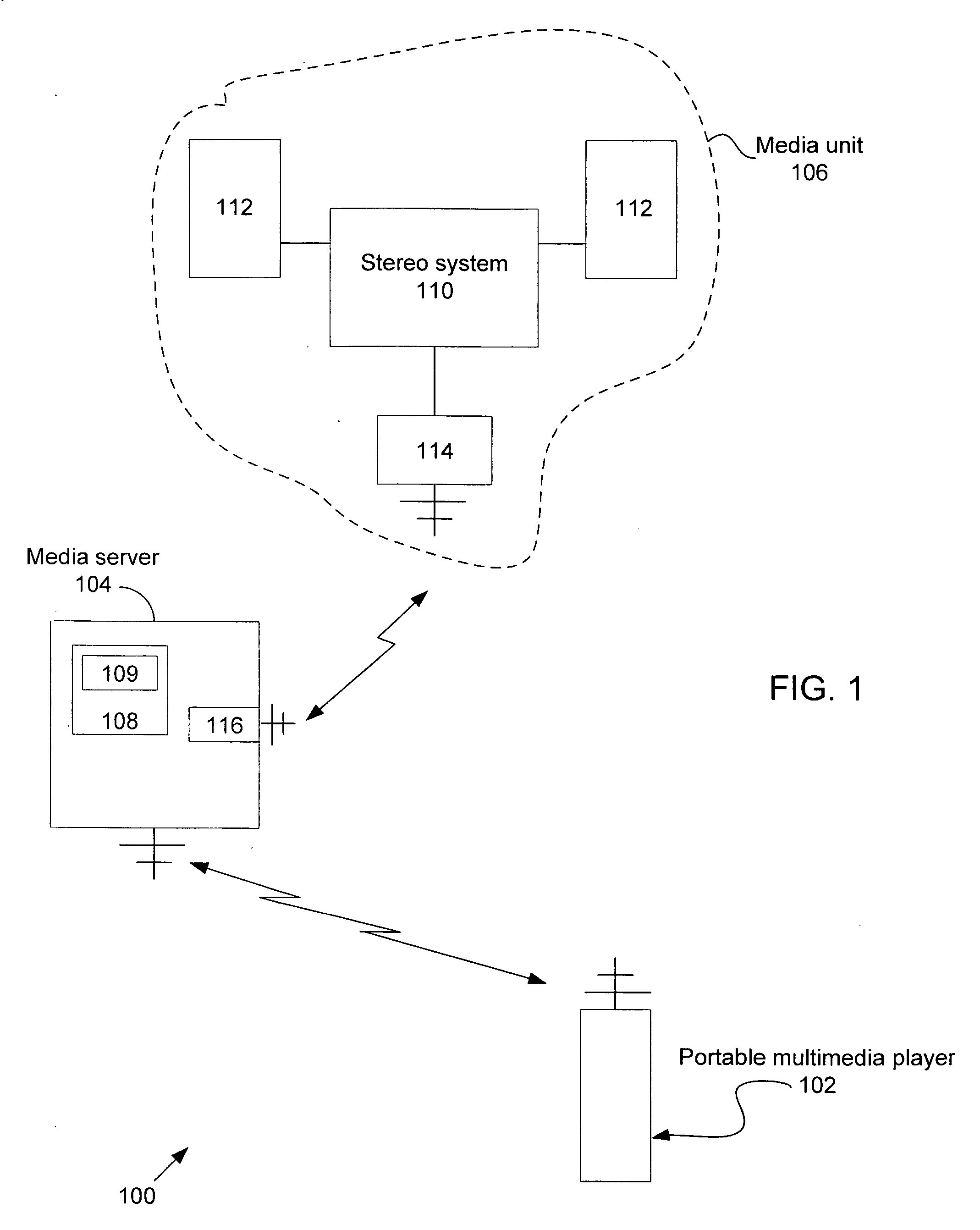

Portable media player as a low power remote control and method thereof

ActiveUS20070169115A1Multiple digital computer combinationsProgram loading/initiatingRemote controlMedia server

A portable multimedia player is used to wirelessly access and control a media server that is streaming digital media by way of a wireless interface to a media unit such as a stereo / speakers in the case of streaming digital audio. In one embodiment, the portable multimedia player is wirelessly synchronized to a selected one(s) of a number of digital media files stored on the media server in such a way that digital media file metadata (song title, author, etc.) associated with the selected digital media file(s) only is transferred from the media server to be stored in the portable media player.

Owner:APPLE INC

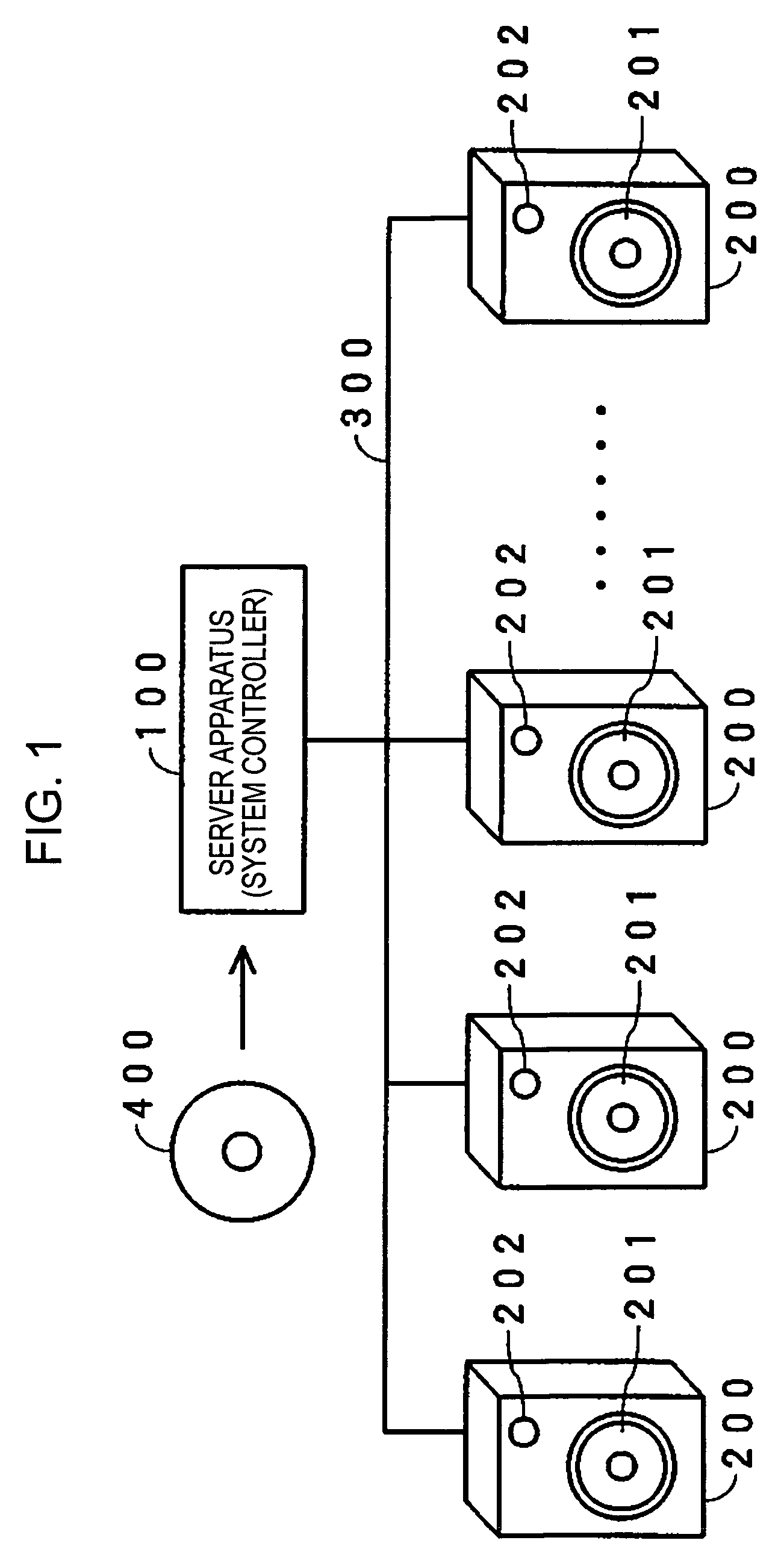

Distributed wireless speaker system with automatic configuration determination when new speakers are added

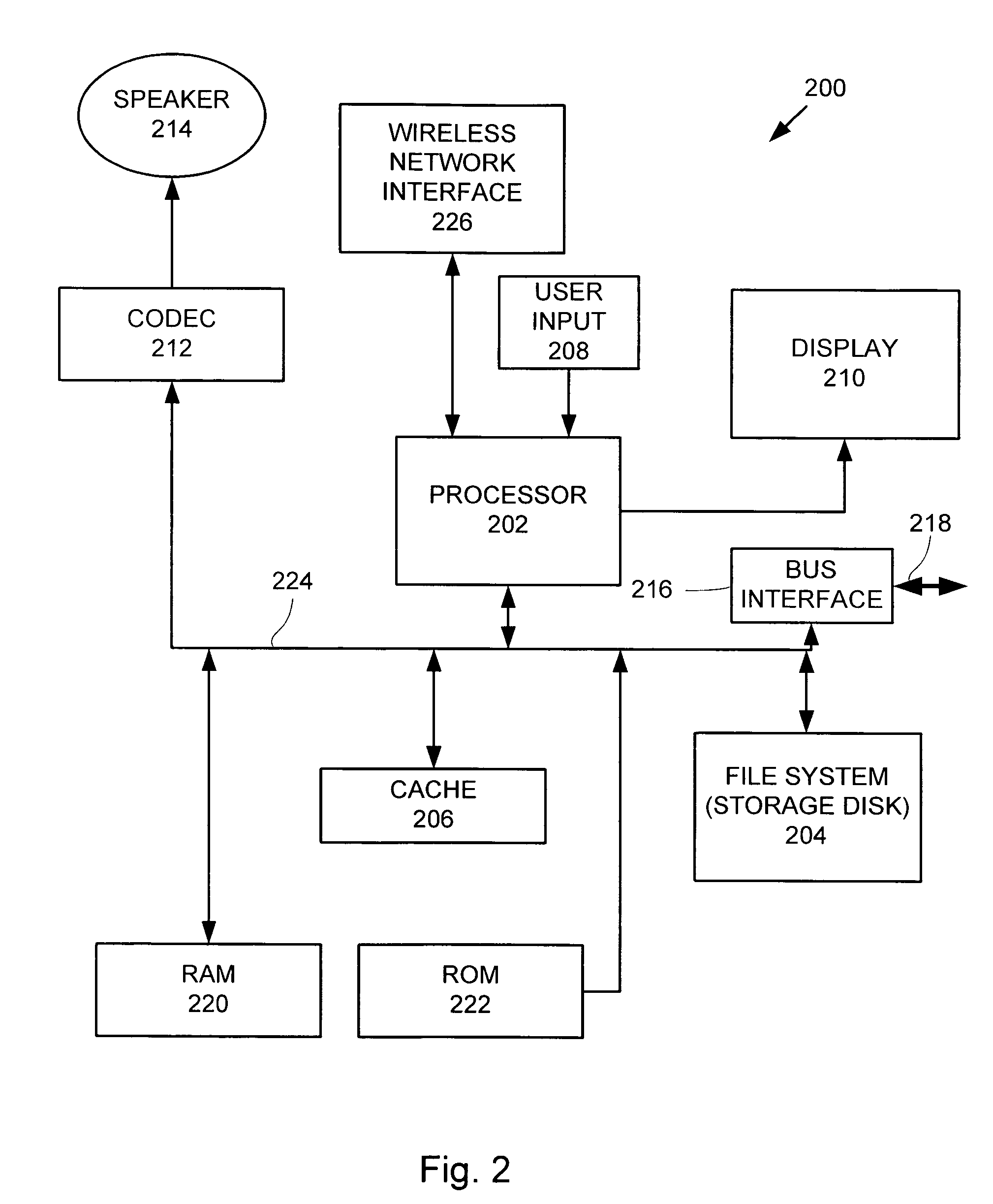

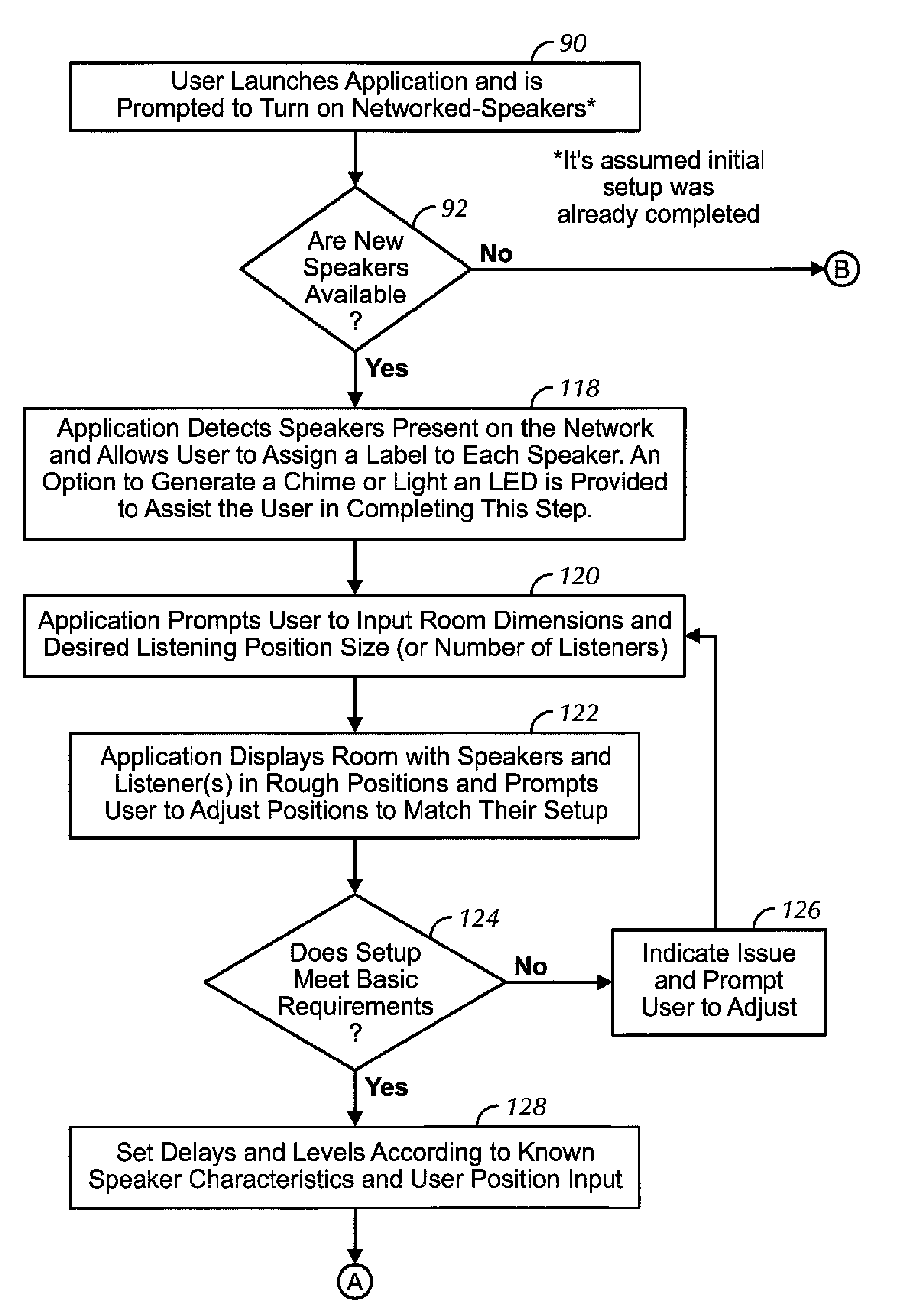

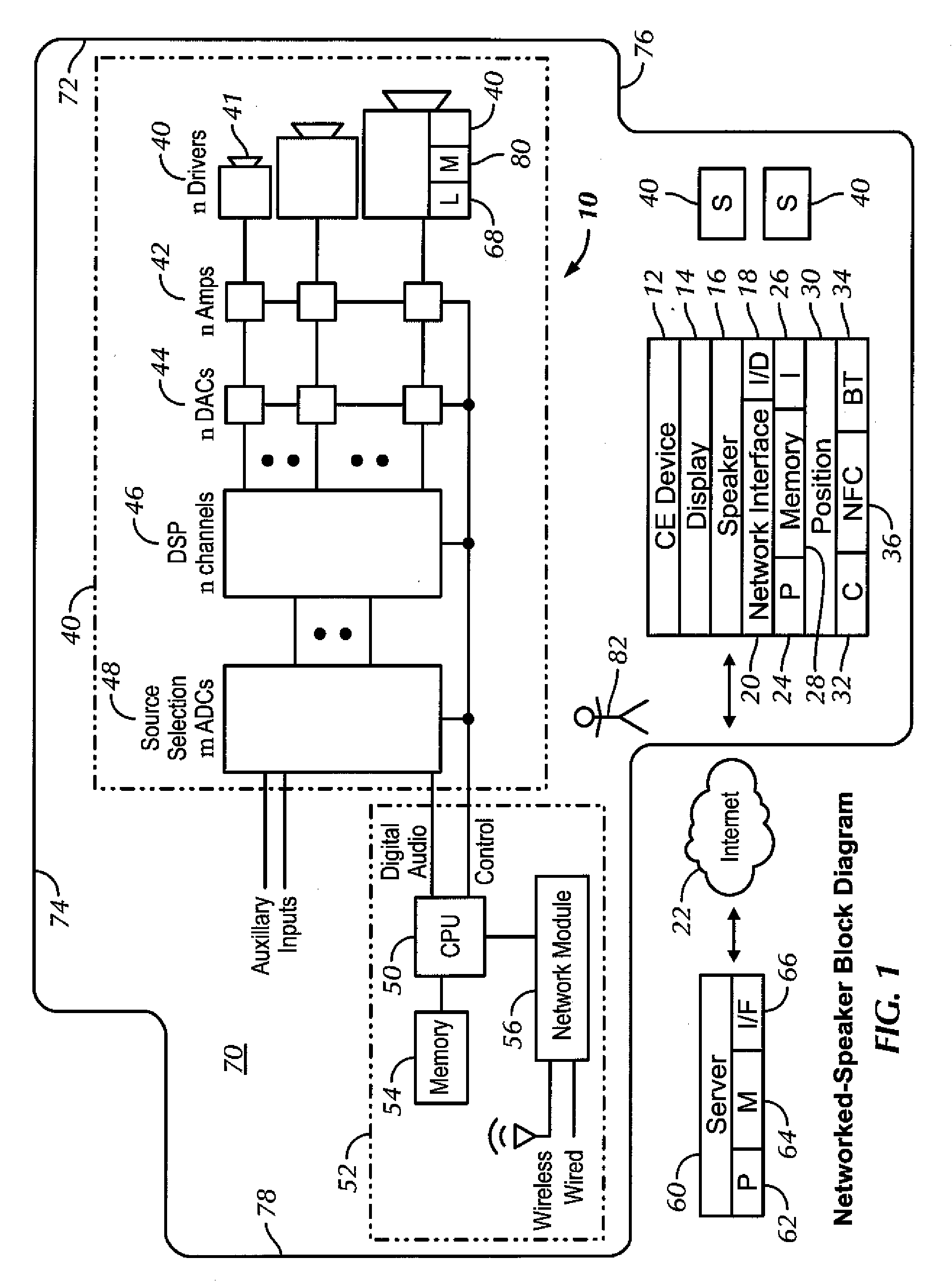

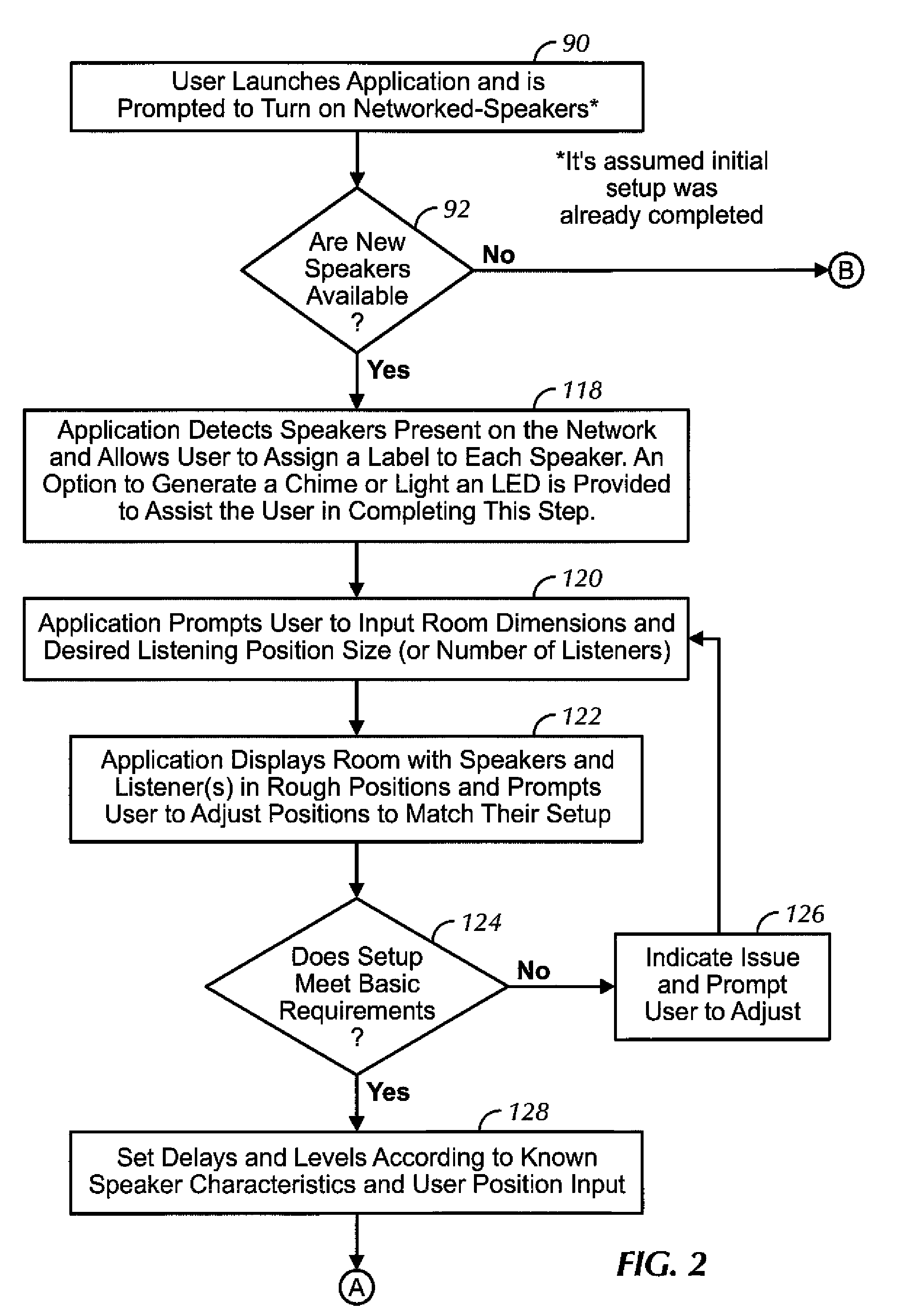

ActiveUS9288597B2Facilitate easier setupIncrease experienceTransducer detailsPublic address systemsAuto-configurationUser input

Owner:SONY CORP

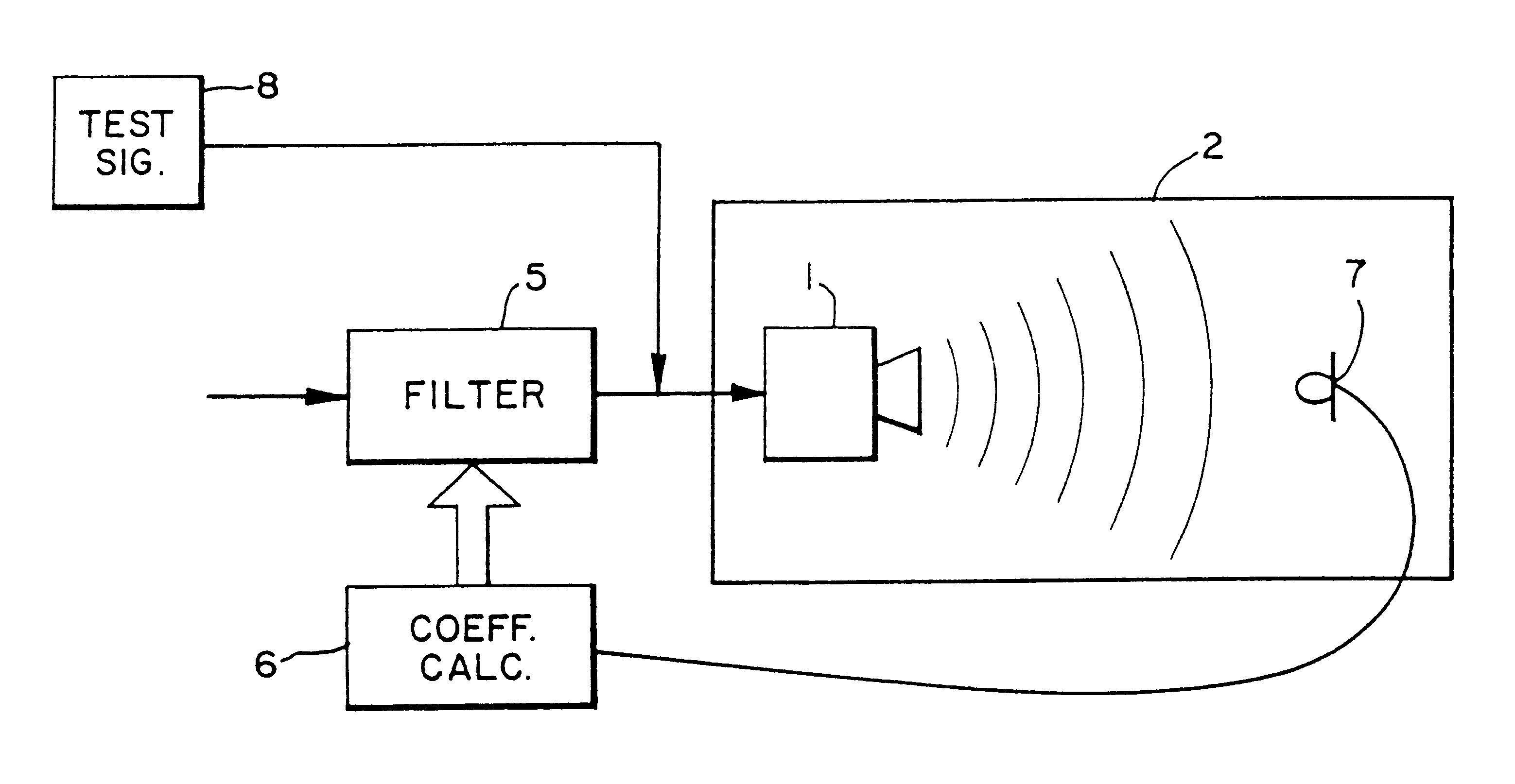

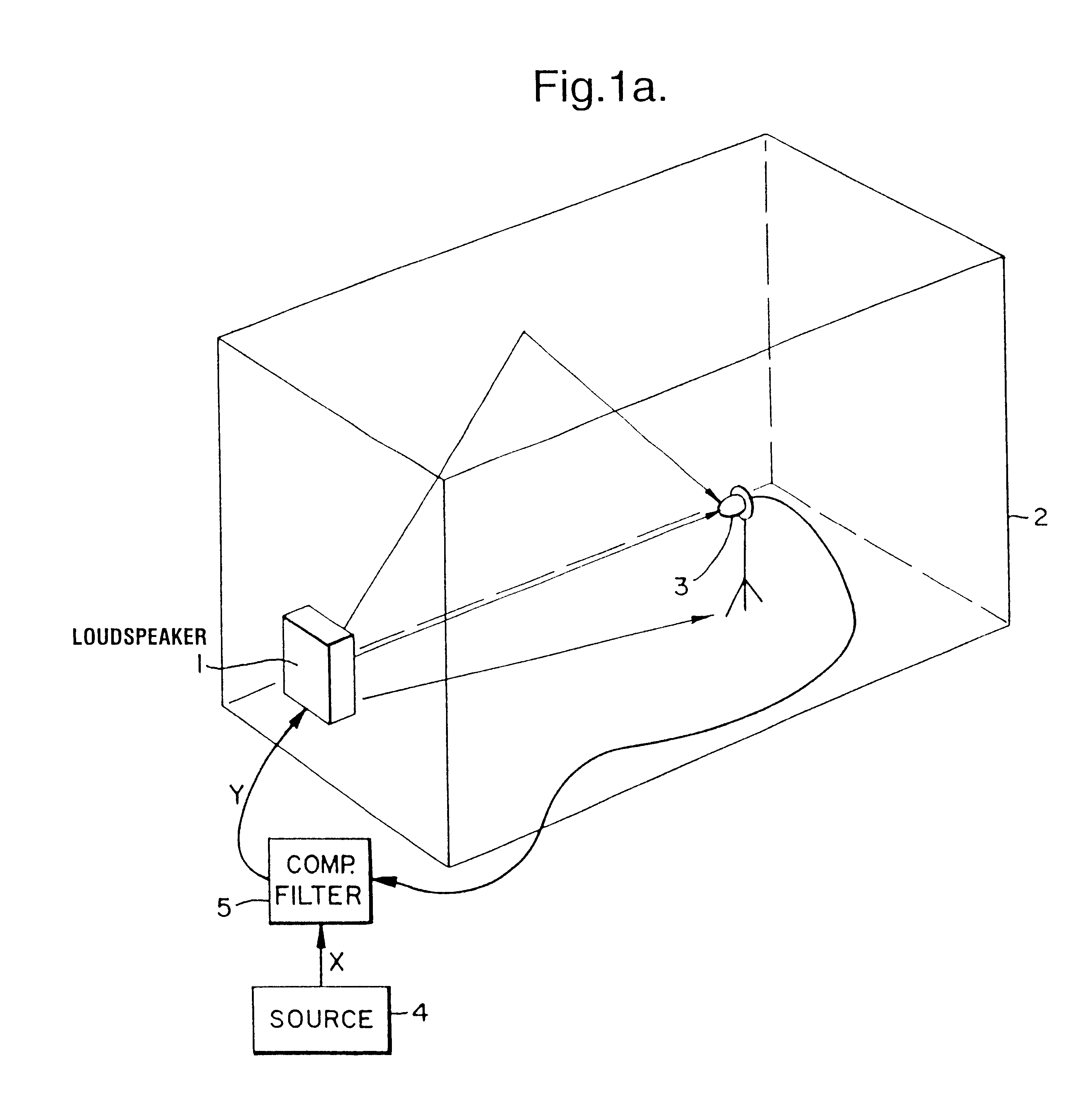

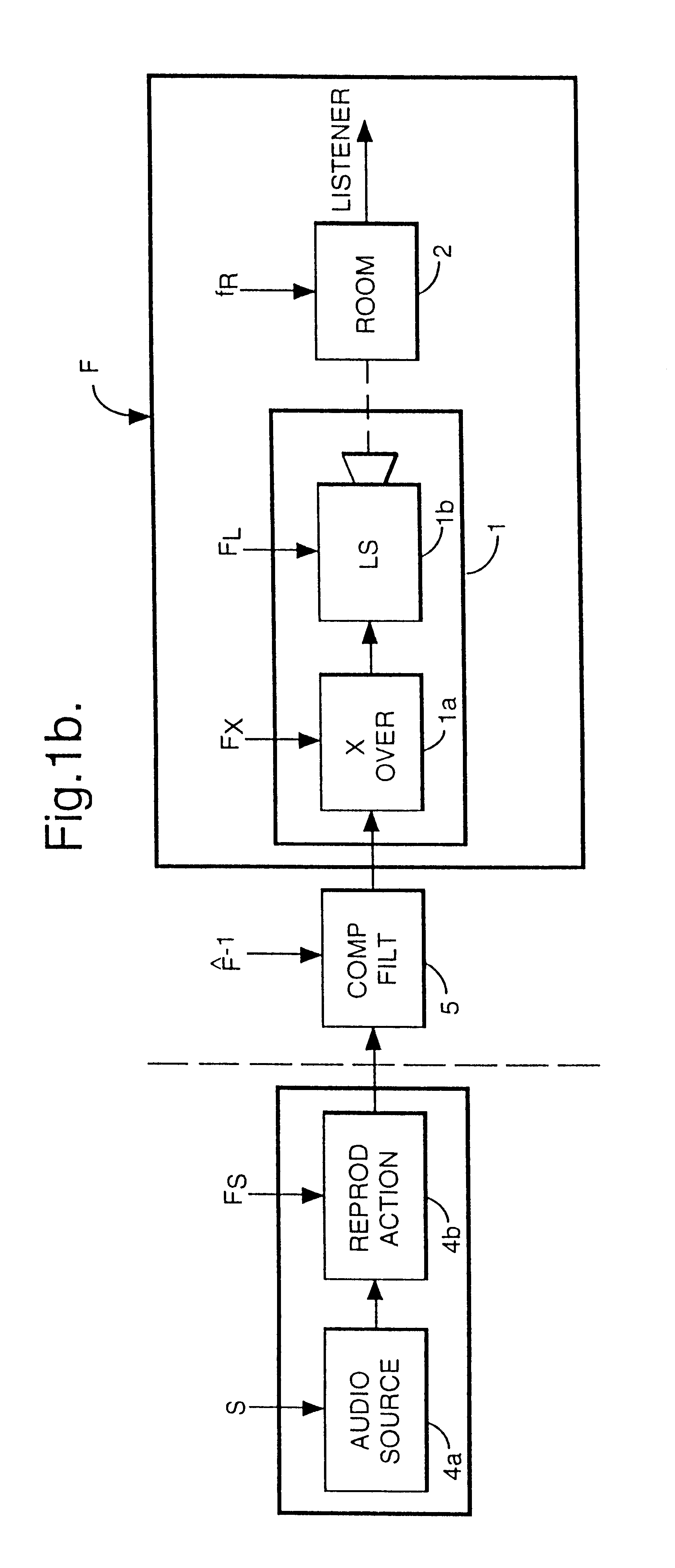

Compensating filters

InactiveUS6760451B1Eliminate phase distortionAdaptive networkAutomatic tone/bandwidth controlDigital signal processingAmplitude response

A prefilter (5) for an audio system comprising a loudspeaker (1) in a room (2), which corrects both amplitude and phase errors due to the loudspeaker (1) by a linear phase correction filter response and corrects the amplitude response of the room (2) whilst introducing the minimum possible amount of extra phase distortion by employing a minimum phase correction filter stage. A test signal generator (8) generates a signal comprising a periodic frequency sweep with a greater phase repetition period than the frequency repetition period. A microphone (7) positioned at various points in the room (2) measures the audio signal processed by the room (2) and loudspeaker (1), and a coefficient calculator (6) (e.g. a digital signal processor device) derives the signal response of the room and thereby a requisite minimum phase correction to be cascaded with the linear phase correction already calculated for the loudspeaker (1). Filter (5) may comprise the same digital signal processor as the coefficient calculator (6). Applications in high fidelity audio reproduction, and in car stereo reproduction.

Owner:CRAVEN PETER GRAHAM +1

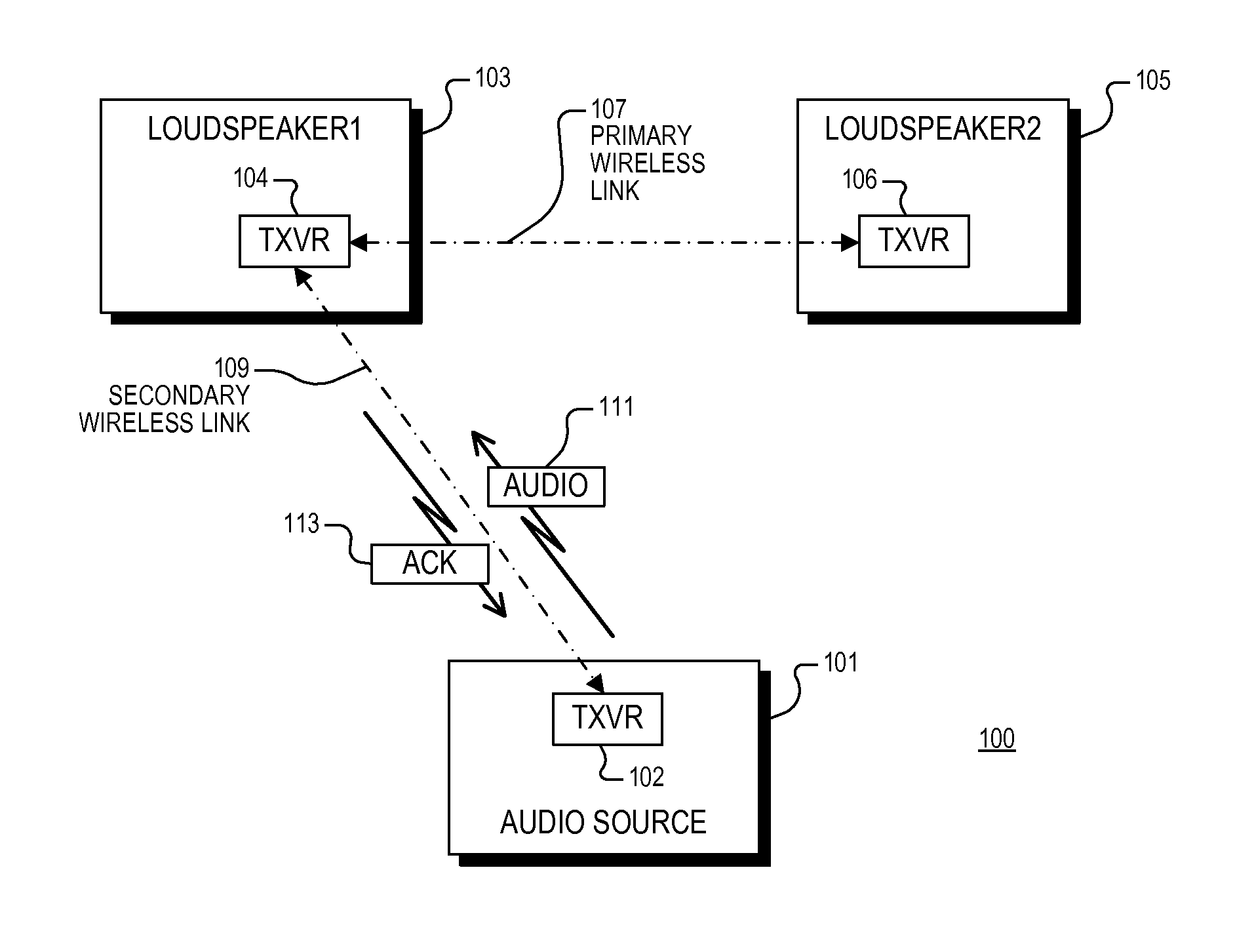

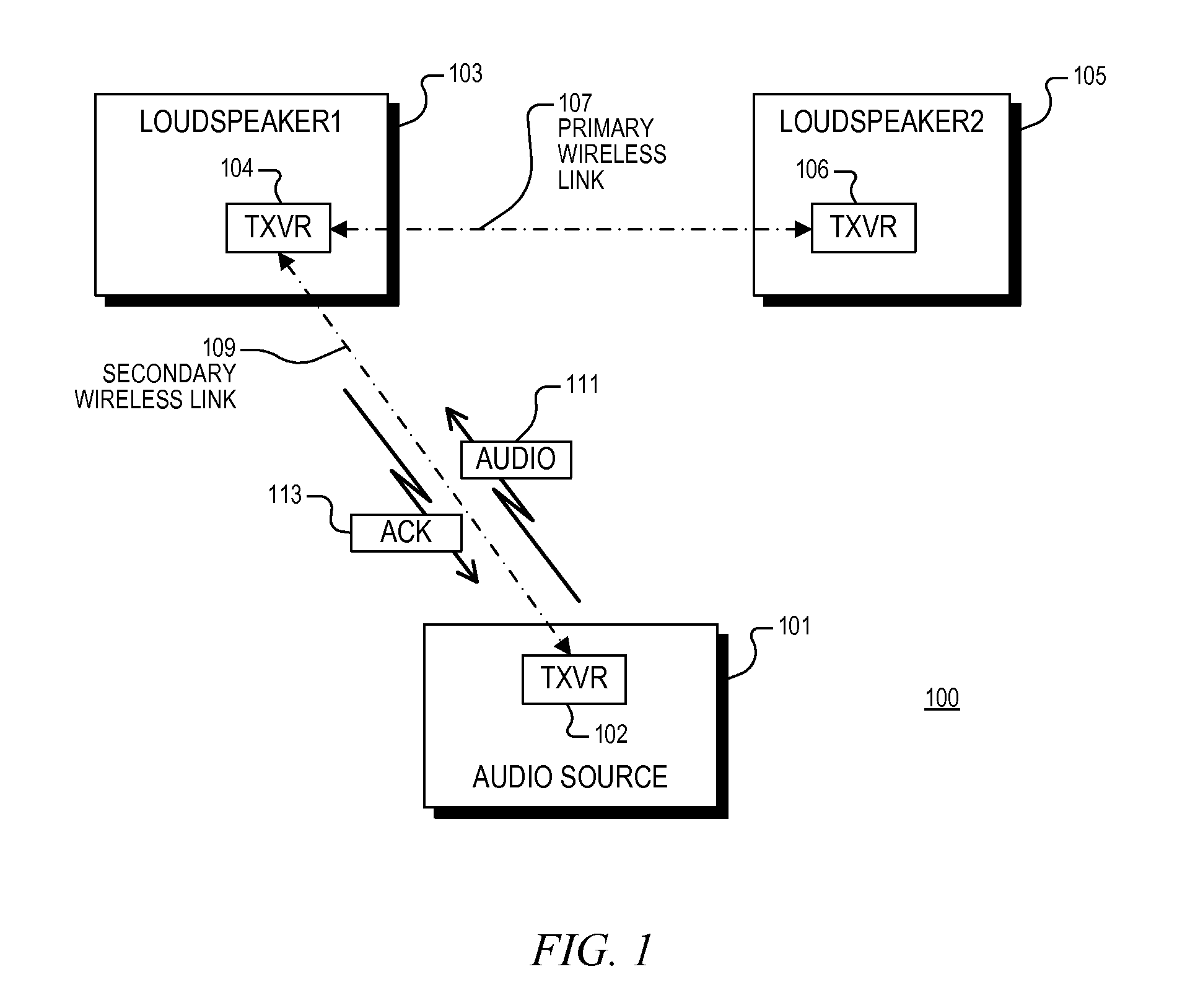

Un-tethered wireless stereo speaker system

ActiveUS20120058727A1Error prevention/detection by using return channelService provisioningTransceiverWireless transceiver

A wireless speaker system configured to receive stereo audio information wirelessly transmitted by an audio source including first and second loudspeakers. The first loudspeaker establishes a bidirectional secondary wireless link with the audio source for receiving and acknowledging receipt of the stereo audio information. The first and second loudspeakers communicate with each other via a primary wireless link, and the first and second loudspeakers are configured to extract first and second audio channels, respectively, from the stereo audio information. A wireless audio system including an audio source and first and second loudspeakers, each having a wireless transceiver. The first and second loudspeakers communicate via a primary wireless link. The audio source communicates audio information to the first loudspeaker via a secondary wireless link which is configured according to a standard wireless protocol. The first loudspeaker is configured to acknowledge successful reception of audio information via the secondary wireless link.

Owner:APPLE INC

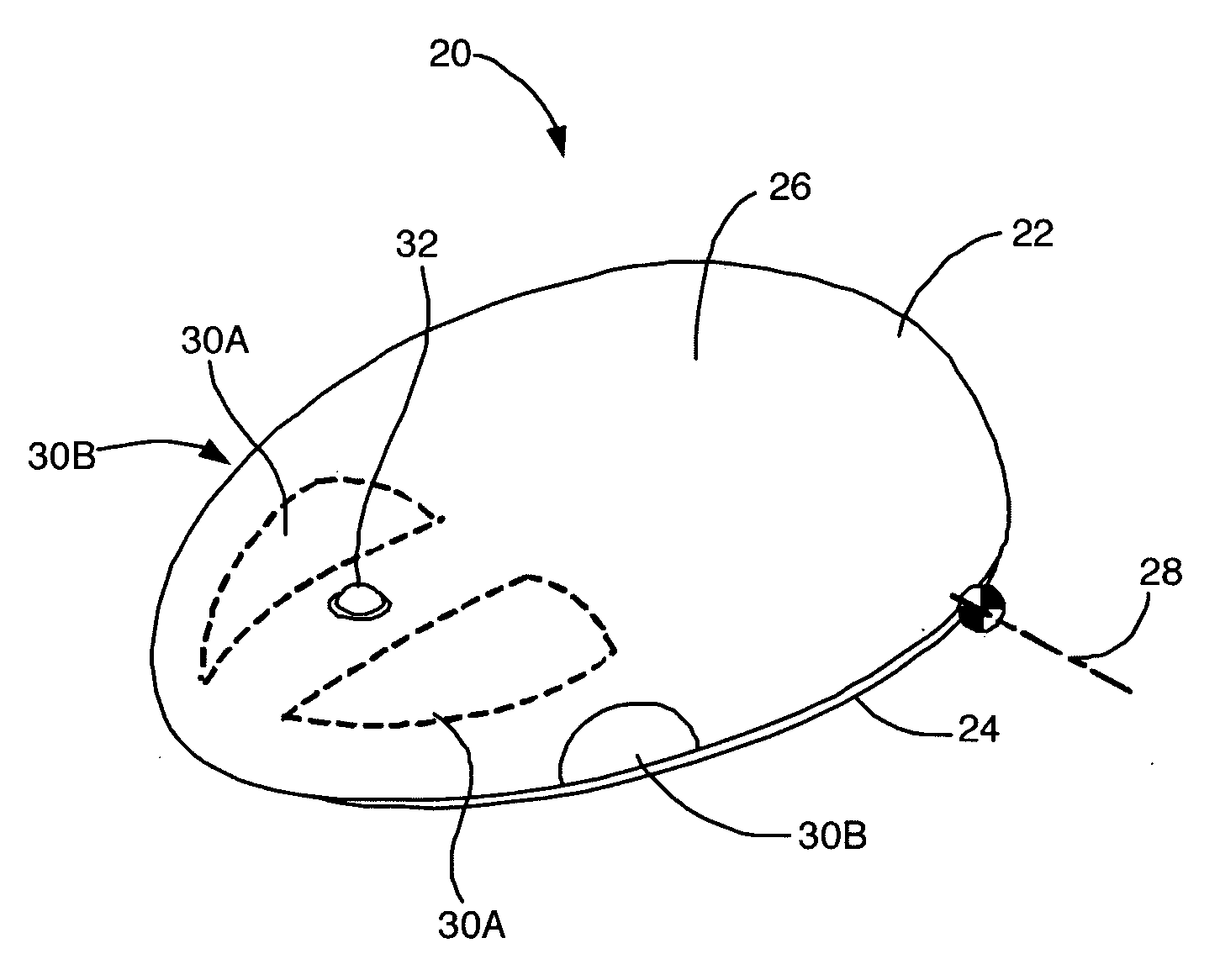

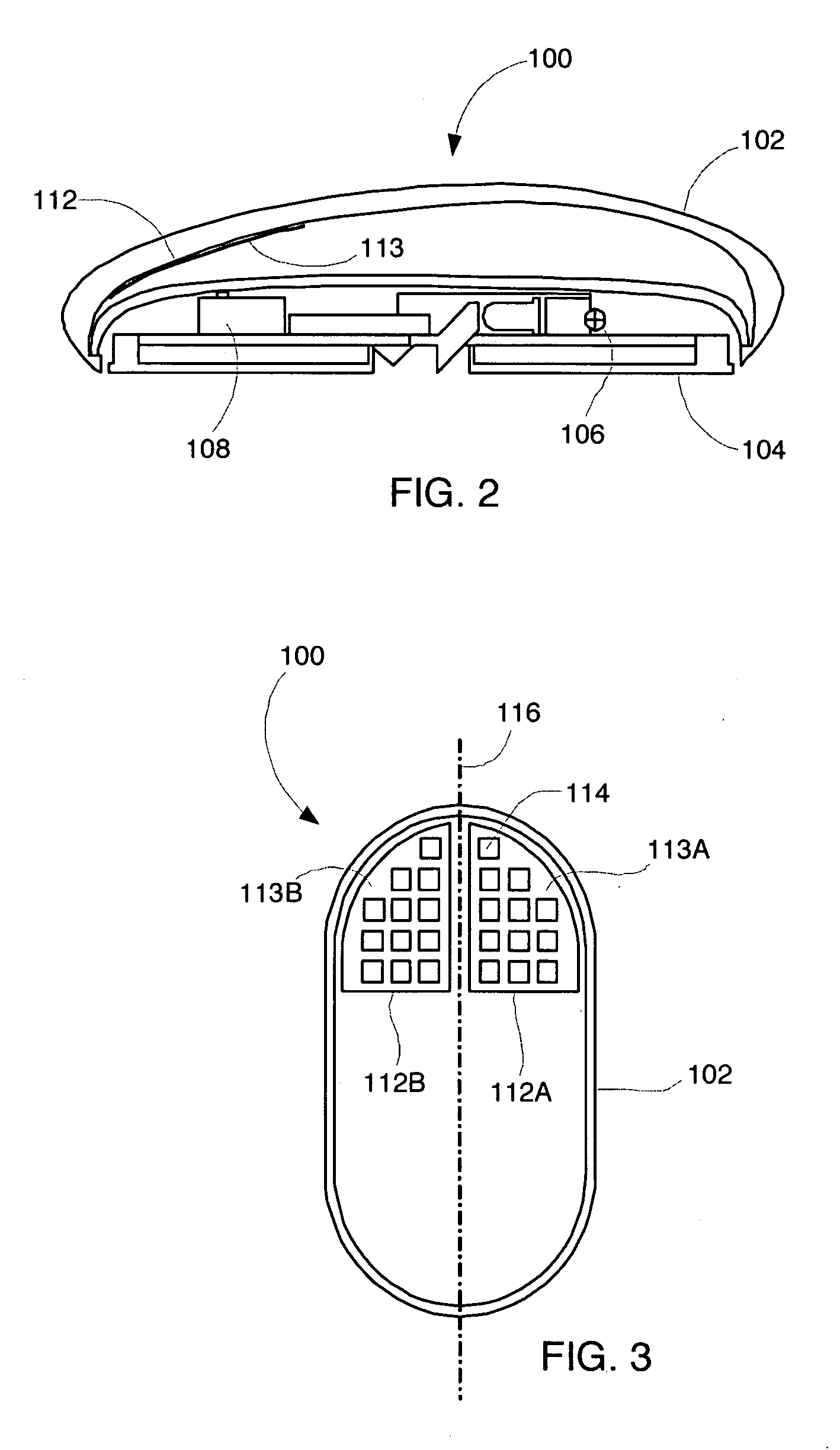

Mouse with improved input mechanisms

ActiveUS20060274042A1Cathode-ray tube indicatorsInput/output processes for data processingTouch SensesLoudspeaker

A mouse having improved input methods and mechanisms is disclosed. The mouse is configured with touch sensing areas capable of generating input signals. The touch sensing areas may for example be used to differentiate between left and right clicks in a single button mouse. The mouse may further be configured with force sensing areas capable of generating input signals. The force sensing areas may for example be positioned on the sides of the mouse so that squeezing the mouse generates input signals. The mouse may further be configured with a jog ball capable of generating input signals. The mouse may additionally be configured with a speaker for providing audio feedback when the various input devices are activated by a user.

Owner:APPLE INC

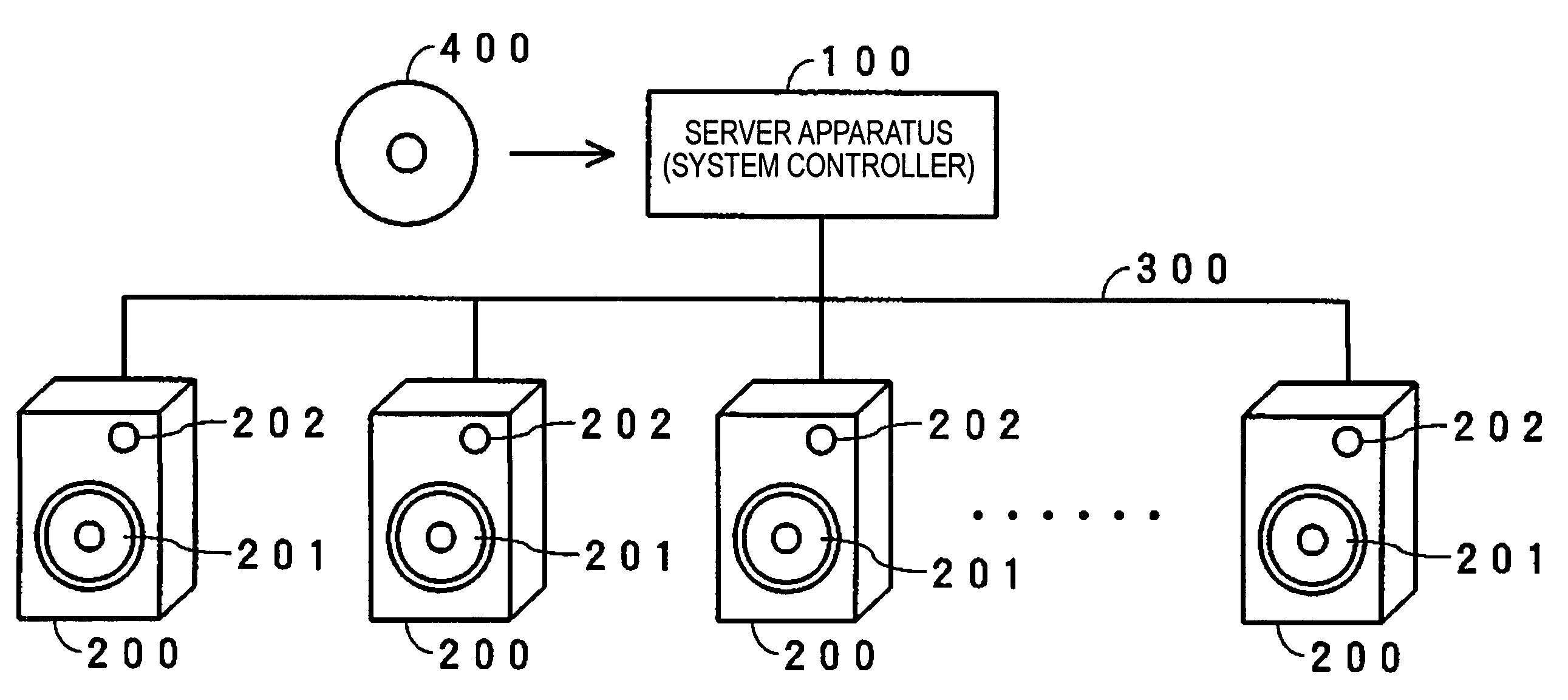

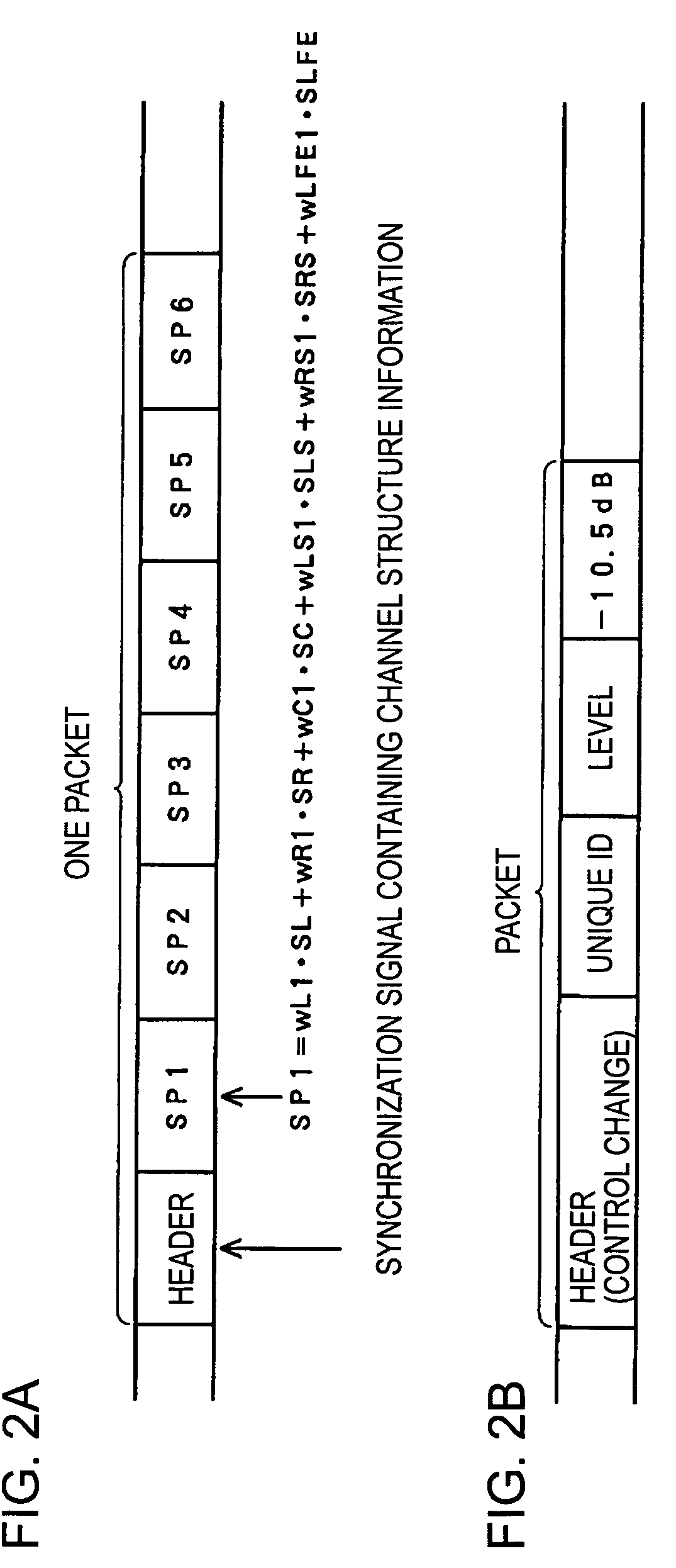

Multi-speaker audio system and automatic control method

InactiveUS7676044B2Loudspeaker enclosure positioningPseudo-stereo systemsAutomatic controlLoudspeaker

A sound produced at the location of a listener is captured by a microphone in each of a plurality of speaker devices. A sever apparatus receives an audio signal of the captured sound from all speaker devices, and calculates a distance difference between the distance of the location of the listener to the speaker device closest to the listener and the distance of the listener to each of the plurality of speaker devices. When one of the speaker devices emits a sound, the server apparatus receives an audio signal of the sound captured by and transmitted from each of the other speaker devices. The server apparatus calculates a speaker-to-speaker distance between the speaker device that has emitted the sound and each of the other speaker devices. The server apparatus calculates a layout configuration of the plurality of speaker devices based on the distance difference and the speaker-to-speaker distance.

Owner:SONY CORP

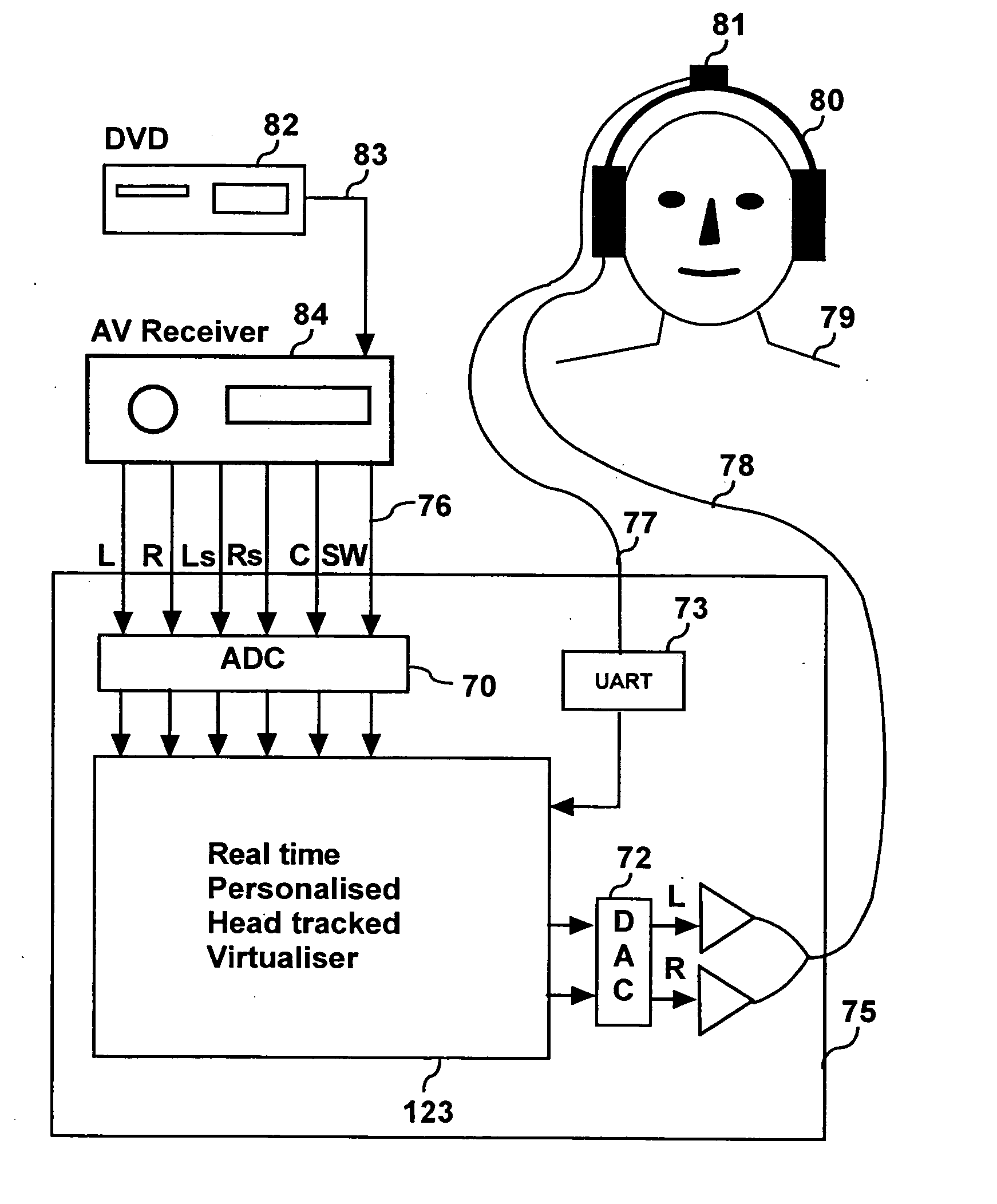

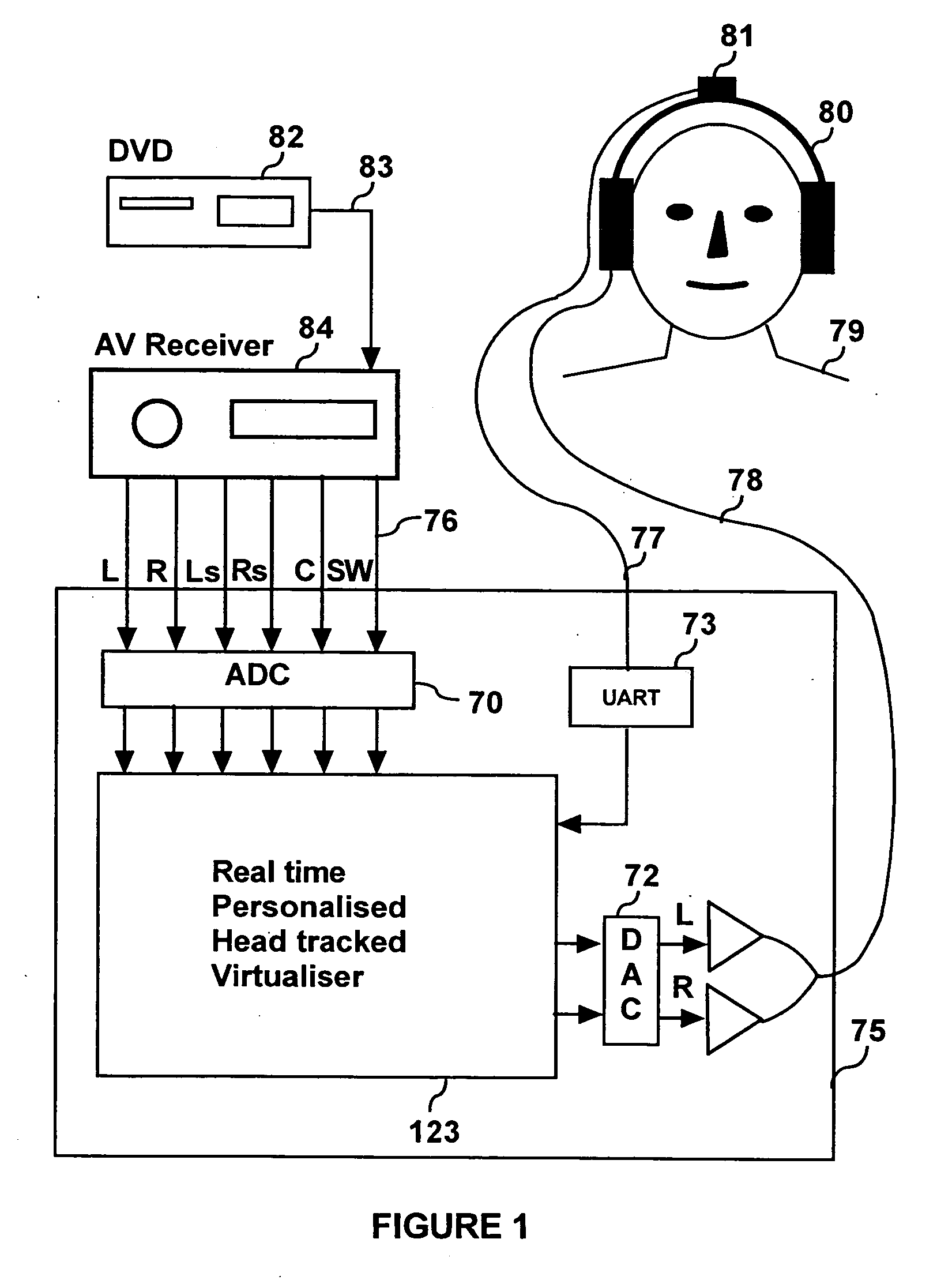

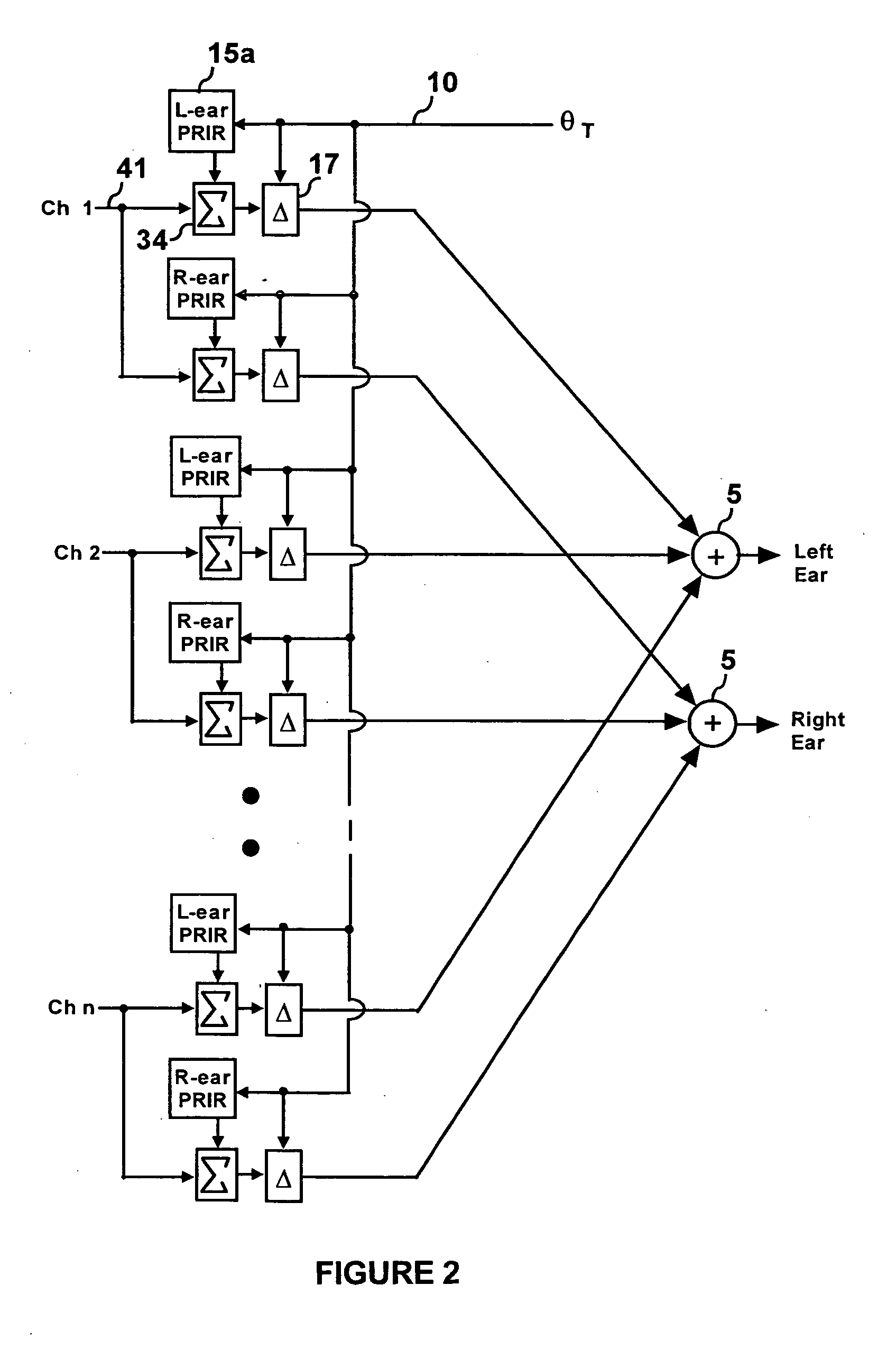

Personalized headphone virtualization

ActiveUS20060045294A1Improve accuracySound qualityHeadphones for stereophonic communicationEarpiece/earphone attachmentsVirtualizationPersonalization

A listener can experience the sound of virtual loudspeakers over headphones with a level of realism that is difficult to distinguish from the real loudspeaker experience. Sets of personalized room impulse responses (PRIRs) are acquired for the loudspeaker sound sources over a limited number of listener head positions. The PRIRs are then used to transform an audio signal for the loudspeakers into a virtualized output for the headphones. Basing the transformation on the listener's head position, the system can adjust the transformation so that the virtual loudspeakers appear not to move as the listener moves the head.

Owner:SMYTH RES

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com