Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1488 results about "Acoustic model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

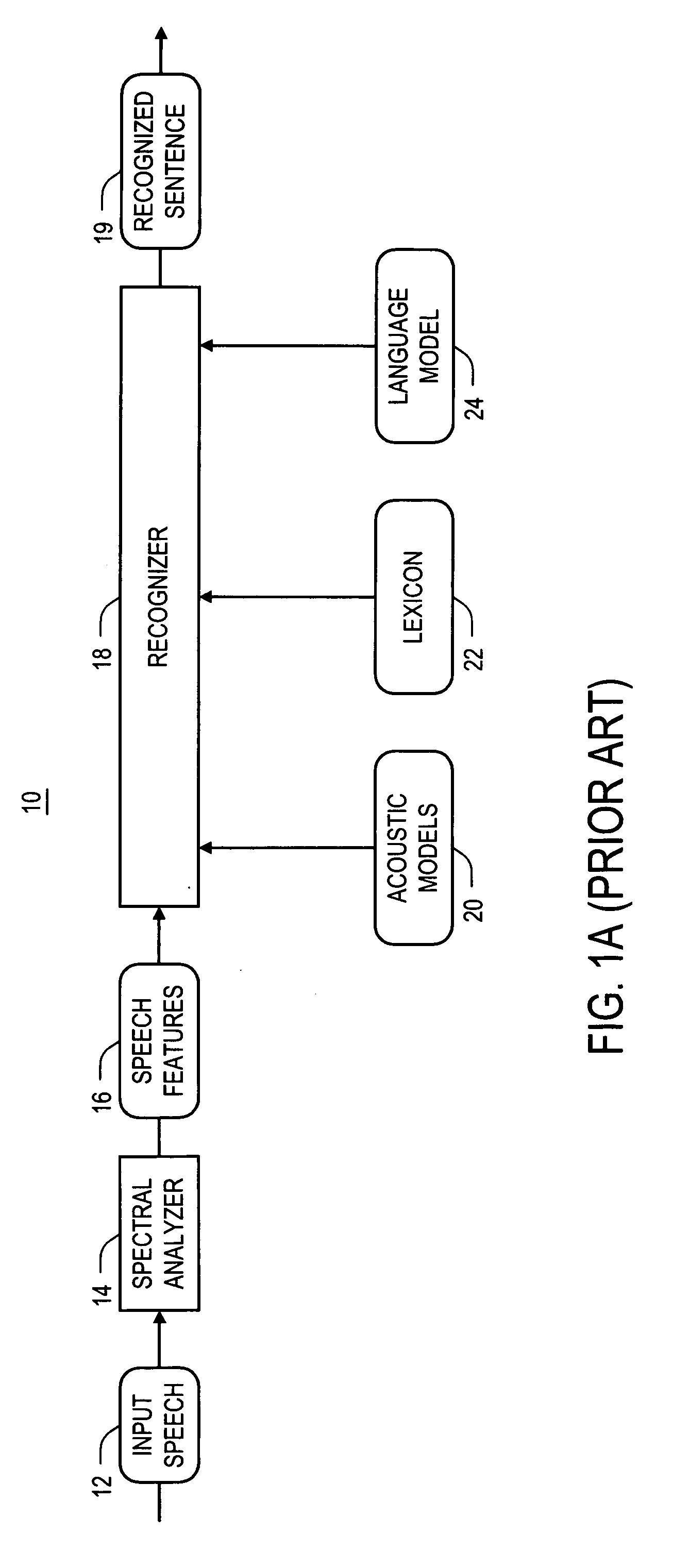

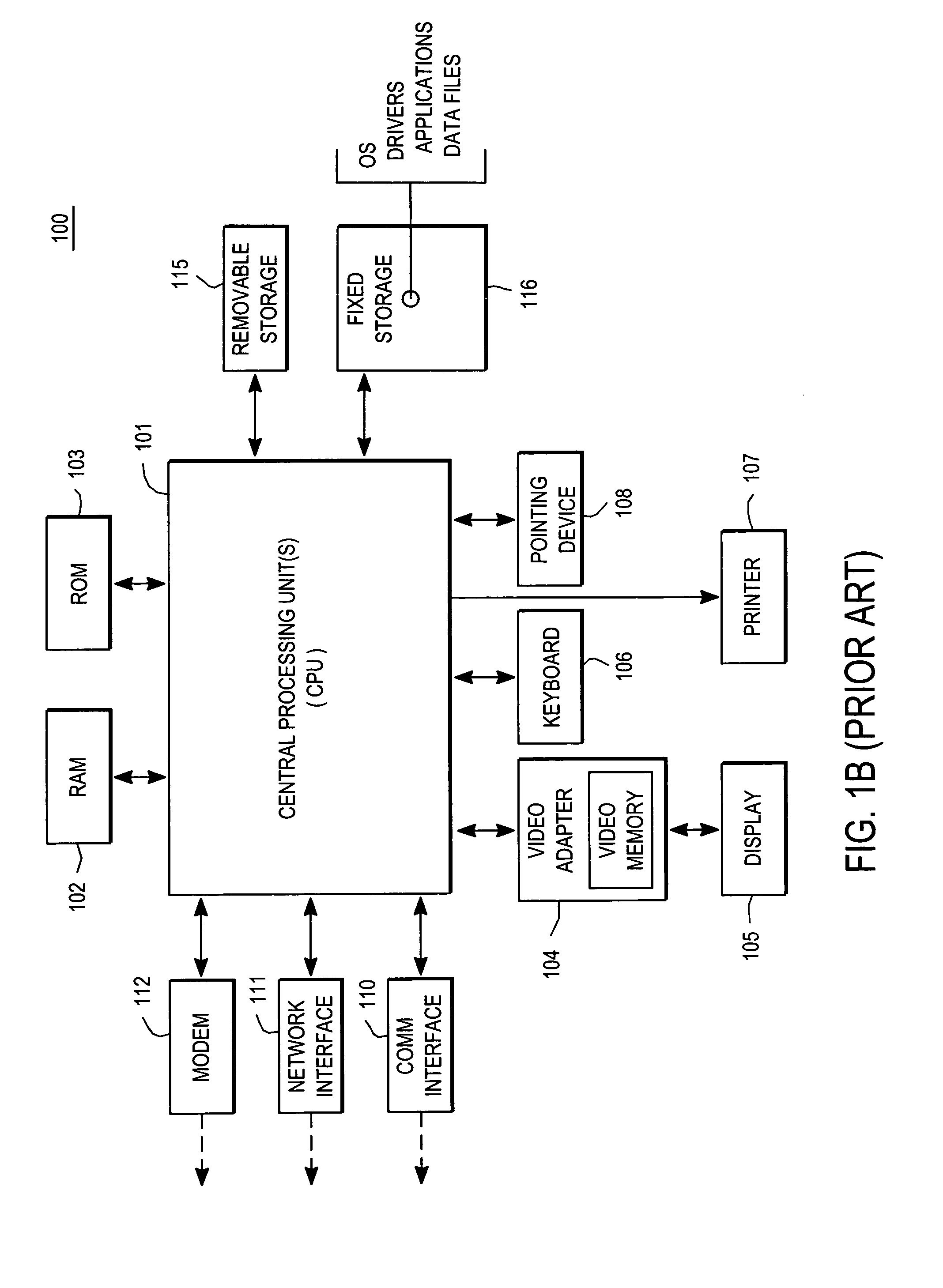

An acoustic model is used in automatic speech recognition to represent the relationship between an audio signal and the phonemes or other linguistic units that make up speech. The model is learned from a set of audio recordings and their corresponding transcripts. It is created by taking audio recordings of speech, and their text transcriptions, and using software to create statistical representations of the sounds that make up each word.

Automatic Speech Recognition System

InactiveUS20090018828A1Improve speech recognition rateImprove accuracySpeech recognitionSound source separationFeature extraction

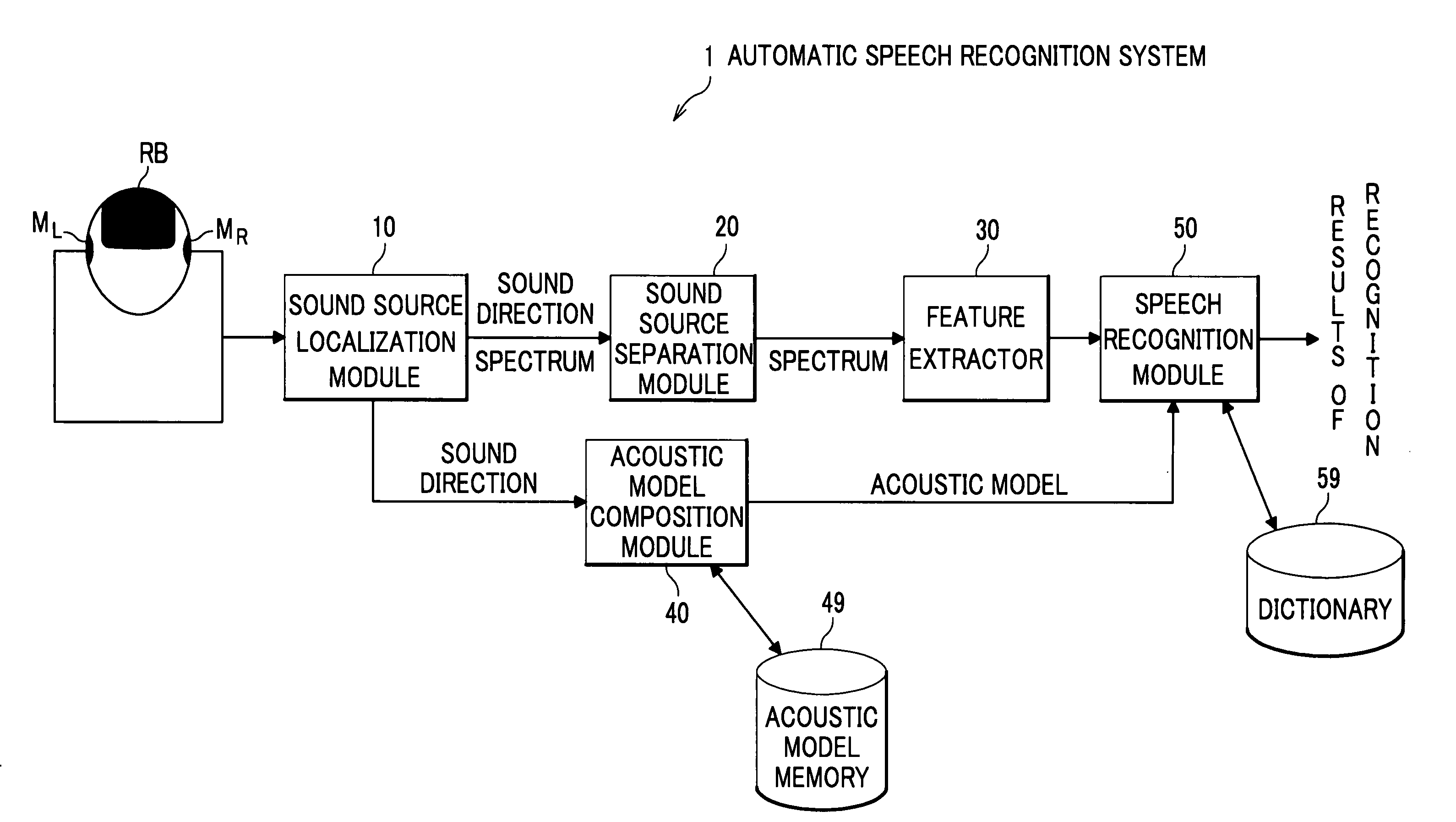

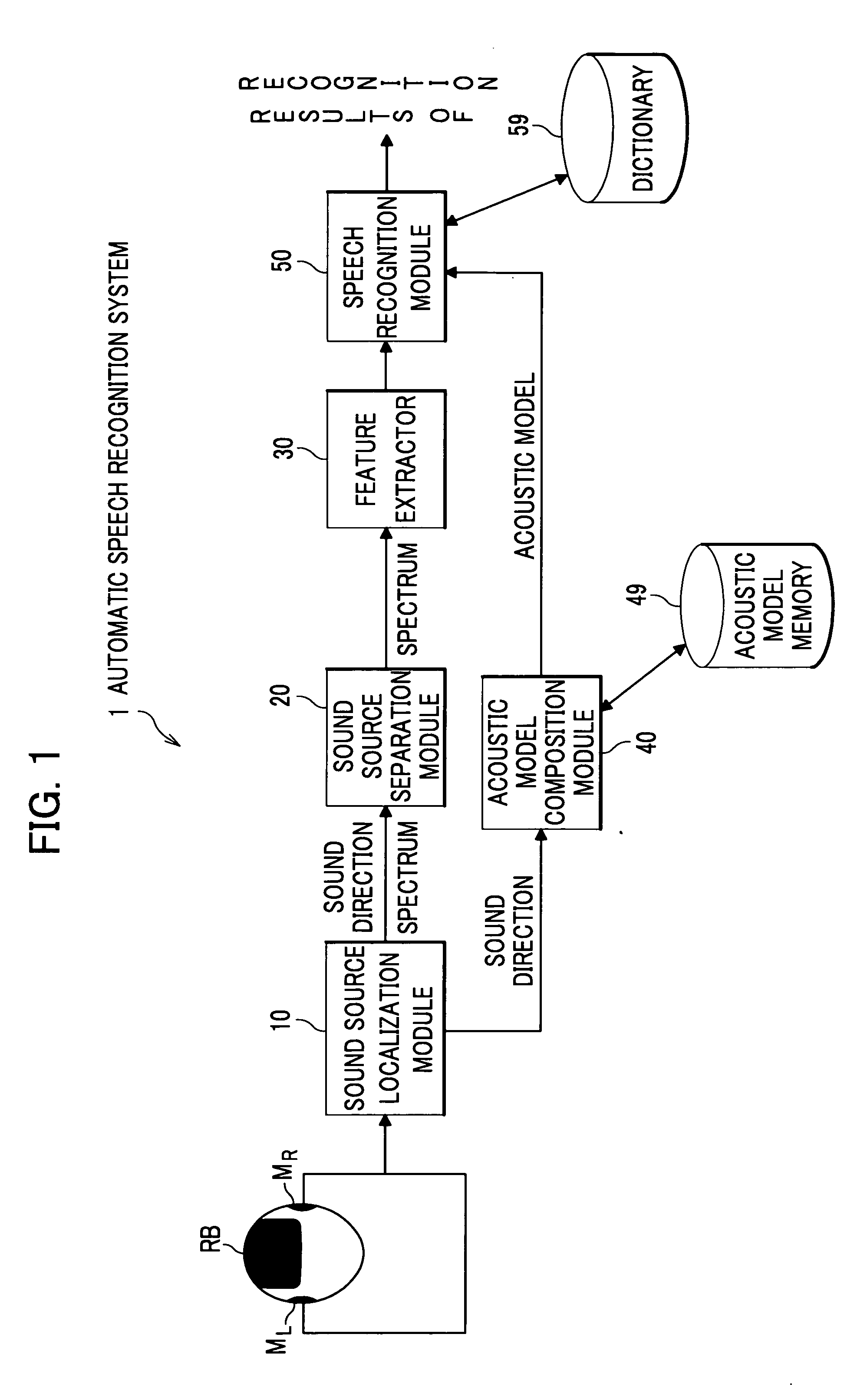

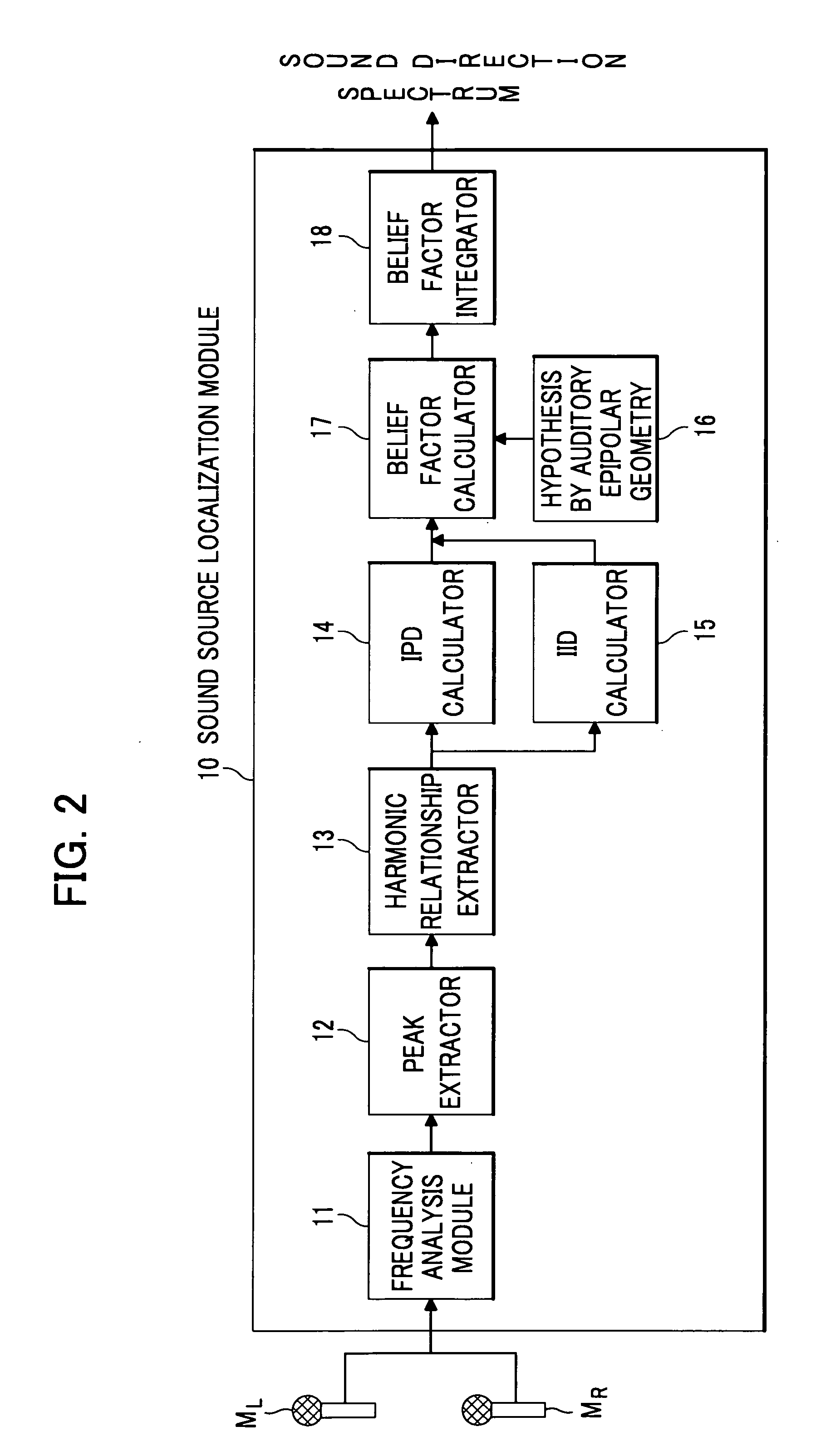

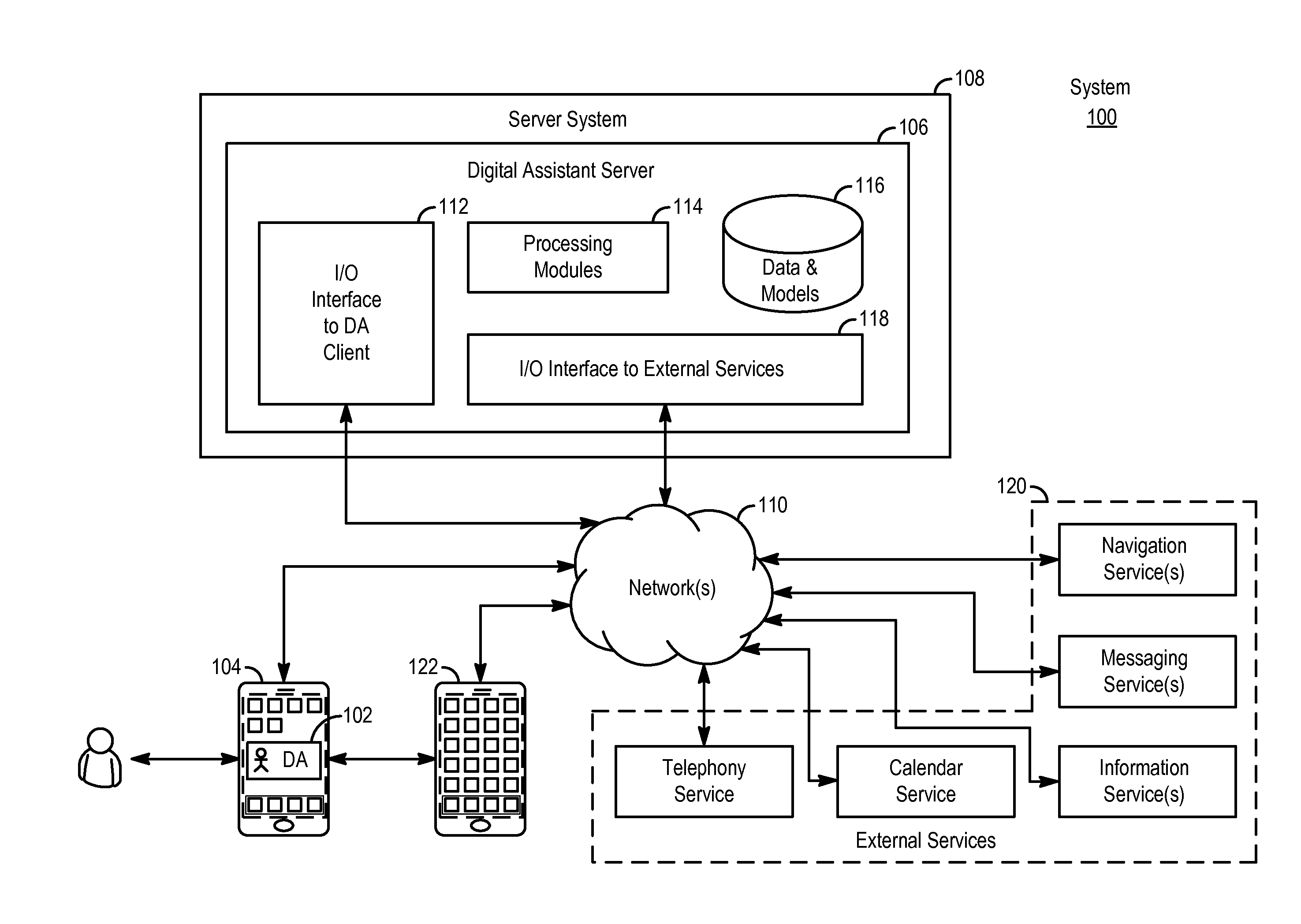

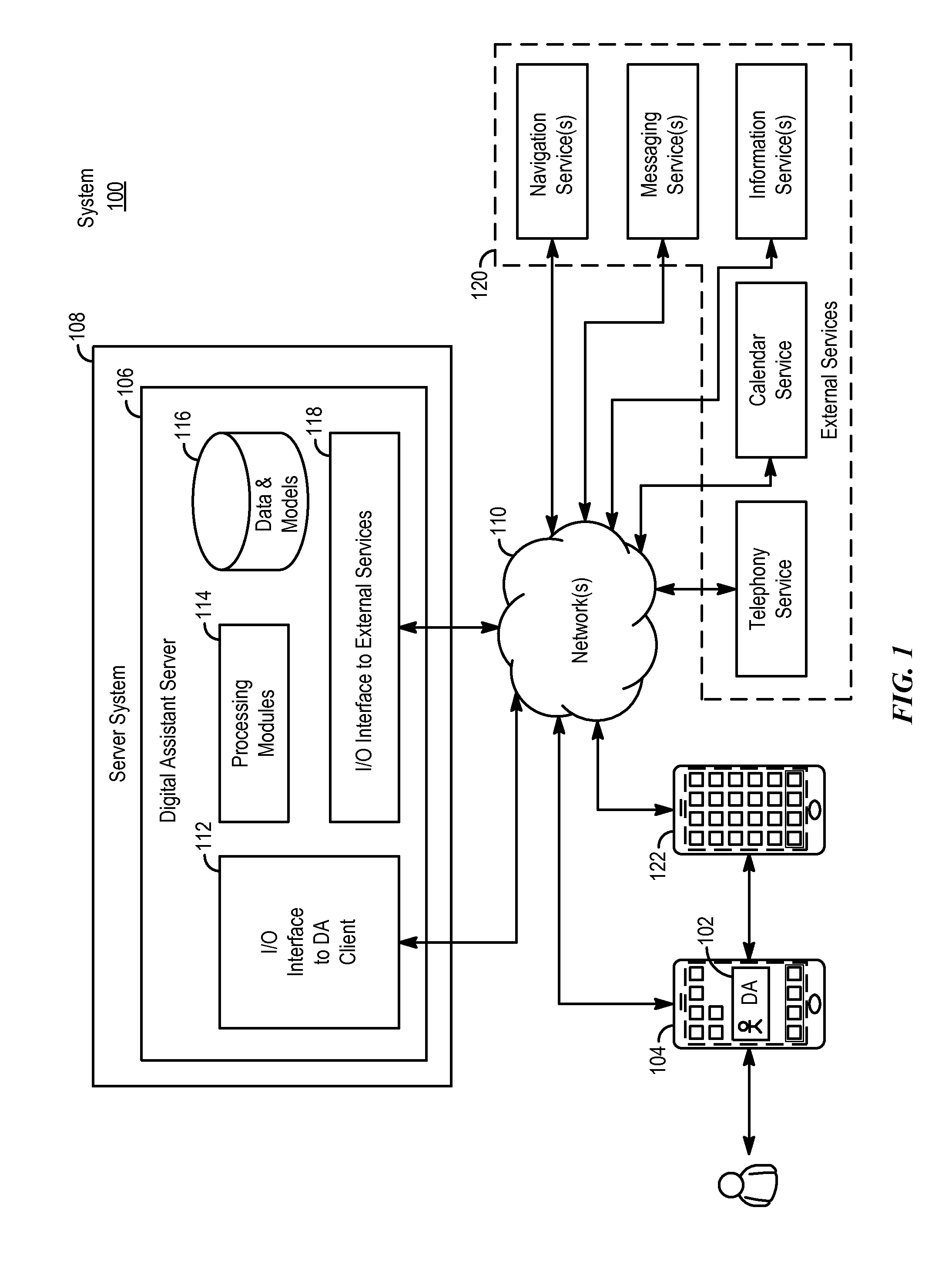

An automatic speech recognition system includes: a sound source localization module for localizing a sound direction of a speaker based on the acoustic signals detected by the plurality of microphones; a sound source separation module for separating a speech signal of the speaker from the acoustic signals according to the sound direction; an acoustic model memory which stores direction-dependent acoustic models that are adjusted to a plurality of directions at intervals; an acoustic model composition module which composes an acoustic model adjusted to the sound direction, which is localized by the sound source localization module, based on the direction-dependent acoustic models, the acoustic model composition module storing the acoustic model in the acoustic model memory; and a speech recognition module which recognizes the features extracted by a feature extractor as character information using the acoustic model composed by the acoustic model composition module.

Owner:HONDA MOTOR CO LTD

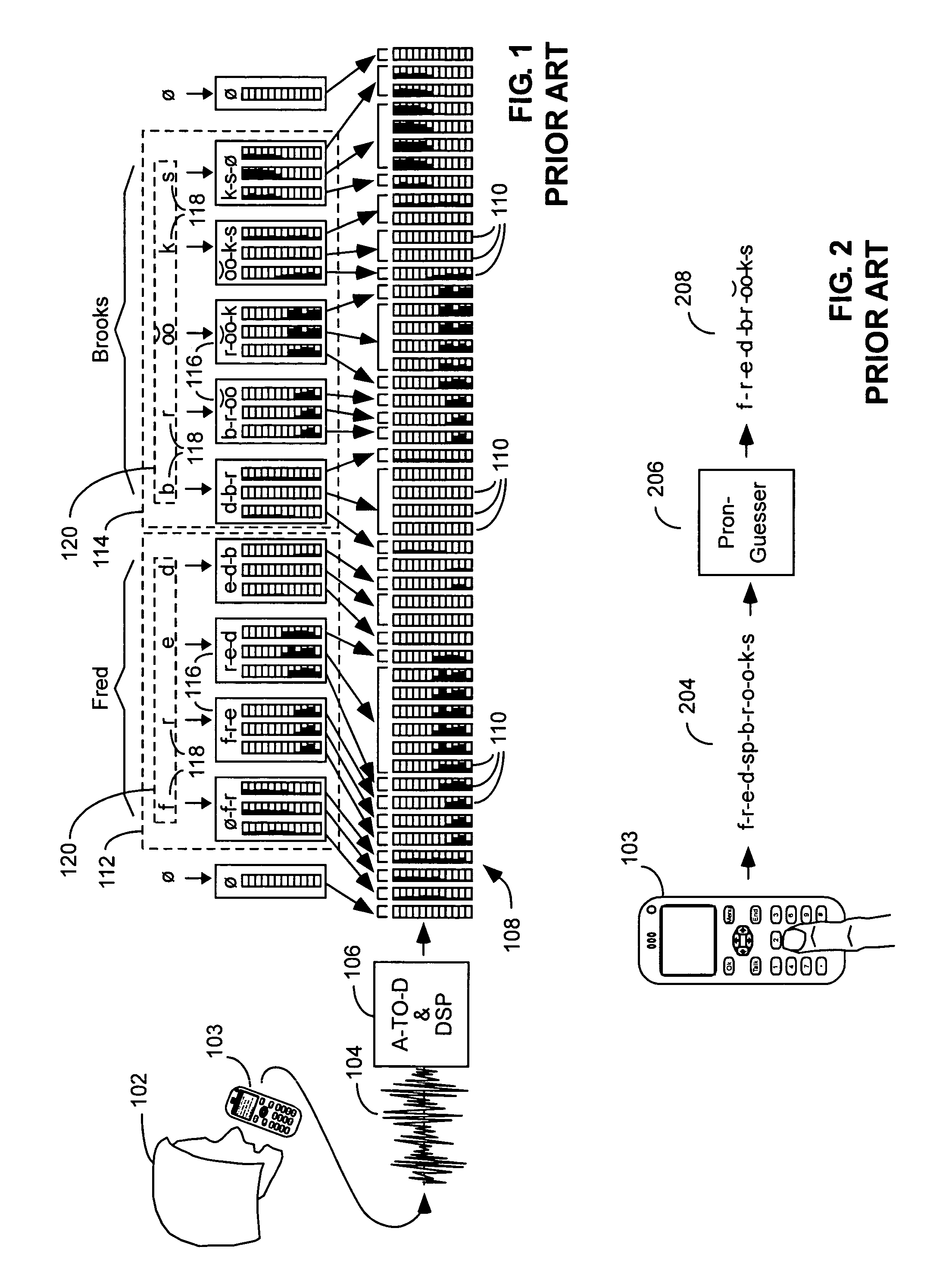

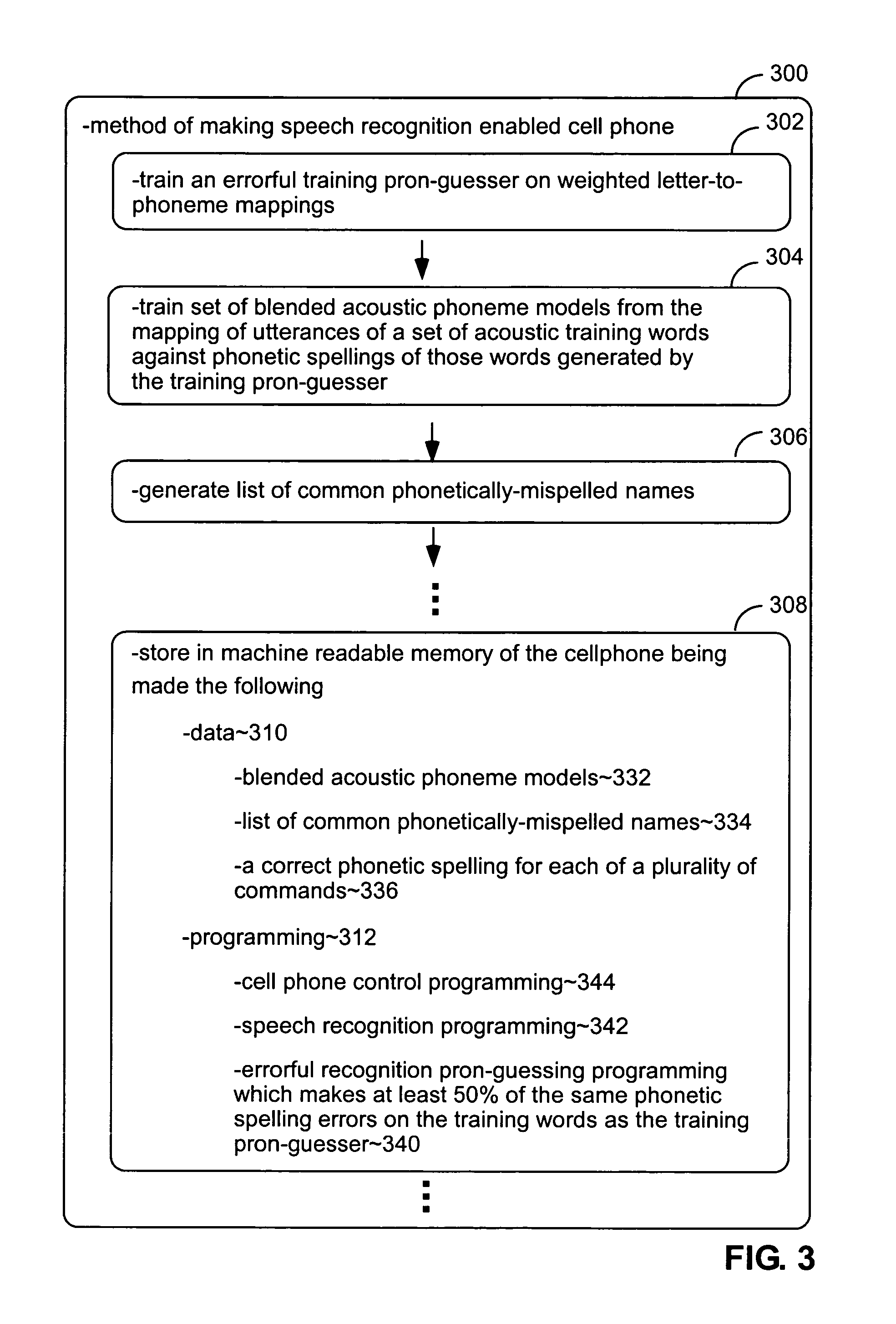

Training and using pronunciation guessers in speech recognition

InactiveUS7467087B1Reduce frequencyReliable phonetic spellingSpeech recognitionSpeech synthesisWord modelSpeech identification

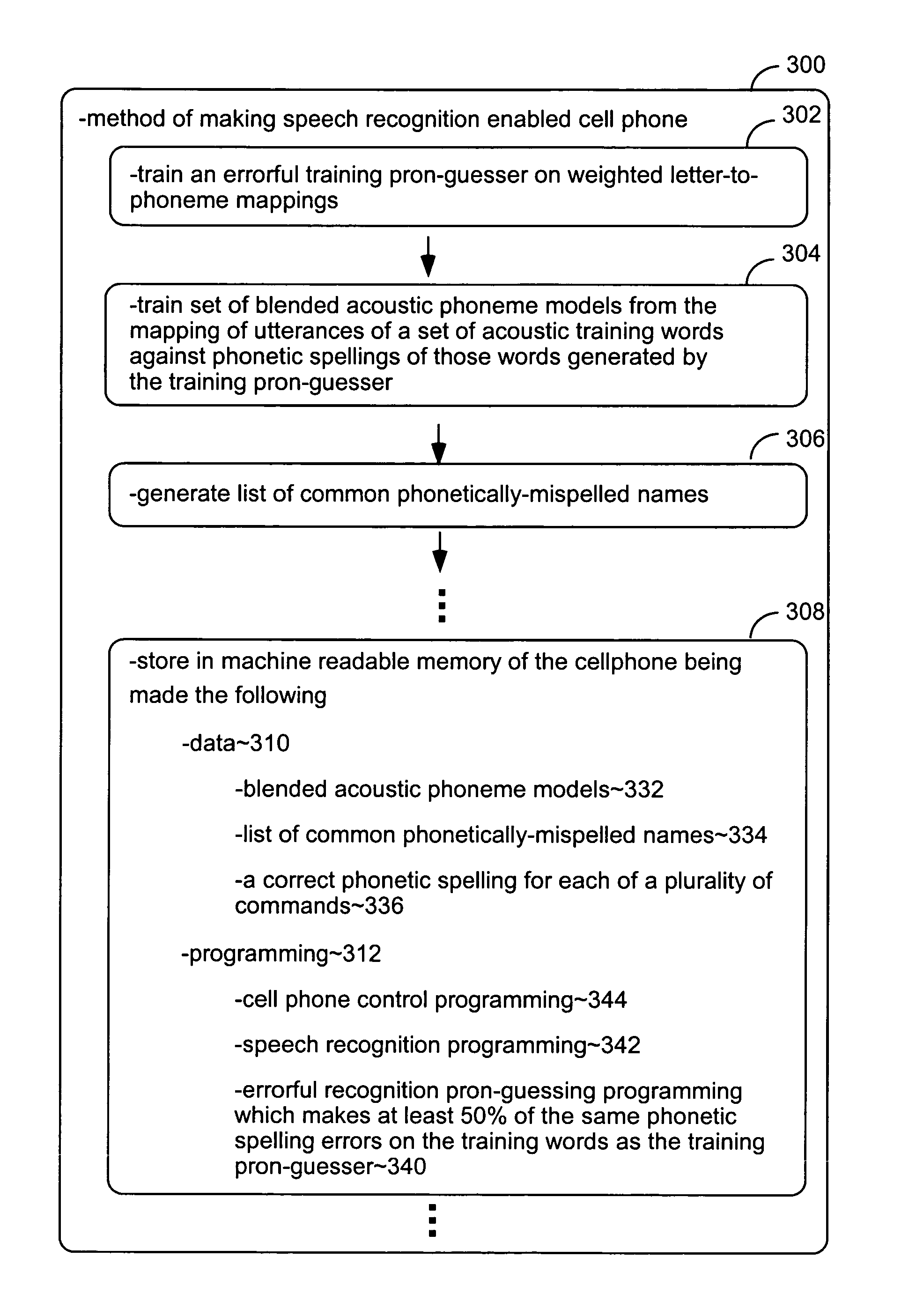

The error rate of a pronunciation guesser that guesses the phonetic spelling of words used in speech recognition is improved by causing its training to weigh letter-to-phoneme mappings used as data in such training as a function of the frequency of the words in which such mappings occur. Preferably the ratio of the weight to word frequency increases as word frequencies decreases. Acoustic phoneme models for use in speech recognition with phonetic spellings generated by a pronunciation guesser that makes errors are trained against word models whose phonetic spellings have been generated by a pronunciation guesser that makes similar errors. As a result, the acoustic models represent blends of phoneme sounds that reflect the spelling errors made by the pronunciation guessers. Speech recognition enabled systems are made by storing in them both a pronunciation guesser and a corresponding set of such blended acoustic models.

Owner:CERENCE OPERATING CO

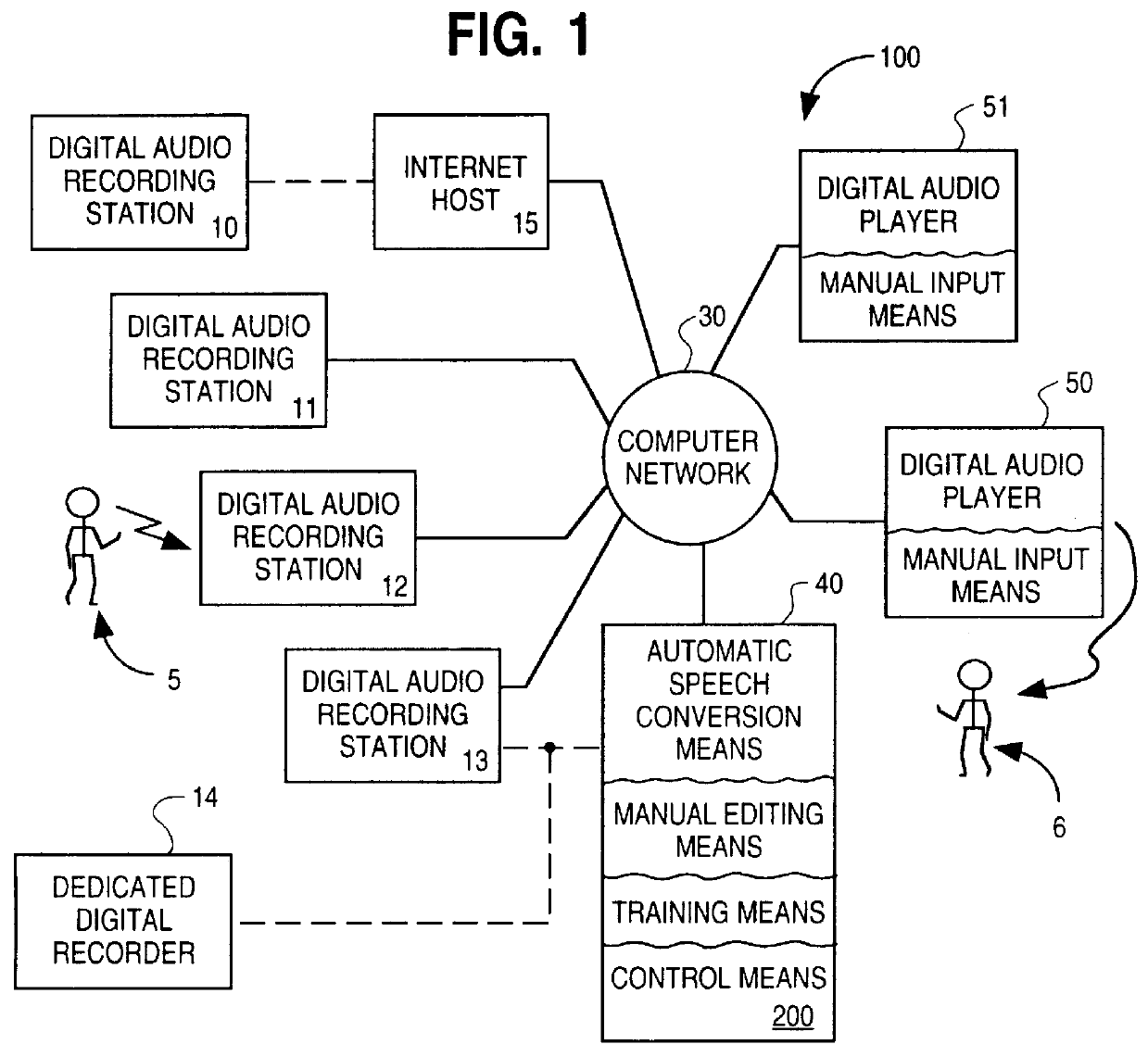

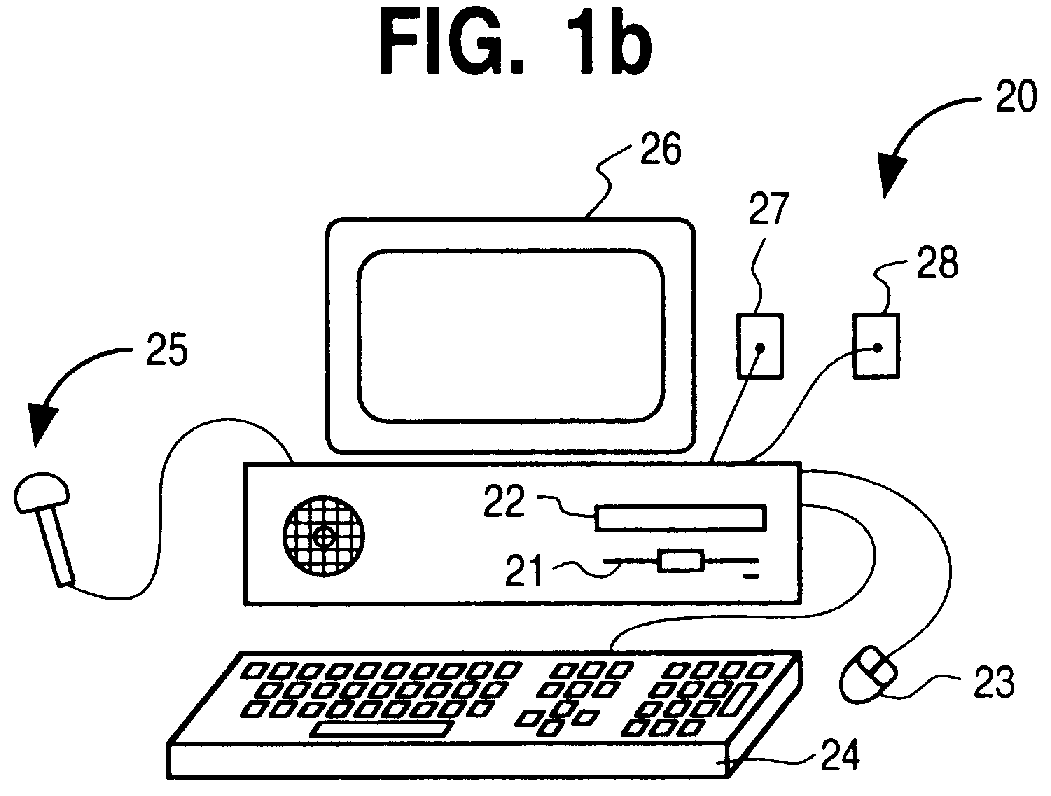

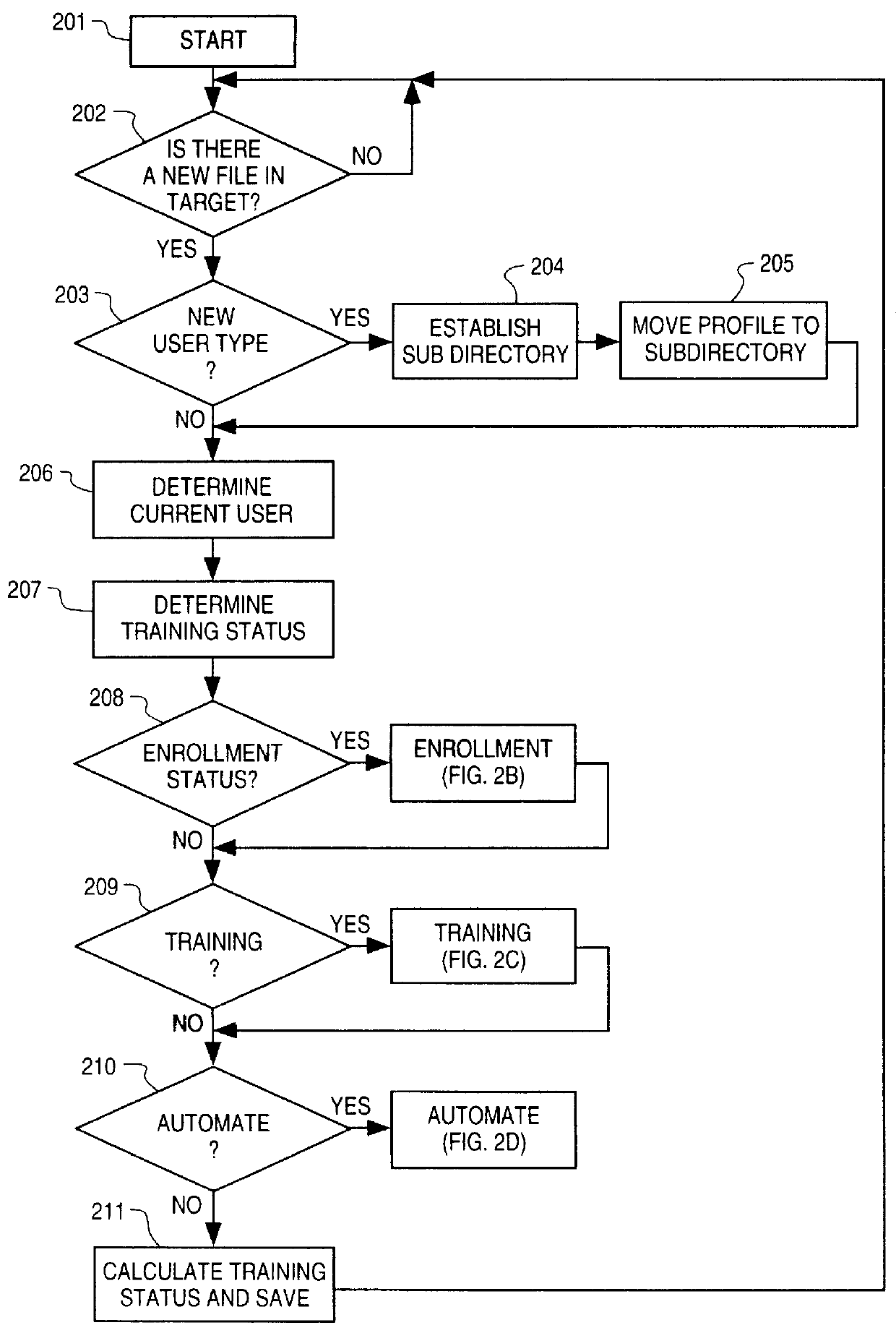

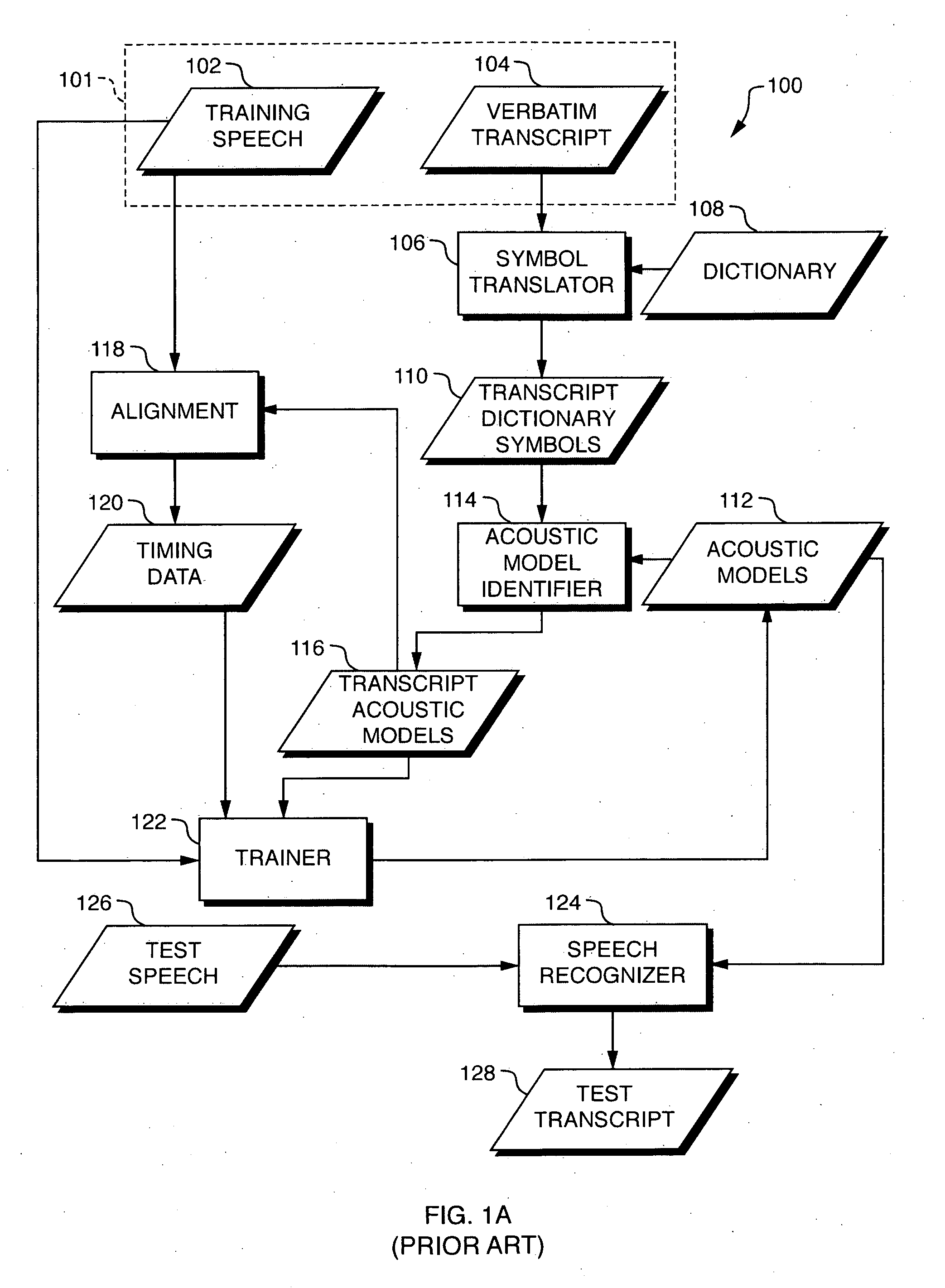

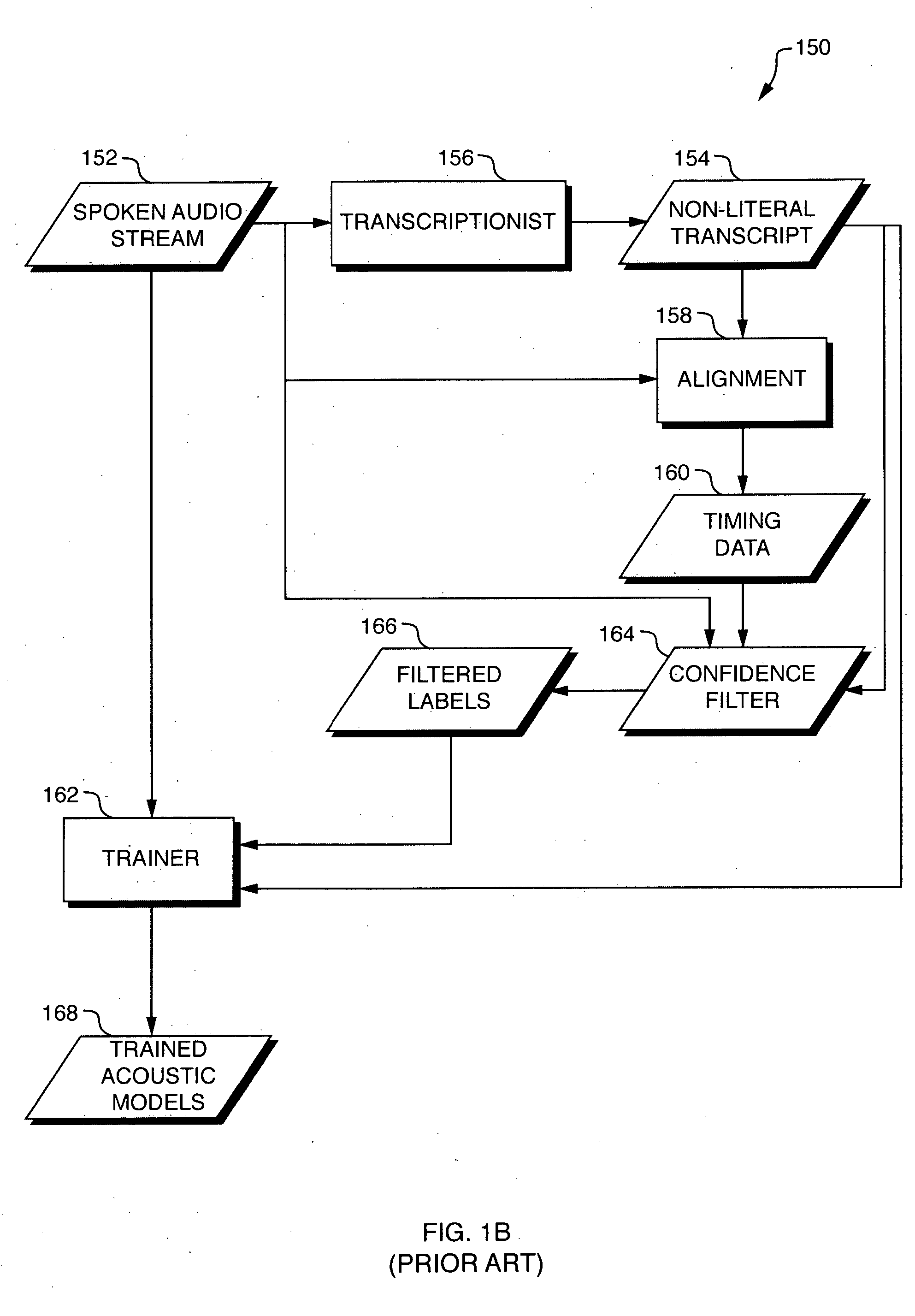

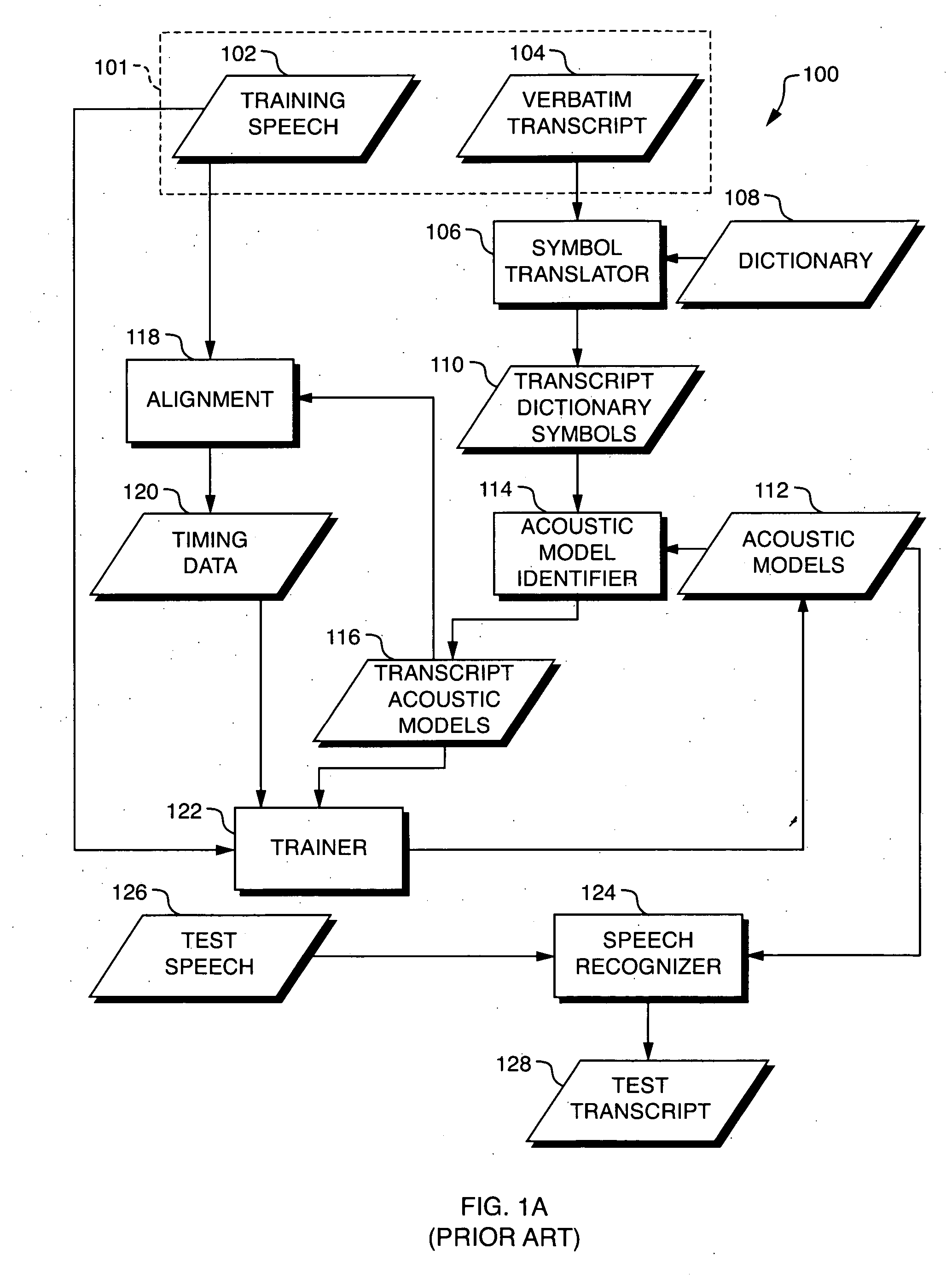

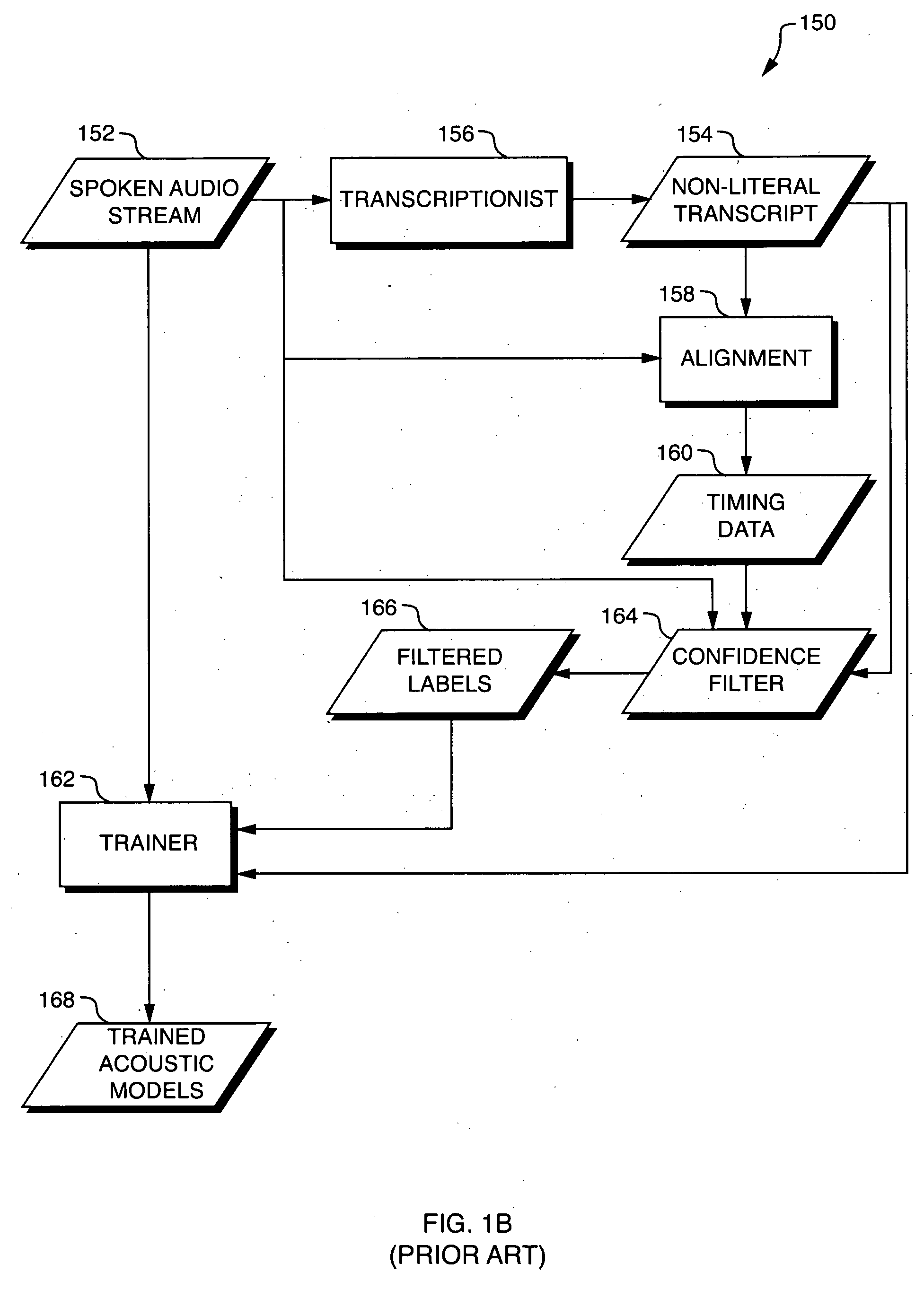

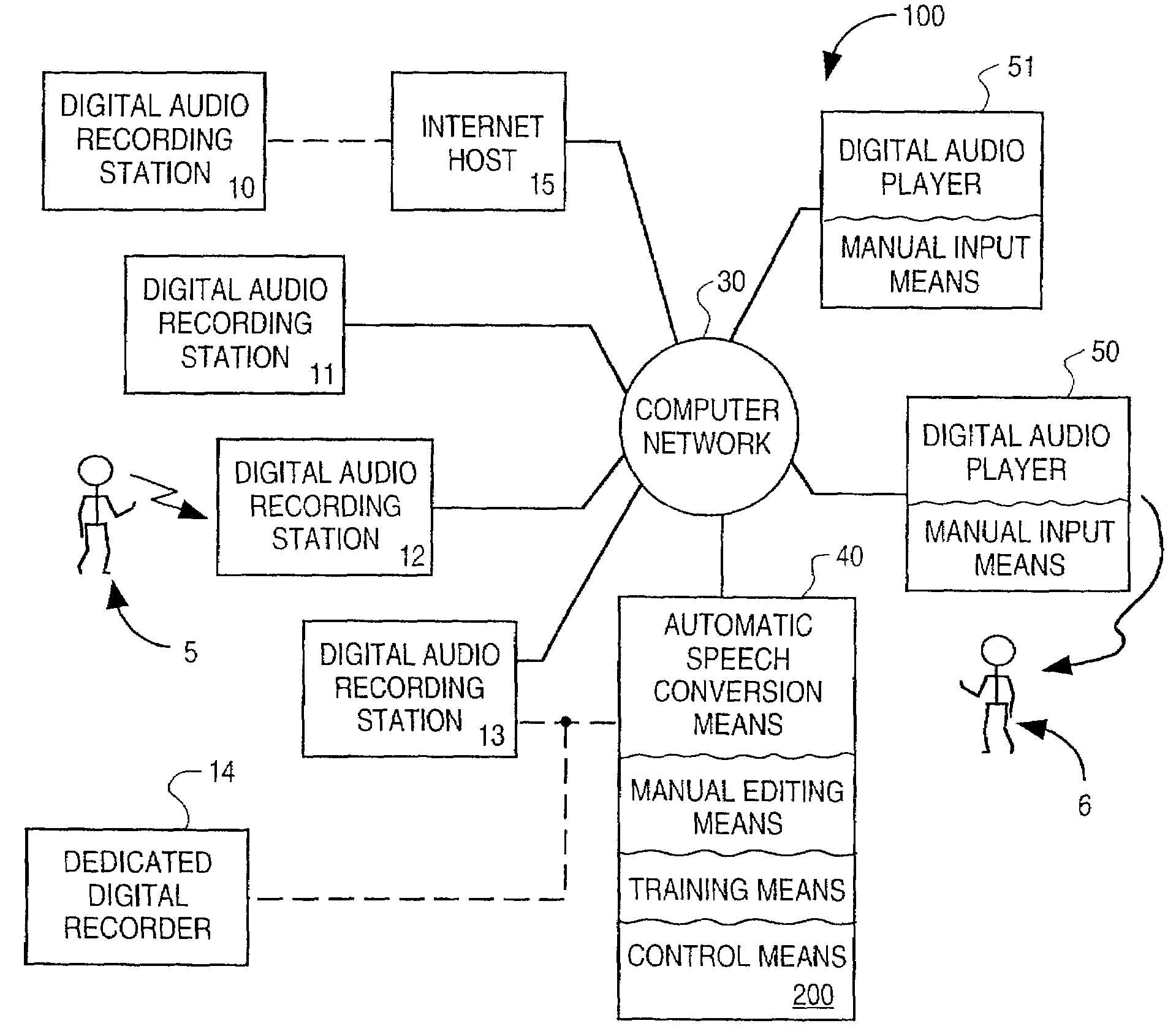

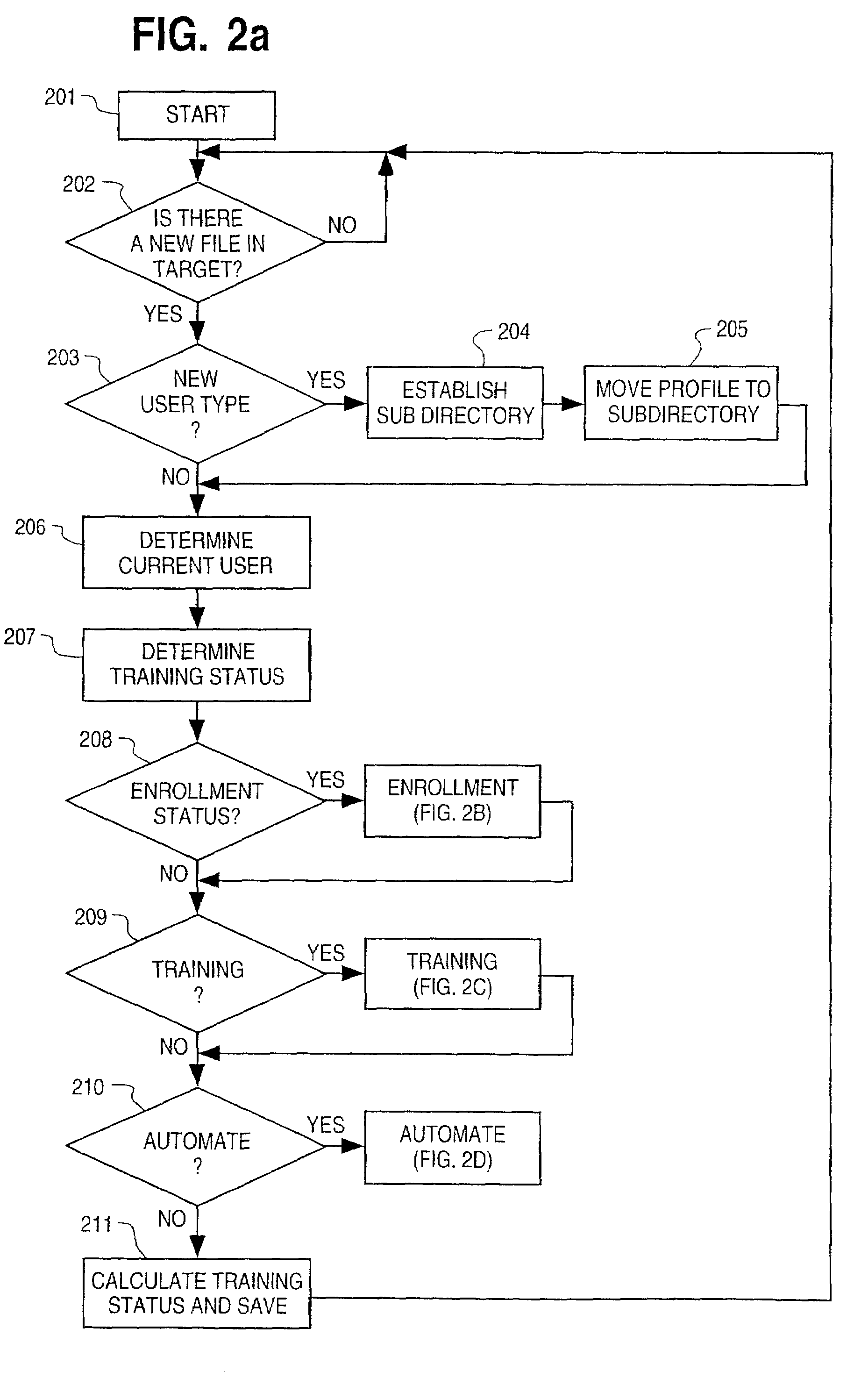

System and method for automating transcription services

InactiveUS6122614AMinimize the numberSimple meansSpeech recognitionSpeech synthesisAcoustic modelSpeech identification

A system for substantially automating transcription services for multiple voice users including a manual transcription station, a speech recognition program and a routing program. The system establishes a profile for each of the voice users containing a training status which is selected from the group of enrollment, training, automated and stop automation. When the system receives a voice dictation file from a current voice user based on the training status the system routes the voice dictation file to a manual transcription station and the speech recognition program. A human transcriptionist creates transcribed files for each received voice dictation files. The speech recognition program automatically creates a written text for each received voice dictation file if the training status of the current user is training or automated. A verbatim file is manually established if the training status of the current user is enrollment or training and the speech recognition program is trained with an acoustic model for the current user using the verbatim file and the voice dictation file if the training status of the current user is enrollment or training. The transcribed file is returned to the current user if the training status of the current user is enrollment or training or the written text is returned if the training status of the current user is automated. An apparatus and method is also disclosed for simplifying the manual establishment of the verbatim file. A method for substantially automating transcription services is also disclosed.

Owner:CUSTOM SPEECH USA +1

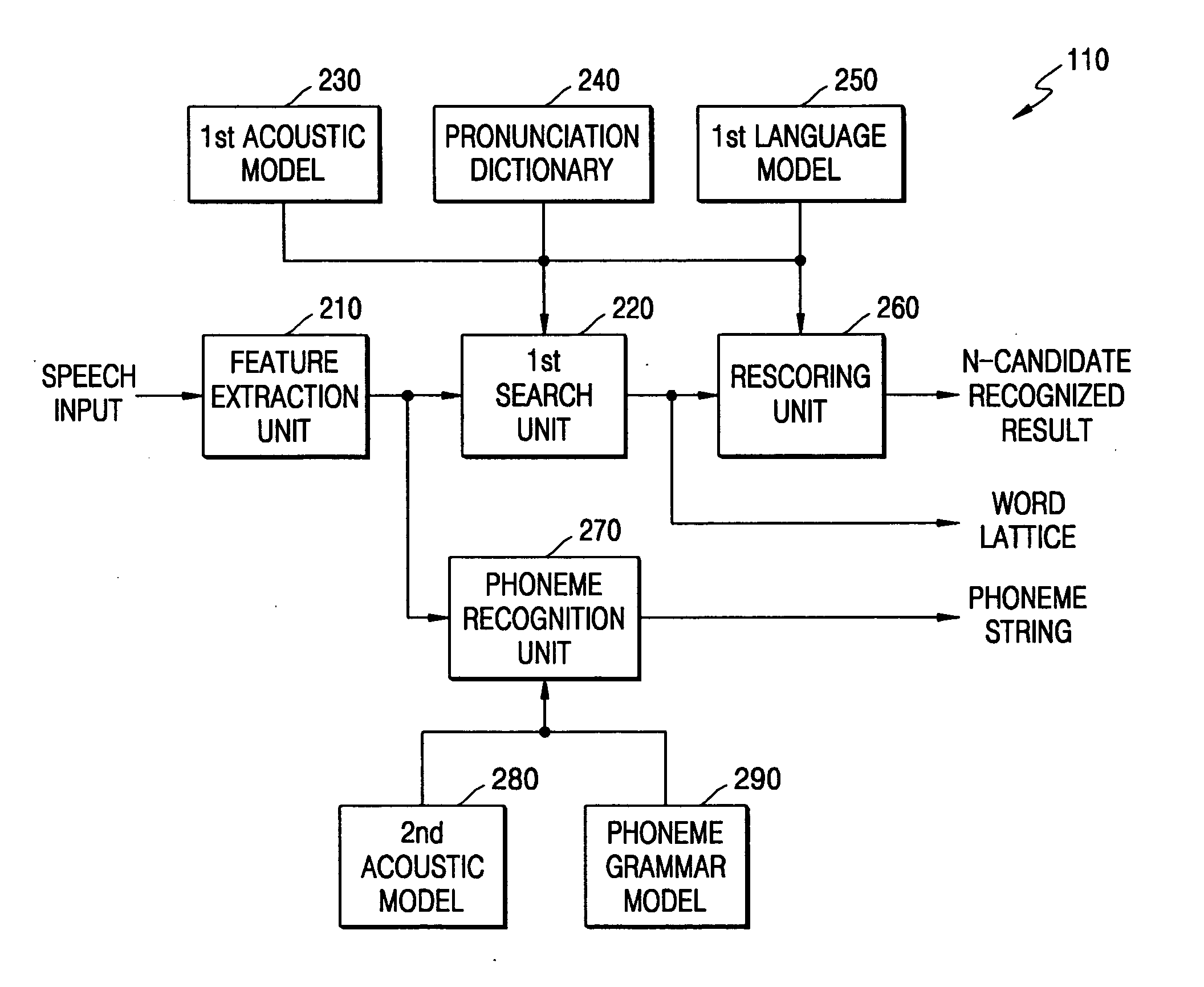

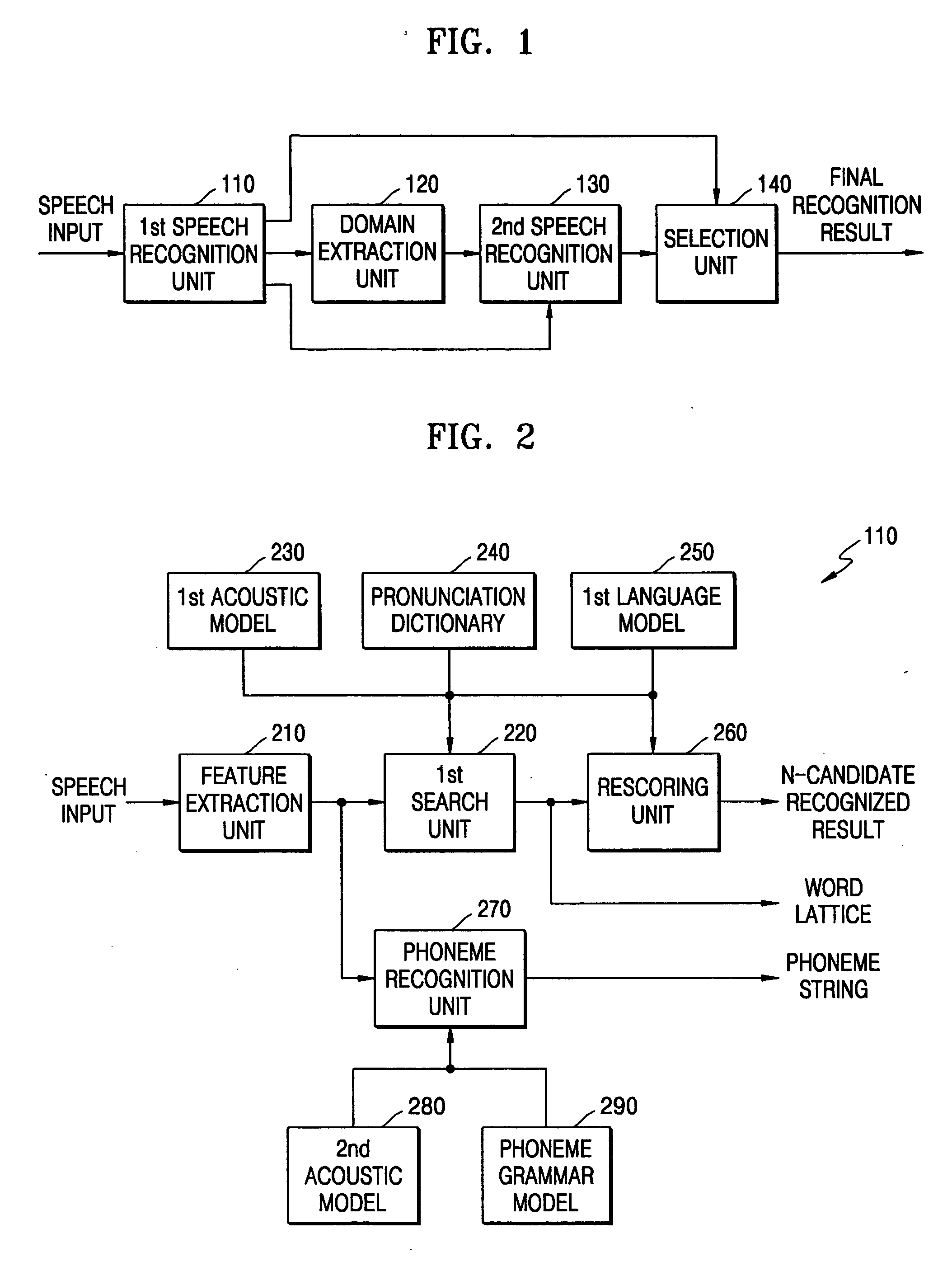

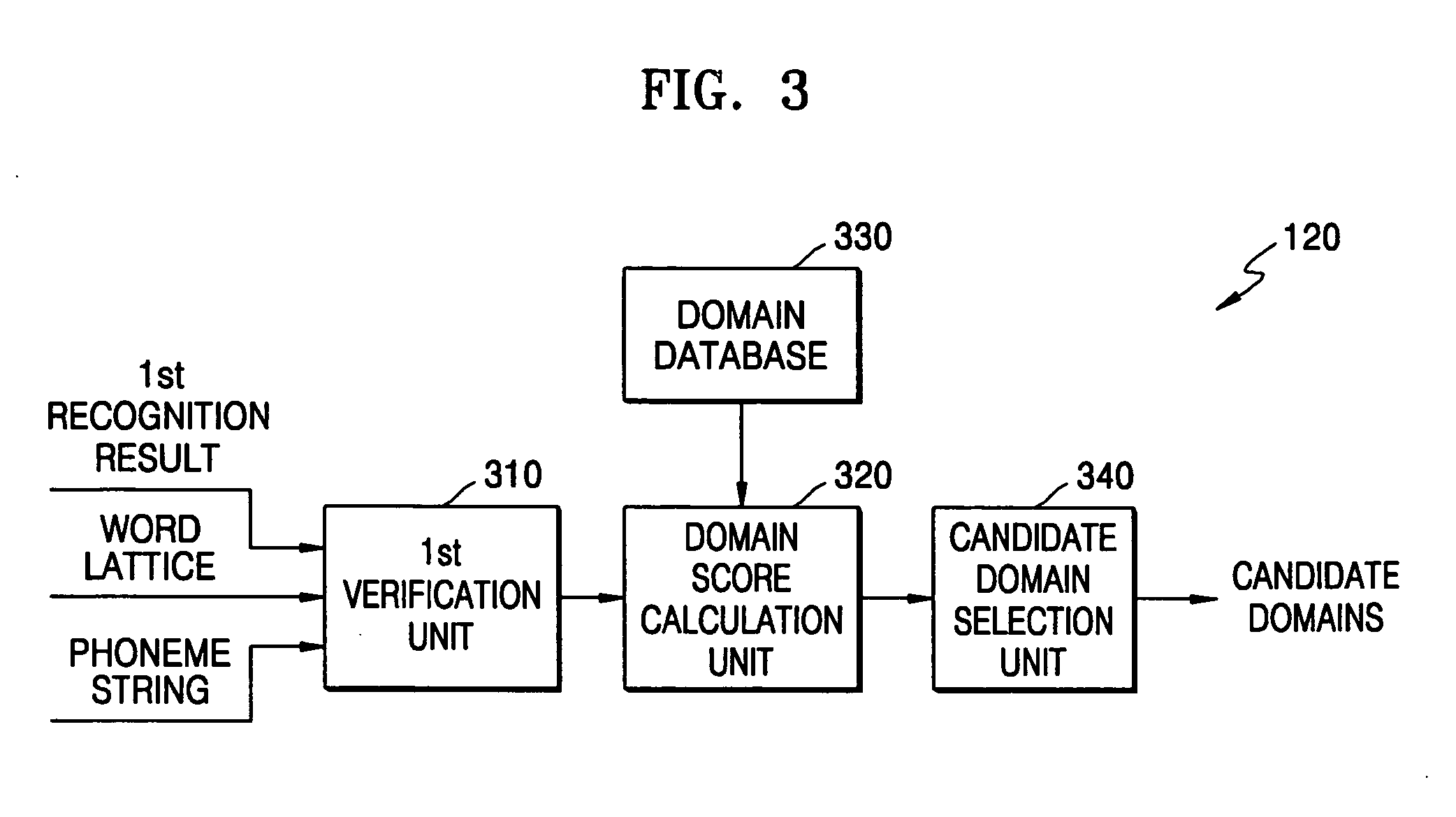

Domain-based dialog speech recognition method and apparatus

A domain-based speech recognition method and apparatus, the method including: performing speech recognition by using a first language model and generating a first recognition result including a plurality of first recognition sentences; selecting a plurality of candidate domains, by using a word included in each of the first recognition sentences and having a confidence score equal to or higher than a predetermined threshold, as a domain keyword; performing speech recognition with the first recognition result, by using an acoustic model specific to each of the candidate domains and a second language model and generating a plurality of second recognition sentences; and selecting at least one or more final recognition sentence from the first recognition sentences and the second recognition sentences. According to this method and apparatus, the effect of a domain extraction error by misrecognition of a word on selection of a final recognition result can be minimized.

Owner:SAMSUNG ELECTRONICS CO LTD

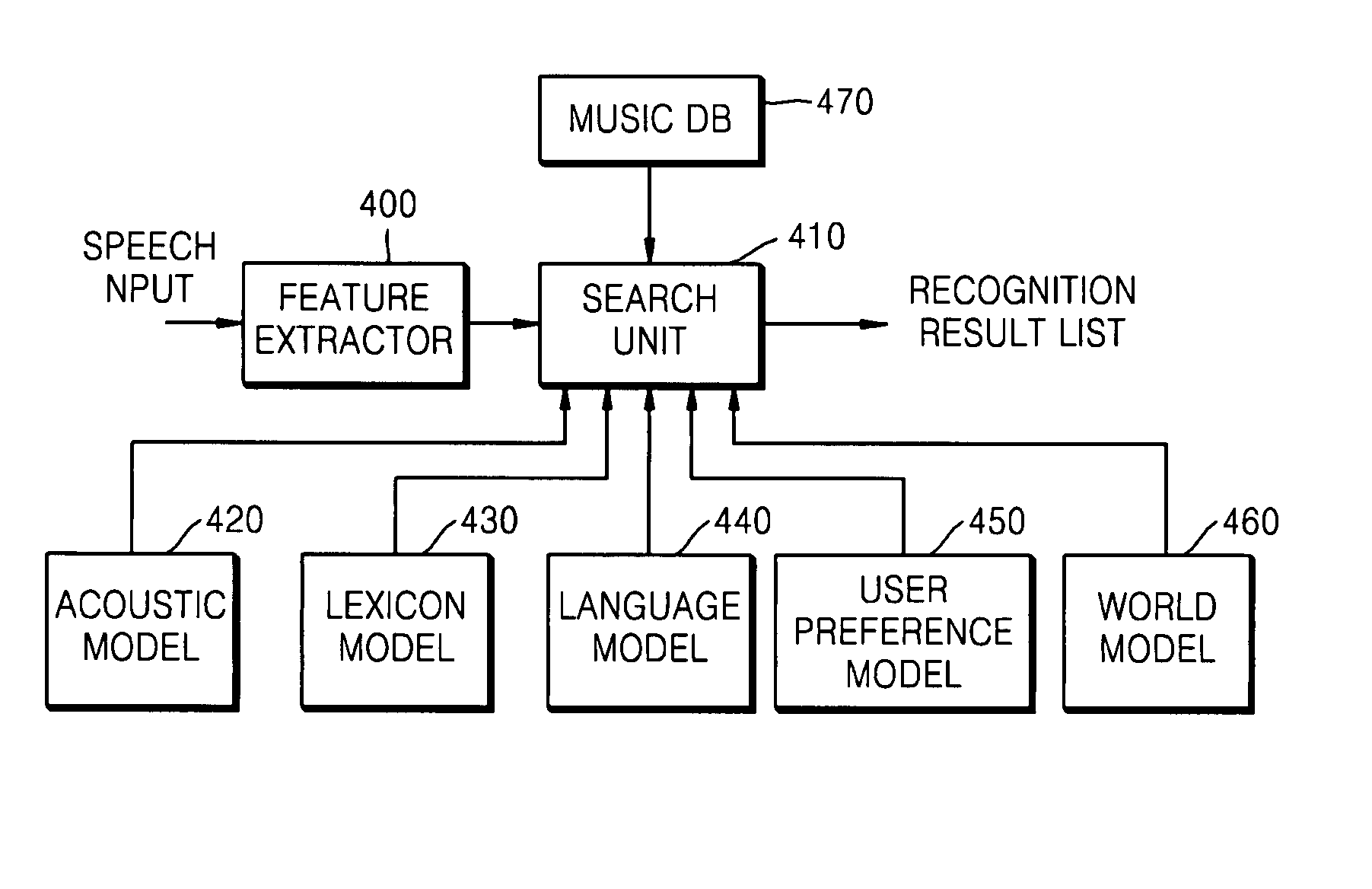

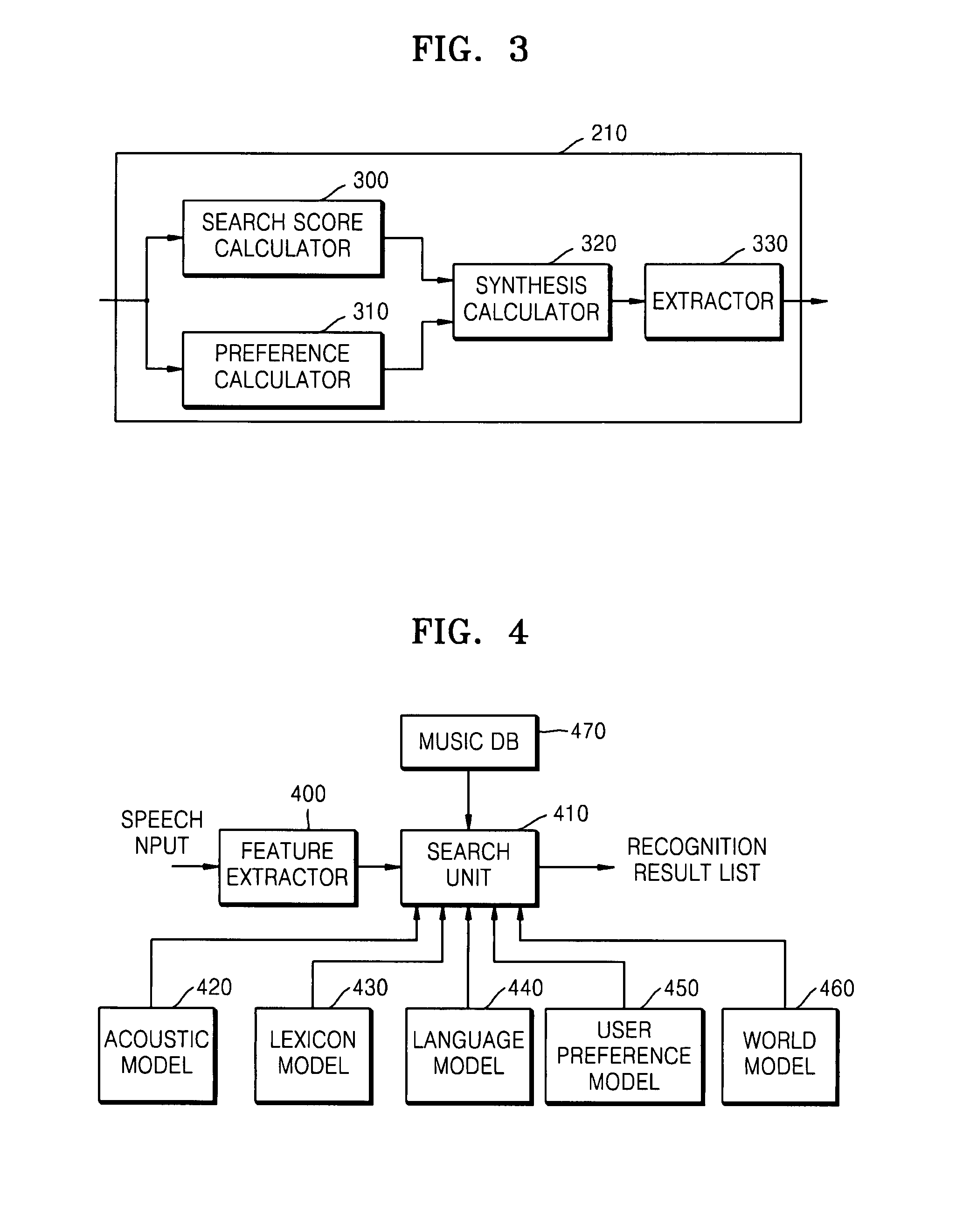

Method and apparatus for searching for music based on speech recognition

Provided is a method and apparatus for searching music based on speech recognition. By calculating search scores with respect to a speech input using an acoustic model, calculating preferences in music using a user preference model, reflecting the preferences in the search scores, and extracting a music list according to the search scores in which the preferences are reflected, a personal expression of a search result using speech recognition can be achieved, and an error or imperfection of a speech recognition result can be compensated for.

Owner:SAMSUNG ELECTRONICS CO LTD

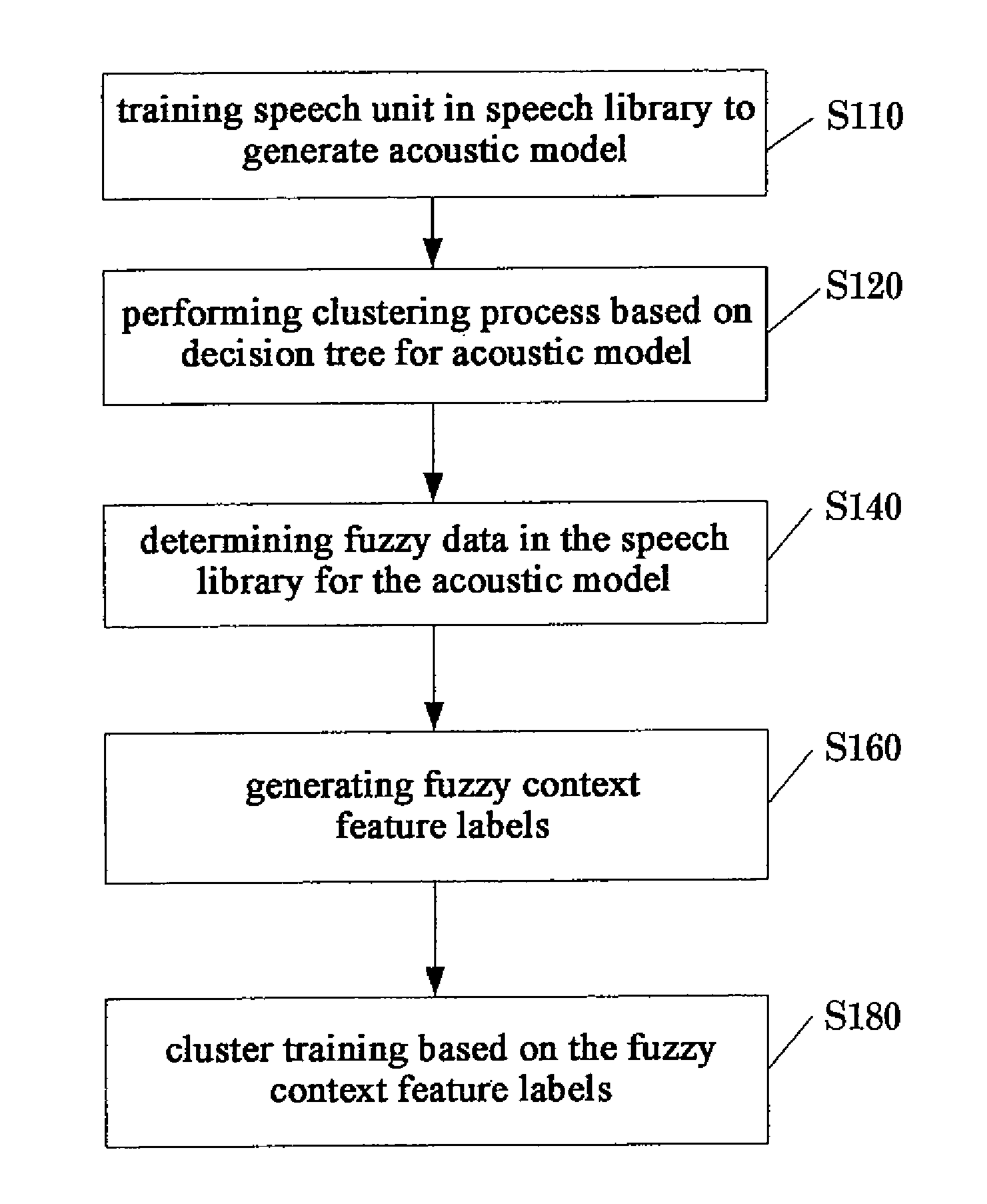

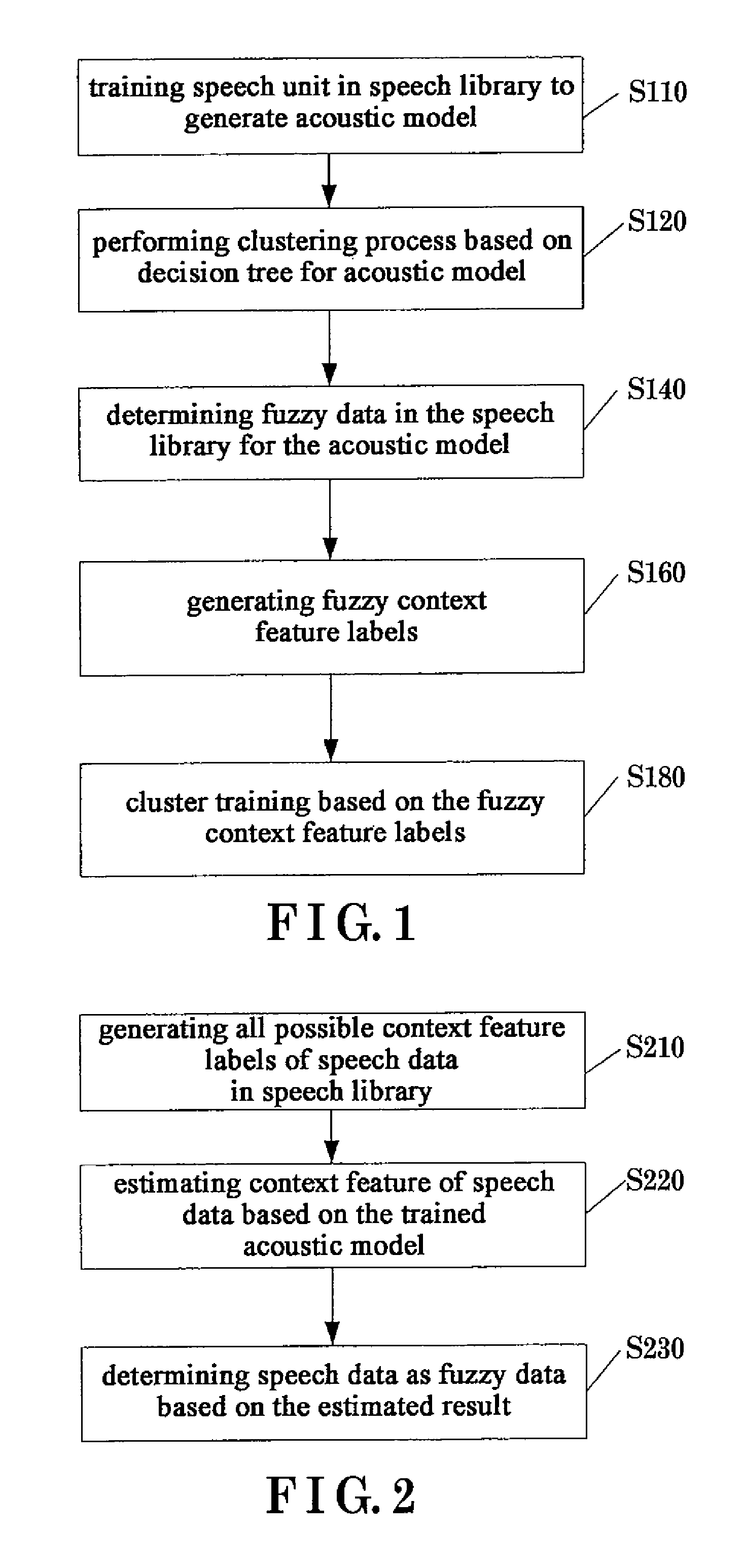

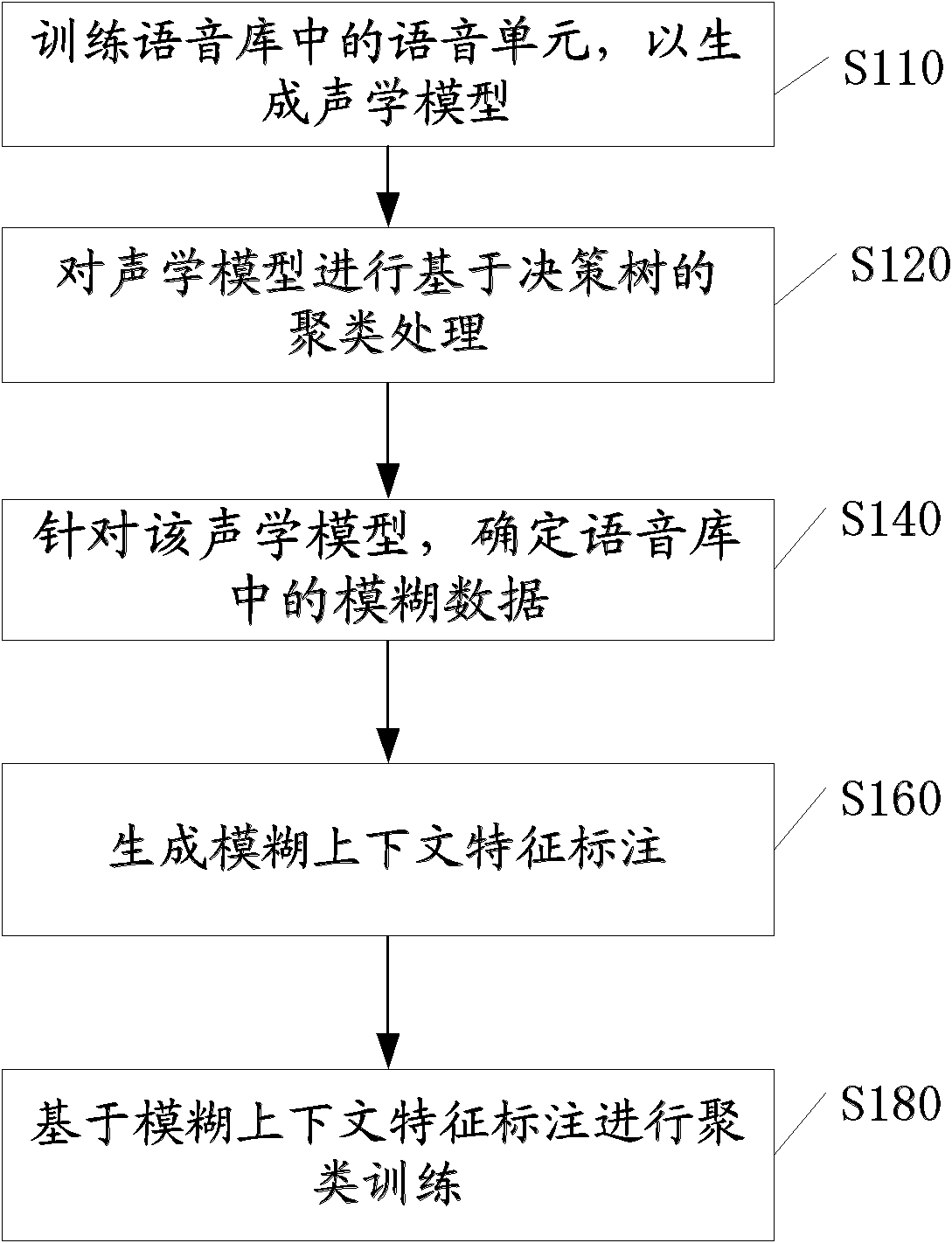

Method, apparatus for synthesizing speech and acoustic model training method for speech synthesis

According to one embodiment, a method, apparatus for synthesizing speech, and a method for training acoustic model used in speech synthesis is provided. The method for synthesizing speech may include determining data generated by text analysis as fuzzy heteronym data, performing fuzzy heteronym prediction on the fuzzy heteronym data to output a plurality of candidate pronunciations of the fuzzy heteronym data and probabilities thereof, generating fuzzy context feature labels based on the plurality of candidate pronunciations and probabilities thereof, determining model parameters for the fuzzy context feature labels based on acoustic model with fuzzy decision tree, generating speech parameters from the model parameters, and synthesizing the speech parameters via synthesizer as speech.

Owner:KK TOSHIBA

Correction of matching results for speech recognition

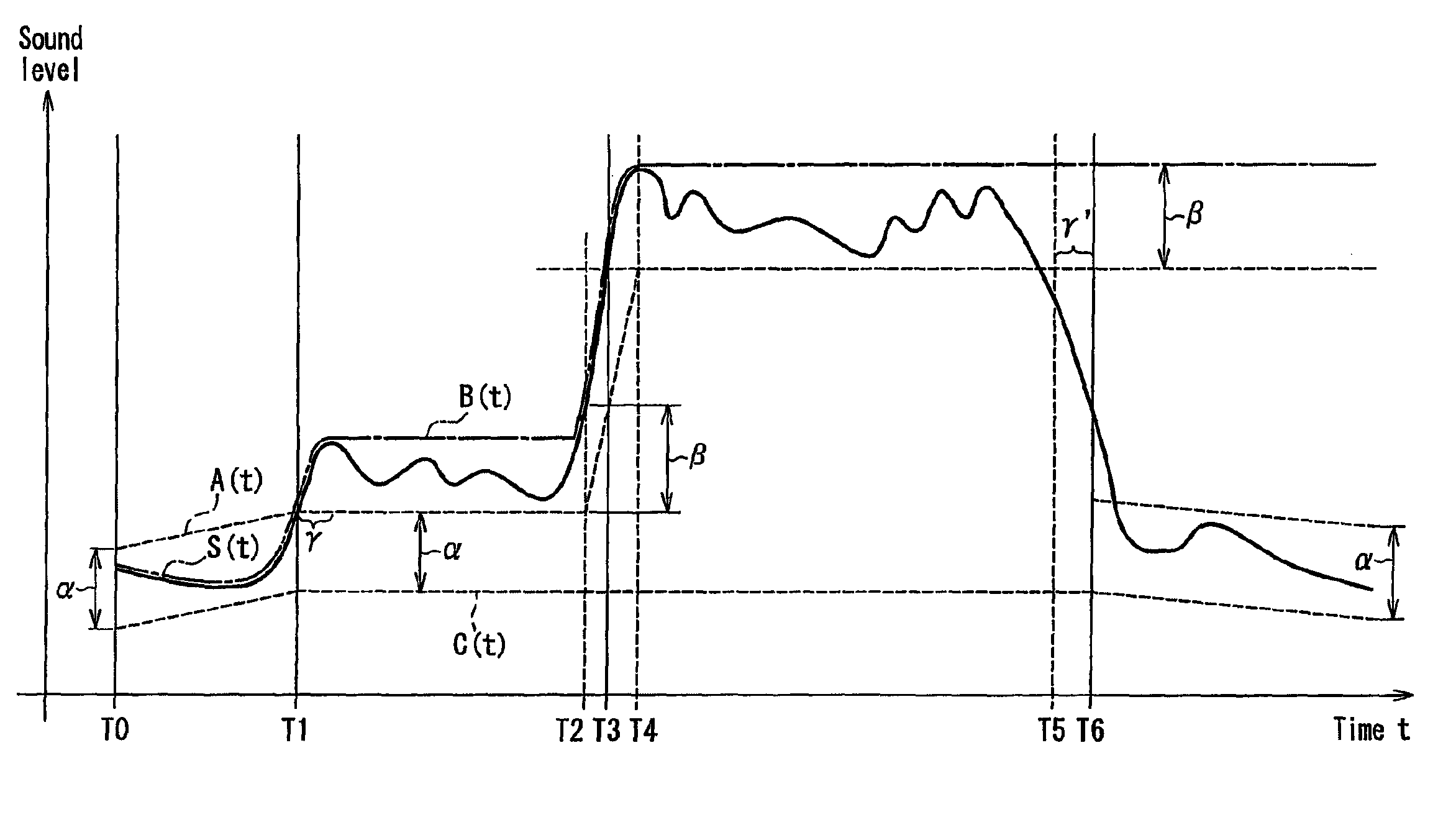

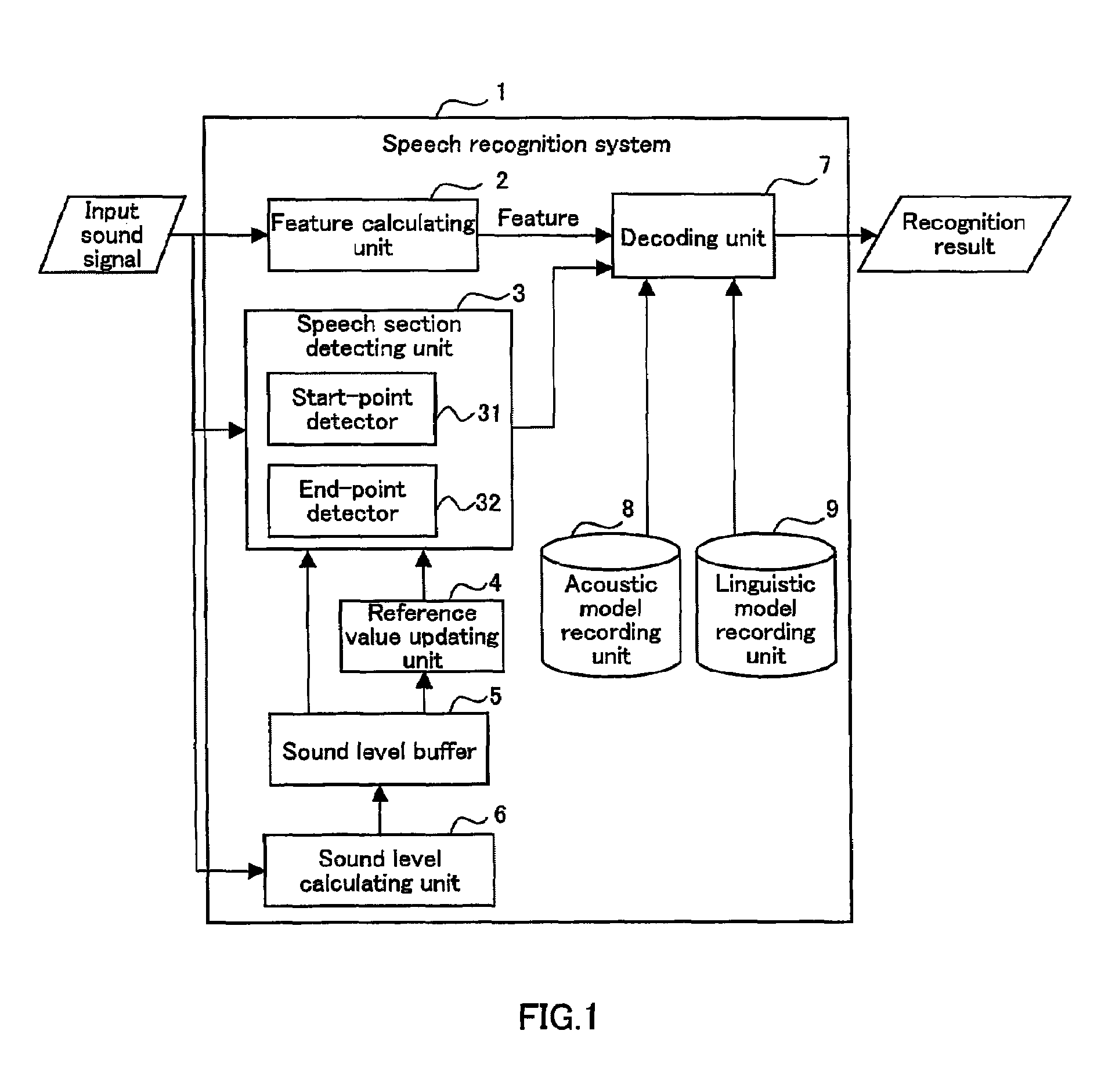

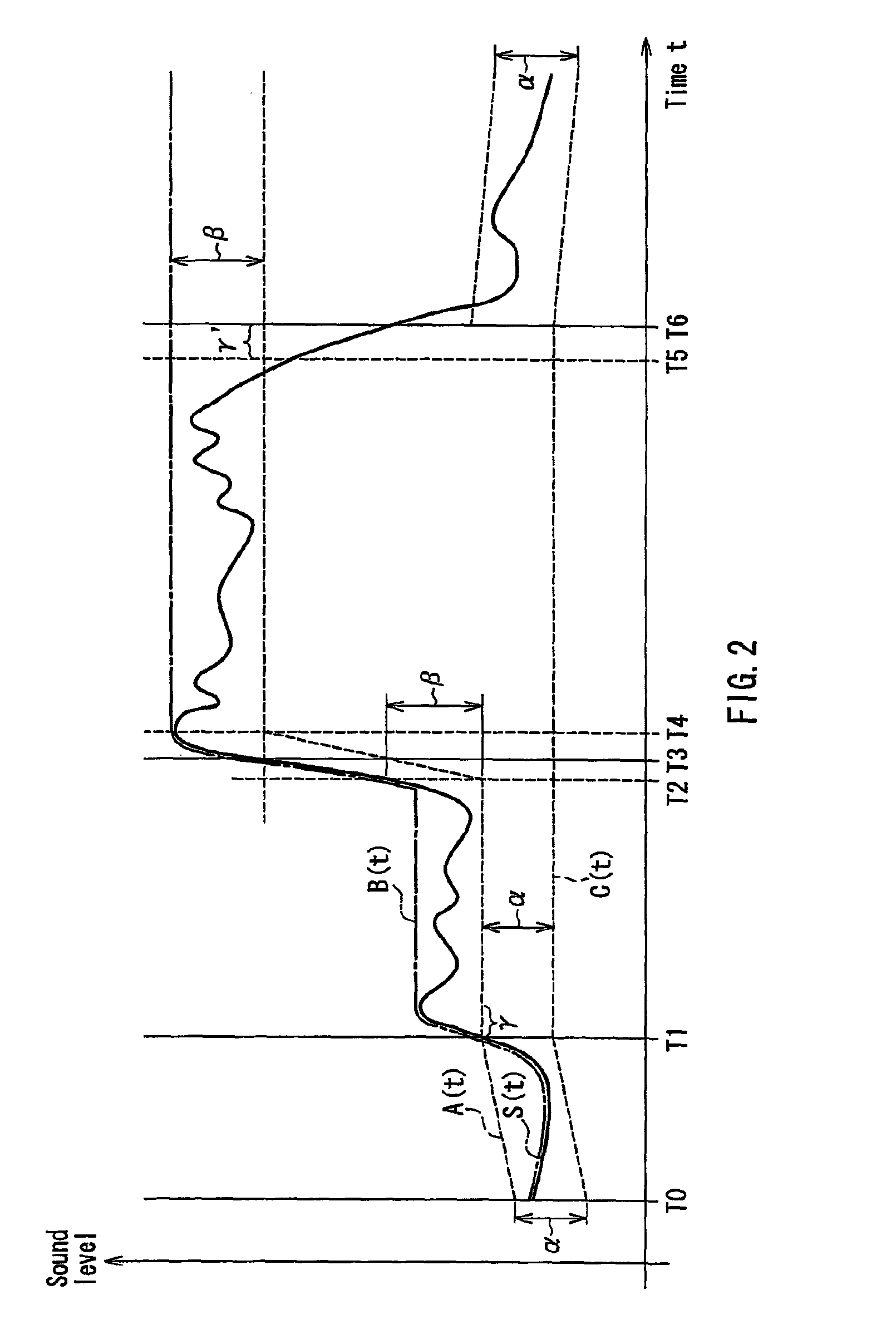

A speech recognition system includes the following: a feature calculating unit; a sound level calculating unit that calculates an input sound level in each frame; a decoding unit that matches the feature of each frame with an acoustic model and a linguistic model, and outputs a recognized word sequence; a start-point detector that determines a start frame of a speech section based on a reference value; an end-point detector that determines an end frame of the speech section based on a reference value; and a reference value updating unit that updates the reference value in accordance with variations in the input sound level. The start-point detector updates the start frame every time the reference value is updated. The decoding unit starts matching before being notified of the end frame and corrects the matching results every time it is notified of the start frame. The speech recognition system can suppress a delay in response time while performing speech recognition based on a proper speech section.

Owner:FUJITSU LTD

Message recognition using shared language model

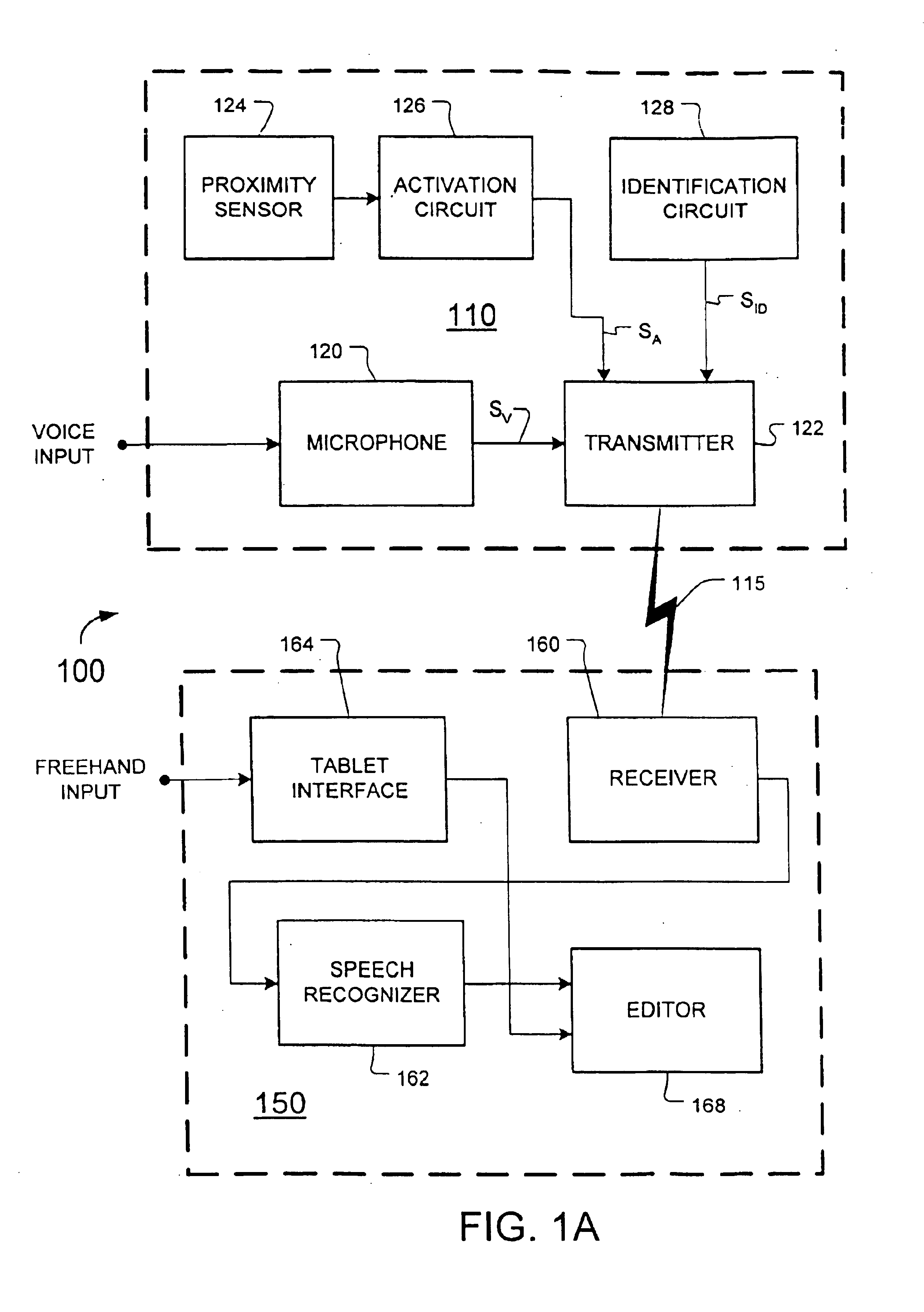

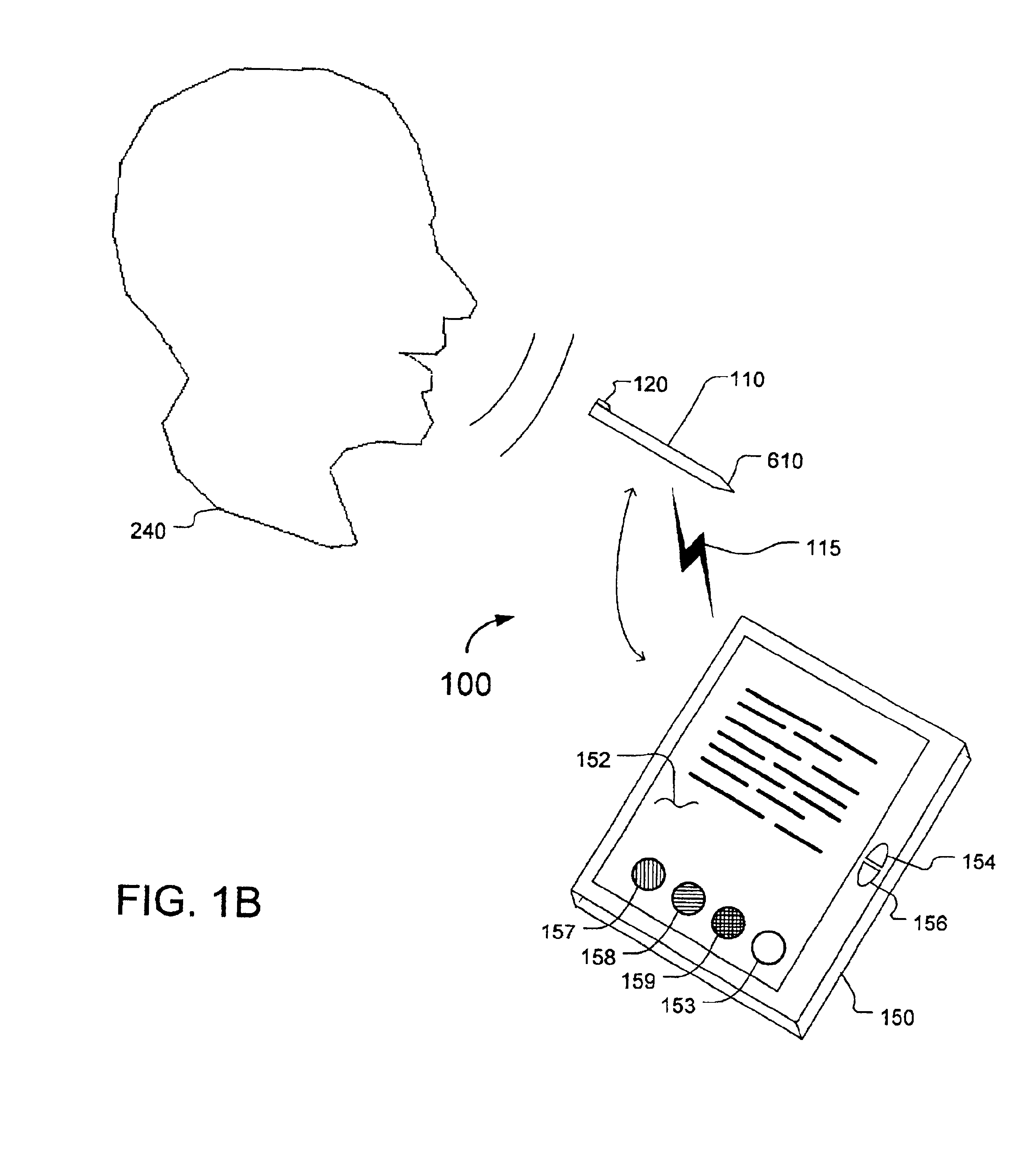

InactiveUS6904405B2Speech recognitionInput/output processes for data processingHandwritingAcoustic model

Certain disclosed methods and systems perform multiple different types of message recognition using a shared language model. Message recognition of a first type is performed responsive to a first type of message input (e.g., speech), to provide text data in accordance with both the shared language model and a first model specific to the first type of message recognition (e.g., an acoustic model). Message recognition of a second type is performed responsive to a second type of message input (e.g., handwriting), to provide text data in accordance with both the shared language model and a second model specific to the second type of message recognition (e.g., a model that determines basic units of handwriting conveyed by freehand input). Accuracy of both such message recognizers can be improved by user correction of misrecognition by either one of them. Numerous other methods and systems are also disclosed.

Owner:BUFFALO PATENTS LLC

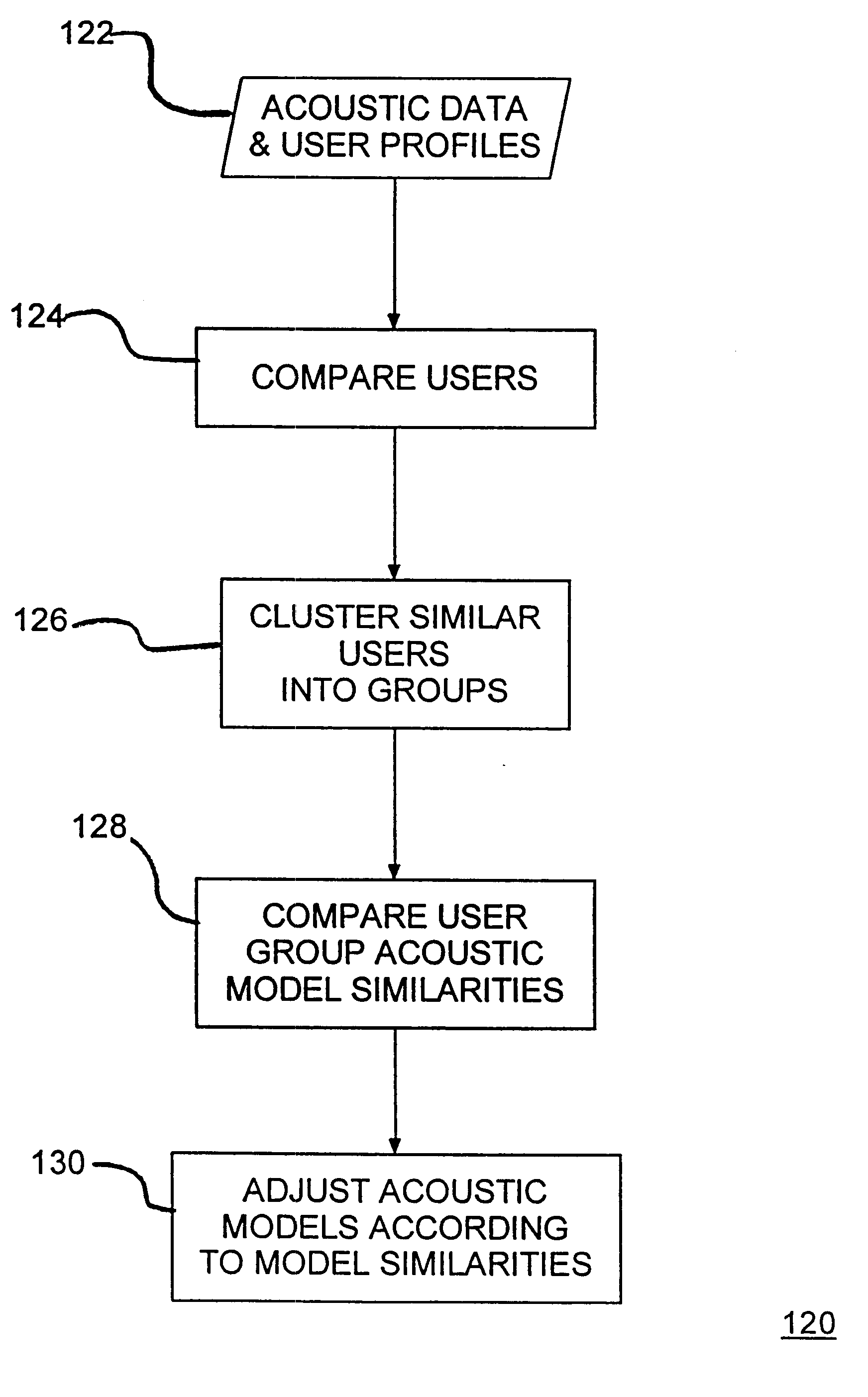

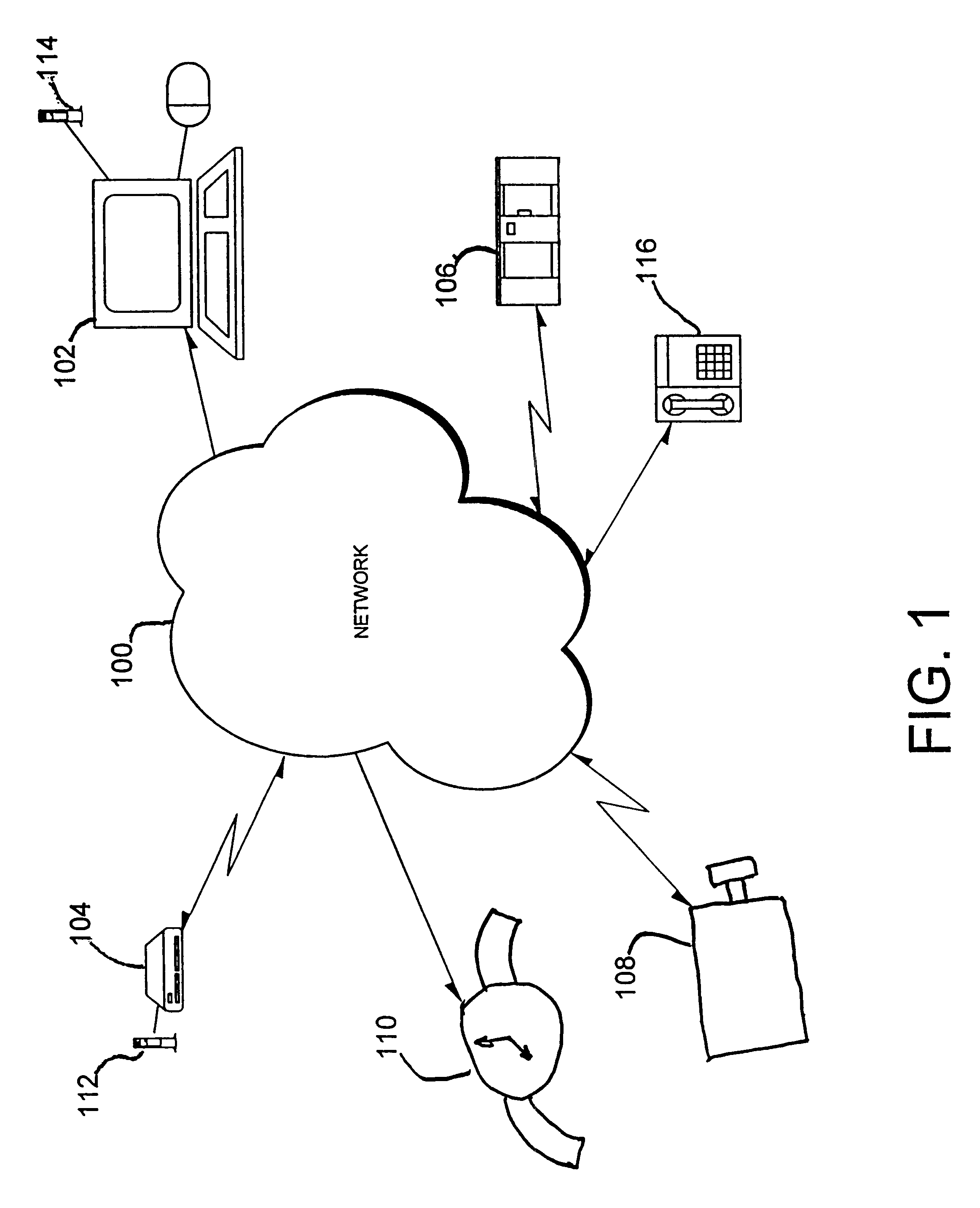

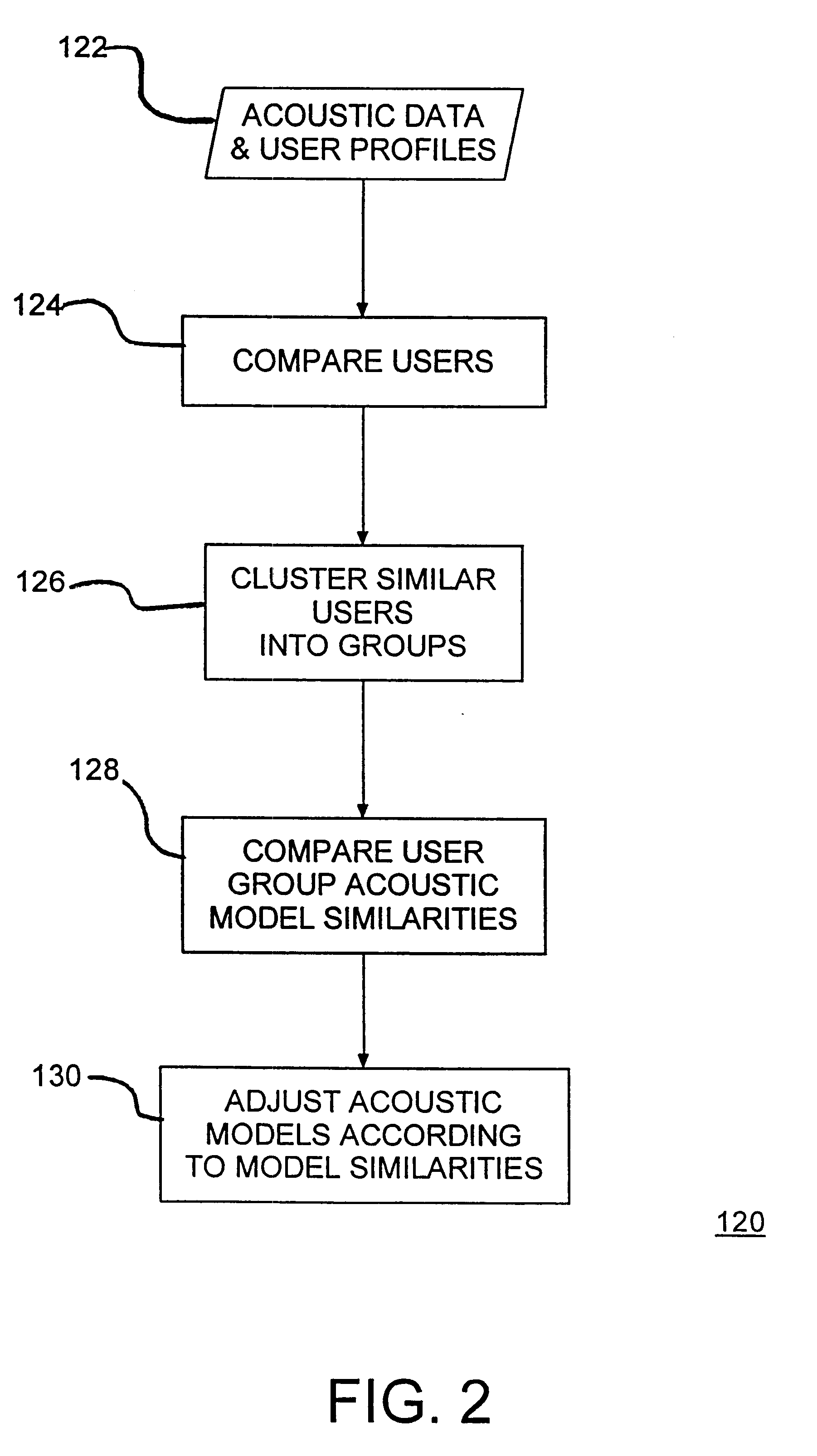

Speaker model adaptation via network of similar users

A speech recognition system, method and program product for recognizing speech input from computer users connected together over a network of computers. Speech recognition computer users on the network are clustered into classes of similar users according their similarities, including characteristics nationality, profession, sex, age, etc. Each computer in the speech recognition network includes at least one user based acoustic model trained for a particular user. The acoustic models include an acoustic model domain, with similar acoustic models being clustered according to an identified domain. User characteristics are collected from databases over the network and from users using the speech recognition system and then, distributed over the network during or after user activities. Existing acoustic models are modified in response to user production activities. As recognition progresses, similar language models among similar users are identified on the network. Update information, including information about user activities and user acoustic model data, is transmitted over the network and identified similar language models are updated. Acoustic models improve for users that are connected over the network as similar users use their respective speech recognition system.

Owner:NUANCE COMM INC

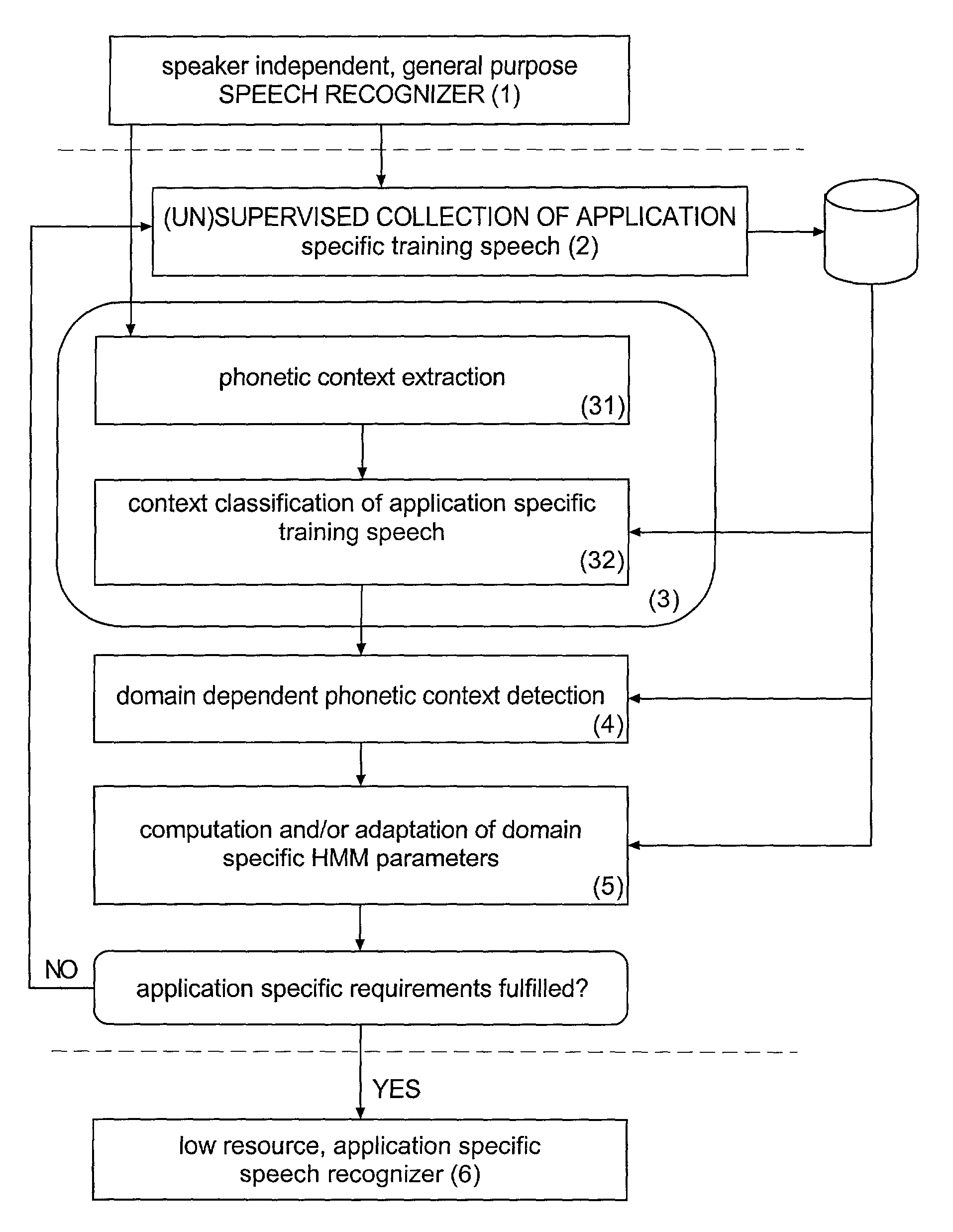

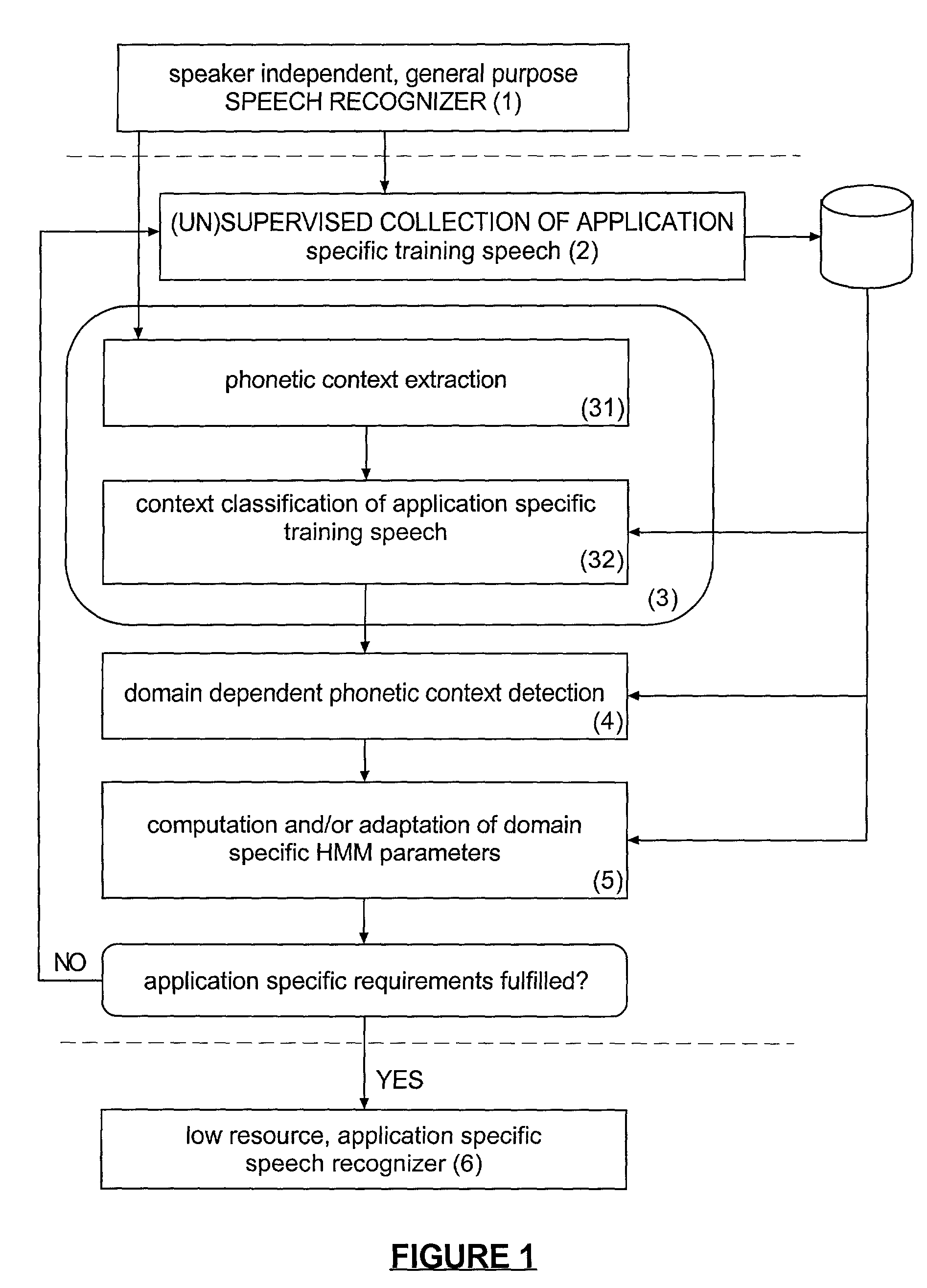

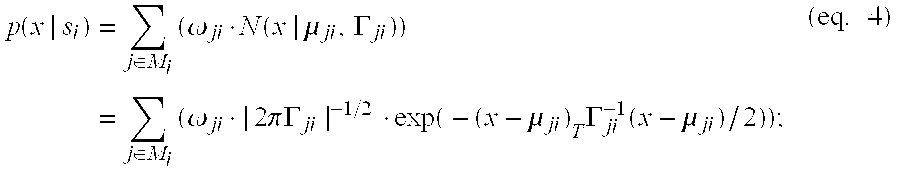

Method and apparatus for phonetic context adaptation for improved speech recognition

The present invention provides a computerized method and apparatus for automatically generating from a first speech recognizer a second speech recognizer which can be adapted to a specific domain. The first speech recognizer can include a first acoustic model with a first decision network and corresponding first phonetic contexts. The first acoustic model can be used as a starting point for the adaptation process. A second acoustic model with a second decision network and corresponding second phonetic contexts for the second speech recognizer can be generated by re-estimating the first decision network and the corresponding first phonetic contexts based on domain-specific training data.

Owner:NUANCE COMM INC

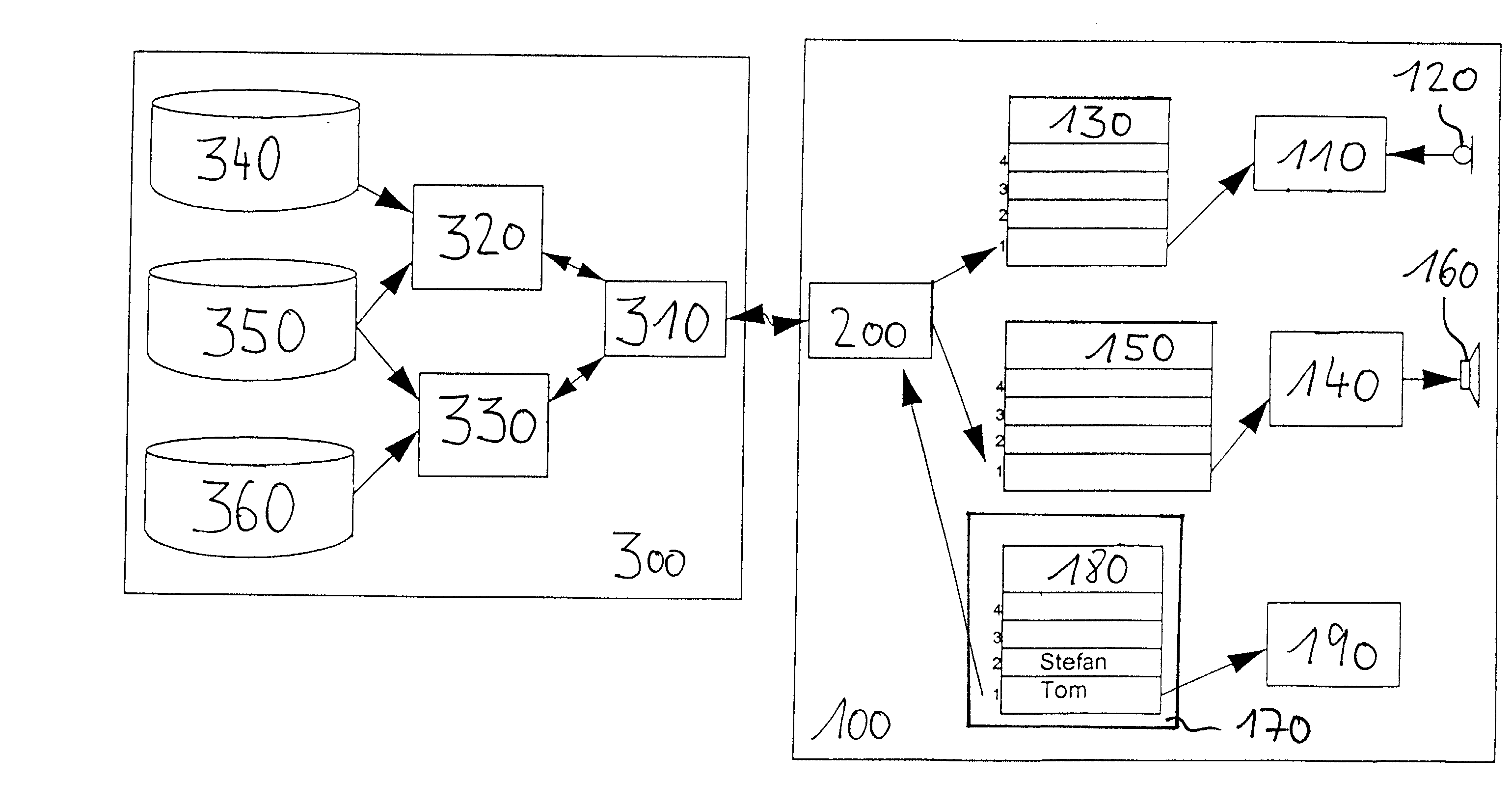

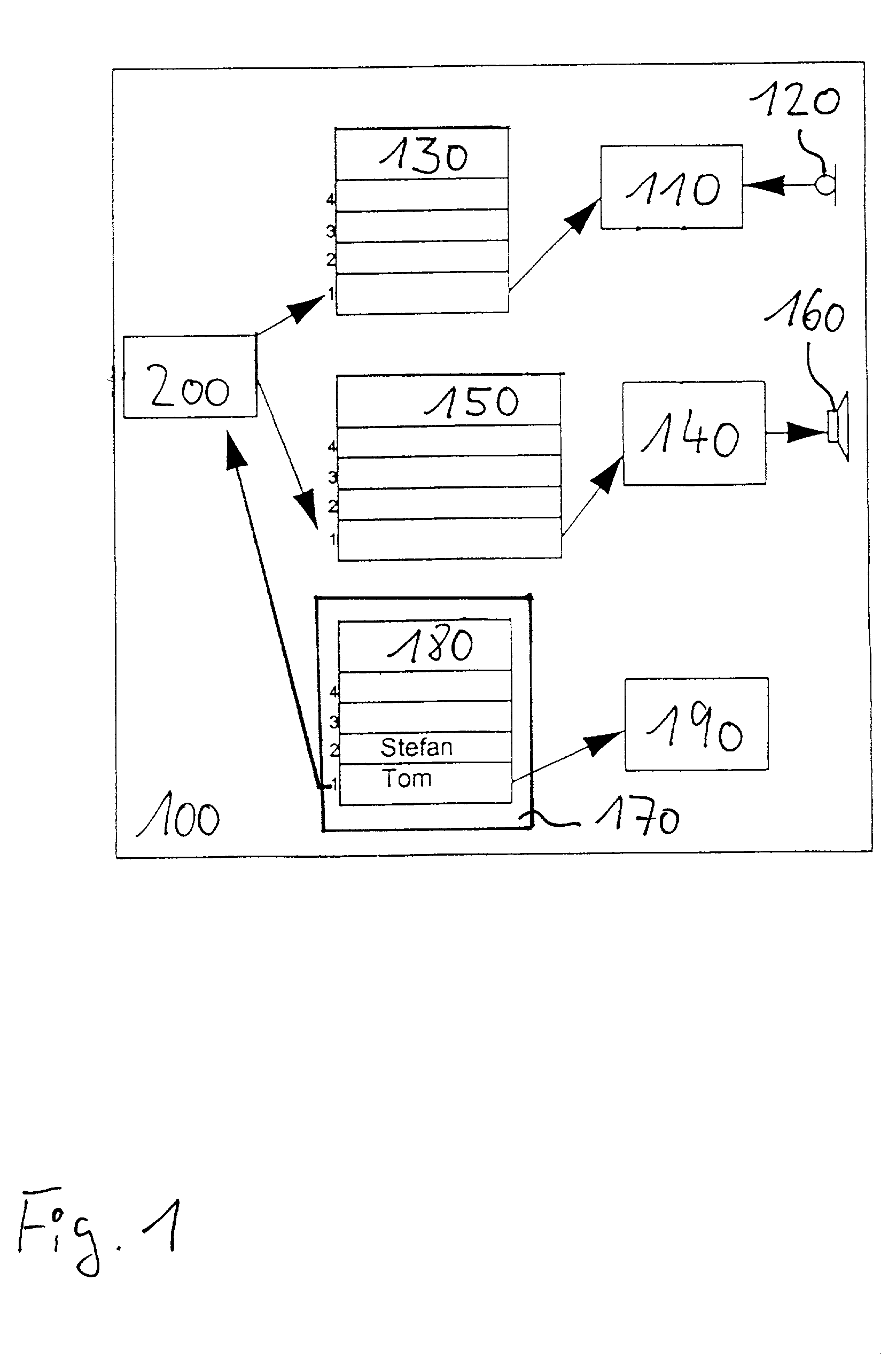

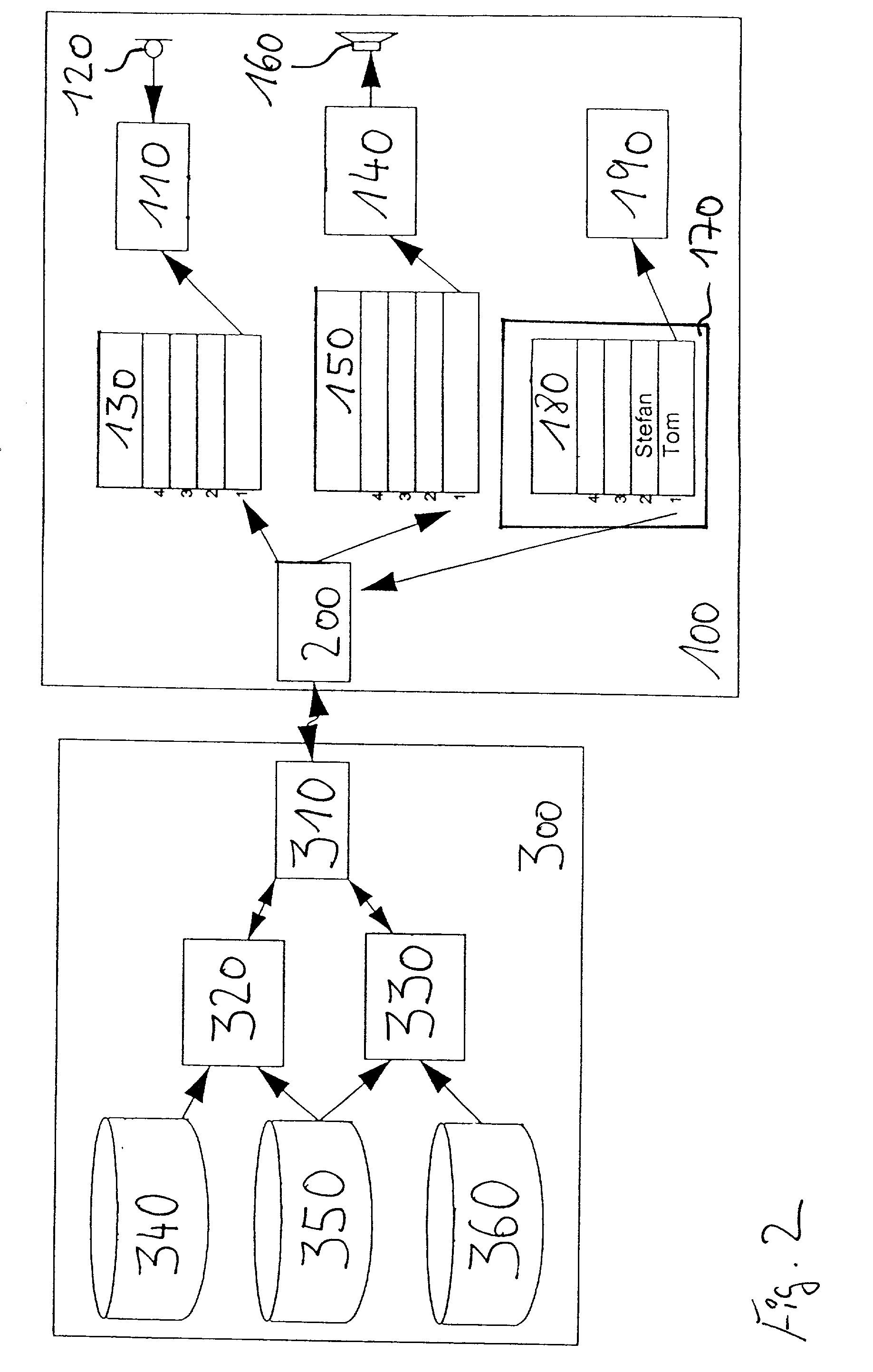

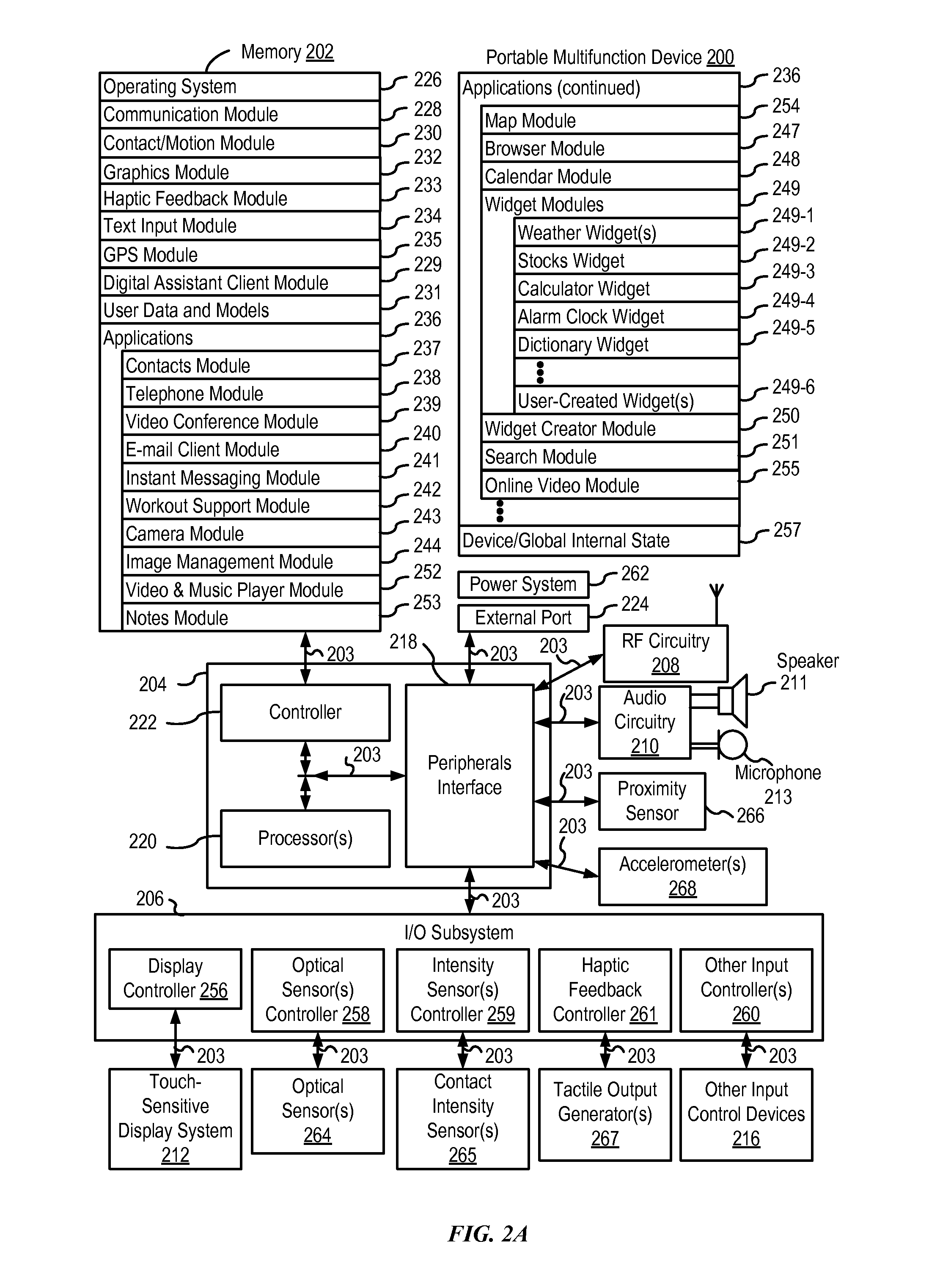

Mobile terminal controllable by spoken utterances

InactiveUS20020091511A1Reduce complexityLow costSubstation equipmentSpeech recognitionAcoustic modelAutomatic speech

A mobile terminal (100) which is controllable by spoken utterances like proper names or command words is described. The mobile terminal (100) comprises an interface (200) for receiving from a network server (300) acoustic models for automatic speech recognition and an automatic speech recognizer (110) for recognizing the spoken utterances based on the received acoustic models. The invention further relates to a network server (300) for mobile terminals (100) which are controllable by spoken utterances and to a method for obtaining acoustic models for a mobile terminal (100) controllable by spoken utterances.

Owner:TELEFON AB LM ERICSSON (PUBL)

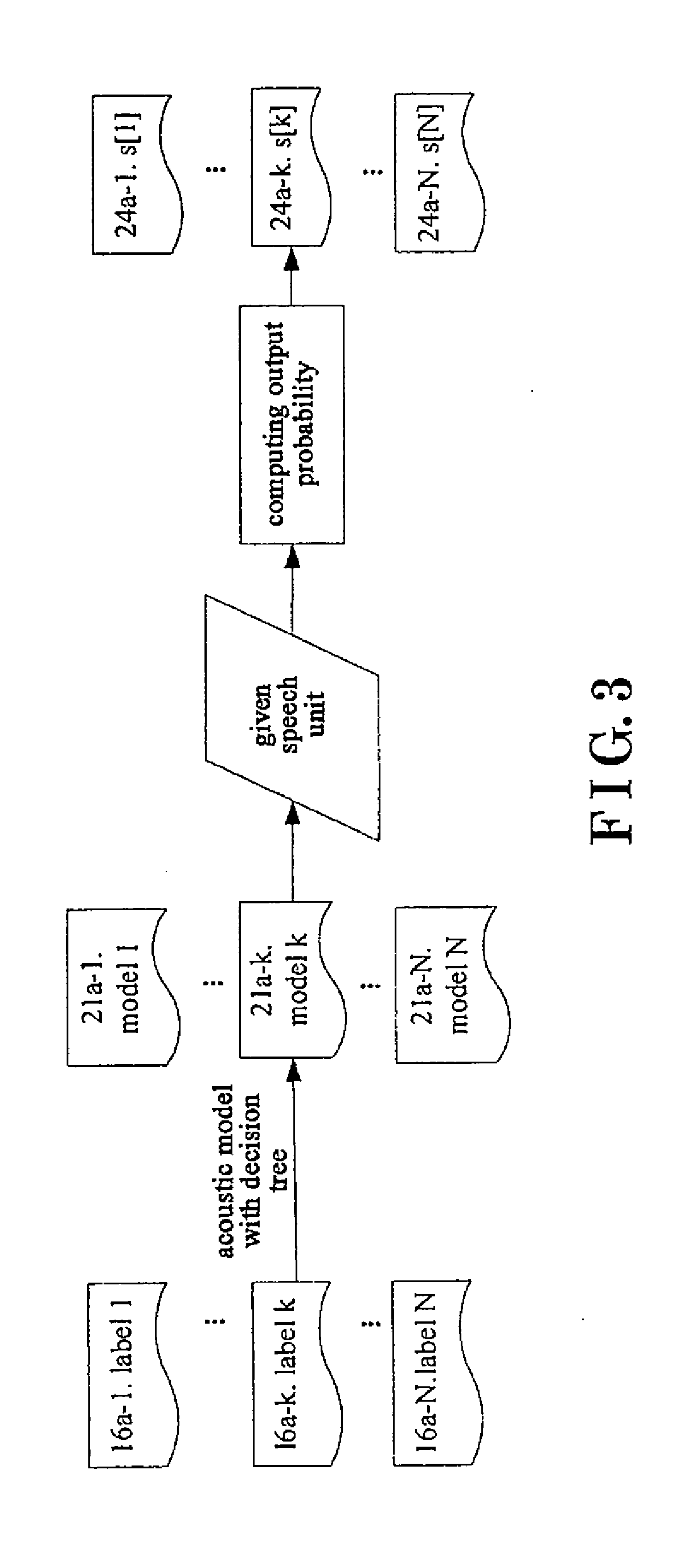

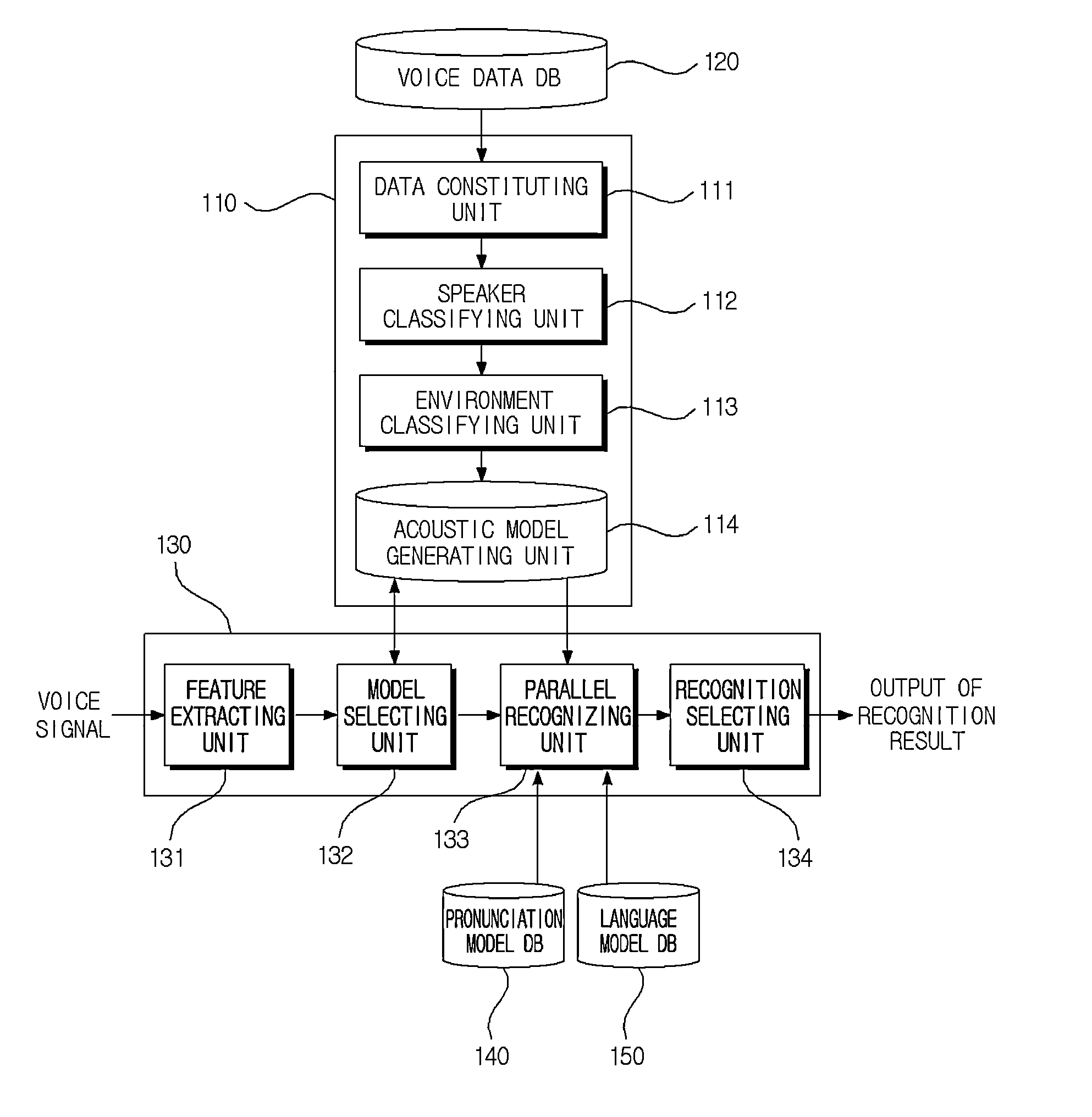

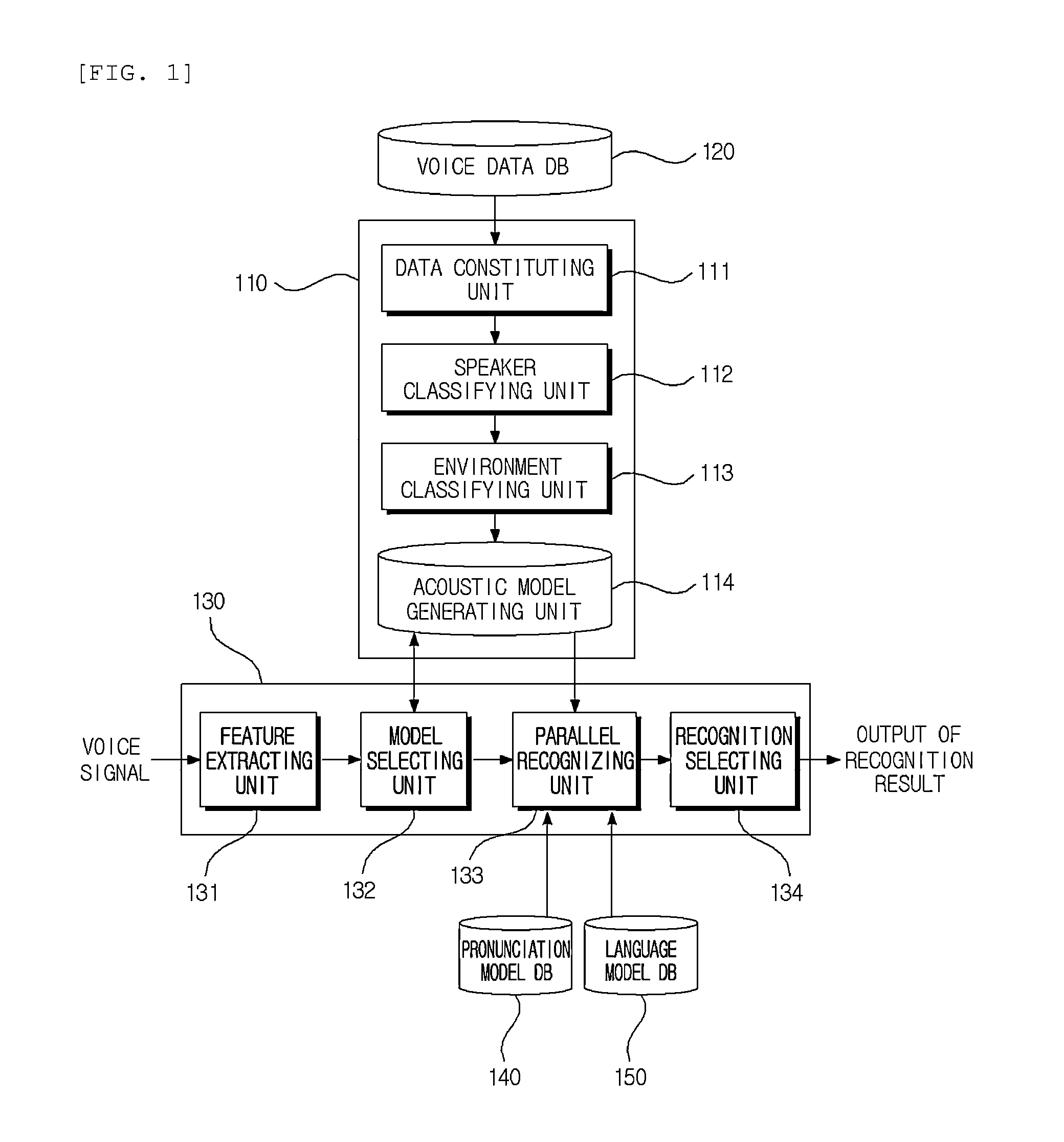

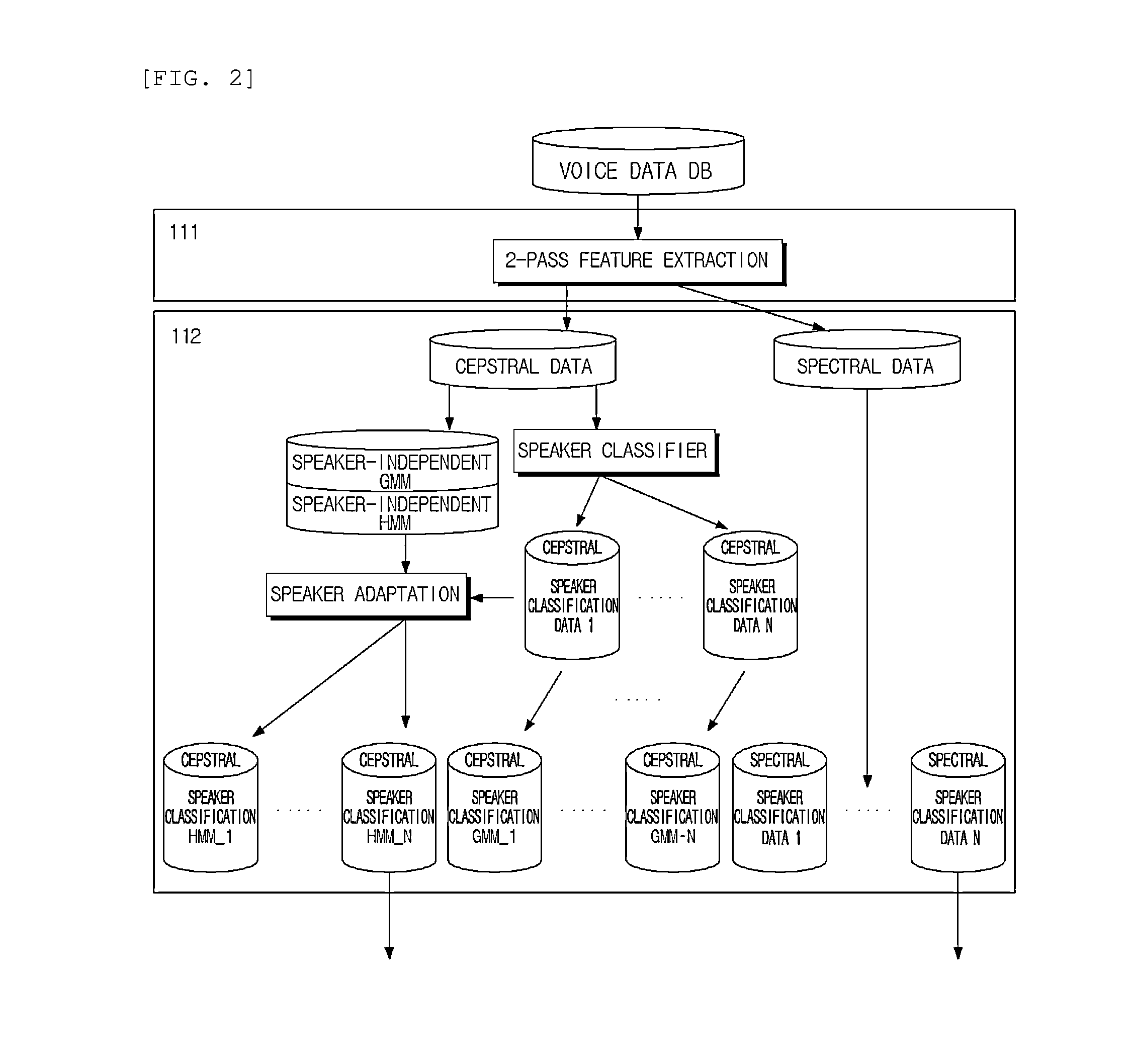

Apparatus for speech recognition using multiple acoustic model and method thereof

Disclosed are an apparatus for recognizing voice using multiple acoustic models according to the present invention and a method thereof. An apparatus for recognizing voice using multiple acoustic models includes a voice data database (DB) configured to store voice data collected in various noise environments; a model generating means configured to perform classification for each speaker and environment based on the collected voice data, and to generate an acoustic model of a binary tree structure as the classification result; and a voice recognizing means configured to extract feature data of voice data when the voice data is received from a user, to select multiple models from the generated acoustic model based on the extracted feature data, to parallel recognize the voice data based on the selected multiple models, and to output a word string corresponding to the voice data as the recognition result.

Owner:ELECTRONICS & TELECOMM RES INST

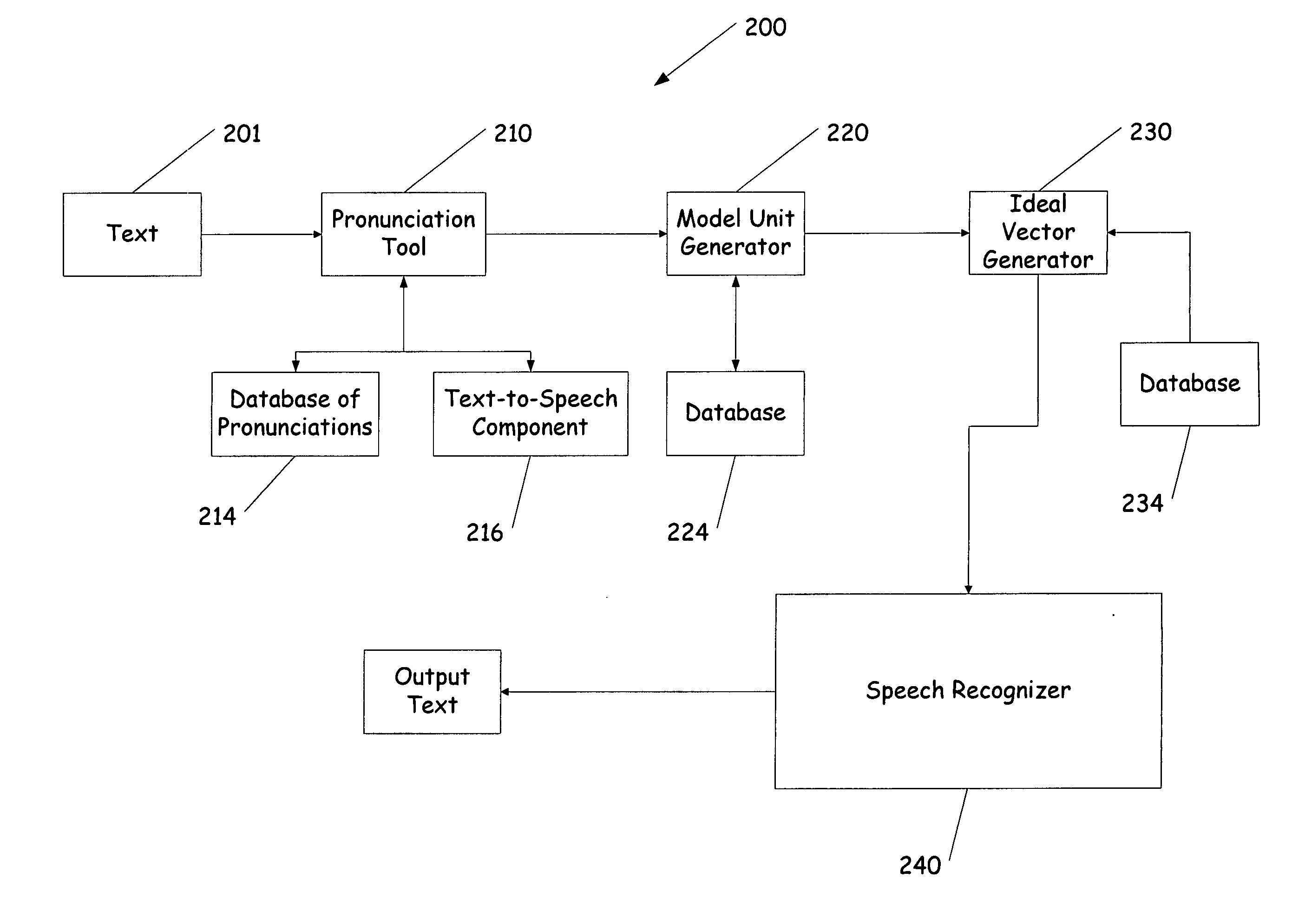

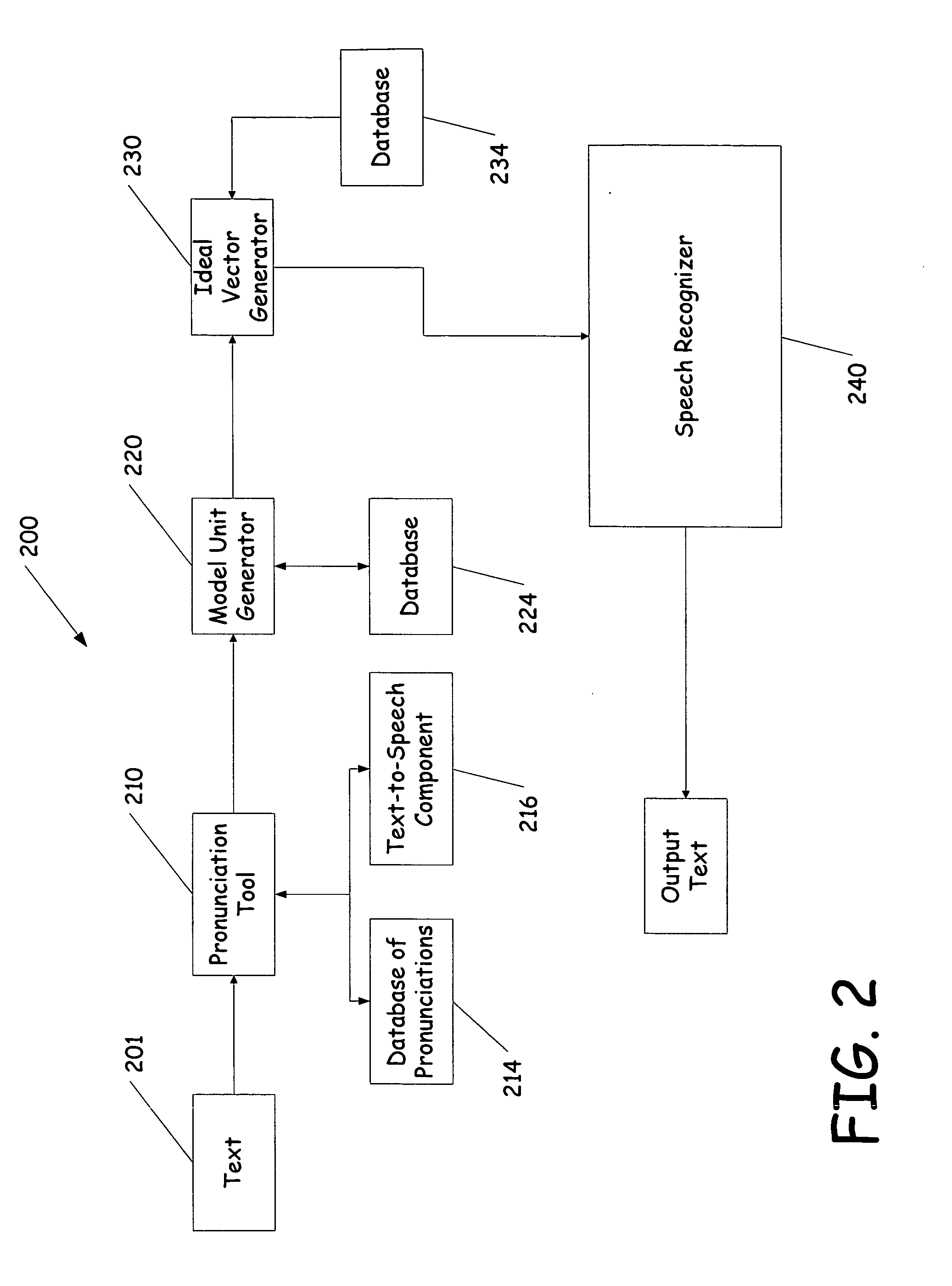

Testing and tuning of automatic speech recognition systems using synthetic inputs generated from its acoustic models

InactiveUS20060085187A1Errors in predictingAvoids acoustic mismatchesSpeech recognitionSpeech synthesisFeature vectorModel selection

A system and method of testing and tuning a speech recognition system by providing pronunciations to the speech recognizer. First a text document is provided to the system and converted into a sequence of phonemes representative of the words in the text. The phonemes are then converted to model units, such as Hidden Markov Models. From the models a probability is obtained for each model or state, and feature vectors are determined. The feature vector matching the most probable vector for each state is selected for each model. These ideal feature vectors are provided to the speech recognizer, and processed. The end result is compared with the original text, and modifications to the system can be made based on the output text.

Owner:MICROSOFT TECH LICENSING LLC

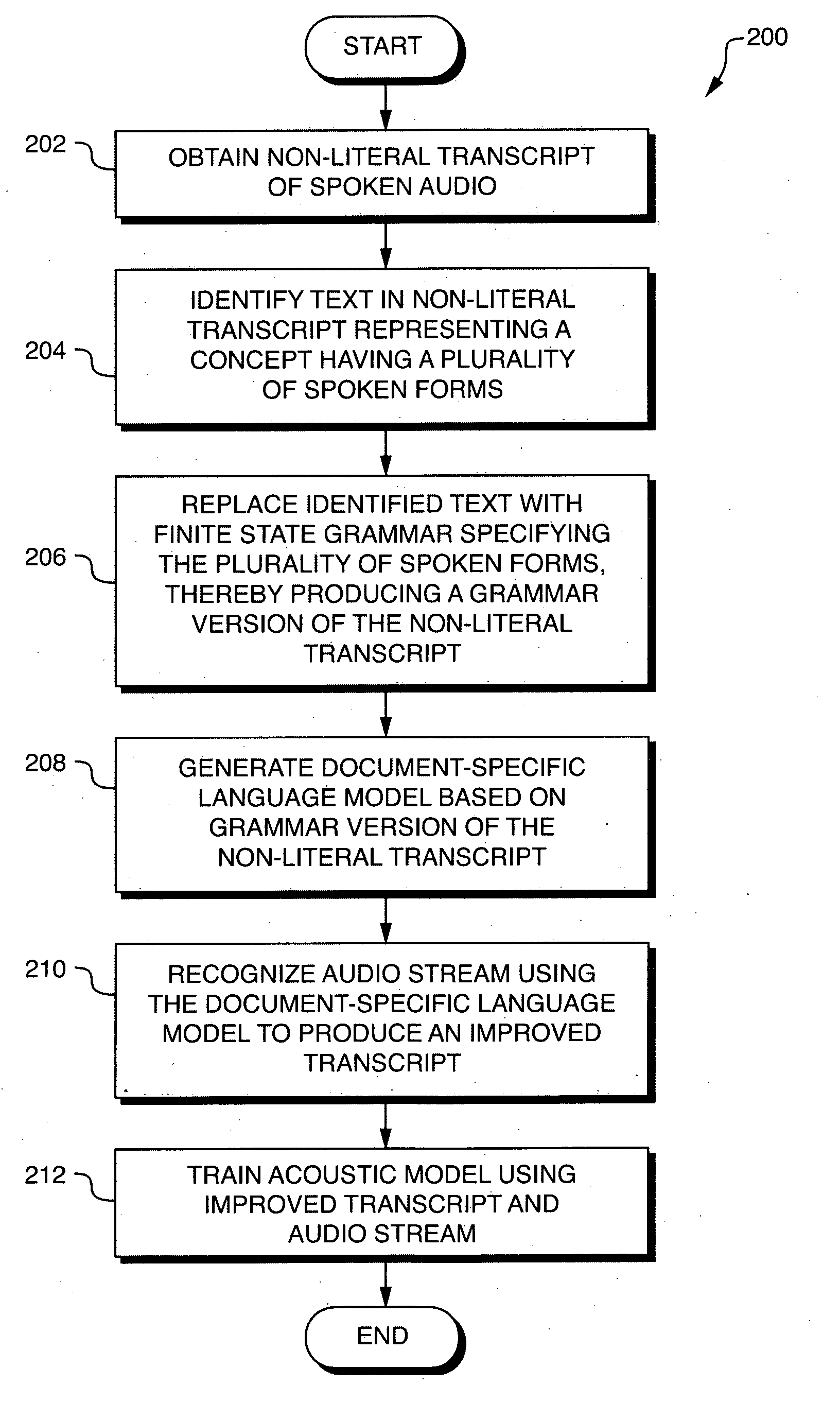

Discriminative training of document transcription system

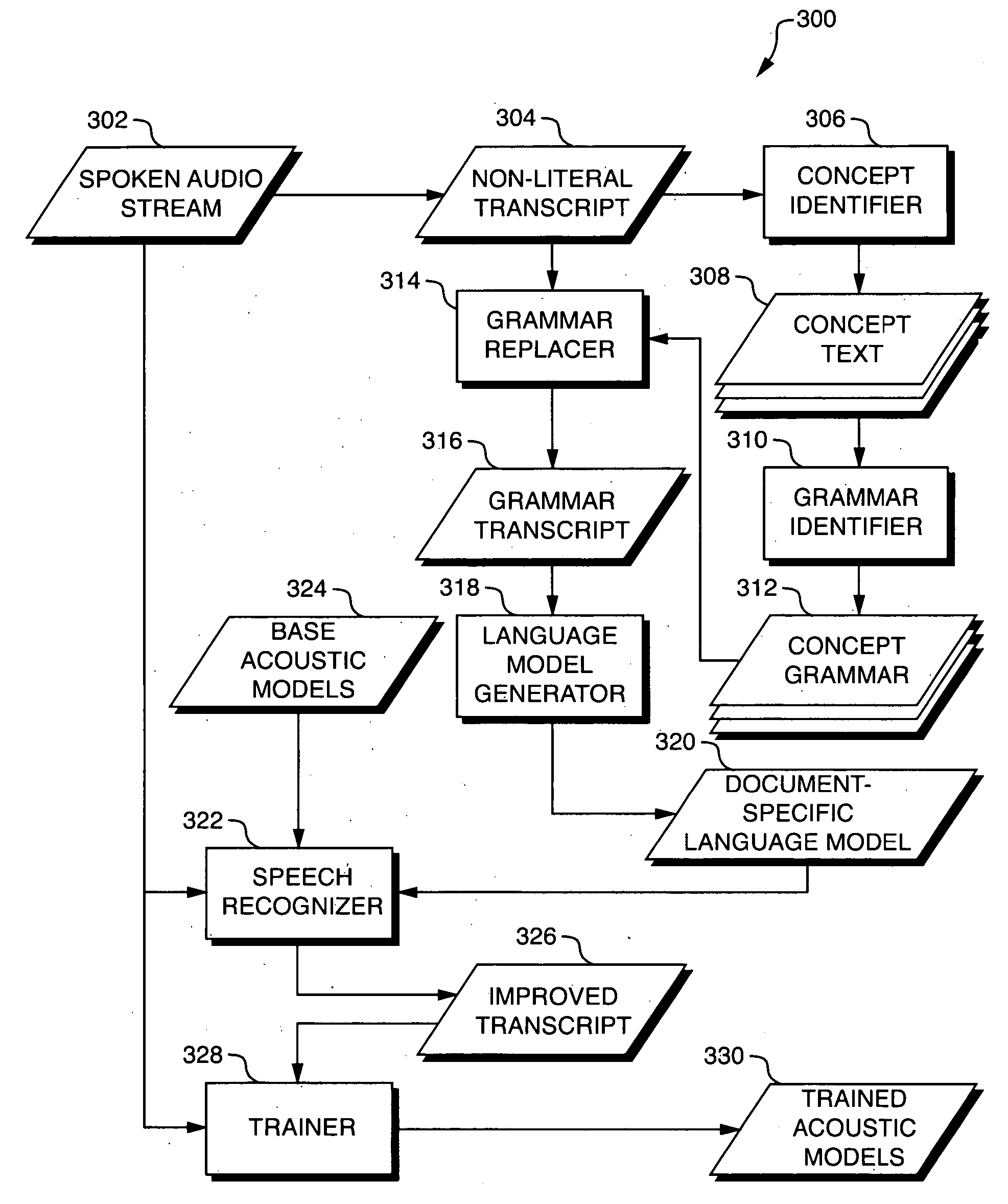

ActiveUS20060074656A1Accurate representationAcoustic modelNatural language translationSpeech recognitionSpoken languageAcoustic model

A system is provided for training an acoustic model for use in speech recognition. In particular, such a system may be used to perform training based on a spoken audio stream and a non-literal transcript of the spoken audio stream. Such a system may identify text in the non-literal transcript which represents concepts having multiple spoken forms. The system may attempt to identify the actual spoken form in the audio stream which produced the corresponding text in the non-literal transcript, and thereby produce a revised transcript which more accurately represents the spoken audio stream. The revised, and more accurate, transcript may be used to train the acoustic model using discriminative training techniques, thereby producing a better acoustic model than that which would be produced using conventional techniques, which perform training based directly on the original non-literal transcript.

Owner:3M INNOVATIVE PROPERTIES CO

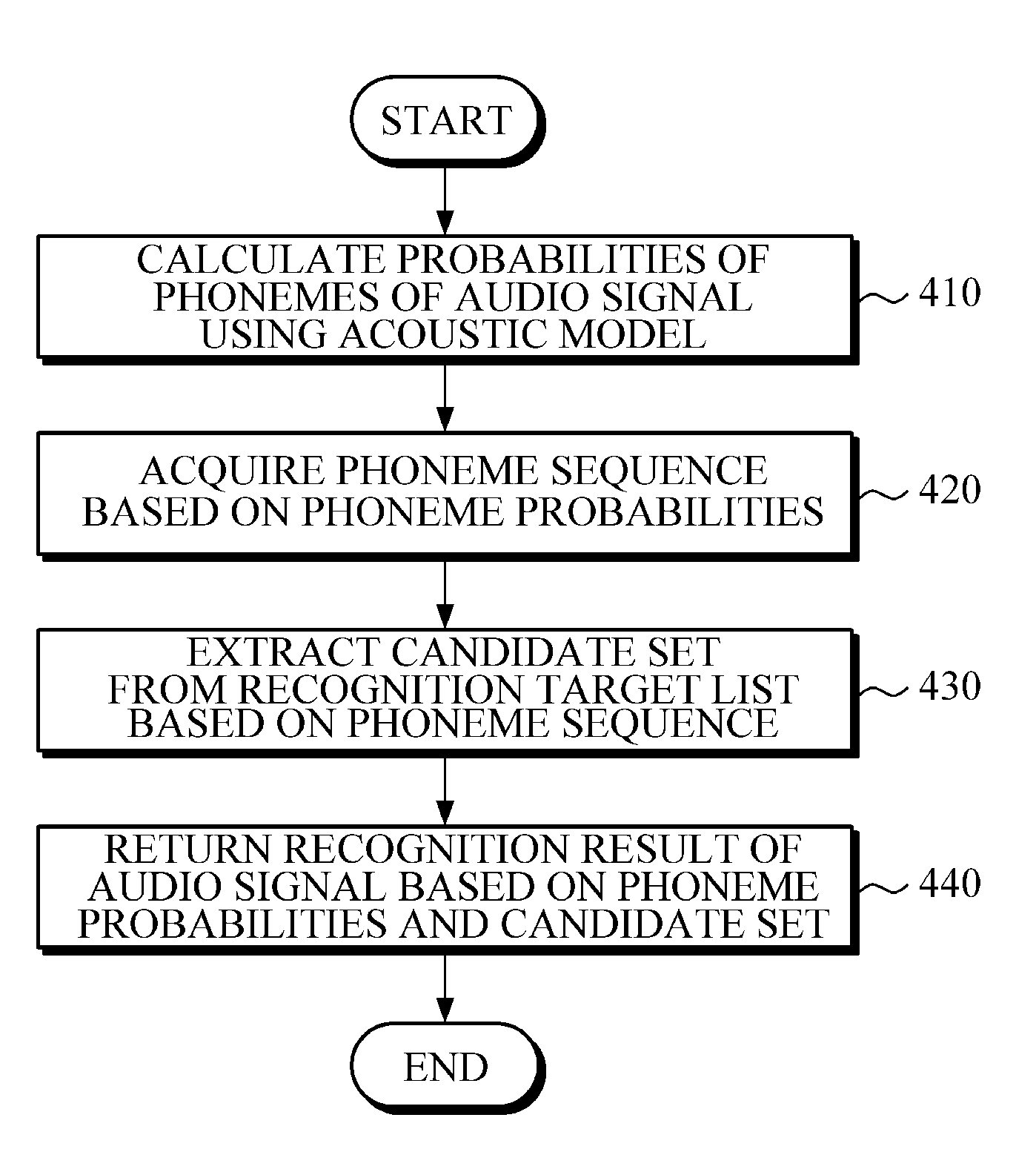

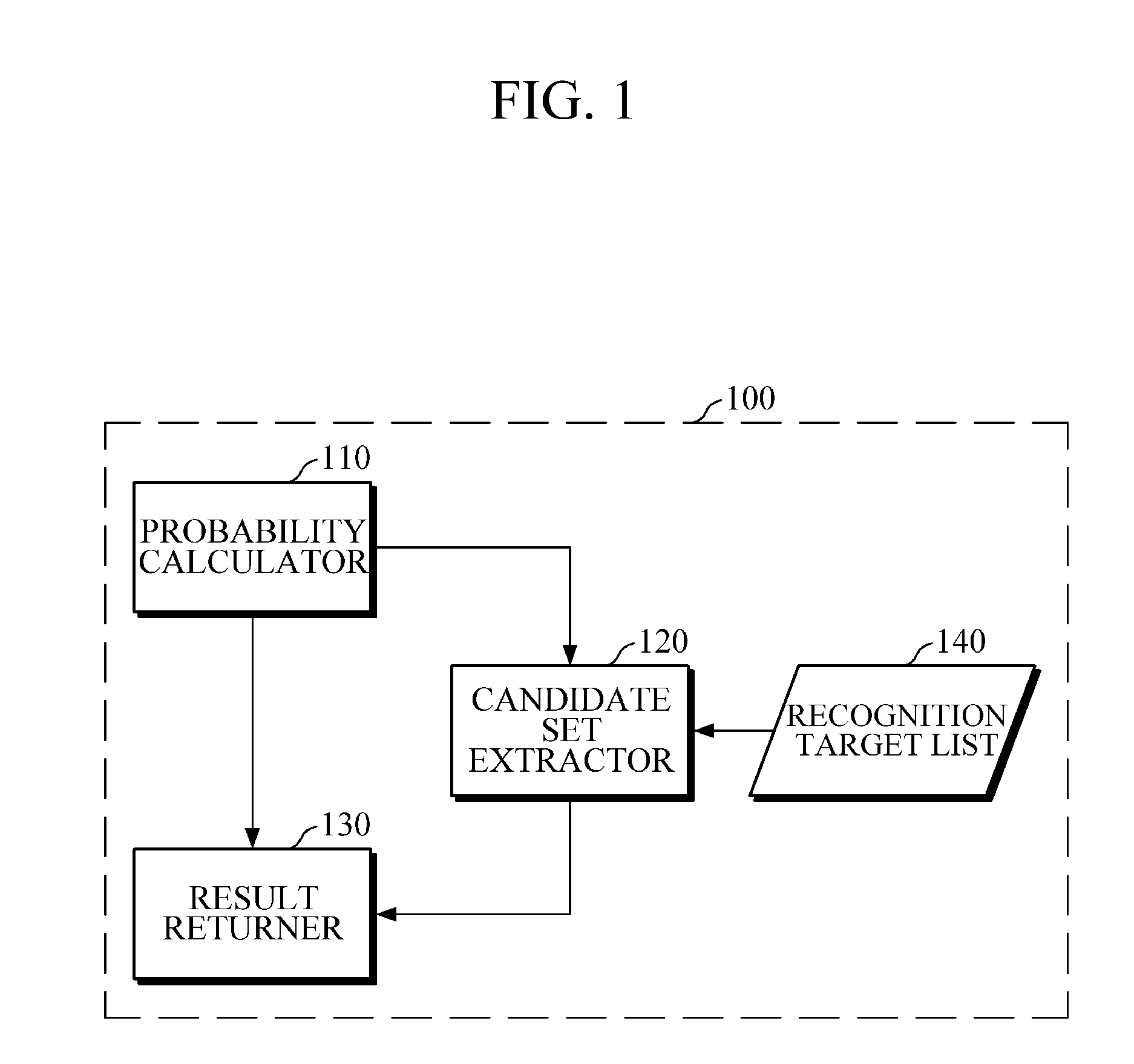

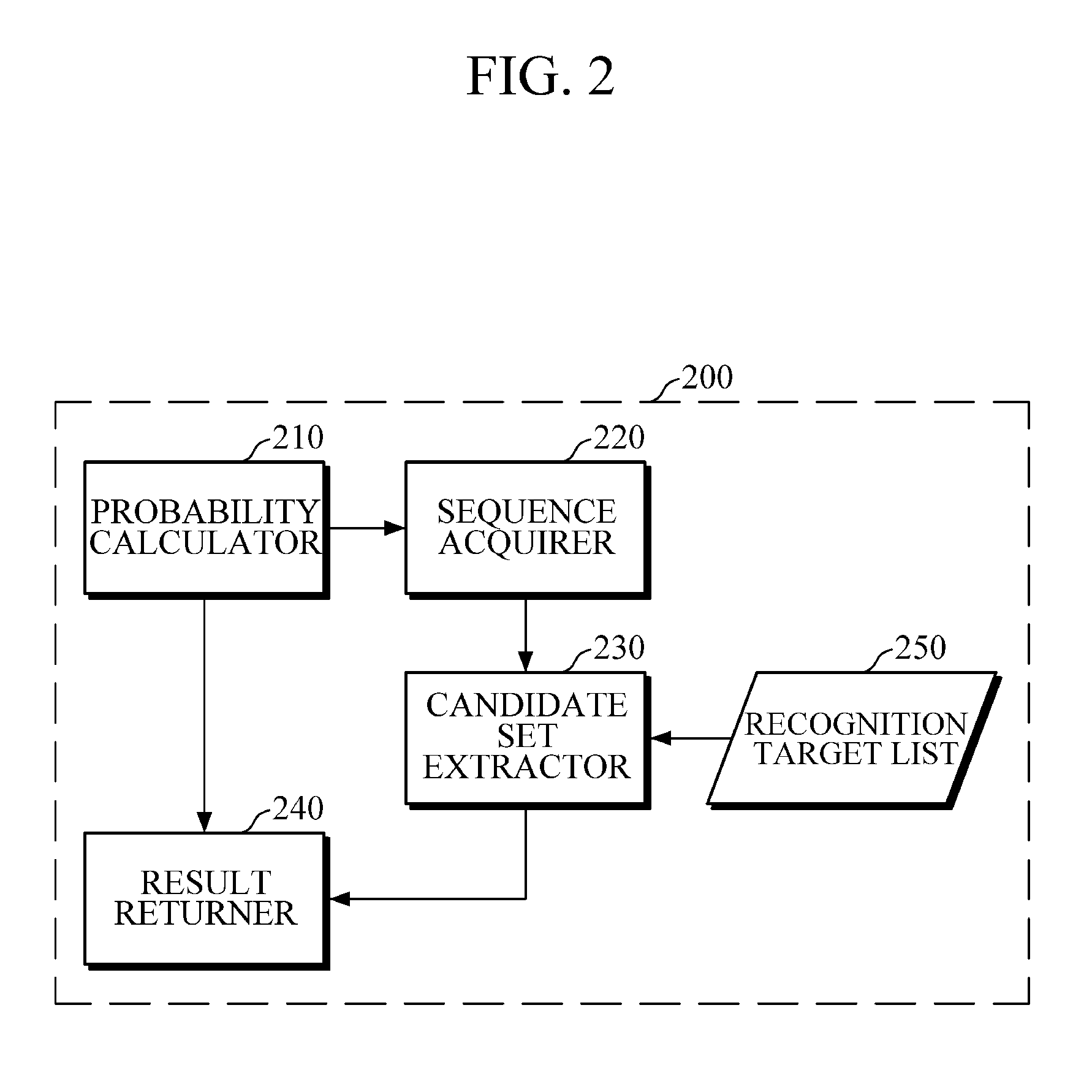

Speech recognition apparatus, speech recognition method, and electronic device

A speech recognition apparatus includes a probability calculator configured to calculate phoneme probabilities of an audio signal using an acoustic model; a candidate set extractor configured to extract a candidate set from a recognition target list; and a result returner configured to return a recognition result of the audio signal based on the calculated phoneme probabilities and the extracted candidate set.

Owner:SAMSUNG ELECTRONICS CO LTD

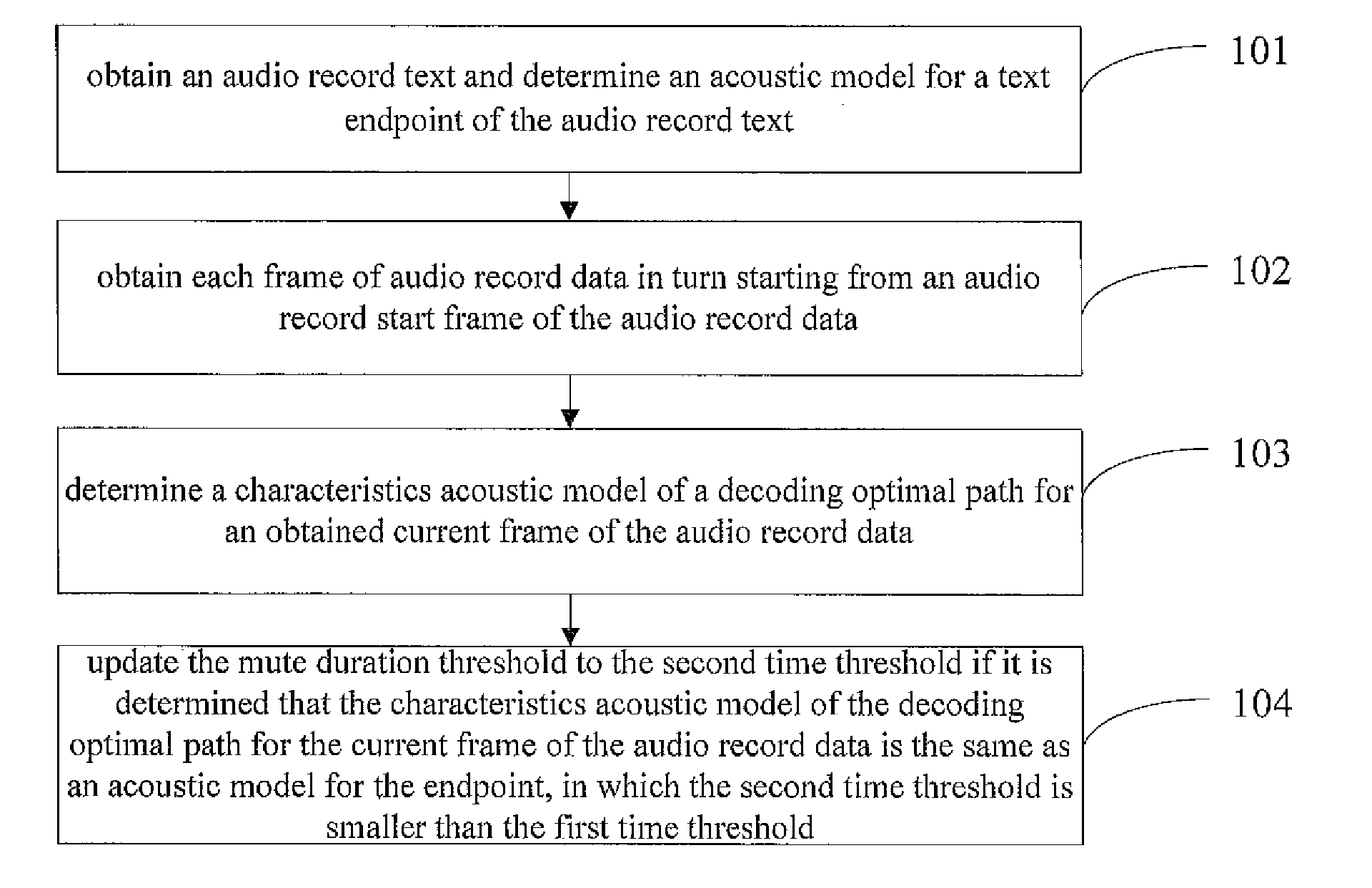

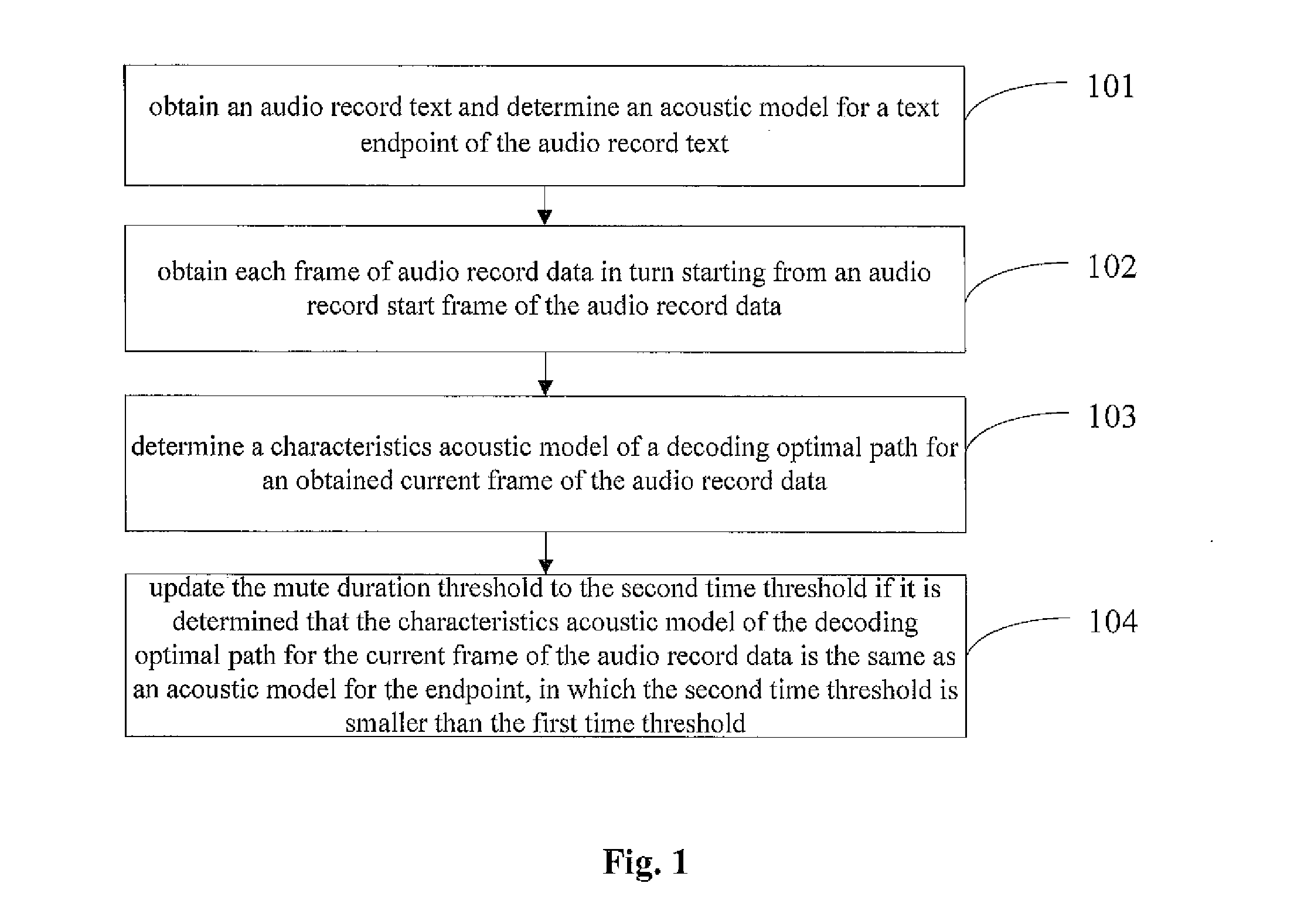

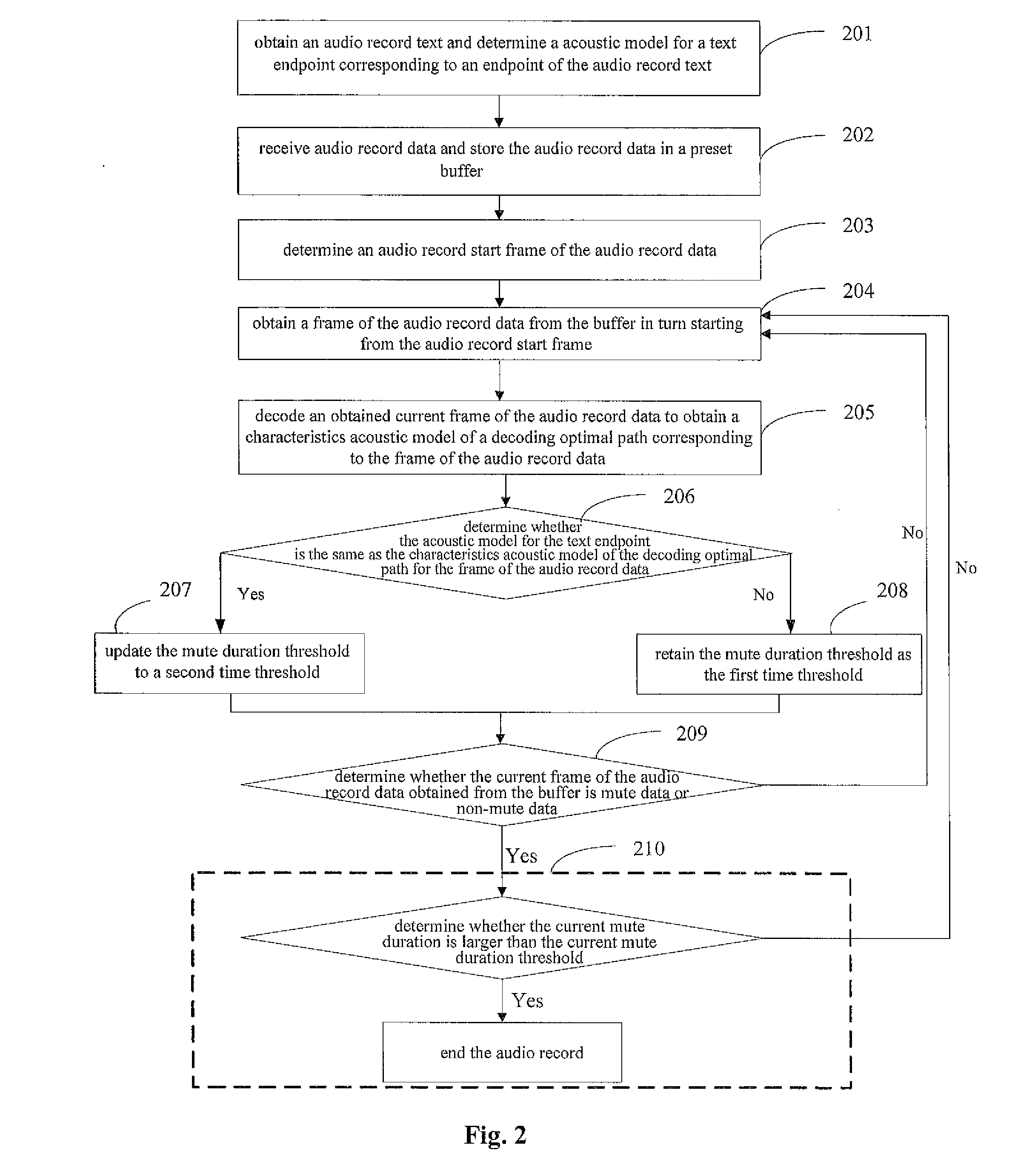

Method and System For Endpoint Automatic Detection of Audio Record

ActiveUS20130197911A1Improve efficiencyShorten the construction periodSpeech recognitionAcoustic modelAudio frequency

A method and system for endpoint automatic detection of audio record is provided. The method comprises the following steps: acquiring a audio record text and affirming the text endpoint acoustic model for the audio record text; starting acquiring the audio record data of each frame in turn from the audio record start frame in the audio record data; affirming the characteristics acoustic model of the decoding optimal path for the acquired current frame of the audio record data; comparing the characteristics acoustic model of the decoding optimal path acquired from the current frame of the audio record data with the endpoint acoustic model to determine if they are the same; if yes, updating a mute duration threshold with a second time threshold, wherein the second time threshold is less than a first time threshold. This method can improve the recognizing efficiency of the audio record endpoint.

Owner:IFLYTEK CO LTD

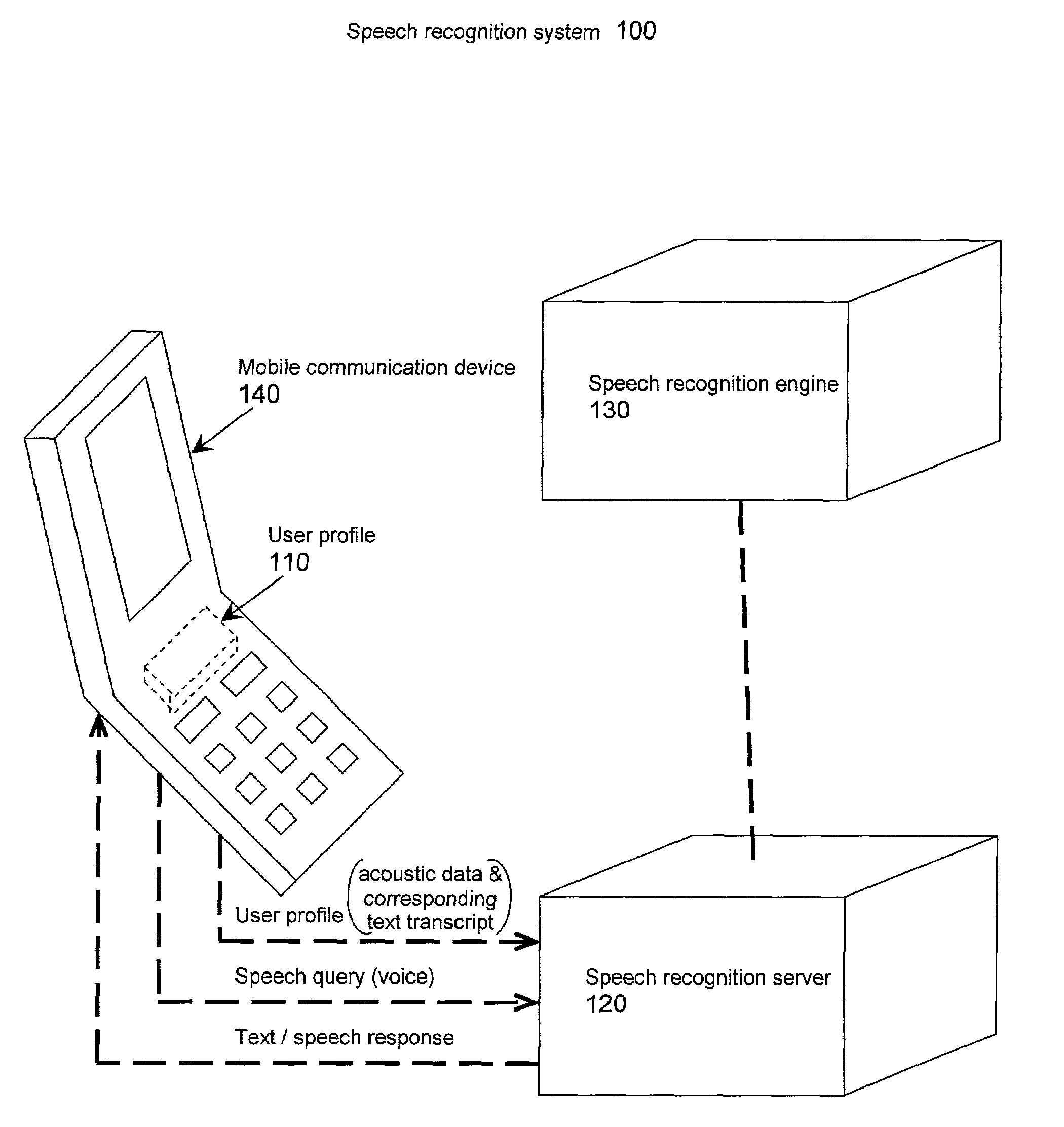

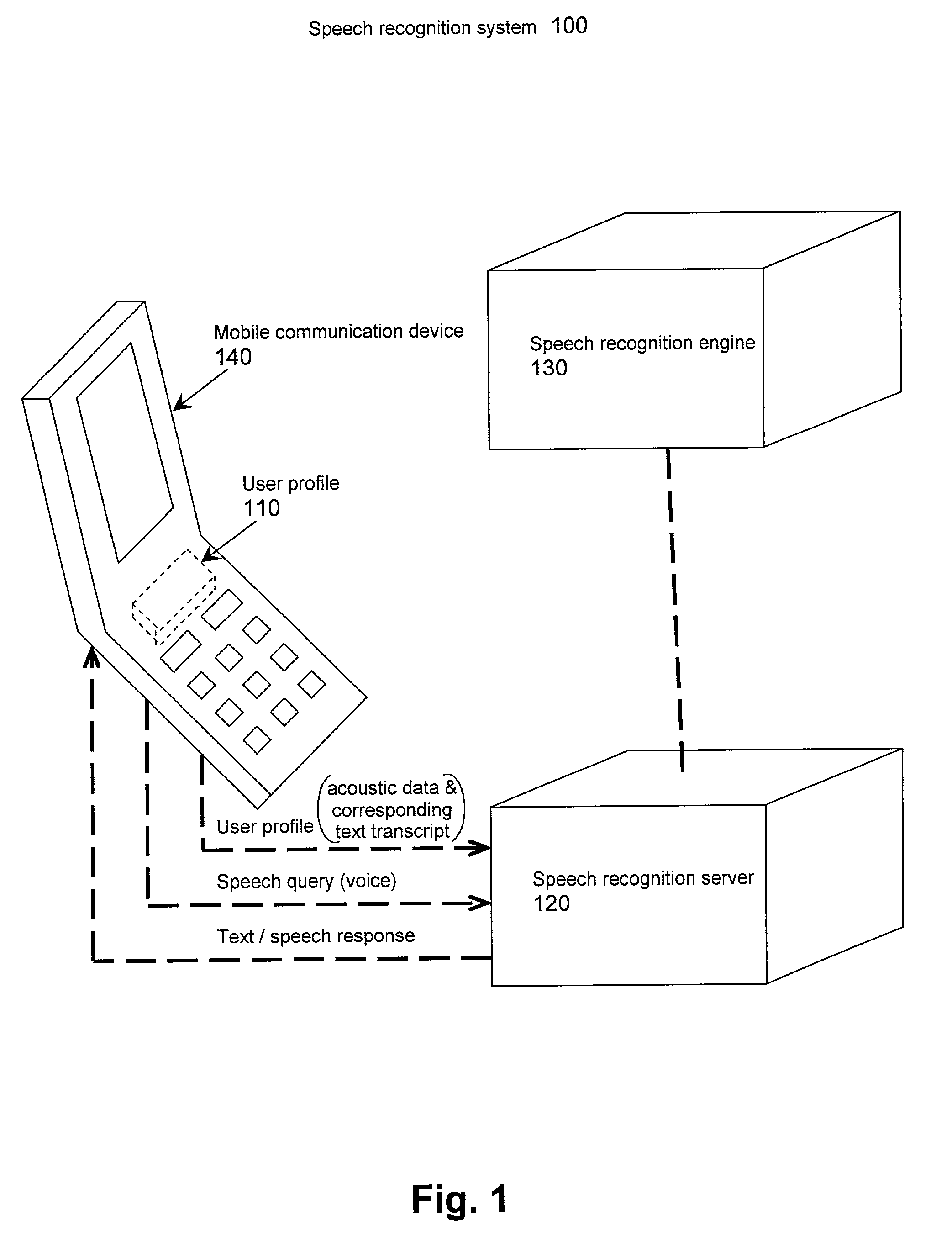

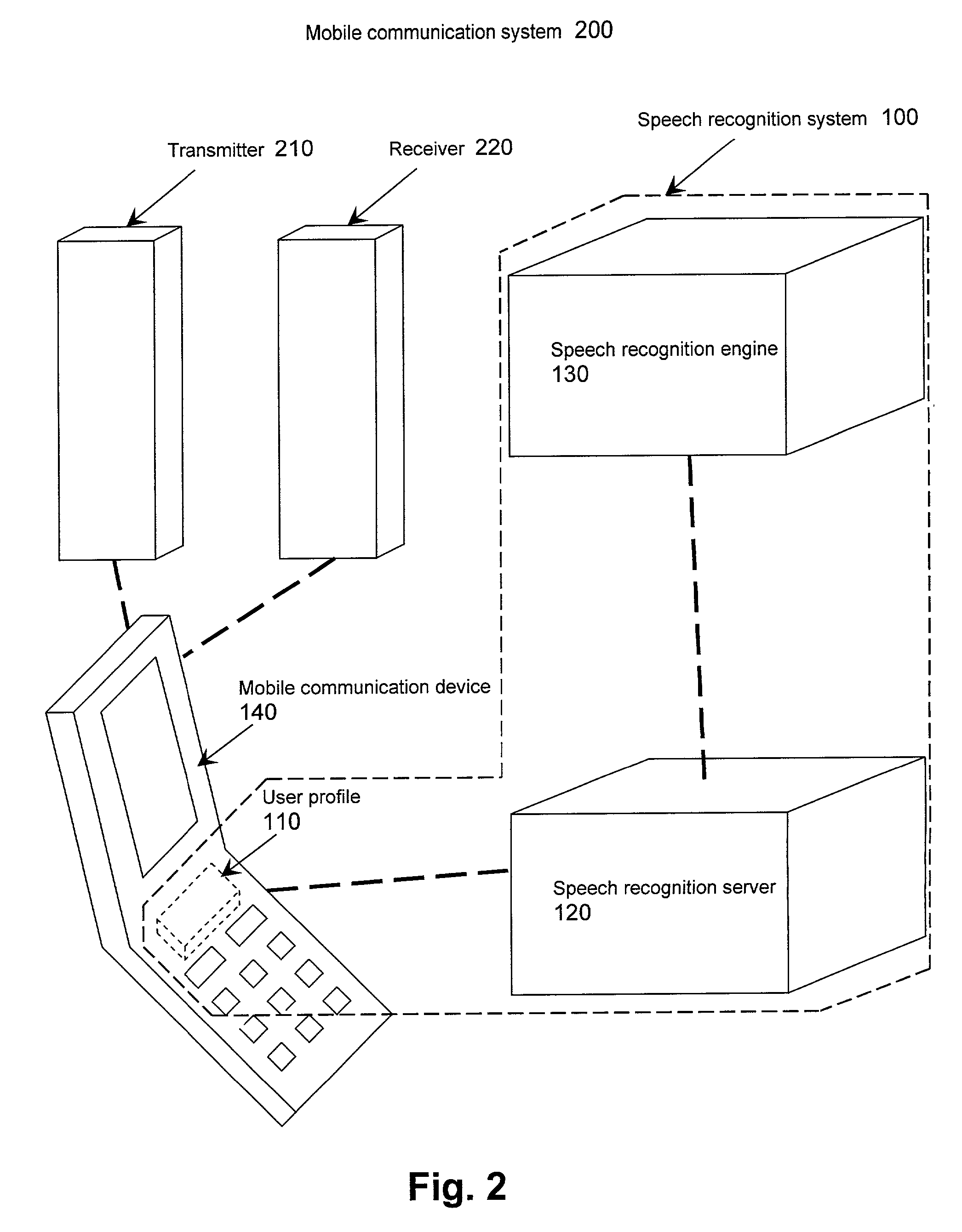

Method and apparatus to improve accuracy of mobile speech-enabled services

ActiveUS7174298B2Two-way loud-speaking telephone systemsAutomatic call-answering/message-recording/conversation-recordingSpeech identificationAcoustic model

A speech recognition system includes a user profile to store acoustic data and a corresponding text transcript. A speech recognition (“SR”) server downloads the acoustic data and the corresponding text transcript that are stored in the user profile. A speech recognition engine is included to adapt an acoustic model based on the acoustic data.

Owner:INTEL CORP

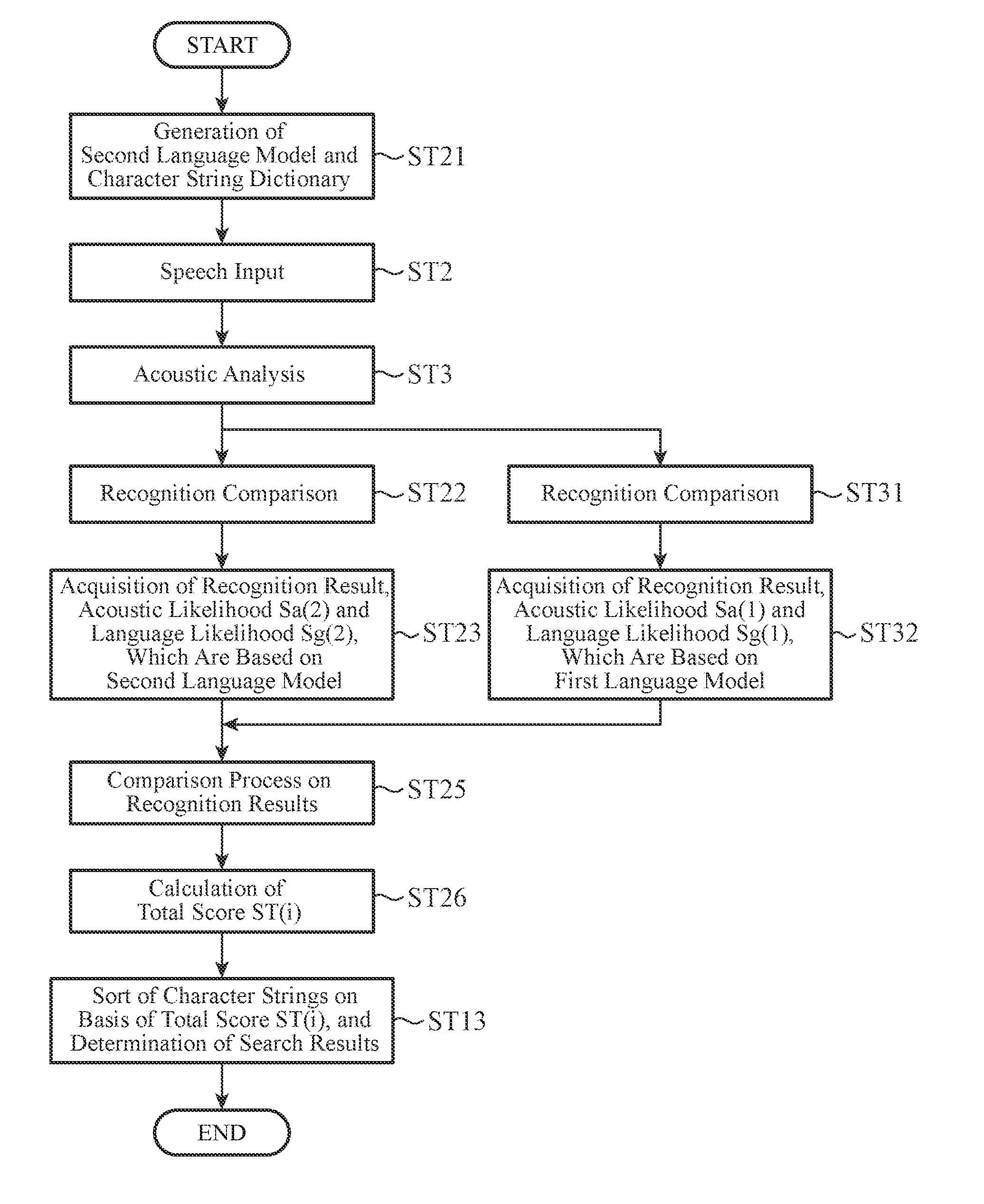

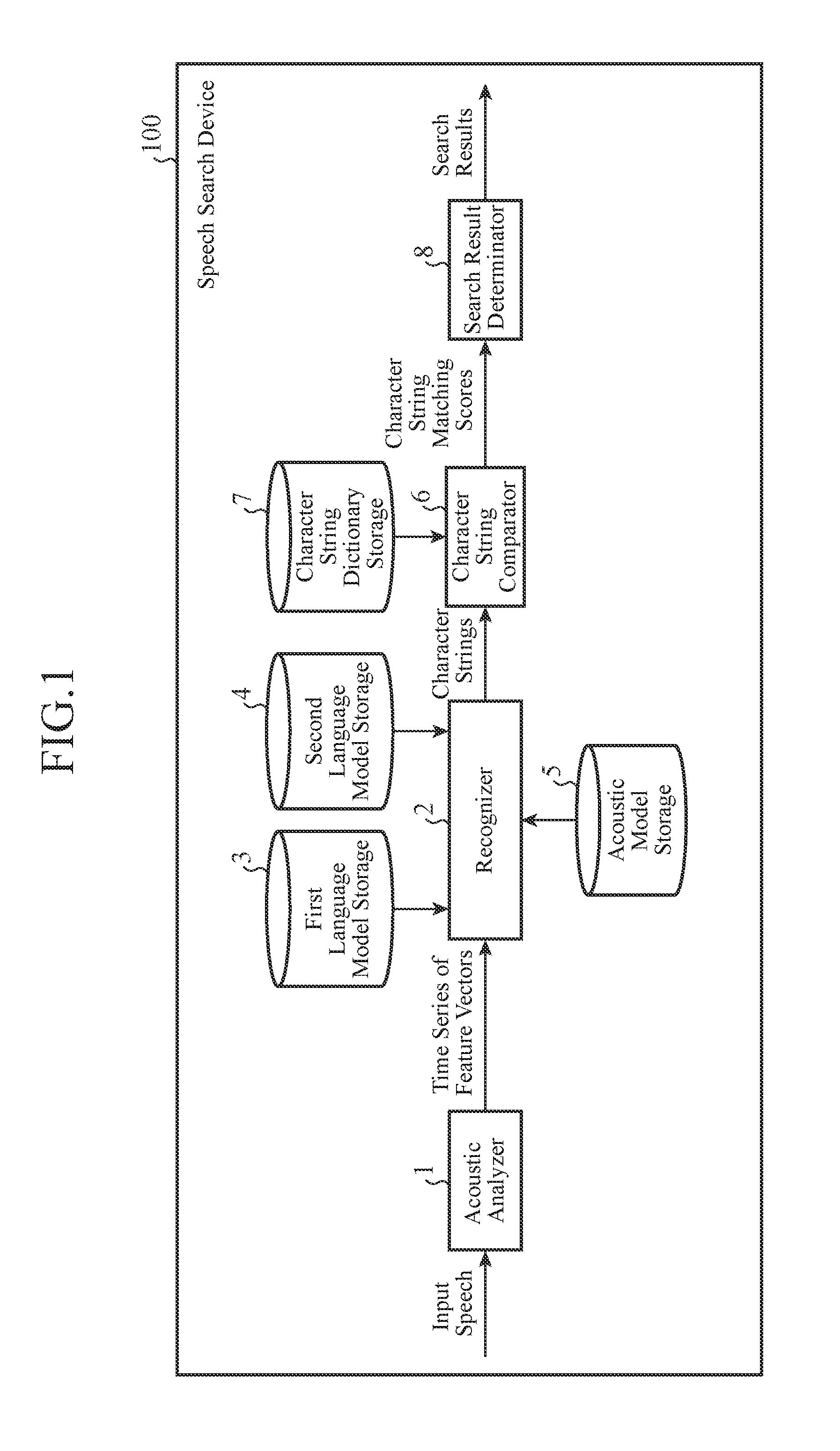

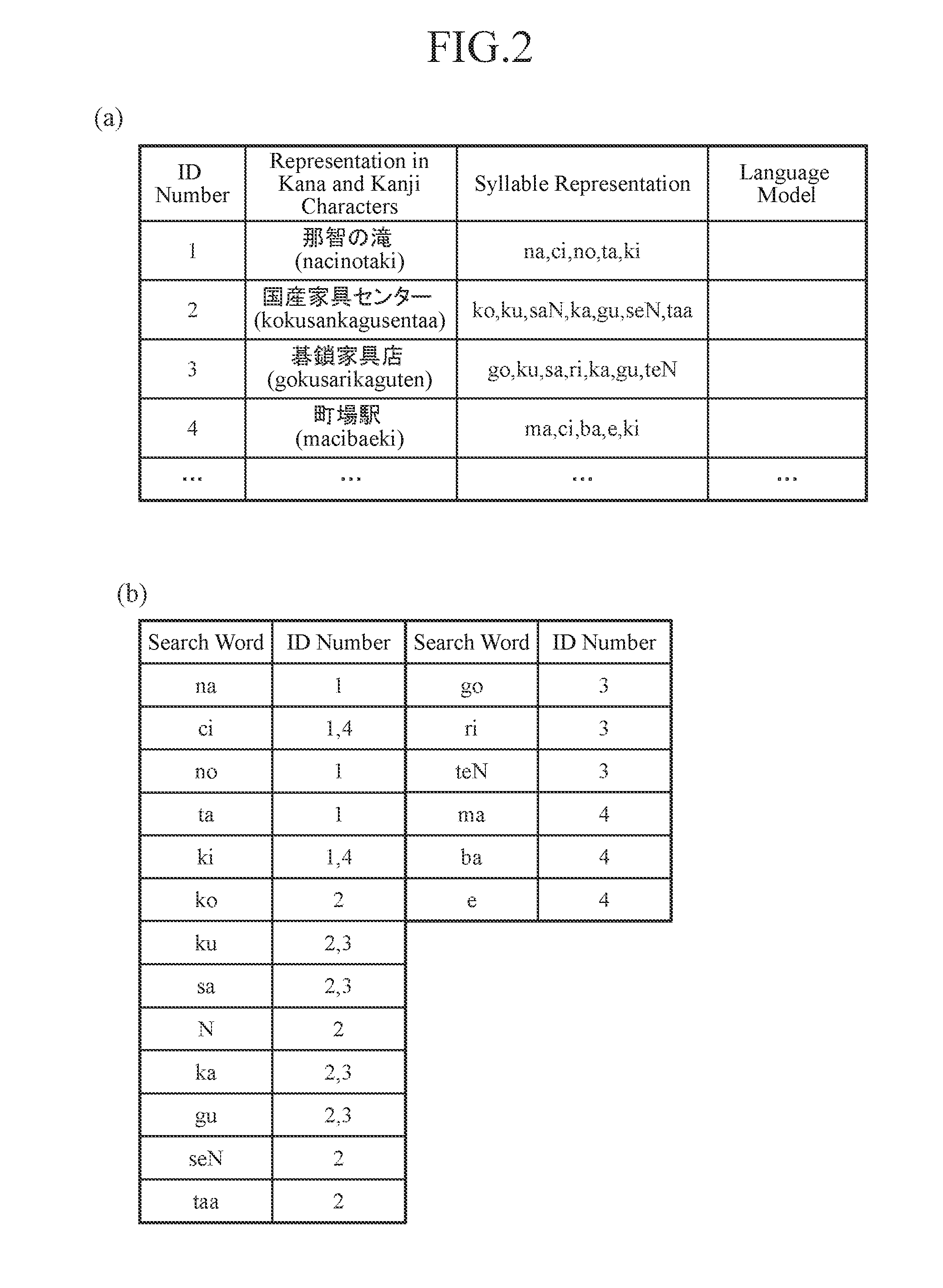

Speech search device and speech search method

InactiveUS20160336007A1Improve search accuracyDigital data information retrievalNatural language data processingLearning dataAcoustic model

Disclosed is a speech search device including a recognizer 2 that refers to an acoustic model and language models having different learning data and performs voice recognition on an input speech, to acquire a recognized character string for each language model, a character string comparator 6 that compares the recognized character string for each language models with the character strings of search target words stored in a character string dictionary, and calculates a character string matching score showing the degree of matching of the recognized character string with respect to each of the character strings of the search target words, to acquire both a character string having the highest character string matching score and this character string matching score for each recognized character strings, and a search result determinator 8 that refers to the acquired score and outputs one or more search target words in descending order of the scores.

Owner:MITSUBISHI ELECTRIC CORP

Automatic accent detection

Systems and processes for automatic accent detection are provided. In accordance with one example, a method includes, at an electronic device with one or more processors and memory, receiving a user input, determining a first similarity between a representation of the user input and a first acoustic model of a plurality of acoustic models, and determining a second similarity between the representation of the user input and a second acoustic model of the plurality of acoustic models. The method further includes determining whether the first similarity is greater than the second similarity. In accordance with a determination that the first similarity is greater than the second similarity, the first acoustic model may be selected; and in accordance with a determination that the first similarity is not greater than the second similarity, the second acoustic model may be selected.

Owner:APPLE INC

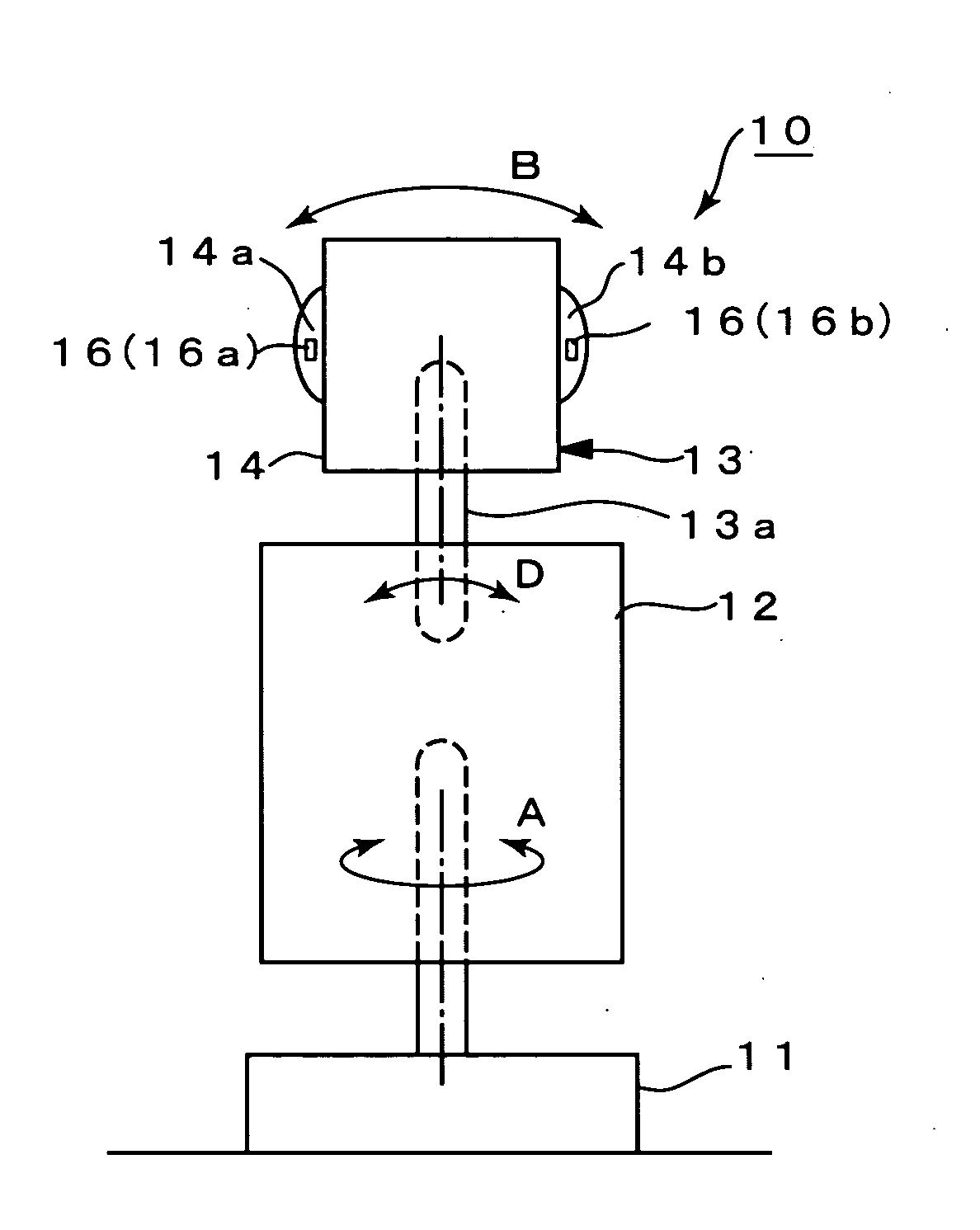

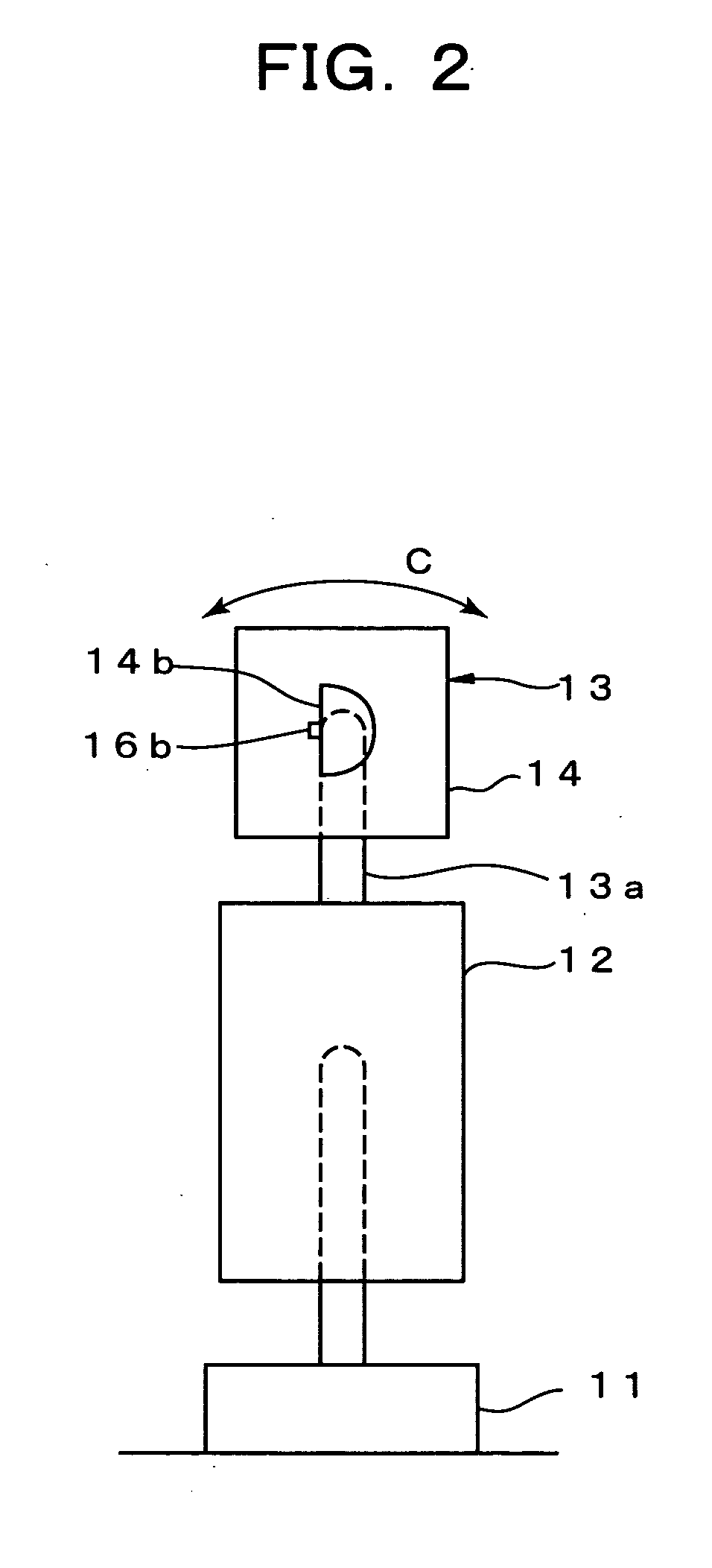

Robotics visual and auditory system

InactiveUS20090030552A1Accurate collectionAccurately localizeProgramme controlComputer controlSound source separationPhase difference

It is a robotics visual and auditory system provided with an auditory module (20), a face module (30), a stereo module (37), a motor control module (40), and an association module (50) to control these respective modules. The auditory module (20) collects sub-bands having interaural phase difference (IPD) or interaural intensity difference (IID) within a predetermined range by an active direction pass filter (23a) having a pass range which, according to auditory characteristics, becomes minimum in the frontal direction, and larger as the angle becomes wider to the left and right, based on an accurate sound source directional information from the association module (50), and conducts sound source separation by restructuring a wave shape of a sound source, conducts speech recognition of separated sound signals from respective sound sources using a plurality of acoustic models (27d), integrates speech recognition results from each acoustic model by a selector, and judges the most reliable speech recognition result among the speech recognition results.

Owner:JAPAN SCI & TECH CORP

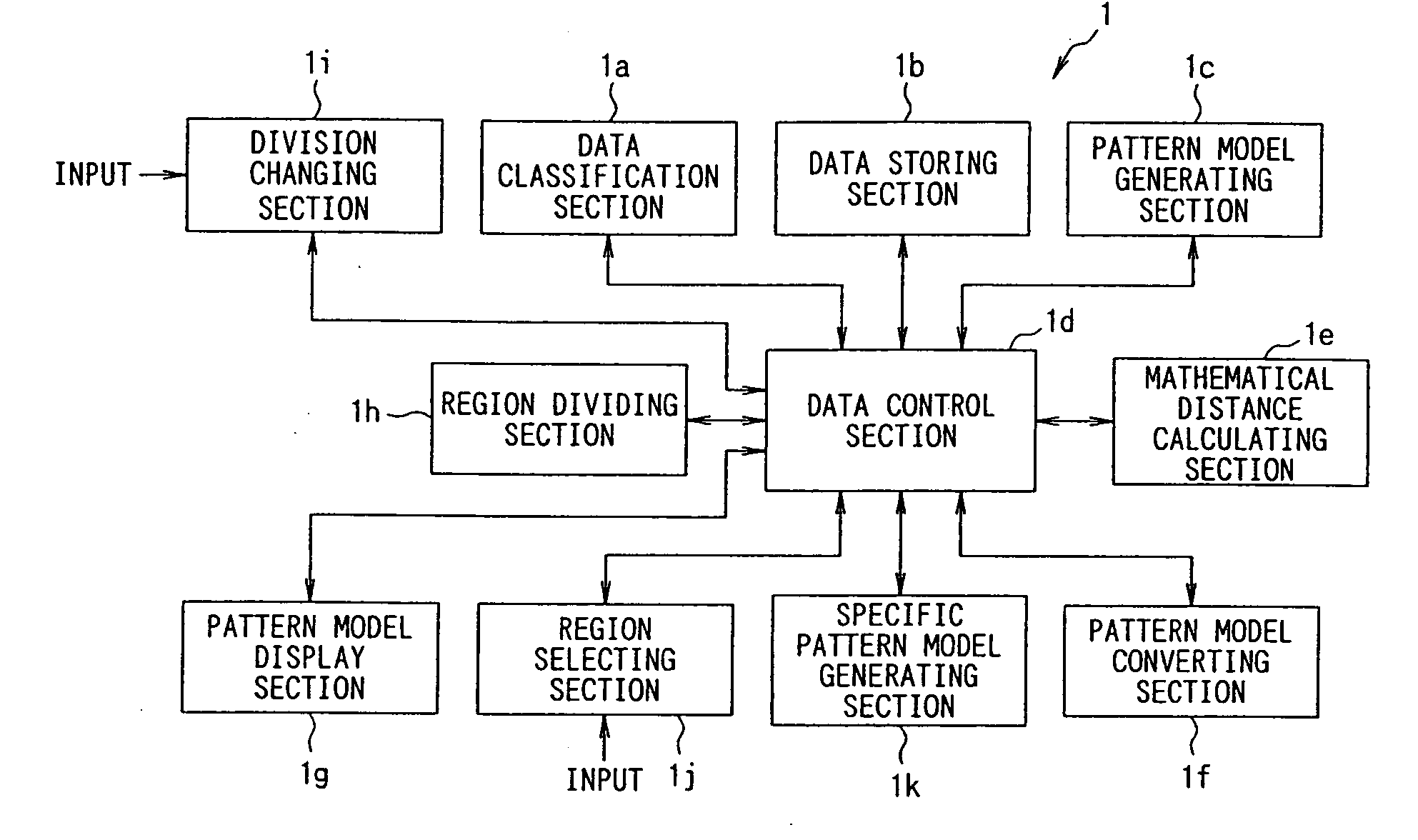

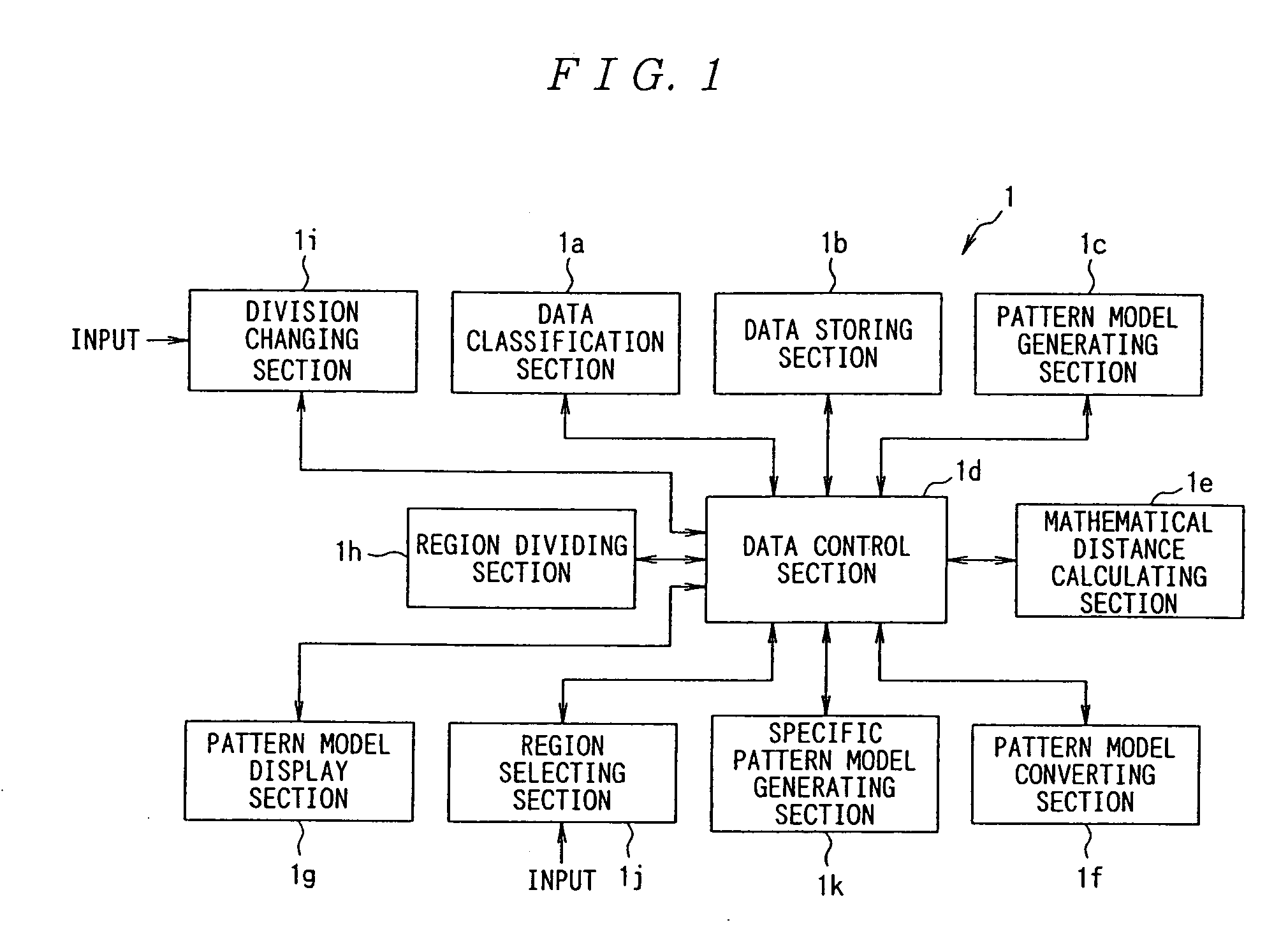

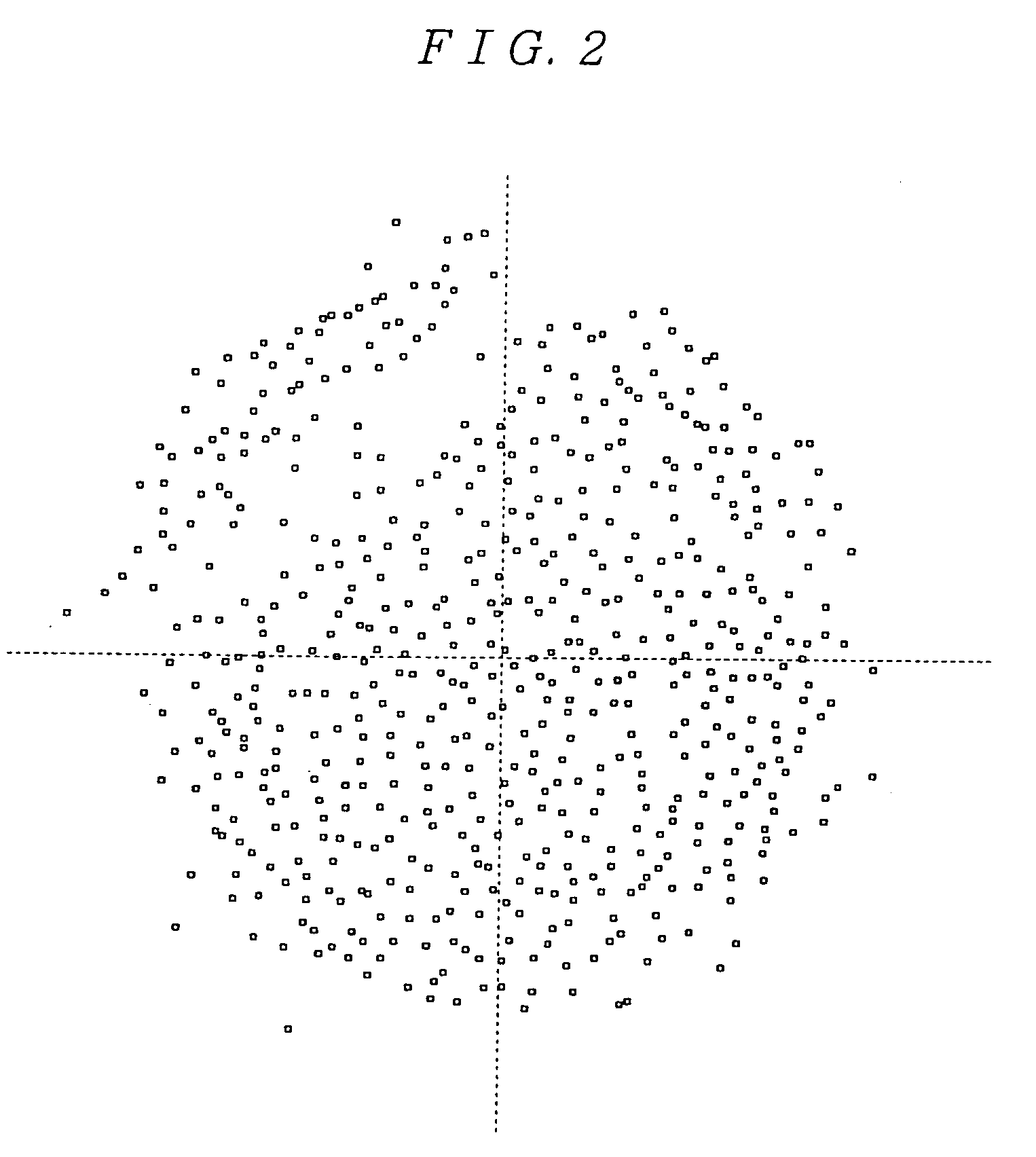

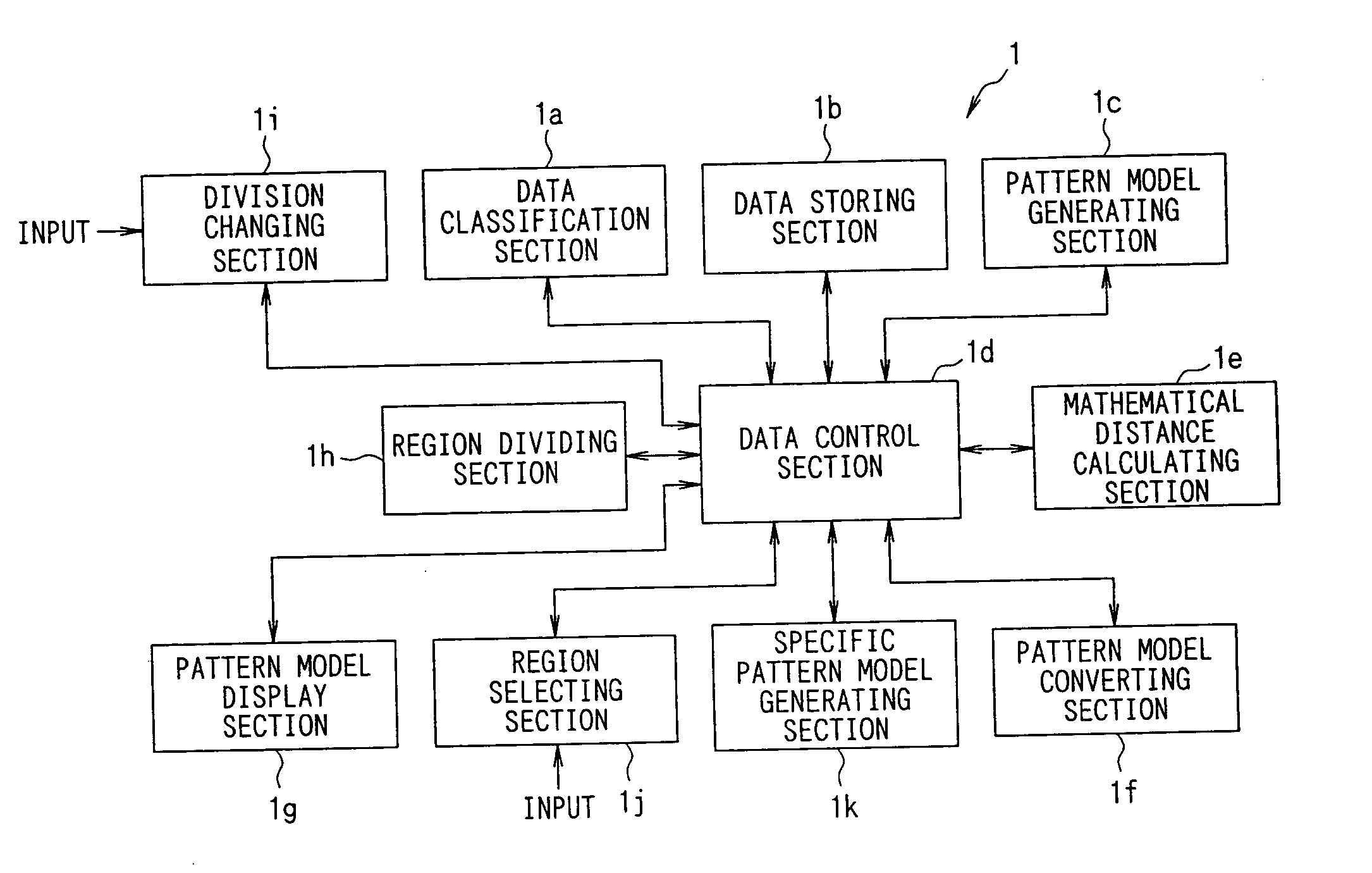

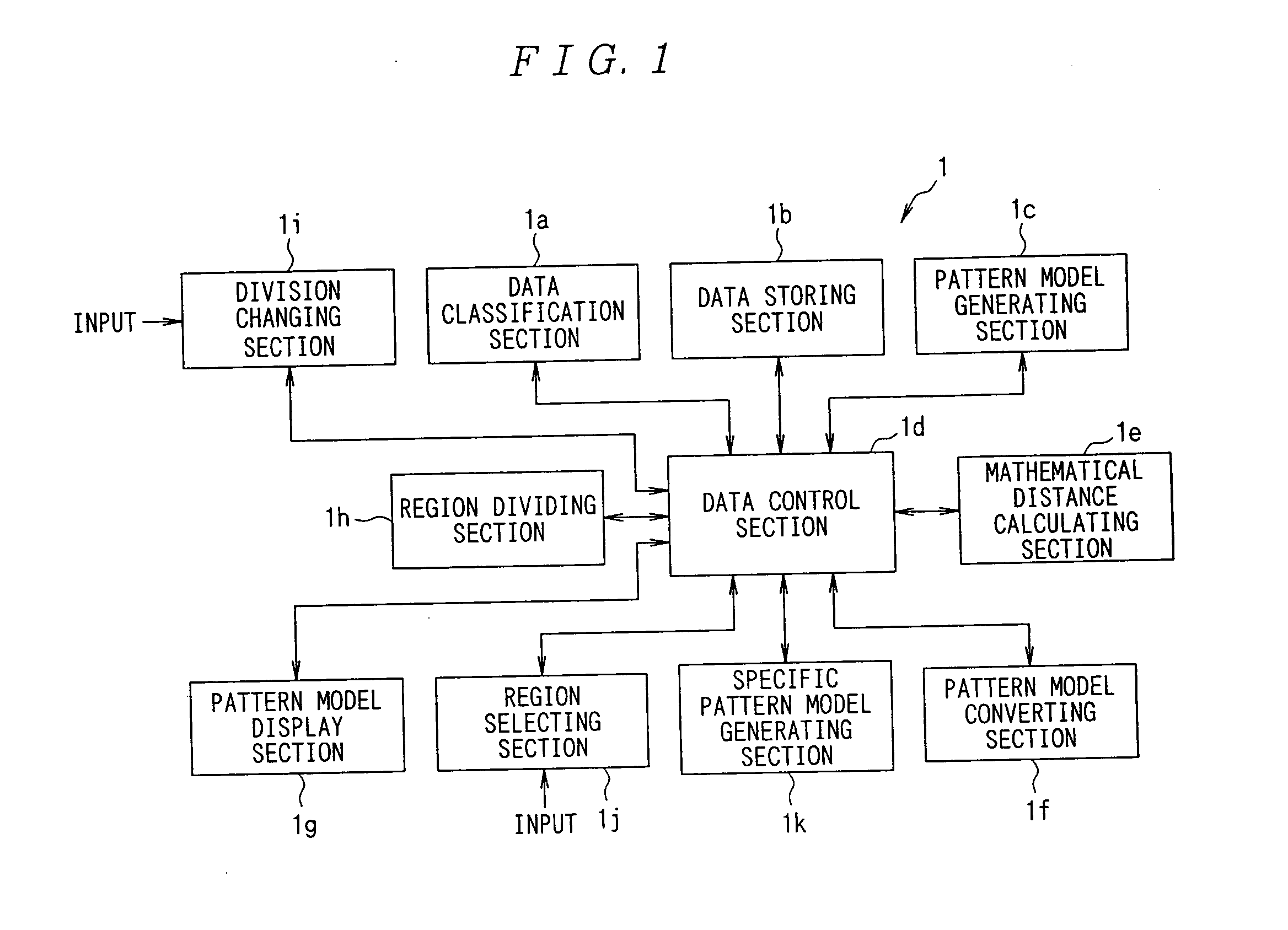

Data Process unit and data process unit control program

InactiveUS20090138263A1Maintain relationshipProcess is performedCharacter and pattern recognitionSpeech recognitionData controlAcoustic model

To provide a data process unit and data process unit control program which are suitable for generating acoustic models for unspecified speakers taking distribution of diversifying feature parameters into consideration under such specific conditions as the type of speaker, speech lexicons, speech styles, and speech environment and which are suitable for providing acoustic models intended for unspecified speakers and adapted to speech of a specific person.A data process unit 1 comprises a data classification section 1a, data storing section 1b, pattern model generating section 1c, data control section 1d, mathematical distance calculating section 1e, pattern model converting section 1f, pattern model display section 1g, region dividing section 1h, division changing section 1i, region selecting section 1j, and specific pattern model generating section 1k.

Owner:ASAHI KASEI KK

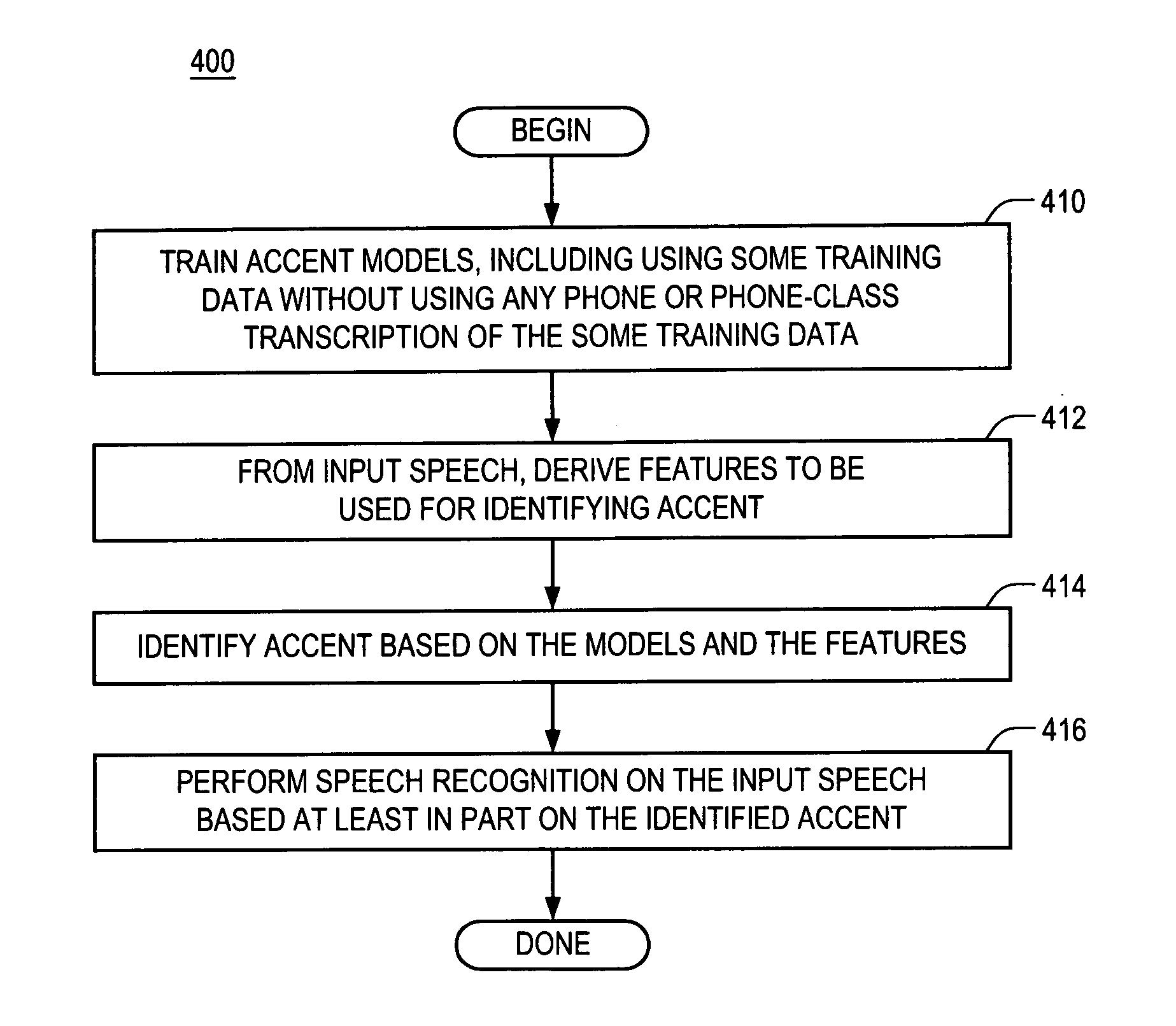

System and methods for accent classification and adaptation

Speech is processed that may be colored by speech accent. A method for recognizing speech includes maintaining a model of speech accent that is established based on training speech data, wherein the training speech data includes at least a first set of training speech data, and wherein establishing the model of speech accent includes not using any phone or phone-class transcription of the first set of training speech data. Related systems are also presented. A system for recognizing speech includes an accent identification module that is configured to identify accent of the speech to be recognized; and a recognizer that is configured to use models to recognize the speech to be recognized, wherein the models include at least an acoustic model that has been adapted for the identified accent using training speech data of a language, other than primary language of the speech to be recognized, that is associated with the identified accent. Related methods are also presented.

Owner:NUSUARA TECH

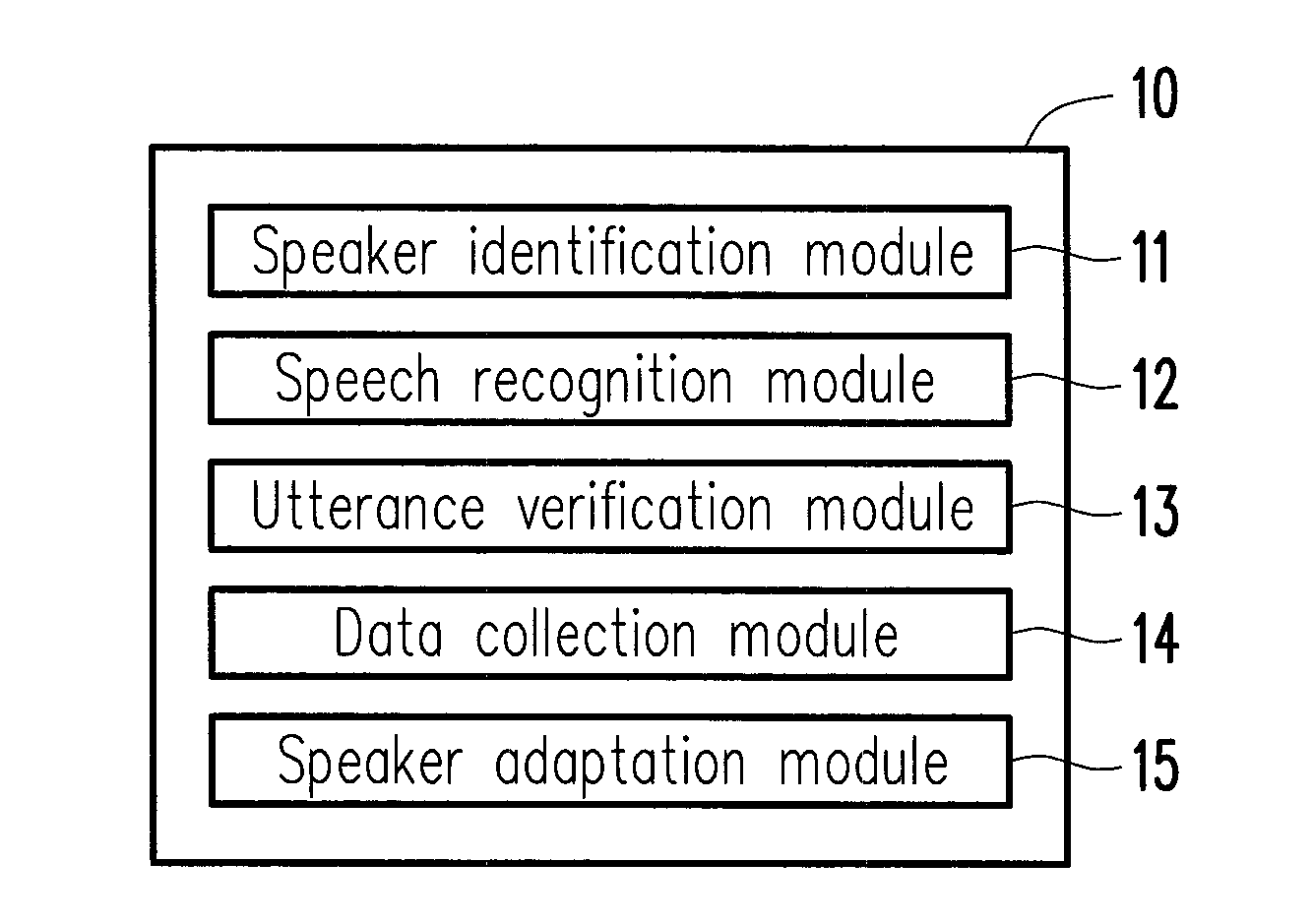

Method and system for speech recognition

InactiveUS20130311184A1Improve speech recognition accuracyImprove accuracySpeech recognitionSpeech identificationAcoustic model

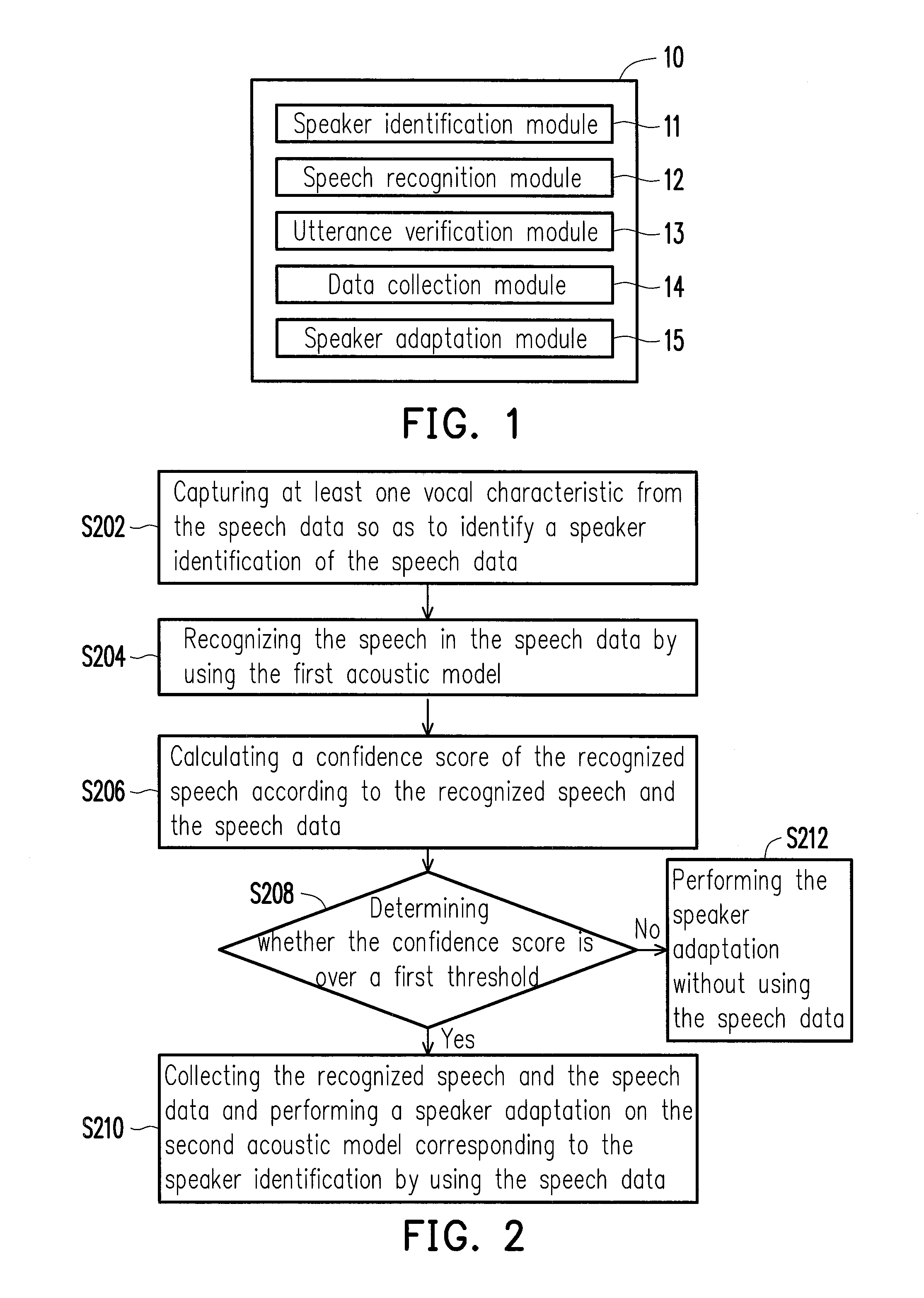

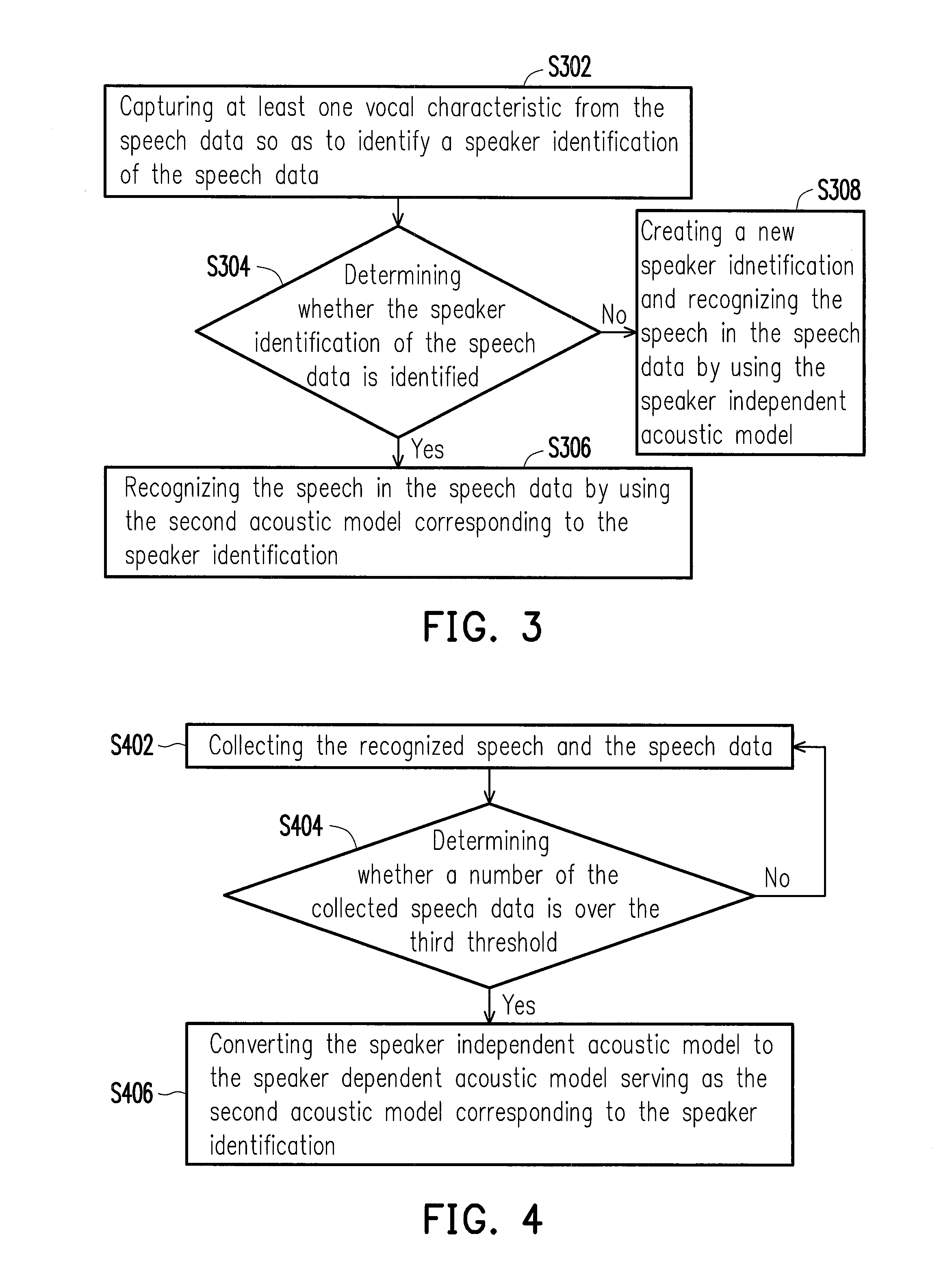

A method and a system for speech recognition are provided. In the method, vocal characteristics are captured from speech data and used to identify a speaker identification of the speech data. Next, a first acoustic model is used to recognize a speech in the speech data. According to the recognized speech and the speech data, a confidence score of the speech recognition is calculated and it is determined whether the confidence score is over a threshold. If the confidence score is over the threshold, the recognized speech and the speech data are collected, and the collected speech data is used for performing a speaker adaptation on a second acoustic model corresponding to the speaker identification.

Owner:ASUSTEK COMPUTER INC

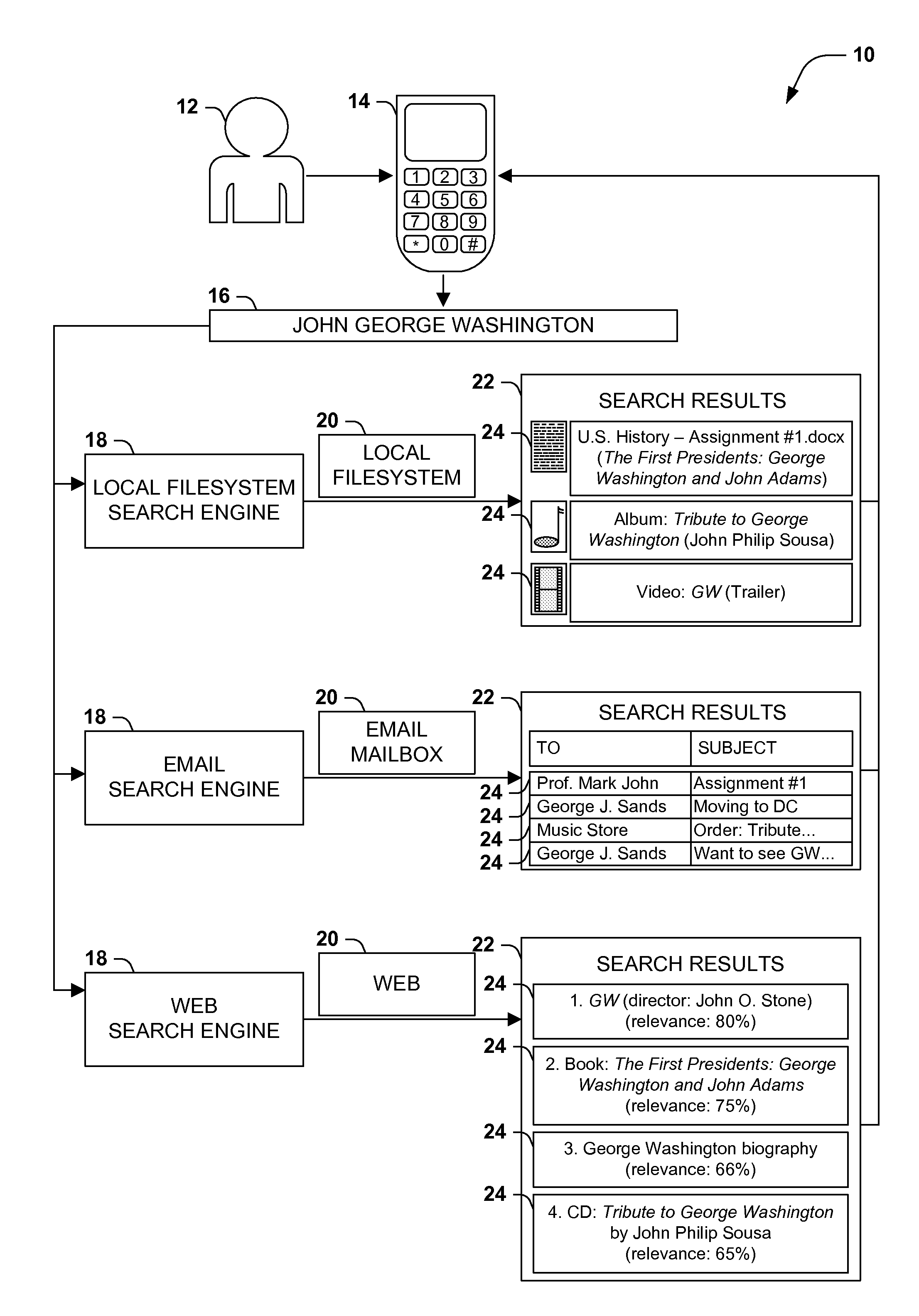

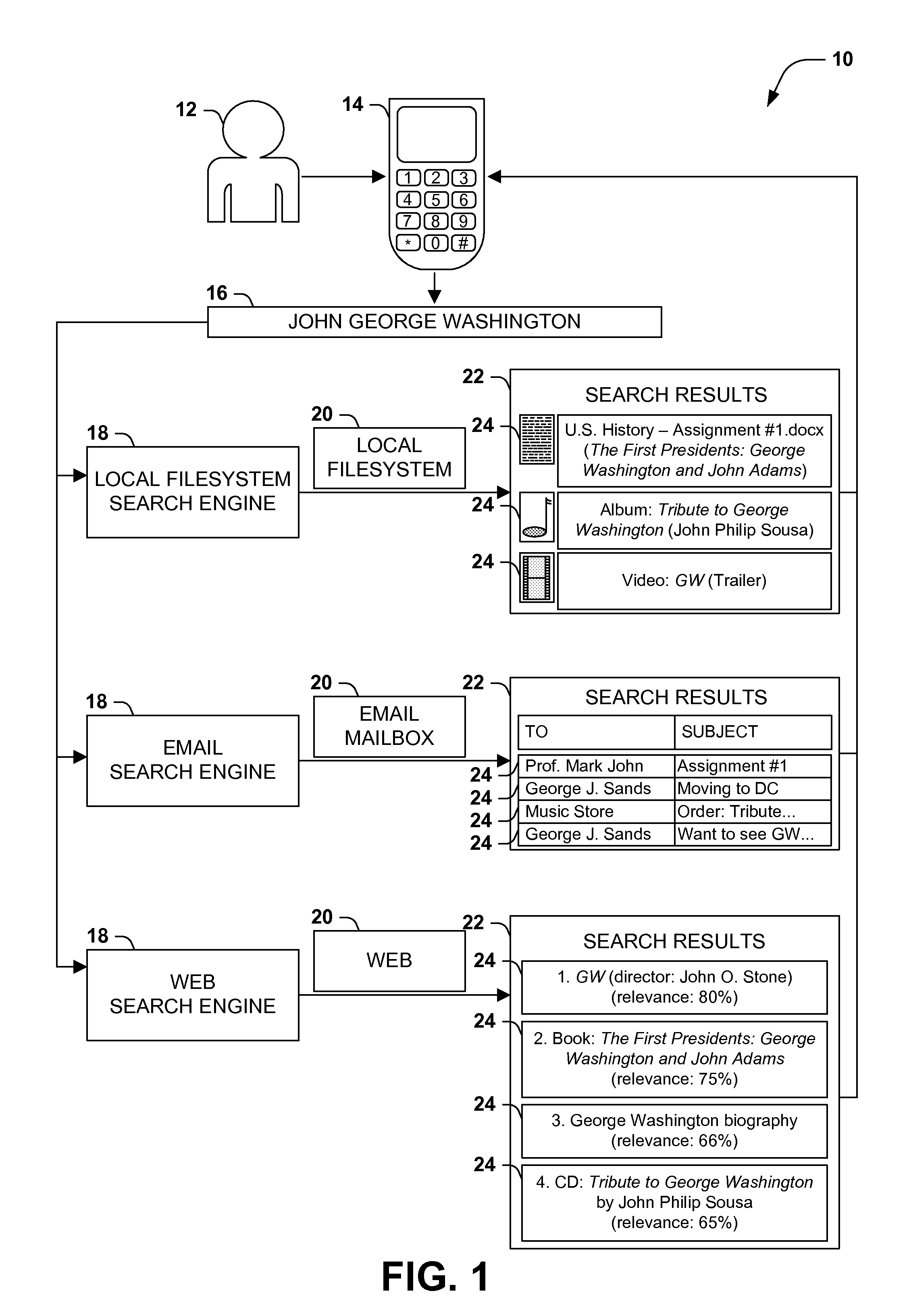

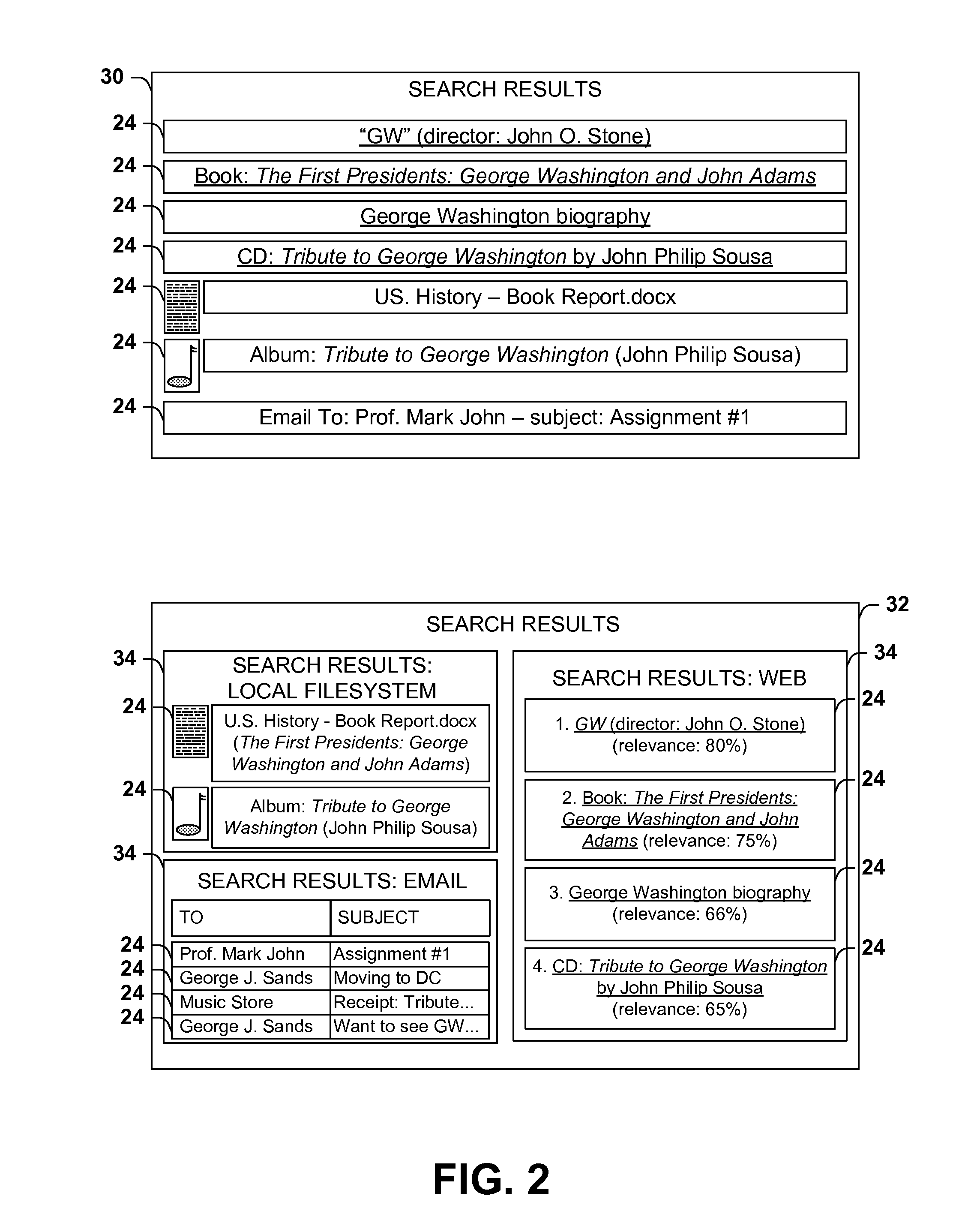

Presenting search results according to query domains

ActiveUS20100312782A1Convenient reviewOrganize effectivelyDigital data processing detailsSpeech recognitionData setDisplay device

A query may be applied against search engines that respectively return a set of search results relating to various items discovered in the searched data sets. However, presenting numerous and varied search results may be difficult on mobile devices with small displays and limited computational resources. Instead, search results may be associated with search domains representing various information types (e.g., contacts, public figures, places, projects, movies, music, and books) and presented by grouping search results with associated query domains, e.g., in a tabbed user interface. The query may be received through an input device associated with a particular input domain, and may be transitioned to the query domain of a particular search engine (e.g., by recognizing phonemes of a voice query using an acoustic model; matching phonemes with query terms according to a pronunciation model; and generating a recognition result according to a vocabulary of an n-gram language model.)

Owner:MICROSOFT TECH LICENSING LLC

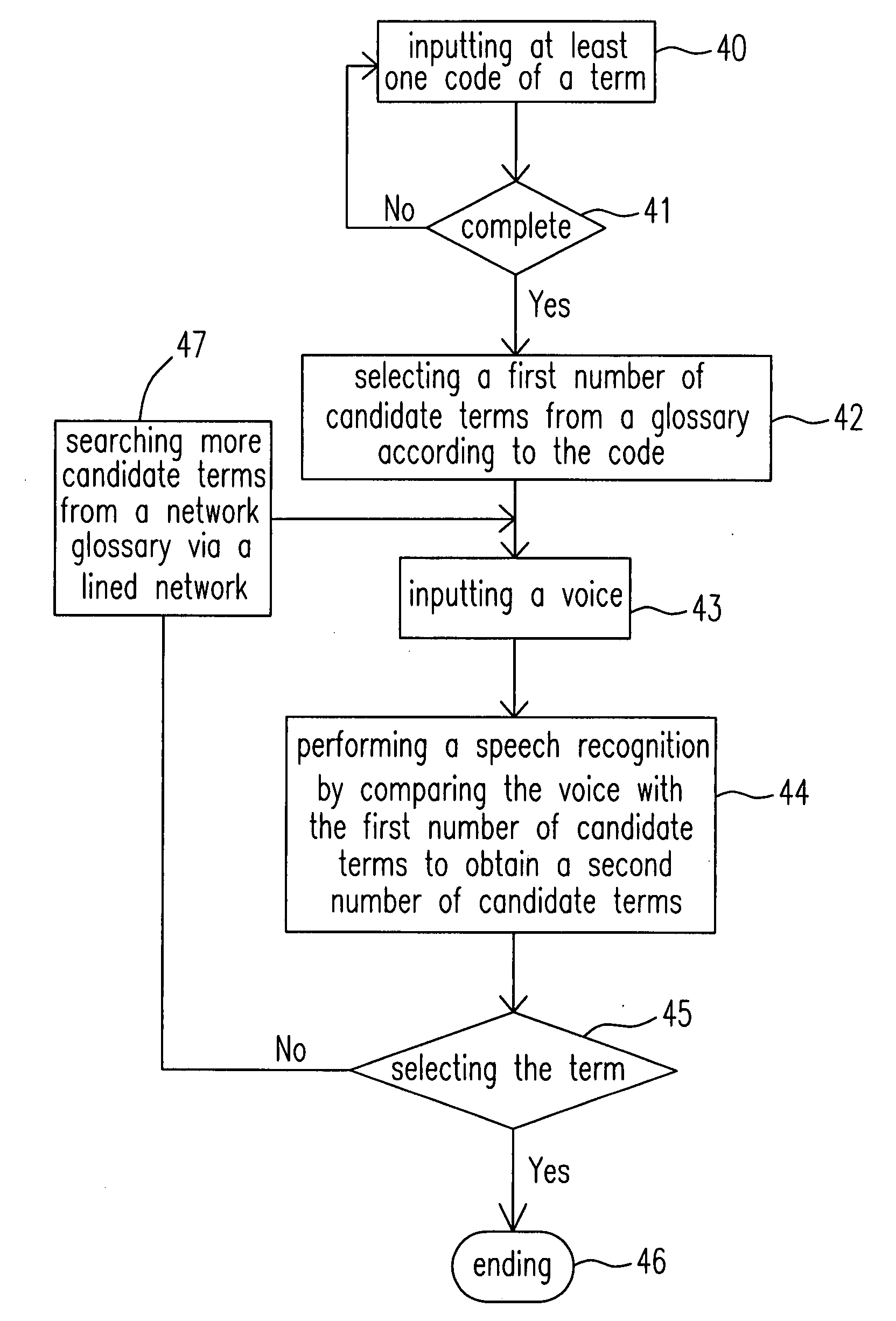

Input system for mobile search and method therefor

InactiveUS20080281582A1Decrease keying numberThe process is convenient and fastDigital data processing detailsNatural language data processingMobile searchProcess module

An input system for mobile search and a method therefor are provided. The input system includes an input module receiving a code input for a specific term and a voice input corresponding thereto, a database including a glossary and an acoustic model, wherein the glossary includes a plurality of terms and a sequence list, and each of the terms has a search weight based on an order of the sequence list, a process module selecting a first number of candidate terms from the glossary according to the code input by using an input algorithm and obtaining a second number of candidate terms by using a speech recognition algorithm to compare the voice input with the first number of candidate terms via the acoustic model, wherein the second number of candidate terms are listed in a particular order based on their respective search weights, and an output module showing the second number of candidate terms in the particular order for selecting the specific term therefrom.

Owner:DELTA ELECTRONICS INC

Document transcription system training

ActiveUS20060041427A1Accurate representationBetter acoustic modelSpeech recognitionSpoken languageAcoustic model

A system is provided for training an acoustic model for use in speech recognition. In particular, such a system may be used to perform training based on a spoken audio stream and a non-literal transcript of the spoken audio stream. Such a system may identify text in the non-literal transcript which represents concepts having multiple spoken forms. The system may attempt to identify the actual spoken form in the audio stream which produced the corresponding text in the non-literal transcript, and thereby produce a revised transcript which more accurately represents the spoken audio stream. The revised, and more accurate, transcript may be used to train the acoustic model, thereby producing a better acoustic model than that which would be produced using conventional techniques, which perform training based directly on the original non-literal transcript.

Owner:3M INNOVATIVE PROPERTIES CO

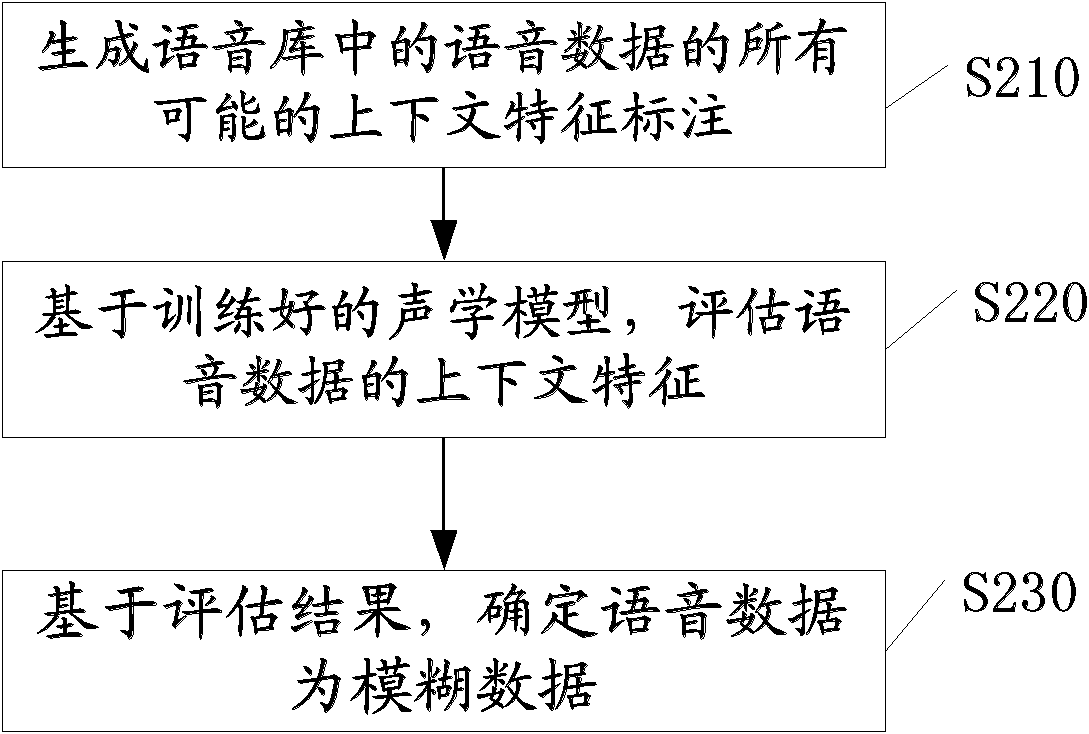

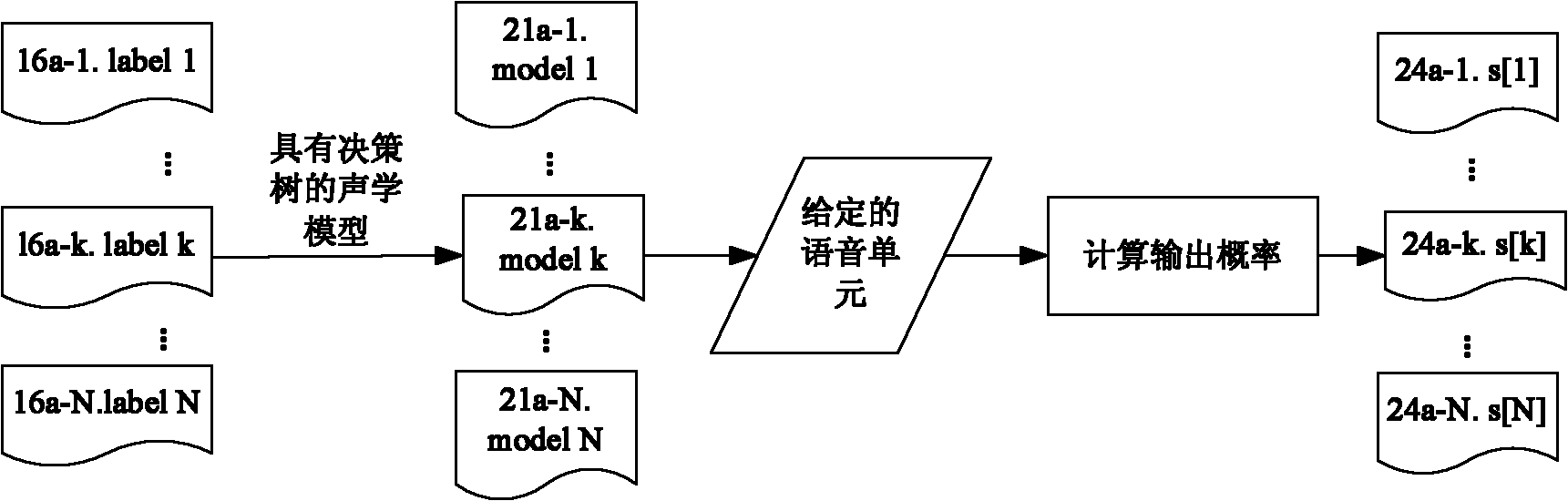

Method and equipment for voice synthesis and method for training acoustic model used in voice synthesis

The invention relates to a method and equipment for voice synthesis and a method for training an acoustic model used in voice synthesis. The method for voice synthesis includes the steps as follows: confirming that data generated by text analysis is fuzzy polyphone data; and performing fuzzy polyphone prediction for the fuzzy polyphone data, so as to output a plurality of candidate pronunciations and the probability thereof; generating the fuzzy context characteristic tagging based on the candidate pronunciations and the probability thereof; based on the acoustical model provided with a fuzzy decision tree, confirming model parameters direct at the fuzzy context characteristic tagging; generating voice parameters based on the model parameters; and synthesizing voice through the voice parameters. As per the method and equipment provided by the embodiment of the invention, the fuzzy treatment can be performed for polyphone words difficult for prediction in a Chinese text, so as to improve the synthesis quality of Chinese polyphones.

Owner:KK TOSHIBA

Data process unit and data process unit control program

InactiveUS20050075875A1Maintain relationshipProcess is performedCharacter and pattern recognitionSpeech recognitionData controlAcoustic model

To provide a data process unit and data process unit control program which are suitable for generating acoustic models for unspecified speakers taking distribution of diversifying feature parameters into consideration under such specific conditions as the type of speaker, speech lexicons, speech styles, and speech environment and which are suitable for providing acoustic models intended for unspecified speakers and adapted to speech of a specific person. A data process unit 1 comprises a data classification section 1a, data storing section 1b, pattern model generating section 1c, data control section 1d, mathematical distance calculating section 1e, pattern model converting section 1f, pattern model display section 1g, region dividing section 1h, division changing section 1i, region selecting section 1j, and specific pattern model generating section 1k.

Owner:ASAHI KASEI KK

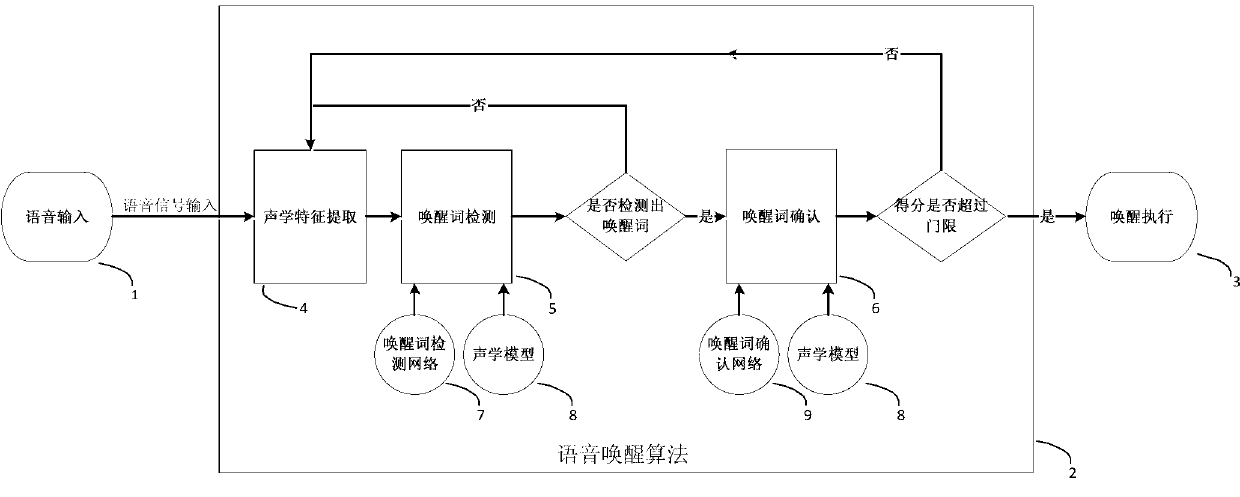

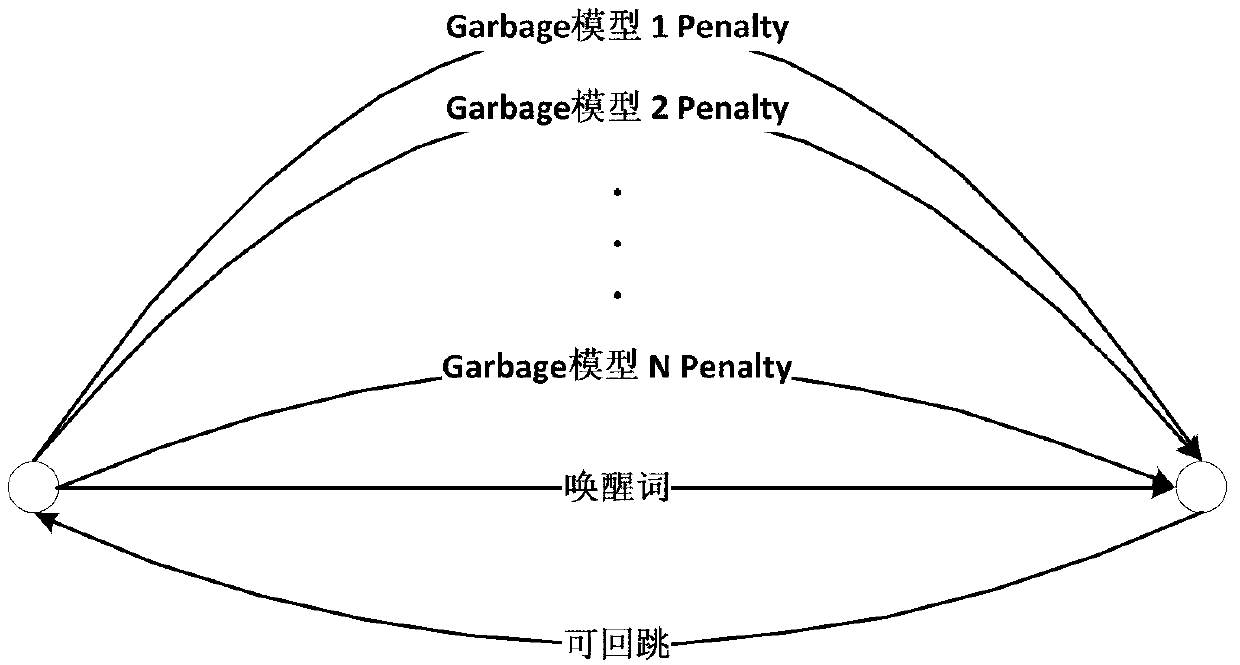

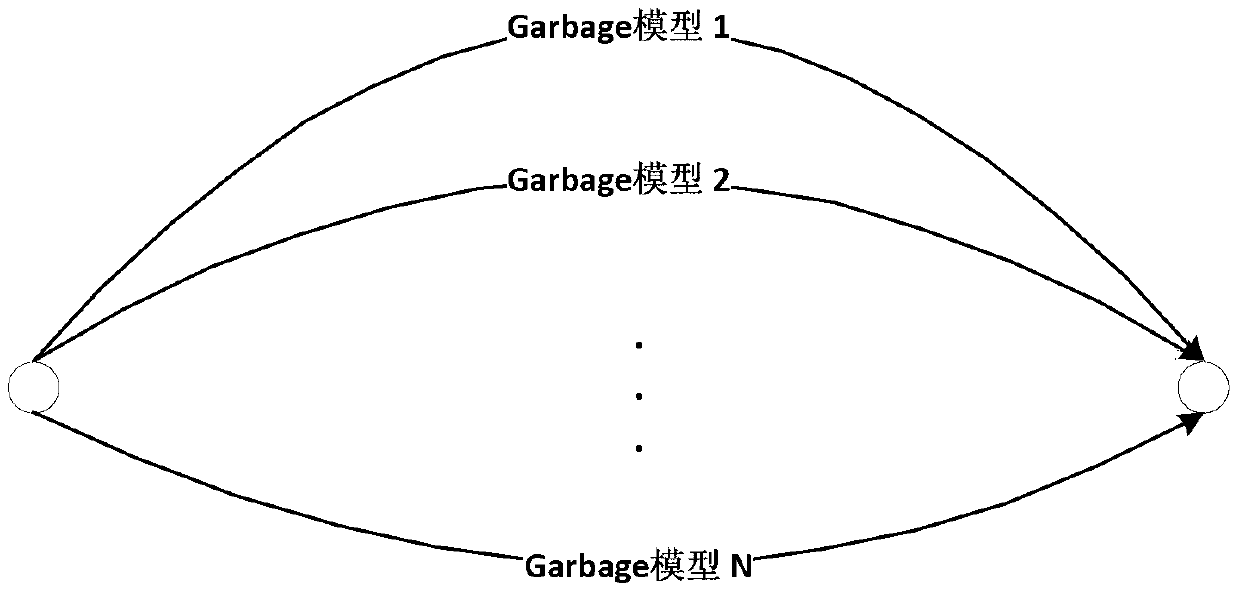

Implementation method and application of voice awakening module

ActiveCN102999161AVoice wake-up effect is goodQuick realization of voice wake-up functionInput/output for user-computer interactionSound input/outputFeature extractionAcoustic model

The invention discloses an implementation method and application of a voice awakening module. The implementation method comprises the following steps of: voice input (1), voice awakening algorithm (2) and awakening actuation (3), wherein the voice awakening algorithm (2) is implemented through the following main steps of: acoustic feature extraction (4), awakening word detection (5), awakening word confirmation (6), construction of an awakening word detection network (7), training of an acoustic model (8) and construction of an awakening word confirming network (9) and the like. The invention has the advantages that even under a noisy environment, no matter whether the music is played, the voice awakening function can be started by the voice awakening word, and the recognition awakening effect is good; and the implementation method can be planted onto an ARM or DSP universal process for operation and is applied in the fields related to vehicle mounting and household appliances.

Owner:IFLYTEK SOUTH CHINA ARTIFICIAL INTELLIGENCE RES INST GUANGZHOU CO LTD

System and method for automating transcription services

A system for substantially automating transcription services for multiple users (10, 11, 12) including a manual transcription station (50), speech recognition program (40) and a routing program (200). A uniquely identified voice dictation file is generated from a user and—based on the training status—routes the voice dictation file to a manual transcription station and speech recognition program. A human transcriptionist creates transcribed files for each voice dictation file. The speech recognition program creates written text for each dictation file if the training status is training or automated. If the training status of the current user is enrollment or training, a verbatim file is manually established and the speech recognition program is trained with an acoustic model using the verbatim and voice dictation files. The transcribed file is returned to the user if the training status is enrollment or training or written text is returned if the status is automated.

Owner:CUSTOM SPEECH USA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com