Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1185 results about "Spoken language" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A spoken language is a language produced by articulate sounds, as opposed to a written language. Many languages have no written form and so are only spoken. An oral language or vocal language is a language produced with the vocal tract, as opposed to a sign language, which is produced with the hands and face. The term "spoken language" is sometimes used to mean only vocal languages, especially by linguists, making all three terms synonyms by excluding sign languages. Others refer to sign language as "spoken", especially in contrast to written transcriptions of signs.

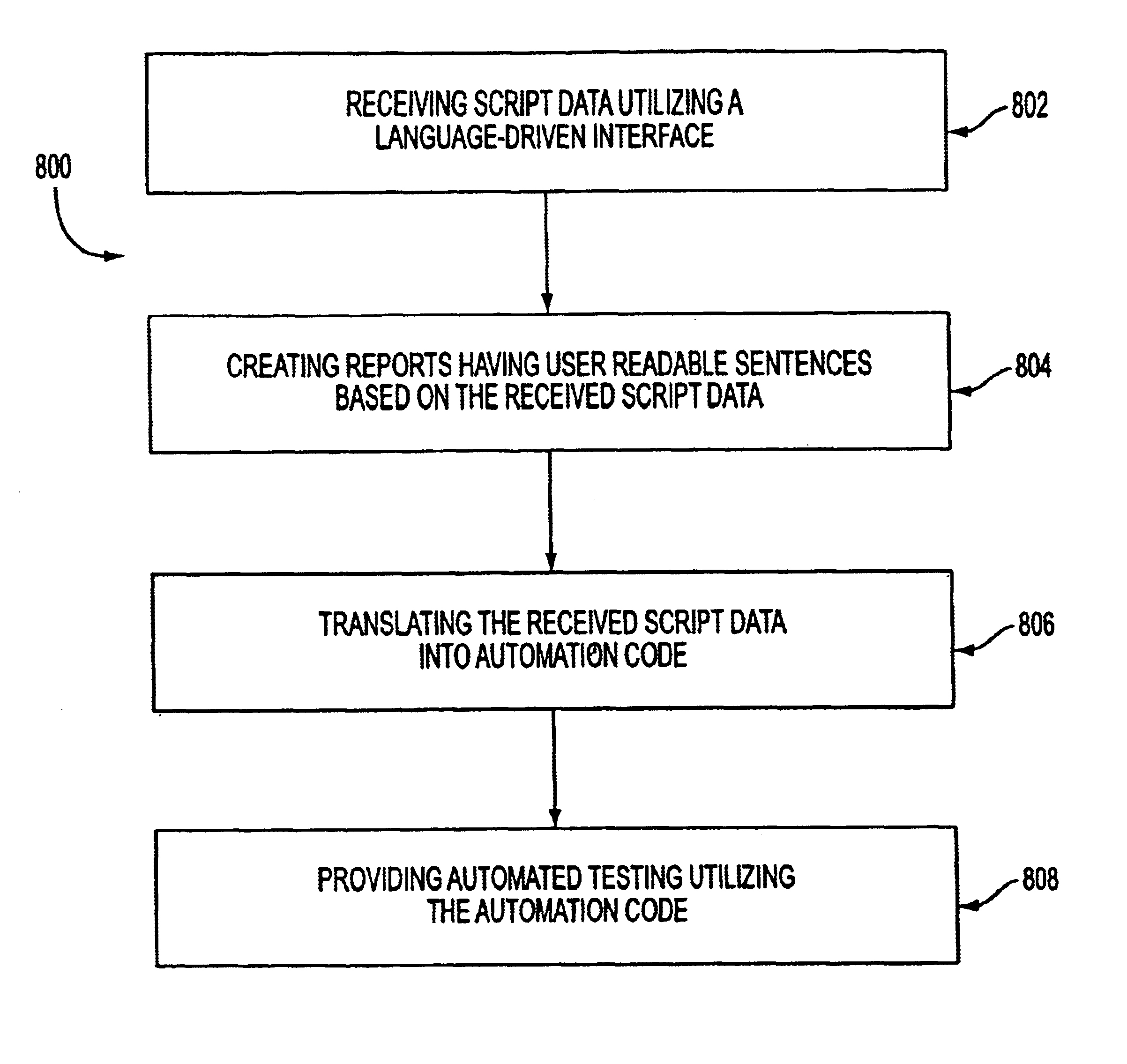

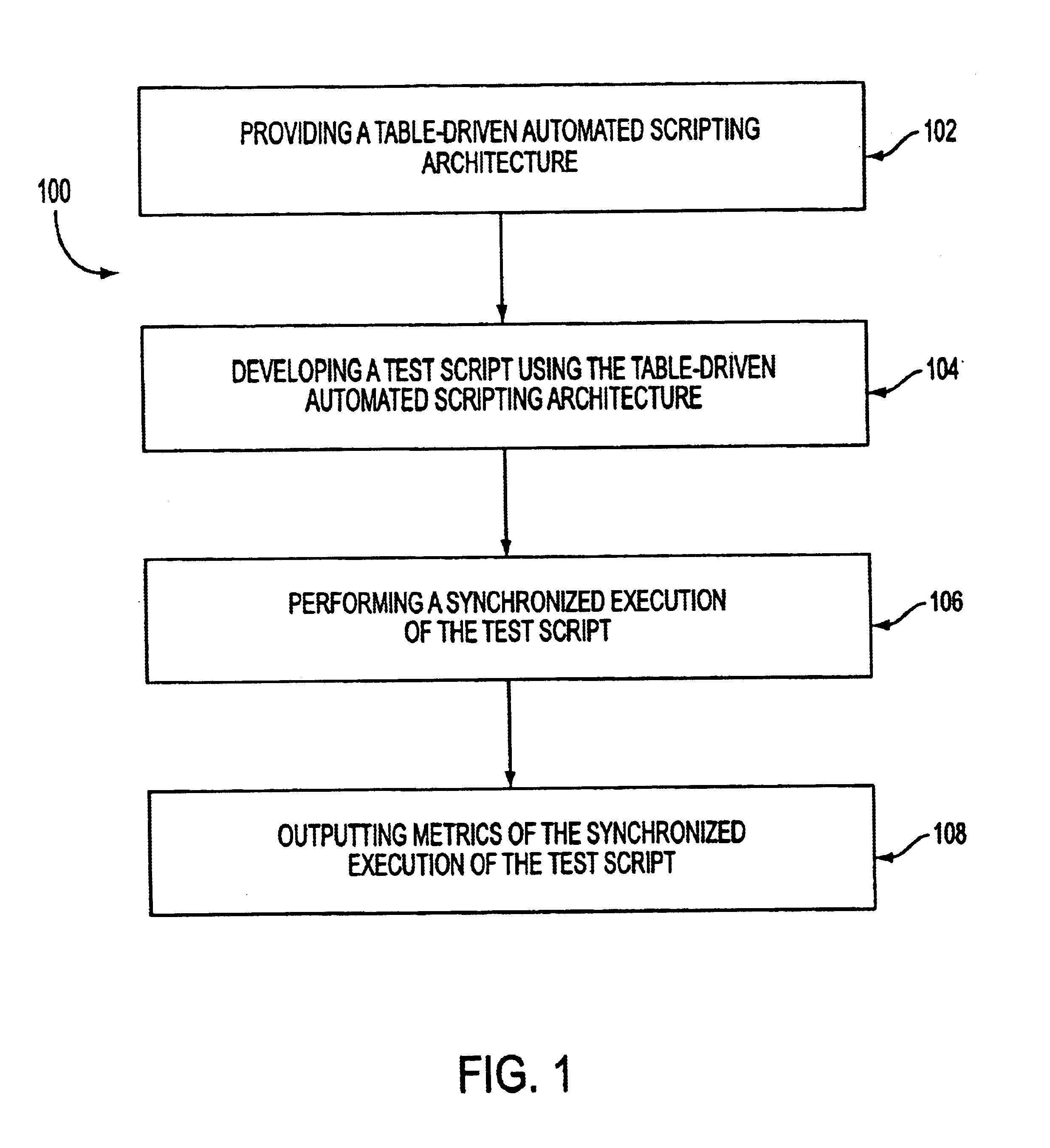

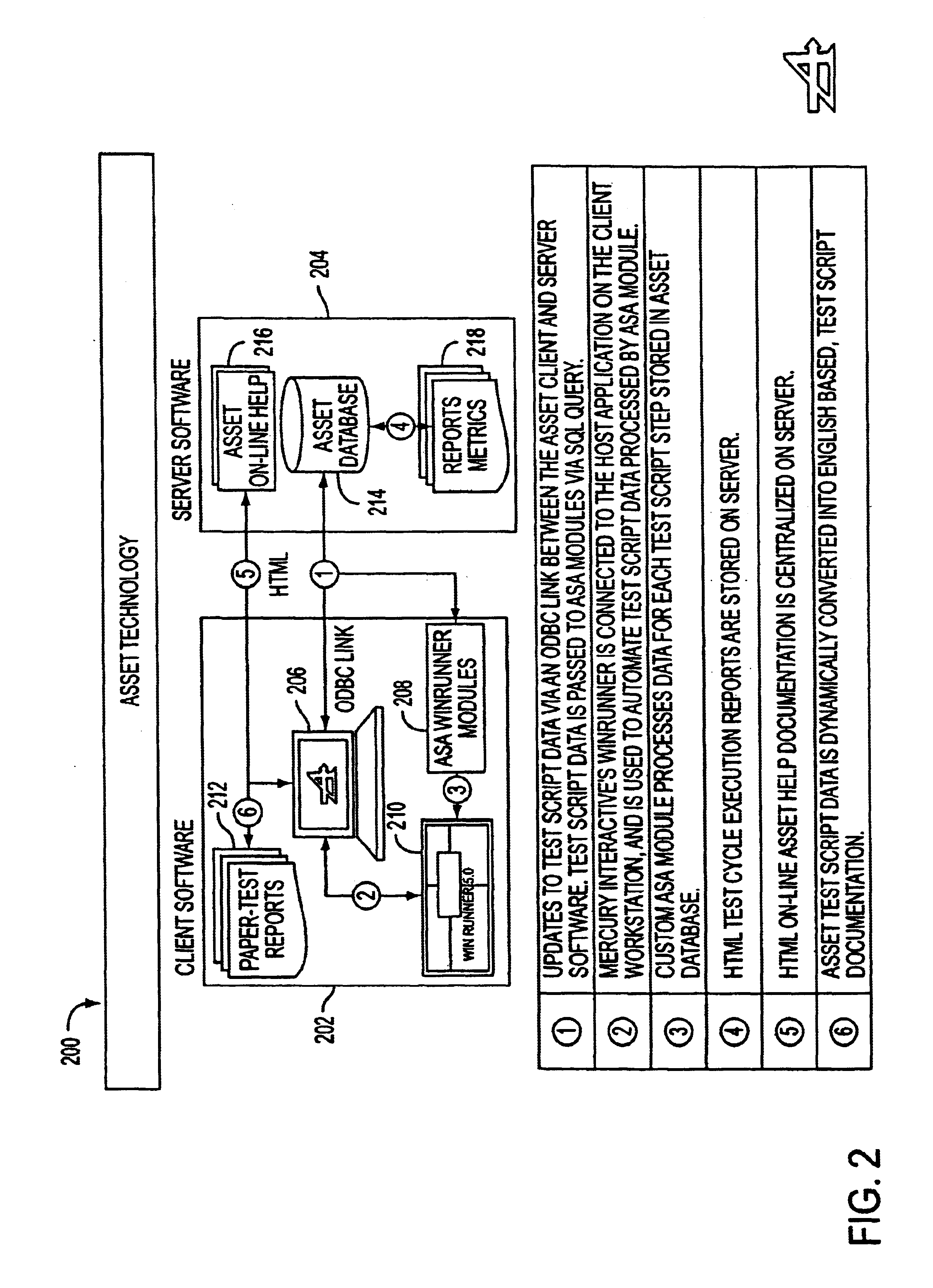

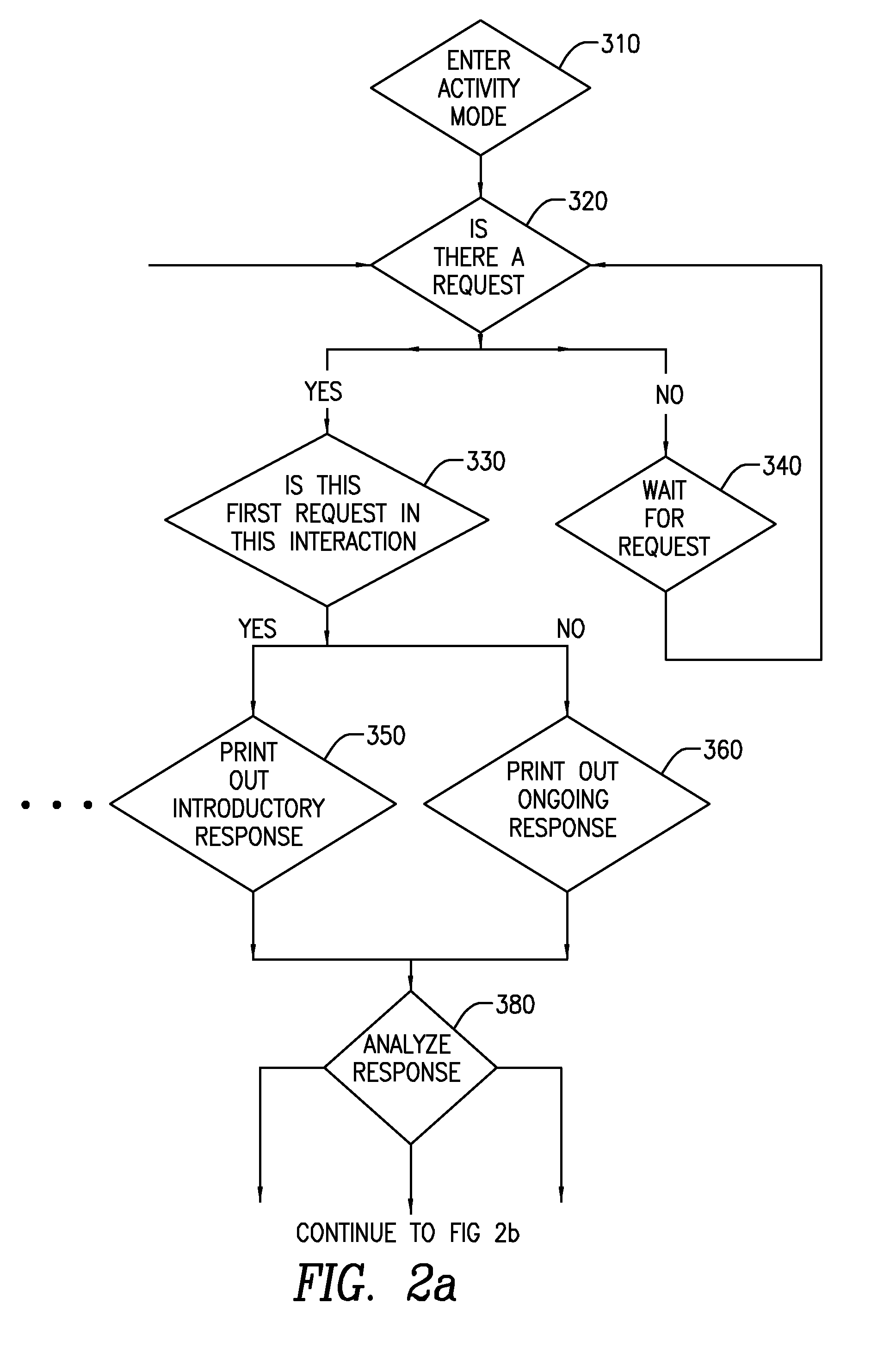

Language-driven interface for an automated testing framework

InactiveUS6907546B1Software testing/debuggingSpecific program execution arrangementsCrowdsAutomatic testing

To test the functionality of a computer system, automated testing may use an automation testing tool that emulates user interactions. A database may store words each having a colloquial meaning that is understood by a general population. For each of these words, the database may store associated computer instructions that can be executed to cause a computer to perform the function that is related to the meaning of the word. During testing, a word may be received having a colloquial meaning that is understood by a general population. The database may be queried for the received word and the set of computer instructions may be returned by the database. The automated testing tool may then perform the function returned to the colloquial meaning of the word. The words stored in the database may be in English or another language.

Owner:ACCENTURE GLOBAL SERVICES LTD

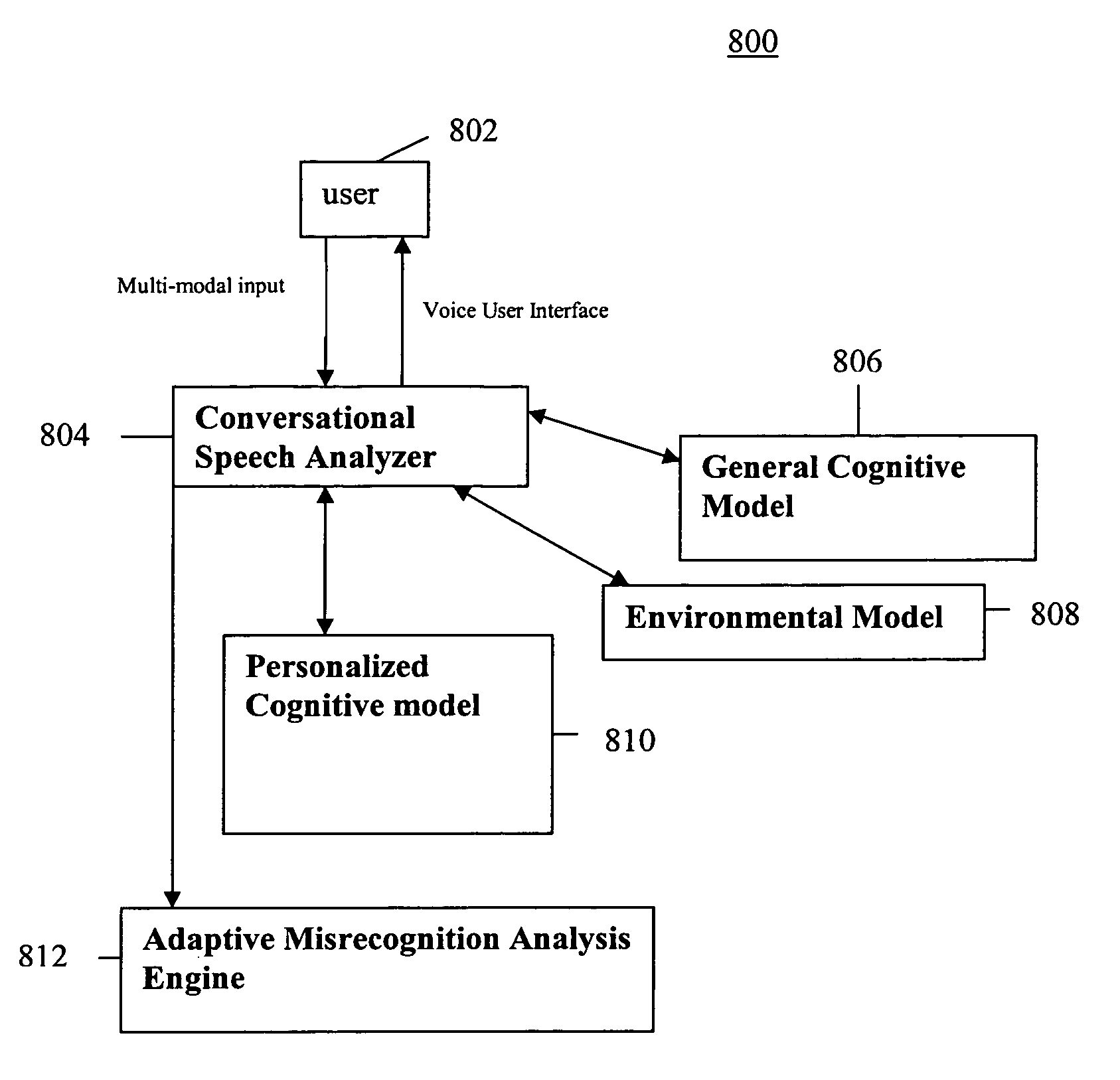

System and method of supporting adaptive misrecognition in conversational speech

ActiveUS20070038436A1Improve maximizationHigh bandwidthNatural language data processingSpeech recognitionPersonalizationSpoken language

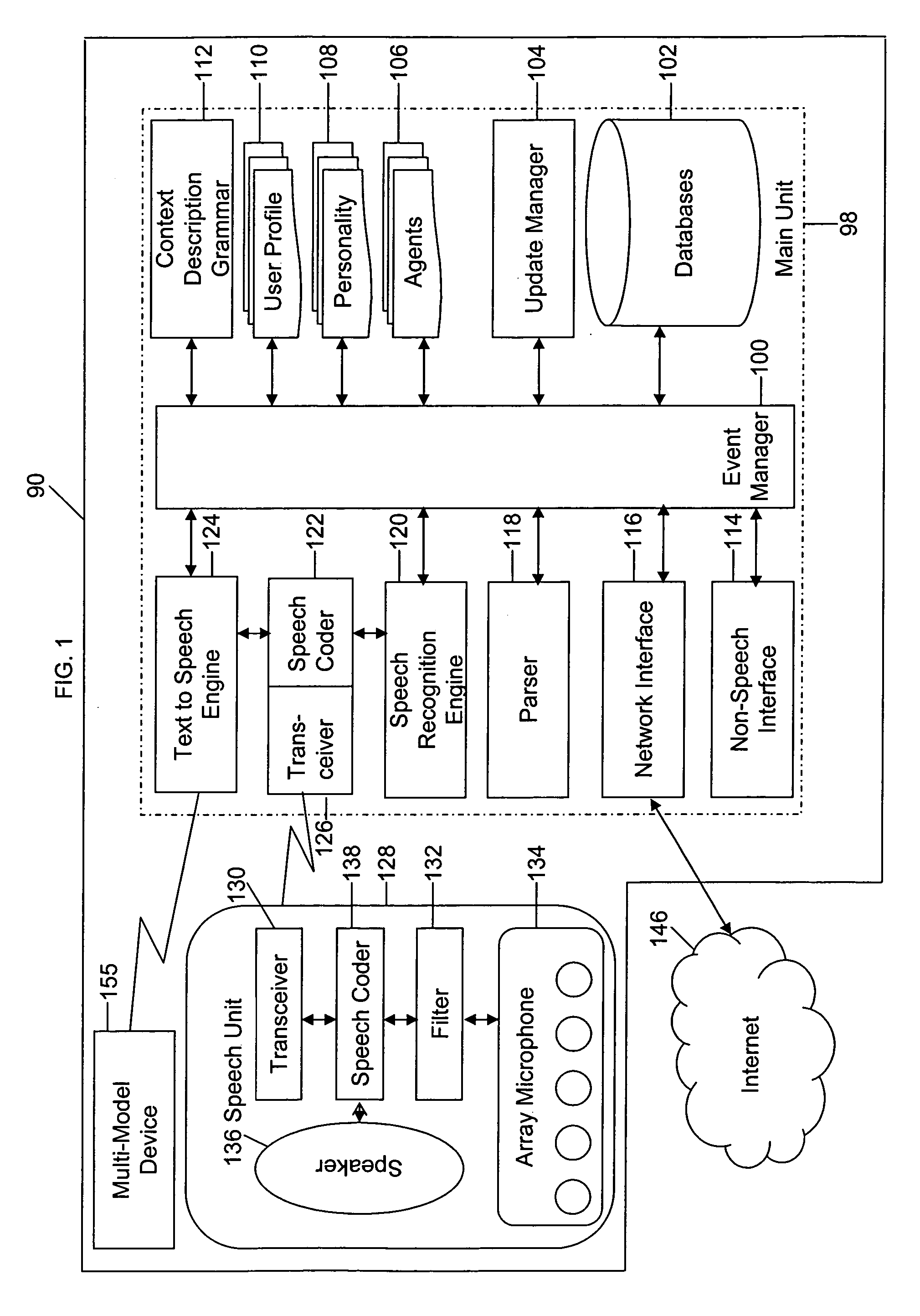

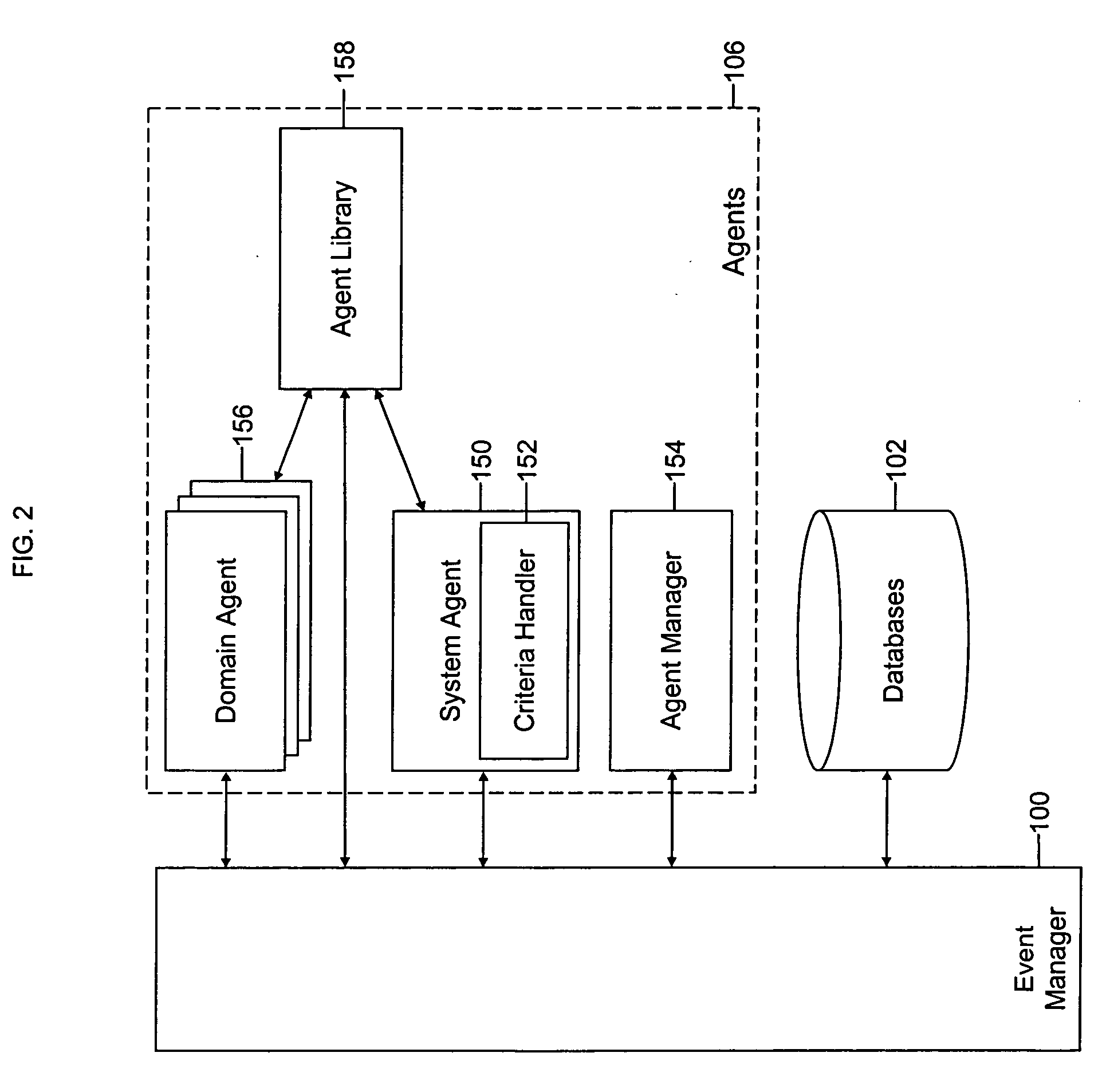

A system and method are provided for receiving speech and / or non-speech communications of natural language questions and / or commands and executing the questions and / or commands. The invention provides a conversational human-machine interface that includes a conversational speech analyzer, a general cognitive model, an environmental model, and a personalized cognitive model to determine context, domain knowledge, and invoke prior information to interpret a spoken utterance or a received non-spoken message. The system and method creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context of the speech or non-speech communication and presenting the expected results for a particular question or command.

Owner:DIALECT LLC

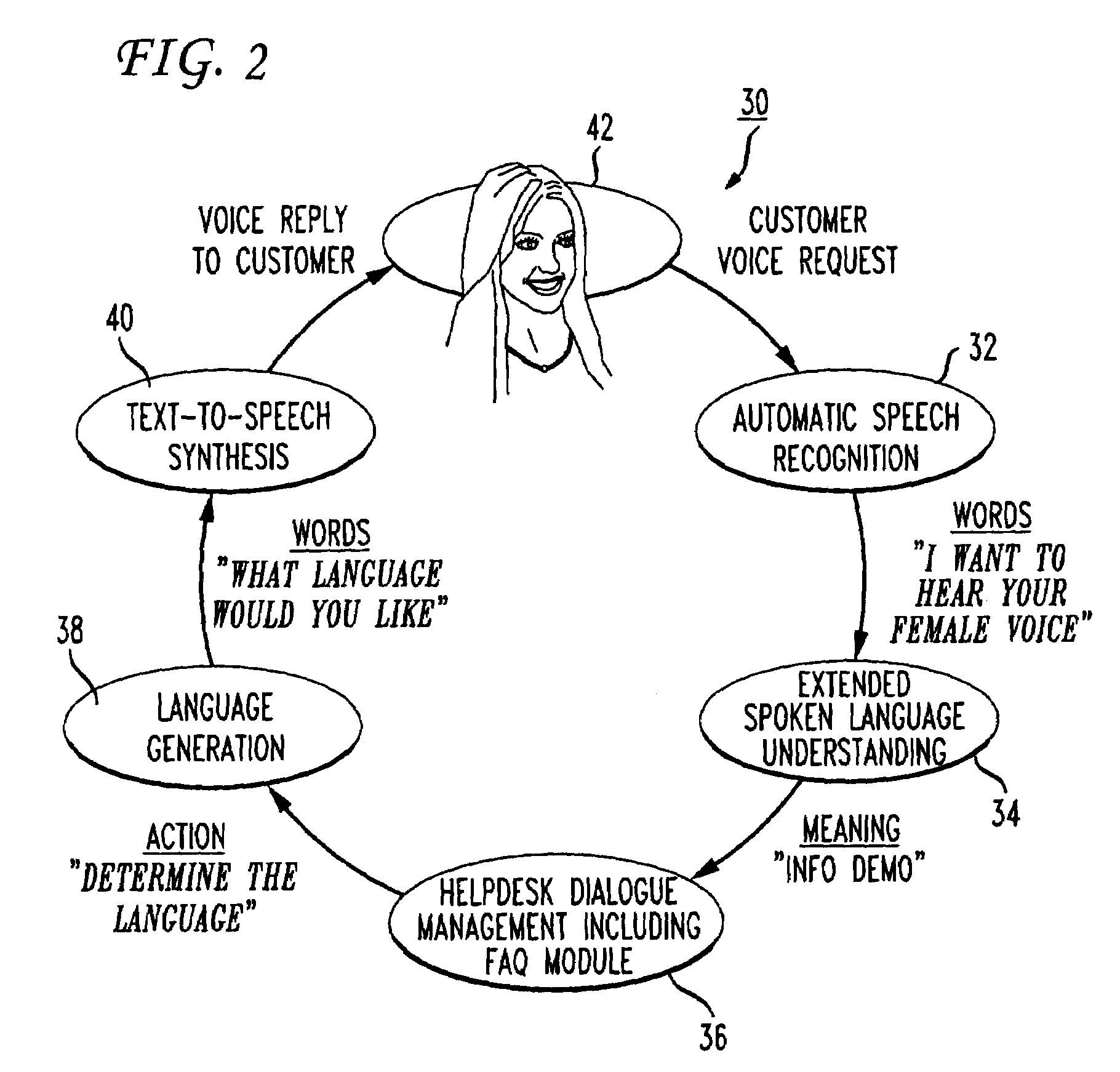

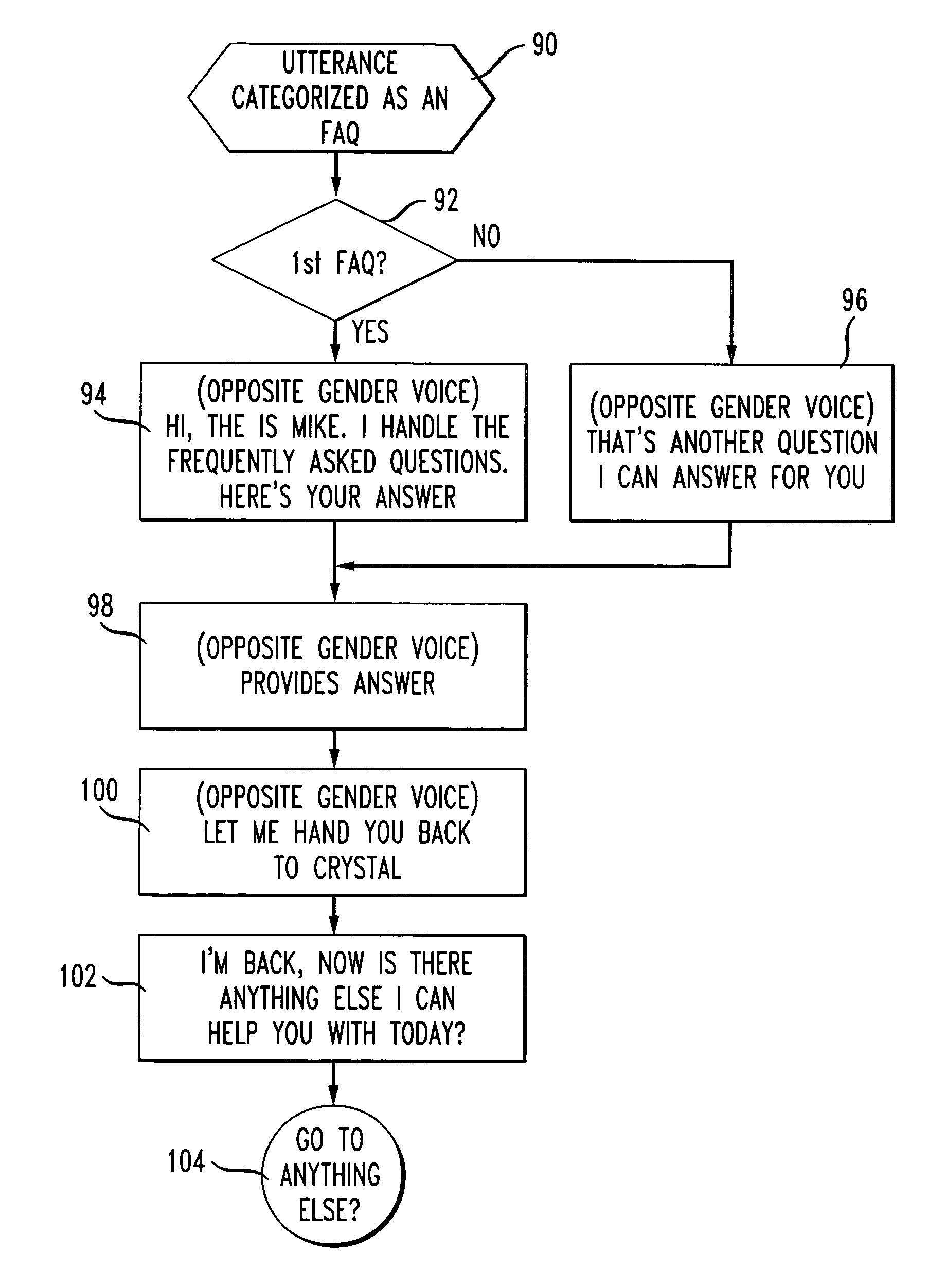

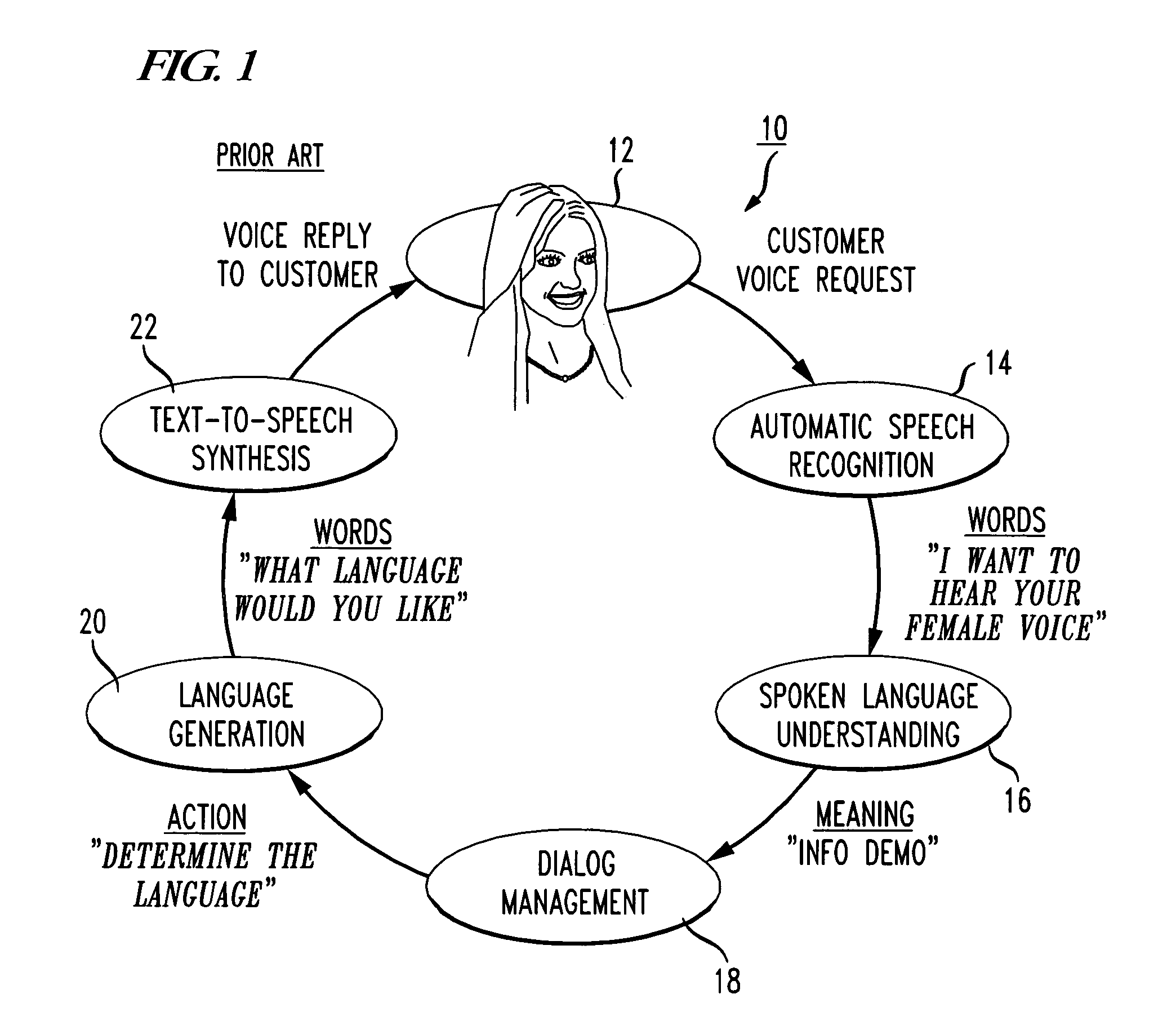

System for handling frequently asked questions in a natural language dialog service

InactiveUS7197460B1Improve customer relationshipEfficient mannerSpeech recognitionInput/output processes for data processingSpoken languageDialog management

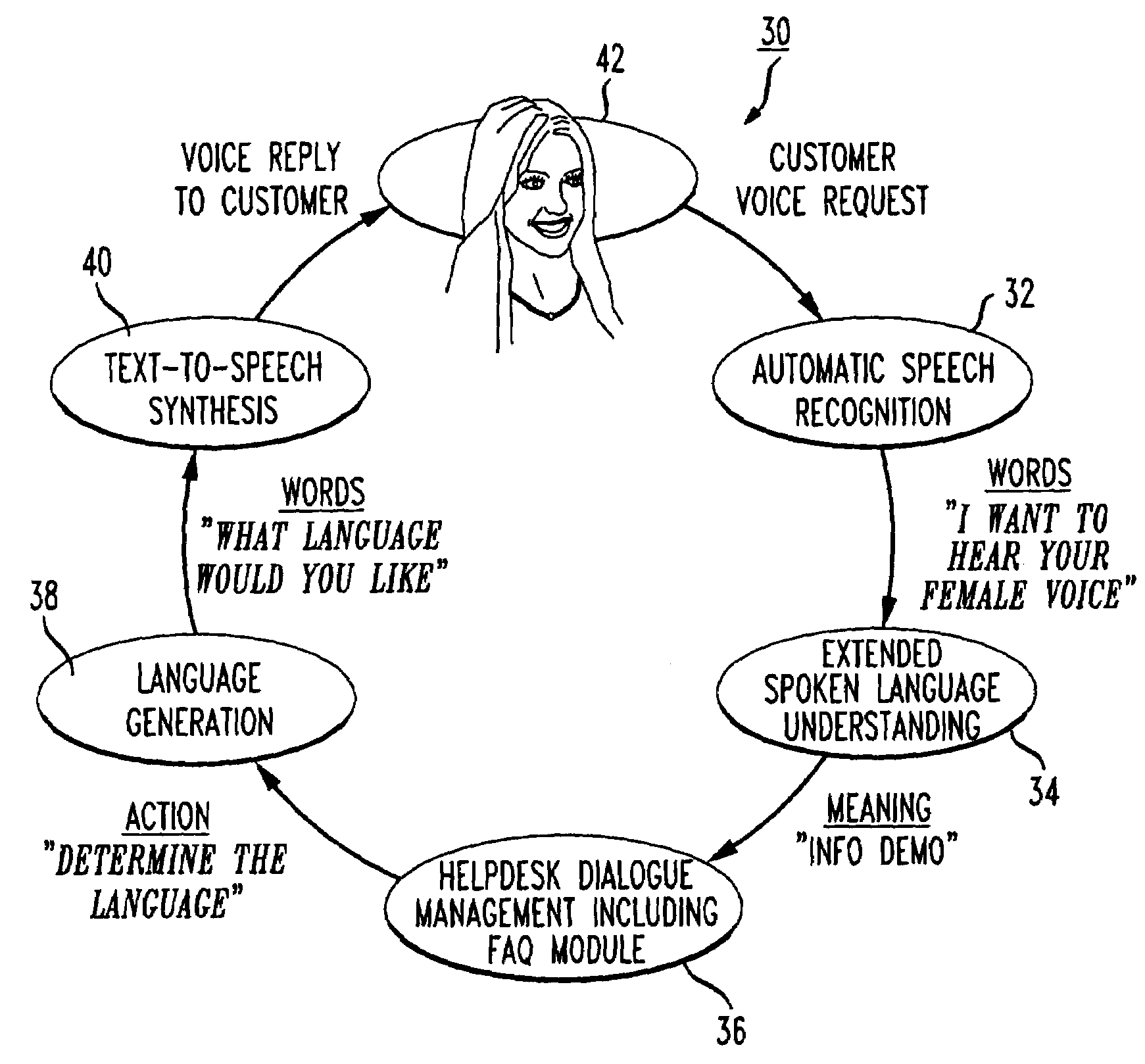

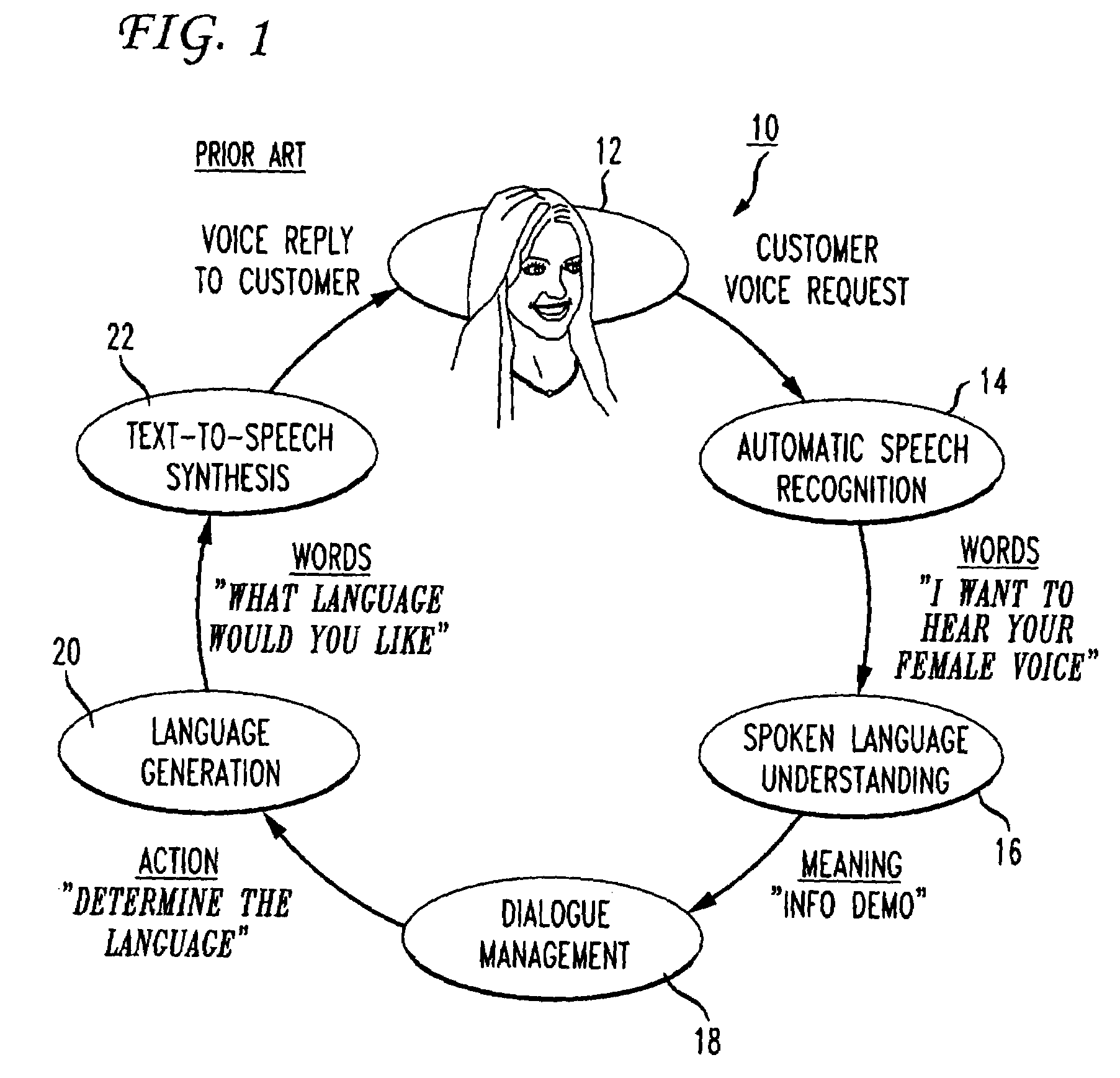

A voice-enabled help desk service is disclosed. The service comprises an automatic speech recognition module for recognizing speech from a user, a spoken language understanding module for understanding the output from the automatic speech recognition module, a dialog management module for generating a response to speech from the user, a natural voices text-to-speech synthesis module for synthesizing speech to generate the response to the user, and a frequently asked questions module. The frequently asked questions module handles frequently asked questions from the user by changing voices and providing predetermined prompts to answer the frequently asked question.

Owner:NUANCE COMM INC

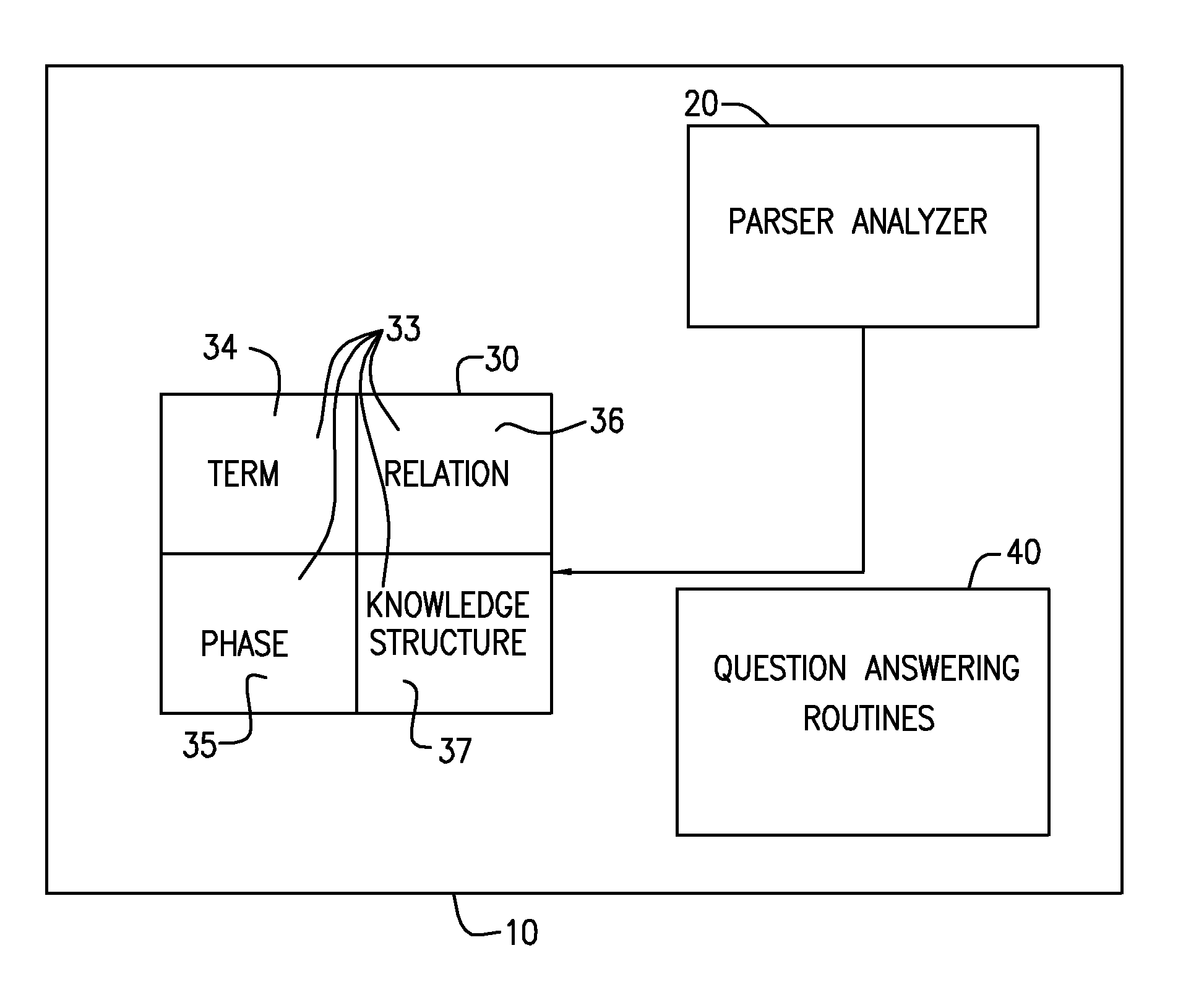

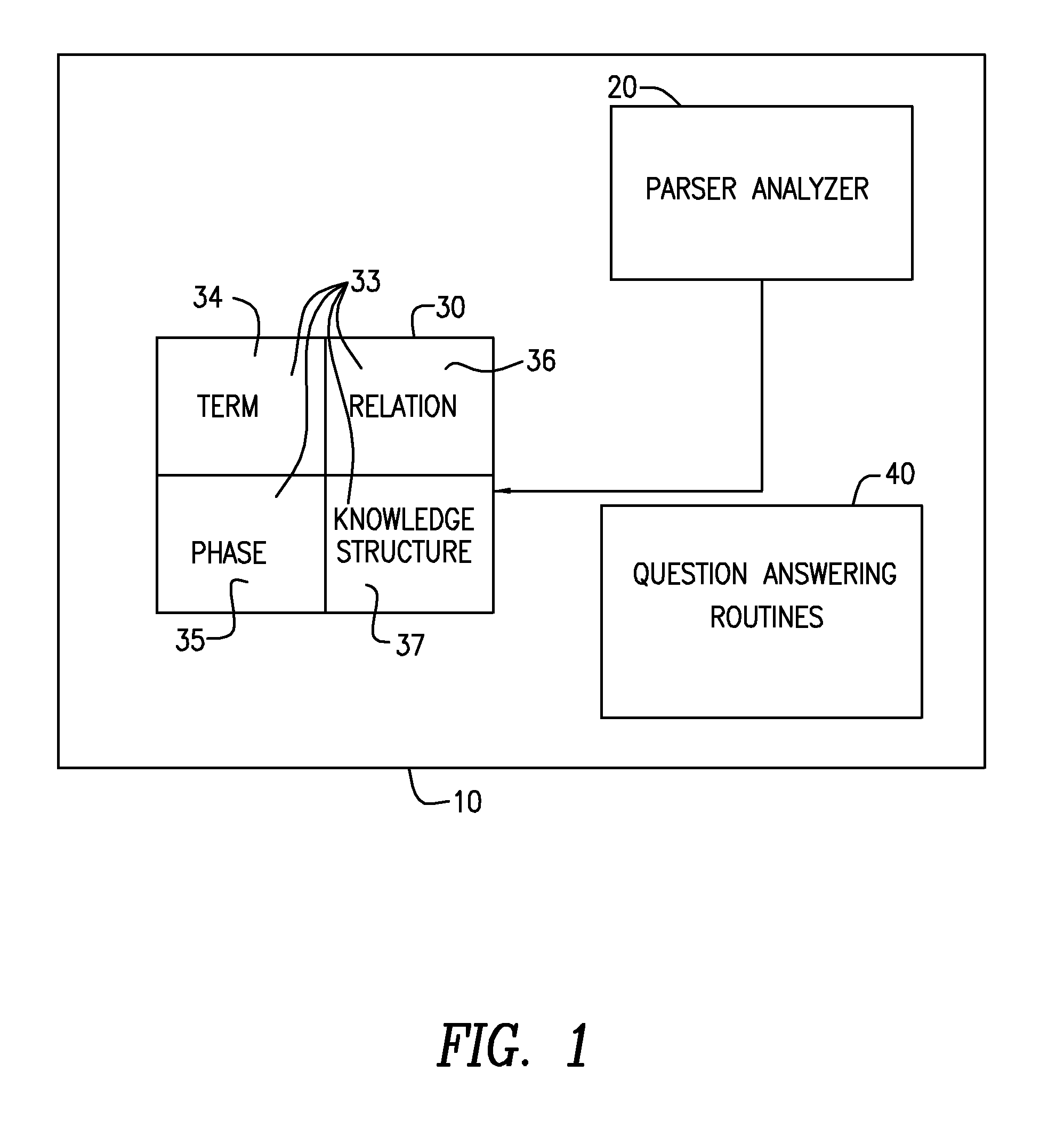

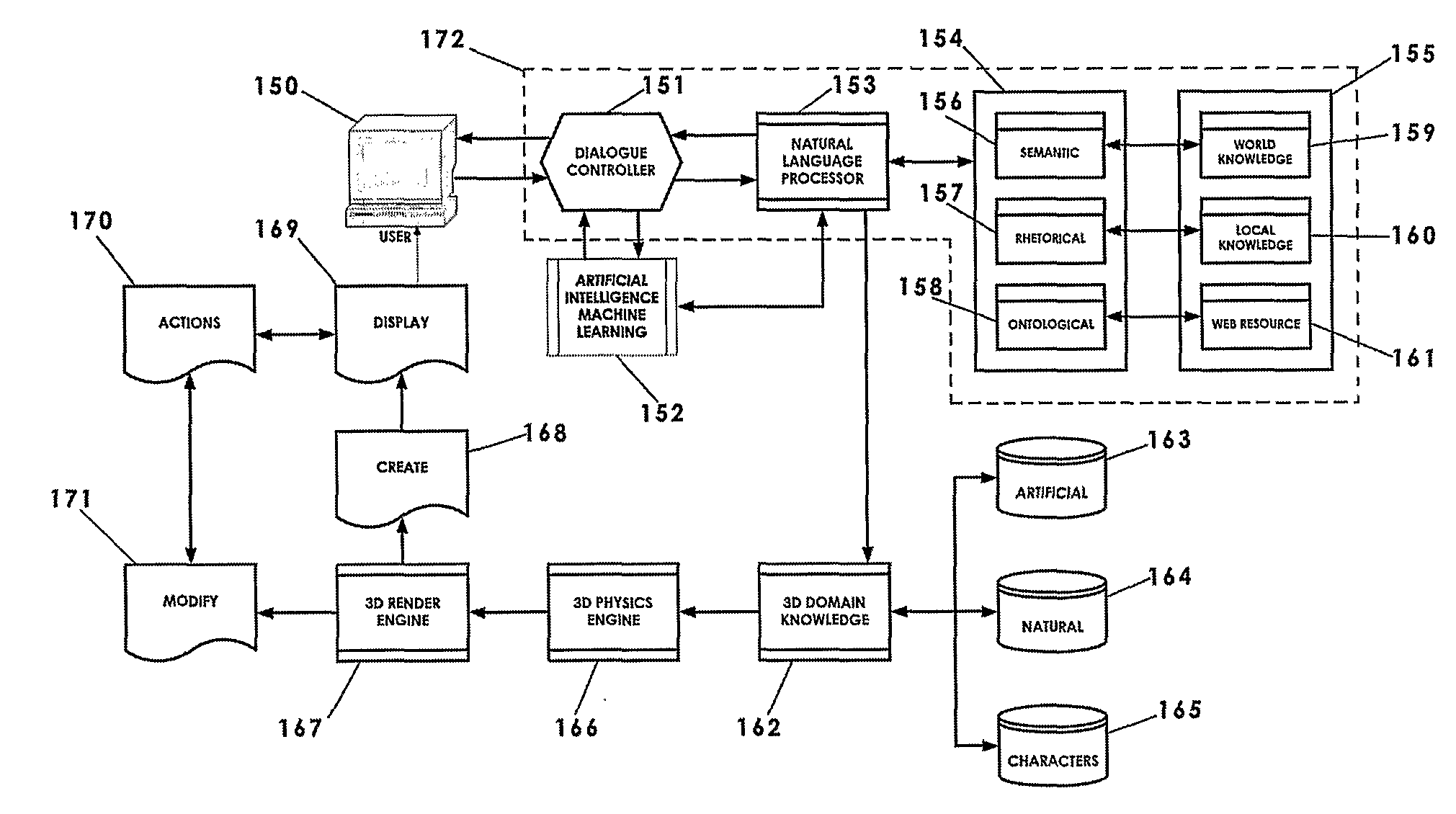

Intelligent home automation

InactiveUS20100332235A1Electric signal transmission systemsMultiple keys/algorithms usageSpoken languageThe Internet

An intelligent home automation system answers questions of a user speaking “natural language” located in a home. The system is connected to, and may carry out the user's commands to control, any circuit, object, or system in the home. The system can answer questions by accessing the Internet. Using a transducer that “hears” human pulses, the system may be able to identify, announce and keep track of anyone entering or staying in the home or participating in a conversation, including announcing their identity in advance. The system may interrupt a conversation to implement specific commands and resume the conversation after implementation. The system may have extensible memory structures for term, phrase, relation and knowledge, question answering routines and a parser analyzer that uses transformational grammar and a modified three hypothesis analysis. The parser analyzer can be dormant unless spoken to. The system has emergency modes for prioritization of commands.

Owner:DAVID ABRAHAM BEN

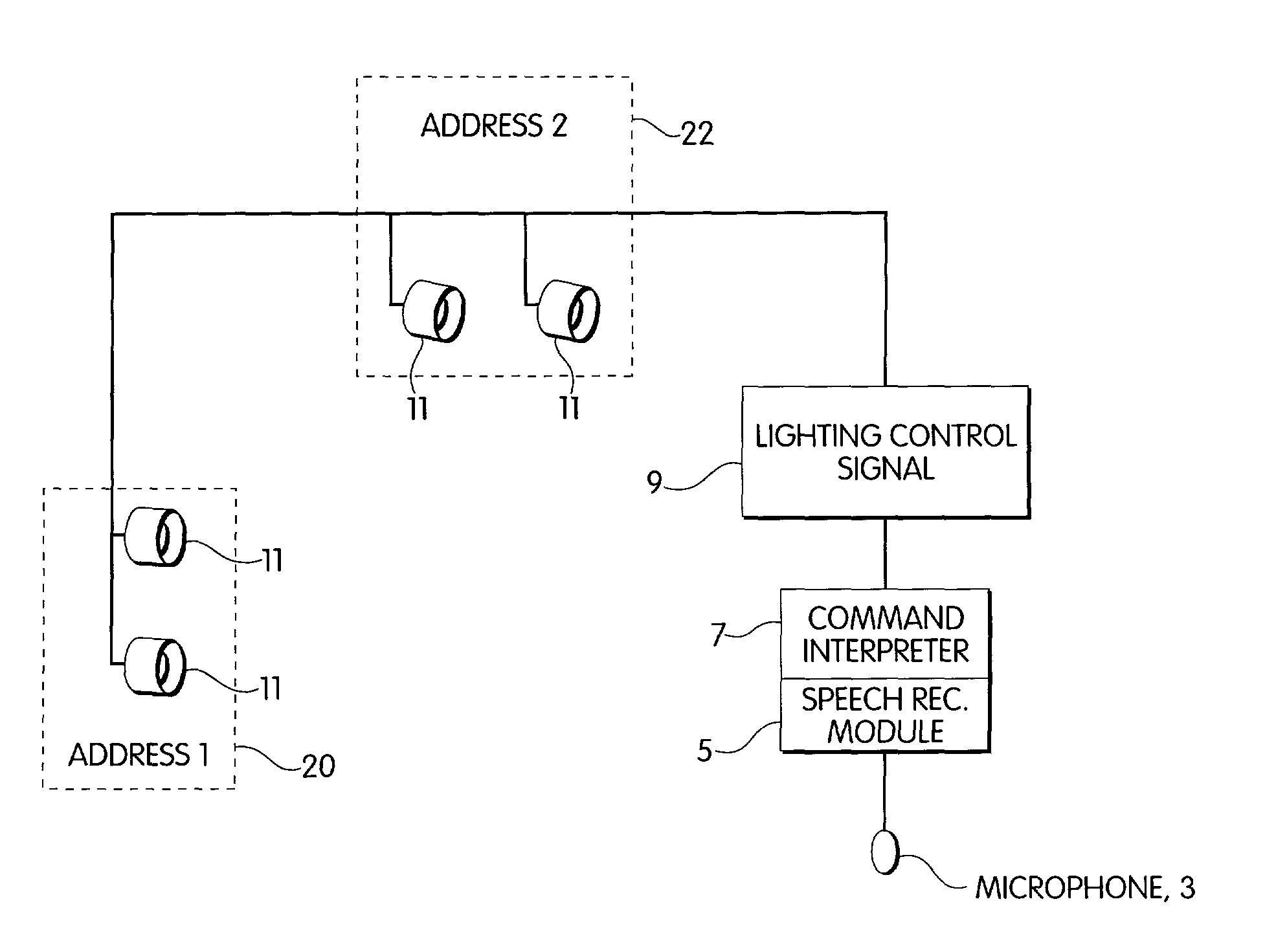

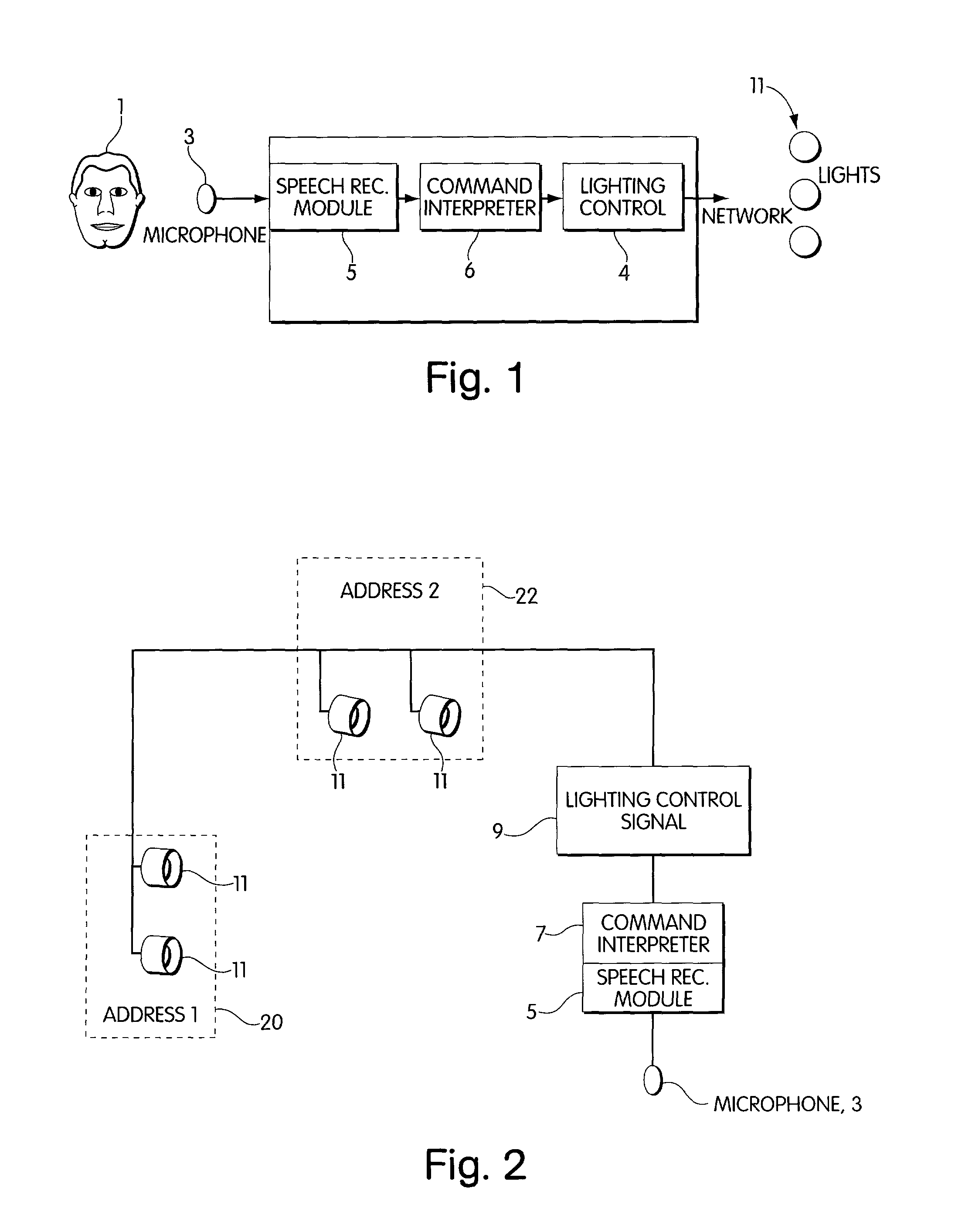

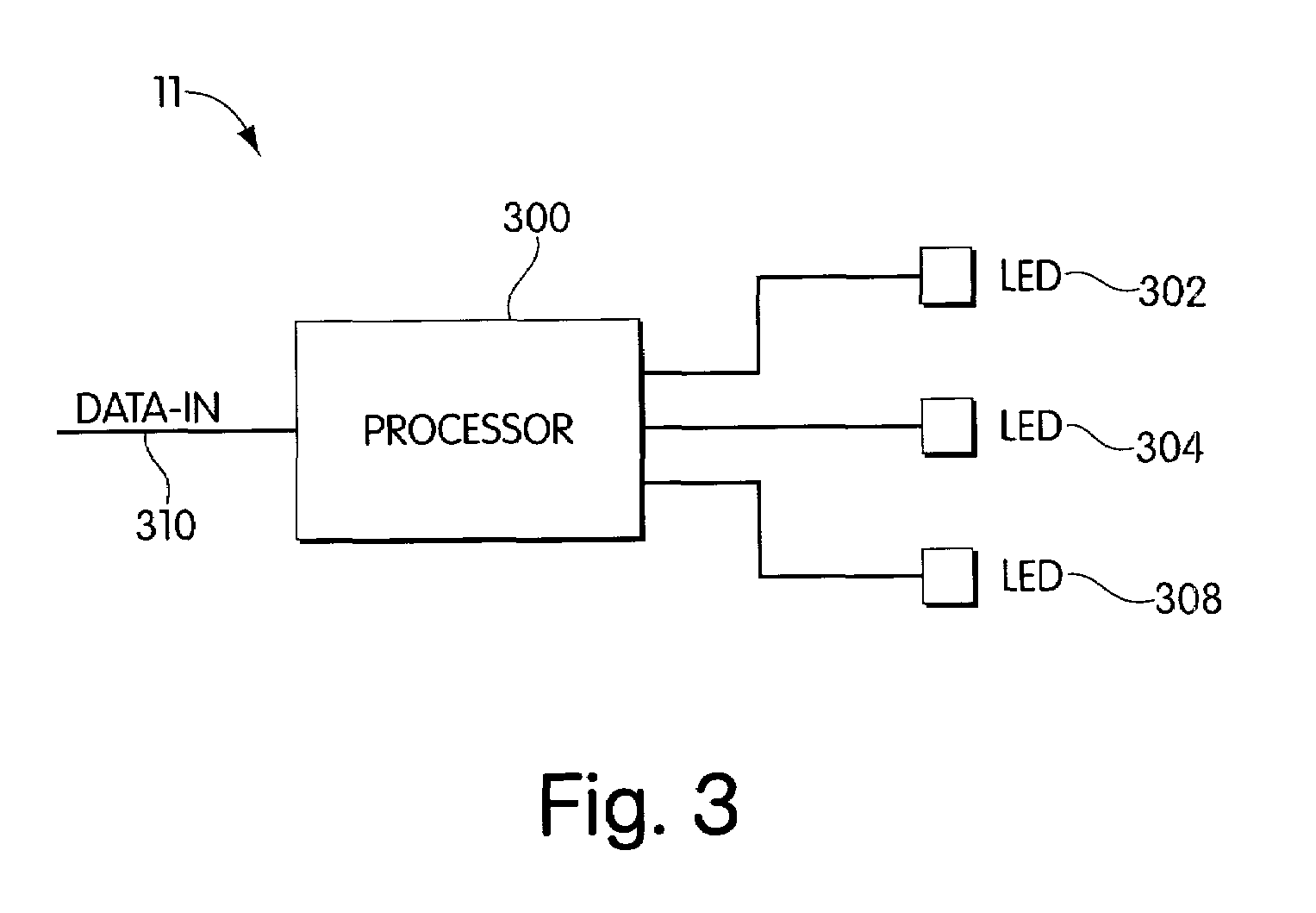

Lighting control using speech recognition

A system and method for the control of color-based lighting through voice control or speech recognition as well as a syntax for use with such a system. In this approach, the spoken voice (in any language) can be used to more naturally control effects without having to learn the myriad manipulation required of some complex controller interfaces. A simple control language based upon spoken words consisting of commands and values is constructed and used to provide a common base for lighting and system control.

Owner:PHILIPS LIGHTING NORTH AMERICA CORPORATION

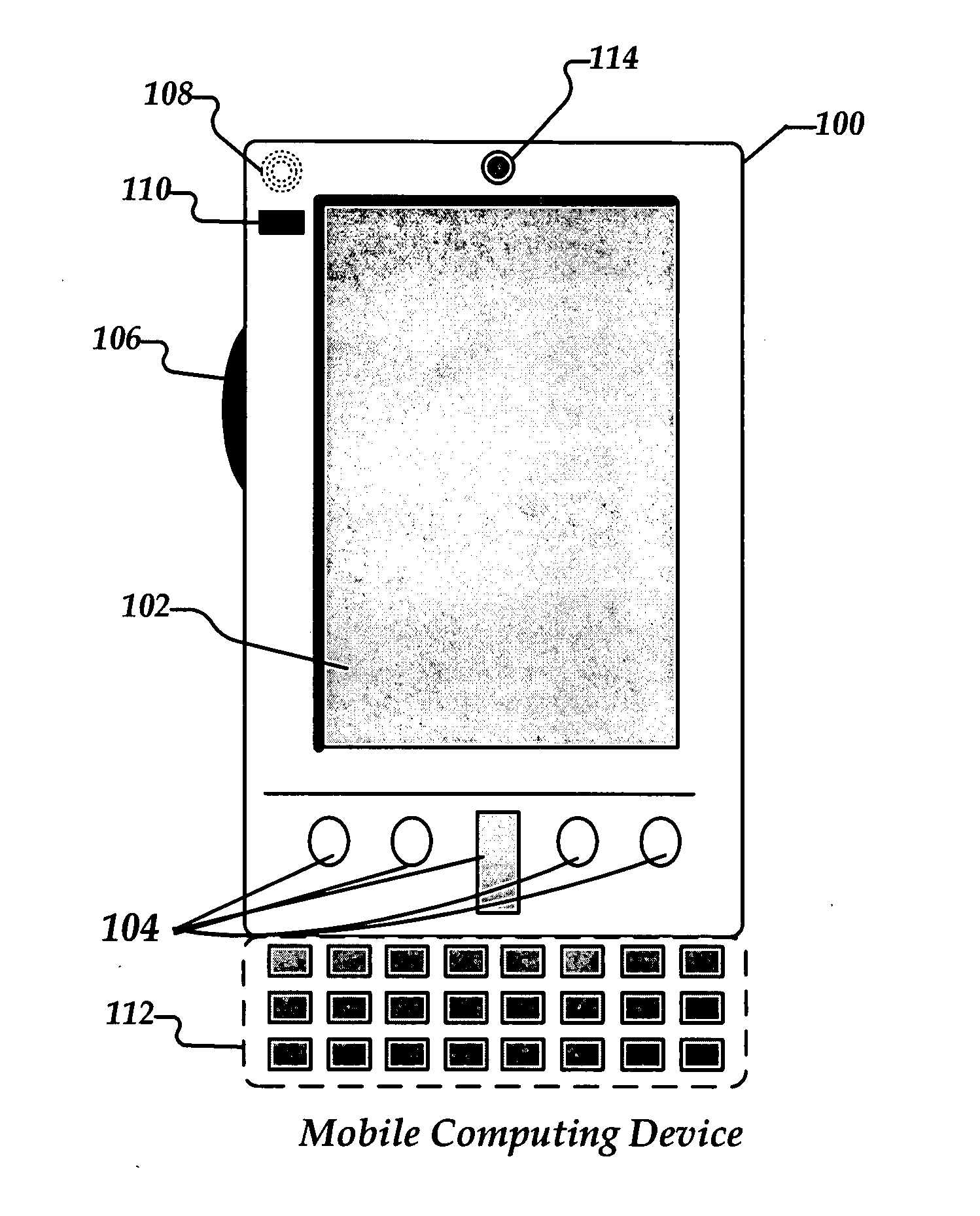

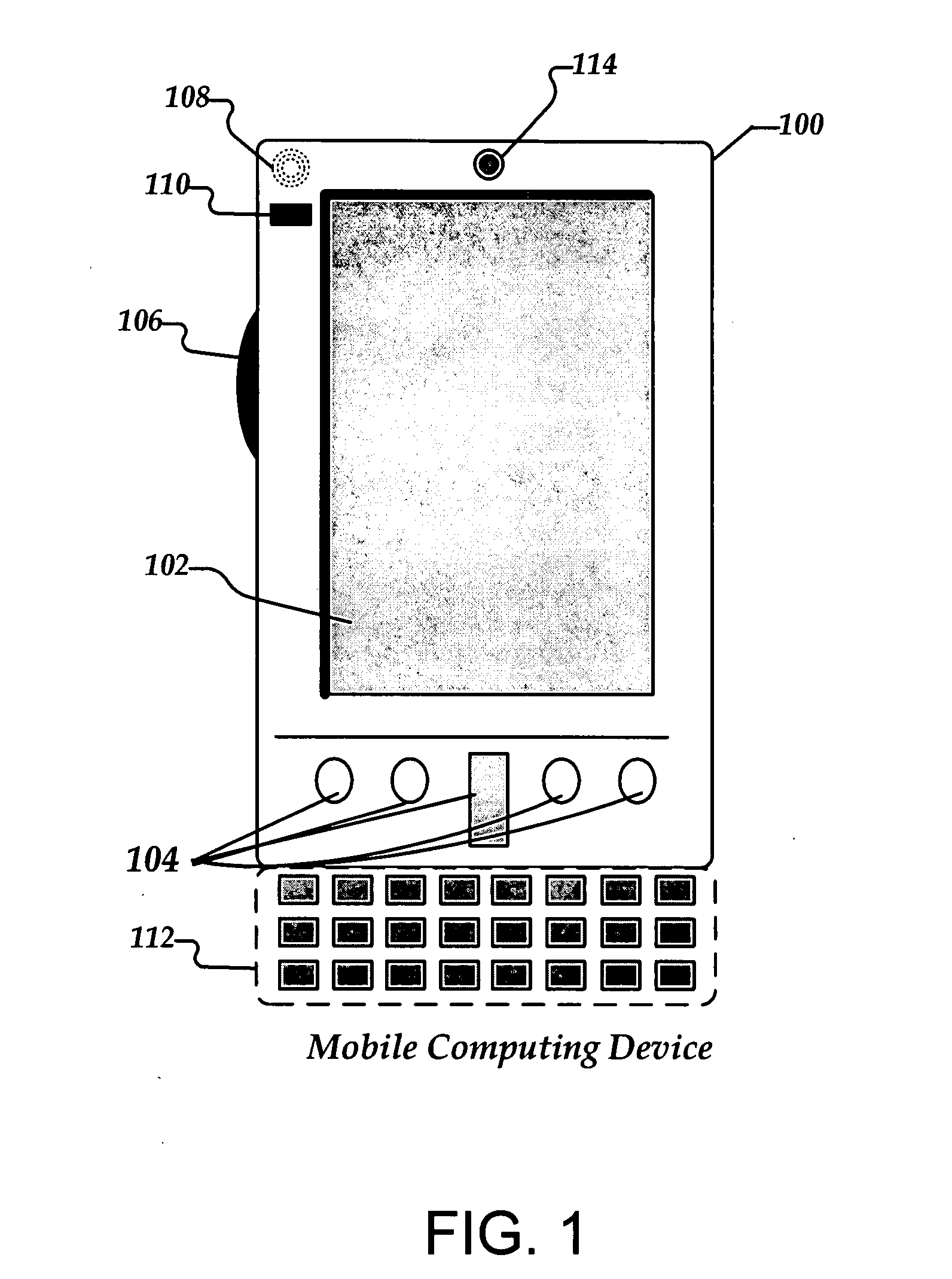

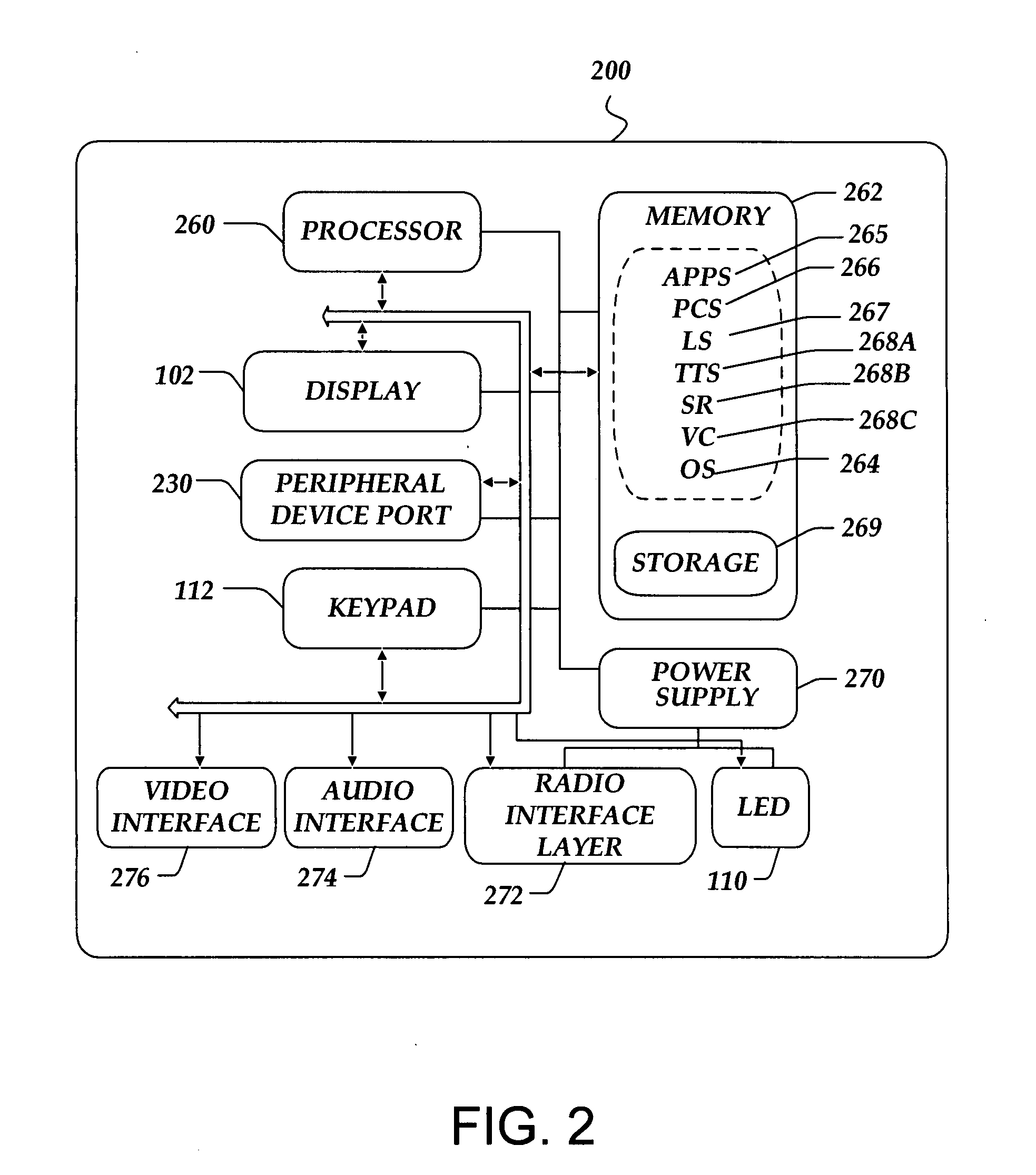

Method for Transforming Language Into a Visual Form

InactiveUS20090058860A1Easy to understandNatural language analysis2D-image generationComputer Aided DesignSpoken language

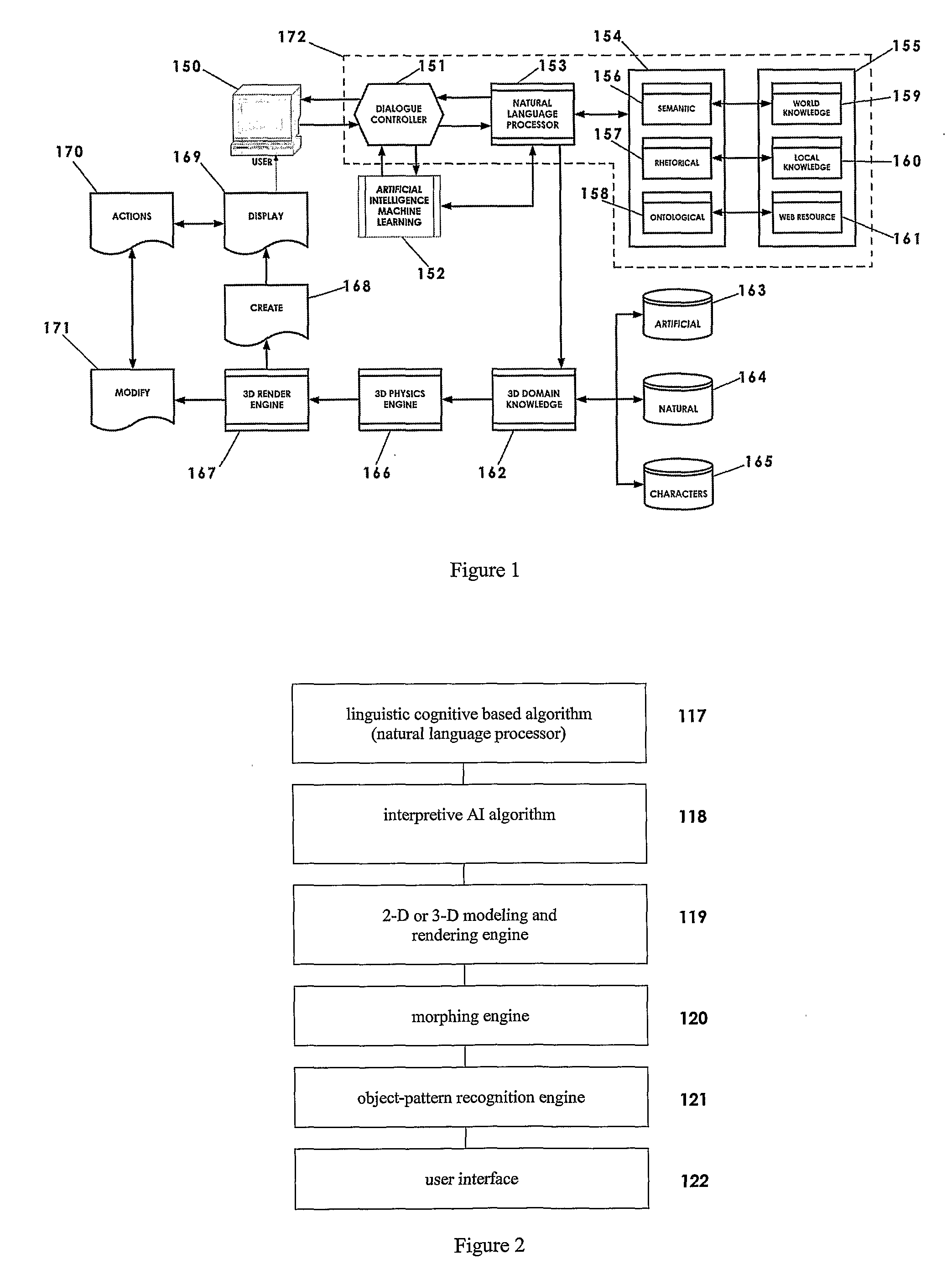

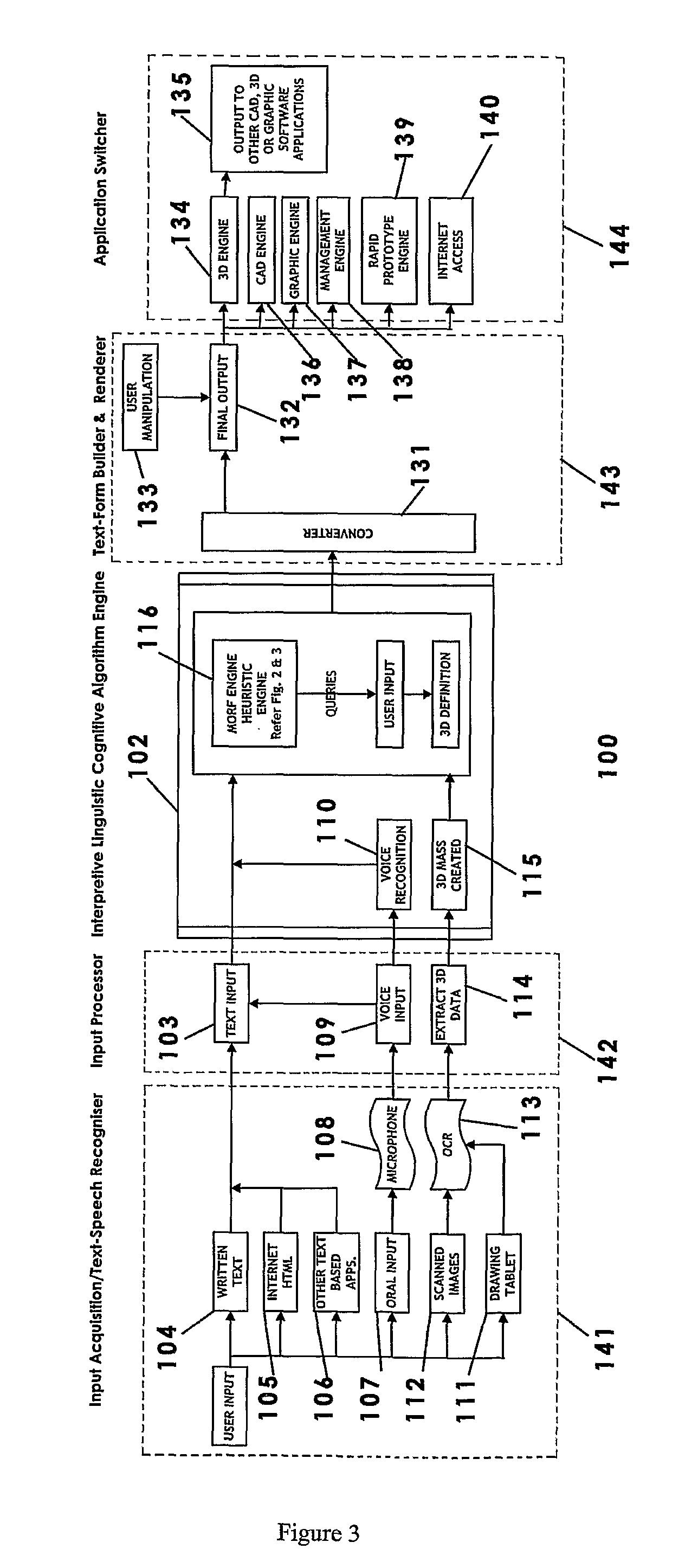

A computer assisted design system (100) that includes a computer system (102) and text input device (103) that may be provided with text elements from a keyboard (104). A user may also provide oral input (107) to the text input device (103) or to a voice recognition software with in-built artificial intelligence algorithms (110) which can convert spoken language into text elements. The computer system (102) includes an interaction design heuristic engine (116) that acts to understand and translate text and language into a visual form for display to the end user.

Owner:MOR F DYNAMICS

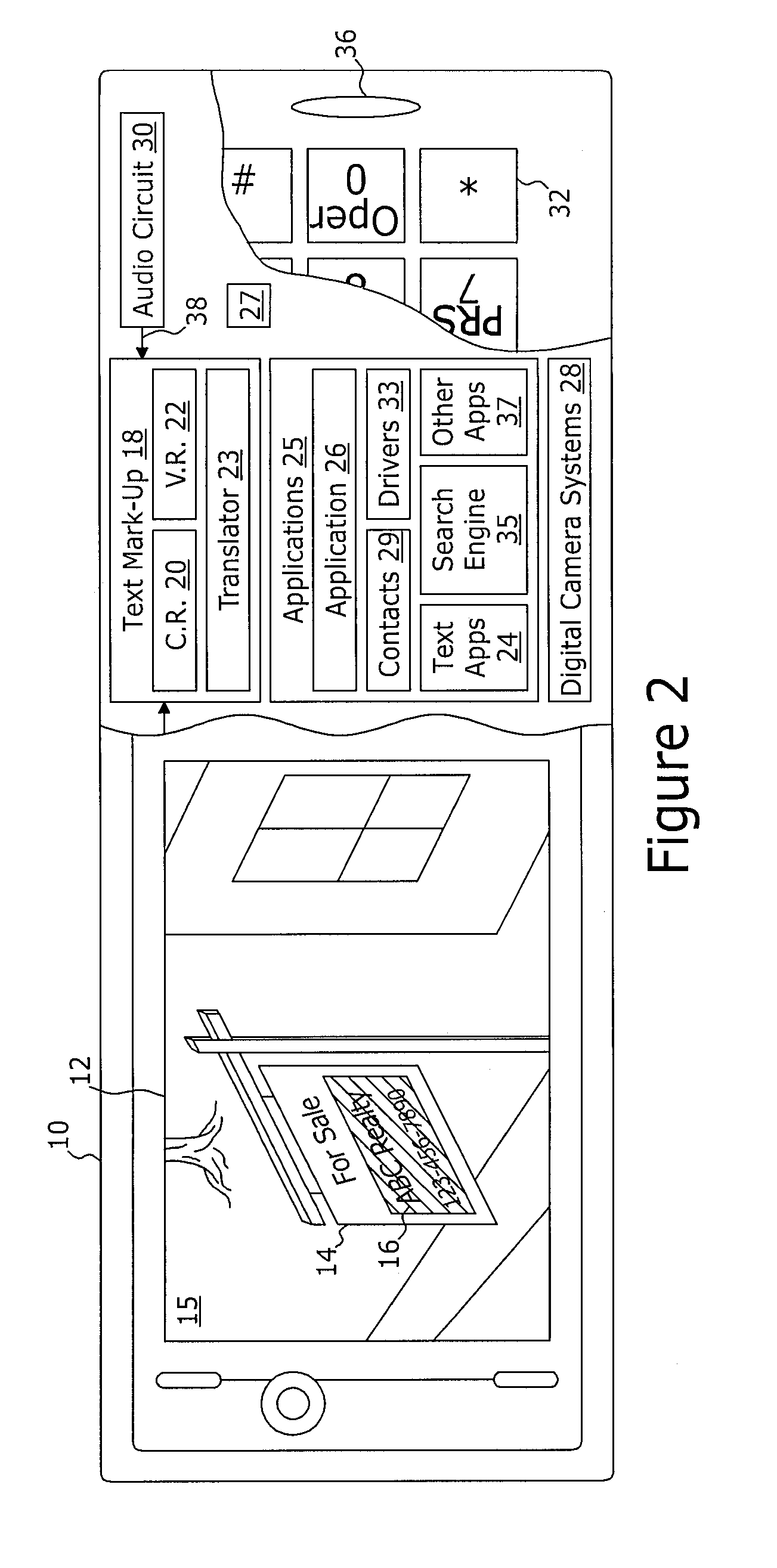

System and method for input of text to an application operating on a device

InactiveUS20090112572A1Natural language data processingSpeech recognitionSpoken languageApplication software

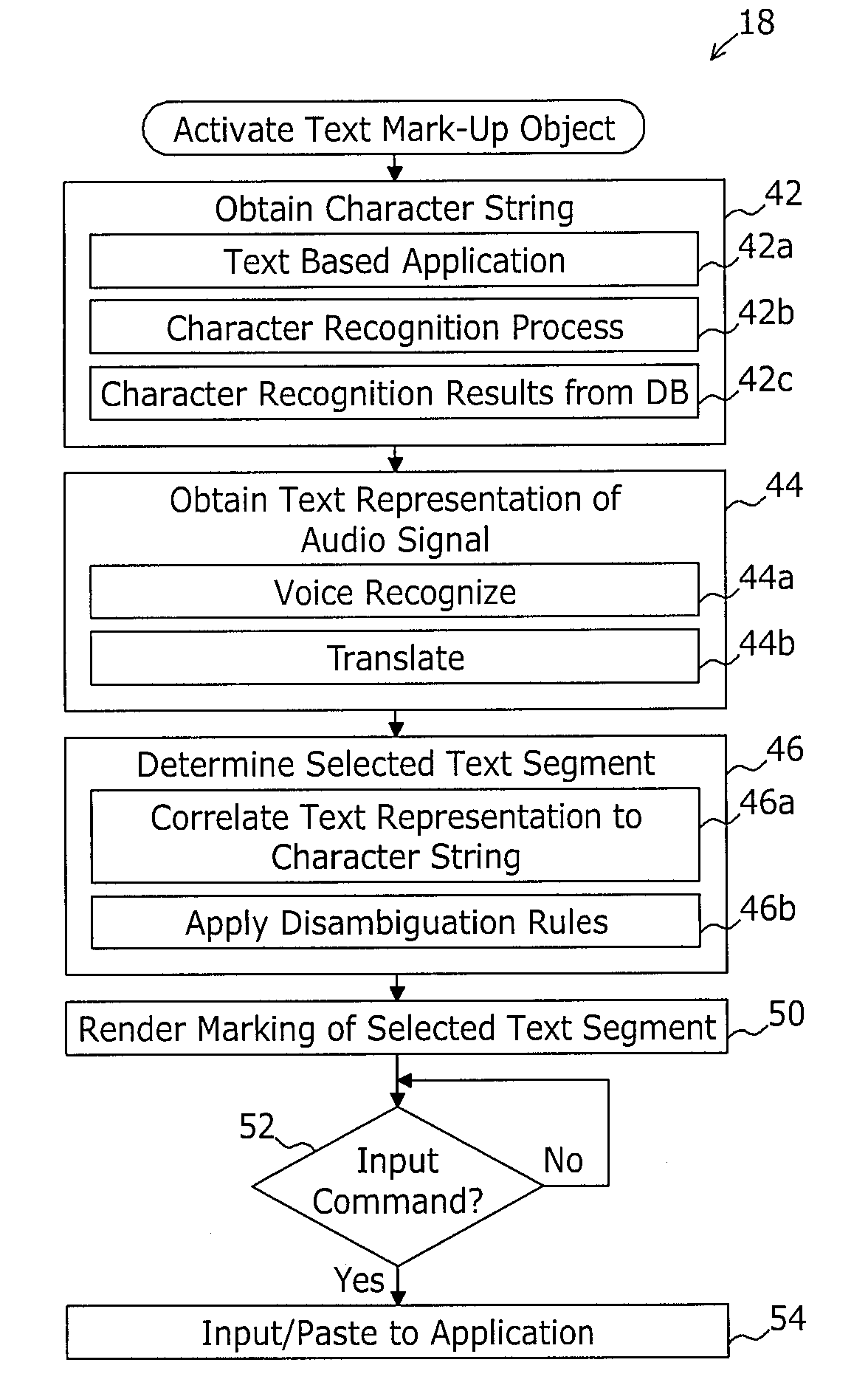

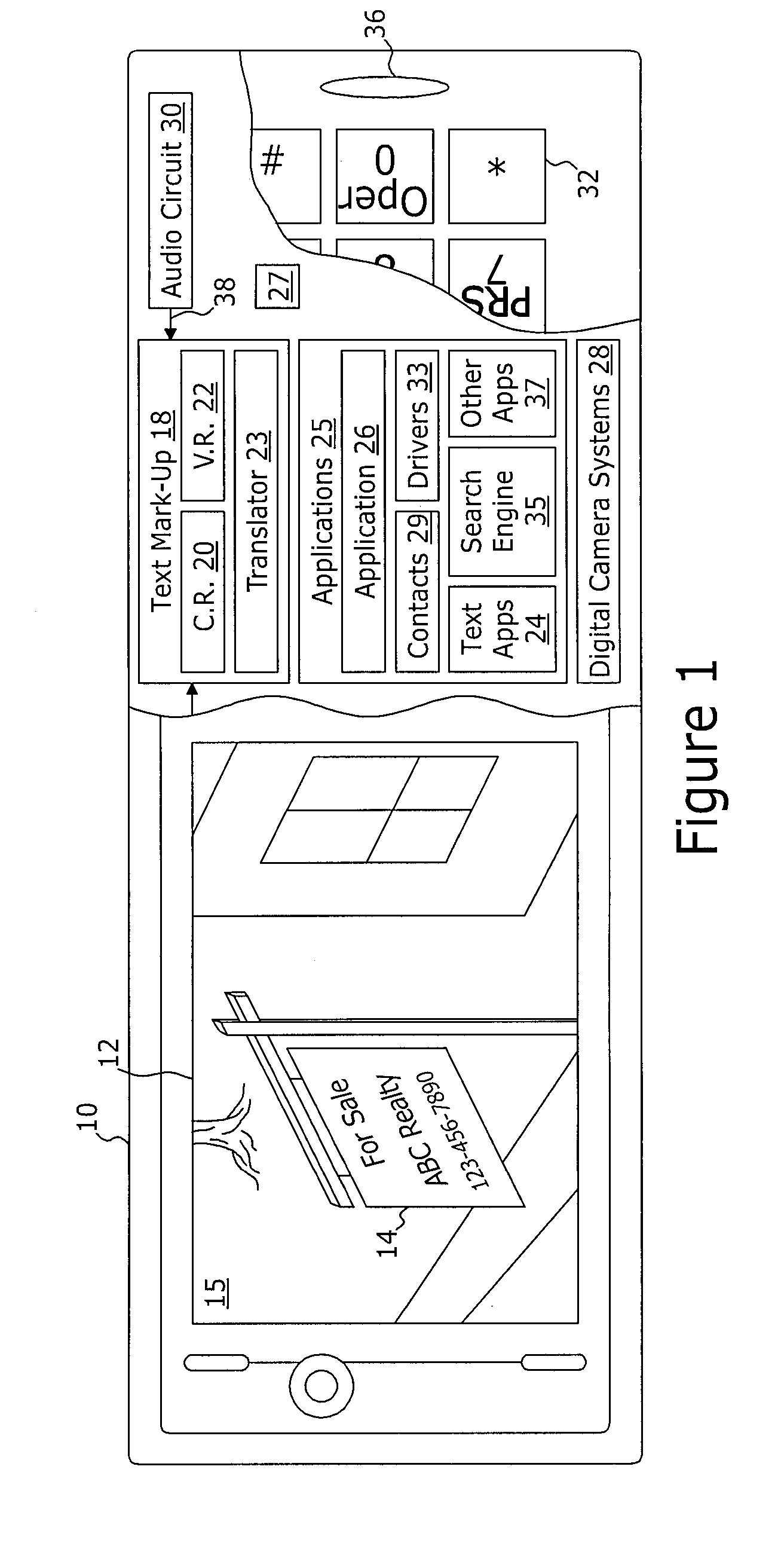

A device comprise an a display screen and an audio circuit for generating an audio signal representing spoken words uttered by the user. A processor executes a first application, a second application, and a text mark-up object. The first application may render a depiction of text on the display screen. The text mark-up object may: i) receiving at least a portion of the audio signal representing spoken words uttered by the user; ii) performing speech recognition to generate a text representation of the spoken words uttered by the user; iii) determining a selected text segment, and iv) performing an input function to input the selected text segment to the second application. The selected text segment may be text which corresponds to both a portion of the depiction of text on the display screen and the text representation of the spoken words uttered by the user.

Owner:SONY ERICSSON MOBILE COMM AB

Pronunciation correction of text-to-speech systems between different spoken languages

Pronunciation correction for text-to-speech (TTS) systems and speech recognition (SR) systems between different languages is provided. If a word requiring pronunciation by a target language TTS or SR is from a same language as the target language, but is not found in a lexicon of words from the target language, a letter-to-speech (LTS) rules set of the target language is used to generate a letter-to-speech output for the word for use by the TTS or SR configured according to the target language. If the word is from a different language as the target language, phonemes comprising the word according to its native language are mapped to phonemes of the target language. The phoneme mapping is used by the TTS or SR configured according to the target language for generating or recognizing an audible form of the word according to the target language.

Owner:MICROSOFT TECH LICENSING LLC

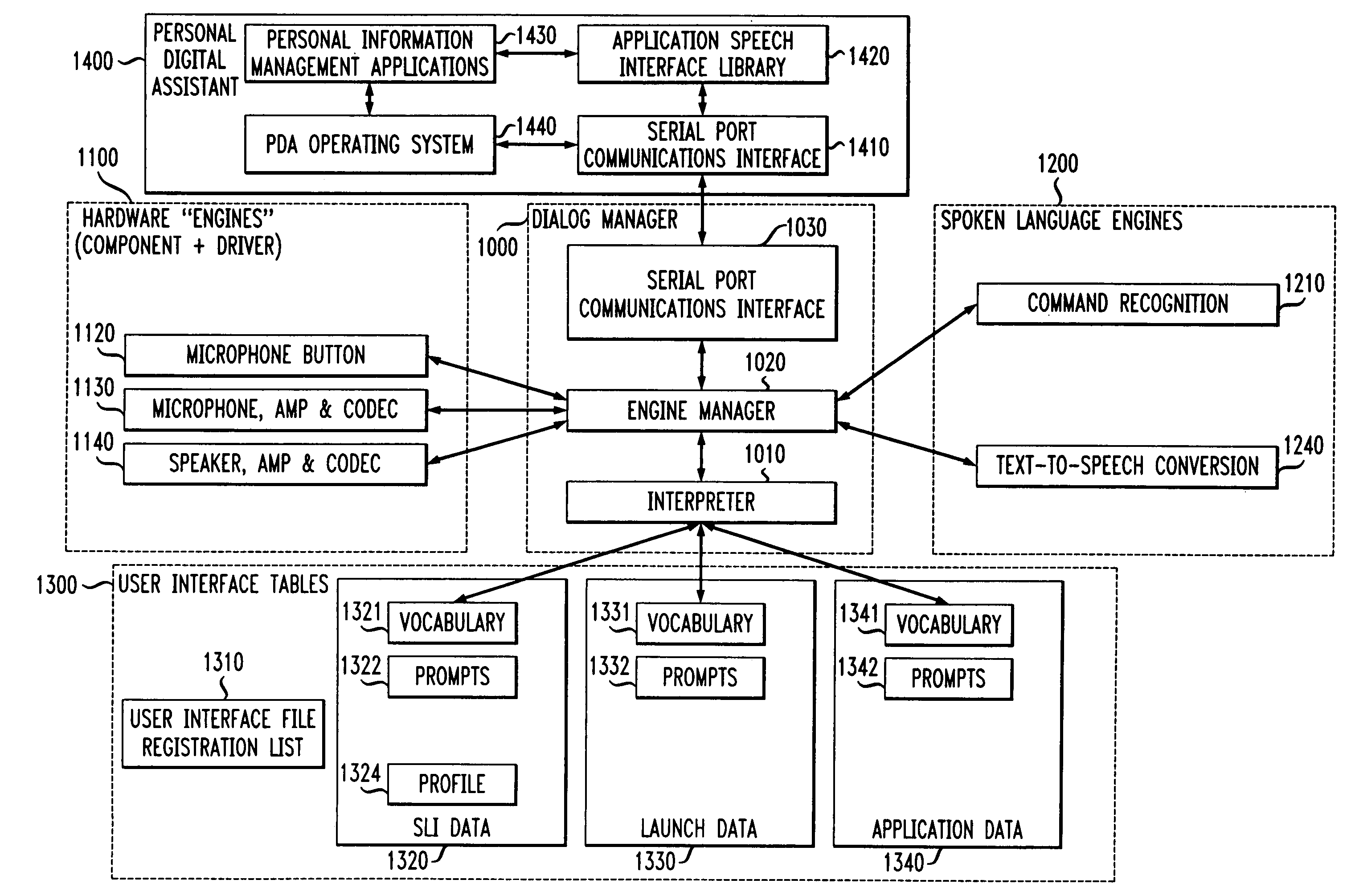

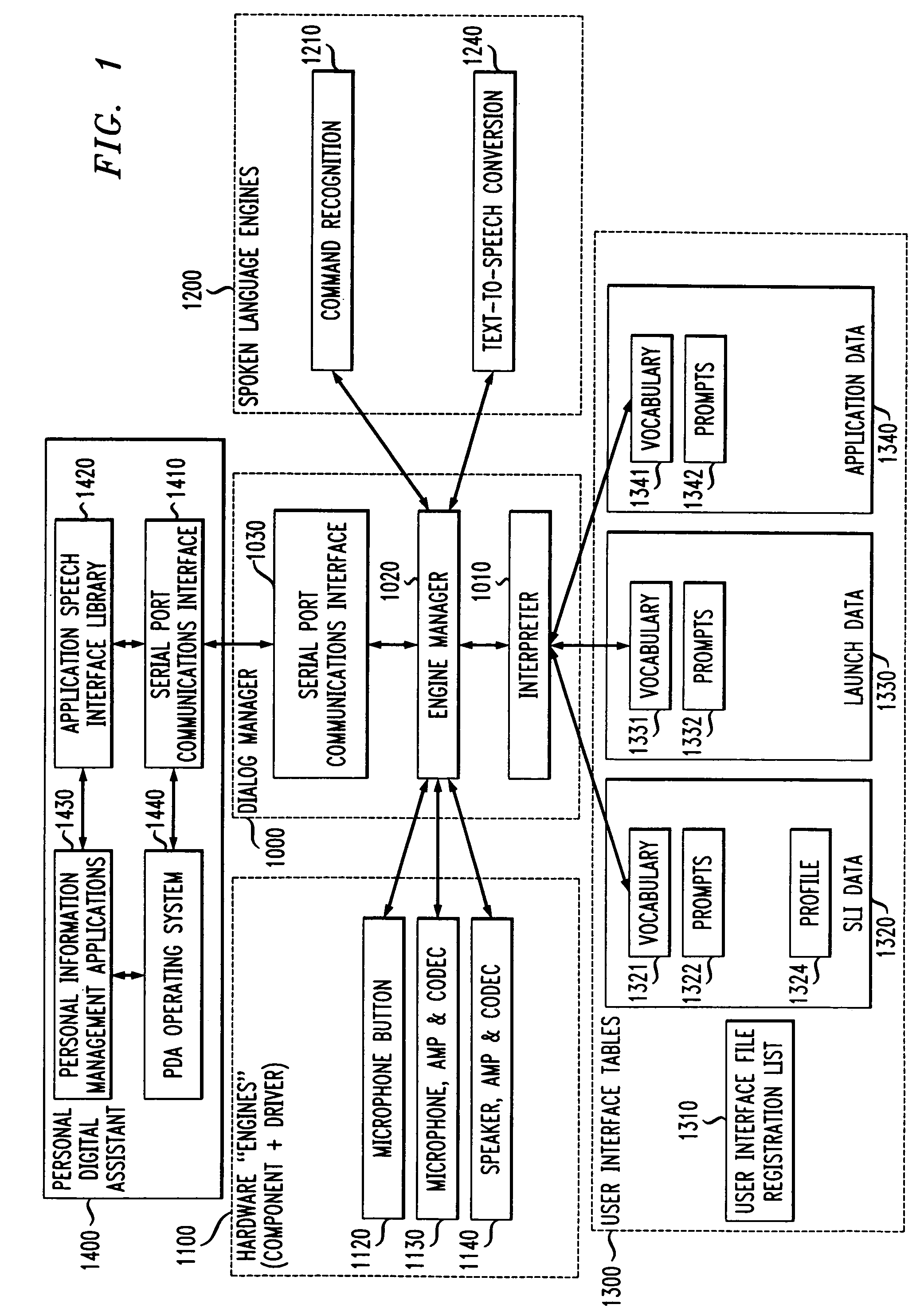

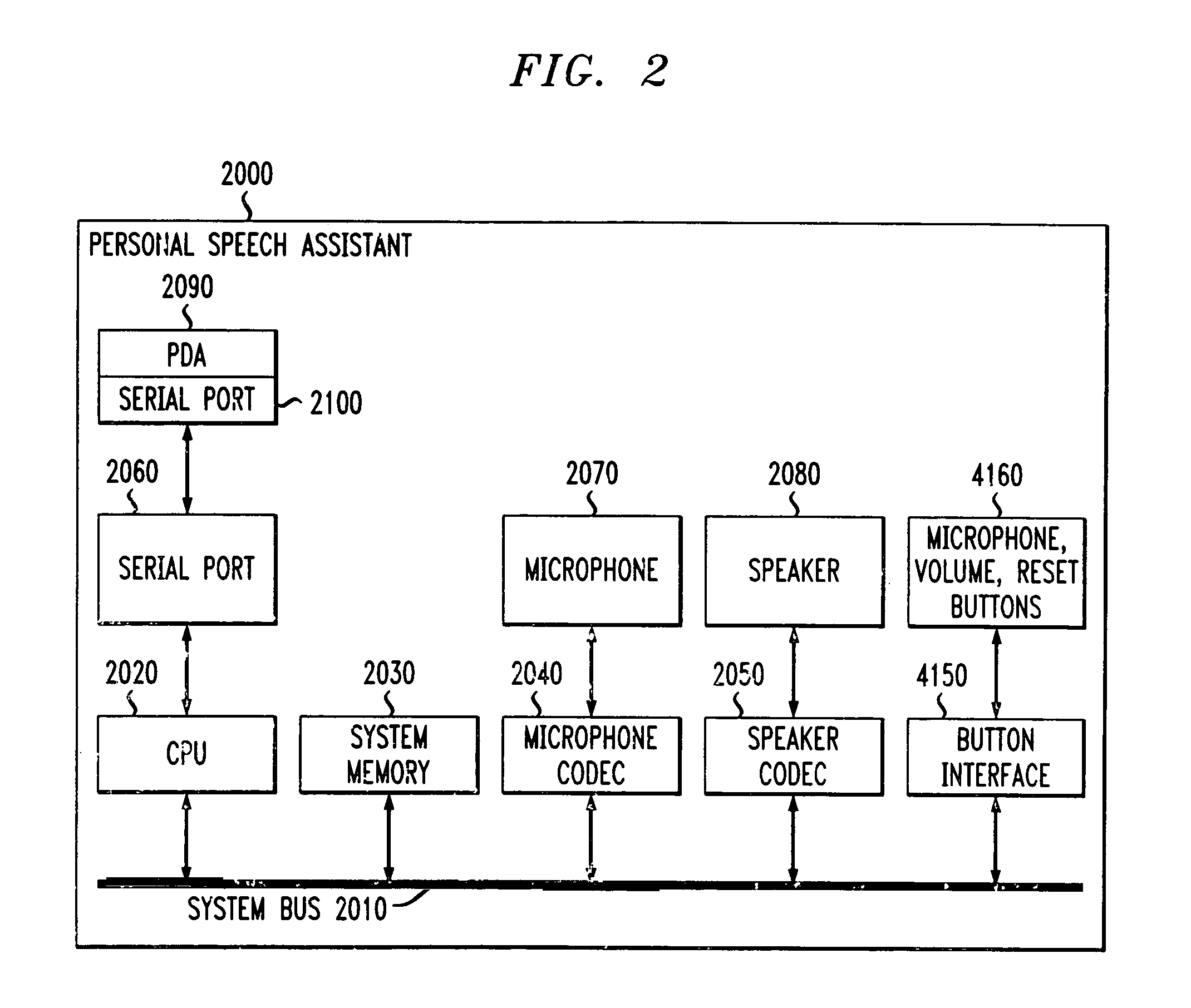

Methods and apparatus for contingent transfer and execution of spoken language interfaces

A method for managing spoken language interface data structures and collections of user interface service engines in a spoken language dialog manager in a personal speech assistant. Interfaces, designed as part of applications, may by these methods be added to or removed from the set of such interfaces used by a dialog manager. Interface service engines, required by new applications, but not already present in the dialog manager, may be made available to the new and subsequently added applications.

Owner:NUANCE COMM INC

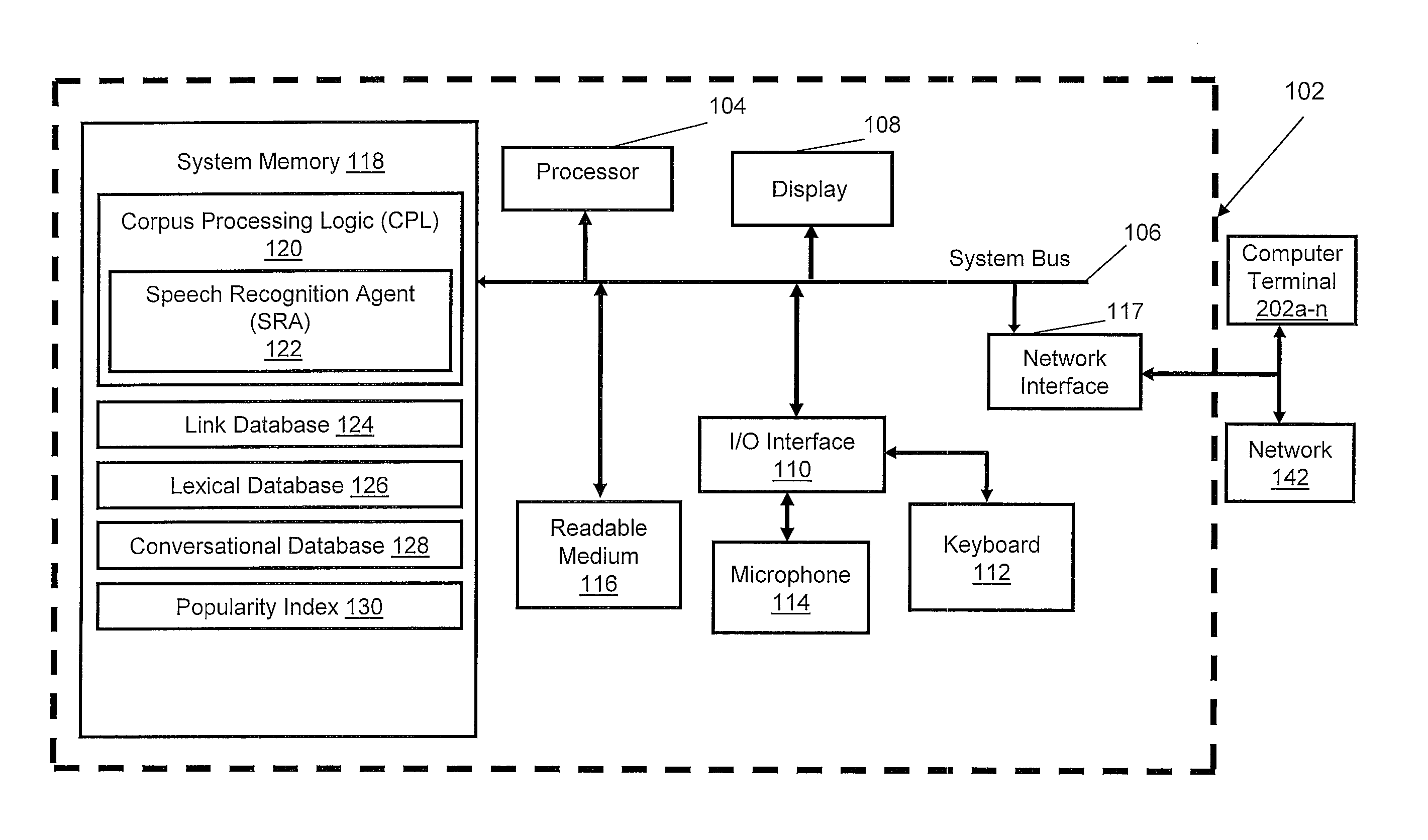

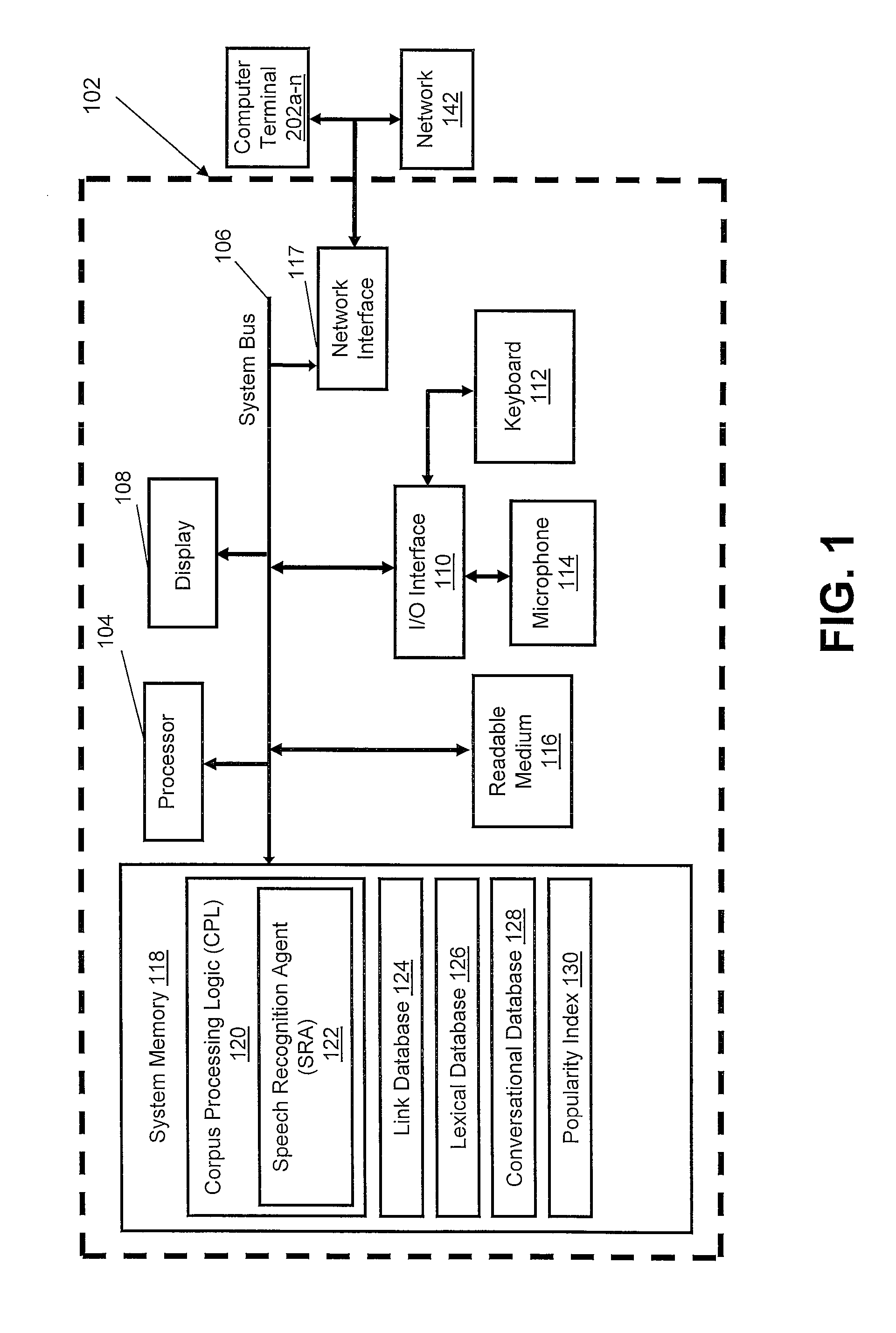

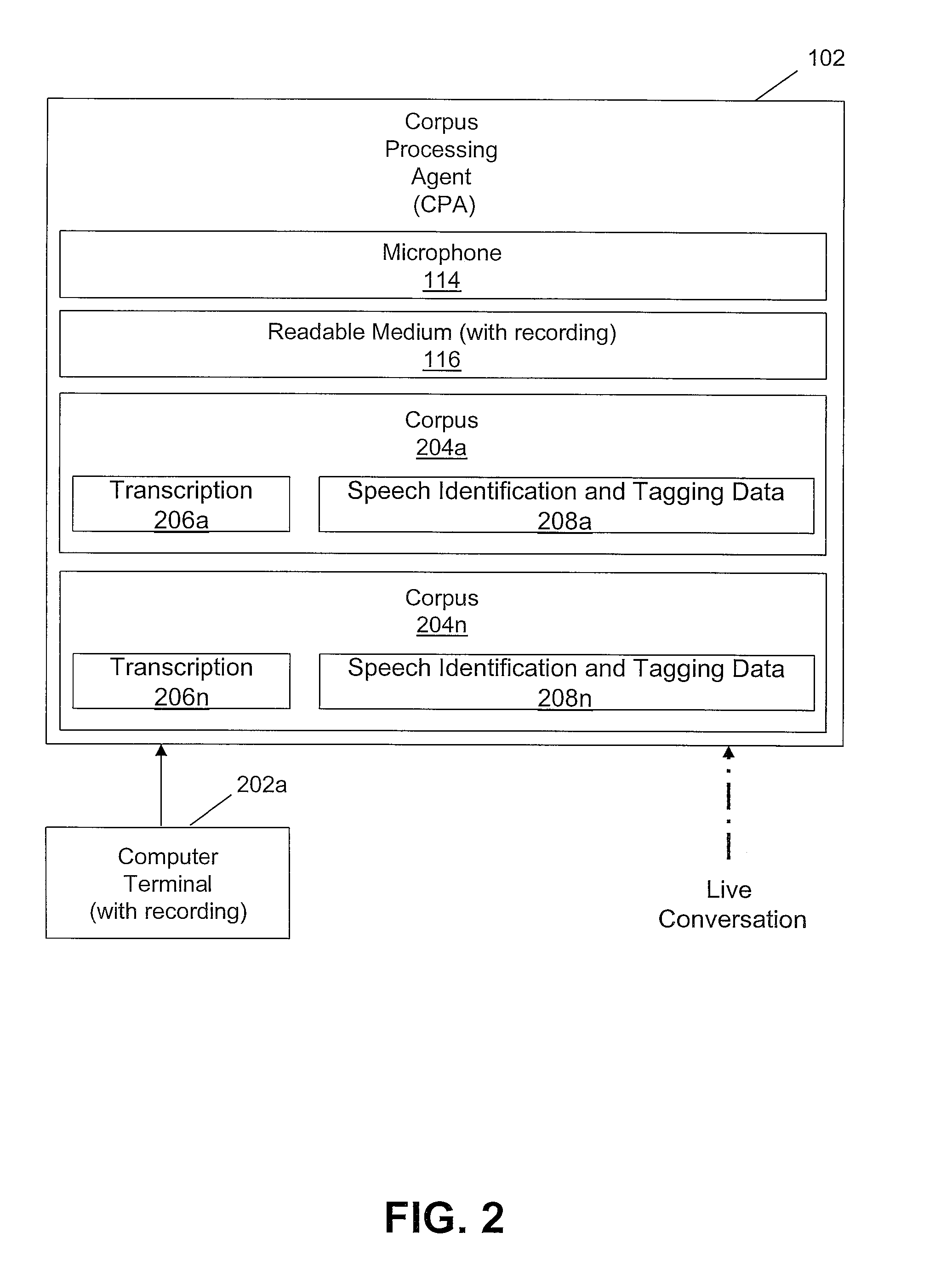

Data processing system for autonomously building speech identification and tagging data

ActiveUS20090306979A1Natural language data processingSpeech recognitionSpoken languageSpeech identification

A method, system, and computer program product for autonomously transcribing and building tagging data of a conversation. A corpus processing agent monitors a conversation and utilizes a speech recognition agent to identify the spoken languages, speakers, and emotional patterns of speakers of the conversation. While monitoring the conversation, the corpus processing agent determines emotional patterns by monitoring voice modulation of the speakers and evaluating the context of the conversation. When the conversation is complete, the corpus processing agent determines synonyms and paraphrases of spoken words and phrases of the conversation taking into consideration any localized dialect of the speakers. Additionally, metadata of the conversation is created and stored in a link database, for comparison with other processed conversations. A corpus, a transcription of the conversation containing metadata links, is then created. The corpus processing agent also determines the frequency of spoken keywords and phrases and compiles a popularity index.

Owner:NUANCE COMM INC

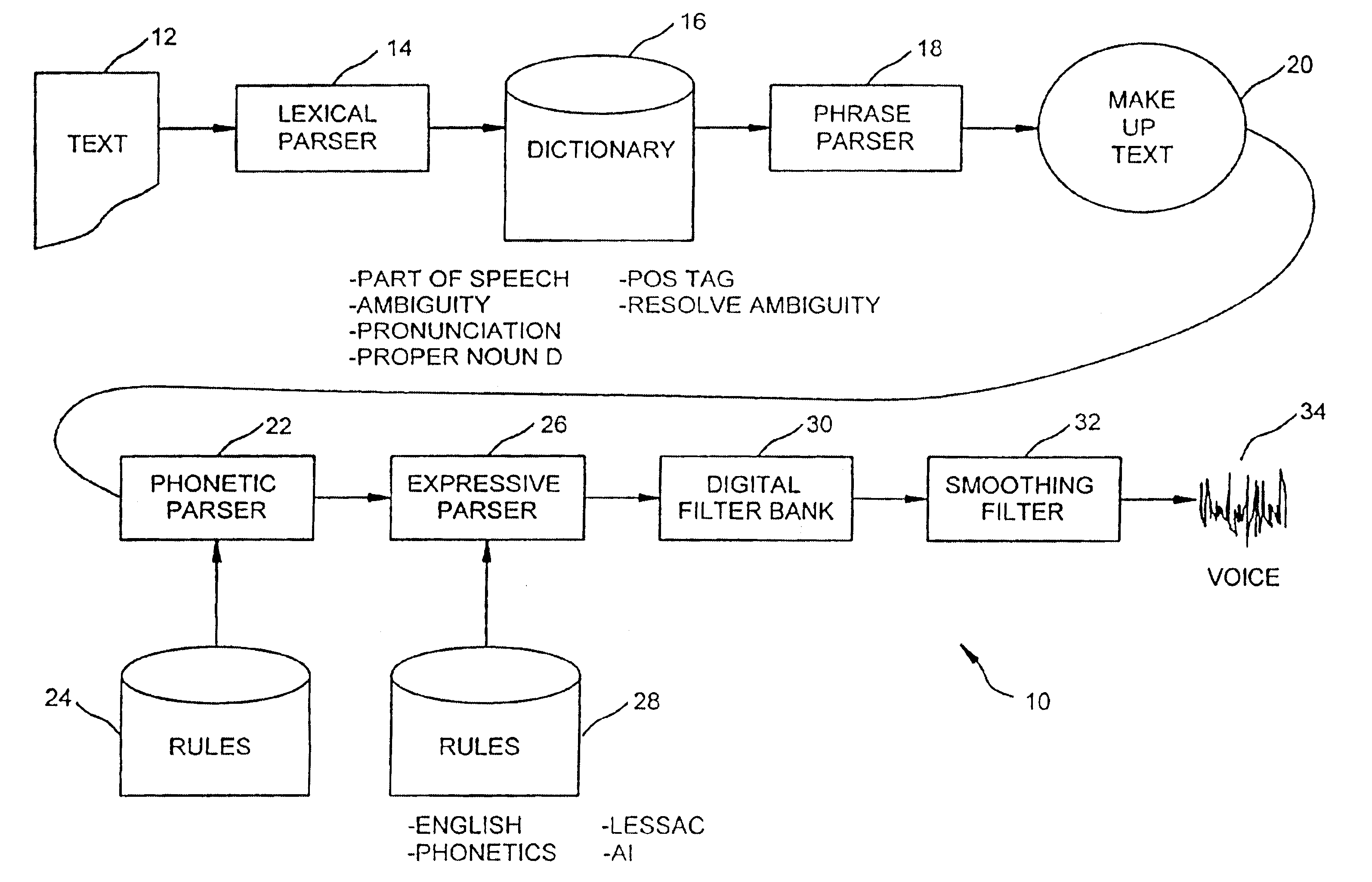

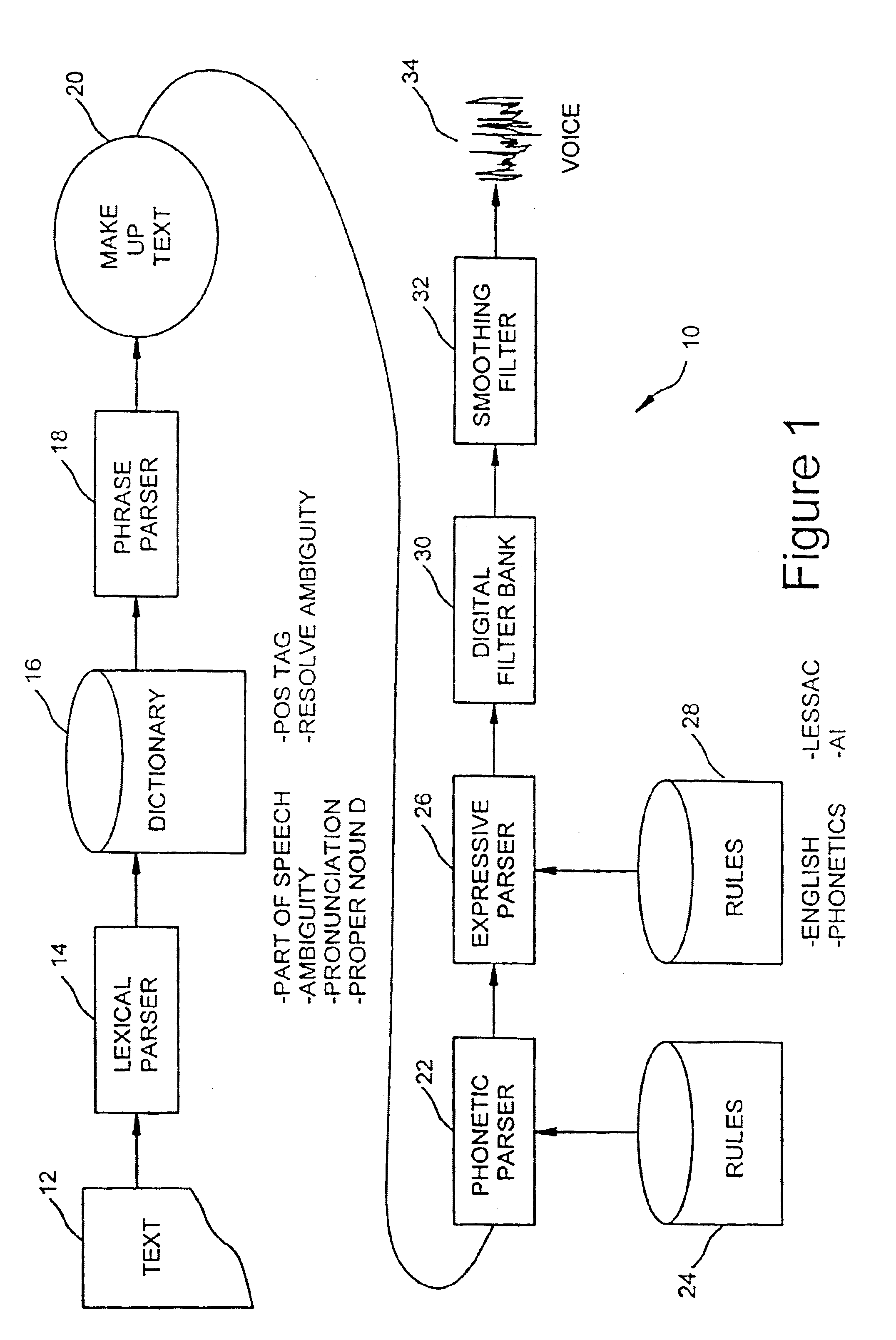

Text to speech

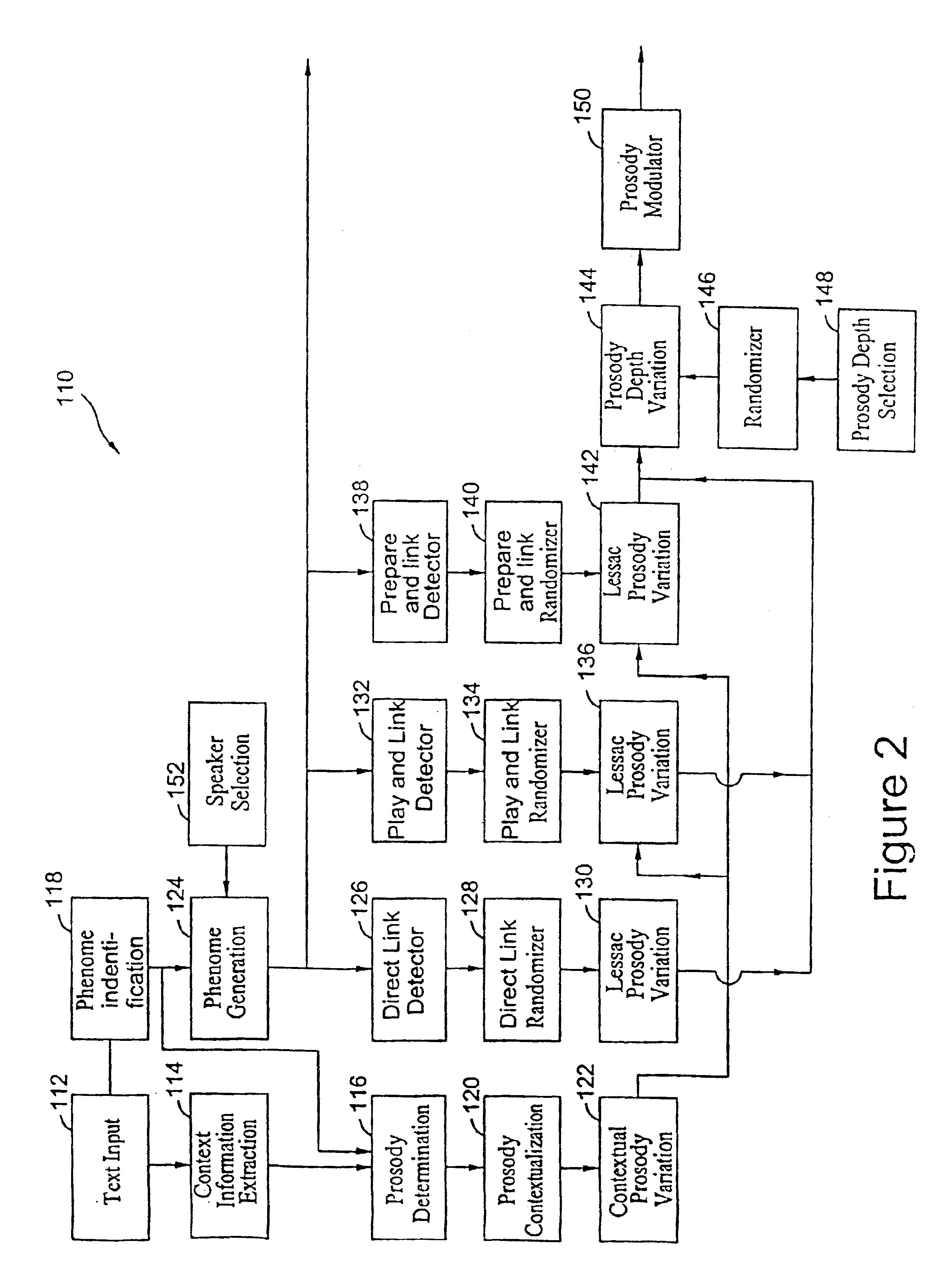

InactiveUS6865533B2Avoiding conditioned pitfallSmall sizeSpeech recognitionElectrical appliancesSpoken languageSpeech sound

A preferred embodiment of the method for converting text to speech using a computing device having a memory is disclosed. The inventive method comprises examining a text to be spoken to an audience for a specific communications purpose, followed by marking-up the text according to a phonetic markup systems such as the Lessac System pronunciation rules notations. A set of rules to control a speech to text generator based on speech principles, such as Lessac principles. Such rules are of the tide normally implemented on prior art text-to-speech engines, and control the operation of the software and the characteristics of the speech generated by a computer using the software. A computer is used to speak the marked-up text expressively. The step of using a computer to speak the marked-up text expressively is repeated using alternative pronunciations of the selected style of expression where each of the tonal, structural, and consonant energies, have a different balance in the speech, are also spoken to a trained speech practitioners that listened to the spoken speech generated by the computer. The spoken speech generated by the computer is then evaluated for consistency with style criteria and / or expressiveness. And audience is then assembled and the spoken speech generated by the computer is played back to the audience. Audience comprehension of spoken speech generated by the computer is evaluated and correlated to a particular implemented rule or rules, and those rules which resulted relatively high audience comprehension are selected.

Owner:LESSAC TECH INC

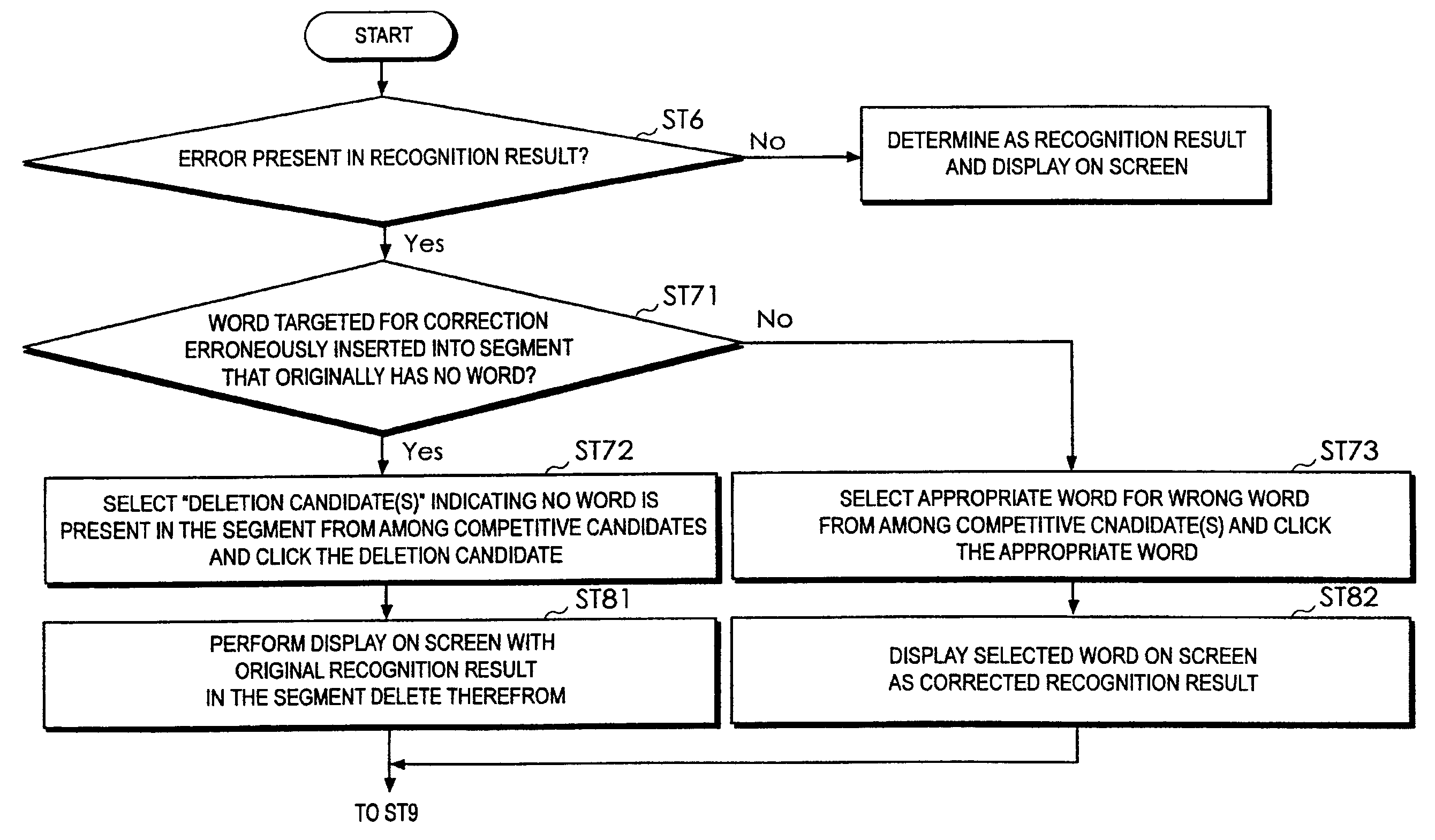

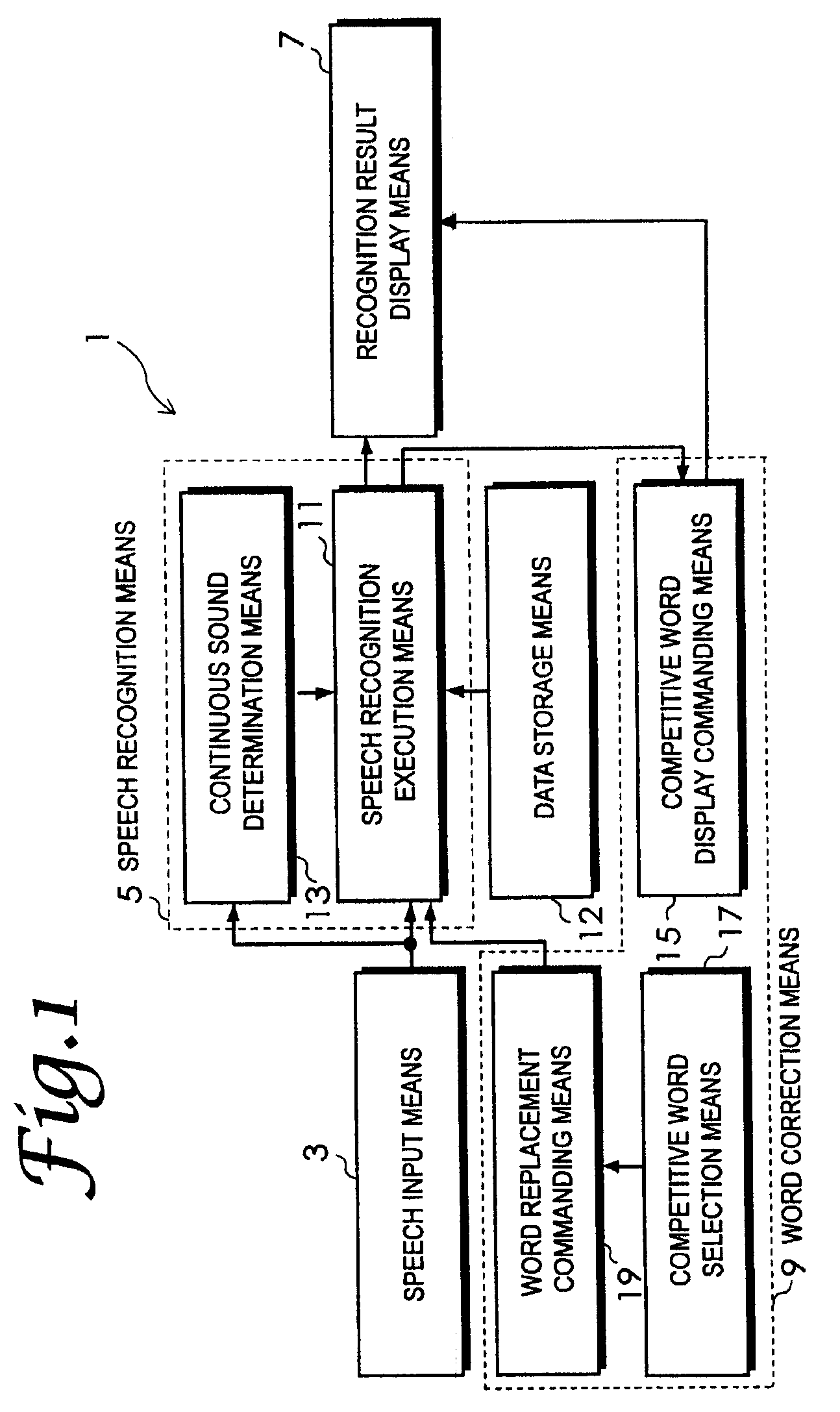

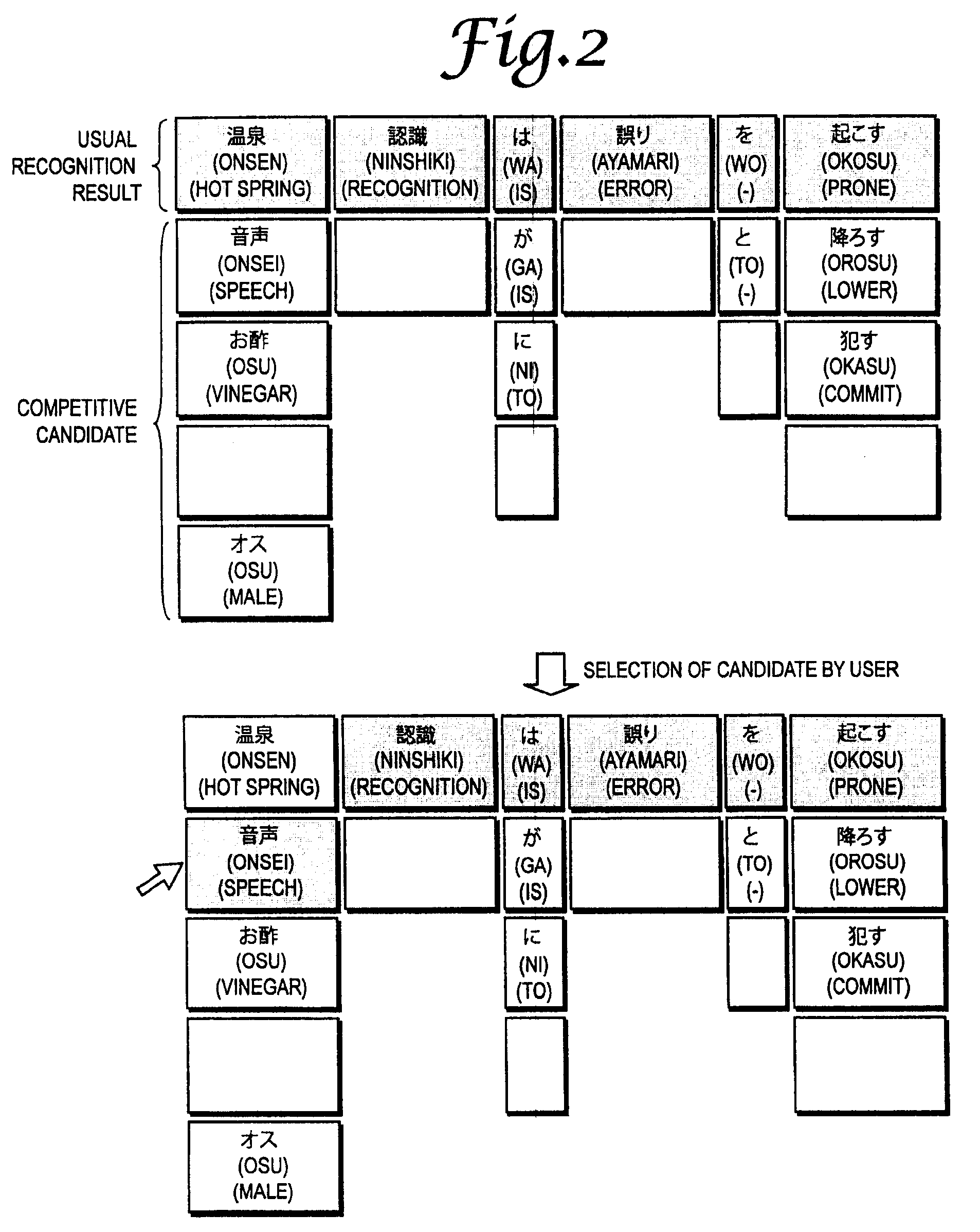

System, method, and program for correcting misrecognized spoken words by selecting appropriate correction word from one or more competitive words

A speech recognition system is provided where a user may more efficiently and easily correct a recognition error resulting from speech recognition. The system compares multiple inputted words with multiple stored words and determines a most-competitive word candidate. The system selects one or more competitive words that have competitive probabilities close to the competitive probability of the most-competitive word candidate and displays the one or more competitive words adjacent to the most-competitive word candidate. The system selects an appropriate correction word from the one or more competitive words and replaces one of the most competitive word candidate with the correction word.

Owner:NAT INST OF ADVANCED IND SCI & TECH

Electronic book player with audio synchronization

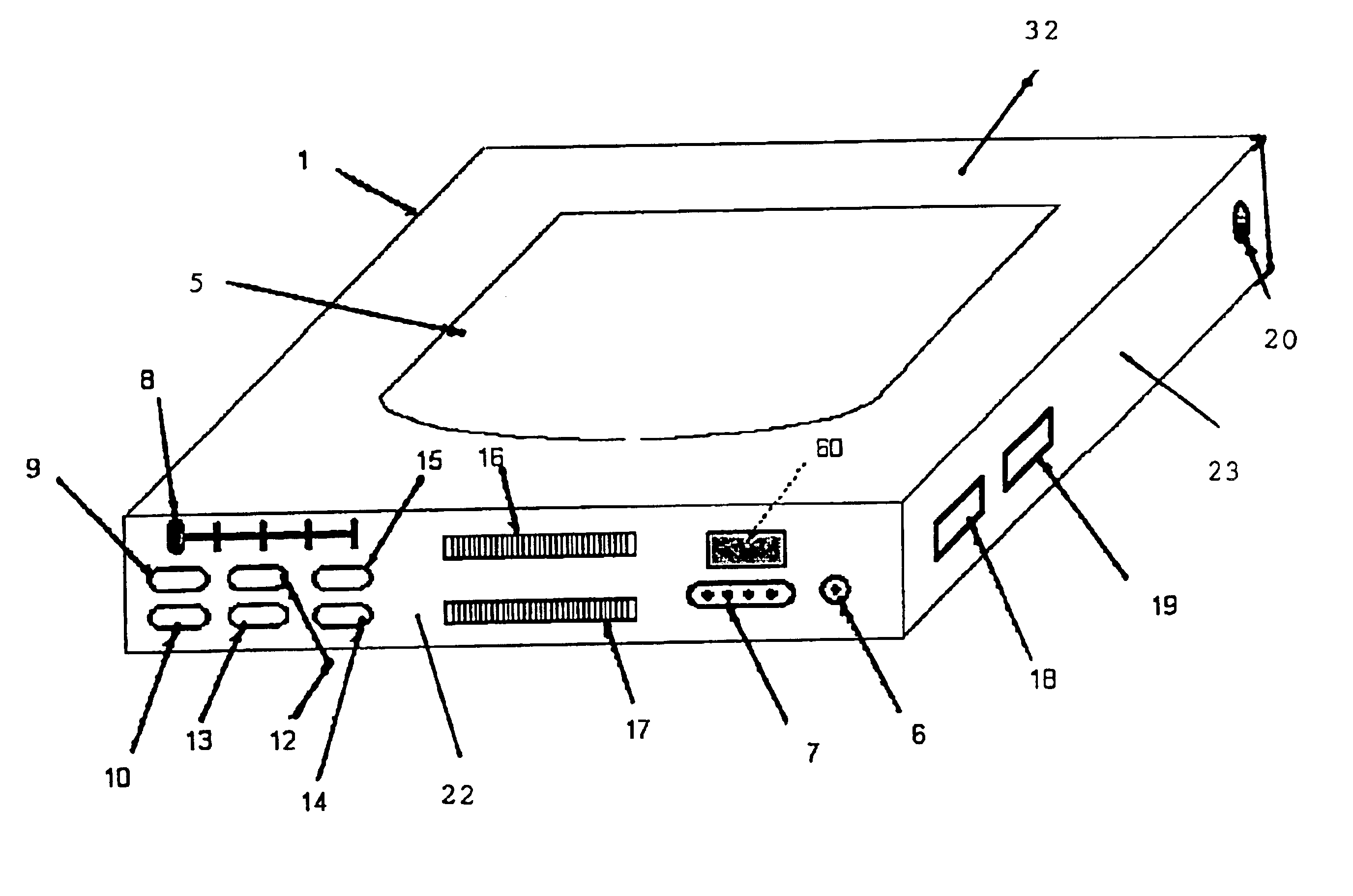

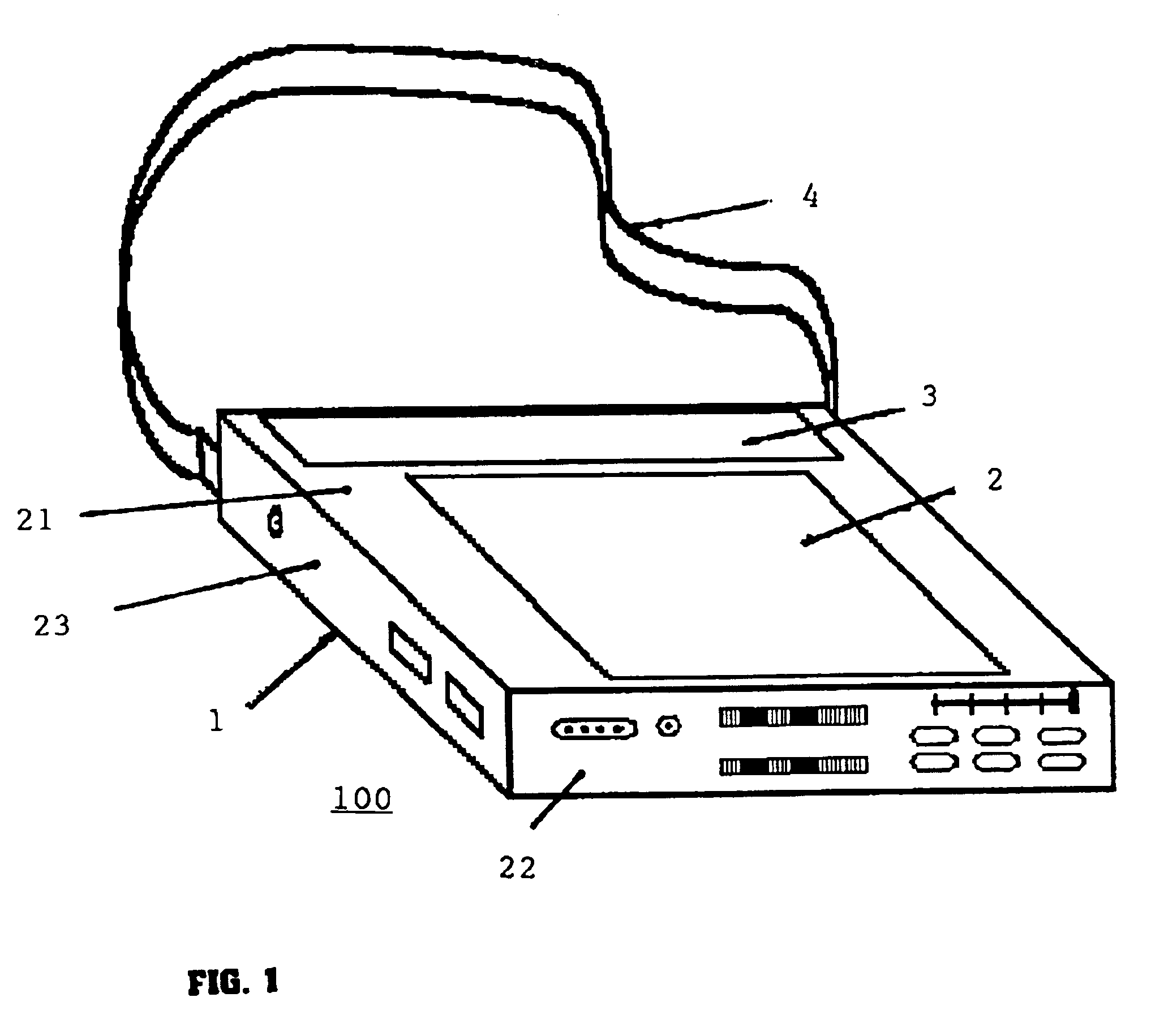

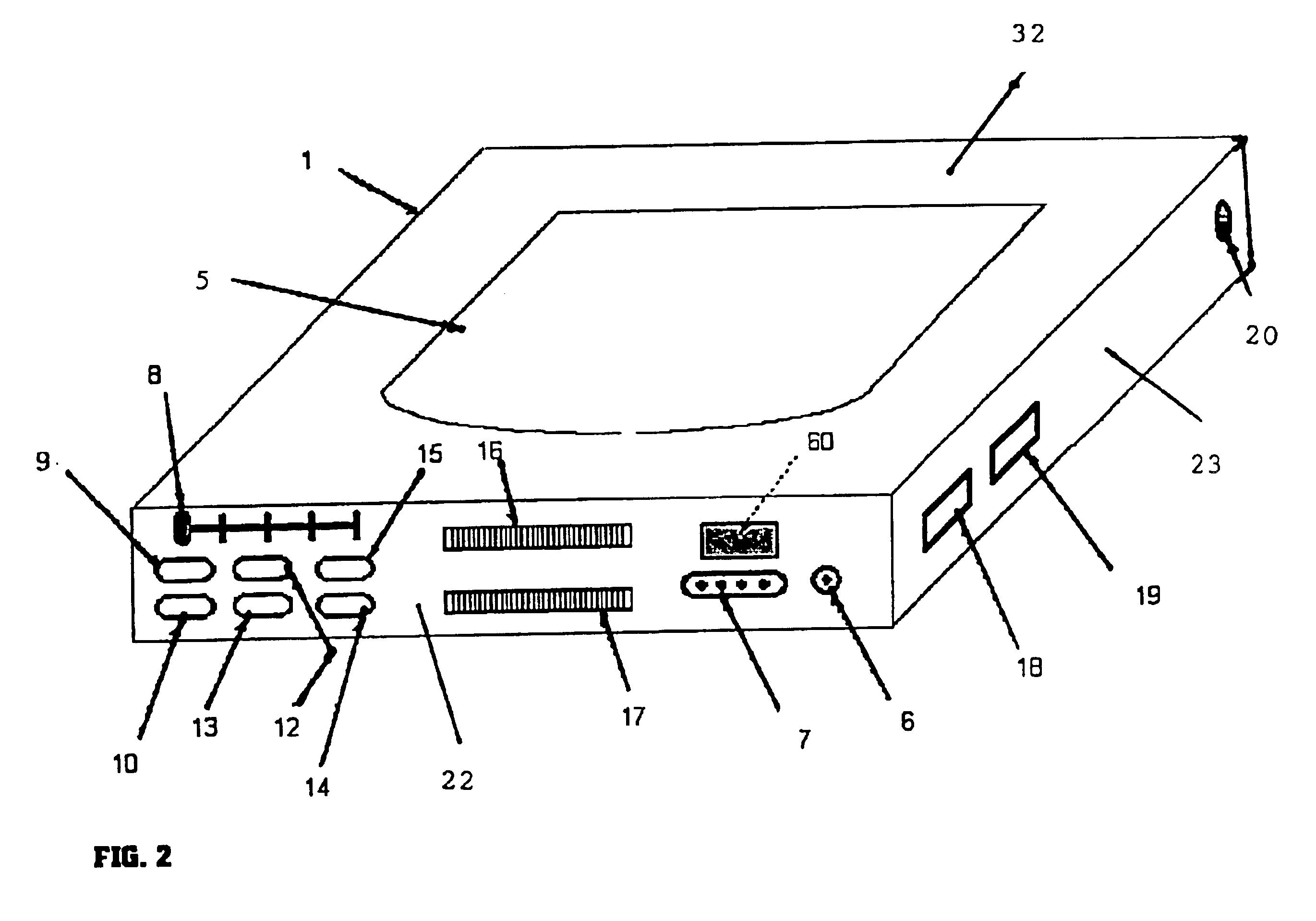

InactiveUS6933928B1Assisted readingEnabling visually-impaired persons accessDigital computer detailsCathode-ray tube indicatorsData synchronizationSpoken language

This paperless book is portable and uses removable ROM devices to provide the visual and aural information displayed in the paperless book. The book can be read in a visual mode, as print, or in an aural mode, as the spoken word corresponding to the print, or in both visual and aural modes. The display mode may be conveniently shifted back and forth between visual and aural, for use, for example, by a counter who uses the book in the aural mode while driving and switches to the visual mode while riding on a train. In addition, the visual display may be manipulated affecting the appearance, for use, for example, by persons with reduced visual acuity. Movies also may be viewed using the paperless book. Finally, portions of the text may be enhanced by additional visual images, music or other sounds.

Owner:MIND FUSION LLC

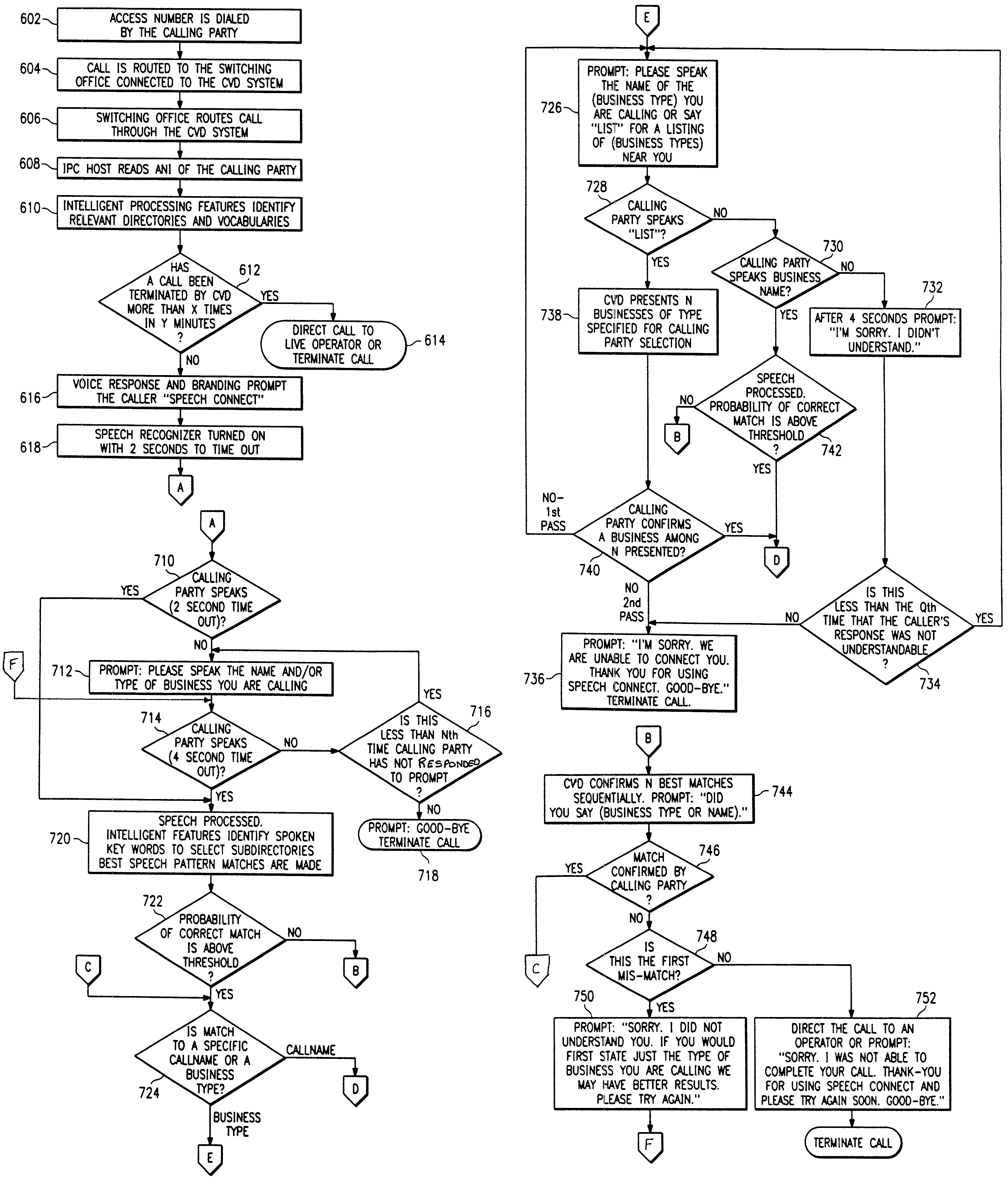

Voice-activated call placement systems and methods

InactiveUS7127046B1Easily reachableEasy to adaptIntelligent networksAutomatic call-answering/message-recording/conversation-recordingSpoken languageService control

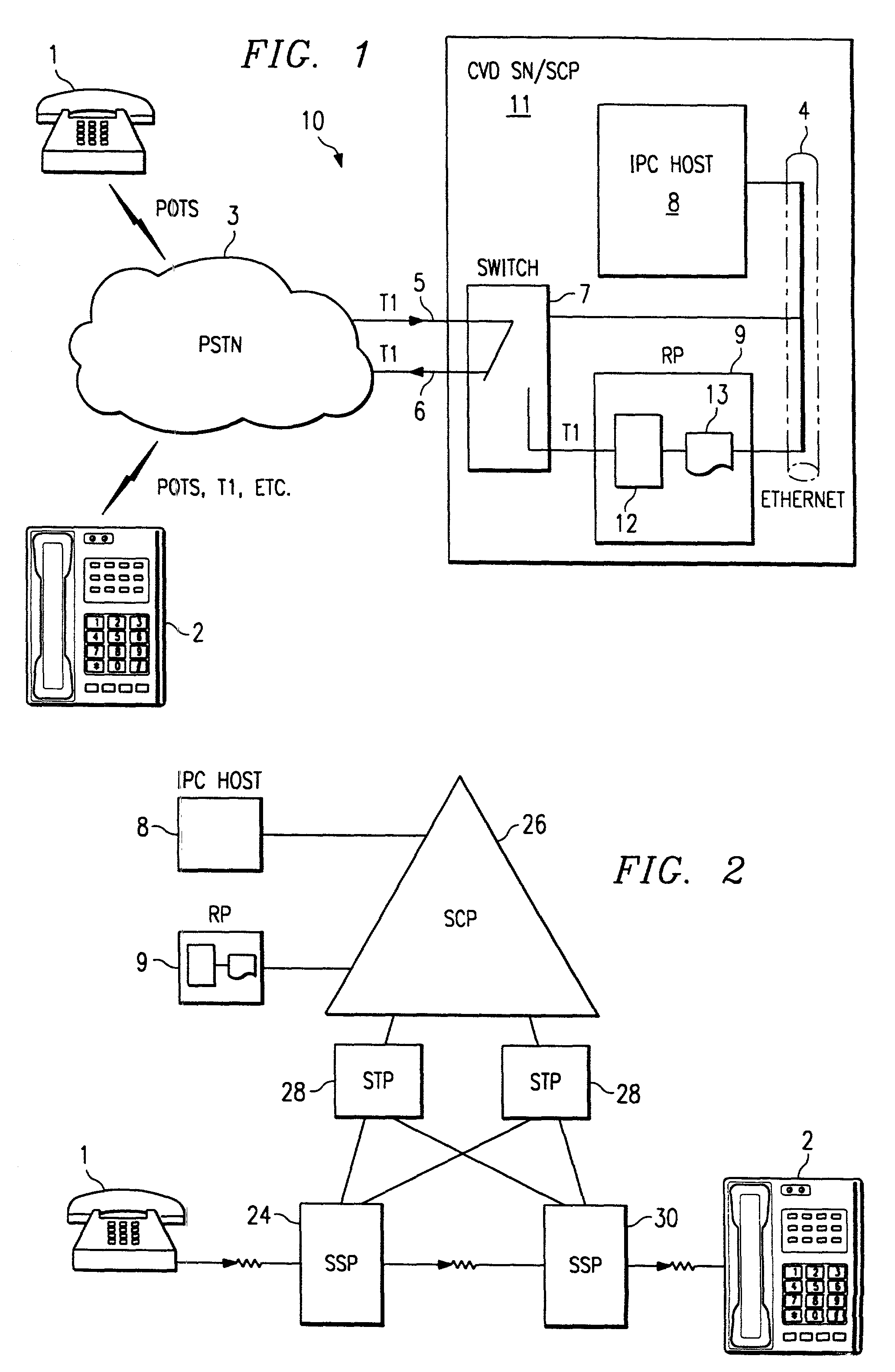

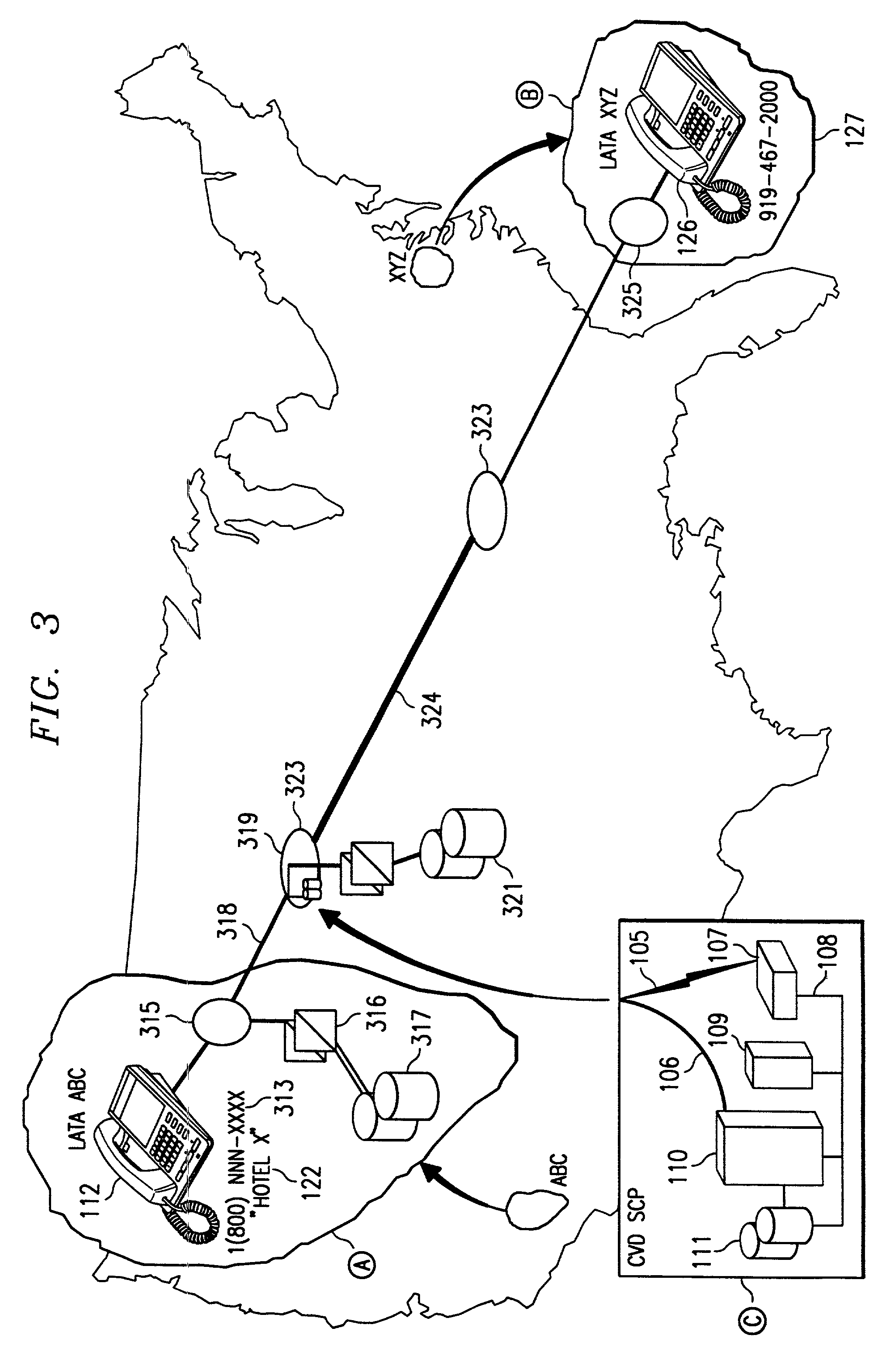

System and method for deriving call routing information utilizing a network control data base system and voice recognition for matching spoken word sound patterns to routing numbers needed to set up calls. Public access is provided to a common database via a common access number or code. Terminating parties sponsor the call and originating parties need not be pre-subscribed to use the service. The common access number is used to initiate or trigger the service. The system advantageously operates under the direction of a service control point, which combines technologies for switching, interactive voice response, and voice recognition with the data base to automate the processes of assisting callers in making calls for which they do not know the phone number. Usage information is gathered on completed calls to each terminating party for billing. Three alternative deployments in the U.S. telephone network are described, and vary based on the location of the service control points or intelligent processors and the degree of intelligence within the network.

Owner:GOOGLE LLC

System and method for transacting an automated patient communications session

InactiveUS20050171411A1Facilitates gathering and storage and analysisBurden to of minimizedMedical communicationData processing applicationsSpoken languagePatient characteristics

A system and method for transacting an automated patient communications session is described. A patent health condition is monitored by regularly collecting physiological measures through an implantable medical device. A patient communications session is activated through a patient communications interface, including an implantable microphone and an implantable speaker in response to a patient-provided activation code. An identification of the patient is authenticated based on pre-defined uniquely identifying patient characteristics. Spoken patient information is received through the implantable microphone and verbal system information is played through the implantable speaker. The patient communications session is terminated by closing the patient communications interface. The physiological measures and the spoken patient information are sent.

Owner:CARDIAC INTELLIGENCE

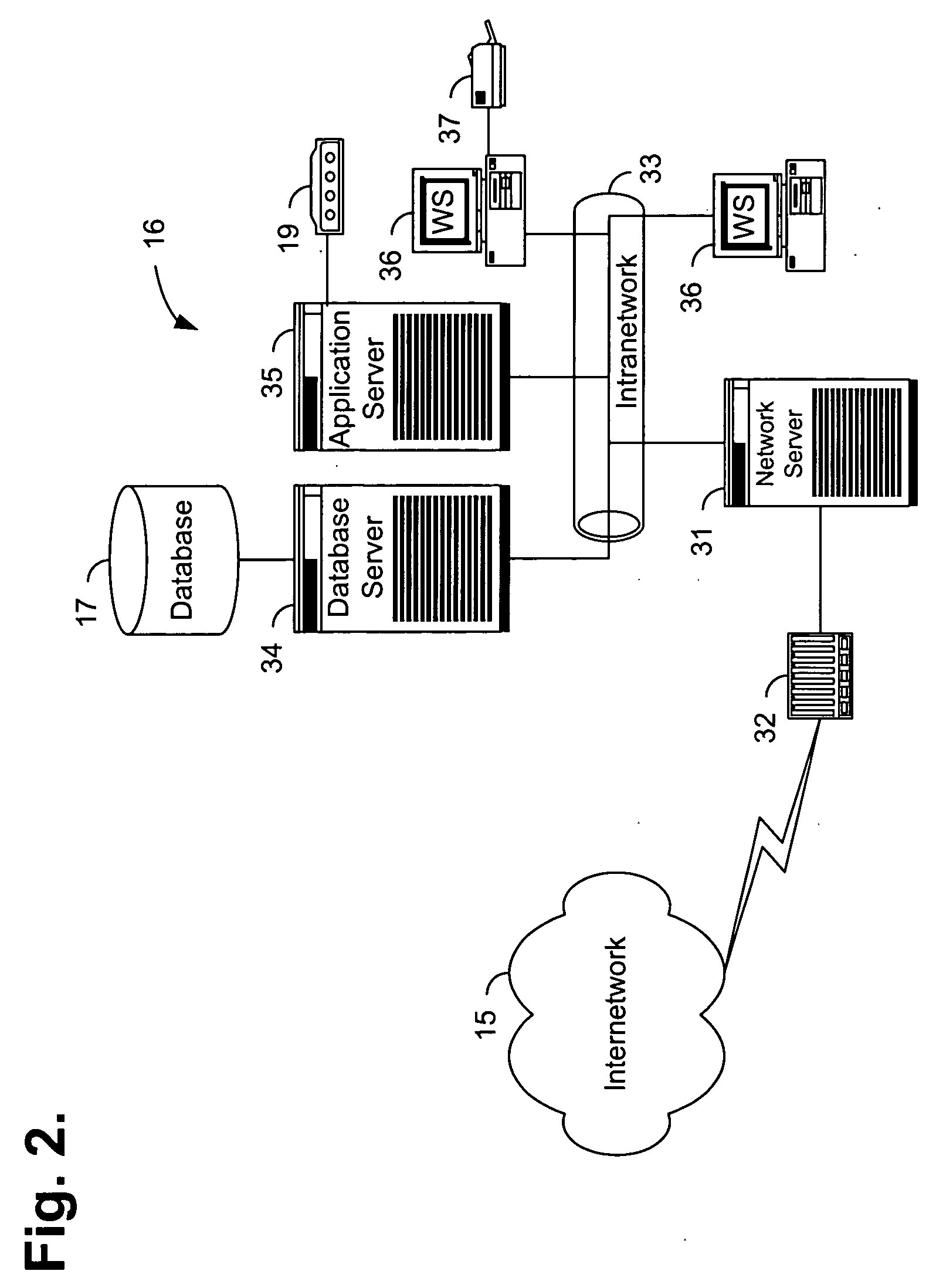

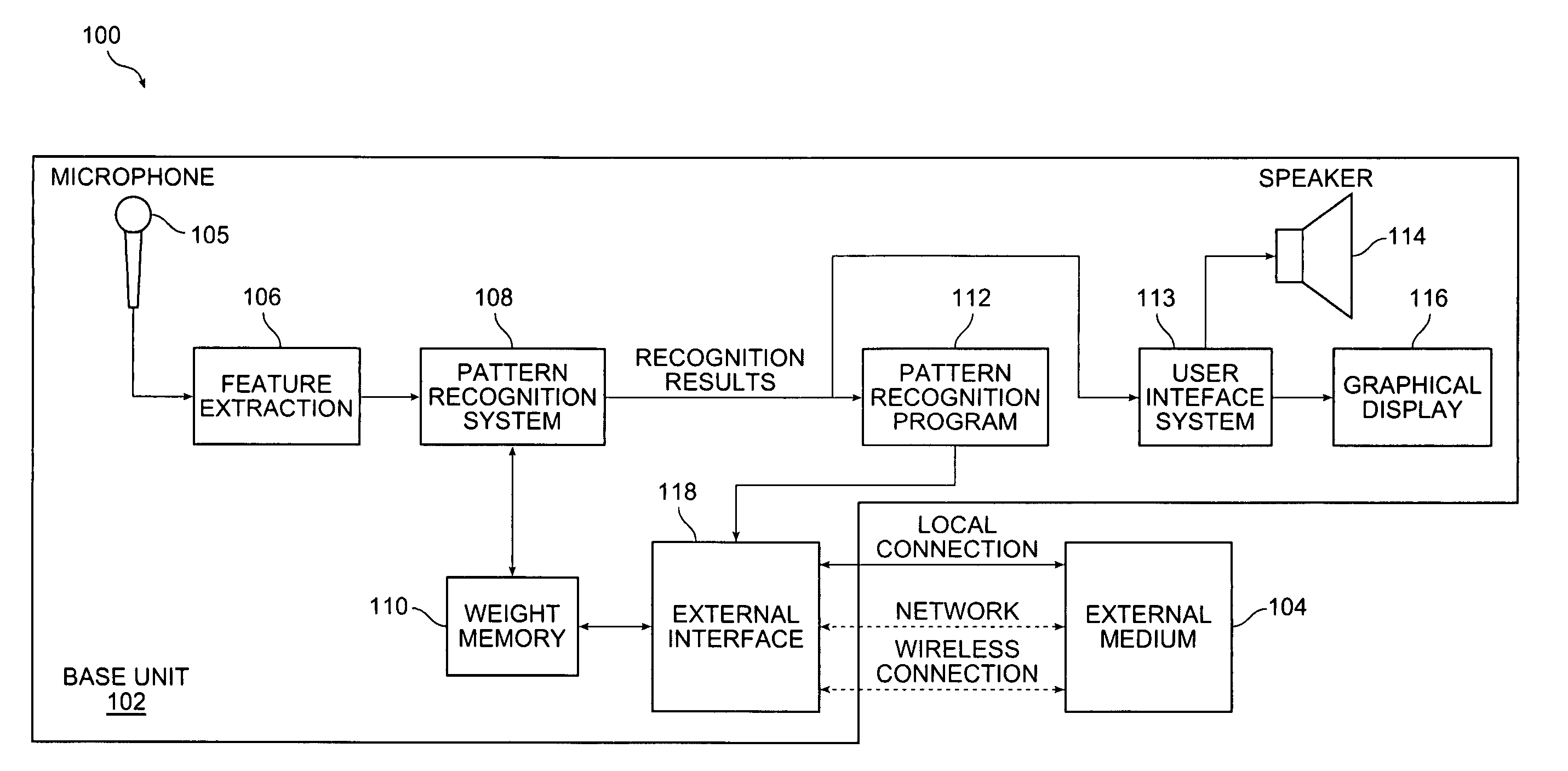

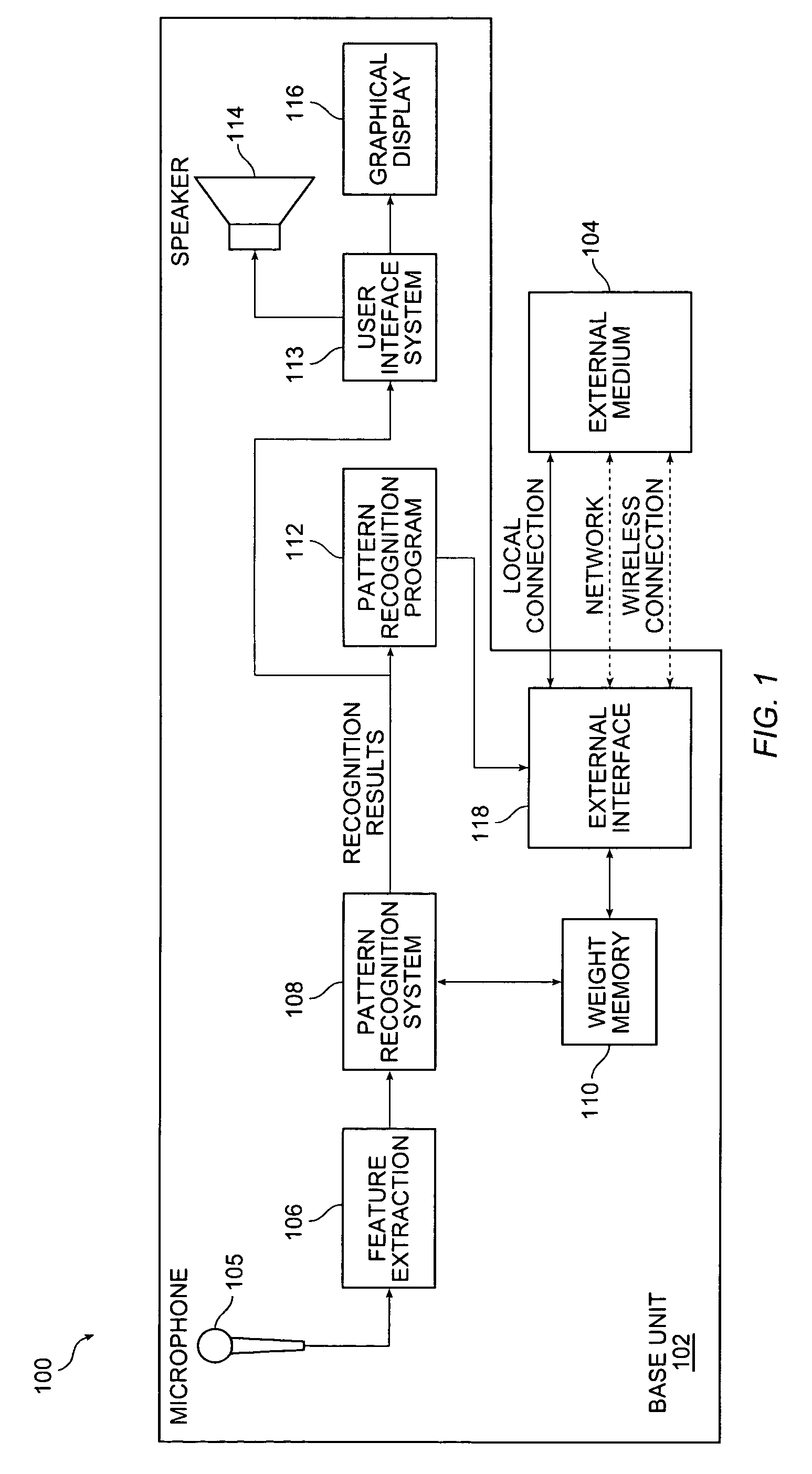

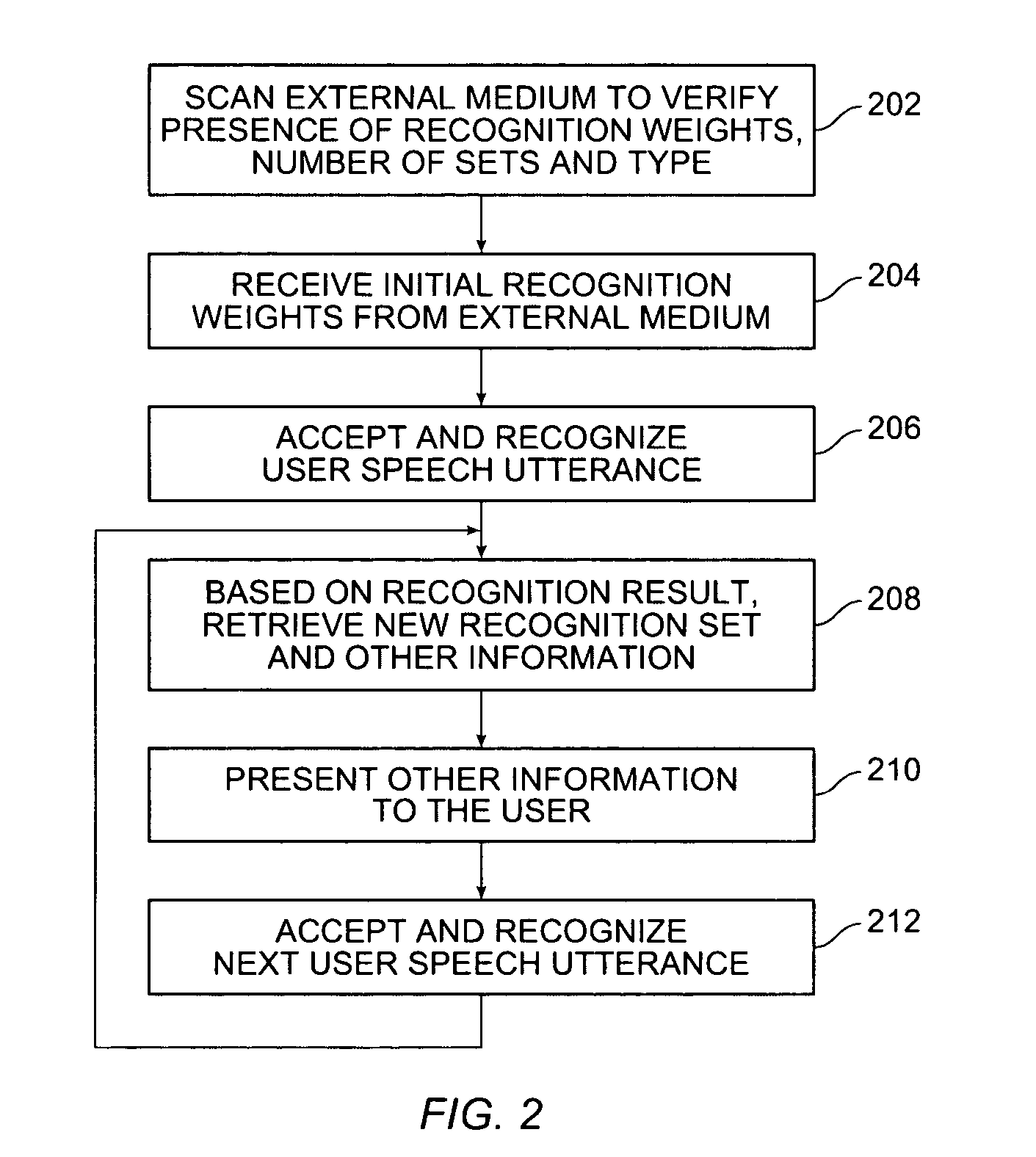

Method of performing speech recognition across a network

Embodiments of the present invention include a method of performing speech recognition across a network. In one embodiment, the method includes providing, from a server to a first computer, sets of data to recognize spoken utterances from corresponding limited sets of candidate utterances, and supplying different sets of said data from the server to the first computer to recognize different spoken utterances from corresponding limited sets of candidate utterances at different times in response to different user interactions.

Owner:SENSORY

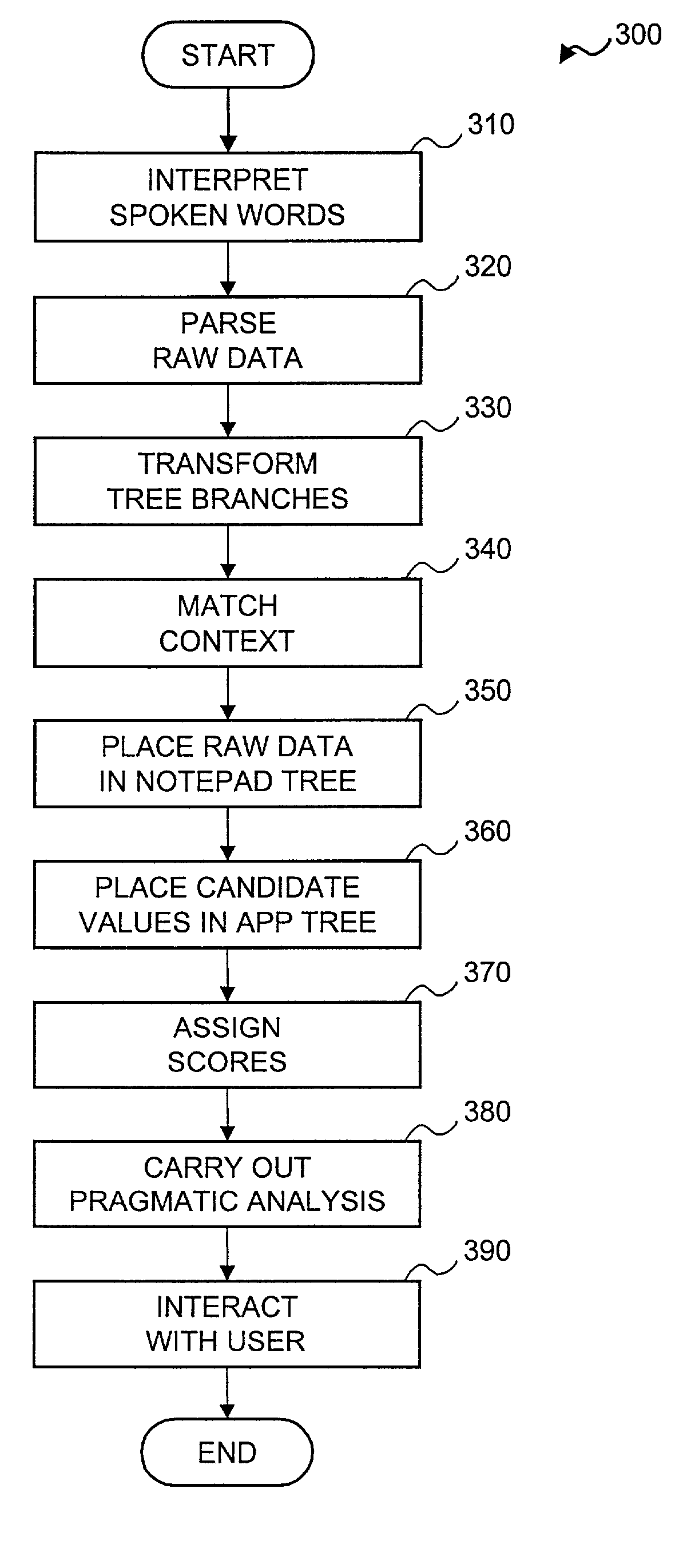

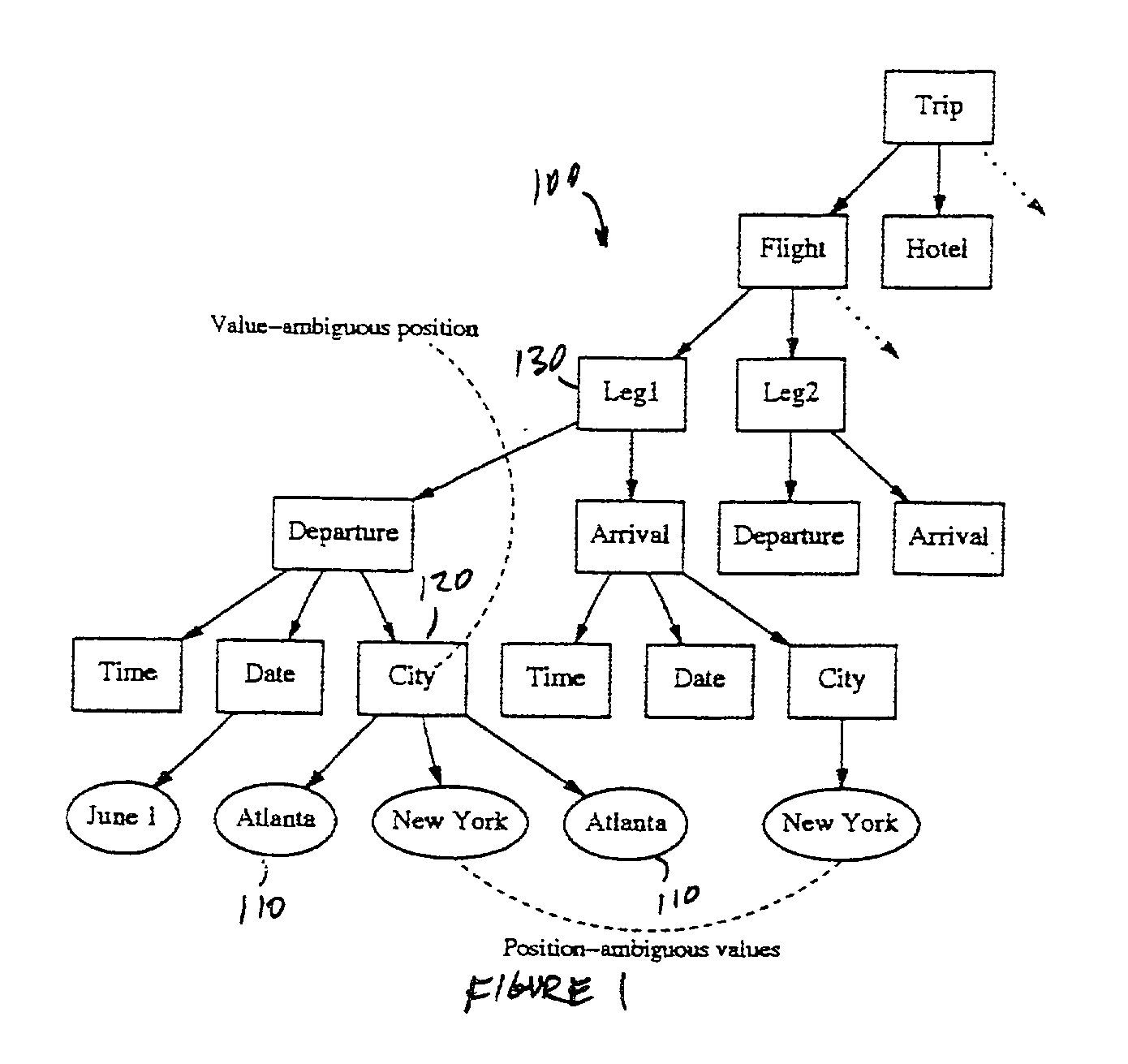

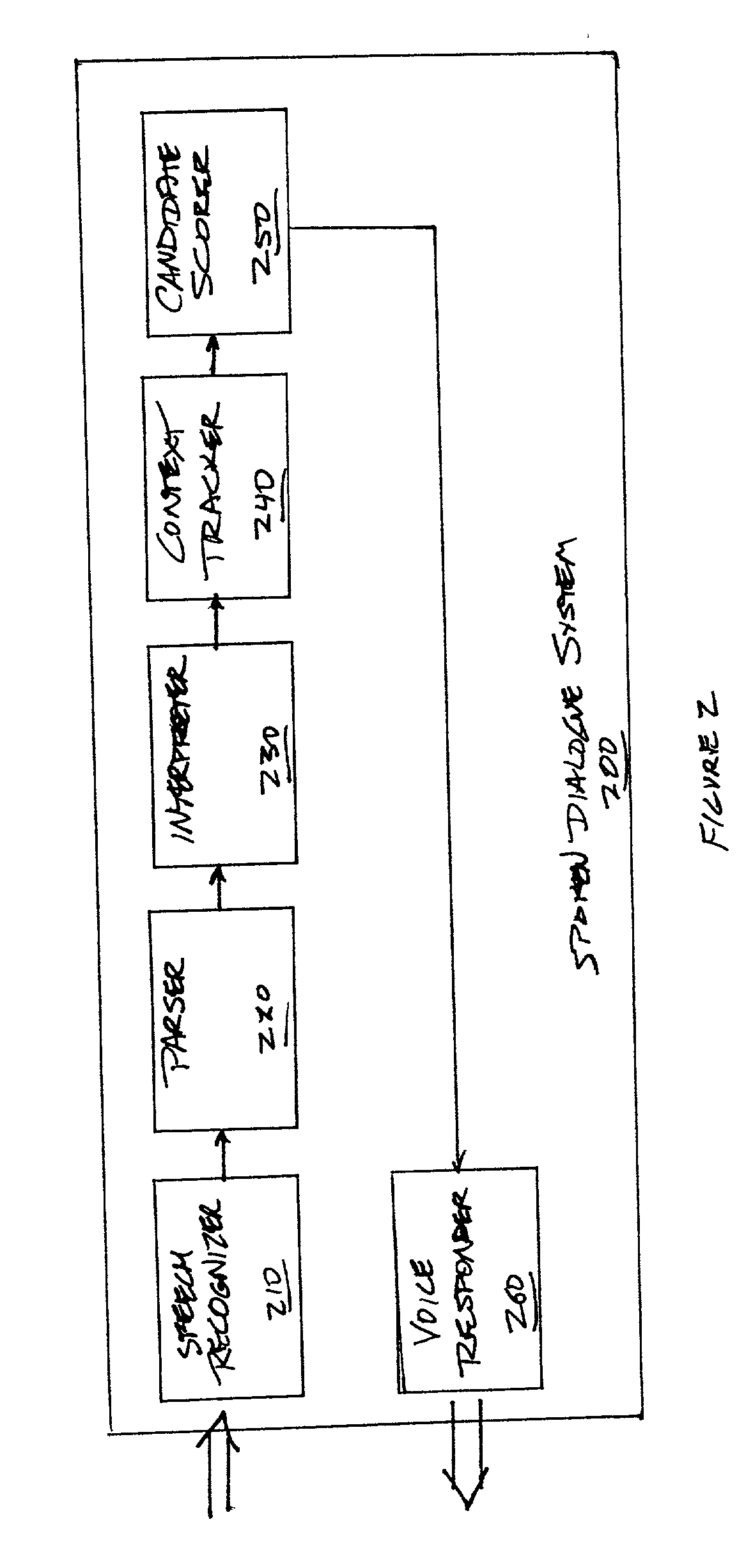

System and method for representing and resolving ambiguity in spoken dialogue systems

InactiveUS20030233230A1Efficient ambiguityEnhanced interactionSpeech recognitionSpoken languageAmbiguity

A system for, and method of, representing and resolving ambiguity in natural language text and a spoken dialogue system incorporating the system for representing and resolving ambiguity or the method. In one embodiment, the system for representing and resolving ambiguity includes: (1) a context tracker that places the natural language text in context to yield candidate attribute-value (AV) pairs and (2) a candidate scorer, associated with the context tracker, that adjusts a confidence associated with each candidate AV pair based on system intent.

Owner:LUCENT TECH INC

Voice-enabled dialog system

ActiveUS7869998B1Improve customer relationshipEfficient mannerSpeech recognitionSpecial data processing applicationsSpoken languageDialog management

A voice-enabled help desk service is disclosed. The service comprises an automatic speech recognition module for recognizing speech from a user, a spoken language understanding module for understanding the output from the automatic speech recognition module, a dialog management module for generating a response to speech from the user, a natural voices text-to-speech synthesis module for synthesizing speech to generate the response to the user, and a frequently asked questions module. The frequently asked questions module handles frequently asked questions from the user by changing voices and providing predetermined prompts to answer the frequently asked question.

Owner:NUANCE COMM INC

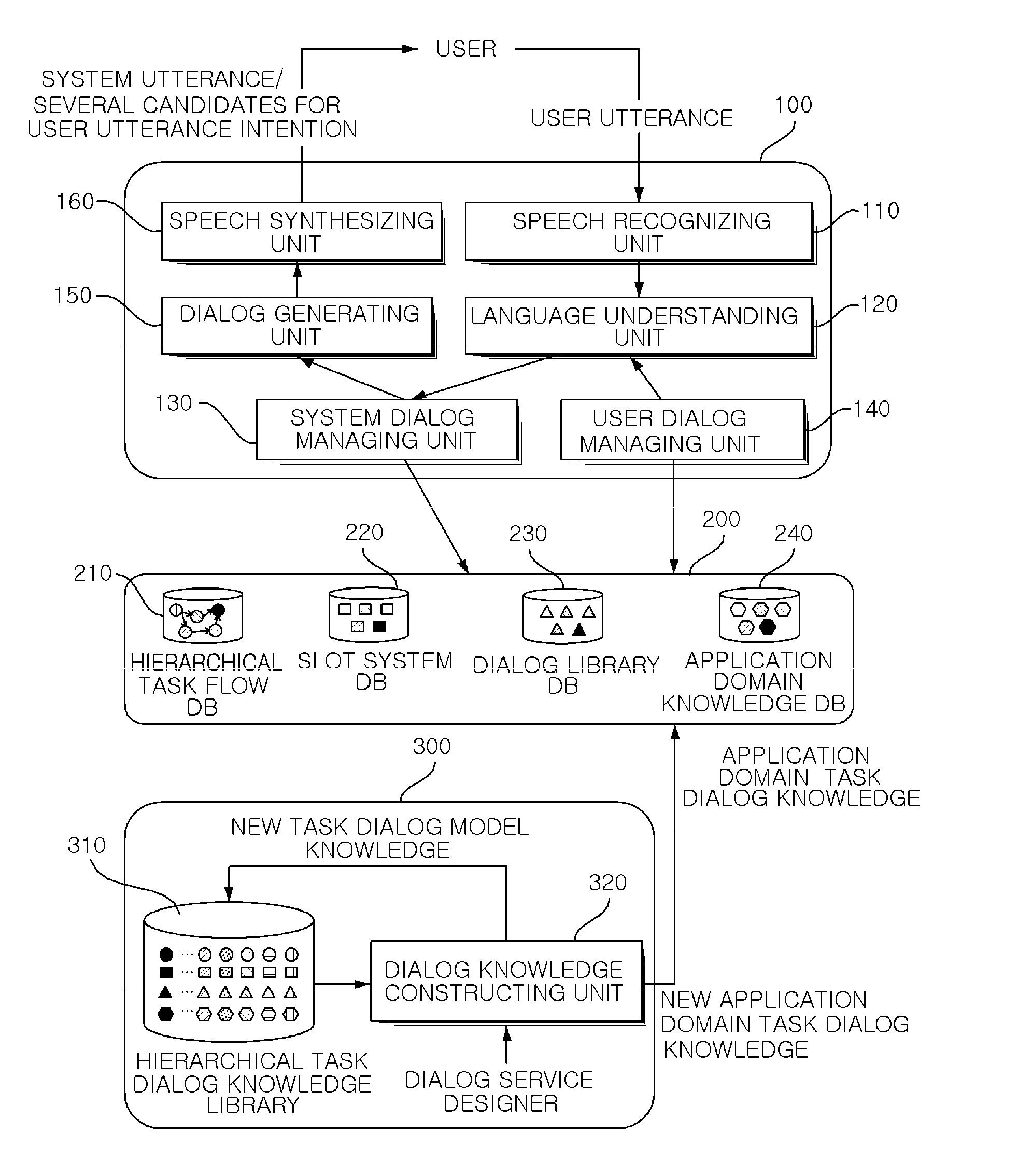

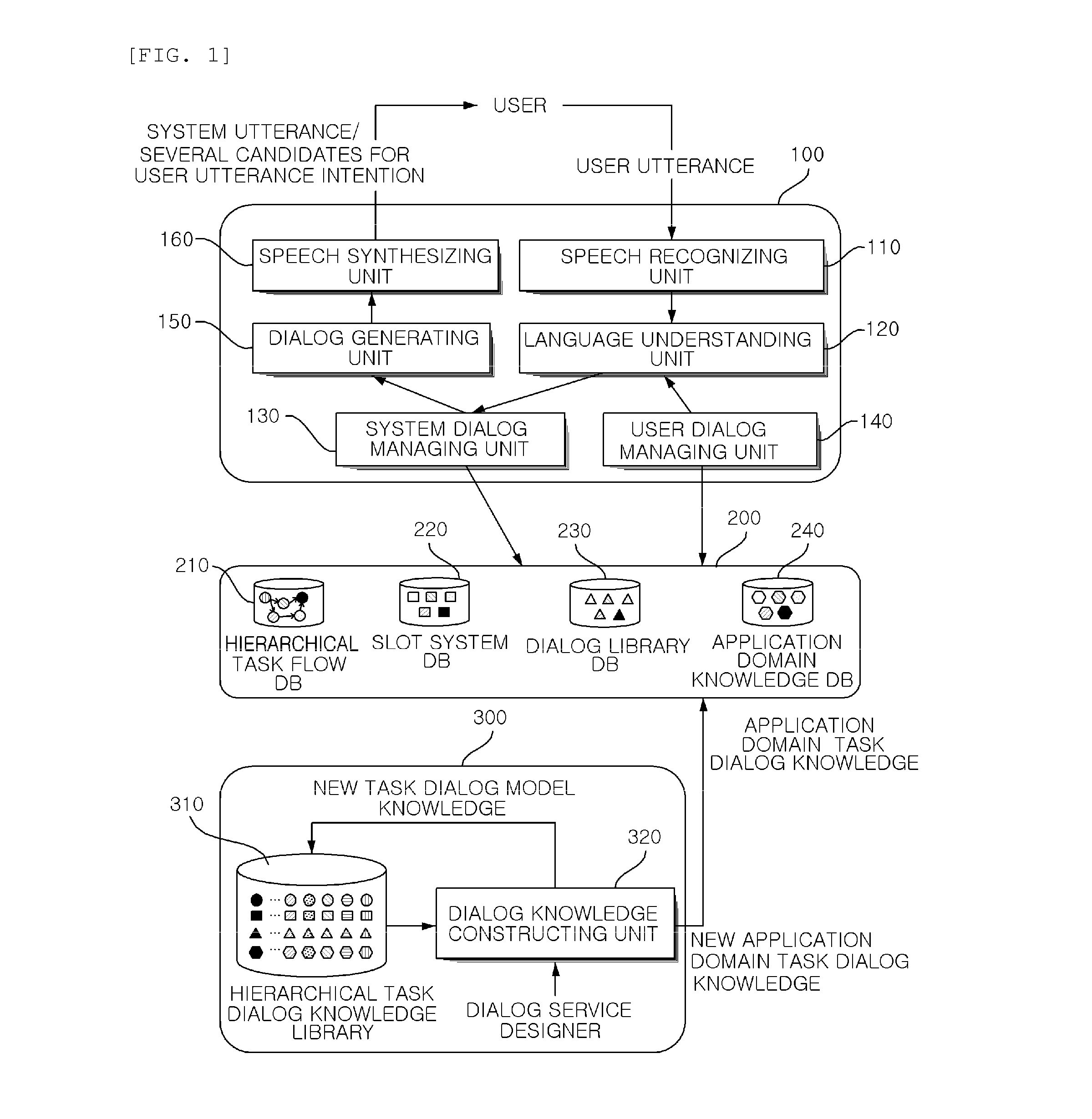

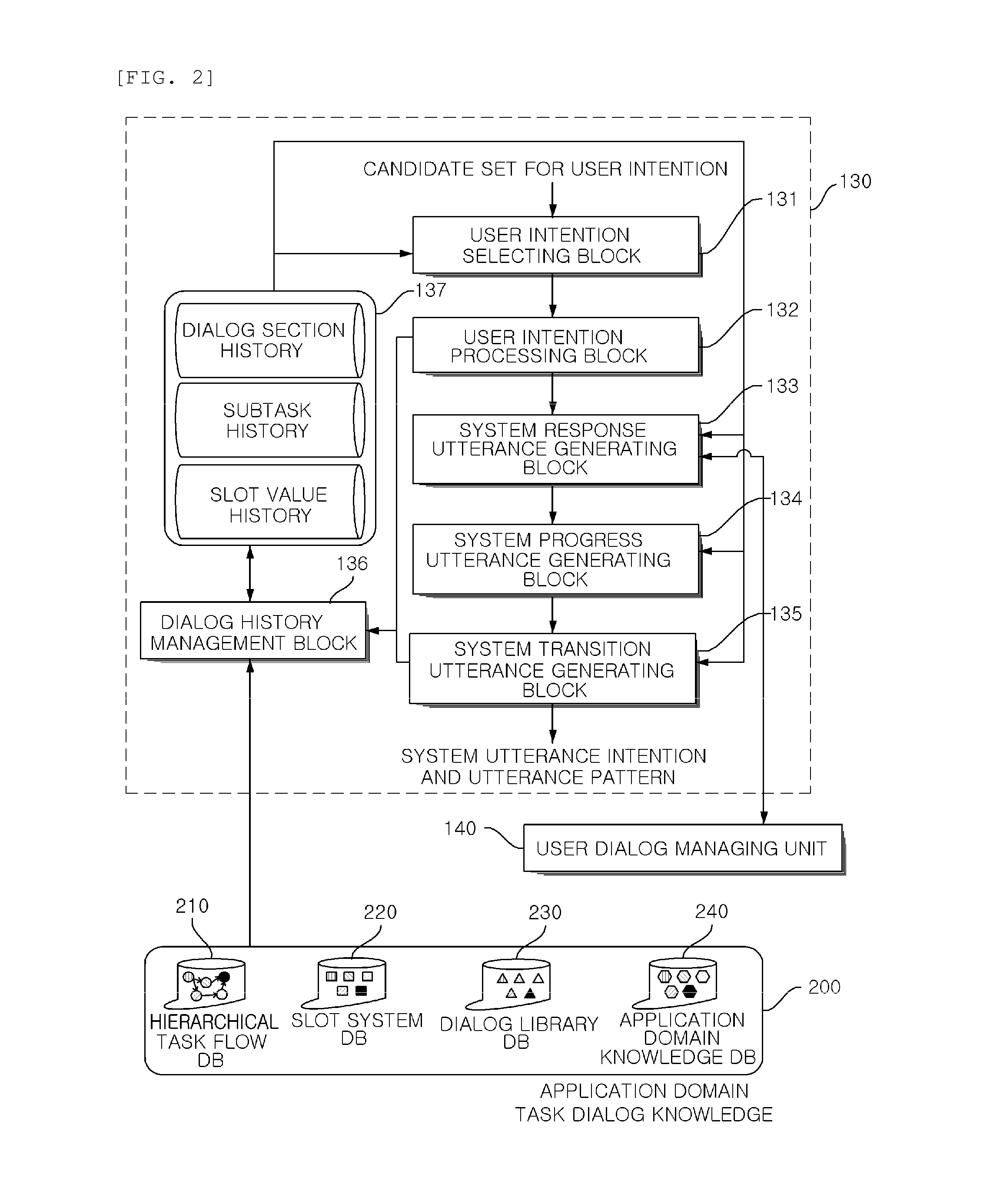

Spoken dialog system based on dual dialog management using hierarchical dialog task library

ActiveUS20140136212A1Reduce difficultyEffective dialog flowAutomatic exchangesSpeech recognitionSpoken languageDialog management

Owner:ELECTRONICS & TELECOMM RES INST

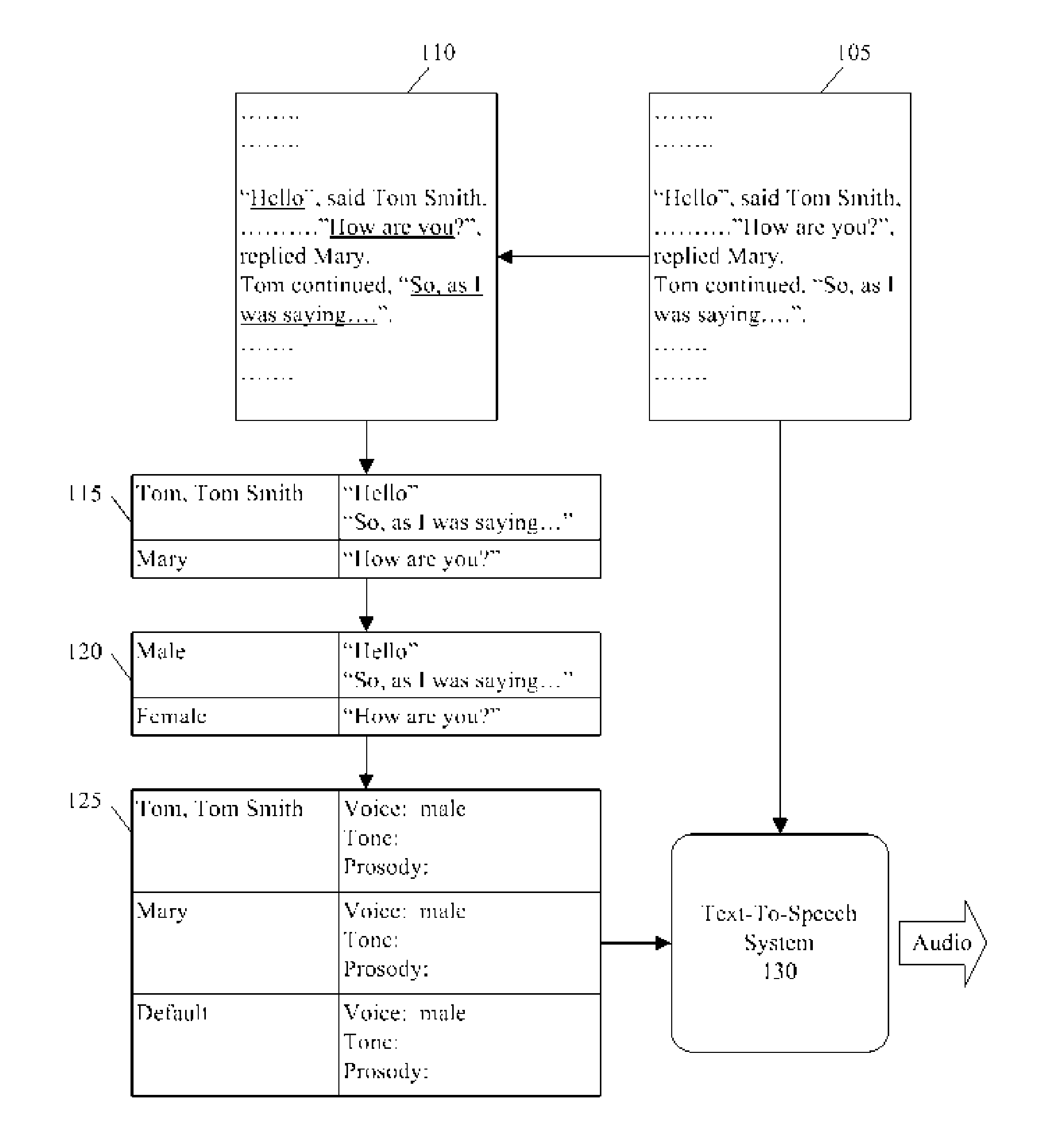

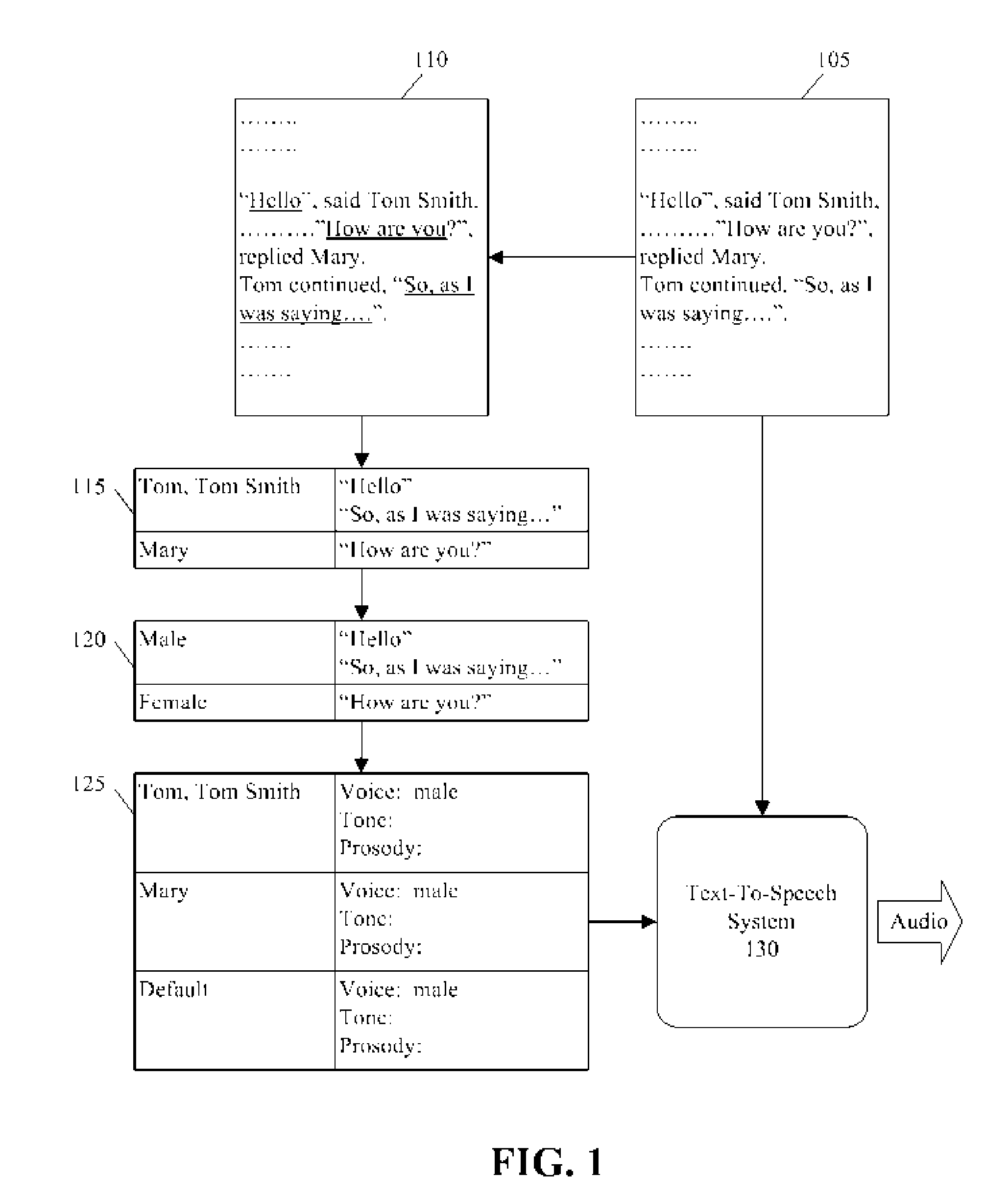

Dynamically Changing Voice Attributes During Speech Synthesis Based upon Parameter Differentiation for Dialog Contexts

ActiveUS20070118378A1Special data processing applicationsSpeech synthesisSpoken languageSpeech synthesis

A method of speech synthesis can include automatically identifying spoken passages within a text source and converting the text source to speech by applying different voice configurations to different portions of text within the text source according to whether each portion of text was identified as a spoken passage. The method further can include identifying the speaker and / or the gender of the speaker and applying different voice configurations according to the speaker identity and / or speaker gender.

Owner:CERENCE OPERATING CO

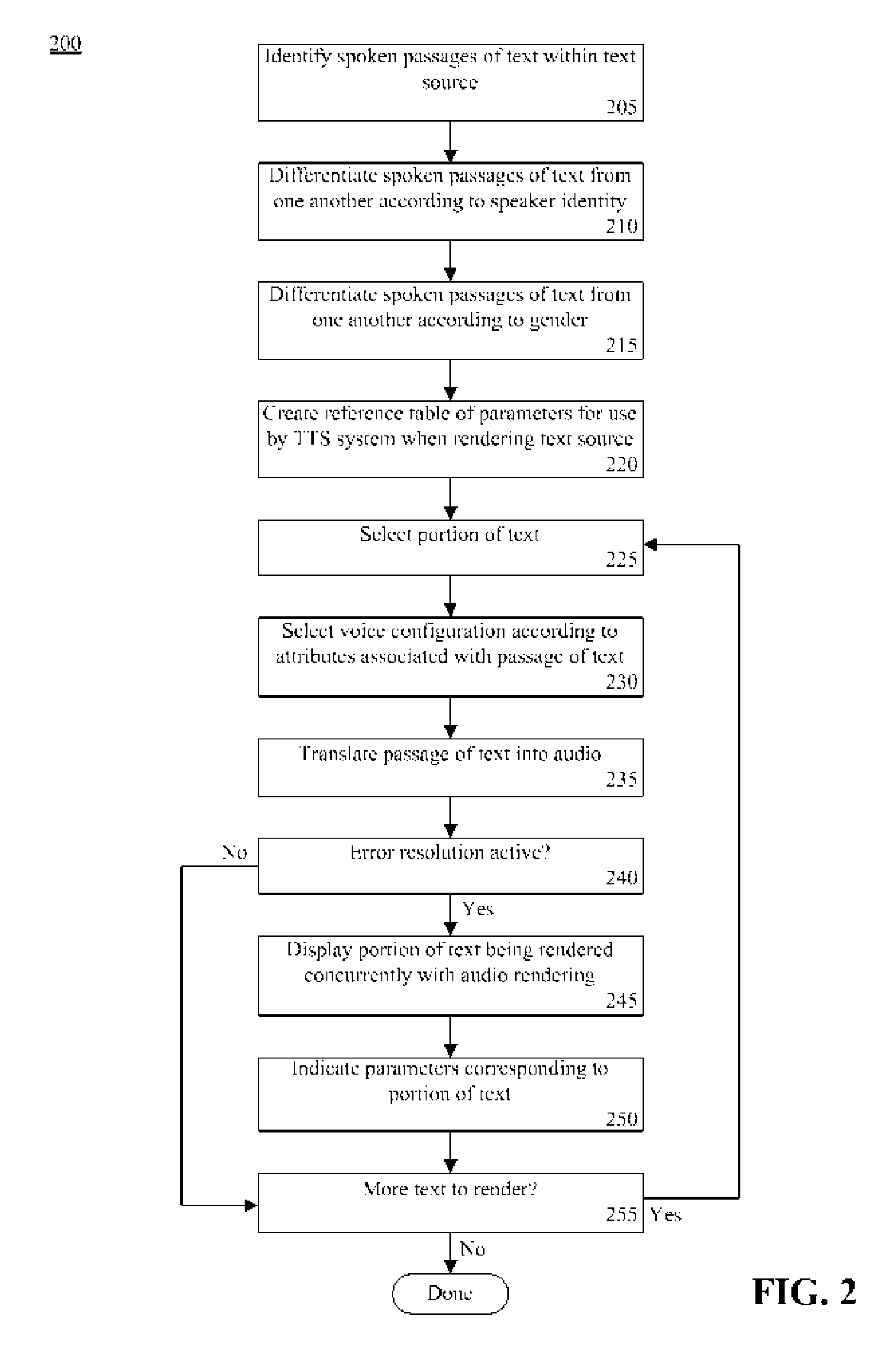

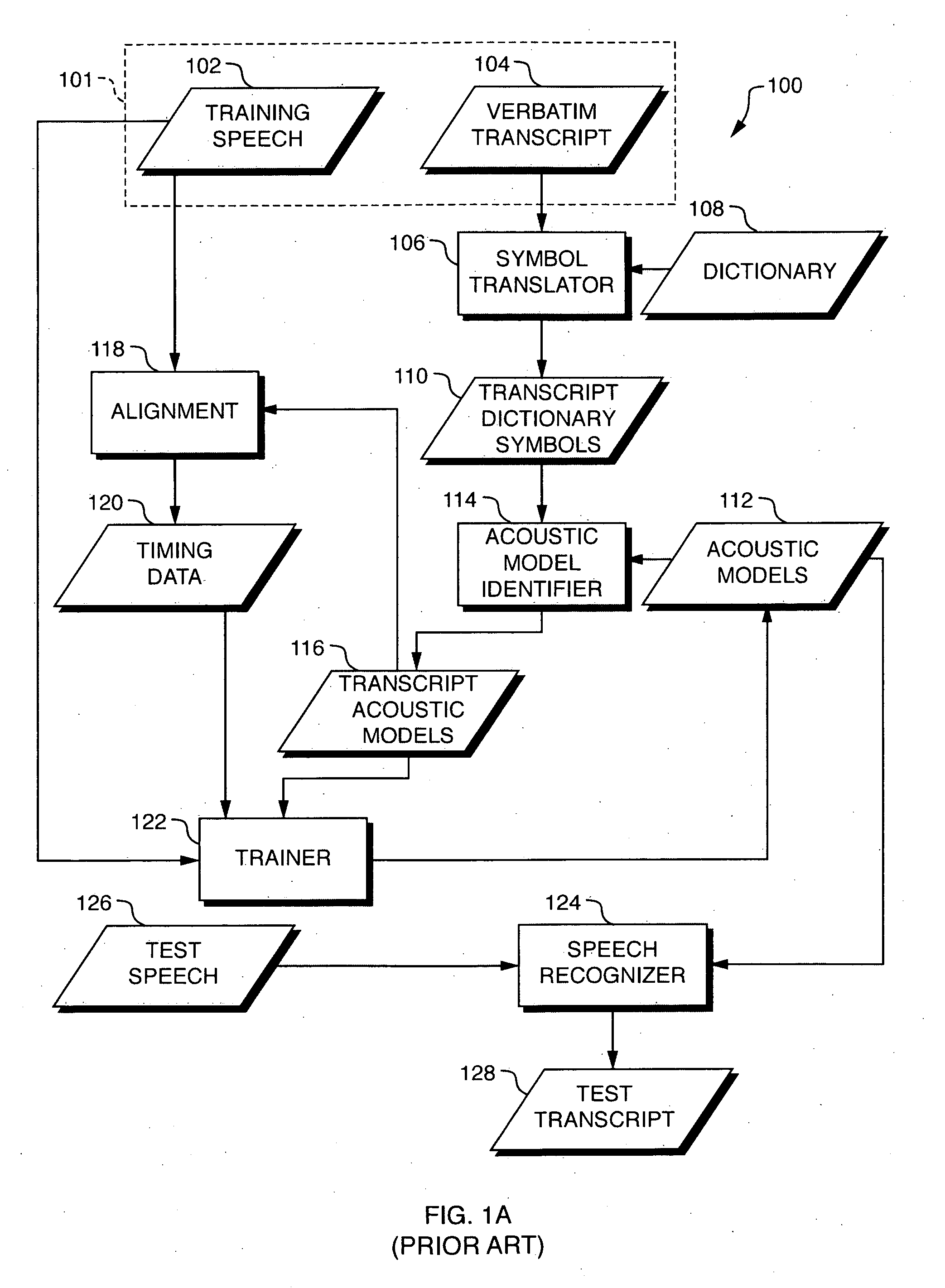

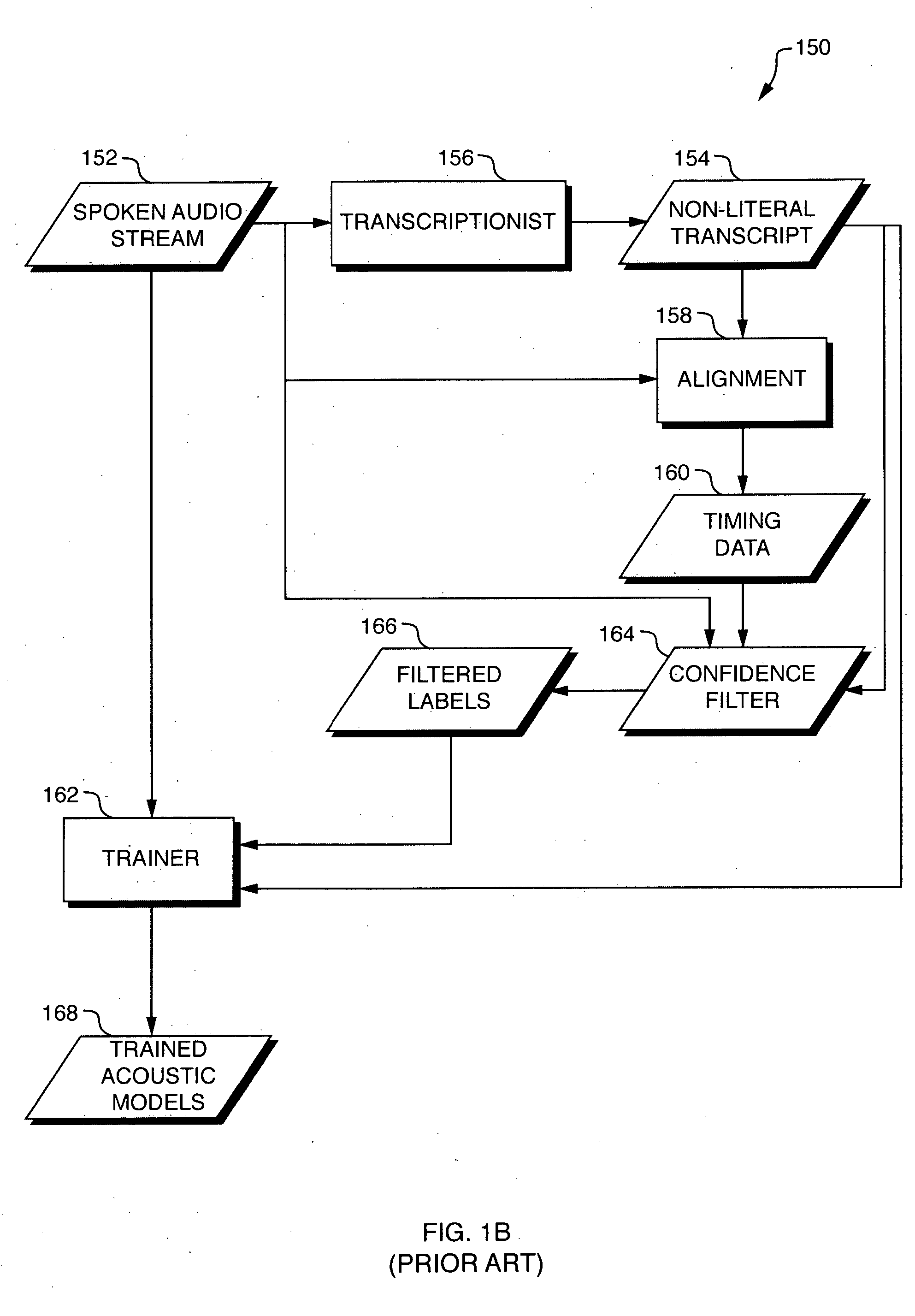

Discriminative training of document transcription system

ActiveUS20060074656A1Accurate representationAcoustic modelNatural language translationSpeech recognitionSpoken languageAcoustic model

A system is provided for training an acoustic model for use in speech recognition. In particular, such a system may be used to perform training based on a spoken audio stream and a non-literal transcript of the spoken audio stream. Such a system may identify text in the non-literal transcript which represents concepts having multiple spoken forms. The system may attempt to identify the actual spoken form in the audio stream which produced the corresponding text in the non-literal transcript, and thereby produce a revised transcript which more accurately represents the spoken audio stream. The revised, and more accurate, transcript may be used to train the acoustic model using discriminative training techniques, thereby producing a better acoustic model than that which would be produced using conventional techniques, which perform training based directly on the original non-literal transcript.

Owner:3M INNOVATIVE PROPERTIES CO

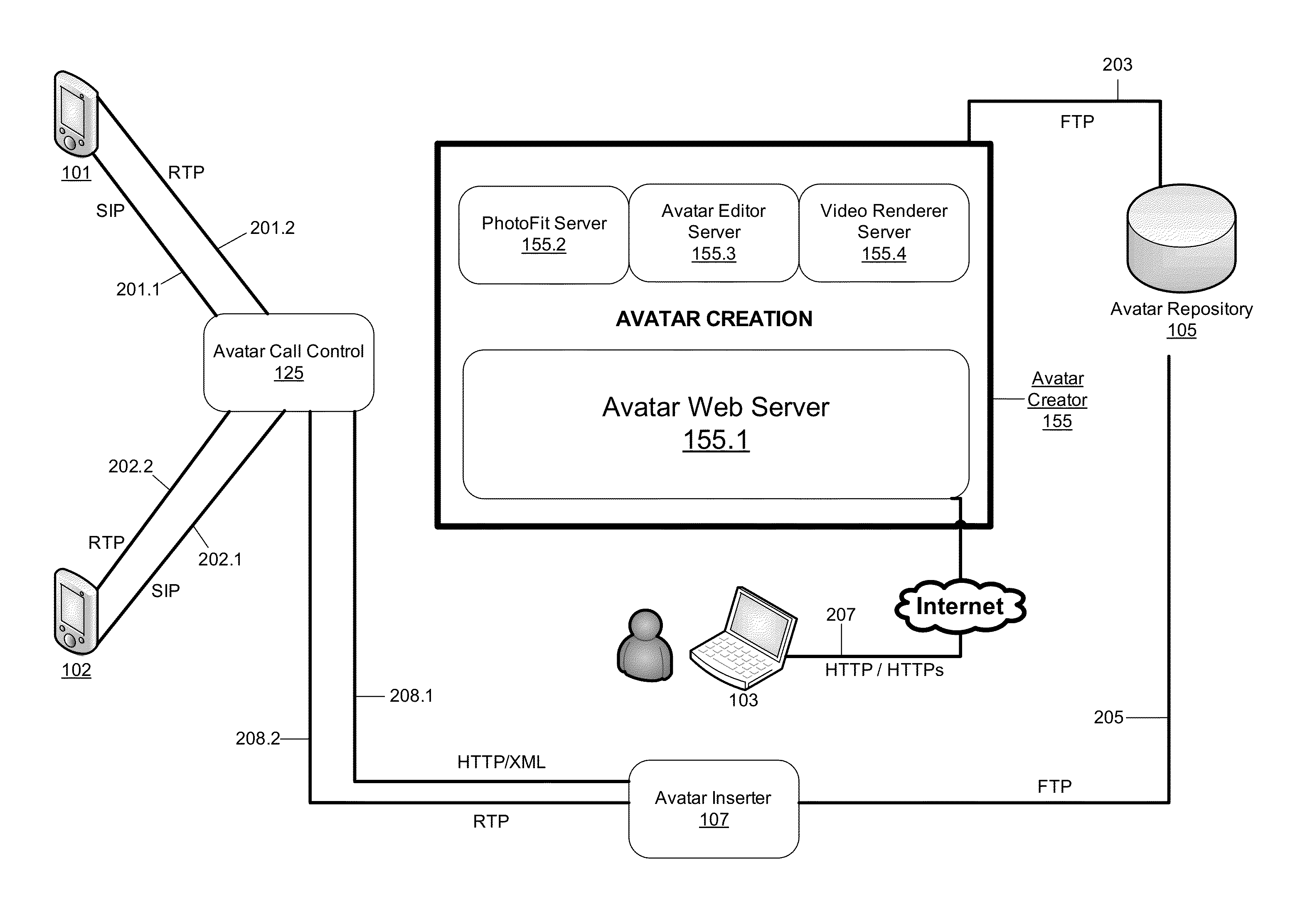

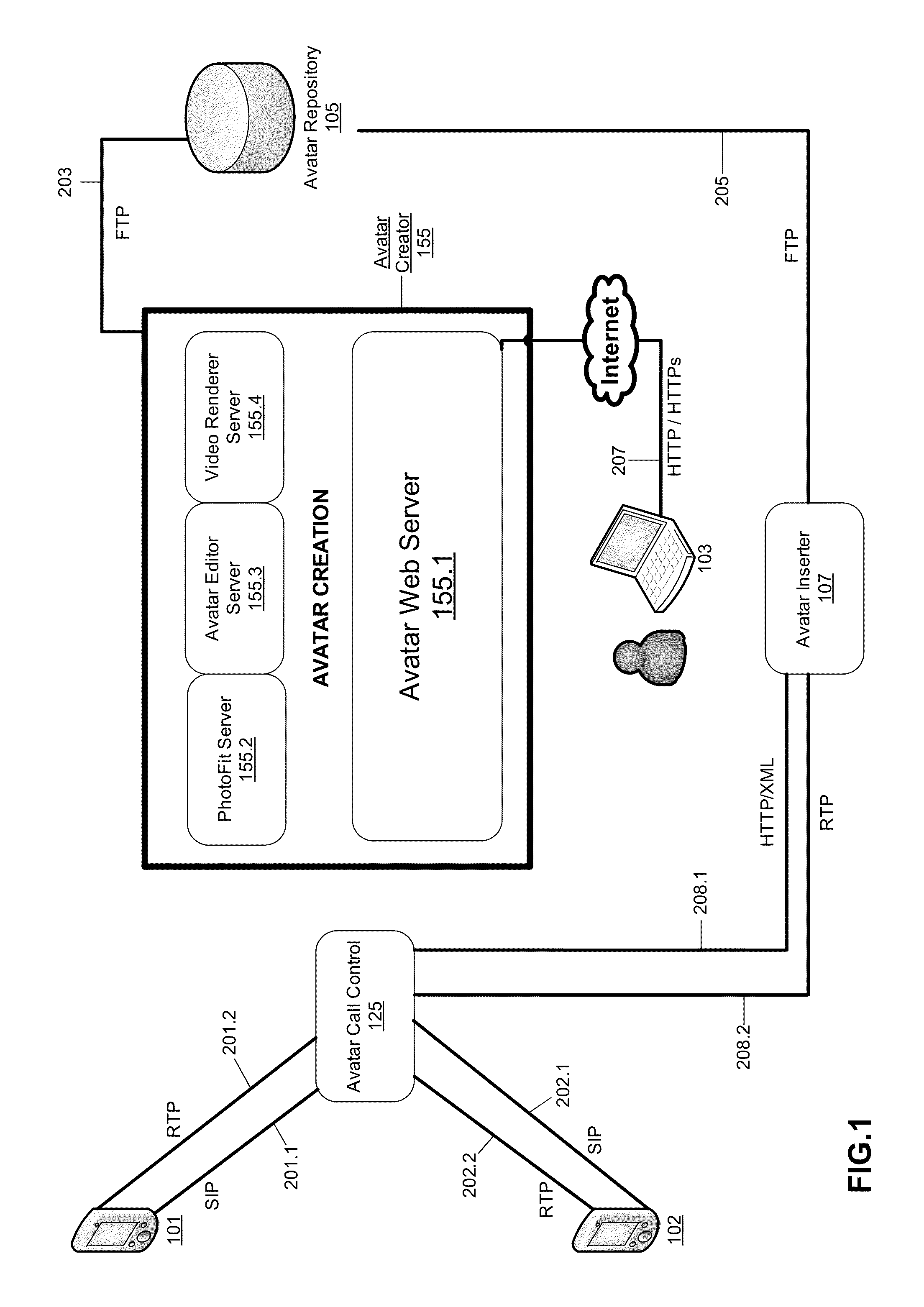

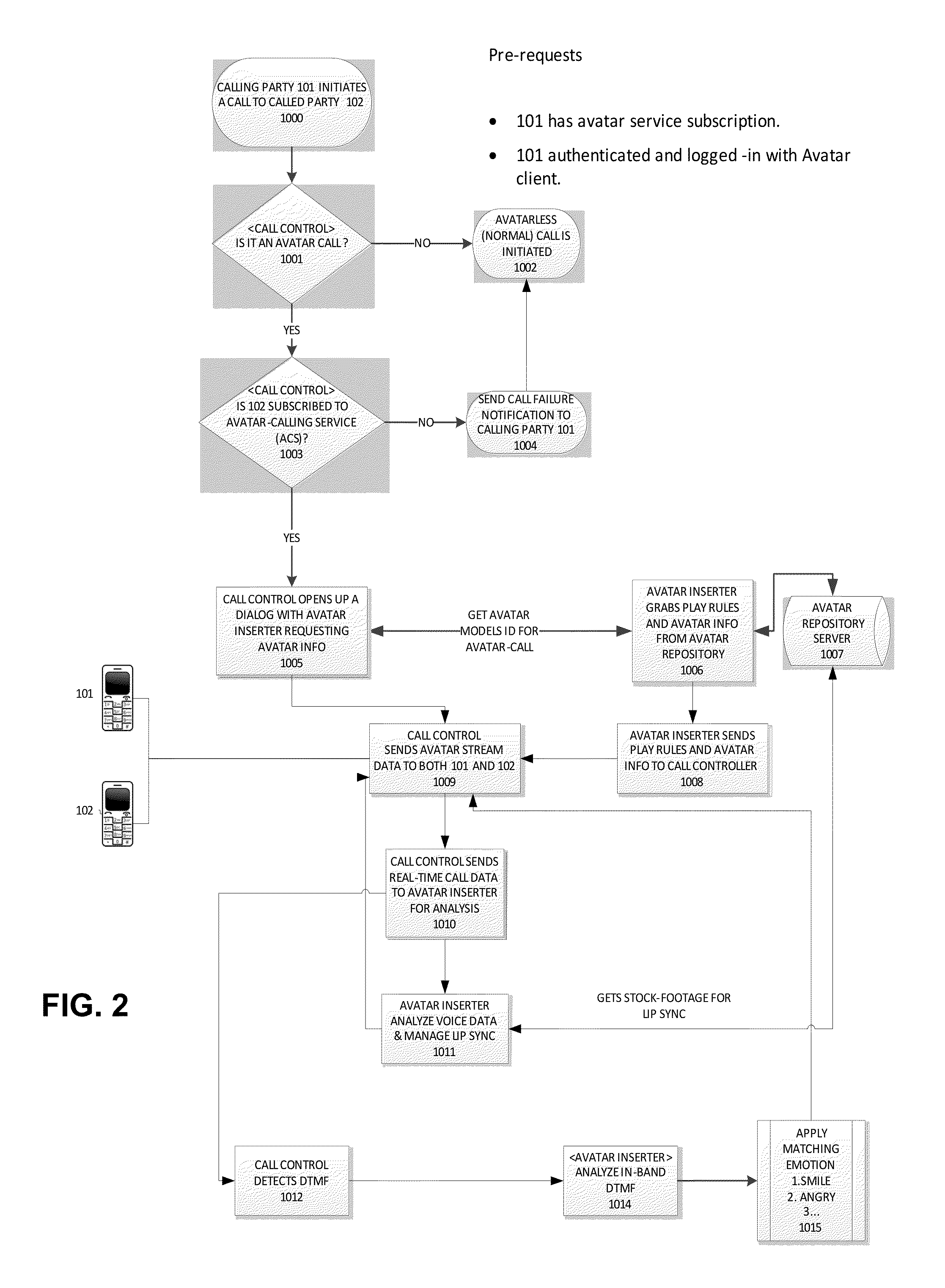

Interactive avatars for telecommunication systems

ActiveUS9402057B2Television conference systemsSpecial service for subscribersSpoken languageHuman–computer interaction

Owner:ARGELA YAZILIM & BILISIM TEKNOLOJILERI SAN & TIC A S

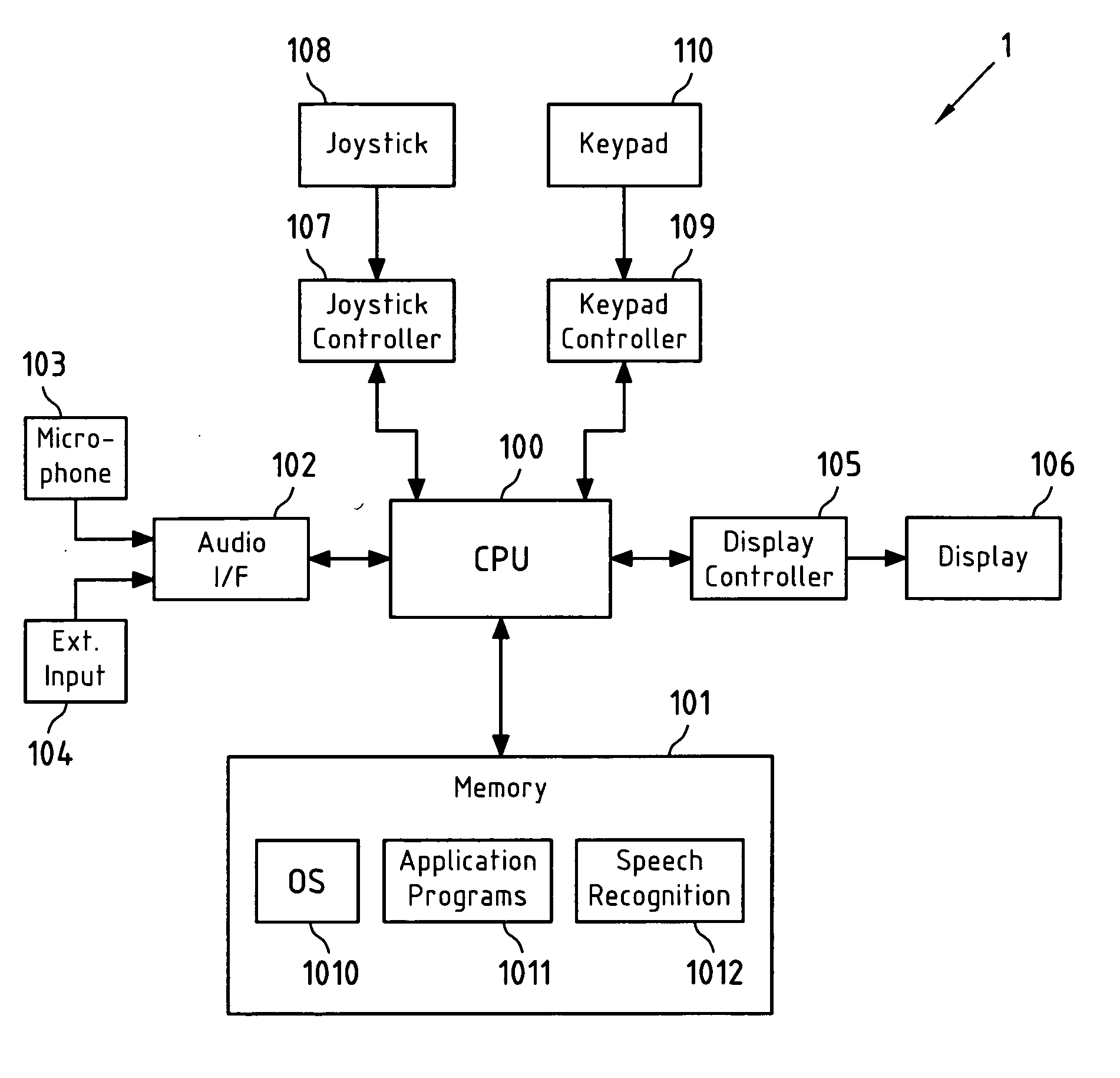

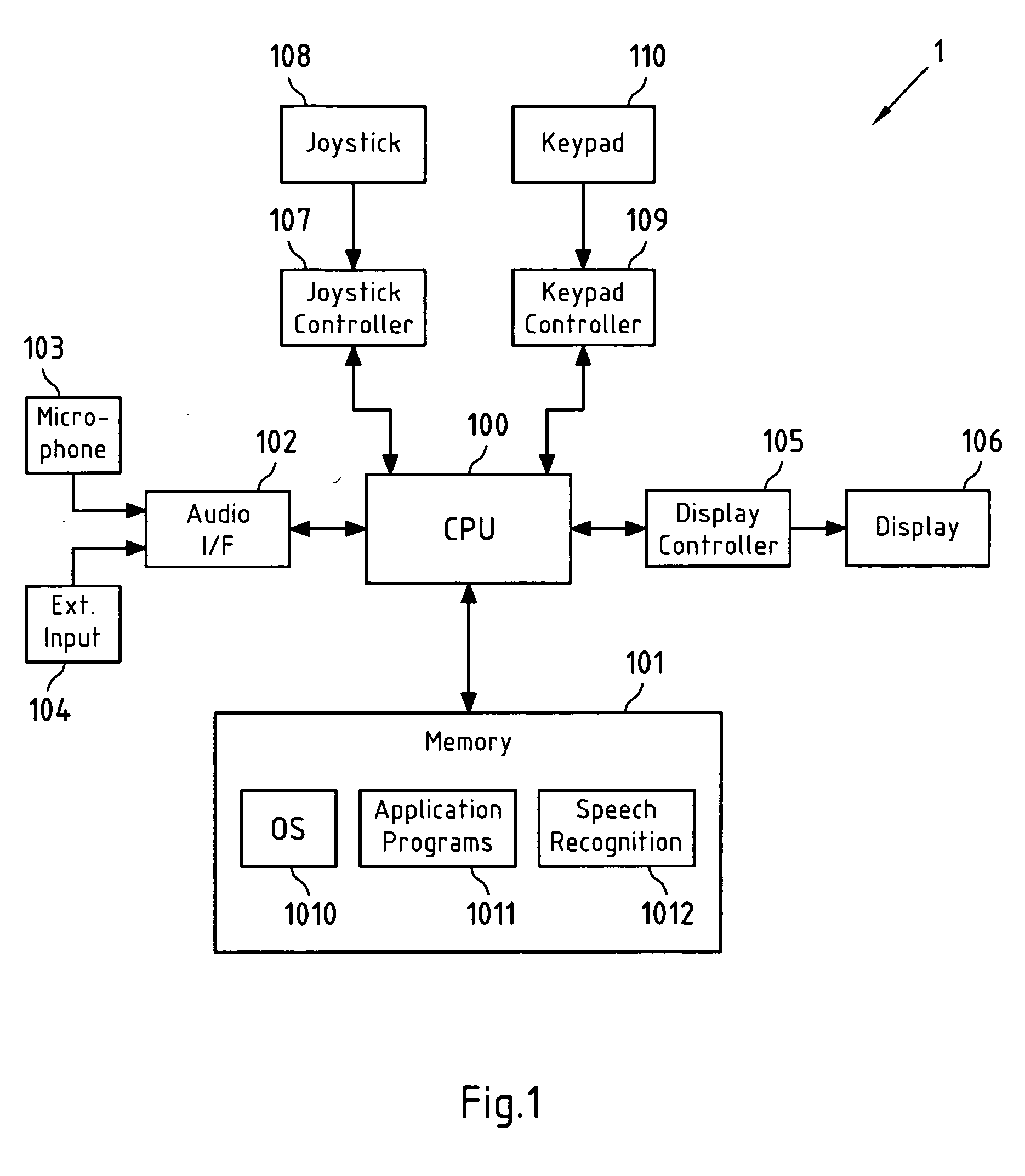

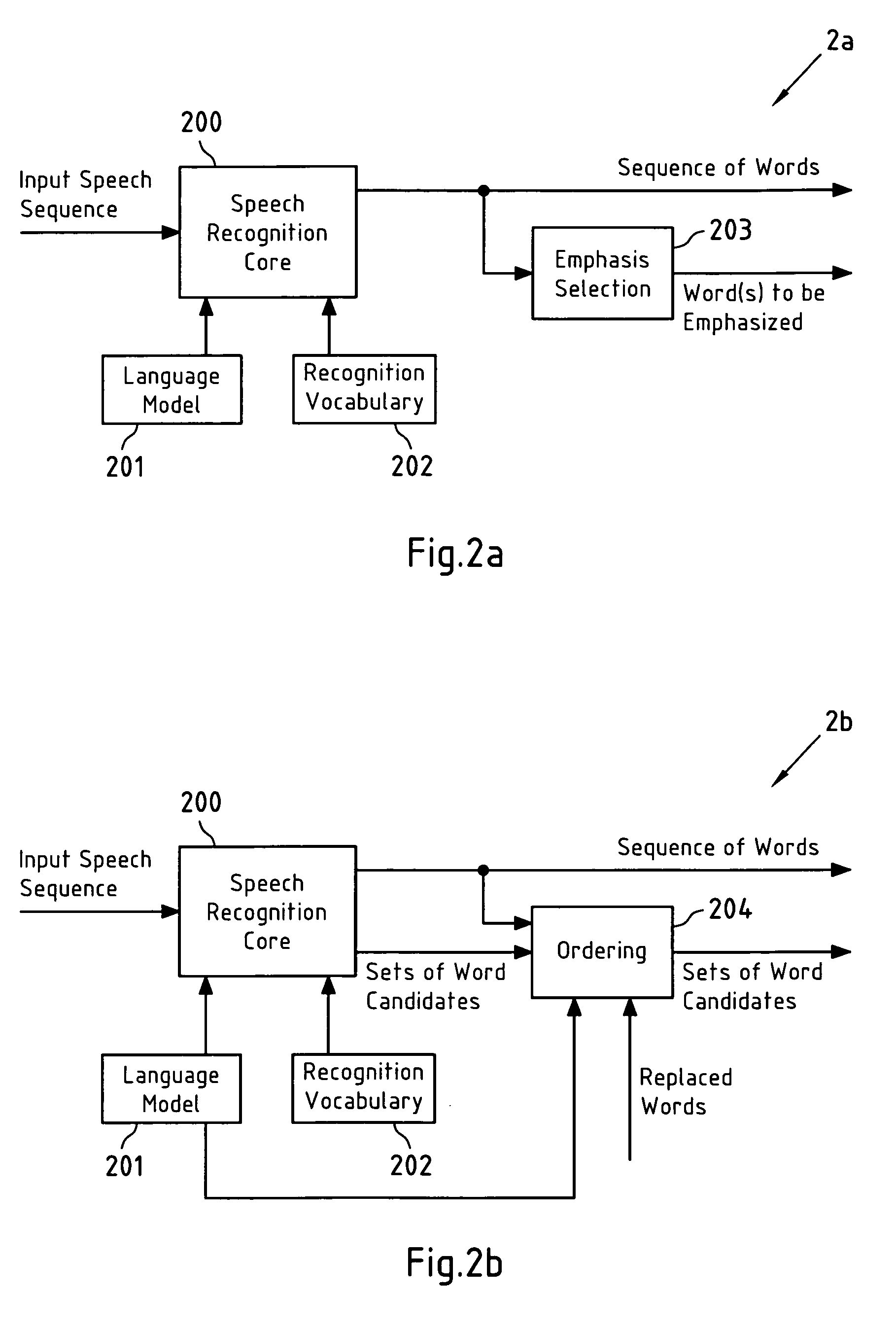

Error correction for speech recognition systems

Words in a sequence of words that is obtained from speech recognition of an input speech sequence are presented to a user, and at least one of the words in the sequence of words is replaced, in case it has been selected by a user for correction. Words with a low recognition confidence value are emphasized; alternative word candidates for the at least one selected word are ordered according to an ordering criterion; after replacing a word, an order of alternative word candidates for neighboring words in the sequence is updated; the replacement word is derived from a spoken representation of the at least one selected word by speech recognition with a limited vocabulary; and the word that replaces the at least one selected word is derived from a spoken and spelled representation of the at least one selected word.

Owner:NOKIA CORP

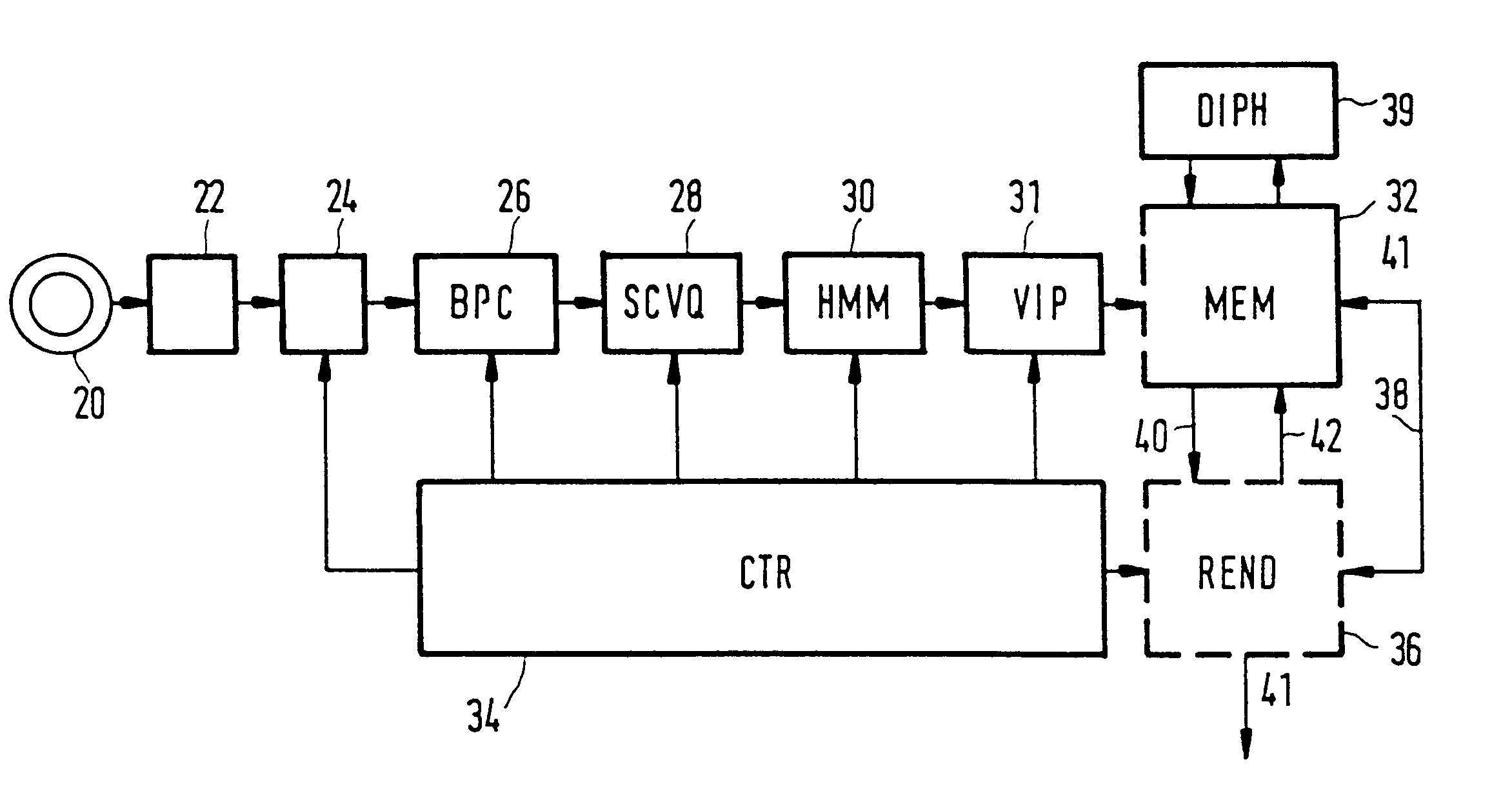

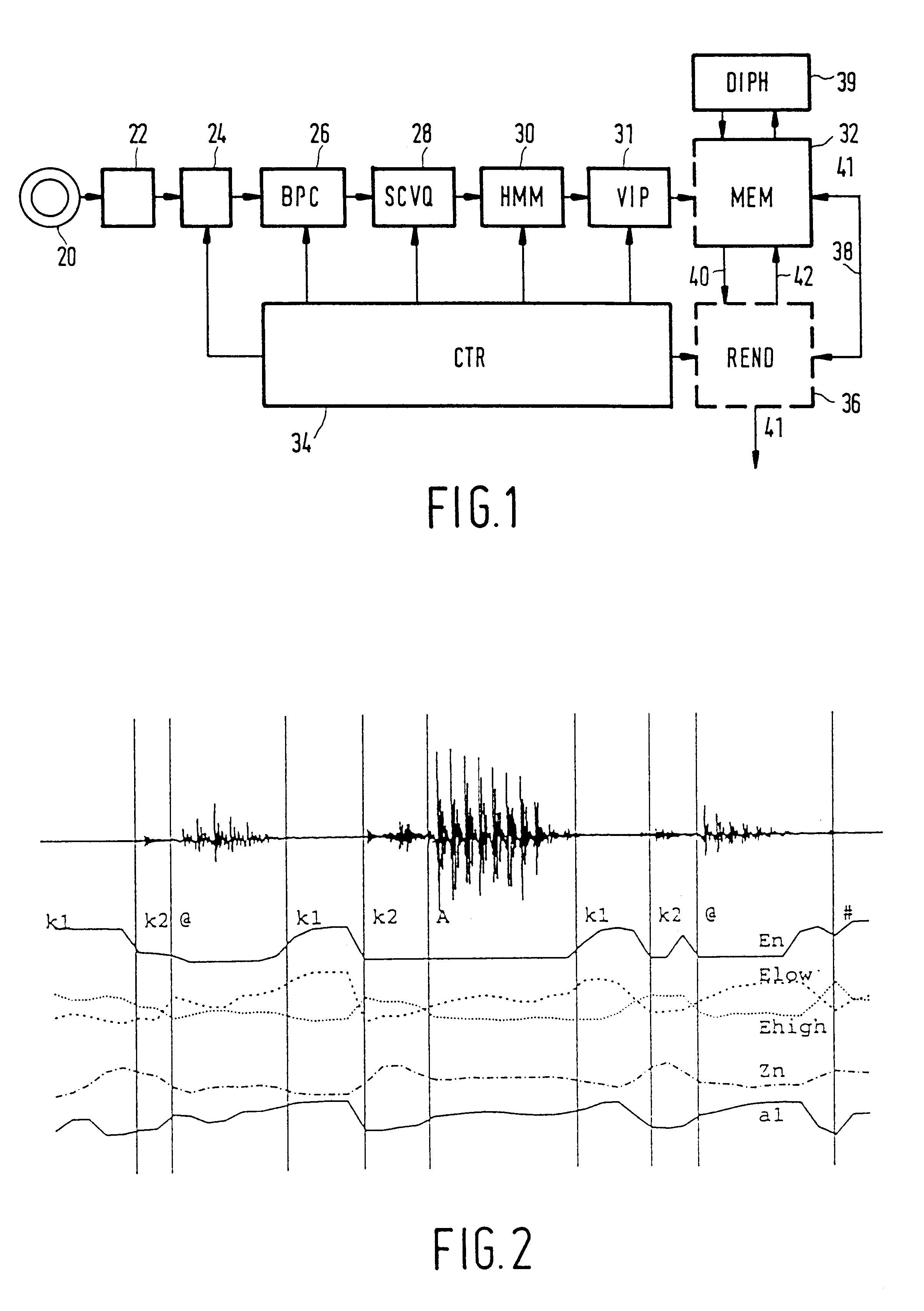

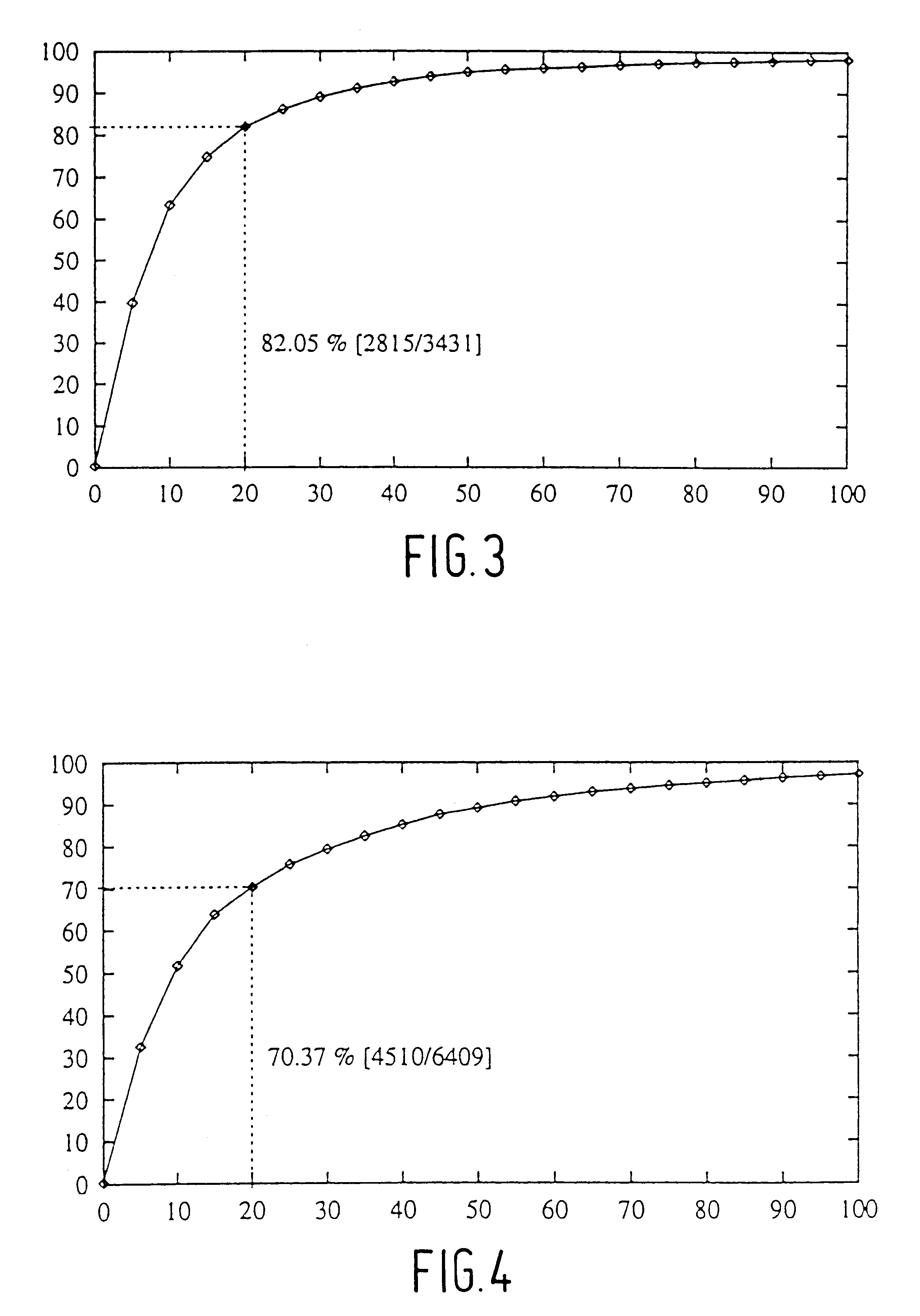

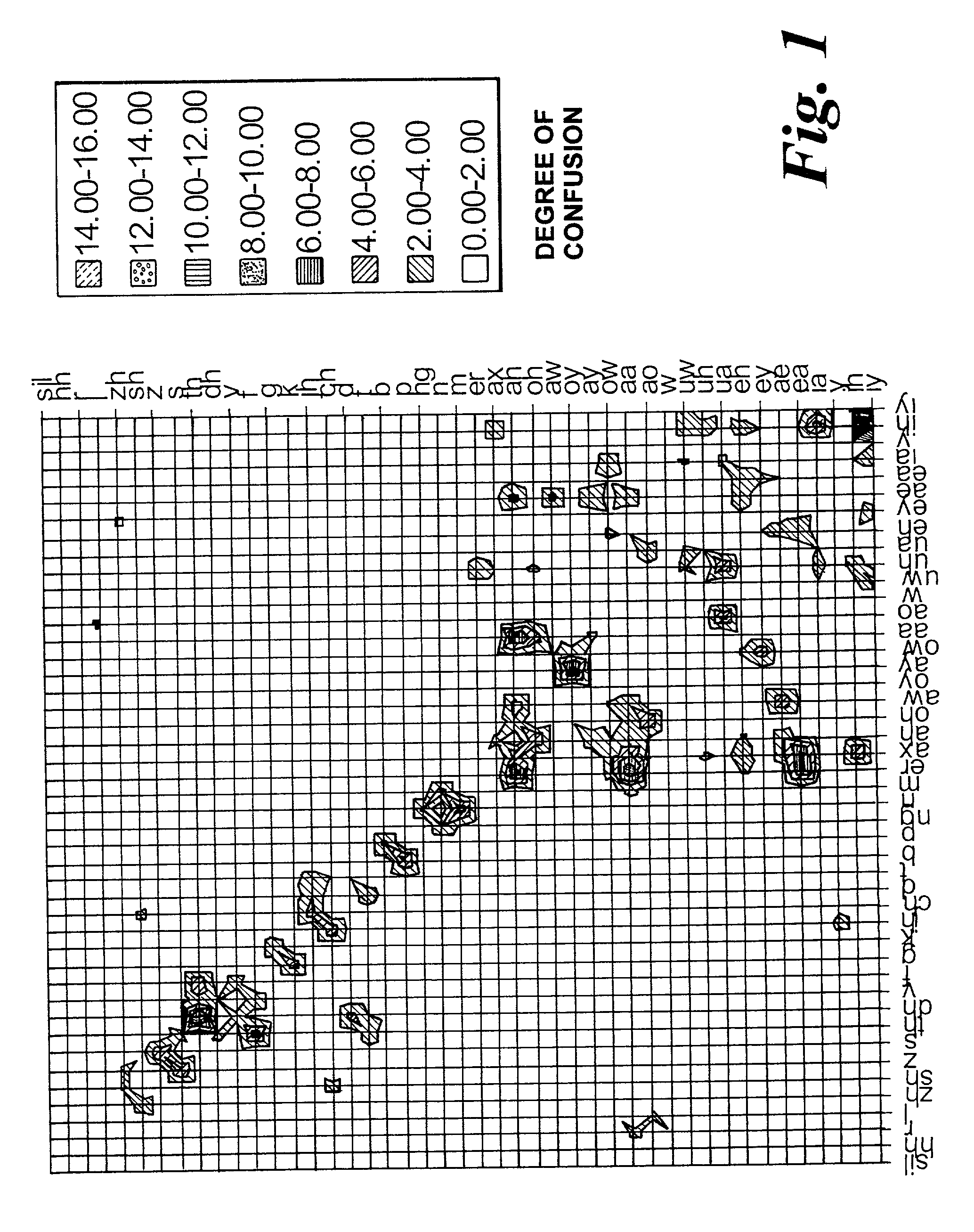

Method and apparatus for automatic speech segmentation into phoneme-like units for use in speech processing applications, and based on segmentation into broad phonetic classes, sequence-constrained vector quantization and hidden-markov-models

InactiveUS6208967B1Straightforward and inexpensiveDigital computer detailsBiological modelsAutomatic speech segmentationSpoken language

For machine segmenting of speech, first utterances from a database of known spoken words are classified and segmented into three broad phonetic classes (BPC) voiced, unvoiced, and silence. Next, using preliminary segmentation positions as anchor points, sequence-constrained vector quantization is used for further segmentation into phoneme-like units. Finally, exact tuning to the segmented phonemes is done through Hidden-Markov Modelling and after training a diphone set is composed for further usage.

Owner:U S PHILIPS CORP

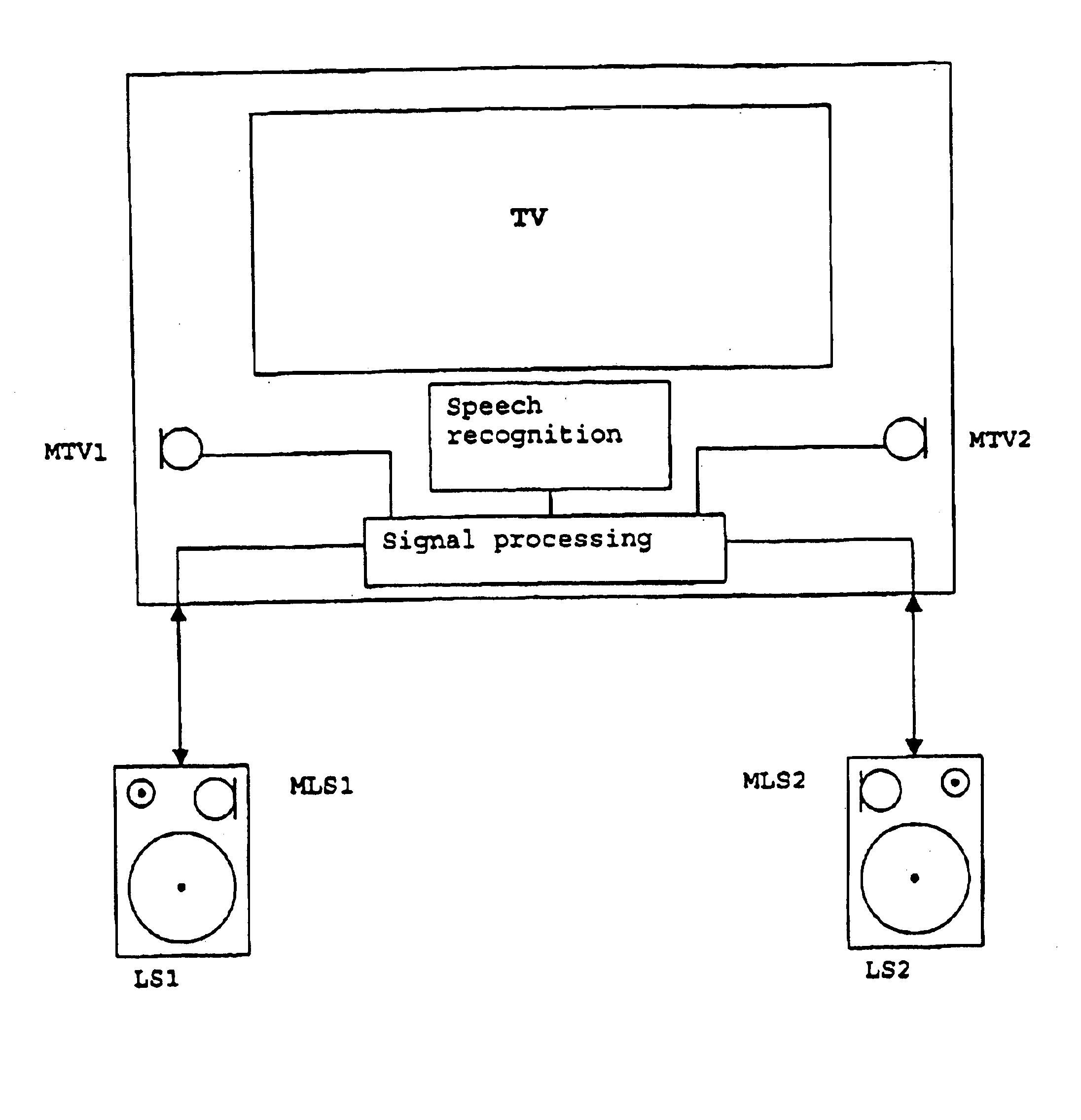

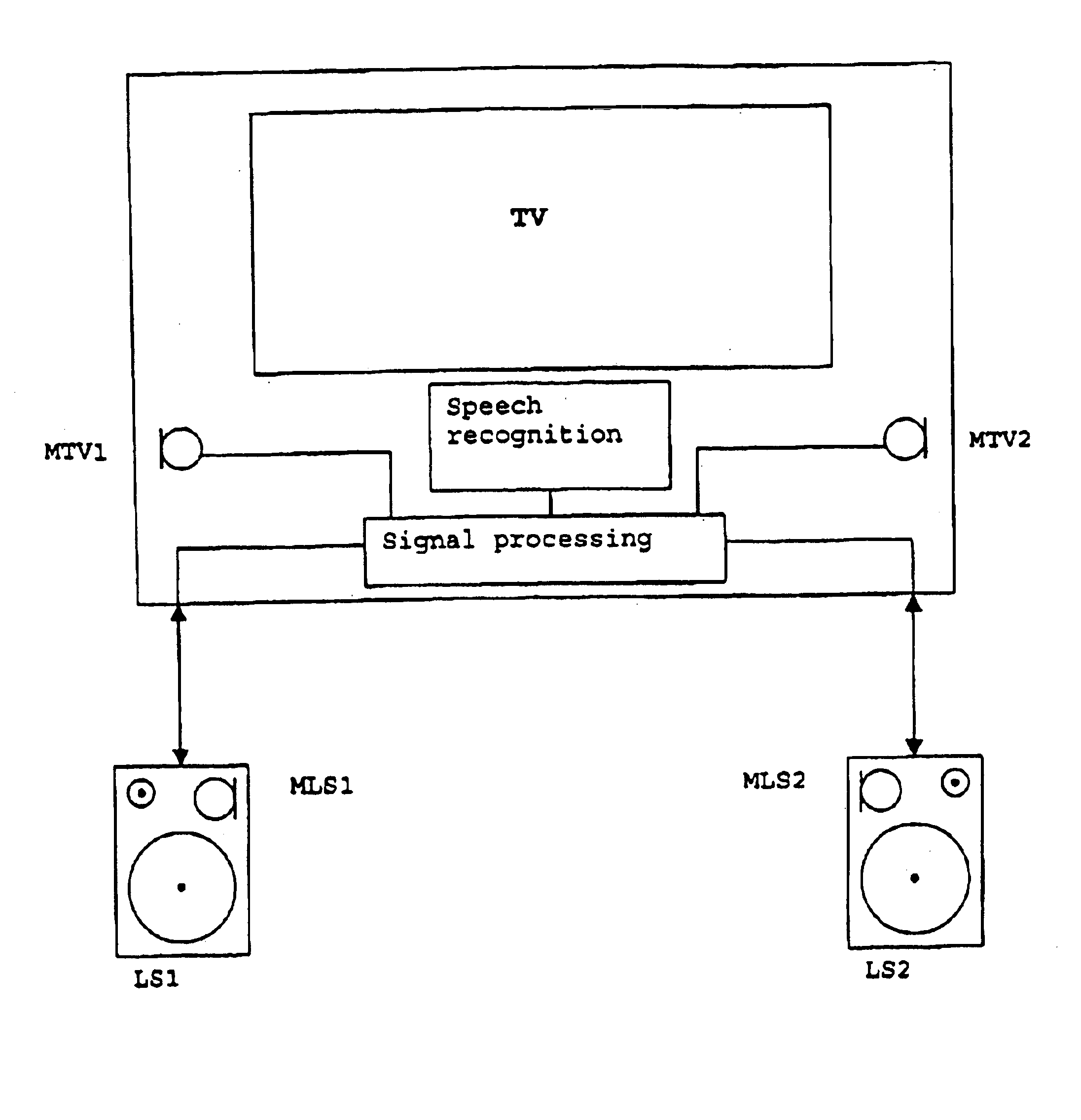

Voice control system with a microphone array

InactiveUS6868045B1Sufficient interference immunityBeacon systems using ultrasonic/sonic/infrasonic wavesElectric signal transmission systemsIEEE 1394VIT signals

Voice control systems are used in diverse technical fields. In this case, the spoken words are detected by one or more microphones and then fed to a speech recognition system. In order to enable voice control even from a relatively great distance, the voice signal must be separated from interfering background signals. This can be effected by spatial separation using microphone arrays comprising two or more microphones. In this case, it is advantageous for the individual microphones of the microphone array to be distributed spatially over the greatest possible distance. In an individual consumer electronics appliance, however, the distances between the individual microphones are limited on account of the dimensions of the appliance. Therefore, the voice control system according to the invention comprises a microphone array having a plurality of microphones which are distributed between different appliances, in which case the signals generated by the microphones can be transmitted to the central speech recognition unit, advantageously via a bidirectional network based on an IEEE 1394 bus.

Owner:INTERDIGITAL CE PATENT HLDG

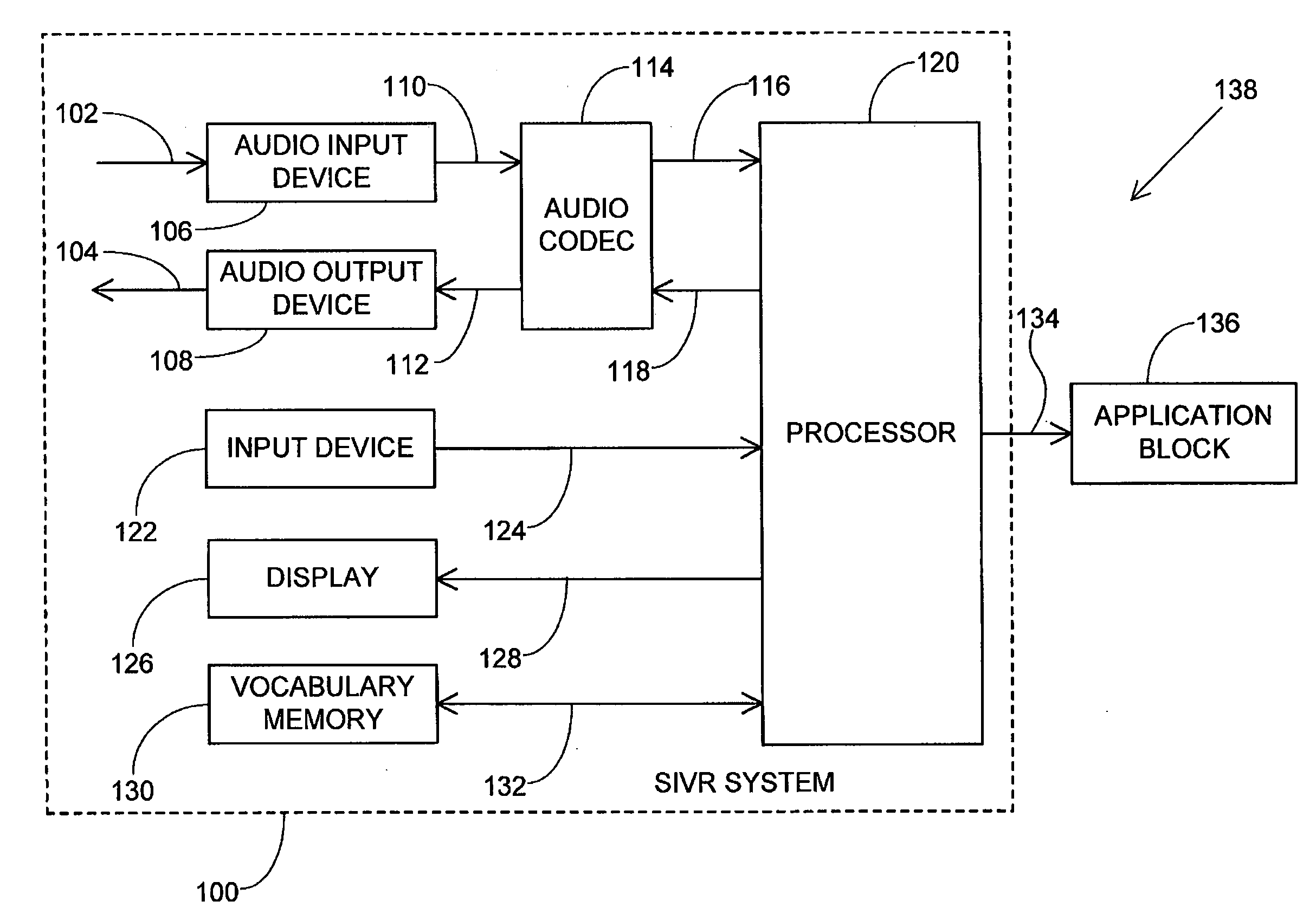

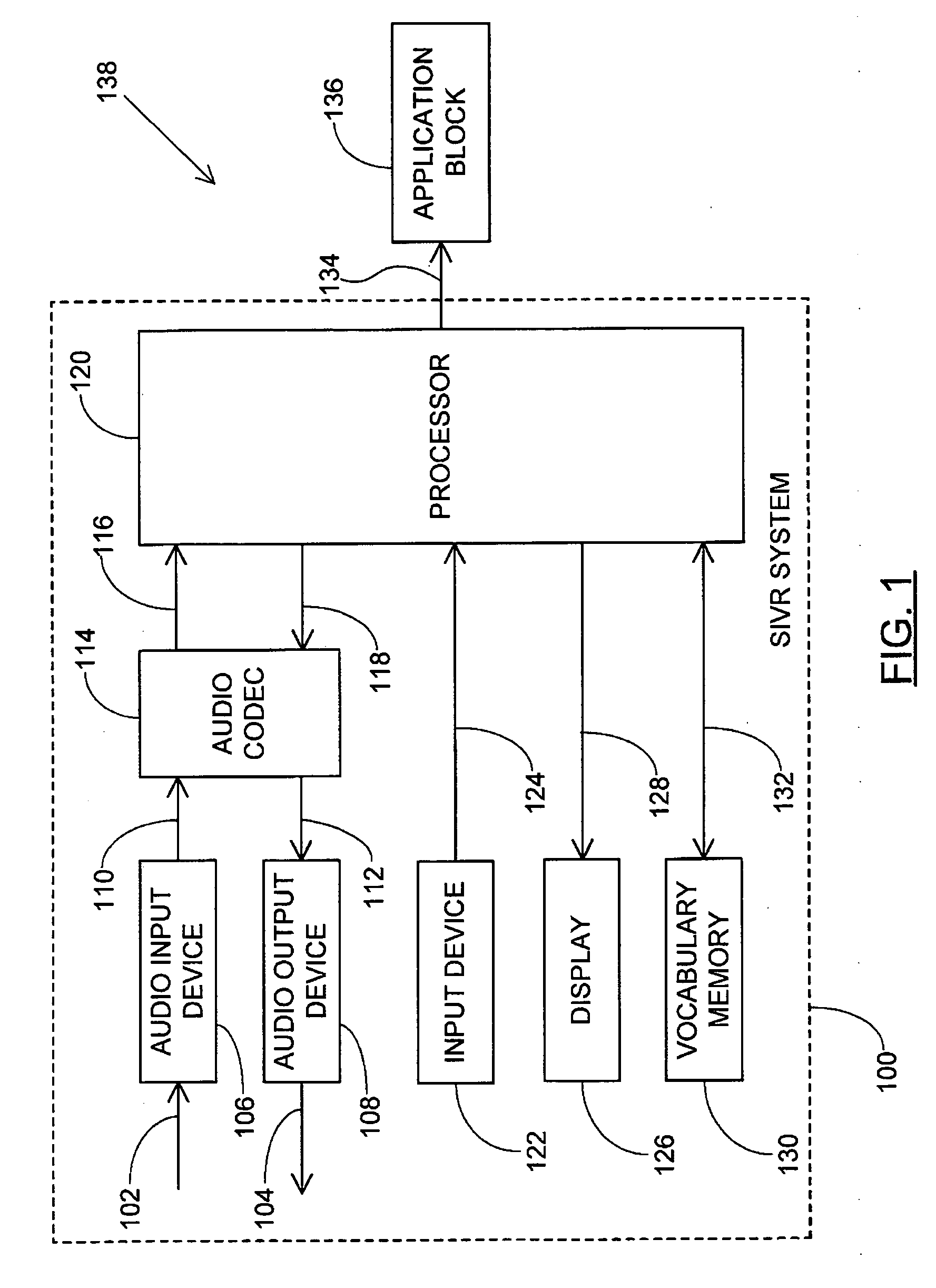

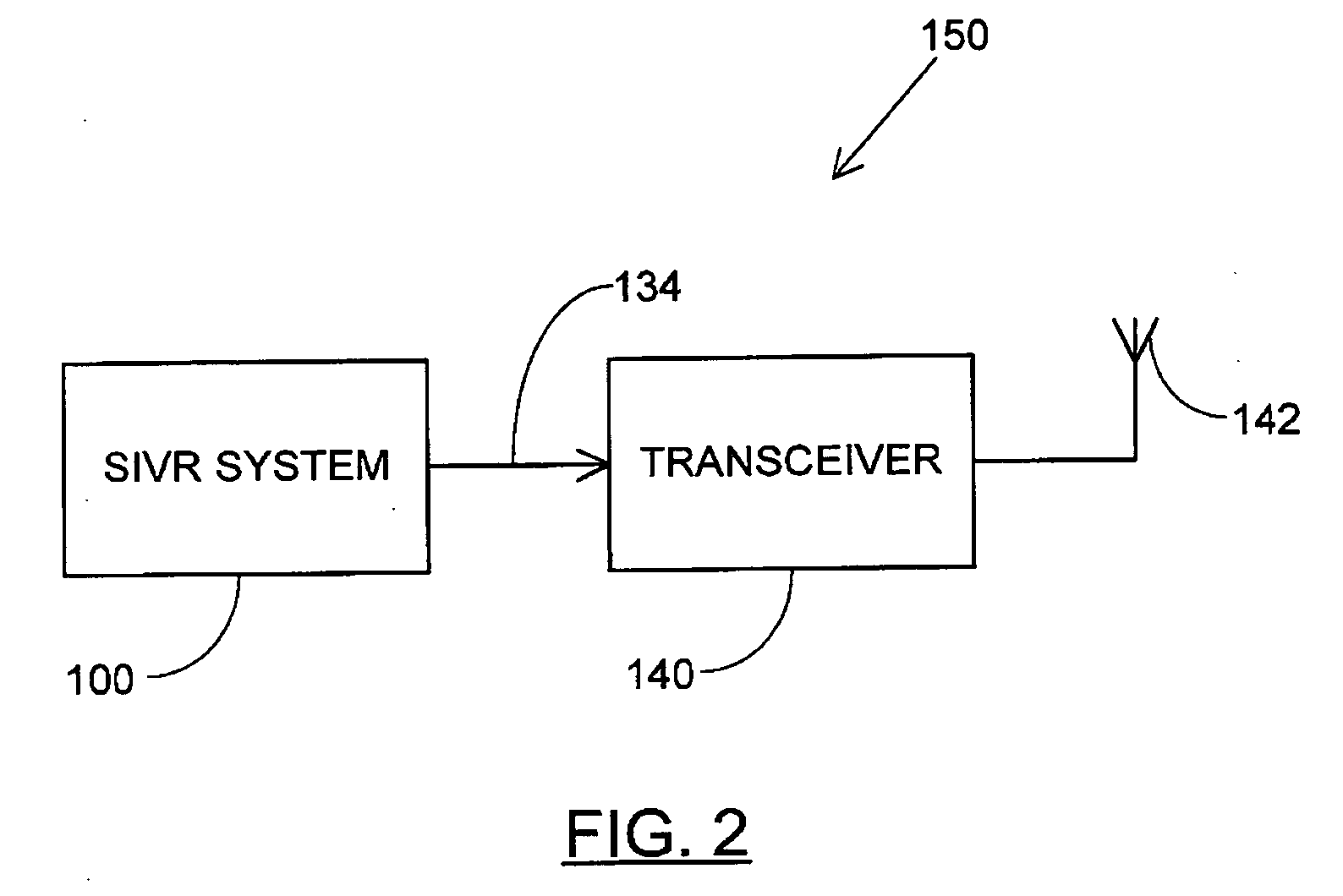

Apparatus and method for synthesized audible response to an utterance in speaker-independent voice recognition

When a speaker-independent voice-recognition (SIVR) system recognizes a spoken utterance that matches a phonetic representation of a speech element belonging to a predefined vocabulary, it may play a synthesized speech fragment as a means for the user to verify that the utterance was correctly recognized. When a speech element in the vocabulary has more than one possible pronunciation, the system may select the one most closely matching the user's utterance, and play a synthesized speech fragment corresponding to that particular representation.

Owner:MARVELL WORLD TRADE LTD

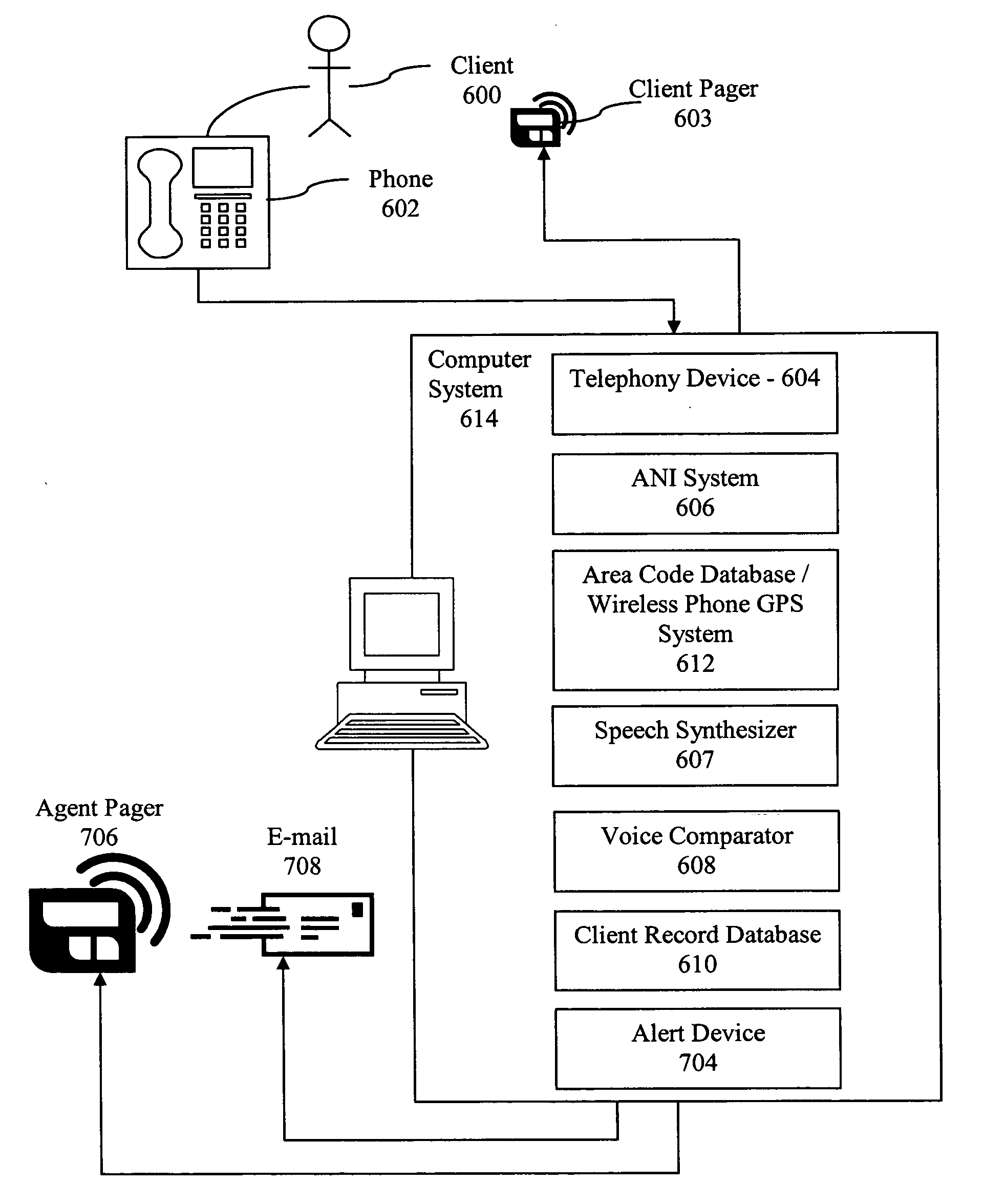

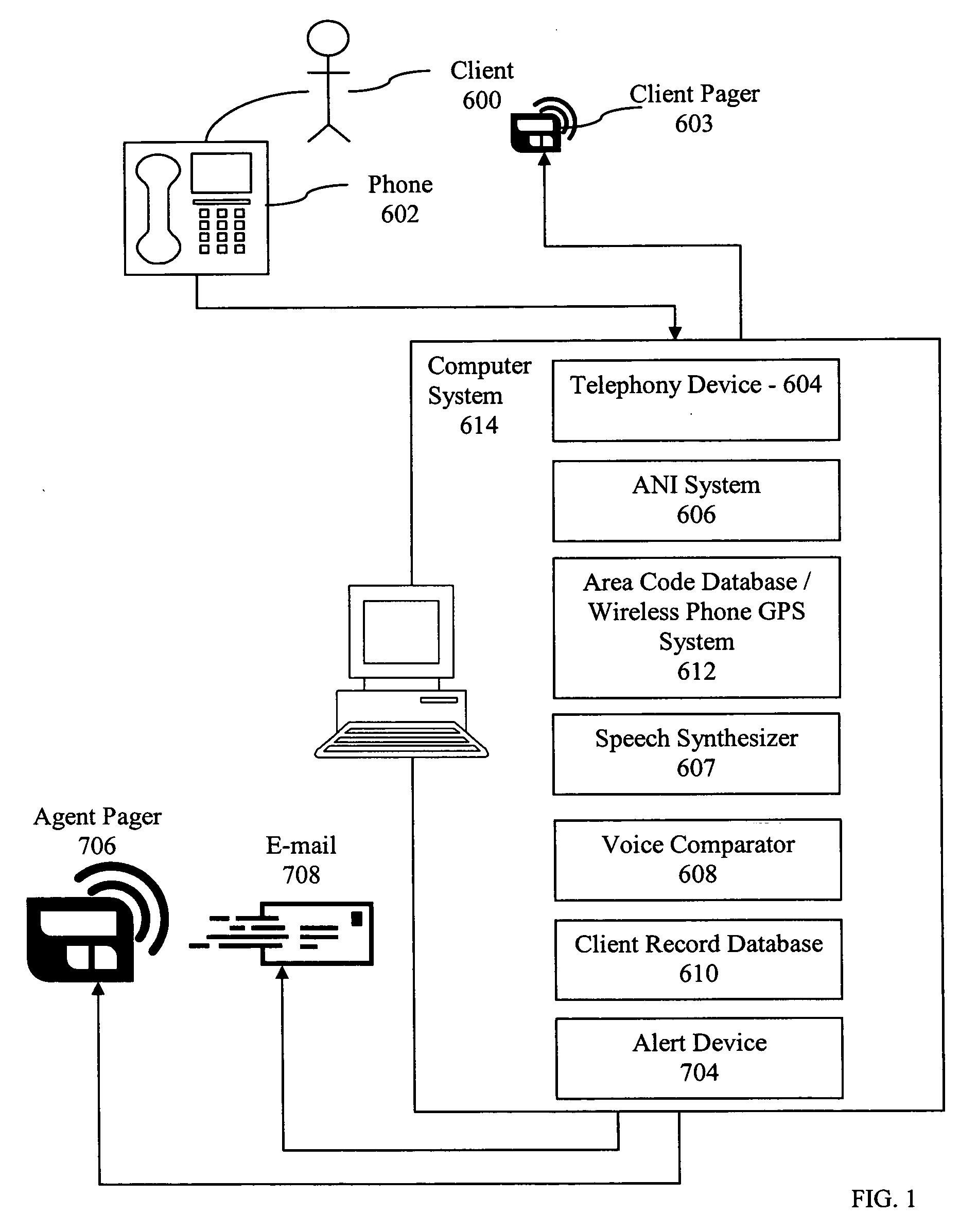

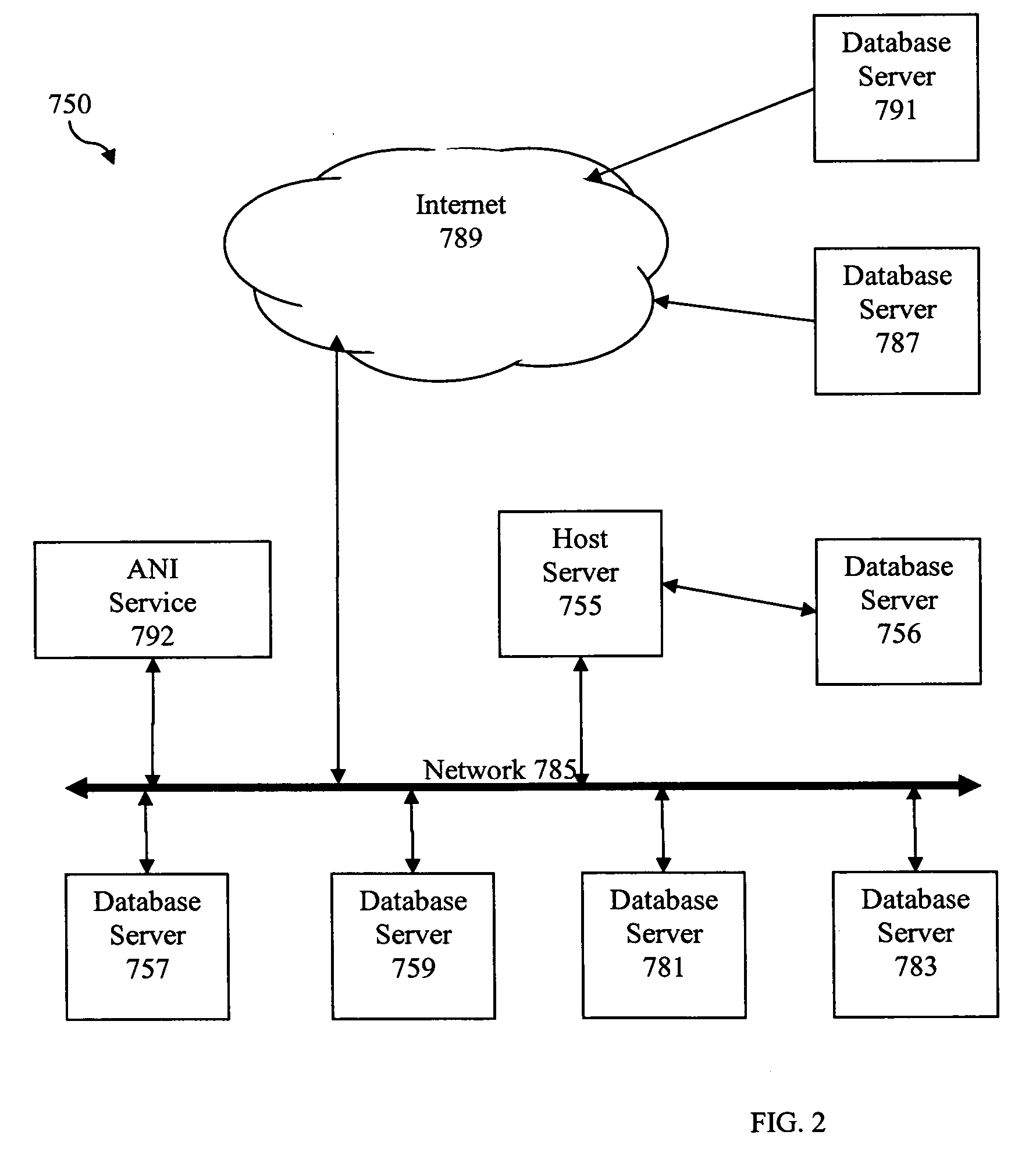

System and method for immigration tracking and intelligence

InactiveUS20060020459A1Anti-collision systemsAutomatic call-answering/message-recording/conversation-recordingSpoken languageVerification system

A client identity verification system and method which includes receiving a spoken voice sample from a client. Identifying the match of the client, verifying client information, changing parameters in a conditional database and communicating alerts based on the results. The method also includes matching the received spoken voice sample against a stored voiceprint and voiceprint template of random phrases. The method then also includes verifying the phone number of the client.

Owner:CARTER JOHN A +2

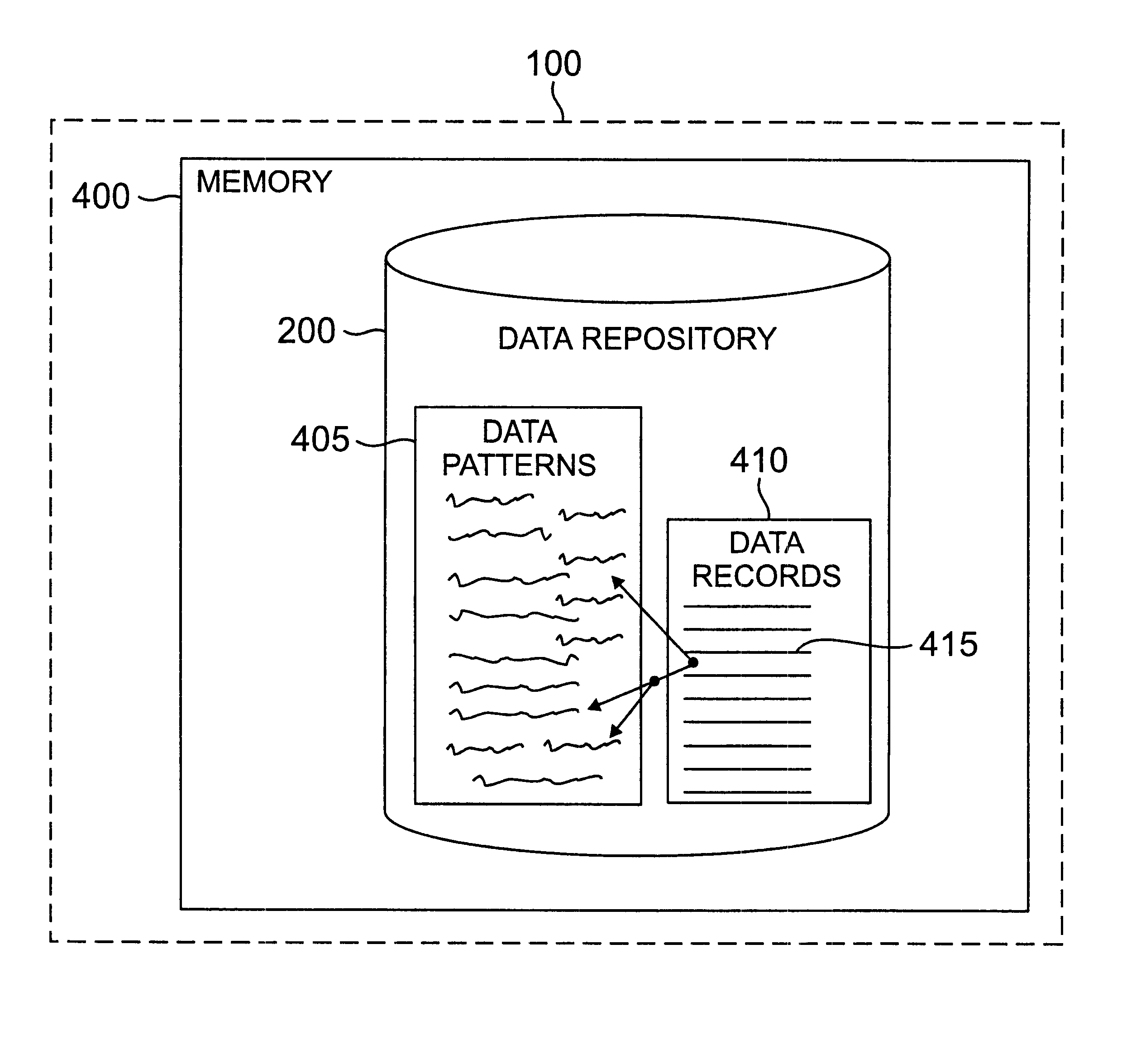

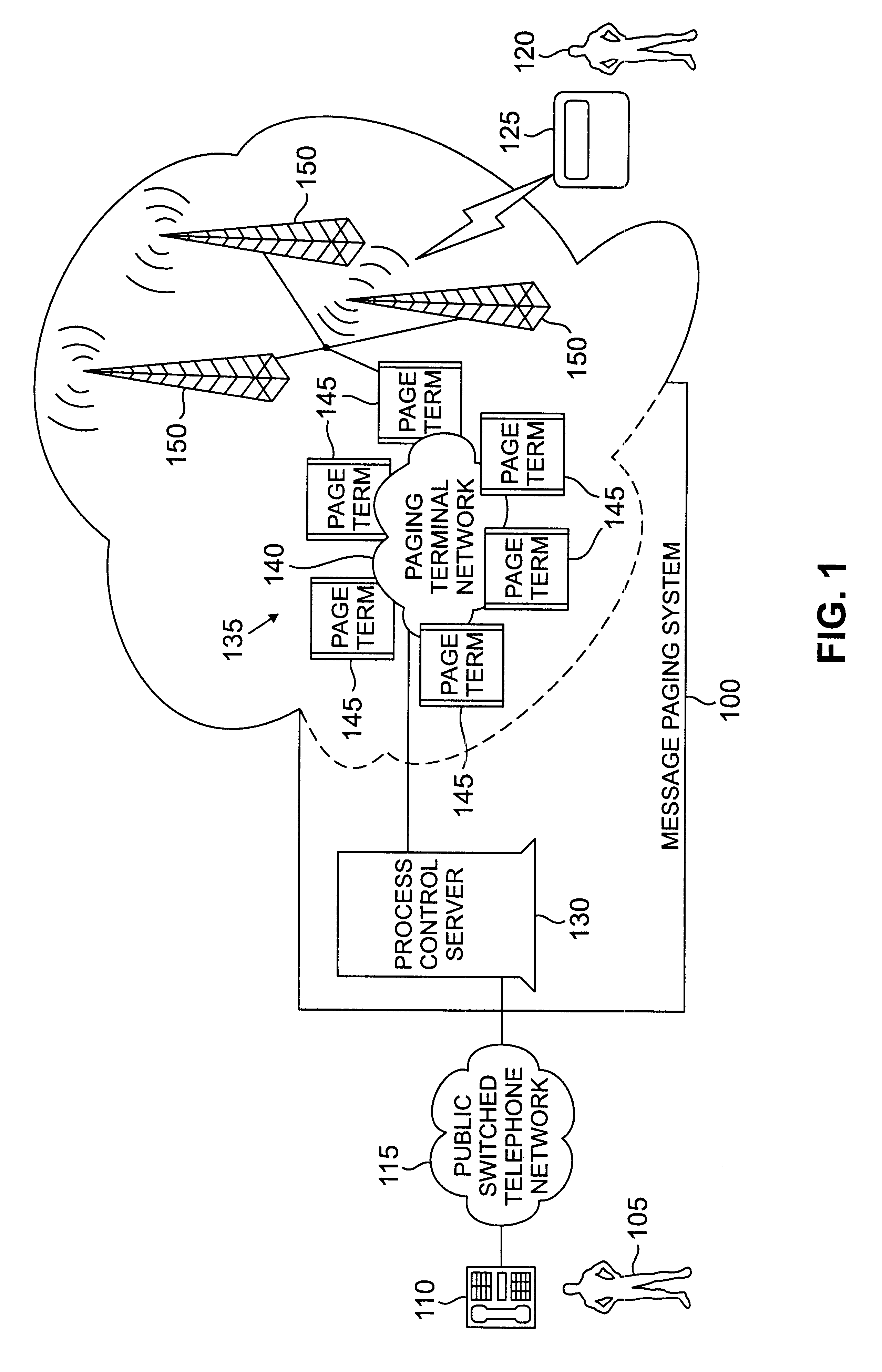

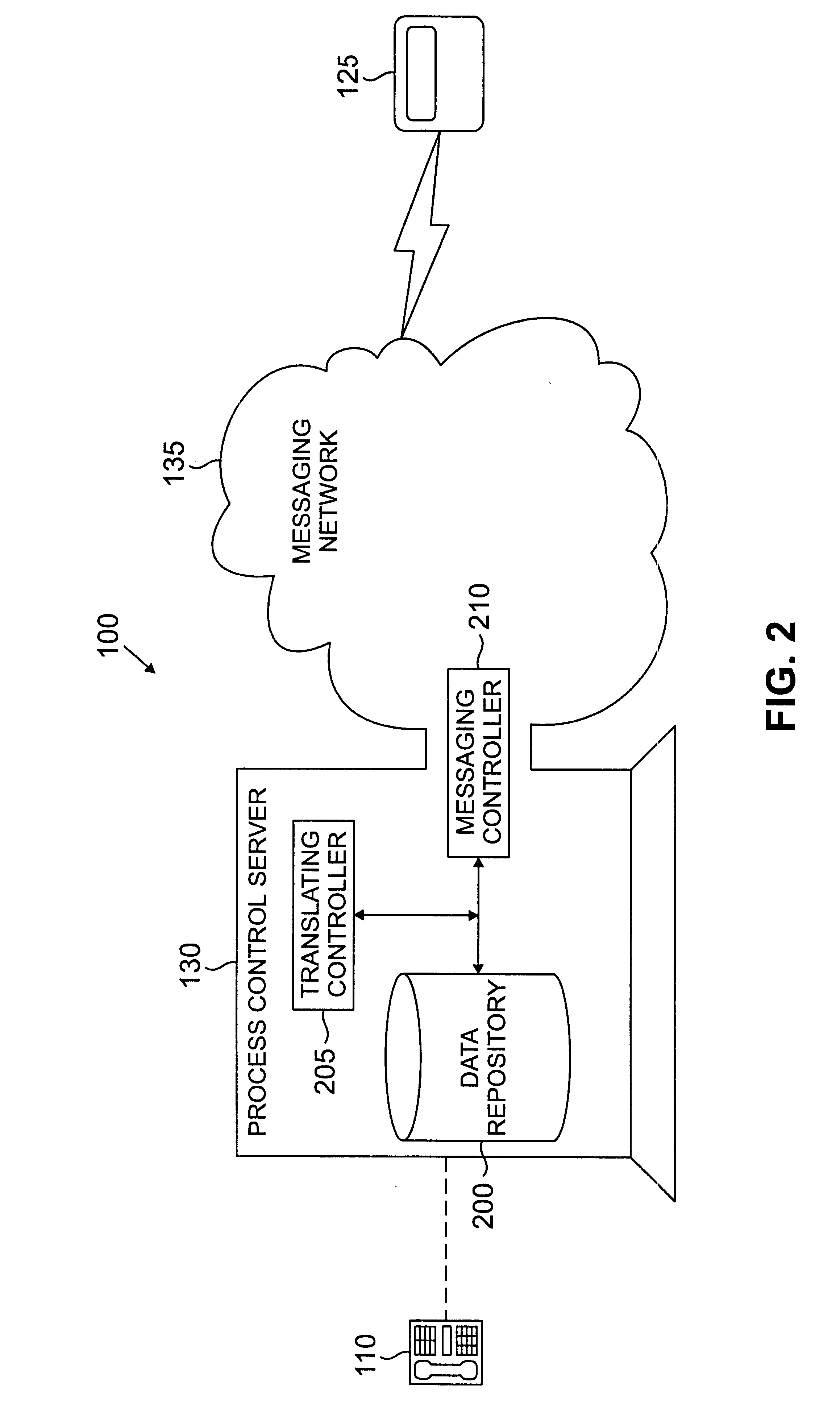

Controller for use with communications systems for converting a voice message to a text message

InactiveUS6687339B2Special service for subscribersAutomatic call-answering/message-recording/conversation-recordingCommunications systemSpoken language

The principles of the present invention introduce non-realtime messaging systems (and controllers for use therewith) that are capable of converting received oral messages from callers into at least substantially equivalent text messages for transmission to subscribers thereof. This may be accomplished by processing the received oral messages using data patterns representing oral phrases specific to non-realtime messaging systems. An exemplary messaging system includes each of a messaging controller, a data repository and a translating controller. The messaging controller is capable of receiving oral messages from callers and transmitting text messages to communications devices associated with subscribers of the non-realtime messaging system. The data repository is capable of storing data patterns that represent oral phrases specific to the non-realtime messaging system. The translating controller, which is associated with the messaging controller and data repository, is operable to process the received oral messages using the stored data patterns and to generate at least substantially equivalent text messages in response thereto.

Owner:USA MOBILITY WIRELESS INC

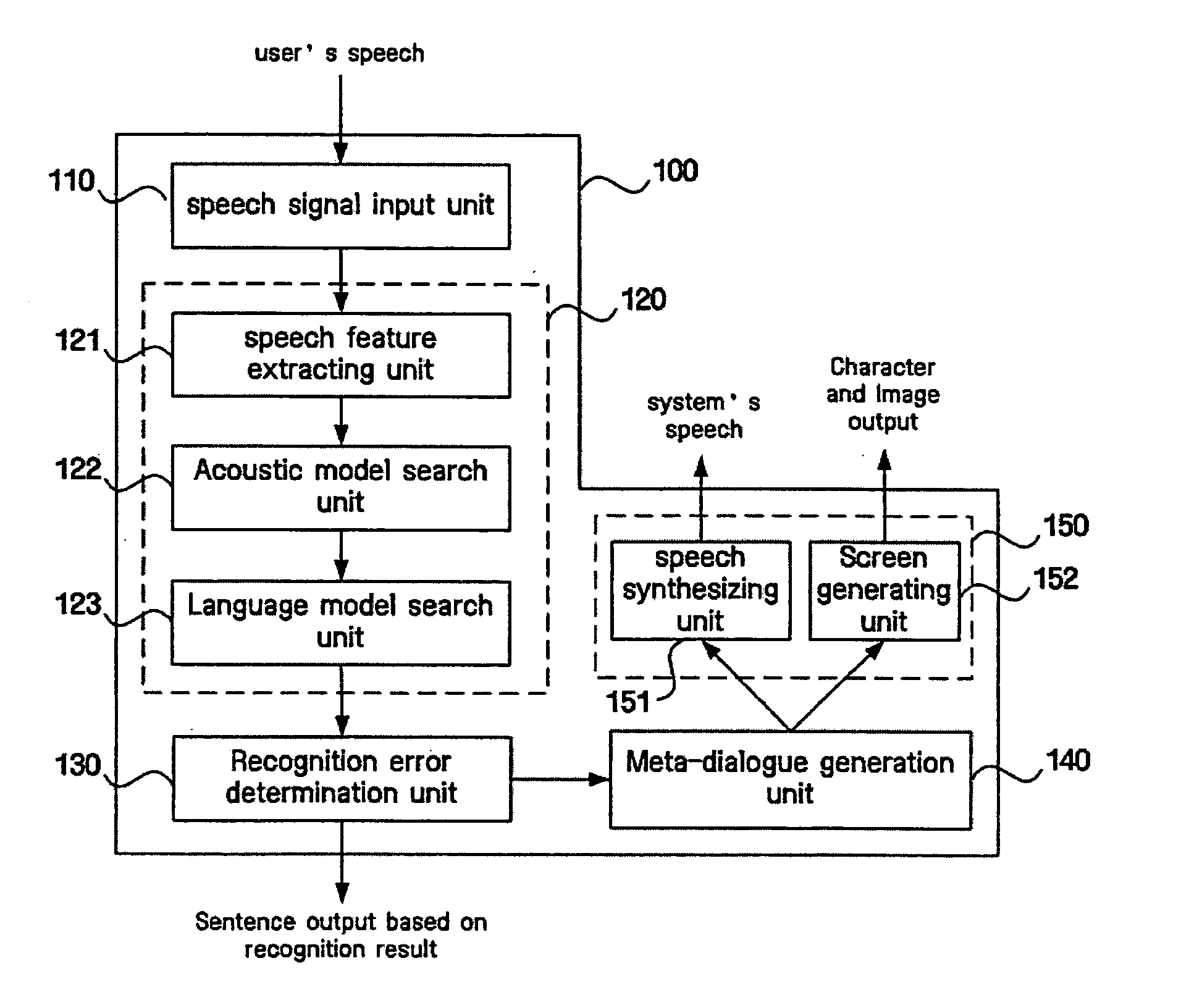

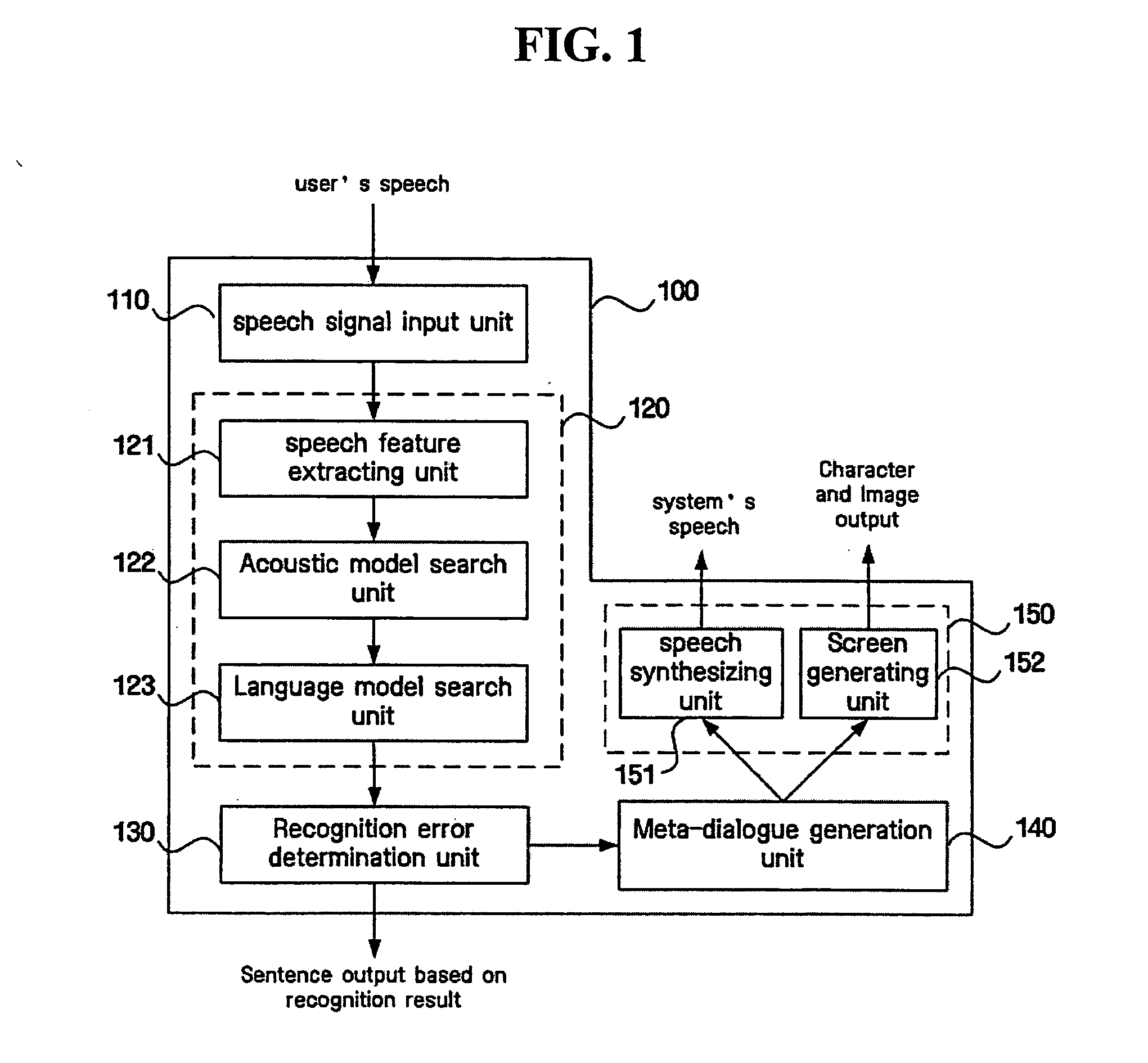

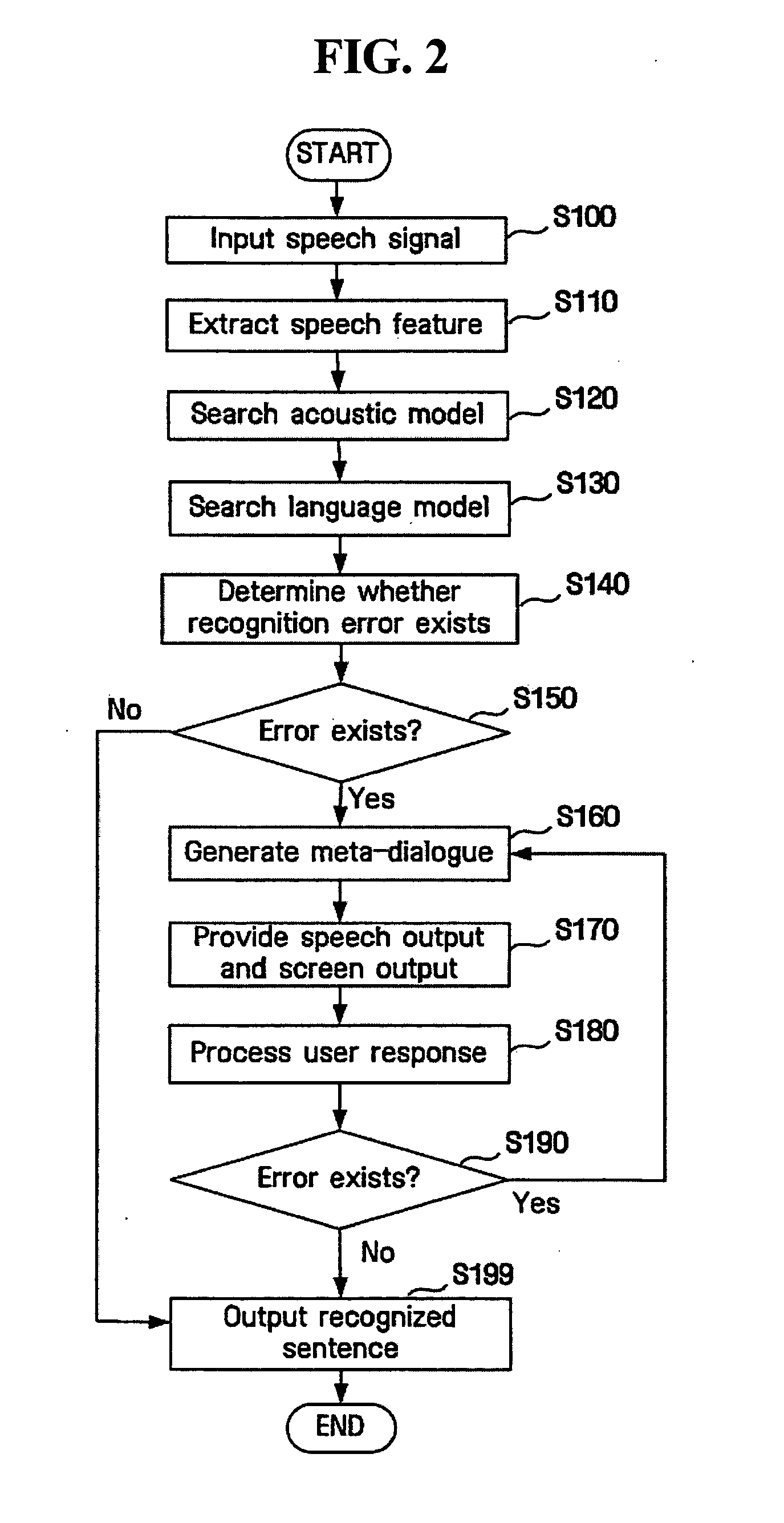

Method and apparatus handling speech recognition errors in spoken dialogue systems

ActiveUS20050033574A1Reliably resolvingEffective recoverySpeech recognitionDialog systemSpoken language

In order to handle portions of a recognized sentence having an error, a speaker or user is questioned about contents associated with the portions, and according to a user's answer a result is obtained. A speech recognition unit extracts a speech feature of a speech signal inputted from a user and finds a phoneme nearest to the speech feature to recognize a word. A recognition error determination unit finds a sentence confidence based on a confidence of the recognized word, performs examination of a semantic structure of a recognized sentence, and determines whether or not an error exists in the recognized sentence which is subject to speech recognition according to a predetermined criterion based on both the sentence confidence and a result of examining the semantic structure of the recognized sentence. Further, a meta-dialogue generation unit generates a question asking the user for additional information based on a content of a portion where the error exists and a type of the error.

Owner:SAMSUNG ELECTRONICS CO LTD

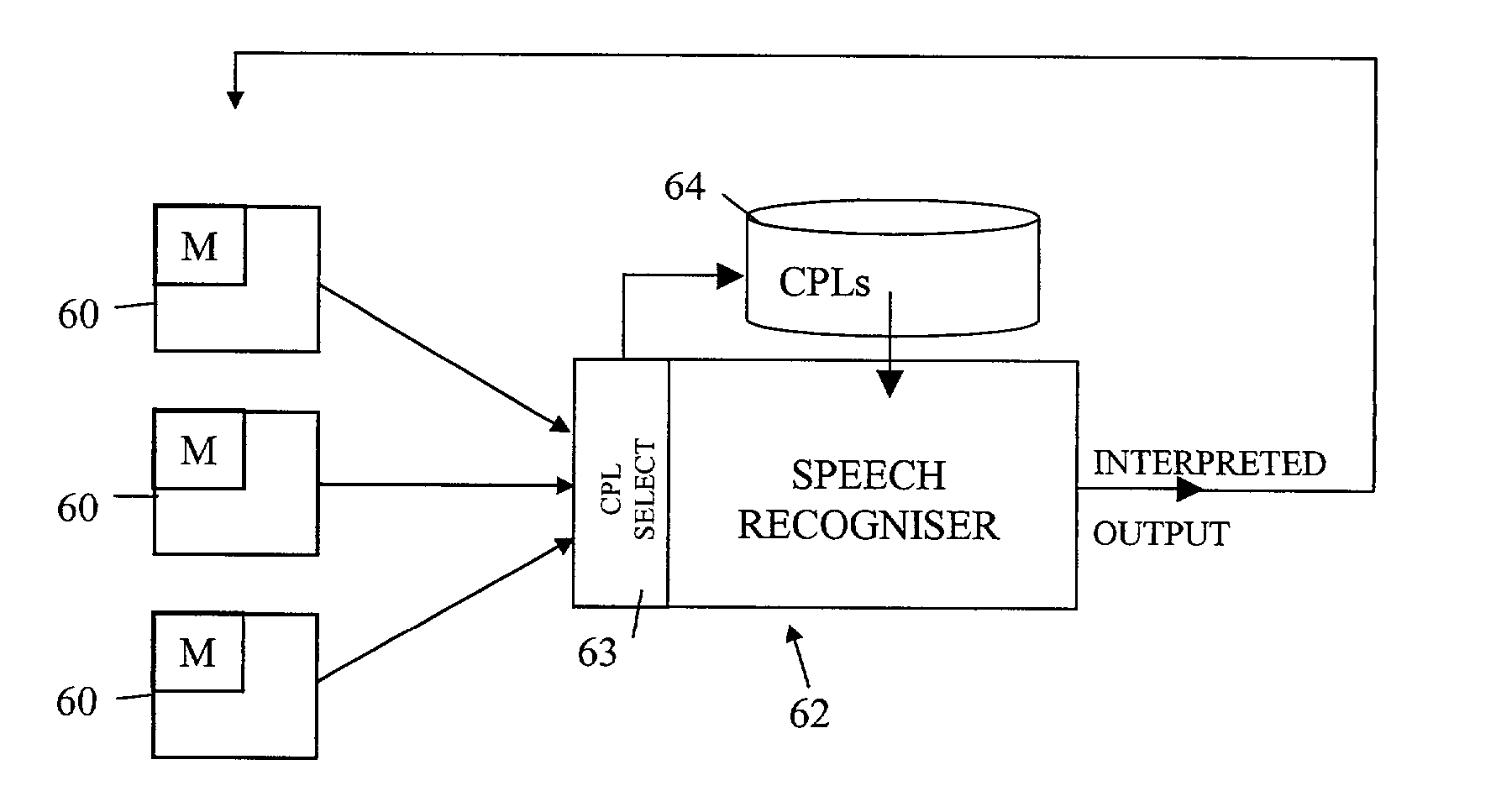

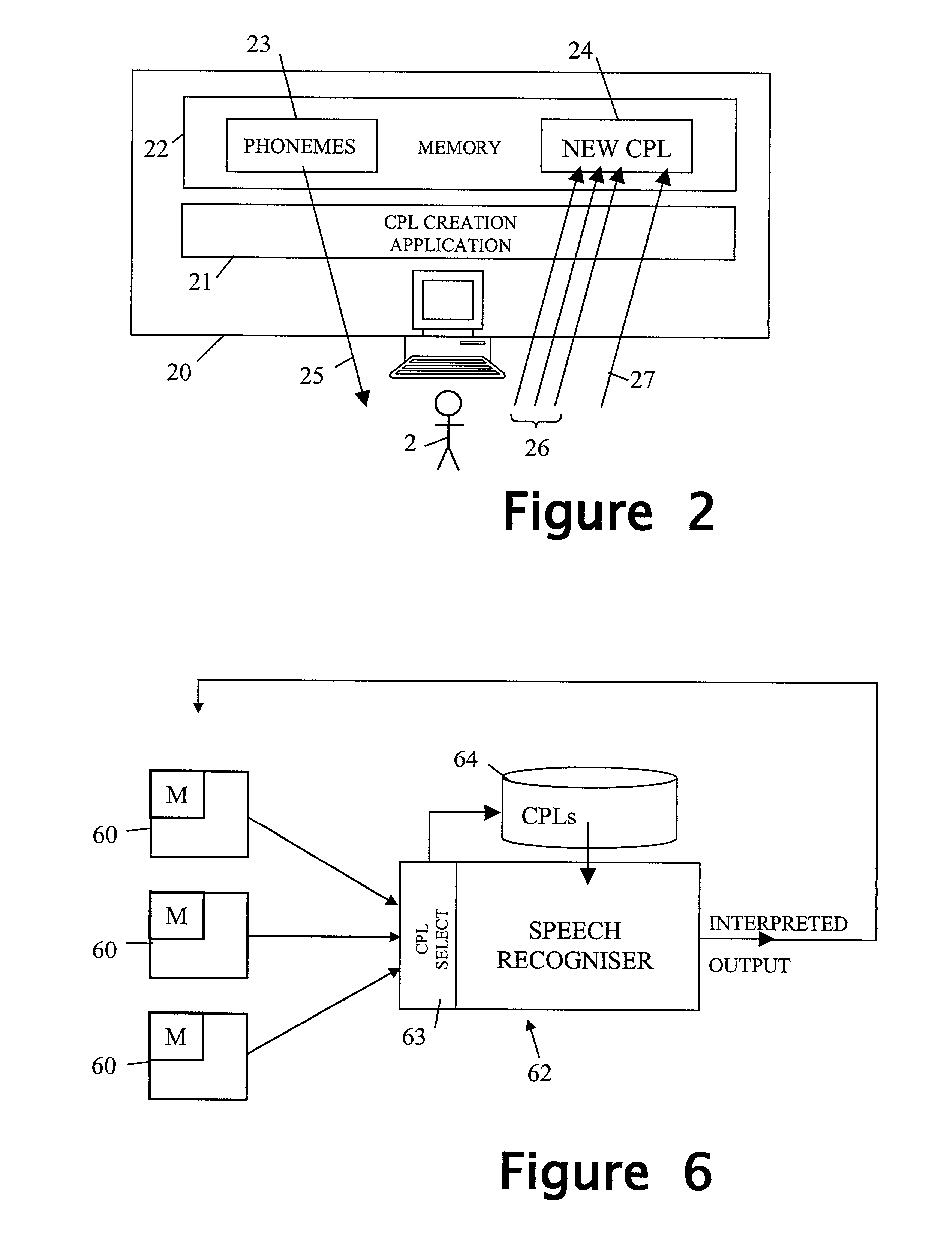

Artificial language

InactiveUS20020128840A1Reduce riskReduce risk of confusionSpeech recognitionSpeech synthesisSpoken languageSpeech identification

Owner:HEWLETT PACKARD DEV CO LP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com