Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

181 results about "Conversational speech" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Conversational speech is also known as Informal speech. Speakers regularly apply informal speech with friends and relatives, in daily conversations and in personal letters. Informal speech can cover informal text messages and different types of written communication.

Conversational computing via conversational virtual machine

InactiveUS7137126B1Limitation for transferReduce degradationInterconnection arrangementsResource allocationConversational speechApplication software

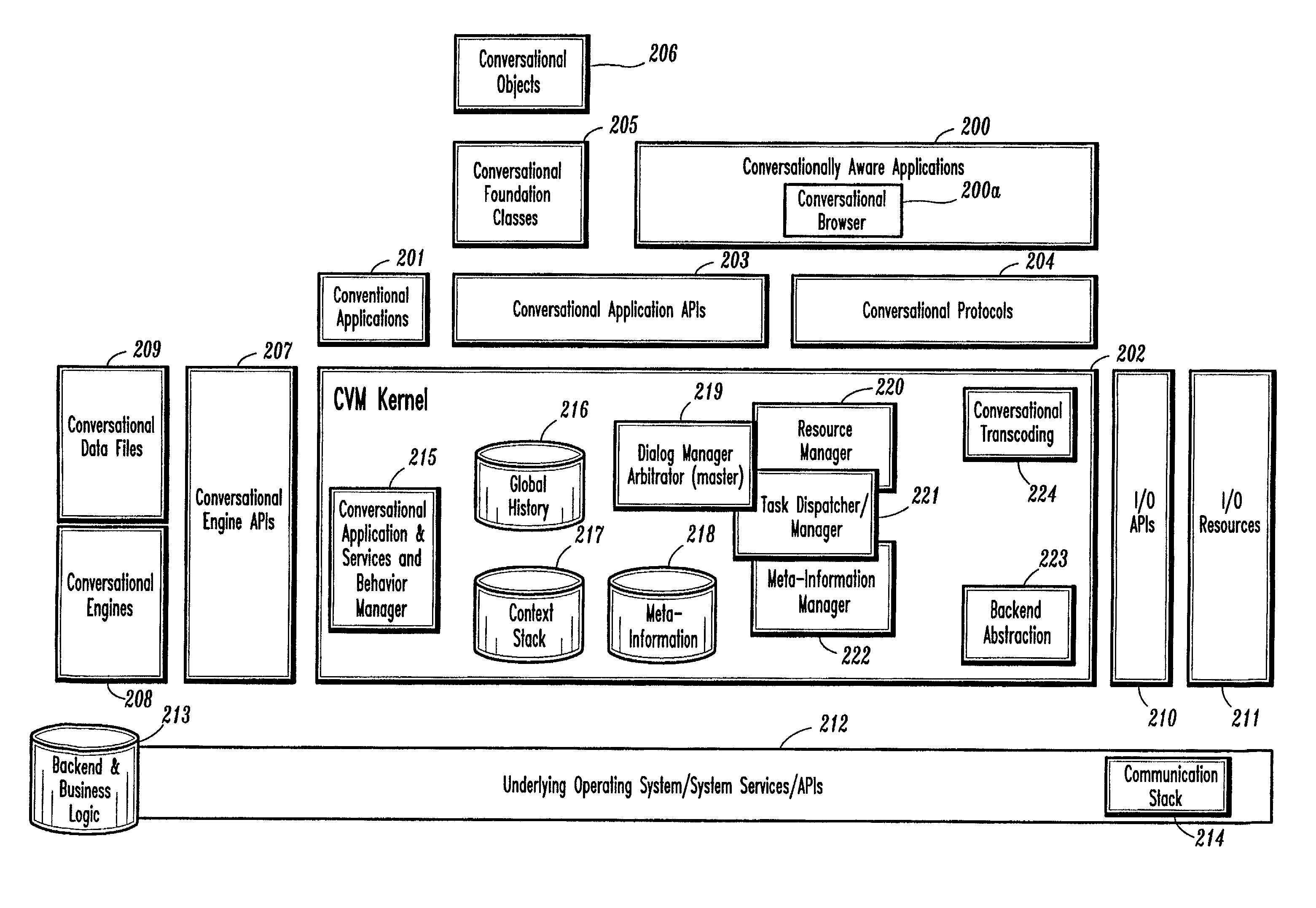

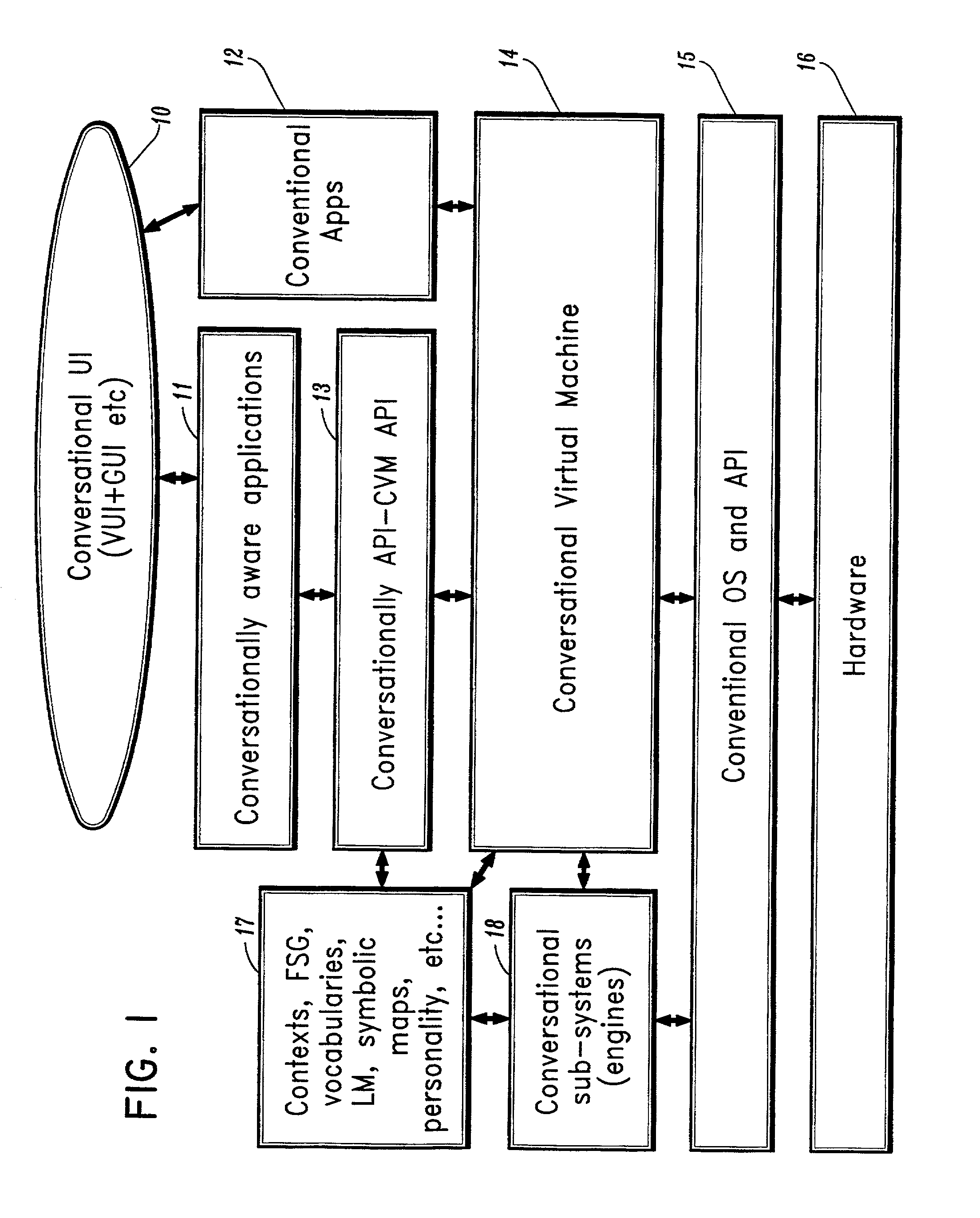

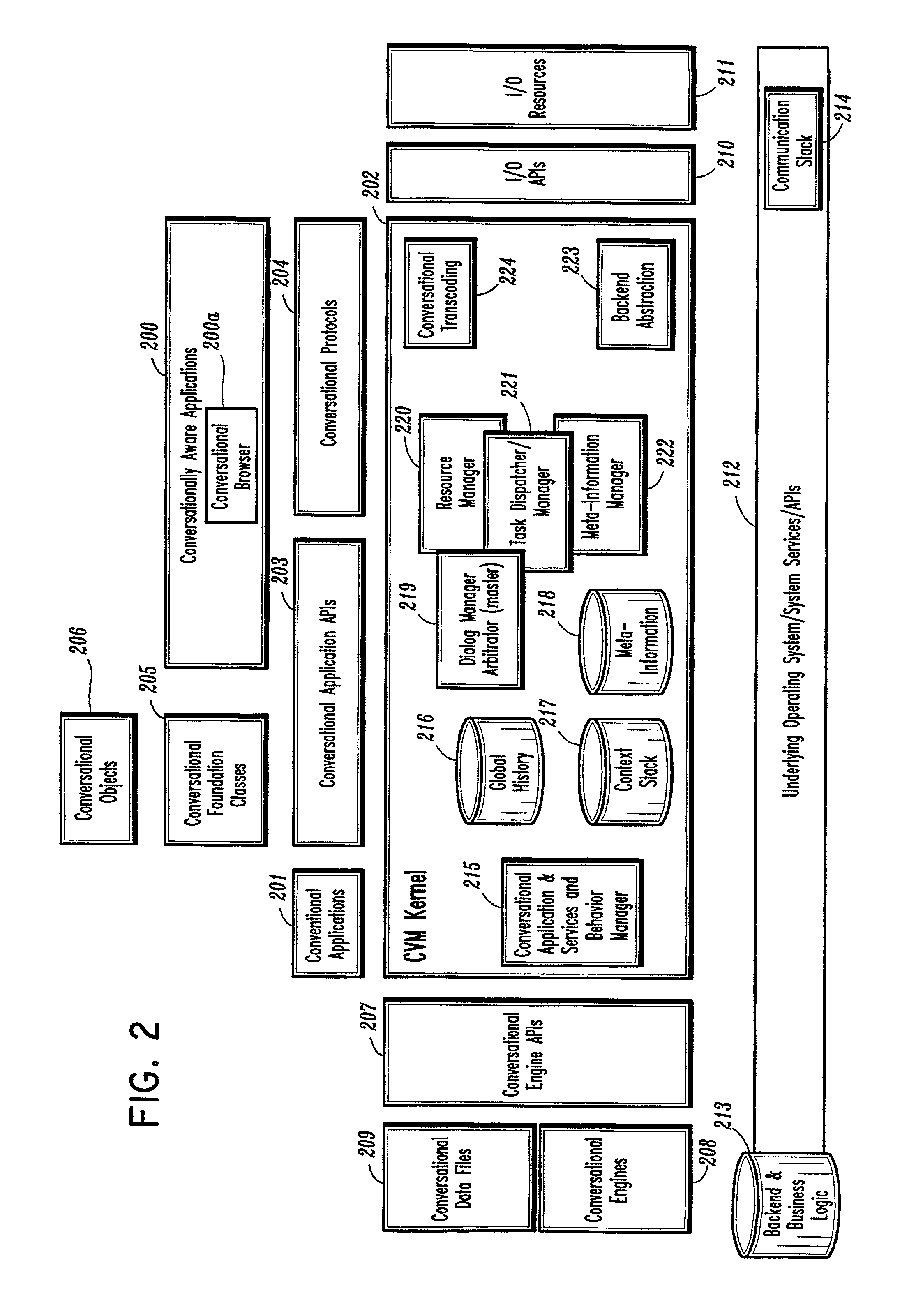

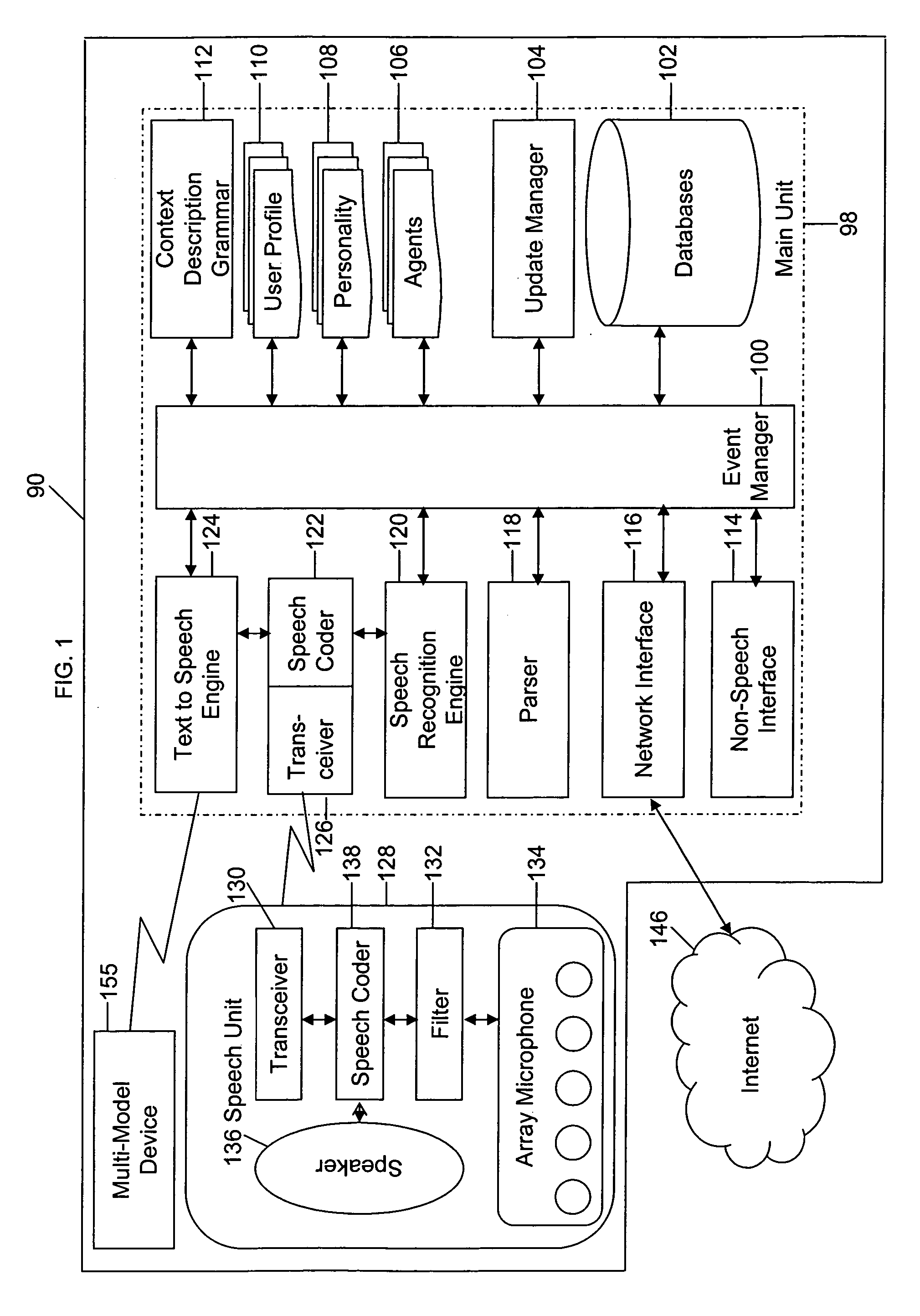

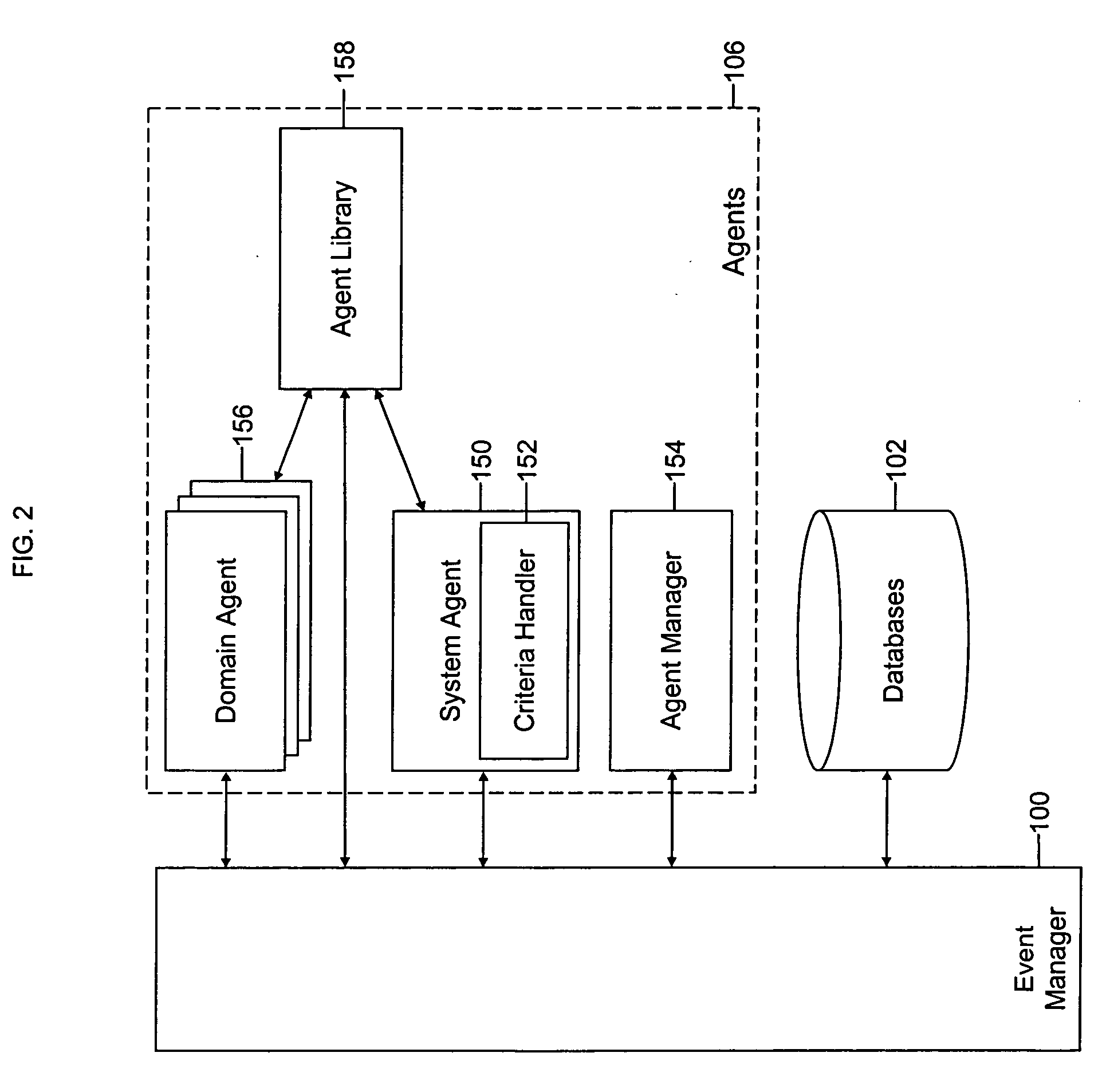

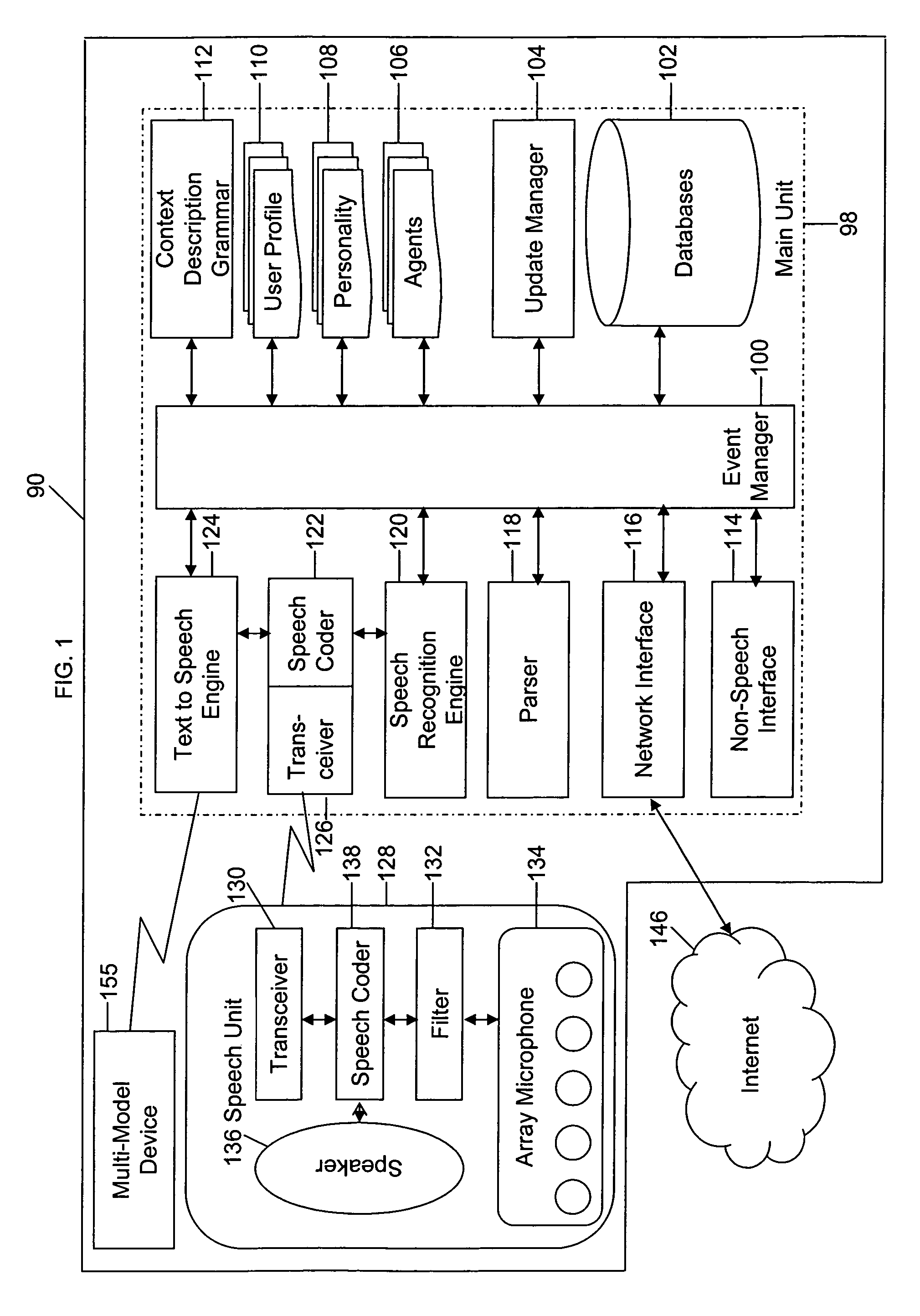

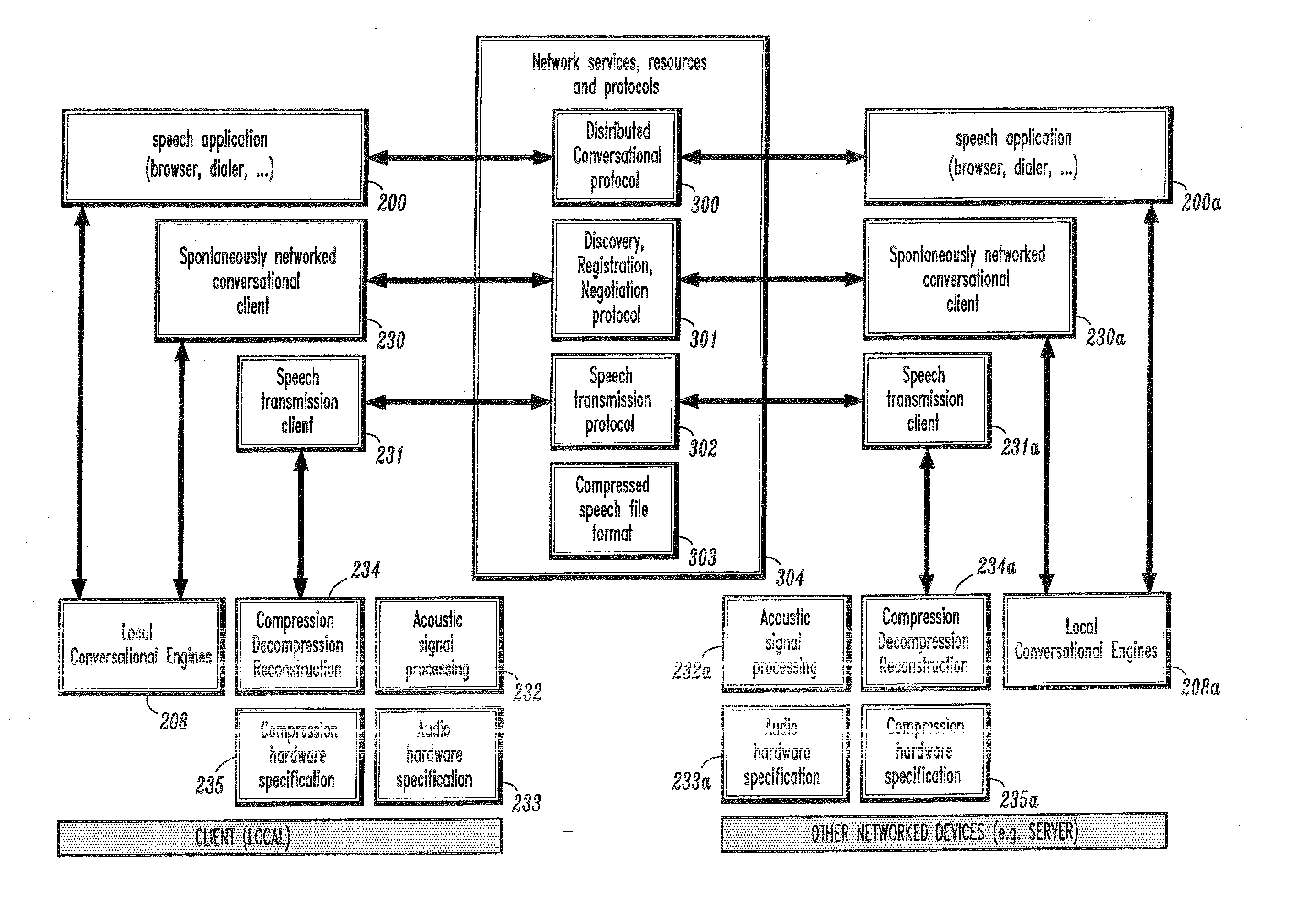

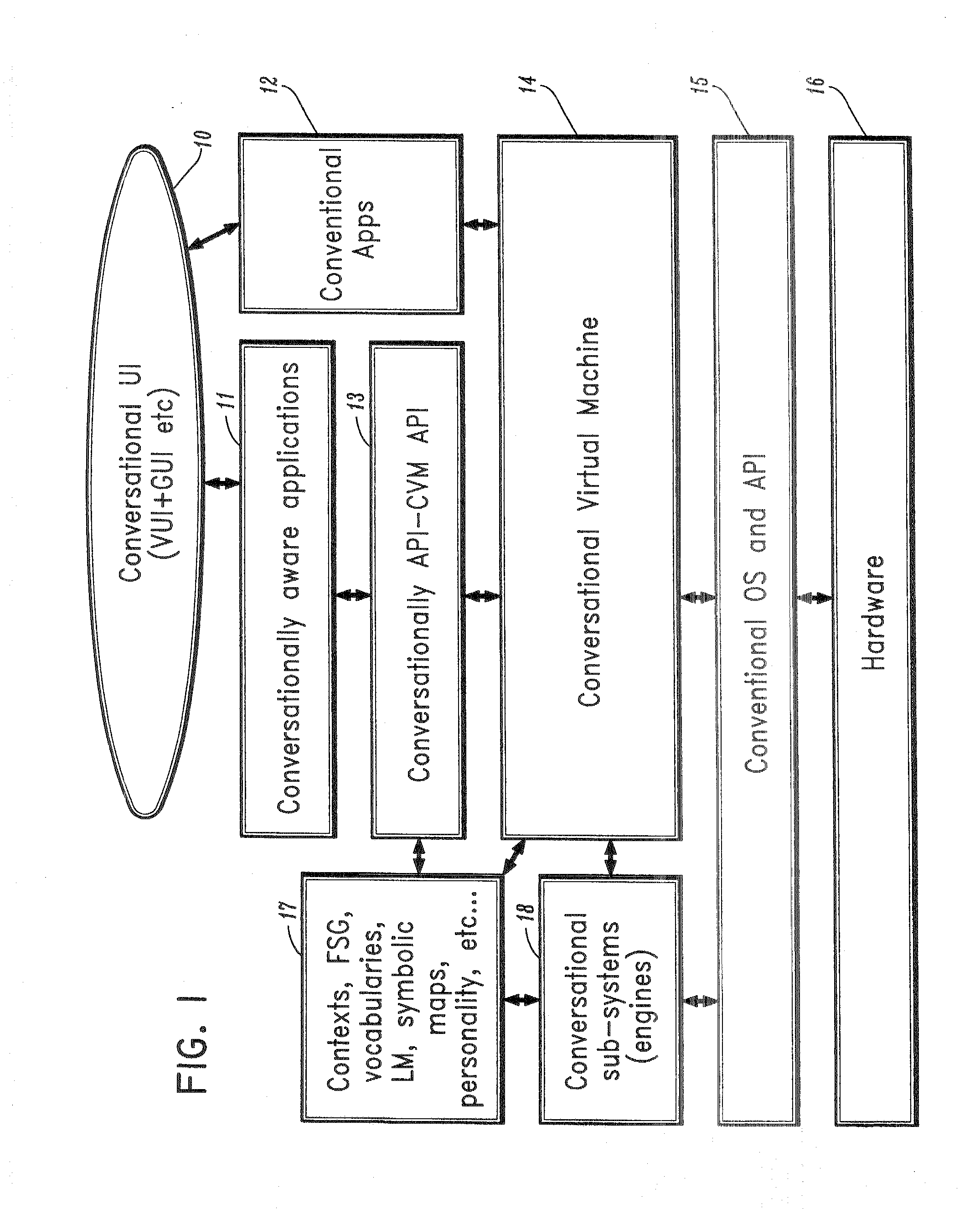

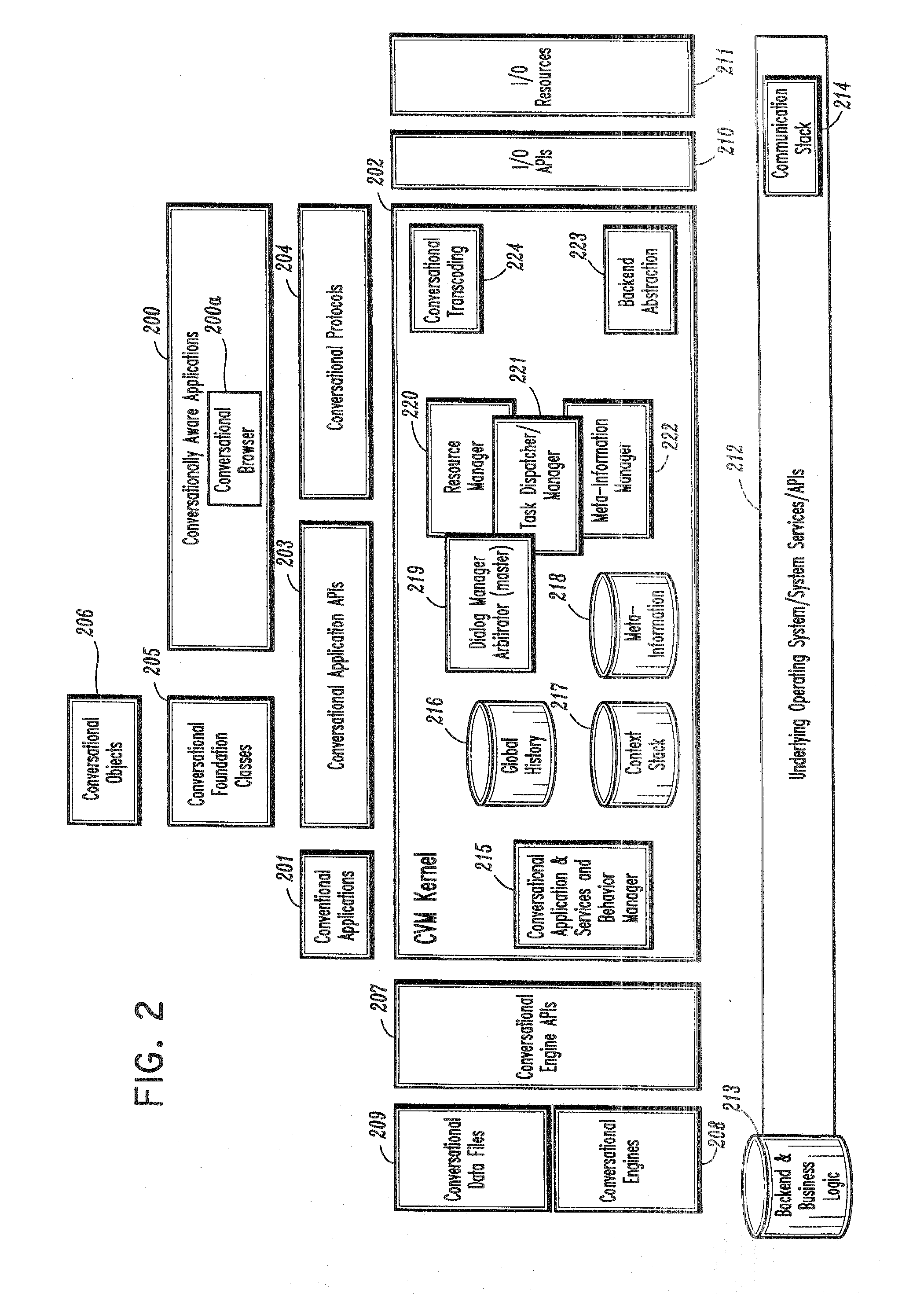

A conversational computing system that provides a universal coordinated multi-modal conversational user interface (CUI) (10) across a plurality of conversationally aware applications (11) (i.e., applications that “speak” conversational protocols) and conventional applications (12). The conversationally aware maps, applications (11) communicate with a conversational kernel (14) via conversational application APIs (13). The conversational kernel (14) controls the dialog across applications and devices (local and networked) on the basis of their registered conversational capabilities and requirements and provides a unified conversational user interface and conversational services and behaviors. The conversational computing system may be built on top of a conventional operating system and APIs (15) and conventional device hardware (16). The conversational kernel (14) handles all I / O processing and controls conversational engines (18). The conversational kernel (14) converts voice requests into queries and converts outputs and results into spoken messages using conversational engines (18) and conversational arguments (17). The conversational application API (13) conveys all the information for the conversational kernel (14) to transform queries into application calls and conversely convert output into speech, appropriately sorted before being provided to the user.

Owner:UNILOC 2017 LLC

System and method of supporting adaptive misrecognition in conversational speech

ActiveUS20070038436A1Improve maximizationHigh bandwidthNatural language data processingSpeech recognitionPersonalizationSpoken language

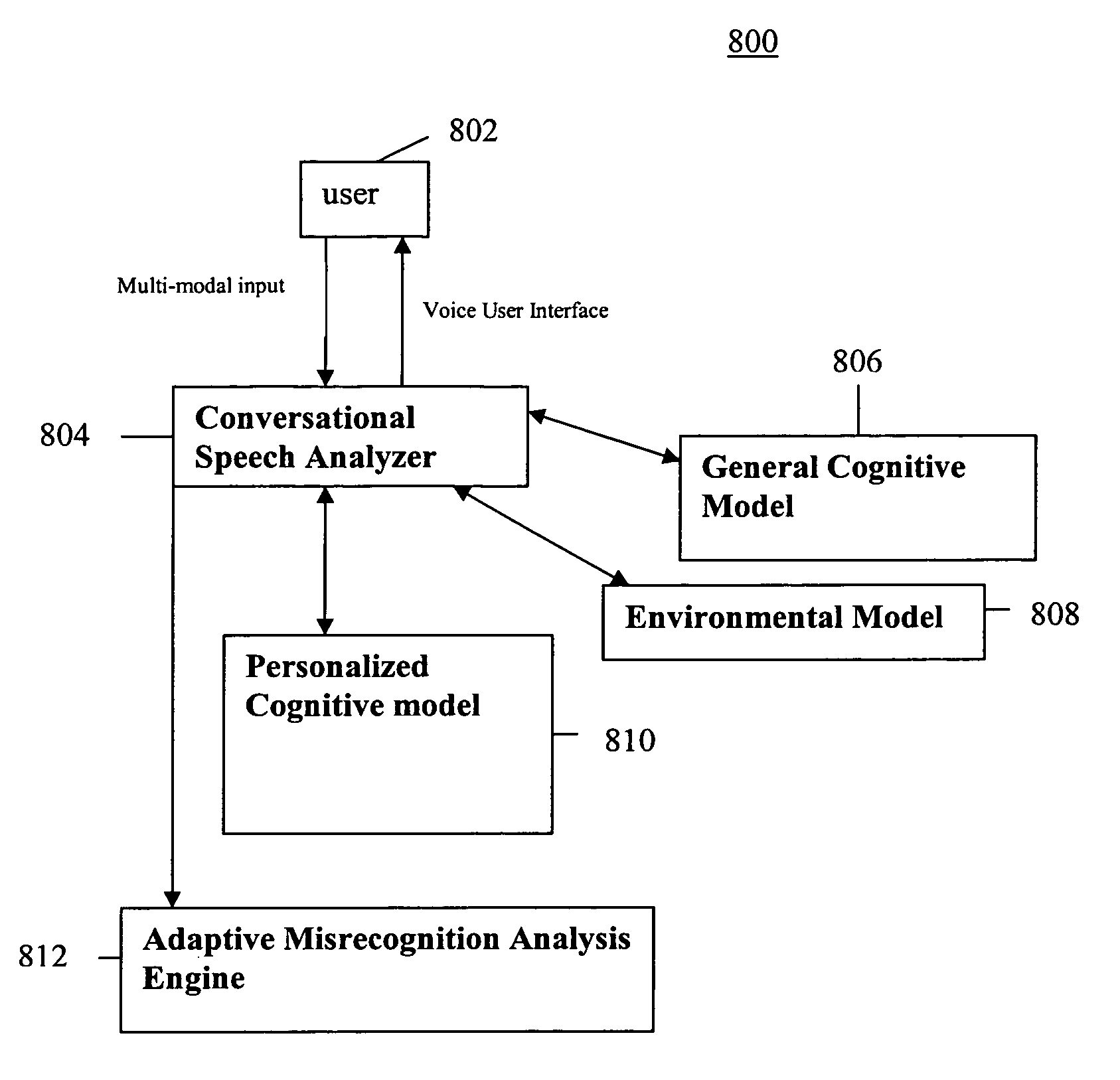

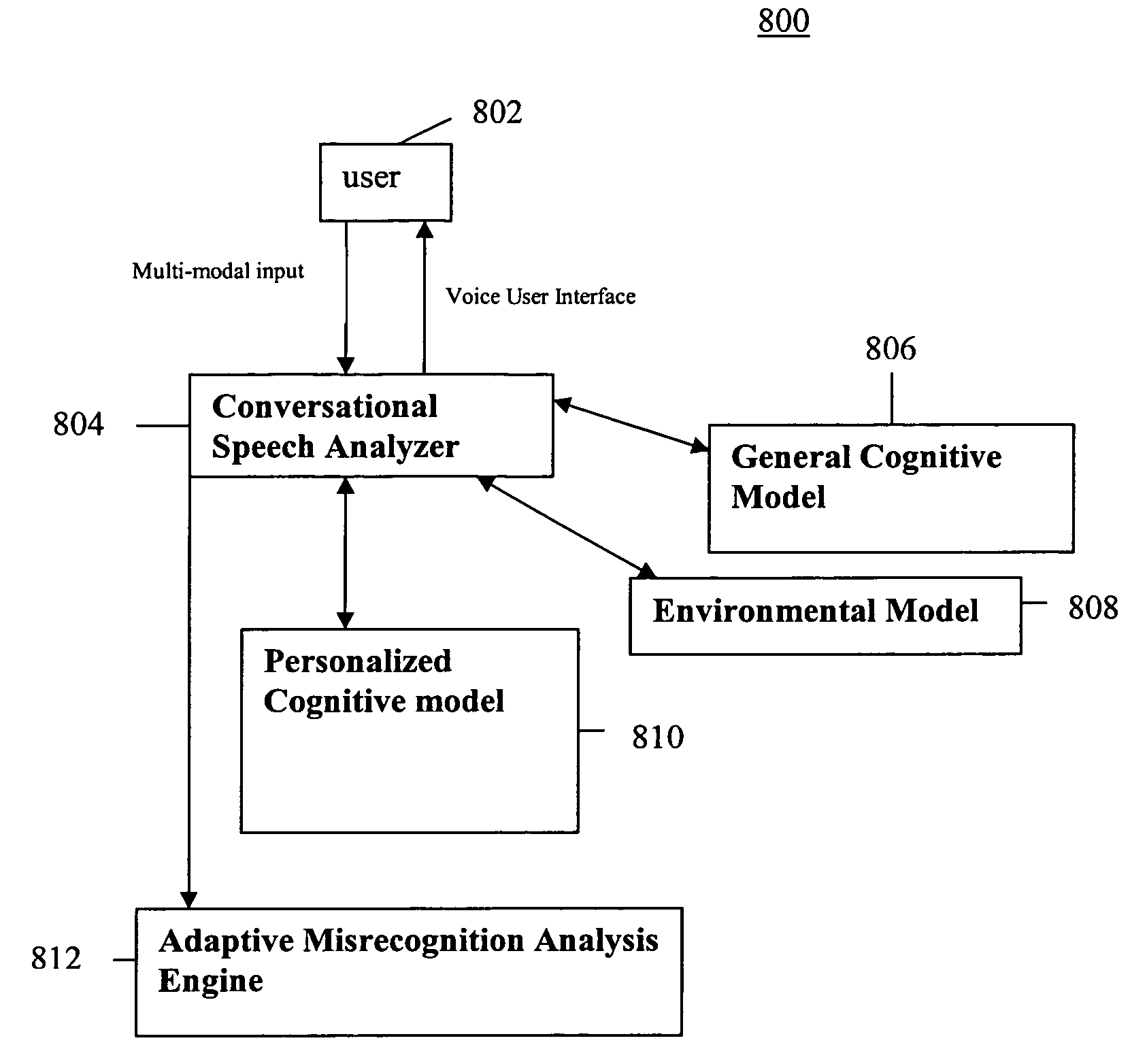

A system and method are provided for receiving speech and / or non-speech communications of natural language questions and / or commands and executing the questions and / or commands. The invention provides a conversational human-machine interface that includes a conversational speech analyzer, a general cognitive model, an environmental model, and a personalized cognitive model to determine context, domain knowledge, and invoke prior information to interpret a spoken utterance or a received non-spoken message. The system and method creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context of the speech or non-speech communication and presenting the expected results for a particular question or command.

Owner:DIALECT LLC

System and method of supporting adaptive misrecognition in conversational speech

ActiveUS7620549B2Promotes feeling of naturalSignificant to useNatural language data processingSpeech recognitionPersonalizationHuman–machine interface

A system and method are provided for receiving speech and / or non-speech communications of natural language questions and / or commands and executing the questions and / or commands. The invention provides a conversational human-machine interface that includes a conversational speech analyzer, a general cognitive model, an environmental model, and a personalized cognitive model to determine context, domain knowledge, and invoke prior information to interpret a spoken utterance or a received non-spoken message. The system and method creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context of the speech or non-speech communication and presenting the expected results for a particular question or command.

Owner:DIALECT LLC

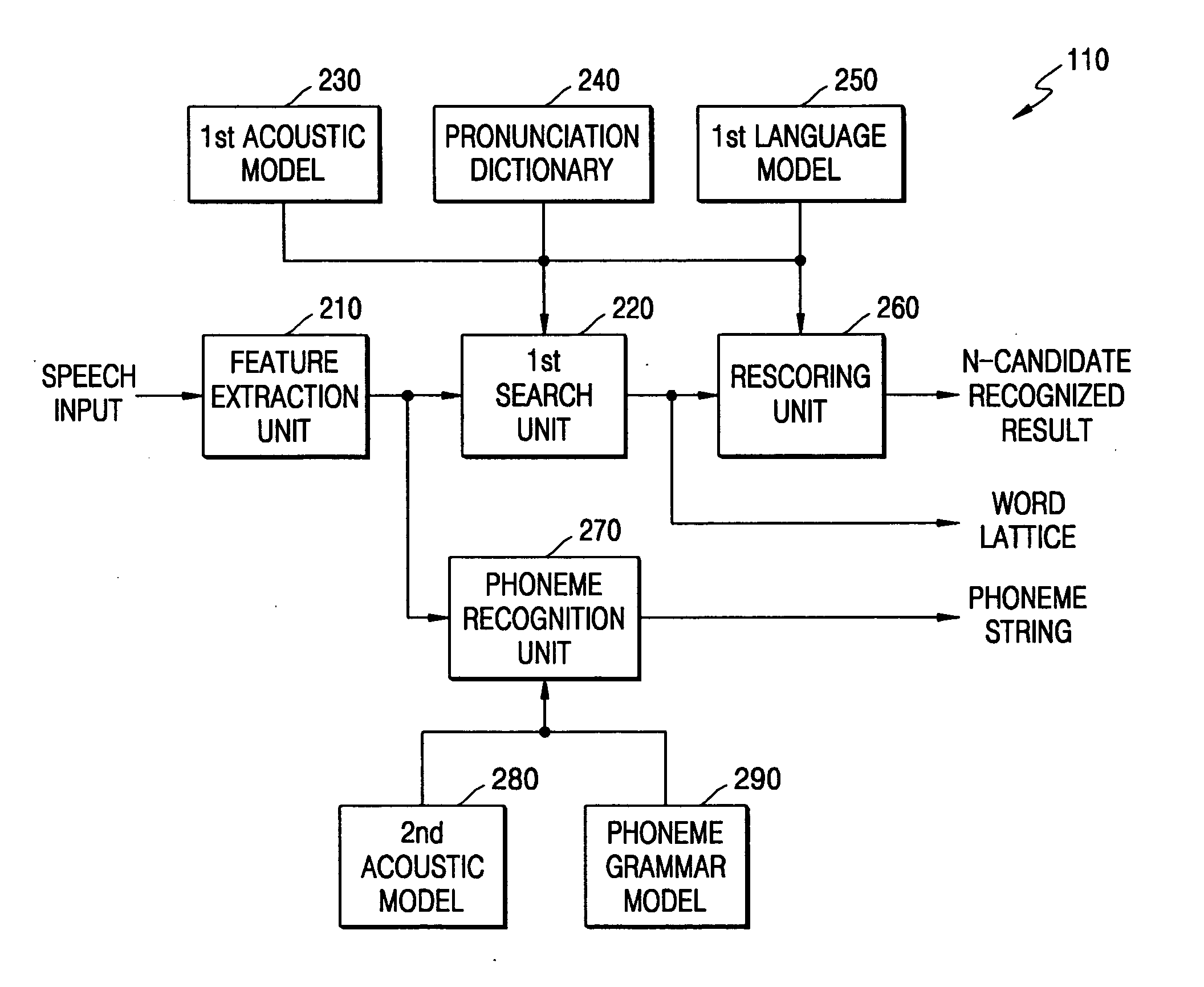

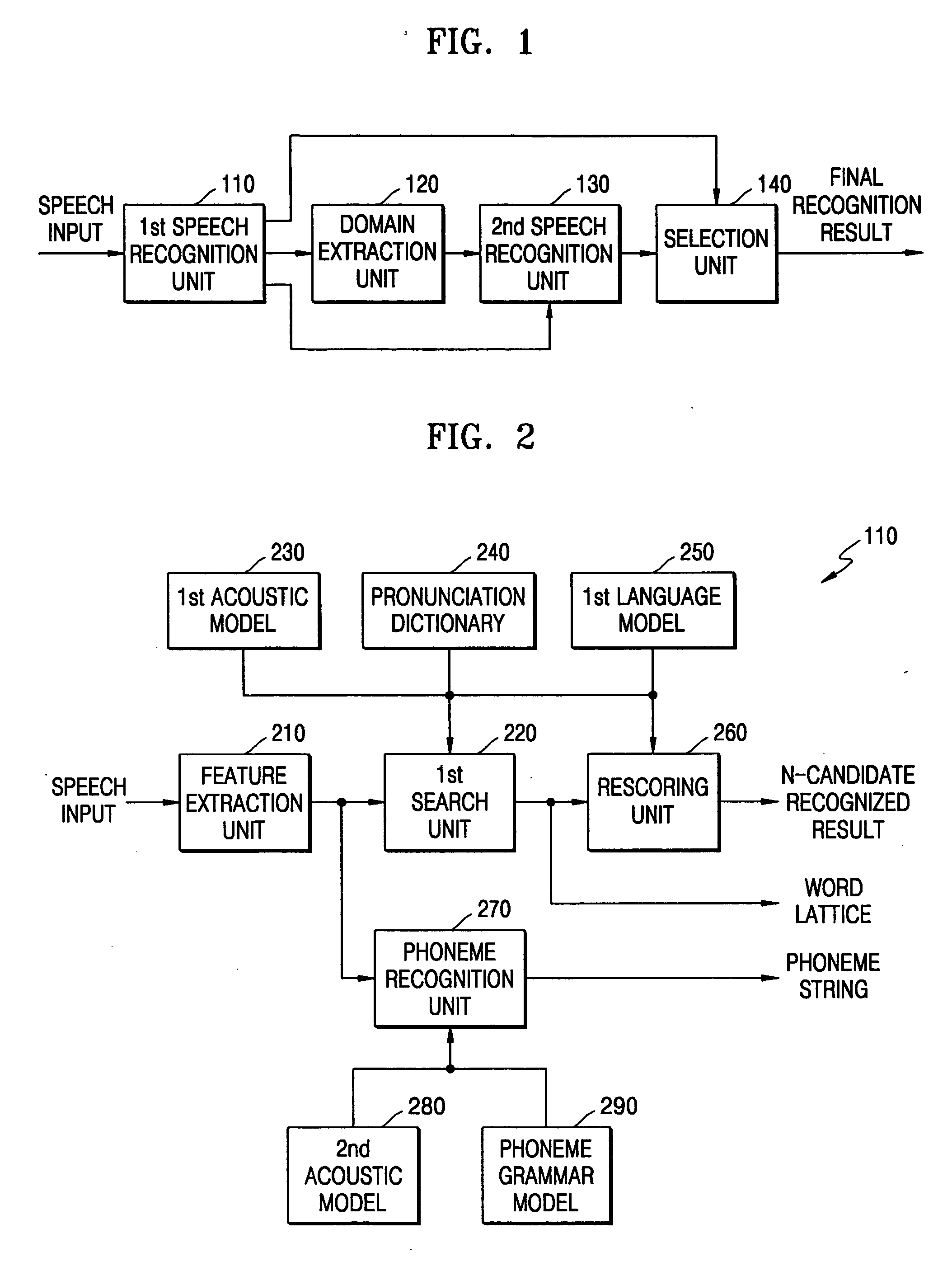

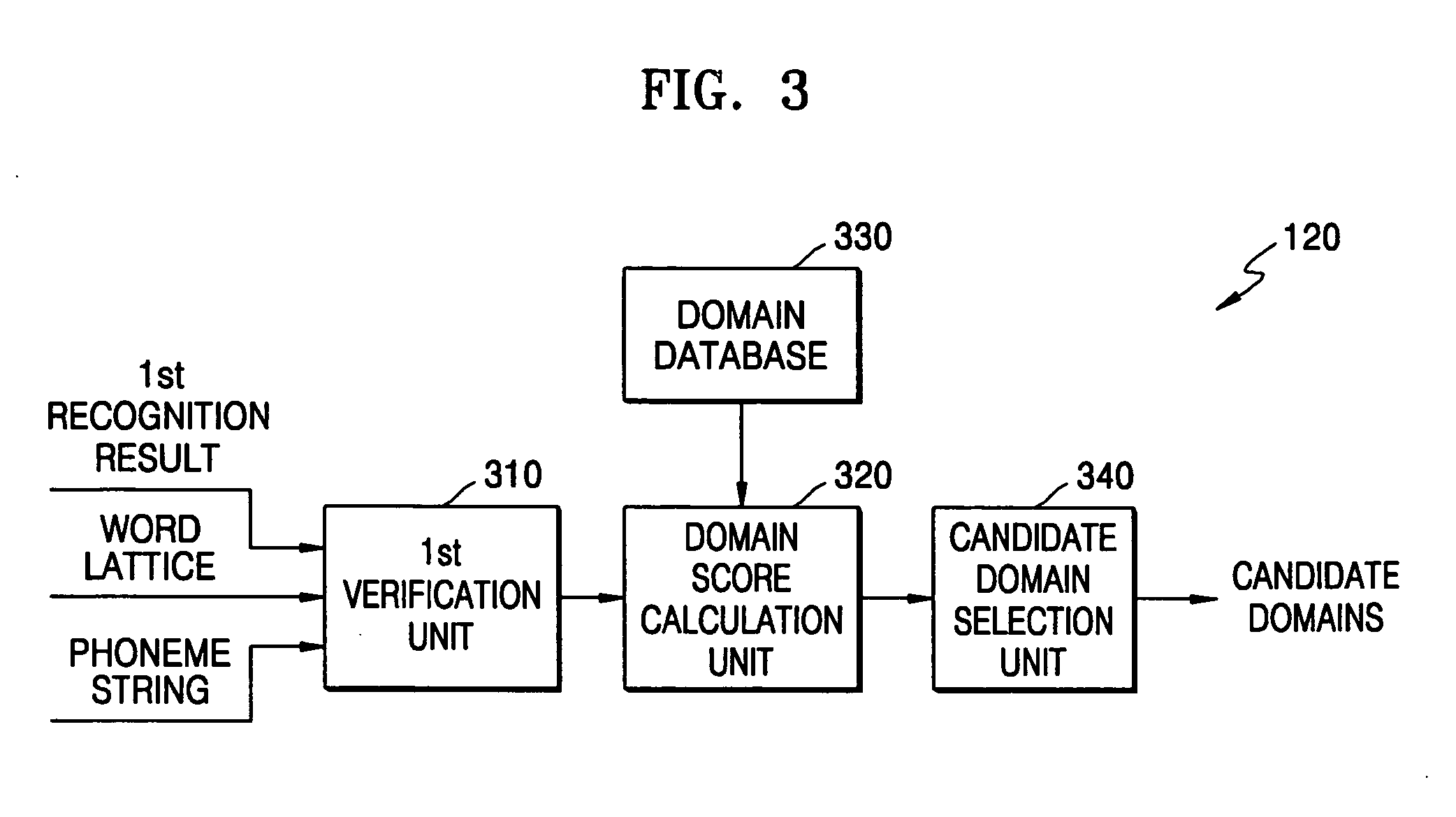

Domain-based dialog speech recognition method and apparatus

A domain-based speech recognition method and apparatus, the method including: performing speech recognition by using a first language model and generating a first recognition result including a plurality of first recognition sentences; selecting a plurality of candidate domains, by using a word included in each of the first recognition sentences and having a confidence score equal to or higher than a predetermined threshold, as a domain keyword; performing speech recognition with the first recognition result, by using an acoustic model specific to each of the candidate domains and a second language model and generating a plurality of second recognition sentences; and selecting at least one or more final recognition sentence from the first recognition sentences and the second recognition sentences. According to this method and apparatus, the effect of a domain extraction error by misrecognition of a word on selection of a final recognition result can be minimized.

Owner:SAMSUNG ELECTRONICS CO LTD

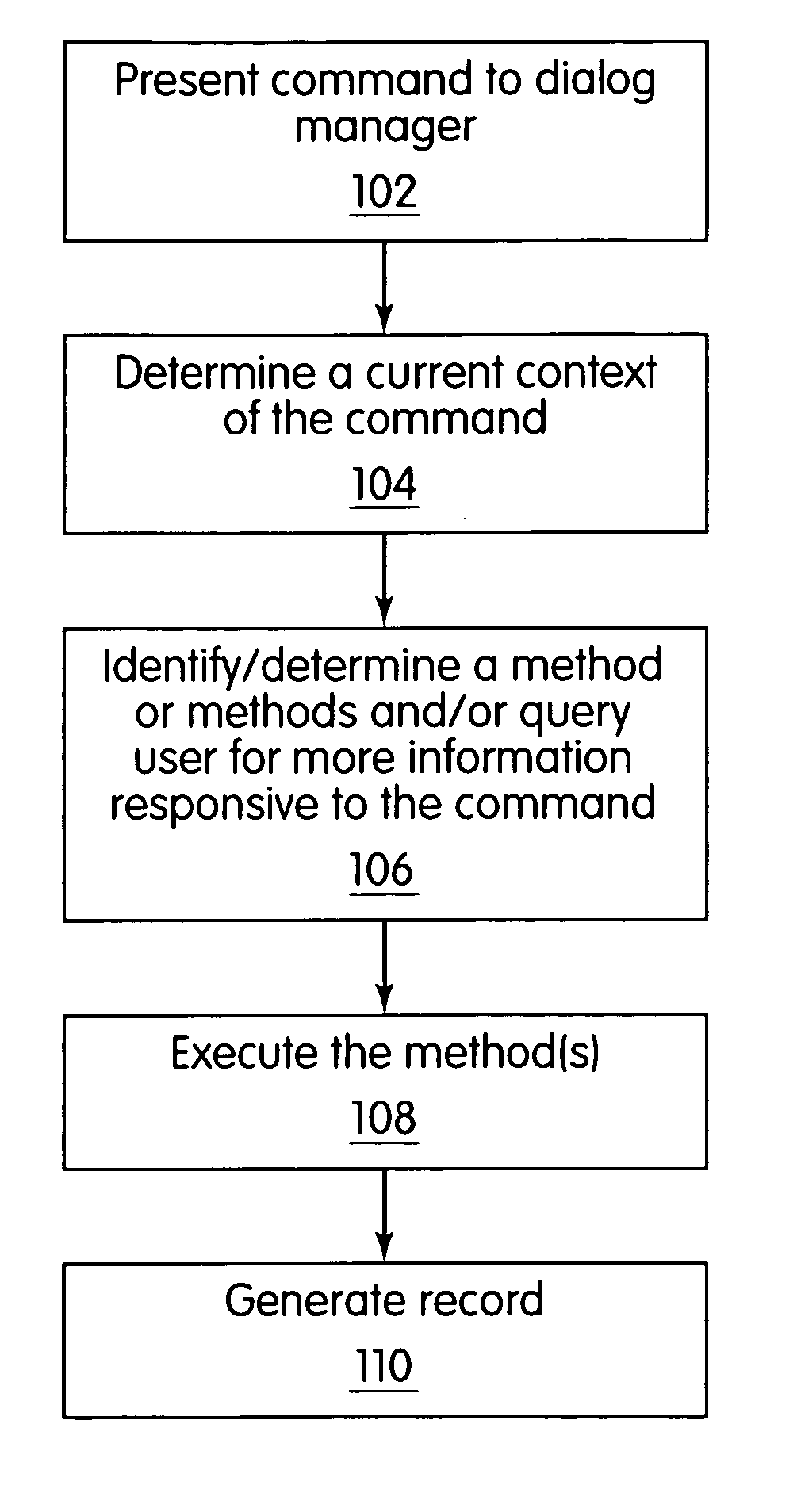

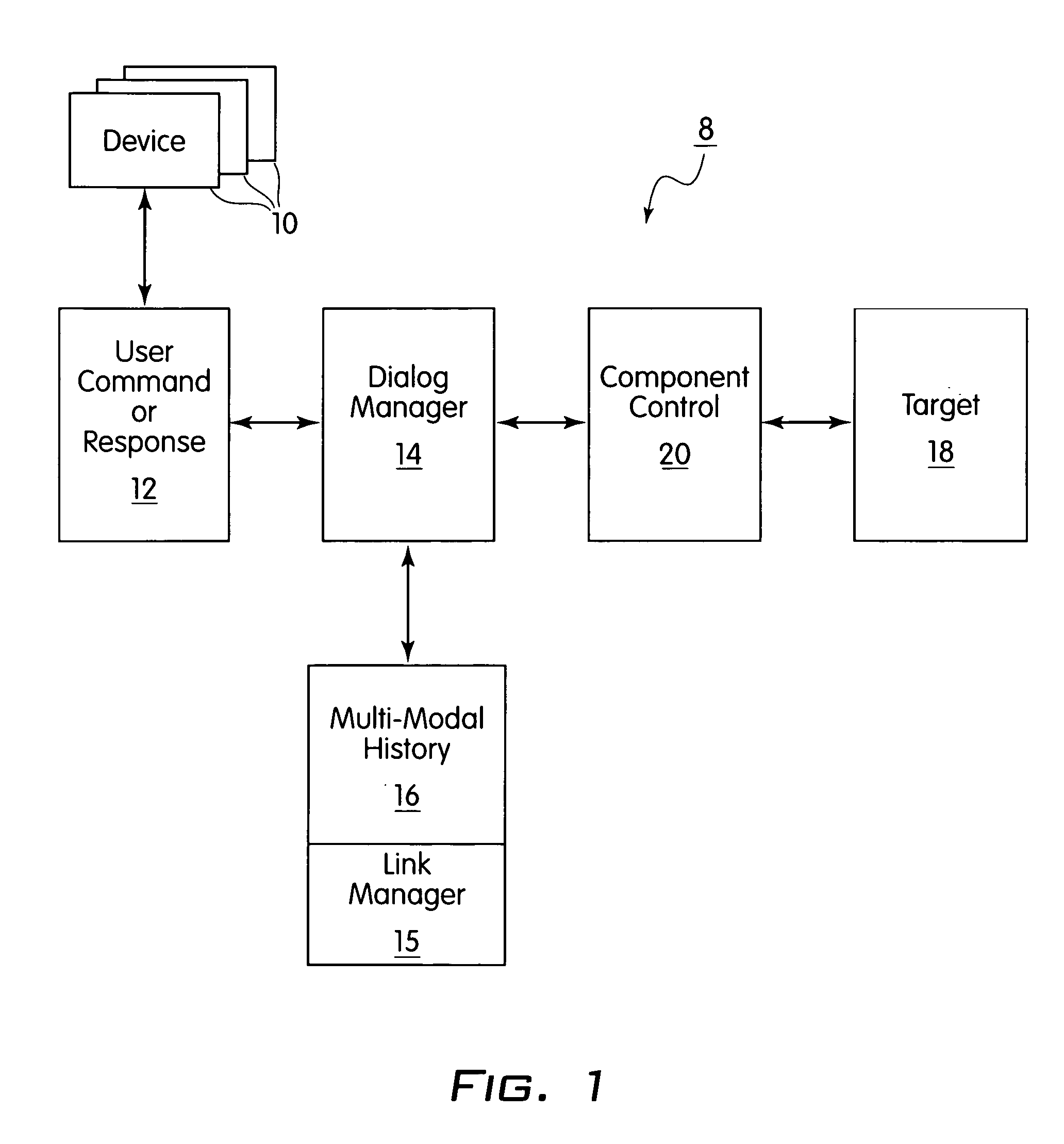

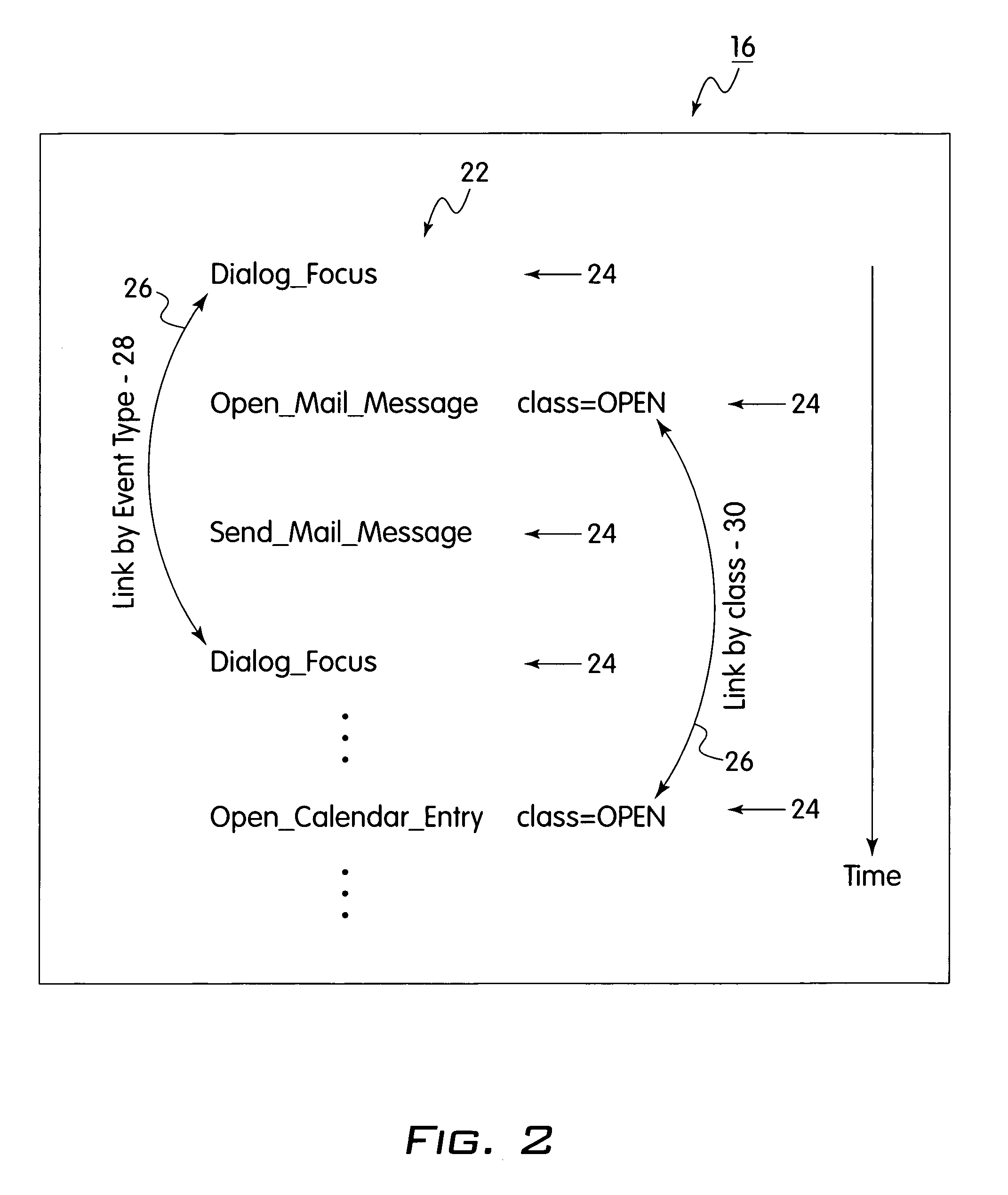

Method for determining and maintaining dialog focus in a conversational speech system

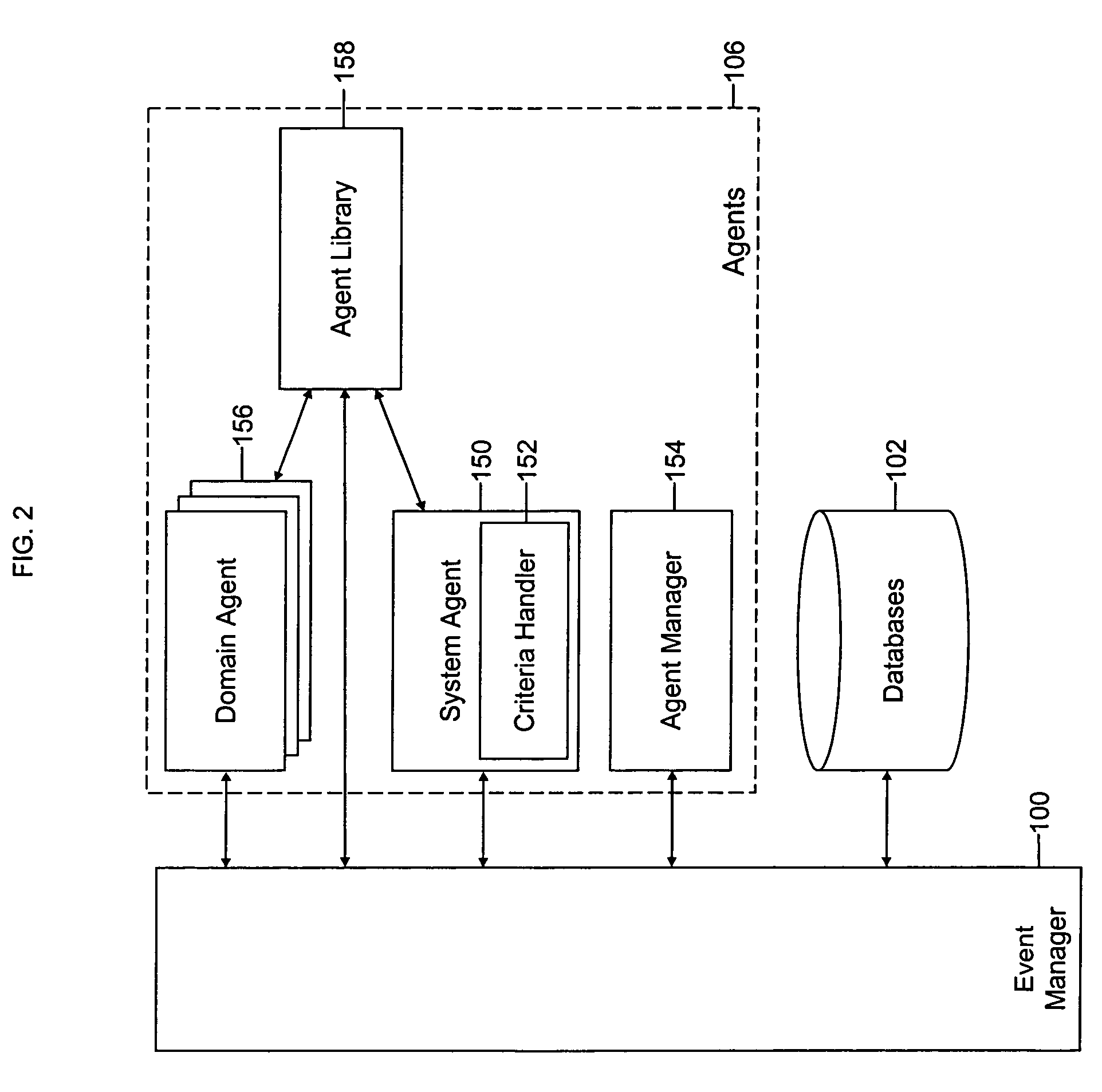

A system and method of the present invention for determining and maintaining dialog focus in a conversational speech system includes presenting a command associated with an application to a dialog manager. The application associated with the command is unknown to the dialog manager at the time it is made. The dialog manager determines a current context of the command by reviewing a multi-modal history of events. At least one method is determined responsive to the command based on the current context. The at least one method is executed responsive to the command associated with the application.

Owner:NUANCE COMM INC

Conversational computing via conversational virtual machine

InactiveUS20070043574A1Limitation for transferReduce degradationInterconnection arrangementsInterprogram communicationConversational speechApplication software

A conversational computing system that provides a universal coordinated multi-modal conversational user interface (CUI) 10 across a plurality of conversationally aware applications (11) (i.e., applications that “speak” conversational protocols) and conventional applications (12). The conversationally aware applications (11) communicate with a conversational kernel (14) via conversational application APIs (13). The conversational kernel 14 controls the dialog across applications and devices (local and networked) on the basis of their registered conversational capabilities and requirements and provides a unified conversational user interface and conversational services and behaviors. The conversational computing system may be built on top of a conventional operating system and APIs (15) and conventional device hardware (16). The conversational kernel (14) handles all I / O processing and controls conversational engines (18). The conversational kernel (14) converts voice requests into queries and converts outputs and results into spoken messages using conversational engines (18) and conversational arguments (17). The conversational application API (13) conveys all the information for the conversational kernel (14) to transform queries into application calls and conversely convert output into speech, appropriately sorted before being provided to the user.

Owner:NUANCE COMM INC

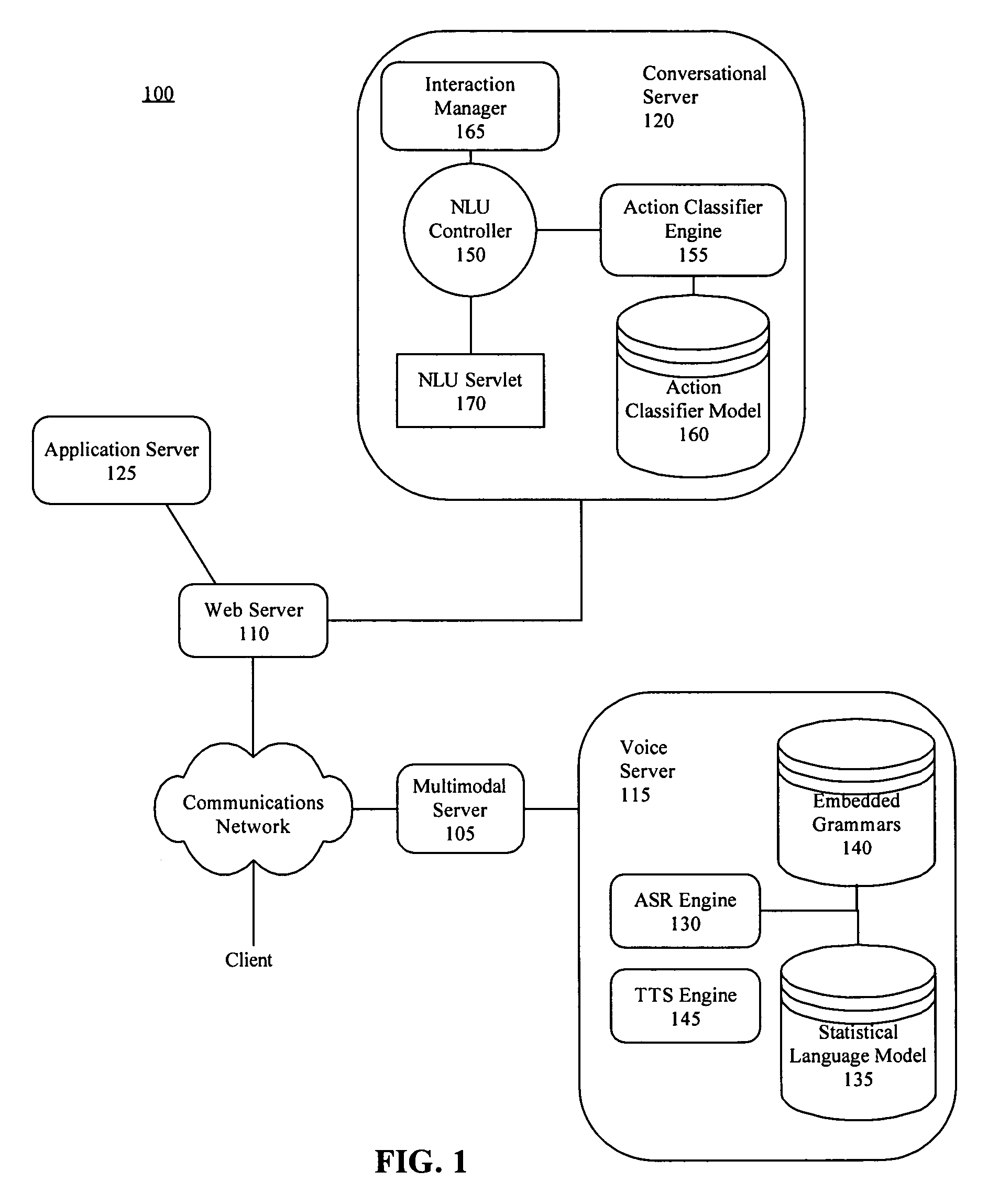

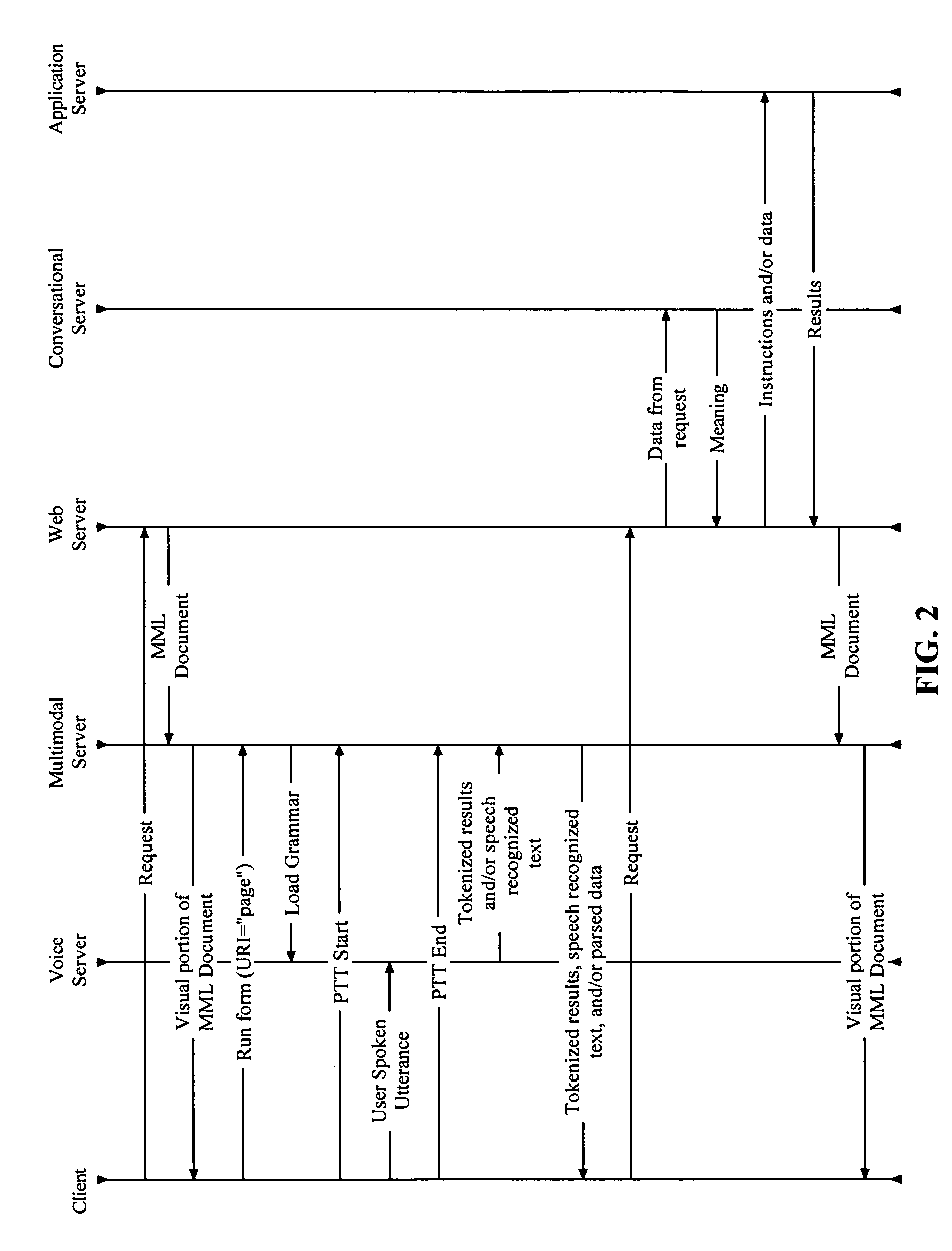

Integrating conversational speech into Web browsers

InactiveUS20060235694A1Web data retrievalAutomatic exchangesNatural language understandingWeb browser

A method of integrating conversational speech into a multimodal, Web-based processing model can include speech recognizing a user spoken utterance directed to a voice-enabled field of a multimodal markup language document presented within a browser. A statistical grammar can be used to determine a recognition result. The method further can include providing the recognition result to the browser, receiving, within a natural language understanding (NLU) system, the recognition result from the browser, and semantically processing the recognition result to determine a meaning. Accordingly, a next programmatic action to be performed can be selected according to the meaning.

Owner:NUANCE COMM INC

Smart phone customer service system based on speech analysis

InactiveCN103811009ARealize objective managementEasy to handleSpeech analysisSpecial service for subscribersOpinion analysisConversational speech

The invention provides a smart phone customer service system based on speech analysis. Firstly, real-time recording and effectiveness detection are performed on conversation speech caused when a client calls human customer service; then related individual information of the client is extracted, voiceprint recognition is performed on client speech in the conversation speech, and the verification is performed and the conversation speech is used as client identity at the time of consulting complaints to be recorded; meanwhile, speech content recognition is performed on the conversation speech to save as written record, and text public opinion analysis is performed on the written record; speech emotion analysis is performed on the conversation speech to record speech emotion data; the effect of the consulting complaints this time is analyzed by combination of the result of the text public opinion analysis and the emotion data; final grade of the customer service is obtained by combination of the analysis results and traditional grading evaluation to perform feed-back assessment. Compared with traditional customer service systems, the smart customer service system is capable of effectively increasing service qualities of the client and achieving objectified management of customer service evaluation.

Owner:EAST CHINA UNIV OF SCI & TECH

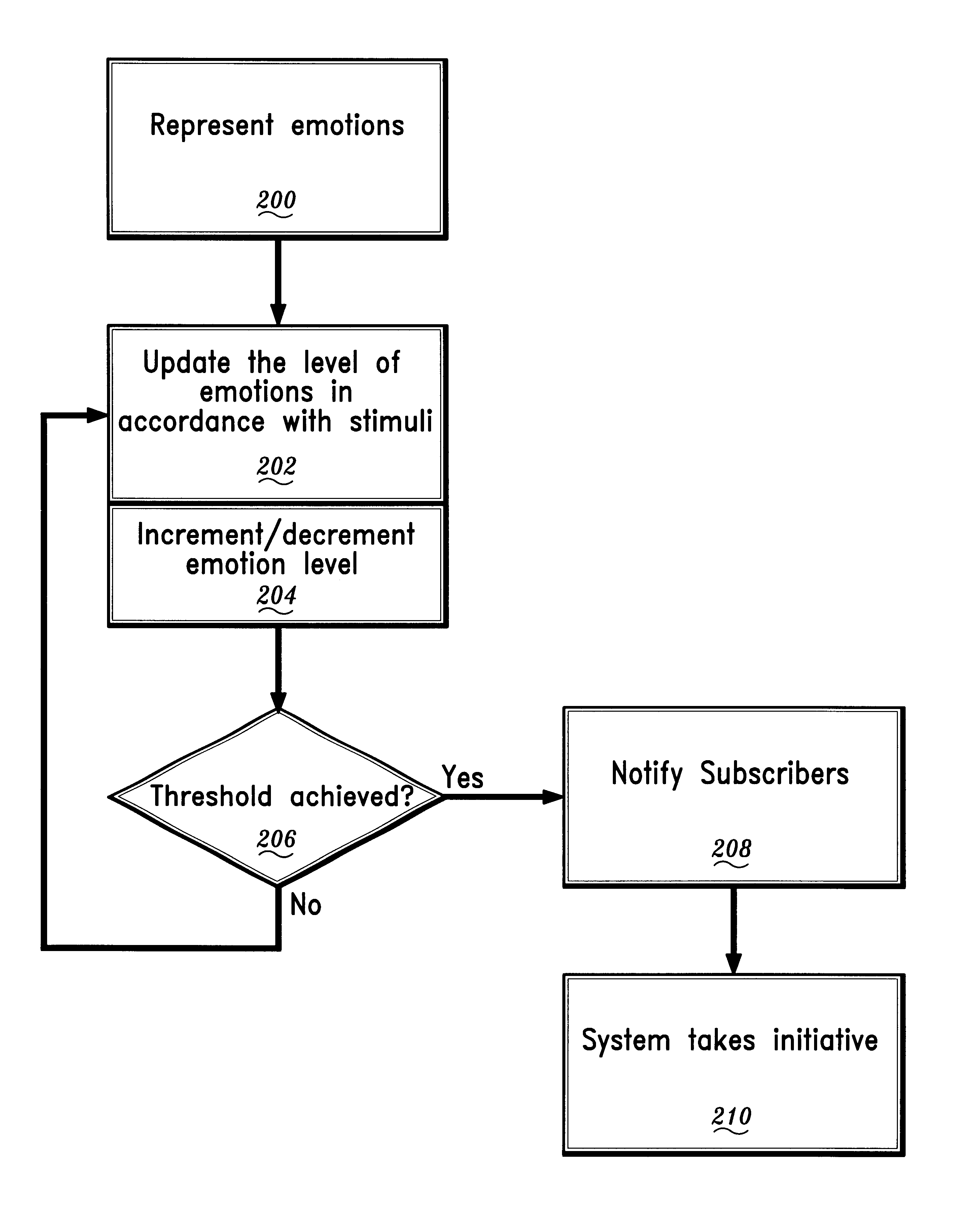

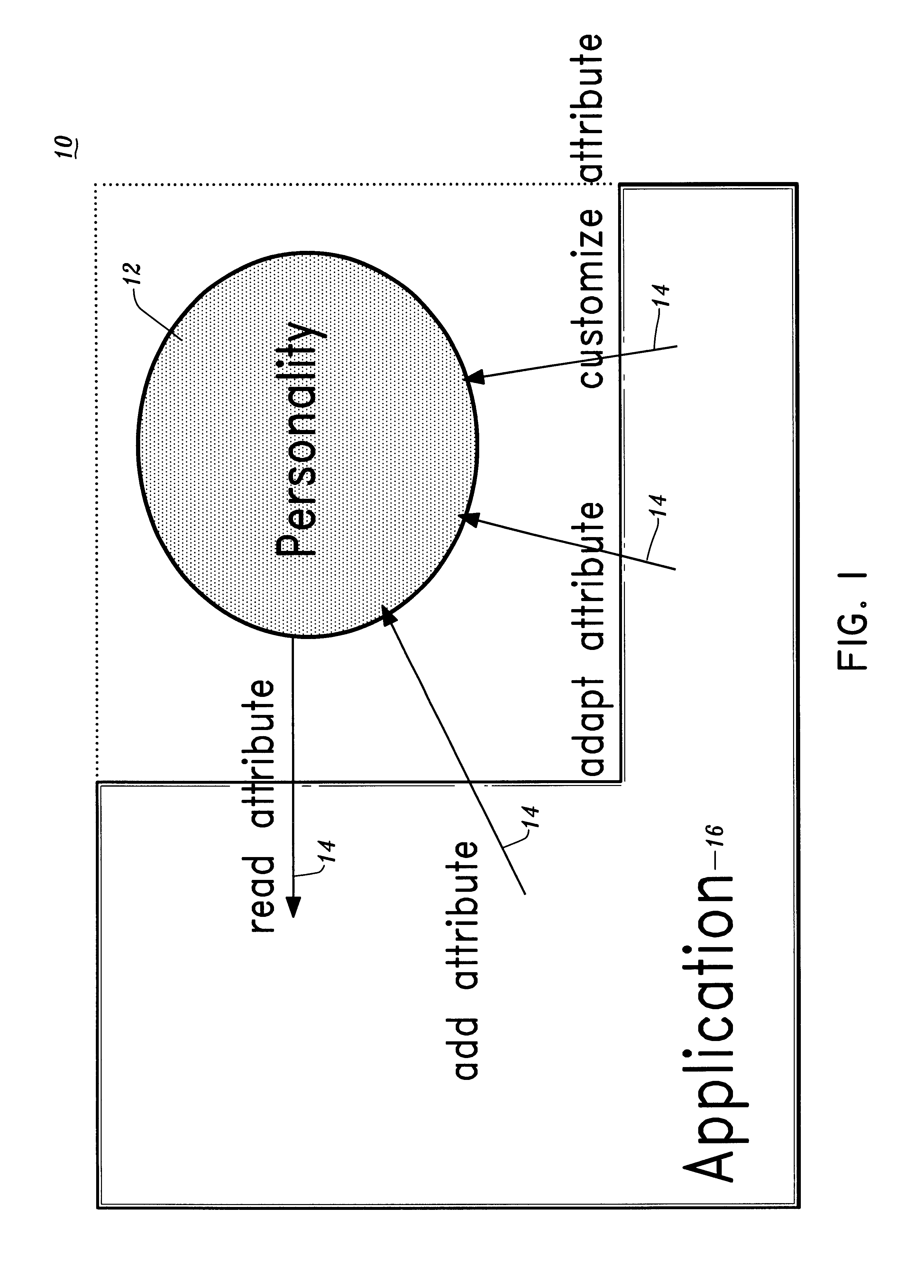

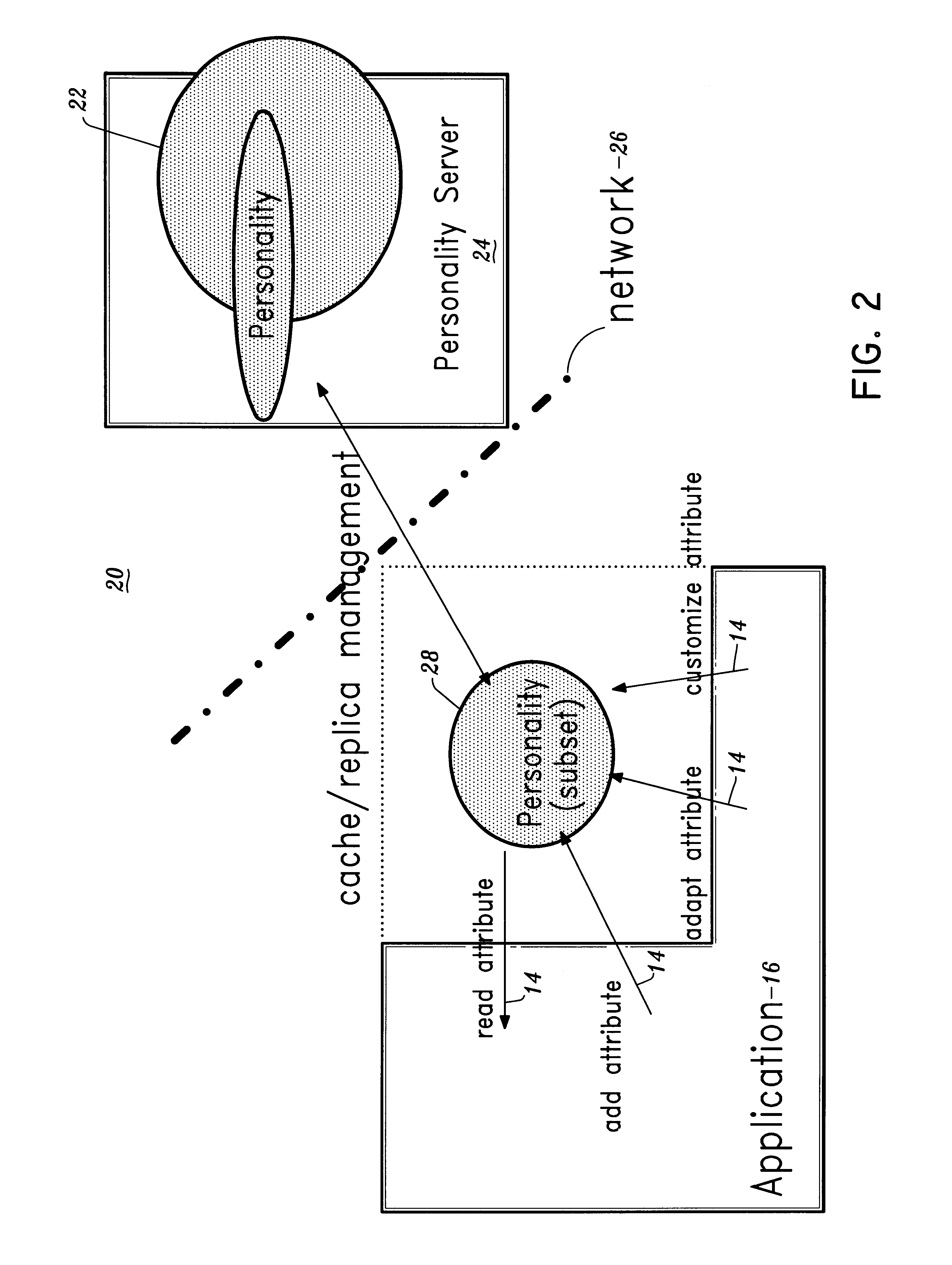

Adaptive emotion and initiative generator for conversational systems

A method, in accordance with the present invention, which may be implemented by a program storage device readable by machine, tangibly embodying a program of instructions executable by the machine to perform steps for providing emotions for a conversational system, includes representing each of a plurality of emotions as an entity. A level of each emotion is updated responsive either user stimuli or internal stimuli or both. When a threshold level is achieved for each emotion, the user stimuli and internal stimuli are reacted to by notifying components subscribing to each emotion to take appropriate action.

Owner:UNILOC 2017 LLC

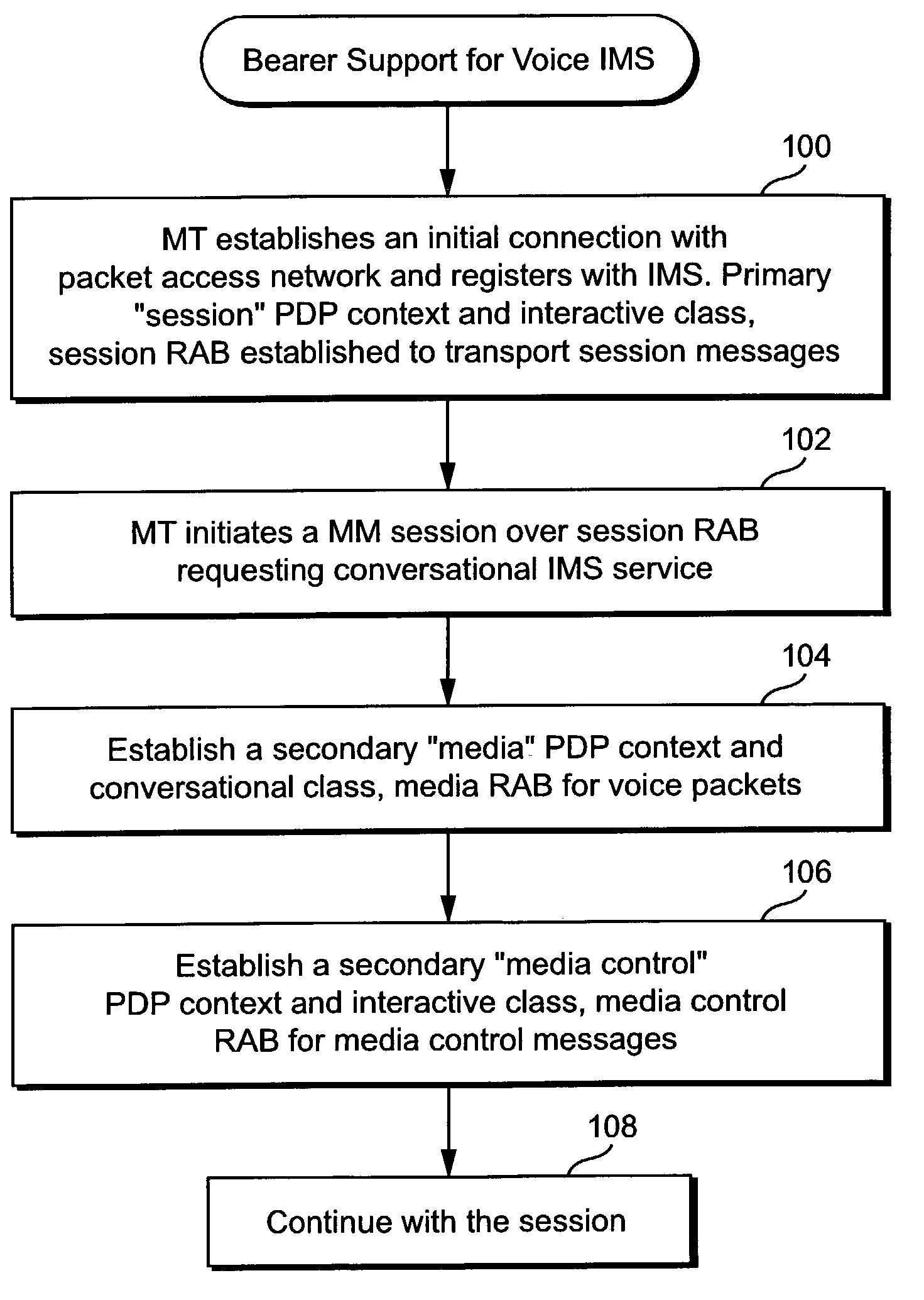

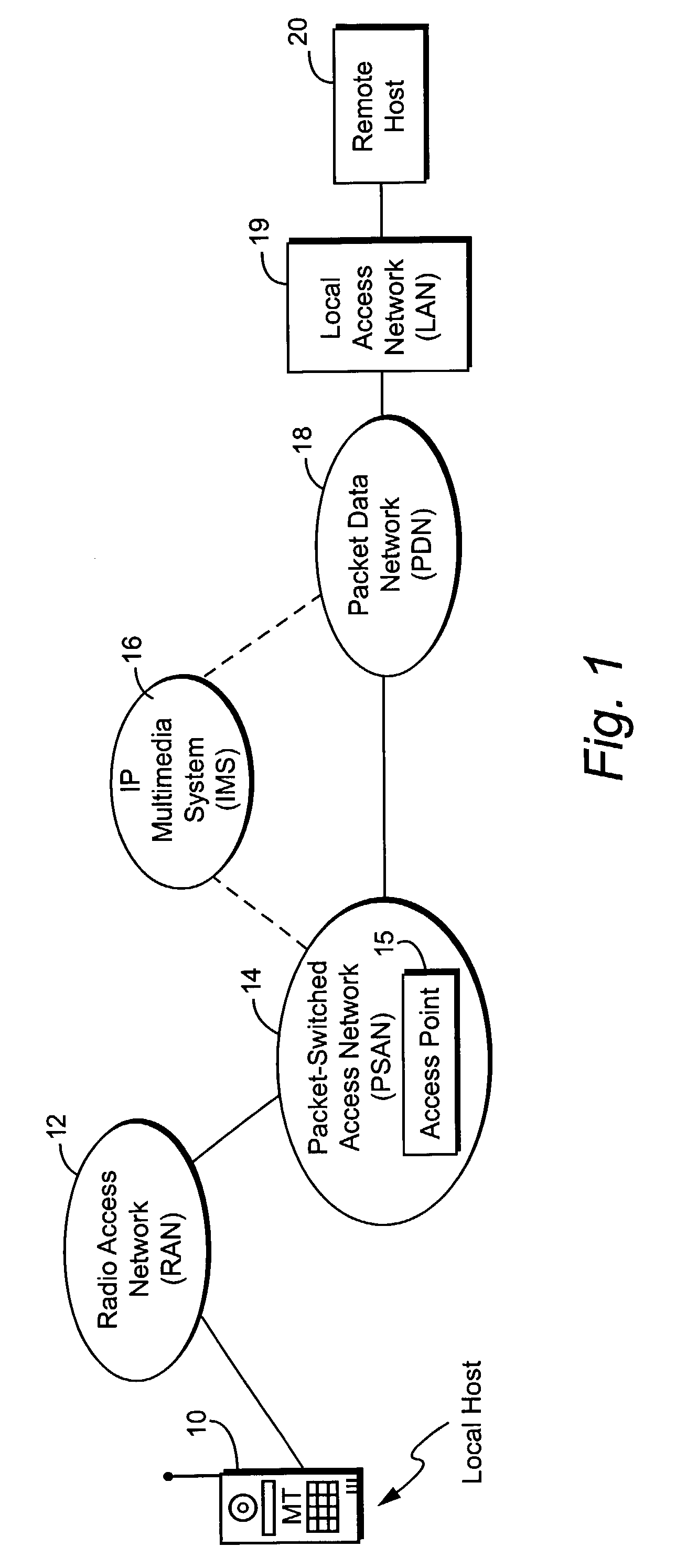

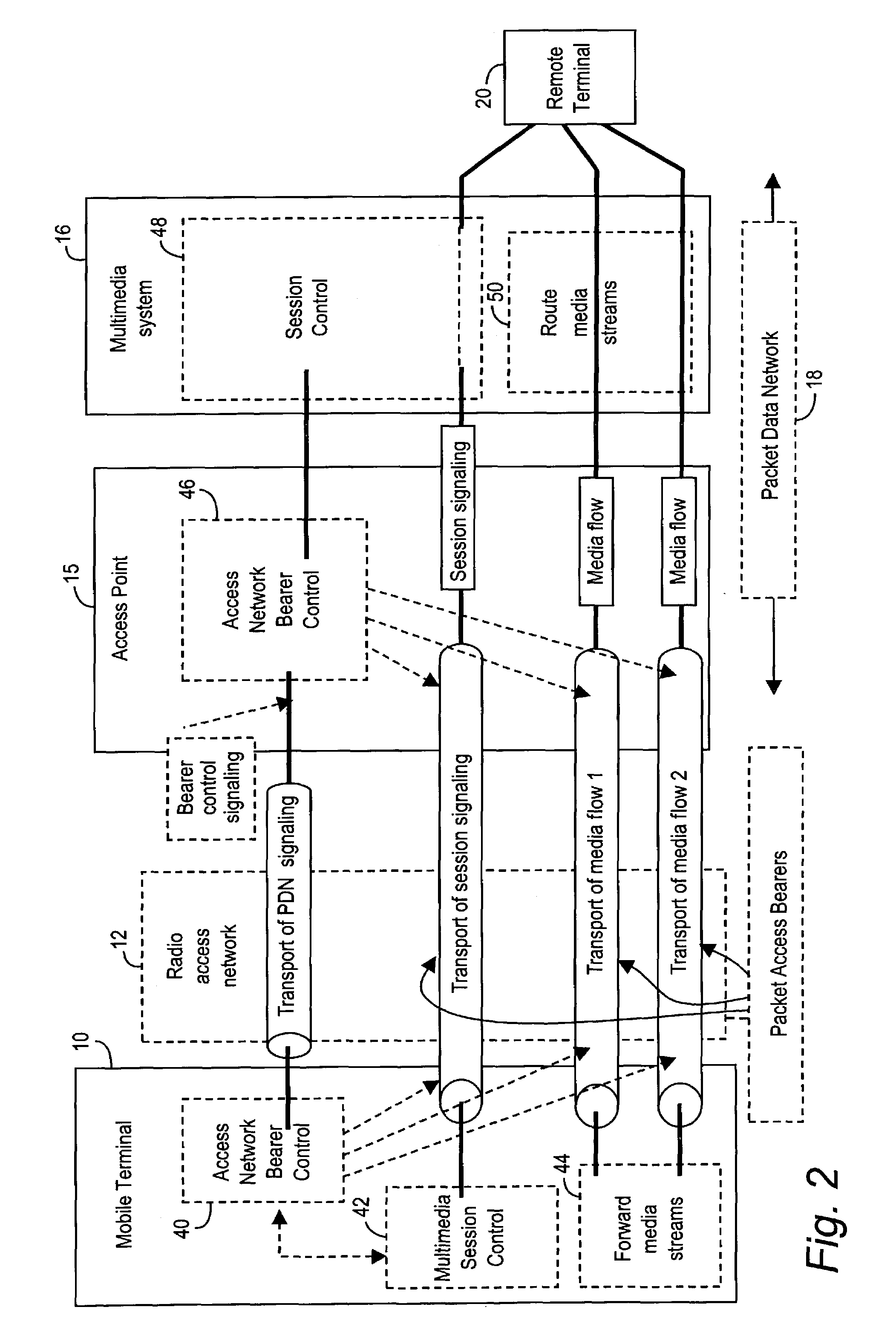

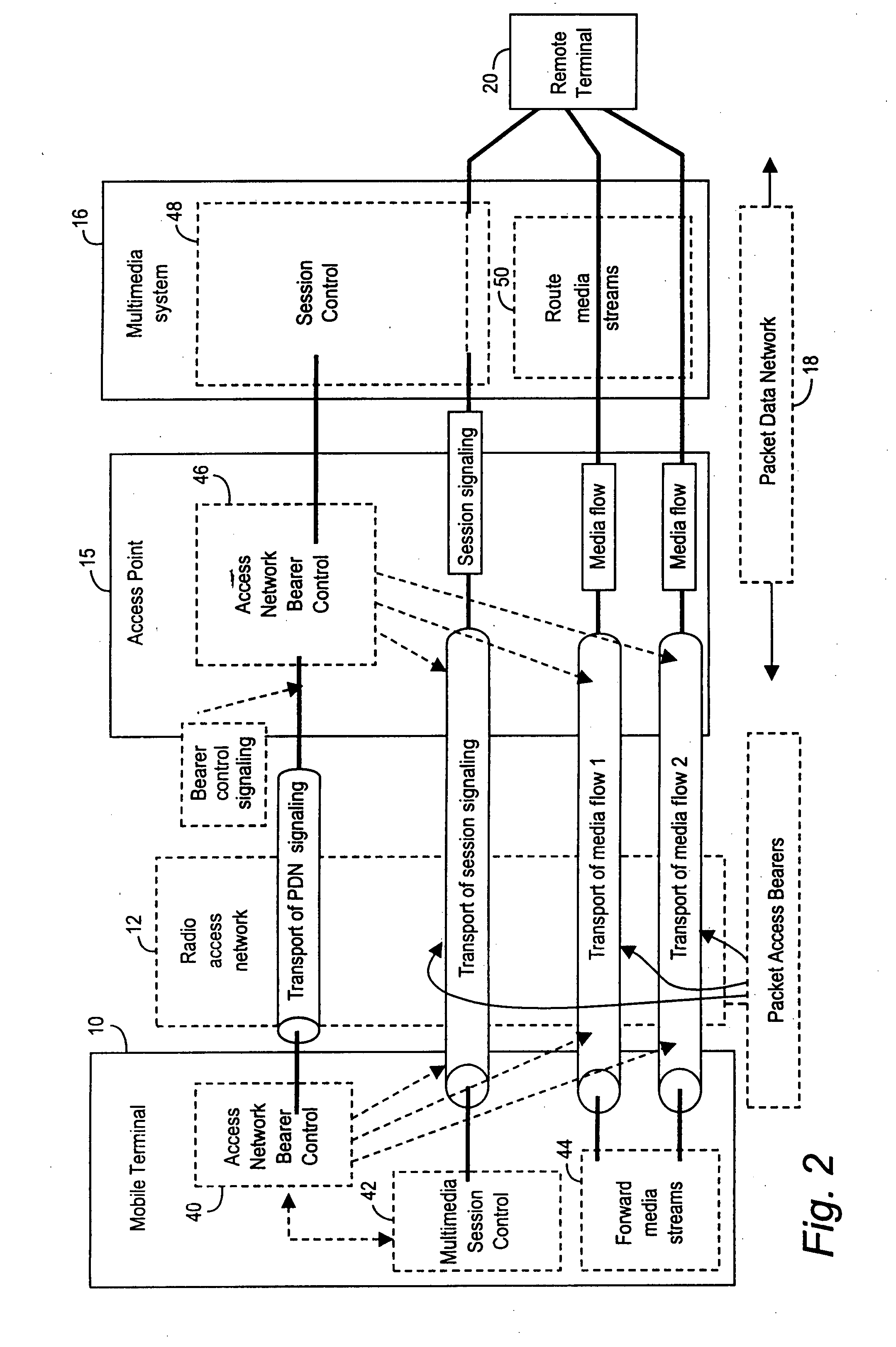

Packet-based conversational service for a multimedia session in a mobile communications system

ActiveUS7609673B2Network traffic/resource managementConnection managementMedia controlsQuality of service

An important objective for third generation mobile communications systems is to provide IP services, and a very important part of these services will be the conversational voice. The conversational voice service includes three distinct information flows: session control, the voice media, and the media control messages. In a preferred embodiment, based on the specific characteristics for each information flow, each information flow is allocated its own bearer with a Quality of Service (QoS) tailored to its particular characteristics. In a second embodiment, the session control and media control messages are supported with a single bearer with a QoS that suits both types of control messages. Both embodiments provide an IP conversational voice service with increased radio resource efficiency and QoS.

Owner:TELEFON AB LM ERICSSON (PUBL)

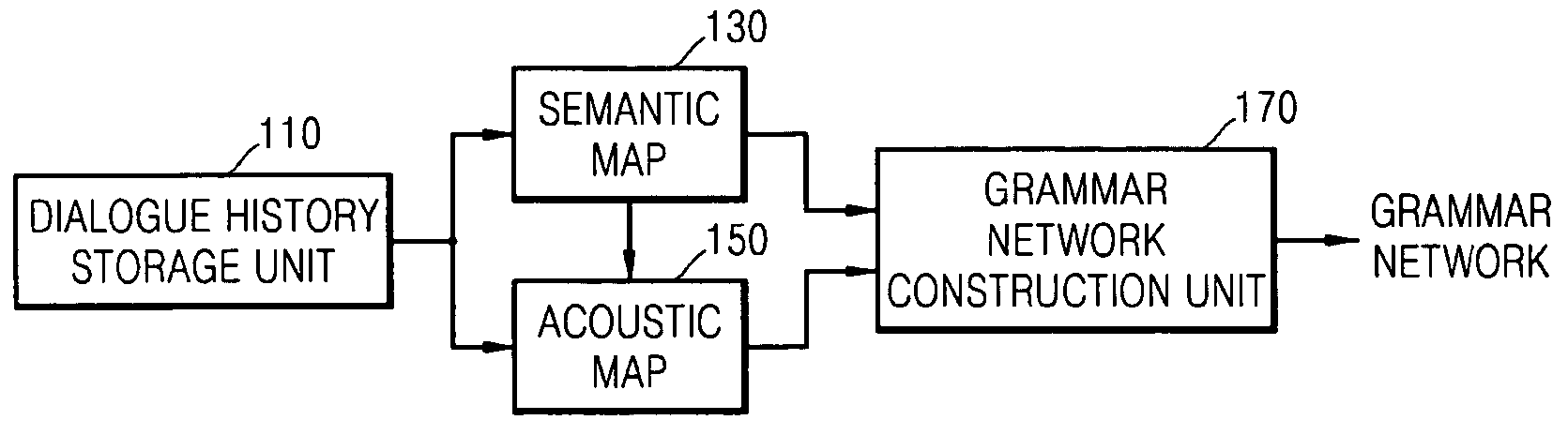

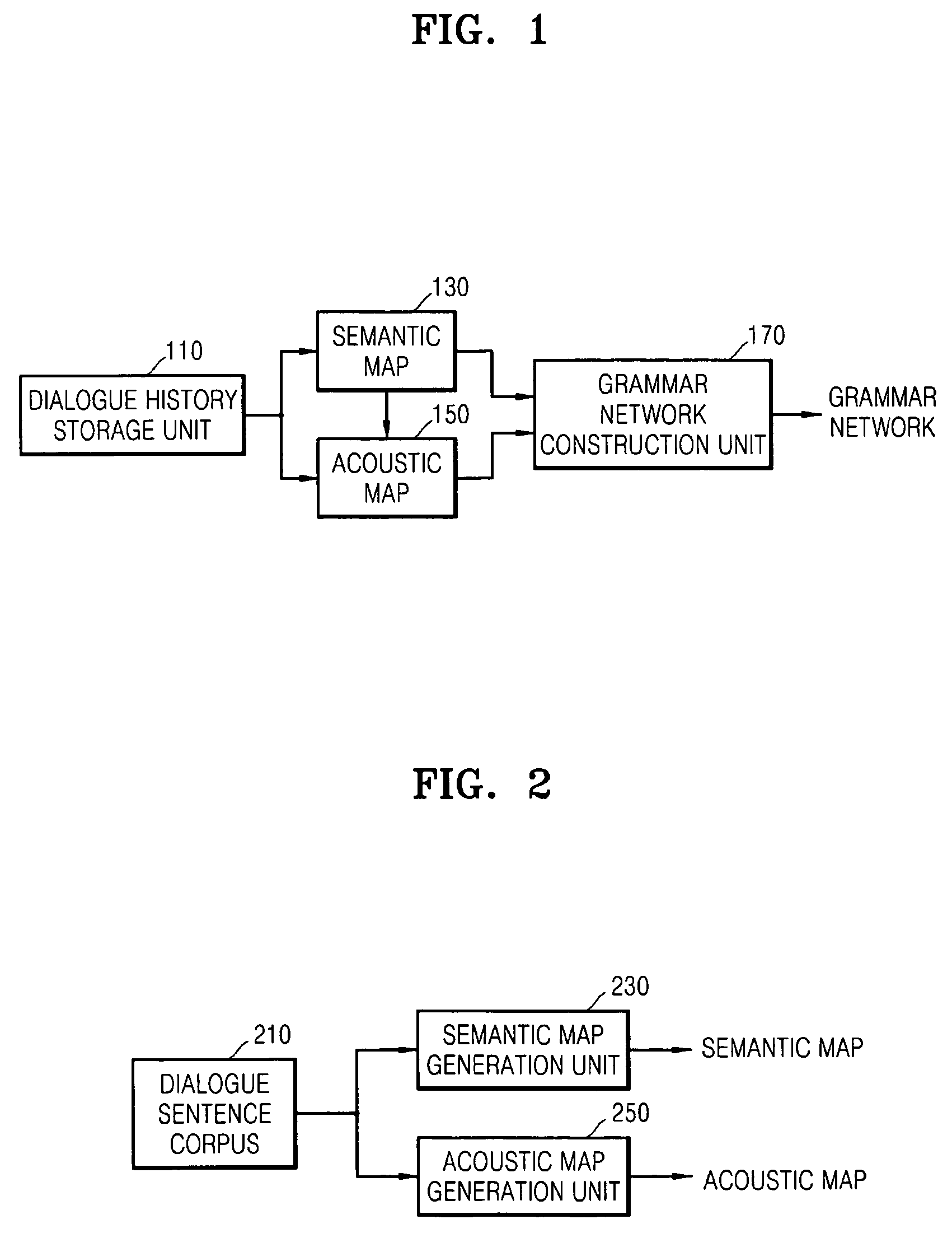

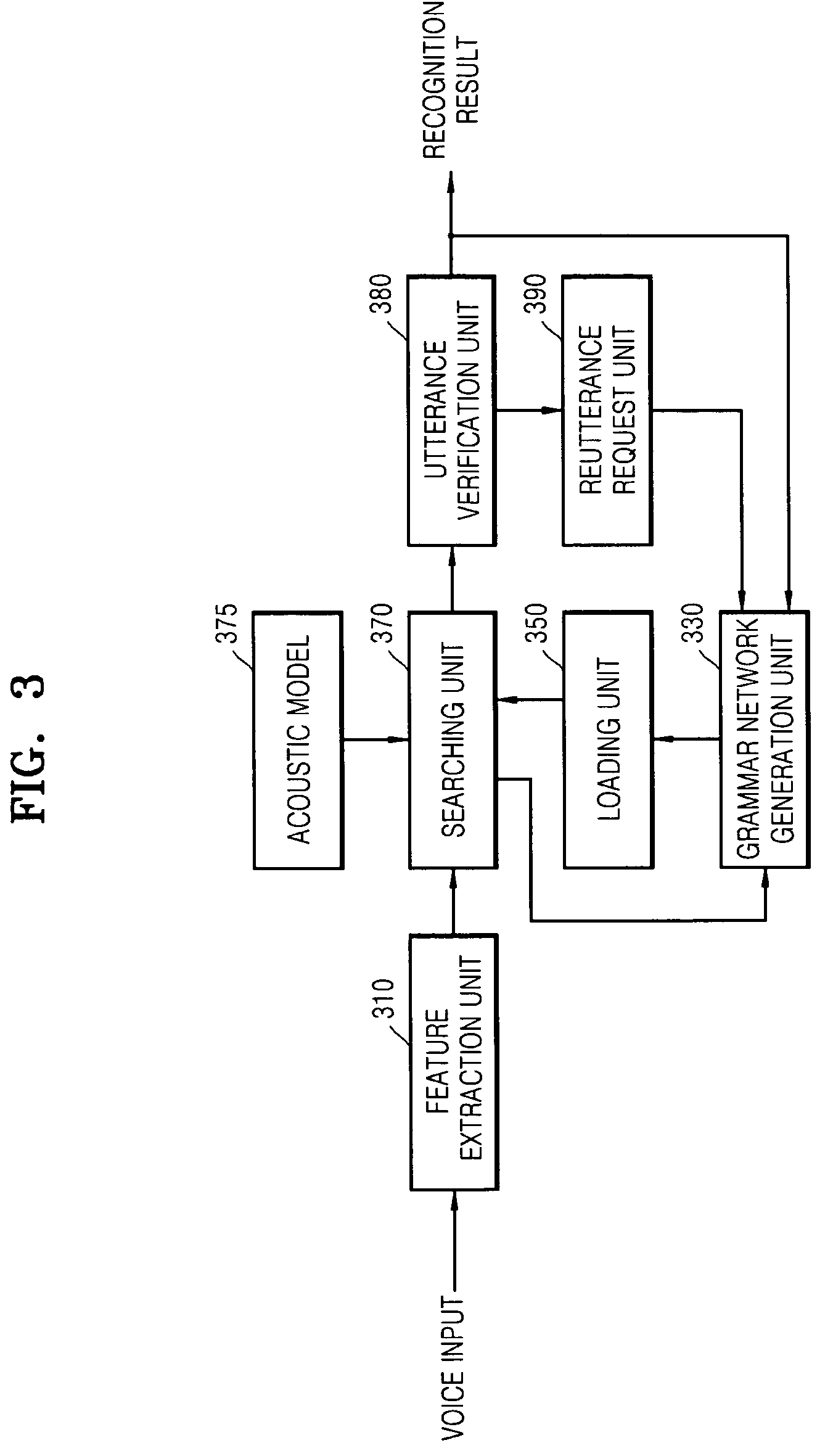

Apparatus, method, and medium for generating grammar network for use in speech recognition and dialogue speech recognition

A method, apparatus, and medium for generating a grammar network for speech recognition and a dialogue speech recognition are provided. A method, apparatus, and medium for employing the same are provided. The apparatus for generating a grammar network for speech recognition includes: a dialogue history storage unit storing a dialogue history between a system and a user; a semantic map formed by clustering words forming each dialogue sentence included in a dialogue sentence corpus depending on semantic correlation, and generating a first candidate group formed of a plurality of words having the semantic correlation extracted for each word forming a dialogue sentence provided from the dialogue history storage unit; a sound map formed by clustering words forming each dialogue sentence included in the dialogue sentence corpus depending on acoustic similarity, and generating a second candidate group formed of a plurality of words having an acoustic similarity extracted for each word forming the dialogue sentence provided from the dialogue history storage unit and each word of the first candidate group; and a grammar network construction unit constructing a grammar network by combining the first candidate group and the second candidate group.

Owner:SAMSUNG ELECTRONICS CO LTD

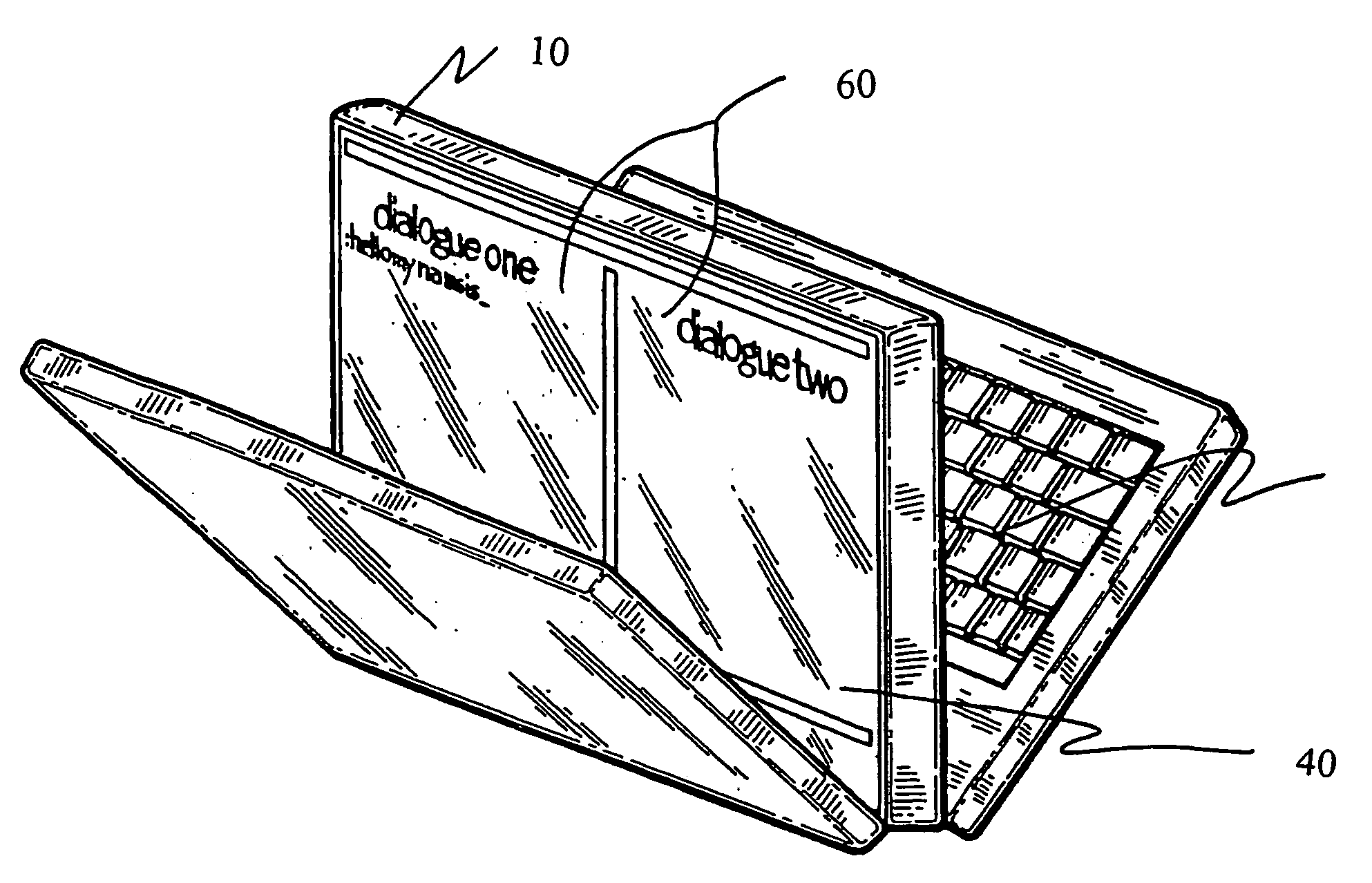

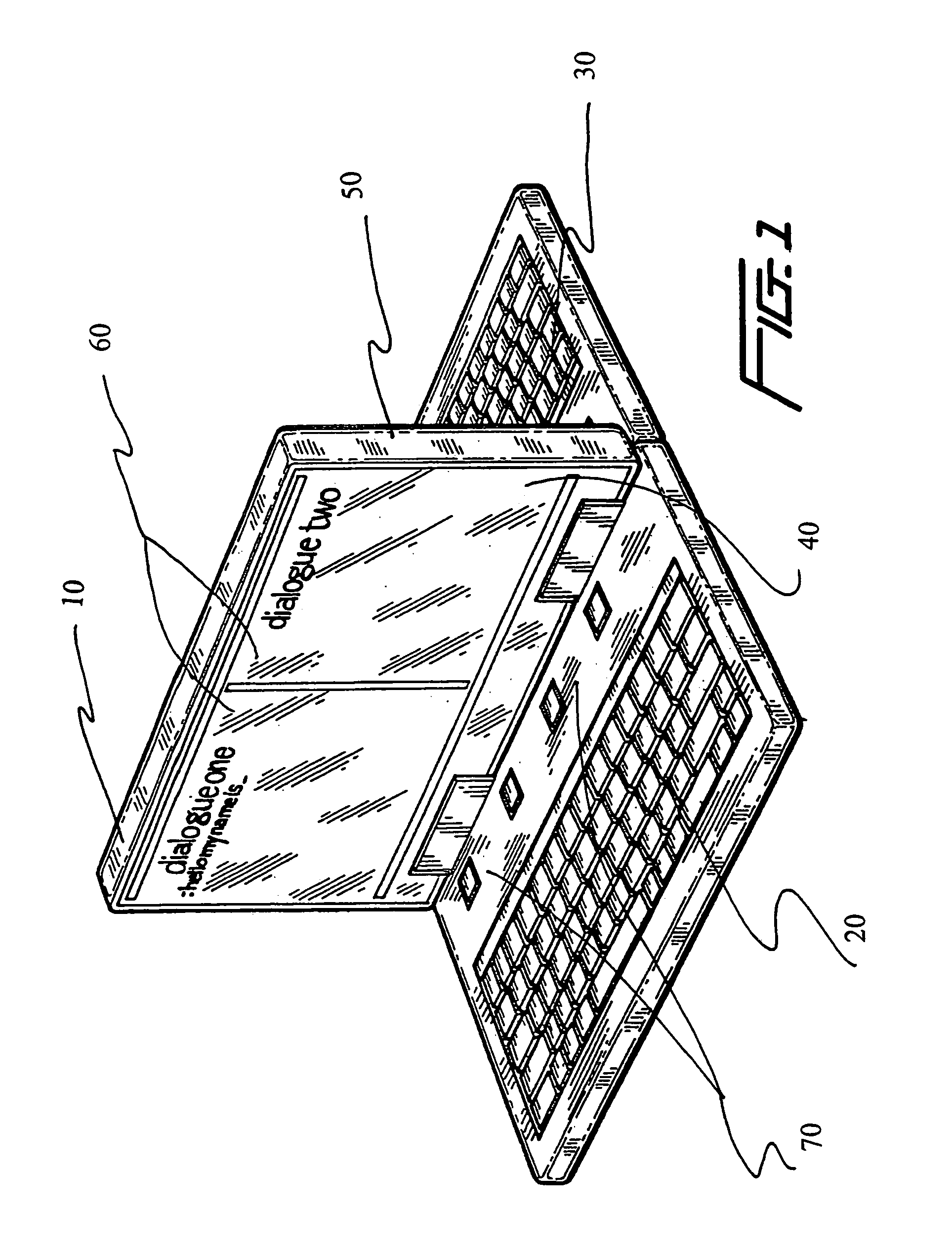

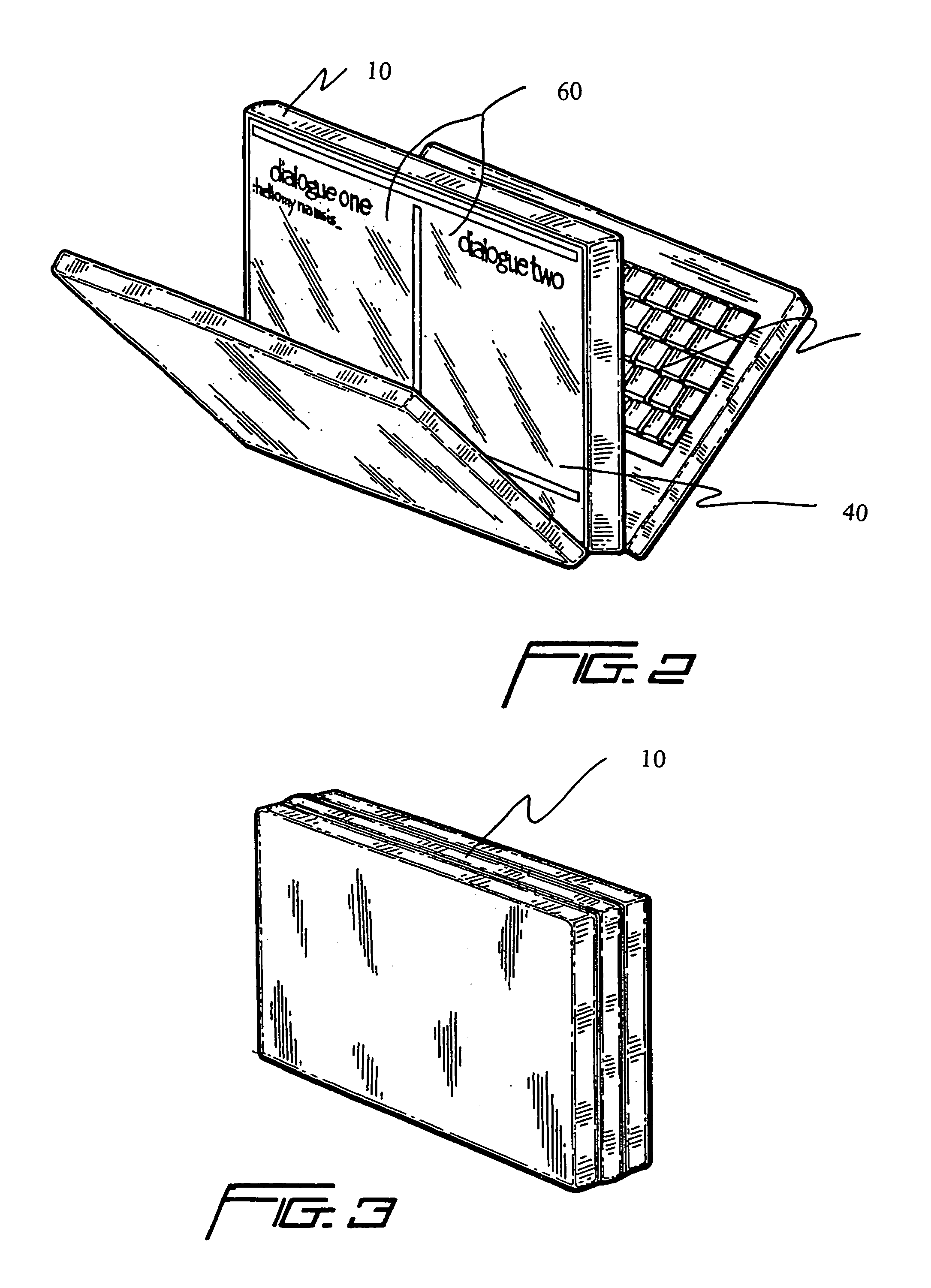

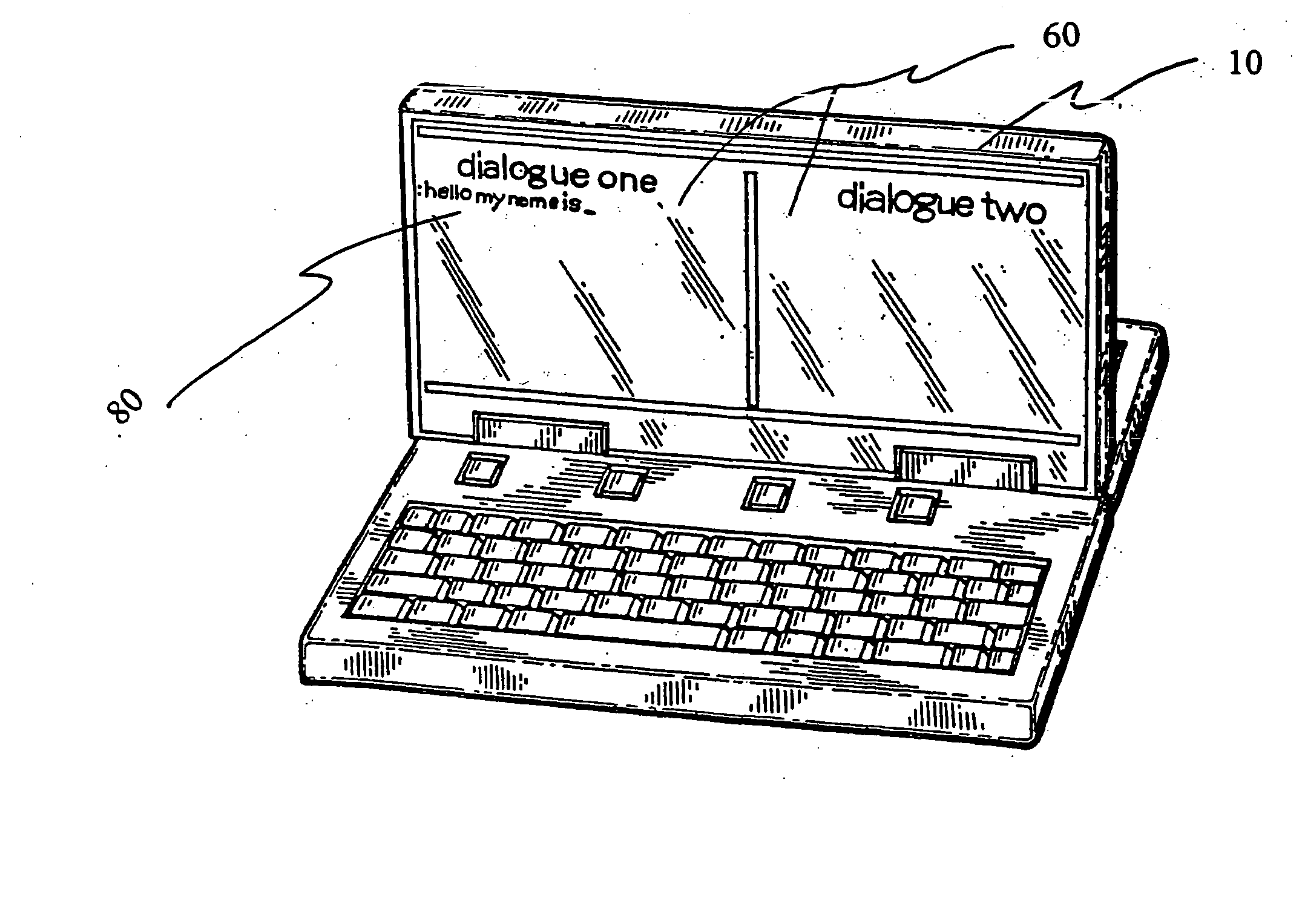

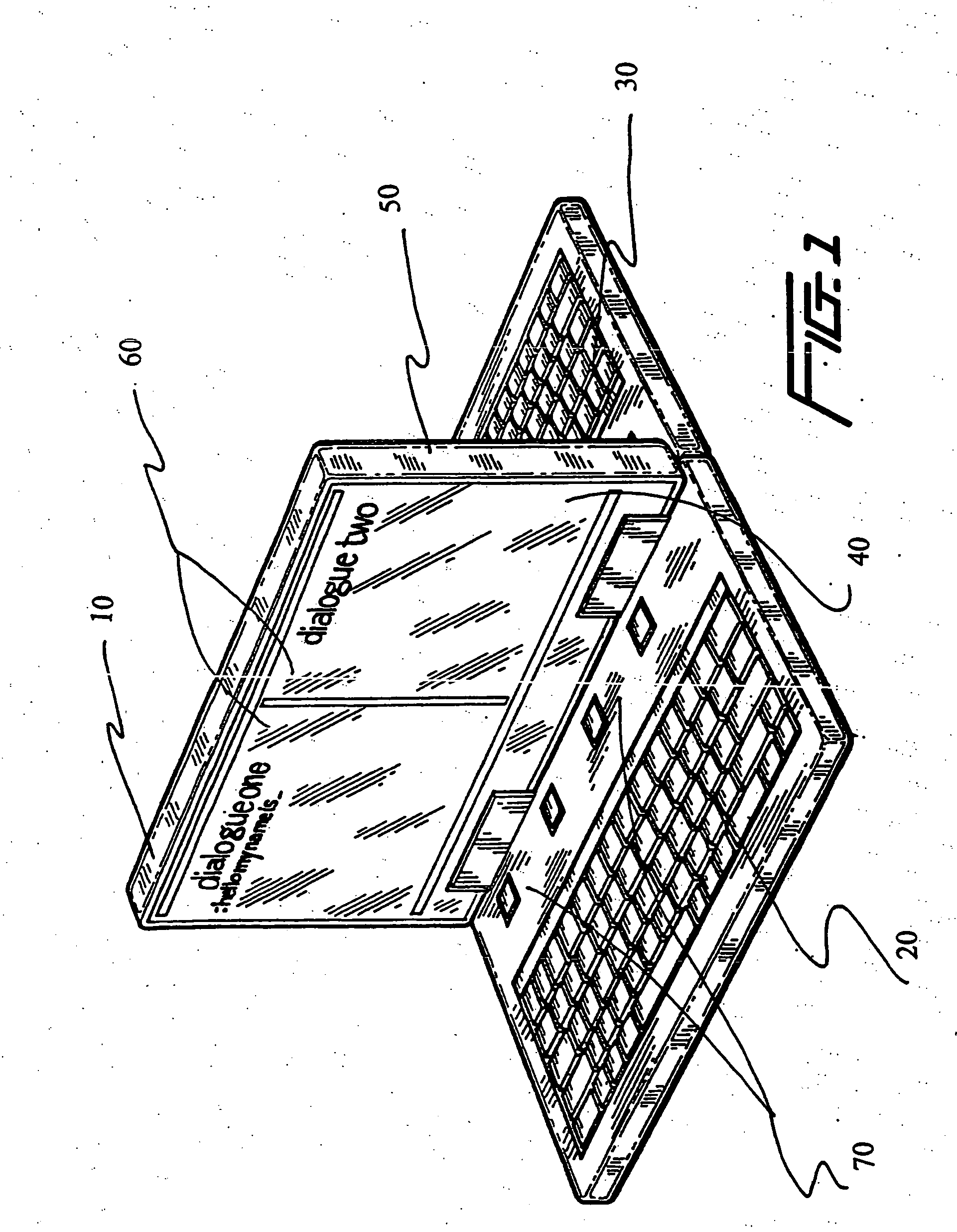

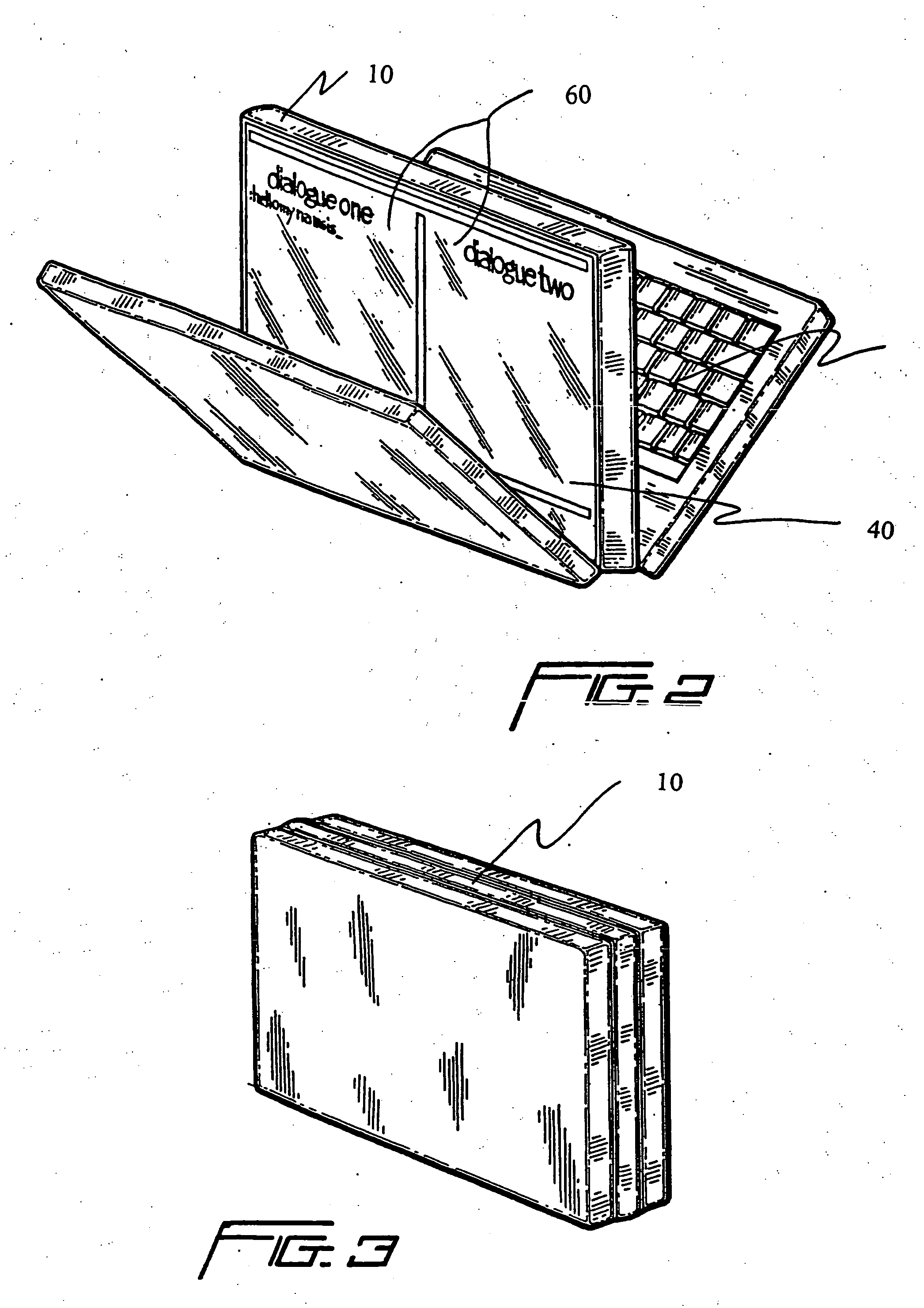

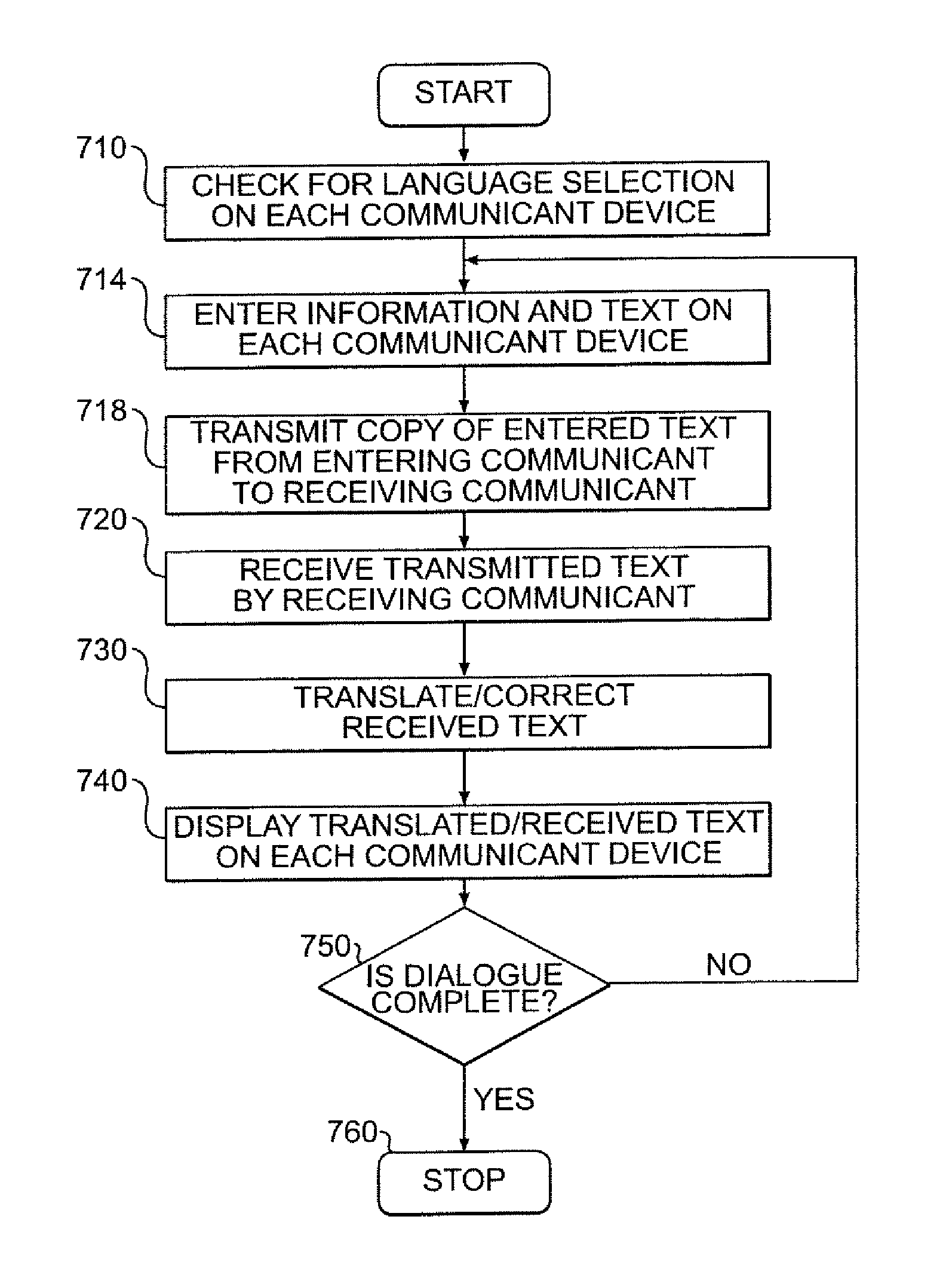

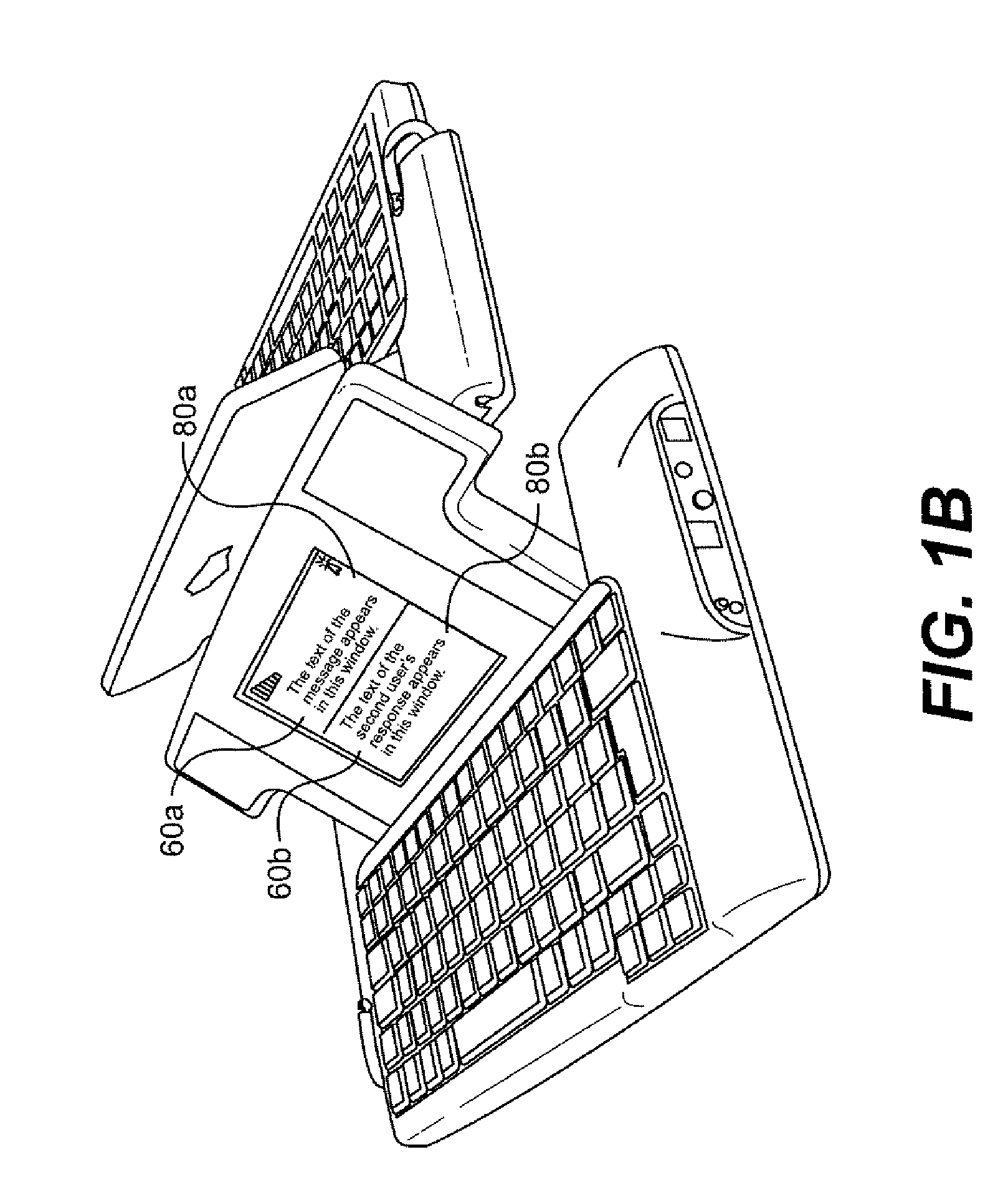

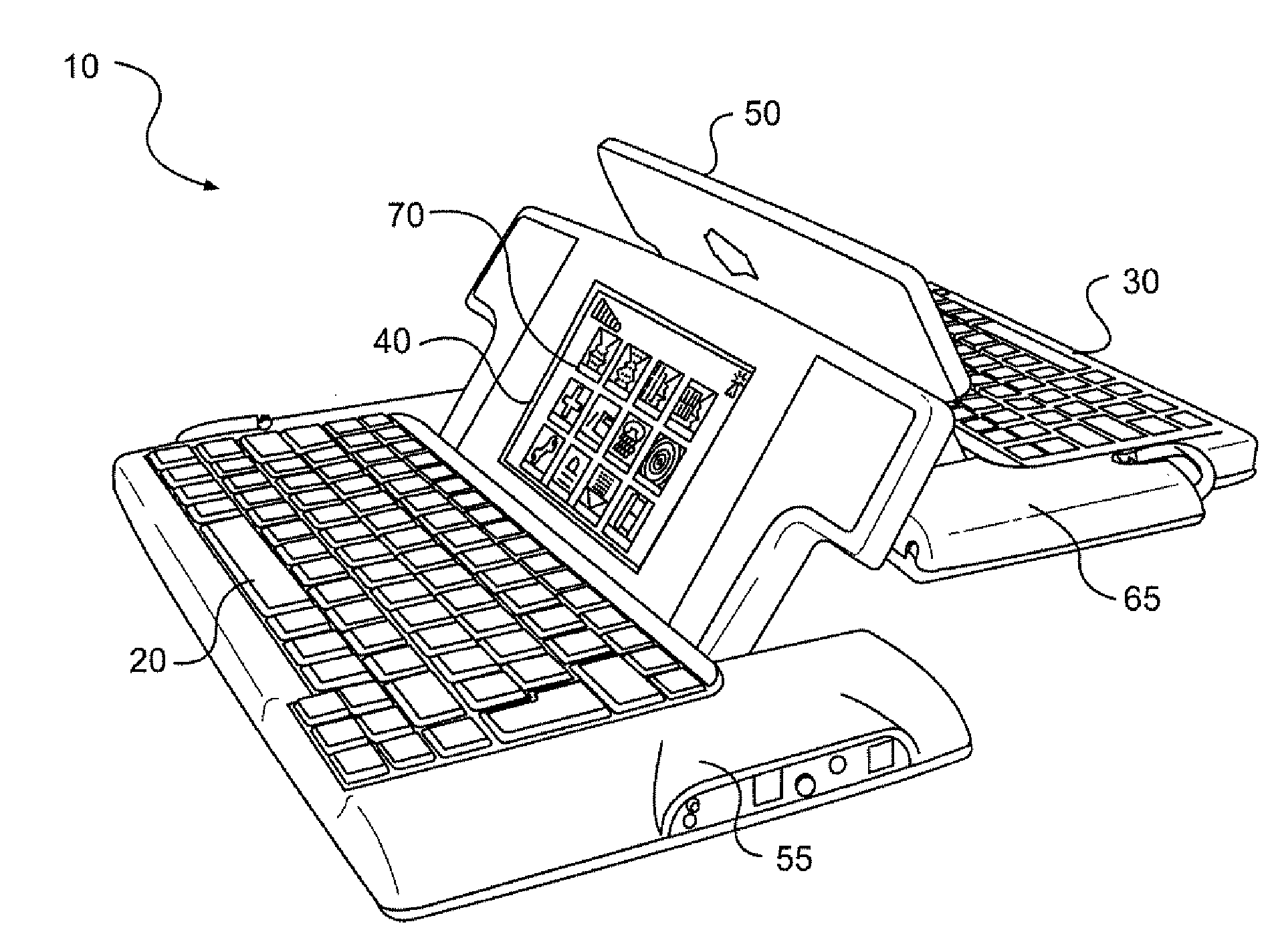

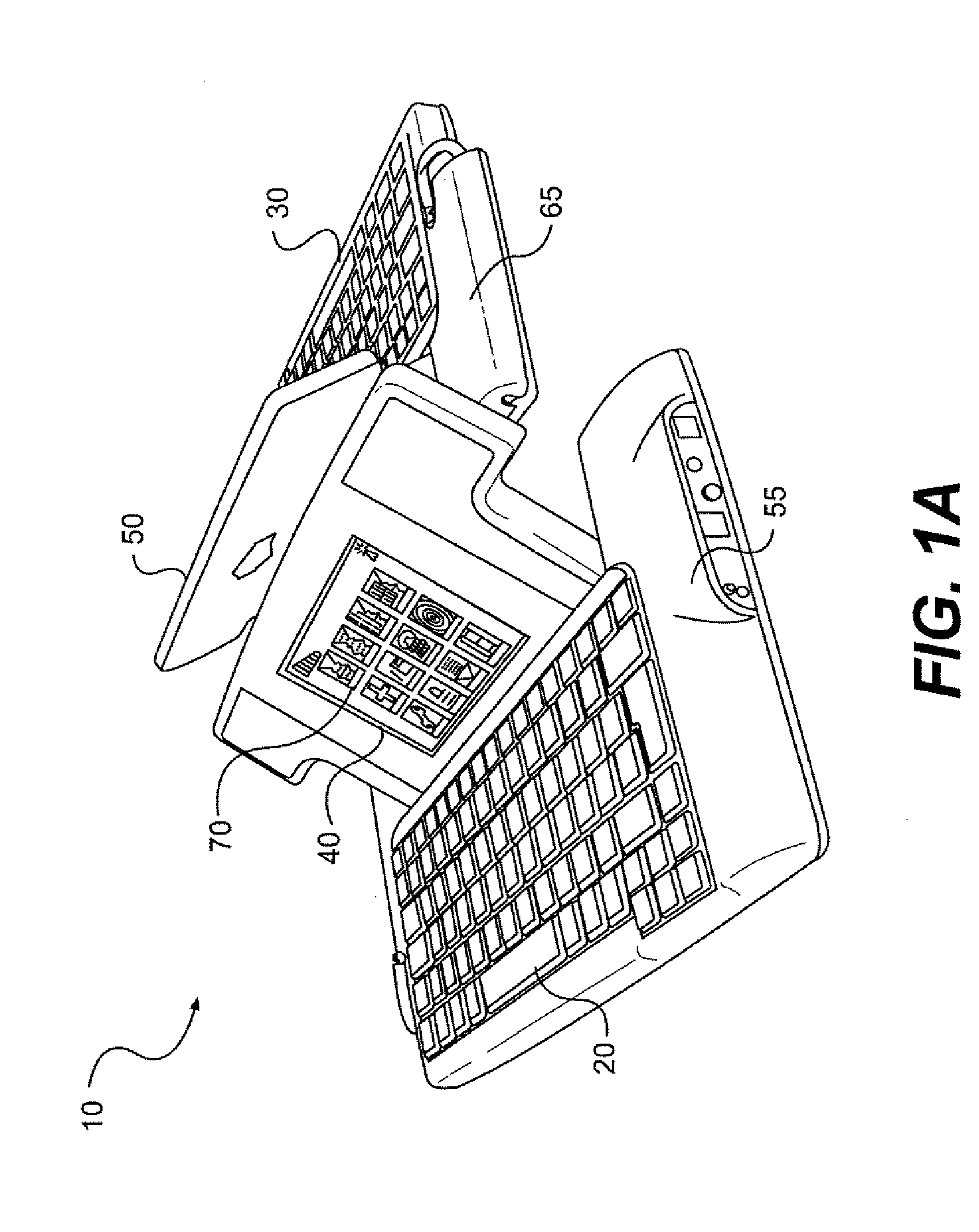

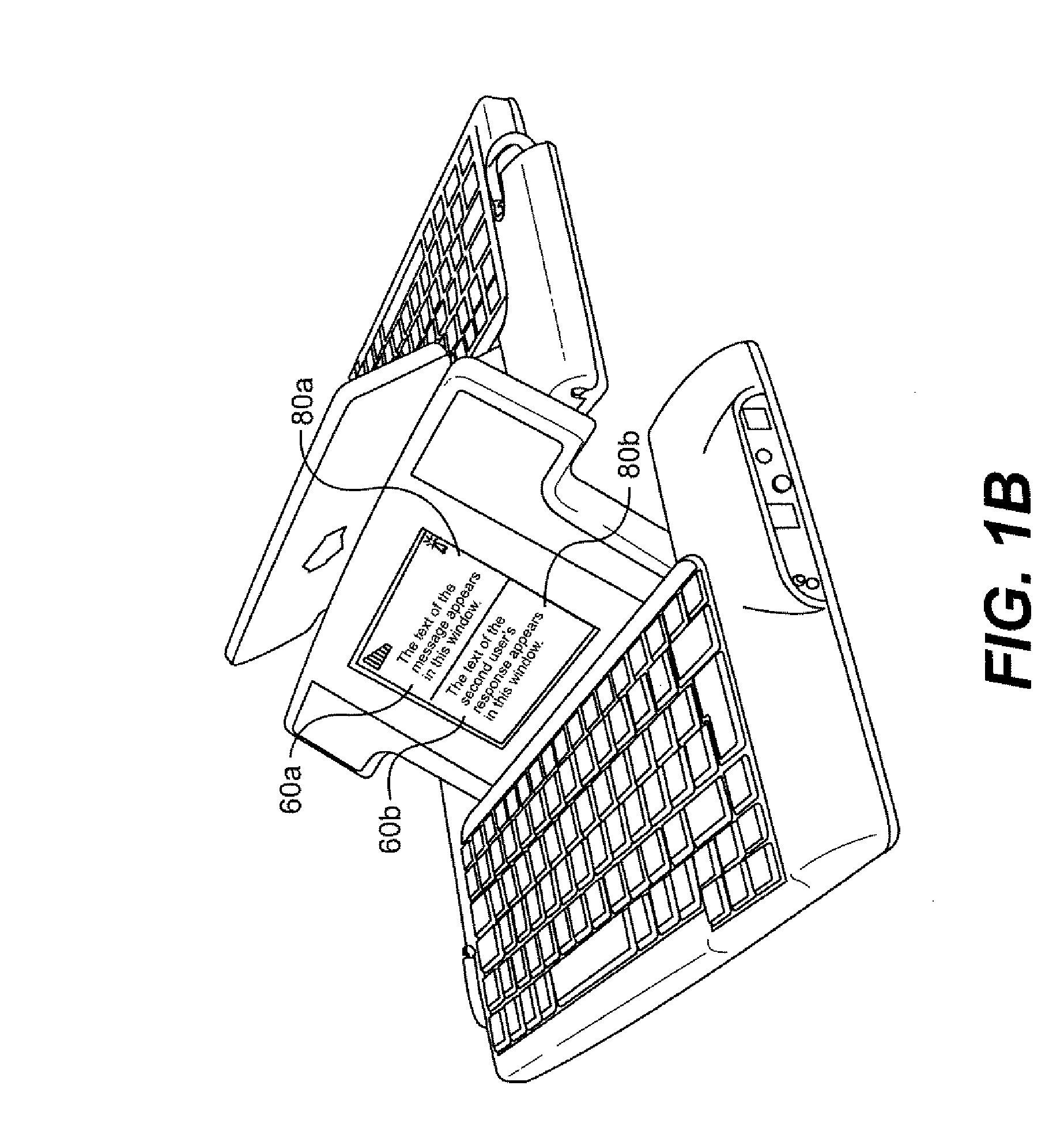

Interactive conversational speech communicator method and system

InactiveUS6993474B2Change the way of beingBreaking down barriers in communicationInput/output for user-computer interactionNatural language translationData translationConversational speech

The invention relates to a compact and portable interactive system for allowing person-to-person communication in a typed language format between individuals experiencing language barriers such as the hearing impaired and language impaired. According to the present invention, the sComm system includes a custom laptop configuration having a display screen and one keyboard on each side of the laptop; and data translations means for translating the original typed data from a first language to a second language. The display screen will further have a split configuration, i.e., a double screen, either top / bottom or left / right depicting chat boxes, each chat box dedicated to a user. The sComm system will be able to support multilingual text-based conversations. In particular, a user will be able to translate, using existing translating technology, the typed text into other languages including, but not limited to, English, Spanish, Chinese, German and French. As such, one chat box can display a text in a first language and the other chat box can display the same text but in a second language.

Owner:CURRY DAVID G +1

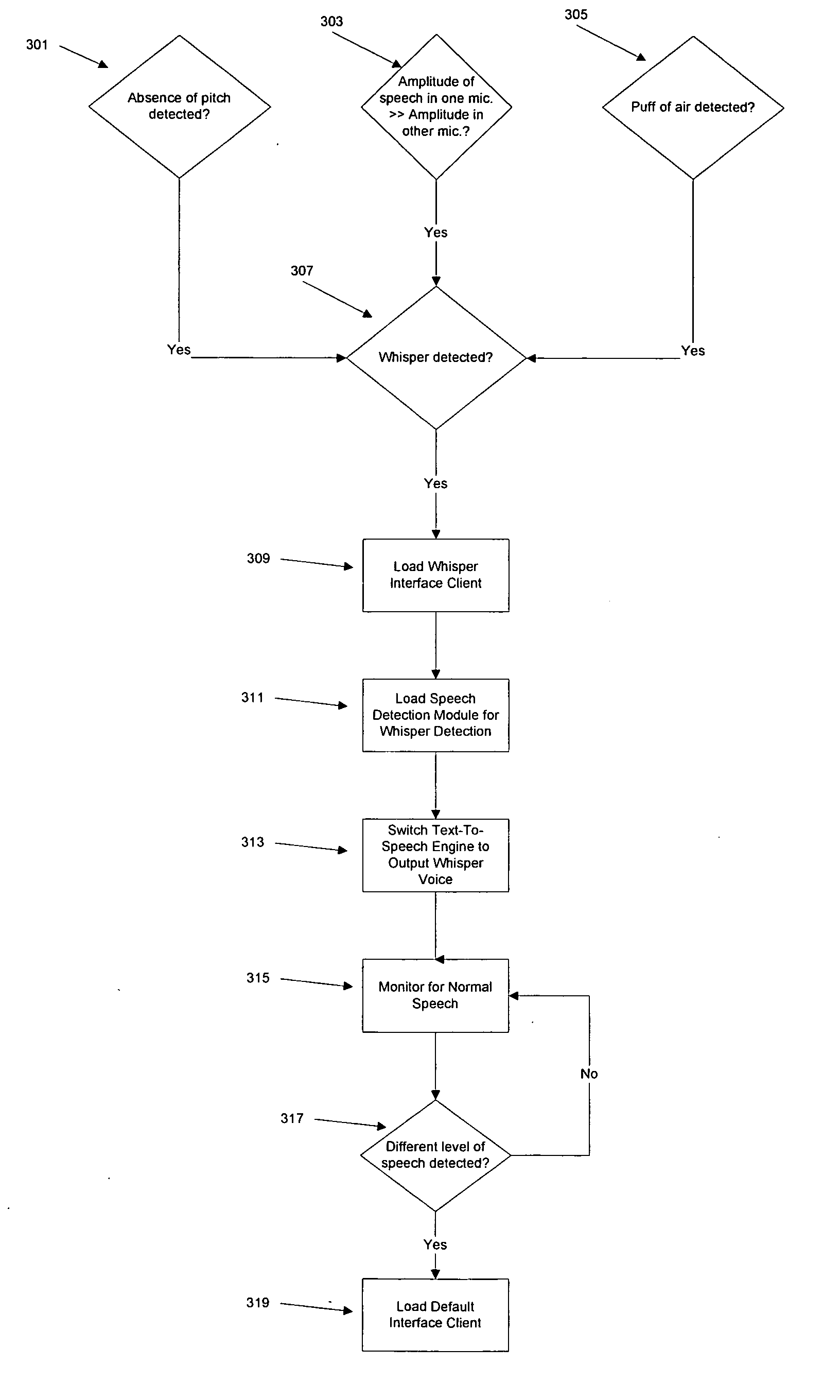

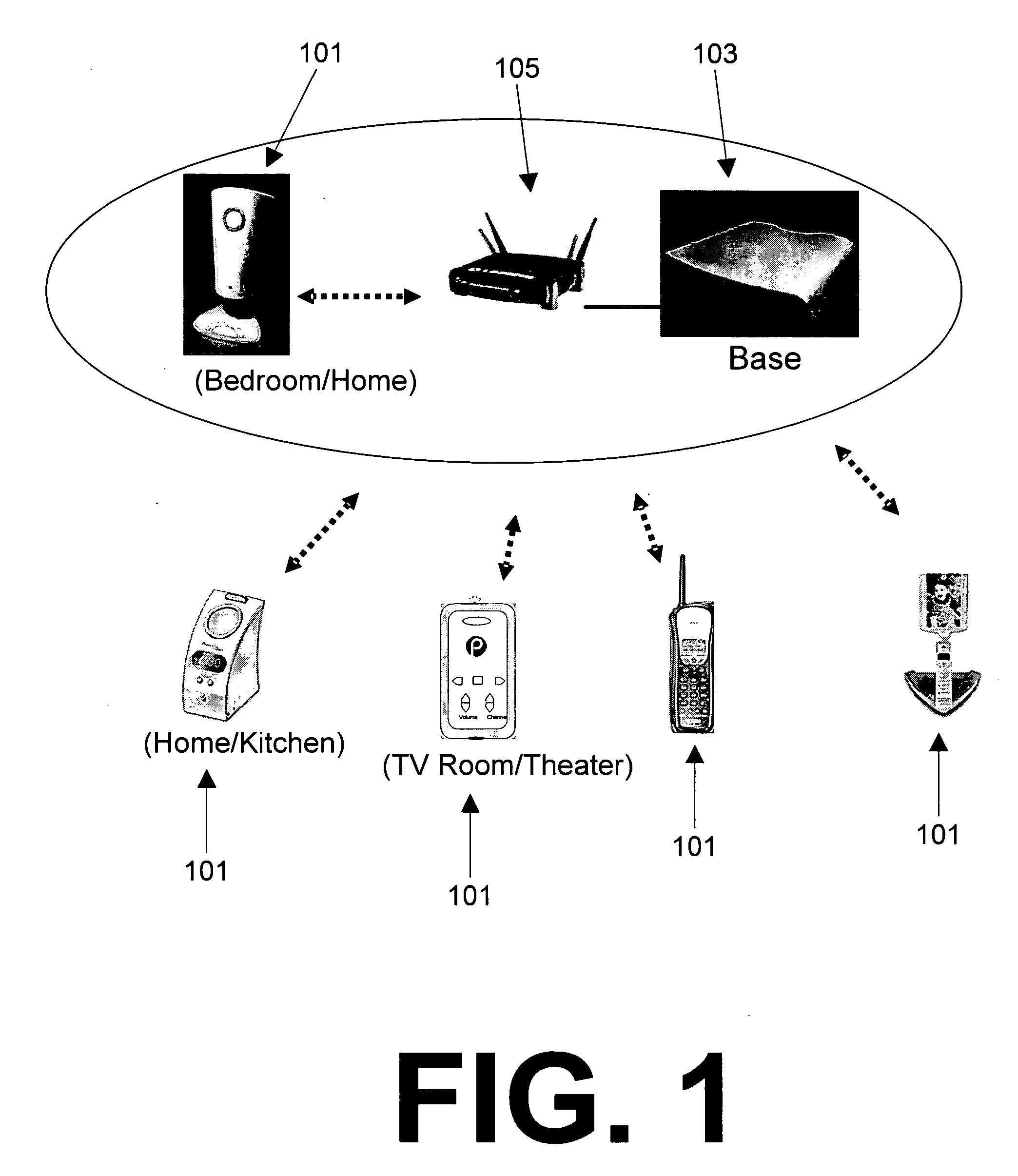

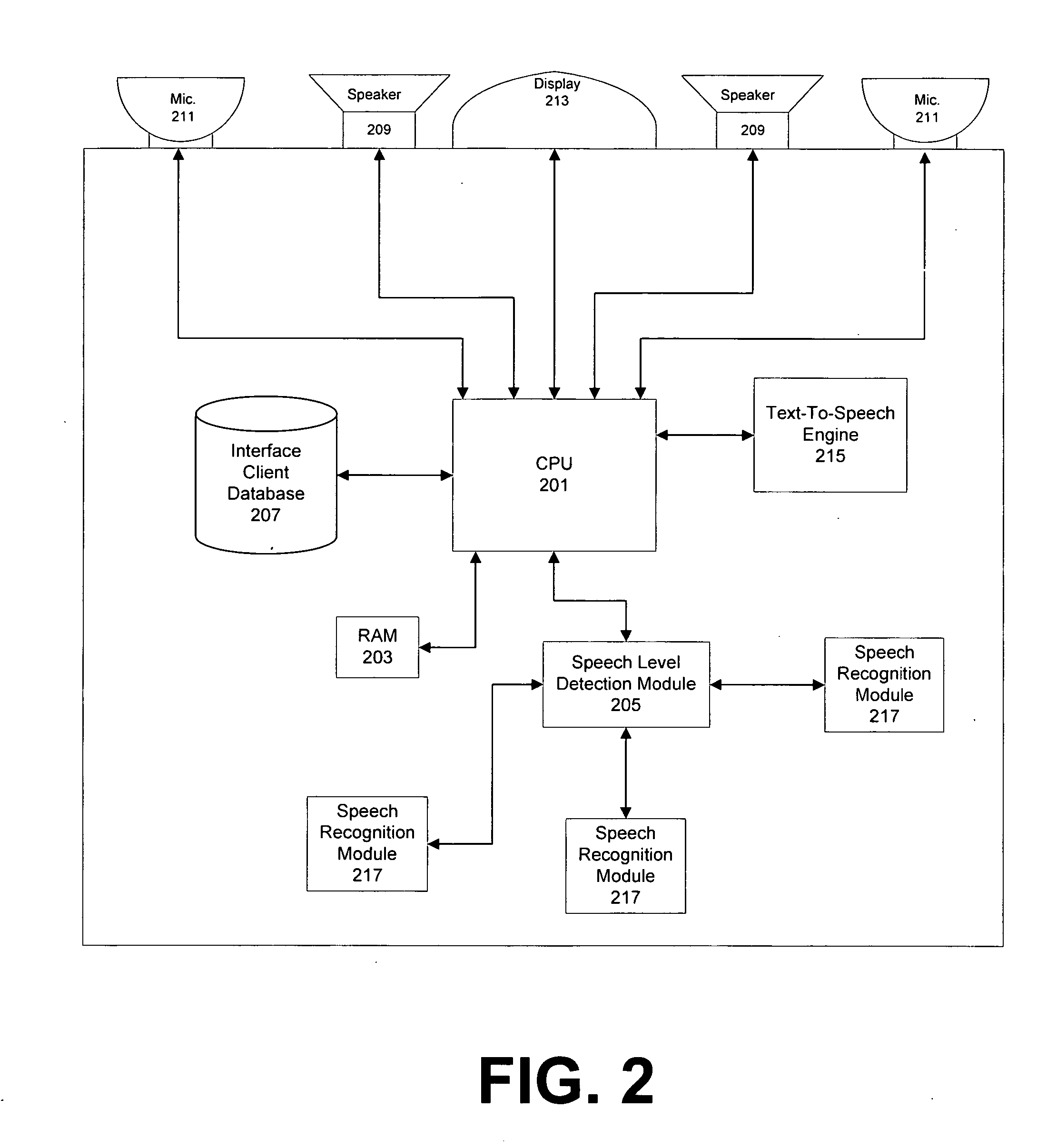

System and method for increasing recognition accuracy and modifying the behavior of a device in response to the detection of different levels of speech

InactiveUS20060085183A1Low volume decreaseImprove recognition accuracySpeech recognitionWhispered voiceRecognition algorithm

The present invention discloses a system and method for controlling the response of a device after a whisper, shout, or conversational speech has been detected. In the preferred embodiment, the system of the present invention modifies its speech recognition module to detect a whisper, shout, or conversational speech (which have different characteristics) and switches the recognition algorithm model, and its speech and dialog output. For example upon detection a whisper, the device may change the dialog output to a quieter, whispered voice. When the device detects a shout it may talk back with higher volume. The device may also utilize more visual displays in response to different levels of speech.

Owner:JAIN YOGENDRA

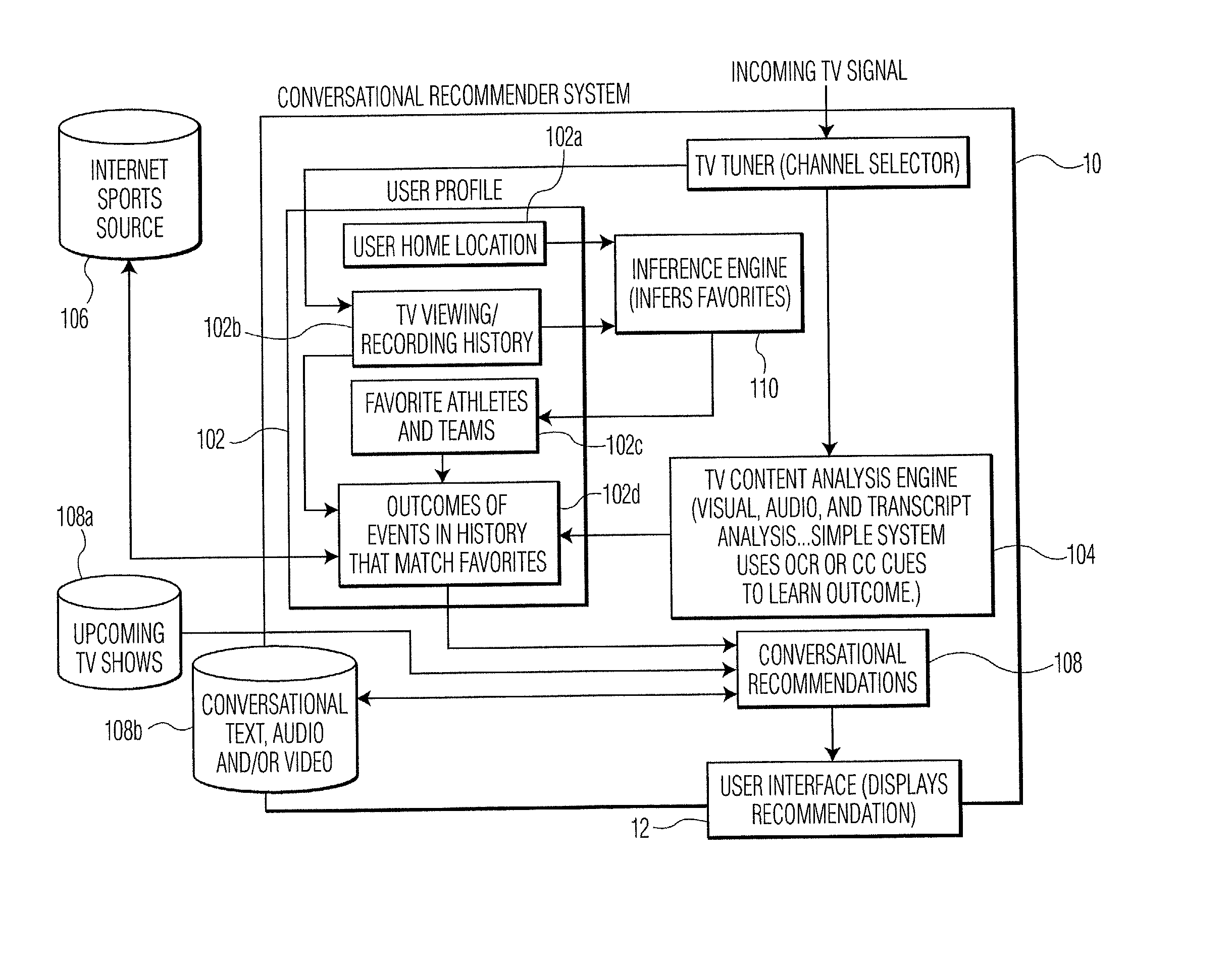

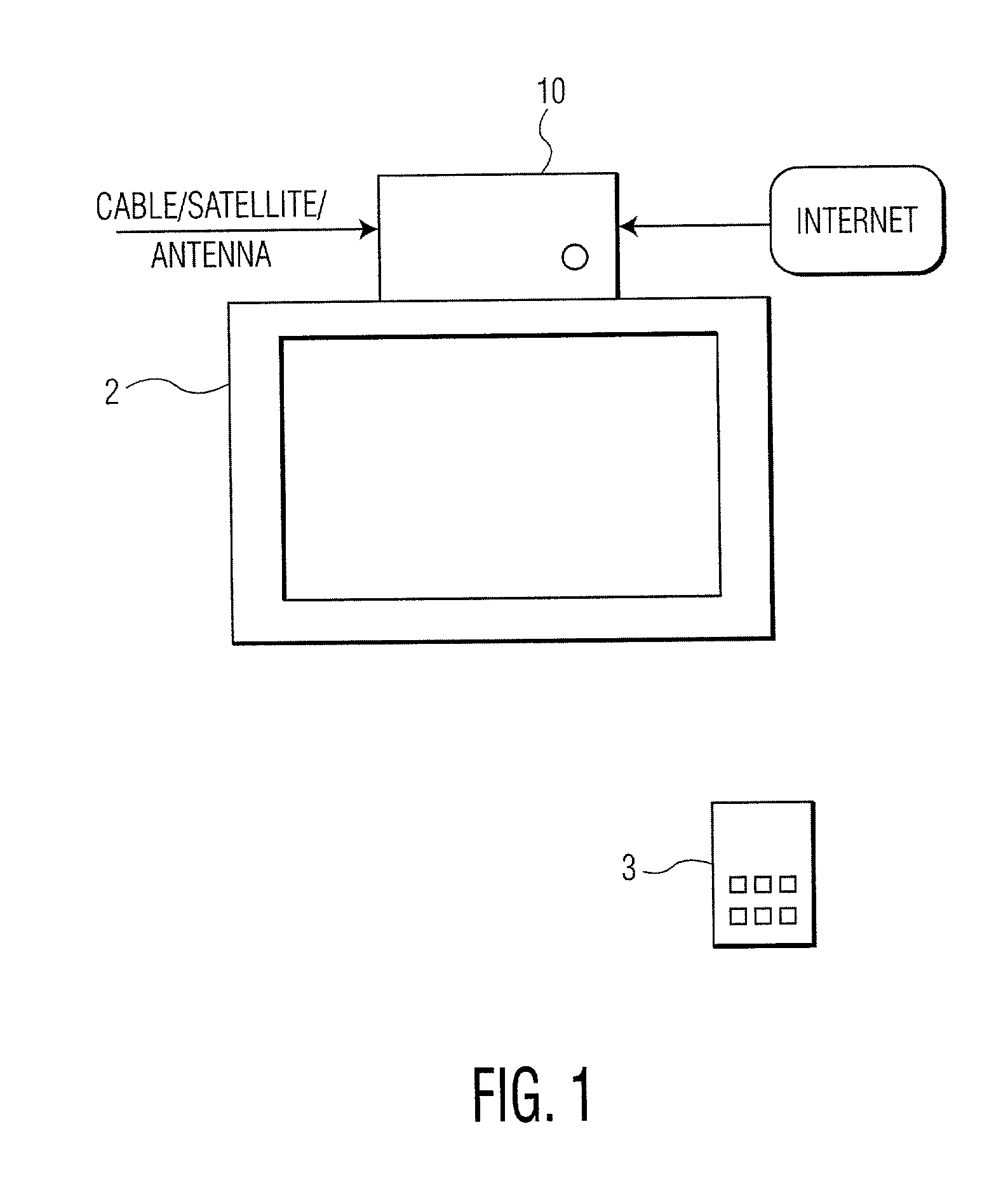

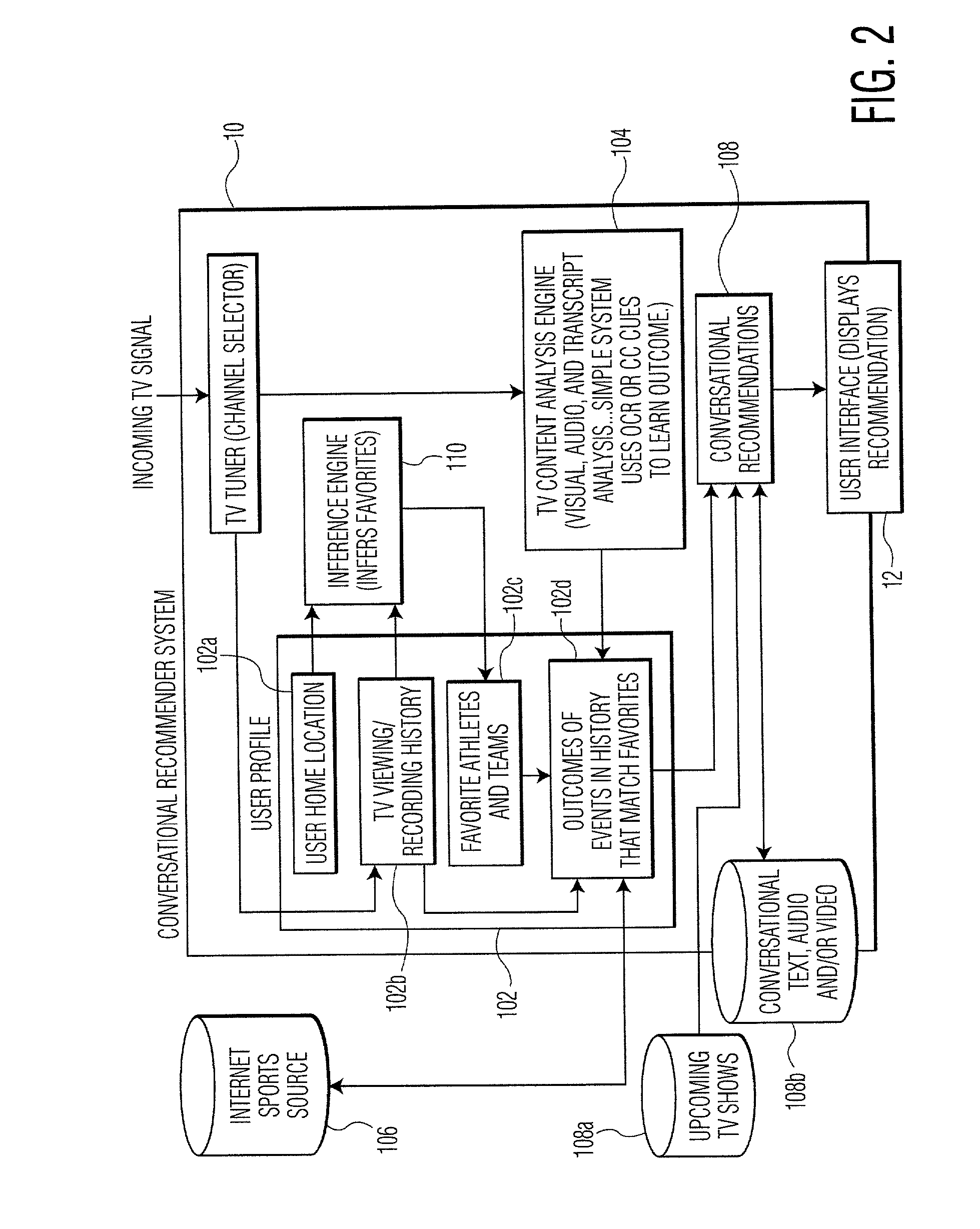

Conversational content recommender

InactiveUS20030208755A1Television system detailsAnalogue secracy/subscription systemsConversational speechTelevision programming

Disclosed is a method and system for providing conversational recommendations while viewing television programs. Accordingly, the present invention monitors incoming television signals to identify a particular sports team liked by a viewer according to a past viewing history. Then, at least one of predetermined conversational recommendations is retrieved when the sports team liked by the viewer is detected. The conversational recommendation is presented to the viewer in a conversational tone in view of the past performance by the sports team.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

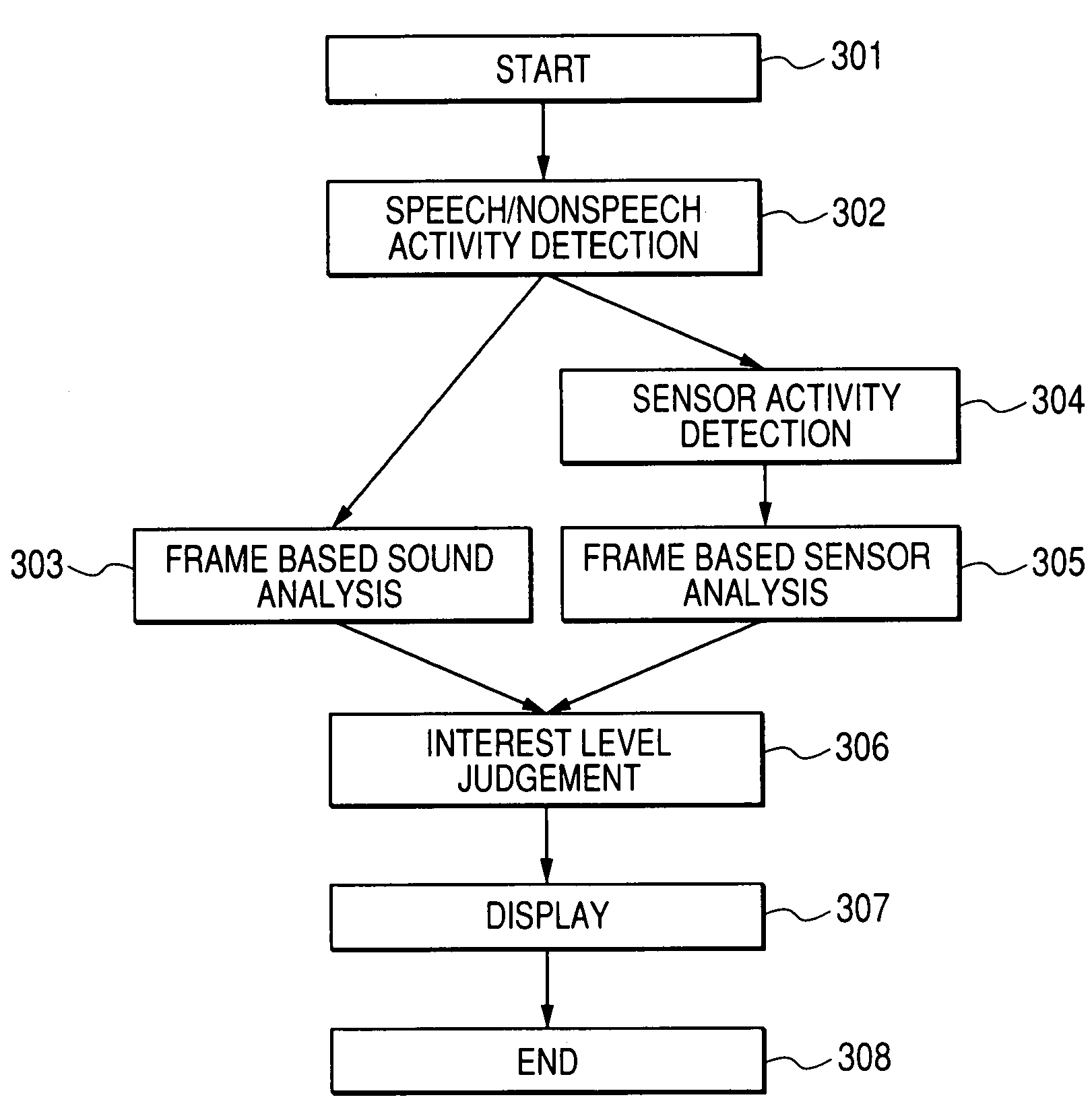

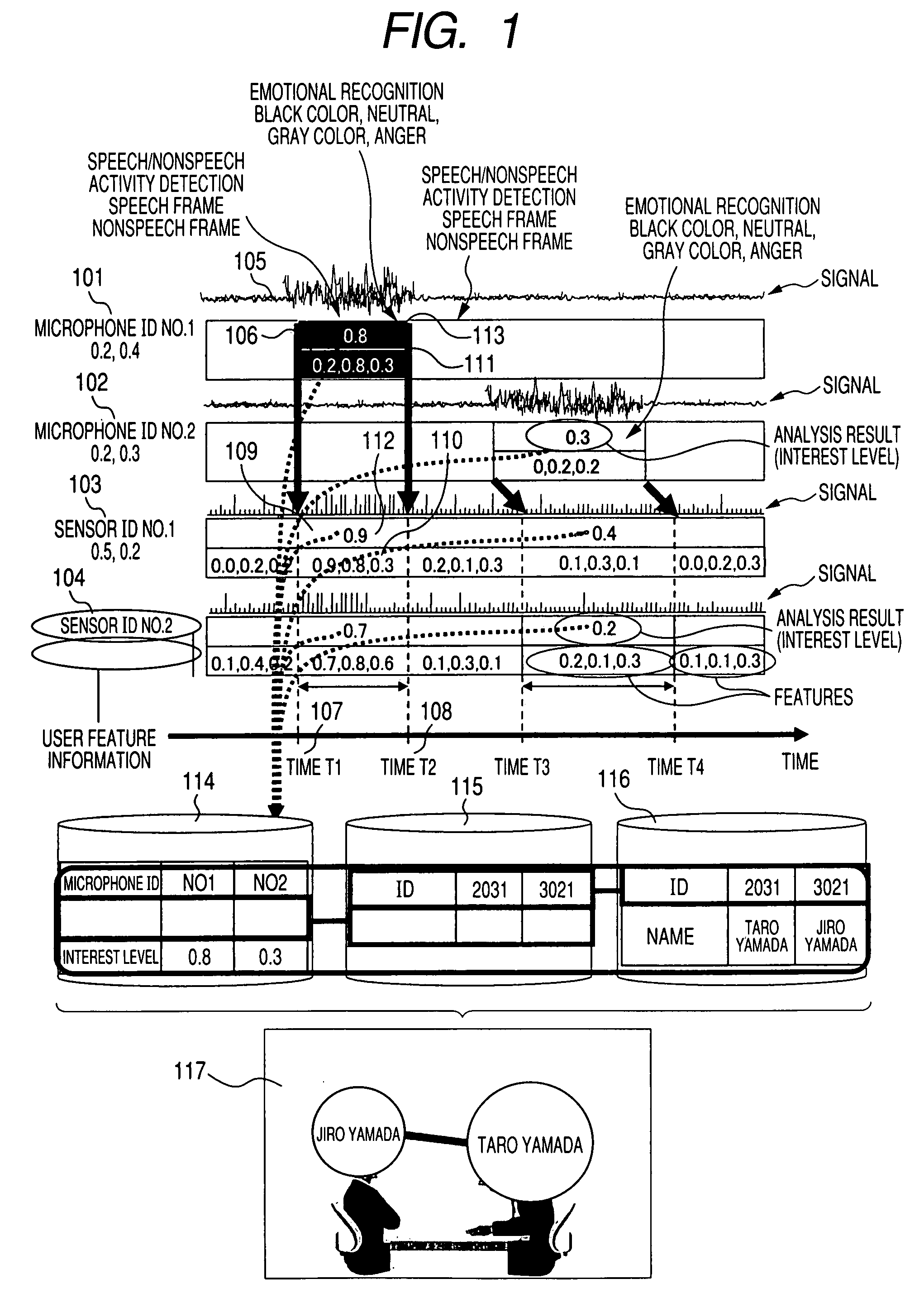

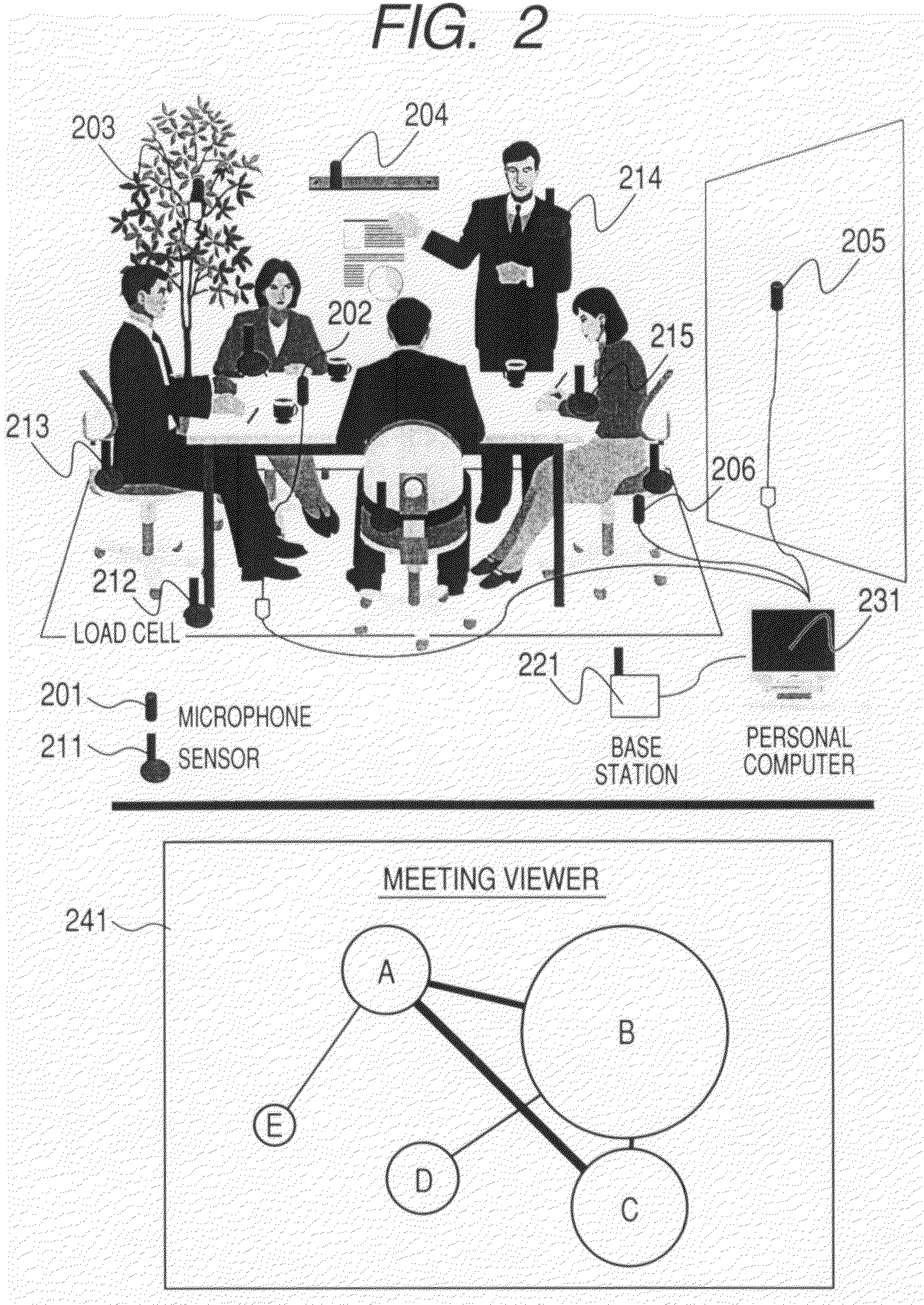

Conversational speech analysis method, and conversational speech analyzer

ActiveUS20070192103A1Assesses level of involvementSpeech recognitionConversational speechAnalysis method

The invention provides a conversational speech analyzer which analyzes whether utterances in a meeting are of interest or concern. Frames are calculated using sound signals obtained from a microphone and a sensor, sensor signals are cut out for each frame, and by calculating the correlation between sensor signals for each frame, an interest level which represents the concern of an audience regarding utterances is calculated, and the meeting is analyzed.

Owner:HITACHI LTD

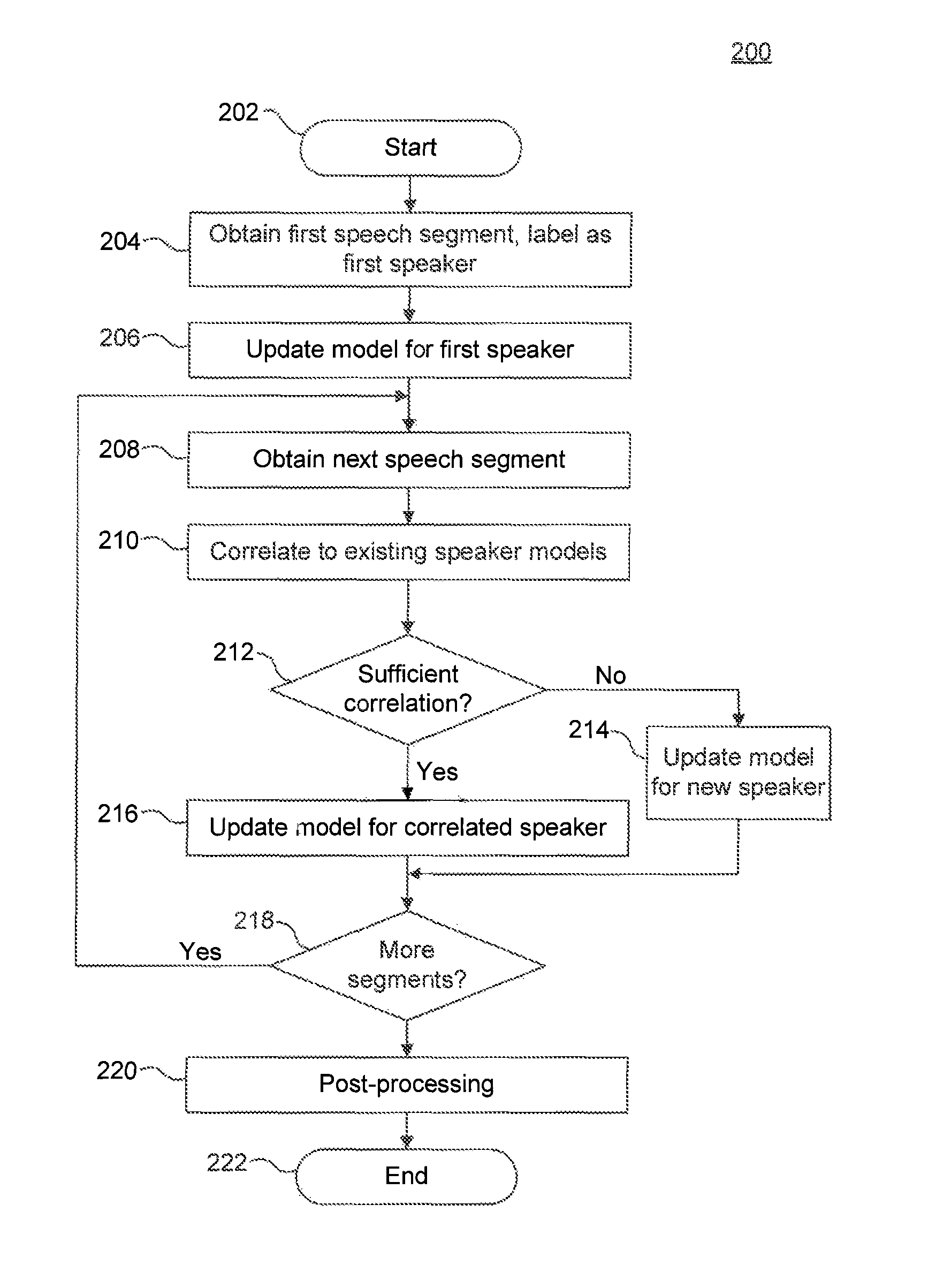

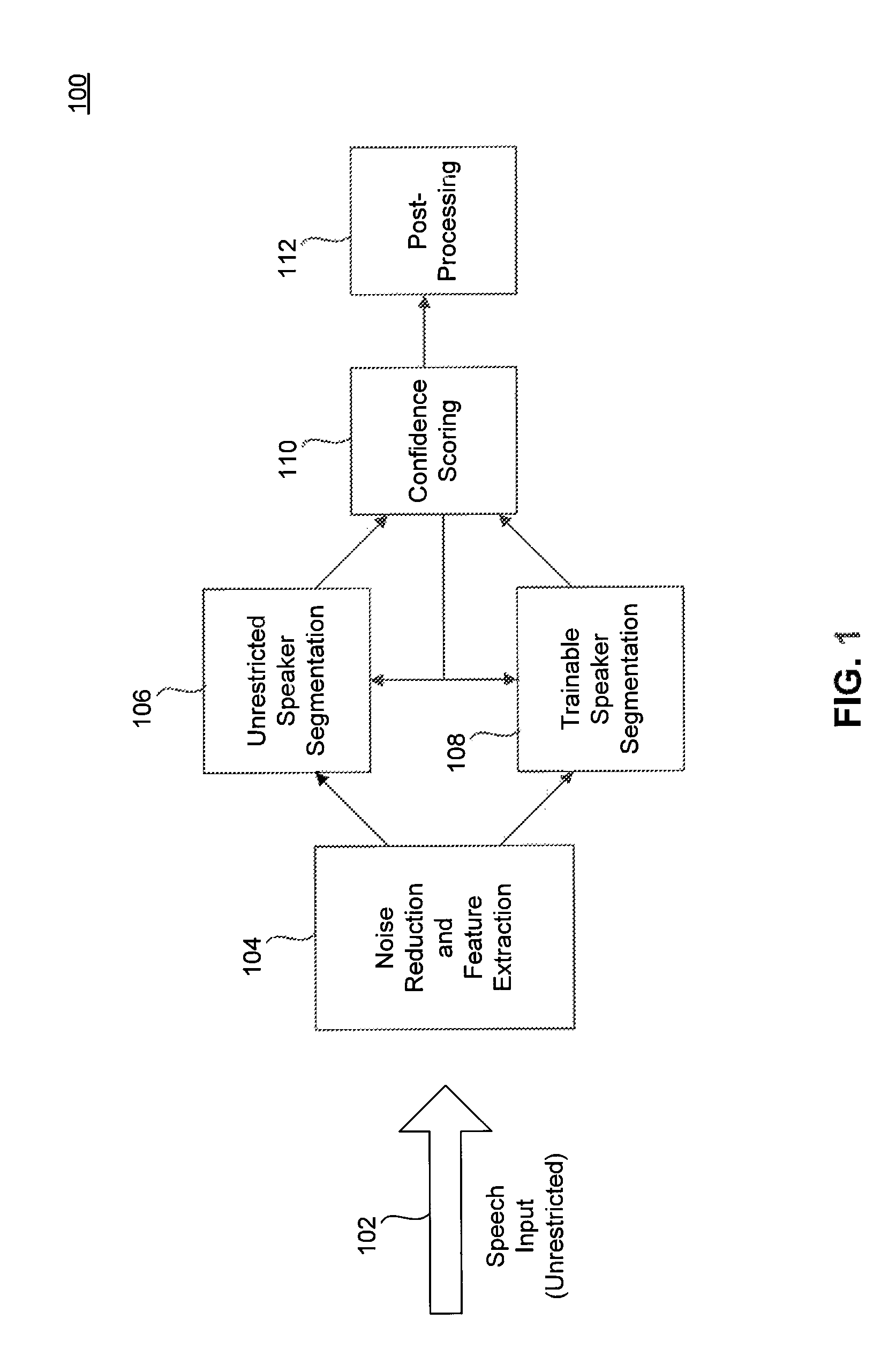

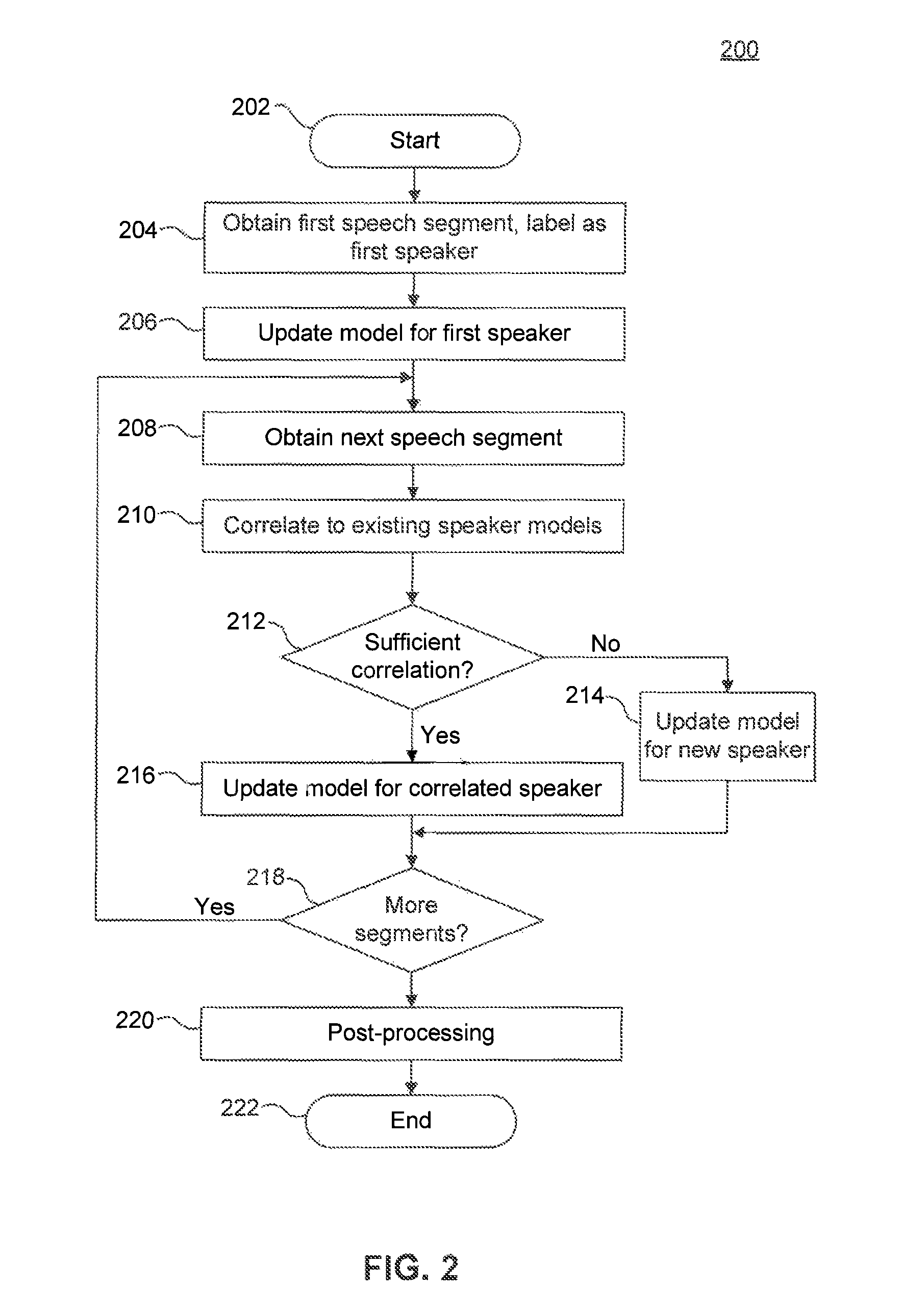

Speaker segmentation in noisy conversational speech

System and methods for robust multiple speaker segmentation in noisy conversational speech are presented. Robust voice activity detection is applied to detect temporal speech events. In order to get robust speech features and detect speech events in a noisy environment, a noise reduction algorithm is applied, using noise tracking. After noise reduction and voice activity detection, the incoming audio / speech is initially labeled as speech segments or silence segments. With no prior knowledge of the number of speakers, the system identifies one reliable speech segment near the beginning of the conversational speech and extracts speech features with a short latency, then learns a statistical model from the selected speech segment. This initial statistical model is used to identify the succeeding speech segments in a conversation. The statistical model is also continuously adapted and expanded with newly identified speech segments that match well to the model. The speech segments with low likelihoods are labeled with a second speaker ID, and a statistical model is learned from them. At the same time, these two trained speaker models are also updated / adapted once a reliable speech segment is identified. If a speech segment does not match well to the two speaker models, the speech segment is temporarily labeled as an outlier or as originating from a third speaker. This procedure is then applied recursively as needed when there are more than two speakers in a conversation.

Owner:FRIDAY HARBOR LLC

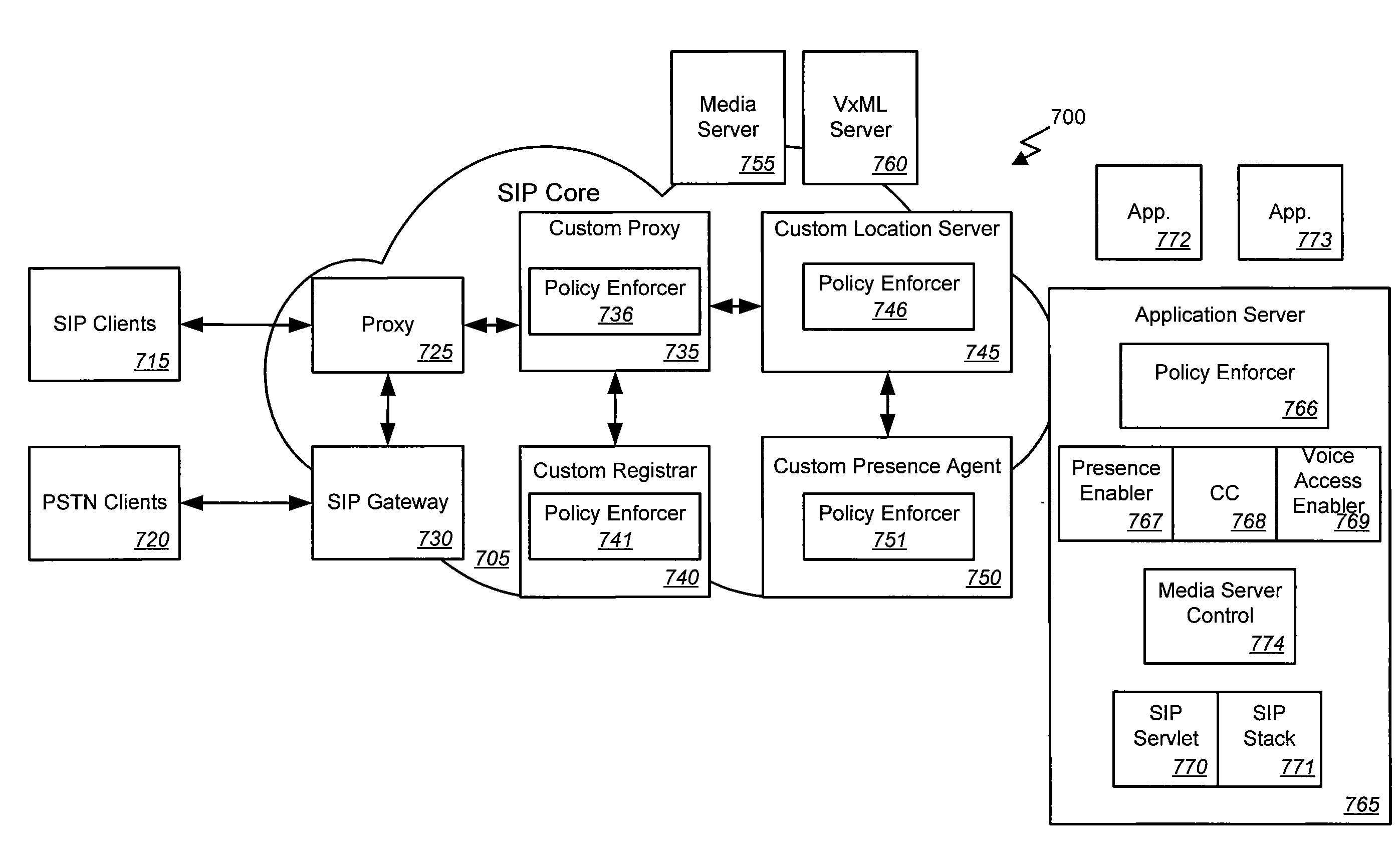

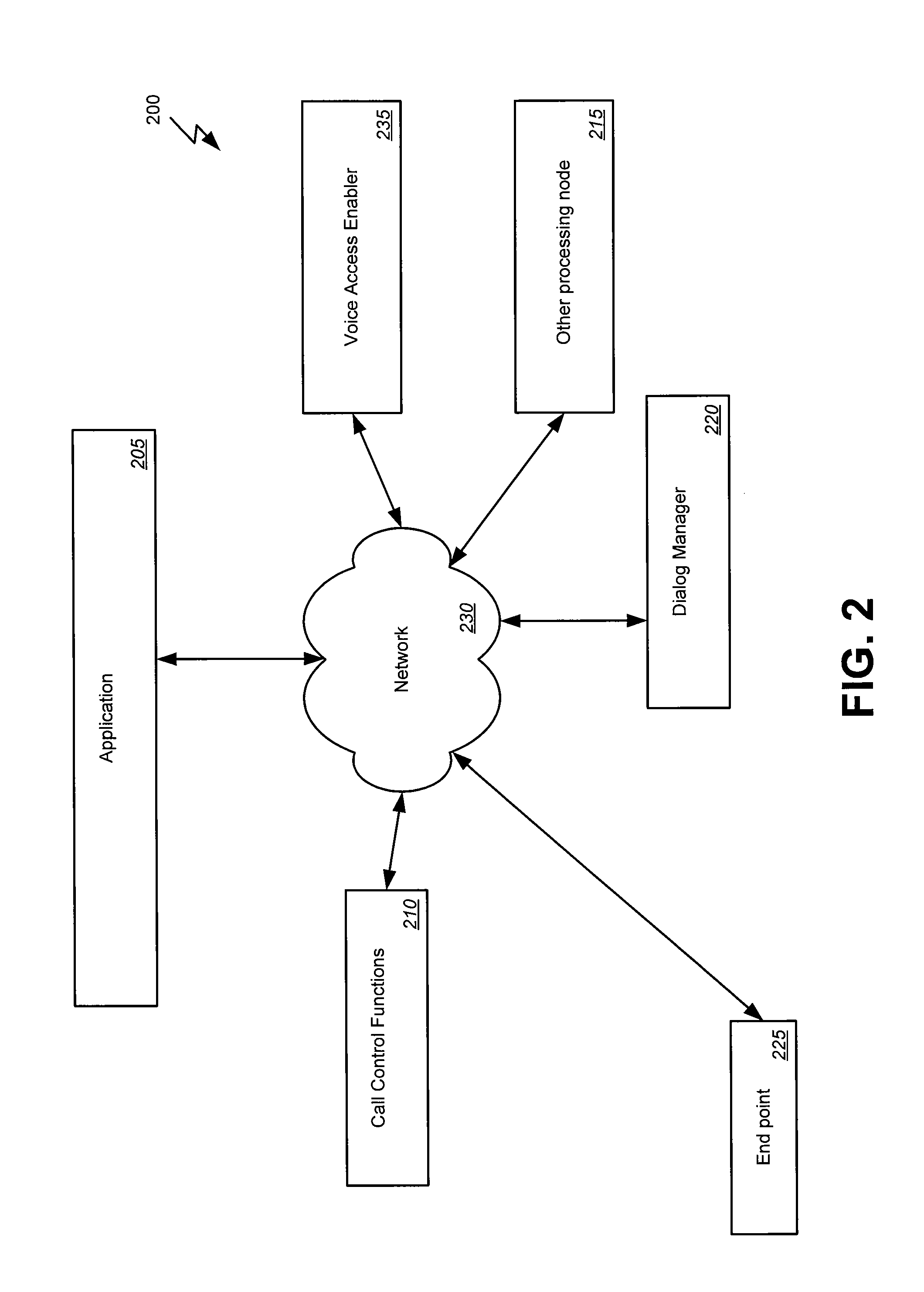

Factoring out dialog control and call control

ActiveUS20080235380A1Multiple digital computer combinationsTransmissionSession controlConversational speech

Systems, methods, and machine-readable media are disclosed for providing session control and media exchange control that can include and combine, for example, call control and voice access concepts such as dialog (voice dialog, prompts and DTMF) or web / GUI elements. In one embodiment, a method of controlling a media session can comprise establishing a call via a signaling protocol, maintaining control of the call, and passing control of aspects of the call other than call control to a separate media processing module. The media processing module can comprise, for example, a dialog manager. In some implementations a voice access enabler providing a an abstract interface for accessing functions of the dialog controller.

Owner:ORACLE INT CORP

Interactive conversational speech communicator method and system

InactiveUS20060206309A1Change the way of beingBreaking down barriers in communicationNatural language translationSpecial data processing applicationsData translationConversational speech

The invention relates to a compact and portable interactive system for allowing person-to-person communication in a typed language format between individuals experiencing language barriers such as the hearing impaired and language impaired. According to the present invention, the sComm system includes a custom laptop configuration having a display screen and one keyboard on each side of the laptop; and data translations means for translating the original typed data from a first language to a second language. The display screen will further have a split configuration, i.e., a double screen, either top / bottom or left / right depicting chat boxes, each chat box dedicated to a user. The sComm system will be able to support multilingual text-based conversations. In particular, a user will be able to translate, using existing translating technology, the typed text into other languages including, but not limited to, English, Spanish, Chinese, German and French. As such, one chat box can display a text in a first language and the other chat box can display the same text but in a second language.

Owner:CURRY DAVID G +1

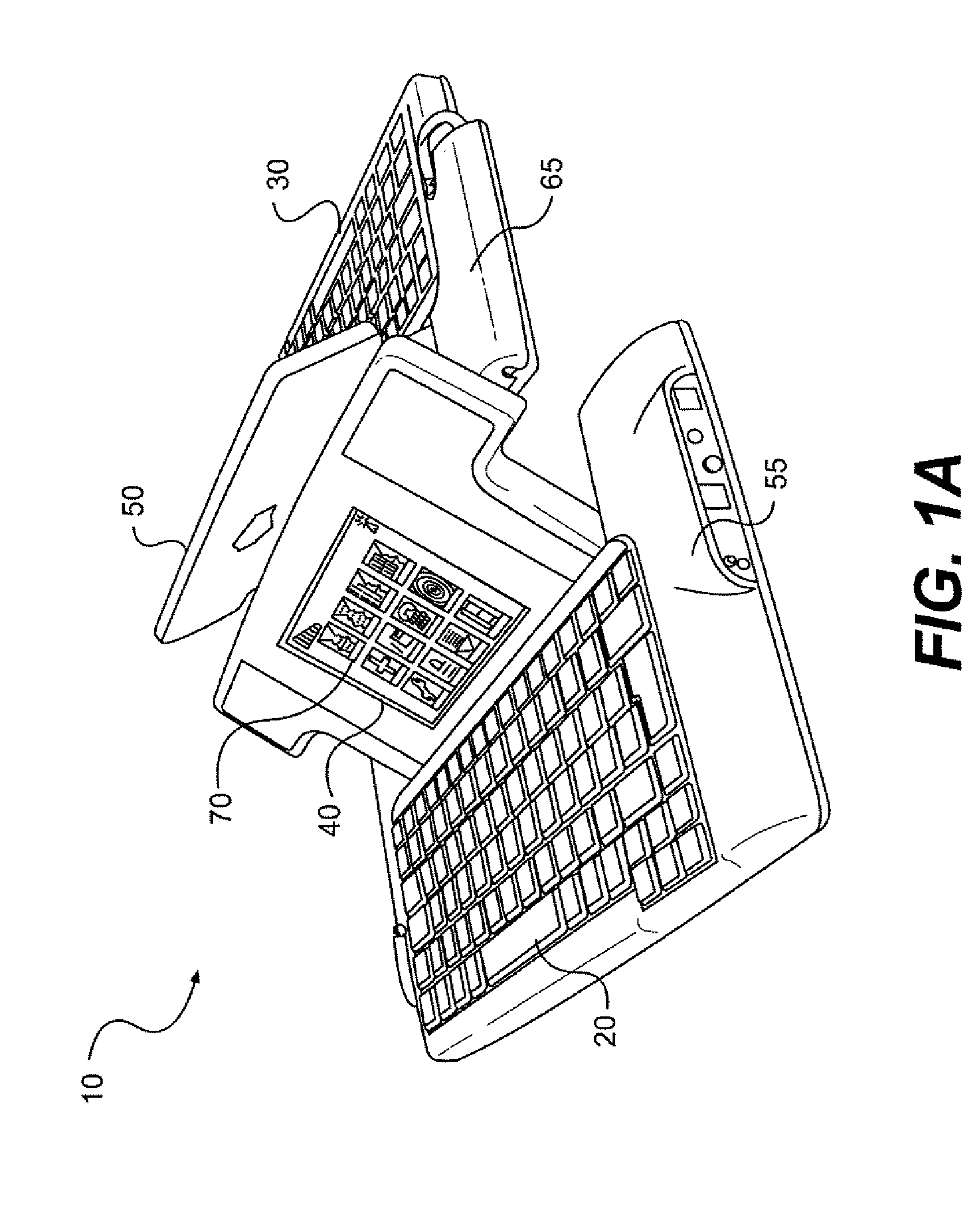

Interactive conversational speech communicator method and system

ActiveUS8275602B2Breaking down barriers in communicationEffective simulationSpeech recognitionElectrical appliancesCommunications systemData translation

The compact and portable interactive system allows person-to-person communication in a typed language format between individuals experiencing language barriers such as the hearing impaired and the language impaired. The communication system includes a custom configuration having a display screen and a keyboard, a data translation module for translating the original data from a first language type to a second language type. The display screen shows a split configuration with multiple dialogue boxes to facilitate simultaneous display of each user's input. The system supports multilingual text-based conversations as well as conversion from audio-to-text and text-to-audio conversations. Translation from one communication format to a second communication format is performed as messages are transmitted between users.

Owner:SCOMM

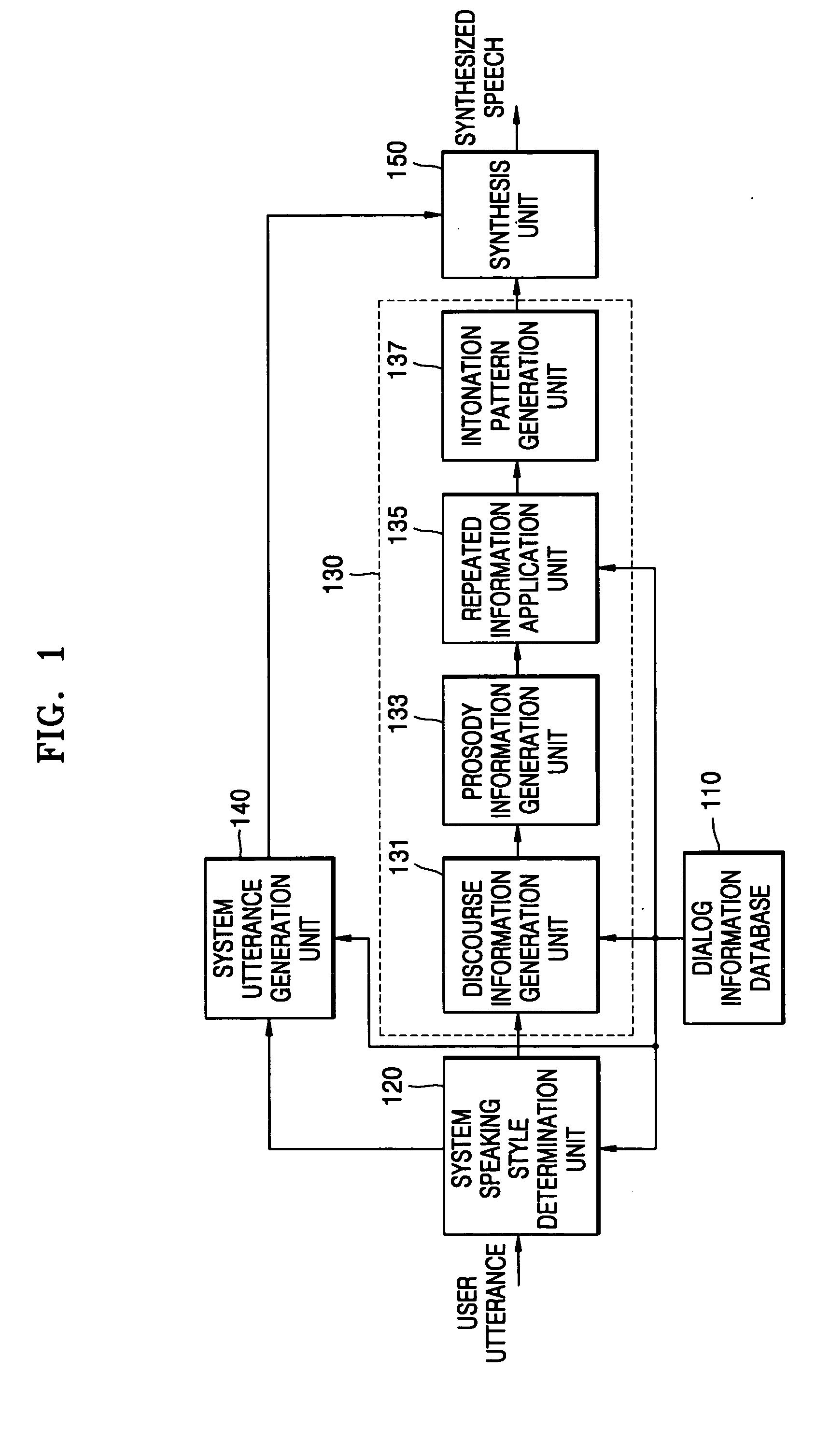

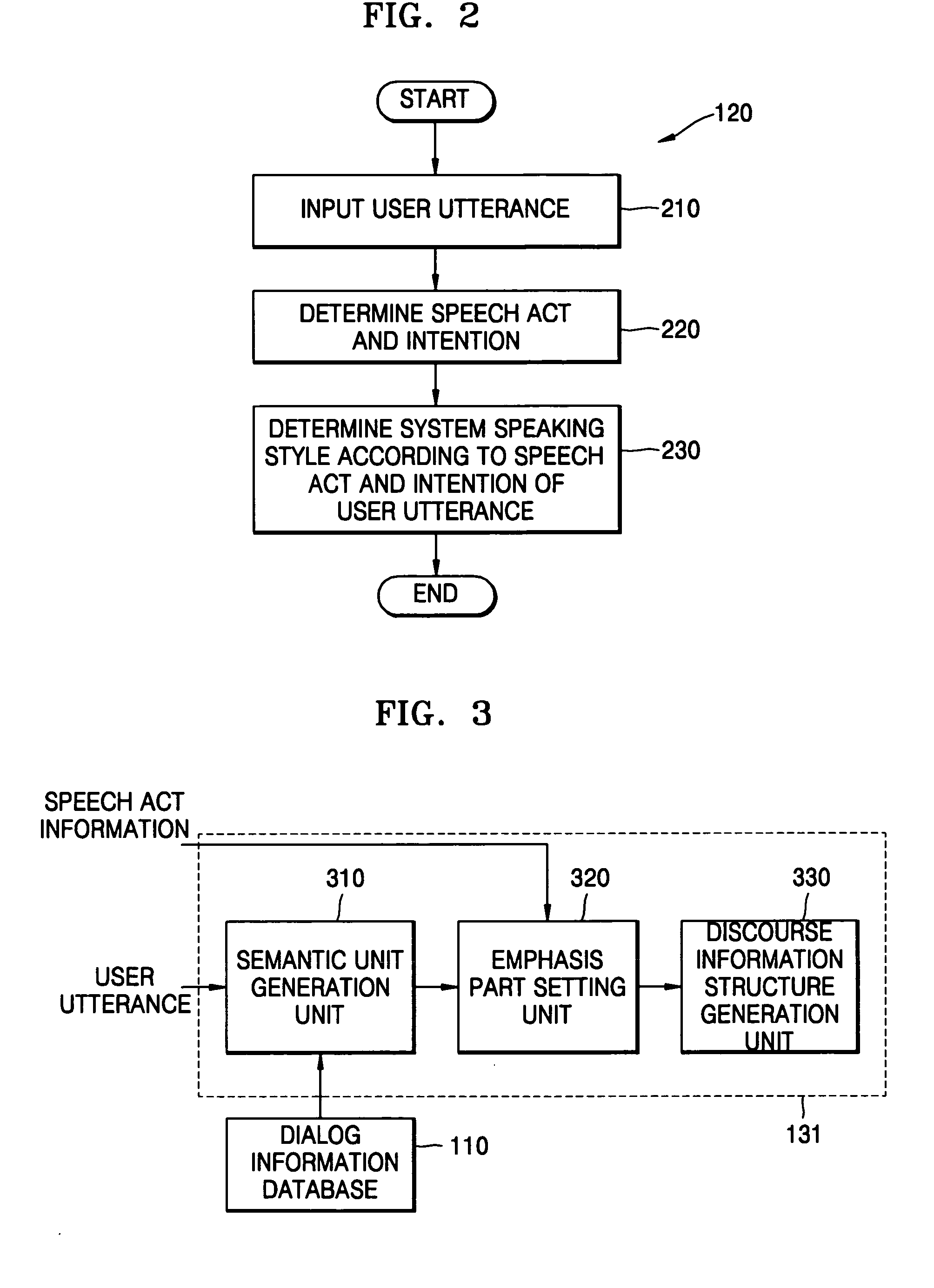

Method and apparatus for generating dialog prosody structure, and speech synthesis method and system employing the same

InactiveUS20050261905A1Natural language data processingSpeech recognitionConversational speechSpeaking style

A dialog prosody structure generating method and apparatus, and a speech synthesis method and system employing the dialog prosody structure generation method and apparatus, are provided. The speech synthesis method using the dialog prosody structure generation method includes: determining a system speaking style based on a user utterance; if the system speaking style is dialog speech, generating dialog prosody information by reflecting discourse information between a user and a system; and synthesizing a system utterance based on the generated dialog prosody information.

Owner:SAMSUNG ELECTRONICS CO LTD

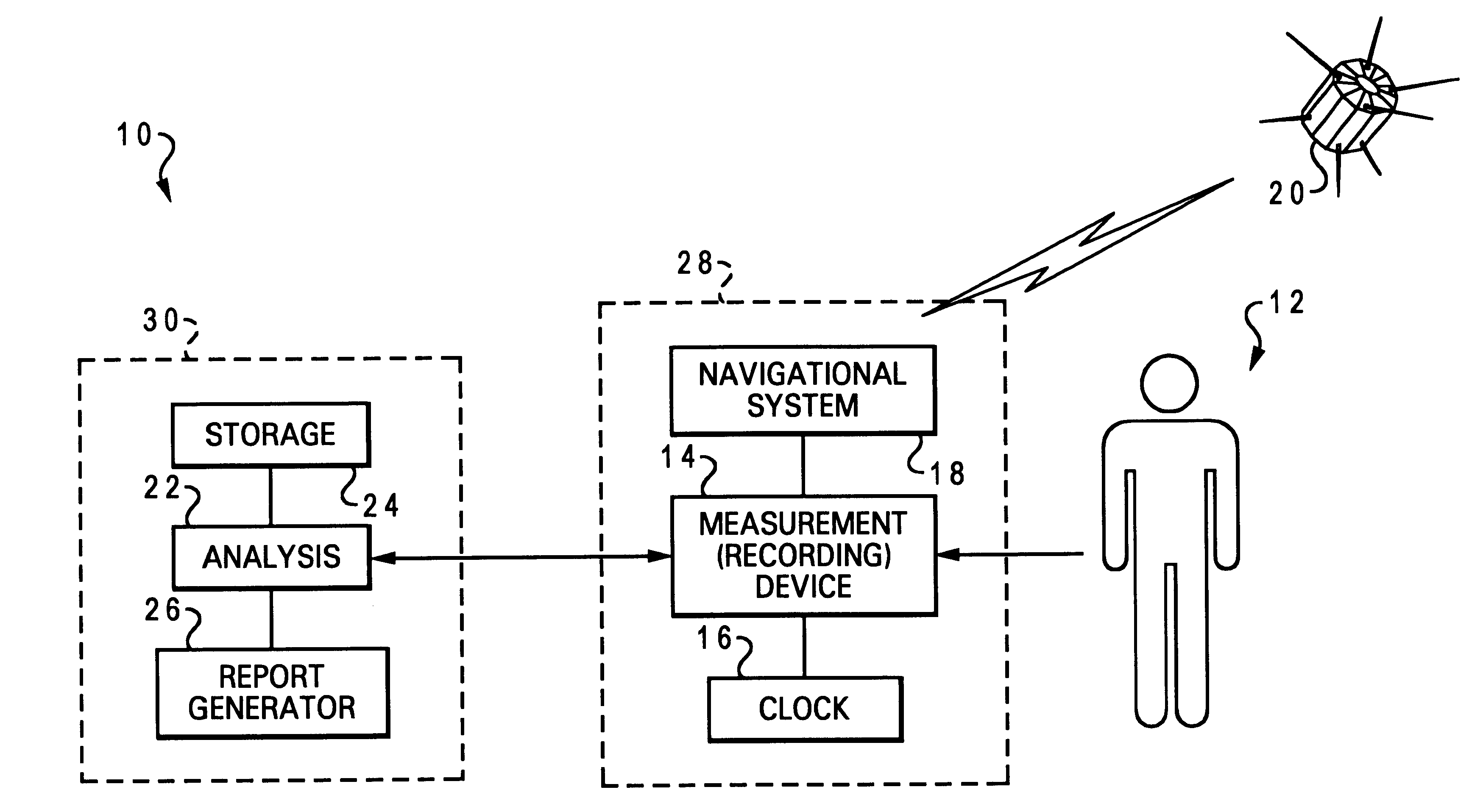

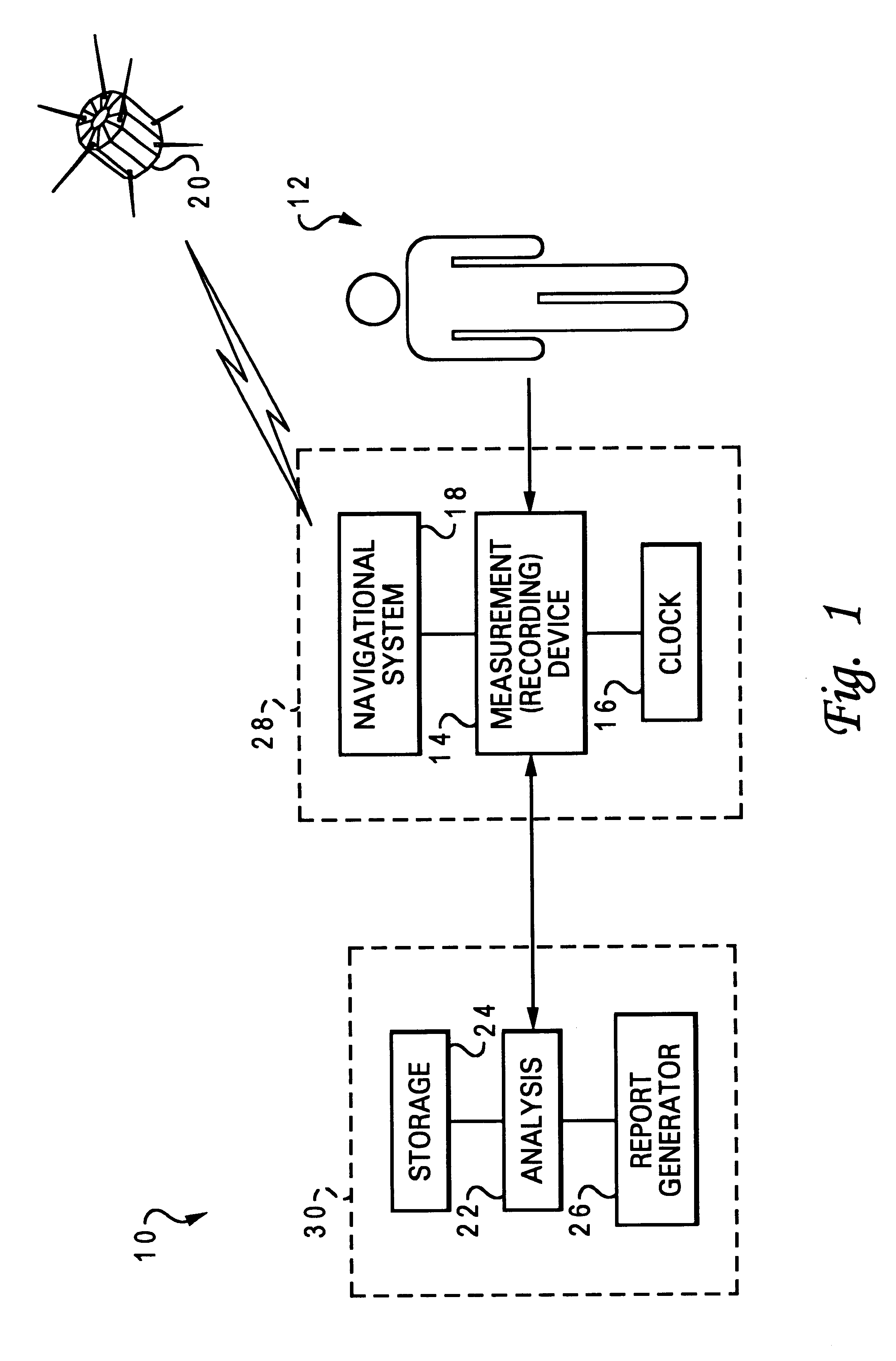

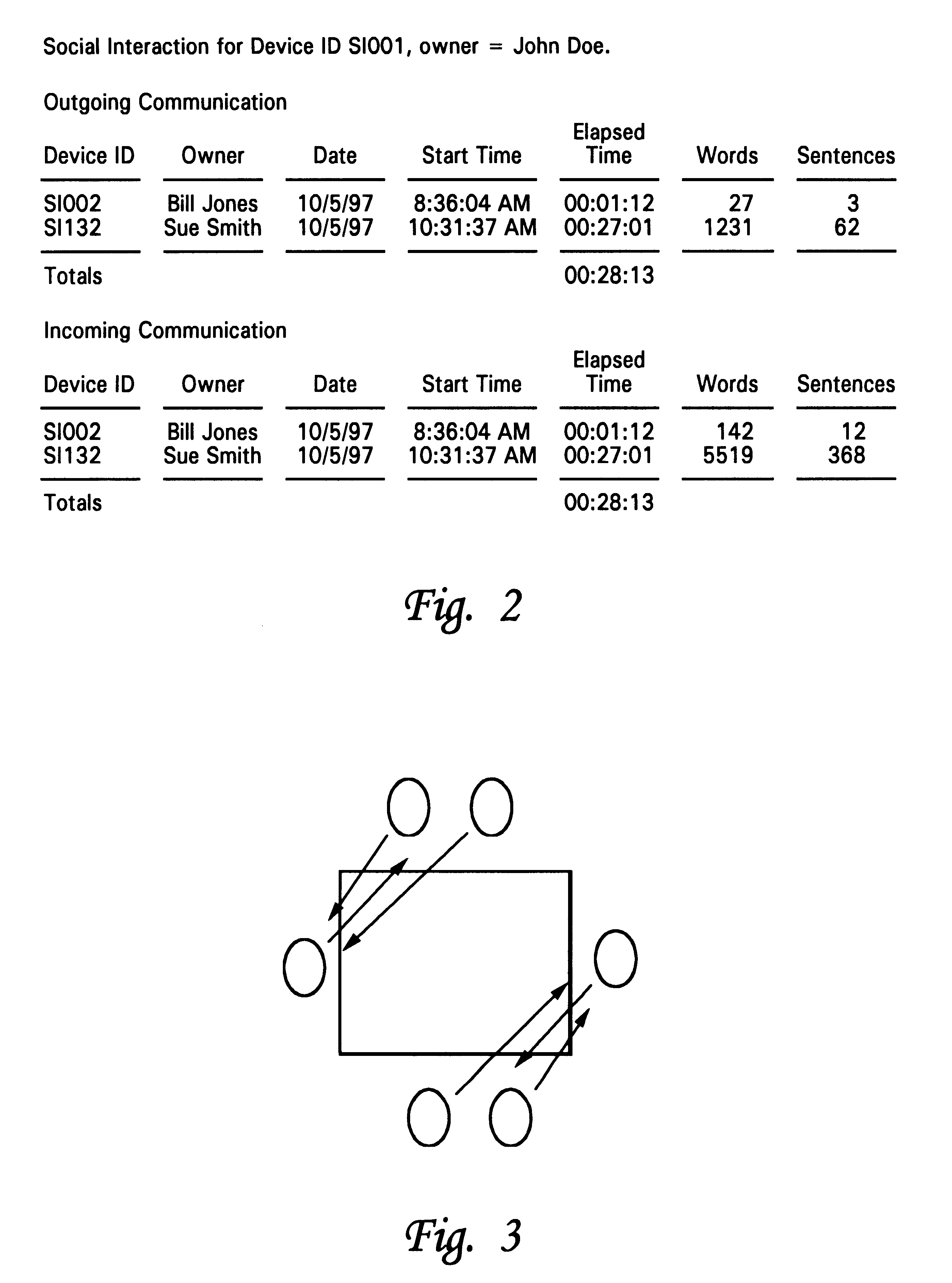

Measurement and validation of interaction and communication

InactiveUS6230121B1Easy to adaptRoad vehicles traffic controlSpeech analysisMeasurement deviceUnique identifier

A method of monitoring an individual's interactions, by recording a value of an interaction parameter of the individual (such as conversational speech) using a measurement device, storing the value of the interaction parameter with an associated geographic coordinate, and generating a report, including the value of the interaction parameter and the associated geographic coordinate. The report can further include a timeframe associated with the particular value of the interaction parameter. The global positioning system (GPS) can be used to provide the geographic data. The directional orientation (attitude) of the individual may further be measured and used to facilitate selection of one or more other subjects as recipients of the communication (i.e., when the individual is facing one or more of the subjects). Analysis and report generation is accomplished at a remote facility which supports multiple measurement devices, so each device sends a unique identifier which distinguishes the particular measurement device.

Owner:NUANCE COMM INC

Interactive conversational speech communicator method and system

ActiveUS20080109208A1Breaking down barriers in communicationEffective simulationSpeech recognitionElectrical appliancesSpeech disorderCommunications system

The compact and portable interactive system allows person-to-person communication in a typed language format between individuals experiencing language barriers such as the hearing impaired and the language impaired. The communication system includes a custom configuration having a display screen and a keyboard, a data translation module for translating the original data from a first language type to a second language type. The display screen shows a split configuration with multiple dialogue boxes to facilitate simultaneous display of each user's input. The system supports multilingual text-based conversations as well as conversion from audio-to-text and text-to-audio conversations. Translation from one communication format to a second communication format is performed as messages are transmitted between users.

Owner:SCOMM

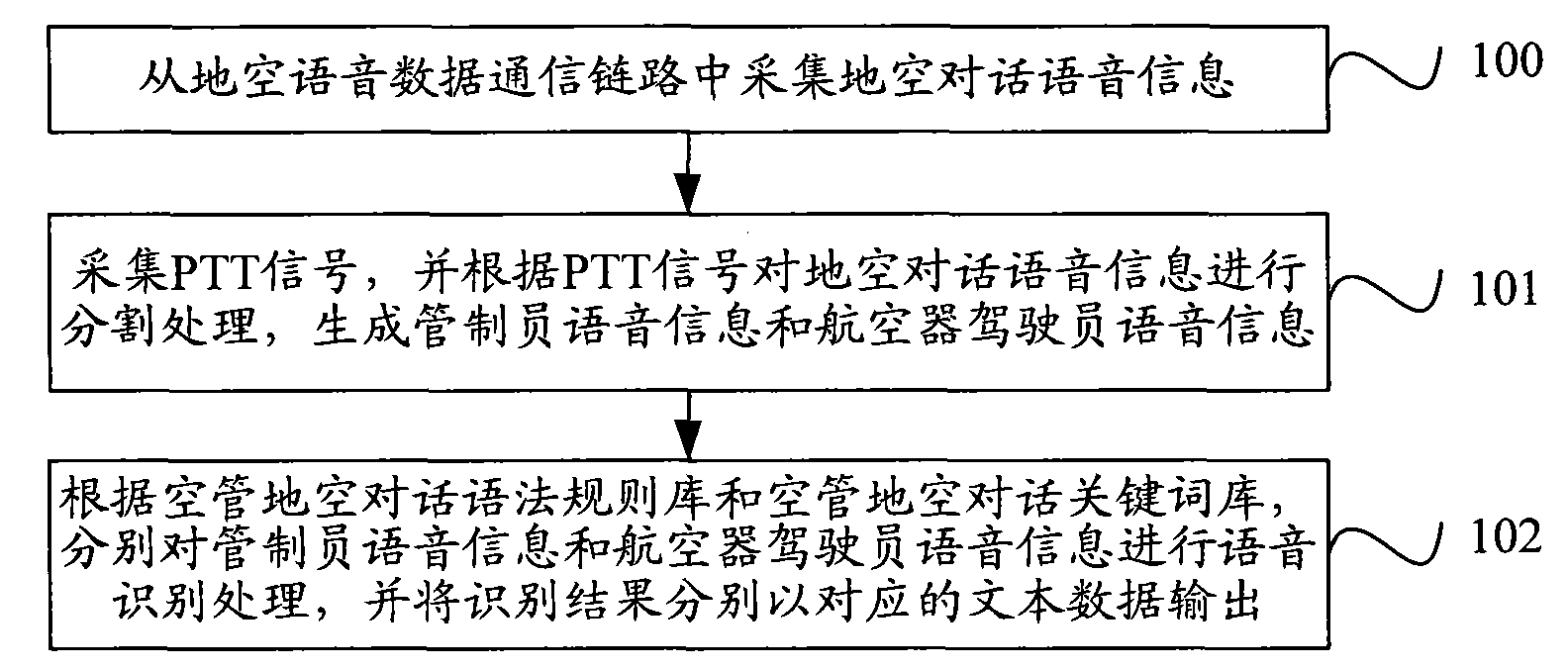

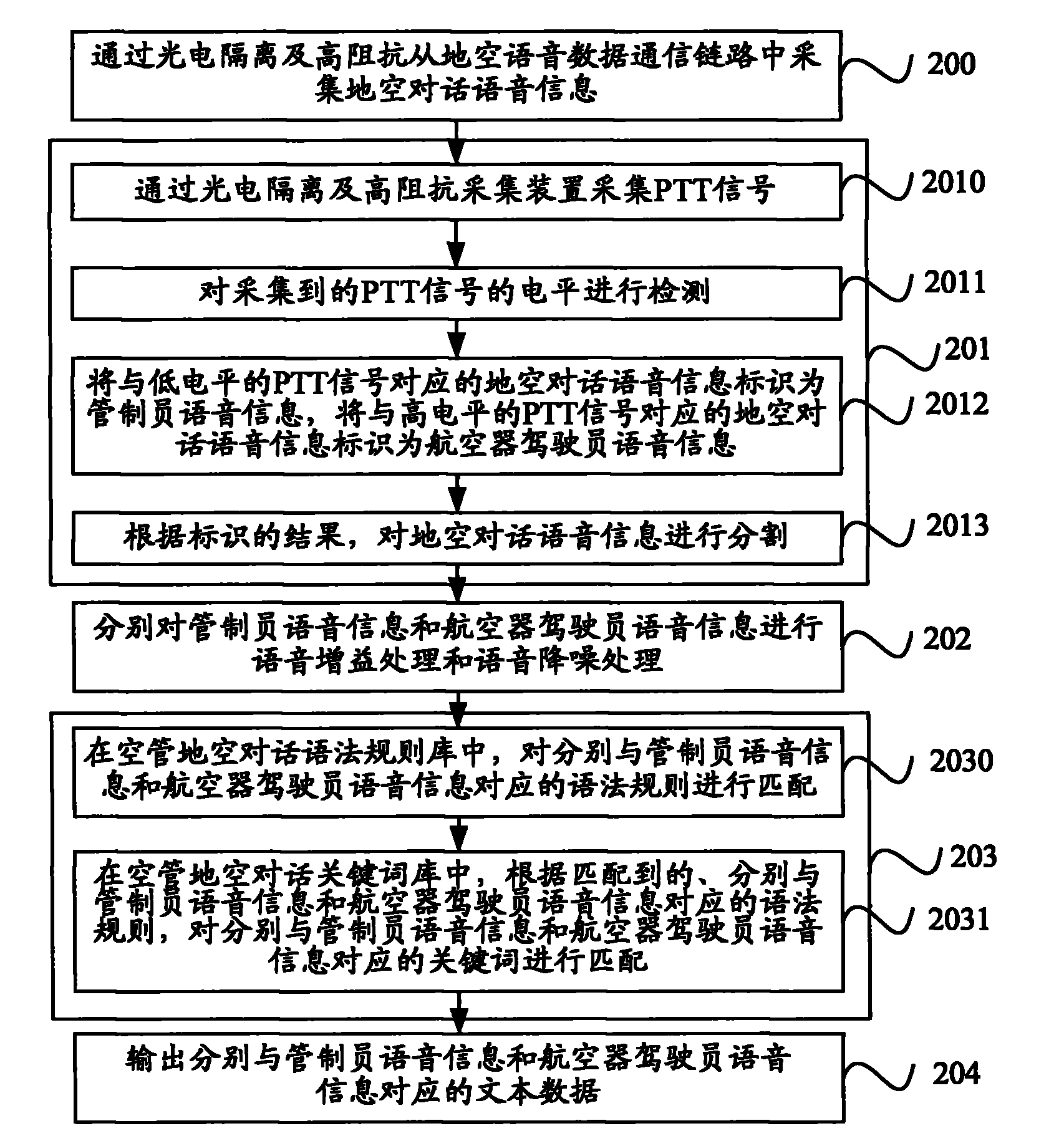

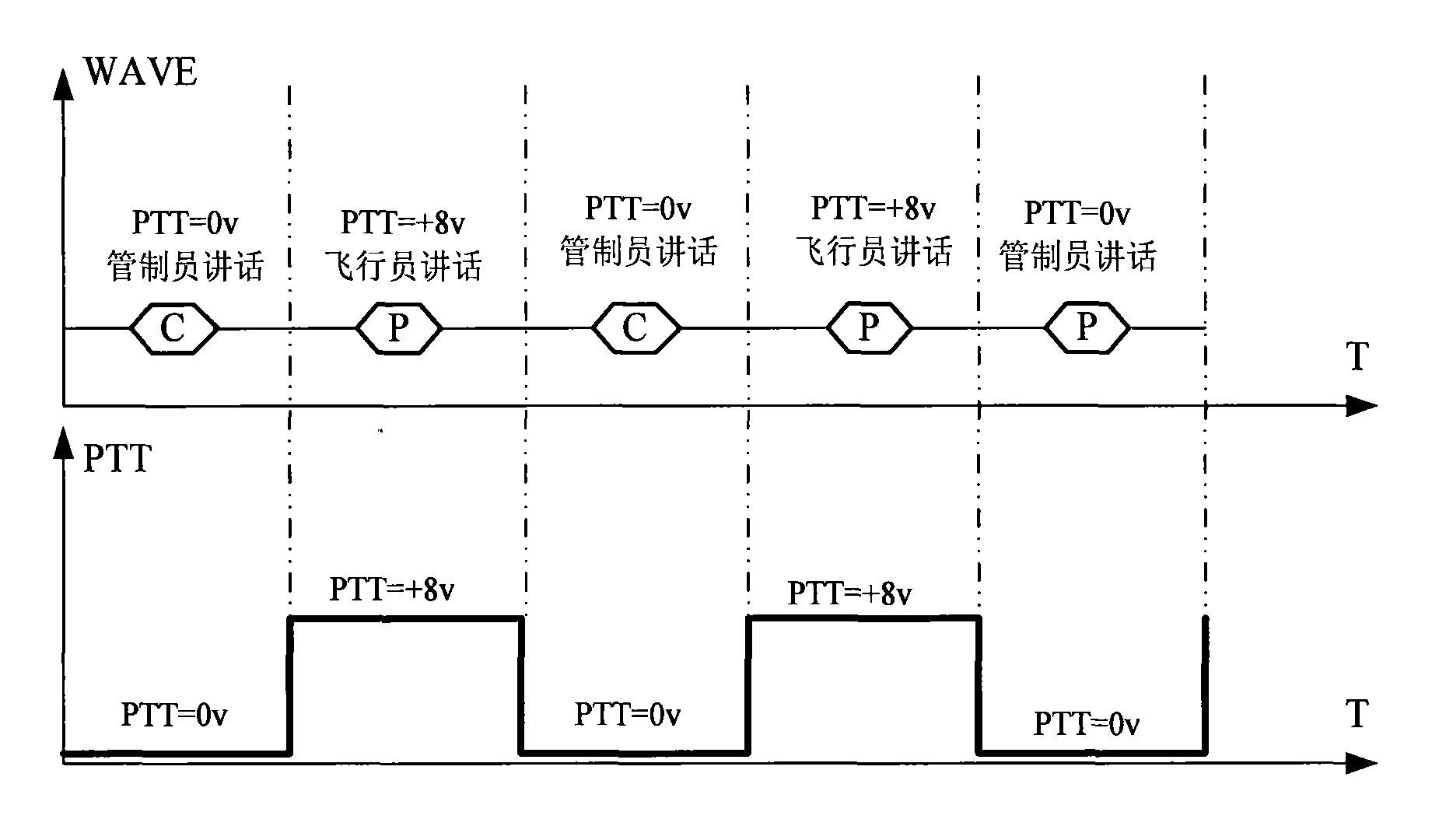

Voice recognition method and voice recognition device in air traffic control system

InactiveCN101916565AReduce error rateAccurate identificationSpeech recognitionStations for two-party-line systemsAviationDriver/operator

The invention provides a voice recognition method and a voice recognition device in an air traffic control system. The method comprises the following steps: collecting ground-to-air dialogue voice information from a ground-to-air voice communication link; collecting a PTT signal, segmenting the ground-to-air dialogue voice information according to the PTT signal to generate air traffic controller voice information and driver voice information in an aircraft; and carrying out voice recognition on the air traffic controller voice information and the driver voice information in the aircraft according to a preset air traffic control ground-to-air dialogue grammatical rule bank and a preset air traffic control ground-to-air dialogue keyword bank respectively, and outputting recognition results in corresponding texts. Through using a voice rule and keyword recognition method, the invention still provides accurate voice recognition service in the air traffic control system with high application complexity so as to further improve automation level of air traffic control and navigation safety and reliability in China, and the voice recognition device also can be applied to various complex application scenes in the aviation field.

Owner:北京华安天诚科技有限公司

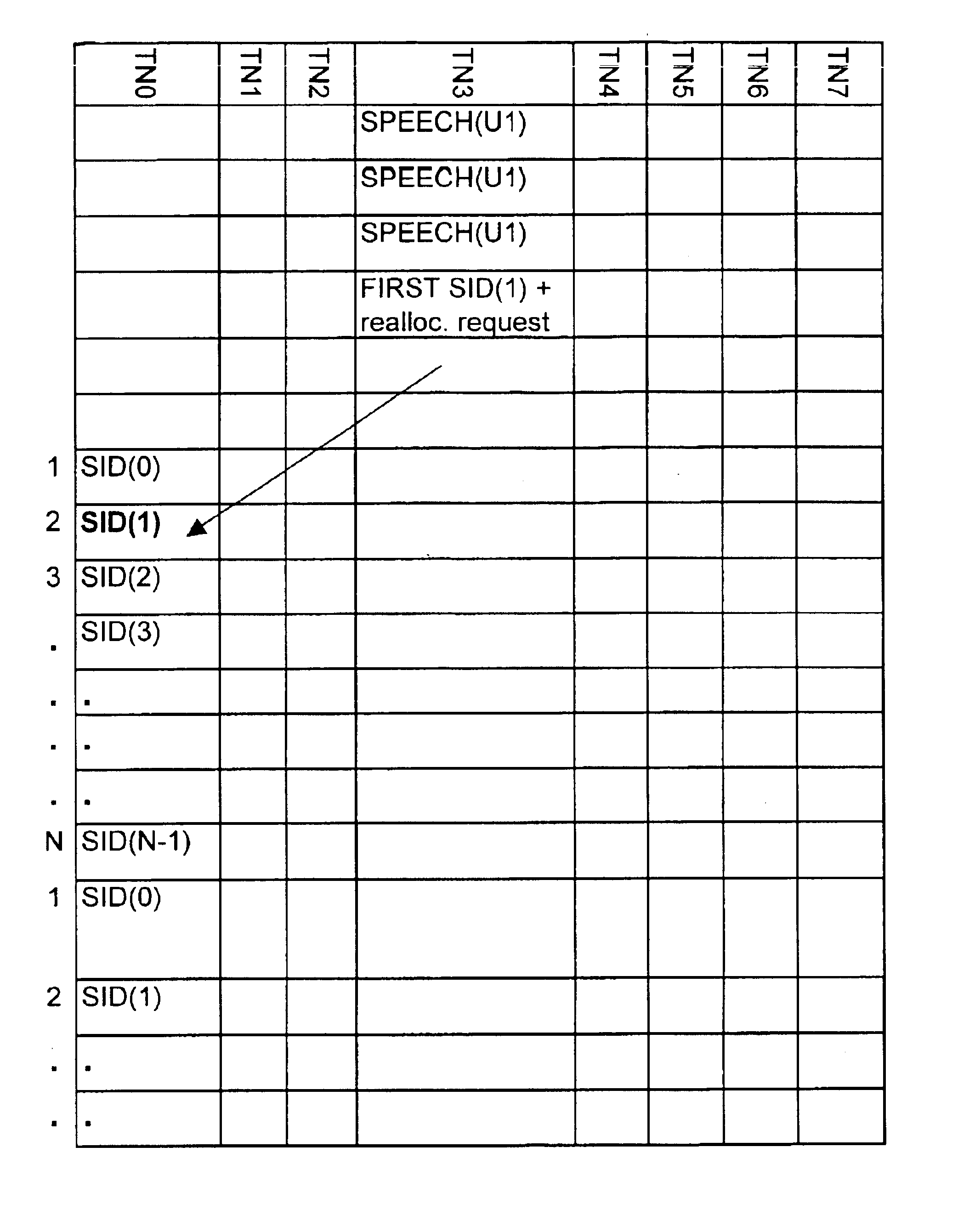

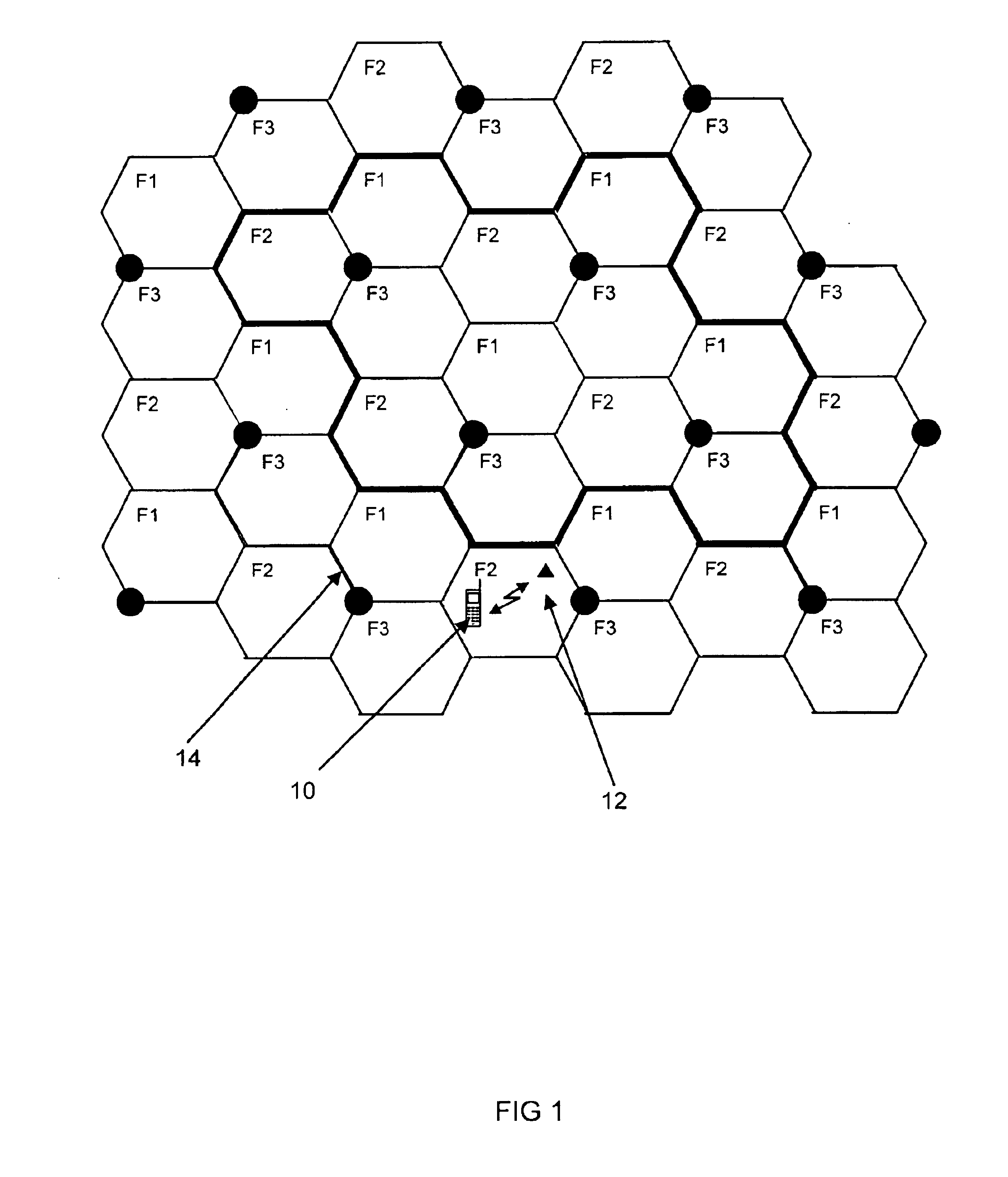

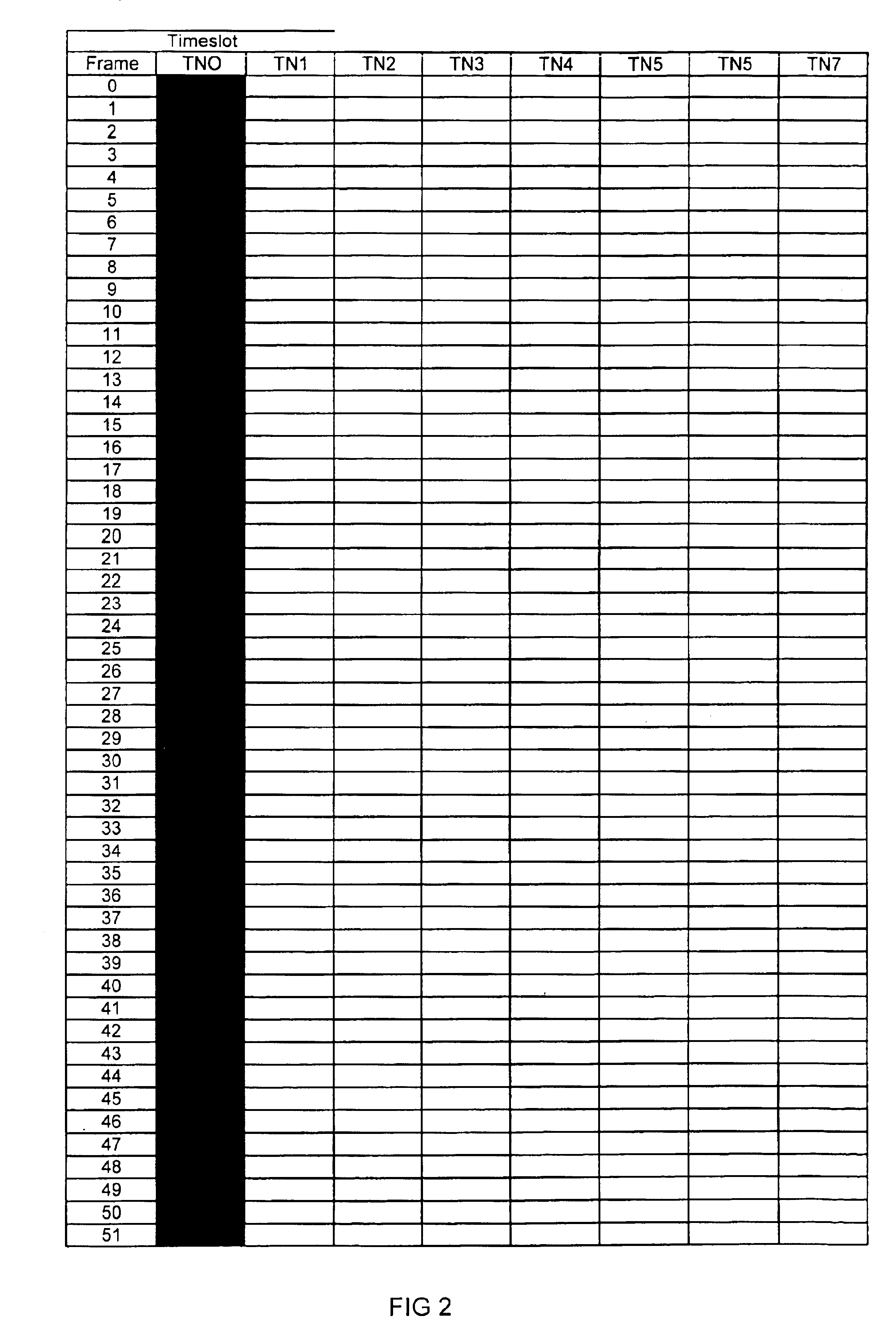

Method and apparatus for sustaining conversational services in a packet switched radio access network

In a packet switched system used for speech communication, a user entering a silent period, start to transmit silence descriptor information (SID). According to the invention, a speech user is not allocated the same resources for transmission of SID as for the speech communication. Instead, the user is, upon entering a silent period, reallocated to a SID communication channel, shared between a number of different users in silent mode. The resources used for speech may then be more advantageously utilized for other speech users, and is not occupied for SID transmissions only. In an alternate embodiment of the invention, when a mobile station is in silent mode, it receives and transmits associated signaling information on the same transmission resources as those used for the SID transmissions.

Owner:TELEFON AB LM ERICSSON (PUBL)

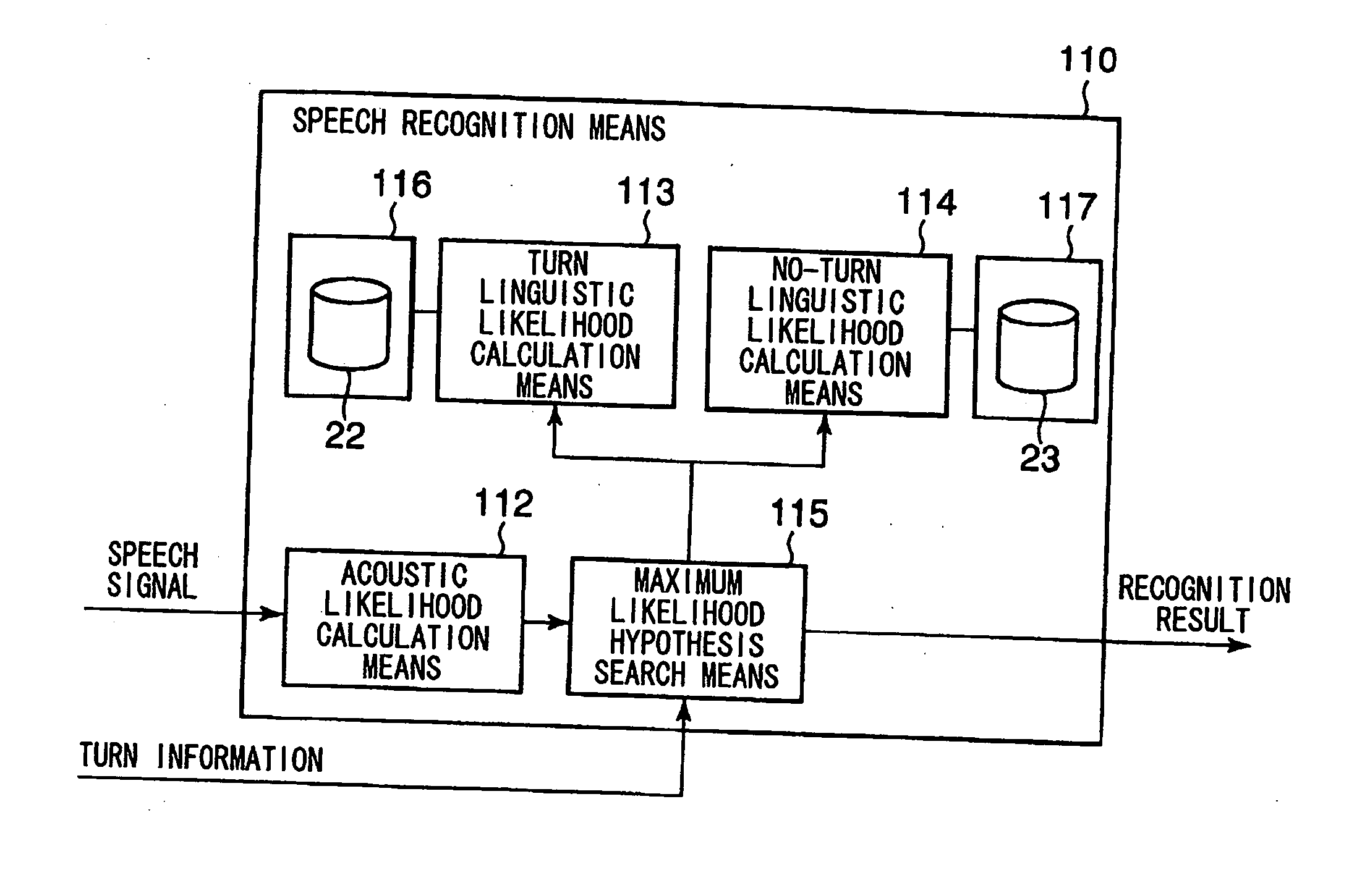

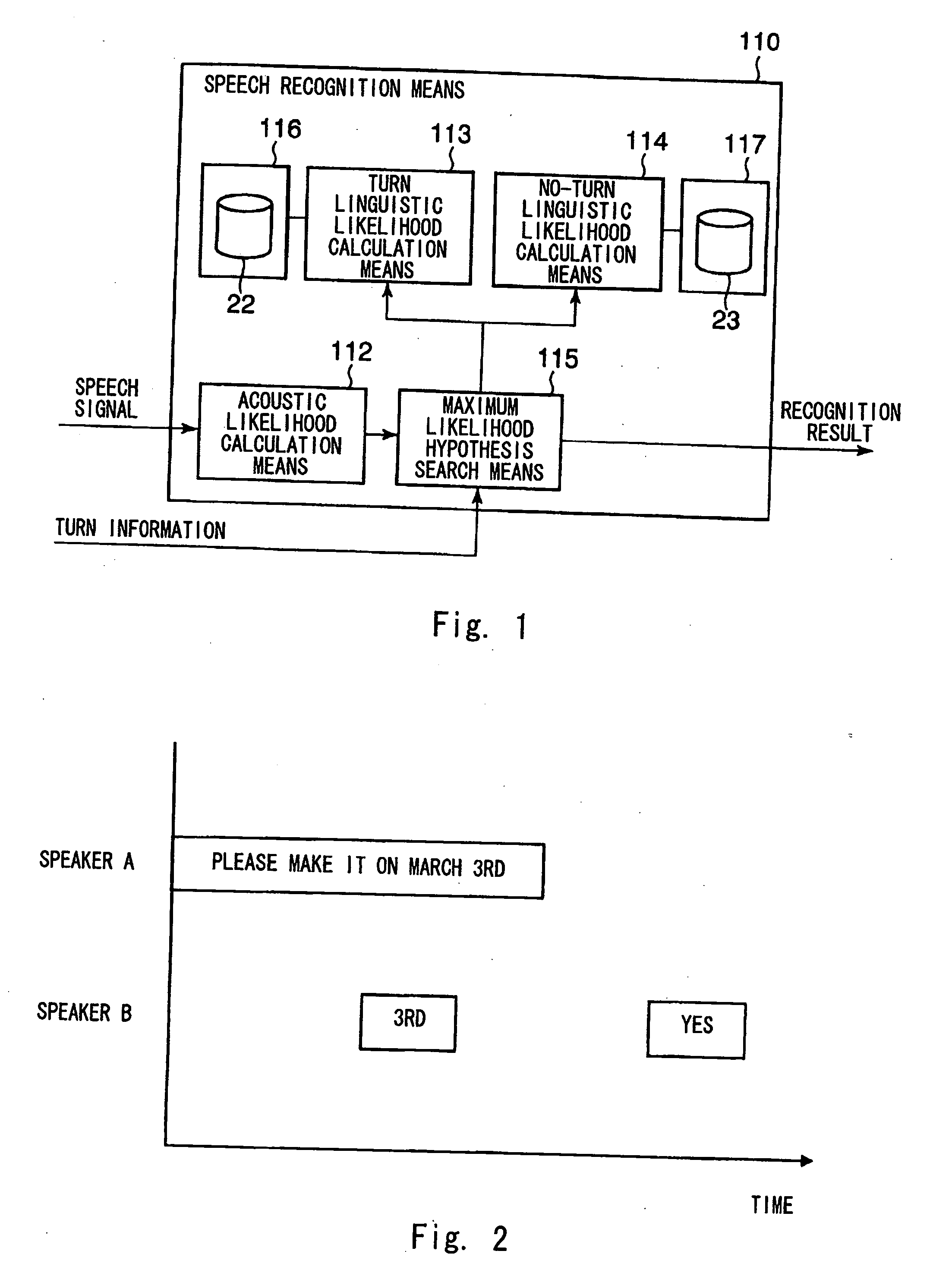

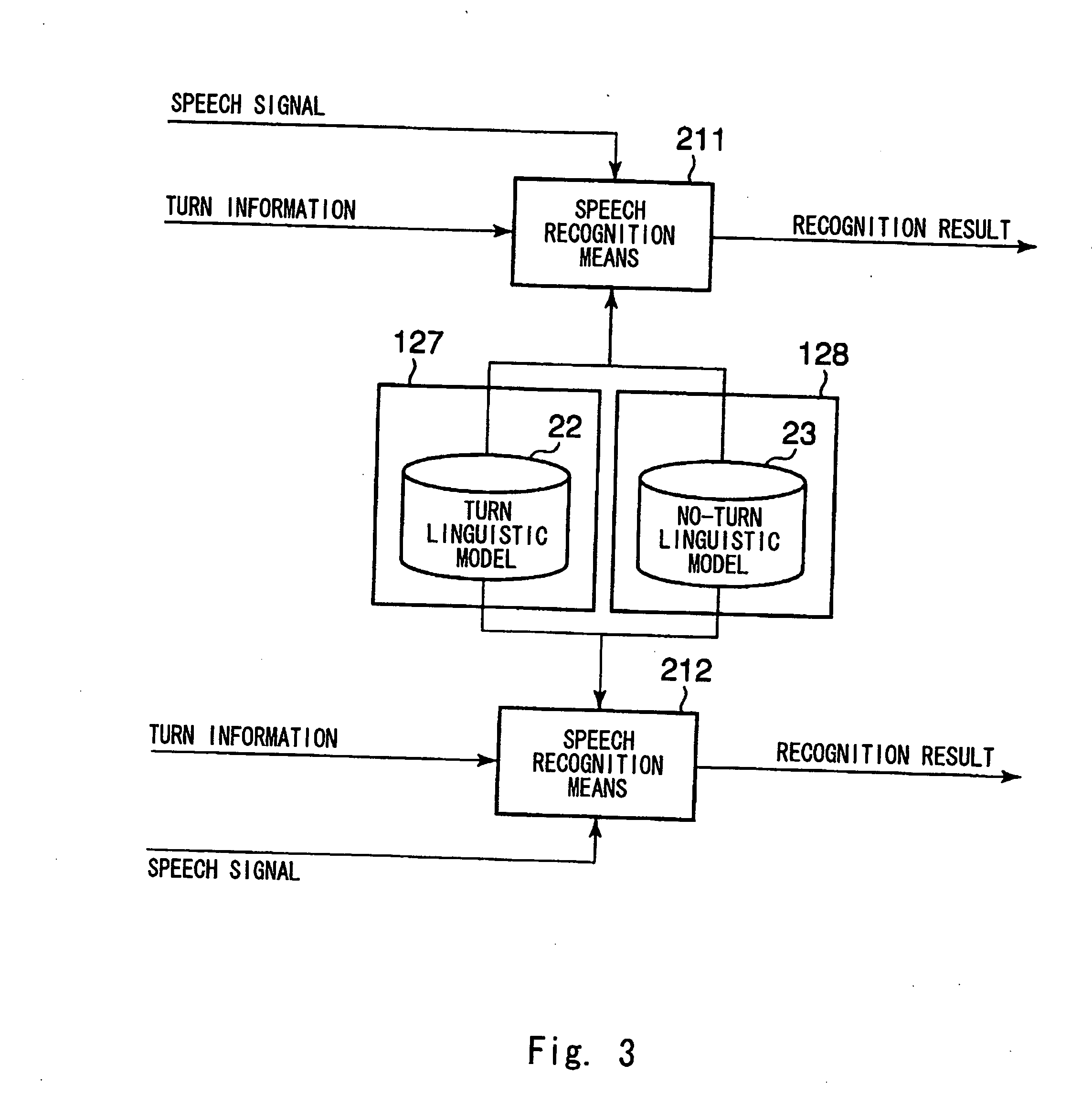

Dialogue speech recognition system, dialogue speech recognition method, and recording medium for storing dialogue speech recognition program

ActiveUS20110131042A1Improve speech recognition accuracySpeech recognitionSpeech identificationConversational speech

Disclosed is a dialogue speech recognition system that can expand the scope of applications by employing a universal dialogue structure as the condition for speech recognition of dialogue speech between persons. An acoustic likelihood computation means (701) provides a likelihood that a speech signal input from a given phoneme sequence will occur. A linguistic likelihood computation means (702) provides a likelihood that a given word sequence will occur. A maximum likelihood candidate search means (703) uses the likelihoods provided by the acoustic likelihood computation means and the linguistic likelihood computation means to provide a word sequence with the maximum likelihood of occurring from a speech signal. Further, the linguistic likelihood computation means (702) provides different linguistic likelihoods when the speaker who generated the acoustic signal input to the speech recognition means does and does not have the turn to speak.

Owner:NEC CORP

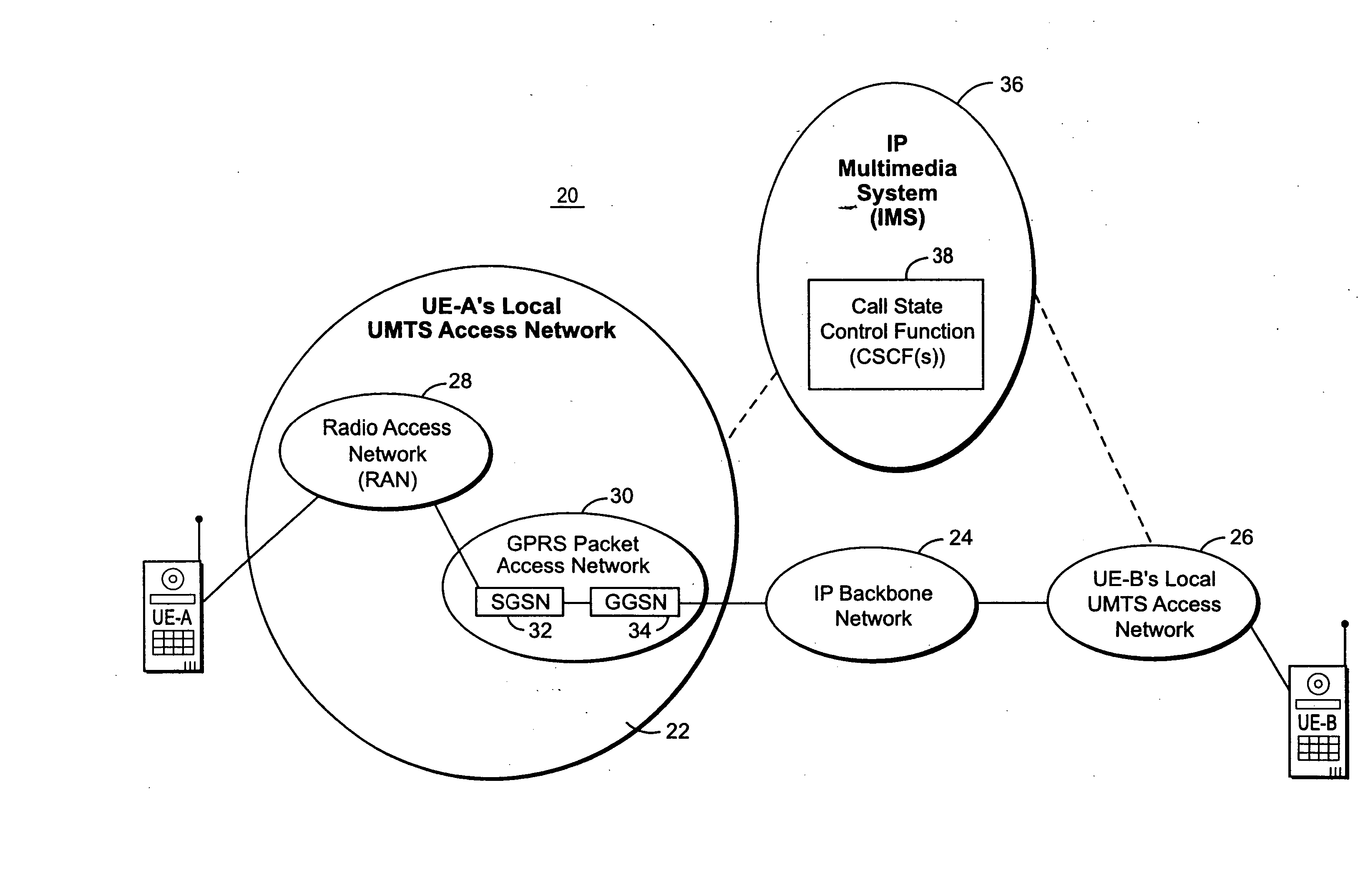

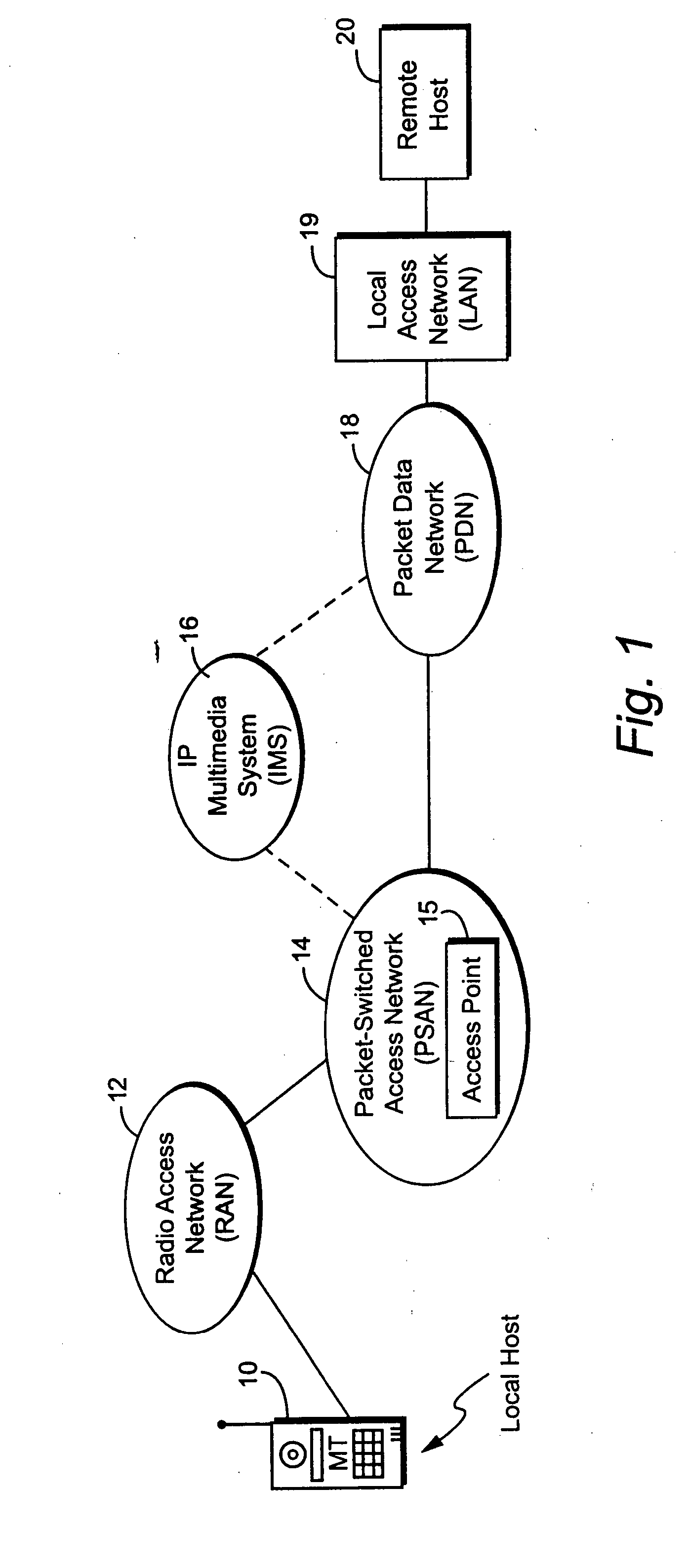

Packet-based conversational service for a multimedia session in a mobile communications system

InactiveUS20070237134A1Quality improvementEffective distributionNetwork traffic/resource managementConnection managementMedia controlsQuality of service

An important objective for third generation mobile communications systems is to provide IP services, and a very important part of these services will be the conversational voice. The conversational voice service includes three distinct information flows: session control, the voice media, and the media control messages. In a preferred embodiment, based on the specific characteristics for each information flow, each information flow is allocated its own bearer with a Quality of Service (QOS) tailored to its particular characteristics. In a second embodiment, the session control and media control messages are supported with a single bearer with a QoS that suits both types of control messages. Both embodiments provide an IP conversational voice service with increased radio resource efficiency and QoS.

Owner:TELEFON AB LM ERICSSON (PUBL)

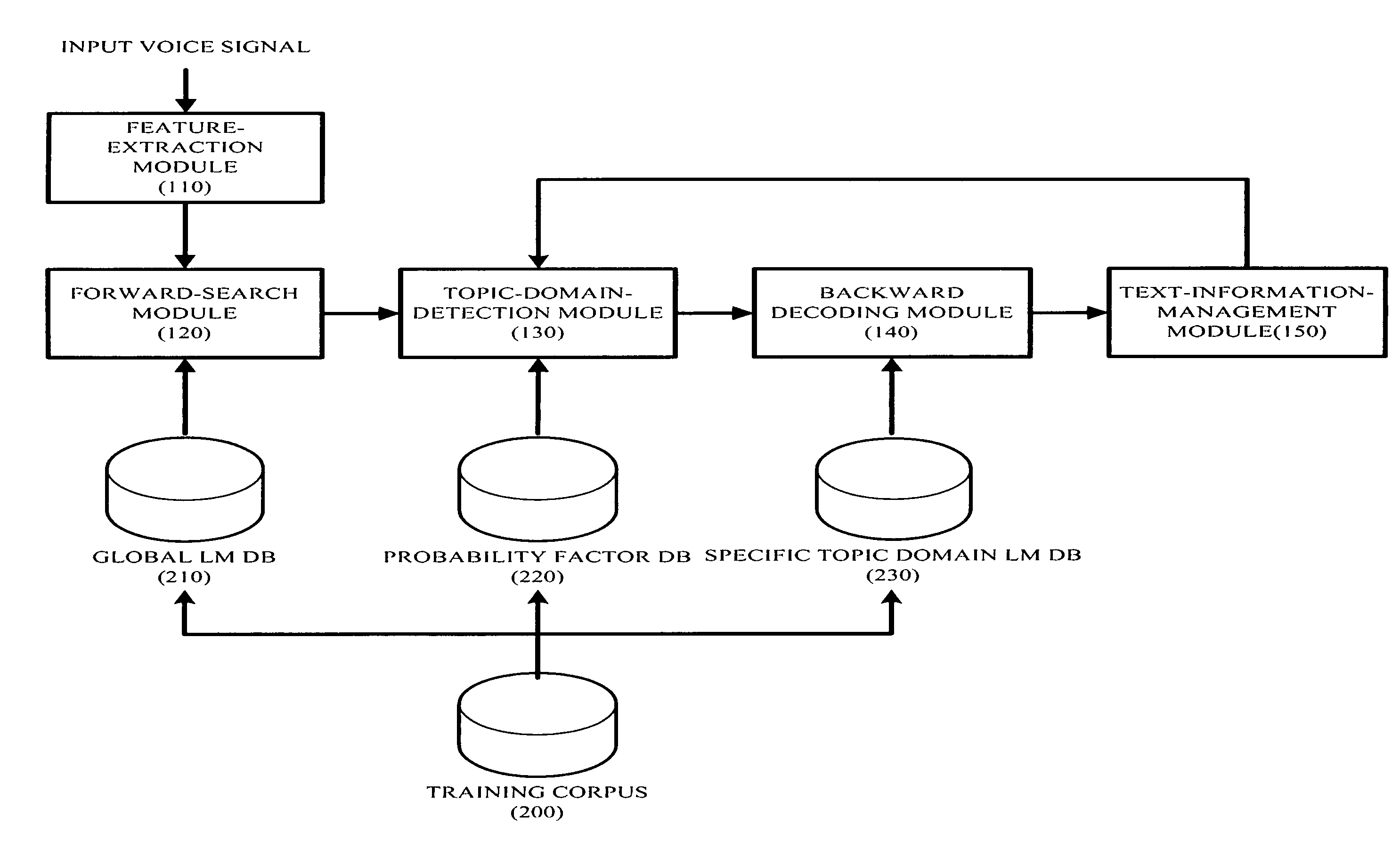

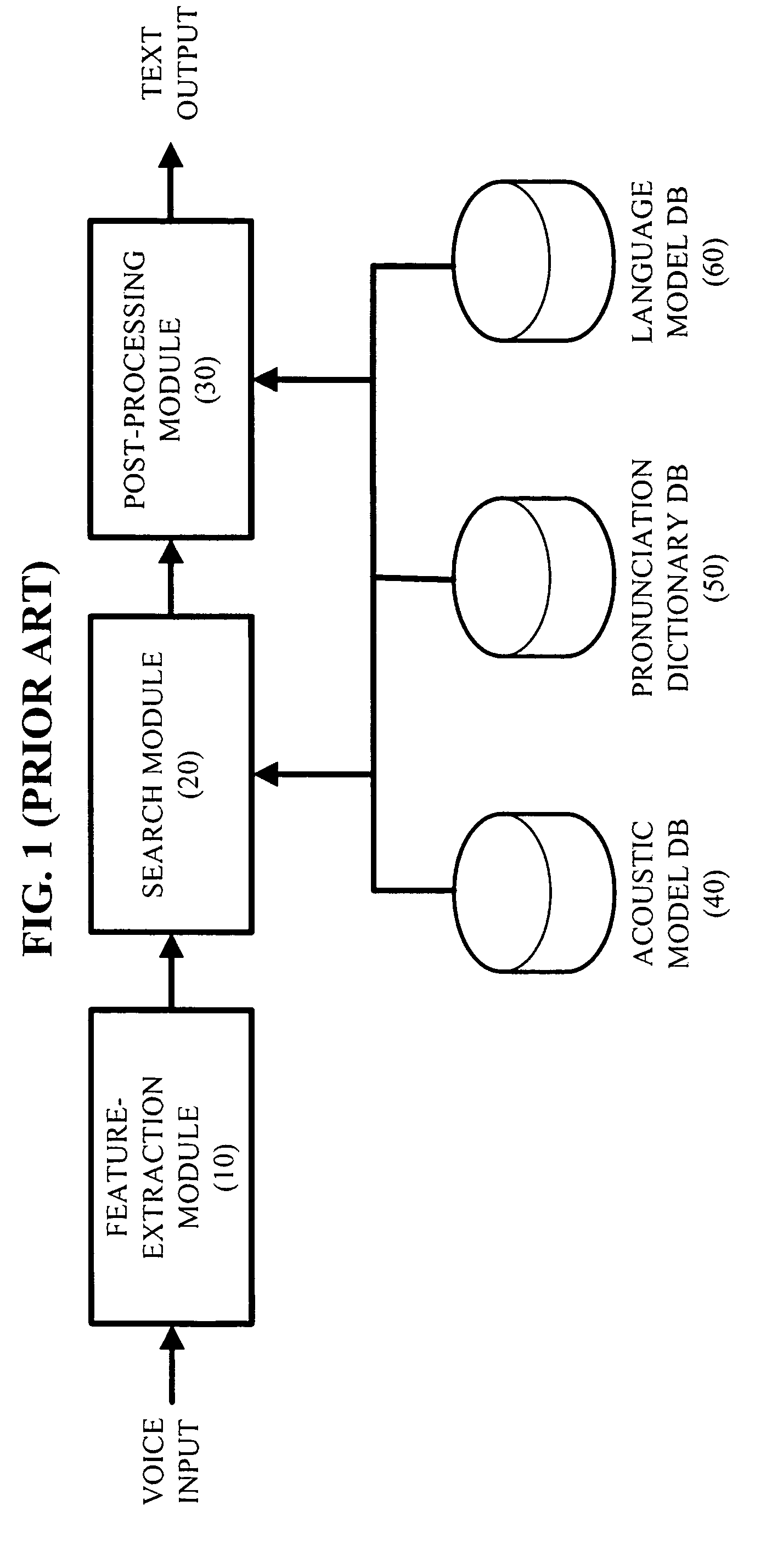

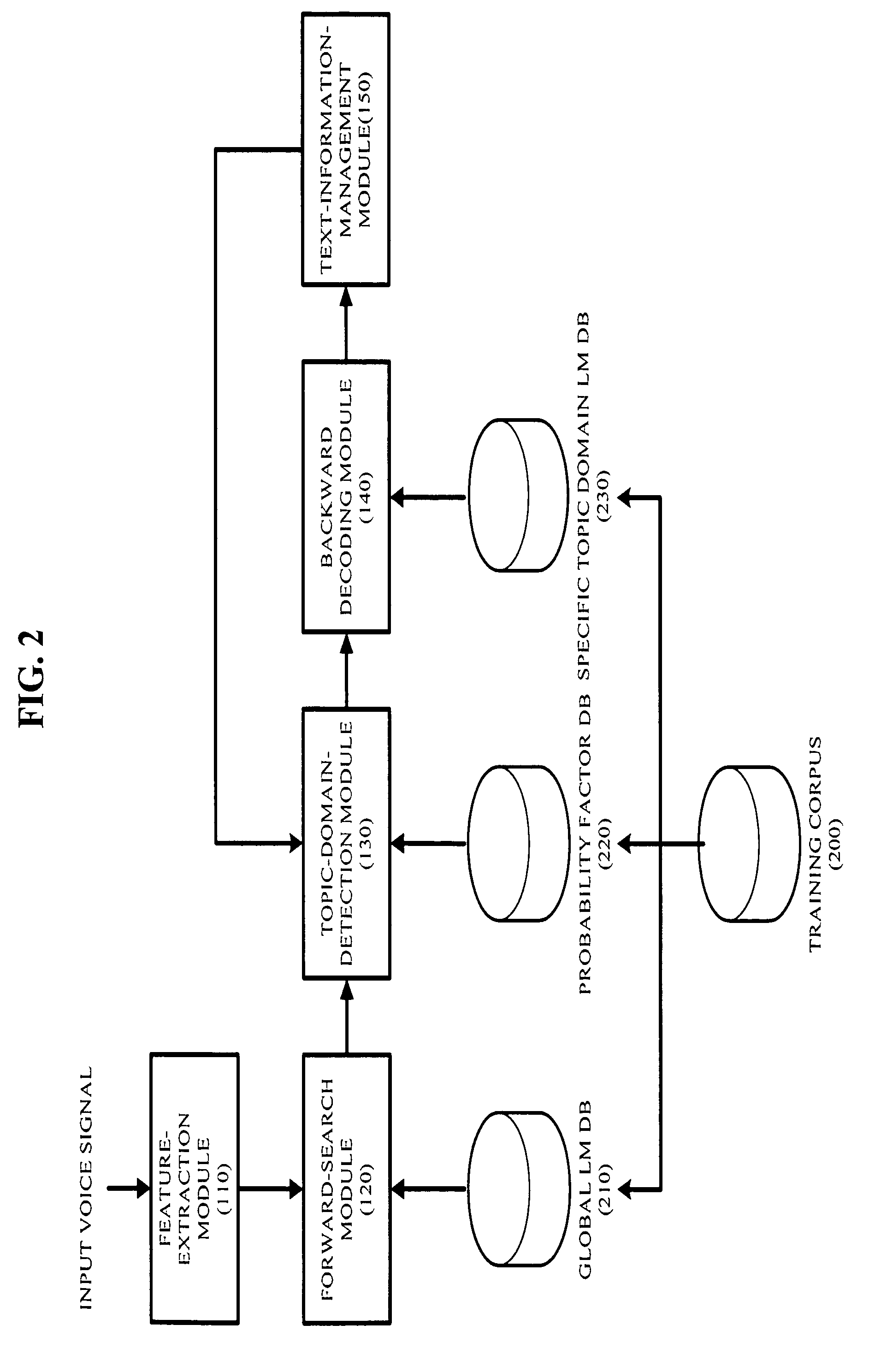

Apparatus, method, and medium for dialogue speech recognition using topic domain detection

InactiveUS8301450B2Ensure high efficiency and accuracySpeech recognitionSpecial data processing applicationsFeature vectorAcoustic model

An apparatus, method, and medium for dialogue speech recognition using topic domain detection are disclosed. An apparatus includes a forward search module performing a forward search in order to create a word lattice similar to a feature vector, which is extracted from an input voice signal, with reference to a global language model database, a pronunciation dictionary database and an acoustic model database, which have been previously established, a topic-domain-detection module detecting a topic domain by inferring a topic based on meanings of vocabularies contained in the word lattice using information of the word lattice created as a result of the forward search, and a backward-decoding module performing a backward decoding of the detected topic domain with reference to a specific topic domain language model database, which has been previously established, thereby outputting a speech recognition result for an input voice signal in text form. Accuracy and efficiency for a dialogue sentence are improved.

Owner:SAMSUNG ELECTRONICS CO LTD

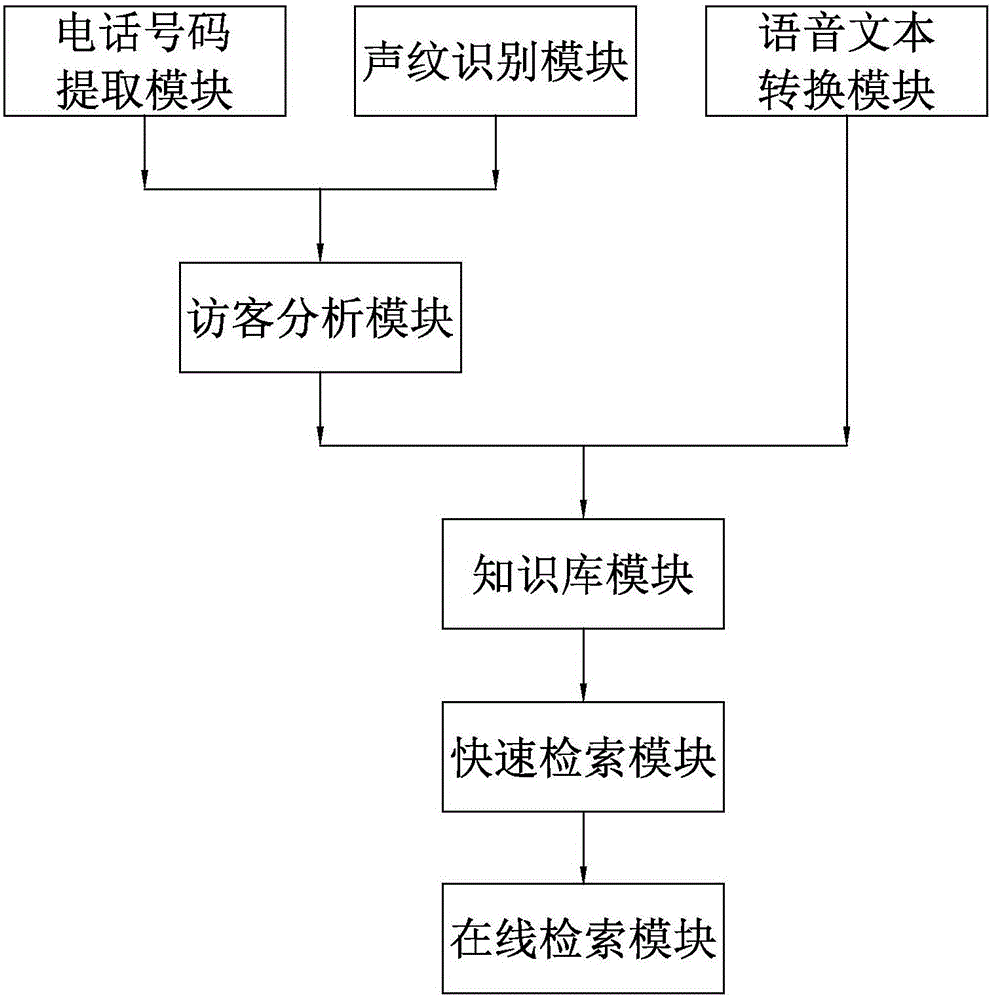

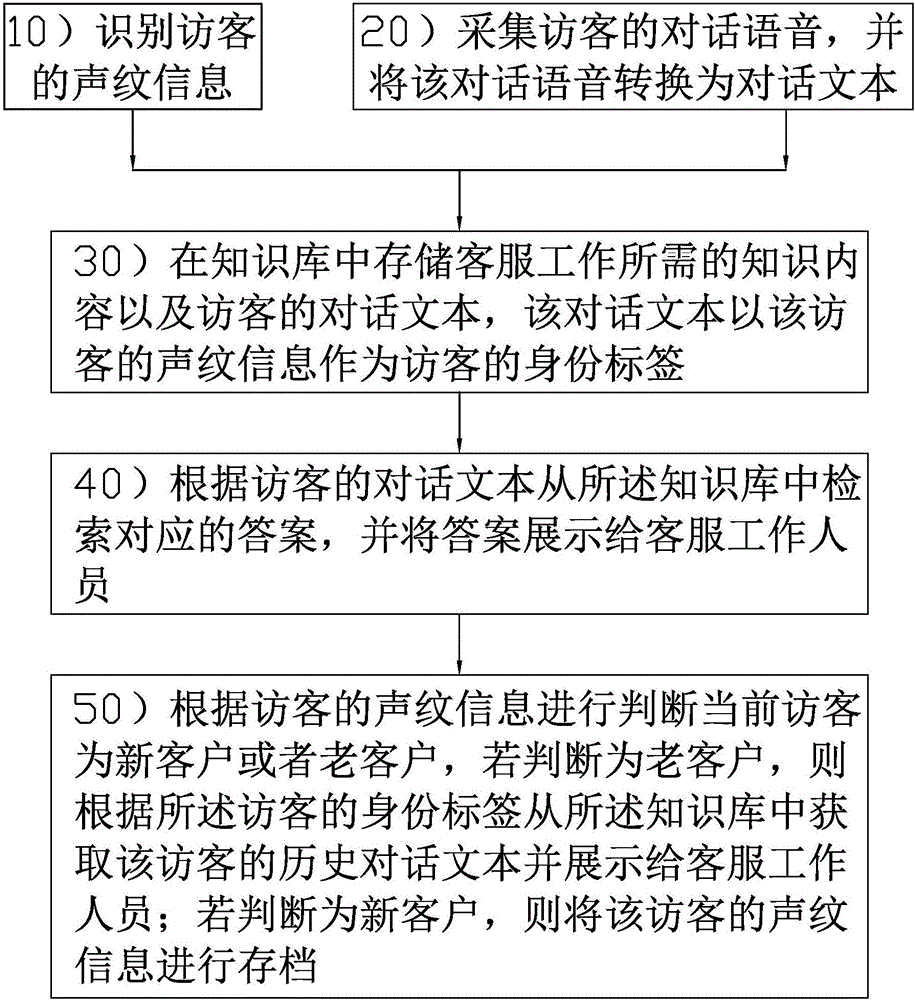

Artificial telephone customer service auxiliary system and method

InactiveCN106683678AReduce waiting timeLow costSpecial service for subscribersSpeech recognitionKnowledge contentConversational speech

The invention discloses an artificial telephone customer service auxiliary system and method. The method includes the following steps: acquiring conversation voice of a visitor, converting the conversation voice to a conversation text, and saving knowledge content required in customer service and the conversation text of the visitor, wherein the conversation text takes voice print of the visitor as an identity label of the visitor; and searching for a corresponding answer from a knowledge base on the basis of the conversation text of the visitor, exhibiting the answer to a customer service worker; and based on the voice print of the visitor, determining whether the current visitor is a new customer or a frequency customer so as to apply different strategies to different types of customer service workers. The system and the method greatly reduce requirements for the capability of the customer service workers. Enterprises do not need to conduct long time training on customer service workers. Customer service workers do not need to memorize a great amount of product information. The system and the method also increase working efficiency, saves cost and reduce work difficulty.

Owner:XIAMEN KUAISHANGTONG TECH CORP LTD

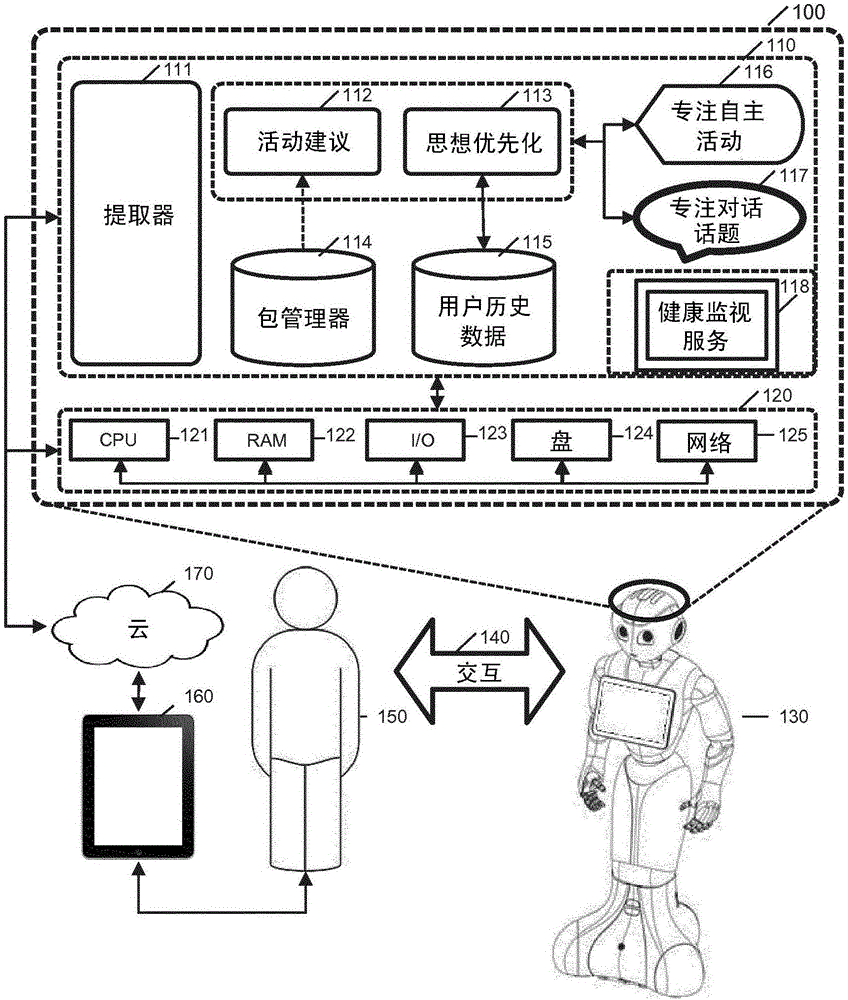

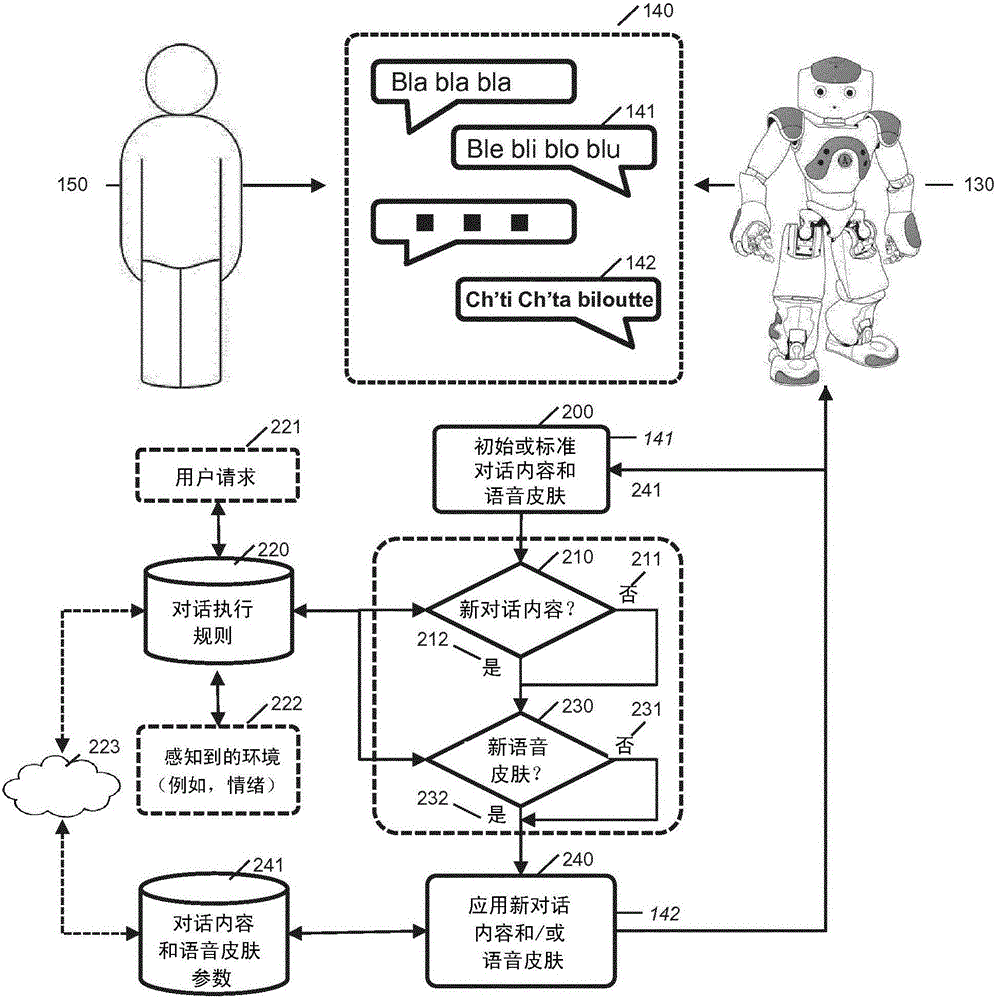

Methods and systems of handling a dialog with a robot

InactiveCN106663219AProgramme-controlled manipulatorArtificial lifeConversational speechSpeech sound

Owner:SOFTBANK ROBOTICS EURO

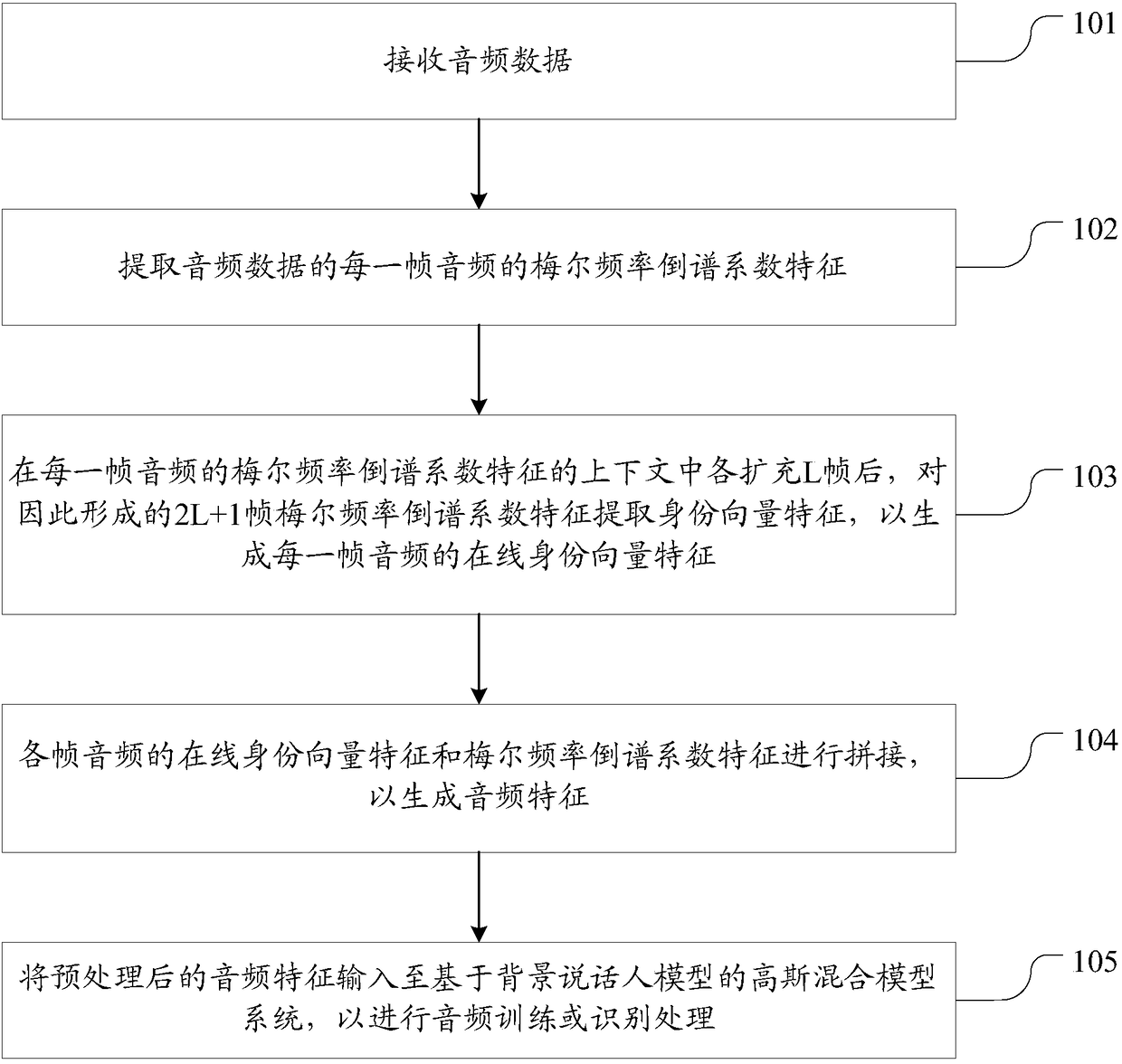

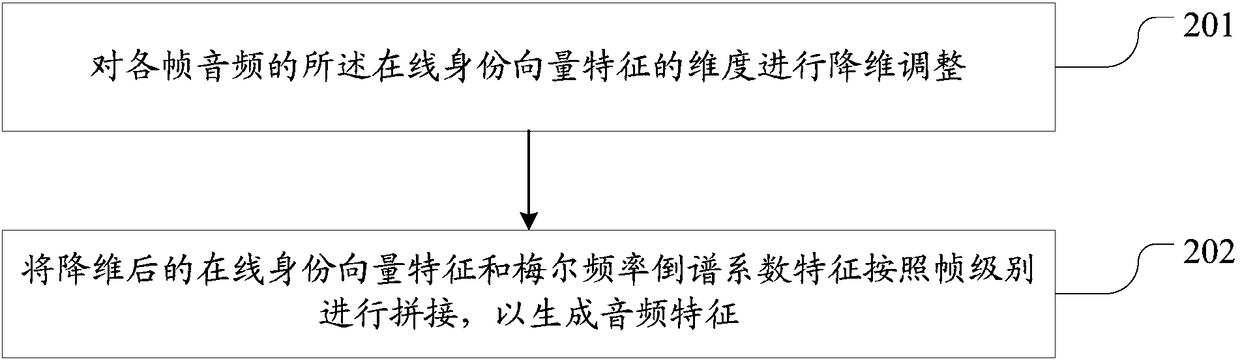

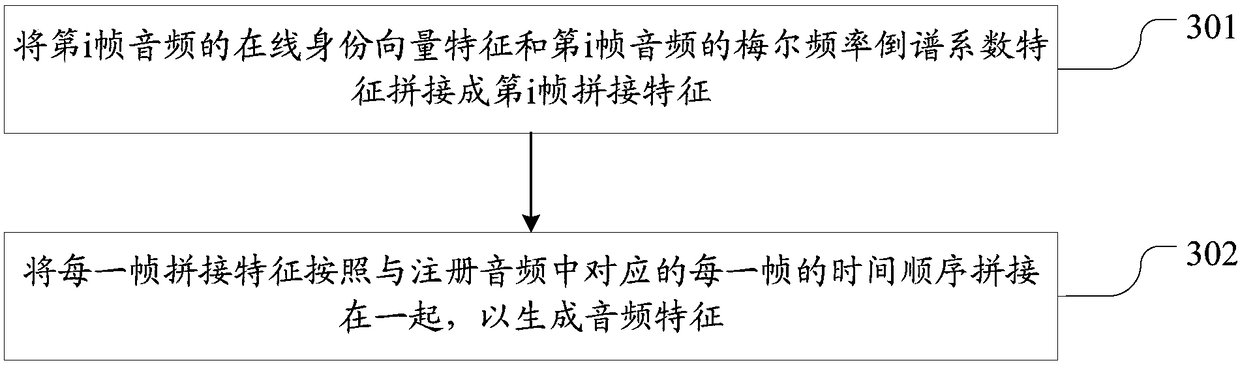

Audio training or recognizing method for intelligent dialogue voice platform and electronic equipment

The invention discloses an audio training or recognizing method and system for an intelligent dialogue voice platform and electronic equipment. The method comprises the steps of receiving audio data;extracting the identity vector characteristics of the audio data, and preprocessing the identity vector characteristics; wherein the preprocessing process comprises extracting Mel Frequency Cepstrum Coefficients (MFCCs) of each frame of audio of the audio data; extracting the identity vector characteristics of the formed 2L+1 frame MFCCs characteristics after the L frames are expanded in the context of the MFCCs characteristic of each frame of audio, so as to generate an on-line identity vector characteristics of each frame of audio; splicing the online identity vector characteristics and theMFCCs characteristics of each frame of audio according to the frame level, so as to generate audio characteristics; and inputting the preprocessed audio characteristics into a gaussian mixture model system based on a background speaker model, so as to carry out audio training or recognition processing. The identity and the speaking content of the speaker can be matched at the same time, and the recognition rate is higher.

Owner:AISPEECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com