Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

38 results about "Speaking style" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

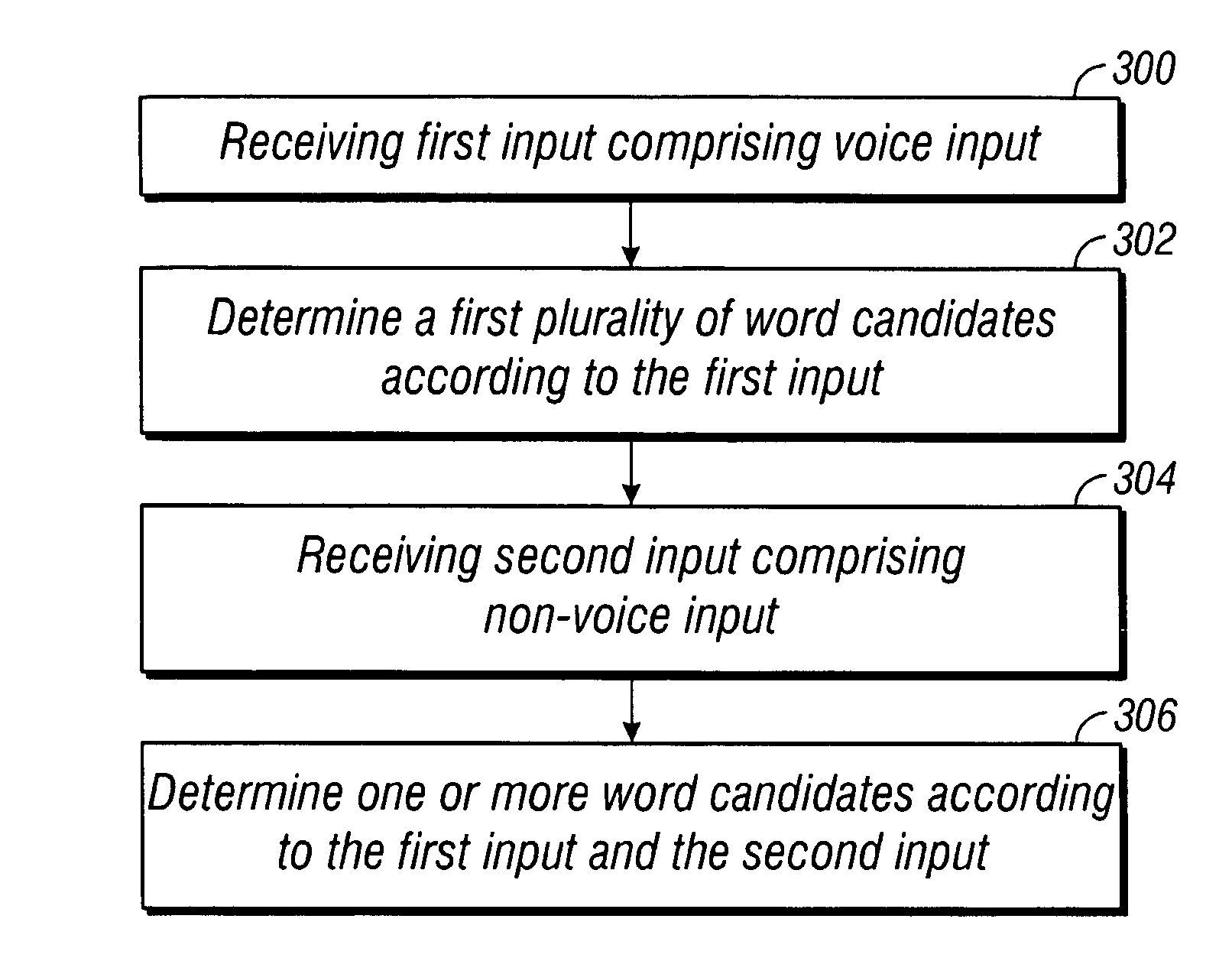

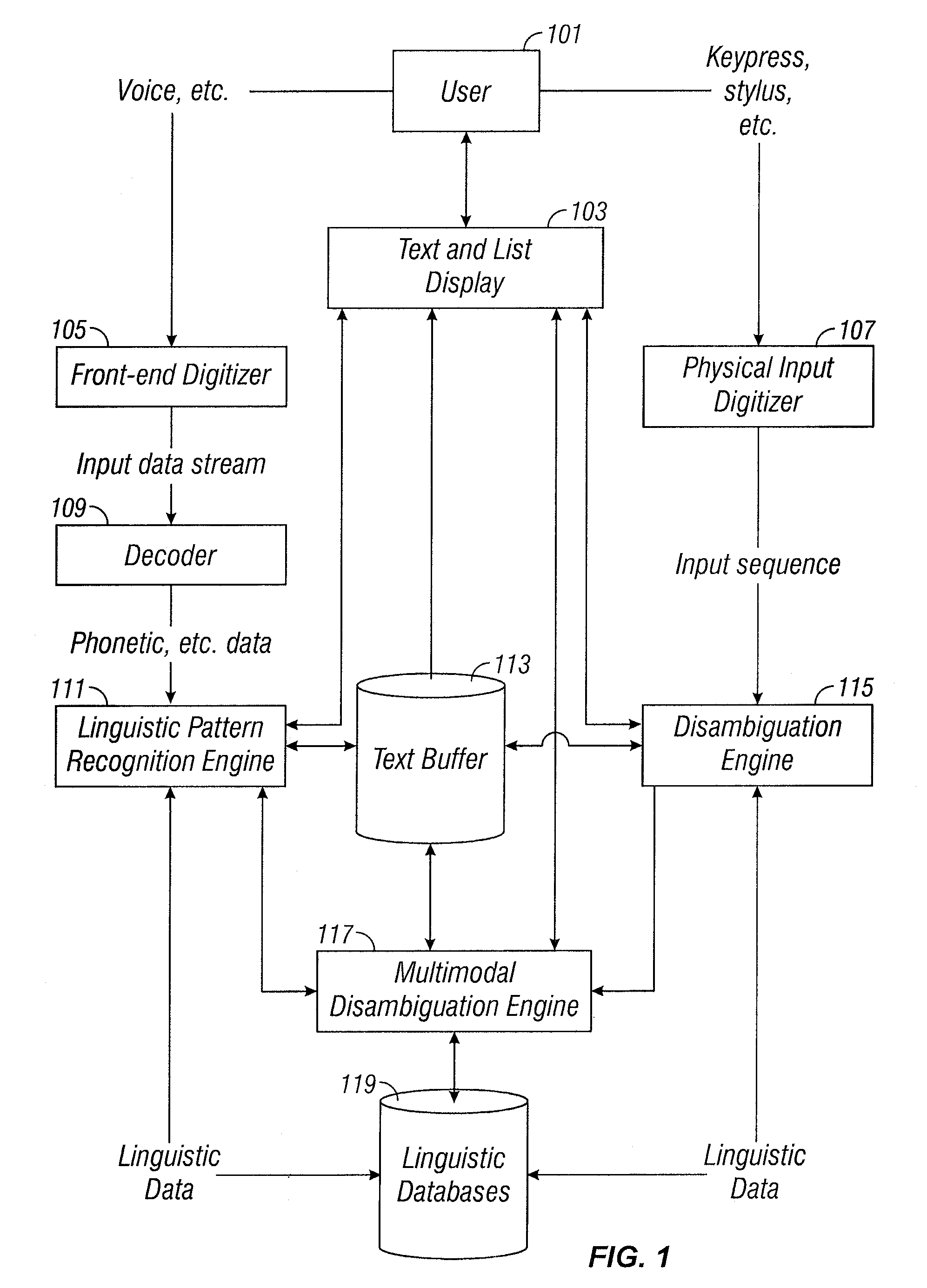

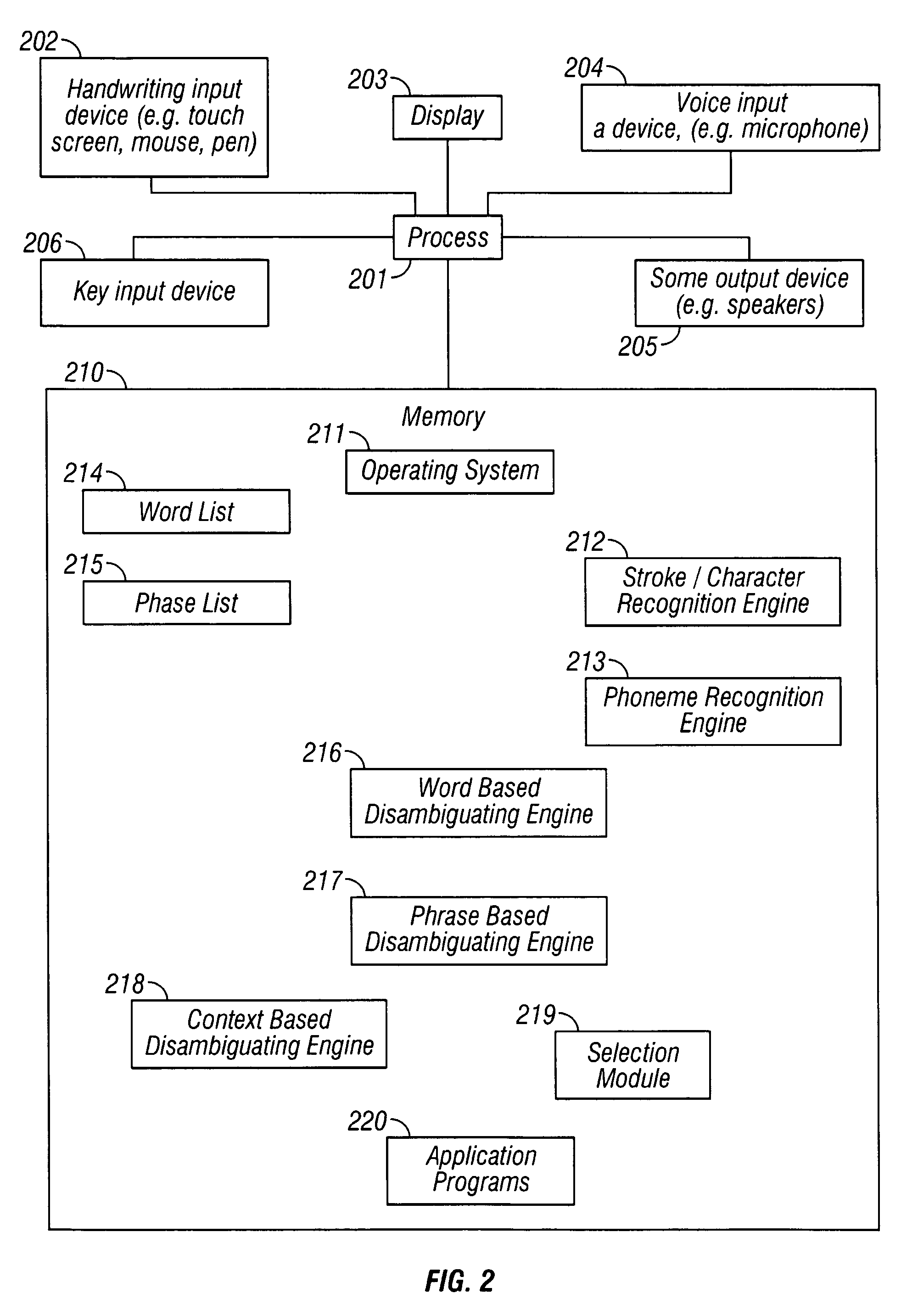

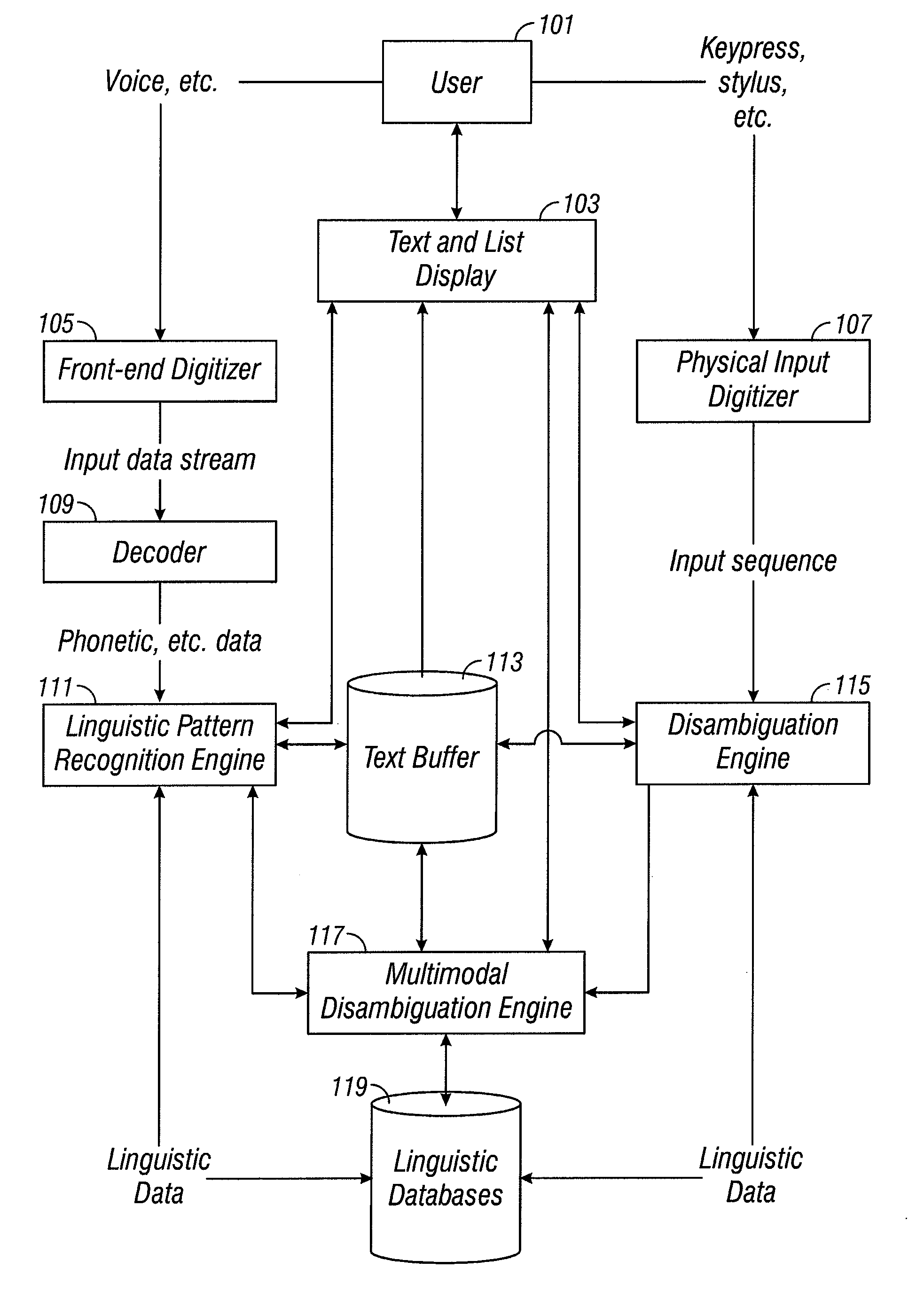

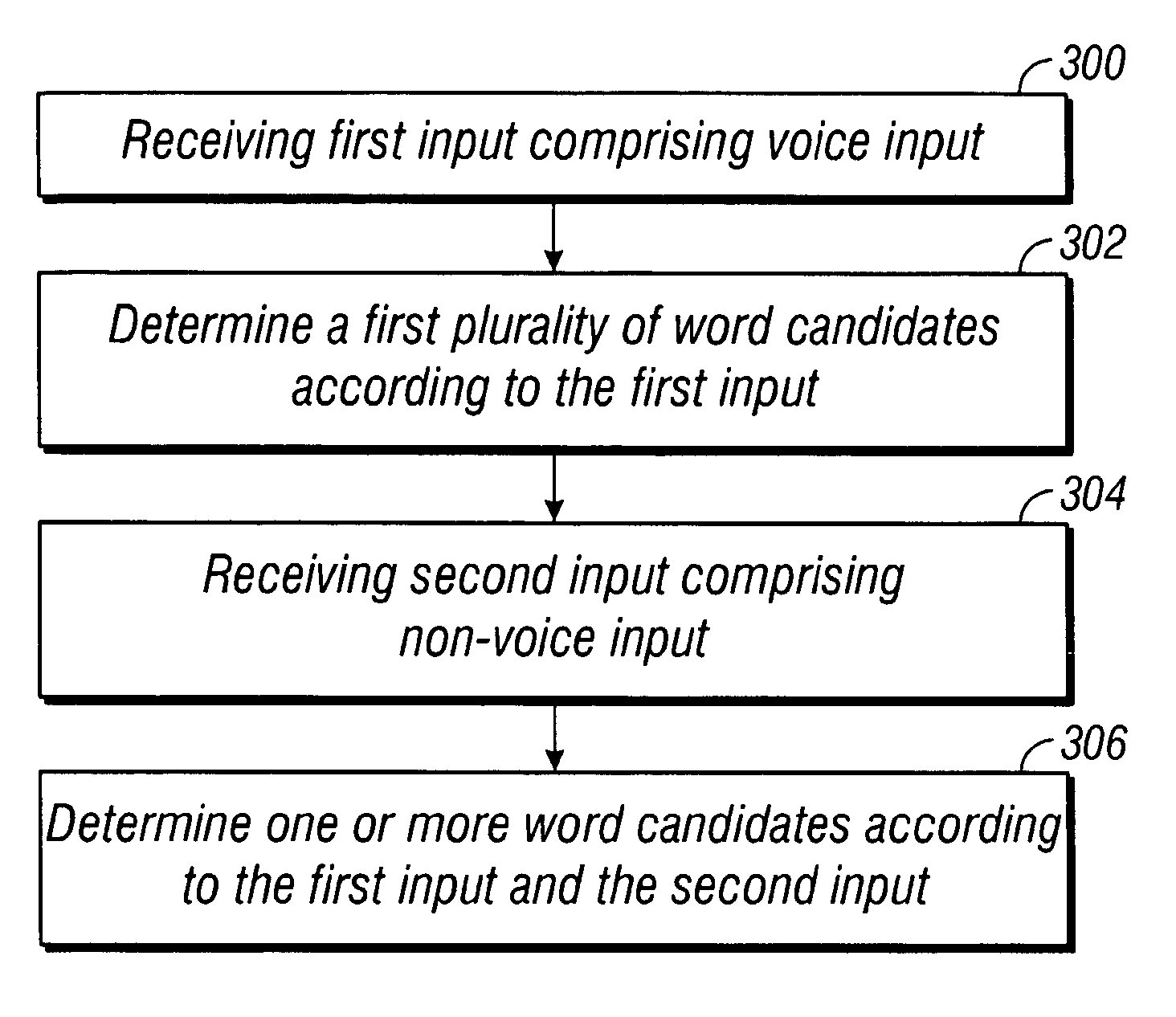

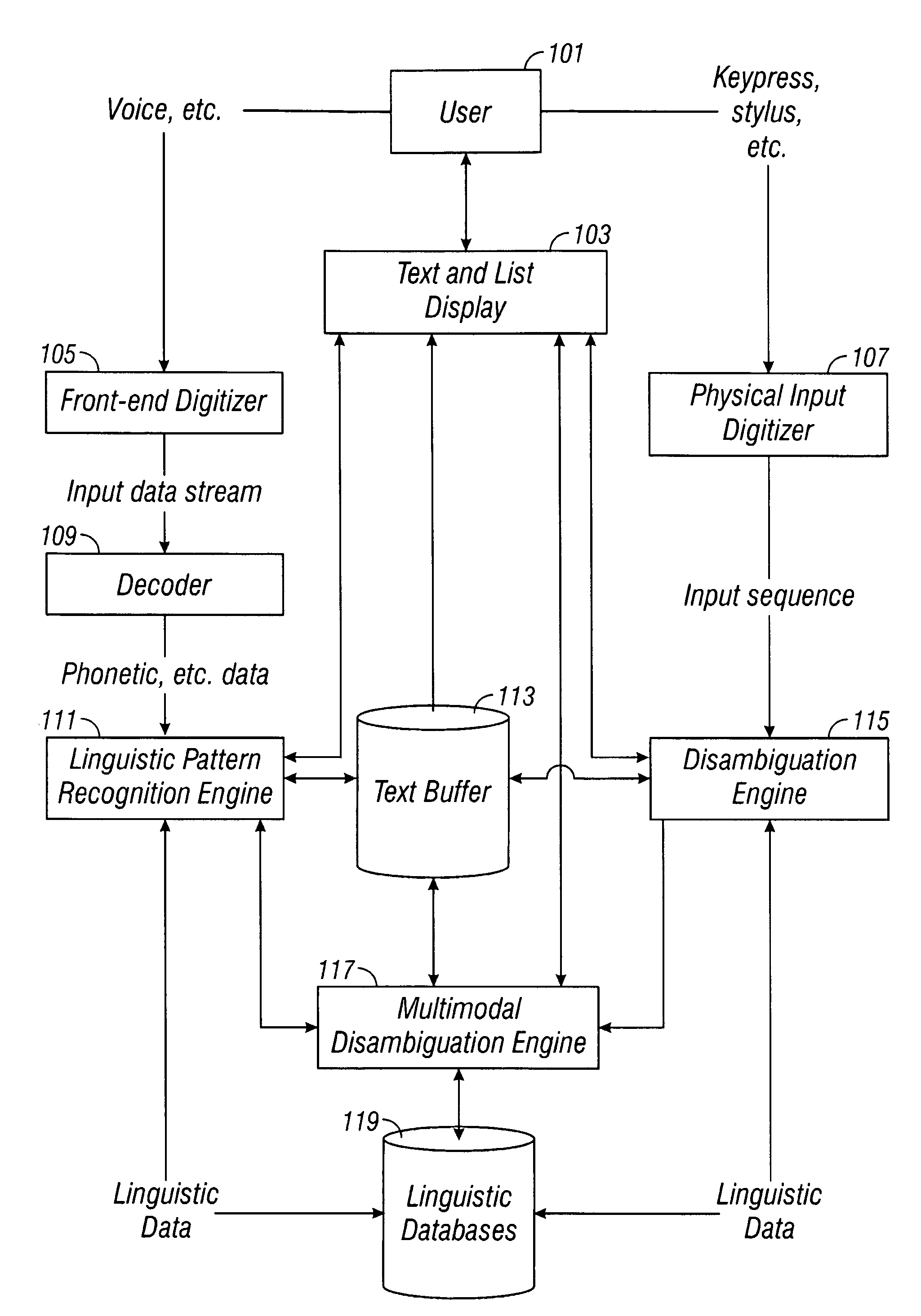

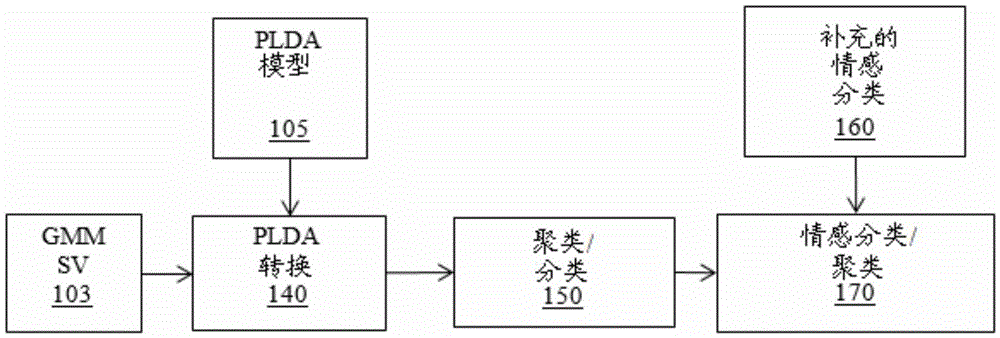

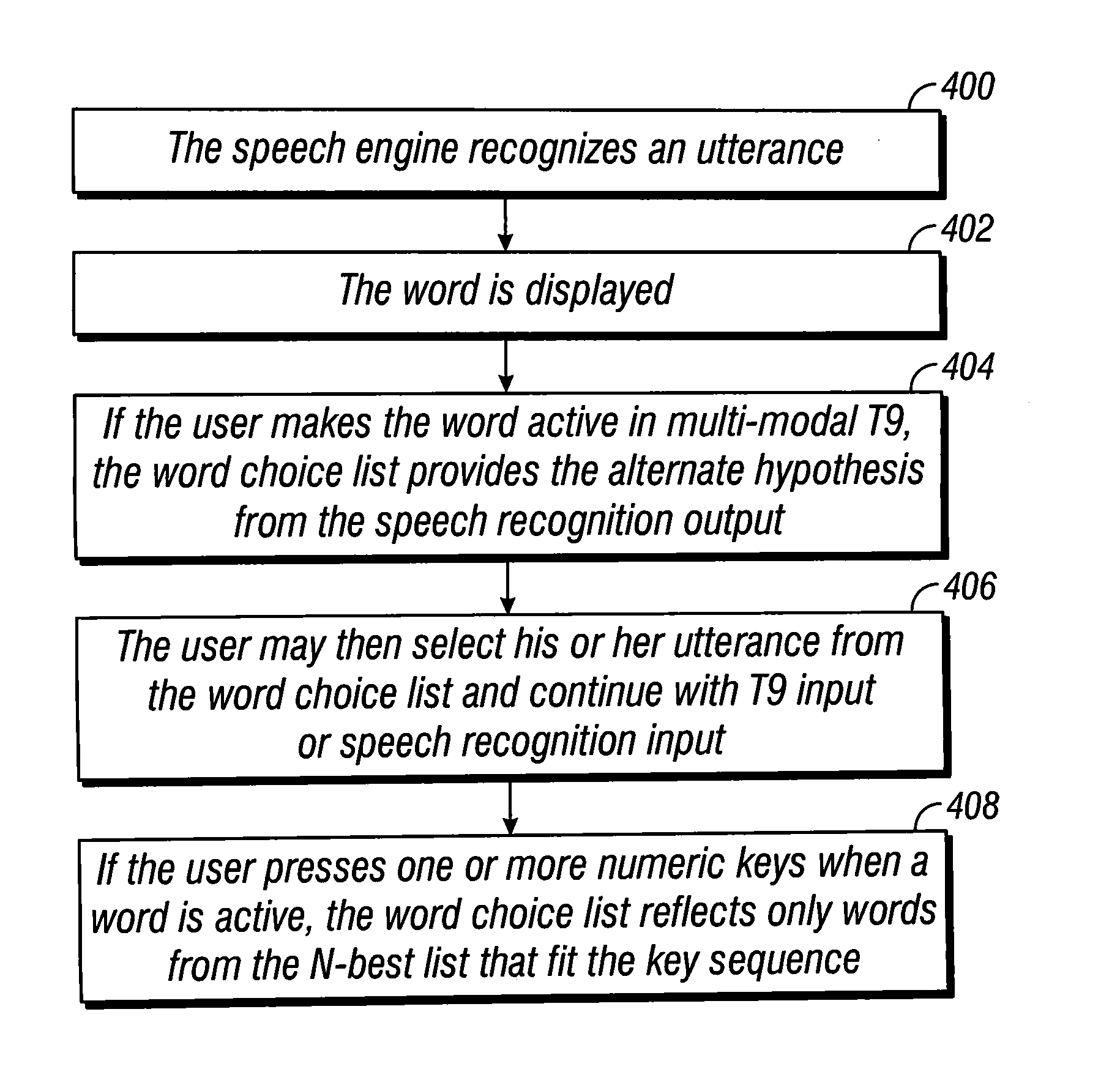

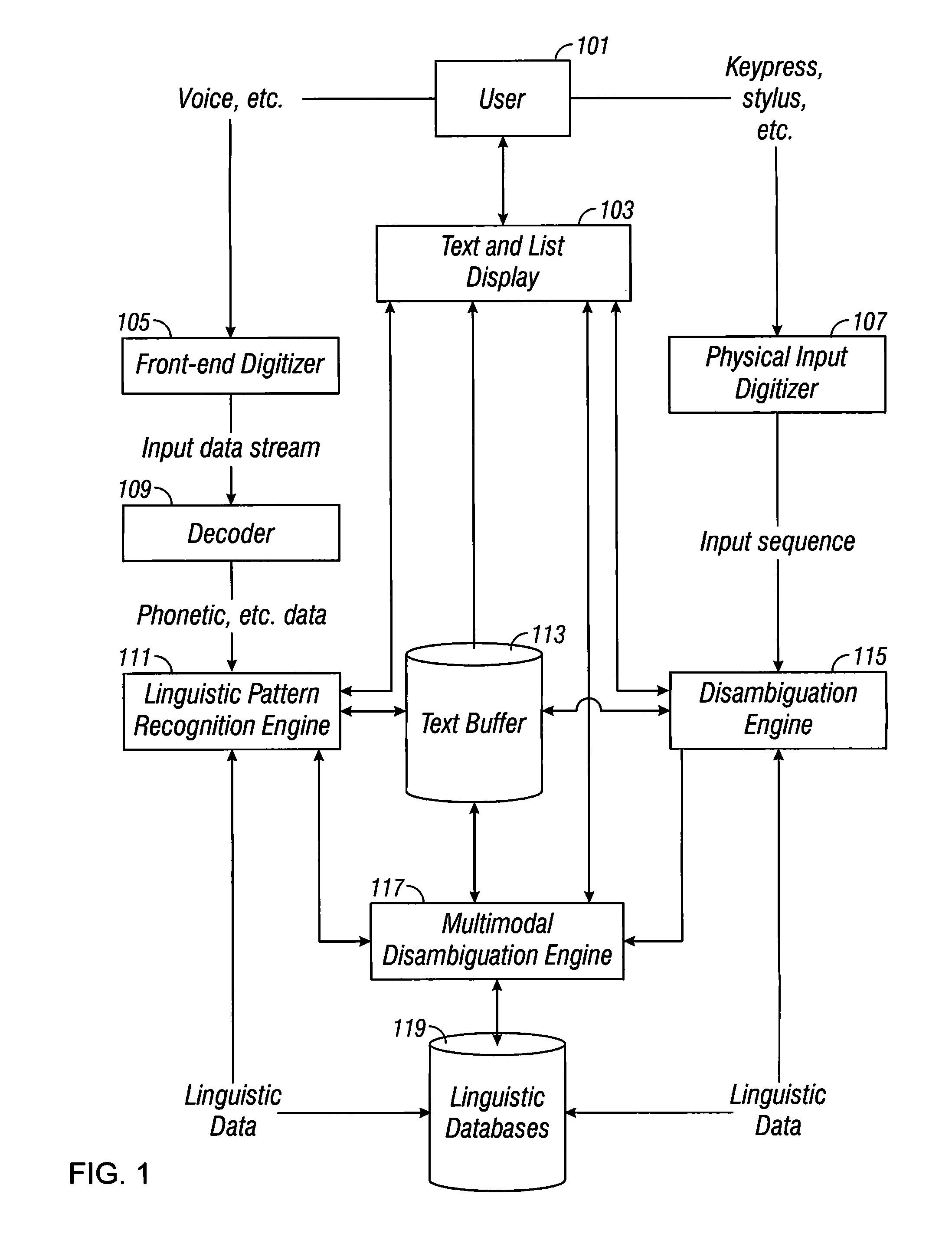

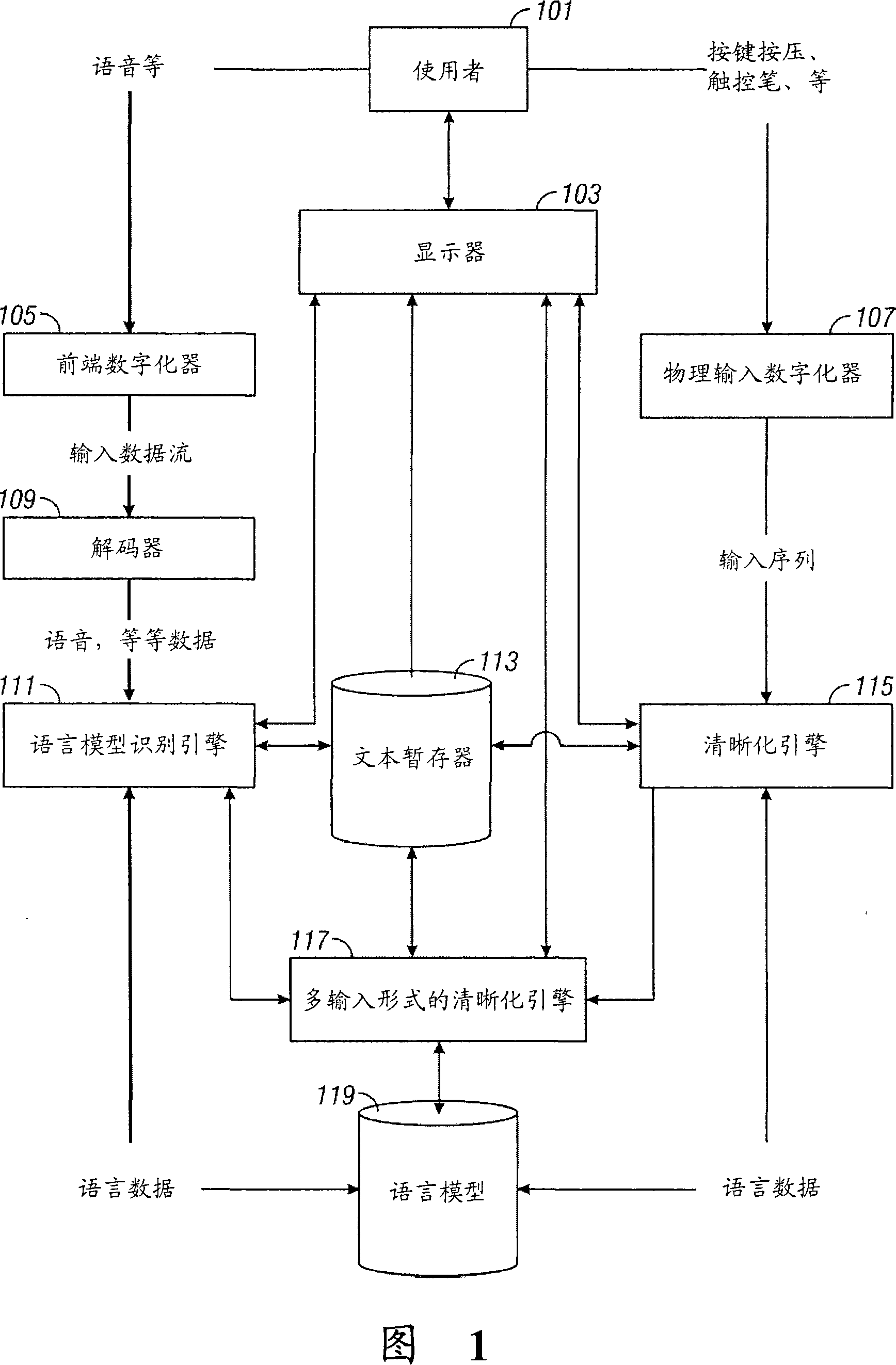

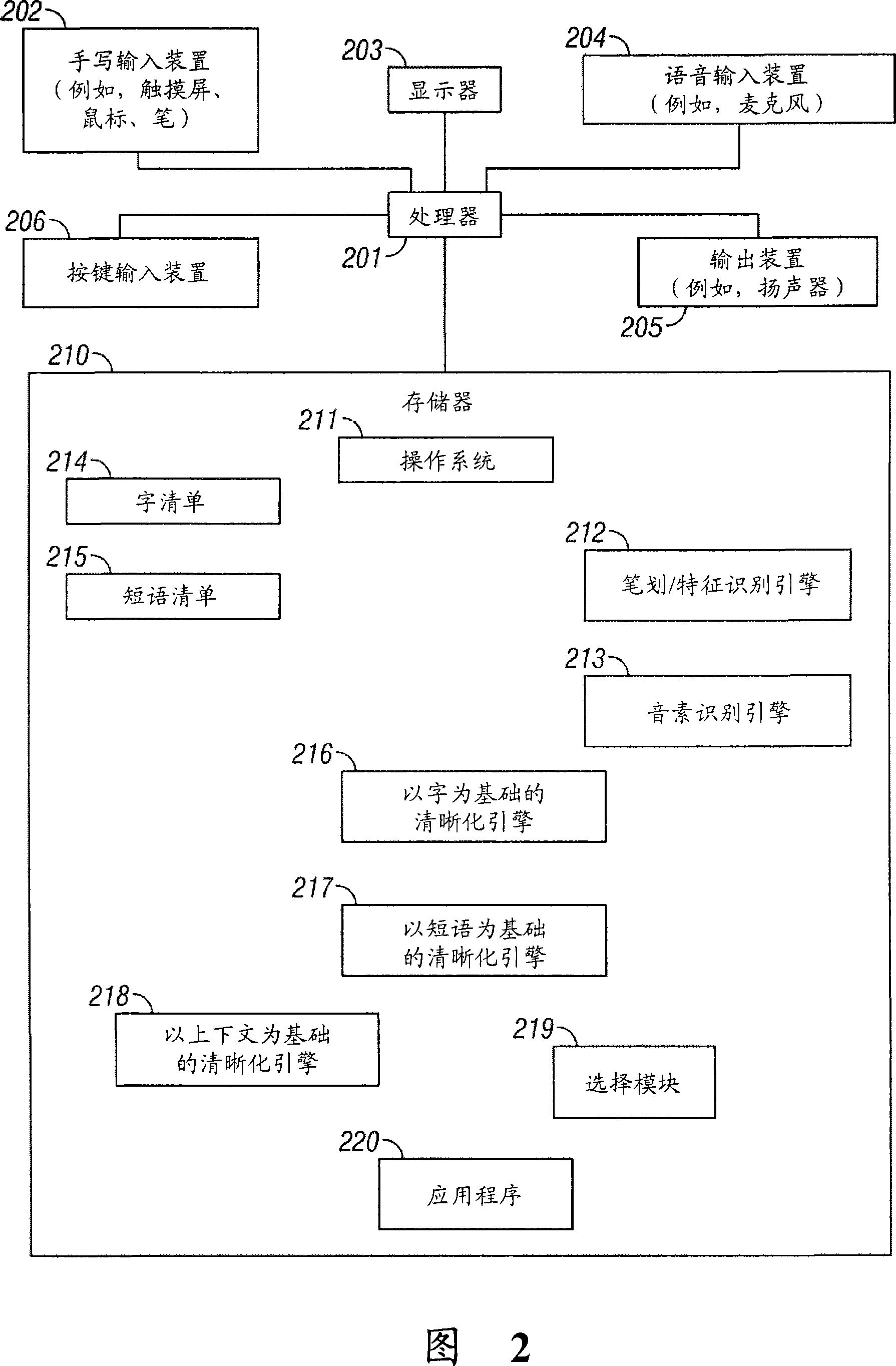

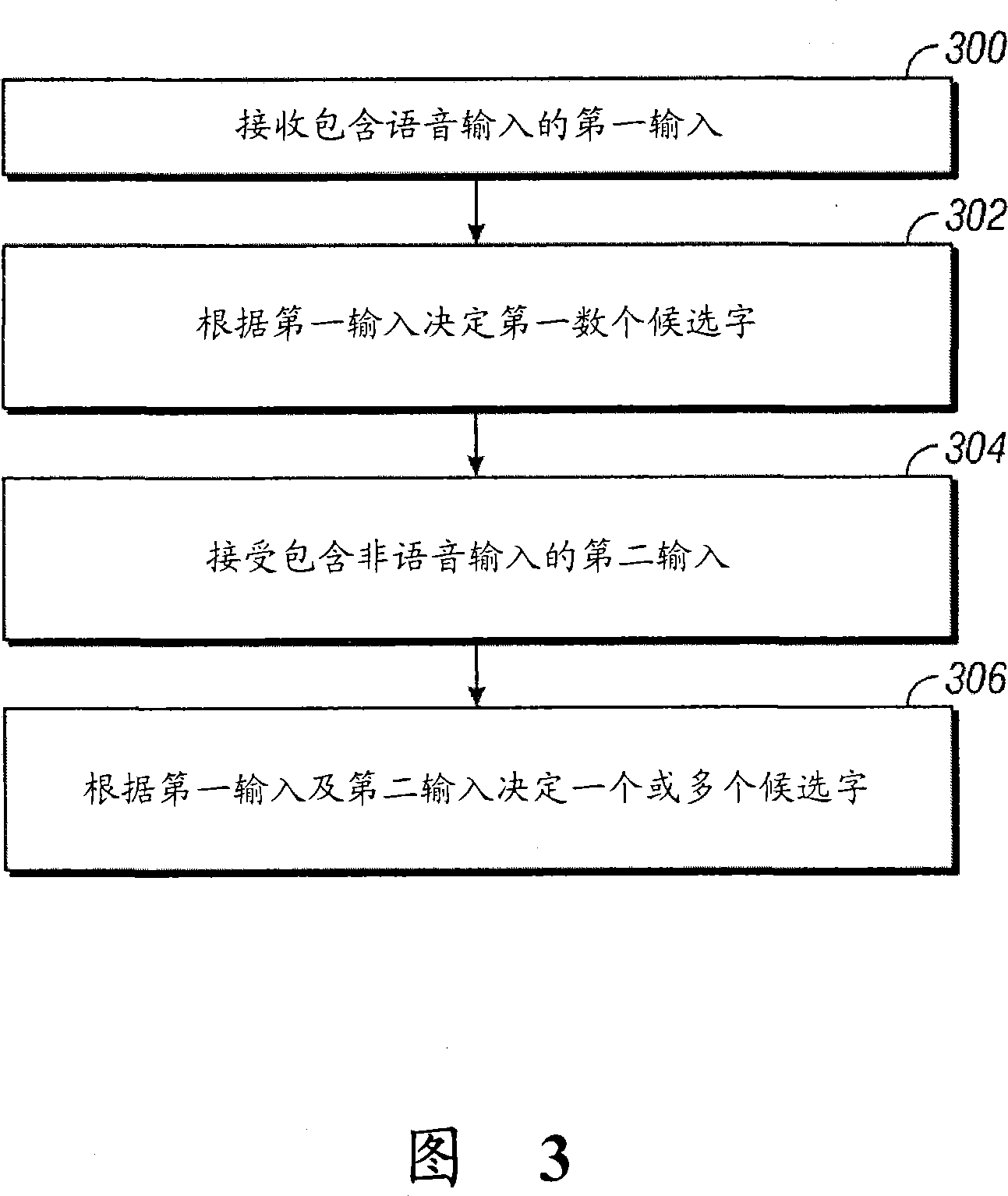

Multimodal disambiguation of speech recognition

InactiveUS7881936B2Efficient and accurate text inputLow accuracyCathode-ray tube indicatorsSpeech recognitionEnvironmental noiseText entry

The present invention provides a speech recognition system combined with one or more alternate input modalities to ensure efficient and accurate text input. The speech recognition system achieves less than perfect accuracy due to limited processing power, environmental noise, and / or natural variations in speaking style. The alternate input modalities use disambiguation or recognition engines to compensate for reduced keyboards, sloppy input, and / or natural variations in writing style. The ambiguity remaining in the speech recognition process is mostly orthogonal to the ambiguity inherent in the alternate input modality, such that the combination of the two modalities resolves the recognition errors efficiently and accurately. The invention is especially well suited for mobile devices with limited space for keyboards or touch-screen input.

Owner:TEGIC COMM

Multimodal disambiguation of speech recognition

InactiveUS8095364B2Resolves the recognition errors efficiently and accuratelySpeech recognitionEnvironmental noiseAmbiguity

The present invention provides a speech recognition system combined with one or more alternate input modalities to ensure efficient and accurate text input. The speech recognition system achieves less than perfect accuracy due to limited processing power, environmental noise, and / or natural variations in speaking style. The alternate input modalities use disambiguation or recognition engines to compensate for reduced keyboards, sloppy input, and / or natural variations in writing style. The ambiguity remaining in the speech recognition process is mostly orthogonal to the ambiguity inherent in the alternate input modality, such that the combination of the two modalities resolves the recognition errors efficiently and accurately. The invention is especially well suited for mobile devices with limited space for keyboards or touch-screen input.

Owner:CERENCE OPERATING CO

Multimodal disambiguation of speech recognition

InactiveUS20050283364A1Efficient and accurate text inputLow accuracyCathode-ray tube indicatorsSpeech recognitionEnvironmental noiseDiagnostic Radiology Modality

The present invention provides a speech recognition system combined with one or more alternate input modalities to ensure efficient and accurate text input. The speech recognition system achieves less than perfect accuracy due to limited processing power, environmental noise, and / or natural variations in speaking style. The alternate input modalities use disambiguation or recognition engines to compensate for reduced keyboards, sloppy input, and / or natural variations in writing style. The ambiguity remaining in the speech recognition process is mostly orthogonal to the ambiguity inherent in the alternate input modality, such that the combination of the two modalities resolves the recognition errors efficiently and accurately. The invention is especially well suited for mobile devices with limited space for keyboards or touch-screen input.

Owner:TEGIC COMM

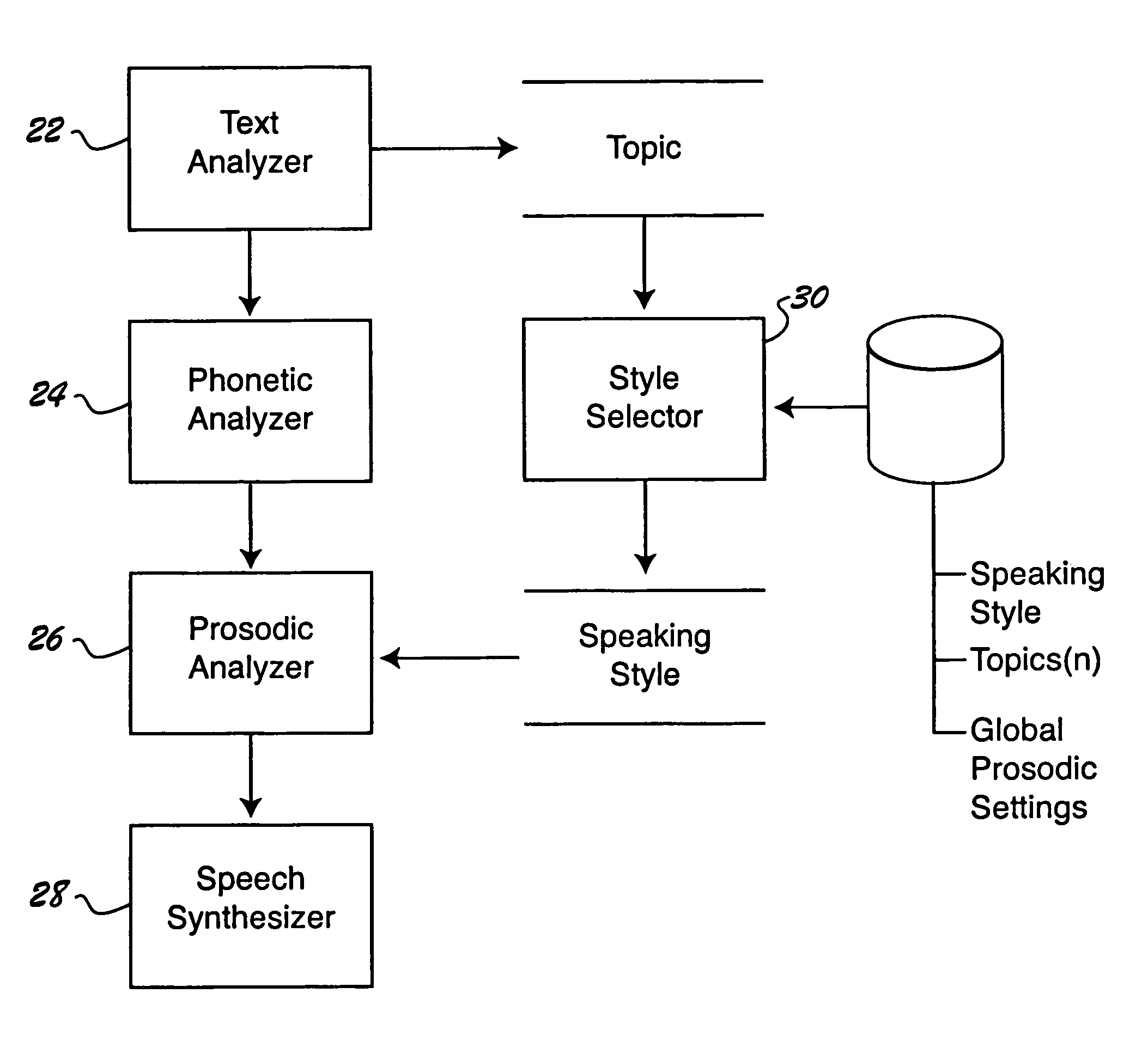

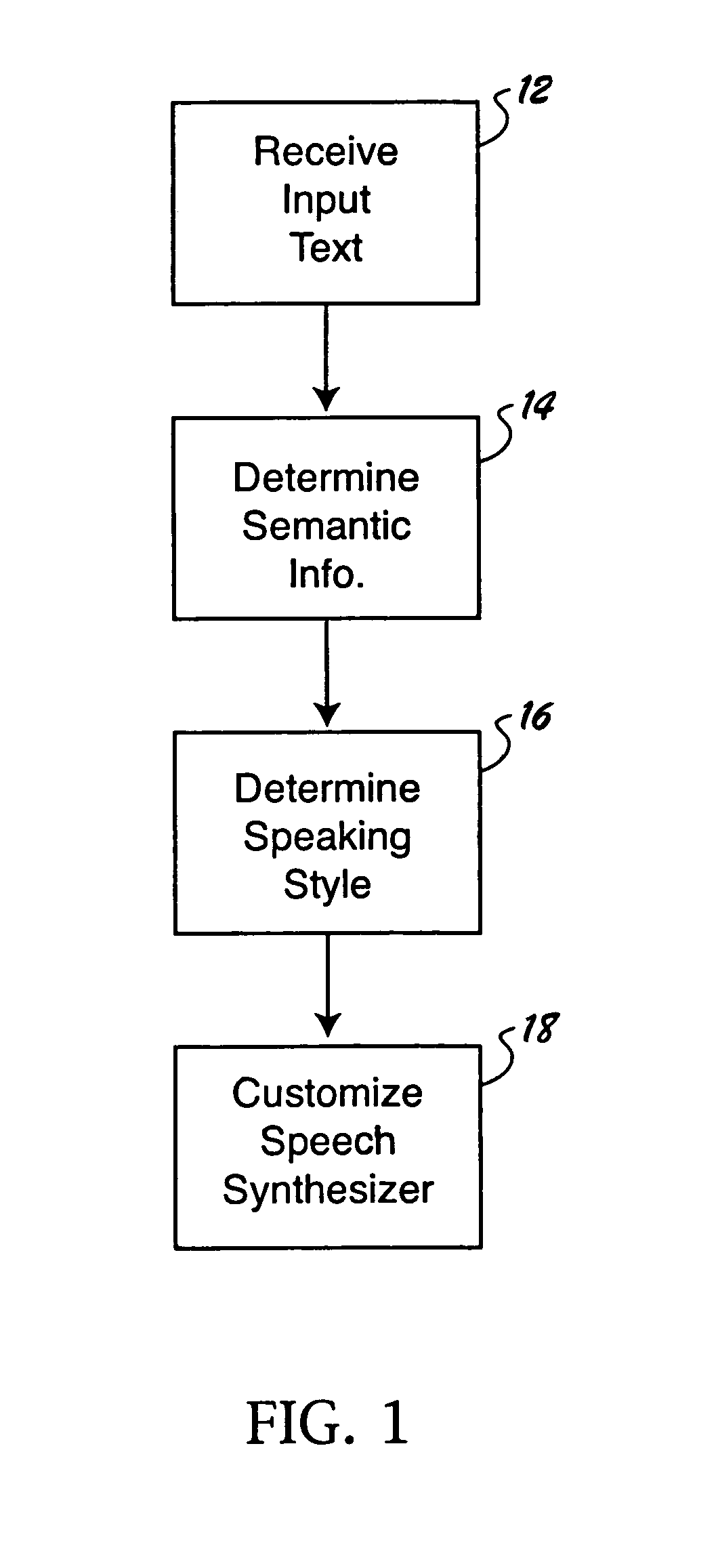

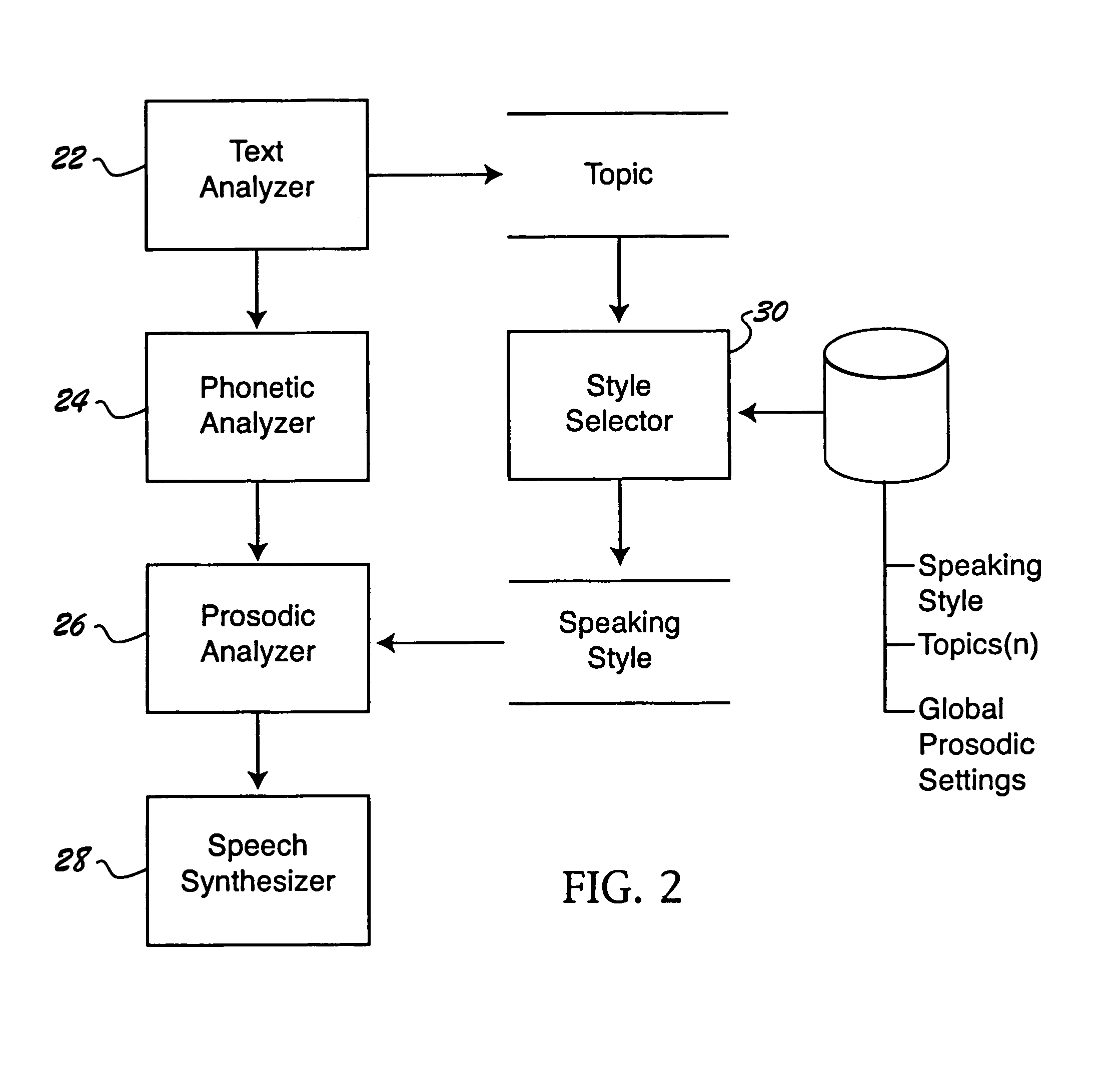

Customizing the speaking style of a speech synthesizer based on semantic analysis

A method is provided for customizing the speaking style of a speech synthesizer. The method includes: receiving input text; determining semantic information for the input text; determining a speaking style for rendering the input text based on the semantic information; and customizing the audible speech output of the speech synthesizer based on the identified speaking style.

Owner:SOVEREIGN PEAK VENTURES LLC

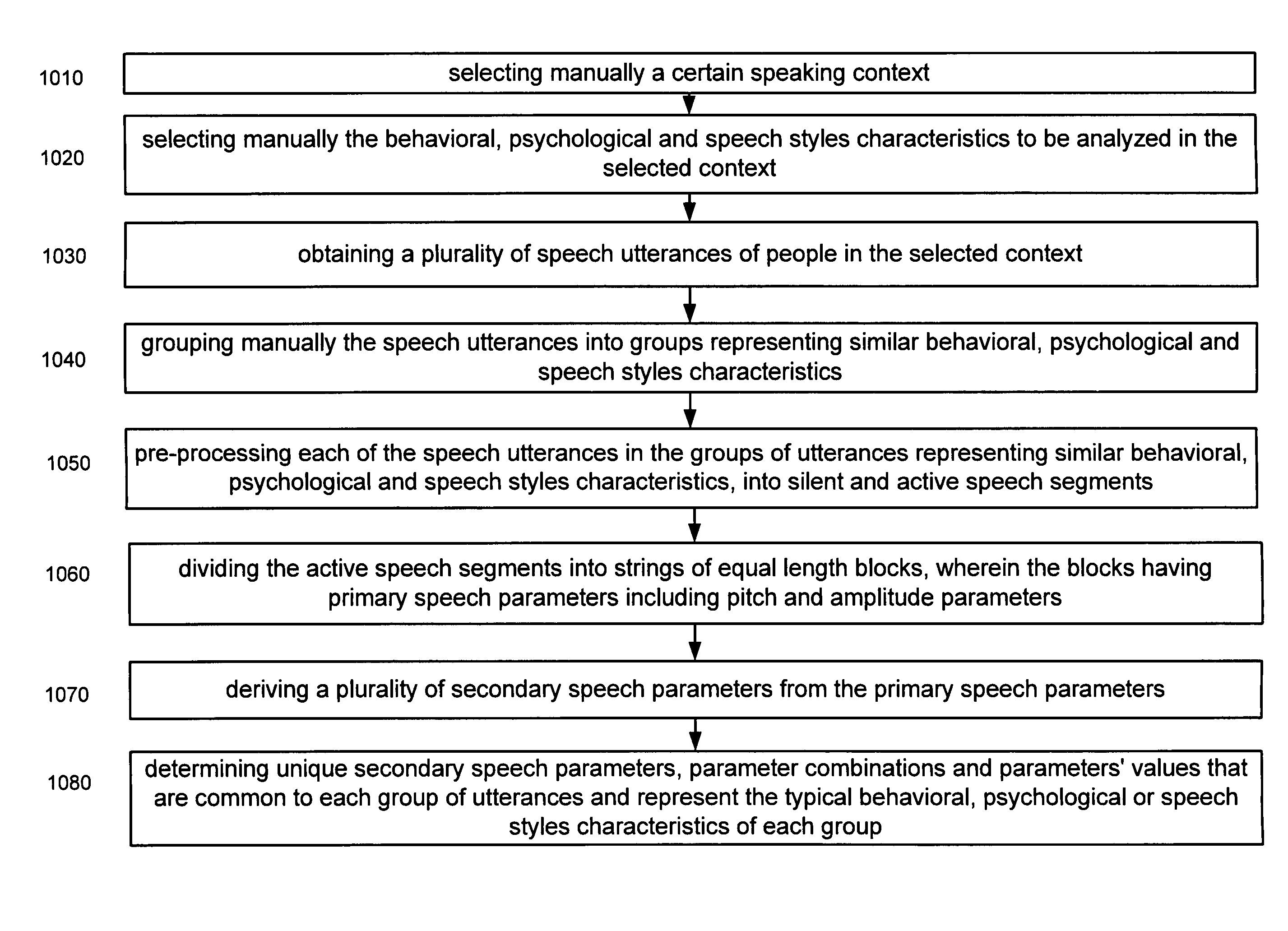

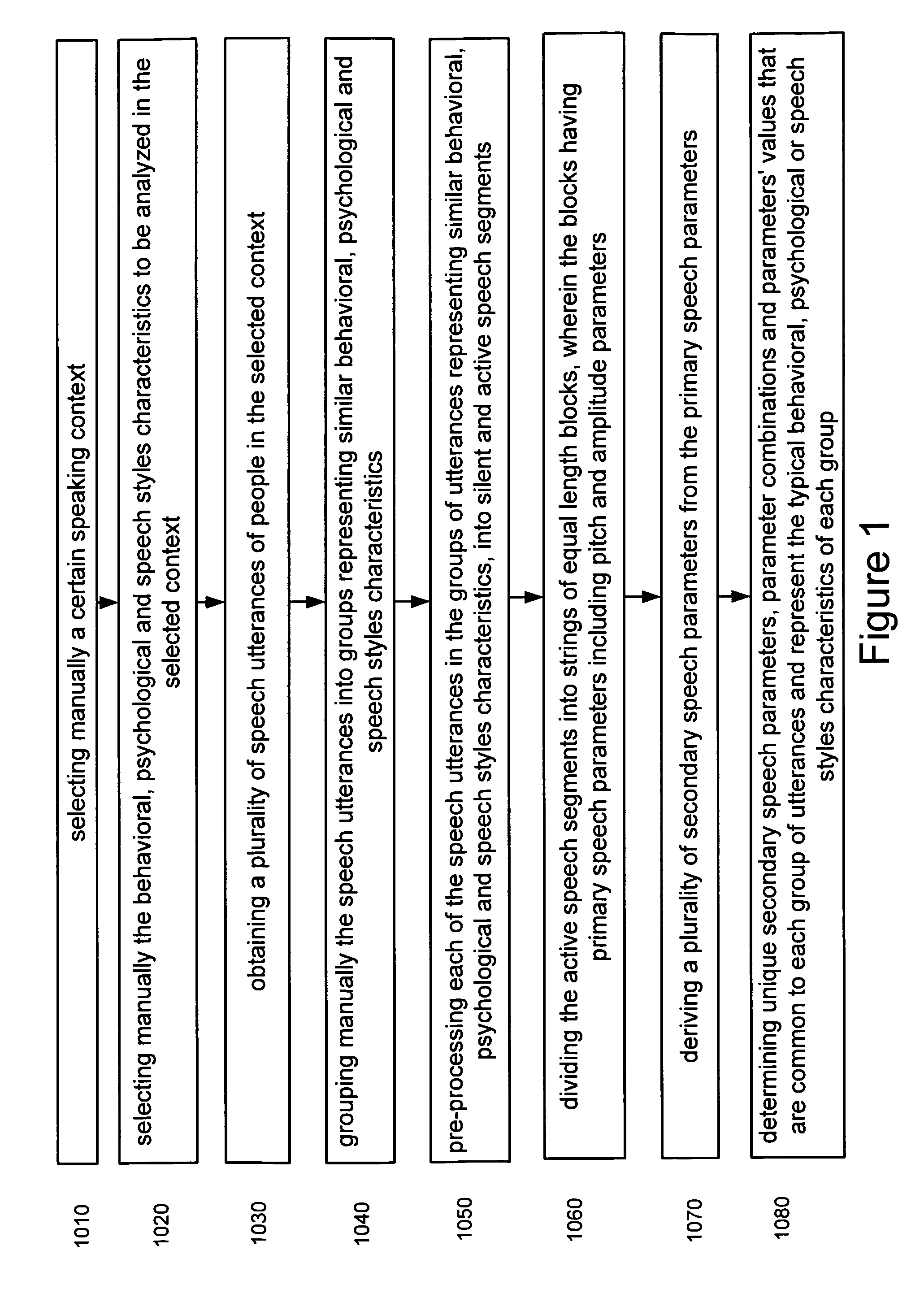

Speaker characterization through speech analysis

A computer implemented method, data processing system, apparatus and computer program product for determining current behavioral, psychological and speech styles characteristics of a speaker in a given situation and context, through analysis of current speech utterances of the speaker. The analysis calculates different prosodic parameters of the speech utterances, consisting of unique secondary derivatives of the primary pitch and amplitude speech parameters, and compares these parameters with pre-obtained reference speech data, indicative of various behavioral, psychological and speech styles characteristics. The method includes the formation of the classification speech parameters reference database, as well as the analysis of the speaker's speech utterances in order to determine the current behavioral, psychological and speech styles characteristics of the speaker in the given situation.

Owner:VOICESENSE LTD

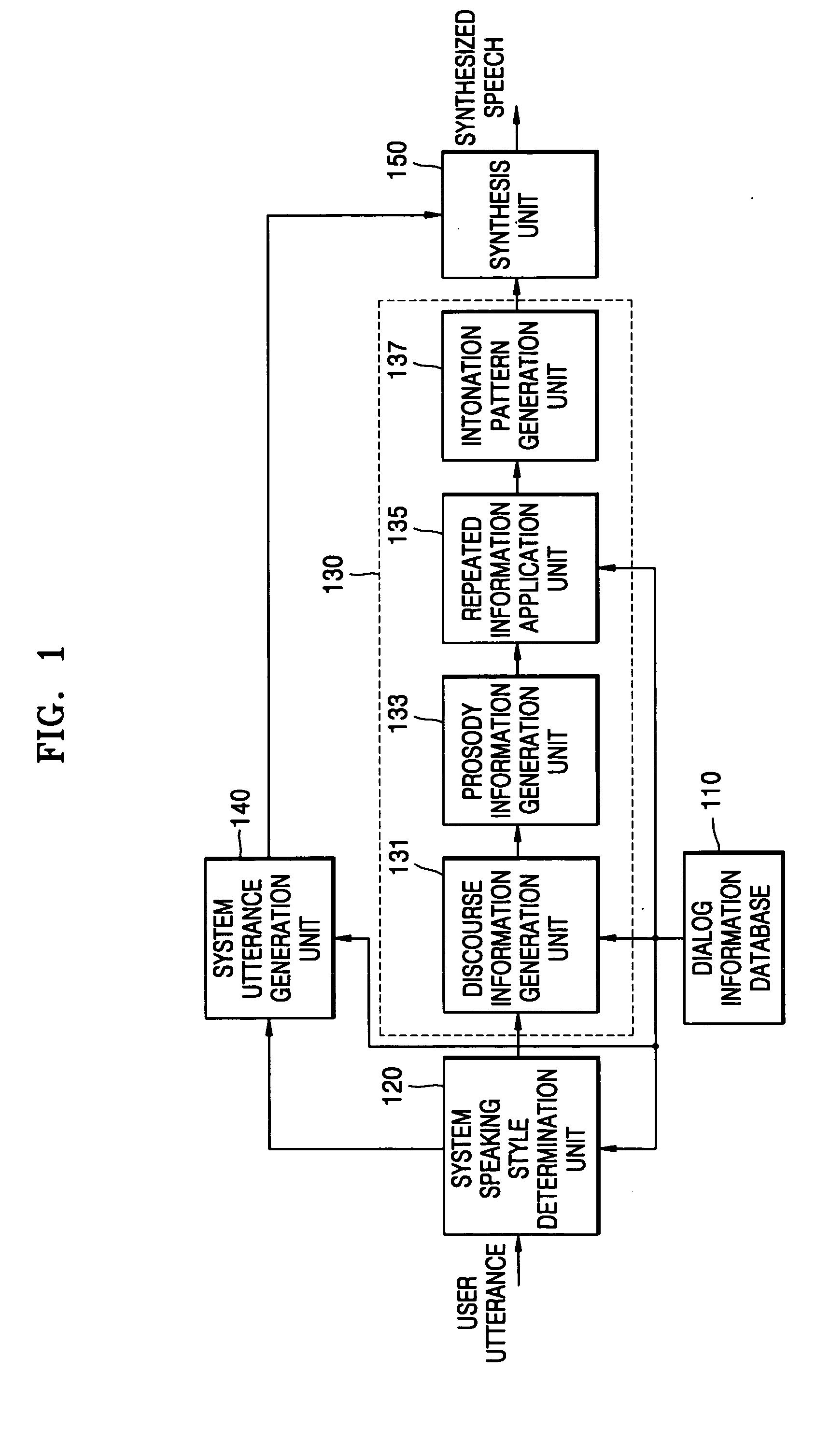

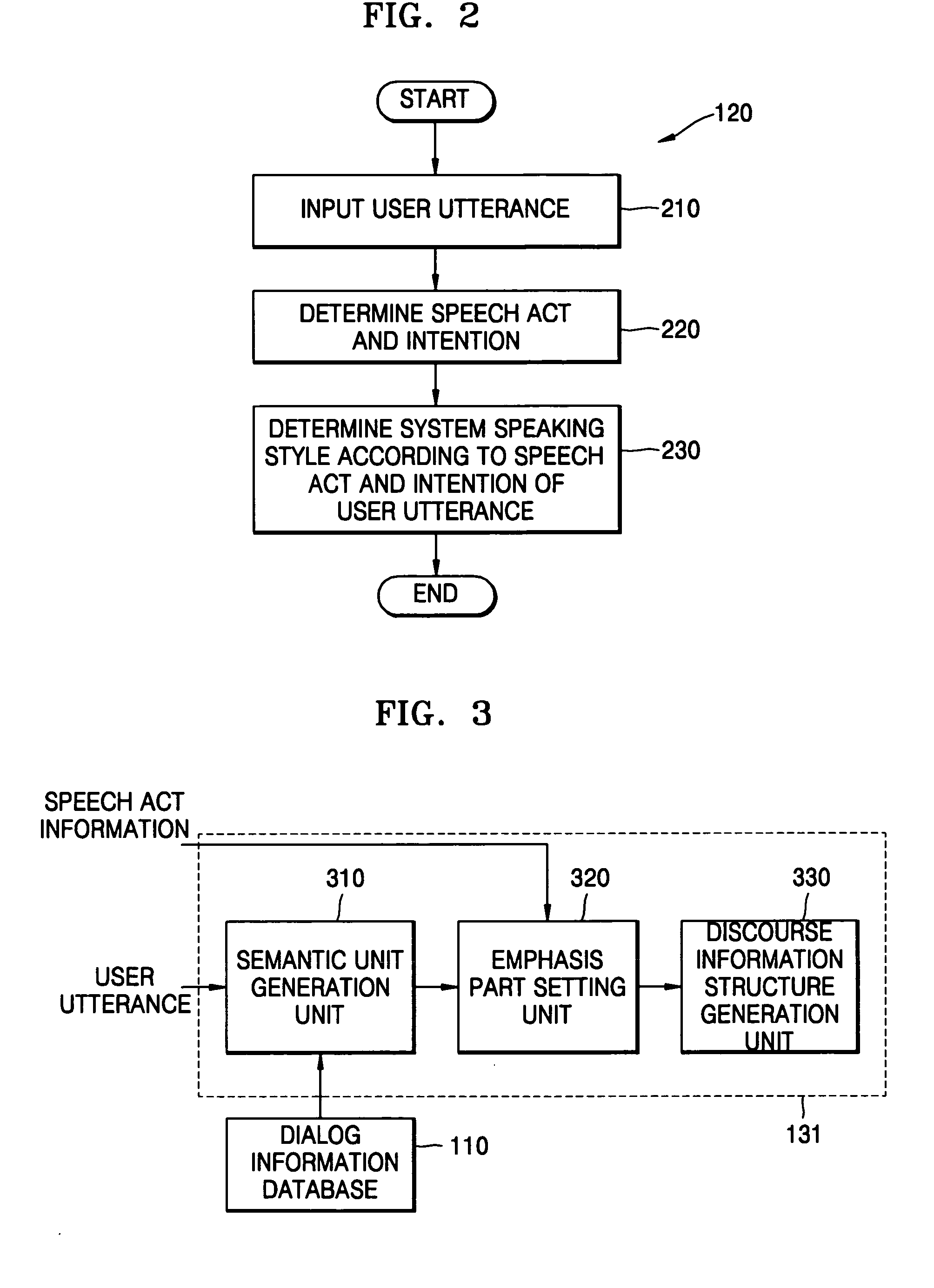

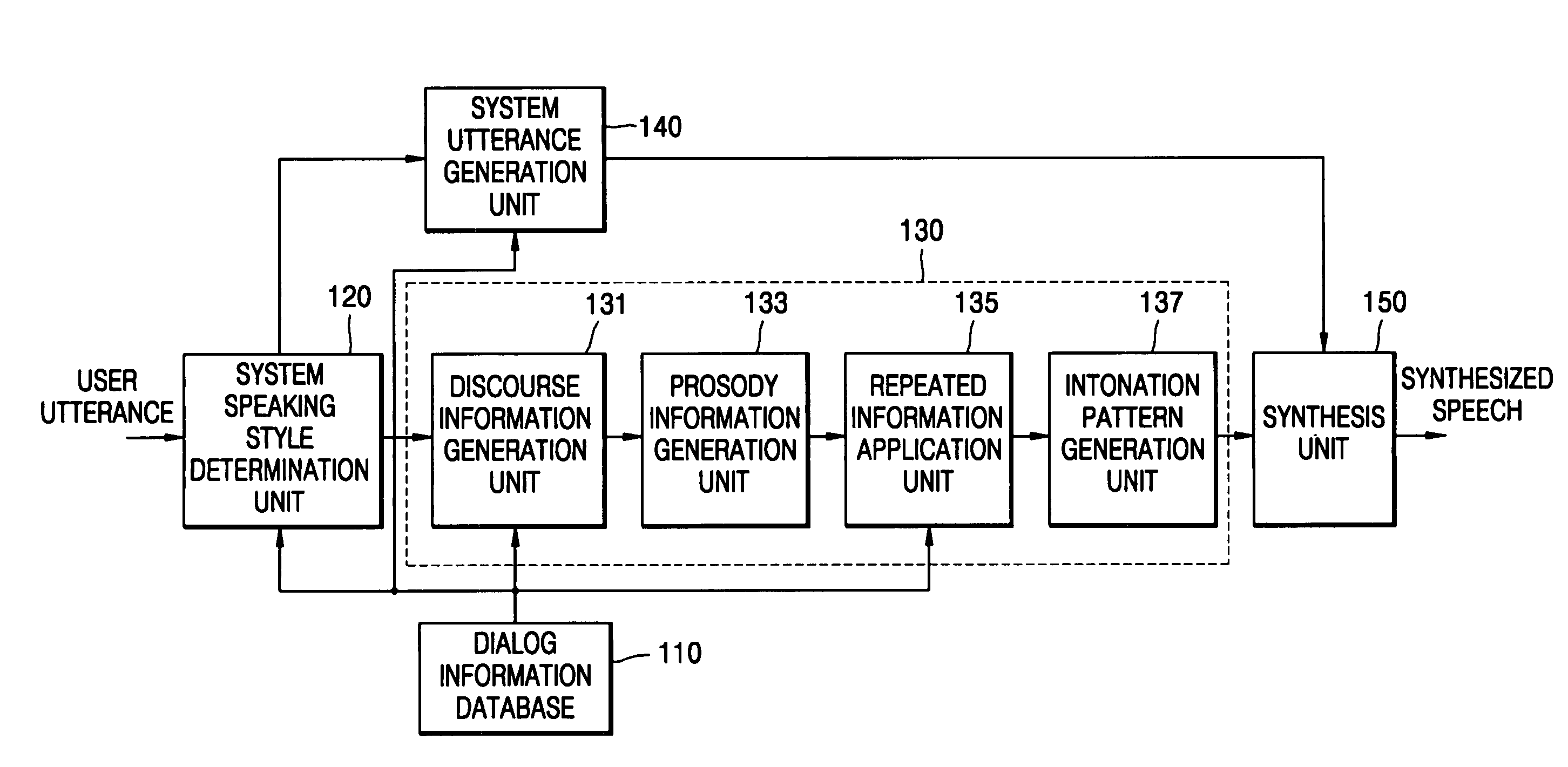

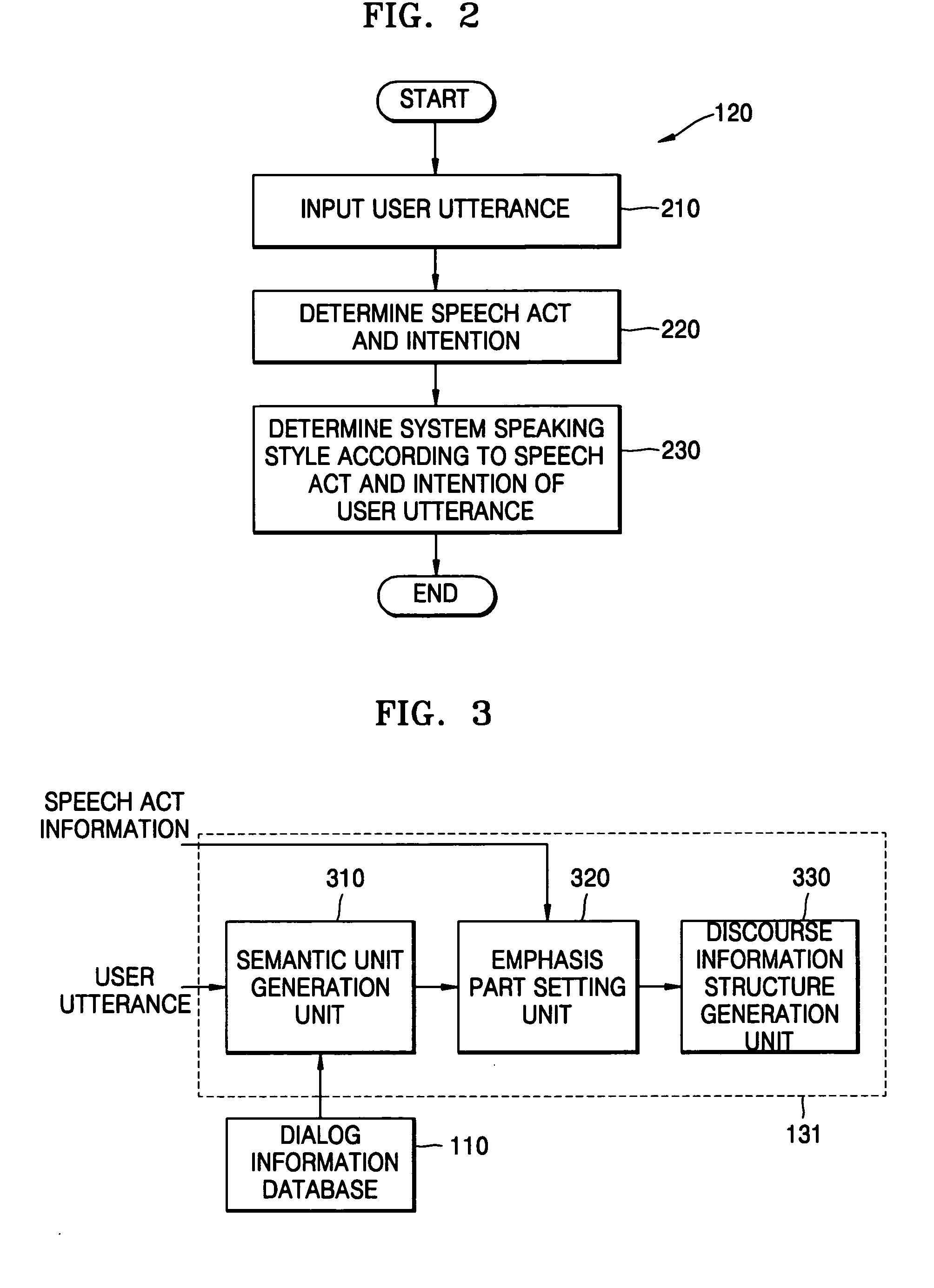

Method and apparatus for generating dialog prosody structure, and speech synthesis method and system employing the same

InactiveUS20050261905A1Natural language data processingSpeech recognitionConversational speechSpeaking style

A dialog prosody structure generating method and apparatus, and a speech synthesis method and system employing the dialog prosody structure generation method and apparatus, are provided. The speech synthesis method using the dialog prosody structure generation method includes: determining a system speaking style based on a user utterance; if the system speaking style is dialog speech, generating dialog prosody information by reflecting discourse information between a user and a system; and synthesizing a system utterance based on the generated dialog prosody information.

Owner:SAMSUNG ELECTRONICS CO LTD

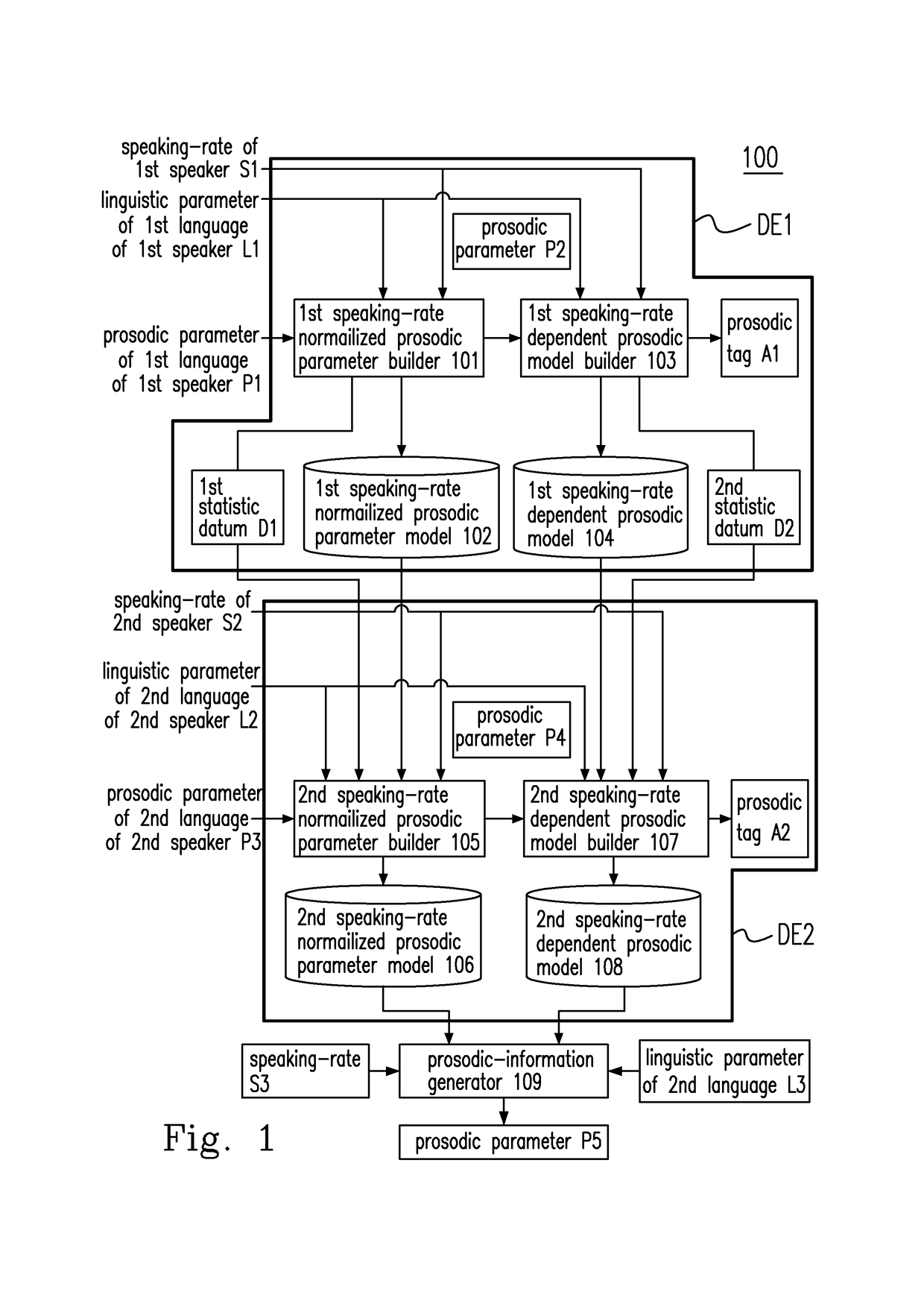

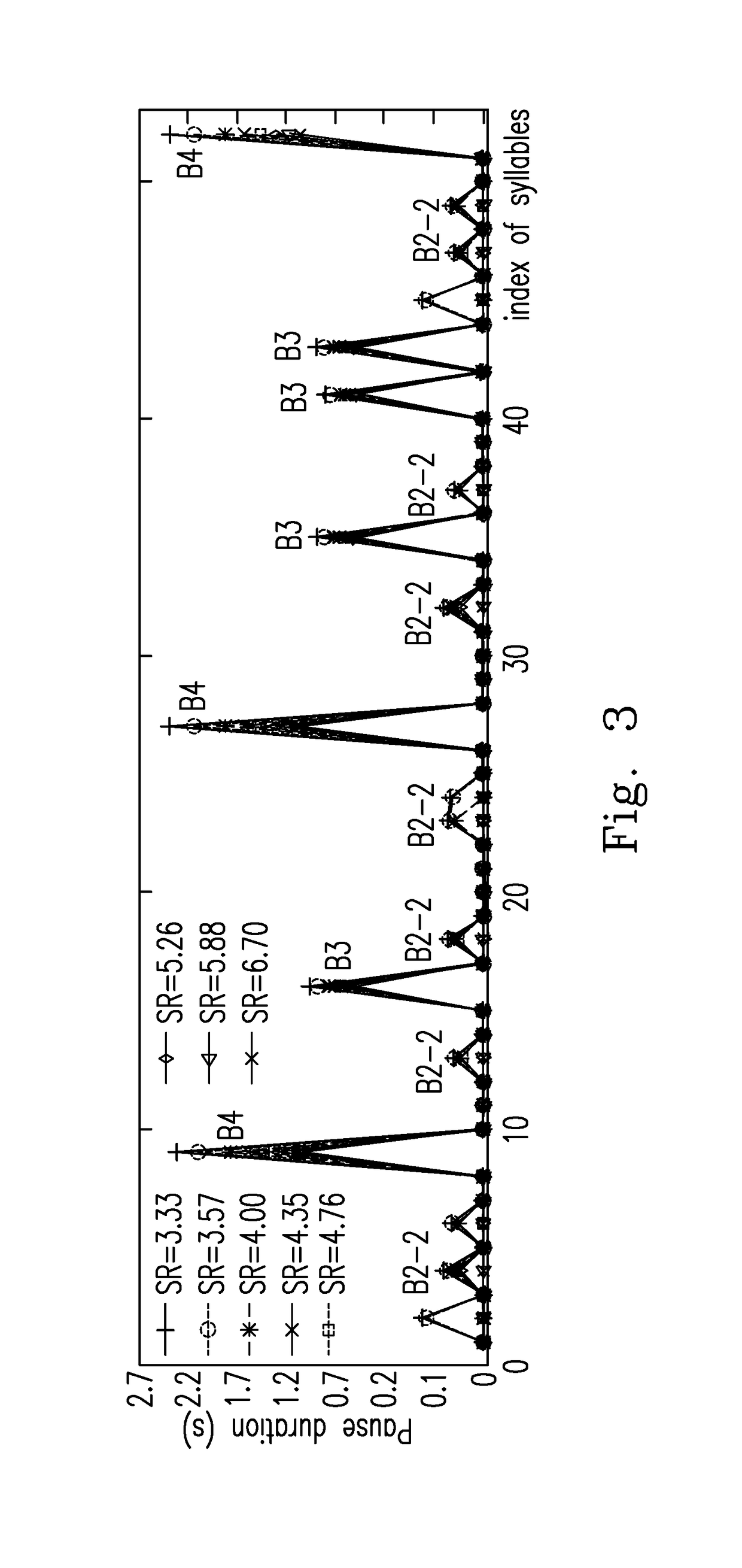

Speaking-rate normalized prosodic parameter builder, speaking-rate dependent prosodic model builder, speaking-rate controlled prosodic-information generation device and prosodic-information generation method able to learn different languages and mimic various speakers' speaking styles

A speaking-rate dependent prosodic model builder and a related method are disclosed. The proposed builder includes a first input terminal for receiving a first information of a first language spoken by a first speaker, a second input terminal for receiving a second information of a second language spoken by a second speaker and a functional information unit having a function, wherein the function includes a first plurality of parameters simultaneously relevant to the first language and the second language or a plurality of sub-parameters in a second plurality of parameters relevant to the second language alone, and the functional information unit under a maximum a posteriori condition and based on the first information, the second information and the first plurality of parameters or the plurality of sub-parameters produces speaking-rate dependent reference information and constructs a speaking-rate dependent prosodic model of the second language.

Owner:NATIONAL TAIPEI UNIVERSITY

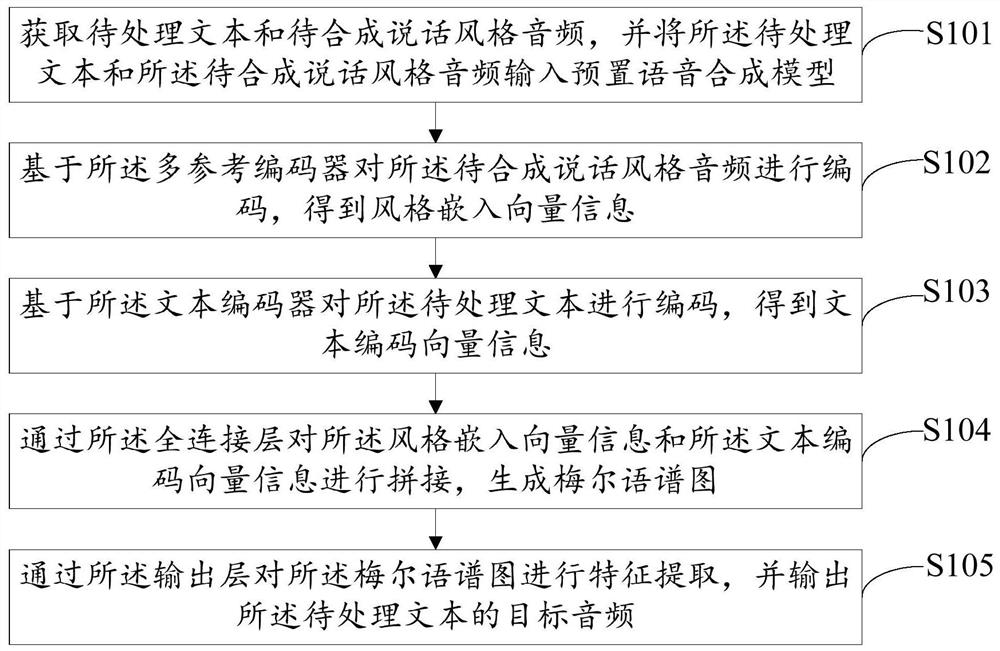

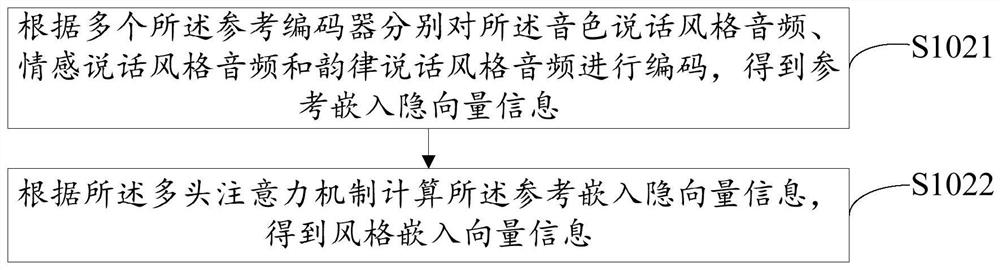

Speech synthesis method and device, equipment and storage medium

PendingCN112786009ASolve the technical problem of very single emotional expressionSpeech synthesisFeature extractionSynthesis methods

The invention relates to the technical field of artificial intelligence, and discloses a speech synthesis method and a device thereof, computer equipment and a computer readable storage medium, and the method comprises the steps: obtaining a to-be-processed text and a to-be-synthesized speech style audio, and inputting the to-be-processed text and the to-be-synthesized speech style audio into a preset speech synthesis model, encoding the speech style audio to be synthesized based on the multi-reference encoder, and obtaining style embedding vector information; encoding the to-be-processed text based on the text encoder to obtain text encoding vector information; splicing the style embedding vector information and the text coding vector information through the full connection layer to generate a Mel language spectrogram; and performing feature extraction on the Mel-language spectrogram through the output layer, and outputting a target audio of the to-be-processed text, thereby realizing control of the speaking style of the synthesized voice, and synthesizing the voice with more emotional expressions.

Owner:PING AN TECH (SHENZHEN) CO LTD

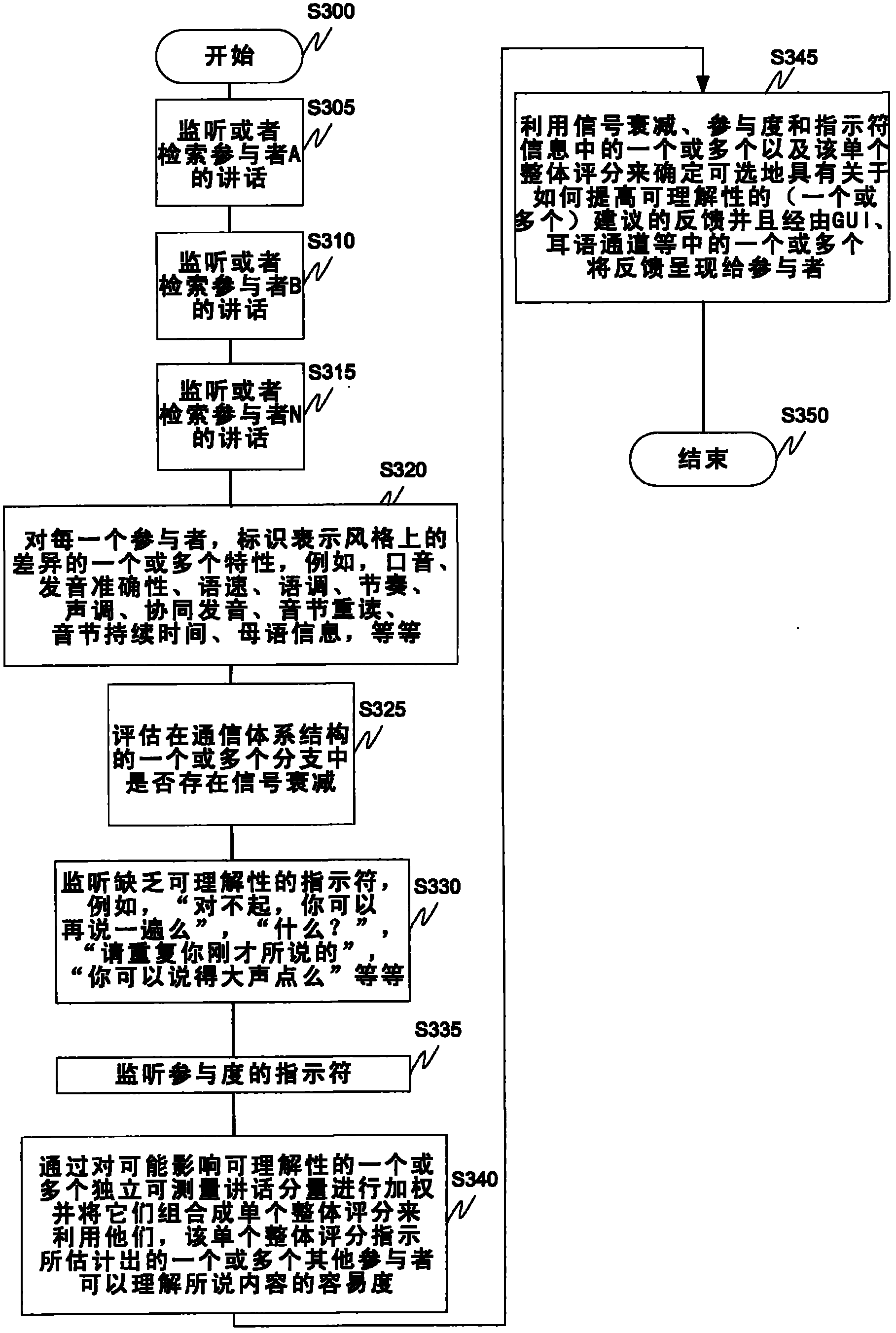

Estimating a Listener's Ability To Understand a Speaker, Based on Comparisons of Their Styles of Speech

An automated telecommunication system adjunct is described that "listens" to one or more participants styles of speech, identifies specific characteristics that represent differences in their styles, notably the accent, but also one or more of pronunciation accuracy, speed, pitch, cadence, intonation, co-articulation, syllable emphasis, and syllable duration, and utilizes, for example, a mathematical model in which the independent measurable components of speech that can affect understandability by that specific listener are weighted appropriately and then combined into a single overall scorethat indicates the estimated ease with which the listener can understand what is being said, and presents real-time feedback to speakers based on the score. In addition, the system can provide recommendations to the speaker as to how improve understandability.

Owner:AVAYA INC

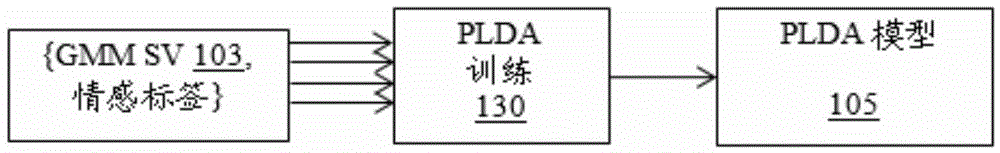

Emotional speech processing

The invention relates to emotional speech processing. A method for emotion or speaking style recognition and / or clustering comprises receiving one or more speech samples, generating a set of training data by extracting one or more acoustic features from every frame of the one or more speech samples, and generating a model from the set of training data, wherein the model identifies emotion or speaking style dependent information in the set of training data. The method may further comprise receiving one or more test speech samples, generating a set of test data by extracting one or more acoustic features from every frame of the one or more test speeches, and transforming the set of test data using the model to better represent emotion / speaking style dependent information, and use the transformed data for clustering and / or classification to discover speech with similar emotion or speaking style. It is emphasized that this abstract is provided to comply with the rules requiring an abstract that will allow a searcher or other reader to quickly ascertain the subject matter of the technical disclosure. It is submitted with the understanding that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:SONY COMPUTER ENTERTAINMENT INC

Multimodal disambiguation of speech recognition

InactiveUS20120143607A1Efficient and accurate text inputLow accuracySpeech recognitionEnvironmental noiseAmbiguity

The present invention provides a speech recognition system combined with one or more alternate input modalities to ensure efficient and accurate text input. The speech recognition system achieves less than perfect accuracy due to limited processing power, environmental noise, and / or natural variations in speaking style. The alternate input modalities use disambiguation or recognition engines to compensate for reduced keyboards, sloppy input, and / or natural variations in writing style. The ambiguity remaining in the speech recognition process is mostly orthogonal to the ambiguity inherent in the alternate input modality, such that the combination of the two modalities resolves the recognition errors efficiently and accurately. The invention is especially well suited for mobile devices with limited space for keyboards or touch-screen input.

Owner:CERENCE OPERATING CO

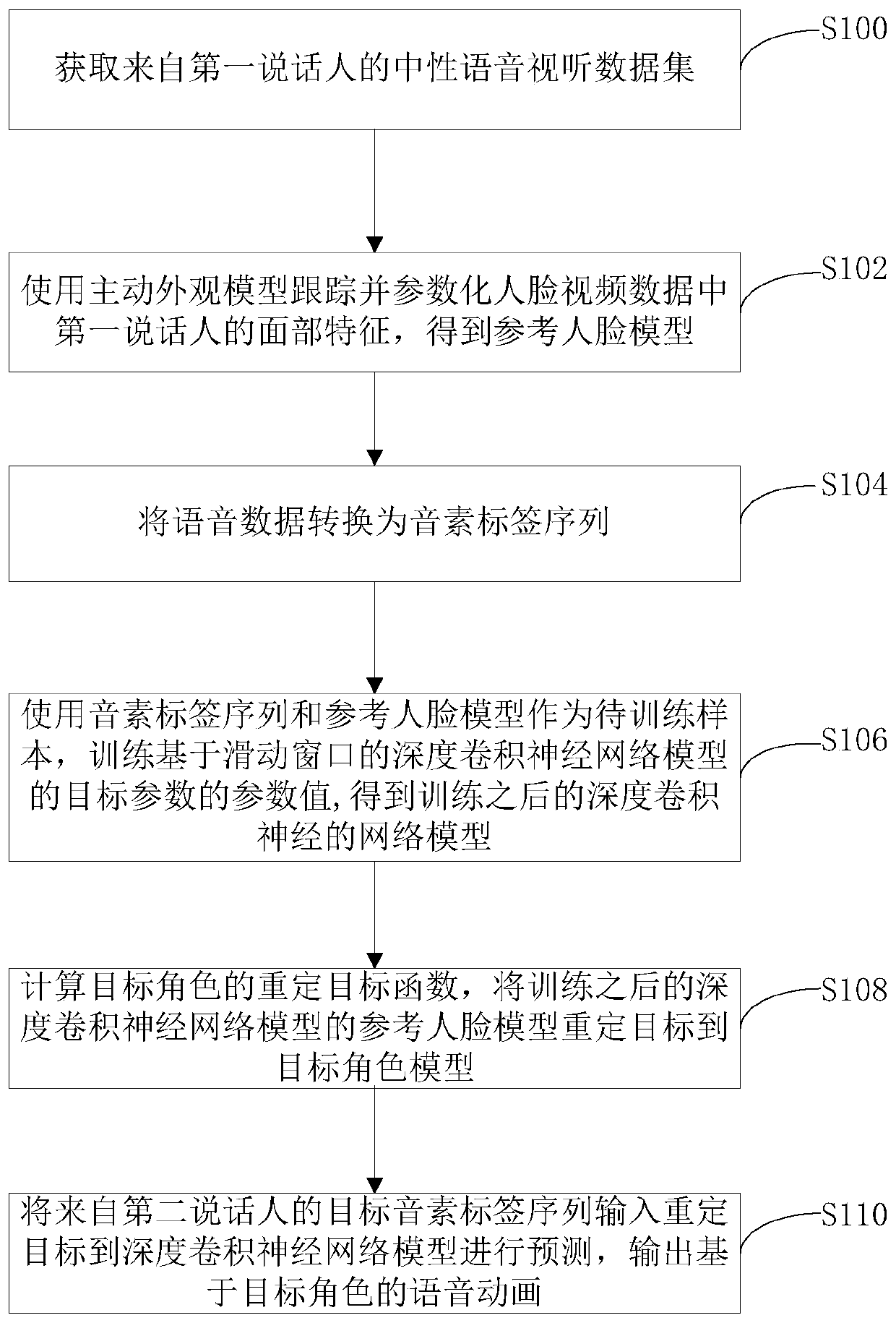

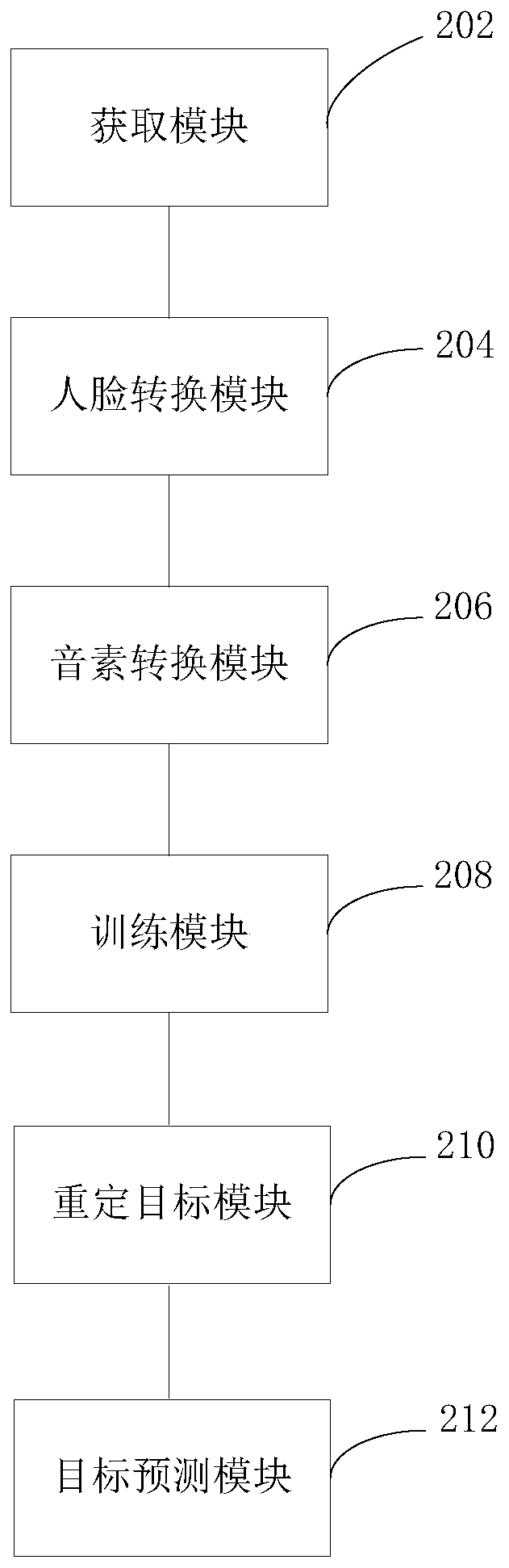

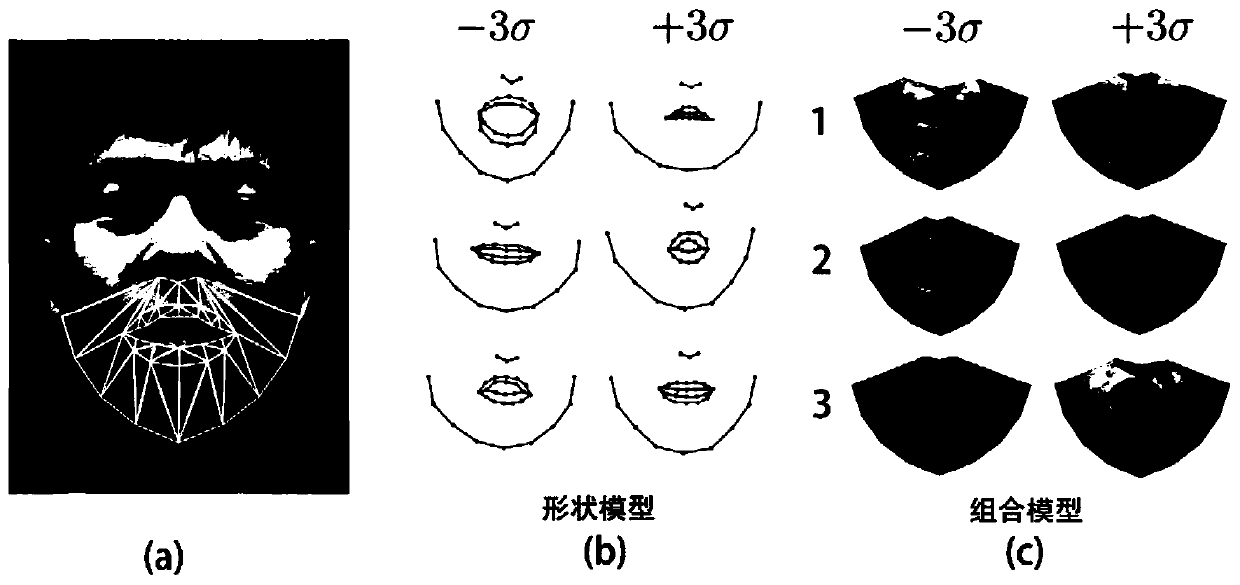

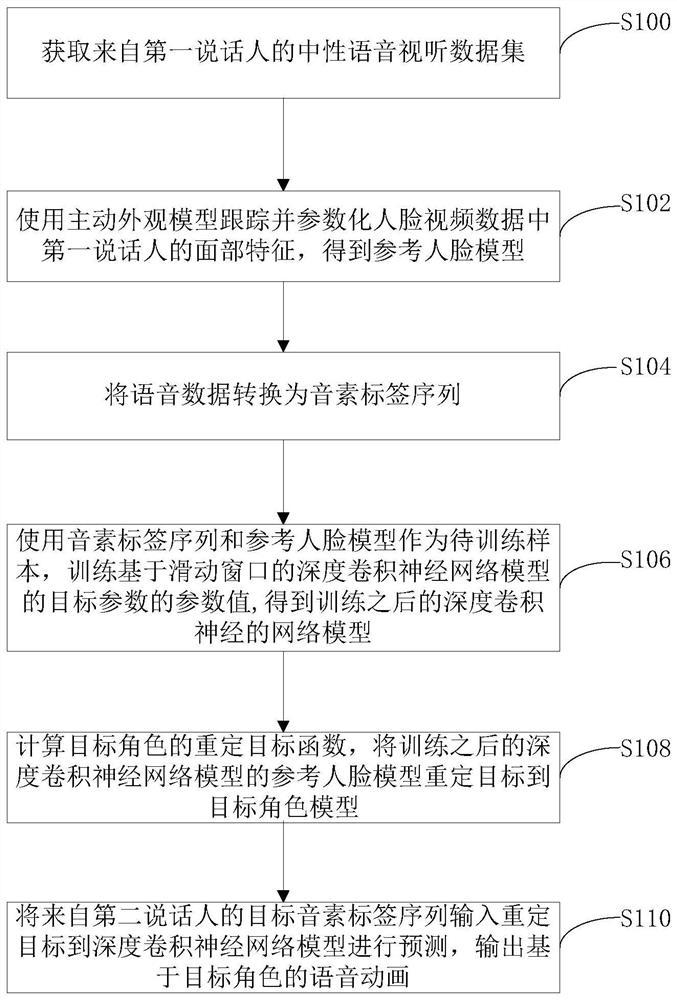

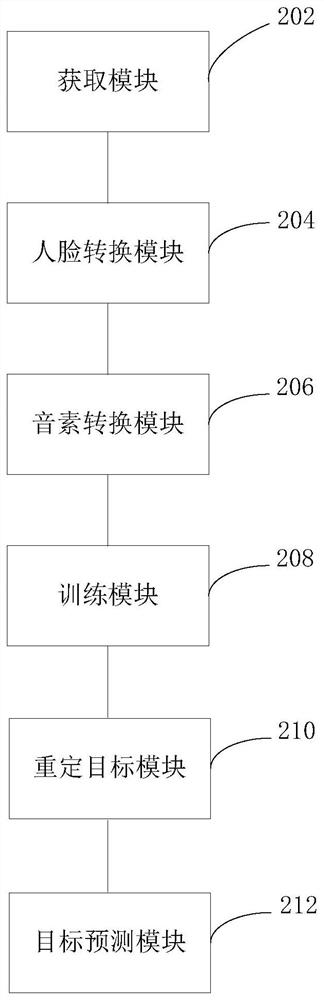

Method and system for driving human face animation through real-time voice

The invention discloses a method and a system for driving human face animation by real-time voice. The method comprises the following steps: acquiring a neutral voice audio-visual data set from a first speaker; tracking and parameterizing face video data by using an active appearance model; converting the voice data into a phoneme label sequence; training a deep convolutional neural network modelbased on a sliding window; relocating the target of the reference face model to the target role model; and inputting the target phoneme label sequence from the second speaker into the deep convolutional neural network model of the target role model for prediction. The system provided by the invention comprises an acquisition module, a face conversion module, a phoneme conversion module, a trainingmodule, a target redetermination module and a target prediction module. According to the method and the system provided by the invention, the problems that the existing voice animation method dependson a specific speaker and a specific speaking style and cannot retarget the generated animation to any facial equipment are solved.

Owner:北京中科深智科技有限公司

Video dubbing method and device based on voice synthesis, computer equipment and medium

ActiveCN111031386AImprove efficiencyQuality improvementSelective content distributionSpeech synthesisPersonalizationEngineering

The invention discloses a video dubbing method and device based on voice synthesis, computer equipment and a storage medium, and belongs to the technical field of video technologies and artificial intelligence. According to the technical scheme of the embodiment of the invention, acquiring voice feature information of different dubbings, and performing speech synthesis based on the speech featureinformation, and simulating different dubbing timbres and speaking styles; therefore, a first dubbing audio with the specified dubbing tone and speaking style can be generated according to the character information set by a user, and the first dubbing audio can be combined with the video, so the video dubbing based on the personalized requirements of the user is achieved, and the video productionefficiency and quality are greatly improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

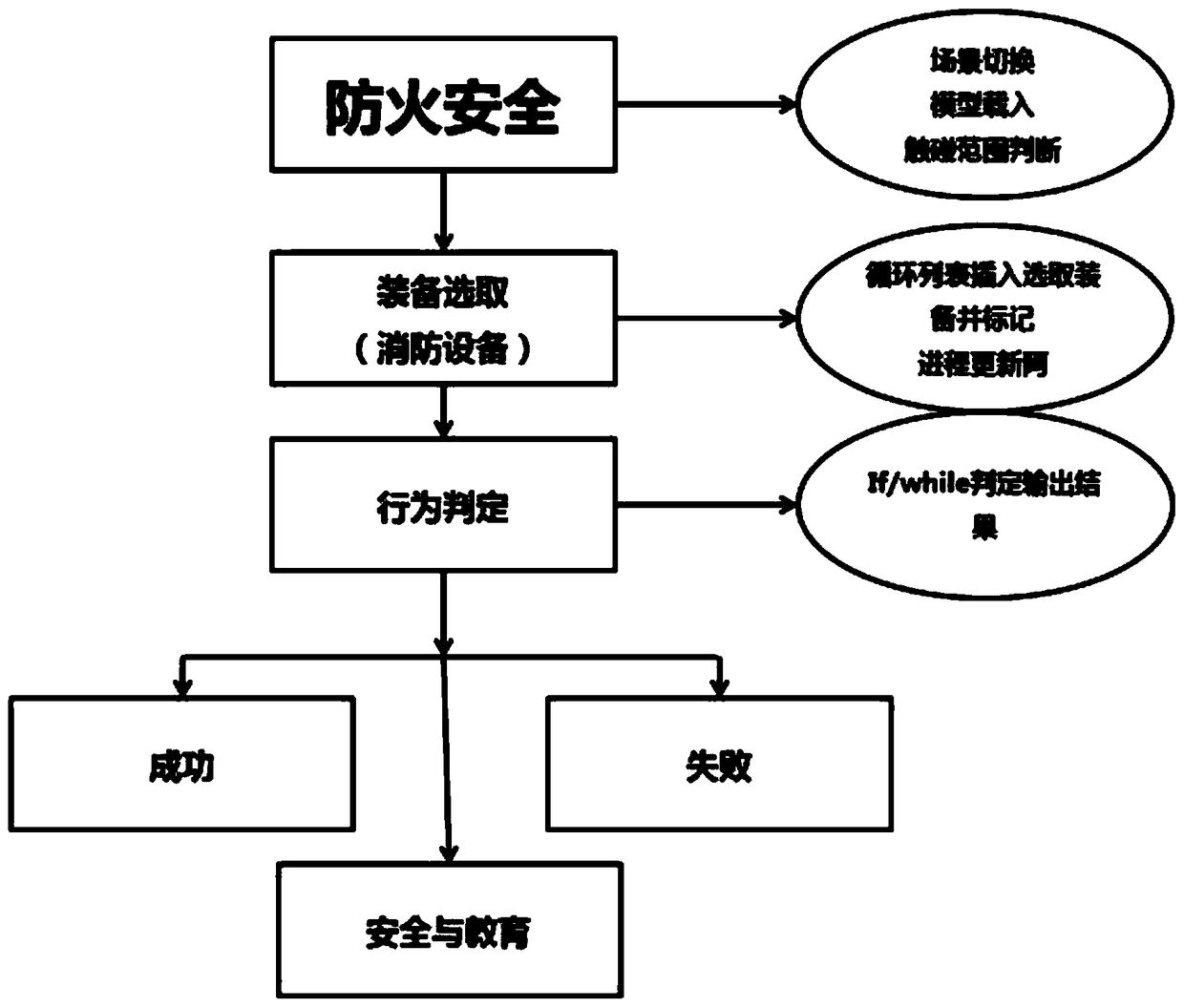

VR-based integrated safety training system

InactiveCN108694872ARaise security awarenessProficiency in safe operation skillsInput/output for user-computer interactionCosmonautic condition simulationsSimulationSpeaking style

The present invention provides a VR-based integrated safety training system and relates to the technical field of VR. The VR-based integrated safety training system comprises a VR helmet and a matching handle thereof. The VR helmet and its matching handle are connected with a main control computer. The main control computer is characterized in that a high-altitude safety simulation module, a firesafety simulation module and an electric safety simulation module are disposed in the main control computer and are modeled by Max, Maya or Zbush; diagrams are created by a Substance Designer; and content is created by a professional engine Unreal Engine4. The VR-based integrated safety training system transform the traditional speaking-style education into experience-style education, simulates accidents at construction sites by VR so that constructors can personally experience the harms caused by violation operations, strengthen safety awareness, and skillfully master operating skills. In engineering management and regulation, the VR-based integrated safety training system can obtain effects instantly.

Owner:厦门风云科技股份有限公司

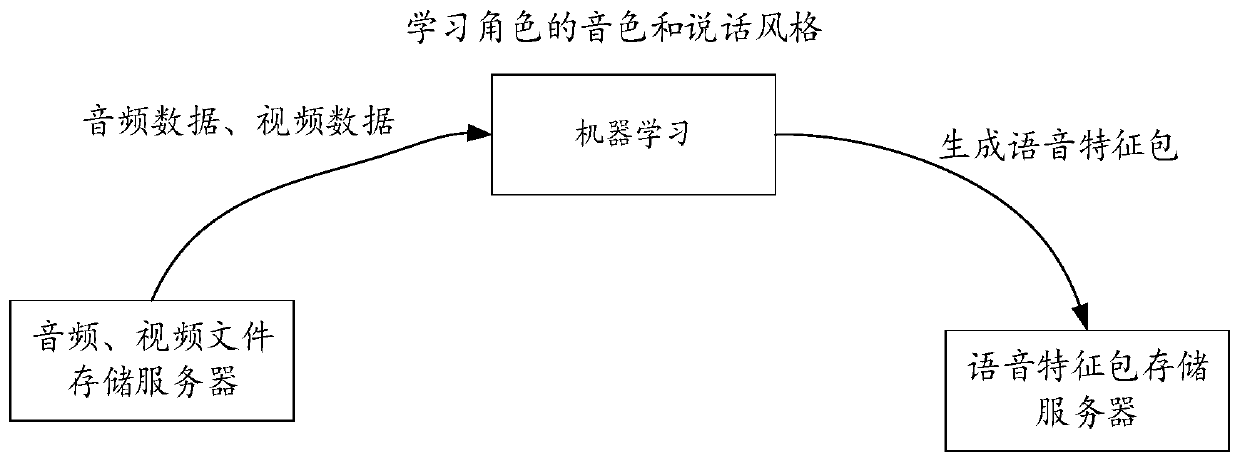

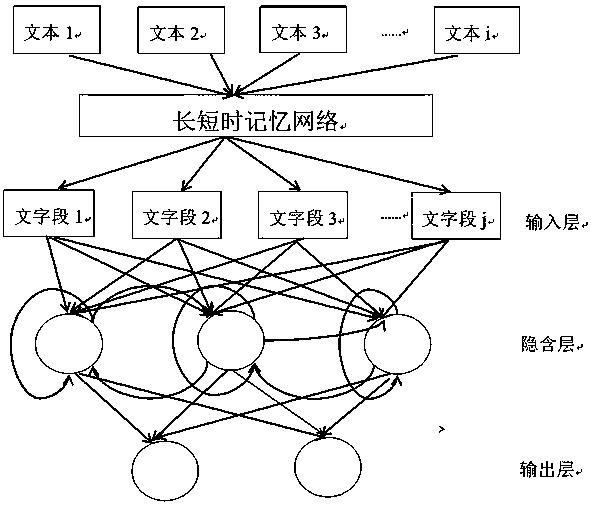

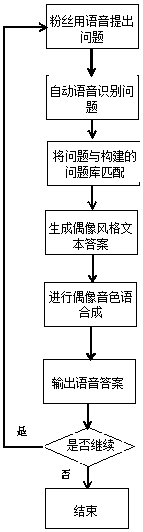

Method for constructing voice idol

InactiveCN108172209ASatisfy the psychology of worshipMeet the purpose of communicationSpeech recognitionSpeech synthesisSpeaking styleSpeech technology

The invention relates to a method for constructing a voice idol. In the method, voice technologies of voice recognition, emotional voice synthesis and the like and a deep learning technology are usedto realize the voice idol so as to make voice responses to the questions of ''fans'' by using the same or similar style and timbre as the idol. The method comprises the following steps of a, collecting a lot of text materials about the idol; b, for the text materials collected in the step a, using a LSTM neural network to convert the large section of a text into a character vector; c, taking a result in the step b as the input of an RNN training model so as to train a style learning model; d, through a lot of data training, learning an idol speaking style; e, collecting a lot of voice files about the idol; f, for the voice files collected in the step e, using a bidirectional short and long time memory rhythm hierarchy model to acquire an emotion voice synthesis model; and g, using the result of an embodiment 3 as text input of voice synthesis and making the emotion voice synthesis model in the step f be used for voice synthesis.

Owner:SHANGHAI UNIV

Method and apparatus for generating dialog prosody structure, and speech synthesis method and system employing the same

InactiveUS8234118B2Natural language data processingSpeech recognitionConversational speechSpeaking style

A dialog prosody structure generating method and apparatus, and a speech synthesis method and system employing the dialog prosody structure generation method and apparatus, are provided. The speech synthesis method using the dialog prosody structure generation method includes: determining a system speaking style based on a user utterance; if the system speaking style is dialog speech, generating dialog prosody information by reflecting discourse information between a user and a system; and synthesizing a system utterance based on the generated dialog prosody information.

Owner:SAMSUNG ELECTRONICS CO LTD

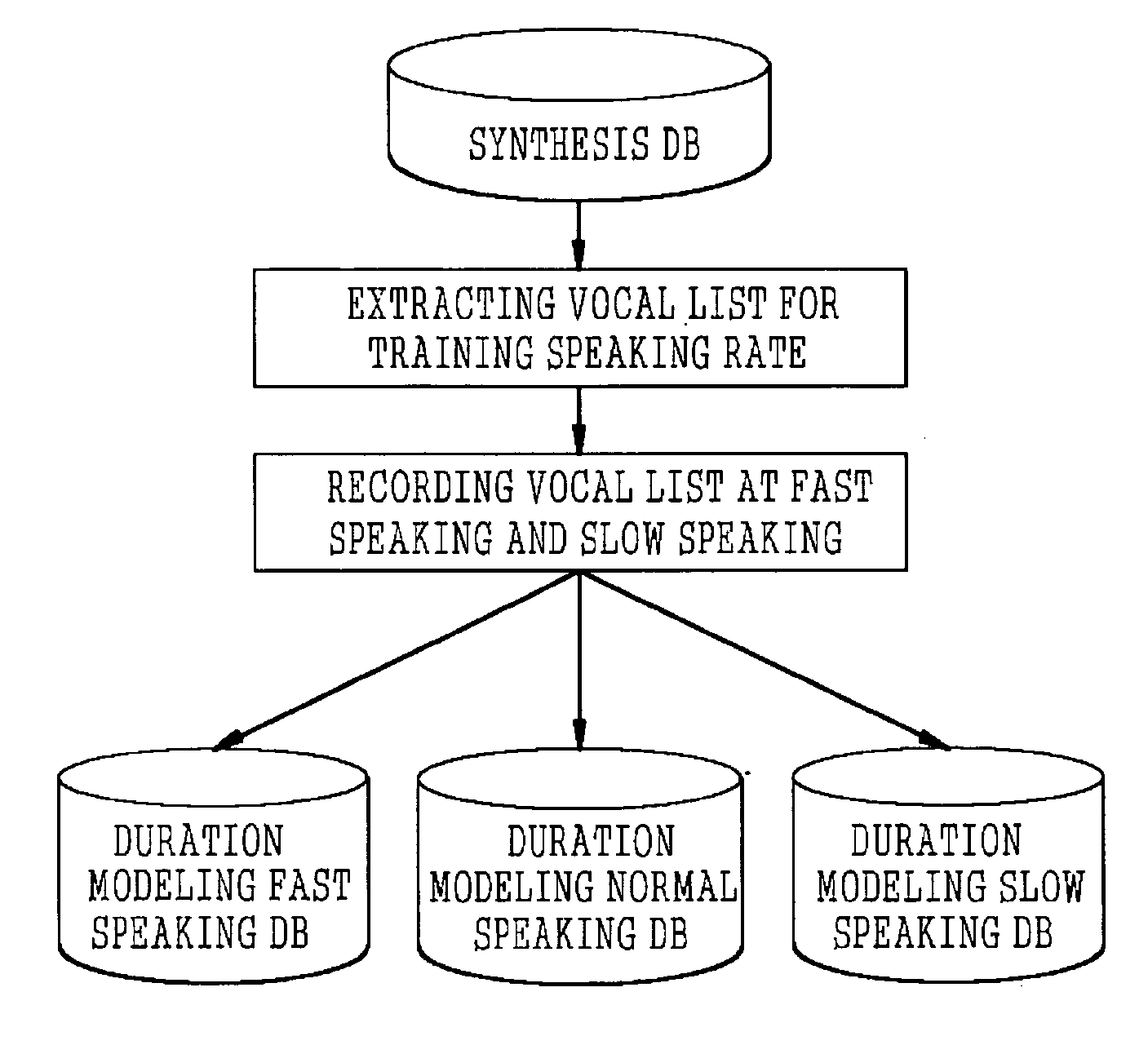

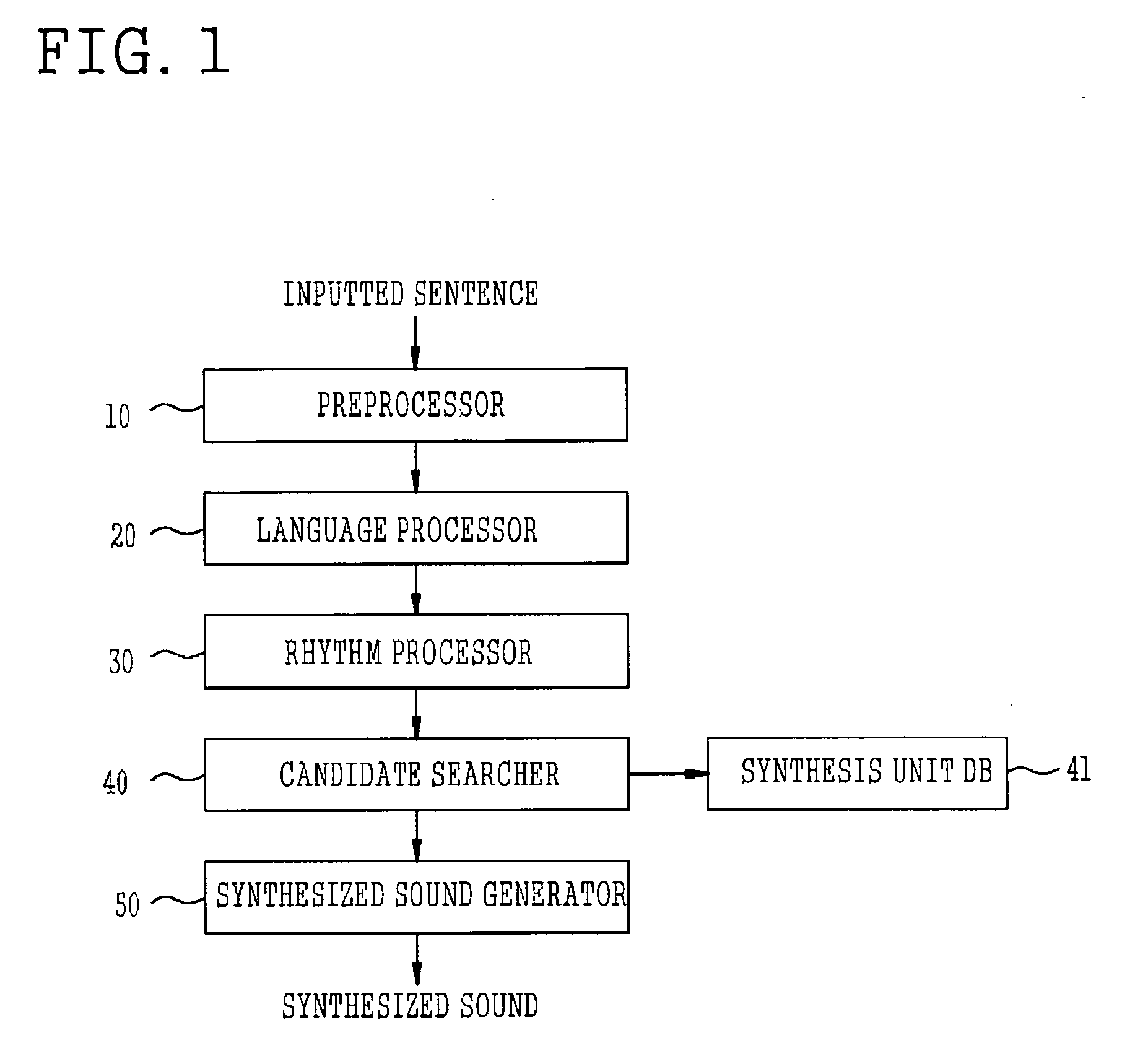

Method of speaking rate conversion in text-to-speech system

A method of a speaking rate conversion in a text-to-speech system is provided. The method includes: a first step of extracting a vocal list from a synthesis DB (database), voicing the extracted vocal list in each speaking style constituted of fast speaking, normal speaking, and slow speaking, and building a probability distribution of a synthesis unit-based duration; a second step of searching for an optimal synthesis unit candidate row using a viterbi search, correspondingly to a requested synthesis, and creating a target duration parameter of a synthesis unit; and a third step of again obtaining an optimal synthesis unit candidate row using the duration parameter of the optimal synthesis unit candidate row, and generating a synthesized sound.

Owner:ELECTRONICS & TELECOMM RES INST

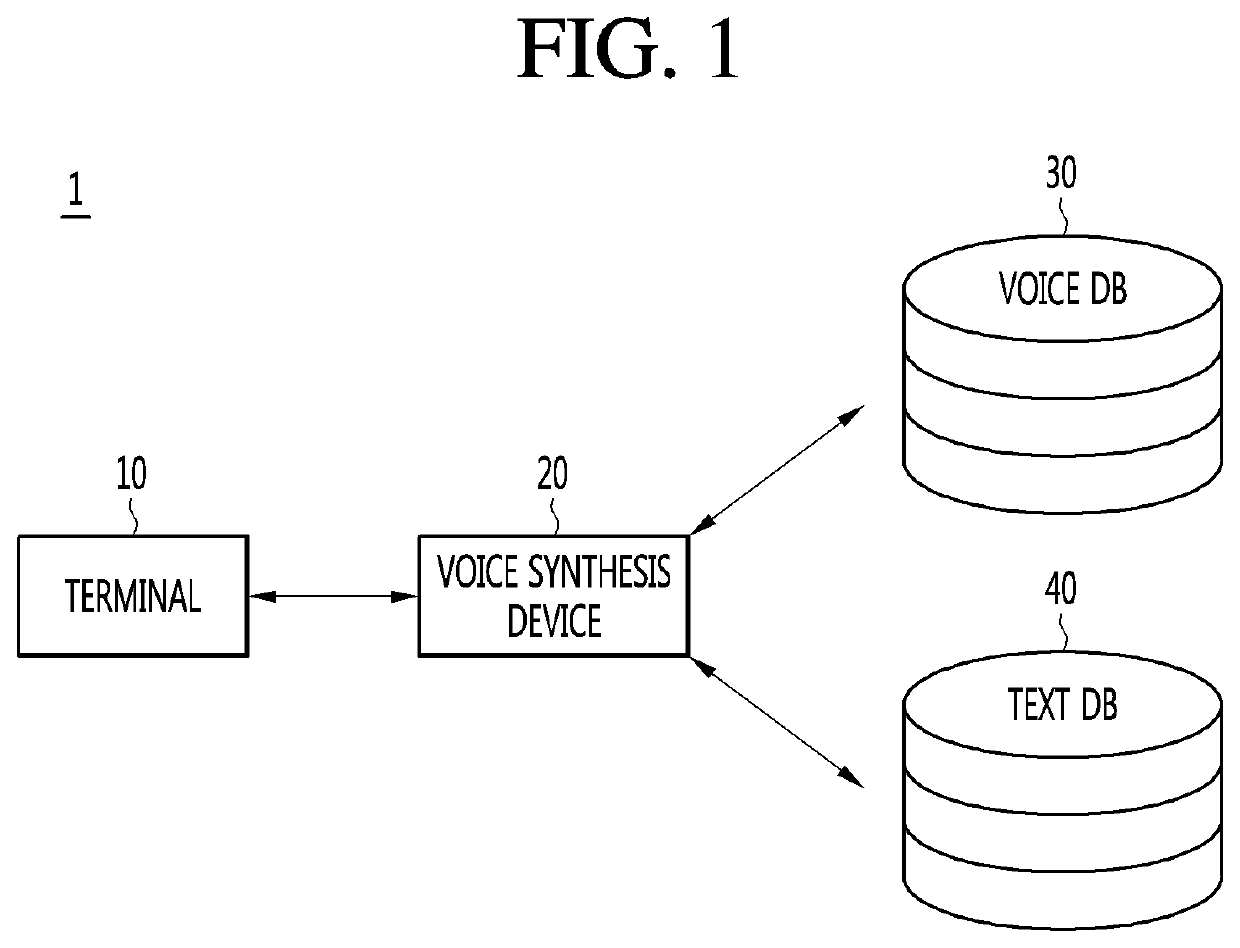

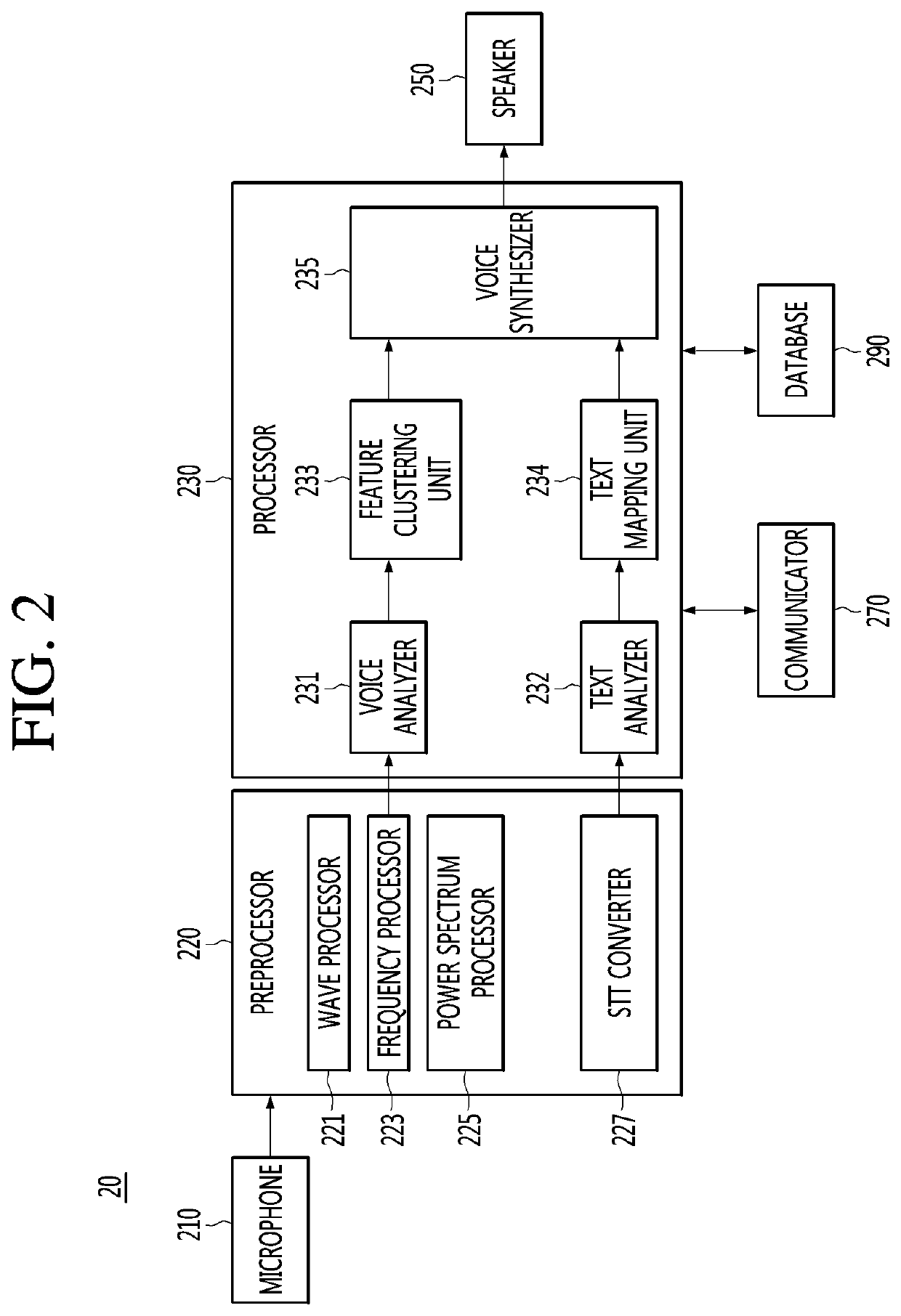

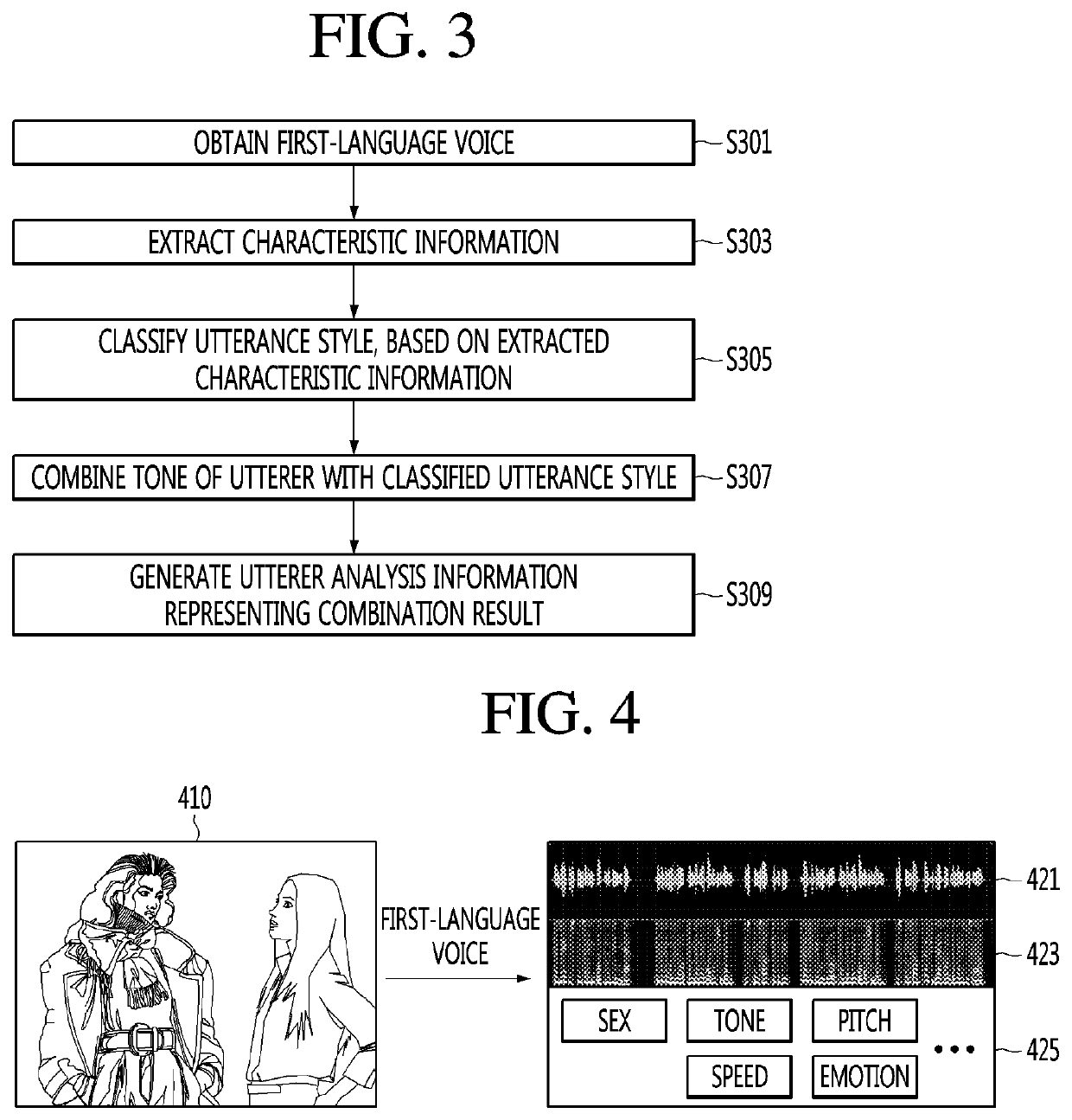

Voice synthesis device

Disclosed is a voice synthesis device. The voice synthesis device includes a database configured to store a voice and a text corresponding to the voice and a processor configured to extract characteristic information and a tone of a first-language voice stored in the database, classify an utterance style of an utterer on basis of the extracted characteristic information, generate utterer analysis information including the utterance style and the tone, translate a text corresponding to the first-language voice into a second language, and synthesize the text, translated into the second language, in a second-language voice by using the utterer analysis information.

Owner:LG ELECTRONICS INC

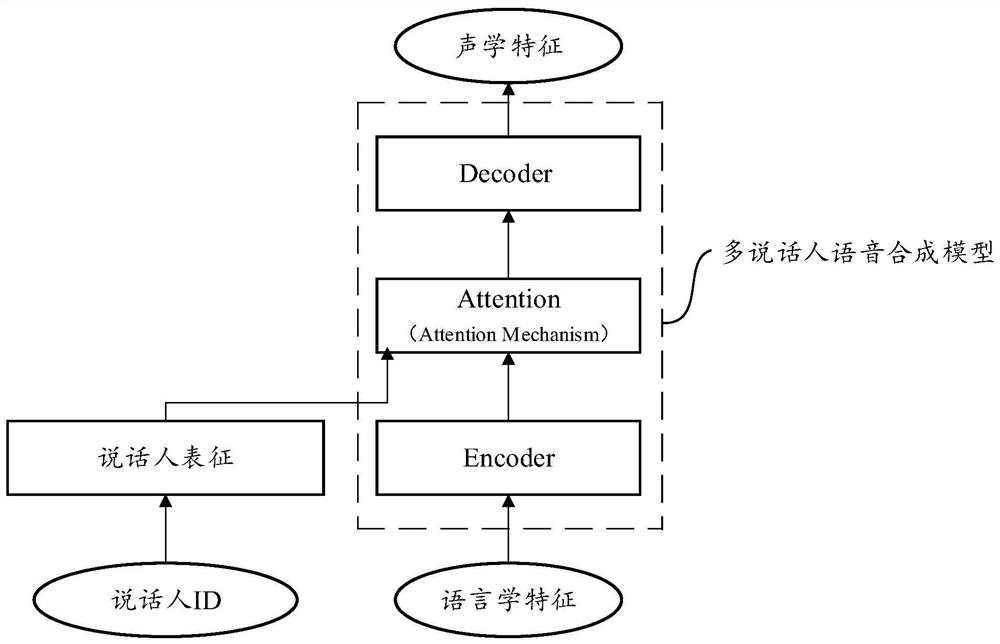

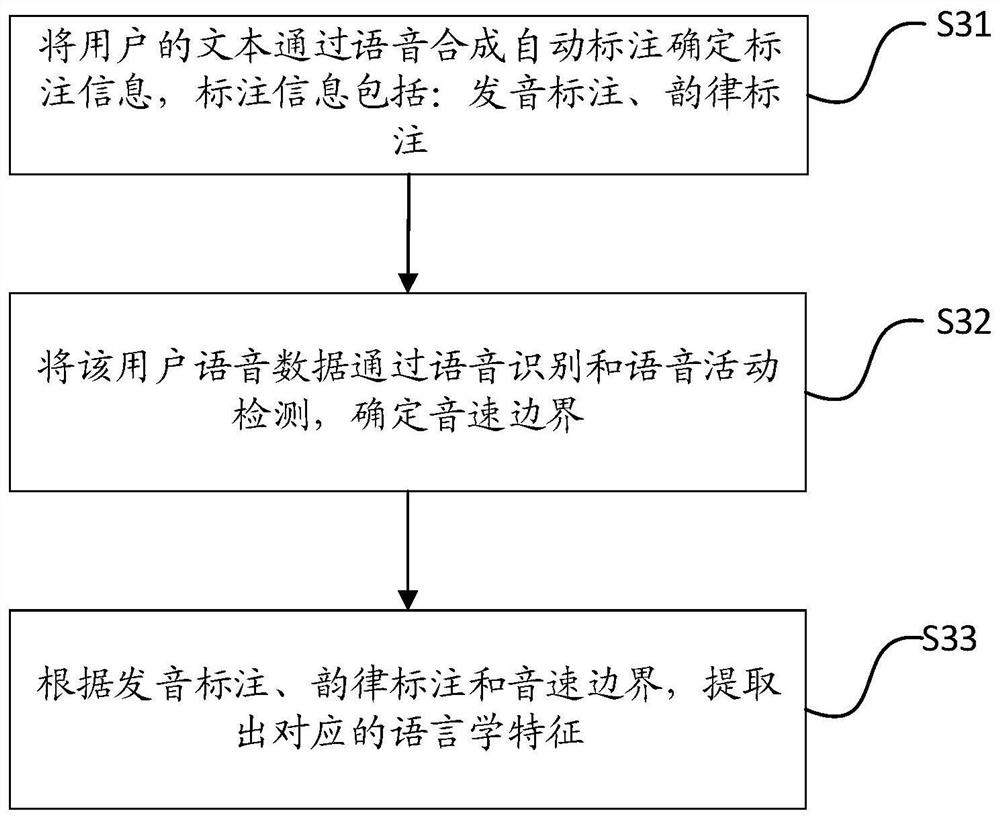

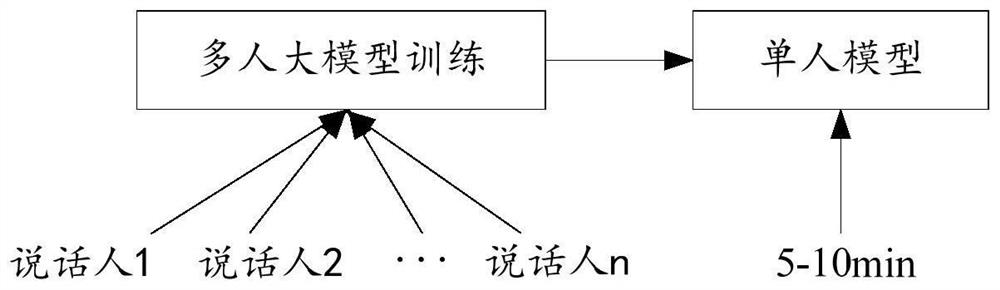

Personalized speech synthesis model creation, speech synthesis and test method and device thereof

PendingCN112885326AImprove experienceEasy to learnSpeech recognitionSpeech synthesisSynthesis methodsSpeaking style

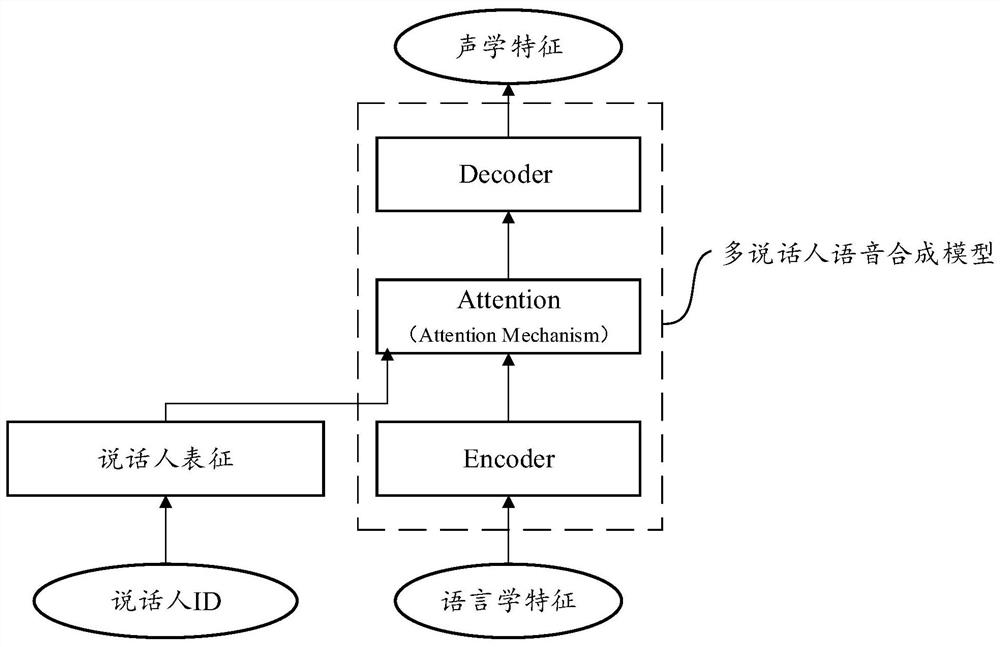

The invention discloses a personalized speech synthesis model creation method, a speech synthesis method and a device thereof. The method comprises the following steps: selecting similar speakers belonging to the same category as a user from a plurality of speakers of a multi-speaker speech synthesis model; and training the multi-speaker speech synthesis model according to the training data of the user and the selected similar speakers to obtain a personalized speech synthesis model of the user. The voice of the specific speaking style of the target speaker can be synthesized, and the user experience is improved.

Owner:ALIBABA GRP HLDG LTD

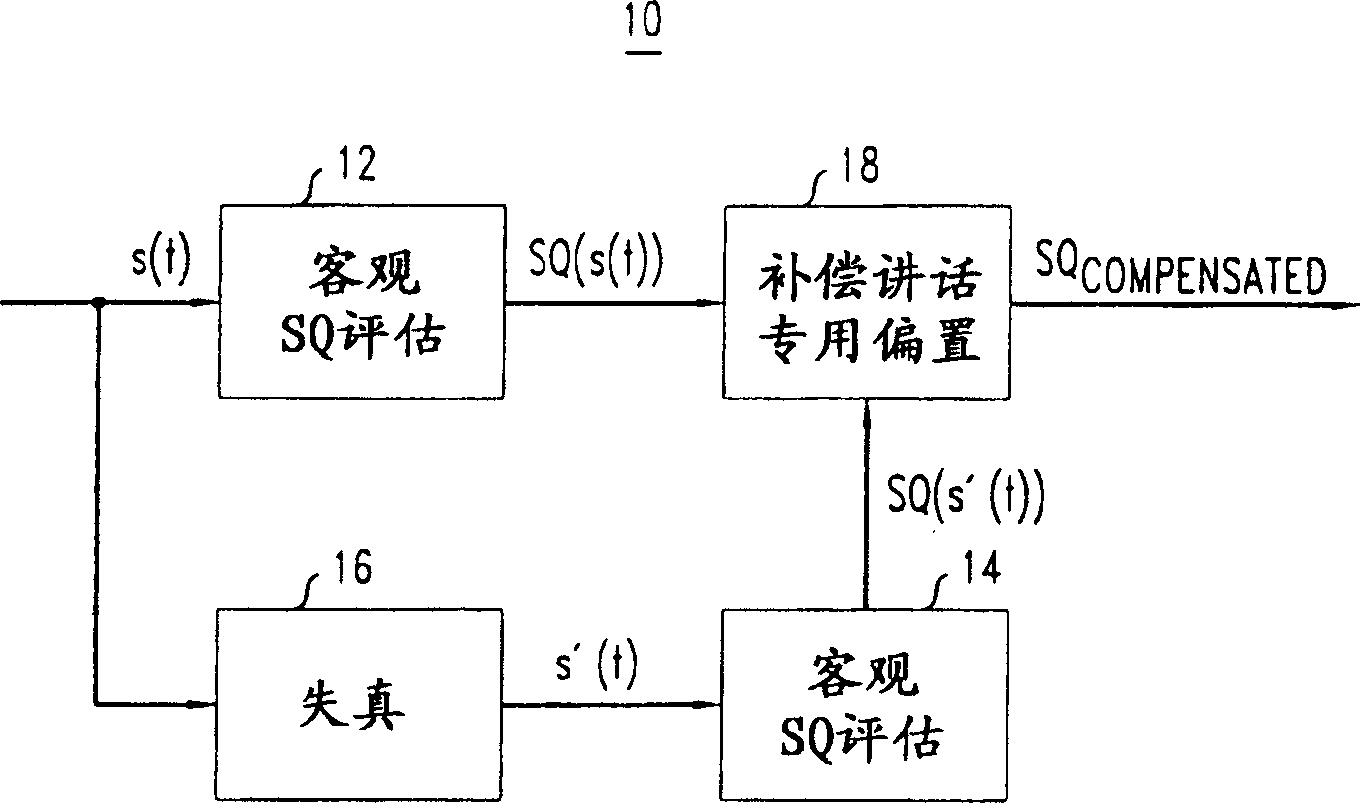

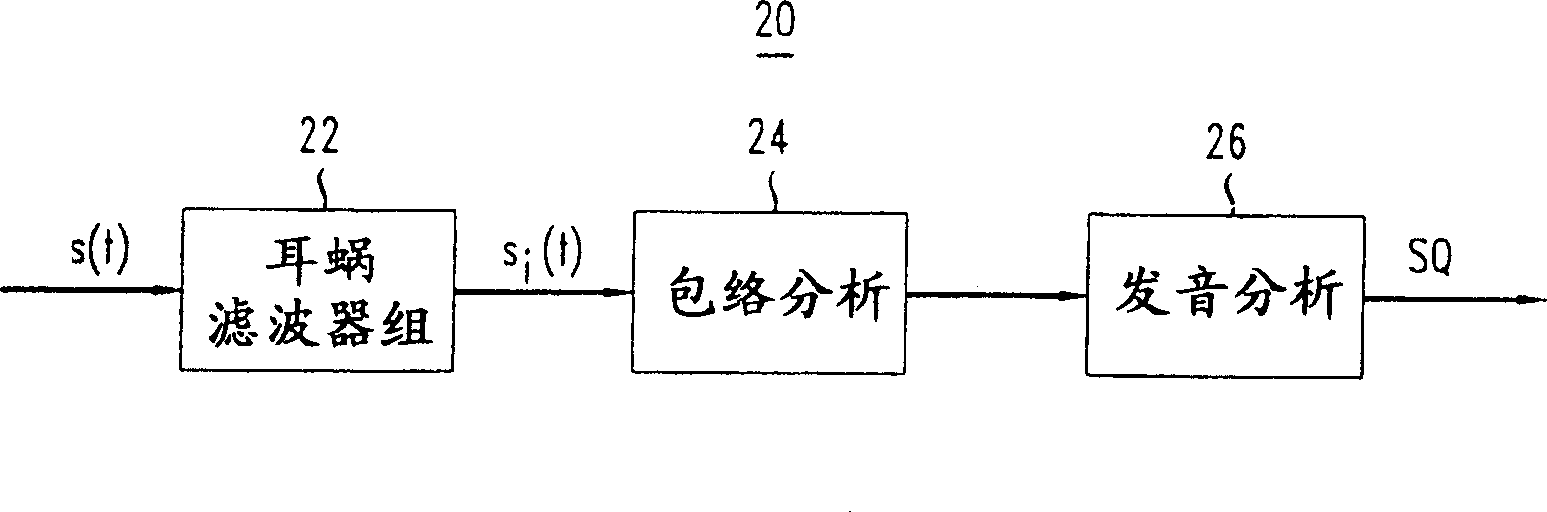

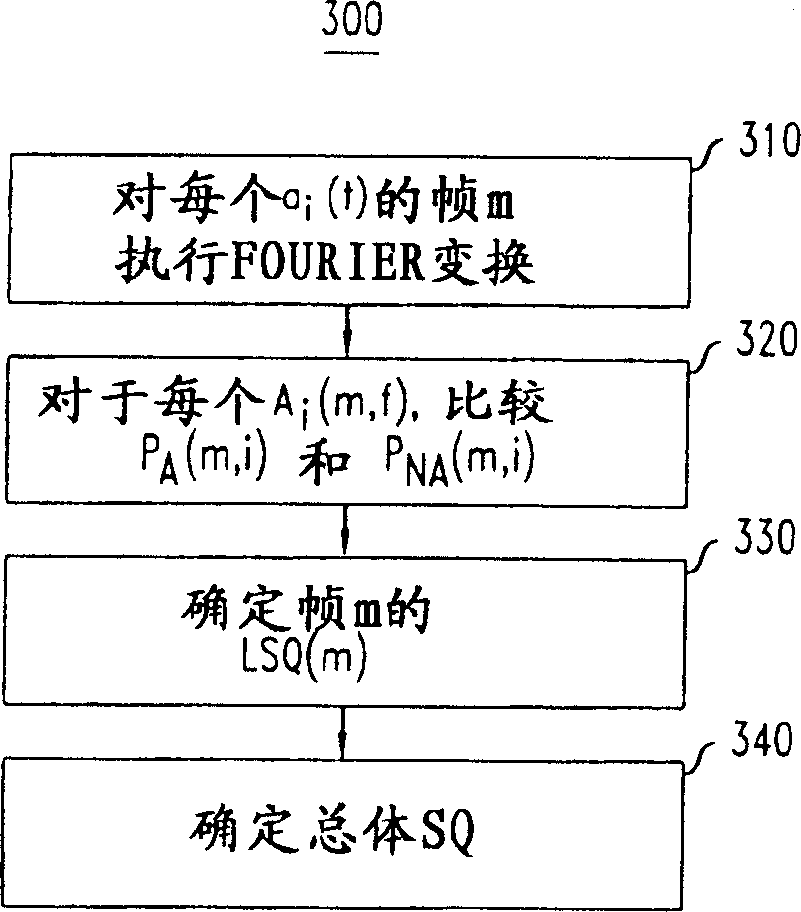

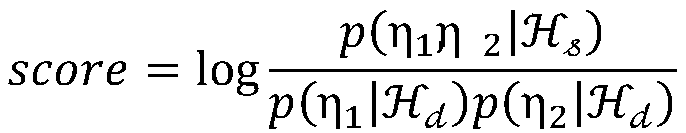

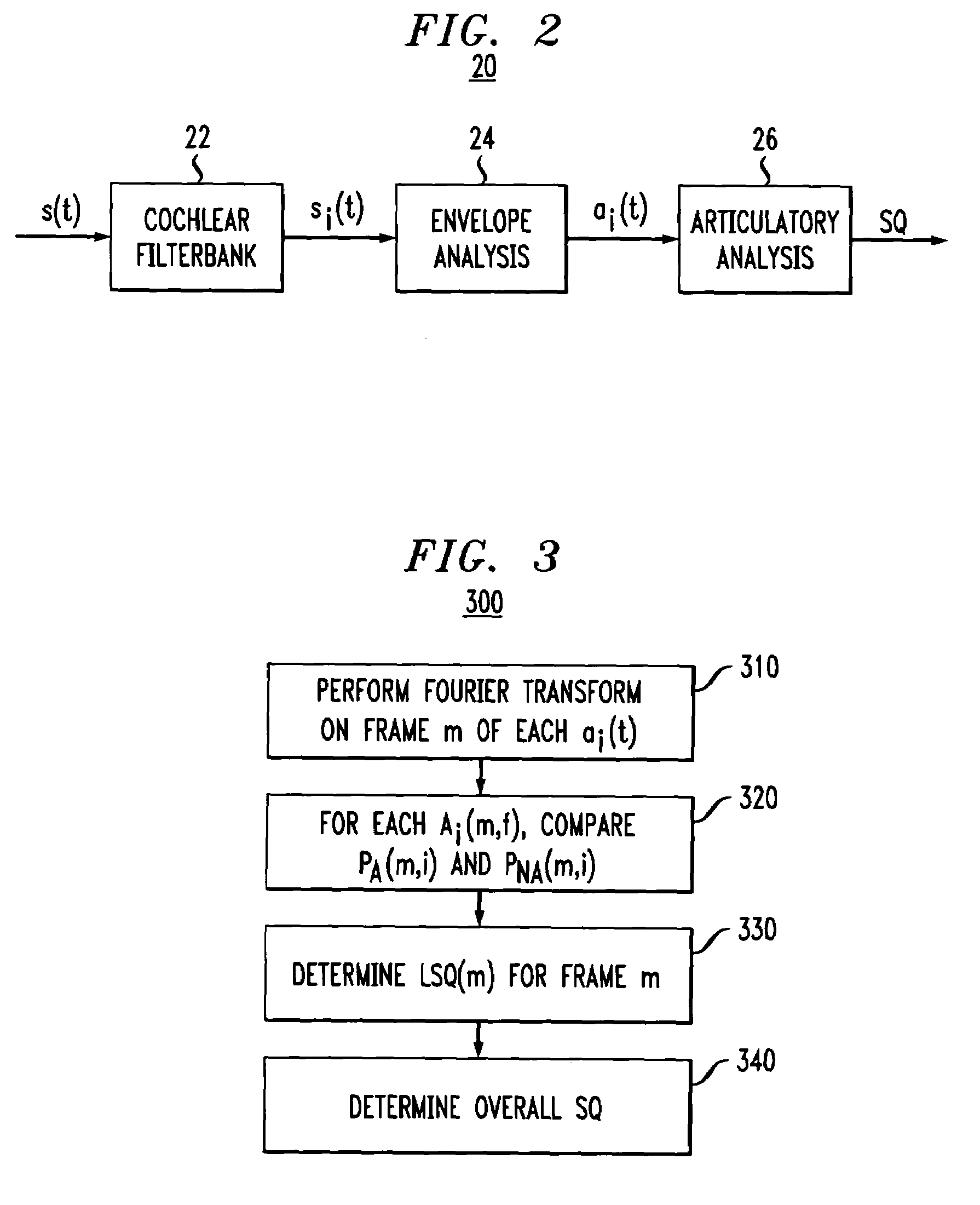

Compensation for utterance dependent articulation for speech quality assessment

A method for objective speech quality assessment that accounts for phonetic contents, speaking styles or individual speaker differences by distorting speech signals under speech quality assessment. By using a distorted version of a speech signal, it is possible to compensate for different phonetic contents, different individual speakers and different speaking styles when assessing speech quality. The amount of degradation in the objective speech quality assessment by distorting the speech signal is maintained similarly for different speech signals, especially when the amount of distortion of the distorted version of speech signal is severe. Objective speech quality assessment for the distorted speech signal and the original undistorted speech signal are compared to obtain a speech quality assessment compensated for utterance dependent articulation.

Owner:LUCENT TECH INC

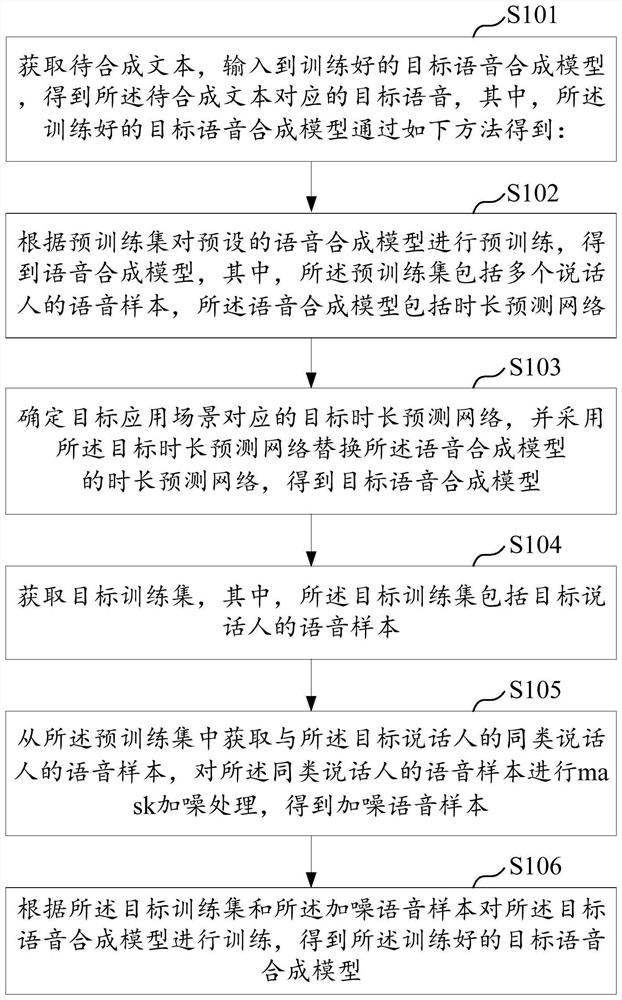

Speech synthesis method, model training method, equipment and storage medium

The invention provides a speech synthesis method, a model training method, equipment and a storage medium, and the method comprises the steps: obtaining a to-be-synthesized text, inputting the to-be-synthesized text to a trained target speech synthesis model, and obtaining a target speech corresponding to the to-be-synthesized text; wherein a preset speech synthesis model is pre-trained according to a pre-training set to obtain a speech synthesis model; replacing the duration prediction network of the speech synthesis model with a target duration prediction network corresponding to the target application scene to obtain a target speech synthesis model; obtaining a target training set, wherein the target training set comprises a voice sample of a target speaker; selecting voice samples of speakers of the same kind of the target speaker from the pre-training set, and performing mask noise addition to obtain noise-added voice samples; and training the target speech synthesis model according to the target training set and the noise-added speech sample to obtain a trained target speech synthesis model. According to the invention, high-quality natural and smooth voice which is more matched with the speaking style of a specific speaker can be synthesized.

Owner:UNIV OF SCI & TECH OF CHINA +1

Multimodal disambiguation of speech recognition

The present invention provides a speech recognition system combined with one or more alternate input modalities to ensure efficient and accurate text input. The speech recognition system achieves less than perfect accuracy due to limited processing power, environmental noise, and / or natural variations in speaking style. The alternate input modalities use disambiguation or recognition engines to compensate for reduced keyboards, sloppy input, and / or natural variations in writing style. The ambiguity remaining in the speech recognition process is mostly orthogonal to the ambiguity inherent in the alternate input modality, such that the combination of the two modalities resolves the recognition errors efficiently and accurately. The invention is especially well suited for mobile devices with limited space for keyboards or touch-screen input.

Owner:AOL LLC A DELAWARE LLC

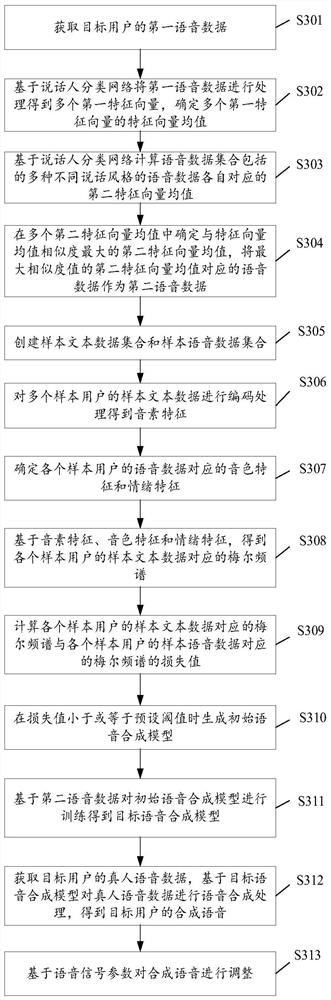

Voice synthesis model training method and device, storage medium and electronic equipment

PendingCN112309365AImprove training efficiencySpeech recognitionSpeech synthesisSpeaking styleSpeech synthesis

The embodiment of the invention discloses a voice synthesis model training method. The method comprises the following steps: obtaining the first voice data of a target user, determining the second voice data with the maximum similarity with the first voice data in a voice data set based on a speaker classification network, and training an initial speech synthesis model based on the second speech data to obtain a target speech synthesis model. When a new target user is trained for the speech synthesis model, speech data most similar to the speaking style of the target user is found in an existing speech data set to train the initial speech synthesis model to obtain the target speech synthesis model, the initial speech synthesis model is a multi-person speech synthesis model, and the training efficiency of the multi-person speech synthesis model is improved.

Owner:BEIJING DA MI TECH CO LTD

Retrieval type personalized conversation method and system

PendingCN113901188APersonalized information is appropriatePersonalized informationDigital data information retrievalCharacter and pattern recognitionPersonalizationFeature vector

The invention discloses a retrieval type personalized conversation method and a system applying the method through a method in the field of artificial intelligence processing. The method comprises the following steps: constructing a personalized style matching module to obtain a style matching feature vector gS (q, r, H); designing a user portrait matching module depending on the current question, and obtaining a user portrait matching vector gP (q, r, H) to measure the consistency between a user portrait and an answer candidate; and finally, combining the style matching feature vector gS (q, r, H) and the user portrait matching vector gP (q, r, H) through a fusion module to calculate a final matching score, and returning a candidate reply with the highest matching score. According to the method, modeling is carried out on personalization of the user from the conversation history of the user, the mutual relation between the speaking style of the user and the preference of the user is mined, the source of the user personalized information learned by the model can be distinguished, and finally the two parts of user personalized information are fused to sort the candidate replies, so that the most suitable personalized reply is obtained.

Owner:RENMIN UNIVERSITY OF CHINA

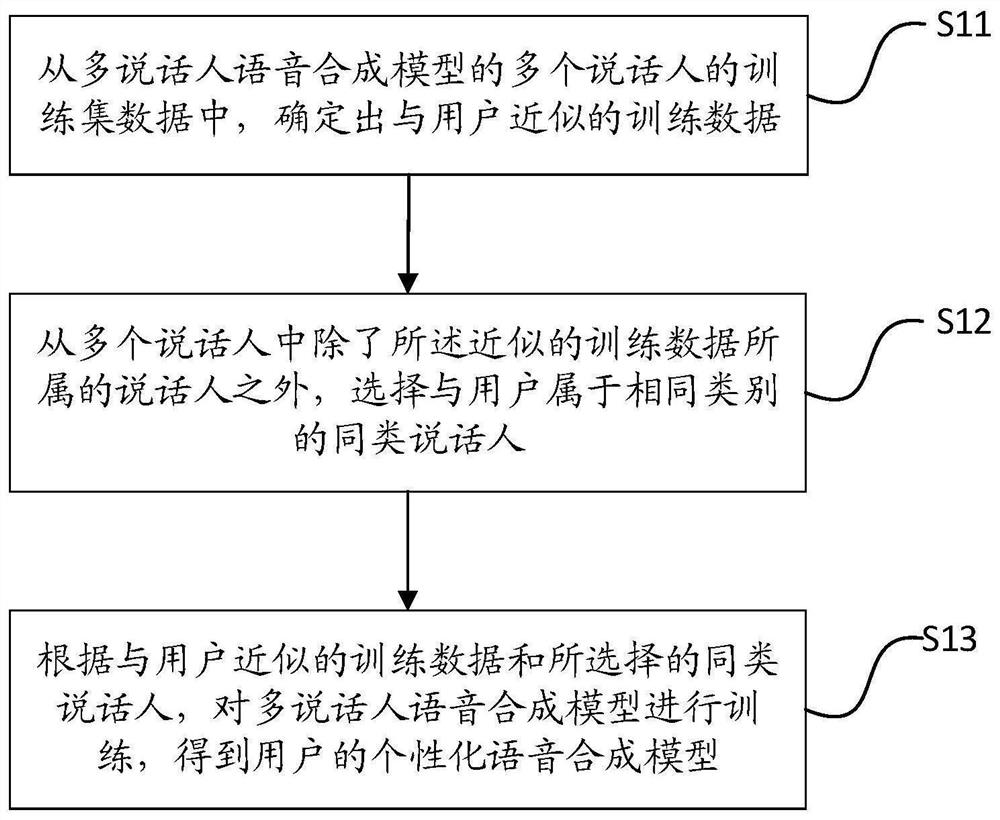

Personalized speech synthesis model construction method and device, speech synthesis method and device, and personalized speech synthesis model test method and device

PendingCN112863476AImprove experienceEasy to learnSpeech recognitionSpeech synthesisPersonalizationSynthesis methods

The invention discloses a personalized speech synthesis model construction method and device, a speech synthesis method and device, and a personalized speech synthesis model test method and device. The construction method of the personalized speech synthesis model comprises the following steps: determining training data similar to a user from training set data of a plurality of speakers of a multi-speaker speech synthesis model; selecting similar speakers belonging to the same category as the user from the plurality of speakers except the speaker to which the approximate training data belongs; and training the multi-speaker speech synthesis model according to training data similar to the user and the selected similar speakers to obtain a personalized speech synthesis model of the user. The voice of the specific speaking style of the user can be synthesized, and the user experience is improved.

Owner:ALIBABA GRP HLDG LTD

Speaking-rate normalized prosodic parameter builder, speaking-rate dependent prosodic model builder, speaking-rate controlled prosodic-information generation device and prosodic-information generation method able to learn different languages and mimic various speakers' speaking styles

A speaking-rate dependent prosodic model builder and a related method are disclosed. The proposed builder includes a first input terminal for receiving a first information of a first language spoken by a first speaker, a second input terminal for receiving a second information of a second language spoken by a second speaker and a functional information unit having a function, wherein the function includes a first plurality of parameters simultaneously relevant to the first language and the second language or a plurality of sub-parameters in a second plurality of parameters relevant to the second language alone, and the functional information unit under a maximum a posteriori condition and based on the first information, the second information and the first plurality of parameters or the plurality of sub-parameters produces speaking-rate dependent reference information and constructs a speaking-rate dependent prosodic model of the second language.

Owner:NATIONAL TAIPEI UNIVERSITY

A real-time voice-driven face animation method and system

The invention discloses a method and system for real-time voice-driven facial animation, the method comprising acquiring a neutral voice audio-visual data set from a first speaker; using an active appearance model to track and parameterize human face video data; Convert data to phoneme label sequences; train sliding window based deep convolutional neural network model; retarget reference face model to target character model; input retarget phoneme label sequence from second speaker to target role model Deep Convolutional Neural Network Models for Prediction. The system provided by the invention includes: an acquisition module, a face conversion module, a phoneme conversion module, a training module, a retargeting module and a target prediction module. The method and system provided by the present invention solve the problem that the existing voice animation method depends on a specific speaker and speaking style, and cannot retarget the generated animation to any facial equipment.

Owner:北京中科深智科技有限公司

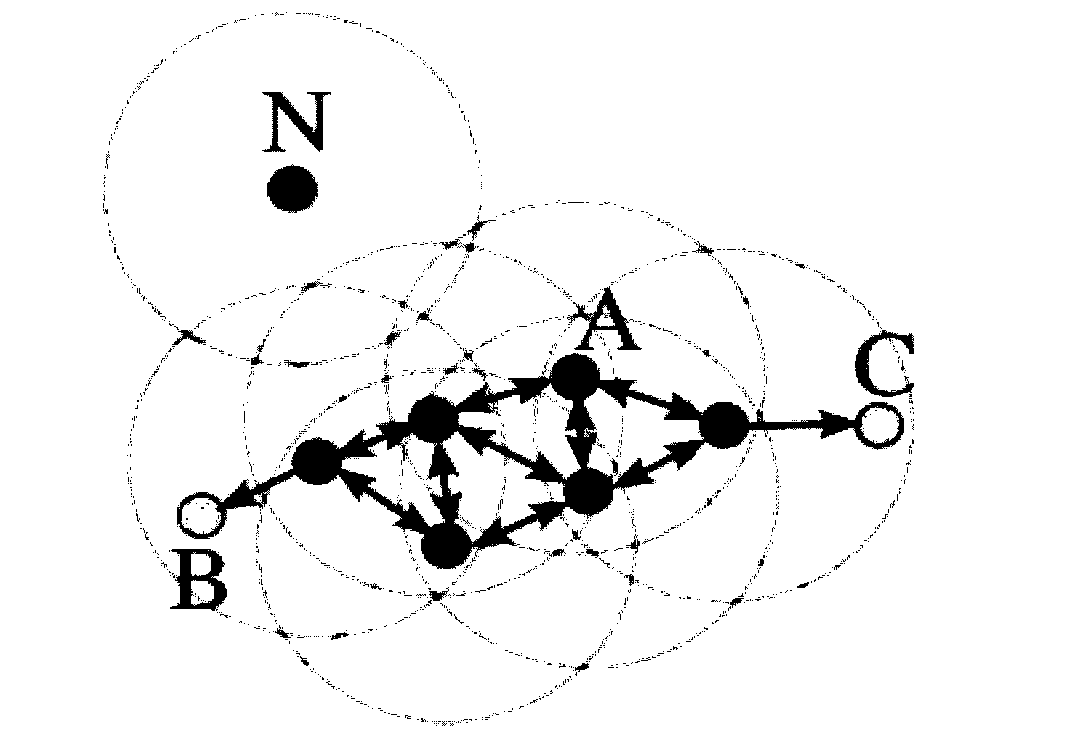

Multi-mode-based conference spokesman identity non-inductive confirmation method

PendingCN110807370ANo human intervention requiredImprove meetingSemantic analysisSpeech analysisCluster algorithmEngineering

The invention provides a multi-mode-based conference speaker identity non-inductive confirmation method. Based on a conference using multiple modes of image, voice and text, the identity of a spokesman is confirmed by recognizing the expression, voice and speaking style of the spokesman, and the method specifically comprises an expression recognition method based on a deep learning model, a voicerecognition method based on an artificial intelligence algorithm and a method for recognizing speaking content by adopting a text clustering algorithm. According to the method, the whole process is automatic, manual intervention is not needed, the identity of the speaker can be confirmed in a non-inductive mode through the artificial intelligence algorithm model, manual intervention is not needed,meeting and office efficiency is greatly improved, and accuracy is high.

Owner:南京星耀智能科技有限公司

Compensation for utterance dependent articulation for speech quality assessment

ActiveUS7308403B2Different in contentSubstation equipmentSpeech recognitionSpeaking styleQuality assessment

A method for objective speech quality assessment that accounts for phonetic contents, speaking styles or individual speaker differences by distorting speech signals under speech quality assessment. By using a distorted version of a speech signal, it is possible to compensate for different phonetic contents, different individual speakers and different speaking styles when assessing speech quality. The amount of degradation in the objective speech quality assessment by distorting the speech signal is maintained similarly for different speech signals, especially when the amount of distortion of the distorted version of speech signal is severe. Objective speech quality assessment for the distorted speech signal and the original undistorted speech signal are compared to obtain a speech quality assessment compensated for utterance dependent articulation.

Owner:SOUND VIEW INNOVATIONS

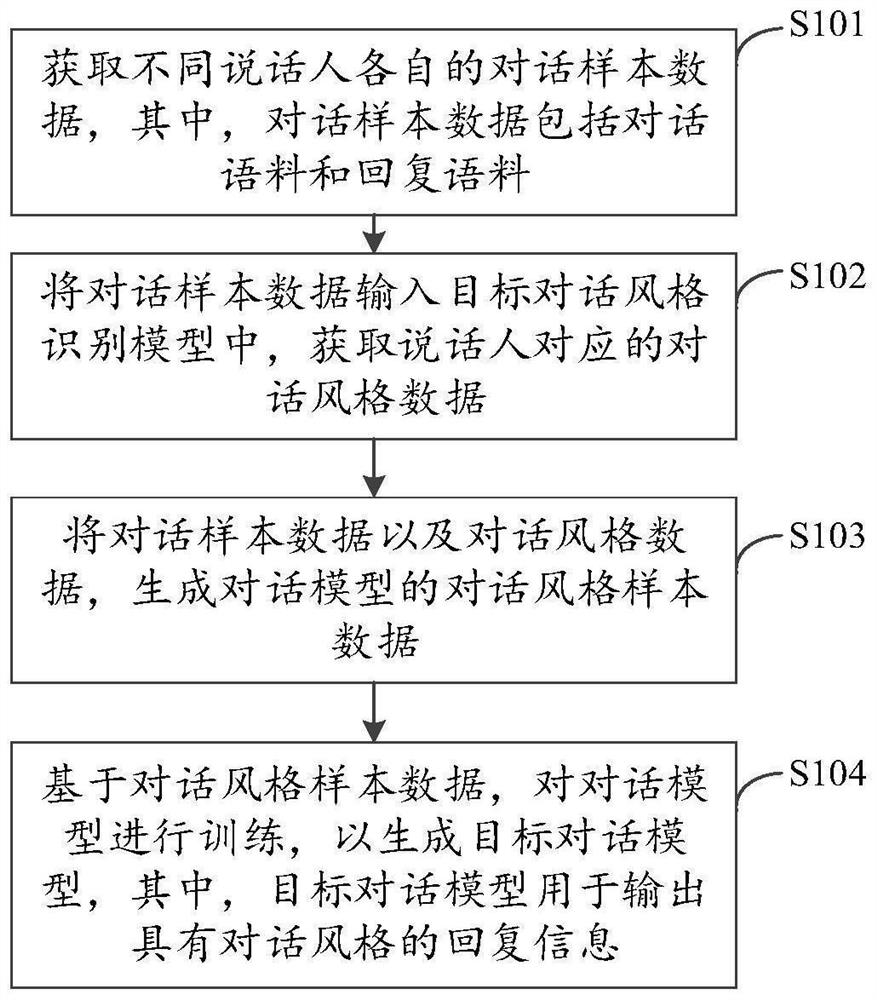

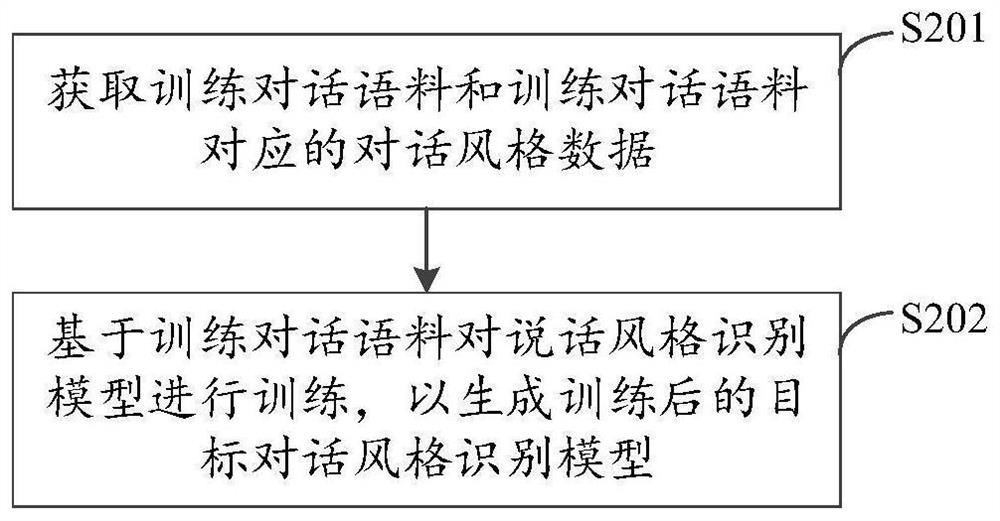

Training method of dialogue model, dialogue method and device of dialogue robot and electronic equipment

PendingCN114490967AEasy to understandCharacter and pattern recognitionSpeech recognitionData packNatural language understanding

The invention provides a training method of a dialogue model, a dialogue method and device of a dialogue robot and electronic equipment, and relates to the field of artificial intelligence such as natural language understanding and deep learning, the method comprises the steps that dialogue sample data of different speakers is acquired, and the dialogue sample data comprises dialogue corpora and reply corpora; inputting the dialogue sample data into a target dialogue style recognition model, and obtaining dialogue style data corresponding to the speaker; dialogue style sample data of a dialogue model is generated through the dialogue sample data and the dialogue style data; and based on the dialogue style sample data, training a dialogue model to generate a target dialogue model, the target dialogue model being used for outputting reply information with a dialogue style. According to the method and the device, the target dialogue model can realize information reply with an accurate and flexible speaking style, and the user experience degree in a man-machine dialogue process is optimized.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com