Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

31144 results about "Deep learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

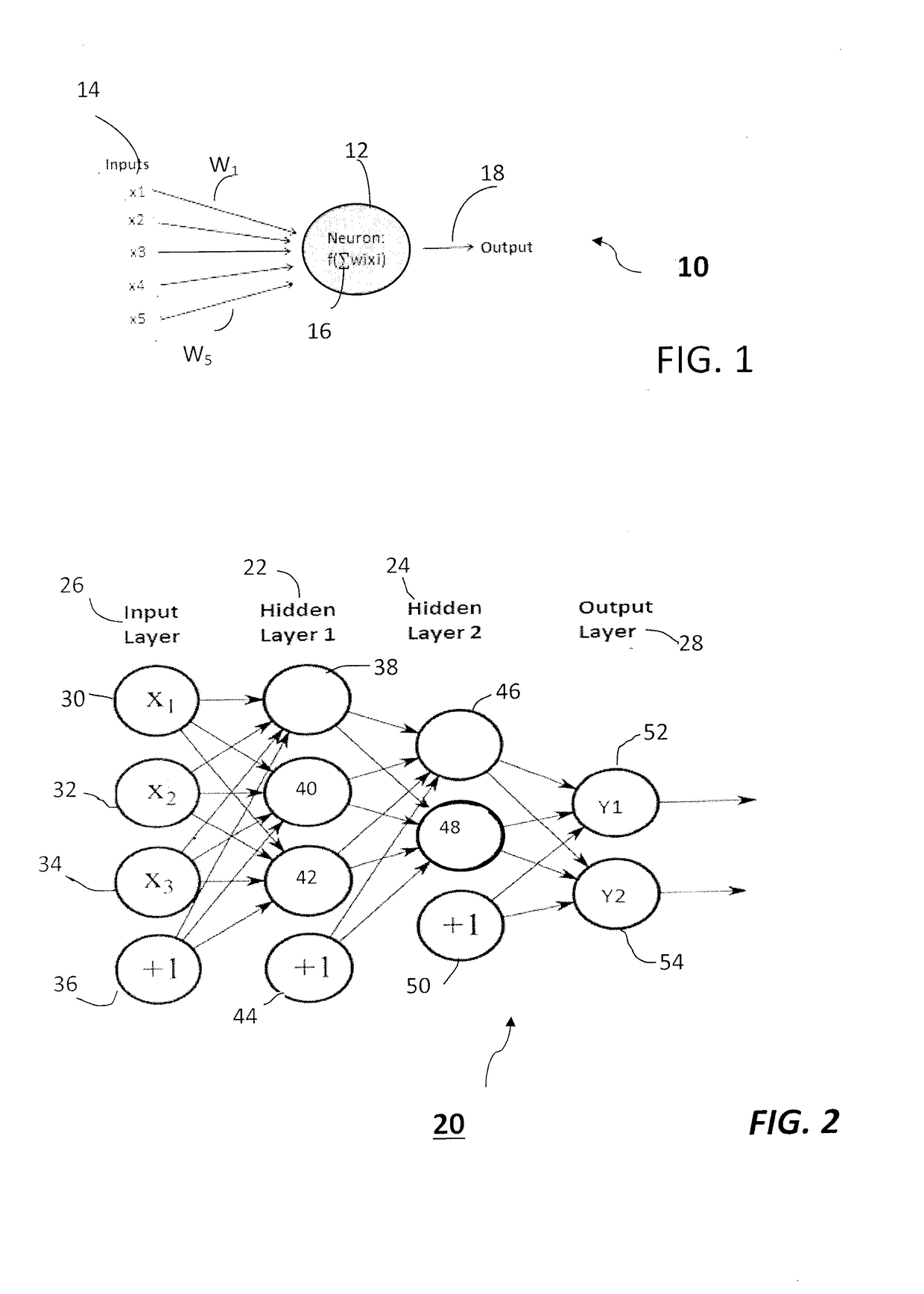

Deep learning (also known as deep structured learning or hierarchical learning) is part of a broader family of machine learning methods based on artificial neural networks. Learning can be supervised, semi-supervised or unsupervised.

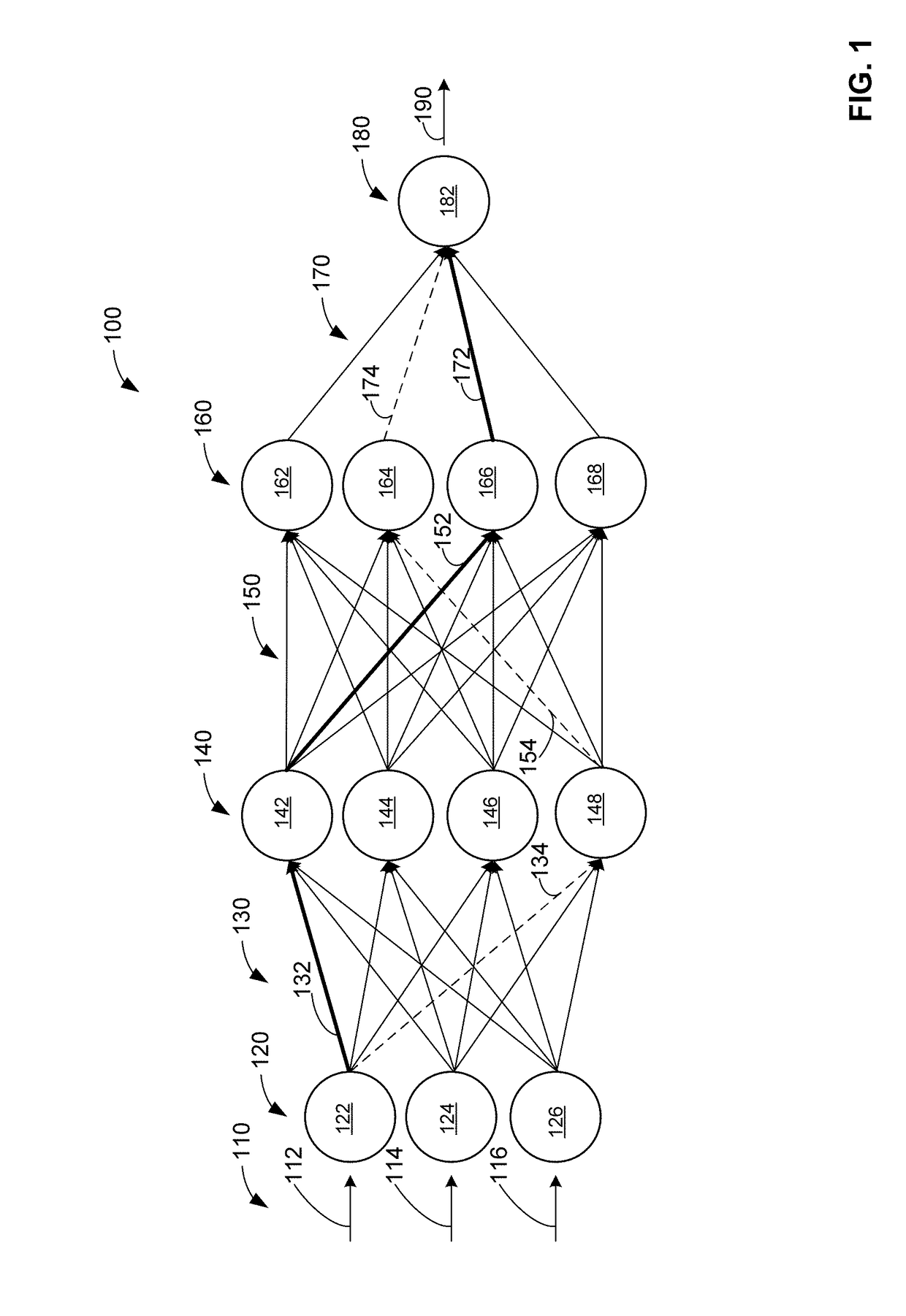

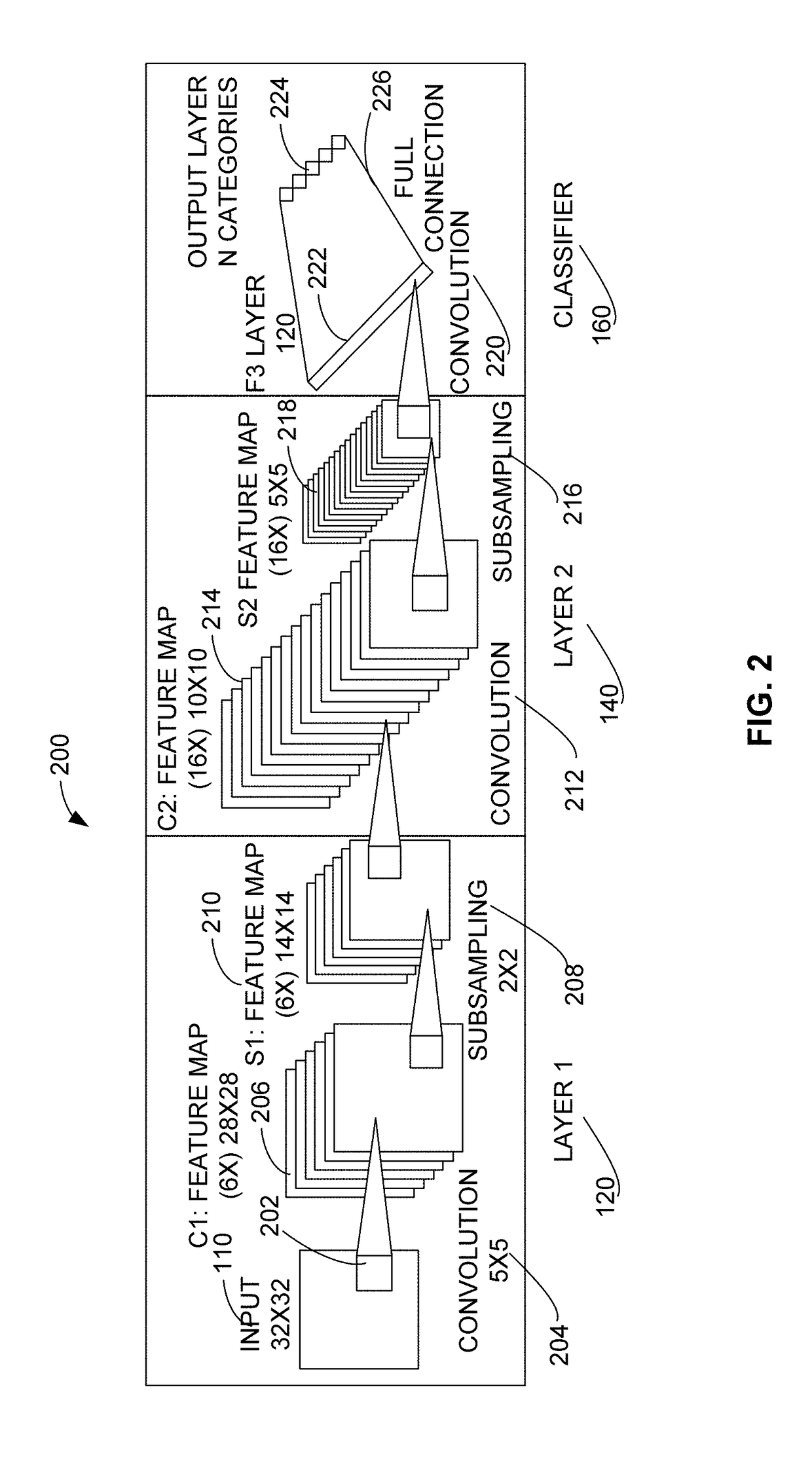

Deep Learning Neural Network Classifier Using Non-volatile Memory Array

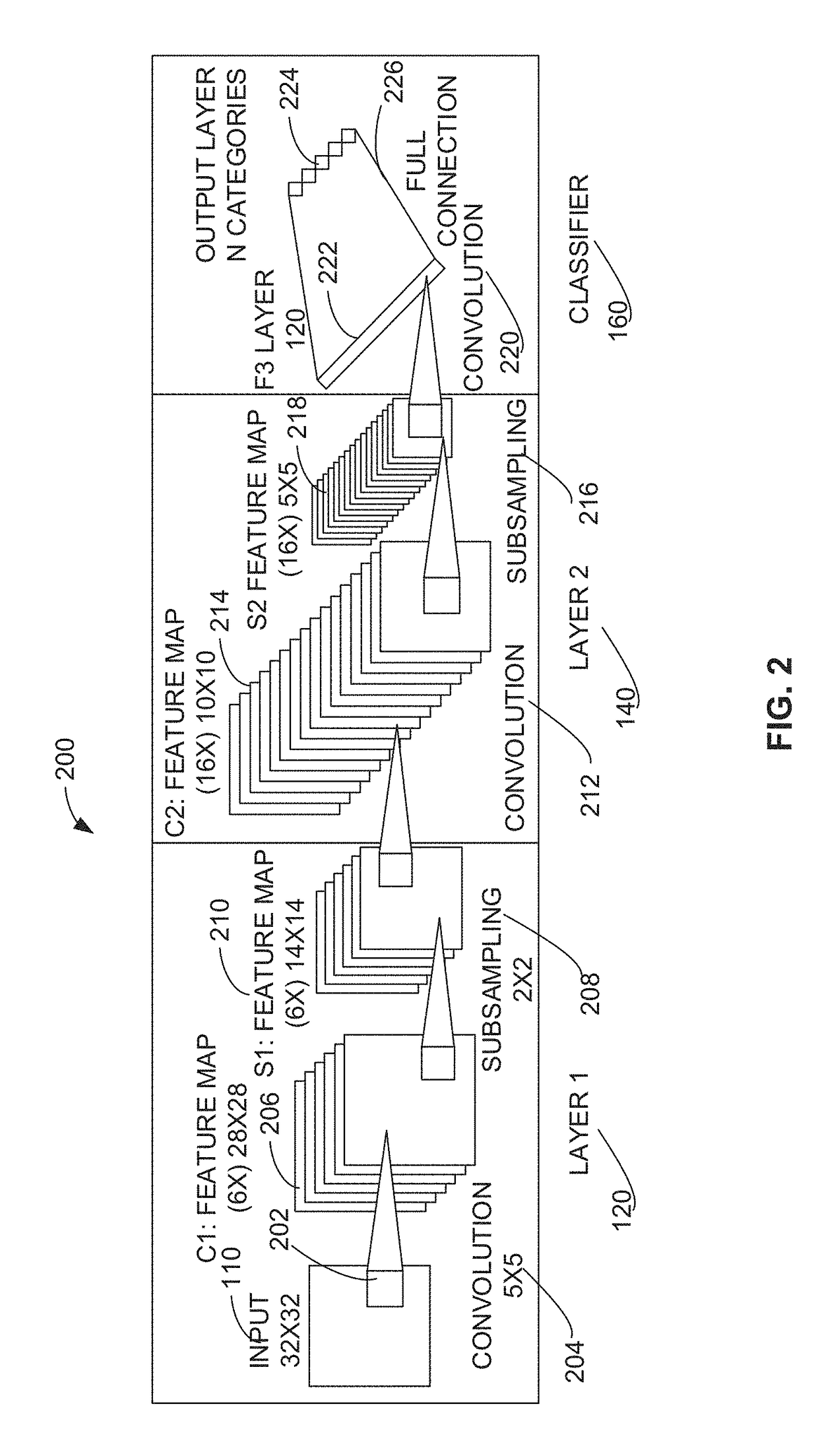

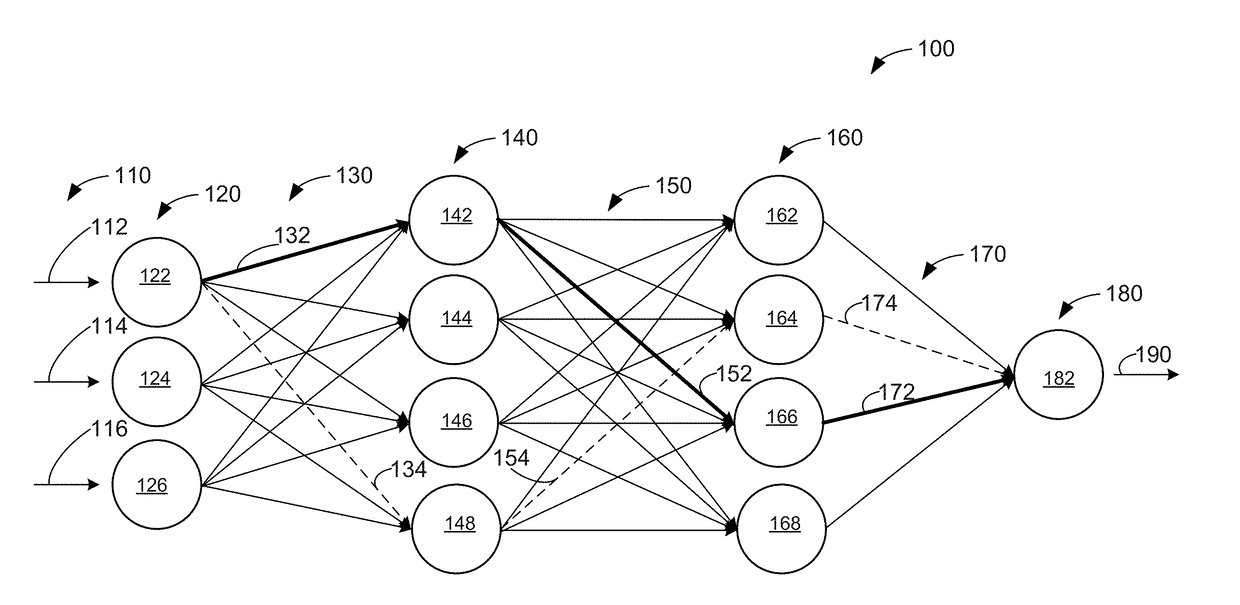

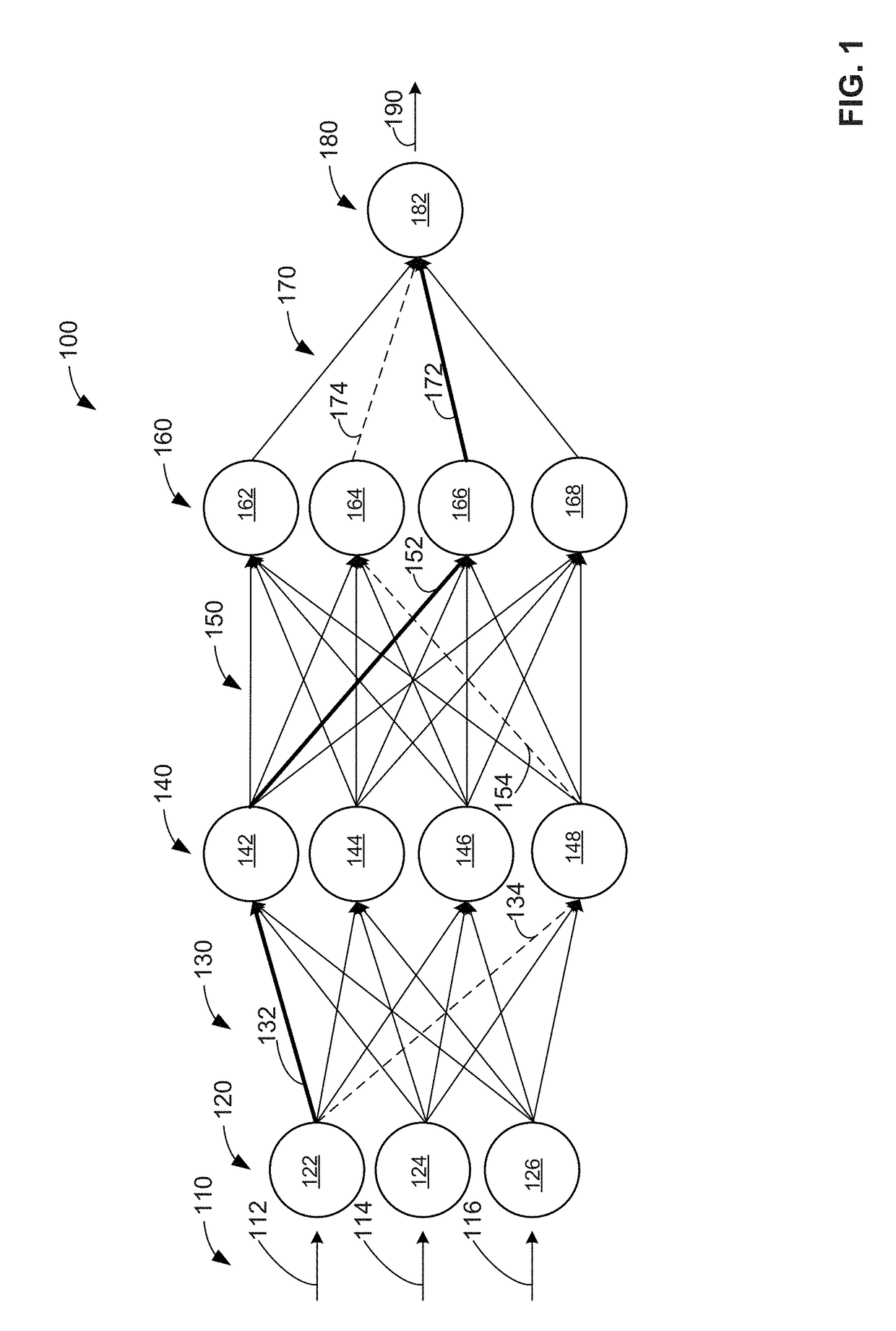

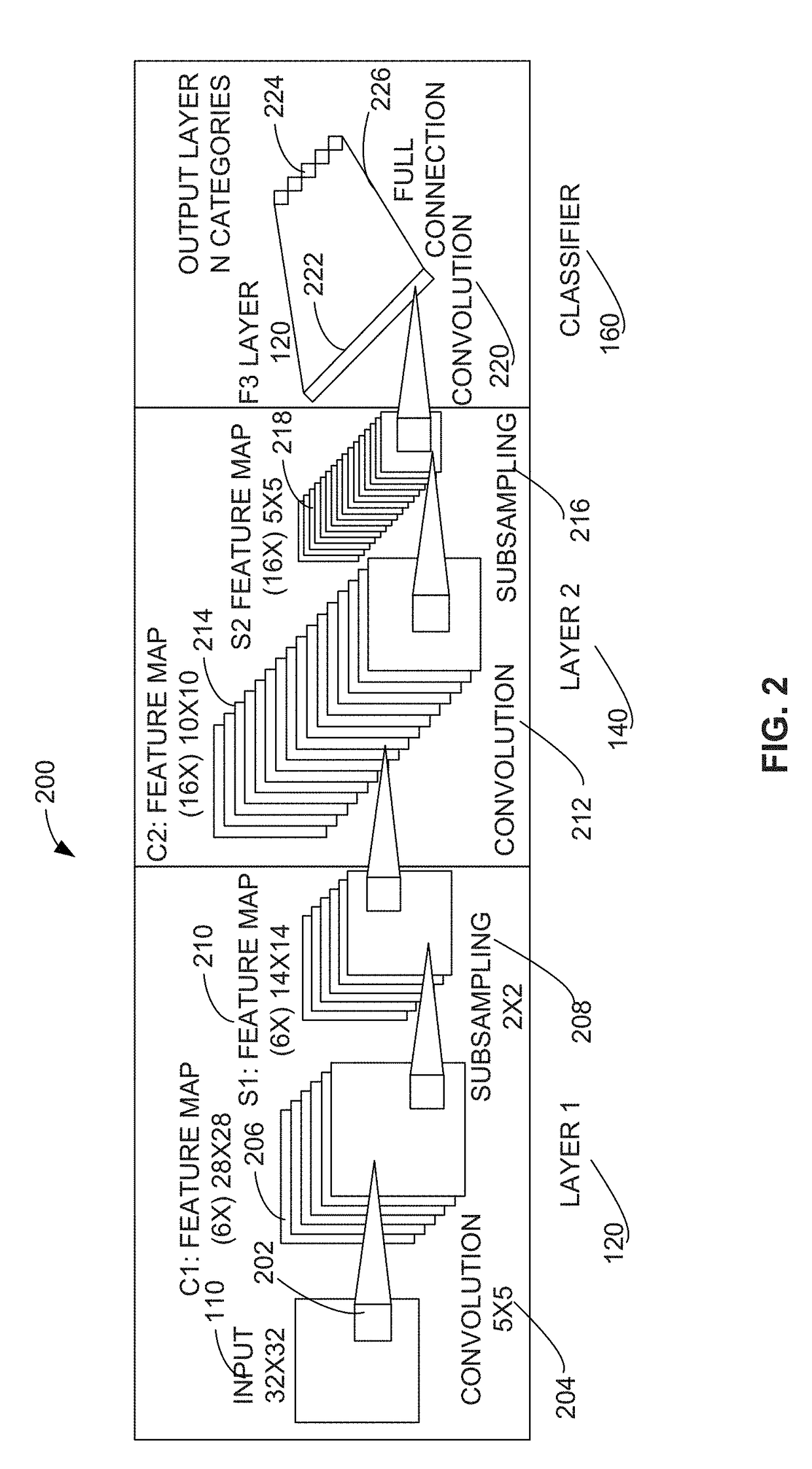

ActiveUS20170337466A1Input/output to record carriersRead-only memoriesSynapseNeural network classifier

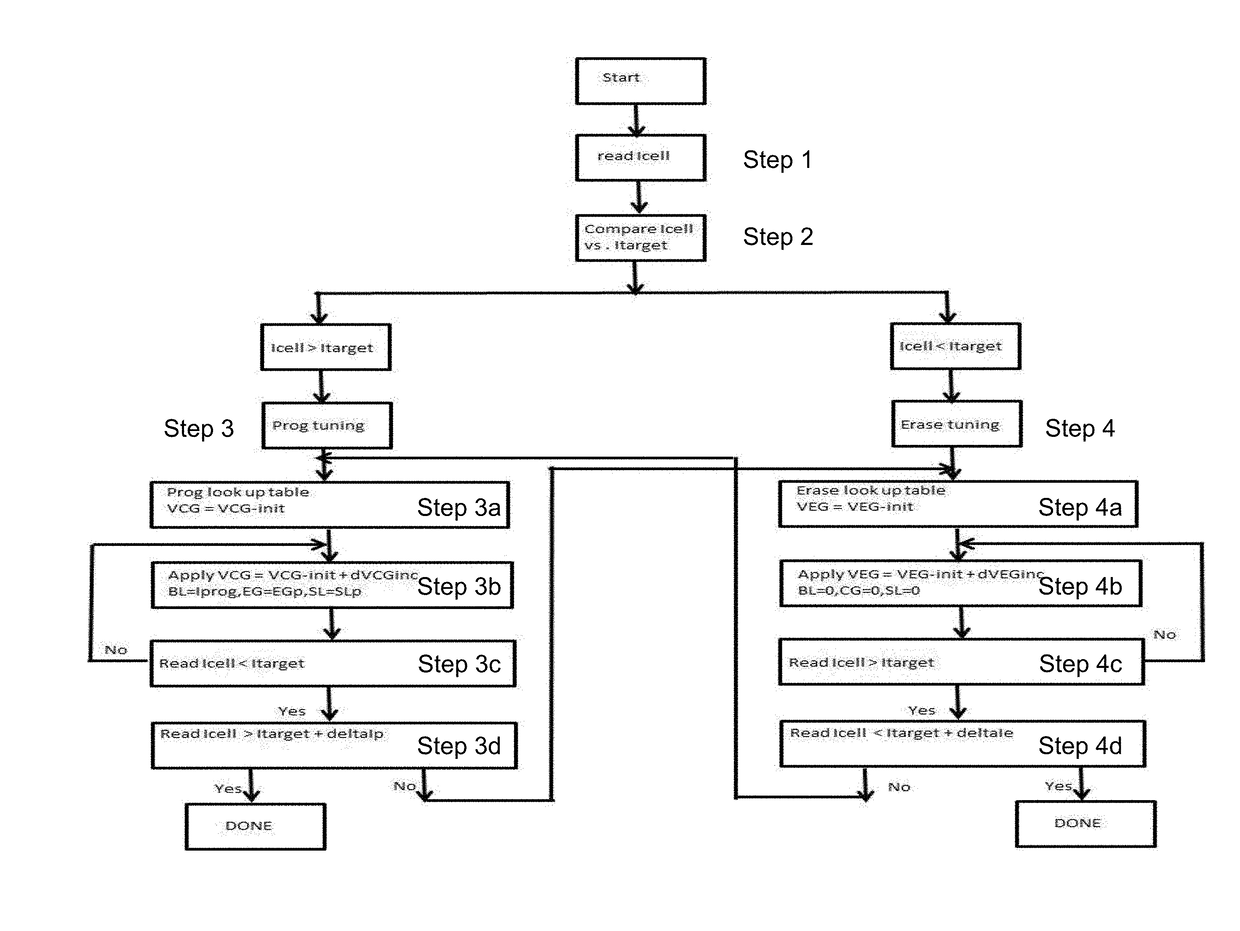

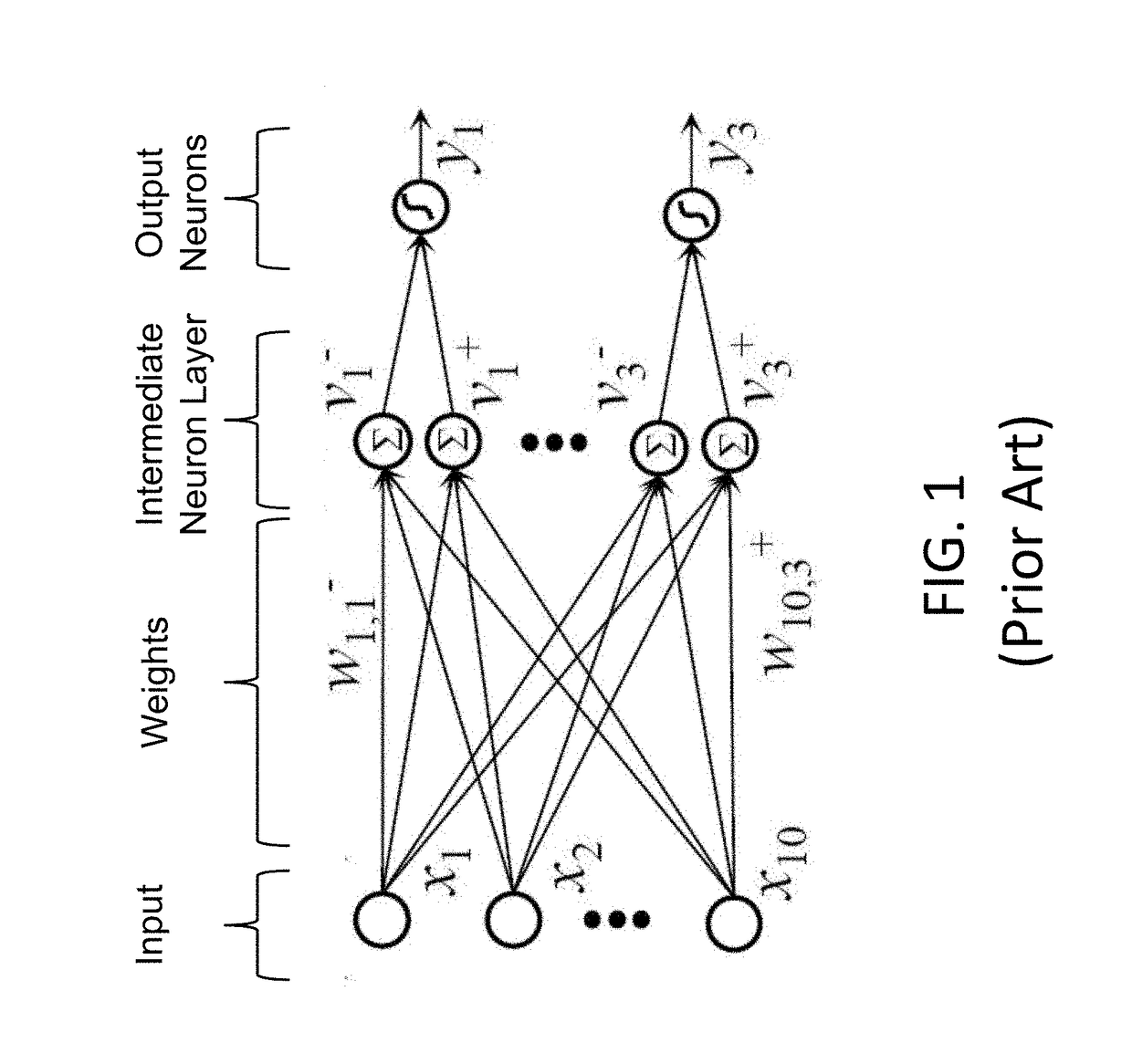

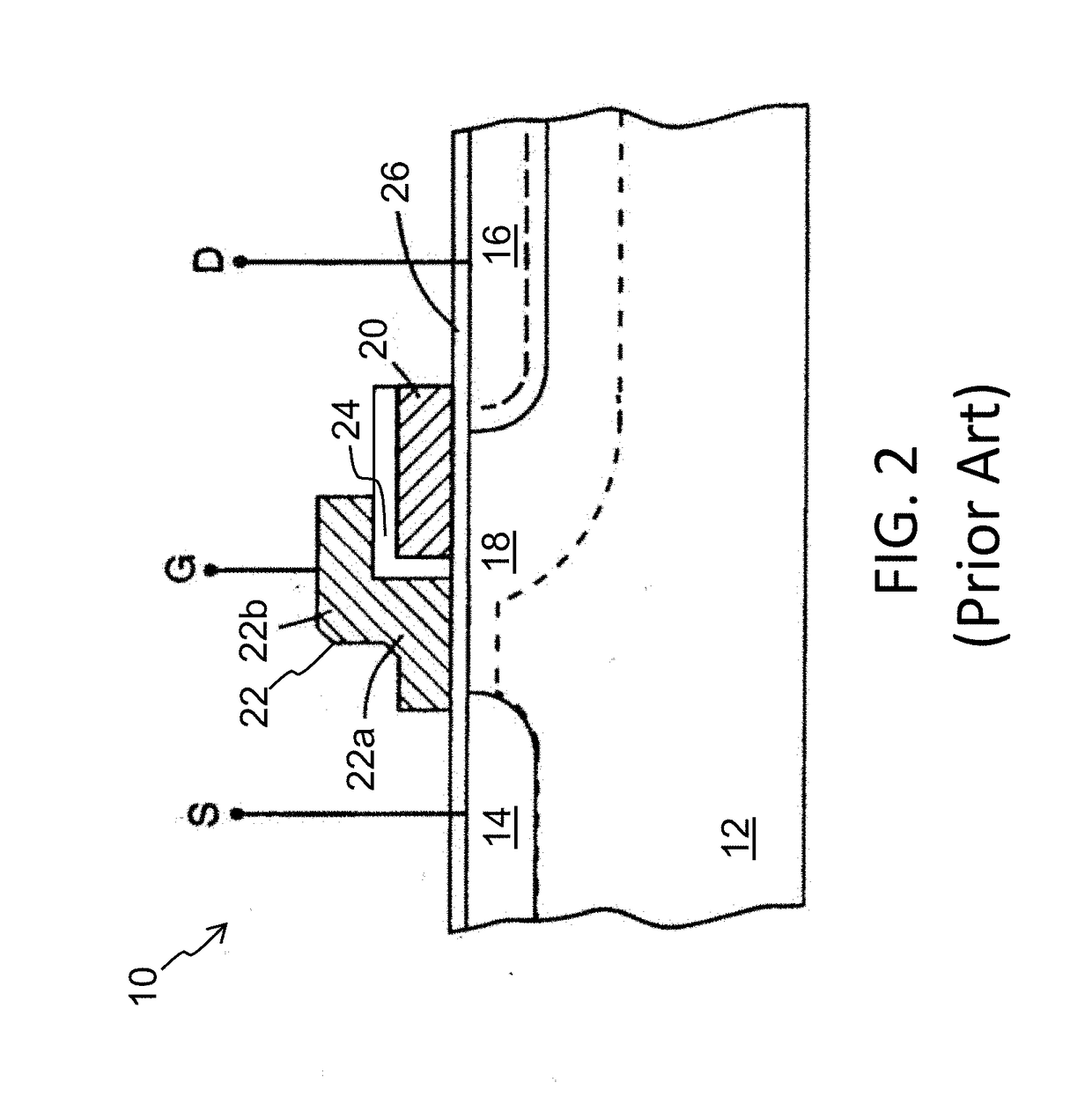

An artificial neural network device that utilizes one or more non-volatile memory arrays as the synapses. The synapses are configured to receive inputs and to generate therefrom outputs. Neurons are configured to receive the outputs. The synapses include a plurality of memory cells, wherein each of the memory cells includes spaced apart source and drain regions formed in a semiconductor substrate with a channel region extending there between, a floating gate disposed over and insulated from a first portion of the channel region and a non-floating gate disposed over and insulated from a second portion of the channel region. Each of the plurality of memory cells is configured to store a weight value corresponding to a number of electrons on the floating gate. The plurality of memory cells are configured to multiply the inputs by the stored weight values to generate the outputs.

Owner:RGT UNIV OF CALIFORNIA +1

Systems and methods for analyzing remote sensing imagery

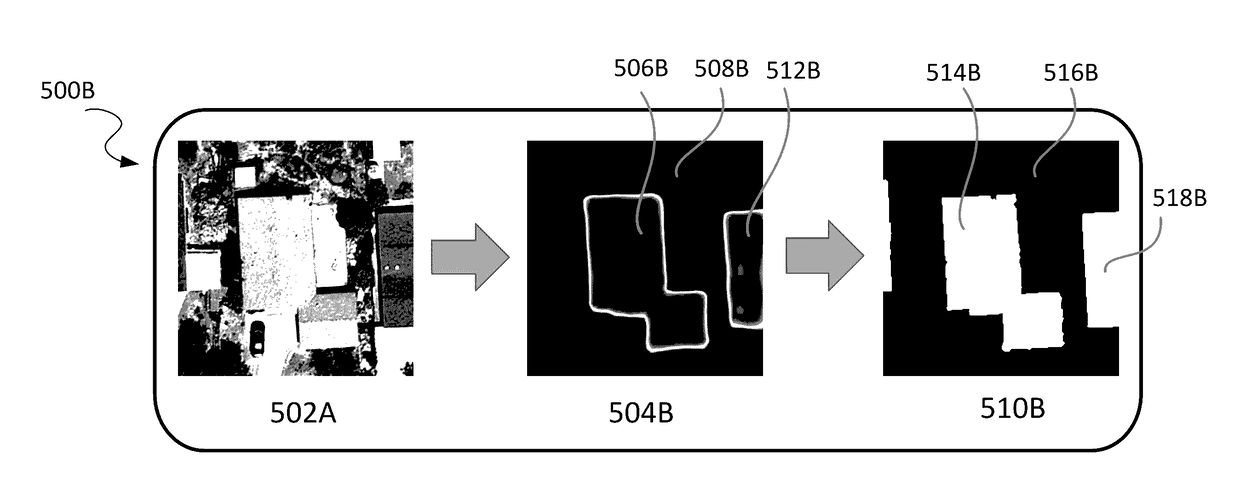

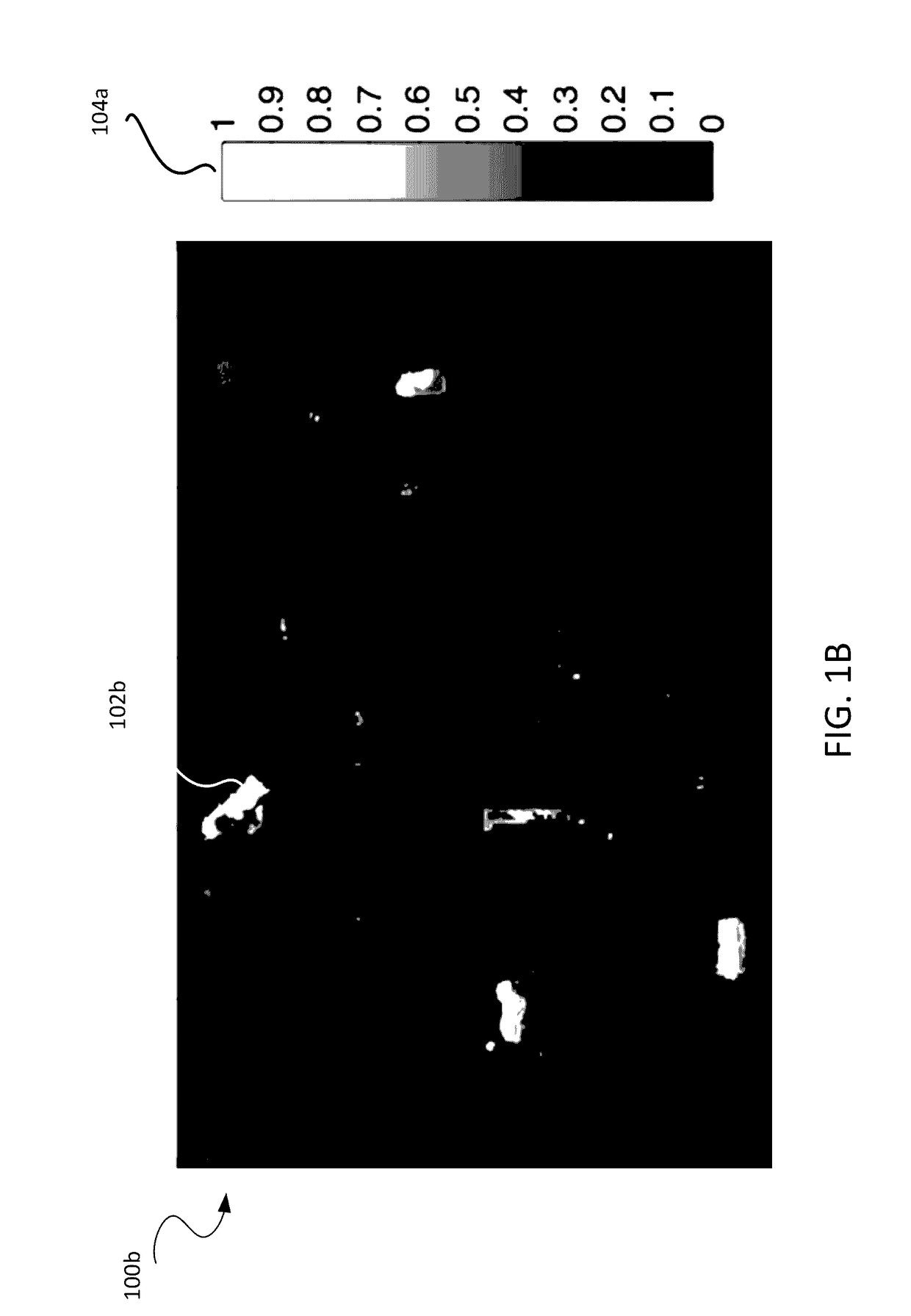

ActiveUS20170076438A1Image enhancementTelevision system detailsTransformation parameterRemote sensing

Disclosed systems and methods relate to remote sensing, deep learning, and object detection. Some embodiments relate to machine learning for object detection, which includes, for example, identifying a class of pixel in a target image and generating a label image based on a parameter set. Other embodiments relate to machine learning for geometry extraction, which includes, for example, determining heights of one or more regions in a target image and determining a geometric object property in a target image. Yet other embodiments relate to machine learning for alignment, which includes, for example, aligning images via direct or indirect estimation of transformation parameters.

Owner:CAPE ANALYTICS INC

Hardware system design improvement using deep learning algorithms

Methods and apparatus for deep learning-based system design improvement are provided. An example system design engine apparatus includes a deep learning network (DLN) model associated with each component of a target system to be emulated, each DLN model to be trained using known input and known output, wherein the known input and known output simulate input and output of the associated component of the target system, and wherein each DLN model is connected as each associated component to be emulated is connected in the target system to form a digital model of the target system. The example apparatus also includes a model processor to simulate behavior of the target system and / or each component of the target system to be emulated using the digital model to generate a recommendation regarding a configuration of a component of the target system and / or a structure of the component of the target system.

Owner:GENERAL ELECTRIC CO

Deep learning medical systems and methods for image reconstruction and quality evaluation

Methods and apparatus to automatically generate an image quality metric for an image are provided. An example method includes automatically processing a first medical image using a deployed learning network model to generate an image quality metric for the first medical image, the deployed learning network model generated from a digital learning and improvement factory including a training network, wherein the training network is tuned using a set of labeled reference medical images of a plurality of image types, and wherein a label associated with each of the labeled reference medical images indicates a central tendency metric associated with image quality of the image. The example method includes computing the image quality metric associated with the first medical image using the deployed learning network model by leveraging labels and associated central tendency metrics to determine the associated image quality metric for the first medical image.

Owner:GENERAL ELECTRIC CO

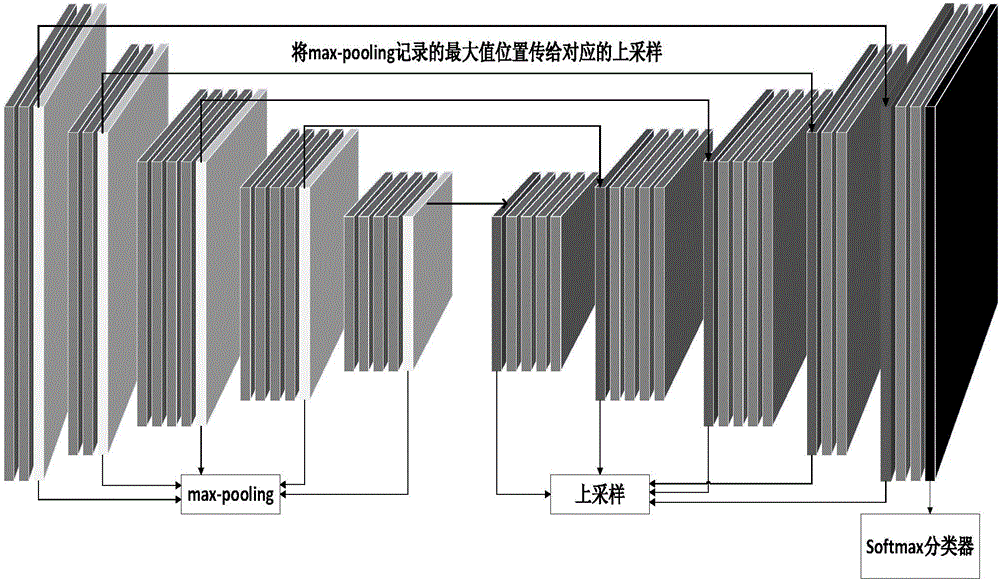

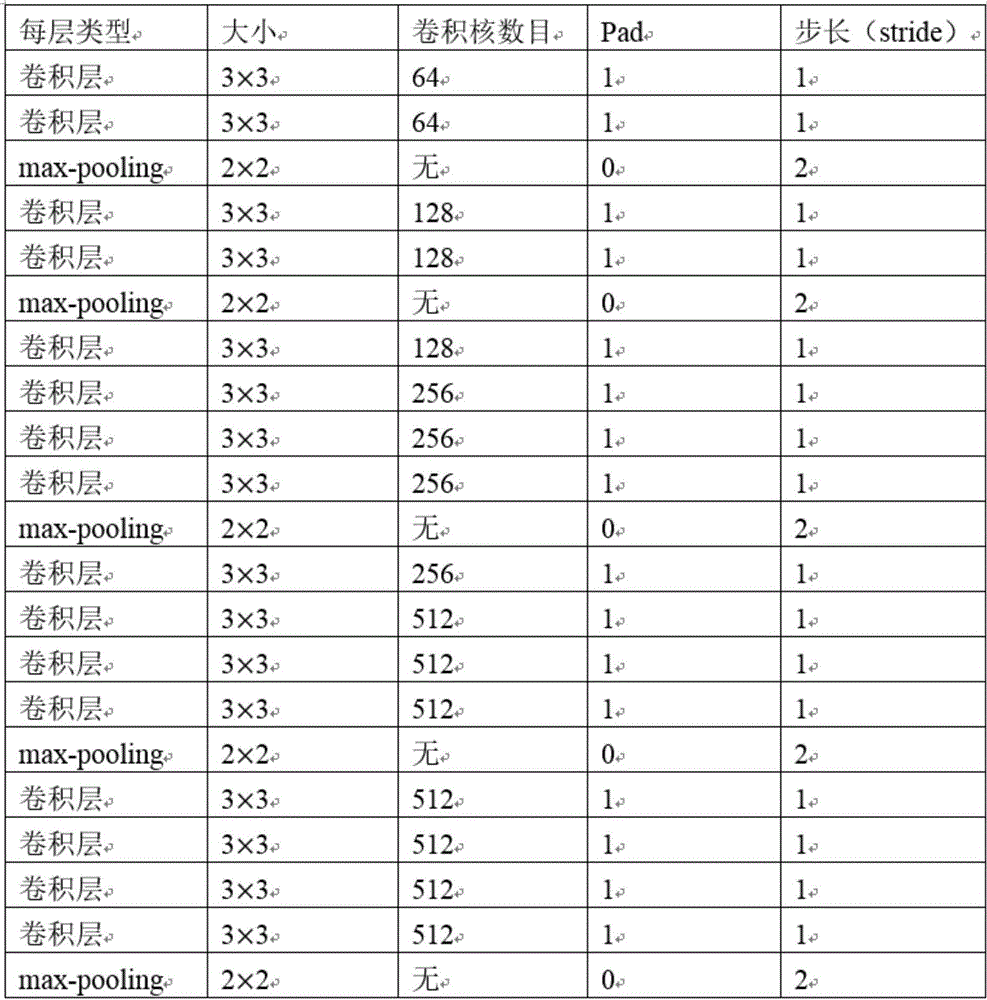

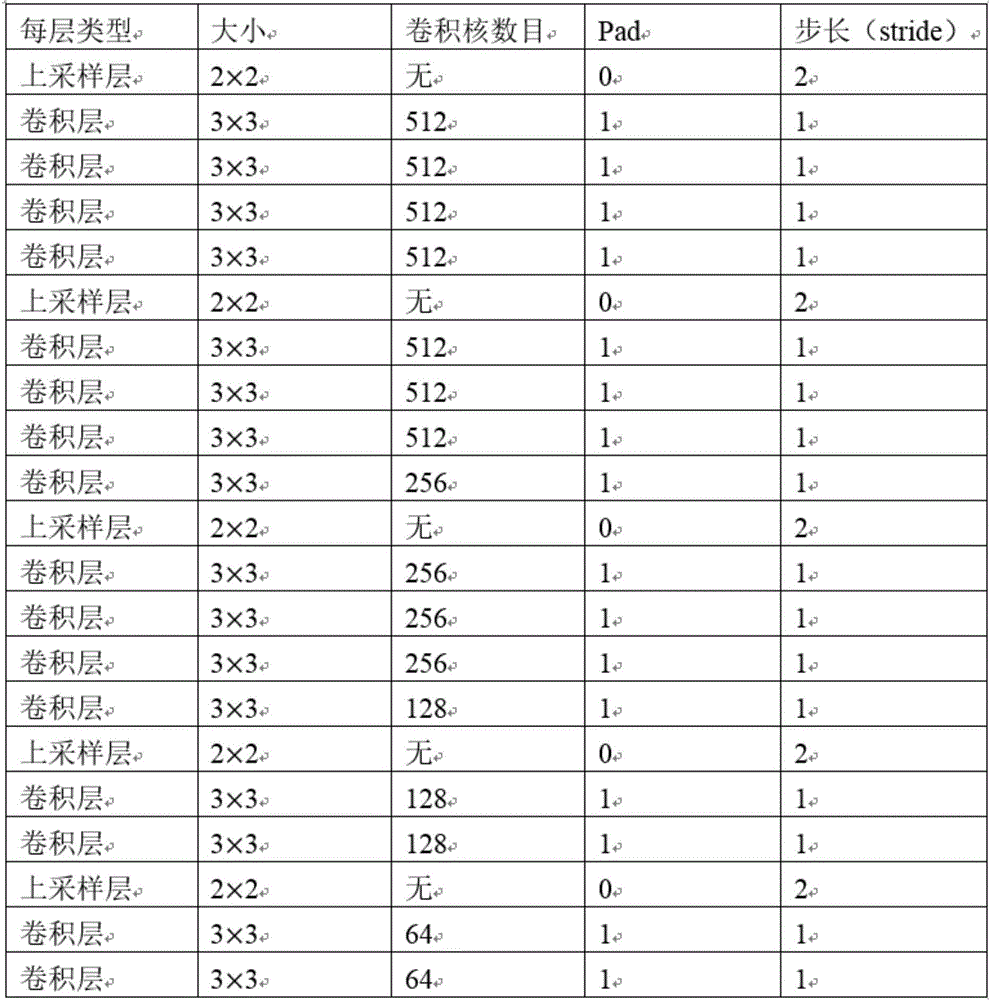

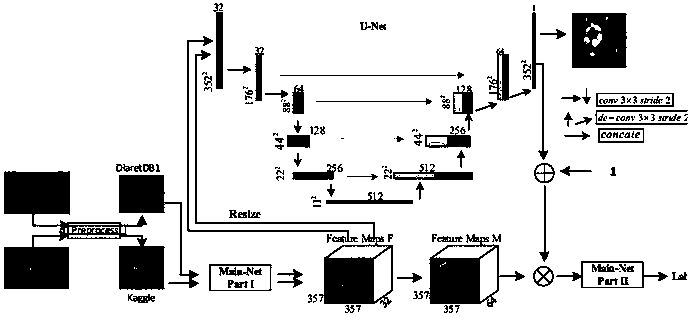

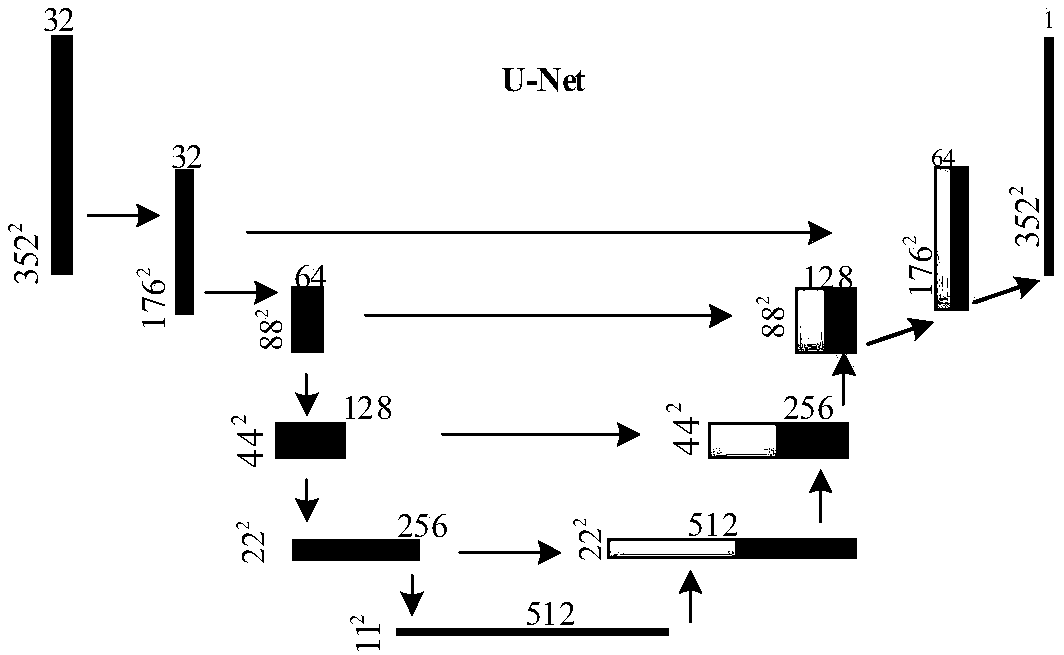

Fundus image retinal vessel segmentation method and system based on deep learning

ActiveCN106408562AEasy to classifyImprove accuracyImage enhancementImage analysisSegmentation systemBlood vessel

The invention discloses a fundus image retinal vessel segmentation method and a fundus image retinal vessel segmentation system based on deep learning. The fundus image retinal vessel segmentation method comprises the steps of performing data amplification on a training set, enhancing an image, training a convolutional neural network by using the training set, segmenting the image by using a convolutional neural network segmentation model to obtain a segmentation result, training a random forest classifier by using features of the convolutional neural network, extracting a last layer of convolutional layer output from the convolutional neural network, using the convolutional layer output as input of the random forest classifier for pixel classification to obtain another segmentation result, and fusing the two segmentation results to obtain a final segmentation image. Compared with the traditional vessel segmentation method, the fundus image retinal vessel segmentation method uses the deep convolutional neural network for feature extraction, the extracted features are more sufficient, and the segmentation precision and efficiency are higher.

Owner:SOUTH CHINA UNIV OF TECH

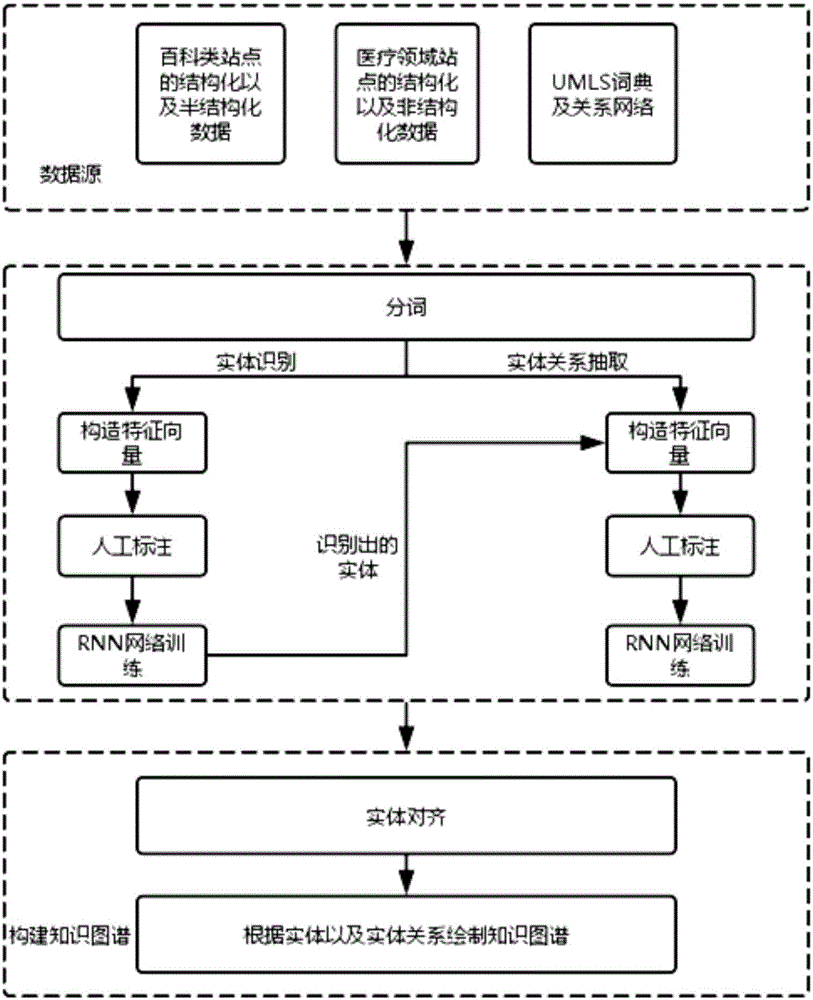

Chinese medical knowledge atlas construction method based on deep learning

ActiveCN106776711AEasy to handleRelationship Accurate and ComprehensiveWeb data indexingSemantic analysisKnowledge unitHealthcare associated

The invention relates to the technology of a knowledge atlas, and aims to provide a Chinese medical knowledge atlas construction method based on deep learning. The Chinese medical knowledge atlas construction method comprises the following steps: obtaining relevant data of a medical field from a data source; using a word segmentation tool to carry out word segmentation on unstructured data, and using an RNN (Recurrent Neural Network) to finish a sequence labeling task to identify entities related to medical care, so as to realize the extraction of knowledge units; carrying out feature vector construction on the entity, and utilizing the RNN to carry out sequence labeling and finish the identification of a relationship among the knowledge units; carrying out entity alignment, and then utilizing the extracted entities and the relationship between the entities to construct the knowledge atlas. According to the Chinese medical knowledge atlas construction method, a recurrent neural network is artfully used for extracting the knowledge units and identifying the relationship among the knowledge units so as to favorably finish the processing of the unstructured data. According to the Chinese medical knowledge atlas construction method, features suitable for the medical care field are put forward to carry out a training task of a network. Compared with general features, the features put forward by the method can better represent a medical entity, and therefore, the relationship among the extracted knowledge units can be more accurate and comprehensive.

Owner:ZHEJIANG UNIV

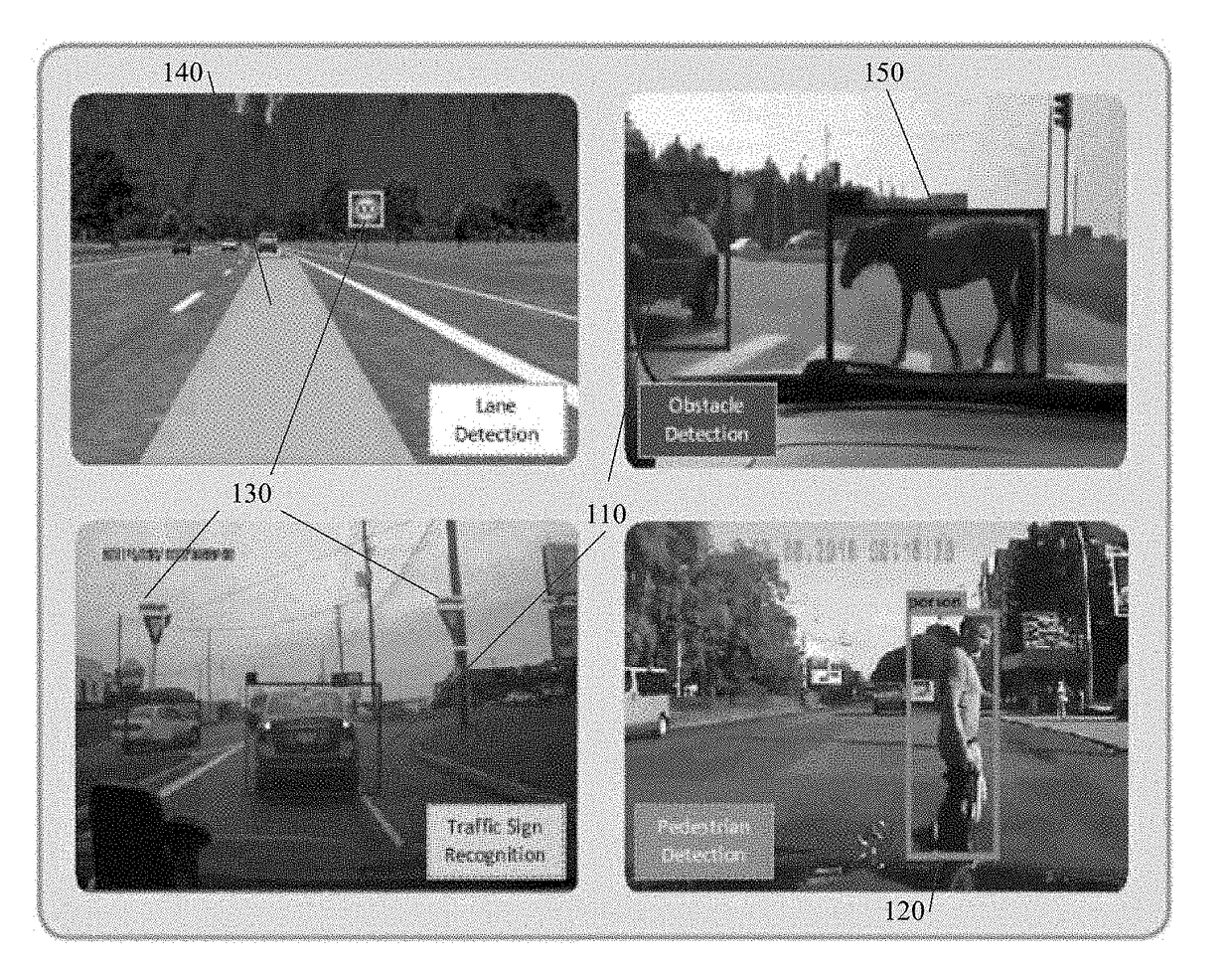

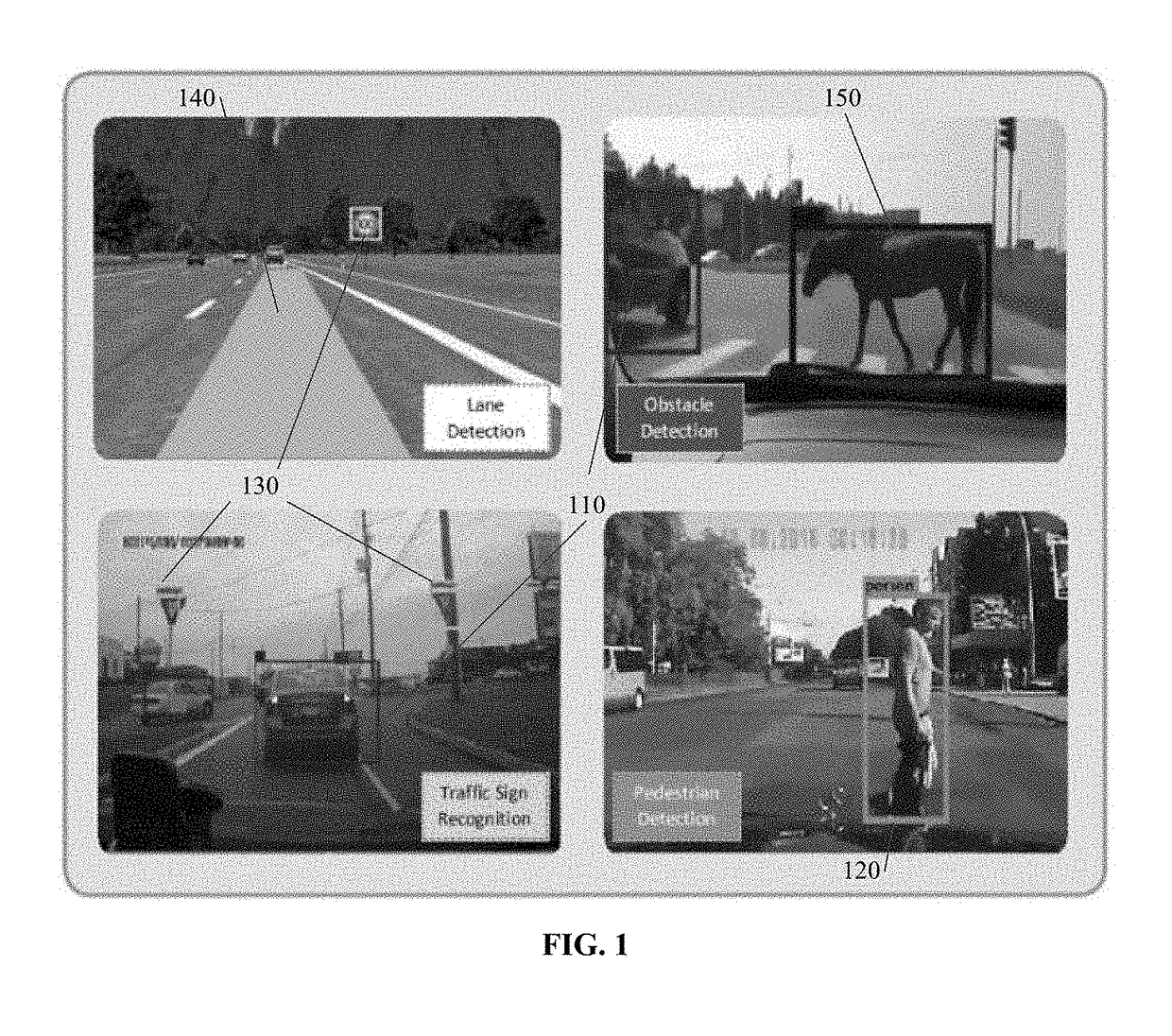

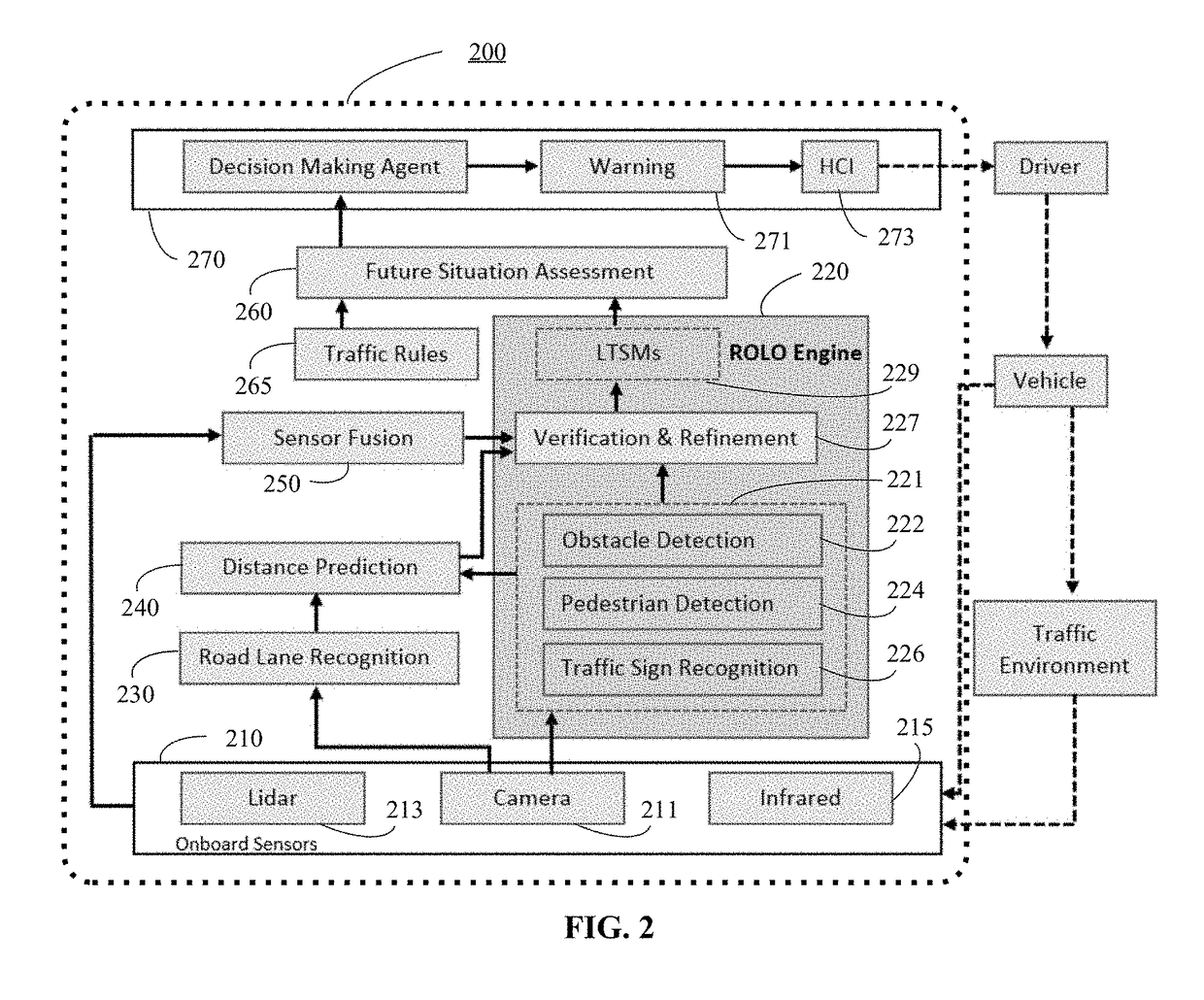

Method and system for vision-centric deep-learning-based road situation analysis

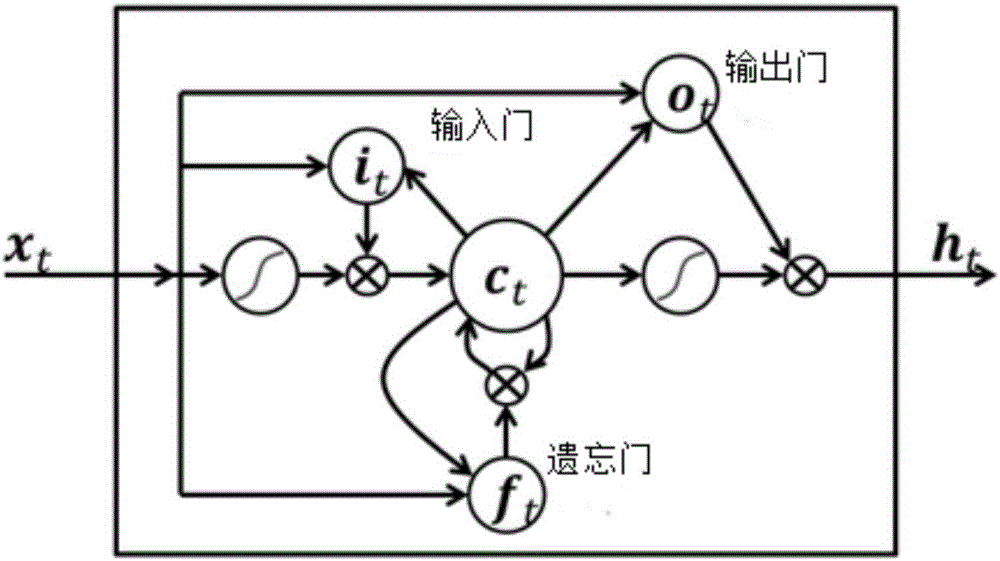

In accordance with various embodiments of the disclosed subject matter, a method and a system for vision-centric deep-learning-based road situation analysis are provided. The method can include: receiving real-time traffic environment visual input from a camera; determining, using a ROLO engine, at least one initial region of interest from the real-time traffic environment visual input by using a CNN training method; verifying the at least one initial region of interest to determine if a detected object in the at least one initial region of interest is a candidate object to be tracked; using LSTMs to track the detected object based on the real-time traffic environment visual input, and predicting a future status of the detected object by using the CNN training method; and determining if a warning signal is to be presented to a driver of a vehicle based on the predicted future status of the detected object.

Owner:TCL CORPORATION

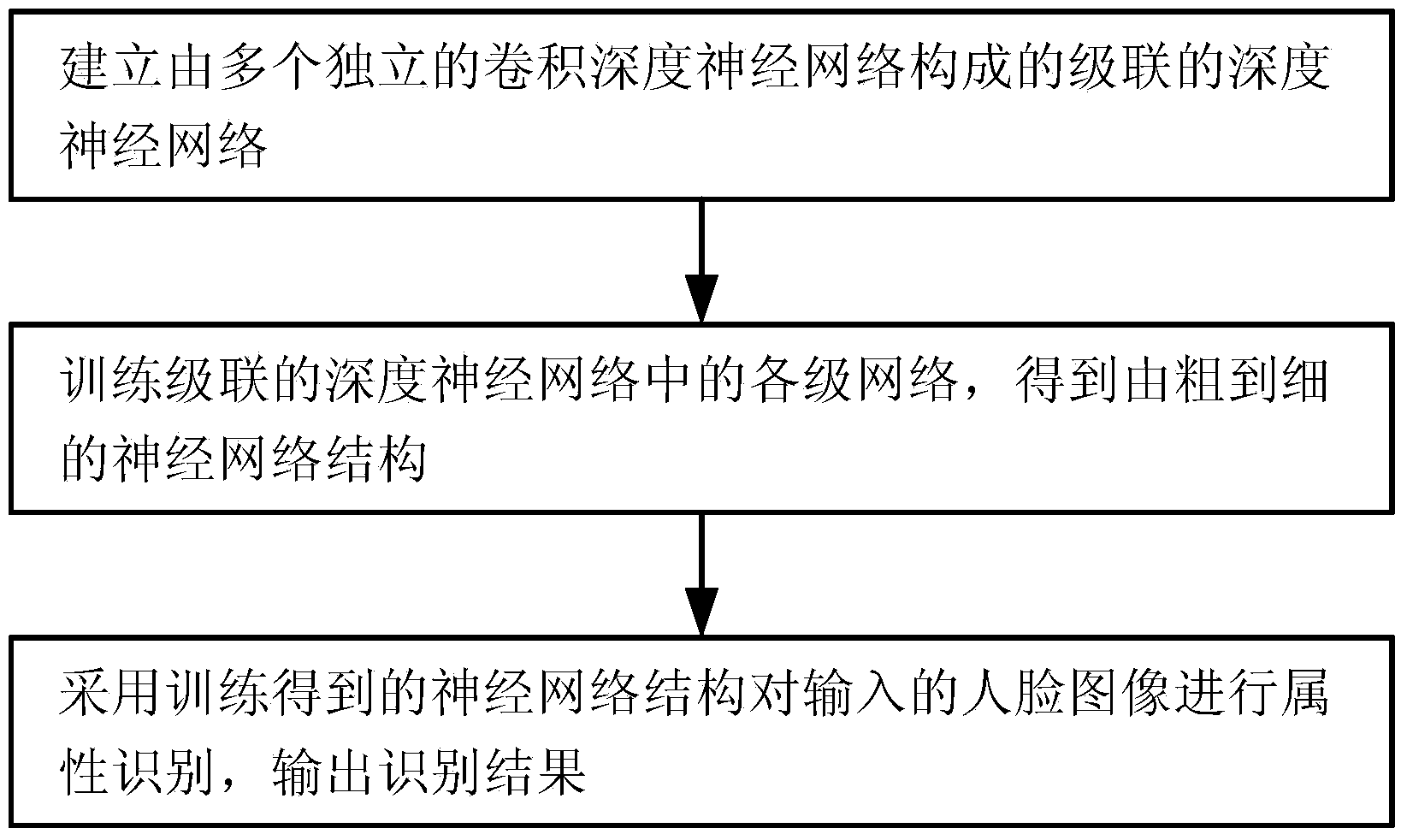

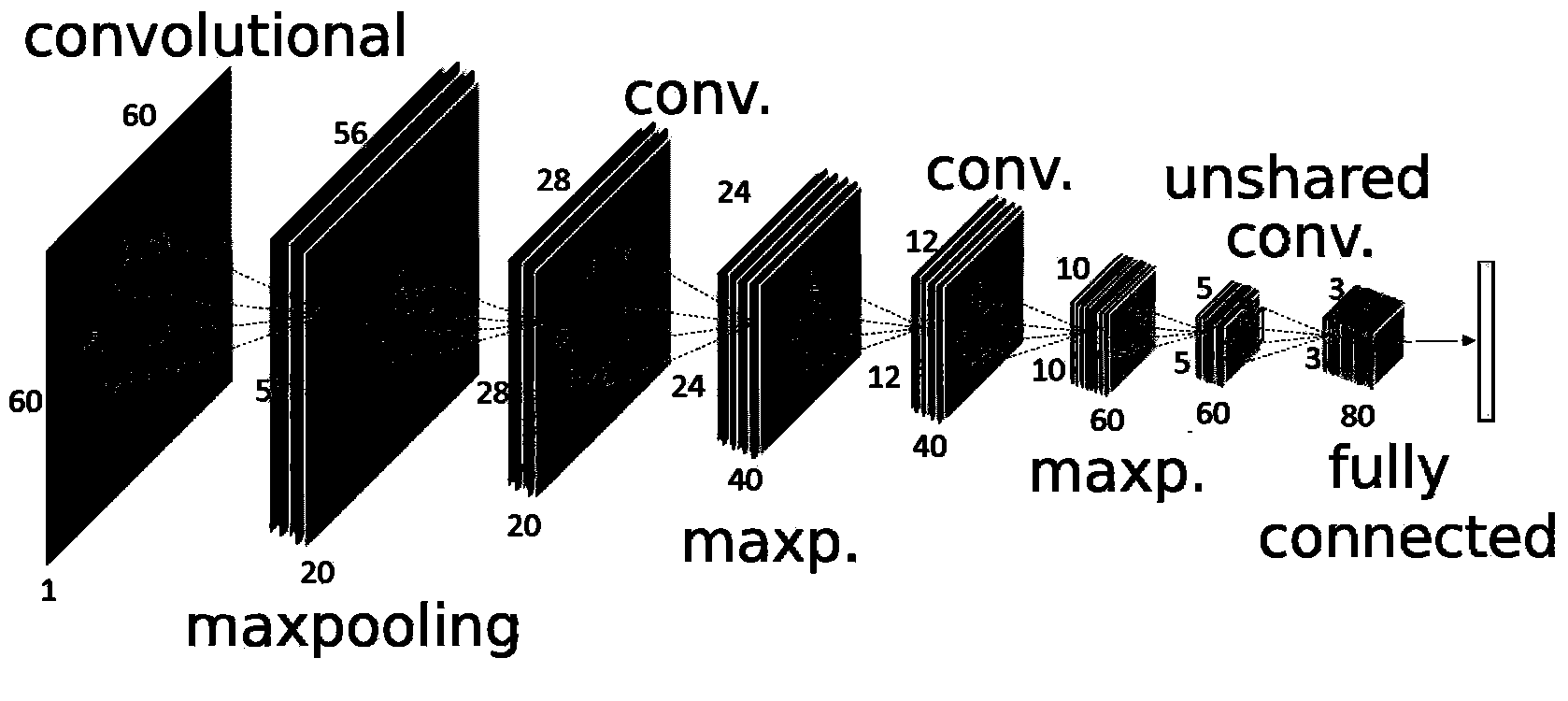

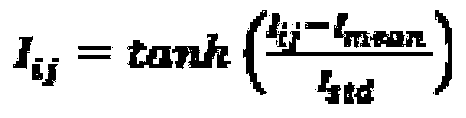

Cascaded depth neural network-based face attribute recognition method

ActiveCN103824054AHigh speedImprove accuracyCharacter and pattern recognitionNeural learning methodsPattern recognitionNetwork structure

The invention relates to a cascaded depth neural network-based face attribute recognition method. The method includes the following steps that: 1) a cascaded depth neural network composed of a plurality of independent convolution depth neural networks is constructed; 2) a large number of face image data are adopted to train networks at all levels in the cascaded depth neural network level by level, and the output of networks of previous levels is adopted as the input of networks of posterior levels, such that a coarse-to-fine neural network structure can be obtained; and 3) the coarse-to-fine neural network structure is adopted to recognize the attributes of an inputted face image, and final recognition results can be outputted. According to the cascaded depth neural network-based face attribute recognition method of the invention, a cascade algorithm system is adopted based on depth learning, and therefore, training time can be accelerated; and a cascaded coarse-to-fine processing process is realized, and the performance of a final network can be improved by networks of each level through utilizing information of networks of upper levels, and therefore, the speed and the accuracy of face attribute recognition can be effectively improved.

Owner:BEIJING KUANGSHI TECH

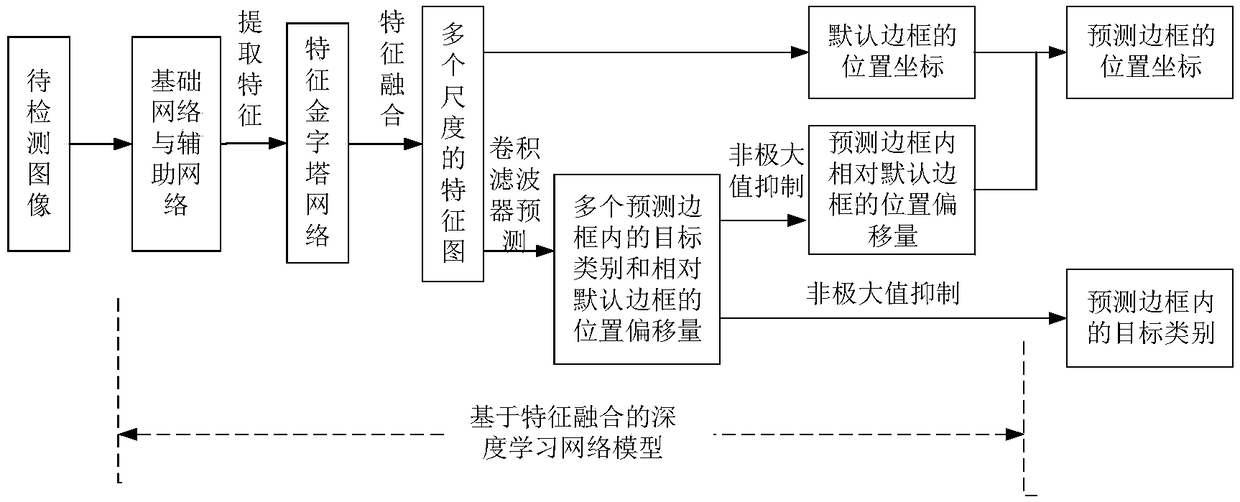

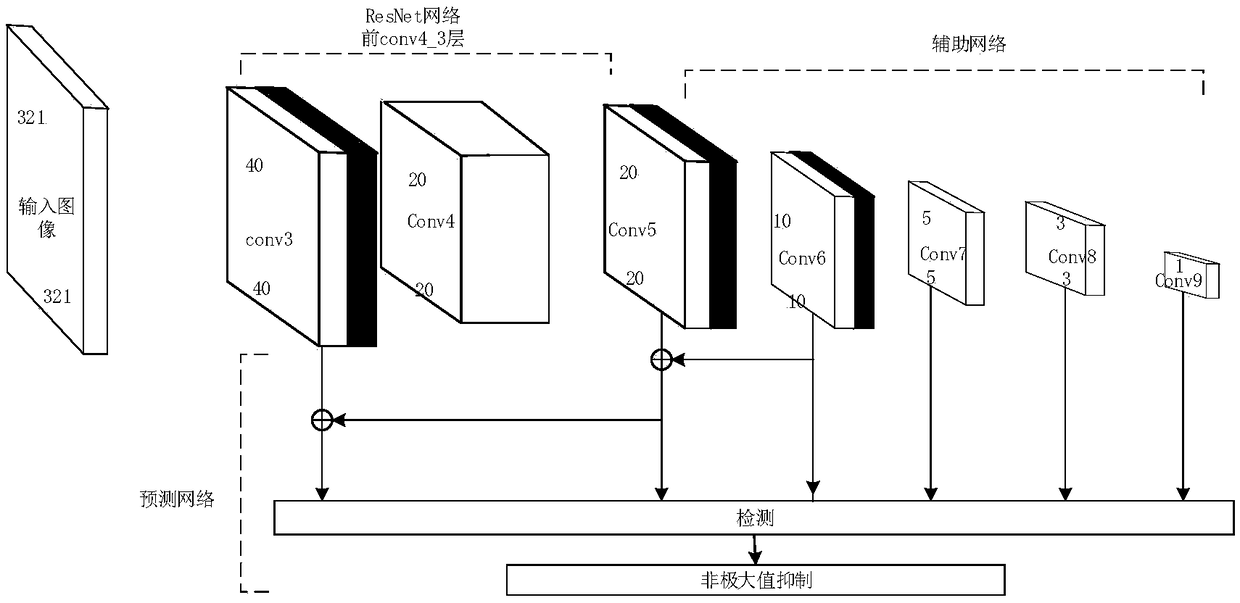

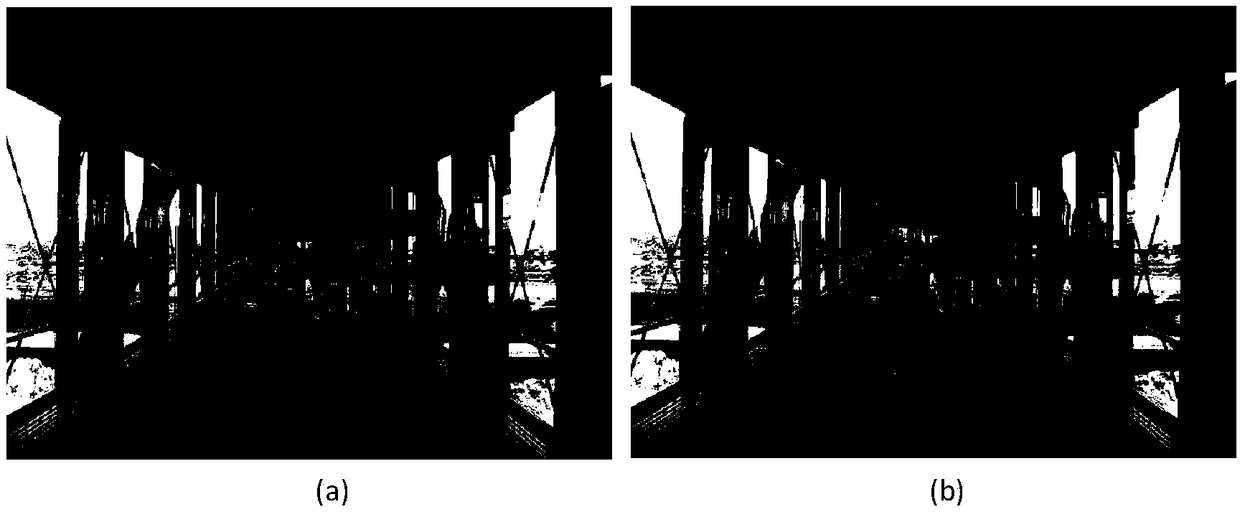

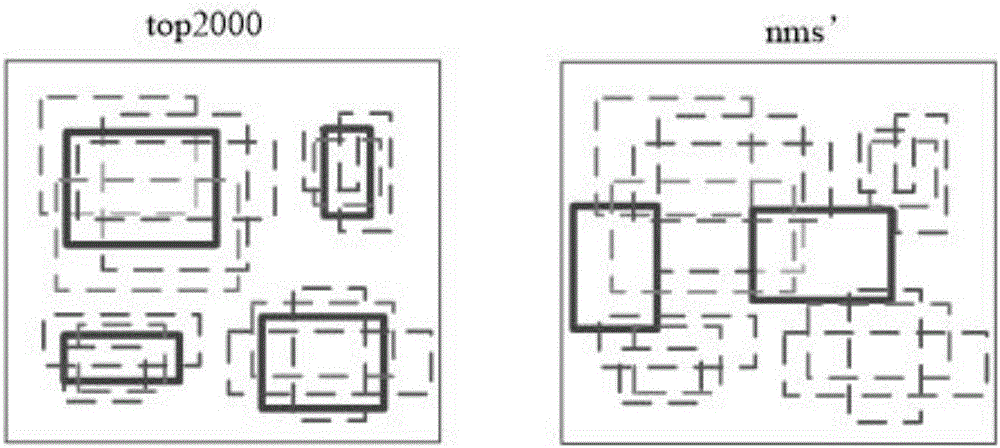

Small target detection method based on feature fusion and depth learning

InactiveCN109344821AScalingRich information featuresCharacter and pattern recognitionNetwork modelFeature fusion

The invention discloses a small target detection method based on feature fusion and depth learning, which solves the problems of poor detection accuracy and real-time performance for small targets. The implementation scheme is as follows: extracting high-resolution feature map through deeper and better network model of ResNet 101; extracting Five successively reduced low resolution feature maps from the auxiliary convolution layer to expand the scale of feature maps. Obtaining The multi-scale feature map by the feature pyramid network. In the structure of feature pyramid network, adopting deconvolution to fuse the feature map information of high-level semantic layer and the feature map information of shallow layer; performing Target prediction using feature maps with different scales and fusion characteristics; adopting A non-maximum value to suppress the scores of multiple predicted borders and categories, so as to obtain the border position and category information of the final target. The invention has the advantages of ensuring high precision of small target detection under the requirement of ensuring real-time detection, can quickly and accurately detect small targets in images, and can be used for real-time detection of targets in aerial photographs of unmanned aerial vehicles.

Owner:XIDIAN UNIV

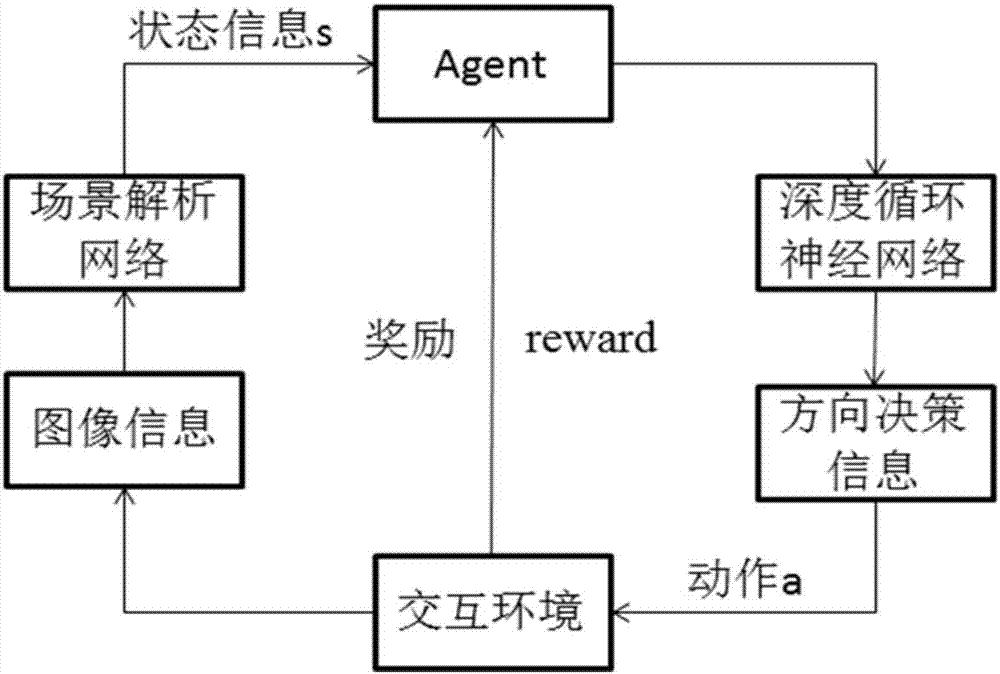

Deep and reinforcement learning-based real-time online path planning method of

ActiveCN106970615AReasonable method designAccurate path planningPosition/course control in two dimensionsPlanning approachStudy methods

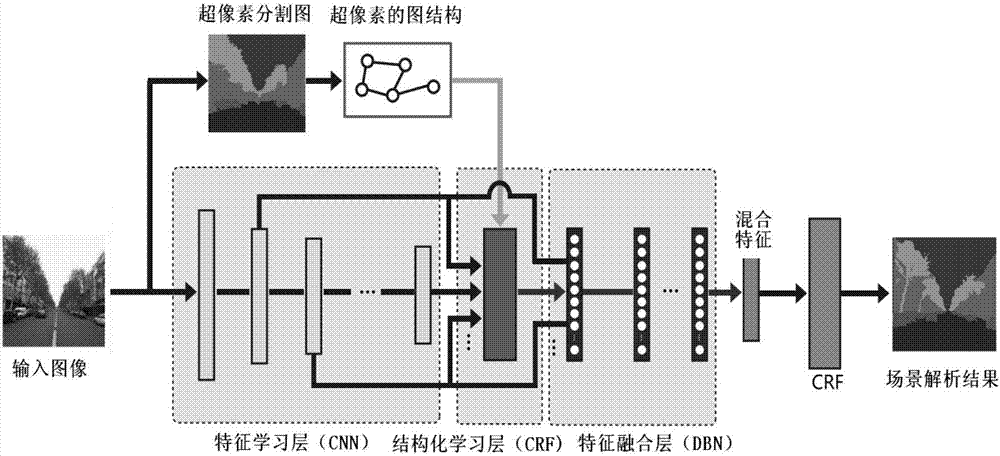

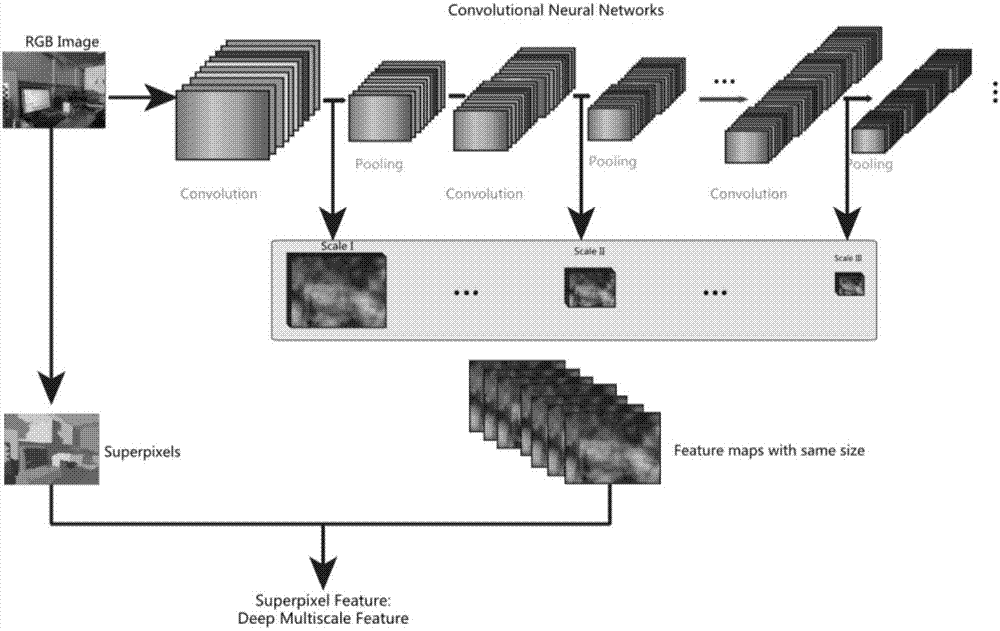

The present invention provides a deep and reinforcement learning-based real-time online path planning method. According to the method, the high-level semantic information of an image is obtained through using a deep learning method, the path planning of the end-to-end real-time scenes of an environment can be completed through using a reinforcement learning method. In a training process, image information collected in the environment is brought into a scene analysis network as a current state, so that an analytical result can be obtained; the analytical result is inputted into a designed deep cyclic neural network; and the decision-making action of each step of an intelligent body in a specific scene can be obtained through training, so that an optimal complete path can be obtained. In an actual application process, image information collected by a camera is inputted into a trained deep and reinforcement learning network, so that the direction information of the walking of the intelligent body can be obtained. With the method of the invention, obtained image information can be utilized to the greatest extent under a premise that the robustness of the method is ensured and the method slightly depends on the environment, and real-time scene walking information path planning can be realized.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

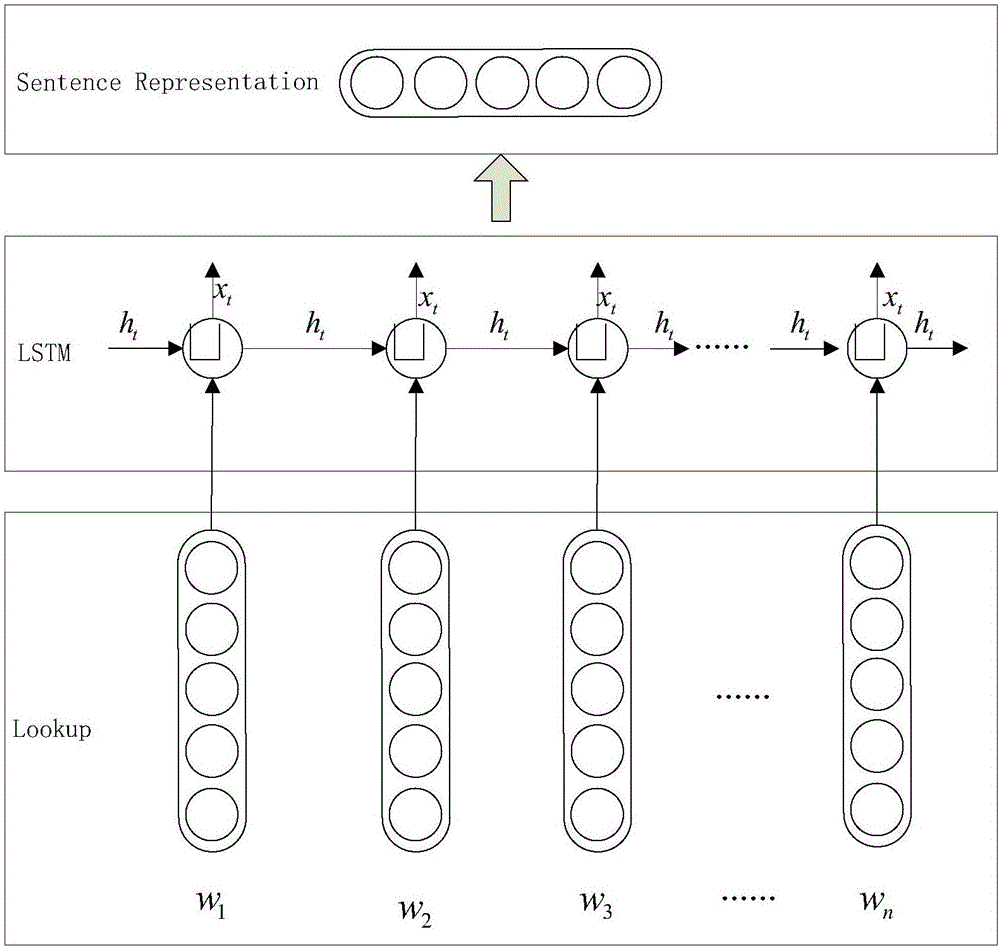

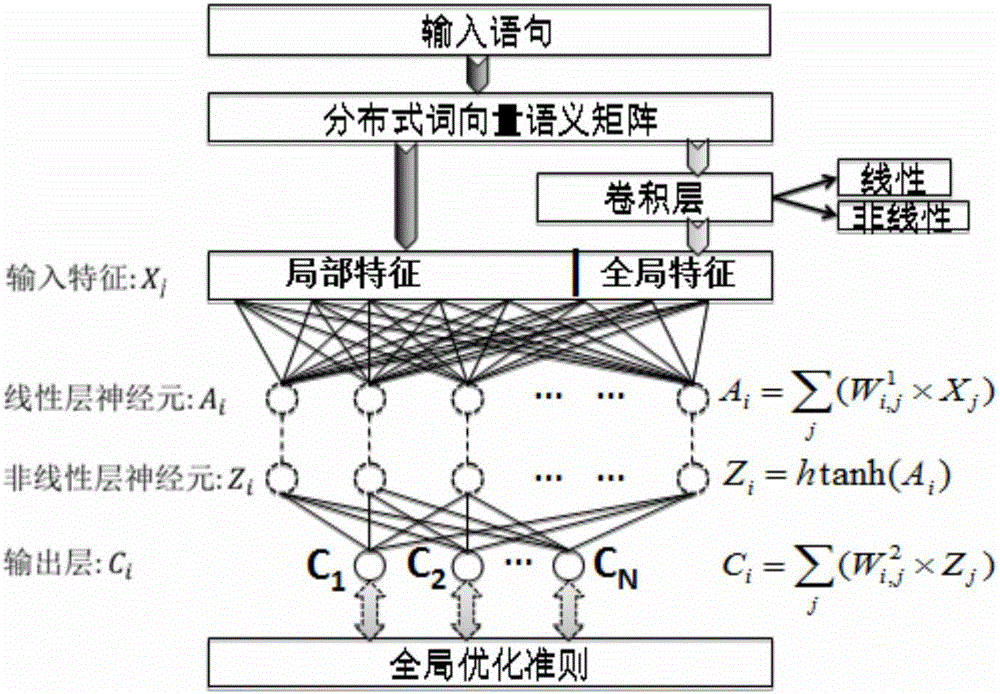

Text emotion classification method based on the joint deep learning model

InactiveCN106599933AImprove classification performanceGreat photo effectCharacter and pattern recognitionSpecial data processing applicationsSupport vector machineCurse of dimensionality

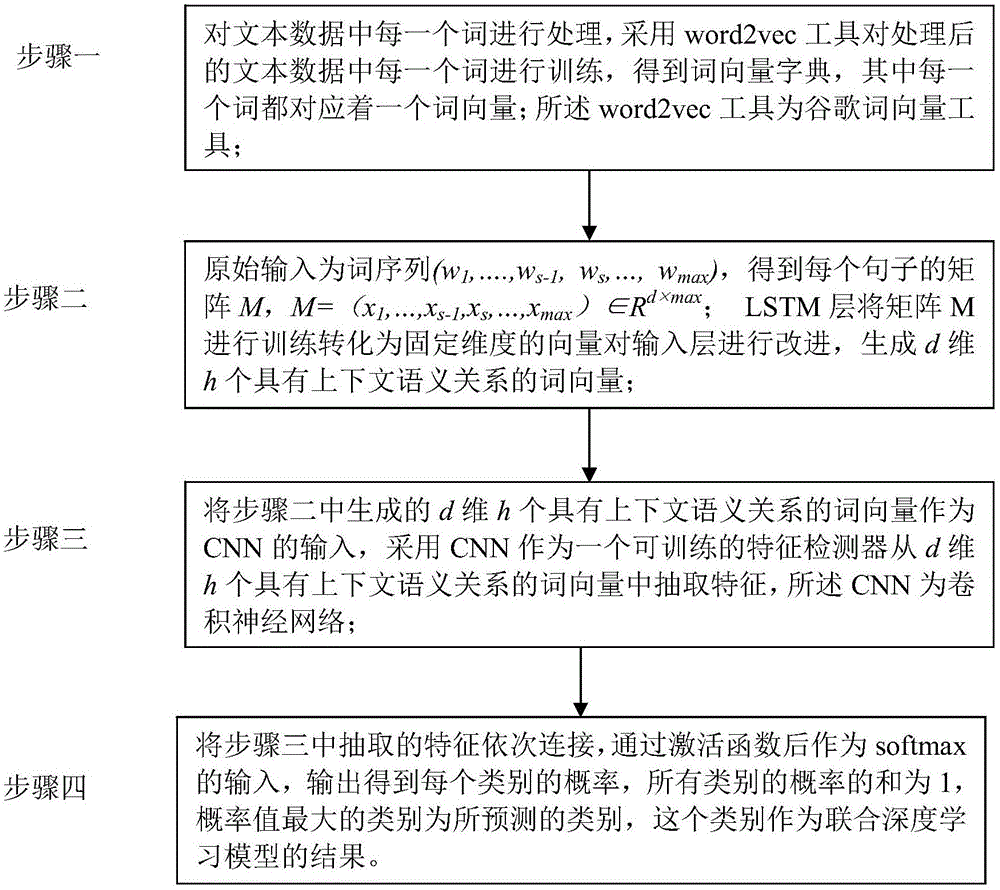

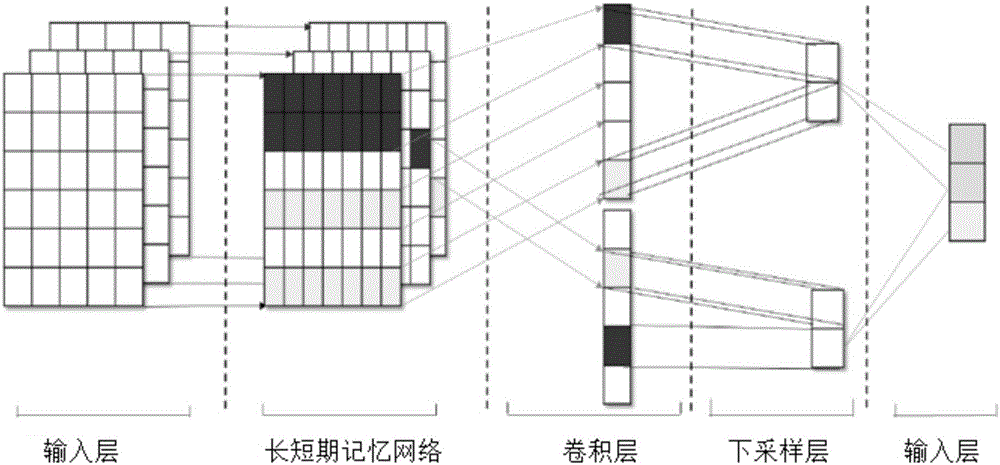

The invention provides a text emotion classification method based on the joint deep learning model which relates to the text emotion classification method. The method is designed with the object of solving the problems with the dimension disaster and sparse data incurred from the existing support vector machine and other shallow layer classification methods. The method comprises: 1) processing each word in the text data; using the word2vec tool to train each processed word in the text data so as to obtain a word vector dictionary; 2) obtaining the matrix M of each sentence; training the matrix M by the LSTM layer and converting it into vector with fixed dimensions; improving the input layer; generating d-dimensional h word vectors with context semantic relations; 3) using a CNN as a trainable characteristic detector to extract characteristics from the d-dimensional h word vectors with context semantic relations; and 4) connecting the extracted characteristics in order; outputting to obtain the probability of each classification wherein the classification with the maximal probability value is the predicated classification. The invention is applied to the natural language processing field.

Owner:HARBIN INST OF TECH

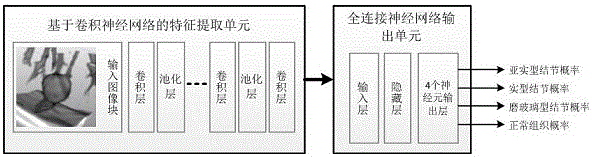

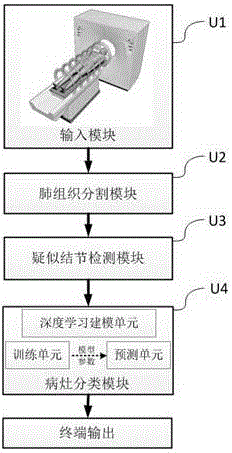

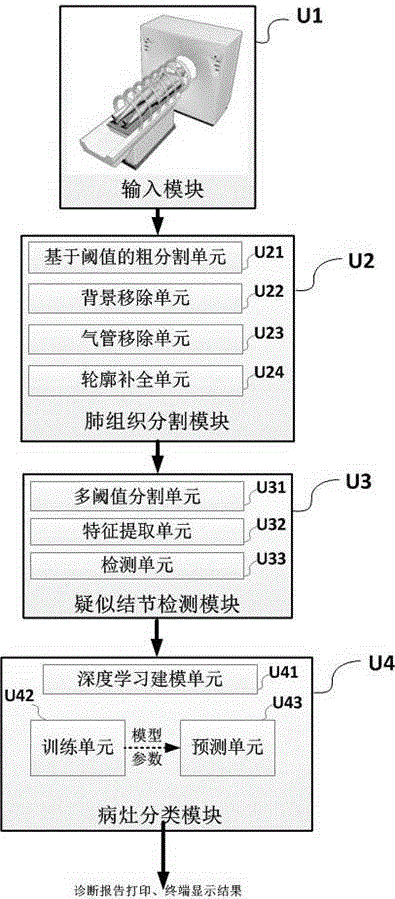

Automatic detection system for pulmonary nodule in chest CT (Computed Tomography) image

ActiveCN106780460AAvoid nodulesImplement classificationImage enhancementImage analysisPulmonary nodulePrediction probability

The invention relates to an automatic detection system for a pulmonary nodule in a chest CT (Computed Tomography) image. The system provides improvements for the problems of large calculated amount of computer aided software, inaccurate prediction and few prediction varieties. The improvement of the invention comprises the steps of acquiring a CT image; segmenting a pulmonary tissue; detecting a suspected nodular lesion area in the pulmonary tissue; classifying nidi based on a nidus classification model of deep learning; and outputting an image mark and a diagnosis report. The system has high nodule detection rate and relatively low false positive rate, acquires an accurate locating, quantitative and qualitative result of a nodular lesion and a prediction probability thereof. The end-to-end (from a CT machine end to a doctor end) nodular lesion screening is truly realized, the accuracy and operability demands of doctors are satisfied and the system has wide mar5ket application prospects.

Owner:杭州健培科技有限公司

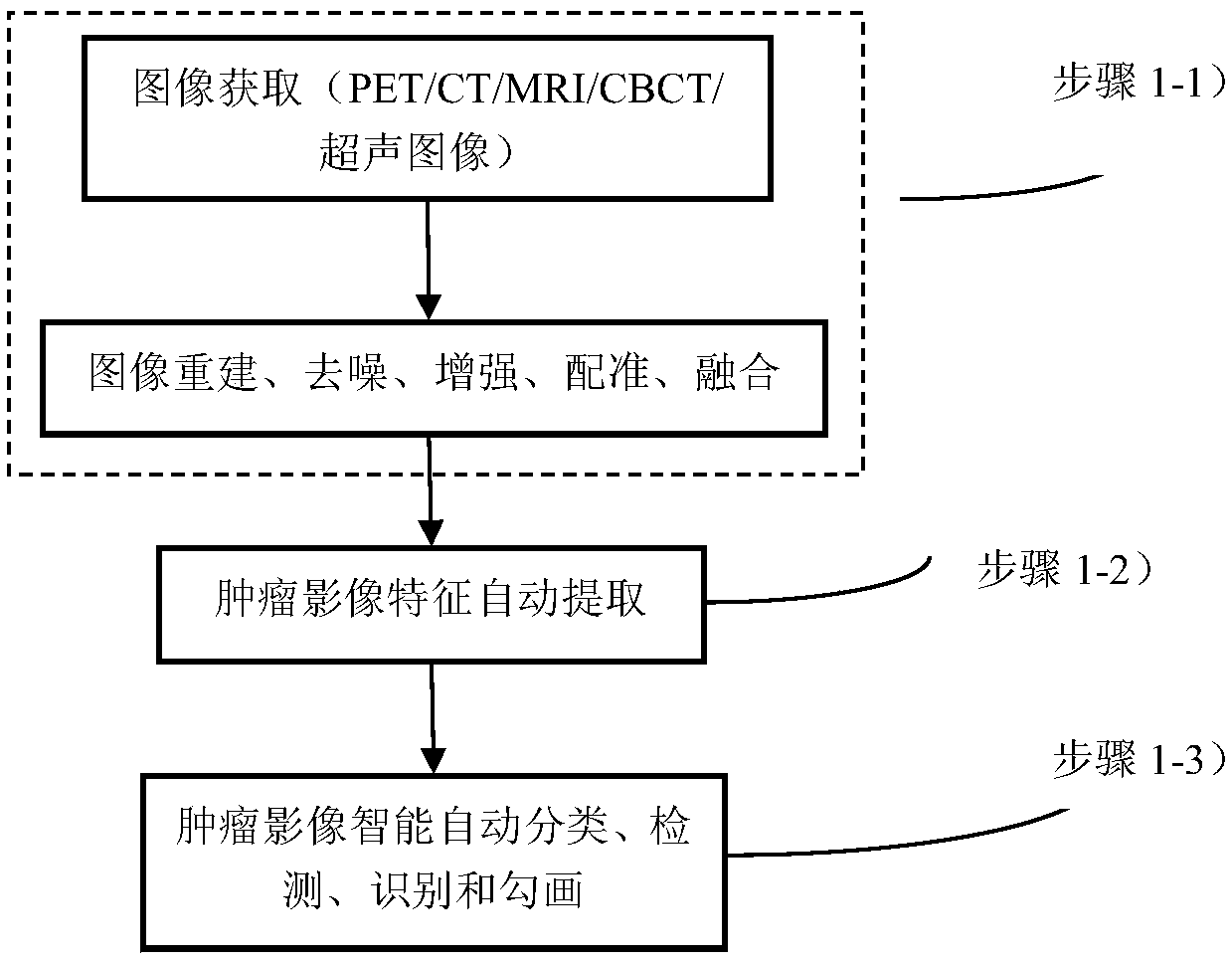

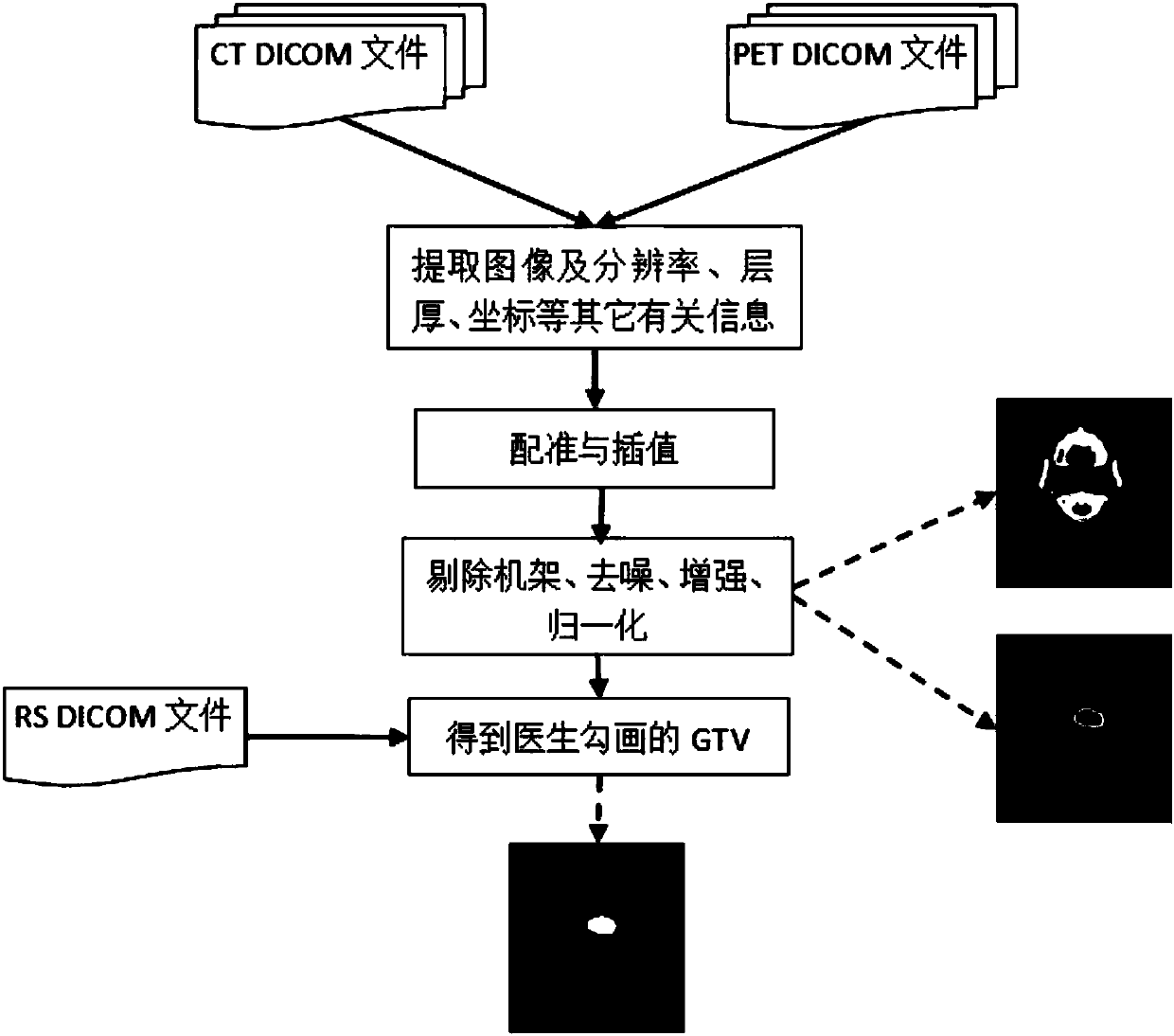

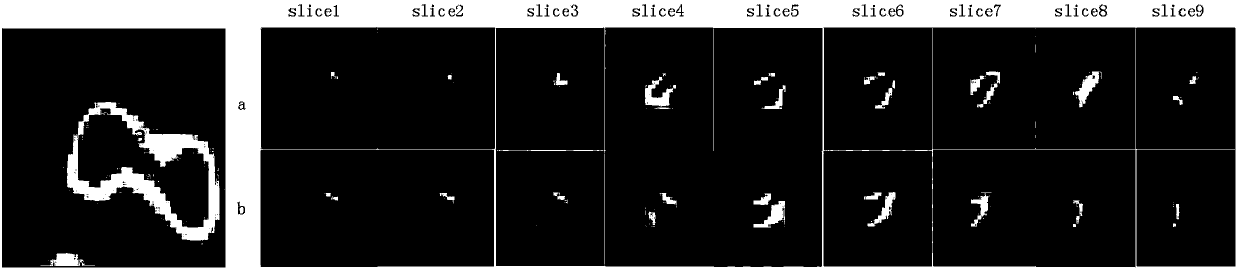

Intelligent and automatic delineation method for gross tumor volume and organs at risk

InactiveCN107403201ASmart sketchAutomatically sketchImage enhancementImage analysisSonificationMathematical Graph

The invention relates to an intelligent and automatic delineation method for a gross tumor volume and organs at risk, which includes the following steps: (1) tumor multi-modal (mode) image reconstruction, de-noising, enhancement, registration, fusion and other preprocessing; (2) automatic extraction of tumor image features: automatically extracting one or more pieces of tumor image group (texture feature spectrum) information from CT, CBCT, MRI, PET and / or ultrasonic multi-modal (mode) tumor medical image data; and (3) intelligently and automatically delineating a gross tumor volume and organs at risk through deep learning, machine learning, artificial intelligence, regional growing, the graph theory (random walk), a geometric level set, and / or a statistical theory method. A gross tumor volume (GTV) and organs at risk (OAR) can be delineated with high precision.

Owner:强深智能医疗科技(昆山)有限公司

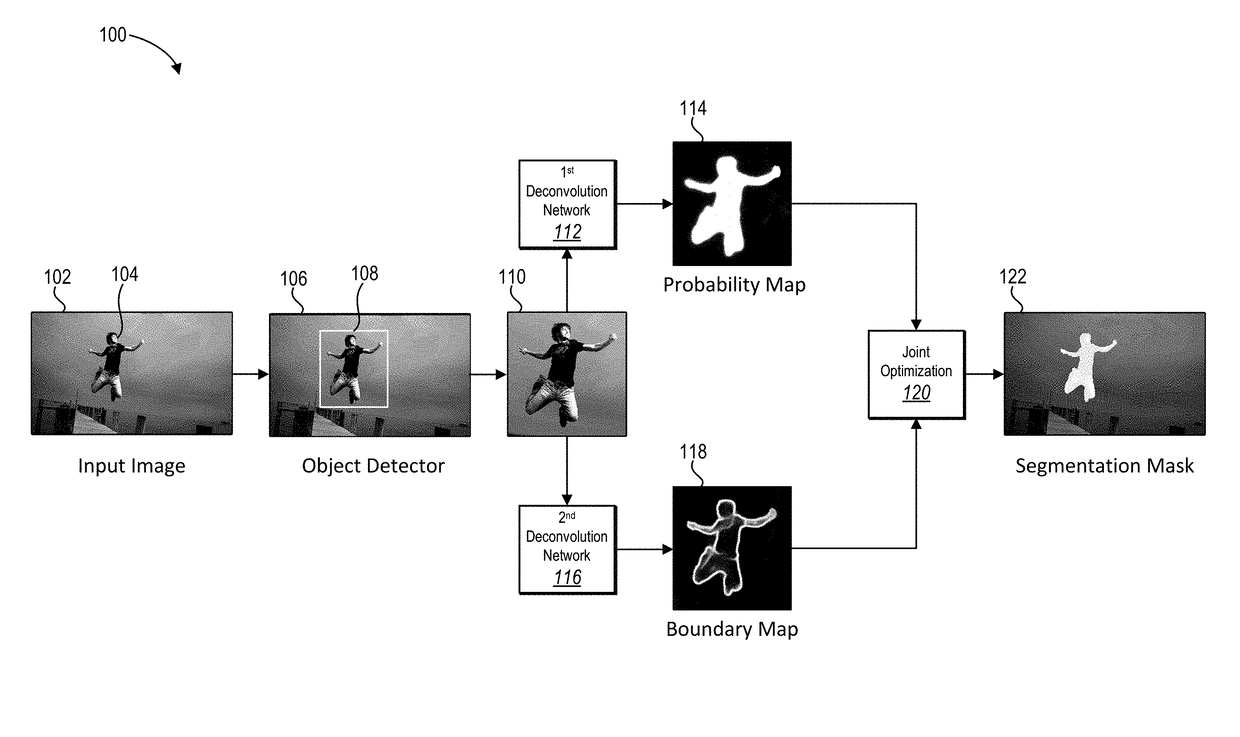

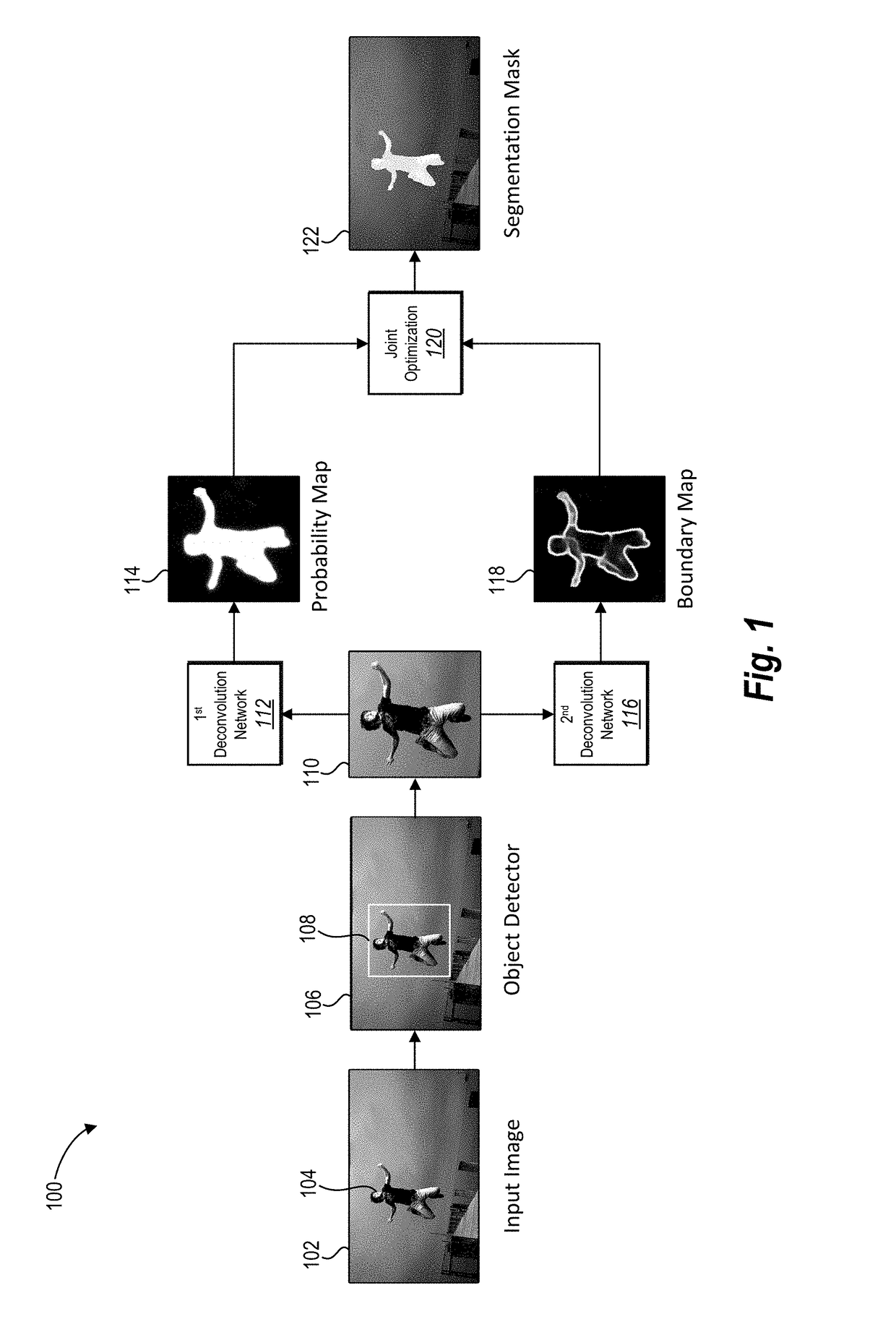

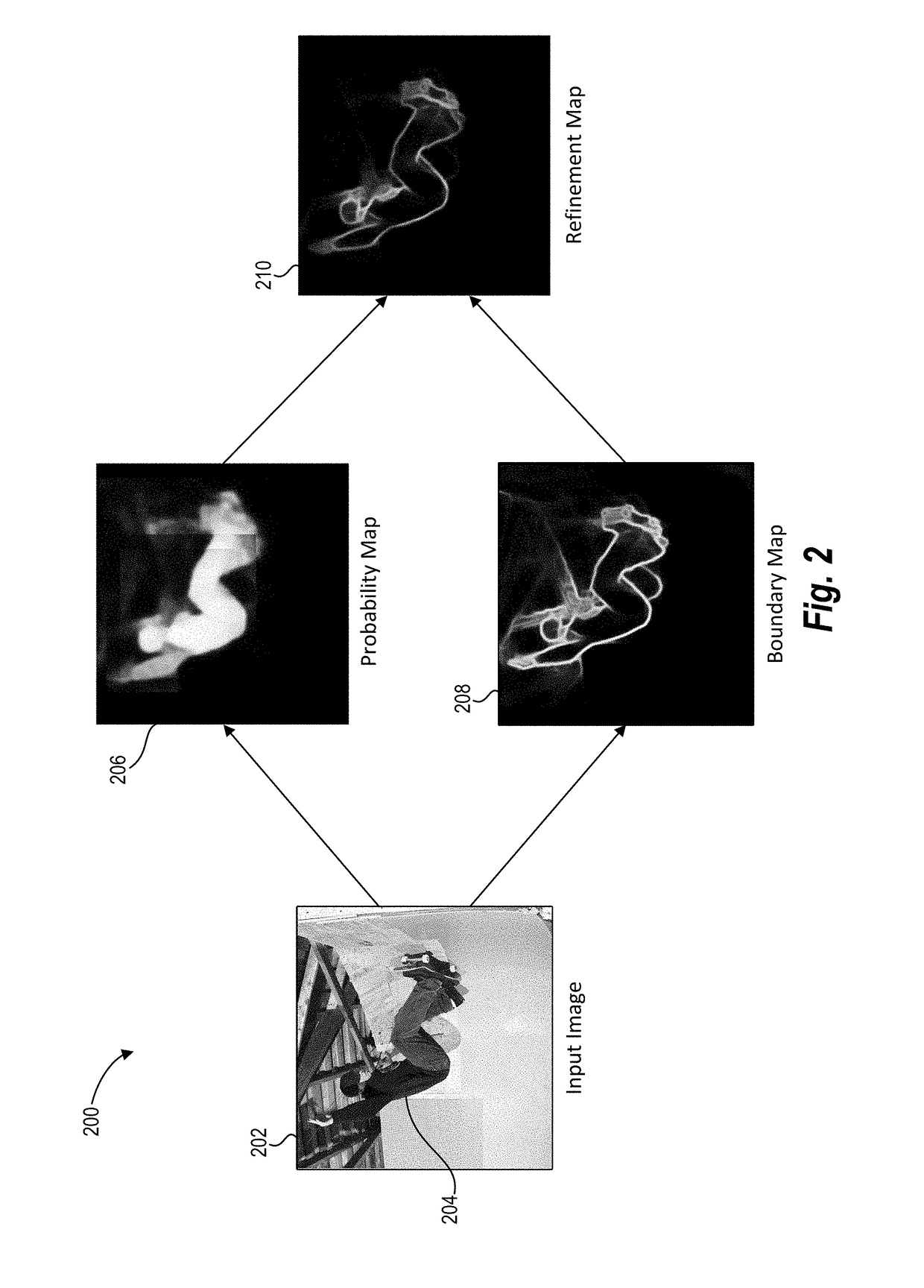

Utilizing deep learning for boundary-aware image segmentation

ActiveUS20170287137A1Accurate segmentationAccurate identificationImage enhancementMathematical modelsImage segmentationComputer vision

Systems and methods are disclosed for segmenting a digital image to identify an object portrayed in the digital image from background pixels in the digital image. In particular, in one or more embodiments, the disclosed systems and methods use a first neural network and a second neural network to generate image information used to generate a segmentation mask that corresponds to the object portrayed in the digital image. Specifically, in one or more embodiments, the disclosed systems and methods optimize a fit between a mask boundary of the segmentation mask to edges of the object portrayed in the digital image to accurately segment the object within the digital image.

Owner:ADOBE INC

Synthesizing training data for broad area geospatial object detection

A system for broad area geospatial object recognition, identification, classification, location and quantification, comprising an image manipulation module to create synthetically-generated images to imitate and augment an existing quantity of orthorectified geospatial images; together with a deep learning module and a convolutional neural network serving as an image analysis module, to analyze a large corpus of orthorectified geospatial images, identify and demarcate a searched object of interest from within the corpus, locate and quantify the identified or classified objects from the corpus of geospatial imagery available to the system. The system reports results in a requestor's preferred format.

Owner:MAXAR INTELLIGENCE INC

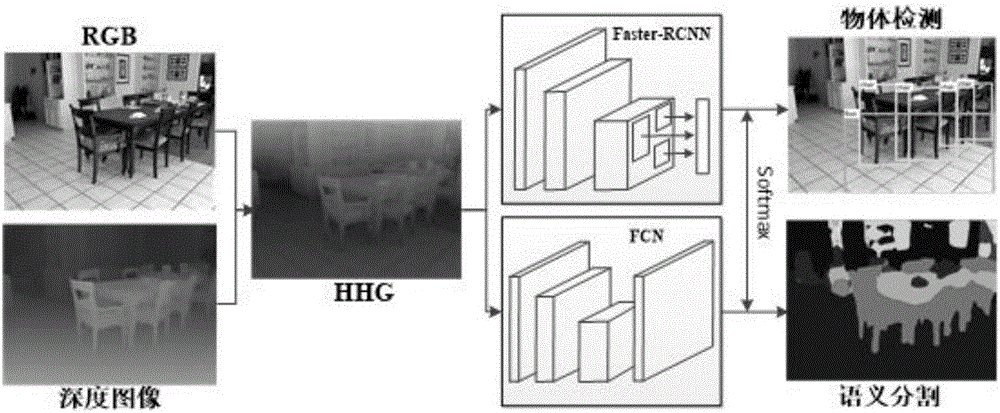

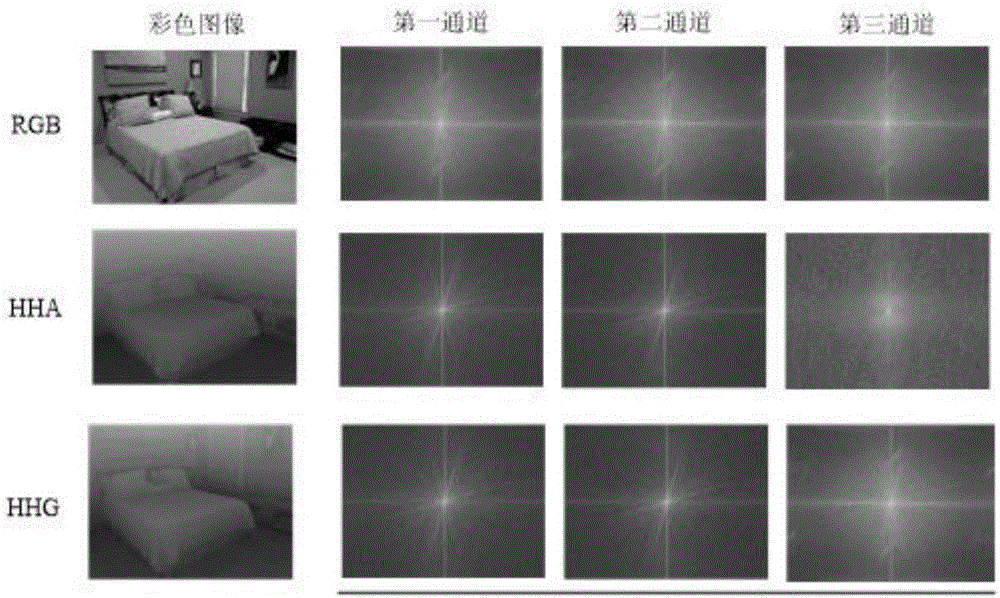

RGB-D image object detection and semantic segmentation method based on deep convolution network

ActiveCN106709568ACharacter and pattern recognitionNeural architecturesMachine visionVisual perception

The invention discloses an RGB-D image object detection and semantic segmentation method based on a deep convolution network, which belongs to the field of depth learning and machine vision. According to the method provided by the technical scheme of the invention, Faster-RCNN is used to replace the original slow RCNN; Faster-RCNN uses GPU, which is fast in the aspect of feature extracting, and at the same time generates a regional scheme in the network; the whole training process is training from end to end; FCN is used to carry out RGB-D image semantic segmentation; FCN uses a GPU and the deep convolution network to rapidly extract the deep features of an image; deconvolution is used to fuse deep features and shallow features of the image convolution; and the local semantic information of the image is integrated into the global semantic information.

Owner:深圳市小枫科技有限公司

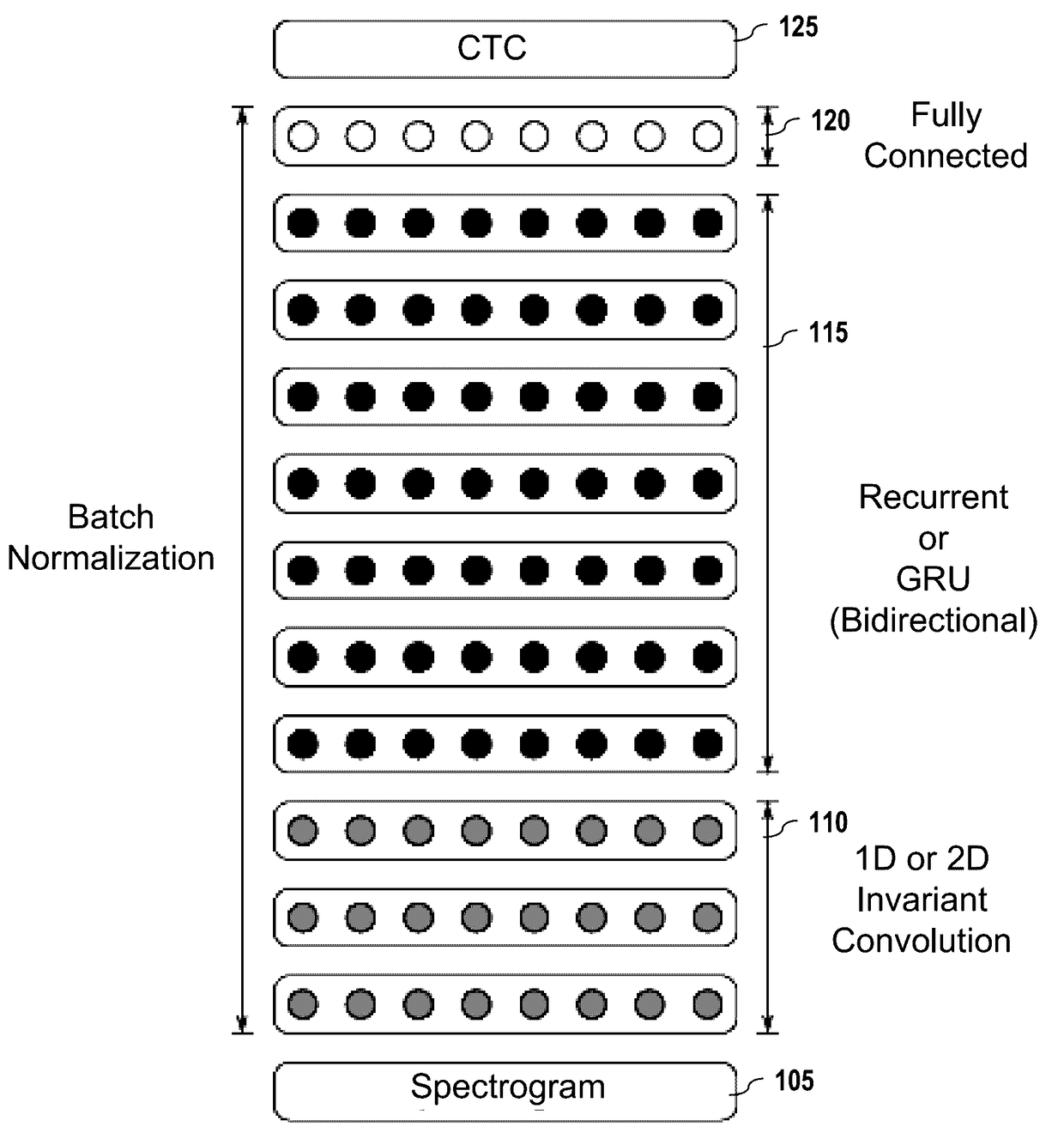

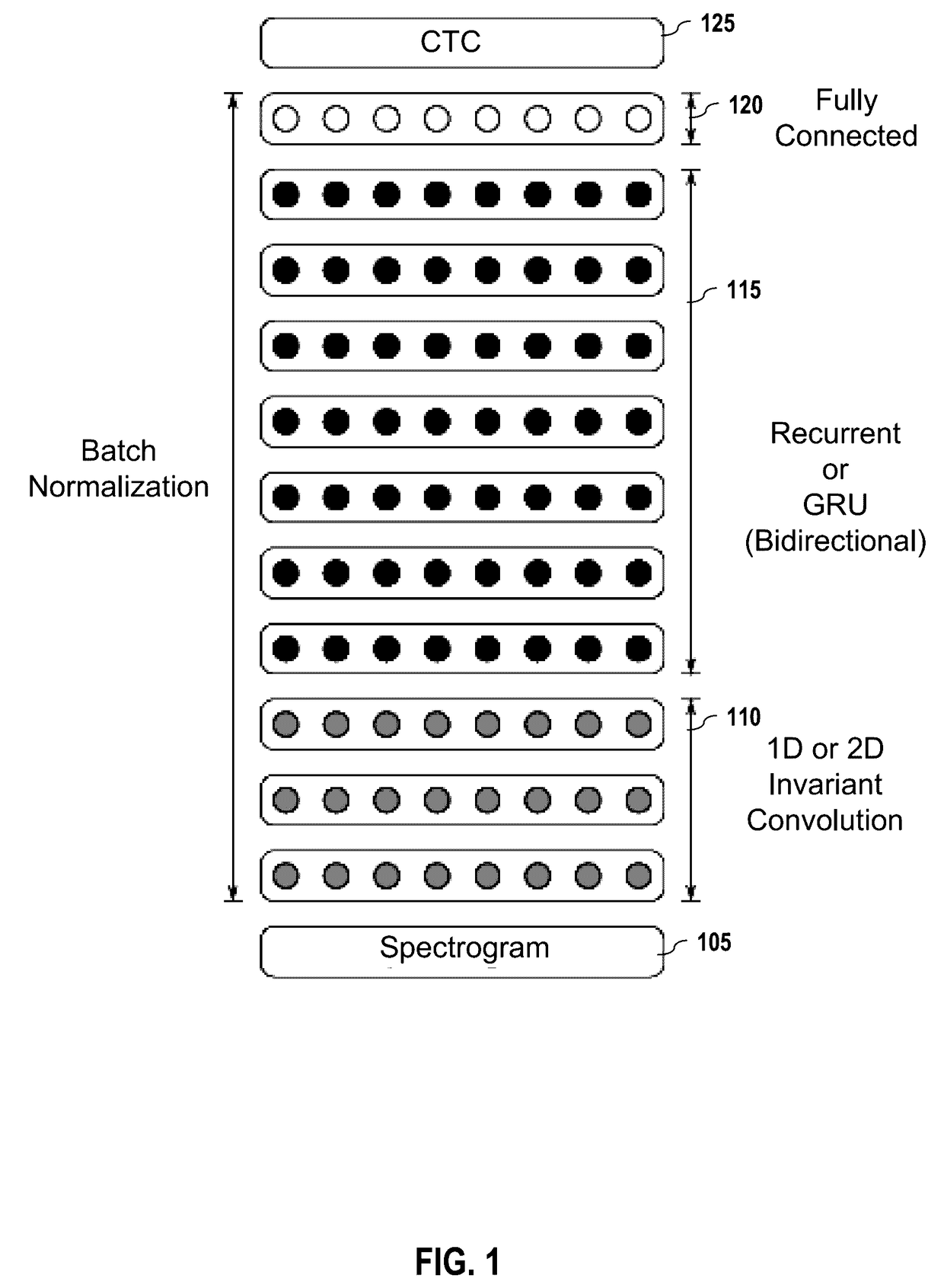

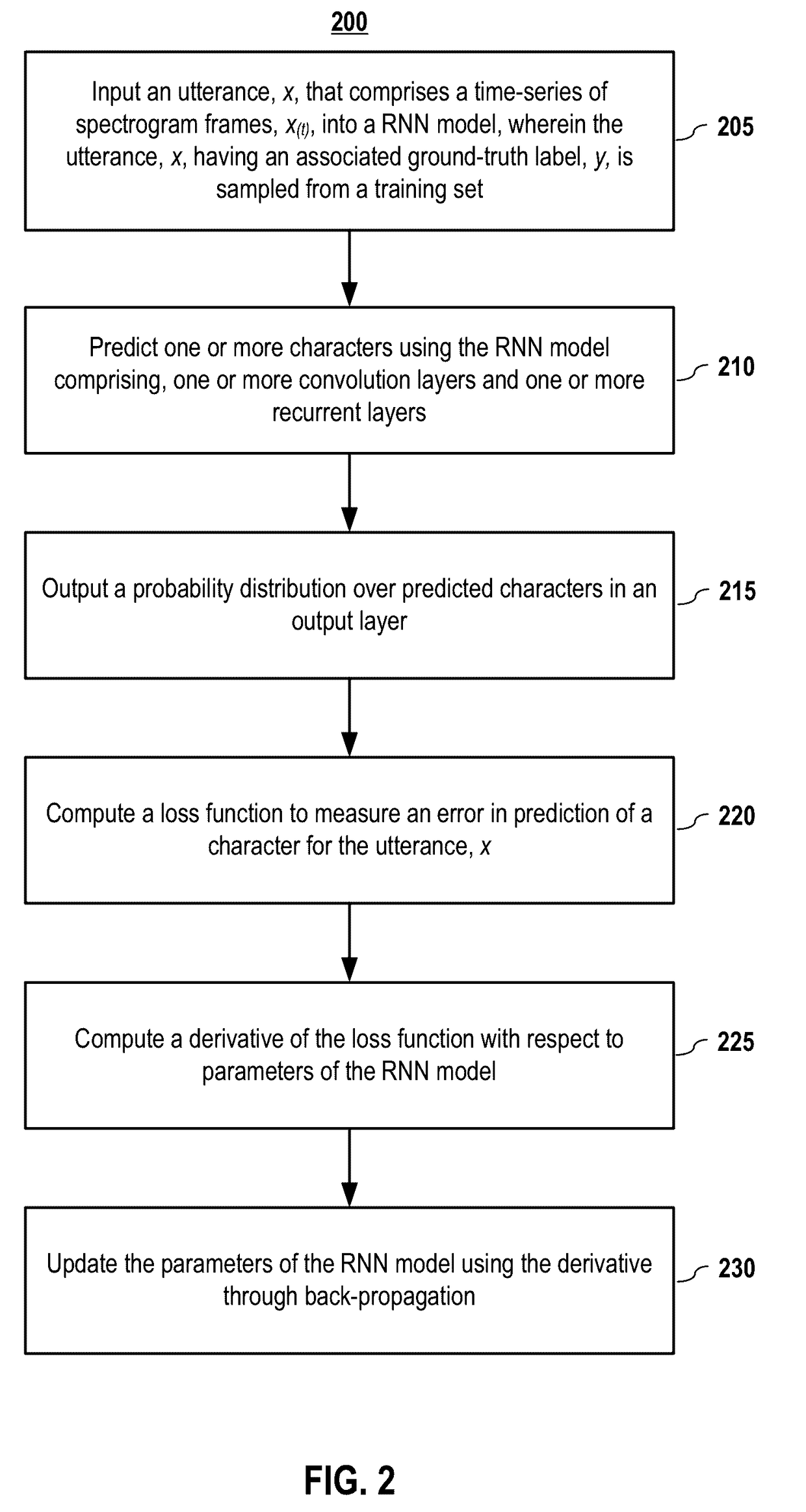

Deployed end-to-end speech recognition

Embodiments of end-to-end deep learning systems and methods are disclosed to recognize speech of vastly different languages, such as English or Mandarin Chinese. In embodiments, the entire pipelines of hand-engineered components are replaced with neural networks, and the end-to-end learning allows handling a diverse variety of speech including noisy environments, accents, and different languages. Using a trained embodiment and an embodiment of a batch dispatch technique with GPUs in a data center, an end-to-end deep learning system can be inexpensively deployed in an online setting, delivering low latency when serving users at scale.

Owner:BAIDU USA LLC

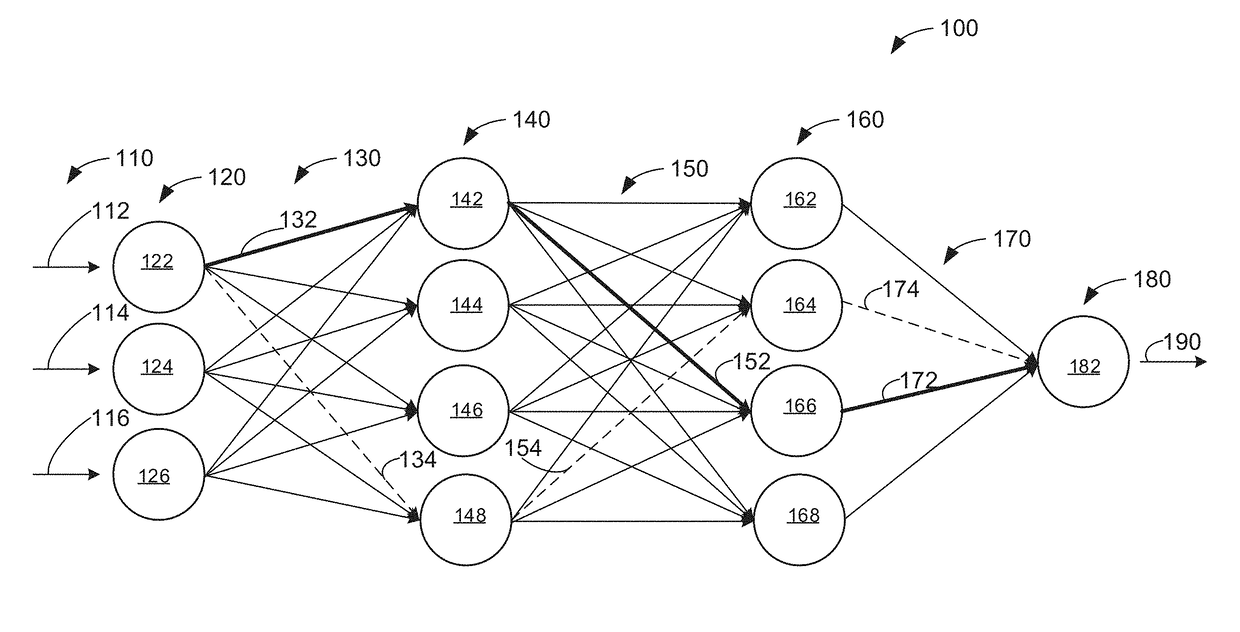

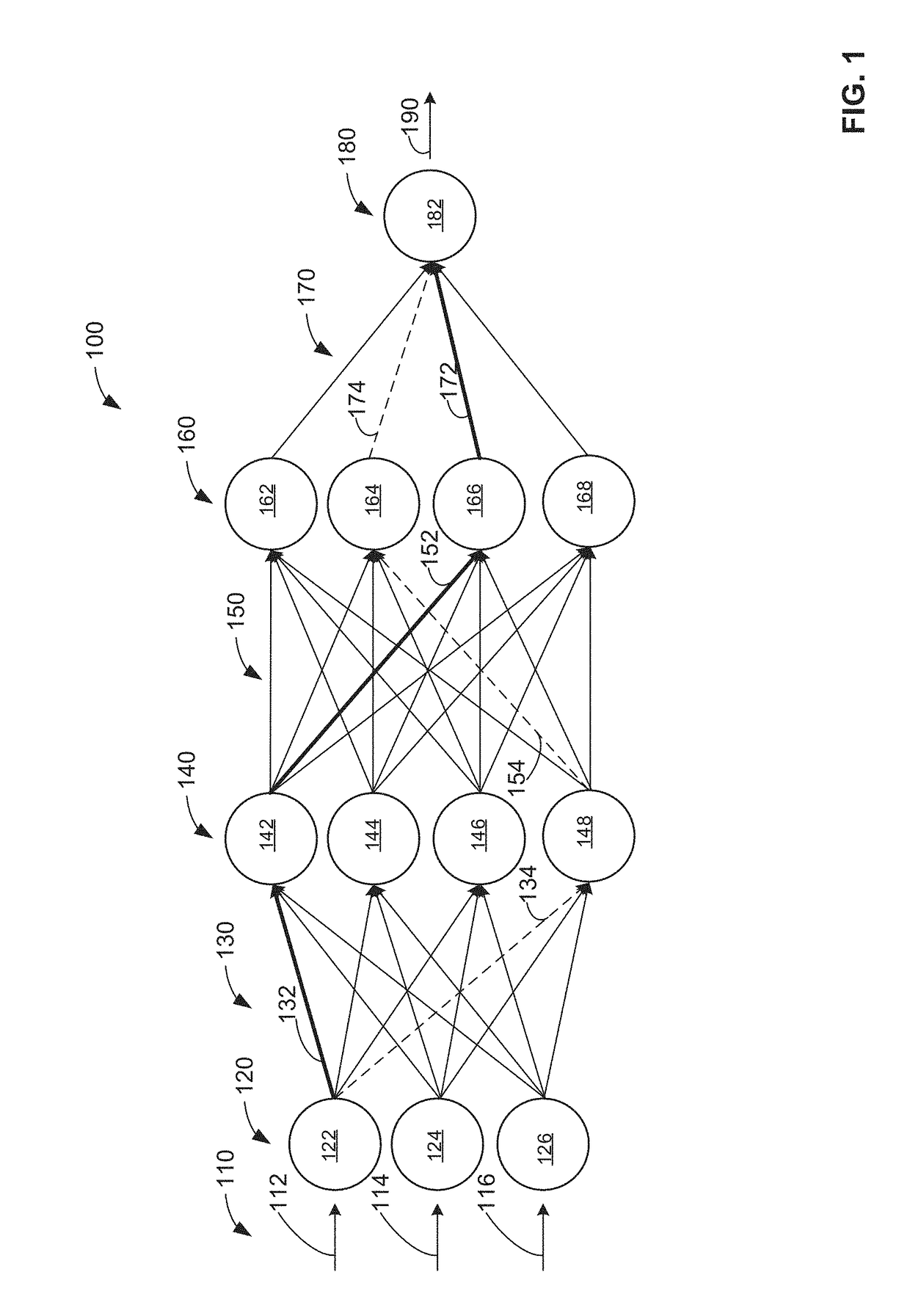

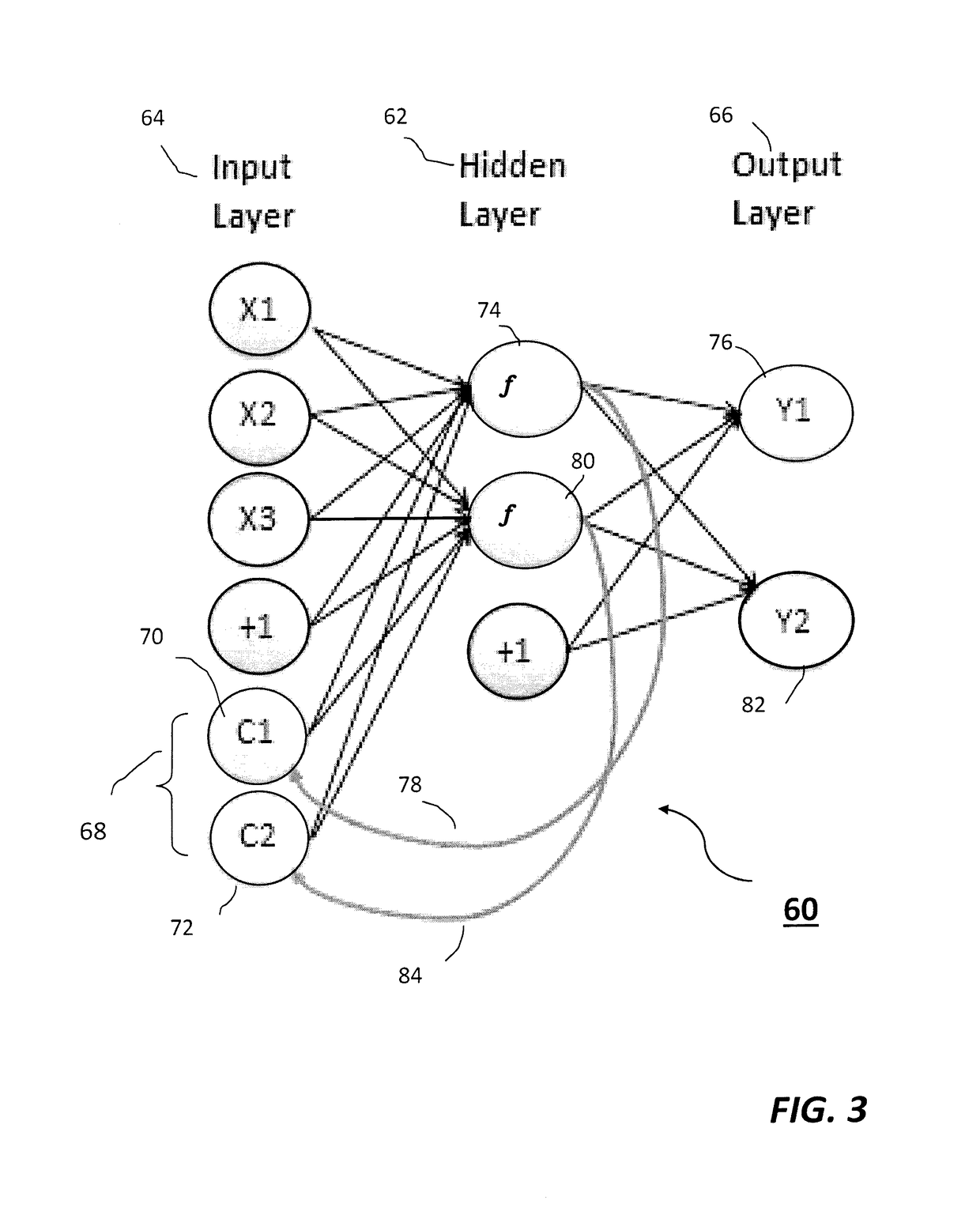

System and method for predicting power plant operational parameters utilizing artificial neural network deep learning methodologies

A system and method of predicting future power plant operations is based upon an artificial neural network model including one or more hidden layers. The artificial neural network is developed (and trained) to build a model that is able to predict future time series values of a specific power plant operation parameter based on prior values. By accurately predicting the future values of the time series, power plant personnel are able to schedule future events in a cost-efficient, timely manner. The scheduled events may include providing an inventory of replacement parts, determining a proper number of turbines required to meet a predicted demand, determining the best time to perform maintenance on a turbine, etc. The inclusion of one or more hidden layers in the neural network model creates a prediction that is able to follow trends in the time series data, without overfitting.

Owner:SIEMENS AG

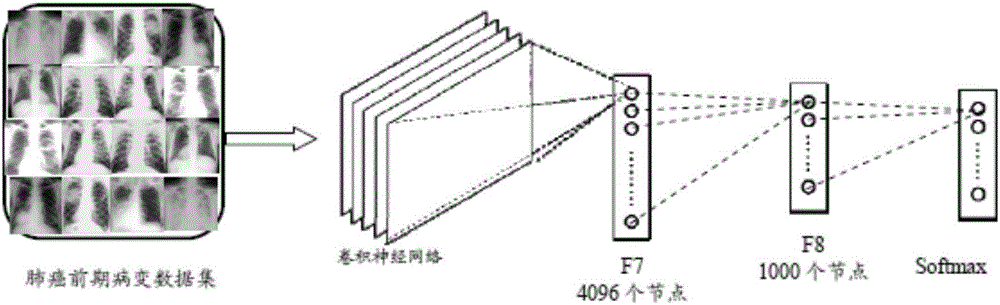

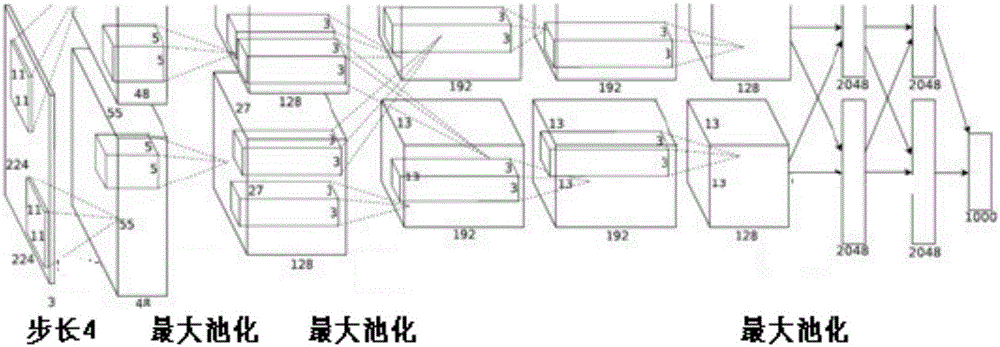

Deep convolutional neural network-based lung cancer preventing self-service health cloud service system

ActiveCN106372390AImprove informatizationIncrease health awarenessSpecial data processing applicationsNerve networkSuspected lung cancer

Owner:汤一平

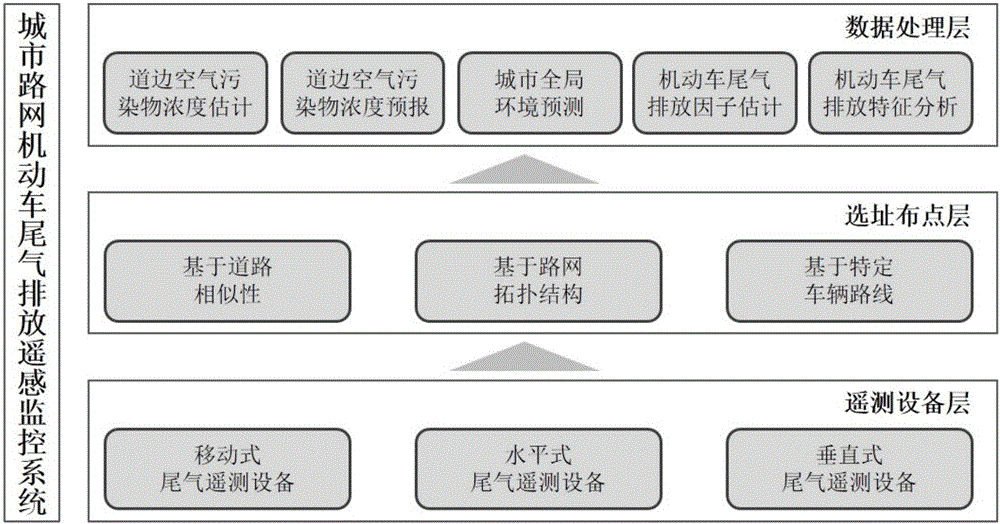

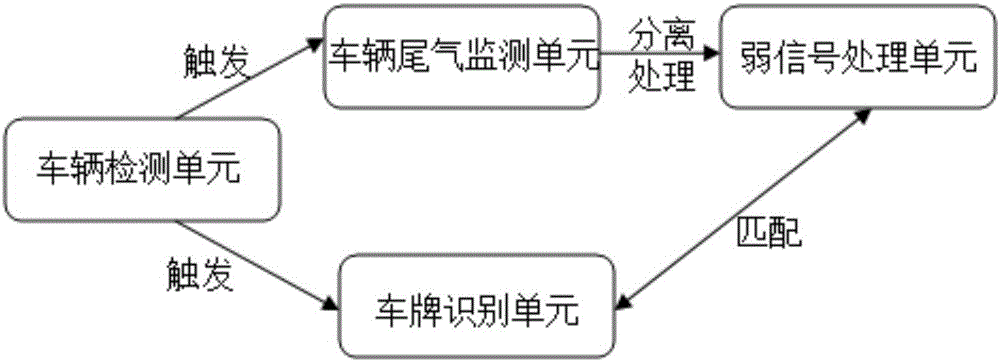

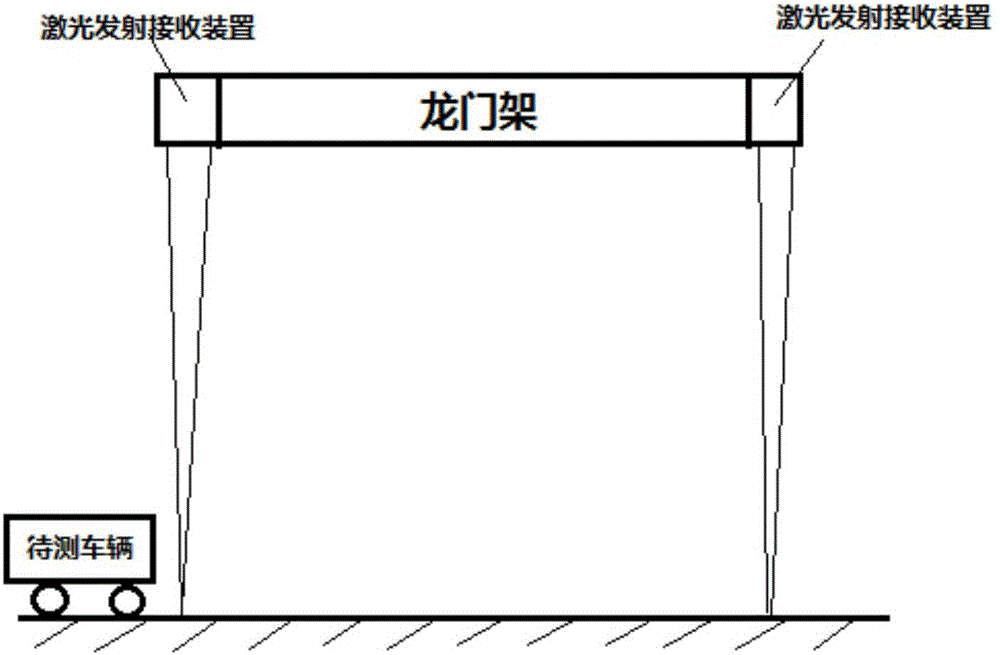

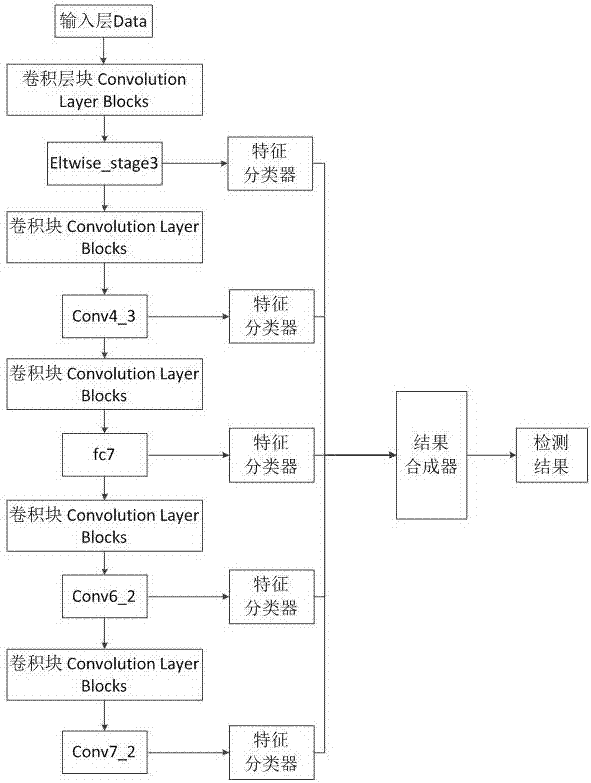

Remote sensing monitoring system for automotive exhaust emission of urban road network

ActiveCN106845371ANetworkingRealize intelligenceData processing applicationsMeasurement devicesMeasurement deviceEngineering

The invention discloses a remote sensing monitoring system for automotive exhaust emission of an urban road network. The system is mainly composed of a remote measurement device layer, a site selection and position arrangement layer, and a data processing layer. Through mobile, horizontal and vertical exhaust remote measurement devices, real-time data of the automotive exhaust emission in running is obtained; by adopting an advanced site selection and position arrangement method, the remote measurement devices are scientifically networked; and in combination with external data of weather, traffic, geographic information and the like, the real-time remote measurement data of the automotive exhaust emission is subjected to intelligent analysis and data mining by adopting big data processing and analysis technologies such as deep learning and the like, and key indexes and statistical data with optimal identification performance are obtained, so that effective support is provided for government departments to make related decisions.

Owner:UNIV OF SCI & TECH OF CHINA

Face recognition method, device, system and apparatus based on convolutional neural network

ActiveCN106951867AIncreased non-linear capabilitiesNonlinear Capability LeapCharacter and pattern recognitionNeural architecturesFace detectionImaging condition

The invention discloses a face recognition method, device, system and apparatus based on a convolutional neural network (CNN). The method comprises the following steps: S1, face detection: using a multilayer CNN feature architecture; S2, key point positioning: obtaining the key point positions of the face by using connecting reference frame regression networks in a cascaded way in deep learning; S3, preprocessing: obtaining a face image with a fixed size; S4, feature extraction: obtaining a feature representative vector by means of a feature extraction model; and S5: feature comparison: determining the similarity according to a threshold or giving a face recognition result according to distance sorting. The method adds a multilayer CNN feature combination to a traditional CNN single-layer feature architecture to treat different imaging conditions, trains a face detection network with good robustness in the monitoring environment from massive image data sets based on a deep convolution neural network algorithm, reduces a false detection rate, and improves detection response speed.

Owner:南京擎声网络科技有限公司

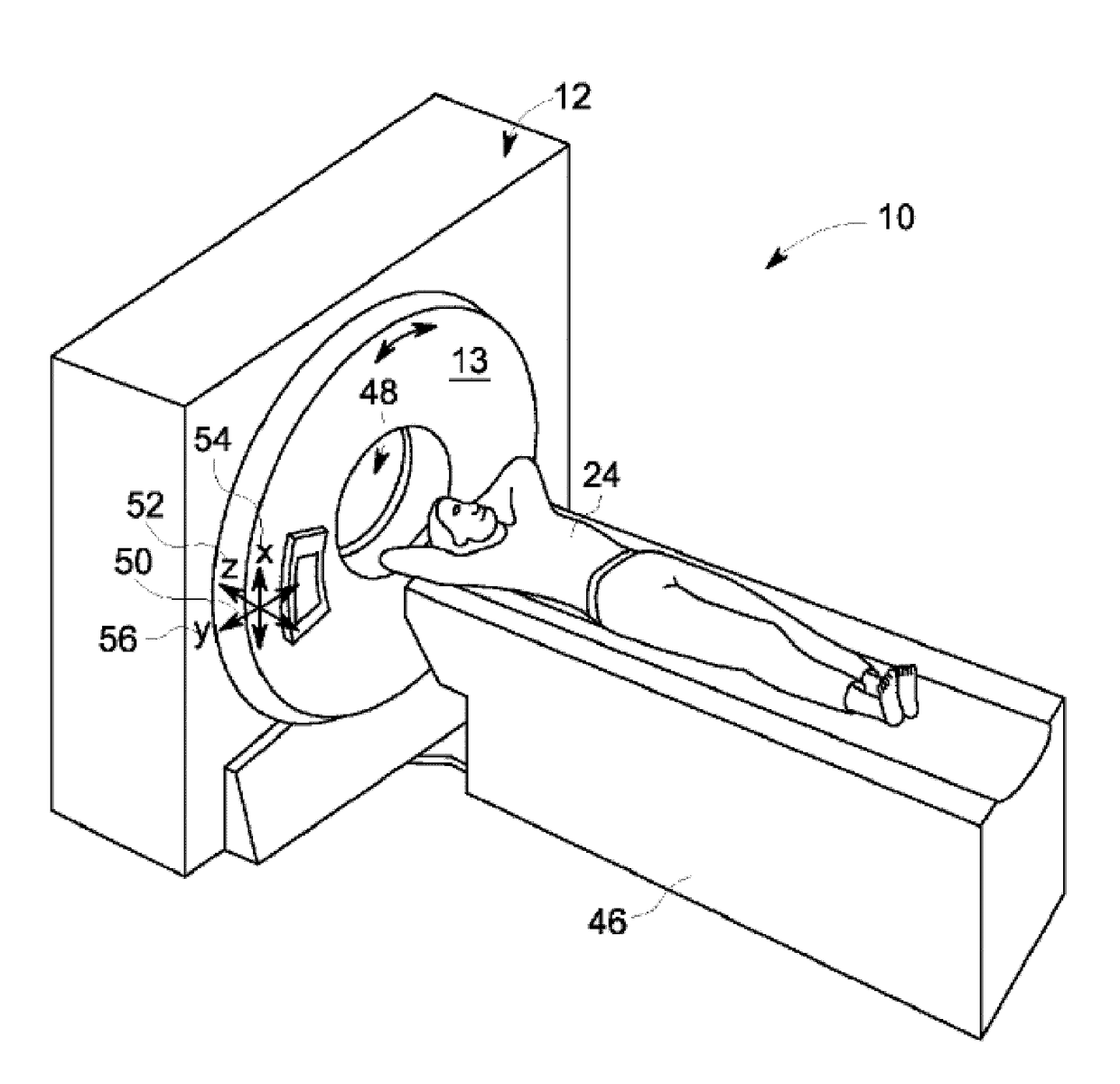

Deep learning medical systems and methods for image acquisition

Methods and apparatus for improved deep learning for image acquisition are provided. An imaging system configuration apparatus includes a training learning device including a first processor to implement a first deep learning network (DLN) to learn a first set of imaging system configuration parameters based on a first set of inputs from a plurality of prior image acquisitions to configure at least one imaging system for image acquisition, the training learning device to receive and process feedback including operational data from the plurality of image acquisitions by the at least one imaging system. The example apparatus includes a deployed learning device including a second processor to implement a second DLN, the second DLN generated from the first DLN of the training learning device, the deployed learning device configured to provide a second imaging system configuration parameter to the imaging system in response to receiving a second input for image acquisition.

Owner:GENERAL ELECTRIC CO

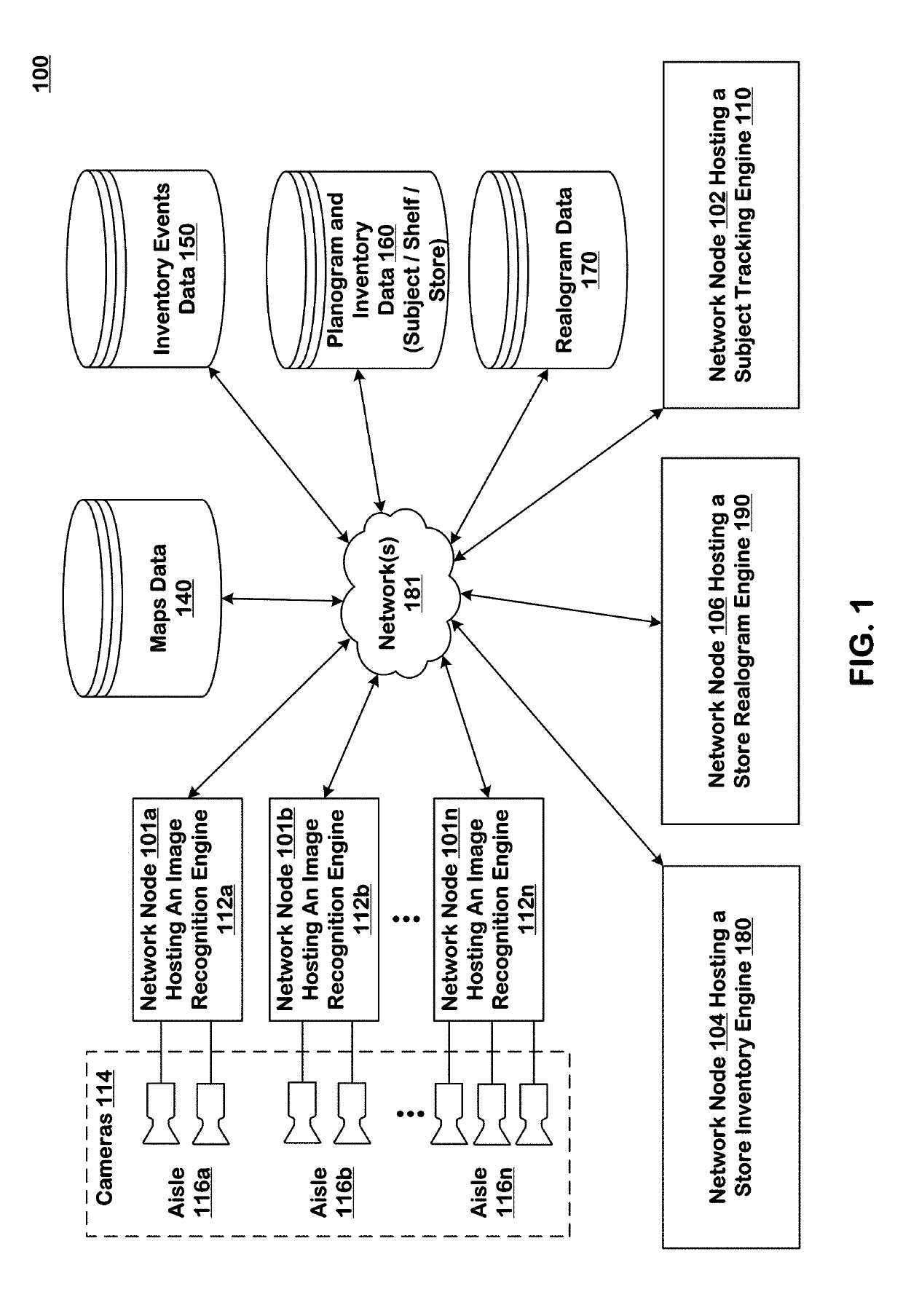

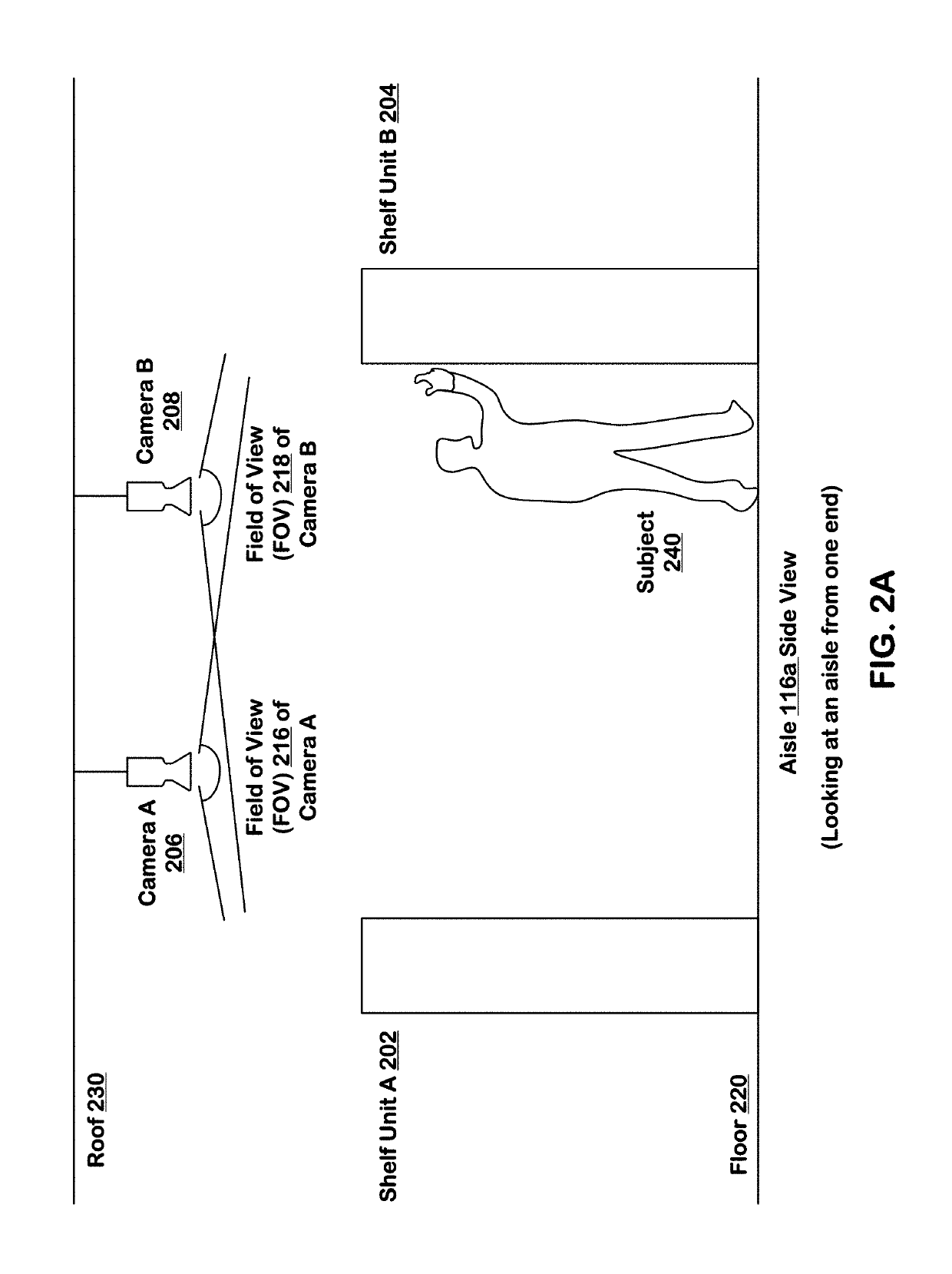

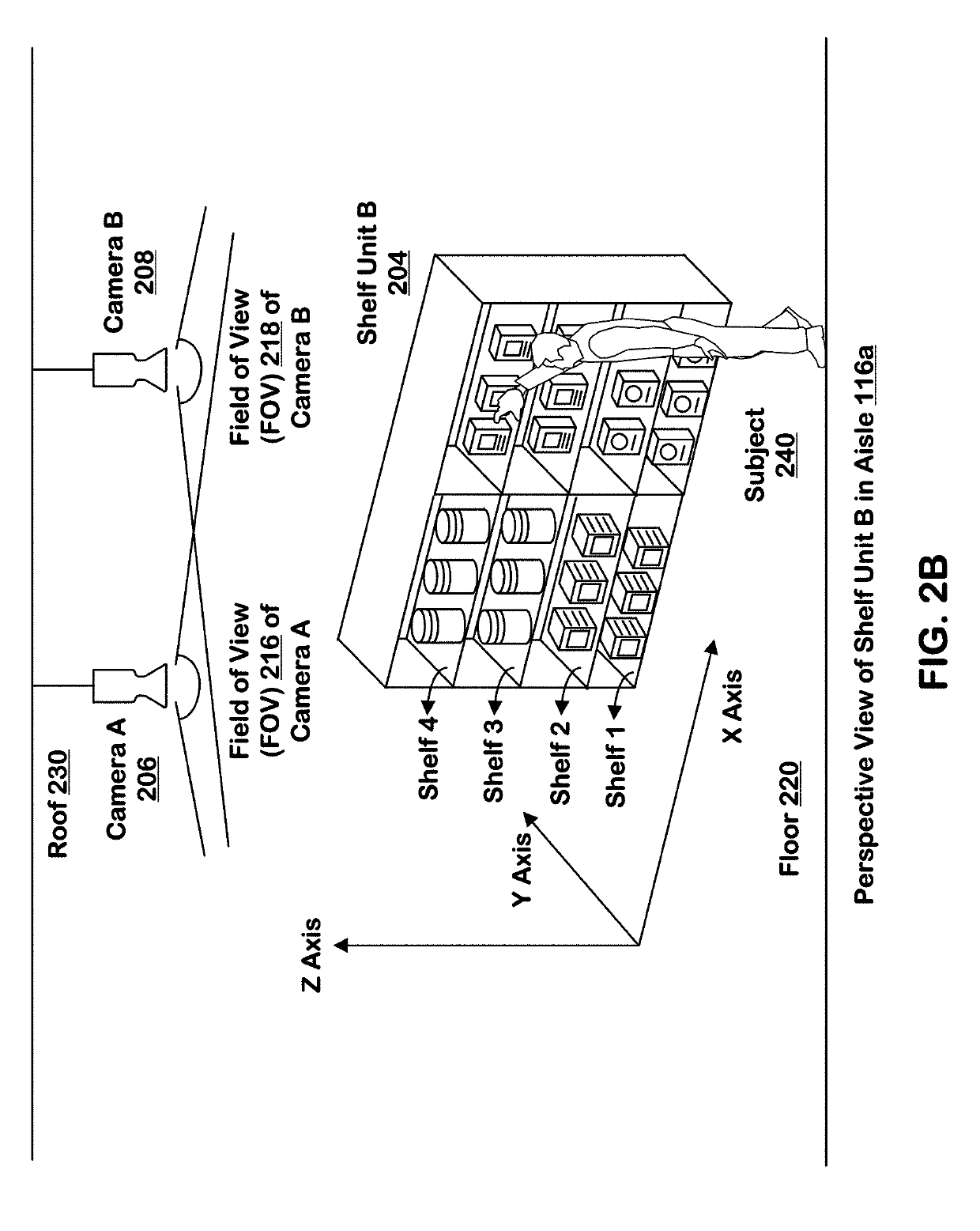

Realtime inventory location management using deep learning

ActiveUS20190156277A1Improve reliabilityImage enhancementMathematical modelsField of viewDeep learning

Systems and techniques are provided for tracking locations of inventory items in an area of real space including inventory display structures. A plurality of cameras are disposed above the inventory display structures. The cameras in the plurality of cameras produce respective sequences of images in corresponding fields of view in the real space. A memory stores a map of the area of real space identifying inventory locations on inventory display structures. The system is coupled to a plurality of cameras and uses the sequences of images produced by at least two cameras in the plurality of cameras to find a location of an inventory event in three dimensions in the area of real space. The system matches the location of the inventory event with an inventory location.

Owner:STANDARD COGNITION CORP

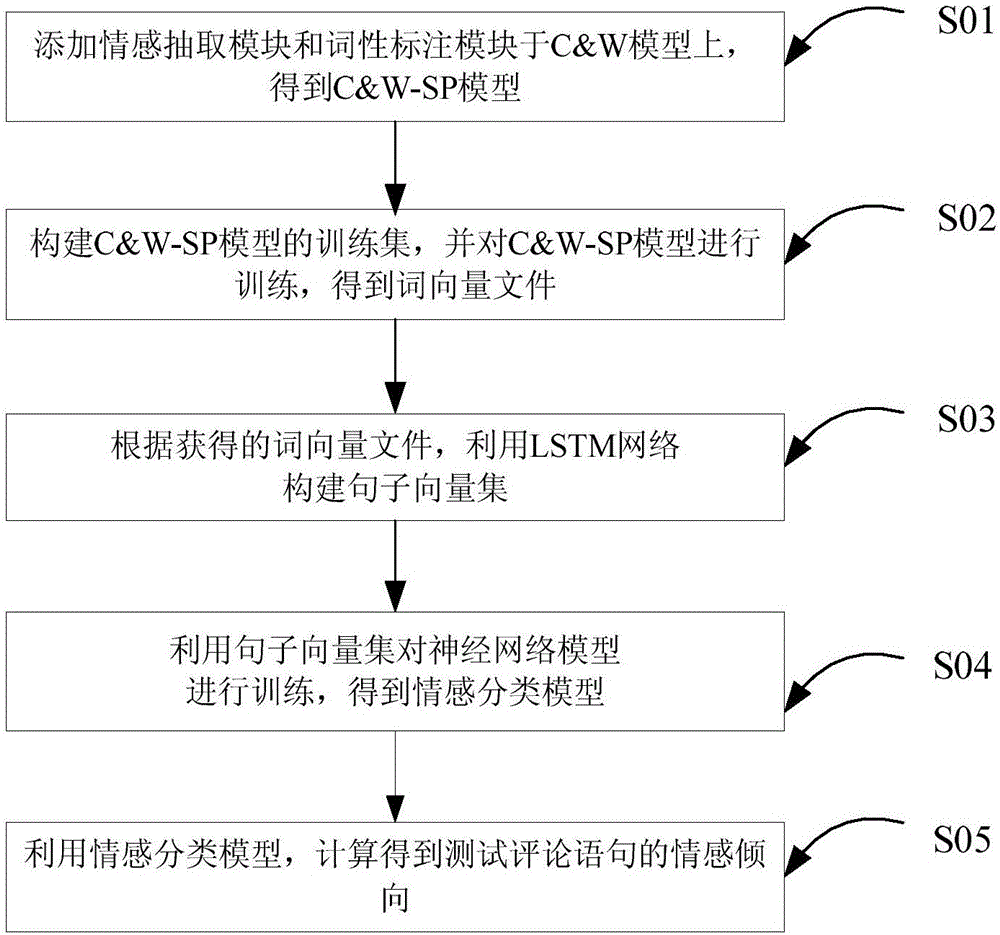

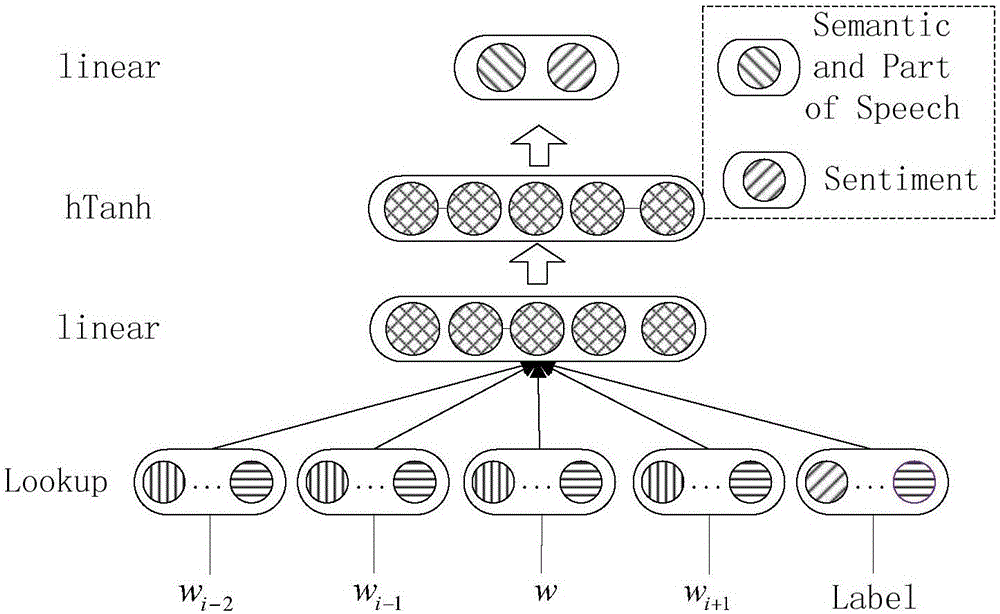

Subjective text emotion analysis method based on deep learning

ActiveCN106776581AImprove accuracyImprove attributesSemantic analysisSpecial data processing applicationsEmotion classificationAnalysis method

The invention discloses a subjective text emotion analysis method based on deep learning. The method includes the steps that 1, a C&W-SP model is established based on a C&W model, an emotion label and a word class label of a sentence are labeled in the sentence, a training set of a C&W_SPC&W-SP model is established, a C&W_SP model is trained through the training set, a word vector of each word in the training set is obtained, and a word vector file is formed; 2, a sentence vector set is established through an LSTM model according to the obtained word vector file; 3, a neutral network model is trained through the sentence vector set, and an emotion classification model is obtained; 4, the tested comment sentence is preprocessed, the tested sentence vectors are input in the emotion classification model, and the emotion tendency of the section of comment is obtained through calculation. According to the method, emotion tendency information and word class information are added into words, and the accuracy of emotion analysis is improved.

Owner:ZHEJIANG GONGSHANG UNIVERSITY

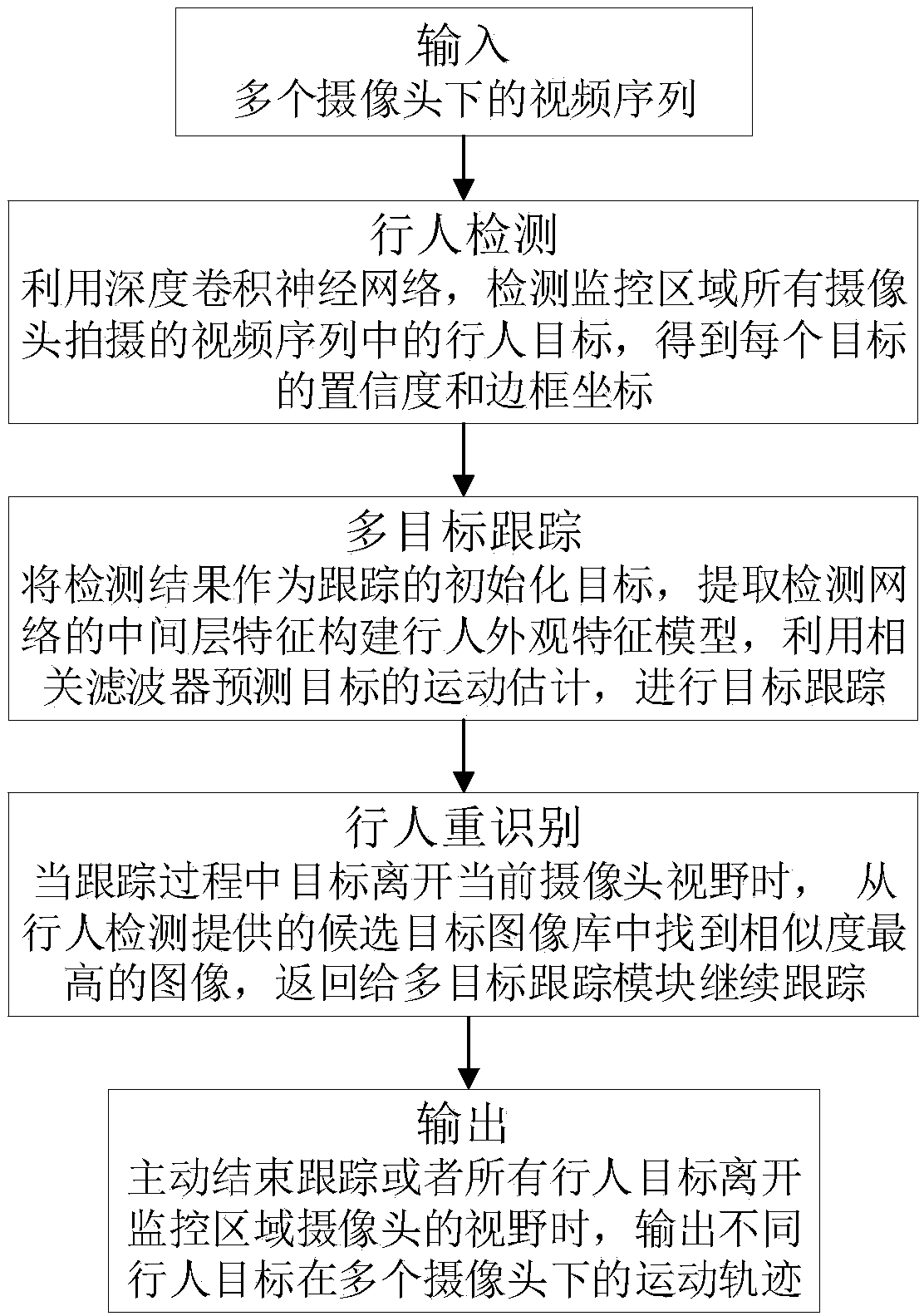

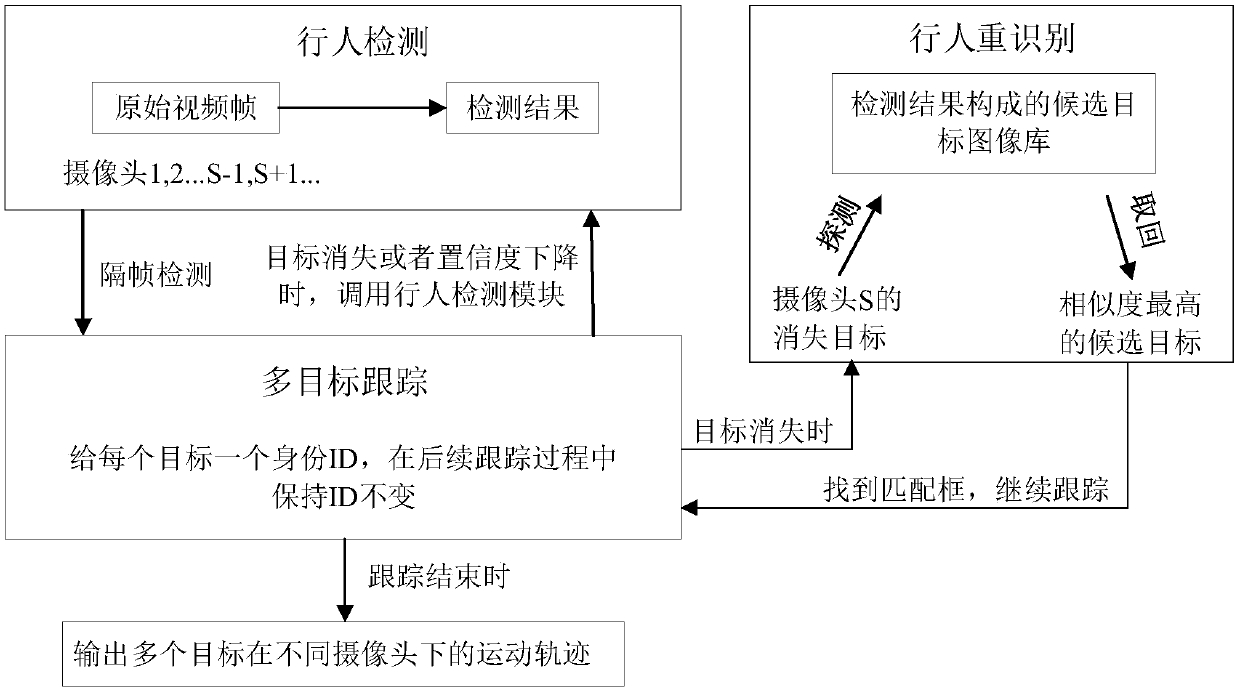

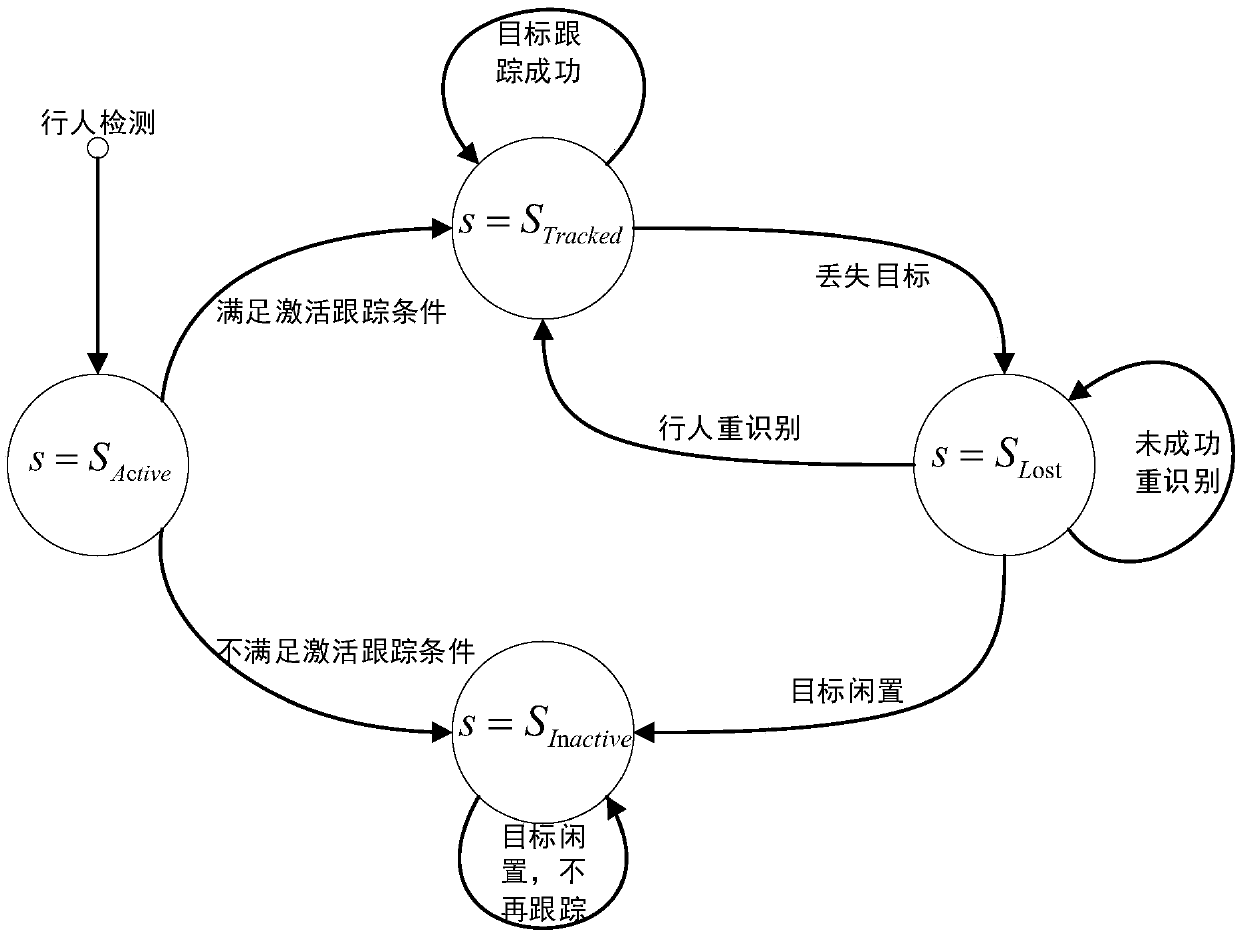

Cross-camera pedestrian detection tracking method based on depth learning

ActiveCN108875588AOvercome occlusionOvercome lighting changesCharacter and pattern recognitionNeural architecturesMulti target trackingRecognition algorithm

The invention discloses a cross-camera pedestrian detection tracking method based on depth learning, which comprises the steps of: by training a pedestrian detection network, carrying out pedestrian detection on an input monitoring video sequence; initializing tracking targets by a target box obtained by pedestrian detection, extracting shallow layer features and deep layer features of a region corresponding to a candidate box in the pedestrian detection network, and implementing tracking; when the targets disappear, carrying out pedestrian re-identification which comprises the process of: after target disappearance information is obtained, finding images with the highest matching degrees with the disappearing targets from candidate images obtained by the pedestrian detection network and continuously tracking; and when tracking is ended, outputting motion tracks of the pedestrian targets under multiple cameras. The features extracted by the method can overcome influence of illuminationvariations and viewing angle variations; moreover, for both the tracking and pedestrian re-identification parts, the features are extracted from the pedestrian detection network; pedestrian detection, multi-target tracking and pedestrian re-identification are organically fused; and accurate cross-camera pedestrian detection and tracking in a large-range scene are implemented.

Owner:WUHAN UNIV

Attention mechanism-based in-depth learning diabetic retinopathy classification method

ActiveCN108021916AEnhanced feature informationImproving Lesion Diagnosis ResultsImage enhancementImage analysisNerve networkDiabetes retinopathy

The invention discloses an attention mechanism-based in-depth learning diabetic retinopathy classification method comprising the following steps: a series of eye ground images are chosen as original data samples which are then subjected to normalization preprocessing operation, the preprocessed original data samples are divided into a training set and a testing set after being cut, a main neutralnetwork is subjected to parameter initializing and fine tuning operation, images of the training set are input into the main neutral network and then are trained, and a characteristic graph is generated; parameters of the main neutral network are fixed, the images of the training set are adopted for training an attention network, pathology candidate zone degree graphs are output and normalized, anattention graph is obtained, an attention mechanism is obtained after the attention graph is multiplied by the characteristic graph, an obtained result of the attention mechanism is input into the main neutral network, the images of the training set are adopted for training operation, and finally a diabetic retinopathy grade classification model is obtained. Via the method disclosed in the invention, the attention mechanism is introduced, a diabetic retinopathy zone data set is used for training the same, and information characteristics of a retinopathy zone is enhanced while original networkcharacteristics are reserved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

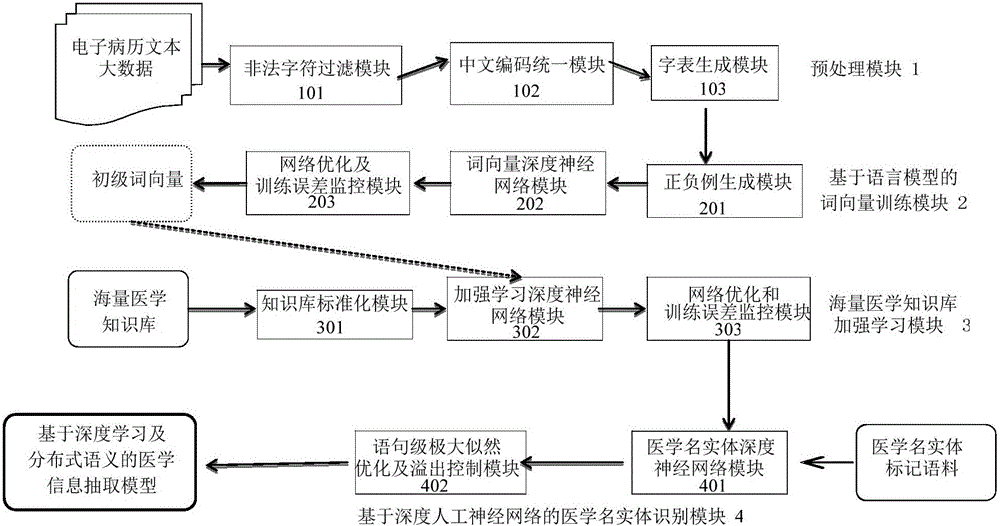

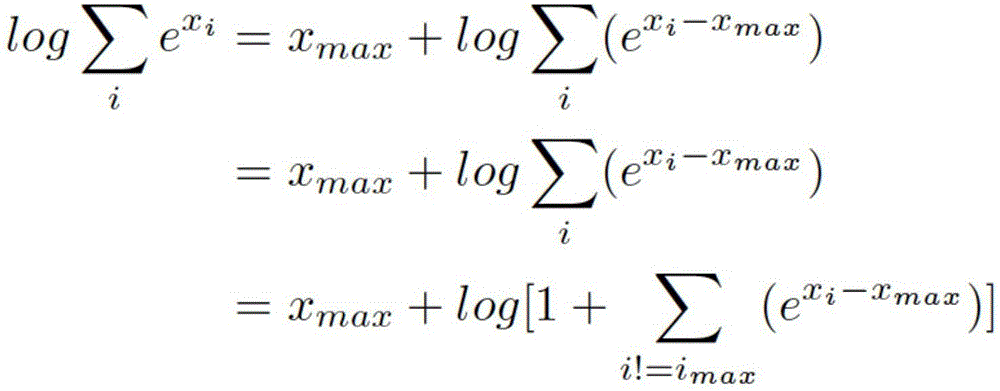

Medical information extraction system and method based on depth learning and distributed semantic features

ActiveCN105894088AAvoid floating point overflow problemsHigh precisionNeural learning methodsNerve networkStudy methods

he invention discloses a medical information extraction system and method based on depth learning and distributed semantic features. The system is composed of a pretreatment module, a linguistic-model-based word vector training module, a massive medical knowledge base reinforced learning module, and a depth-artificial-neural-network-based medical term entity identification module. With a depth learning method, generation of the probability of a linguistic model is used as an optimization objective; and a primary word vector is trained by using medical text big data; on the basis of the massive medical knowledge base, a second depth artificial neural network is trained, and the massive knowledge base is combined to the feature leaning process of depth learning based on depth reinforced learning, so that distributed semantic features for the medical field are obtained; and then Chinese medical term entity identification is carried out by using the depth learning method based on the optimized statement-level maximum likelihood probability. Therefore, the word vector is generated by using lots of unmarked linguistic data, so that the tedious feature selection and optimization adjustment process during medical natural language process can be avoided.

Owner:神州医疗科技股份有限公司 +1

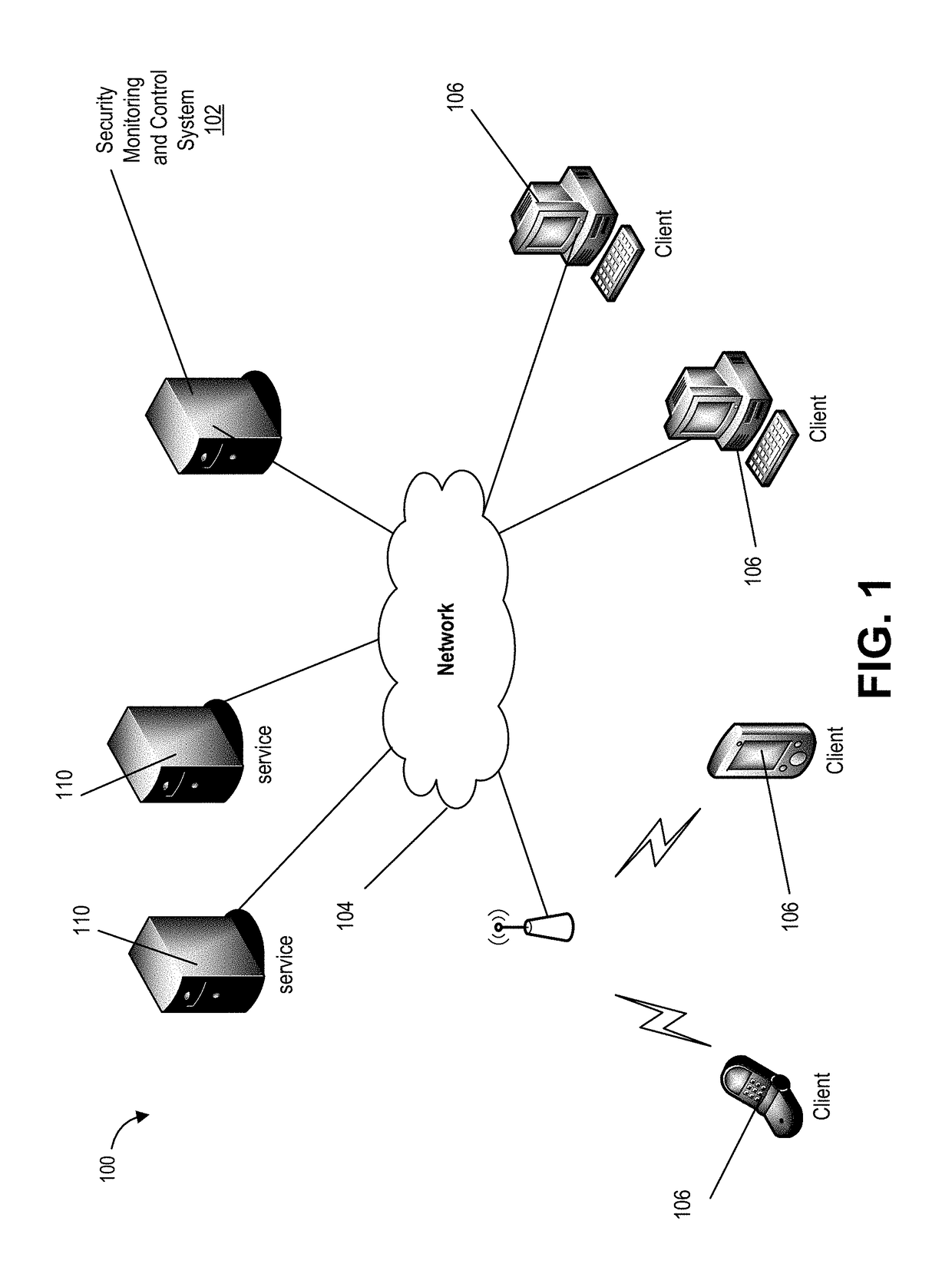

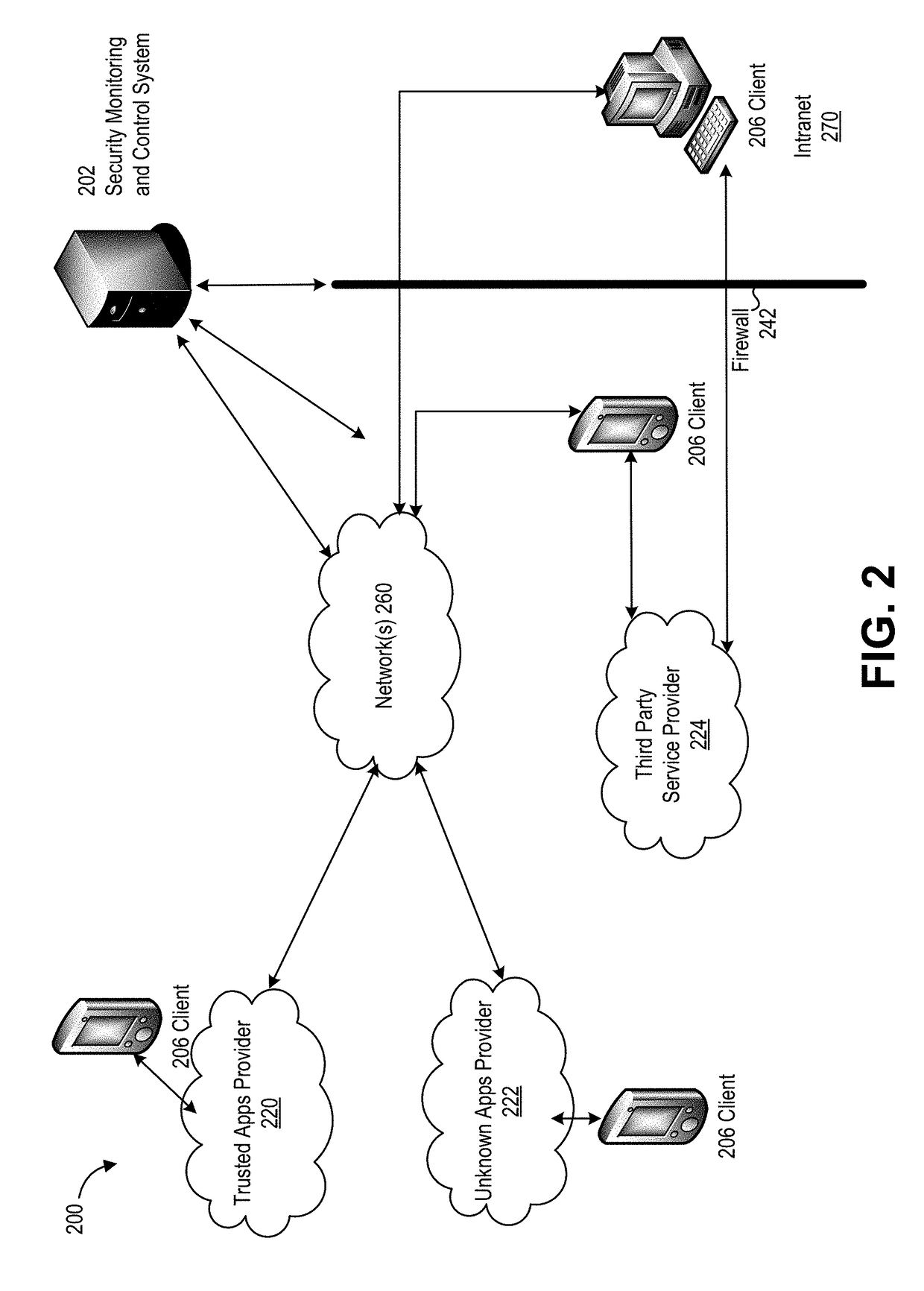

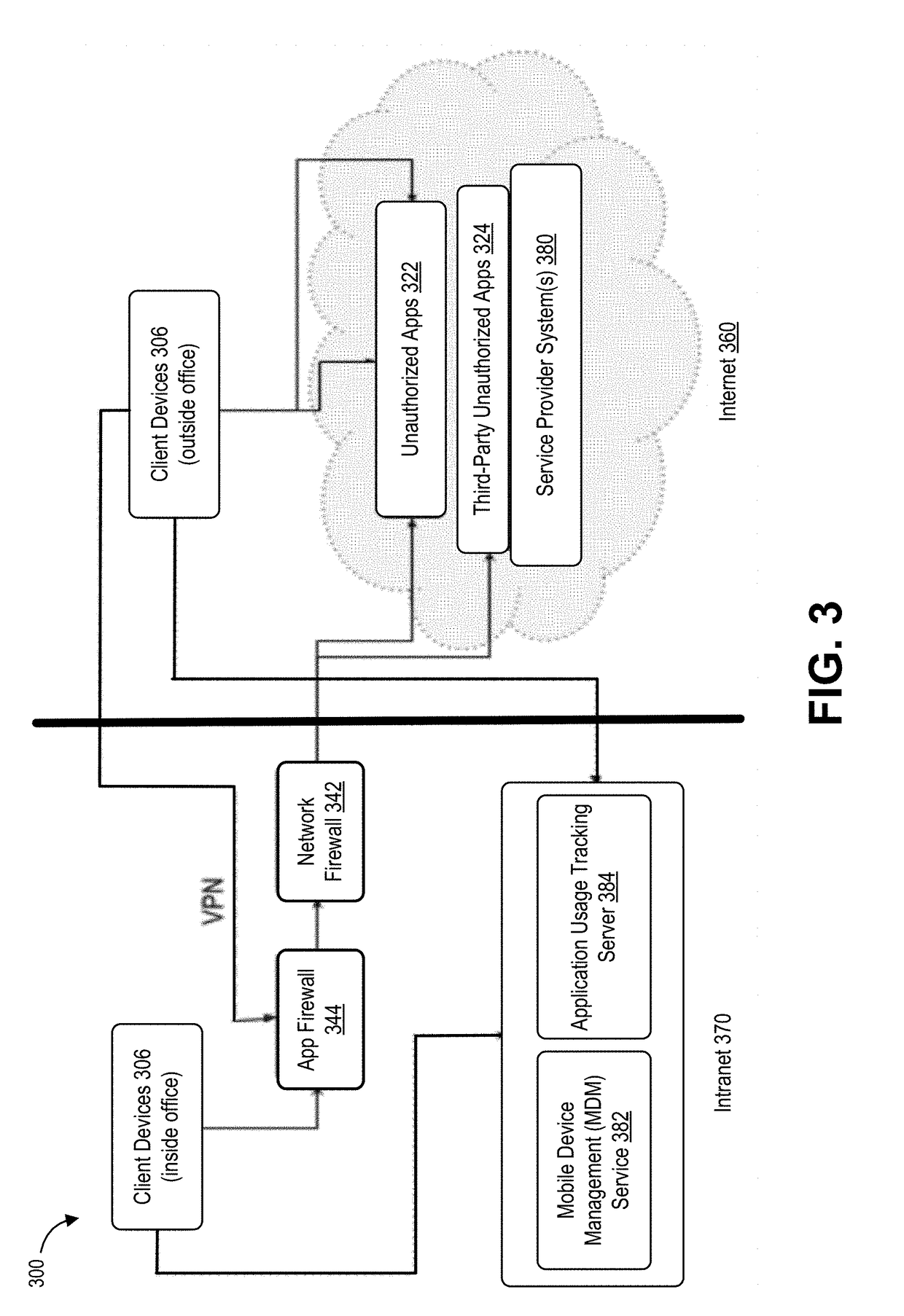

Cloud based security monitoring using unsupervised pattern recognition and deep learning

Provided are systems and methods for a cloud security system that learns patterns of user behavior and uses the patterns to detect anomalous behavior in a network. Techniques discussed herein include obtaining activity data from a service provider system. The activity data describes actions performed during use of a cloud service over a period of time. A pattern corresponding to a series of actions performed over a subset of time can be identified. The pattern can be added a model associated with the cloud service. The model represents usage of the cloud service by the one or more users. Additional activity data can be obtained from the service provider system. Using the model, a set of actions can be identified in the additional activity data that do not correspond to the model. The set of actions and an indicator that identifies the set of actions as anomalous can be output.

Owner:ORACLE INT CORP

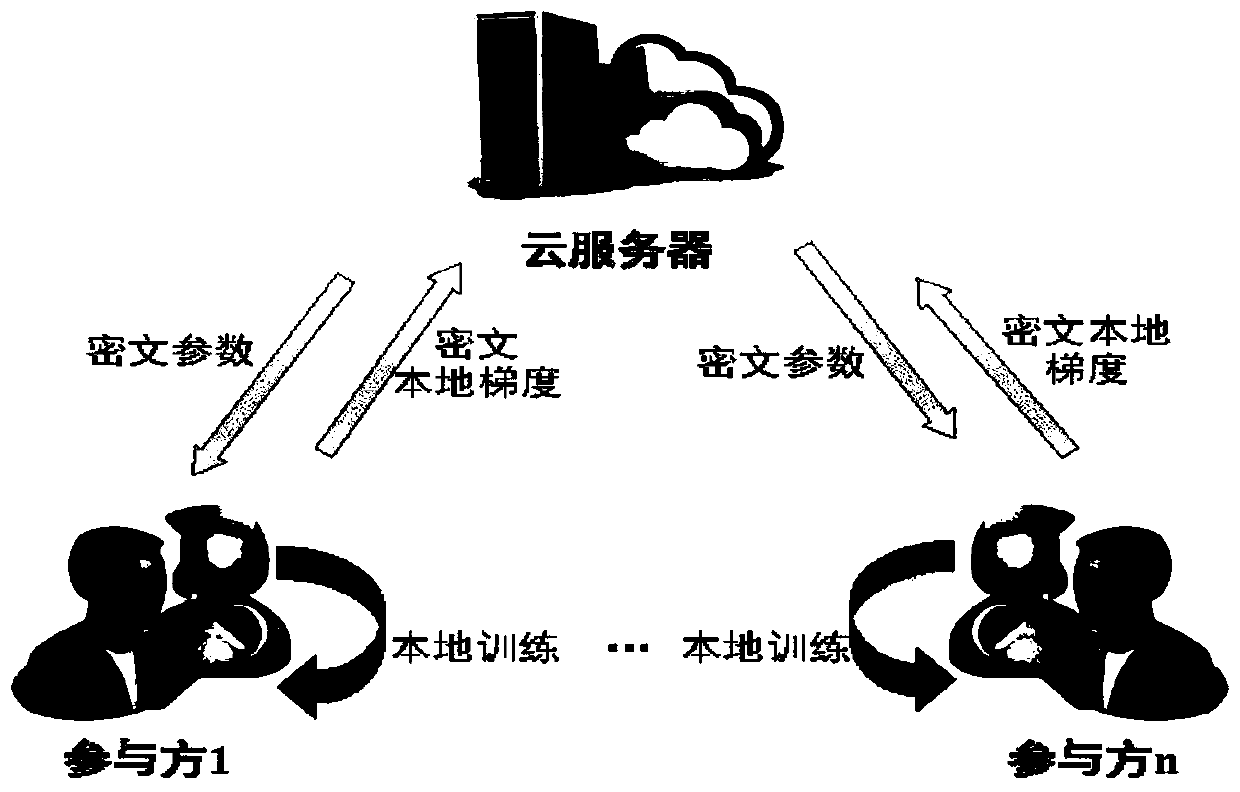

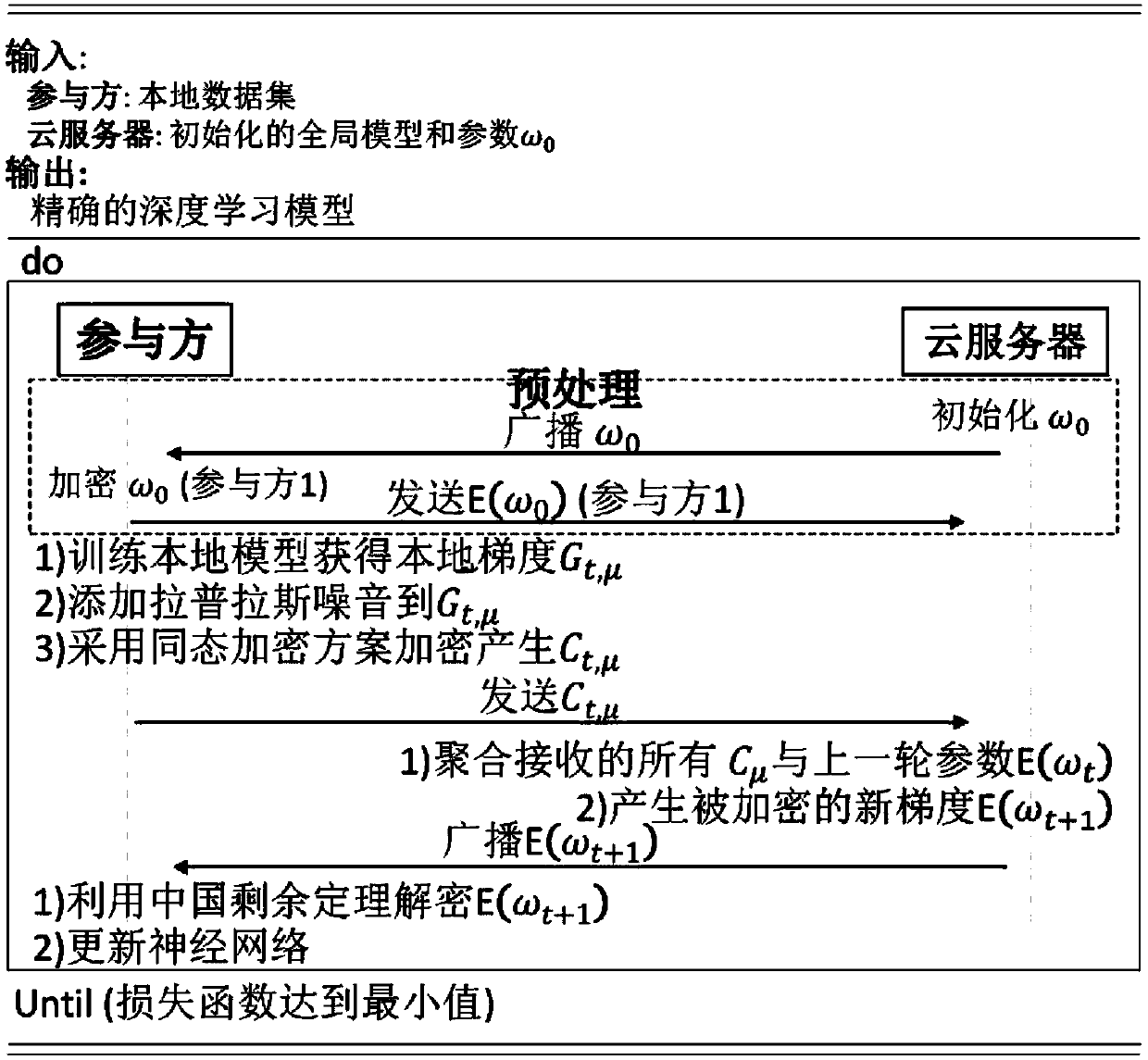

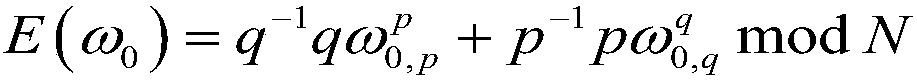

A combined deep learning training method based on a privacy protection technology

ActiveCN109684855AAvoid gettingSafe and efficient deep learning training methodDigital data protectionCommunication with homomorphic encryptionPattern recognitionData set

The invention belongs to the technical field of artificial intelligence, and relates to a combined deep learning training method based on a privacy protection technology. The efficient combined deep learning training method based on the privacy protection technology is achieved. In the invention, each participant first trains a local model on a private data set to obtain a local gradient, then performs Laplace noise disturbance on the local gradient, encrypts the local gradient and sends the encrypted local gradient to a cloud server; The cloud server performs aggregation operation on all thereceived local gradients and the ciphertext parameters of the last round, and broadcasts the generated ciphertext parameters; And finally, the participant decrypts the received ciphertext parameters and updates the local model so as to carry out subsequent training. According to the method, a homomorphic encryption scheme and a differential privacy technology are combined, a safe and efficient deep learning training method is provided, the accuracy of a training model is guaranteed, and meanwhile a server is prevented from inferring model parameters, training data privacy and internal attacksto obtain private information.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Automatic driving system based on enhanced learning and multi-sensor fusion

ActiveCN108196535APrecise positioningAccurate understandingPosition/course control in two dimensionsLearning networkProcess information

The invention discloses an automatic driving system based on enhanced learning and multi-sensor fusion. The system comprises a perception system, a control system and an execution system. The perception system high-efficiently processes a laser radar, a camera and a GPS navigator through a deep learning network so as to realize real time identification and understanding of vehicles, pedestrians, lane lines, traffic signs and signal lamps surrounding a running vehicle. Through an enhanced learning technology, the laser radar and a panorama image are matched and fused so as to form a real-time three-dimensional streetscape map and determination of a driving area. The GPS navigator is combined to realize real-time navigation. The control system adopts an enhanced learning network to process information collected by the perception system, and the people, vehicles and objects of the surrounding vehicles are predicted. According to vehicle body state data, the records of driver actions are paired, a current optimal action selection is made, and the execution system is used to complete execution motion. In the invention, laser radar data and a video are fused, and driving area identification and destination path optimal programming are performed.

Owner:清华大学苏州汽车研究院(吴江)

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com