Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

4624 results about "Video sequence" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Monocular tracking of 3D human motion with a coordinated mixture of factor analyzers

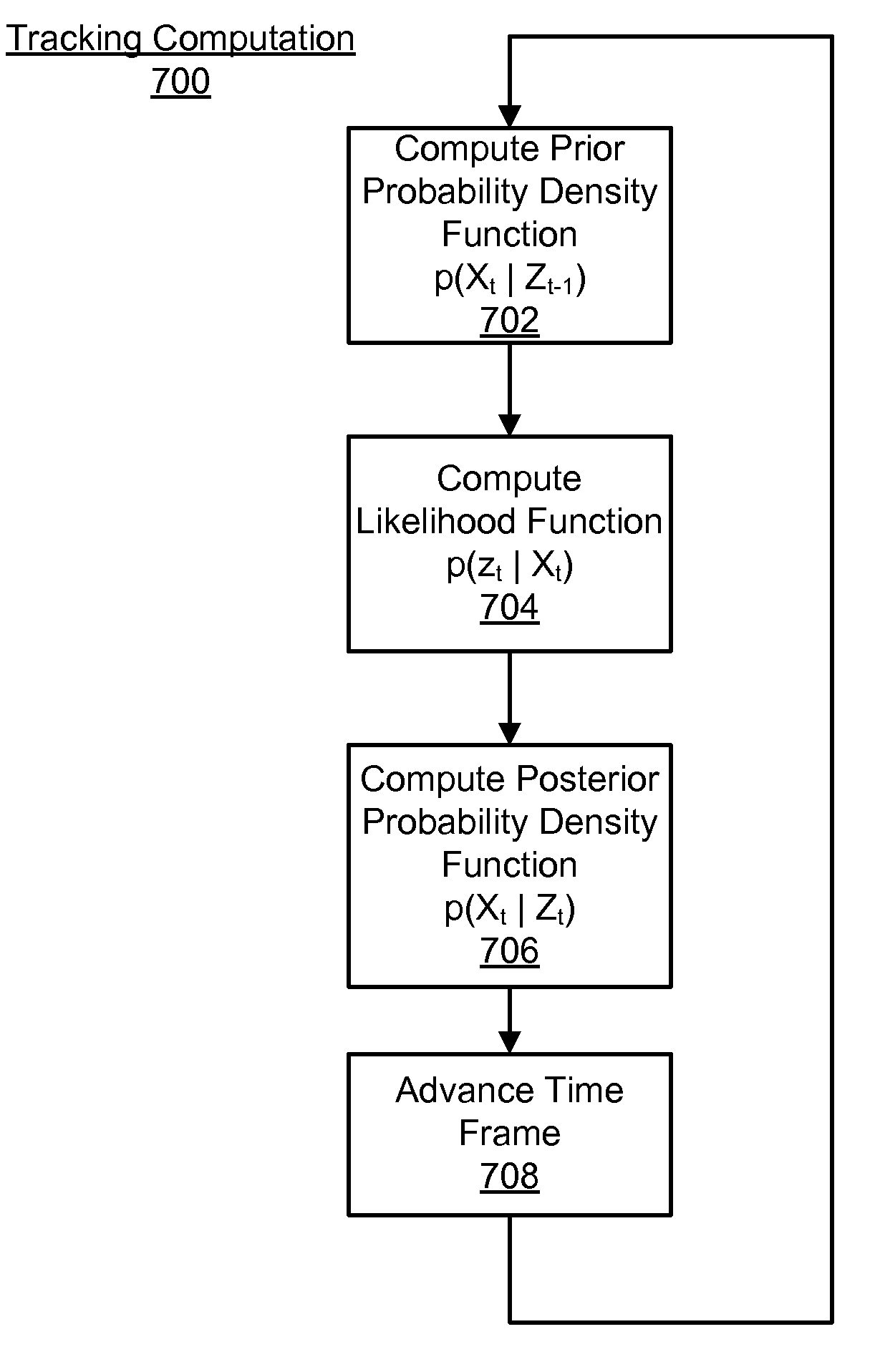

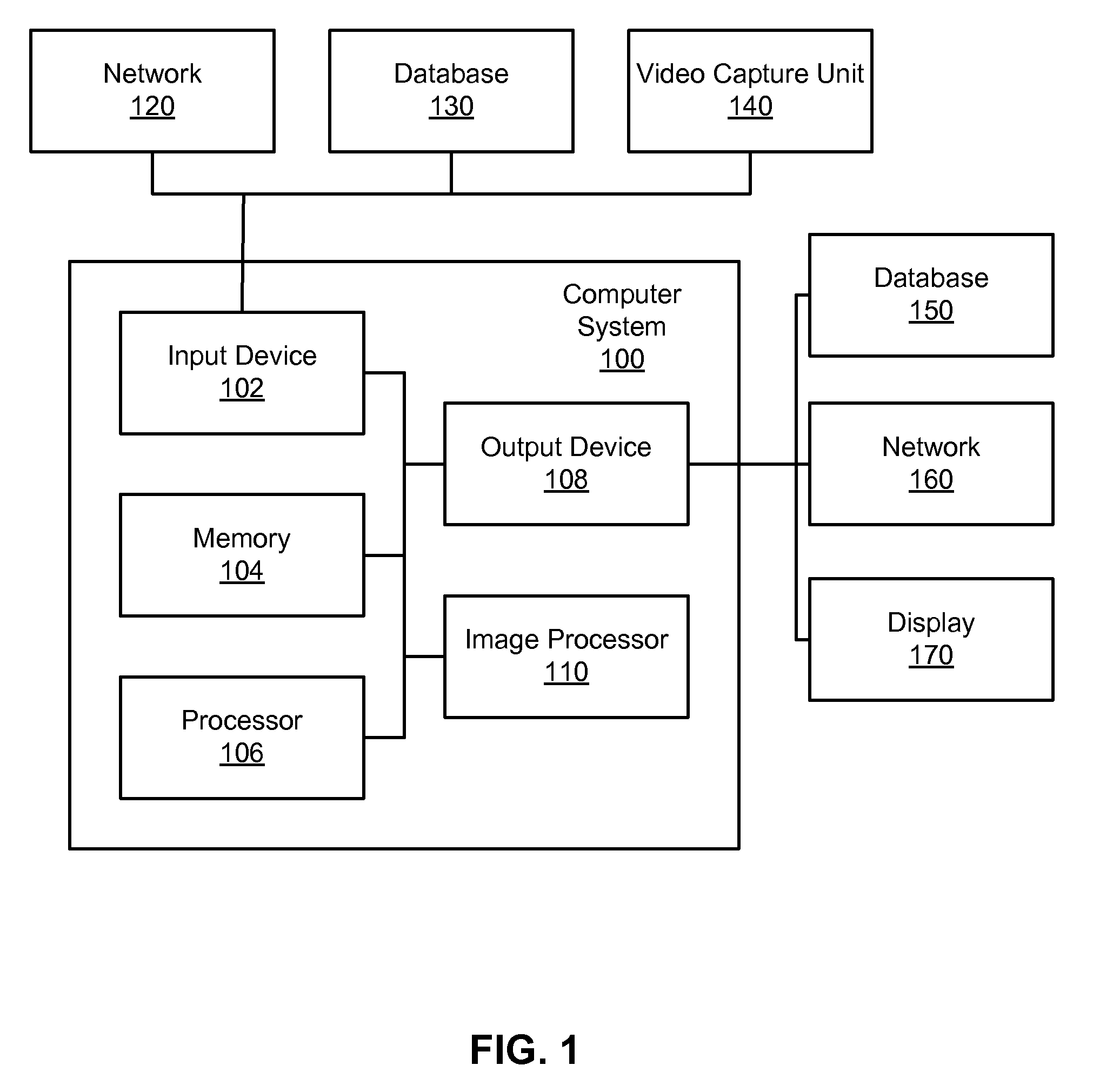

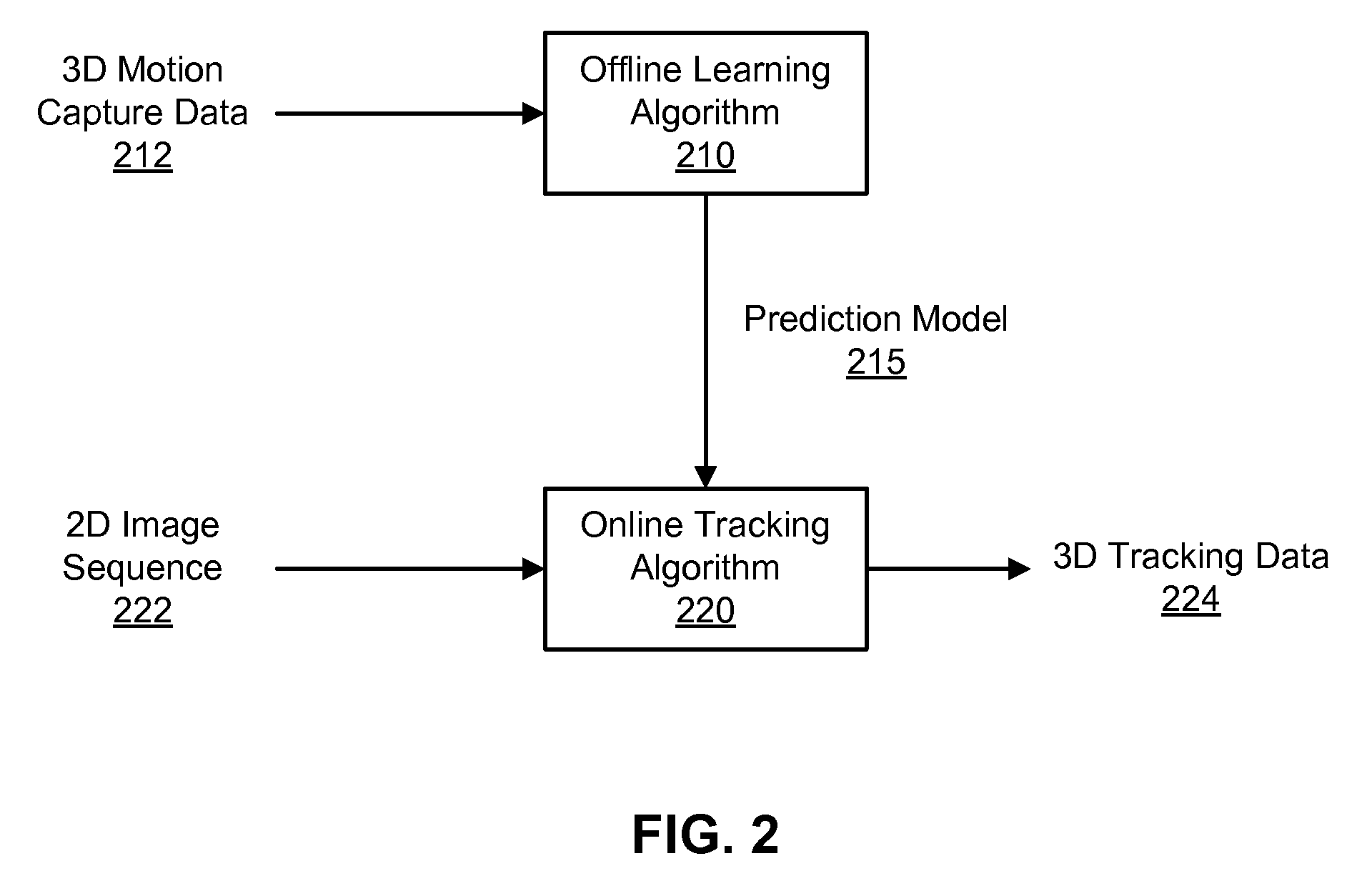

ActiveUS7450736B2Efficiently and accurately trackingSmall sizeImage analysisCharacter and pattern recognitionOffline learningMultiple hypothesis tracking

Owner:HONDA MOTOR CO LTD

Methods and systems for converting 2d motion pictures for stereoscopic 3D exhibition

ActiveUS20090116732A1Improve image qualityImprove visual qualityPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningImaging quality3d image

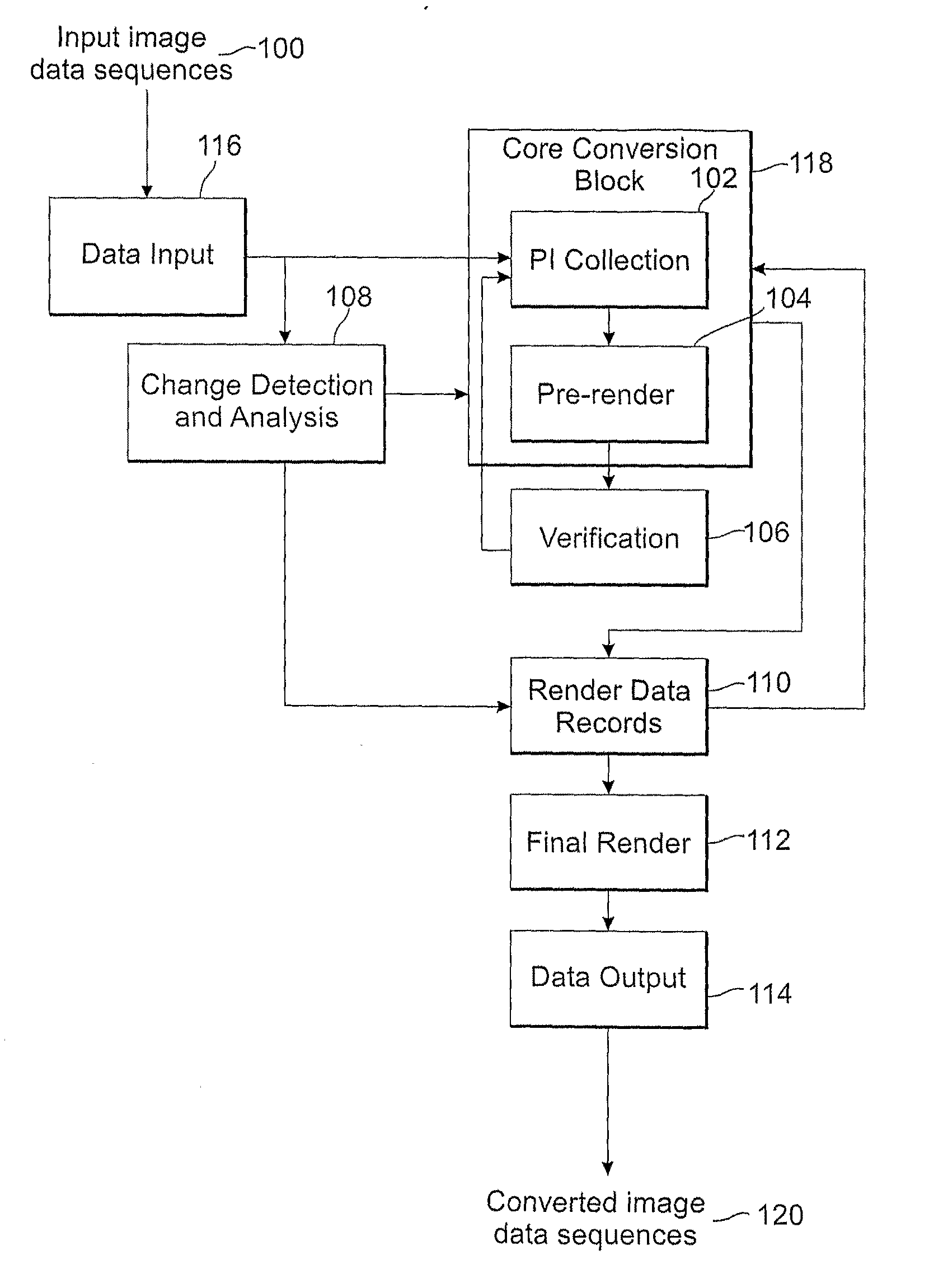

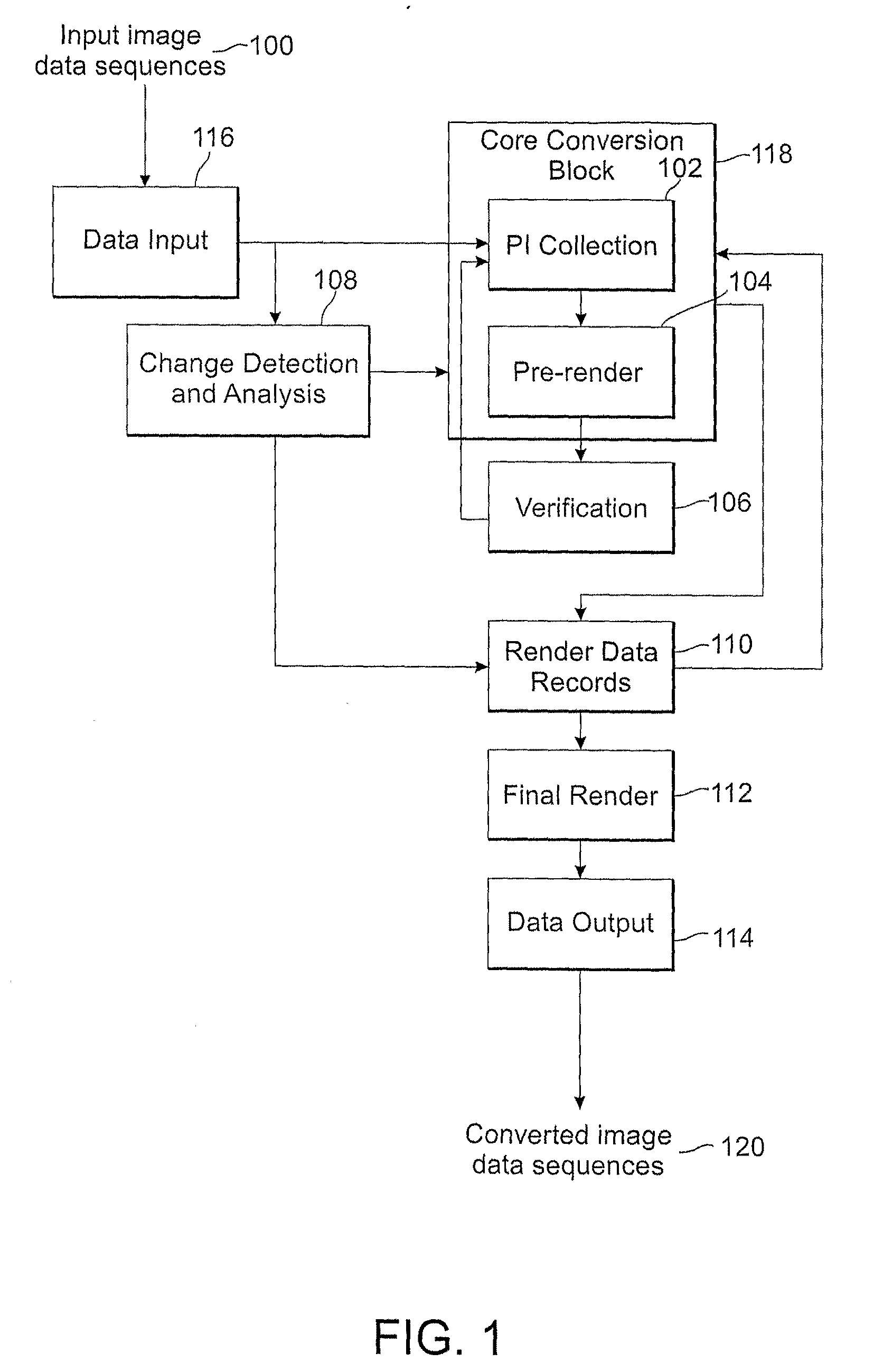

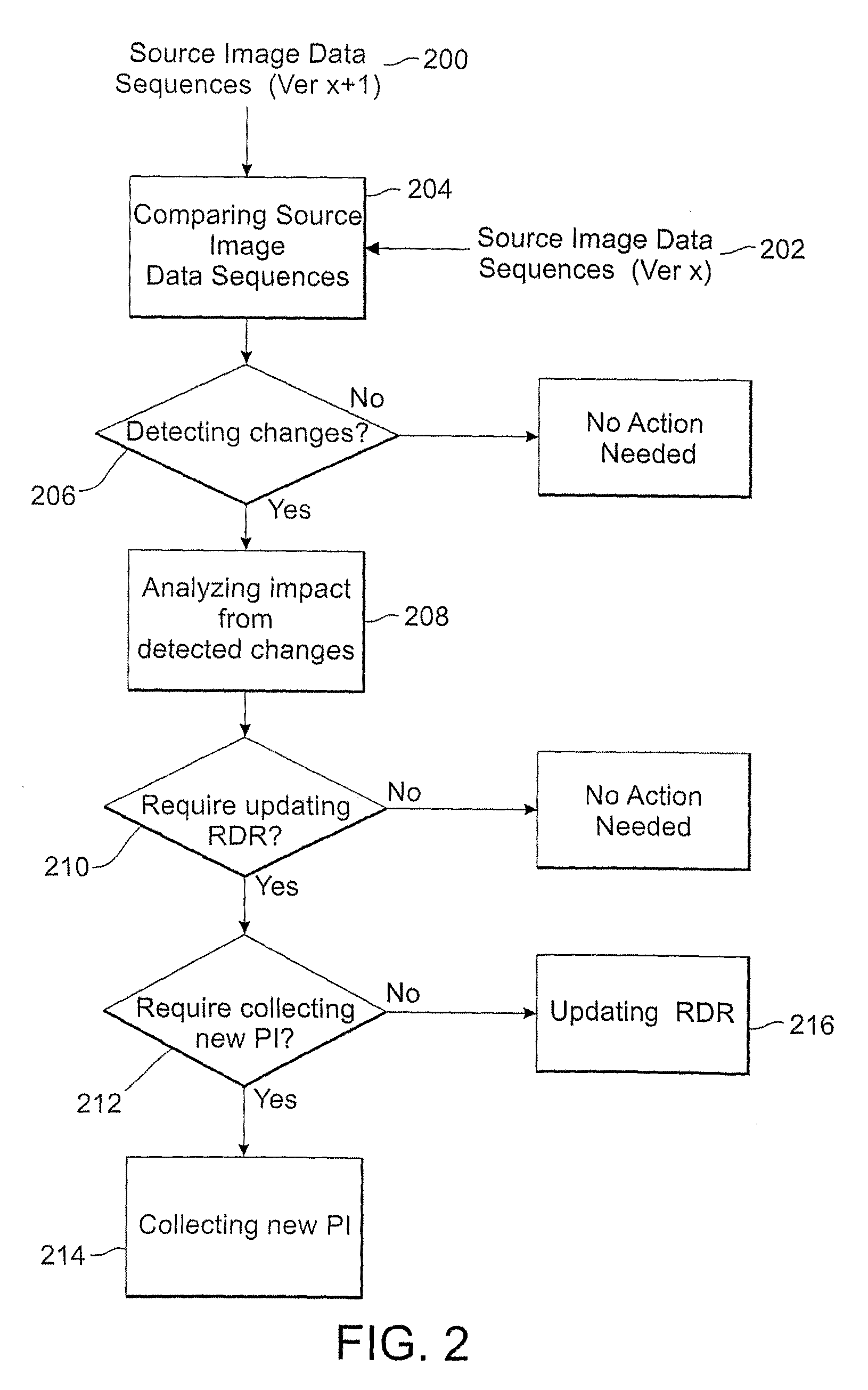

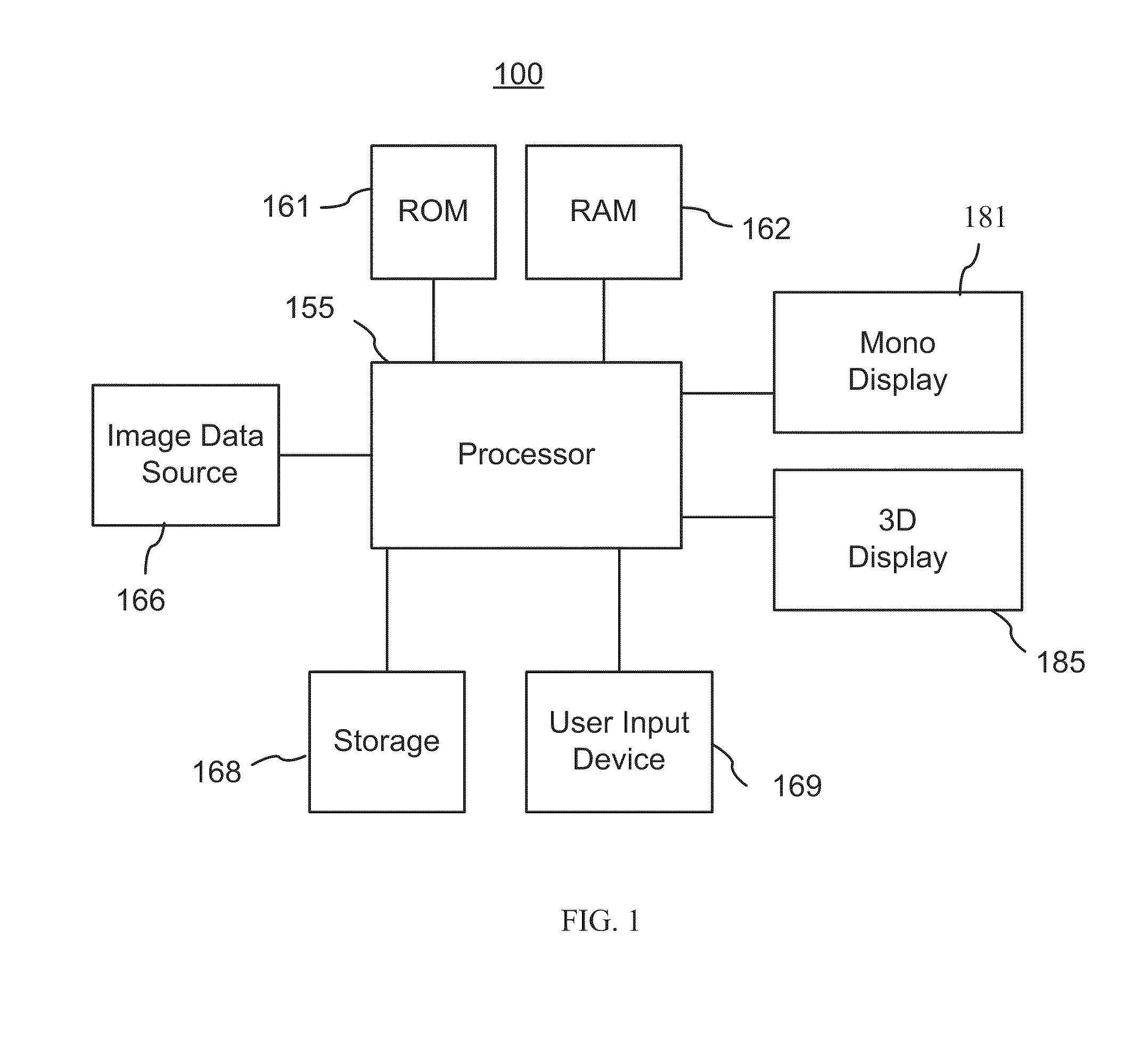

The present invention discloses methods of digitally converting 2D motion pictures or any other 2D image sequences to stereoscopic 3D image data for 3D exhibition. In one embodiment, various types of image data cues can be collected from 2D source images by various methods and then used for producing two distinct stereoscopic 3D views. Embodiments of the disclosed methods can be implemented within a highly efficient system comprising both software and computing hardware. The architectural model of some embodiments of the system is equally applicable to a wide range of conversion, re-mastering and visual enhancement applications for motion pictures and other image sequences, including converting a 2D motion picture or a 2D image sequence to 3D, re-mastering a motion picture or a video sequence to a different frame rate, enhancing the quality of a motion picture or other image sequences, or other conversions that facilitate further improvement in visual image quality within a projector to produce the enhanced images.

Owner:IMAX CORP

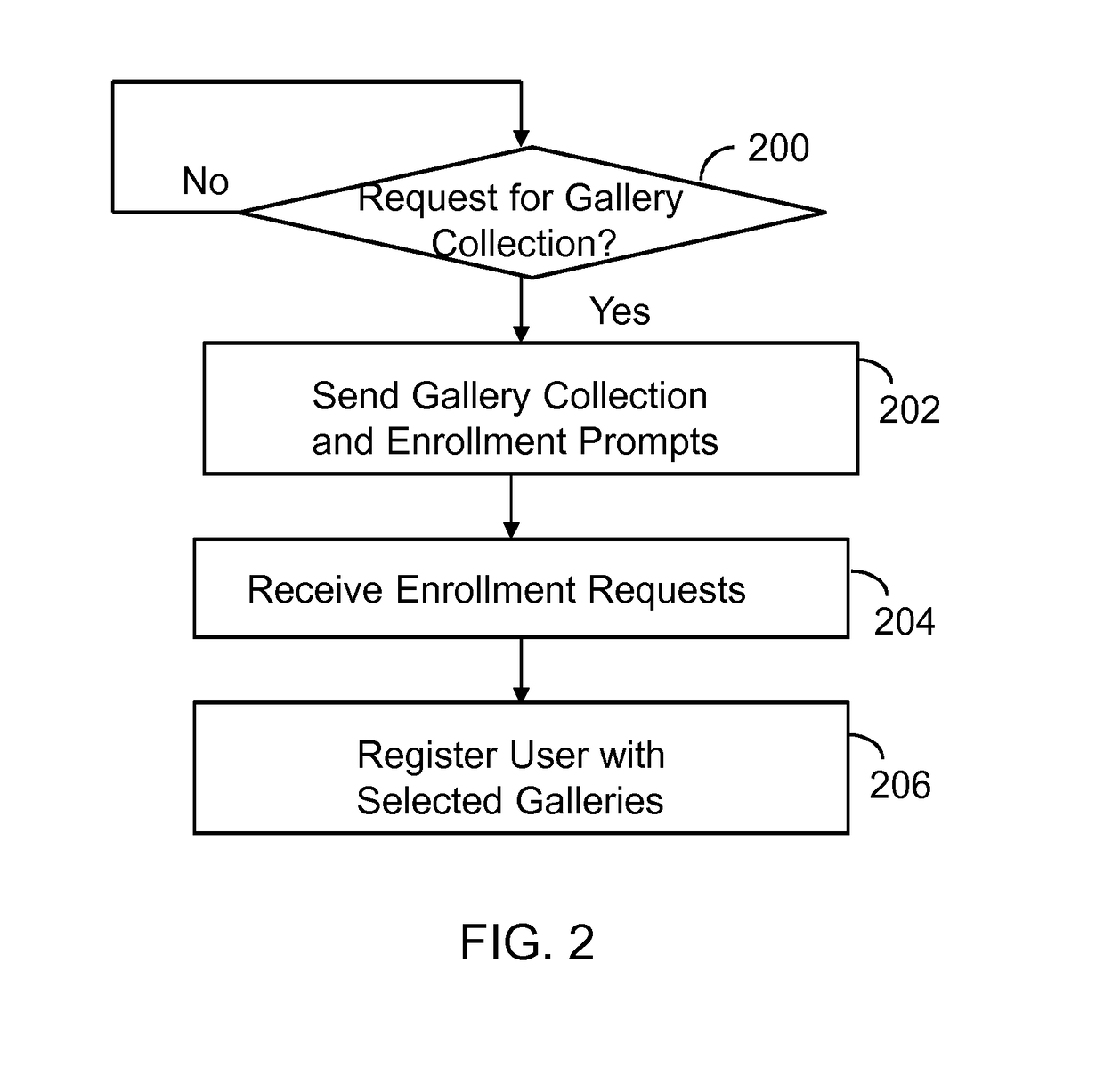

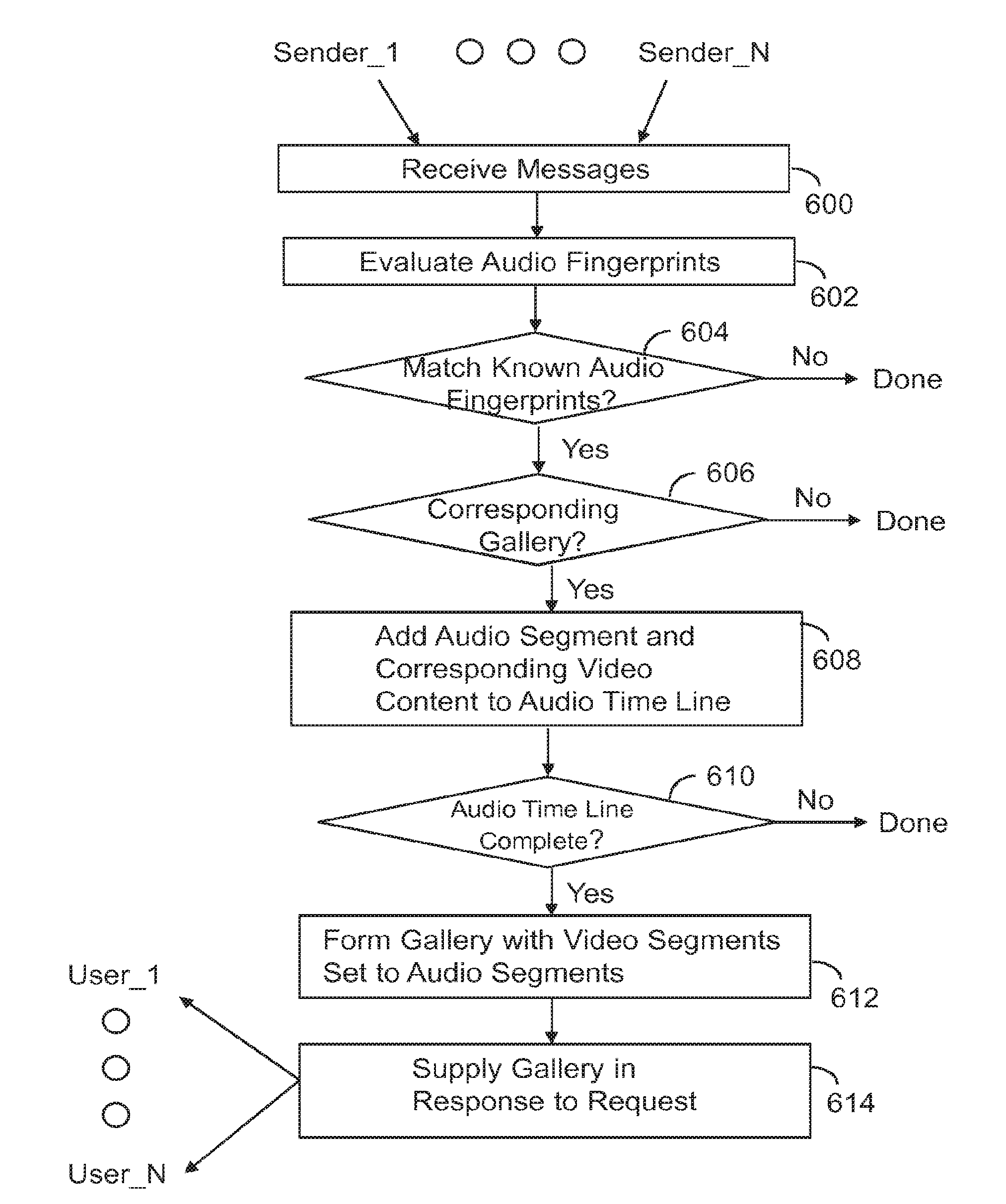

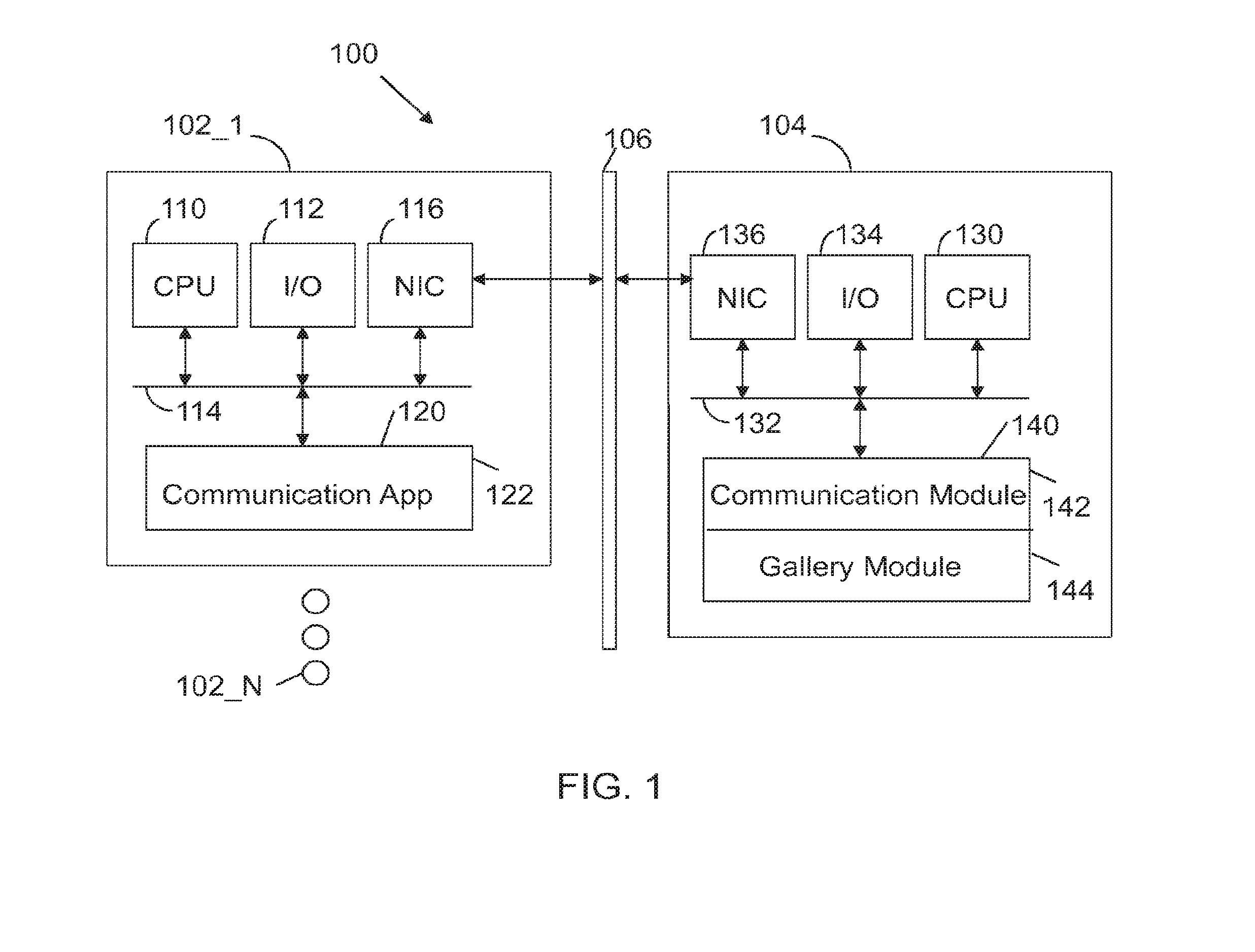

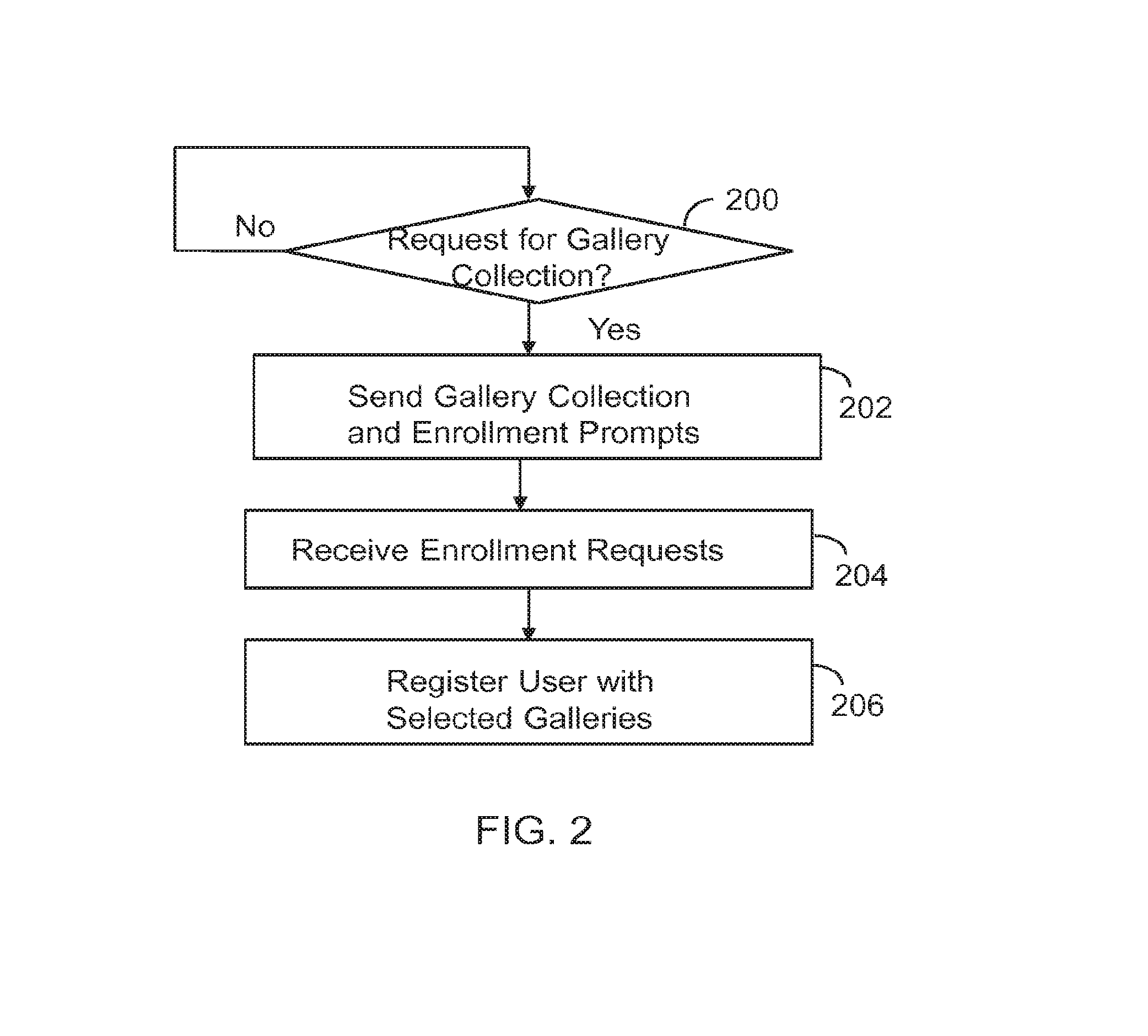

Gallery of videos set to an audio time line

ActiveUS9854219B2Electronic editing digitised analogue information signalsRecord information storageVideo sequenceTime line

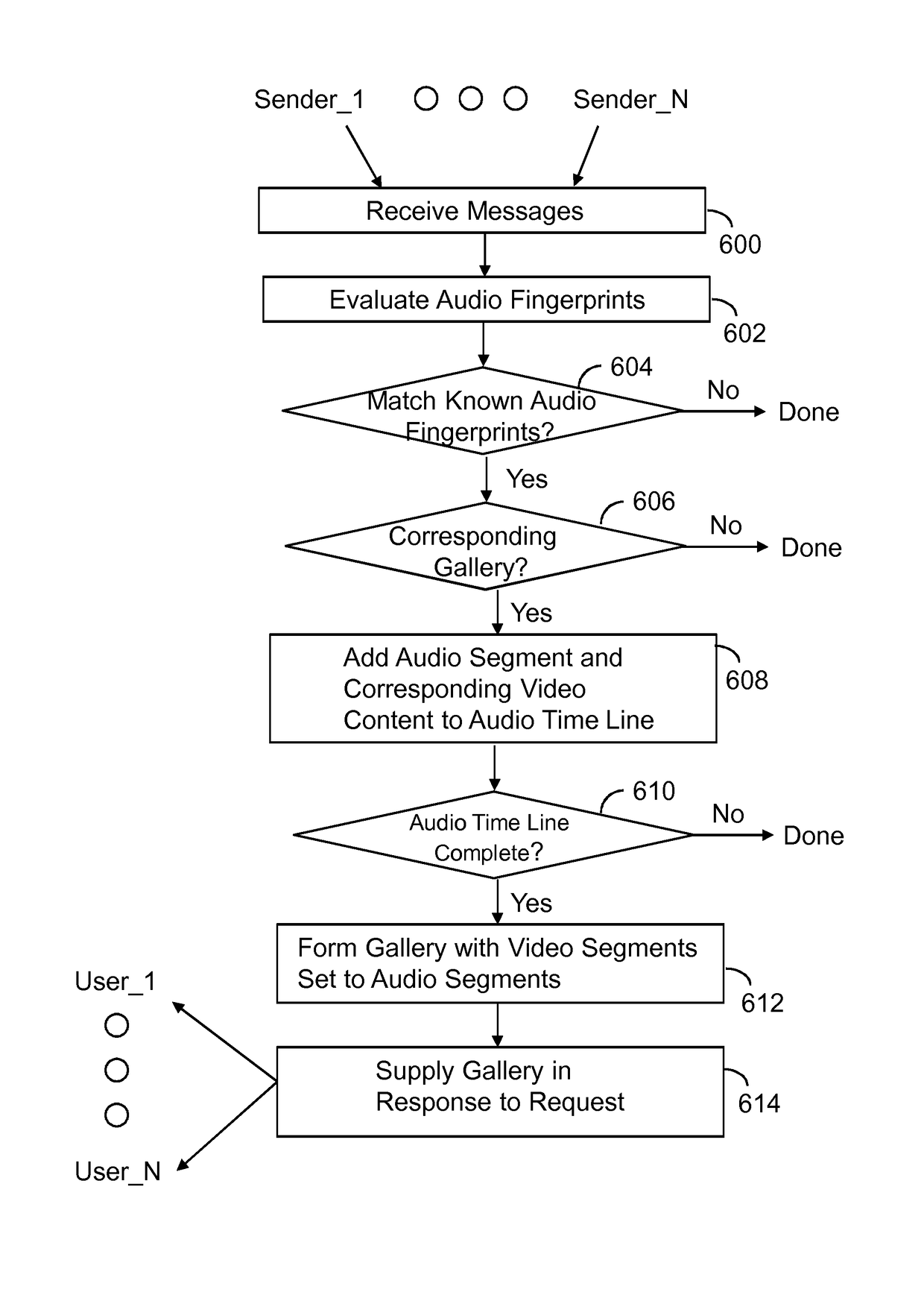

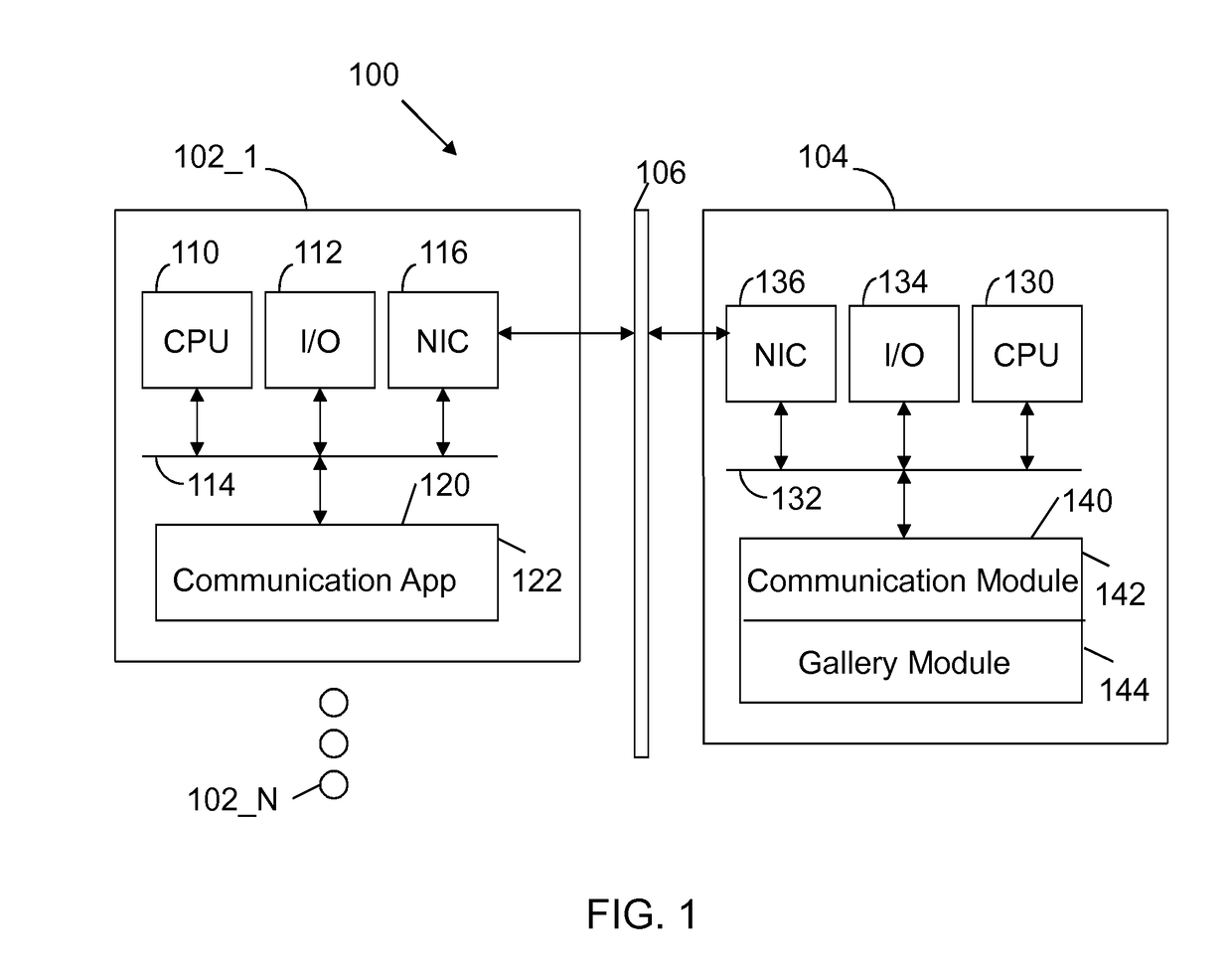

A machine includes a processor and a memory connected to the processor. The memory stores instructions executed by the processor to receive a message with audio content and video content. Audio fingerprints within the audio content are evaluated. The audio fingerprints are matched to known audio fingerprints to establish matched audio fingerprints. A determination is made whether the matched audio fingerprints correspond to a designated gallery constructed to receive a sequence of videos set to an audio time line. The matched audio fingerprints and corresponding video content are added to the audio time line. The operations are repeated until the audio time line is populated with corresponding video content to form a completed gallery with video segments set to audio segments that constitute a complete audio time line. The completed gallery is supplied in response to a request.

Owner:SNAP INC

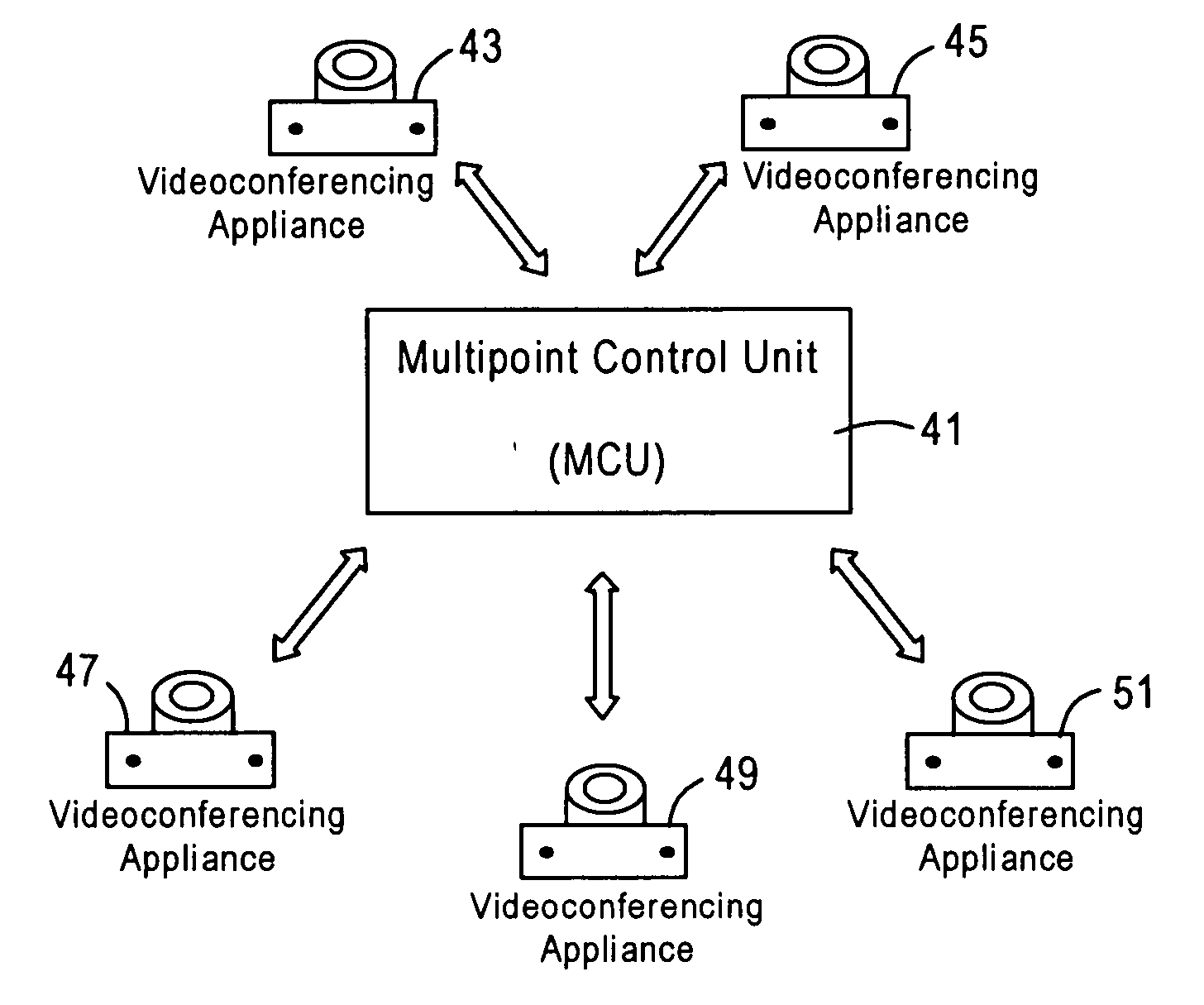

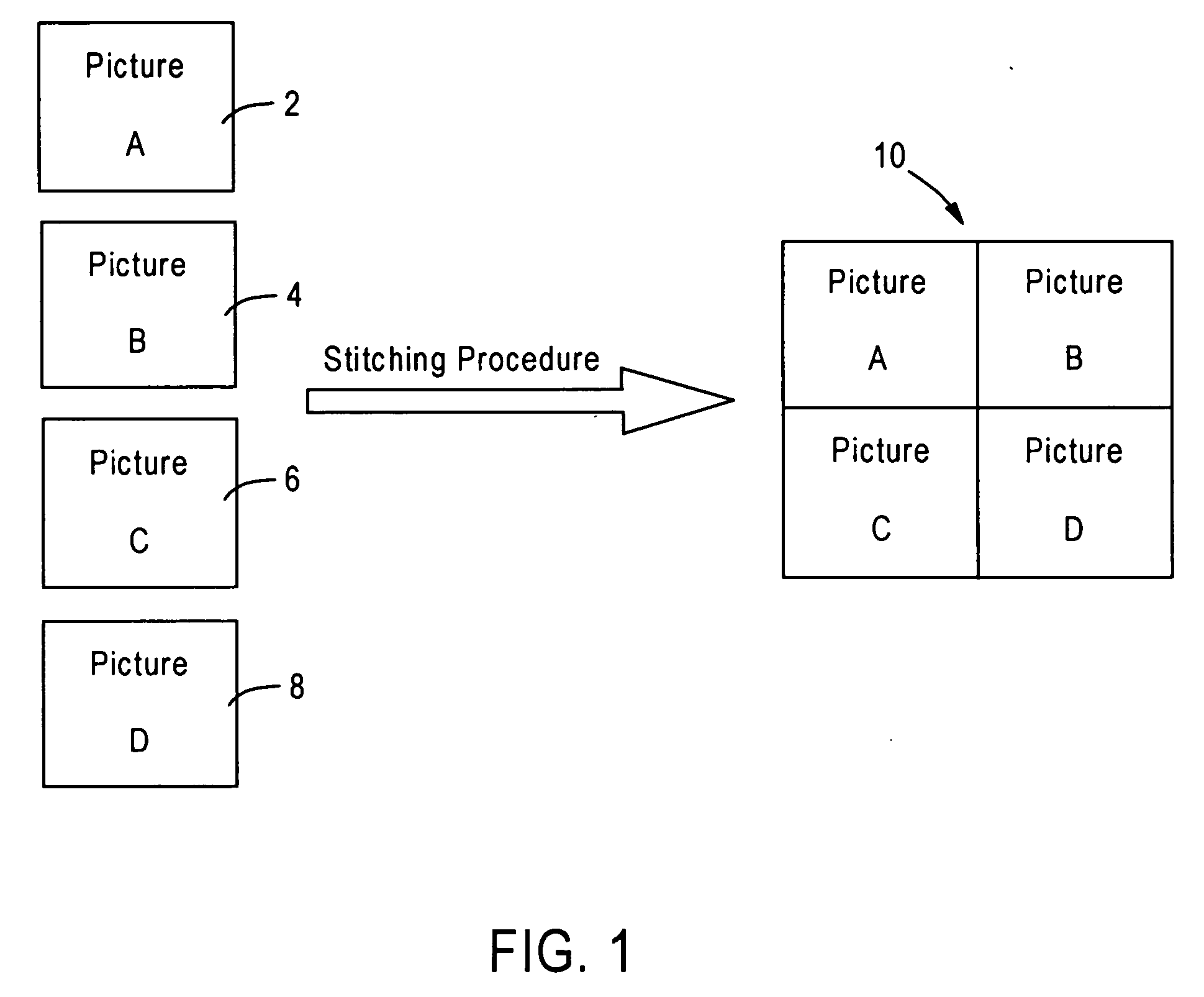

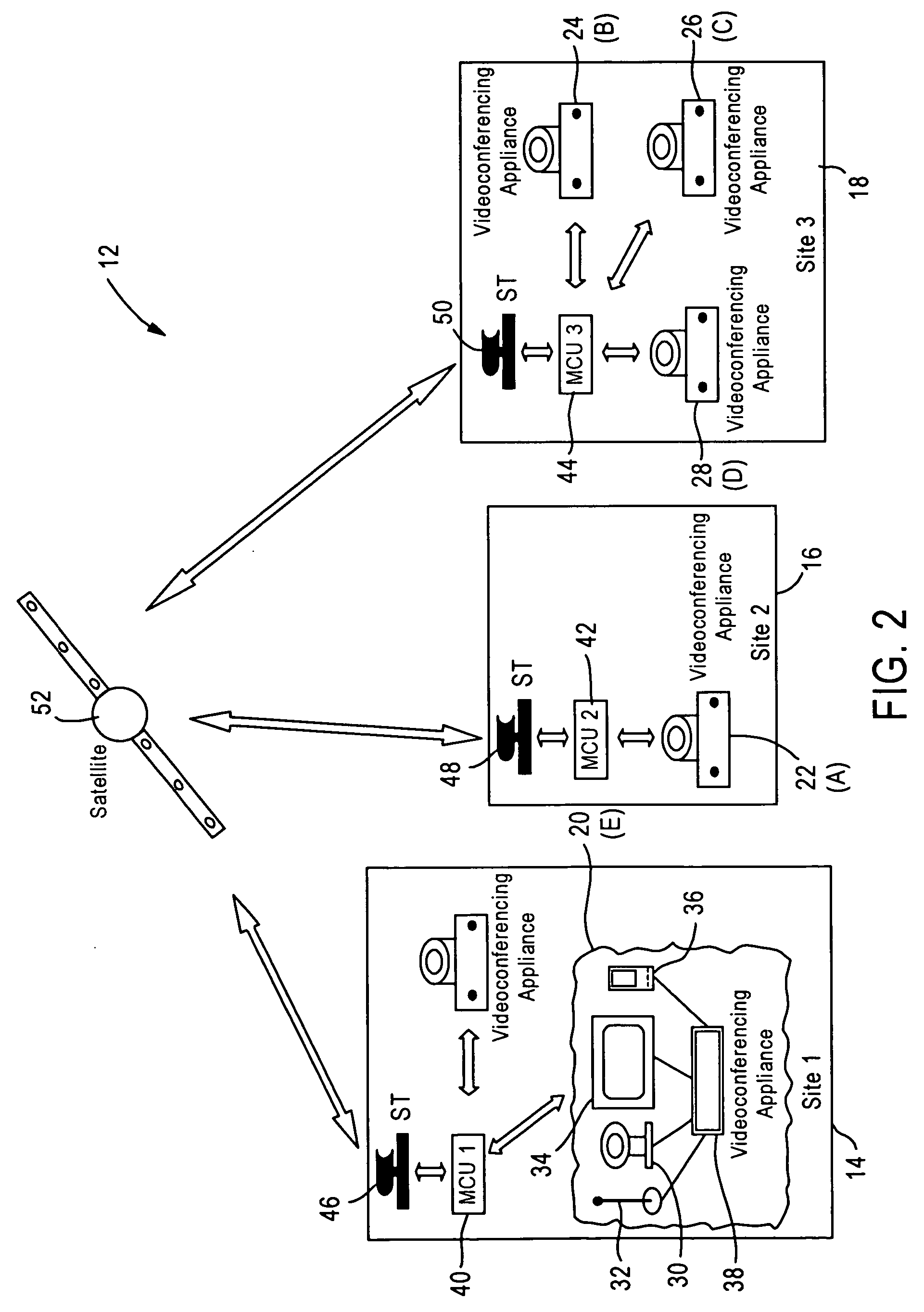

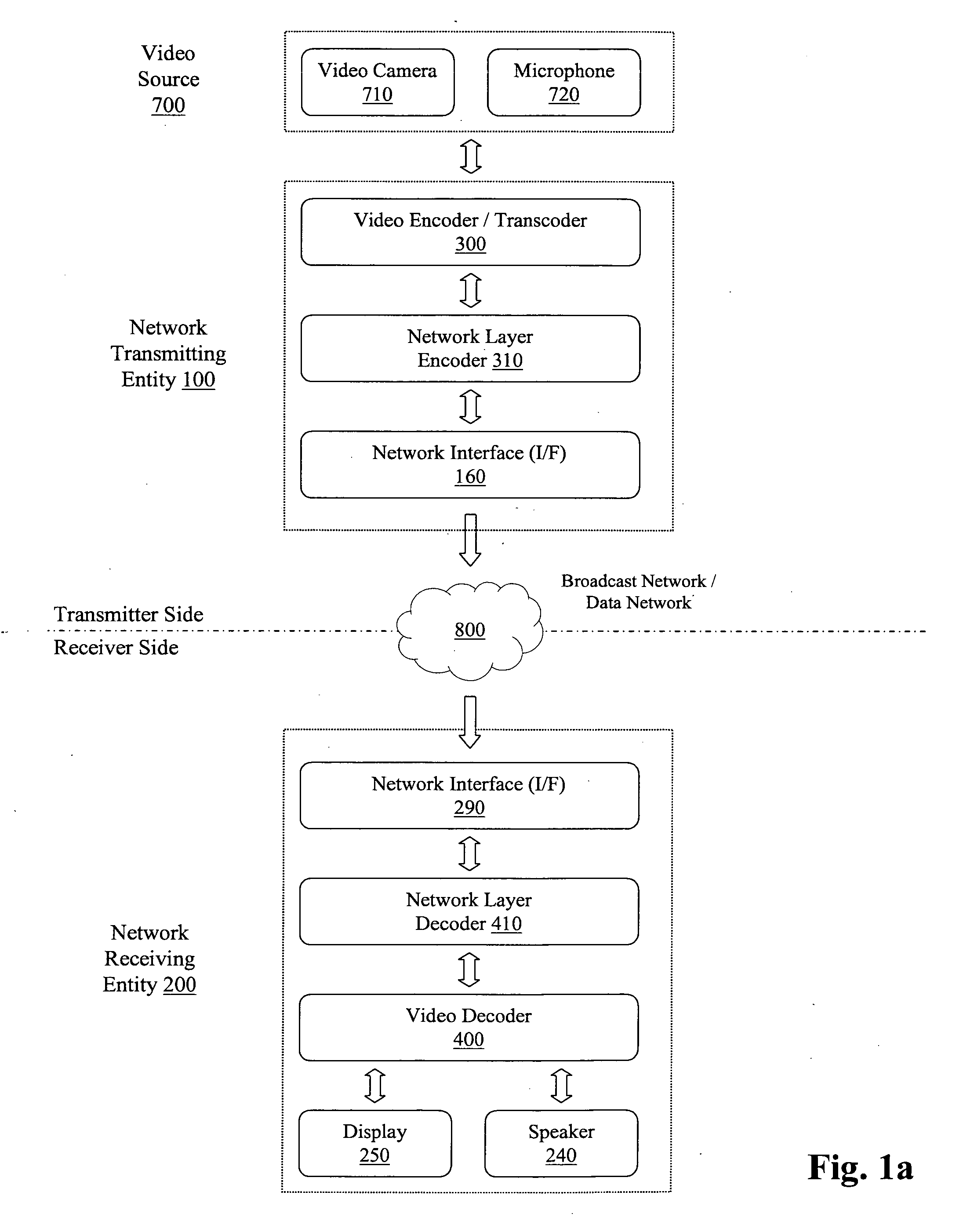

Stitching of video for continuous presence multipoint video conferencing

InactiveUS20050008240A1Avoid mistakesTelevision system detailsTelevision conference systemsVideo bitstreamVideo sequence

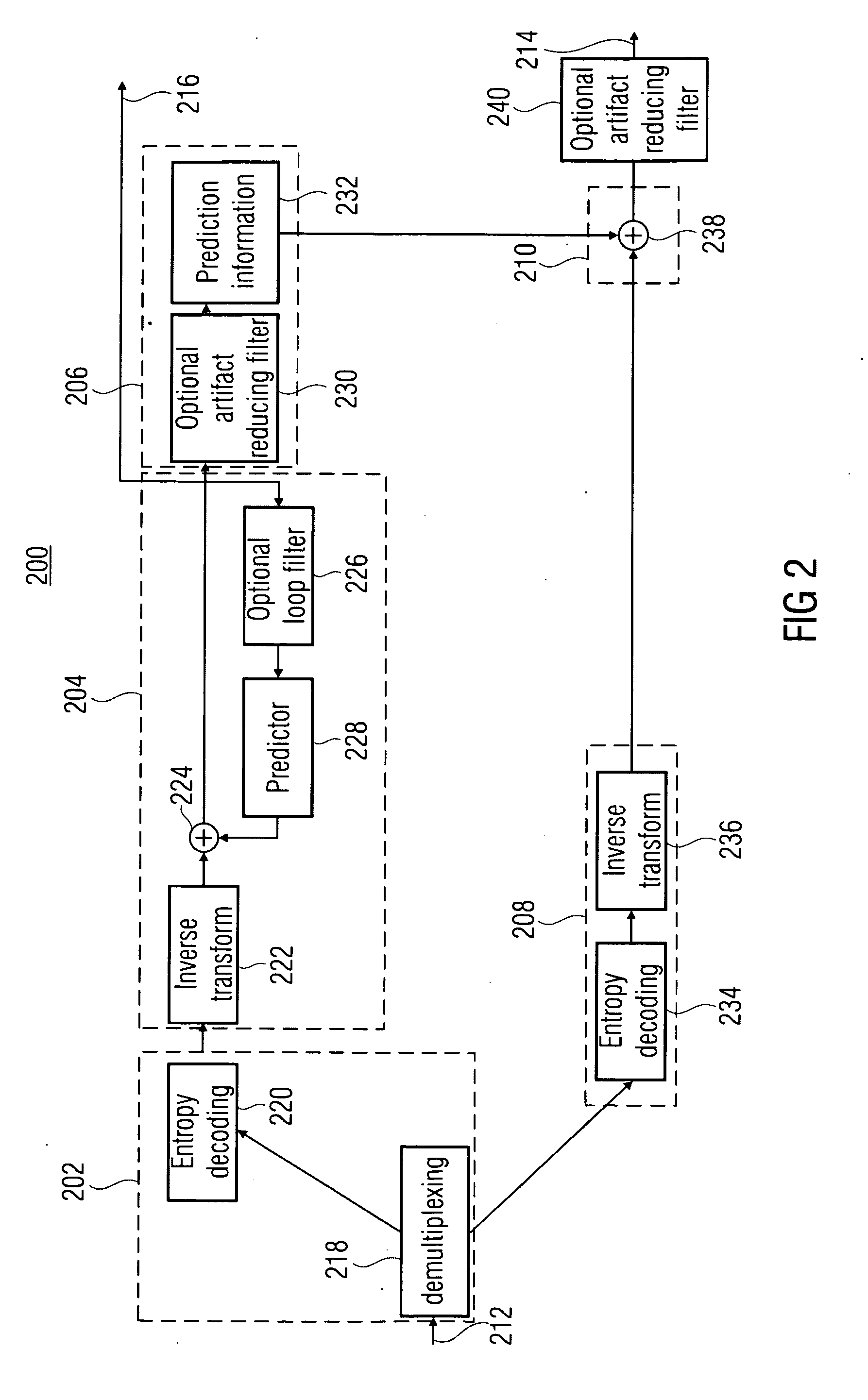

A drift-free hybrid method of performing video stitching is provided. The method includes decoding a plurality of video bitstreams and storing prediction information. The decoded bitstreams form video images, spatially composed into a combined image. The image comprises frames of ideal stitched video sequence. The method uses prediction information in conjunction with previously generated frames to predict pixel blocks in the next frame. A stitched predicted block in the next frame is subtracted from a corresponding block in a corresponding frame to create a stitched raw residual block. The raw residual block is forward transformed, quantized, entropy encoded and added to the stitched video bitstream along with the prediction information. Also, the stitched raw residual block is inverse transformed and dequantized to create a stitched decoded residual block. The residual block is added to the predicted block to generate the stitched reconstructed block in the next frame of the sequence.

Owner:HUGHES NETWORK SYST

Gallery of videos set to an audio time line

ActiveUS20160180887A1Television system detailsElectronic editing digitised analogue information signalsVideo sequenceTime line

A machine includes a processor and a memory connected to the processor. The memory stores instructions executed by the processor to receive a message with audio content and video content. Audio fingerprints within the audio content are evaluated. The audio fingerprints are matched to known audio fingerprints to establish matched audio fingerprints. A determination is made whether the matched audio fingerprints correspond to a designated gallery constructed to receive a sequence of videos set to an audio time line. The matched audio fingerprints and corresponding video content are added to the audio time line. The operations are repealed until the audio time line is populated with corresponding video content to form a completed gallery with video segments set to audio segments that constitute a complete audio time line. The completed gallery is supplied in response to a request.

Owner:SNAP INC

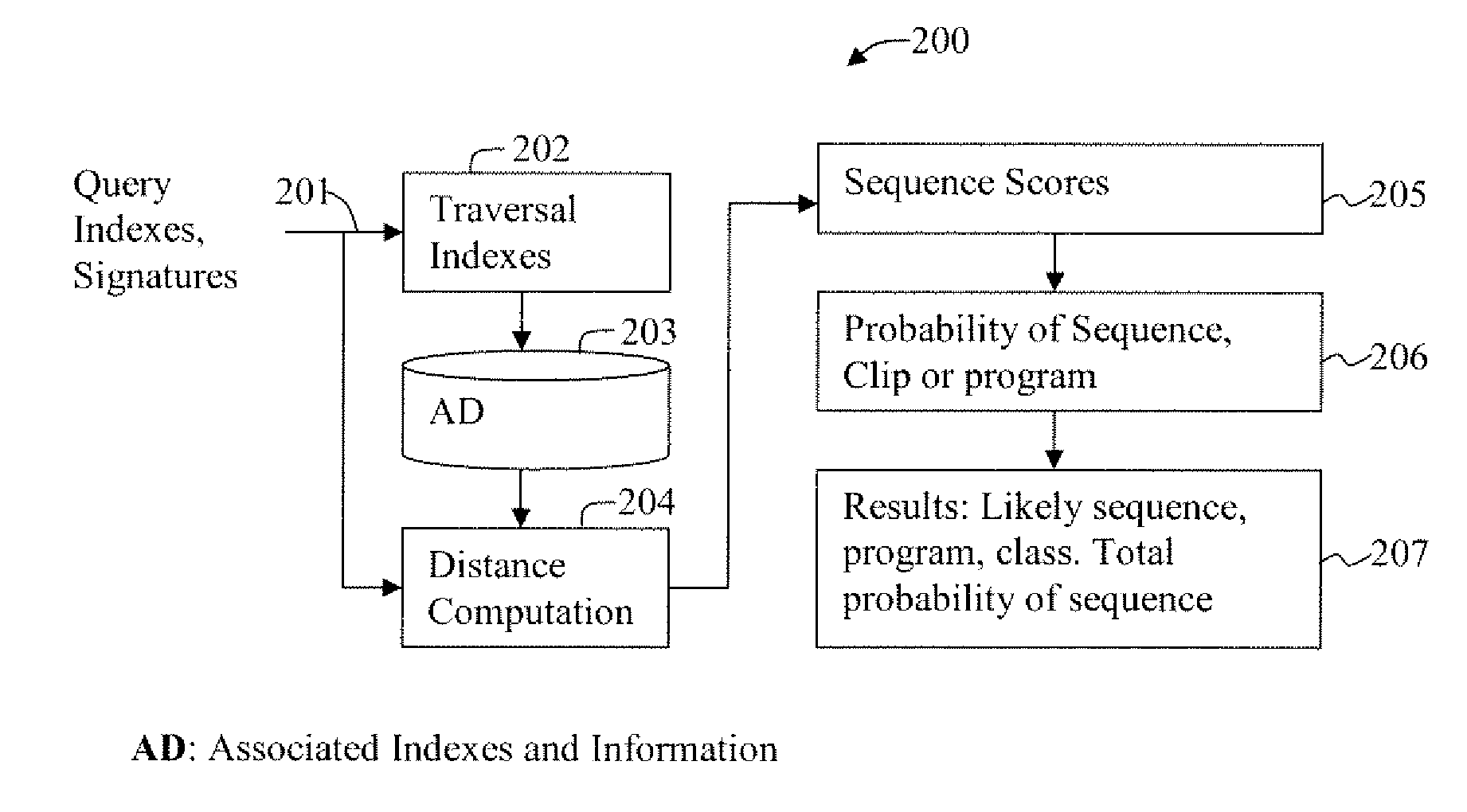

Method and Apparatus for Multi-Dimensional Content Search and Video Identification

ActiveUS20080313140A1Improve accuracyConfidenceImage enhancementVideo data indexingFrame sequenceVideo sequence

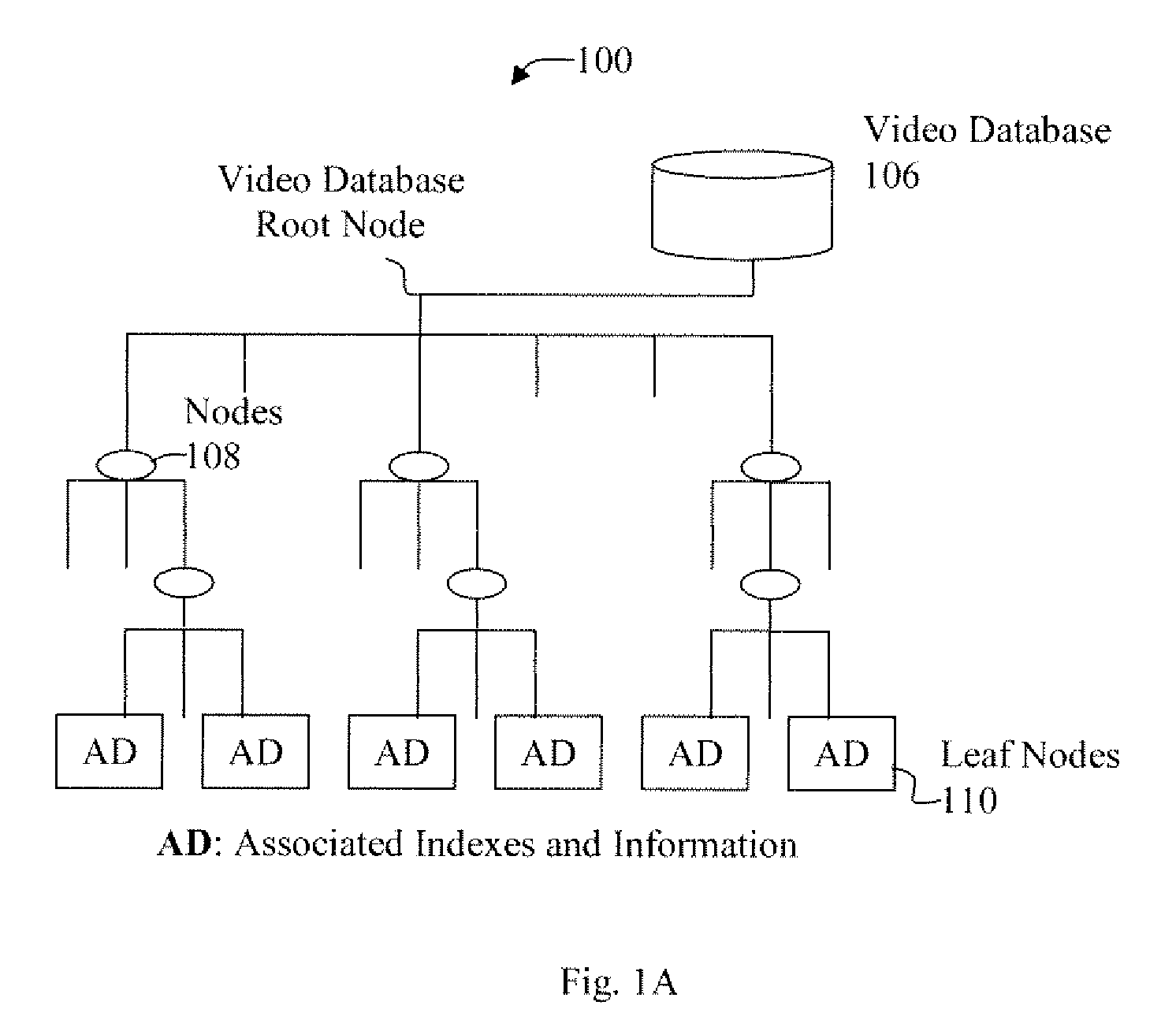

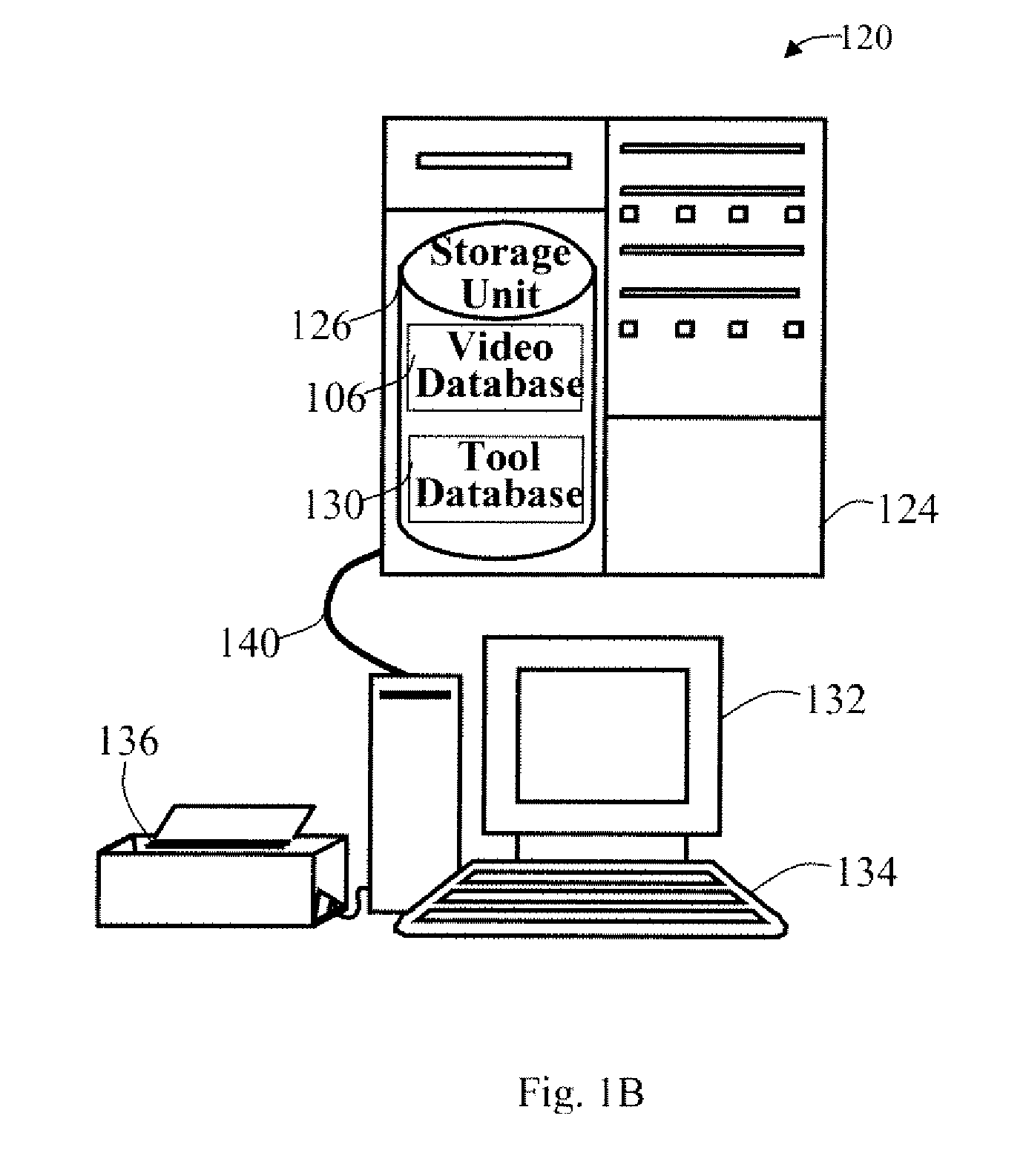

A multi-dimensional database and indexes and operations on the multi-dimensional database are described which include video search applications or other similar sequence or structure searches. Traversal indexes utilize highly discriminative information about images and video sequences or about object shapes. Global and local signatures around keypoints are used for compact and robust retrieval and discriminative information content of images or video sequences of interest. For other objects or structures relevant signature of pattern or structure are used for traversal indexes. Traversal indexes are stored in leaf nodes along with distance measures and occurrence of similar images in the database. During a sequence query, correlation scores are calculated for single frame, for frame sequence, and video clips, or for other objects or structures.

Owner:ROKU INCORPORATED

Method and apparatus for automated video activity analysis

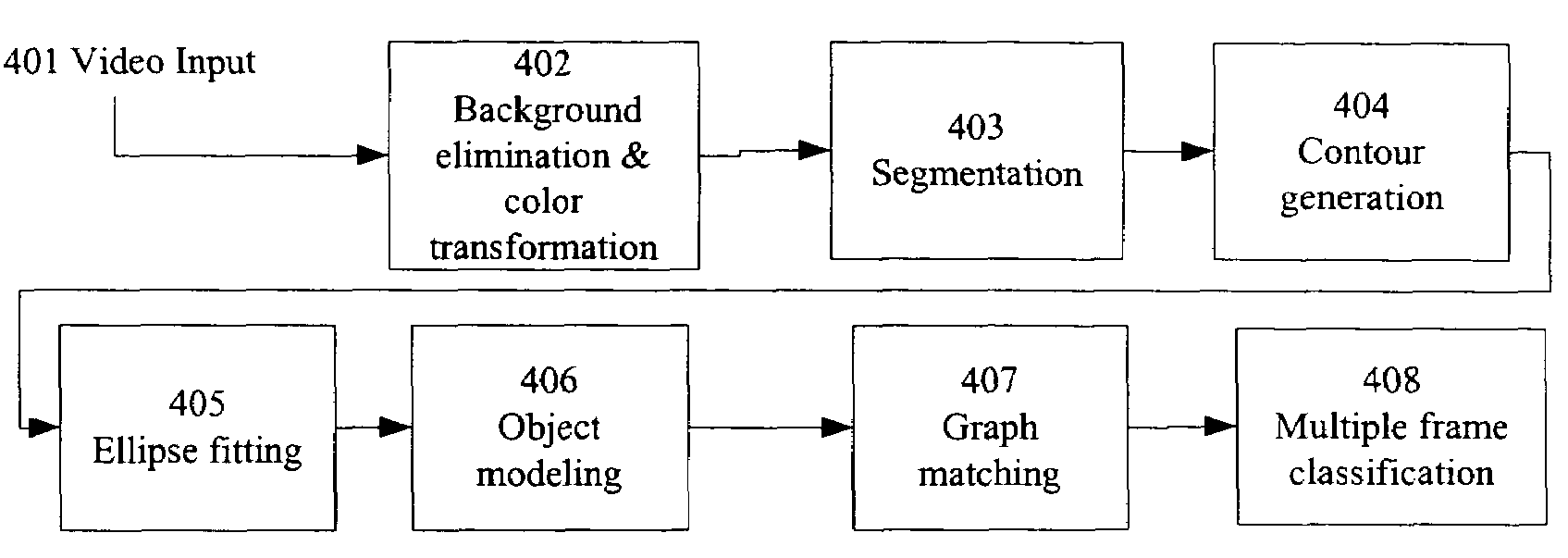

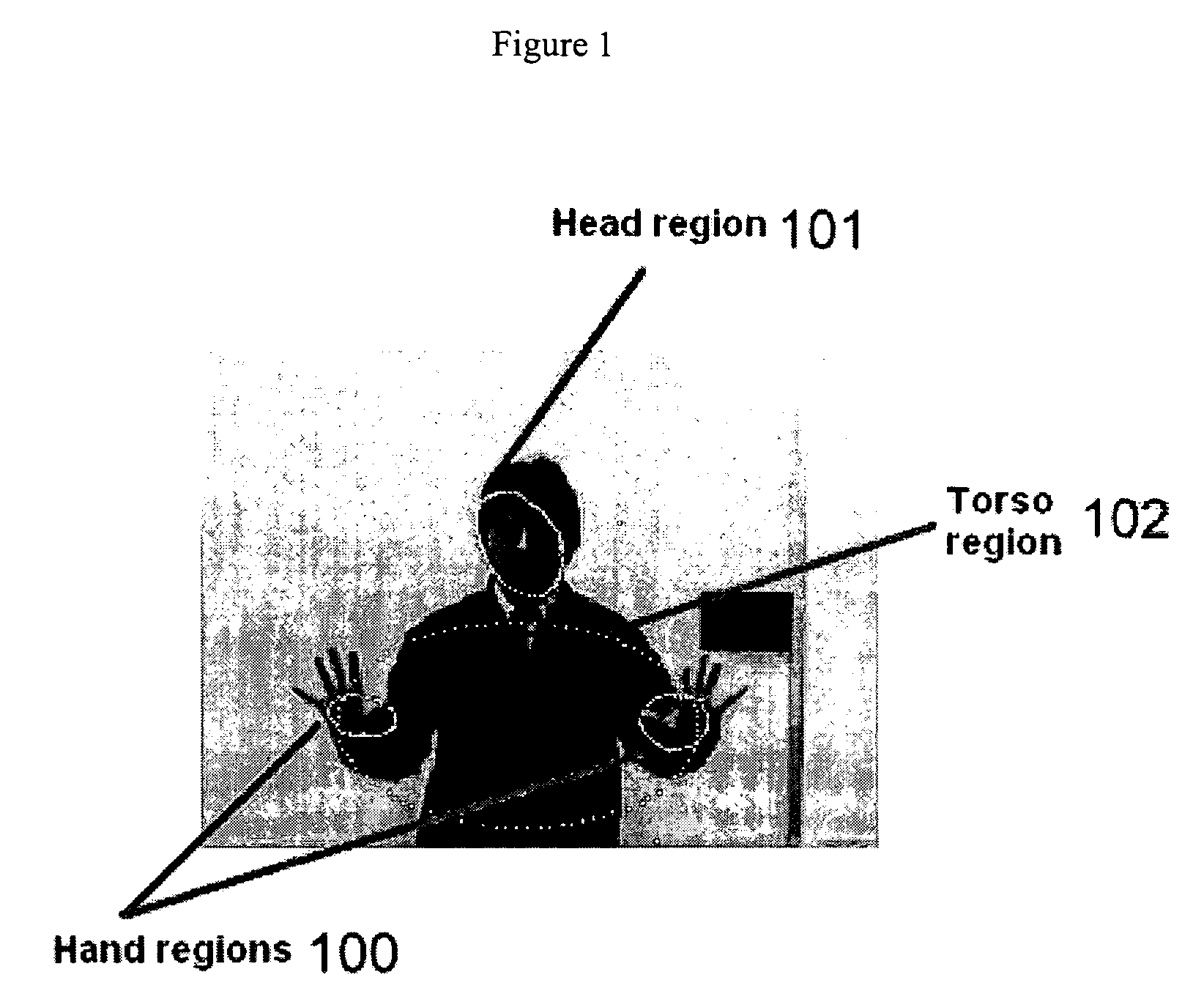

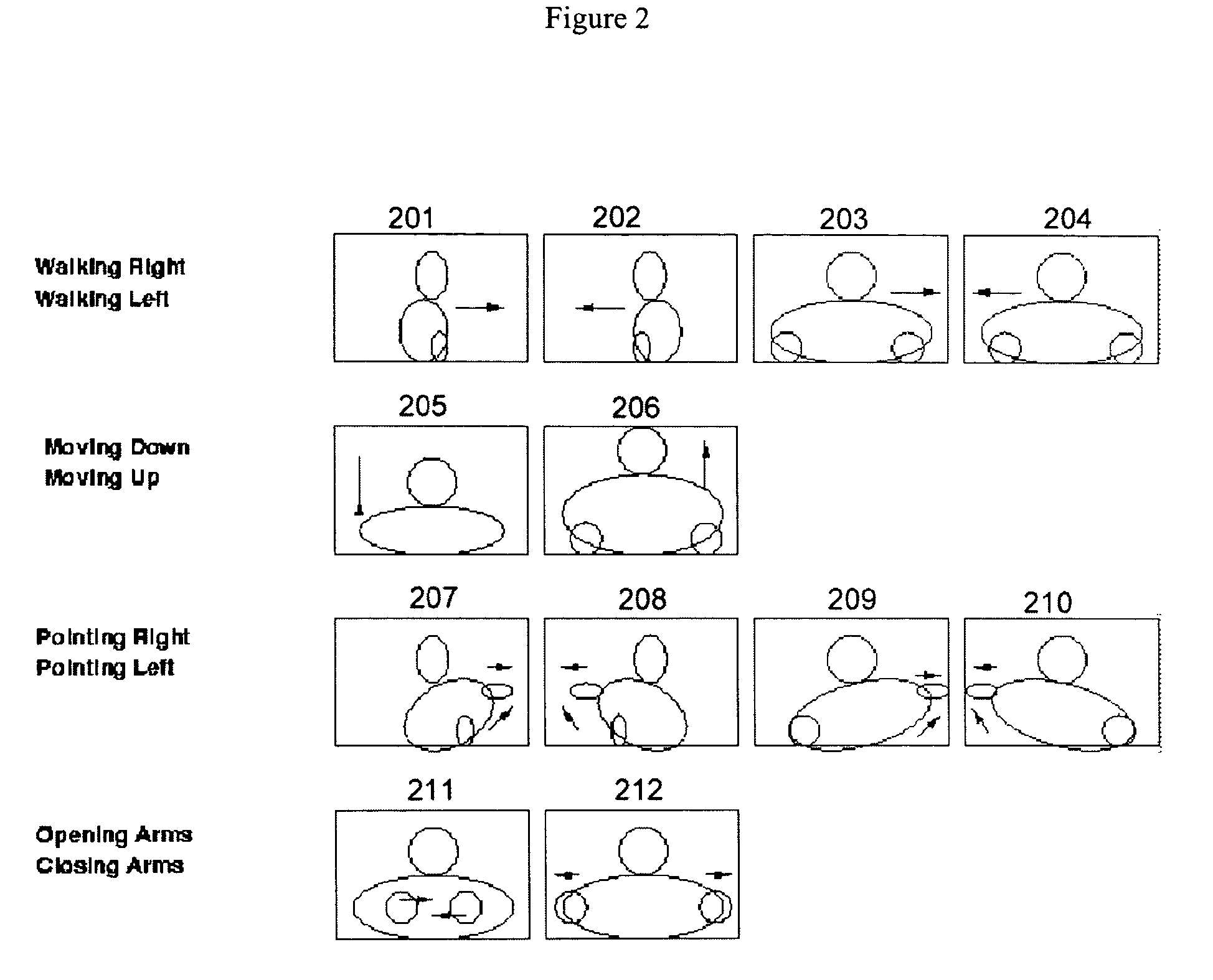

InactiveUS7200266B2Improving object extractionReduce dependenceBiometric pattern recognitionBurglar alarmHuman bodyPublic place

The invention is a new method and apparatus that can be used to detect, recognize, and analyze people or other objects in security checkpoints, public-places, parking lots, or in similar environments under surveillance to detect the presence of certain objects of interests (e.g., people), and to identify their activities for security and other purposes in real-time. The system can detect a wide range of activities for different applications. The method detects any new object introduced into a known environment and then classifies the object regions to human body parts or to other non-rigid and rigid objects. By comparing the detected objects with the graphs from a database in the system, the methodology is able to identify object parts and to decide on the presence of the object of interest (human, bag, dog, etc.) in video sequences. The system tracks the movement of different object parts in order to combine them at a later stage to high-level semantics. For example, the motion pattern of each human body part is compared to the motion pattern of the known activities. The recognized movements of the body parts are combined by a classifier to recognize the overall activity of the human body.

Owner:PRINCETON UNIV

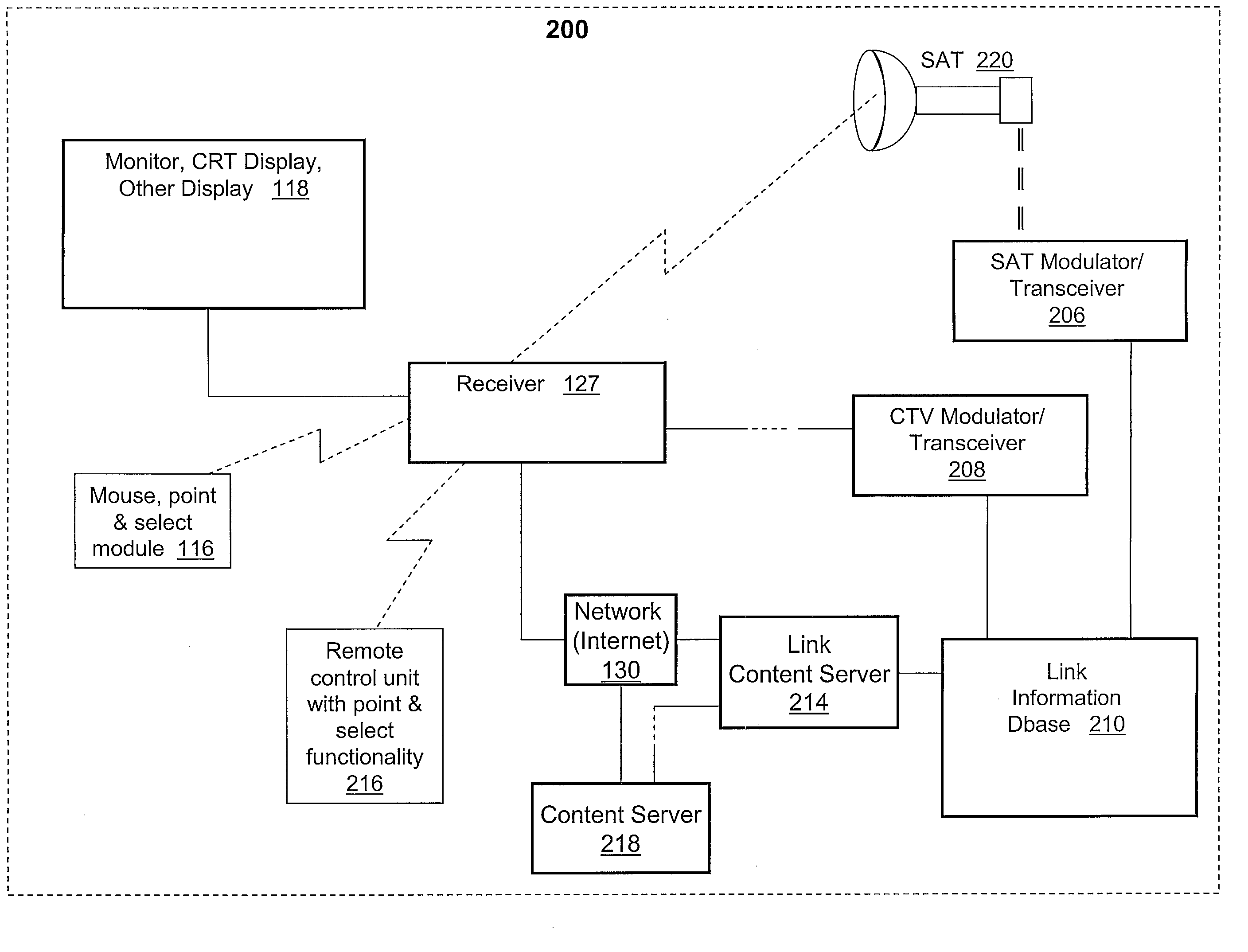

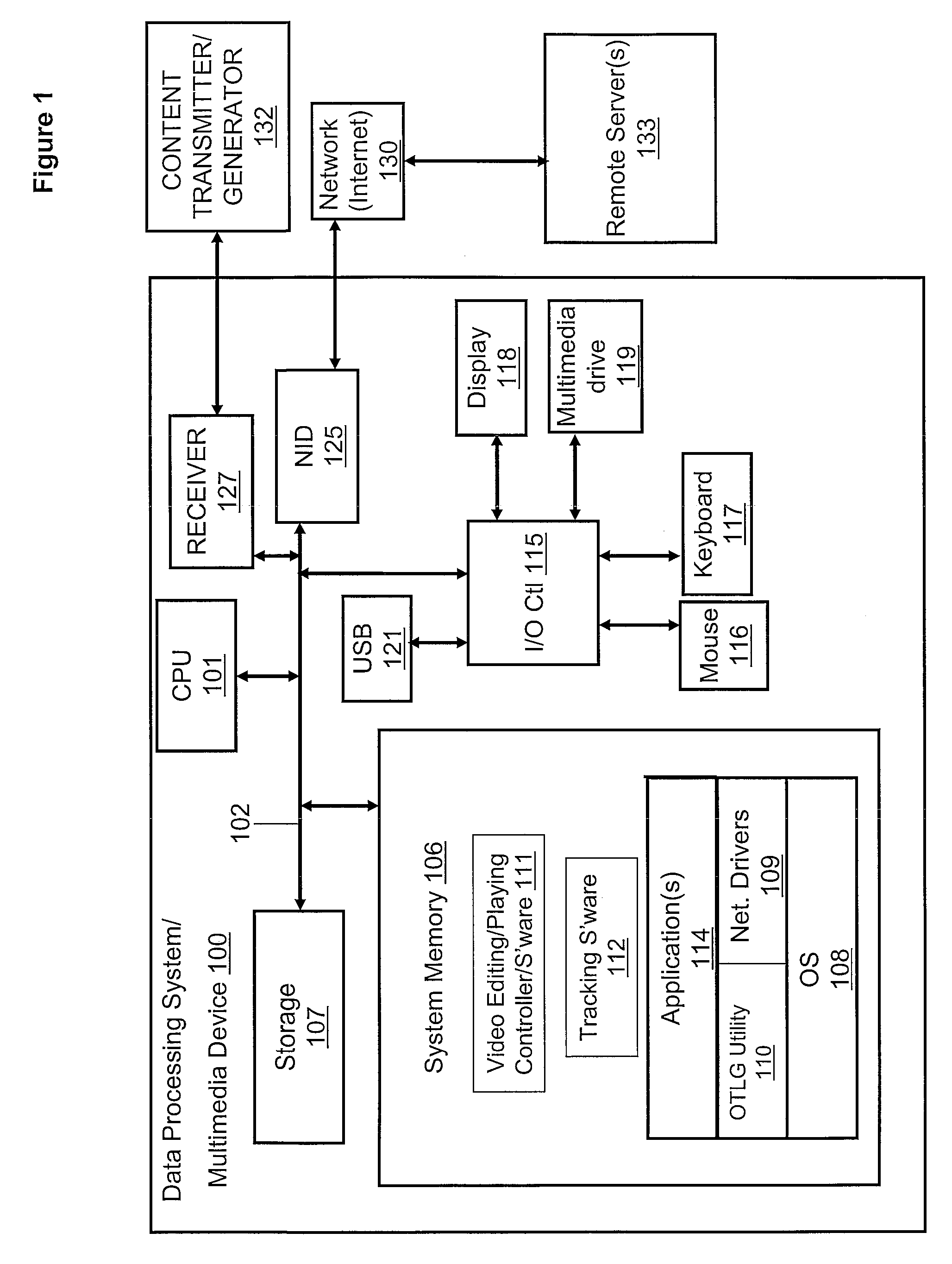

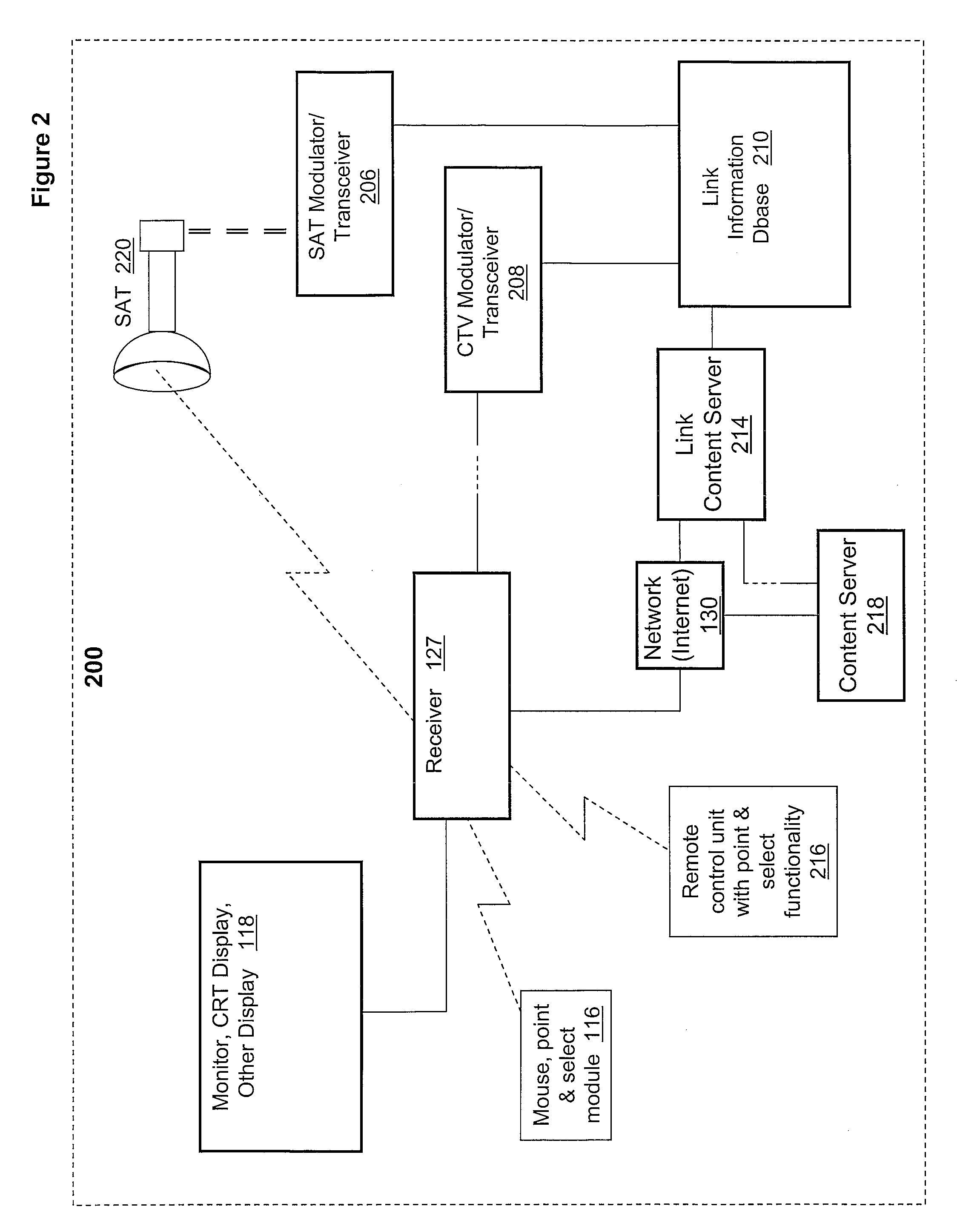

Generating synchronized interactive link maps linking tracked video objects to other multimedia content in real-time

InactiveUS20090083815A1Facilitate product advertisingGreat product awarenessTwo-way working systemsSelective content distributionPattern recognitionComputer graphics (images)

The method, system and computer program product generate online interactive maps linking tracked objects (in a live or pre-recorded video sequence) to multimedia content in real time. Specifically, an object tracking and link generation (OTLG) utility allows a user to access multimedia content by clicking on moving (or still) objects within the frames of a video (or image) sequence. The OTLG utility identifies and stores a clear image(s) of an object or of multiple objects to be tracked and initiates a mechanism to track the identified objects over a sequence of video or image frames. The OTLG utility utilizes the results of the tracking mechanism to generate, for each video frame, an interactive map frame with interactive links placed in the map frame at a location corresponding to the object's tracked location in each video frame.

Owner:MCMASTER ORLANDO +1

Method and graphical user interface for modifying depth maps

Owner:HER MAJESTY THE QUEEN & RIGHT OF CANADA REPRESENTED BY THE MIN OF IND THROUGH THE COMM RES CENT

Method and graphical user interface for modifying depth maps

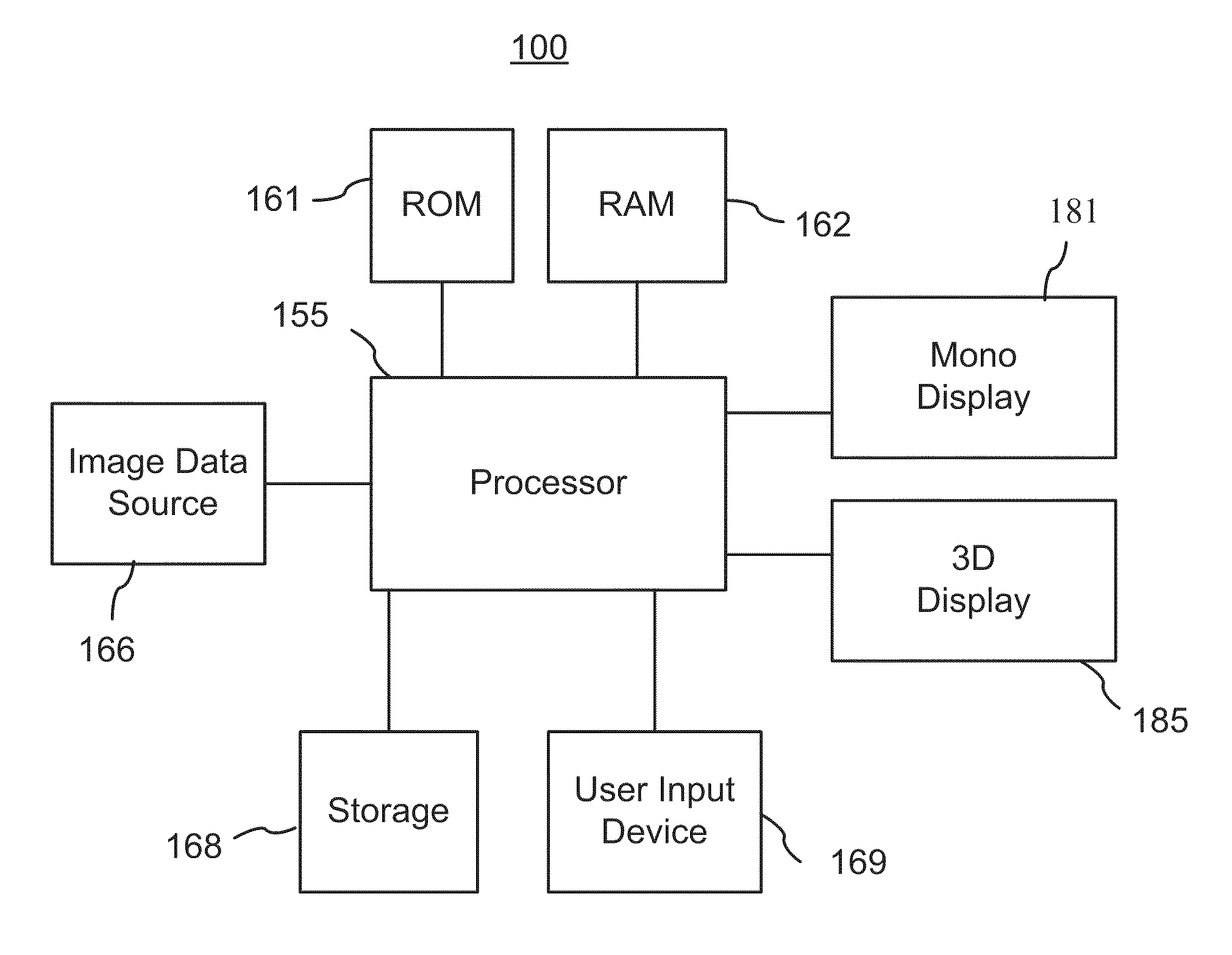

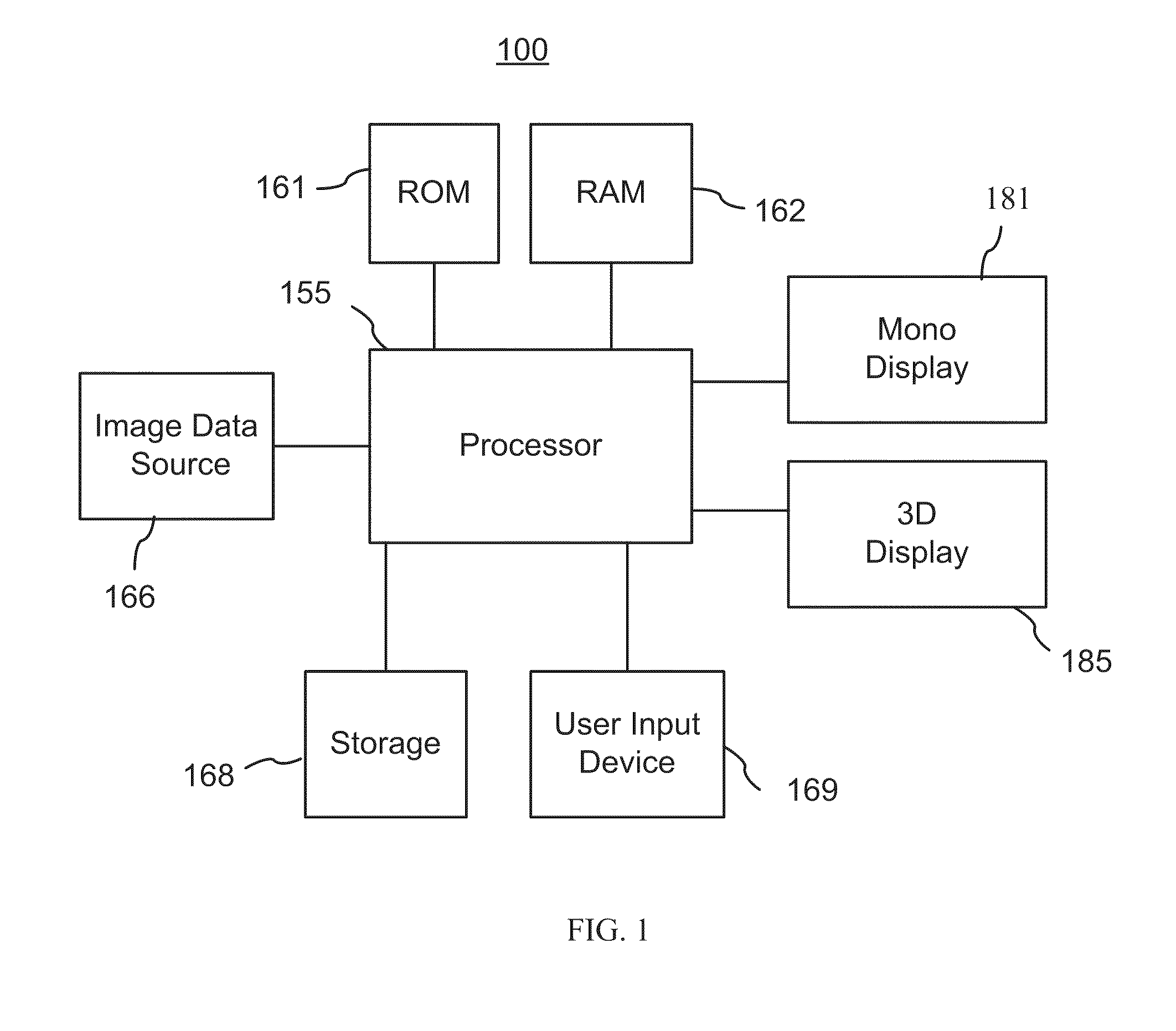

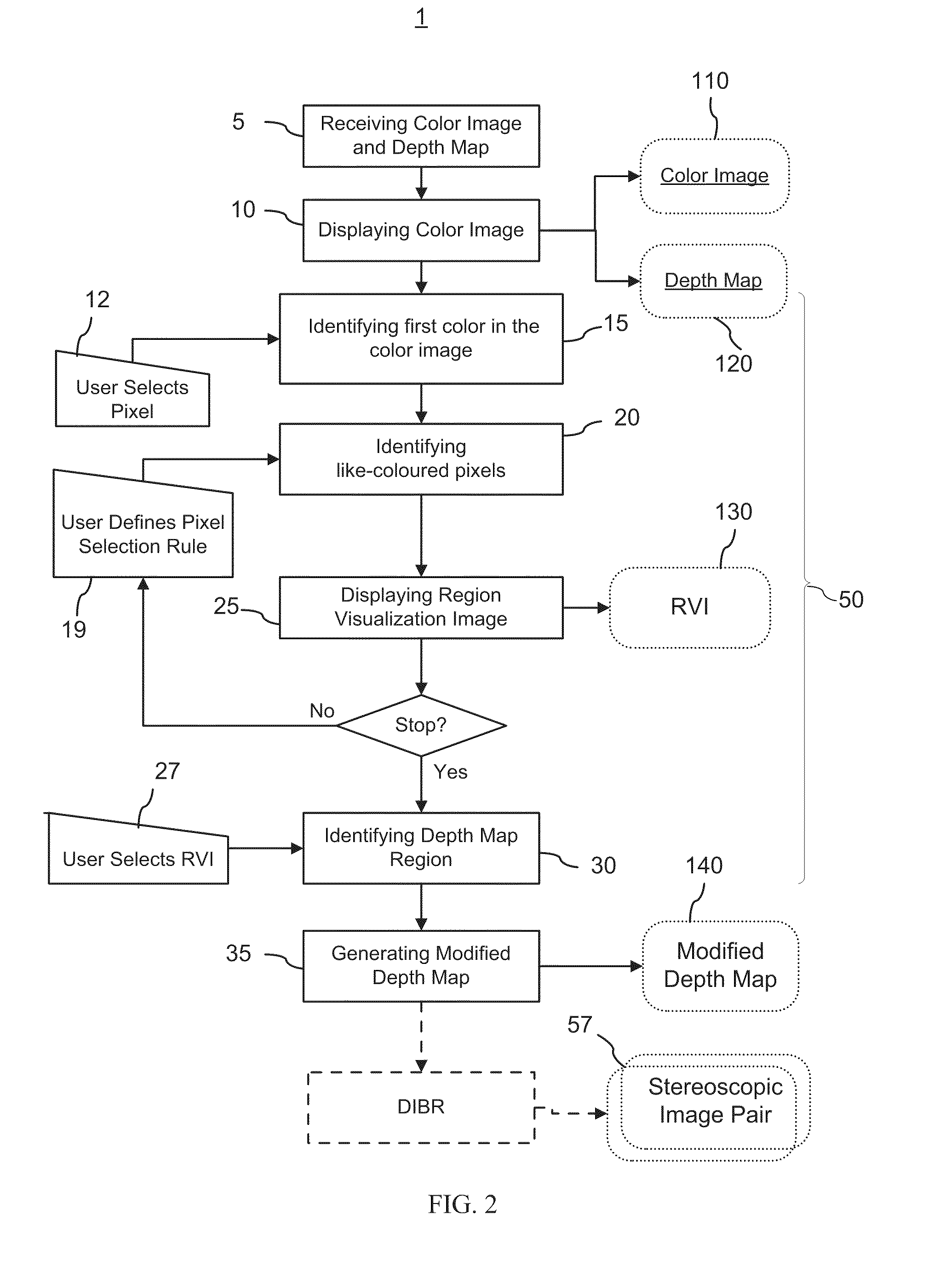

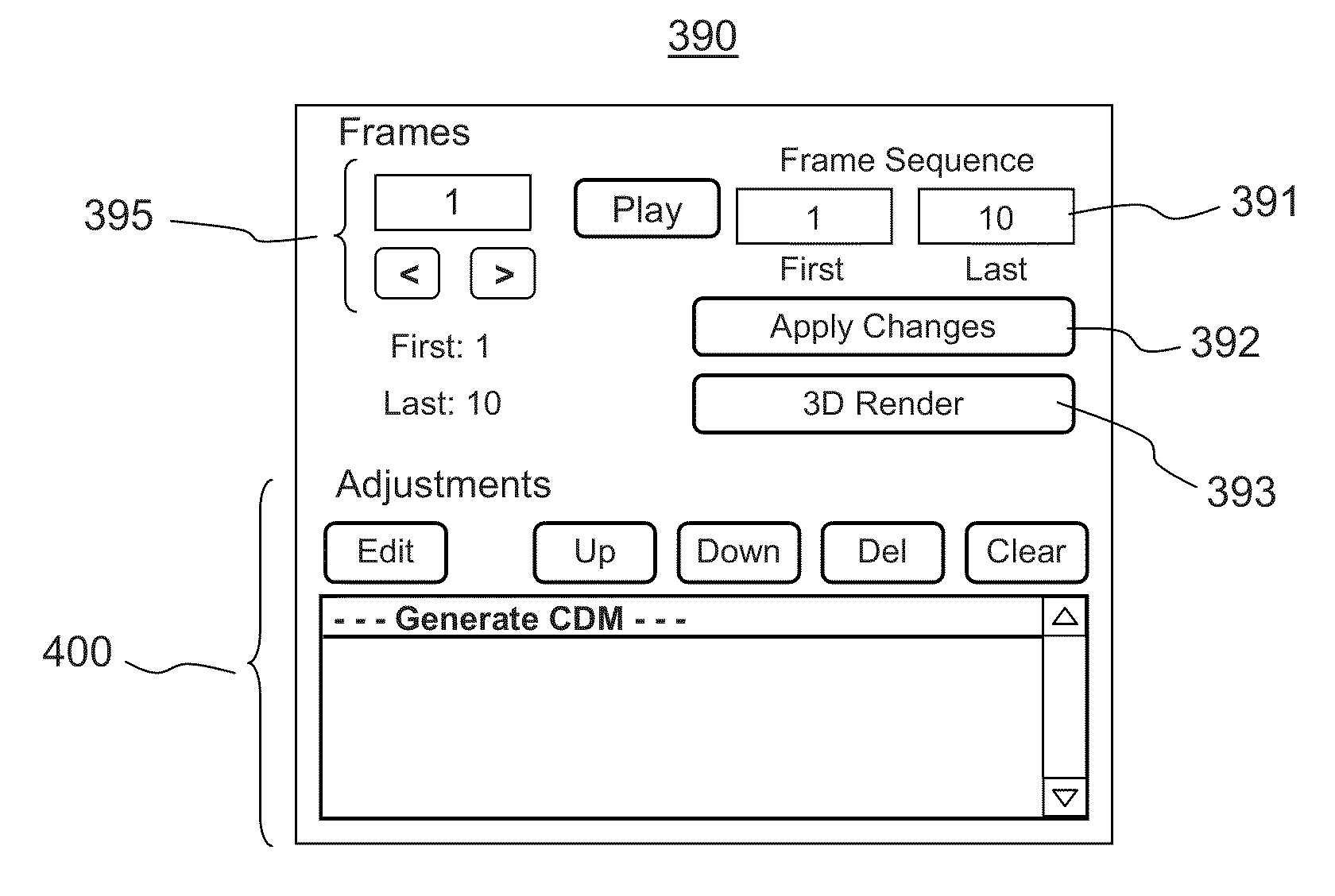

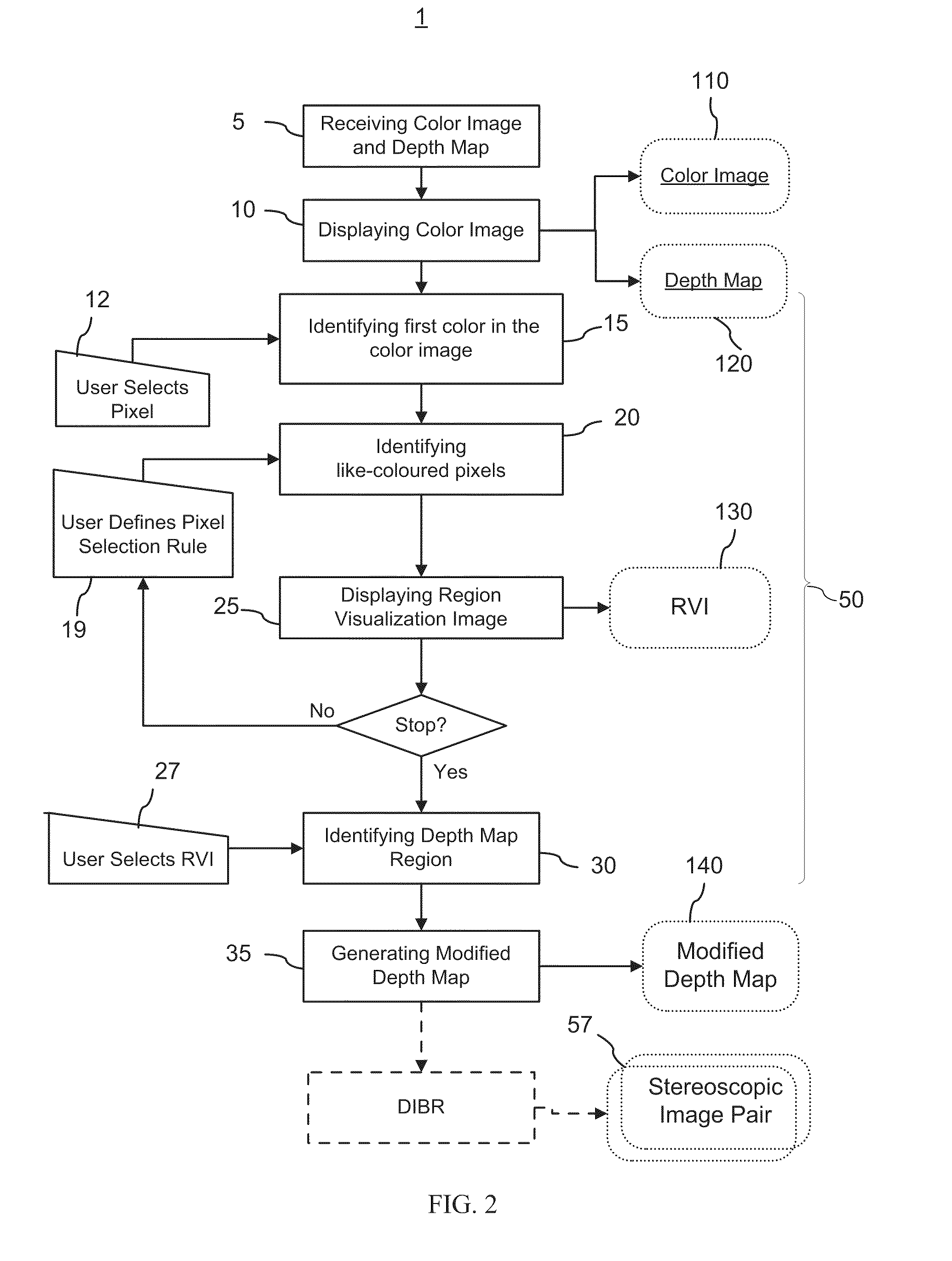

The invention relates to a method and a graphical user interface for modifying a depth map for a digital monoscopic color image. The method includes interactively selecting a region of the depth map based on color of a target region in the color image, and modifying depth values in the thereby selected region of the depth map using a depth modification rule. The color-based pixel selection rules for the depth map and the depth modification rule selected based on one color image from a video sequence may be saved and applied to automatically modify depths maps of other color images from the same sequence.

Owner:HER MAJESTY THE QUEEN & RIGHT OF CANADA REPRESENTED BY THE MIN OF IND THROUGH THE COMM RES CENT

System and method for creating trick play video streams from a compressed normal play video bitstream

InactiveUS6445738B1Generate efficientlyReduced storage and data transfer data bandwidth requirementTelevision system detailsPulse modulation television signal transmissionVideo bitstreamVideo sequence

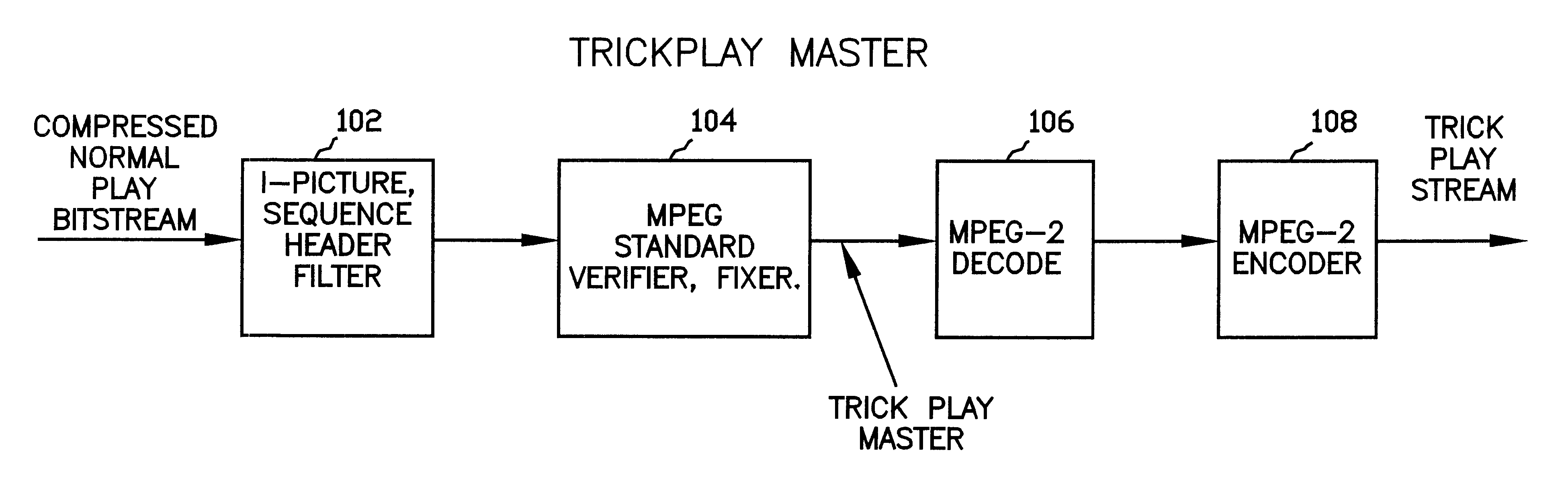

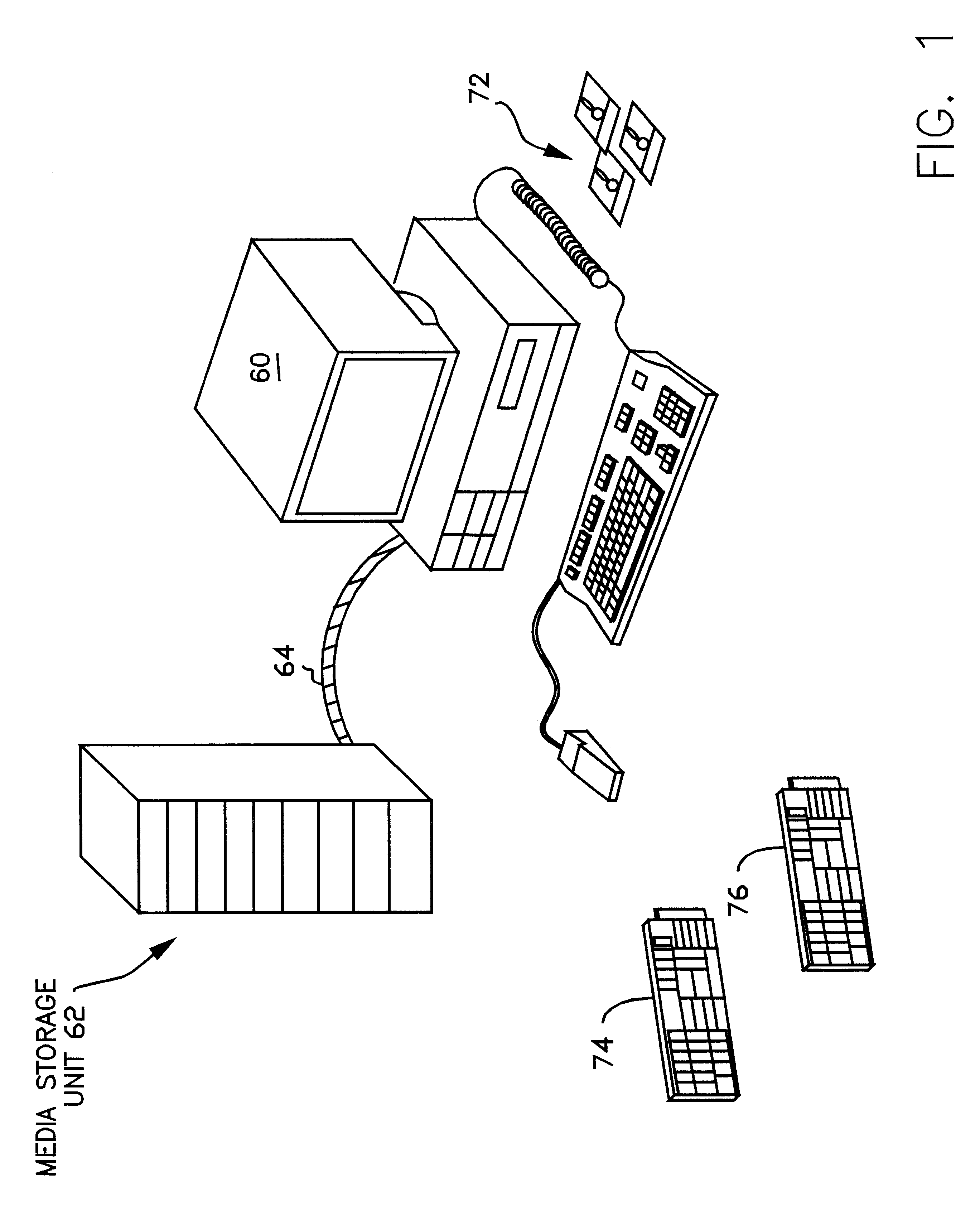

A system and method for generating trick play video streams, such as fast forward and fast reverse video streams, from an MPEG compressed normal play bitstream. The system receives a compressed normal play bitstream and filters the bitstream by extracting and saving only portions of the bitstream. The system preferably extracts I-frames and sequence headers, including all weighting matrices, from the MPEG bitstream and stores this information in a new file. The system then assembles or collates the filtered data into the proper order to generate a single assembled bitstream. The system also ensures that the weighting matrixes properly correspond to the respective I-frames. This produces a bitstream comprised of a plurality of sequence headers and I-frames. This assembled bitstream is MPEG-2 decoded to produce a new video sequence which comprises only one out of every X pictures of the original, uncompressed normal play bitstream. This output picture stream is then re-encoded with respective MPEG parameters desired for the trickplay stream, thus producing a trickplay stream that is a valid MPEG encoded stream, but which includes only one of every X frames. The present invention thus generates compressed trick play video streams which require reduced storage and reduced data transfer bandwidth requirements.

Owner:OPEN TV INC +1

Method, device, and system for multiplexing of video streams

InactiveUS20080037656A1Television system detailsPicture reproducers using cathode ray tubesMultiplexingDigital video

There is disclosed a method and device of statistical interleaving of at least two digital video sequences each comprising a plurality of coded pictures to be reproduced by a decoder, wherein the two digital video sequences form a single video stream and are destined for being successively reproduced in time, comprising statistical multiplexing a first digital video sequence with a second digital video sequence, wherein the pictures of the second digital video sequence are associated with timing information, which prevents a decoder from reproducing the pictures; transmitting the digital video sequences in an interleaved manner in accordance with a result of the statistical multiplexing to the decoder; and once the second digital video sequence is to be reproduced by decoder receiving the stream: transmitting dummy pictures, which are coded to refer to one or more pictures of the second video sequence transmitted in advance to the decoder.

Owner:CORE WIRELESS LICENSING R L

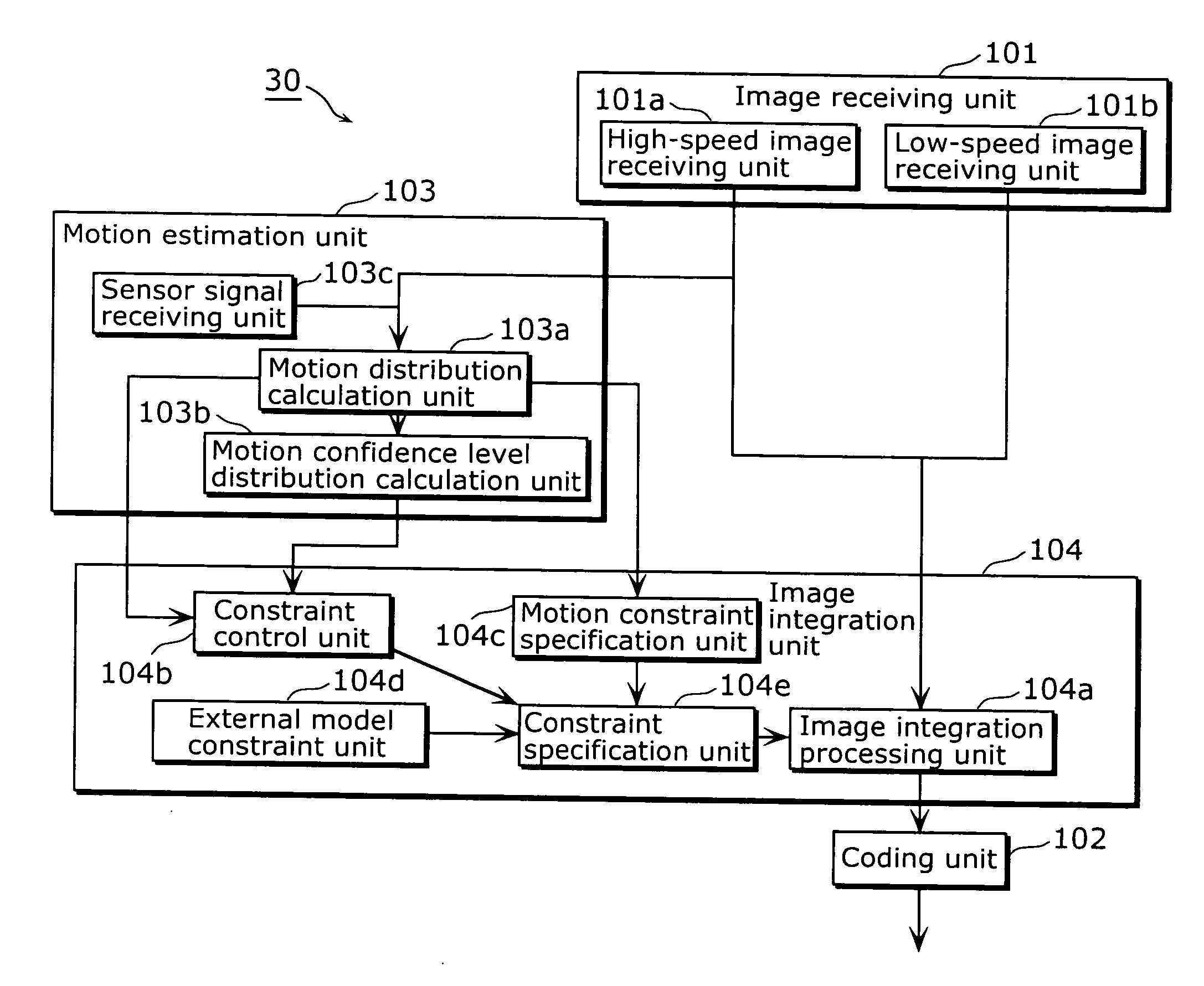

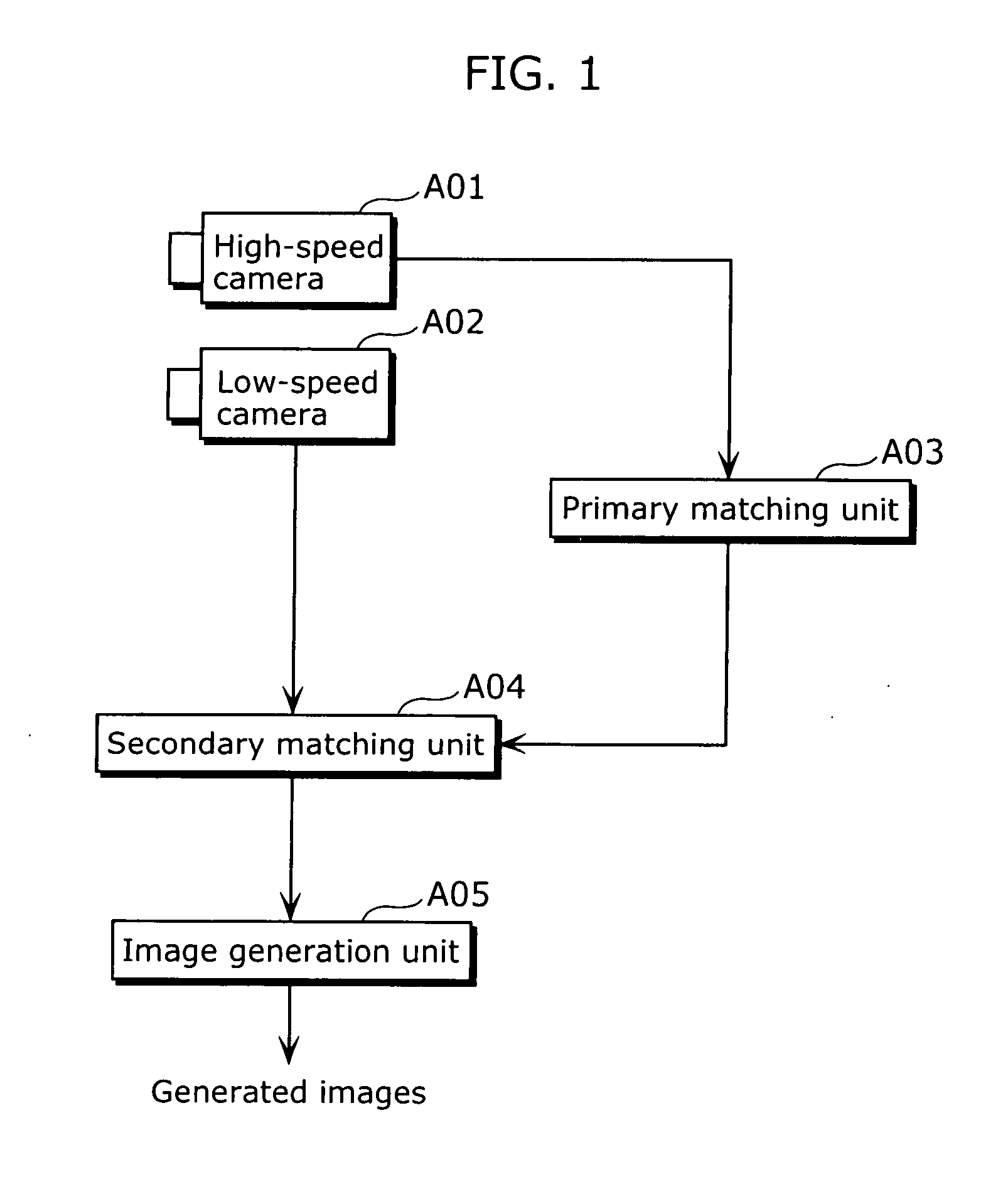

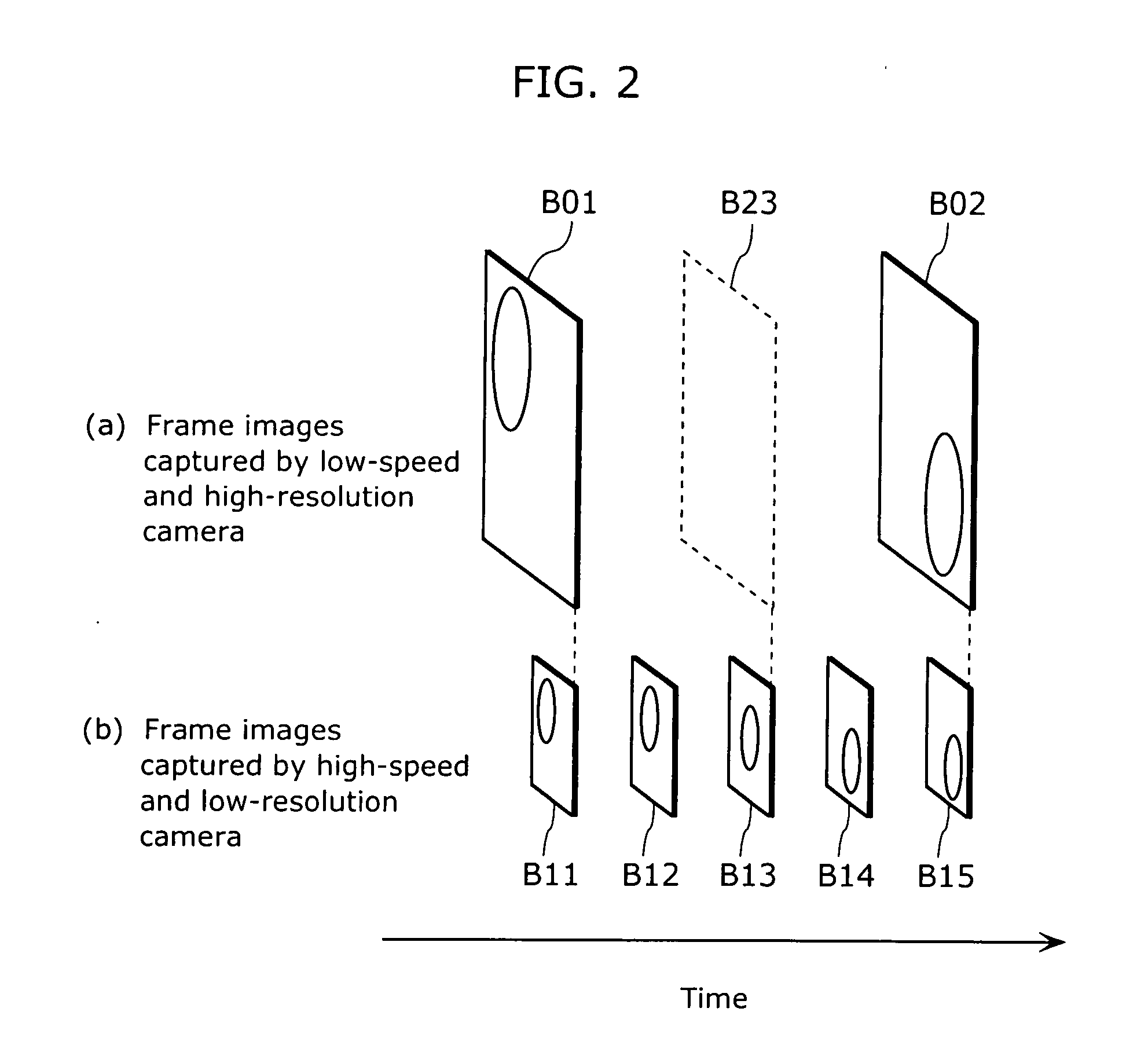

Image generation apparatus and image generation method

InactiveUS20070189386A1Maintain good propertiesReliable generationTelevision system detailsImage analysisComputer graphics (images)Image resolution

The image generation apparatus includes: an image receiving unit which receives a first video sequence including frames having a first resolution and a second video sequence including frames having a second resolution which is higher than the first resolution, each frame of the first video sequence being obtained with a first exposure time, and each frame of the second video sequence being obtained with a second exposure time which is longer than the first exposure time; and an image integration unit which generates, from the first video sequence and the second video sequence, a new video sequence including frames having a resolution which is equal to or higher than the second resolution, at a frame rate which is equal to or higher than a frame rate of the first video sequence, by reducing a difference between a value of each frame of the second video sequence and a sum of values of frames of the new video sequence which are included within an exposure period of the frame of the second video sequence.

Owner:SOVEREIGN PEAK VENTURES LLC

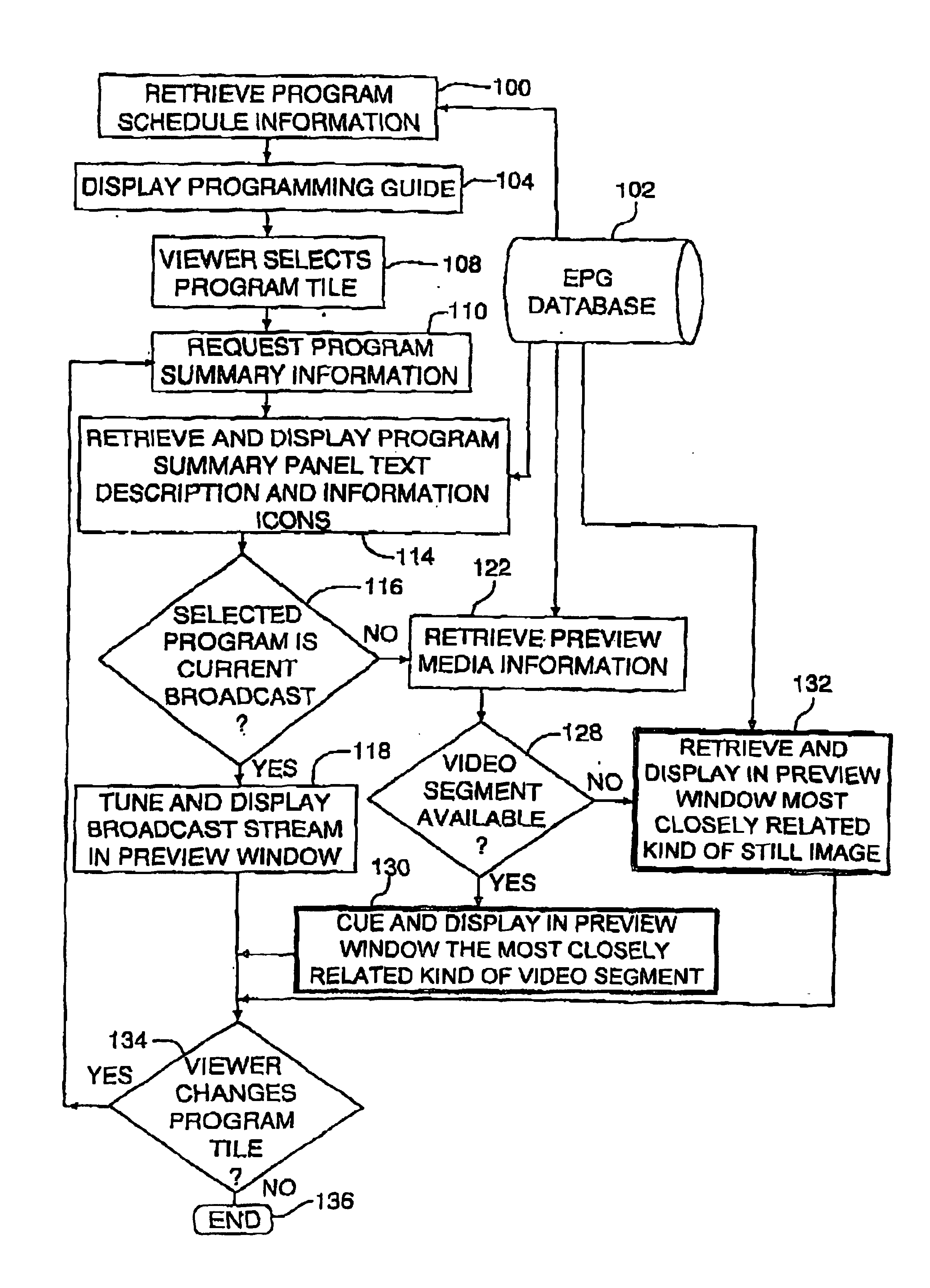

Interactive program summary panel

InactiveUS6868551B1Television system detailsColor television detailsTelevision systemInteractive television

The present invention includes a method of displaying for a viewer summary information relating to programming available on an interactive television or televideo system. In a preferred embodiment, the method includes obtaining a user selection indication corresponding to a scheduled program selected by the viewer from a programming guide. Based on the user selection indication, the interactive television system accesses summary information relating specifically to the program selected by the viewer. The summary information preferably includes a text description of the programming and display imagery relating to the programming. The display imagery may include a multi-frame video sequence of or relating to the programming or a still video image.

Owner:MICROSOFT TECH LICENSING LLC

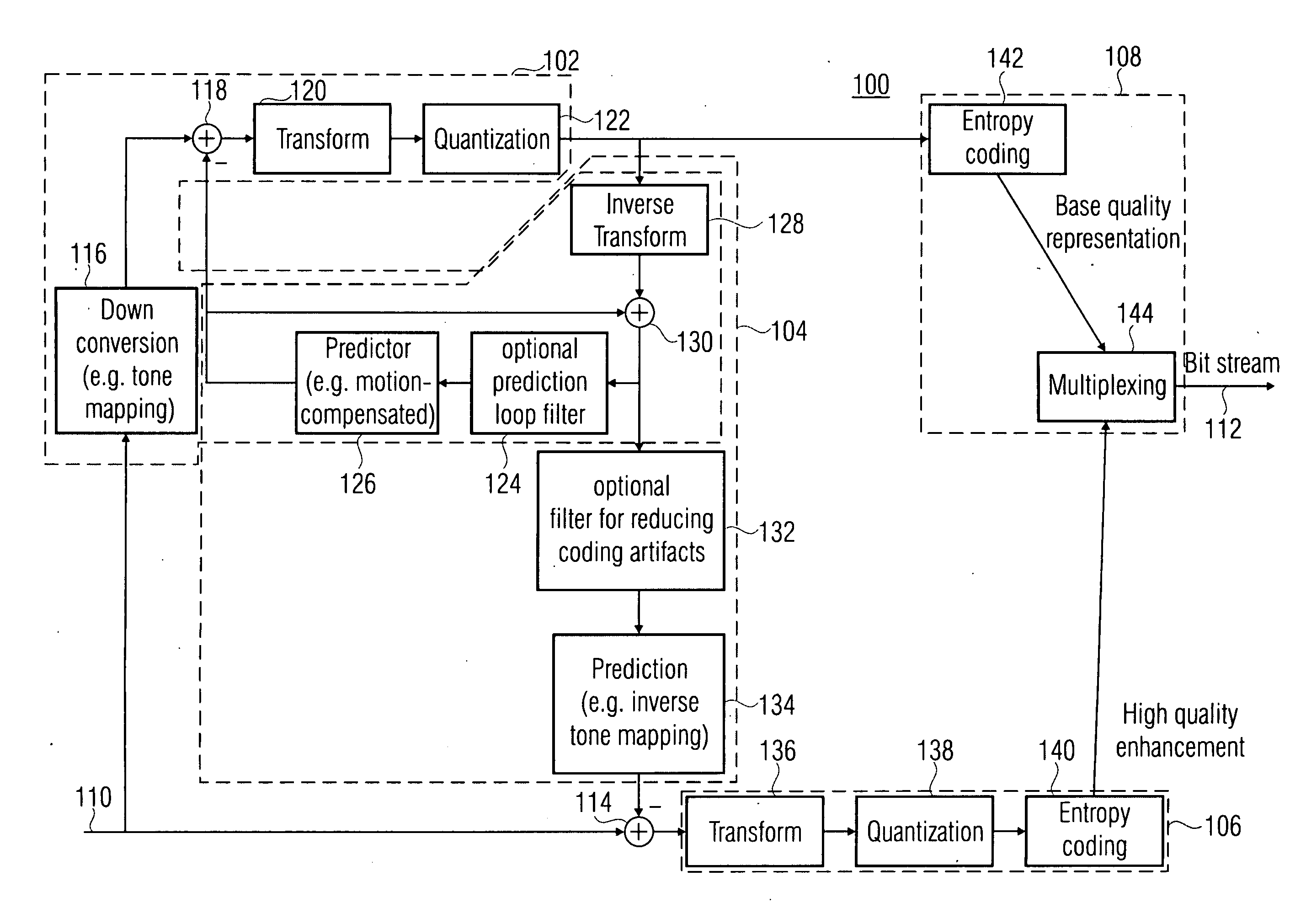

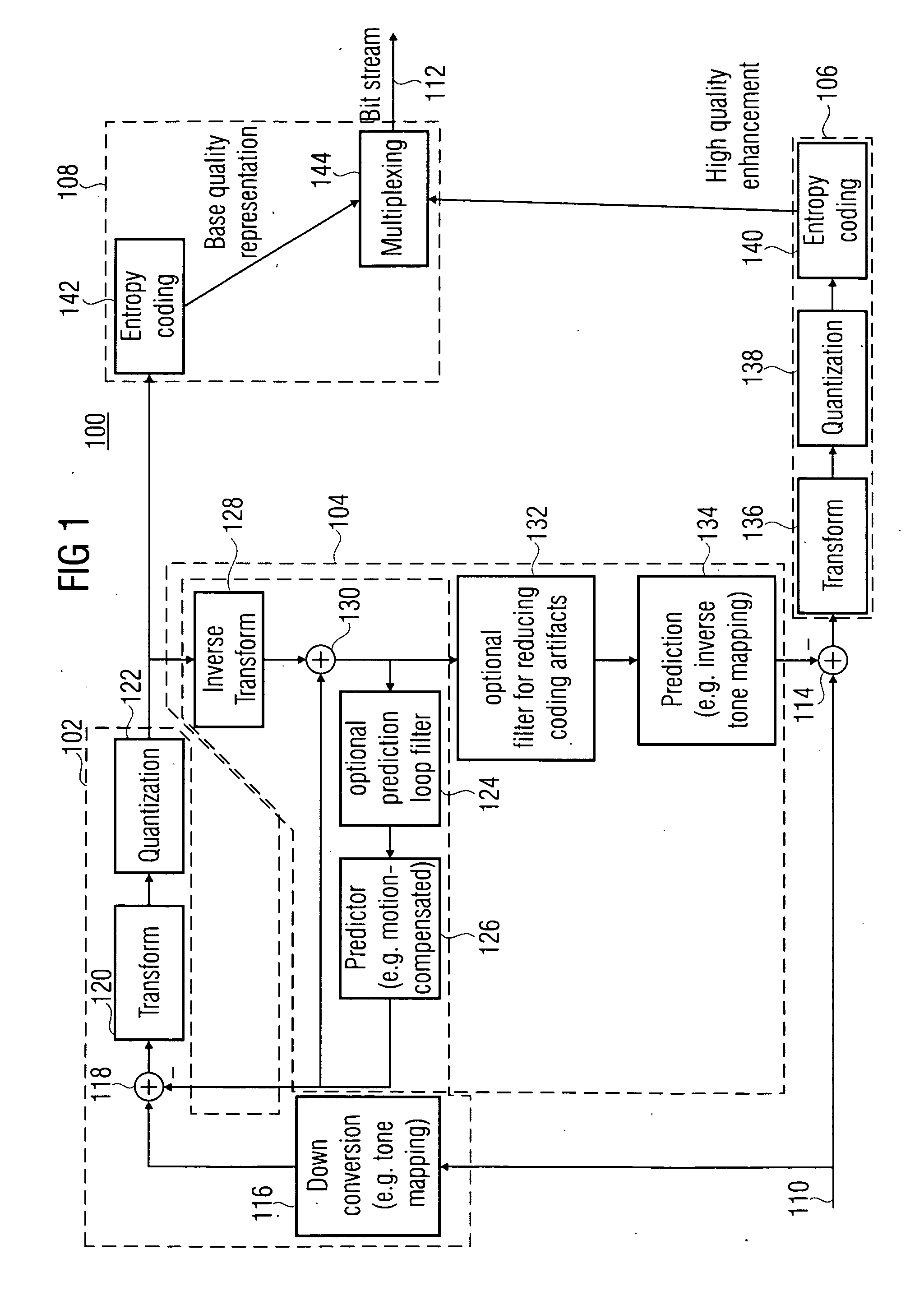

Quality scalable coding

ActiveUS20100020866A1Effective wayHigh bit-depthColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionData stream

A more efficient way of addressing different bit-depths, or different bit-depths and chroma sampling format requirements is achieved by using a low bit-depth and / or low-chroma resolution representation for providing a respective base layer data stream representing this low bit-depth and / or low-chroma resolution representation as well as for providing a higher bit-depth and / or higher chroma resolution representation so that a respective prediction residual may be encoded in order to obtain a higher bit-depth and / or higher chroma resolution representation. By this measure, an encoder is enabled to store a base-quality representation of a picture or a video sequence, which can be decoded by any legacy decoder or video decoder, together with an enhancement signal for higher bit-depth and / or reduced chroma sub-sampling, which may be ignored by legacy decoders or video decoders.

Owner:GE VIDEO COMPRESSION LLC

Video camera providing a composite video sequence

ActiveUS20130235224A1Inhibitory contentTelevision system detailsColor television detailsDigital videoComputer graphics (images)

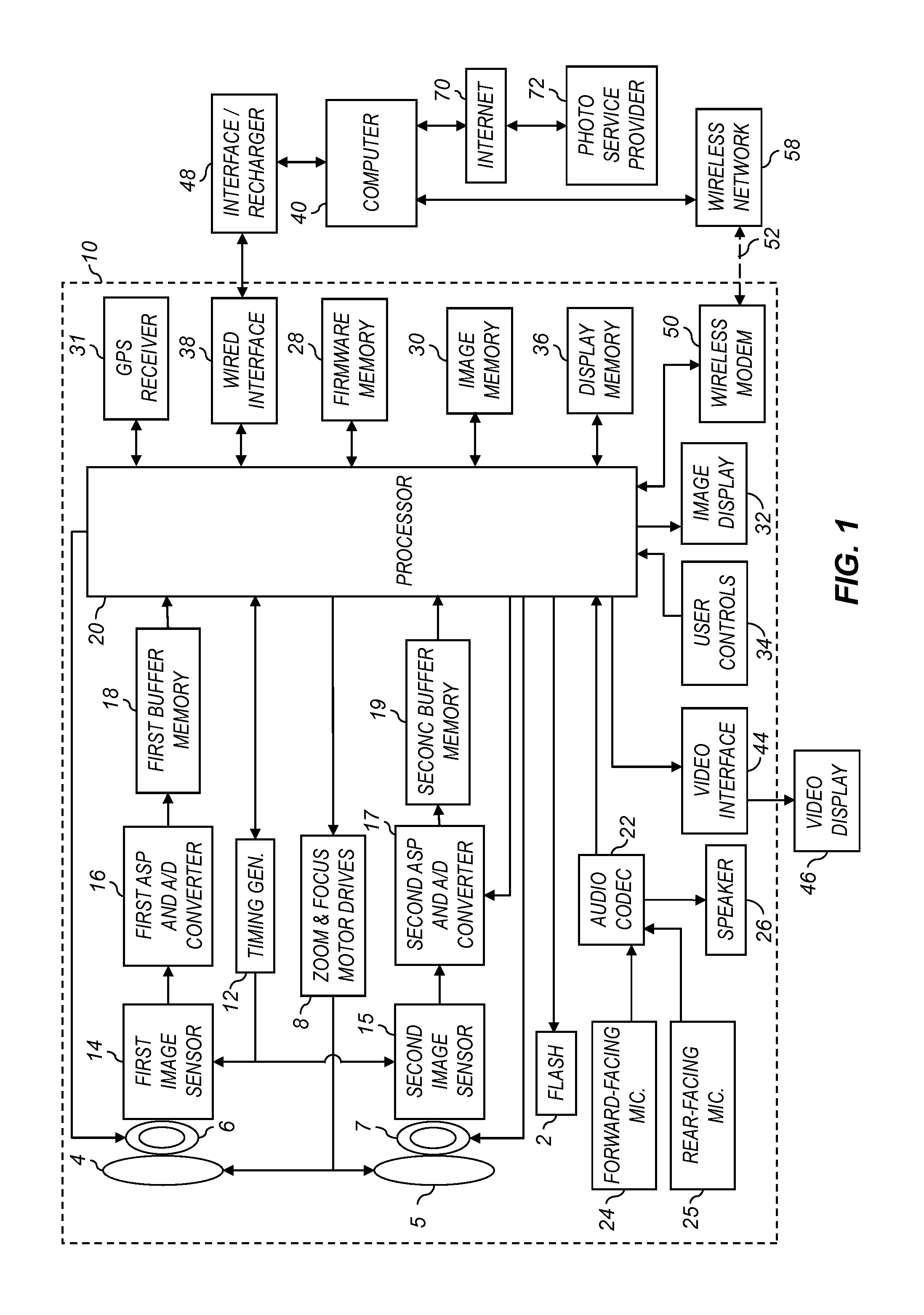

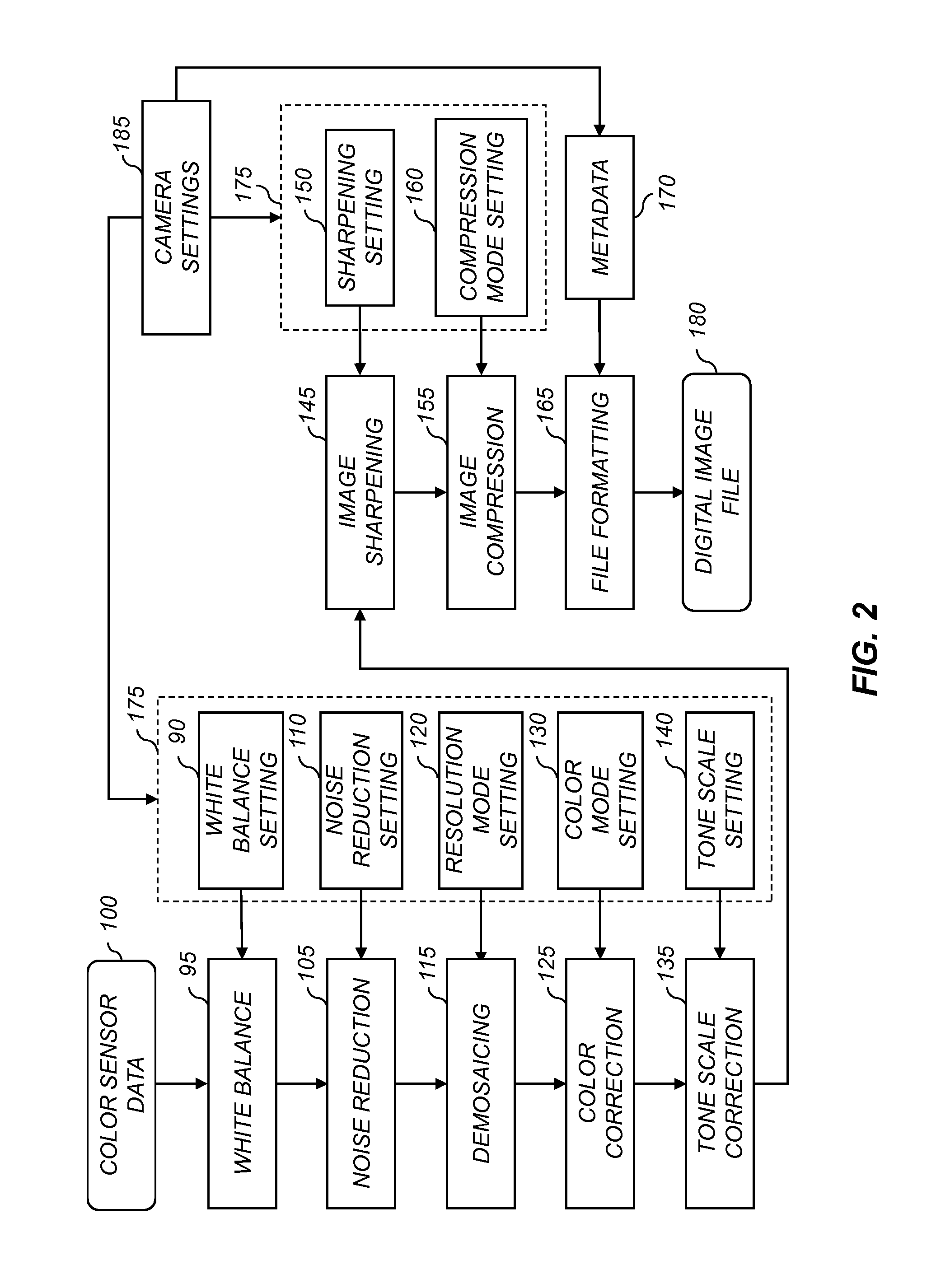

A digital camera system including a first video capture unit for capturing a first digital video sequence of a scene and a second video capture unit that simultaneously captures a second digital video sequence that includes the photographer. A data processor automatically analyzes first digital video sequence to determine a low-interest spatial image region. A facial video sequence including the photographer's face is extracted from the second digital video sequence, and inserted into the low-interest spatial image region in the first digital video sequence to form the composite video sequence.

Owner:APPLE INC

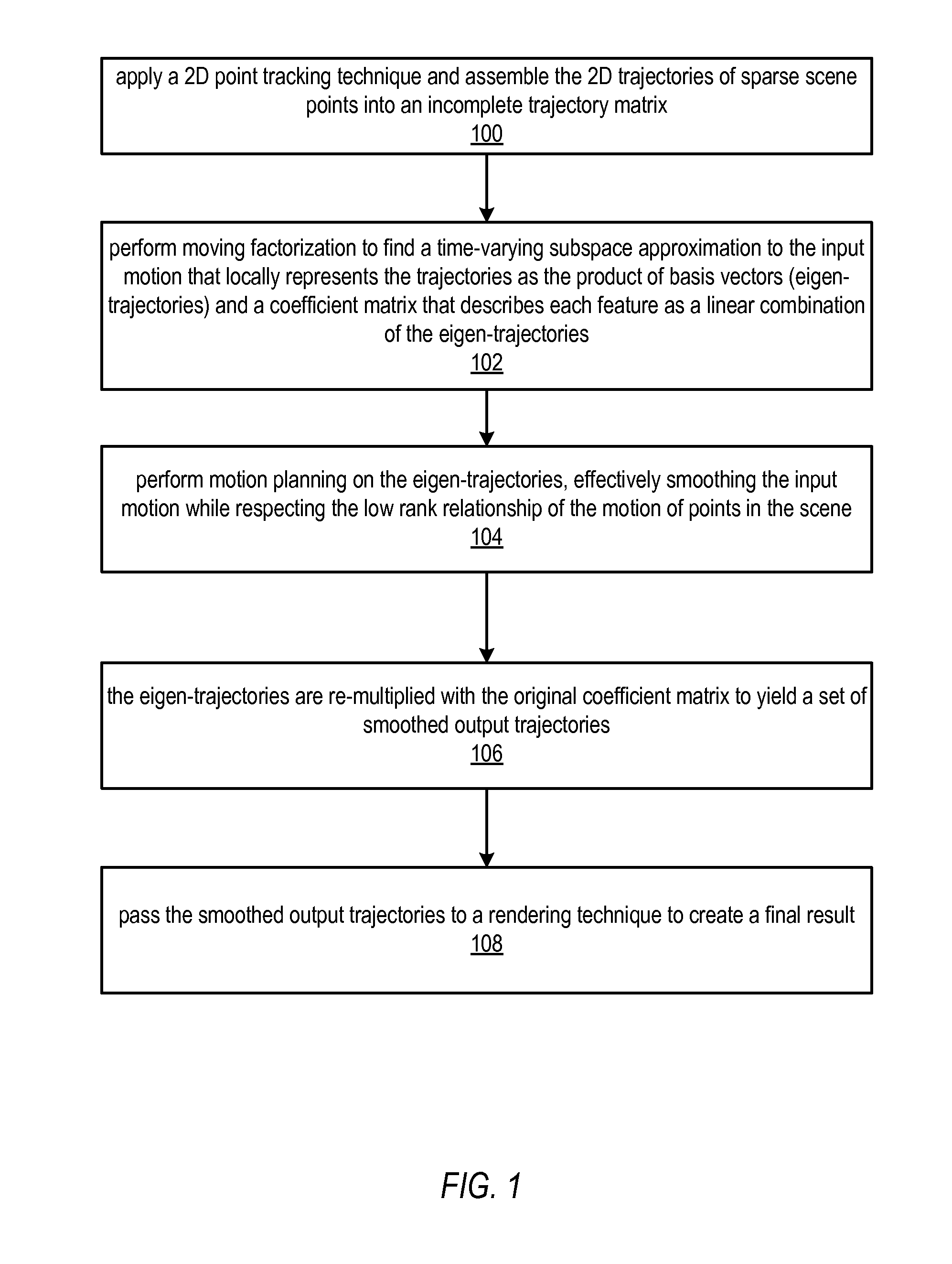

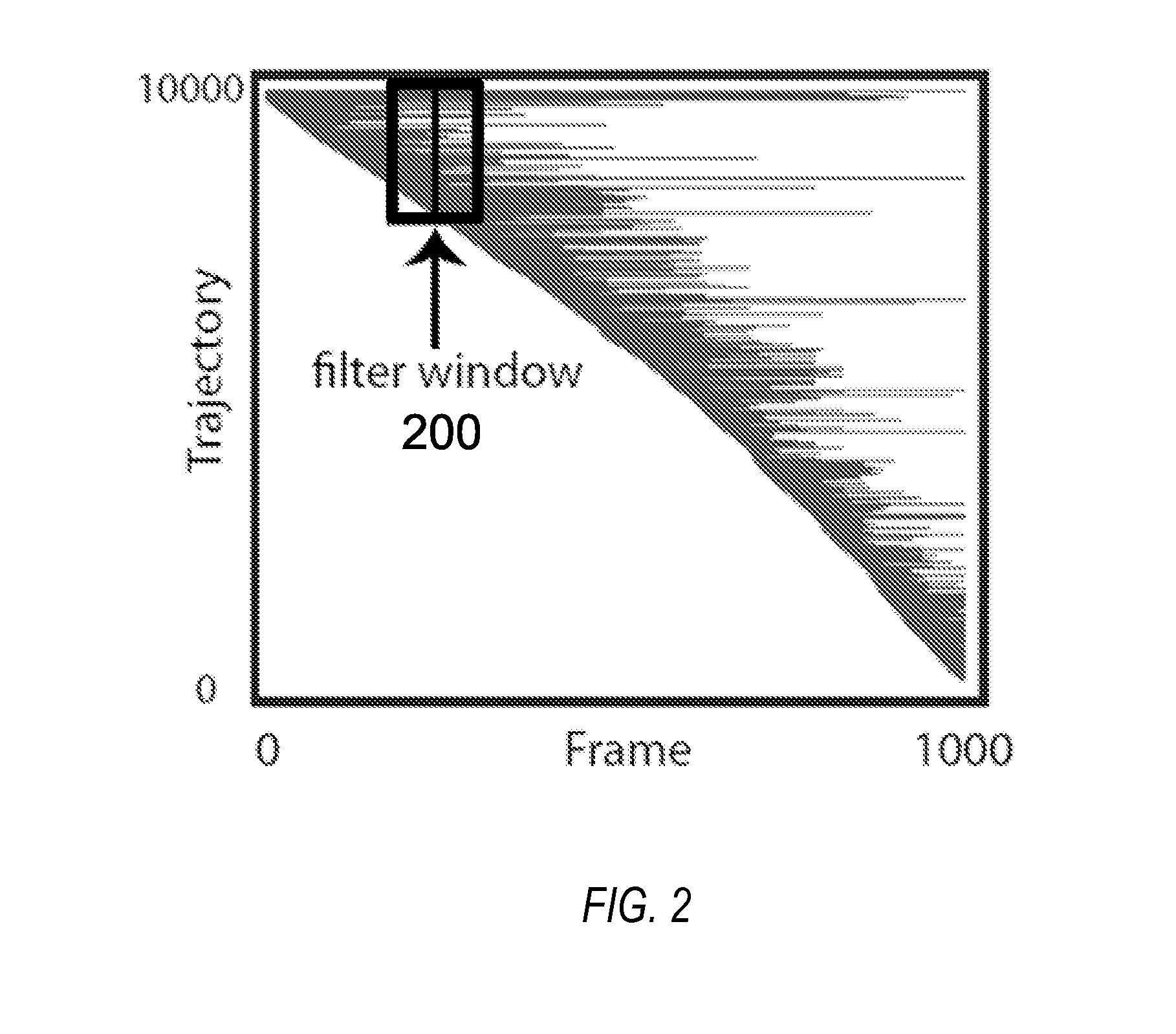

Methods and Apparatus for Video Completion

Methods, apparatus, and computer-readable storage media for video completion that may be applied to restore missing content, for example holes or border regions, in video sequences. A video completion technique applies a subspace constraint technique that finds and tracks feature points in the video, which are used to form a model of the camera motion and to predict locations of background scene points in frames where the background is occluded. Another frame where those points were visible is found, and that frame is warped using the predicted points. A content-preserving warp technique may be used. Image consistency constraints may be applied to modify the warp so that it fills the hole seamlessly. A compositing technique is applied to composite the warped image into the hole. This process may be repeated until the missing content is filled on all frames.

Owner:ADOBE INC

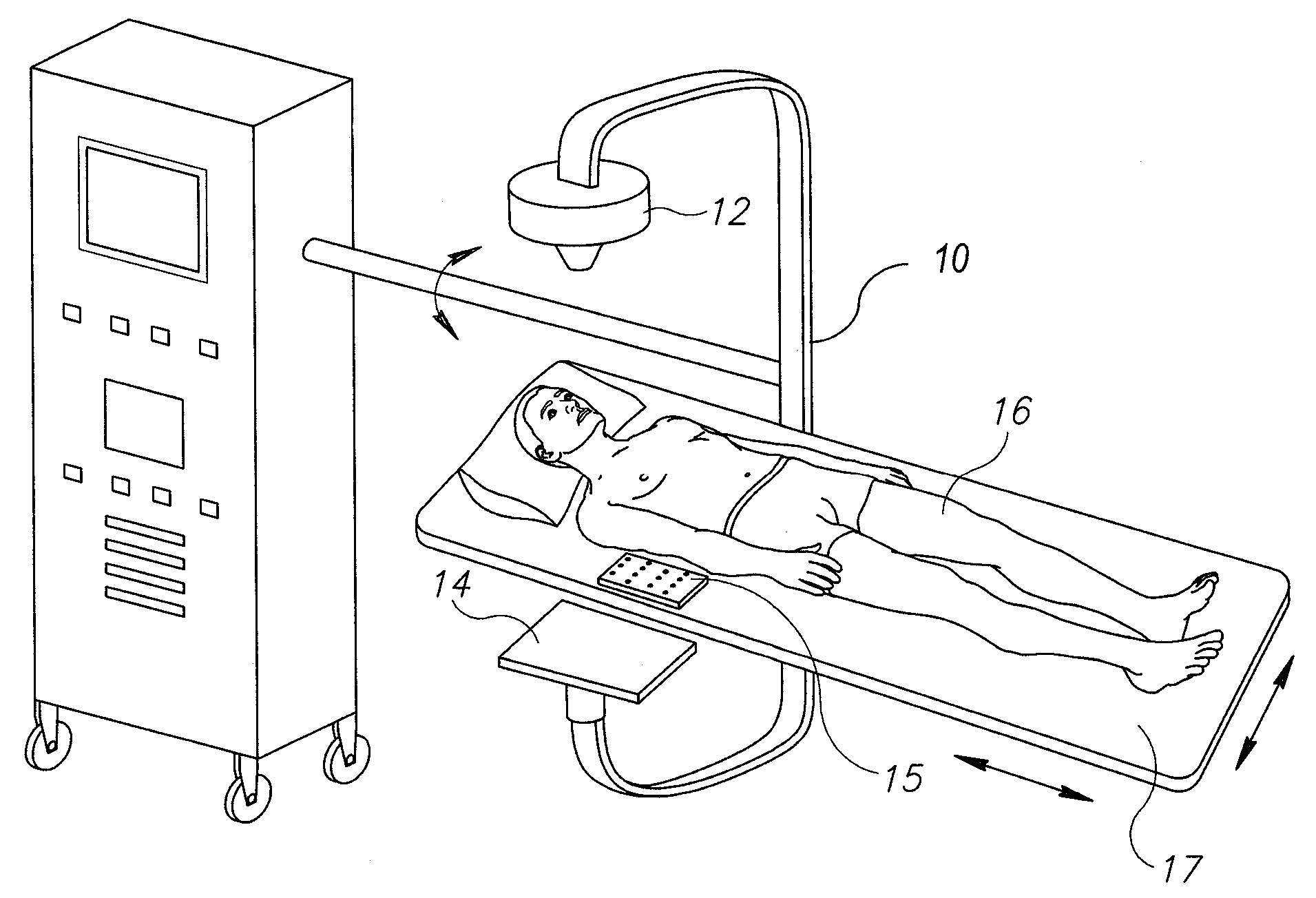

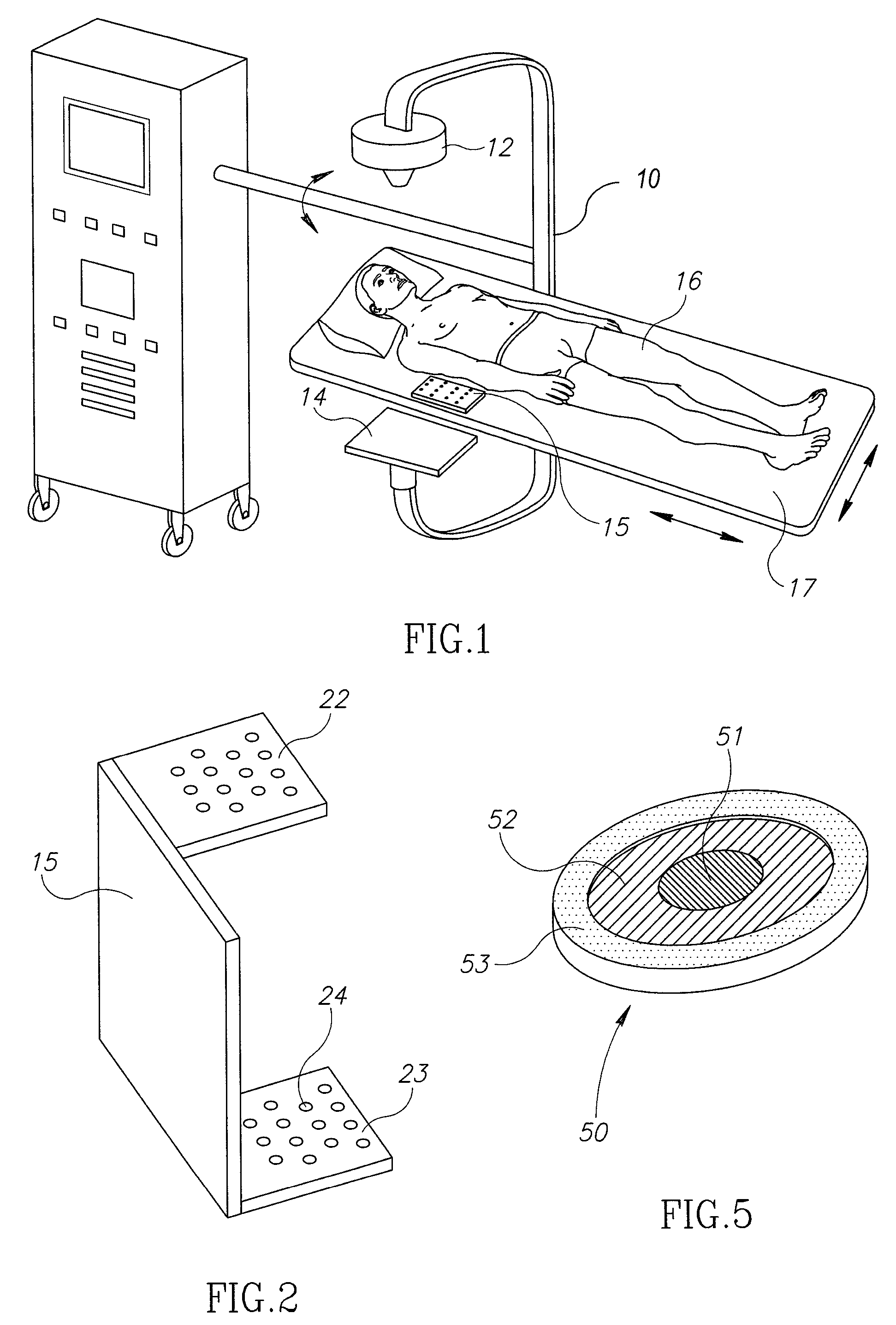

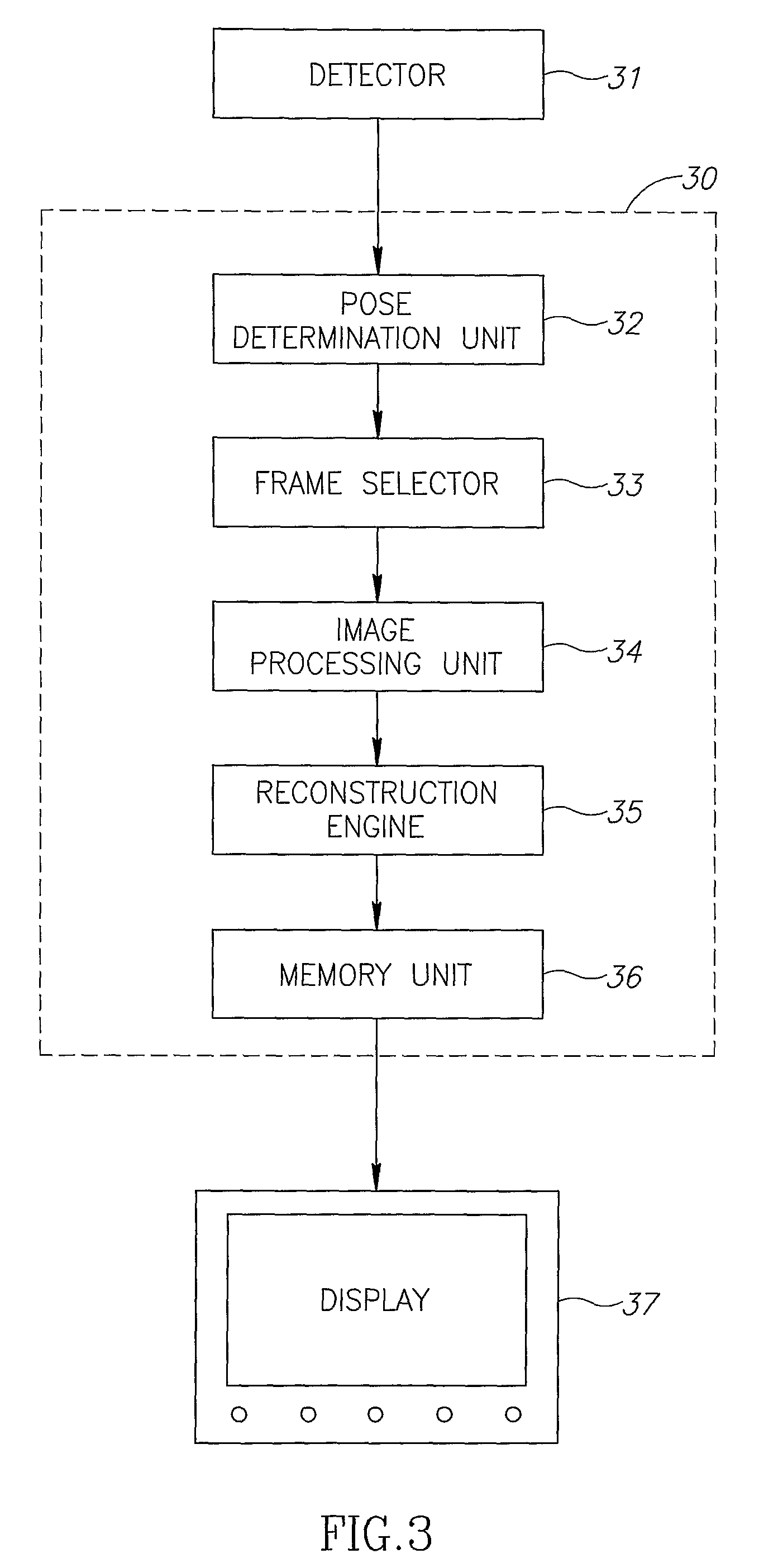

C-arm computerized tomography system

ActiveUS9044190B2Reducing effect of motion of C-armCharacter and pattern recognitionX-ray apparatusData setVideo sequence

Owner:MAZOR ROBOTICS

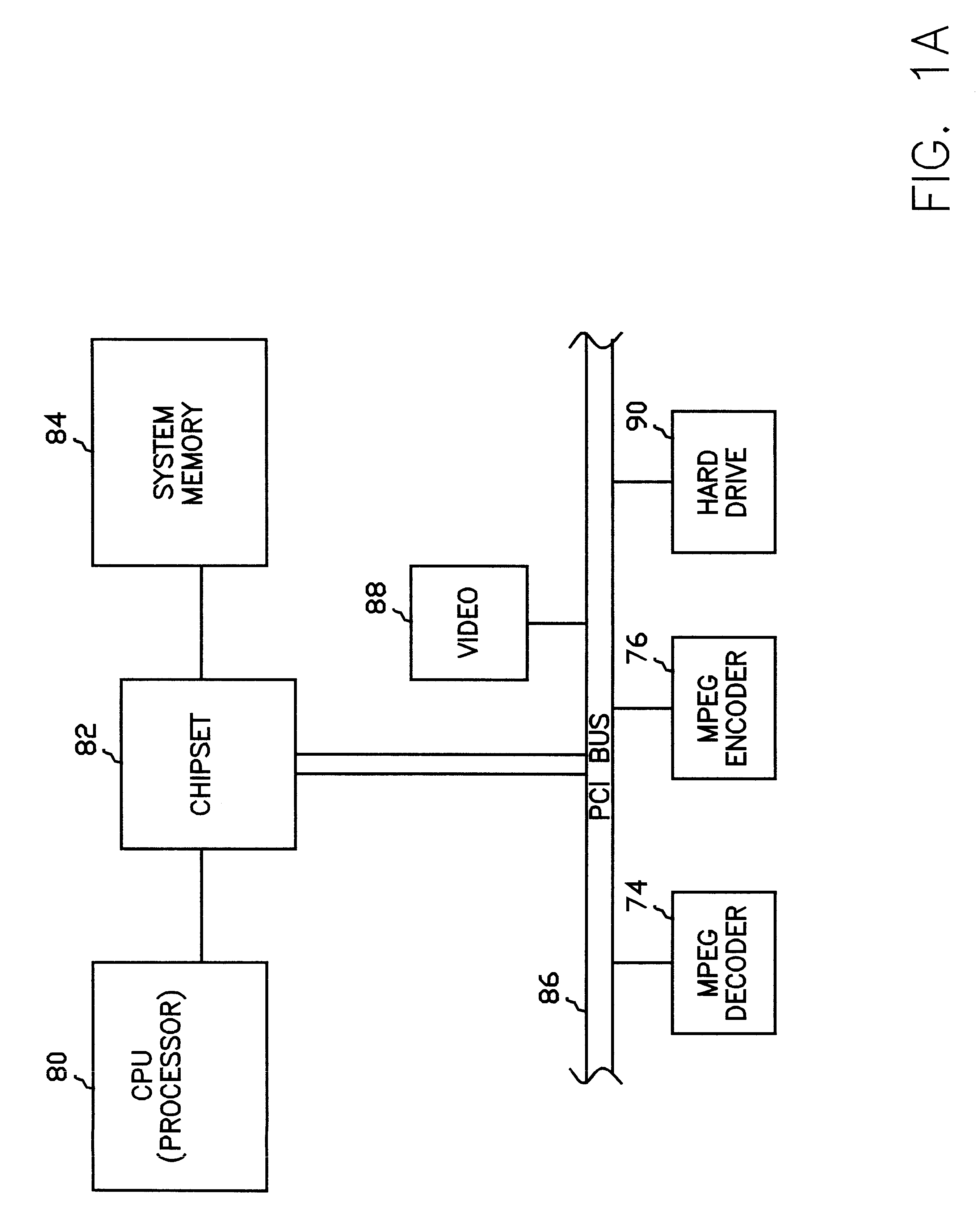

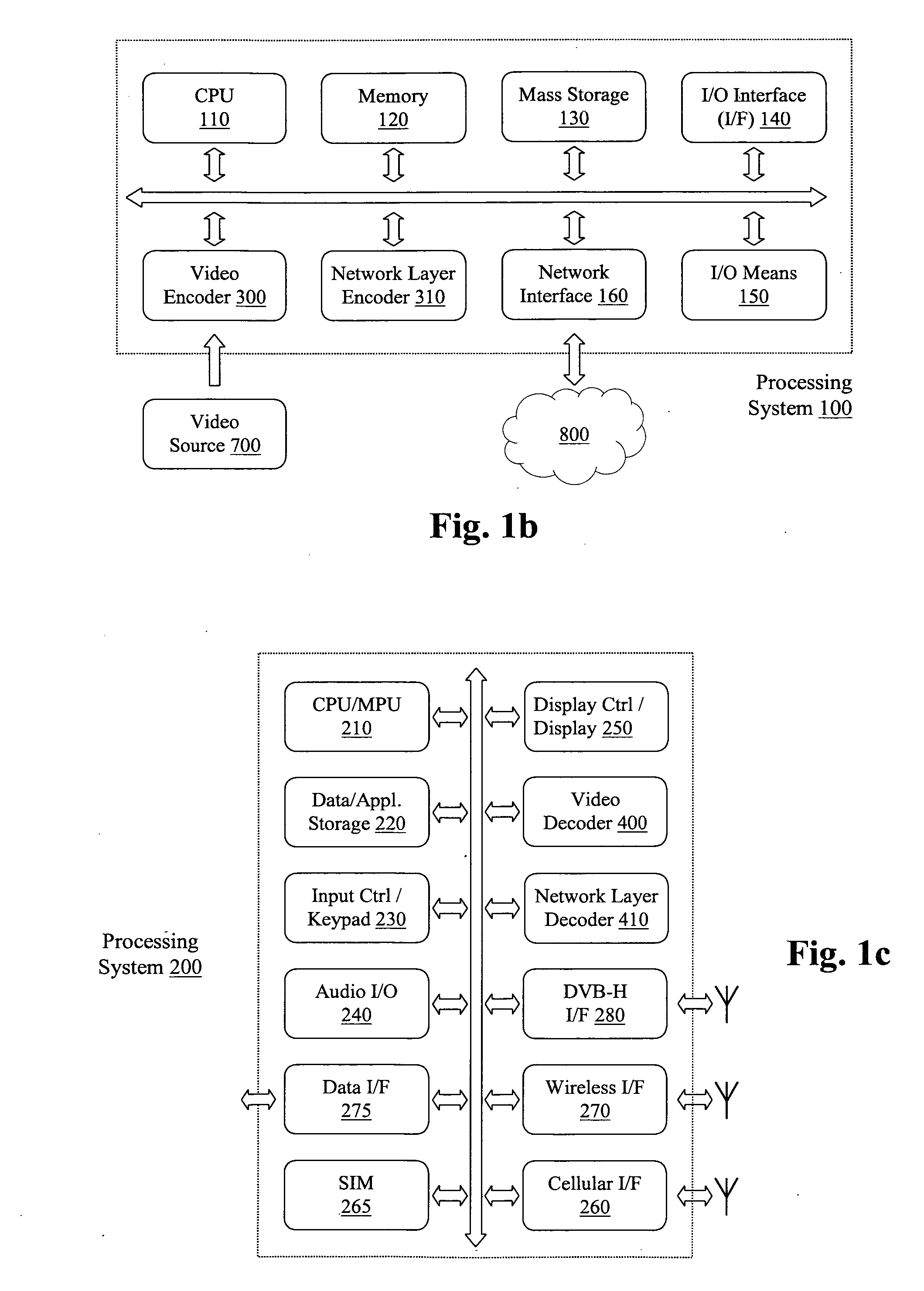

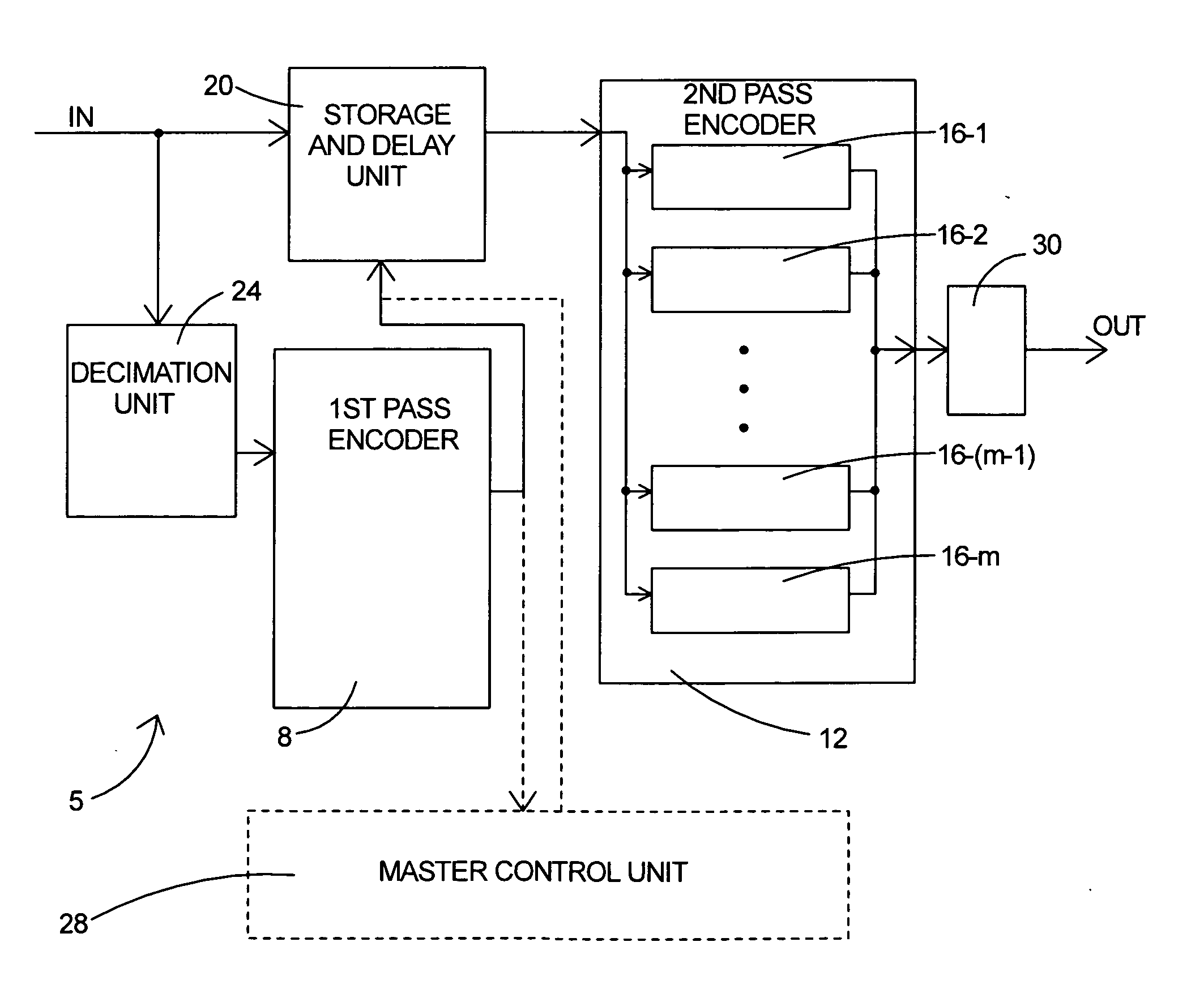

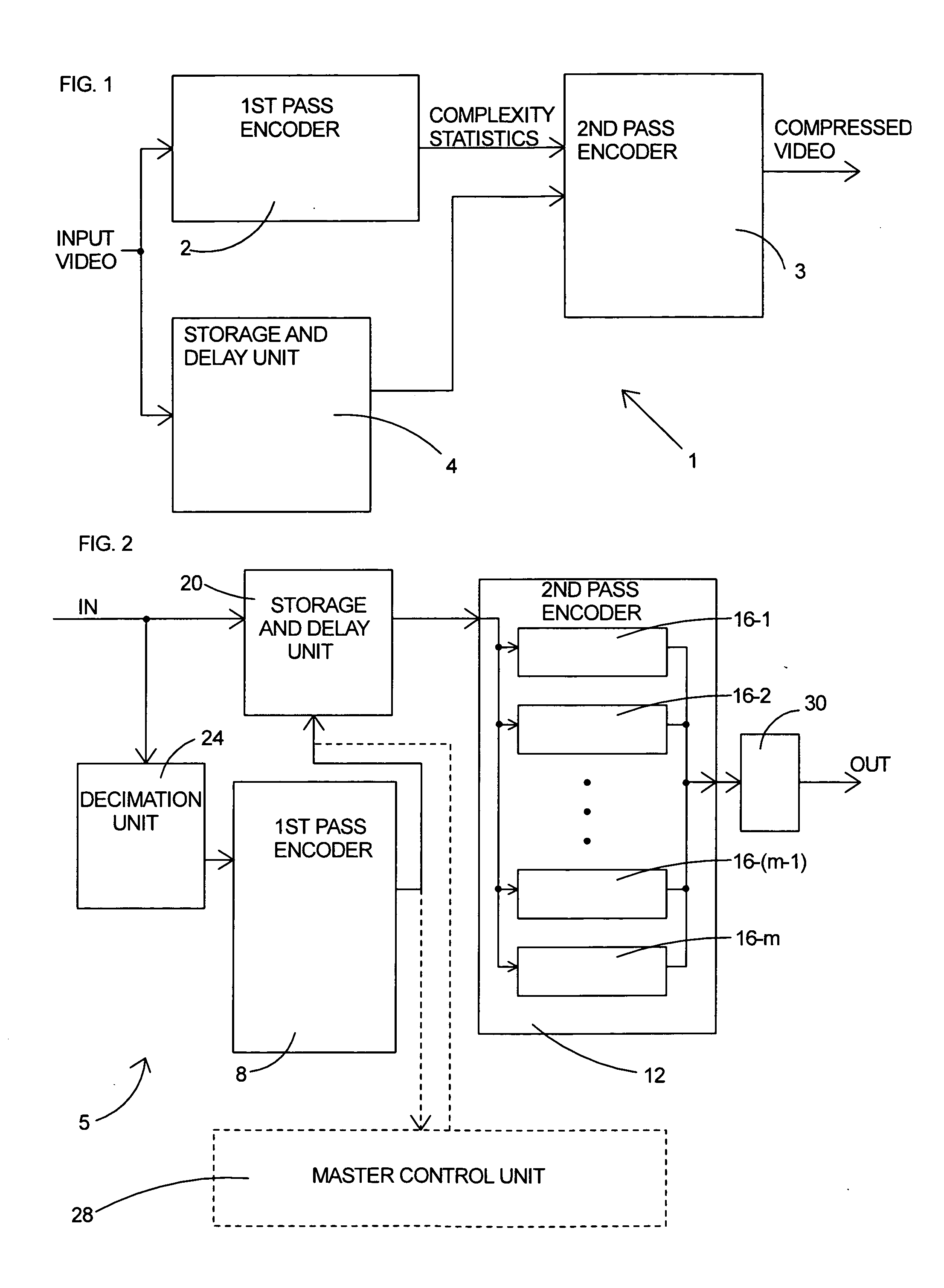

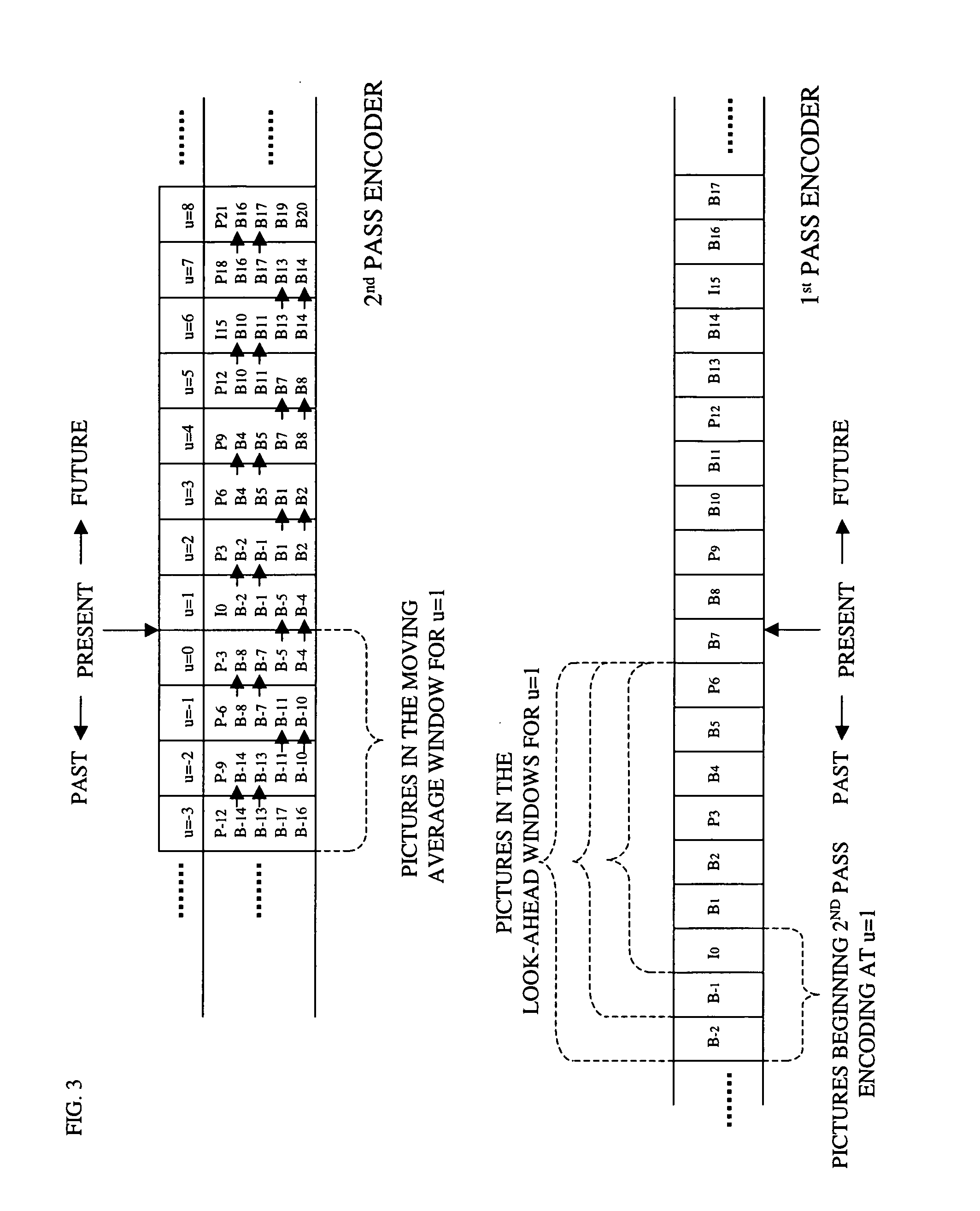

Parallel rate control for digital video encoder with multi-processor architecture and picture-based look-ahead window

ActiveUS20060126728A1Prevent underflowIncrease in sizeColor television with pulse code modulationColor television with bandwidth reductionDigital videoMulti processor

A method of operating a multi-processor video encoder by determining a target size corresponding to a preferred number of bits to be used when creating an encoded version of a picture in a group of sequential pictures making up a video sequence. The method includes the steps of calculating a first degree of fullness of a coded picture buffer at a first time, operating on the first degree of fullness to return an estimated second degree of fullness of the coded picture buffer at a second time, and operating on the second degree of fullness to return an initial target sized for the picture. The first time corresponds to the most recent time an accurate degree of fullness of the coded picture buffer can be calculated and the second time occurs after the first time.

Owner:BISON PATENT LICENSING LLC

Smart Video Surveillance System Ensuring Privacy

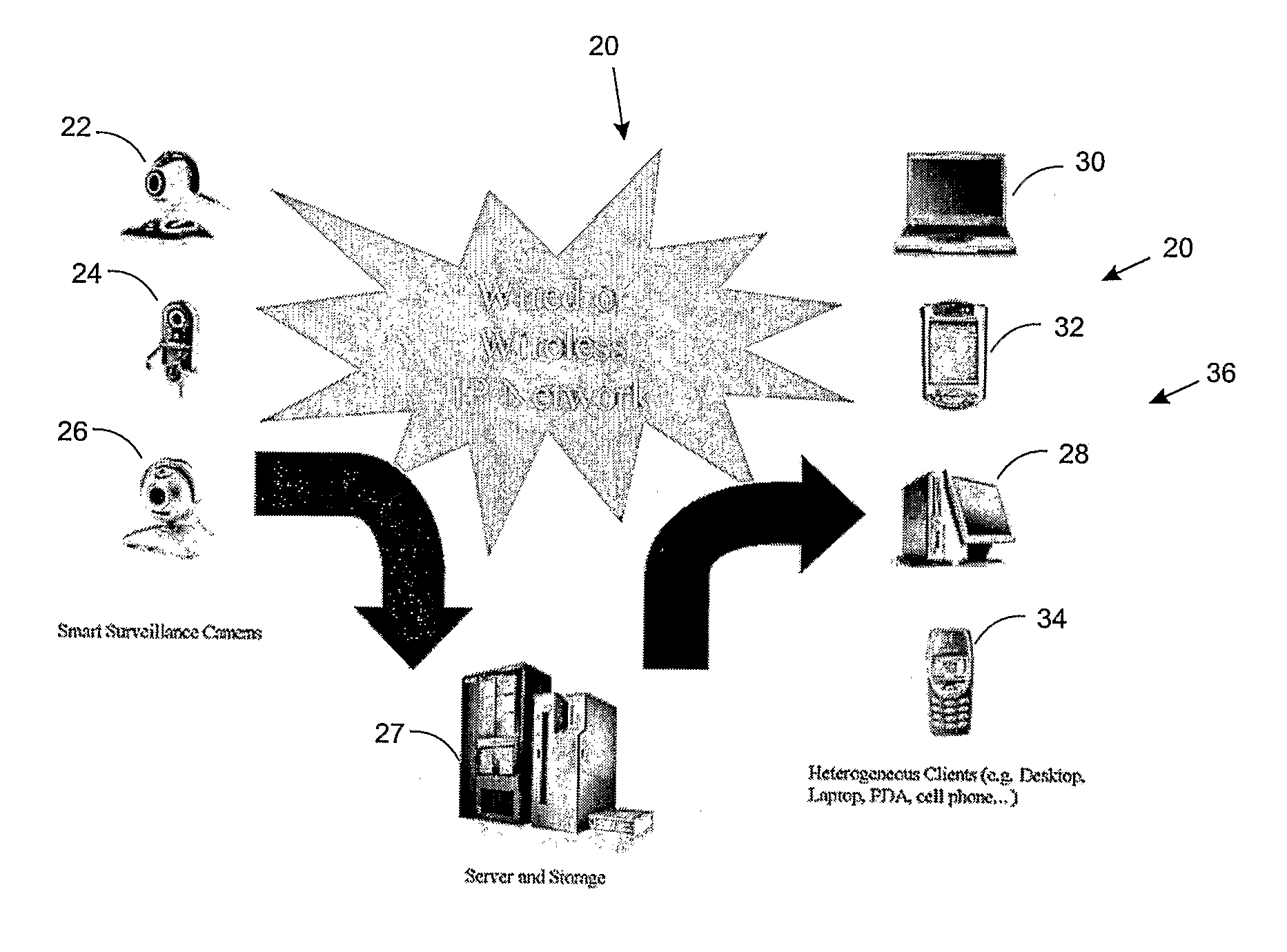

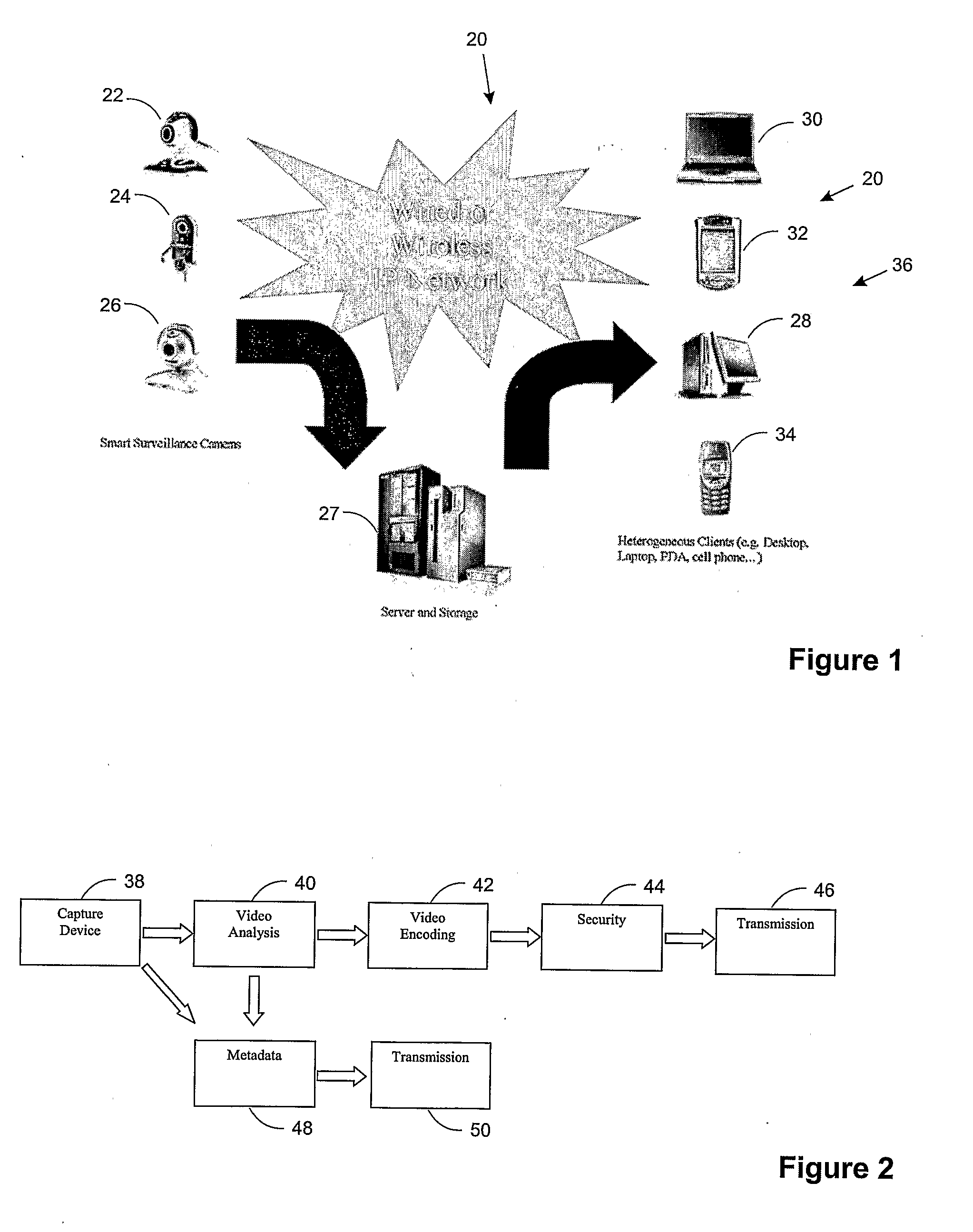

InactiveUS20070296817A1Closed circuit television systemsBurglar alarmComputer hardwareVideo monitoring

This invention describes a video surveillance system which is composed of three key components 1—smart camera(s), 2—server(s), 3—client(s), connected through IP-networks in wired or wireless configurations. The system has been designed so as to protect the privacy of people and goods under surveillance. Smart cameras are based on JPEG 2000 compression where an analysis module allows for efficient use of security tools for the purpose of scrambling, and event detection. The analysis is also used in order to provide a better quality in regions of the interest in the scene. Compressed video streams leaving the camera(s) are scrambled and signed for the purpose of privacy and data integrity verification using JPSEC compliant methods. The same bit stream is also protected based on JPWL compliant methods for robustness to transmission errors. The operations of the smart camera are optimized in order to provide the best compromise in terms of perceived visual quality of the decoded video, versus the amount of power consumption. The smart camera(s) can be wireless in both power and communication connections. The server(s) receive(s), store(s), manage(s) and dispatch(es) the video sequences on wired and wireless channels to a variety of clients and users with different device capabilities, channel characteristics and preferences. Use of seamless scalable coding of video sequences prevents any need for transcoding operations at any point in the system.

Owner:EMITALL SURVEILLANCE

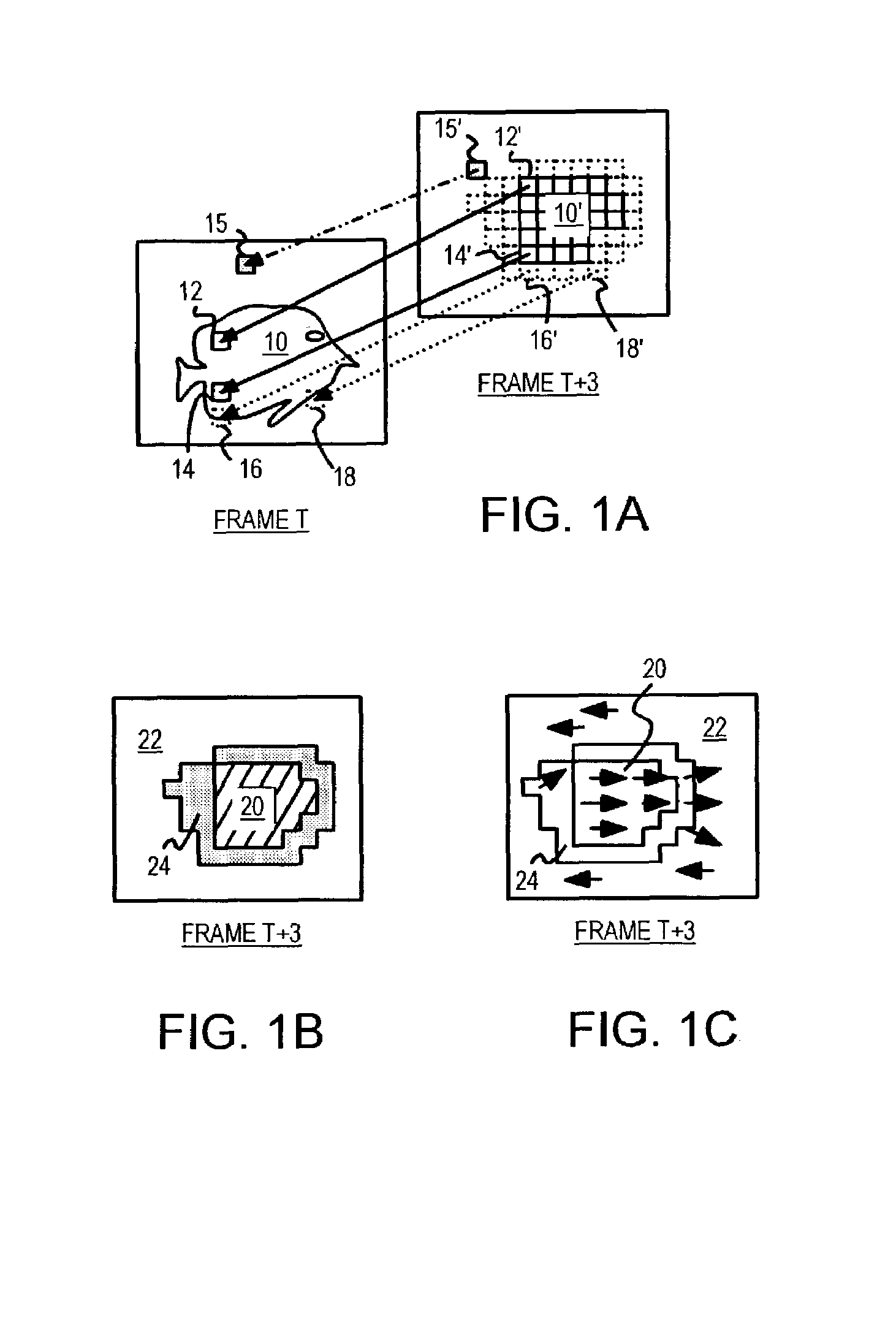

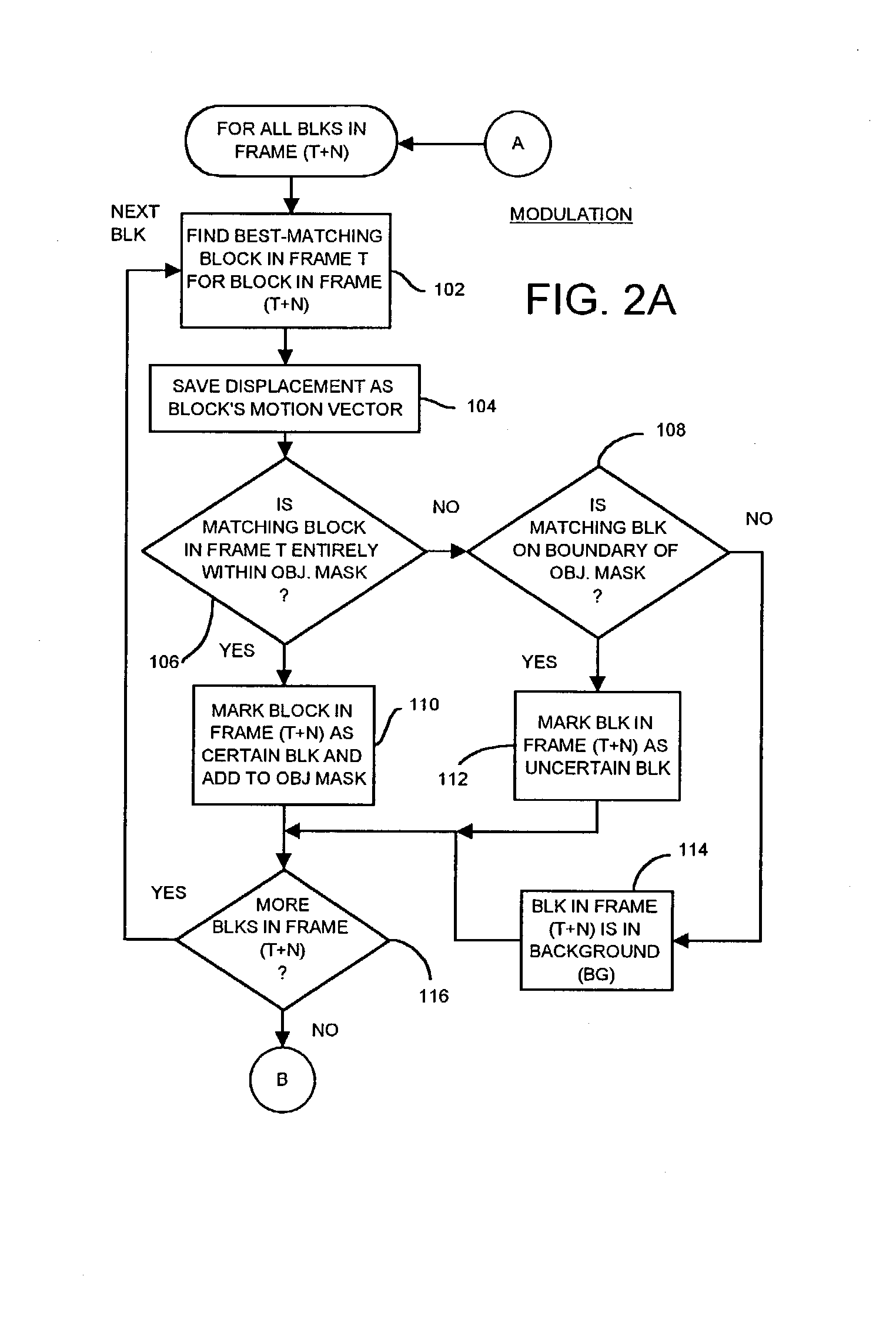

Occlusion/disocclusion detection using K-means clustering near object boundary with comparison of average motion of clusters to object and background motions

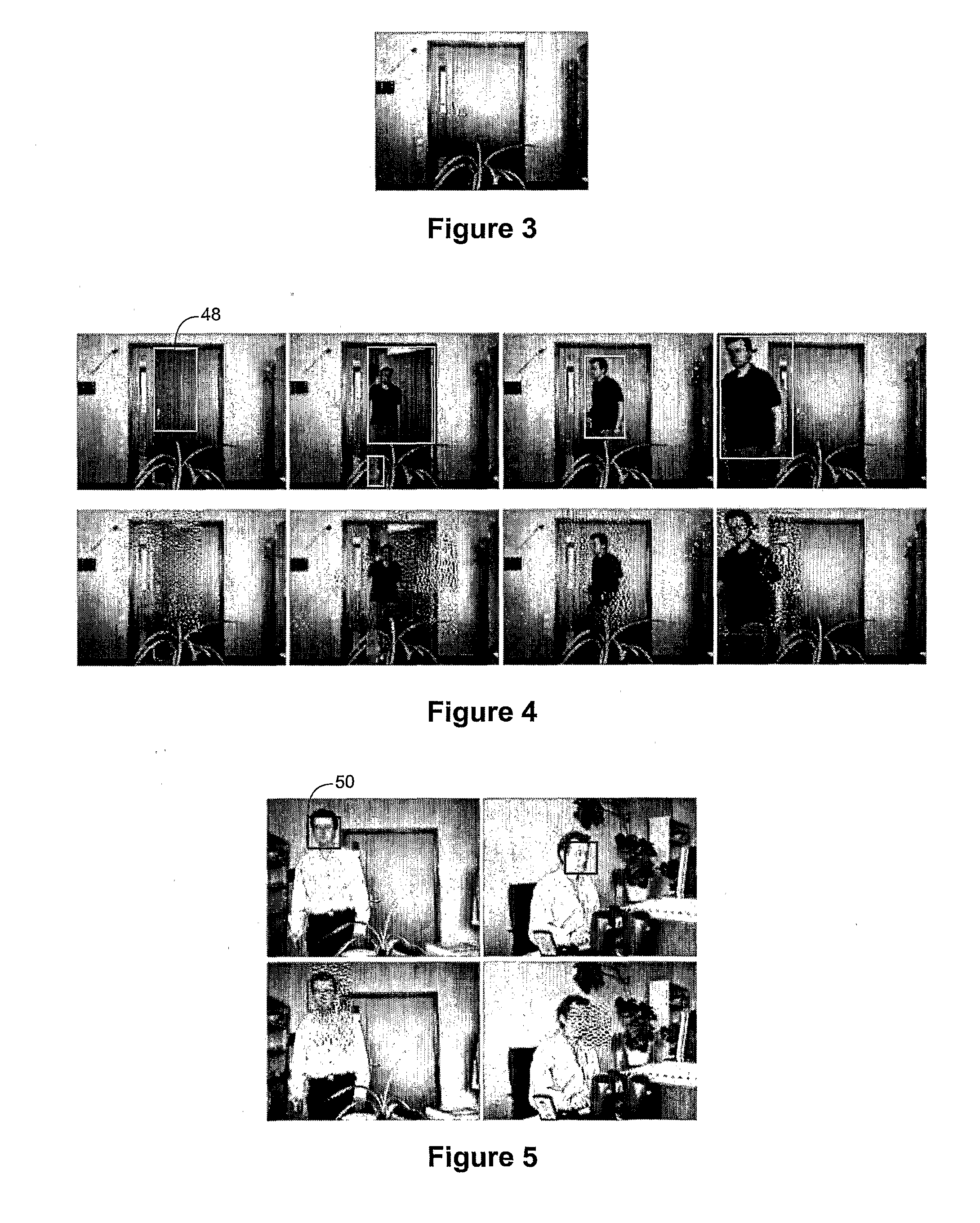

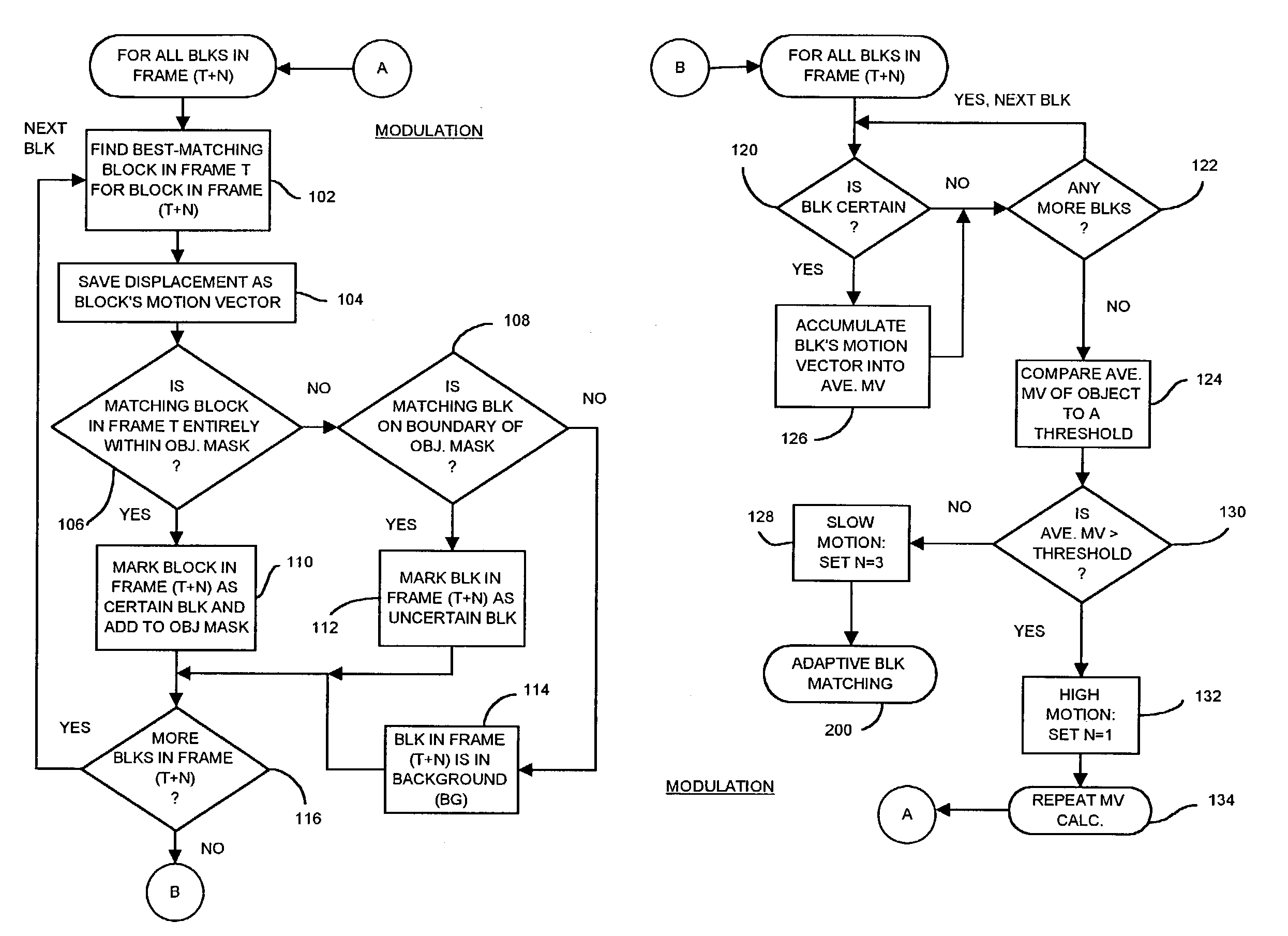

ActiveUS7142600B1Reduce varianceAccurate representationColor television with pulse code modulationImage analysisMotion vectorVideo sequence

An object in a video sequence is tracked by object masks generated for frames in the sequence. Macroblocks are motion compensated to predict the new object mask. Large differences between the next frame and the current frame detect suspect regions that may be obscured in the next frame. The motion vectors in the object are clustered using a K-means algorithm. The cluster centroid motion vectors are compared to an average motion vector of each suspect region. When the motion differences are small, the suspect region is considered part of the object and removed from the object mask as an occlusion. Large differences between the prior frame and the current frame detect suspected newly-uncovered regions. The average motion vector of each suspect region is compared to cluster centroid motion vectors. When the motion differences are small, the suspect region is added to the object mask as a disocclusion.

Owner:INTELLECTUAL VENTURES I LLC

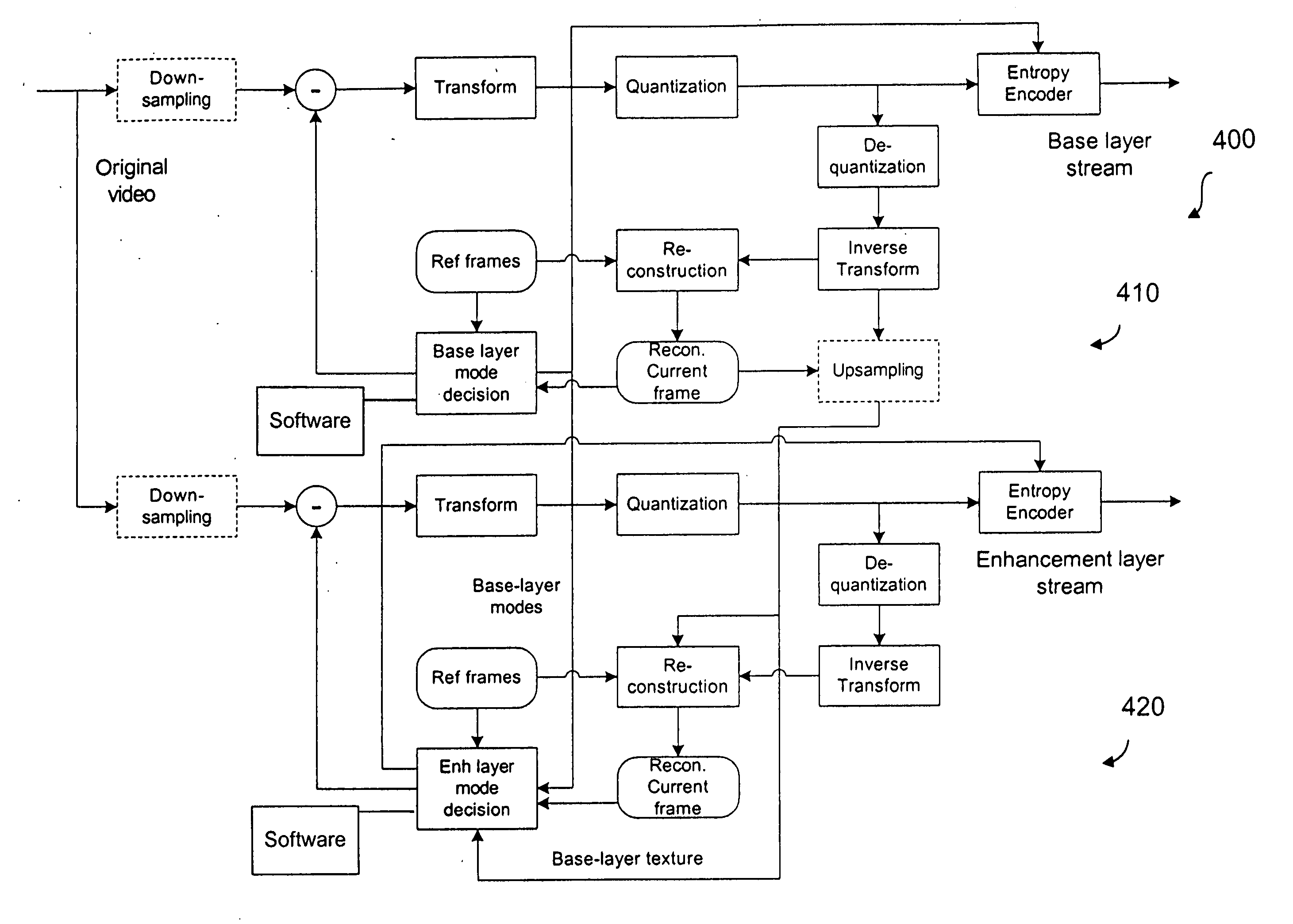

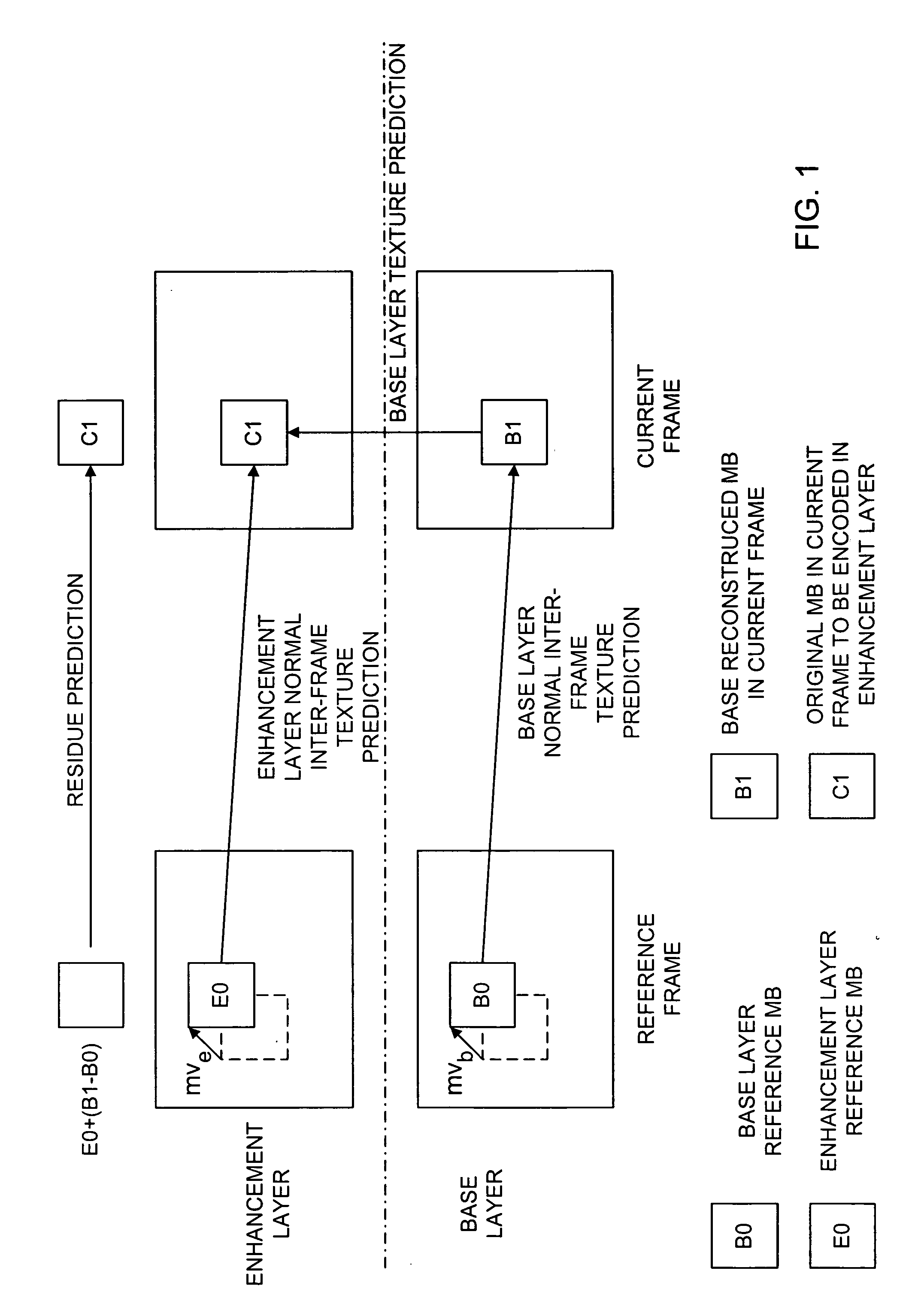

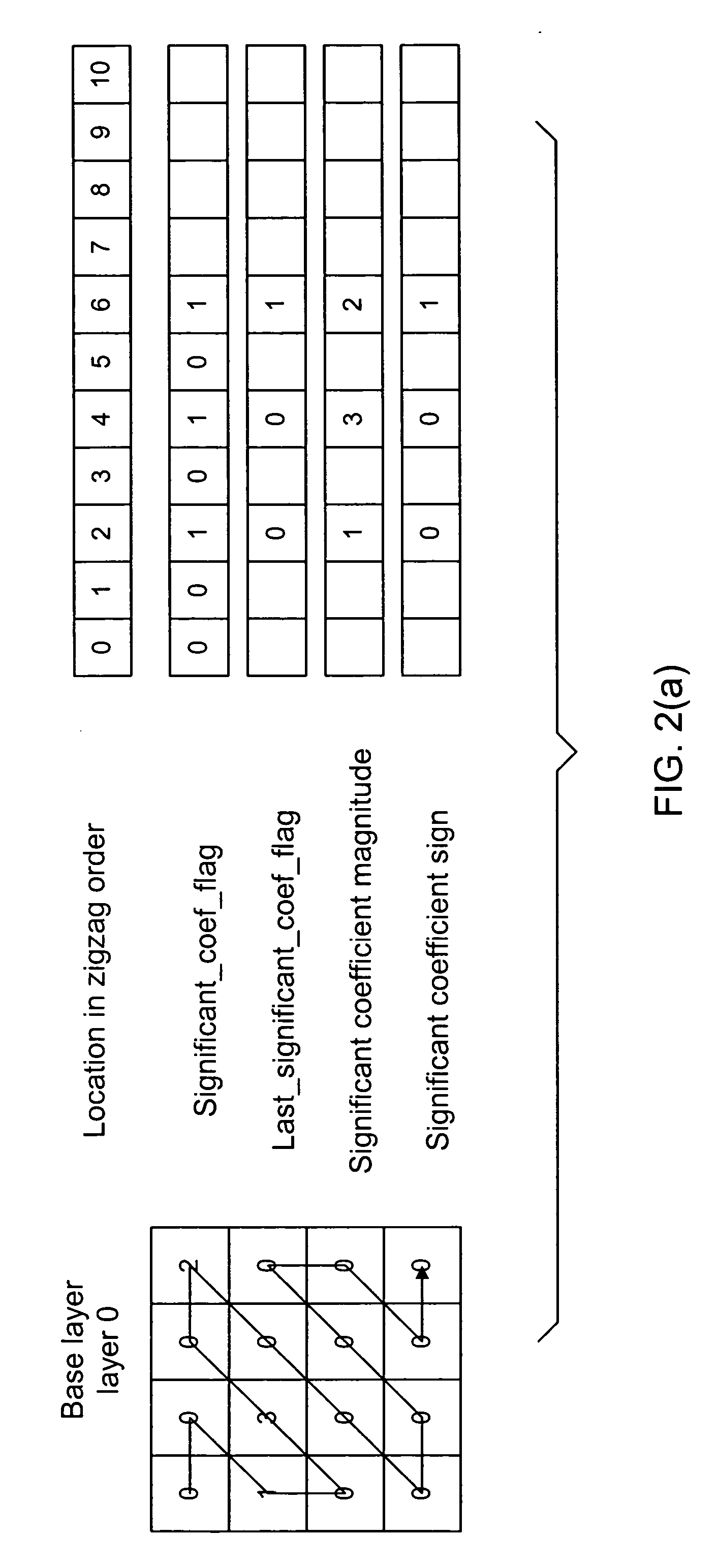

Inter-layer coefficient coding for scalable video coding

InactiveUS20060153294A1Pixel predictorImprove the overall coefficientColor television with pulse code modulationColor television with bandwidth reductionInter layerComputer architecture

A scalable video coding method and apparatus for coding a video sequence, wherein the coefficients in the enhancement layer is classified as belonging to a significant pass when the corresponding coefficient in the base layer is zero, and classified as belonging to a refinement pass when the corresponding coefficient in the base layer is non-zero. For coefficients classified as belonging to the significance pass, an indication is coded to indicate whether the coefficient is zero or non-zero, and if the coefficient is non-zero, coding an indication of the sign of the coefficient. A last_significant_coeff_flag is used to indicate the coding of remaining coefficients in the scanning order can be skipped. For coefficients classified as belonging to the refinement pass, a value to refine the magnitude of the corresponding coefficient in the base layer is coded, and if the coefficient is non-zero, a sign bit may be coded.

Owner:NOKIA CORP

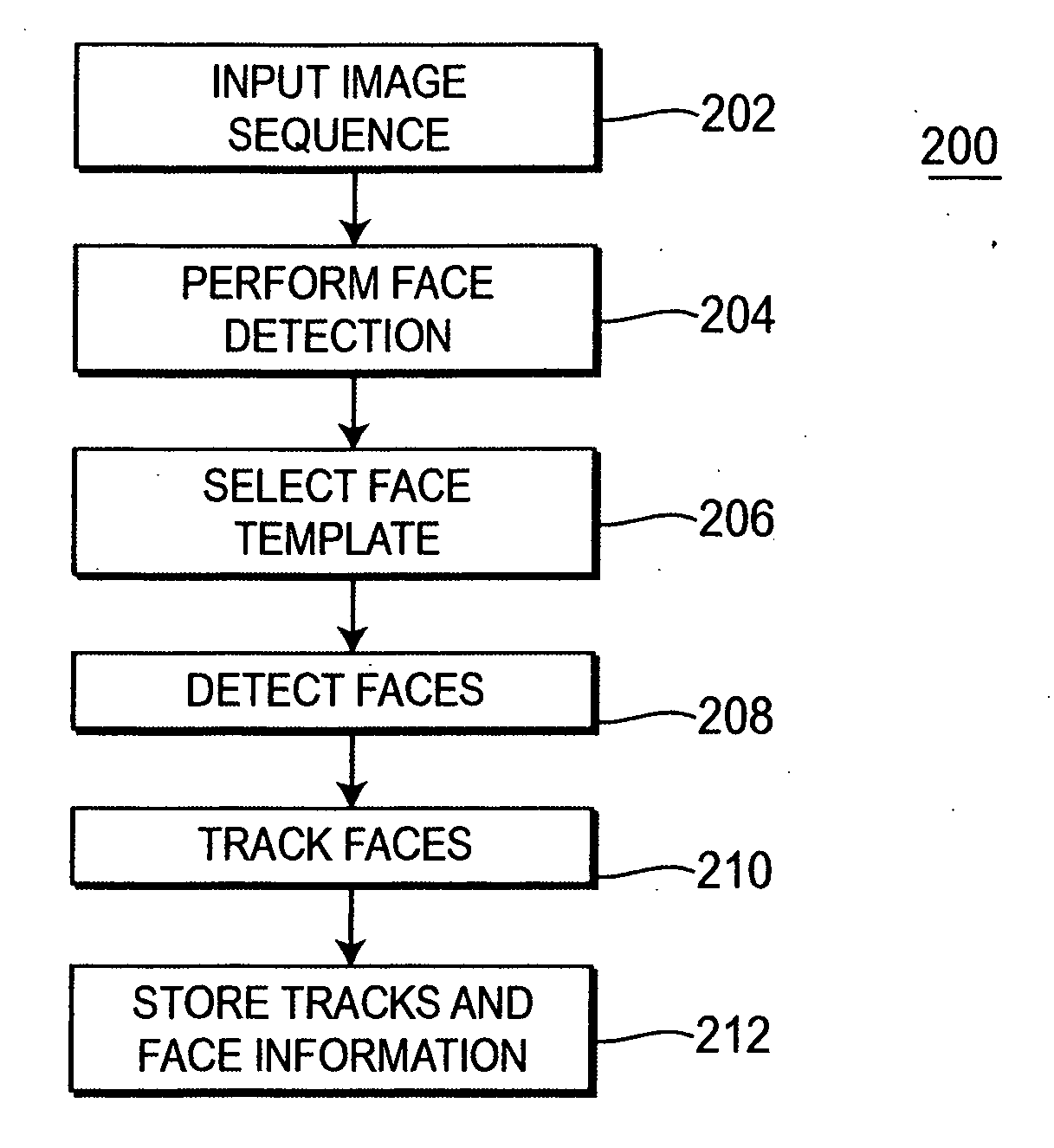

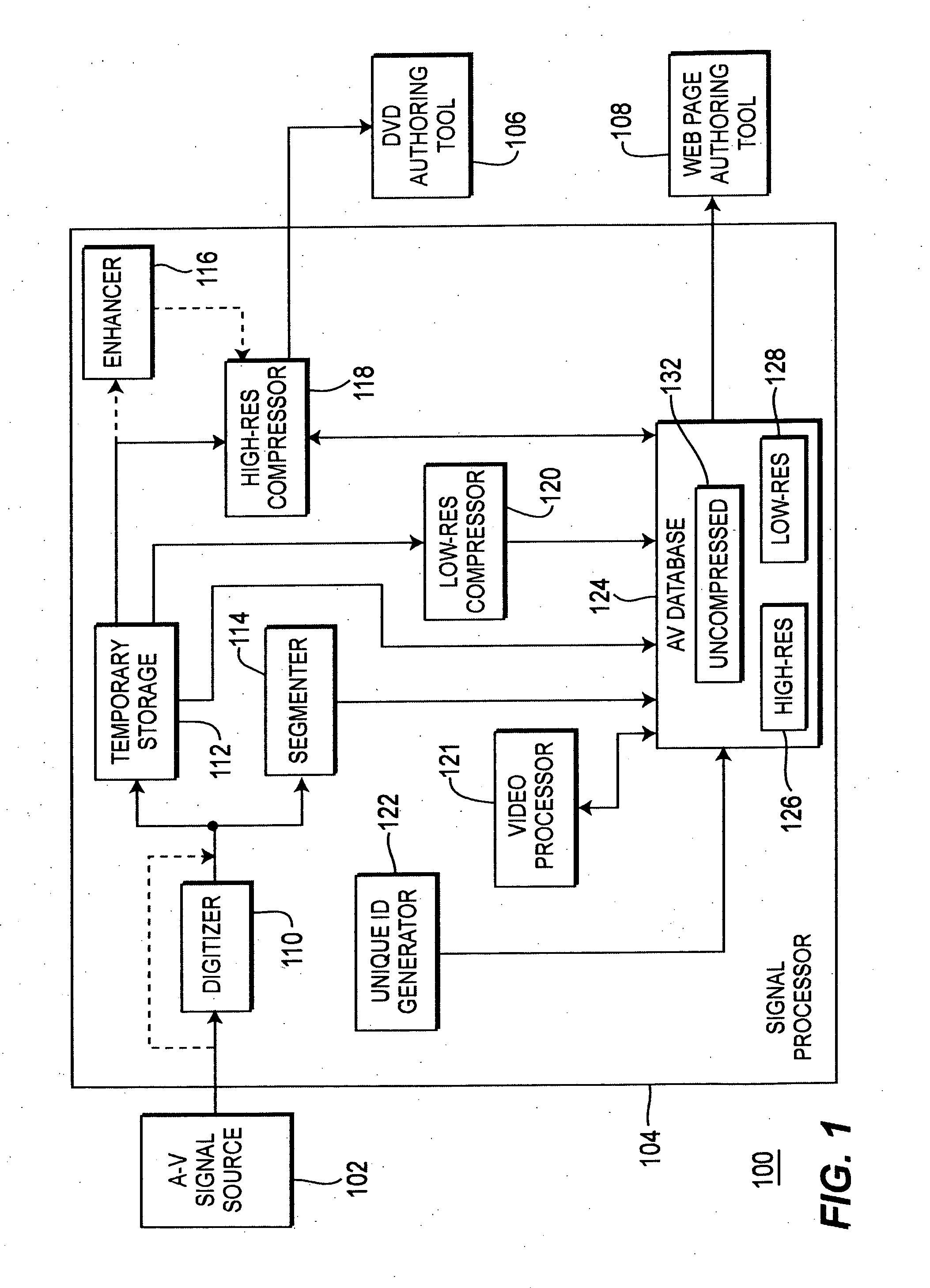

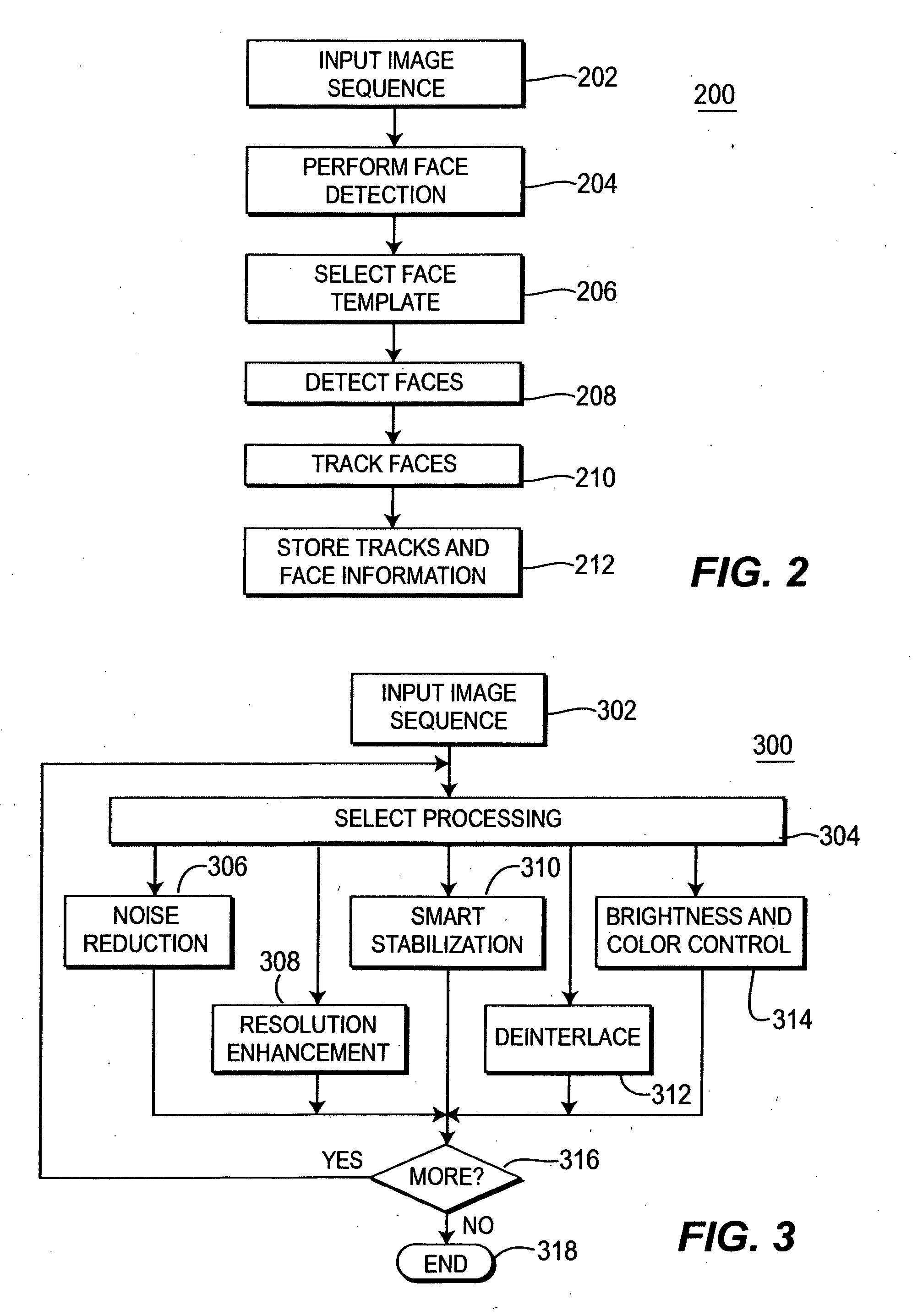

Method and apparatus for enhancing and indexing video and audio signals

InactiveUS20060008152A1Enhanced digital signalHigh resolutionTelevision system detailsRecord information storagePattern recognitionFace detection

A method and apparatus for processing a video sequence is disclosed. The apparatus may include a video processor for detecting and tracking at least one identifiable face in a video sequence. The method may include performing face detection of at least one identifiable face, selecting a face template including face features used to represent the at least one identifiable face, processing the video sequence to detect faces similar to the at least one identifiable face, and tracking the at least one identifiable face in the video sequence.

Owner:KUMAR RAKESH +2

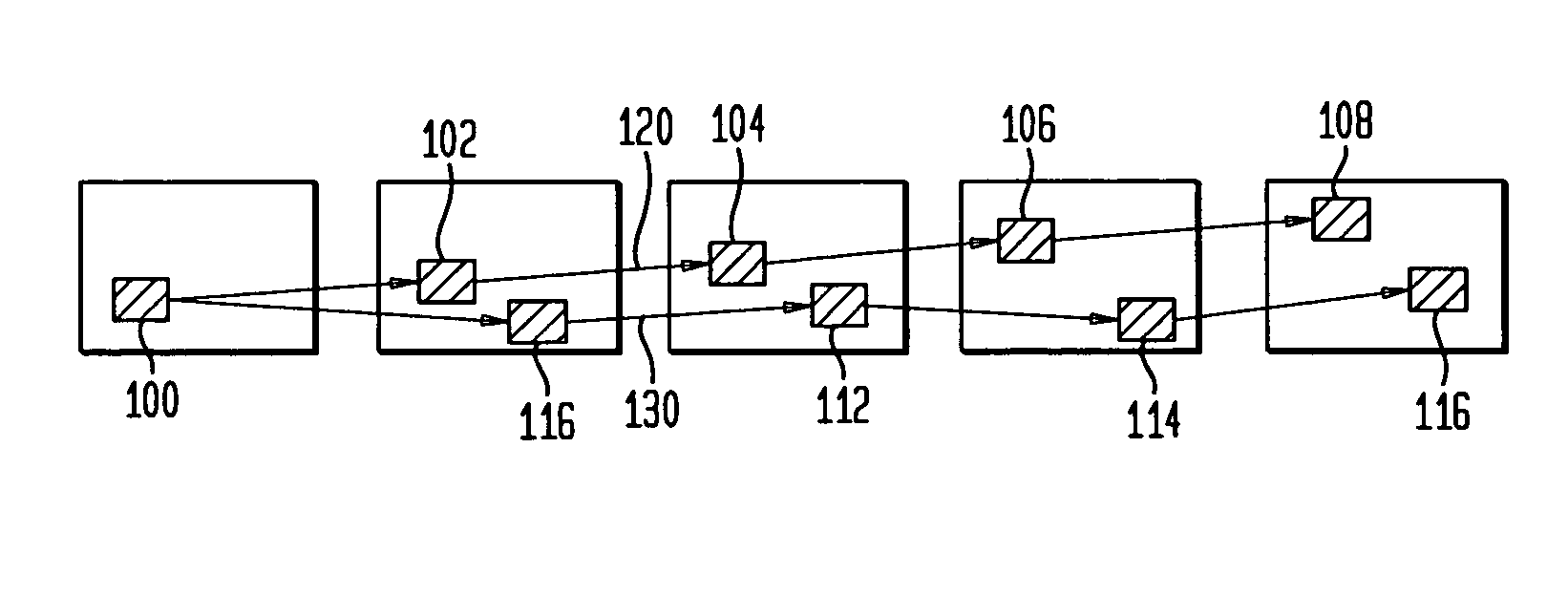

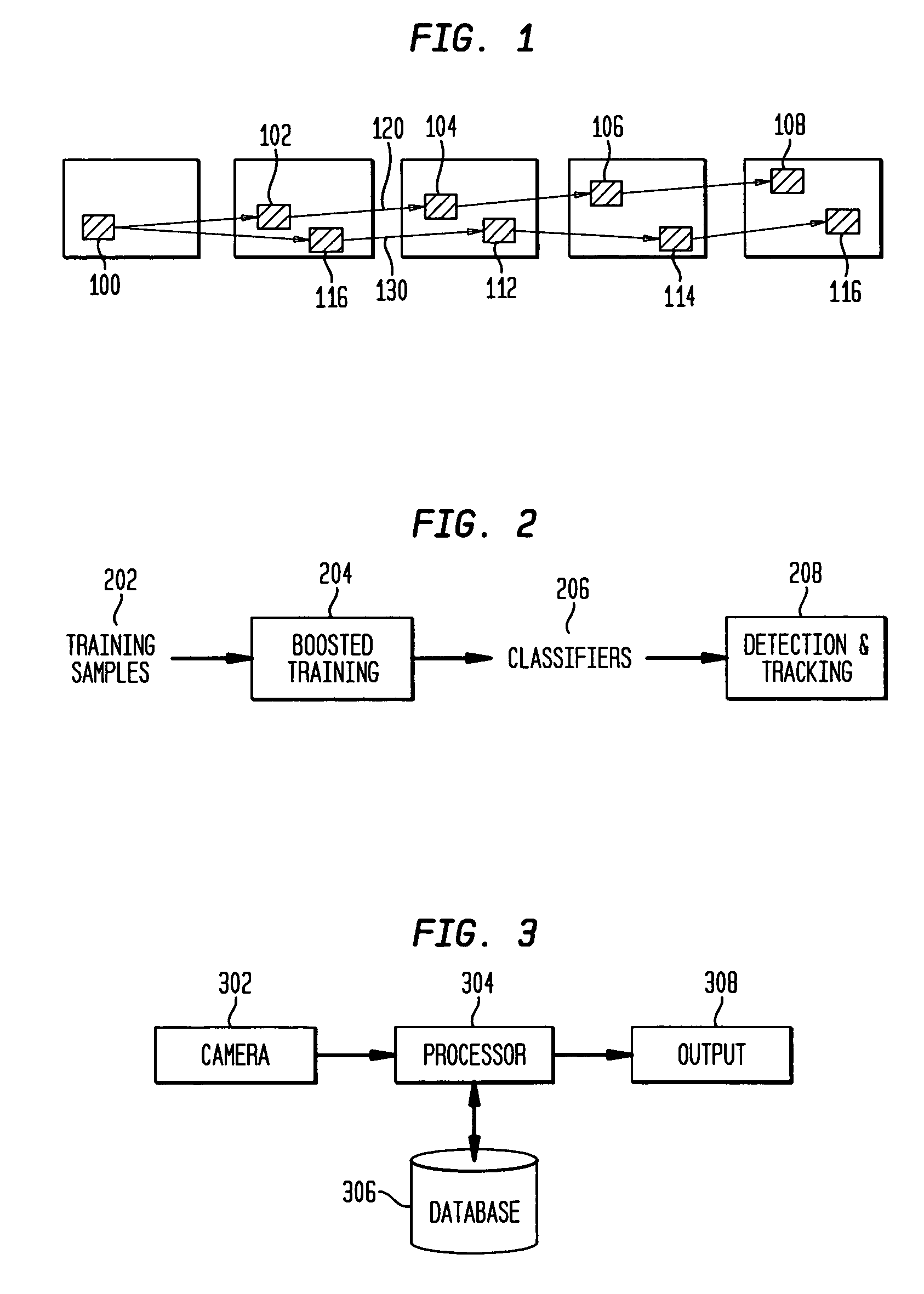

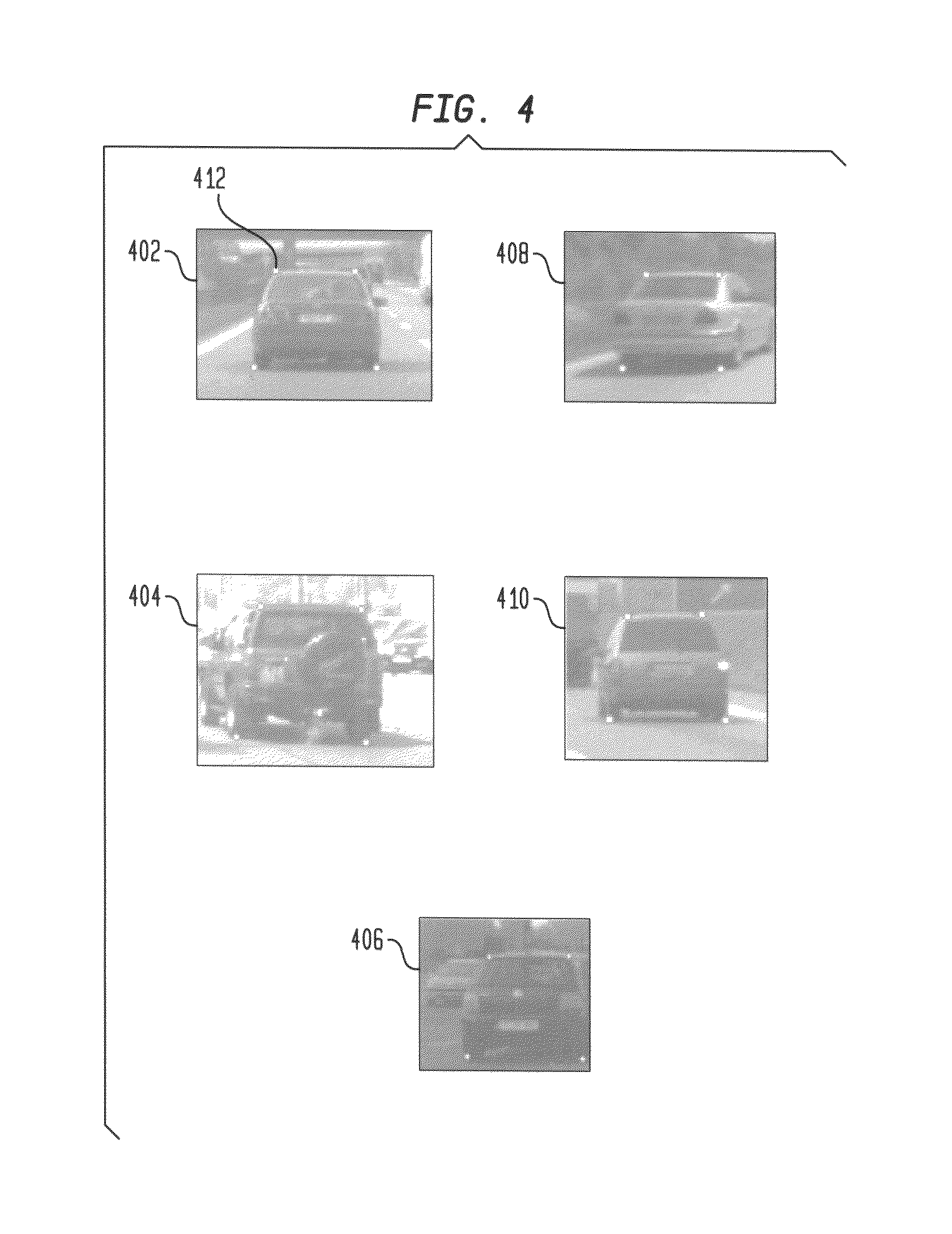

System and method for vehicle detection and tracking

A system and method for detecting and tracking an object is disclosed. A camera captures a video sequence comprised of a plurality of image frames. A processor receives the video sequence and analyzes each image frame to determine if an object is detected. The processor applies one or more classifiers to an object in each image frame and computes a confidence score based on the application of the one or more classifiers to the object. A database stores the one or more classifiers and vehicle training samples. A display displays the video sequence.

Owner:CONTINENTAL AUTOMOTIVE GMBH

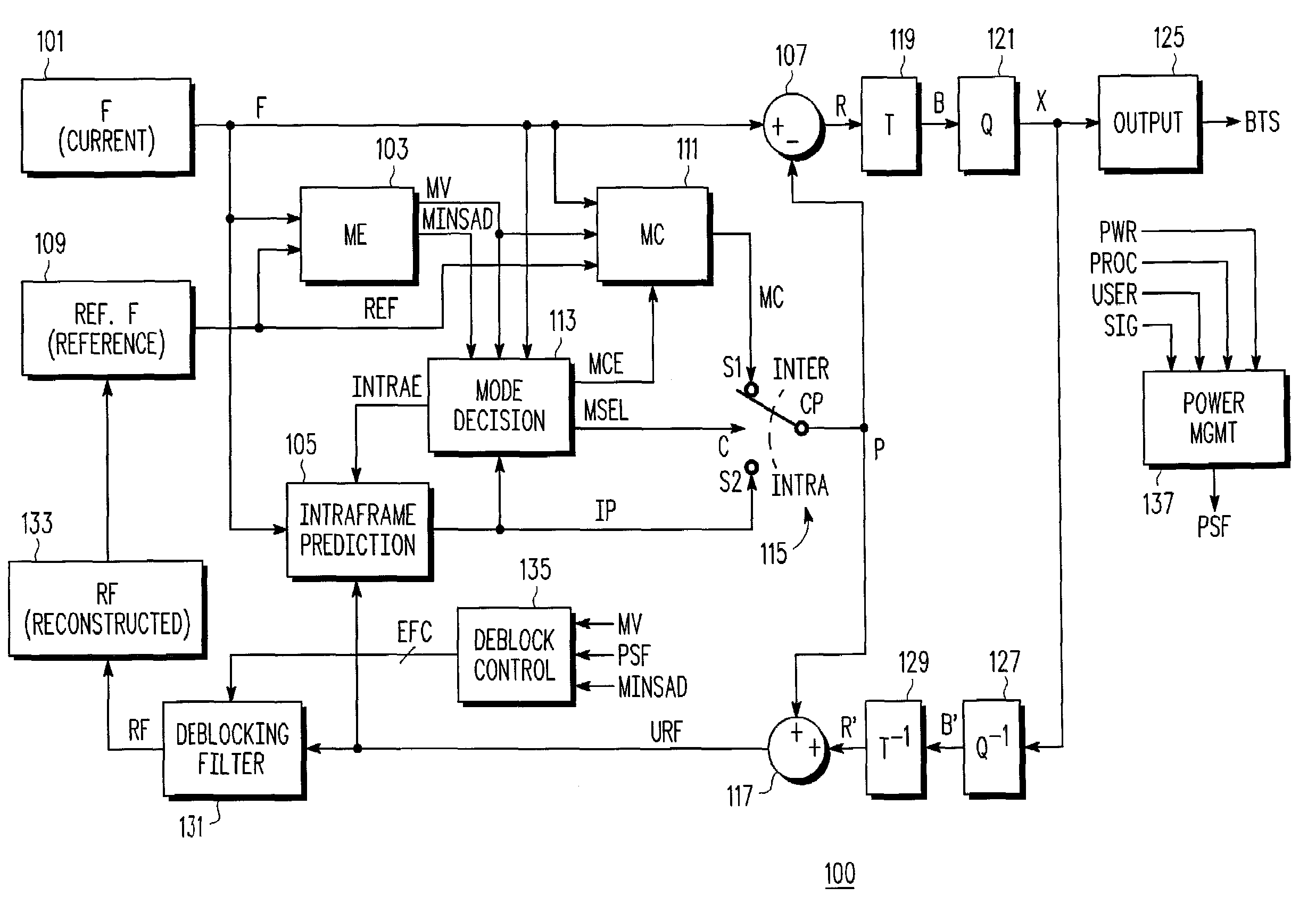

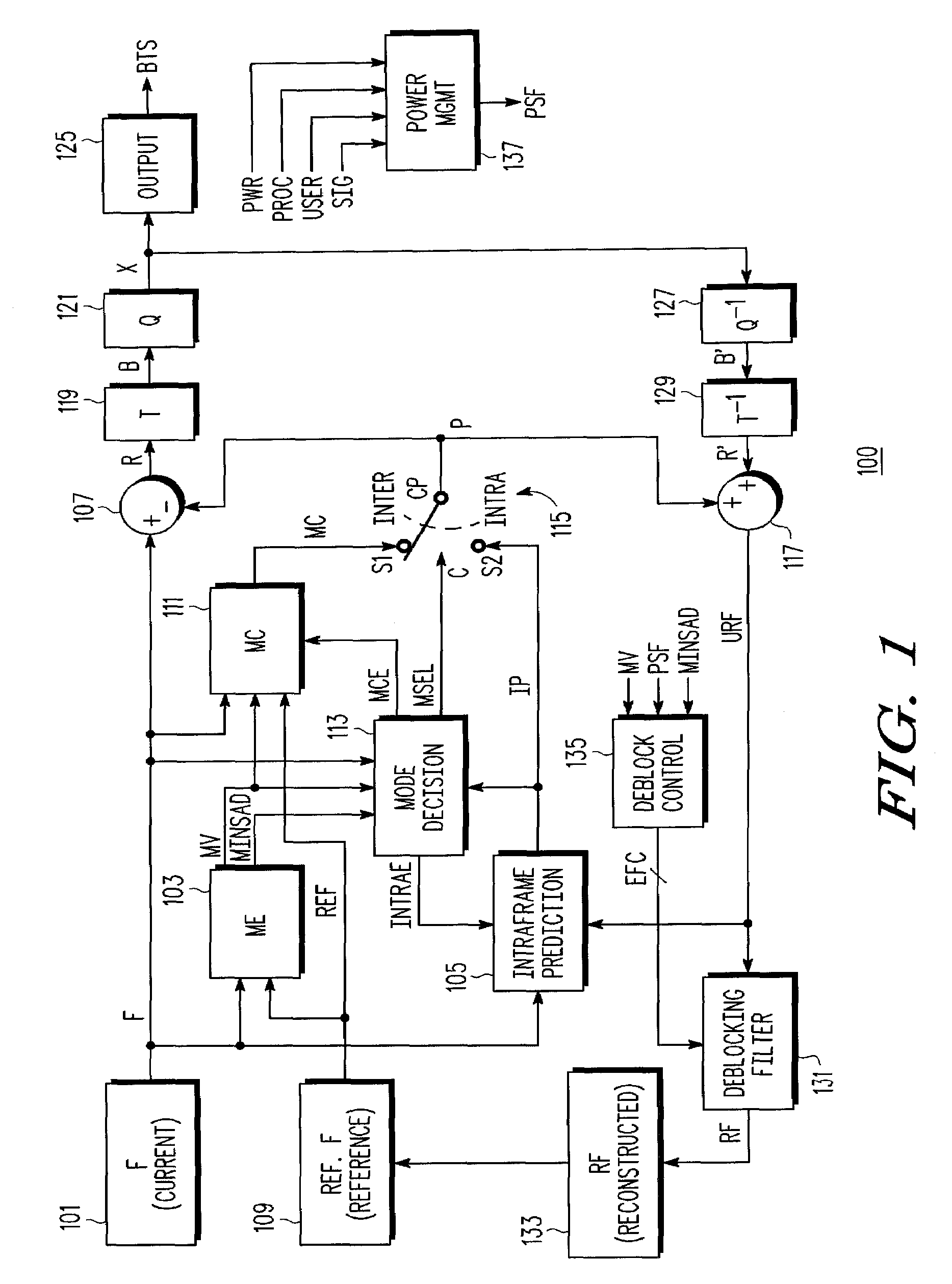

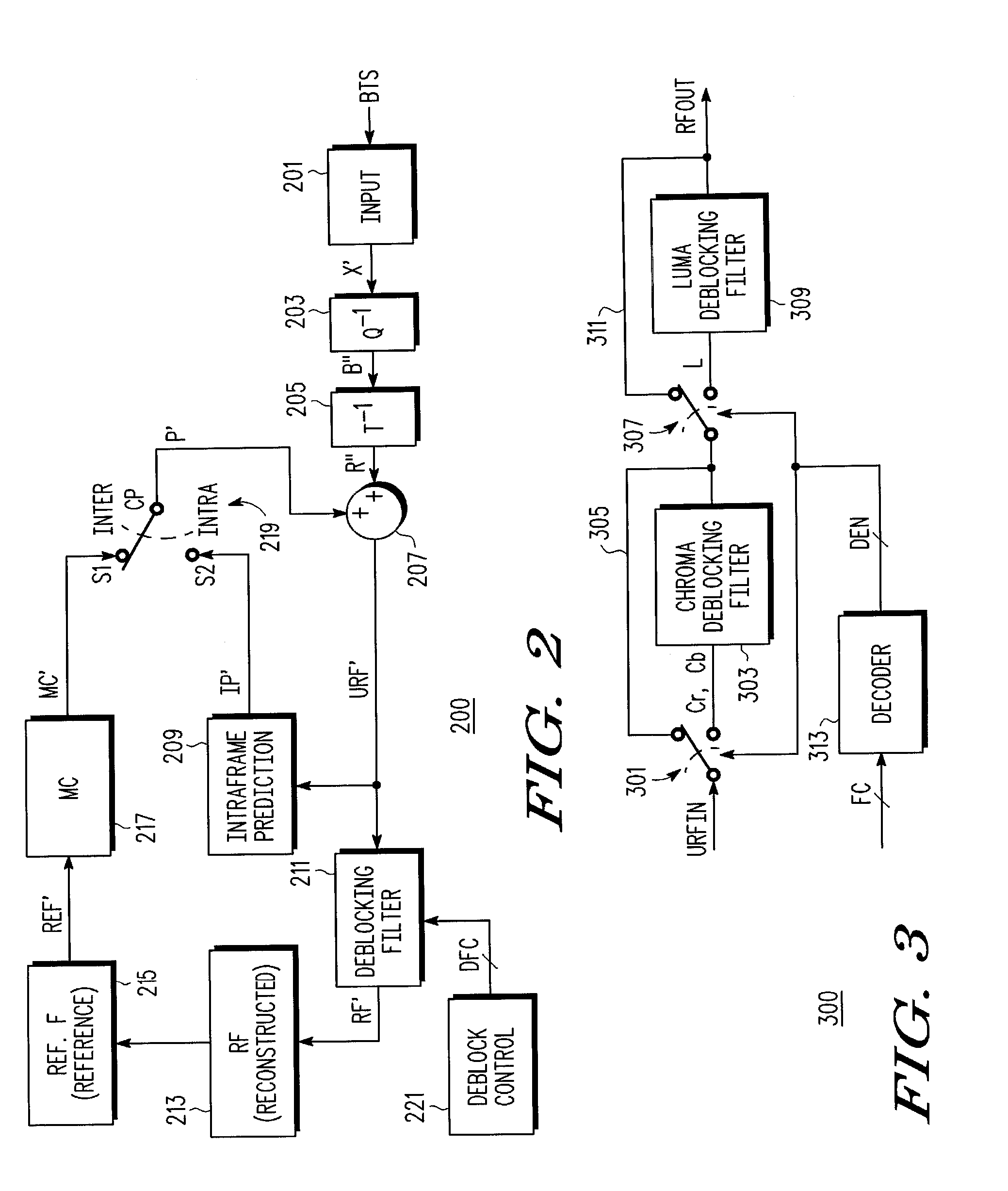

System and method of determining deblocking control flag of scalable video system for indicating presentation of deblocking parameters for multiple layers

ActiveUS20080137753A1Color television with pulse code modulationPulse modulation television signal transmissionVideo sequenceControl circuit

A method of generating a video sequence including setting a state of a deblocking control flag in a frame header of a frame to indicate that a deblocking parameter is presented for some but not all layers. A method of processing a received video sequence including determining a state of a deblocking control flag of a frame header and retrieving a deblocking parameter for some but not all layers. A scalable video system including a deblocking control circuit which sets a state of a deblocking control flag in a frame header to indicate that a deblocking parameter is presented for some but not all layers. A scalable video system including a deblocking control circuit which determines the state of a deblocking control flag in a frame header of a received video sequence and which retrieves a deblocking parameter for some but not all layers of the frame.

Owner:NXP USA INC

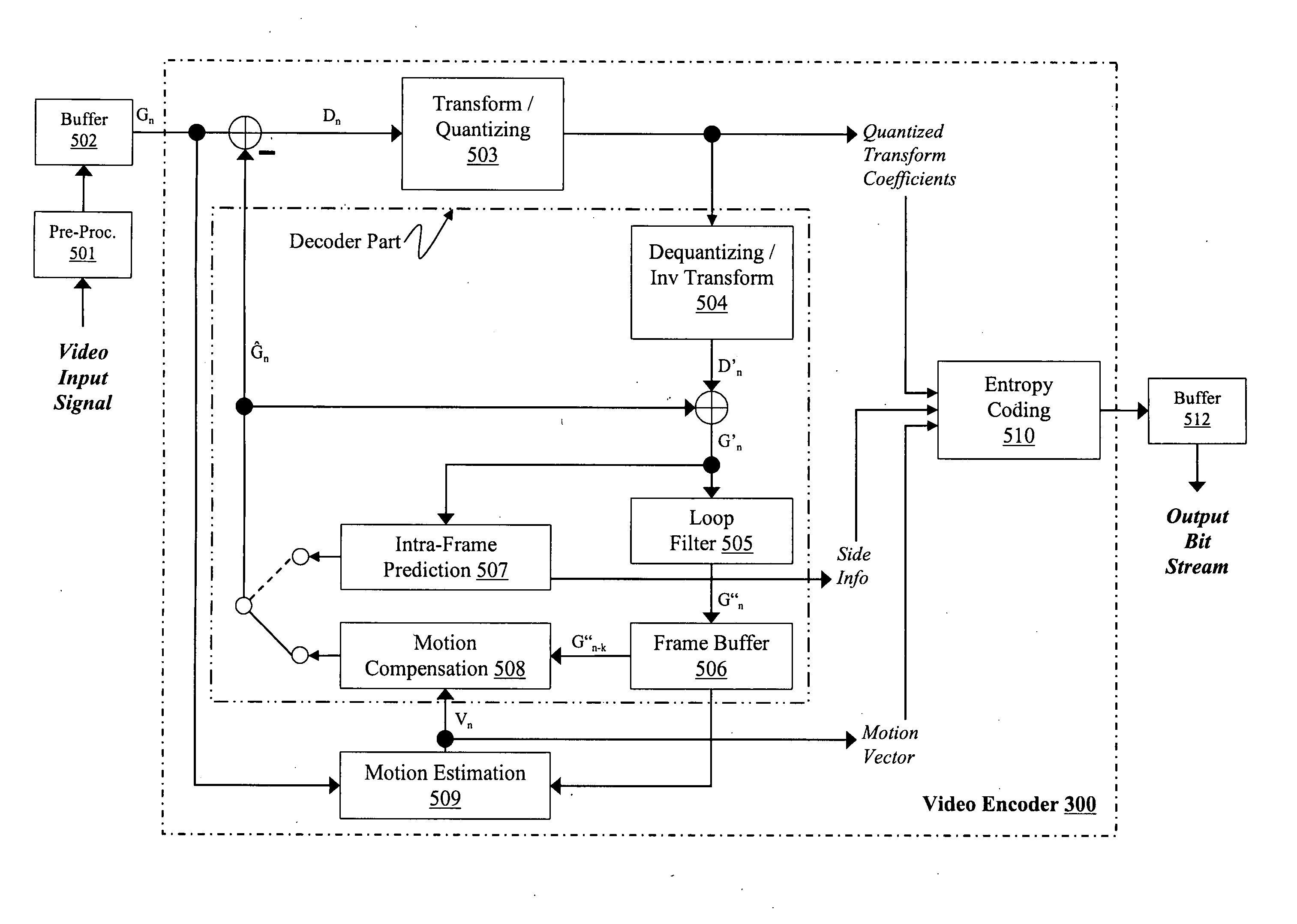

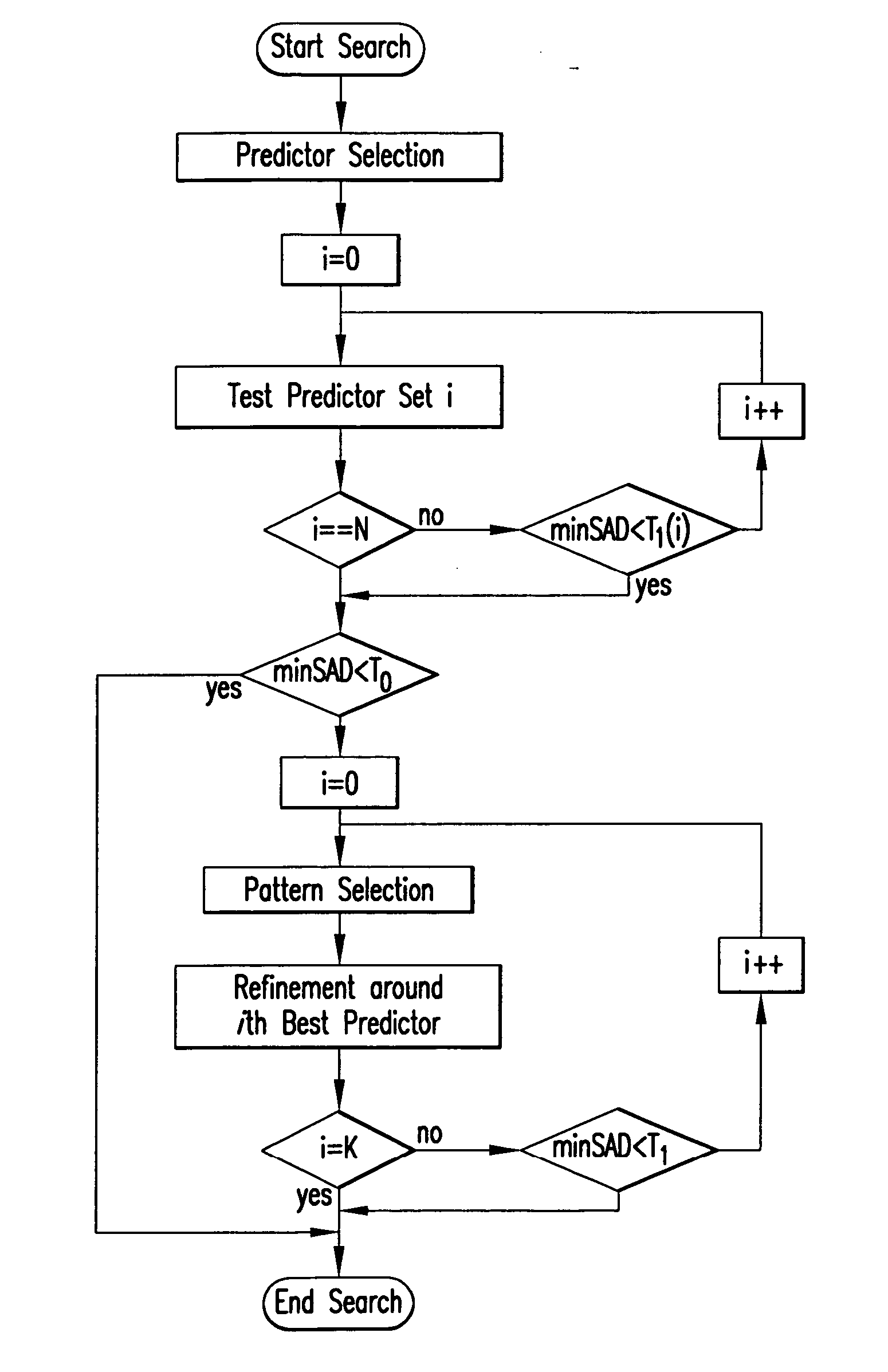

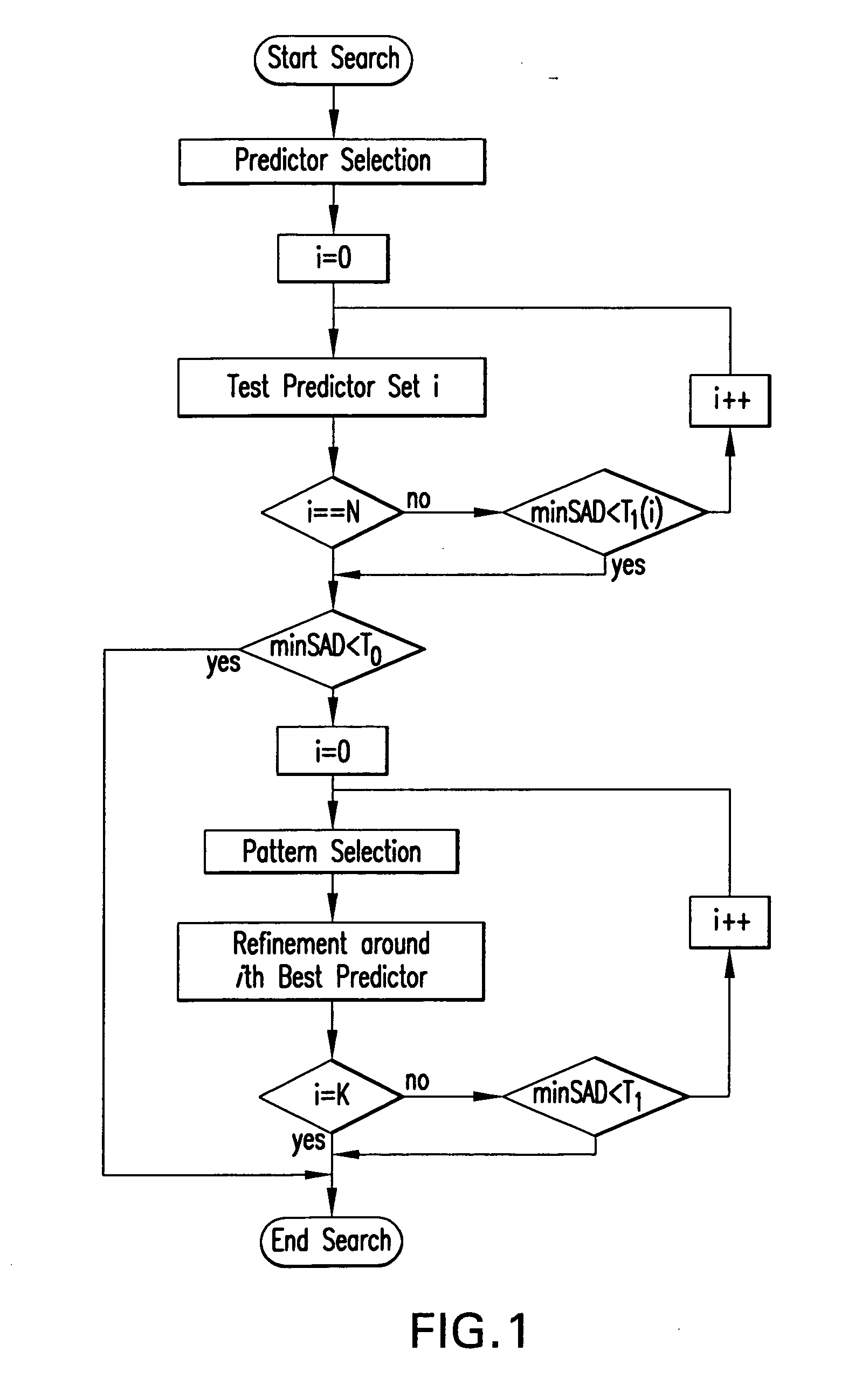

Device and method for fast block-matching motion estimation in video encoders

InactiveUS20060245497A1Reduce complexityQuick estimateColor television with pulse code modulationColor television with bandwidth reductionMotion vectorVideo sequence

Motion estimation is the science of predicting the current frame in a video sequence from the past frame (or frames), by slicing it into rectangular blocks of pixels, and matching these to past such blocks. The displacement in the spatial position of the block in the current frame with respect to the past frame is called the motion vector. This method of temporally decorrelating the video sequence by finding the best matching blocks from past reference frames—motion estimation—makes up about 80% or more of the computation in a video encoder. That is, it is enormously expensive, and methods do so that are efficient are in high demand. Thus the field of motion estimation within video coding is rich in the breadth and diversity of approaches that have been put forward. Yet it is often the simplest methods that are the most effective. So it is in this case. While it is well-known that a full search over all possible positions within a fixed window is an optimal method in terms of performance, it is generally prohibitive in computation. In this patent disclosure, we define an efficient, new method of searching only a very sparse subset of possible displacement positions (or motion vectors) among all possible ones, to see if we can get a good enough match, and terminate early. This set of sparse subset of motion vectors is preselected, using a priori knowledge and extensive testing on video sequences, so that these “predictors” for the motion vector are essentially magic. The art of this method is the preselection of excellent sparse subsets of vectors, the smart thresholds for acceptance or rejection, and even in the order of the testing prior to decision.

Owner:FASTVDO

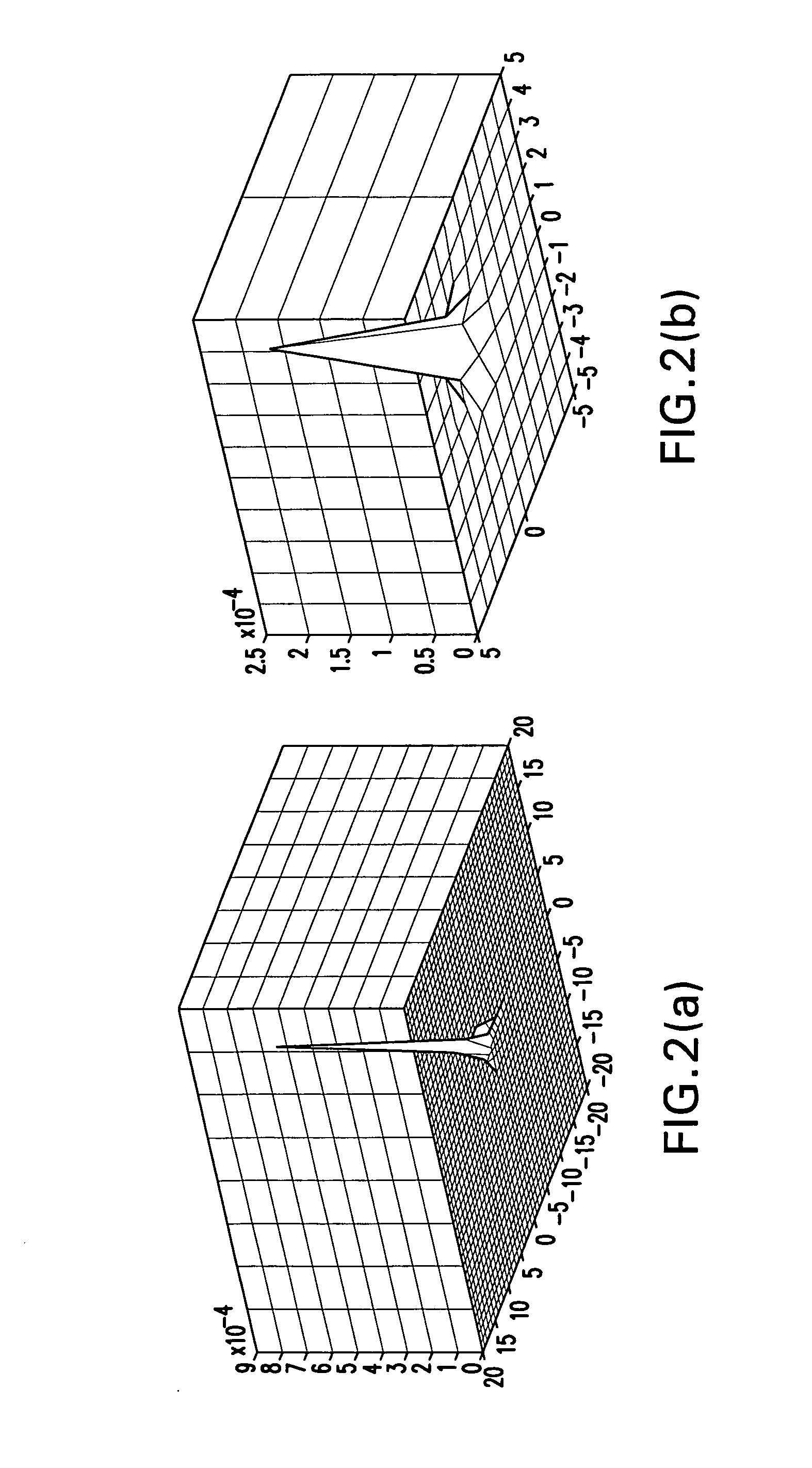

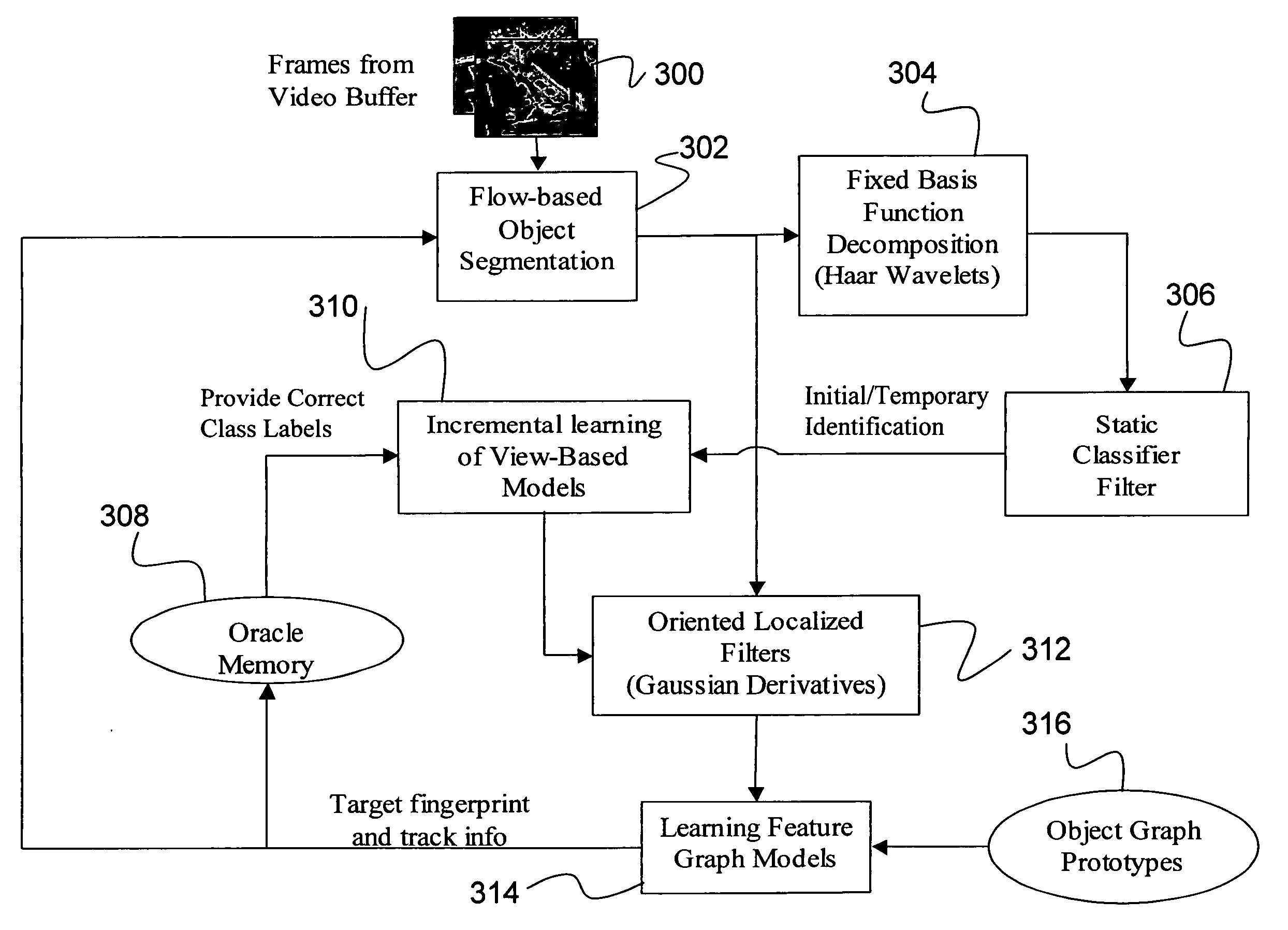

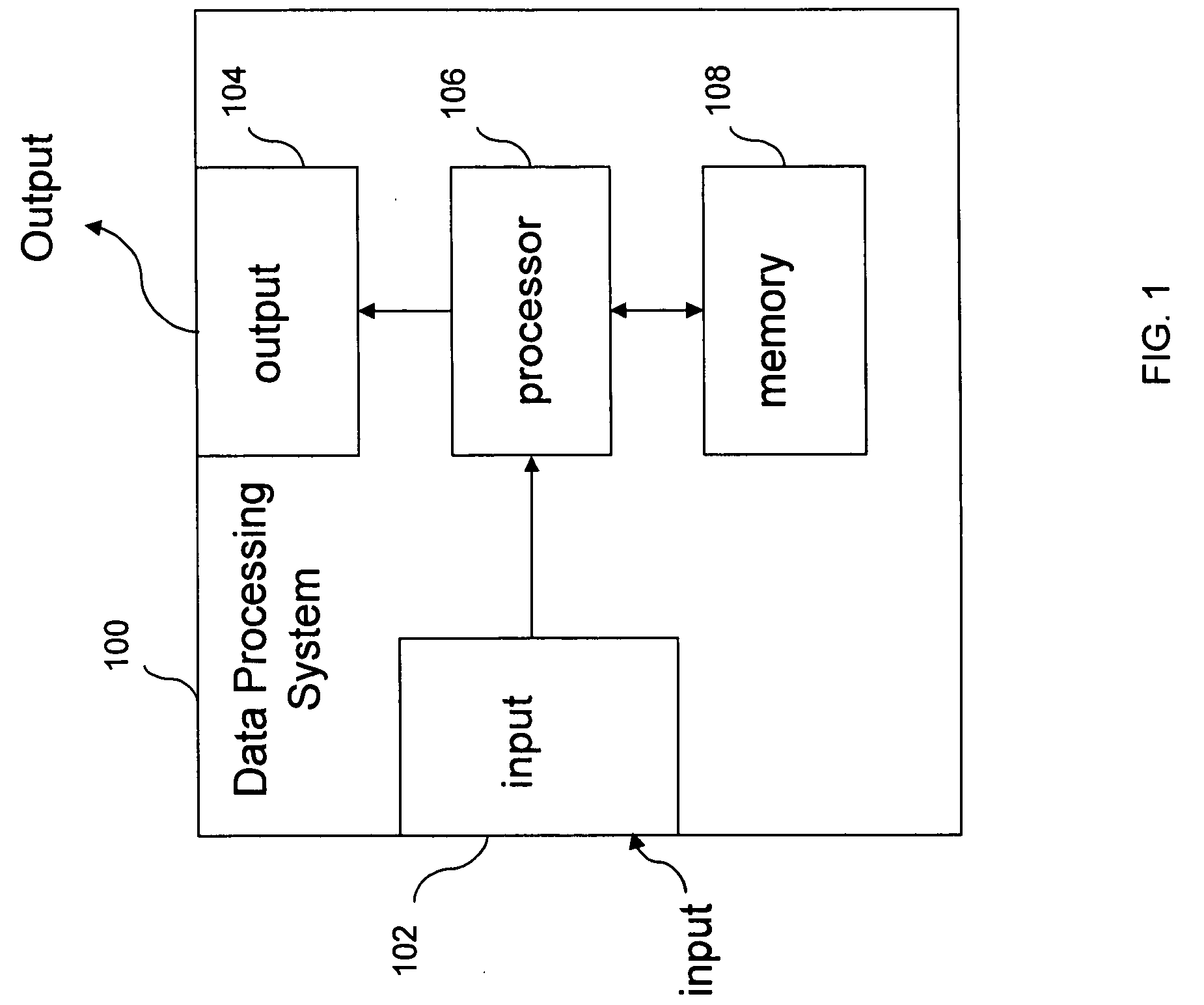

Active learning system for object fingerprinting

Described is an active learning system for fingerprinting an object identified in an image frame. The active learning system comprises a flow-based object segmentation module for segmenting a potential object candidate from a video sequence, a fixed-basis function decomposition module using Haar wavelets to extract a relevant feature set from the potential object candidate, a static classifier for initial classification of the potential object candidate, an incremental learning module for predicting a general class of the potential object candidate, an oriented localized filter module to extract features from the potential object candidate, and a learning-feature graph-fingerprinting module configured to receive the features and build a fingerprint of the object for tracking the object.

Owner:HRL LAB

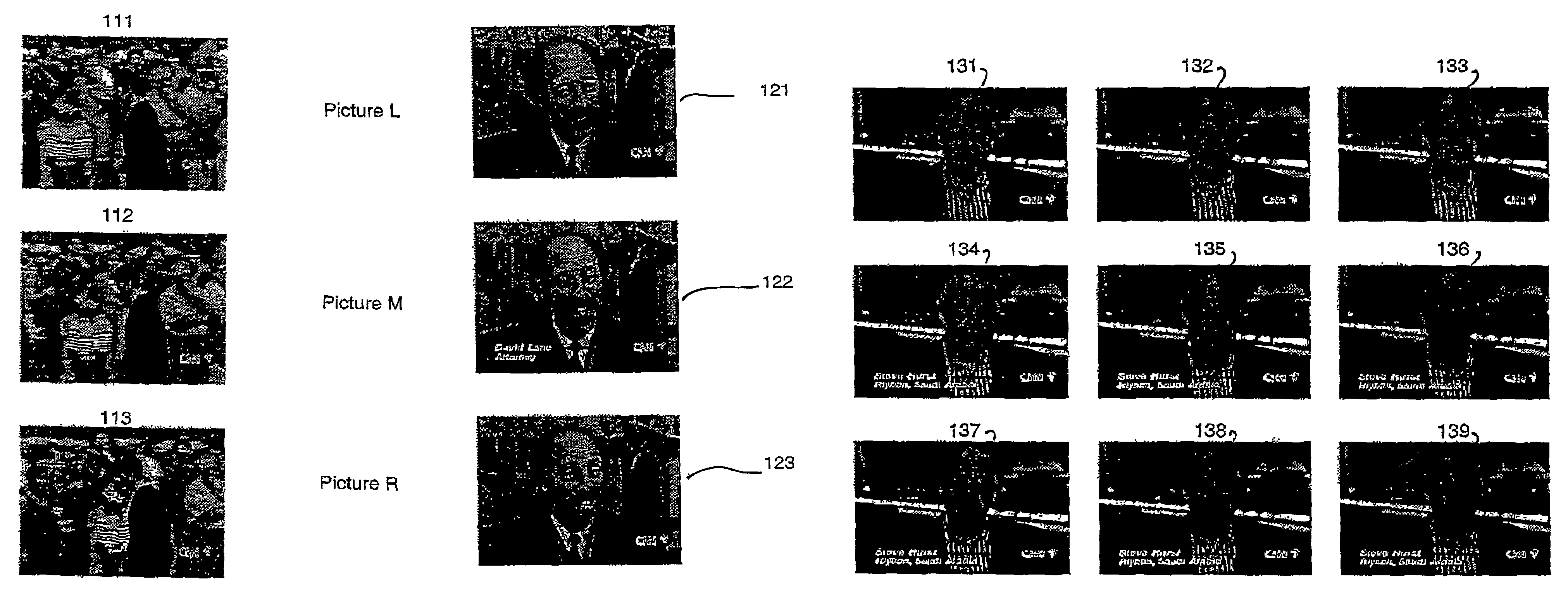

Method of selecting key-frames from a video sequence

InactiveUS7184100B1Reducing online storage requirementReduce search timeTelevision system detailsColor signal processing circuitsGraphicsVideo sequence

A method of selecting key-frames (230) from a video sequence (210, 215) by comparing each frame in the video sequence with respect to its preceding and subsequent key-frames for redundancy where the comparison involves region and motion analysis. The video sequence is optionally pre-processed to detect graphic overlay. The key-frame set is optionally post-processed (250) to optimize the resulting set for face or other object recognition.

Owner:ANXIN MATE HLDG

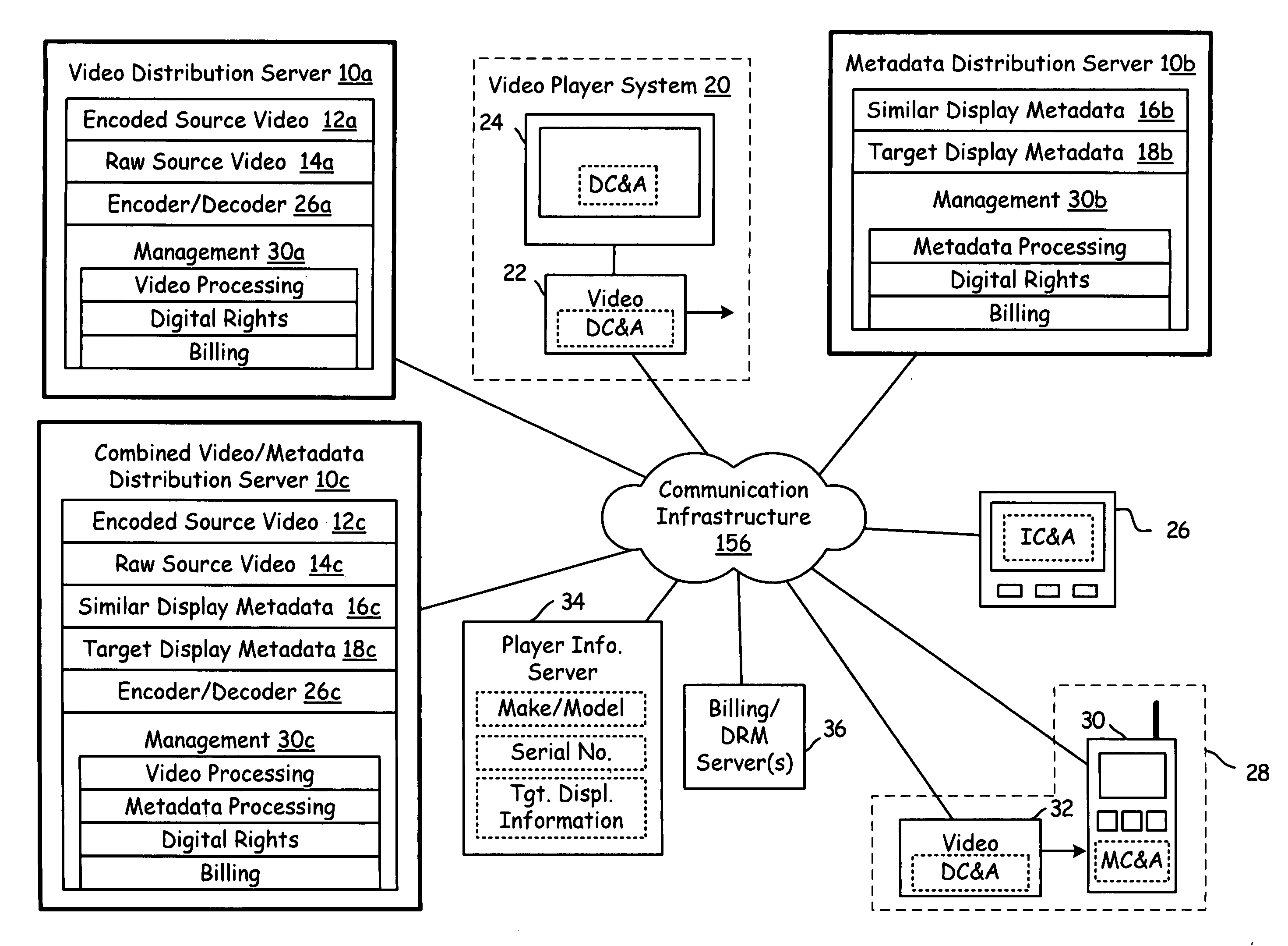

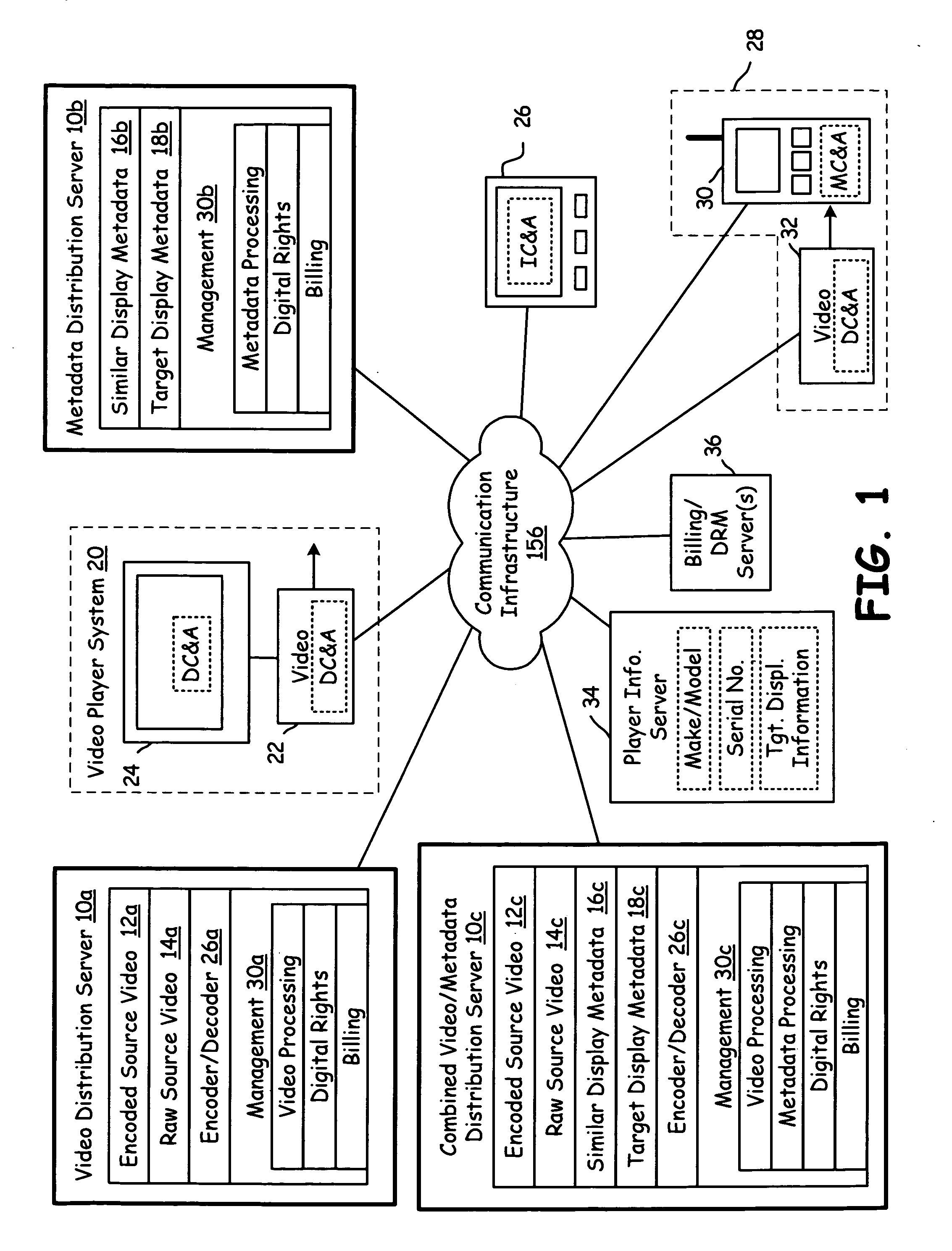

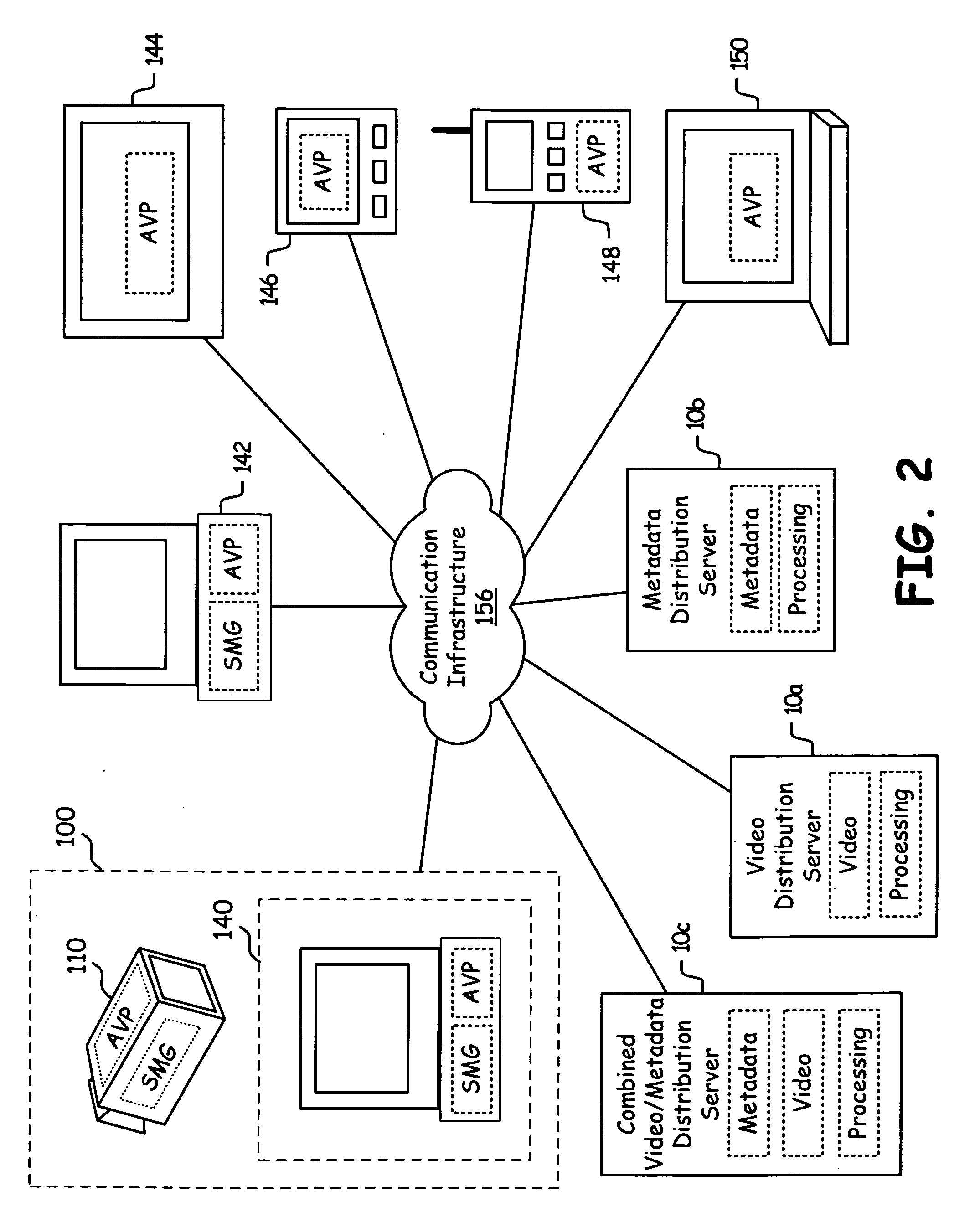

Sub-frame metadata distribution server

InactiveUS20080007651A1Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningCommunication interfaceComputer graphics (images)

A distribution server includes a communication interface, storage, and processing circuitry. The processing circuitry retrieves a full screen sequence of video and sub-frame metadata relating to the full screen sequence of video. The processing circuitry sub-frame processes the sequence of full screen video using the sub-frame metadata to produce a plurality of sub-frames of video. The processing circuitry assembles the plurality of sub-frames of video to produce an output sequence for a client system. The distribution server may also receive, store, and distribute the sub-frame metadata and / or the video for subsequent use by a video processing system.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

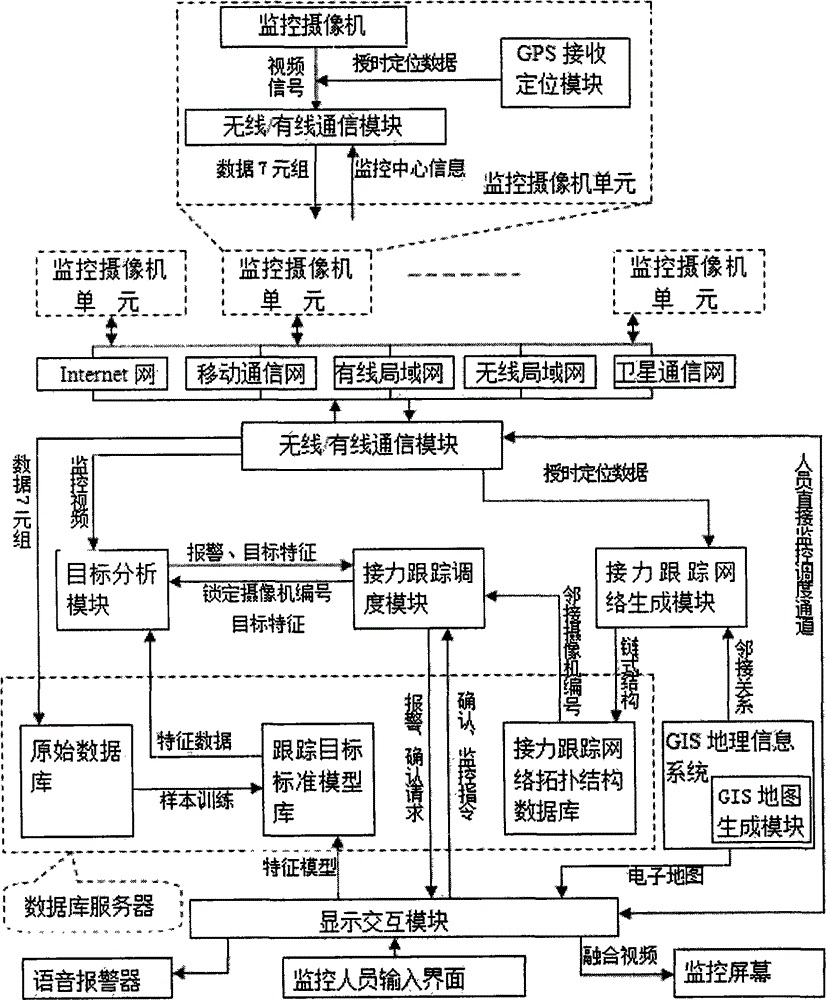

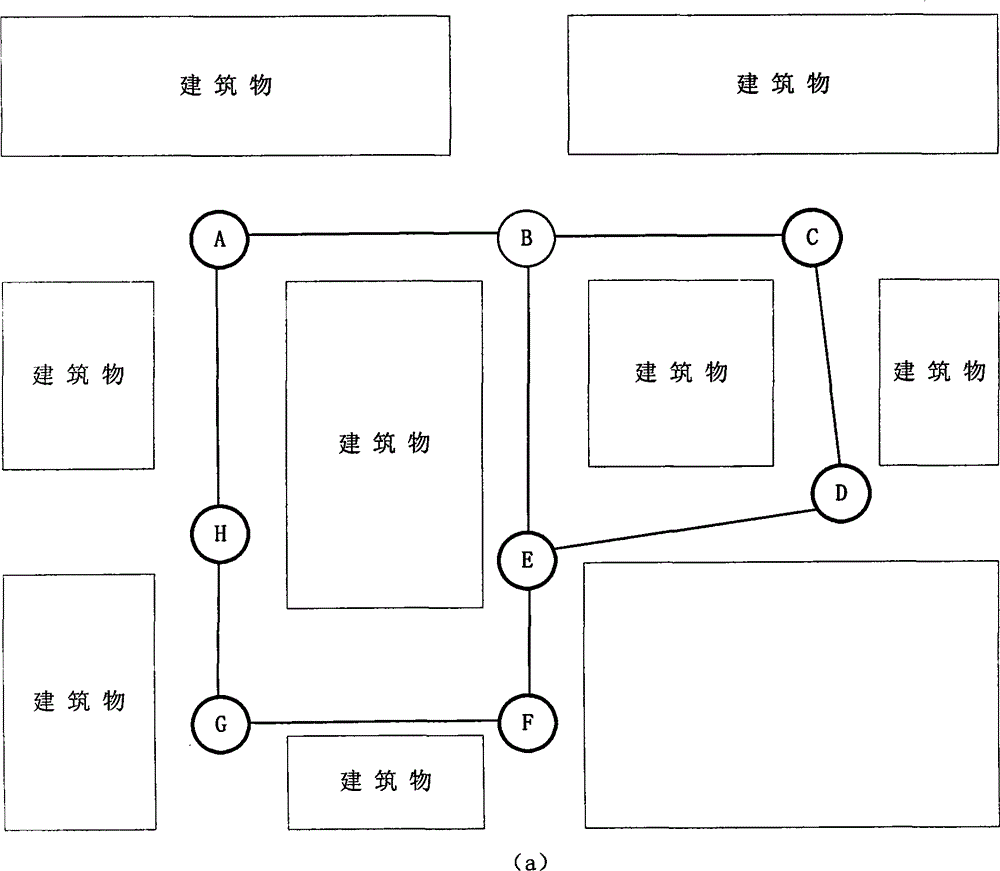

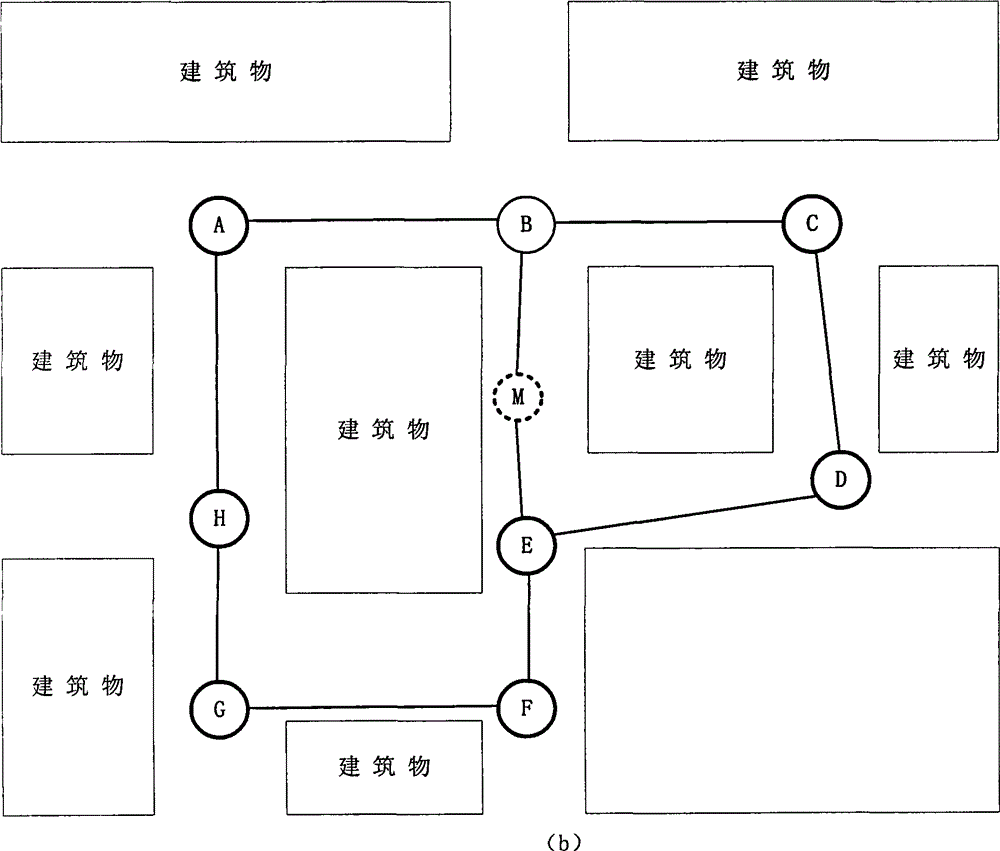

Intelligent visual sensor network moving target relay tracking system based on GPS (global positioning system) and GIS (geographic information system)

InactiveCN102724482ARealize manual judgmentRealize intelligent monitoring relay trackingTelevision system detailsInstruments for road network navigationVideo monitoringVideo sequence

The invention discloses an intelligent visual sensor network moving target relay tracking system based on a GPS (global positioning system) and a GIS (geographic information system). The intelligent visual sensor network moving target relay tracking system comprises a plurality of monitoring camera shooting unit, each monitoring camera shooting unit comprises a monitoring camera, a GPS receiving positioning module and a wired / wireless communication module are embedded into or disposed in each monitoring camera shooting unit, each monitoring camera shooting unit is used for acquiring video sequences in a monitoring area, and each wired / wireless communication module is used for transmitting the video sequences to a monitoring center via a wired or wireless digital communication network. The monitoring center comprises a wired / wireless communication module, a target analysis module, a relay tracking scheduling module, a relay tracking network generation module, a database server, a display interactive module and the GIS. The intelligent visual sensor network moving target relay tracking system can achieve independent discovery, real-time positioning and relay tracking of movable suspicious targets, integrally display monitoring videos of the targets on an electronic map and achieve multiple functions of emergency linkage, video monitoring, scheduling commanding and the like.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com