Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

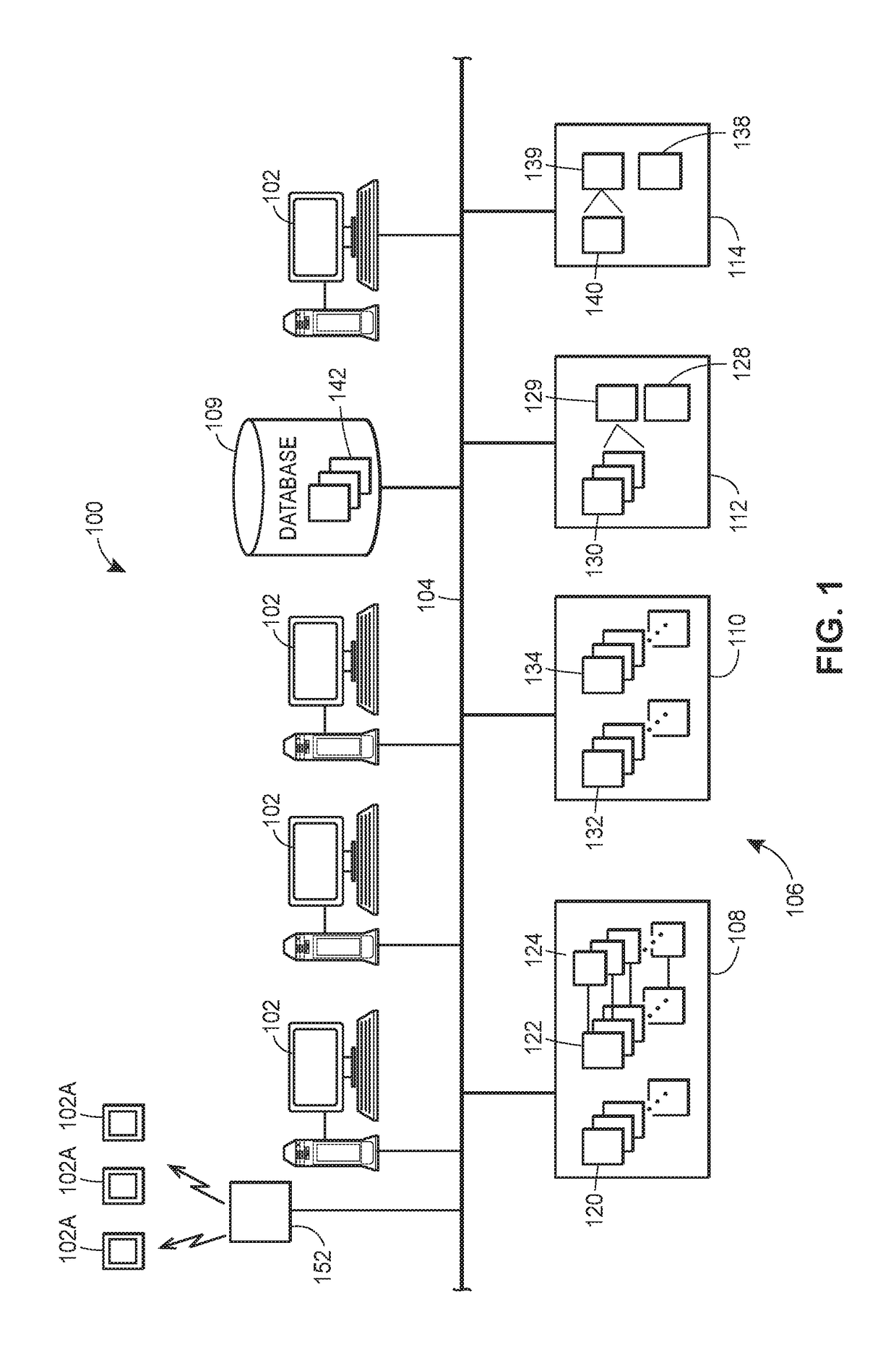

1071 results about "Composite video" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Composite video is an analog video transmission that carries standard definition video typically at 480i or 576i resolution as a single channel. Video information is encoded on one channel, unlike the higher-quality S-video (two channels) and the even higher-quality component video (three or more channels). In all of these video formats, audio is carried on a separate connection.

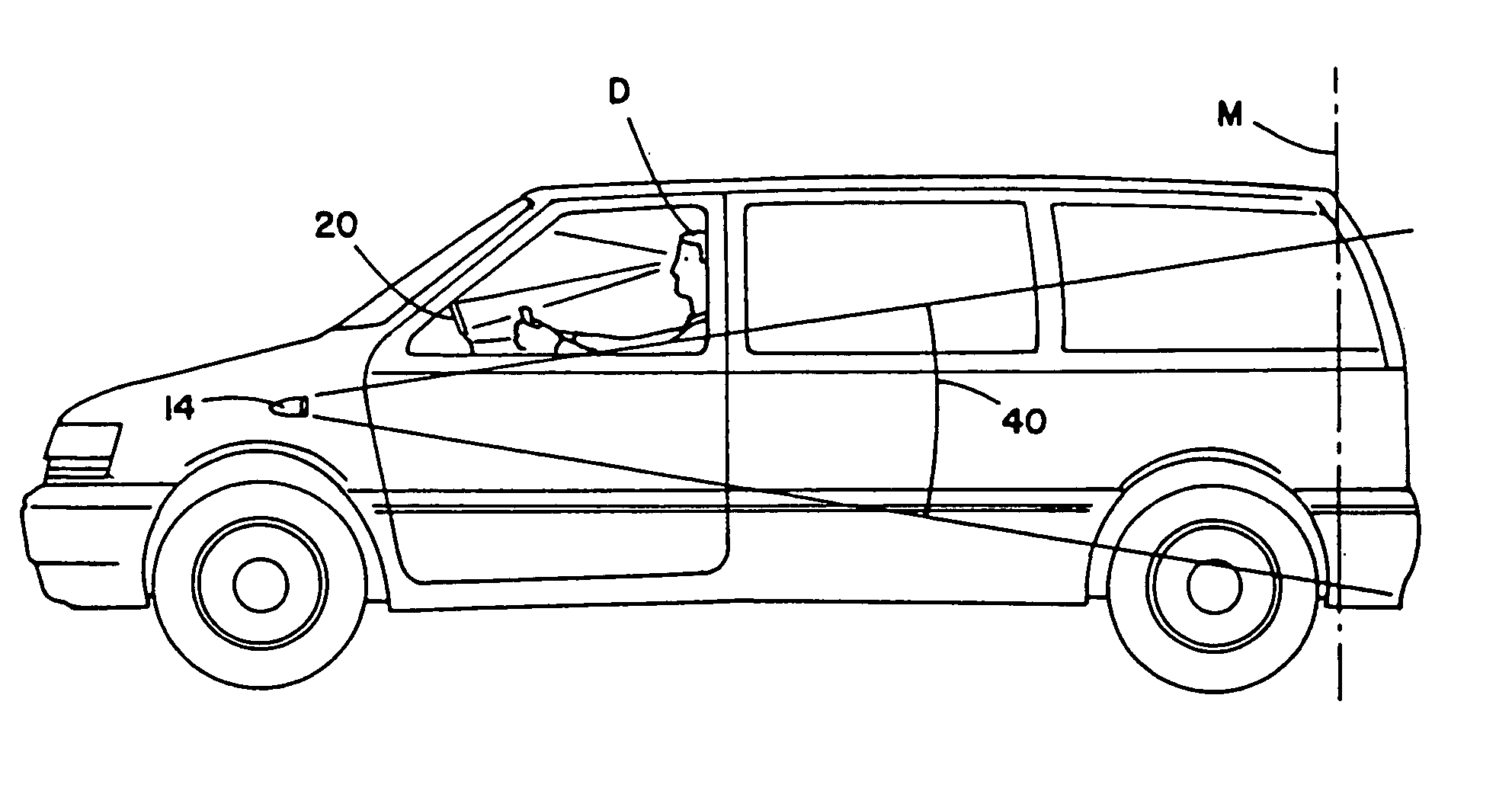

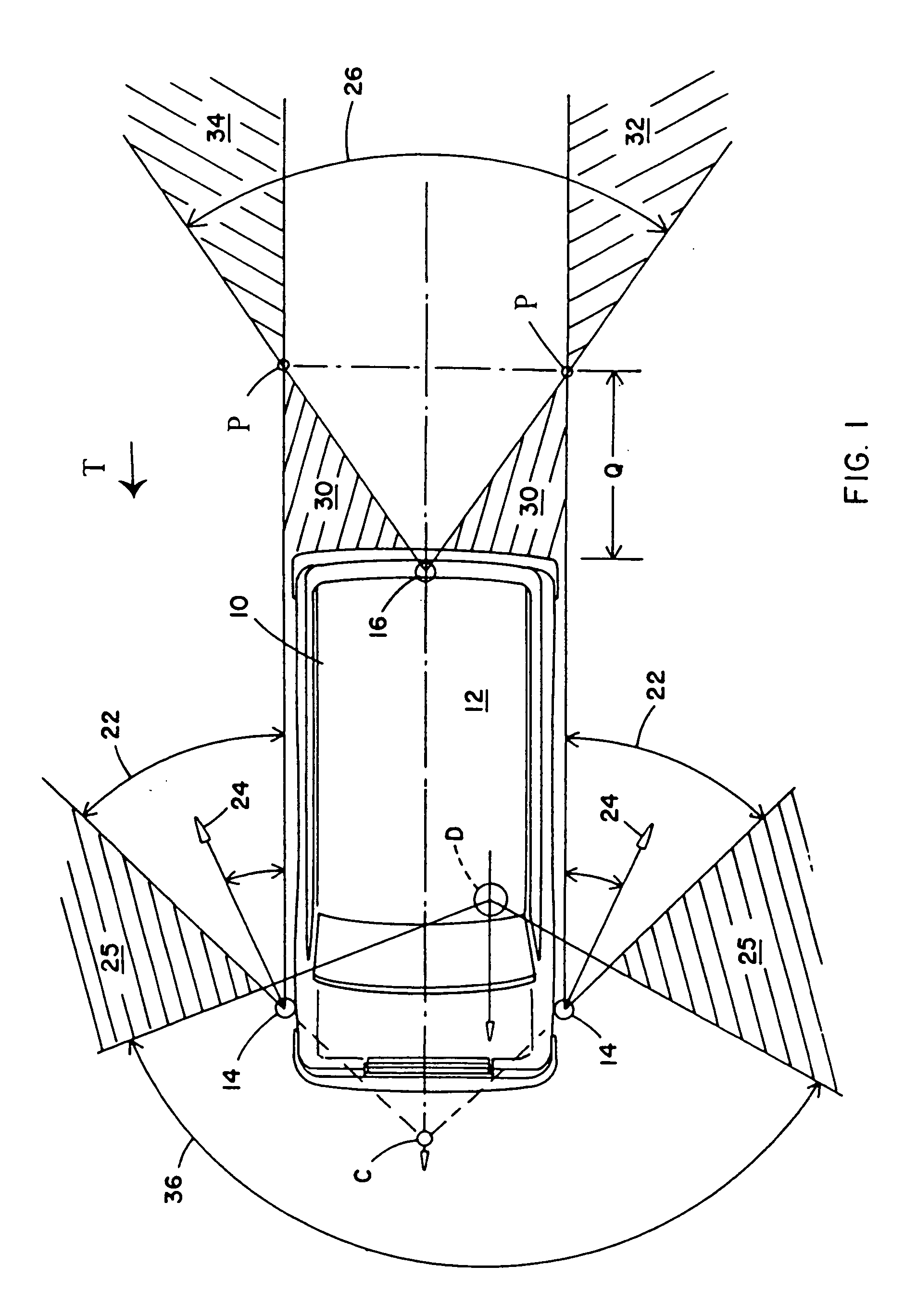

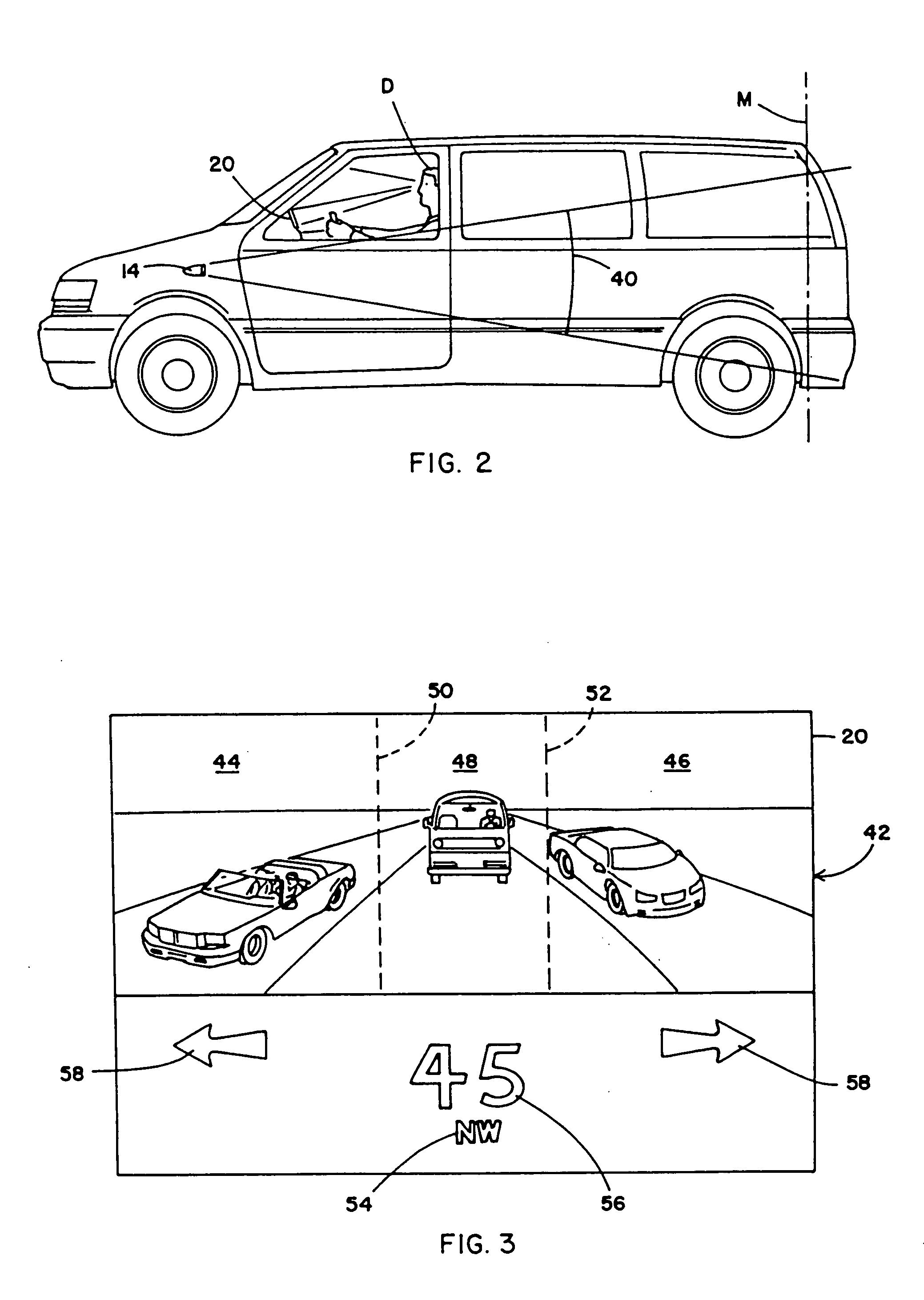

Vehicular vision system

InactiveUS6891563B2Easy to explainRemove distortionColor television detailsClosed circuit television systemsVisual perceptionComputer science

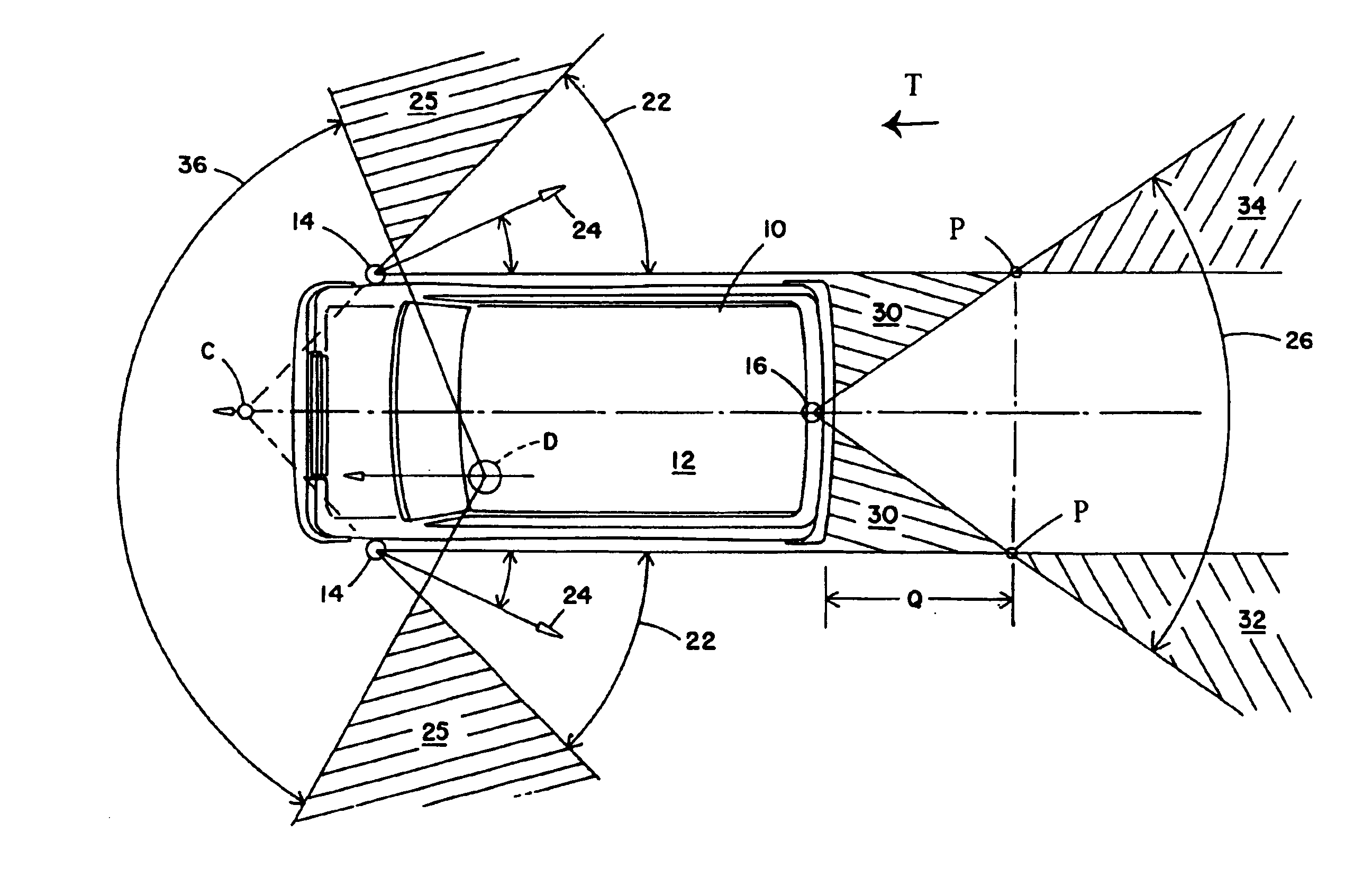

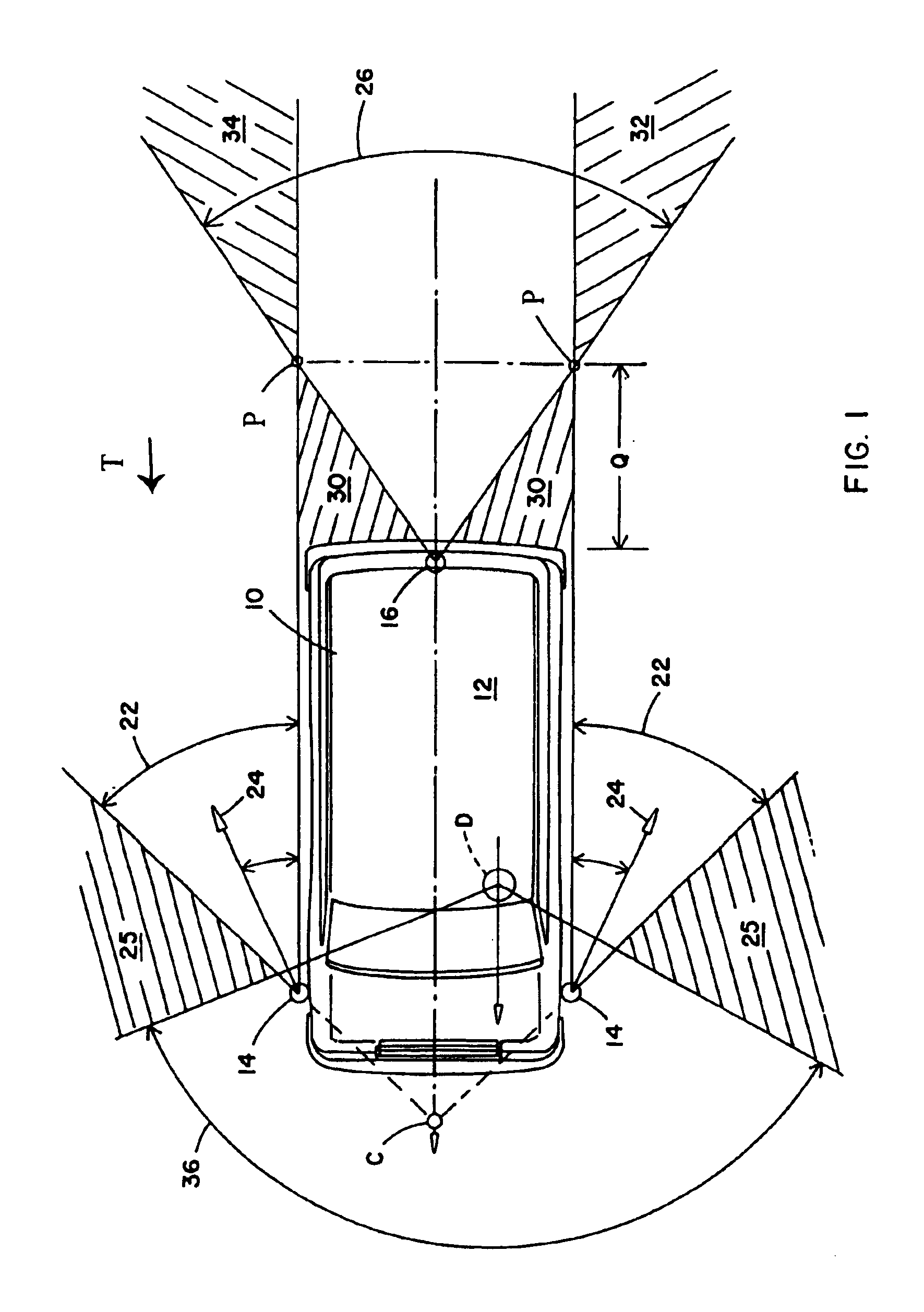

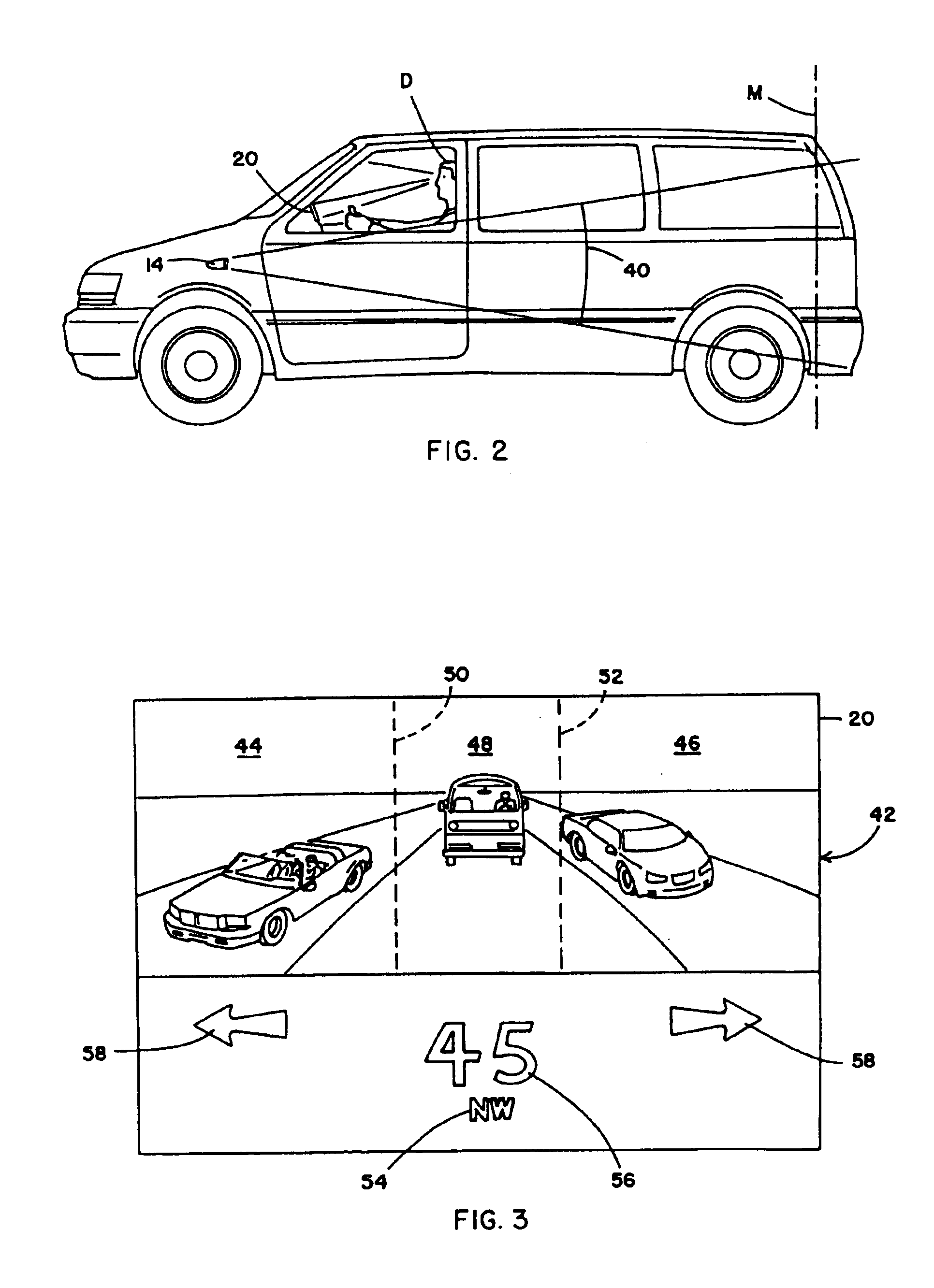

A vehicle vision system includes a vehicle having first and second spatially separated image capture sensors. The first image capture device has a first field of view having a first field of view portion at least partially overlapping a field of view portion of a second field of view of the second image capture device. A control receives a first image input from the first image capture sensor and a second image input from the second image capture sensor and generates a composite image synthesized from the first image input and the second image input. A display system displays the composite image.

Owner:DONNELLY CORP

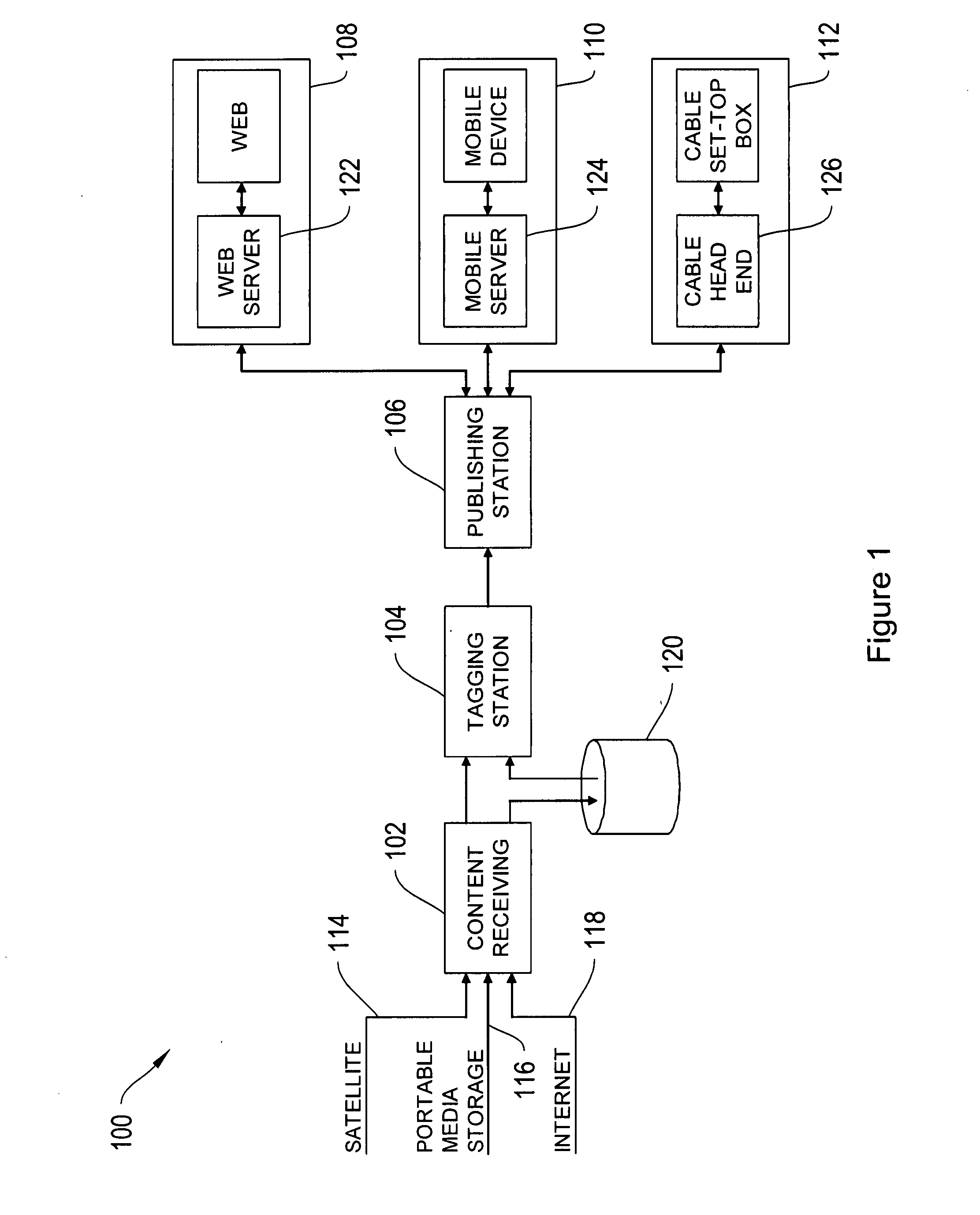

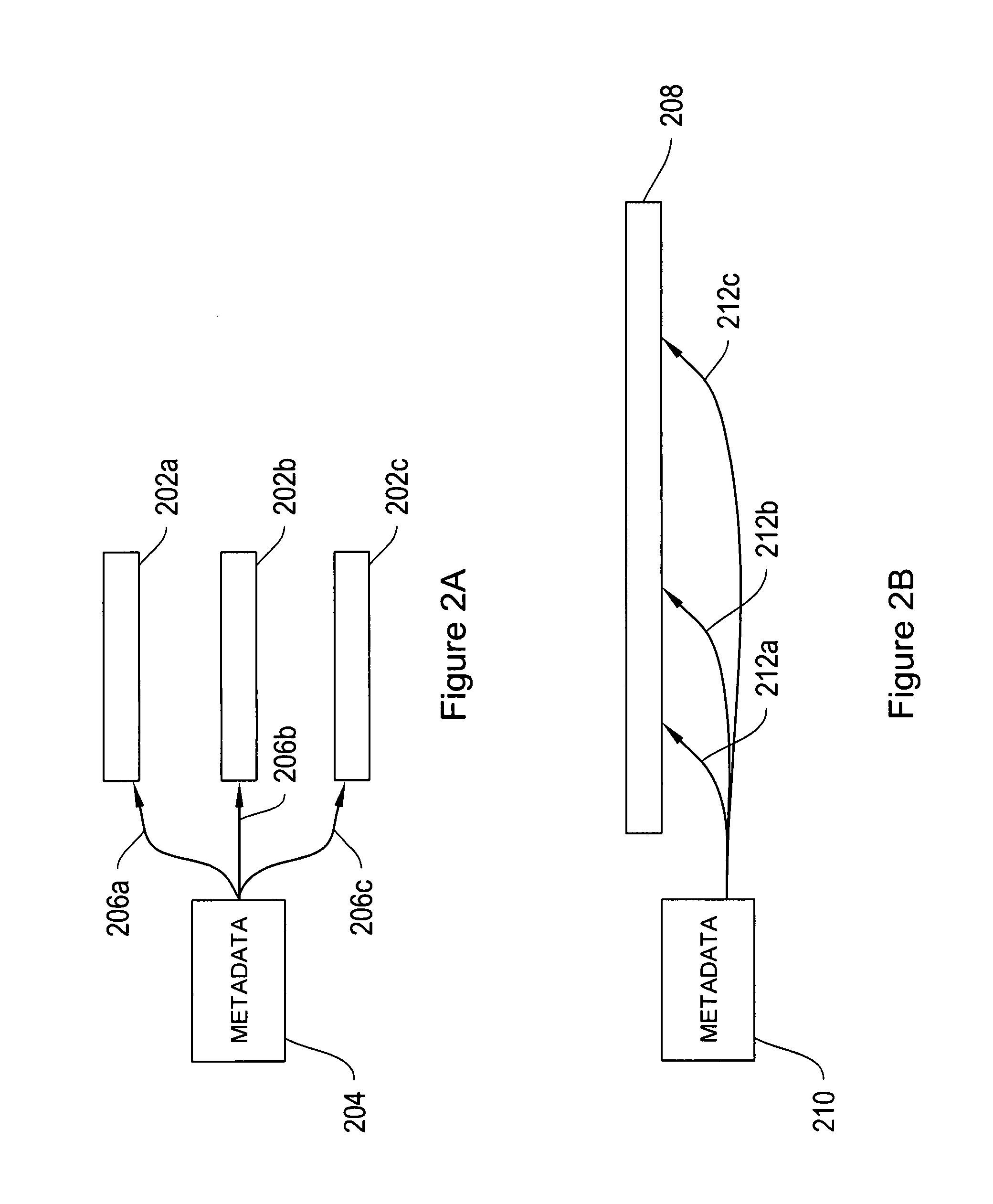

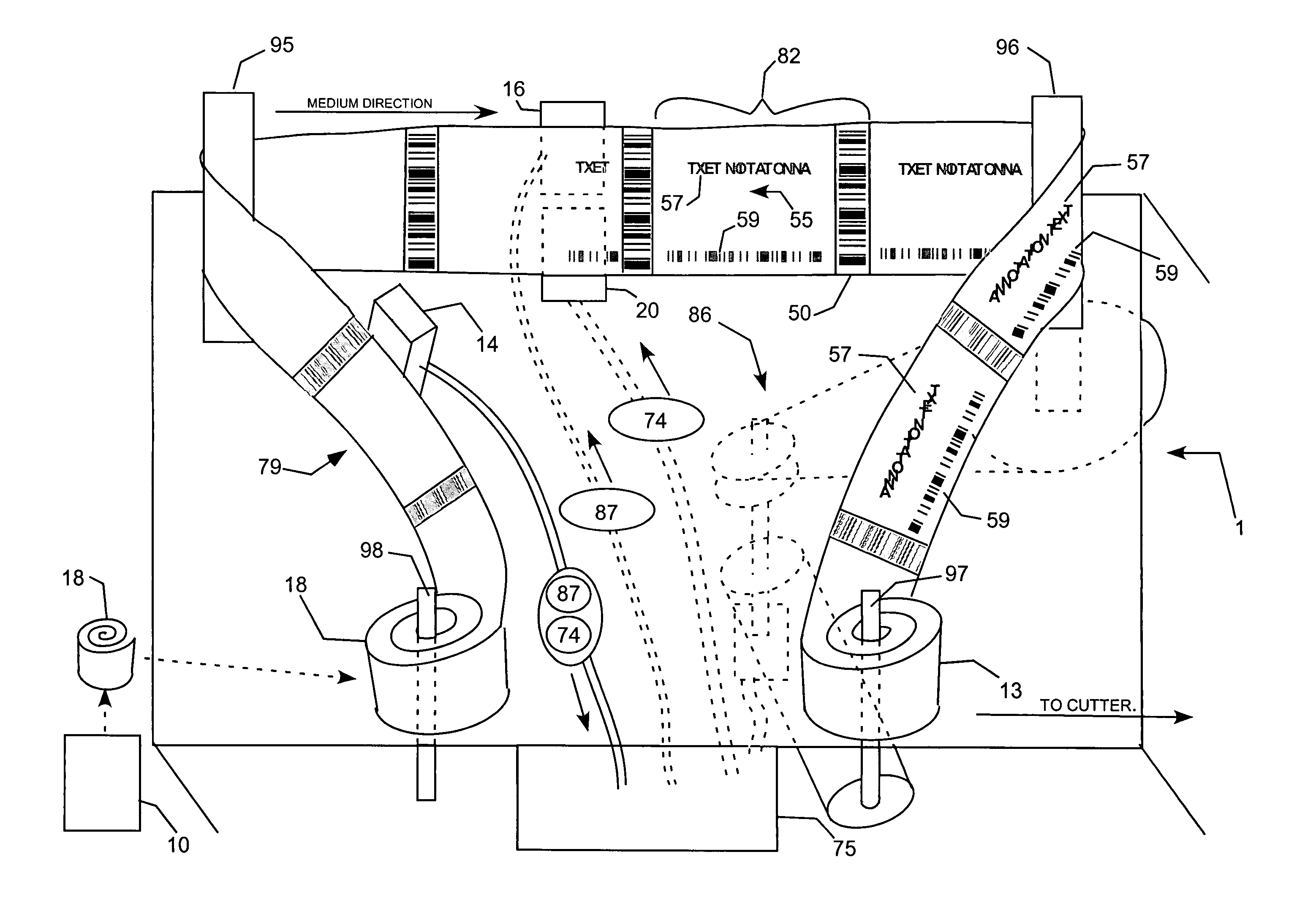

Methods and systems for generating and delivering navigatable composite videos

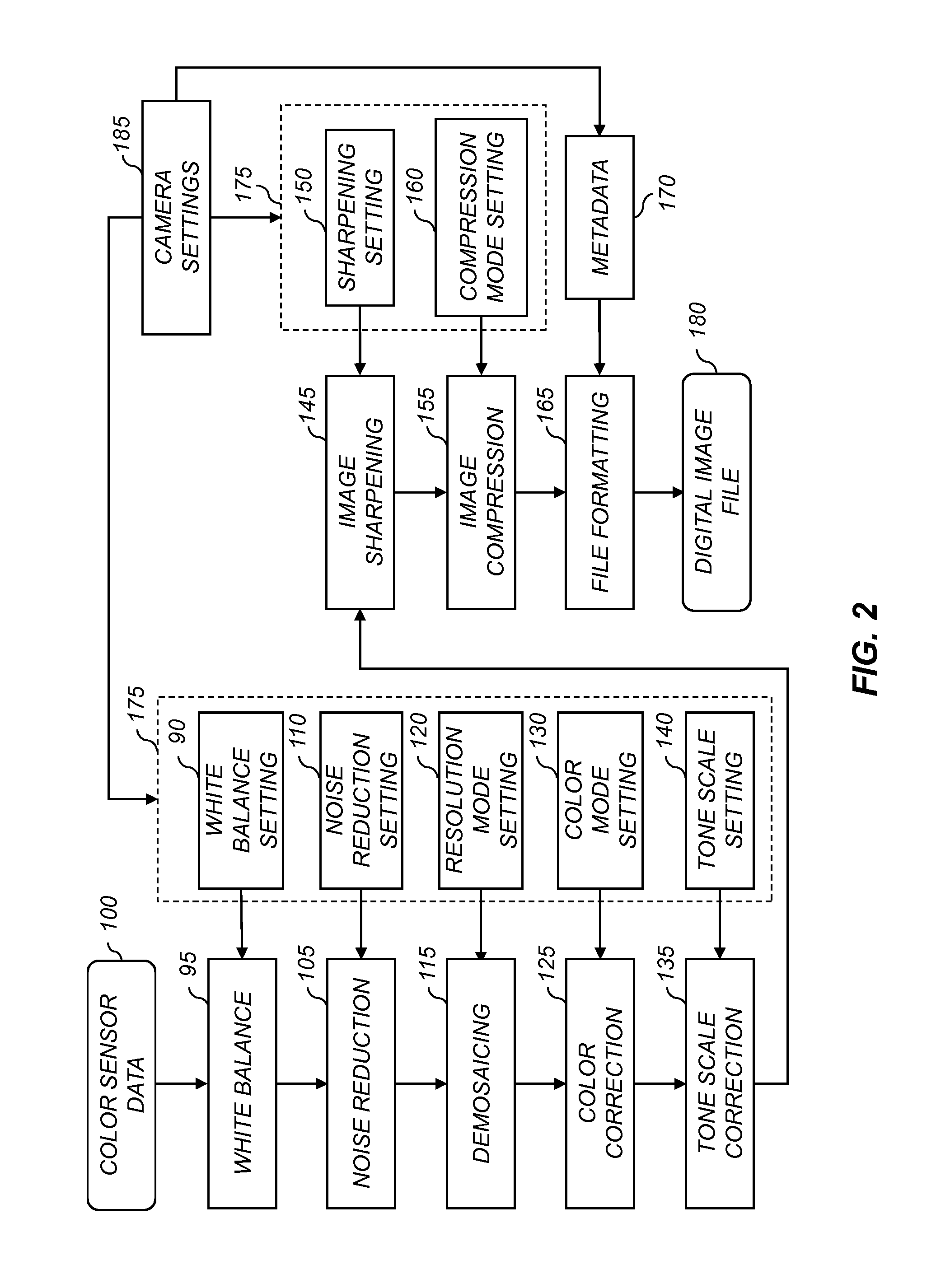

InactiveUS20080036917A1Easy to navigateFacilitates broad distributionTelevision system detailsColor signal processing circuitsComputer graphics (images)Metadata

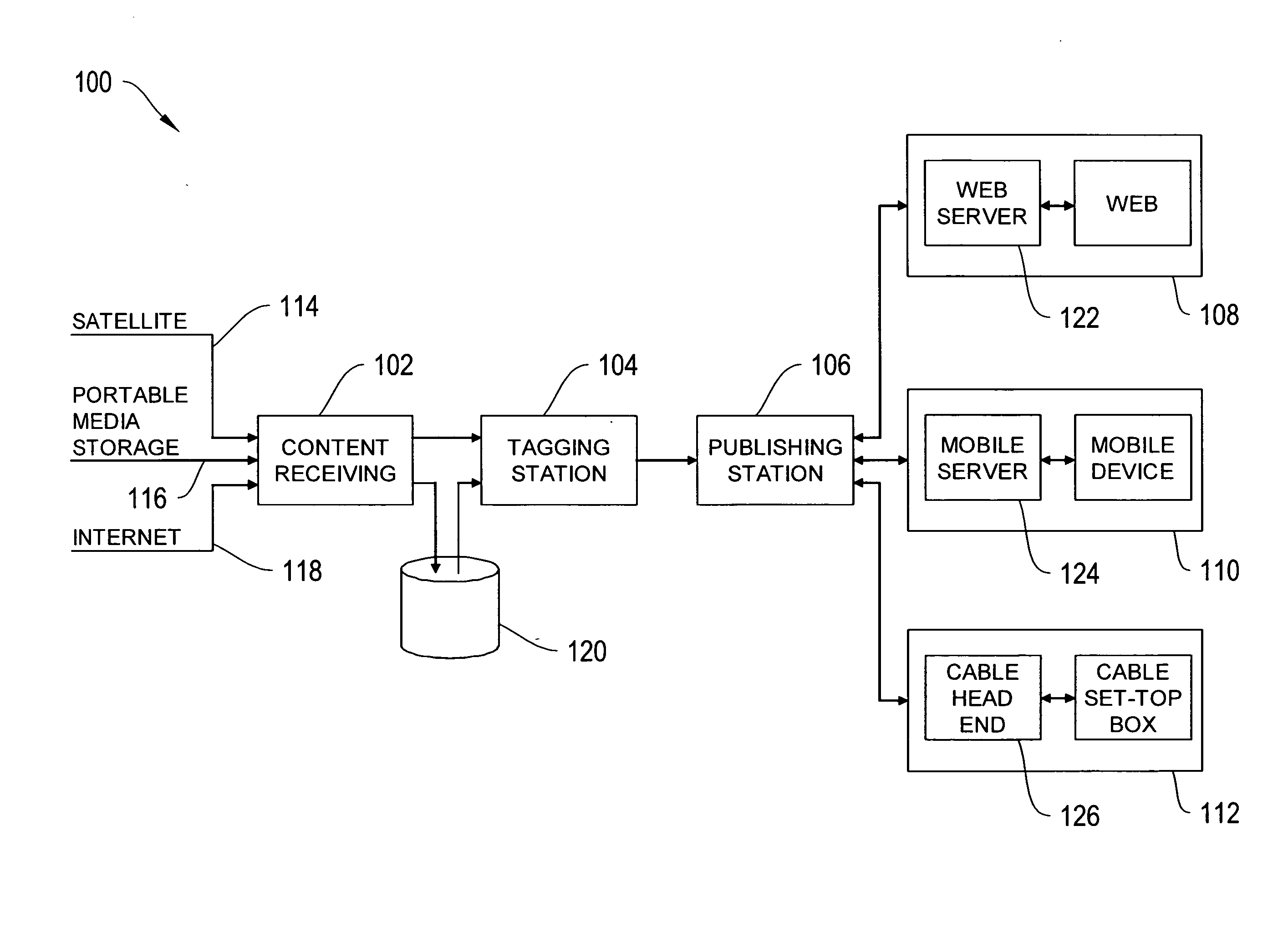

Systems and methods for generating a composite video having a plurality of video assets are provided. Systems may include storage having a plurality of video assets, a metadata generator, and a composite video generator. The metadata generator processes respective ones of the video assets to generate a metadata track representative of information that is descriptive of the content of the video asset. The composite video generator receives a plurality of metadata tracks and, in response, processes the associated video assets and the metadata tracks to generate a composite video asset having video data from the video assets. The composite video asset may have a metadata list panel that presents information representative of the metadata information as video data appearing within the composite video asset. The metadata list panel visually presents the sequence of video assets in the composite video asset.

Owner:TIVO INC

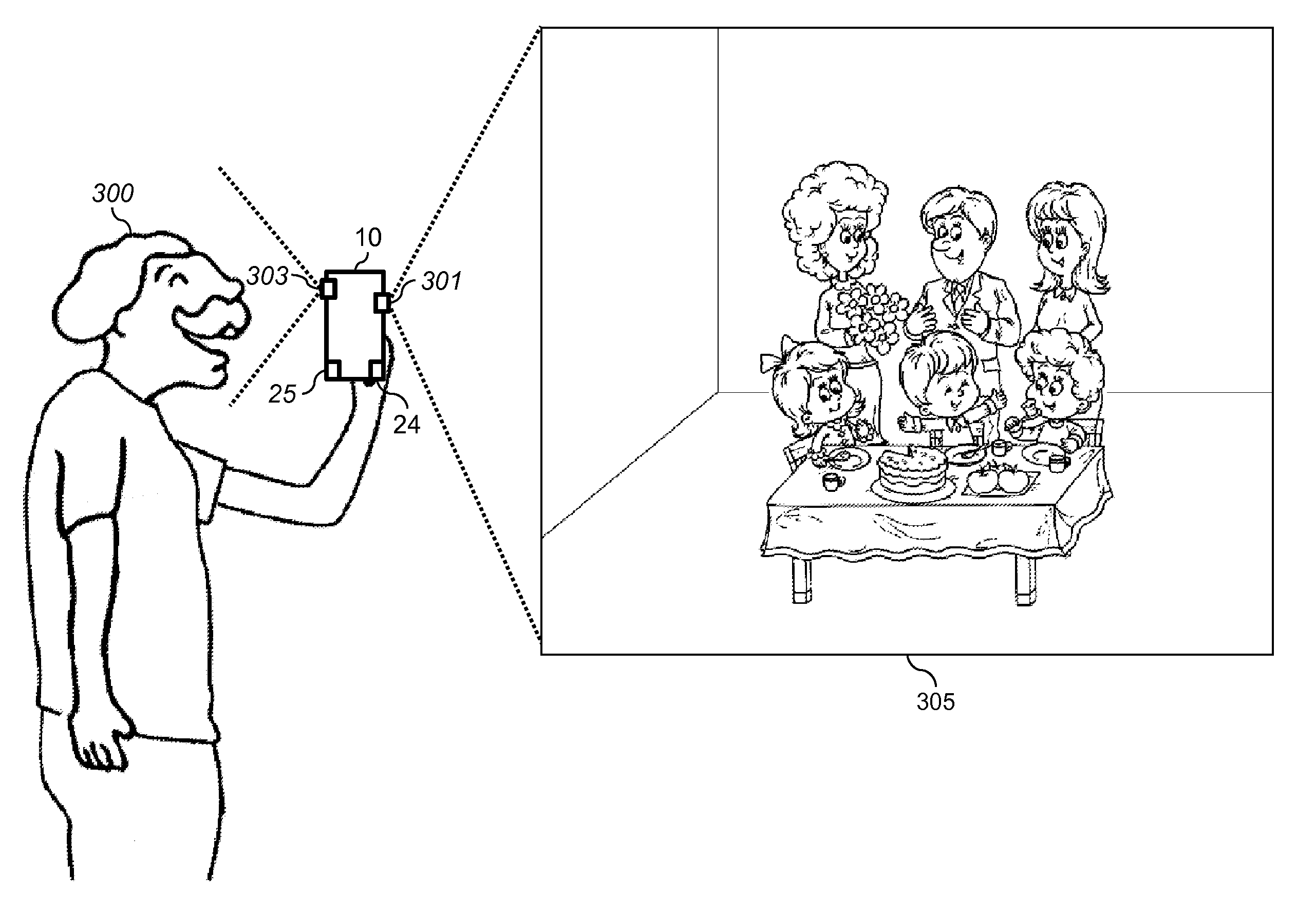

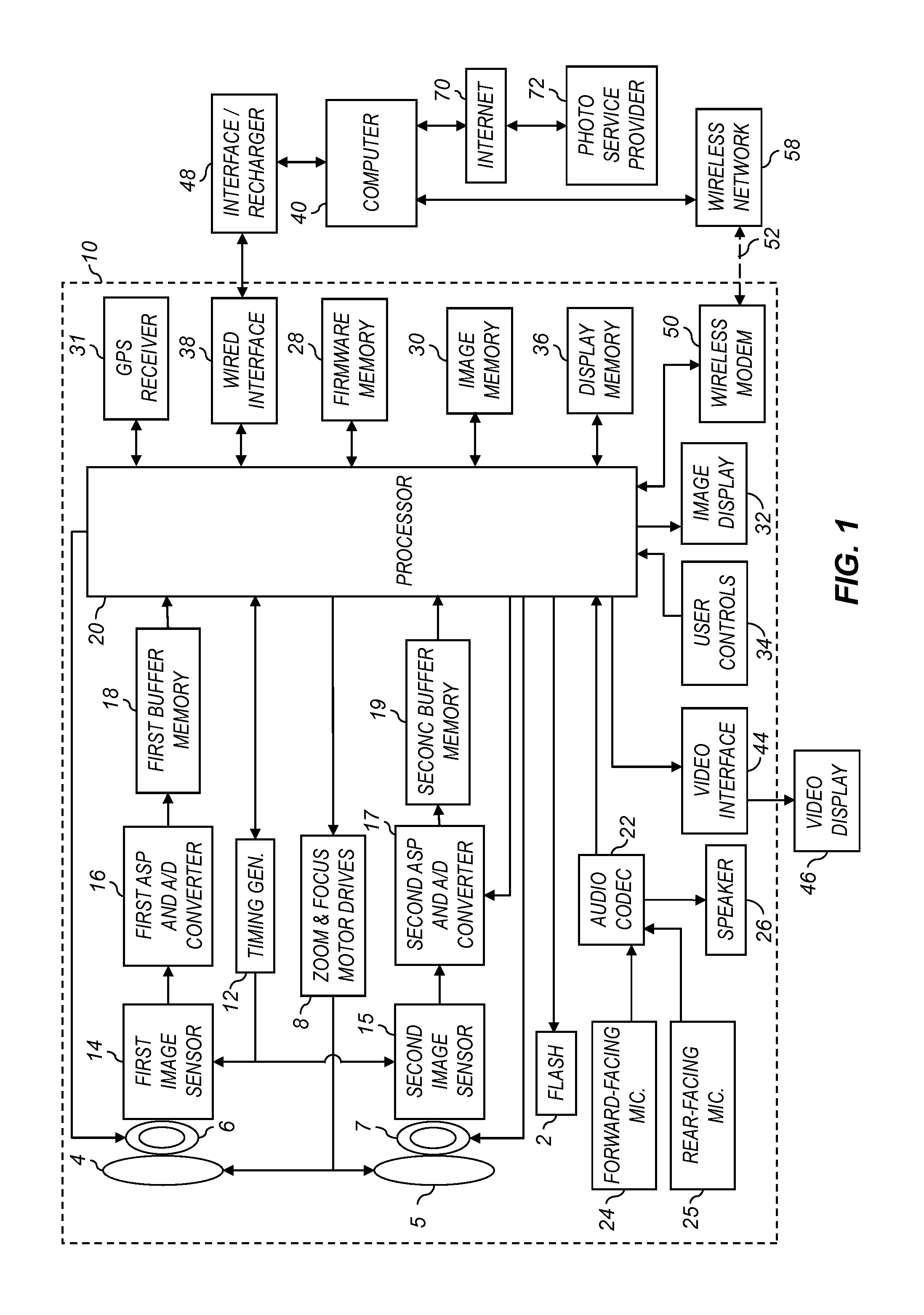

Video camera providing a composite video sequence

ActiveUS20130235224A1Inhibitory contentTelevision system detailsColor television detailsDigital videoComputer graphics (images)

A digital camera system including a first video capture unit for capturing a first digital video sequence of a scene and a second video capture unit that simultaneously captures a second digital video sequence that includes the photographer. A data processor automatically analyzes first digital video sequence to determine a low-interest spatial image region. A facial video sequence including the photographer's face is extracted from the second digital video sequence, and inserted into the low-interest spatial image region in the first digital video sequence to form the composite video sequence.

Owner:APPLE INC

Vehicular vision system

InactiveUS20050200700A1Easy to explainRemove distortionColor television detailsClosed circuit television systemsVisual perceptionField of view

A vehicle vision system includes a vehicle having first and second spatially separated image capture sensors. The first image capture device has a first field of view having a first field of view portion at least partially overlapping a field of view portion of a second field of view of the second image capture device. A control receives a first image input from the first image capture sensor and a second image input from the second image capture sensor and generates a composite image synthesized from the first image input and the second image input. A display system displays the composite image.

Owner:DONNELLY CORP

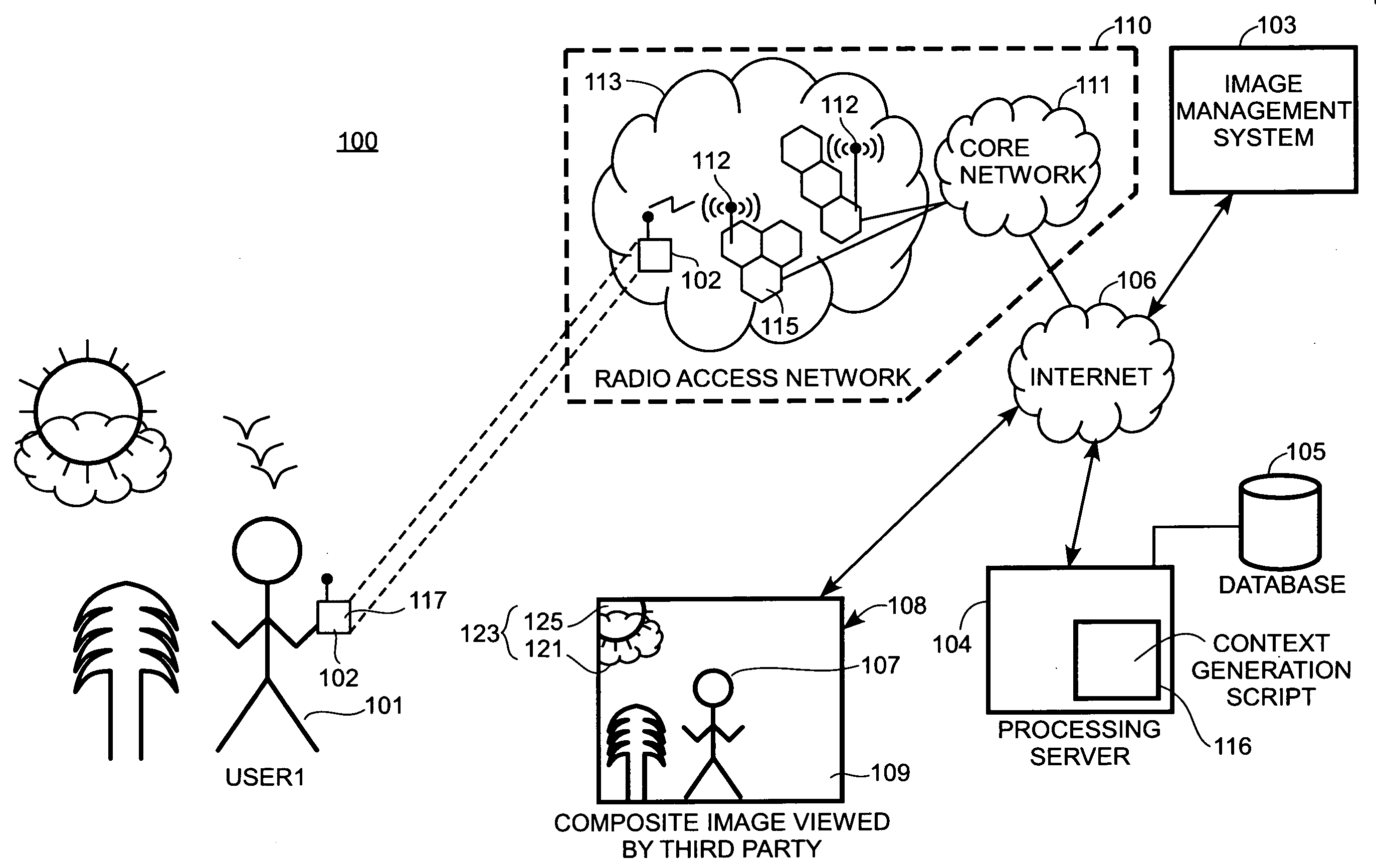

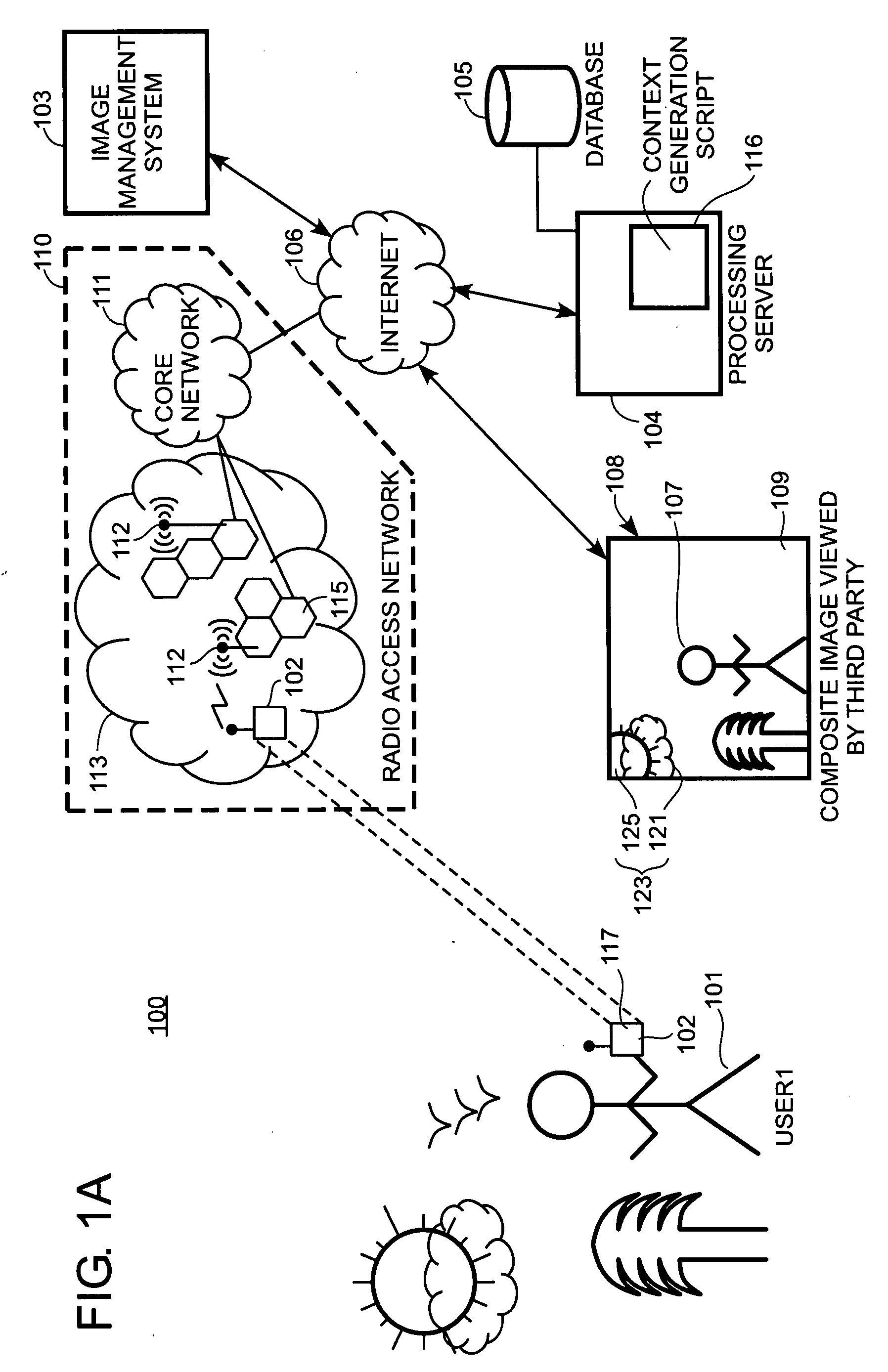

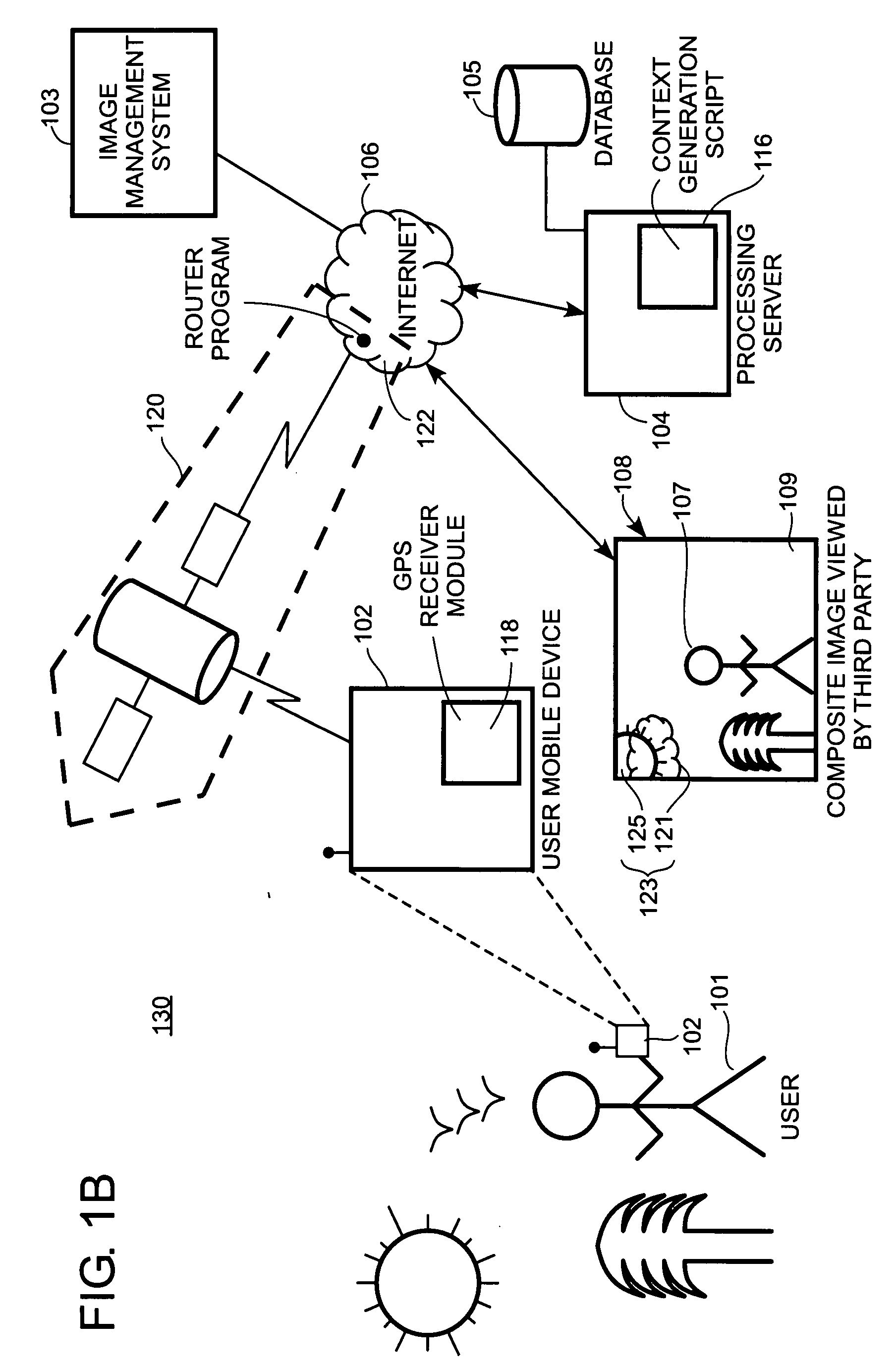

Context avatar

Methods and systems for generating information about a physical context of a user are provided. These methods and systems provide the capability to render a context avatar associated with the user as a composite image that can be broadcast in virtual environments to provide information about the physical context of the user. The composite image can be automatically updated without user intervention to include, among other things, a virtual person image of the user and a background image defined by encoded image data associated with the current geographic location of the user.

Owner:STARBOARD VALUE INTERMEDIATE FUND LP AS COLLATERAL AGENT

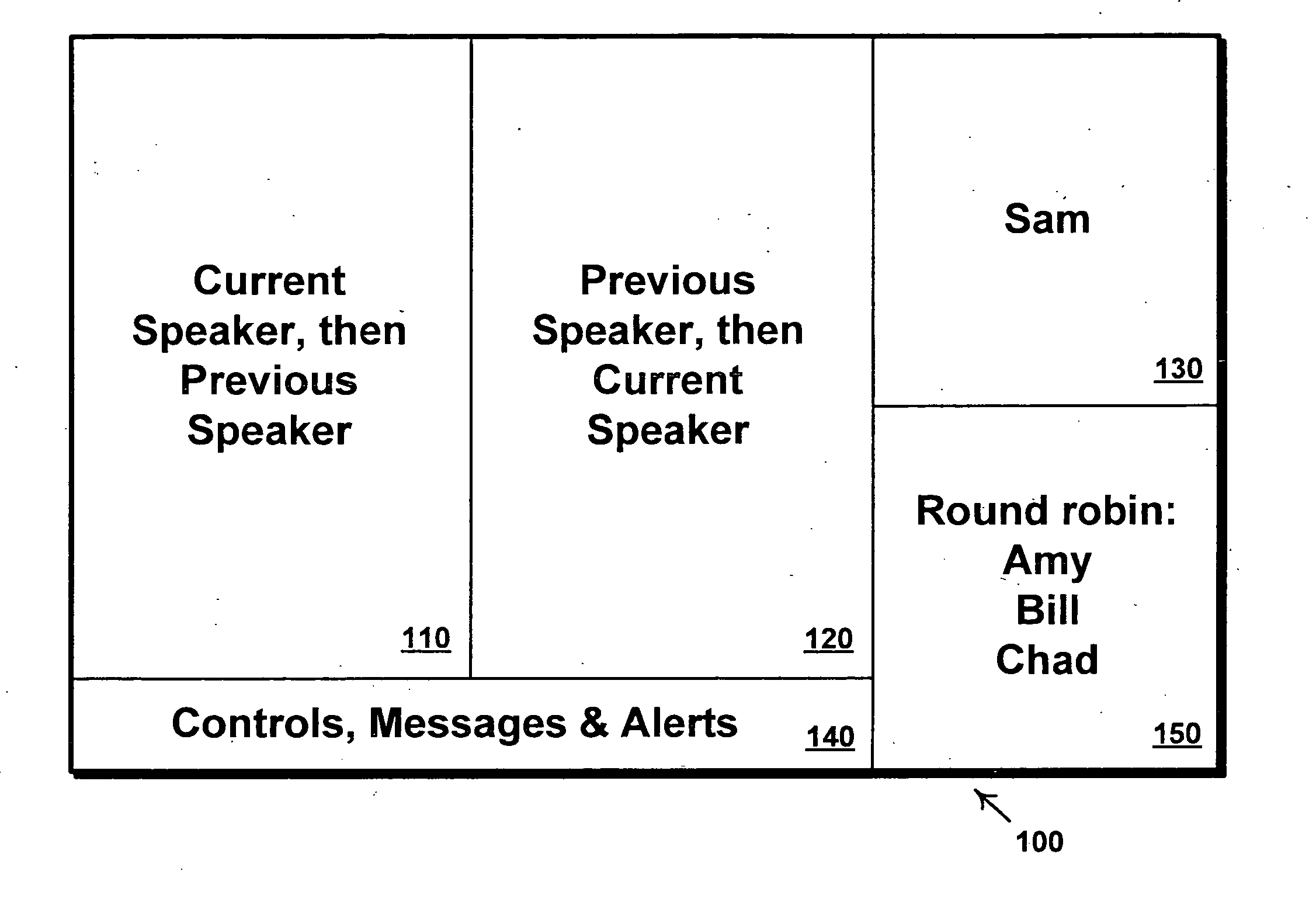

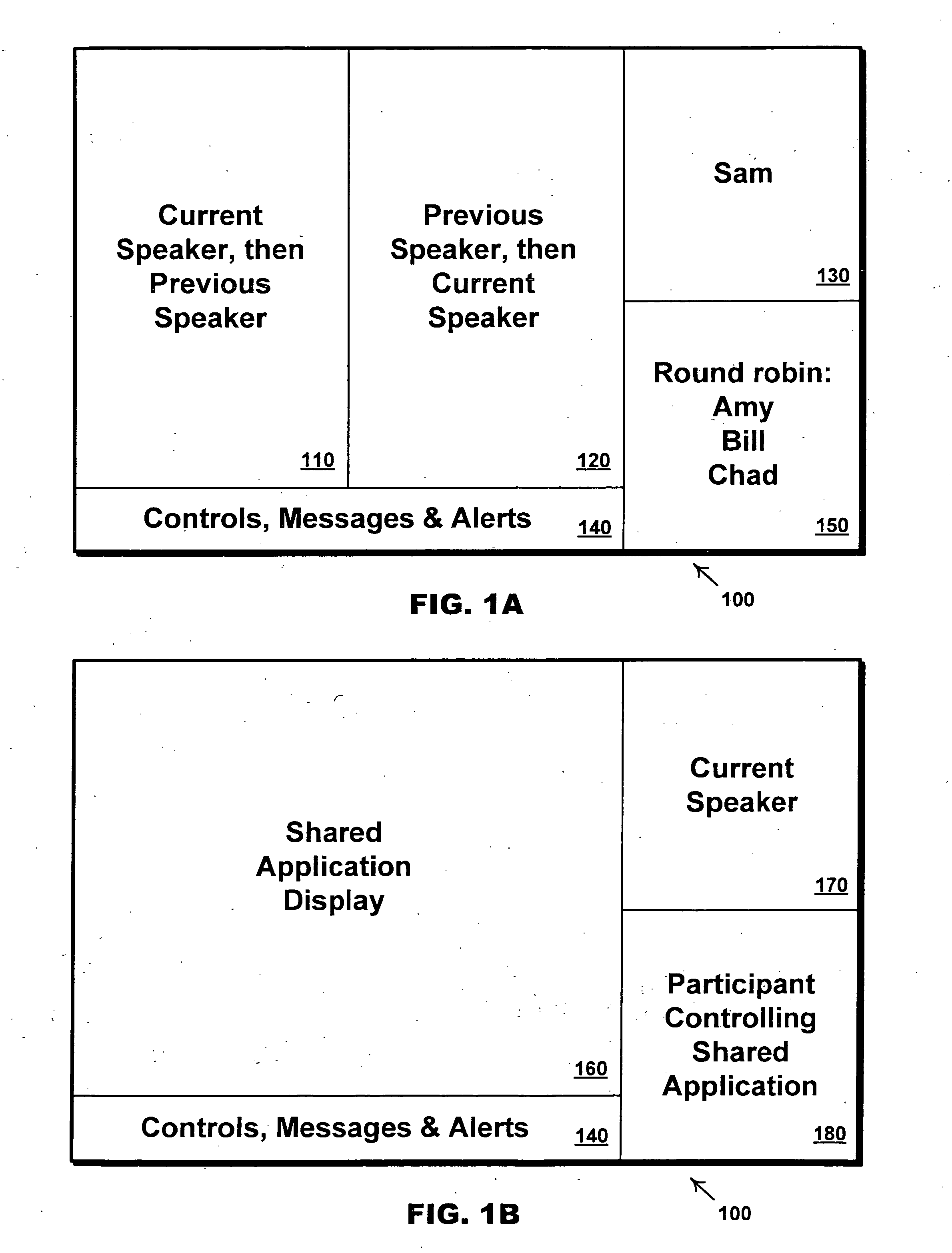

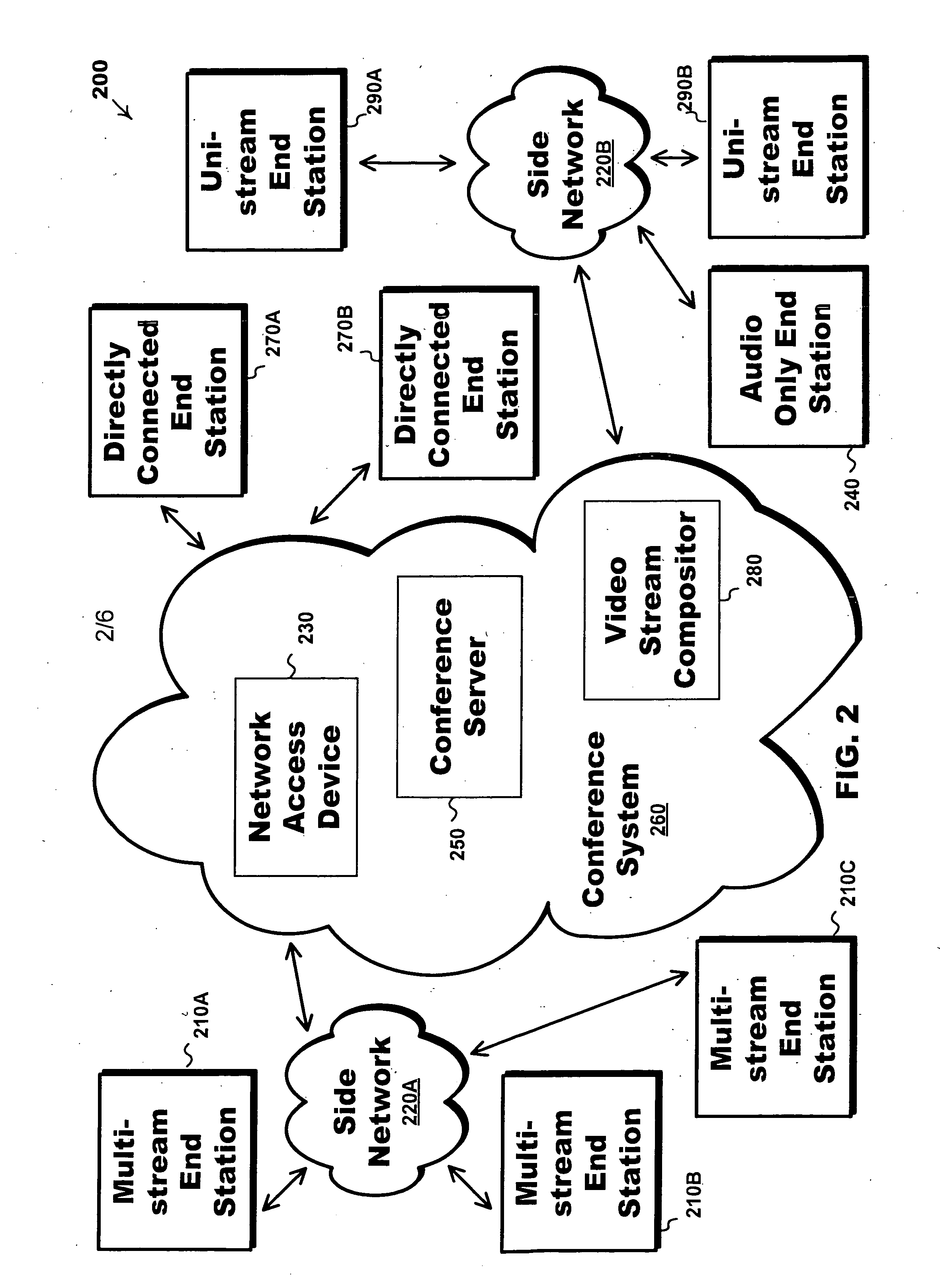

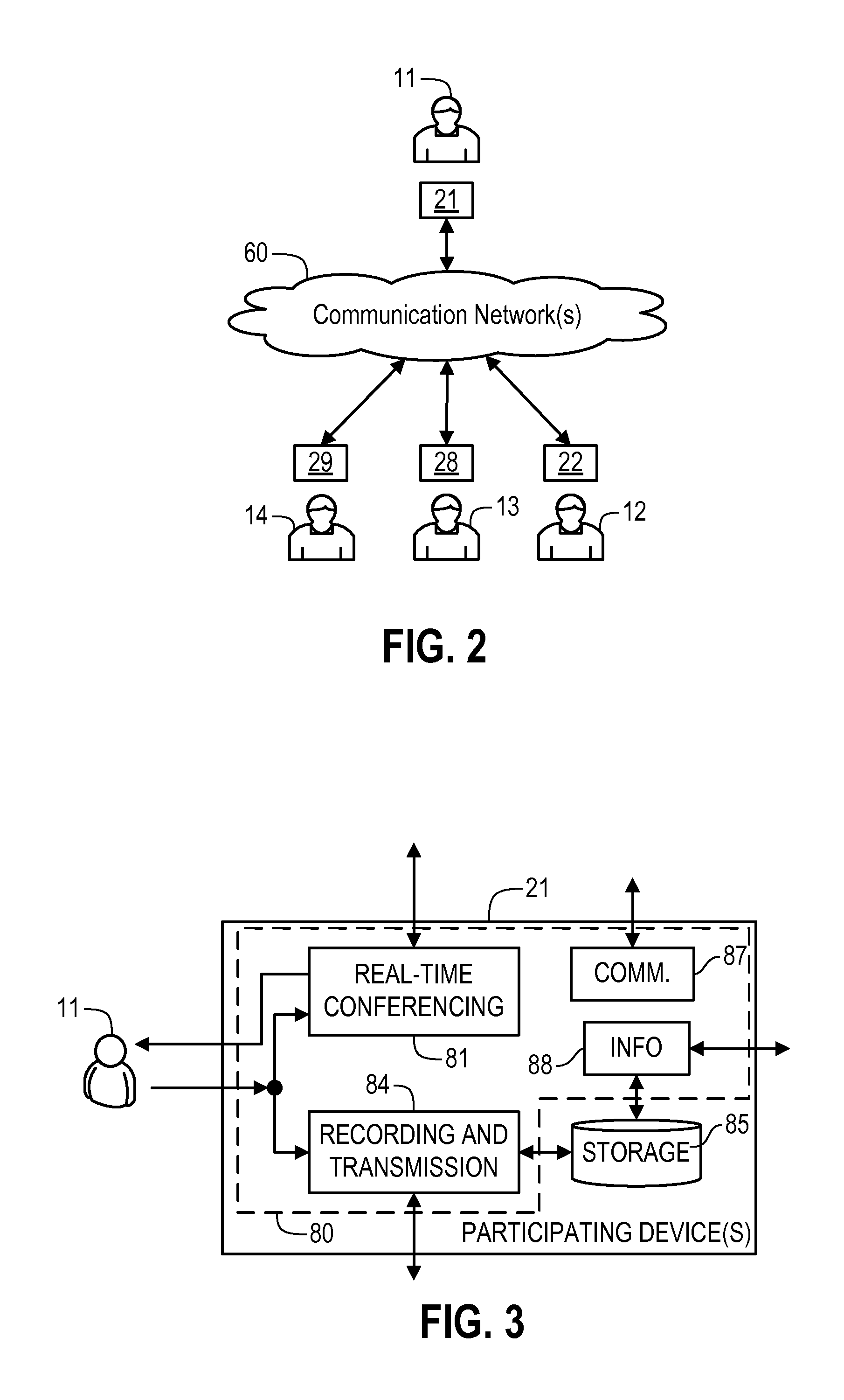

Dynamically switched and static multiple video streams for a multimedia conference

An end station for a videoconference / multimedia conference is disclosed, where the end station requests, receives and displays multiple video streams. Call control messages request video streams with specified video policies. A static policy specifies a constant source video stream, e.g., a participant. A dynamic policy dynamically maps various source streams to a requested stream and shows, for example, the current speaker, or a round robin of participants. A network access device, e.g., a media switch or a video composition system, mediates between the multi-stream end station and the core conference system. Multi-stream endpoints need not handle the complexity of directly receiving video according to a potentially wide variety of call control protocols, formats, and bit-rates. Multi-stream endpoints decentralize compositing video streams, which increases functional flexibility and reduces the need for centralized equipment.

Owner:CISCO TECH INC

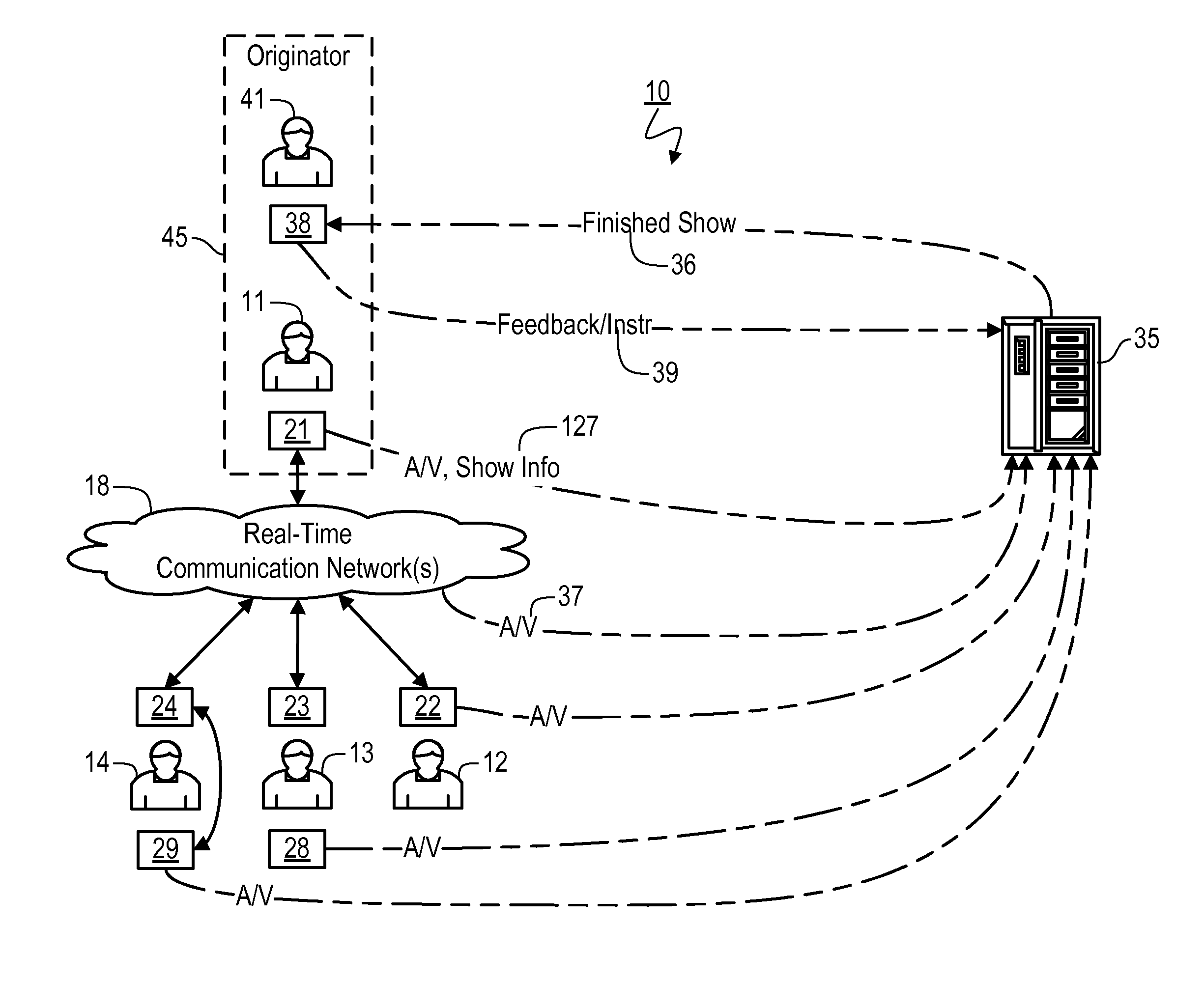

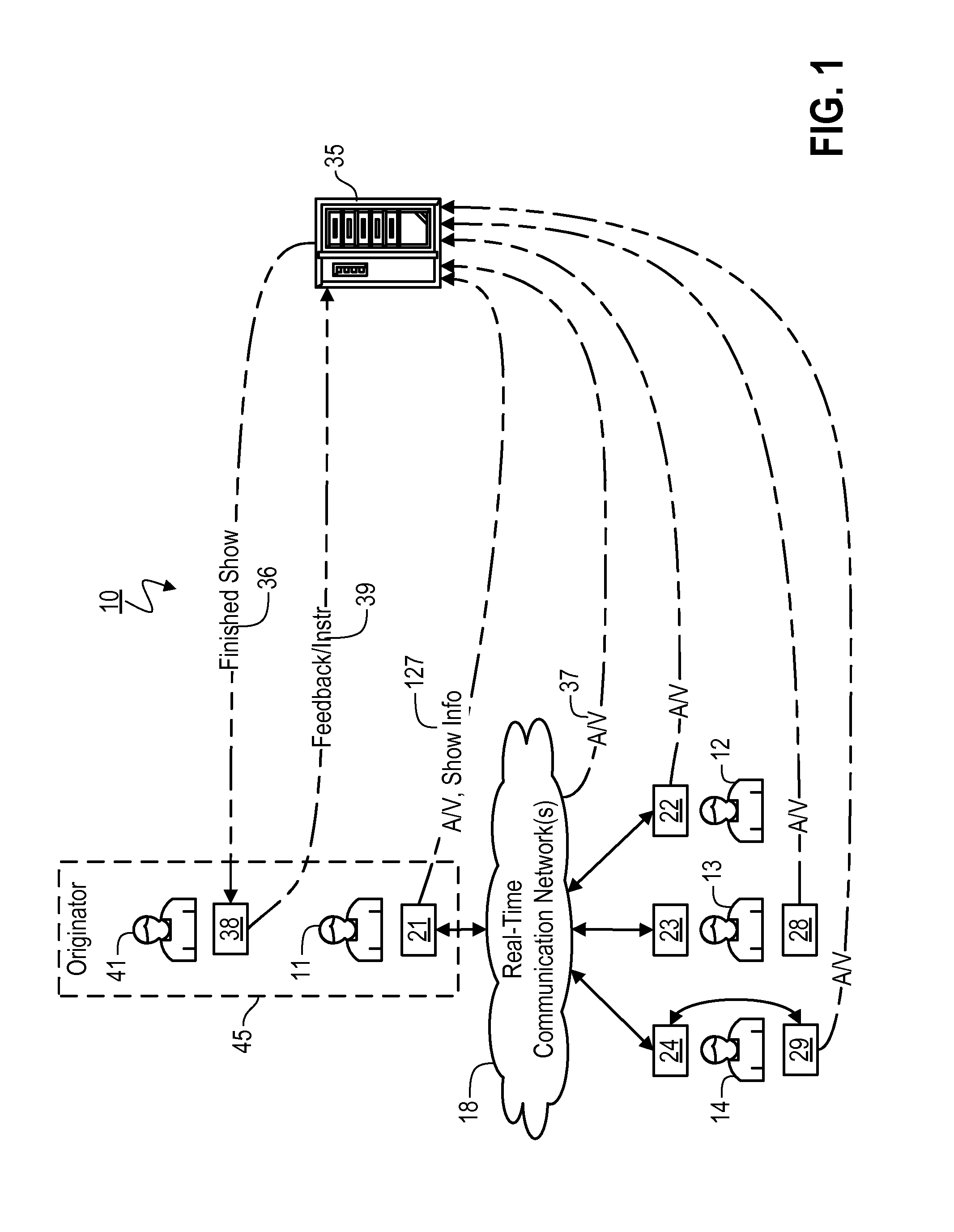

Generation of Composited Video Programming

ActiveUS20130216206A1Television system detailsElectronic editing digitised analogue information signalsUser interfaceComposite video

A system for creating video programming is provided. The system includes: a processor-based production server that has access to a library of existing styles for wrapping around video, each of such styles including a specific arrangement of design elements; a processor-based contributor device configured to accept input of video content and electronically transfer such video content to the production server via a network; and a processor-based administrator device, providing a user interface that permits a user to: (i) design new styles and modify the existing styles, (ii) specify rules as to when particular styles are to apply, and (iii) electronically communicate the new and modified styles and the rules to the production server. The production server applies the specified rules in relation to user-specific information in order to select a style and applies the selected style to generate a completed show.

Owner:VUMANITY MEDIA

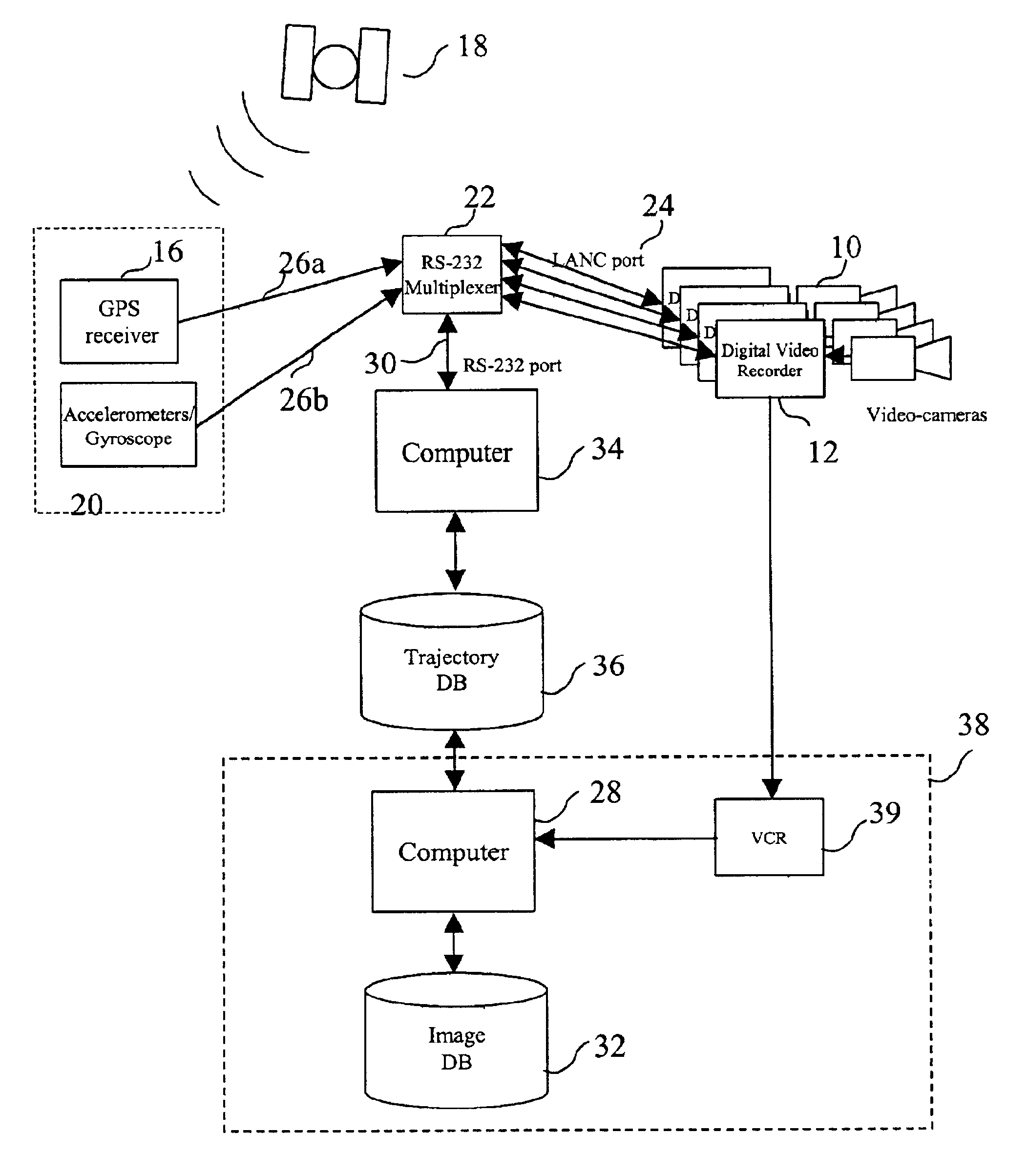

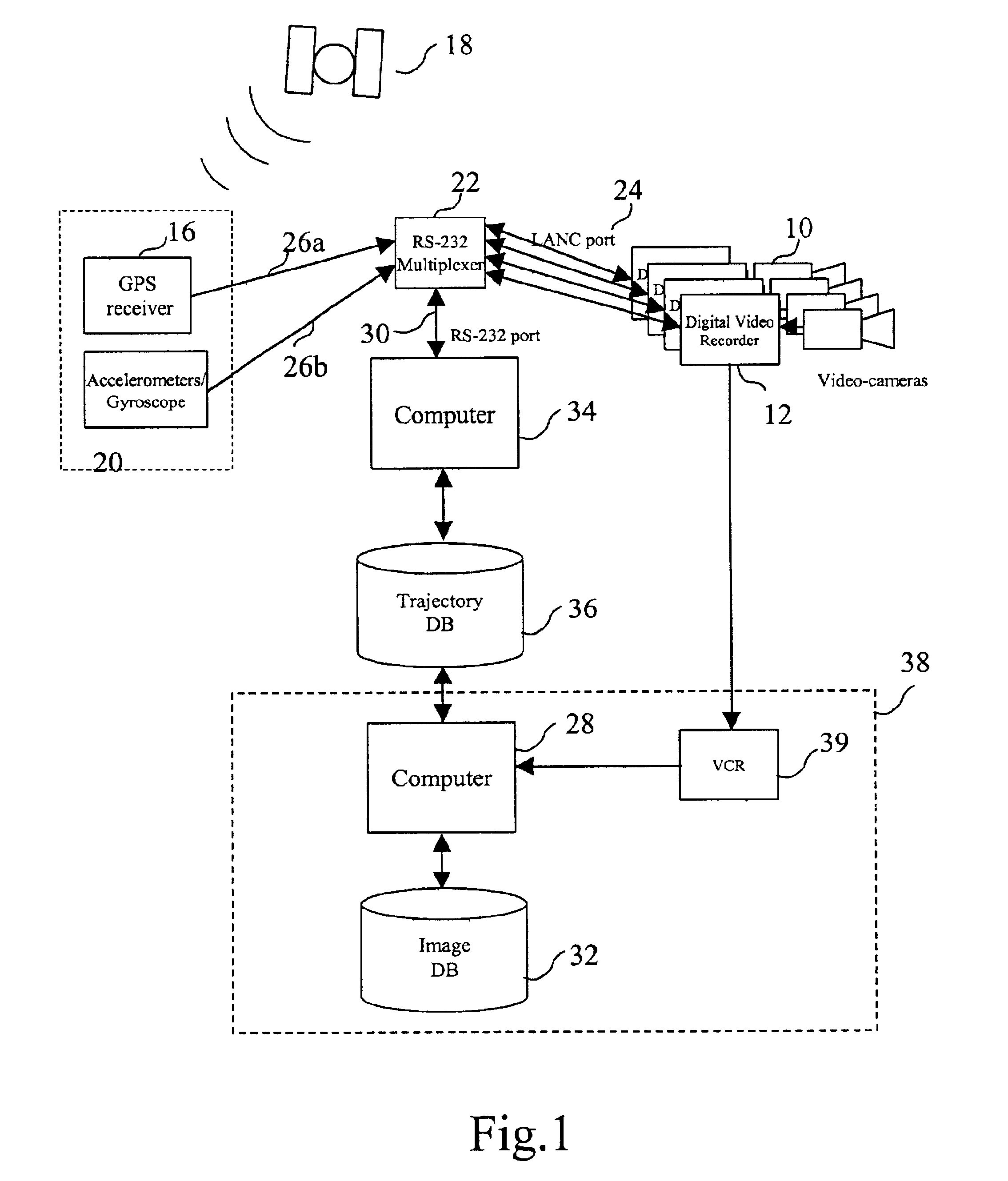

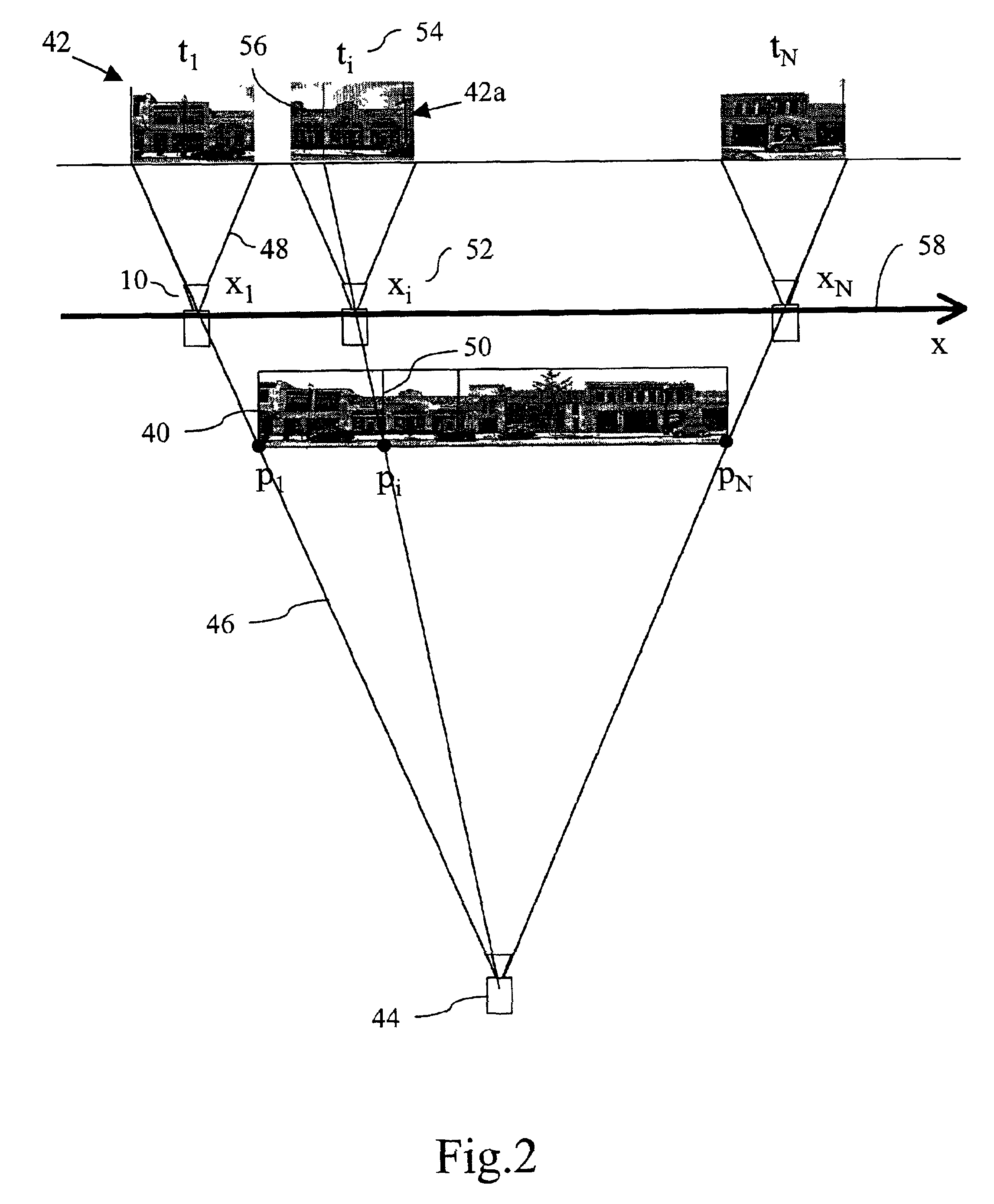

System and method for creating, storing, and utilizing composite images of a geographic location

InactiveUS6895126B2Television system detailsInstruments for road network navigationGps receiverGeolocation

A system and method synthesizing images of a locale to generate a composite image that provide a panoramic view of the locale. A video camera moves along a street recording images of objects along the street. A GPS receiver and inertial navigation system provide the position of the camera as the images are being recorded. The images are indexed with the position data provided by the GPS receiver and inertial navigation system. The composite image is created on a column-by-column basis by determining which of the acquired images contains the desired pixel column, extracting the pixels associated with the column, and stacking the columns side by side. The composite images are stored in an image database and associated with a street name and number range of the street being depicted in the image. The image database covers a substantial amount of a geographic area allowing a user to visually navigate the area from a user terminal.

Owner:VEDERI

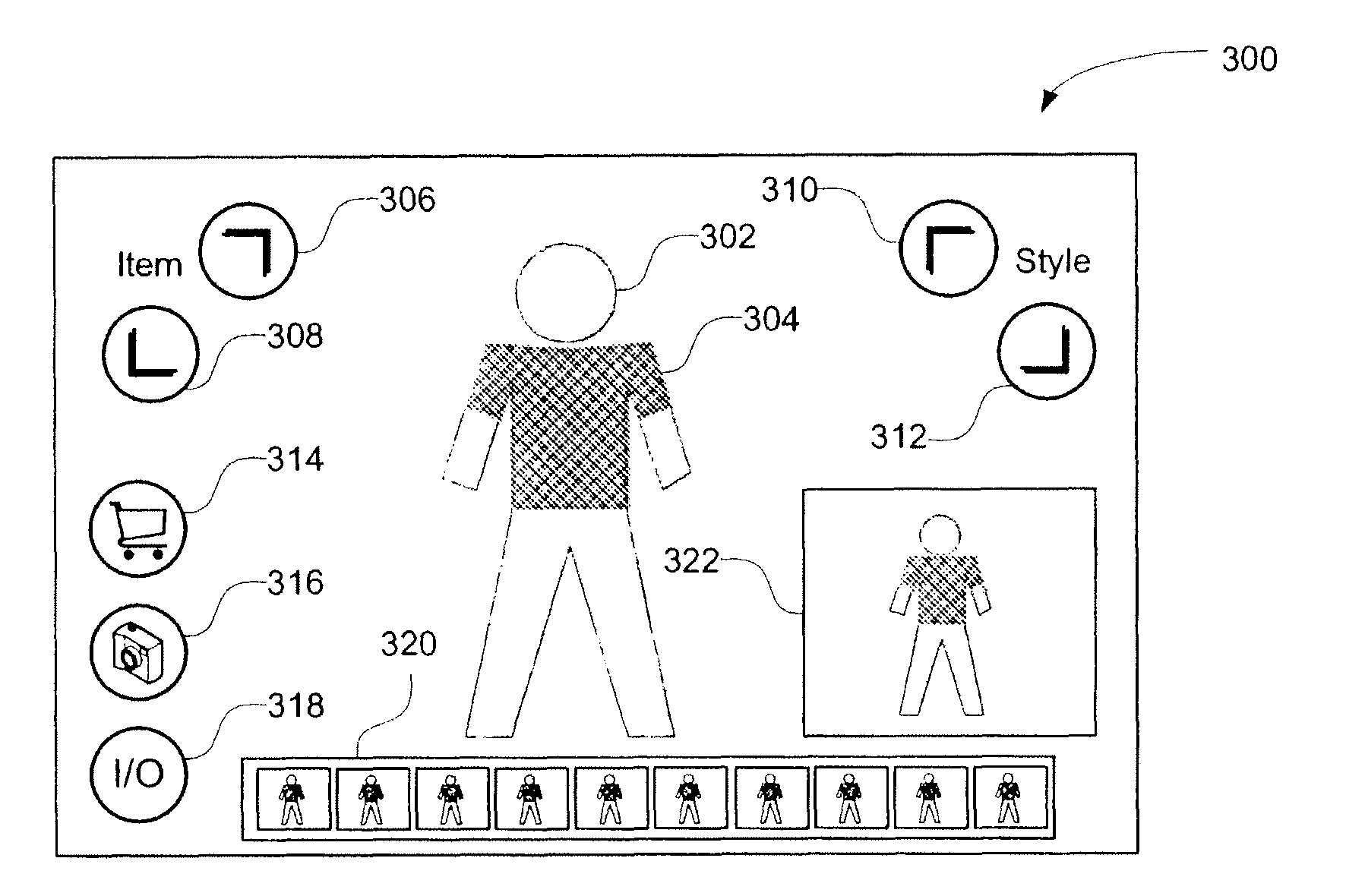

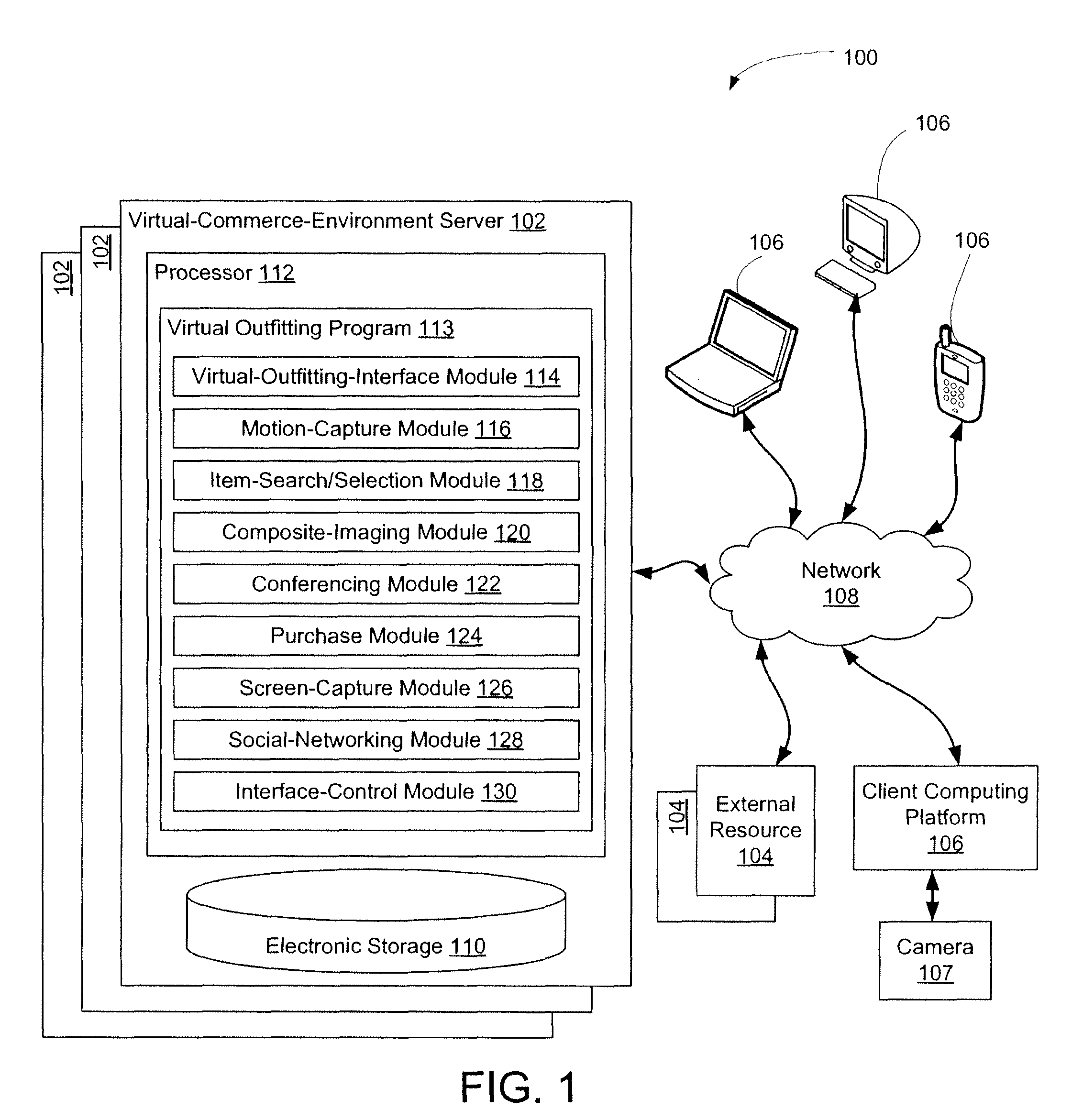

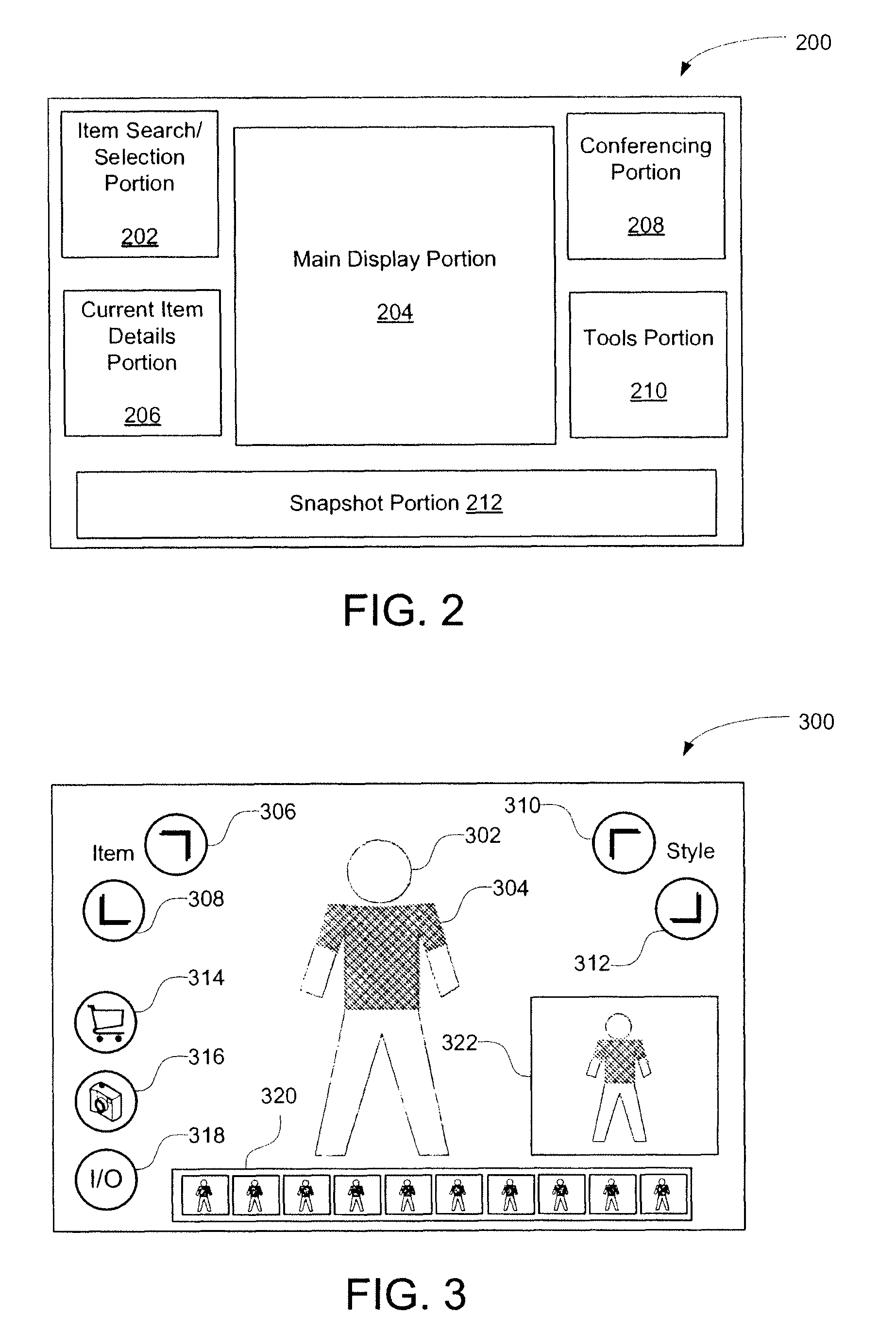

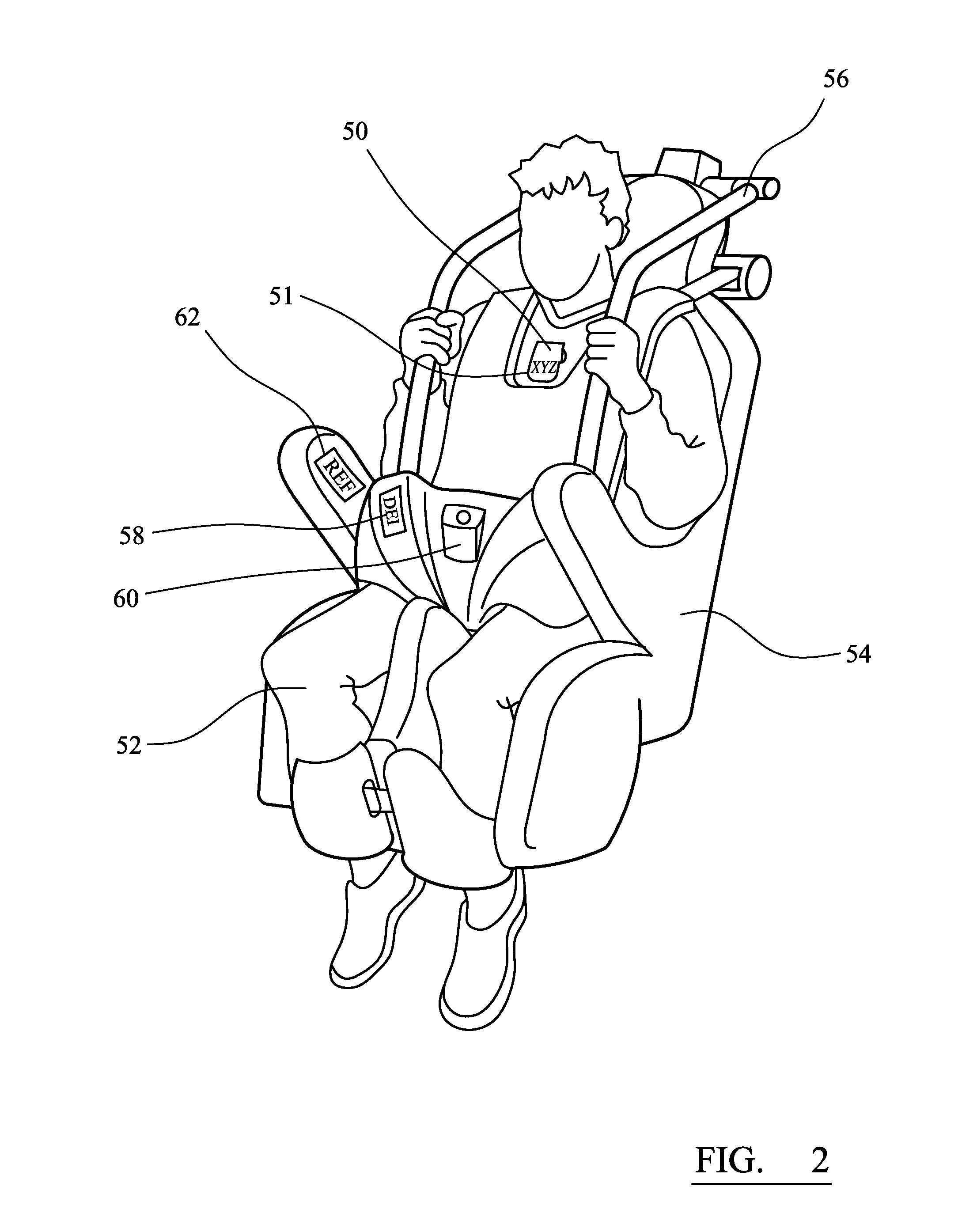

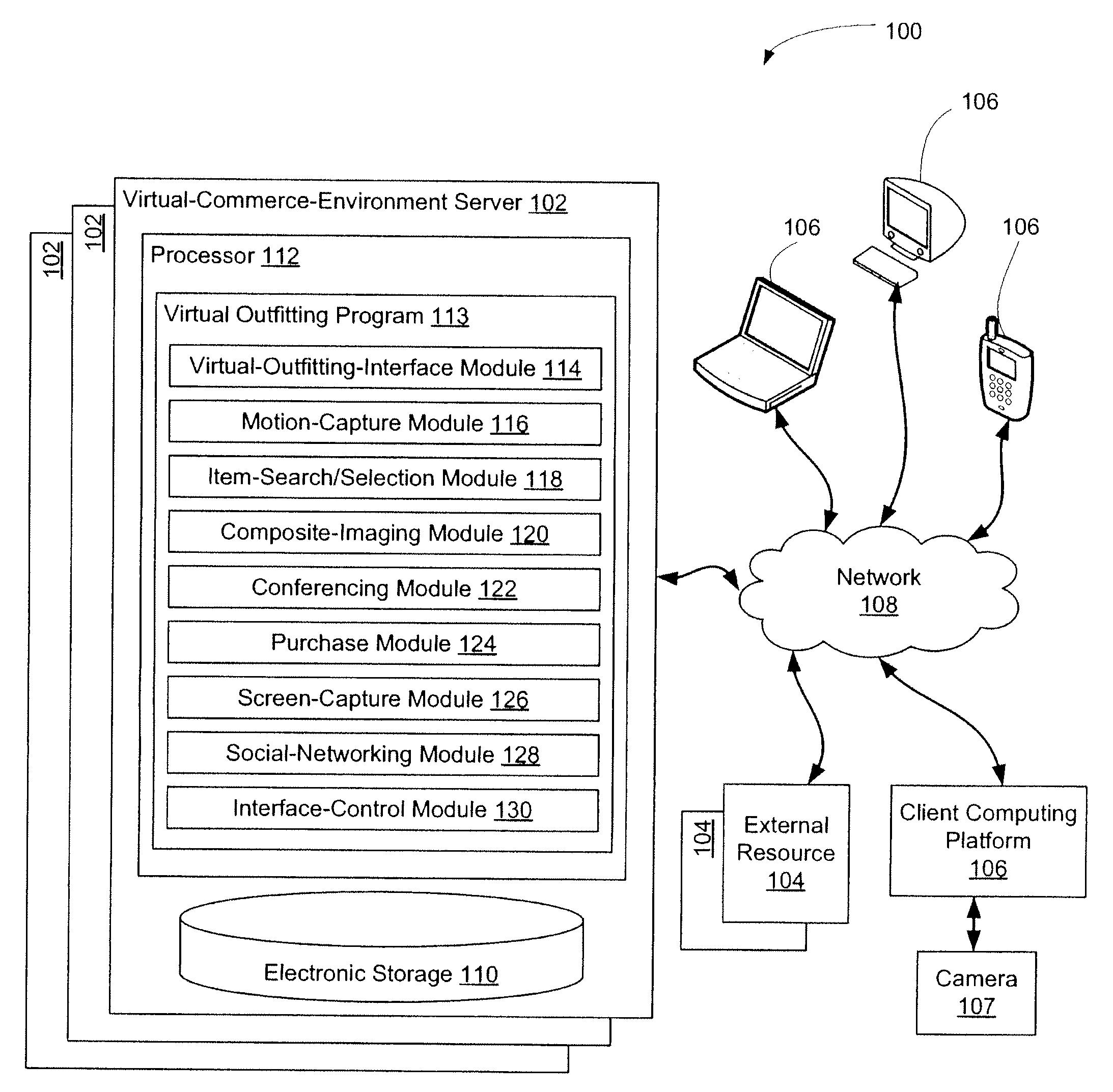

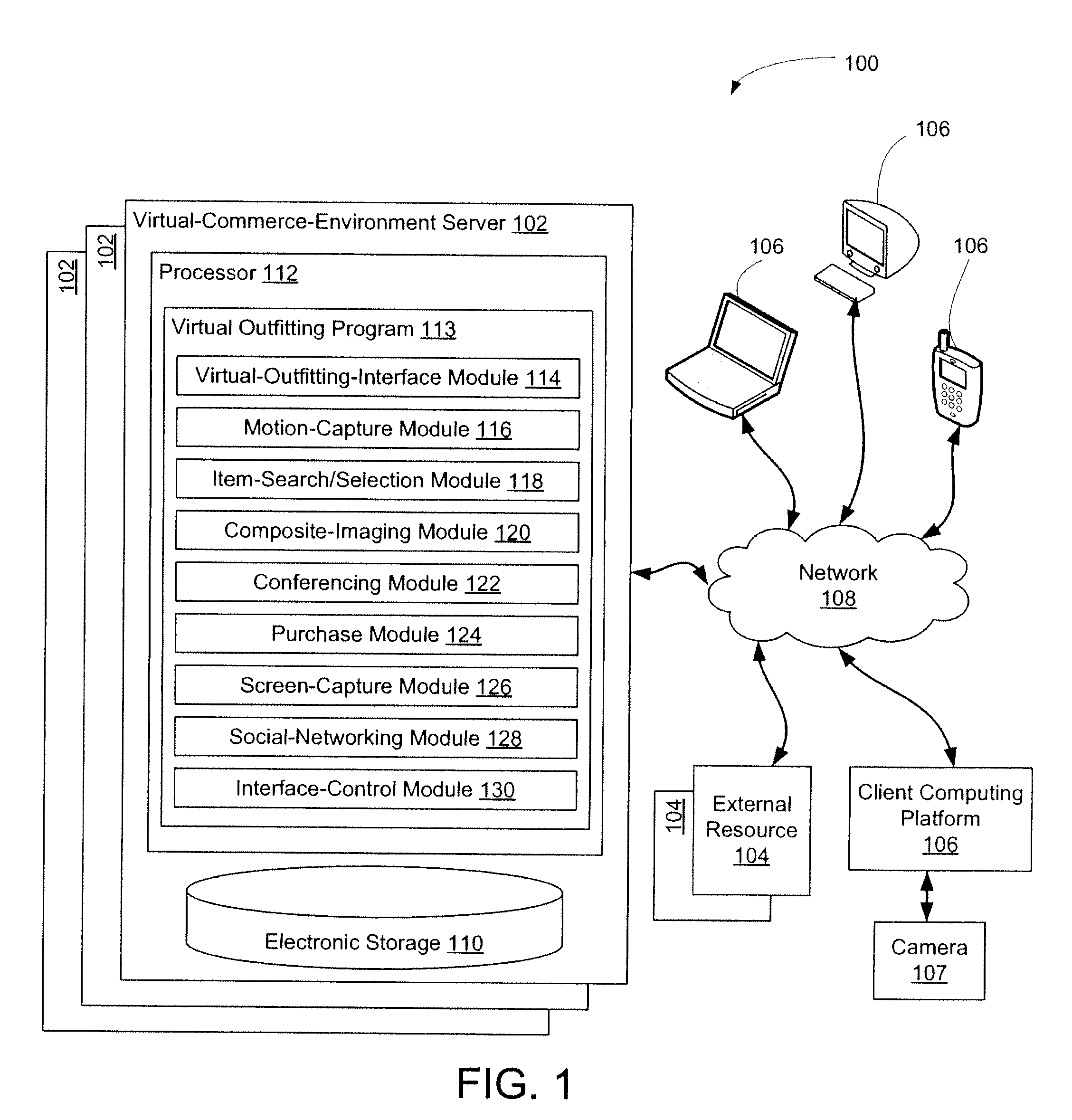

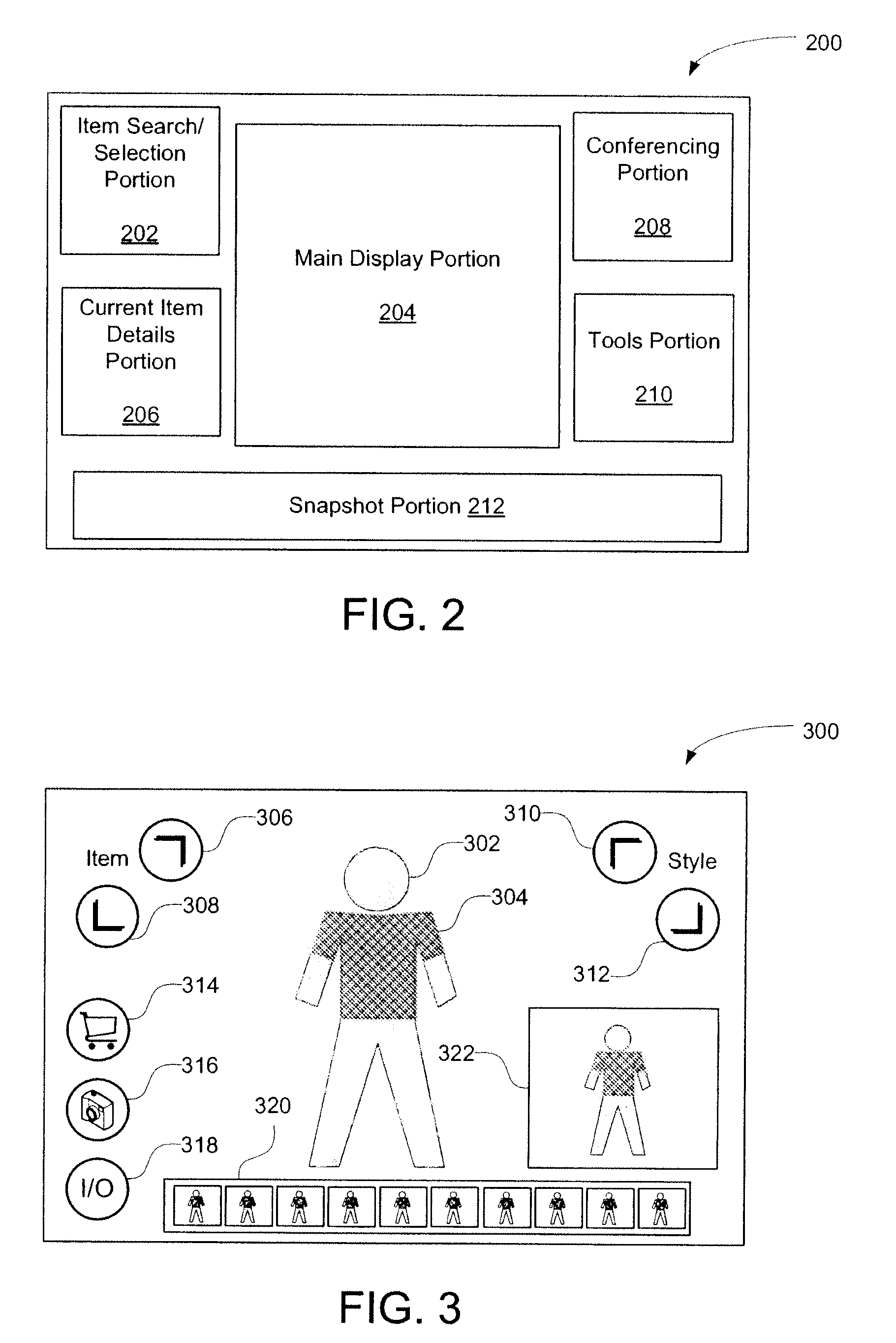

Providing a simulation of wearing items such as garments and/or accessories

ActiveUS8275590B2Good lookingImprove overall senseComplete banking machinesCathode-ray tube indicatorsComposite videoMultimedia

A user may simulate wearing real-wearable items, such as virtual garments and accessories. A virtual-outfitting interface may be provided for presentation to the user. An item-search / selection portion within the virtual-outfitting interface may be provided. The item-search / selection portion may depict one or more virtual-wearable items corresponding to one or more real-wearable items. The user may be allowed to select at least one virtual-wearable item from the item-search / selection portion. A main display portion within the virtual-outfitting interface may be provided. The main display portion may include a composite video feed that incorporates a video feed of the user and the selected at least one virtual-wearable item such that the user appears to be wearing the selected at least one virtual-wearable item in the main display portion.

Owner:ZUGARA

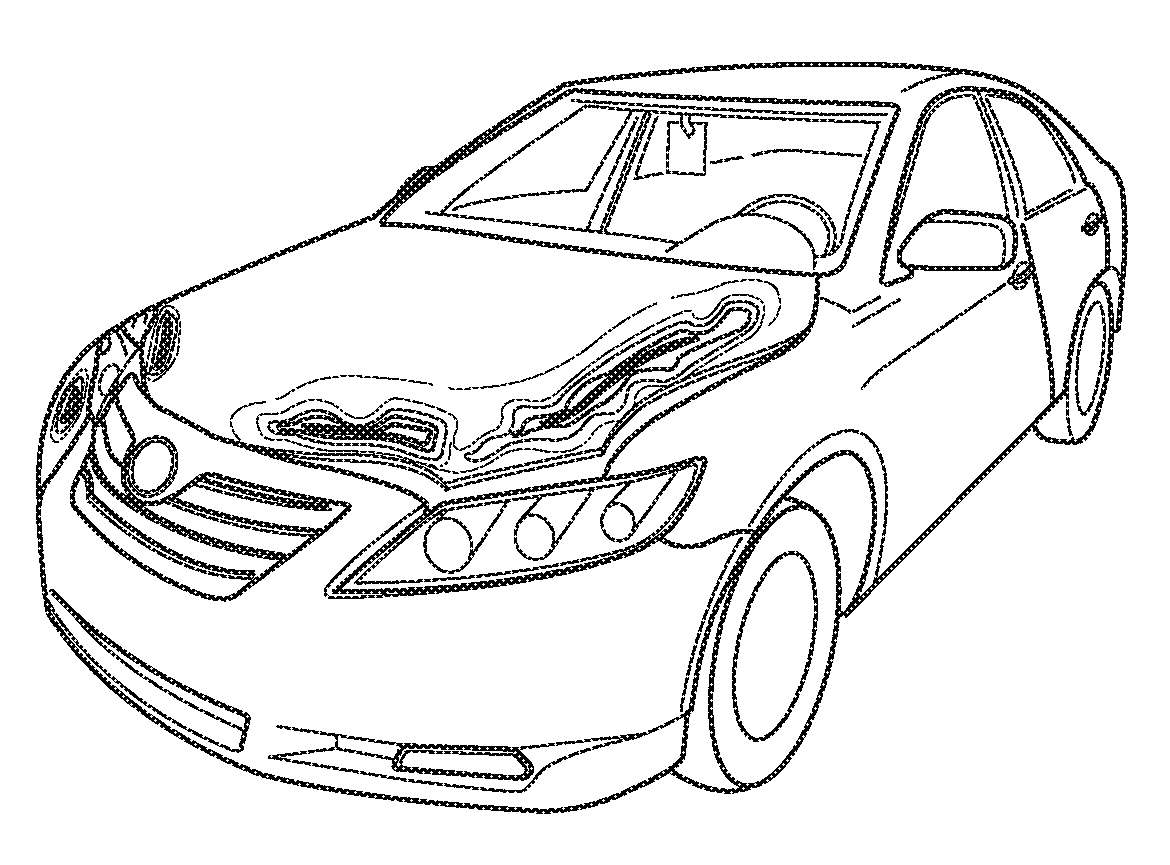

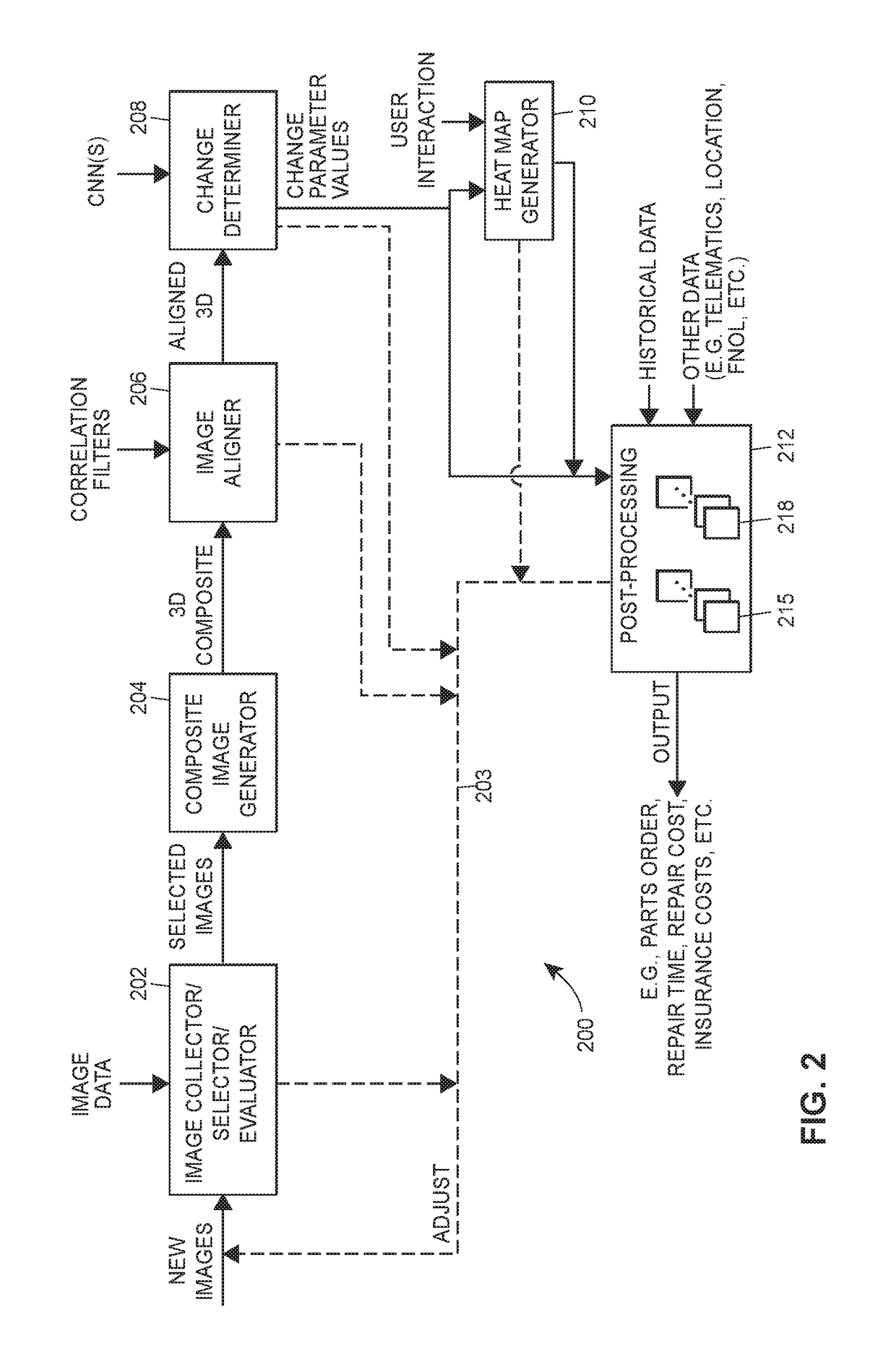

Heat map of vehicle damage

An image processing system and / or method obtains source images in which a damaged vehicle is represented, and performs image processing techniques to determine, predict, estimate, and / or detect damage that has occurred at various locations on the vehicle. The image processing techniques may include generating a composite image of the damaged vehicle, aligning and / or isolating the image, applying convolutional neural network techniques to the image to generate damage parameter values, where each value corresponds to damage in a particular location of vehicle, and / or other techniques. Based on the damage values, the image processing system / method generates and displays a heat map for the vehicle, where each color and / or color gradation corresponds to respective damage at a respective location on the vehicle. The heat map may be manipulatable by the user, and may include user controls for displaying additional information corresponding to the damage at a particular location on the vehicle.

Owner:CCC INFORMATION SERVICES

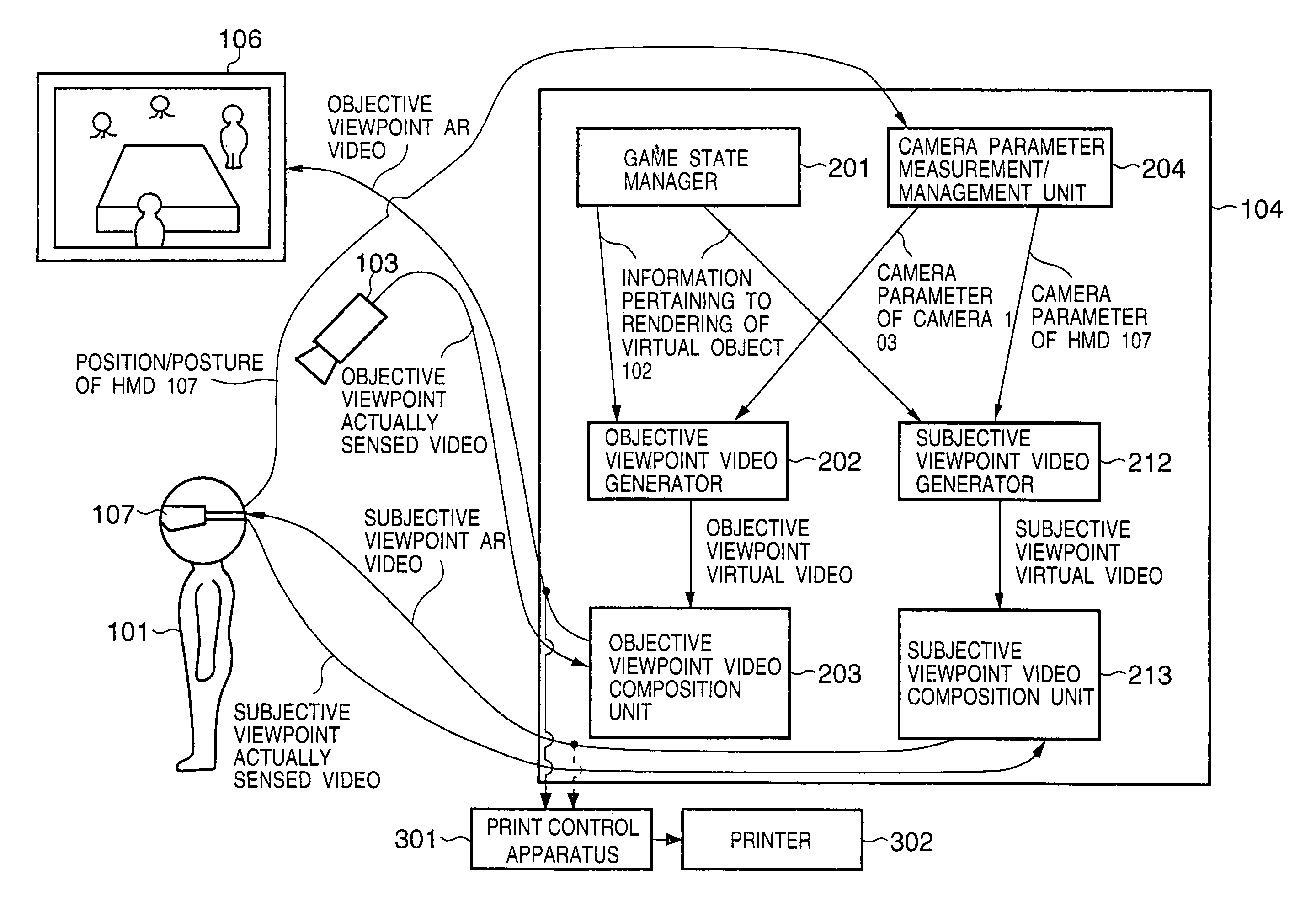

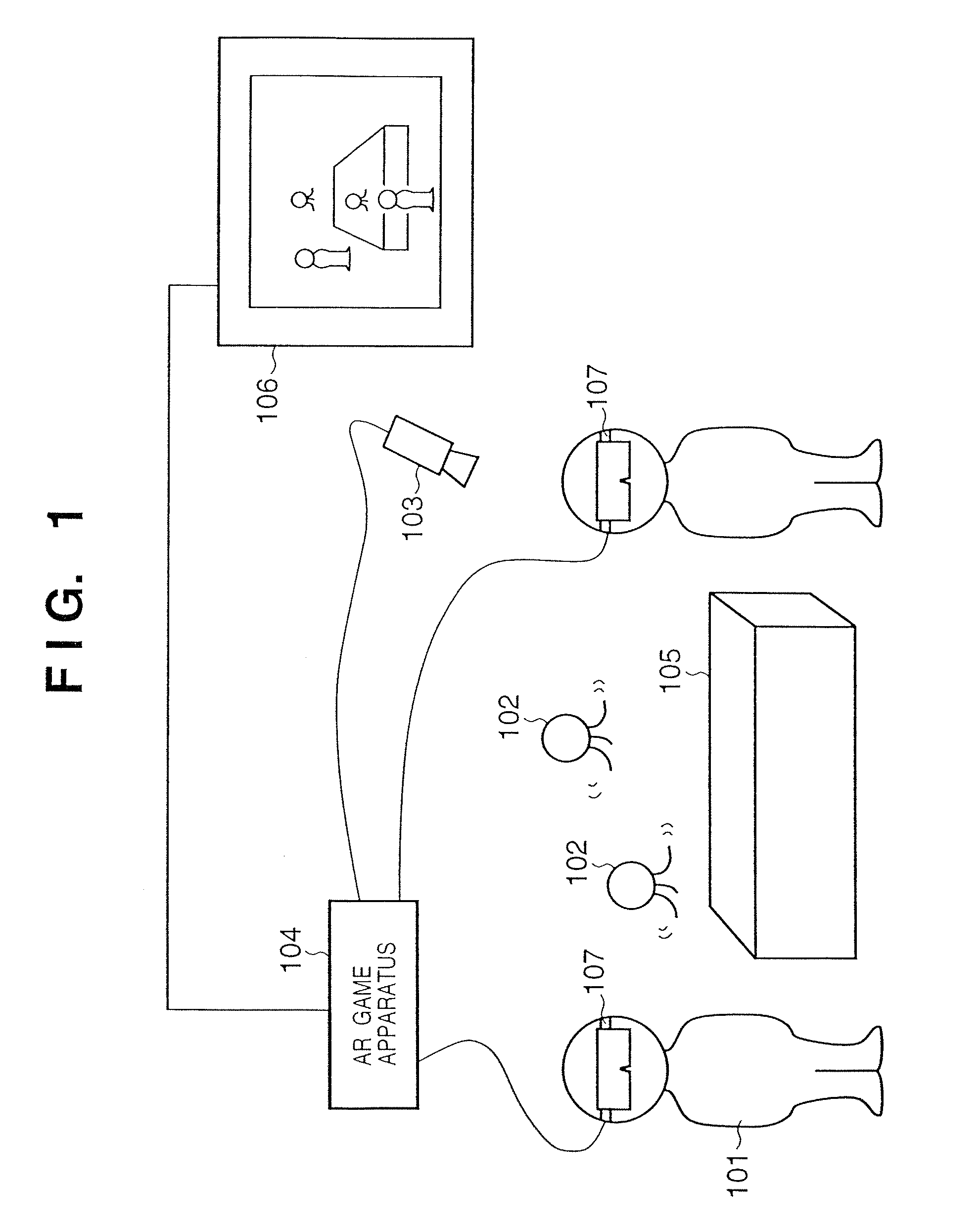

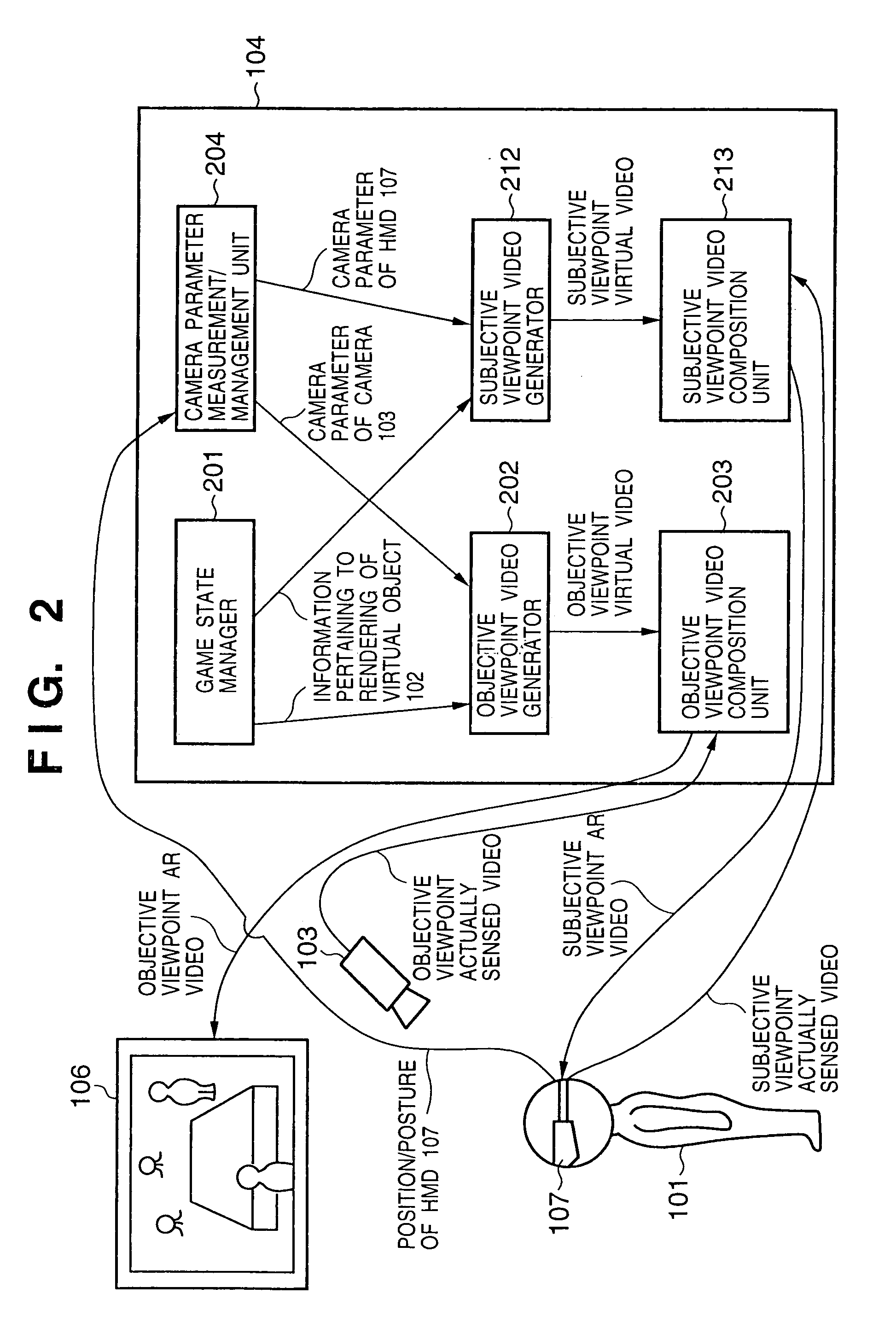

Augmented reality presentation apparatus and method, and storage medium

InactiveUS7289130B1Television system detailsCathode-ray tube indicatorsViewpointsComputer graphics (images)

A game state manager (201) manages the state of an AR game (information that pertains to rendering of each virtual object (102), the score of a player (101), the AR game round count, and the like). An objective viewpoint video generator (202) generates a video of each virtual object (102) viewed from a camera (103). An objective viewpoint video composition unit (203) generates a composite video of the video of the virtual object (102) and an actually sensed video, and outputs it to a display (106). A subjective viewpoint video generator (212) generates a video of the virtual object (102) viewed from an HMD (107). A subjective viewpoint video composition unit (213) generates a composite video of the video of the virtual object (102) and an actually sensed video, and outputs it to the HMD (107).

Owner:CANON KK

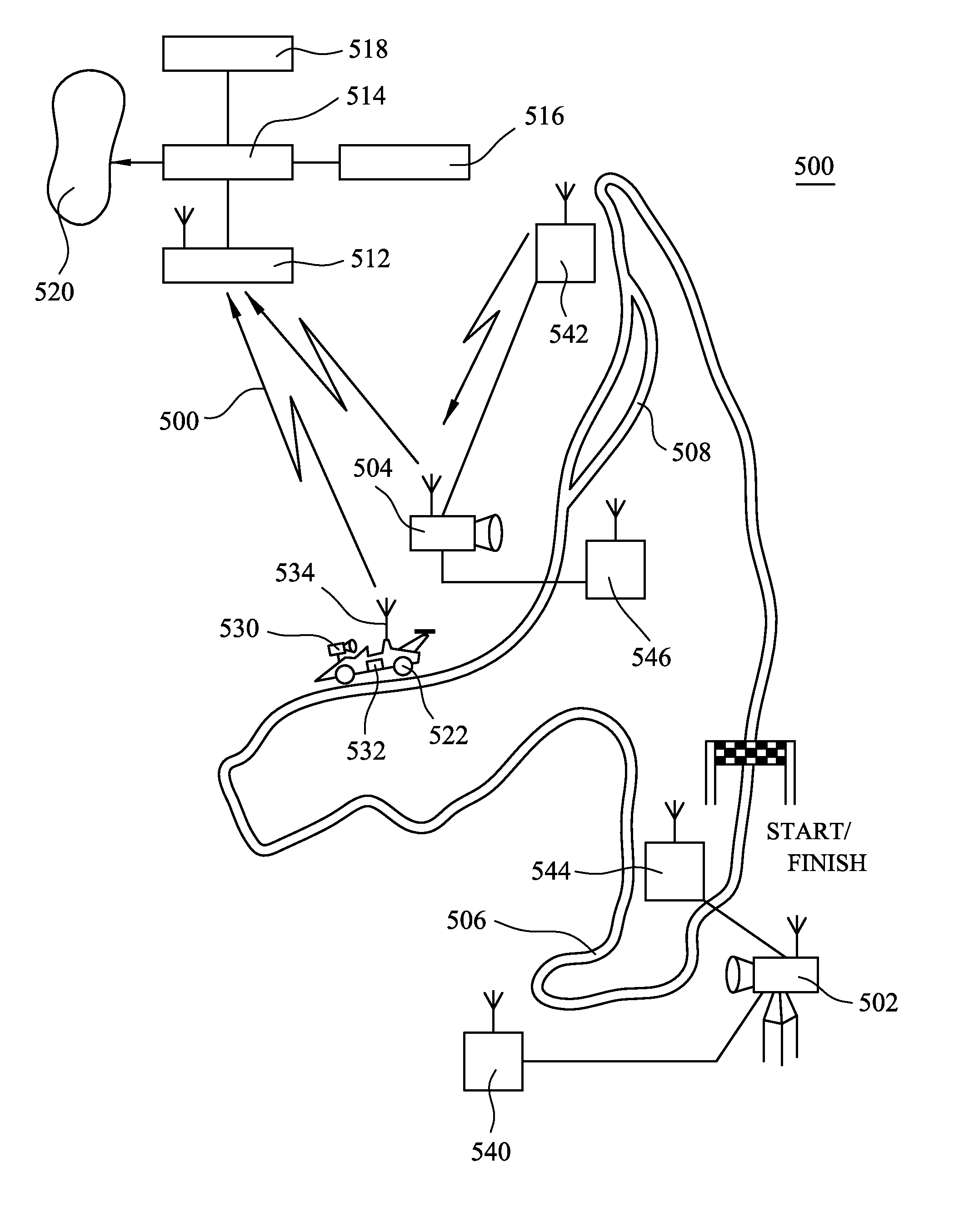

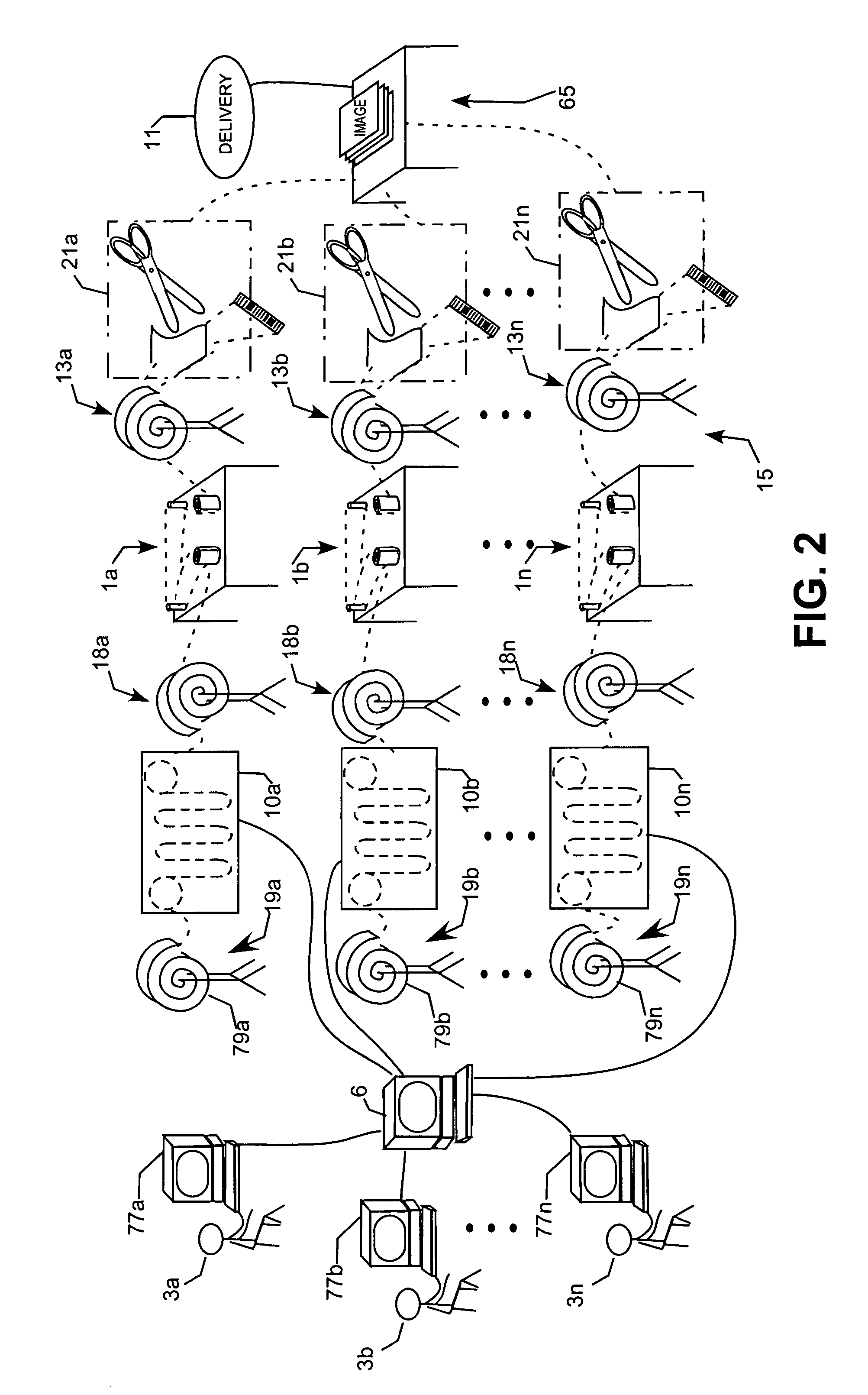

Video acquisition and compilation system and method of assembling and distributing a composite video

InactiveUS20120162436A1Loss of timeTelevision system detailsRegistering/indicating time of eventsComputer graphics (images)Image capture

FIG. 7 shows a camera system (700) that operates to time-stamp video content captured from multiple cameras (740) relative to a recorded and time-synchronized location of a portable tracking unit (722). The position of the cameras (740) is known to the system. Based on the time and position data for each uniquely identifiable tracking unit, an editing suite (770) automatically compiles a composite video made up from time-spliced video segments from the various cameras. Video or still images captured by the cameras (740)are cross-referenced against the client address stored in database (760) and related to the assigned, uniquely identifiable tracking unit (722). A server (750) is arranged to use the client address to send reminder messages, which reminder messages may include selected images taken by the composite video. Alternatively, a client (720) can use the client address to access the database and view the composite video. In the event that the client (720) wants to receive a fair copy of the composite video, the server (750) is arranged to process the request and send the composite video to the client. Streaming of multiple video feeds from different cameras that each encode synchronized time allows cross-referencing of stored client-specific data and, ultimately, the assembly of the resultant composite video that reflects a timely succession of events having direct relevant to the client (720).

Owner:E PLATE

Providing a simulation of wearing items such as garments and/or accessories

ActiveUS20110040539A1Improve overall senseGood lookingMultiple digital computer combinationsPayment architectureComposite videoMultimedia

A user may simulate wearing real-wearable items, such as virtual garments and accessories. A virtual-outfitting interface may be provided for presentation to the user. An item-search / selection portion within the virtual-outfitting interface may be provided. The item-search / selection portion may depict one or more virtual-wearable items corresponding to one or more real-wearable items. The user may be allowed to select at least one virtual-wearable item from the item-search / selection portion. A main display portion within the virtual-outfitting interface may be provided. The main display portion may include a composite video feed that incorporates a video feed of the user and the selected at least one virtual-wearable item such that the user appears to be wearing the selected at least one virtual-wearable item in the main display portion.

Owner:ZUGARA

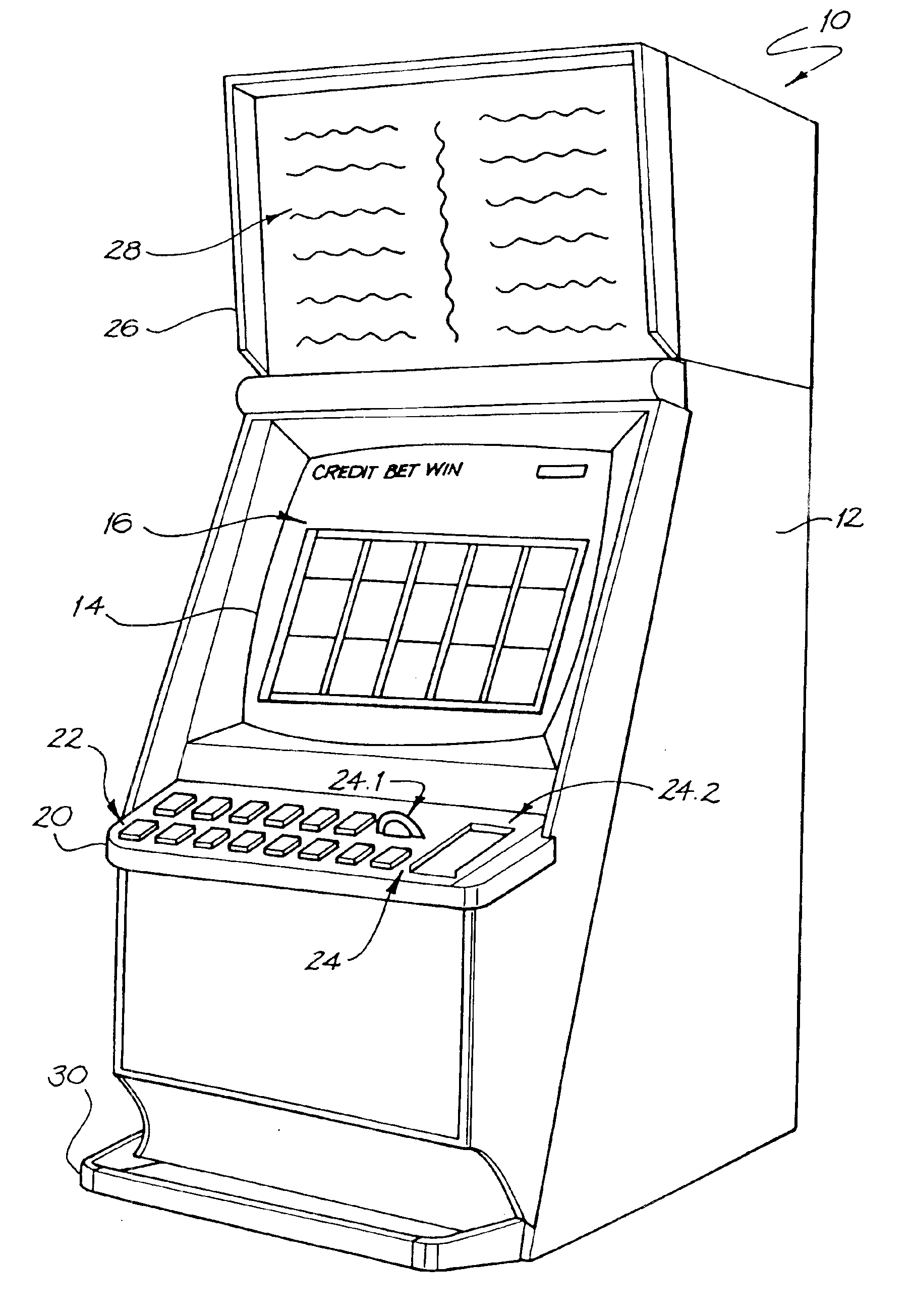

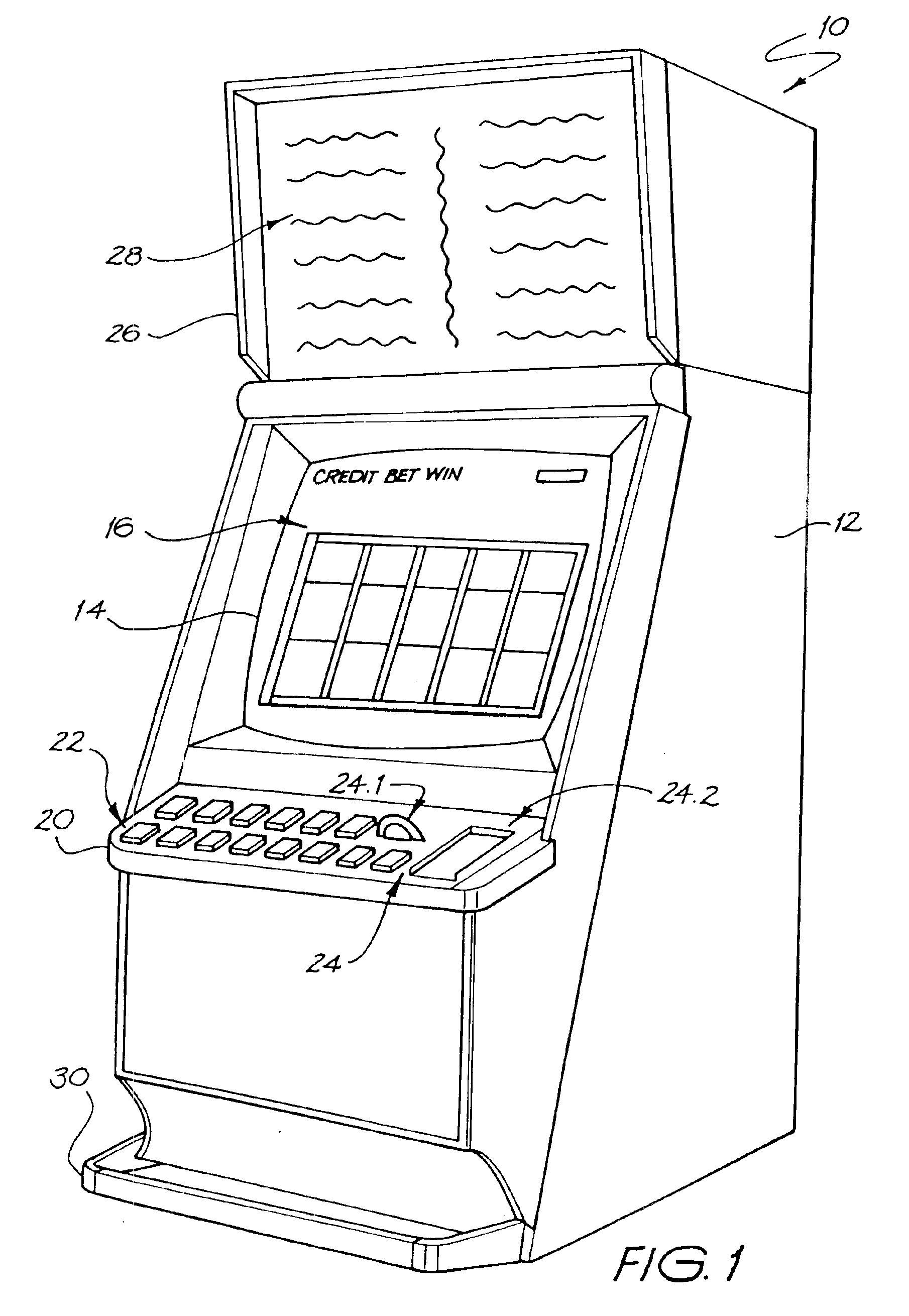

Gaming graphics

InactiveUS6866585B2High quality image compositionQuality improvementApparatus for meter-controlled dispensingVideo gamesGraphicsGame play

A gaming machine graphics package includes a storage device for storing data relating to non-varying parts of an image, the non-varying parts of the image being independent of an outcome of a game played on the gaming machine. An image generator generates simulated three-dimensional additional parts of the image, the additional parts being dependent on the game outcome. A compositor merges the non-varying parts of the image and the additional parts of the image to provide to the player a composite image relating to the game outcome.

Owner:ARISTOCRAT TECH AUSTRALIA PTY LTD

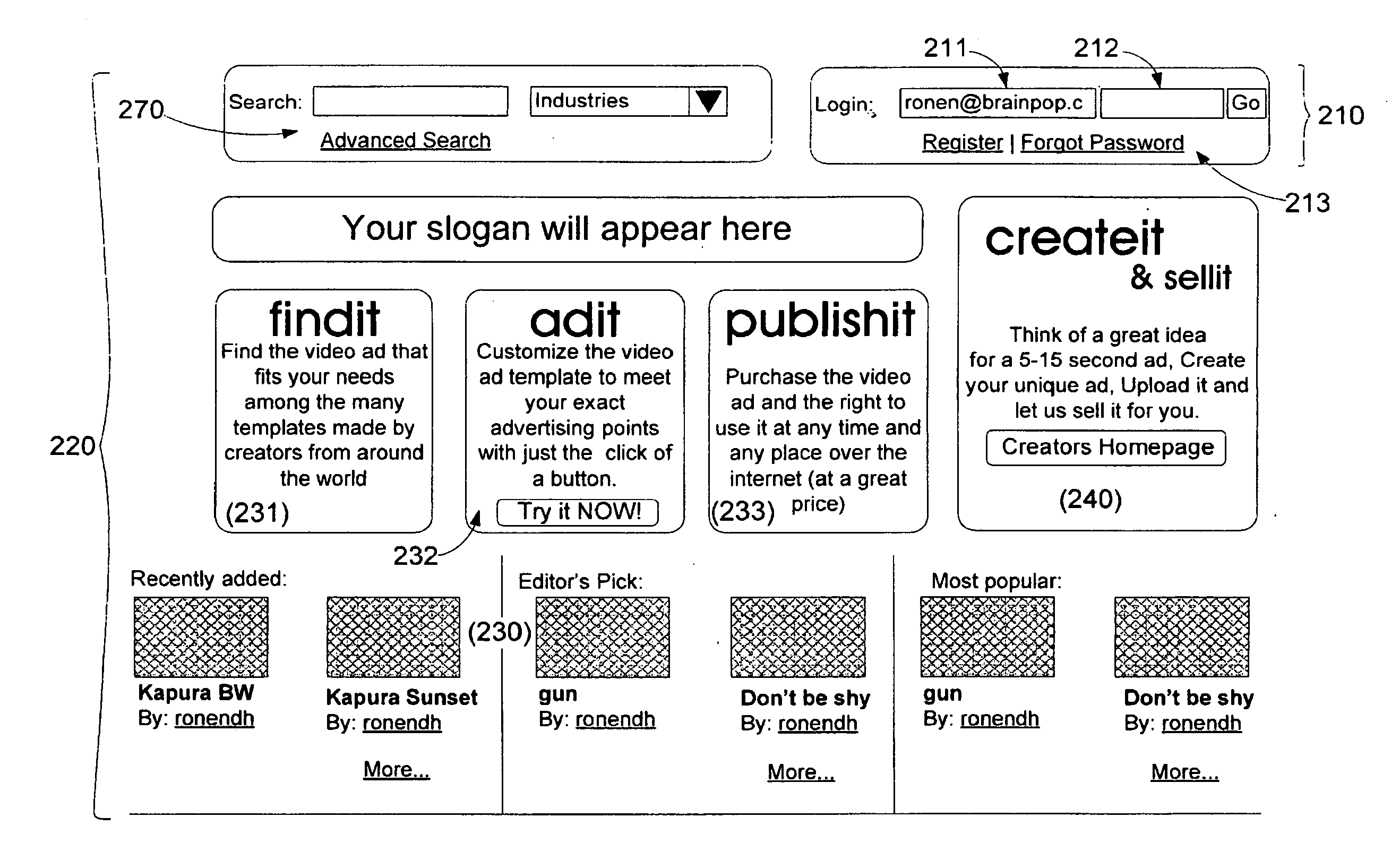

System and method for dynamic generation of video content

ActiveUS20100023863A1Full creative flexibilityCompetitive priceAdvertisementsElectronic editing digitised analogue information signalsWeb siteE-commerce

An automated system method for dynamically generating a composite video clip by a computer. In some embodiments of the invention, a template may be provided including at least one digital media asset and one or more placeholders, each placeholder associated with a respective dynamic content object and a respective layout. The system and method may extract from one or more websites, for example, e-commerce sites, data items corresponding to each of the dynamic content objects, and generated a composite video clip including the media asset, wherein the dynamic content objects are replaced with respective extracted data and presented in association with the media asset based on their respective layouts.

Owner:ADITALL LLC

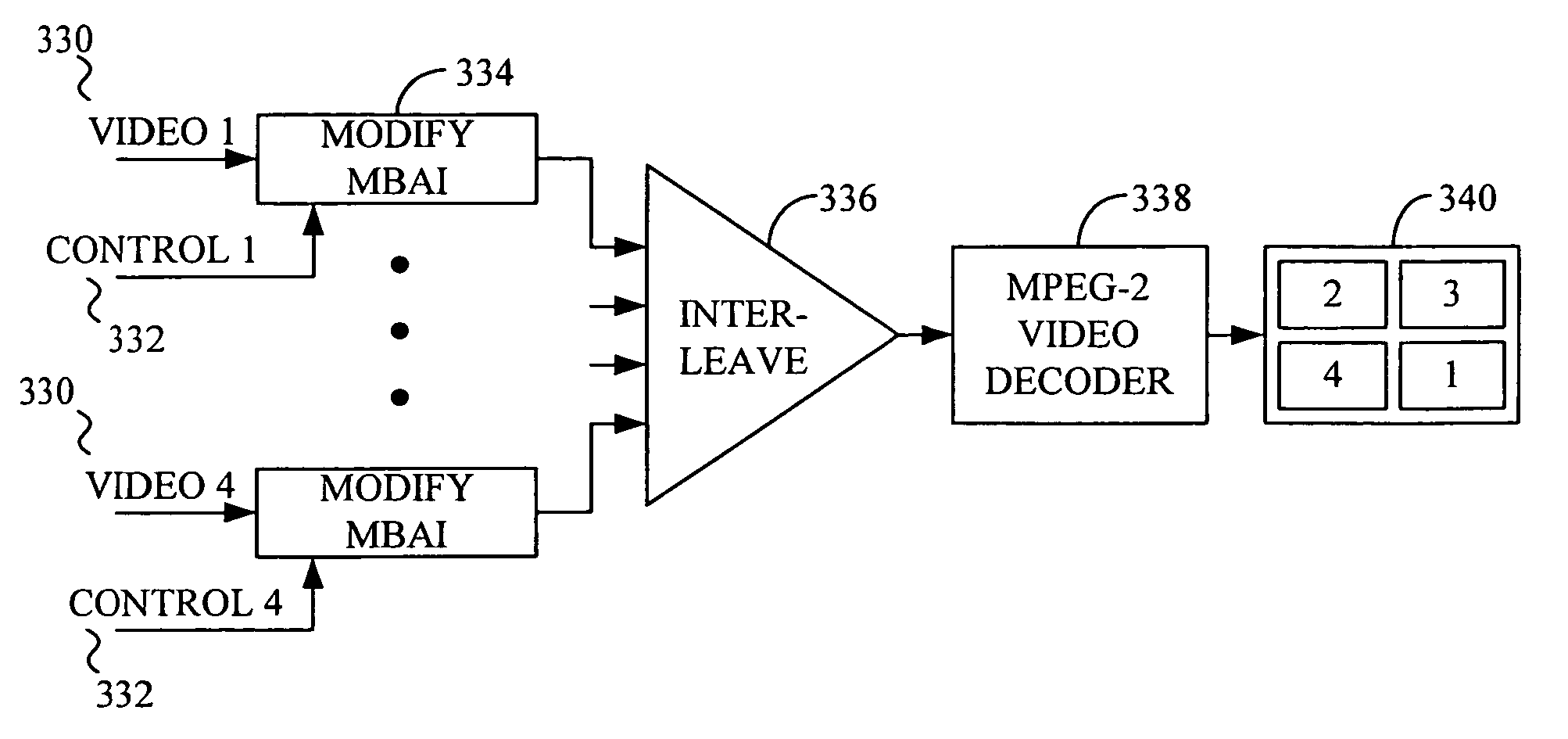

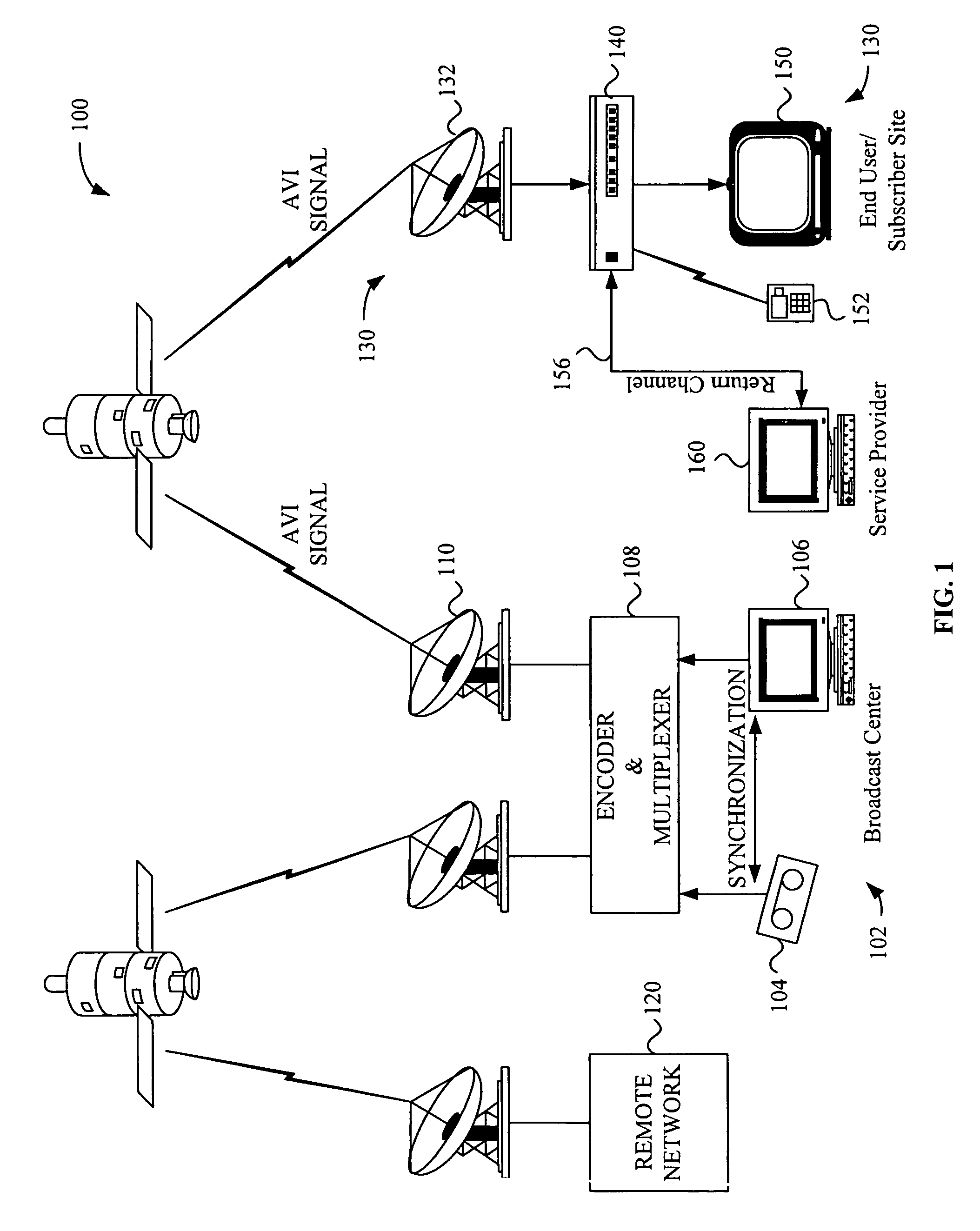

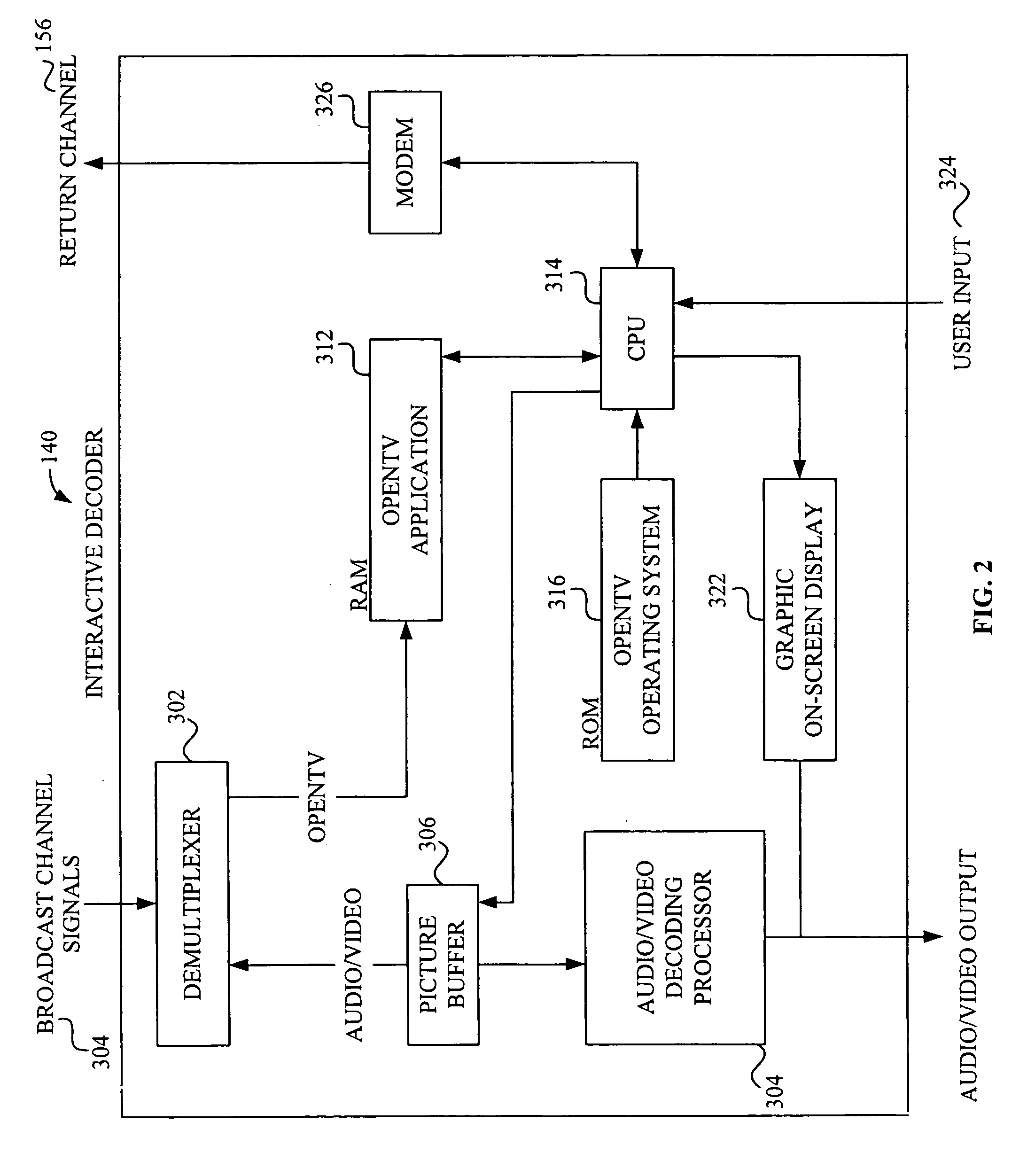

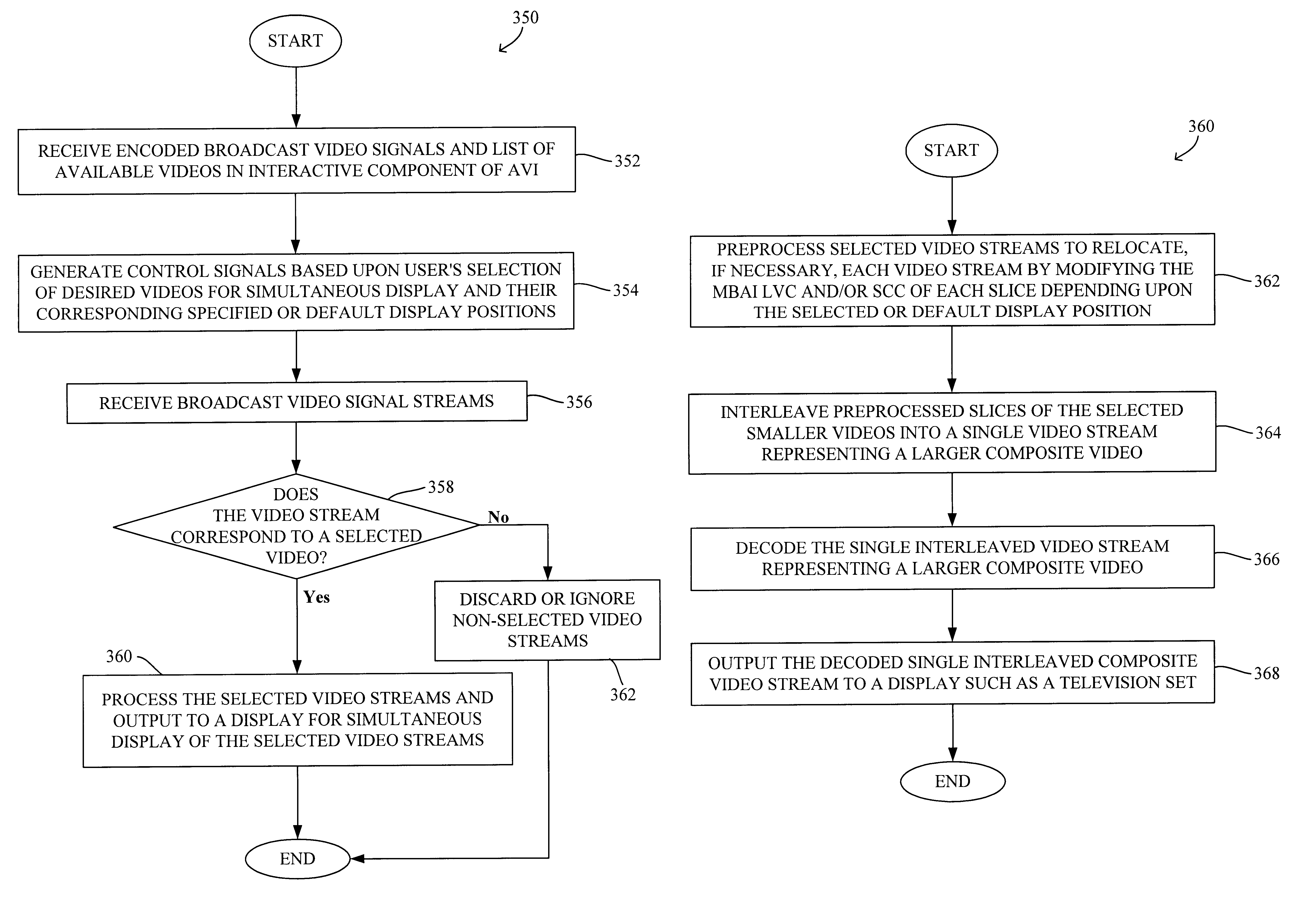

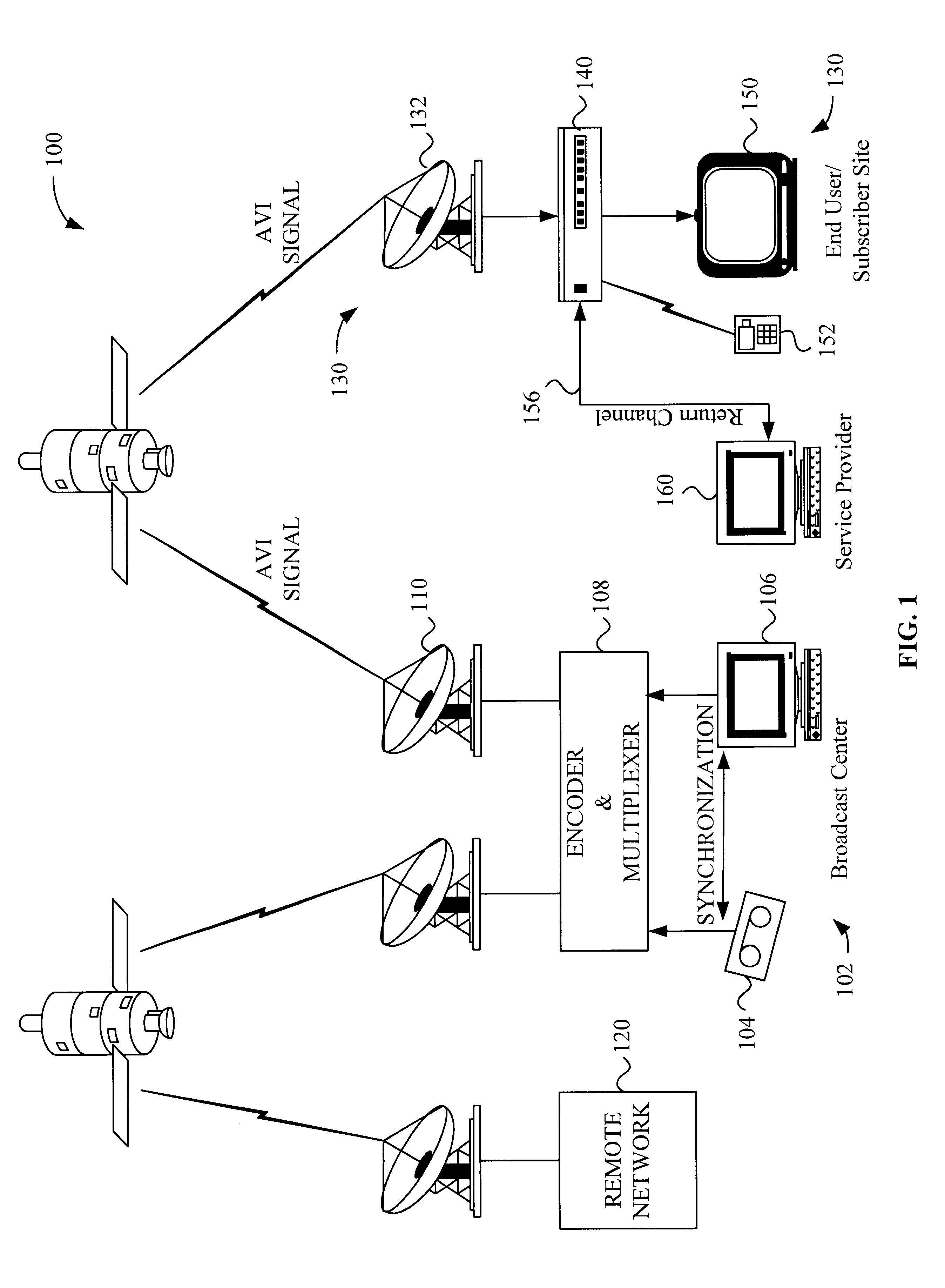

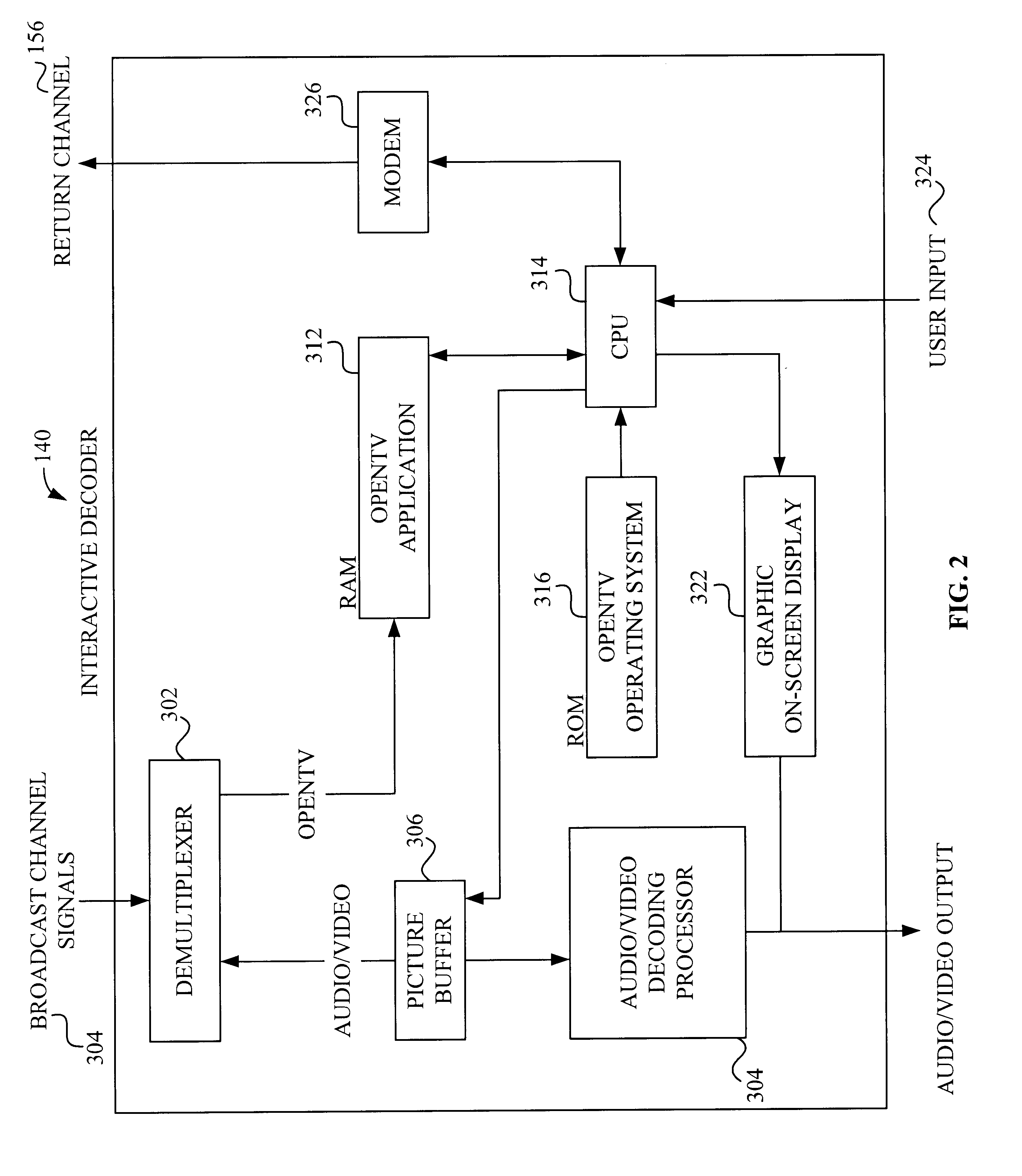

Interactive television system and method for simultaneous transmission and rendering of multiple MPEG-encoded video streams

InactiveUS6931660B1Television system detailsPulse modulation television signal transmissionTelevision systemDigital video

A system and method for the simultaneous transmission and rendition of multiple MPEG-encoded digital video signal streams in an interactive television application are disclosed. Simultaneous transmission and rendition of multiple MPEG-encoded digital video signal streams in an interactive television application generally comprises determining a value for a display position code corresponding to a display position of each slice of each of the MPEG-encoded video streams, modifying the value of the display position code of each slice of each of the MPEG-encoded video streams as necessary, and interleaving each slice of each of the MPEG-encoded video streams as modified into a single composite video stream. The modifying preferably maintains bit-alignment of the display position code within a byte. The MPEG-encoded video streams are optionally MPEG-1 or MPEG-2 encoded video streams and the display position code is optionally a macroblock address increment variable length codeword and / or at least a byte of a slice startcode.

Owner:OPEN TV INC

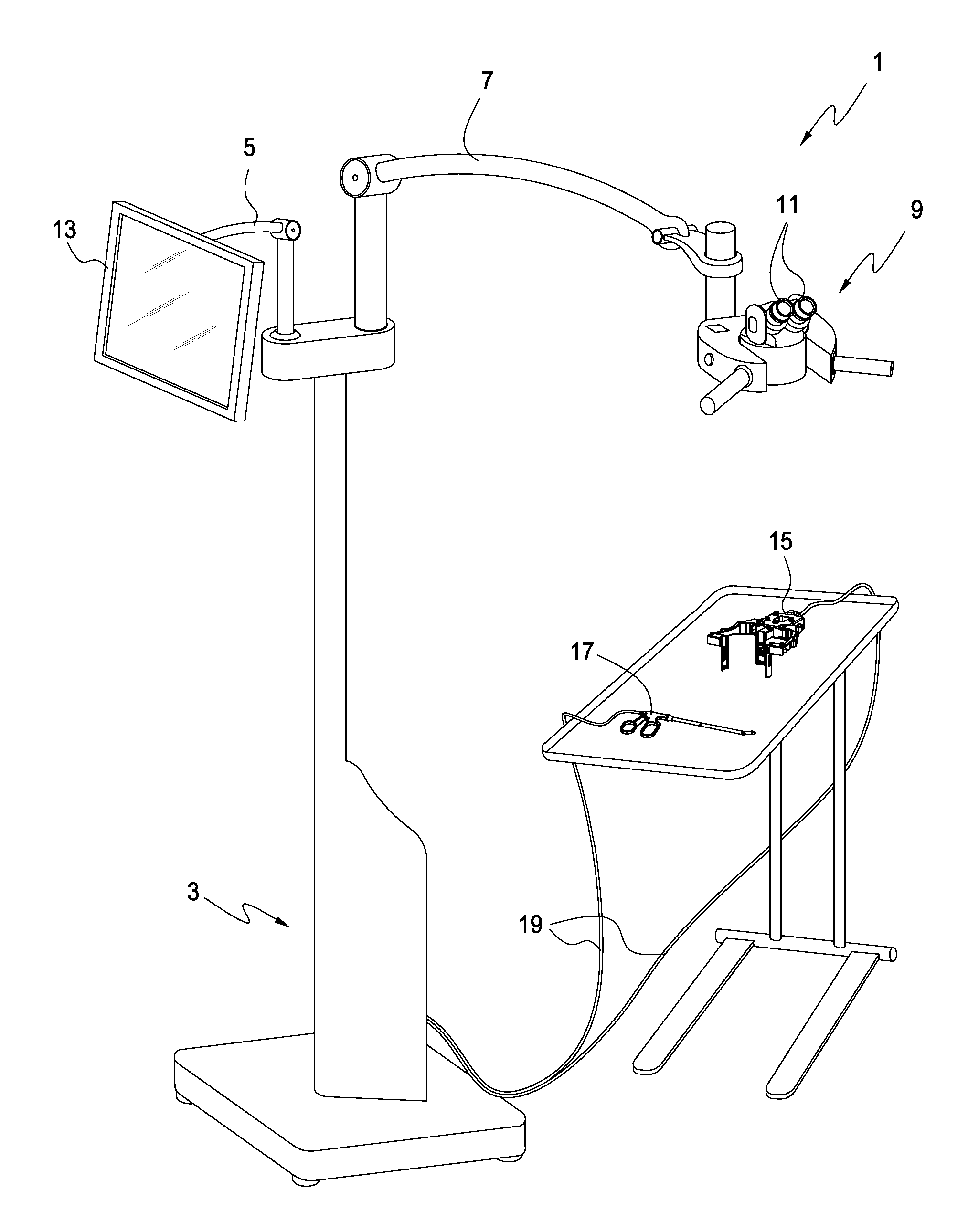

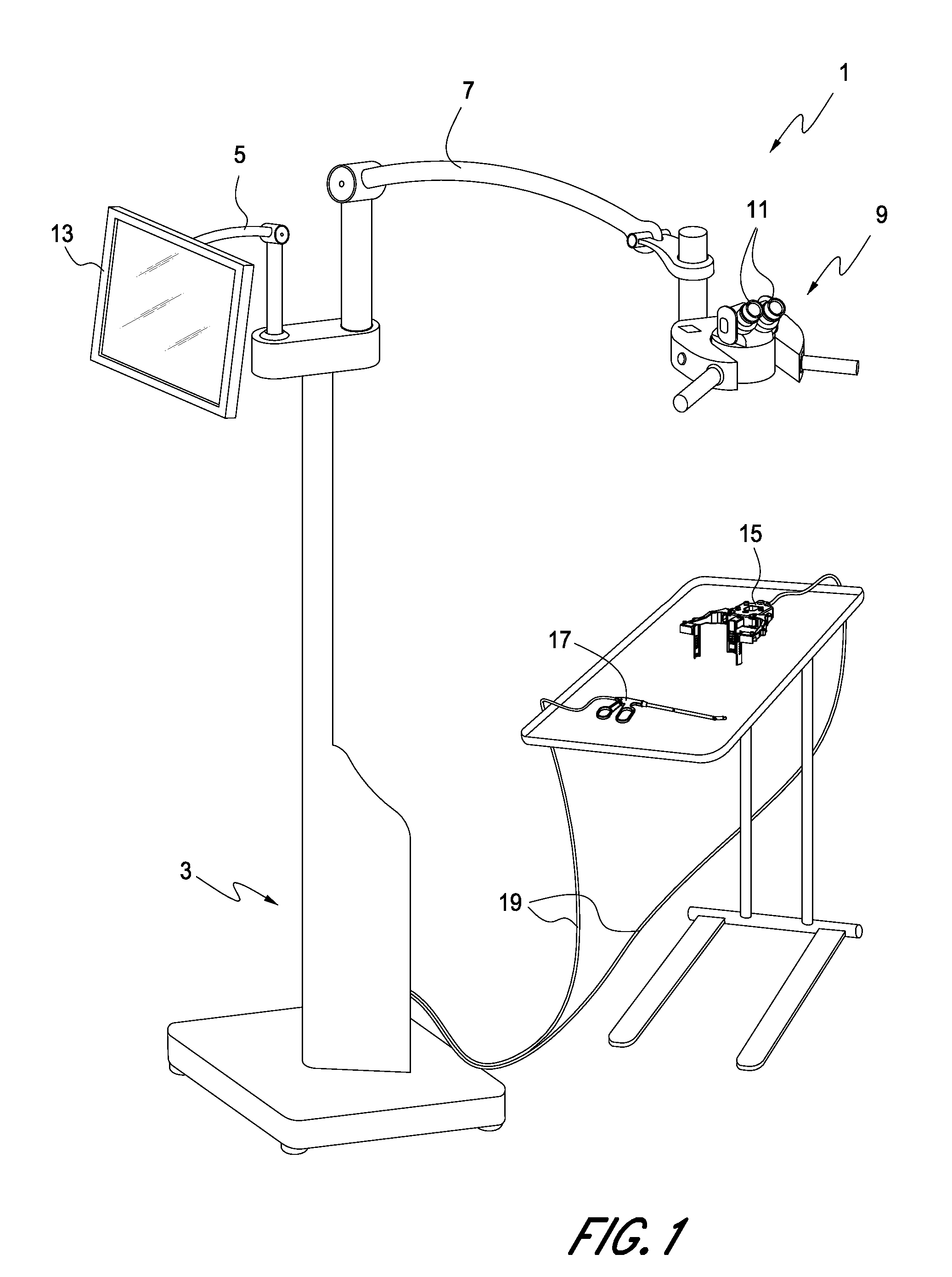

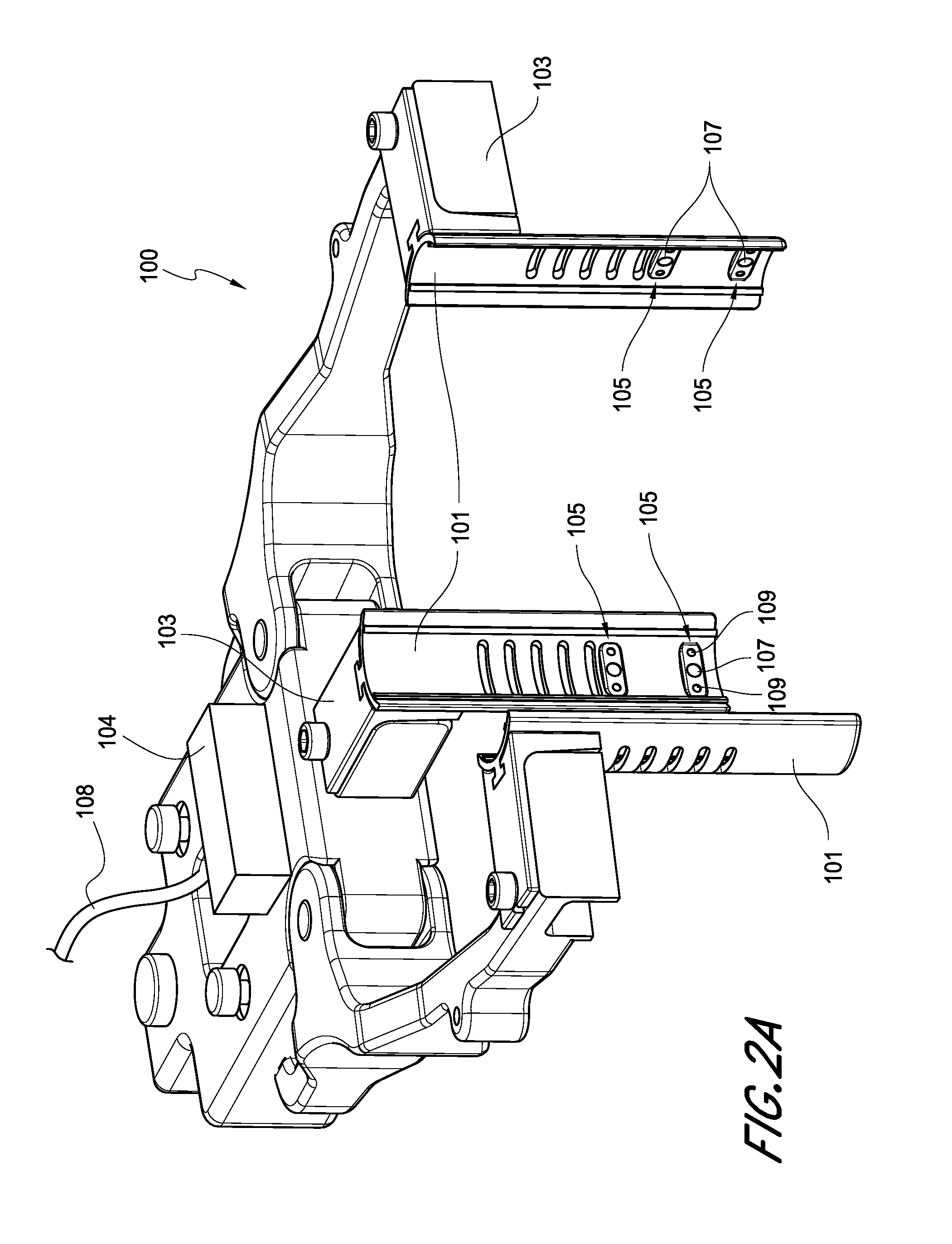

Optical assembly providing a surgical microscope view for a surgical visualization system

ActiveUS20140005555A1Different stiffnessMedical imagingSurgical furnitureSurgical operationSurgical microscope

A surgical device includes a plurality of cameras integrated therein. The view of each of the plurality of cameras can be integrated together to provide a composite image. A surgical tool that includes an integrated camera may be used in conjunction with the surgical device. The image produced by the camera integrated with the surgical tool may be associated with the composite image generated by the plurality of cameras integrated in the surgical device. The position and orientation of the cameras and / or the surgical tool can be tracked, and the surgical tool can be rendered as transparent on the composite image. A surgical device may be powered by a hydraulic system, thereby reducing electromagnetic interference with tracking devices.

Owner:CAMPLEX

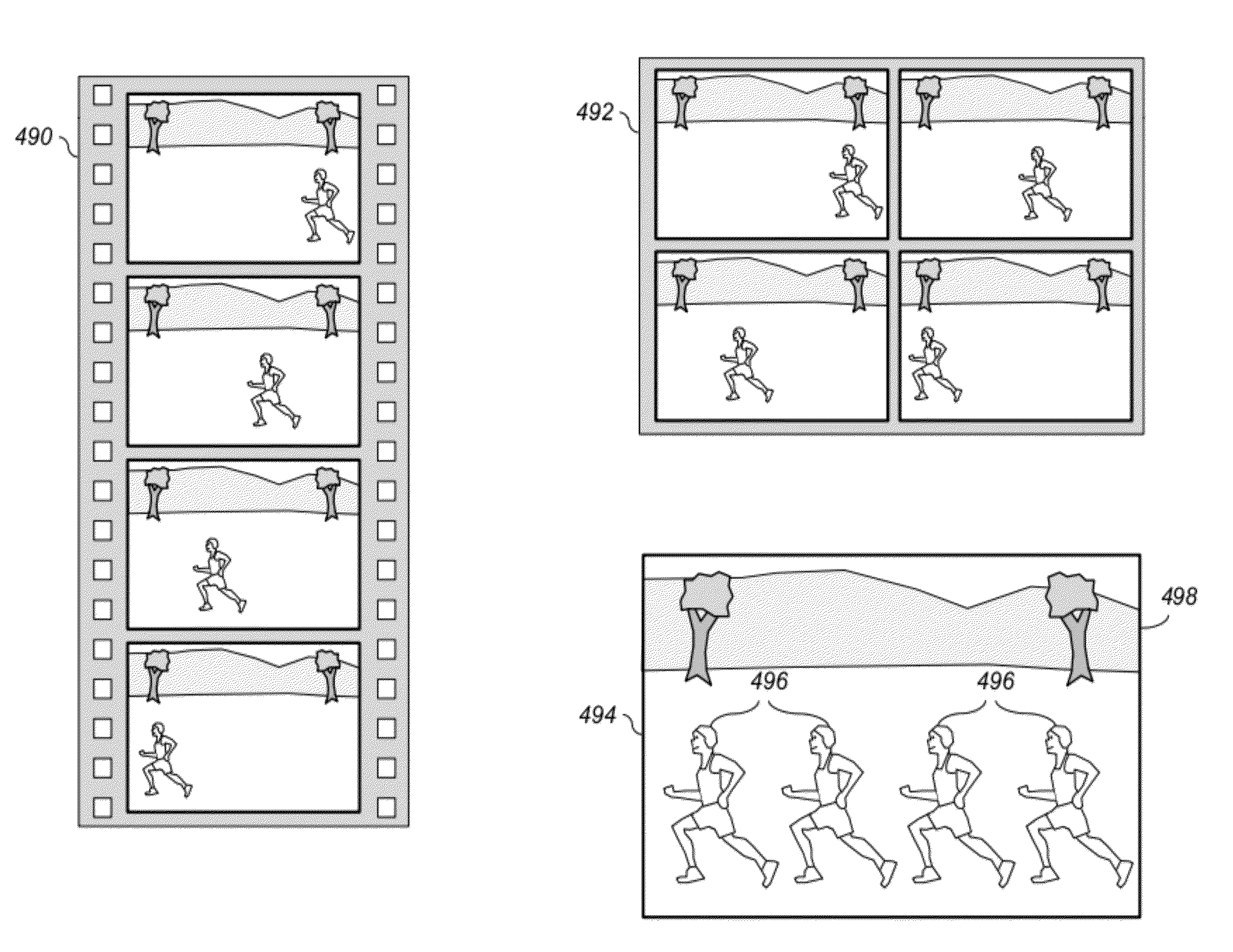

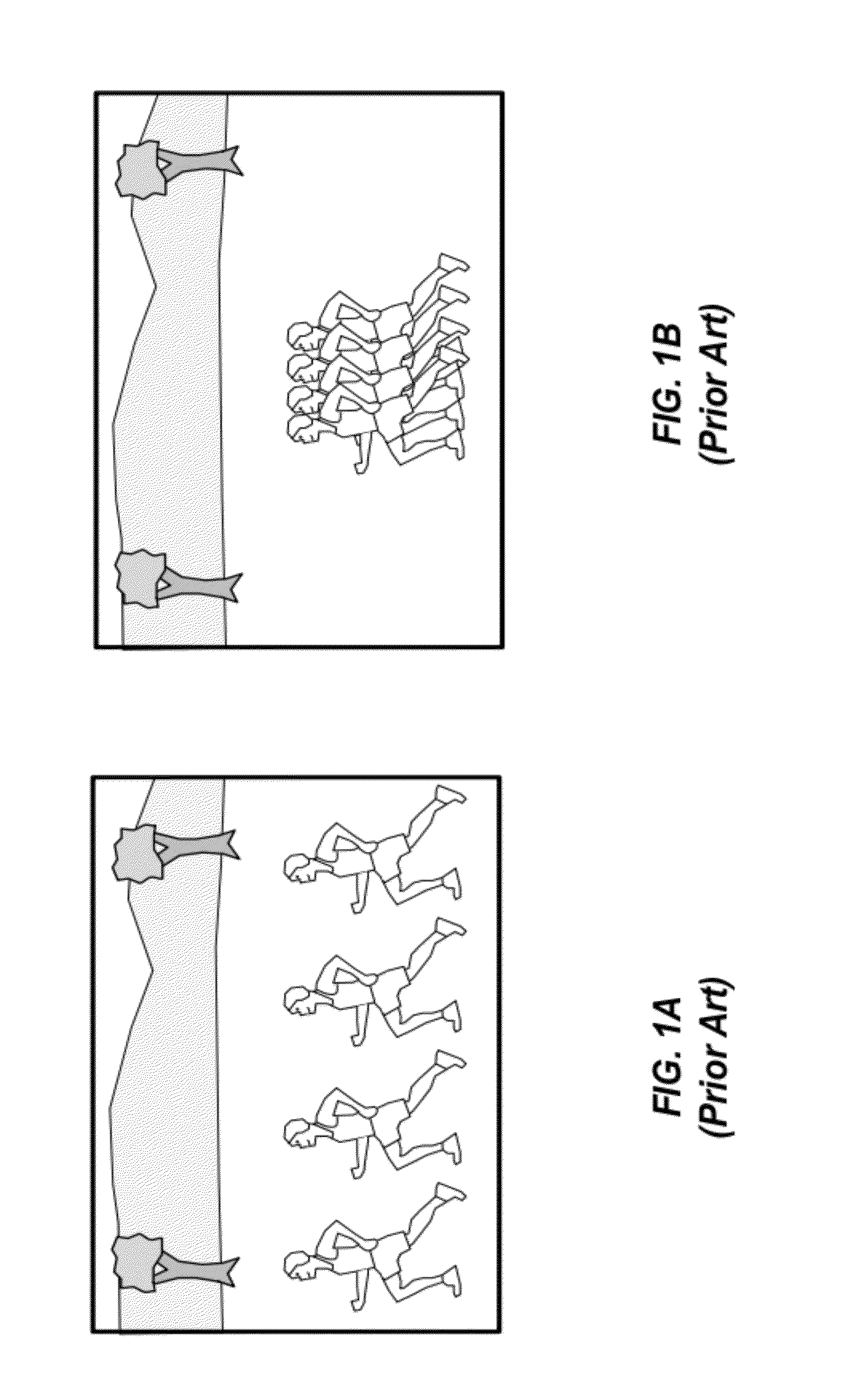

Composite image formed from an image sequence

InactiveUS20120243802A1Optimize spaceRate can be determinedTelevision system detailsCharacter and pattern recognitionData treatmentDigital image

A method for forming a composite image from a sequence of digital images, comprising: receiving a sequence of digital images of a scene, each digital image being captured at a different time, wherein the scene includes a moving object; using a data processor to automatically analyze two or more of the digital images in the sequence of digital images to determine a rate of motion for the moving object; determining a frame rate responsive to the rate of motion for the moving object; selecting a subset of the digital images from the sequence of digital images corresponding to the determined frame rate; and forming the composite image by combining the selected subset of digital images from the sequence of digital images.

Owner:INTELLECTUAL VENTURES FUND 83 LLC

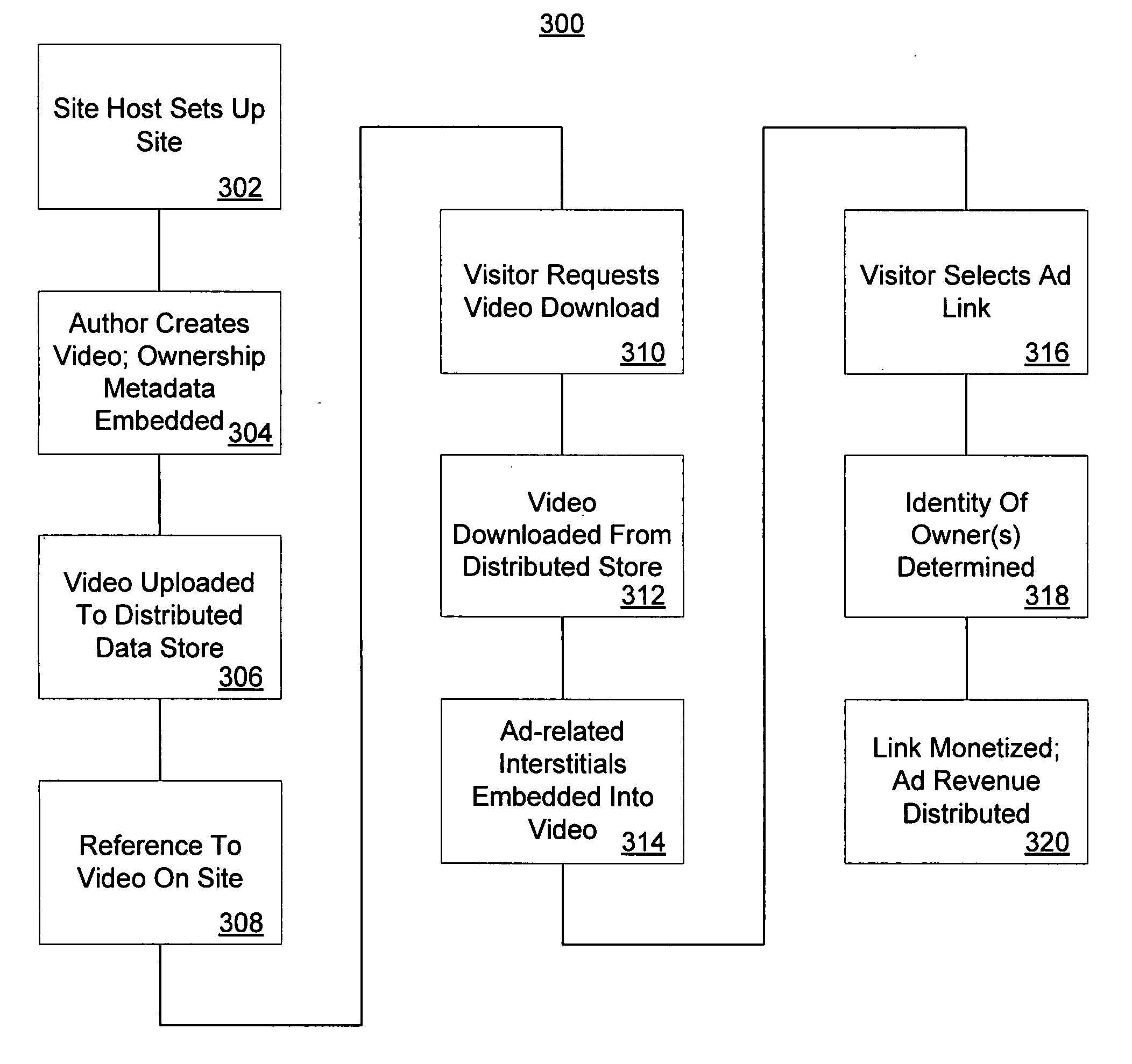

Collaborative video via distributed storage and blogging

InactiveUS20060294571A1Television system detailsAnalogue secracy/subscription systemsComposite mediaSubject matter

Systems and methods for sharing video or media content on a distributed network are disclosed. End users may be induced through a variety of ways to allocate some amount of unused disk space and otherwise idle bandwidth for storing media produced in a blogging or web-publishing context. For example, when a user creates a blog, the user may link certain video or media files to it. Such video may be kept in a distributed store across a variety of peer machines. If a visitor visits the blog, the visitor may see contextual ads based upon the subject matter associated with the blog, and the blog owner may receive a share of the advertising revenue generated. A visitor may download the video and combine it with new content. Thus, composite media files may be created from sections taken from any number of previously created video clips, each of which may be associated with a different owner. The composite media files may include pointers to any or all of the original owners, as well as the respective length of each included section. The composite video may then be added to a different blog. Anyone visiting the new blog may also see contextual ads, with pro-rated portions of the generated ad revenue flowing proportionally to each of the original media owners.

Owner:MICROSOFT TECH LICENSING LLC

Interactive television system and method for simultaneous transmission and rendering of multiple encoded video streams

InactiveUS6539545B1Television system detailsPulse modulation television signal transmissionTelevision systemDigital video

A system and method for the simultaneous transmission and rendition of multiple encoded digital video signal streams in an interactive television application are disclosed. Simultaneous transmission and rendition of multiple encoded digital video signal streams in an interactive television application generally comprises modifying at least one of the multiple encoded video streams broadcast from a broadcast center for repositioning the at least one of the multiple encoded video stream for display, interleaving the modified multiple encoded video streams comprising the at least one modified encoded video stream into a single composite interleaved video stream, and outputting the single composite video stream. The method may further comprise receiving the multiple encoded video streams from a broadcast center. Each of the multiple encoded video streams is preferably encoded using MPEG-1 or MPEG-2 compression technology.

Owner:OPEN TV INC

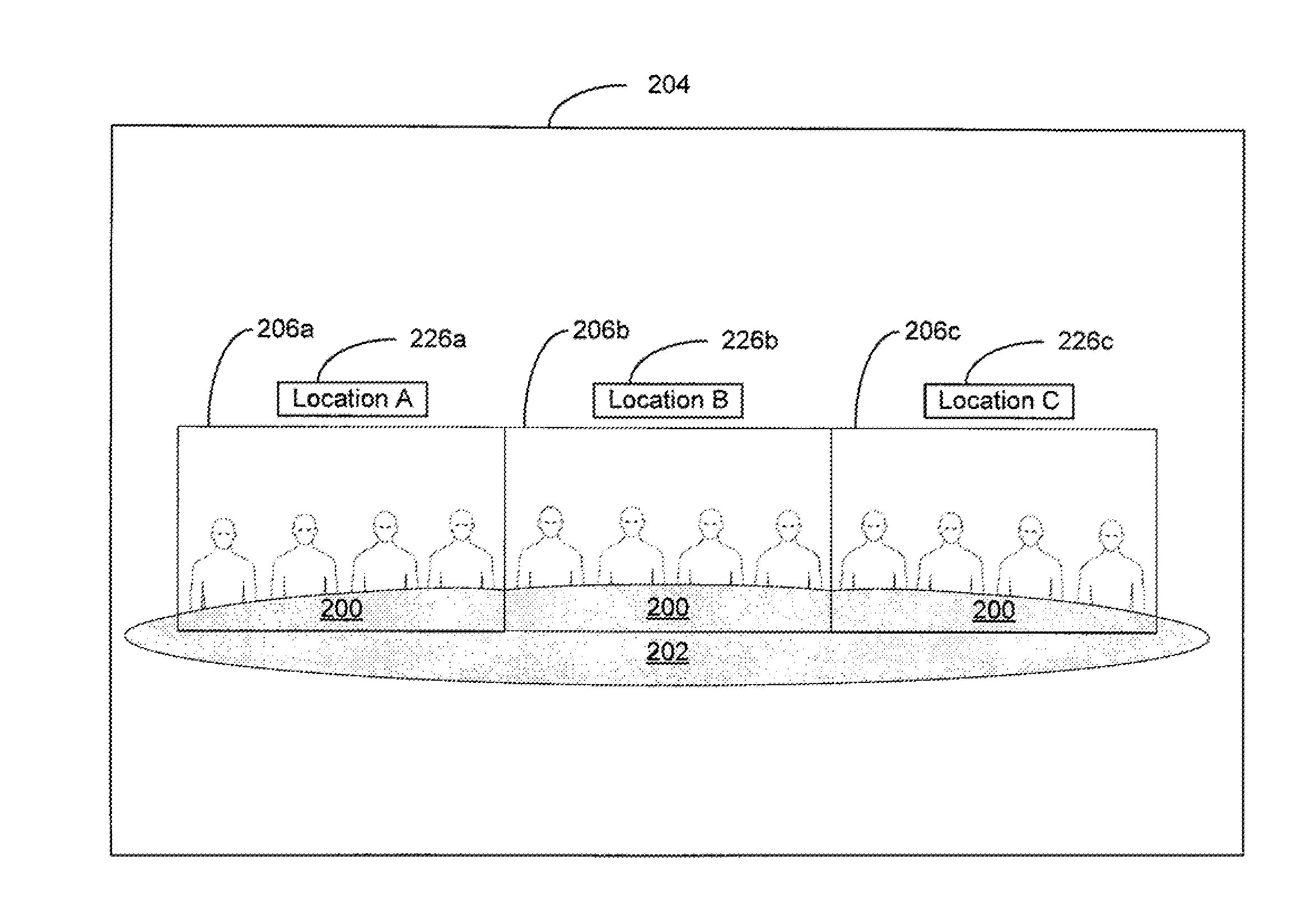

Compositing Video Streams

ActiveUS20110025819A1Color signal processing circuitsTelevision conference systemsComputer graphics (images)Background image

Methods and apparatus for compositing multiple video streams onto a background image having at least one object while keeping at least one of a proper perspective, order, and substantial alignment to the object of the multiple video streams based on the rules of a common layout. The background is defined as a meeting space that fills in gaps between the multiple video streams with appropriate structure of the object. The background creates the context of the meeting space.

Owner:HEWLETT PACKARD DEV CO LP

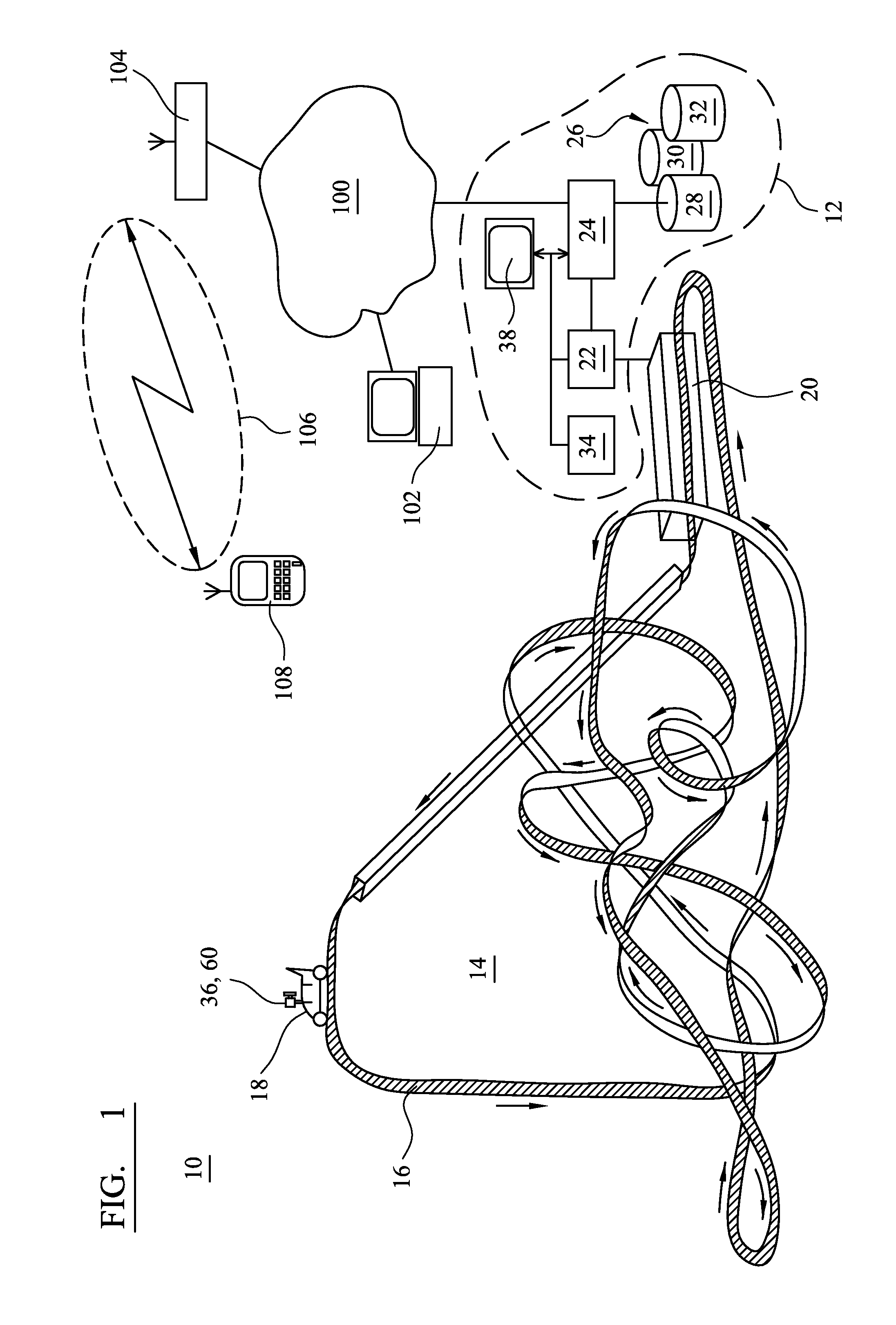

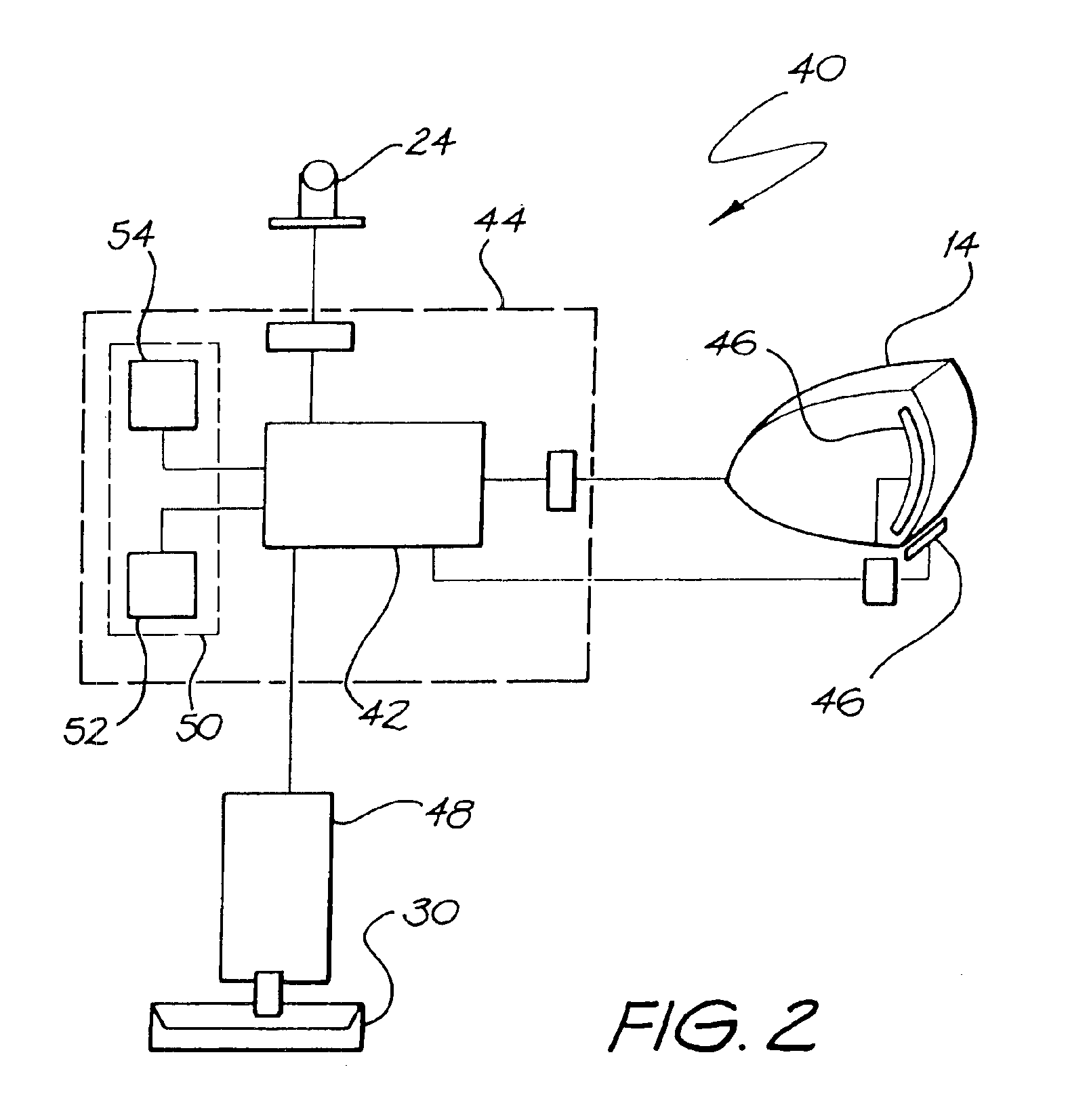

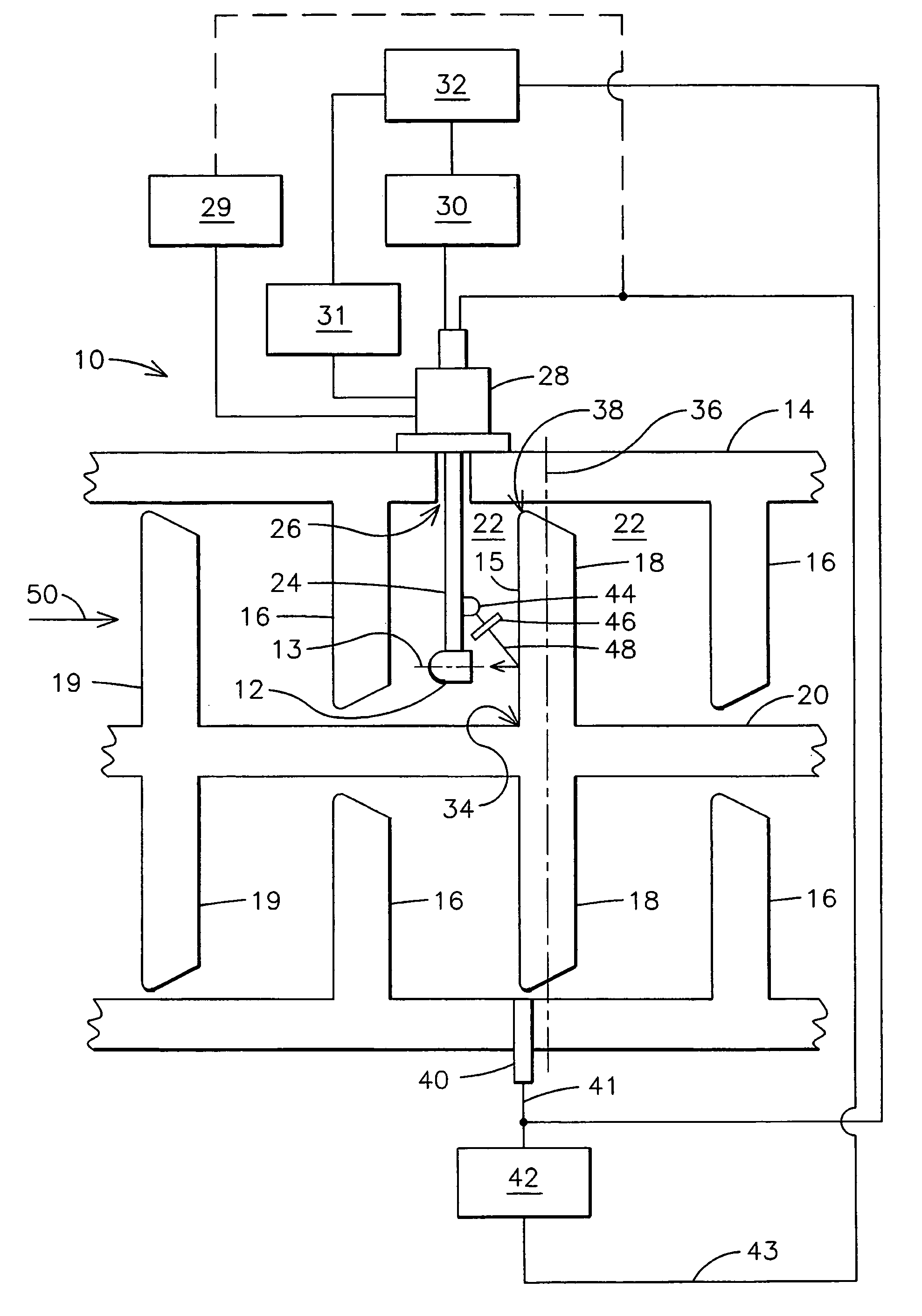

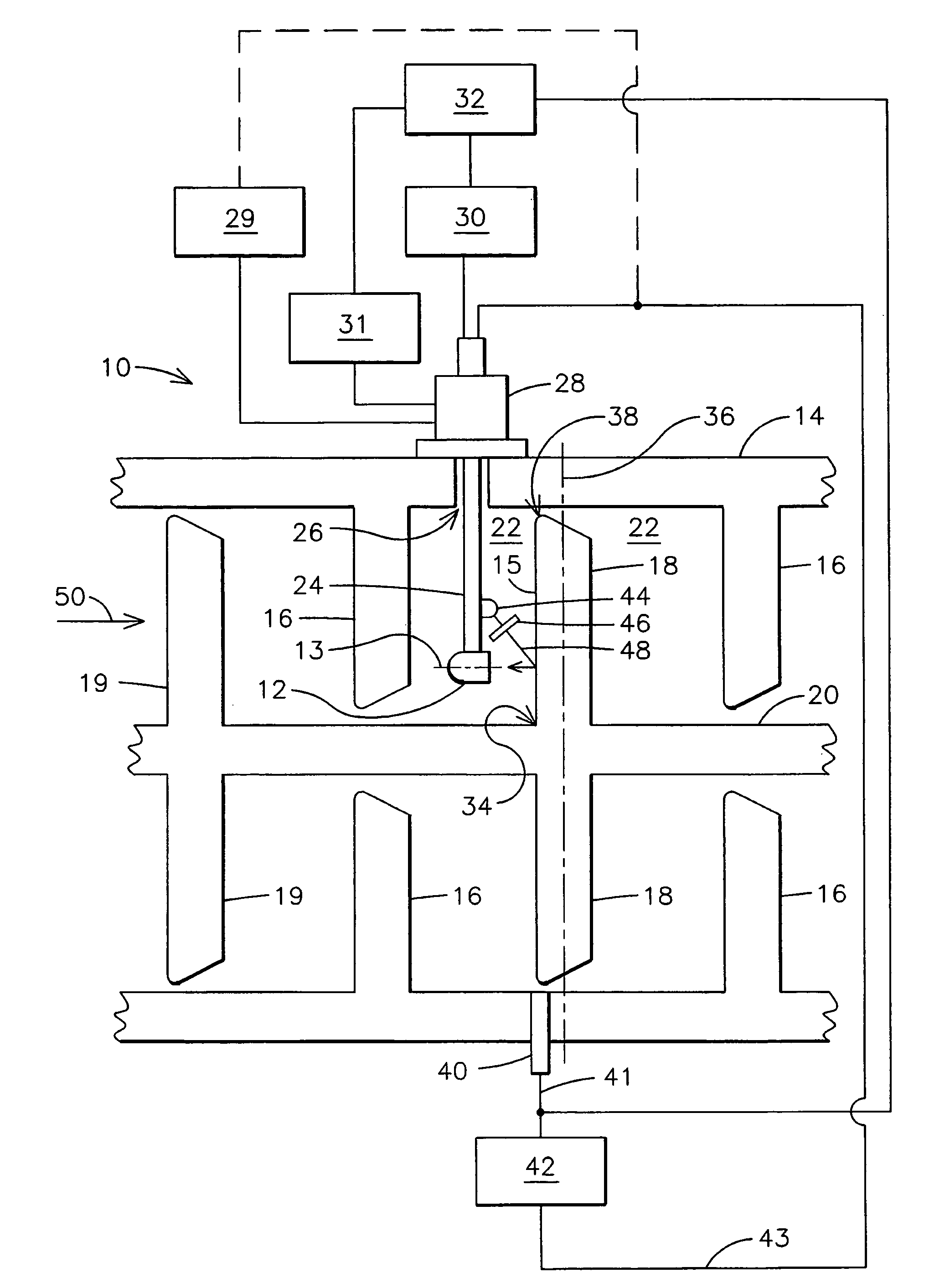

In situ combustion turbine engine airfoil inspection

A system (10) for imaging a combustion turbine engine airfoil includes a camera (12) and a positioner (24). The positioner may be controlled to dispose the camera within an inner turbine casing of the engine at a first position for acquiring a first image. The camera may then be moved to a second position for acquiring a second image. A storage device (30) stores the first and second images, and a processor (32) accesses the storage device to generate a composite image from the first and second images. For use when the airfoil is rotating, the system may also include a sensor (40) for generating a position signal (41) responsive to a detected angular position of an airfoil. The system may further include a trigger device (42), responsive to the position signal, for triggering the camera to acquire an image when the airfoil is proximate the camera.

Owner:SIEMENS ENERGY INC

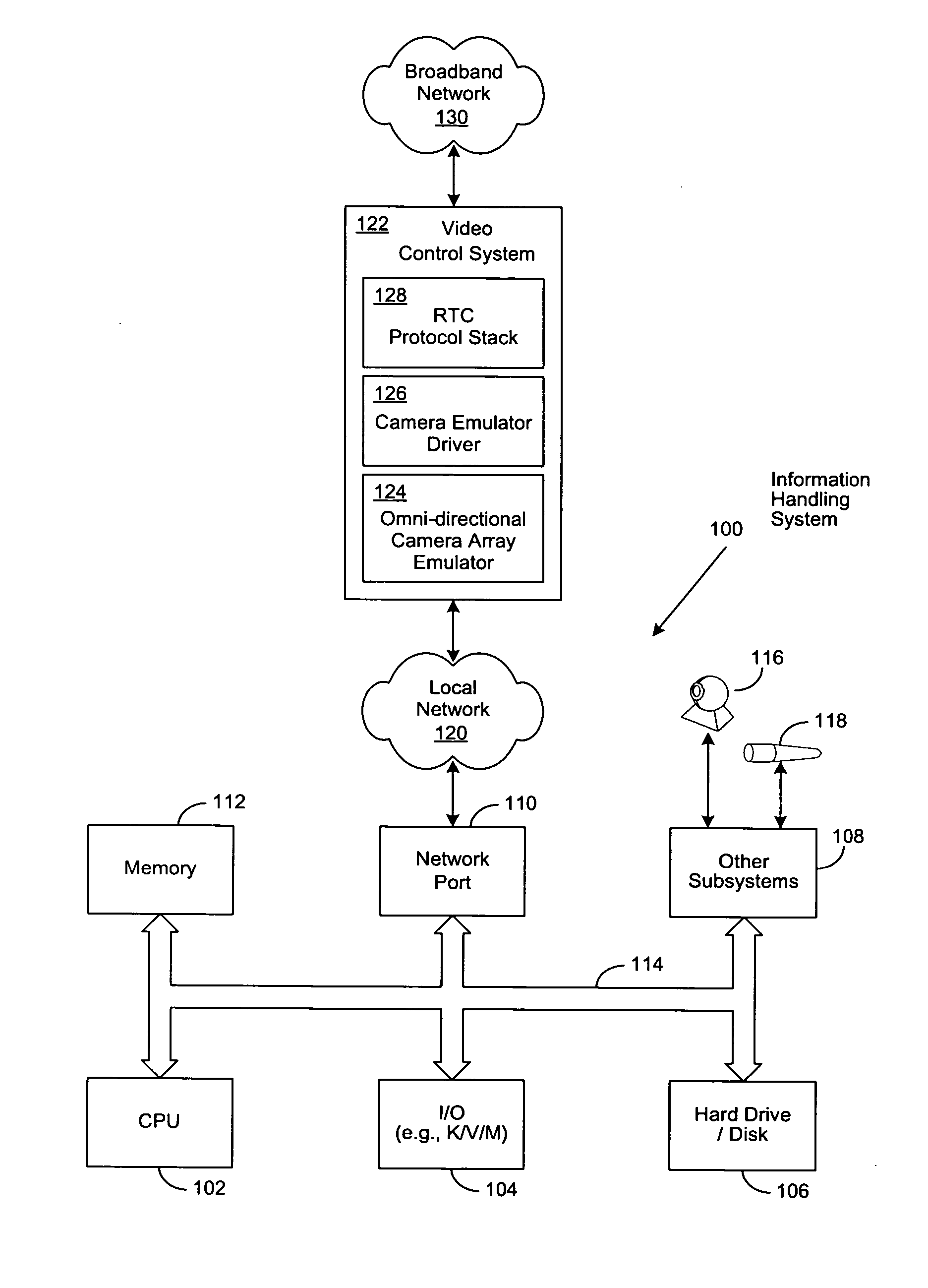

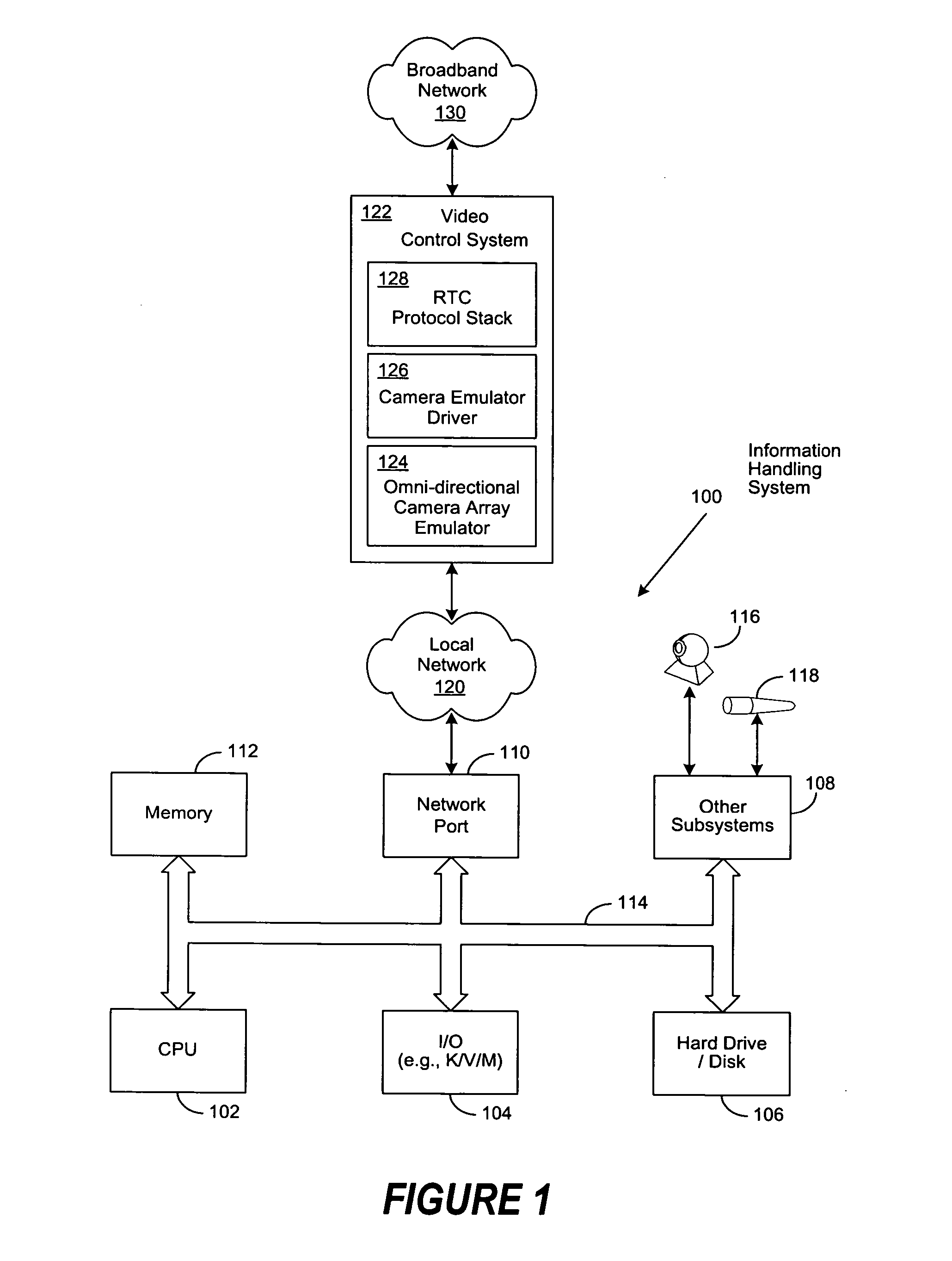

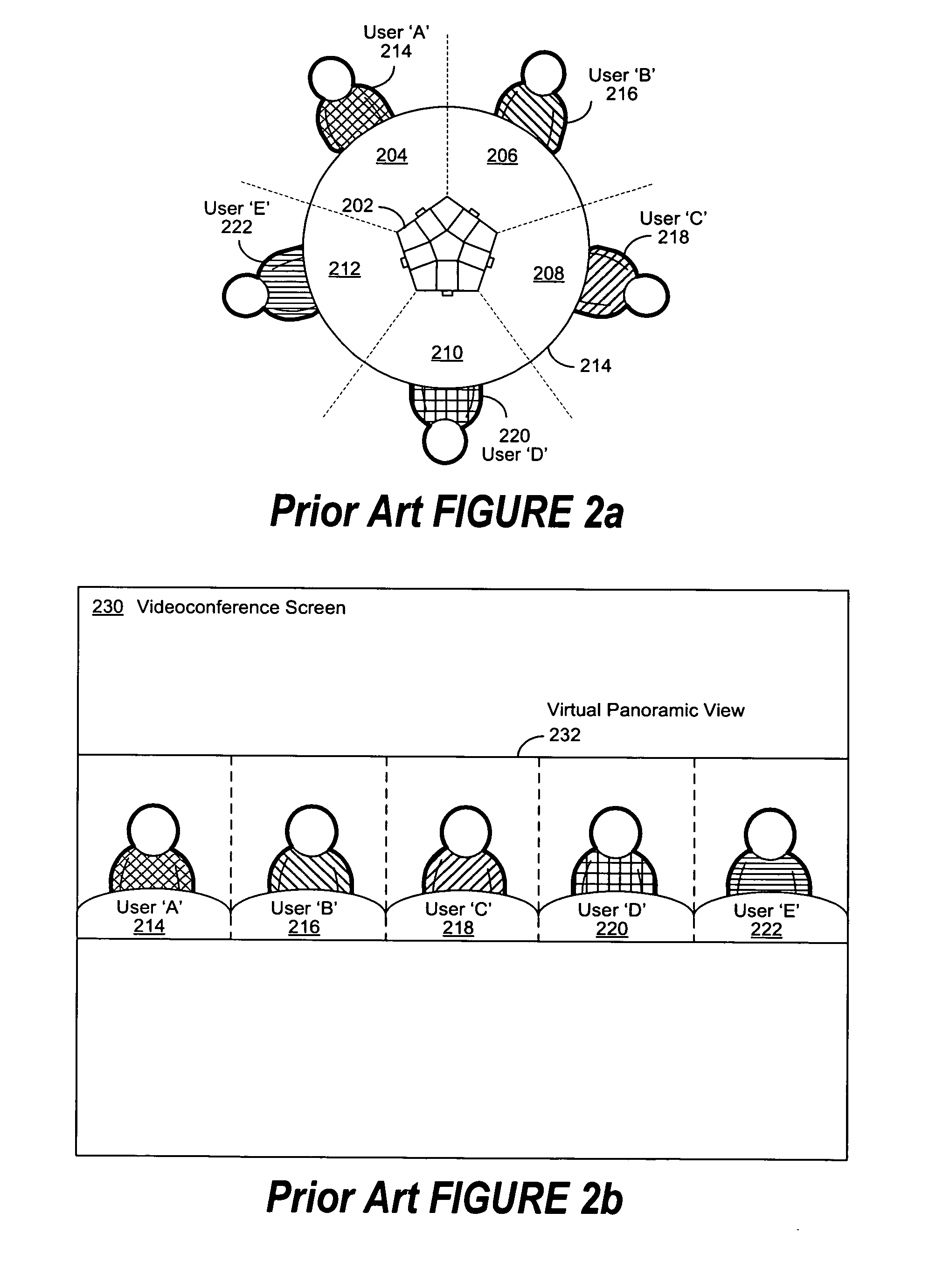

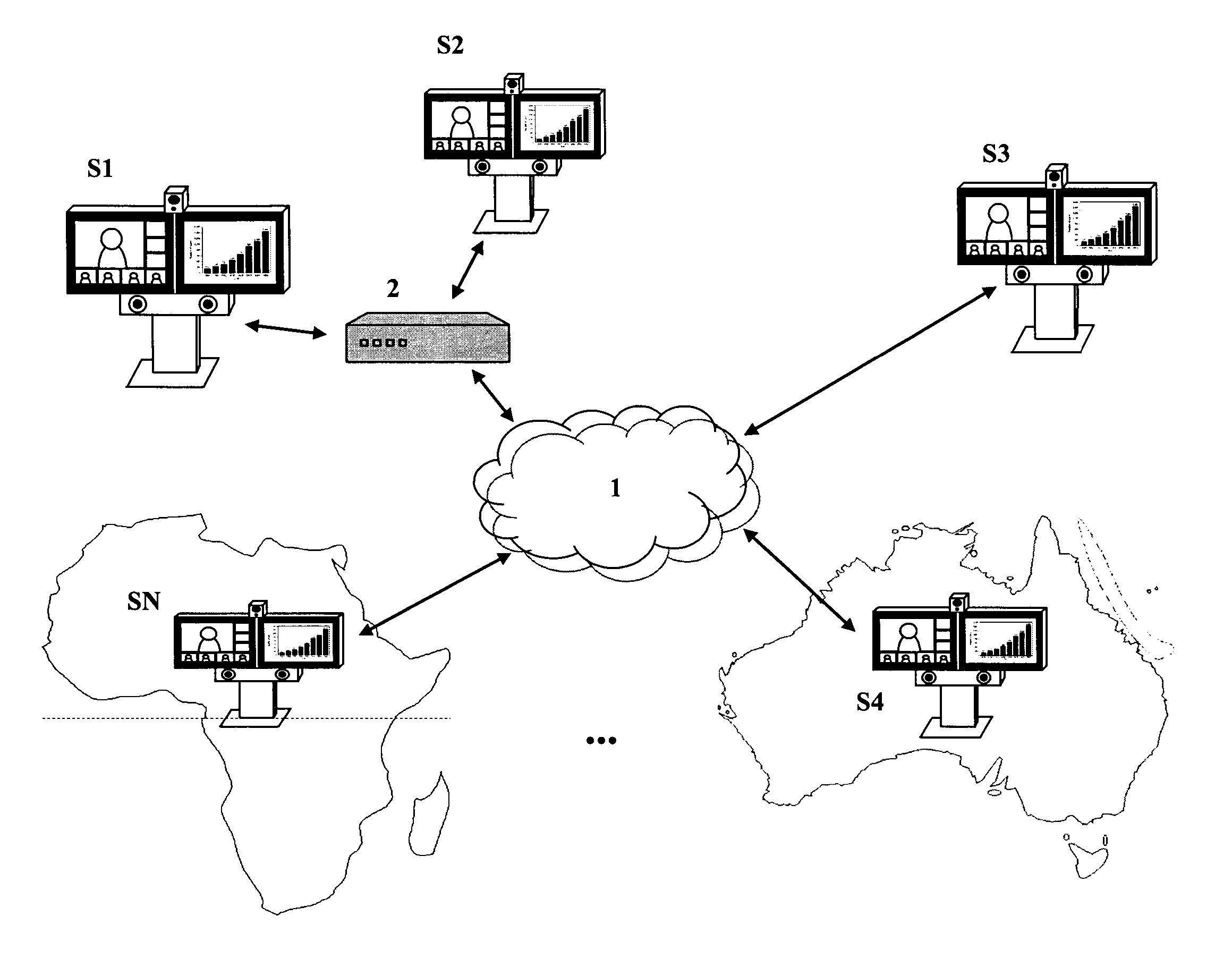

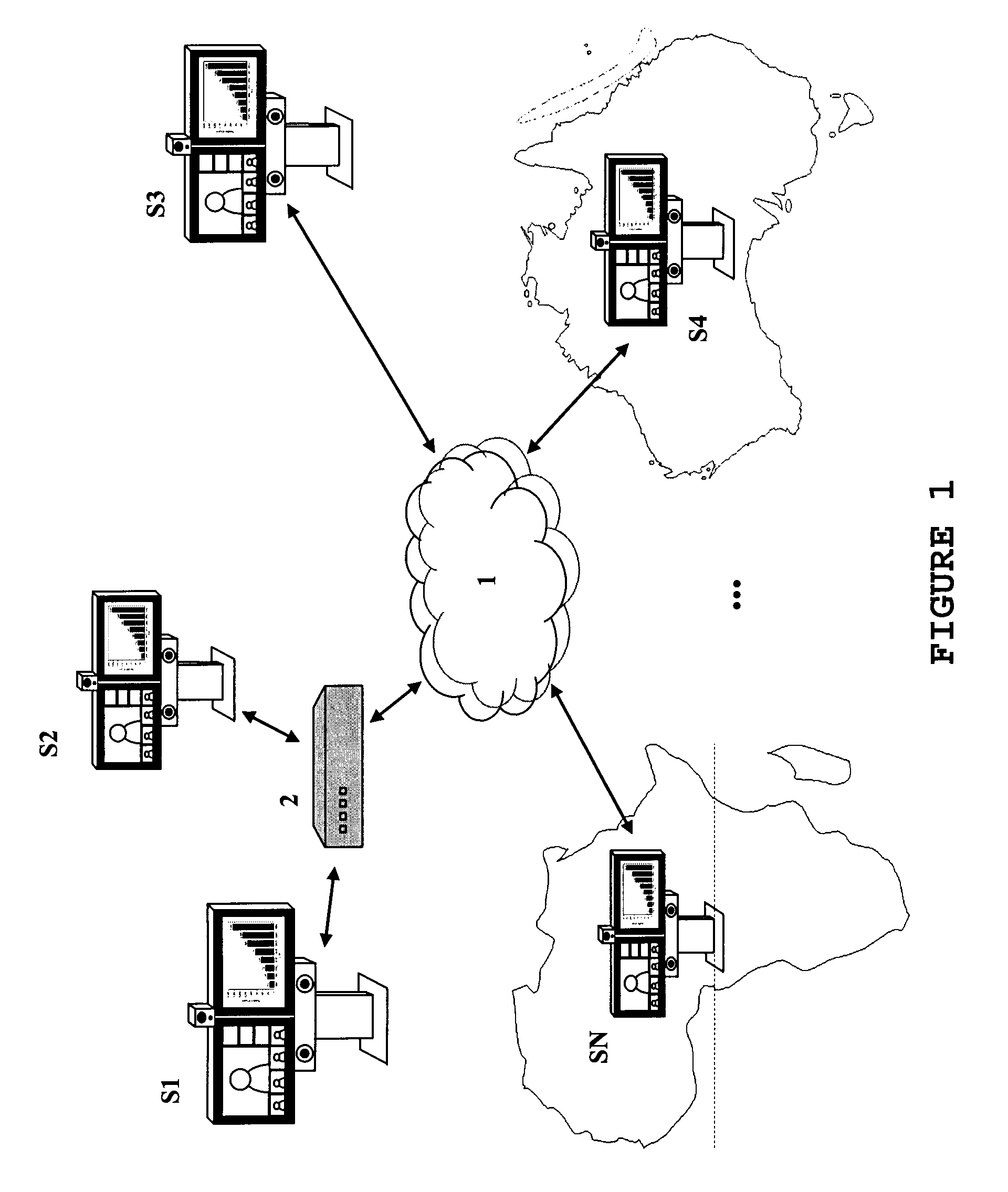

Virtual ring camera

ActiveUS20070263076A1Improved composite video viewTelevision conference systemsTwo-way working systemsInformation processingThe Internet

A system and method for a virtual omni-directional camera array, comprising a video control system (VCS) coupled to two or more co-located portable or stationary information processing systems, each enabled with a video camera and microphone, to provide a composite video view to remote videoconference participants. Audio streams are captured and selectively mixed to produce a virtual array microphone as a clue to selectively switch or combine the video streams from individual cameras. The VCS selects and controls predetermined subsets of video and audio streams from the co-located video camera and microphone-enabled computers to create a composite video view, which is then conveyed to one or more similarly-enabled remote computers over a broadband network (e.g., the Internet). Manual overrides allow participants or a videoconference operator to select predetermined video streams as the primary video view of the videoconference.

Owner:DELL PROD LP

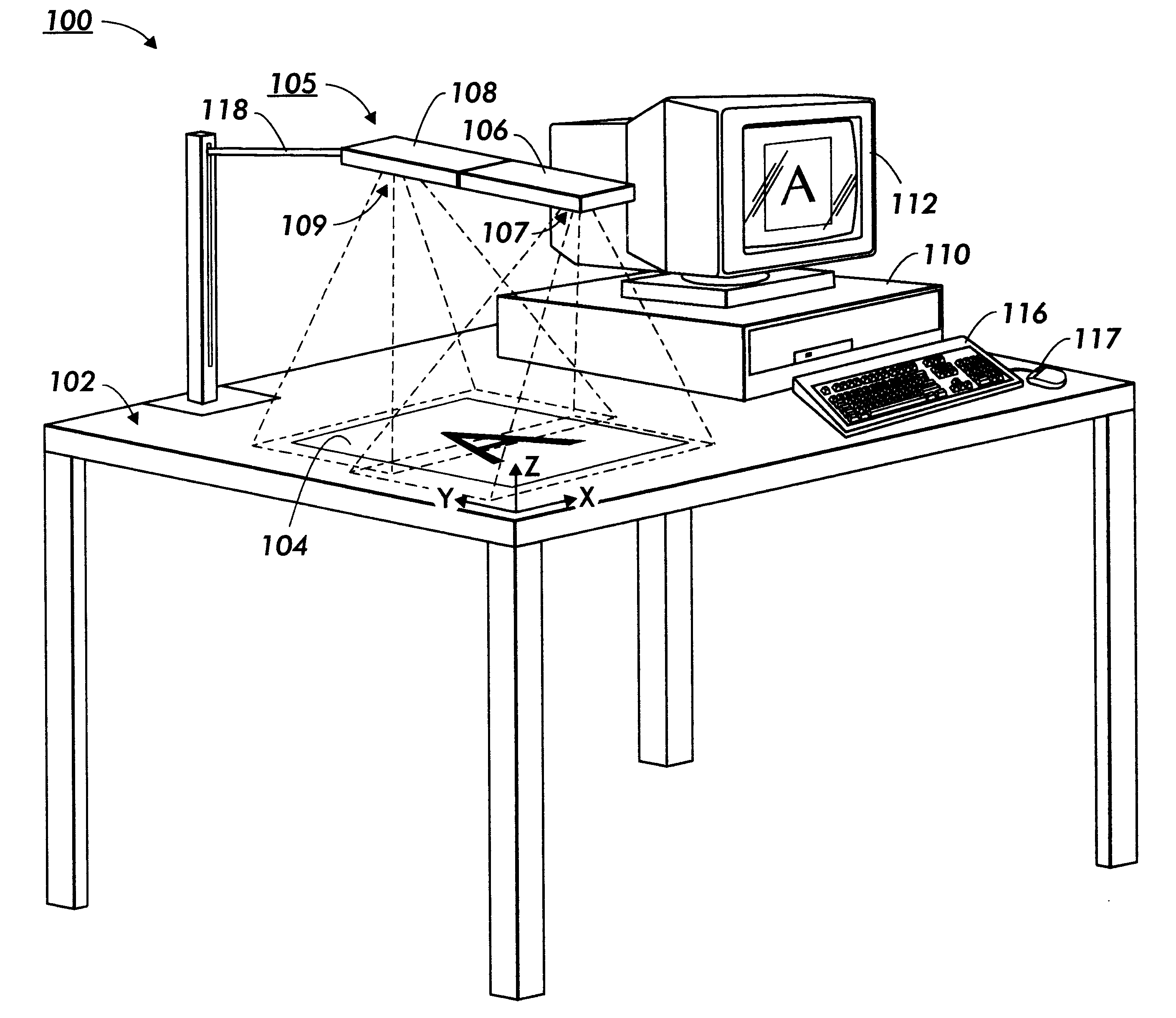

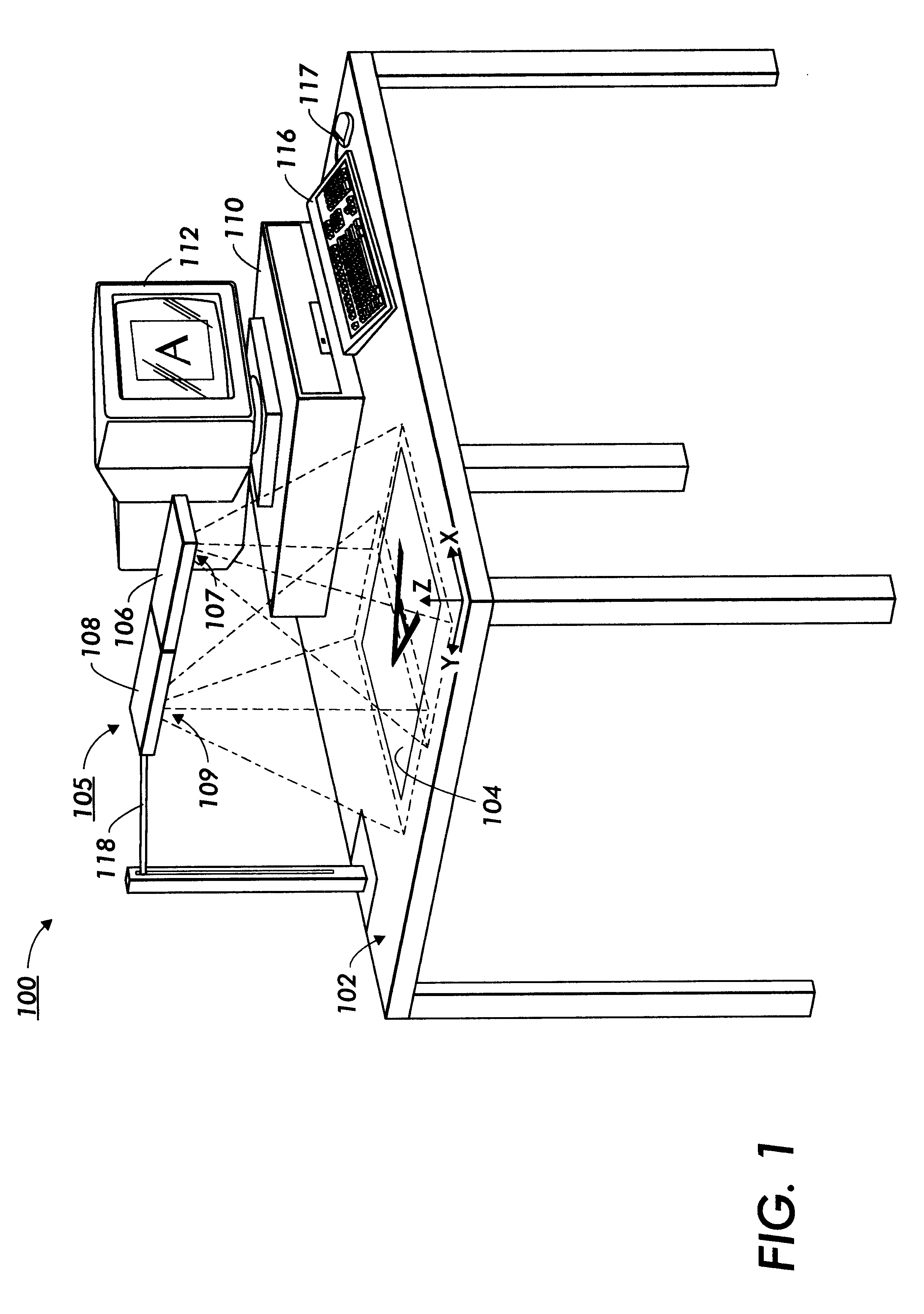

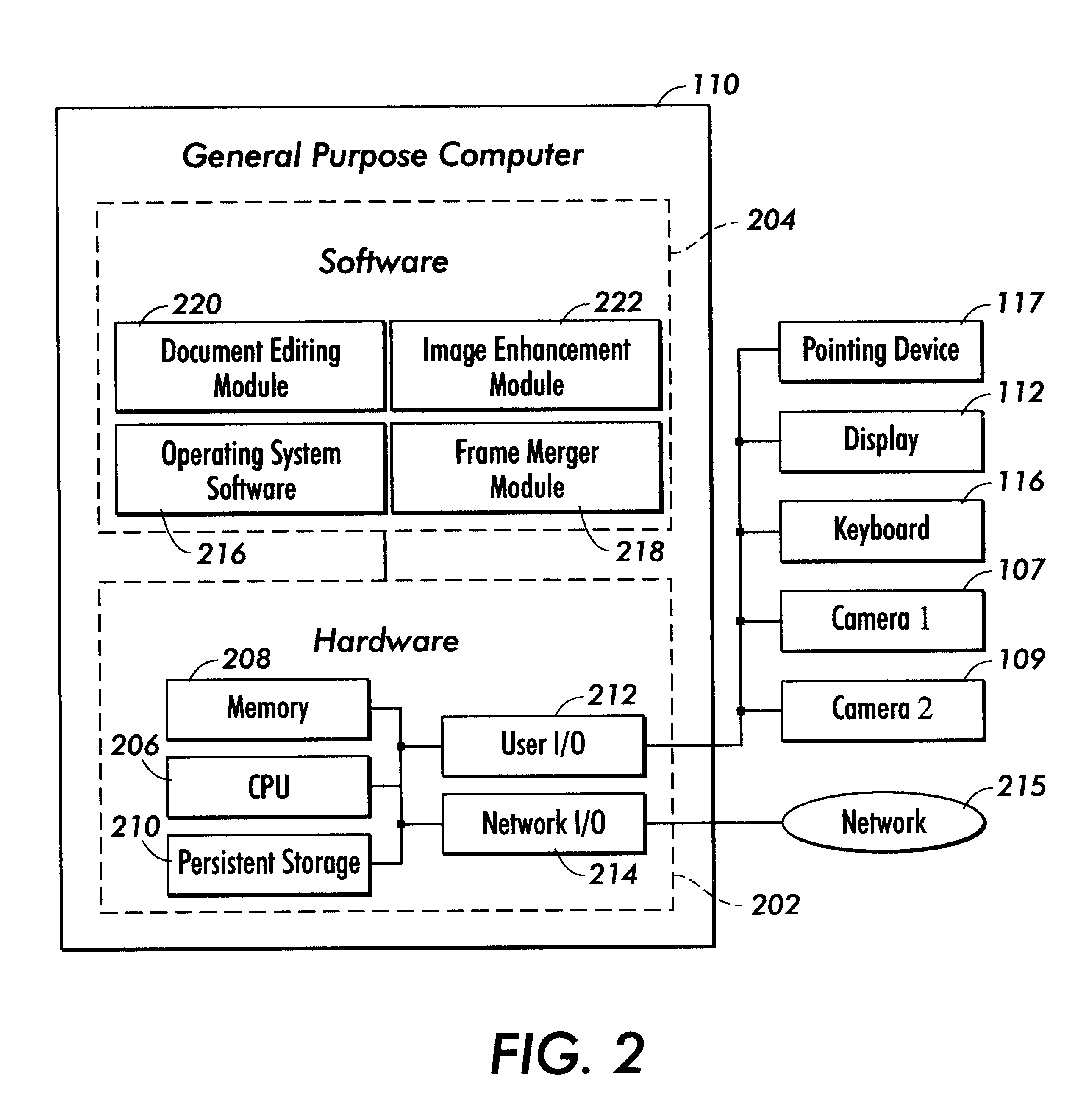

Dual video camera system for scanning hardcopy documents

InactiveUS6493469B1Character and pattern recognitionTwo-way working systemsVarying thicknessDocumentation

A face-up document scanning apparatus stitches views from multiple video cameras together to form a composite image. The document scanning apparatus includes an image acquisition system and a frame merger module. The image acquisition, which is mounted over the surface of a desk on which a hardcopy document is placed, has two video cameras for simultaneously recording two overlapping images of different portions of the hardcopy document. By overlapping a portion of the recorded images, the document scanning apparatus can accommodate hardcopy documents of varying thickness. Once the overlapping images are recorded by the image acquisition system, the frame merger module assembles a composite image by identifying the region of overlap between the overlapping images. The composite image is subsequently transmitted for display on a standalone device or as part of a video conferencing system.

Owner:XEROX CORP

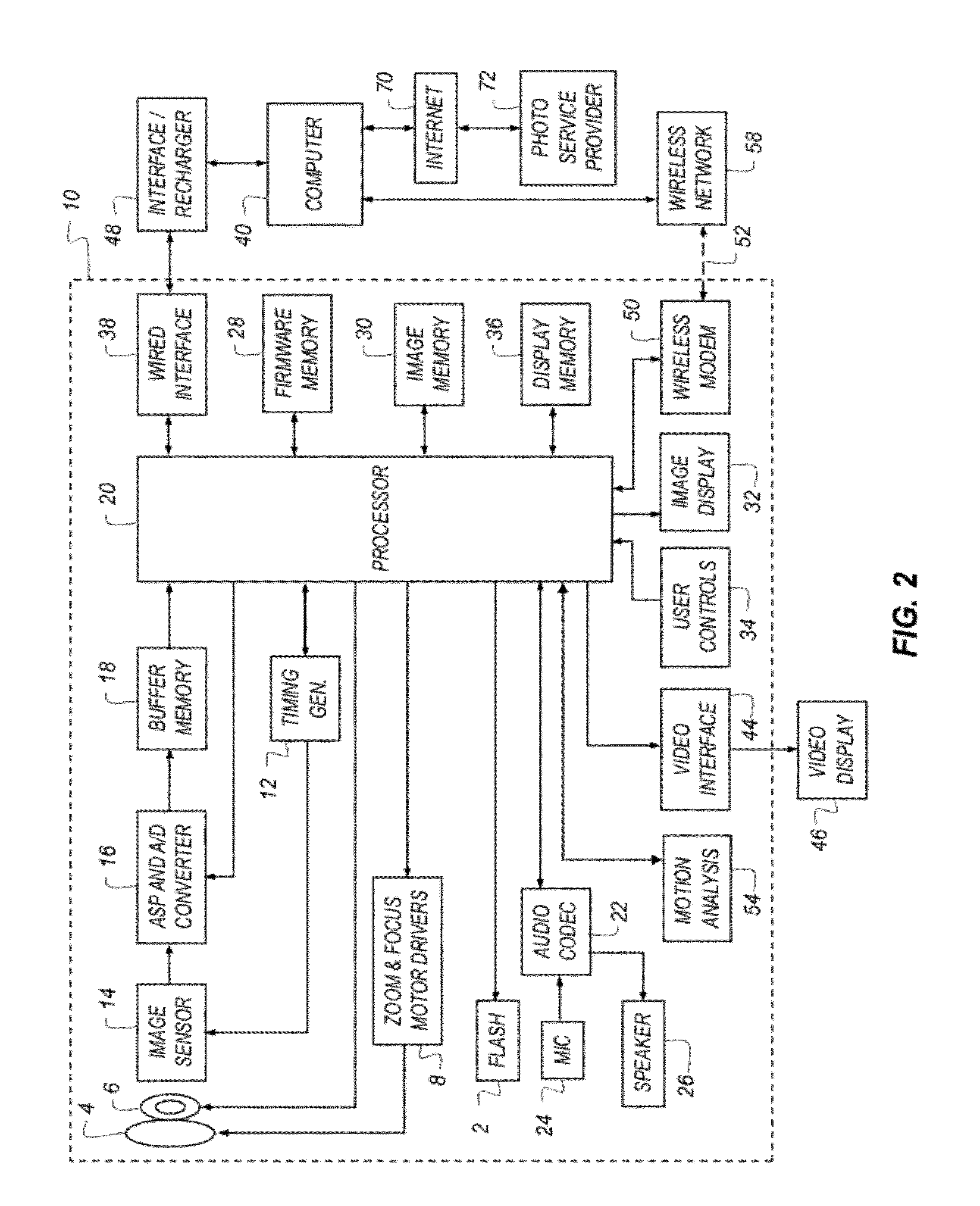

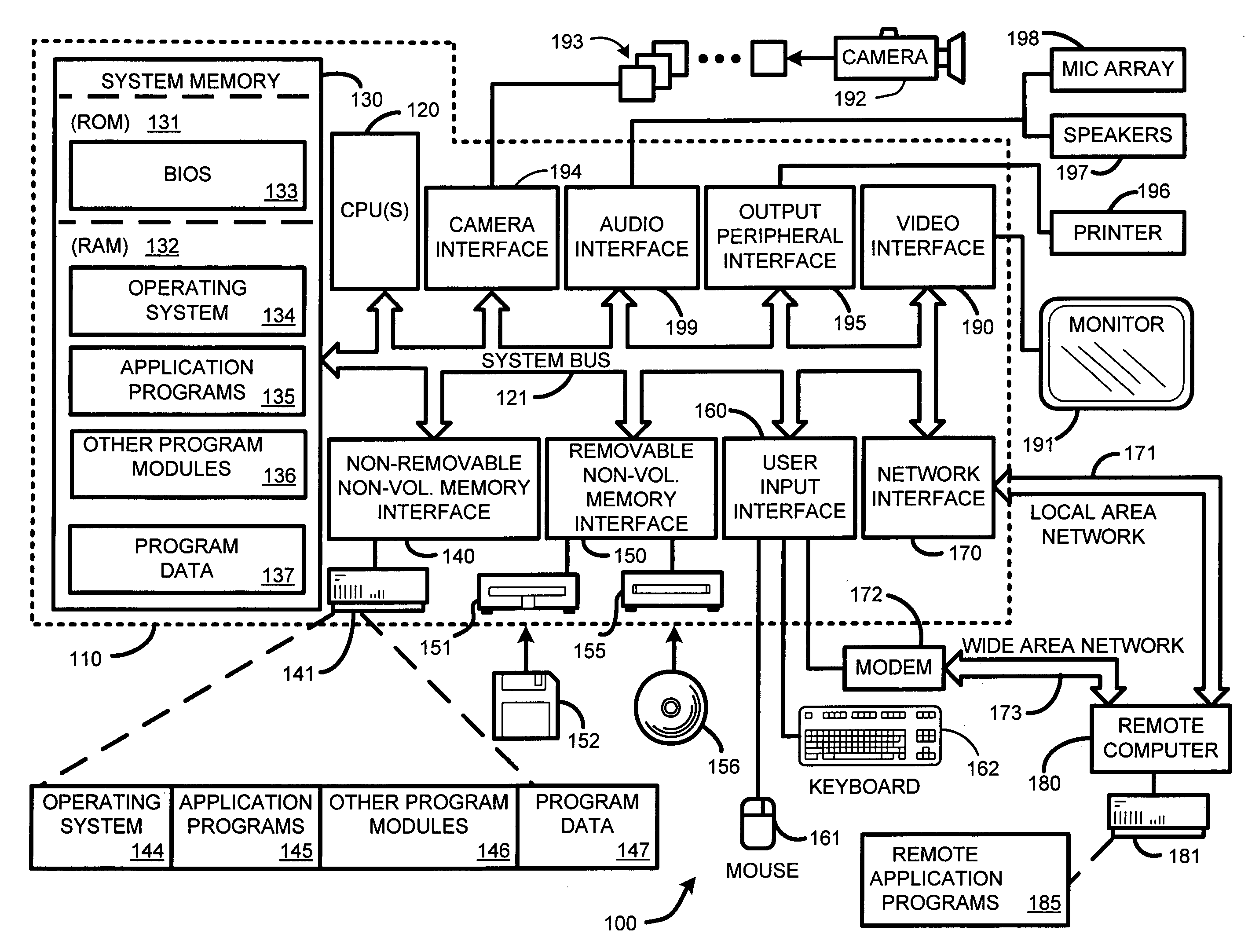

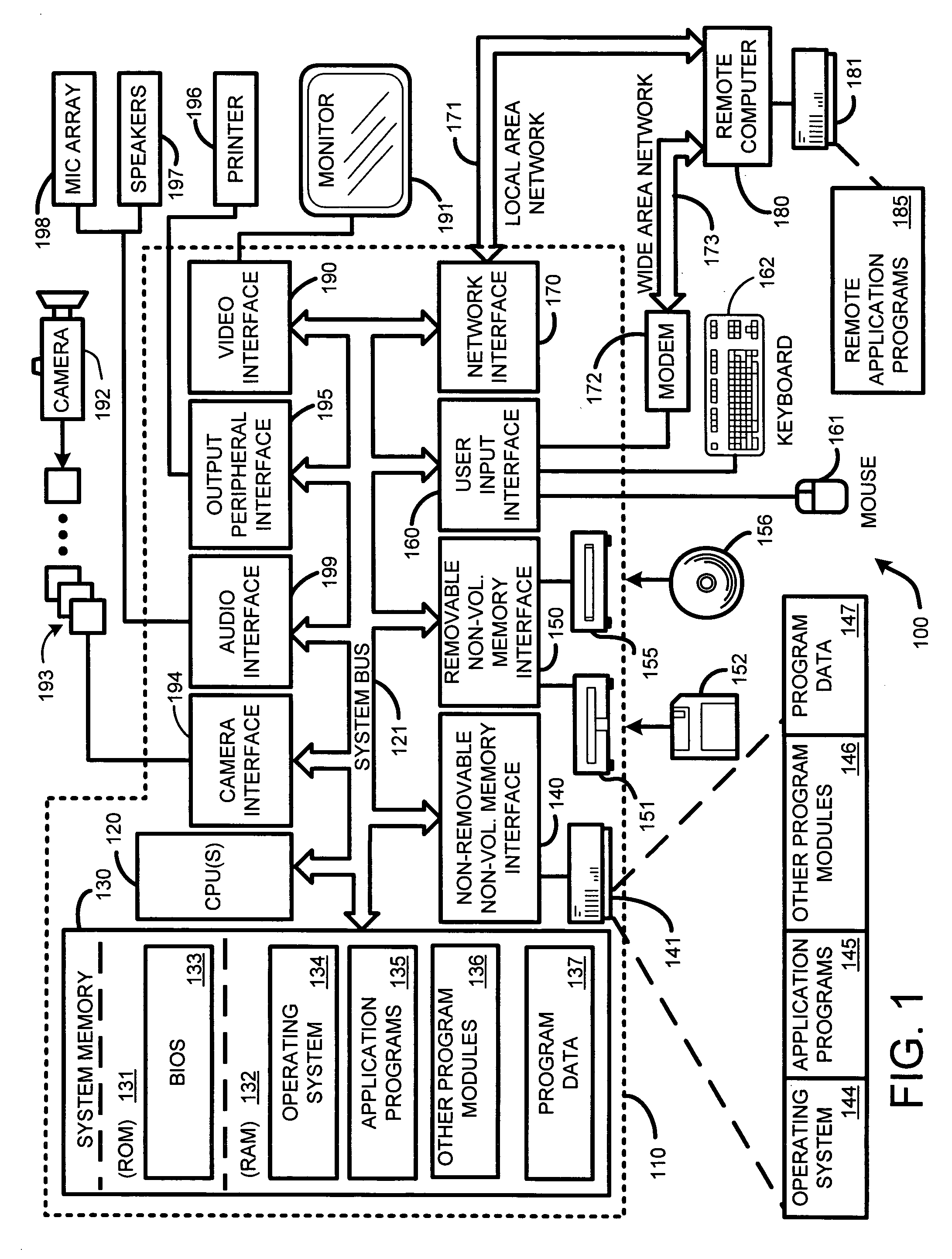

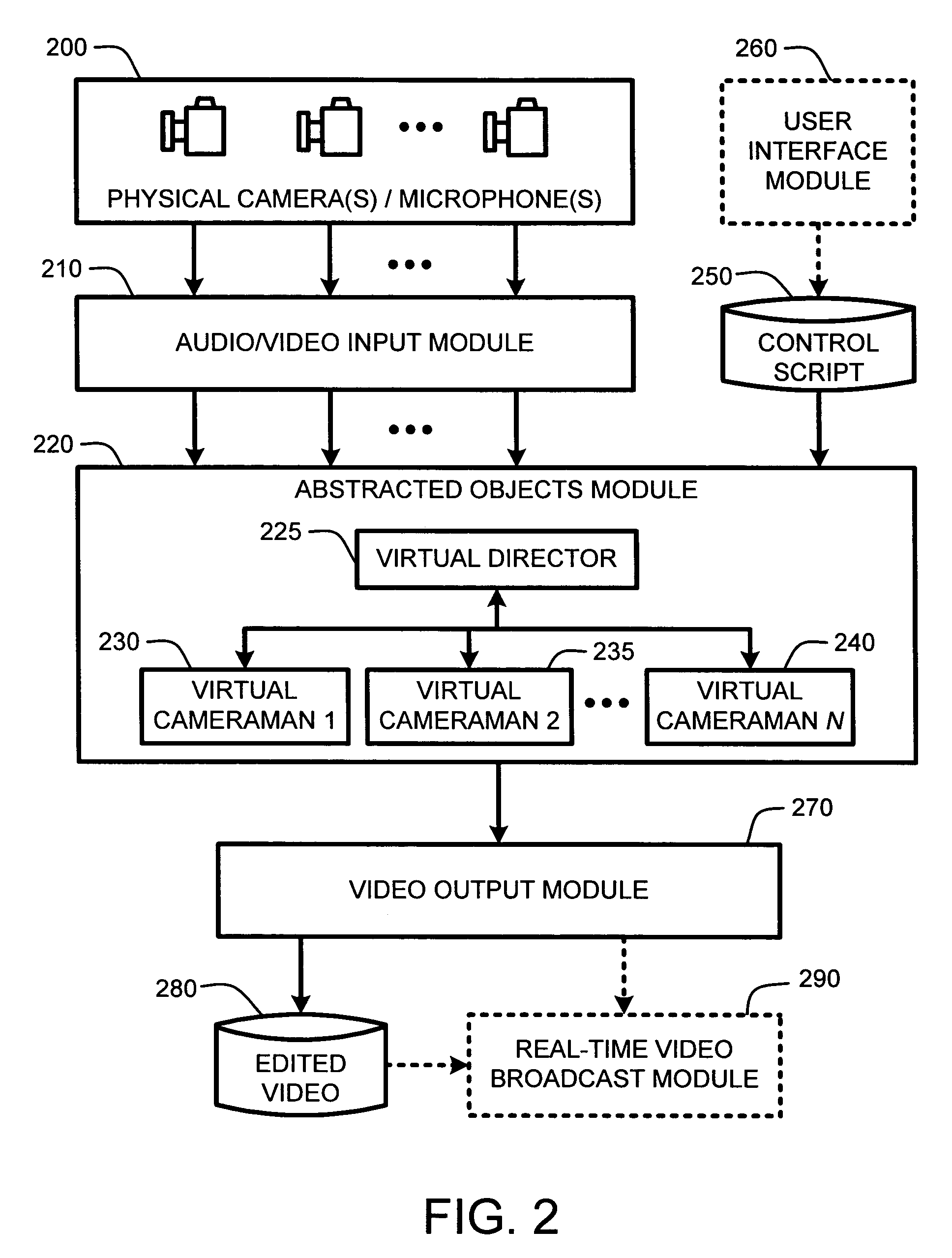

Portable solution for automatic camera management

InactiveUS20060005136A1Fast response timeHigh resolutionTelevision system detailsInterconnection arrangementsCamera controlVirtual studio

A “virtual video studio”, as described herein, provides a highly portable real-time capability to automatically capture, record, and edit a plurality of video streams of a presentation, such as, for example, a speech, lecture, seminar, classroom instruction, talk-show, teleconference, etc., along with any accompanying exhibits, such as a corresponding slide presentation, using a suite of one or more unmanned cameras controlled by a set of videography rules. The resulting video output may then either be stored for later use, or broadcast in real-time to a remote audience. This real-time capability is achieved by using an abstraction of “virtual cameramen” and physical cameras in combination with a scriptable interface to the aforementioned videography rules for capturing and editing the recorded video to create a composite video of the presentation in real-time under the control of a “virtual director.”

Owner:MICROSOFT TECH LICENSING LLC

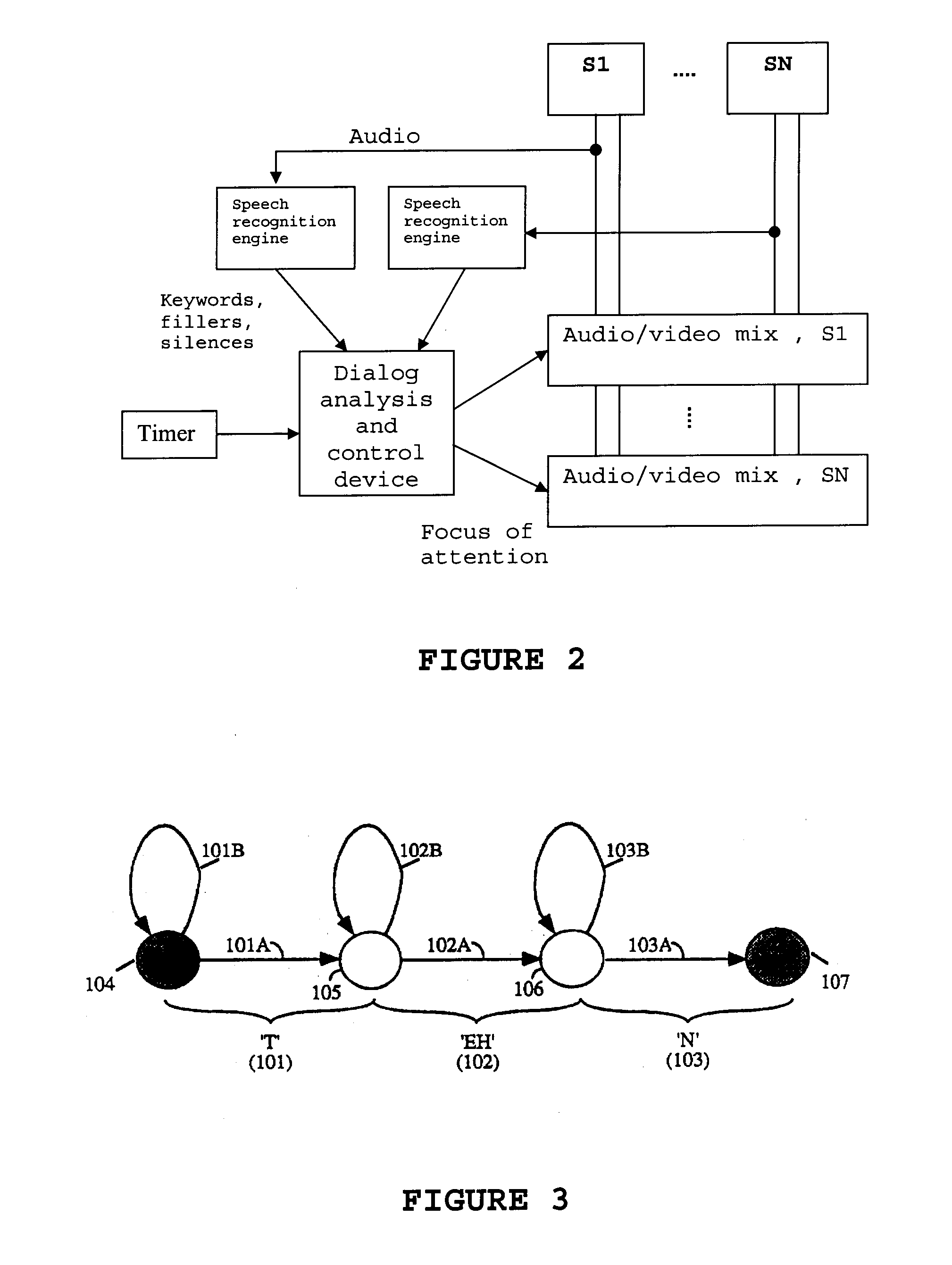

Method and apparatus for video conferencing having dynamic layout based on keyword detection

In particular, the present invention provides a method and system for conferencing, including the steps of connecting at least two sites to a conference, receiving at least two video signals and two audio signals from the connected sites, consecutively analyzing the audio data from the at least two sites connected in the conference by converting at least a part of the audio data to acoustical features and extracting keywords and speech parameters from the acoustical features using speech recognition, and comparing said extracted keywords to predefined words, then deciding if said extracted predefined keywords are to be considered a call for attention based on said speech parameters, and further, defining an image layout based on said decision, and processing the received video signals to provide a video signal according to the defined image layout, and transmitting the composite video signal to at least one of the at least two connected sites.

Owner:TANDBERG TELECOM AS

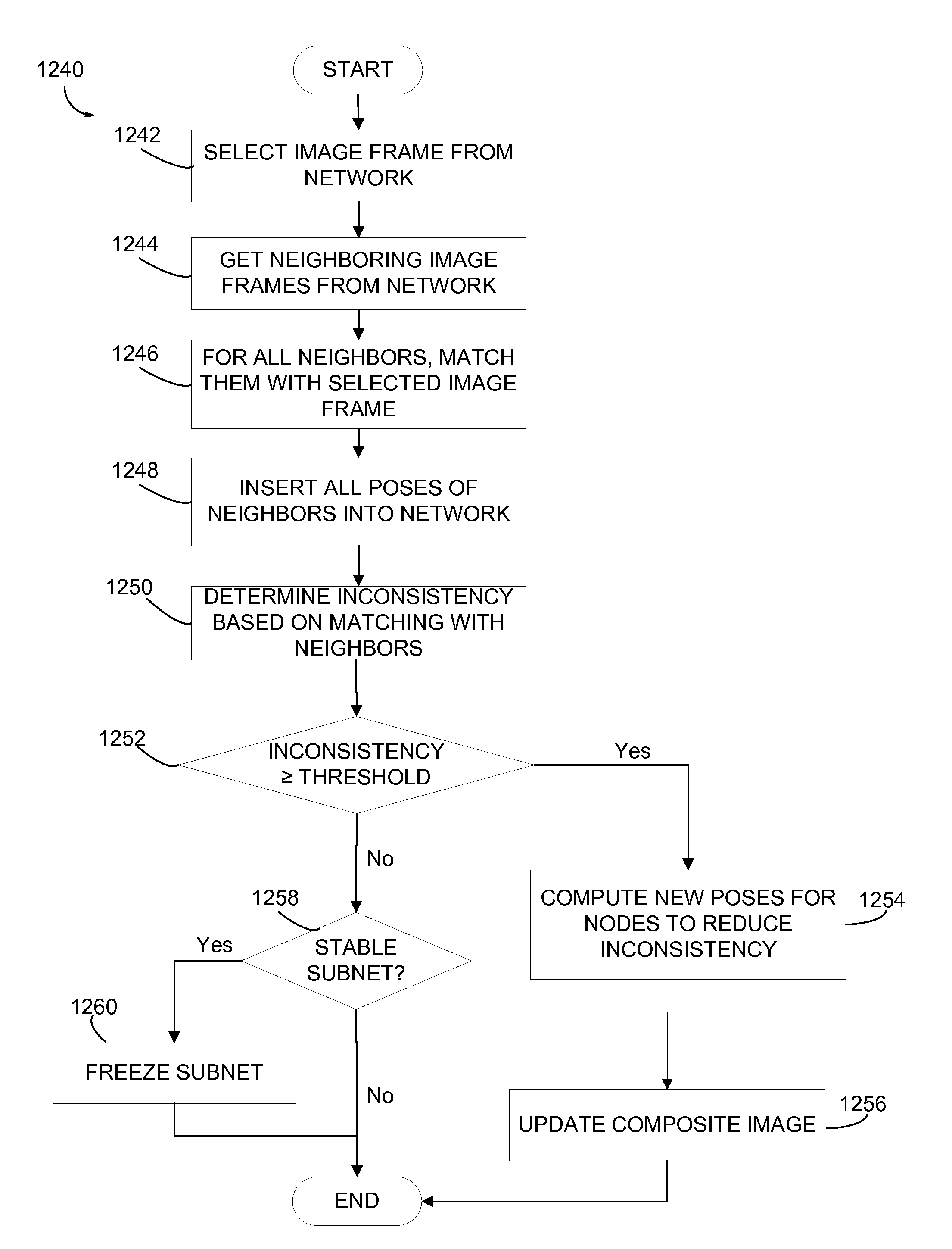

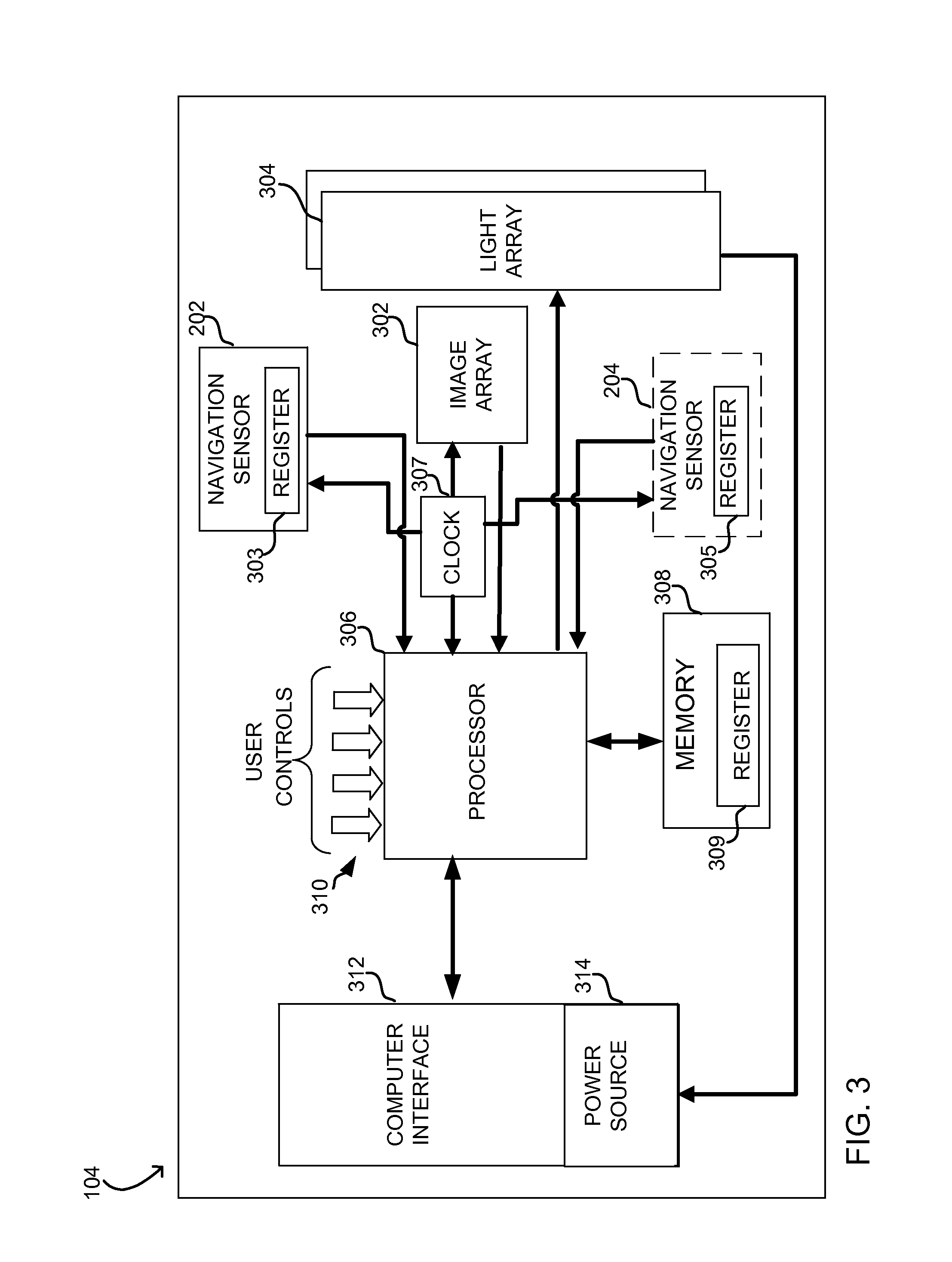

Image processing for handheld scanner

ActiveUS20100295868A1Reduce metric of inconsistencyReduce the metric of inconsistencyImage analysisCharacter and pattern recognitionImaging processingMarine navigation

A computer peripheral that may operate as a scanner. The scanner captures image frames as it is moved across an object. The image frames are formed into a composite image based on computations in two processes. In a first process, fast track processing determines a coarse position of each of the image frames based on a relative position between each successive image frame and a respective preceding image determine by matching overlapping portions of the image frames. In a second process, fine position adjustments are computed to reduce inconsistencies from determining positions of image frames based on relative positions to multiple prior image frames. The peripheral may also act as a mouse and may be configured with one or more navigation sensors that can be used to reduce processing time required to match a successive image frame to a preceding image frame.

Owner:MAGIC LEAP

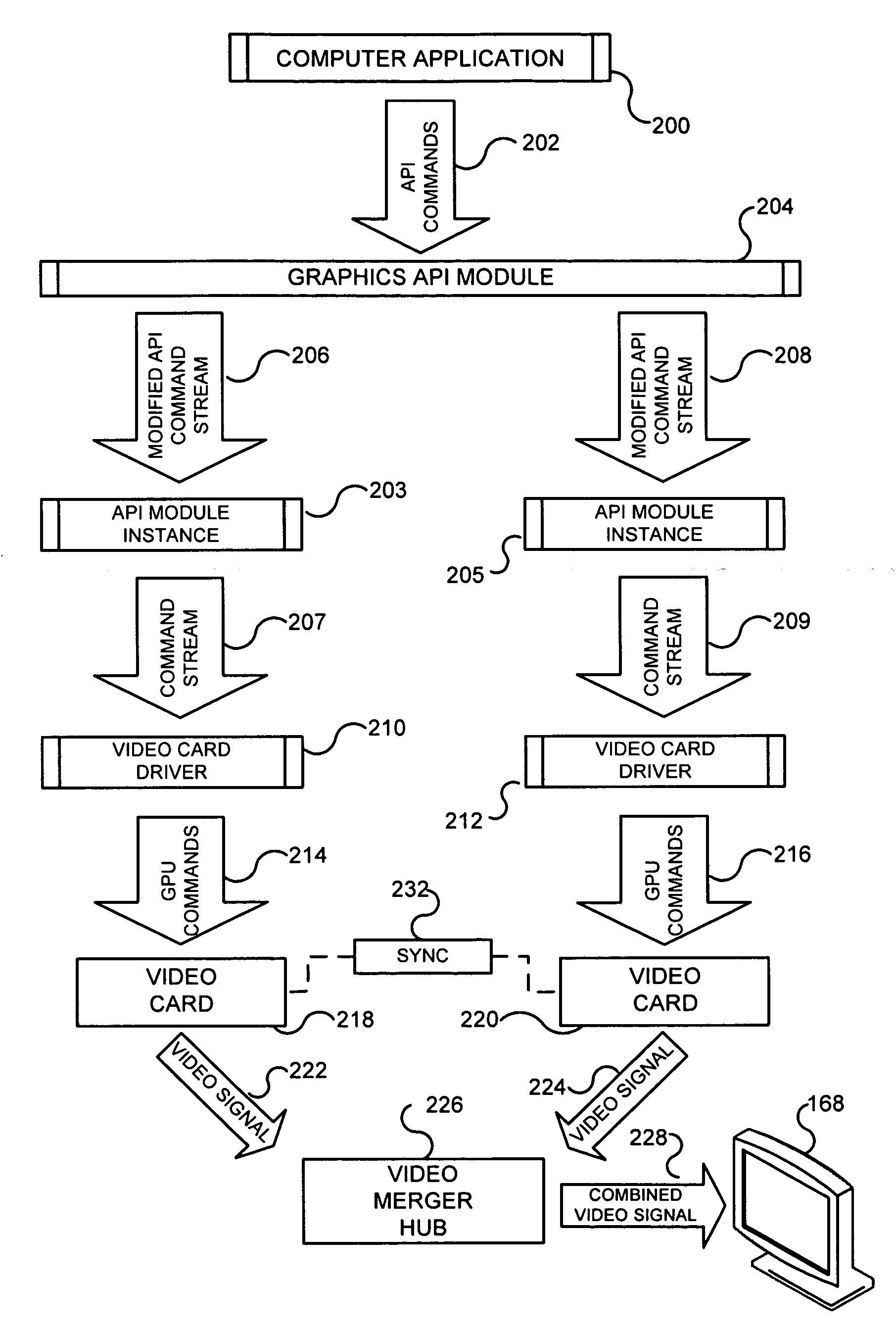

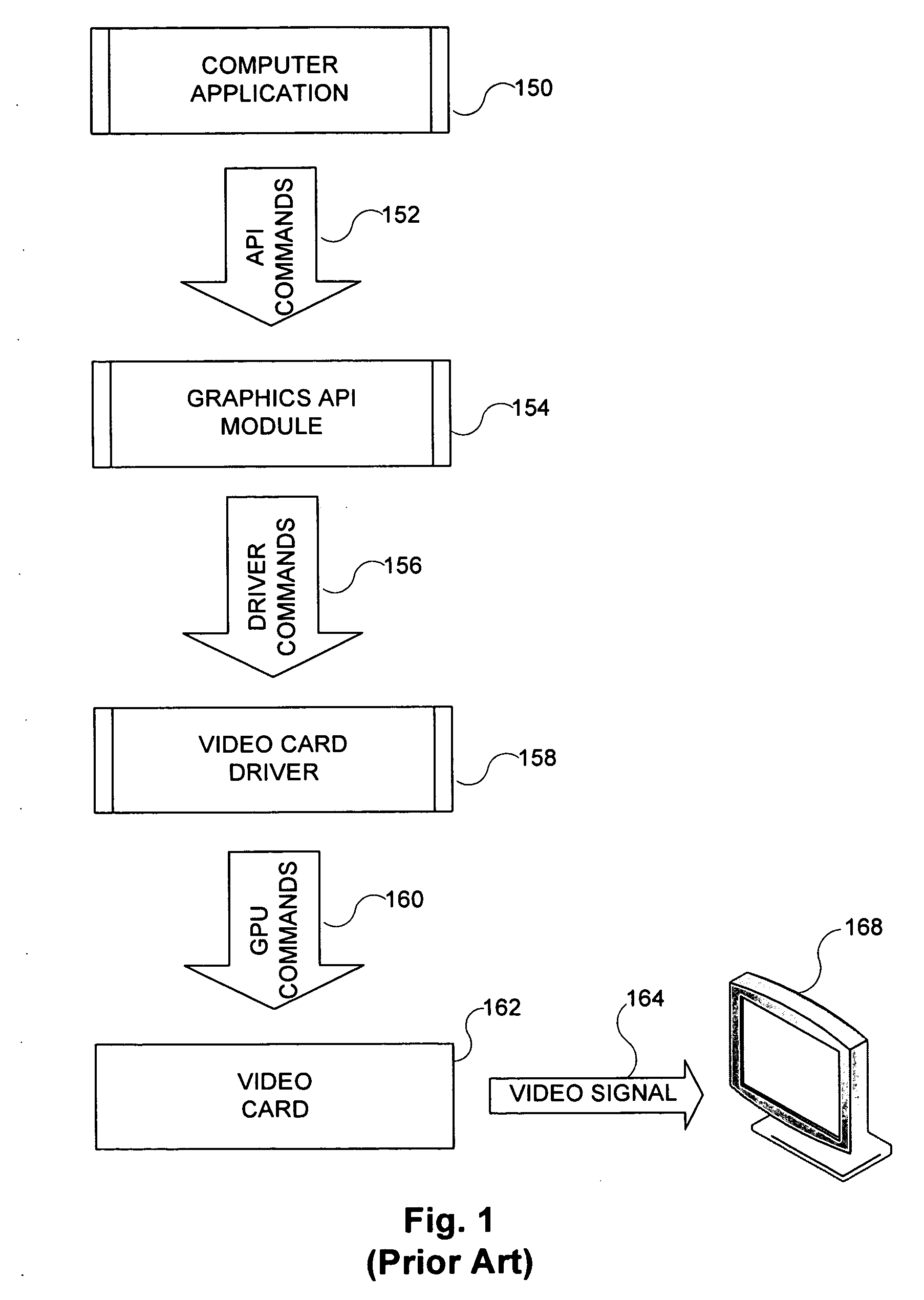

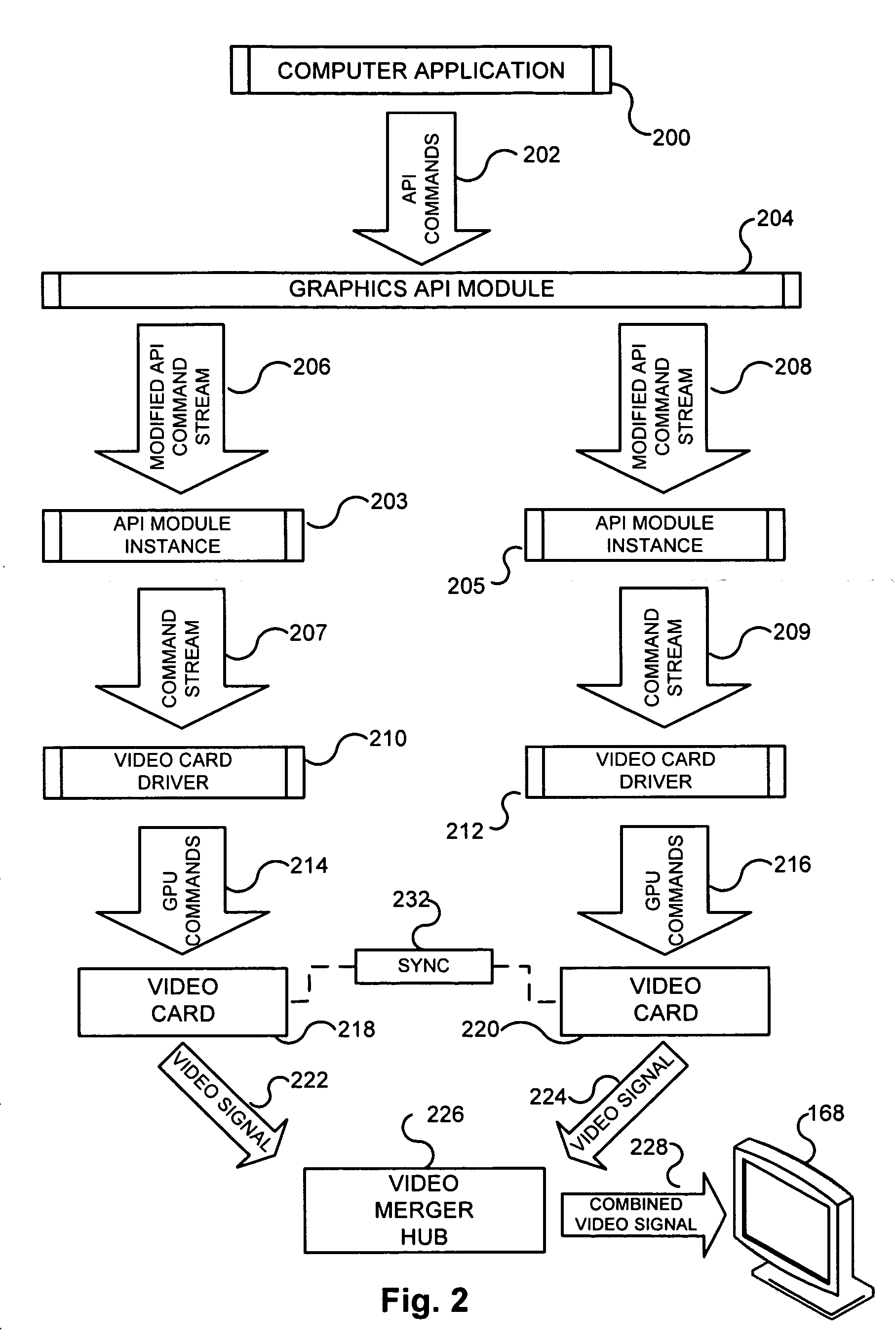

Multiple parallel processor computer graphics system

InactiveUS20080211816A1Improve throughputMinimal amount of processingCathode-ray tube indicatorsMultiple digital computer combinationsGraphicsTime segment

An accelerated graphics processing subsystem combines the processing power of multiple graphics processing units (GPUs) or video cards. Video processing by the multiple video cards is organized by time division such that each video card is responsible for video data processing during a different time period. For example, two video cards may take turns, with the first video card controlling a display for a certain time period and the second video sequentially assuming video processing duties for a subsequent period. In this way, as one video card is managing the display in one time period, the second video card is processing video data for the for the next time period, thereby allowing extensive processing of the video data before the start of the next time period. The present invention may further incorporate load balancing such that the duration of the processing time periods for each of the video cards is dynamically modified to maximize composite video processing.

Owner:ALIENWARE LABS

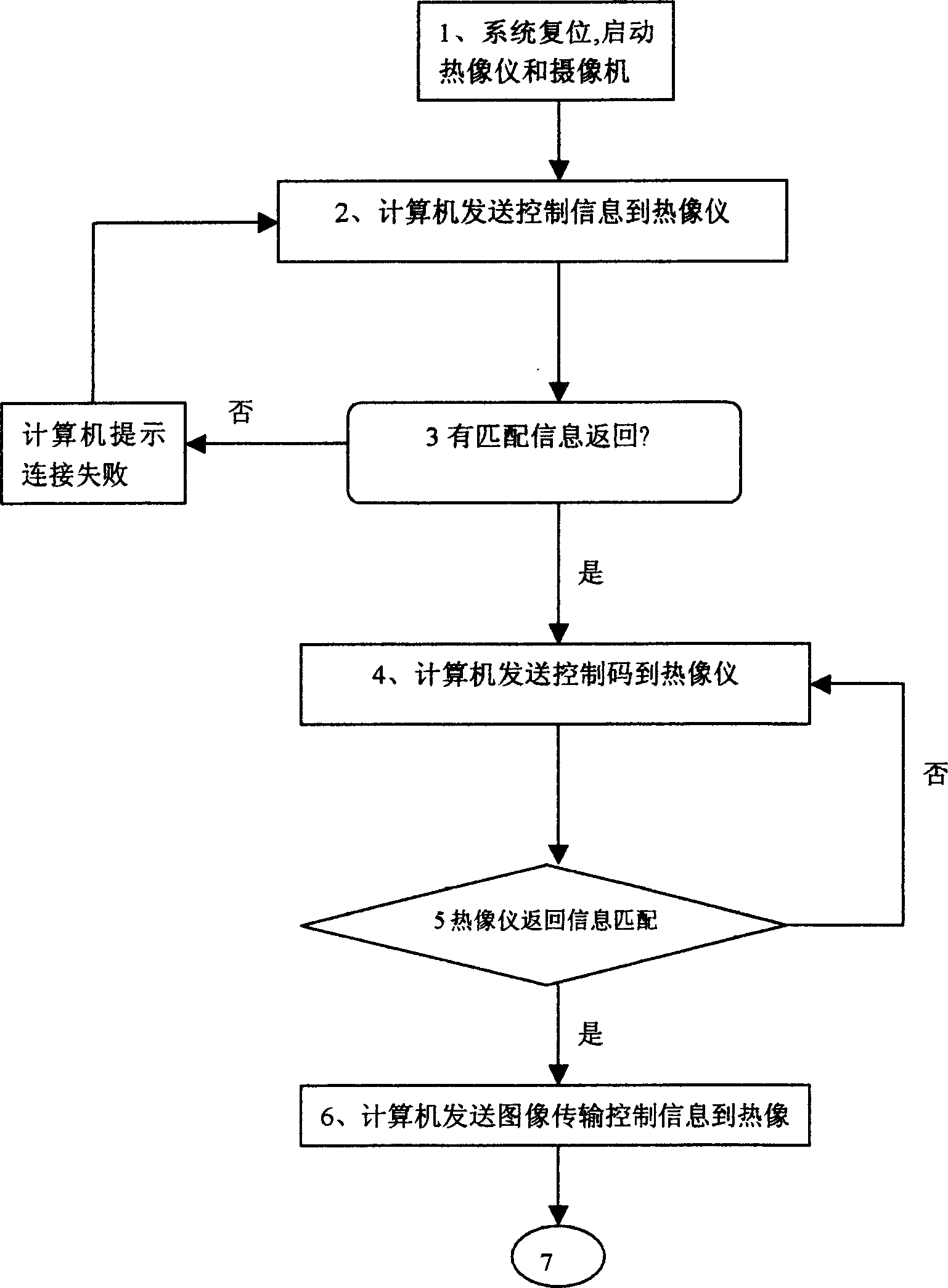

Real time display control device for composite video of infrared thermal imaging image and visible light image

The invention is a video frequency real-time displaying control device for infrared heat imaging image and visible image. The device includes a computer, an infrared heat imaging device, visible camera whose field angle can match with it; there has a video collecting card on the interface slot of the computer, the infrared image device and the visible camera are fixed on the same platform; there stores control, temperature measuring, image processing and displaying procedure model, it can remote control the platform, the infrared heat device and the visible light camera, they can be rotated synchronously, the field angles of the posterior two match, and makes the heat imaging image and the visible light image can coincided with the coordinate of the computer monitor. The invention can carry on real-time memorization, displaying, imaging, and playbacks the heat imaging image and the visible light image, it also can display the temperature valve and position coordinates of the object, it also has merits of infrared imaging and visible light image.

Owner:GUANGZHOU KEII ELECTRO OPTICS TECH

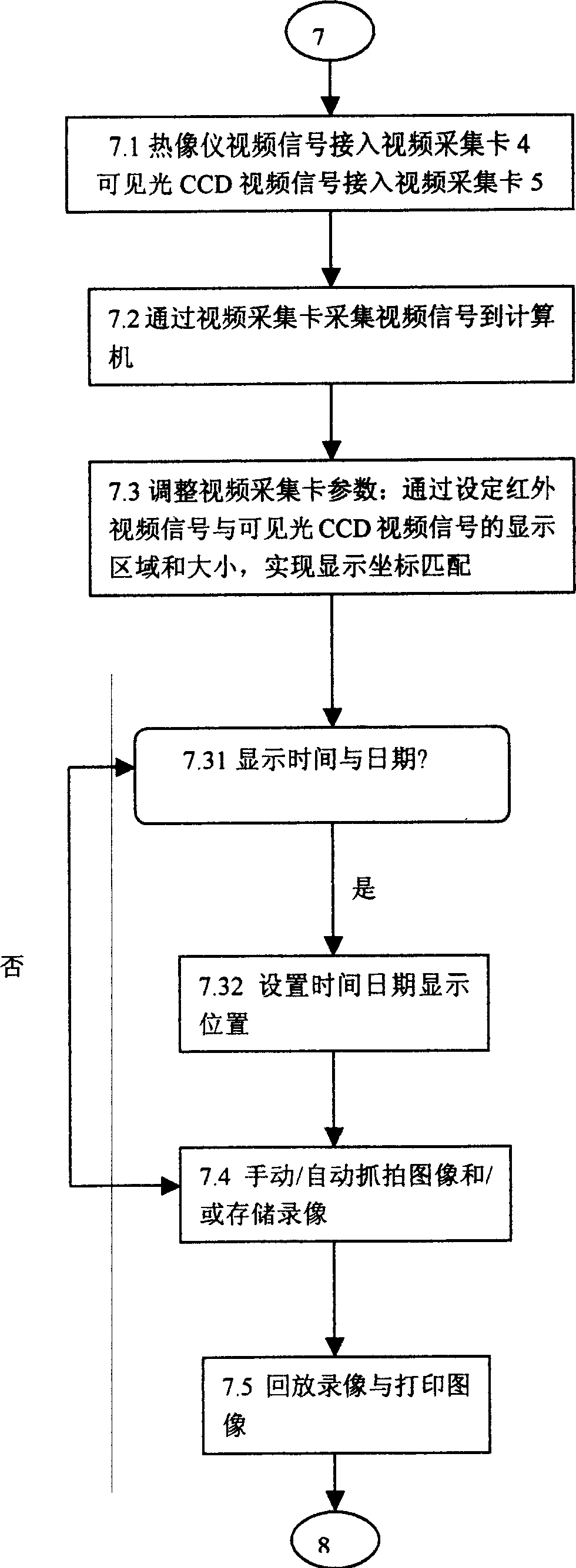

Method and system for processing an annotated digital photograph using a composite image

InactiveUS7092116B2Limited accessImprove image qualityVisual presentationElectric digital data processingComputer graphics (images)Barcode

An annotated digital photograph processing system that forms a plurality of composite image files from a plurality of corresponding digital images, annotation information and process control information, and prints them as a plurality of composite images on a roll of photographic medium. Each composite image file may be generated by a server computer, which also receives the components thereof, the file comprising the image and a first symbol. The first symbol includes process control information, and may optionally include annotation information. A roll containing the composite images is processed by a novel printing apparatus that comprises a scanner and inkjet print-heads connected to a control computer, to print the annotation and a second symbol, which includes process control information, directly behind the image to which they correspond after the destructive effects of photo-processing chemicals occur. Then, the first barcode is severed from the medium for each printed image.

Owner:CALAWAY DOUGLAS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com