Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

26302 results about "Convolutional neural network" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

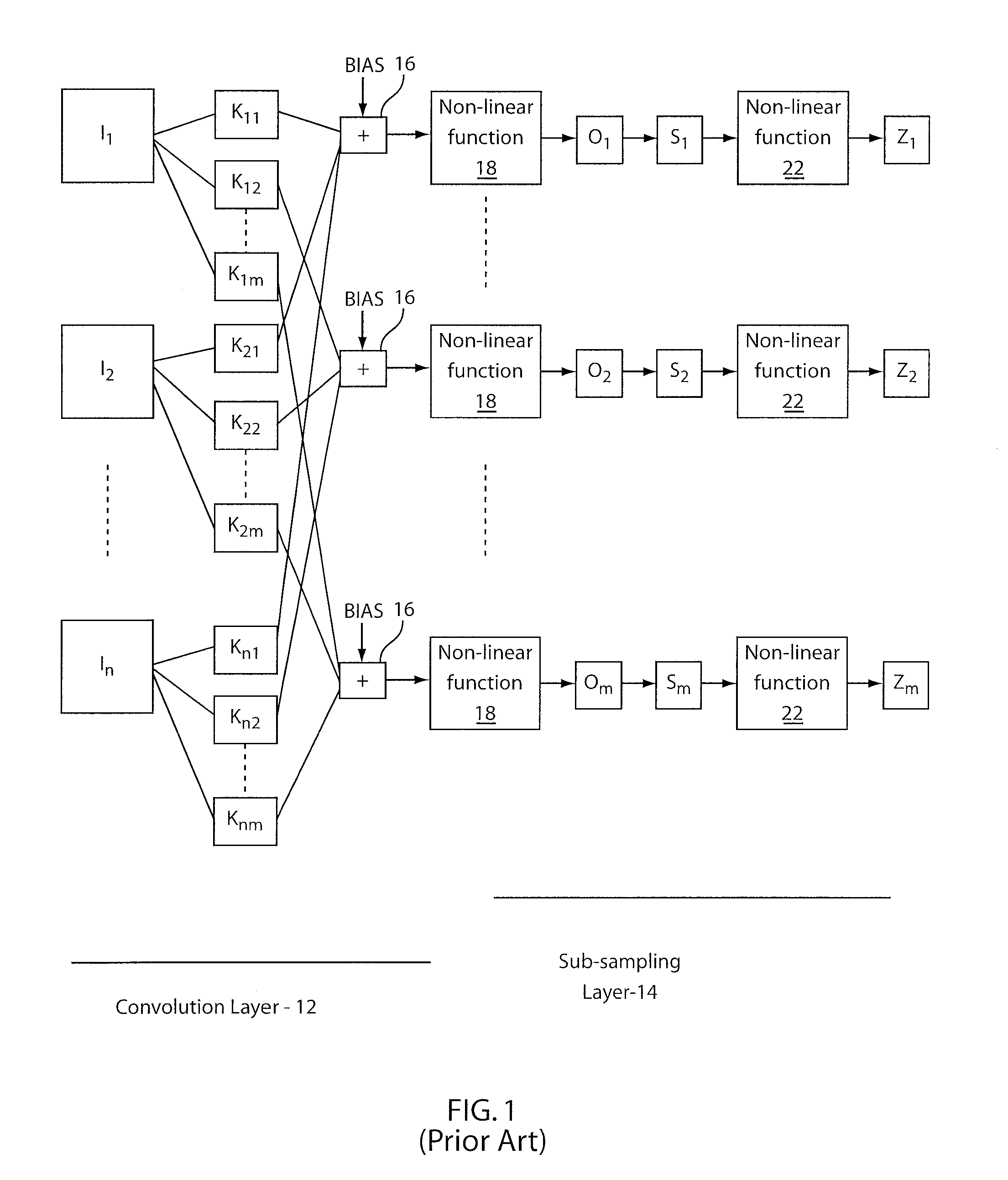

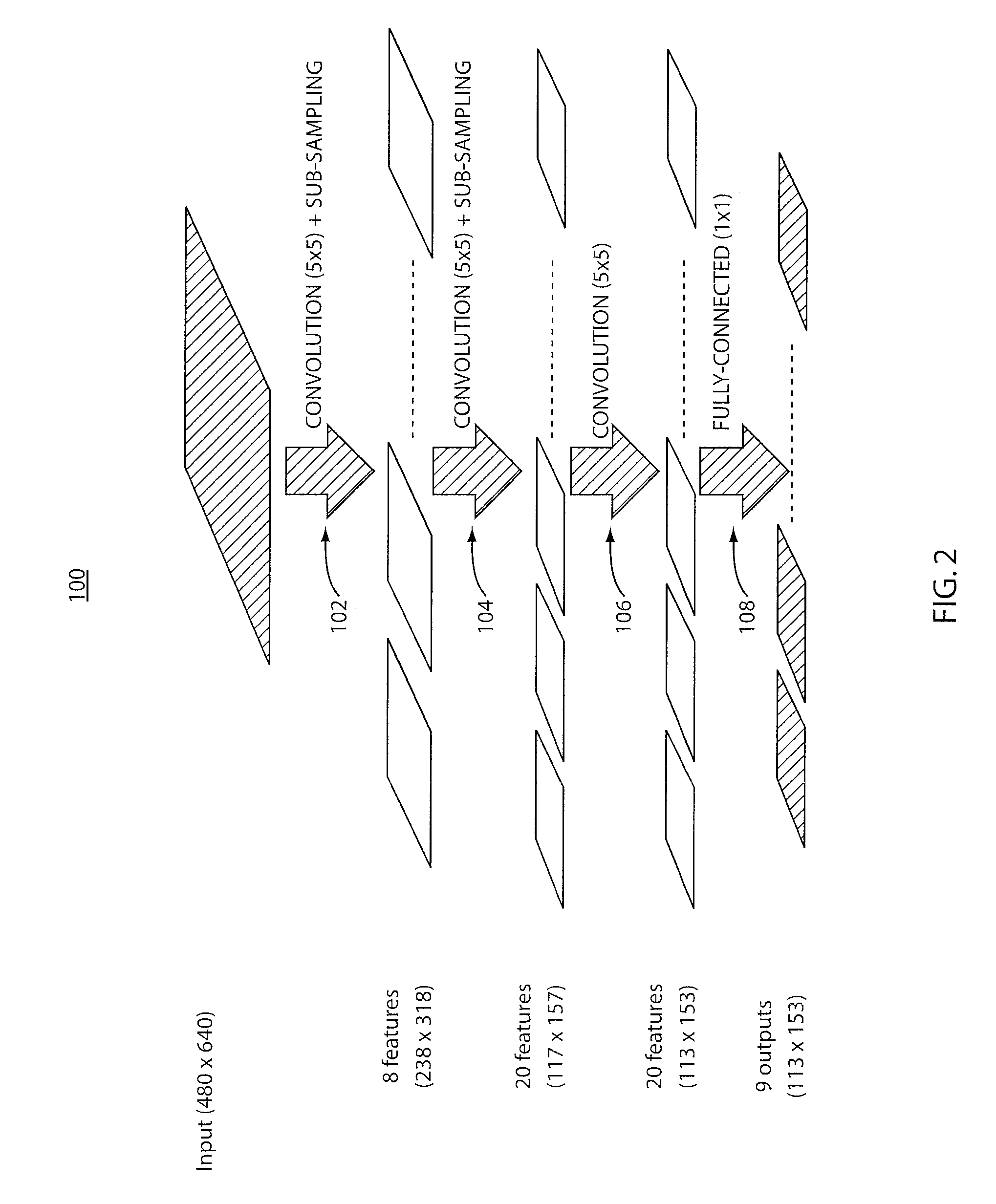

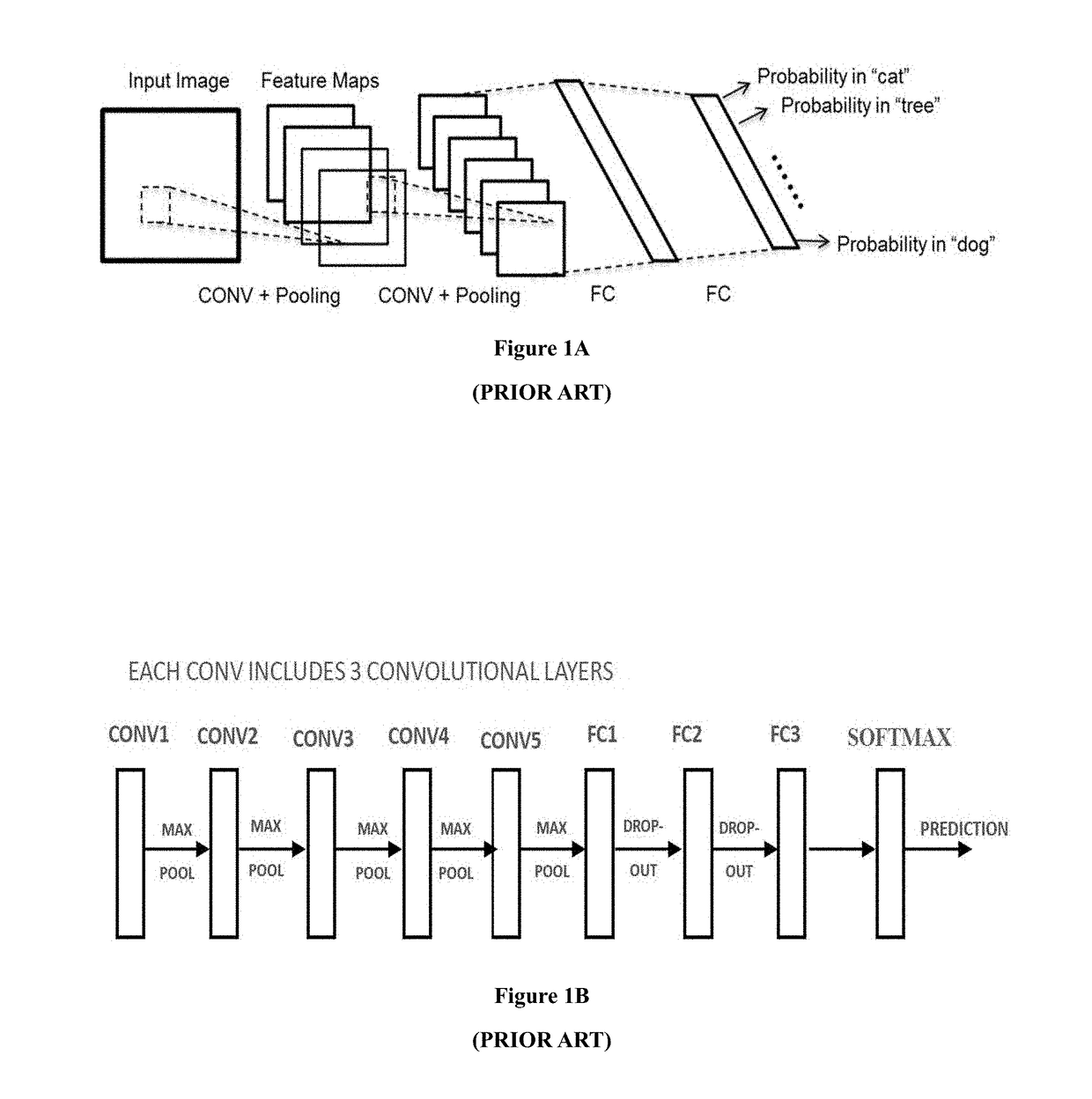

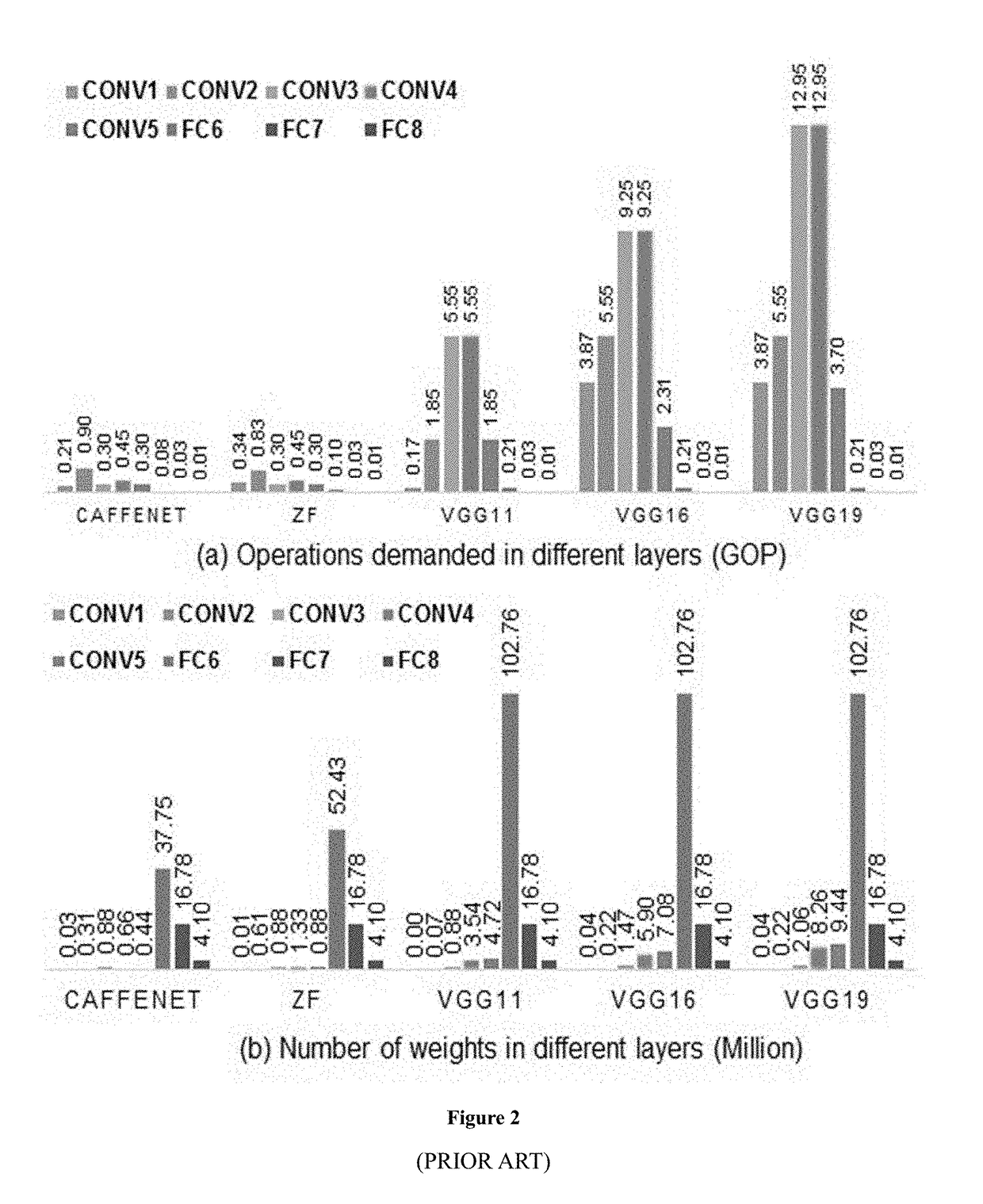

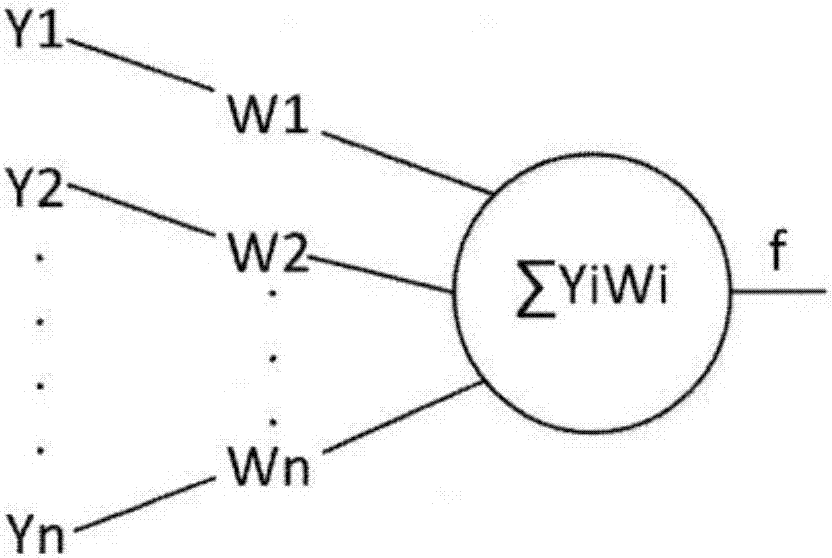

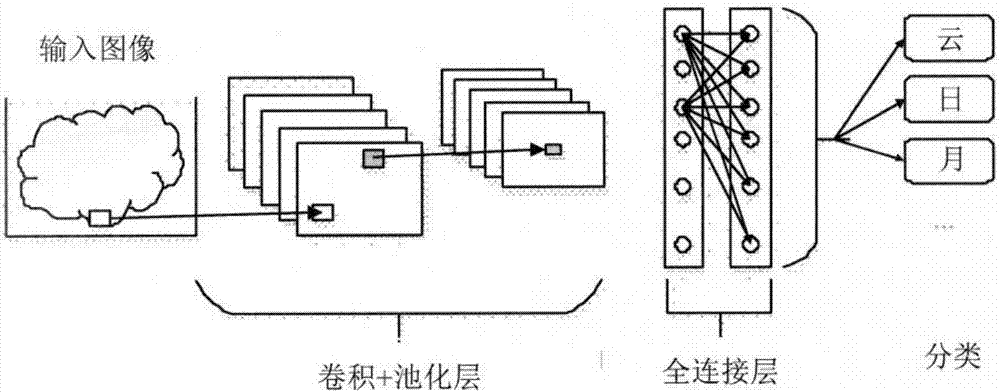

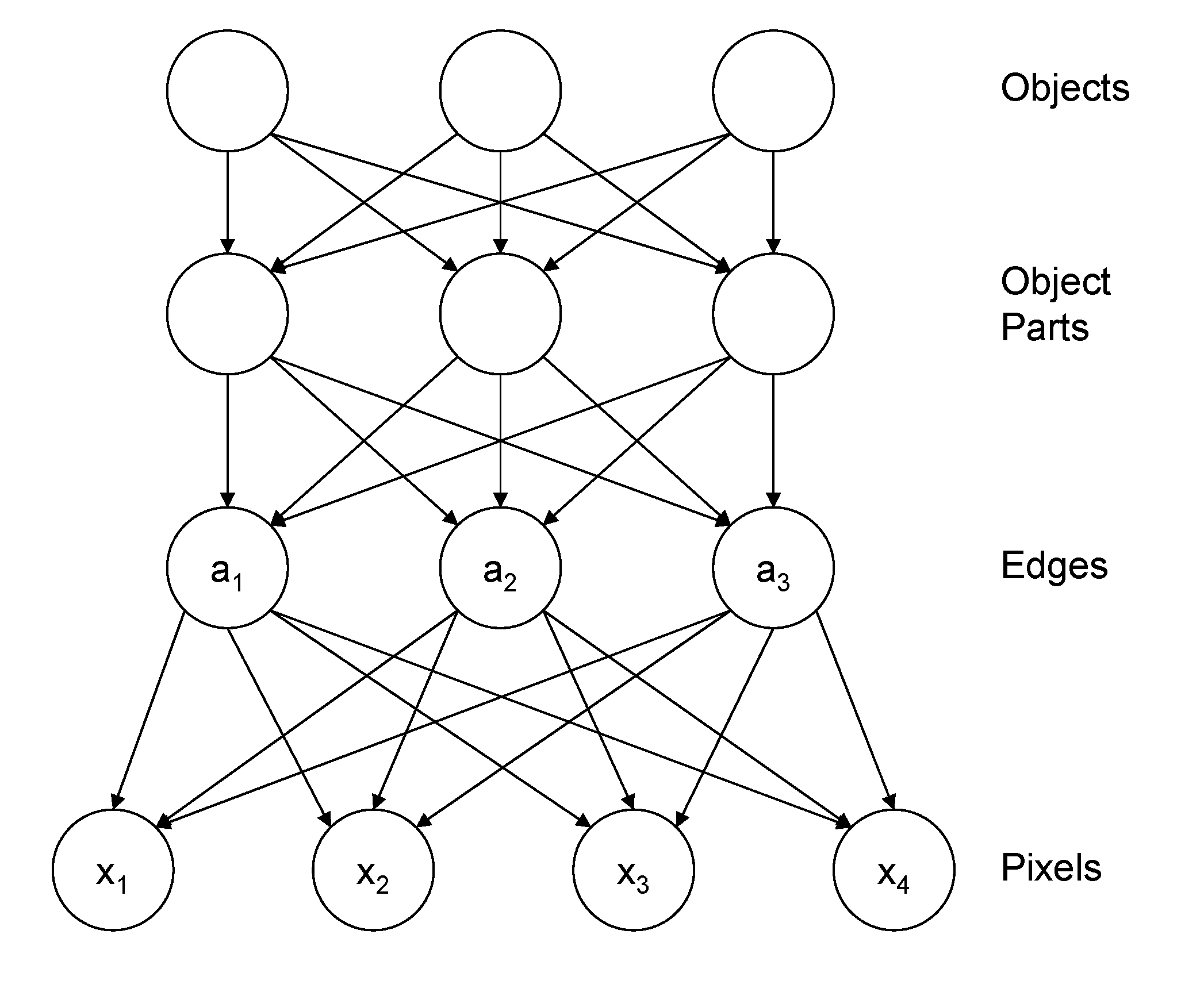

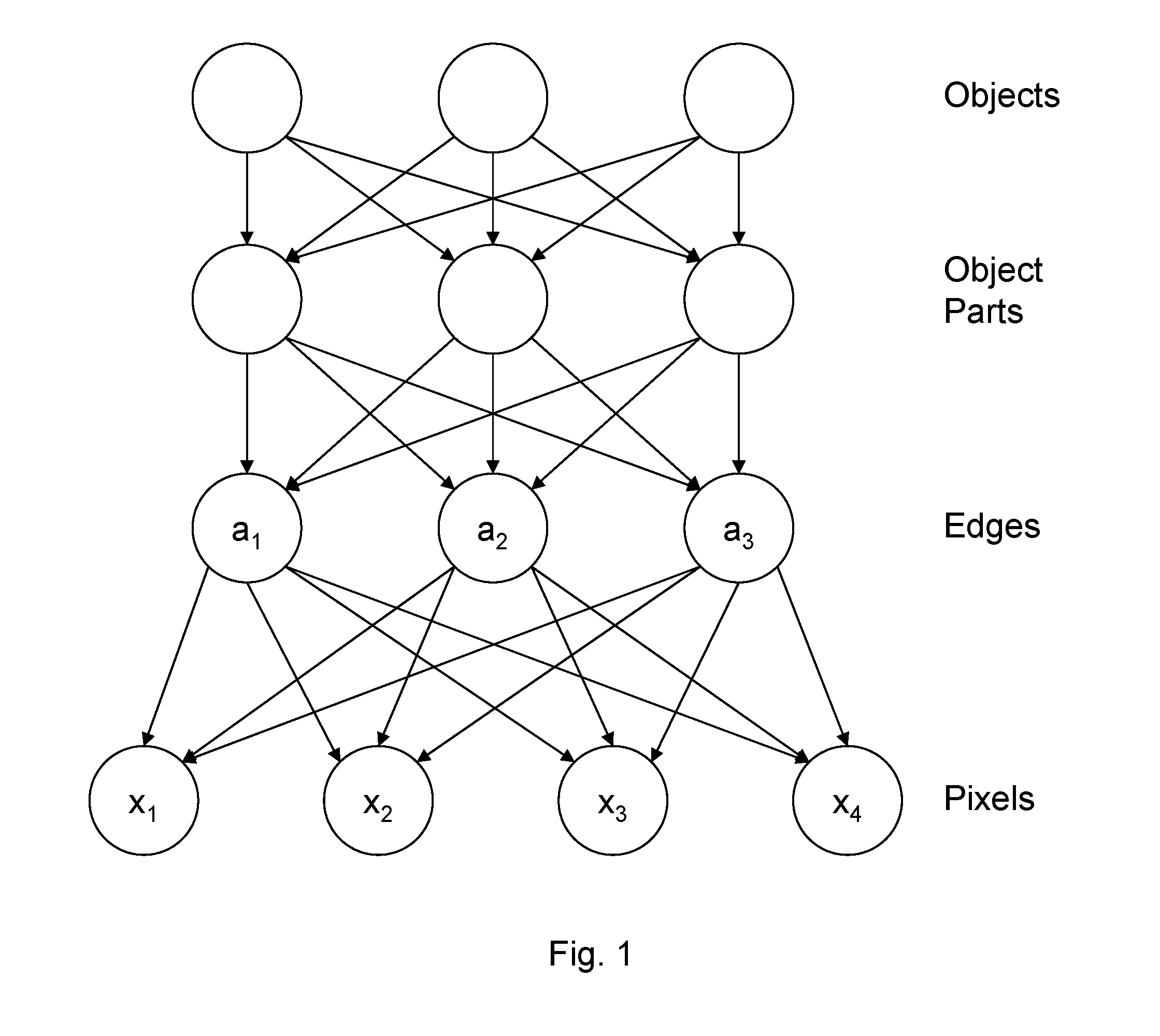

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of deep neural networks, most commonly applied to analyzing visual imagery. CNNs are regularized versions of multilayer perceptrons. Multilayer perceptrons usually mean fully connected networks, that is, each neuron in one layer is connected to all neurons in the next layer. The "fully-connectedness" of these networks makes them prone to overfitting data. Typical ways of regularization include adding some form of magnitude measurement of weights to the loss function. However, CNNs take a different approach towards regularization: they take advantage of the hierarchical pattern in data and assemble more complex patterns using smaller and simpler patterns. Therefore, on the scale of connectedness and complexity, CNNs are on the lower extreme.

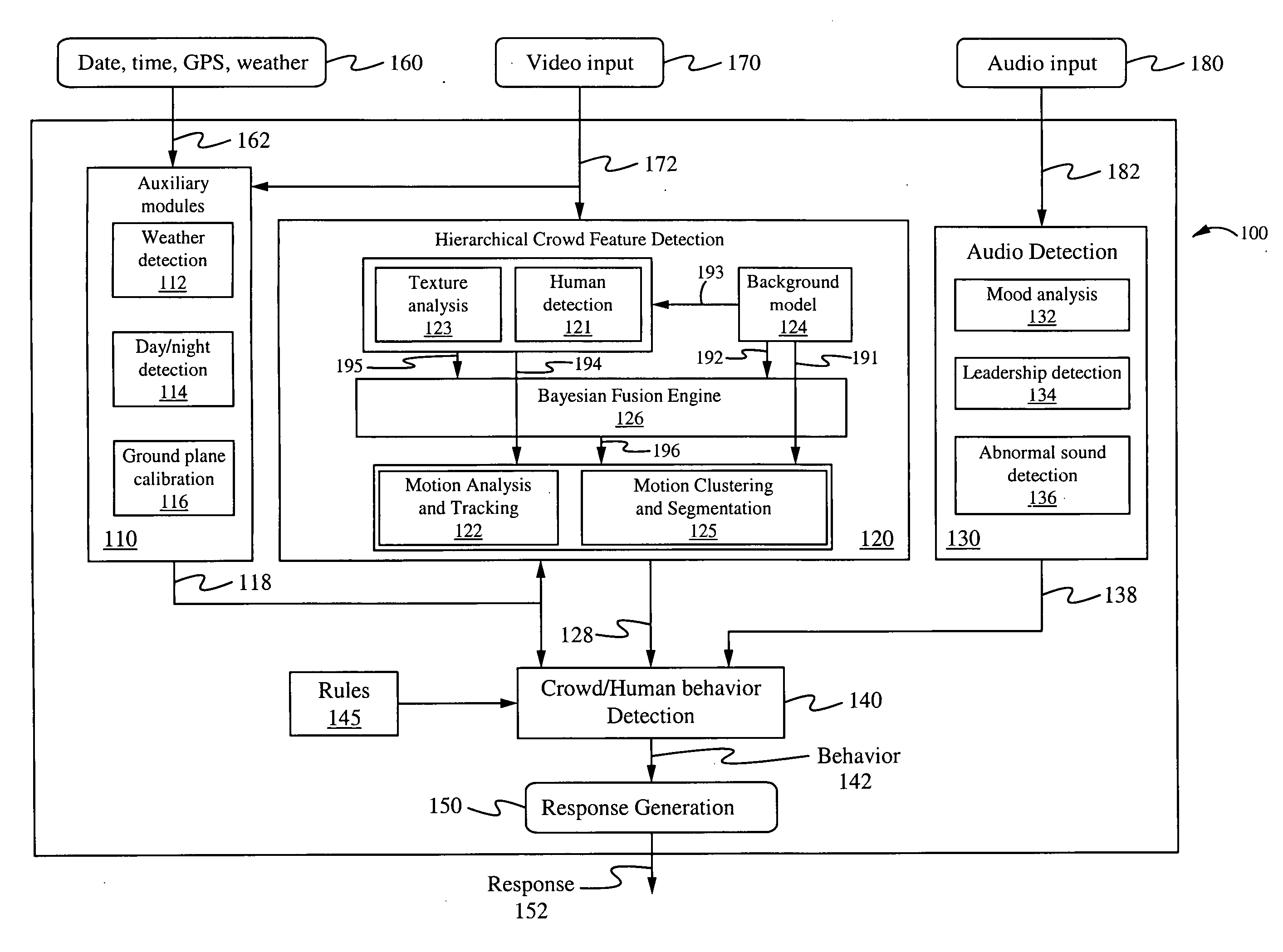

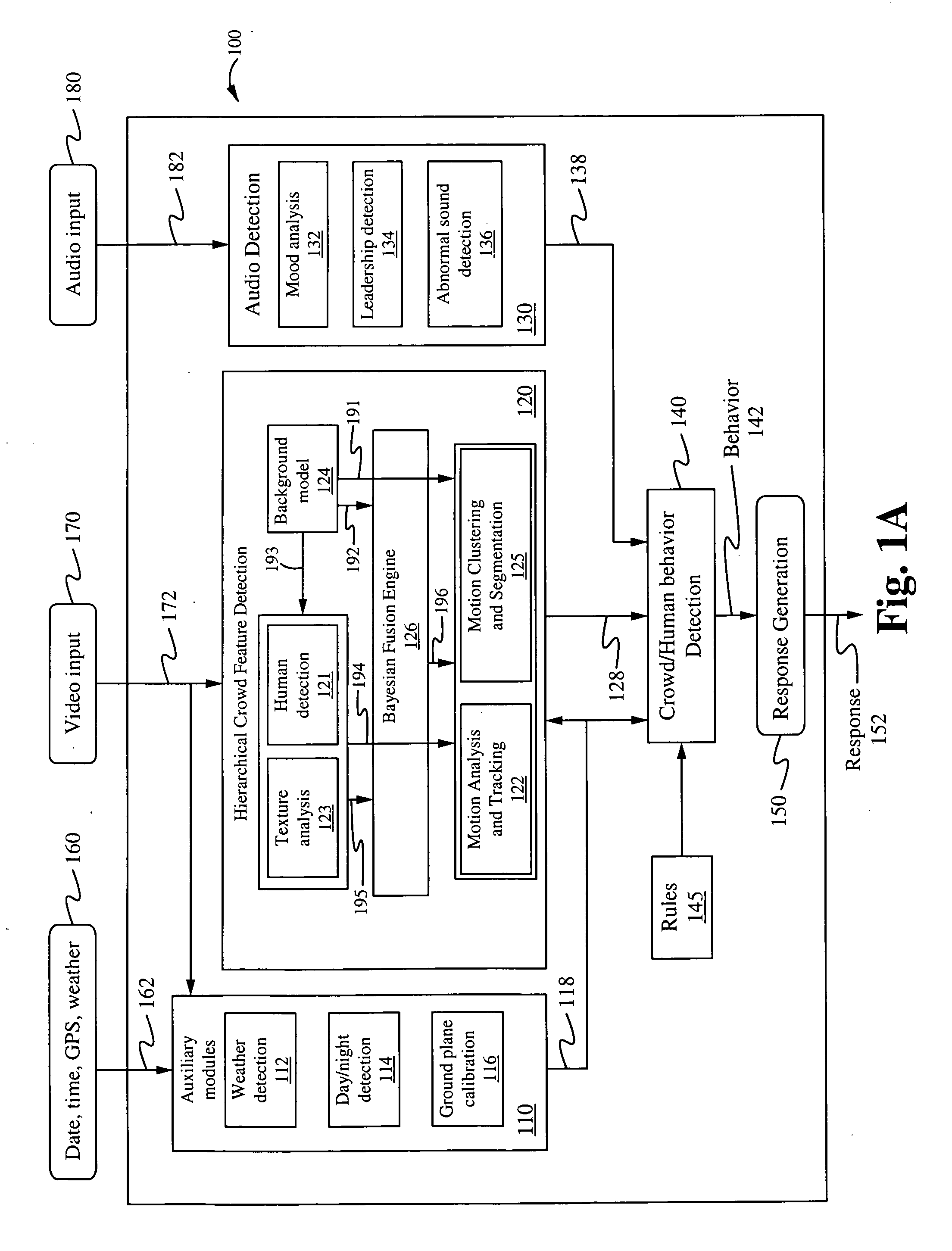

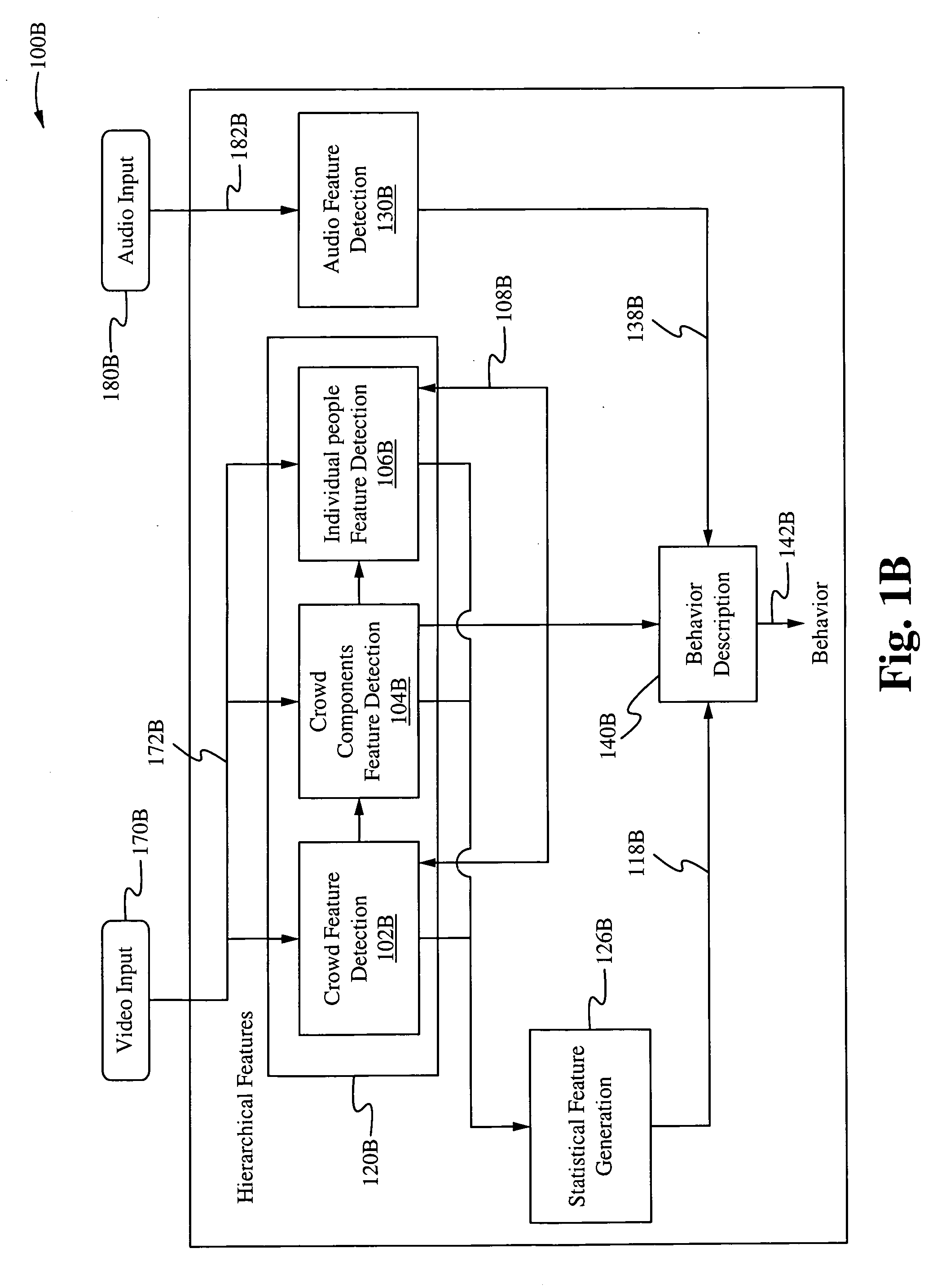

Method of and system for hierarchical human/crowd behavior detection

ActiveUS20090222388A1Improved crowd featureAdd featureKernel methodsDigital computer detailsAdaBoostCrowds

The present invention is directed to a computer automated method of selectively identifying a user specified behavior of a crowd. The method comprises receiving video data but can also include audio data and sensor data. The video data contains images a crowd. The video data is processed to extract hierarchical human and crowd features. The detected crowd features are processed to detect a selectable crowd behavior. The selected crowd behavior detected is specified by a configurable behavior rule. Human detection is provided by a hybrid human detector algorithm which can include Adaboost or convolutional neural network. Crowd features are detected using textual analysis techniques. The configurable crowd behavior for detection can be defined by crowd behavioral language.

Owner:AXIS

Vehicle type recognition method based on rapid R-CNN deep neural network

ActiveCN106250812AScalableQuick Subclass IdentificationCharacter and pattern recognitionNeural learning methodsCategory recognitionNerve network

The invention discloses a vehicle type recognition method based on a rapid R-CNN deep neural network, which mainly comprises unsupervised deep learning, a multilayer CNN (Convolutional Neural Network), a regional advice network, network sharing and a softmax classifier. The vehicle type recognition method realizes a framework for implementing end-to-end vehicle detection and recognition by using one rapid R-CNN network in a real sense, and is capable of carrying out quick vehicle sub-category recognition with high accuracy and robustness under the environment of being applicable to the shape diversity, the illumination variation diversity, the background diversity and the like of vehicle targets.

Owner:汤一平

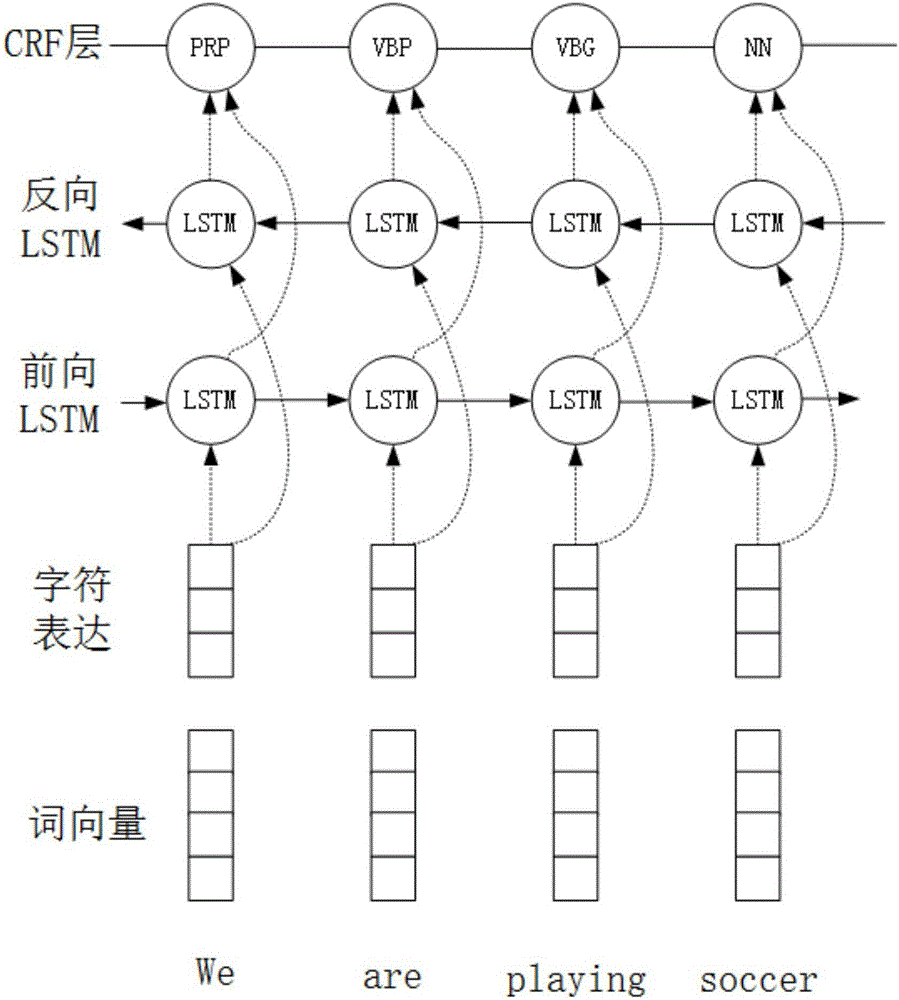

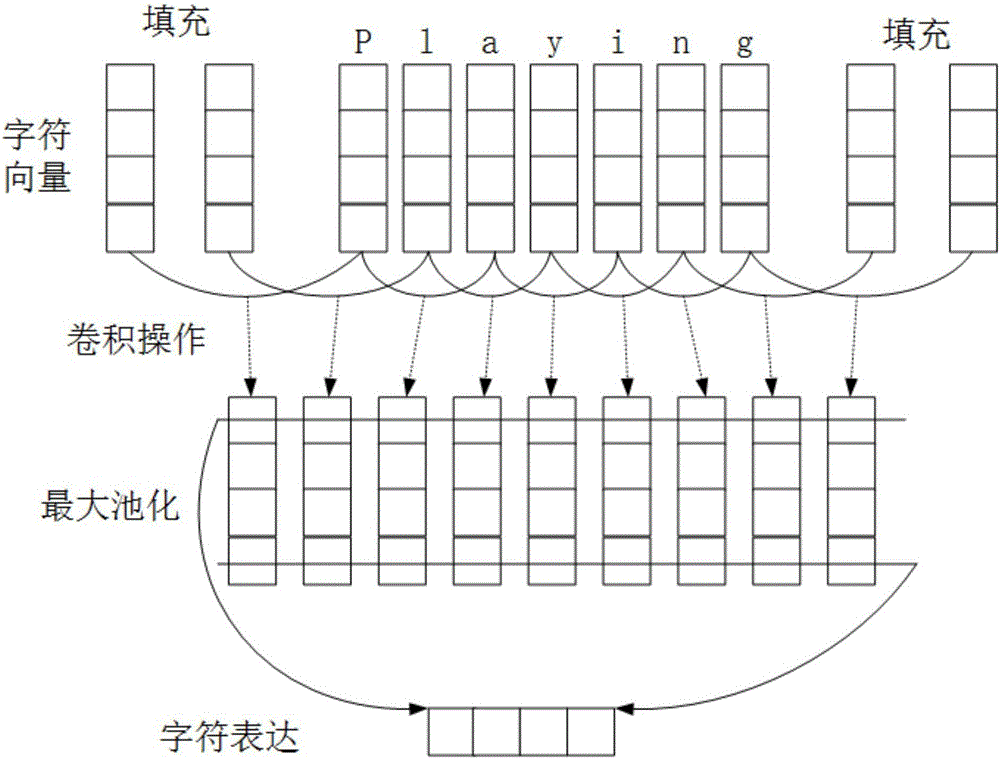

Text named entity recognition method based on Bi-LSTM, CNN and CRF

InactiveCN106569998ASolving the Named Entity Labeling ProblemNatural language data processingNeural learning methodsConditional random fieldNerve network

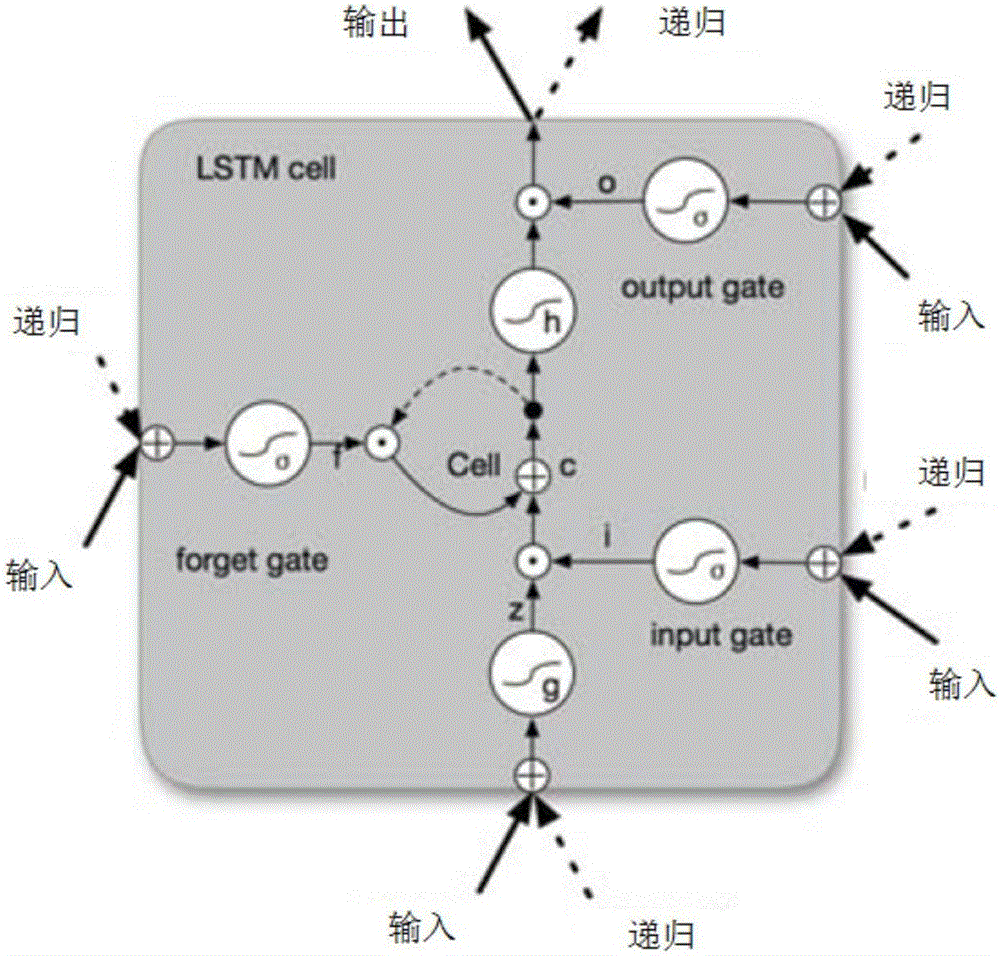

The invention discloses a text named entity recognition method based on Bi-LSTM, CNN and CRF. The method includes the following steps: (1) using a convolutional nerve network to encode and convert information on text word character level to a character vector; (2) combining the character vector and word vector into a combination which, as an input, is transmitted to a bidirectional LSTM neural network to build a model for contextual information of every word; and (3) in the output end of the LSTM neural network, utilizing continuous conditional random fields to carry out label decoding to a whole sentence, and mark the entities in the sentence. The invention is an end-to-end model without the need of data pre-processing in the un-marked corpus with the exception of the pre-trained word vector, therefore the invention can be widely applied for statement marking of different languages and fields.

Owner:ZHEJIANG UNIV

Method for accelerating convolution neutral network hardware and AXI bus IP core thereof

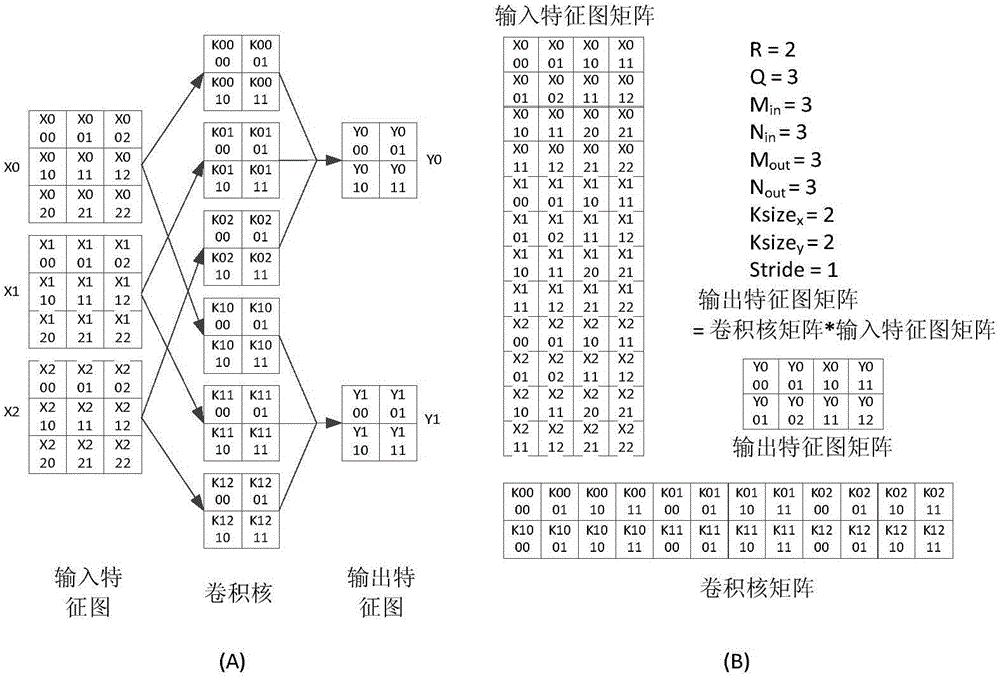

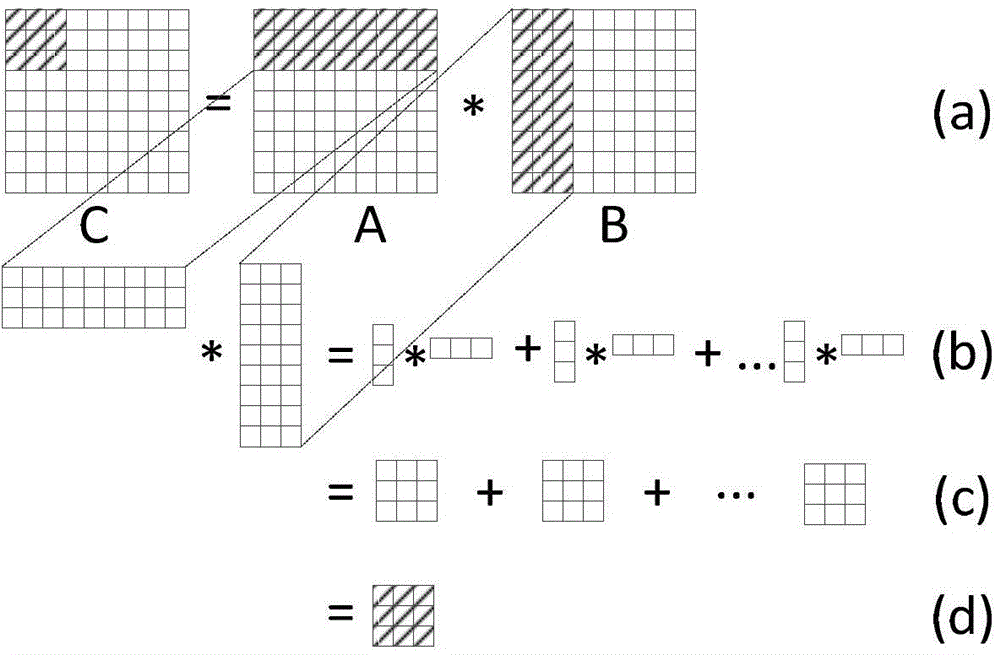

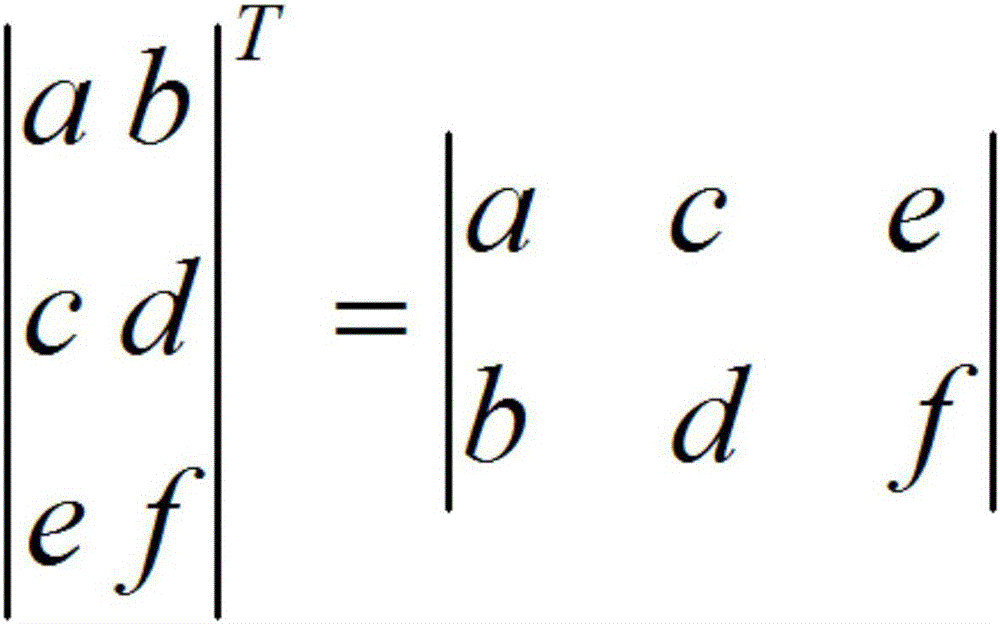

ActiveCN104915322AImprove adaptabilityIncrease flexibilityResource allocationMultiple digital computer combinationsChain structureMatrix multiplier

The invention discloses a method for accelerating convolution neutral network hardware and an AXI bus IP core thereof. The method comprises the first step of performing operation and converting a convolution layer into matrix multiplication of a matrix A with m lines and K columns and a matrix B with K lines and n columns; the second step of dividing the matrix result into matrix subblocks with m lines and n columns; the third step of starting a matrix multiplier to prefetch the operation number of the matrix subblocks; and the fourth step of causing the matrix multiplier to execute the calculation of the matrix subblocks and writing the result back to a main memory. The IP core comprises an AXI bus interface module, a prefetching unit, a flow mapper and a matrix multiplier. The matrix multiplier comprises a chain type DMA and a processing unit array, the processing unit array is composed of a plurality of processing units through chain structure arrangement, and the processing unit of a chain head is connected with the chain type DMA. The method can support various convolution neutral network structures and has the advantages of high calculation efficiency and performance, less requirements for on-chip storage resources and off-chip storage bandwidth, small in communication overhead, convenience in unit component upgrading and improvement and good universality.

Owner:NAT UNIV OF DEFENSE TECH

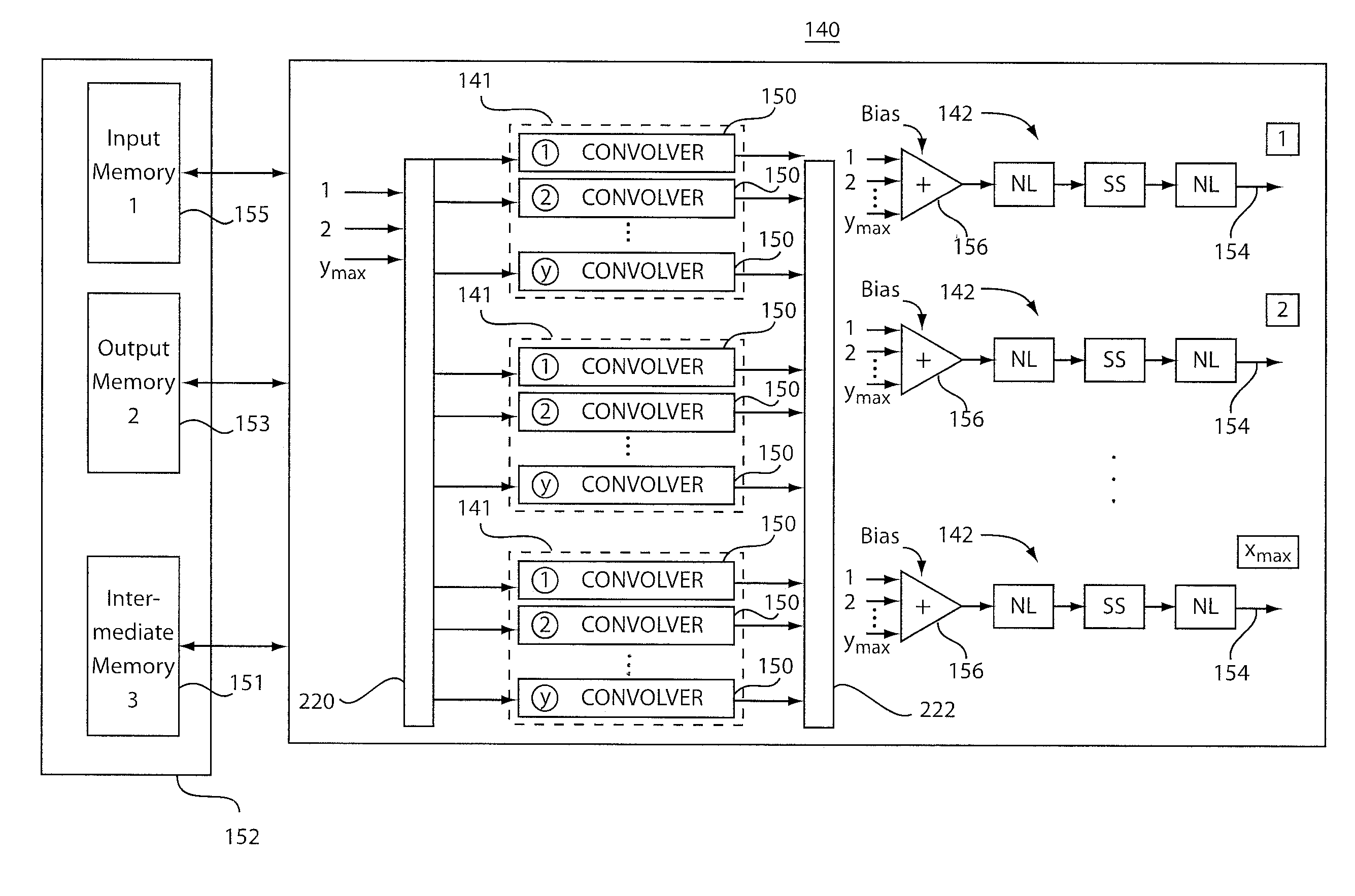

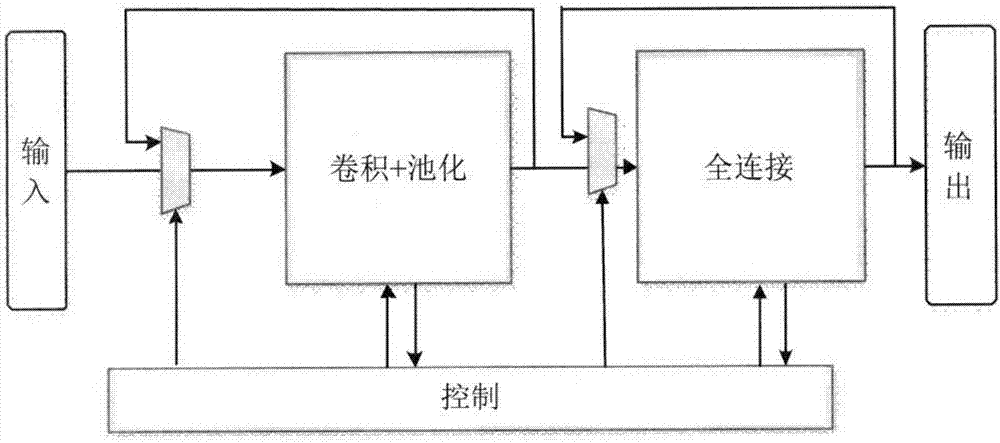

Dynamically configurable, multi-ported co-processor for convolutional neural networks

ActiveUS20110029471A1Improve feed-forward processing speedGeneral purpose stored program computerDigital dataCoprocessorControl signal

A coprocessor and method for processing convolutional neural networks includes a configurable input switch coupled to an input. A plurality of convolver elements are enabled in accordance with the input switch. An output switch is configured to receive outputs from the set of convolver elements to provide data to output branches. A controller is configured to provide control signals to the input switch and the output switch such that the set of convolver elements are rendered active and a number of output branches are selected for a given cycle in accordance with the control signals.

Owner:NEC CORP

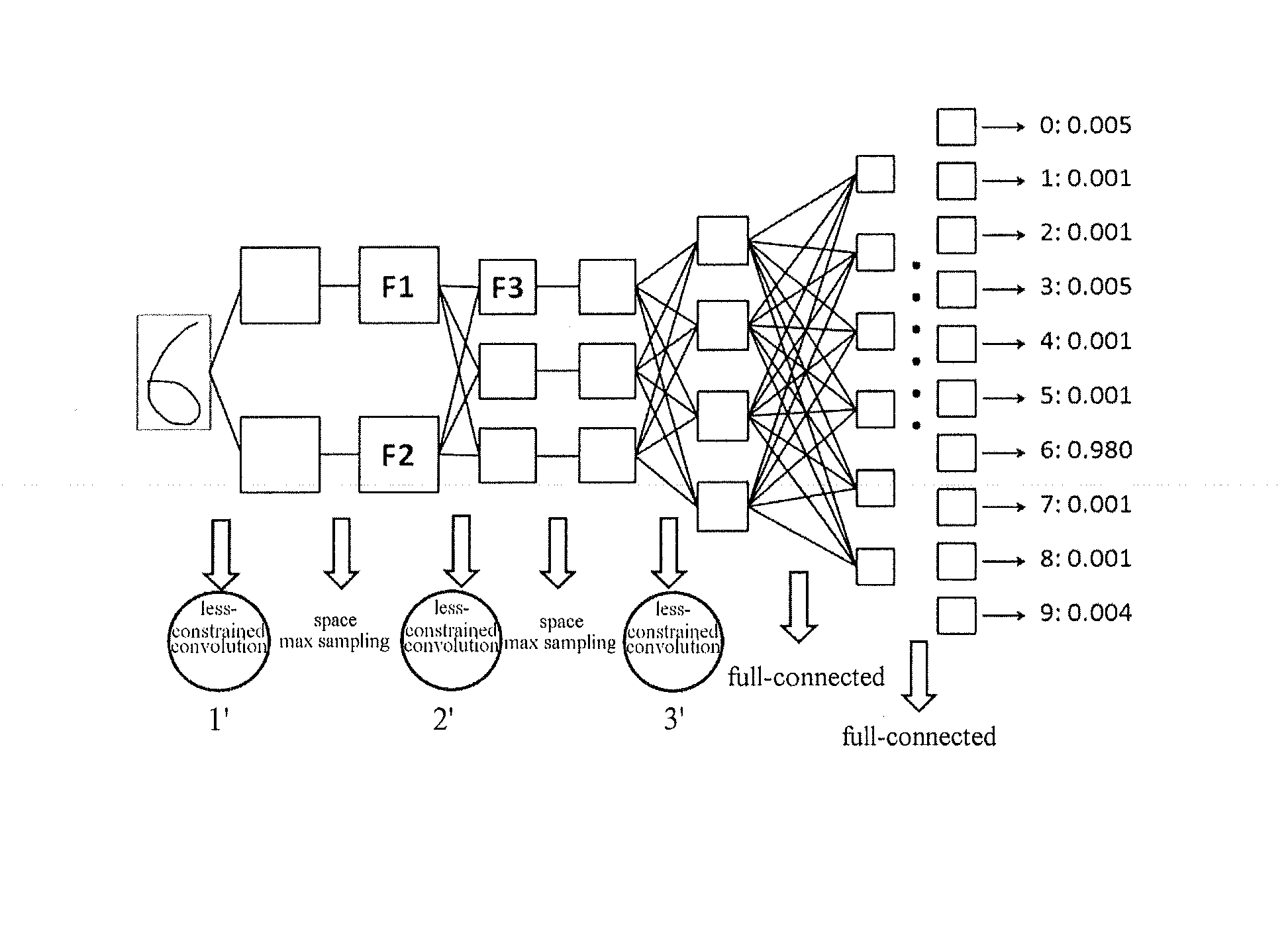

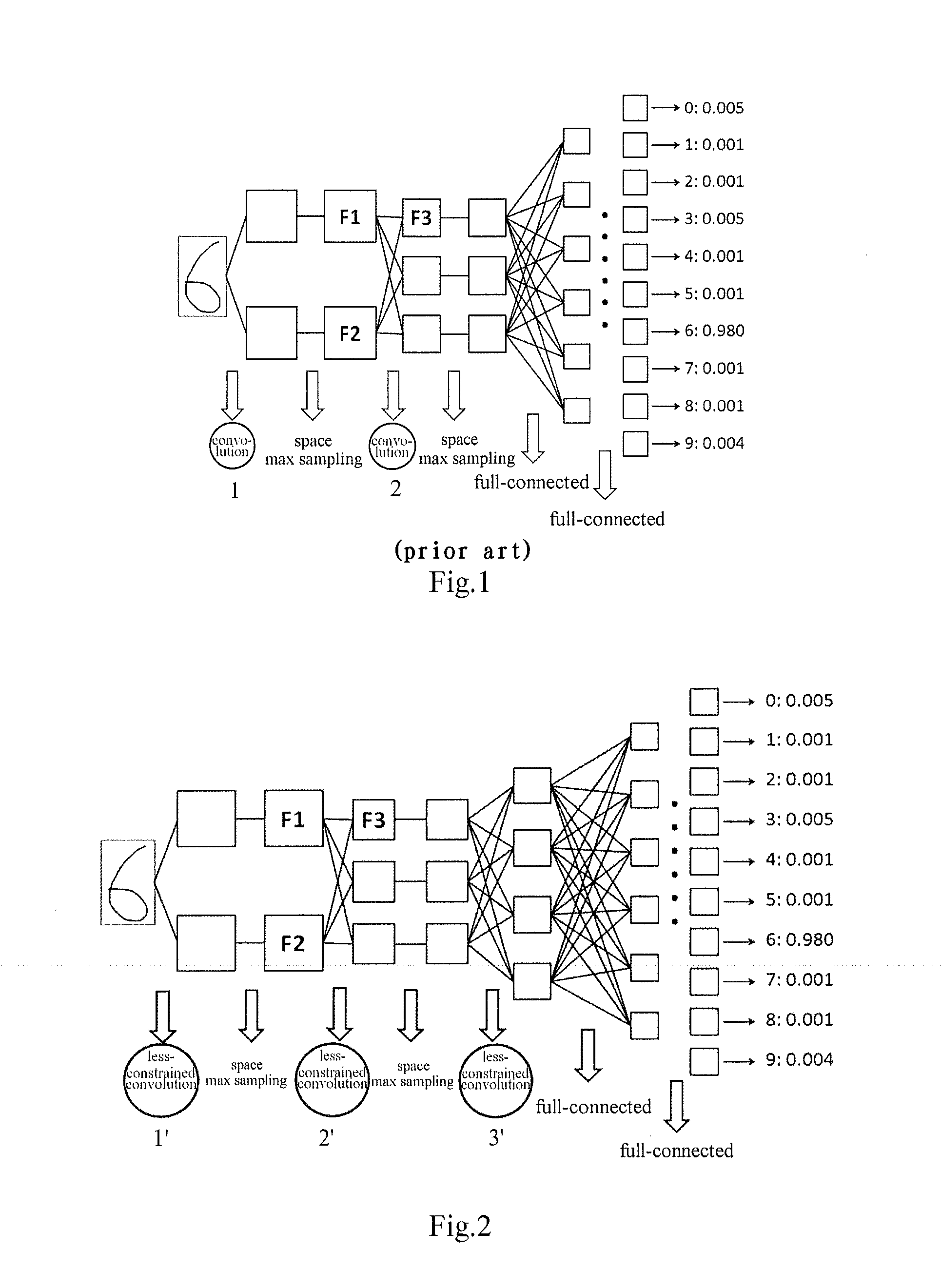

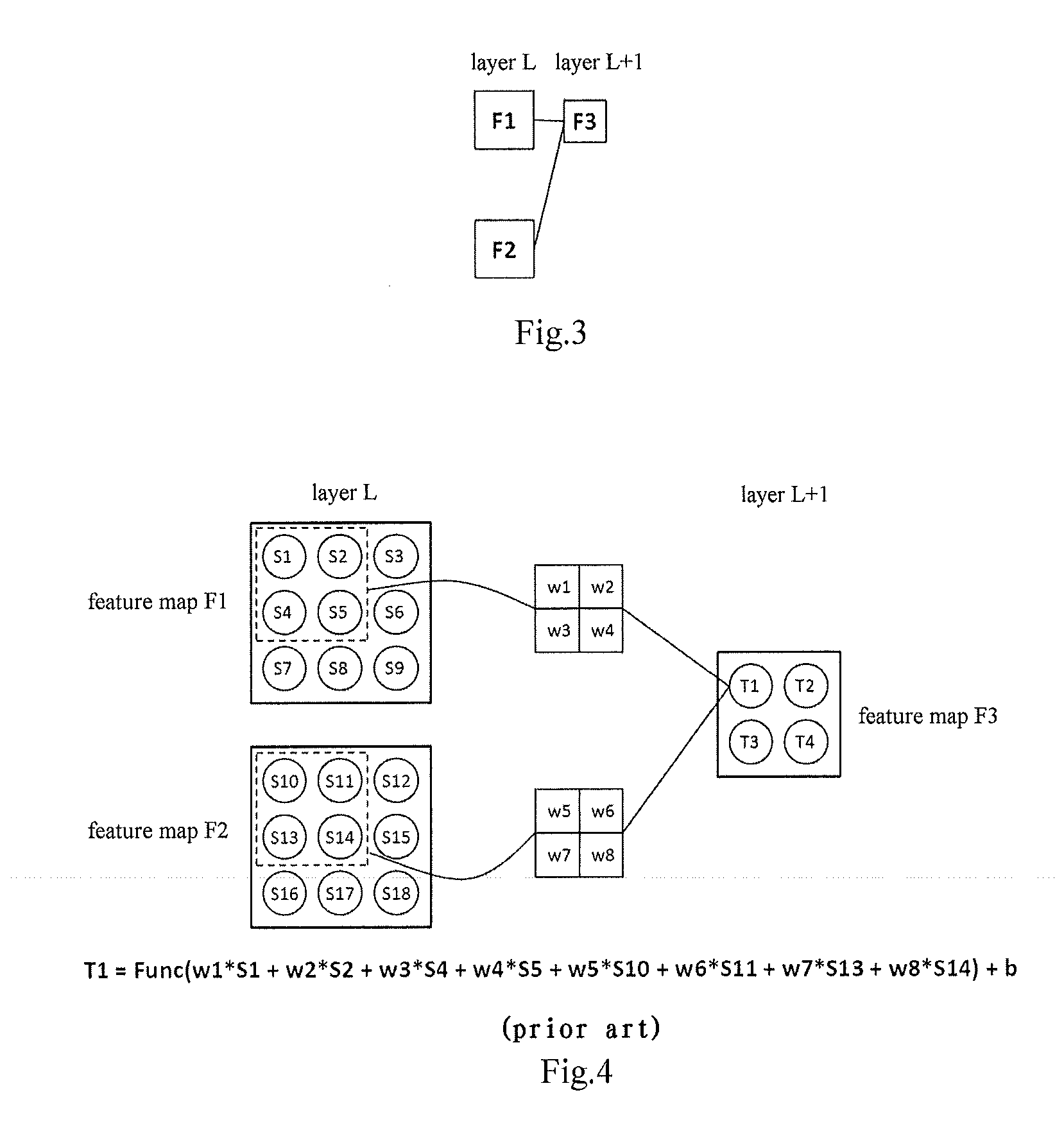

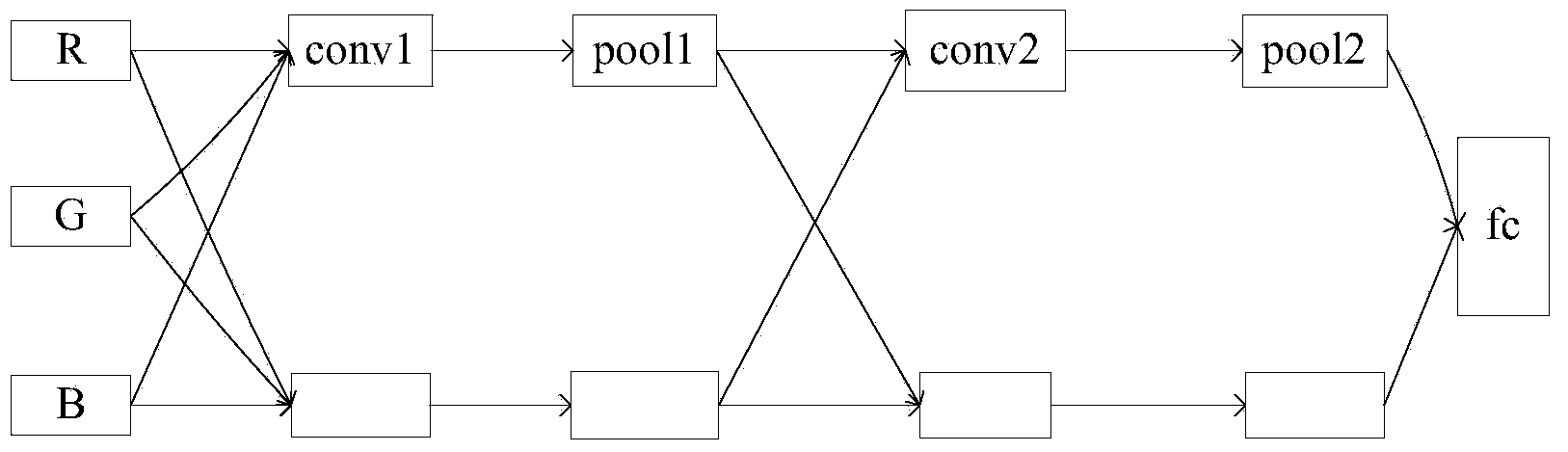

Convolutional-neural-network-based classifier and classifying method and training methods for the same

InactiveUS20150036920A1Character and pattern recognitionNeural learning methodsClassification methodsNeuron

The present invention relates to a convolutional-neural-network-based classifier, a classifying method by using a convolutional-neural-network-based classifier and a method for training the convolutional-neural-network-based classifier. The convolutional-neural-network-based classifier comprises: a plurality of feature map layers, at least one feature map in at least one of the plurality of feature map layers being divided into a plurality of regions; and a plurality of convolutional templates corresponding to the plurality of regions respectively, each of the convolutional templates being used for obtaining a response value of a neuron in the corresponding region.

Owner:FUJITSU LTD

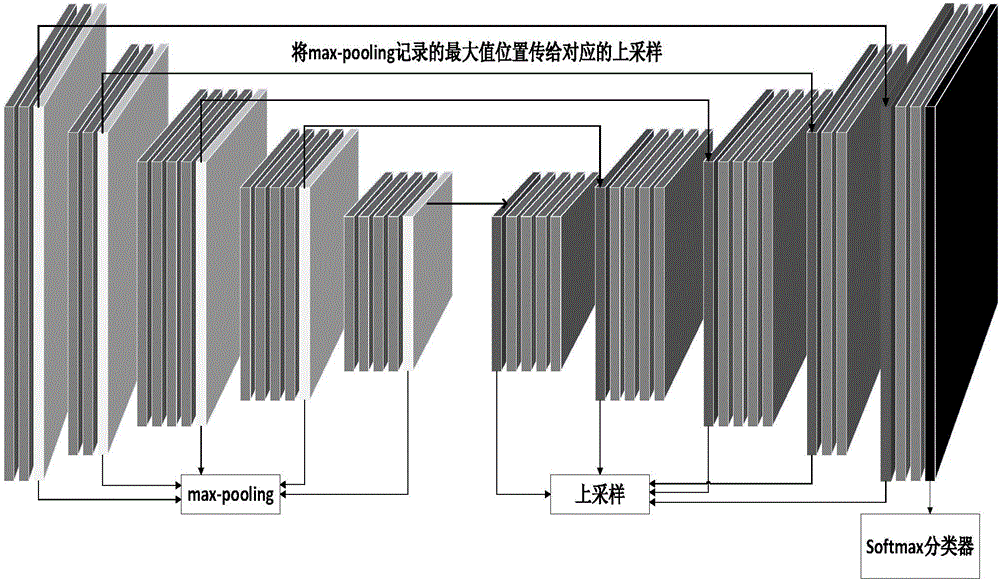

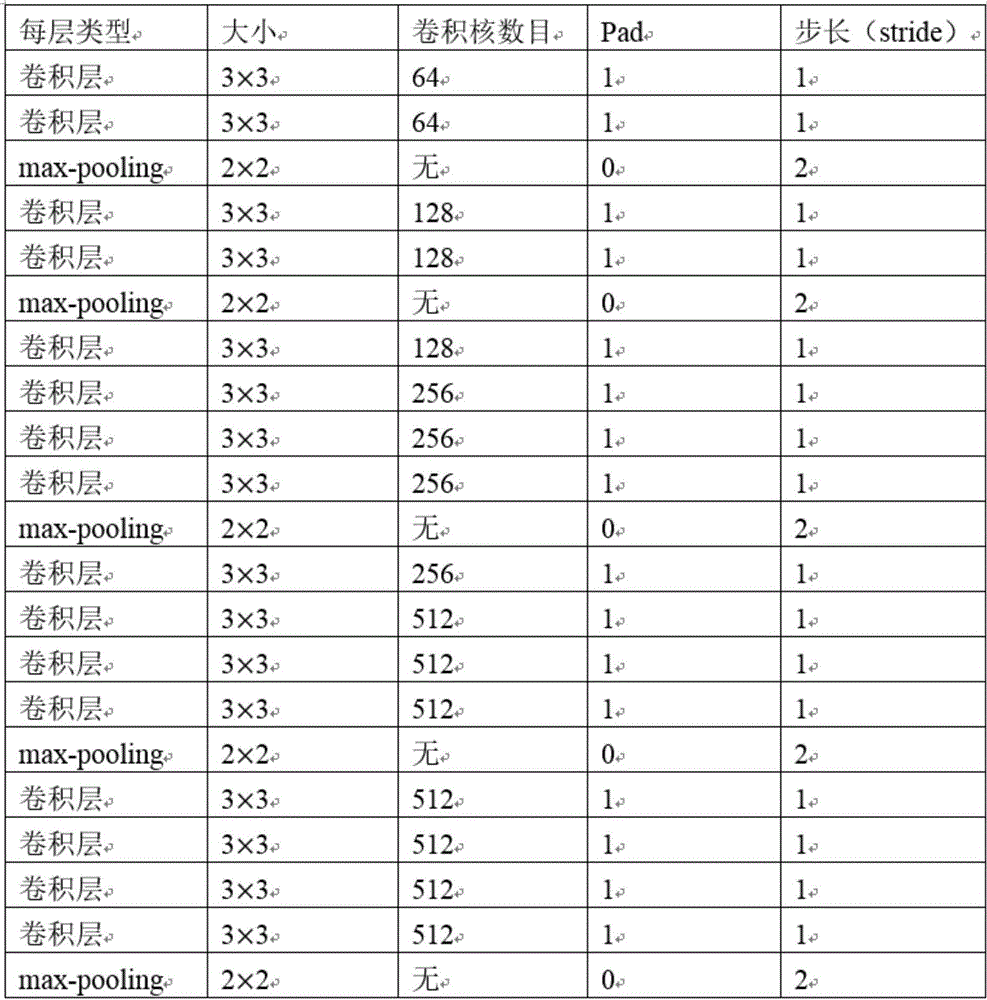

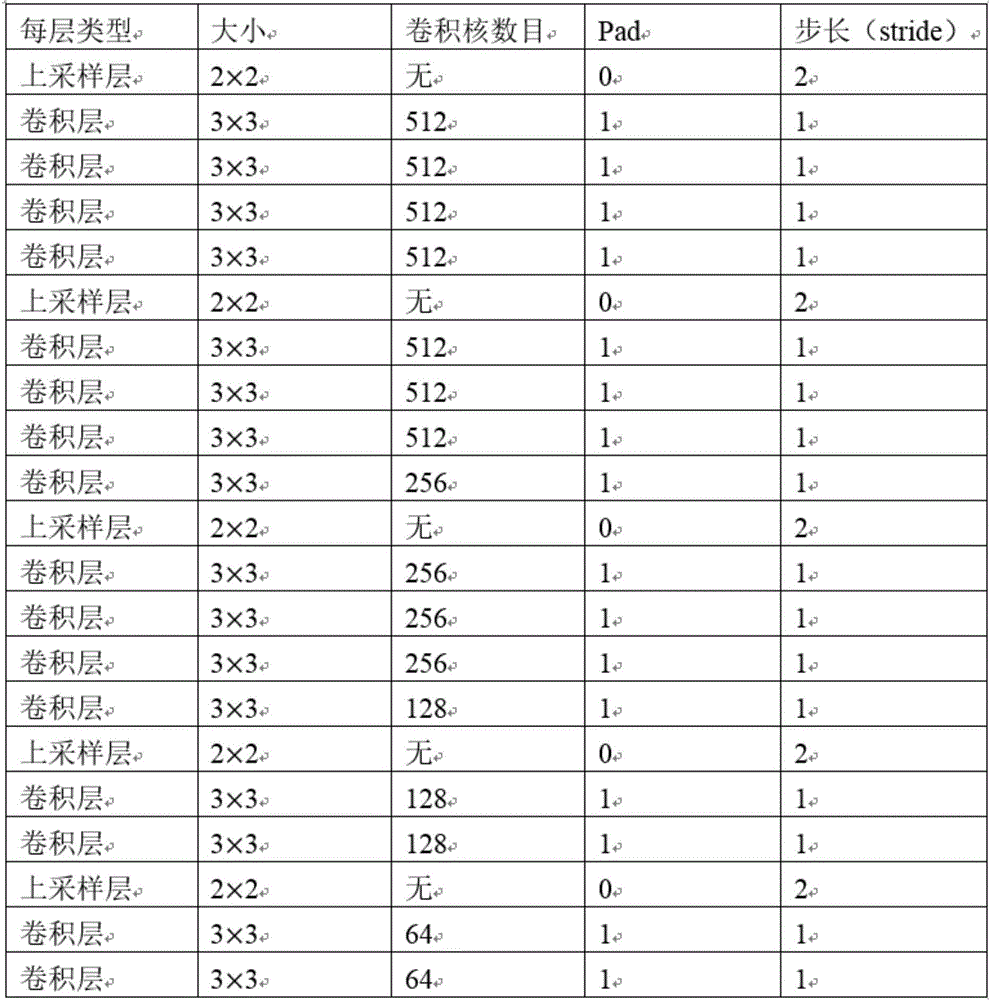

Fundus image retinal vessel segmentation method and system based on deep learning

ActiveCN106408562AEasy to classifyImprove accuracyImage enhancementImage analysisSegmentation systemBlood vessel

The invention discloses a fundus image retinal vessel segmentation method and a fundus image retinal vessel segmentation system based on deep learning. The fundus image retinal vessel segmentation method comprises the steps of performing data amplification on a training set, enhancing an image, training a convolutional neural network by using the training set, segmenting the image by using a convolutional neural network segmentation model to obtain a segmentation result, training a random forest classifier by using features of the convolutional neural network, extracting a last layer of convolutional layer output from the convolutional neural network, using the convolutional layer output as input of the random forest classifier for pixel classification to obtain another segmentation result, and fusing the two segmentation results to obtain a final segmentation image. Compared with the traditional vessel segmentation method, the fundus image retinal vessel segmentation method uses the deep convolutional neural network for feature extraction, the extracted features are more sufficient, and the segmentation precision and efficiency are higher.

Owner:SOUTH CHINA UNIV OF TECH

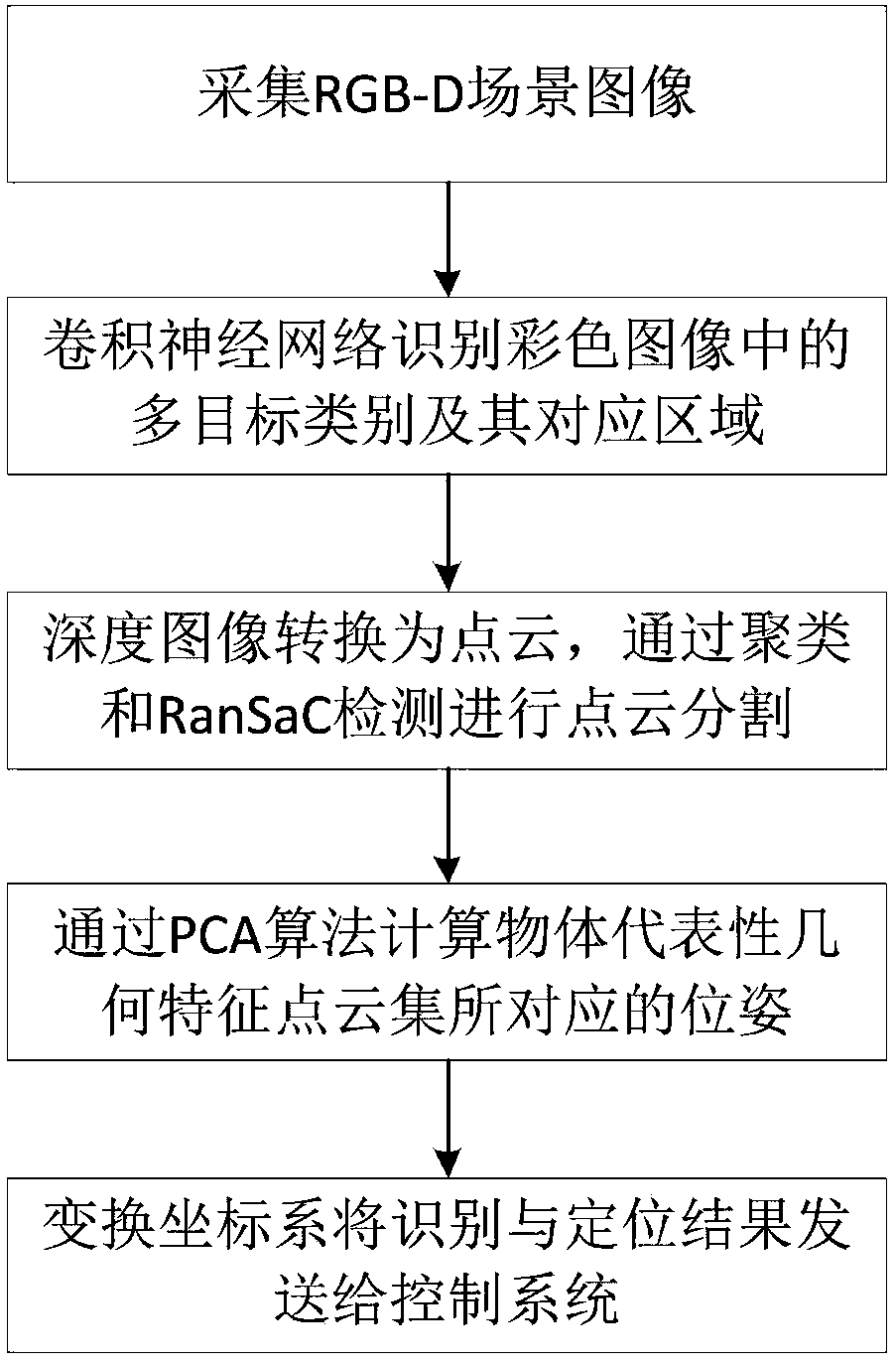

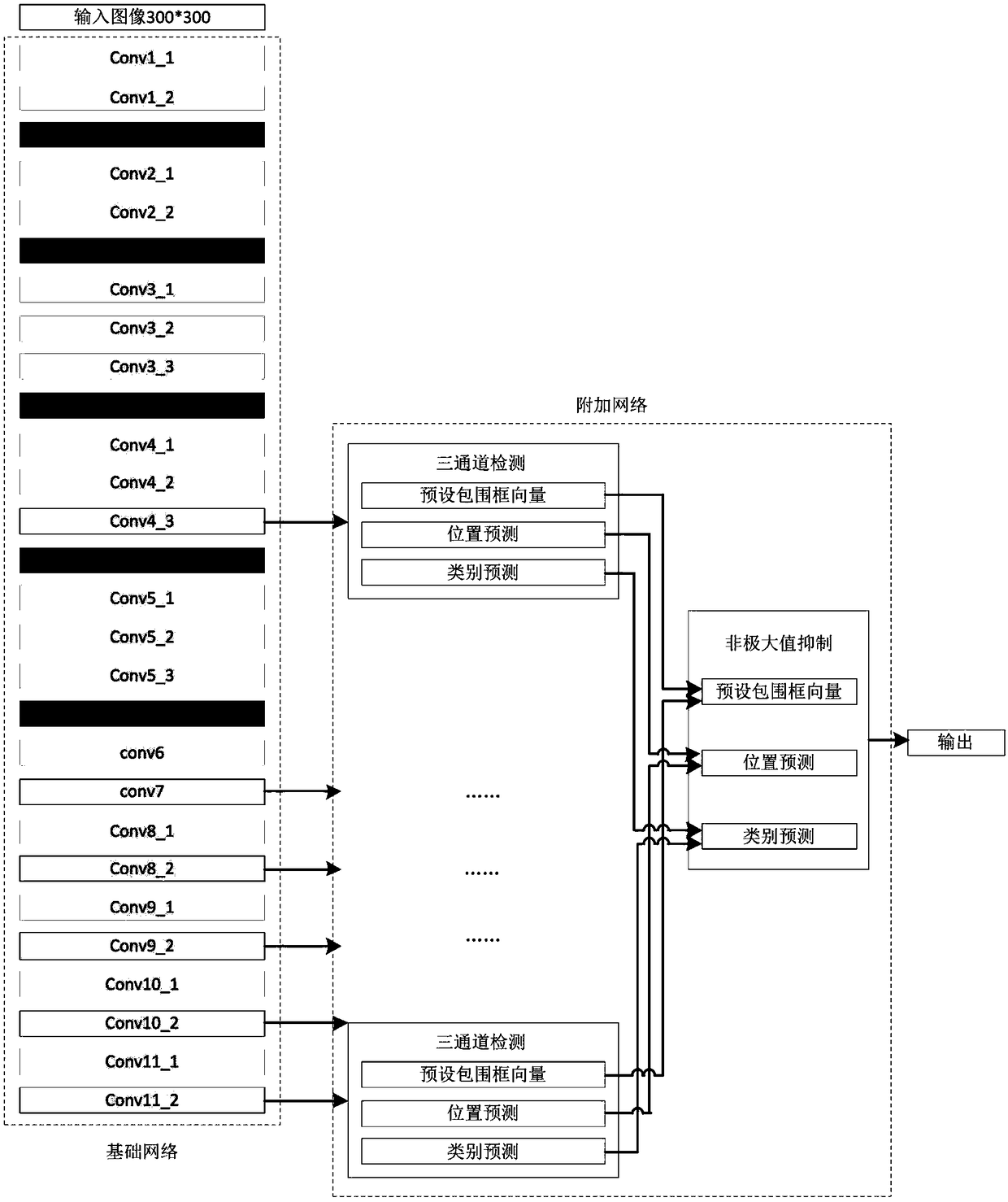

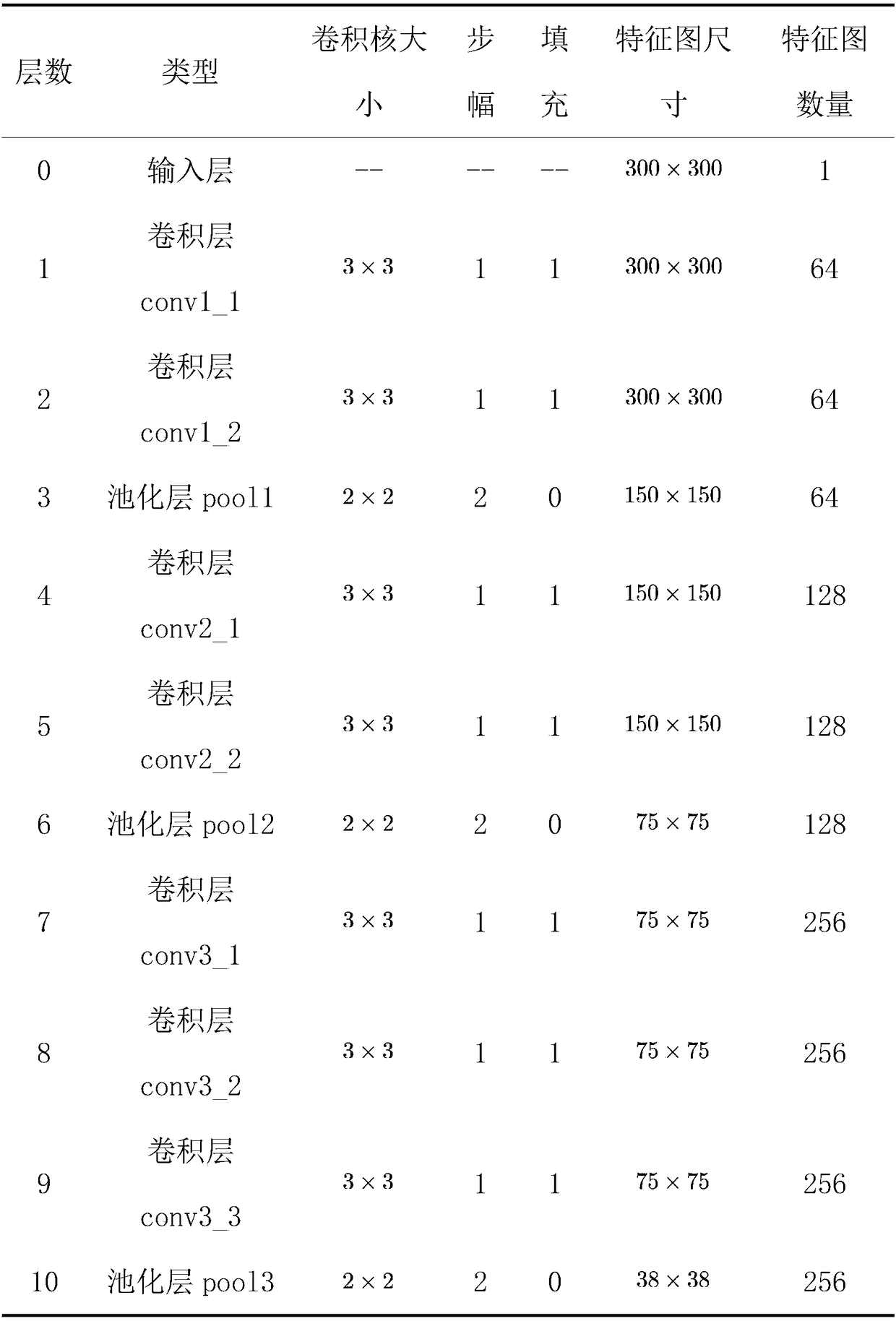

Visual recognition and positioning method for robot intelligent capture application

The invention relates to a visual recognition and positioning method for robot intelligent capture application. According to the method, an RGB-D scene image is collected, a supervised and trained deep convolutional neural network is utilized to recognize the category of a target contained in a color image and a corresponding position region, the pose state of the target is analyzed in combinationwith a deep image, pose information needed by a controller is obtained through coordinate transformation, and visual recognition and positioning are completed. Through the method, the double functions of recognition and positioning can be achieved just through a single visual sensor, the existing target detection process is simplified, and application cost is saved. Meanwhile, a deep convolutional neural network is adopted to obtain image features through learning, the method has high robustness on multiple kinds of environment interference such as target random placement, image viewing anglechanging and illumination background interference, and recognition and positioning accuracy under complicated working conditions is improved. Besides, through the positioning method, exact pose information can be further obtained on the basis of determining object spatial position distribution, and strategy planning of intelligent capture is promoted.

Owner:合肥哈工慧拣智能科技有限公司

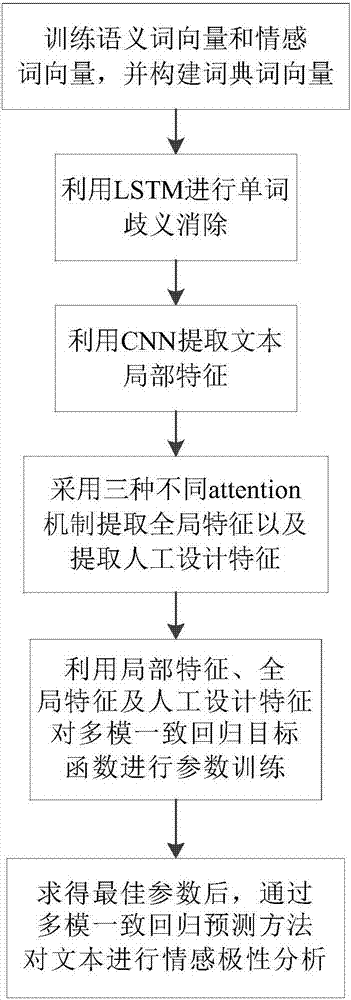

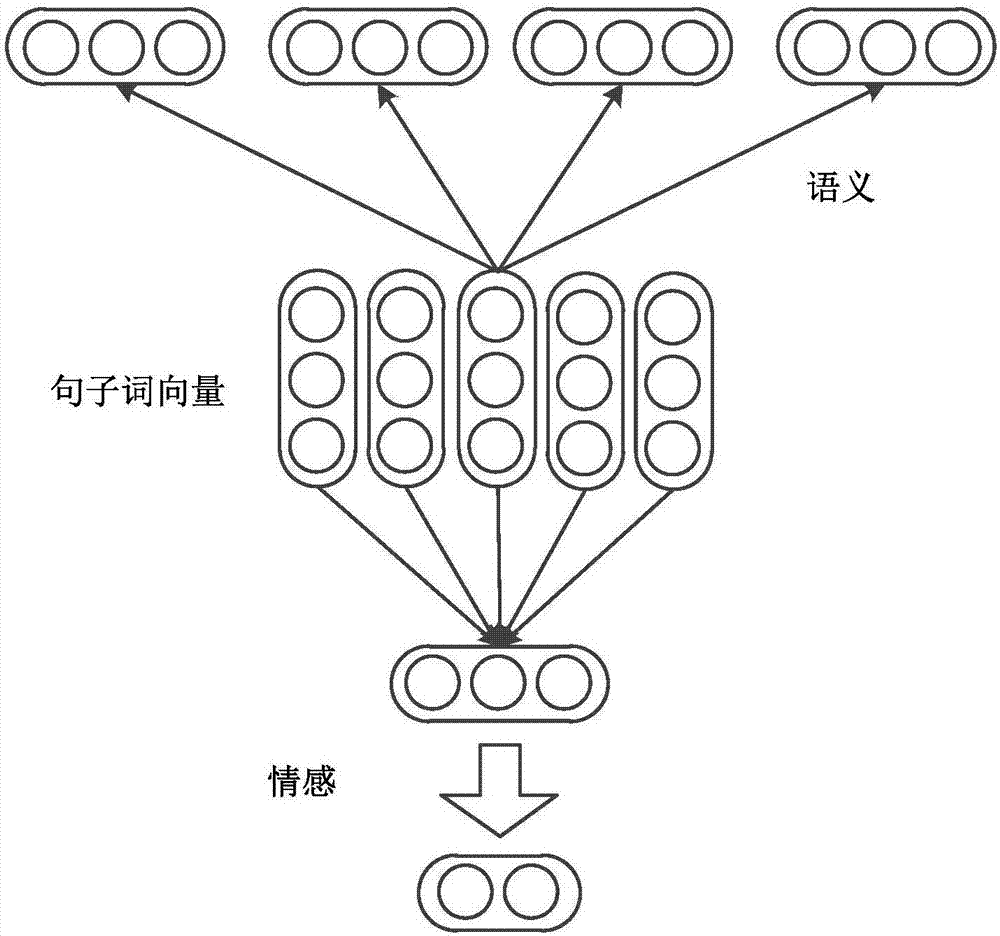

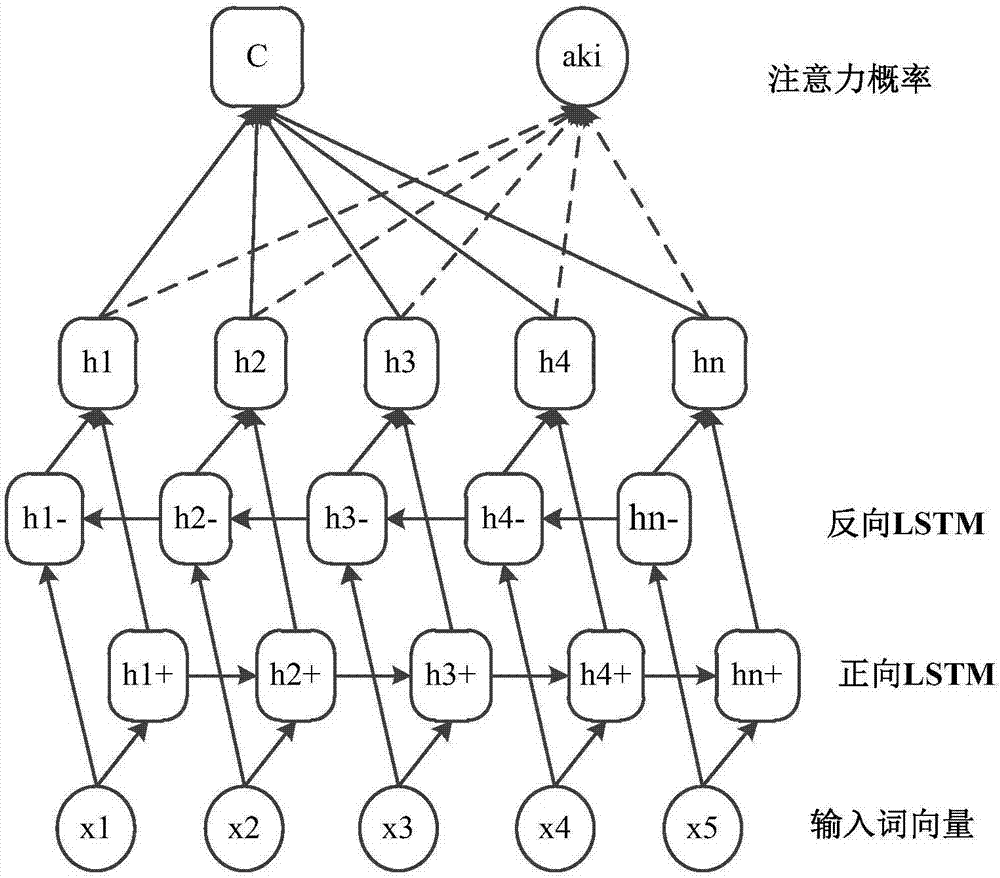

attention CNNs and CCR-based text sentiment analysis method

ActiveCN107092596AHigh precisionImprove classification accuracySemantic analysisNeural architecturesFeature extractionAmbiguity

The invention discloses an attention CNNs and CCR-based text sentiment analysis method and belongs to the field of natural language processing. The method comprises the following steps of 1, training a semantic word vector and a sentiment word vector by utilizing original text data and performing dictionary word vector establishment by utilizing a collected sentiment dictionary; 2, capturing context semantics of words by utilizing a long-short-term memory (LSTM) network to eliminate ambiguity; 3, extracting local features of a text in combination with convolution kernels with different filtering lengths by utilizing a convolutional neural network; 4, extracting global features by utilizing three different attention mechanisms; 5, performing artificial feature extraction on the original text data; 6, training a multimodal uniform regression target function by utilizing the local features, the global features and artificial features; and 7, performing sentiment polarity prediction by utilizing a multimodal uniform regression prediction method. Compared with a method adopting a single word vector, a method only extracting the local features of the text, or the like, the text sentiment analysis method can further improve the sentiment classification precision.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

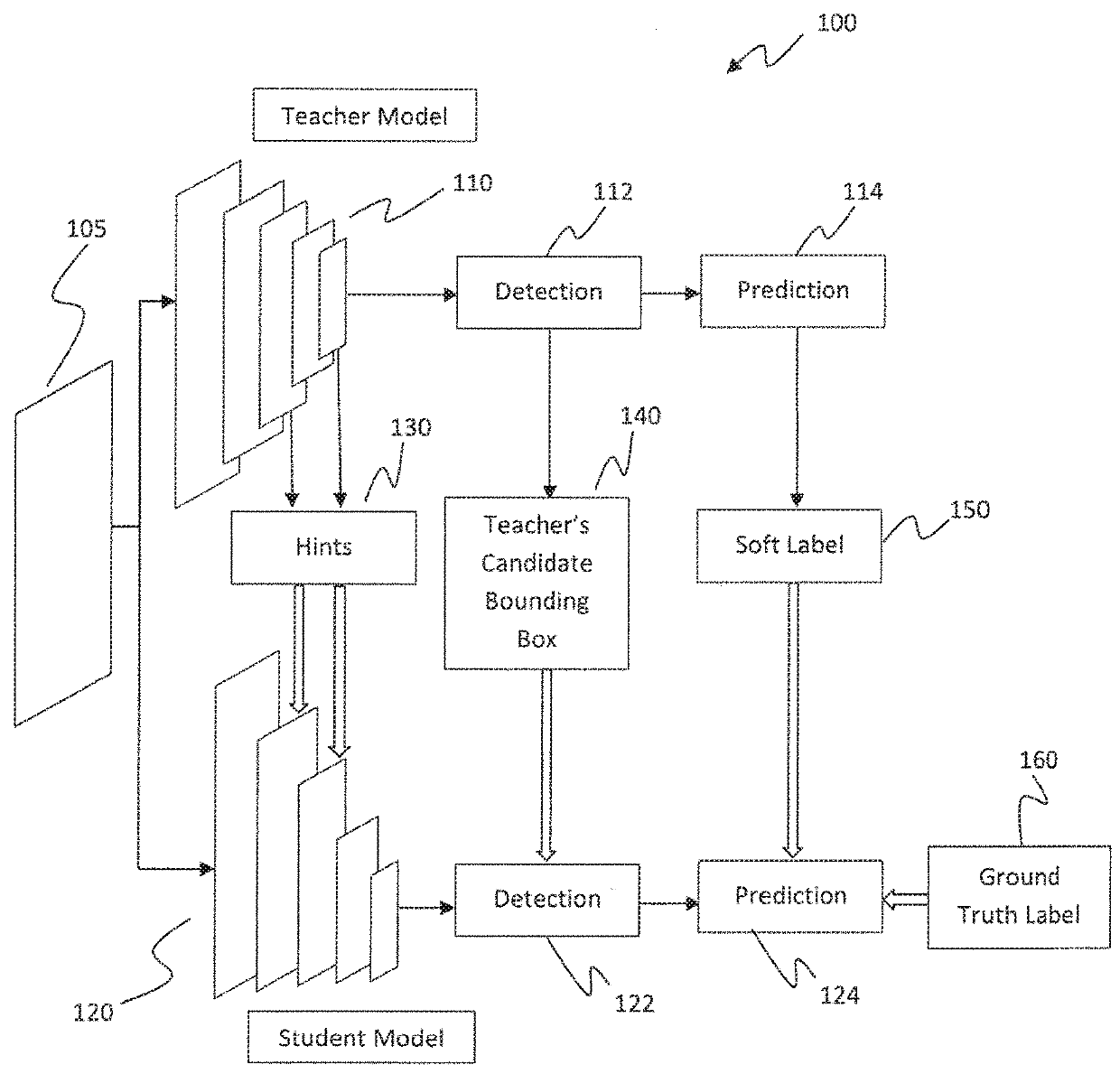

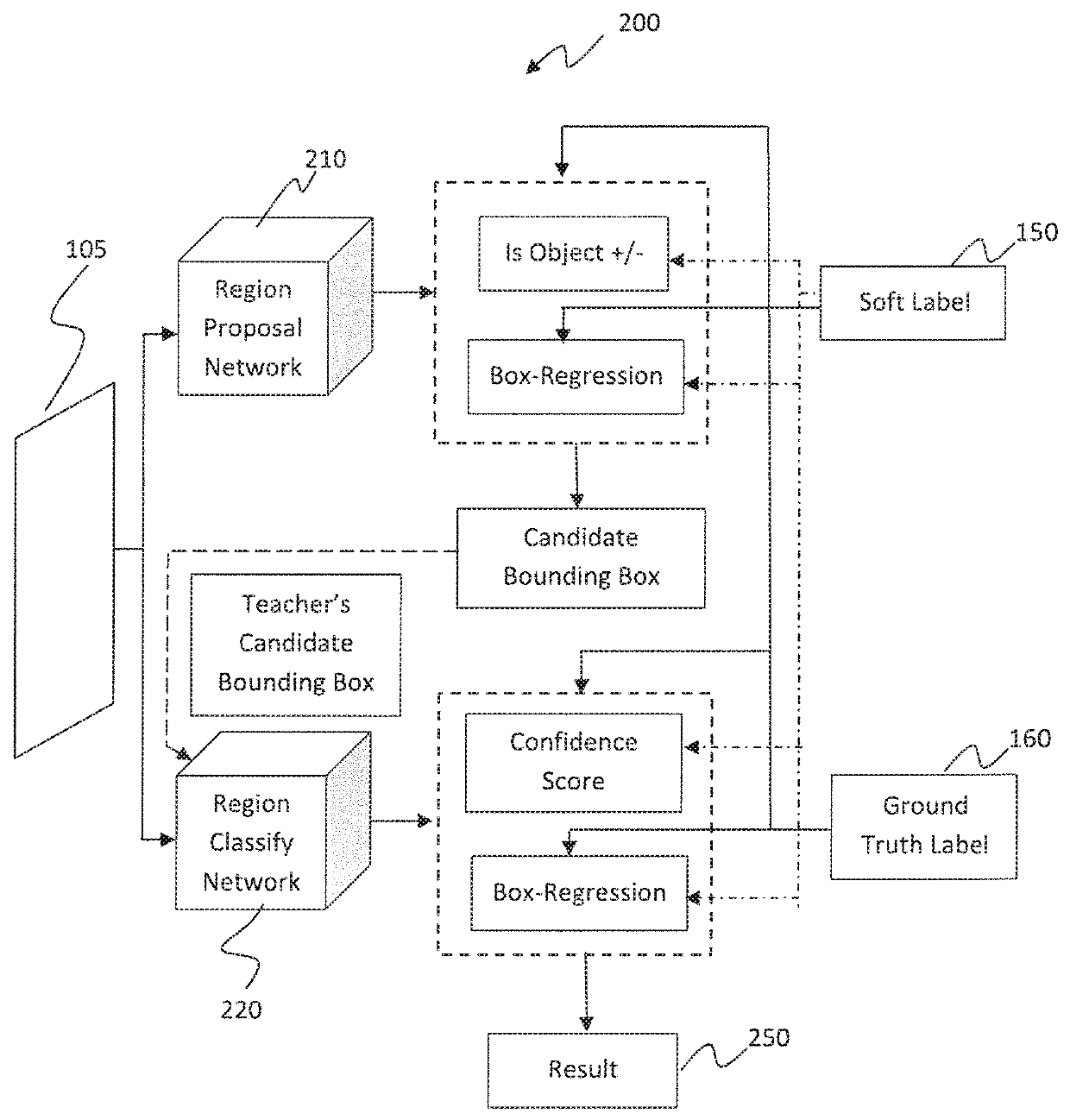

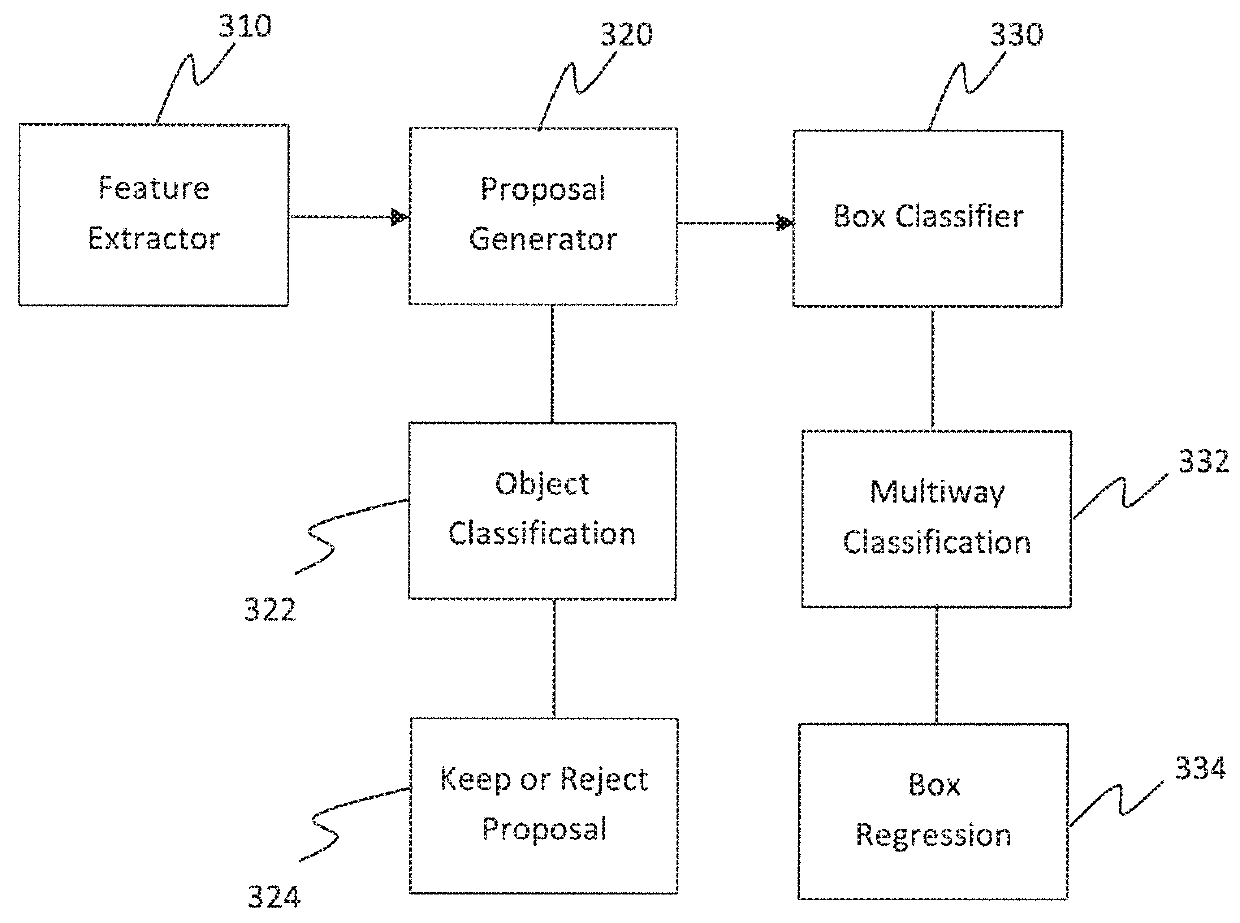

Learning efficient object detection models with knowledge distillation

InactiveUS20180268292A1Character and pattern recognitionNeural learning methodsObject ClassDistillation

A computer-implemented method executed by at least one processor for training fast models for real-time object detection with knowledge transfer is presented. The method includes employing a Faster Region-based Convolutional Neural Network (R-CNN) as an objection detection framework for performing the real-time object detection, inputting a plurality of images into the Faster R-CNN, and training the Faster R-CNN by learning a student model from a teacher model by employing a weighted cross-entropy loss layer for classification accounting for an imbalance between background classes and object classes, employing a boundary loss layer to enable transfer of knowledge of bounding box regression from the teacher model to the student model, and employing a confidence-weighted binary activation loss layer to train intermediate layers of the student model to achieve similar distribution of neurons as achieved by the teacher model.

Owner:NEC LAB AMERICA

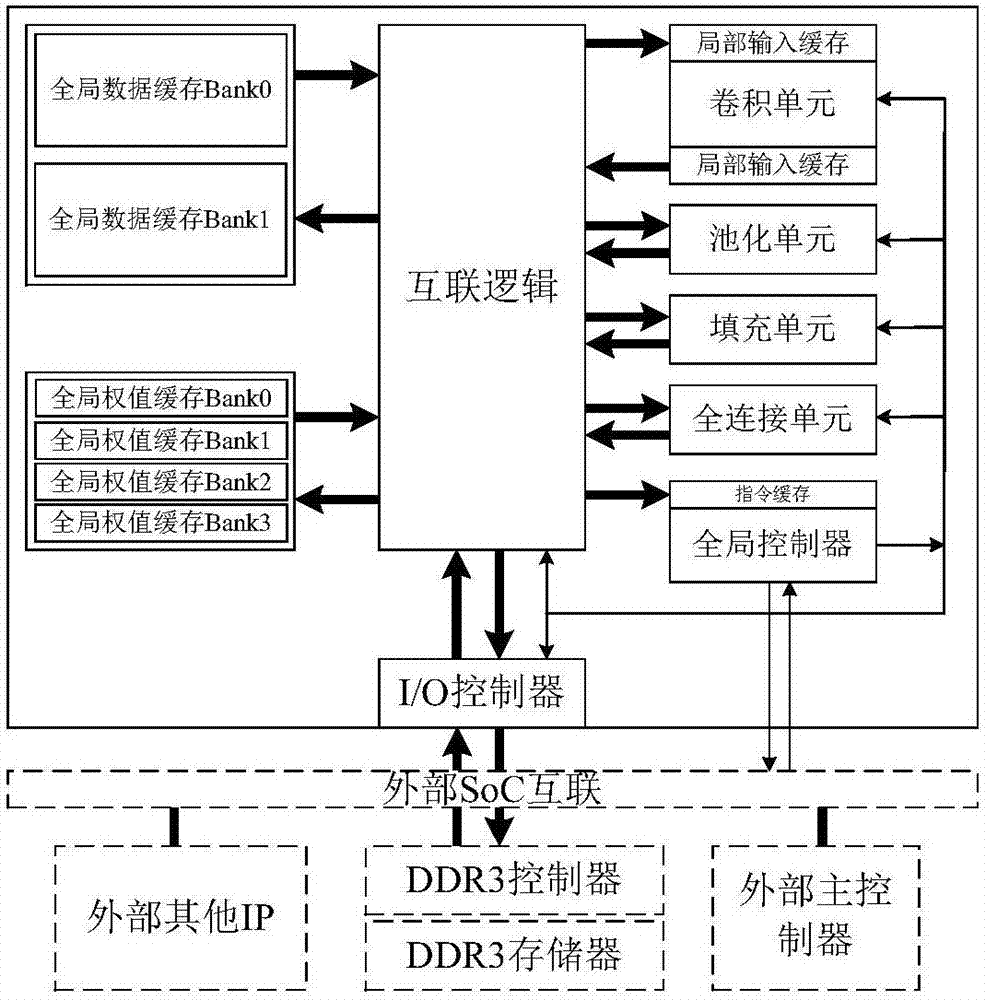

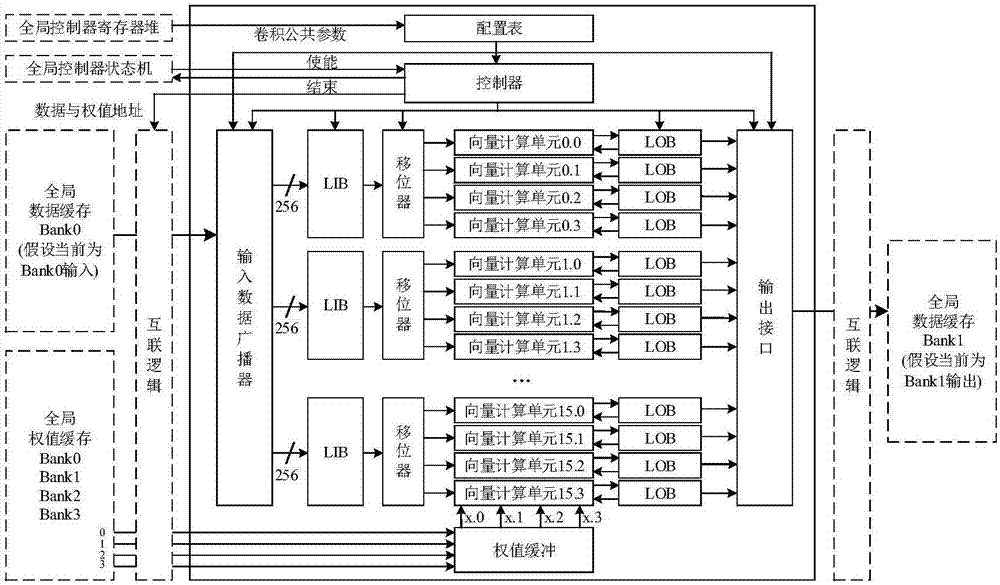

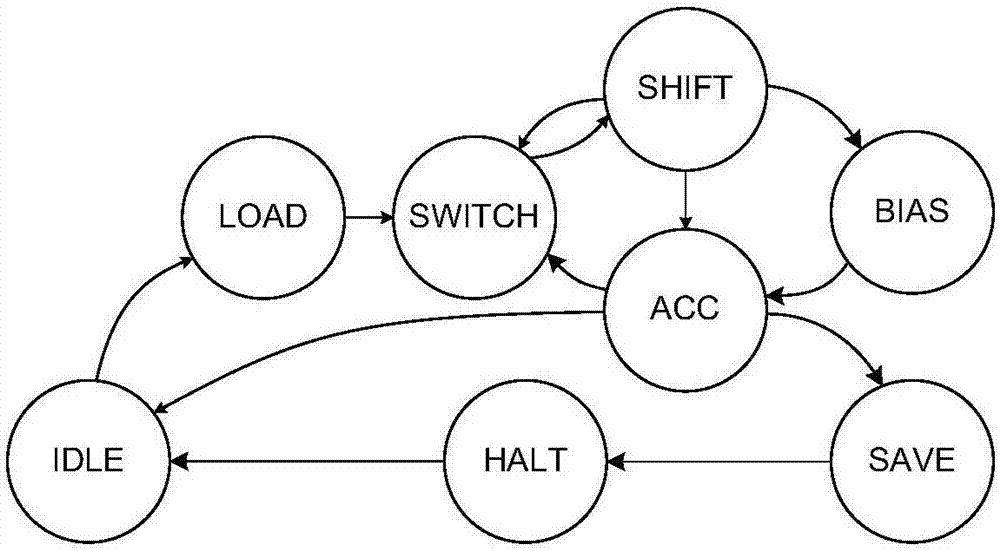

Co-processor IP core of programmable convolutional neural network

ActiveCN106940815AReduce frequencyReduce bandwidth pressureNeural architecturesPhysical realisationHardware structureInstruction set design

The present invention discloses a co-processor IP core of a programmable convolutional neural network. The invention aims to realize the arithmetic acceleration of the convolutional neural network on a digital chip (FPGA or ASIC). The co-processor IP core specifically comprises a global controller, an I / O controller, a multi-level cache system, a convolution unit, a pooling unit, a filling unit, a full-connection unit, an internal interconnection logical unit, and an instruction set designed for the co-processor IP. The proposed hardware structure supports the complete flows of convolutional neural networks diversified in scale. The hardware-level parallelism is fully utilized and the multi-level cache system is designed. As a result, the characteristics of high performance, low power consumption and the like are realized. The operation flow is controlled through instructions, so that the programmability and the configurability are realized. The co-processor IP core can be easily applied to different application scenes.

Owner:XI AN JIAOTONG UNIV

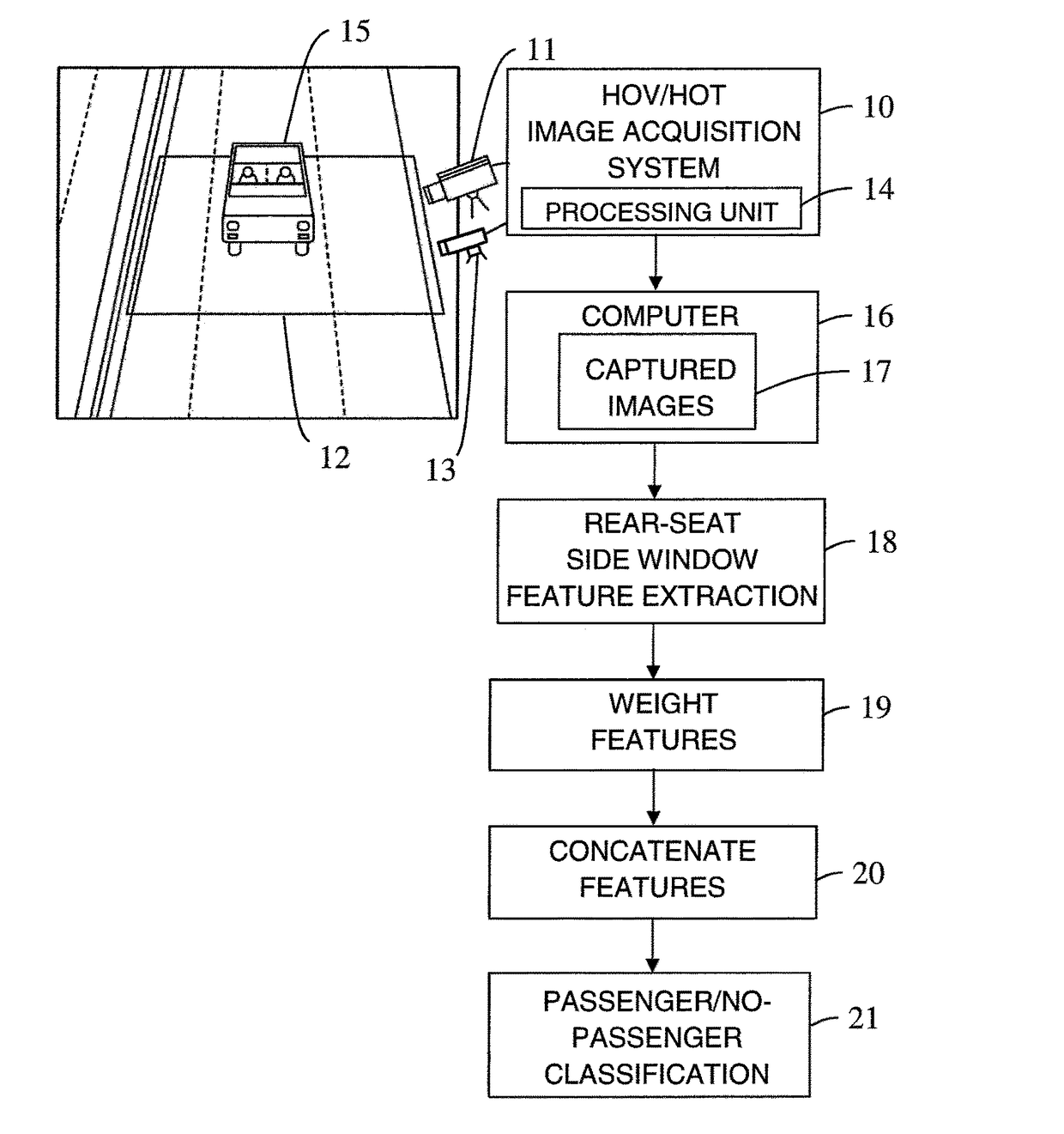

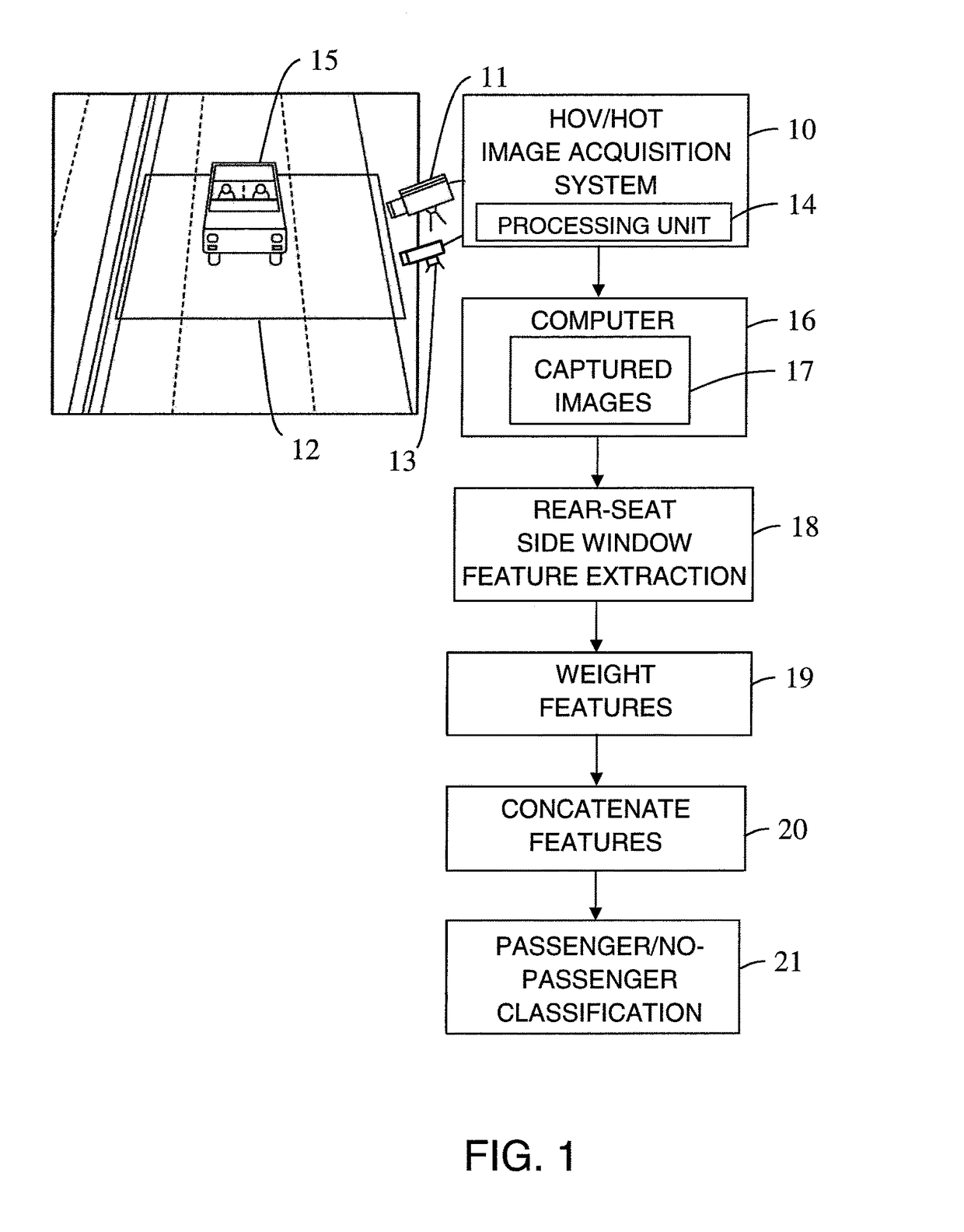

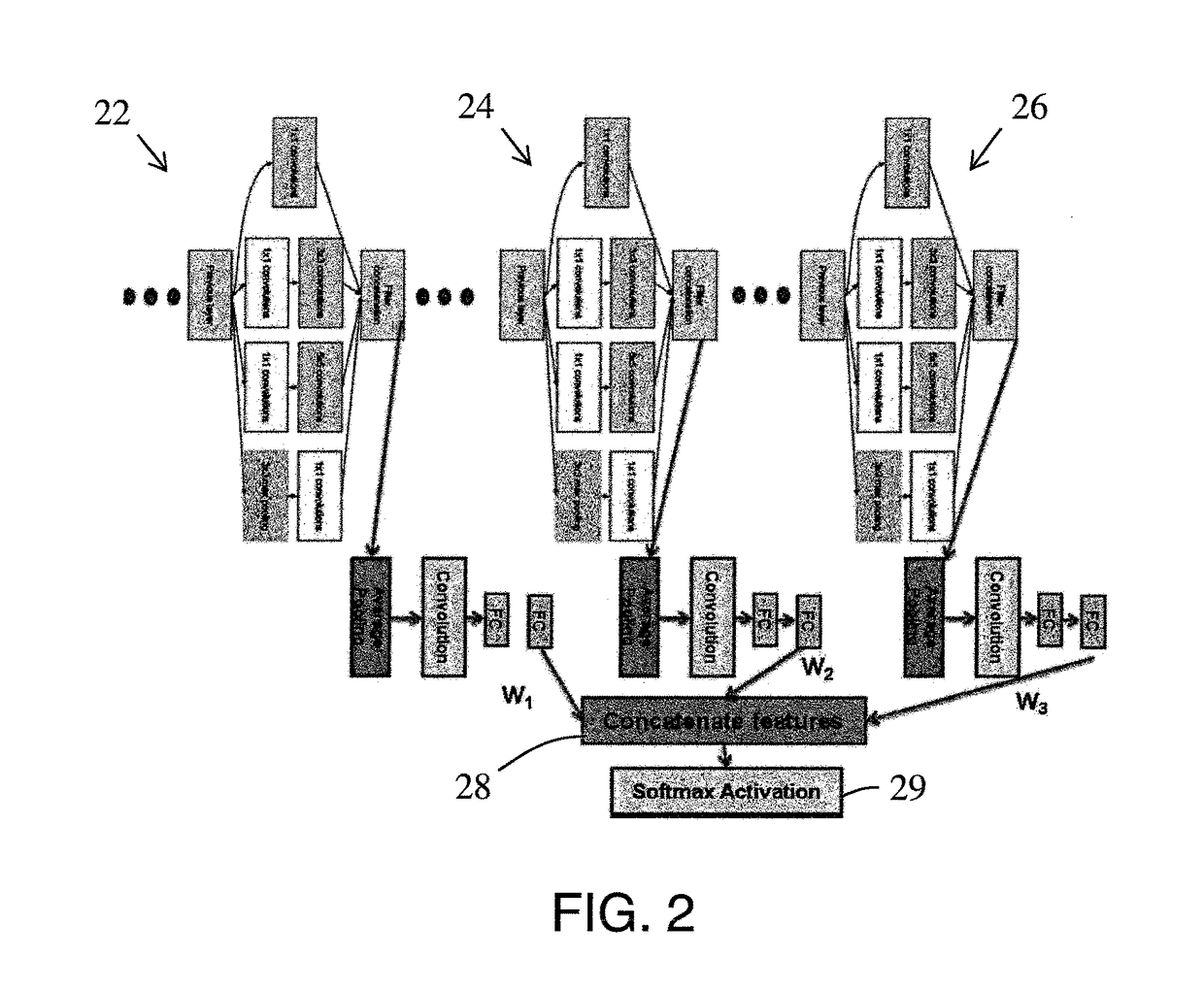

Multi-layer fusion in a convolutional neural network for image classification

ActiveUS20170140253A1Easy to adaptImprove classification accuracyCharacter and pattern recognitionNeural learning methodsFeature extractionTwo step

A method and system for domain adaptation based on multi-layer fusion in a convolutional neural network architecture for feature extraction and a two-step training and fine-tuning scheme. The architecture concatenates features extracted at different depths of the network to form a fully connected layer before the classification step. First, the network is trained with a large set of images from a source domain as a feature extractor. Second, for each new domain (including the source domain), the classification step is fine-tuned with images collected from the corresponding site. The features from different depths are concatenated with and fine-tuned with weights adjusted for a specific task. The architecture is used for classifying high occupancy vehicle images.

Owner:CONDUENT BUSINESS SERVICES LLC

Synthesizing training data for broad area geospatial object detection

A system for broad area geospatial object recognition, identification, classification, location and quantification, comprising an image manipulation module to create synthetically-generated images to imitate and augment an existing quantity of orthorectified geospatial images; together with a deep learning module and a convolutional neural network serving as an image analysis module, to analyze a large corpus of orthorectified geospatial images, identify and demarcate a searched object of interest from within the corpus, locate and quantify the identified or classified objects from the corpus of geospatial imagery available to the system. The system reports results in a requestor's preferred format.

Owner:MAXAR INTELLIGENCE INC

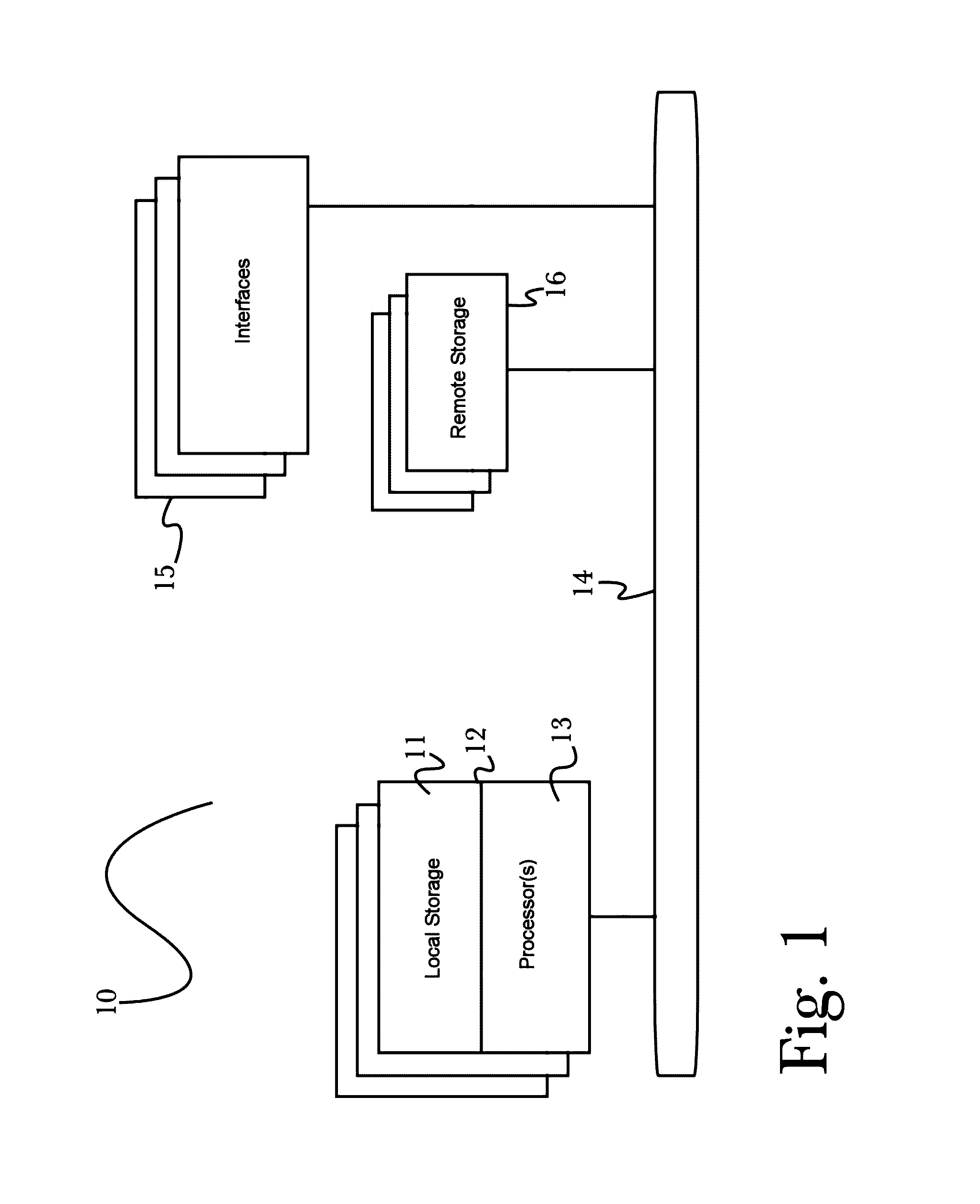

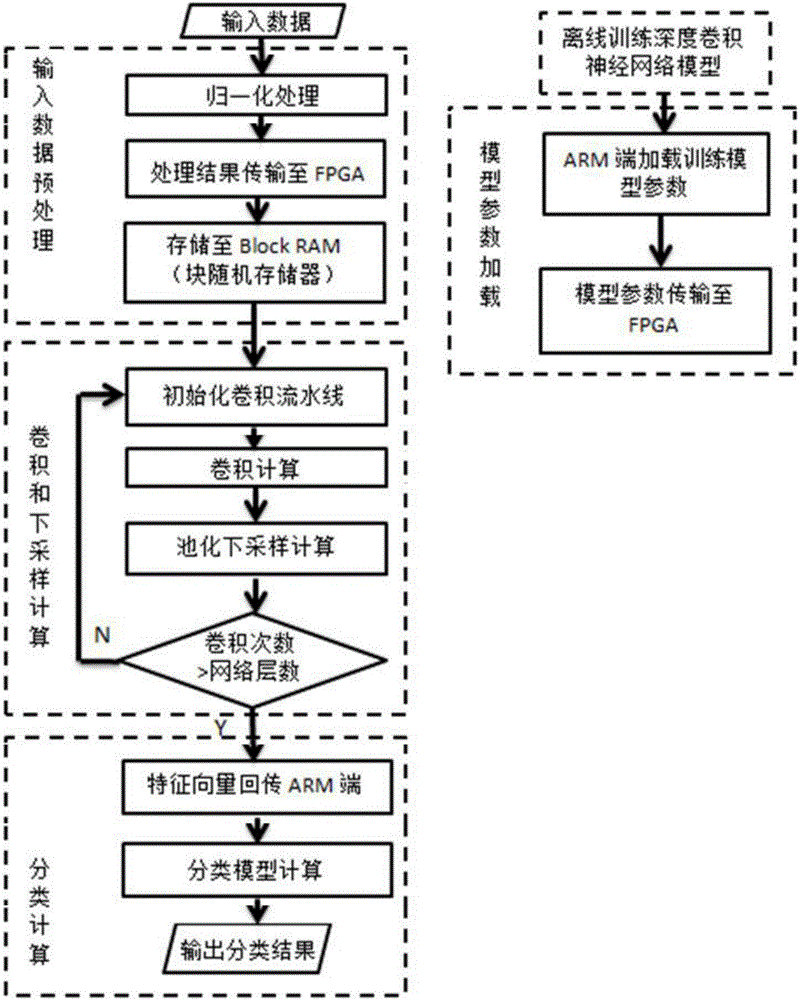

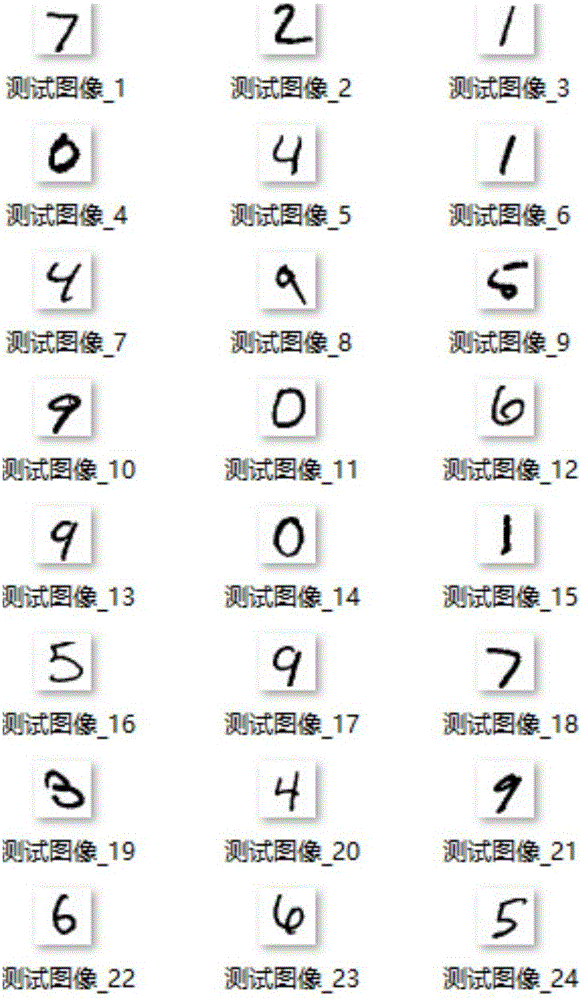

FPGA-based deep convolution neural network realizing method

ActiveCN106228240ASimple designReduce resource consumptionCharacter and pattern recognitionSpeech recognitionFeature vectorAlgorithm

The invention belongs to the technical field of digital image processing and mode identification, and specifically relates to an FPGA-based deep convolution neural network realizing method. The hardware platform for realizing the method is XilinxZYNQ-7030 programmable sheet SoC, and an FPGA and an ARM Cortex A9 processor are built in the hardware platform. Trained network model parameters are loaded to an FPGA end, pretreatment for input data is conducted at an ARM end, and the result is transmitted to the FPGA end. Convolution calculation and down-sampling of a deep convolution neural network are realized at the FPGA end to form data characteristic vectors and transmit the data characteristic vectors to the ARM end, thus completing characteristic classification calculation. Rapid parallel processing and extremely low-power high-performance calculation characteristics of FPGA are utilized to realize convolution calculation which has the highest complexity in a deep convolution neural network model. The algorithm efficiency is greatly improved, and the power consumption is reduced while ensuring algorithm correct rate.

Owner:FUDAN UNIV

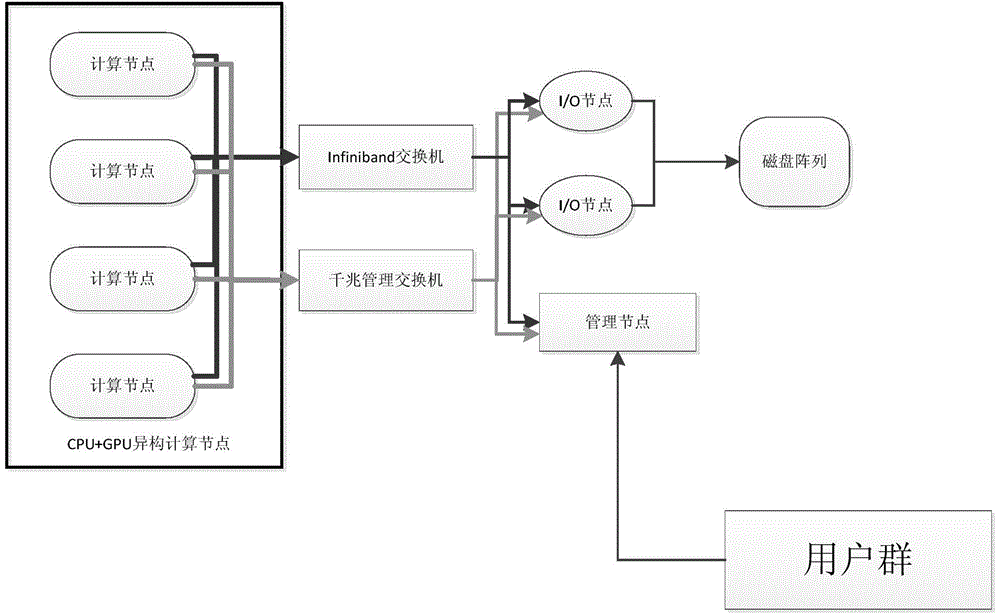

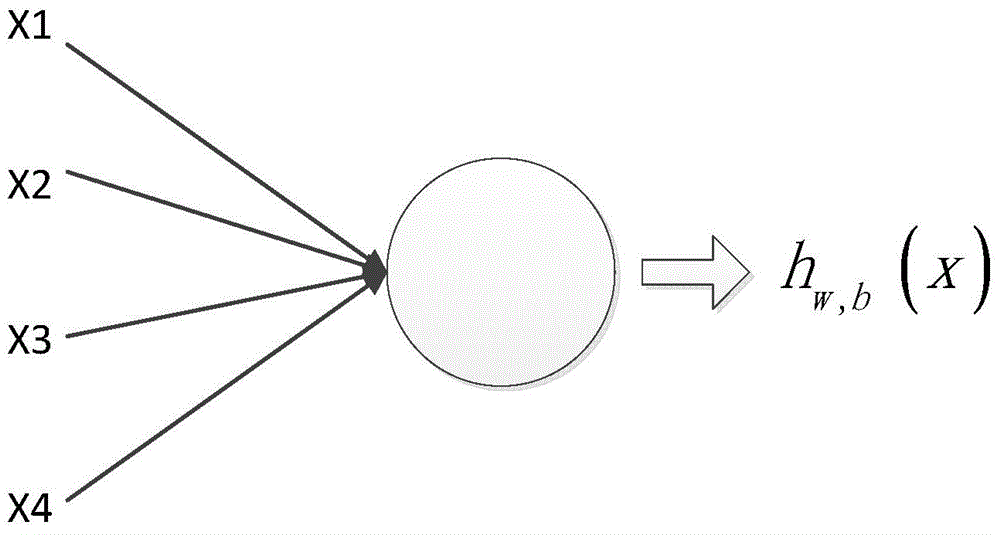

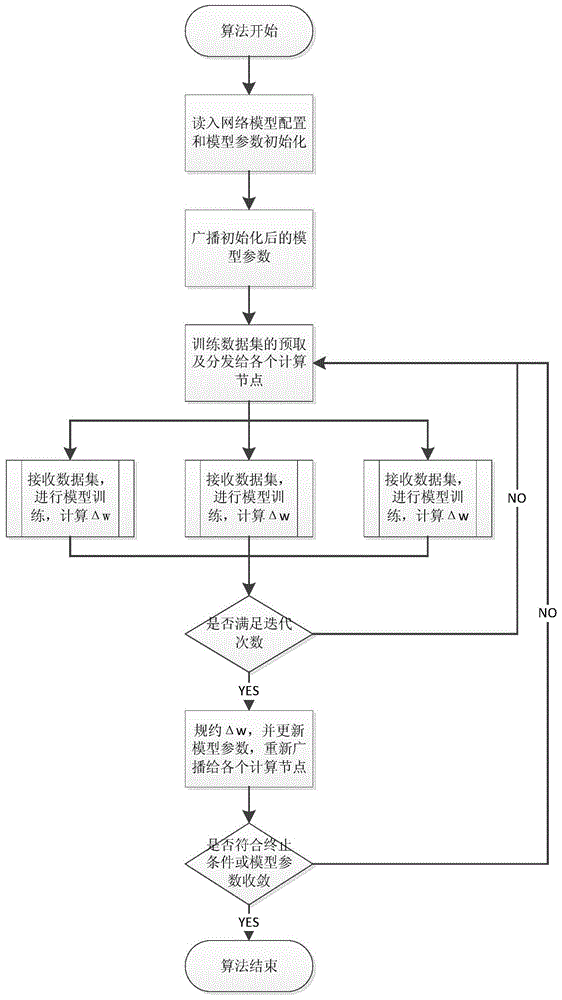

Convolution neural network parallel processing method based on large-scale high-performance cluster

InactiveCN104463324AReduce training timeImprove computing efficiencyBiological neural network modelsConcurrent instruction executionNODALAlgorithm

The invention discloses a convolution neural network parallel processing method based on a large-scale high-performance cluster. The method comprises the steps that (1) a plurality of copies are constructed for a network model to be trained, model parameters of all the copies are identical, the number of the copies is identical with the number of nodes of the high-performance cluster, each node is provided with one model copy, one node is selected to serve as a main node, and the main node is responsible for broadcasting and collecting the model parameters; (2) a training set is divided into a plurality of subsets, the training subsets are issued to the rest of sub nodes except the main mode each time to conduct parameter gradient calculation together, gradient values are accumulated, the accumulated value is used for updating the model parameters of the main node, and the updated model parameters are broadcast to all the sub nodes until model training is ended. The convolution neural network parallel processing method has the advantages of being capable of achieving parallelization, improving the efficiency of model training, shortening the training time and the like.

Owner:CHANGSHA MASHA ELECTRONICS TECH

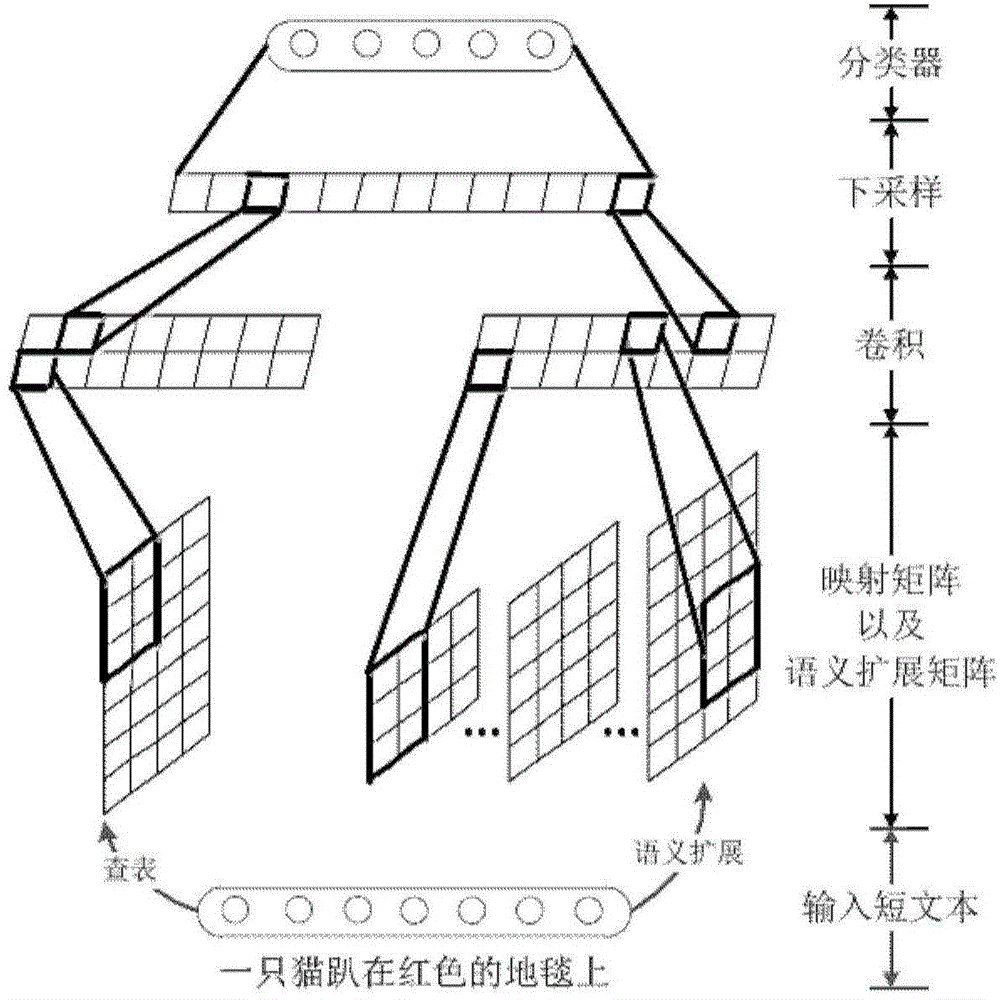

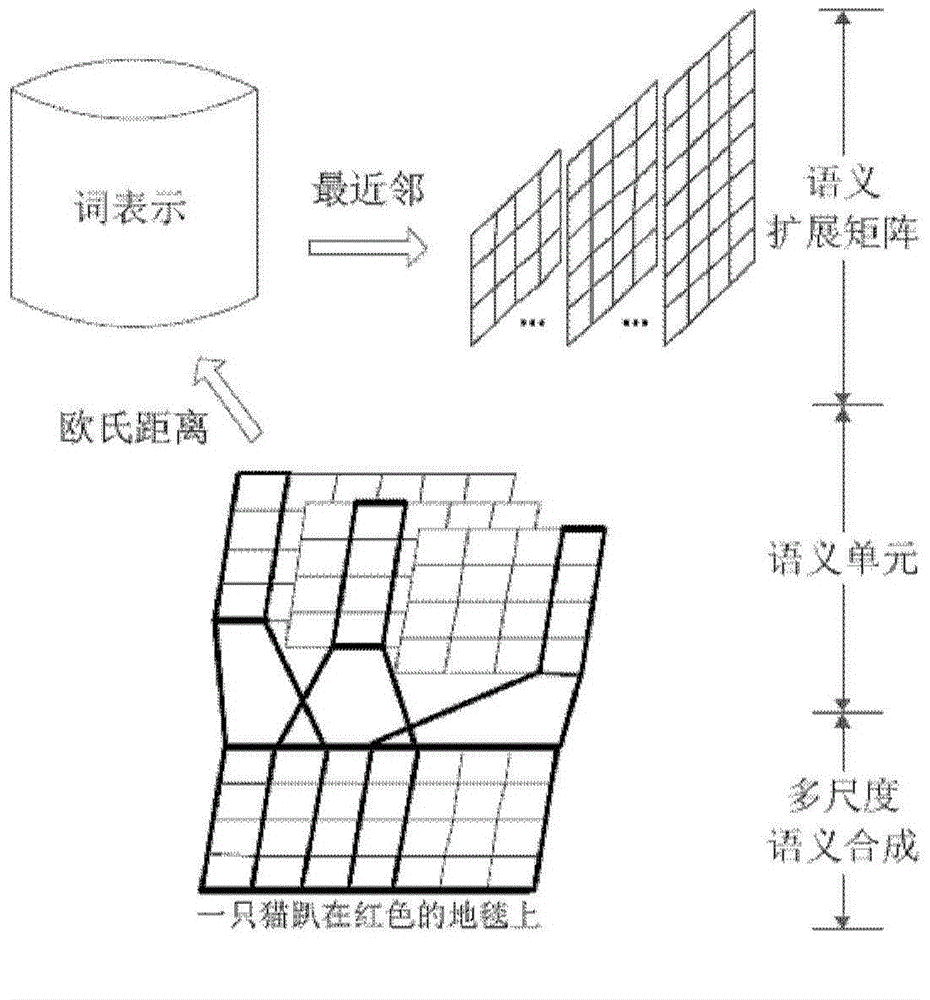

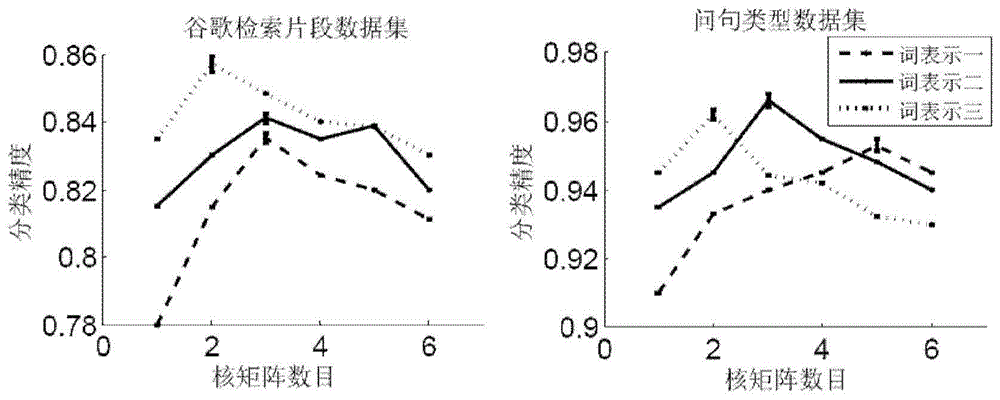

Short text classification method based on convolution neutral network

ActiveCN104834747AImprove semantic sensitivity issuesImprove classification performanceInput/output for user-computer interactionBiological neural network modelsNear neighborClassification methods

The invention discloses a short text classification method based on a convolution neutral network. The convolution neutral network comprises a first layer, a second layer, a third layer, a fourth layer and a fifth layer. On the first layer, multi-scale candidate semantic units in a short text are obtained; on the second layer, Euclidean distances between each candidate semantic unit and all word representation vectors in a vector space are calculated, nearest-neighbor word representations are found, and all the nearest-neighbor word representations meeting a preset Euclidean distance threshold value are selected to construct a semantic expanding matrix; on the third layer, multiple kernel matrixes of different widths and different weight values are used for performing two-dimensional convolution calculation on a mapping matrix and the semantic expanding matrix of the short text, extracting local convolution features and generating a multi-layer local convolution feature matrix; on the fourth layer, down-sampling is performed on the multi-layer local convolution feature matrix to obtain a multi-layer global feature matrix, nonlinear tangent conversion is performed on the global feature matrix, and then the converted global feature matrix is converted into a fixed-length semantic feature vector; on the fifth layer, a classifier is endowed with the semantic feature vector to predict the category of the short text.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

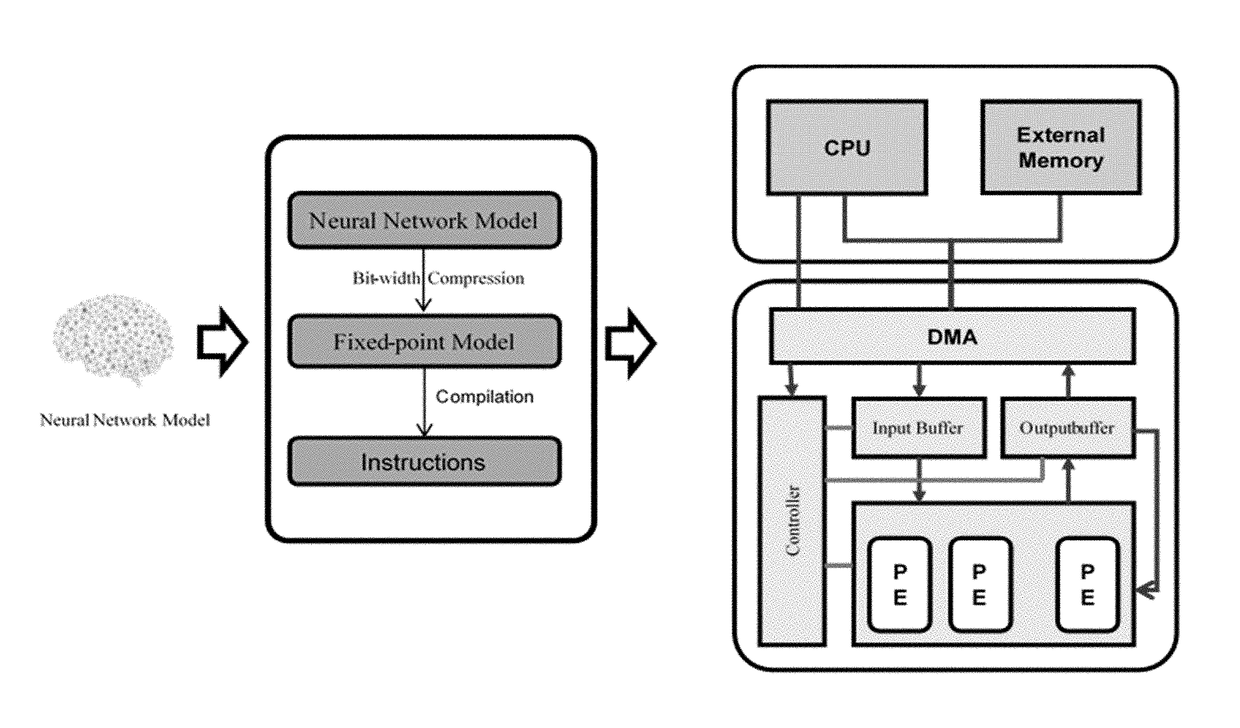

Method for optimizing an artificial neural network (ANN)

ActiveUS20180046894A1Digital data processing detailsSpeech analysisNetwork modelArtificial neural network

The present invention relates to artificial neural network, for example, convolutional neural network. In particular, the present invention relates to how to implement and optimize a convolutional neural network based on an embedded FPGA. Specifically, it proposes an overall design process of compressing, fix-point quantization and compiling the neural network model.

Owner:XILINX TECH BEIJING LTD

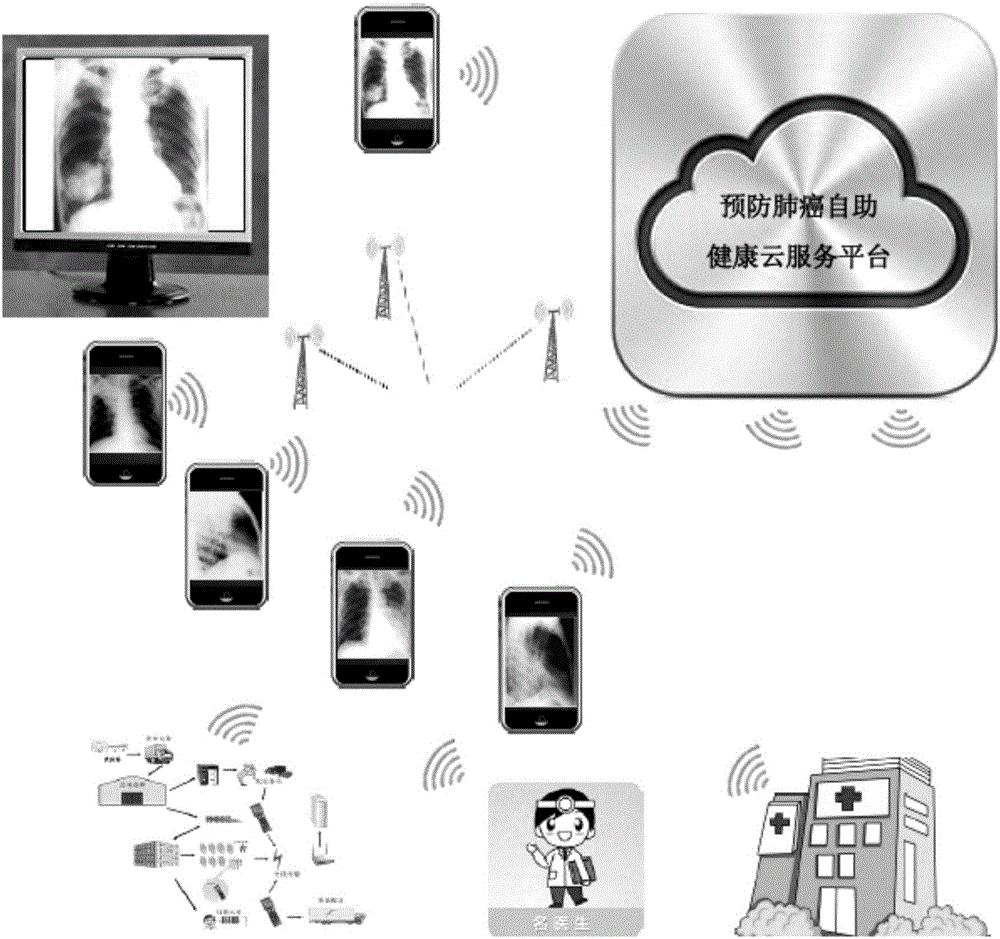

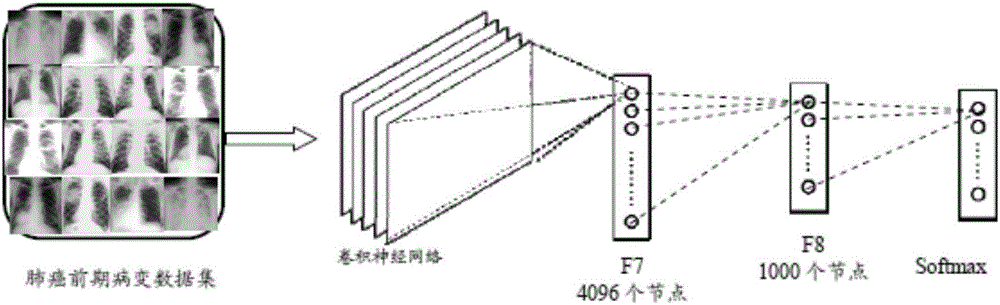

Deep convolutional neural network-based lung cancer preventing self-service health cloud service system

ActiveCN106372390AImprove informatizationIncrease health awarenessSpecial data processing applicationsNerve networkSuspected lung cancer

Owner:汤一平

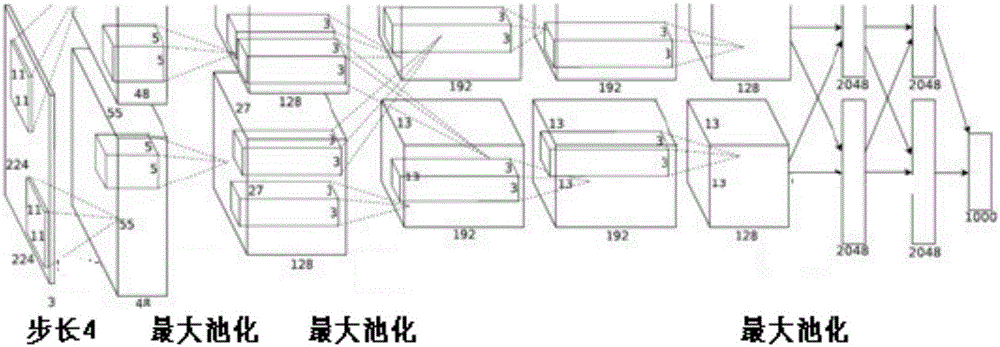

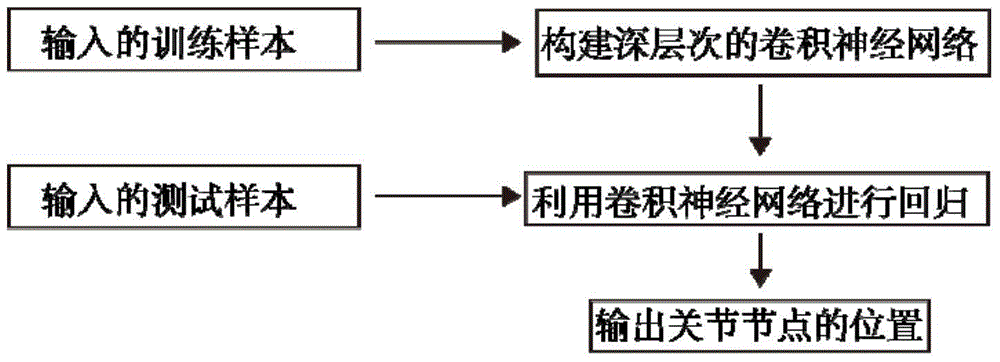

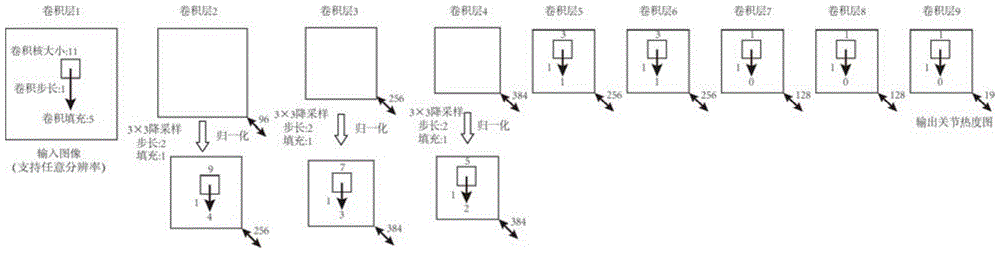

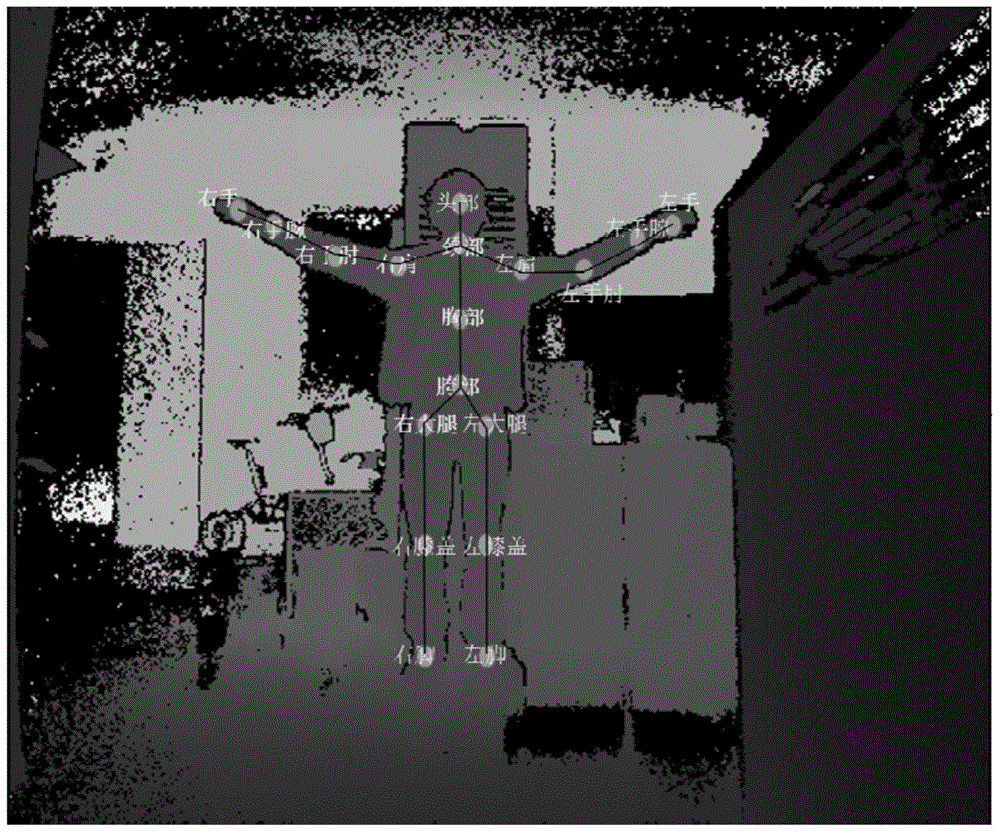

Depth image human body joint positioning method based on convolution nerve network

ActiveCN105787439ACharacter and pattern recognitionNeural learning methodsHuman bodyForward algorithm

The invention discloses a depth image human body joint positioning method based on a convolution nerve network. The method is characterized by comprising a training process and an identification process. The training process comprises the following steps: 1, inputting a training sample; 2, initializing a deep convolution nerve network and its parameters, wherein the parameters comprise a weight and a bias of each layer edge; and 3, by use of a forward algorithm and a backward algorithm, learning the parameters of the constructed convolution nerve network. The identification process comprises the following steps: 4, inputting a test sample; and 5, performing regression on the input test sample by use of the trained convolution nerve network to find positions of human body joints. According to the invention, by use of the deep convolution nerve network and large data, multiple challenges such as shielding, noise and the like can be resisted, and the accuracy is quite high; and at the same time, by means of parallel calculation, the effect of accurately positioning the human body joints in real time can be realized.

Owner:GUANGZHOU NEWTEMPO TECH

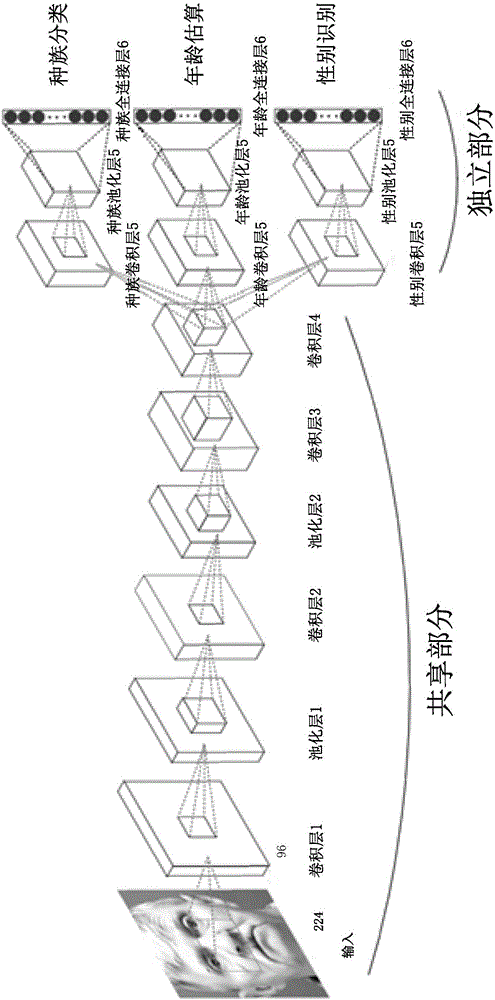

Multi-task learning convolutional neural network-based face attribute analysis method

ActiveCN106529402AImprove generalization abilityCalculation speedCharacter and pattern recognitionTask networkMulti attribute analysis

The present invention discloses a multi-task learning convolutional neural network (CNN)-based face attribute analysis method. According to the method, based on a convolutional neural network, a multi-task learning method is adopted to carry out age estimation, gender identification and race classification on a face image simultaneously. In a traditional processing method, when face multi-attribute analysis is carried out, a plurality of times of calculation are required, and as a result, time can be wasted, and the generalization ability of a model is decreased. According to the method of the invention, three single-task networks are trained separately; the weight of a network with the lowest convergence speed is adopted to initialize the shared part of a multi-task network, and the independent parts of the multi-task network are initialized randomly; and the multi-task network is trained, so that a multi-task convolutional neural network (CNN) model can be obtained; and the trained multi-task convolutional neural network (CNN) model is adopted to carry out age, gender and race analysis on an inputted face image simultaneously, and therefore, time can be saved, and accuracy is high.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Apparatus and method for realizing accelerator of sparse convolutional neural network

InactiveCN107239824AImprove computing powerReduce response latencyDigital data processing detailsNeural architecturesAlgorithmBroadband

The invention provides an apparatus and method for realizing an accelerator of a sparse convolutional neural network. According to the invention, the apparatus herein includes a convolutional and pooling unit, a full connection unit and a control unit. The method includes the following steps: on the basis of control information, reading convolutional parameter information, and input data and intermediate computing data, and reading full connected layer weight matrix position information, in accordance with the convolutional parameter information, conducting convolution and pooling on the input data with first iteration times, then on the basis of the full connected layer weight matrix position information, conducting full connection computing with second iteration times. Each input data is divided into a plurality of sub-blocks, and the convolutional and pooling unit and the full connection unit separately operate on the plurality of sub-blocks in parallel. According to the invention, the apparatus herein uses a specific circuit, supports a full connected layer sparse convolutional neural network, uses parallel ping-pang buffer design and assembly line design, effectively balances I / O broadband and computing efficiency, and acquires better performance power consumption ratio.

Owner:XILINX INC

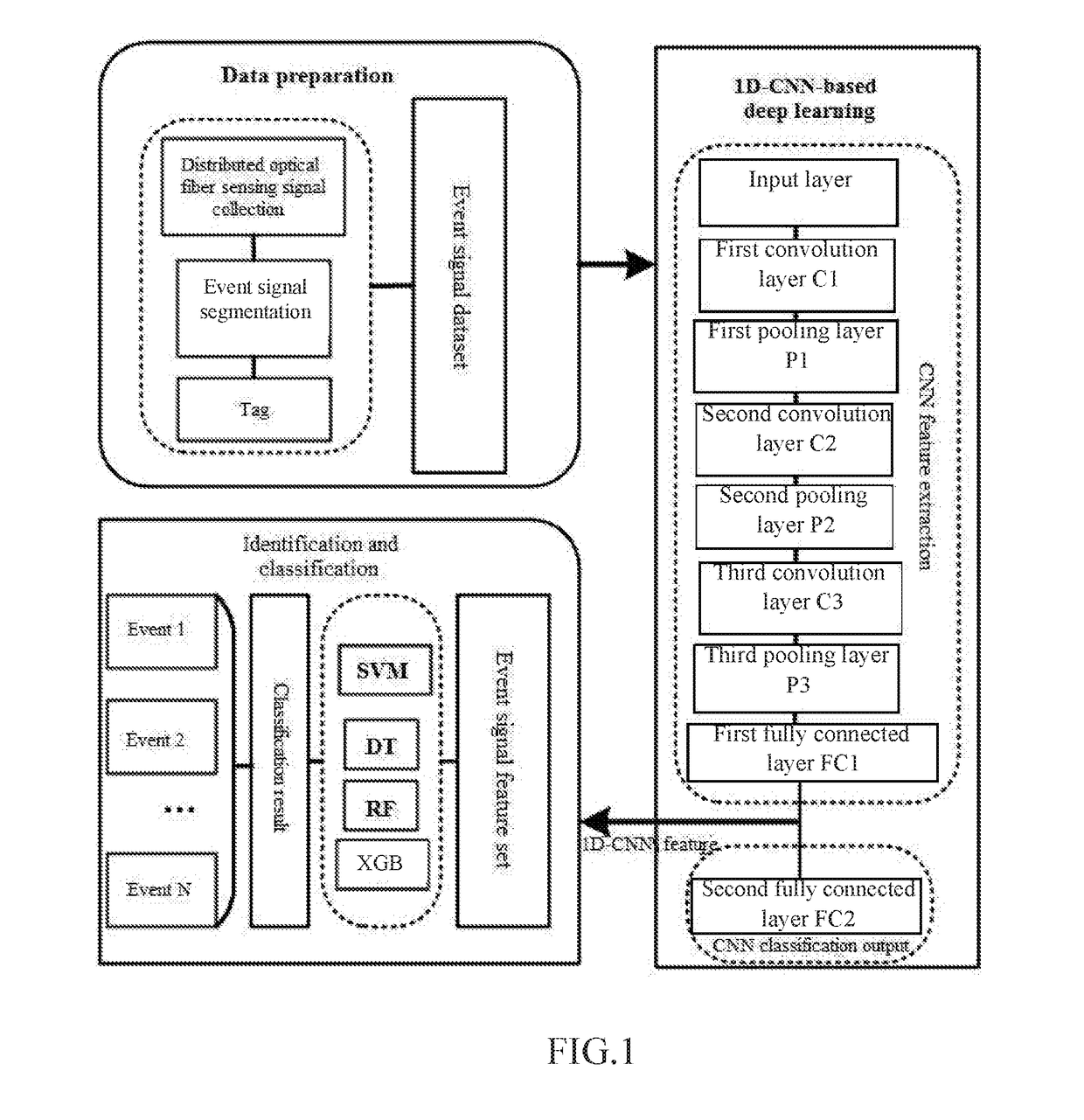

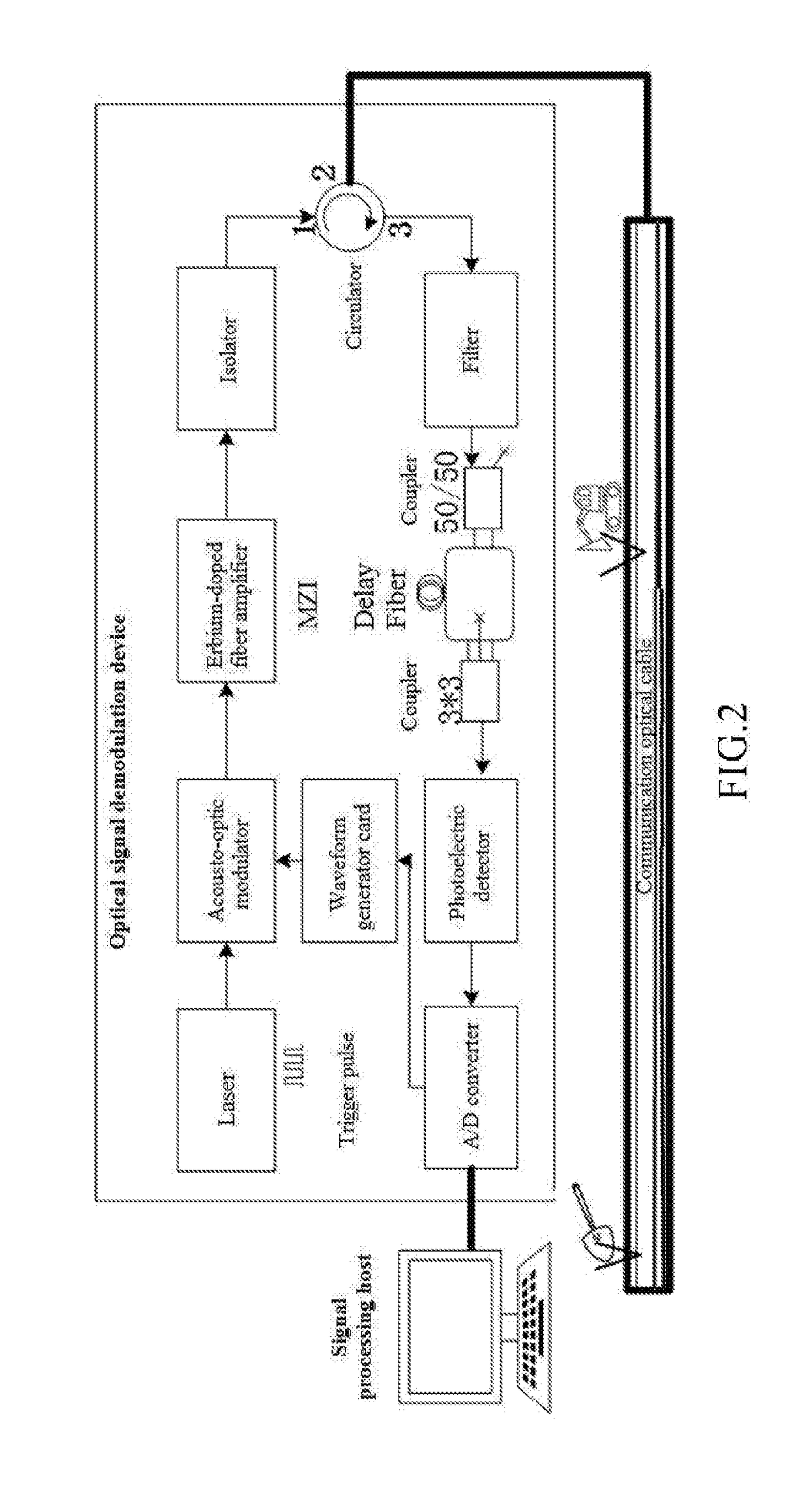

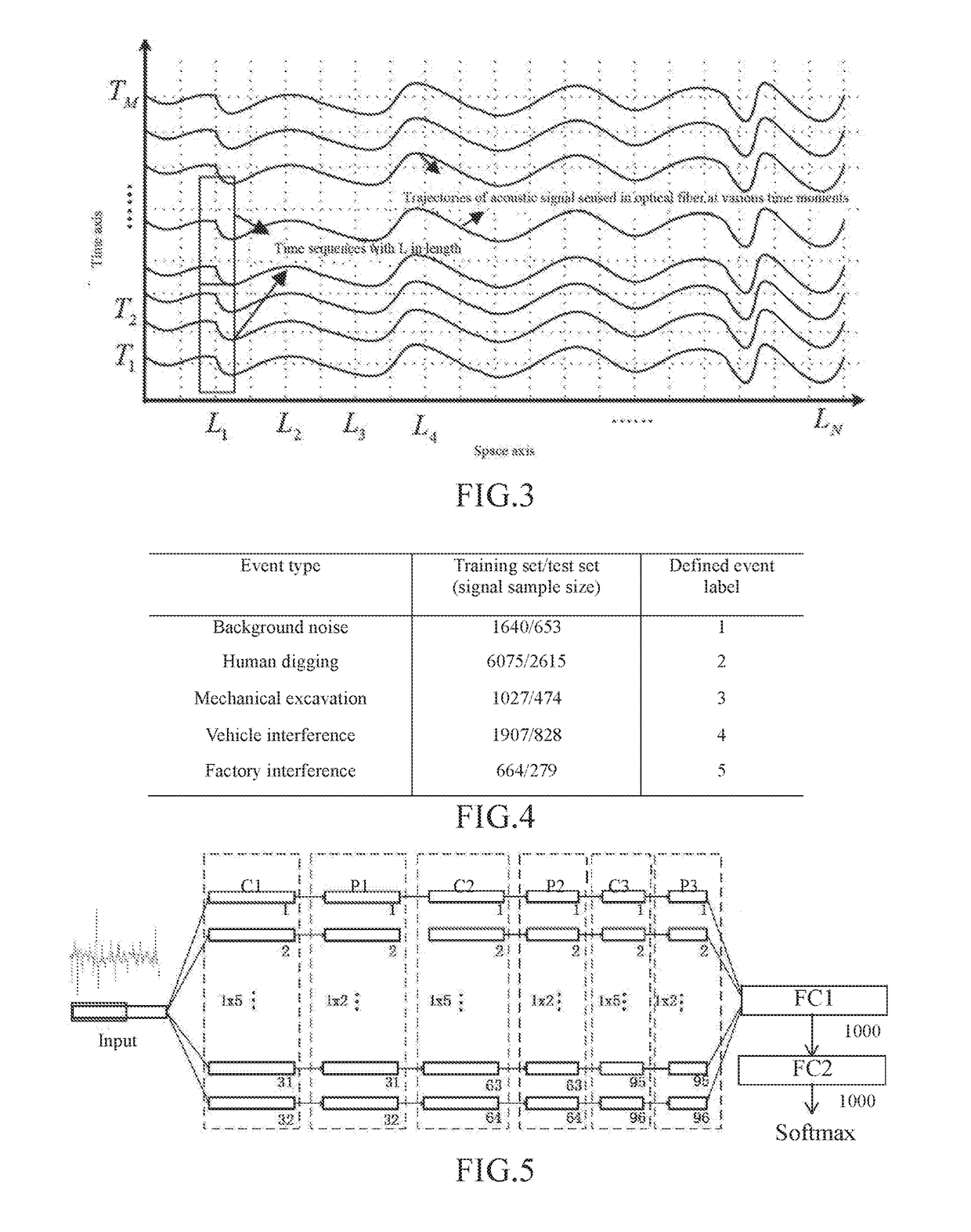

1D-CNN-Based Distributed Optical Fiber Sensing Signal Feature Learning and Classification Method

A 1D-CNN-based ((one-dimensional convolutional neural network)-based) distributed optical fiber sensing signal feature learning and classification method is provided, which solves a problem that an existing distributed optical fiber sensing system has poor adaptive ability to a complex and changing environment and consumes time and effort due to adoption of manually extracted distinguishable event features, The method includes steps of: segmenting time sequences of distributed optical fiber sensing acoustic and vibration signals acquired at all spatial points, and building a typical event signal dataset; constructing a 1D-CNN model, conducting iterative update training of the network through typical event signals in a training dataset to obtain optimal network parameters, and learning and extracting 1D-CNN distinguishable features of different types of events through an optimal network to obtain typical event signal feature sets; and after training different types of classifiers through the typical event signal feature sets, screening out an optimal classifier.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

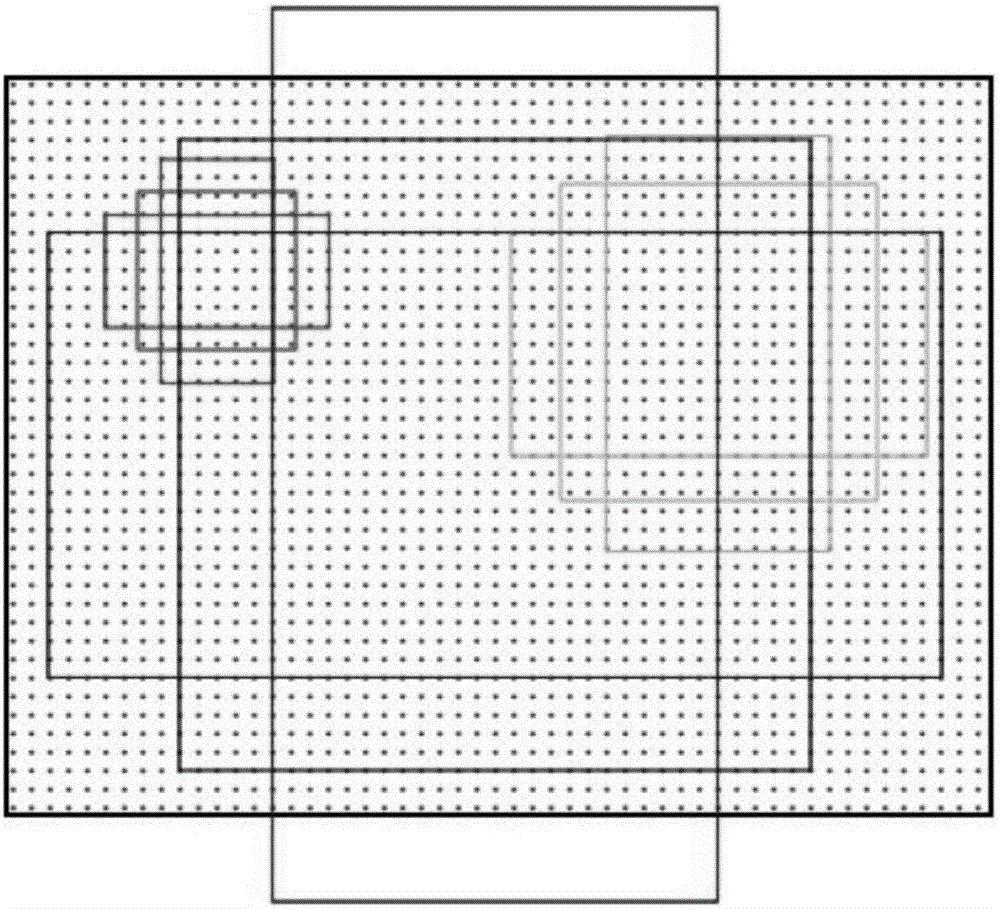

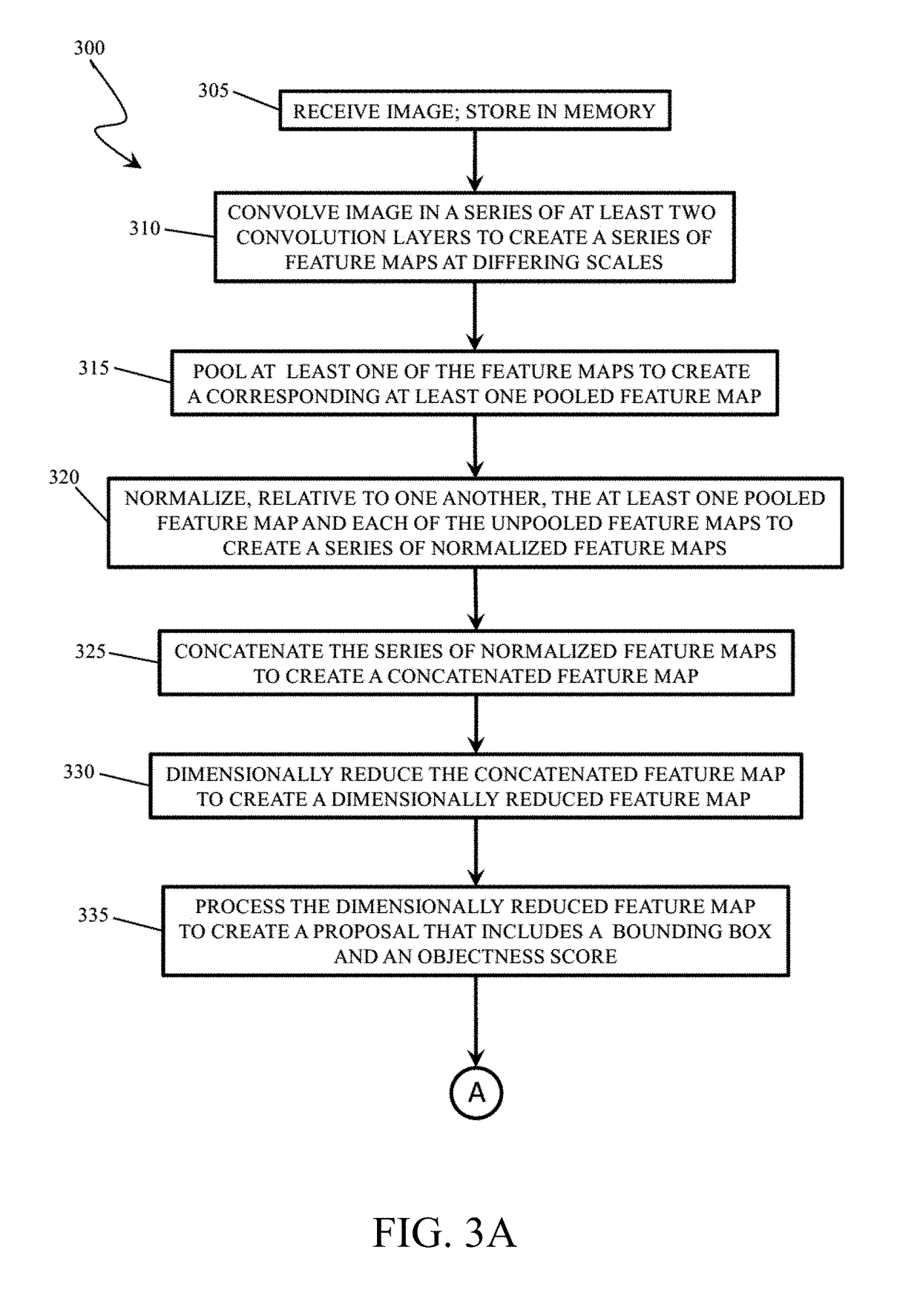

Methods and Software for Detecting Objects in an Image Using Contextual Multiscale Fast Region-Based Convolutional Neural Network

ActiveUS20180068198A1Character and pattern recognitionNeural architecturesNerve networkContext based

Methods of detecting an object in an image using a convolutional neural-network-based architecture that processes multiple feature maps of differing scales from differing convolution layers within a convolutional network to create a regional-proposal bounding box. The bounding box is projected back to the feature maps of the individual convolution layers to obtain a set of regions of interest (ROIs) and a corresponding set of context regions that provide additional context for the ROIs. These ROIs and context regions are processed to create a confidence score representing a confidence that the object detected in the bounding box is the desired object. These processes allow the method to utilize deep features encoded in both the global and the local representation for object regions, allowing the method to robustly deal with challenges in the problem of object detection. Software for executing the disclosed methods within an object-detection system is also disclosed.

Owner:CARNEGIE MELLON UNIV

Face recognition method, device, system and apparatus based on convolutional neural network

ActiveCN106951867AIncreased non-linear capabilitiesNonlinear Capability LeapCharacter and pattern recognitionNeural architecturesFace detectionImaging condition

The invention discloses a face recognition method, device, system and apparatus based on a convolutional neural network (CNN). The method comprises the following steps: S1, face detection: using a multilayer CNN feature architecture; S2, key point positioning: obtaining the key point positions of the face by using connecting reference frame regression networks in a cascaded way in deep learning; S3, preprocessing: obtaining a face image with a fixed size; S4, feature extraction: obtaining a feature representative vector by means of a feature extraction model; and S5: feature comparison: determining the similarity according to a threshold or giving a face recognition result according to distance sorting. The method adds a multilayer CNN feature combination to a traditional CNN single-layer feature architecture to treat different imaging conditions, trains a face detection network with good robustness in the monitoring environment from massive image data sets based on a deep convolution neural network algorithm, reduces a false detection rate, and improves detection response speed.

Owner:南京擎声网络科技有限公司

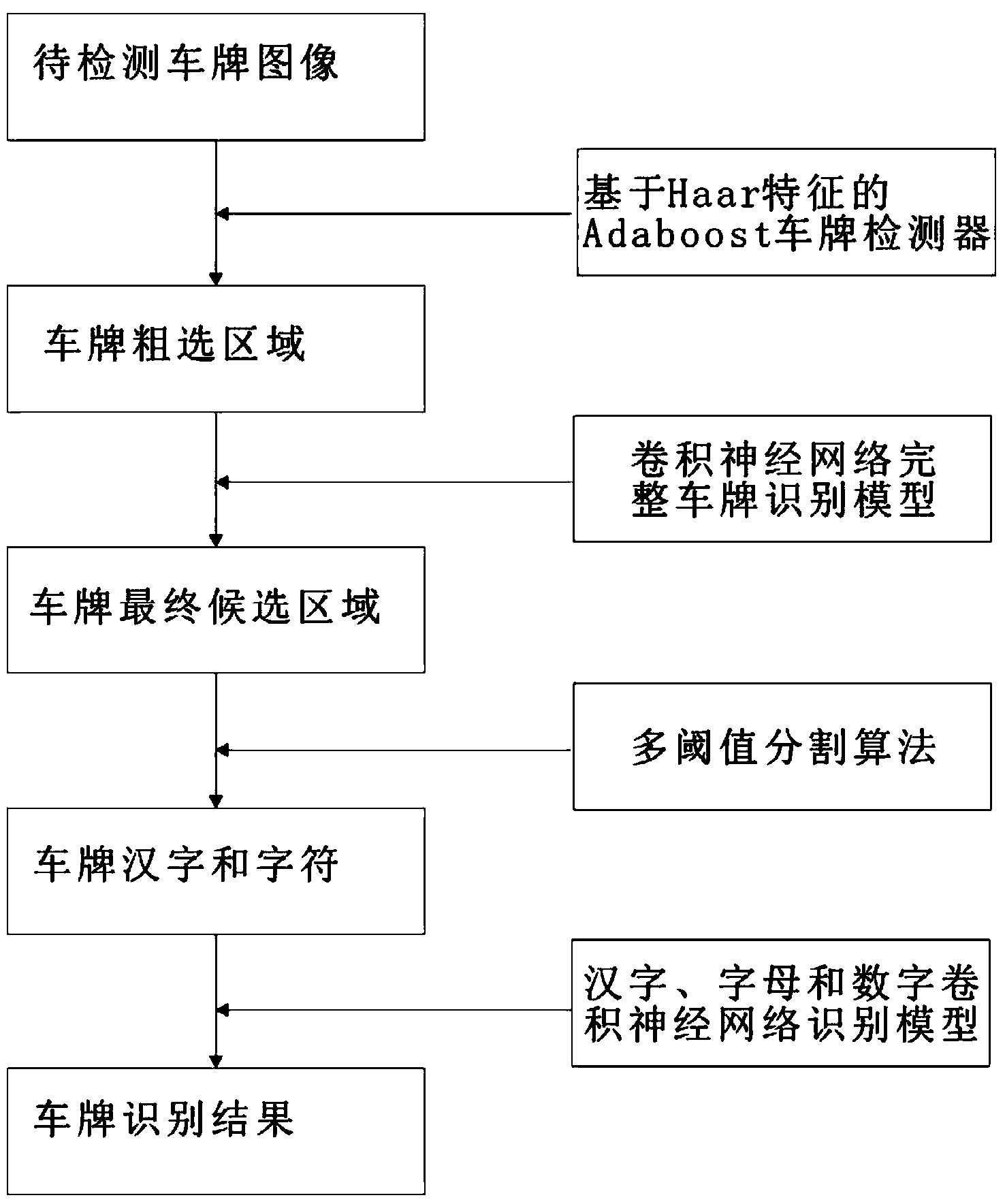

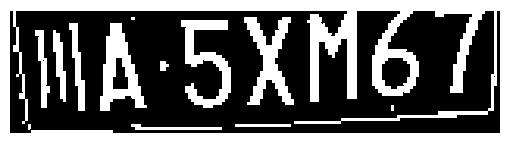

License plate detection method based on convolutional neural network

InactiveCN104298976AAccurate identificationEasy to identifyCharacter and pattern recognitionChinese charactersLicense

The invention discloses a license plate detection method based on a convolutional neural network. The method specifically includes the steps that an Adaboost license plate detector based on Haar characteristics detects license plate images to be detected, license plate roughing regions are acquired, a convolutional neural network complete license plate recognition model recognizes the license plate roughing regions, a final license plate candidate region is acquired, the final license plate candidate region is segmented through a multi-threshold segmentation algorithm, license plate Chinese characters, letters and numbers are acquired, a Chinese character, letter and number convolutional neural network recognition model recognizes the license plate Chinese characters, letters and numbers, and then a license plate recognition result is acquired. License plate images under different conditions can be accurately recognized through the Adaboost license plate detector based on the Haar characteristics and the convolutional neural network complete license plate recognition model, meanwhile, characters are segmented through the multi-threshold segmentation algorithm, character images can be more easily and conveniently segmented, and the good effect is achieved in engineering application.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

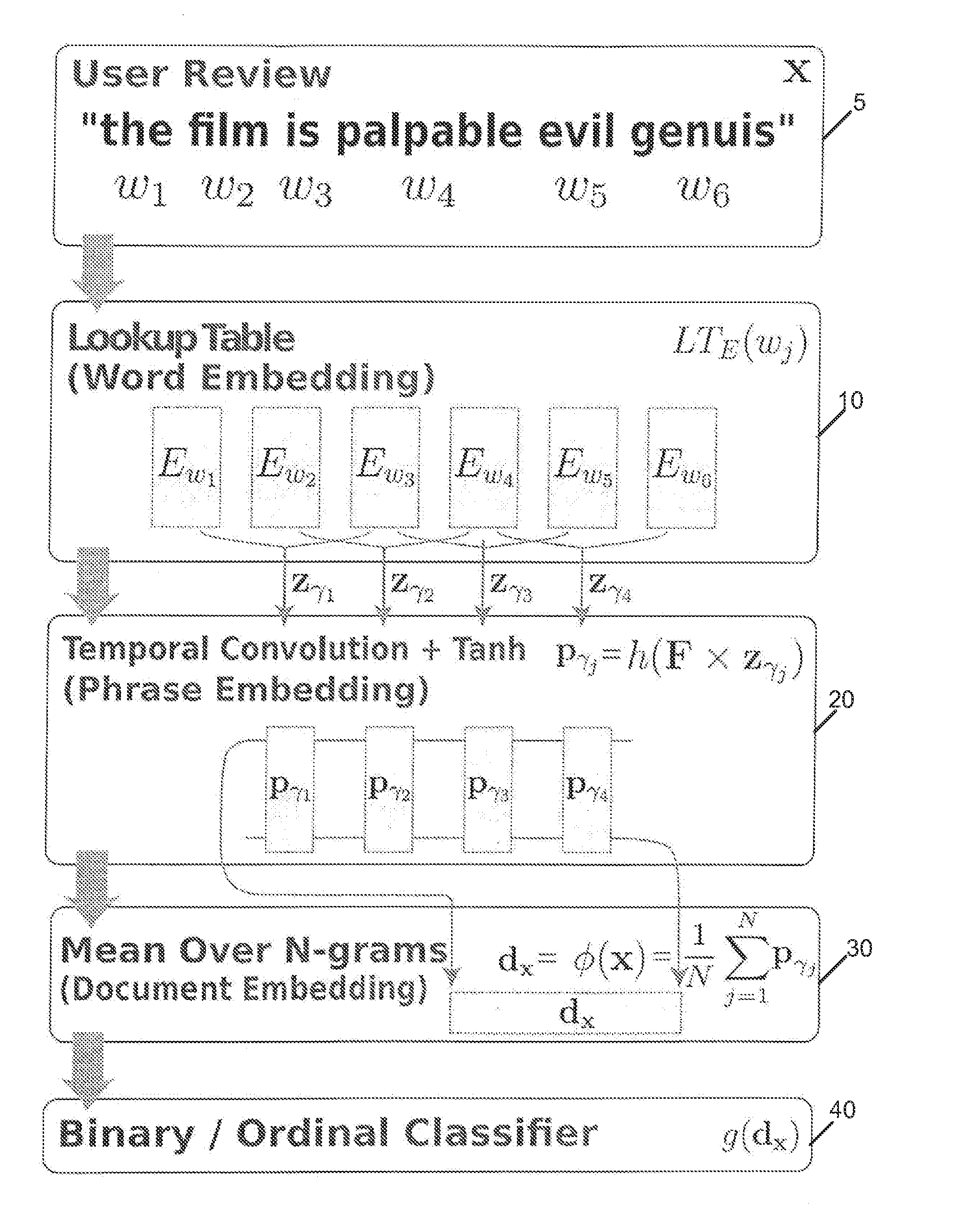

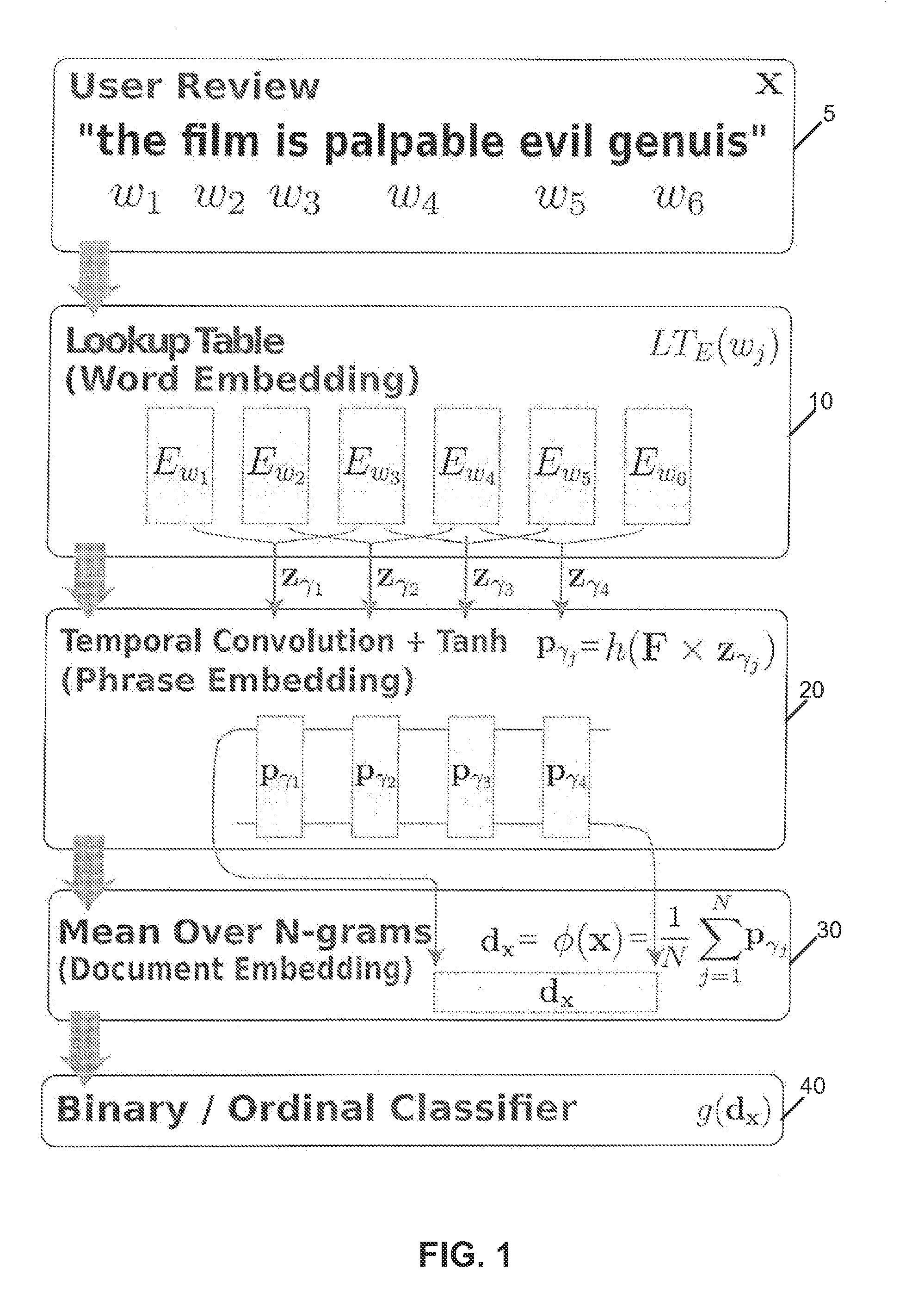

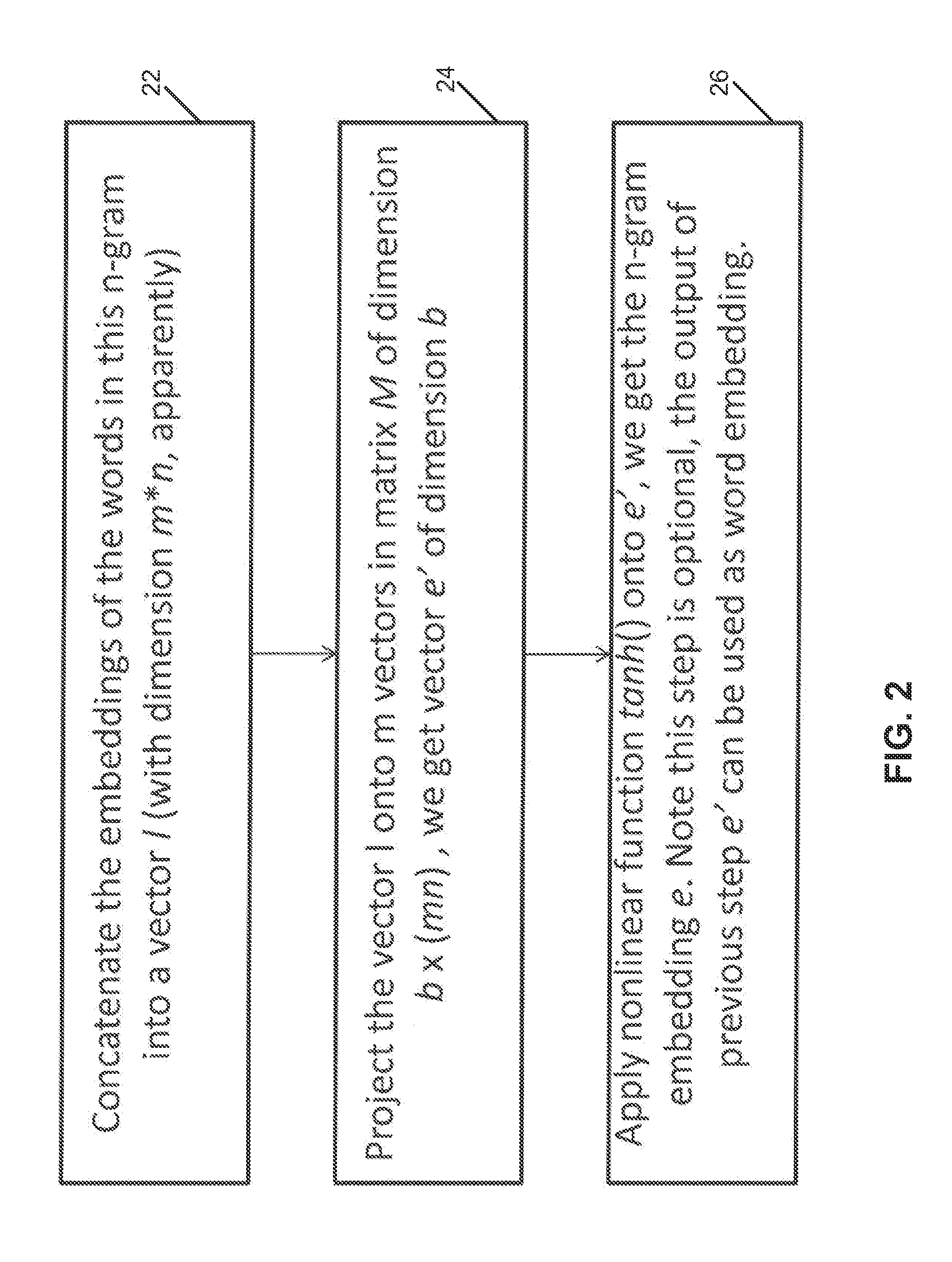

Sentiment Classification Based on Supervised Latent N-Gram Analysis

InactiveUS20120253792A1Digital data information retrievalSpecial data processing applicationsSemantic spaceEmotion classification

A method for sentiment classification of a text document using high-order n-grams utilizes a multilevel embedding strategy to project n-grams into a low-dimensional latent semantic space where the projection parameters are trained in a supervised fashion together with the sentiment classification task. Using, for example, a deep convolutional neural network, the semantic embedding of n-grams, the bag-of-occurrence representation of text from n-grams, and the classification function from each review to the sentiment class are learned jointly in one unified discriminative framework.

Owner:NEC LAB AMERICA

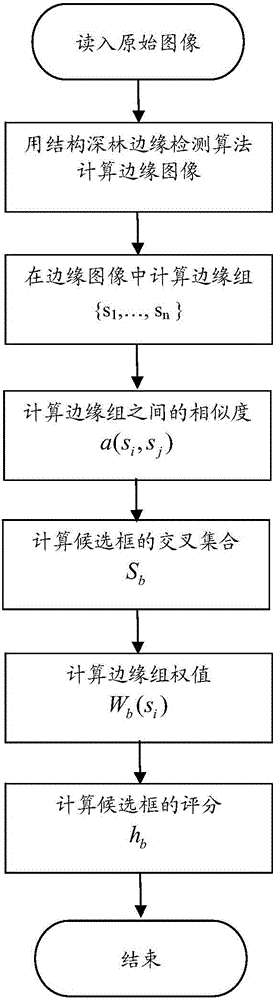

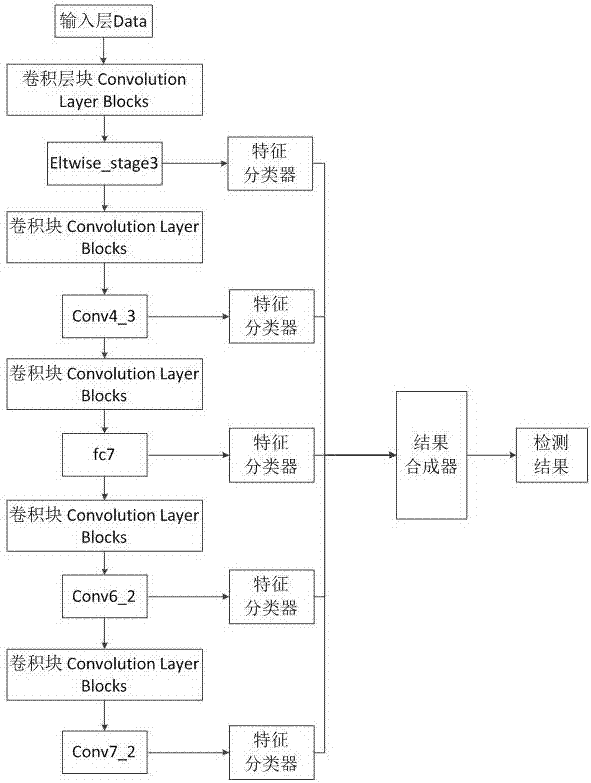

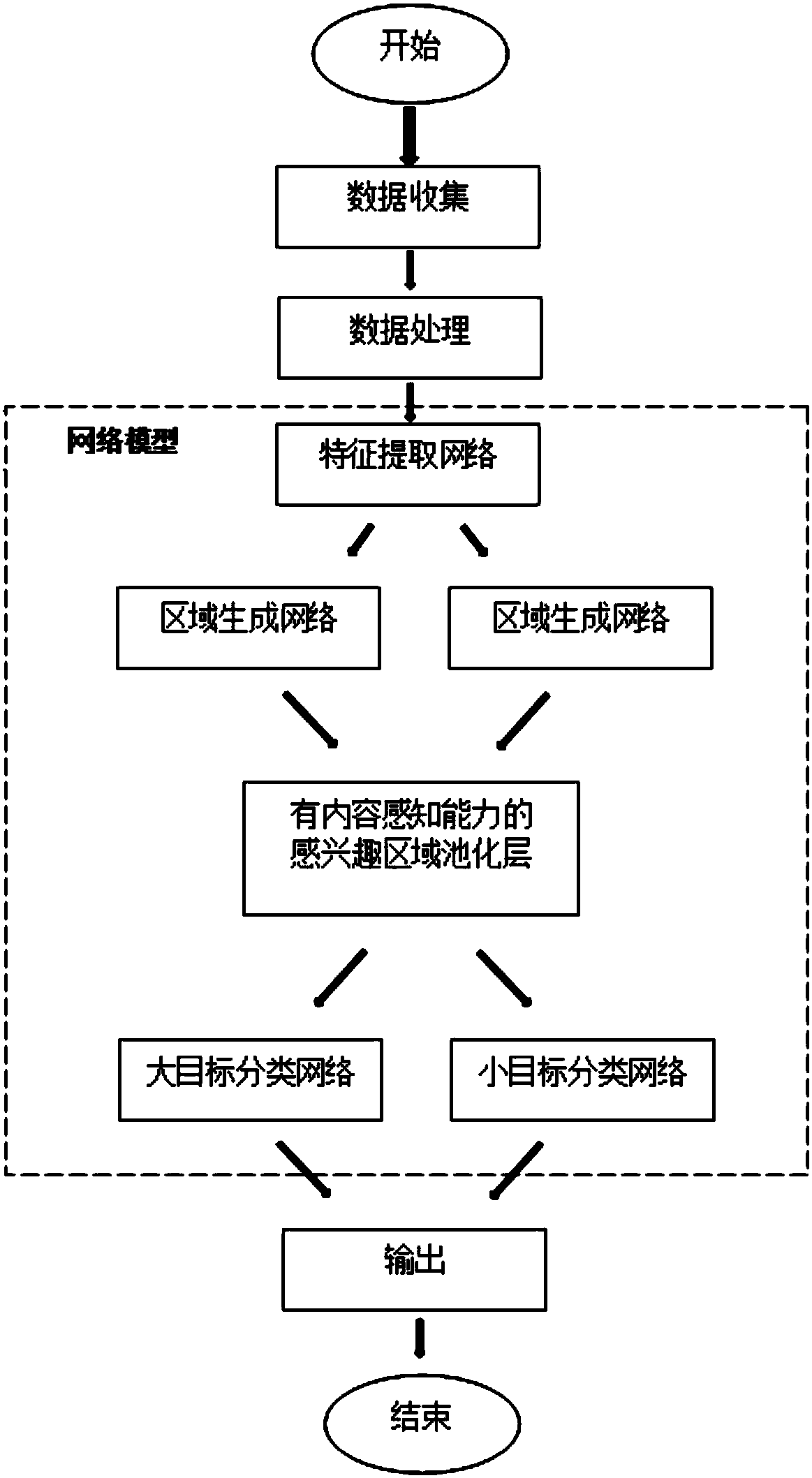

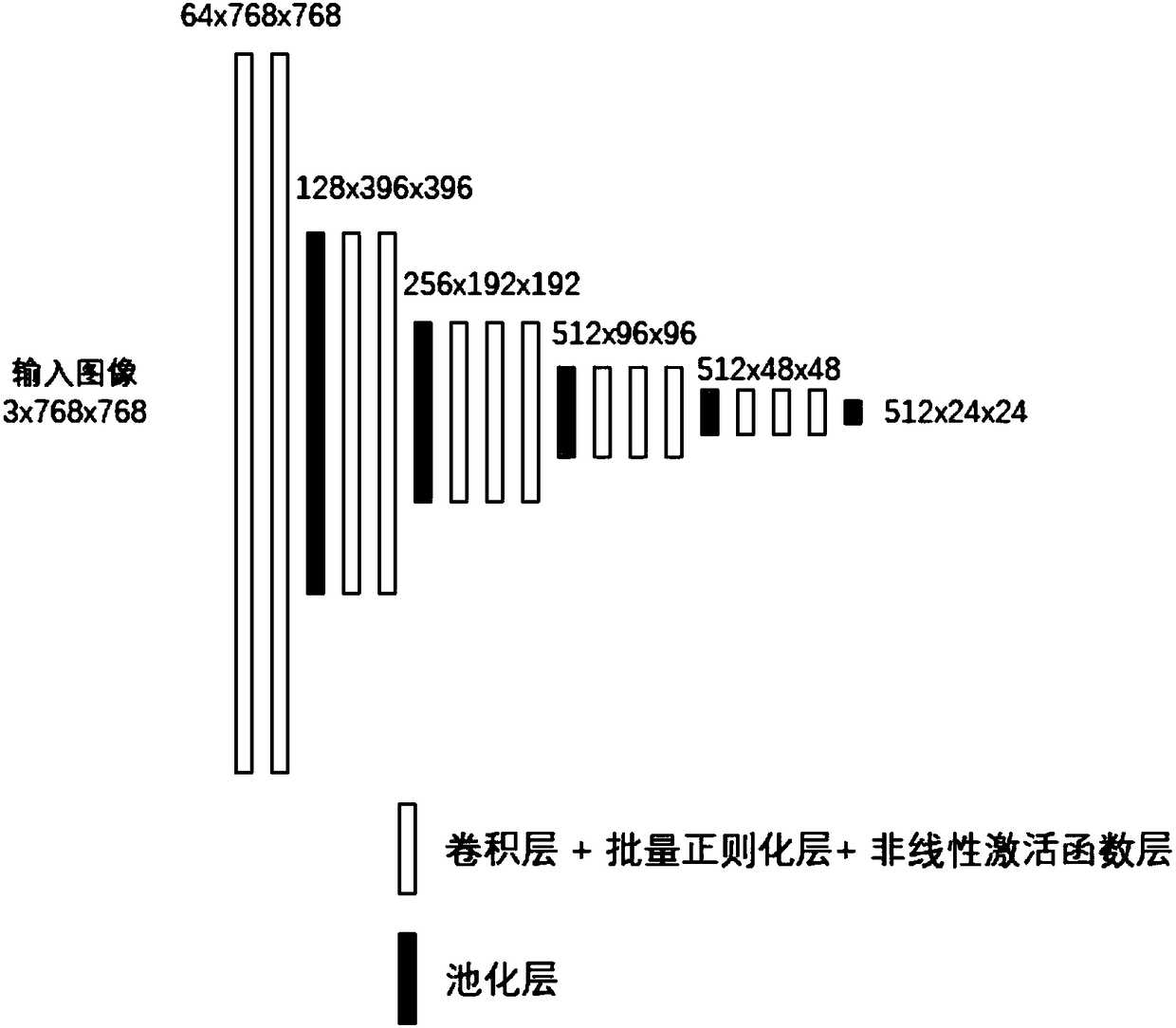

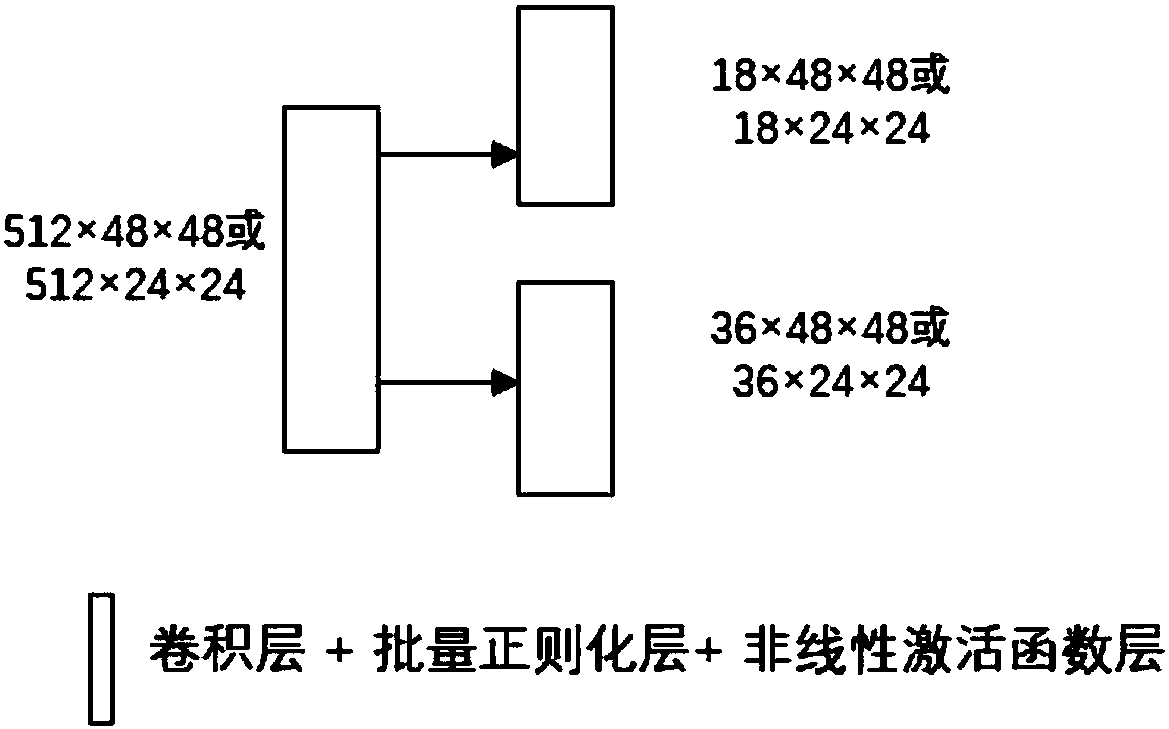

Multi-scale target detection method based on deep convolutional neural network

ActiveCN108564097AAccurate distinctionAccurate detectionCharacter and pattern recognitionNeural architecturesNerve networkNetwork generation

The invention discloses a multi-scale target detection method based on a deep convolutional neural network. The method comprises the steps of (1) data acquisition; (2) data processing; (3) model construction; (4) loss function definition; (5) model training; and (6) model verification. The method combines the ability of extracting image high-level semantic information of the deep convolutional neural network, the ability of generating candidate regions of region generation networks, the repair and mapping abilities of a content-aware region-of-interest pooling layer and the precise classification ability of multi-task classification networks, and therefore multi-scale target detection is completed more accurately and efficiently.

Owner:SOUTH CHINA UNIV OF TECH

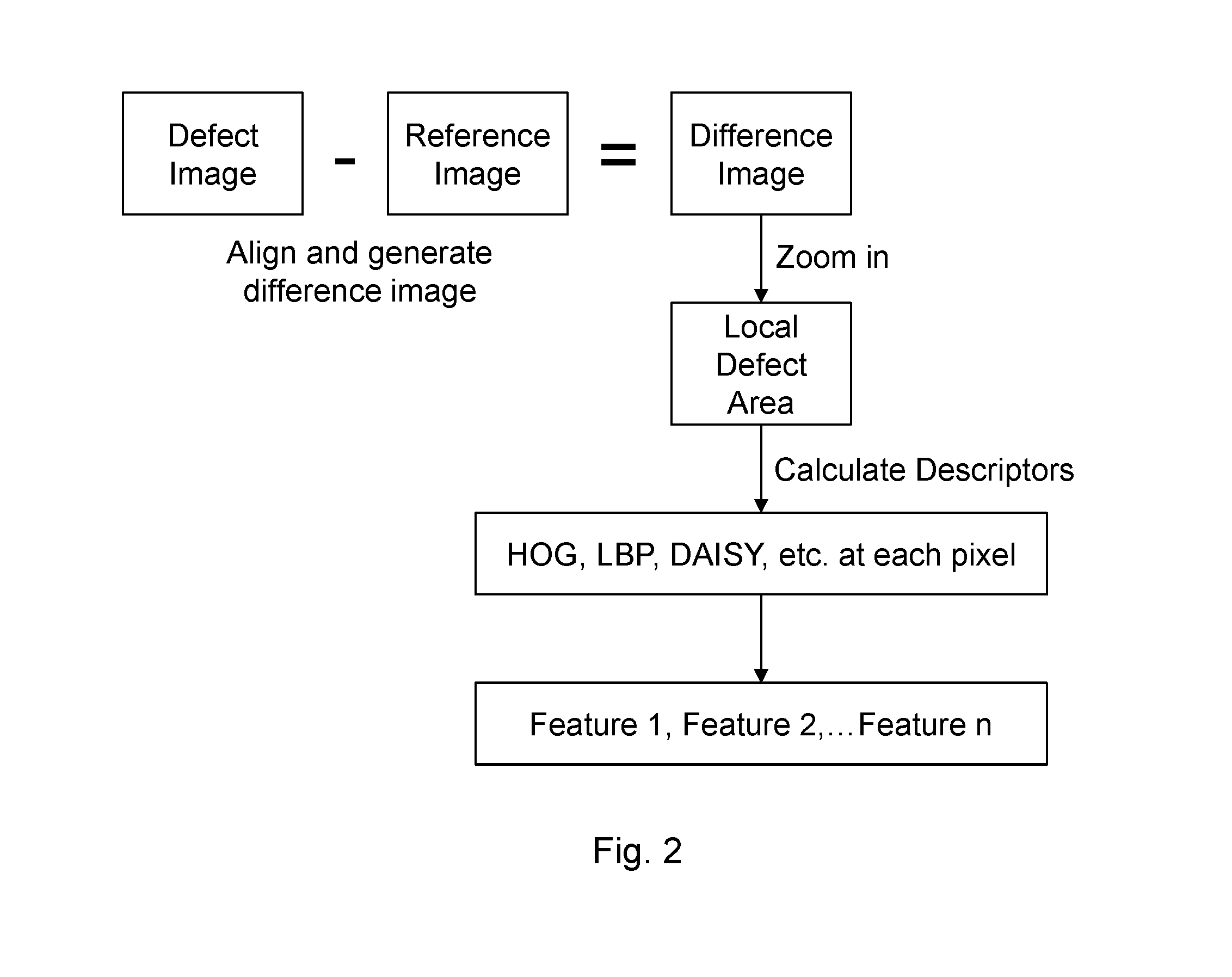

Automatic Defect Classification Without Sampling and Feature Selection

ActiveUS20160163035A1Small sizeReduce variationImage enhancementImage analysisElectronic communicationFeature selection

Systems and methods for defection classification in a semiconductor process are provided. The system includes a communication line configured to receive a defect image of a wafer from the semiconductor process and a deep-architecture neural network in electronic communication with the communication line. The neural network has a first convolution layer of neurons configured to convolve pixels from the defect image with a filter to generate a first feature map. The neural network also includes a first subsampling layer configured to reduce the size and variation of the first feature map. A classifier is provided for determining a defect classification based on the feature map. The system may include more than one convolution layers and / or subsampling layers. A method includes extracting one or more features from a defect image using a deep-architecture neural network, for example a convolutional neural network.

Owner:KLA TENCOR TECH CORP

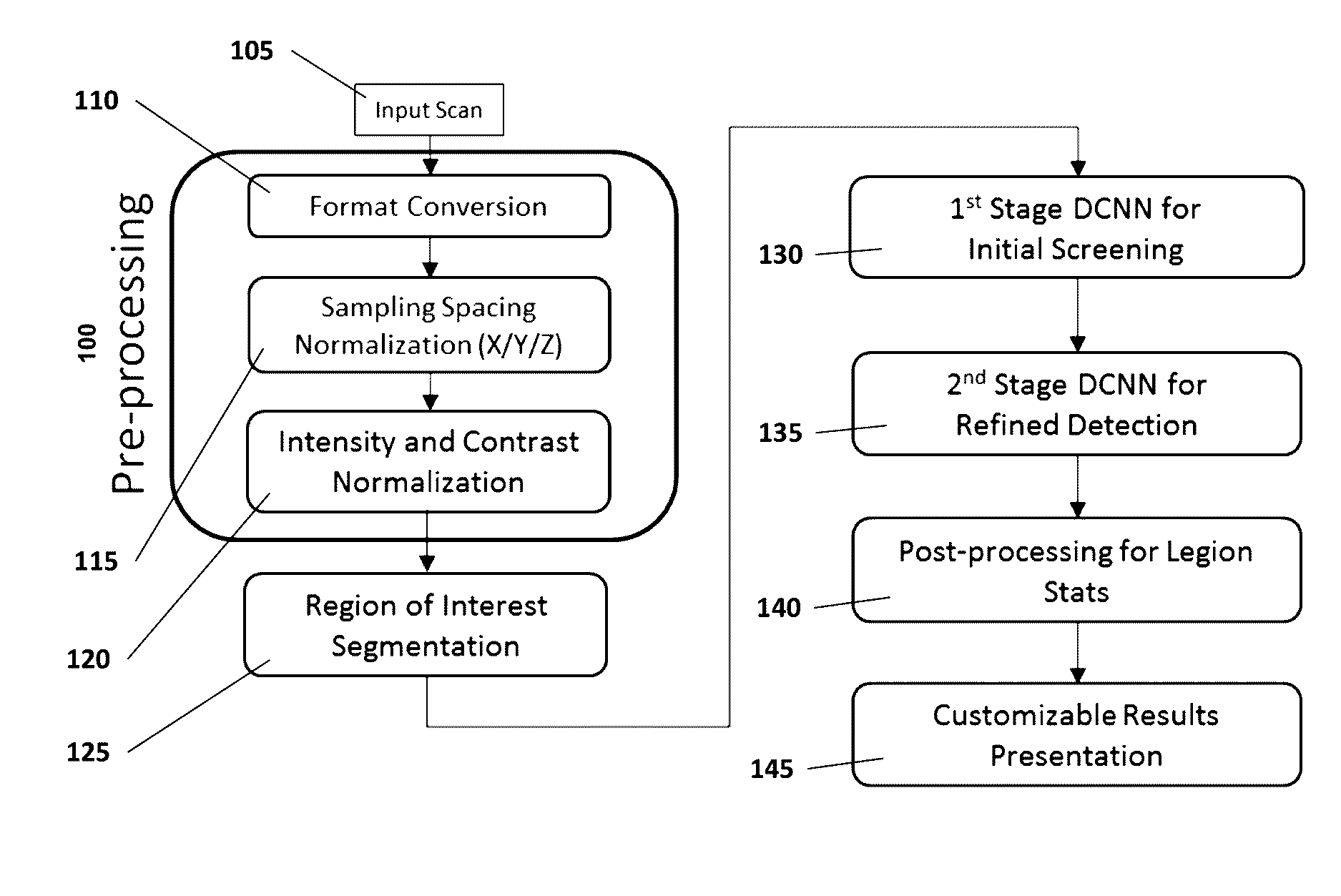

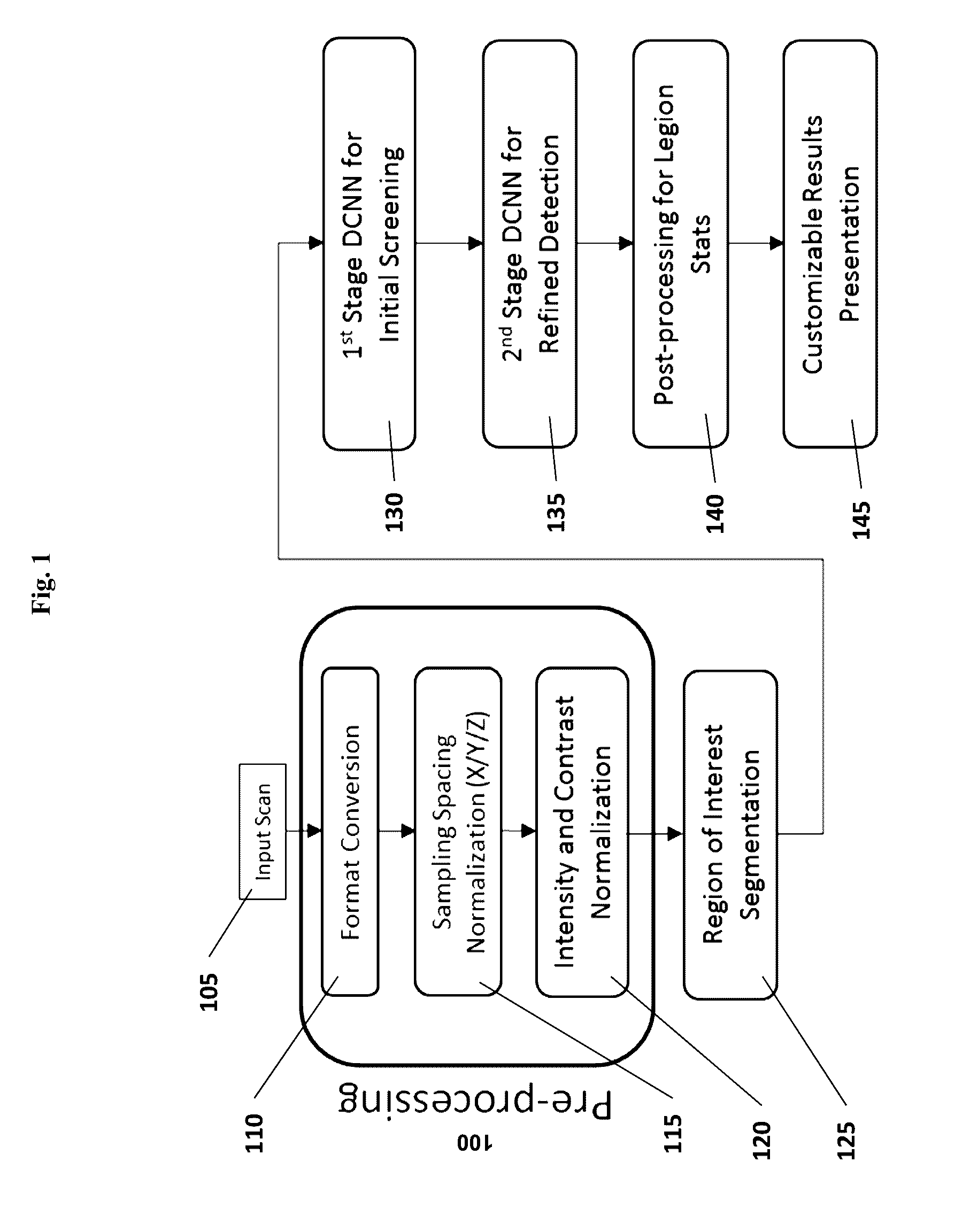

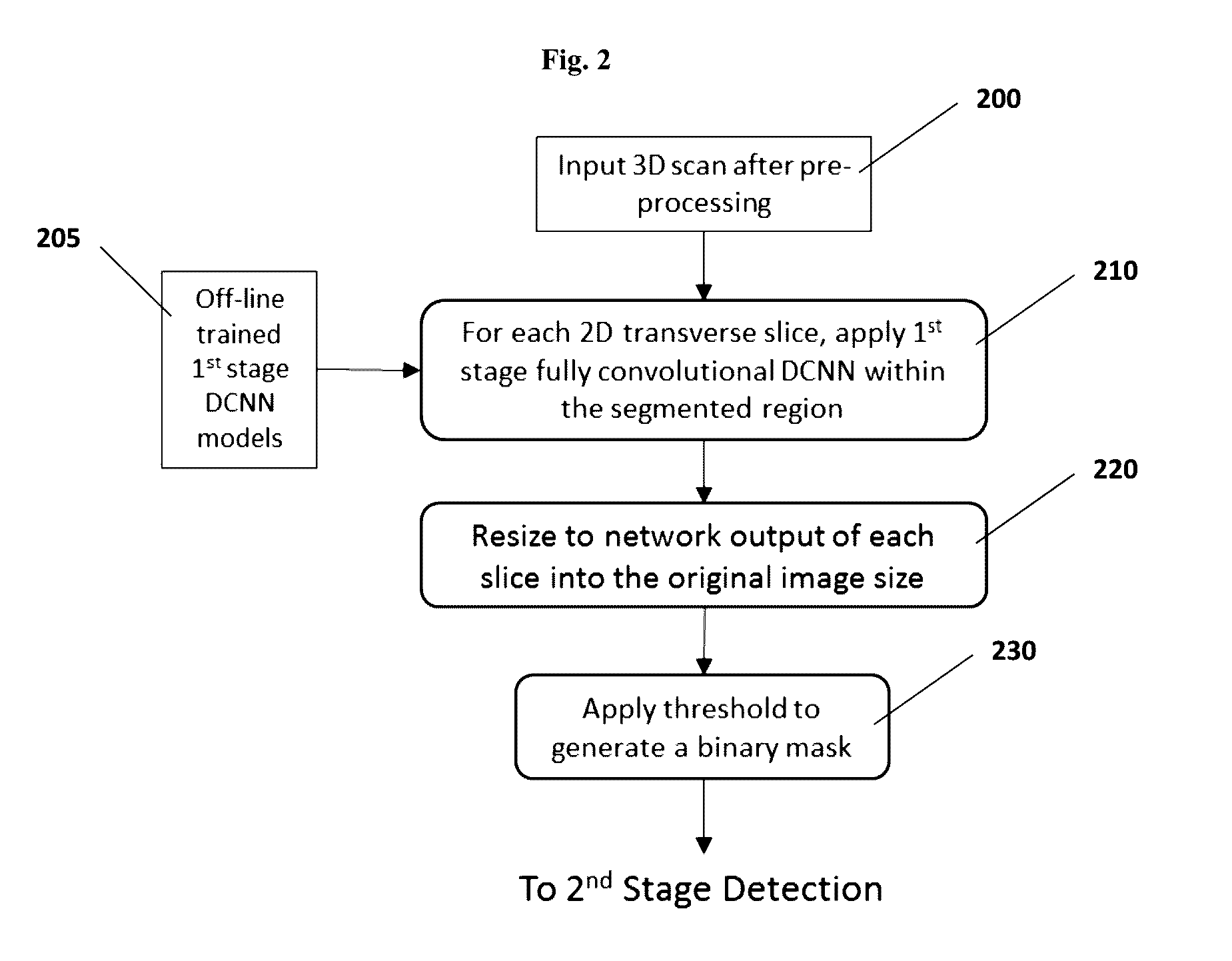

Computer-aided diagnosis system for medical images using deep convolutional neural networks

InactiveUS9589374B1Examination time can be shortenedImprove diagnostic accuracyUltrasonic/sonic/infrasonic diagnosticsImage enhancementComputer visionAided diagnosis

Owner:12 SIGMA TECH

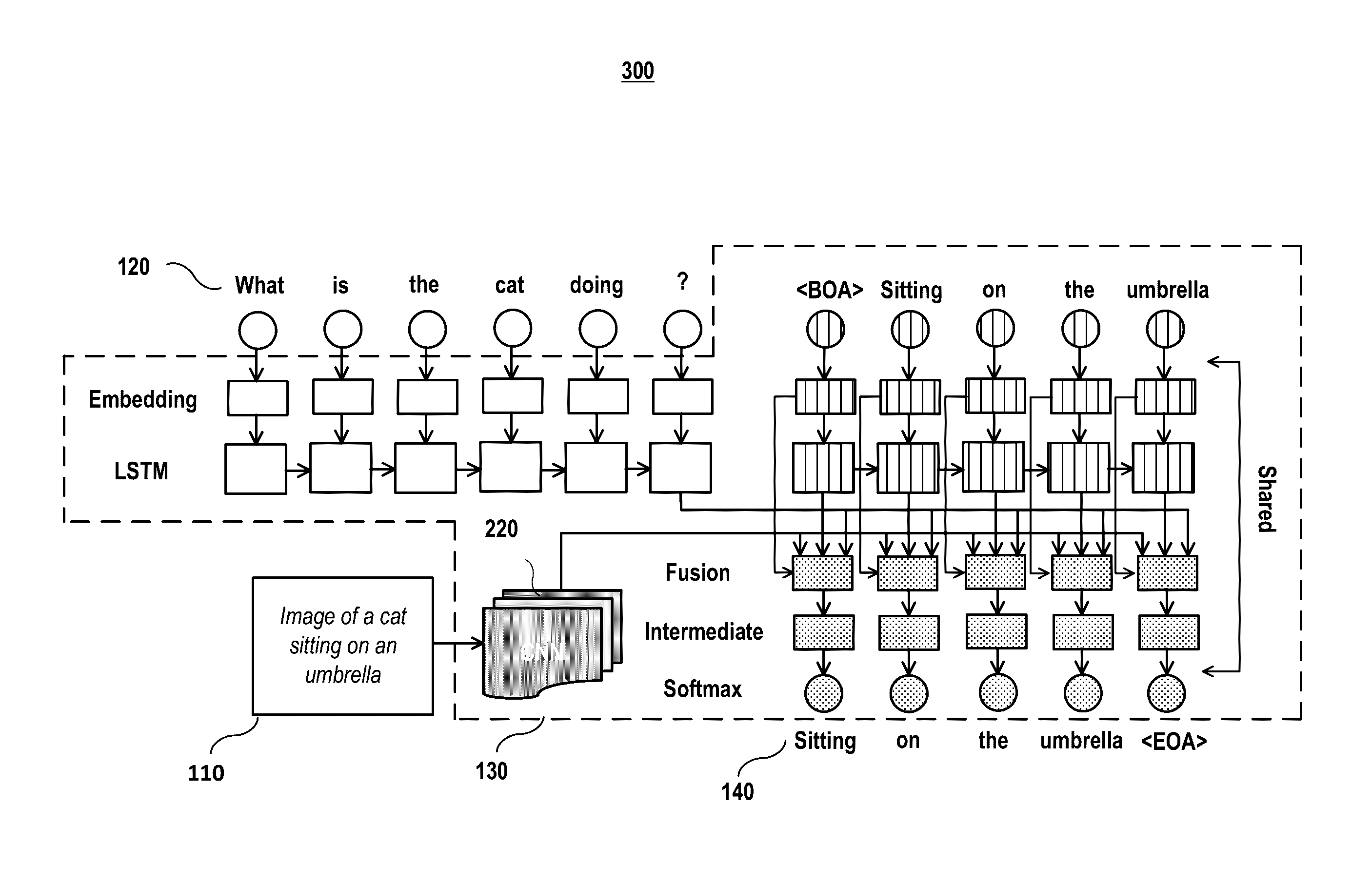

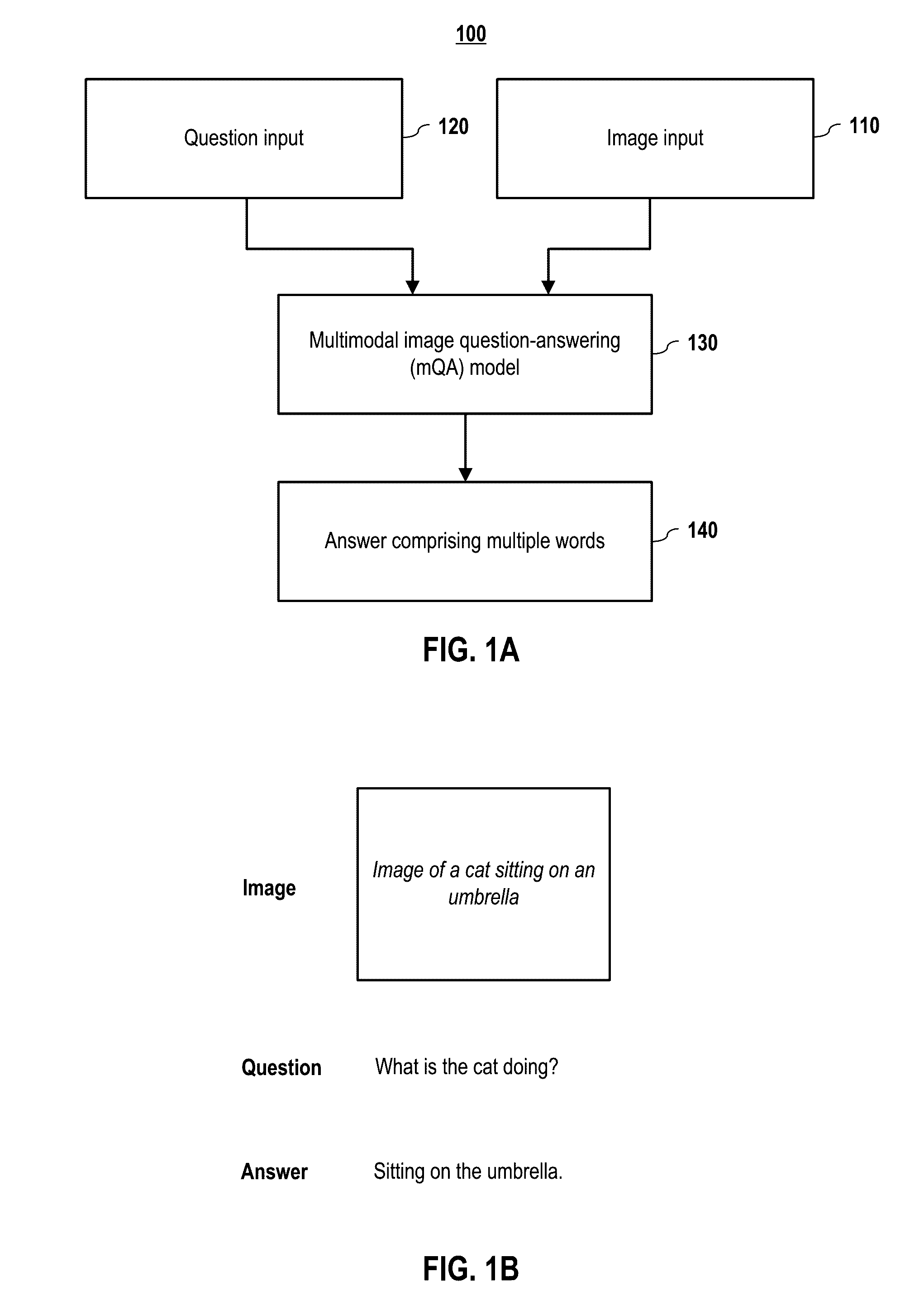

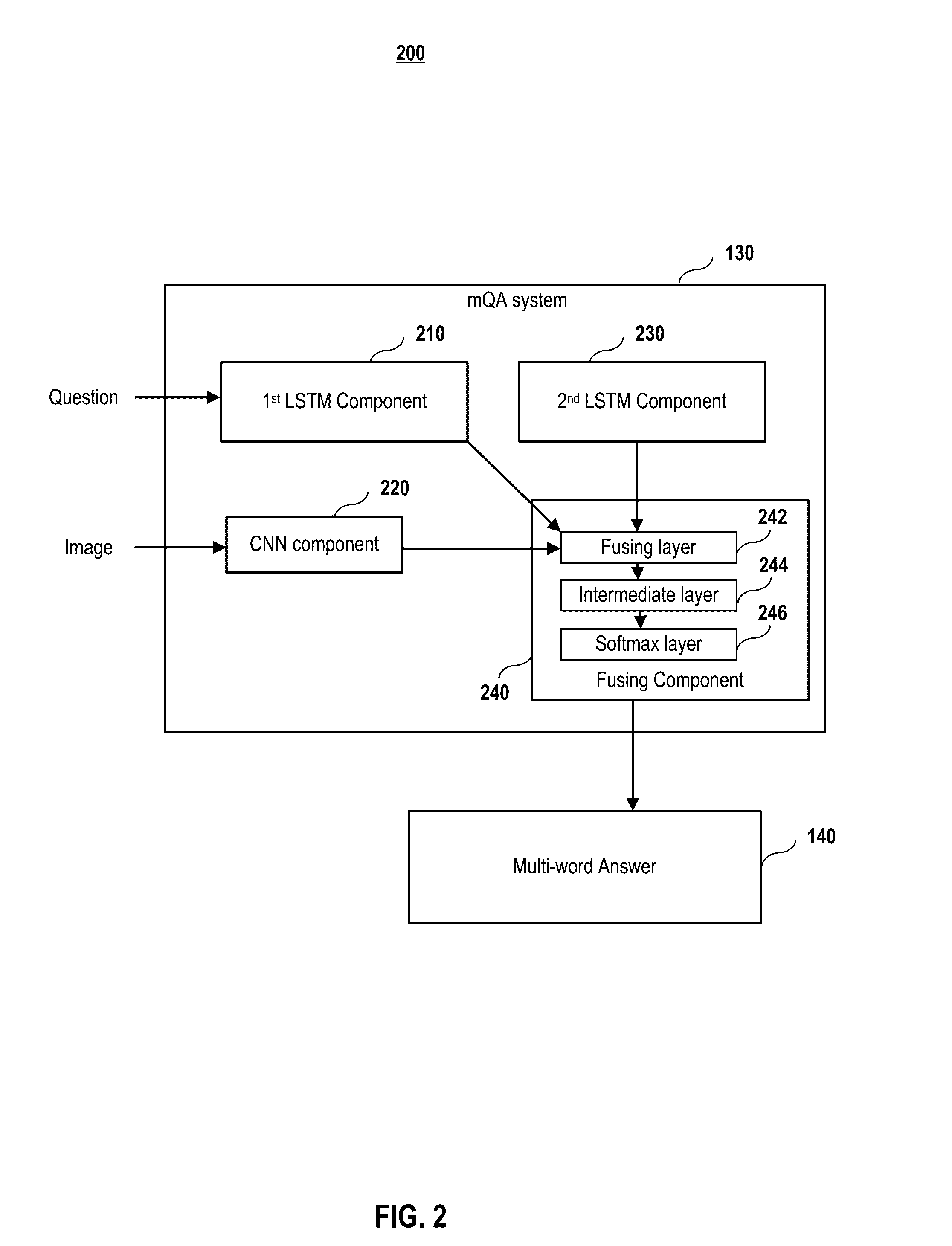

Multilingual image question answering

ActiveUS20160342895A1Natural language translationKnowledge representationData miningLong short term memory

Embodiments of a multimodal question answering (mQA) system are presented to answer a question about the content of an image. In embodiments, the model comprises four components: a Long Short-Term Memory (LSTM) component to extract the question representation; a Convolutional Neural Network (CNN) component to extract the visual representation; an LSTM component for storing the linguistic context in an answer, and a fusing component to combine the information from the first three components and generate the answer. A Freestyle Multilingual Image Question Answering (FM-IQA) dataset was constructed to train and evaluate embodiments of the mQA model. The quality of the generated answers of the mQA model on this dataset is evaluated by human judges through a Turing Test.

Owner:BAIDU USA LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com