Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1831 results about "Loss function" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In mathematical optimization and decision theory, a loss function or cost function is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its negative (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized.

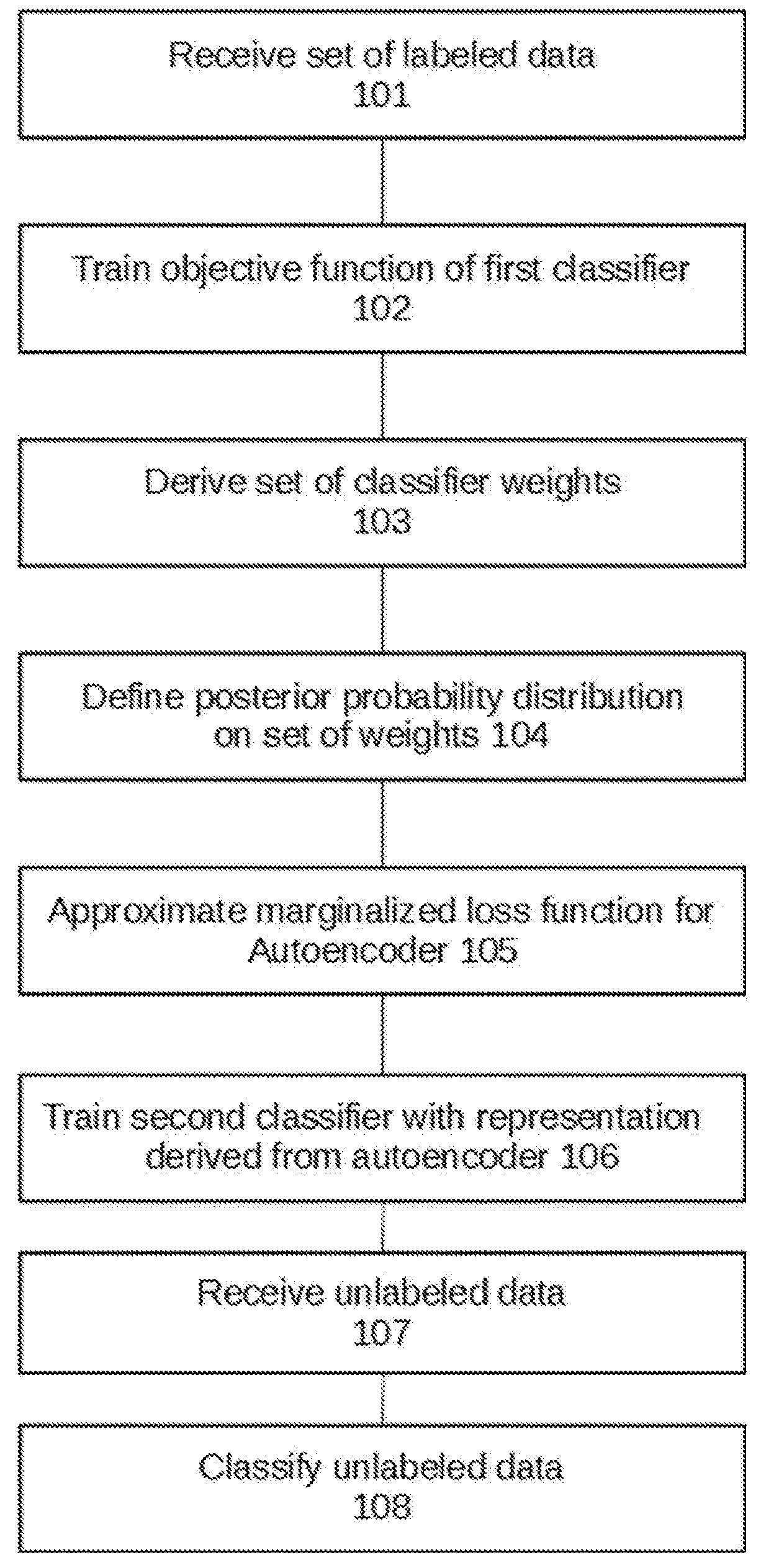

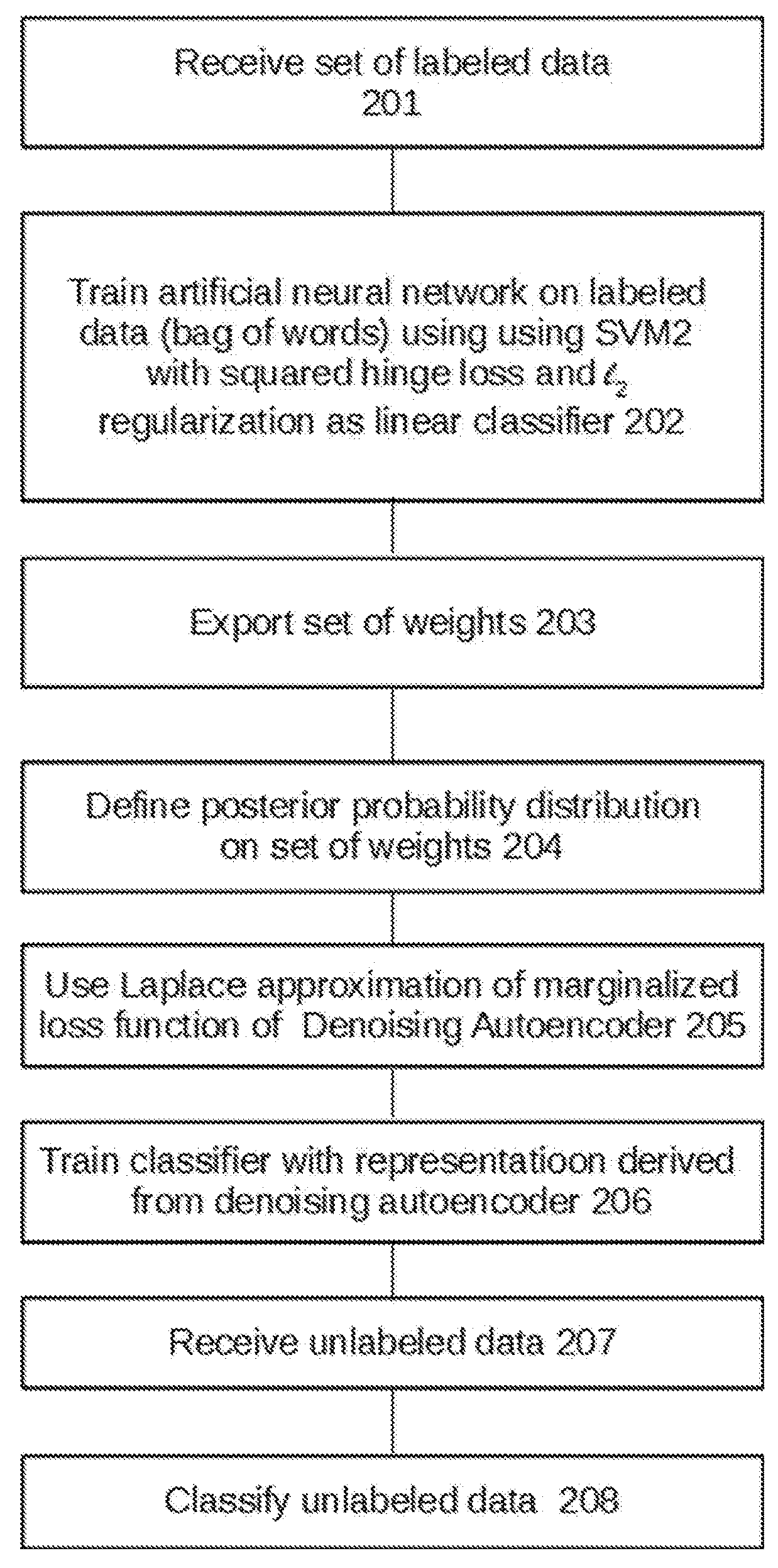

Semisupervised autoencoder for sentiment analysis

ActiveUS20180165554A1Reduce biasImprove performanceMathematical modelsKernel methodsLabeled dataComputer science

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

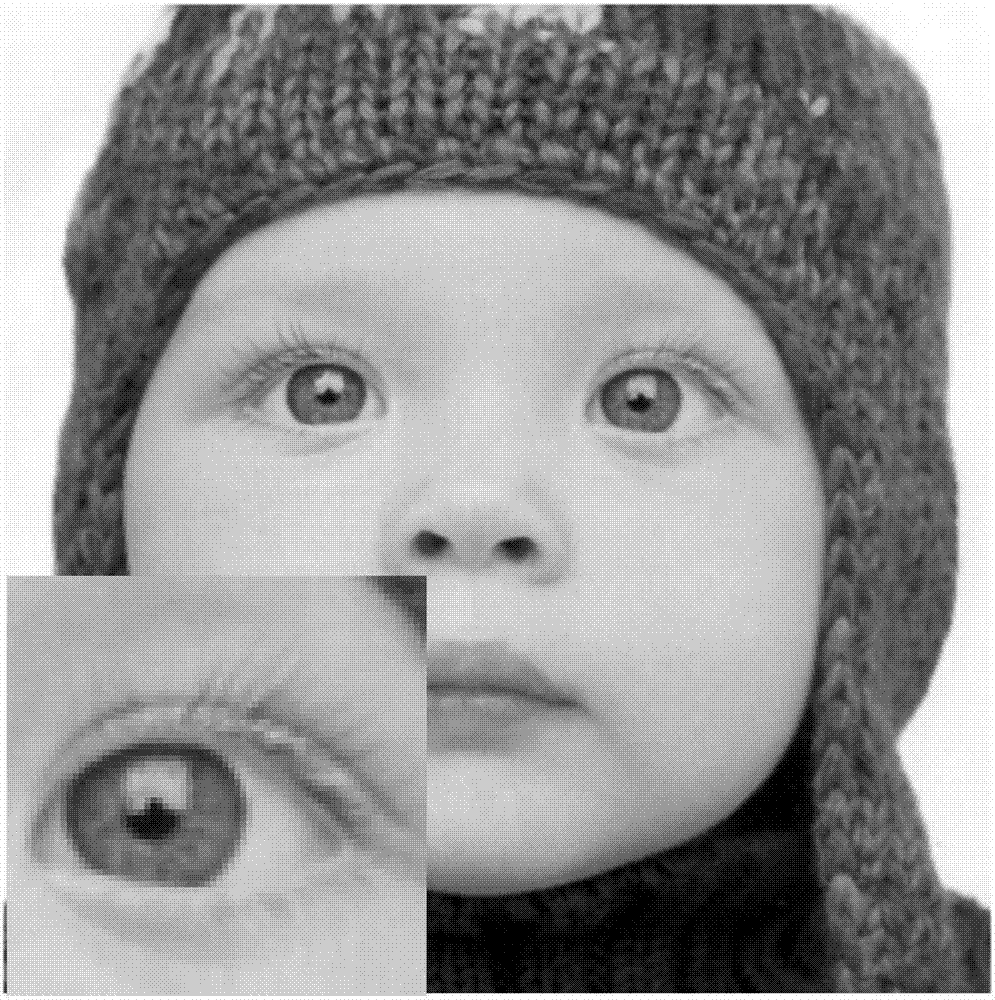

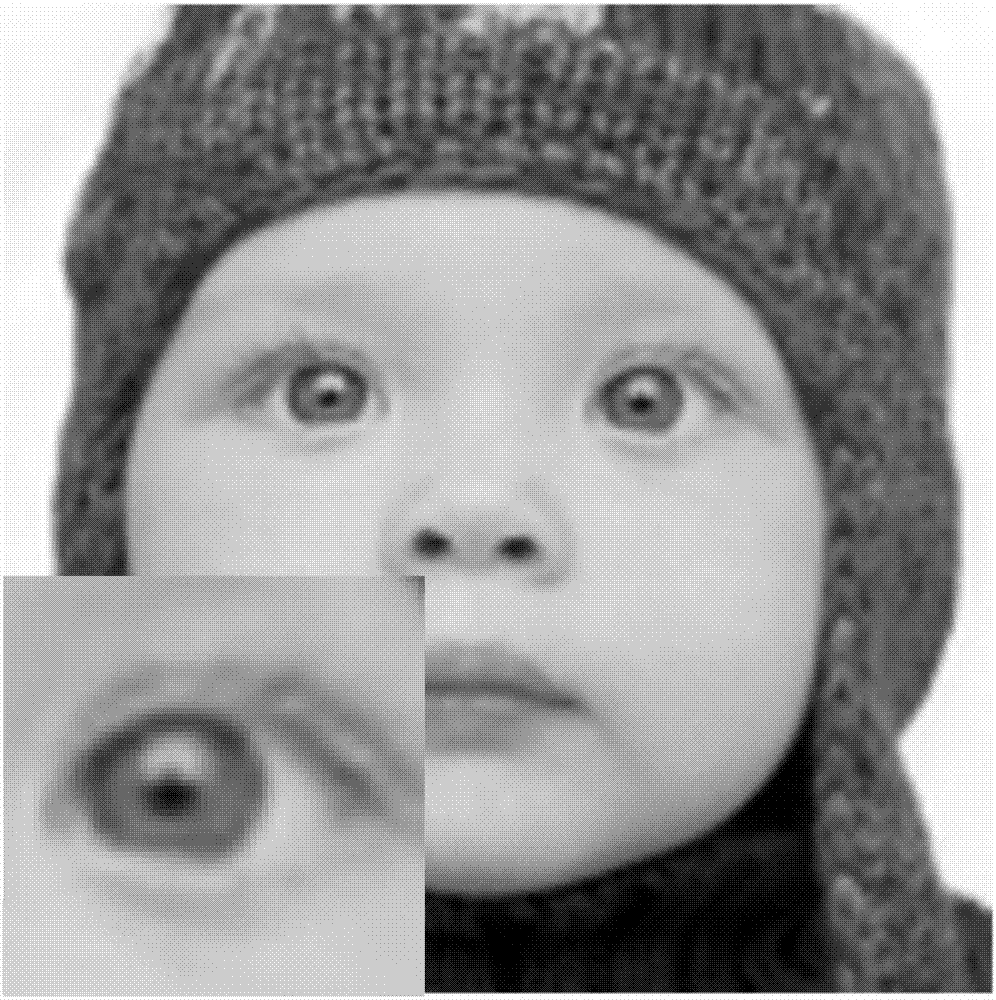

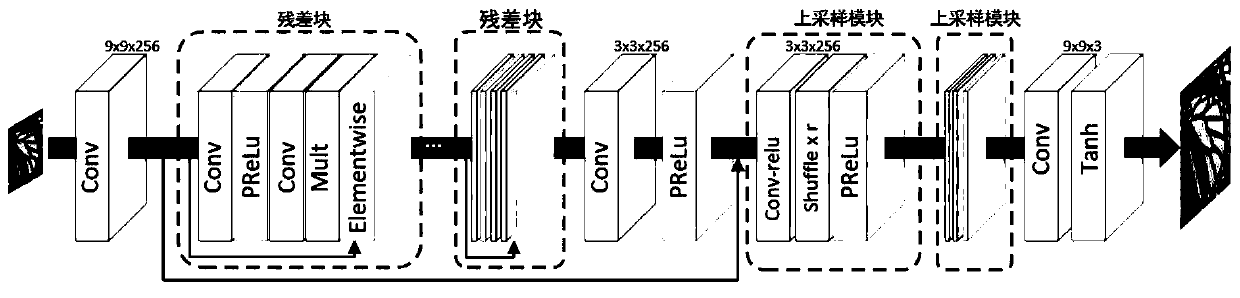

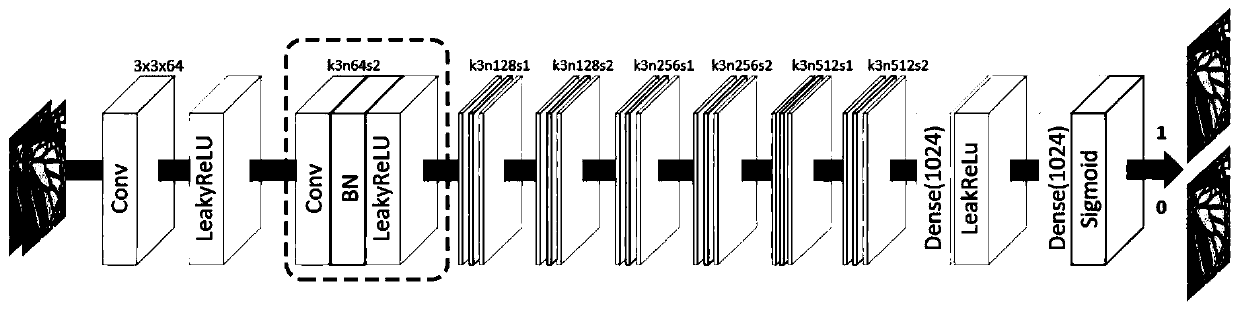

Human face super-resolution reconstruction method based on generative adversarial network and sub-pixel convolution

ActiveCN107154023AImprove recognition accuracyClear outline of the faceGeometric image transformationNeural architecturesData setImage resolution

The invention discloses a human face super-resolution reconstruction method based on a generative adversarial network and sub-pixel convolution, and the method comprises the steps: A, carrying out the preprocessing through a normally used public human face data set, and making a low-resolution human face image and a corresponding high-resolution human face image training set; B, constructing the generative adversarial network for training, adding a sub-pixel convolution to the generative adversarial network to achieve the generation of a super-resolution image and introduce a weighted type loss function comprising feature loss; C, sequentially inputting a training set obtained at step A into a generative adversarial network model for modeling training, adjusting the parameters, and achieving the convergence; D, carrying out the preprocessing of a to-be-processed low-resolution human face image, inputting the image into the generative adversarial network model, and obtaining a high-resolution image after super-resolution reconstruction. The method can achieve the generation of a corresponding high-resolution image which is clearer in human face contour, is more specific in detail and is invariable in features. The method improves the human face recognition accuracy, and is better in human face super-resolution reconstruction effect.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

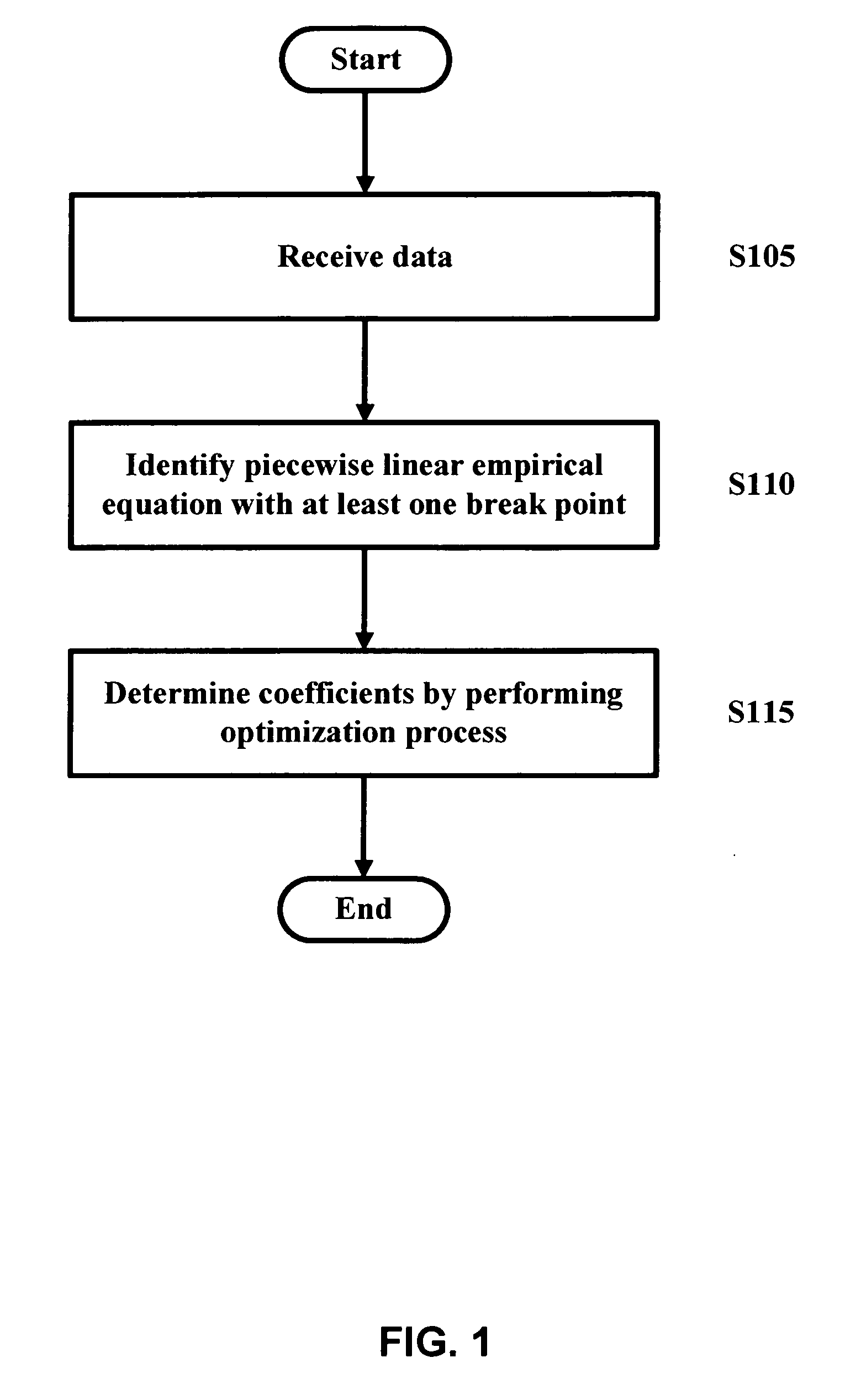

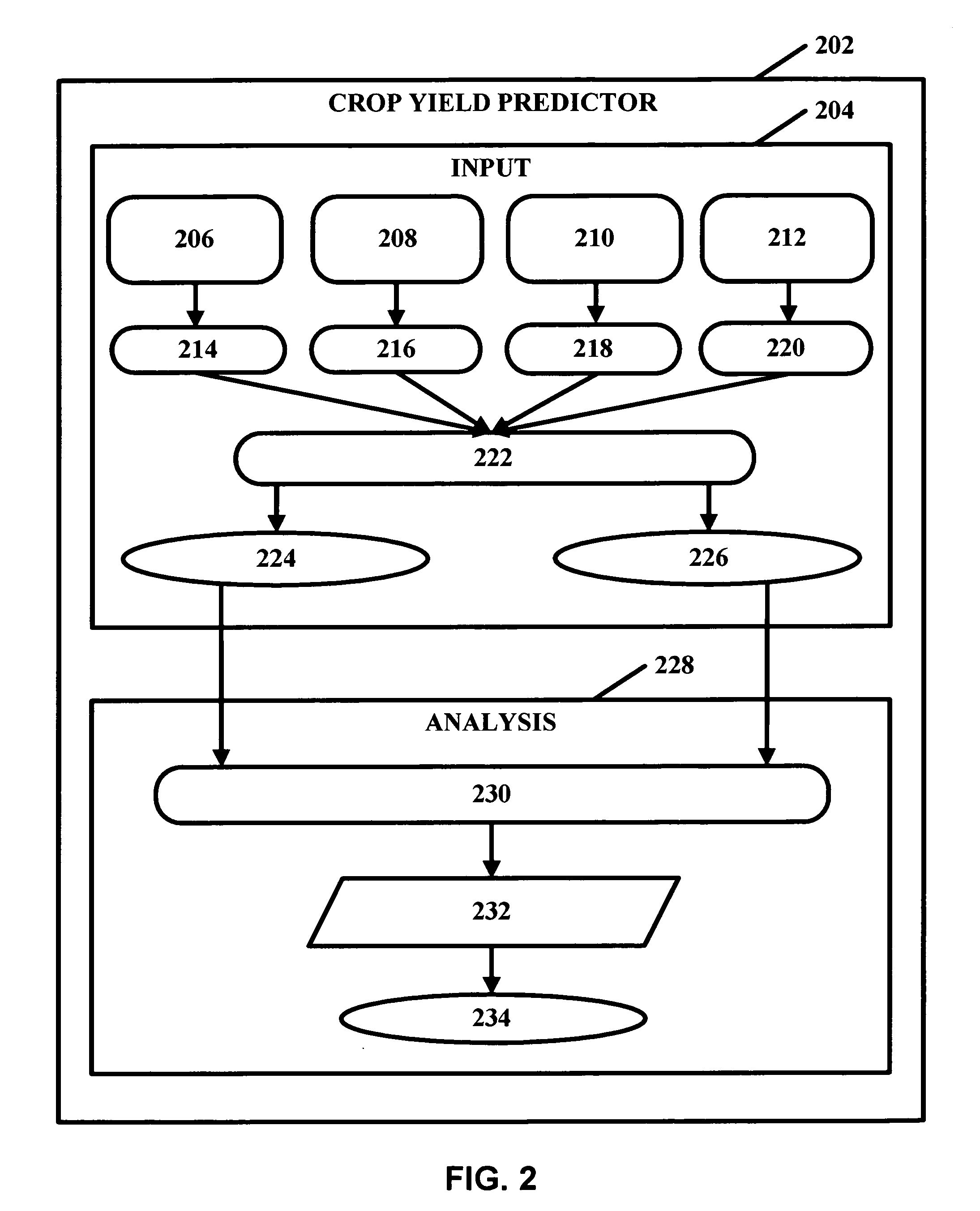

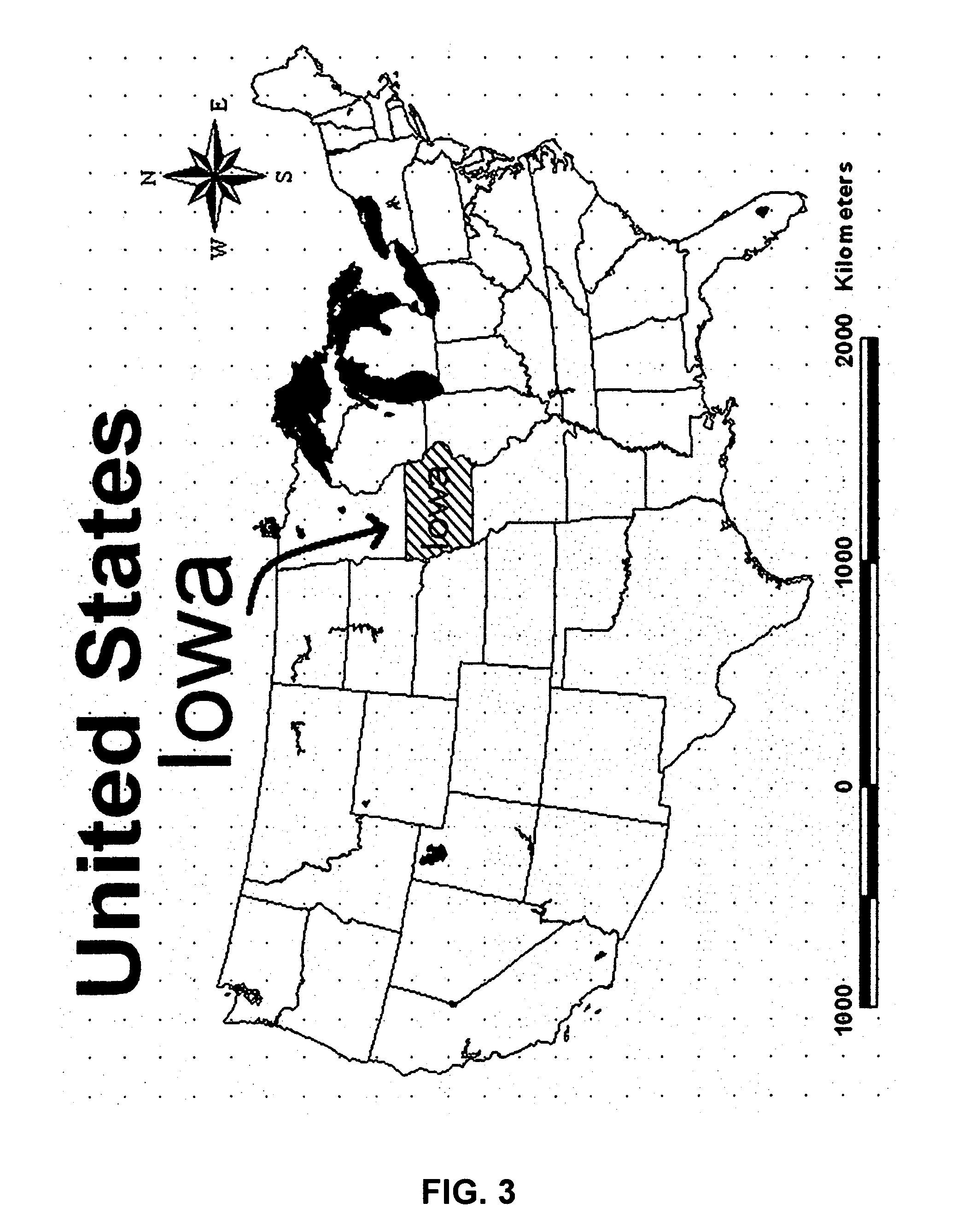

Crop yield prediction

InactiveUS20050234691A1Climate change adaptationAnalogue computers for chemical processesNon linear methodsEngineering

Crop yield may be assessed and predicted using a piecewise linear regression method with break point and various weather and agricultural parameters, such as NDVI, surface parameters (soil moisture and surface temperature) and rainfall data. These parameters may help aid in estimating and predicting crop conditions. The overall crop production environment can include inherent sources of heterogeneity and their nonlinear behavior. A non-linear multivariate optimization method may be used to derive an empirical crop yield prediction equation. Quasi-Newton method may be used in optimization for minimizing inconsistencies and errors in yield prediction. Minimization of least square loss function through iterative convergence of pre-defined empirical equation can be based on piecewise linear regression method with break point. This non-linear method can achieve acceptable lower residual values with predicted values very close to the observed values. The present invention can be modified and tailored for different crops worldwide.

Owner:GEORGE MASON INTPROP INC

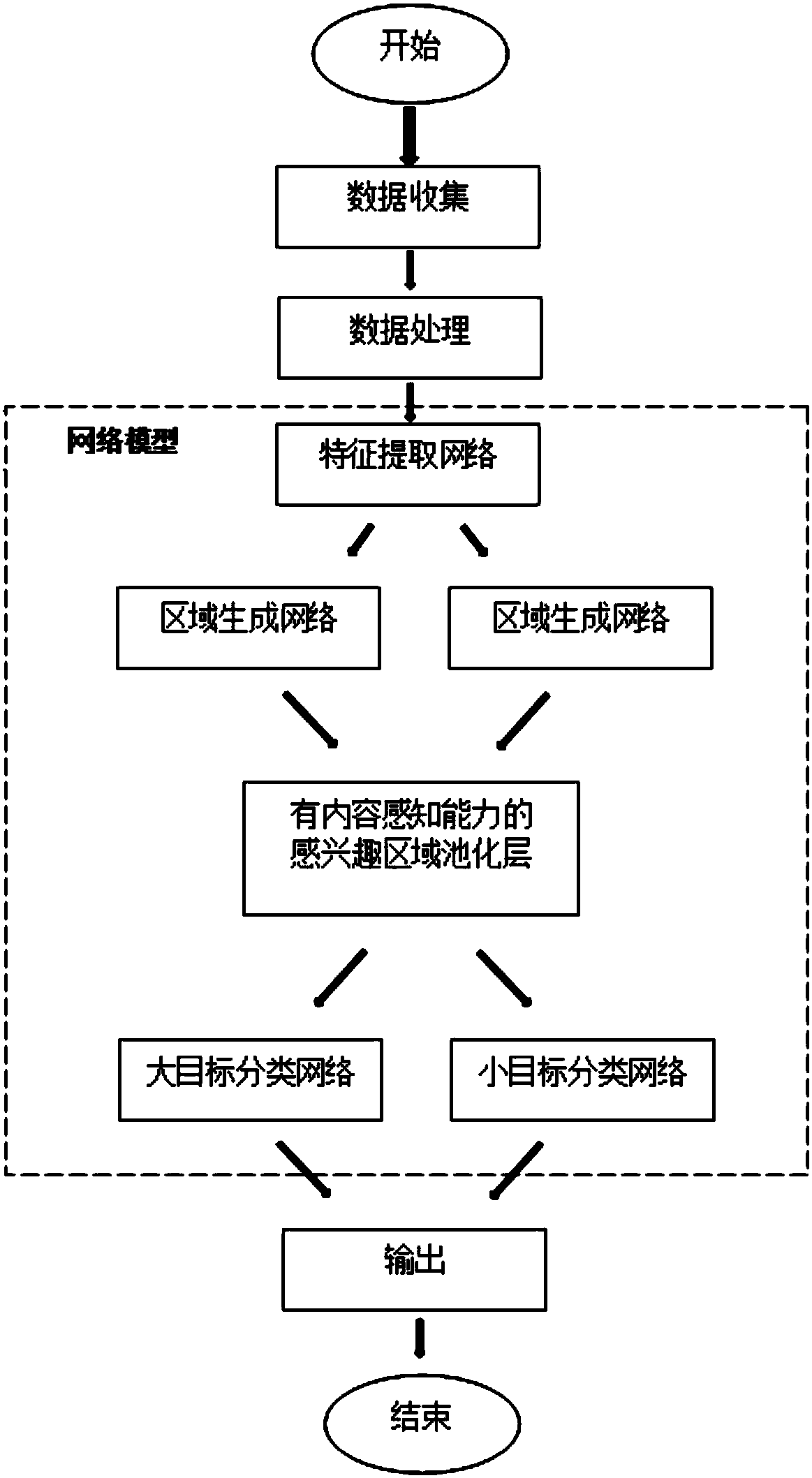

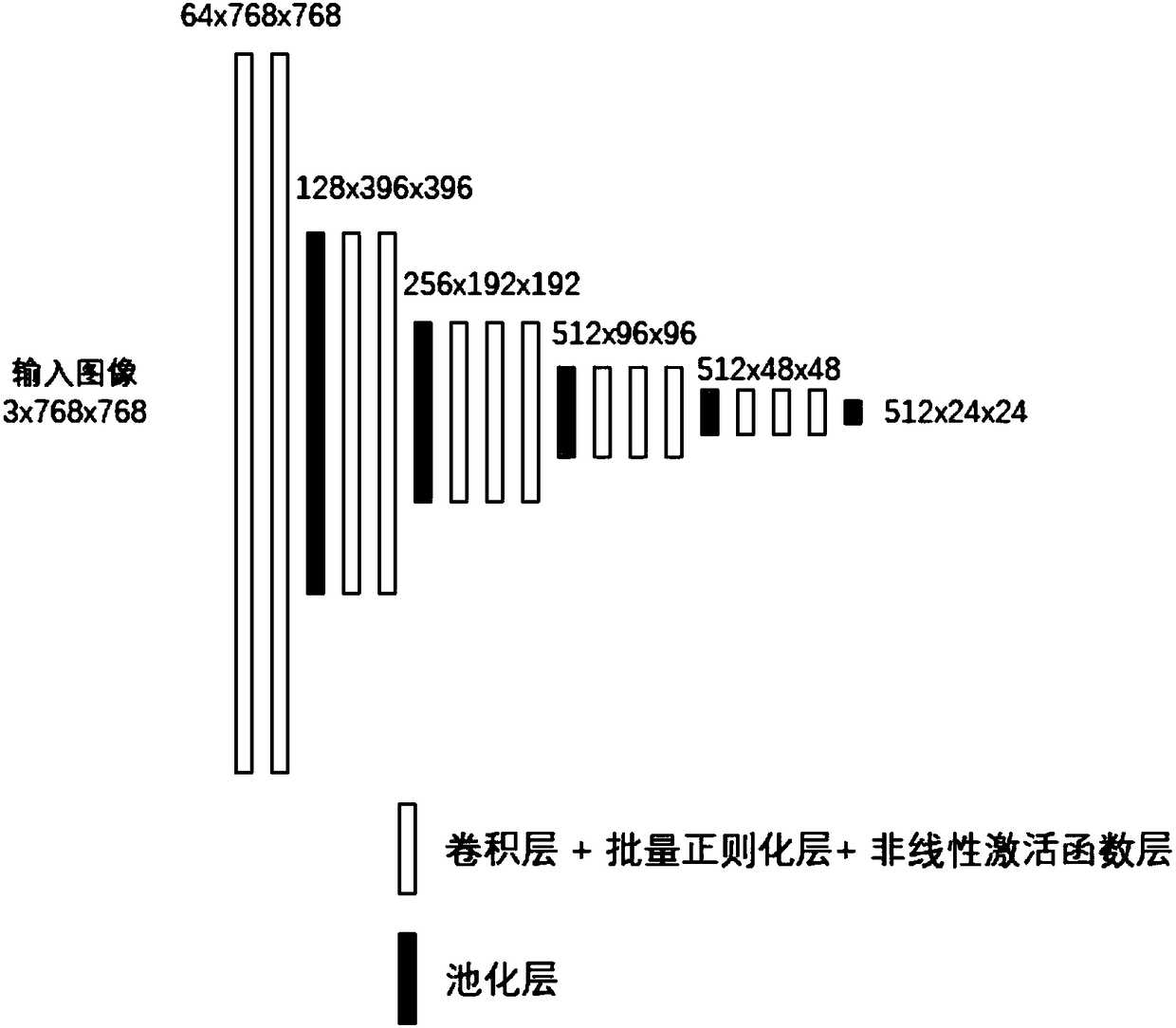

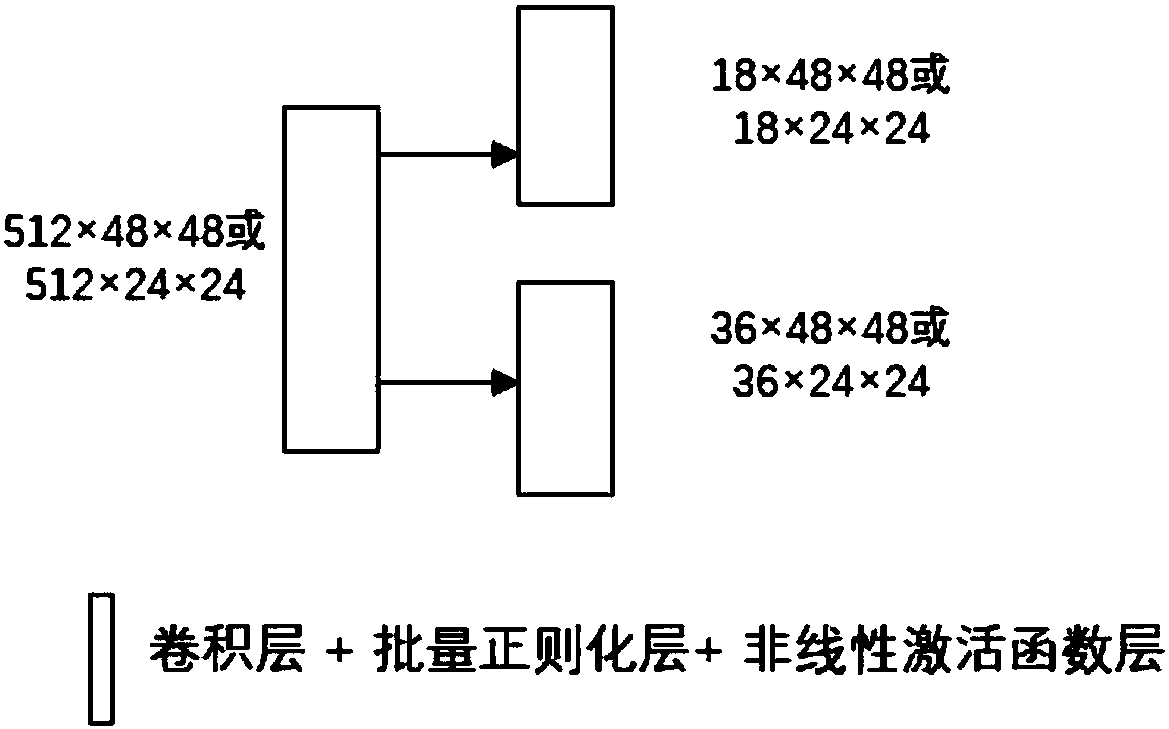

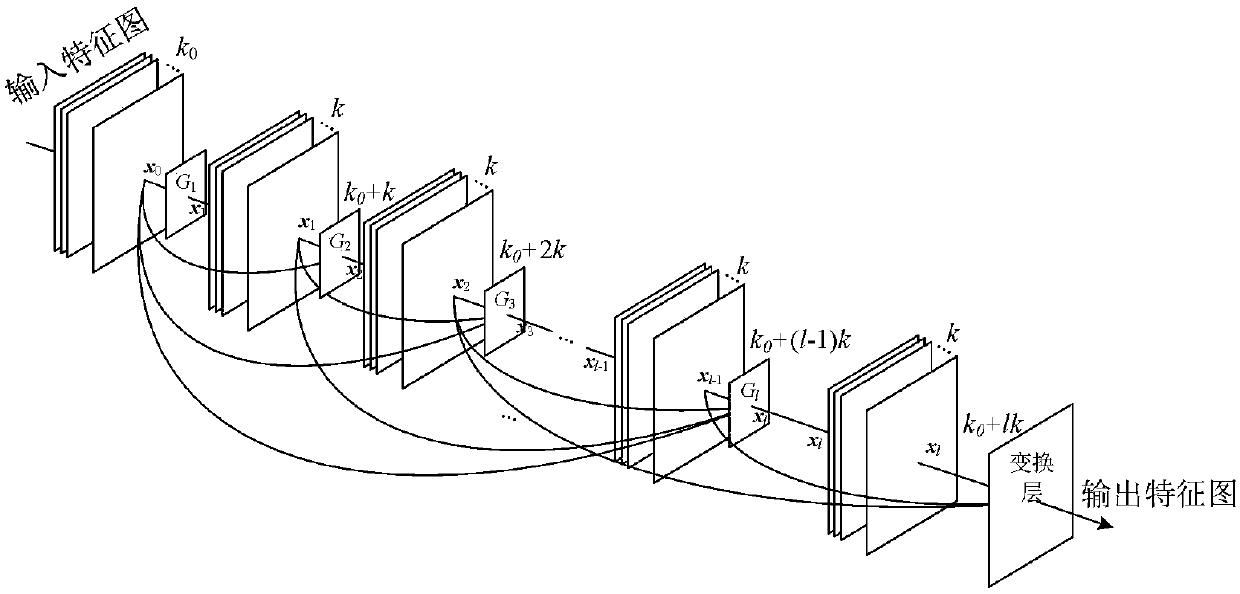

Multi-scale target detection method based on deep convolutional neural network

ActiveCN108564097AAccurate distinctionAccurate detectionCharacter and pattern recognitionNeural architecturesNerve networkNetwork generation

The invention discloses a multi-scale target detection method based on a deep convolutional neural network. The method comprises the steps of (1) data acquisition; (2) data processing; (3) model construction; (4) loss function definition; (5) model training; and (6) model verification. The method combines the ability of extracting image high-level semantic information of the deep convolutional neural network, the ability of generating candidate regions of region generation networks, the repair and mapping abilities of a content-aware region-of-interest pooling layer and the precise classification ability of multi-task classification networks, and therefore multi-scale target detection is completed more accurately and efficiently.

Owner:SOUTH CHINA UNIV OF TECH

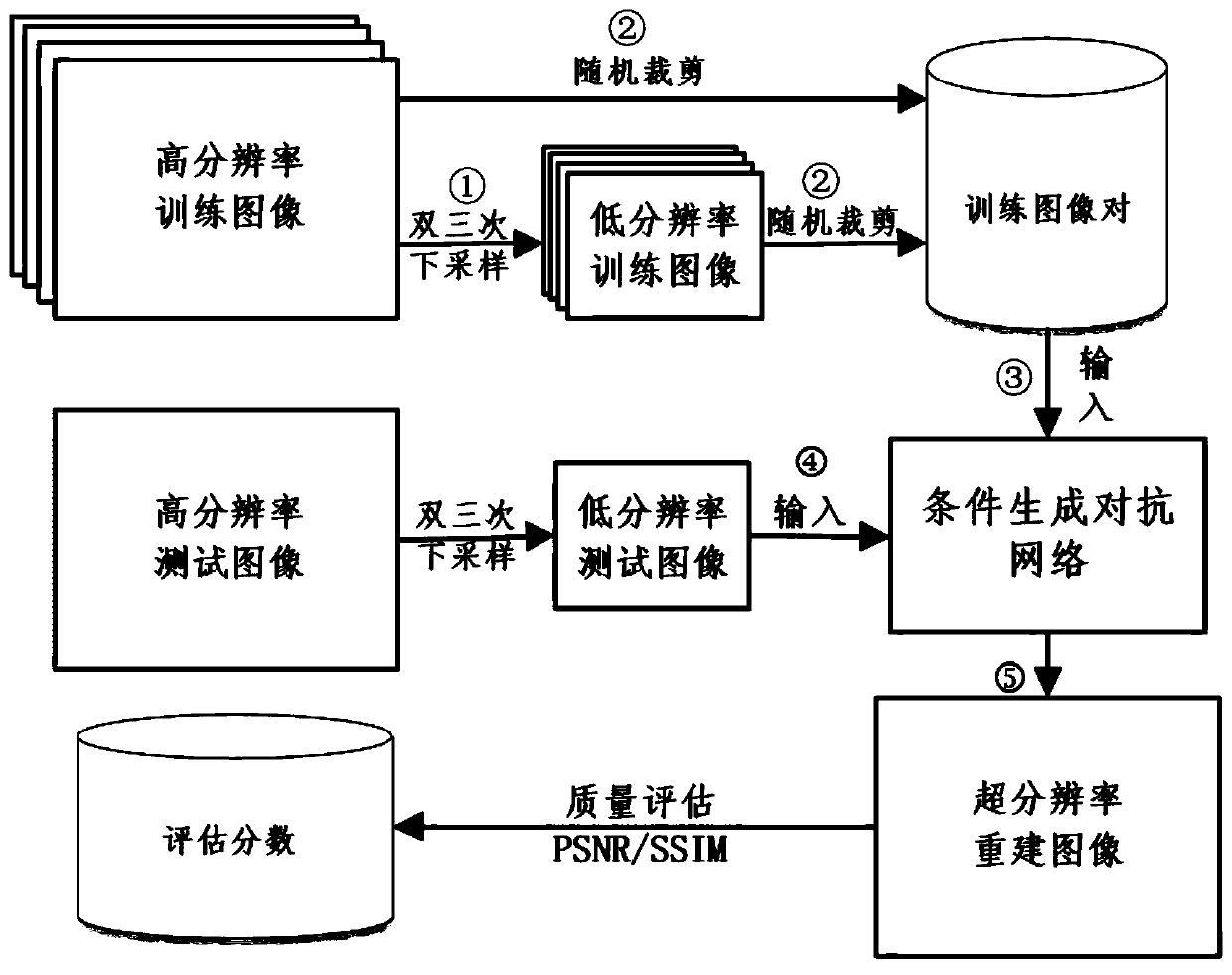

Single image super-resolution reconstruction method based on conditional generative adversarial network

ActiveCN110136063AImprove discrimination accuracyImprove performanceGeometric image transformationNeural architecturesGenerative adversarial networkReconstruction method

The invention discloses a single image super-resolution reconstruction method based on a conditional generative adversarial network. A judgment condition, namely an original real image, is added intoa judger network of the generative adversarial network. A deep residual error learning module is added into a generator network to realize learning of high-frequency information and alleviate the problem of gradient disappearance. The single low-resolution image is input to be reconstructed into a pre-trained conditional generative adversarial network, and super-resolution reconstruction is performed to obtain a reconstructed high-resolution image; learning steps of the conditional generative adversarial network model include: learning a model of the conditional adversarial network; inputtingthe high-resolution training set and the low-resolution training set into a conditional generative adversarial network model, using pre-trained model parameters as initialization parameters of the training, judging the convergence condition of the whole network through a loss function, obtaining a finally trained conditional generative adversarial network model when the loss function is converged,and storing the model parameters.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

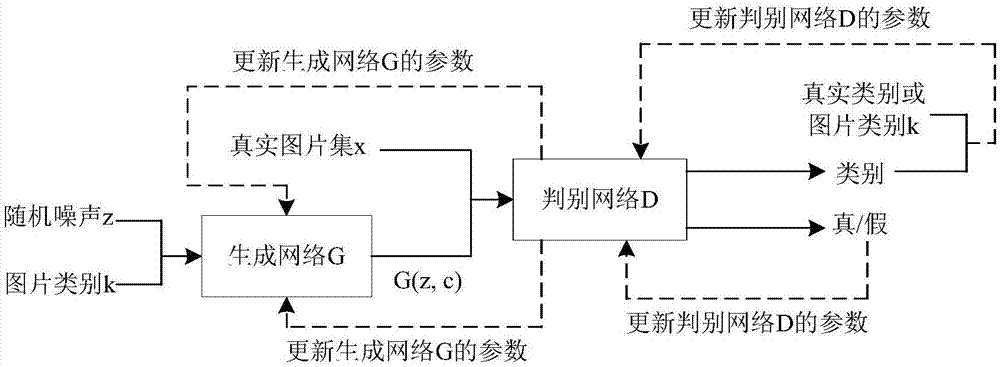

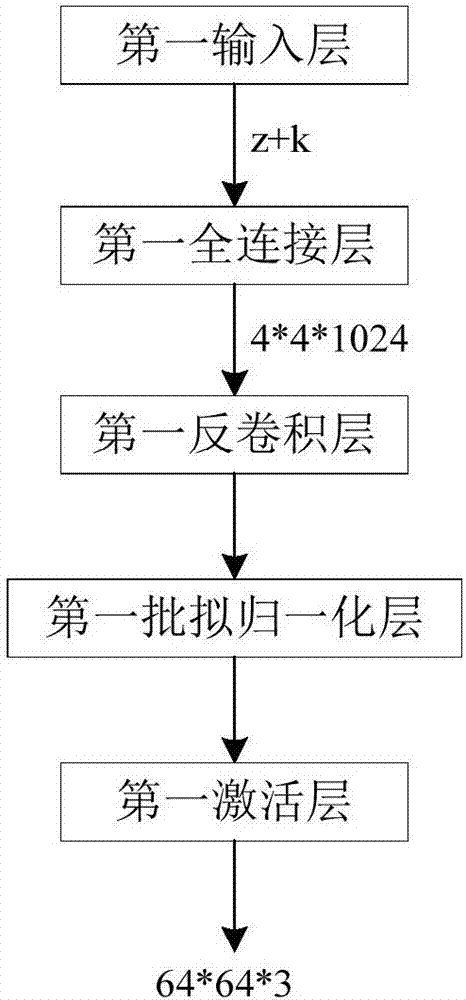

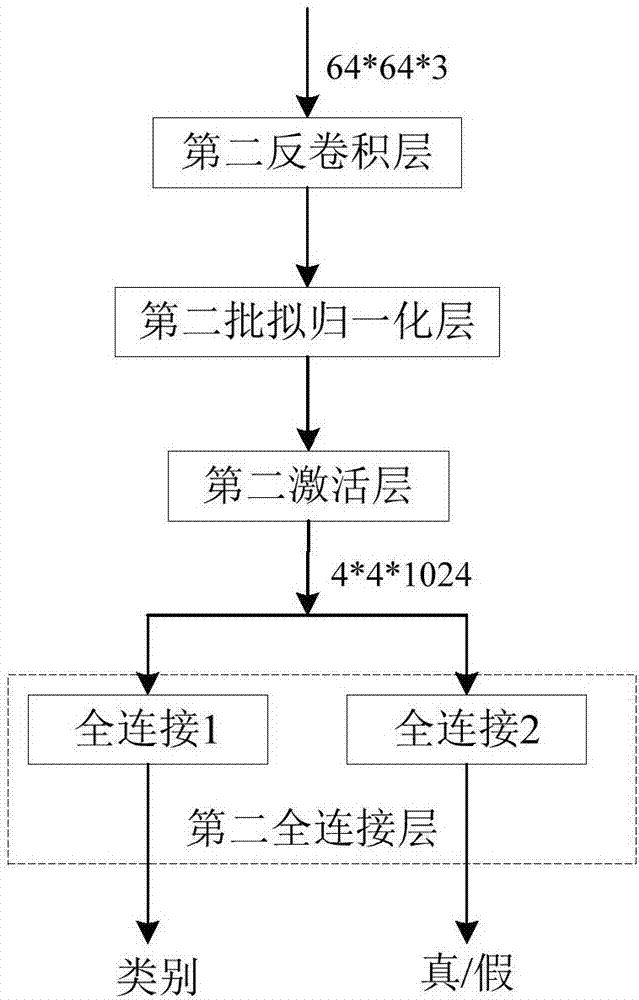

Picture generation method based on depth learning and generative adversarial network

The invention discloses a picture generation method based on depth learning and a generative adversarial network. The method comprises steps that (1), a picture database is established, multiple real pictures are collected and are further classified and marked, and each picture has a unique class label k corresponding to the each picture; (2), the generation network G is constructed, a vector combined by a random noise signal z and the class label k is inputted to the generation network G, and generated data is taken as input of a discrimination network D; (3), the discrimination network D is constructed, and a loss function of the discrimination network D comprises a first loss function used for determining true and false pictures and a second loss function used for determining picture classes; (4), the generation network is trained; (5), needed pictures are generated, the random noise signal z and the class label k are inputted to the generation network G trained in the step (4) to acquire pictures in a designated class. The method is advantaged in that not only can the pictures can be generated, but also the designated generation picture classes can be further realized.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

Systems and Methods for Providing Convolutional Neural Network Based Image Synthesis Using Stable and Controllable Parametric Models, a Multiscale Synthesis Framework and Novel Network Architectures

Systems and methods for providing convolutional neural network based image synthesis using localized loss functions is disclosed. A first image including desired content and a second image including a desired style are received. The images are analyzed to determine a local loss function. The first and second images are merged using the local loss function to generate an image that includes the desired content presented in the desired style. Similar processes can also be utilized to generate image hybrids and to perform on-model texture synthesis. In a number of embodiments, Condensed Feature Extraction Networks are also generated using a convolutional neural network previously trained to perform image classification, where the Condensed Feature Extraction Networks approximates intermediate neural activations of the convolutional neural network utilized during training.

Owner:ARTOMATIX LTD

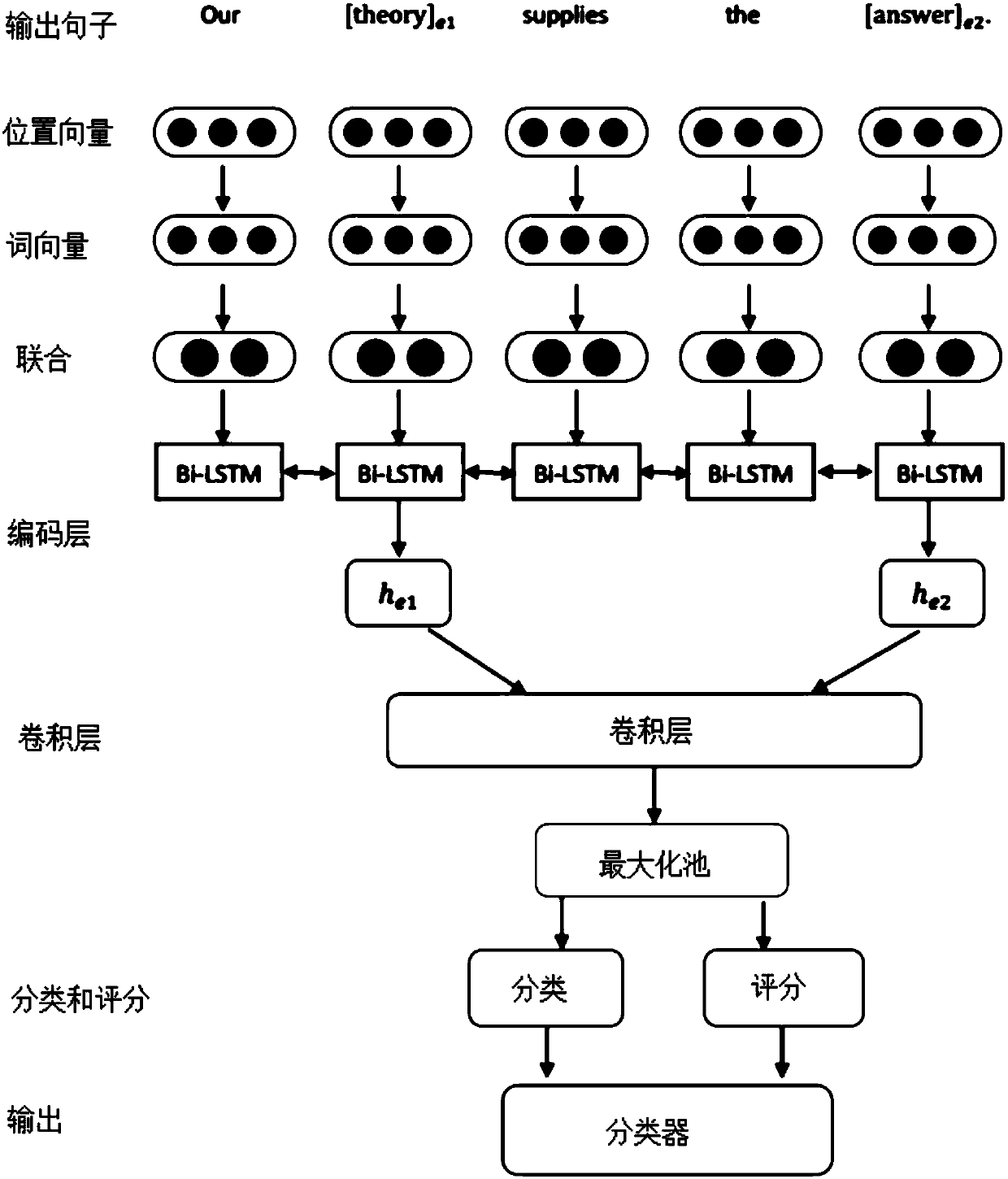

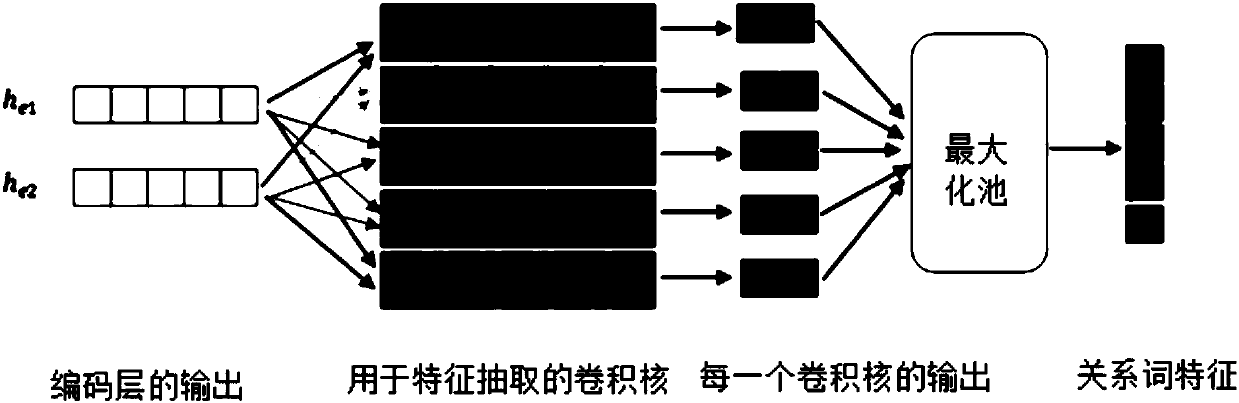

Method for relationship classification with LSTM and CNN joint model based on location

ActiveCN107832400AUniversalGood effectNatural language data processingNeural architecturesFeature vectorHigh dimensional

The invention relates to a method for relationship classification with an LSTM and CNN joint model based on location. The method includes the steps of (1) preprocessing data; (2) training word vectors; (3) extracting location vectors; acquiring the location vector feature and high-dimensional location feature vector of each word in a training set, cascading the word vector of each word with the high-dimensional location feature vector thereof to obtain a joint feature; (4) building a model for a specific task; encoding contextual information and semantic information of entities by use of bidirectional LSTM; outputting the vector of the location corresponding to the marked entities, inputting the output to CNN, outputting two entity nouns and their contextual information and relational wordinformation, and inputting the entity nouns and their contextual information and relational word information into a classifier for classification; (5) training the model by use of a loss function. The method does not need to manually extract any features, the joint model does not need to use additional natural language processing tools to preprocess the data, the algorithm is simple and clear, and the best effect at present is achieved.

Owner:SHANDONG UNIV

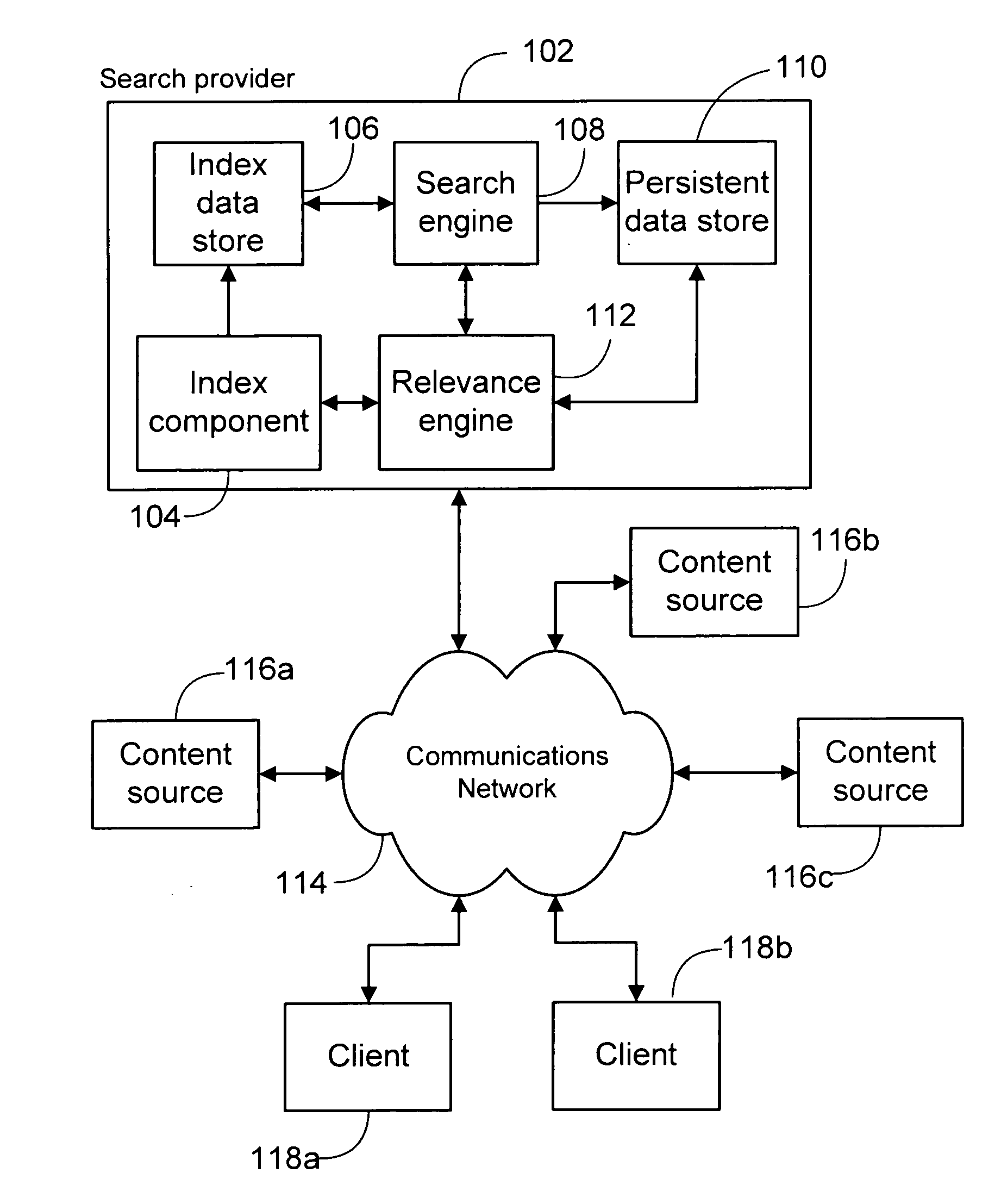

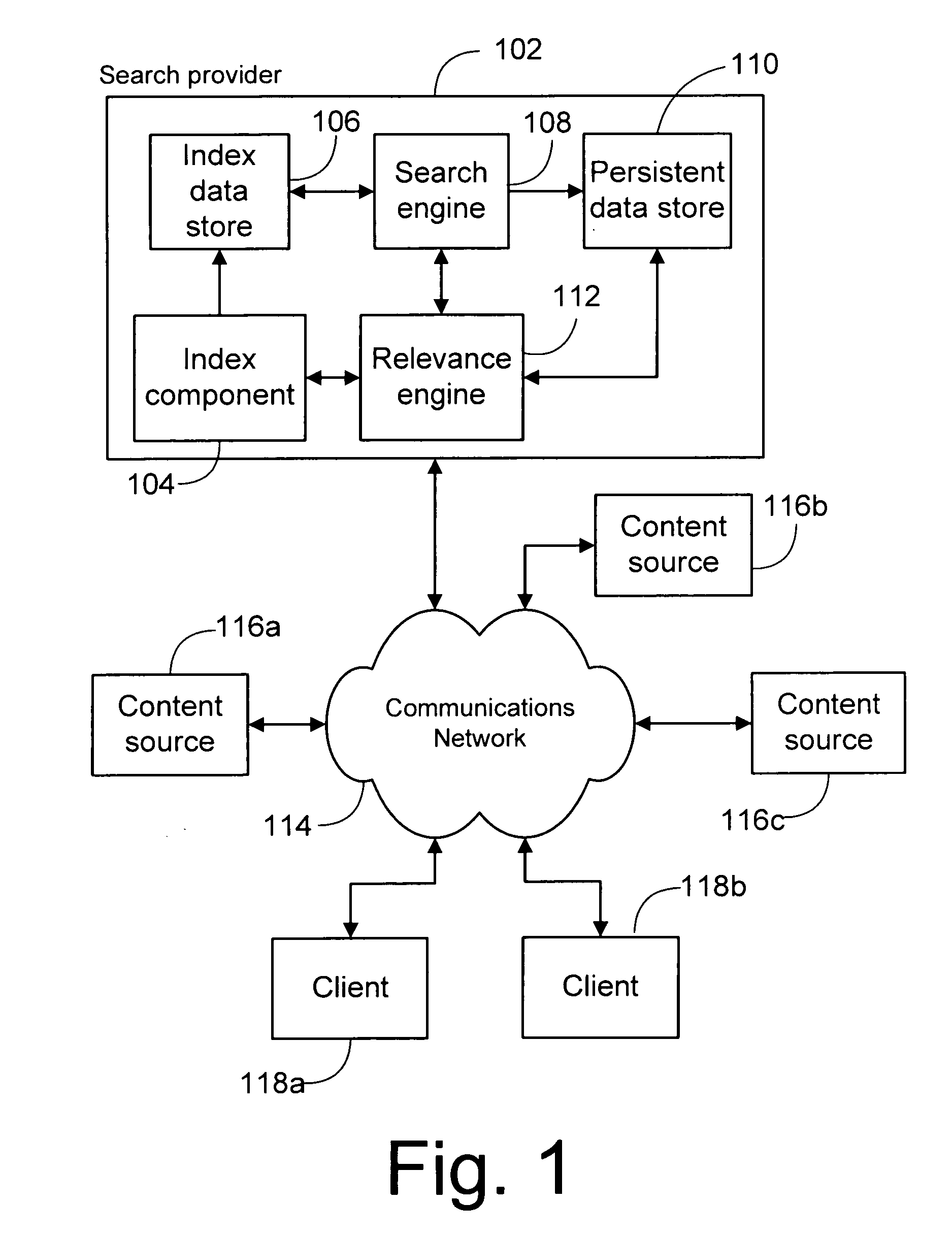

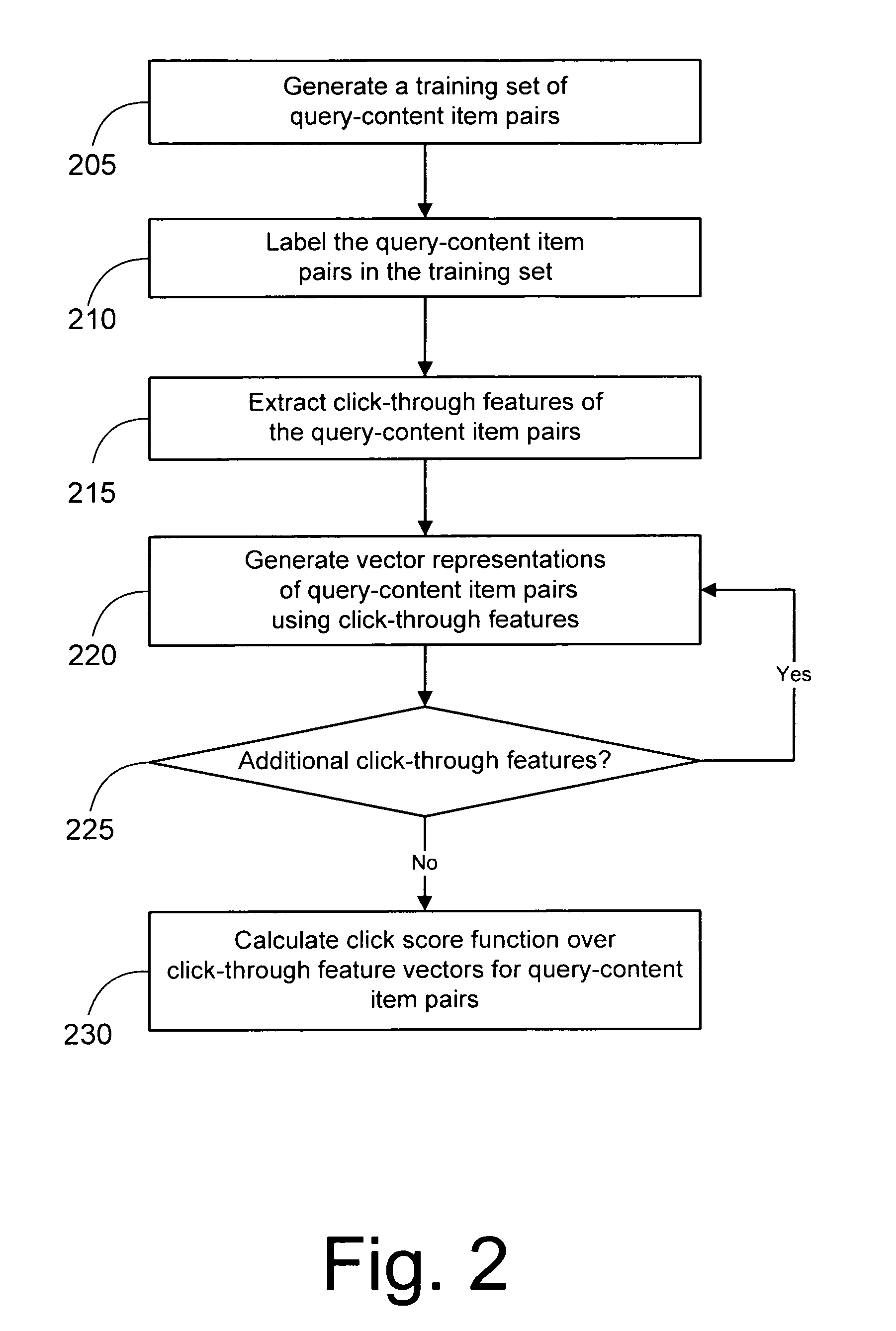

System and method for indexing web content using click-through features

ActiveUS20070255689A1Minimize the differenceIncrease searchWeb data indexingDigital data processing detailsUniform resource locatorWeb content

The system and method of the present invention allows for the determination of the relevance of a content item to a query through the use of a machine learned relevance function that incorporates click-through features of the content items. A method for selecting a relevance function to determine a relevance of a query-content item pair comprises generating training set having one or more query-URL pairs labeled for relevance based on their click-through features. The labeled query-URL pairs are used to determine the relevance function by minimizing a loss function that accounts for click-through features of the content item. The computed relevance function is then applied to the click-though features of unlabeled content items to assign relevance scores thereto. An inverted click-through index of query-score pairs is formed and combined with the content index to improve relevance of search results.

Owner:PINTEREST

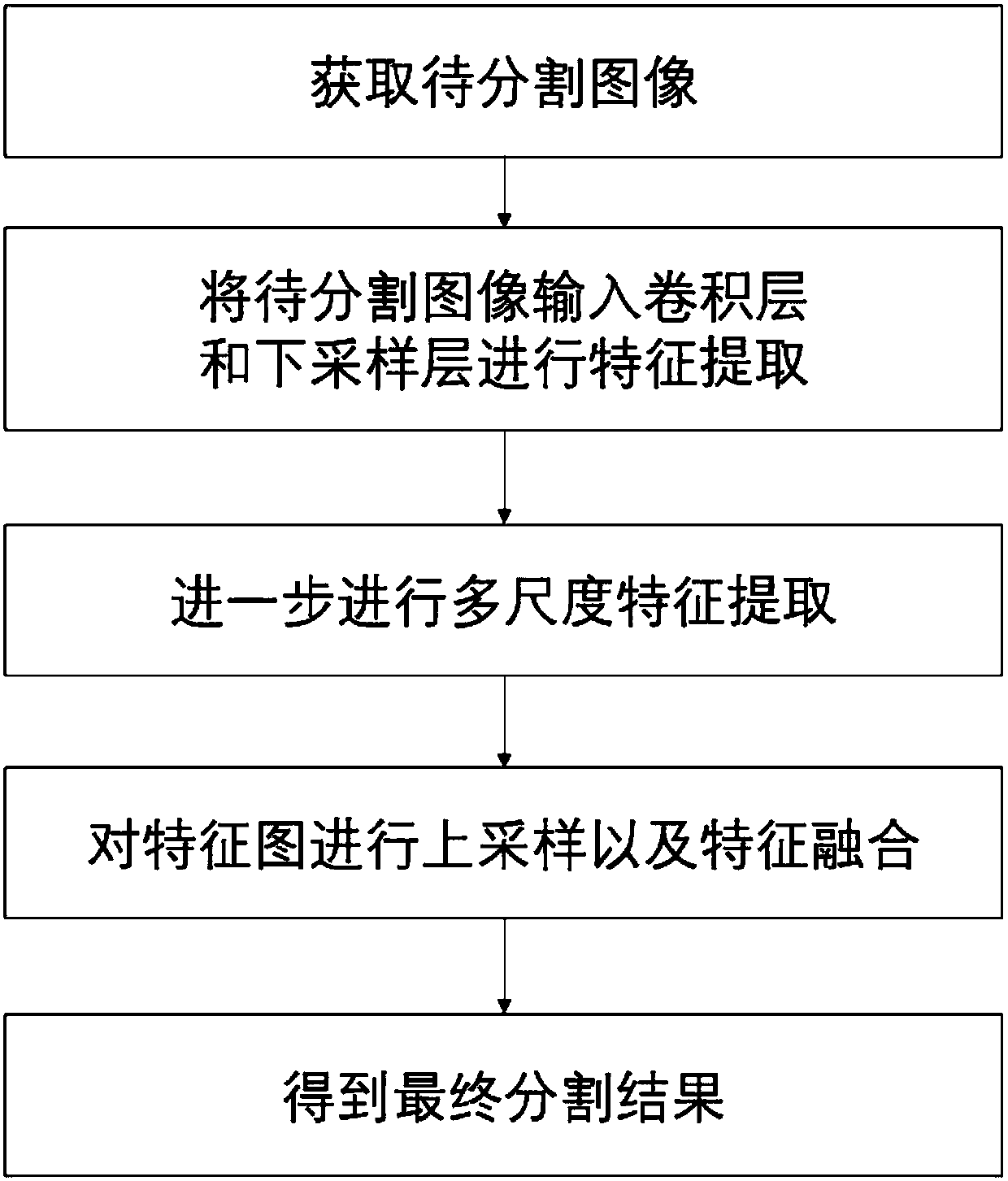

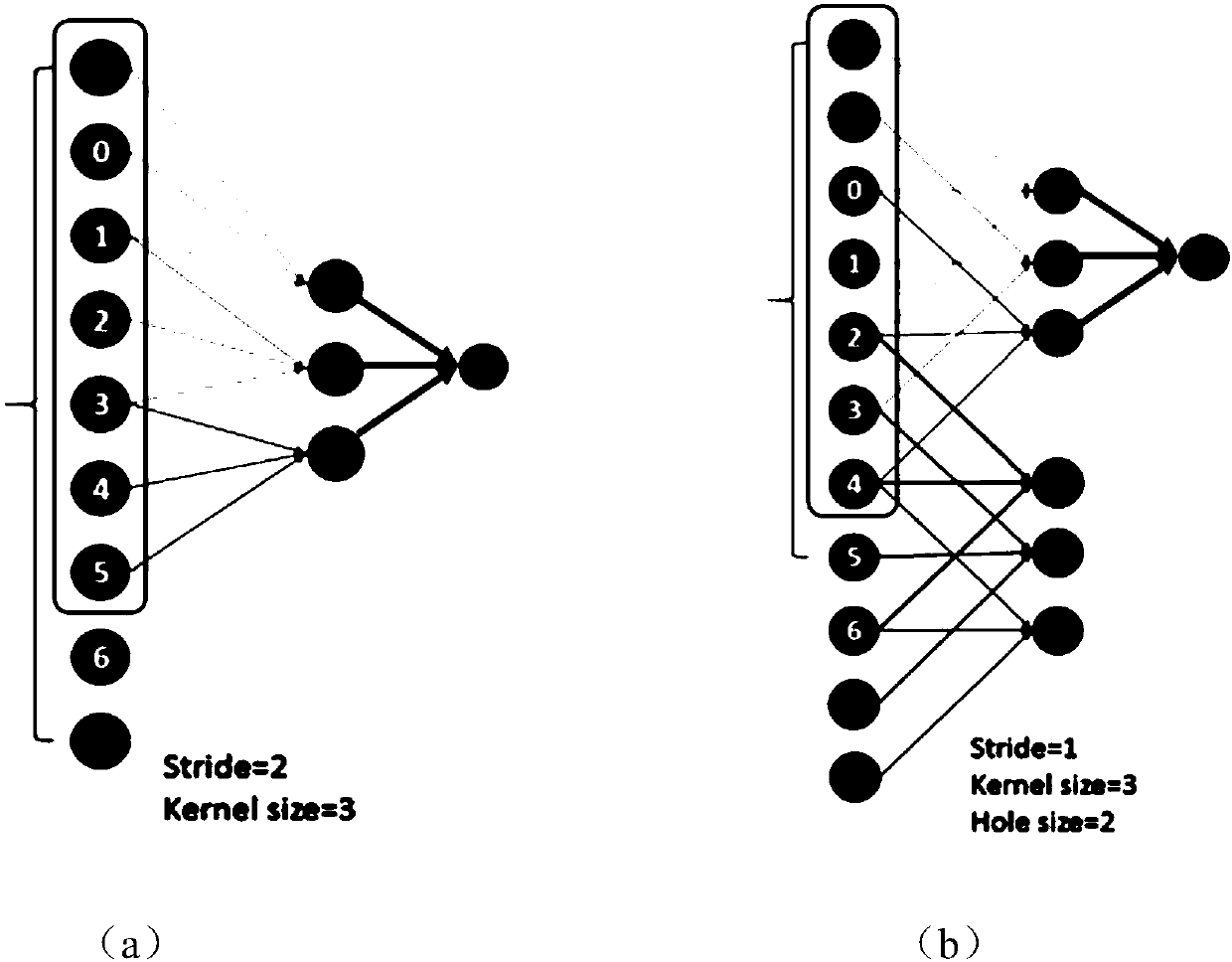

Multi-scale image semantic segmentation method

ActiveCN110232394AIncrease profitEasy to handleCharacter and pattern recognitionNeural architecturesSample imageMinutiae

The invention discloses a multi-scale image semantic segmentation method. The method comprises the following steps: obtaining a to-be-segmented image and a corresponding label; constructing a full convolutional deep neural network, wherein the full convolutional deep neural network comprises a convolution module, a hole convolution module, a pyramid pooling module, a 1 * 1 * depth convolution layer and a deconvolution structure; setting hole convolution as channel-by-channel operation, and utilizing low-scale, medium-scale and high-scale characteristics in a targeted mode; training the full convolutional deep neural network, establishing a loss function, and determining parameters of the full convolutional deep neural network by training the sample image; and inputting the to-be-segmentedimage into the trained full convolutional deep neural network to obtain a semantic segmentation result. By means of the method, the image semantic segmentation problem with complex details, holes andlarge targets can be well solved while the calculated amount and the parameter number are reduced, and the consistency of category labels can be reserved while the target edges can be well segmented.

Owner:SOUTH CHINA UNIV OF TECH

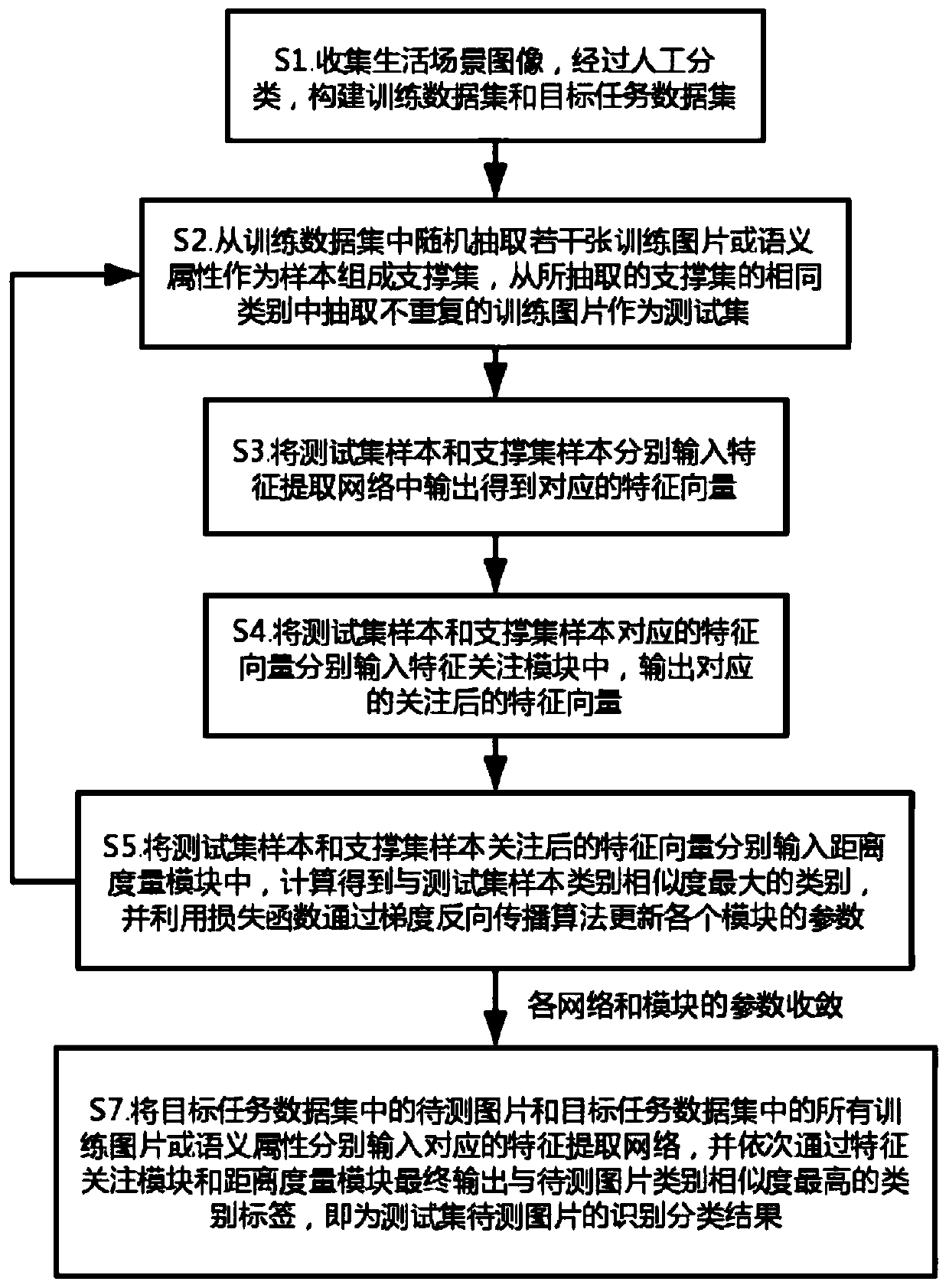

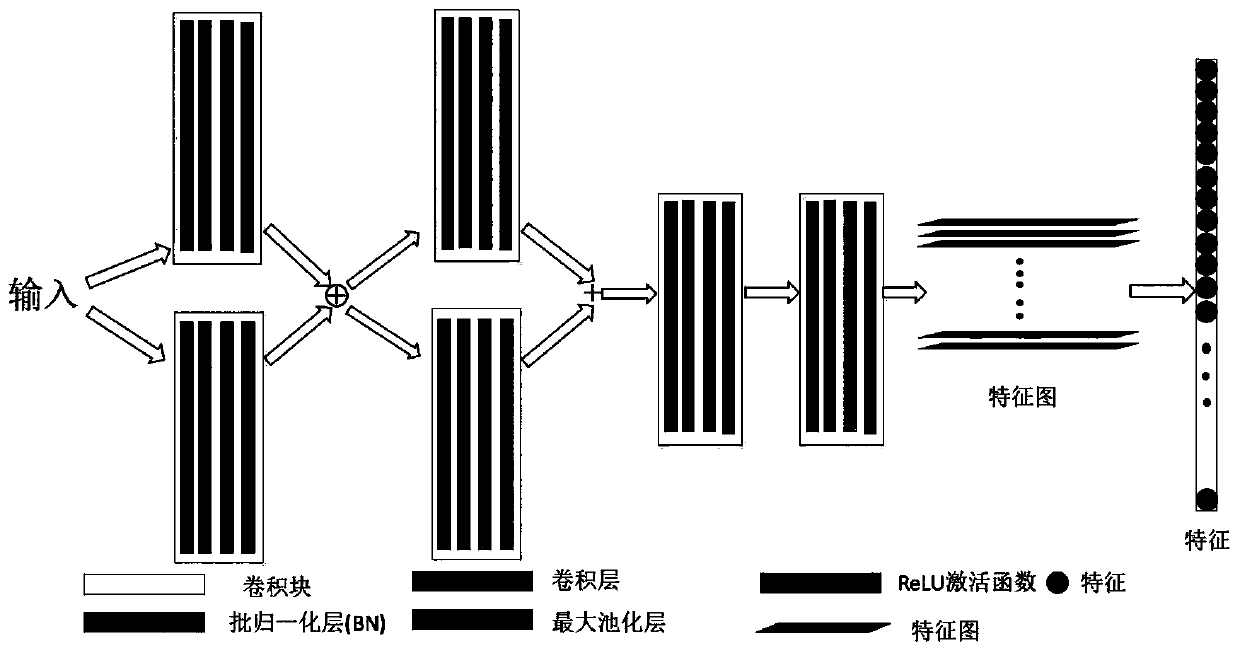

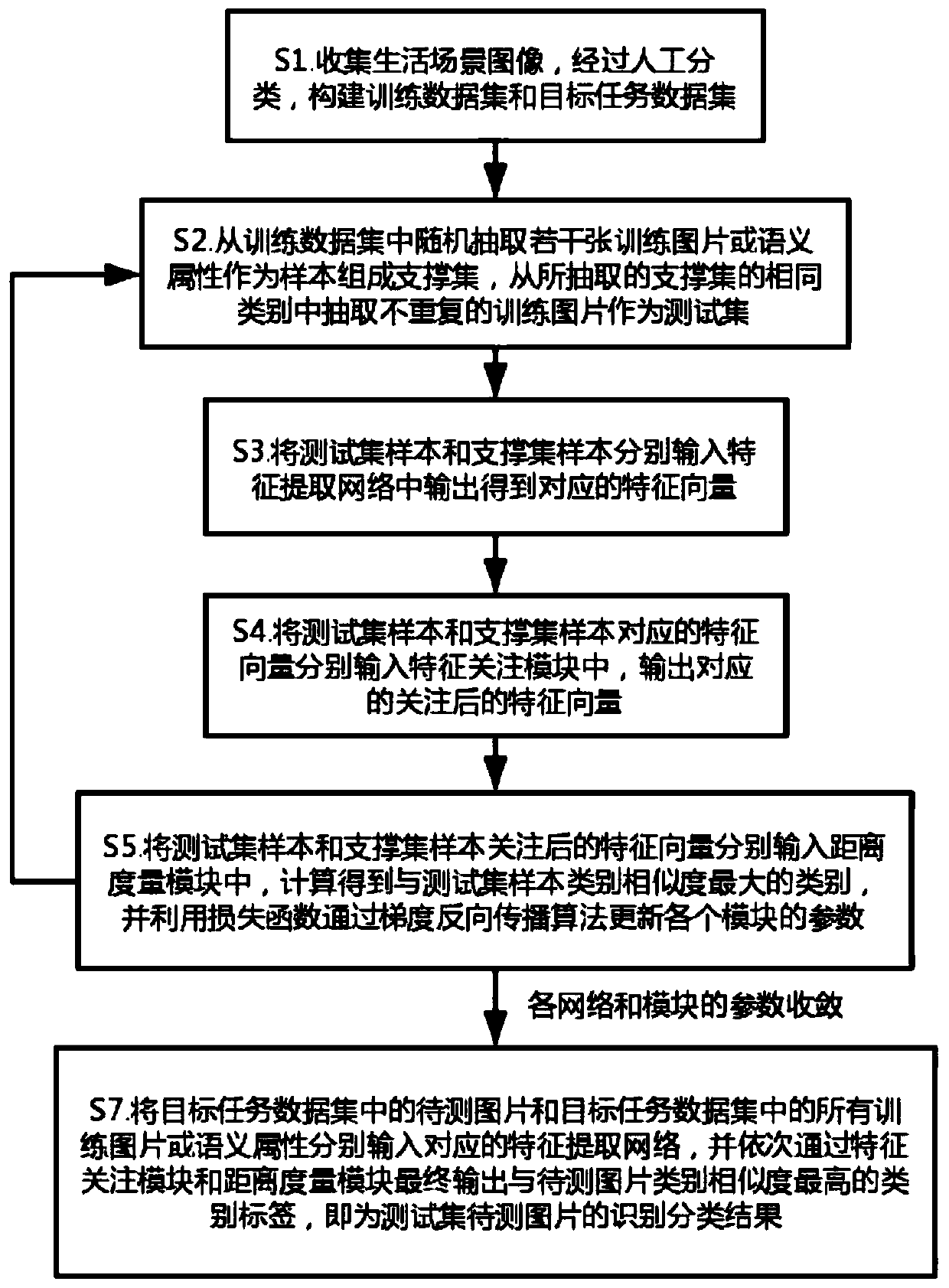

Small sample and zero sample image classification method based on metric learning and meta-learning

ActiveCN109961089ASolve the recognition classification problemAccurate classificationCharacter and pattern recognitionEnergy efficient computingSmall sampleData set

The invention relates to the field of computer vision recognition and transfer learning, and provides a small sample and zero sample image classification method based on metric learning and meta-learning, which comprises the following steps of: constructing a training data set and a target task data set; selecting a support set and a test set from the training data set; respectively inputting samples of the test set and the support set into a feature extraction network to obtain feature vectors; sequentially inputting the feature vectors of the test set and the support set into a feature attention module and a distance measurement module, calculating the category similarity of the test set sample and the support set sample, and updating the parameters of each module by utilizing a loss function; repeating the above steps until the parameters of the networks of the modules converge, and completing the training of the modules; and enabling the to-be-tested picture and the training picture in the target task data set to sequentially pass through a feature extraction network, a feature attention module and a distance measurement module, and outputting a category label with the highestcategory similarity with the test set to obtain a classification result of the to-be-tested picture.

Owner:SUN YAT SEN UNIV

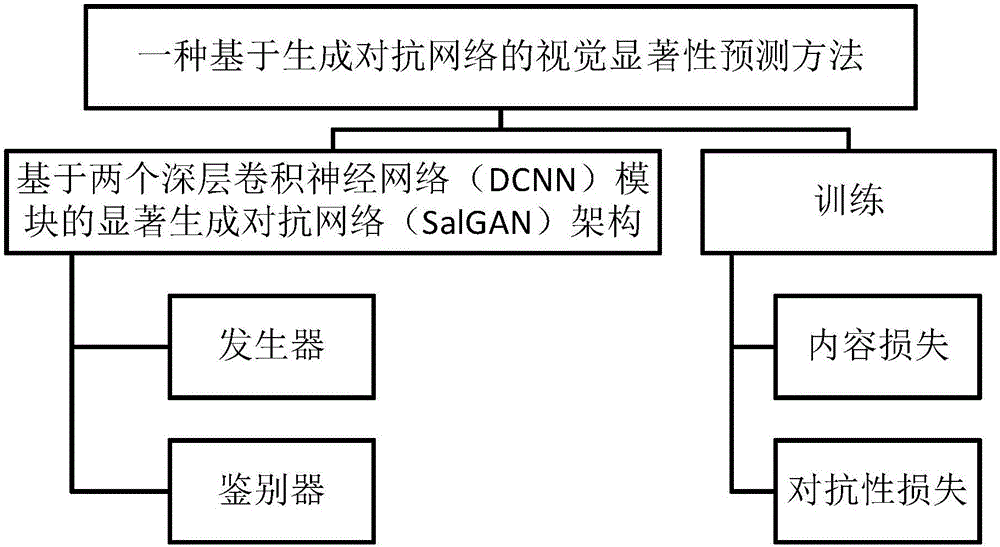

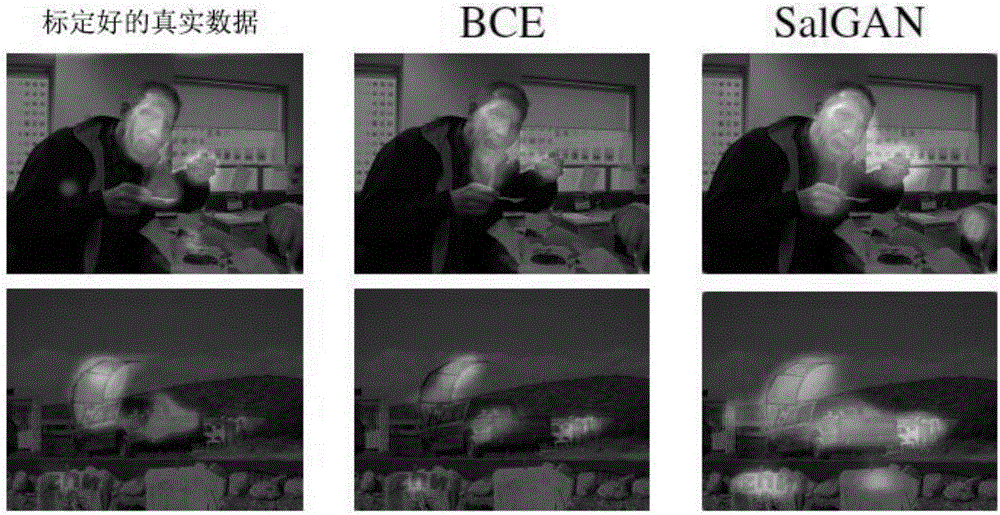

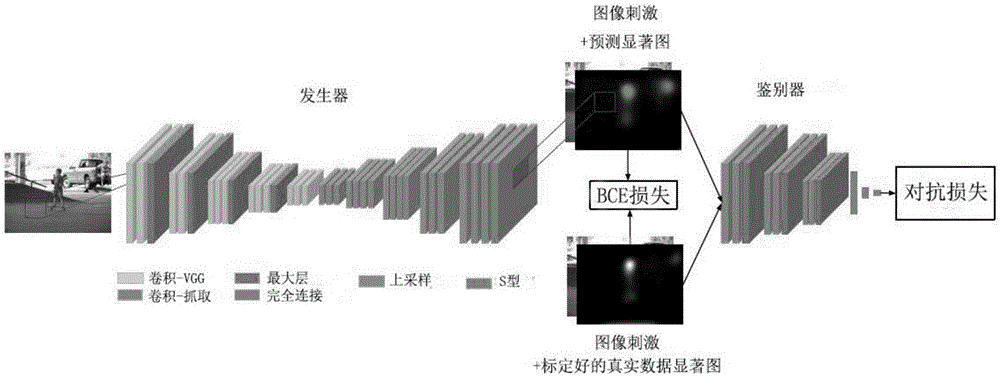

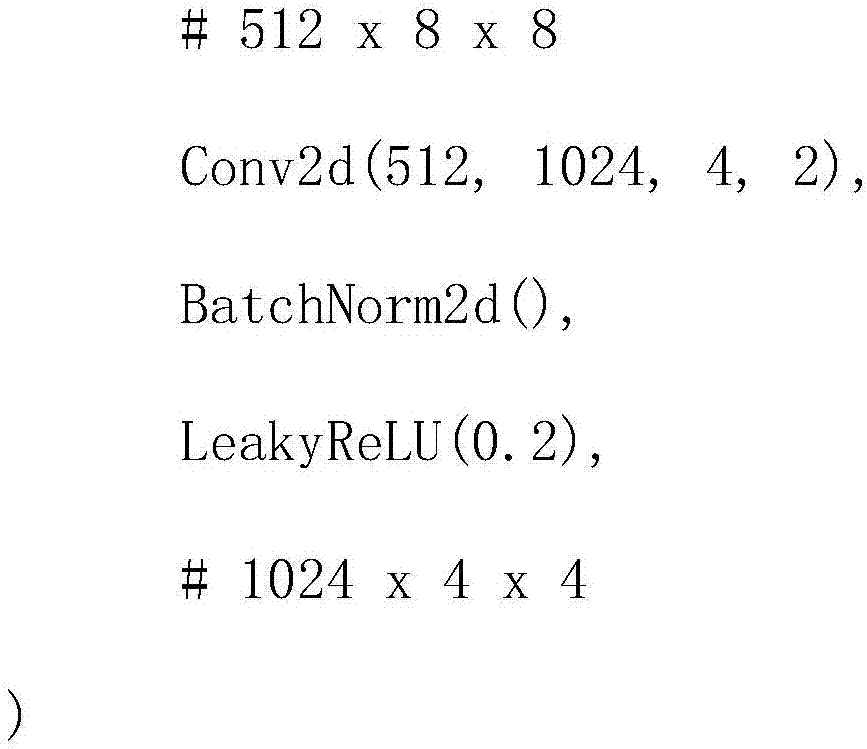

Visual significance prediction method based on generative adversarial network

InactiveCN106845471ACharacter and pattern recognitionNeural learning methodsDiscriminatorVisual saliency

The invention provides a visual significance prediction method based on a generative adversarial network. which mainly comprises the steps of constructing a saliency generative adversarial network (SalGAN) based on two deep convolutional neural network (DCNN) modules, and training. Specifically, the method comprises the steps of constructing the saliency generative adversarial network (SalGAN) based on two deep convolutional neural network (DCNN) modules, wherein the saliency generative adversarial network comprises a generator and a discriminator, and the generator and the discriminator are combined for predicating a visual saliency graph of a preset input image; and training filter weight in the SalGAN through perception loss which is caused by combining content loss and adversarial loss. A loss function in the method is combination of error from the discriminator and cross entropy relative to calibrated true data, thereby improving stability and convergence rate in adversarial training. Compared with further training of individual cross entropy, adversarial training improves performance so that higher speed and higher efficiency are realized.

Owner:SHENZHEN WEITESHI TECH

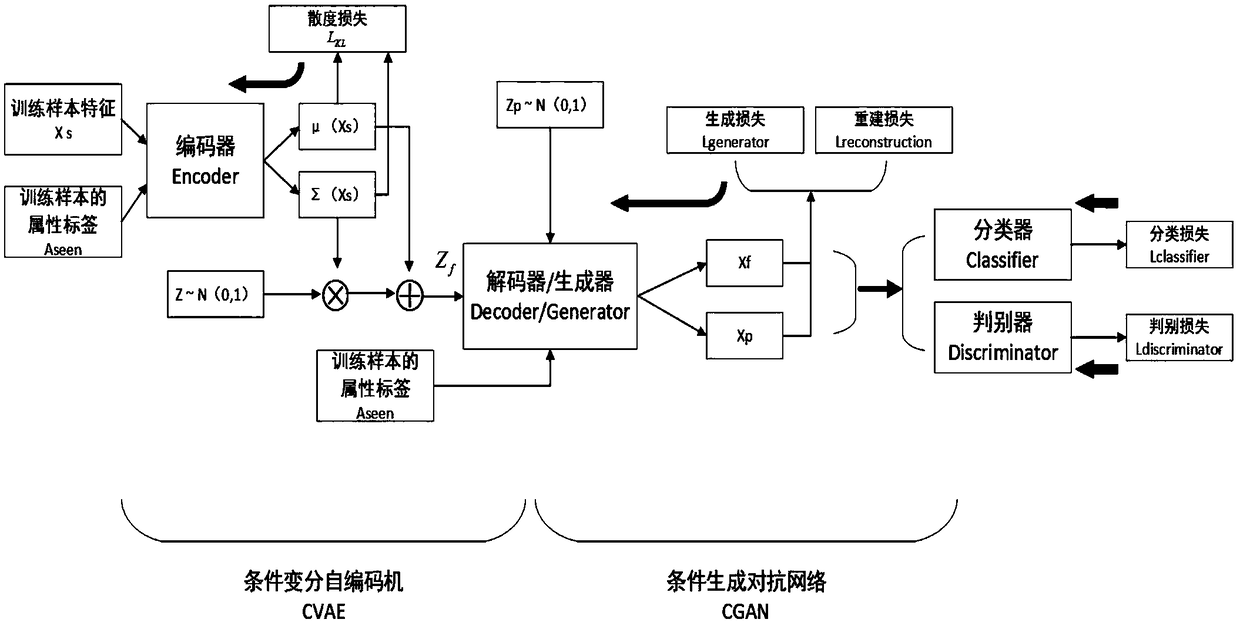

Zero sample image classification method based on combination of variational autocoder and adversarial network

ActiveCN108875818AImplement classificationMake up for the problem of missing training samples of unknown categoriesCharacter and pattern recognitionPhysical realisationClassification methodsSample image

The invention discloses a zero sample image classification method based on combination of a variational autocoder and an adversarial network. Samples of a known category are input during model training; category mapping of samples of a training set serves as a condition for guidance; the network is subjected to back propagation of optimization parameters through five loss functions of reconstruction loss, generation loss, discrimination loss, divergence loss and classification loss; pseudo-samples of a corresponding unknown category are generated through guidance of category mapping of the unknown category; and a pseudo-sample training classifier is used for testing on the samples of the unknown category. The high-quality samples beneficial to image classification are generated through theguidance of the category mapping, so that the problem of lack of the training samples of the unknown category in a zero sample scene is solved; and zero sample learning is converted into supervised learning in traditional machine learning, so that the classification accuracy of traditional zero sample learning is improved, the classification accuracy is obviously improved in generalized zero sample learning, and an idea for efficiently generating the samples to improve the classification accuracy is provided for the zero sample learning.

Owner:XI AN JIAOTONG UNIV

A novel biomedical image automatic segmentation method based on a U-net network structure

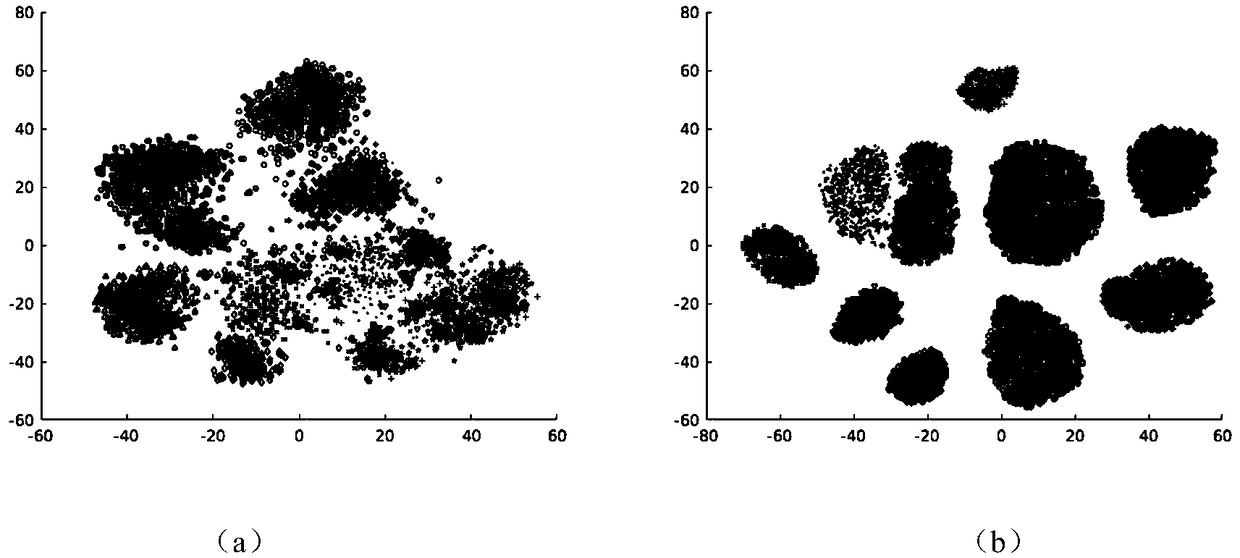

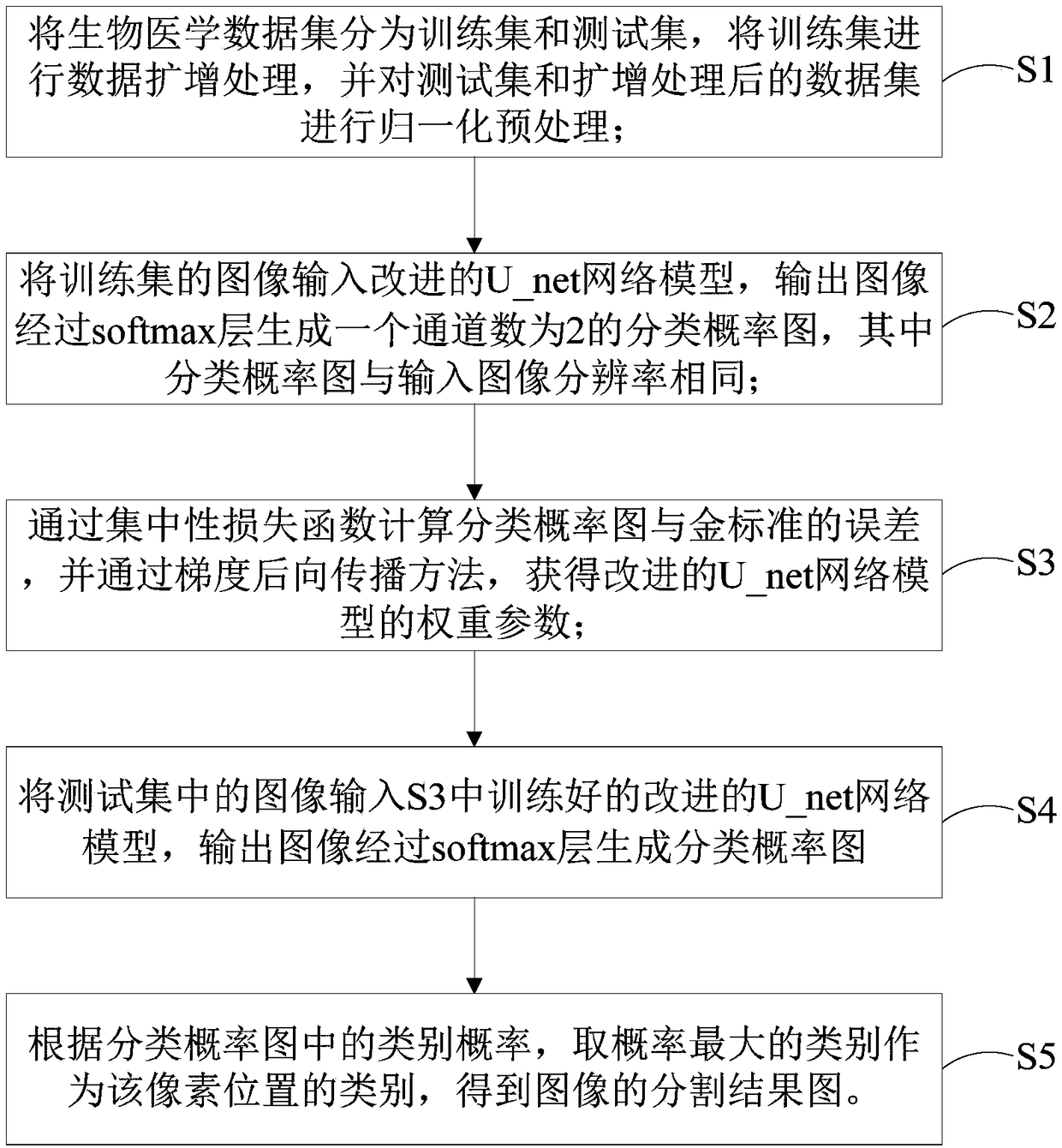

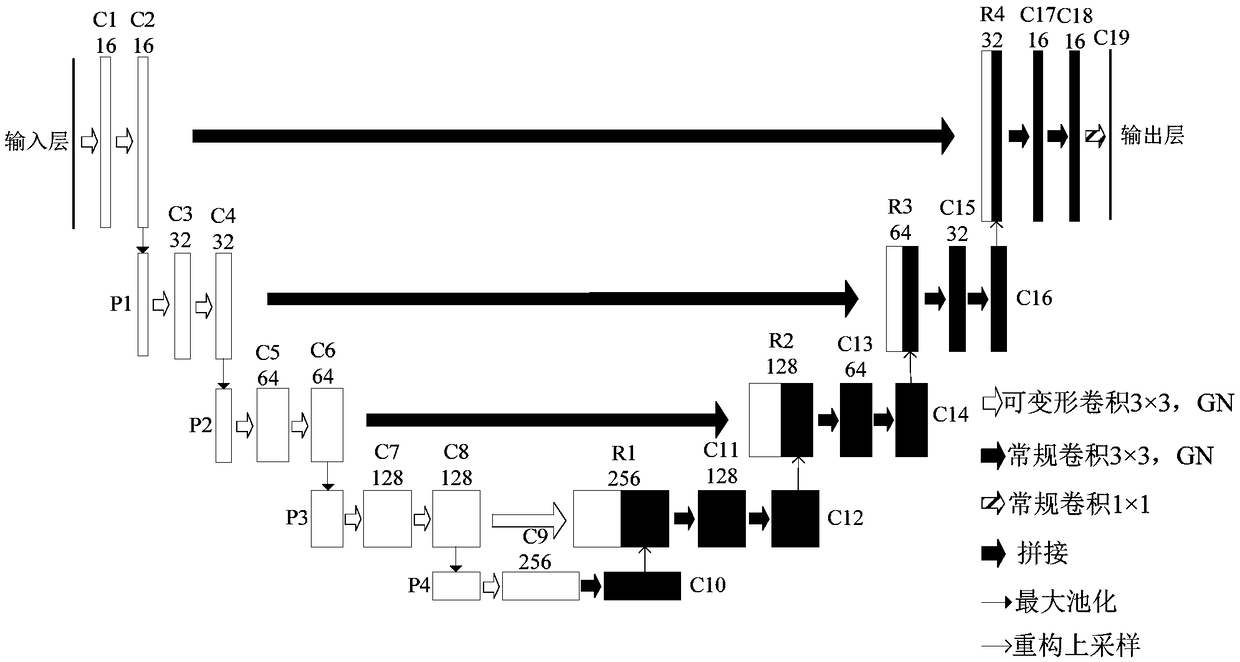

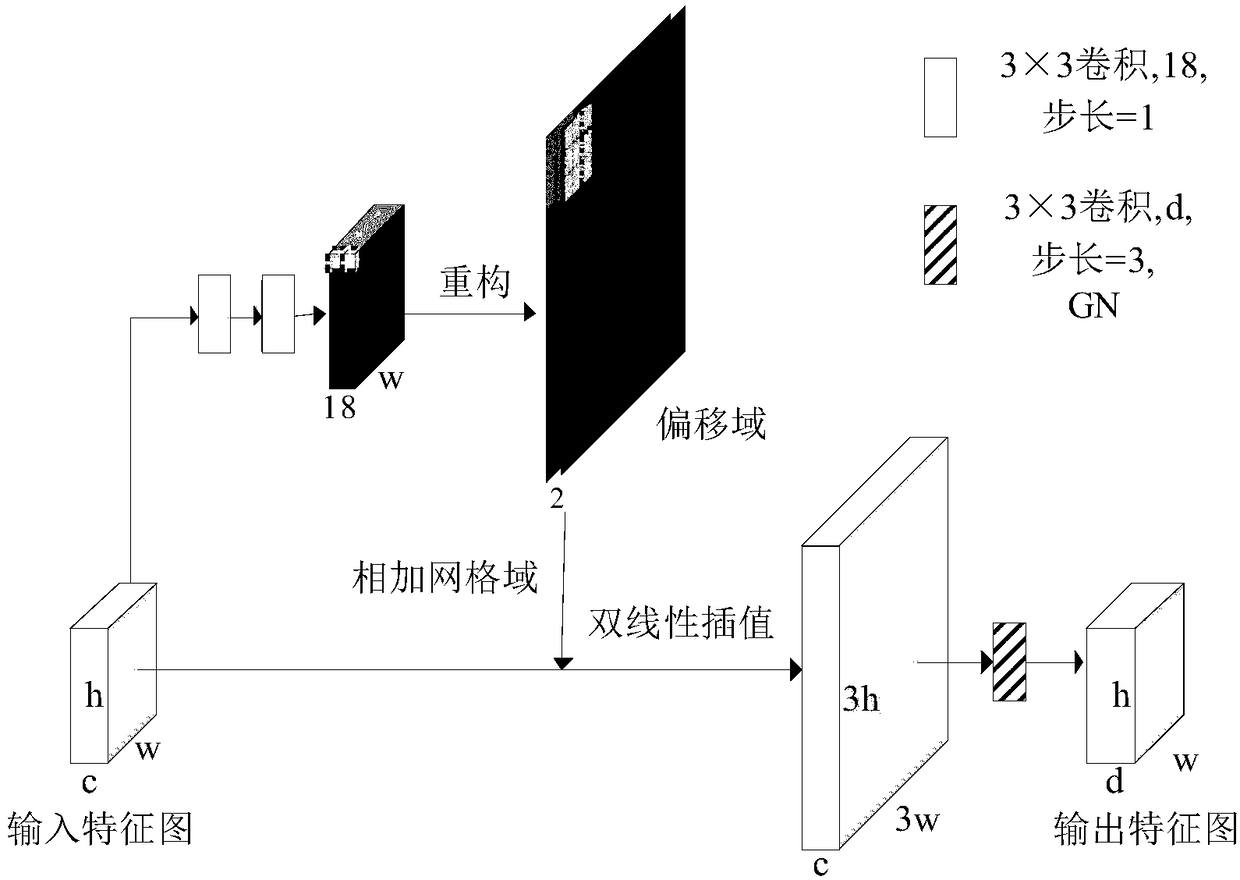

ActiveCN109191476AIncrease the number ofImprove segmentationImage enhancementImage analysisData setVisual technology

The invention belongs to the technical field of image processing and computer vision, and relates to a novel biomedical image automatic segmentation method based on a U-net network structure, including dividing a biomedical data set into a training set and a test set, and normalizing the test set and augmented test set; inputting the images of the training set into the improved U-net network model, and generating a classification probability map by output image passing through a softmax layer; calculating the error between classification probability diagram and gold standard by a centralized loss function, and obtaining the weight parameters of network model by a gradient backpropagation method; entering the images in the test set into the improved U-net network model, and outputting the image to generate a classification probability map through the softmax layer; according to the class probability in the classification probability graph, obtaining the segmentation result graph of theimage. The invention solves the problems that simple samples in the image segmentation process contribute too much to the loss function to learn difficult samples well.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

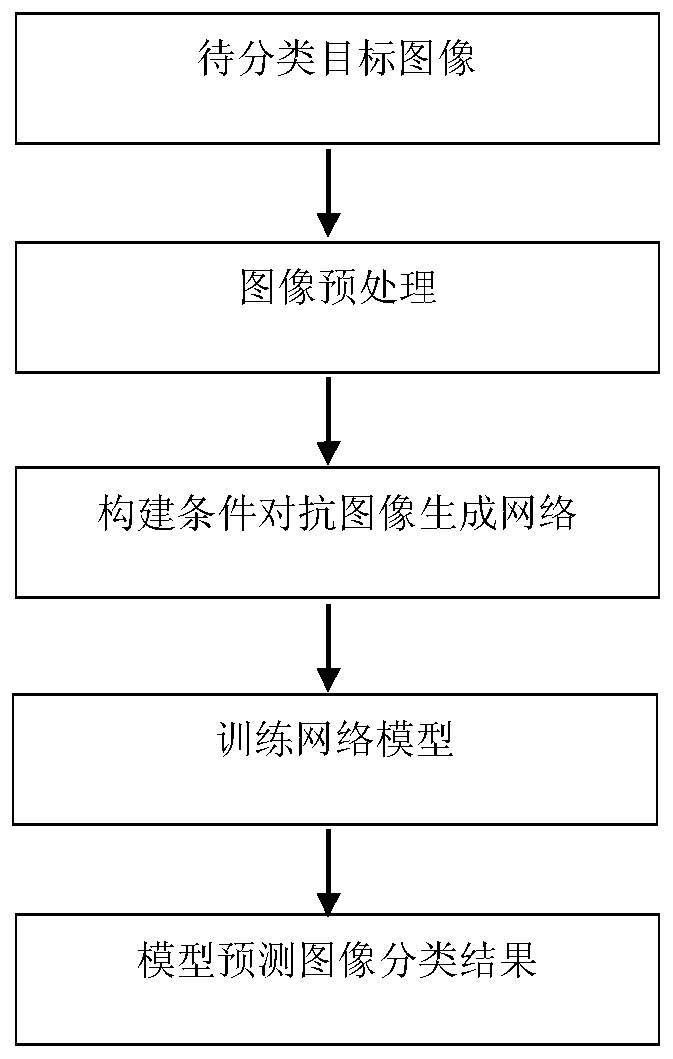

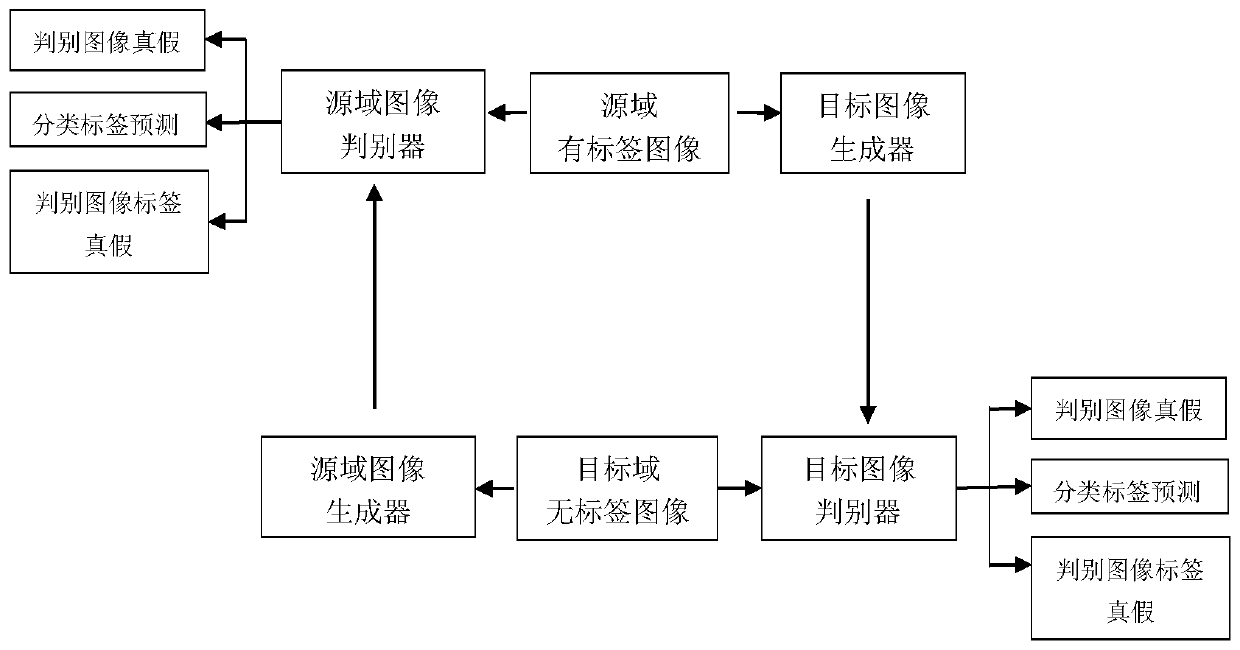

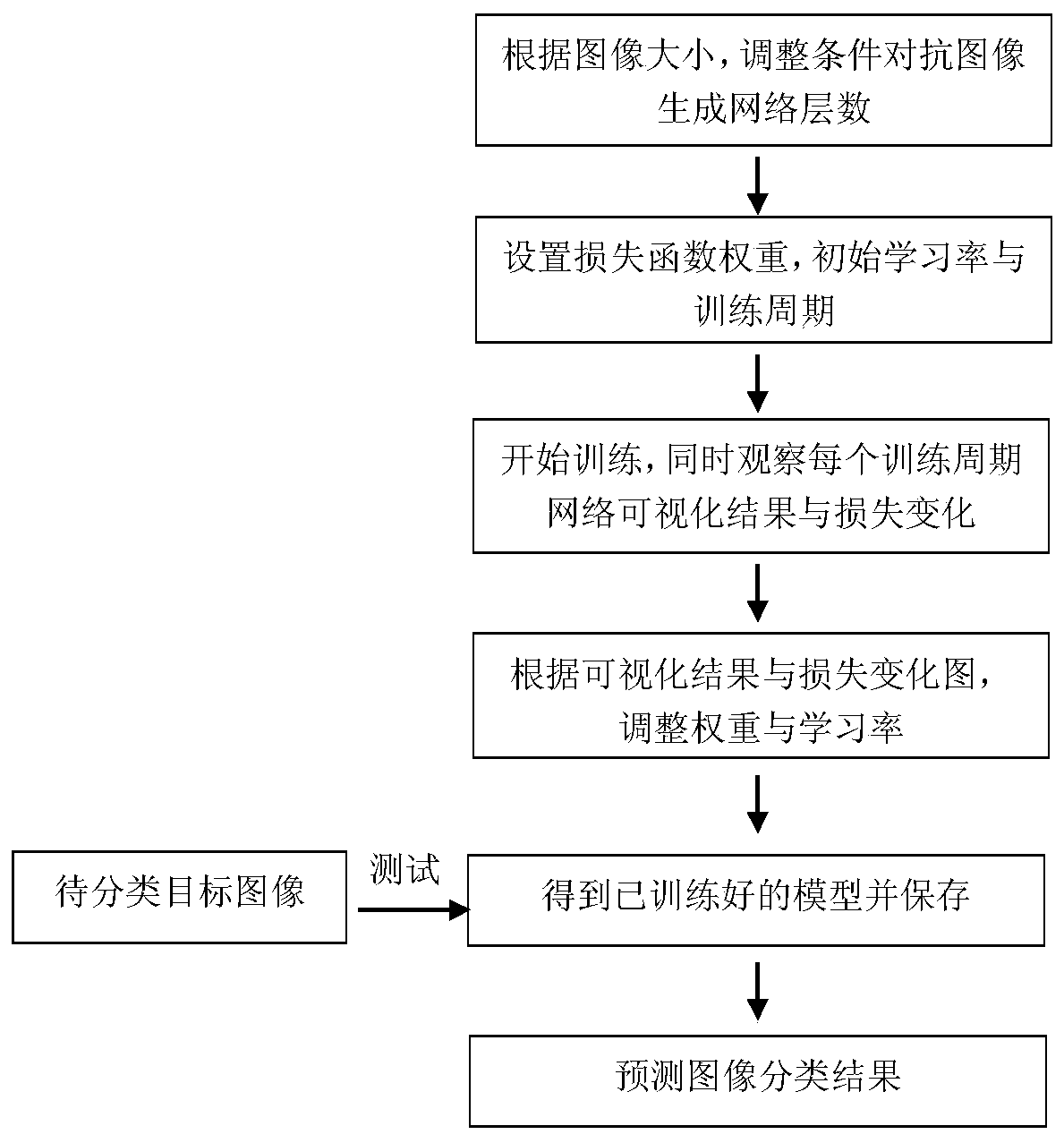

Unsupervised domain adaptive image classification method based on conditional generative adversarial network

ActiveCN109753992ARealize mutual conversionImprove domain adaptabilityCharacter and pattern recognitionNeural architecturesData setClassification methods

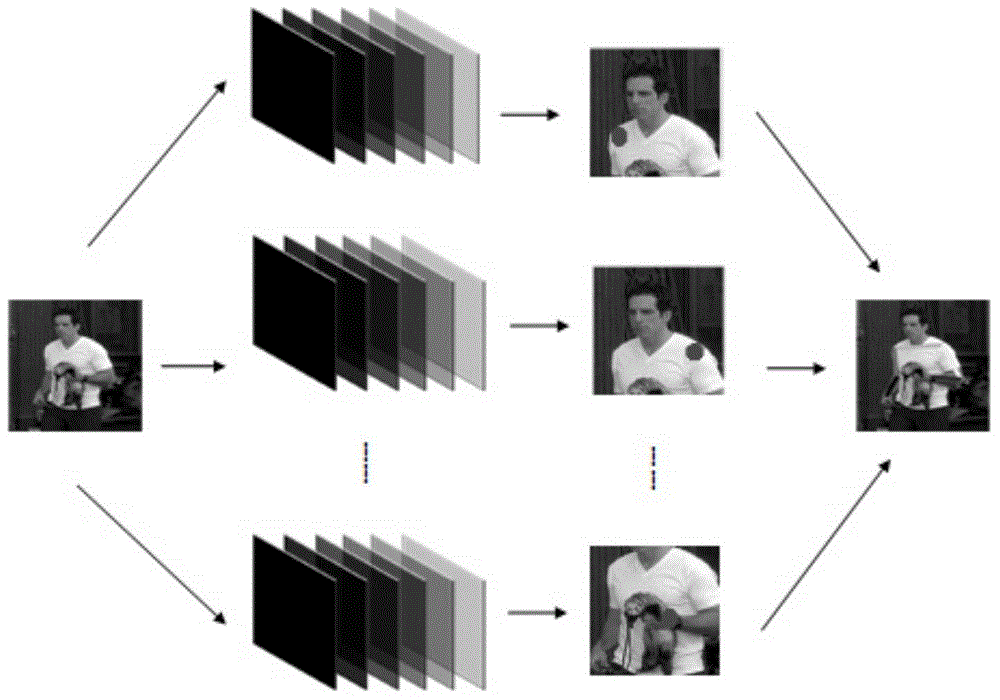

The invention discloses an unsupervised domain adaptive image classification method based on a conditional generative adversarial network. The method comprises the following steps: preprocessing an image data set; constructing a cross-domain conditional confrontation image generation network by adopting a cyclic consistent generation confrontation network and applying a constraint loss function; using the preprocessed image data set to train the constructed conditional adversarial image generation network; and testing the to-be-classified target image by using the trained network model to obtain a final classification result. According to the method, a conditional adversarial cross-domain image migration algorithm is adopted to carry out mutual conversion on source domain image samples andtarget domain image samples, and consistency loss function constraint is applied to classification prediction of target images before and after conversion. Meanwhile, discriminative classification tags are applied to carry out conditional adversarial learning to align joint distribution of source domain image tags and target domain image tags, so that the source domain image with the tags is applied to train the target domain image, classification of the target image is achieved, and classification precision is improved.

Owner:NANJING NORMAL UNIVERSITY

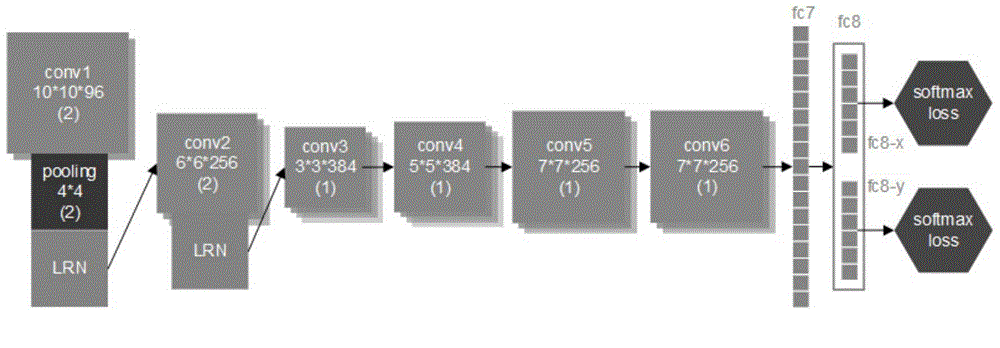

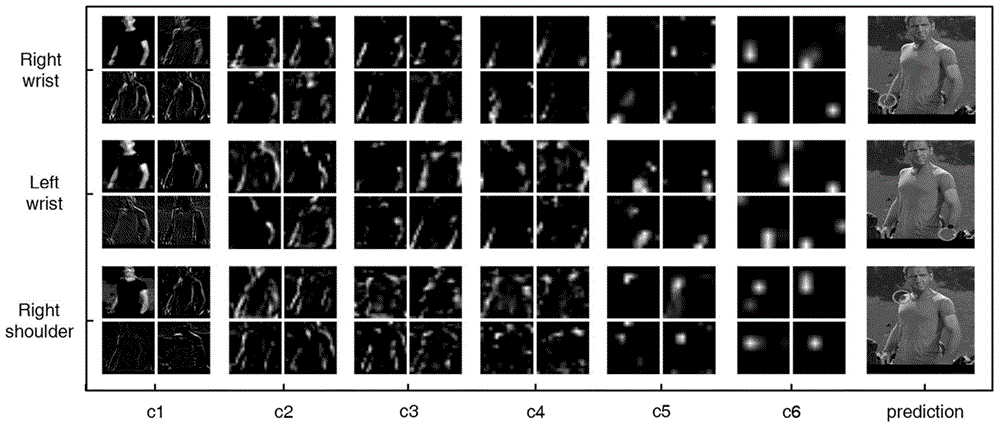

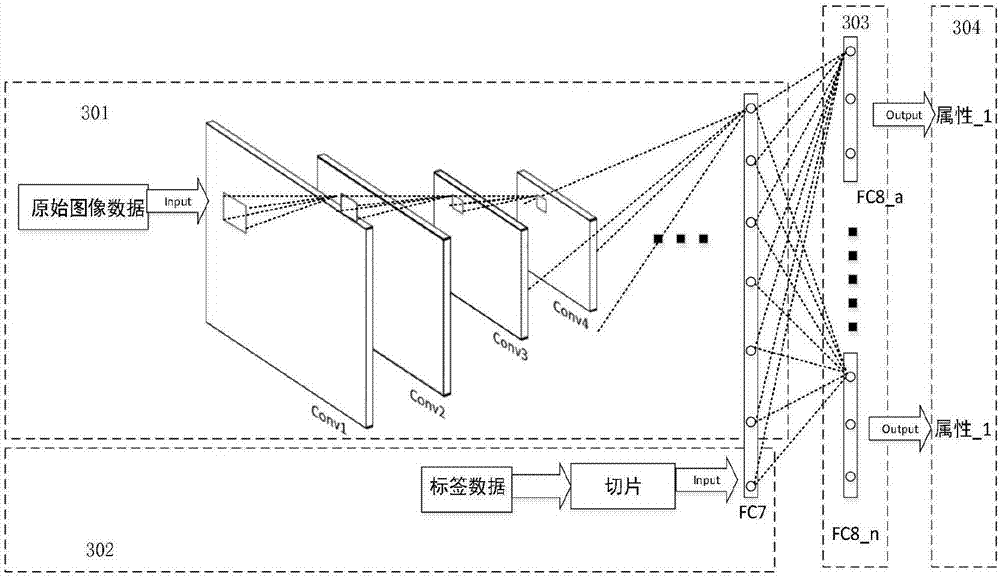

Human body gesture identification method based on depth convolution neural network

InactiveCN105069413AOvercome limitationsEasy to trainBiometric pattern recognitionHuman bodyInformation processing

The invention discloses a human body gesture identification method based on a depth convolution neural network, belongs to the technical filed of mode identification and information processing, relates to behavior identification tasks in the aspect of computer vision, and in particular relates to a human body gesture estimation system research and implementation scheme based on the depth convolution neural network. The neural network comprises independent output layers and independent loss functions, wherein the independent output layers and the independent loss functions are designed for positioning human body joints. ILPN consists of an input layer, seven hidden layers and two independent output layers. The hidden layers from the first to the sixth are convolution layers, and are used for feature extraction. The seventh hidden layer (fc7) is a full connection layer. The output layers consist of two independent parts of fc8-x and fc8-y. The fc8-x is used for predicting the x coordinate of a joint. The fc8-y is used for predicting the y coordinate of the joint. When model training is carried out, each output is provided with an independent softmax loss function to guide the learning of a model. The human body gesture identification method has the advantages of simple and fast training, small computation amount and high accuracy.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

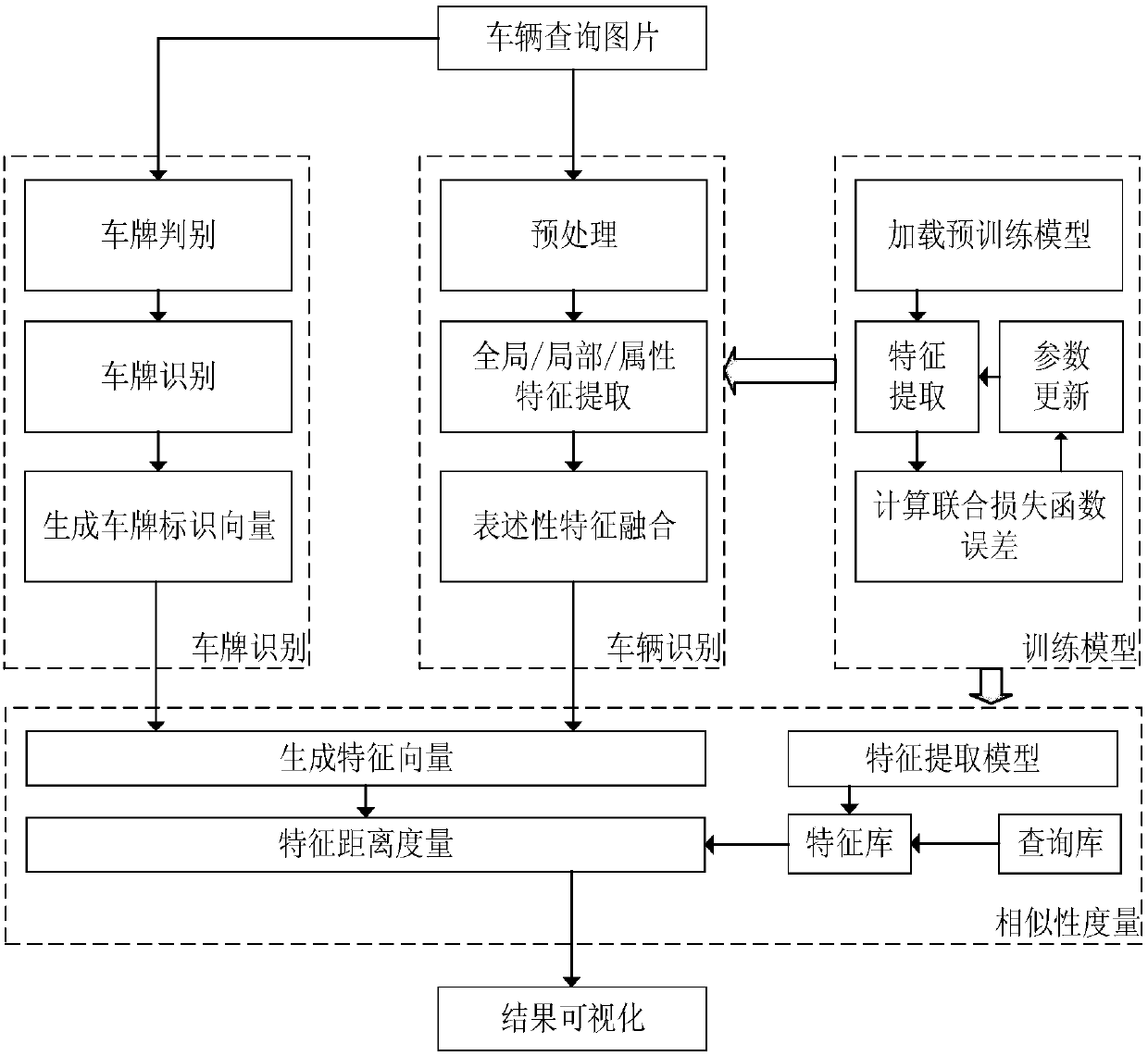

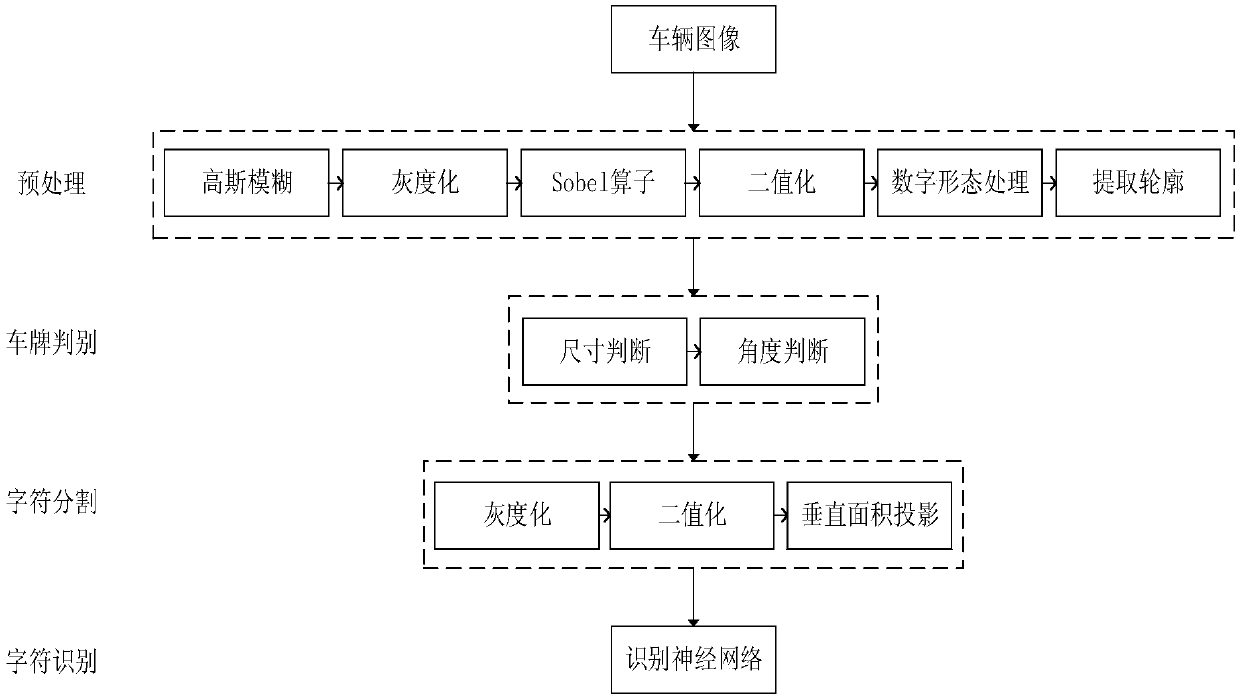

Multi-feature fusion vehicle re-identification method based on deep learning

ActiveCN107729818AImprove feature extractionGood presentation skillsCharacter and pattern recognitionFeature vectorData set

The invention discloses a multi-feature fusion vehicle re-identification method based on deep learning. The method comprises five parts of model training, license plate identification, vehicle identification, similarity measurement and visualization. The method comprises the steps that a large-scale vehicle data set is used for model training, and a multi-loss function-phased joint training policyis used for training; license plate identification is carried out on each vehicle image, and a license plate identification feature vector is generated according to the license plate recognition condition; a trained model is used to extract the vehicle descriptive features and vehicle attribute features of an image to be analyzed and an image in a query library, and the vehicle descriptive features and the license plate identification vector are combined with the unique re-identification feature vector of each vehicle image; in the stage of similarity measurement, similarity measurement is carried out on the image to be analyzed and the re-identification feature vector of the image in the query library; and a search result which meets requirements is locked and visualized.

Owner:BEIHANG UNIV

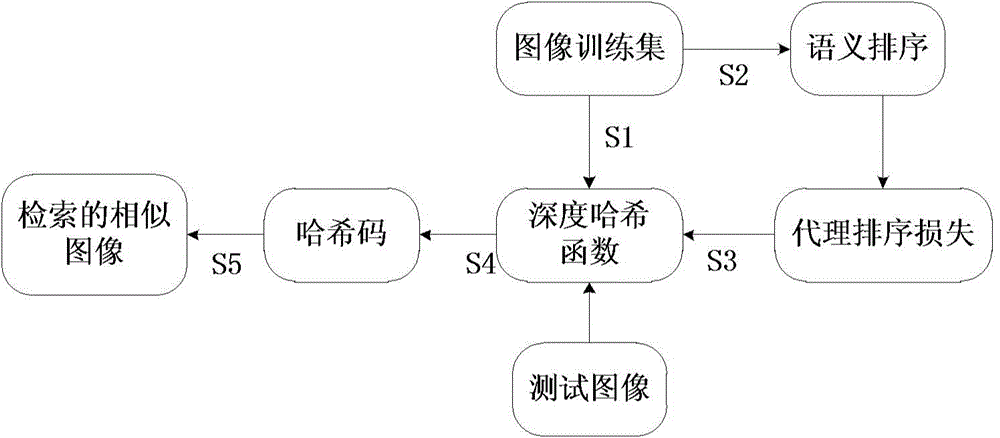

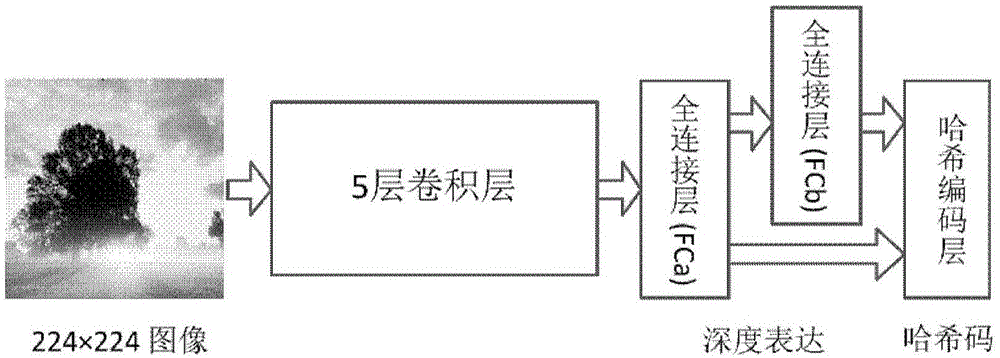

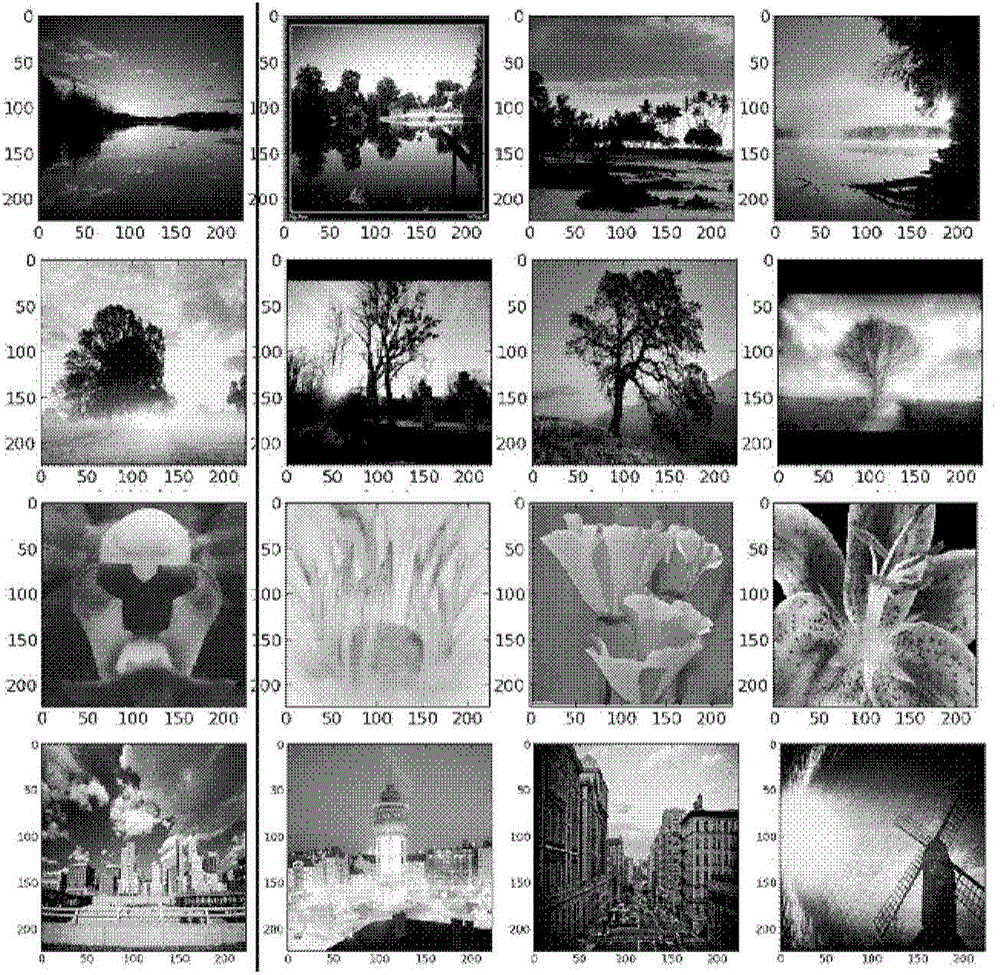

Image retrieval method utilizing deep semantic to rank hash codes

ActiveCN104834748APreserve multi-level similarityAvoid lostStill image data retrievalCharacter and pattern recognitionStochastic gradient descentHash function

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

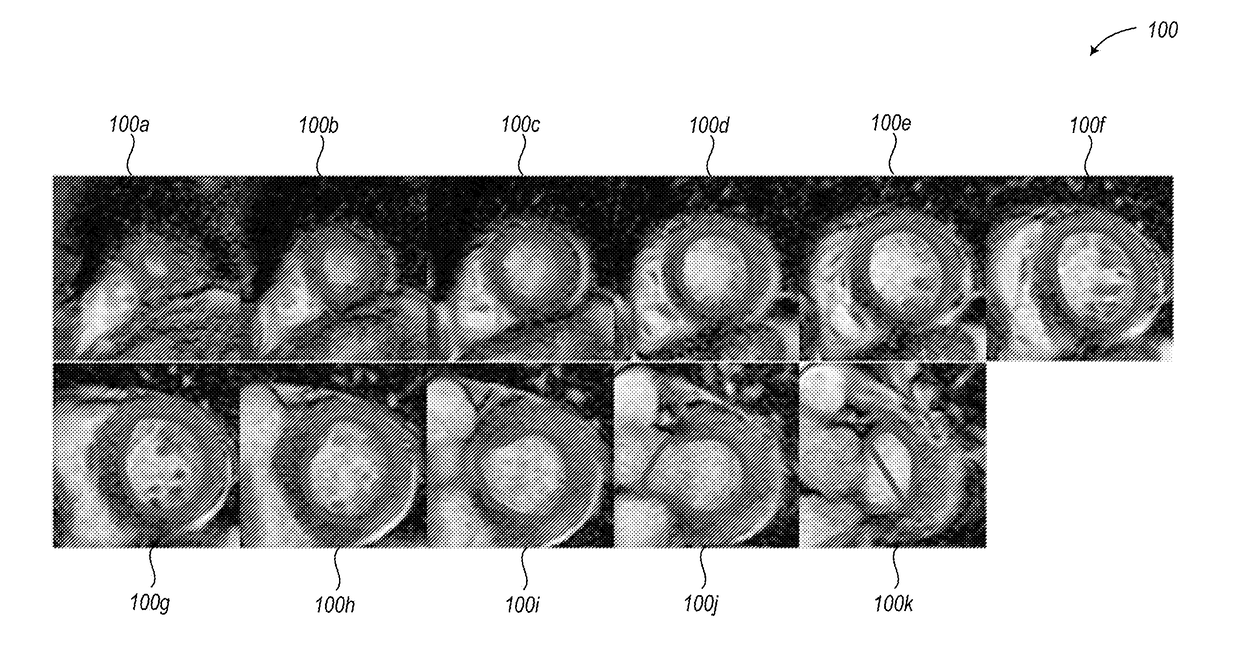

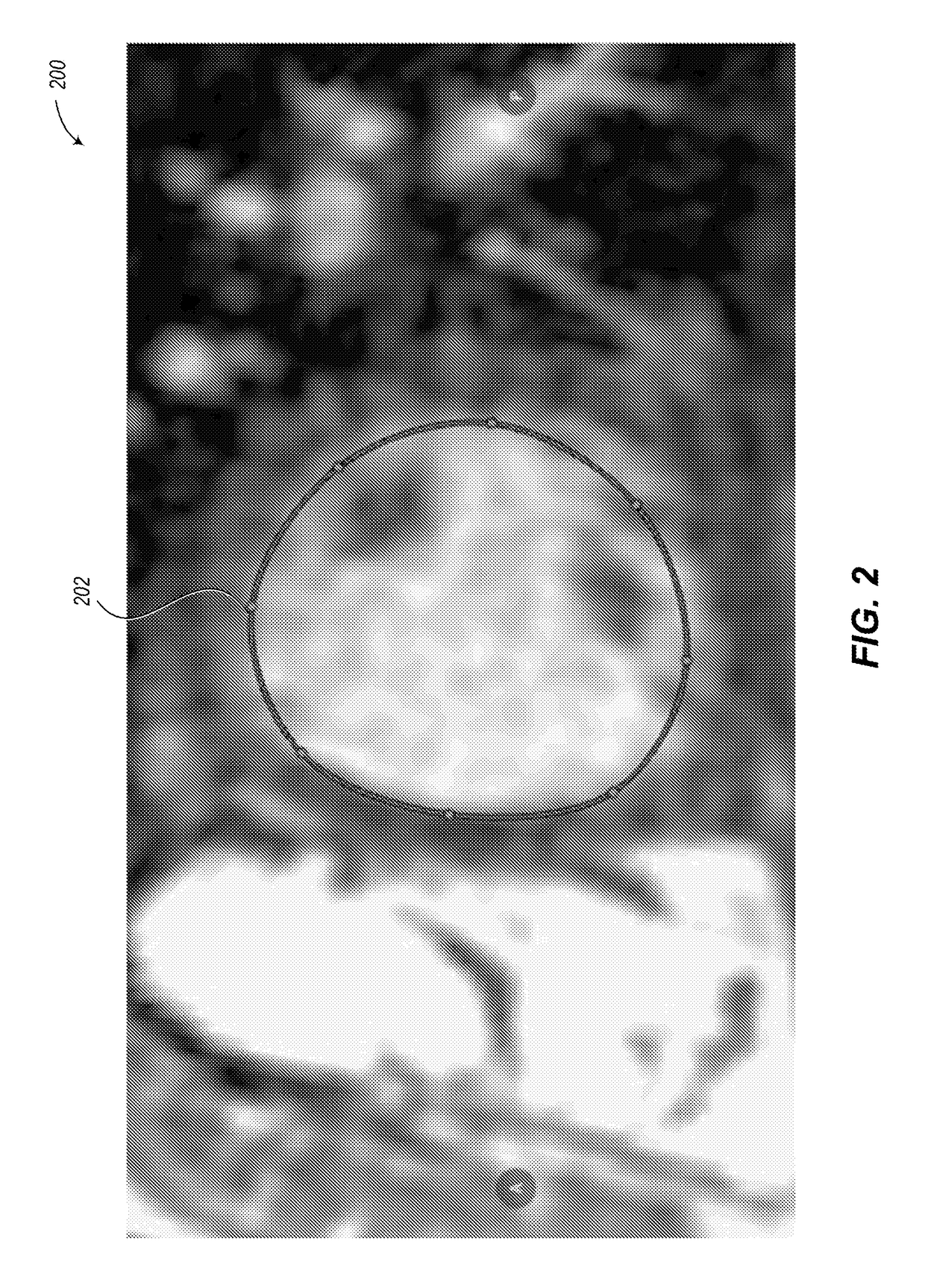

Automated cardiac volume segmentation

ActiveUS20180259608A1Verify accuracyImage enhancementImage analysisAnatomical structuresMissing data

Systems and methods for automated segmentation of anatomical structures, such as the human heart. The systems and methods employ convolutional neural networks (CNNs) to autonomously segment various parts of an anatomical structure represented by image data, such as 3D MRI data. The convolutional neural network utilizes two paths, a contracting path which includes convolution / pooling layers, and an expanding path which includes upsampling / convolution layers. The loss function used to validate the CNN model may specifically account for missing data, which allows for use of a larger training set. The CNN model may utilize multi-dimensional kernels (e.g., 2D, 3D, 4D, 6D), and may include various channels which encode spatial data, time data, flow data, etc. The systems and methods of the present disclosure also utilize CNNs to provide automated detection and display of landmarks in images of anatomical structures.

Owner:ARTERYS INC

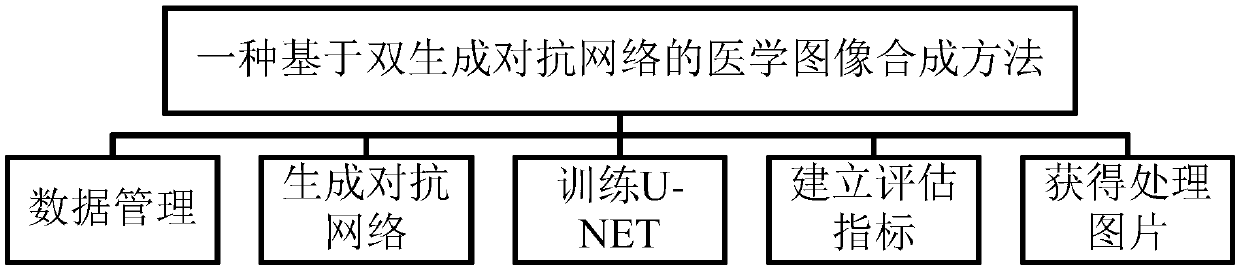

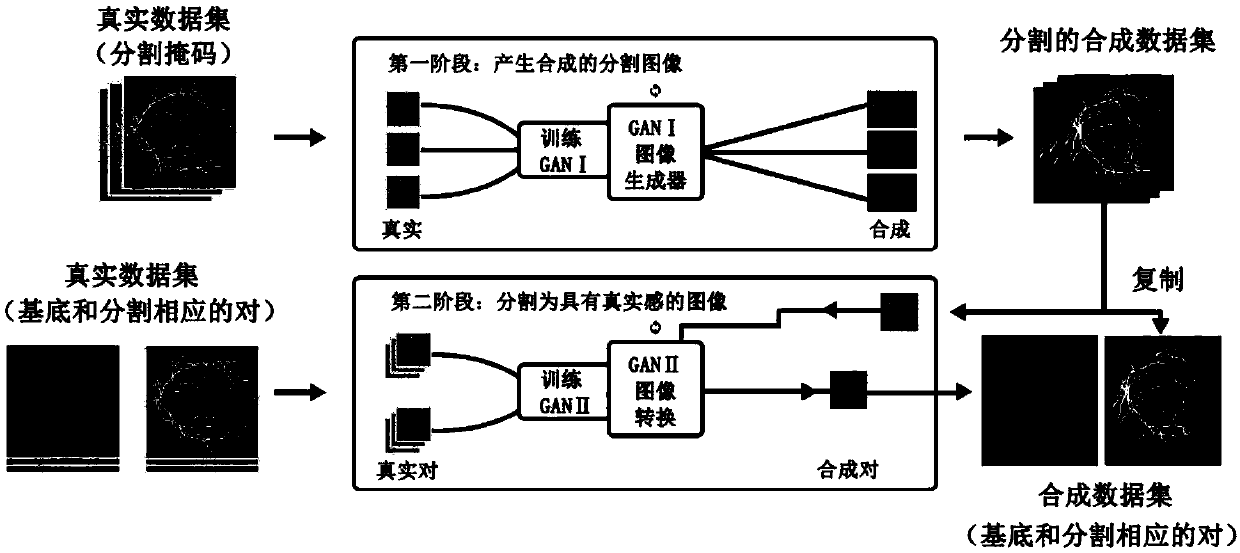

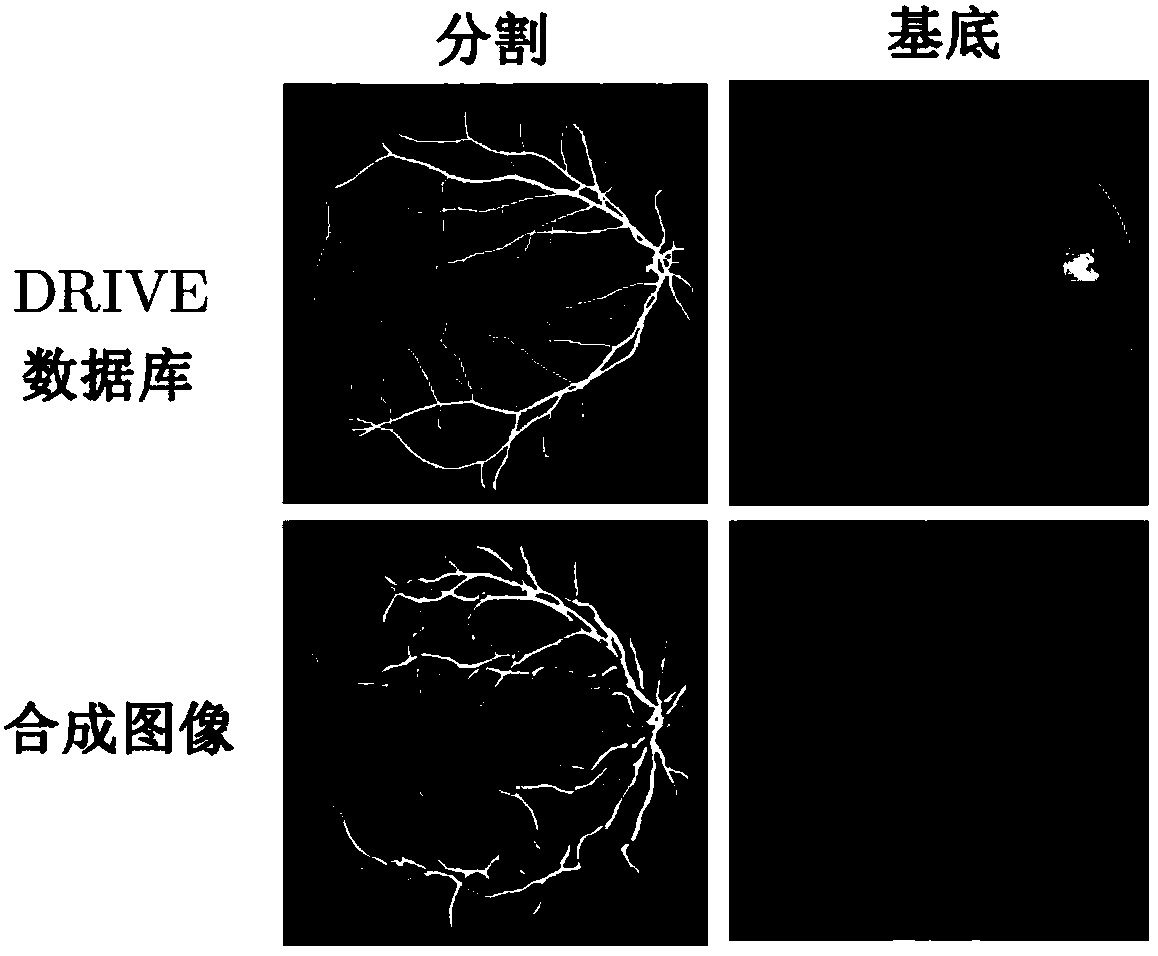

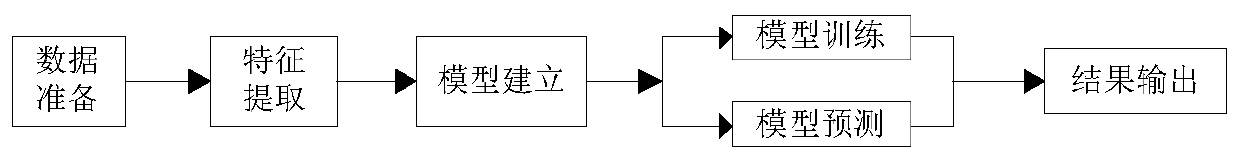

Medical image synthesis method based on double generative adversarial networks

The invention provides a medical image synthesis method based on double generative adversarial networks. The method comprises the main content of performing data management, generating the generativeadversarial networks, training a U-NET, establishing assessment indexes and obtaining processed pictures, wherein first, a DRIVE database is used to manage a first-stage GAN, then the first-stage GANgenerates a partitioning mask representing variable geometry of a dataset, a second-stage GAN converts the mask produced at the first stage into an image with a sense of reality, an generator minimizes a loss function of the true data in classification through a descriminator, then the U-NET is trained to assess the reliability of synthetic data, and finally the assessment indexes are establishedto measure a generated model. According to the method, by use of a pair of generative adversarial networks to create a new image generation path, the problem that the synthetic image contains a fake shadow and noise is solved, the stability and the sense of reality of the image are improved, and meanwhile image details are clearer.

Owner:SHENZHEN WEITESHI TECH

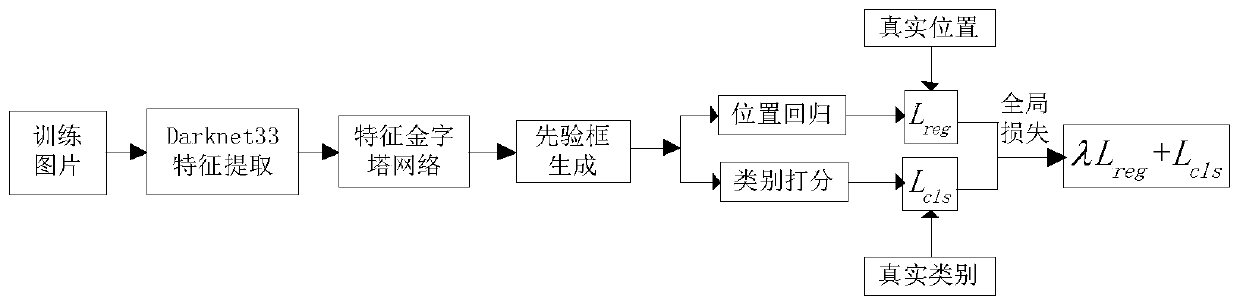

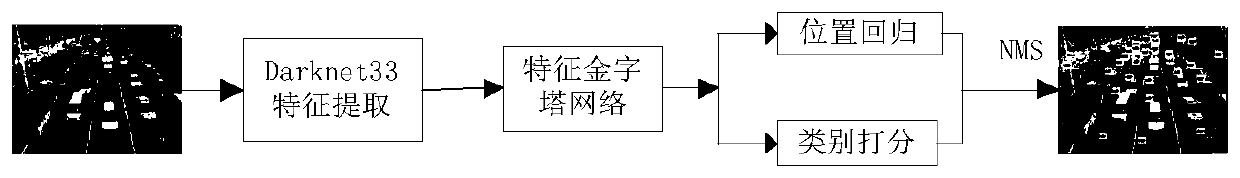

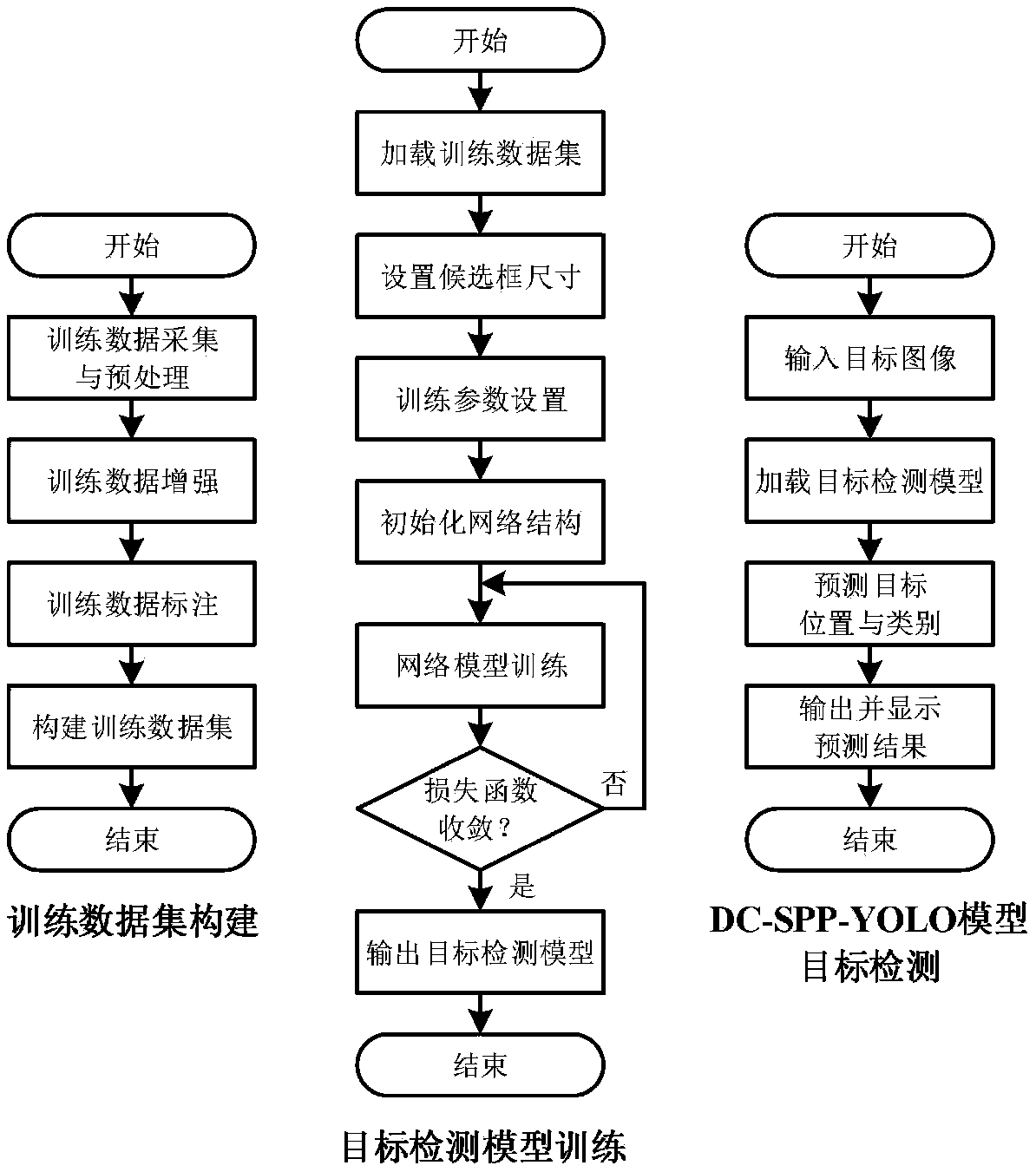

A pedestrian and vehicle detection method and system based on improved YOLOv3

ActiveCN109815886ADetection matchHigh speedCharacter and pattern recognitionNeural architecturesMulti-label classificationVehicle detection

The invention discloses a pedestrian and vehicle detection method and system based on improved YOLOv3. According to the method, an improved YOLOv3 network based on Darknet-33 is adopted as a main network to extract features; the cross-layer fusion and reuse of multi-scale features in the backbone network are carried out by adopting a transmittable feature map scale reduction method; and then a feature pyramid network is constructed by adopting a scale amplification method. In the training stage, a K-means clustering method is used for clustering the training set, and the cross-to-parallel ratio of a prediction frame to a real frame is used as a similarity standard to select a priori frame; and then the BBox regression and the multi-label classification are performed according to the loss function. And in the detection stage, for all the detection frames, a non-maximum suppression method is adopted to remove redundant detection frames according to confidence scores and IOU values, and an optimal target object is predicted. According to the method, a feature extraction network Darknet-33 of feature map scale reduction fusion is adopted, a feature pyramid is constructed through feature map scale amplification migration fusion, and a priori frame is selected through clustering, so that the speed and precision of the pedestrian and vehicle detection can be improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

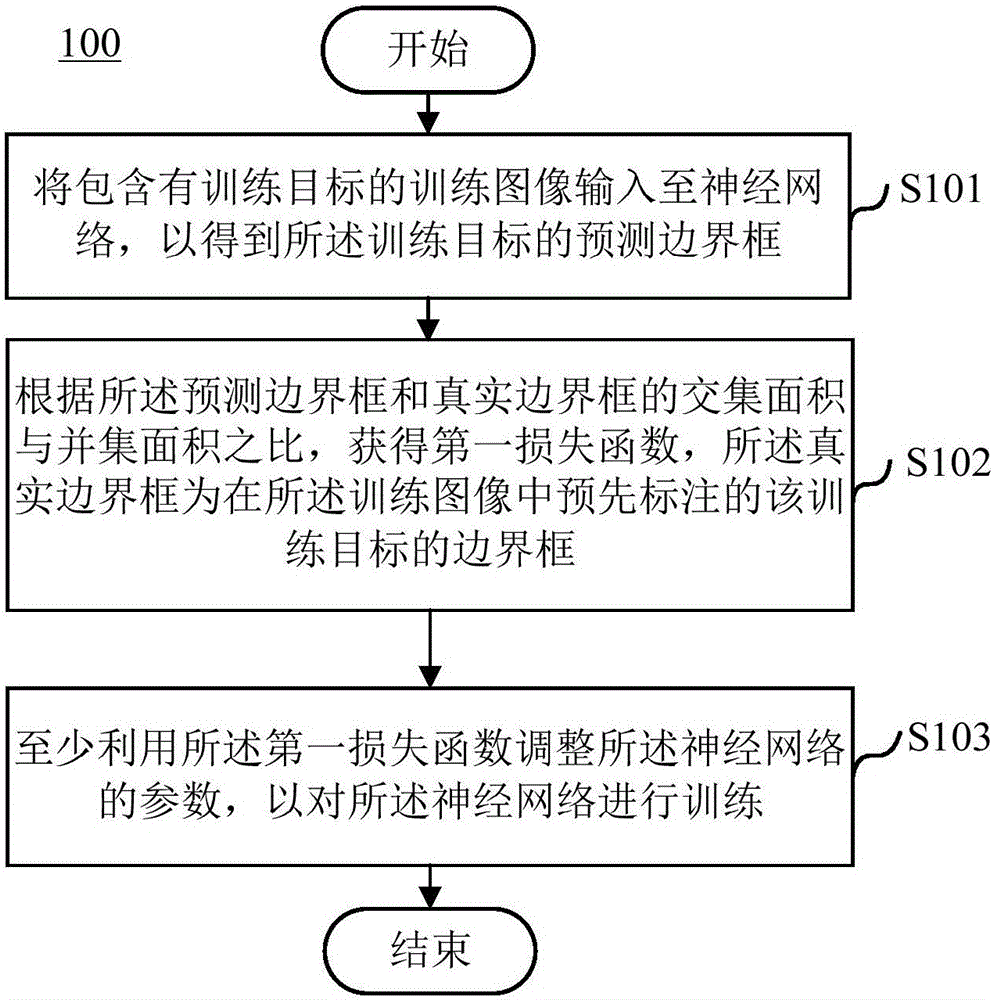

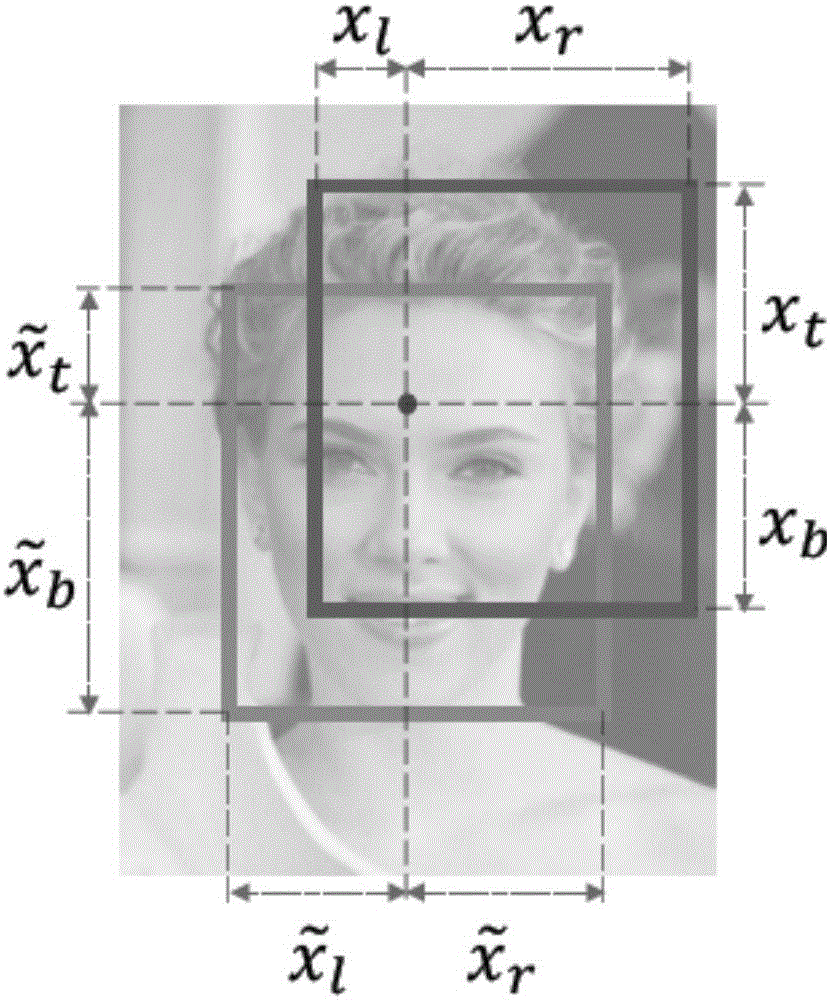

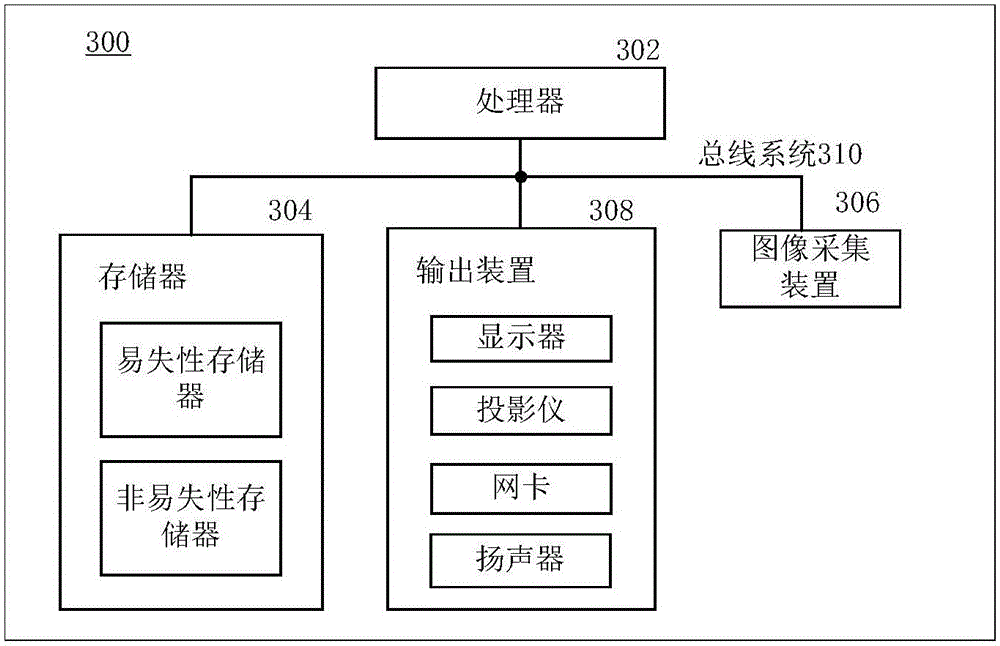

Neural network training and construction method and device, and object detection method and device

ActiveCN106295678AChange sizePrecise and effective target betting resultsImage enhancementImage analysisMachine learningObject detection

The embodiment of the invention provides a neural network training and construction method and device for object detection, and an object detection method and device based on a neural network. The neural network training and construction method for object detection comprises the steps: inputting a training image comprising a training object into the neural network, so as to obtain a prediction boundary frame of the training object; obtaining a first loss function according to the ratio of the intersection area and union area of the prediction boundary frame and a real boundary frame, wherein the real boundary frame is the boundary frame of the training object marked in the training image in advance; and adjusting the parameters of the neural network at least through the first loss function, so as to carry out the training of the neural network. According to the invention, the first loss function is employed for the regression of a target boundary frame into an integrated unit, and the object detection precision of the neural network is remarkably improved. Moreover, the training and detection efficiency of the neural network can be effectively improved through two branch structures of the neural network.

Owner:BEIJING KUANGSHI TECH +1

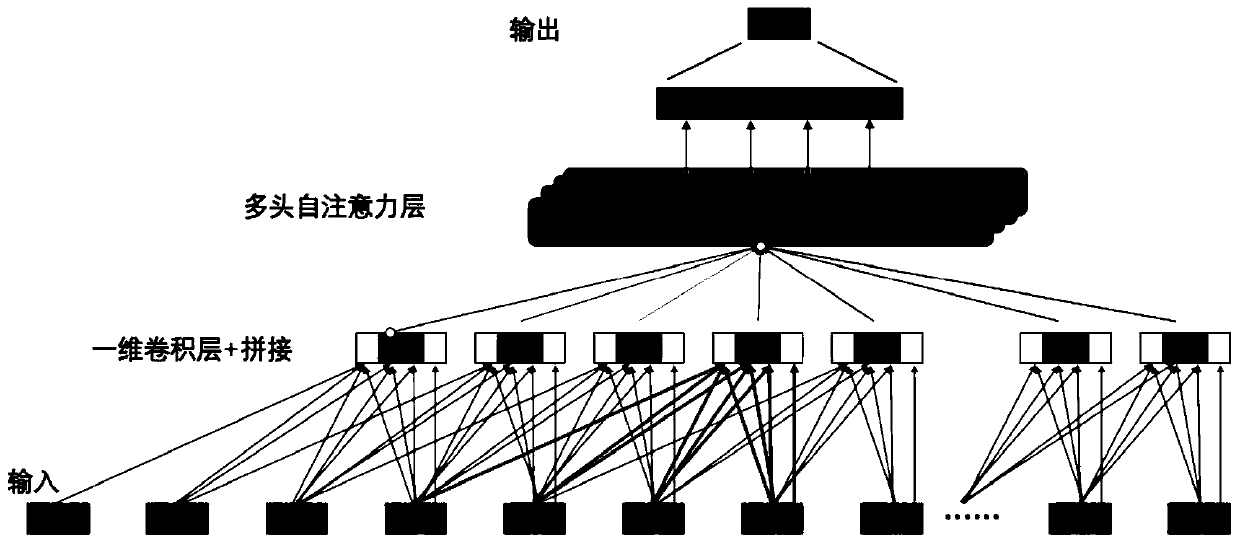

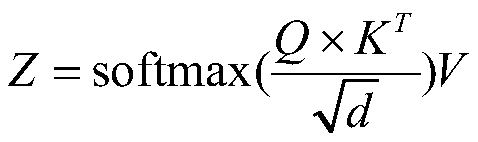

Personalized recommendation method based on deep learning

InactiveCN110196946AReduce serial operationsImprove training efficiencyDigital data information retrievalAdvertisementsPersonalizationRecommendation model

The invention discloses a personalized recommendation method based on deep learning. The method comprises the steps of according to the viewing time sequence behavior sequence of the user, predictingthe next movie that the user will watch, including three stages of preprocessing the historical behavior characteristic data of the user watching the movie, modeling a personalized recommendation model, and performing model training and testing by using the user time sequence behavior characteristic sequence; at the historical behavior characteristic data preprocessing stage when the user watchesthe movie, using the implicit feedback of interaction between the user and the movie to sort the interaction data of each user and the movie according to the timestamp, and obtaining a corresponding movie watching time sequence; and then encoding and representing the movie data,wherein the personalized recommendation model modeling comprises the embedded layer design, the one-dimensional convolutional network layer design, a self-attention mechanism, a classification output layer and the loss function design. According to the method, the one-dimensional convolutional neural network technologyand the self-attention mechanism are combined, so that the training efficiency is higher, and the number of parameters is relatively small.

Owner:SOUTH CHINA UNIV OF TECH

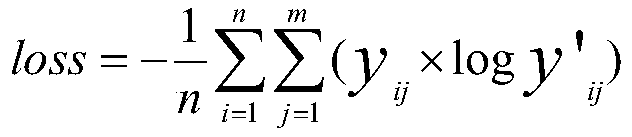

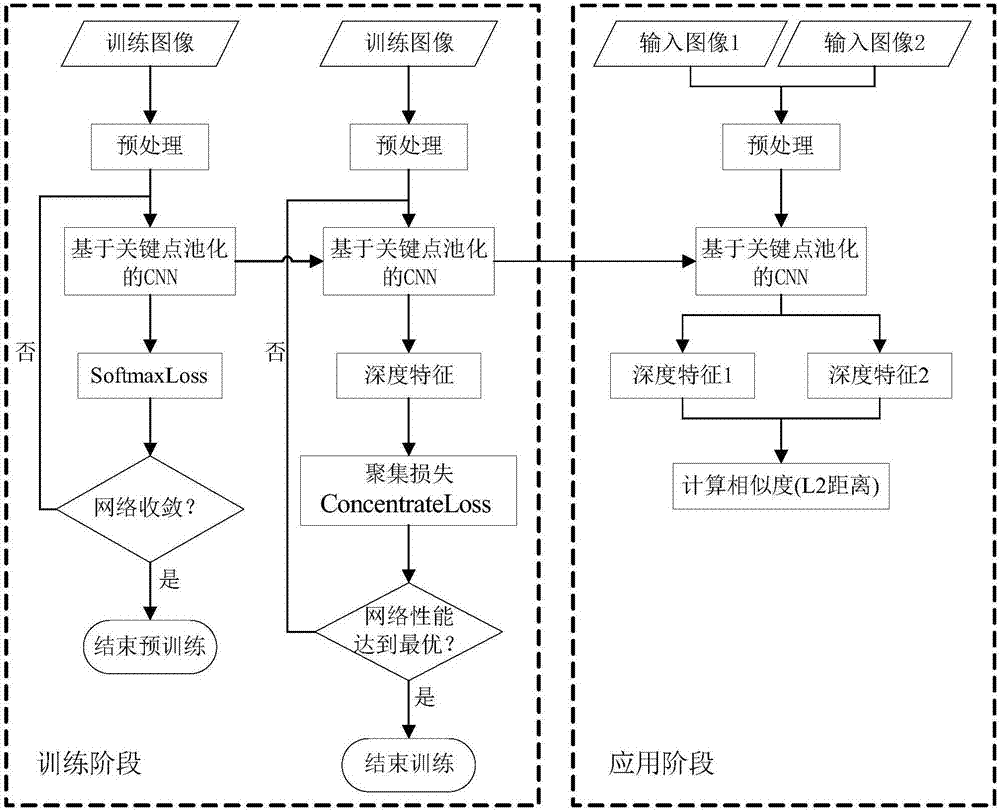

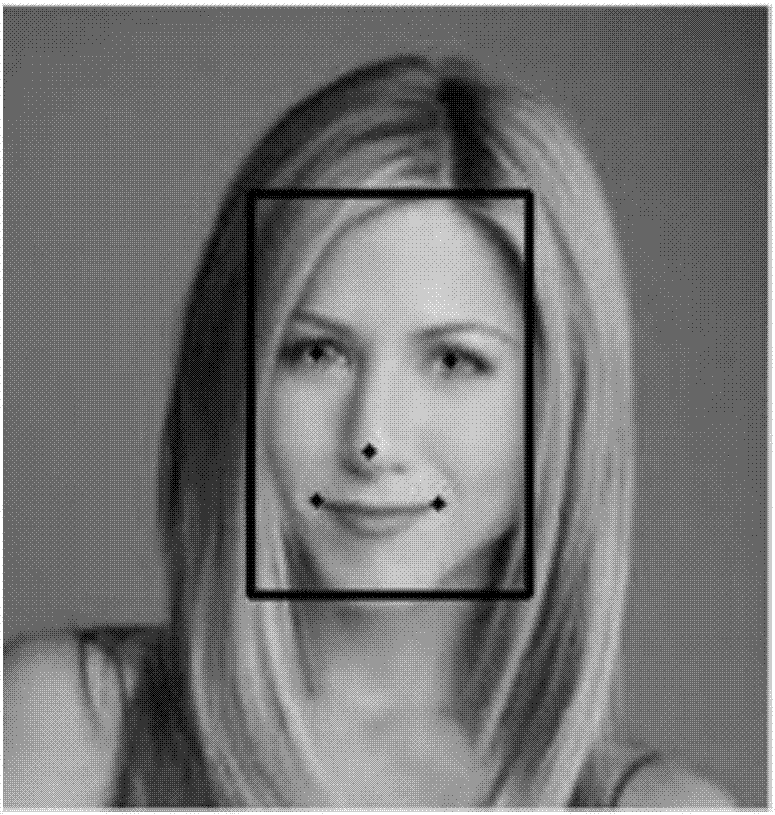

Face recognition method based on aggregate loss deep metric learning

InactiveCN107103281AAvoid hard case miningAvoid trainingCharacter and pattern recognitionNeural learning methodsNetwork modelMethod of undetermined coefficients

The invention discloses a face recognition method based on aggregate loss deep metric learning. The method comprises the steps of 1), preprocessing a training image; 2), performing pre-training on a deep convolutional neural network by means of the preprocessed image, using a softmax loss as a loss function and introducing key point pooling technology; 3), inputting all training images into a pre-trained model, and calculating the initial kind center of each kind; 4), performing fine adjustment on the pre-trained model by means of the aggregate loss, aggregating the samples of the same kind to the kind center through iteratively updating a network parameter and the kind center, and simultaneously increasing distances between different kind centers, thereby learning robust discriminative face characteristic expression; and 5), in application, performing preprocessing on the input image, and respectively inputting the input image into the trained network model for extracting characteristic expression, and realizing face recognition through calculating similarity between different faces. The face recognition method can realize relatively high face recognition accuracy just through training small mass of data.

Owner:SUN YAT SEN UNIV

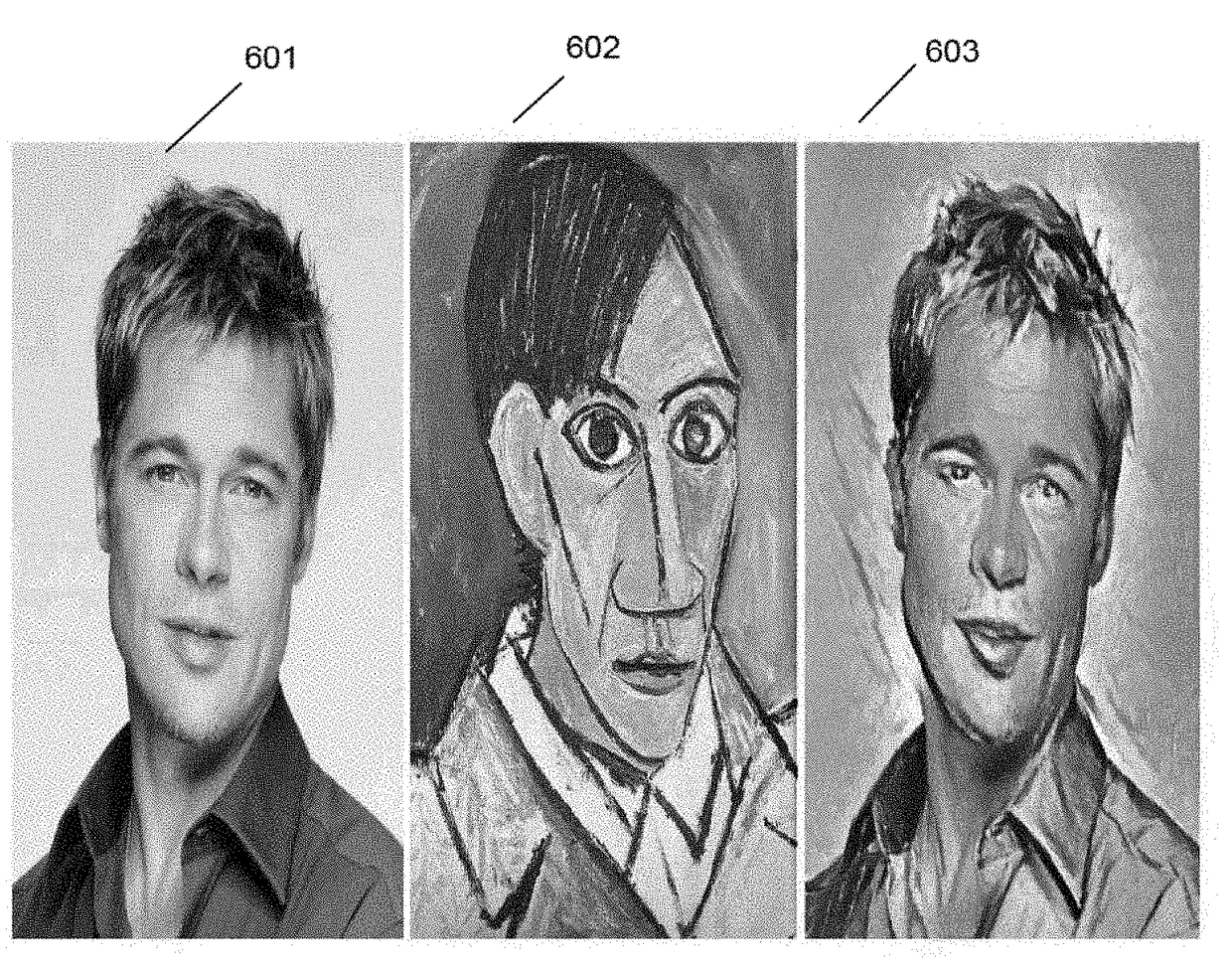

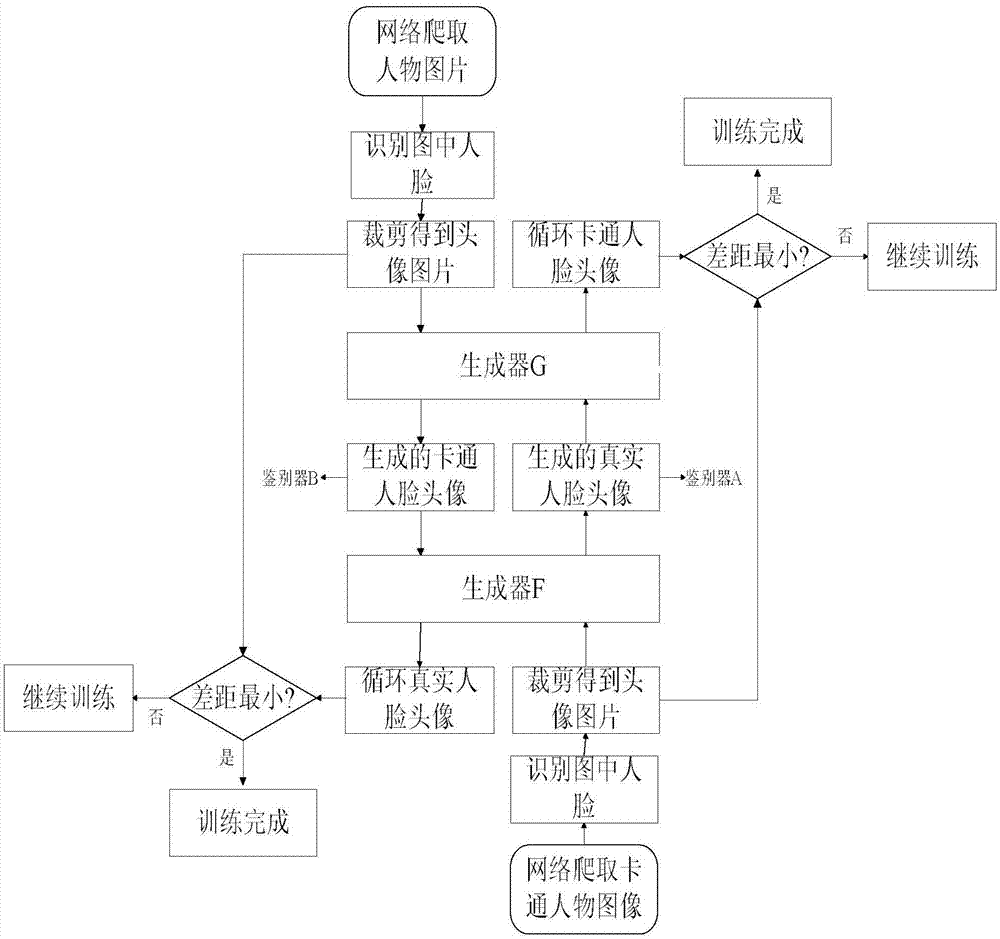

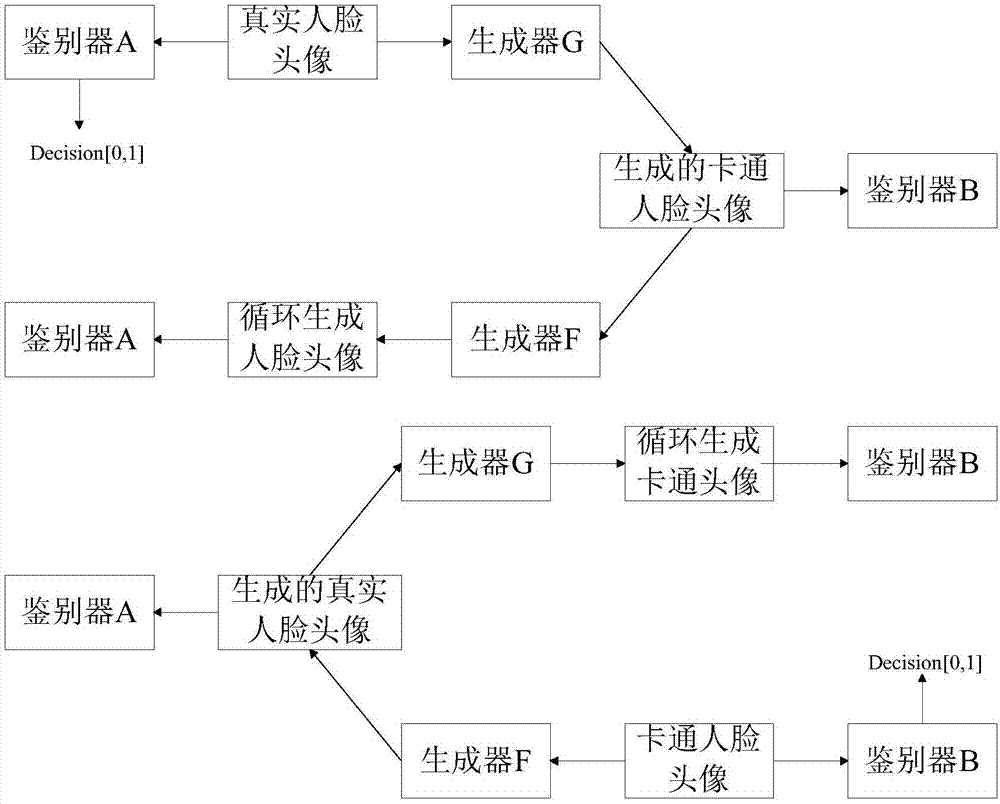

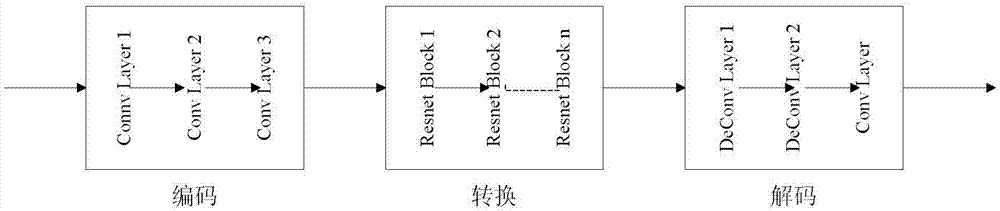

Facial avatar cartoonization realization method based on cyclic generative adversarial nets

ActiveCN107577985AReal-timeBe effectiveCharacter and pattern recognitionSpecial data processing applicationsPattern recognitionDiscriminator

The invention discloses a facial avatar cartoonization realization method based on cyclic generative adversarial nets. The method includes the steps of crawling a number of real people pictures and cartoon character pictures from the network; identifying the face in the pictures based on the face detection algorithm to obtain a real face avatar and a cartoon face avatar as training samples; constructing cyclic generative adversarial nets composed of generators and discriminators and designing a loss function; taking the real face avatar and the cartoon face avatar as the input, training the cyclic generative adversarial nets to minimize the loss function; and inputting the real face avatar to be processed into the generators of the trained cyclic generative adversarial nets to obtain the corresponding cartoon face avatar. The performance of the first generator of the cyclic generative adversarial nets can be optimal, the input real face avatar can be cartoonized, and the real-timenessand effectiveness of face cartoonization can be realized.

Owner:NANJING UNIV OF POSTS & TELECOMM

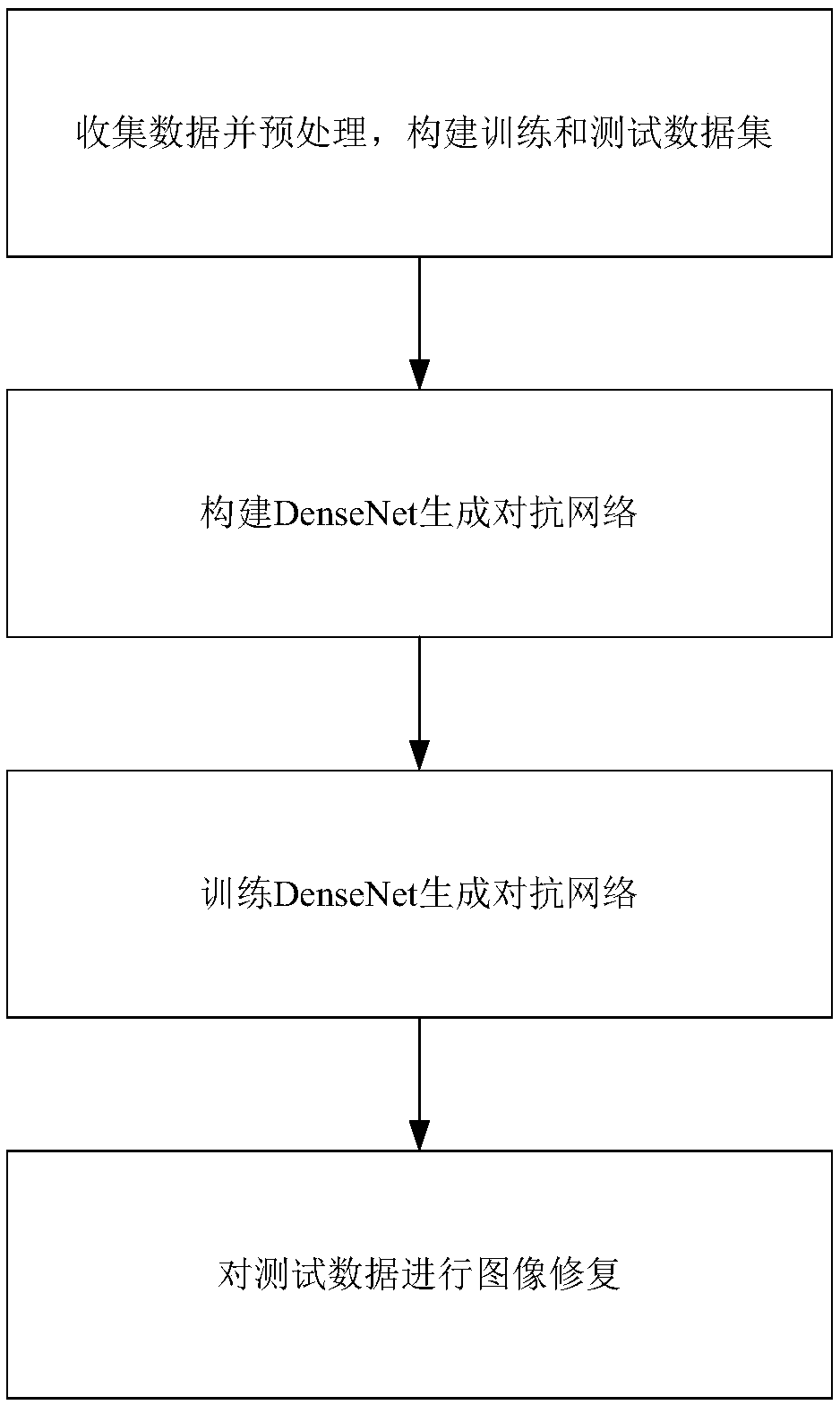

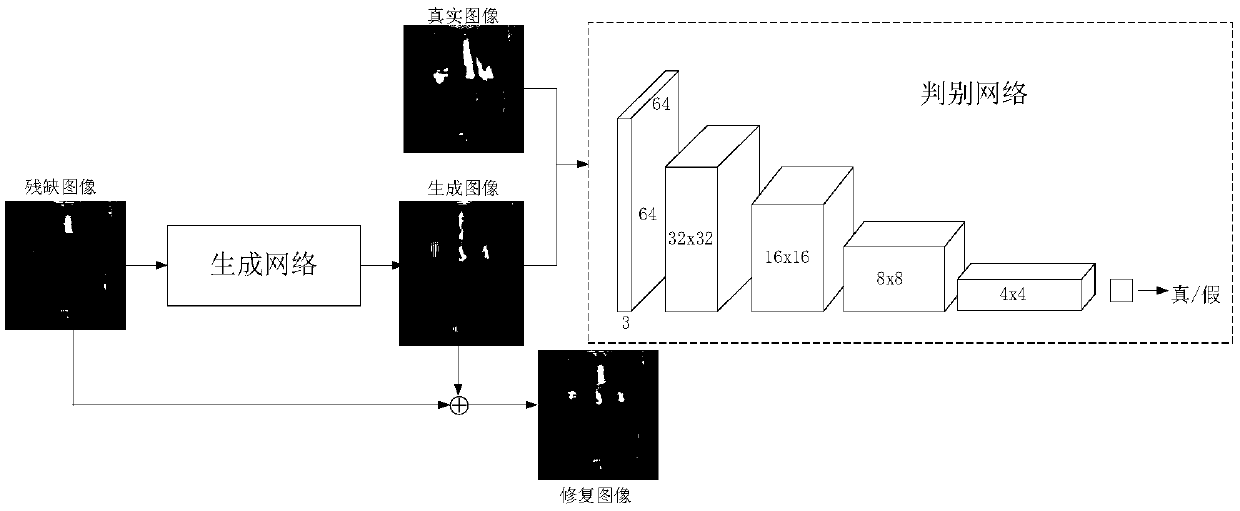

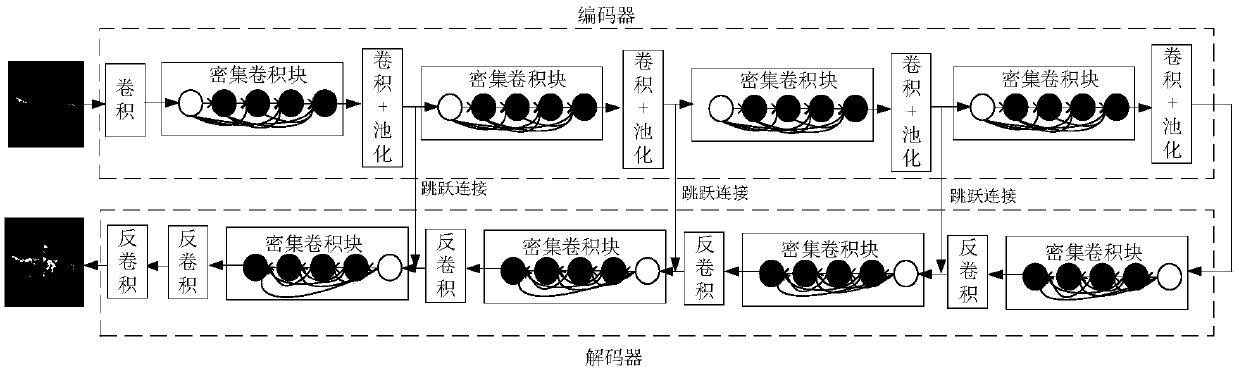

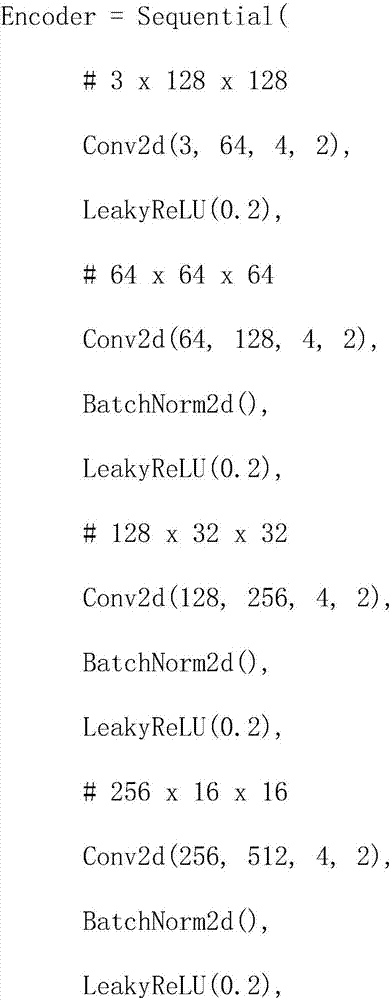

A semantic image restoration method based on a DenseNet generative adversarial network

The invention discloses a semantic image restoration method based on a DenseNet generative adversarial network. The method comprises the following steps of preprocessing collected images, and constructing a training and testing data set; constructing a DenseNet generative adversarial network; training a DenseNet generation adversarial network stage; and finally, realizing repair processing of thedefect image by using the trained network. Under the framework of the generative adversarial network, the DenseNet structure is introduced, a new loss function is constructed to optimize the network,the gradient disappearance is reduced, network parameters are reduced, meanwhile, transmission and utilization of features are improved, and the similarity and visual effect of large-area semantic information missing image restoration are improved and improved. Examples show that face image restoration with serious defect information can be achieved, and compared with other existing methods, the restoration results better conform to visual cognition.

Owner:BEIJING UNIV OF TECH

Image reflection removing method based on depth convolution generative adversarial network

ActiveCN107103590AFlexibility to defineAveraging of dismantling resultsImage enhancementCharacter and pattern recognitionPattern recognitionGenerative adversarial network

The invention discloses an image reflection removing method based on a depth convolution generative adversarial network. The method comprises the following steps: 1) data obtaining; 2) data processing; 3) model structuring; 4) loss defining; 5) model training; and 6) model verifying. In combination with the capability of a depth convolution neural network to extract high-level image semantic information and the flexible capability of the generative adversarial network to define the loss function, the method overcomes the limitations of a traditional method using low level pixel information, therefore, making the method with a better adaptive ability to the reflection removal of a generalized image.

Owner:SOUTH CHINA UNIV OF TECH

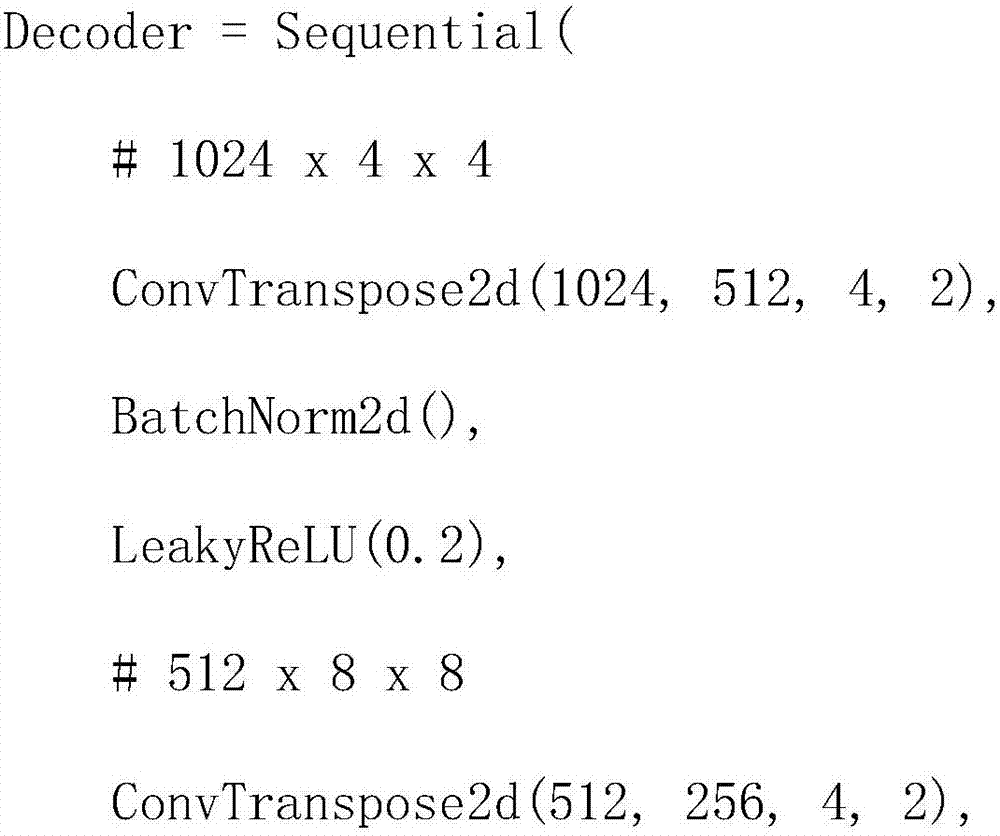

An image target detection method based on DC-SPP-YOLO

ActiveCN109685152AEnhancing feature propagationHigh precisionCharacter and pattern recognitionPattern recognitionPyramid

The invention discloses an image target detection method based on DC-SPP-YOLO, which comprises the following steps: firstly, preprocessing a training image sample by using a data enhancement method,constructing a training sample set, aSelecting a prior candidate box for target boundary box prediction by using a k-means clustering algorithm; Then, improving the convolutional layer connection modeof the YOLOv2 model from layer-by-layer connection to dense connection, introducing a space pyramid pooling between the convolutional module and a target detection layer, and establishing DC-SPP-YOLOtarget detection model; And finally, constructing a loss function by using an error quadratic sum between the predicted value and the real value, and iteratively updating model weight parameters to converge the loss function to obtain the DC-SPP-YOLO model for target detection. The invention considers the gradient disappearance caused by deepening convolution network and the insufficient use of multi-scale local region features of YOLOv2 model, and constructs a DC-SPP-YOLO target detection model based on improved convolution layer dense connection and spatial pyramid pooling. the target detection accuracy is improved.

Owner:BEIJING UNIV OF CHEM TECH

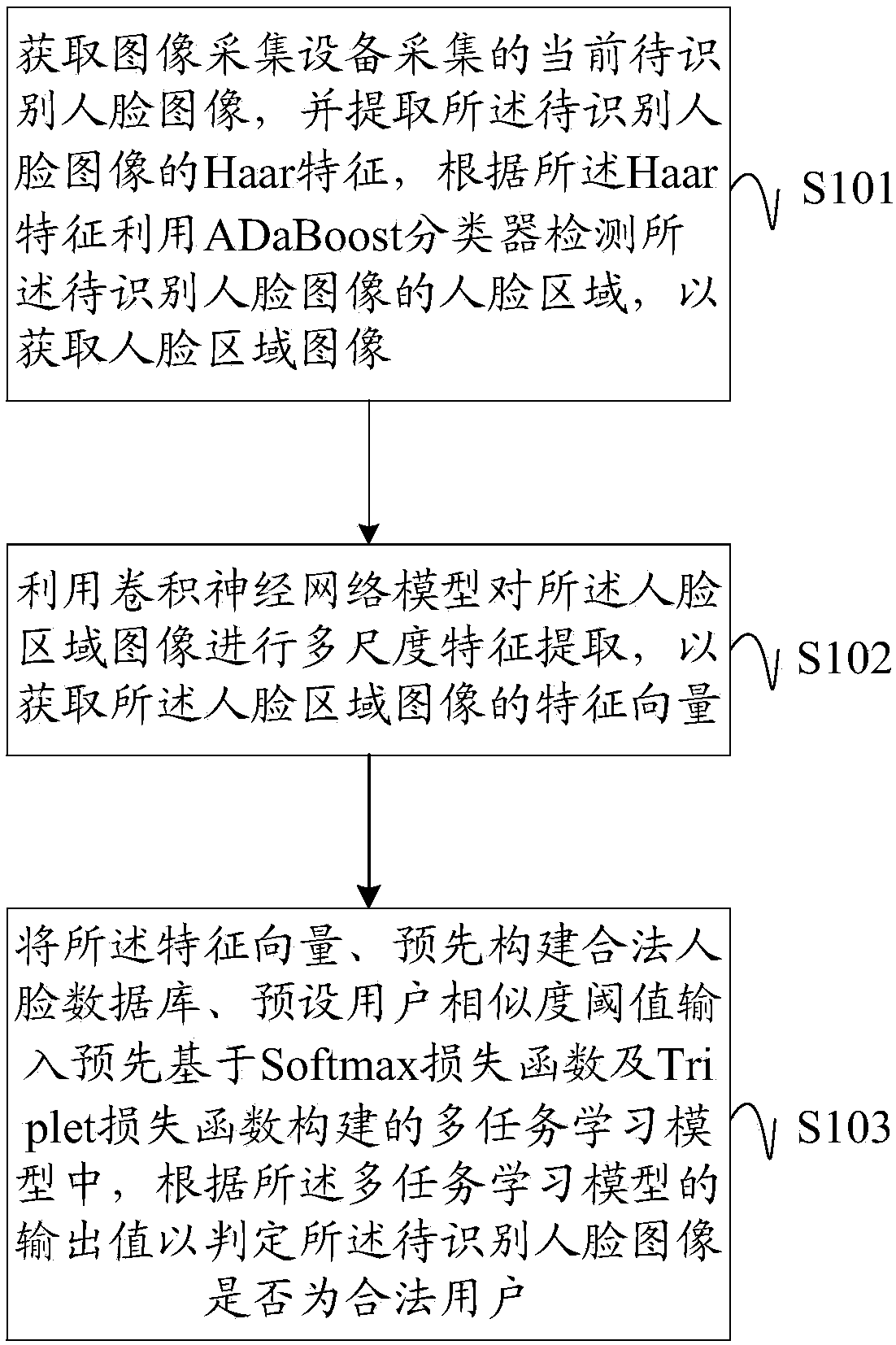

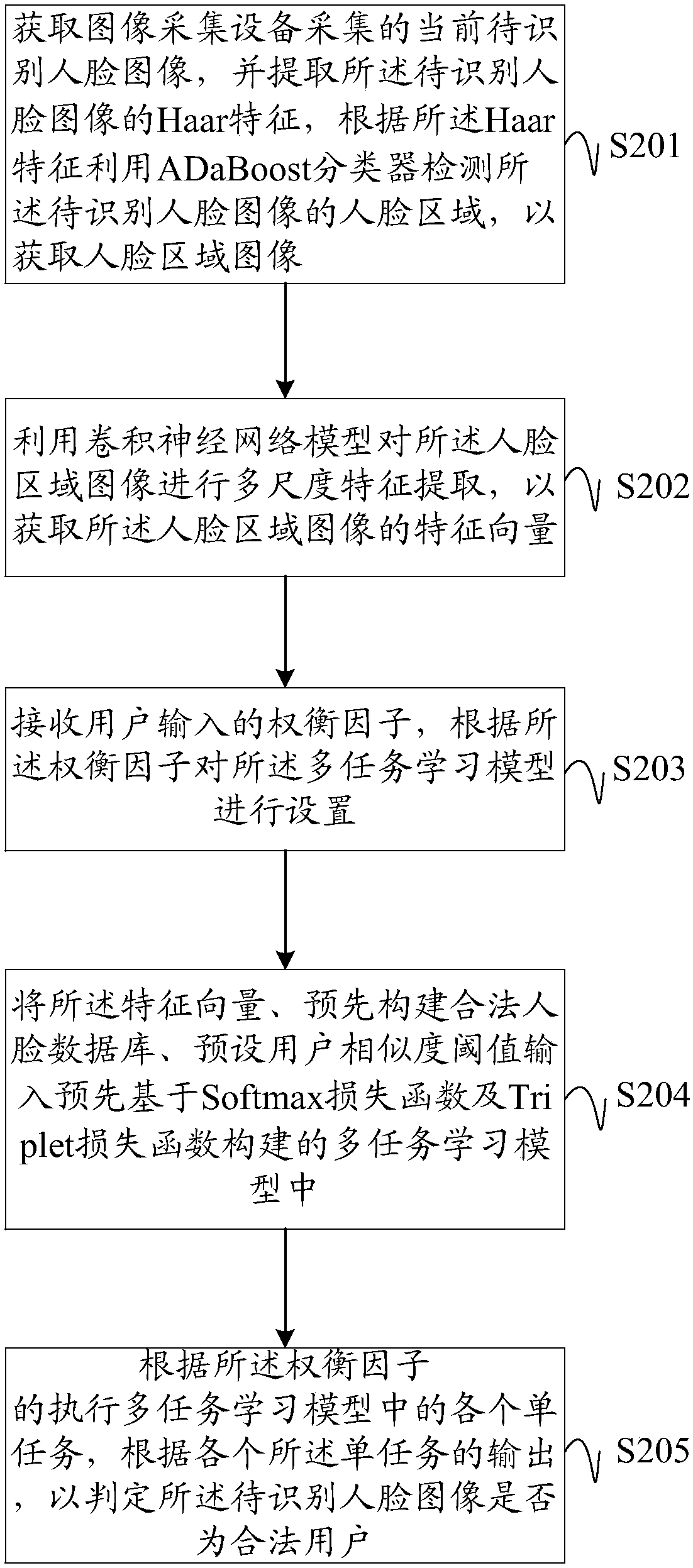

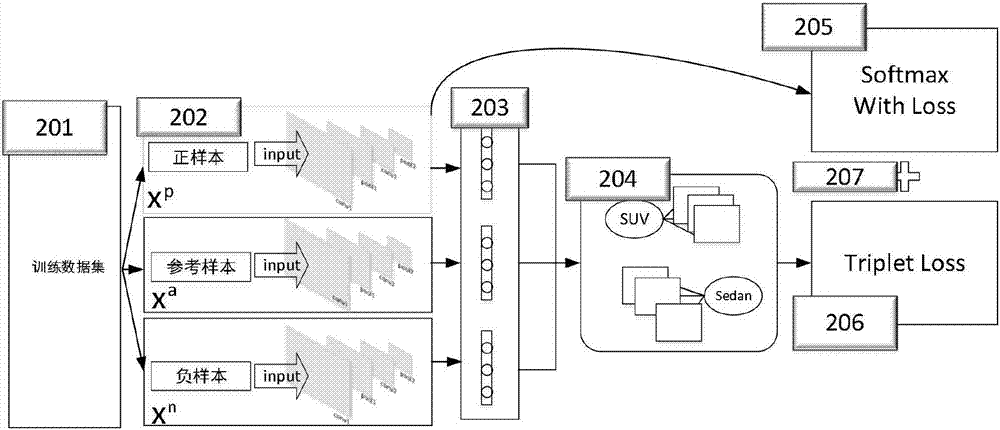

Face recognition method and device

ActiveCN107423690AImprove accuracyCalculation speedCharacter and pattern recognitionFeature vectorFeature extraction

The embodiment of the invention discloses a face recognition method and a device. The method comprises the steps of extracting the Haar feature of a current to-be-recognized face image, and detecting the human face area of the to-be-recognized face image by adopting an ADaBoost classifier so as to obtain a face region image; performing the multi-scale feature extraction on the face region image by utilizing a convolution neural network model, and obtaining a feature vector of the face region image; inputting the feature vector, a pre-built legal face database and a preset user similarity threshold value into a multi-task learning model pre-constructed according to a Softmax loss function and a Triplet loss function, and judging whether the to-be-recognized face image is a legal user or not according to the output value of the multi-task learning model. The extracted feature is good in robustness and good in generalization ability. Therefore, not only the face recognition rate improved, but also the accuracy of face recognition is improved. The safety of identity authentication is improved.

Owner:GUANGDONG UNIV OF TECH

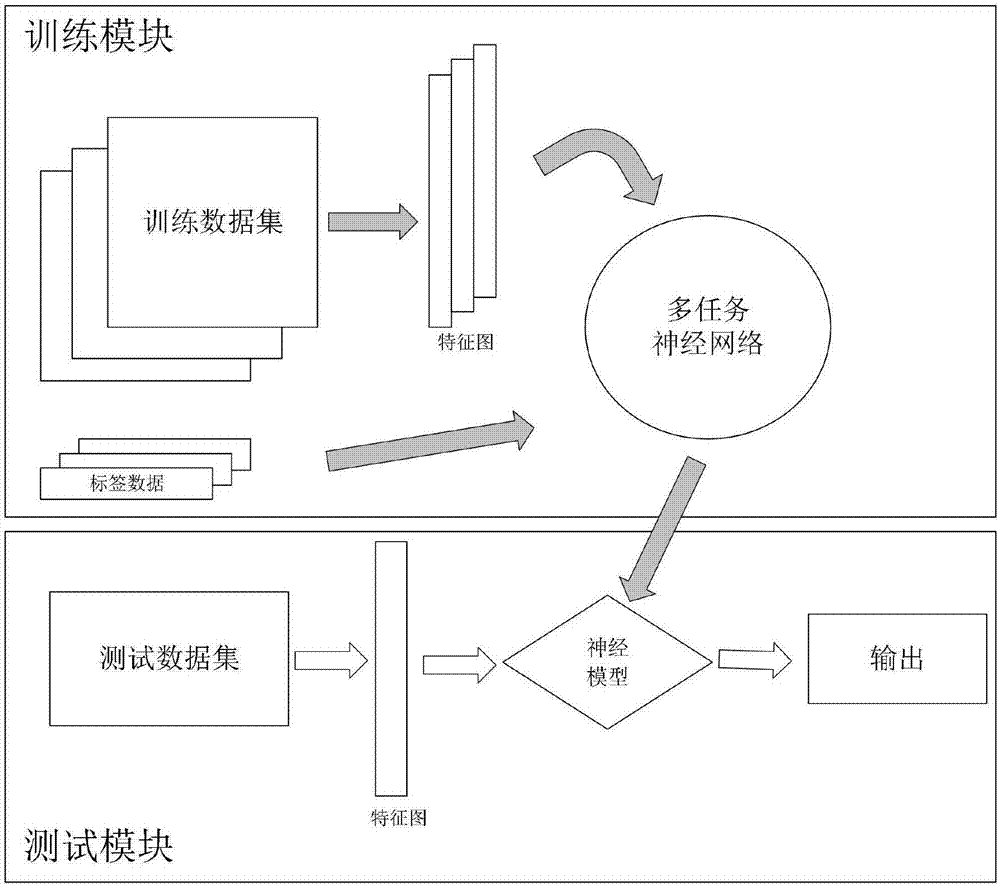

Fine granularity vehicle multi-property recognition method based on convolutional neural network

ActiveCN107886073AReduce human interventionCharacter and pattern recognitionNeural architecturesExtensibilityData set

The invention relates to a fine granularity vehicle multi-property recognition method based on a convolutional neural network and belongs to the technical field of computer visual recognition. The method comprises the steps that a neural network structure is designed, including a convolution layer, a pooling layer and a full-connection layer, wherein the convolution layer and the pooling layer areresponsible for feature extraction, and a classification result is output by calculating an objective loss function on the last full-connection layer; a fine granularity vehicle dataset and a tag dataset are utilized to train the neural network, the training mode is supervised learning, and a stochastic gradient descent algorithm is utilized to adjust a weight matrix and offset; and a trained neural network model is used for performing vehicle property recognition. The method can be applied to multi-property recognition of a vehicle, the fine granularity vehicle dataset and the multi-propertytag dataset are utilized to obtain more abstract high-level expression of the vehicle through the convolutional neural network, invisible characteristics reflecting the nature of the to-be-recognizedvehicle are learnt from a large quantity of training samples, therefore, extensibility is higher, and recognition precision is higher.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com