Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

380 results about "Cross entropy" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In information theory, the cross entropy between two probability distributions p and q over the same underlying set of events measures the average number of bits needed to identify an event drawn from the set if a coding scheme used for the set is optimized for an estimated probability distribution q, rather than the true distribution p.

Learning efficient object detection models with knowledge distillation

InactiveUS20180268292A1Character and pattern recognitionNeural learning methodsObject ClassDistillation

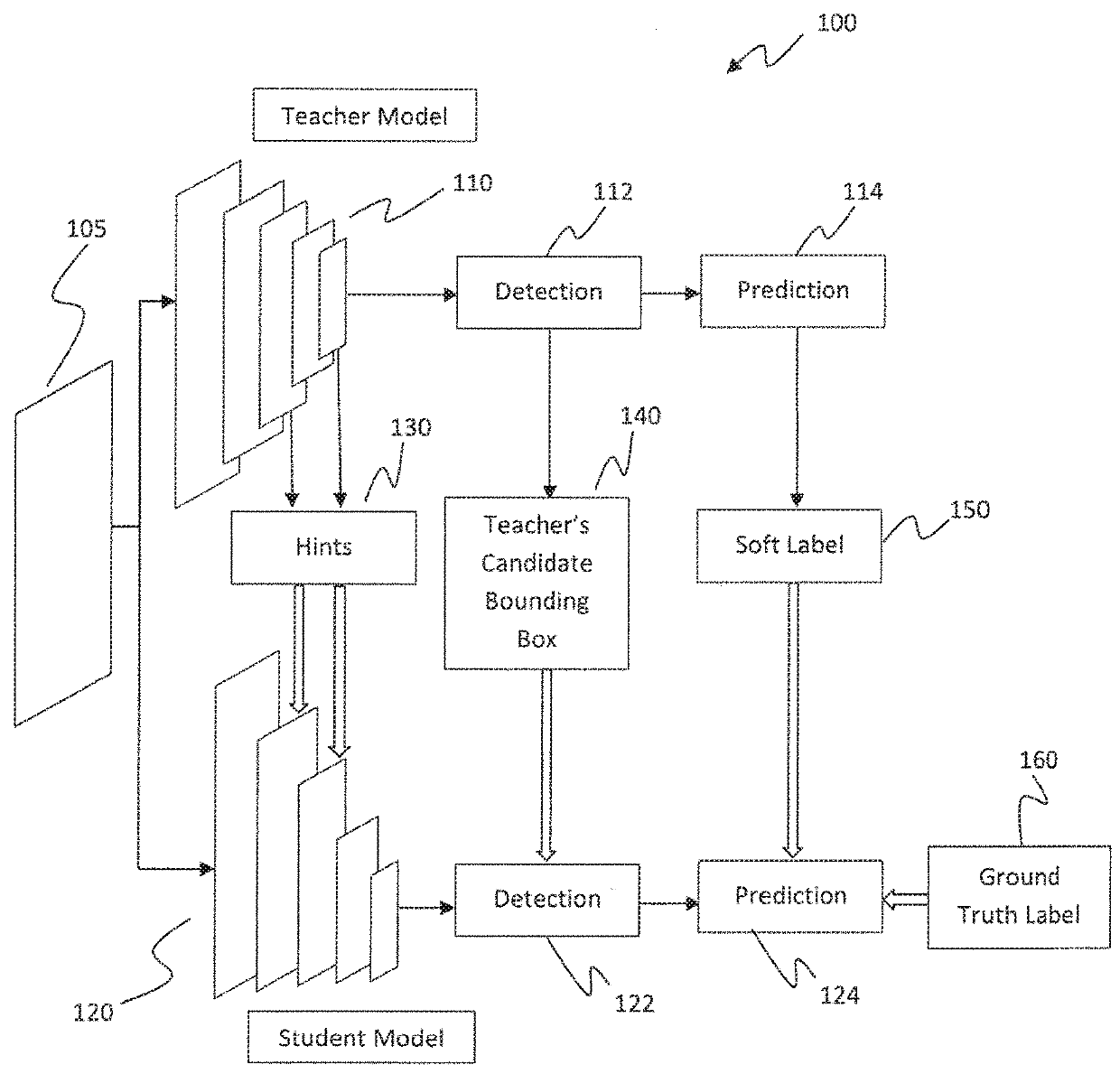

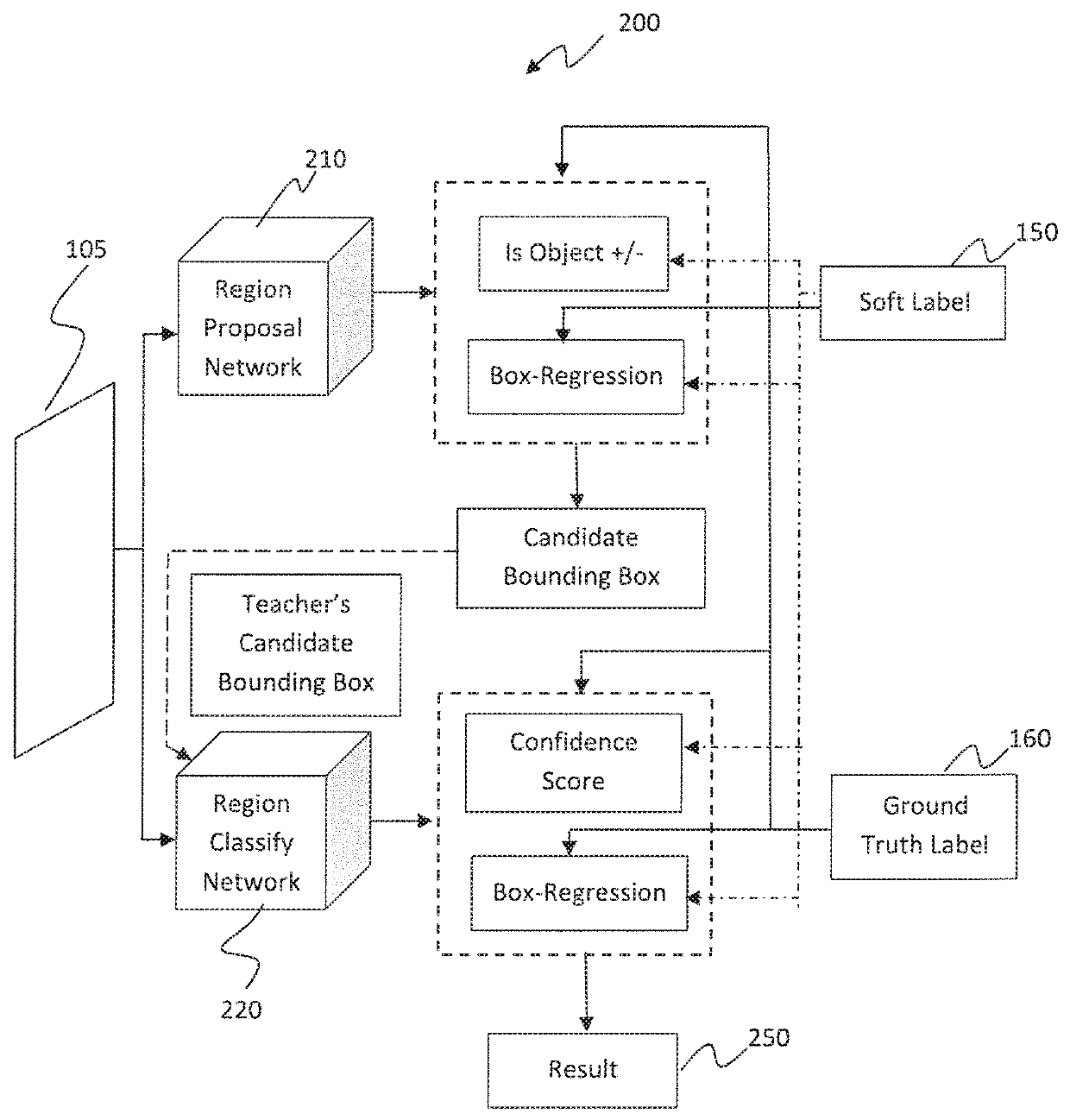

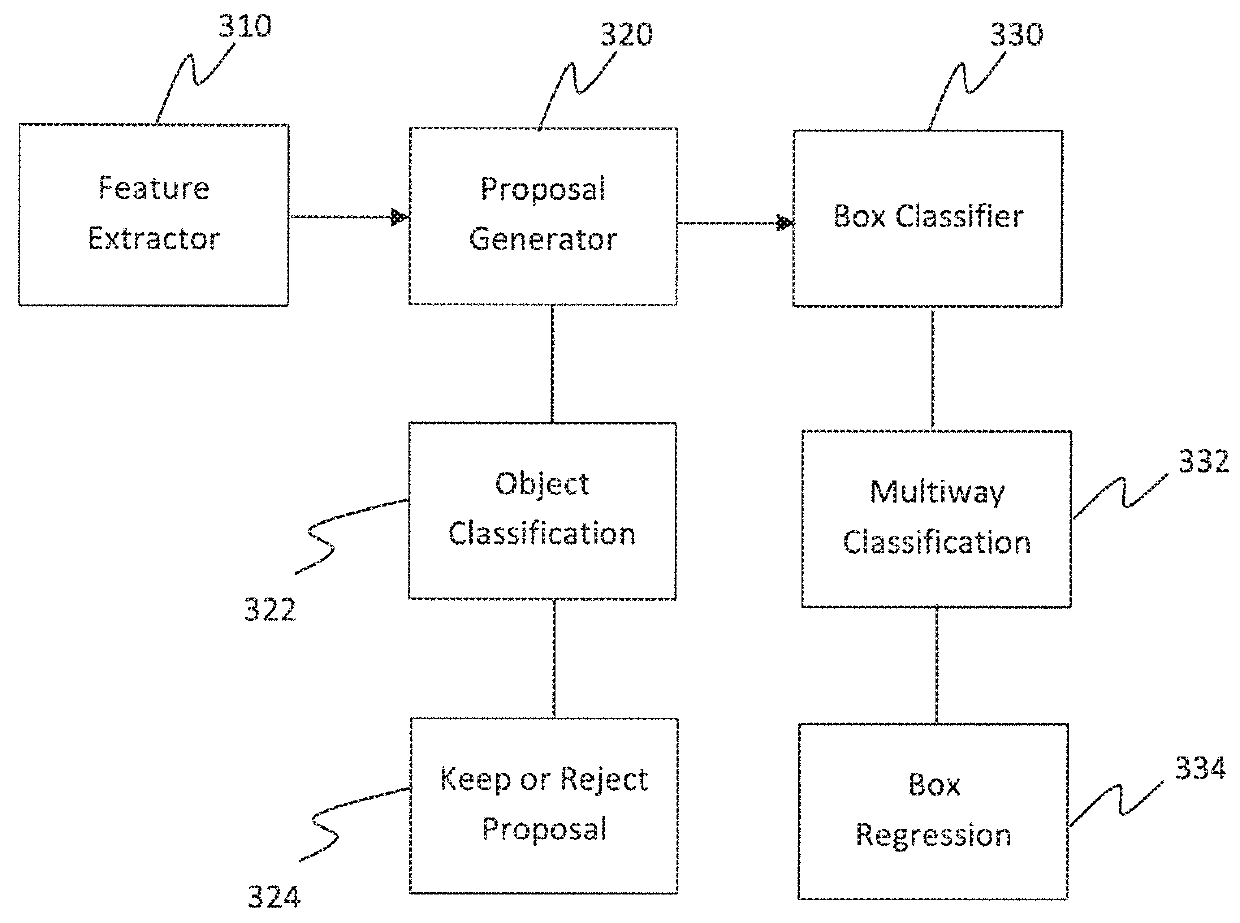

A computer-implemented method executed by at least one processor for training fast models for real-time object detection with knowledge transfer is presented. The method includes employing a Faster Region-based Convolutional Neural Network (R-CNN) as an objection detection framework for performing the real-time object detection, inputting a plurality of images into the Faster R-CNN, and training the Faster R-CNN by learning a student model from a teacher model by employing a weighted cross-entropy loss layer for classification accounting for an imbalance between background classes and object classes, employing a boundary loss layer to enable transfer of knowledge of bounding box regression from the teacher model to the student model, and employing a confidence-weighted binary activation loss layer to train intermediate layers of the student model to achieve similar distribution of neurons as achieved by the teacher model.

Owner:NEC LAB AMERICA

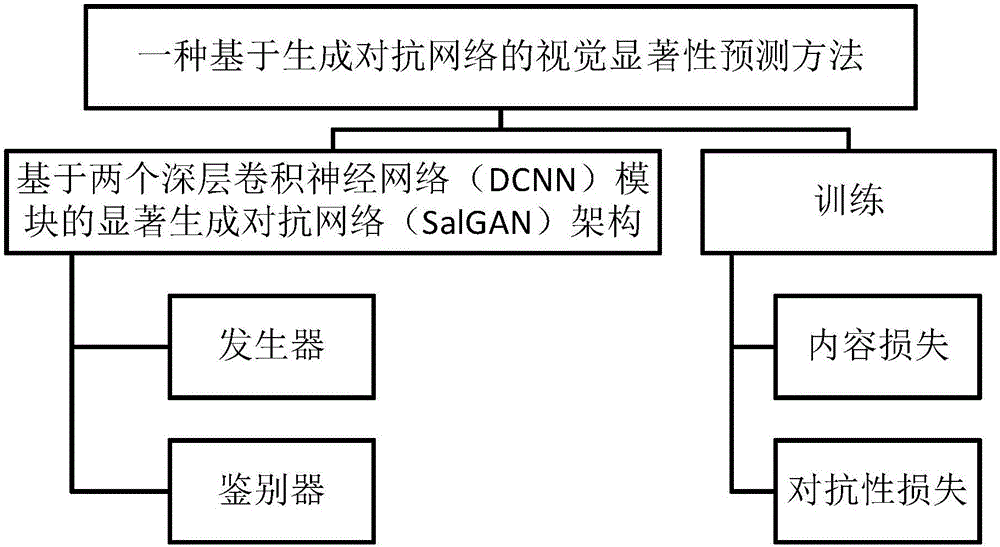

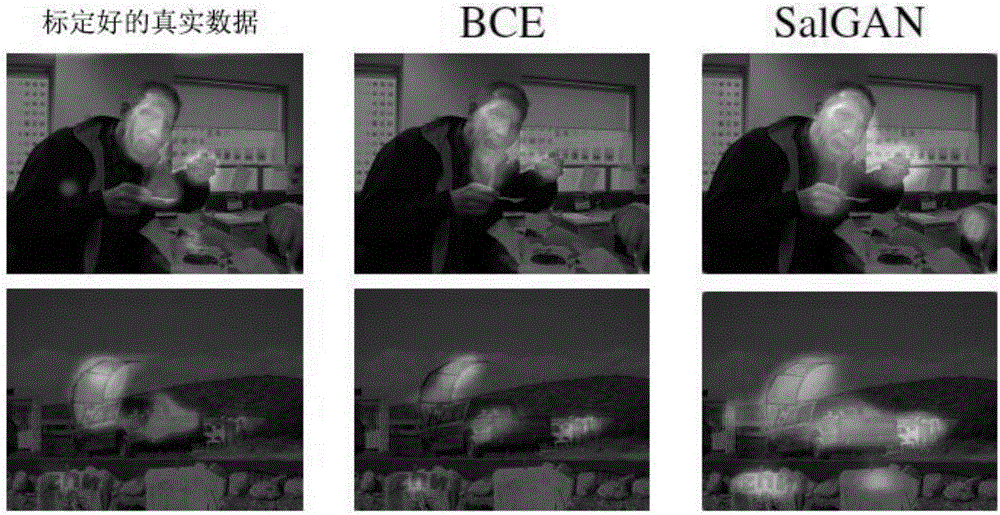

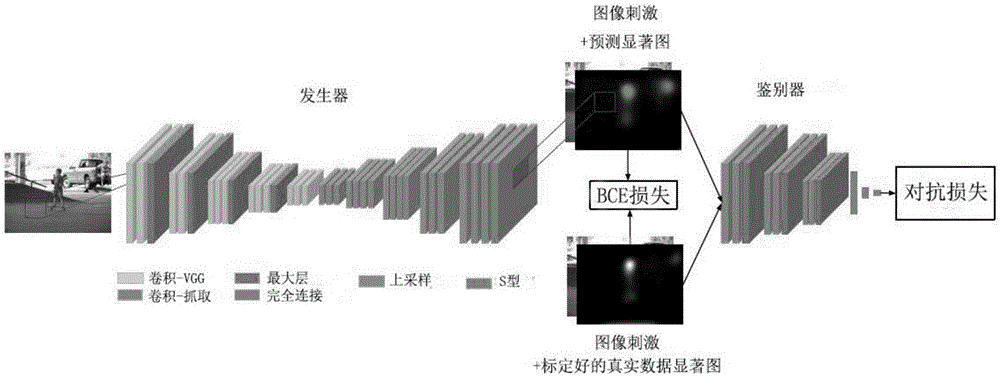

Visual significance prediction method based on generative adversarial network

InactiveCN106845471ACharacter and pattern recognitionNeural learning methodsDiscriminatorVisual saliency

The invention provides a visual significance prediction method based on a generative adversarial network. which mainly comprises the steps of constructing a saliency generative adversarial network (SalGAN) based on two deep convolutional neural network (DCNN) modules, and training. Specifically, the method comprises the steps of constructing the saliency generative adversarial network (SalGAN) based on two deep convolutional neural network (DCNN) modules, wherein the saliency generative adversarial network comprises a generator and a discriminator, and the generator and the discriminator are combined for predicating a visual saliency graph of a preset input image; and training filter weight in the SalGAN through perception loss which is caused by combining content loss and adversarial loss. A loss function in the method is combination of error from the discriminator and cross entropy relative to calibrated true data, thereby improving stability and convergence rate in adversarial training. Compared with further training of individual cross entropy, adversarial training improves performance so that higher speed and higher efficiency are realized.

Owner:SHENZHEN WEITESHI TECH

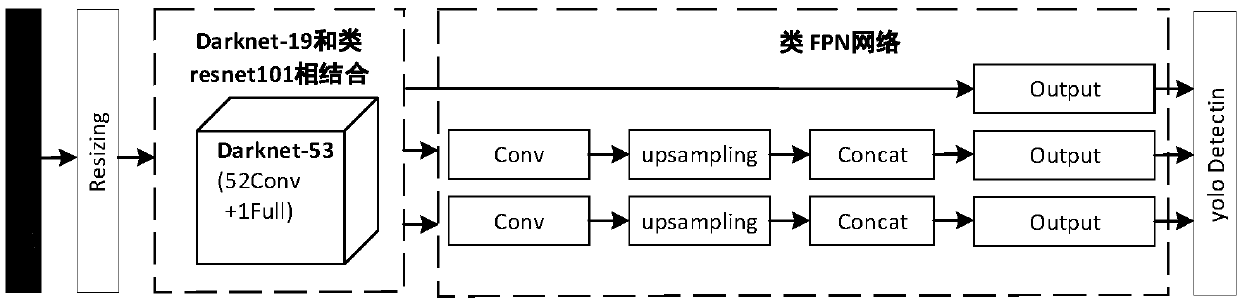

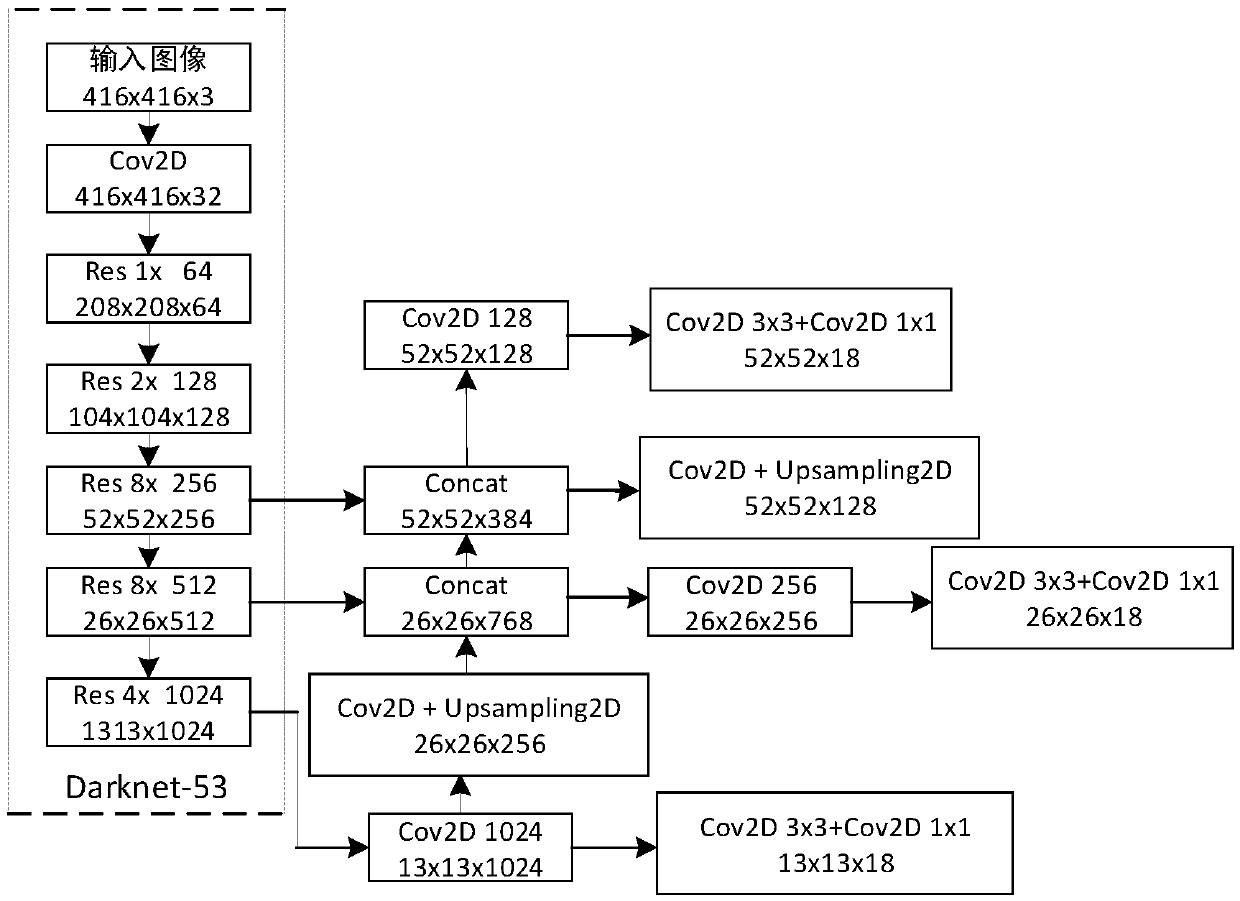

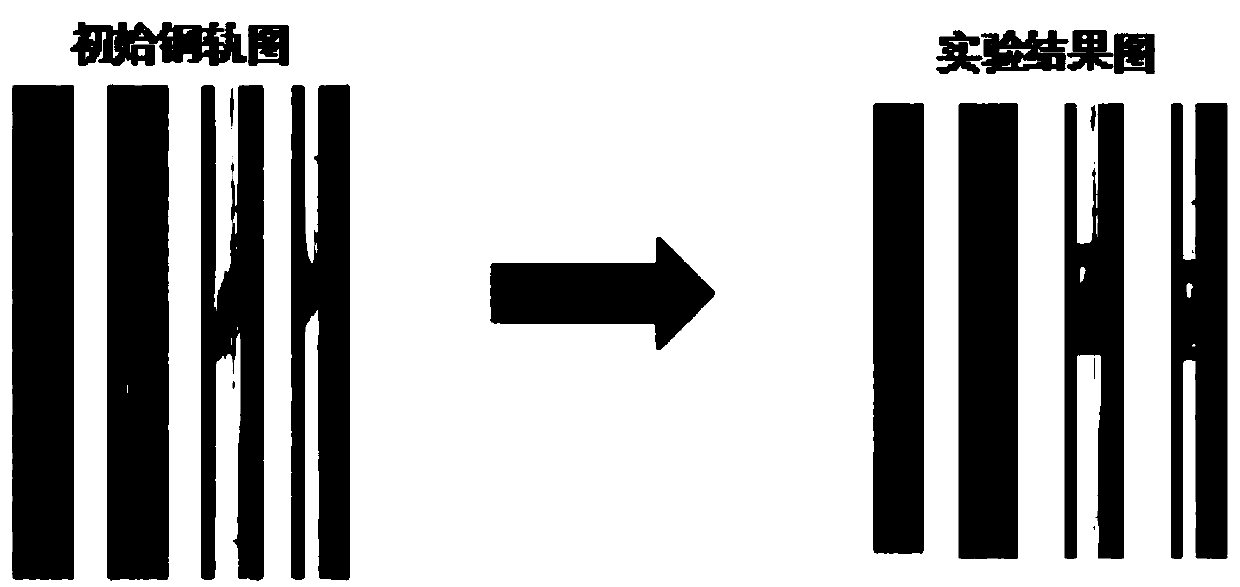

Rail surface defect detection method based on depth learning network

InactiveCN109064461AResolve overlapTargeting is accurateImage analysisOptically investigating flaws/contaminationImage extractionNetwork model

The invention belongs to the field of depth learning, and provides a rail surface defect detection method based on the depth learning network, aiming at solving various problems existing in the priorrail detection methods. The depth learning method first automatically resets the input rail image to 416*416, and then extracts and processes the image. Image extraction mainly by Darknet-53 model complete. The processing output is mainly accomplished by the FPN-like network model. Firstly, the rail image is divided into cells. According to the position of the defects in the cells, the width, height and coordinates of the center point of the defects are calculated by dimension clustering method, and the coordinates are normalized. At the same time, we use logistic regression to predict the fraction of boundary box object, use binary cross-entropy loss to predict the category contained in the boundary box, calculate the confidence level, and then process the convolution in the output, up-sampling, network feature fusion to get the prediction results. The invention can accurately identify defects and effectively improve the detection and identification rate of rail surface defects.

Owner:CHANGSHA UNIVERSITY OF SCIENCE AND TECHNOLOGY

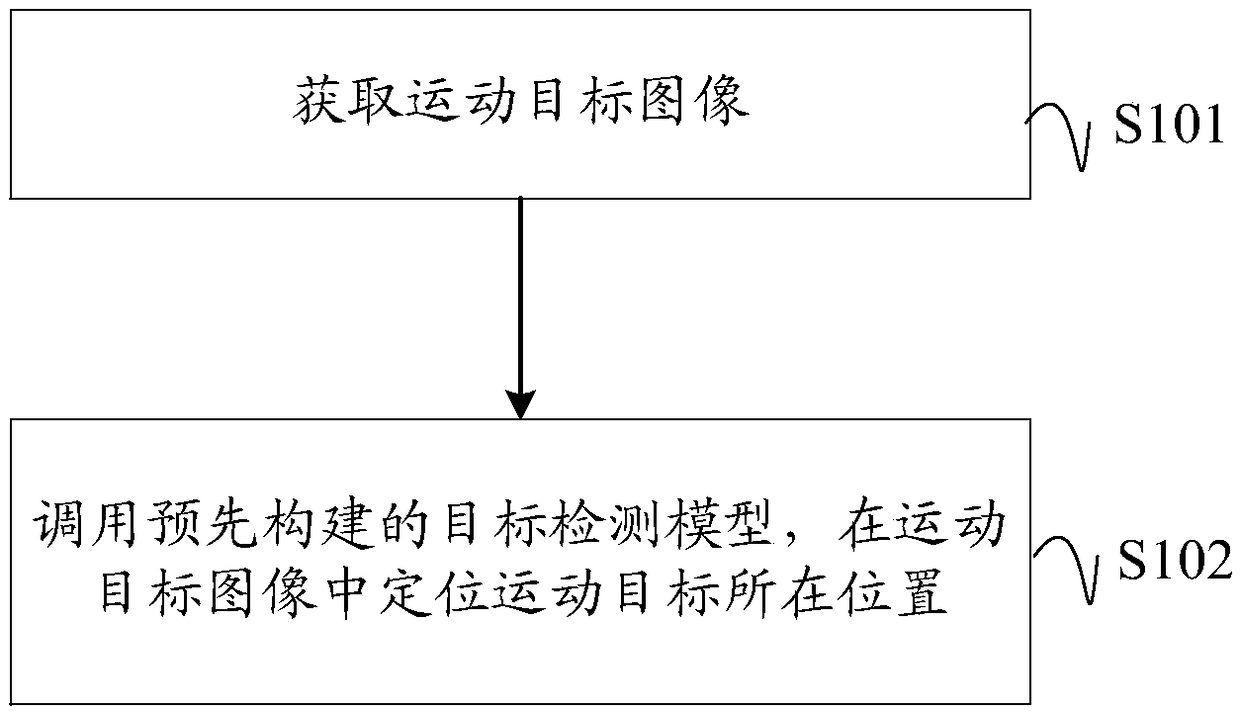

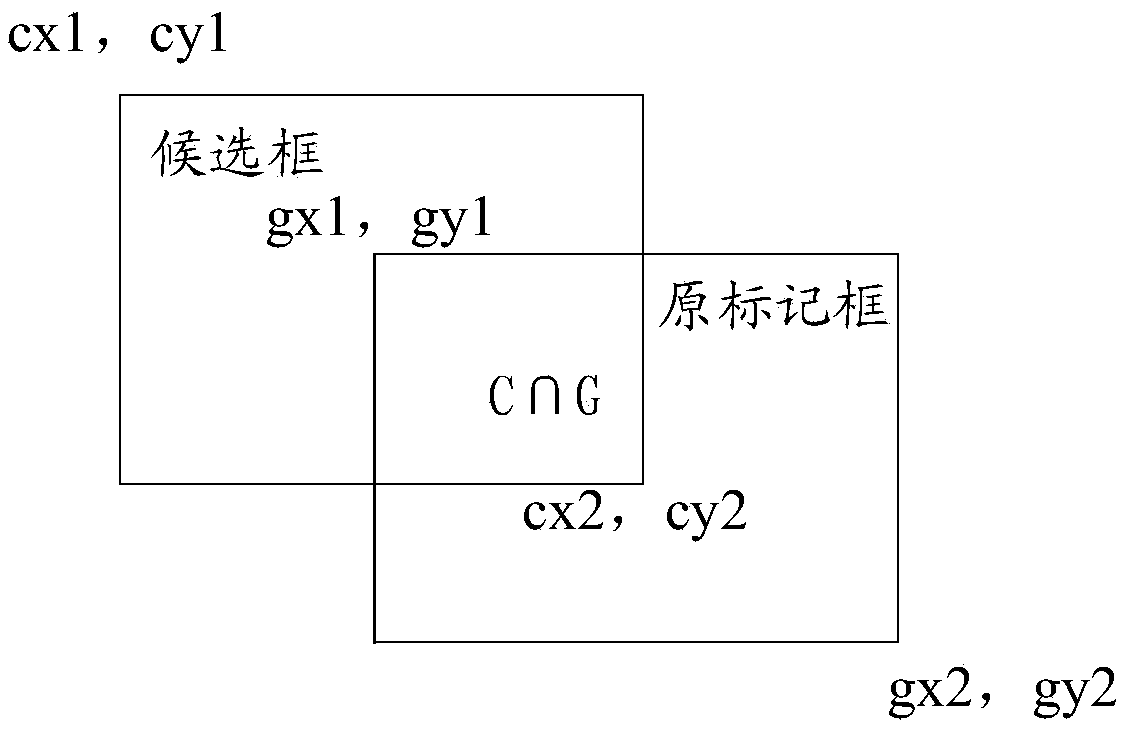

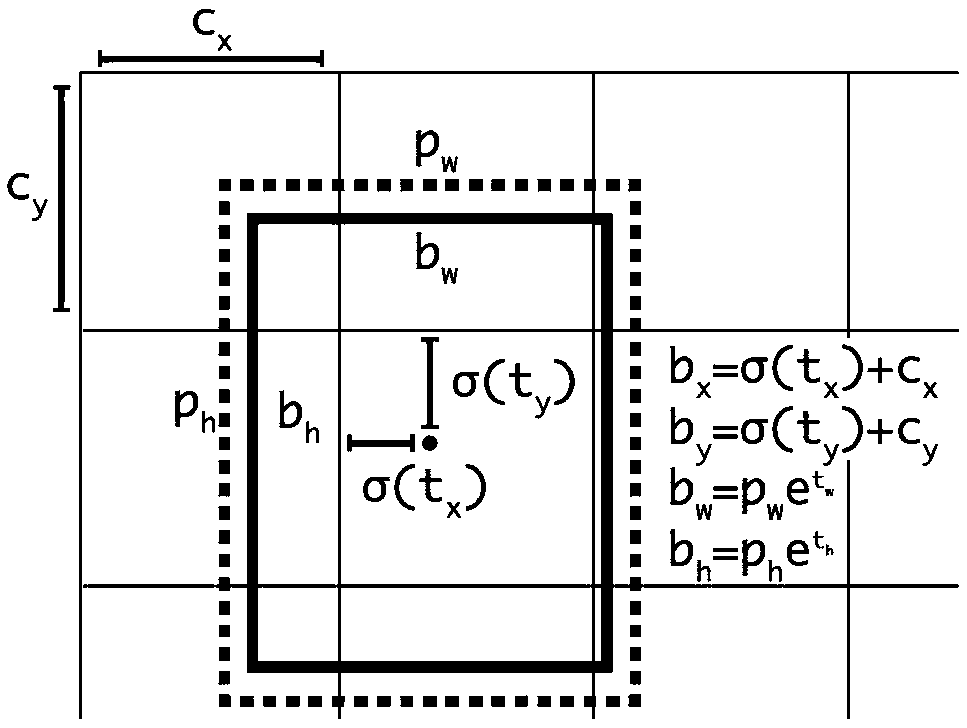

Moving object behavior tracking method, device, apparatus, and readable storage medium

InactiveCN109117794ASolve the detection speed is slowImprove positioning accuracyCharacter and pattern recognitionCross entropyNon maximum suppression

The embodiment of the invention discloses a moving target behavior tracking method, a device, a device and a computer-readable storage medium. The method includes invoking the pre-constructed object detection model to detect the object in the moving object image so as to locate the position of the moving object in the moving object image. The target detection model is based on YOLOv3 algorithm andtrained with regularization and binary cross entropy as loss function. The target detection model selects the target boundary box satisfying the preset conditions according to the intersection ratioand non-maximum suppression algorithm, and determines the center coordinate of the target boundary box to locate the position of the moving target. The present application utilizes an object detectionimage model to locate a moving object in a captured moving object image, and for a plurality of successive frames of successive images, by locating a moving object in each frame of the images, tracking detection of a moving object behavior in a video is realized. The present application improves the detection speed and positioning accuracy of a moving object.

Owner:GUANGDONG UNIV OF TECH

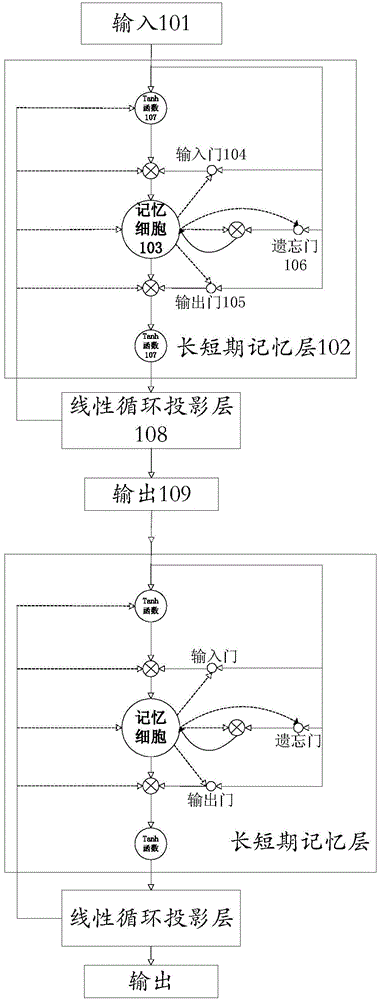

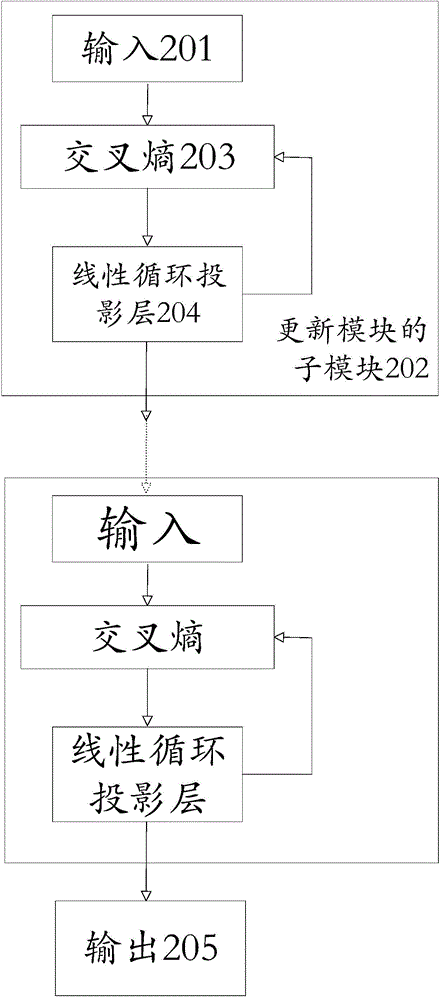

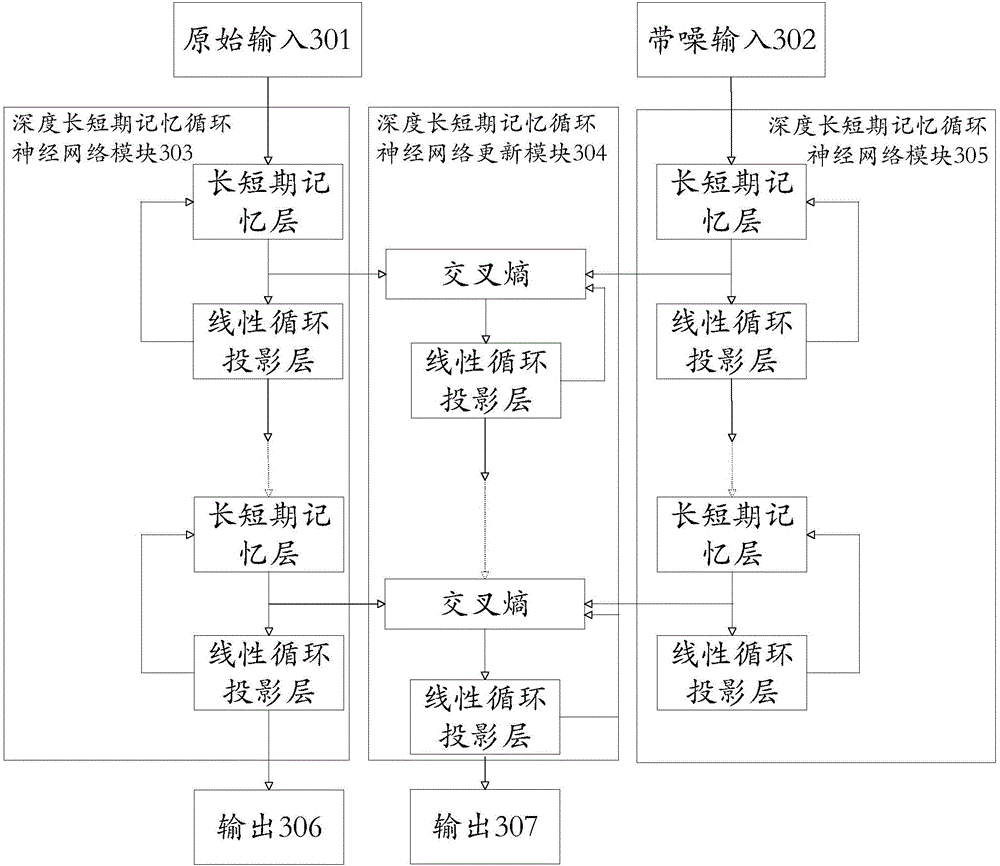

Continuous voice recognition method based on deep long and short term memory recurrent neural network

ActiveCN104538028AValid descriptionImprove noise immunitySpeech recognitionEnvironmental noiseComputation complexity

The invention provides a continuous voice recognition method based on a deep long and short term memory recurrent neural network. According to the method, a noisy voice signal and an original pure voice signal are used as training samples, two deep long and short term memory recurrent neural network modules with the same structure are established, the difference between each deep long and short term memory layer of one module and the corresponding deep long and short term memory layer of the other module is obtained through cross entropy calculation, a cross entropy parameter is updated through a linear circulation projection layer, and a deep long and short term memory recurrent neural network acoustic model robust to environmental noise is finally obtained. By the adoption of the method, by establishing the deep long and short term memory recurrent neural network acoustic model, the voice recognition rate of the noisy voice signal is improved, the problem that because the scale of deep neutral network parameters is large, most of calculation work needs to be completed on a GPU is avoided, and the method has the advantages that the calculation complexity is low, and the convergence rate is high. The continuous voice recognition method based on the deep long and short term memory recurrent neural network can be widely applied to the multiple machine learning fields, such as speaker recognition, key word recognition and human-machine interaction, involving voice recognition.

Owner:TSINGHUA UNIV

Model training method and device and storage medium

ActiveCN110163234AImprove accuracyImprove visual effectsGeometric image transformationCharacter and pattern recognitionComputer scienceMachine learning

The embodiment of the invention discloses a model training method and device and a storage medium. The method includes: obtaining a multi-label image training set; selecting a target training image currently used for model training from the multi-label image training set; carrying out label prediction on the target training image by adopting a deep neural network model to obtain a plurality of prediction labels of the target training image; obtaining a cross entropy loss function corresponding to the sample label, the positive label loss in the cross entropy loss function being provided with aweight, and the weight being greater than 1; and converging the prediction label and the sample label of the target training image according to the cross entropy loss function to obtain a trained deep neural network model. According to the scheme, the model accuracy and visual expressive force can be improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

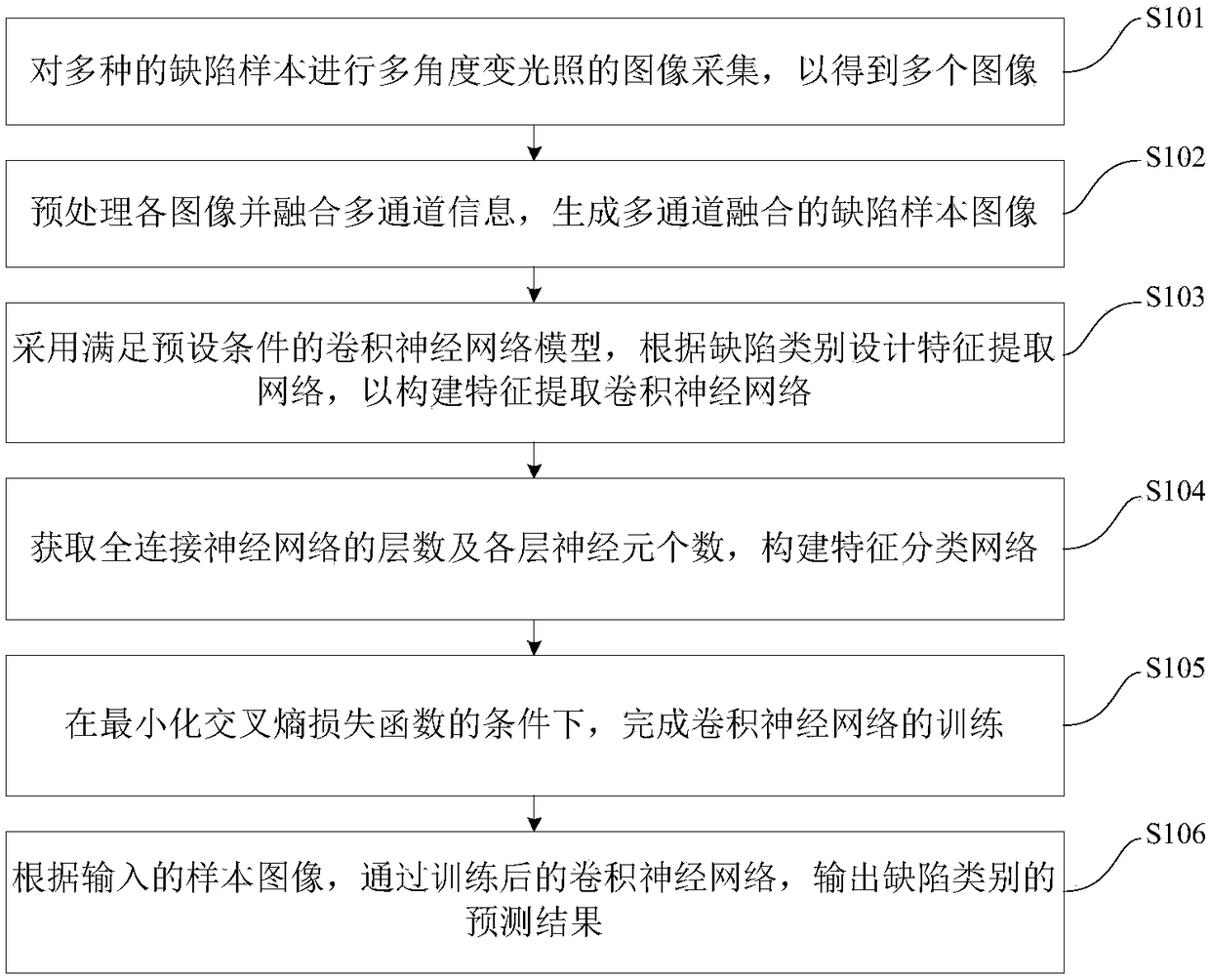

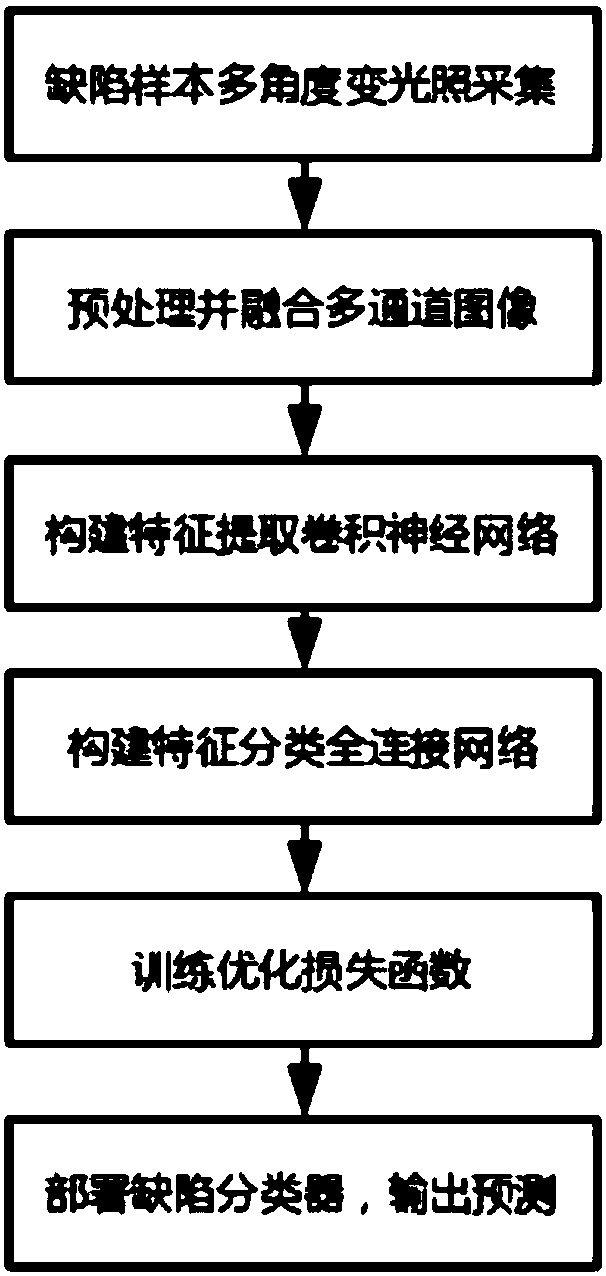

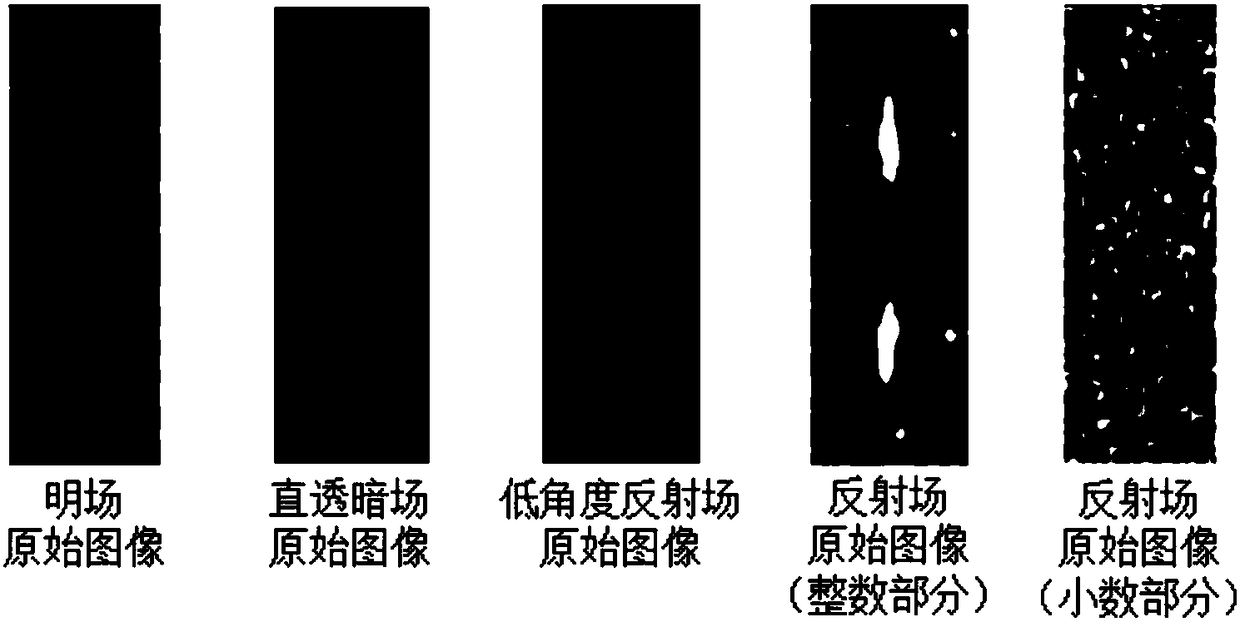

Convolutional neural network-based photovoltaic glass defect classification method and device

ActiveCN108108768AReduce consumptionGuaranteed generalization abilityCharacter and pattern recognitionNeural architecturesClassification methodsNetwork model

The invention discloses a convolutional neural network-based photovoltaic glass defect classification method and a convolutional neural network-based photovoltaic glass defect classification device. The method comprises the following steps of carrying out multi-angle and variable-illumination image acquisition on a plurality of defect samples to obtain a plurality of images; preprocessing the images to fuse the multi-channel information and generate a multi-channel-fused defect sample image; adopting a convolution neural network model which meets a preset condition, extracting a network according to defect category design features and constructing a feature extraction convolutional neural network; obtaining the number of layers of all-connected neural networks and the number of neurons ofeach layer, and constructing a feature classification network; under the condition that the cross entropy loss function is minimized, completing the training of the convolutional neural network; according to an input sample image, outputting a prediction result of a defect category through the trained convolutional neural network. Based on the method, the situation that training sets are insufficient can be effectively solved while the generalization ability and the prediction precision of the model are guaranteed. Meanwhile, the high classification precision can be achieved for a small amountof glass defect samples.

Owner:TSINGHUA UNIV

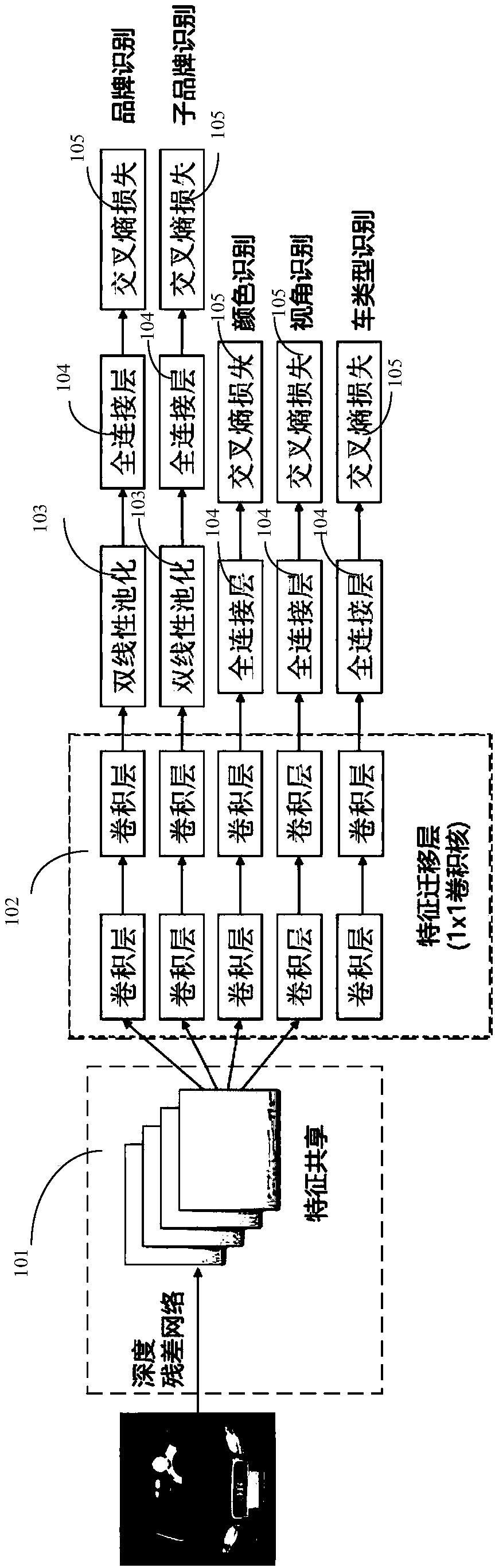

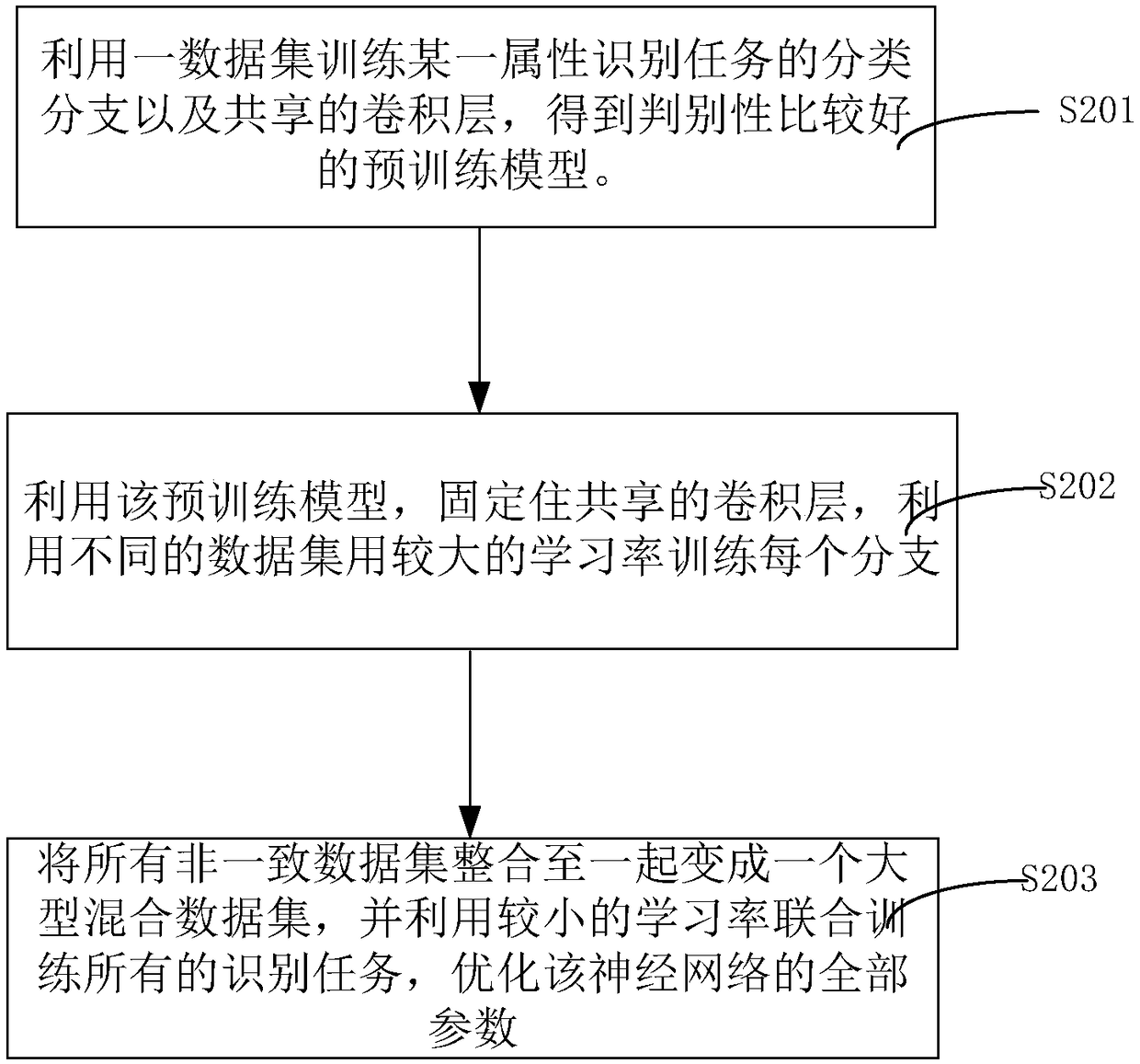

Deep neural network for fine recognition of vehicle attributes and training method thereof

InactiveCN108549926AImprove accuracyDoes not affect the recognition effectKernel methodsNeural architecturesStochastic gradient descentFeature vector

The invention discloses a deep neural network for the fine recognition of vehicle attributes and a training method thereof. The network comprises a depth residual network, a feature migration layer, aplurality of all-connection layers, a plurality of loss calculation units, and a plurality of parameter updating units. The depth residual network is used for carrying out feature extraction on an input image to obtain a feature image. The feature migration layer comprises a plurality of feature migration units and is used for enabling each of all feature migration units to be adapted to specifictasks according to the features shared by all attribute identifying tasks. The plurality of all-connection layers correspond to the branches of all attribute identifying tasks and are connected withthe feature migration layer so as to obtain feature vectors corresponding to all attribute identifying tasks. The plurality of loss calculation units correspond to the branches of all attribute identifying tasks and are respectively connected with the all-connection layers. The plurality of loss calculation units are used for calculating the loss of a loss function by adopting cross entropies as multiple classifiers. The plurality of parameter updating units correspond to the attribute identifying tasks and are connected with the loss calculation units. The parameter updating units are used for returning the loss based on the random gradient descent optimization algorithm, and updating parameters. According to the invention, various fine vehicle attributes can be identified at the same time by adopting only one neural network.

Owner:SUN YAT SEN UNIV

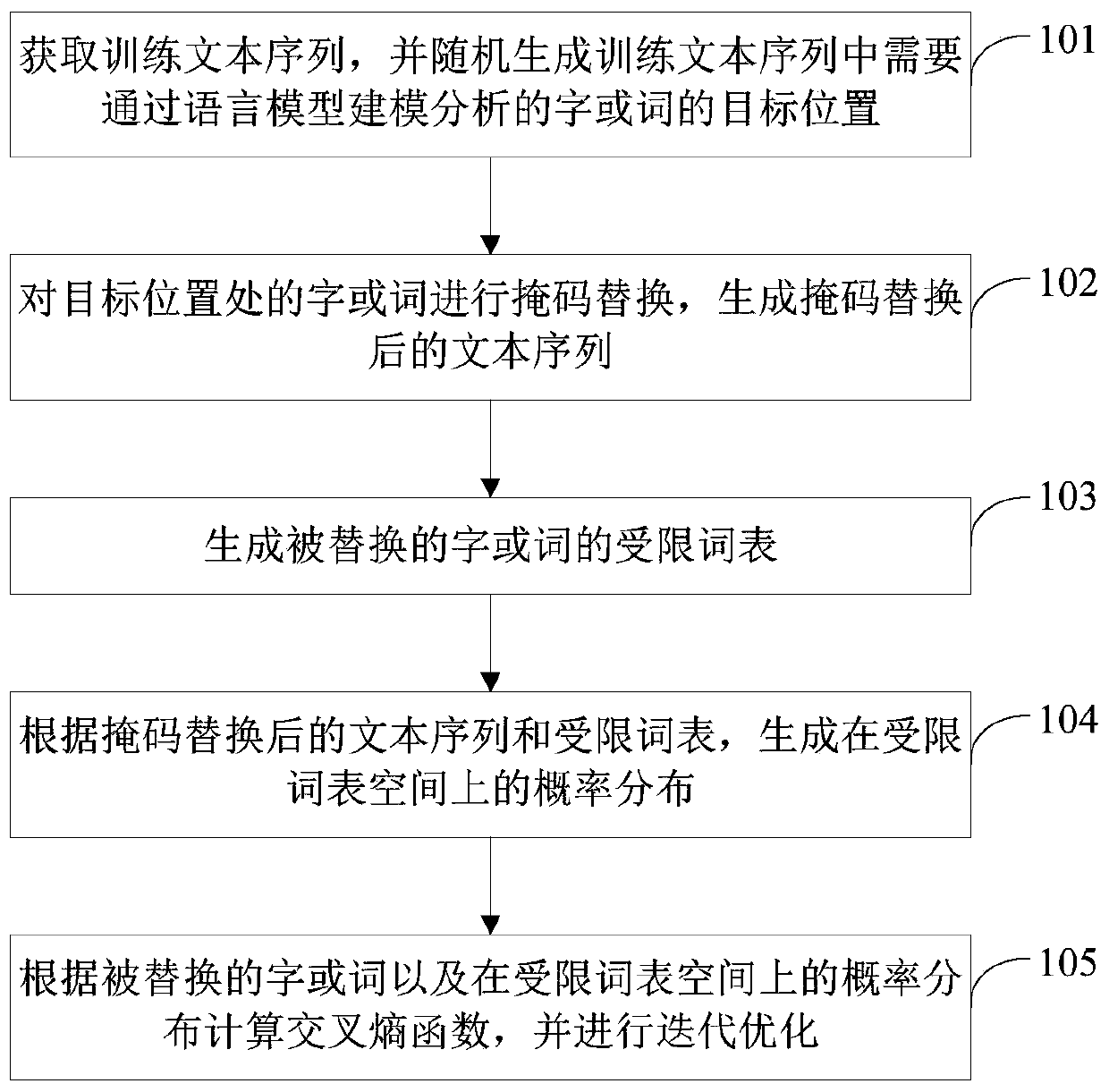

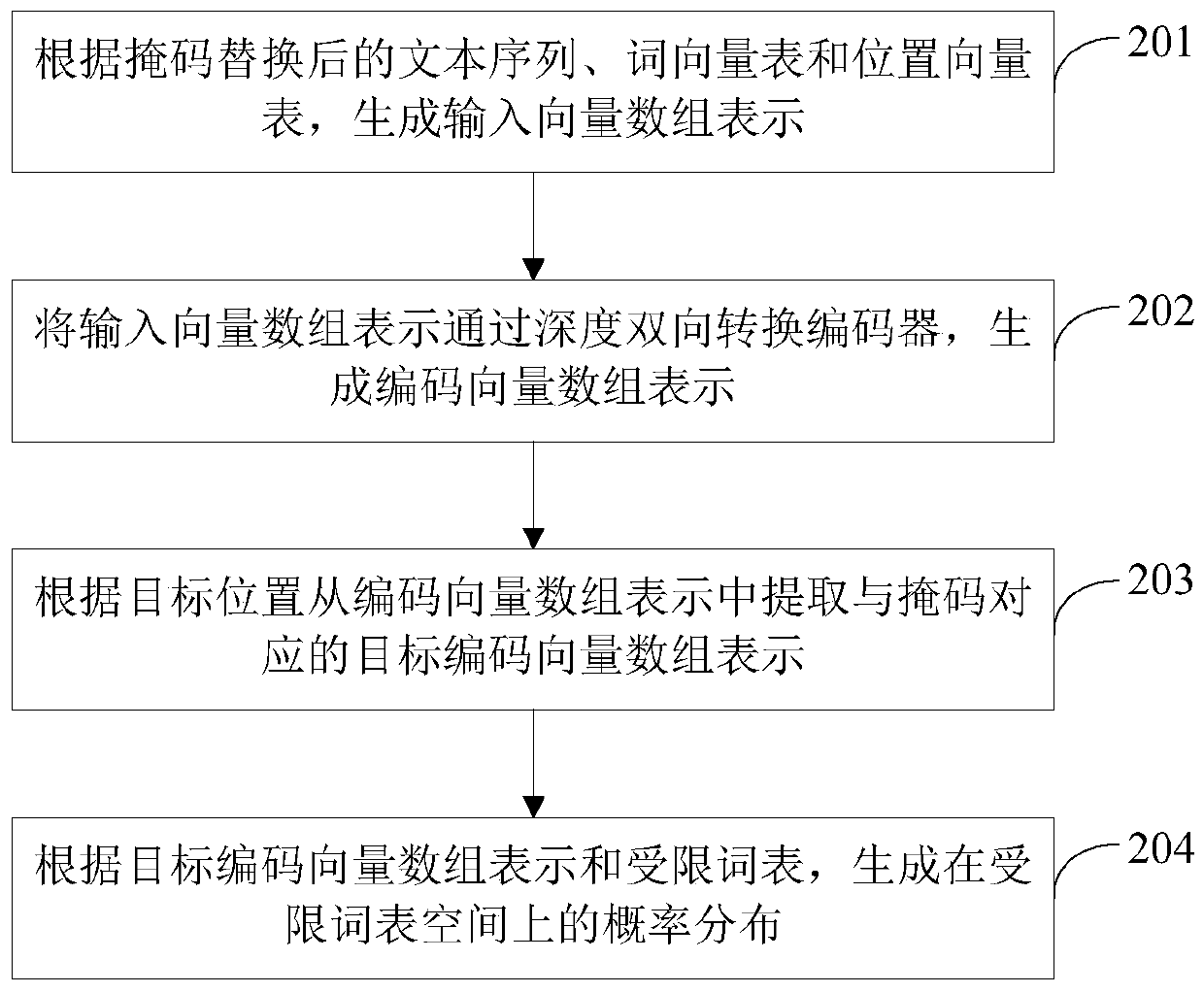

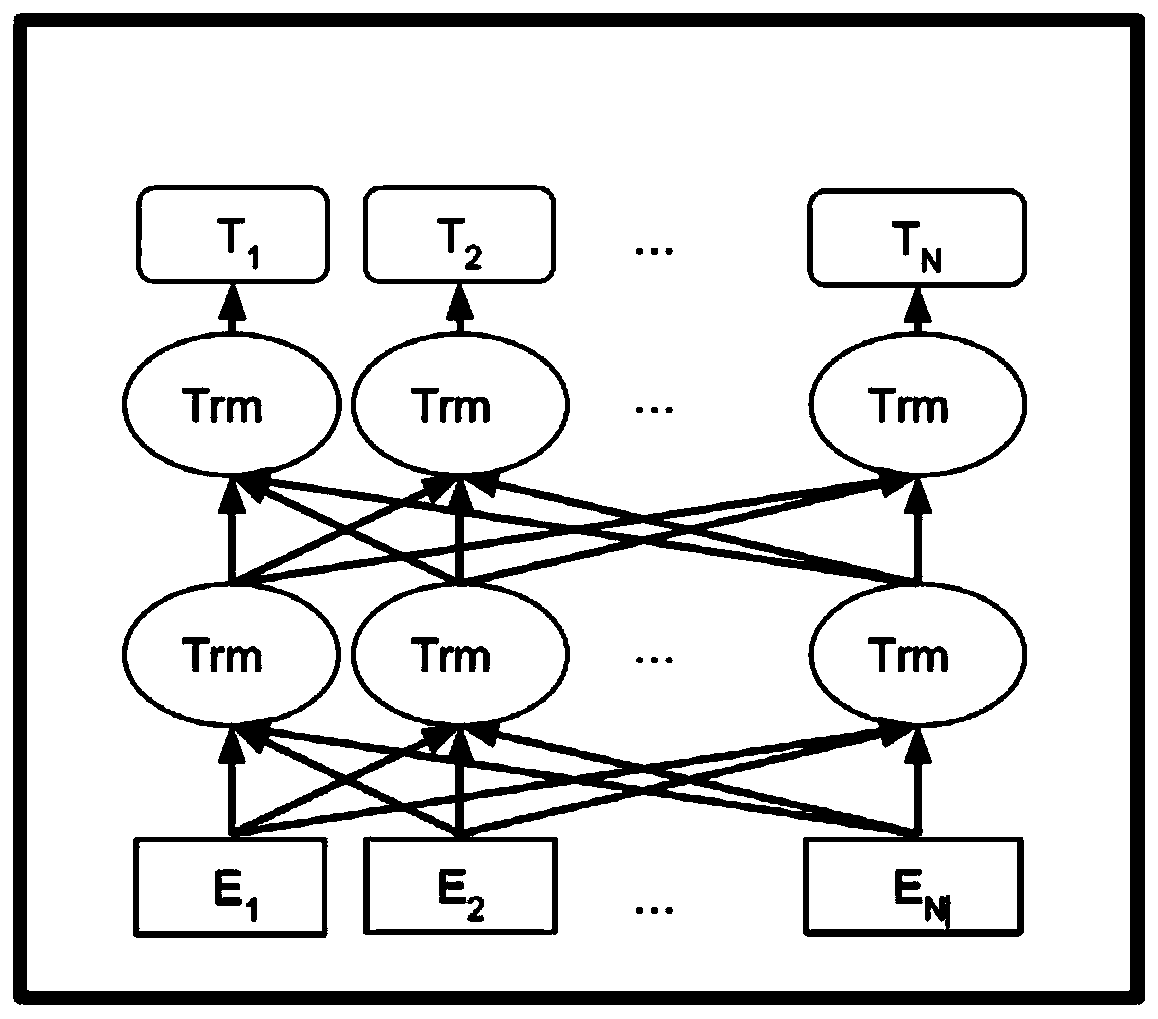

Language model training method and prediction method

ActiveCN110196894AEasy to detectImprove performance on tasks such as error correctionText database queryingSpecial data processing applicationsAlgorithmCross entropy

The invention provides a language model training method and a prediction method. The training method comprises the following steps of: obtaining training text sequences, randomly generating a target position of a character or a word which needs to be modeled and analyzed through a language model in the training text sequence; carrying out mask replacement on characters or words at a target position; generating a text sequence subjected to mask replacement, generating a limited word list of replaced characters or words, generating probability distribution on a limited word list space accordingto the text sequence subjected to mask replacement and the limited word list, calculating a cross entropy function according to the replaced characters or words and the probability distribution on thelimited word list space, and carrying out iterative optimization. According to the method, the limited word list is introduced to the decoding end of the model, and the original word information is fully utilized during model training, so that confusable words are easier to distinguish by the language model, and the effect of the language model on tasks such as error detection or error correctionis improved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

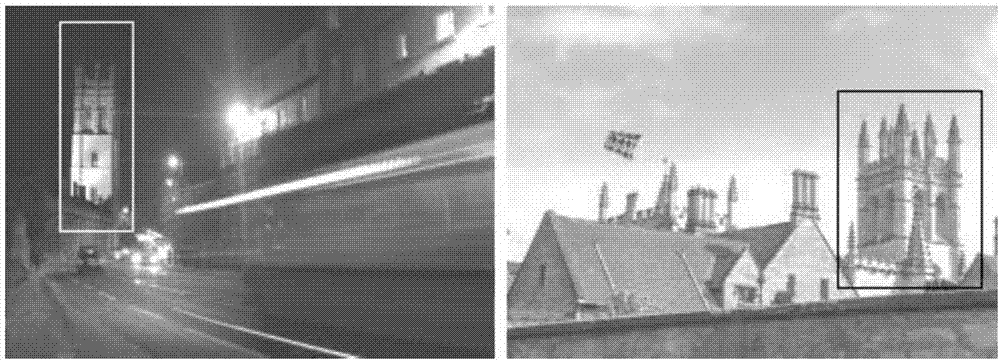

Visual target retrieval method and system based on target detection

ActiveCN107515895AImprove retrieval speedHigh precisionCharacter and pattern recognitionSpecial data processing applicationsFeature vectorData set

The invention relates to a visual target retrieval method and system based on target detection. The method comprises the steps that an IDF weighted cross entropy loss function is adopted to train a public target detection dataset, and a preliminary target detection model is generated; a retrieval dataset containing a target type designated by a user is adopted to slightly adjust the preliminary target detection model, and a final target detection model is generated; and feature extraction is performed on a visual target in a to-be-retrieved picture through the final target detection model, multiple convolution feature graphs of the to-be-retrieved picture are generated, the convolution feature graphs are aggregated through a spatial attention matrix, aggregate feature vectors are generated, and a picture matched with the aggregate feature vectors is retrieved in a picture library. According to the method, visual target retrieval and detection are associated, so that a candidate window prediction step is avoided; and the attention matrix is obtained by selectively accumulating the feature graphs, local descriptors of a convolution layer are aggregated into a global feature expression in a weighted mode, the global feature expression is used for visual target retrieval, and retrieval speed and precision are improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

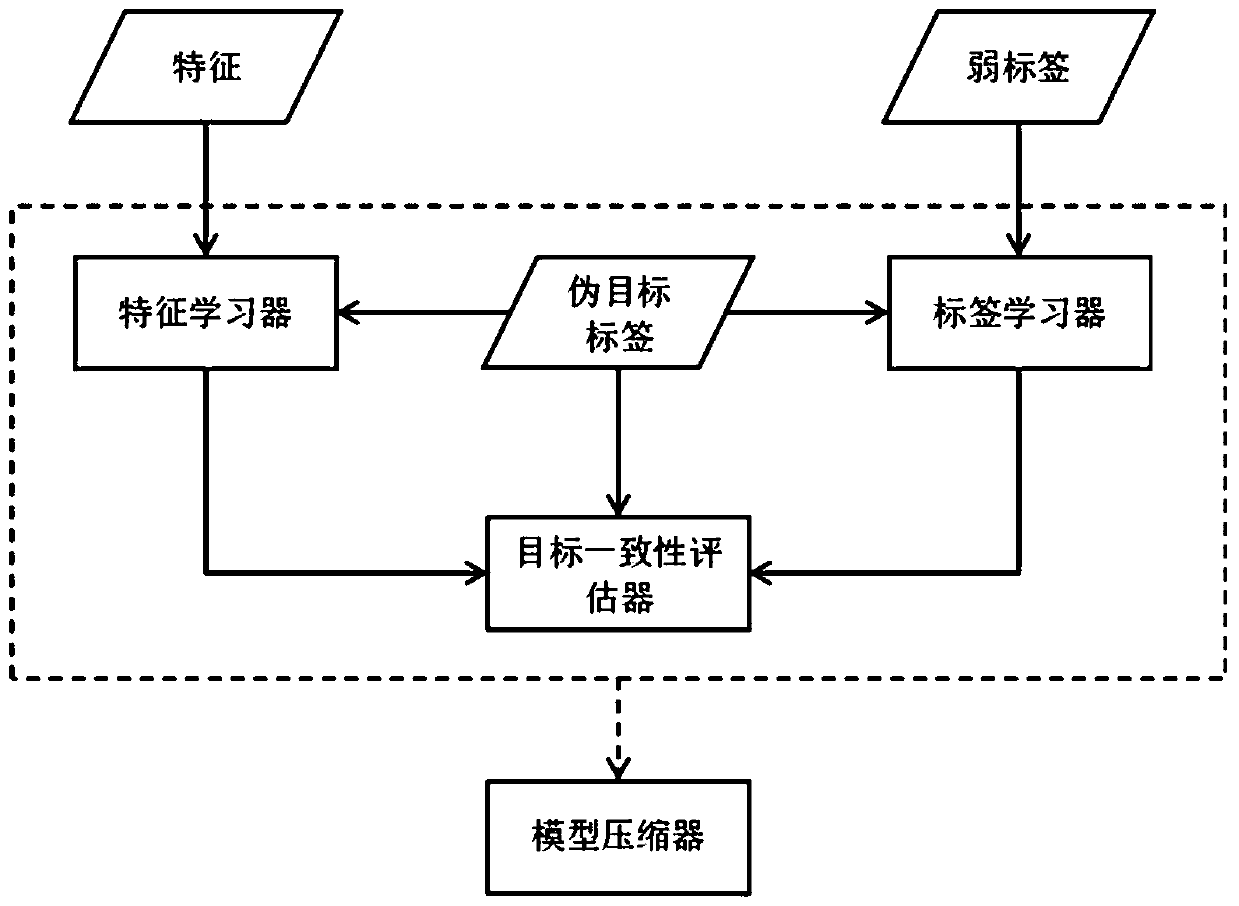

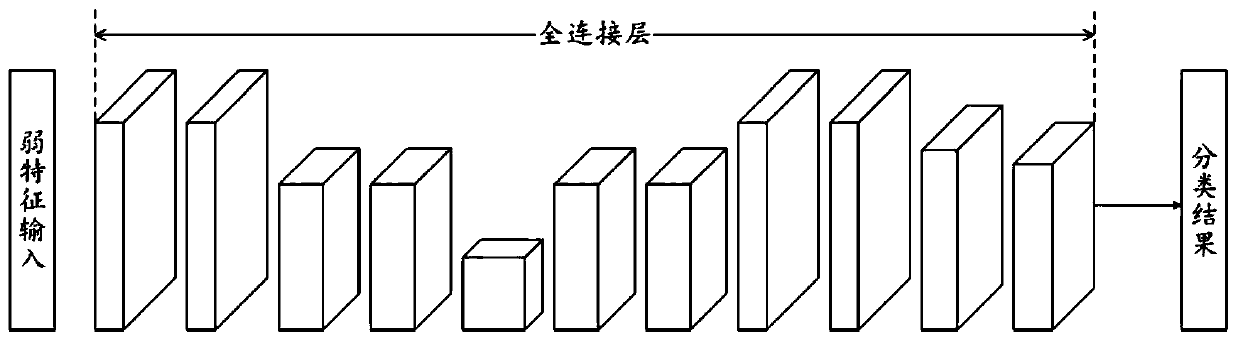

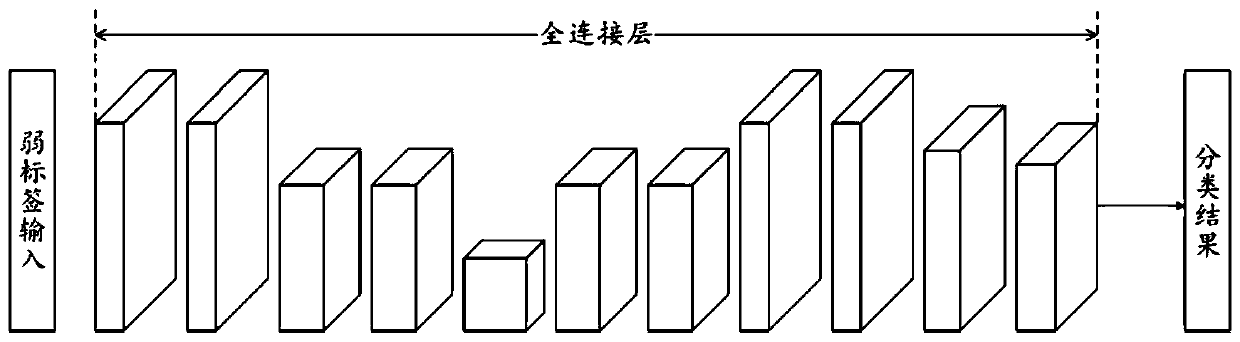

Neural network model training method and device for weak annotation data

ActiveCN110070183AFewer design layersCompact structureNeural learning methodsNetwork modelLabeled data

The invention relates to a neural network model training method and device for weak labeled data. The method comprises the following steps: 1) learning tag prediction from input features through a feature flow deep neural network, and outputting a prediction result of a target tag; 2) learning tag prediction from the input multi-view weak tags through a tag flow deep neural network, and outputtinga prediction result of a target tag; and 3) using generalized cross entropy loss to define the consistency of the tags, and optimizing the prediction result of the target tag by jointly training thefeature flow deep neural network and the tag flow deep neural network. According to the method, feature and tag two-way learning tag prediction is adopted, models and knowledge are fused in a unifiedmode through double-flow collaboration, weak features and weak tags are considered at the same time, a model collaborative optimization strategy is innovatively constructed, and model optimization isguided through knowledge cross validation of the model and the weak features.

Owner:INST OF INFORMATION ENG CHINESE ACAD OF SCI

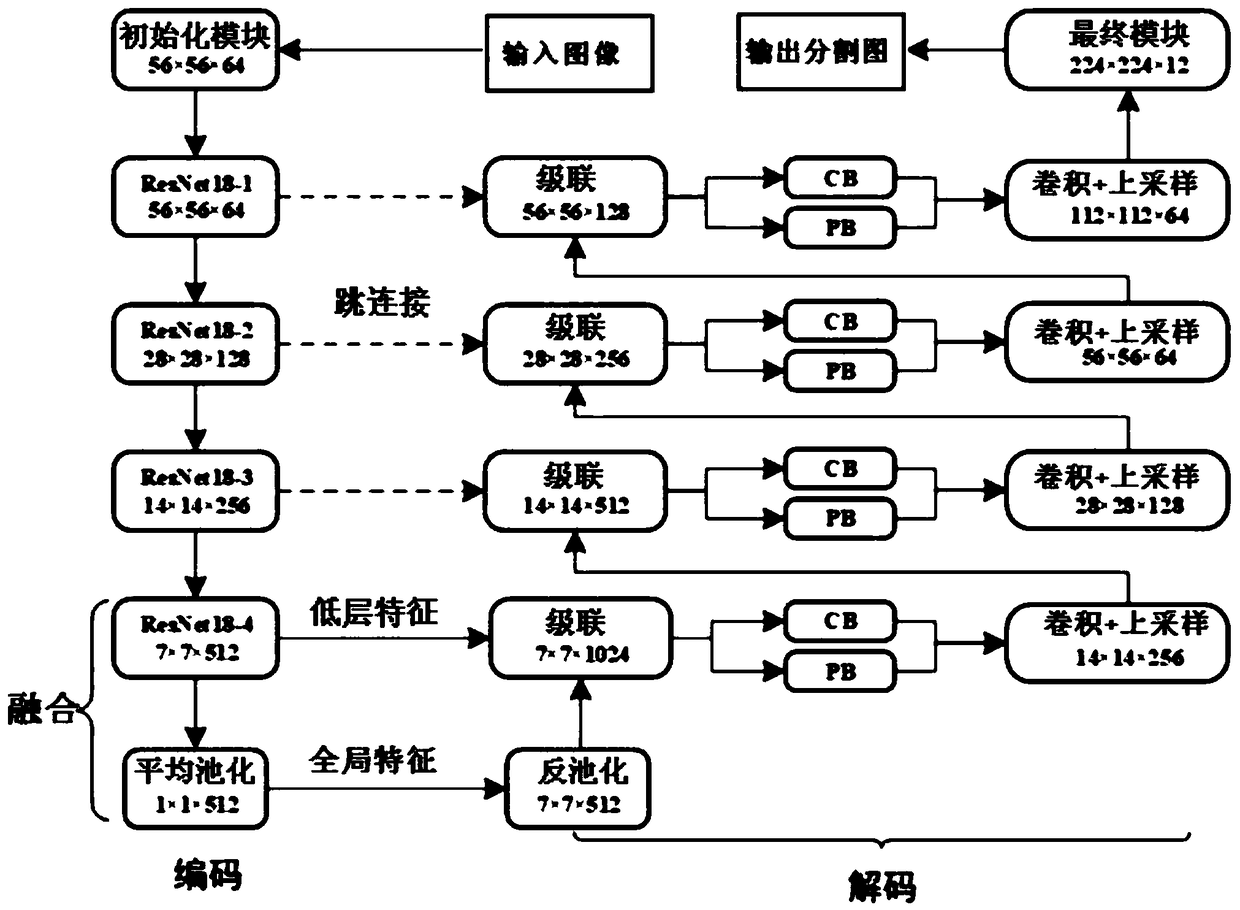

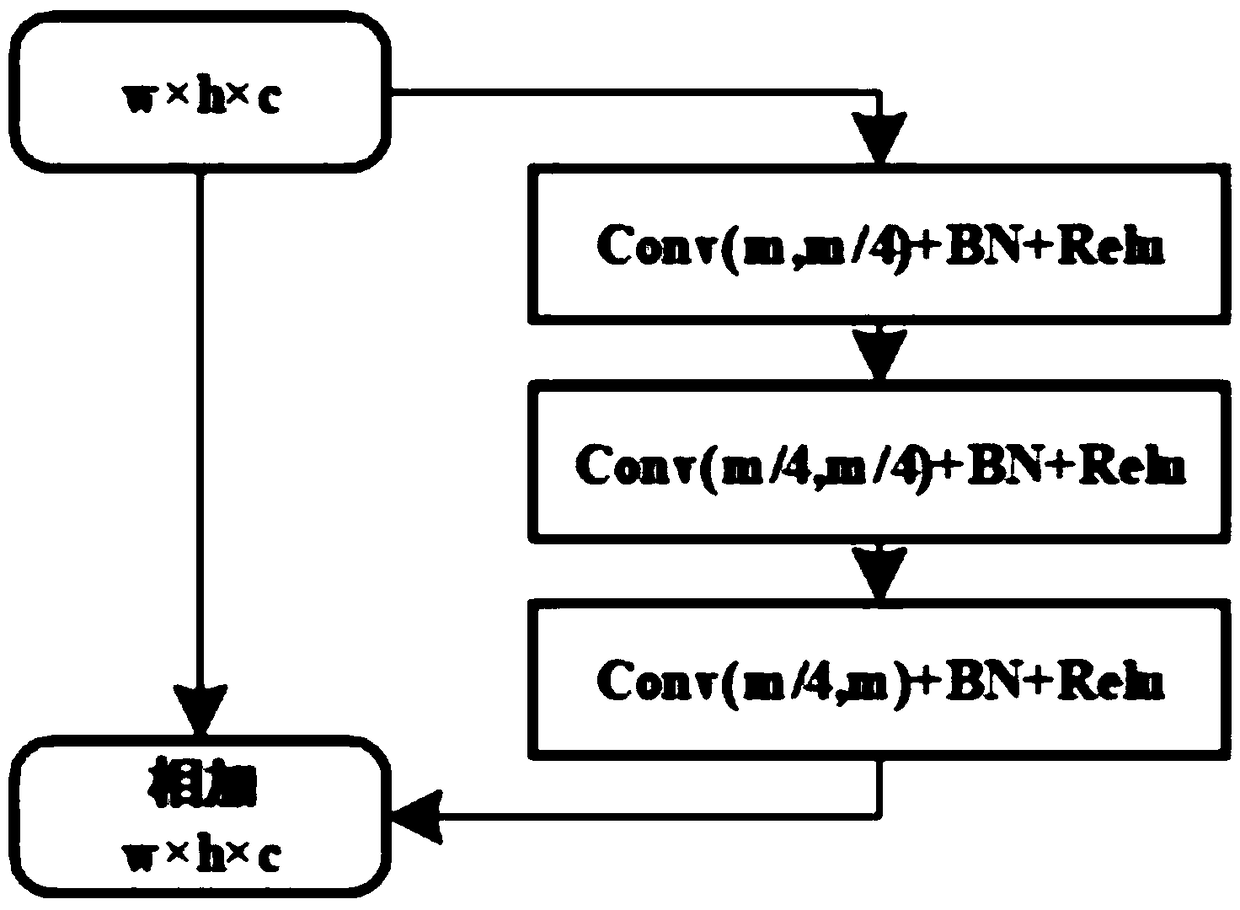

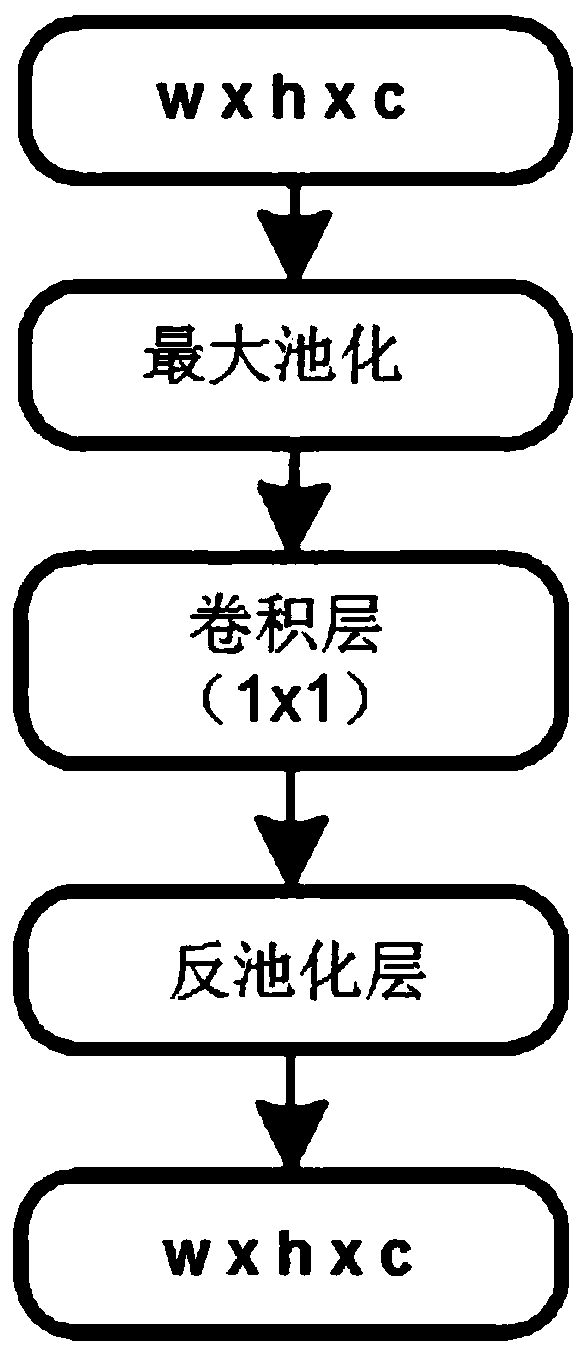

Image semantic segmentation based on global and local features of deep learning

ActiveCN109190752AReduce noiseClear boundariesCharacter and pattern recognitionNeural architecturesPattern recognitionNetwork model

The invention relates to an image semantic segmentation method based on global features and local features of deep learning. The method comprises the following steps: at an encoding end, the basic deep features of an image are extracted by using a convolution neural network model based on deep learning; meanwhile, the features are divided into low-level features and high-level features according to the deep of the convolution layer; the feature fusion module fuses the low-level feature and the high-level feature into the enhanced deep feature; the feature fusion module fuses the low-level feature and the high-level feature into the enhanced deep feature; after the deep feature is acquired, the deep feature is inputted to the decoding end; the cross-entropy loss function is used to train the network and mIoU is used to evaluate the performance of the network. The invention is reasonable in design, and uses the deep convolution neural network model to extract the global and local features of an image, fully utilizes the complementarity of the global features and the local features, further improves the performance by utilizing the stack pooling layer, and effectively improves the accuracy of image semantic segmentation.

Owner:ACADEMY OF BROADCASTING SCI STATE ADMINISTATION OF PRESS PUBLICATION RADIO FILM & TELEVISION +1

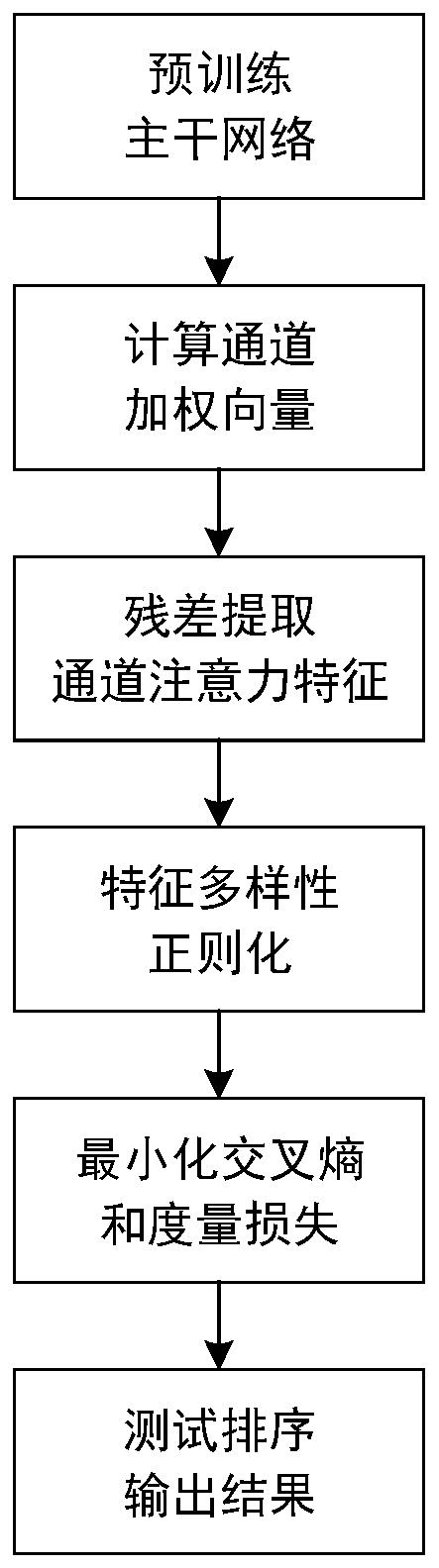

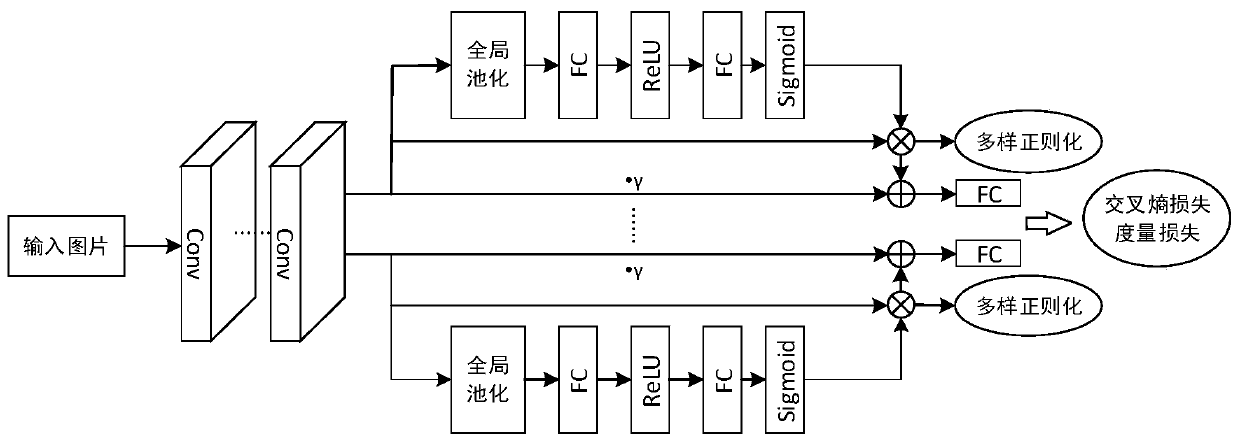

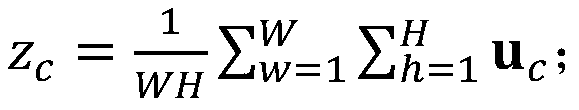

Pedestrian re-identification method based on multi-channel attention characteristics

ActiveCN110110642AImprove accuracyPrecise attentionBiometric pattern recognitionNeural architecturesRe identificationTest set

The invention discloses a pedestrian re-identification method based on multi-channel attention characteristics, which comprises the following steps: 1) constructing a convolutional neural network model based on channel attention, and pre-training a trunk network; 2) extracting output characteristics of the pedestrian picture in the trunk network, and calculating channel weighted vectors of the characteristics after global pooling; 3) multiplying the weighted vector by the output characteristic of the main network, and adding the multiplied weighted vector to obtain a channel attention characteristic; 4) repeatedly extracting a plurality of attention characteristics, and performing characteristic diversity regularization by adopting a Hailinger distance; 5) inputting the attention characteristics into a full connection layer and a classifier, and performing training to minimize cross entropy loss and metric loss; and 6) inputting the test set pictures into the trained model to extract features, and realizing pedestrian re-identification through metric sorting. According to the pedestrian re-identification method based on the attention mechanism, discriminative features of pedestrians are extracted based on the attention mechanism, repeated extraction of similar attention features is limited, and the accuracy and robustness of pedestrian re-identification are effectively improved.

Owner:SOUTH CHINA UNIV OF TECH

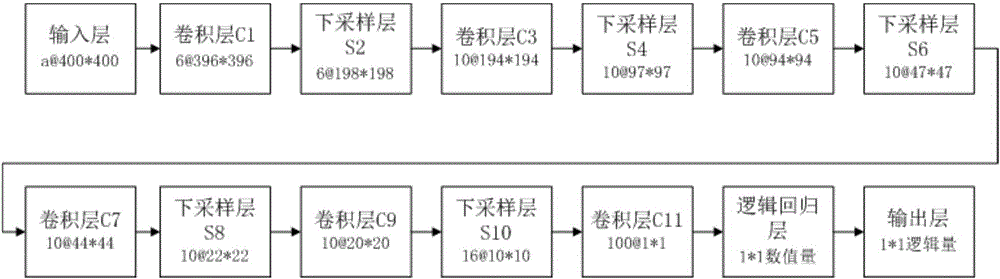

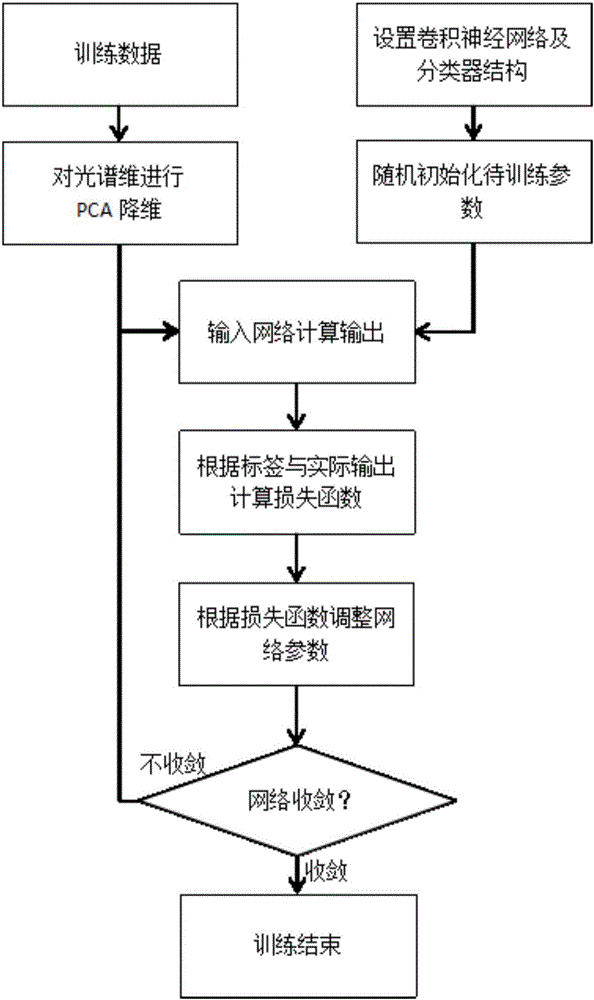

Gastrointestinal tumor microscopic hyper-spectral image processing method based on convolutional neural network

InactiveCN106097355AIncrease the amount of informationImprove efficiencyImage enhancementImage analysisBatch processingHyperspectral image processing

The invention discloses a gastrointestinal tumor microscopic hyper-spectral image processing method based on a convolutional neural network, comprising the following steps: reducing and de-noising the spectral dimension of an acquired gastrointestinal tissue hyper-spectral training image; constructing a convolutional neural network structure; and inputting obtained hyper-spectral data principal components (namely, a plurality of 2D gray images, which are equivalent to a plurality of feature maps of an input layer) as input images into the constructed convolutional neural network structure using a batch processing method, and by taking a cross entropy function as a loss function and using an error back propagation algorithm, training the parameters in the convolutional neural network and the parameters of a logistic regression layer according to the average loss function in a training batch until the network converges. According to the invention, the dimension of a hyper-spectral image is reduced using a principal component analysis method, enough spectral information and spatial texture information are retained, the complexity of the algorithm is reduced greatly, and the efficiency of the algorithm is improved.

Owner:SHANDONG UNIV

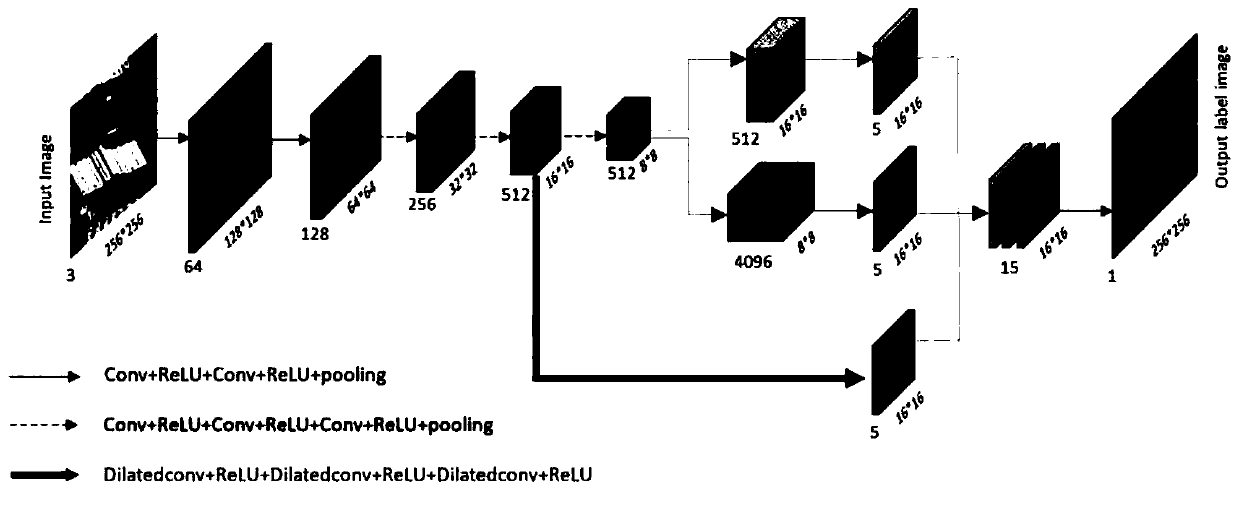

Remote sensing image semantic segmentation method based on migration VGG network

ActiveCN110059772AGood segmentation effectShort training timeCharacter and pattern recognitionNeural architecturesPattern recognitionNerve network

The invention discloses a remote sensing image semantic segmentation technology based on a VGG network. The method comprises the following steps: 1, randomly cutting the high-resolution remote sensingimage for training and the corresponding label image into small images; dividing the network structure into an encoding part and a decoding part; adopting the depooling path and the deconvolution path to double the resolution of the coded information; carrying out channel connection on a characteristic image and a result of cavity convolution, recovering the characteristic image to an original size through deconvolution upsampling, inputting an output label image into a PPB module for multi-scale aggregation processing, and finally, updating network parameters in a random gradient descent mode by taking cross entropy as a loss function; inputting the small images formed by sequentially cutting the test images into a neural network to predict corresponding label images, and splicing the label images into an original size. According to the technical scheme, the segmentation precision of the model is improved, the complexity of the network is reduced, and the training time is saved.

Owner:WENZHOU UNIVERSITY

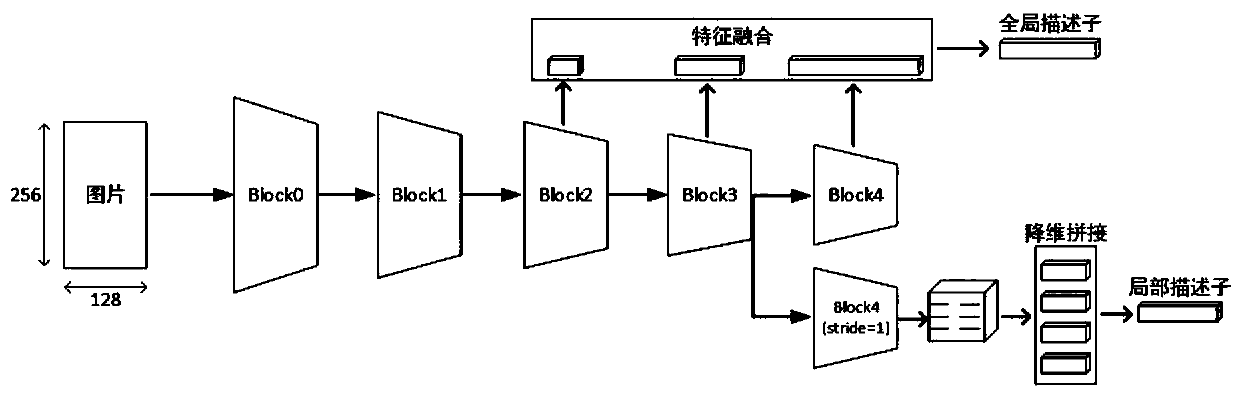

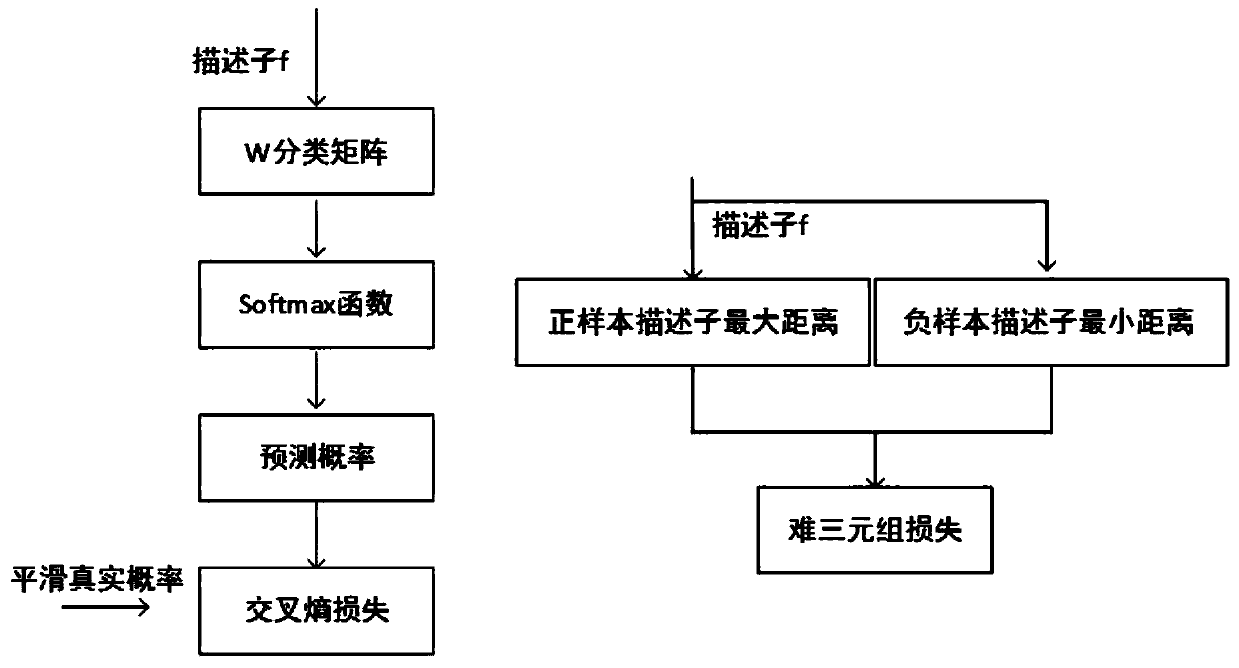

Pedestrian re-identification method based on multi-scale feature cutting and fusion

InactiveCN109784258AExempt from importingRealize learning and trainingCharacter and pattern recognitionNeural architecturesRobustificationRe identification

The invention provides a pedestrian re-identification method based on multi-scale feature cutting and fusion, particularly provides pedestrian re-identification network training based on multi-scale depth feature cutting and fusion and a pedestrian re-identification method based on the network, and performs pedestrian re-identification through multi-scale global descriptor extraction and local descriptor extraction. The extraction of the global descriptor is to carry out average pooling and feature fusion on feature maps of different layers of the deep network, and the extraction of the localdescriptor is to horizontally divide the feature map of the deepest layer of the deep network into a plurality of blocks and respectively extract the local descriptors corresponding to the feature maps. In the training process, a minimum smooth cross entropy cost function and a difficult sample sampling triple cost function are used as the target training network parameters. By adopting the technical scheme of the invention, the problem of feature mismatching caused by factors such as pedestrian posture change and camera color cast in pedestrian re-identification can be solved, and the influence caused by background can be eliminated, so that the robustness and precision of pedestrian re-identification are improved.

Owner:SOUTH CHINA UNIV OF TECH +2

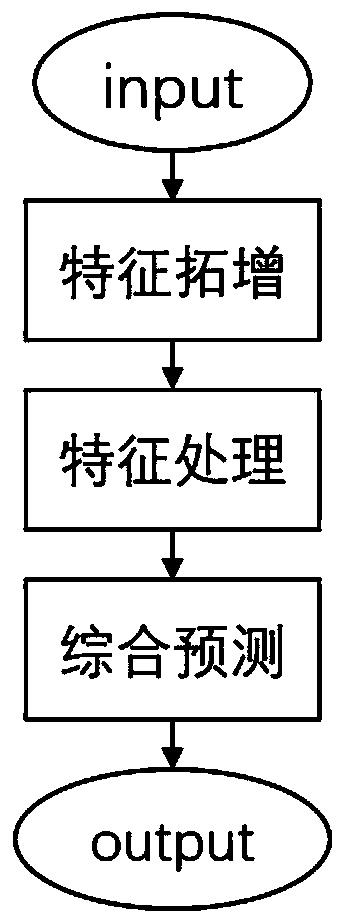

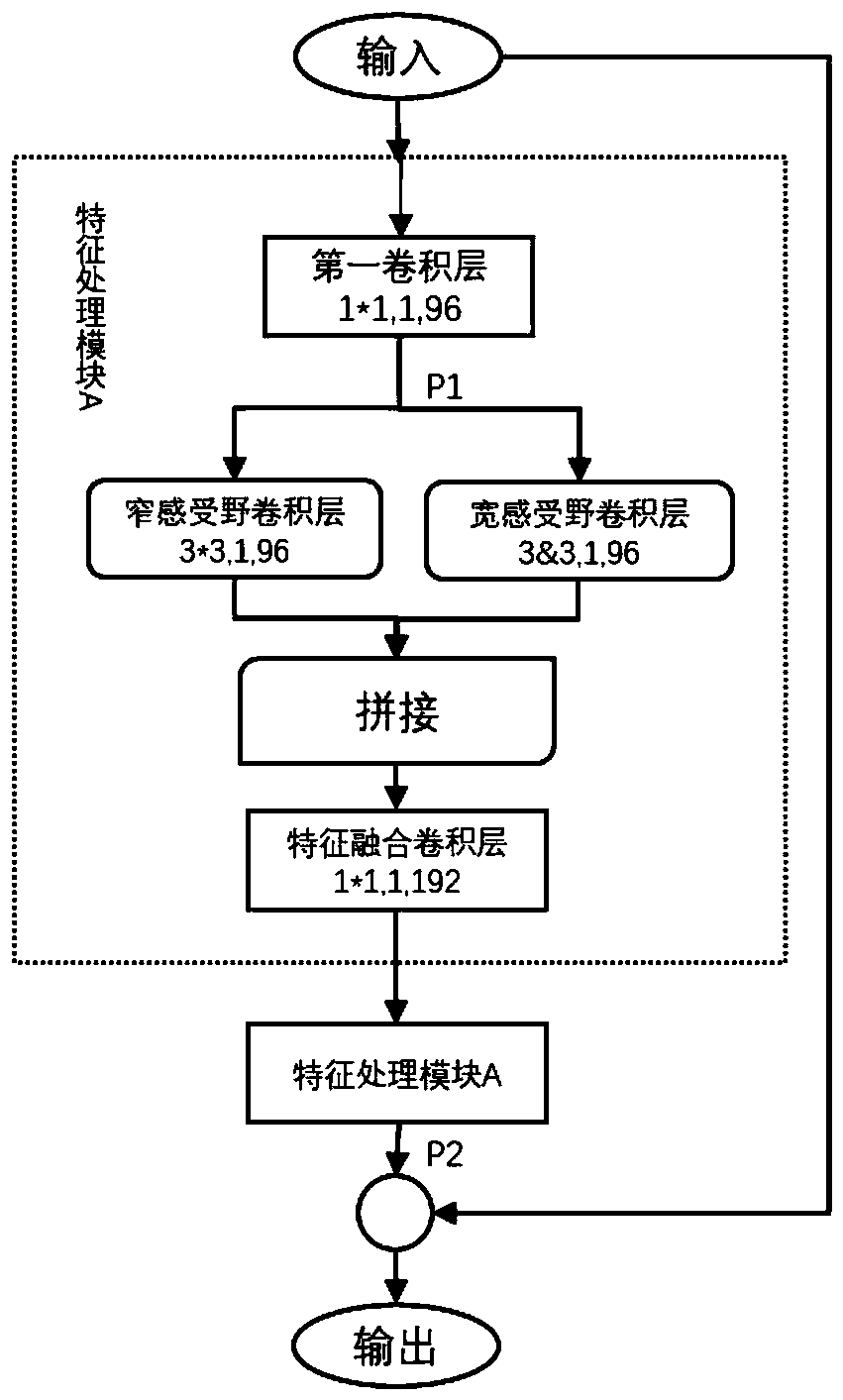

Real-time image semantic segmentation method based on lightweight full convolutional neural network

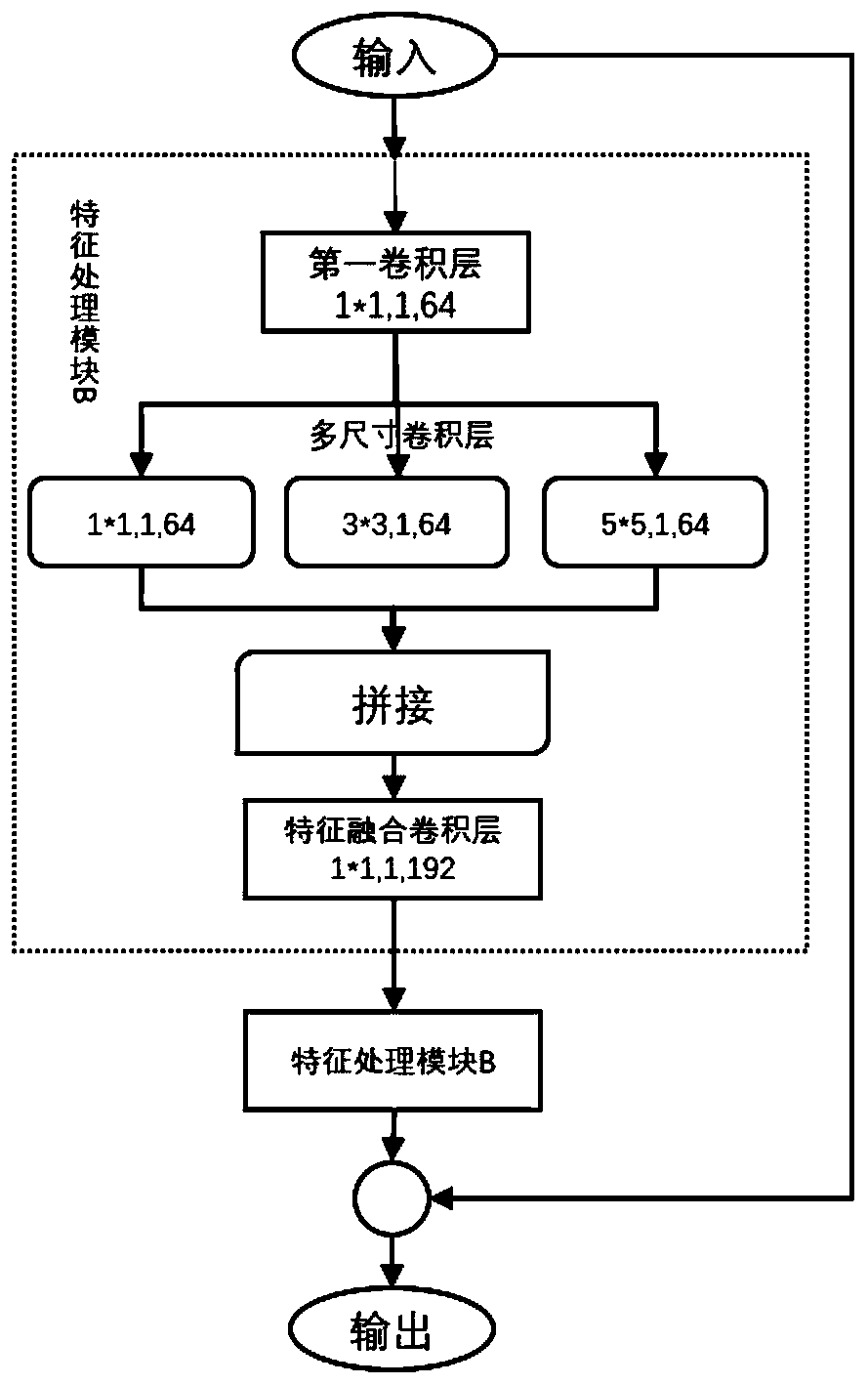

PendingCN110110692AReduce memory usageReduce the amount of calculation dataCharacter and pattern recognitionData setAlgorithm

The invention discloses a real-time image semantic segmentation method based on a lightweight full convolutional neural network. The method comprises the following steps of 1) constructing a full convolutional neural network by using the design elements of a lightweight neural network, wherein the network totally comprises three stages of a feature extension stage, a feature processing stage and acomprehensive prediction stage, and the feature processing stage uses a multi-receptive field feature fusion structure, a multi-size convolutional fusion structure and a receptive field amplificationstructure; 2) at a training stage, training the network by using a semantic segmentation data set, using a cross entropy function as a loss function, using an Adam algorithm as a parameter optimization algorithm, and using an online difficult sample retraining strategy in the process; and 3) at a test stage, inputting the test image into the network to obtain a semantic segmentation result. According to the present invention, the high-precision real-time semantic segmentation method suitable for running on a mobile terminal platform is obtained by adjusting a network structure and adapting asemantic segmentation task while controlling the scale of the model.

Owner:NANJING UNIV

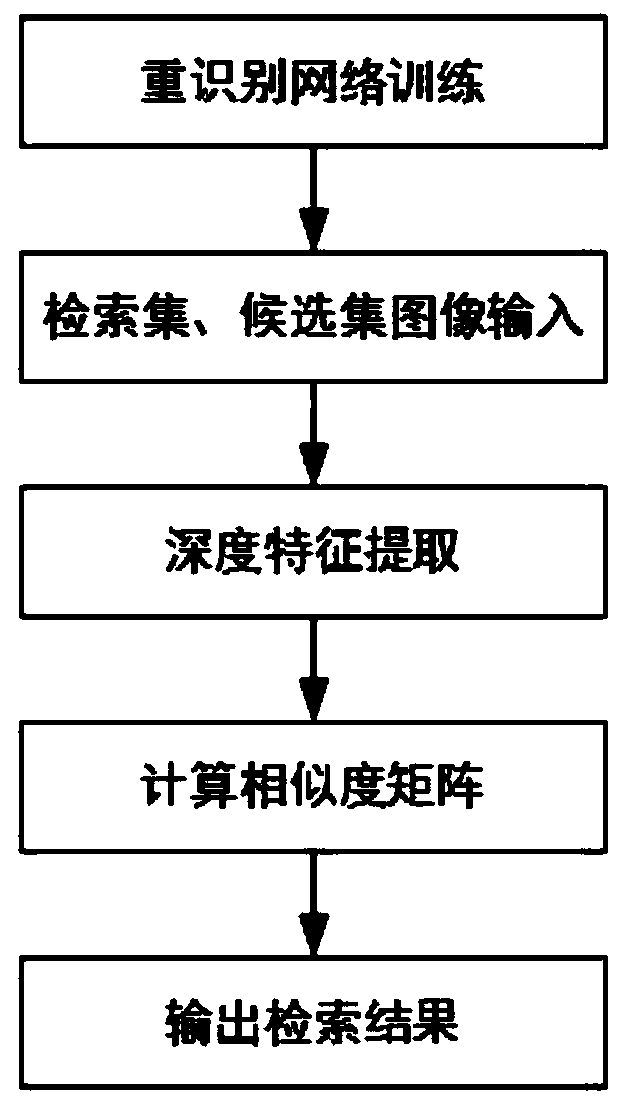

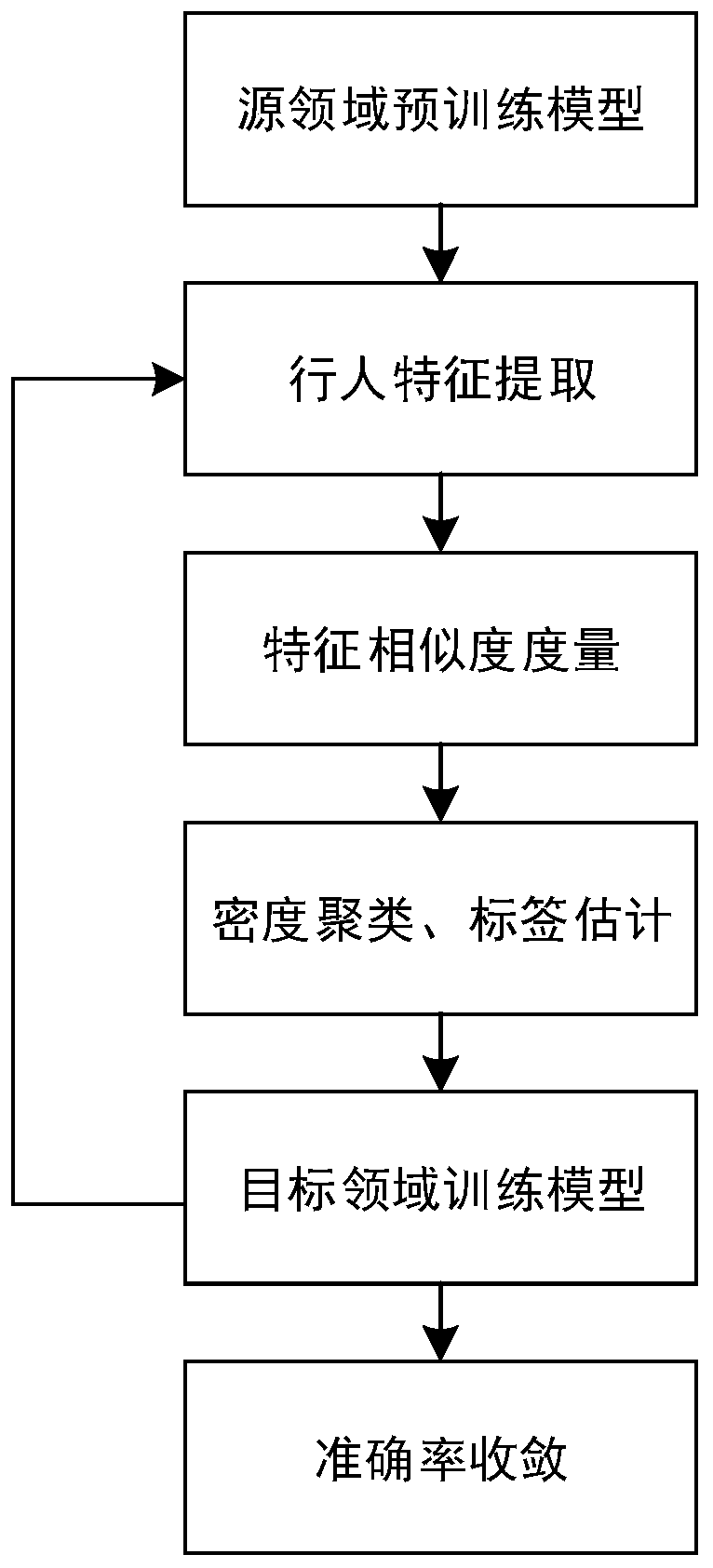

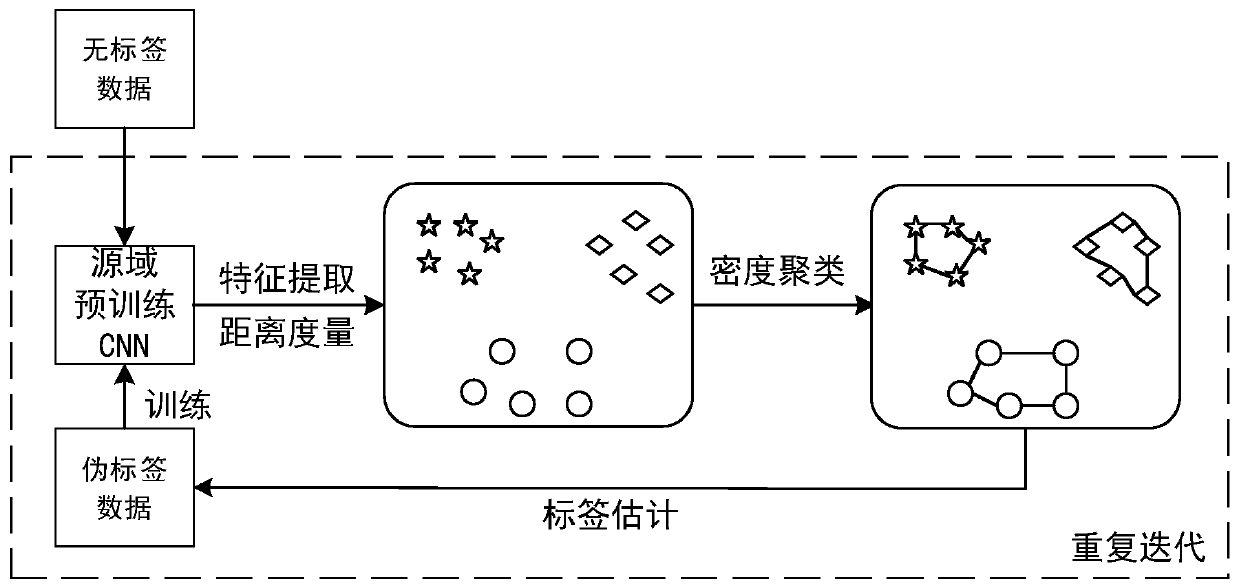

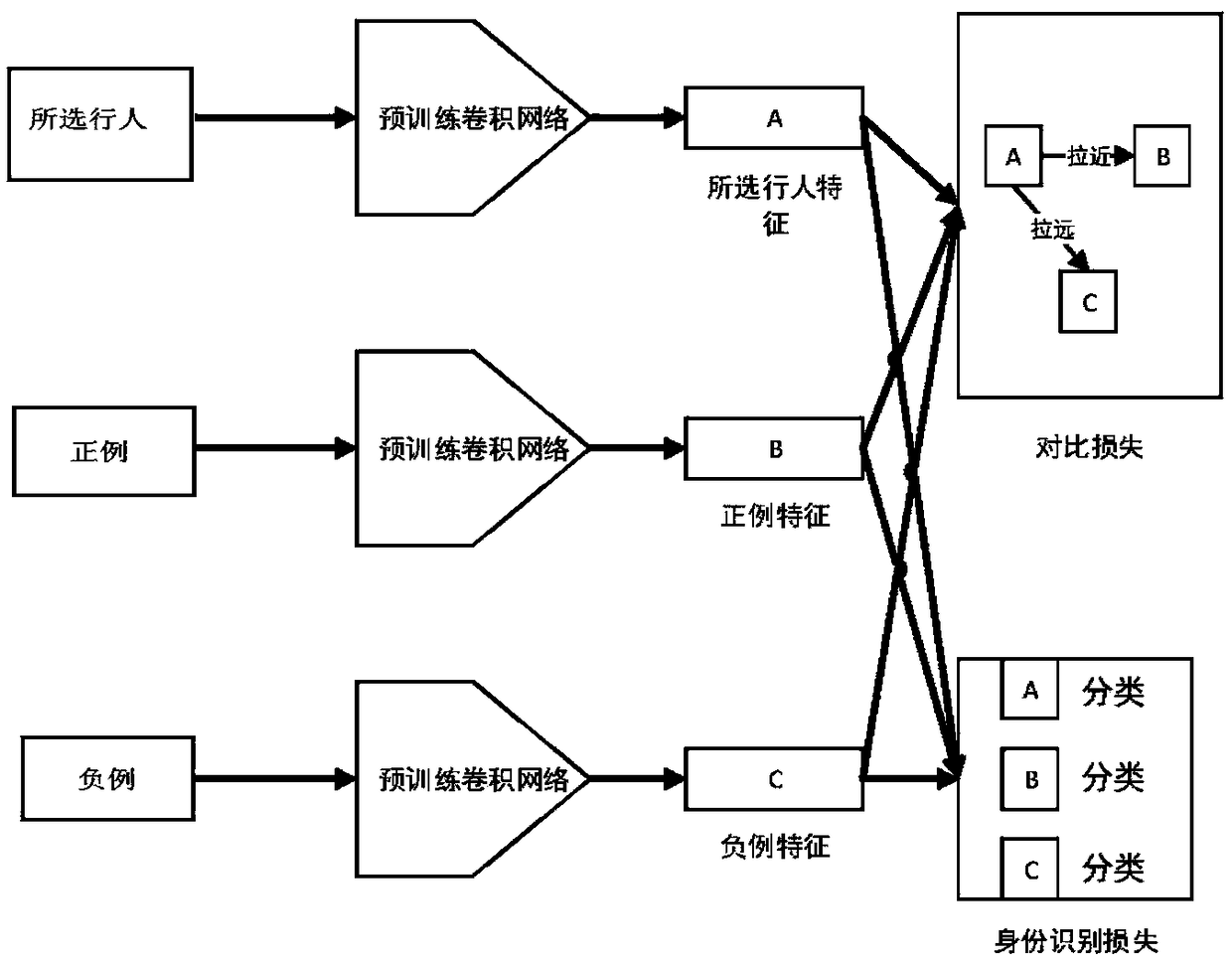

Unsupervised pedestrian re-identification method based on transfer learning

InactiveCN110135295AImplement the re-identification methodImprove learning effectBiometric pattern recognitionData setFeature set

The invention discloses an unsupervised pedestrian re-identification method based on transfer learning, and the method comprises the following steps: 1), pre-training a CNN model on a source data setwith a label, and employing cross entropy loss and ternary metric loss as a target optimization function; 2) extracting pedestrian characteristics of the label-free target data set; 3) calculating a feature similarity matrix by combining the candidate column distance and the absolute distance; 4) performing density clustering on the similarity matrix, setting a label for each feature set with thedistance smaller than a preset threshold value, and recombining the feature sets into a target data set with the label; 5) training the CNN model on the recombination data set until convergence; 6) repeating the steps 2)-5) according to a preset number of iterations, and 7) inputting the test pictures into the model to extract features, and sorting the test pictures according to feature similarityto obtain a result. The source domain labeled data and the target domain unlabeled data are reasonably applied, the accuracy of pedestrian re-identification is improved in the target domain, and thestrong dependence on the labeled data is reduced.

Owner:SOUTH CHINA UNIV OF TECH

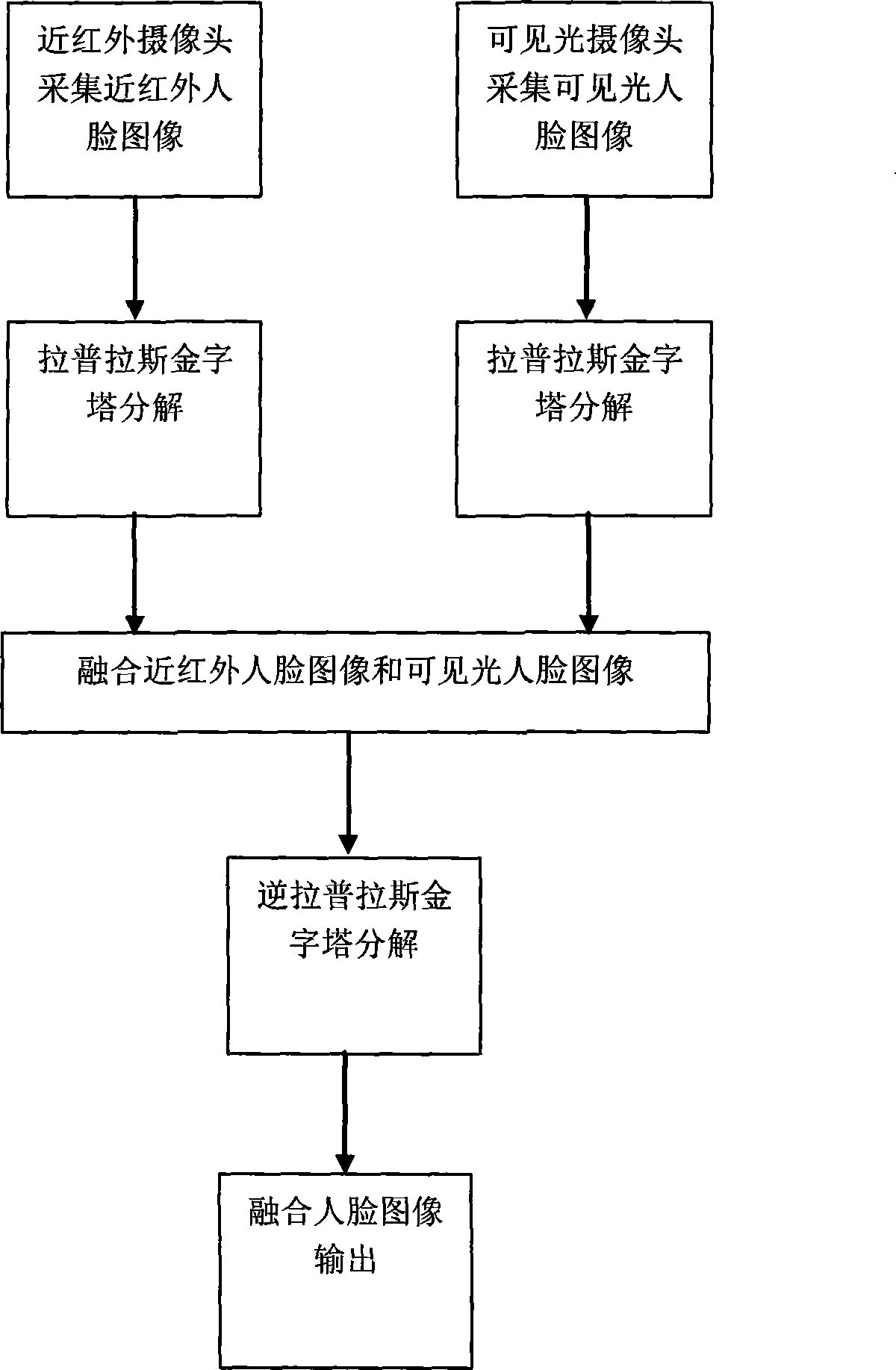

Face image fusing method based on laplacian-pyramid

InactiveCN101425137ARich in detailsEasy to observeImage enhancementCharacter and pattern recognitionMean squareWeight coefficient

The invention relates to a human face image fusing method based on laplacian-pyramid. On a basis of the laplacian-pyramid decomposition, the invention applies a partial binary mode arithmetic operator into a fusion rule. As for infrared and visible-light human face images in each layer of the laplacian-pyramid, average gradient of the image, standard deviation of an image histogram and Chi square statistic similarity measurement are used for determining the selection of respective weighting coefficients of the infrared and visible-light human face images, and effective fusion is performed by utilizing the determined weighting coefficients so as to obtain a fused image which is rich in detail information, clear and stable and facilitates the observation of human eyes. Compared with visible-light images and near-infrared images, the fused information provided by the invention has greater comentropy but smaller average cross-entropy and mean square root cross-entropy and has more remarkable effect of image fusion.

Owner:NORTH CHINA UNIVERSITY OF TECHNOLOGY +1

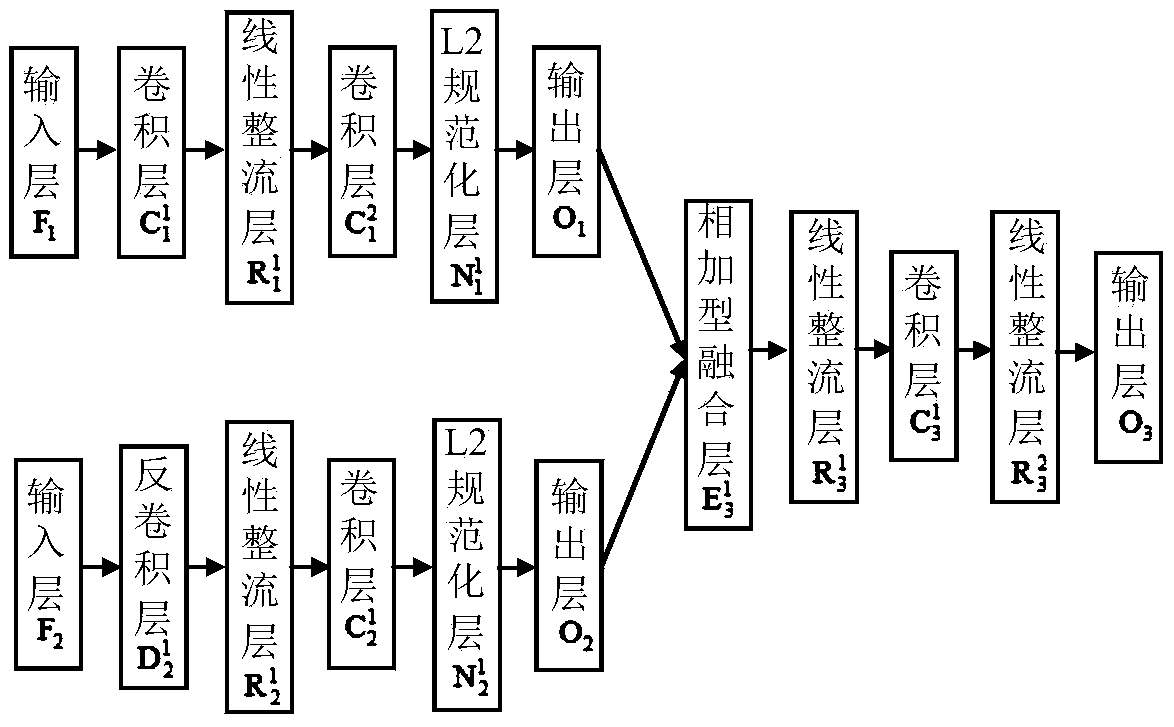

Multi-source remote sensing image classification method based on two-way attention fusion neural network

ActiveCN109993220AImprove the problem of mutual separationFusion classification results are accurateCharacter and pattern recognitionClassification methodsNetwork model

The invention discloses a multi-source remote sensing image classification method based on a two-way attention fusion neural network, and mainly solves the problem of low classification precision of multi-source remote sensing images in the prior art. The implementation scheme comprises the following steps: 1) preprocessing and dividing hyperspectral data and laser radar data to obtain a trainingsample and a test sample; 2) designing an attention fusion layer based on an attention mechanism to carry out weighted screening and fusion on spectral data and laser radar data, andestablishing a two-way interconnection convolutional neural network, (3) training the interconnection convolutional neural network by taking multiple types of cross entropies as a loss function to obtain a trained network model, and (4) predicting a test sample by using the trained model to obtain a final classification result. The method can extract the features of the multi-source remote sensing data and effectively fuse and classify the features, improves the problem of overhigh dimension in fusion, improves the average classification precision, and can be used for fusing remote sensing images obtained by two different sensors.

Owner:XIDIAN UNIV

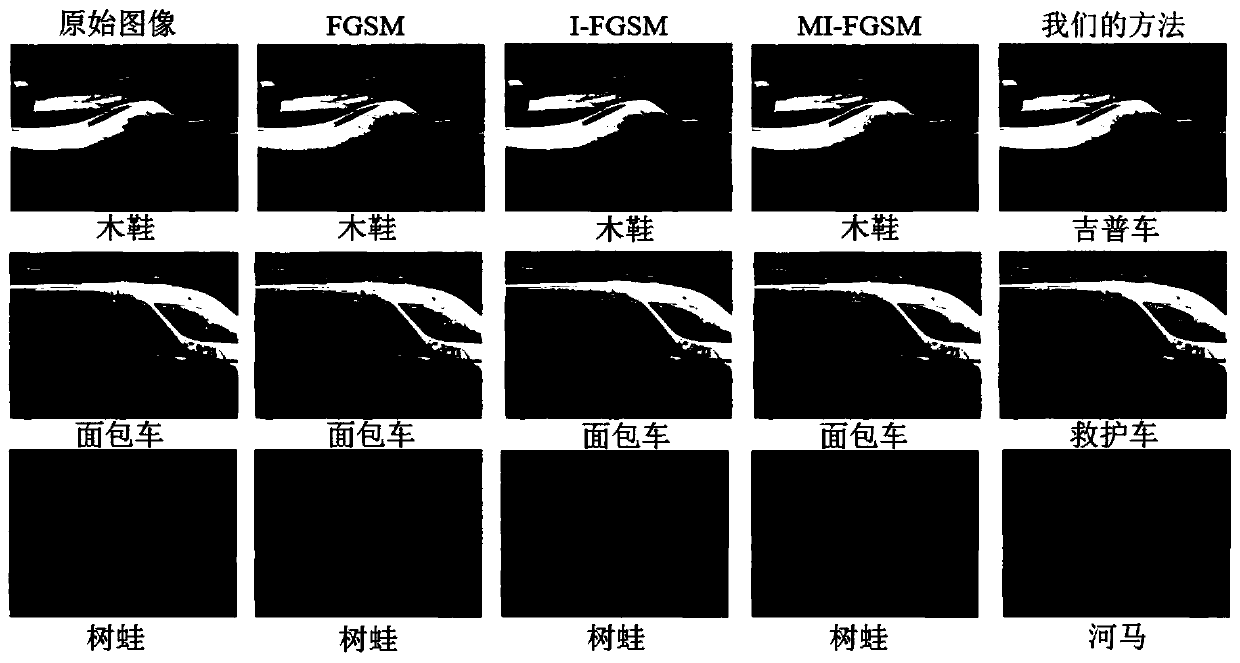

A step size self-adaptive attack resisting method based on model extraction

ActiveCN109948663AGood attack performanceStrong non-black box attack capabilityCharacter and pattern recognitionNeural architecturesModel extractionData set

The invention discloses a step size self-adaptive attack resisting method based on model extraction. The step size self-adaptive attack resisting method comprises the following steps: step 1, constructing an image data set; Step 2, training a convolutional neural network for the image set IMG to serve as a to-be-attacked target model, step 3, calculating a cross entropy loss function, realizing model extraction of the convolutional neural network, and initializing a gradient value and a step length g1 of an iterative attack; Step 4, forming a new adversarial sample x1; 5, recalculating the cross entropy loss function, and updating the step length of adding the confrontation noise in the next step by using the new gradient value; Step 6, repeatedly the process of inputting images, calculating cross entropy loss function, computing the step size, updating the adversarial sample; repeatedly operating the step 5 for T-1 timeS, obtaining a final iteration attack confrontation sample x'i, and inputting the confrontation sample into the target model for classification to obtain a classification result N (x'i). Compared with the prior art, the method has the advantages that a better attackeffect can be achieved, and compared with a current iteration method, the method has higher non-black box attack capability.

Owner:TIANJIN UNIV

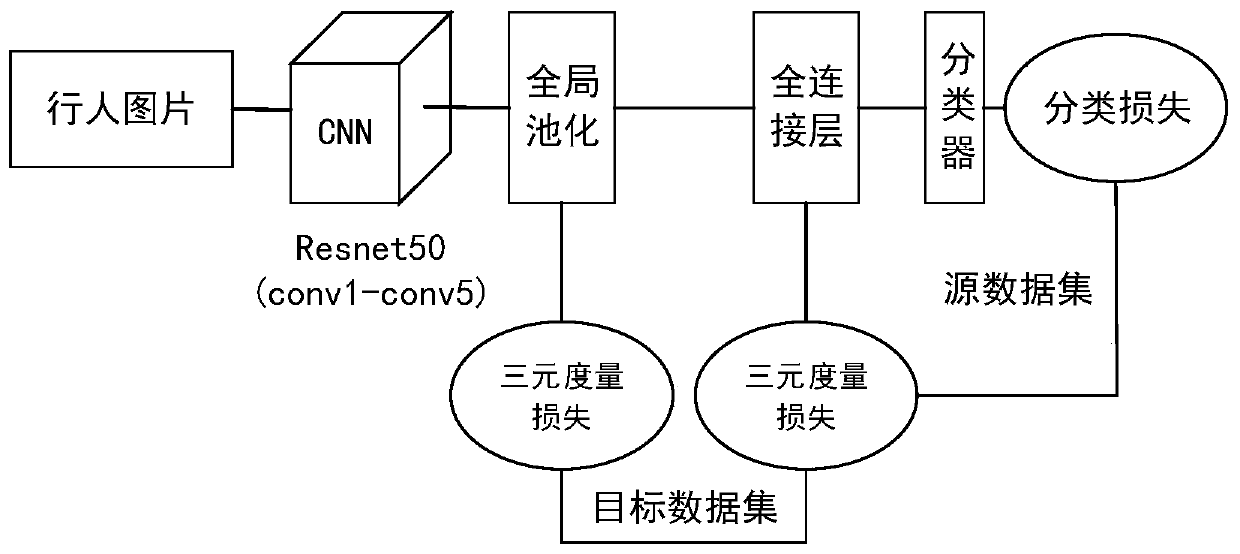

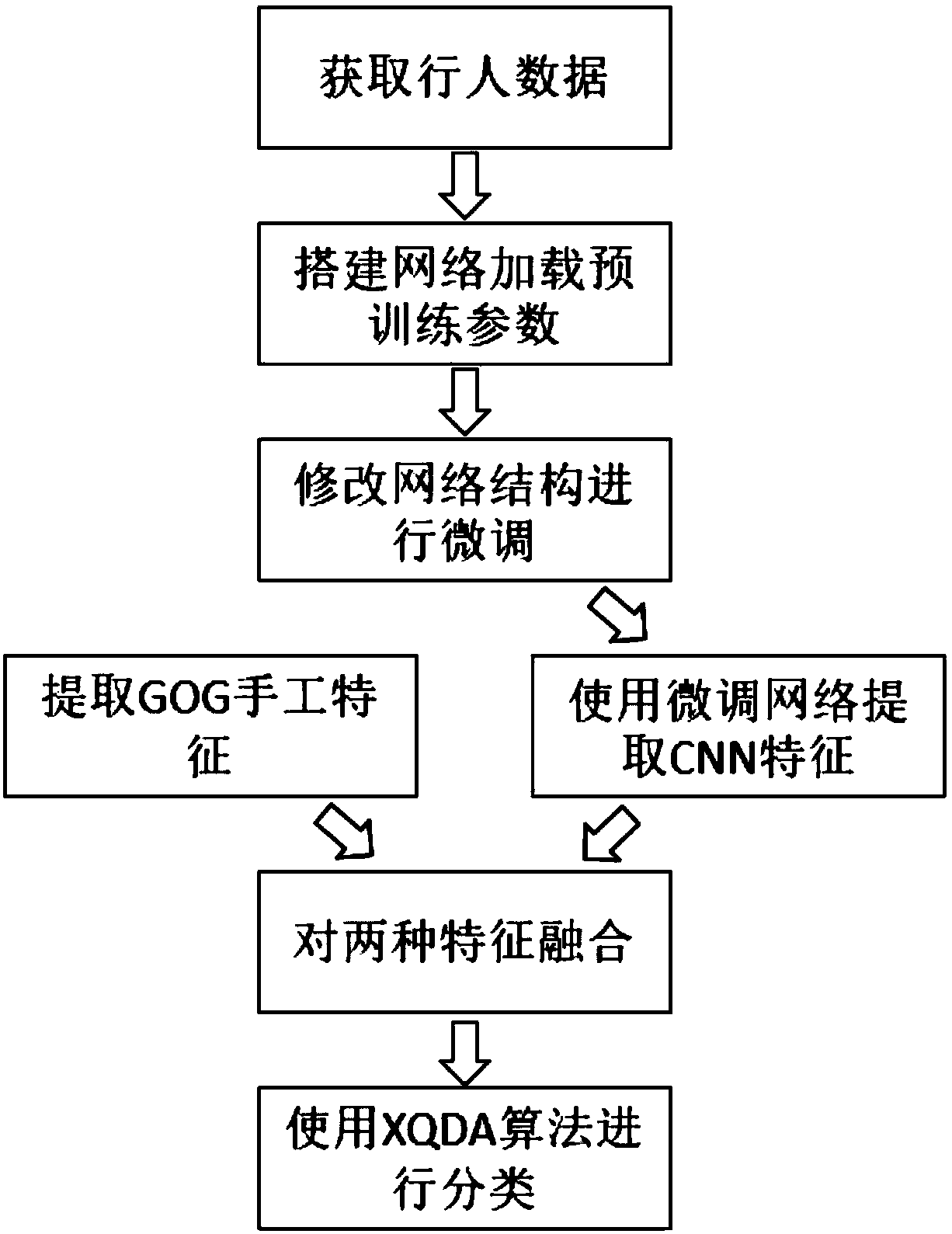

A pedestrian re-recognition method based on transfer learning and feature fusion

ActiveCN109446898AImprove generalization abilityImprove portabilityBiometric pattern recognitionNeural architecturesLower gradeRe identification

The invention discloses a pedestrian re-identification method based on transfer learning and feature fusion, comprising the following steps of acquiring the pedestrian data, carrying out initial training through a neural network, modifying the structure and combining the improved loss function to carry out re-training in a data set; carrying out the manual feature extraction and neural network feature extraction; after extracting features, fusing the two features to get high-Low grade features; using an XQDA algorithm to calculate the high-Low grade features, classifying and verifying to obtain the re-recognition results. The method of the invention adopts the cross entropy loss function and the triple loss function to restrict the whole network more strongly, and then extracts manual features and convolution network features to carry out feature fusion, and forms high-low features, covers different levels of pedestrian feature expression, achieves better recognition results, and reduces the training time in the form of fine-tuning, and has good generalization and portability for small data sets.

Owner:JINAN UNIVERSITY

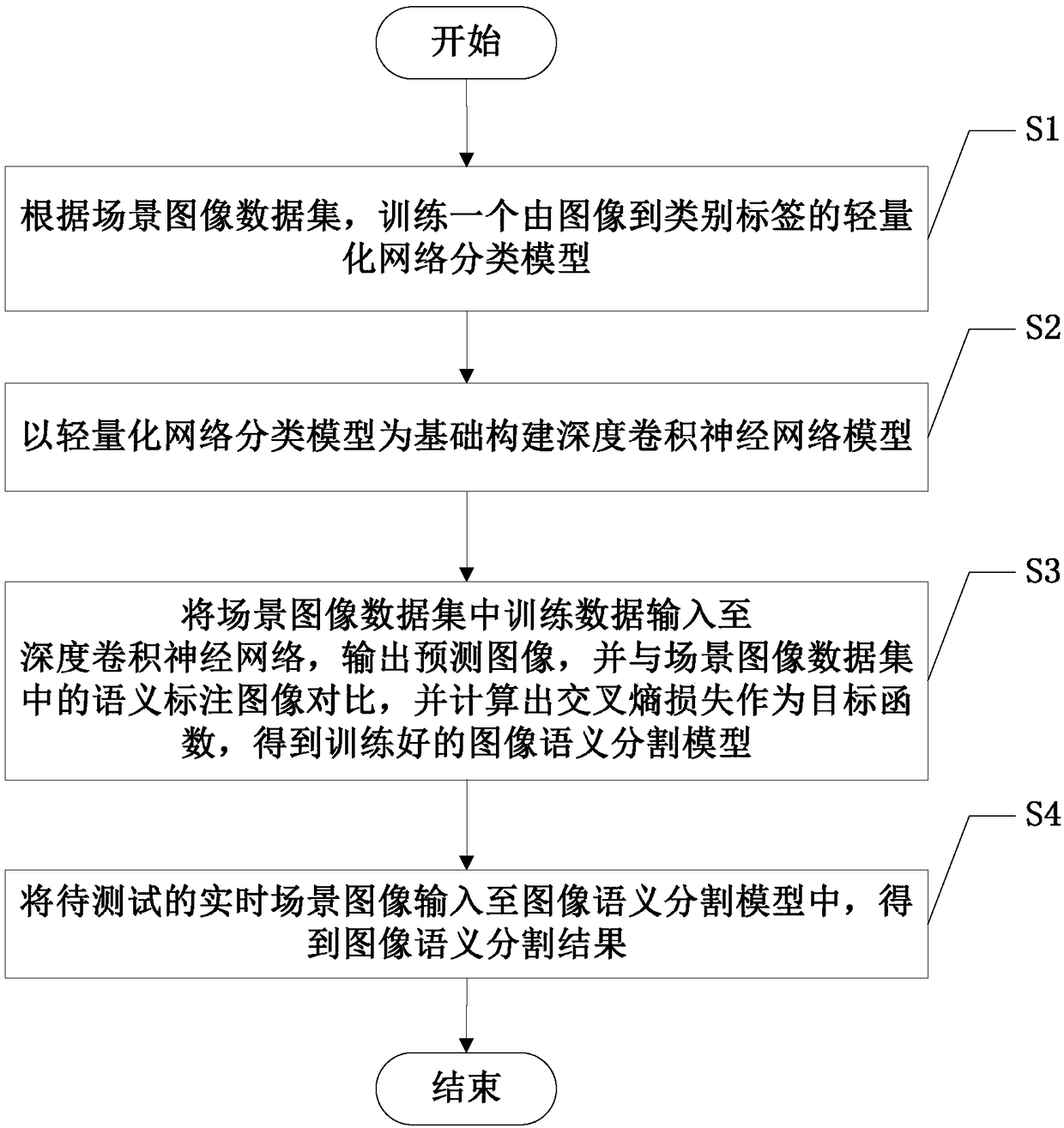

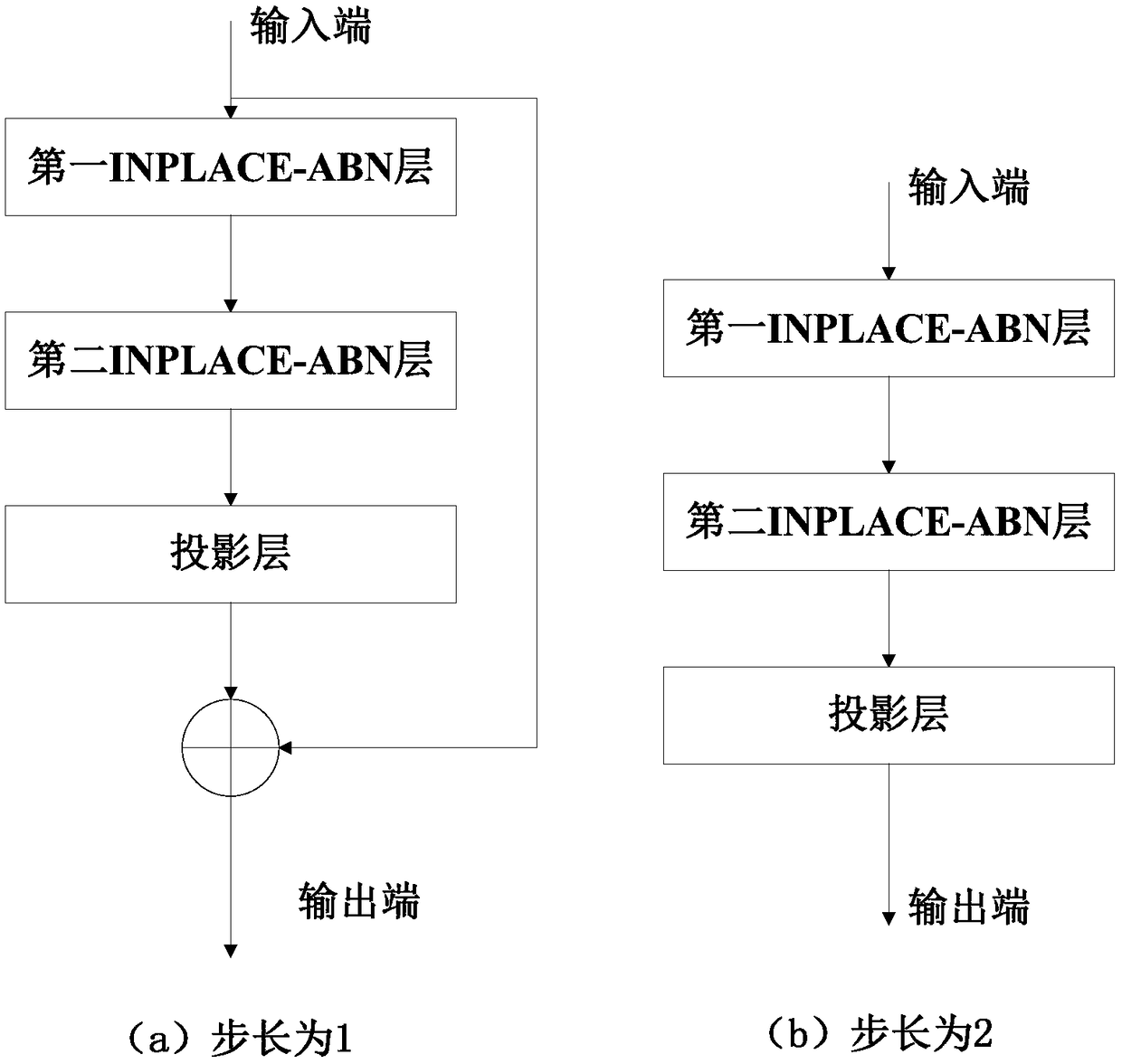

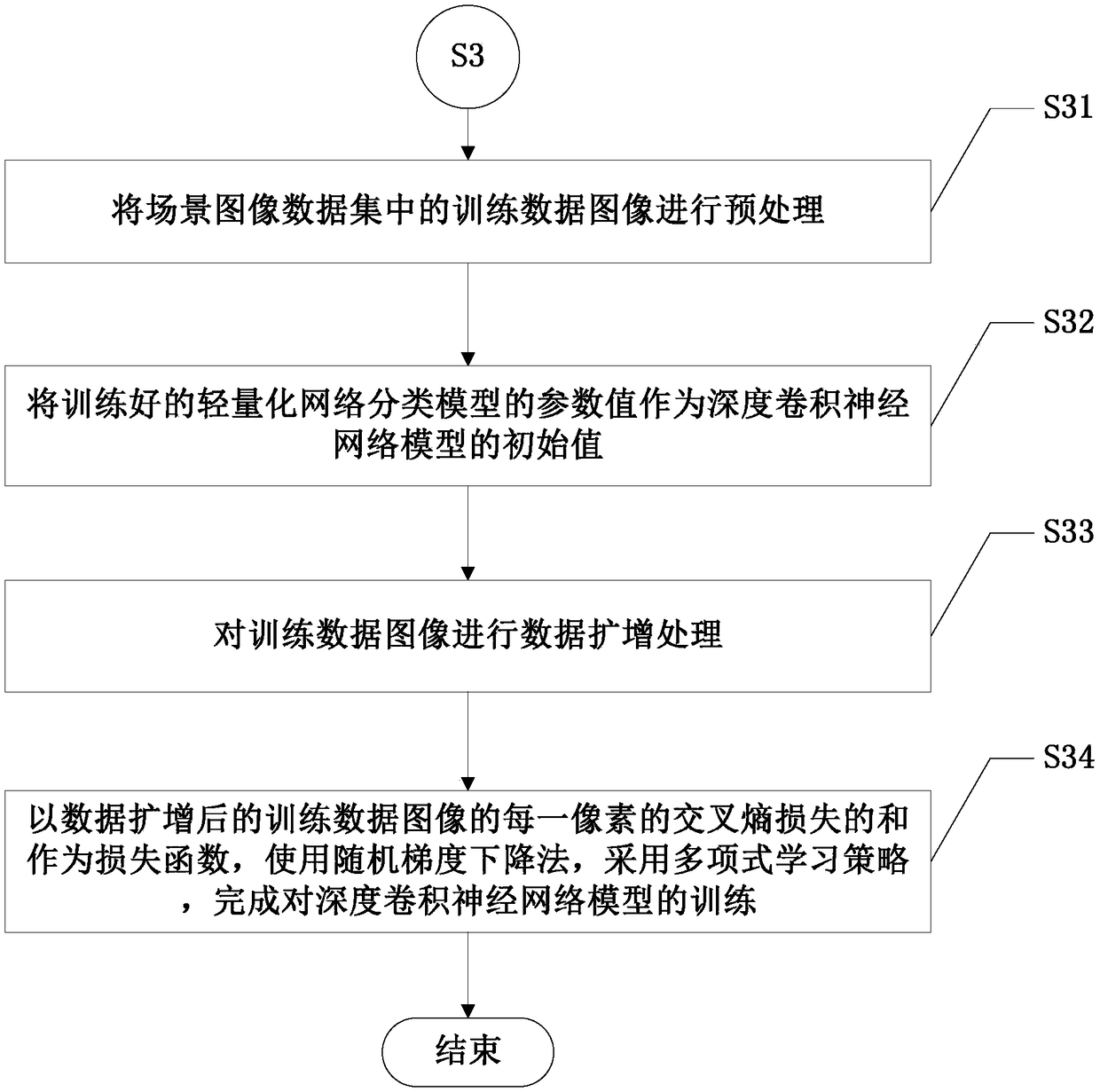

A real-time scene image semantic segmentation method based on lightweight network

InactiveCN109145983AEfficient extractionEfficient use ofCharacter and pattern recognitionData setImaging Feature

The invention discloses a real-time scene image semantic segmentation method based on a lightweight network, comprising the following steps: S1, training a lightweight network classification model according to a scene image data set; S2, constructing a deep convolution neural network model based on a lightweight network classification model; S3, inputting the training data of the scene image dataset into a depth convolution neural network, output a predicted image, comparing the predicted image with a semantic label image of the scene image data set, and calculating a cross entropy loss as anobjective function to obtain a trained image semantic segmentation model; S4, inputting the real-time scene image to be tested into the image semantic segmentation model to obtain the image semanticsegmentation result. By taking the modified MobileNetV2 as a basic network, the invention can efficiently extract image features, and in the upsampling process, a quick connection block is used, so that the parameter utilization is more efficient, and the speed of the semantic segmentation model is further improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

A safety helmet wearing detection method based on depth features and video object detection

InactiveCN109447168ARealize wearing detectionAchieve deliveryCharacter and pattern recognitionData setData acquisition

The invention discloses a safety helmet wearing detection method based on depth features and video object detection, which comprises the following steps of video data acquisition; data marking of manual marking for the data collected by Step 1; data set preparation, wherein the data set consists of divided training set, test set and verification set, and each set contains pictures corresponding tothe original video, and the special training set and verification set also contain annotation data corresponding to each picture; the network construction and training of extracting features of key frames from input video and transferring them to different neighboring frames; transferring and multiplexing the key frame features to the features of the current frame by optical flow; the target classification and location frame prediction; network training, wherein the loss function of each ROI is the sum of cross entropy loss and boundary box regression loss.

Owner:江苏德劭信息科技有限公司

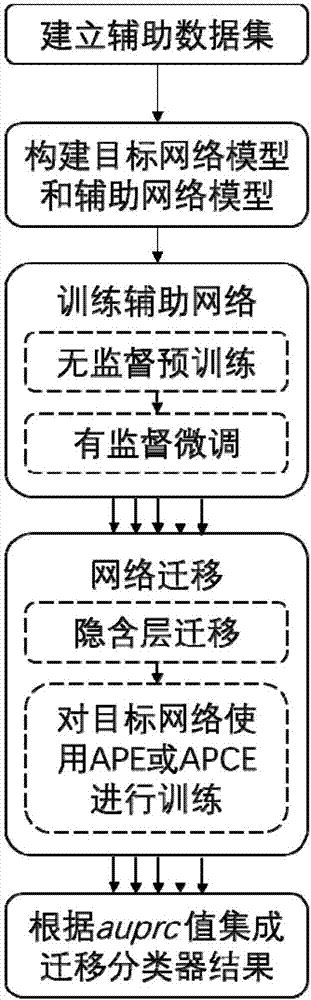

Deep transfer learning-based unbalanced classification ensemble method

ActiveCN107316061AFor classification problems applyImprove classification performanceCharacter and pattern recognitionNeural learning methodsLearning basedData set

The invention discloses a deep transfer learning-based unbalanced classification ensemble method. The method comprises the following steps that: an auxiliary data set is established; an auxiliary deep network model and a target deep network model are constructed; the auxiliary deep network is trained; the structure and parameters of the auxiliary deep network are transferred to the target deep network; and the products of auprc values are calculated and are adopted as the weights of classifiers, and weighted ensemble is performed on the classification results of each transfer classifier, so that an ensemble classification result is obtained and is adopted as the output of an ensemble classifier. According to the method of the present invention, an improved average precision variance loss function (APE) and an average precision cross-entropy loss function (APCE) are adopted; when the loss cost of samples is calculated, the weights of the samples are dynamically adjusted; and few weights are assigned to majority classification samples, more weights are assigned to minority classification samples, and therefore, the trained deep network attaches more importance to the minority classification samples, and the method is more suitable for the classification of unbalanced data.

Owner:SOUTH CHINA UNIV OF TECH

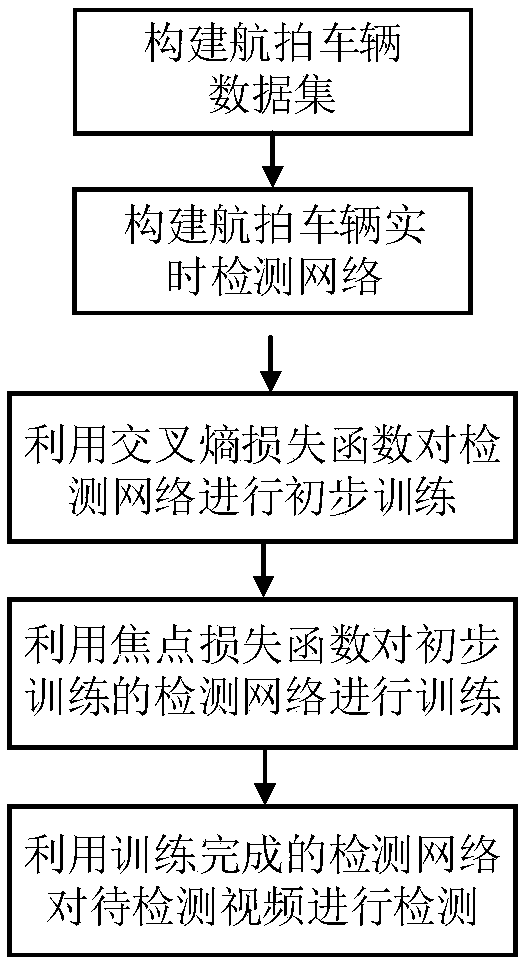

Aerially-photographed vehicle real-time detection method based on deep learning

ActiveCN108647665AImprove detection accuracyRich in featuresScene recognitionData setAerial photography

The invention provides an aerially-photographed vehicle real-time detection method based on deep learning and mainly aims to solve the problem that in the prior art, it is difficult to perform precisedetection on an aerially-photographed vehicle target under a complicated scene on the basis of guaranteeing instantaneity. The method comprises the implementation steps that 1, an aerially-photographed vehicle dataset is constructed; 2, a multi-scale feature fusion module is designed, and a RefineDet real-time target detection network based on deep learning is optimized in combination with the module, so that an aerially-photographed vehicle real-time detection network is obtained; 3, a cross entropy loss function and a focus loss function are utilized to train the aerially-photographed vehicle real-time detection network in sequence; and 4, a trained detection model is used to detect a vehicle in a to-be-detected aerially-photographed vehicle video. According to the method, the designedmulti-scale feature fusion module can effectively increase the information utilization rate of the aerially-photographed vehicle target, meanwhile, the aerially-photographed vehicle dataset can be trained more sufficiently by use of the two loss functions, and therefore the detection accuracy of the aerially-photographed vehicle target under the complicated scene is improved.

Owner:XIDIAN UNIV

Rapid threshold segmentation method based on gray level-gradient two-dimensional symmetrical Tsallis cross entropy

The invention relates to a rapid threshold segmentation method based on gray level-gradient two-dimensional symmetrical Tsallis cross entropy, aims at the problems that approximate assumption exists in a conventional gray level-average gray level histogram and a whole solution space is required to be searched by calculation, so that segmentation is inaccurate and the efficiency is not high, and provides improved two-dimensional symmetrically Tsallis cross entropy threshold segmentation and a rapid recursive method thereof. The threshold segmentation method is higher in universality and accurate in segmentation; in order to realize accurate segmentation of a gray image, a new gray level-gradient two-dimensional histogram is adopted, and a two-dimensional symmetrical Tsallis cross entropy theory with a superior segmentation effect is combined with the histogram, so that the gray level image segmentation accuracy is effectively improved; the requirement for on-line timeliness of an industrial assembly line is met at the same time, a novel rapid recursive algorithm is adopted, and redundant calculation is reduced; and after a gray level image of the industrial assembly line is processed, the inside of an image zone is uniform, the contour boundary is accurate, the texture detail is clear, and at same time, good universality is provided.

Owner:WUXI XINJIE ELECTRICAL +1

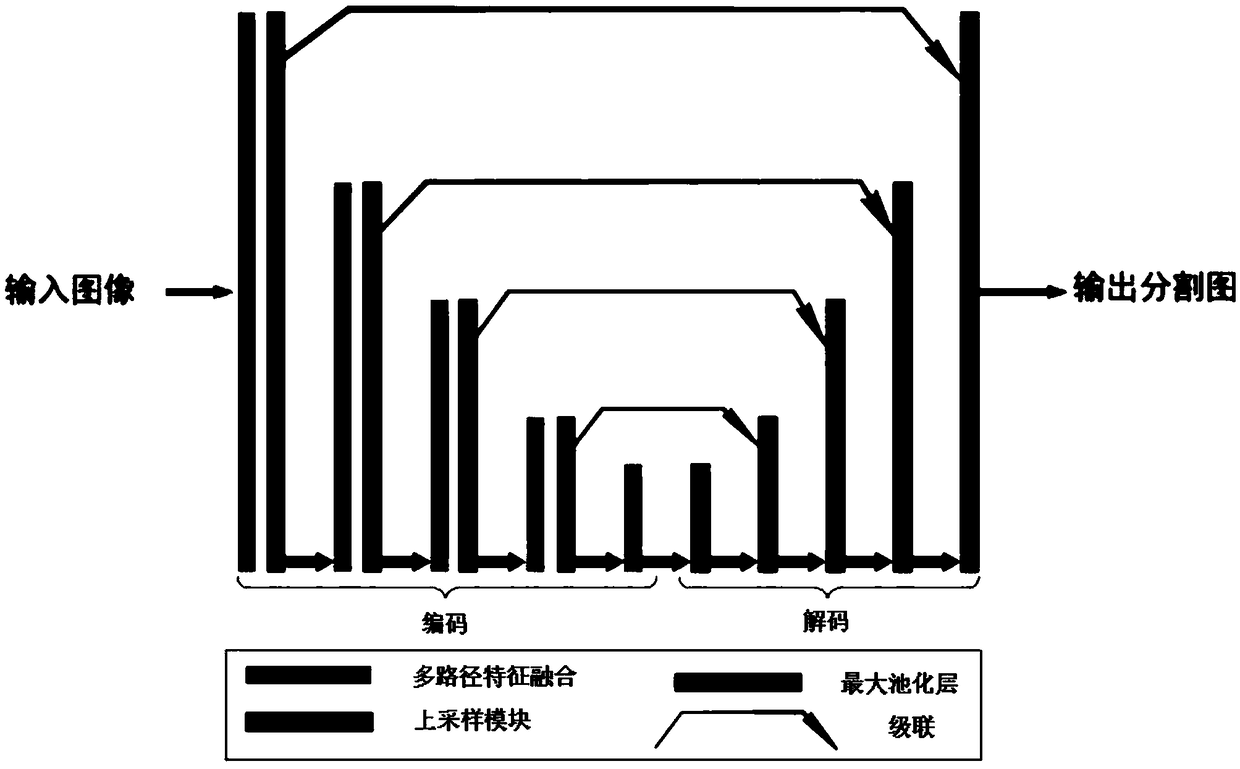

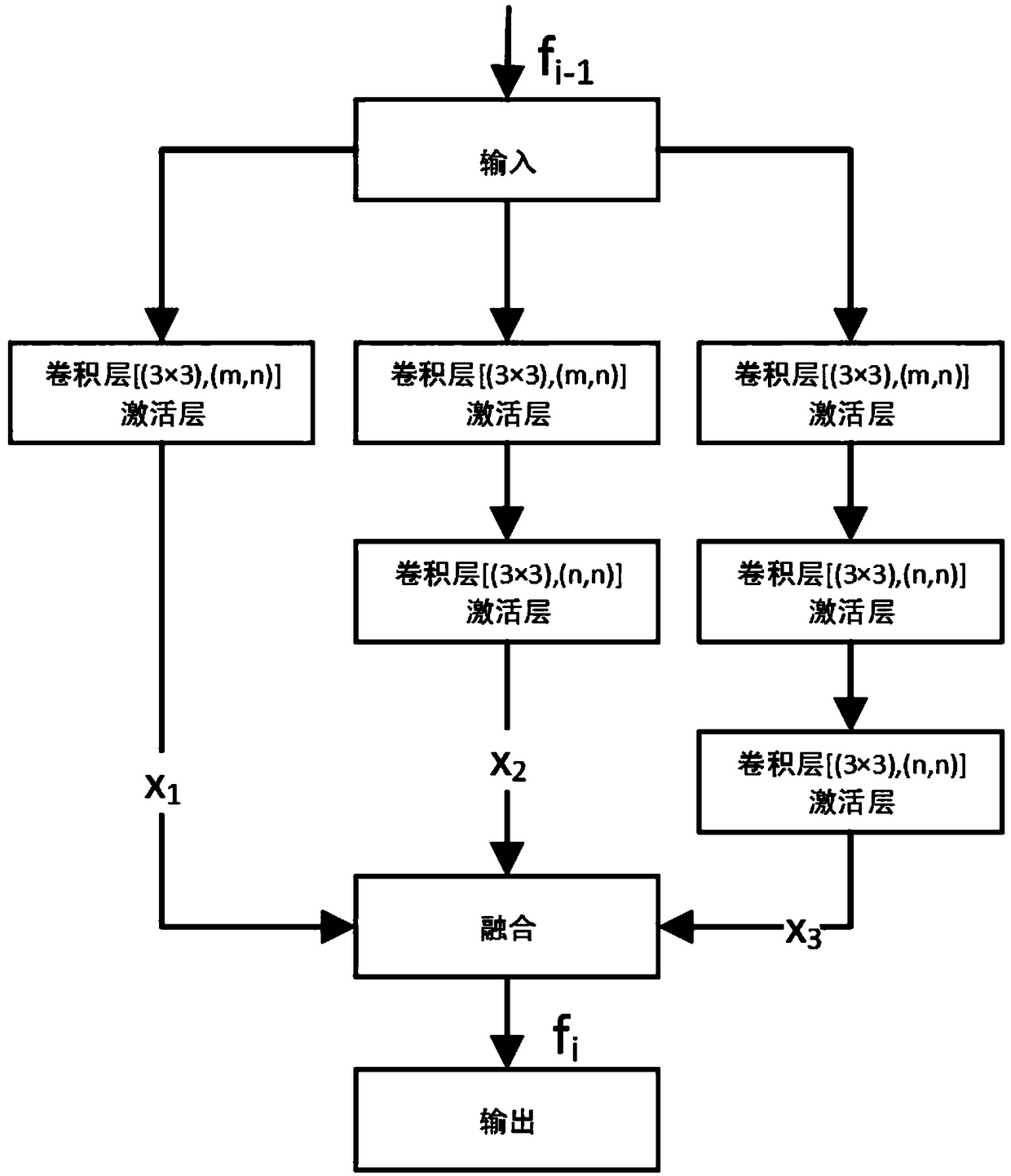

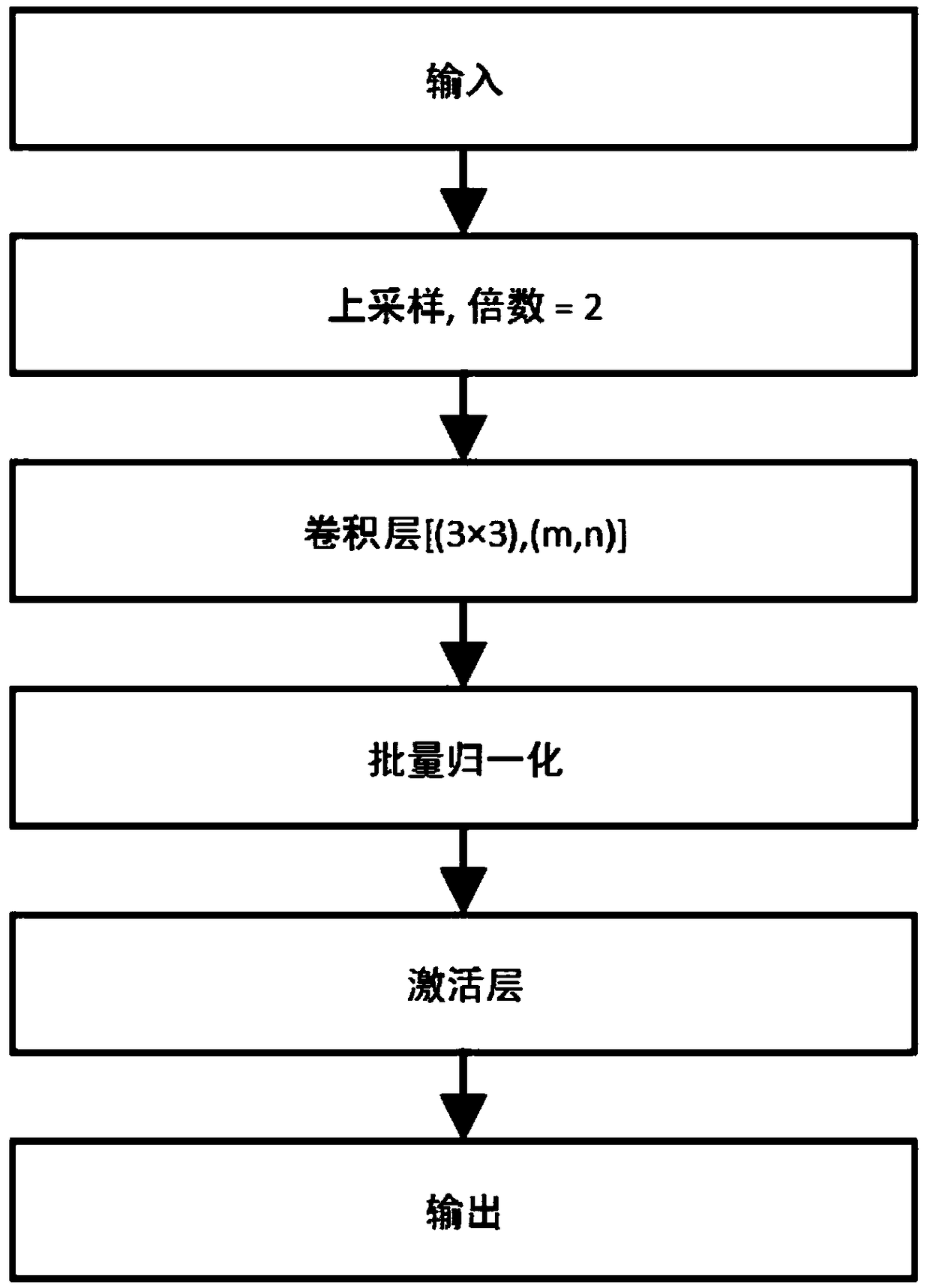

A semantic segmentation method of multi-path feature fusion based on deep learning

InactiveCN109190626AImprove accuracyReasonable designCharacter and pattern recognitionNeural architecturesImage resolutionGlobal information

The invention relates to a semantic segmentation method of multi-path feature fusion based on deep learning, comprising the following steps: extracting basic deep feature of an image by using a multi-path feature fusion method; passing the extracted basic deep features through the decoding end network, restoring the original image resolution information, and generating the segmentation result; using the cross-entropy loss function to train the network, and using the accuracy and mIoU to evaluate the performance of the network. The design of the invention is reasonable, which takes full accountof local information and global information. The output of the network is a segmentation map with the same resolution as the original image. The segmentation accuracy is calculated by using the existing labels of the image. The network is trained to minimize the cross-entropy loss function, which effectively improves the accuracy of image semantic segmentation.

Owner:ACADEMY OF BROADCASTING SCI STATE ADMINISTATION OF PRESS PUBLICATION RADIO FILM & TELEVISION +1

Full convolution network based remote-sensing image land cover classifying method

InactiveCN108537192AImprove classification performancePracticalScene recognitionNeural architecturesStochastic gradient descentLand cover

The invention relates to a full convolution network based remote-sensing image land cover classifying method. The method comprises the following steps: S1, performing data enhancement on a data set with limited data quantity; and generating a training set of which the data quantity and quality meet the training requirement; S2, combining the improved full convolution network FCN4s and the improvedU type full convolution network U-NetBN; and building a remote-sensing image land cover classifying model; S4, minimizing the cross entropy loss as the decrease of random gradient; learning the optimal parameters of the model to obtain the trained remote-sensing image land cover classifying model; and S4, performing pixel class classifying prediction on the predicted remote-sensing image throughthe trained remote image land cover classifying model. According to the method, the properties of the FCN full convolution network and the U-Net full convolution network are combined, so that the remote-sensing image land cover classifying performance can be improved.

Owner:FUZHOU UNIV

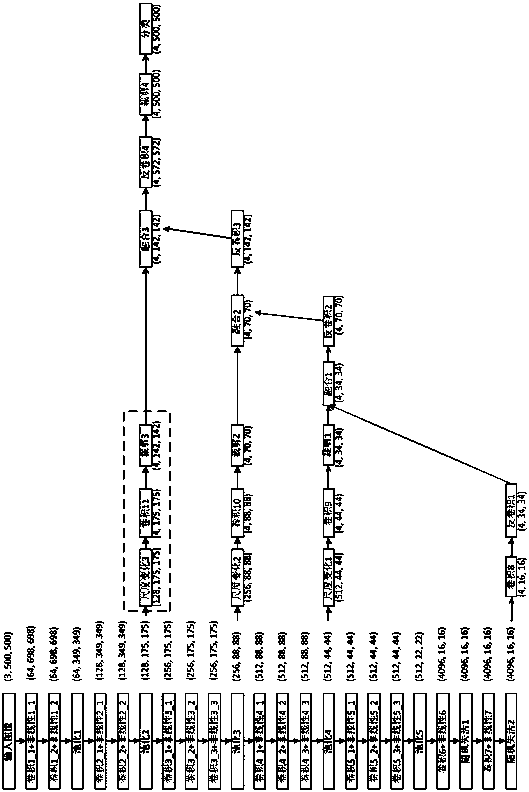

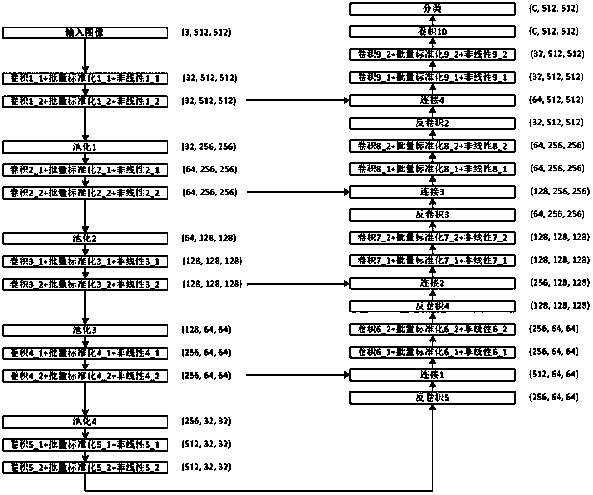

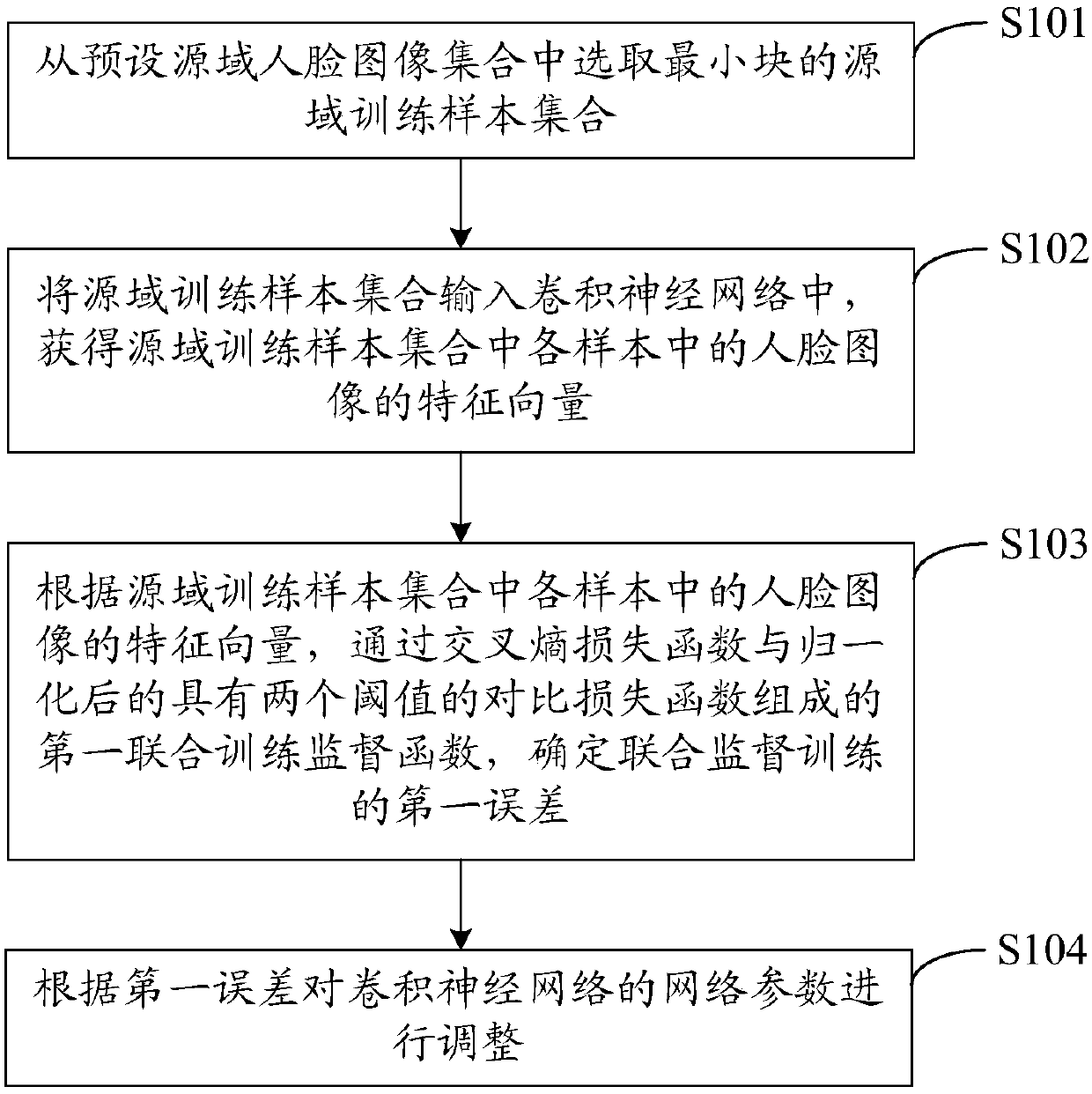

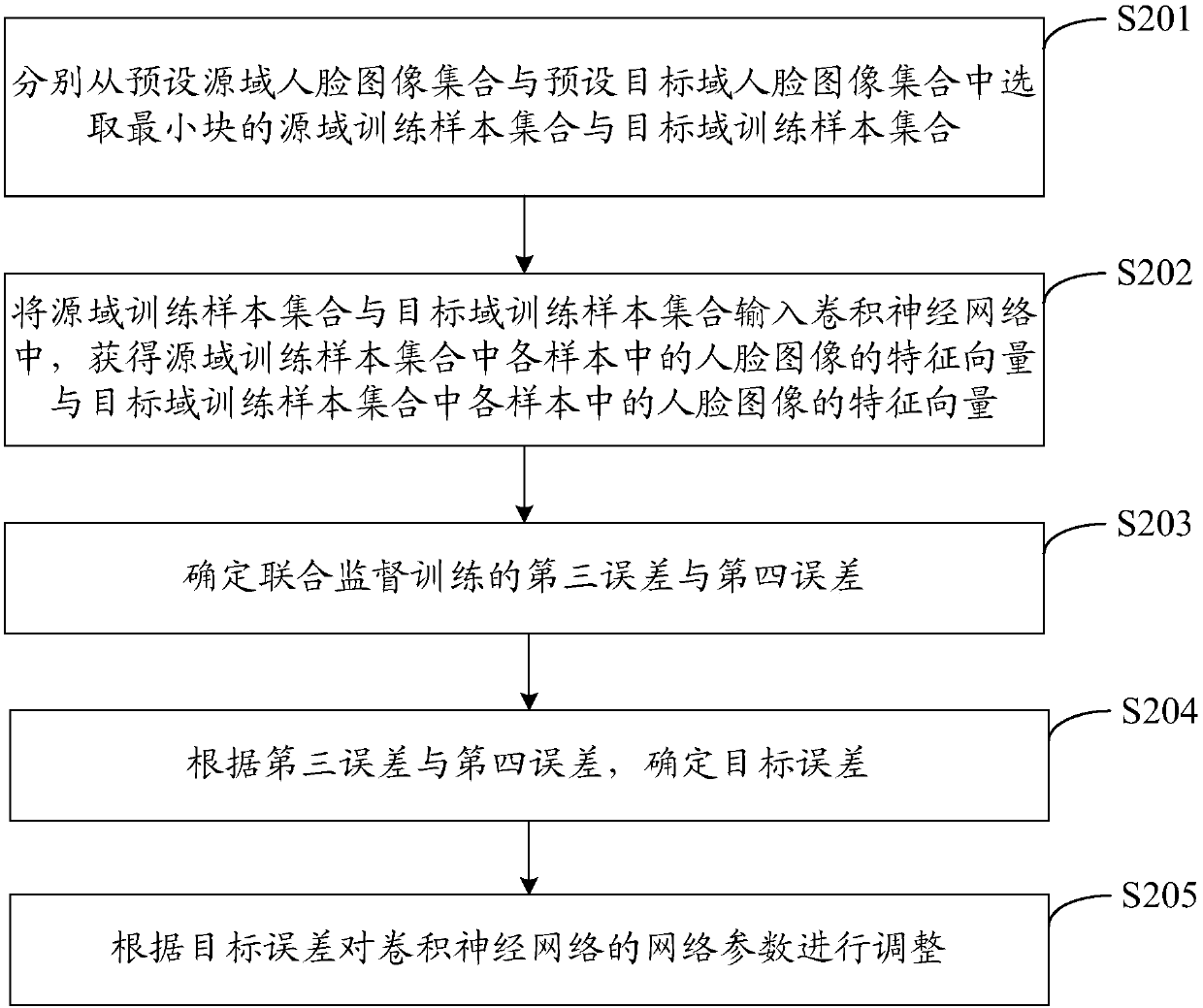

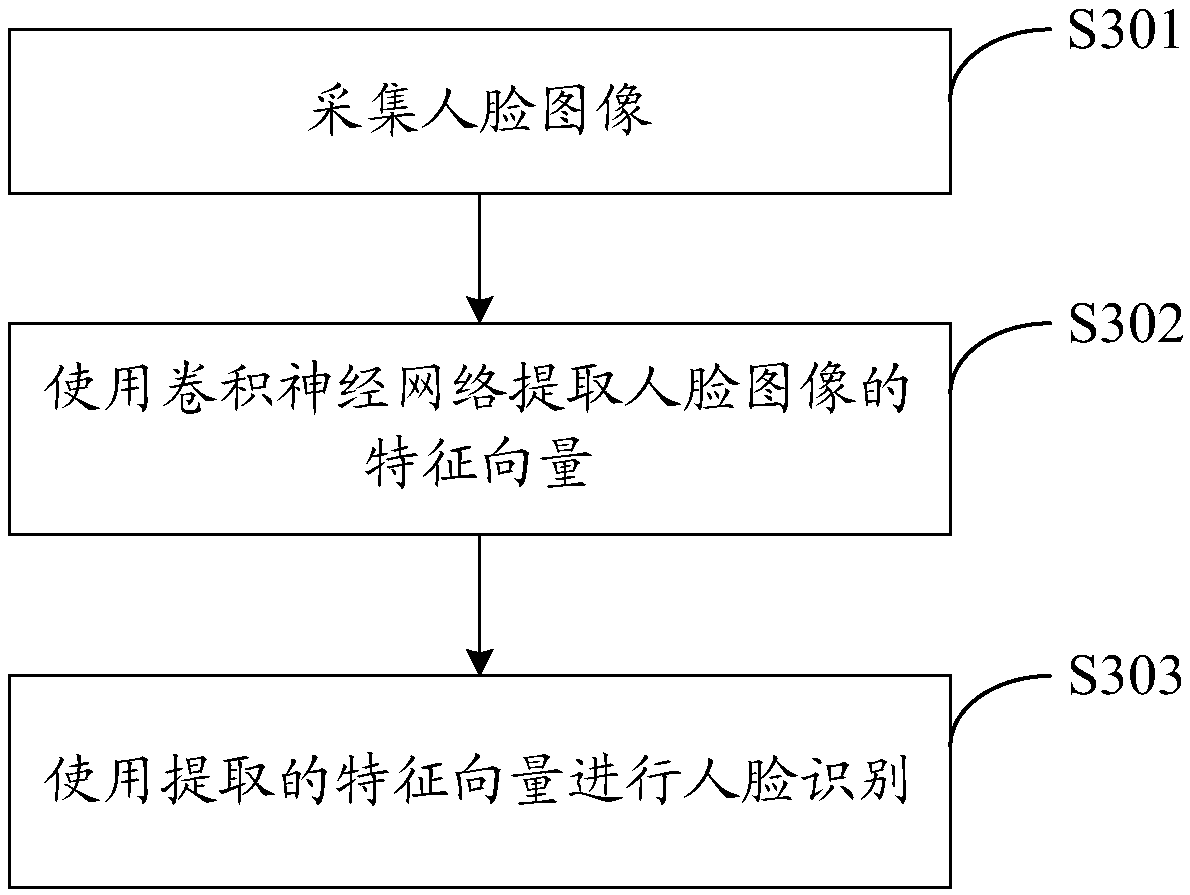

Training method of convolutional neural network (CNN) and face identification method and device

ActiveCN108182394AImprove generalization abilityImprove training efficiencyCharacter and pattern recognitionNeural architecturesFeature vectorPositive sample

The present invention discloses a training method of a convolutional neural network and a face identification method and device. The training method is characterized by determining a first error by adopting a first joint training supervision function composed of a cross-entropy loss function and a comparison loss function after the normalization and having two threshold values and according to thecharacteristic vectors of the face images in the samples of a source domain training sample set, and adjusting the network parameters of the convolutional neural network via the first error, whereinthe first threshold value is used to compare with the Euclidean distance of the characteristic vectors of the two face images in a positive sample pair, and the second threshold value is used to compare with the Euclidean distance of the characteristic vectors of the two face images in a negative sample pair, so that the supervised training of the negative sample pair can be controlled, and the supervised training of the positive sample pair also can be controlled, and the training efficiency and the accuracy of the CNN are improved, and accordingly, the generalization ability of the face identification method can be improved when the trained CNN is applied to the face identification method.

Owner:ZHEJIANG DAHUA TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com