Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

422 results about "Image fusion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

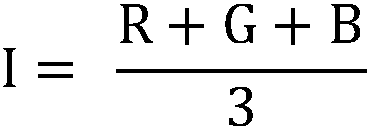

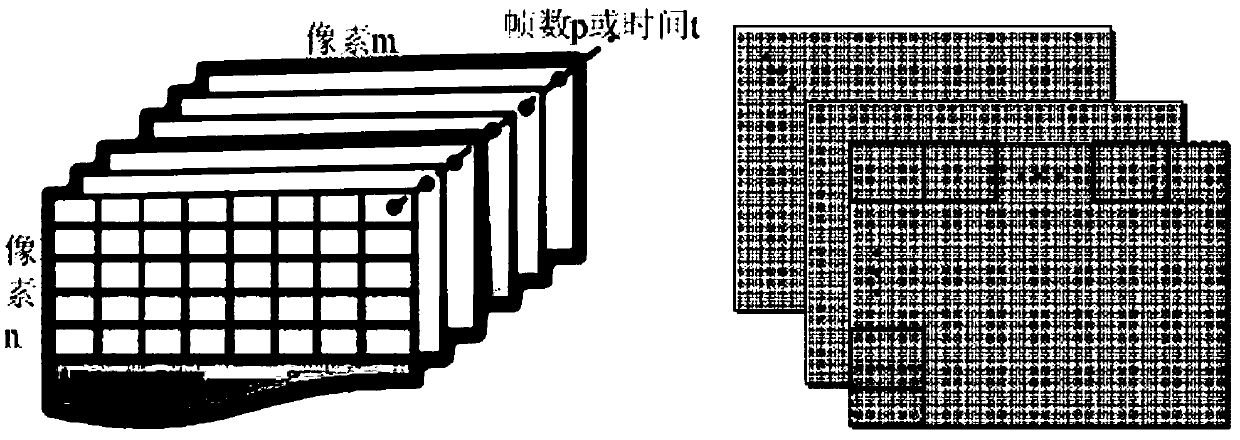

The image fusion process is defined as gathering all the important information from multiple images, and their inclusion into fewer images, usually a single one. This single image is more informative and accurate than any single source image, and it consists of all the necessary information. The purpose of image fusion is not only to reduce the amount of data but also to construct images that are more appropriate and understandable for the human and machine perception. In computer vision, Multisensor Image fusion is the process of combining relevant information from two or more images into a single image. The resulting image will be more informative than any of the input images.

System and method for image fusion

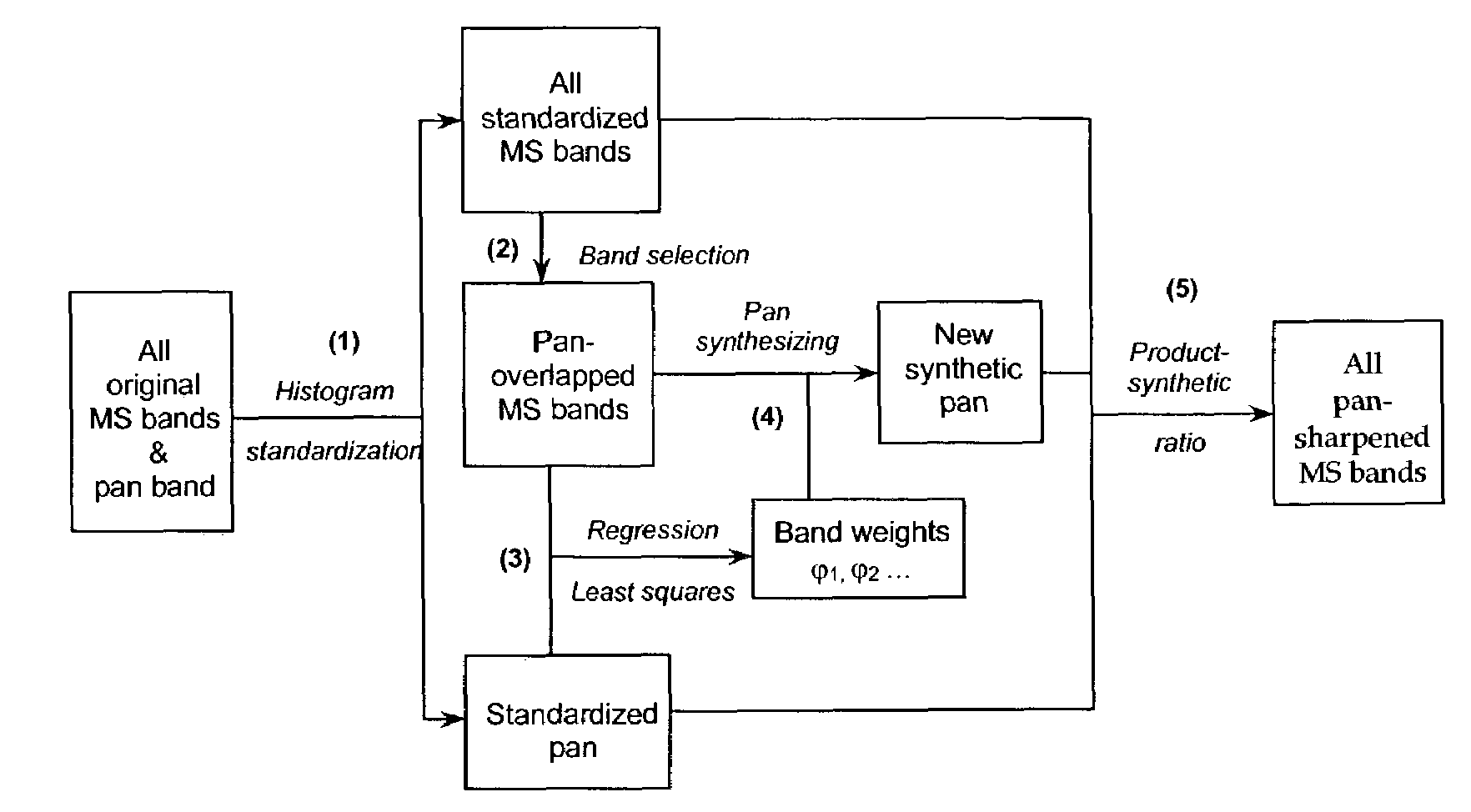

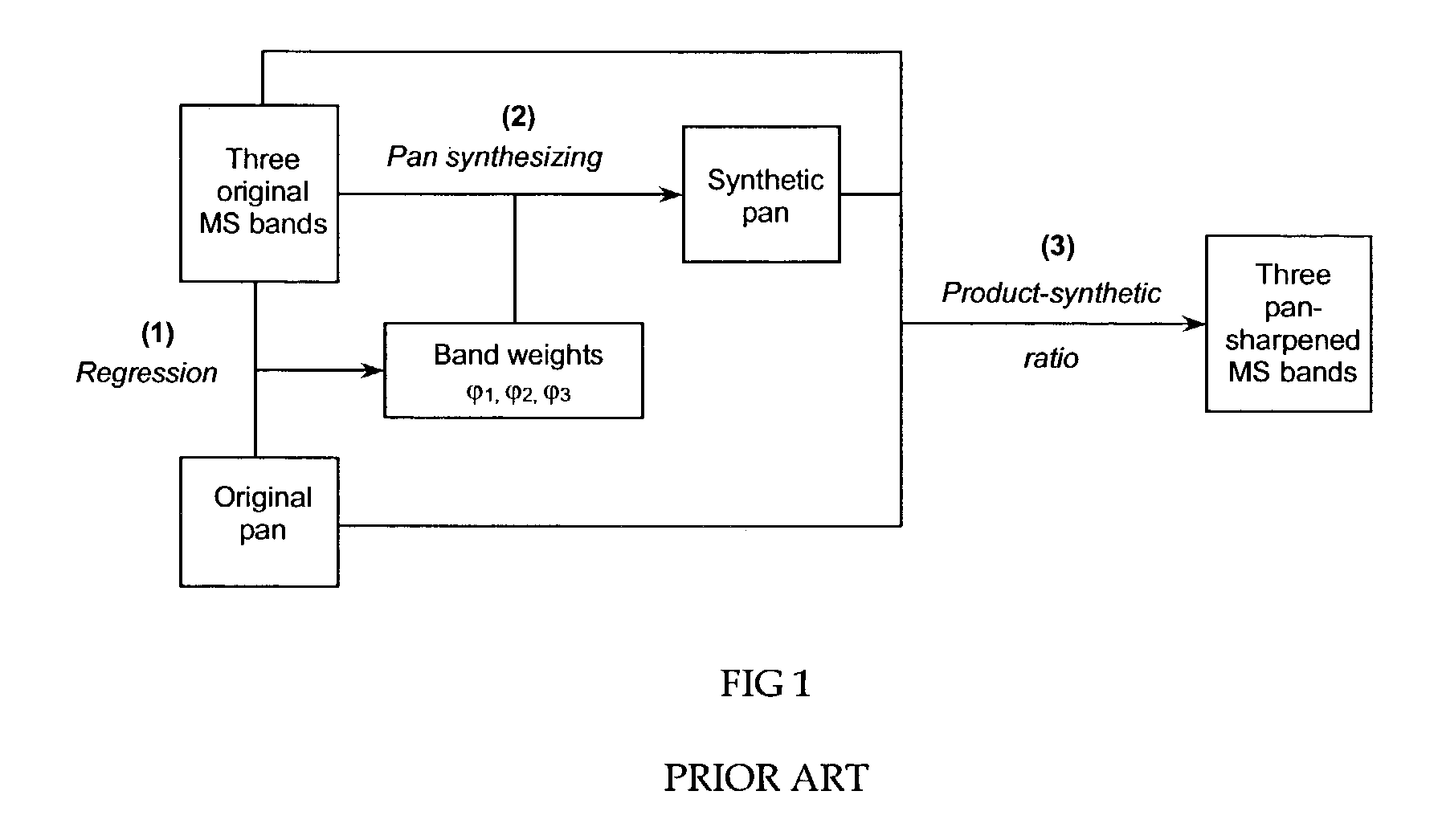

A method of fusing a low resolution multispectral image with a high resolution panchromatic image comprising the step of generating a new synthetic pan image using multispectral bands from the multispectral image.

Owner:NEW BRUNSWICK UNIV OF THE

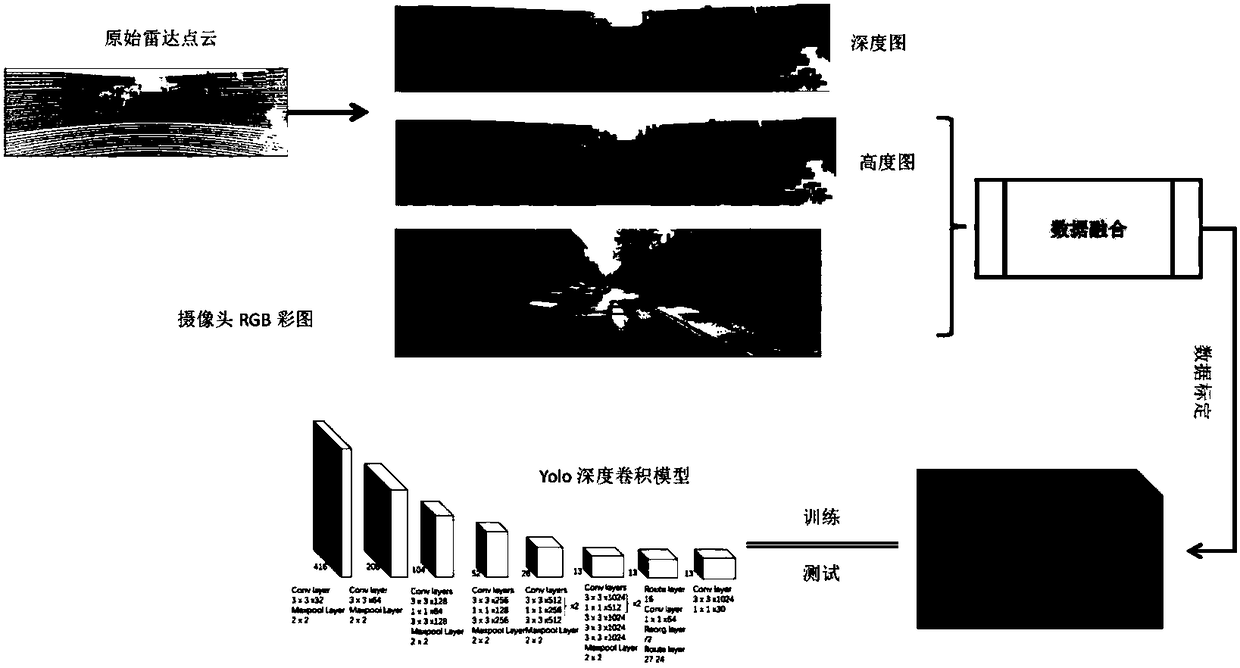

Vehicle-mounted barrier detection method based on radar data and image data fusion and deep learning

ActiveCN108229366AComplementary Imaging UncertaintiesComplementary instabilityScene recognitionElectromagnetic wave reradiationPoint cloudCharacteristic type

The invention discloses a target detection algorithm based on intelligent equipment sensor data fusion and deep learning. Through fused radar point cloud data and camera data, data feature types capable of being sensed by a detection model are enriched. By performing model training on data channel fusion with different configuration, optimal channel configuration is selected, detection accuracy isimproved, and meanwhile calculation power consumption is lowered. By performing a test on real data, the channel configuration suitable for a real condition is determined, and the goals of processingfusion data by use of a Yolo deep convolutional neural network model and performing target barrier detection on a road scene is achieved.

Owner:BEIHANG UNIV

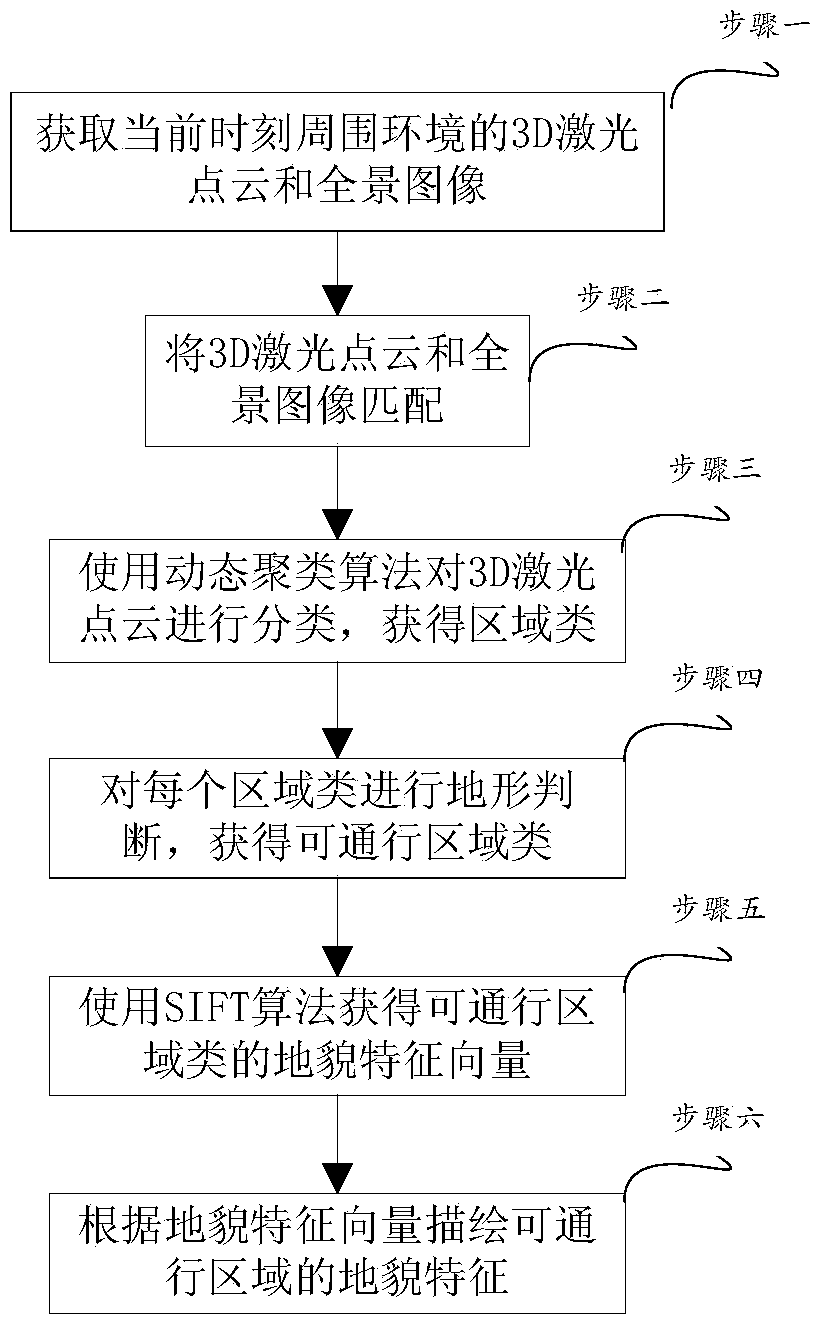

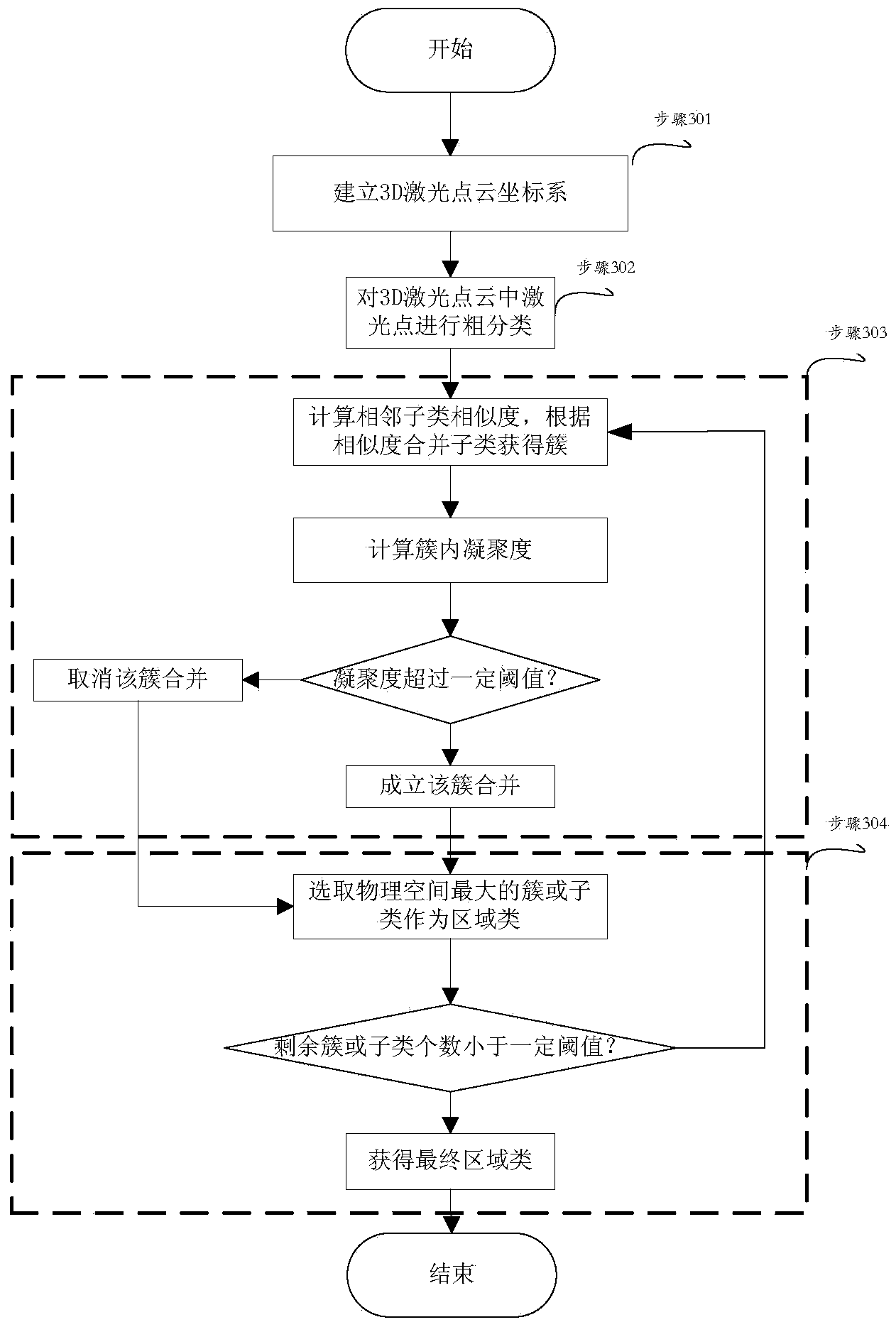

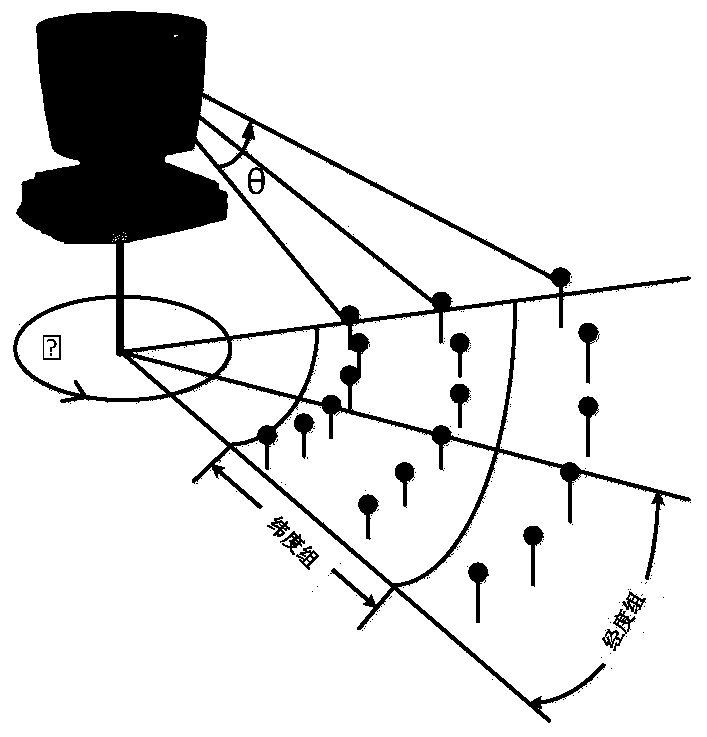

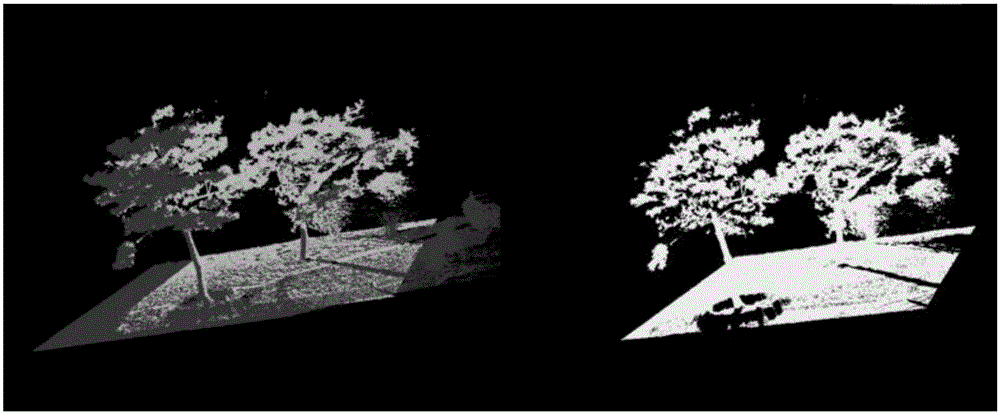

Geographic and geomorphic characteristic construction method based on laser radar and image data fusion

ActiveCN103645480AGuaranteed accuracyReduce complexityElectromagnetic wave reradiationAutomatic controlPoint cloud

The invention discloses a geographic and geomorphic characteristic construction method based on laser radar and image data fusion and belongs to the automatic control field. The method specifically comprises 1) obtaining 3D laser point clouds and panoramic pictures of the surrounding environment of a ground unmanned mobile platform at present; 2) matching the 3D laser point clouds and the panoramic pictures and obtaining matched images; 3) dividing the 3D laser point clouds based on different distribution characteristics corresponding to each laser point and carrying out clustering based on a dynamic clustering algorithm of each distribution characteristic to obtain a plurality of region classes; 4) finding passable region classes in the plurality of region classes based on travel ability of the ground unmanned mobile platform; 5)obtaining landform identification vectors of the passable region classes by utilizing a denseness SIFT algorithm; and 6) carrying out landform classification on the passable region classes based on the landform identification vectors and by utilizing a classifier. The method is suitable for passable geographic and geomorphic characteristic construction of the ground unmanned mobile platform.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

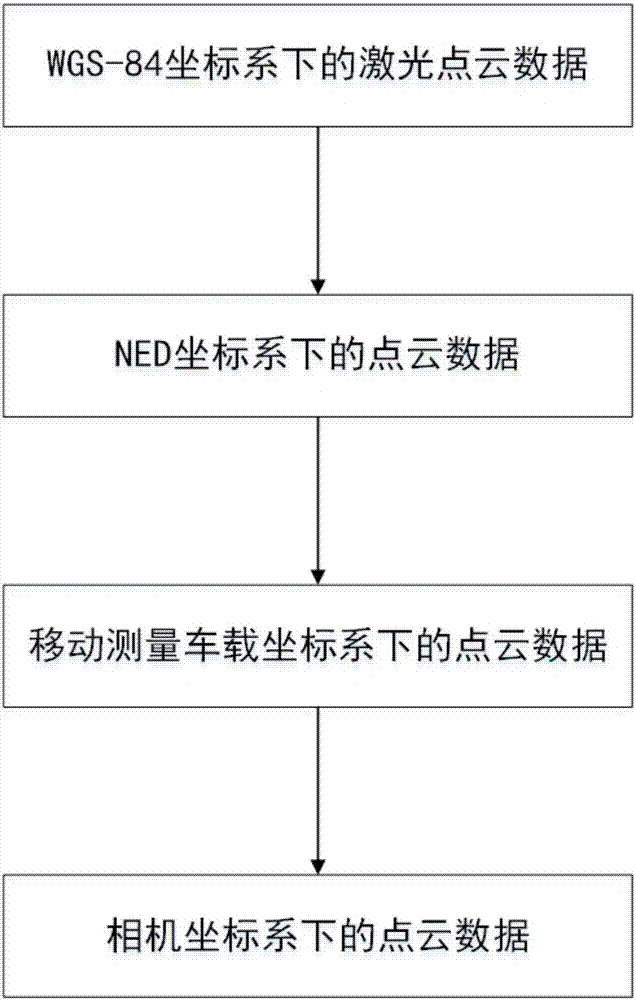

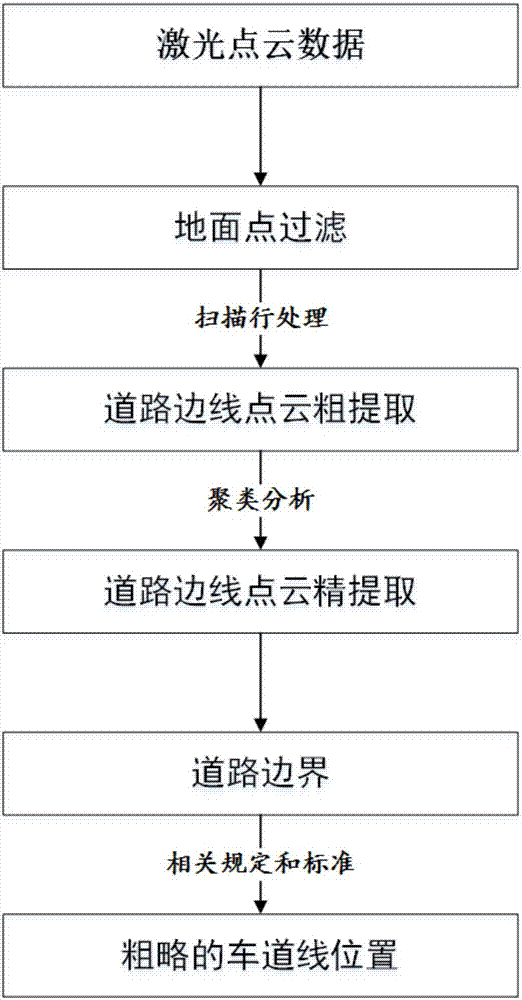

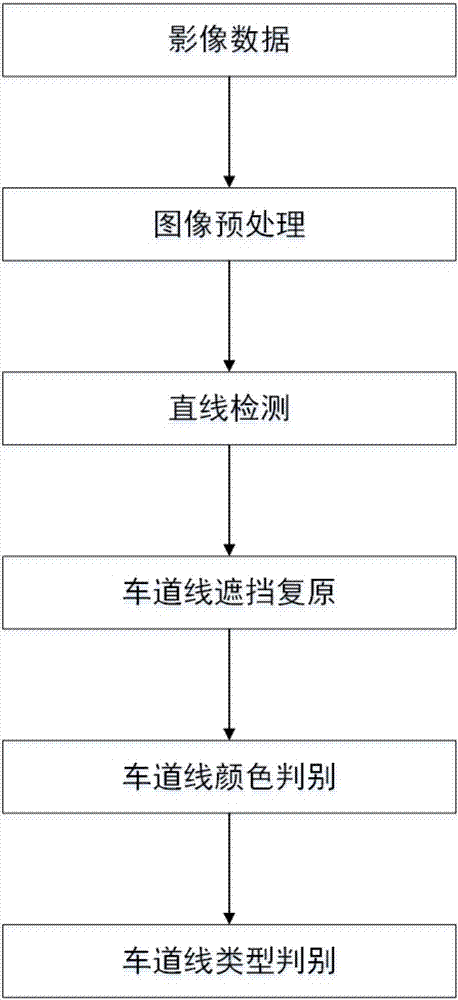

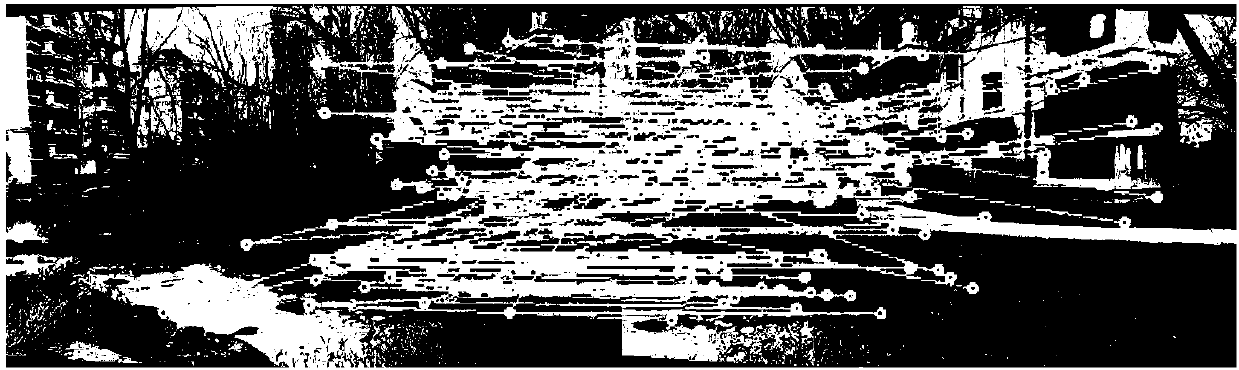

Laser point cloud and image data fusion-based lane line extraction method

ActiveCN107463918AAccurate and Robust ExtractionImage enhancementImage analysisPoint cloudRoad surface

The invention relates to a laser point cloud and image data fusion-based lane line extraction method. The method comprises the following steps of 1, preprocessing an original laser point cloud, extracting a road surface point cloud, and preprocessing an original image to remove influences of noises, illumination and the like; 2, extracting a point cloud of a road boundary from the extracted road surface point cloud in the step 1, and by utilizing a principle that a distance between a lane line and the road boundary is constant, determining a point cloud position of the lane line; 3, registering a point cloud of the lane line obtained after processing in the step 2 and the preprocessed image, and roughly determining an approximate position of the lane line on the image; and 4, performing accurate lane line detection in an image region determined in the step 3. According to the method, the lane line is extracted more accurately and robustly by fully utilizing the advantages of point cloud data and image data.

Owner:WUHAN UNIV

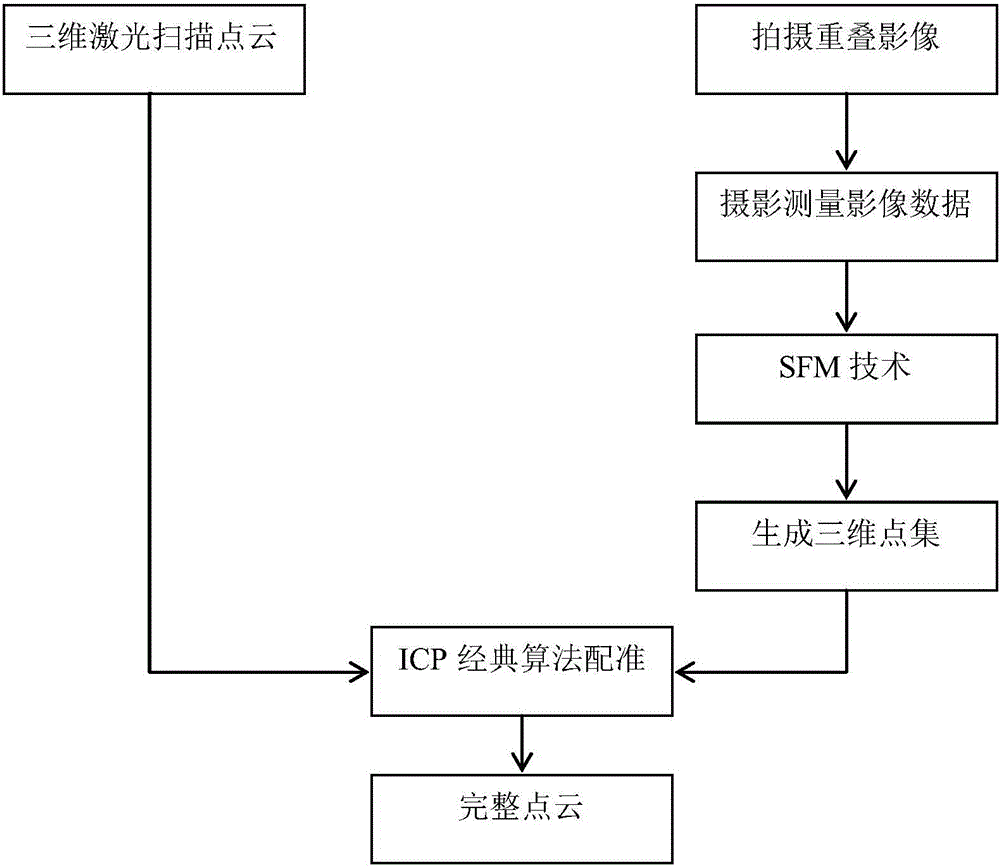

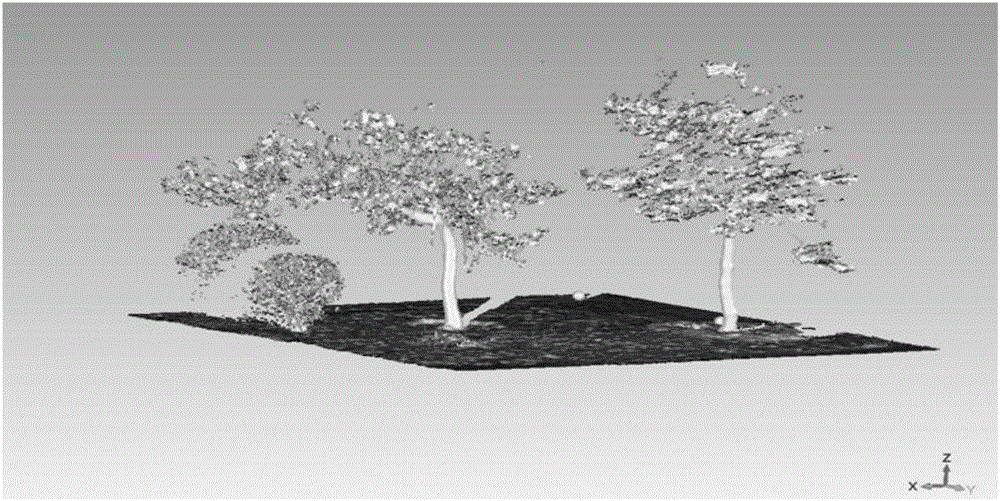

Ground three-dimensional laser scanning point cloud and image fusion and registration method

InactiveCN105931234AImprove noise immunityEnsuring rotation invarianceImage enhancementImage analysisLaser scanningImage fusion

The present invention provides a ground three-dimensional laser scanning point cloud and image fusion and registration method, and the limitation of the scanning of the ground three-dimensional laser scanning technology is overcome. The point cloud data obtained by ground three-dimensional laser scanning often comprises various areas which can not be measured, point cloud holes are generated, and thus local area information of a scanned object is lost. The holes cause that a model can not realize visualization correctly, and model subsequent processing is influenced too. For the above problems, the invention aims to solve the problems through the following technical scheme that an SIFT image is used to carry out mosaic processing of collected image photos by means of an algorithm to obtain a panoramic image, the intensive reconstruction is carried out through the panoramic image to obtain the 3D point cloud data generated by the image photo, and an iterative closest point algorithm (ICP) is used to realize the registration working of laser scanning point cloud and image data generation point cloud.

Owner:NORTHEAST FORESTRY UNIVERSITY

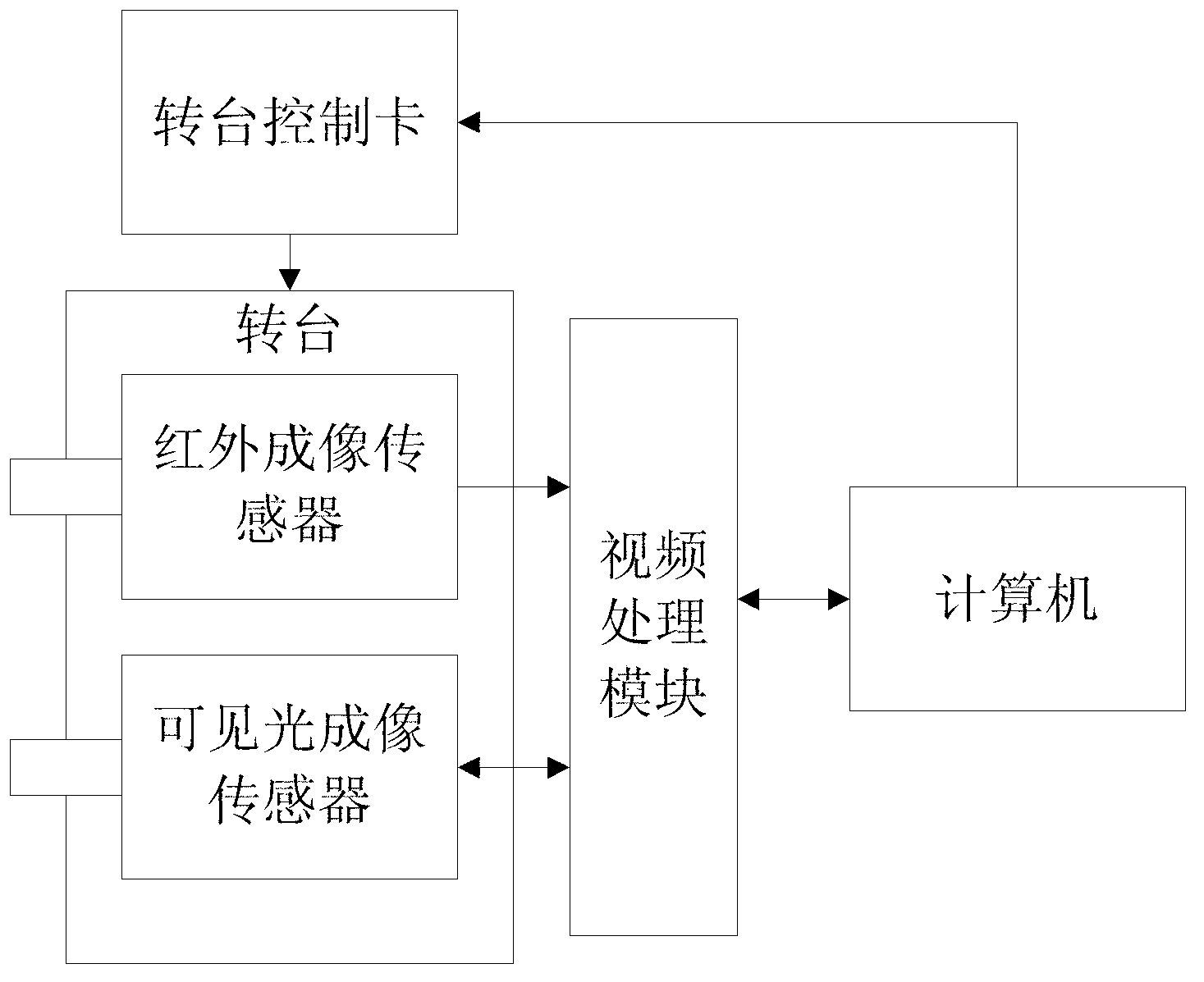

Fusion method of infrared image and visible light dynamic image and fusion device of infrared image and visible light dynamic image

InactiveCN102982518ASmall amount of calculationMeet real-time requirementsImage enhancementImaging processingImage fusion

The invention discloses a fusion method of an infrared image and a visible light dynamic image and a fusion device of the infrared image and the visible light dynamic image and belongs to the image processing field. The image fusion method comprises calculating an image registration parameter; collecting the infrared image and the visible light image respectively and carrying out image registration to the infrared image and the visible light image according to the image registration parameter; and carrying out image fusion to the infrared image and the visible light image after the image registration are carried out to the infrared image and the visible light image. Due to the fact that in a image fusion process of the infrared image and the visible light image, the image registration is achieved according to the image registration parameter, the calculated amount of numerical value change is decreased greatly, calculating speed is improved, and further speed of the image fusion is improved, and real-time requirements of image processing are met.

Owner:YANGZHOU WANFANG ELECTRONICS TECH

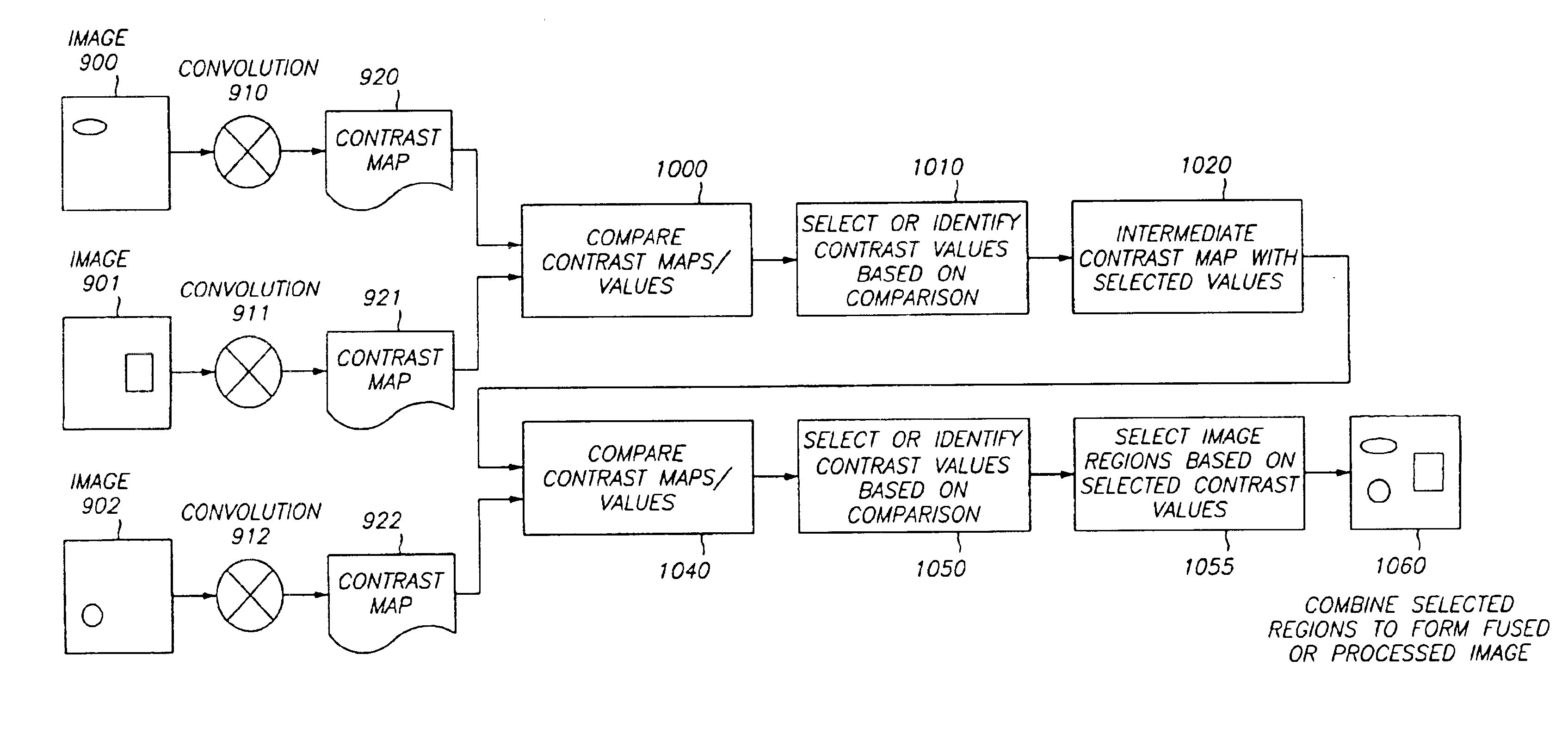

Image fusion system and method

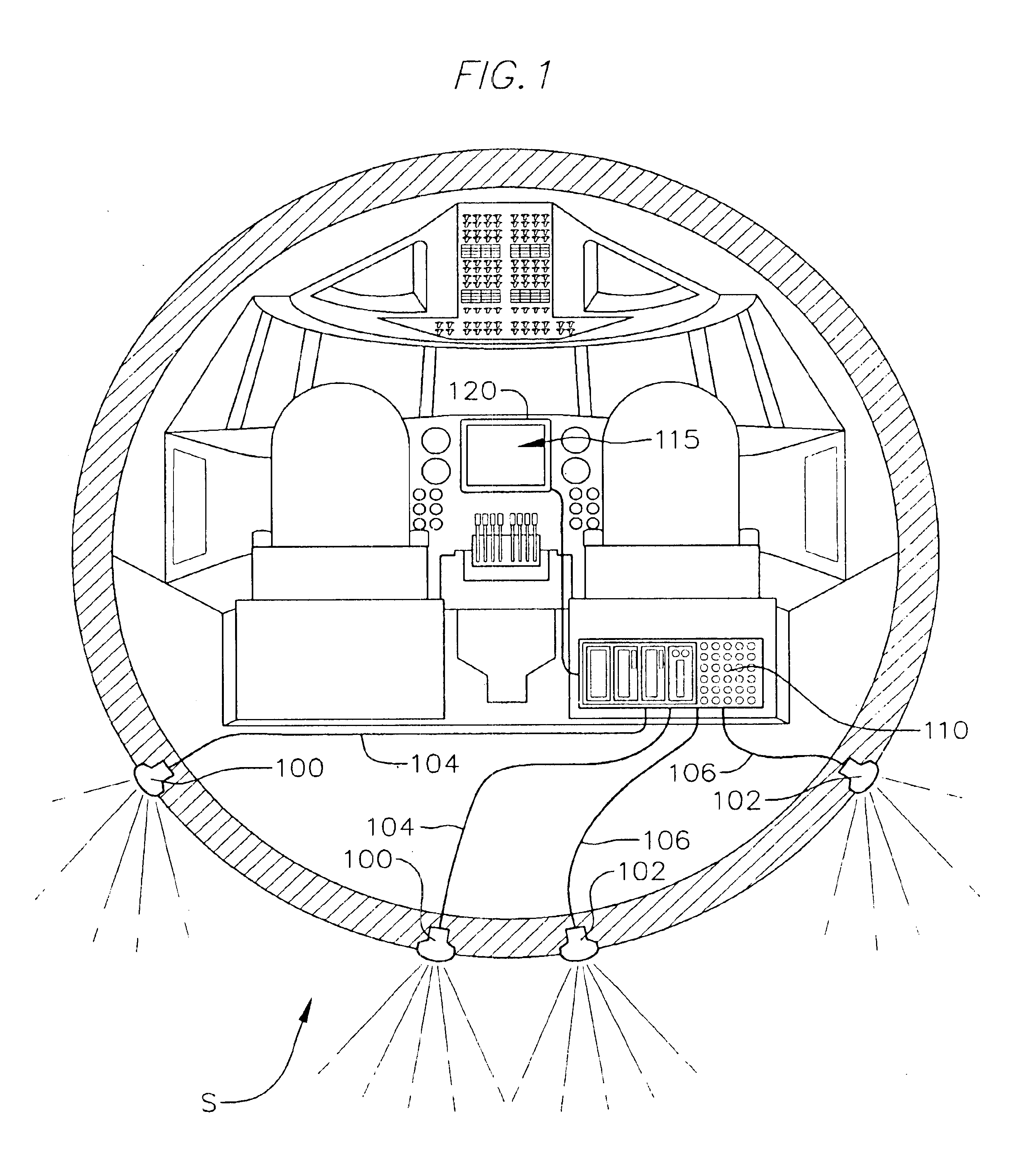

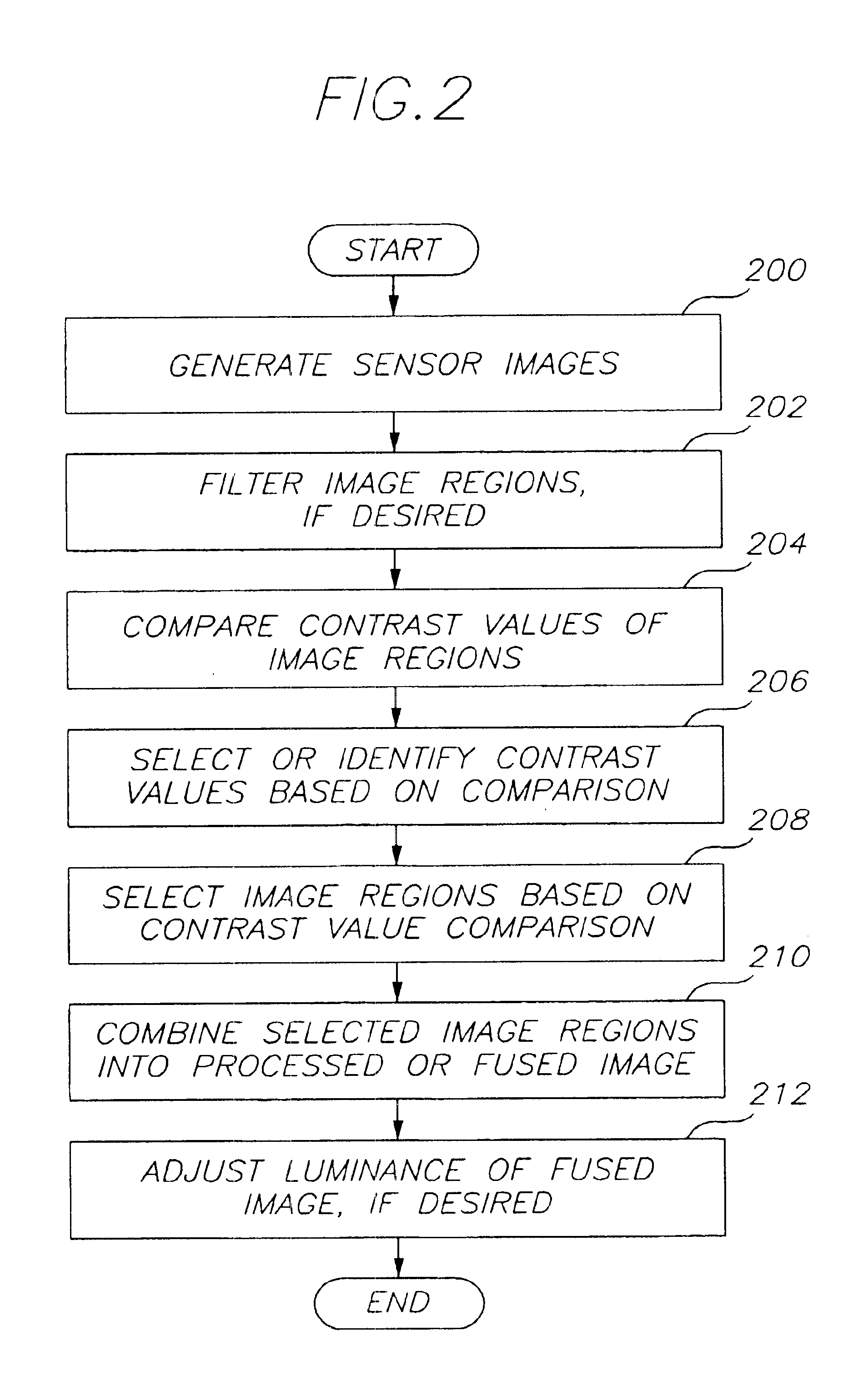

A contrast-based image fusion system and method of processing multiple images to form a processed or fused image including regions selected from one or more images. Images are divided into image regions. Portions of the images are filtered if necessary. A contrast map is generated for each image via a convolution kernel resulting in a contrast map with contrast values for each image region. Contrast values are compared and image regions are selected based on a selection criteria or process such as greater or maximum contrast. The selected image regions form the fused image. If necessary, the luminance of one or more portions of the fused image is adjusted. One sensor is selected as a reference sensor, and an average intensity of each region of the reference sensor image is determined across the reference sensor image. The intensity of one or more regions in the final image is adjusted by combining the determined average luminance values and intensity values of the final image.

Owner:BAE SYSTEMS CONTROLS INC

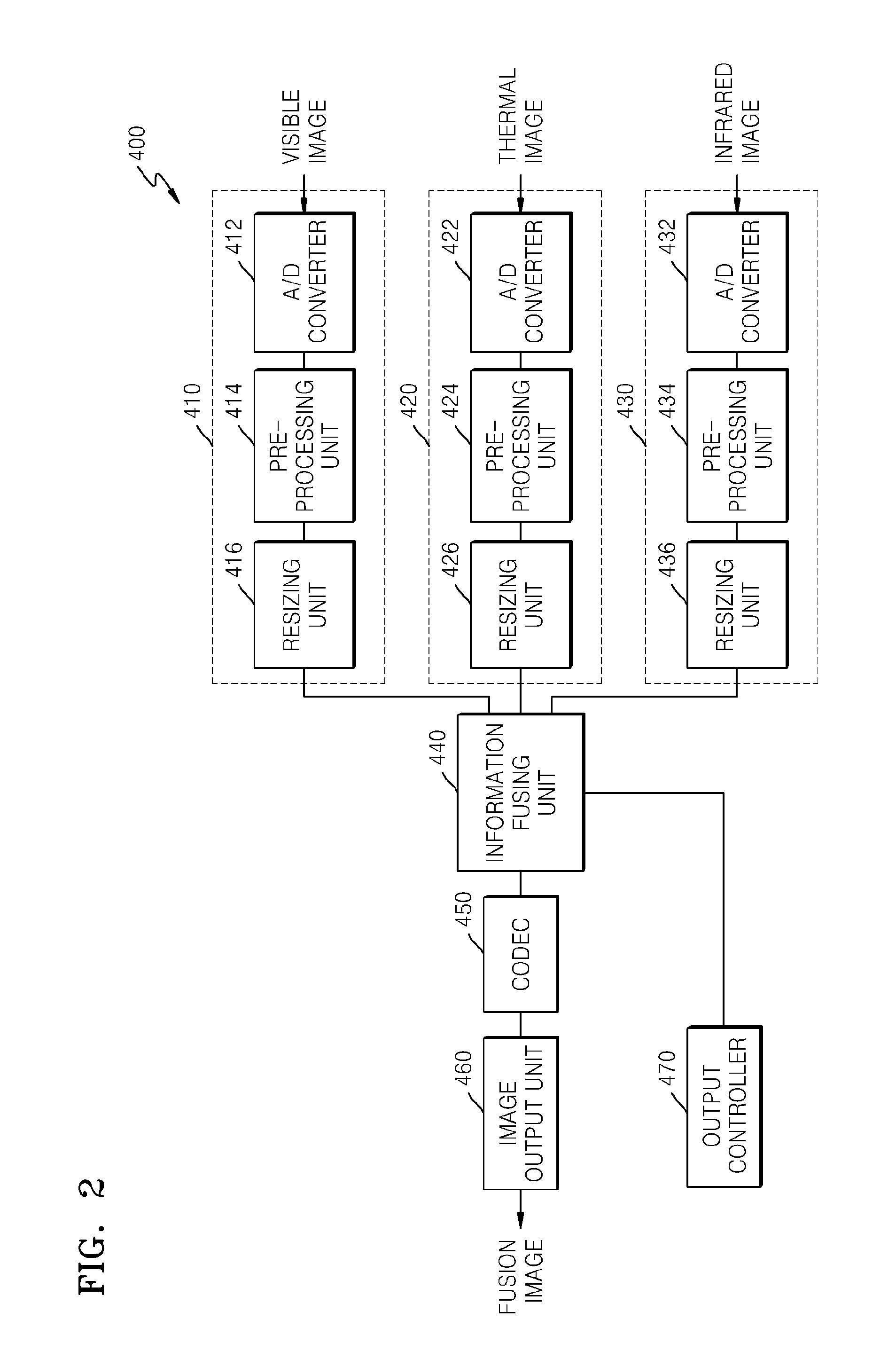

Image fusion system and method

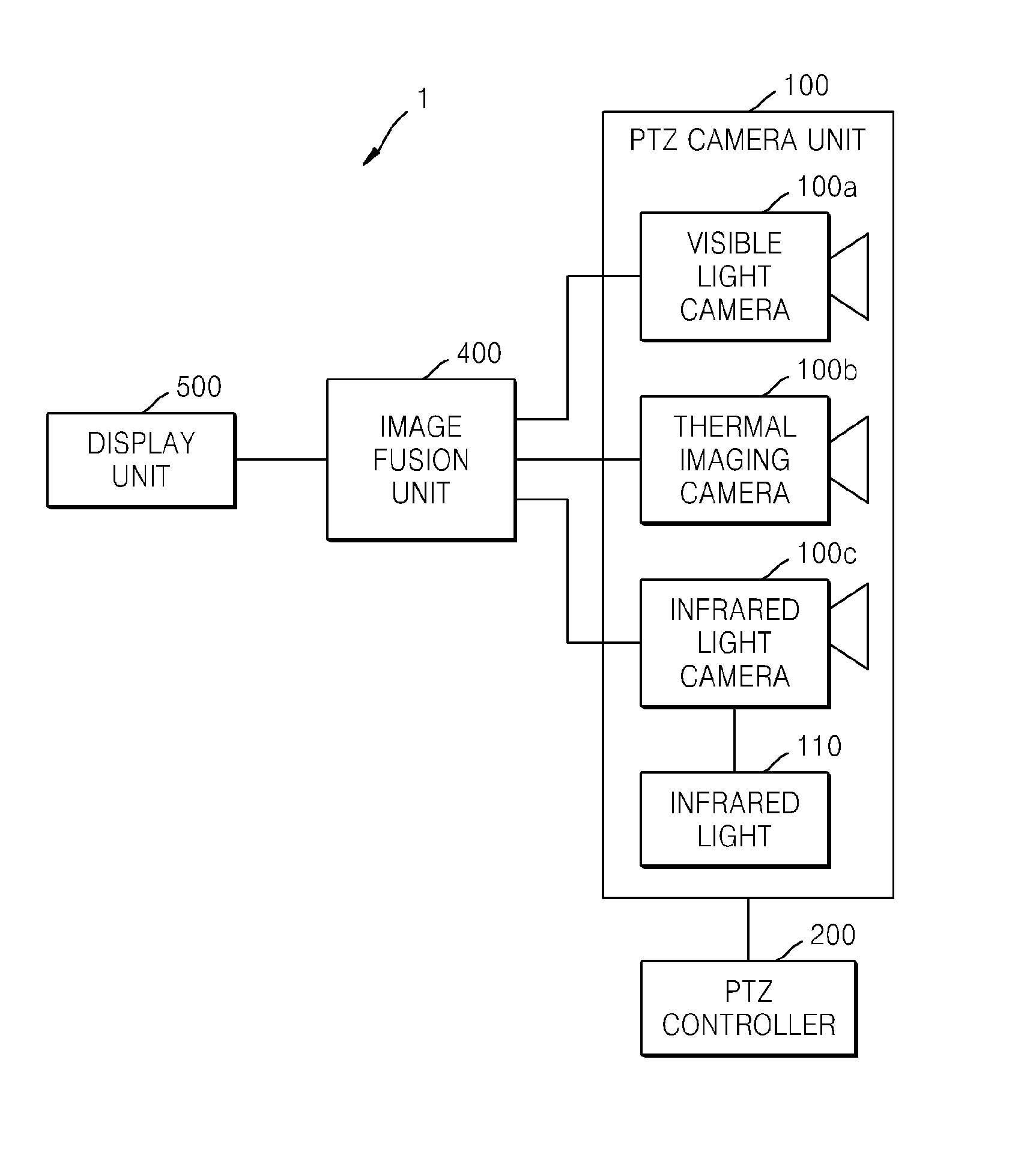

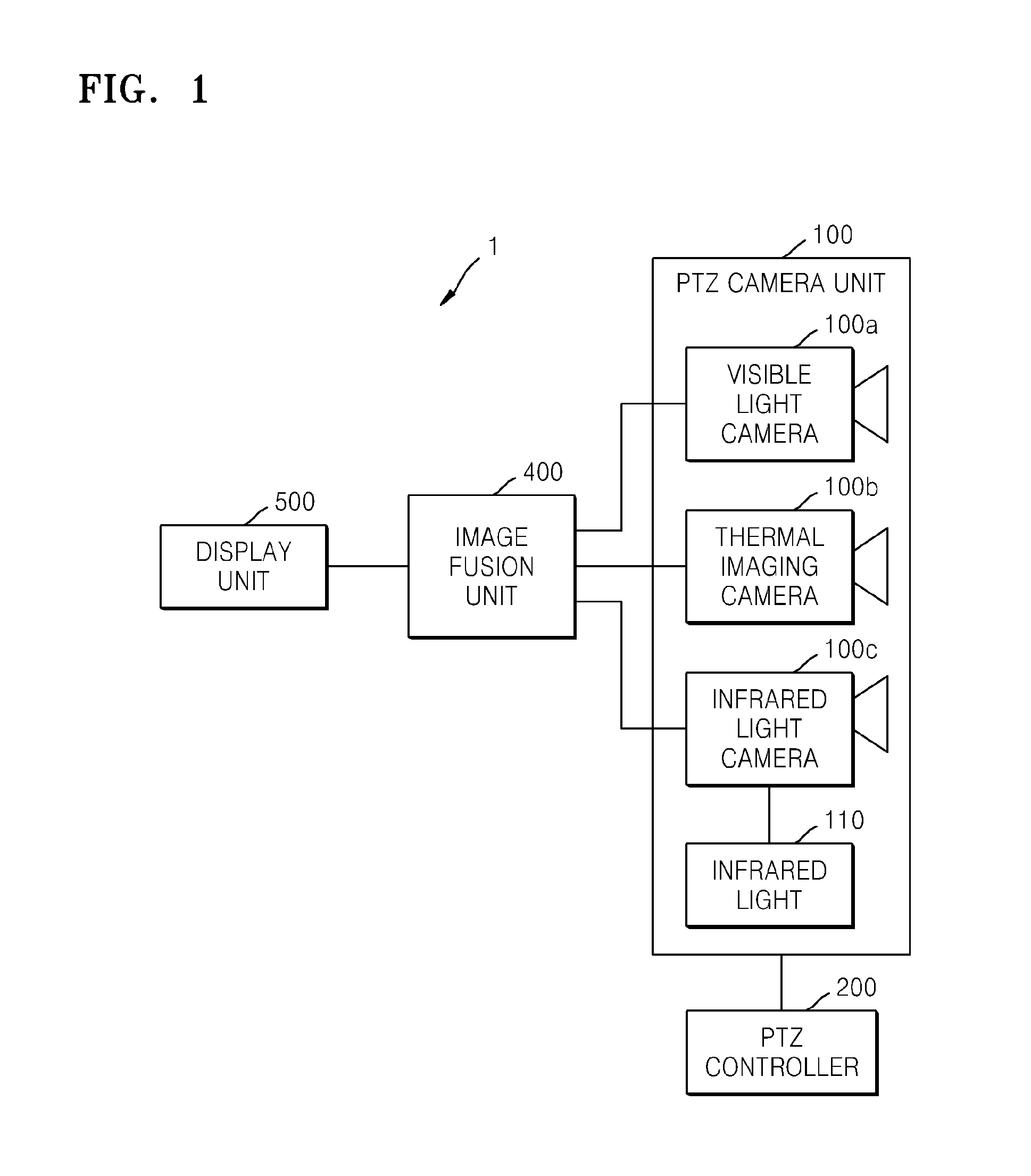

An image fusion system and method are provided. The system includes a plurality of cameras configured to generate a plurality of images, respectively, and an image fusion unit configured to fuse the plurality of images into a single image.

Owner:HANWHA VISION CO LTD

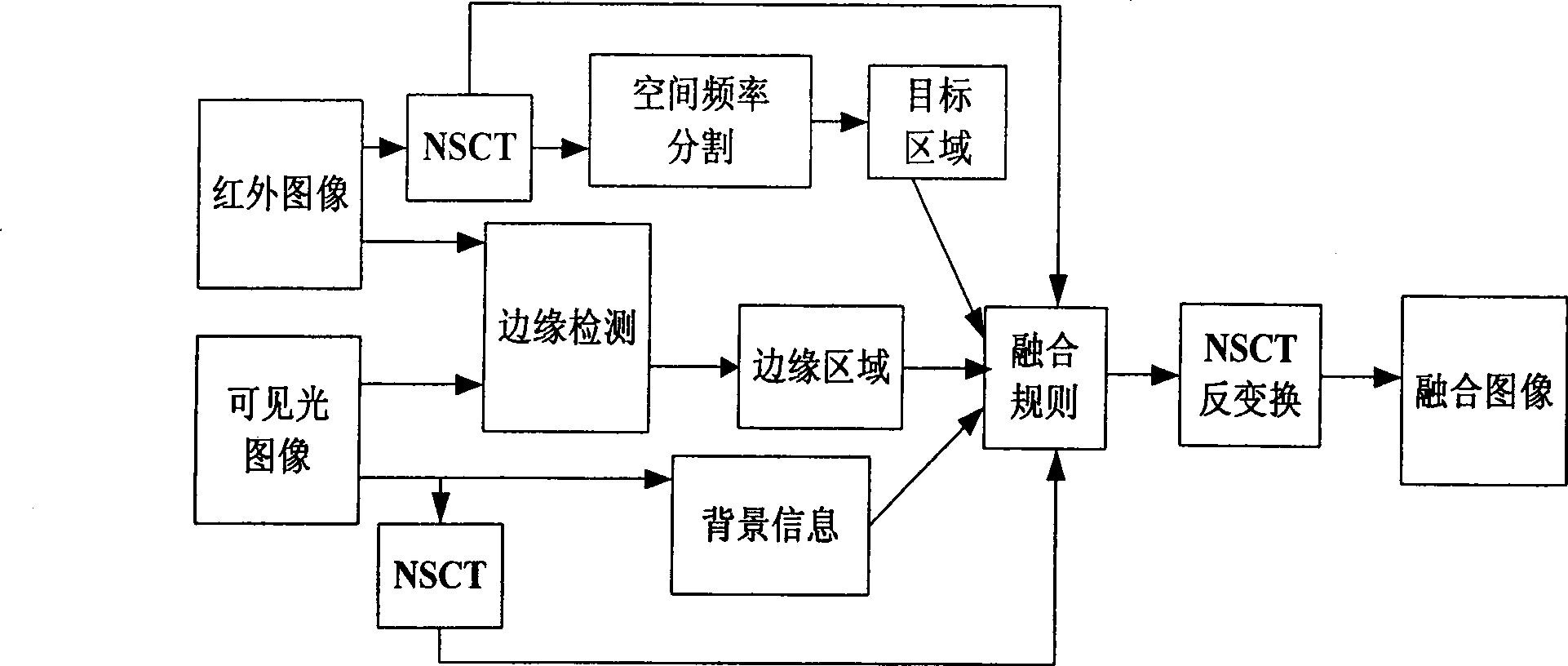

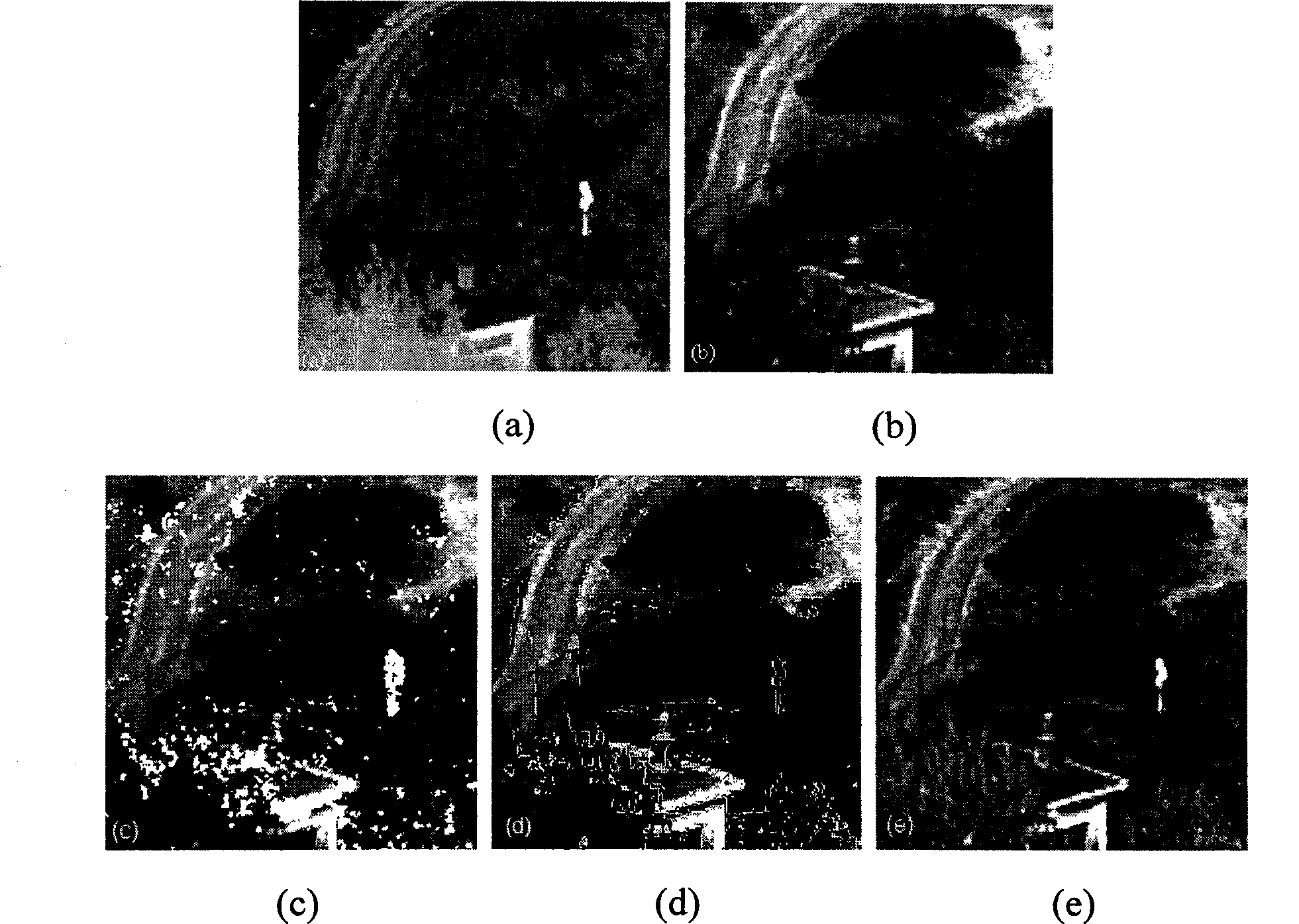

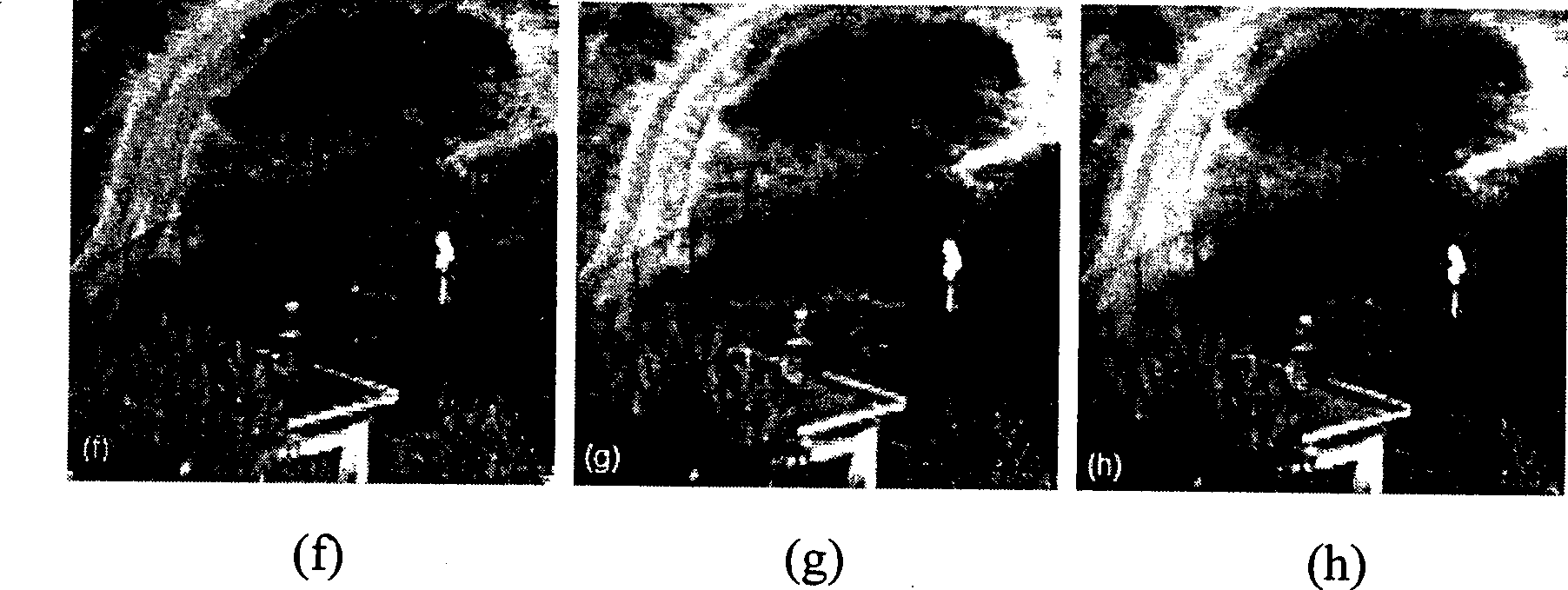

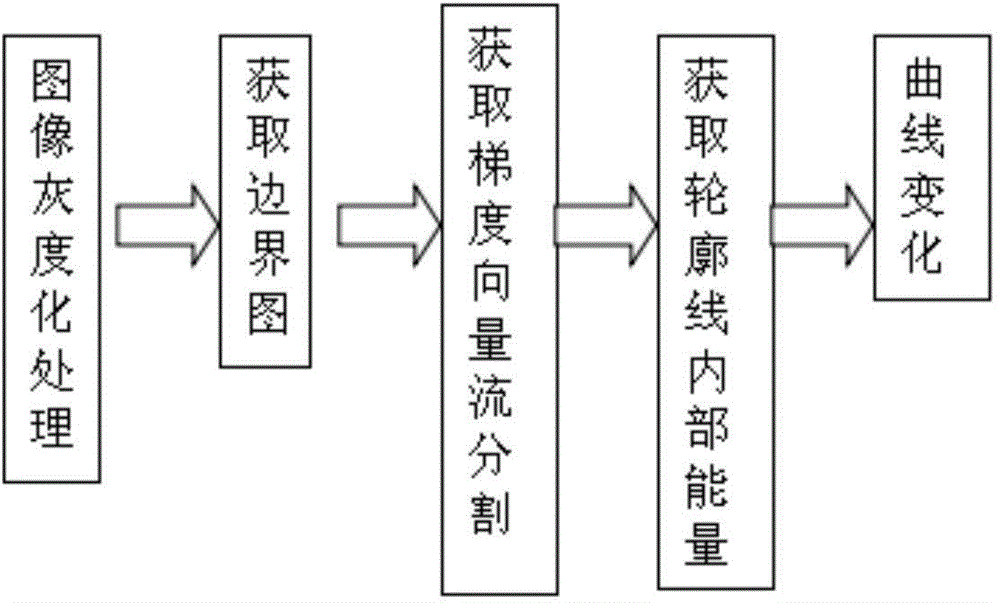

Image fusion of sequence infrared and visible light based on region segmentation

ActiveCN101546428AEfficient captureGood multi-resolutionImage enhancementImage analysisContourletImage fusion

The invention relates to an image fusion of sequence infrared and visible light based on region segmentation. The invention is characterized in that infrared images are segmented into different regions according to the interframe objective change situation and gray scale change degree thereof; the images are dissembled to different frequency domains in the different directions by utilizing non-sub-sampling Contourlet transform; different fusion rules are selected based on the characteristics of different regions in the different frequency domains; and image reformation is carried out for the processed coefficients to obtain the final fusion result. The method takes the information of the characteristics in one of the regions into consideration, so the algorithm is capable of effectively reducing the rate of fusion image error due to the noise and low matching precision, and has stronger robustness.

Owner:JIANGSU HUAYI GARMENT CO LTD +1

Virtual viewpoint rendering method

The invention relates to a virtual viewpoint rendering method, which belongs to the field of image processing and stereo imaging and can achieve high-quality virtual viewpoint rendering. The technical scheme adopted by the virtual viewpoint rendering method comprises the following steps: (1) preprocessing depth maps; (3) performing image fusion on the two virtual viewpoint images by using distance weighted values, eliminating most of hollows and producing a fusional initial virtual view; and (4) filling the hollows of the initial virtual view by a method on the basis of figure-ground segregation and obtaining a final virtual viewpoint image. The virtual viewpoint rendering method is mainly applicable to image processing and stereo imaging.

Owner:TIANJIN UNIV

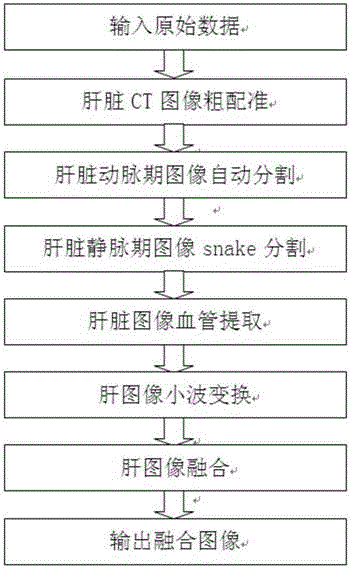

Liver multi-phase CT image fusion method

InactiveCN104835112AHigh precisionEfficient extractionImage enhancementImage analysisSource imageMutual information

The invention discloses a liver multi-phase CT image fusion method which comprises the steps of firstly performing coarse registering on a source image sequence according to a multi-resolution CT image registering method based on a combined histogram, then realizing automatic liver image dividing according to a region growing algorithm in combination with confidence connection and liver image dividing based on a gradient vector flow snake model, and effectively extracting the edge information of the liver; performing blood vessel extraction based on a directional region growing algorithm on the liver image, performing free deformation transformation based on a B spline and liver non-rigid registering based on space weighting mutual information on a liver essential image, thereby accurately finding an image pair at a same position of a space; and finally performing image fusion based on wavelet transformation. The liver multi-phase CT image fusion method aims at the characteristics of a liver CT image. An image separation process and an image registering process are combined with the image fusion process, thereby greatly improving fusion precision.

Owner:XIAMEN UNIV

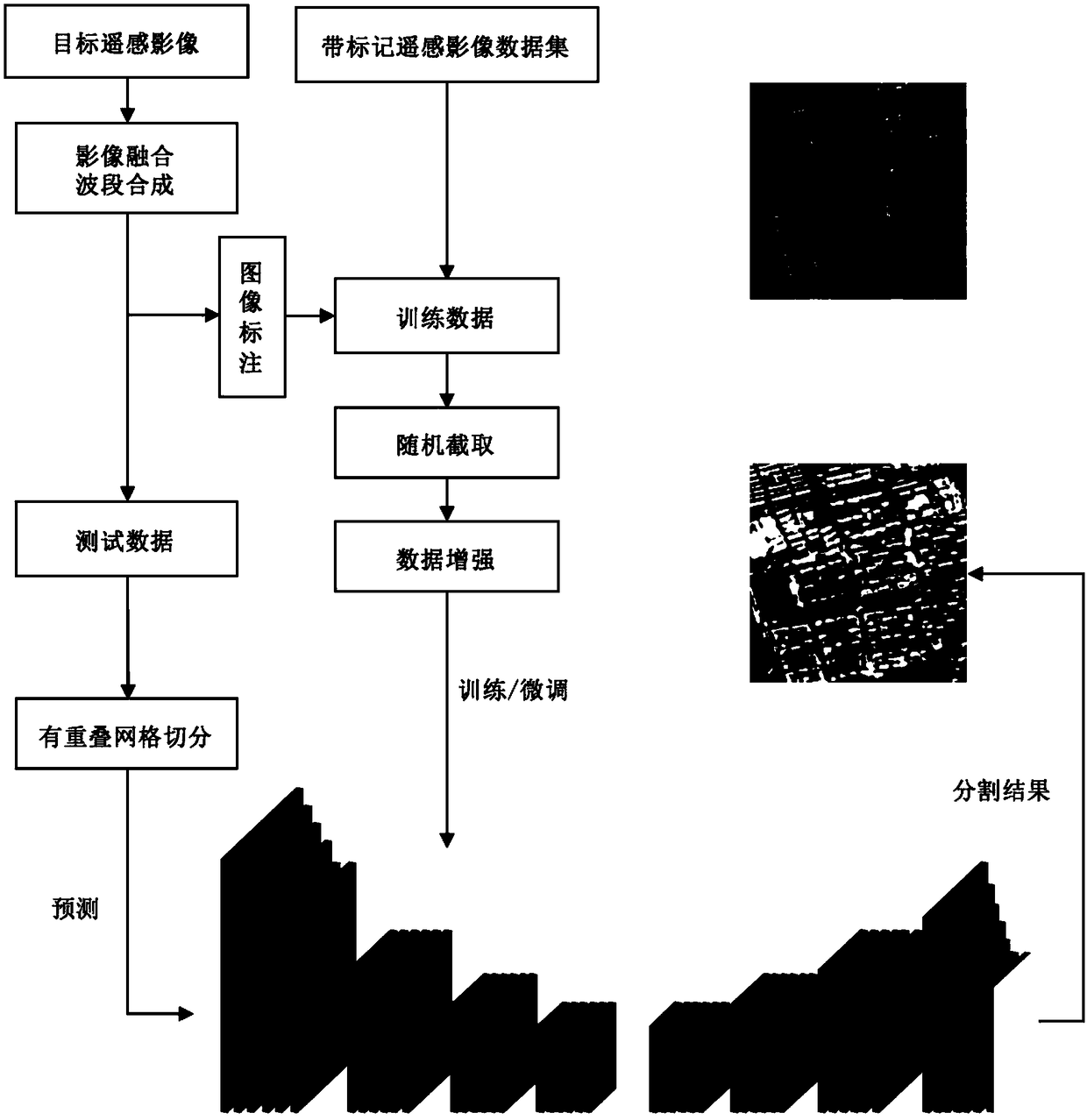

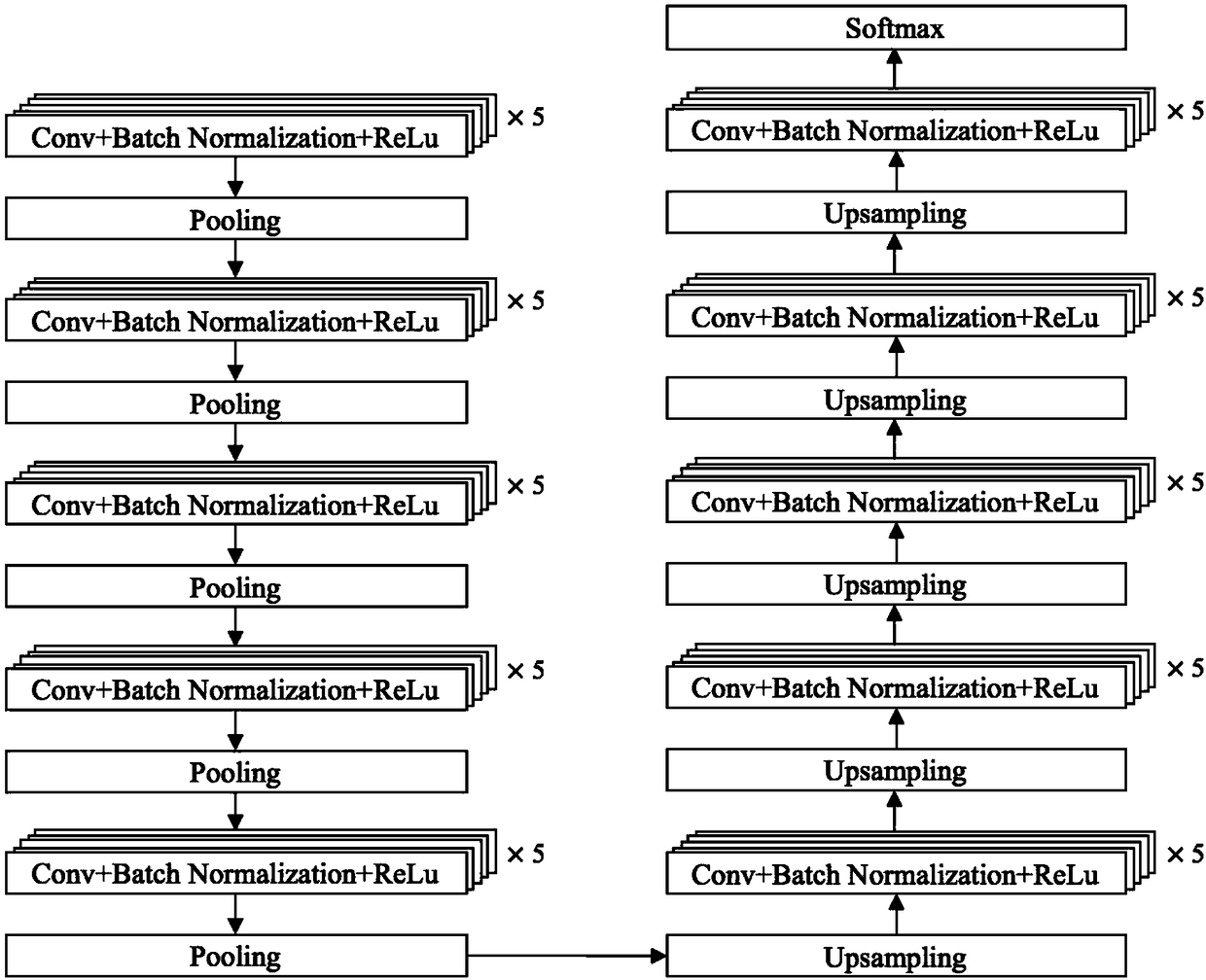

Full convolutional neural network-based large-range remote sensing image semantic segmentation method

The invention discloses a full convolutional neural network-based large-range remote sensing image semantic segmentation method. The method comprises three stages of data tagging, model training and result prediction; the method performs preprocessing operations of waveband synthesis, image fusion and image segmentation on remote sensing images for characteristics of high precision, large range, multispectral information and the like of the remote sensing images; the richness of samples is improved by applying a data enhancement technique to a training set; and if a data set with a label can be obtained, the data set can be used for training a model firstly, and target data model training is initialized to reduce the workload of manual labeling. For improving the accuracy of a result, themethod performs overlapping grid division on the images, performs prediction, splices prediction result images in sequence, and performs median filtering to reduce noises and unsmooth parts in the images; and finally, relatively high accuracy is achieved.

Owner:ZHEJIANG UNIV

Image splicing method based on improved image fusion

InactiveCN107146201AImprove accuracyImprove real-time performanceImage enhancementImage analysisDynamic planningReference image

The invention relates to an image splicing method based on improved image fusion. The method mainly settles technical problems of low real-time performance, splicing seam and ghost in prior art. The method comprises the steps of respectively performing characteristic point extraction on a target image and a reference image by means of an A-KAZE algorithm, and establishing a characteristic description subset; constructing a KD-tree, establishing a characteristic point data index, matching the characteristic point by means of a bidirectional KNN matching algorithm, obtaining an initial matching result, performing external point elimination and internal point reserving on the initial matching result through a RANSAC algorithm, and finishing image registering; and performing image fusion by means of an improved Laplace multi-resolution fusion algorithm based on a splicing seam, wherein the step comprises an optimal splicing seam by means of dynamic planning method, limiting a fusion range according to the optimal splicing seam, and finally performing fusion by means the Laplace multi-resolution fusion algorithm in a fusion range, thereby finishing image splicing. The image splicing method settles the problems in a relatively good manner and can be used in image splicing.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

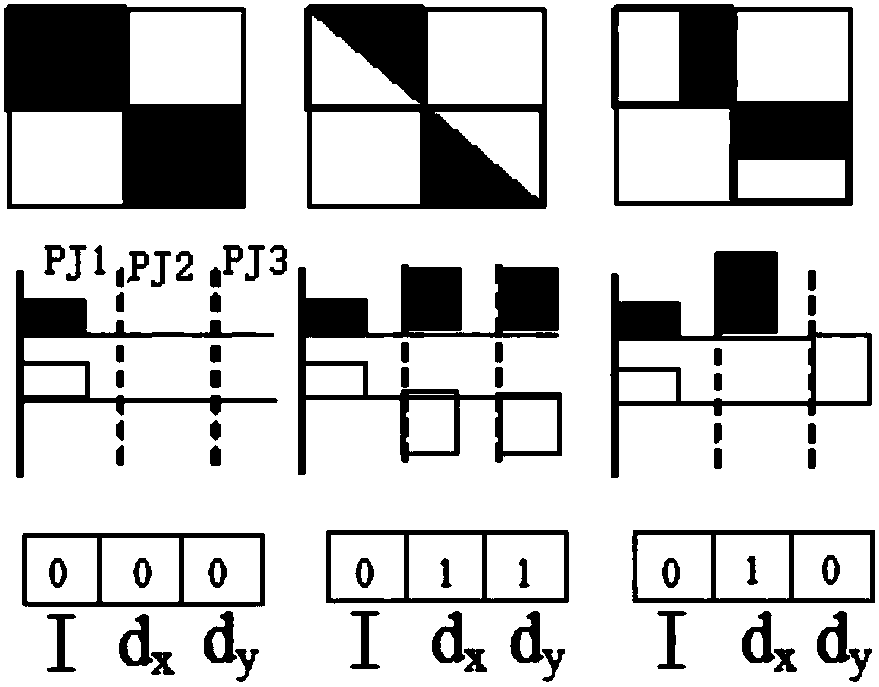

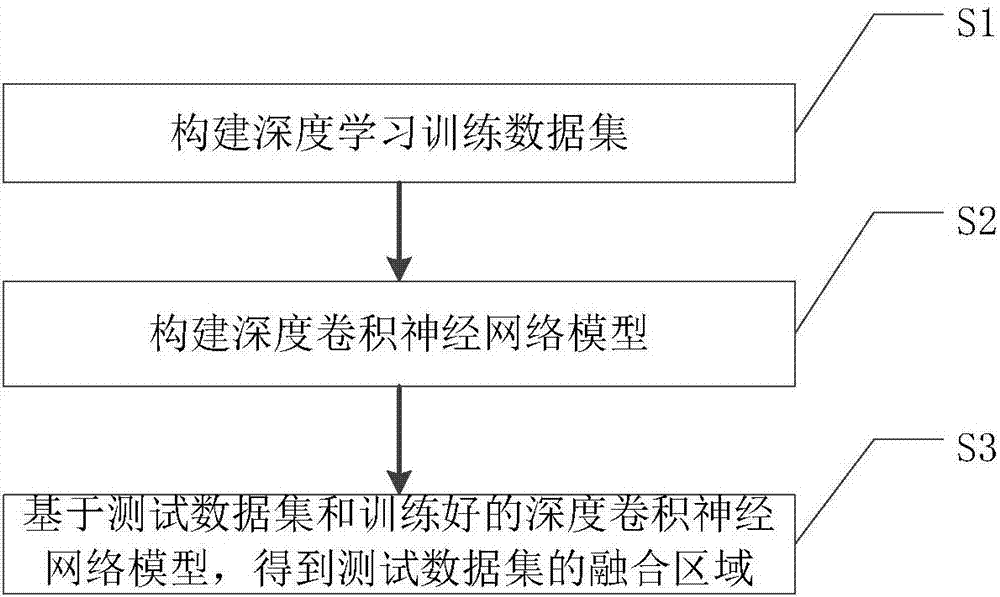

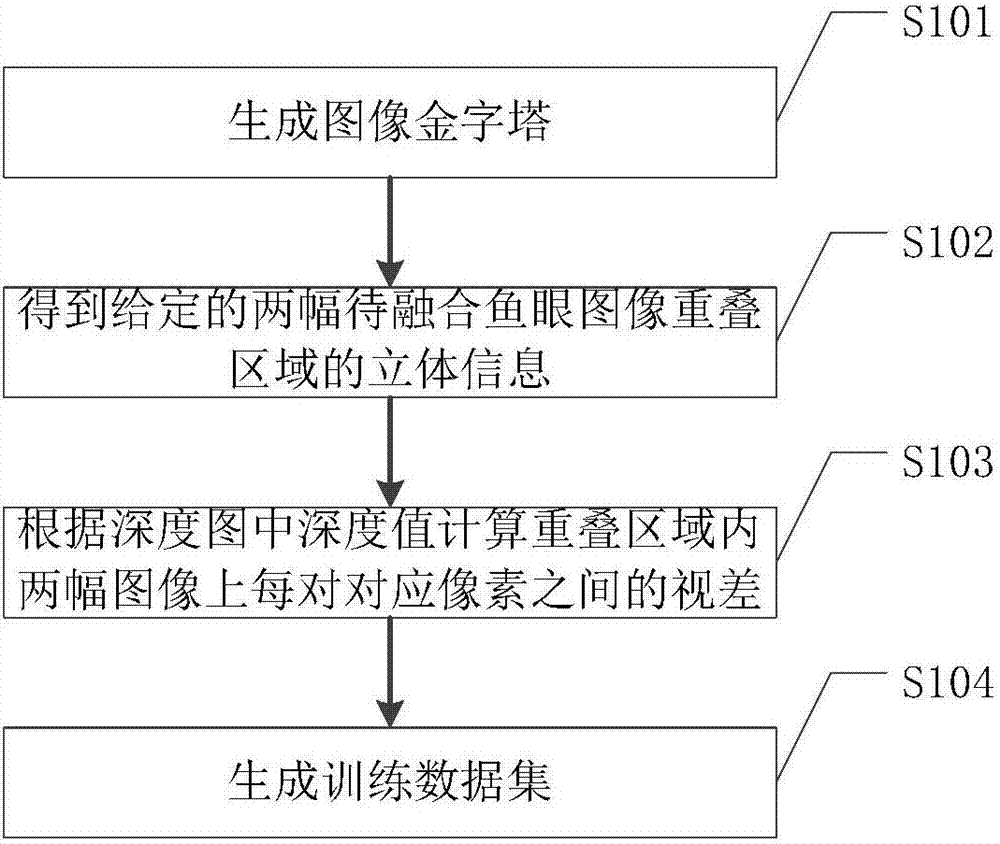

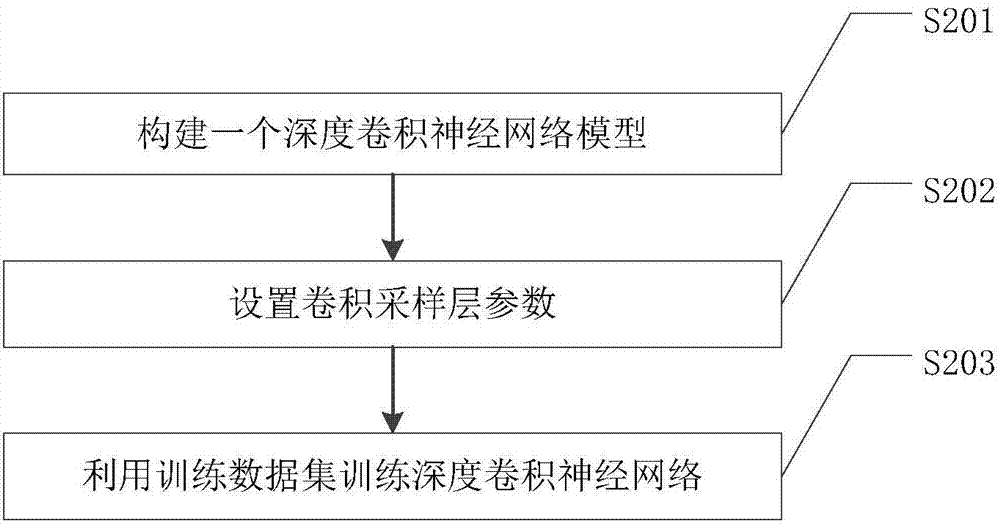

Panoramic image fusion method based on depth convolution neural network and depth information

InactiveCN106934765AReduce ghostingImplement automatic selectionImage enhancementImage analysisData setSemantic representation

The invention discloses a panoramic image fusion method based on a depth convolution neural network and depth information. The method comprises the steps of (S1) constructing a deep learning training data set, selecting overlap regions xe1 and xe2 of two fish eye images to be fused used for training and an ideal fusion area ye of a panoramic image formed after fusing the two fish eye images, and constructing a training set {xe1, xe2, ye} of the images to be fused and a panoramic image block pair, (S2) constructing a convolution neural network model, and (S3) obtaining a fusion area of a test data set based on a test data set and a trained depth convolution neural network model. According to the method, an image can be expressed more comprehensively and deeply, the image semantic representation in a plurality of abstract levels is realized, and the accuracy of image fusion is improved.

Owner:CHANGSHA PANODUX TECH CO LTD

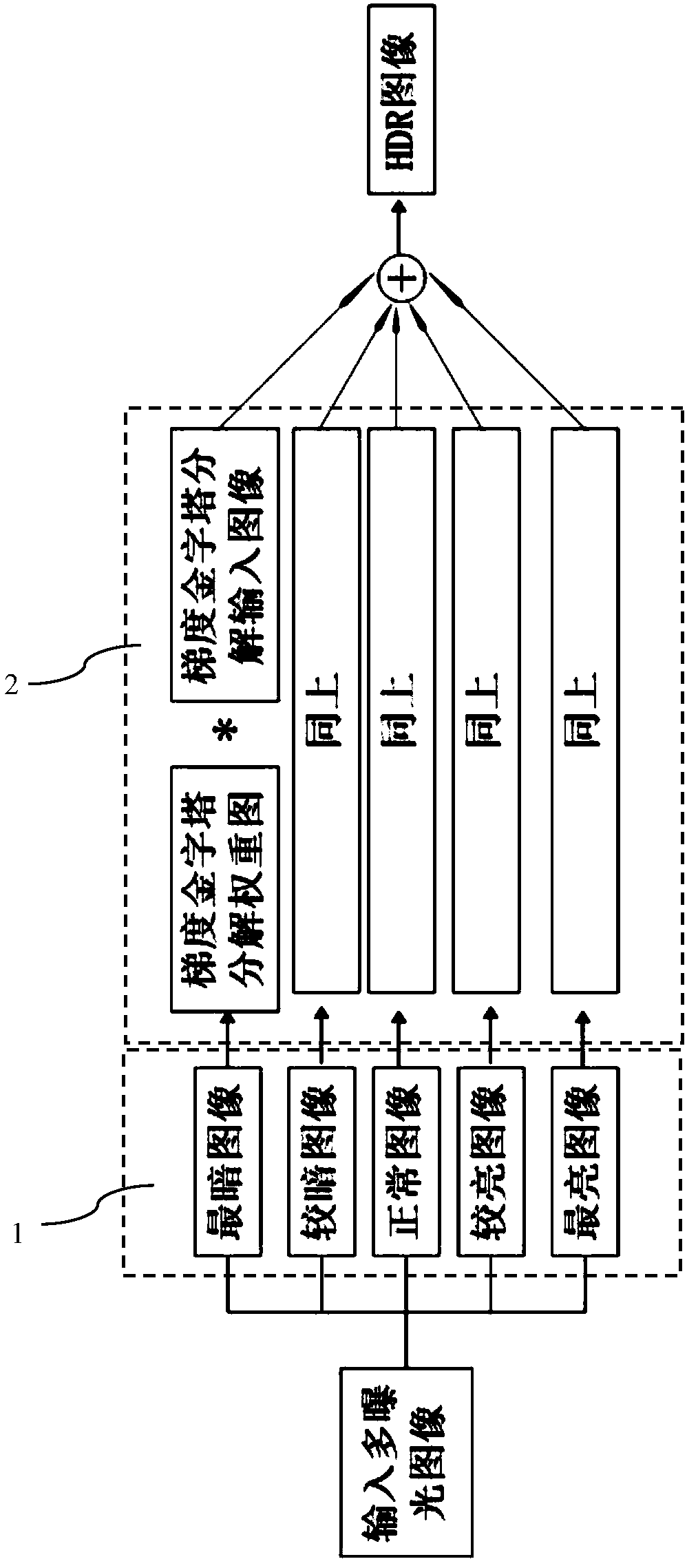

HDR image fusion method based on a plurality of LDR images with different exposure degrees

InactiveCN107220956AImprove directionEnhance edge informationImage enhancementImage analysisDecompositionImage fusion

The invention discloses an HDR image fusion method based on a plurality of LDR images with different exposure degrees. The method comprises the following steps: step one, carrying out dark-bright region division on a plurality of inputted LDR images with different exposure degrees and calculating weight maps of the images according to contrast ratios, saturability values, exposure degrees, and brightness of the images in all regions; and step two, carrying out image decomposition by using a gradient pyramid and carry out image fusion in a multi-resolution manner. Compared with the prior art, the method has the following beneficial effects: because of usage of the gradient pyramid instead of a Laplacian pyramid during the fusion process, the image direction and edge information is increased and thus the generated HDR image effect is good; the image brightness information is considered during weight solving, so that the generated HDR image has the bright color, high contrast ratio, and clear image details; and the HDR image fusion method has the good referential significance in the HDR image field.

Owner:TIANJIN UNIV

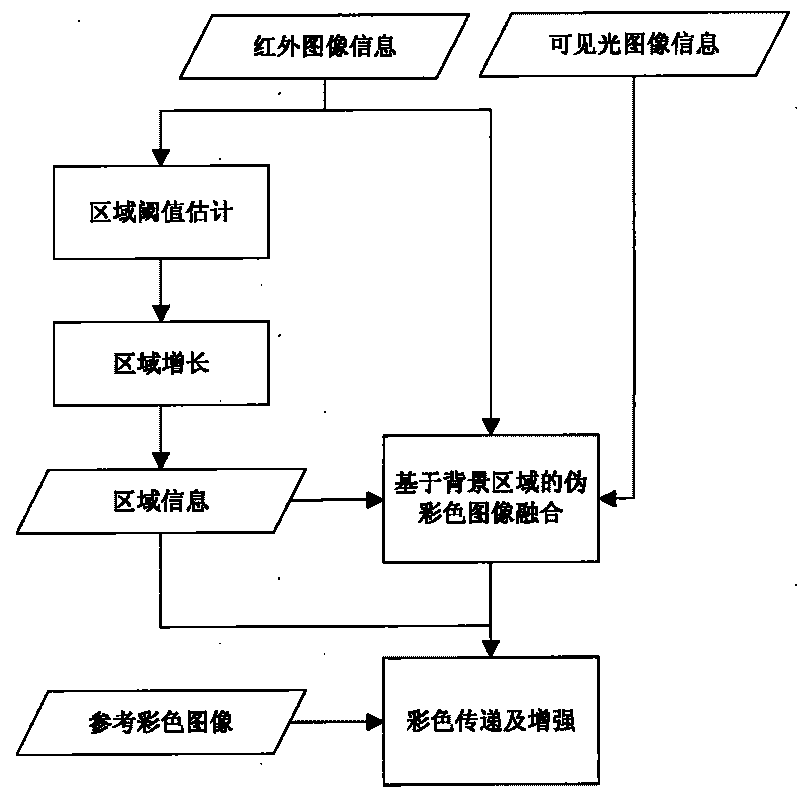

Infrared and visual pseudo-color image fusion and enhancement method

ActiveCN101714251AMulti-region feature informationImprove color qualityImage enhancementImage analysisColor imageBackground information

The invention relates to an infrared and visual pseudo-color image fusion and enhancement method. The method comprises the following steps: performing advanced partition treatment on infrared images to acquire three different area characteristics of background information, target information and cold target information, preserving characteristic information, performing pseudo-color fusion on the infrared and visual images under a color space of YUV to acquire pseudo-color fusion images, and then performing color transfer and color enhancement treatment on the pseudo-color fusion images by using a given color reference image to acquire final fusion images. The method can cause the final fusion images to have more area characteristic information, and furthest promote the color quality of the fused images at the same time of ensuring the instantaneity of a system.

Owner:SHANGHAI UNIVERSITY OF ELECTRIC POWER +1

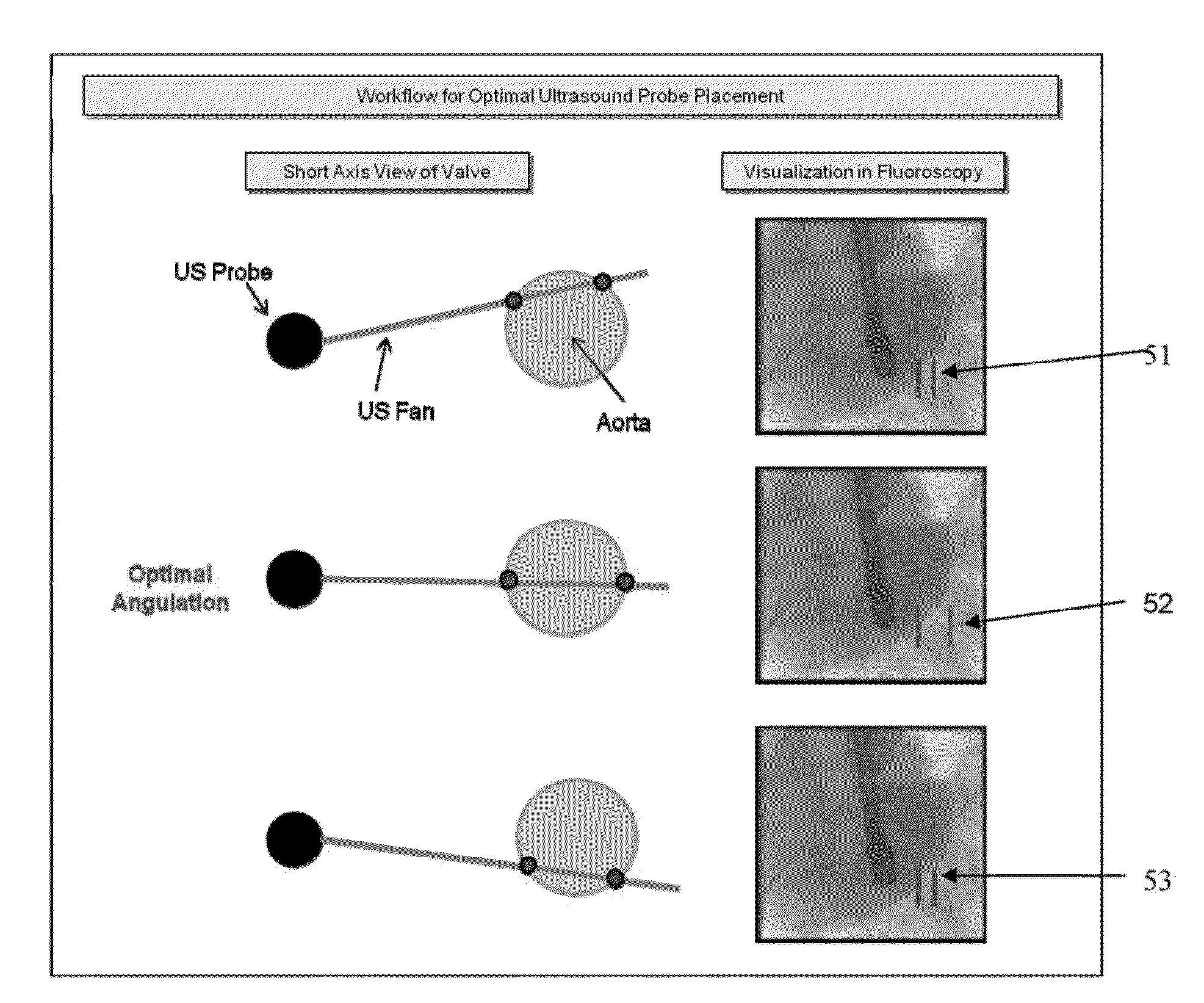

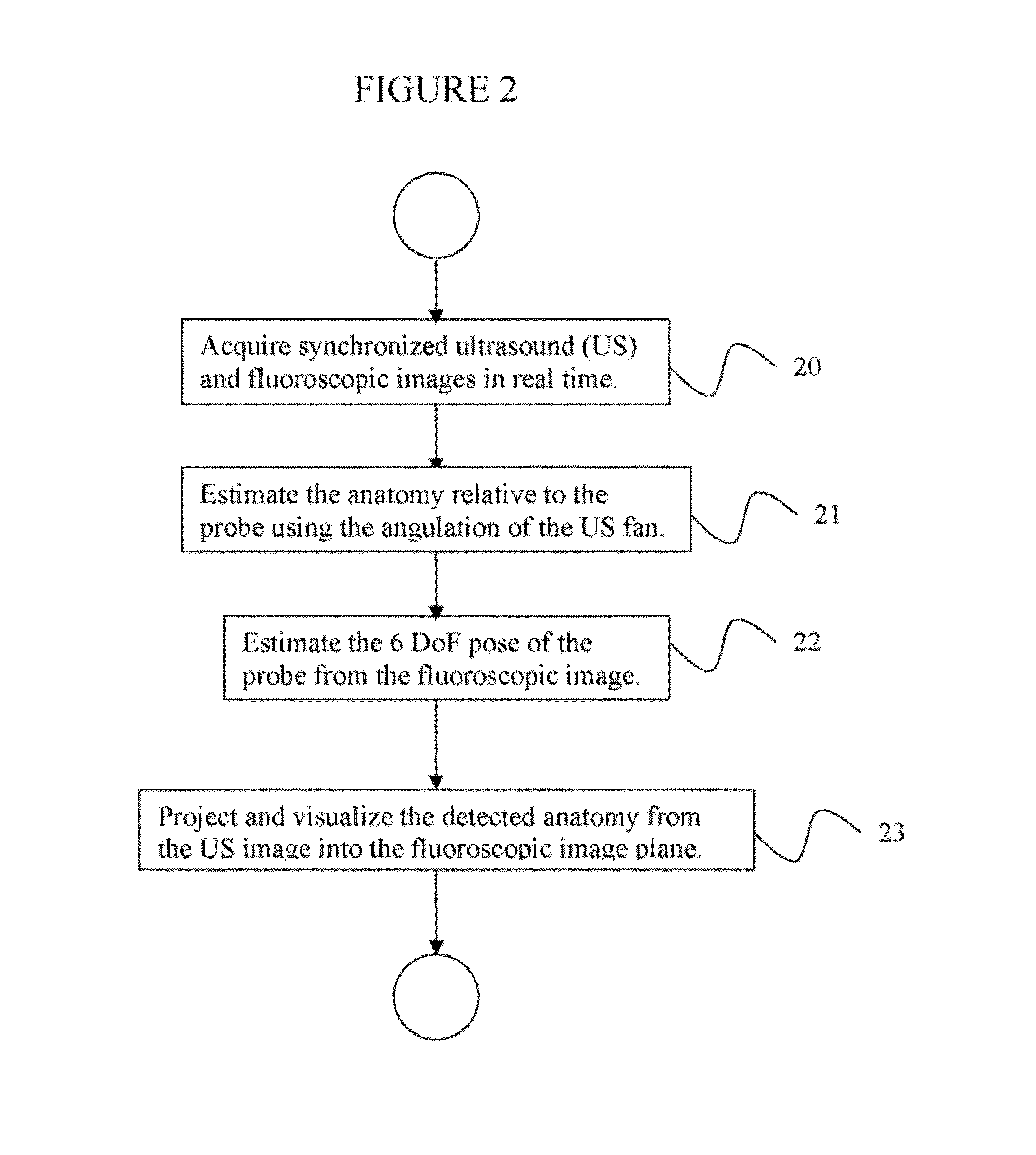

Image fusion for interventional guidance

ActiveUS20130259341A1Minimizing mapping errorOperation is requiredUltrasonic/sonic/infrasonic diagnosticsImage enhancementFluoroscopic imageRadiology

A method for real-time fusion of a 2D cardiac ultrasound image with a 2D cardiac fluoroscopic image includes acquiring real time synchronized US and fluoroscopic images, detecting a surface contour of an aortic valve in the 2D cardiac ultrasound (US) image relative to an US probe, detecting a pose of the US probe in the 2D cardiac fluoroscopic image, and using pose parameters of the US probe to transform the surface contour of the aortic valve from the 2D cardiac US image to the 2D cardiac fluoroscopic image.

Owner:SIEMENS HEALTHCARE GMBH

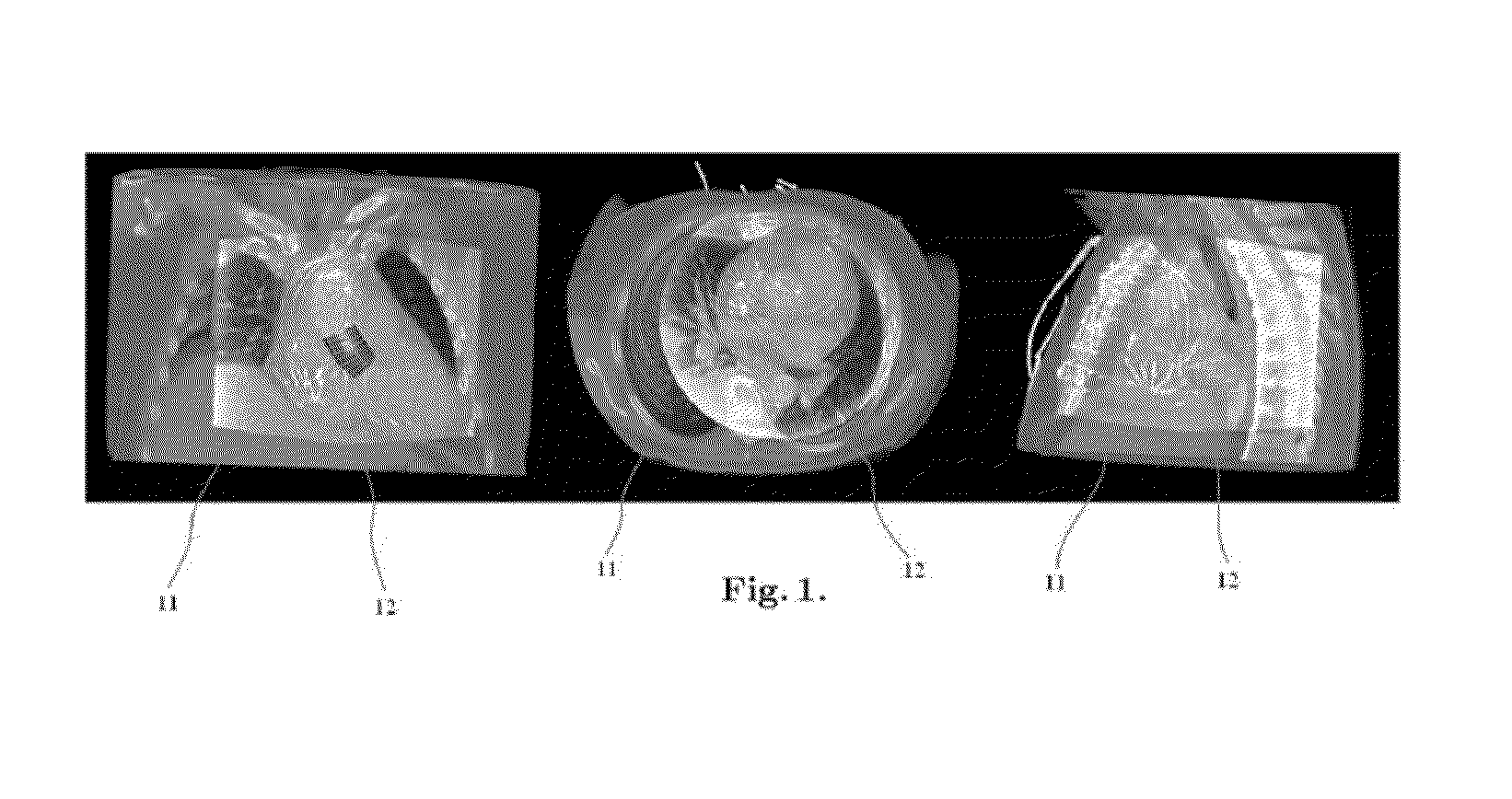

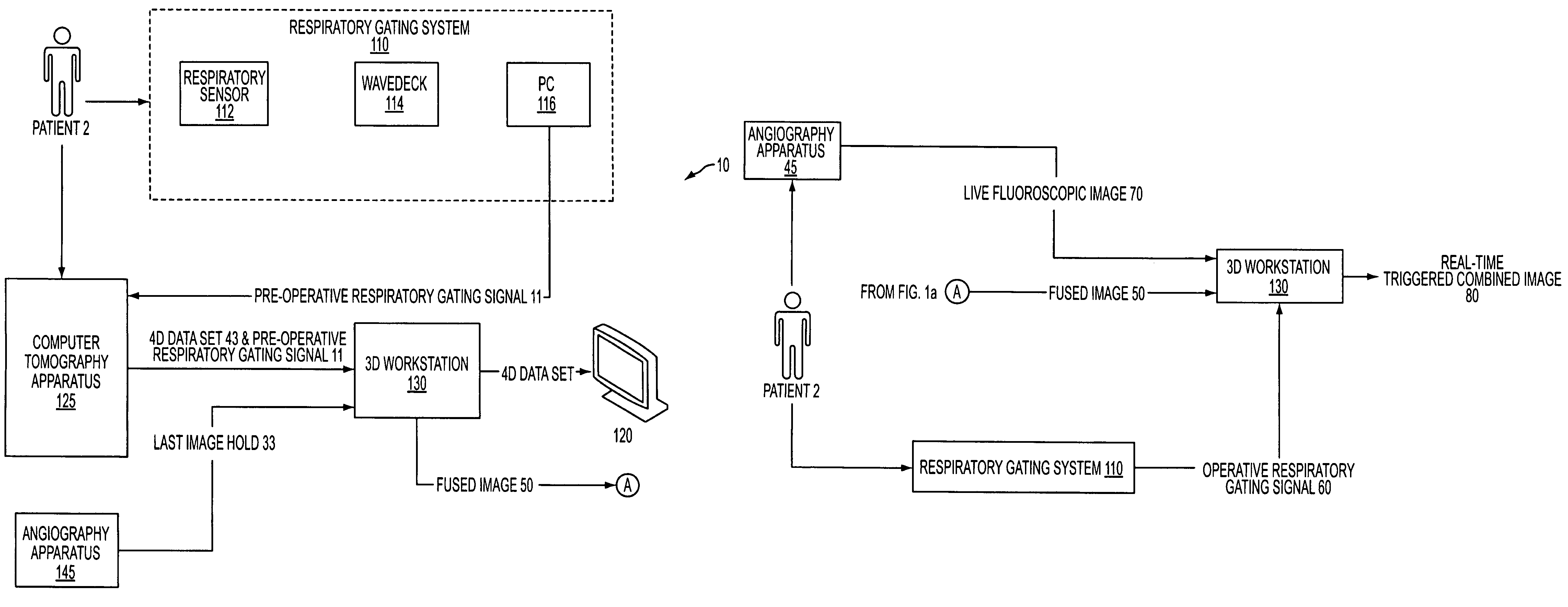

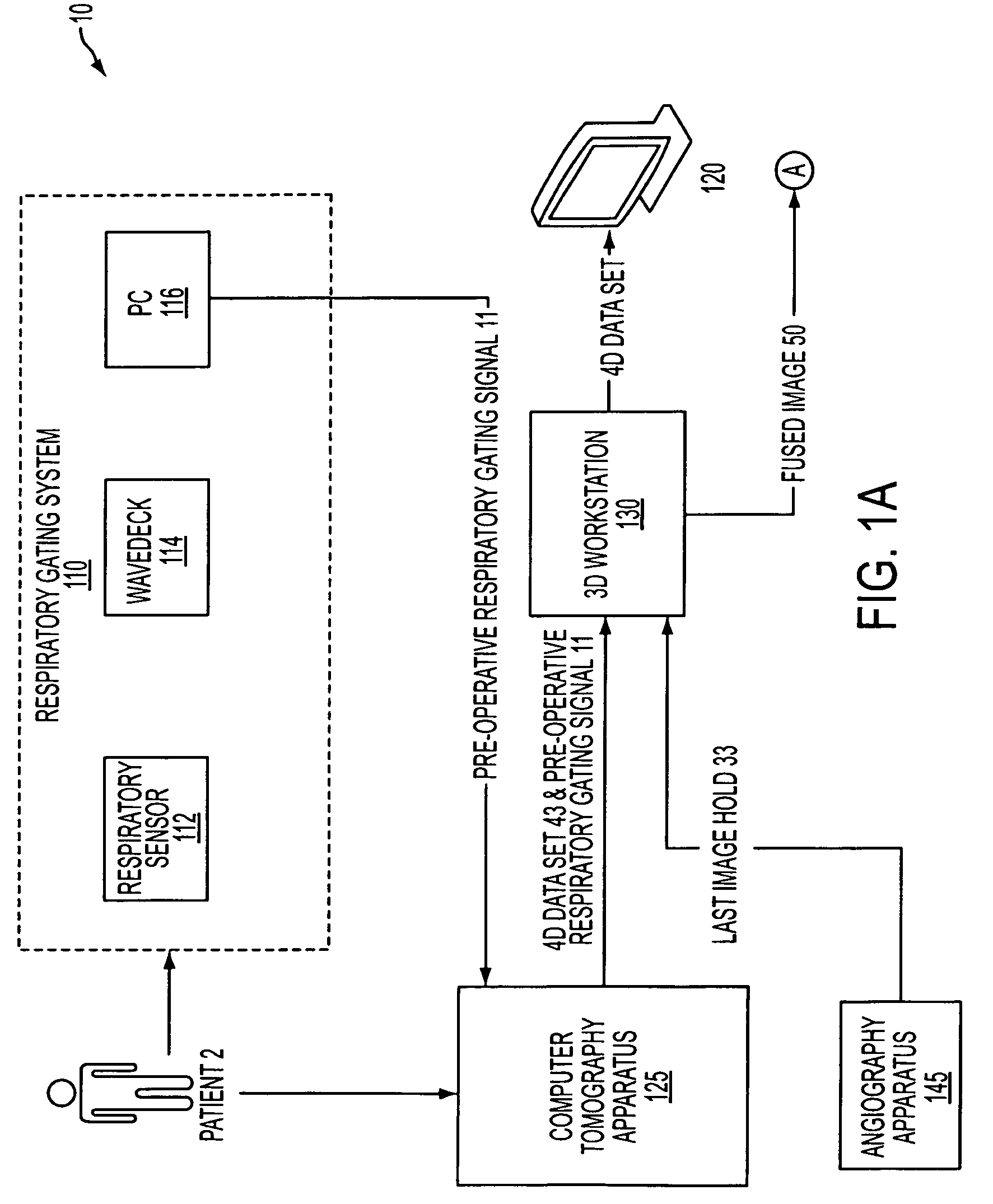

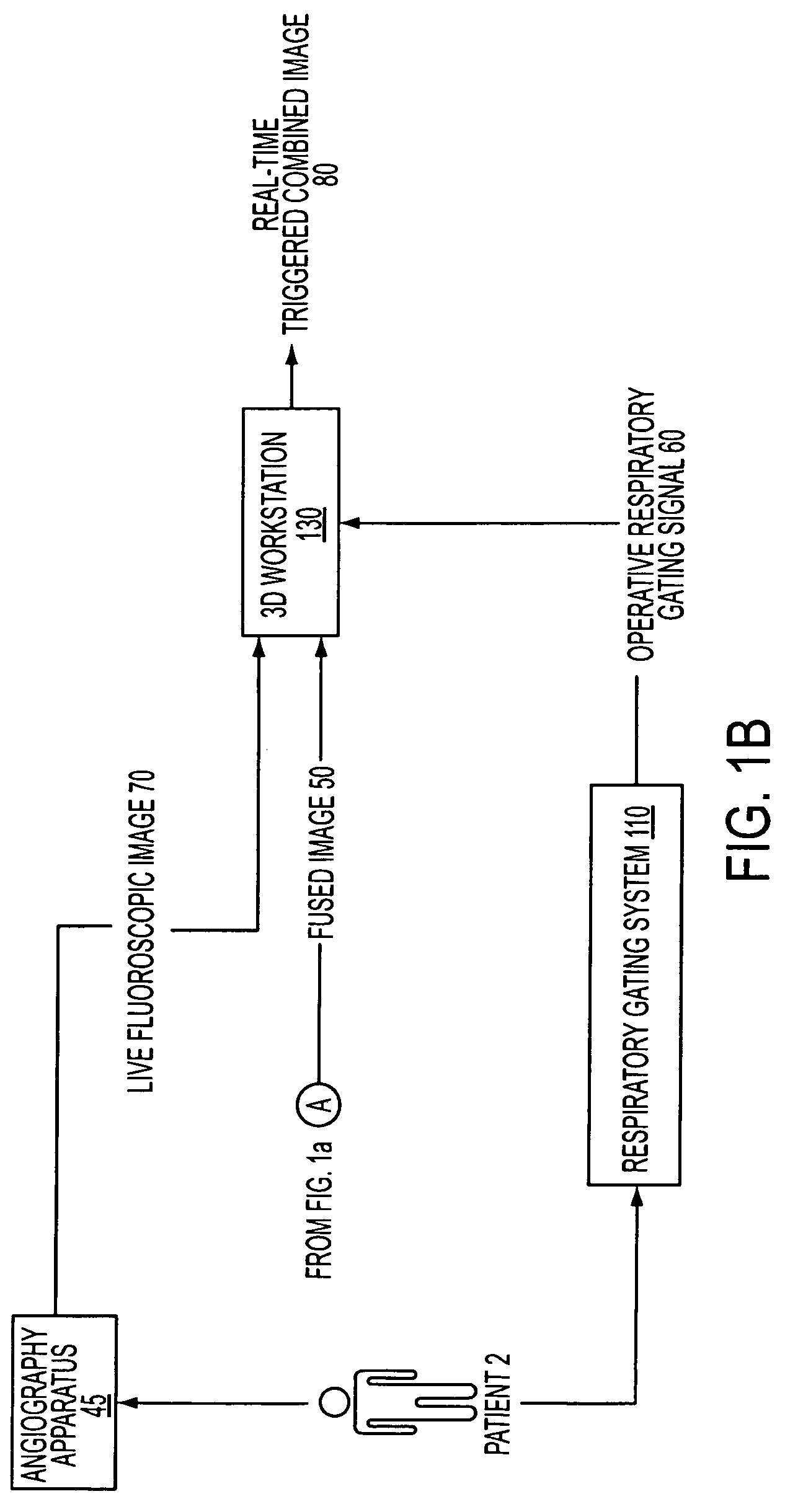

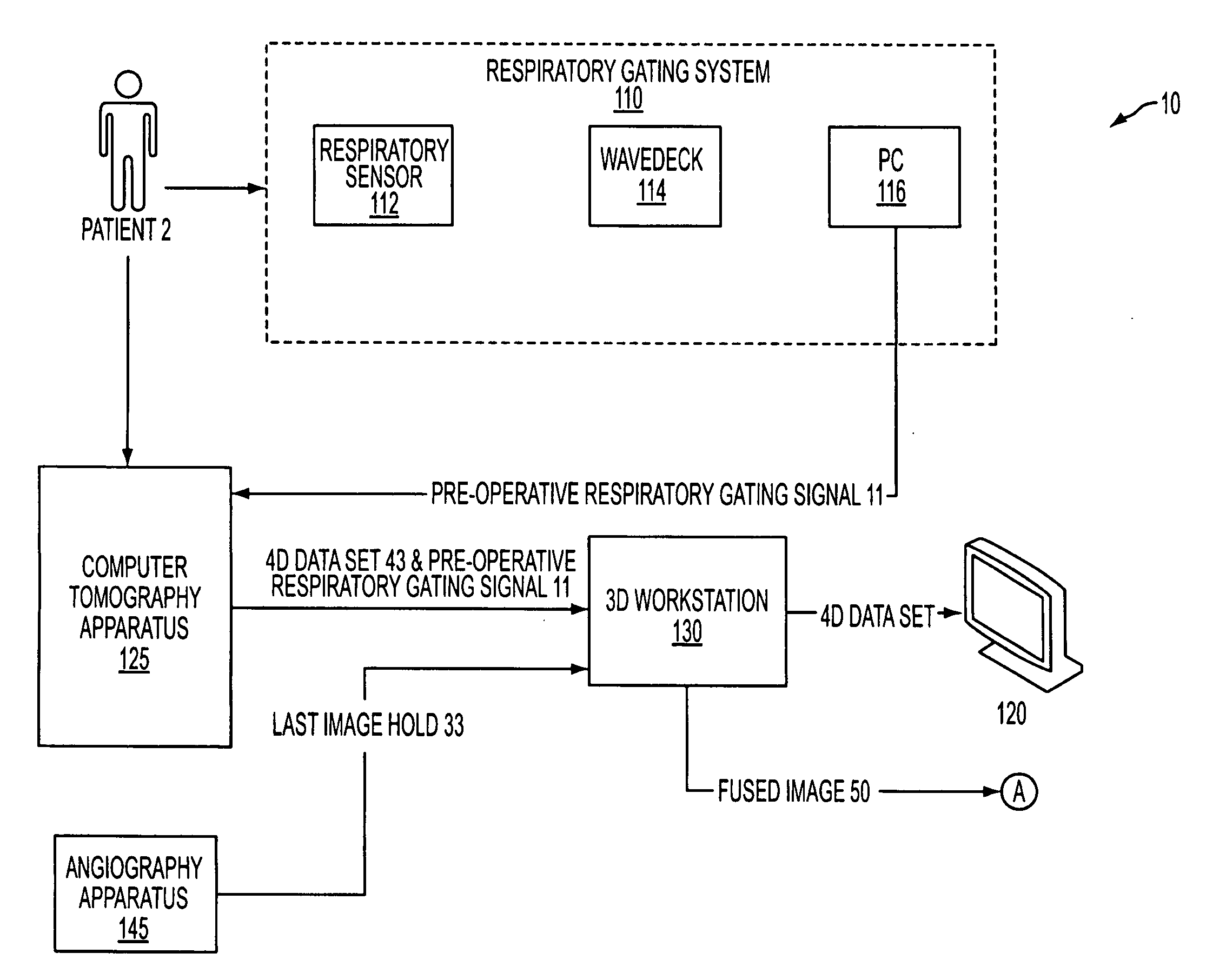

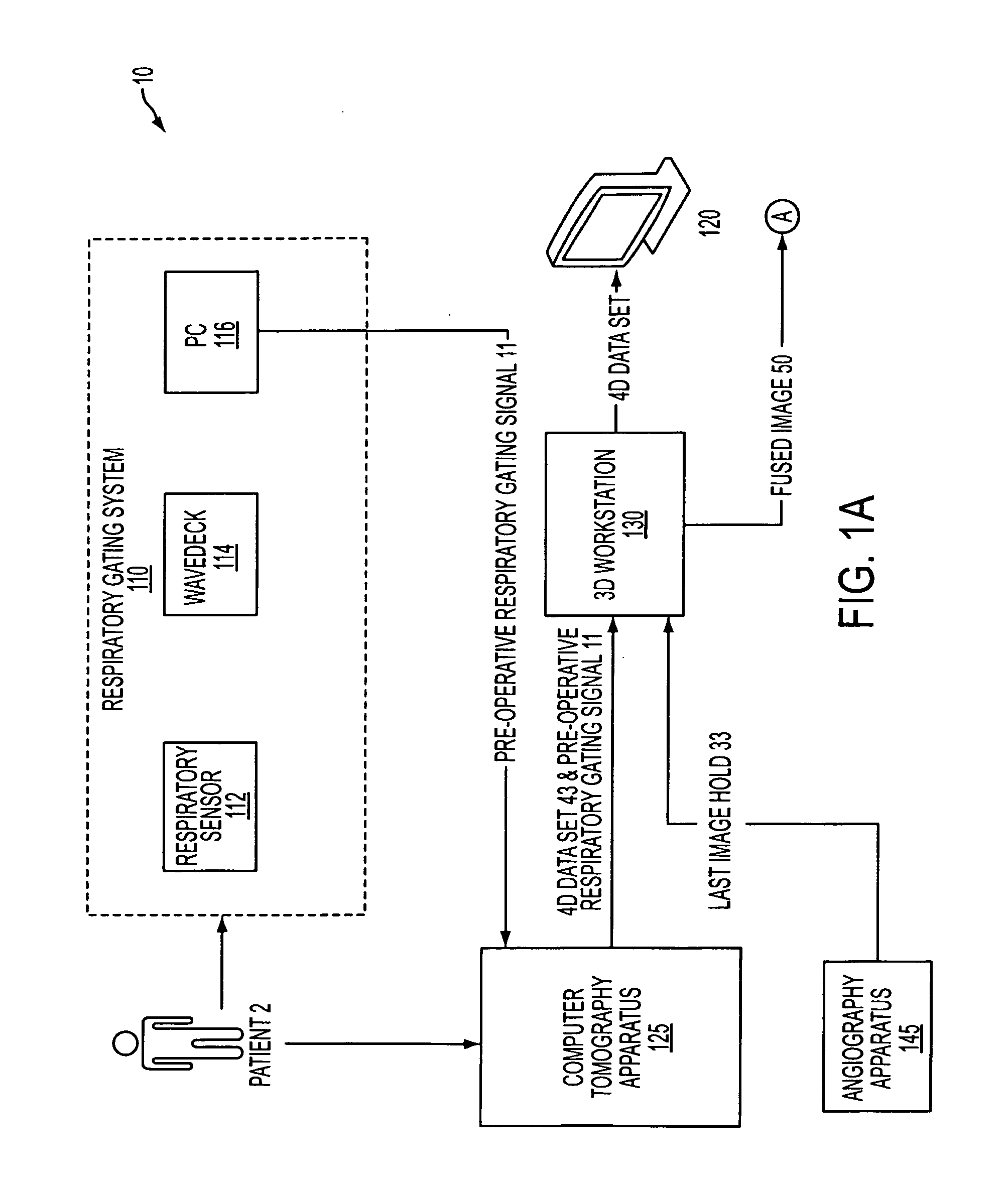

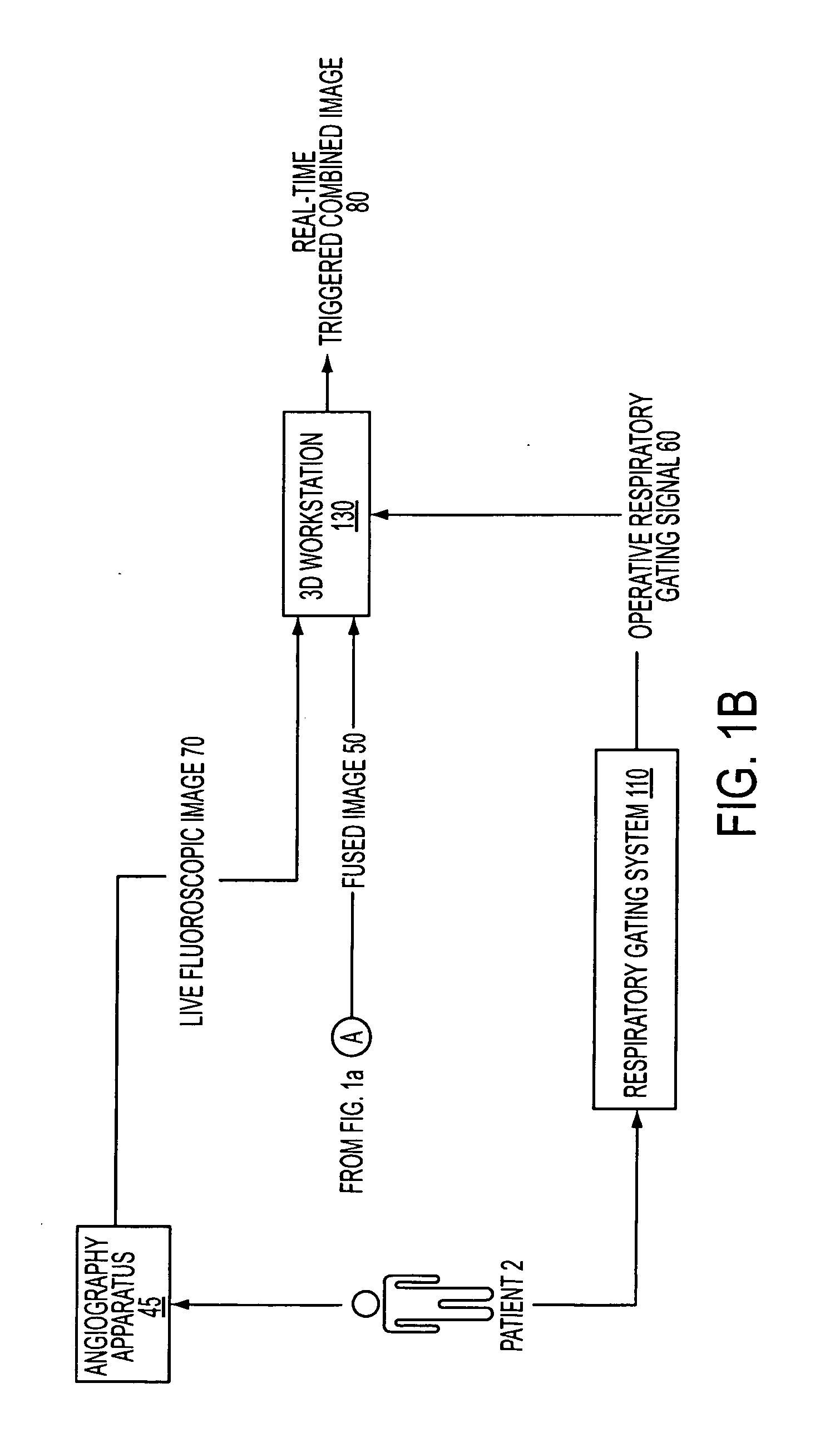

Respiratory gated image fusion of computed tomography 3D images and live fluoroscopy images

InactiveUS7467007B2Efficient and safe interventionReduce negative impactDiagnostic recording/measuringTomographyHuman bodyDiagnostic Radiology Modality

A system and method is provided directed to improved real-time image guidance by uniquely combining the capabilities of two well-known imaging modalities, anatomical 3D data from computer tomography (CT) and real time data from live fluoroscopy images provided by an angiography / fluoroscopy system to ensure a more efficient and safer intervention in treating punctures, drainages and biopsies in the abdominal and thoracic areas of the human body.

Owner:SIEMENS HEALTHCARE GMBH

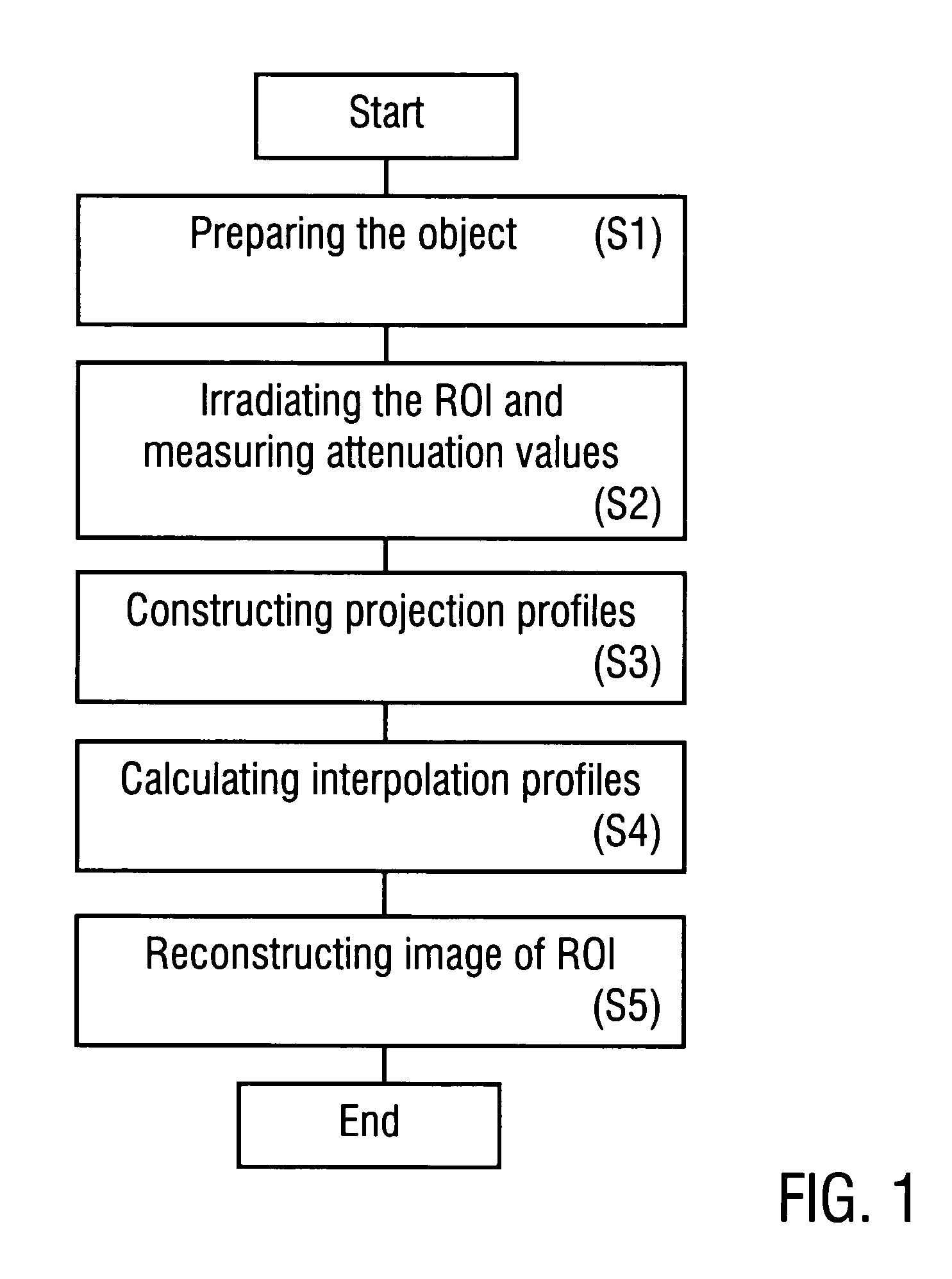

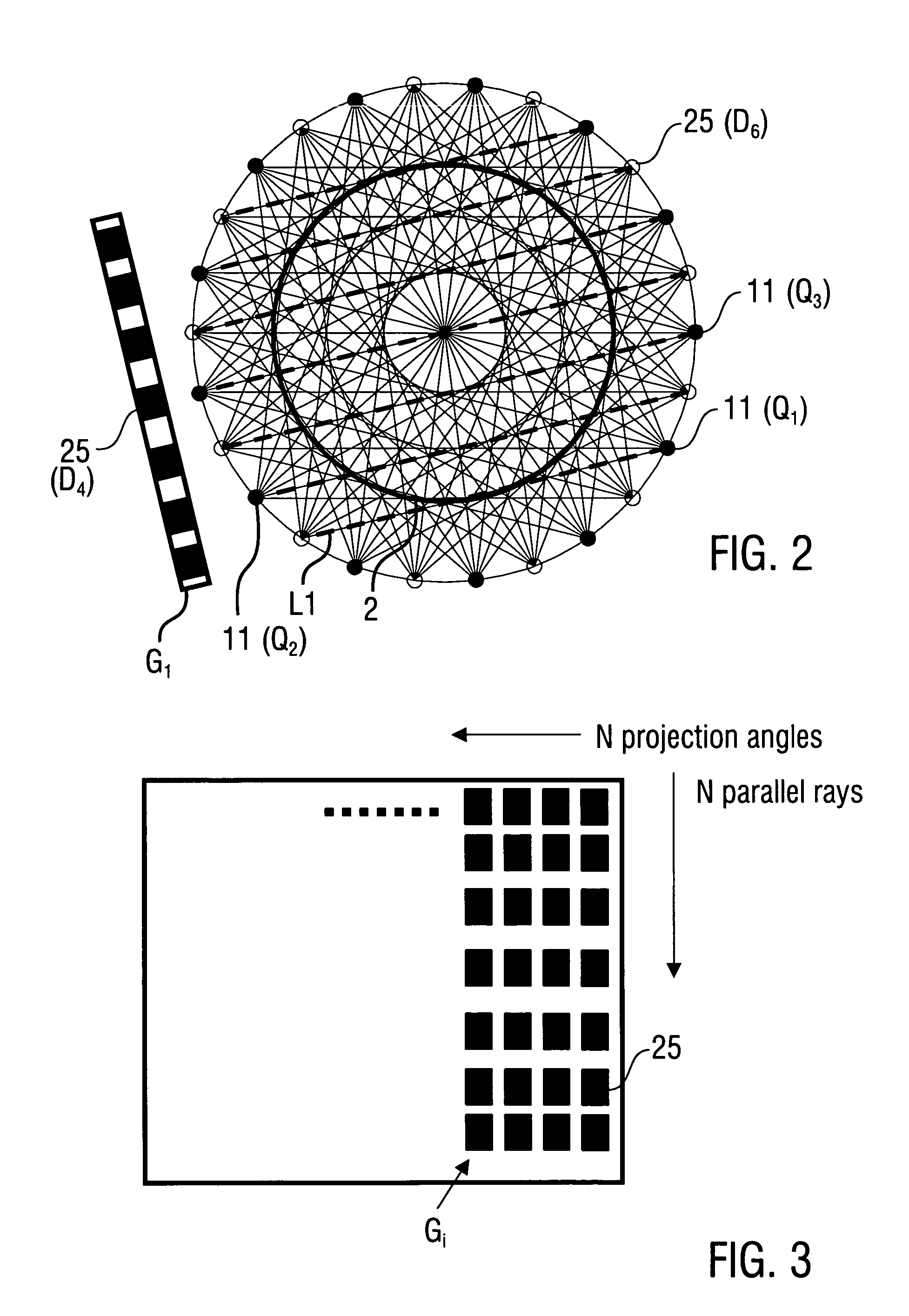

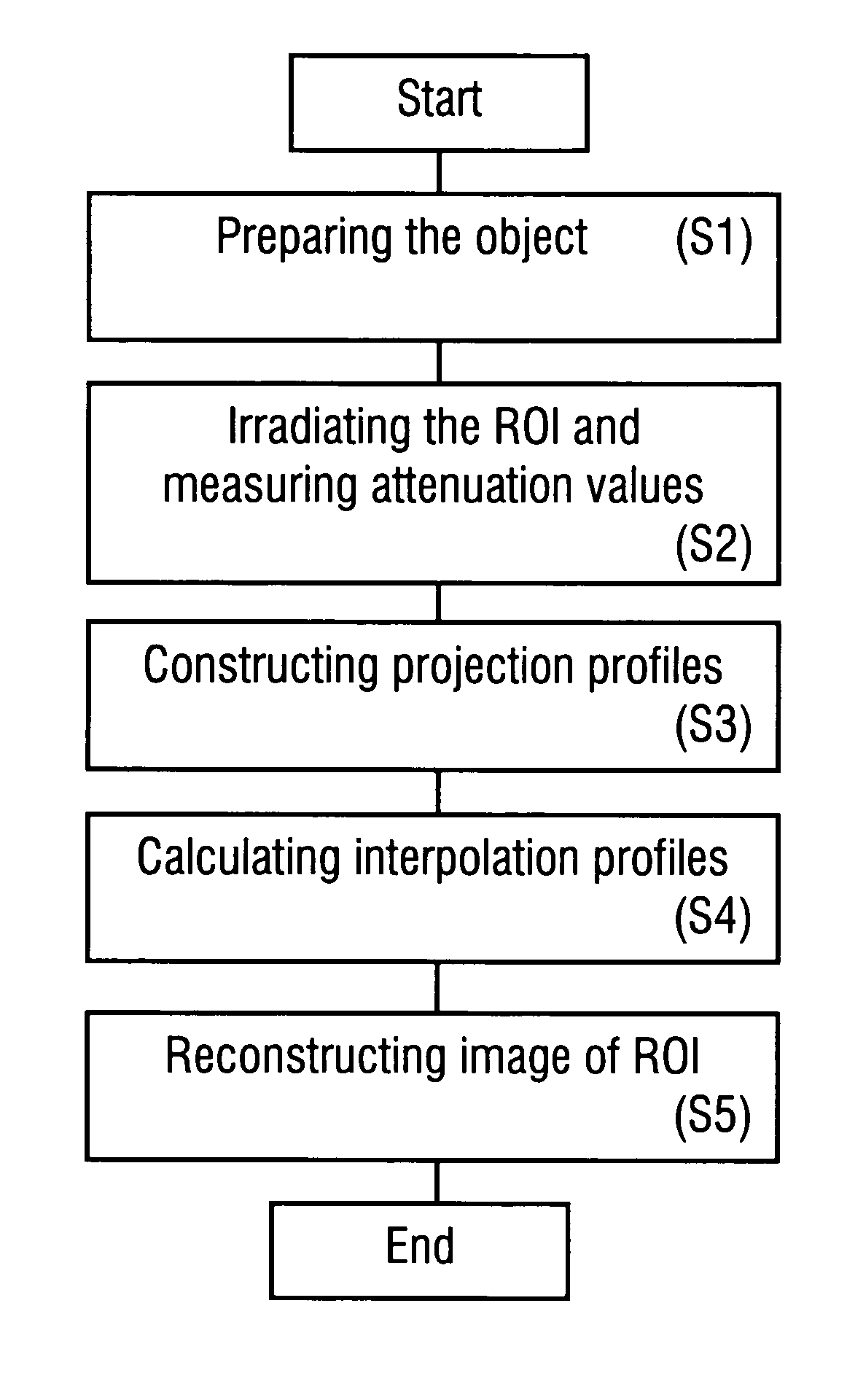

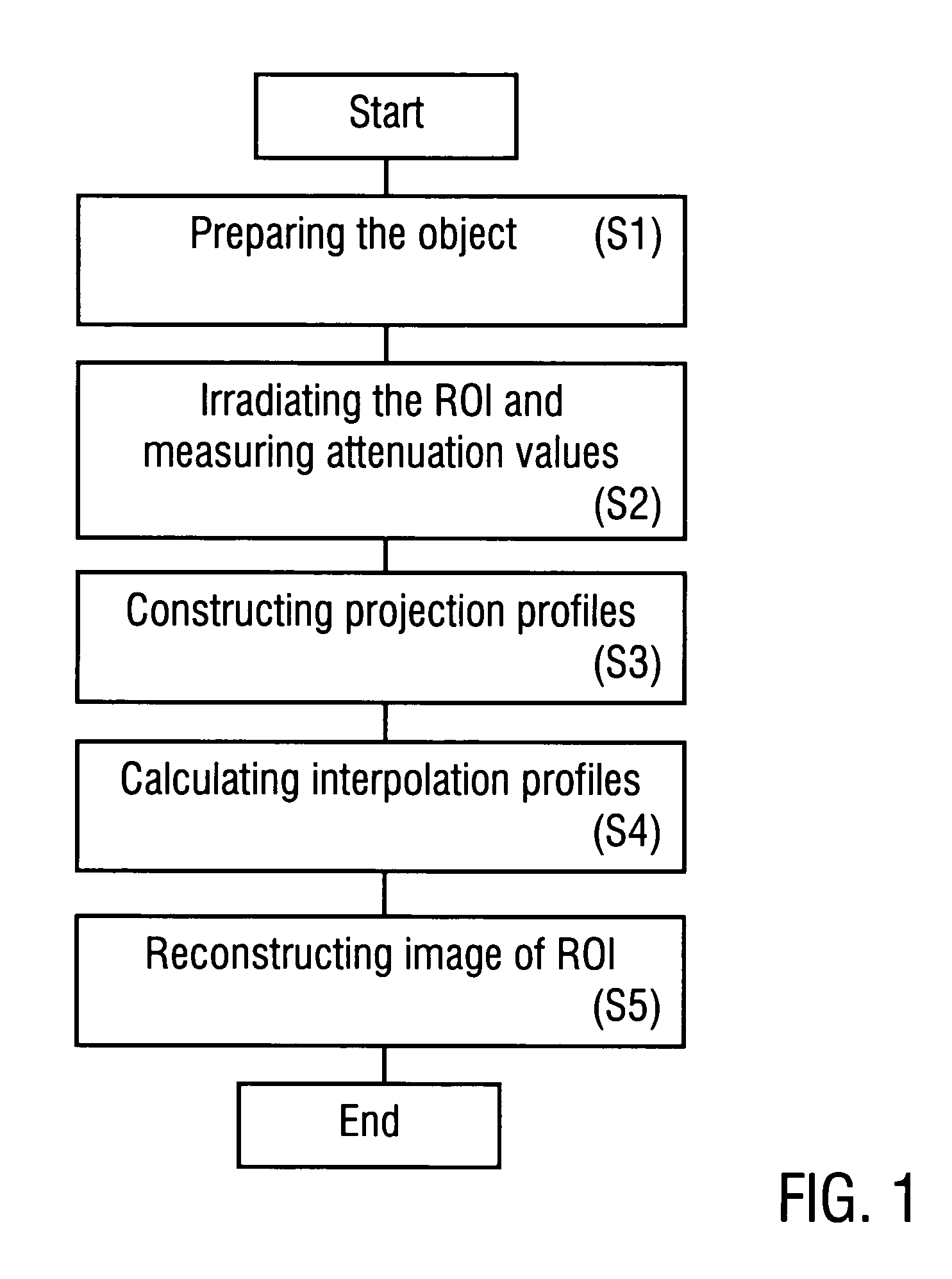

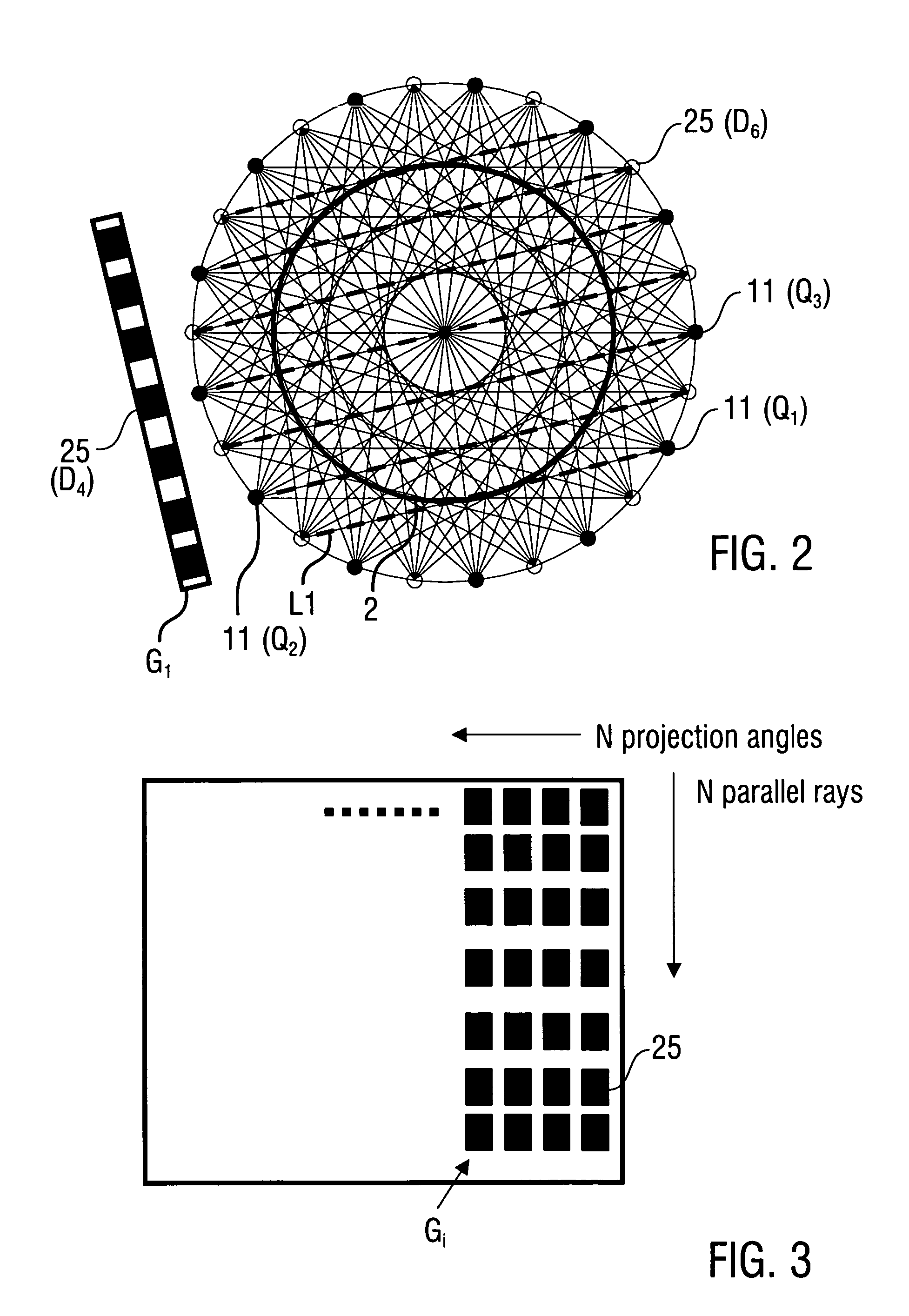

Method of reconstructing an image function from radon data

InactiveUS20090297009A1Increase the scope of applicationImprove imaging resolutionReconstruction from projectionMaterial analysis using wave/particle radiationReconstruction algorithmImage fusion

A method of reconstructing an image function on the basis of a plurality of projection profiles corresponding to a plurality of projection directions through a region of investigation, each projection profile including a series of value positions, wherein measured projection values corresponding to projection lines parallel to the respective projection direction are assigned to respective value positions and a plurality of remaining value positions are empty, comprises the steps of (a) assigning first interpolation values to the empty value positions for constructing a plurality of interpolation profiles on the basis of the projection profiles, wherein the first interpolation values are obtained from a first interpolation within a group of measured projection values having the same value position in different projection profiles, and (b) determining the image function by applying a predetermined reconstruction algorithm on the interpolation profiles.

Owner:UNIVERSITY OF OREGON +1

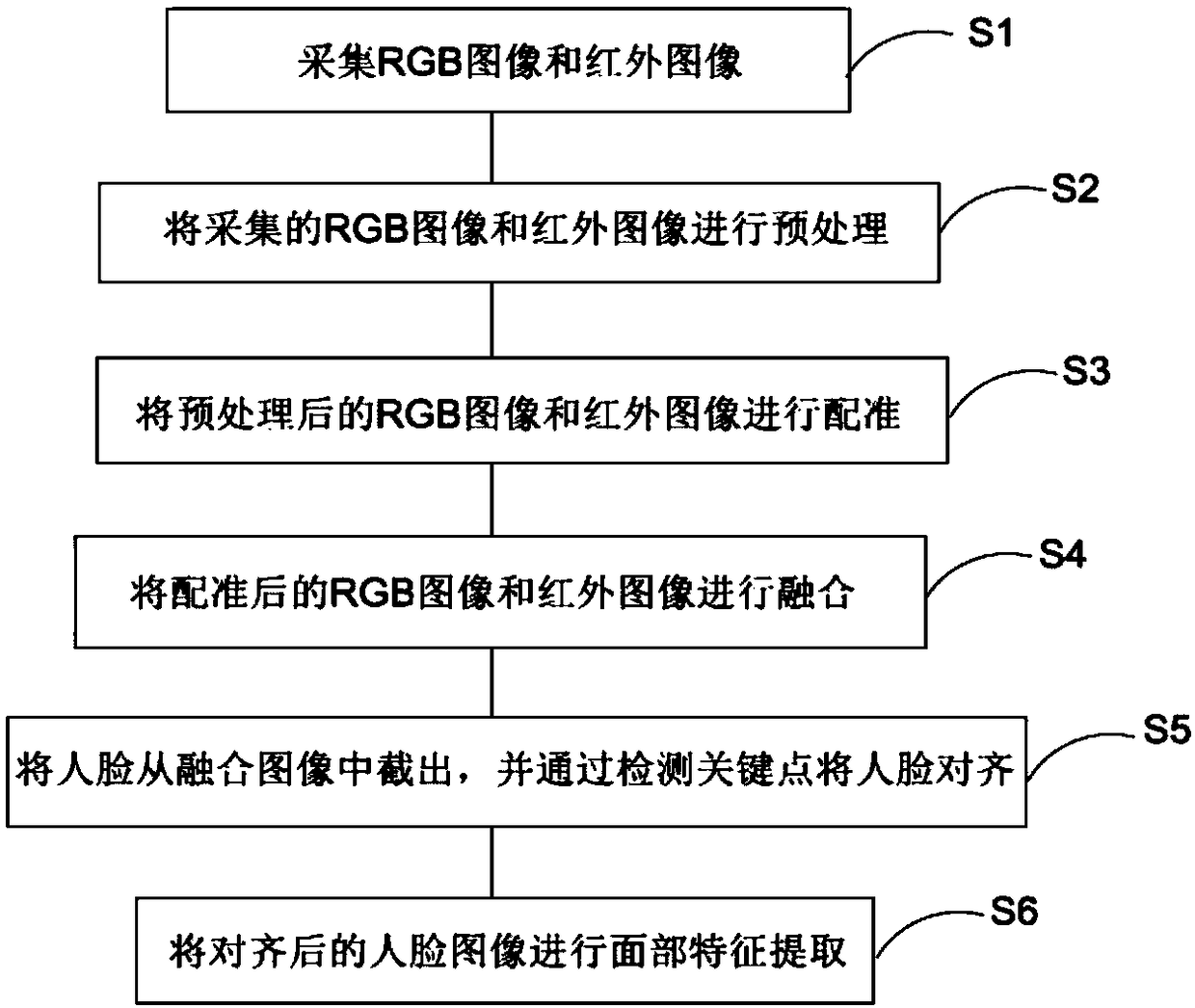

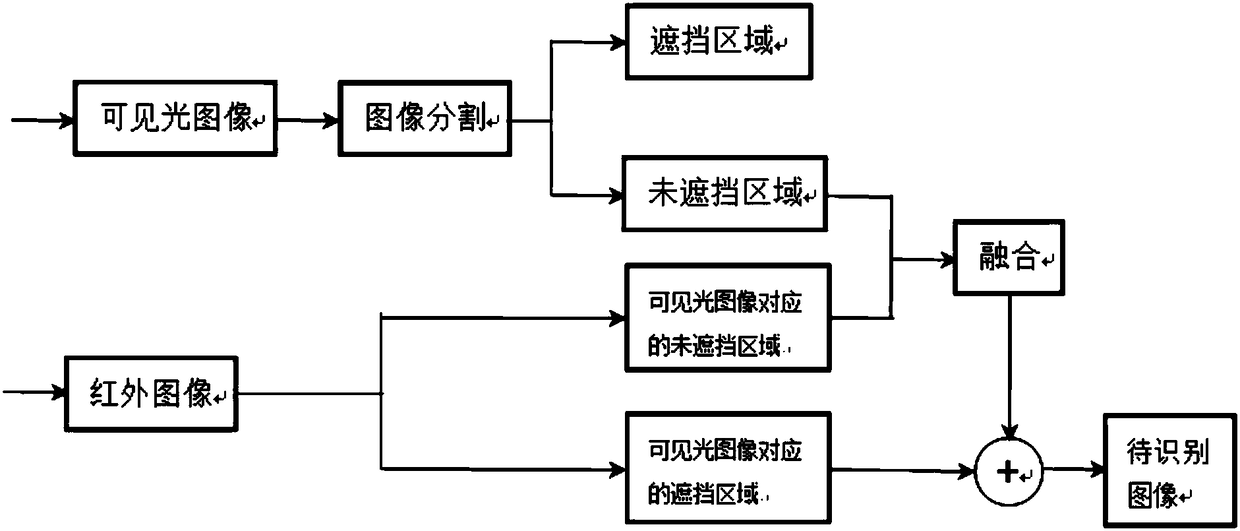

Face recognition method and device based on multispectral fusion

InactiveCN108090477APrevent the impact of collectionAvoid influenceCharacter and pattern recognitionFeature extractionRgb image

The invention discloses a face recognition method and device based on multispectral fusion. The method comprises the steps that collected RGB images and infrared images are preprocessed; the preprocessed RGB images and infrared images are registered; image fusion of the preprocessed RGB images and infrared images is conducted; human faces are cut out from the images on which fusion is conducted, and the human faces are aligned though detection key points; facial feature extraction is conducted on aligned human faces. According to the method, fusion is conducted on infrared image collection andvisible light image collection, advantages of the infrared image collection and the visible light image collection are combined, the influence of the visible light image collection under the conditions of dark light and blockage is prevented, meanwhile the problems that the infrared image collection is easily affected by environment temperature, and glass products such as glasses are hard to penetrate, so that black shadows are formed to influence image collection quality are solved, and face recognition accuracy under special conditions such as dark light, blockage and the like is improved.

Owner:BEIJING EASY AI TECHNOLOGY CO LTD

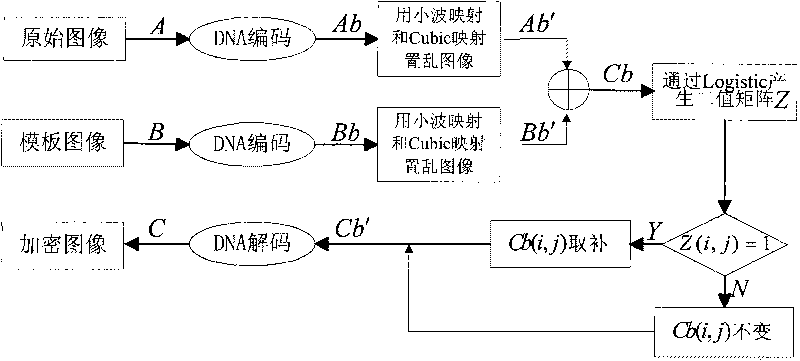

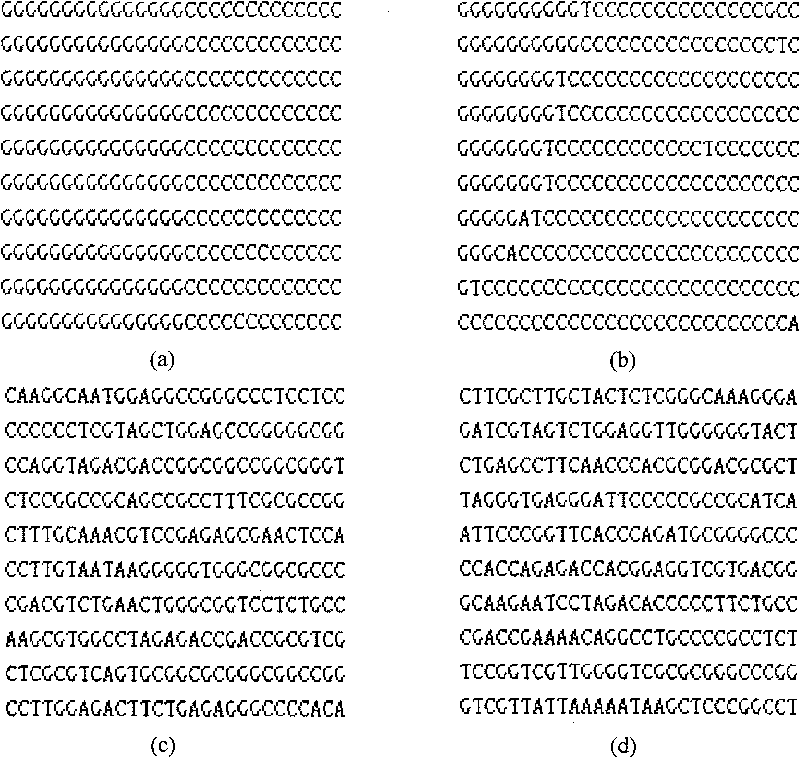

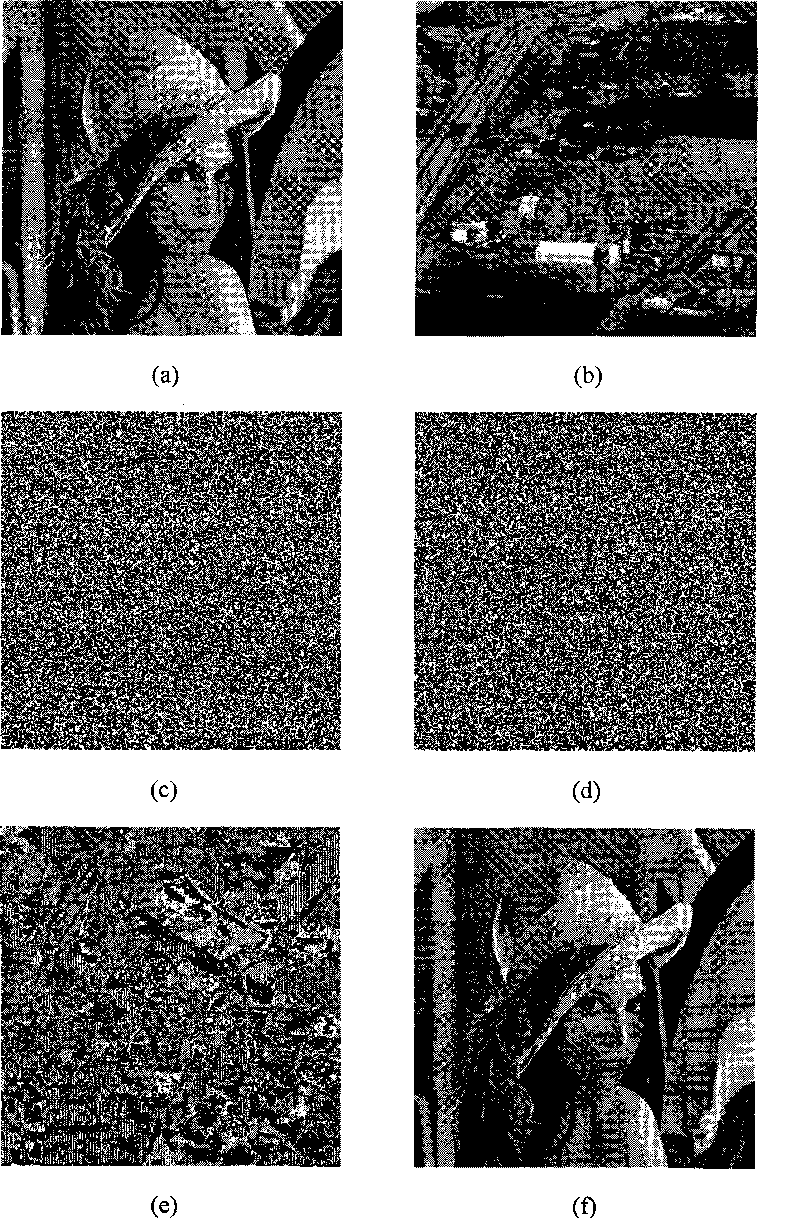

Image fusion encryption method based on DNA sequences and multiple chaotic mappings

ActiveCN101706947AGood encryptionLarge key spaceGenetic modelsChaos modelsChaotic systemsImage fusion

The invention discloses an image fusion encryption algorithm based on DNA sequences and multiple chaotic mappings, belonging to the field of DNA computation and image encryption. The traditional chaos-based encryption algorithm has the defects that the key space is small, the chaotic system is easy to be analyzed and predicted, and the like, and the existing image fusion based encryption method features difficult control of fusion parameters and low safety. To overcome the above defects, the invention first utilizes the two-dimensional chaotic sequence based scrambled coded original image and the template image which are generated by the Cubic mapping and the wavelet function to obtain two DNA sequence matrices, then carries out addition operation on the two scrambled DNA sequence matrices and finally utilizes the chaotic sequence generated by Logistic mapping and the DNA sequence matrices obtained by addition operation to interact to obtain the encrypted image. The experimental results show that the algorithm can effectively encrypt the digital images and has higher safety.

Owner:DALIAN UNIV

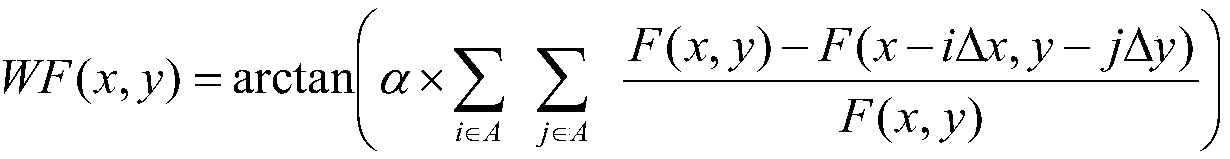

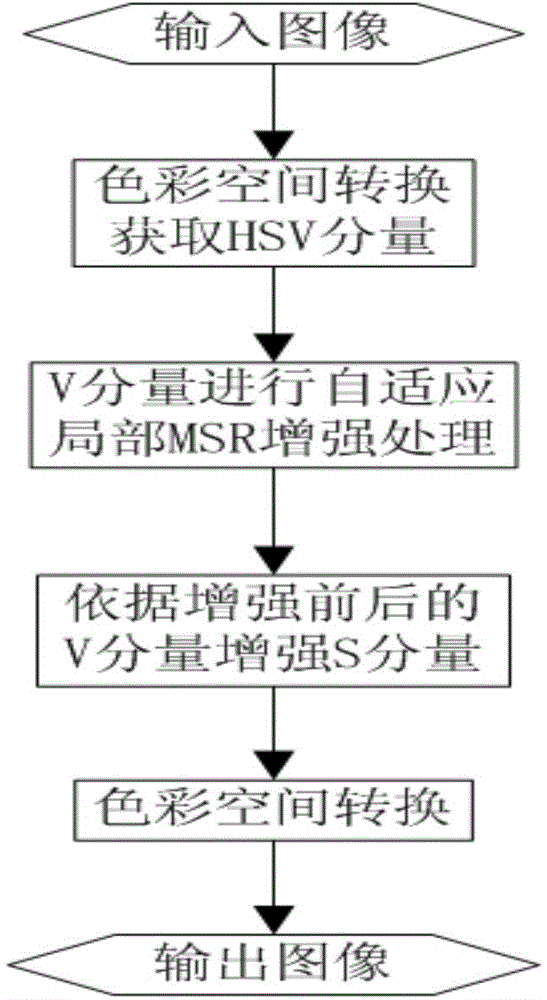

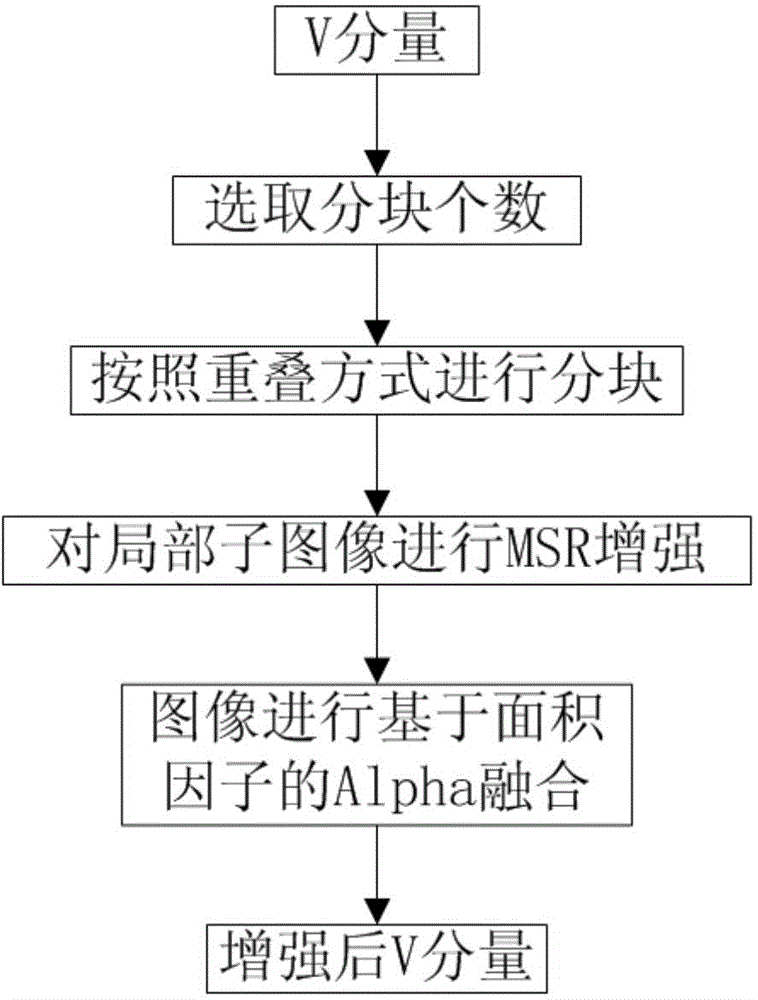

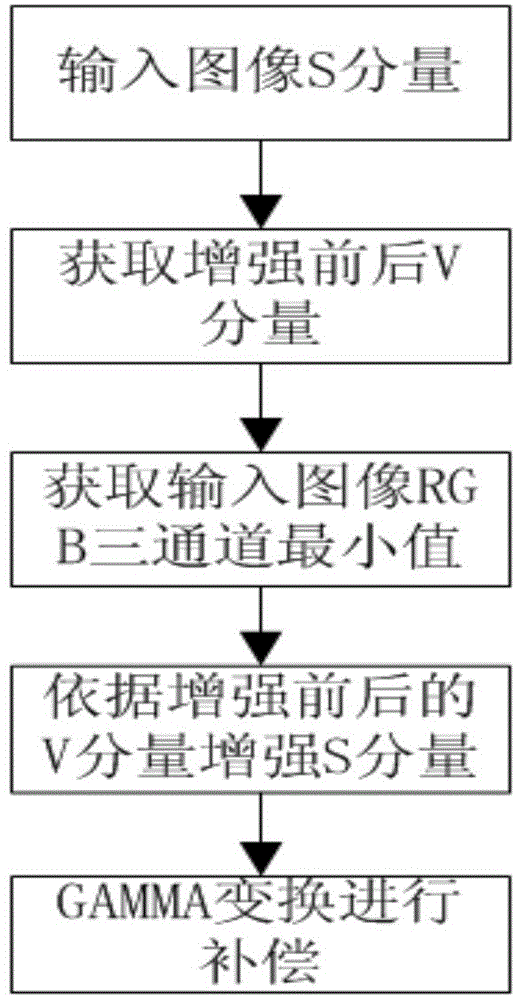

Local Retinex enhancement algorithm based on HSV color spaces

ActiveCN104537615ADoes not affect offsetIncrease contrastImage enhancementImage contrastContrast enhancement

The invention discloses a local Retinex dehazing algorithm based on HSV color spaces. The local Retinex dehazing algorithm is used for conducting image enhancement on an image with fog being unevenly distributed under the foggy day condition. The method includes the following steps that A, the multi-layered foggy day image is processed in different color spaces, so that the image contrast ratio is enhanced, and the image definition is improved; B, the size of the image is obtained, the sizes of subblock images are judged in a self-adaptation mode, and a luminance image of the foggy day image is partitioned; enhancement processing is conducted on all sub images of the luminance component, and the image definition is improved; C, the area proportion factors of all pixel points in the overlapped sub images are calculated, weighted summation is conducted on all the overlapped sub images, and image fusion is achieved; D, the saturability component of the image is processed, and the image color is restored. By means of the local Retinex dehazing algorithm, scenery details at different levels can be restored clearly and quickly, the visual effect of the image is improved, and the algorithm is suitable for aerial photo systems at different regions.

Owner:DALIAN UNIV OF TECH

Method of reconstructing an image function from Radon data

InactiveUS8094910B2Improve imaging resolutionSimple methodReconstruction from projectionMaterial analysis using wave/particle radiationReconstruction algorithmImage fusion

A method of reconstructing an image function on the basis of a plurality of projection profiles corresponding to a plurality of projection directions through a region of investigation, each projection profile including a series of value positions, wherein measured projection values corresponding to projection lines parallel to the respective projection direction are assigned to respective value positions and a plurality of remaining value positions are empty, comprises the steps of (a) assigning first interpolation values to the empty value positions for constructing a plurality of interpolation profiles on the basis of the projection profiles, wherein the first interpolation values are obtained from a first interpolation within a group of measured projection values having the same value position in different projection profiles, and (b) determining the image function by applying a predetermined reconstruction algorithm on the interpolation profiles.

Owner:UNIVERSITY OF OREGON +1

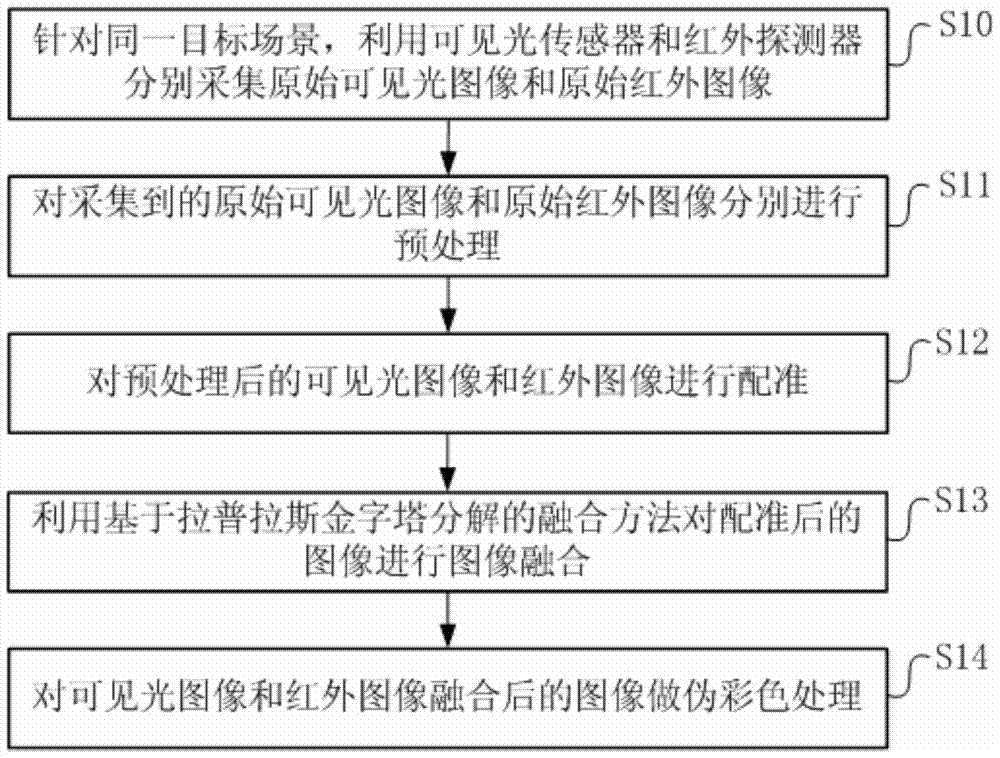

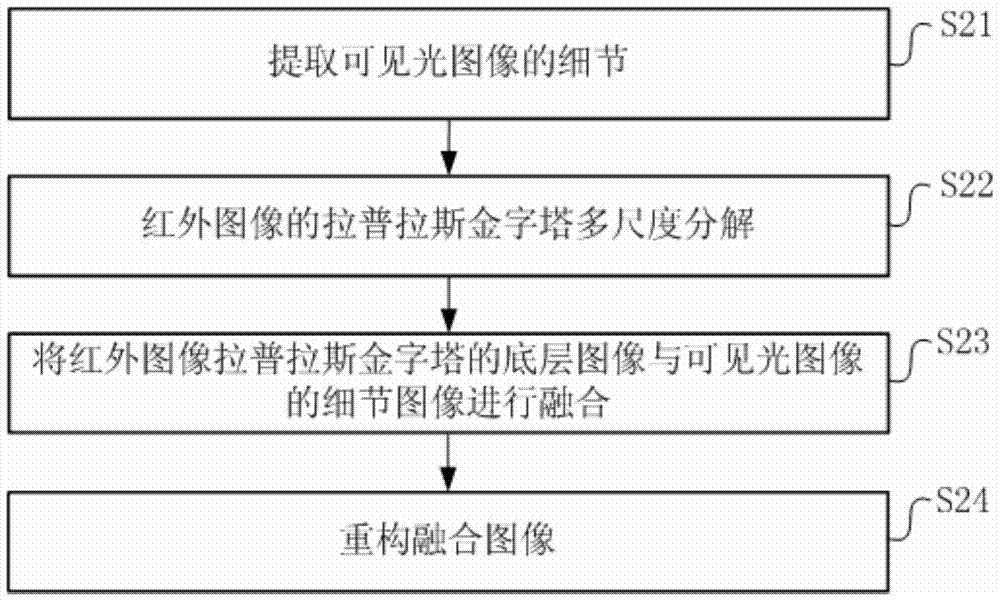

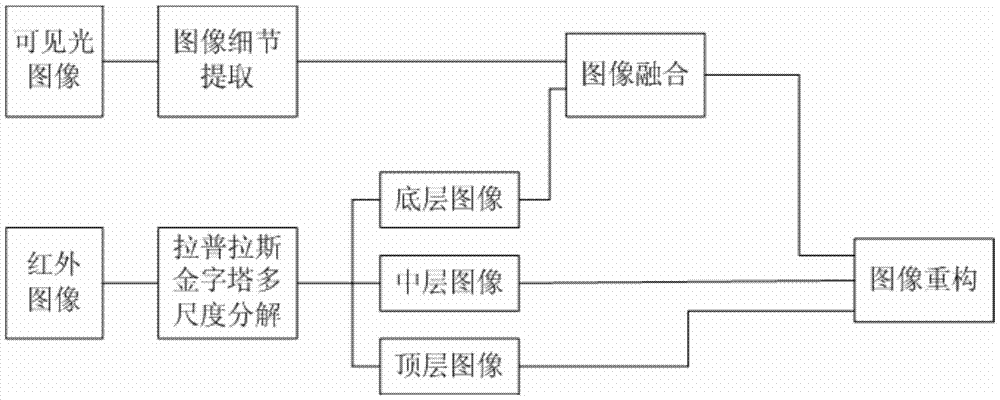

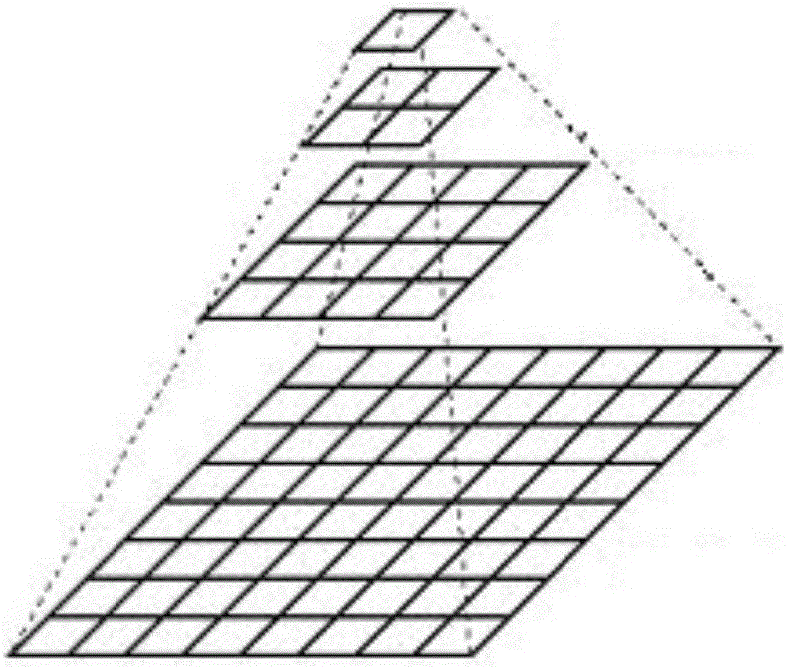

Visible light and infrared double wave band image fusion enhancing method

InactiveCN106960428AStrong complementarityCompensate for visual quality effectsImage enhancementImage resolutionImage fusion

The invention provides a visible light and infrared double wave band image fusion enhancing method. The visible light and infrared double wave band image fusion enhancing method includes the following steps: a) for the same target scene, utilizing a visible light sensor and an infrared detector to respectively acquire an original visible light image and an original infrared image; b) respectively preprocessing the acquired original visible light image and original infrared image; c) registering the preprocessed original visible light image and original infrared image so as to enable imaging fields of the visible light sensor and the infrared detector to be consistent; and d) utilizing a fusion method based on Laplace pyramid decomposition to perform image fusion on the registered images. The visible light and infrared double wave band image fusion enhancing method has the advantages of compensating the influence of lack of resolution of the detector on the visual quality of the output images, simplifying the image fusion rule, and greatly improving the fusion speed on the basis of guaranteeing the quality of the fused images.

Owner:ZHEJIANG DALI TECH

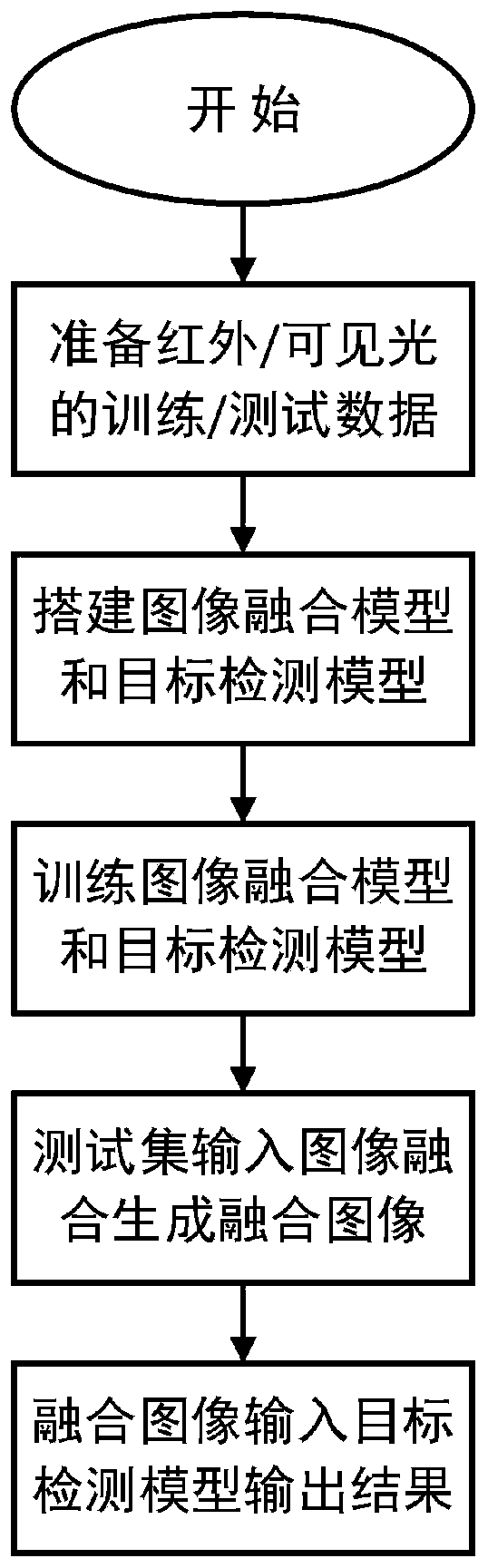

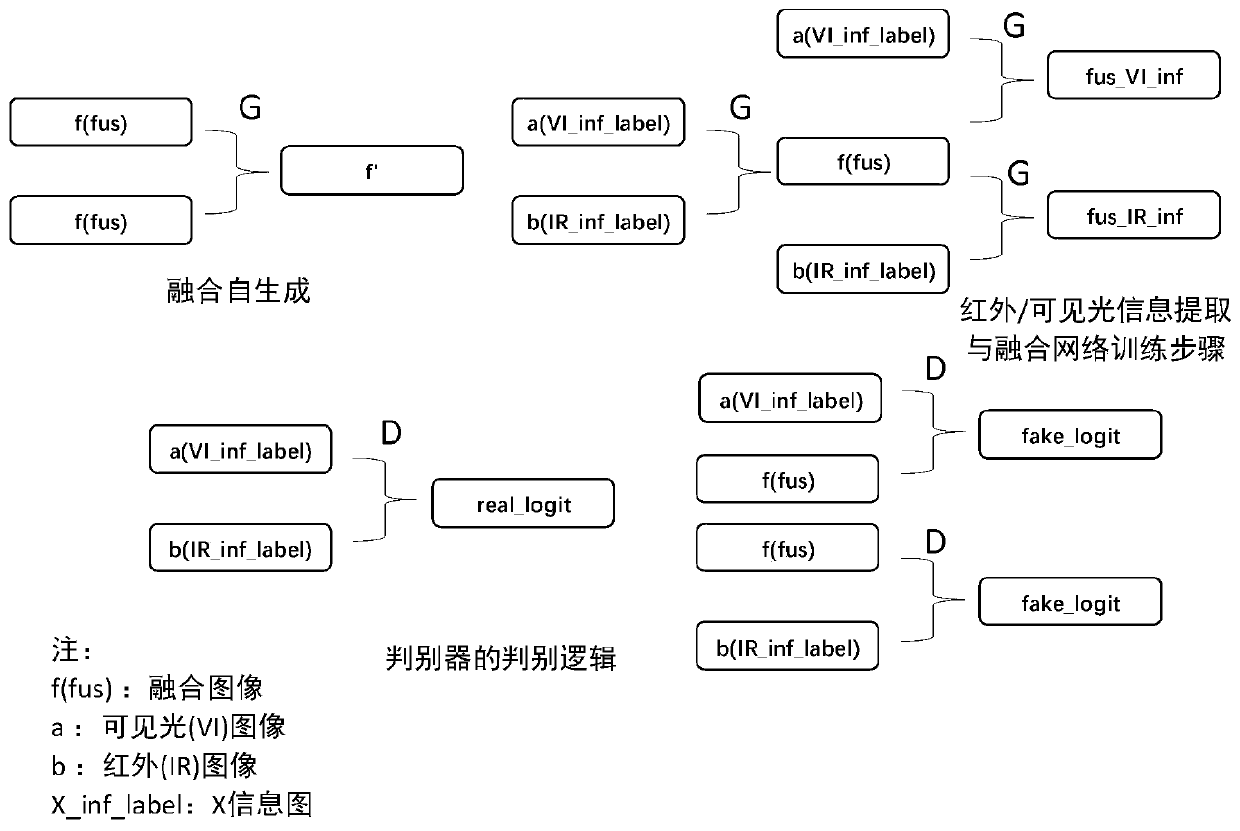

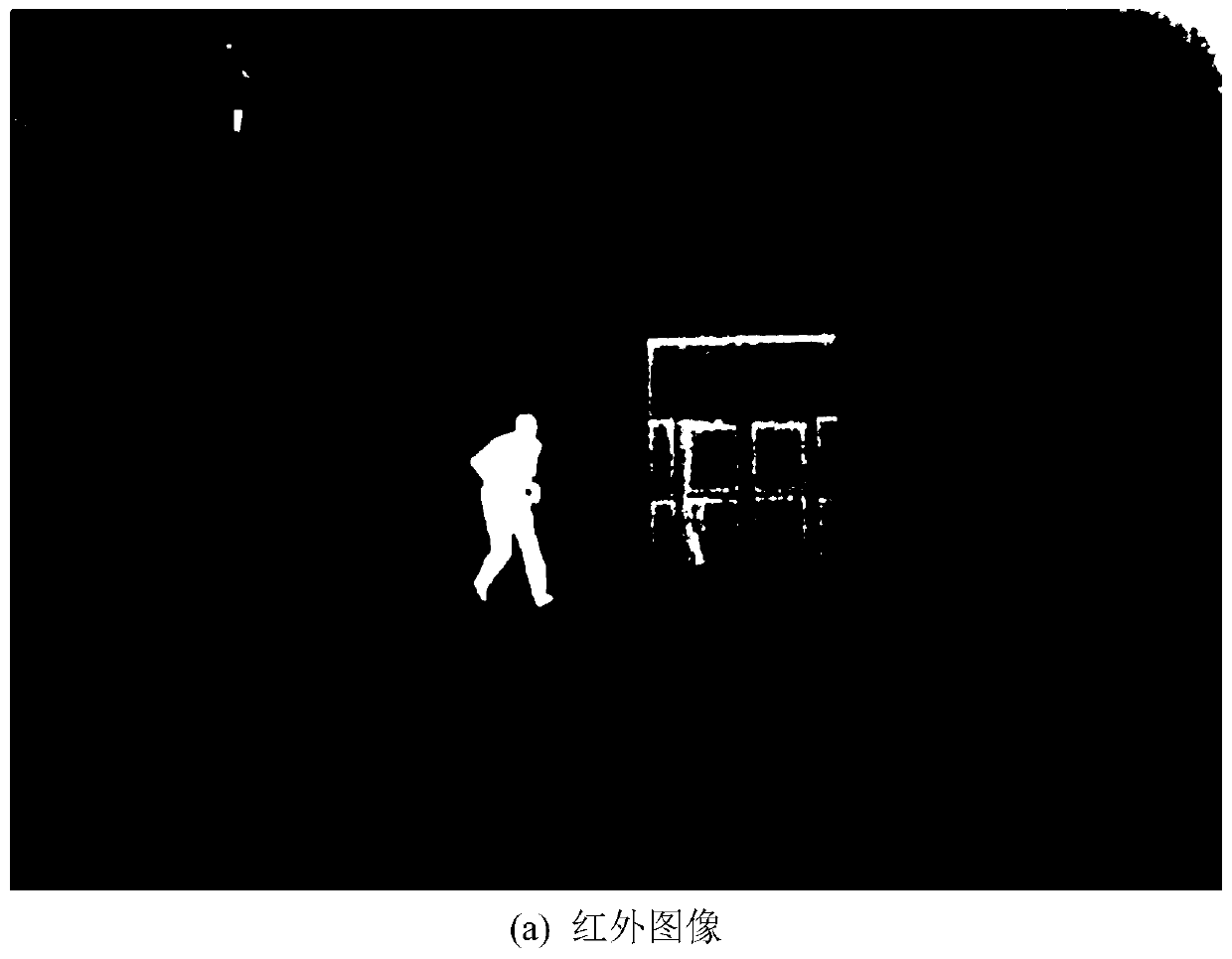

Multi-modal image target detection method based on image fusion

The invention relates to a multi-modal image target detection method based on image fusion, and the method comprises the steps: 1), making a multi-modal image data set through an infrared image and avisible light image which are collected in advance; 2) taking the preprocessed paired images as the input of a generation model G in the fusion model; generating a model G based on U-Net full convolutional network. A convolutional neural network based on a residual network is used as a generative network model structure and comprises a contraction process and an expansion process, a contraction path comprises a plurality of convolution plus ReLU active layer plus maximum pooling (Max Pooling) structures, the number of feature channels of each step of down-sampling is doubled, and a generated fusion image is output; the fused image is input into a discrimination network model in a fusion model; according to the change of a loss function in a training process, a learning rate training indexis adjusted according to the number of iterations, and through training, based on a self-owned multi-modal image data set, an image fusion model which retains infrared image thermal radiation characteristics and visible light image structural texture characteristics at the same time can be obtained.

Owner:TIANJIN UNIV

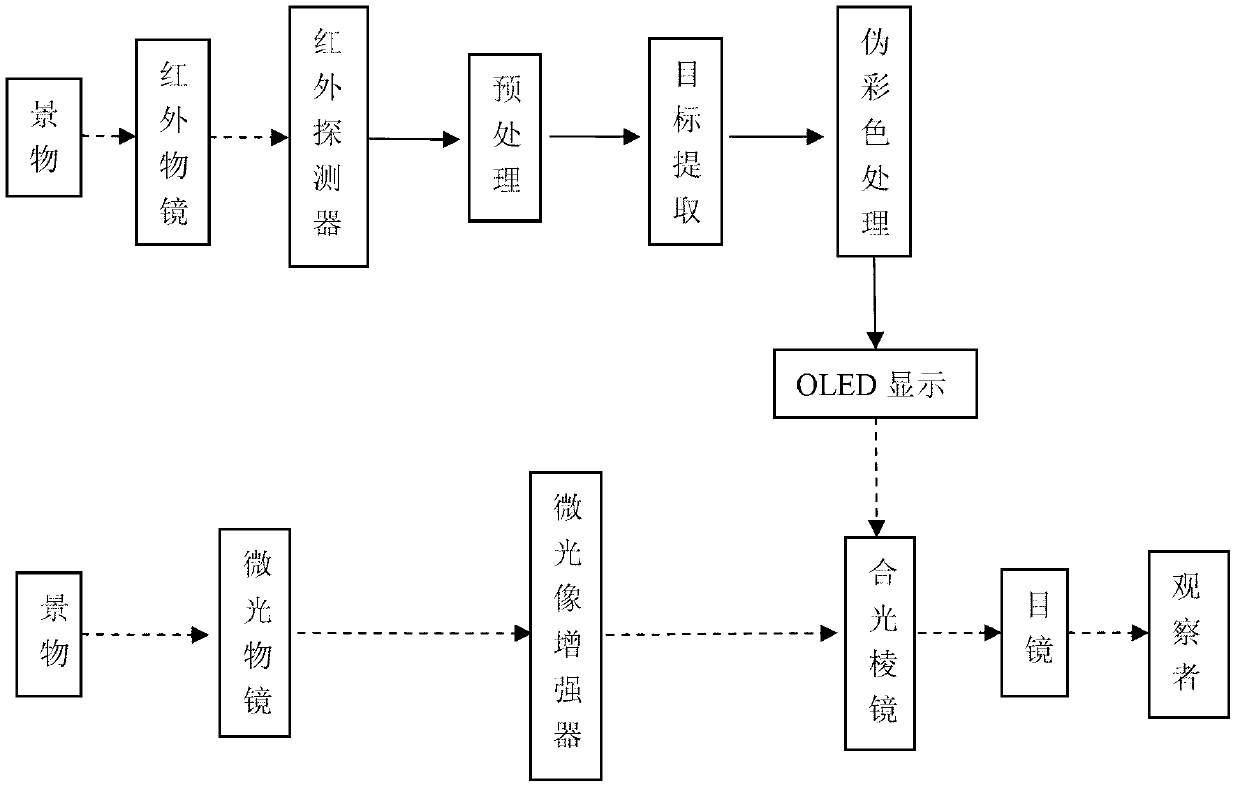

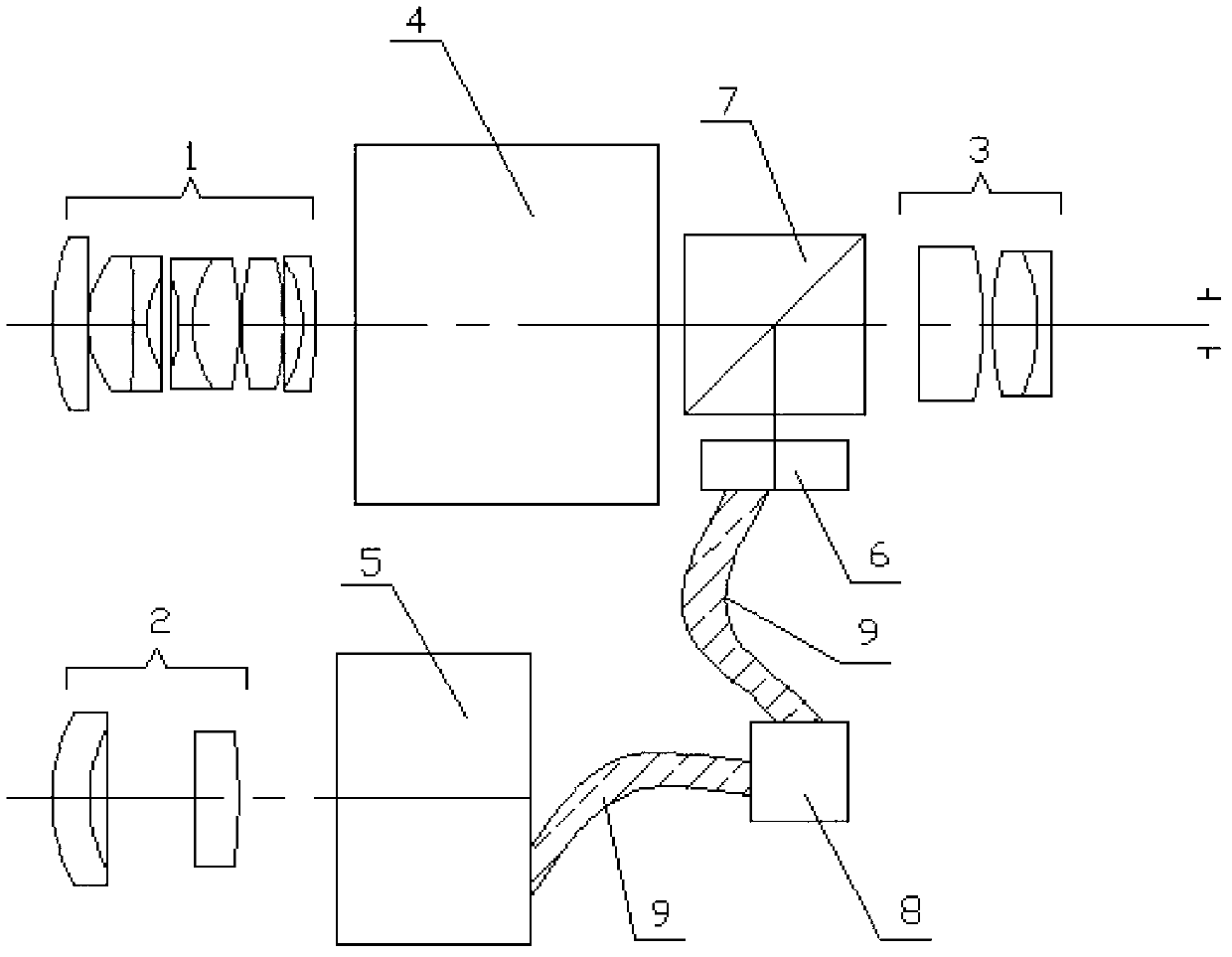

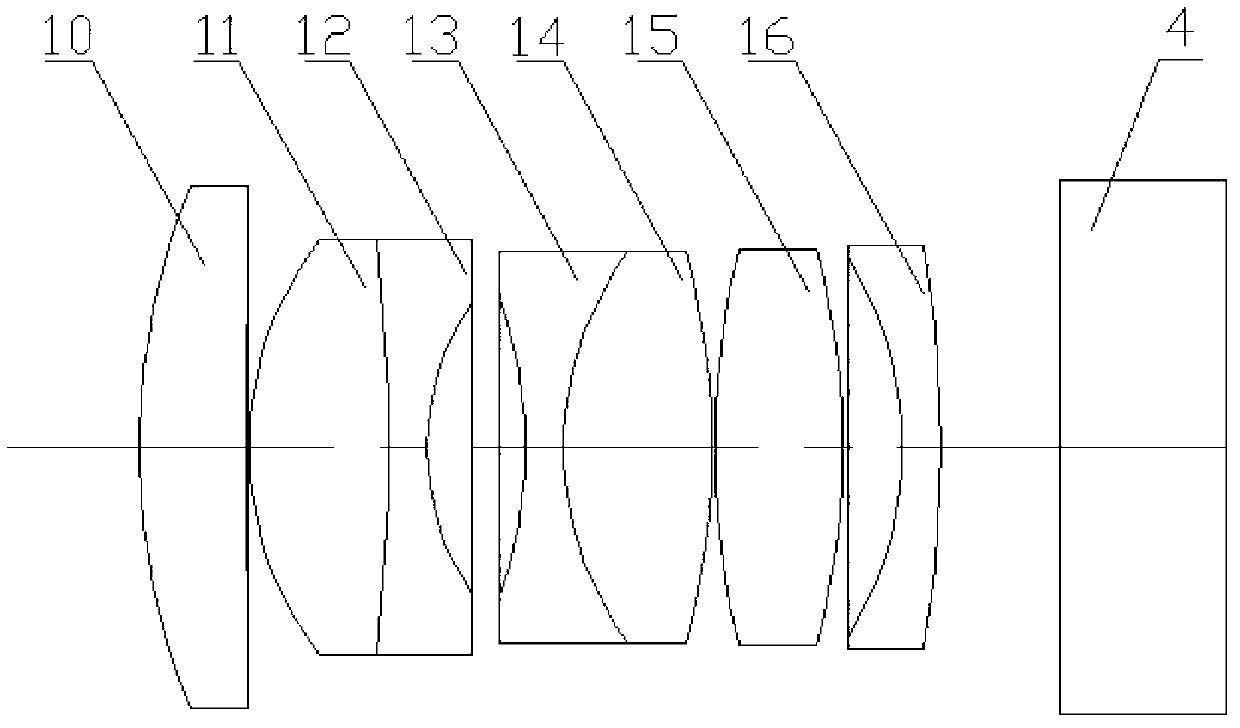

Infrared/ glimmer image fusion night vision system

The invention discloses an infrared / glimmer image fusion night vision system, which consists of a glimmer object glass group, a glimmer image intensifier, an infrared object glass group, an uncooling long-wave infrared detector, an image processing circuit module, an electric signal transmission line, an OLED (organic light emitting diode) minitype display, an integrated optical prism and an eye lense system. The night vision system takes a glimmer image as the background, and the image fusion of a glimmer channel and an infrared channel is realized in a mode that a pseudo-color infrared target is optically projected. In order to obtain the good image fusion effect, the glimmer object glass group and the infrared object glass group both satisfy one time of magnifying power; an infrared image is subjected to denoising and enhancing pretreatments, target extraction and the electronic registration of the image; and then, a gray level image is subjected to pseudo-color treatment and is then output to the OLED minitype display for color display. The fused image has a clear glimmer background and an outstanding infrared target. The night vision system has the advantages of small size and light weight, can work for a long time and is especially suitable for night vehicle driving and for a single person to carry, observe and use.

Owner:KUNMING INST OF PHYSICS

Respiratory gated image fusion of computed tomography 3D images and live fluoroscopy images

InactiveUS20070270689A1Efficient and safe interventionReduce negative impactDiagnostic recording/measuringSensorsHuman bodyDiagnostic Radiology Modality

A system and method is provided directed to improved real-time image guidance by uniquely combining the capabilities of two well-known imaging modalities, anatomical 3D data from computer tomography (CT) and real time data from live fluoroscopy images provided by an angiography / fluoroscopy system to ensure a more efficient and safer intervention in treating punctures, drainages and biopsies in the abdominal and thoracic areas of the human body.

Owner:SIEMENS HEALTHCARE GMBH

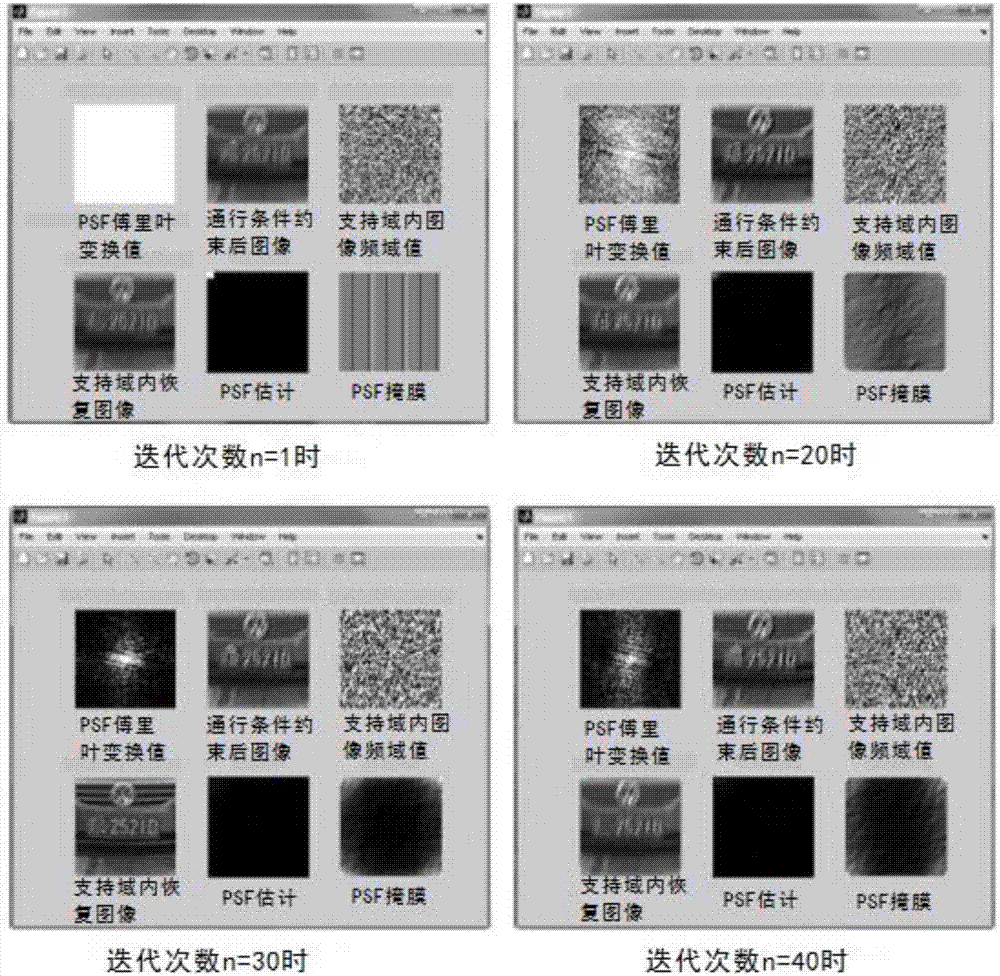

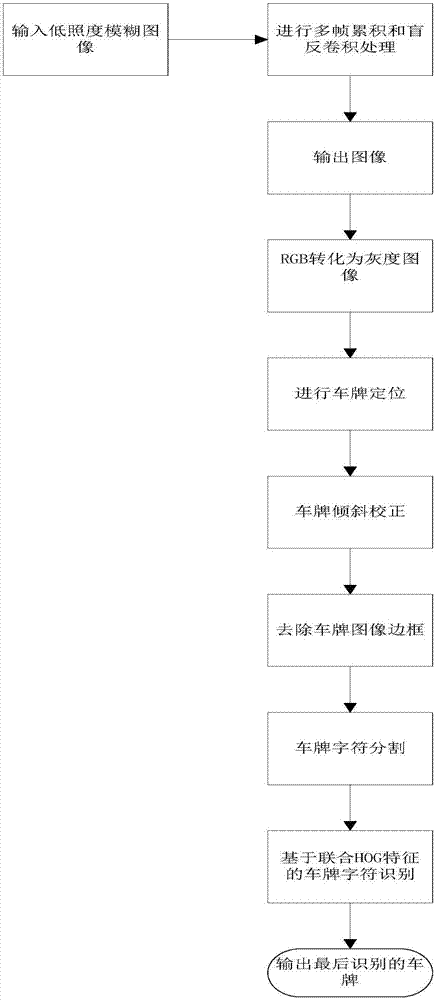

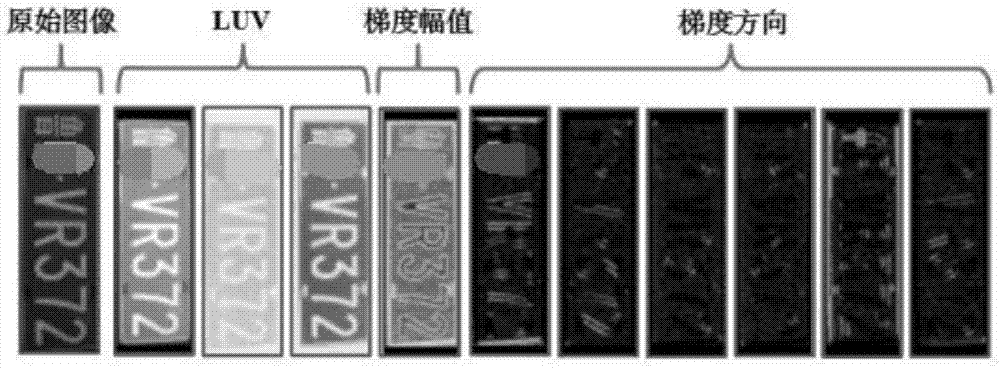

Blurred license plate image identification algorithm based on image fusion and blind deconvolution

InactiveCN107103317AImprove read reliabilityEasy to identifyCharacter and pattern recognitionVisual perceptionImage based

The present invention relates to the computer vision field, especially to a blurred license plate image identification algorithm based on image fusion and blind deconvolution. The algorithm comprises 5 steps: 1, multi-frame image fusion for enhancement of distinguishing degree of low-illumination images of a license plate; 2, blurred image processing based on the blind deconvolution algorithm; 3, license plate location and inclination estimation; 4, segmentation of license plate characters; and 5, character identification and output after segmentation of the license plate characters. The blurred license plate image identification algorithm based on the image fusion and the blind deconvolution is high in reading reliability of license plate characters, good in identification degree and good in robustness in the condition of blurred license plate and low-quality imaging of license plate images caused by low illumination at night or vehicle overspeed and the like; and moreover, the step calculation is simple, the high efficiency is maintained, and the timeliness can satisfy the requirement.

Owner:HUNAN VISION SPLEND PHOTOELECTRIC TECH

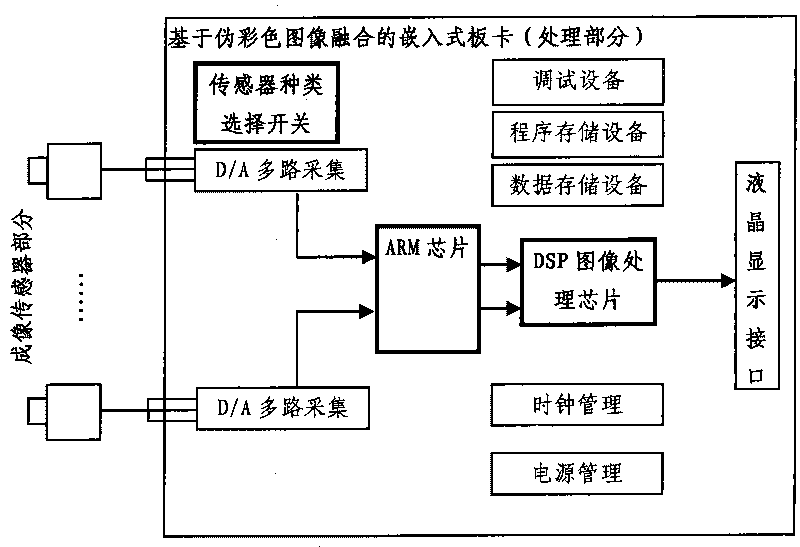

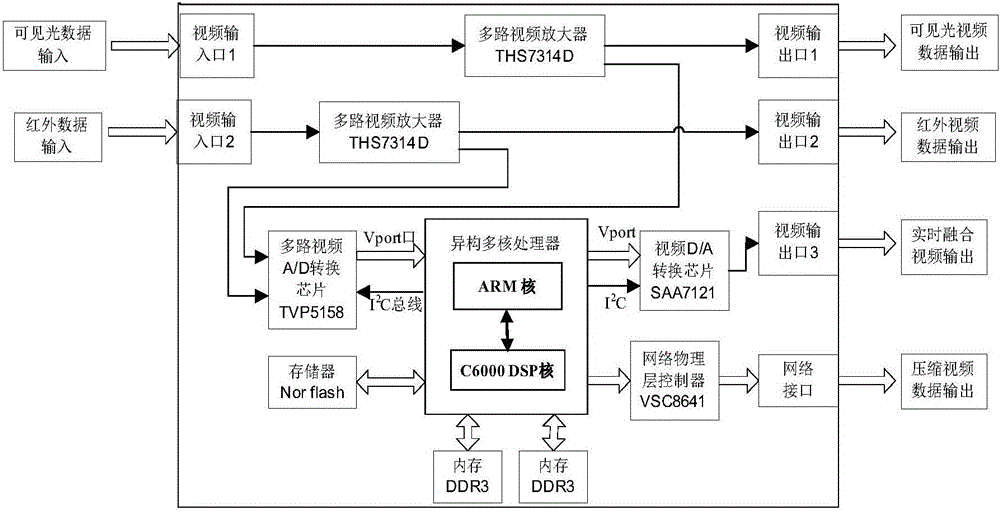

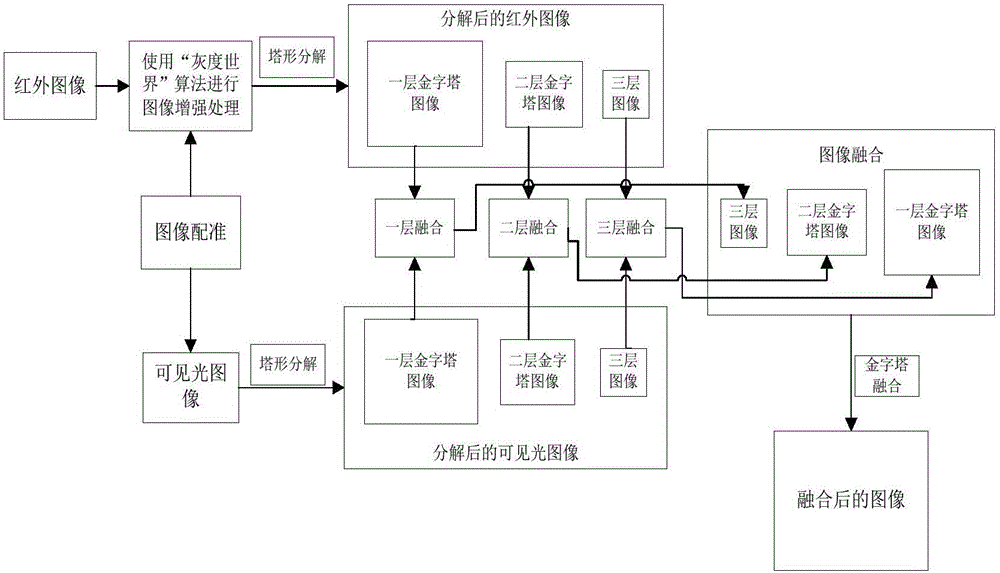

Infrared and visible light image real-time fusion system based on heterogeneous multi-core architecture

InactiveCN105678727AEfficient developmentImprove scalabilityImage enhancementImage analysisSoftware developmentVideo image

The invention discloses an infrared and visible light image real-time fusion system based on a heterogeneous multi-core architecture. The system uses a heterogeneous multi-core processor, with an ARM core and a DSP core as cores, to take charge of the control flow and the data sending and receiving and processing logic of the whole system, complete video data collection and real-time enhancing and fusion of infrared and visible light images, and display and output the video data after fusion. The ARM core is responsible for real-time data collection of both infrared light and visible light video data and real-time transmission of compressed data after image fusion. The DSP core is responsible for registration preprocessing and image enhancement preprocessing of the infrared light input image, real-time fusion of the infrared light and visible light video data, and real-time encoding compression of the video data after fusion. A three-layer Laplacian pyramid structure is used for the real-time fusion. The system is high in computation efficiency, good in compatibility, high in extensibility and adaptability, and convenient and highly efficient in software developing.

Owner:SICHUAN UNIV

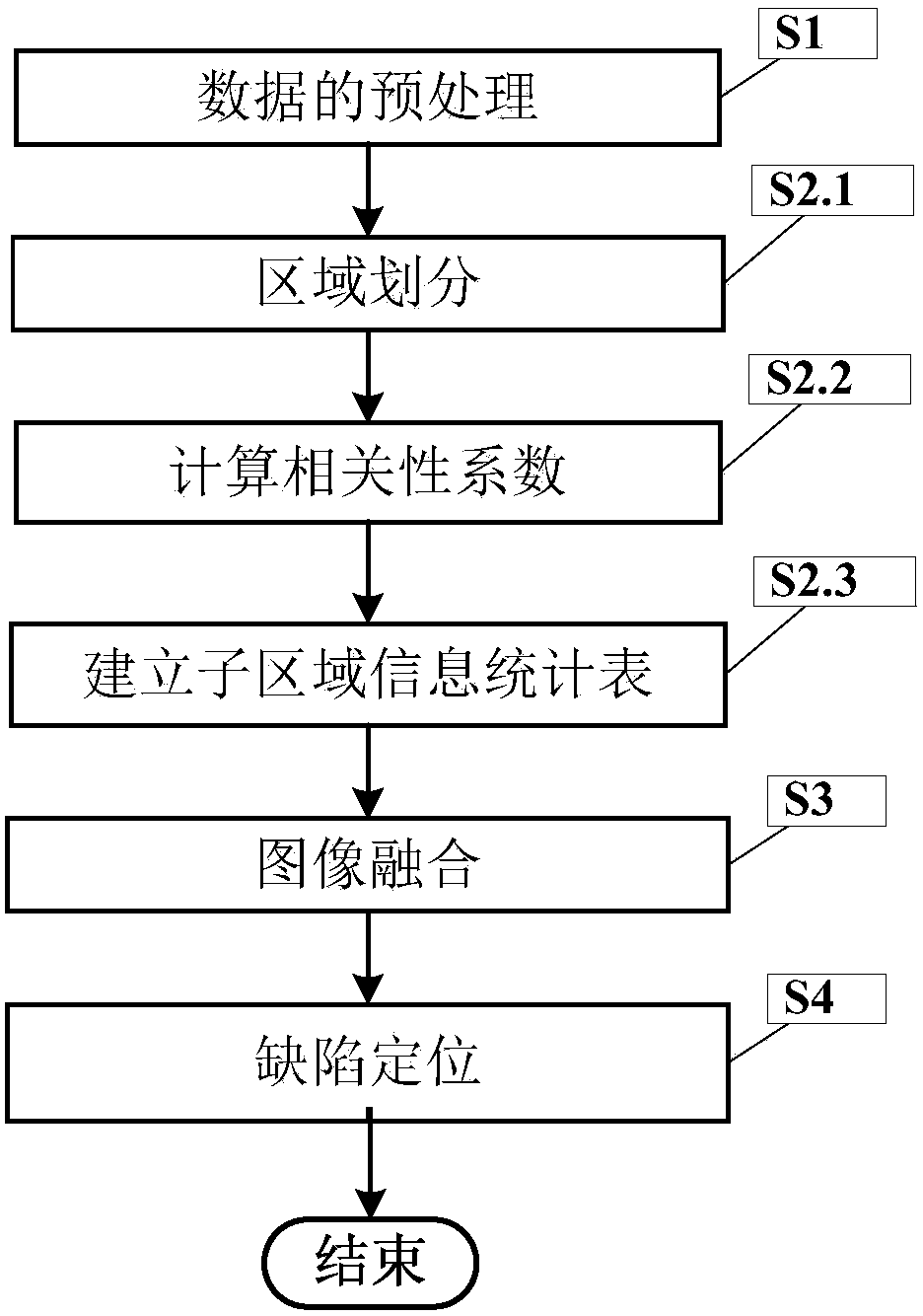

Infrared-heat-image processing method based on region segmentation and image fusion

ActiveCN108198181AEasy to detectAchieve positioningImage enhancementImage analysisSpatial correlationCorrelation coefficient

The invention discloses an infrared-heat-image processing method based on region segmentation and image fusion. The infrared-heat-image processing method includes the steps that video stream data of the heating stage is online collected in real time; then images are preprocessed to extract edge information, heat image sequences are subjected to equal region segmentation, and spatial correlation ofeach sub-region is analyzed; in other words, according to time sequences, correlation coefficients between adjacent frames of all sub regions are computed; the correlation coefficients are compared with threshold values, upper-left-corner coordinate values and corresponding frame numbers of sub regions without meeting the threshold-value condition are returned, a frame of image is fused through the upper-left-corner coordinate values and the corresponding frame numbers of the sub regions; defect information enhancement is achieved accordingly, defects are rapidly identified, and quantitativedetection of the defects is completed.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com