Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

35713 results about "Feature extraction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In machine learning, pattern recognition and in image processing, feature extraction starts from an initial set of measured data and builds derived values (features) intended to be informative and non-redundant, facilitating the subsequent learning and generalization steps, and in some cases leading to better human interpretations. Feature extraction is related to dimensionality reduction.

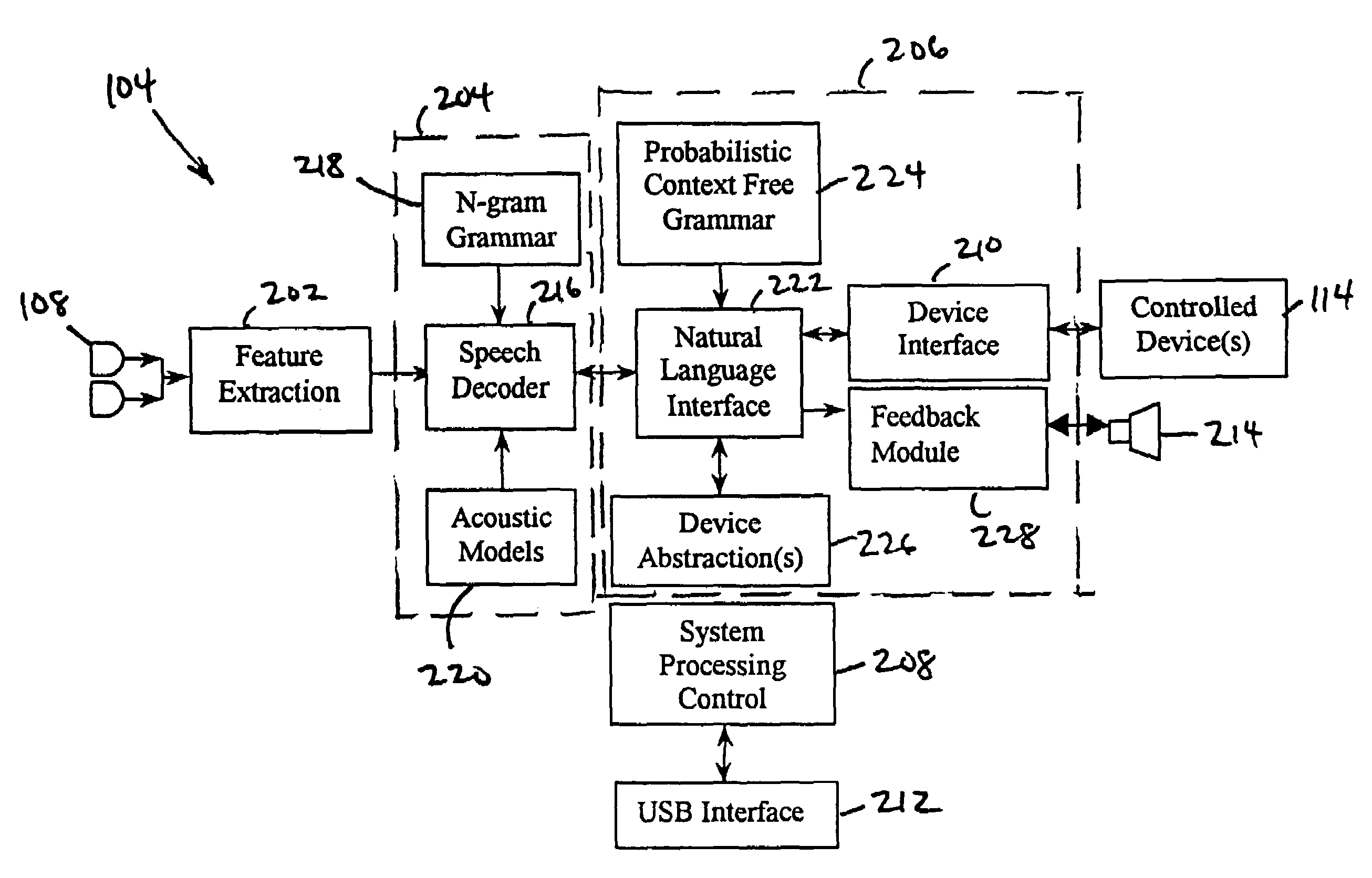

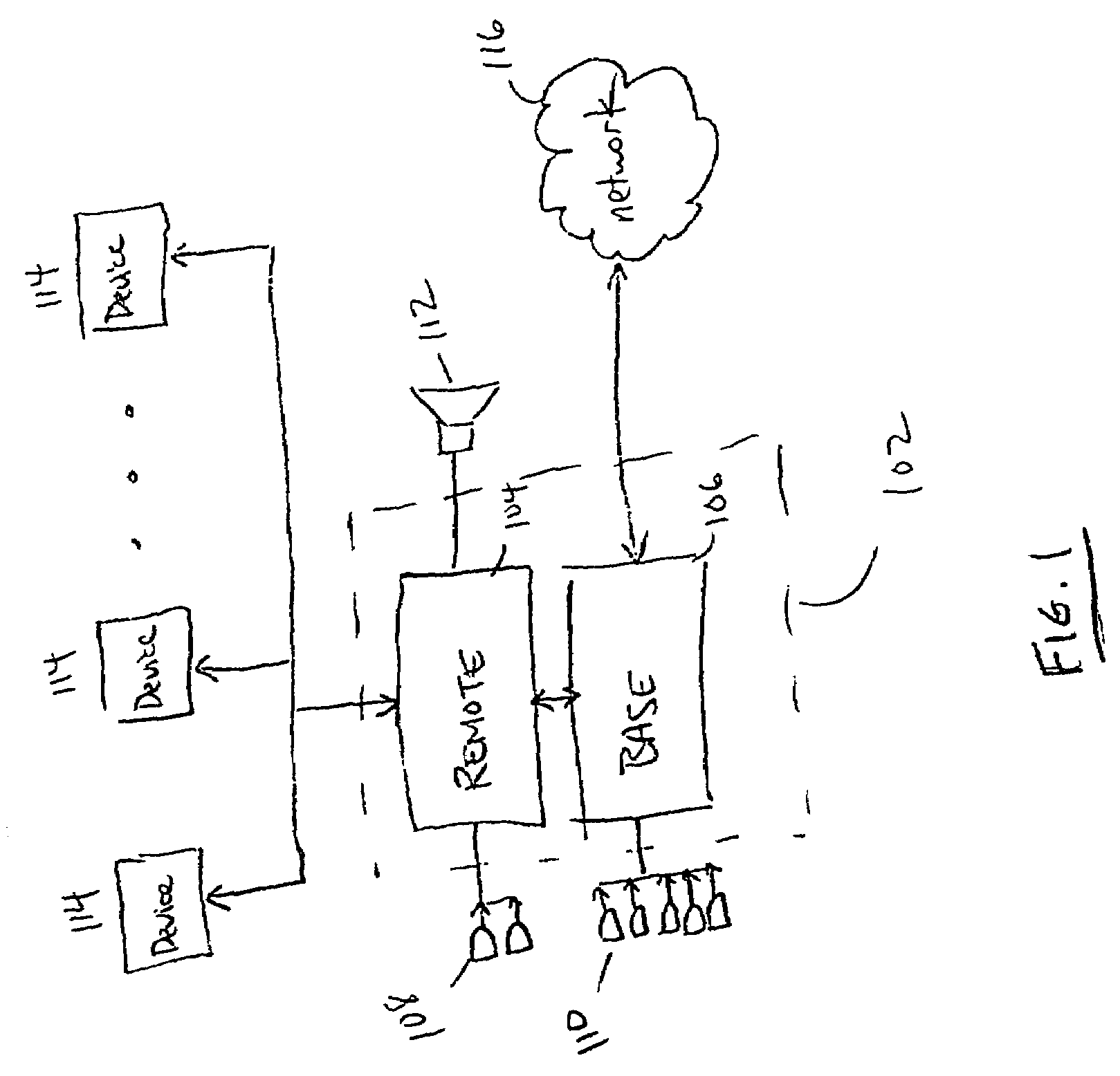

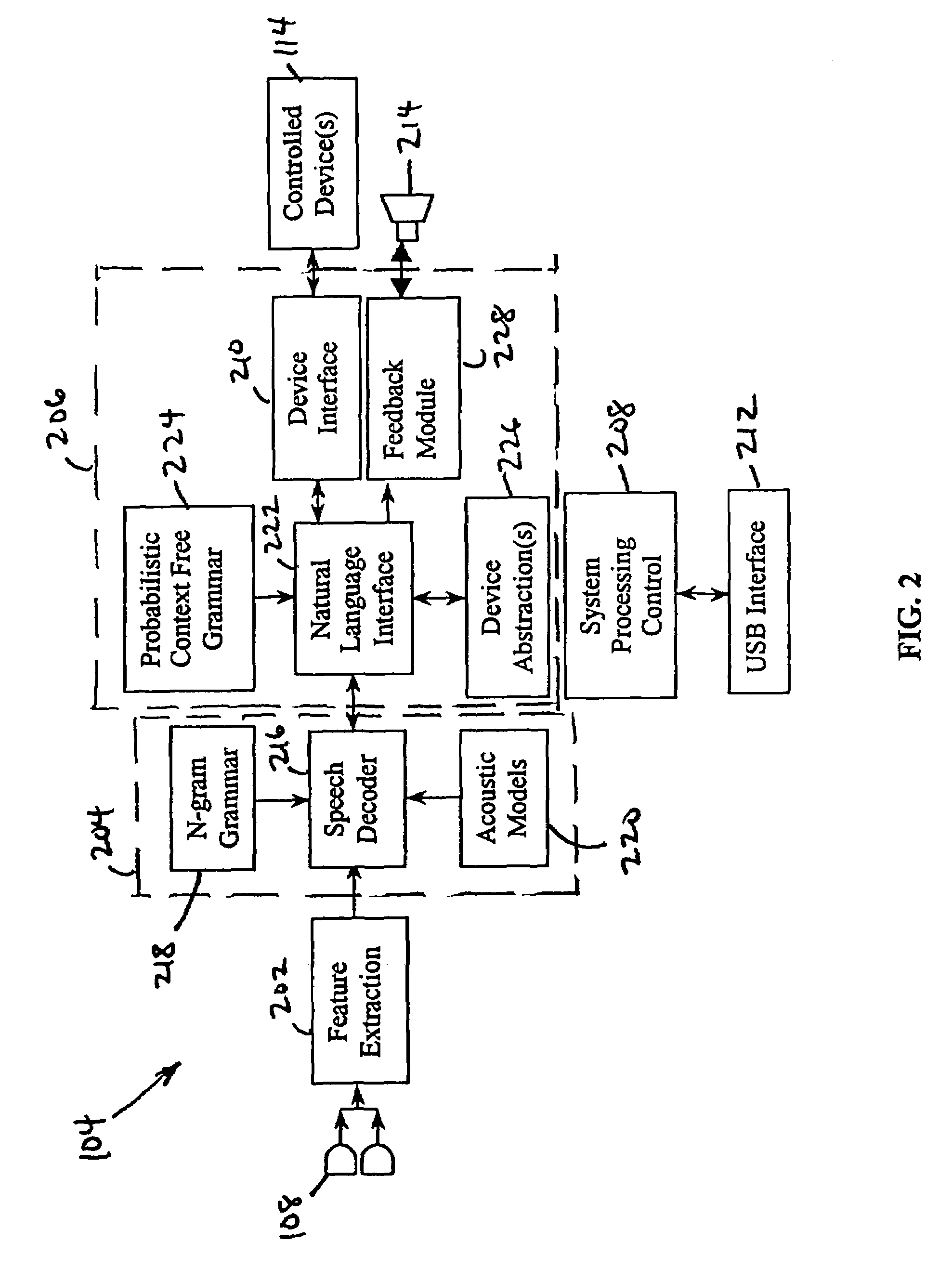

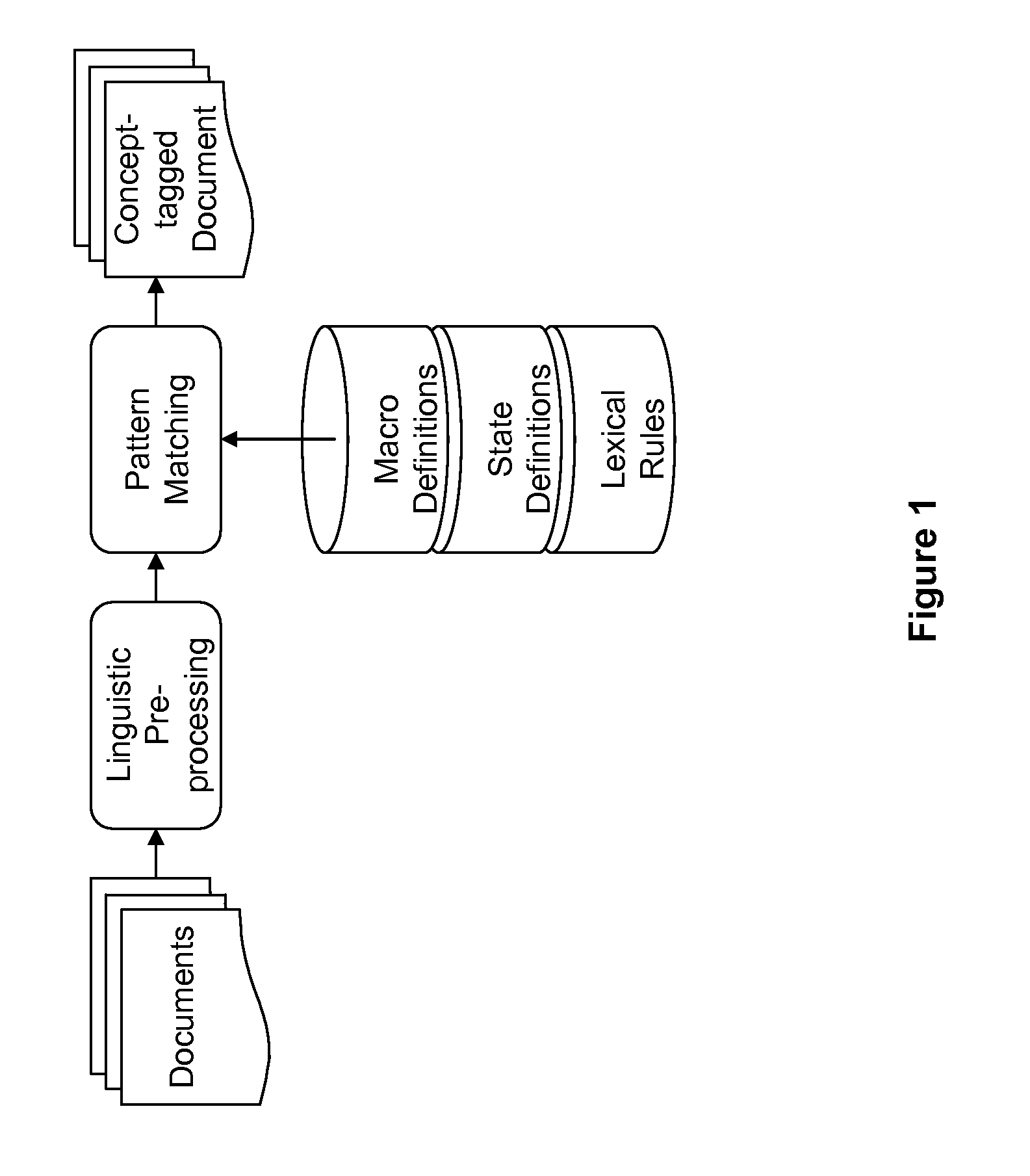

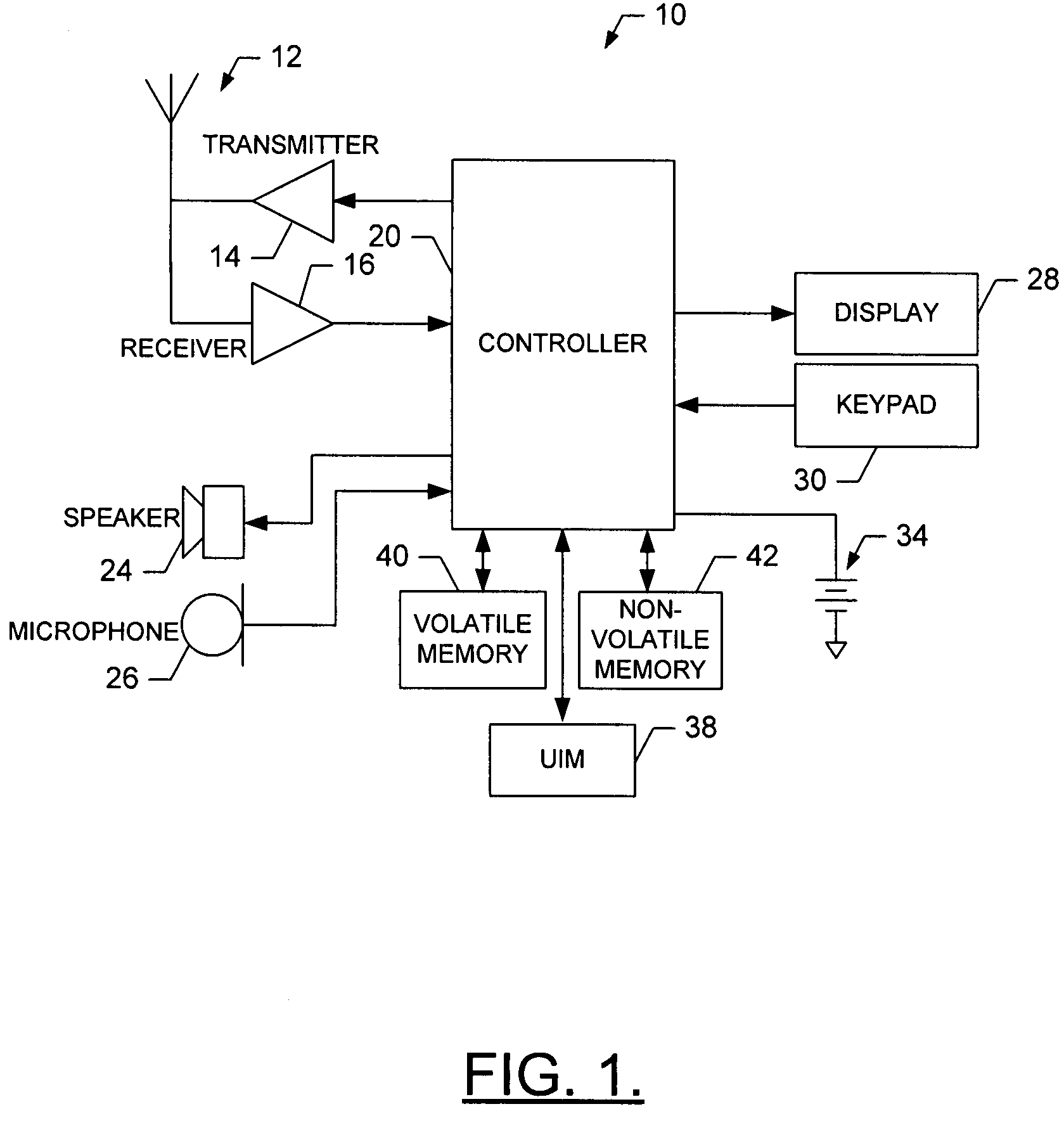

Natural language interface control system

InactiveUS7447635B1Speech recognitionSpecial data processing applicationsFeature extractionHide markov model

A natural language interface control system for operating a plurality of devices consists of a first microphone array, a feature extraction module coupled to the first microphone array, and a speech recognition module coupled to the feature extraction module, wherein the speech recognition module utilizes hidden Markov models. The system also comprises a natural language interface module coupled to the speech recognition module and a device interface coupled to the natural language interface module, wherein the natural language interface module is for operating a plurality of devices coupled to the device interface based upon non-prompted, open-ended natural language requests from a user.

Owner:SONY CORP +1

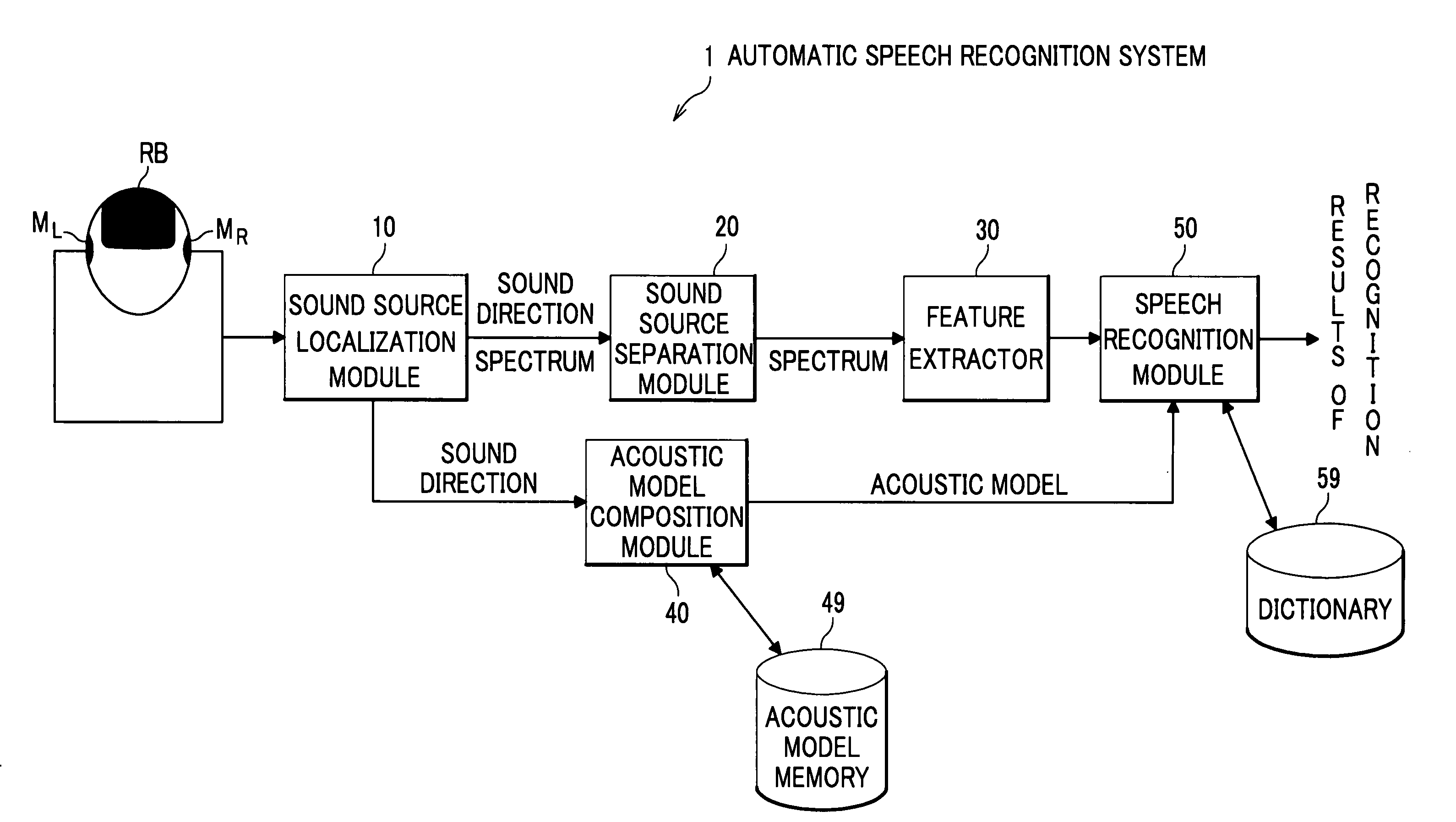

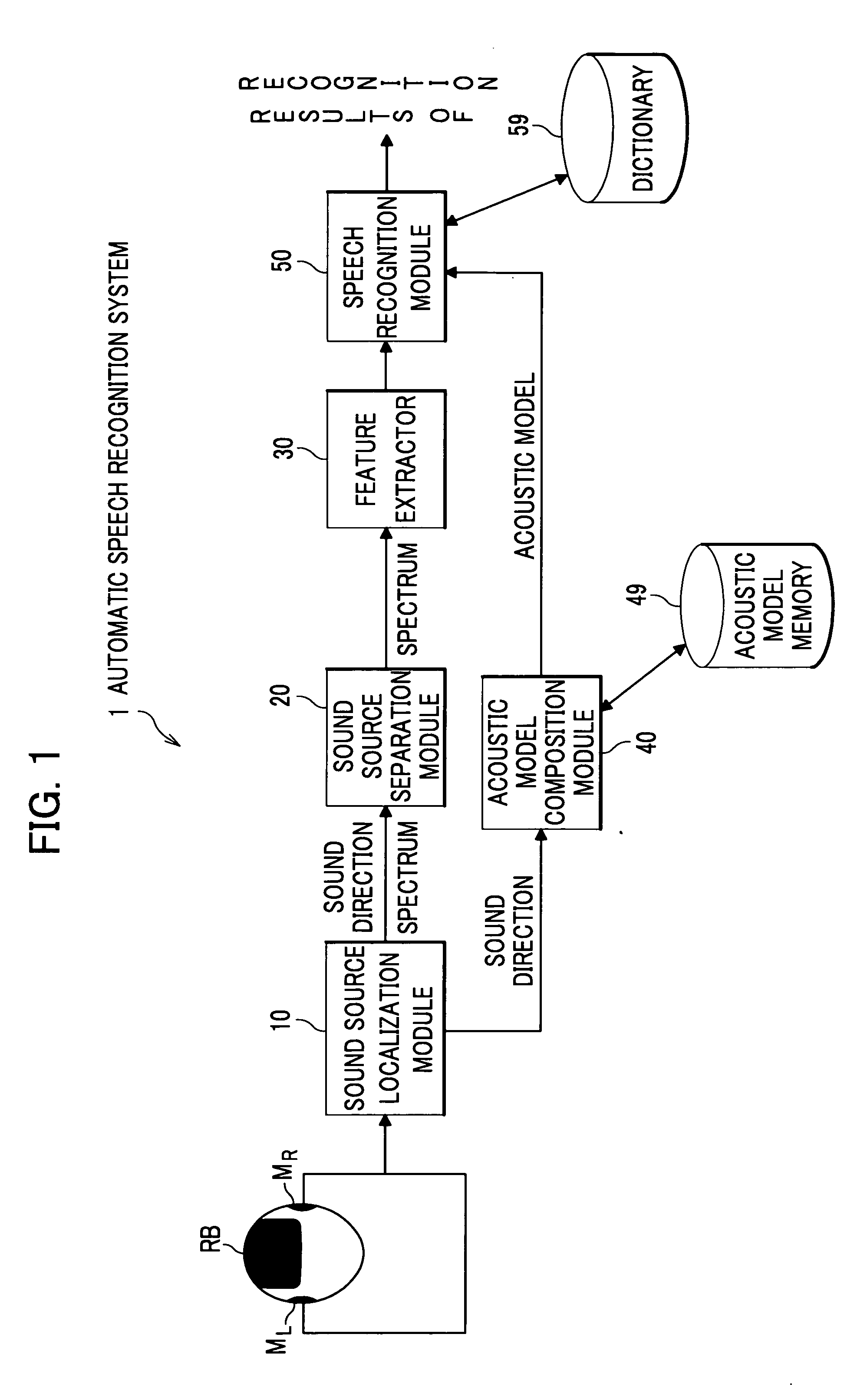

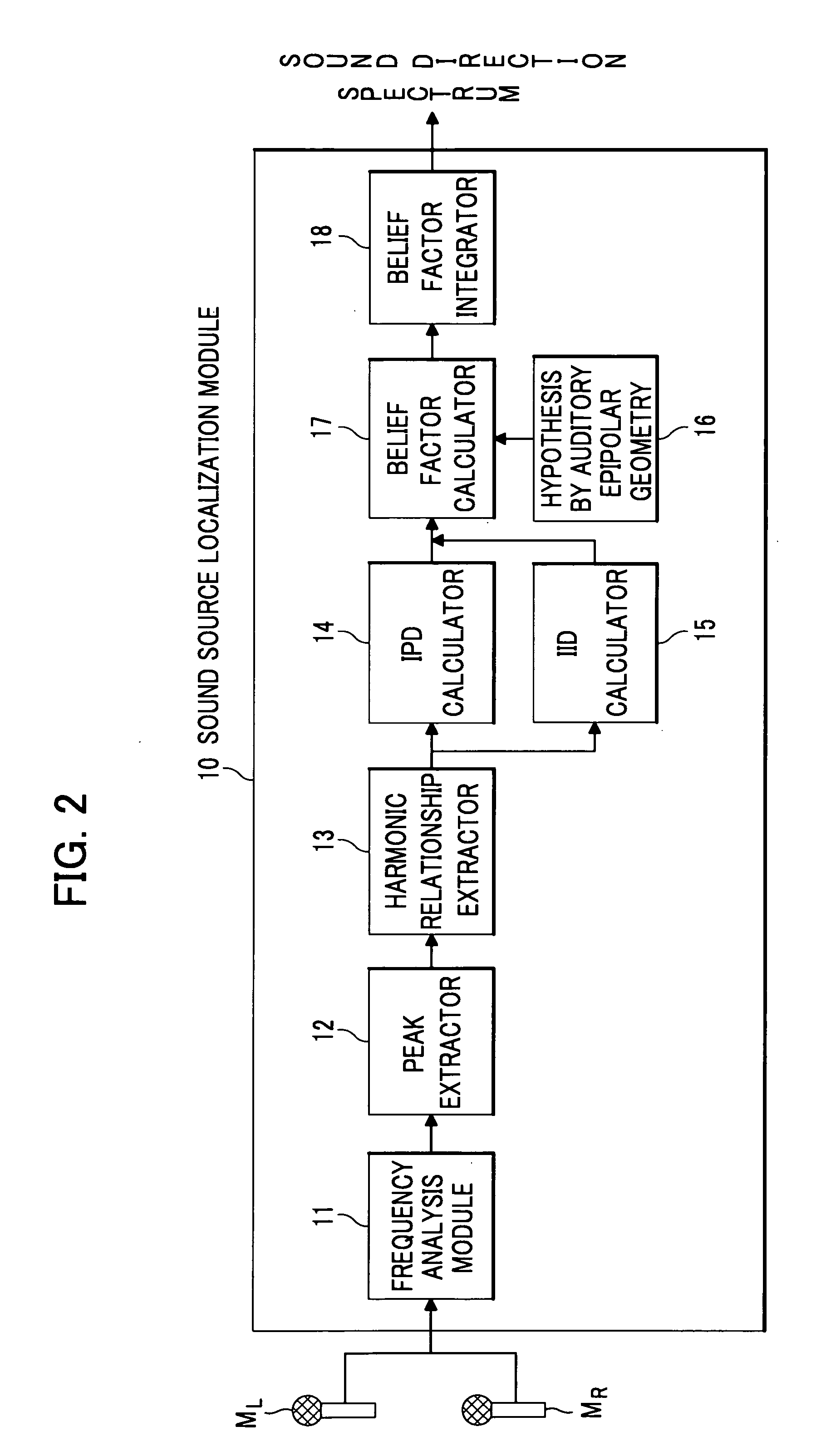

Automatic Speech Recognition System

InactiveUS20090018828A1Improve speech recognition rateImprove accuracySpeech recognitionSound source separationFeature extraction

An automatic speech recognition system includes: a sound source localization module for localizing a sound direction of a speaker based on the acoustic signals detected by the plurality of microphones; a sound source separation module for separating a speech signal of the speaker from the acoustic signals according to the sound direction; an acoustic model memory which stores direction-dependent acoustic models that are adjusted to a plurality of directions at intervals; an acoustic model composition module which composes an acoustic model adjusted to the sound direction, which is localized by the sound source localization module, based on the direction-dependent acoustic models, the acoustic model composition module storing the acoustic model in the acoustic model memory; and a speech recognition module which recognizes the features extracted by a feature extractor as character information using the acoustic model composed by the acoustic model composition module.

Owner:HONDA MOTOR CO LTD

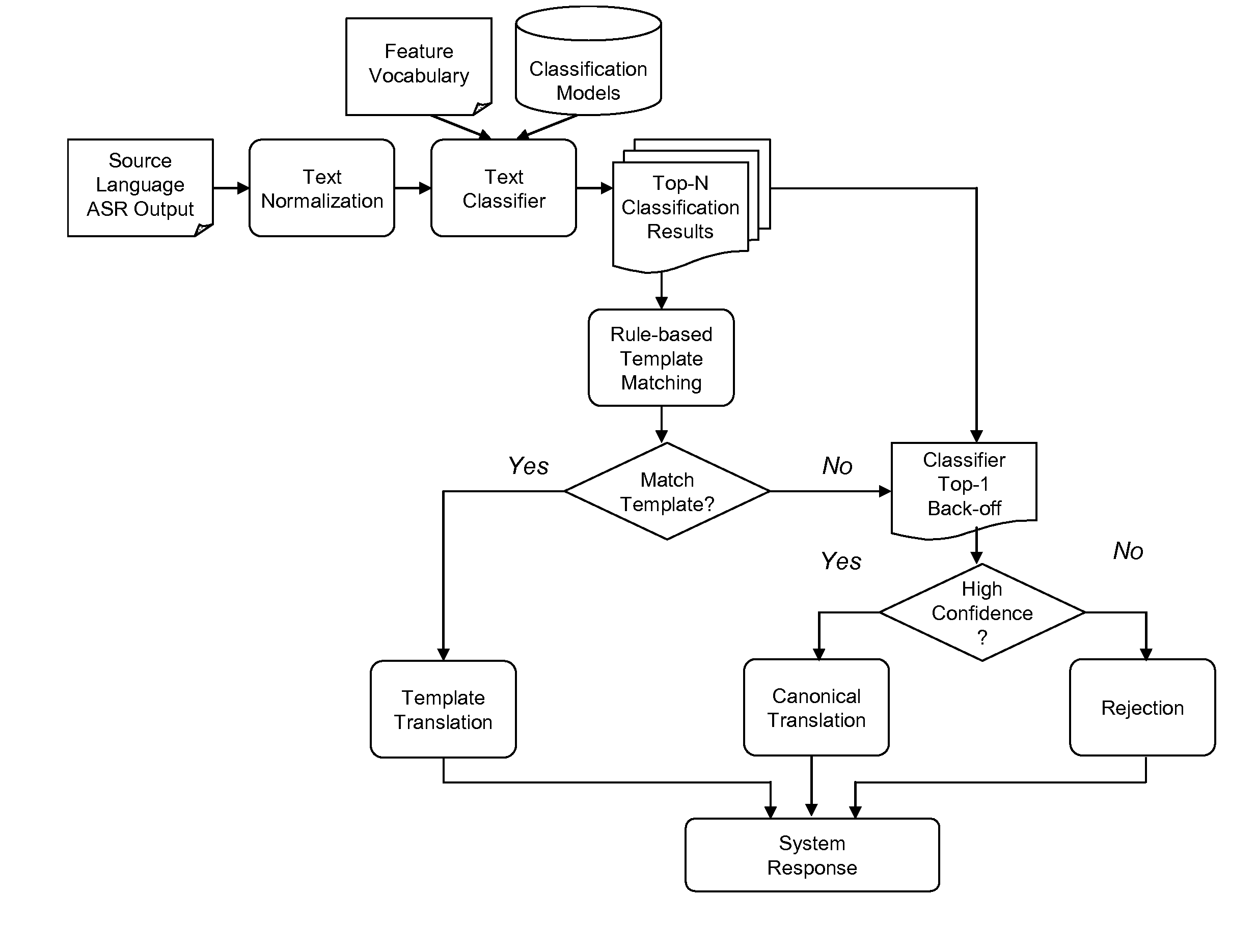

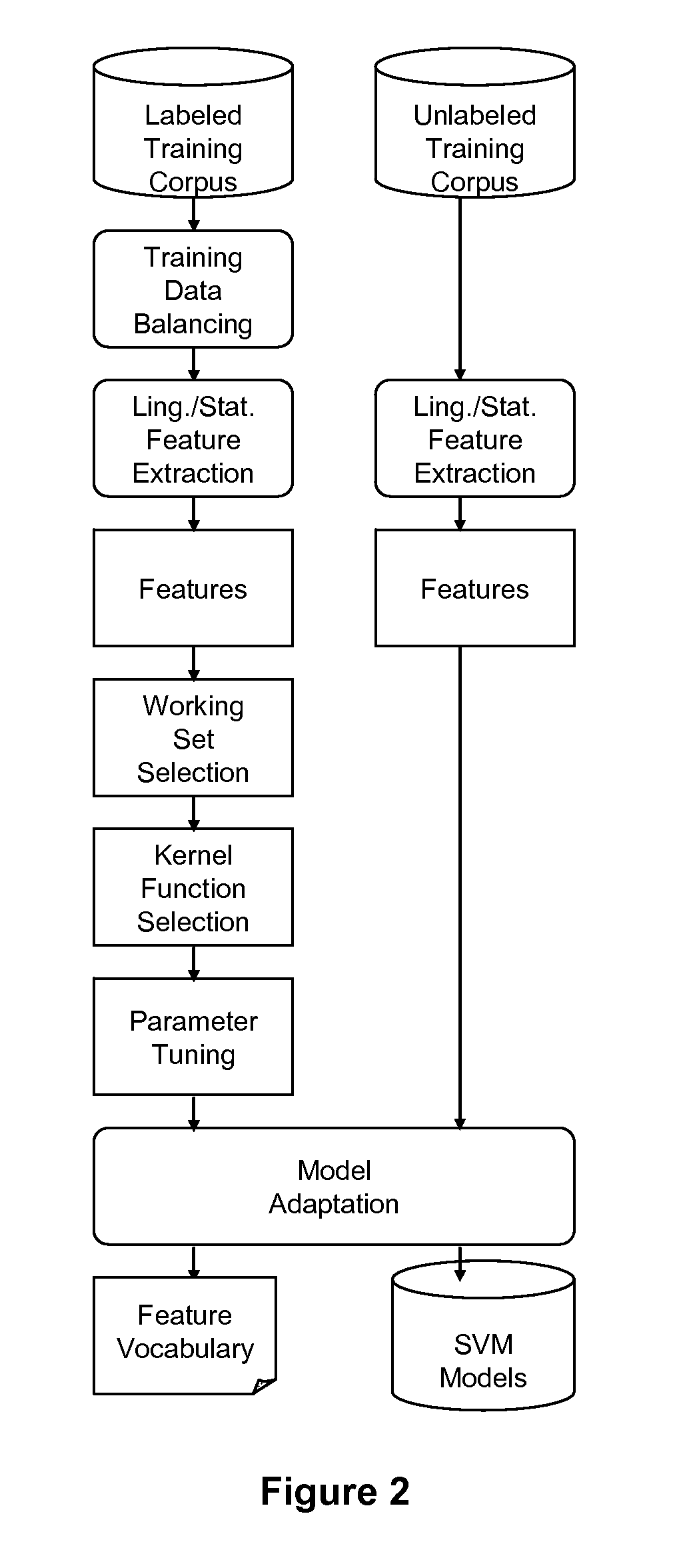

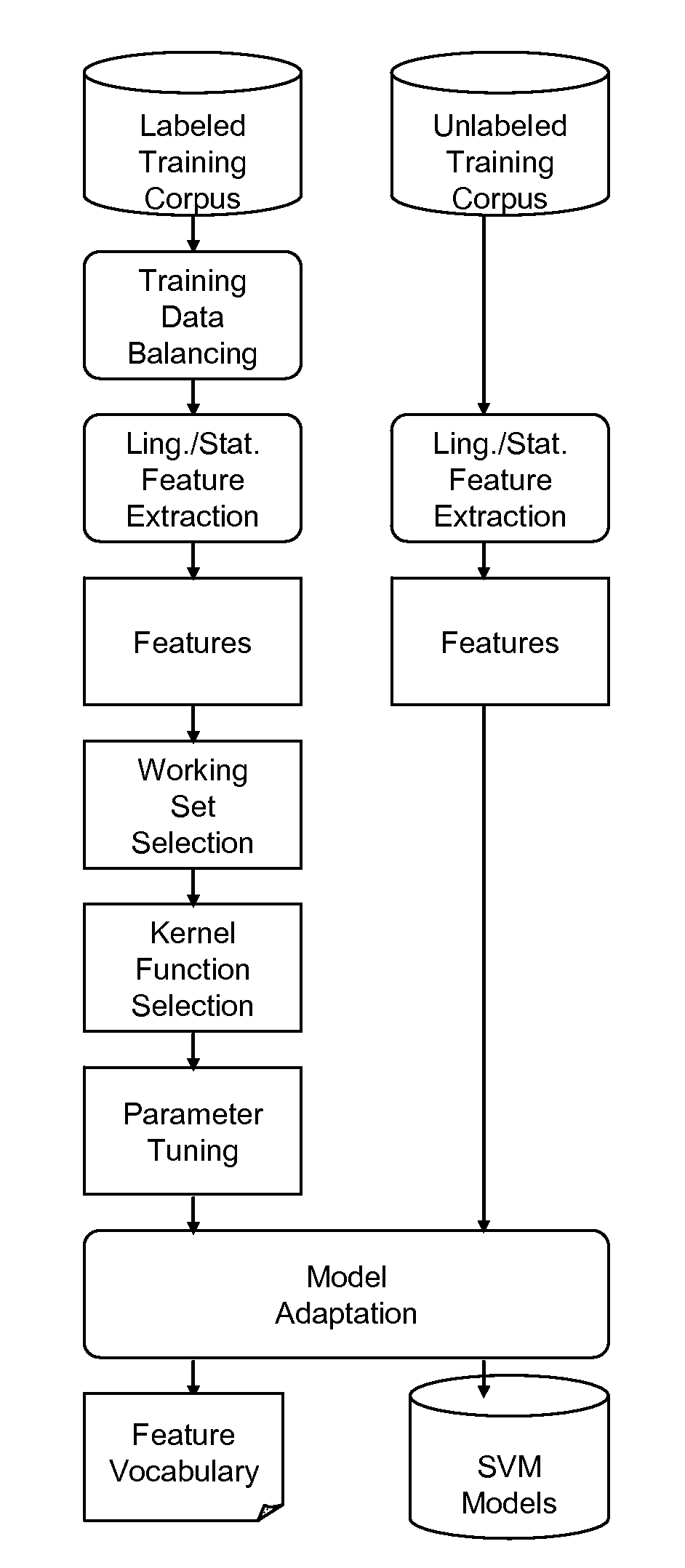

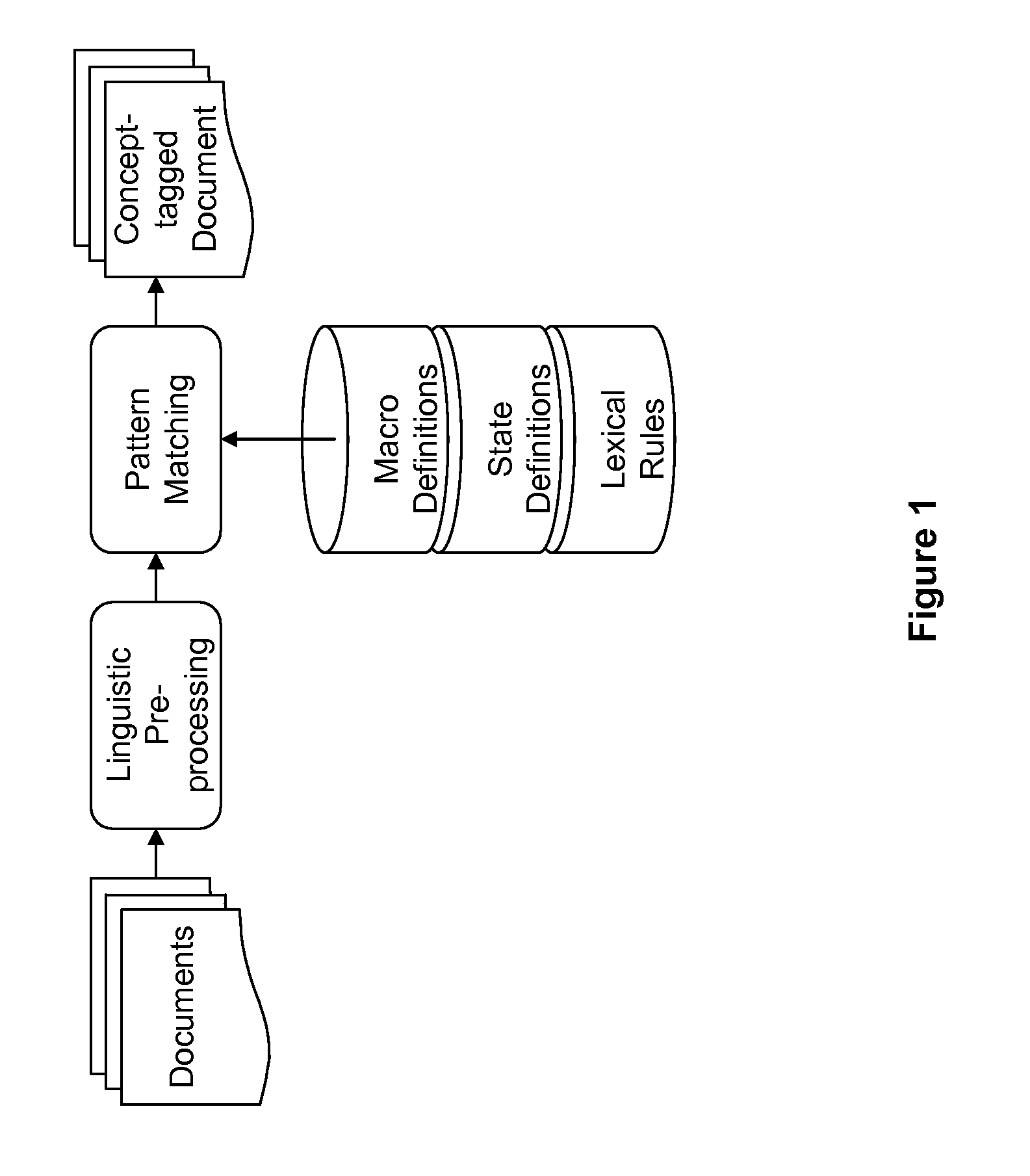

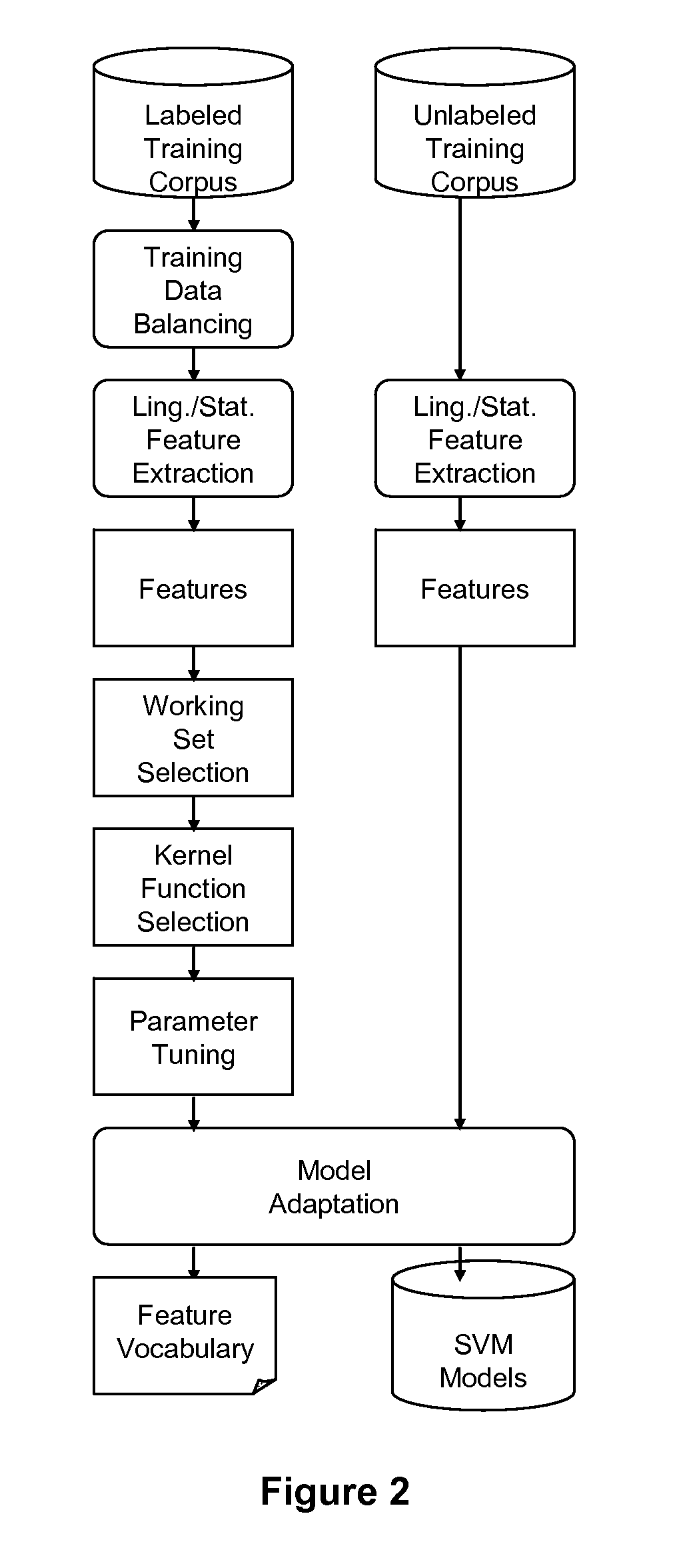

Robust information extraction from utterances

ActiveUS8583416B2Increase cross-entropyHigh precisionSpeech recognitionSpecial data processing applicationsFeature extractionText categorization

The performance of traditional speech recognition systems (as applied to information extraction or translation) decreases significantly with, larger domain size, scarce training data as well as under noisy environmental conditions. This invention mitigates these problems through the introduction of a novel predictive feature extraction method which combines linguistic and statistical information for representation of information embedded in a noisy source language. The predictive features are combined with text classifiers to map the noisy text to one of the semantically or functionally similar groups. The features used by the classifier can be syntactic, semantic, and statistical.

Owner:NANT HLDG IP LLC

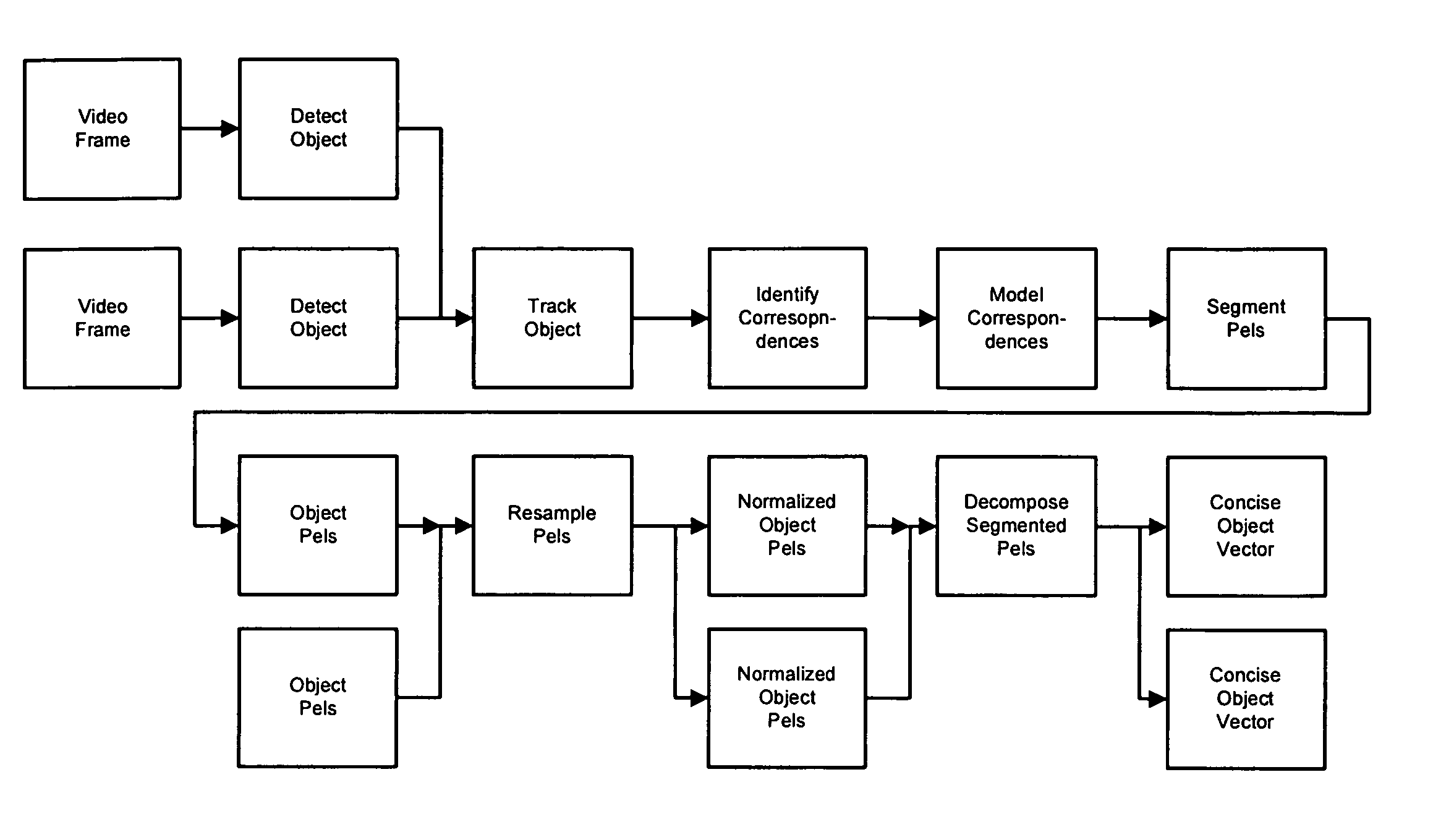

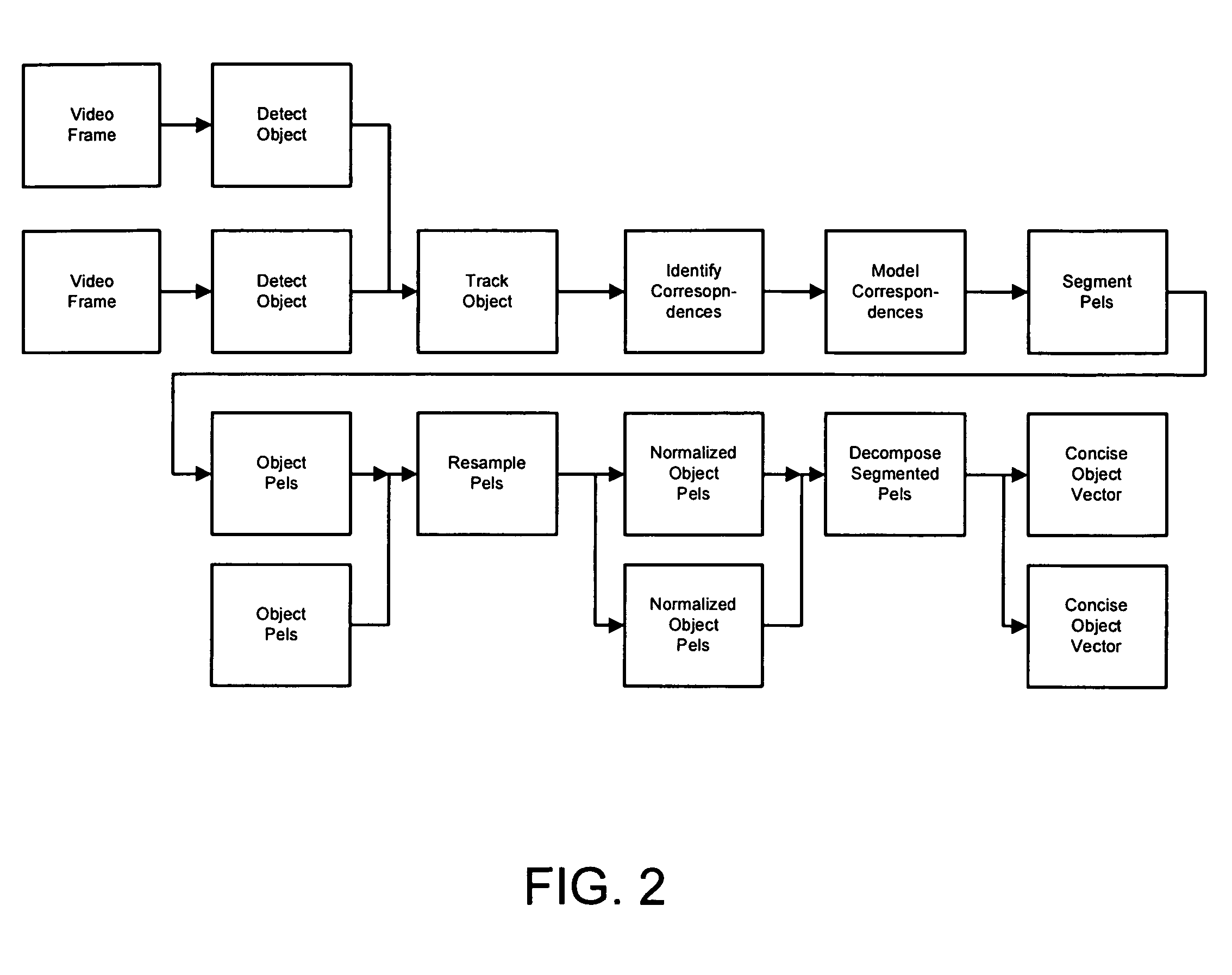

Apparatus and method for processing video data

ActiveUS7158680B2Increases robustness and applicabilityReduce non-linearityImage enhancementImage analysisFeature extractionComputer graphics (images)

An apparatus and methods for processing video data are described. The invention provides a representation of video data that can be used to assess agreement between the data and a fitting model for a particular parameterization of the data. This allows the comparison of different parameterization techniques and the selection of the optimum one for continued video processing of the particular data. The representation can be utilized in intermediate form as part of a larger process or as a feedback mechanism for processing video data. When utilized in its intermediate form, the invention can be used in processes for storage, enhancement, refinement, feature extraction, compression, coding, and transmission of video data. The invention serves to extract salient information in a robust and efficient manner while addressing the problems typically associated with video data sources

Owner:EUCLID DISCOVERIES LLC

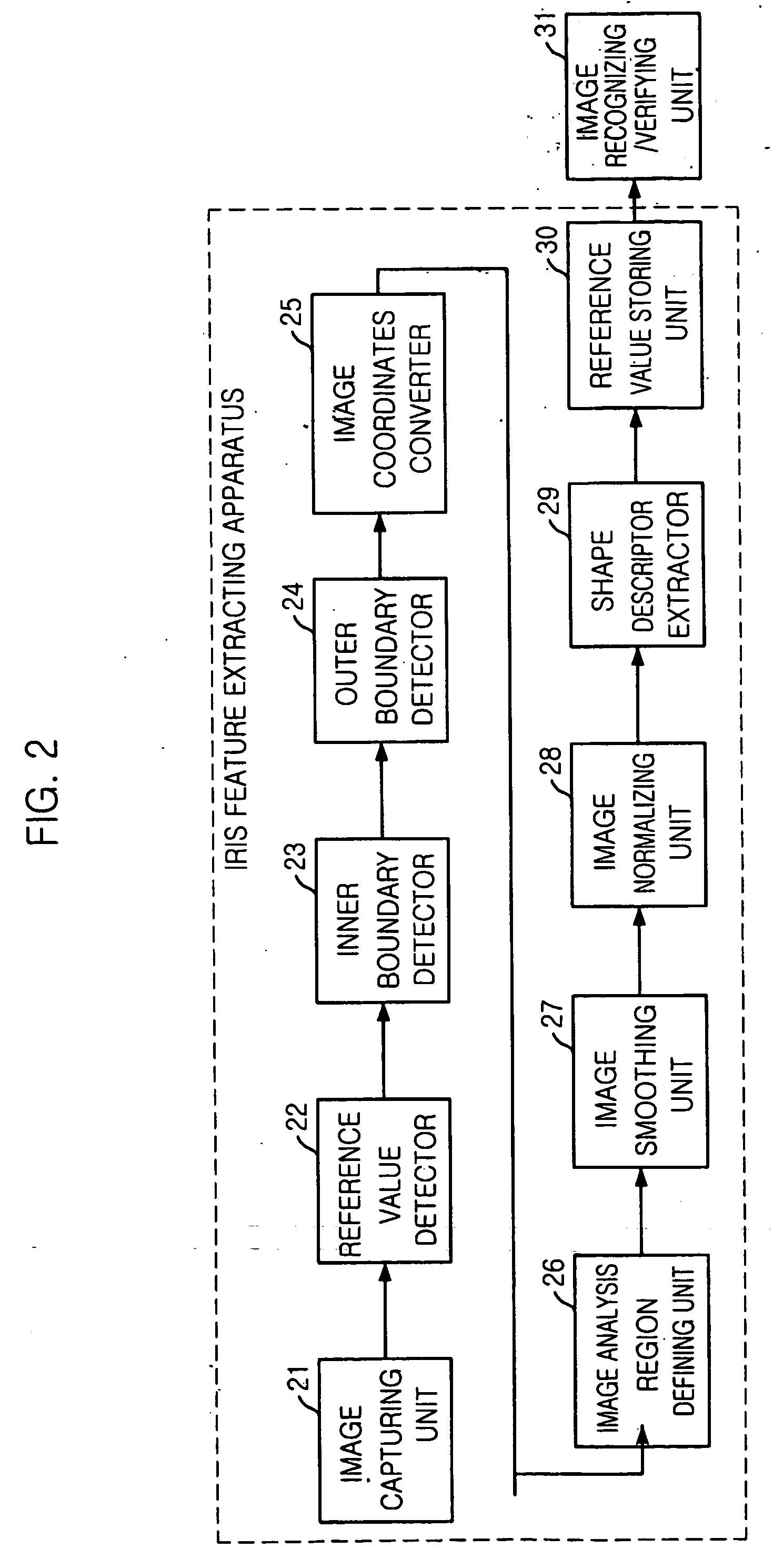

Computer-aided image analysis

InactiveUS6996549B2Improve abilitiesGreat dimensionalityMedical data miningImage analysisLearning machineComputer-aided

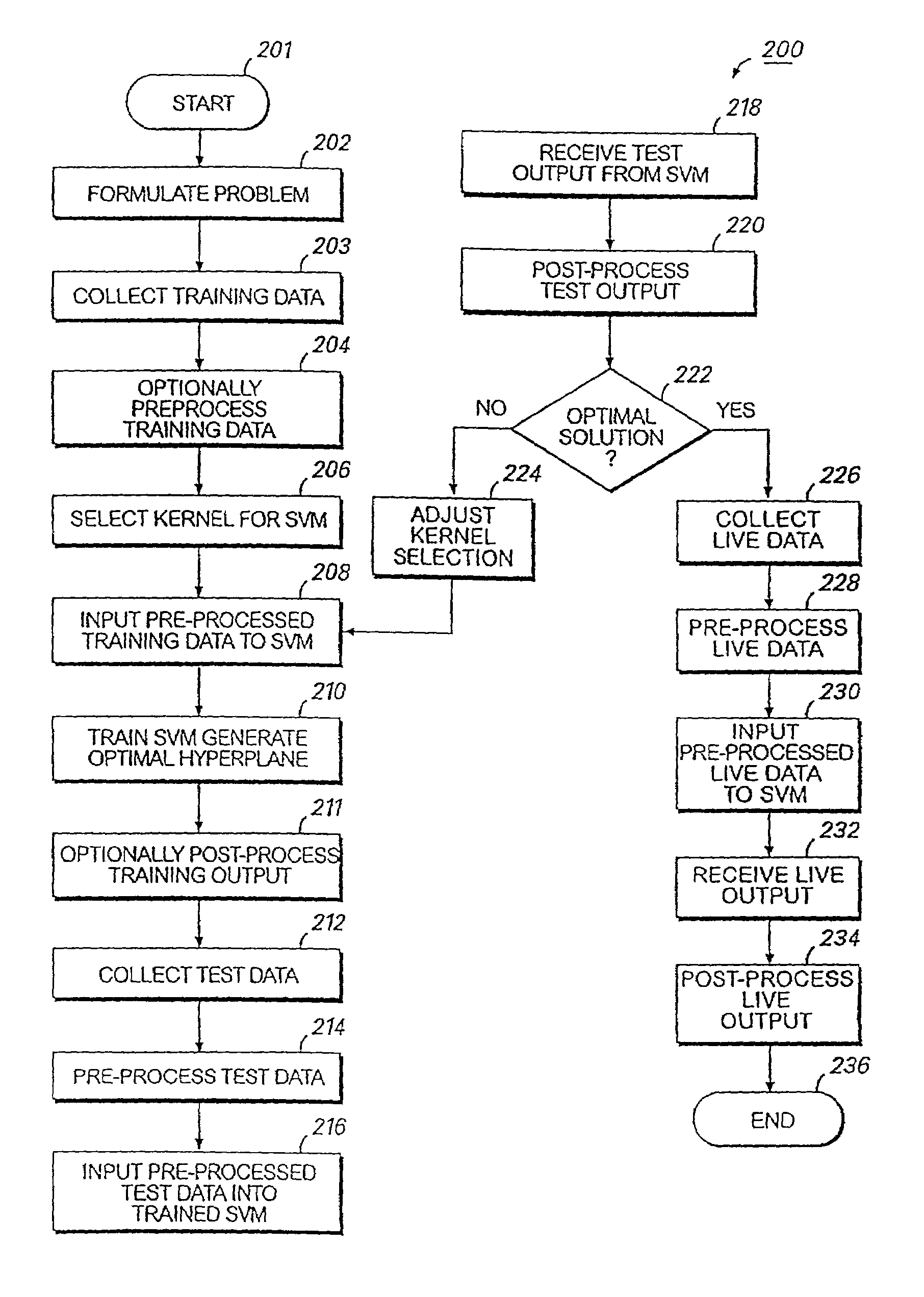

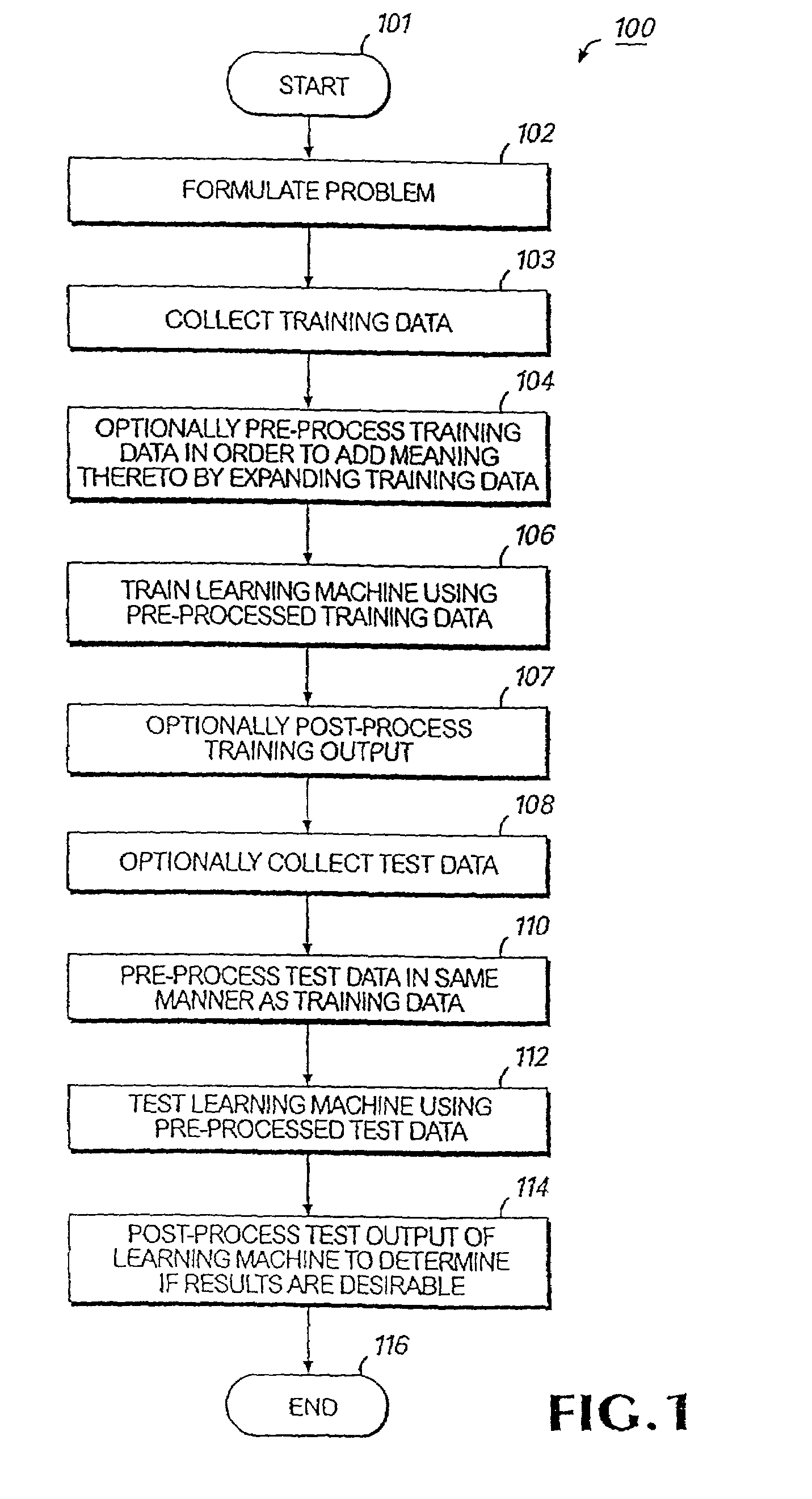

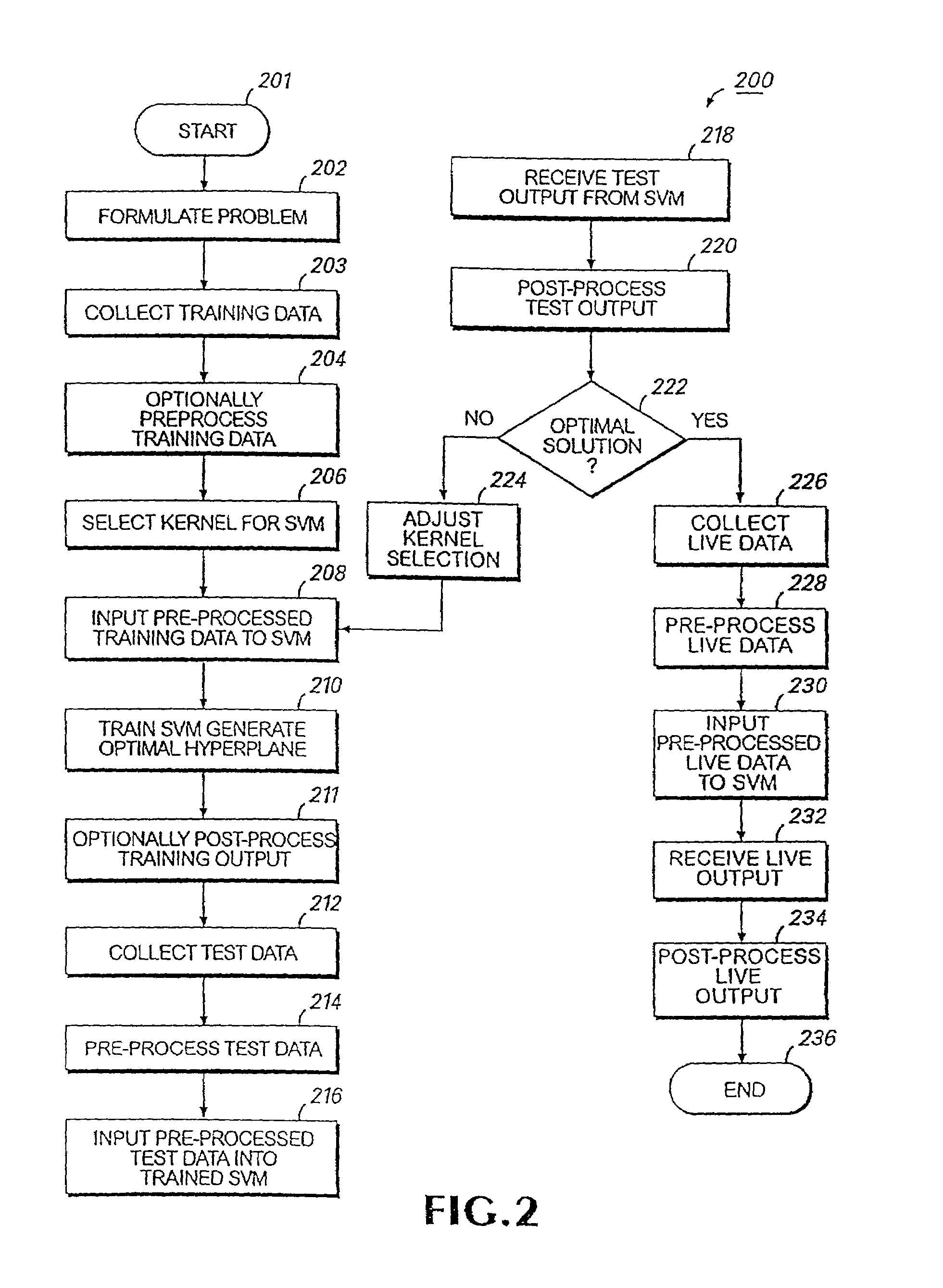

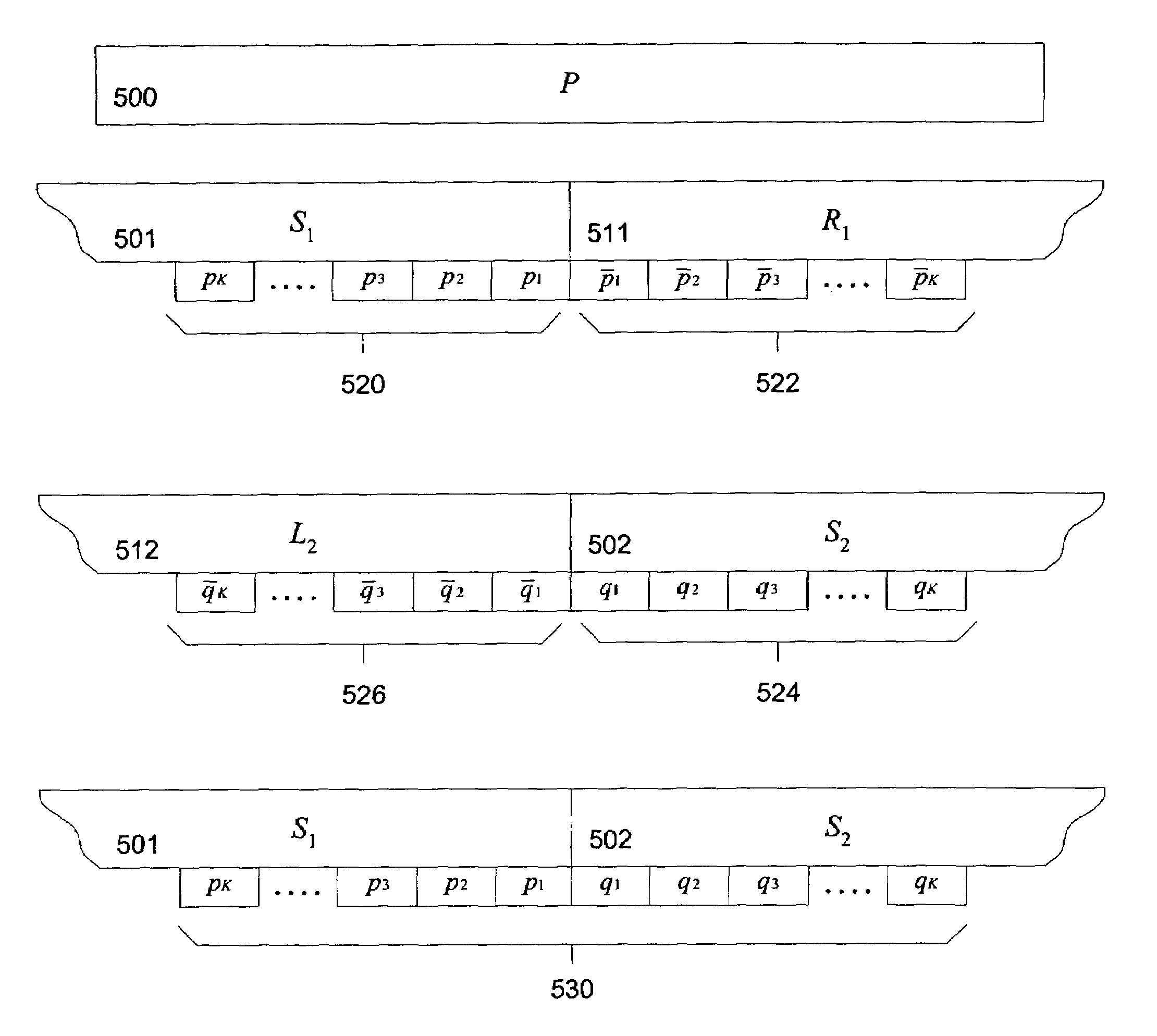

Digitized image data are input into a processor where a detection component identifies the areas (objects) of particular interest in the image and, by segmentation, separates those objects from the background. A feature extraction component formulates numerical values relevant to the classification task from the segmented objects. Results of the preceding analysis steps are input into a trained learning machine classifier which produces an output which may consist of an index discriminating between two possible diagnoses, or some other output in the desired output format. In one embodiment, digitized image data are input into a plurality of subsystems, each subsystem having one or more support vector machines. Pre-processing may include the use of known transformations which facilitate extraction of the useful data. Each subsystem analyzes the data relevant to a different feature or characteristic found within the image. Once each subsystem completes its analysis and classification, the output for all subsystems is input into an overall support vector machine analyzer which combines the data to make a diagnosis, decision or other action which utilizes the knowledge obtained from the image.

Owner:HEALTH DISCOVERY CORP +1

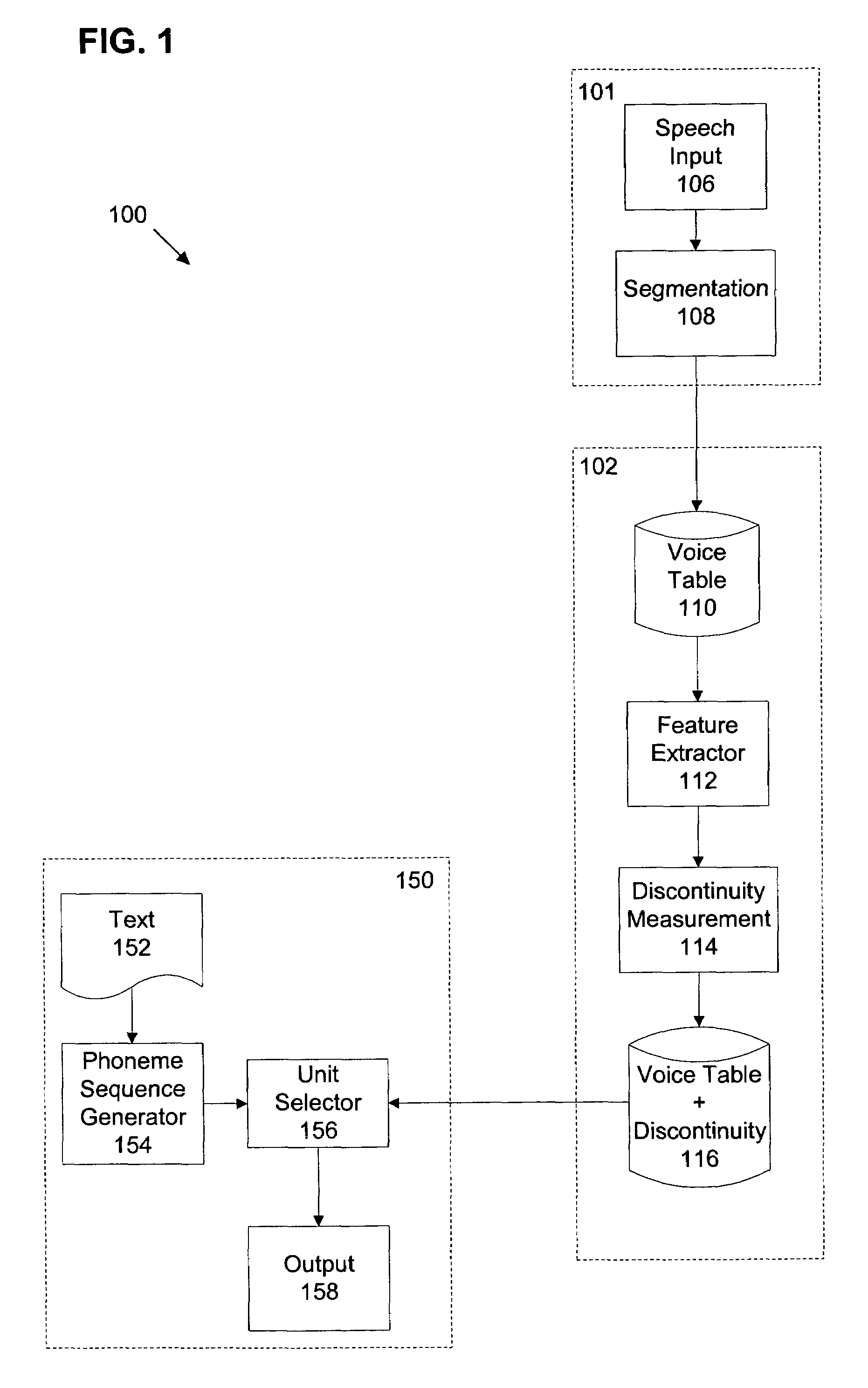

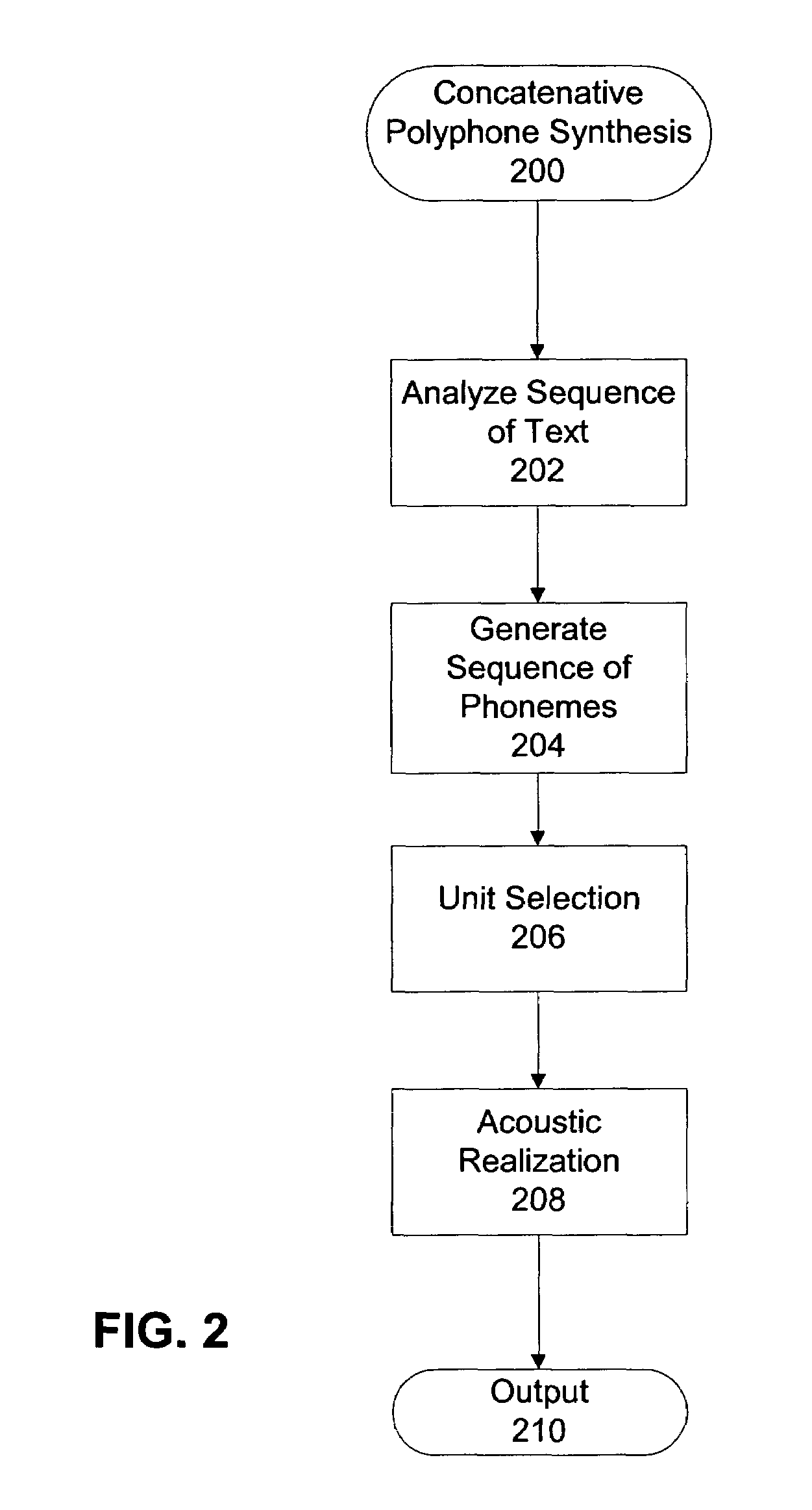

Global boundary-centric feature extraction and associated discontinuity metrics

Portions from time-domain speech segments are extracted. Feature vectors that represent the portions in a vector space are created. The feature vectors incorporate phase information of the portions. A distance between the feature vectors in the vector space is determined. In one aspect, the feature vectors are created by constructing a matrix W from the portions and decomposing the matrix W. In one aspect, decomposing the matrix W comprises extracting global boundary-centric features from the portions. In one aspect, the portions include at least one pitch period. In another aspect, the portions include centered pitch periods.

Owner:APPLE INC

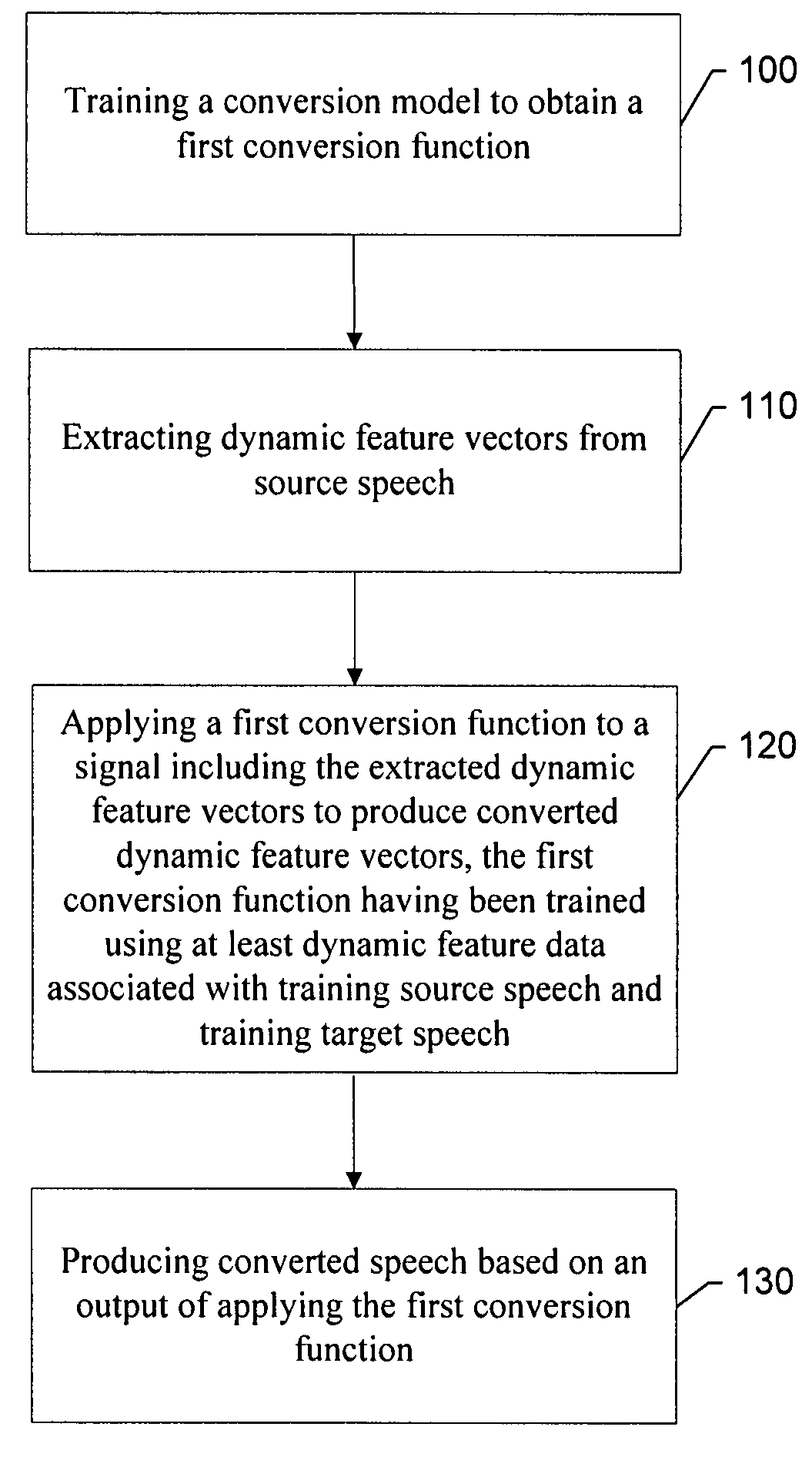

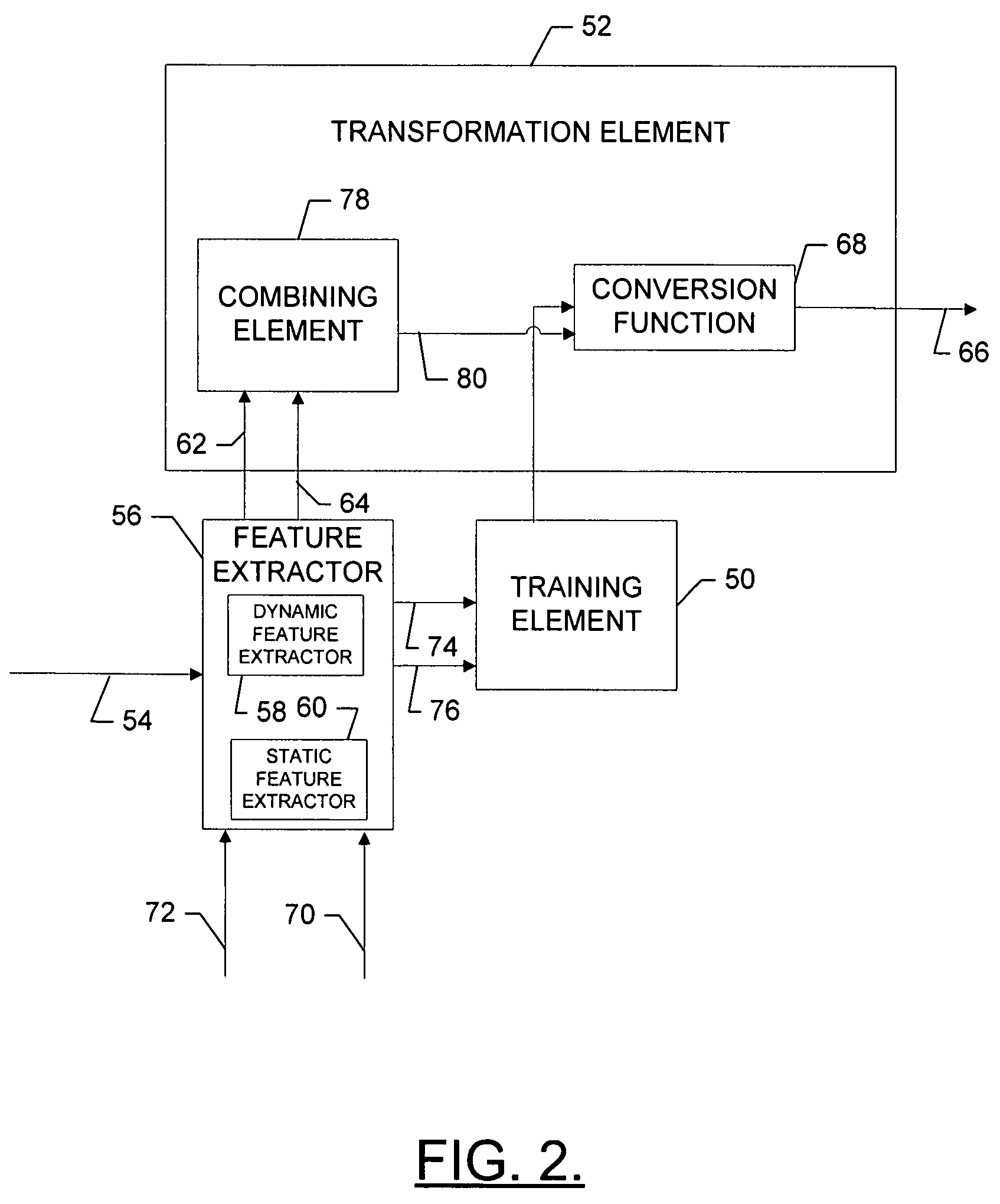

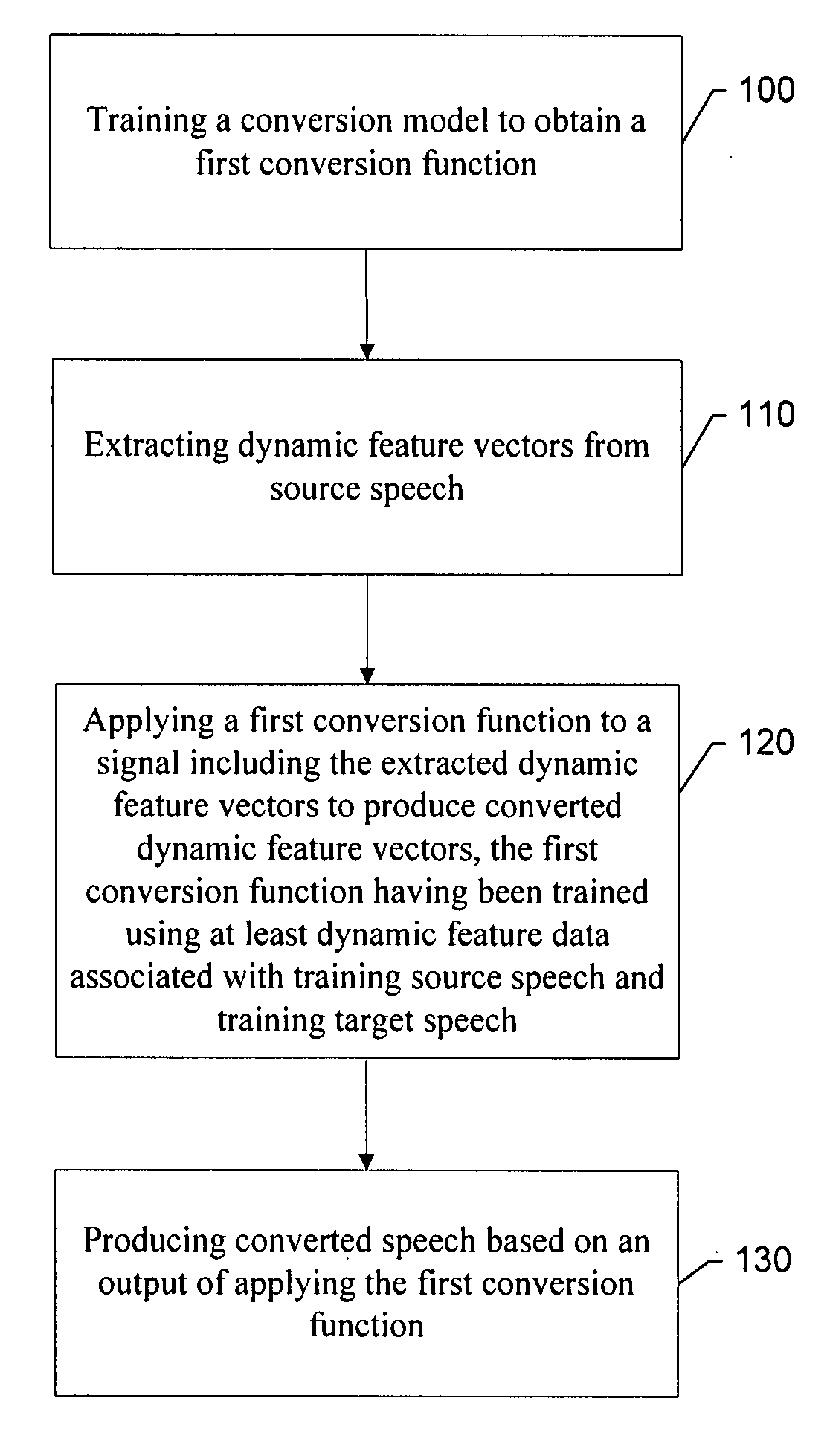

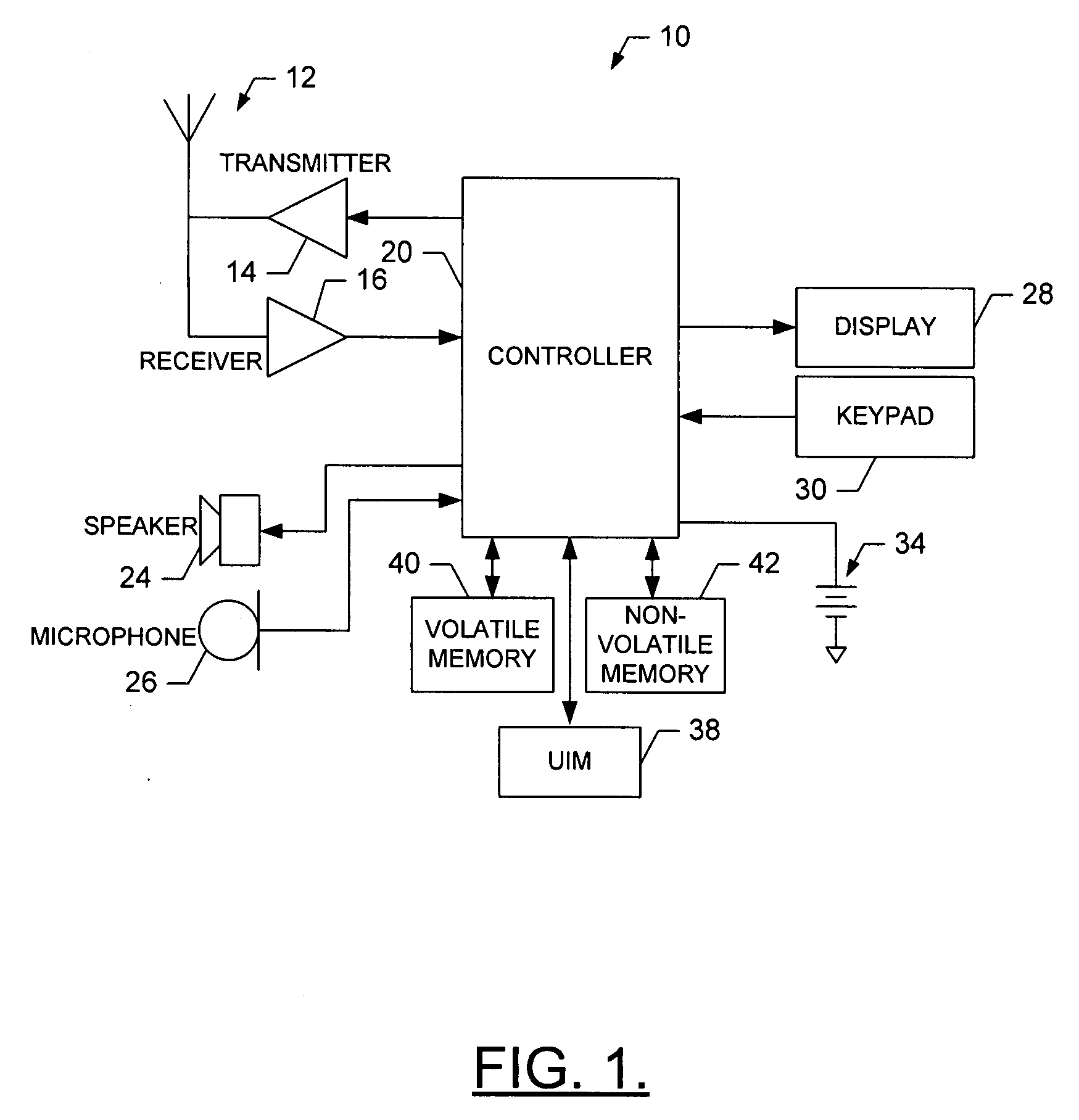

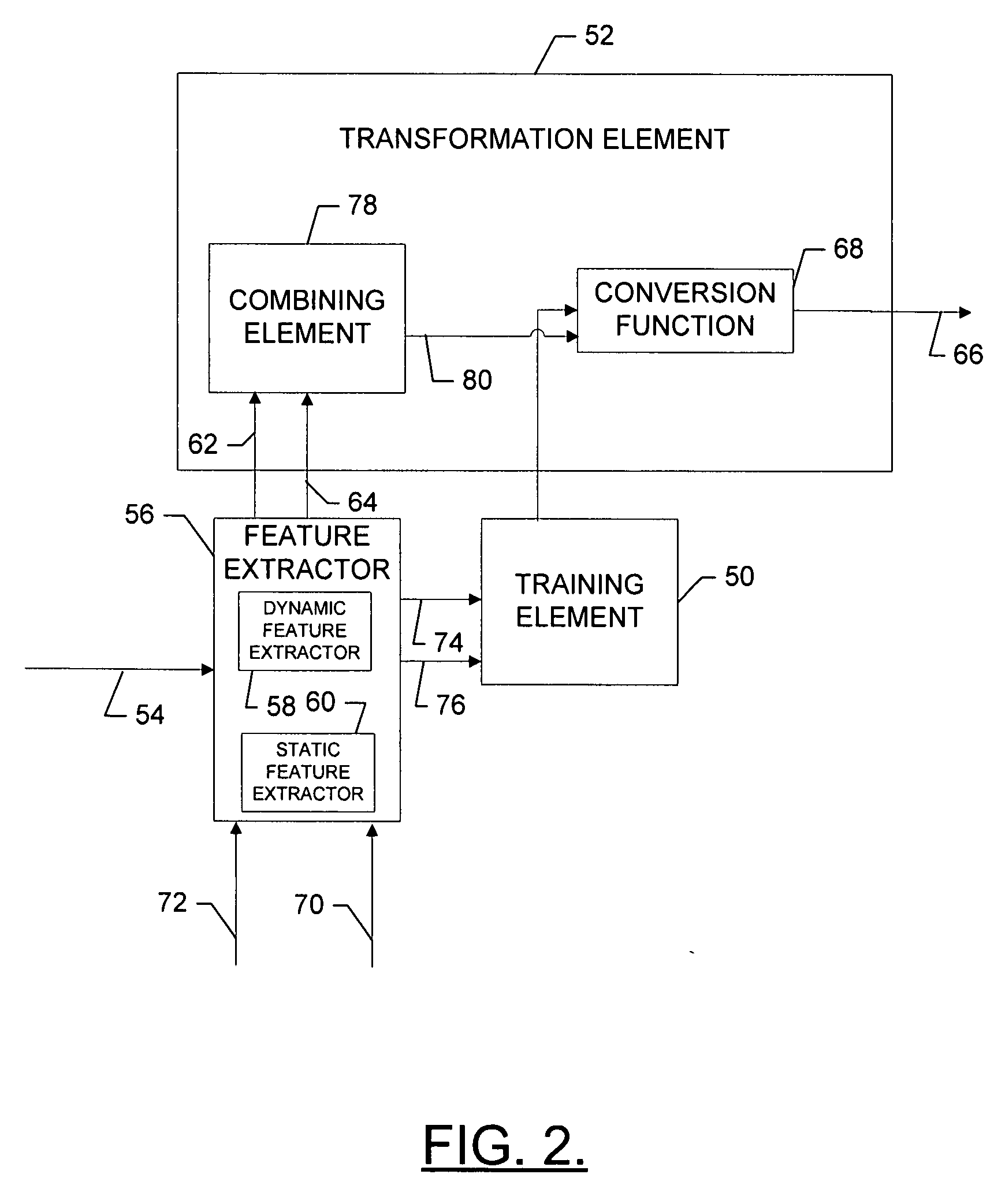

Method, apparatus and computer program product for providing voice conversion using temporal dynamic features

ActiveUS7848924B2Improved quality and naturalnessSpeed up the conversion processSpeech synthesisFeature extractionVoice transformation

An apparatus for providing voice conversion using temporal dynamic features includes a feature extractor and a transformation element. The feature extractor may be configured to extract dynamic feature vectors from source speech. The transformation element may be in communication with the feature extractor and configured to apply a first conversion function to a signal including the extracted dynamic feature vectors to produce converted dynamic feature vectors. The first conversion function may have been trained using at least dynamic feature data associated with training source speech and training target speech. The transformation element may be further configured to produce converted speech based on an output of applying the first conversion function.

Owner:WSOU INVESTMENTS LLC

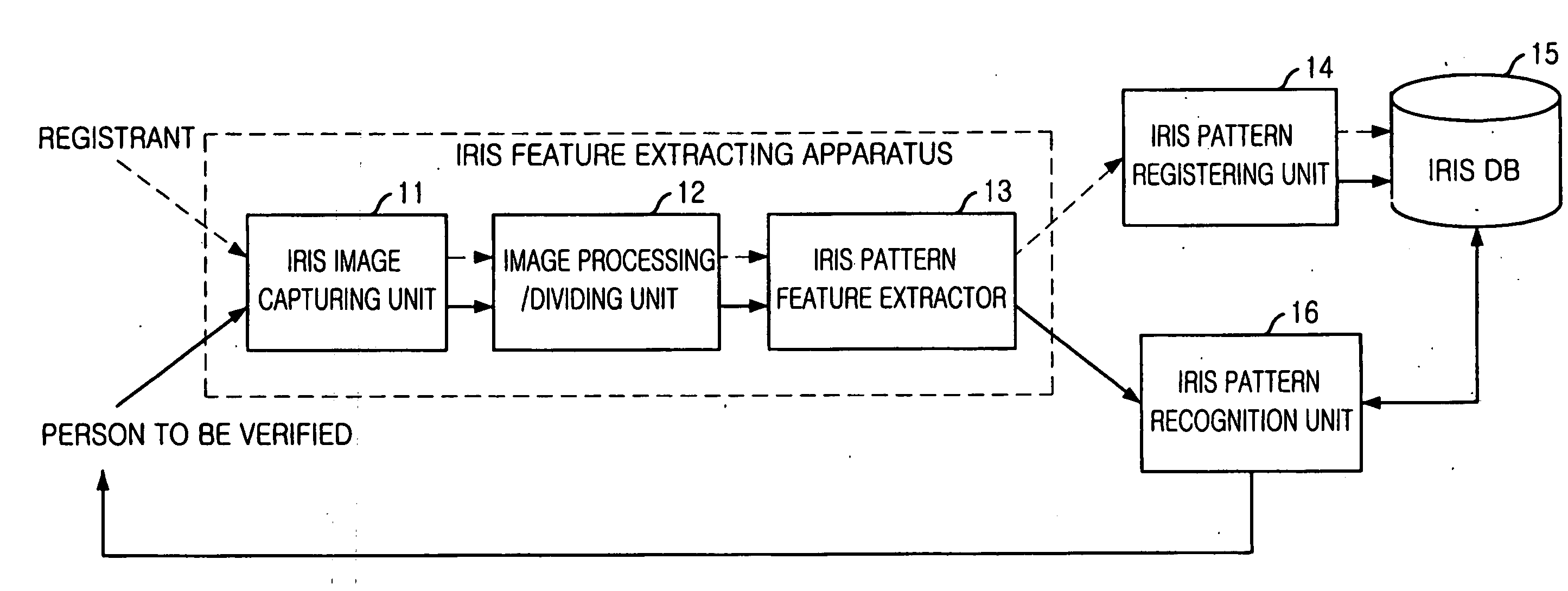

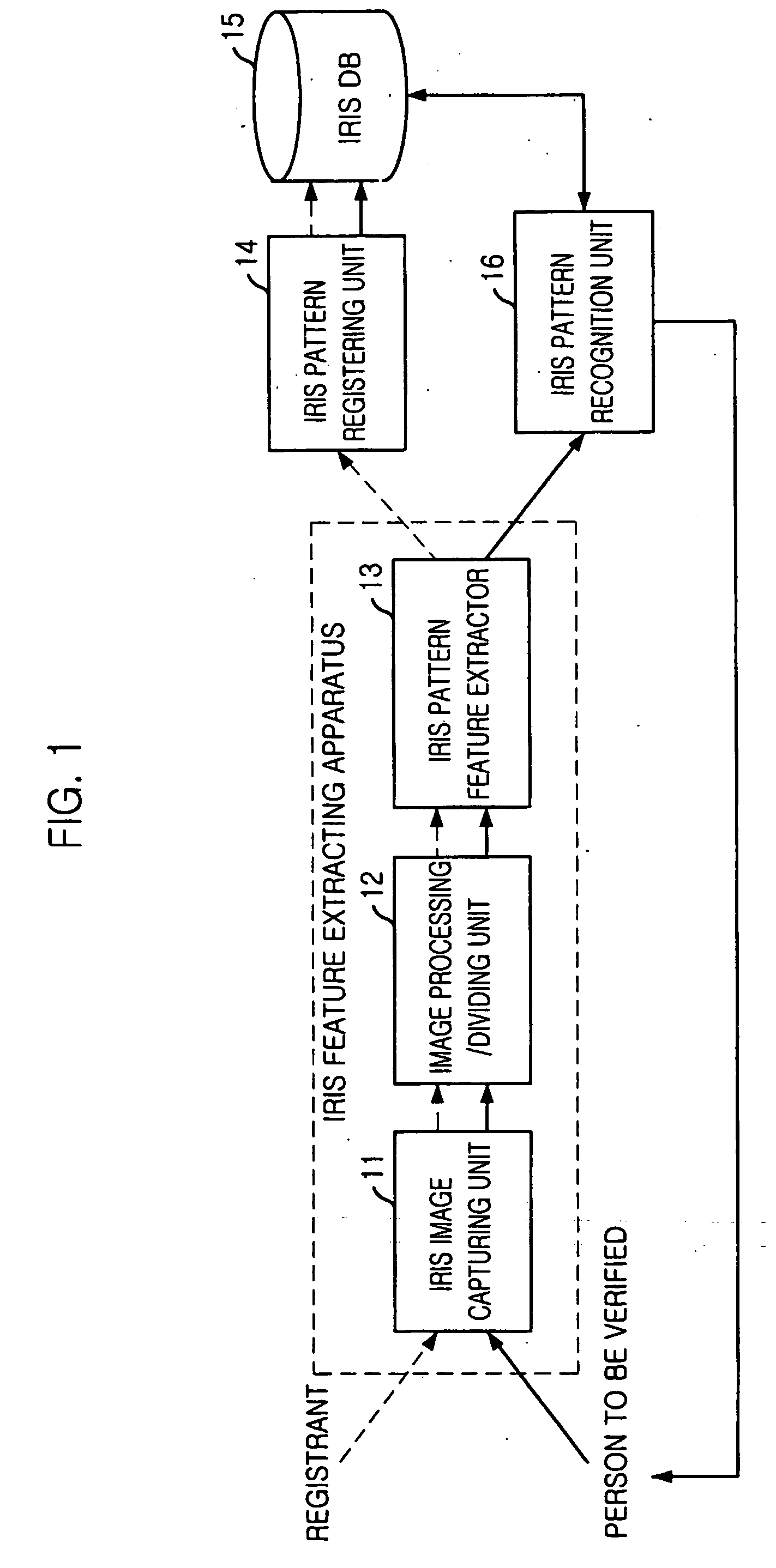

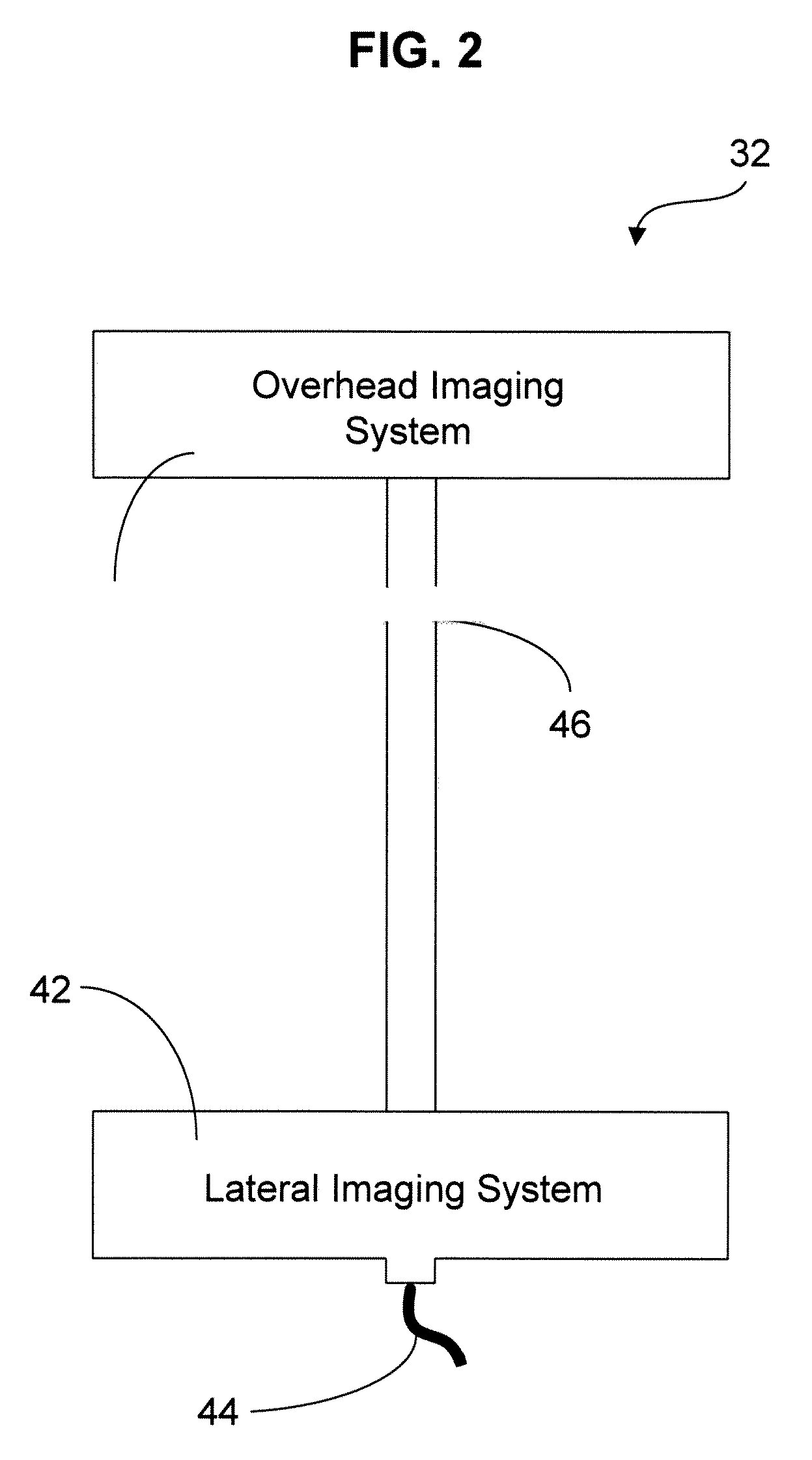

Pupil detection method and shape descriptor extraction method for a iris recognition, iris feature extraction apparatus and method, and iris recognition system and method using its

Provided is pupil detection method and shape descriptor extraction method for an iris recognition, iris feature extraction apparatus and method, and iris recognition system and method using the same. The method for detecting a pupil for iris recognition, includes the steps of: a) detecting light sources in the pupil from an eye image as two reference points; b) determining first boundary candidate points located between the iris and the pupil of the eye image, which cross over a straight line between the two reference points; c) determining second boundary candidate points located between the iris and the pupil of the eye image, which cross over a perpendicular bisector of a straight line between the first boundary candidate points; and d) determining a location and a size of the pupil by obtaining a radius of a circle and coordinates of a center of the circle based on a center candidate point, wherein the center candidate point is a center point of perpendicular bisectors of straight line between the neighbor boundary candidate points, to thereby detect the pupil.

Owner:JIRIS

Robust Information Extraction from Utterances

ActiveUS20090171662A1Increase cross-entropyImprove precisionSpeech recognitionSpecial data processing applicationsFeature extractionText categorization

The performance of traditional speech recognition systems (as applied to information extraction or translation) decreases significantly with, larger domain size, scarce training data as well as under noisy environmental conditions. This invention mitigates these problems through the introduction of a novel predictive feature extraction method which combines linguistic and statistical information for representation of information embedded in a noisy source language. The predictive features are combined with text classifiers to map the noisy text to one of the semantically or functionally similar groups. The features used by the classifier can be syntactic, semantic, and statistical.

Owner:NANT HLDG IP LLC

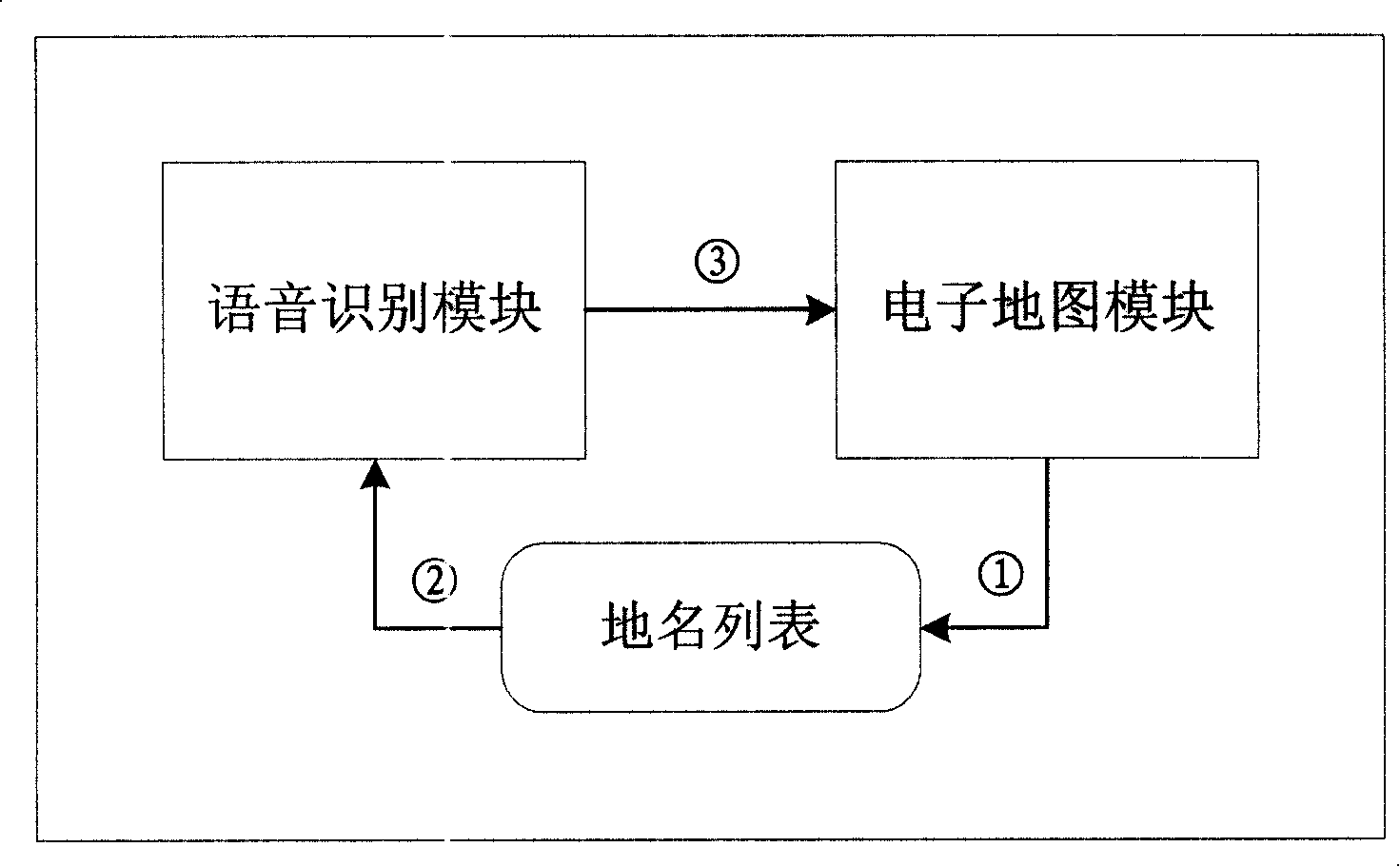

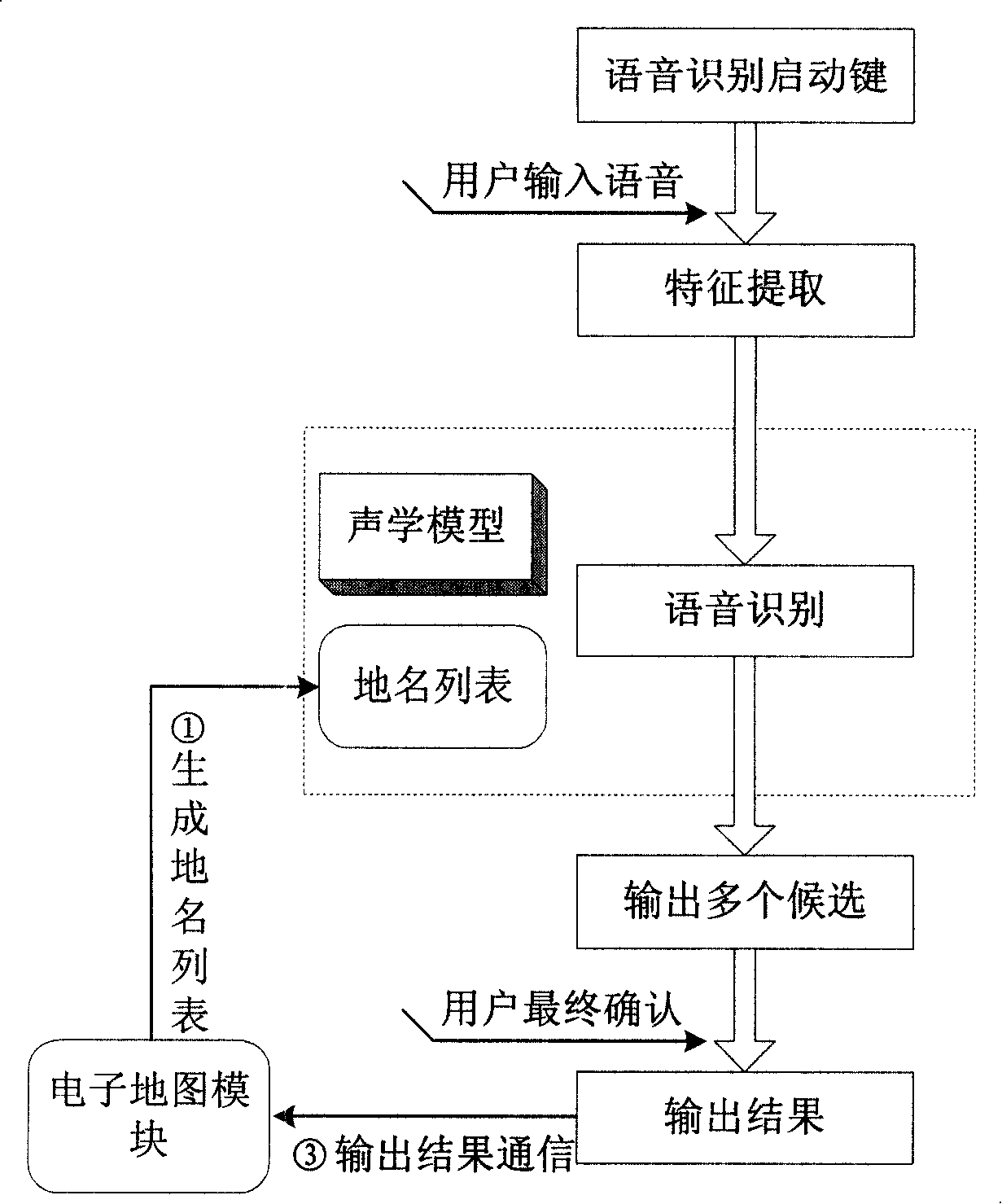

Voice controlled vehicle mounted GPS guidance system and method for realizing same

InactiveCN101162153AEasy to operateEnsure safe drivingInstruments for road network navigationNavigational calculation instrumentsGuidance systemNavigation system

The invention relates to a voice-controlled vehicle GPS navigating system and the method for realizing the same, comprising the following steps of: 1, an electric map module make the place names in a map into a place name list, and the place name list is used as a distinguishing set for a voice distinguishing module and then is sent to the voice distinguishing module; 2, the start-up key of the voice distinguishing module is turned on; 3, the voice distinguishing module receives input voice and extracts characters of the input voice; 4, voice recognition is performed after abstracting the characters; 5, after the voice recognition is finished, a plurality of candidates are output for the final affirmation of users; 6, the final results affirmed by users are transmitted to the electric map module and then is mapped into corresponding map coordinate to be displayed on a screen. The invention not only simplified the operation procedures of GPS device, but also provides safeguards for drivers, and the invention, at the same time, facilitates the popularization of GPS.

Owner:丁玉国

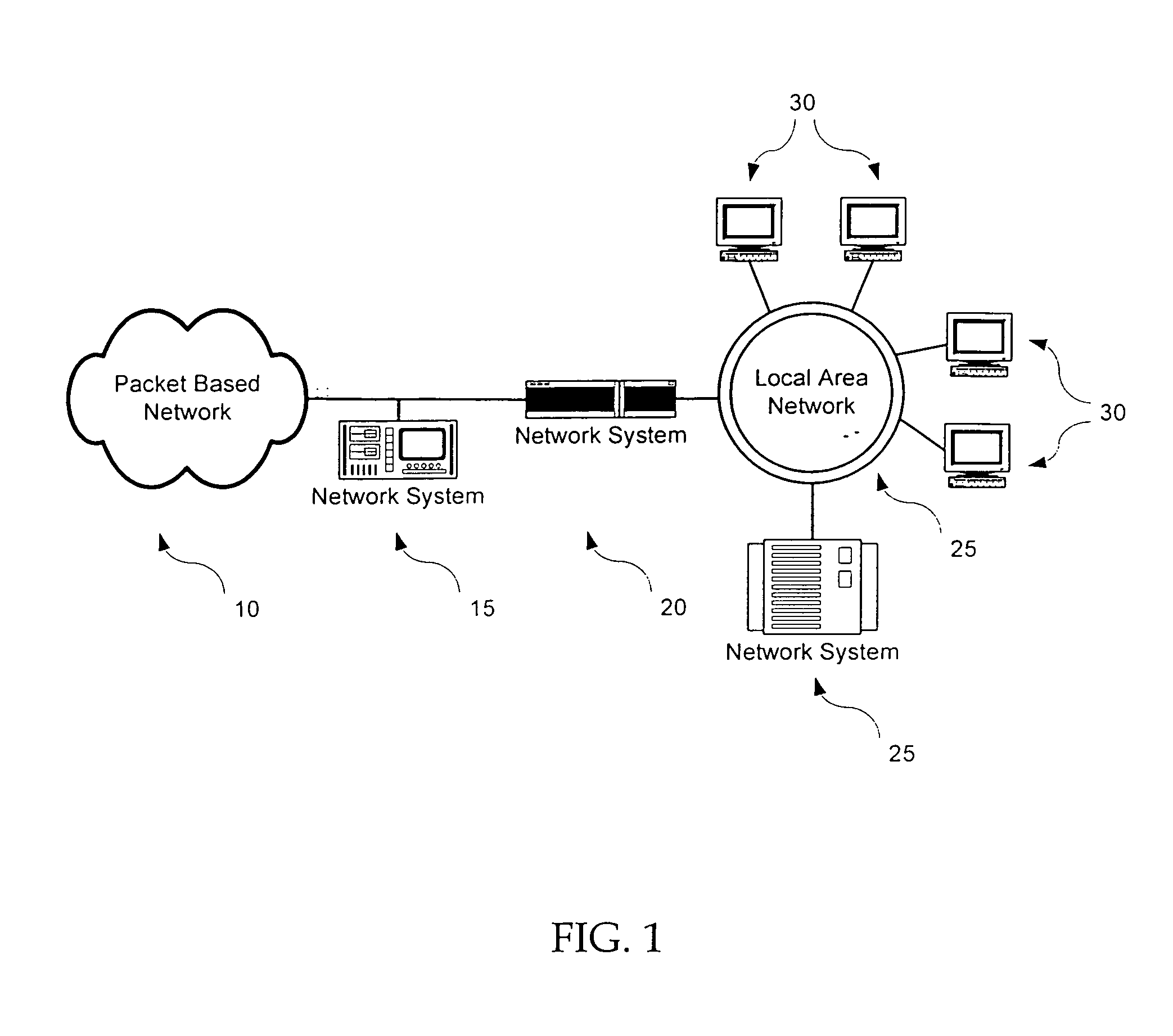

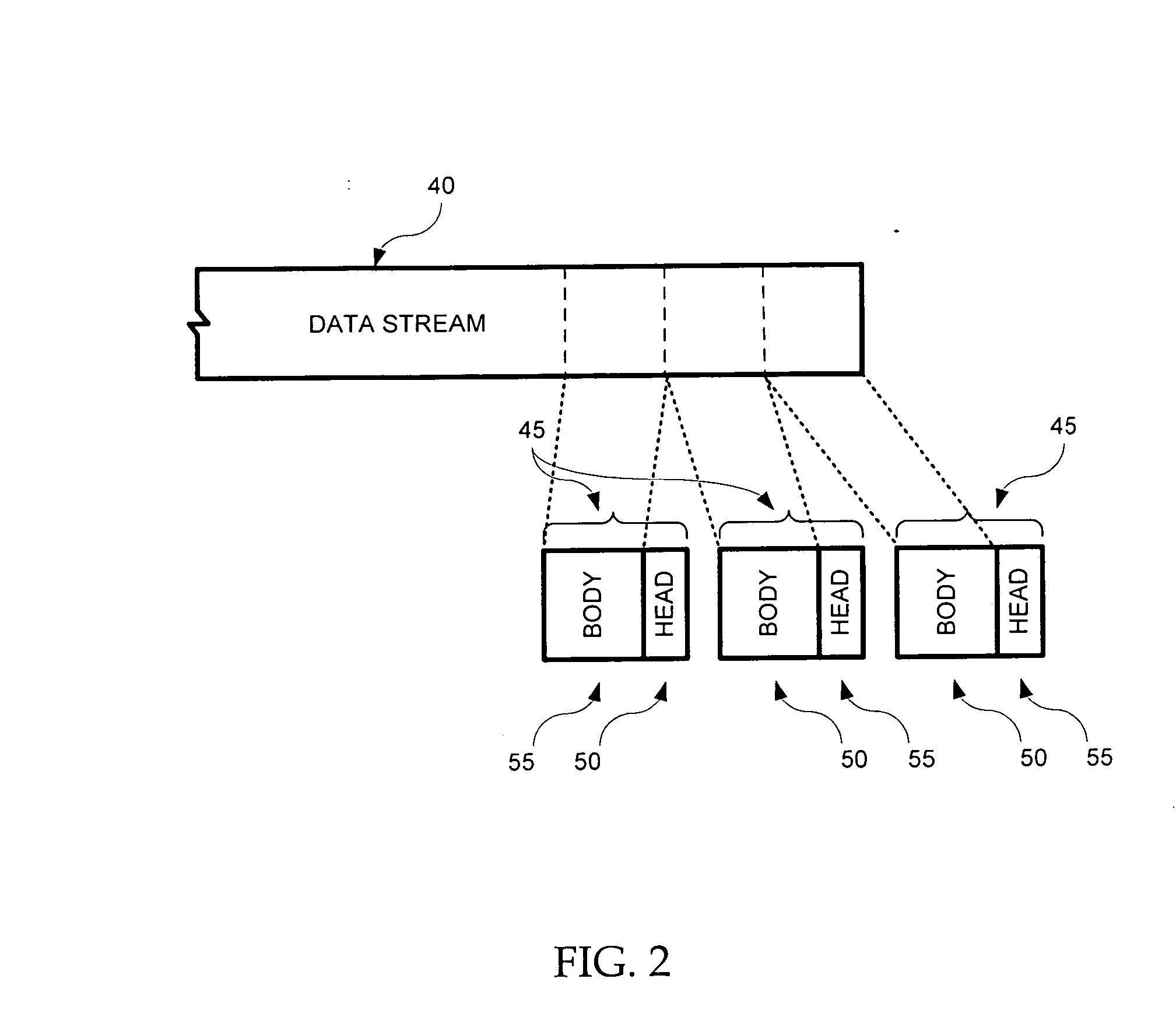

Statistical classification of high-speed network data through content inspection

InactiveUS20050060295A1Good marginEasy to separateData switching networksSpecial data processing applicationsFeature extractionStatistical classification

A network data classifier statistically classifies received data at wire-speed by examining, in part, the payloads of packets in which such data are disposed and without having a priori knowledge of the classification of the data. The network data classifier includes a feature extractor that extract features from the packets it receives. Such features include, for example, textual or binary patterns within the data or profiling of the network traffic. The network data classifier further includes a statistical classifier that classifies the received data into one or more pre-defined categories using the numerical values representing the features extracted by the feature extractor. The statistical classifier may generate a probability distribution function for each of a multitude of classes for the received data. The data so classified are subsequently be processed by a policy engine. Depending on the policies, different categories may be treated differently.

Owner:INTEL CORP +1

Method, apparatus and computer program product for providing voice conversion using temporal dynamic features

ActiveUS20080262838A1Improve voice conversionQuality improvementSpeech synthesisFeature extractionVoice transformation

An apparatus for providing voice conversion using temporal dynamic features includes a feature extractor and a transformation element. The feature extractor may be configured to extract dynamic feature vectors from source speech. The transformation element may be in communication with the feature extractor and configured to apply a first conversion function to a signal including the extracted dynamic feature vectors to produce converted dynamic feature vectors. The first conversion function may have been trained using at least dynamic feature data associated with training source speech and training target speech. The transformation element may be further configured to produce converted speech based on an output of applying the first conversion function.

Owner:WSOU INVESTMENTS LLC

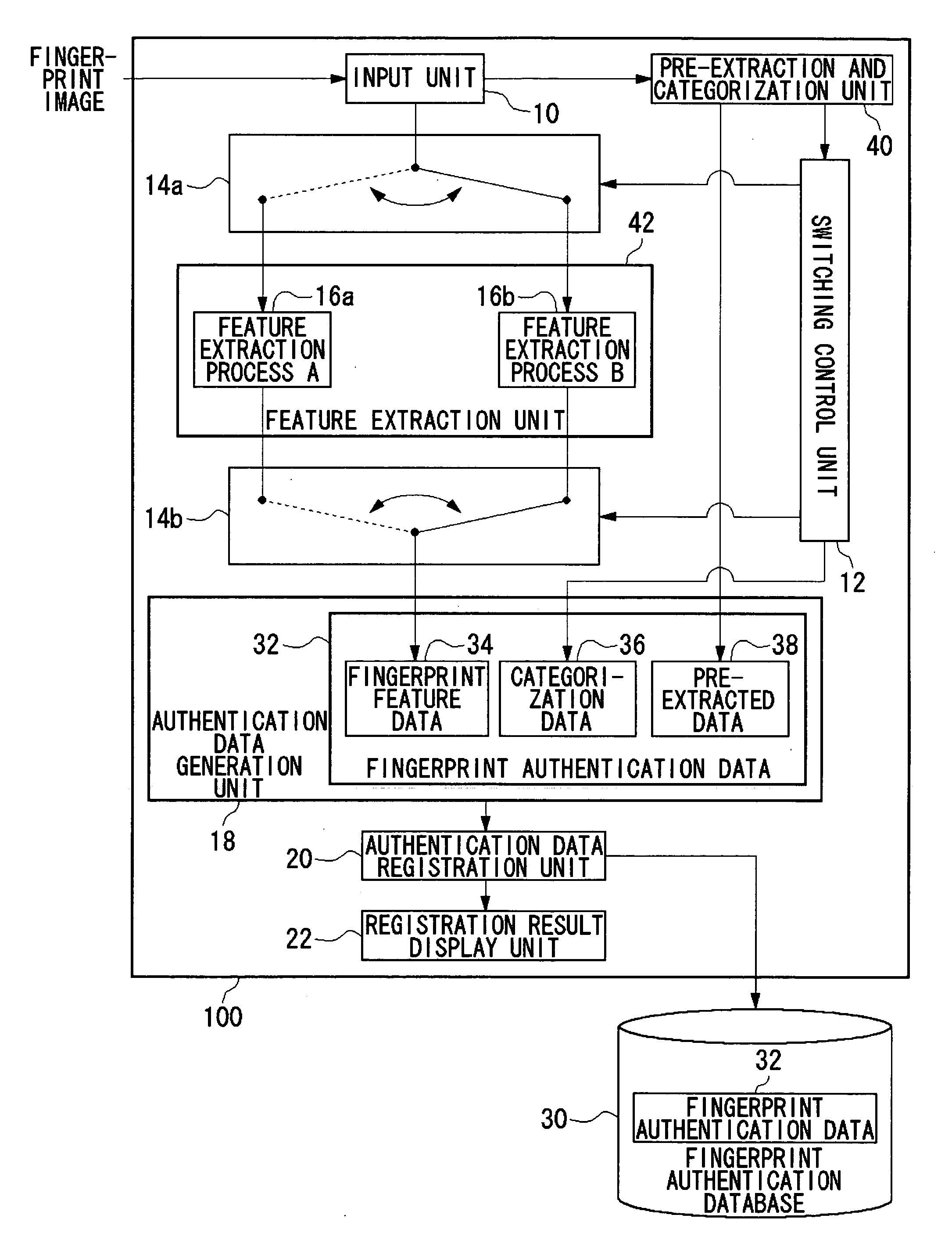

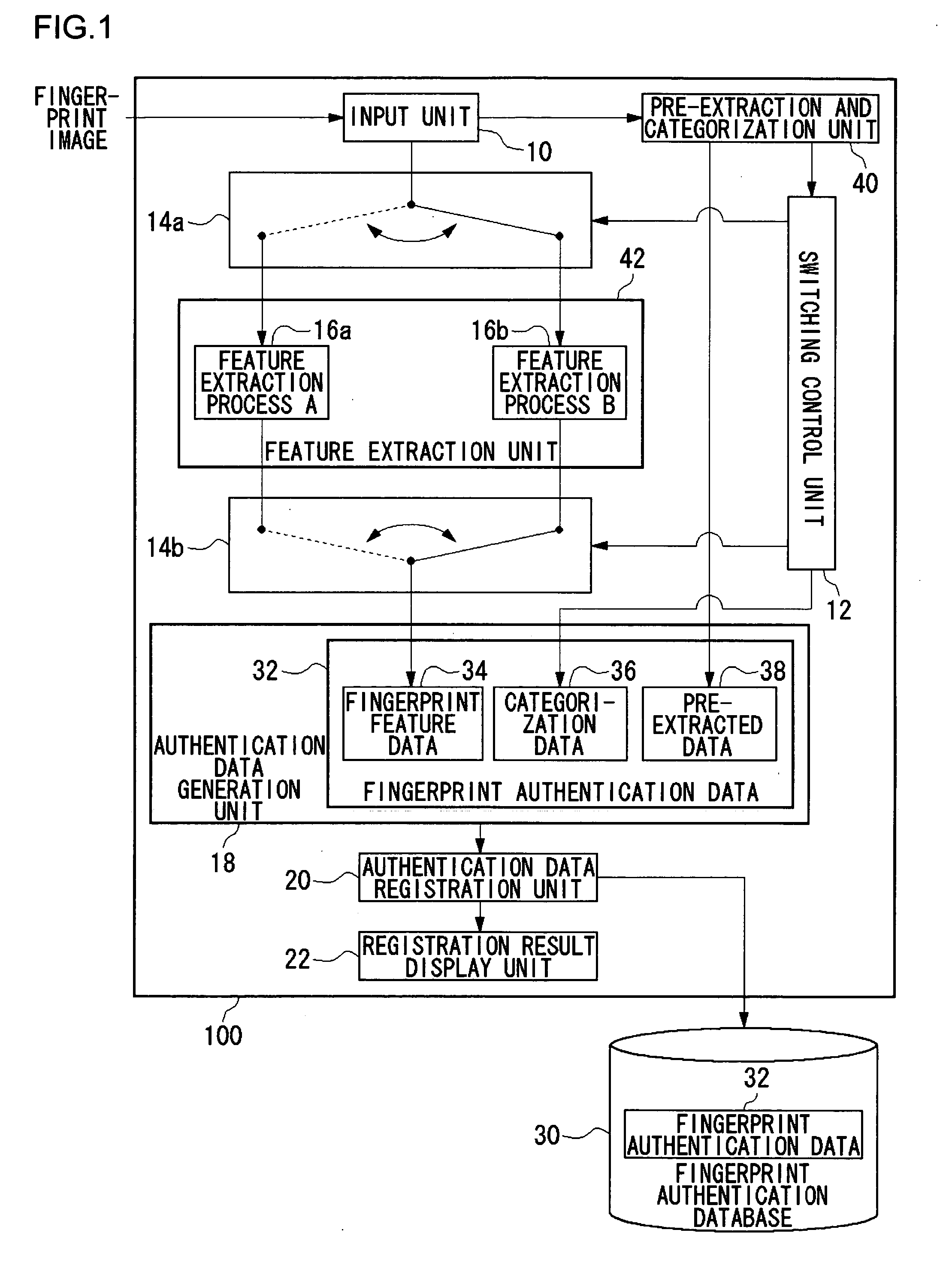

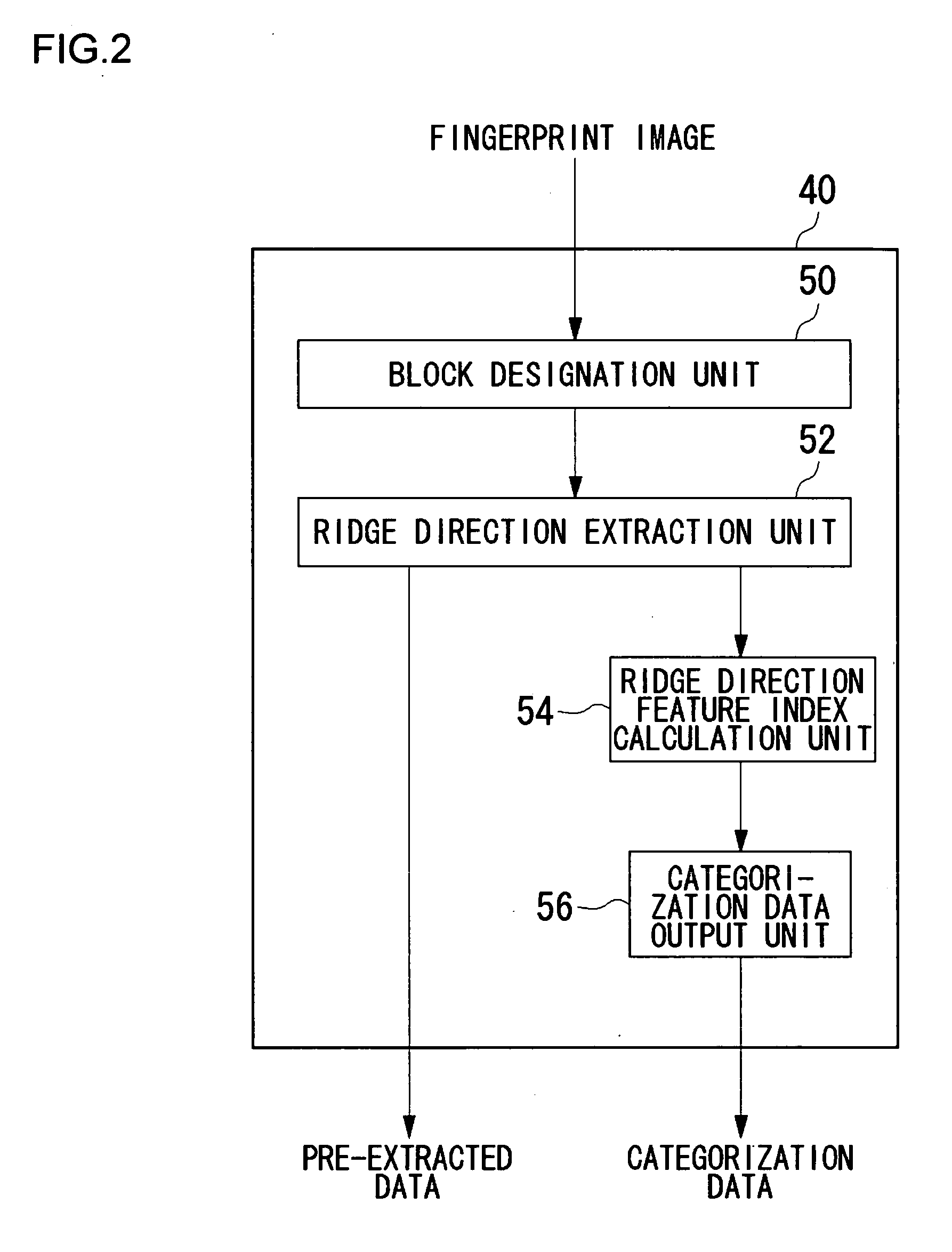

User authentication using biometric information

InactiveUS20070036400A1High precisionEfficiently retrieve the reference biometric informationCharacter and pattern recognitionData matchingUser verification

An input unit accepts a fingerprint image of a user. A pre-extraction and categorization unit generates pre-extracted data from the fingerprint image and uses the data to categorize the input fingerprint image into one of multiple groups. A feature extraction unit extracts fingerprint feature data from the fingerprint image by processing methods defined for the respective groups. A feature data matching processing unit matches the fingerprint feature data against fingerprint authentication data registered in a fingerprint authentication database by processing methods defined for the respective groups. An integrated authentication unit authenticates a user with the input fingerprint image based upon a result of matching.

Owner:SANYO ELECTRIC CO LTD

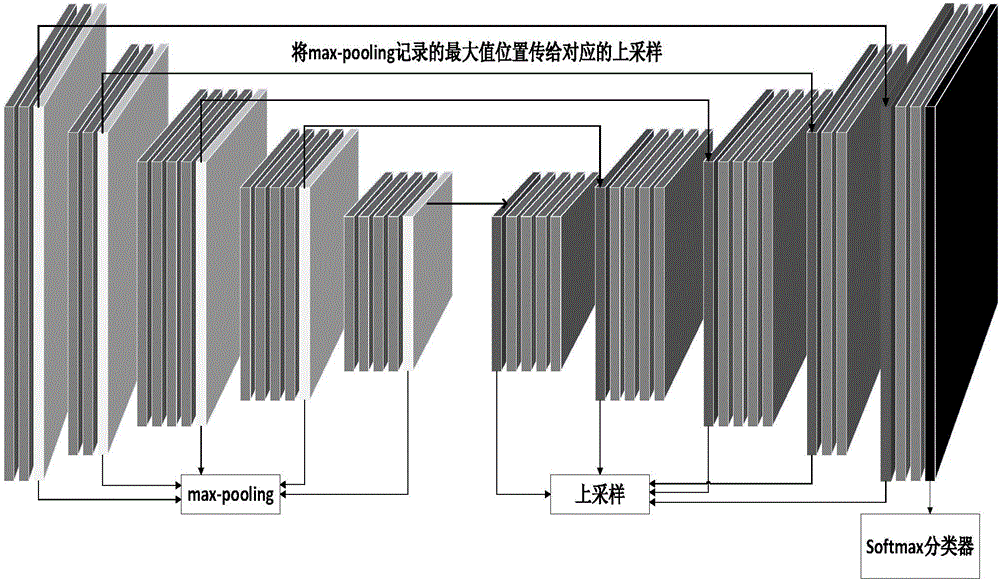

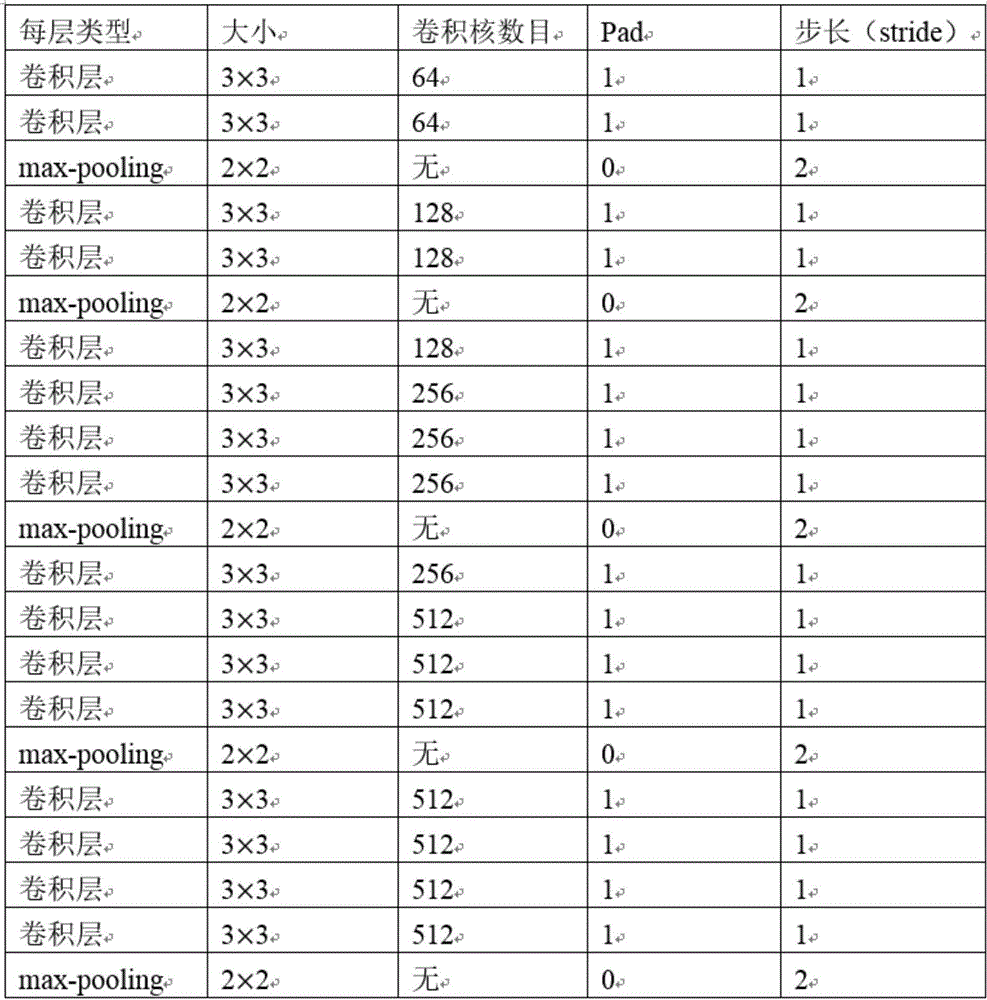

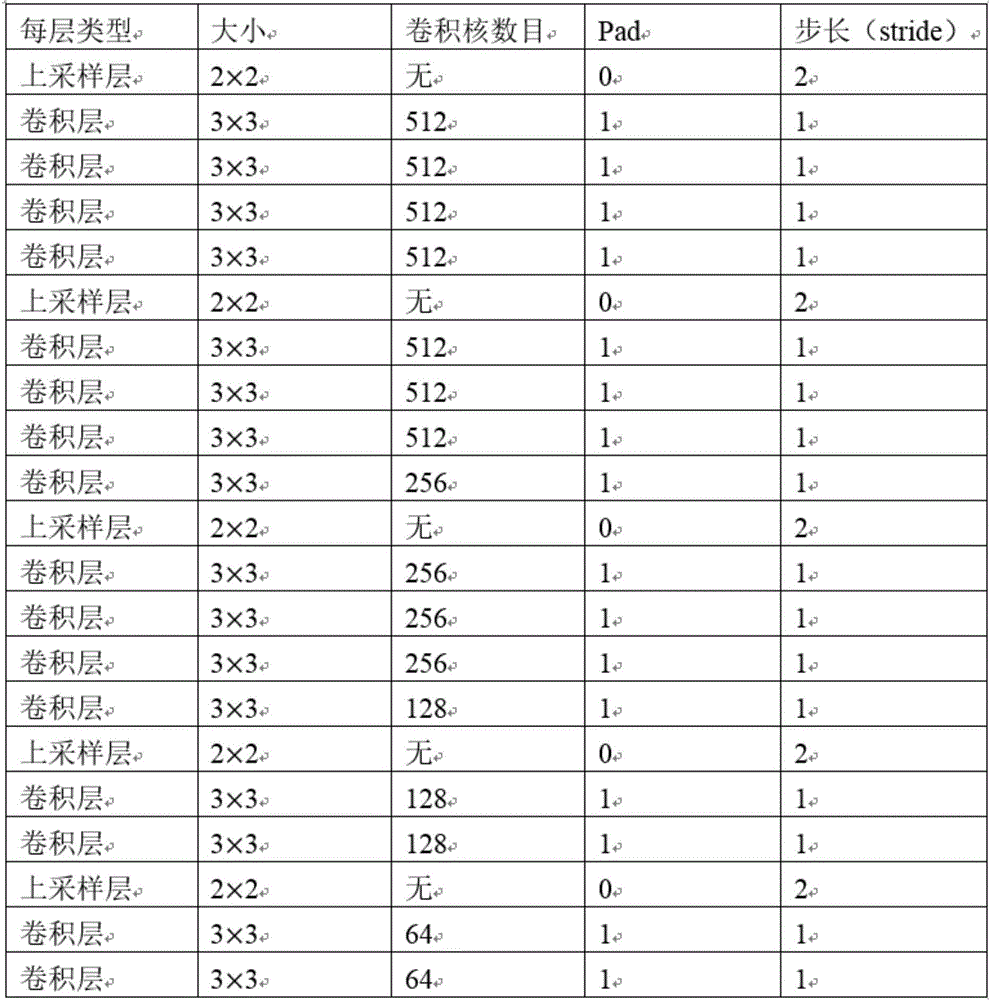

Fundus image retinal vessel segmentation method and system based on deep learning

ActiveCN106408562AEasy to classifyImprove accuracyImage enhancementImage analysisSegmentation systemBlood vessel

The invention discloses a fundus image retinal vessel segmentation method and a fundus image retinal vessel segmentation system based on deep learning. The fundus image retinal vessel segmentation method comprises the steps of performing data amplification on a training set, enhancing an image, training a convolutional neural network by using the training set, segmenting the image by using a convolutional neural network segmentation model to obtain a segmentation result, training a random forest classifier by using features of the convolutional neural network, extracting a last layer of convolutional layer output from the convolutional neural network, using the convolutional layer output as input of the random forest classifier for pixel classification to obtain another segmentation result, and fusing the two segmentation results to obtain a final segmentation image. Compared with the traditional vessel segmentation method, the fundus image retinal vessel segmentation method uses the deep convolutional neural network for feature extraction, the extracted features are more sufficient, and the segmentation precision and efficiency are higher.

Owner:SOUTH CHINA UNIV OF TECH

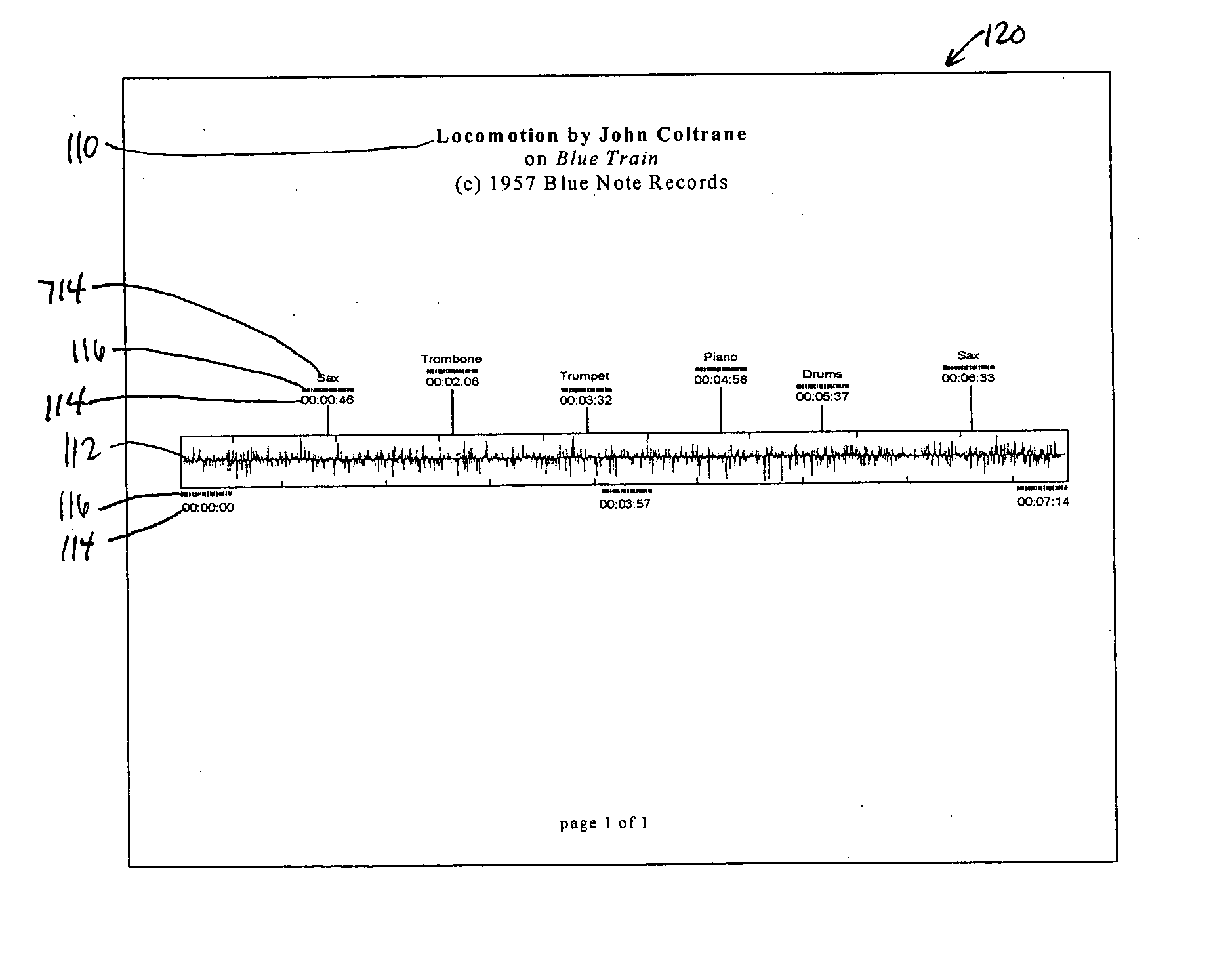

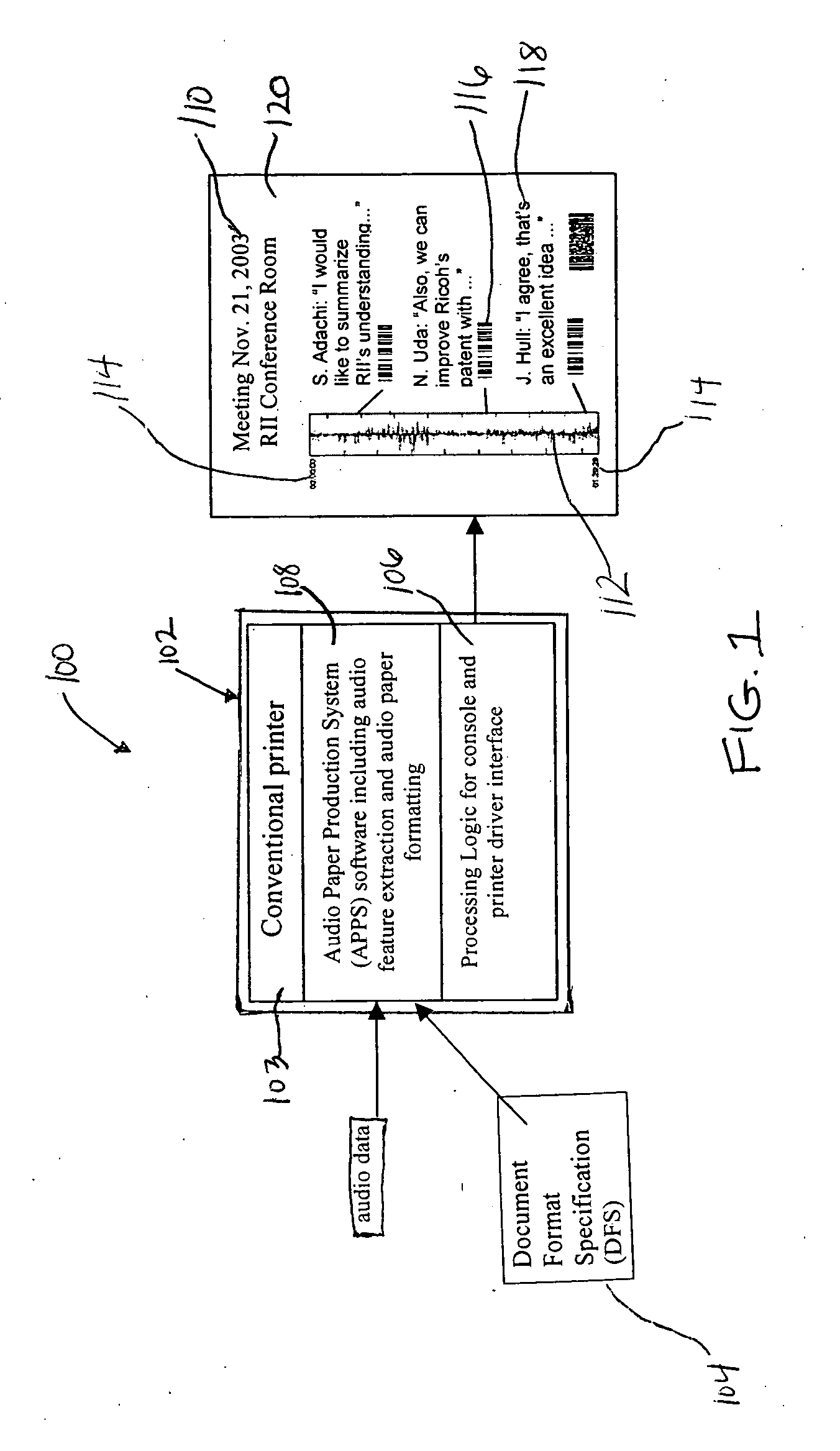

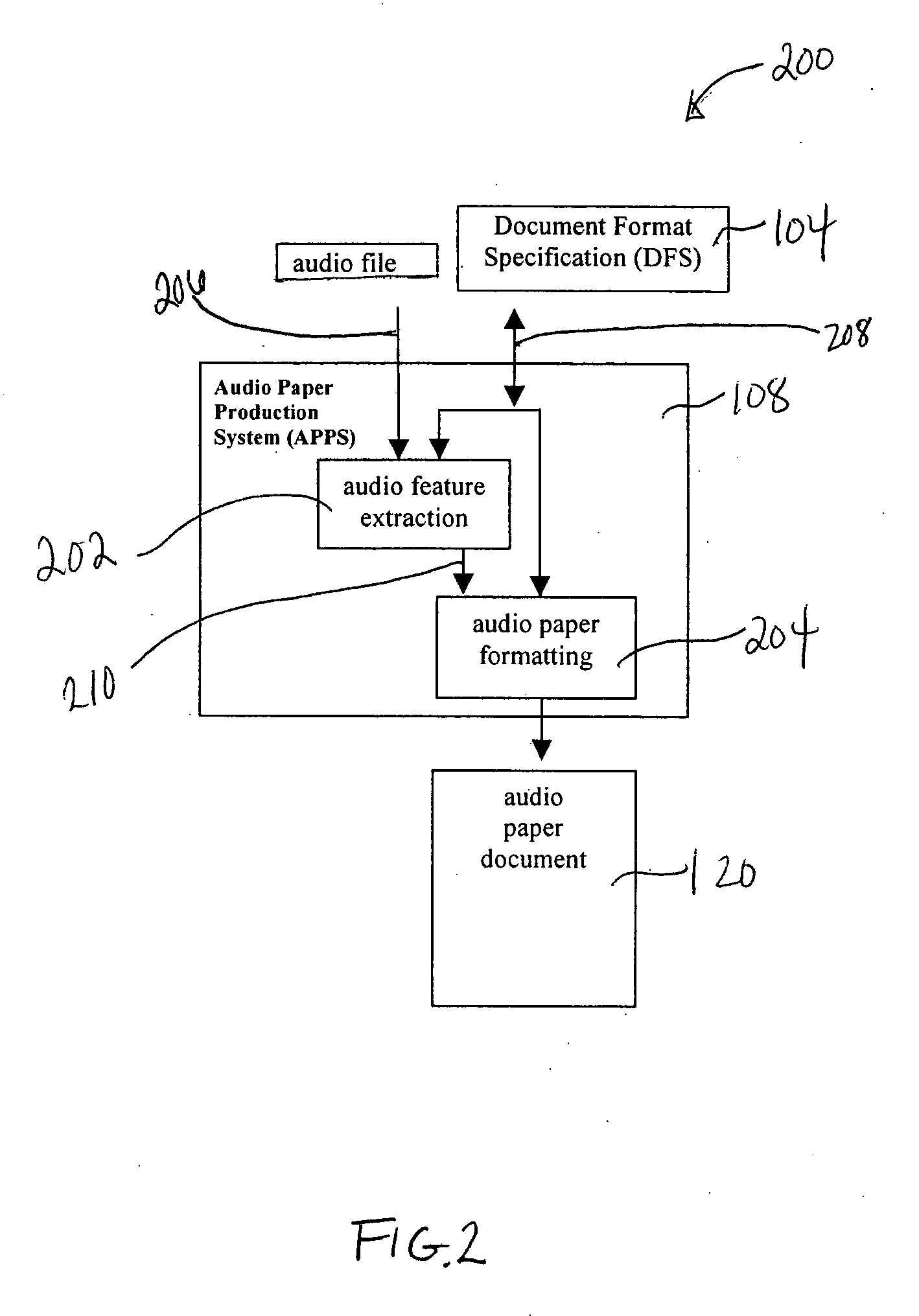

Printable representations for time-based media

InactiveUS20050010409A1Overcome limitationsElectrophonic musical instrumentsDigital data processing detailsFeature extractionOutput device

The system of the present invention allows a user to generate a representation of time-based media. The system of the present invention includes a feature extraction module for extracting features from media content. For example, the feature extraction module can detect solos in a musical performance, or can detect music, applause speech, and the like. A formatting module formats a media representation generated by the system. The formatting module also applies feature extraction information to the representation, and formats the representation according to a representation specification. In addition, the system can include an augmented output device that generates a media representation based on the feature extraction information and the representation specification. The methods of the present invention include extracting features from media content, and formatting a media representation being generated using the extracted features and based on a specification or data structure specifying the representation format. The methods can also include generating a media representation based on the results of the formatting.

Owner:RICOH KK

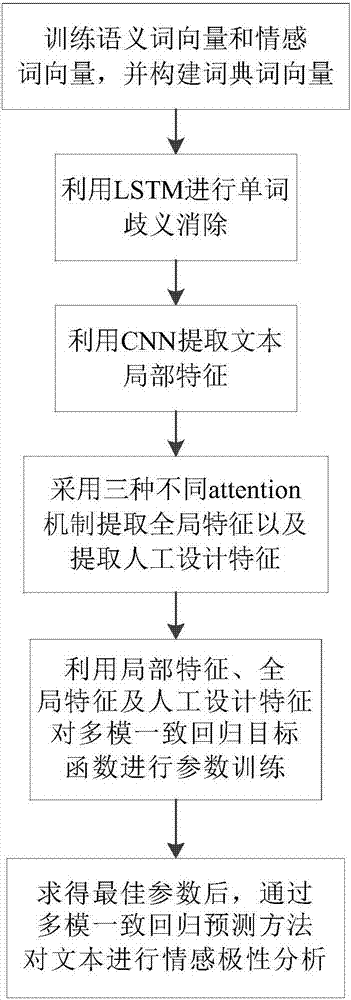

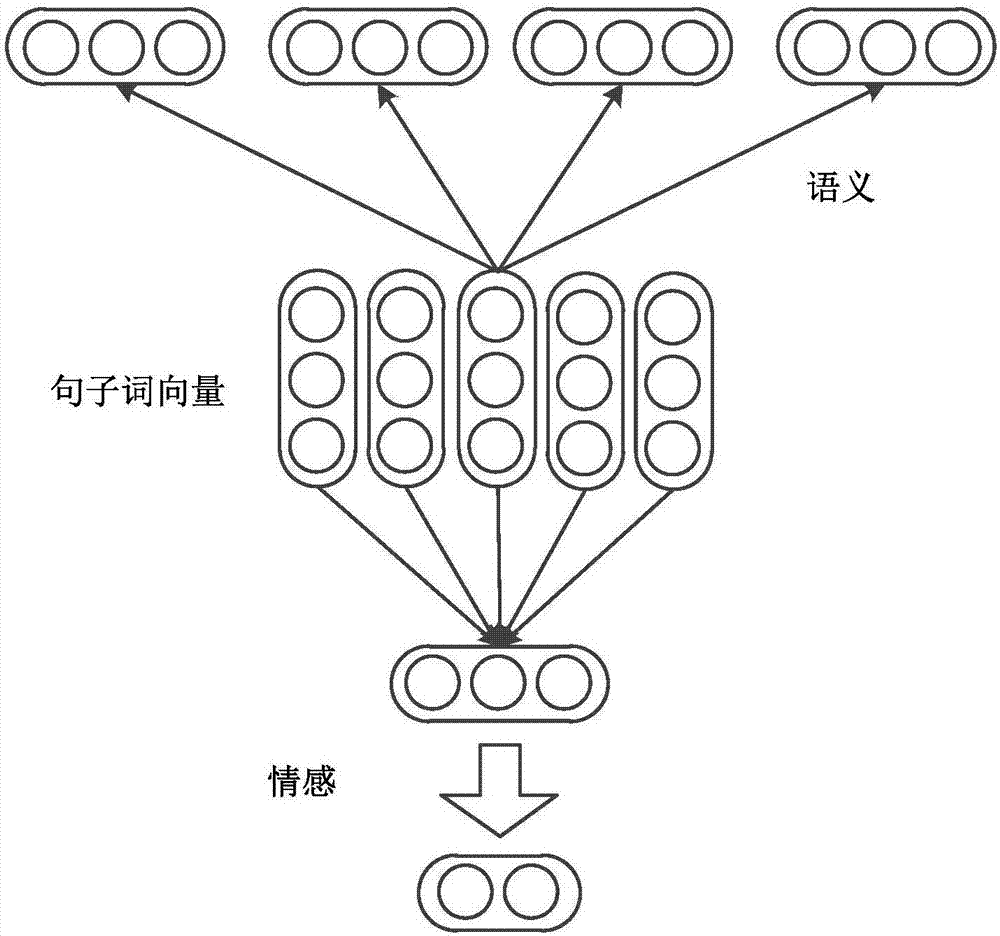

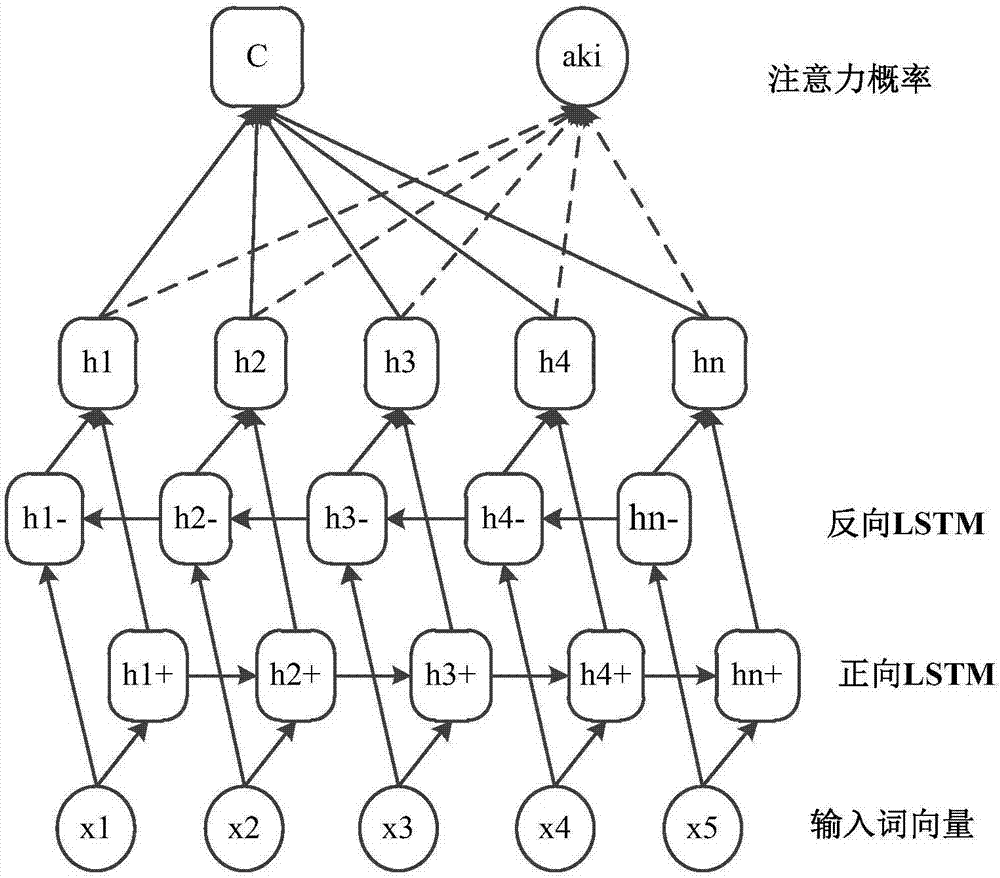

attention CNNs and CCR-based text sentiment analysis method

ActiveCN107092596AHigh precisionImprove classification accuracySemantic analysisNeural architecturesFeature extractionAmbiguity

The invention discloses an attention CNNs and CCR-based text sentiment analysis method and belongs to the field of natural language processing. The method comprises the following steps of 1, training a semantic word vector and a sentiment word vector by utilizing original text data and performing dictionary word vector establishment by utilizing a collected sentiment dictionary; 2, capturing context semantics of words by utilizing a long-short-term memory (LSTM) network to eliminate ambiguity; 3, extracting local features of a text in combination with convolution kernels with different filtering lengths by utilizing a convolutional neural network; 4, extracting global features by utilizing three different attention mechanisms; 5, performing artificial feature extraction on the original text data; 6, training a multimodal uniform regression target function by utilizing the local features, the global features and artificial features; and 7, performing sentiment polarity prediction by utilizing a multimodal uniform regression prediction method. Compared with a method adopting a single word vector, a method only extracting the local features of the text, or the like, the text sentiment analysis method can further improve the sentiment classification precision.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

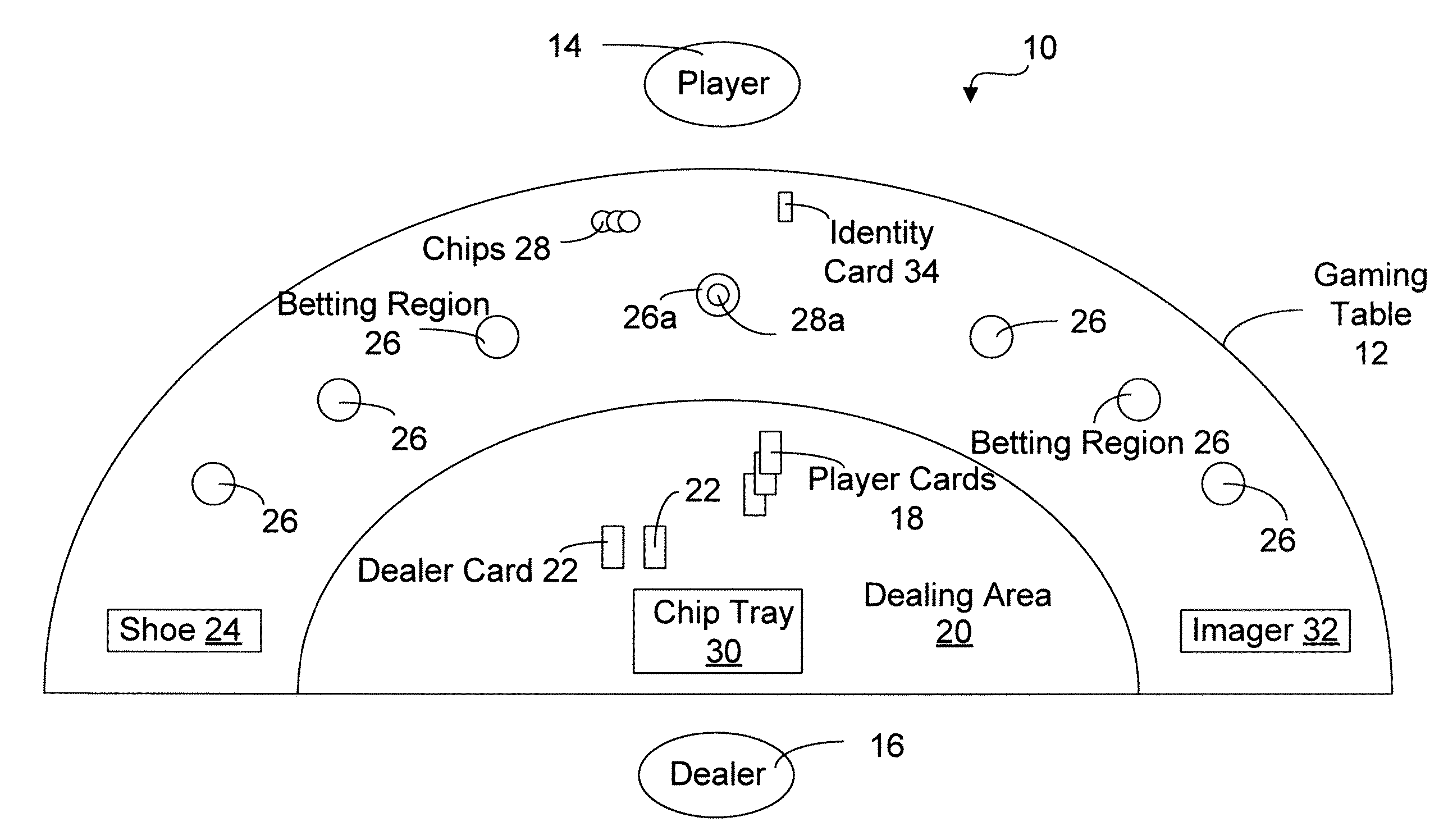

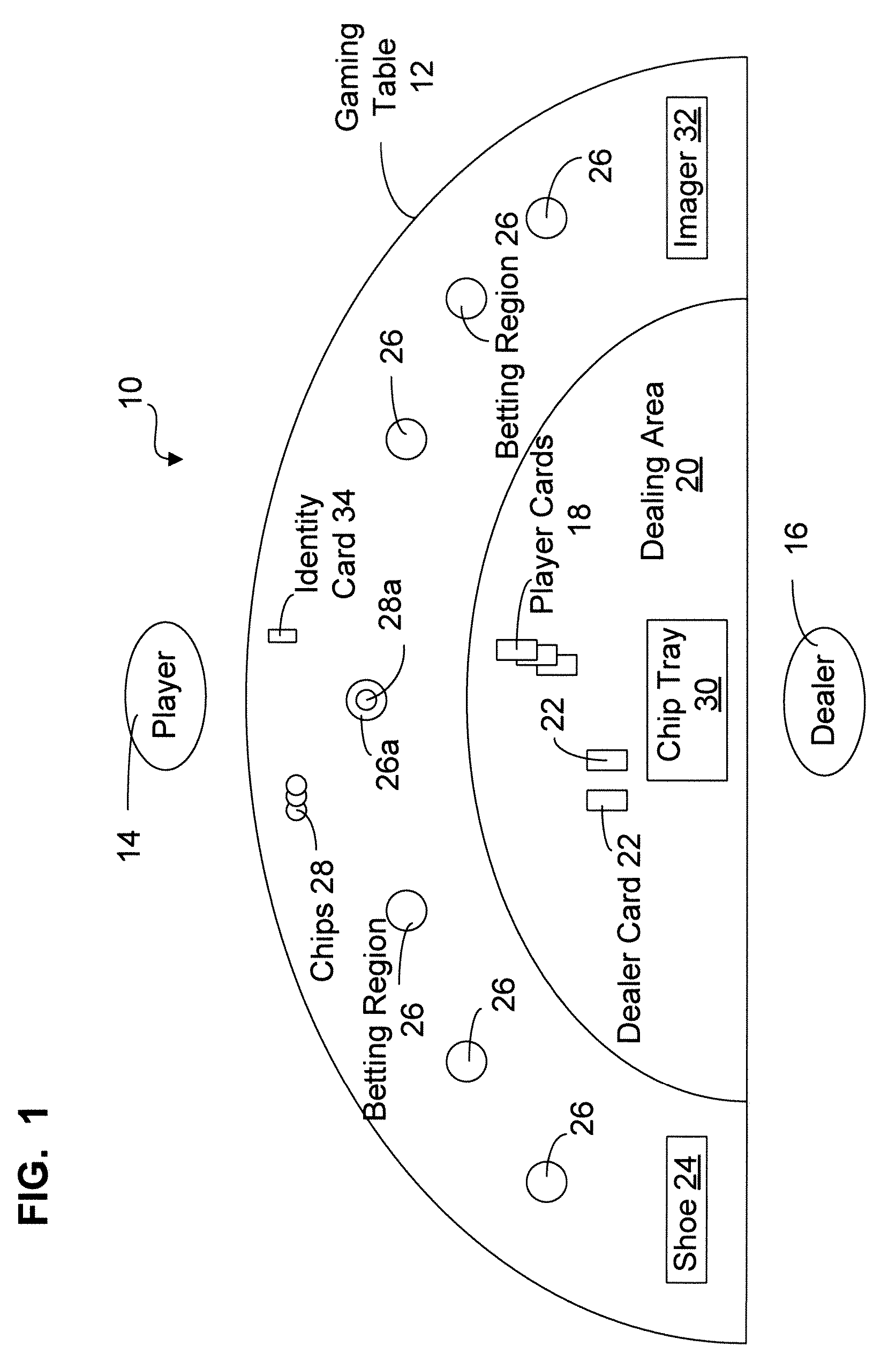

Gaming object recognition

InactiveUS20070077987A1Accurate and efficientApparatus for meter-controlled dispensingVideo gamesFeature extractionPlaying card

The present invention relates to a system and method for identifying and tracking gaming objects. The system comprises an overhead camera for capturing an image the table, a detection module for detecting a feature of the object on the image, a search module for extracting a region of interest of the image that describes the object from the feature, a search module for extracting a region of interest of the image that describes the object from the feature, a feature space module for transforming a feature space of the region of interest to obtain a transformed region of interest, and an identity module comprising a statistical classifier trained to recognize the object from the transformed region. The search module is able to extract a region of interest of an image from any detected feature indicative of its position. The system may be operated in conjunction with a card reader to provide two different sets of playing card data to a tracking module, which may reconcile the provided data in order to detect inconsistencies with respect to playing cards dealt on the table.

Owner:TANGAM GAMING TECH

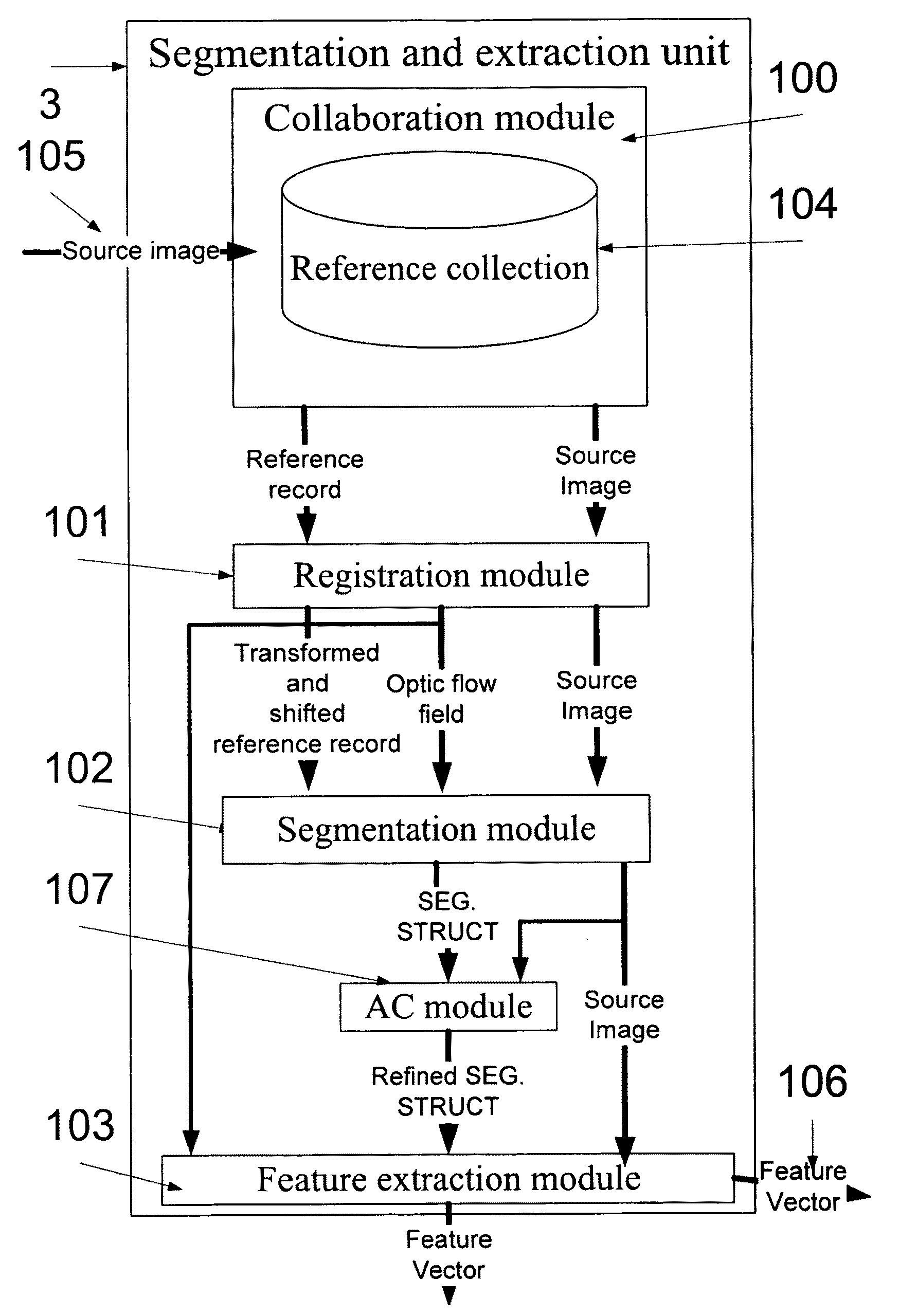

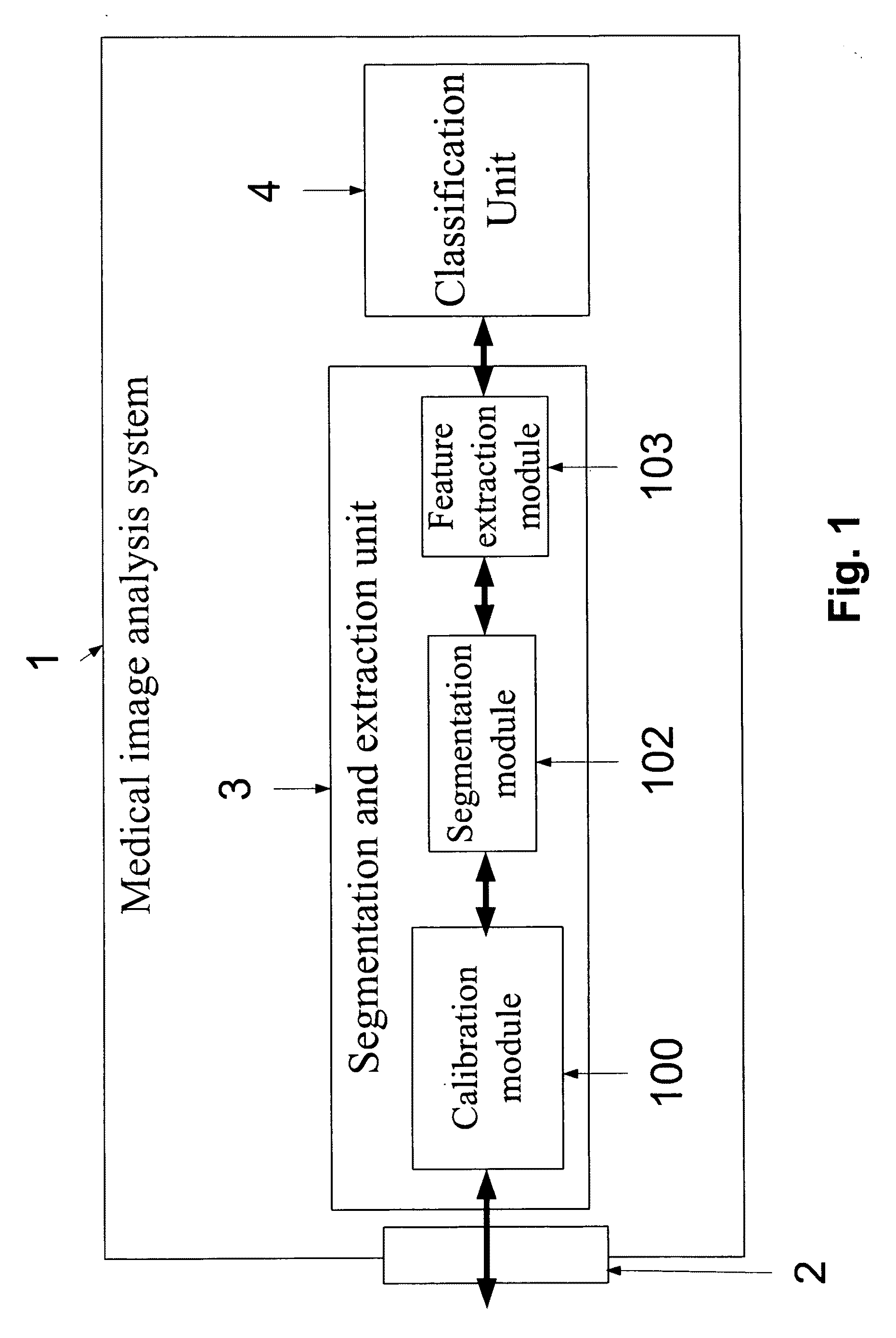

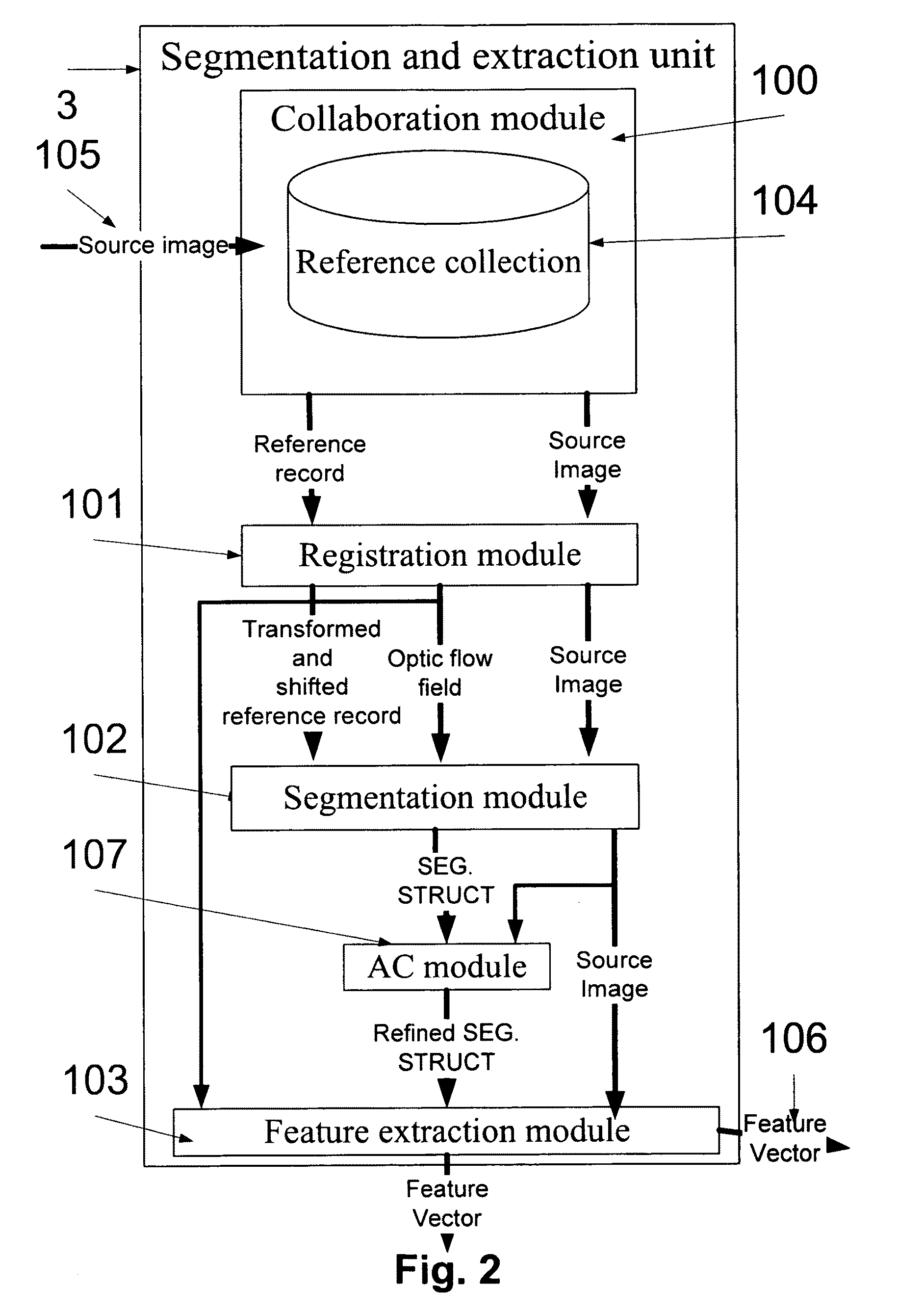

System and Method of Automatic Prioritization and Analysis of Medical Images

A system for analyzing a source medical image of a body organ. The system comprises an input unit for obtaining the source medical image having three dimensions or more, a feature extraction unit that is designed for obtaining a number of features of the body organ from the source medical image, and a classification unit that is designed for estimating a priority level according to the features.

Owner:MEDIC VISION BRAIN TECH

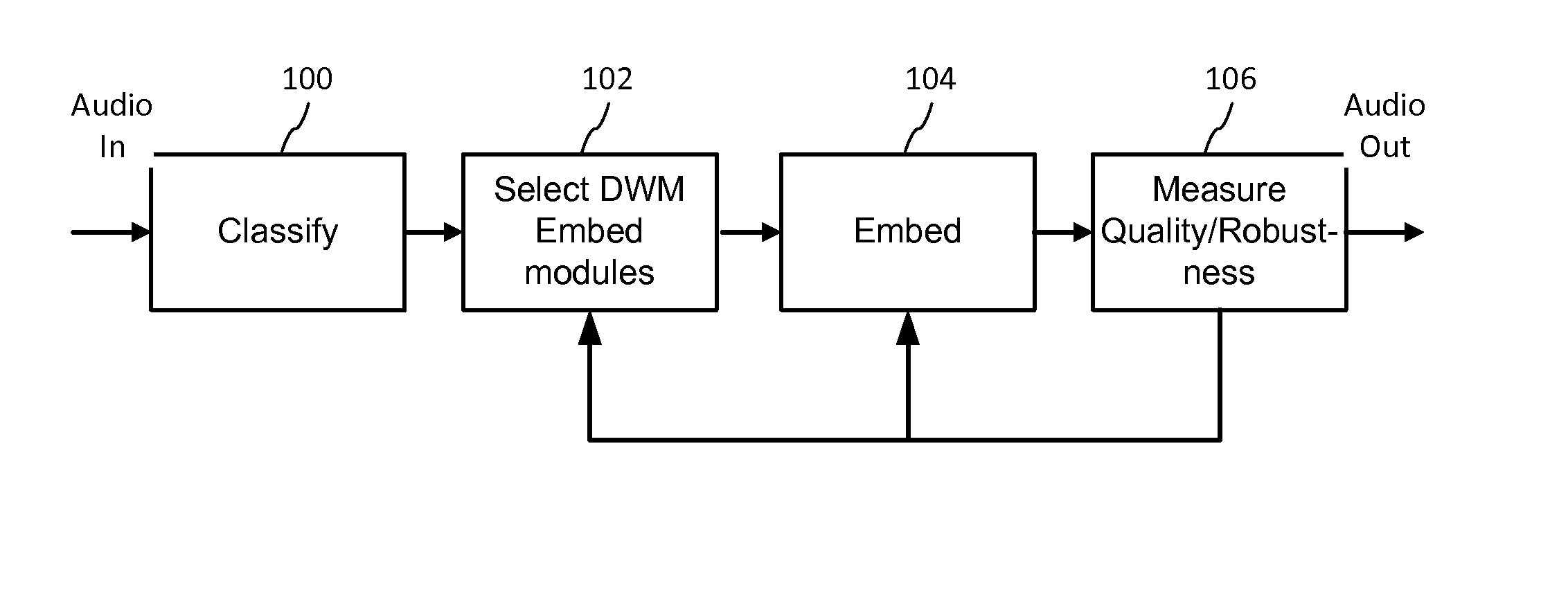

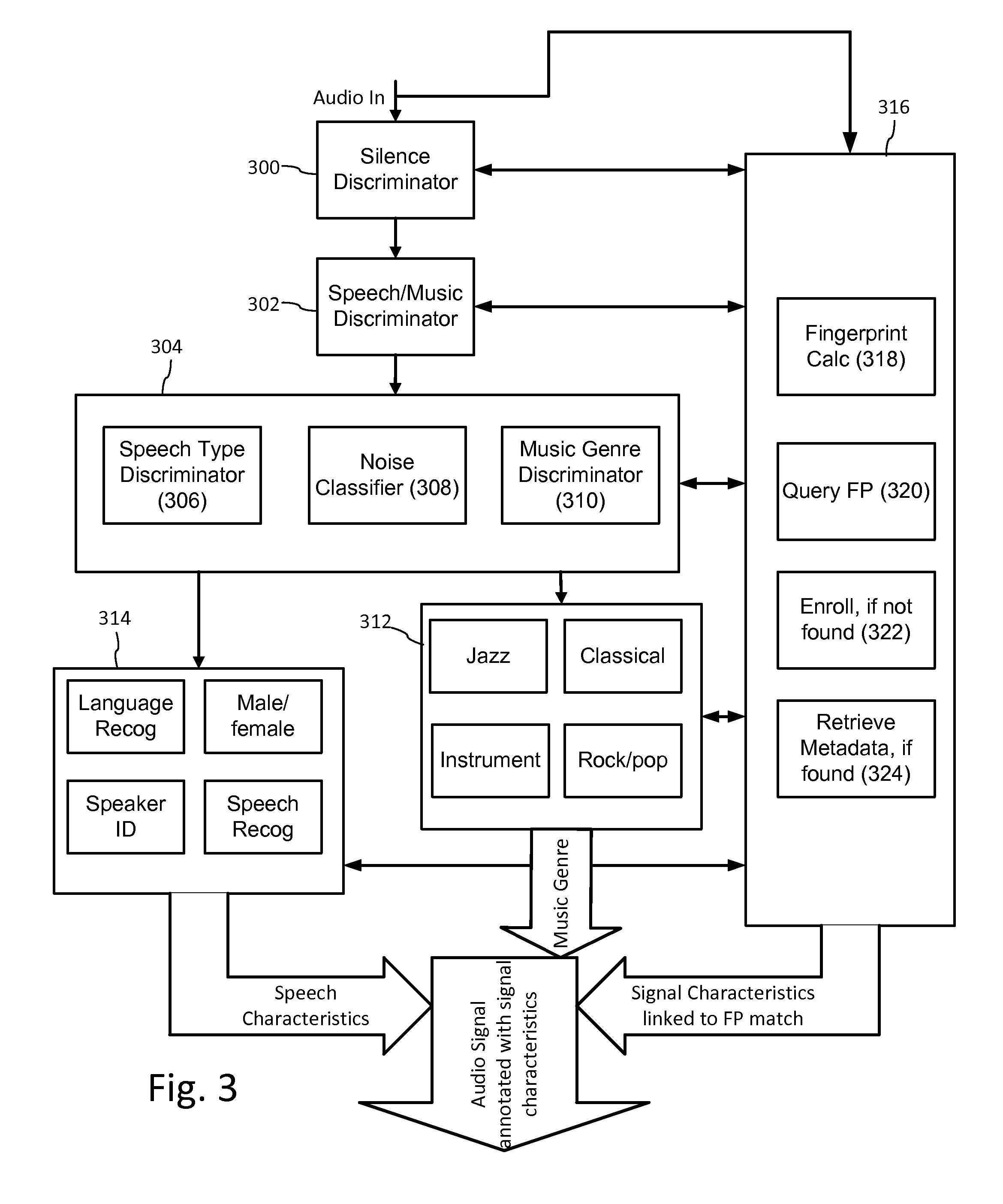

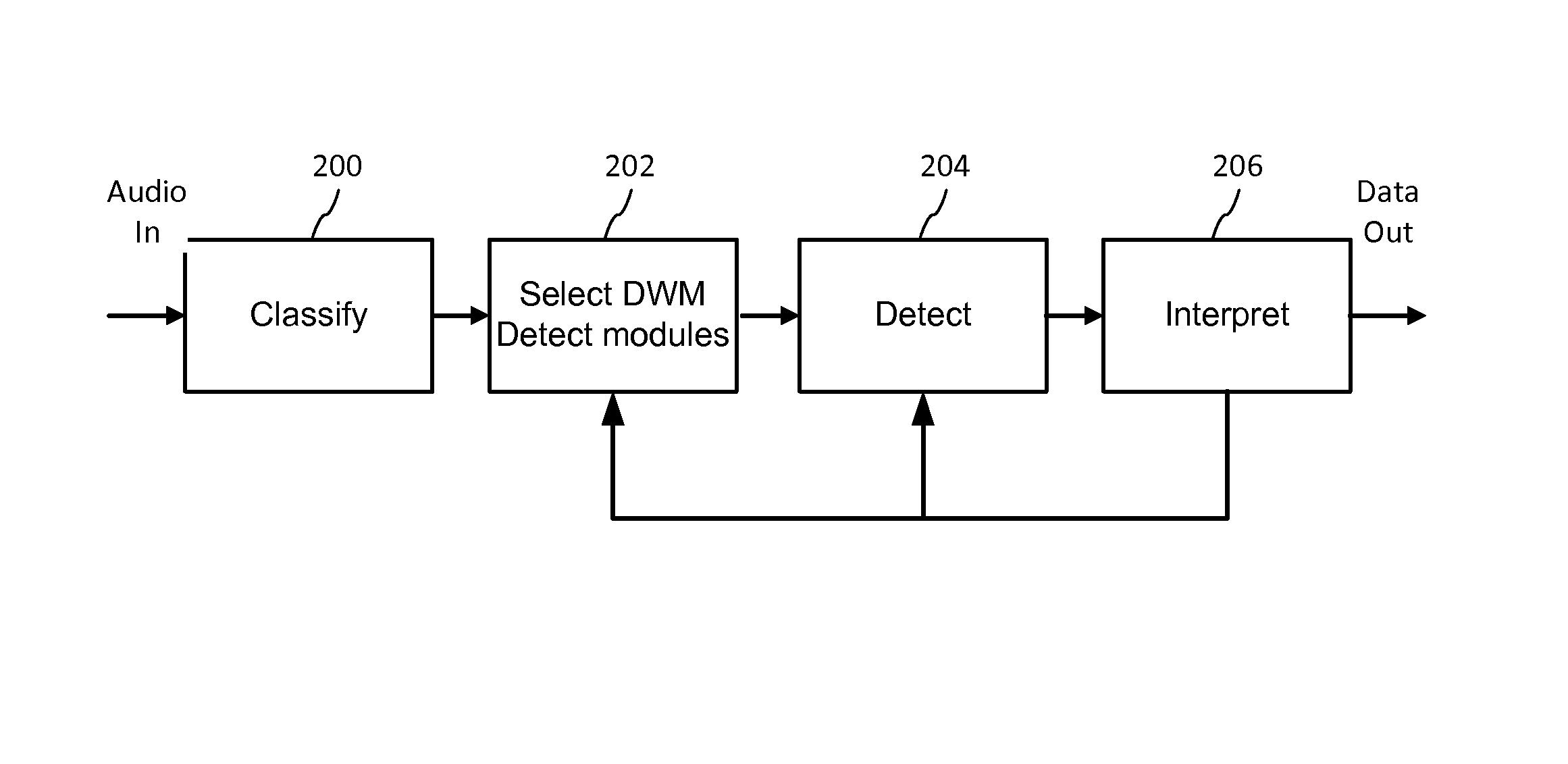

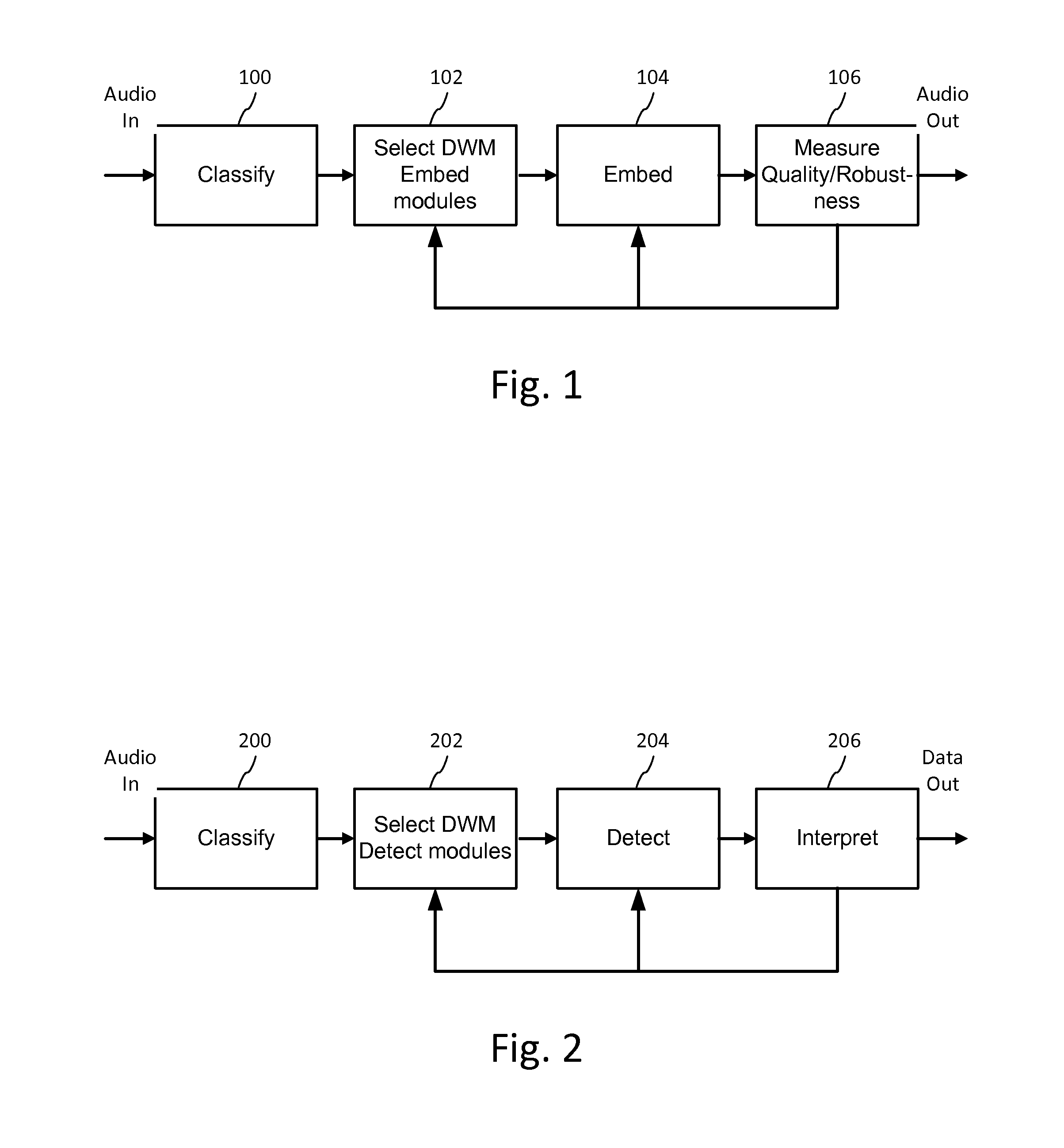

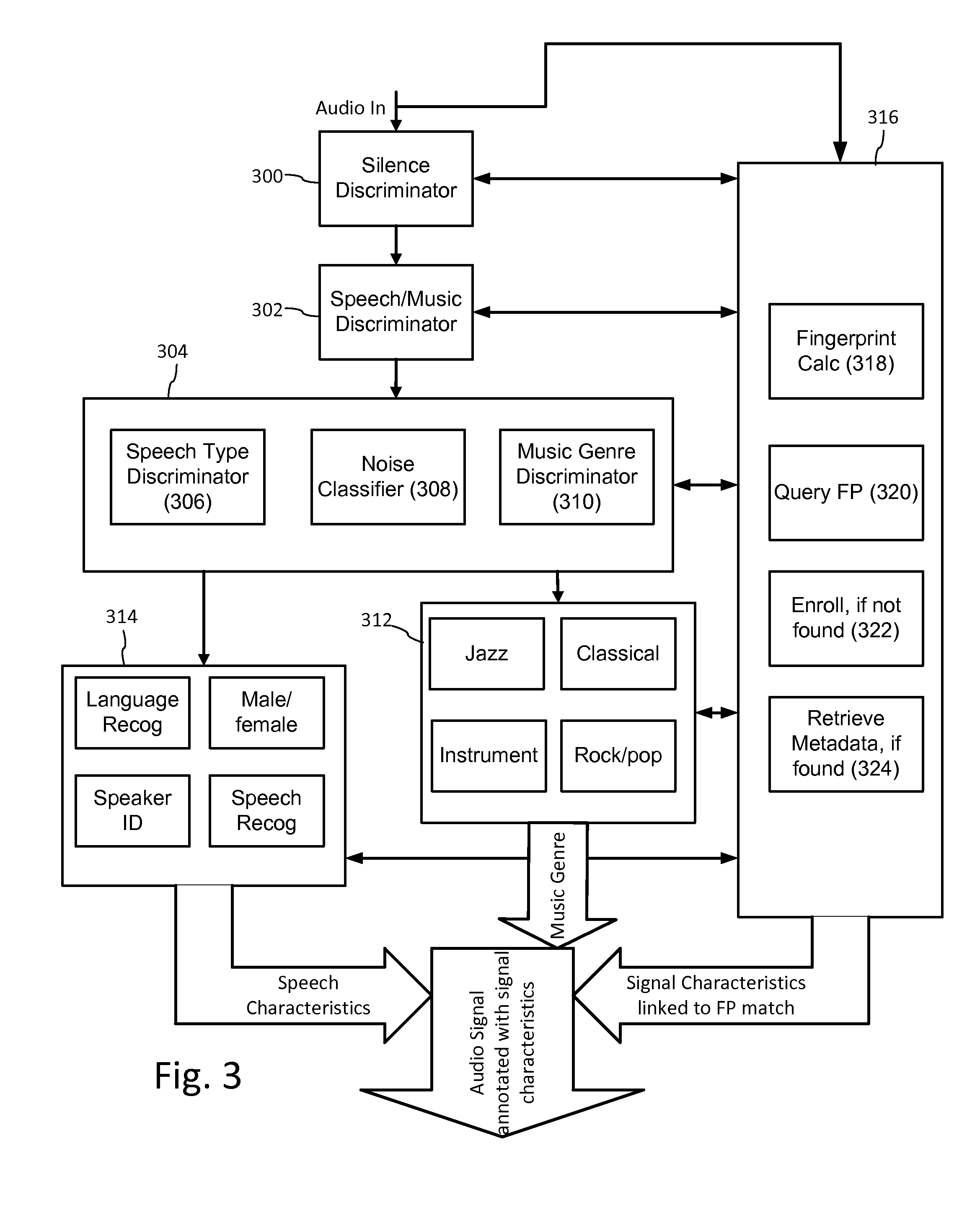

Multi-mode audio recognition and auxiliary data encoding and decoding

ActiveUS20140108020A1Improving communication over networkOptimize networkSpeech analysisData capacityFeature extraction

Audio signal processing enhances audio watermark embedding and detecting processes. Audio signal processes include audio classification and adapting watermark embedding and detecting based on classification. Advances in audio watermark design include adaptive watermark signal structure data protocols, perceptual models, and insertion methods. Perceptual and robustness evaluation is integrated into audio watermark embedding to optimize audio quality relative the original signal, and to optimize robustness or data capacity. These methods are applied to audio segments in audio embedder and detector configurations to support real time operation. Feature extraction and matching are also used to adapt audio watermark embedding and detecting.

Owner:DIGIMARC CORP

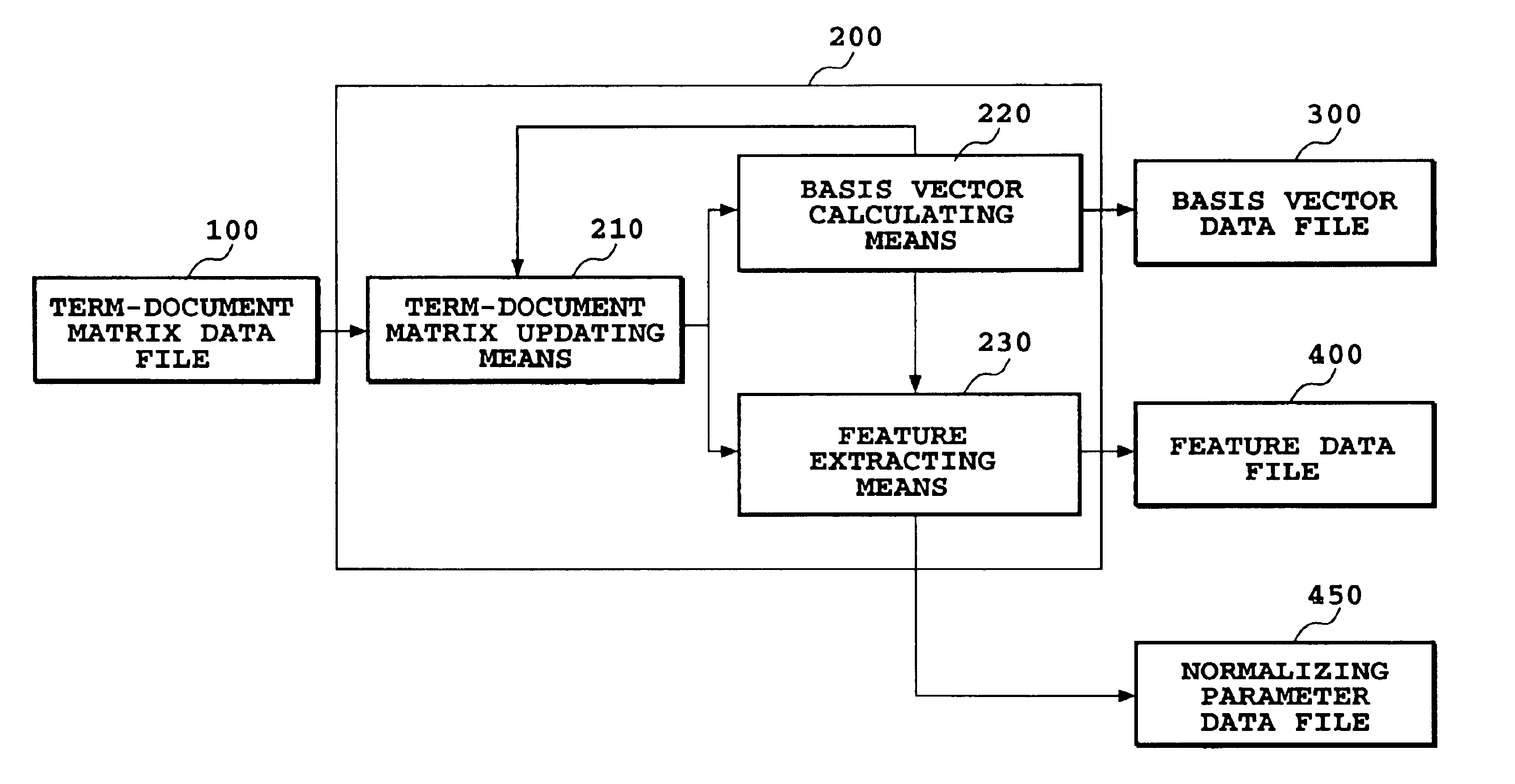

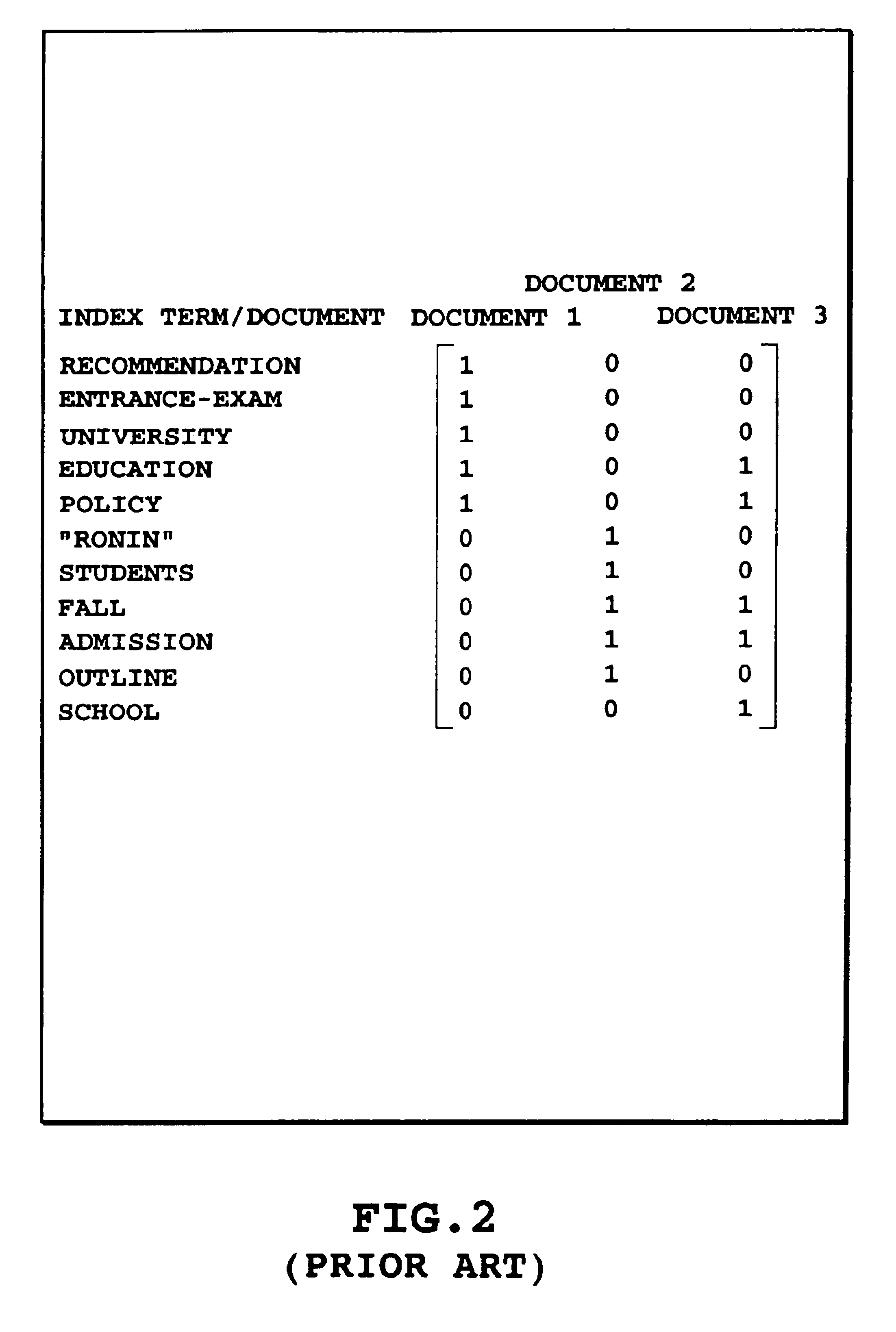

Text mining method and apparatus for extracting features of documents

InactiveUS6882747B2Little memory spaceEasy to implementDigital data information retrievalData processing applicationsFeature extractionText mining

Concerning feature extraction of documents in text mining, a method and an apparatus for extracting features having the same nature as those by LSA are provided that require smaller memory space and simpler program and apparatus than the apparatus for executing LSA. Features of each document are extracted by feature extracting acts on the basis of a term-document matrix updated by term-document updating acts and of a basis vector, spanning a space of effective features, calculated by basis vector calculations. Execution of respective acts is repeated until a predetermined requirement given by a user is satisfied.

Owner:SSR +1

Multi-mode audio recognition and auxiliary data encoding and decoding

ActiveUS20140142958A1Improving communication over networkOptimize networkSpeech analysisData capacityFeature extraction

Audio signal processing enhances audio watermark embedding and detecting processes. Audio signal processes include audio classification and adapting watermark embedding and detecting based on classification. Advances in audio watermark design include adaptive watermark signal structure data protocols, perceptual models, and insertion methods. Perceptual and robustness evaluation is integrated into audio watermark embedding to optimize audio quality relative the original signal, and to optimize robustness or data capacity. These methods are applied to audio segments in audio embedder and detector configurations to support real time operation. Feature extraction and matching are also used to adapt audio watermark embedding and detecting.

Owner:DIGIMARC CORP

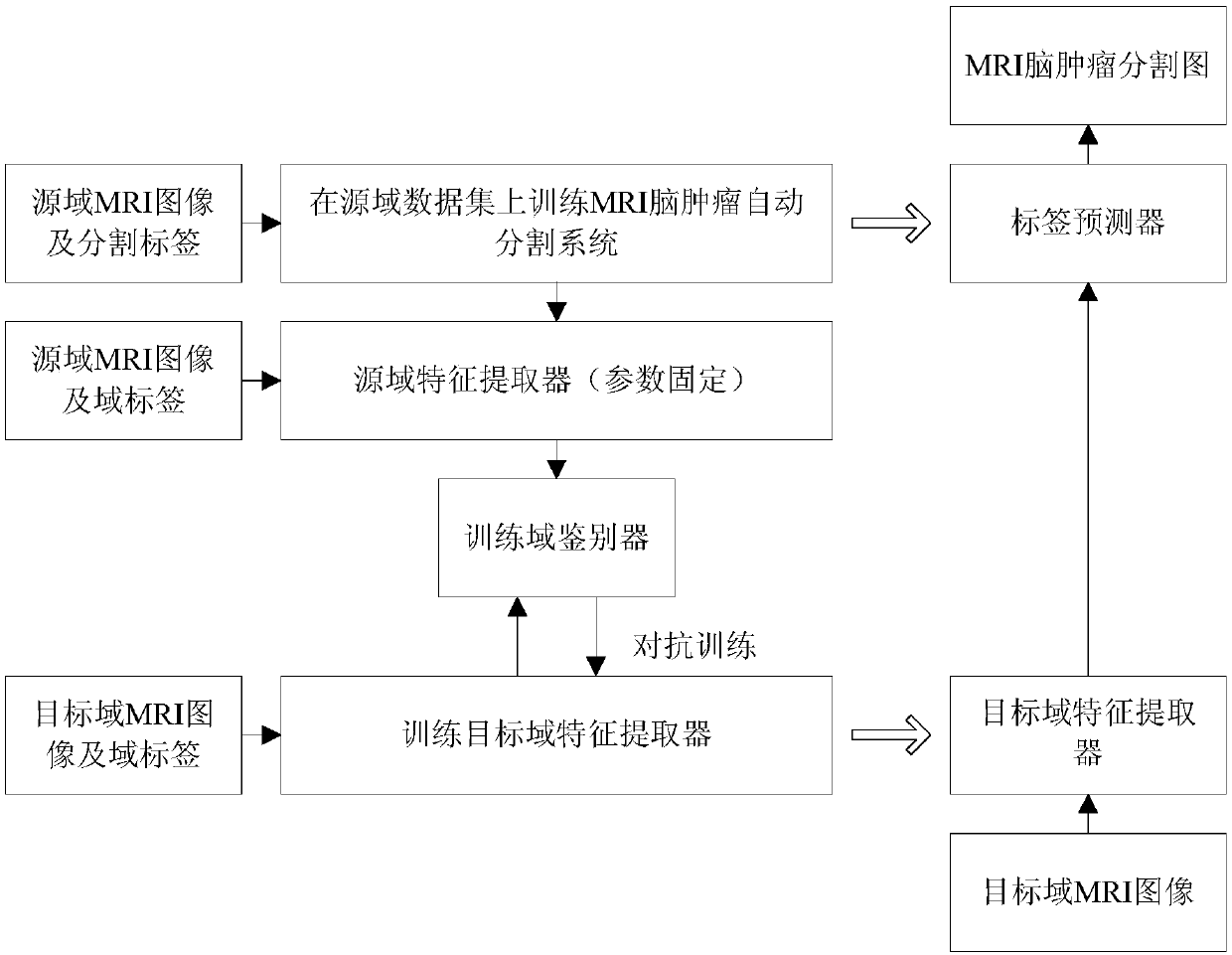

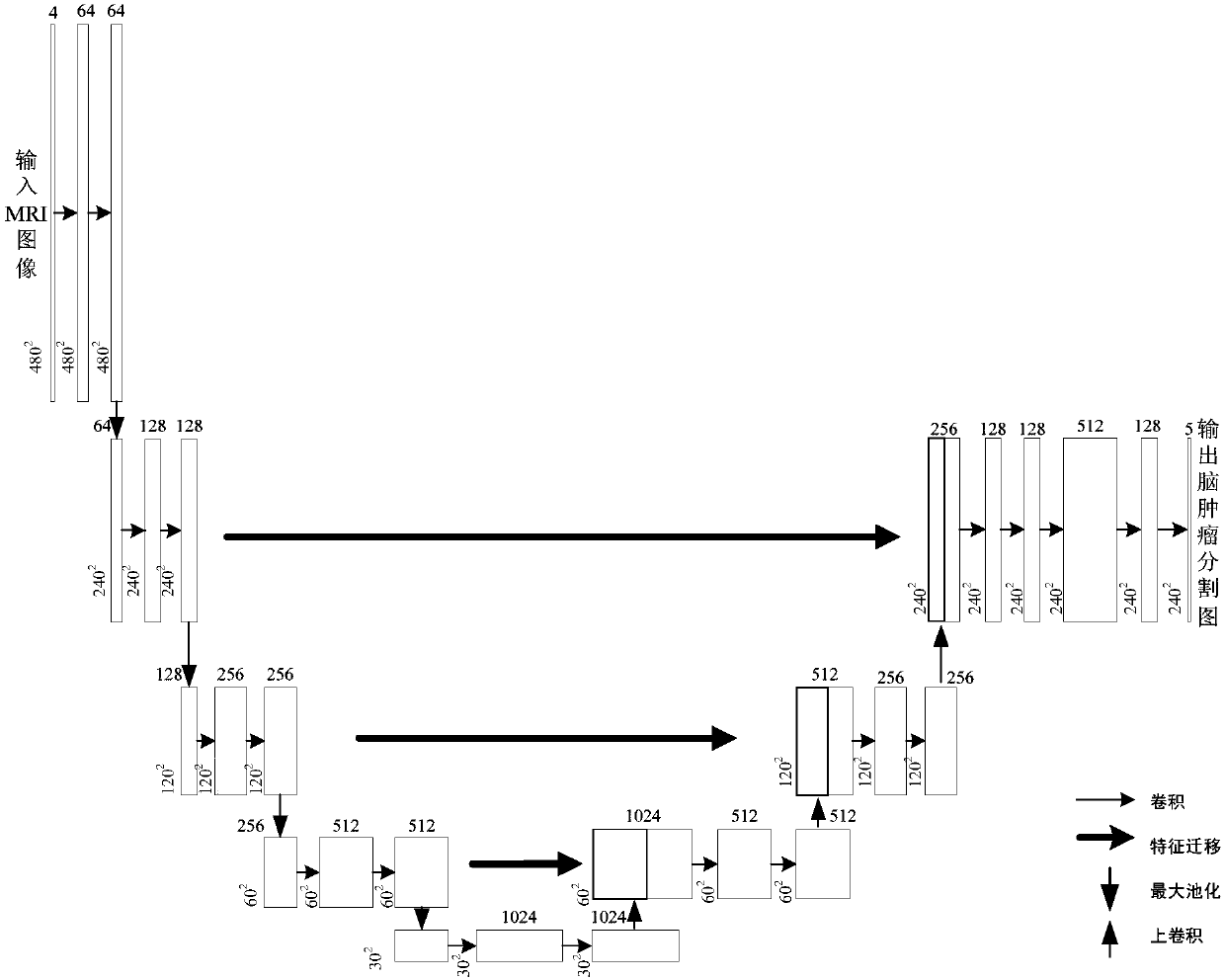

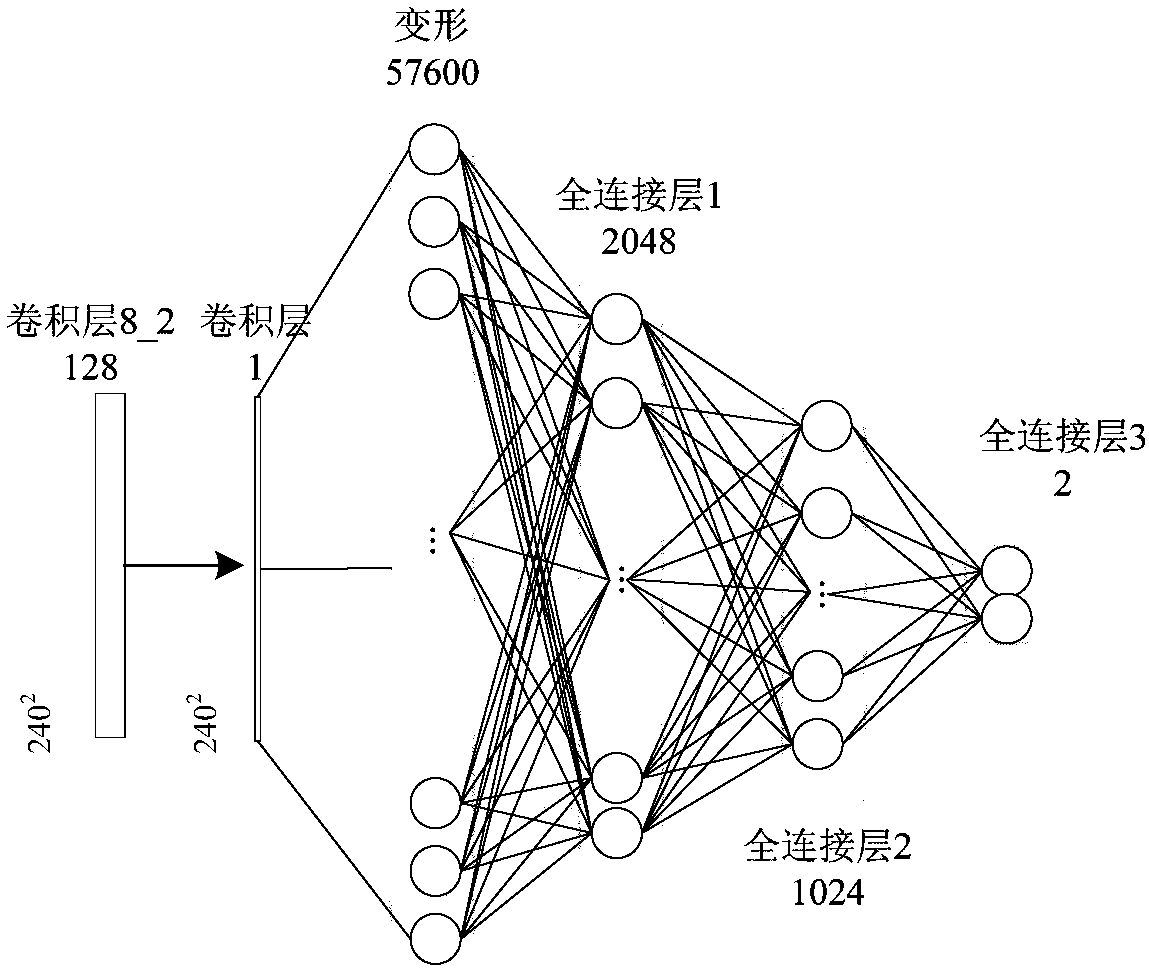

Unsupervised domain-adaptive brain tumor semantic segmentation method based on deep adversarial learning

InactiveCN108062753AAccurate predictionEasy to trainImage enhancementImage analysisDiscriminatorNetwork model

The invention provides an unsupervised domain-adaptive brain tumor semantic segmentation method based on deep adversarial learning. The method comprises the steps of deep coding-decoding full-convolution network segmentation system model setup, domain discriminator network model setup, segmentation system pre-training and parameter optimization, adversarial training and target domain feature extractor parameter optimization and target domain MRI brain tumor automatic semantic segmentation. According to the method, high-level semantic features and low-level detailed features are utilized to jointly predict pixel tags by the adoption of a deep coding-decoding full-convolution network modeling segmentation system, a domain discriminator network is adopted to guide a segmentation model to learn domain-invariable features and a strong generalization segmentation function through adversarial learning, a data distribution difference between a source domain and a target domain is minimized indirectly, and a learned segmentation system has the same segmentation precision in the target domain as in the source domain. Therefore, the cross-domain generalization performance of the MRI brain tumor full-automatic semantic segmentation method is improved, and unsupervised cross-domain adaptive MRI brain tumor precise segmentation is realized.

Owner:CHONGQING UNIV OF TECH

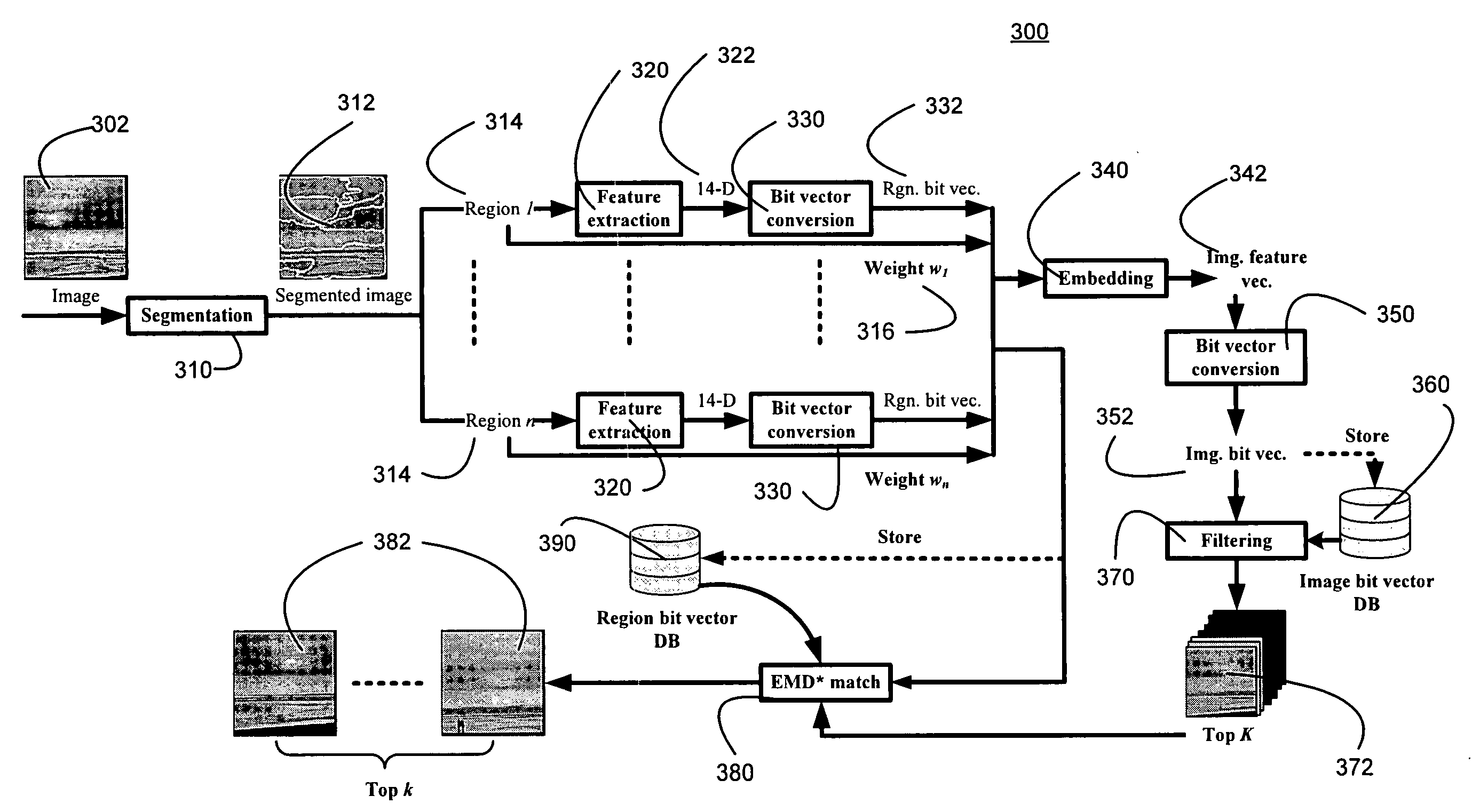

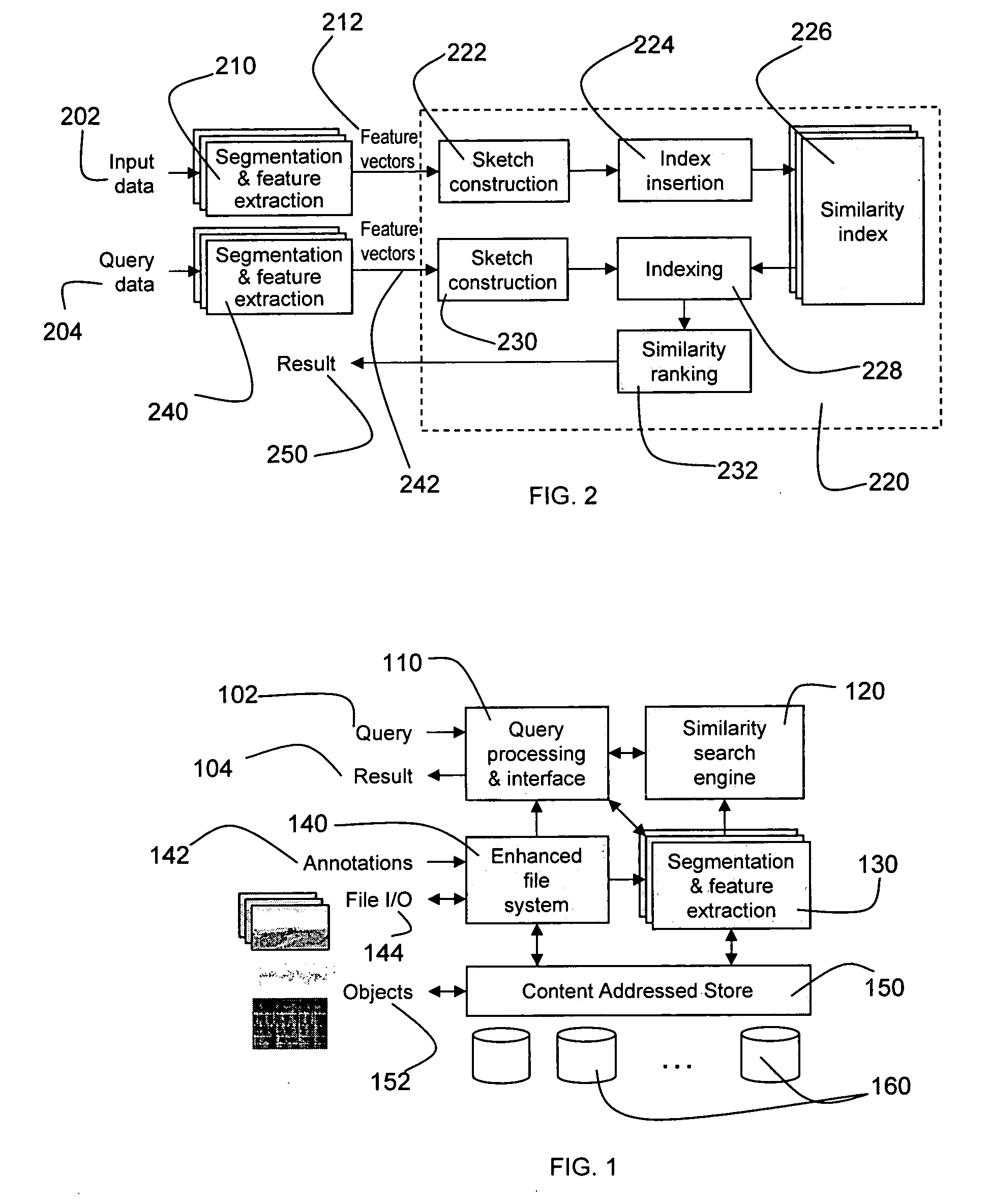

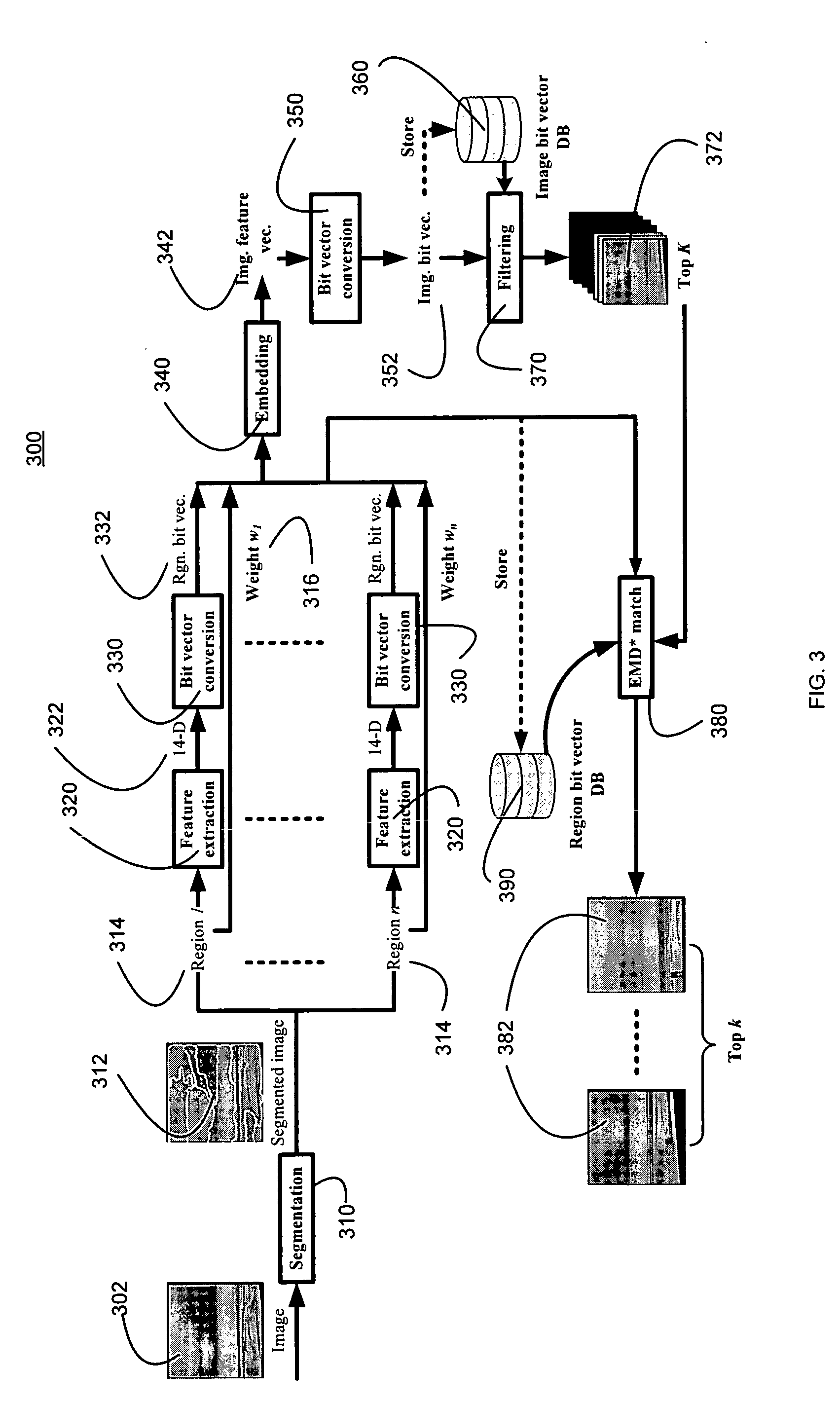

Similarity search system with compact data structures

InactiveUS20060101060A1Minimal amount of workAvoid calculationDigital data information retrievalDigital data processing detailsFeature vectorFeature extraction

A content-addressable and searchable storage system for managing and exploring massive amounts of feature-rich data such as images, audio or scientific data, is shown. The system comprises a segmentation and feature extraction unit for segmenting data corresponding to an object into a plurality of data segments and generating a feature vector for each data segment; a sketch construction component for converting a feature vector into a compact bit-vector corresponding to the object; a similarity index comprising a plurality of compact bit-vectors corresponding to a plurality of objects; and an index insertion component for inserting a compact bit-vector corresponding to an object into the similarity index. The system may further comprise an indexing unit for identifying a candidate set of objects from said similarity index based upon a compact bit-vector corresponding to a query object. Still further, the system may additionally comprise a similarity ranking component for ranking objects in said candidate set by estimating their distances to the query object.

Owner:THE TRUSTEES FOR PRINCETON UNIV

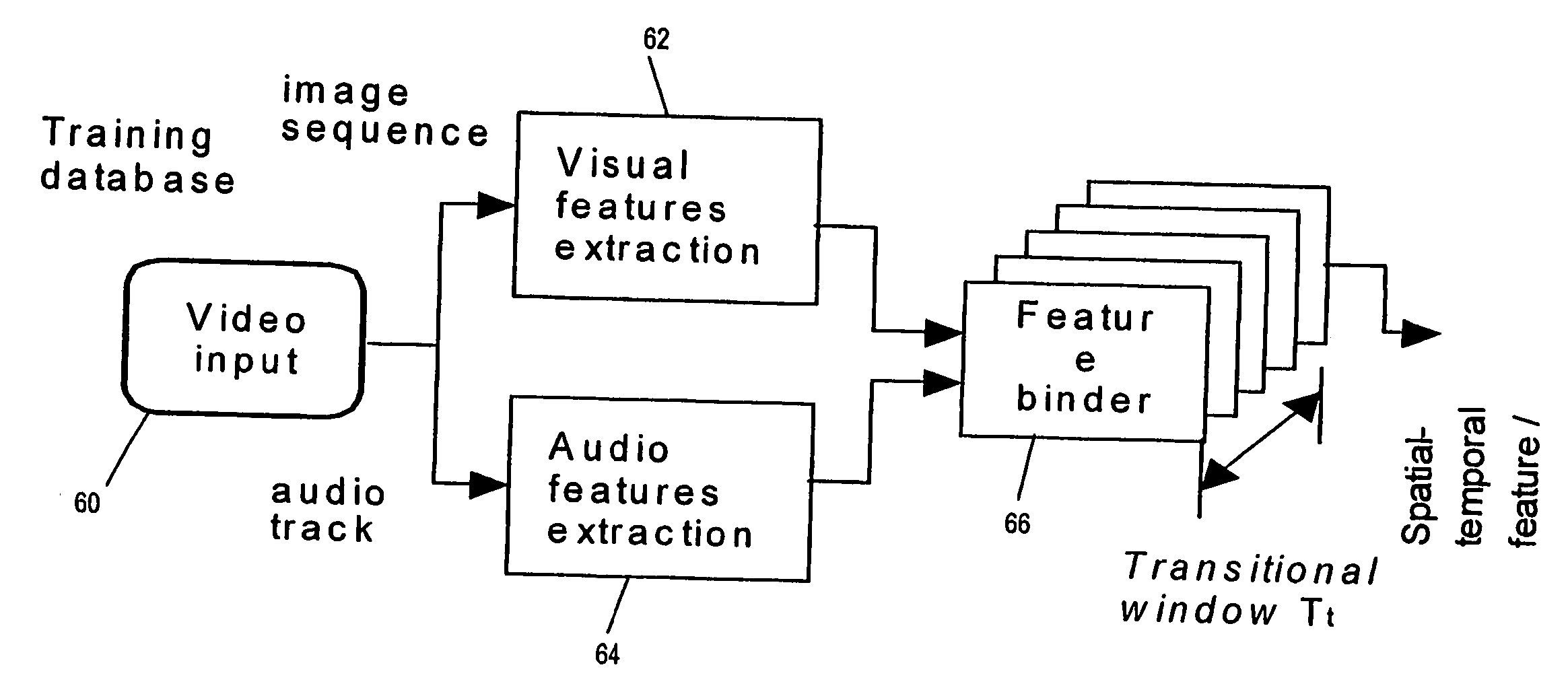

Method and system for classification of semantic content of audio/video data

InactiveUS20050238238A1Minimising within-class varianceMaximising between-class varianceDigital data information retrievalCharacter and pattern recognitionFeature vectorFeature extraction

Audio / Visual data is classified into semantic classes such as News, Sports, Music video or the like by providing class models for each class and comparing input audio visual data to the models. The class models are generated by extracting feature vectors from training samples, and then subjecting the feature vectors to kernel discriminant analysis or principal component analysis to give discriminatory basis vectors. These vectors are then used to obtain further feature vector of much lower dimension than the original feature vectors, which may then be used directly as a class model, or used to train a Gaussian Mixture Model or the like. During classification of unknown input data, the same feature extraction and analysis steps are performed to obtain the low-dimensional feature vectors, which are then fed into the previously created class models to identify the data genre.

Owner:BRITISH TELECOMM PLC

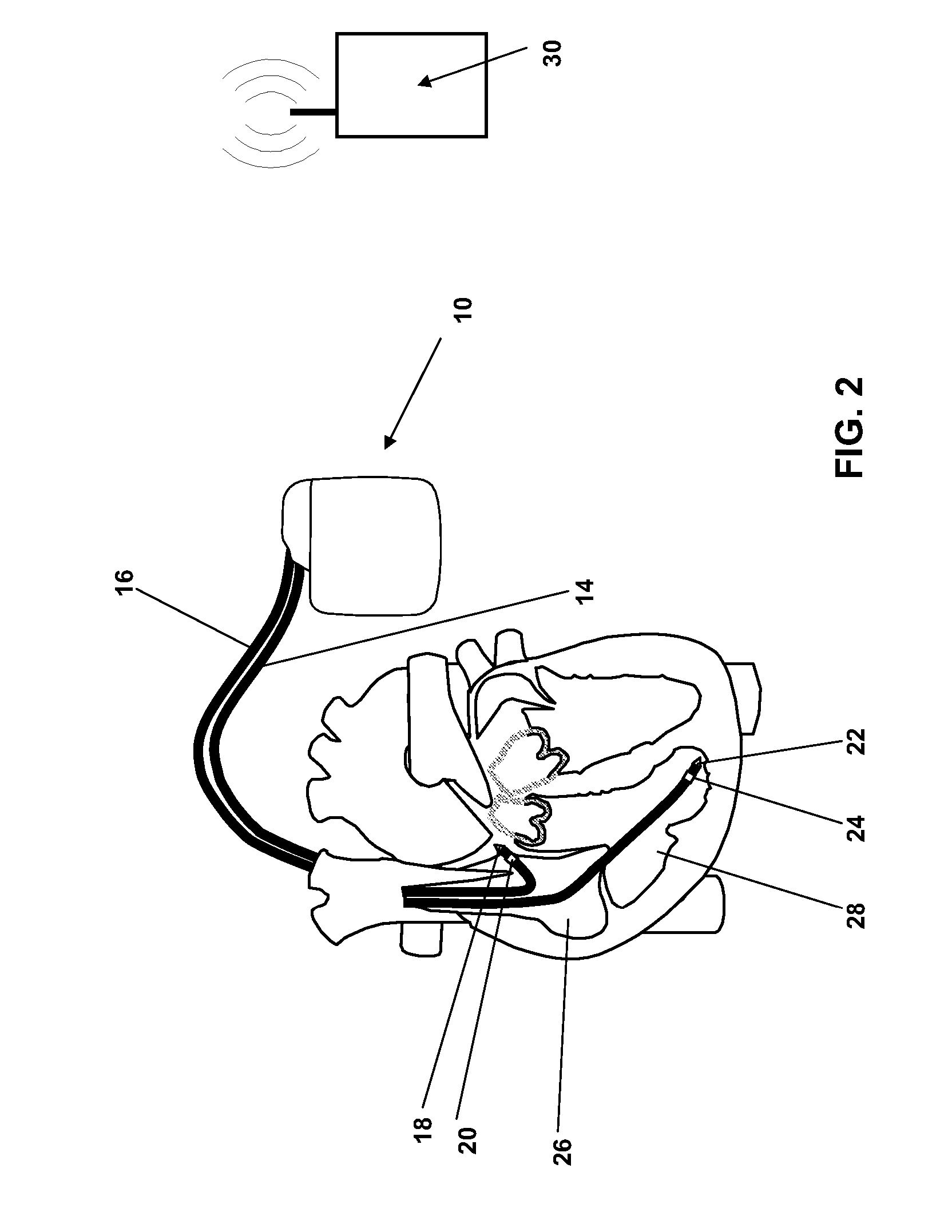

Wavelet based feature extraction and dimension reduction for the classification of human cardiac electrogram depolarization waveforms

ActiveUS20080109041A1Improve accuracyPrecise processElectrocardiographyMedical automated diagnosisCardiac pacemaker electrodeClassification methods

A depolarization waveform classifier based on the Modified lifting line wavelet Transform is described. Overcomes problems in existing rate-based event classifiers. A task for pacemaker / defibrillators is the accurate identification of rhythm categories so correct electrotherapy can be administered. Because some rhythms cause rapid dangerous drop in cardiac output, it's desirable to categorize depolarization waveforms on a beat-to-beat basis to accomplish rhythm classification as rapidly as possible. Although rate based methods of event categorization have served well in implanted devices, these methods suffer in sensitivity and specificity when atrial / ventricular rates are similar. Human experts differentiate rhythms by morphological features of strip chart electrocardiograms. The wavelet transform approximates human expert analysis function because it correlates distinct morphological features at multiple scales. The accuracy of implanted rhythm determination can then be improved by using human-appreciable time domain features enhanced by time scale decomposition of depolarization waveforms.

Owner:BIOTRONIK SE & CO KG

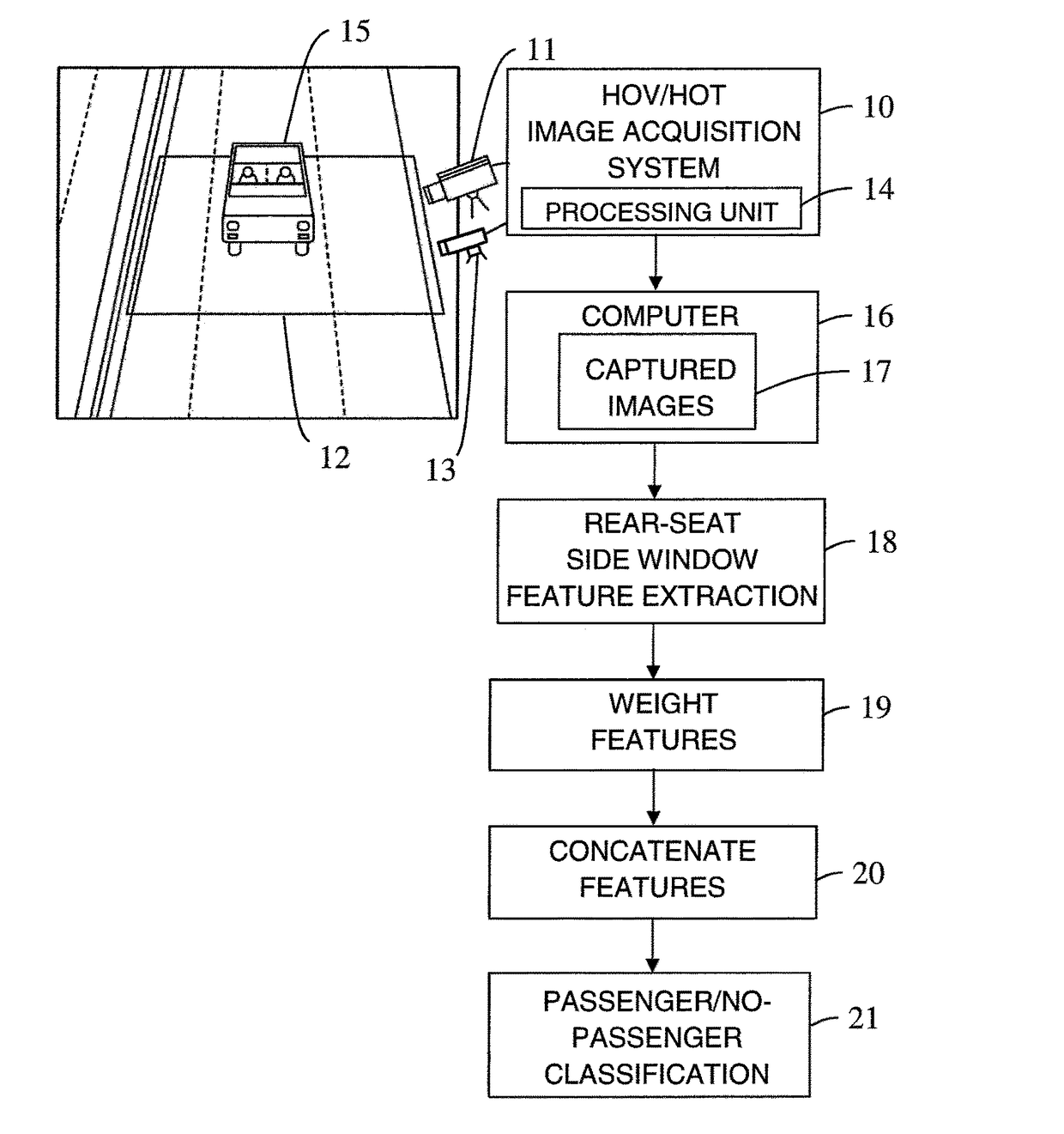

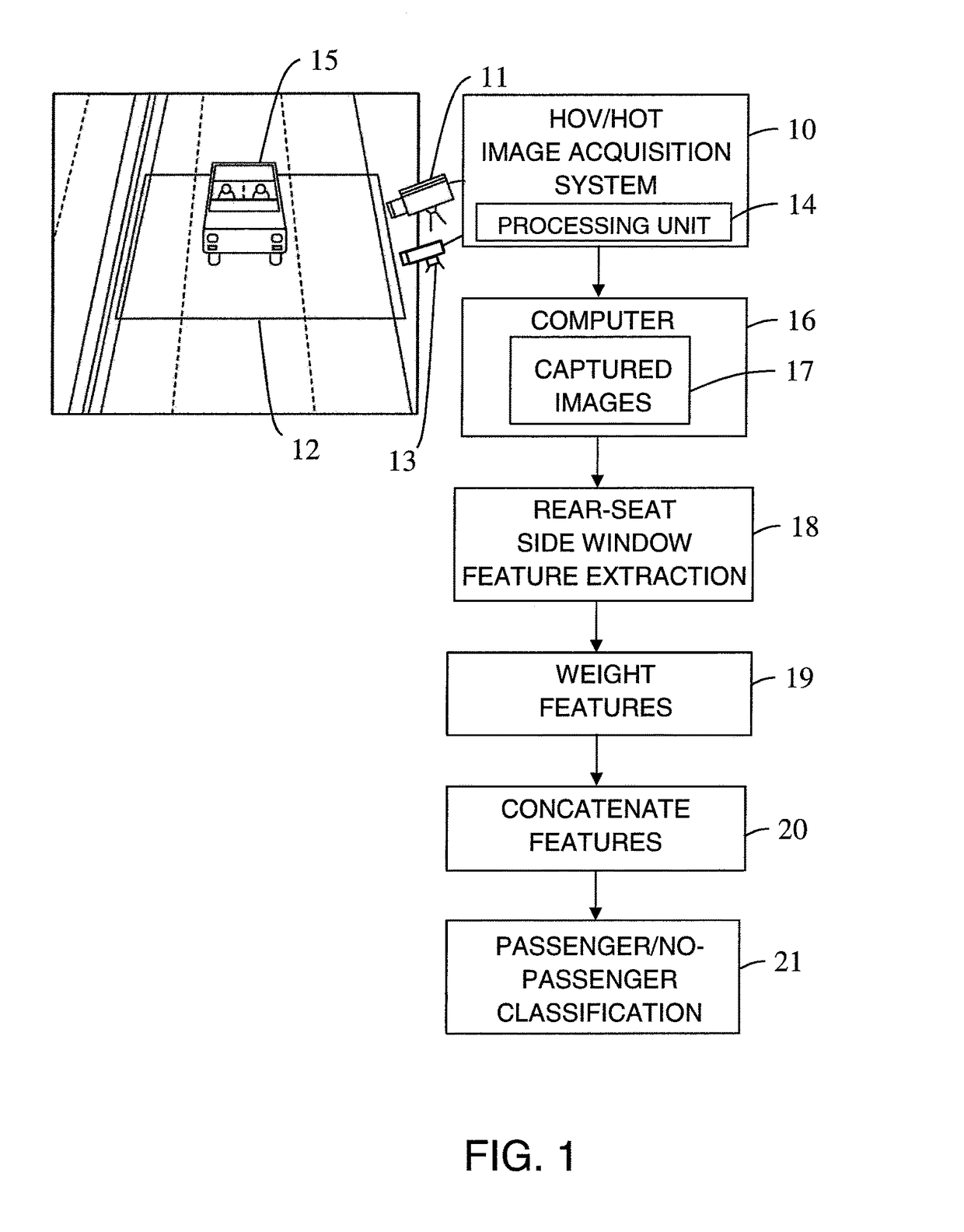

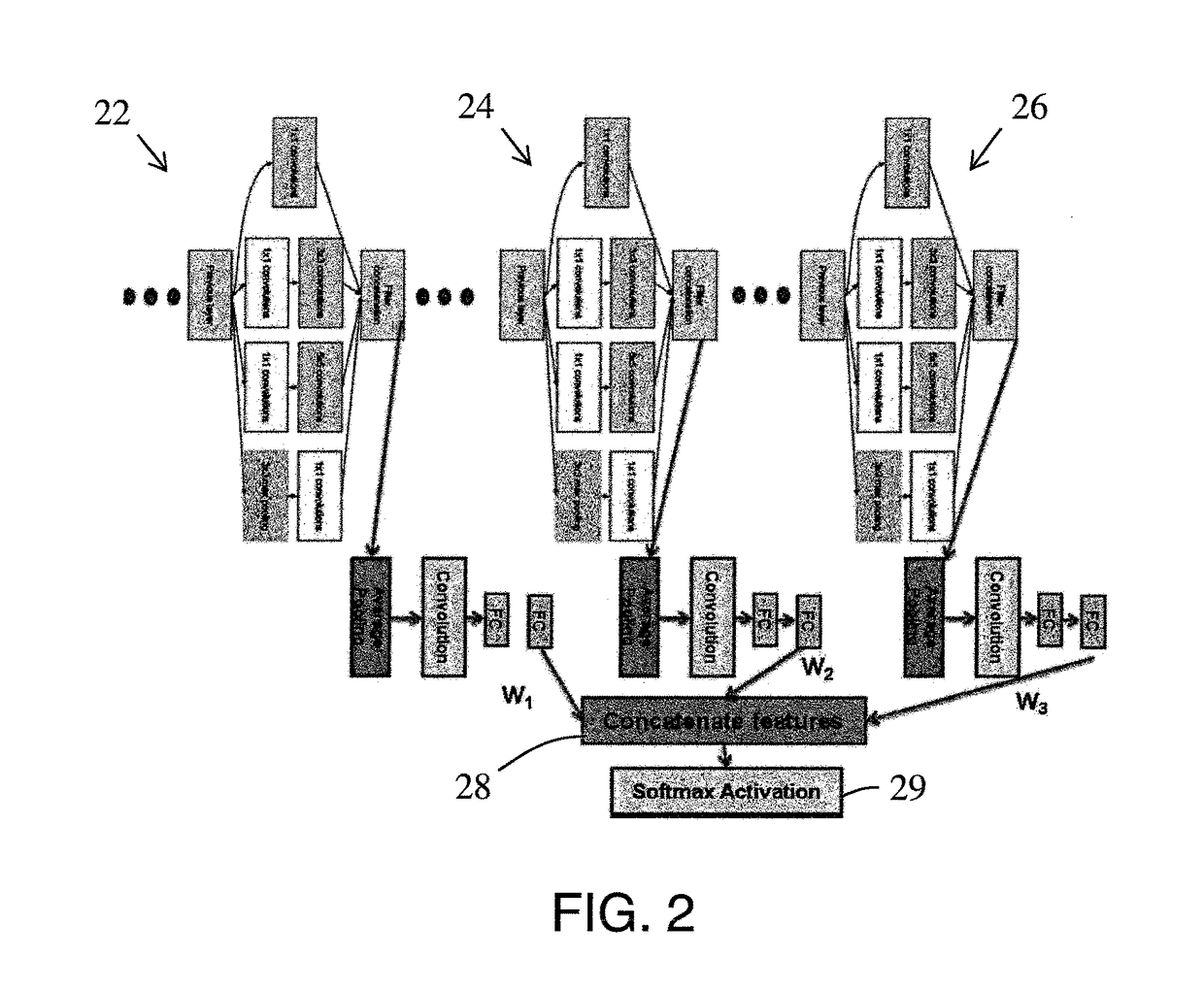

Multi-layer fusion in a convolutional neural network for image classification

ActiveUS20170140253A1Easy to adaptImprove classification accuracyCharacter and pattern recognitionNeural learning methodsFeature extractionTwo step

A method and system for domain adaptation based on multi-layer fusion in a convolutional neural network architecture for feature extraction and a two-step training and fine-tuning scheme. The architecture concatenates features extracted at different depths of the network to form a fully connected layer before the classification step. First, the network is trained with a large set of images from a source domain as a feature extractor. Second, for each new domain (including the source domain), the classification step is fine-tuned with images collected from the corresponding site. The features from different depths are concatenated with and fine-tuned with weights adjusted for a specific task. The architecture is used for classifying high occupancy vehicle images.

Owner:CONDUENT BUSINESS SERVICES LLC

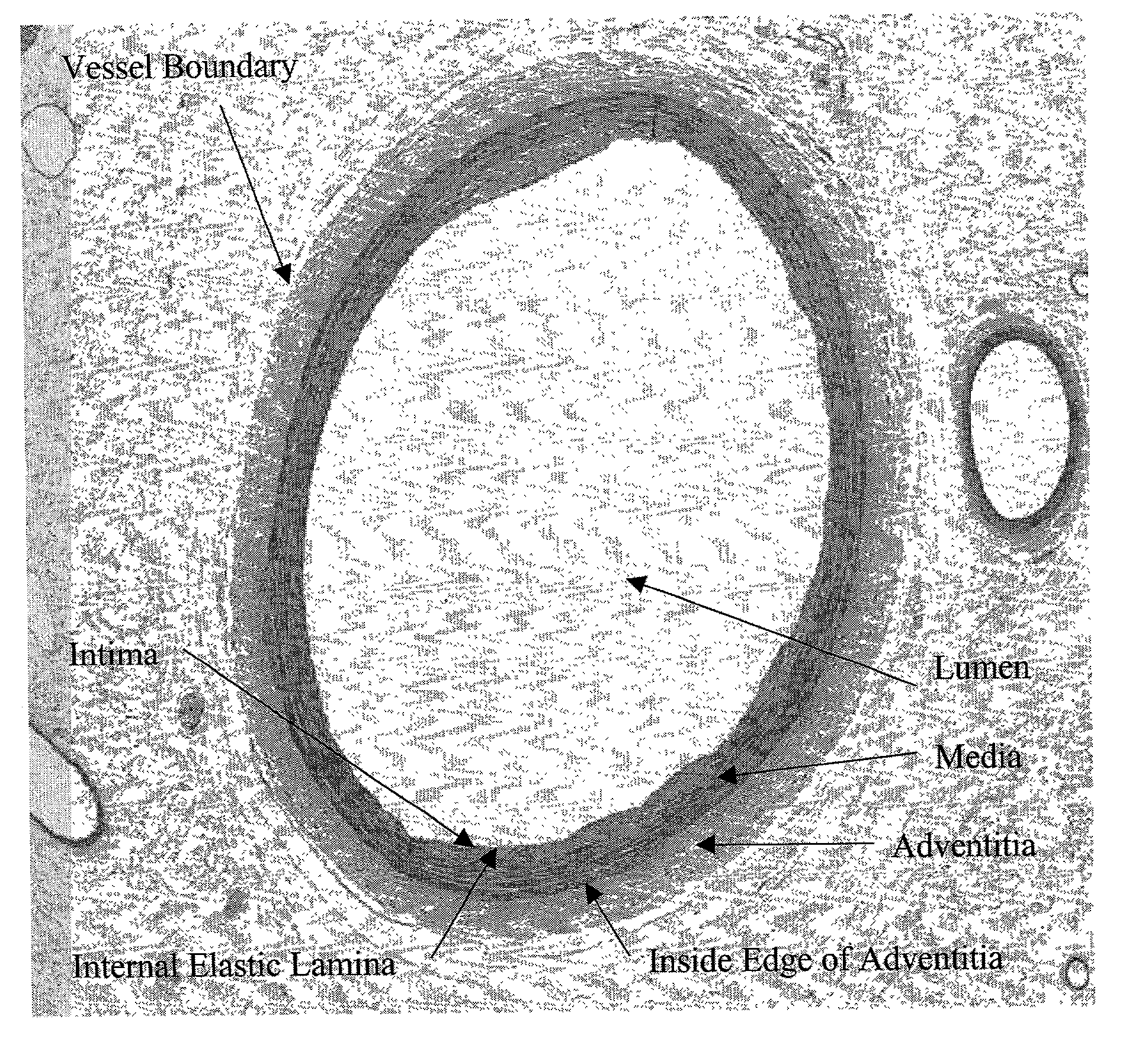

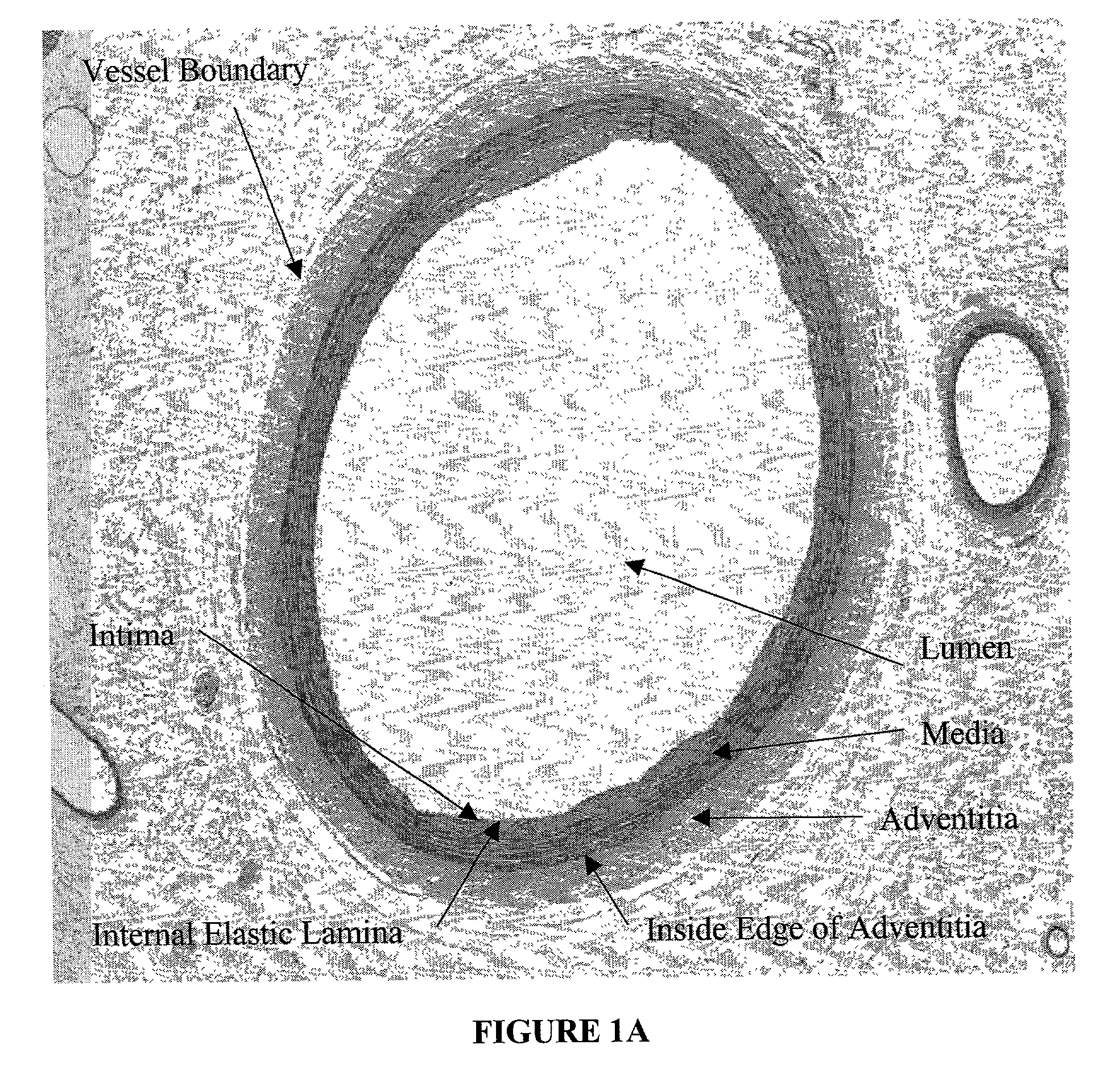

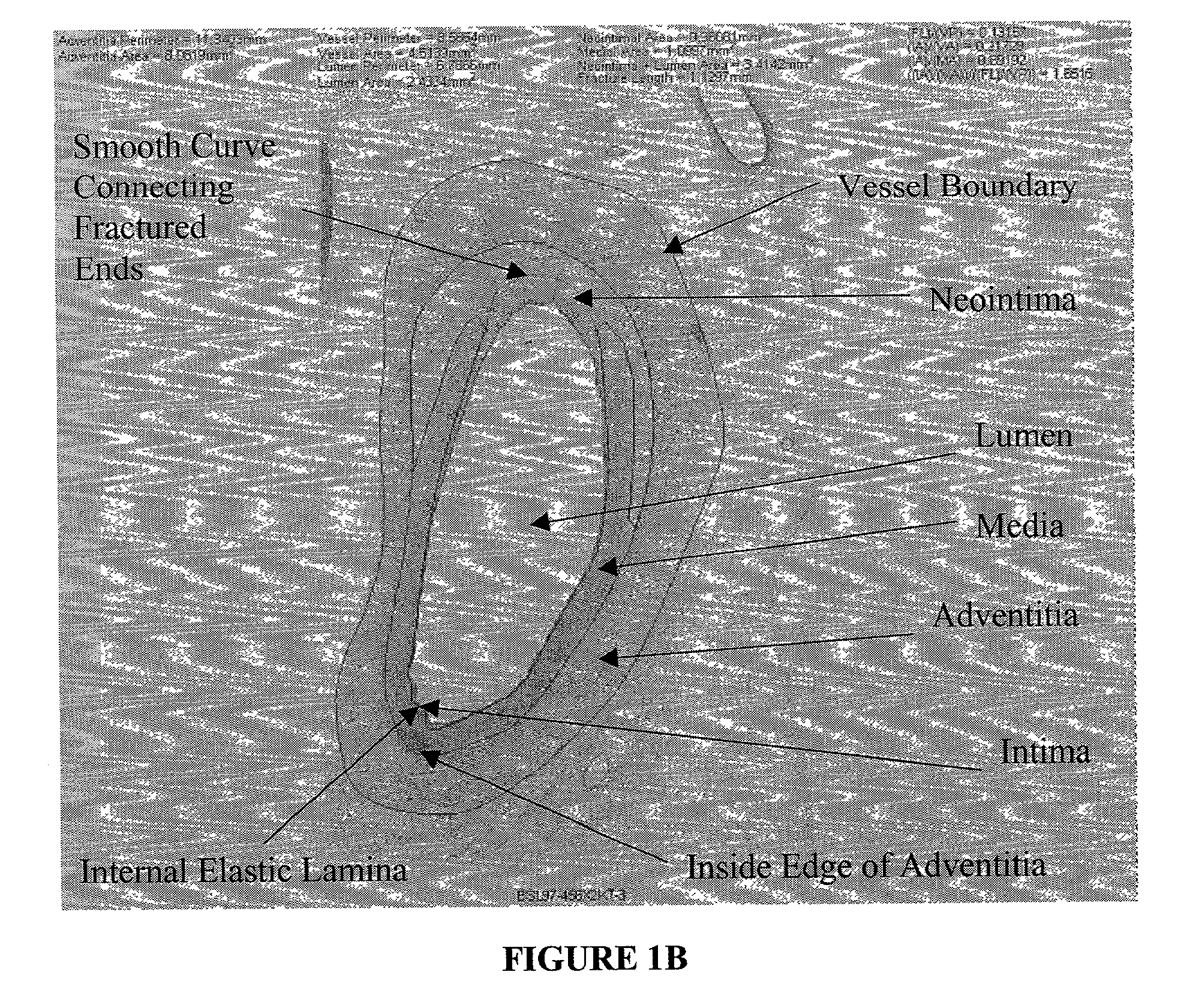

Method for quantitative analysis of blood vessel structure

We disclose quantitative geometrical analysis enabling the measurement of several features of images of tissues including perimeter, area, and other metrics. Automation of feature extraction creates a high throughput capability that enables analysis of serial sections for more accurate measurement of tissue dimensions. Measurement results are input into a relational database where they can be statistically analyzed and compared across studies. As part of the integrated process, results are also imprinted on the images themselves to facilitate auditing of the results. The analysis is fast, repeatable and accurate while allowing the pathologist to control the measurement process.

Owner:ICORIA

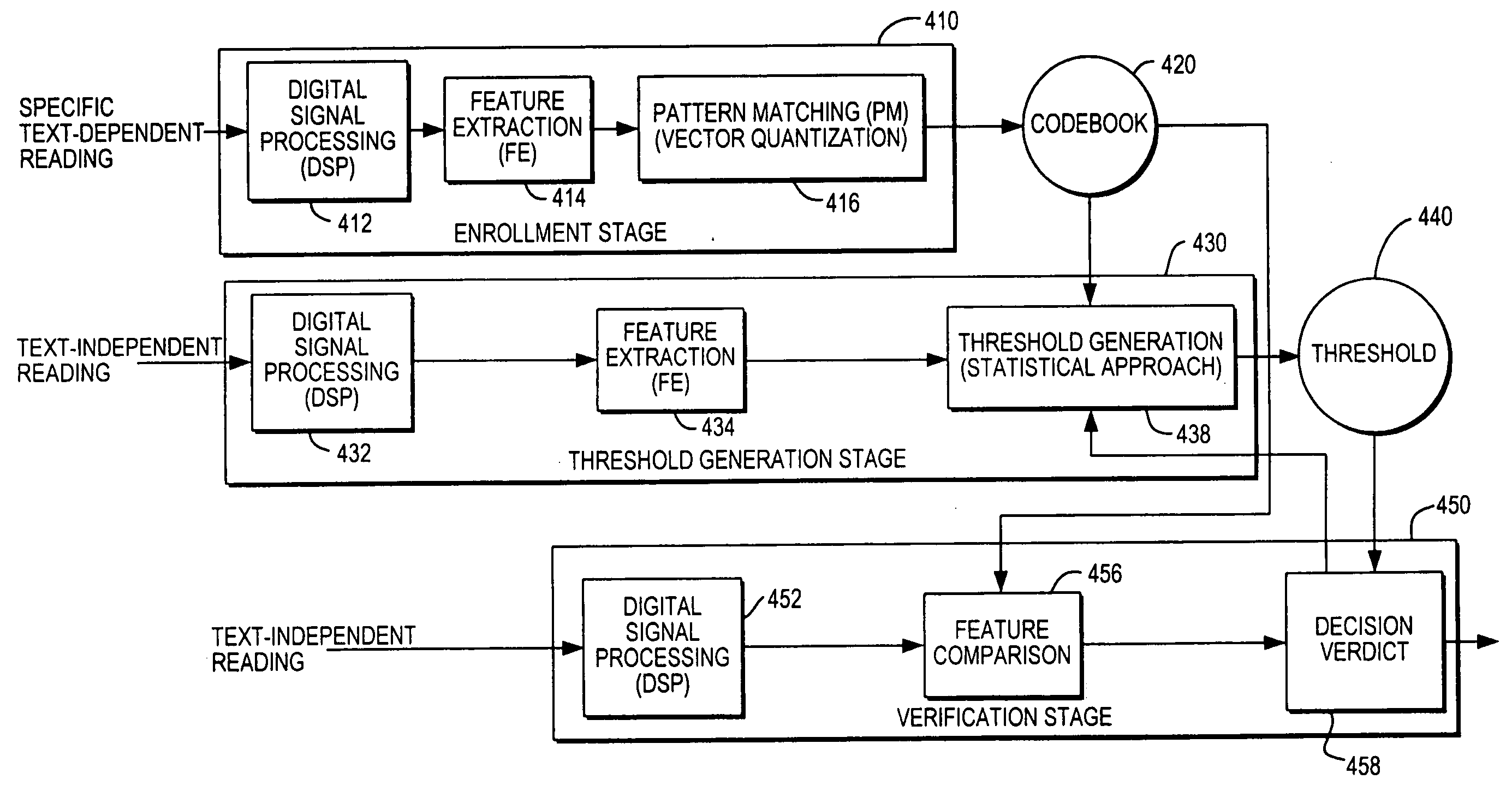

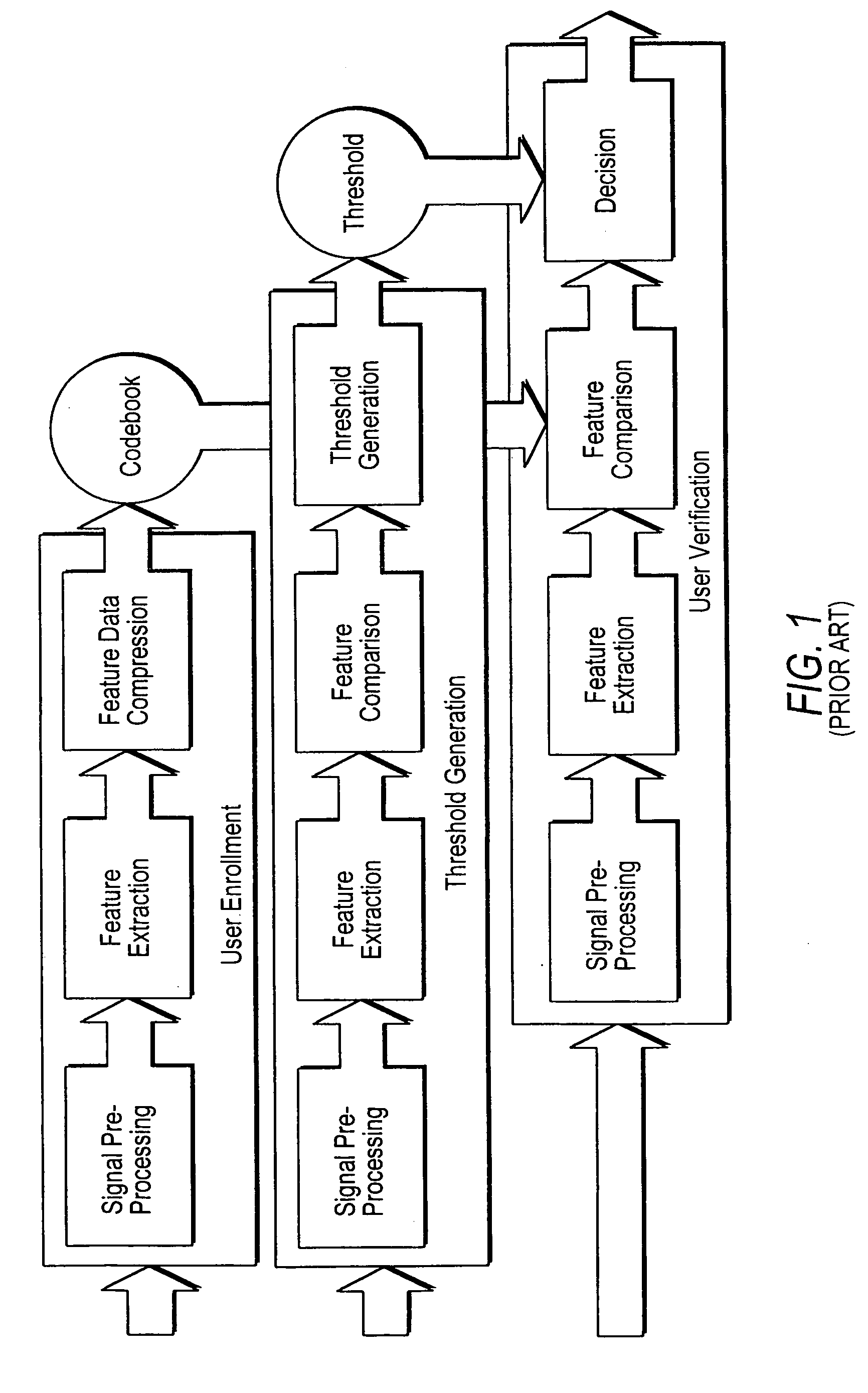

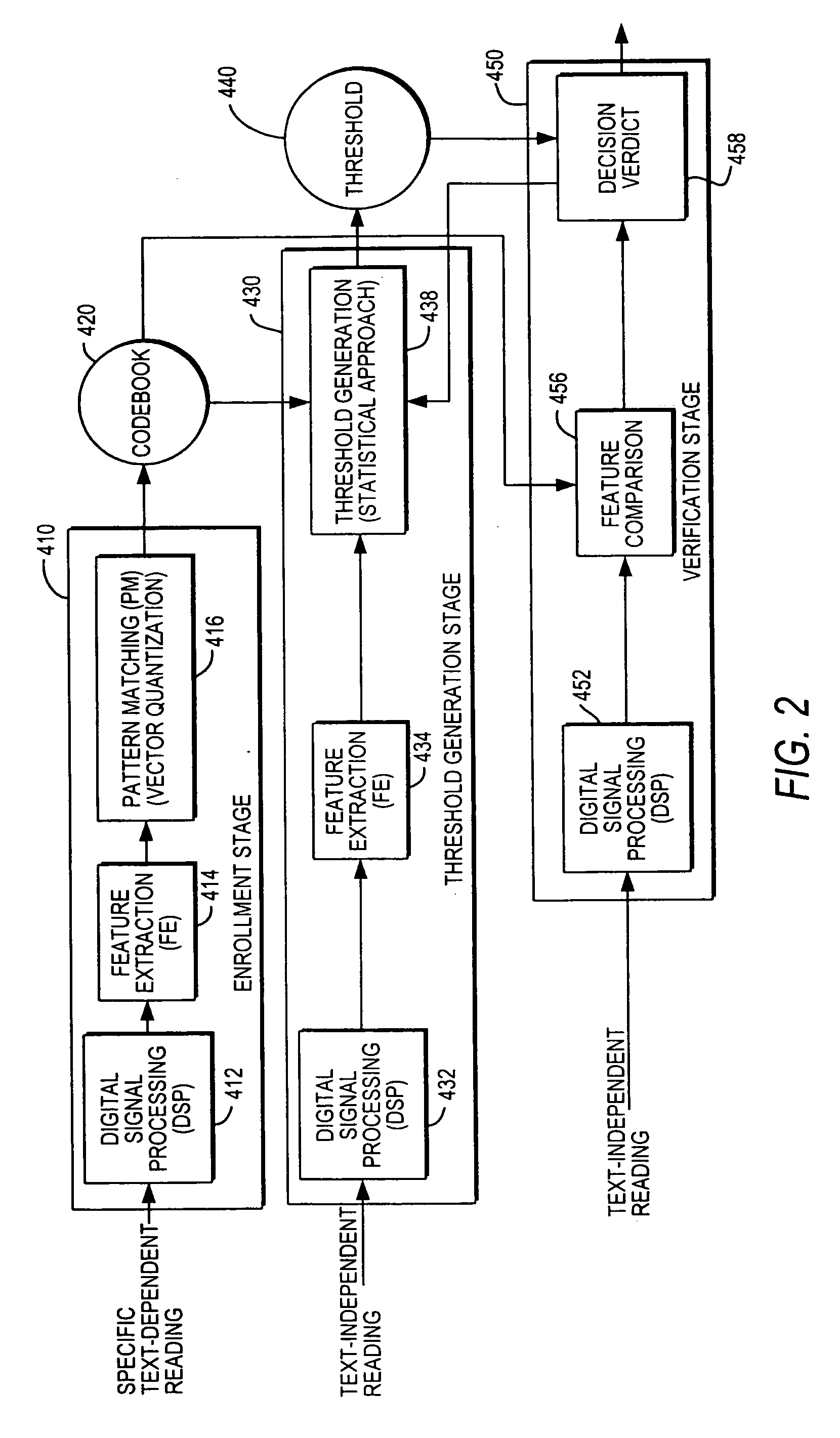

Speaker verification system

ActiveUS20100268537A1High precisionLess-powerful microprocessorProgramme controlElectric signal transmission systemsFeature extractionPattern matching

A text-independent speaker verification system utilizes mel frequency cepstral coefficients analysis in the feature extraction blocks, template modeling with vector quantization in the pattern matching blocks, an adaptive threshold and an adaptive decision verdict and is implemented in a stand-alone device using less powerful microprocessors and smaller data storage devices than used by comparable systems of the prior art.

Owner:SAUDI ARABIAN OIL CO

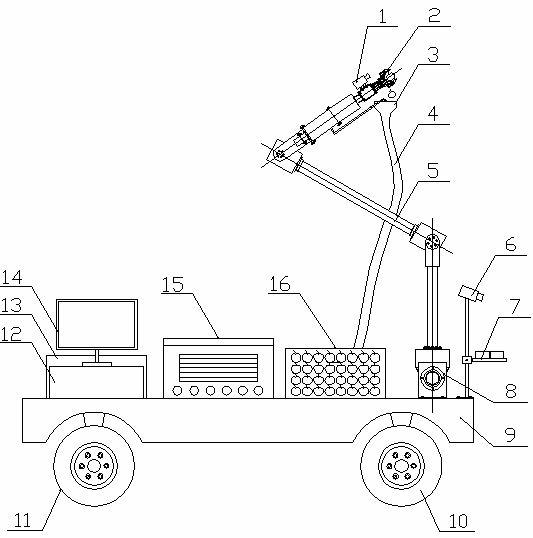

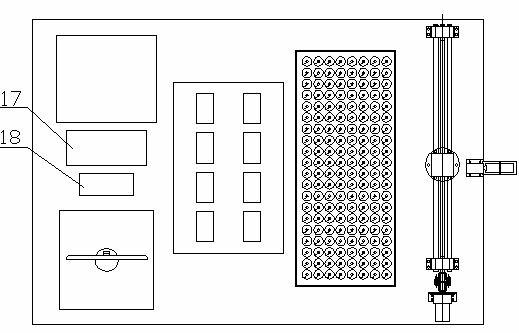

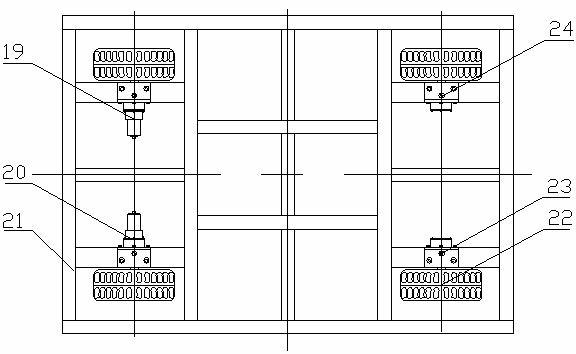

Wheel type mobile fruit picking robot and fruit picking method

InactiveCN102124866AReduce energy consumptionShorten speedProgramme-controlled manipulatorPicking devicesUltrasonic sensorData acquisition

Owner:NANJING AGRICULTURAL UNIVERSITY

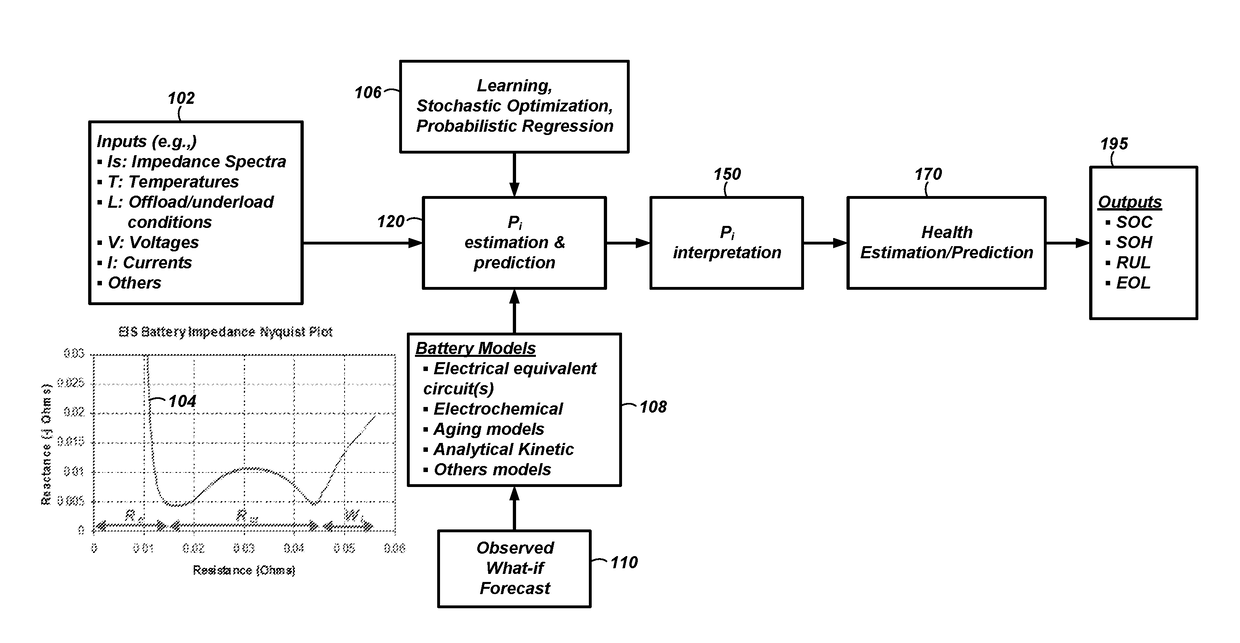

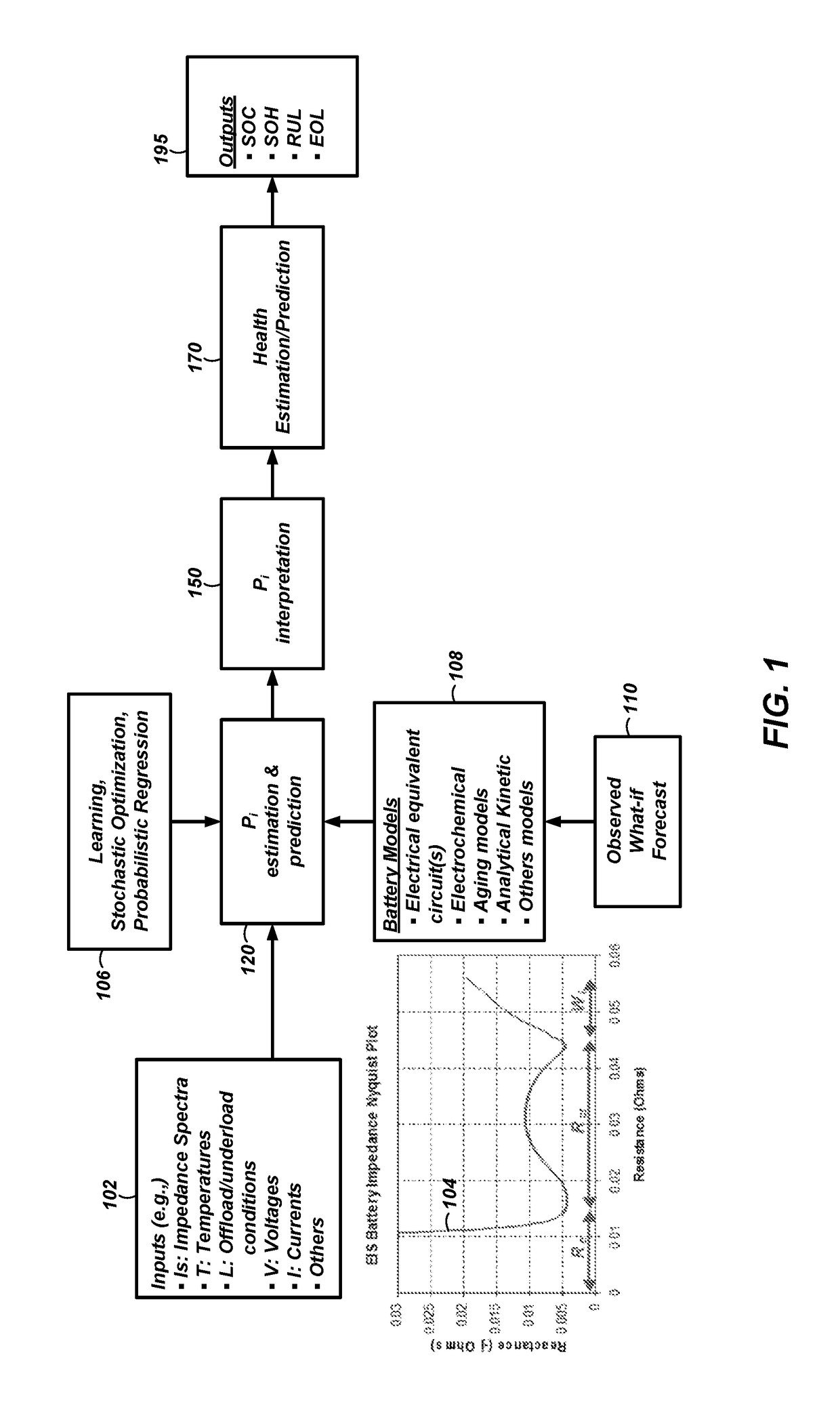

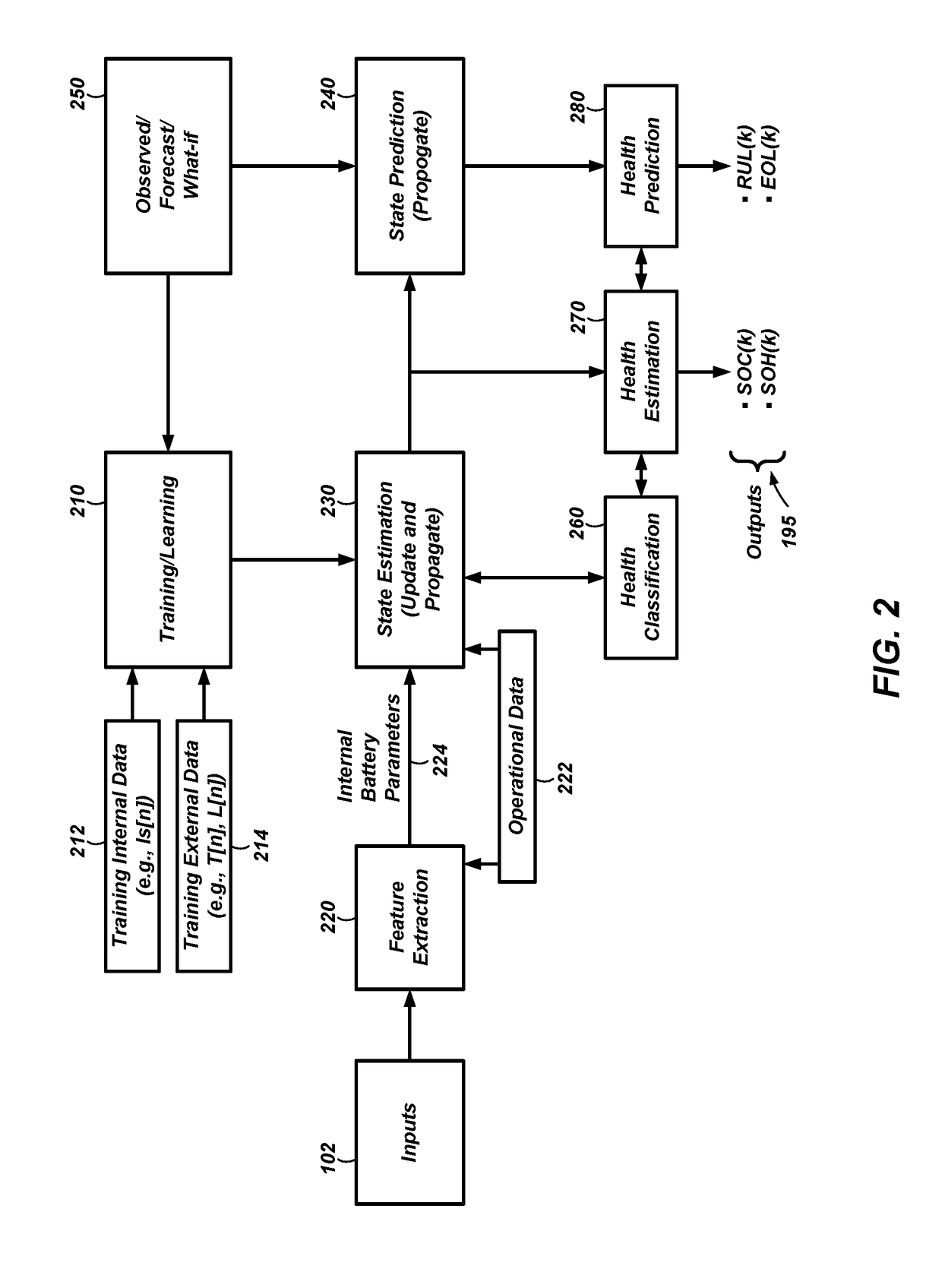

Systems and methods for estimation and prediction of battery health and performance

ActiveUS20180143257A1Testing/monitoring control systemsElectrical testingMoving averageState of health

Systems and computer-implemented methods are used for analyzing battery information. The battery information may be acquired from both passive data acquisition and active data acquisition. Active data may be used for feature extraction and parameter identification responsive to the input data relative to an electrical equivalent circuit model to develop geometric-based parameters and optimization-based parameters. These parameters can be combined with a decision fusion algorithm to develop internal battery parameters. Analysis processes including particle filter analysis, neural network analysis, and auto regressive moving average analysis can be used to analyze the internal battery parameters and develop battery health metrics. Additional decision fusion algorithms can be used to combine the internal battery parameters and the battery health metrics to develop state-of-health estimations, state-of-charge estimations, remaining-useful-life predictions, and end-of-life predictions for the battery.

Owner:BATTELLE ENERGY ALLIANCE LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com