Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

162 results about "Sound source separation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

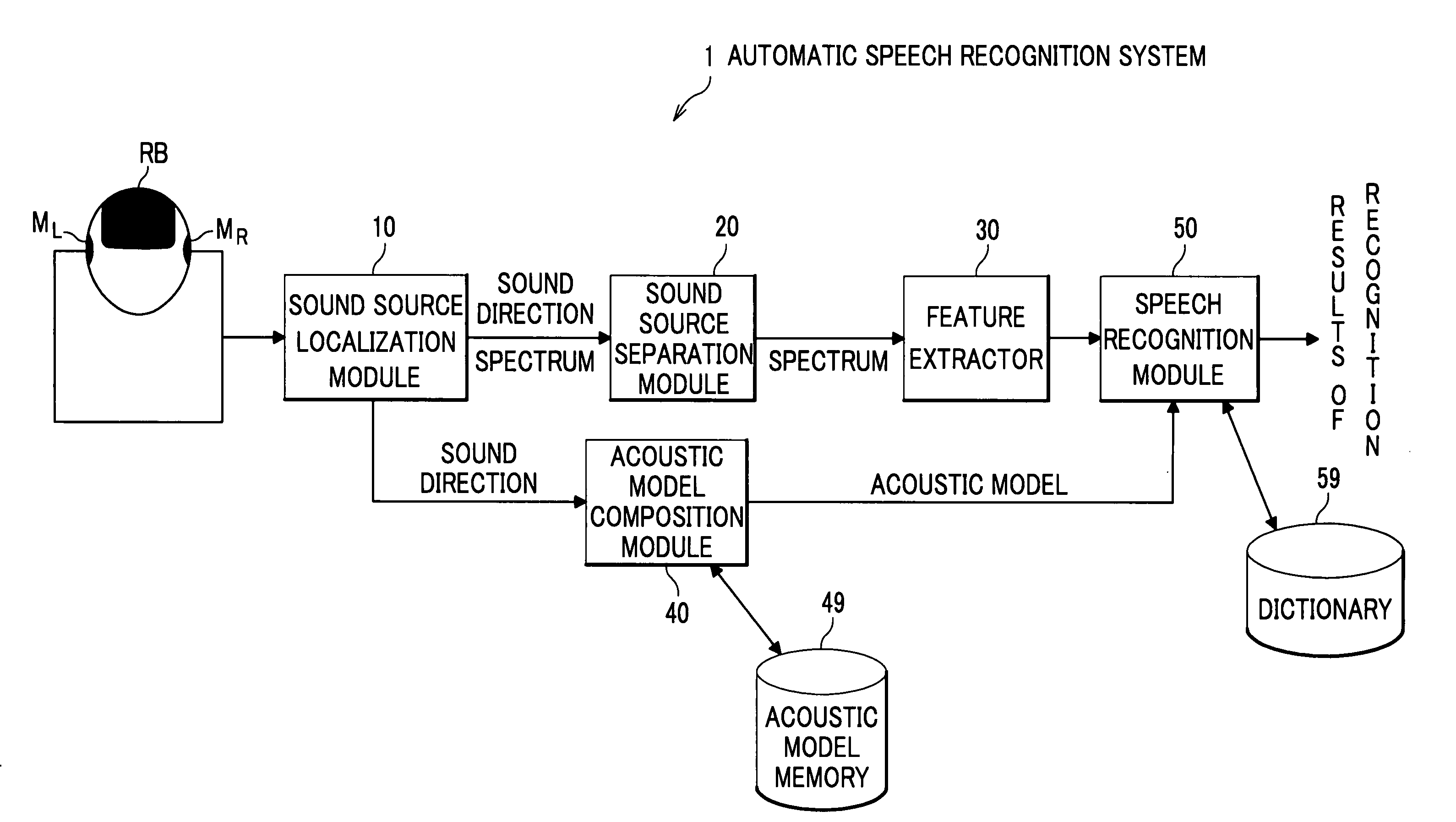

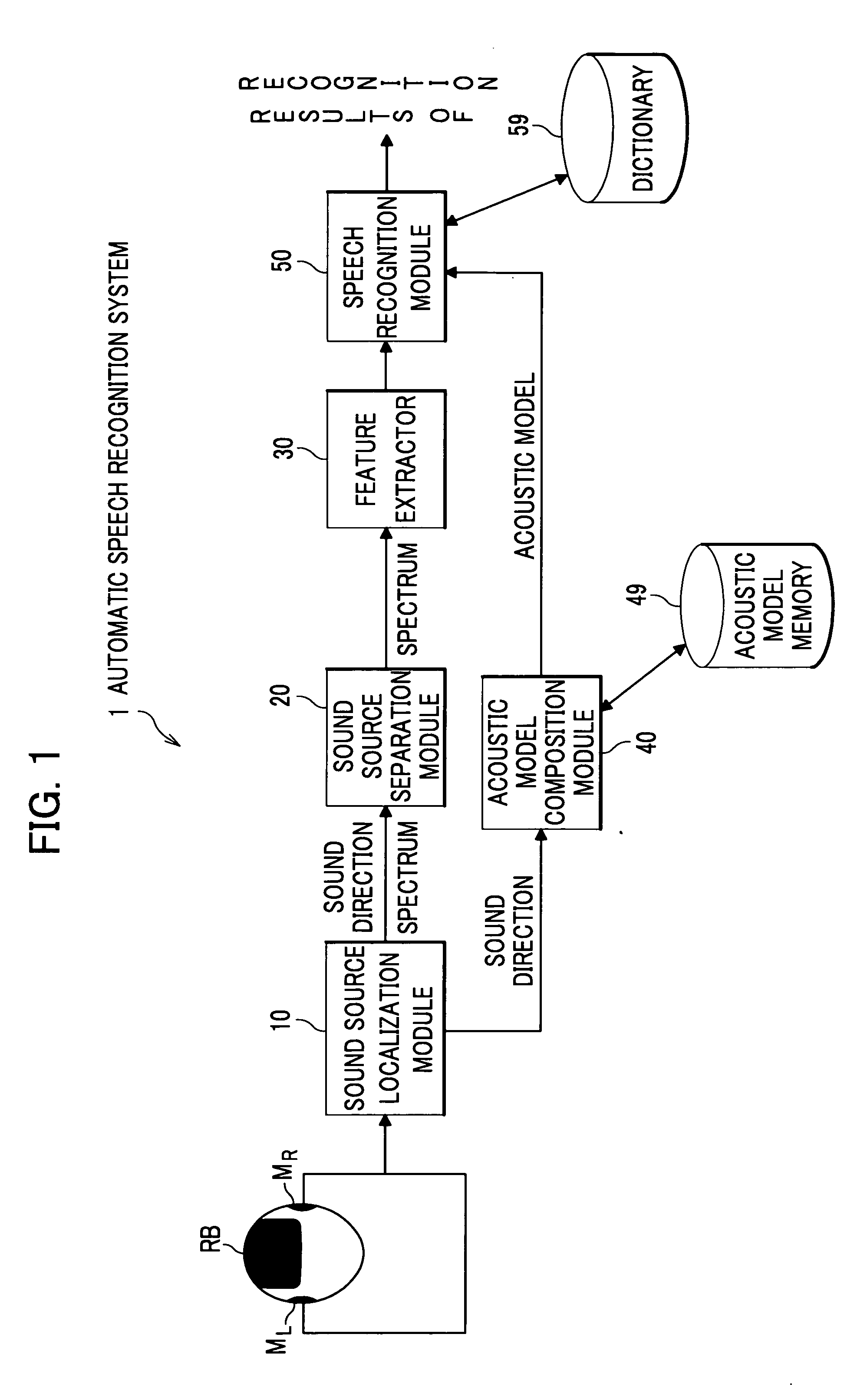

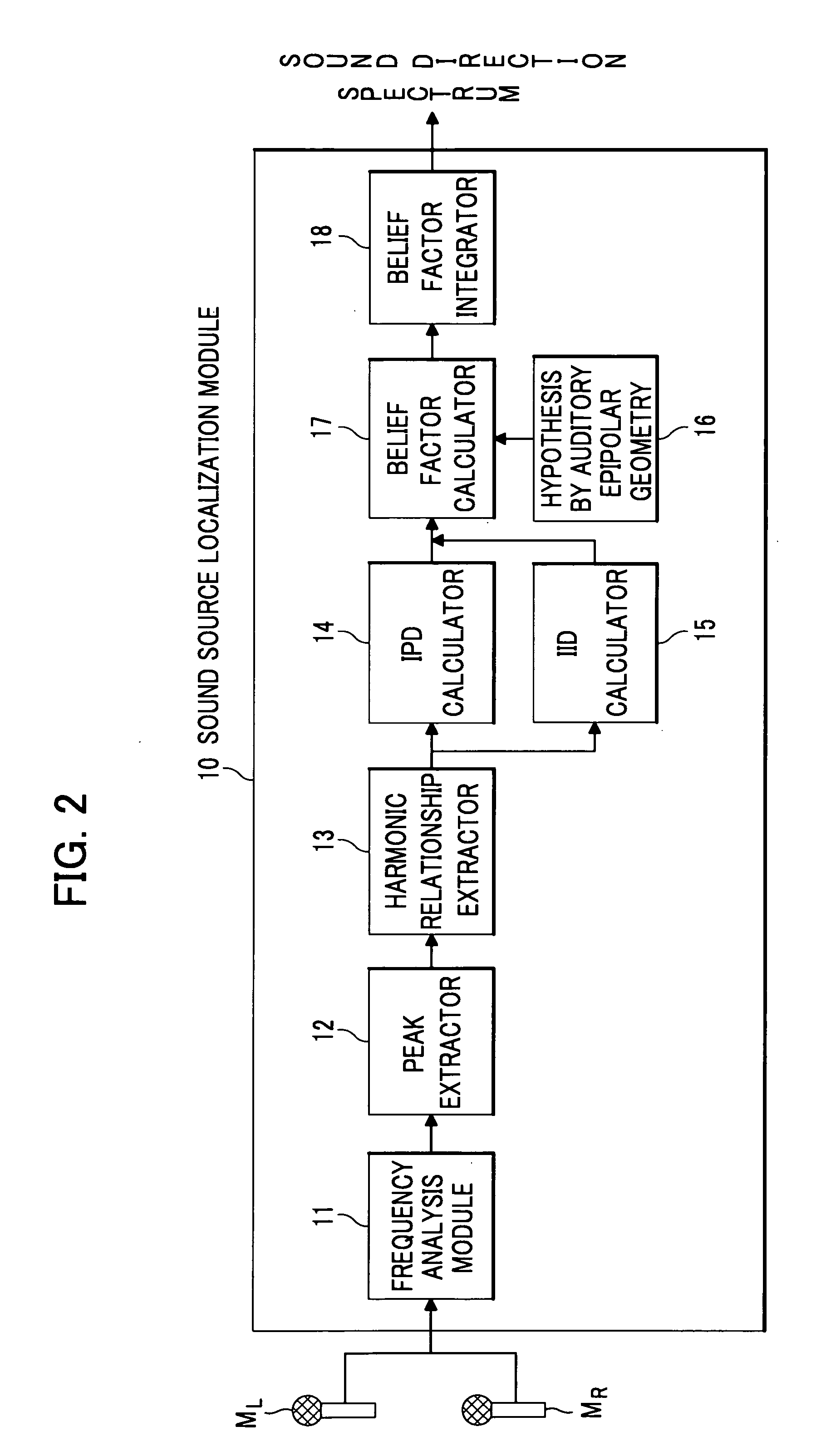

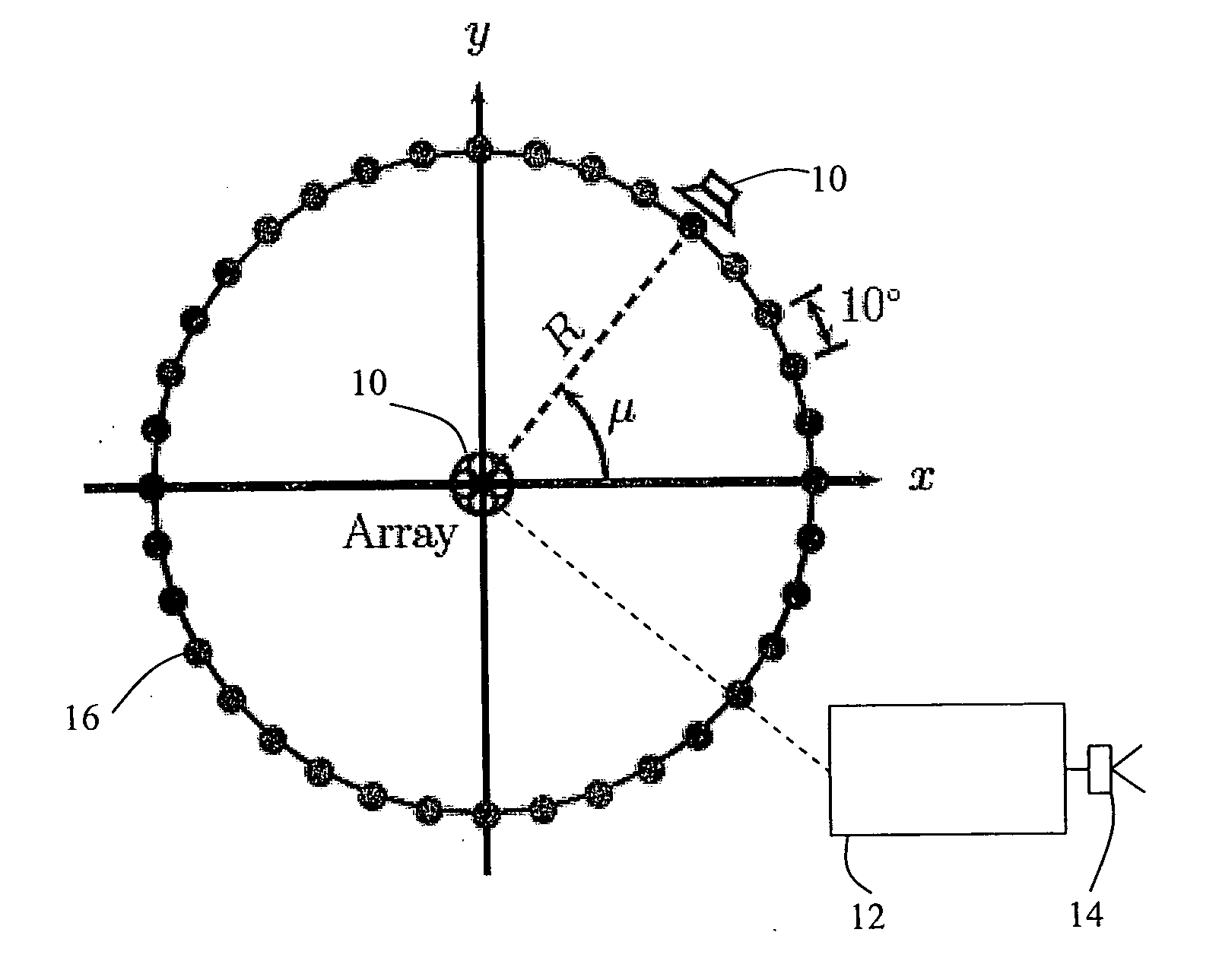

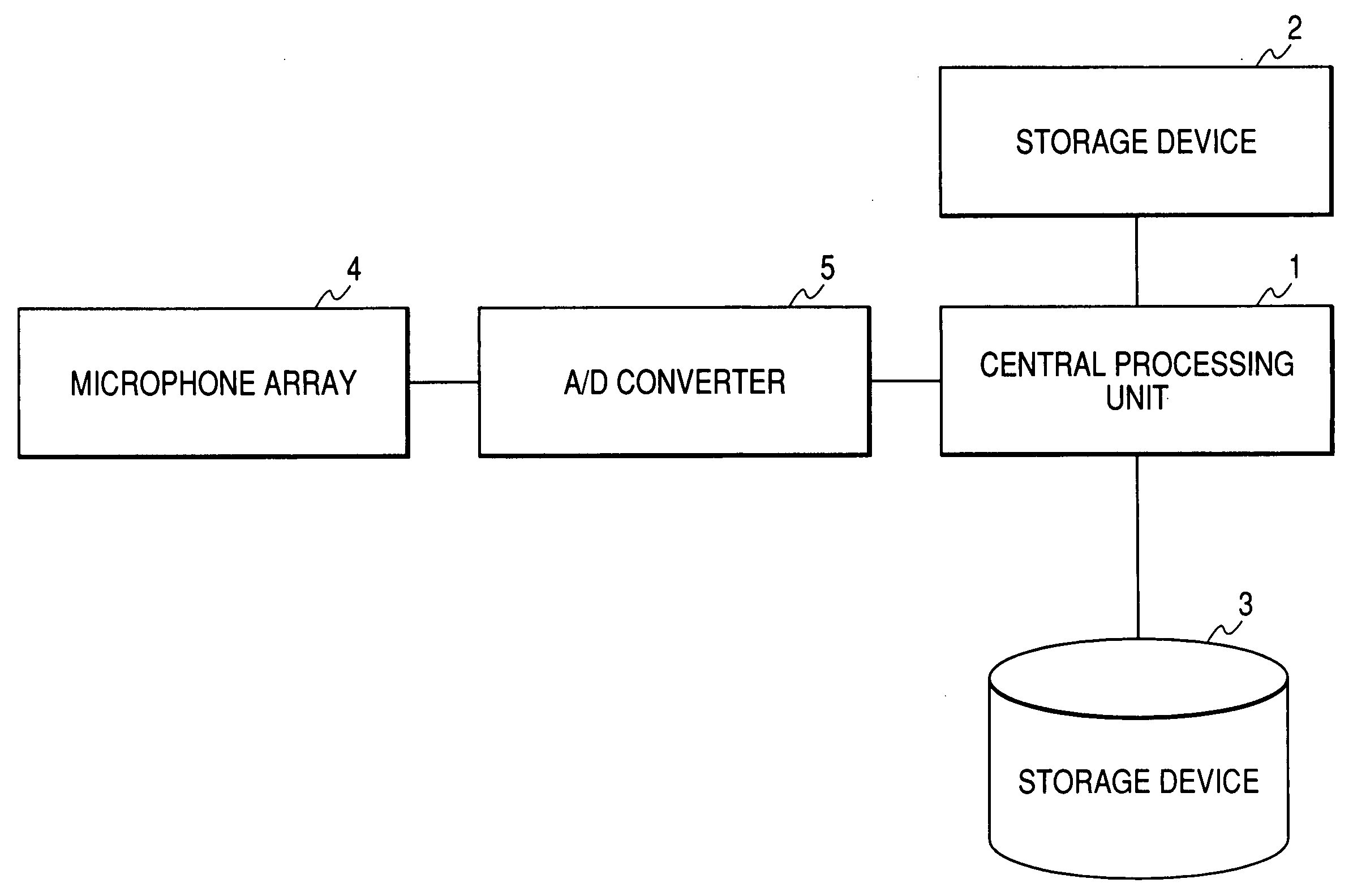

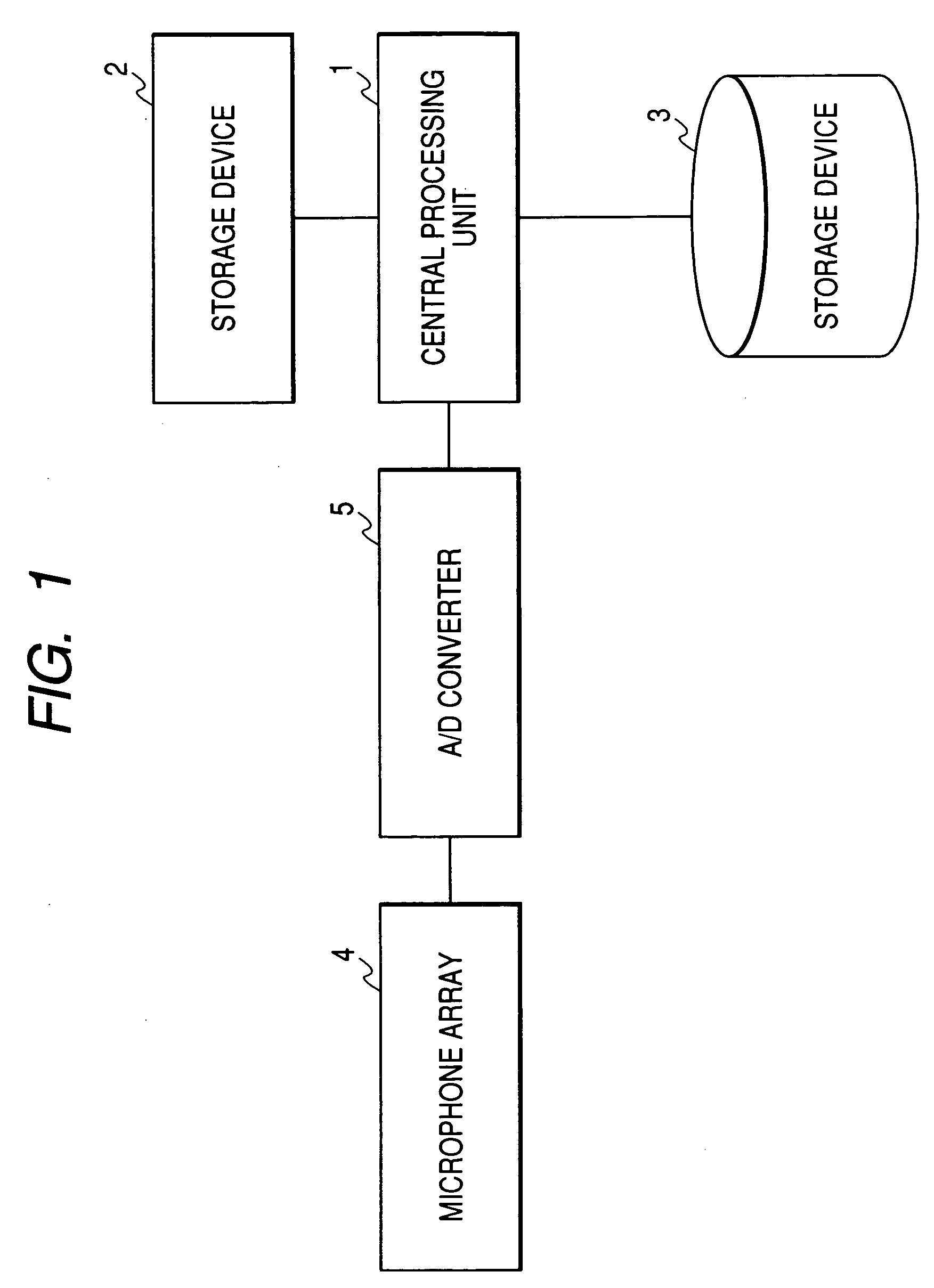

Automatic Speech Recognition System

InactiveUS20090018828A1Improve speech recognition rateImprove accuracySpeech recognitionSound source separationFeature extraction

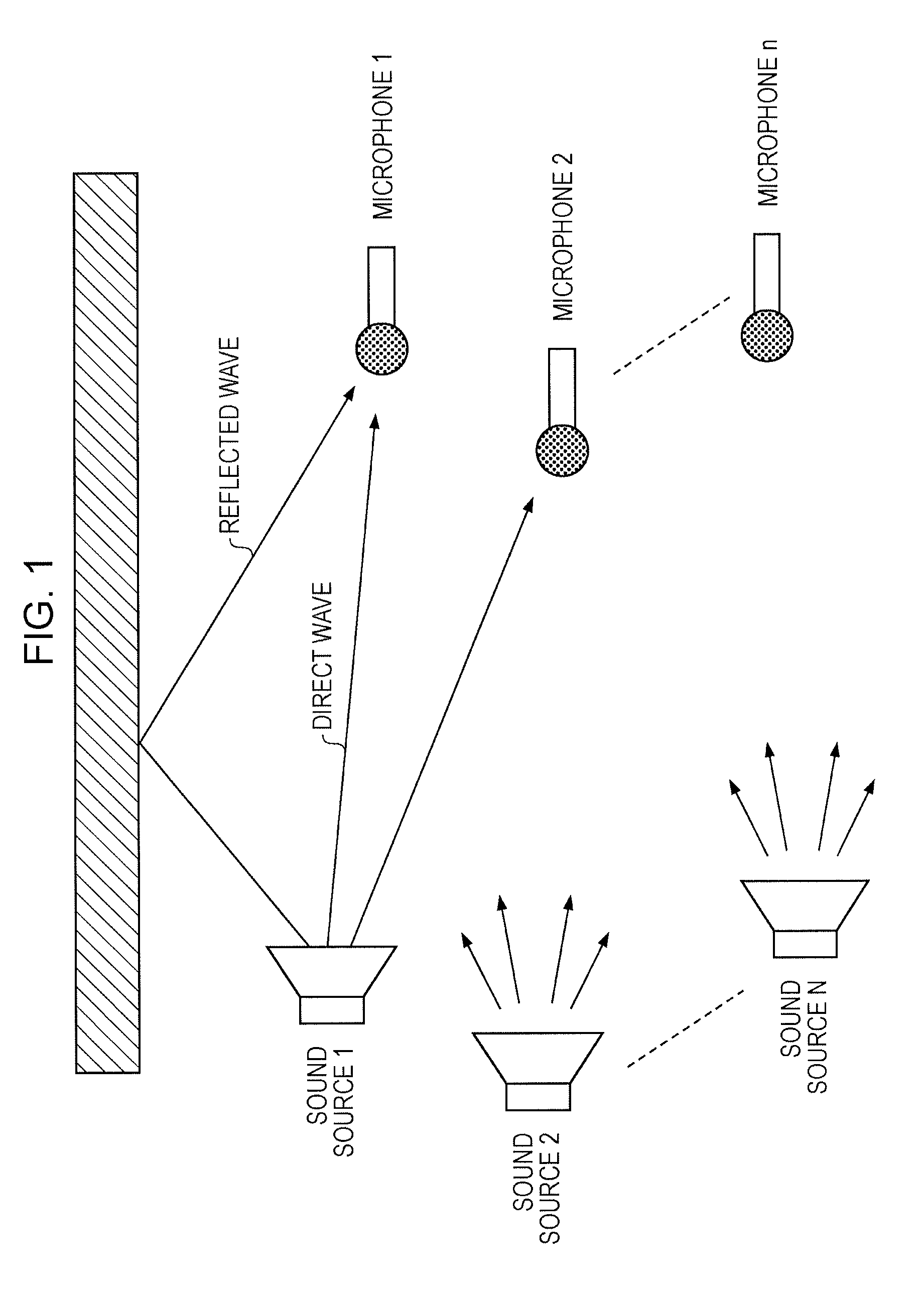

An automatic speech recognition system includes: a sound source localization module for localizing a sound direction of a speaker based on the acoustic signals detected by the plurality of microphones; a sound source separation module for separating a speech signal of the speaker from the acoustic signals according to the sound direction; an acoustic model memory which stores direction-dependent acoustic models that are adjusted to a plurality of directions at intervals; an acoustic model composition module which composes an acoustic model adjusted to the sound direction, which is localized by the sound source localization module, based on the direction-dependent acoustic models, the acoustic model composition module storing the acoustic model in the acoustic model memory; and a speech recognition module which recognizes the features extracted by a feature extractor as character information using the acoustic model composed by the acoustic model composition module.

Owner:HONDA MOTOR CO LTD

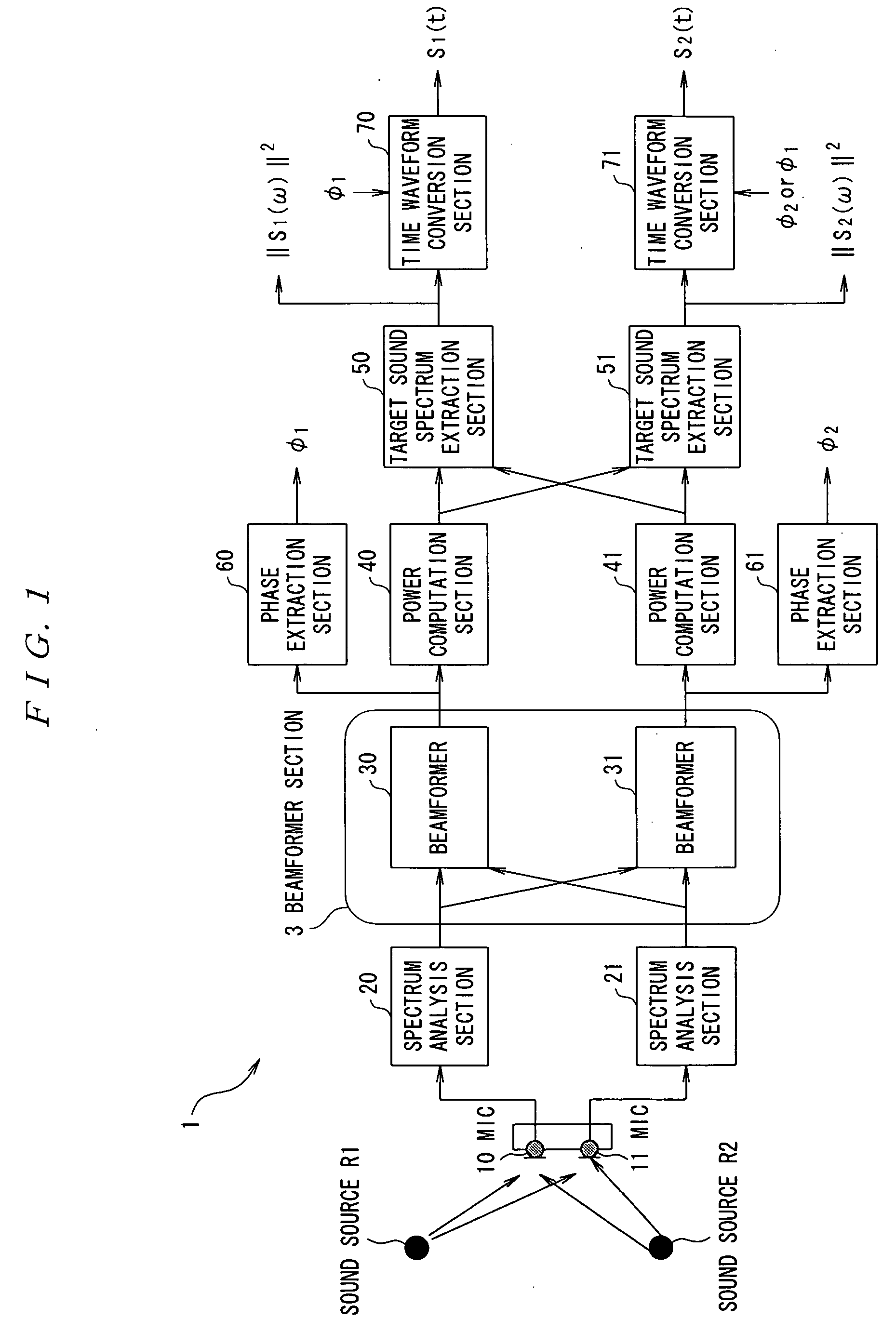

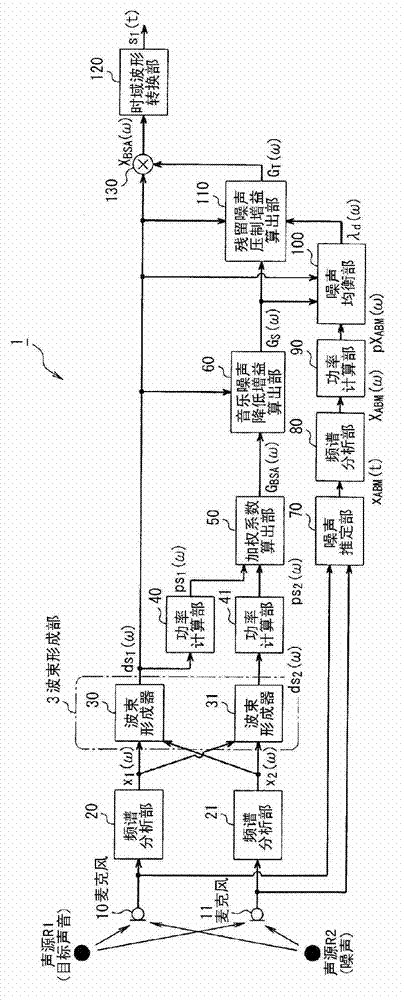

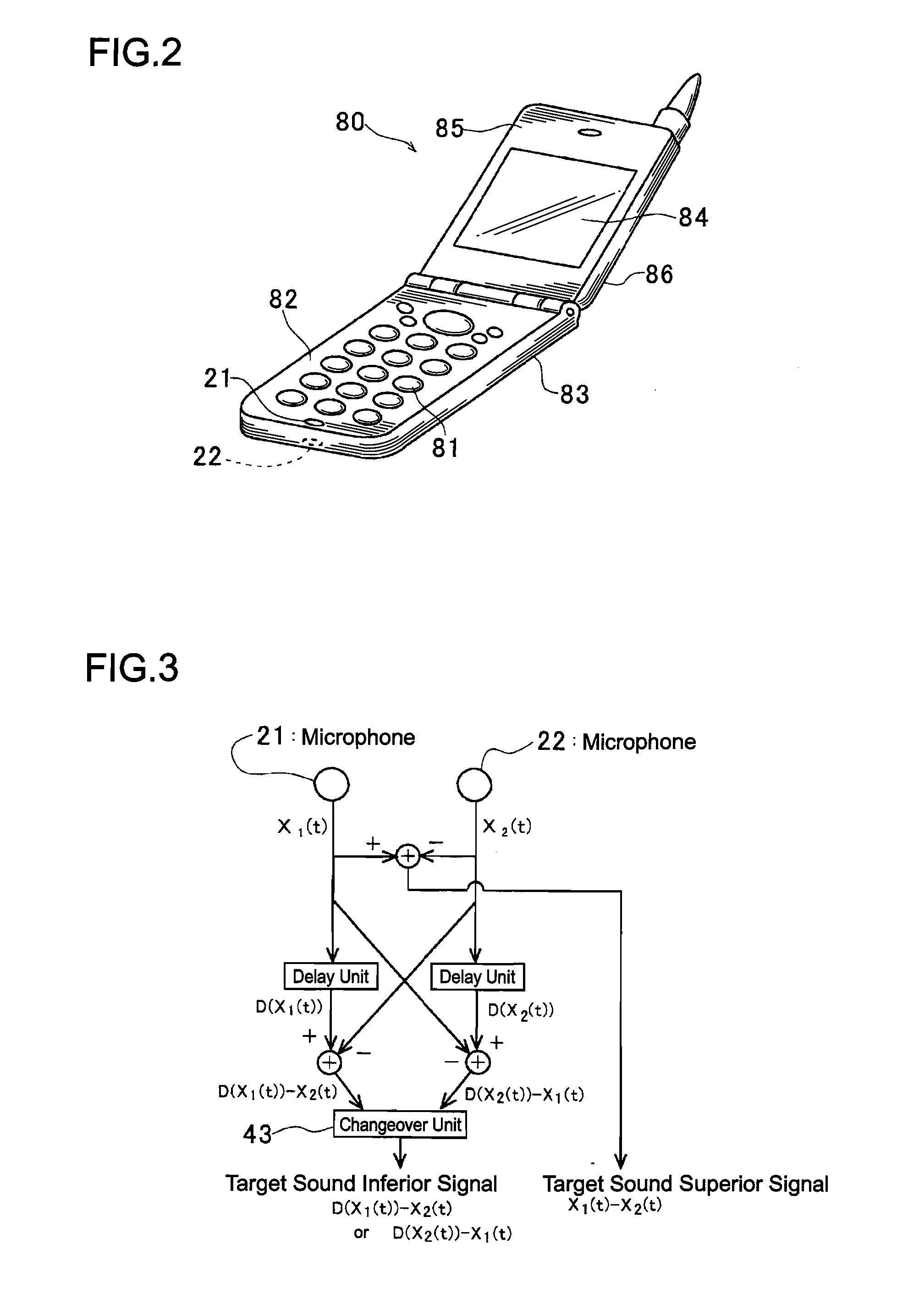

Sound Source Separation Device, Speech Recognition Device, Mobile Telephone, Sound Source Separation Method, and Program

ActiveUS20090055170A1Reduce system performanceSignal processingSpeech recognitionSound source separationFrequency spectrum

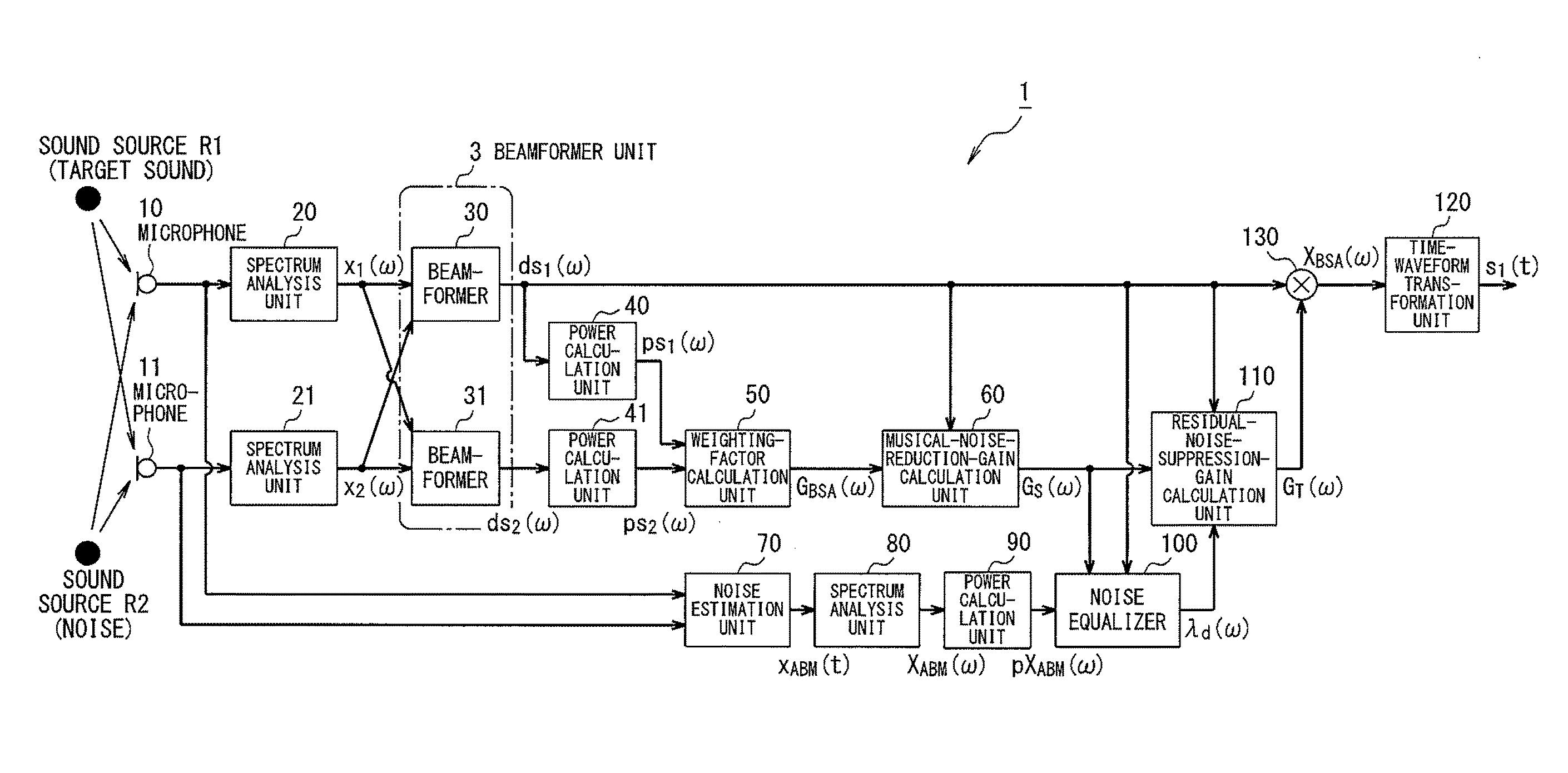

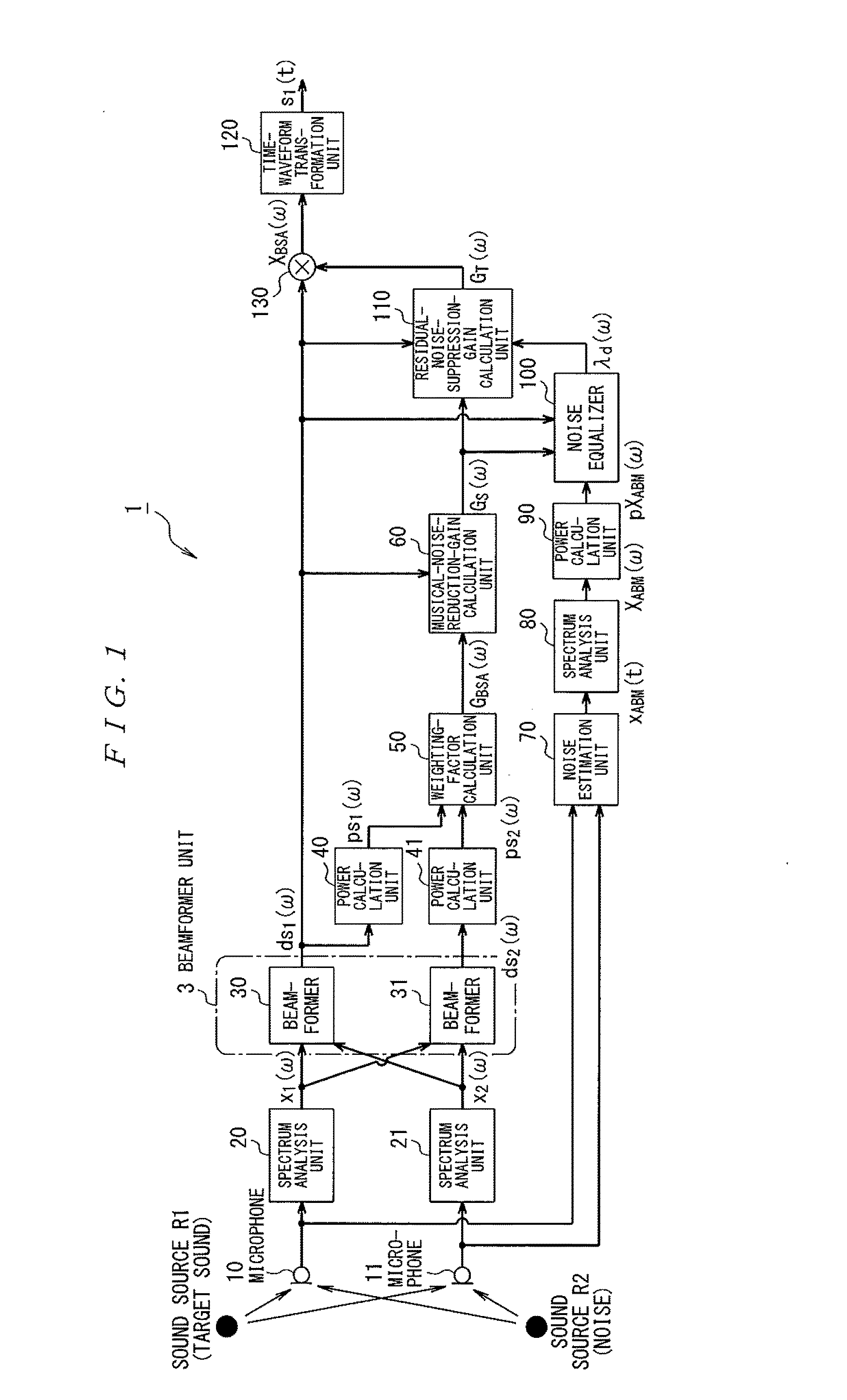

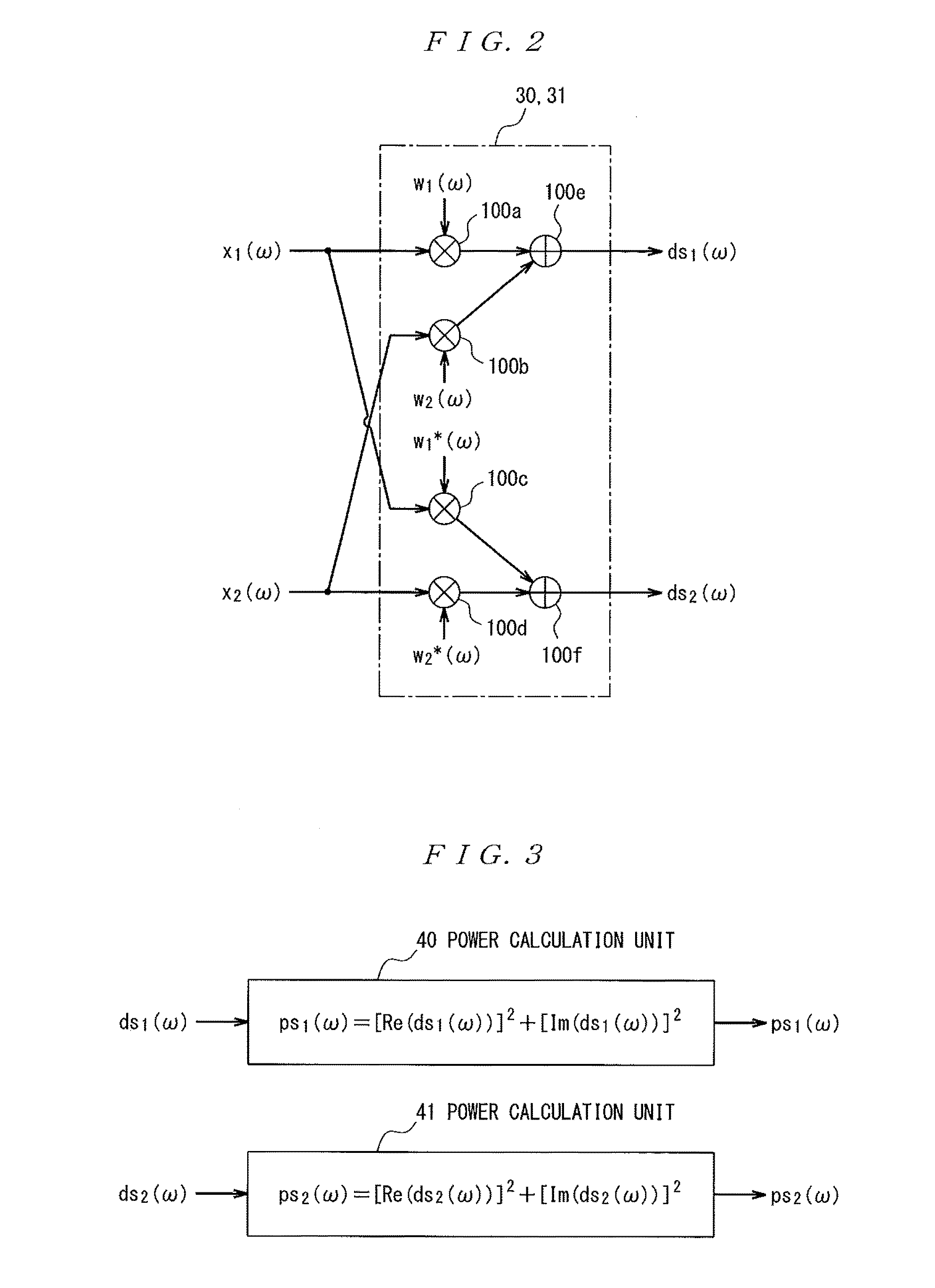

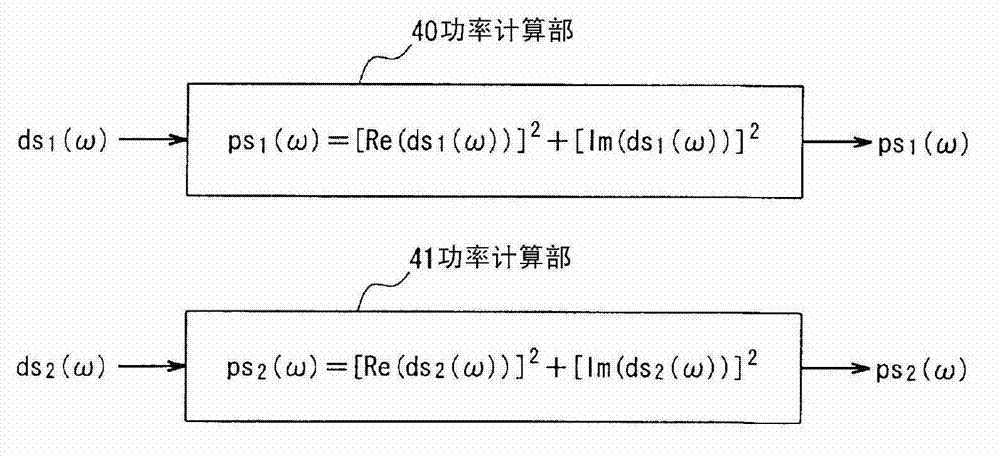

A sound source signal from a target sound source is allowed to be separated from a mixed sound which consists of sound source signals emitted from a plurality of sound sources without being affected by uneven sensitivity of microphone elements. A beamformer section 3 of a source separation device 1 performs beamforming processing for attenuating sound source signals arriving from directions symmetrical with respect to a perpendicular line to a straight line connecting two microphones 10 and 11 respectively by multiplying output signals from the microphones 10 and 11 after spectrum analysis by weighted coefficients which are complex conjugate to each other. Power computation sections 40 and 41 compute power spectrum information, and target sound spectrum extraction sections 50 and 51 extract spectrum information of a target sound source based on a difference between the power spectrum information.

Owner:ASAHI KASEI KK

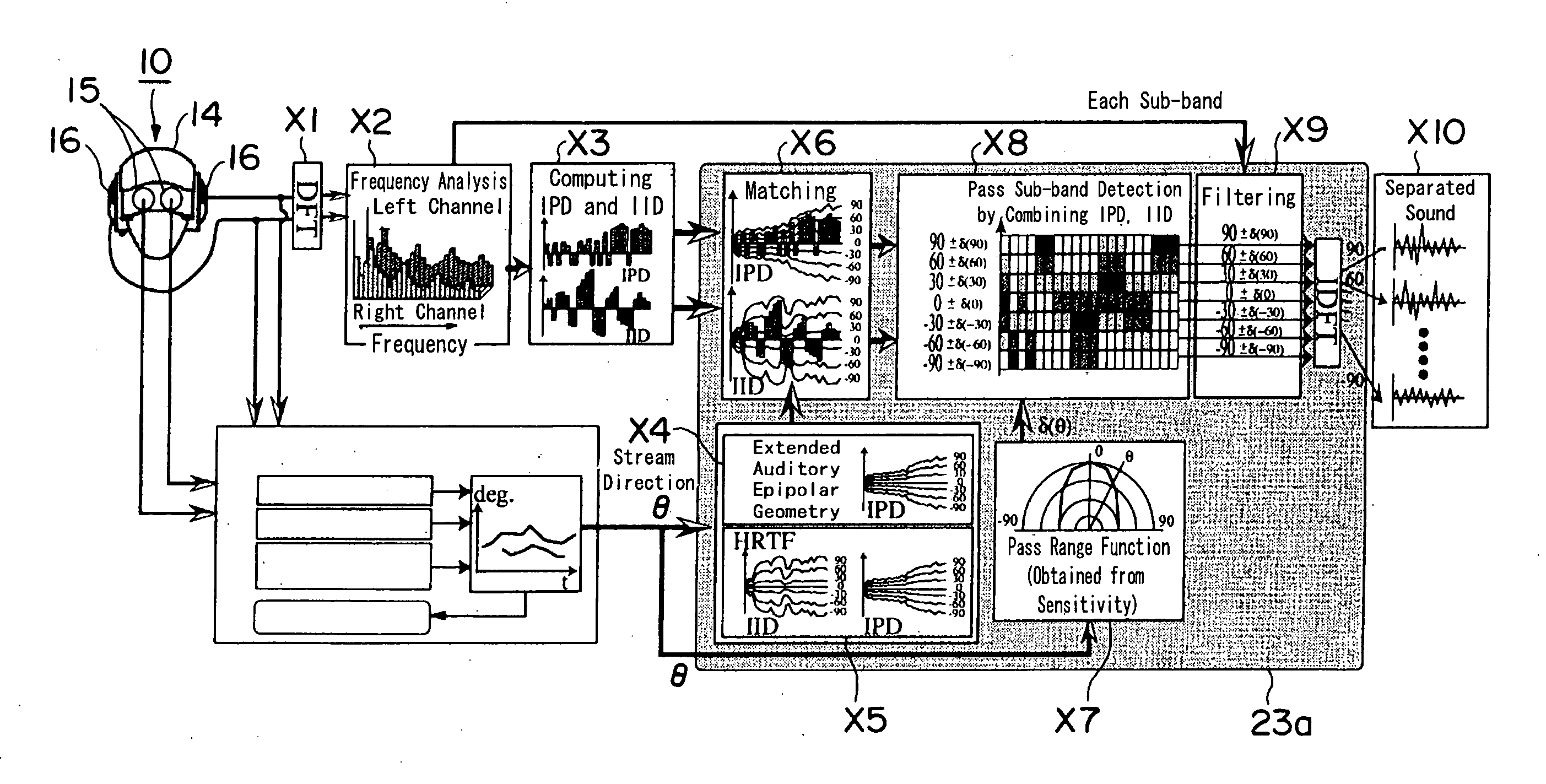

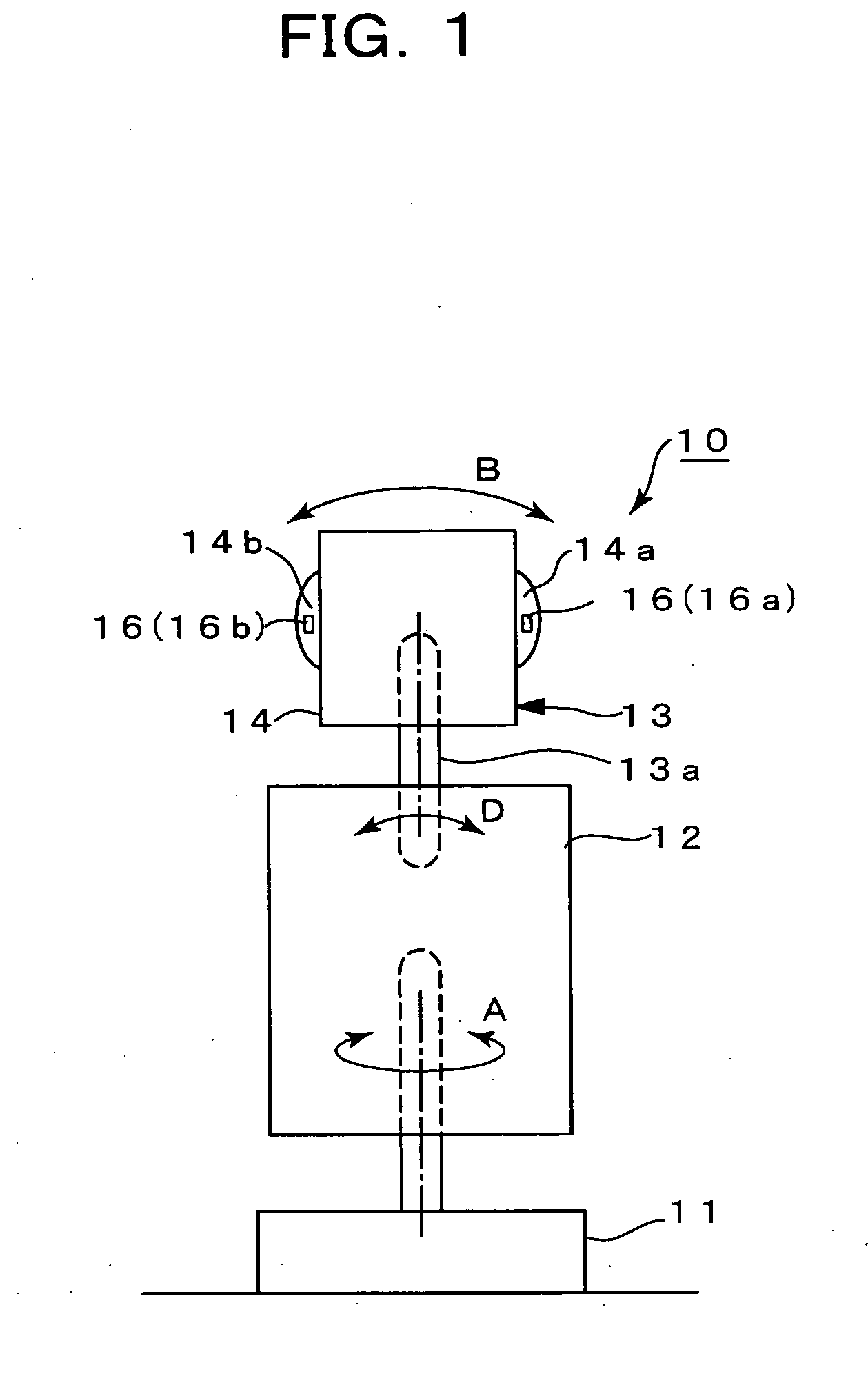

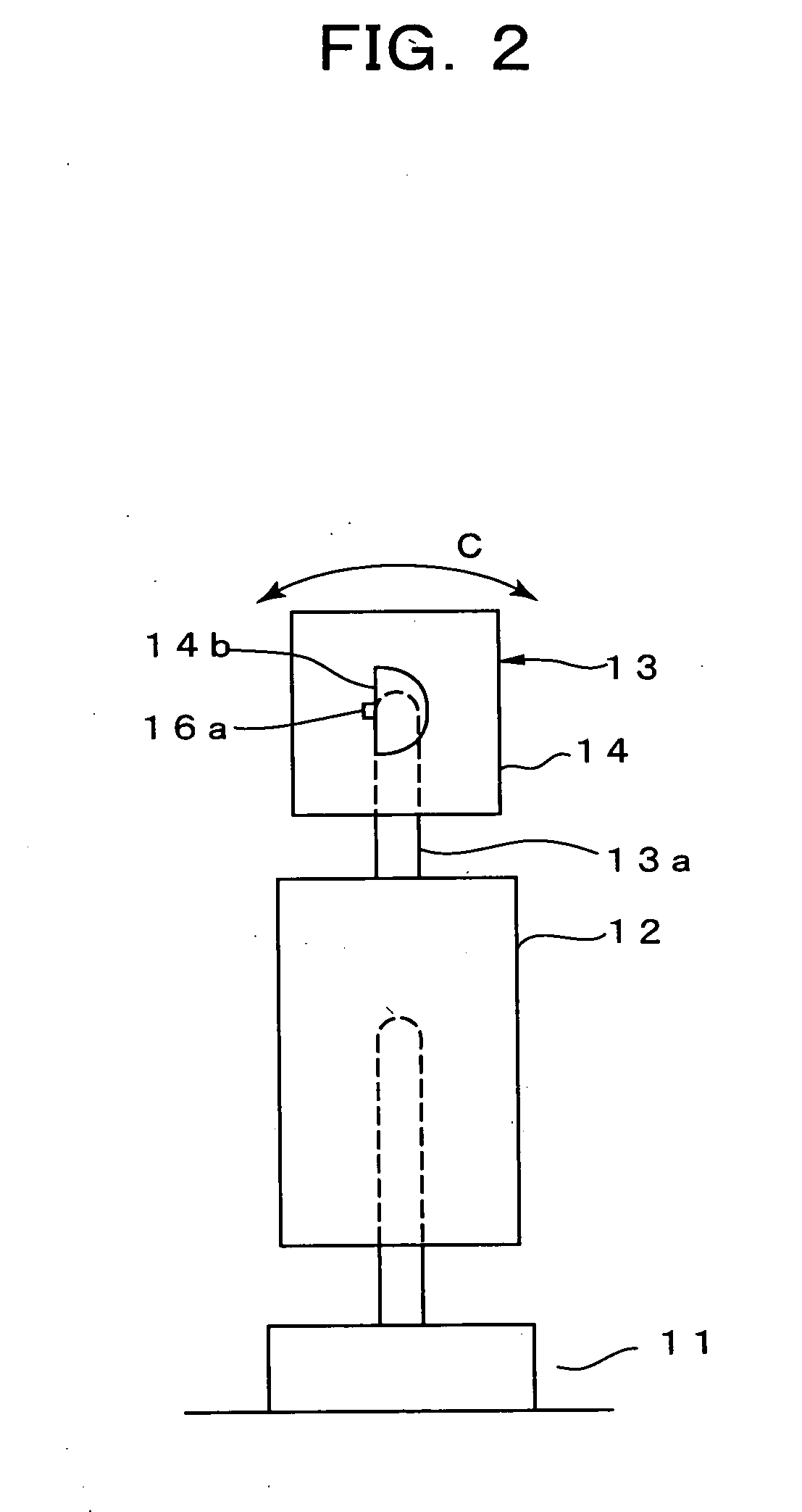

Robotics visual and auditory system

InactiveUS20090030552A1Accurate collectionAccurately localizeProgramme controlComputer controlSound source separationPhase difference

It is a robotics visual and auditory system provided with an auditory module (20), a face module (30), a stereo module (37), a motor control module (40), and an association module (50) to control these respective modules. The auditory module (20) collects sub-bands having interaural phase difference (IPD) or interaural intensity difference (IID) within a predetermined range by an active direction pass filter (23a) having a pass range which, according to auditory characteristics, becomes minimum in the frontal direction, and larger as the angle becomes wider to the left and right, based on an accurate sound source directional information from the association module (50), and conducts sound source separation by restructuring a wave shape of a sound source, conducts speech recognition of separated sound signals from respective sound sources using a plurality of acoustic models (27d), integrates speech recognition results from each acoustic model by a selector, and judges the most reliable speech recognition result among the speech recognition results.

Owner:JAPAN SCI & TECH CORP

Sound source-separating device and sound source -separating method

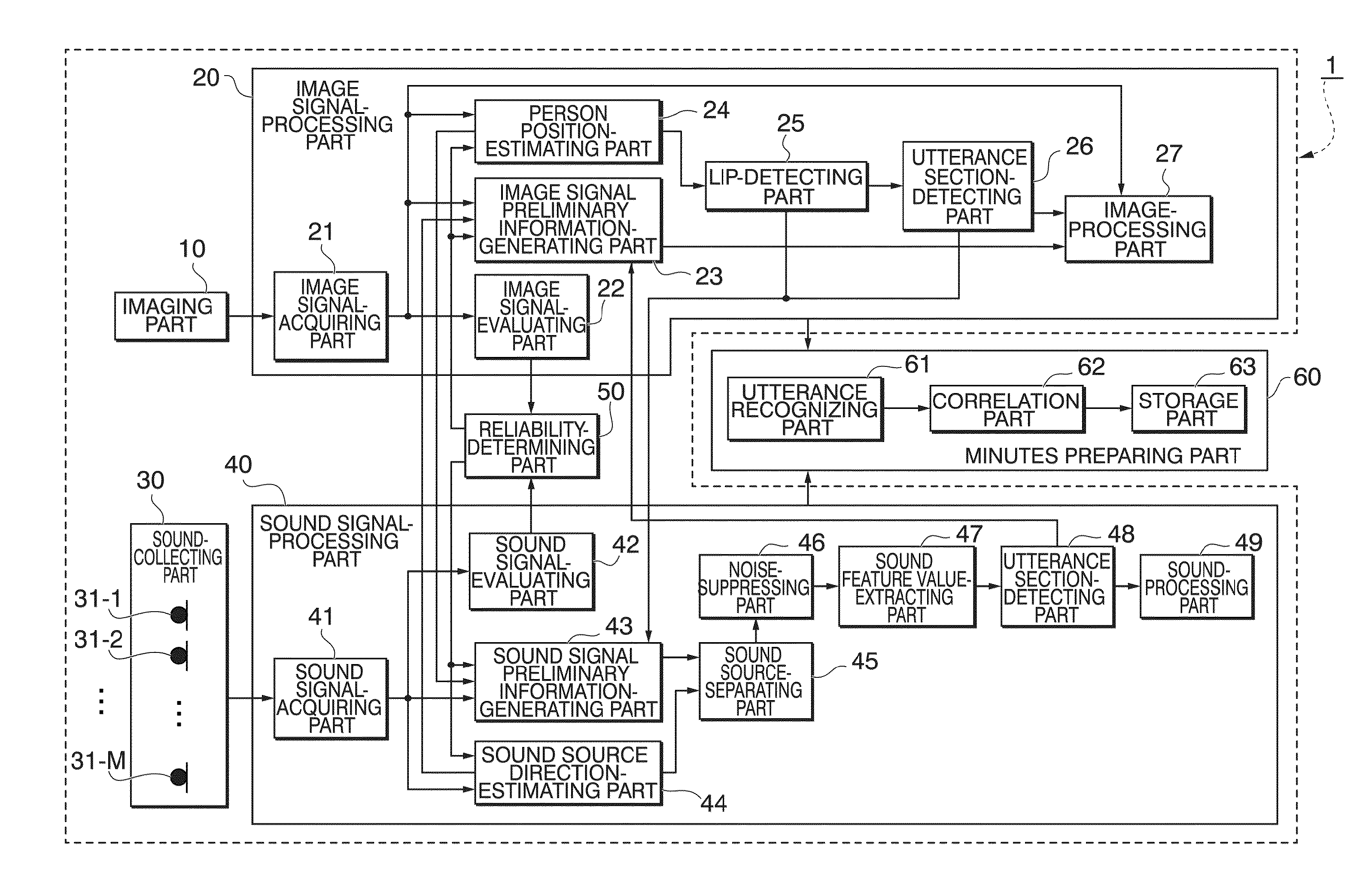

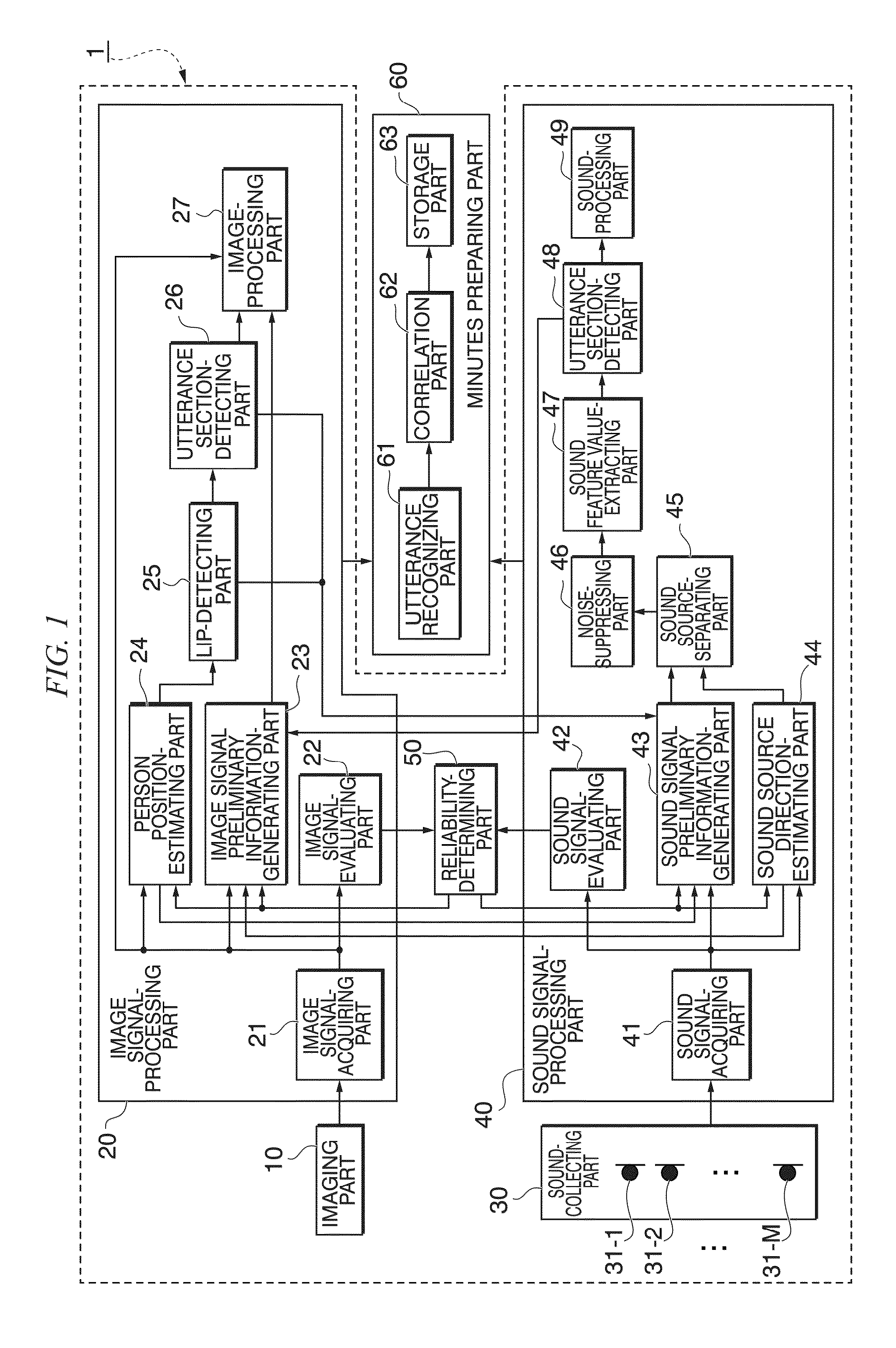

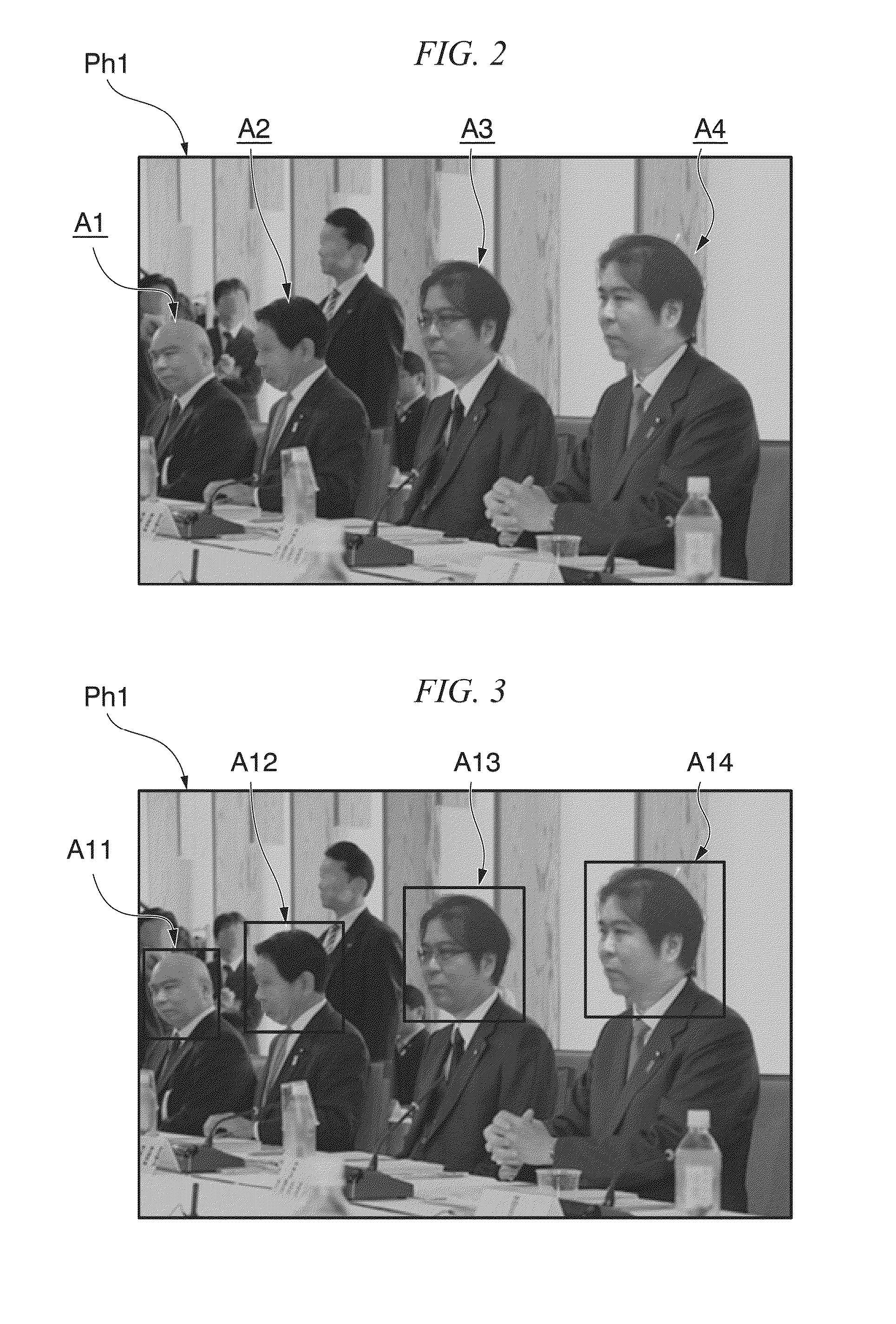

ActiveUS20160064000A1Improve text recognition accuracyImprove accuracyImage enhancementSignal processingImage extractionSound source separation

A sound source-separating device includes a sound-collecting part, an imaging part, a sound signal-evaluating part, an image signal-evaluating part, a selection part that selects whether to estimate a sound source direction based on the first sound signal or the first image signal, a person position-estimating part that estimates a sound source direction using the first image signal, a sound source direction-estimating part that estimates a sound source direction, a sound source-separating part that extracts a second sound signal corresponding to the sound source direction from the first sound signal, an image-extracting part that extracts a second image signal of an area corresponding to the estimated sound source direction from the first image signal, and an image-combining part that changes a third image signal of an area other than the area for the second image signal and combines the third image signal with the second image signal.

Owner:HONDA MOTOR CO LTD

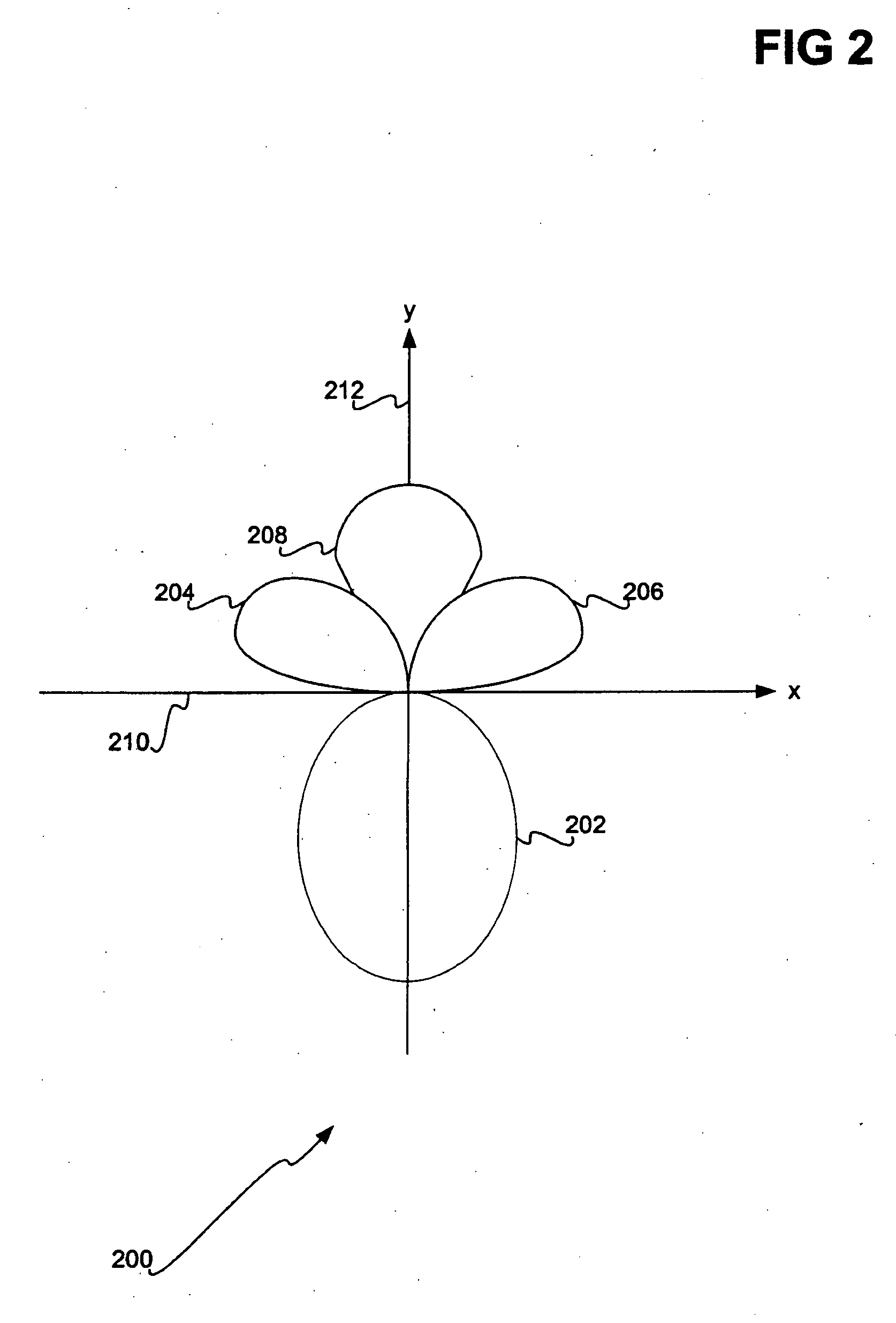

Sound source separation device, sound source separation method and program

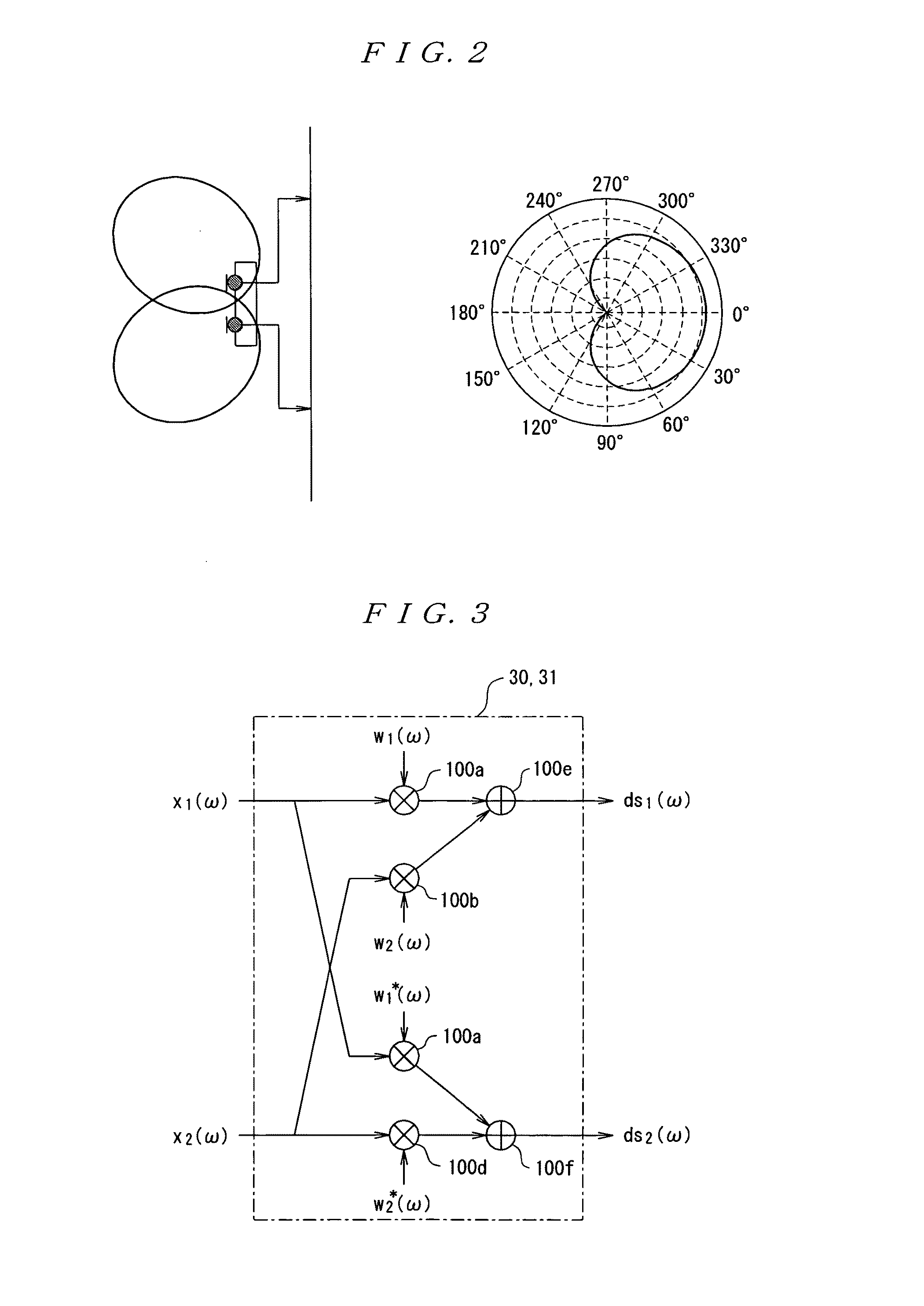

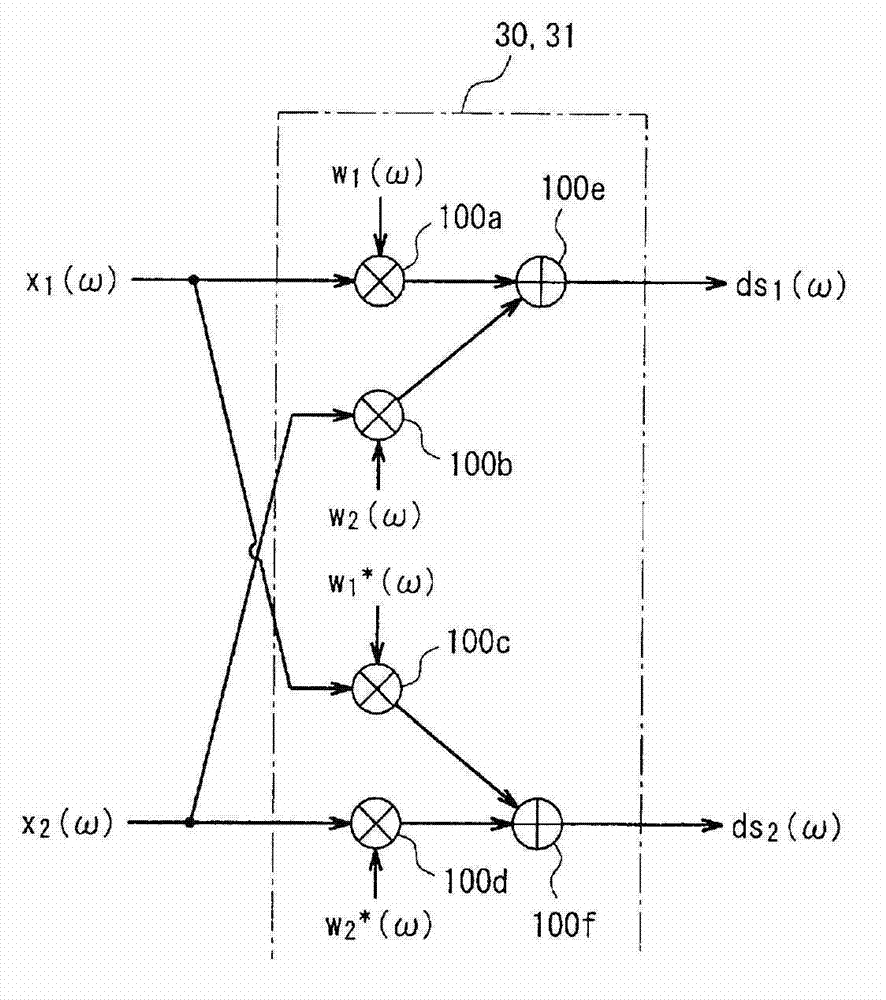

InactiveUS20130142343A1Suppress generation of musical noiseSignal processingEar treatmentSound source separationFrequency spectrum

With conventional source separator devices, specific frequency bands are significantly reduced in environments where dispersed static is present that does not come from a particular direction, and as a result, the dispersed static may be filtered irregularly without regard to sound source separation results, giving rise to musical noise. In an embodiment of the present invention, by computing weighting coefficients which are in a complex conjugate relation, for post-spectrum analysis output signals from microphones (10, 11), a beam former unit (3) of a sound source separator device (1) thus carries out a beam former process for attenuating each sound source signal that comes from a region wherein the general direction of a target sound source is included and a region opposite to said region, in a plane that intersects a line segment that joins the two microphones (10, 11). A weighting coefficient computation unit (50) computes a weighting coefficient on the basis of the difference between power spectrum information calculated by power calculation units (40, 41).

Owner:ASAHI KASEI KK

Sound source separation device, speech recognition device, mobile telephone, sound source separation method, and program

ActiveUS8112272B2Reduce system performanceSignal processingTransducer detailsSound source separationFrequency spectrum

A sound source signal from a target sound source is allowed to be separated from a mixed sound which consists of sound source signals emitted from a plurality of sound sources without being affected by uneven sensitivity of microphone elements. A beamformer section 3 of a source separation device 1 performs beamforming processing for attenuating sound source signals arriving from directions symmetrical with respect to a perpendicular line to a straight line connecting two microphones 10 and 11 respectively by multiplying output signals from the microphones 10 and 11 after spectrum analysis by weighted coefficients which are complex conjugate to each other. Power computation sections 40 and 41 compute power spectrum information, and target sound spectrum extraction sections 50 and 51 extract spectrum information of a target sound source based on a difference between the power spectrum information.

Owner:ASAHI KASEI KK

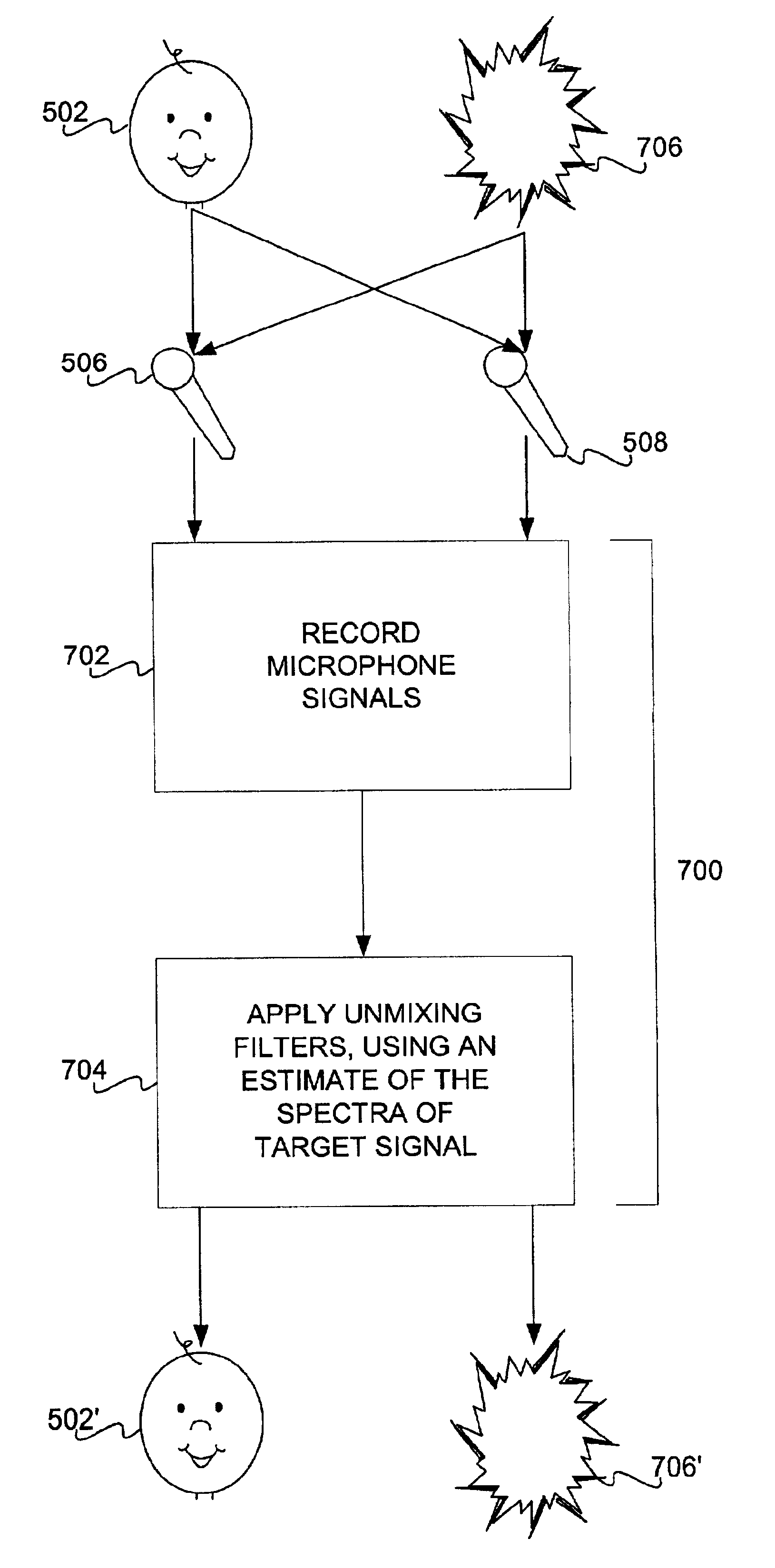

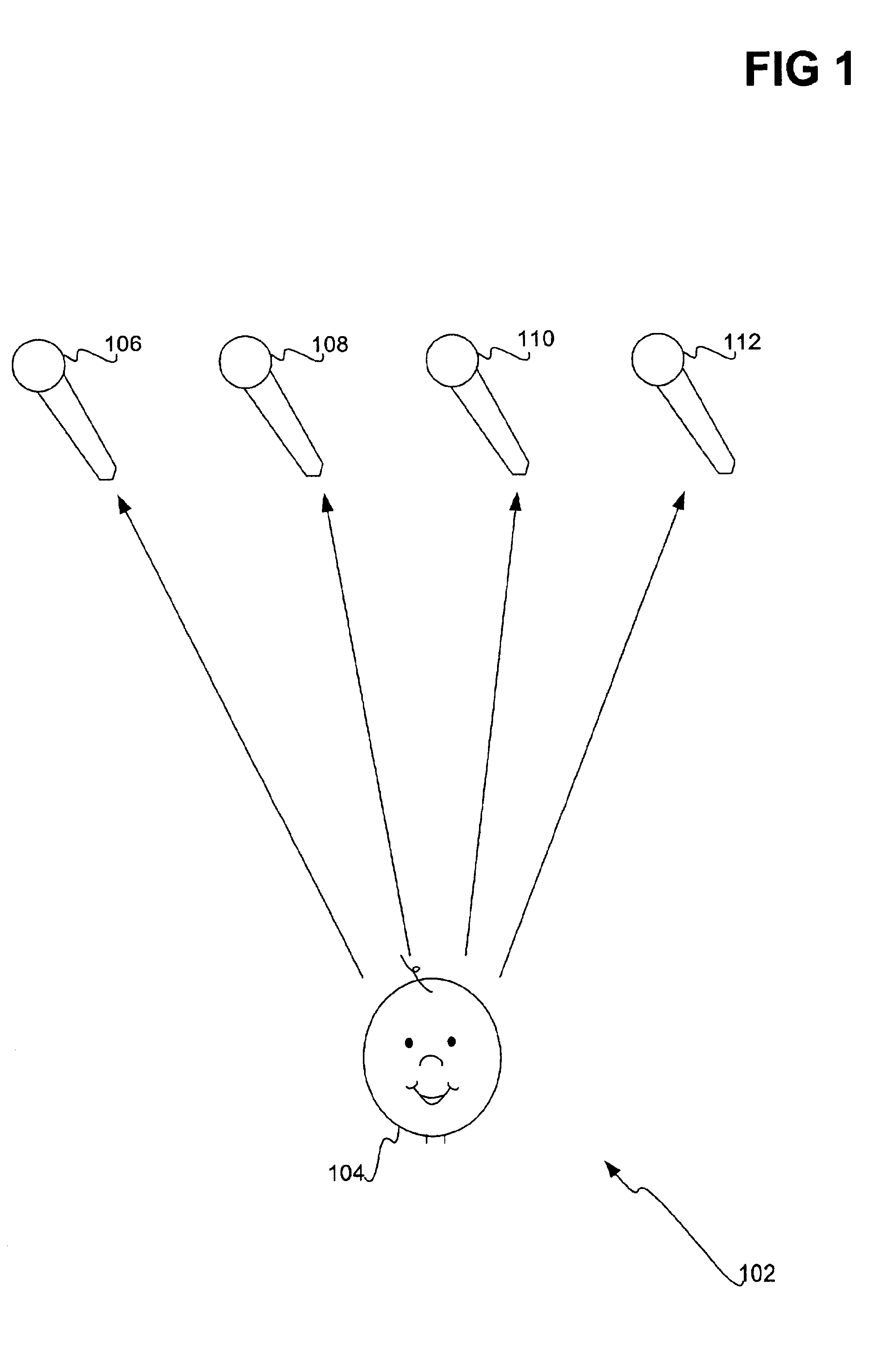

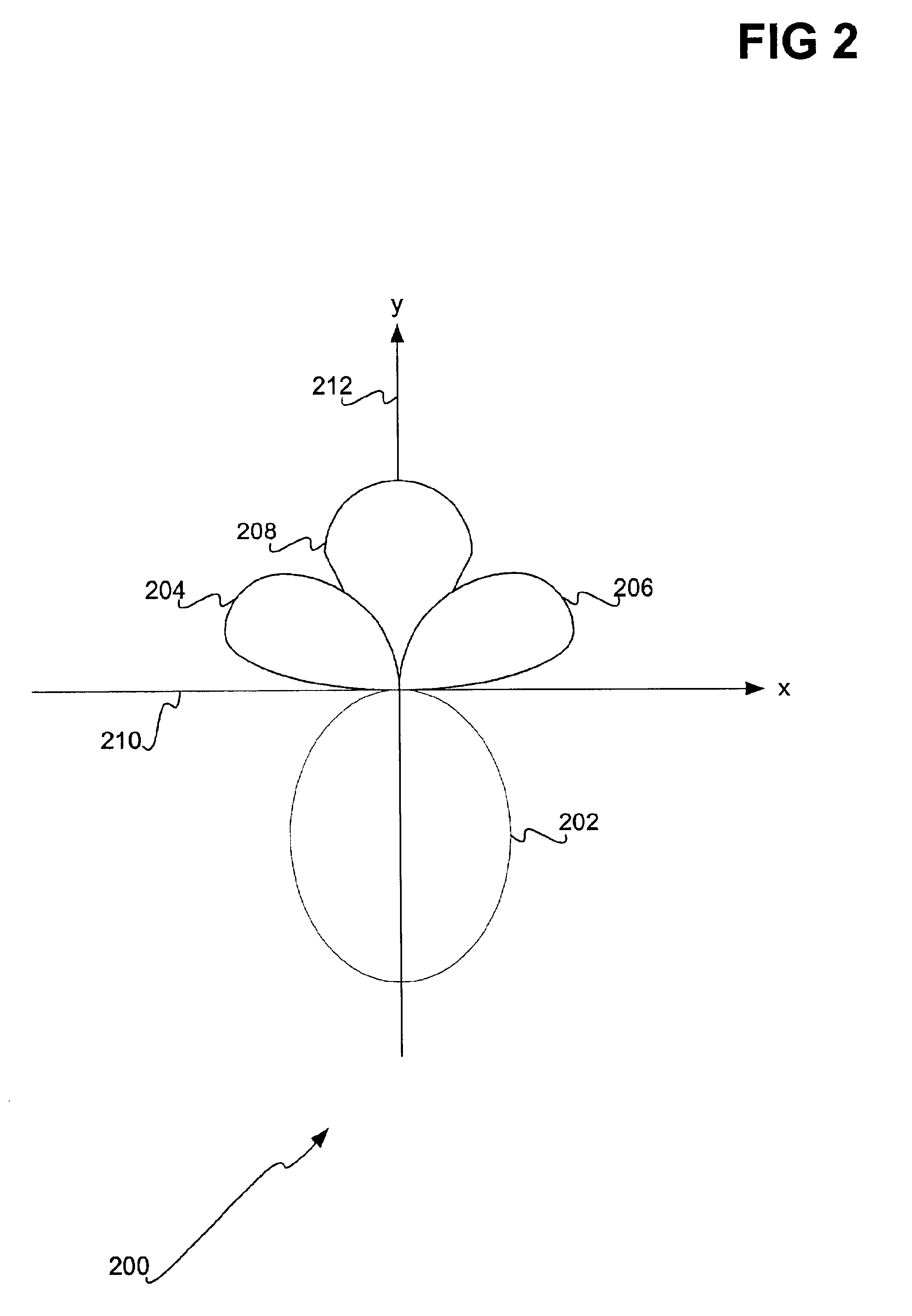

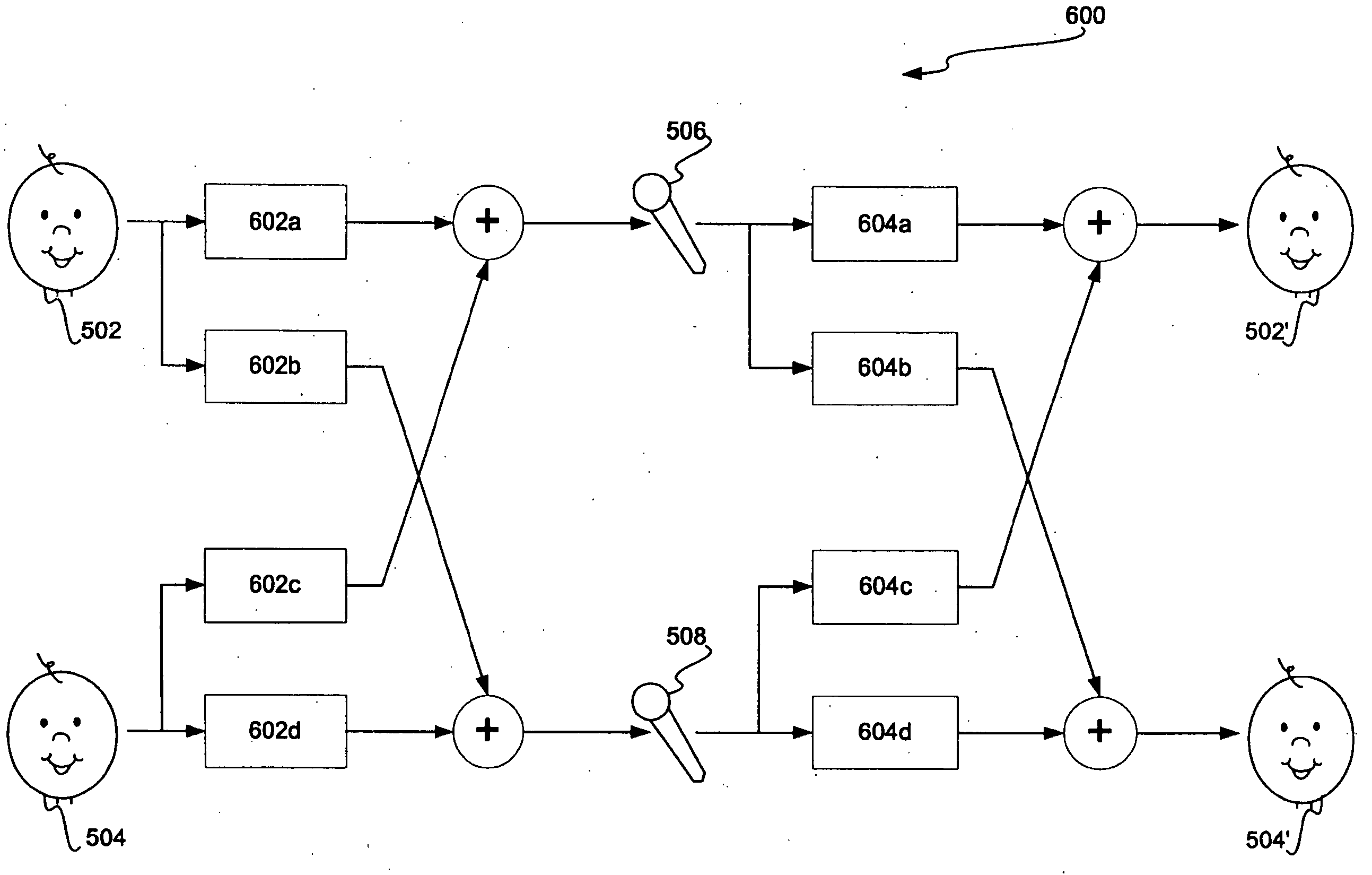

Sound source separation using convolutional mixing and a priori sound source knowledge

InactiveUS6879952B2Achieve separationEar treatmentSpeech analysisSound source separationFrequency spectrum

Sound source separation, without permutation, using convolutional mixing independent component analysis based on a priori knowledge of the target sound source is disclosed. The target sound source can be a human speaker. The reconstruction filters used in the sound source separation take into account the a priori knowledge of the target sound source, such as an estimate the spectra of the target sound source. The filters may be generally constructed based on a speech recognition system. Matching the words of the dictionary of the speech recognition system to a reconstructed signal indicates whether proper separation has occurred. More specifically, the filters may be constructed based on a vector quantization codebook of vectors representing typical sound source patterns. Matching the vectors of the codebook to a reconstructed signal indicates whether proper separation has occurred. The vectors may be linear prediction vectors, among others.

Owner:MICROSOFT TECH LICENSING LLC

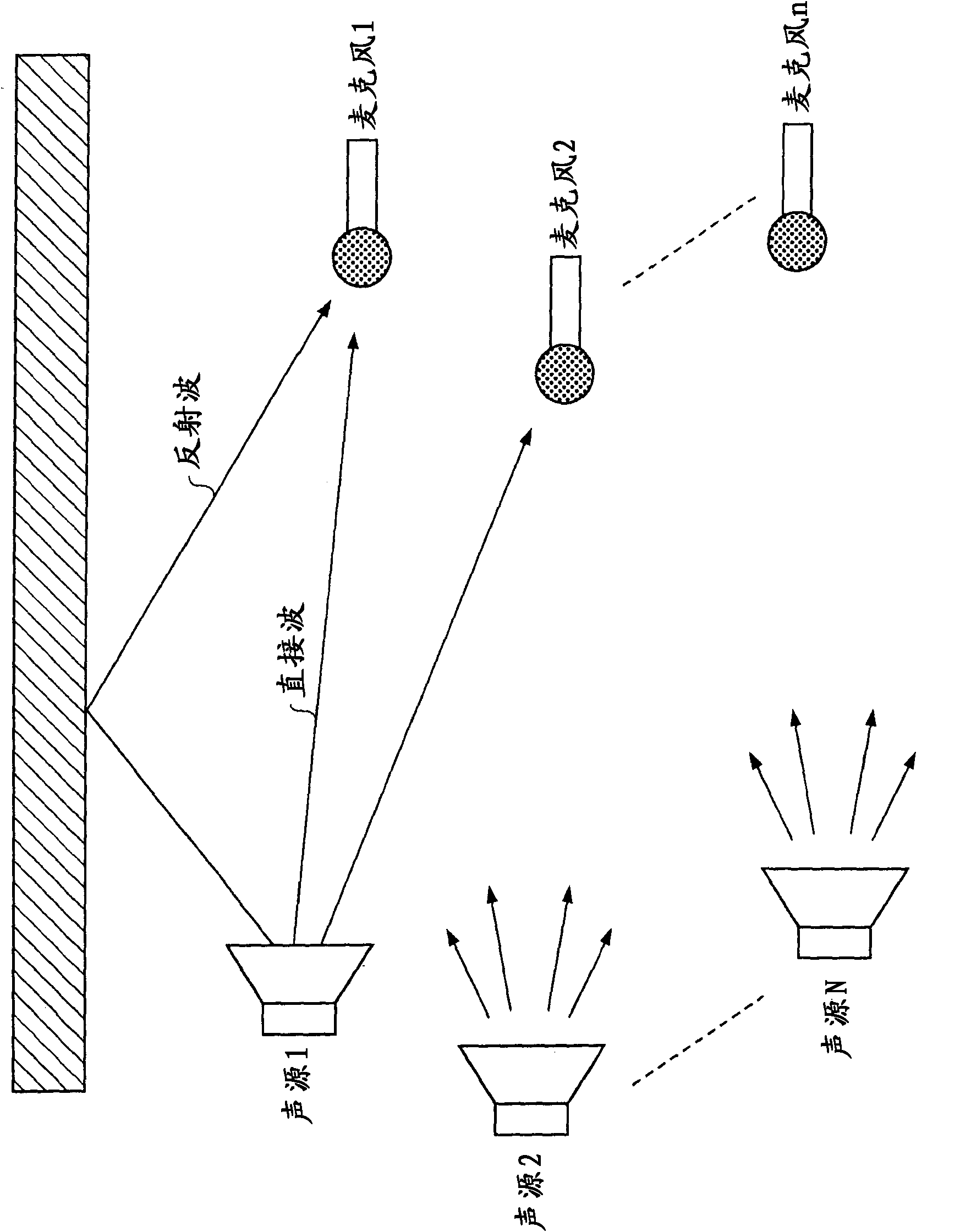

Apparatus and method for speech recognition based on sound source separation and sound source identification

InactiveUS20100070274A1Improve user convenienceSpeech recognitionTransmission noise suppressionSound source separationSound source identification

An apparatus for a speech recognition based on source separation and identification includes: a sound source separator for separating mixed signals, which are input to two or more microphones, into sound source signals by using independent component analysis (ICA), and estimating direction information of the separated sound source signals; and a speech recognizer for calculating normalized log likelihood probabilities of the separated sound source signals. The apparatus further includes a speech signal identifier identifying a sound source corresponding to a user's speech signal by using both of the estimated direction information and the reliability information based on the normalized log likelihood probabilities.

Owner:ELECTRONICS & TELECOMM RES INST

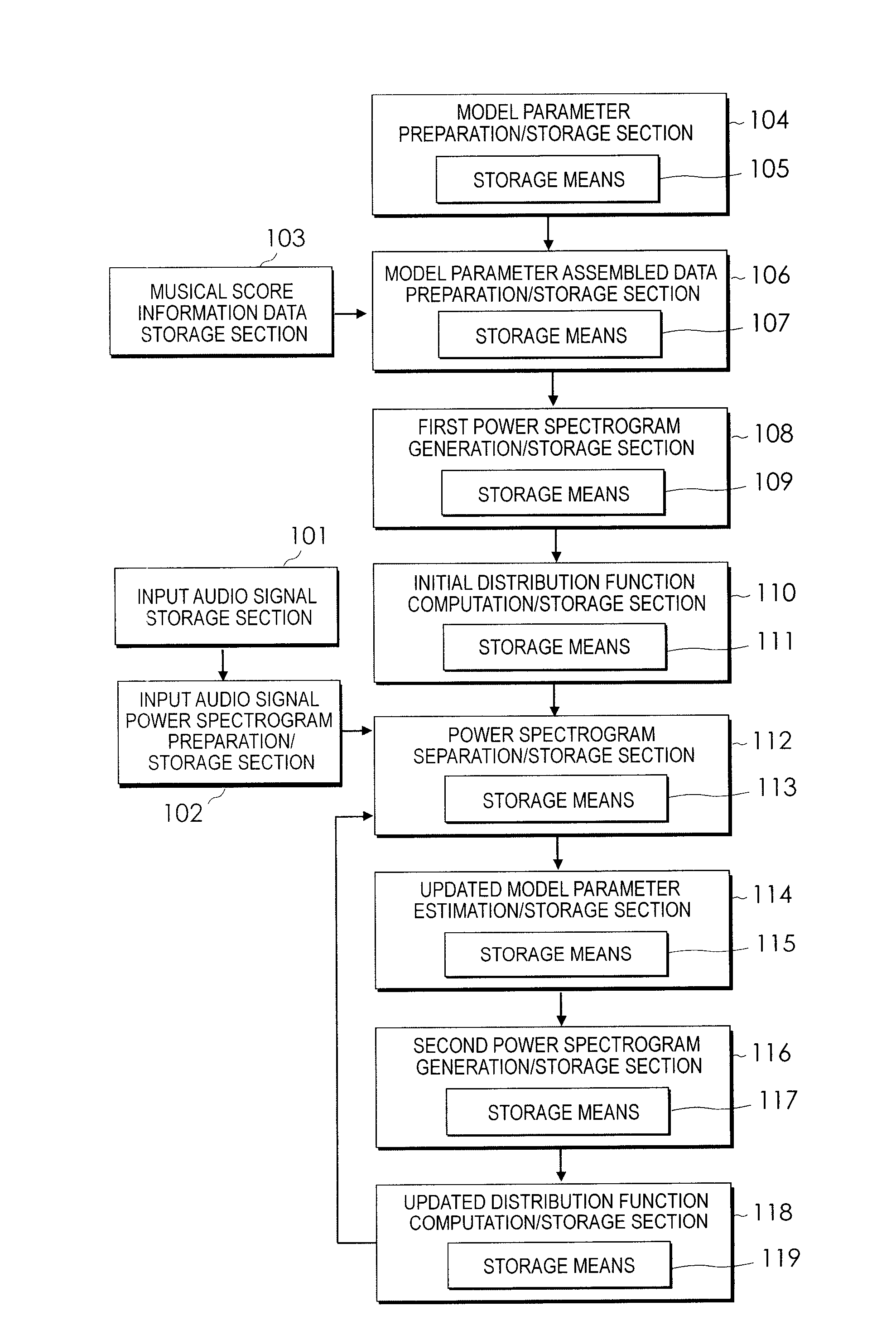

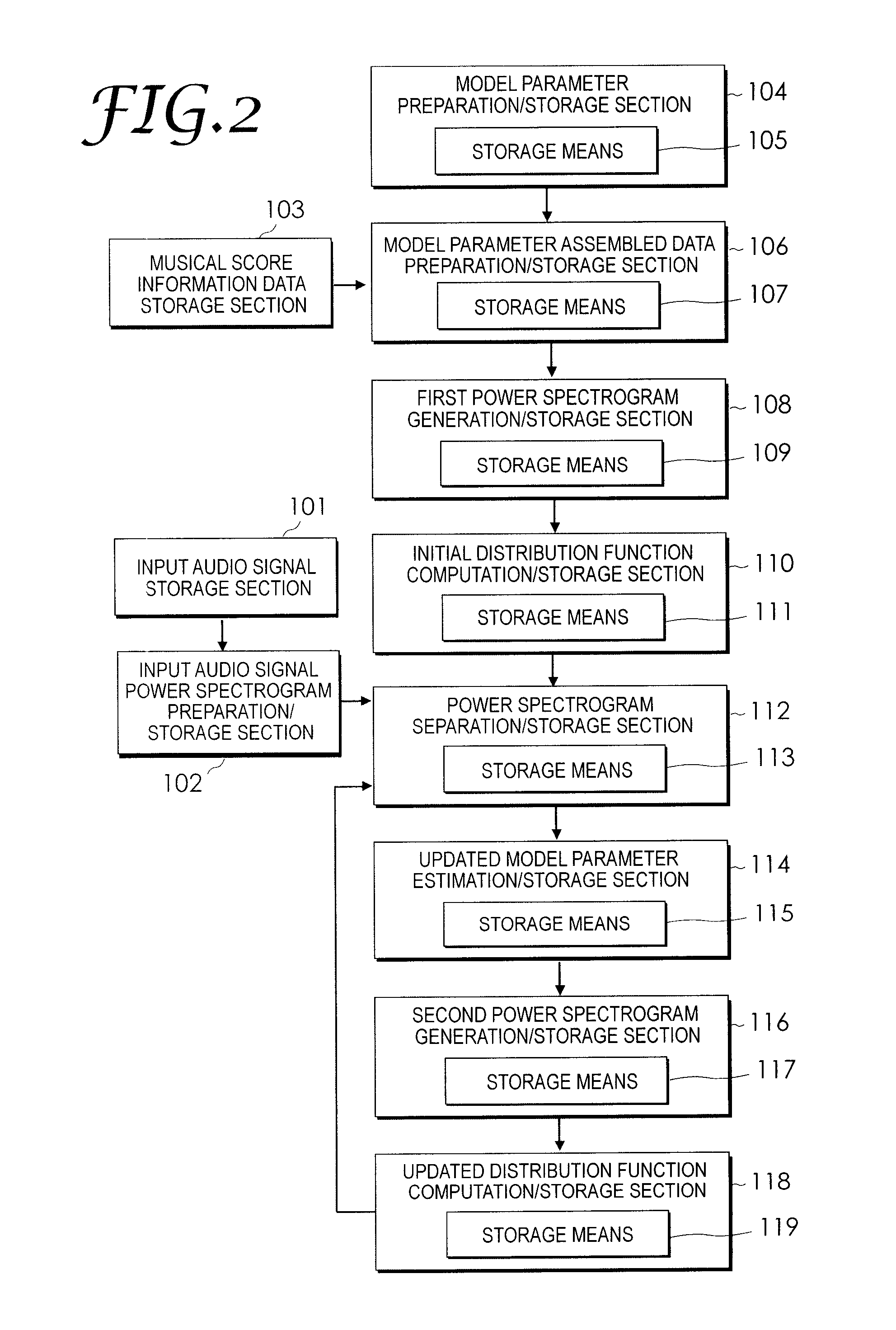

Sound source separation system, sound source separation method, and computer program for sound source separation

InactiveUS20100131086A1Minimize cost functionHigh precisionElectrophonic musical instrumentsAnalogue computers for electric apparatusSound source separationModel parameters

An audio signal produced by playing a plurality of musical instruments is separated into sound sources according to respective instrument sounds. Each time a separation process is performed, the updated model parameter estimation / storage section 114 estimates parameters respectively contained in updated model parameters such that updated power spectrograms gradually change from a state close to initial power spectrograms to a state close to a plurality of power spectrograms most recently stored in a power spectrogram separation / storage section. Respective sections including the power spectrogram separation / storage section 112 and an updated distribution function computation / storage section 118 repeatedly perform process operations until the updated power spectrograms change from the state close to the initial power spectrograms to the state close to the plurality of power spectrograms most recently stored in the power spectrogram separation / storage section 112. The final updated power spectrograms are close to the power spectrograms of single tones of one musical instrument contained in the input audio signal formed to contain harmonic and inharmonic models.

Owner:NAT INST OF ADVANCED IND SCI & TECH

Sound source separator device, sound source separator method, and program

InactiveCN103098132AMusic noise reductionSignal processingSpeech analysisSound source separationFrequency spectrum

With conventional source separator devices, specific frequency bands are significantly reduced in environments where dispersed static is present that does not come from a particular direction, and as a result, the dispersed static may be filtered irregularly without regard to sound source separation results, giving rise to musical noise. In an embodiment of the present invention, by computing weighting coefficients which are in a complex conjugate relation, for post-spectrum analysis output signals from microphones (10, 11), a beam former unit (3) of a sound source separator device (1) thus carries out a beam former process for attenuating each sound source signal that comes from a region wherein the general direction of a target sound source is included and a region opposite to said region, in a plane that intersects a line segment that joins the two microphones (10, 11). A weighting coefficient computation unit (50) computes a weighting coefficient on the basis of the difference between power spectrum information calculated by power calculation units (40, 41).

Owner:ASAHI KASEI KK

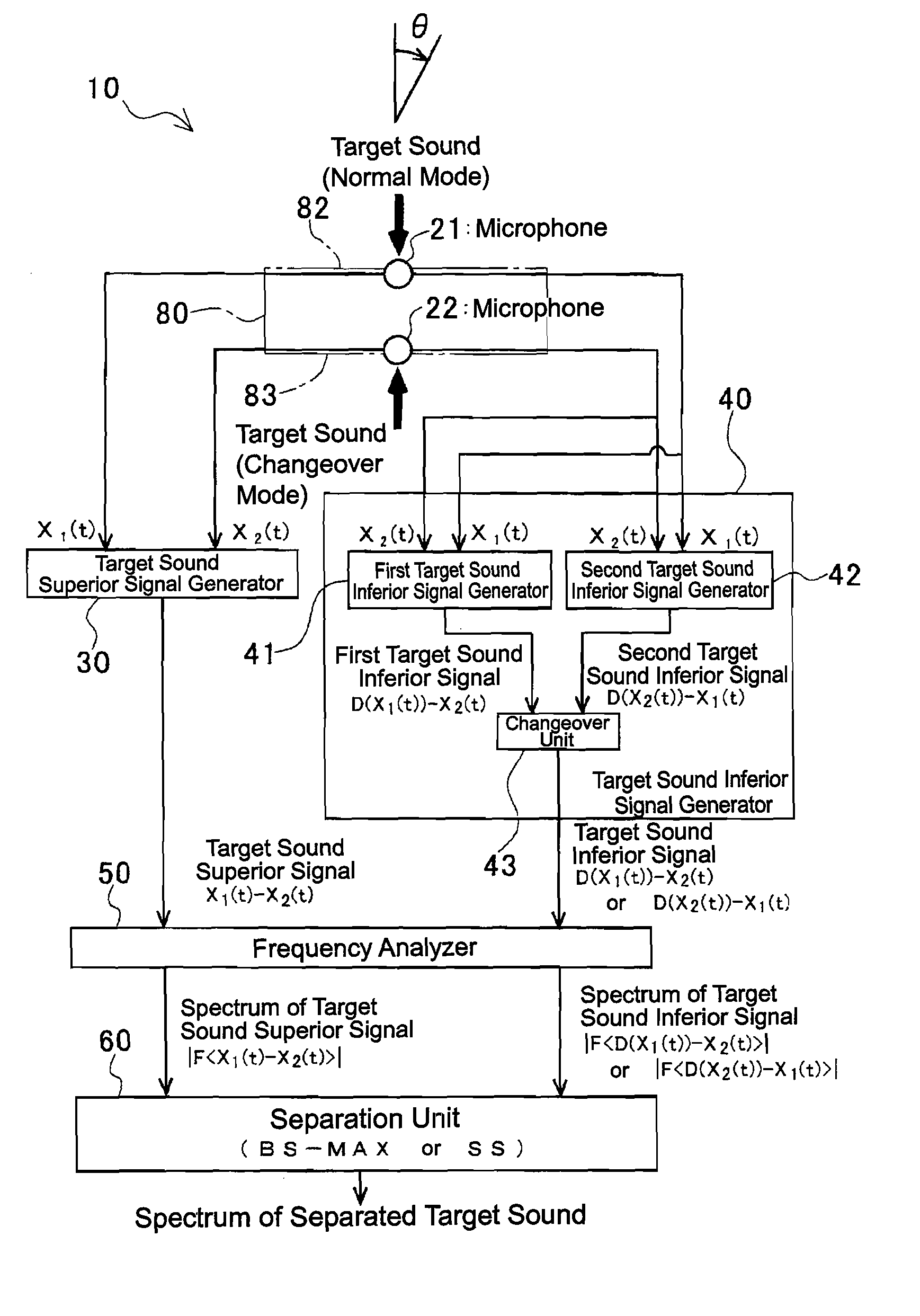

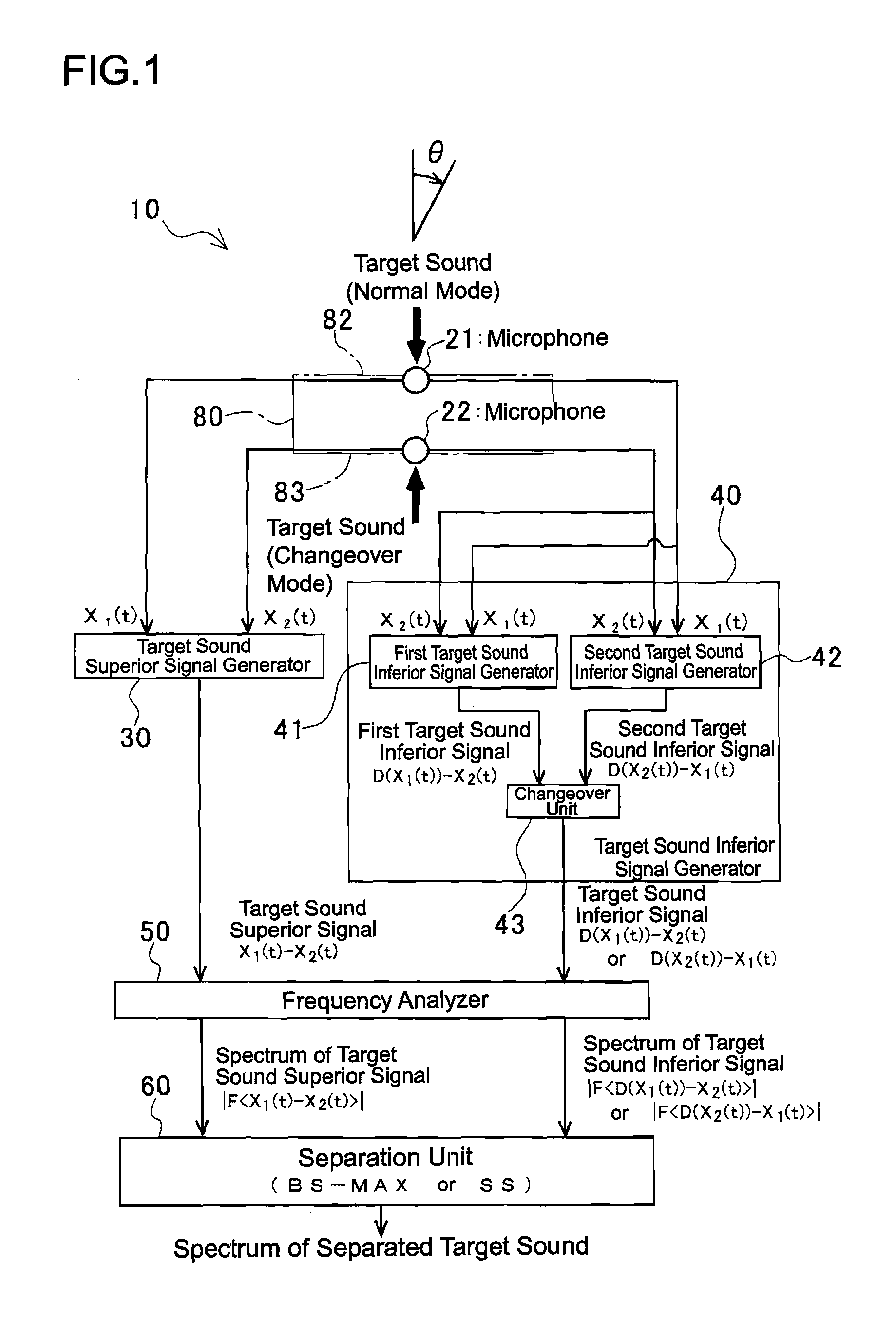

Sound source separation system, sound source separation method, and acoustic signal acquisition device

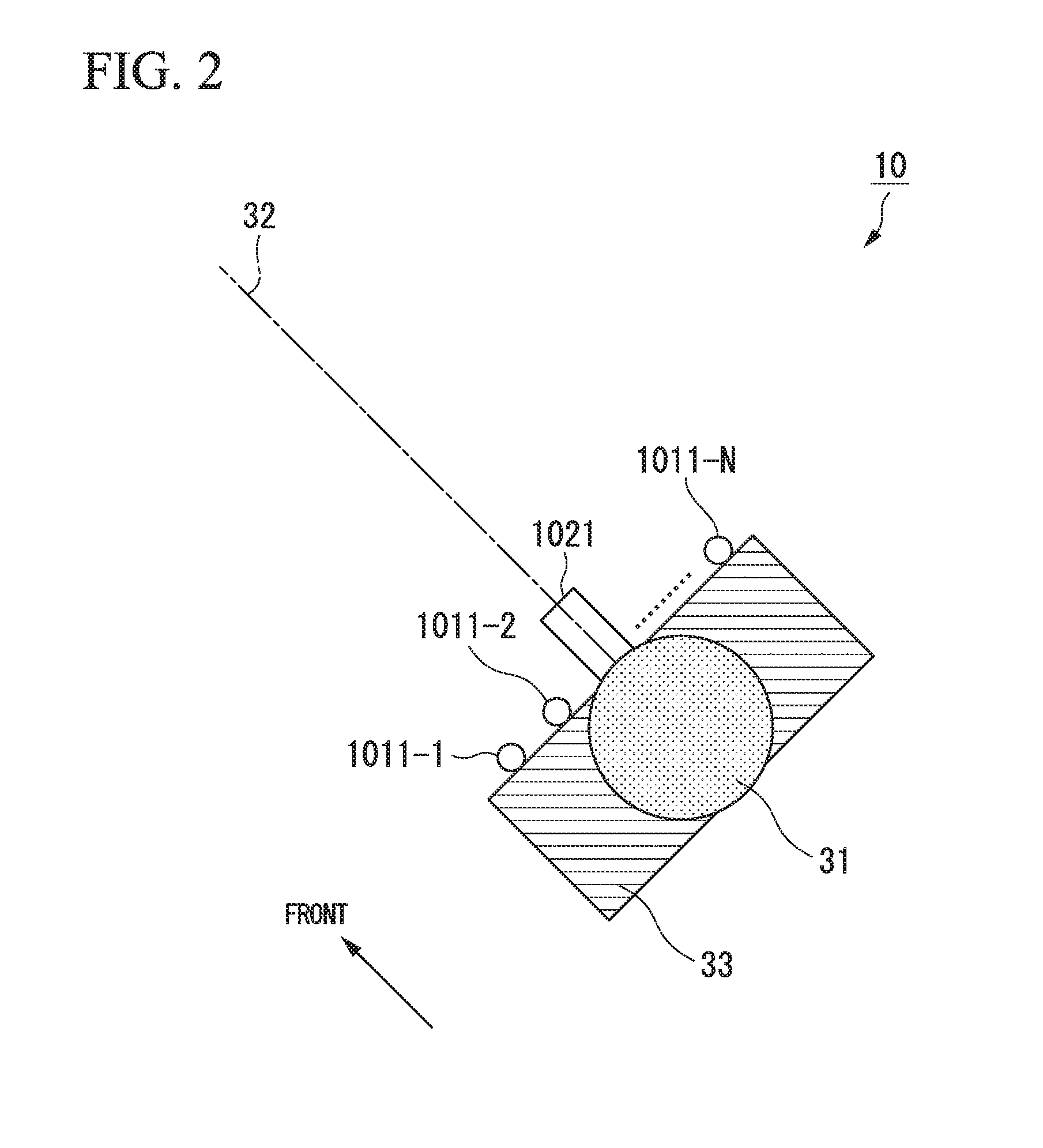

InactiveUS20090323977A1Accurate separationDevice can be miniaturizedEar treatmentNoise generationSound source separationFrequency spectrum

The invention provides a sound source separation system, a sound source separation method, and an acoustic signal acquisition device which can precisely separate a target sound and a disturbance sound coming from an arbitrary direction, and which ensures miniaturization of a device. A sound source separation system 10 comprises two microphones 21, 22 disposed side by side in a direction in which a target sound comes from, a target sound superior signal generator 30 which performs a linear combination process for emphasizing the target sound, using the received sound signals of the microphones to generate a target sound superior signal, a target sound inferior signal generator 40 which performs a linear combination process for suppressing the target sound, using the received sound signals of the microphones 21, 22, to generate a target sound inferior signal, and a separation unit 60 which separates the target sound and a disturbance sound, using a target sound superior signal spectrum and a target sound inferior signal spectrum.

Owner:WASEDA UNIV

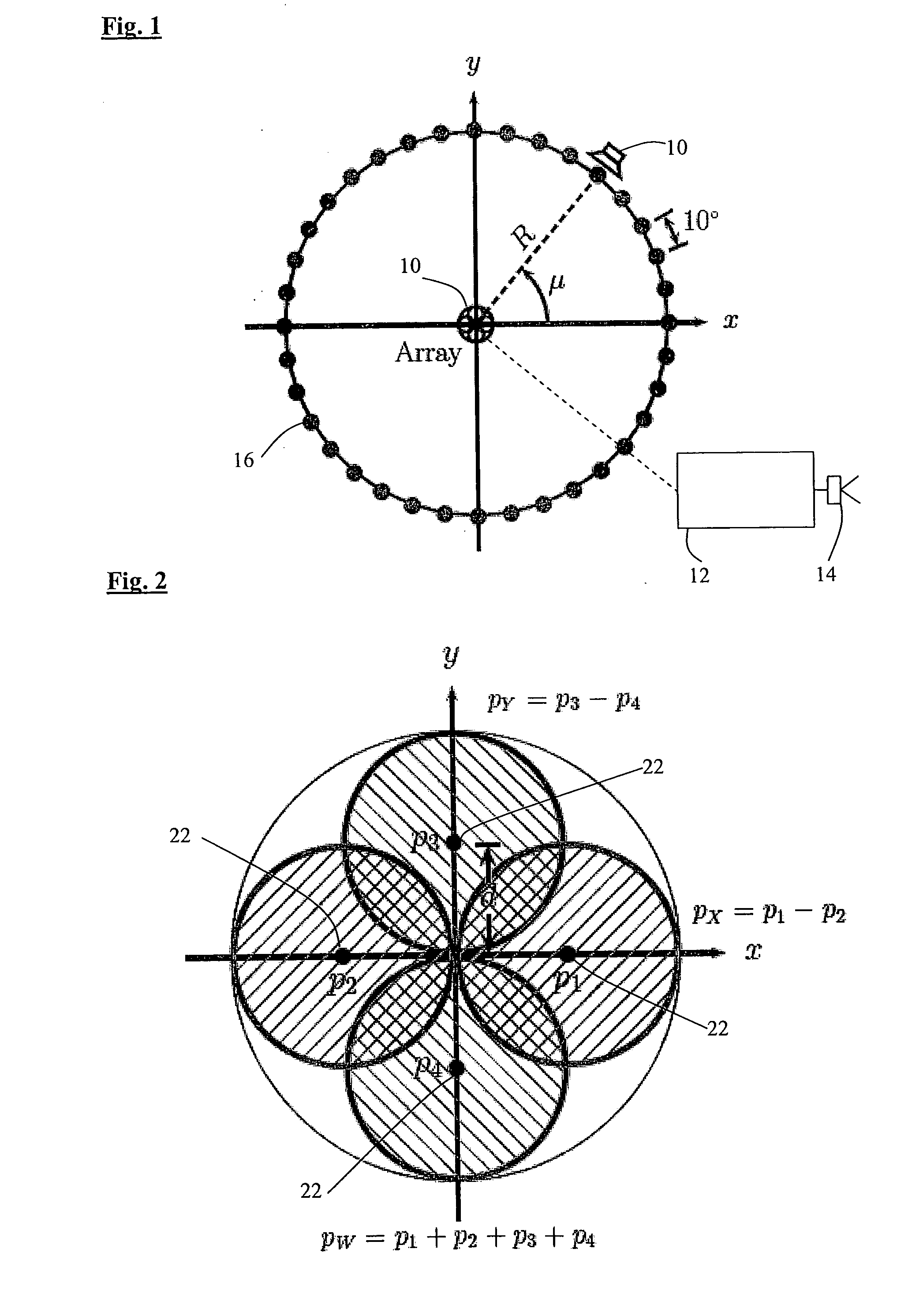

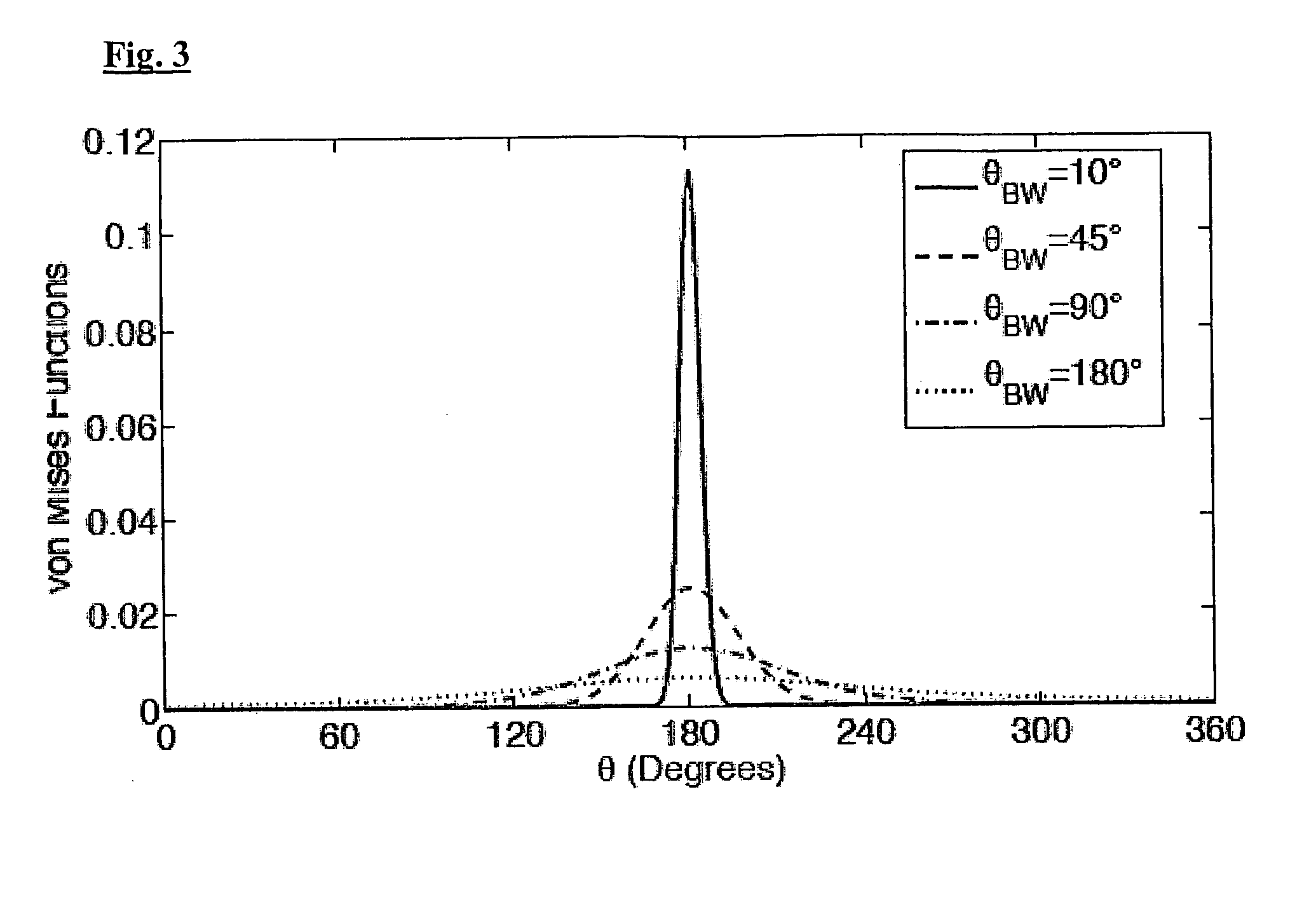

Acoustic source separation

ActiveUS20110015924A1Avoid problemsHearing aids signal processingMicrophones signal combinationSound source separationEngineering

A method of separating a mixture of acoustic signals from a plurality of sources comprises: providing pressure signals indicative of time-varying acoustic pressure in the mixture; defining a series of time windows; and for each time window: a) providing from the pressure signals a series of sample values of measured directional pressure gradient; b) identifying different frequency components of the pressure signals c) for each frequency component defining an associated direction; and d) from the frequency components and their associated directions generating a separated signal for one of the sources.

Owner:UNIVERSITY OF SURREY

Speech recognition system and speech recognizing method

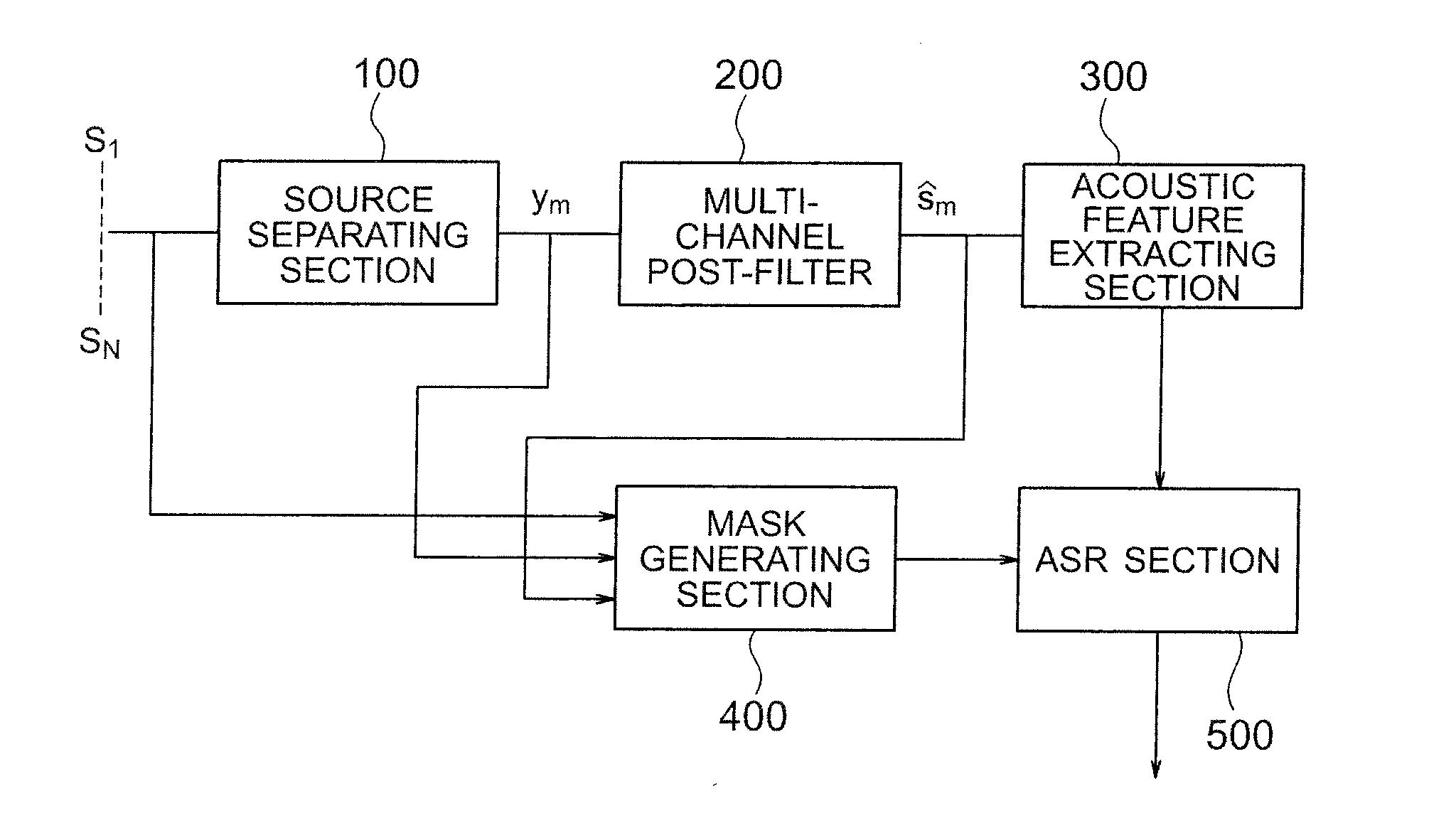

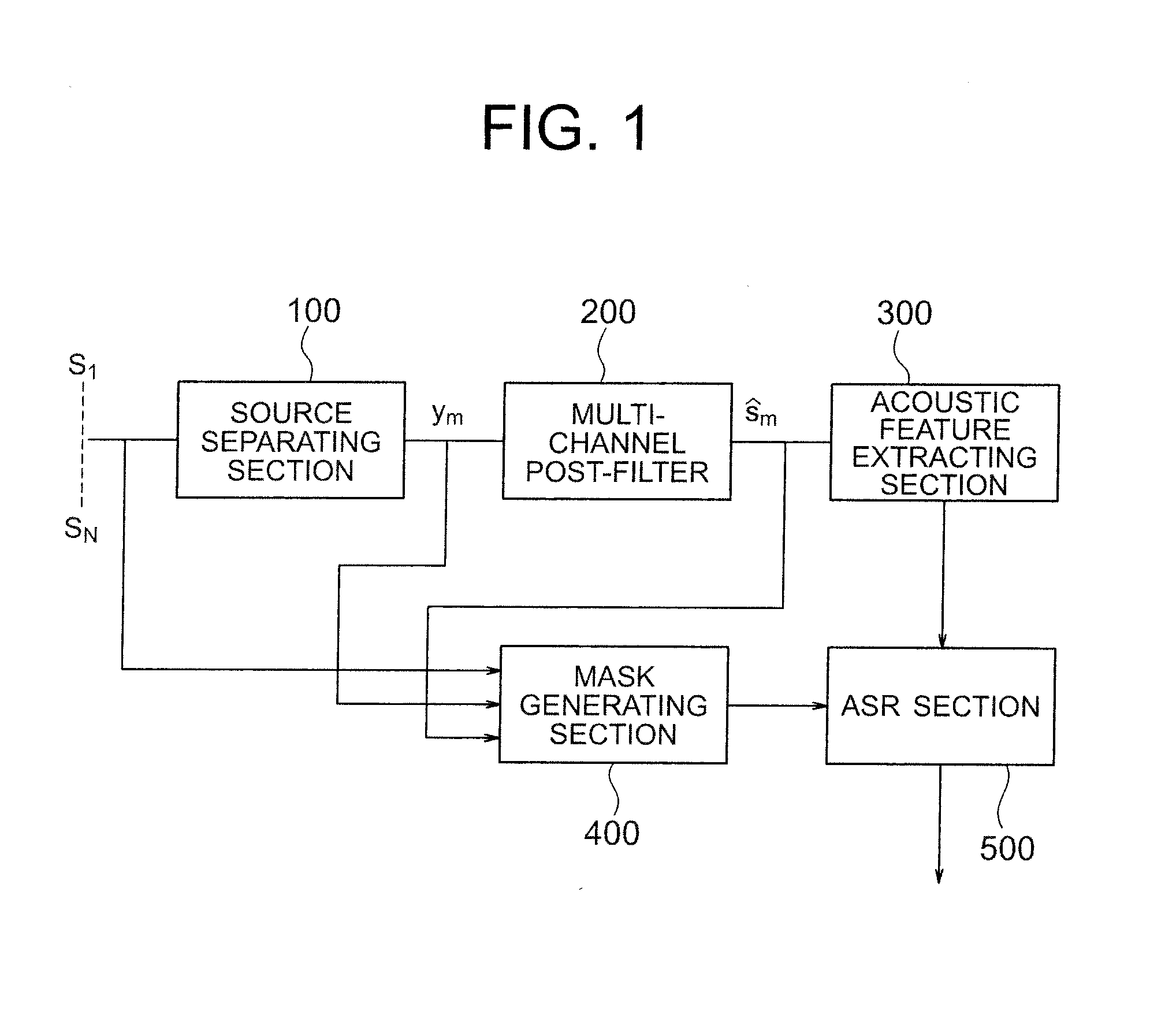

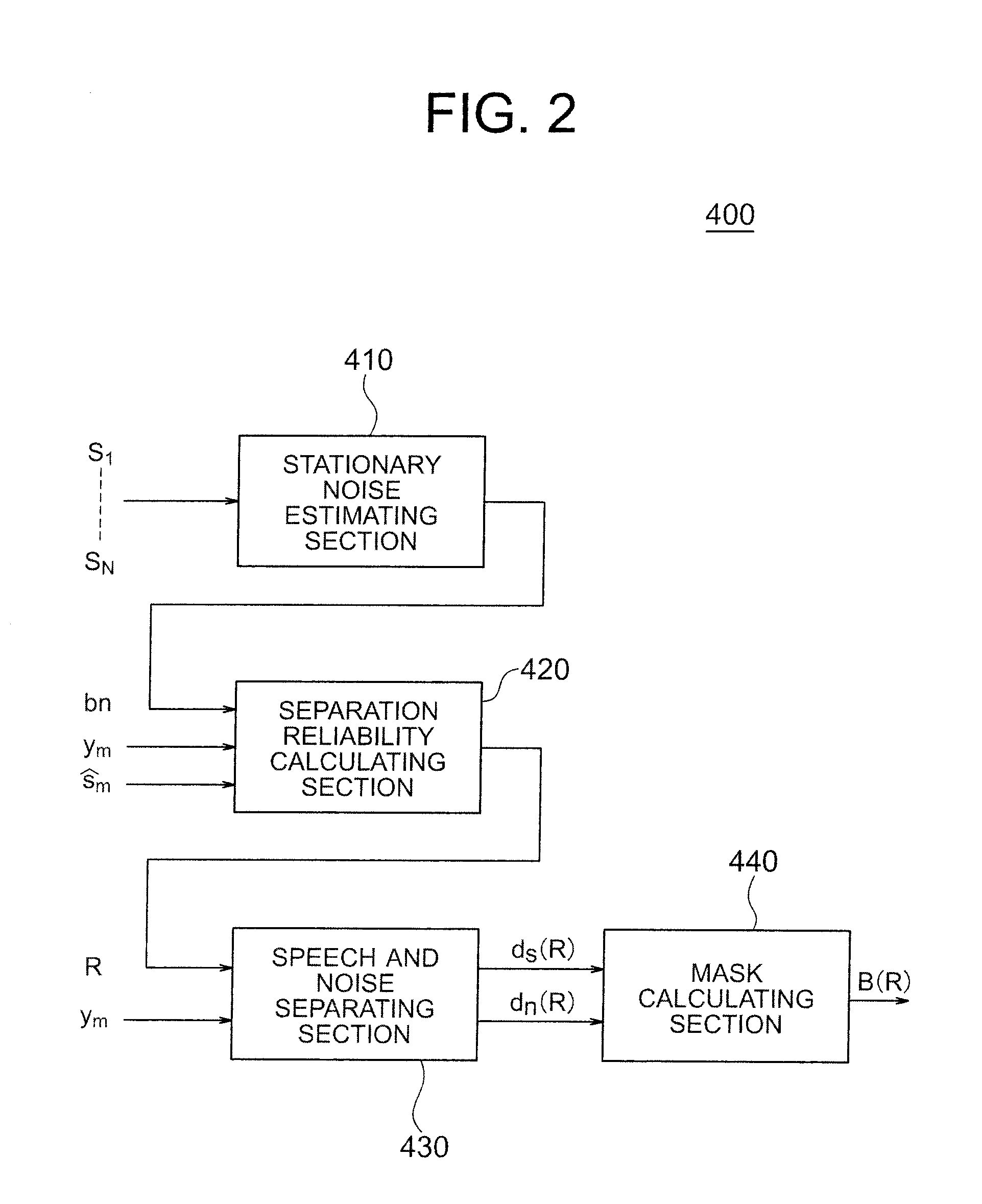

ActiveUS20110224980A1Speech recognitionSimplified generationSpeech recognitionSound source separationFrequency spectrum

A speech recognition system according to the present invention includes a sound source separating section which separates mixed speeches from multiple sound sources from one another; a mask generating section which generates a soft mask which can take continuous values between 0 and 1 for each frequency spectral component of a separated speech signal using distributions of speech signal and noise against separation reliability of the separated speech signal; and a speech recognizing section which recognizes speeches separated by the sound source separating section using soft masks generated by the mask generating section.

Owner:HONDA MOTOR CO LTD

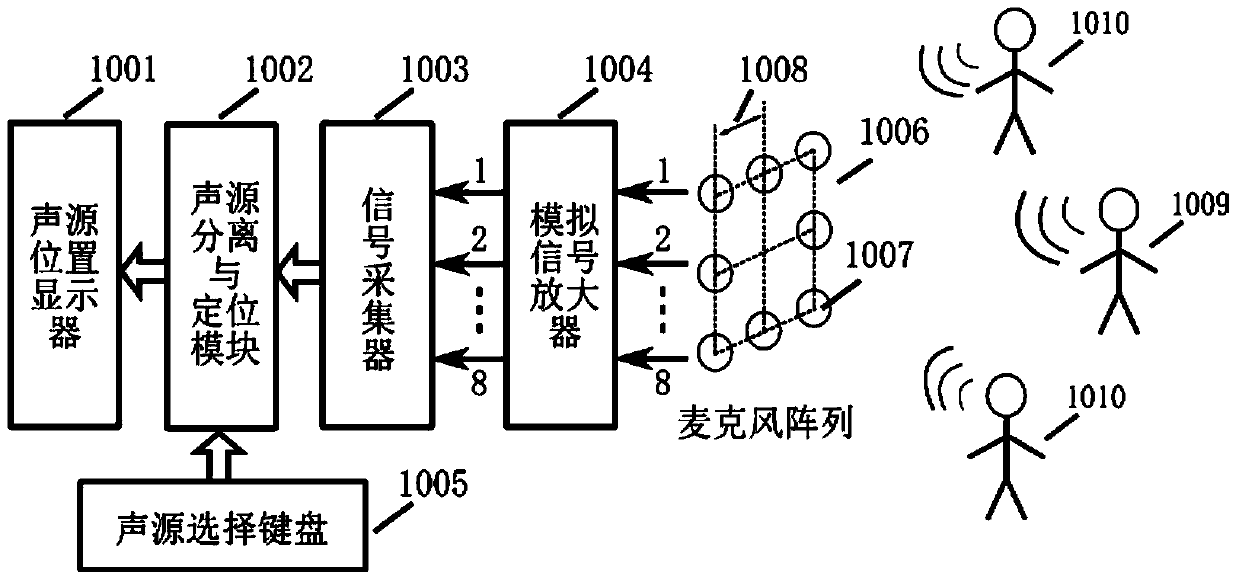

Hearing aid device and method for separating and positioning sound sources in noise environments

ActiveCN104053107AGuaranteed real-timeReduce computational complexitySpeech analysisDeaf amplification systemsSound sourcesDisplay device

The invention relates to a hearing aid device and method, in particular to the hearing aid device and method for separating and positioning sound sources in noise environments. The hearing aid device comprises a microphone array, a sound source position displayer, a sound source separating and positioning module, a signal collector, an analog signal amplifier and a sound source selection keyboard. The sound source separating and positioning module adopts a cross-correlation method to process the collected microphone signals to obtain eight initial positions of the sound sources relative to the microphone array, the collected microphone signals are processed through a blind source separation method based on space search to obtain sound sources containing voices of a talker and other persons, a user selects out the sound sources containing voices of the talker through the sound source selection keyboard and computes the accurate position of the talker relative to the microphone array according to the selected sound source, and the position of the sound source is displayed in the sound source position displayer. By means of the hearing aid device and method, voice signals of two persons involving in the conversations in the noise environments can be separated automatically, and a positioning function of a sound signal source is added. Furthermore, the hearing aid device and method for separating and positioning sound sources in noise environments are convenient to use.

Owner:CHONGQING UNIV

Sound source separation apparatus and sound source separation method

InactiveUS20070133811A1High sound source separation performanceEasy to separateSpeech analysisStereophonic arrangmentsPattern recognitionSound source separation

Owner:KOBE STEEL LTD

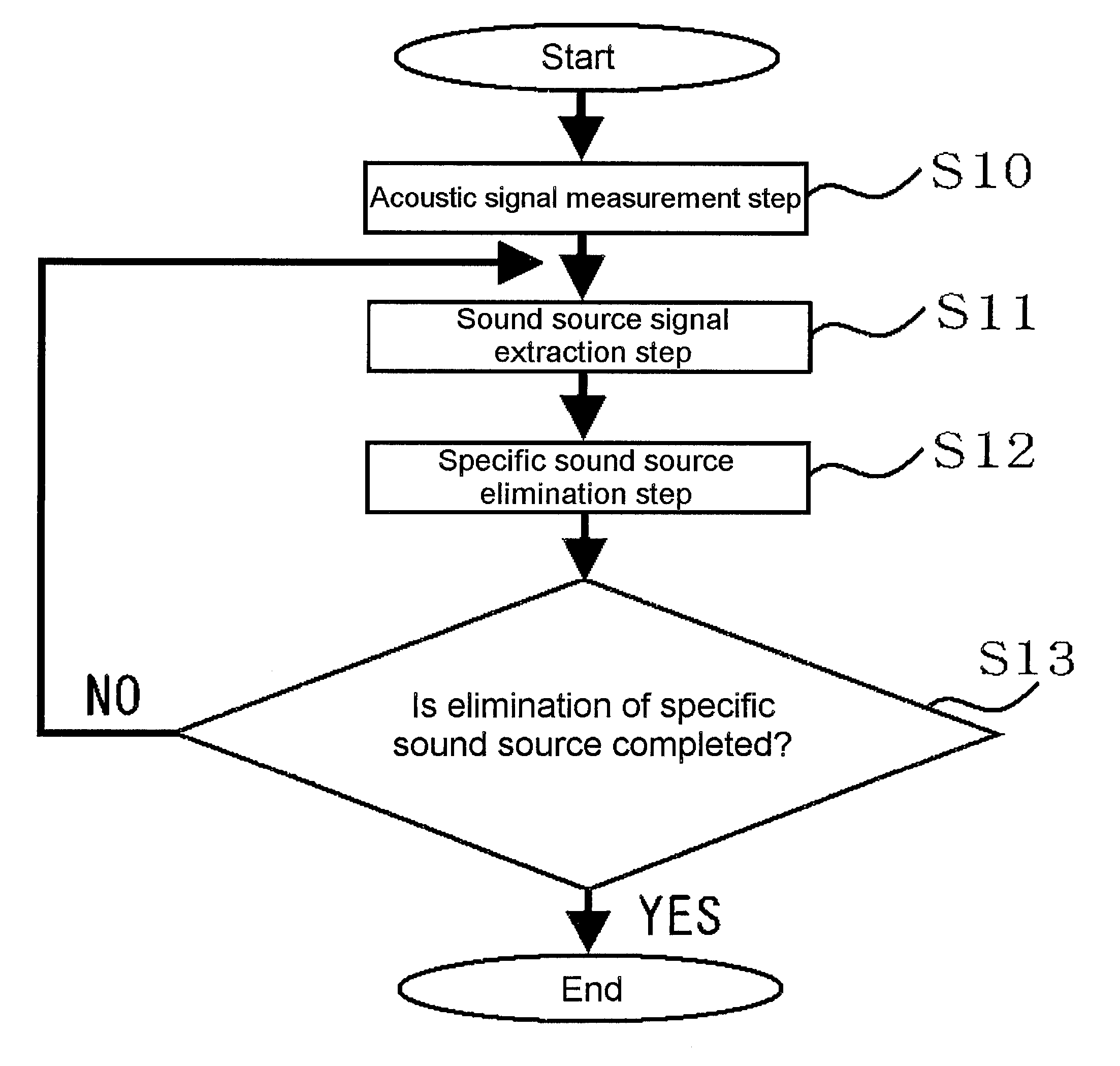

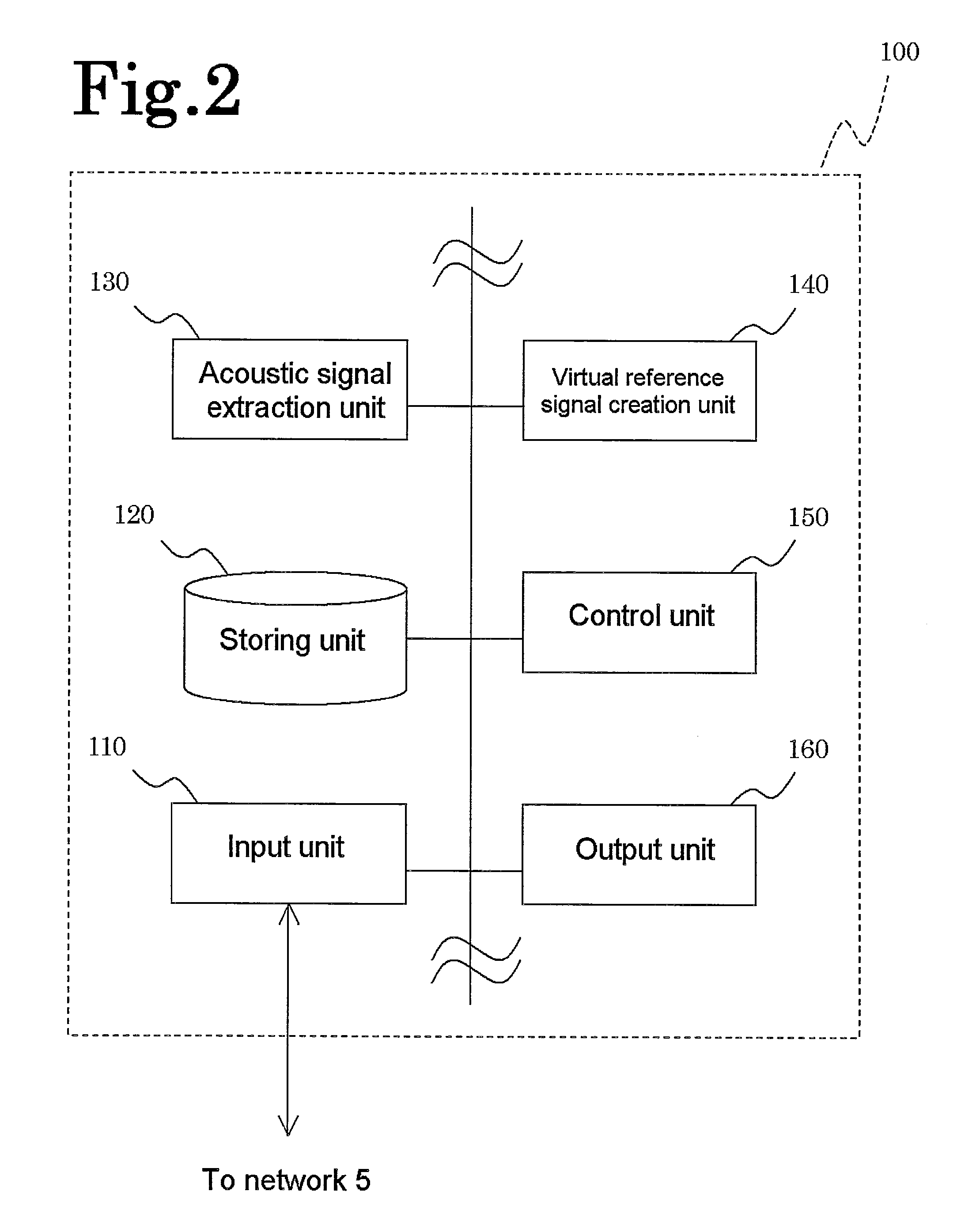

Sound source separation and display method, and system thereof

InactiveUS20110075860A1Ease of evaluationEasy to performVibration measurement in fluidMulti-channel direction findingSound source identificationSound source separation

The present invention relates to a sound source separation and display method and a system thereof, and provides in particular a sound source separation and display method and a system thereof that are intended to eliminate a specific sound source. In order to separate a plurality of sound sources by using a single set of microphone array, the result of processing of sound source identification is utilized. More specifically, a signal in a direction is extracted from the result of the processing of the sound source identification, and a field limited to / eliminated of the effect of the signal is calculated and displayed. Such an operation can be repeated. A virtual reference signal can be created in a time domain.

Owner:NITTOBO ACOUSTIC ENG CO LTD

Sound source separation using convolutional mixing and a priori sound source knowledge

Sound source separation, without permutation, using convolutional mixing independent component analysis based on a priori knowledge of the target sound source is disclosed. The target sound source can be a human speaker. The reconstruction filters used in the sound source separation take into account the a priori knowledge of the target sound source, such as an estimate the spectra of the target sound source. The filters may be generally constructed based on a speech recognition system. Matching the words of the dictionary of the speech recognition system to a reconstructed signal indicates whether proper separation has occurred. More specifically, the filters may be constructed based on a vector quantization codebook of vectors representing typical sound source patterns. Matching the vectors of the codebook to a reconstructed signal indicates whether proper separation has occurred. The vectors may be linear prediction vectors, among others.

Owner:MICROSOFT TECH LICENSING LLC

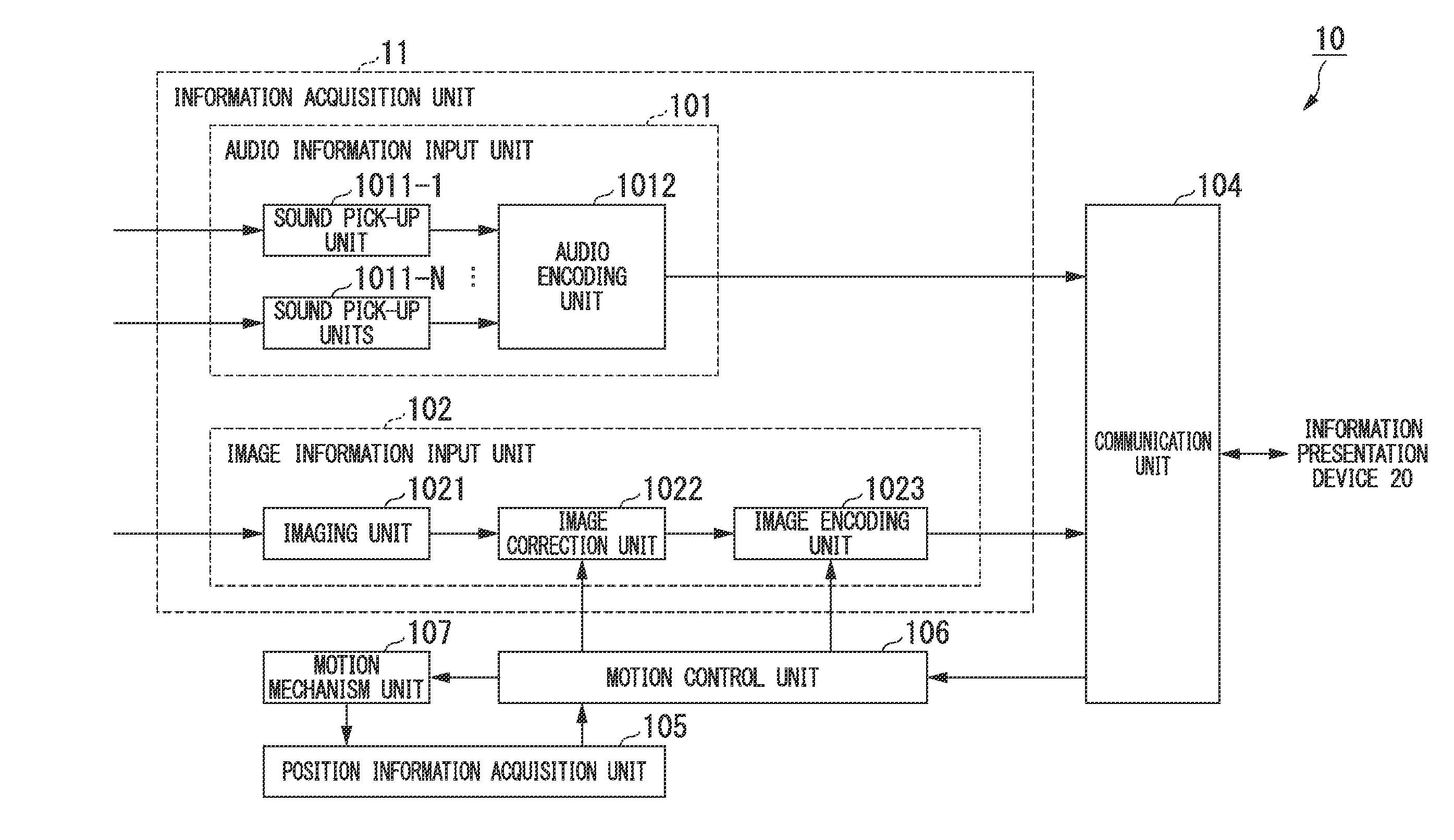

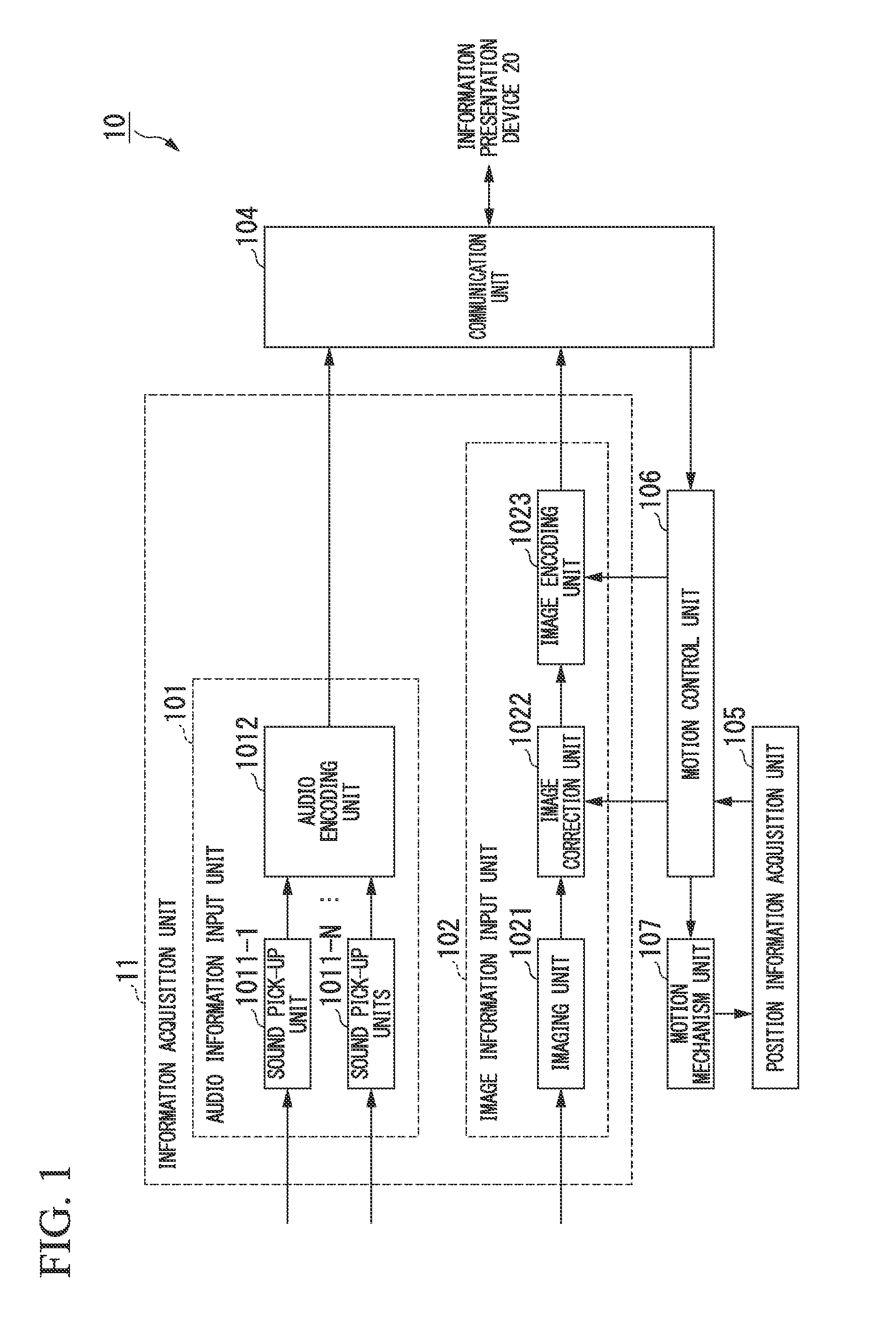

Information presentation device, information presentation method, information presentation program, and information transmission system

ActiveUS20130151249A1Degradation of display imageReduce degradationClosed circuit television systemsVisible signalling systemsSound source separationInformation transmission

An information presentation device includes an audio signal input unit configured to input an audio signal, an image signal input unit configured to input an image signal, an image display unit configured to display an image indicated by the image signal, a sound source localization unit configured to estimate direction information for each sound source based on the audio signal, a sound source separation unit configured to separate the audio signal to sound-source-classified audio signals for each sound source, an operation input unit configured to receive an operation input and generates coordinate designation information indicating a part of a region of the image, and a sound source selection unit configured to select a sound-source-classified audio signal of a sound source associated with a coordinate which is included in a region indicated by the coordinate designation information, and which corresponds to the direction information.

Owner:HONDA MOTOR CO LTD

Sound source separation apparatus and sound source separation method

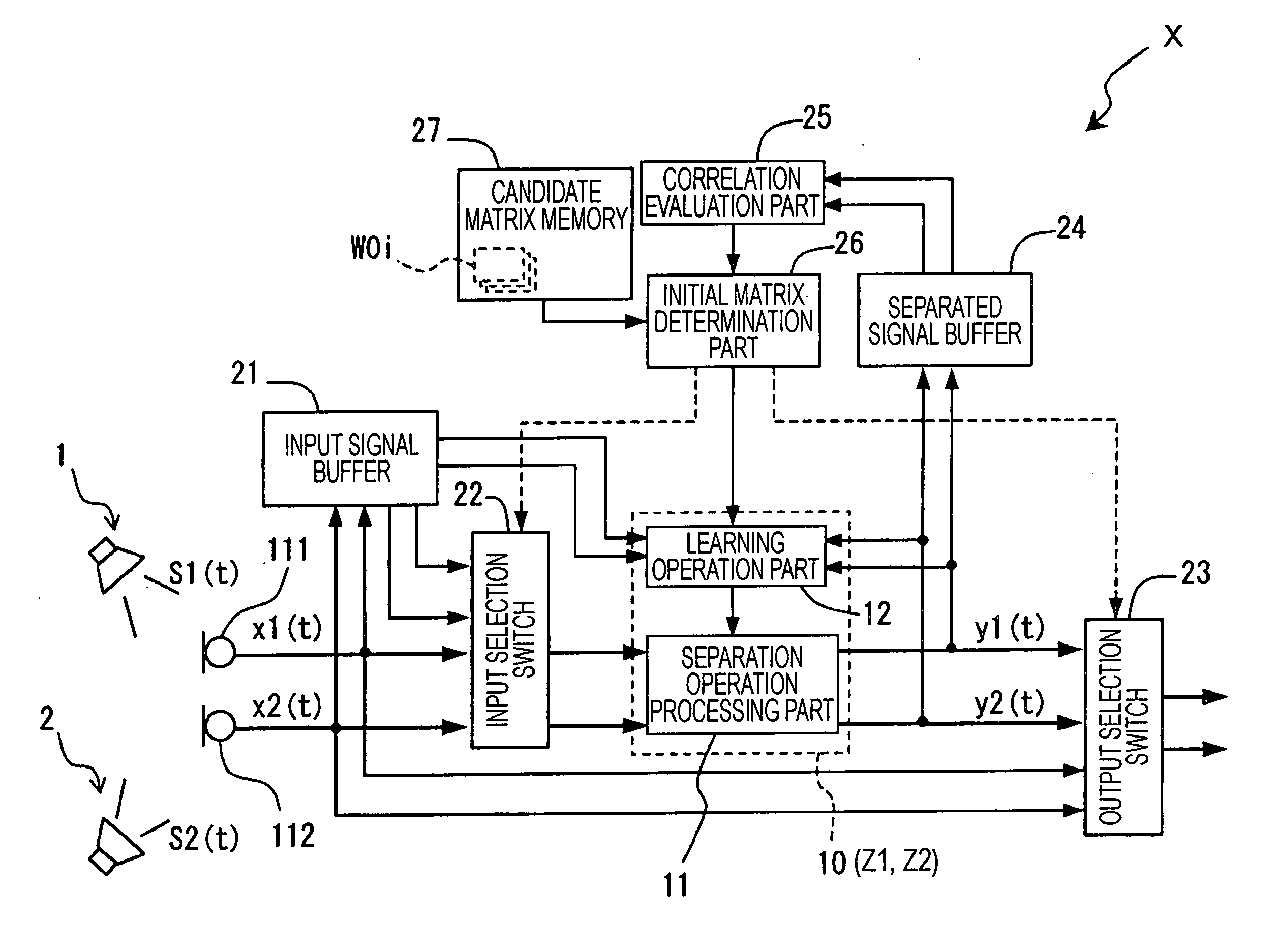

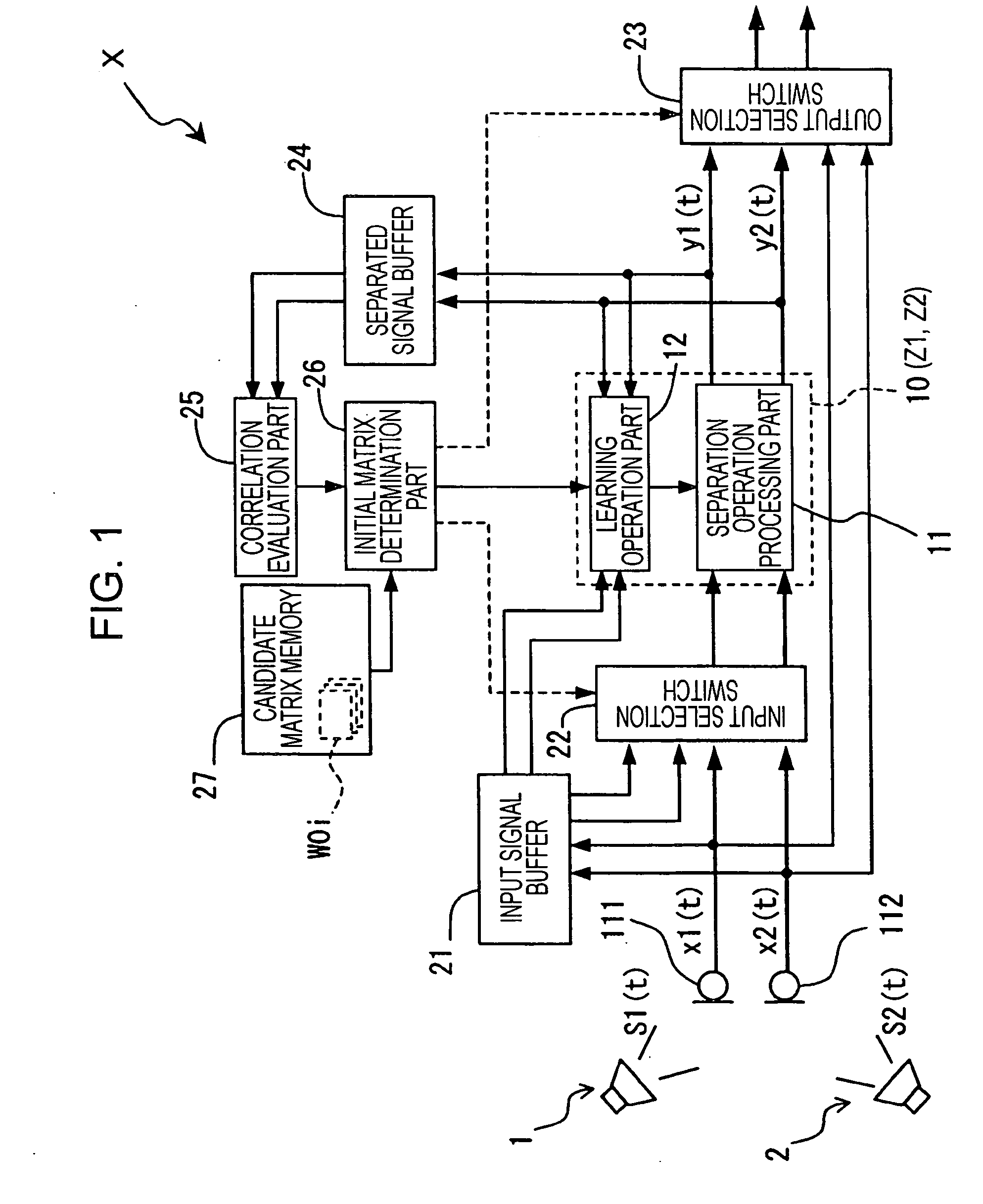

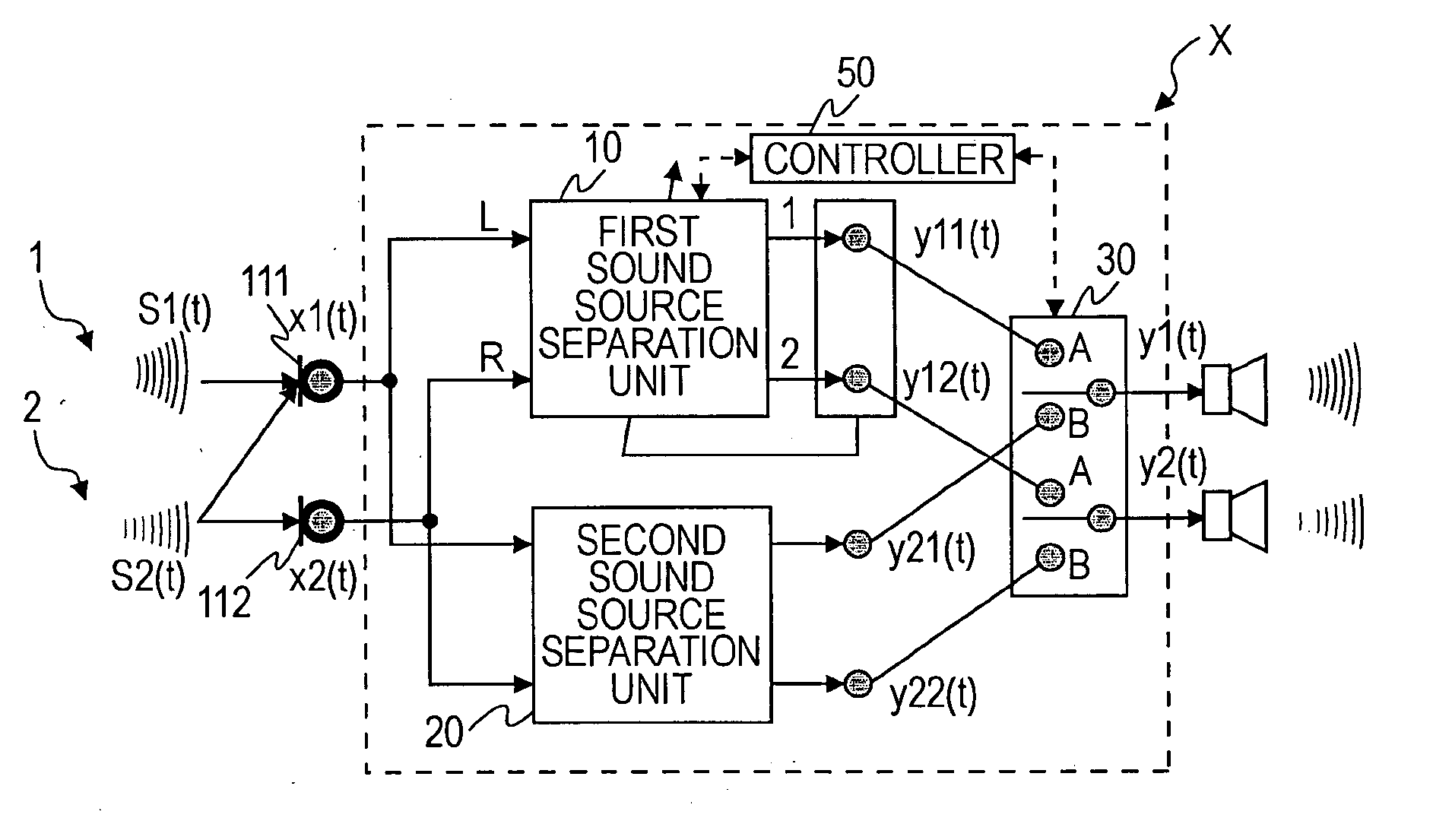

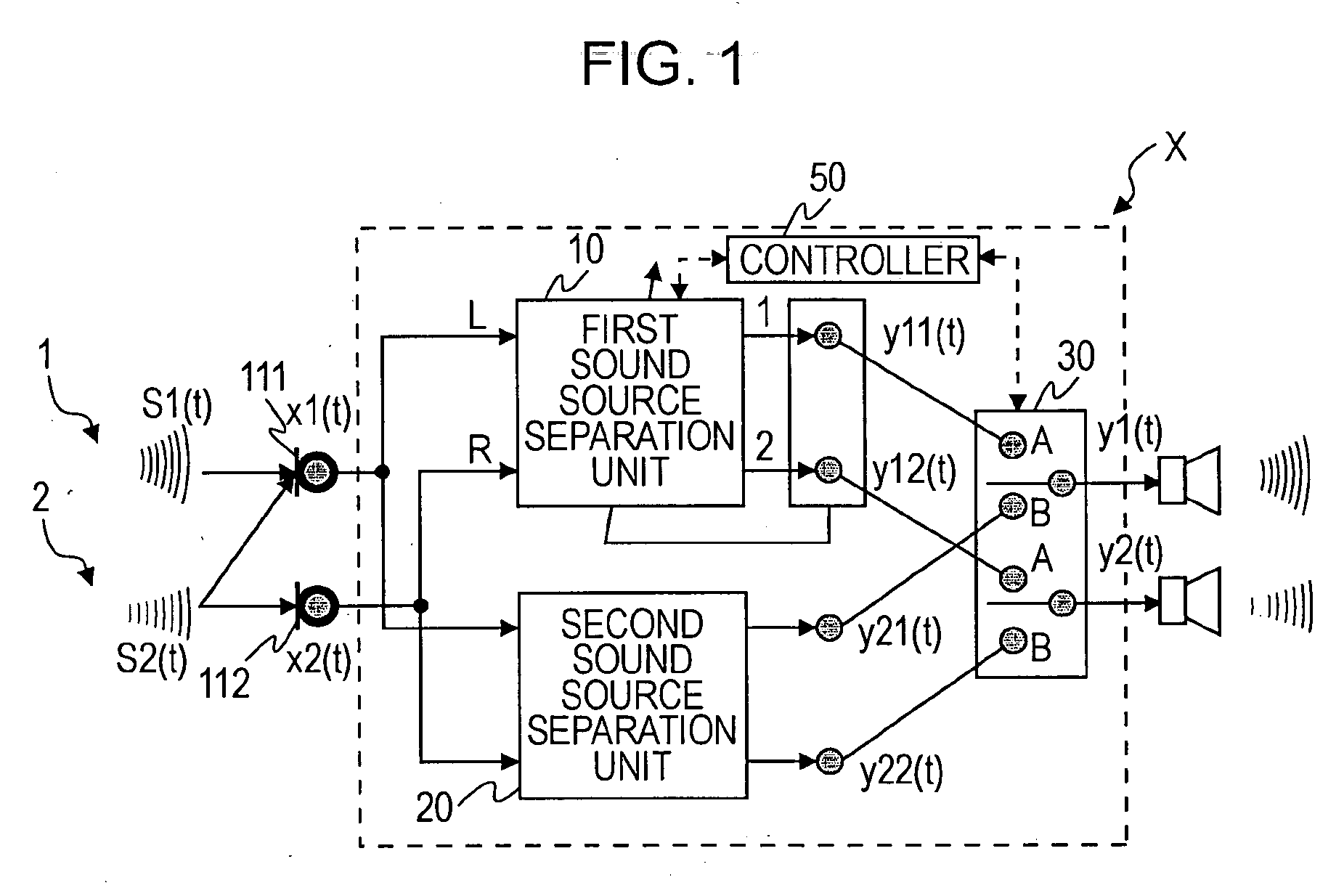

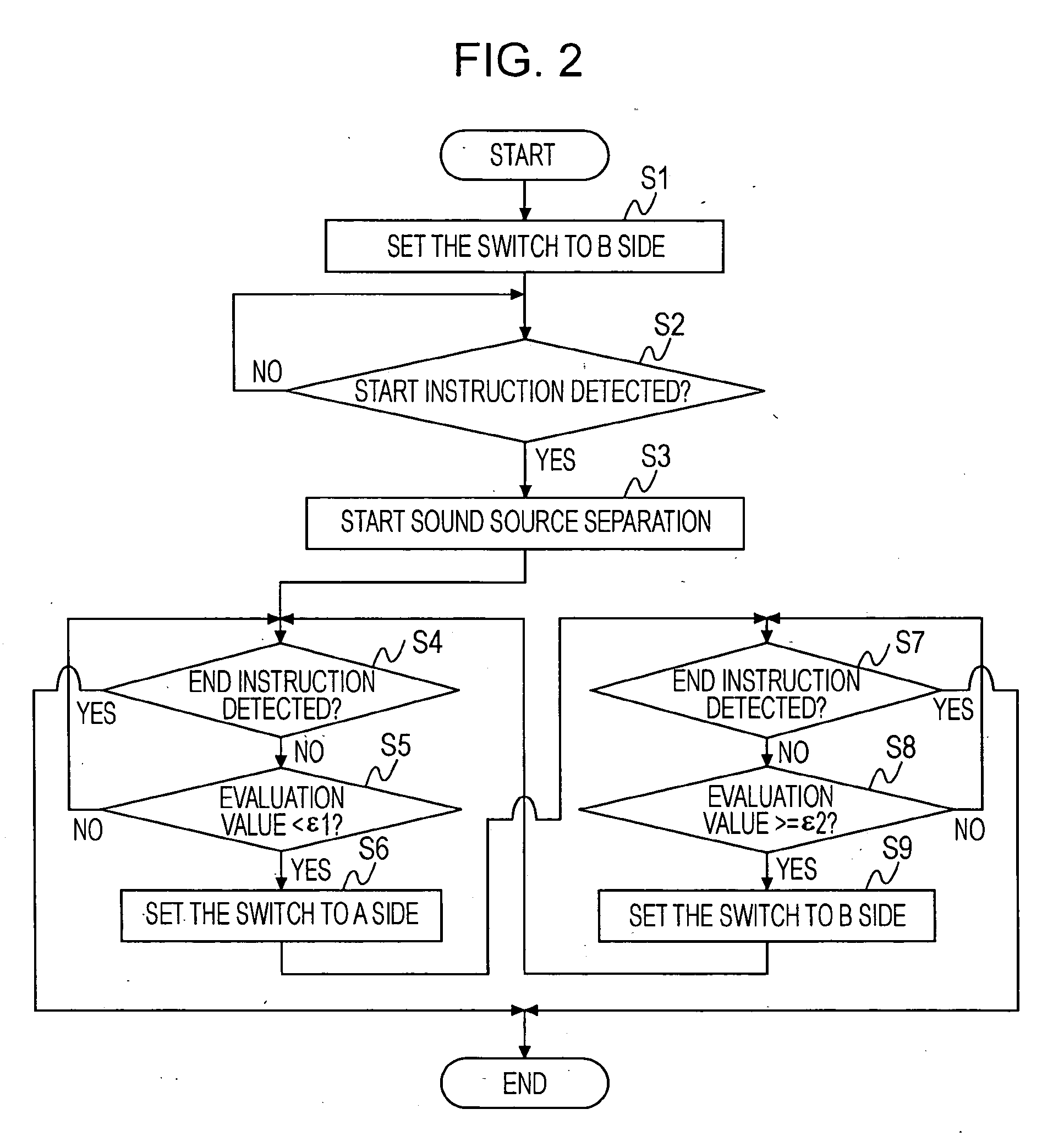

InactiveUS20070025556A1Maximize sound source separation performancePerformance maximizationSpeech analysisTwo-channel systemsSound source separationMultiplexer

A sound source separation apparatus includes a first sound source separation unit that performs blind source separation based on independent component analysis to separate a sound source signal from a plurality of mixed sound signals, thereby generating a first separated signal; a second sound source separation unit that performs real-time sound source separation by using a method other than the blind source separation based on independent component analysis to generate a second separated signal; and a multiplexer that selects one of the first separated signal and the second separated signal as an output signal. The first sound source separation unit continues processing regardless of the selection state of the multiplexer. When the first separated signal is selected as an output signal, the number of sequential calculations of a separating matrix performed in the first sound source separation unit is limited to a number that allows for real-time processing.

Owner:KOBE STEEL LTD

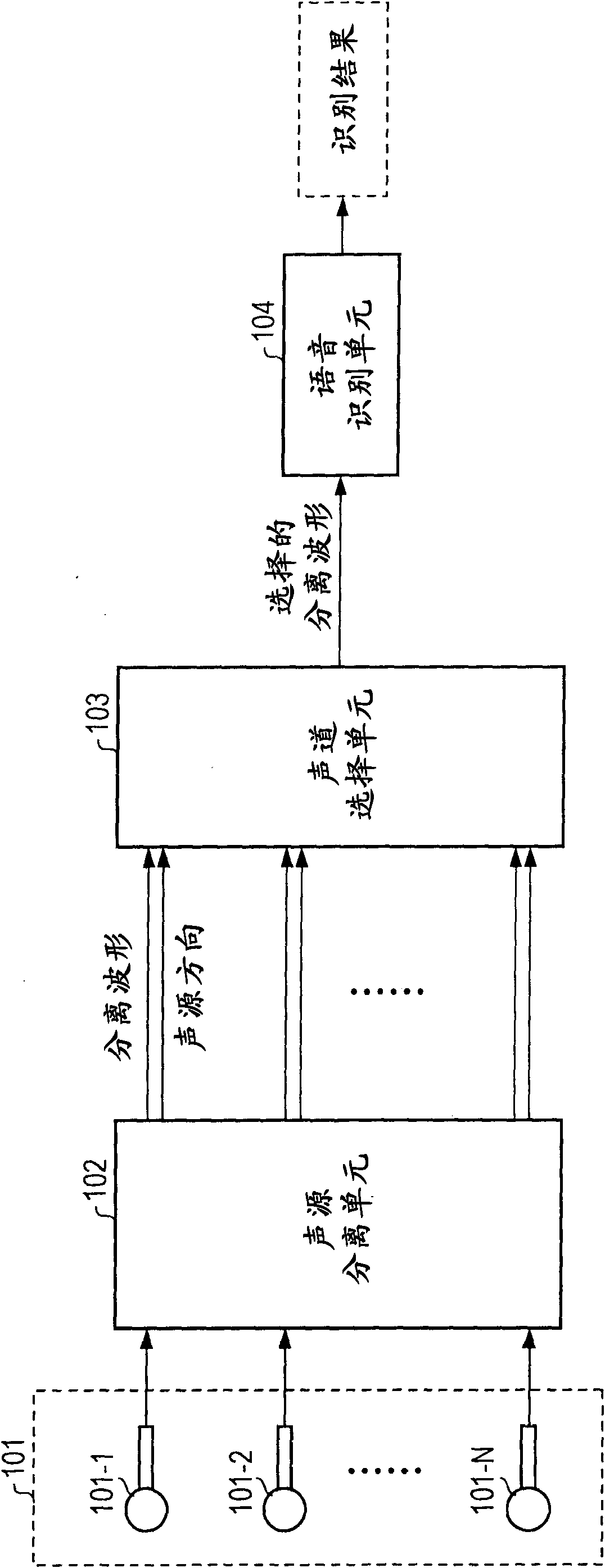

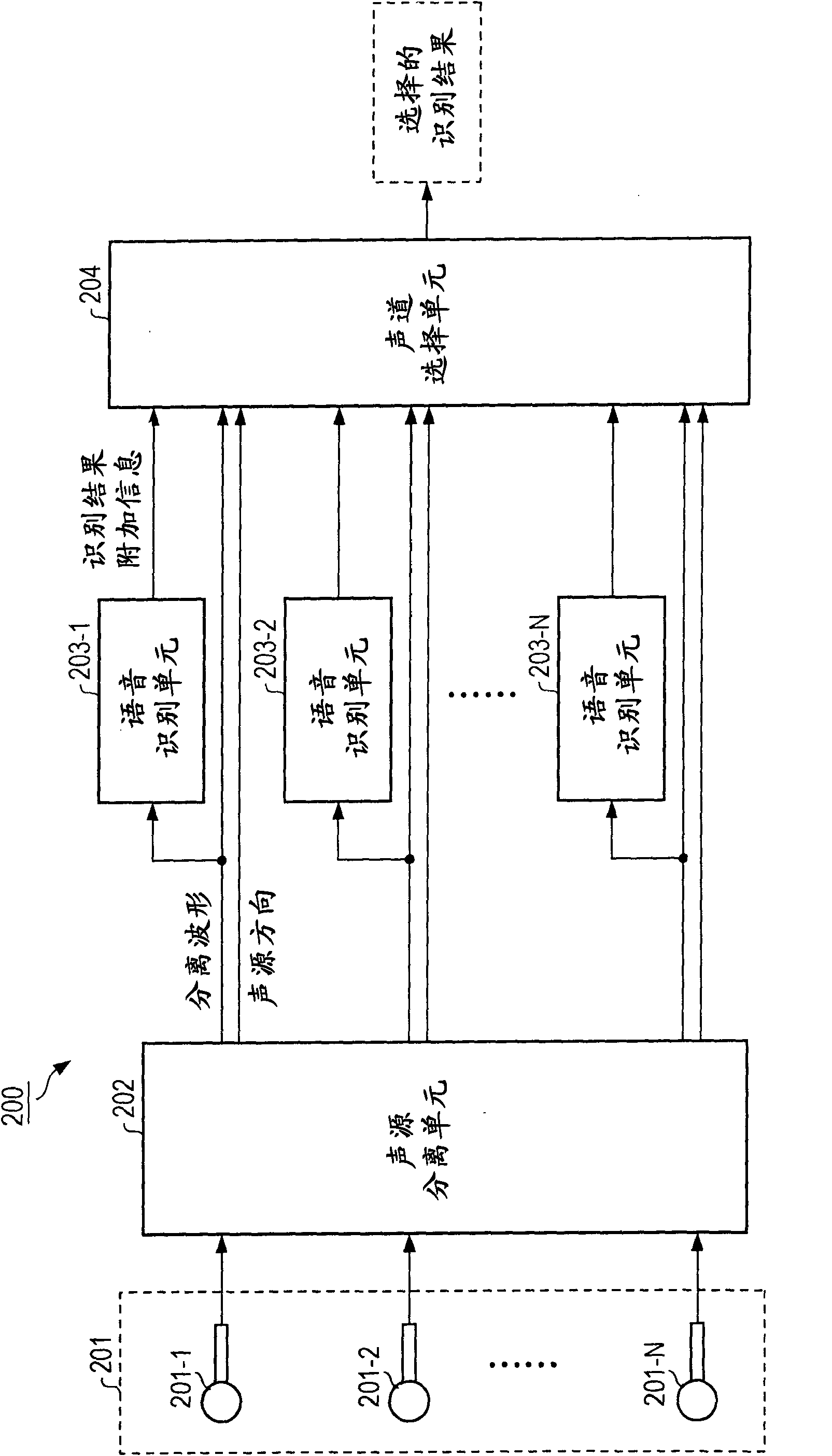

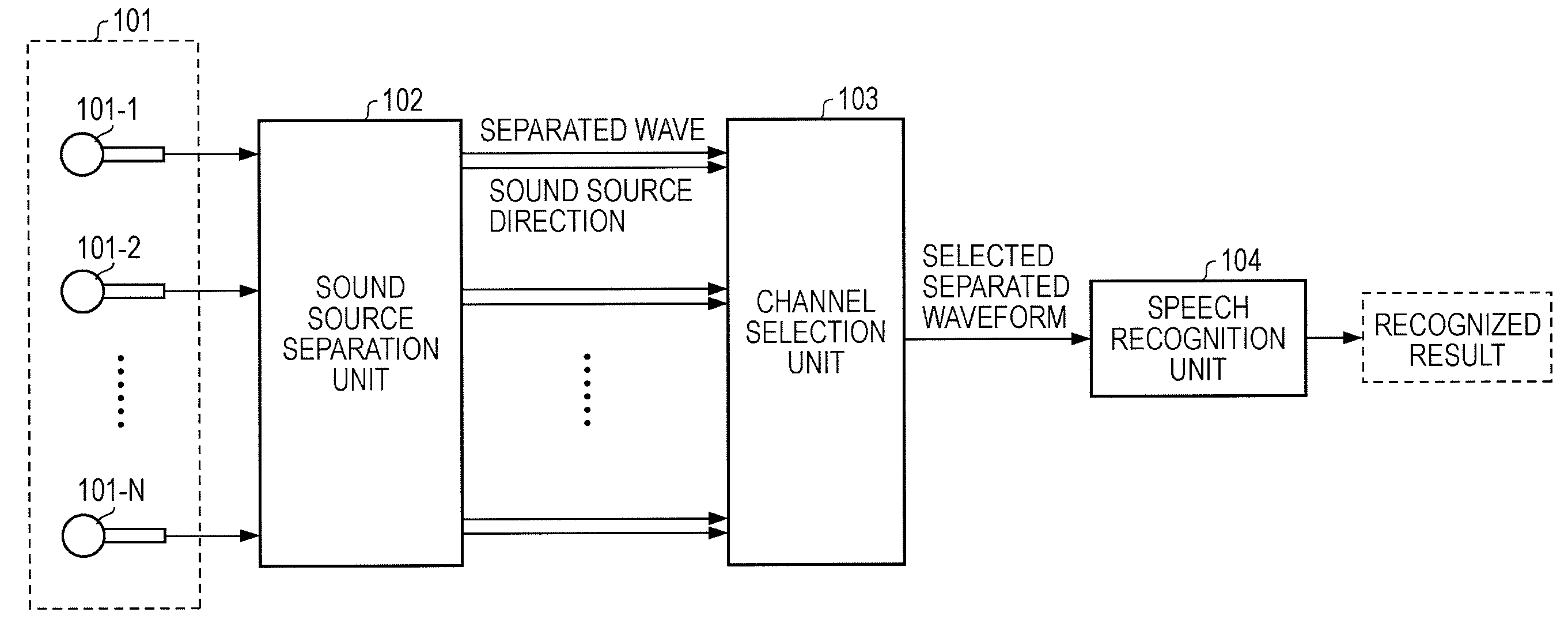

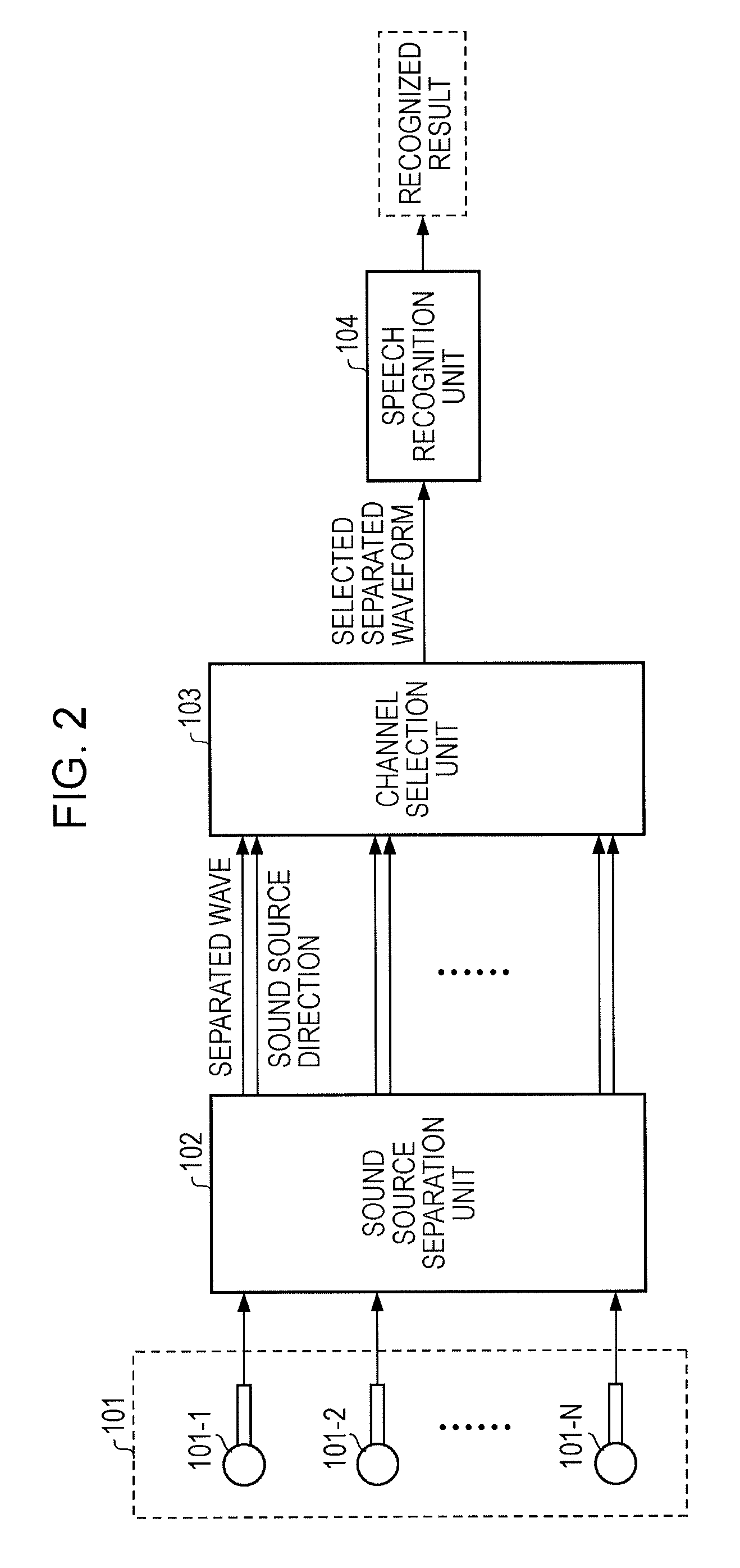

Speech recognition device, speech recognition method, and program

The present invention relates to a speech recognition device, a speech recognition method, and a program. The speech recognition device includes a sound source separation unit configured to separate a mixed signal of outputs of a plurality of sound sources into signals corresponding to individual sound sources and generate separation signals of a plurality of channels; a speech recognition unit configured to input the separation signals of the plurality of channels, wherein the separation signals being generated by the sound source separation unit perform a speech recognition process, generate a speech recognition result corresponding to each channel, and generate additional information serving as evaluation information on the speech recognition result corresponding to each channel; and a channel selection unit configured to input the speech recognition result and the additional information, calculate a score of the speech recognition result corresponding to each channel by applying the additional information, and select and output a speech recognition result having a high score.

Owner:SONY CORP

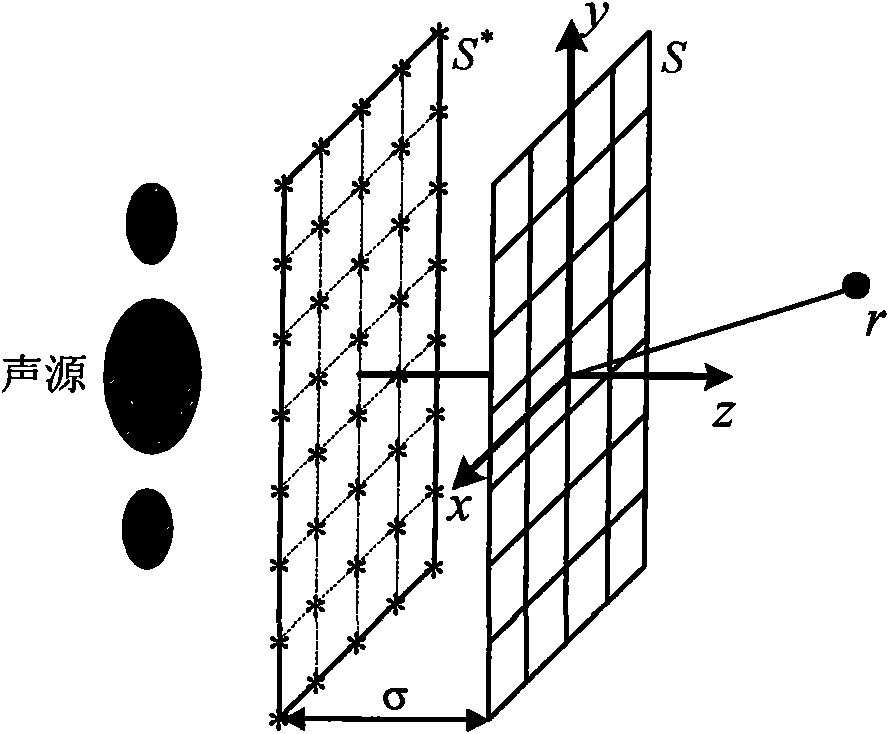

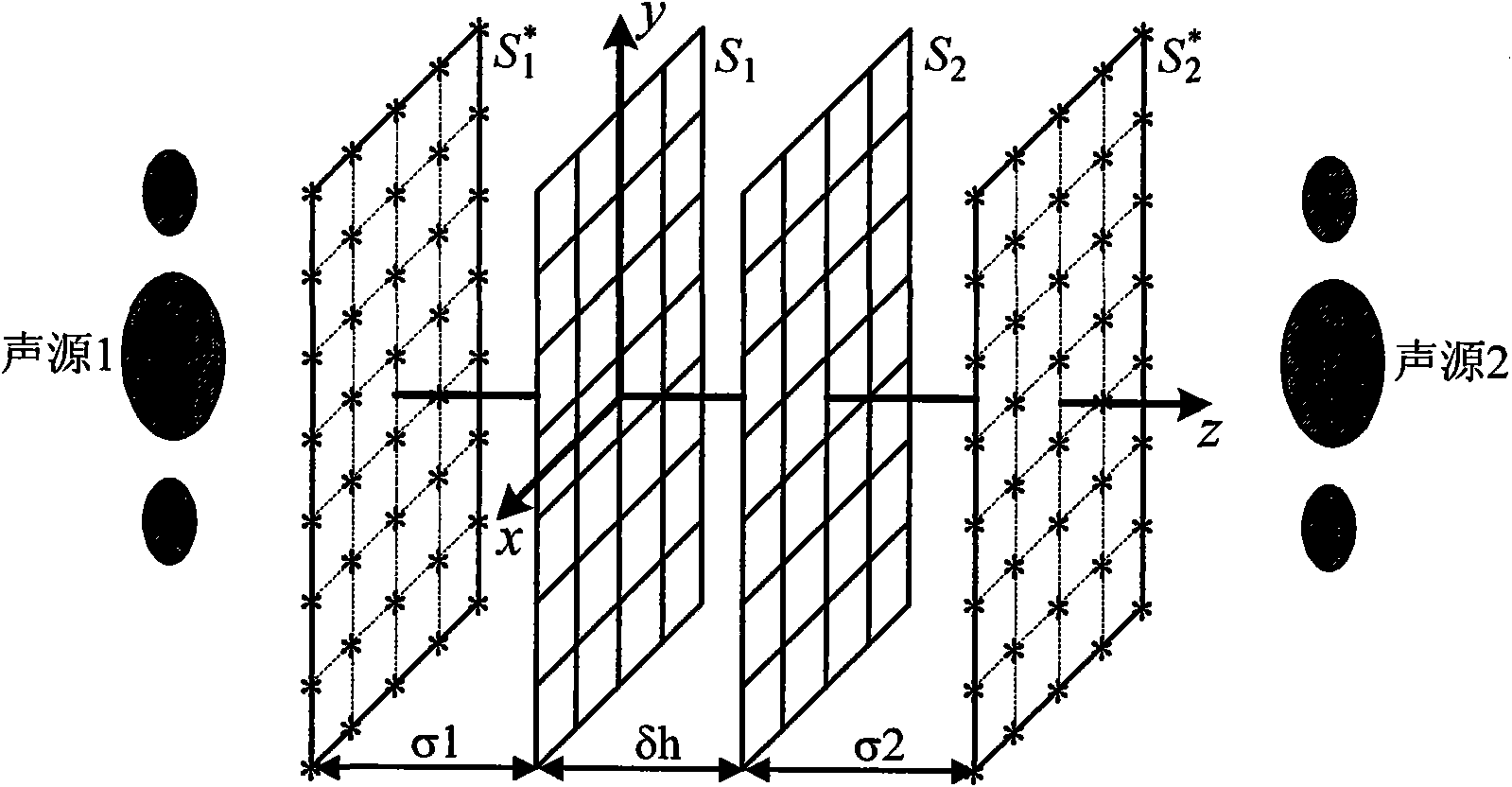

Method for sound field separation by double plane vibration speed measurement and equivalent source method

InactiveCN101566496AHigh precisionGood calculation stabilityMaterial analysis using sonic/ultrasonic/infrasonic wavesVelocity propogationEquivalent source methodSource plane

The invention provides a method for sound field separation by double plane vibration speed measurement and equivalent source method, which is characterized in that a measurement plane S1 and an auxiliary measurement plane S2 parallel therewith and Delta h therefrom are provided in the measured sound field; normal direction particle vibration speed on the two planes are measured; two imaginary source planes S1* and S2* are provided, and equivalent source are distributed on the imaginary source planes; transfer relationship between the equivalent source and normal direction particle vibration speed on two measurement planes are established; strength of each equivalent source on the imaginary source planes S1* and S2* are determined according to the transfer relationship; sound pressure and normal direction particle vibration speed radiated by sound sources on both sides of the two measurement planes are separated according to strength of equivalent source on two imaginary source plane. The invention adopts equivalent source method as the sound source separation algorithm, which has great computation stability, high computation accuracy, and simple implementation; normal direction vibration speed on two measurement planes are used as input for the separation, therefore vibration speed of the separated normal direction particle has high accuracy. The method is widely applicable near-field acoustic holographic measurement under internal sound field or noise environment, material reflection coefficient measurement, scattering sound field separation.

Owner:HEFEI UNIV OF TECH

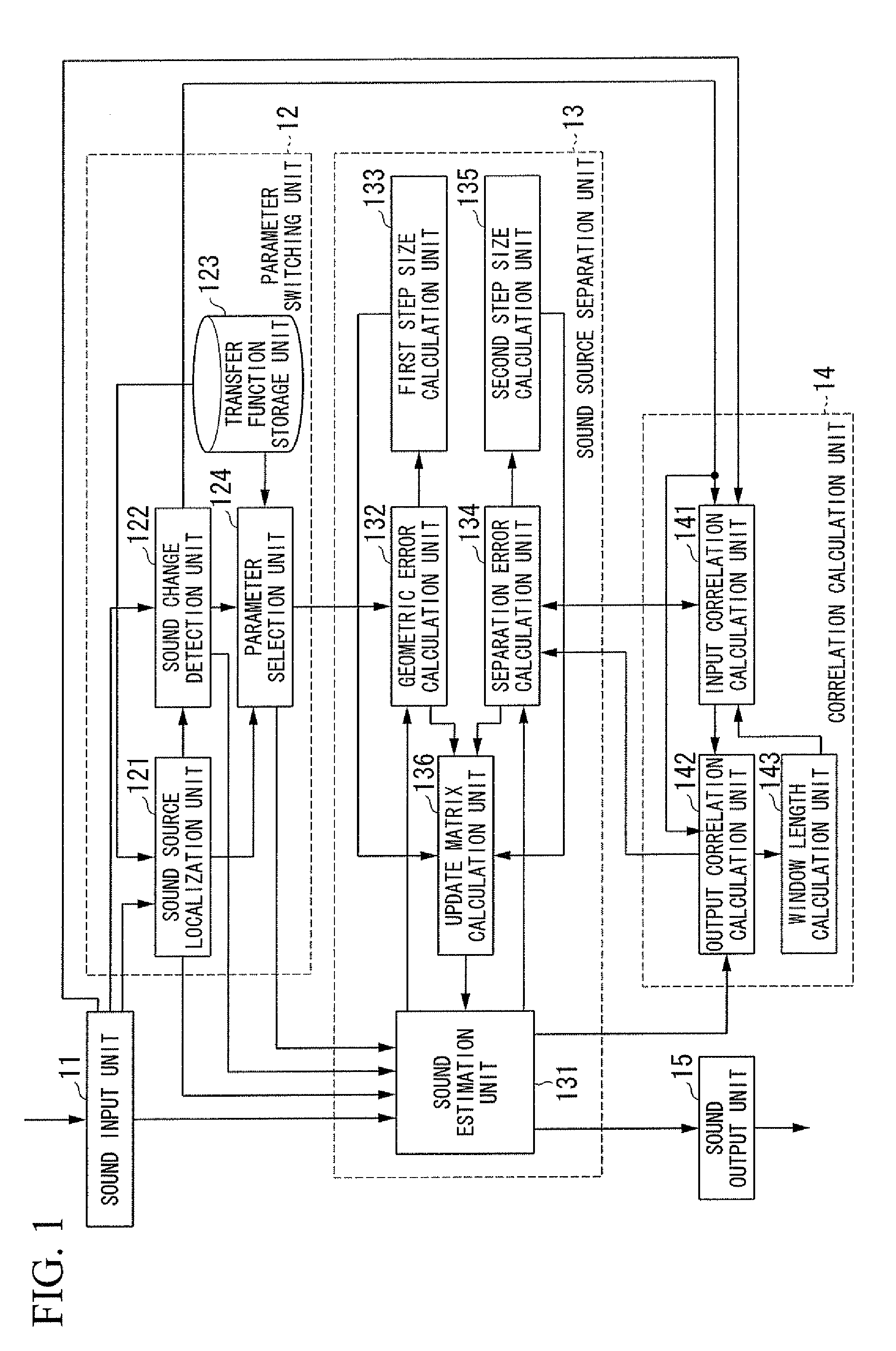

Sound source separation apparatus and sound source separation method

ActiveUS20120045066A1Degree of reductionReduce componentsSpeech analysisStereophonic arrangmentsSound source separationComputer science

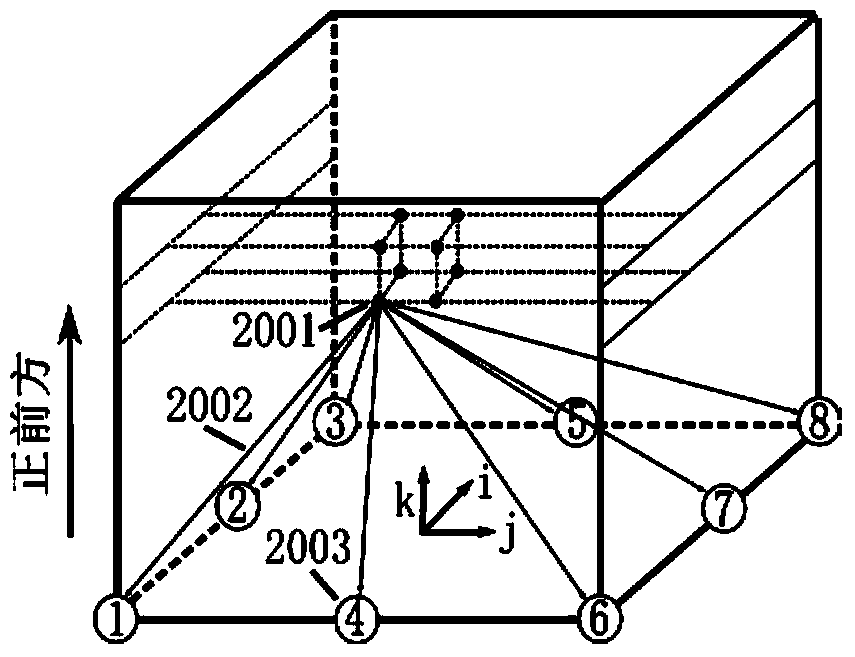

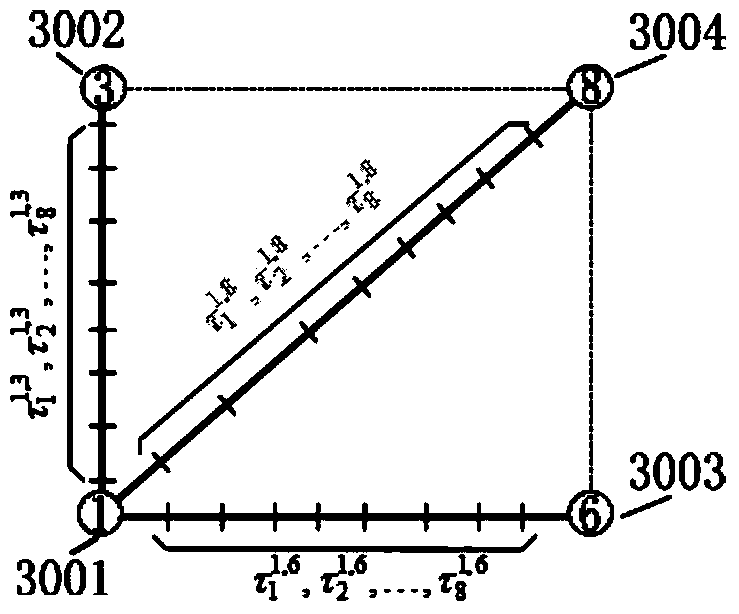

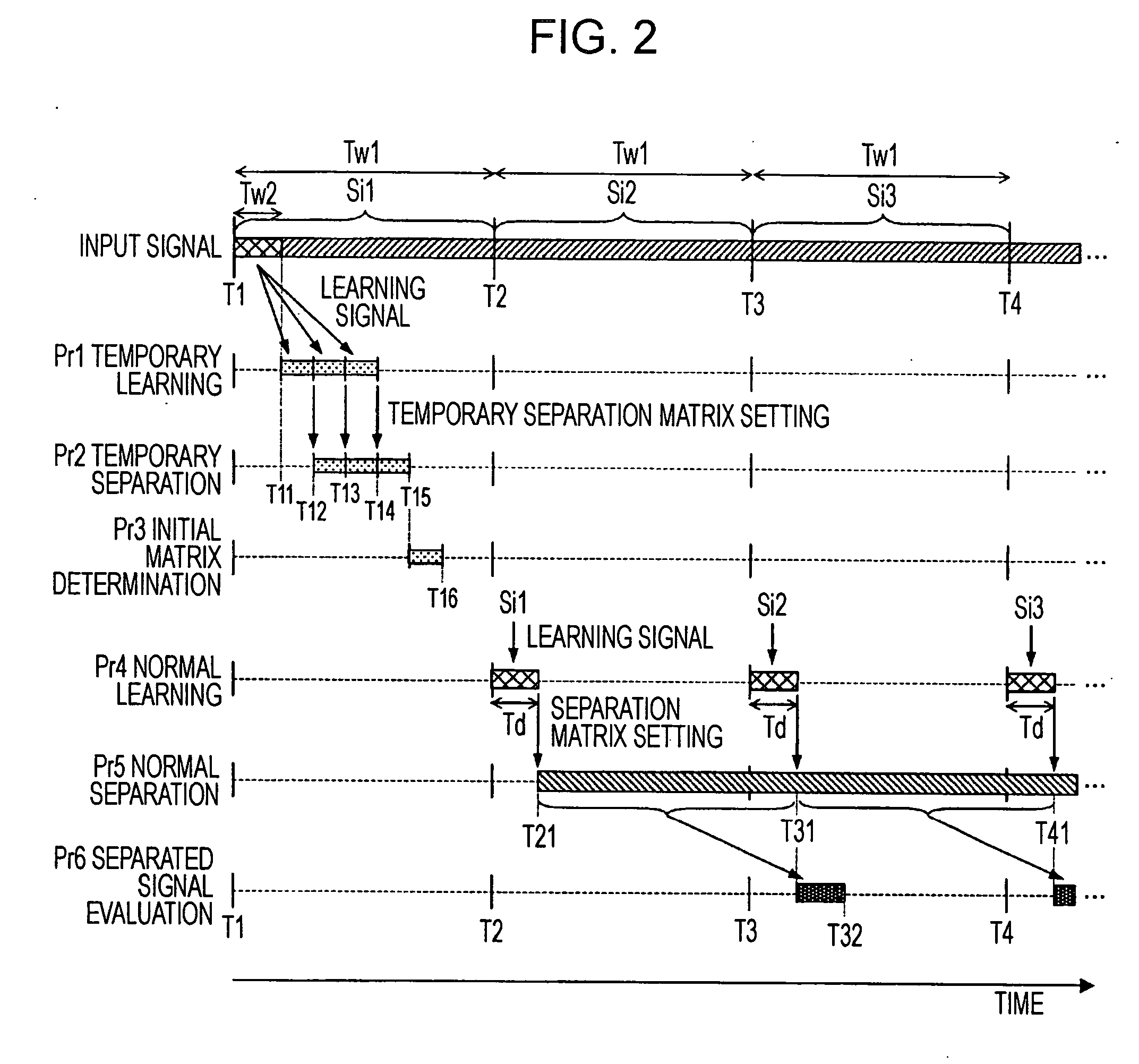

A sound source separation apparatus includes a transfer function storage unit that stores a transfer function from a sound source, a sound change detection unit that generates change state information indicating a change of the sound source on the basis of an input signal input from a sound input unit, a parameter selection unit that calculates an initial separation matrix on the basis of the change state information generated by the sound change detection unit, and a sound source separation unit that separates the sound source from the input signal input from the sound input unit using the initial separation matrix calculated by the parameter selection unit.

Owner:HONDA MOTOR CO LTD

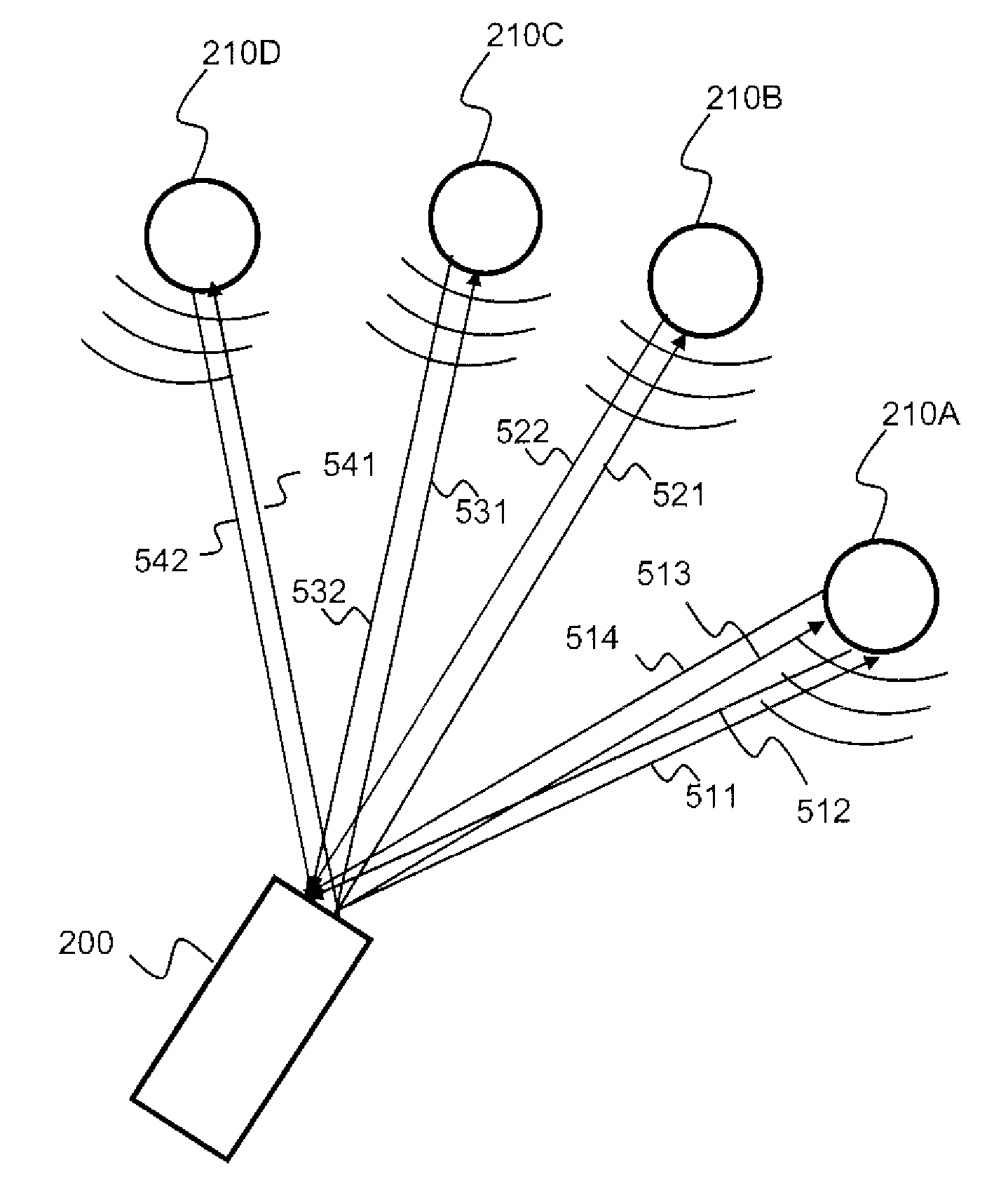

Sound sources separation and monitoring using directional coherent electromagnetic waves

InactiveUS7775113B2Eliminate noise componentsEnsures independenceVibration measurement in solidsMultiple-port networksSound source separationLight beam

An apparatus and a method that achieve physical separation of sound sources by pointing directly a beam of coherent electromagnetic waves (i.e. laser). Analyzing the physical properties of a beam reflected from the vibrations generating sound source enable the reconstruction of the sound signal generated by the sound source, eliminating the noise component added to the original sound signal. In addition, the use of multiple electromagnetic waves beams or a beam that rapidly skips from one sound source to another allows the physical separation of these sound sources. Aiming each beam to a different sound source ensures the independence of the sound signals sources and therefore provides full sources separation.

Owner:VOCALZOOM SYST

Robotics visual and auditory system

InactiveUS20060241808A1The effect is accurateImprove robustnessProgramme-controlled manipulatorComputer controlSound source separationPhase difference

Robotics visual and auditory system is provided which is made capable of accurately conducting the sound source localization of a target by associating a visual and an auditory information with respect to a target. It is provided with an audition module (20), a face module (30), a stereo module (37), a motor control module (40), an association module (50) for generating streams by associating events from said each module (20, 30, 37, and 40), and an attention control module (57) for conducting attention control based on the streams generated by the association module (50), and said association module (50) generates an auditory stream (55) and a visual stream (56) from a auditory event (28) from the auditory module (20), a face event (39) from the face module (30), a stereo event (39a) from the stereo module (37), and a motor event (48) from the motor control module (40), and an association stream (57) which associates said streams, as well as said audition module (20) collects sub-bands having the interaural phase difference (IPD) or the interaural intensity difference (IID) within the preset range by an active direction pass filter (23a) having a pass range which, according to auditory characteristics, becomes minimum in the frontal direction, and larger as the angle becomes wider to the left and right, based on an accurate sound source directional information from the association module (50), and conducts sound source separation by restructuring the wave shape of the sound source.

Owner:HONDA MOTOR CO LTD

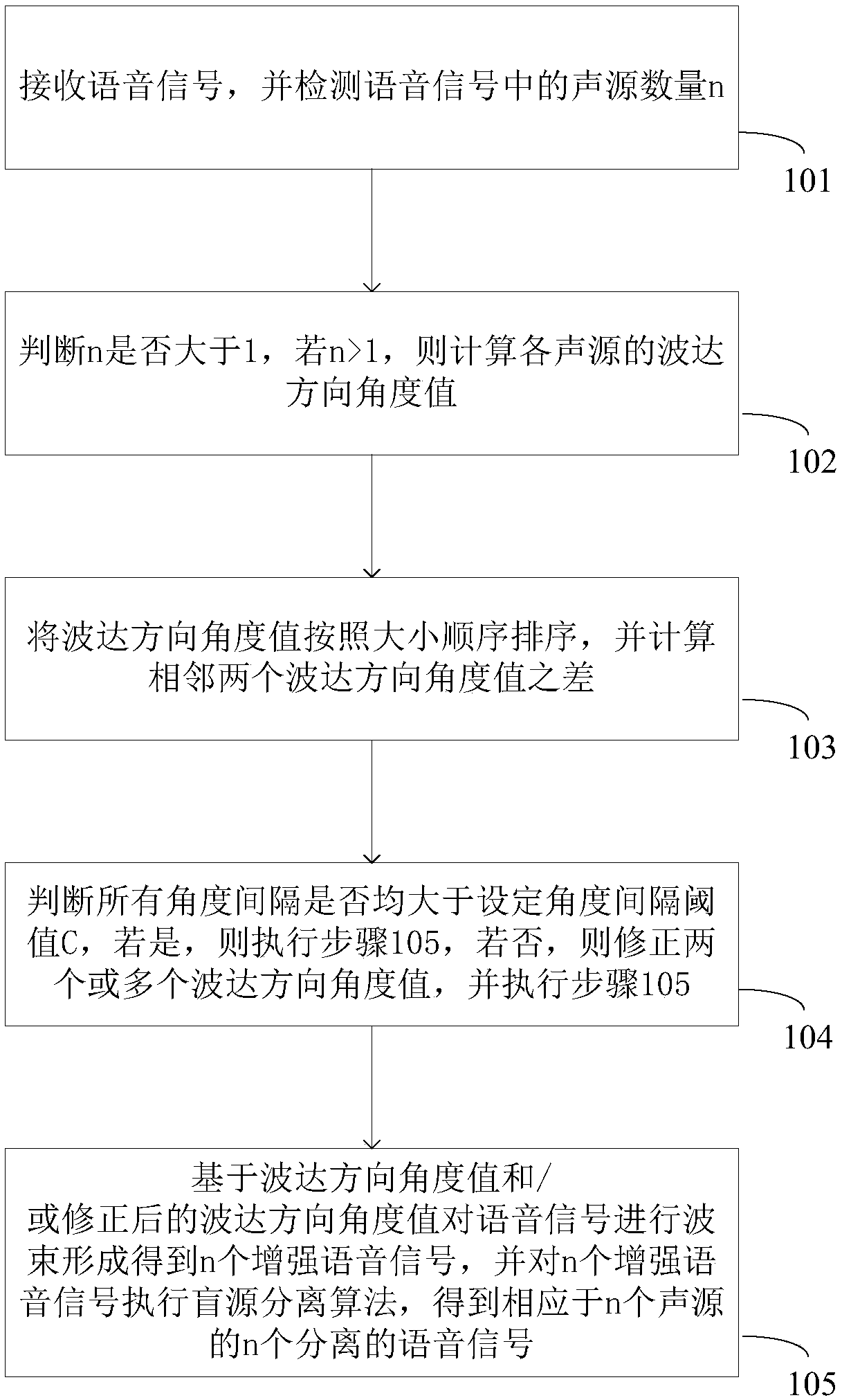

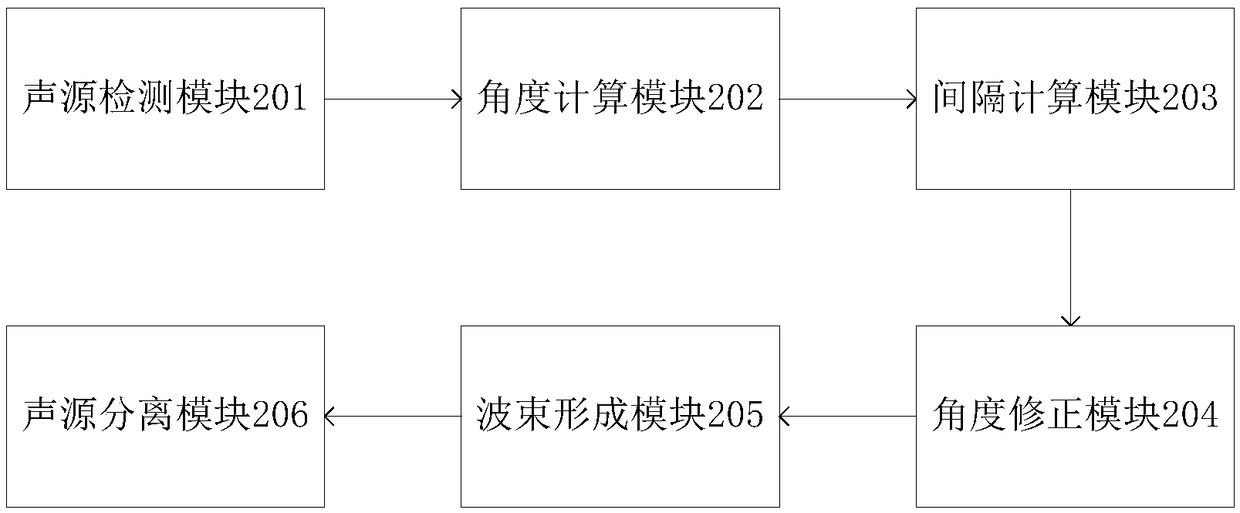

Method and system for performing sound source separation on sound signal acquired by microphone array

ActiveCN108735227AEasy to separateImprove the problem of unsatisfactory separation effectSpeech analysisSound source separationSpeech sound

The invention provides a method and a system for performing sound source separation on a sound signal acquired by a microphone array. The method comprises the steps of receiving the sound signal and detecting the number n of the sound sources in the sound signal; determining whether n is larger than one, if yes, calculating a direction-of-arrival angle value of each sound source; ordering the direction-of-arrival angle values according to a magnitude sequence, and calculating a difference between two adjacent direction-of-arrival angle values; determining whether all angle intervals is largerthan or equal with a preset angle interval threshold C, if yes, executing a next step, and otherwise, correcting two or more direction-of-arrival angle values, and executing a next step; performing wave beam forming based on the direction-of-arrival angle value and / or the corrected direction-of-arrival angle value for obtaining n enhanced voice signals, and executing a blind source separation algorithm on n enhanced voice signals, and n separated sound signals which correspond with n sound sources. The method and the system according to the invention can realize better sound source separationperformance.

Owner:北京三听科技有限公司

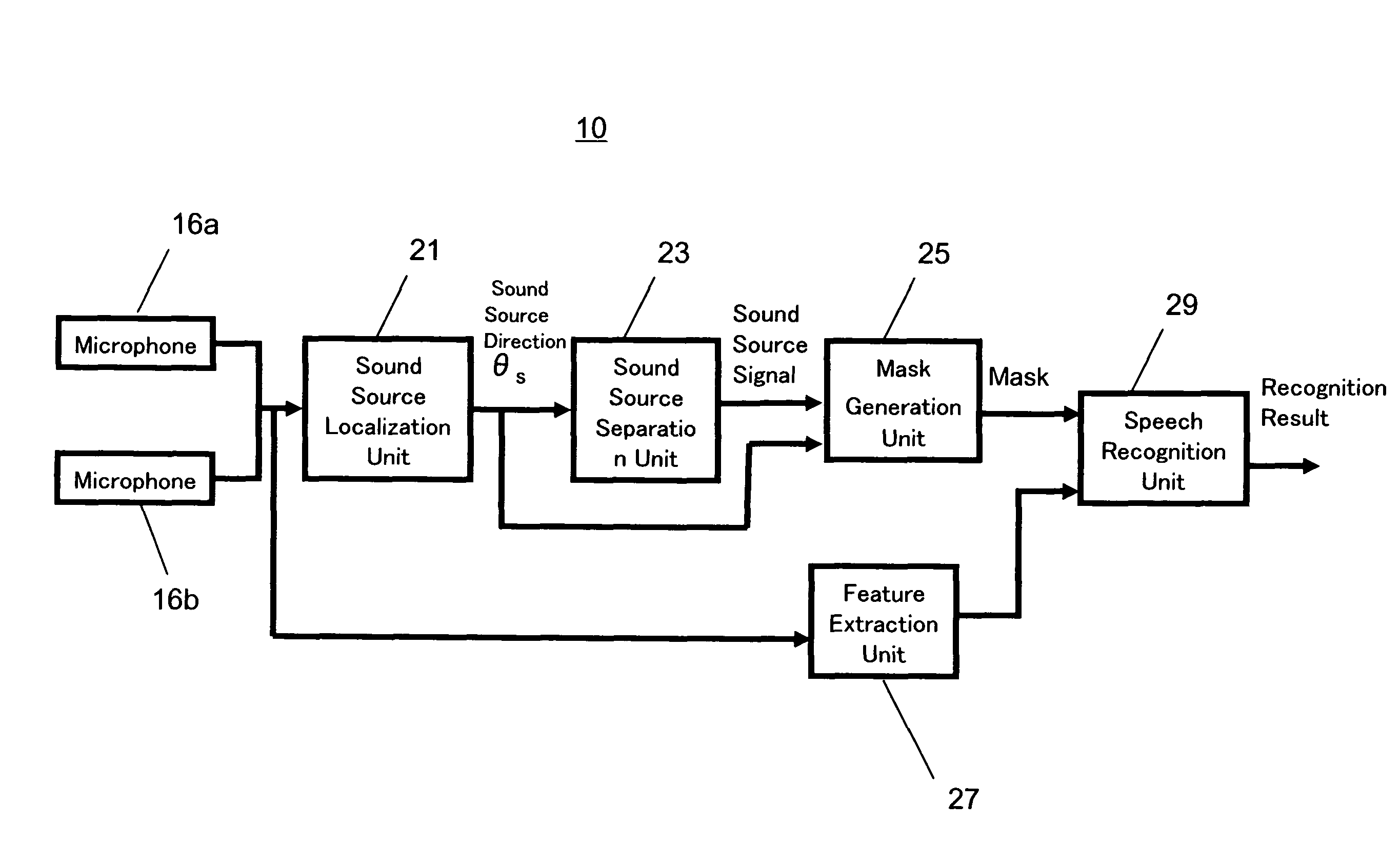

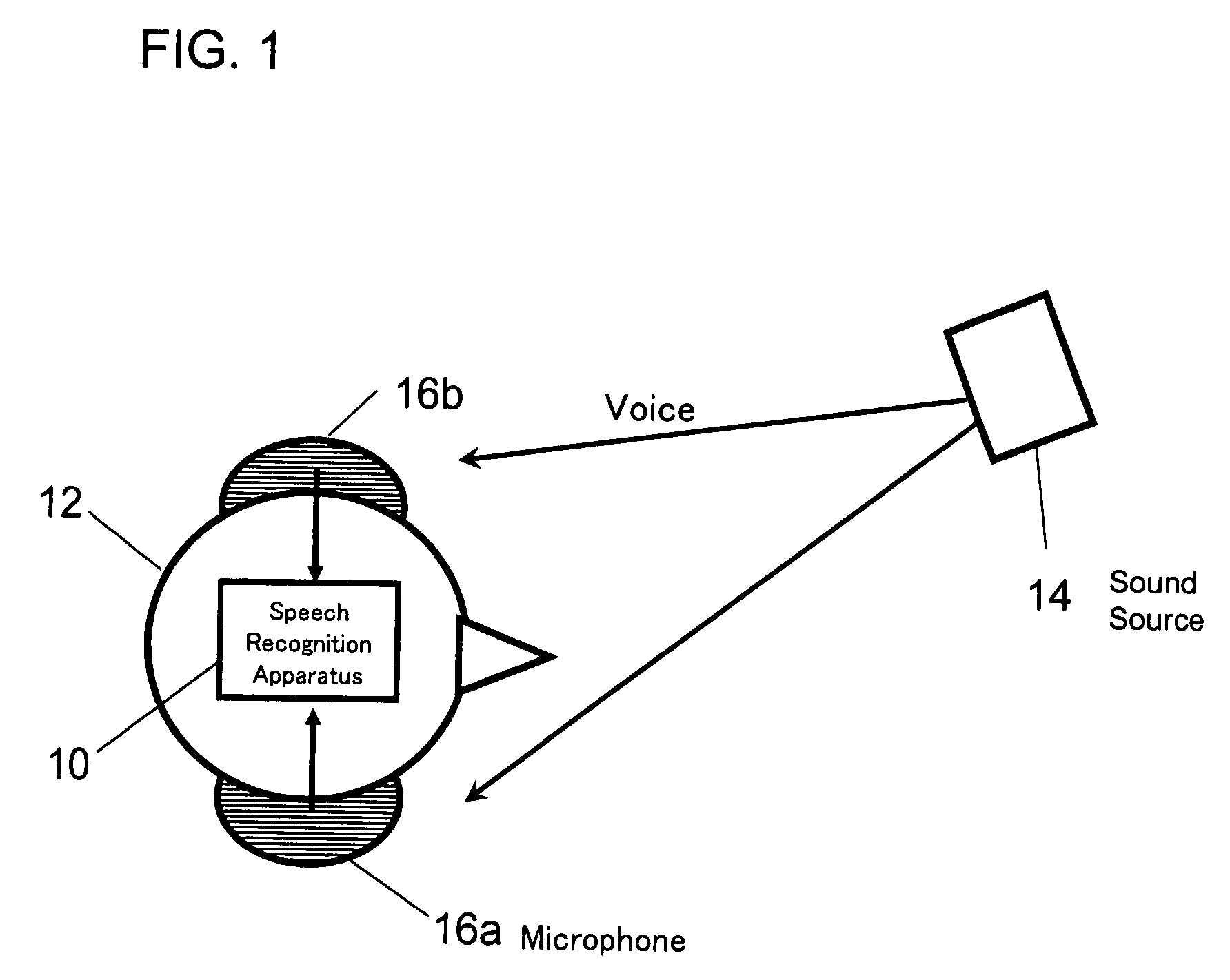

Speech recognition apparatus and method recognizing a speech from sound signals collected from outside

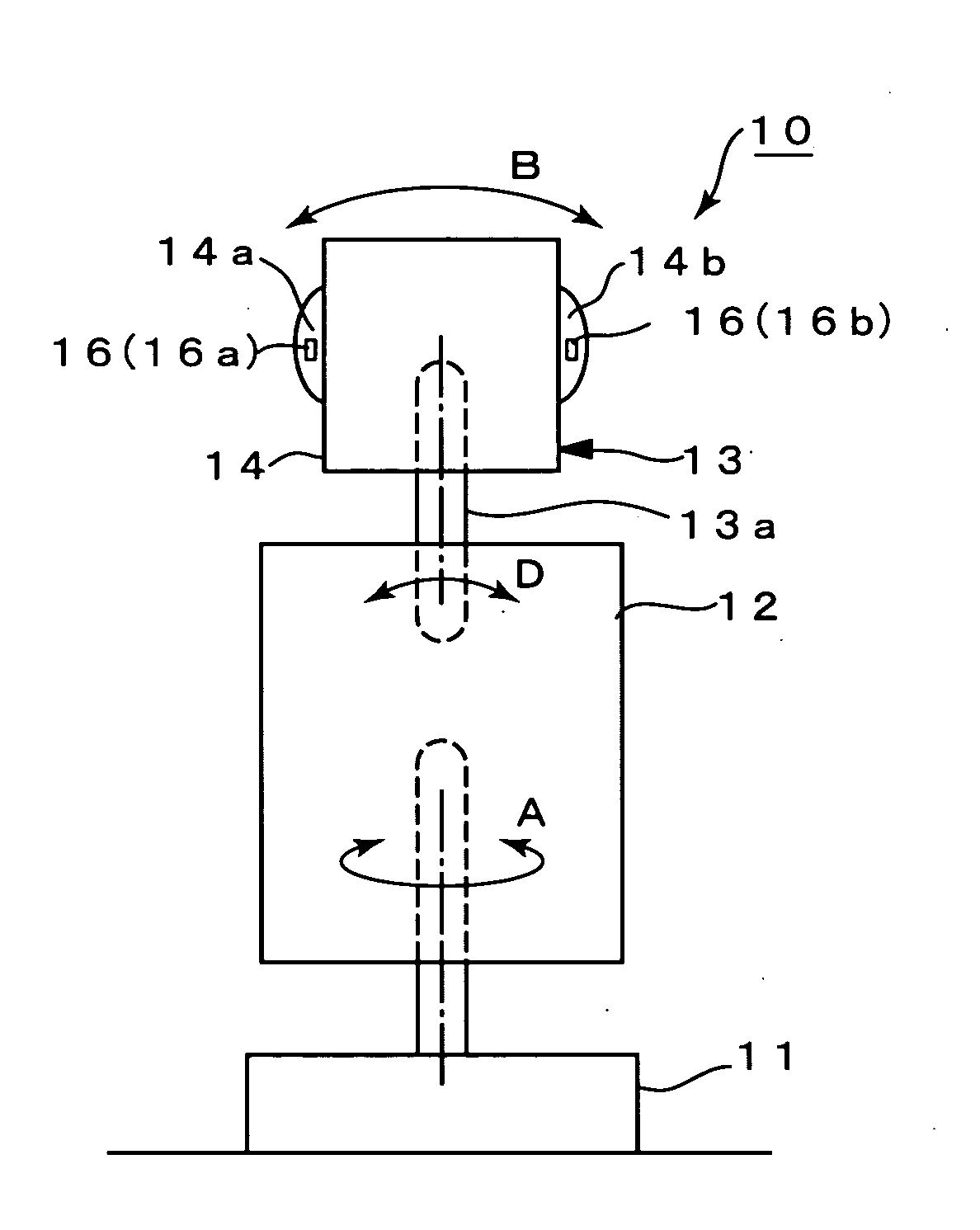

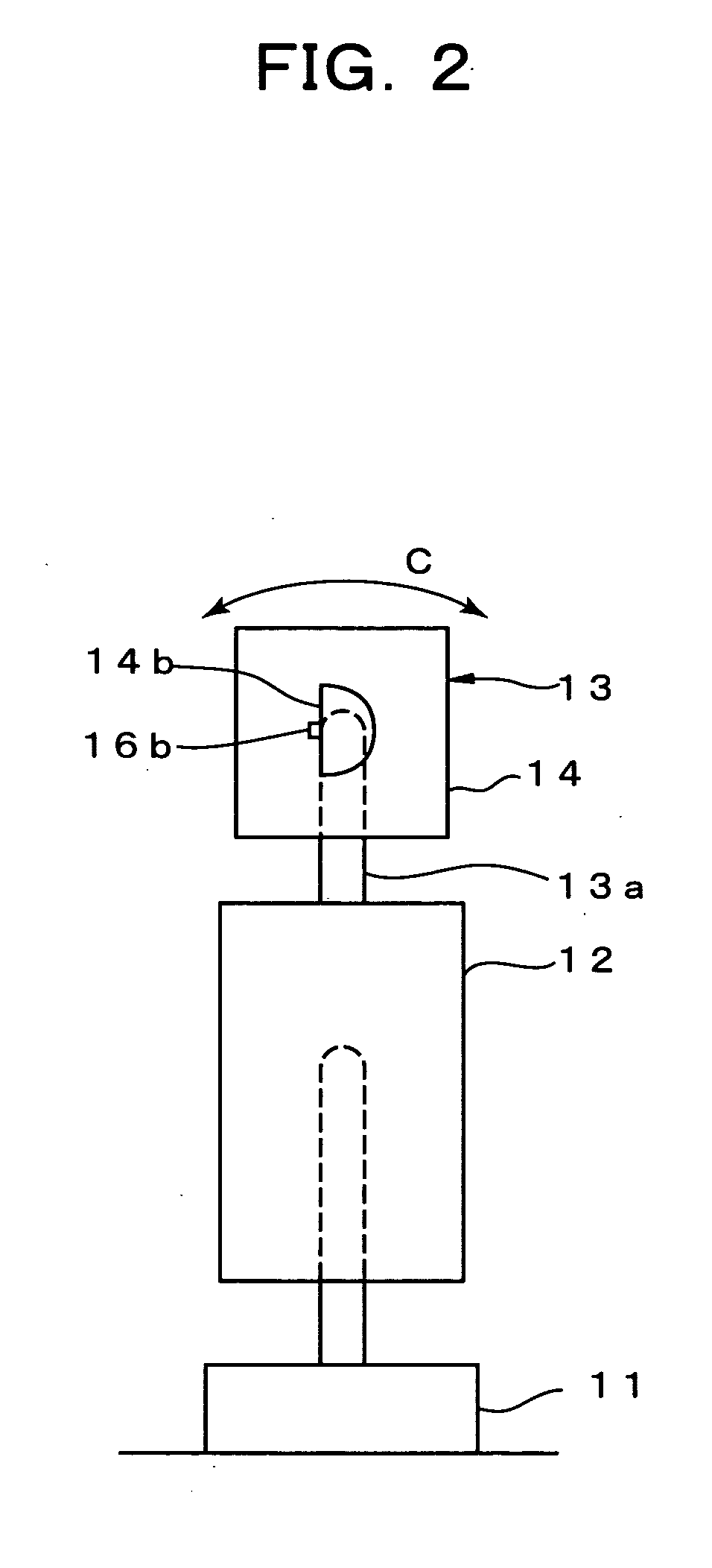

ActiveUS8073690B2Improve robustnessImprove reliabilitySpeech recognitionSound detectionSound source separation

A voice recognition system (10) for improving the toughness of voice recognition for a voice input for which a deteriorated feature amount cannot be completely identified. The system comprises at least two sound detecting means (16a, 16b) for detecting a sound signal, a sound source localizing unit (21) for determining the direction of a sound source based on the sound signal, a sound source separating unit (23) for separating a sound by the sound source from the sound signal based on the sound source direction, a mask producing unit (25) for producing a mask value according to the reliability of the separation results, a feature extracting unit (27) for extracting the feature amount of the sound signal, and a voice recognizing unit (29) for applying the mask to the feature amount to recognize a voice from the sound signal.

Owner:HONDA MOTOR CO LTD

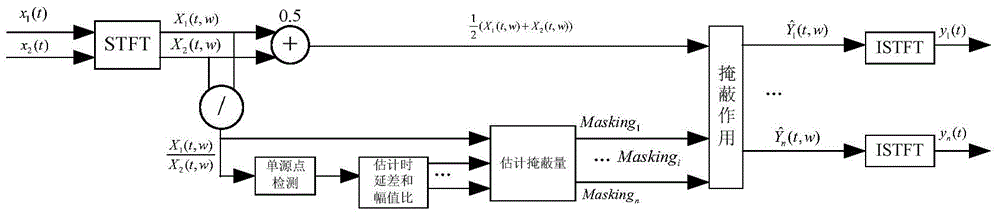

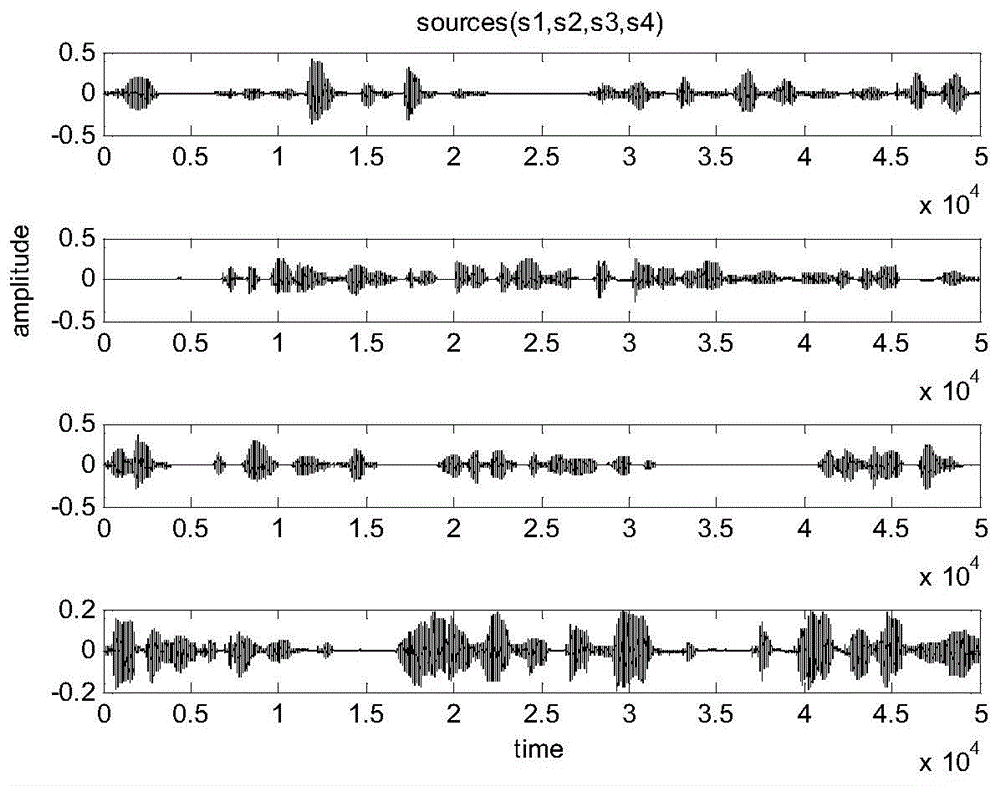

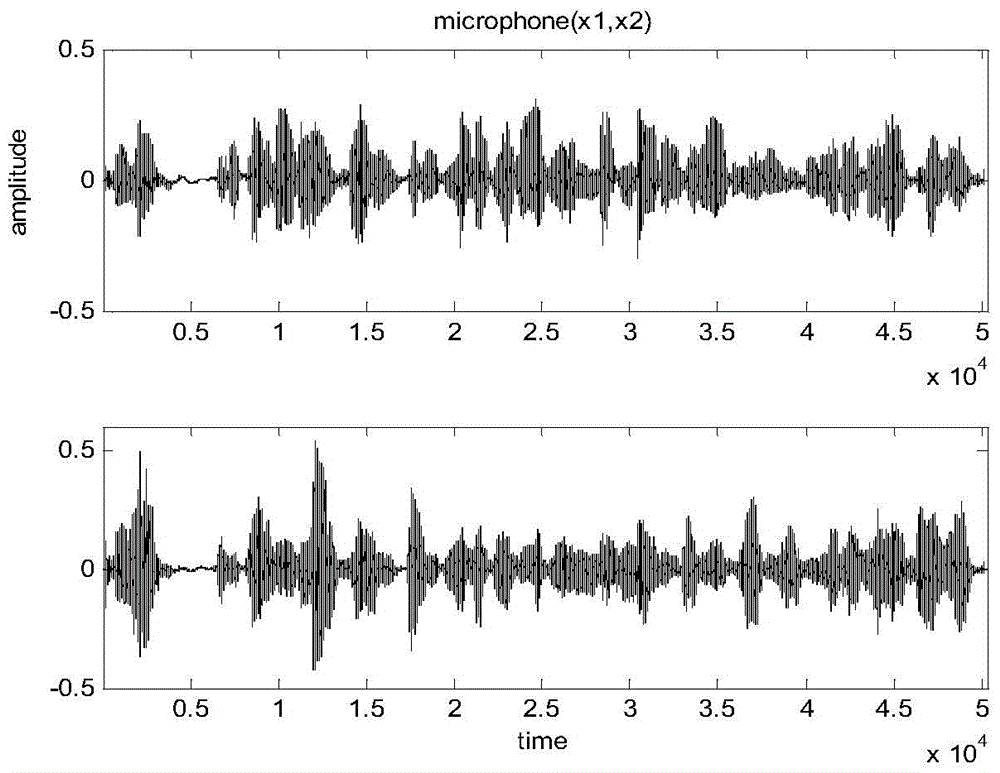

Quick source signal reconstruction method achieving blind sound source separation of two microphones

InactiveCN104167214AAvoid problemsSmall amount of calculationSpeech analysisSound source separationTime domain

The invention discloses a quick source signal reconstruction method achieving blind sound source separation of two microphones, belongs to the field of speech signal processing, and particularly relates to a quick source signal reconstruction method achieving sound source separation of the two microphones on the situation that the number of sound sources and the surrounding environments are unknown. On the situation that temporal envelopes of reconstruction source signals are not influenced, a small additional phase is added to each frequency component of reconstruction signals, contribution of each source signal to a time-frequency point is approximately calculated to simulate the proportion of each source signal in the time-frequency point, and accordingly the source signals are restored. A traditional solving process of an underdetermined system of equations is avoided, calculation steps are simplified, and the aim of quickness is achieved. Accordingly, compared with an existing algorithm, the method has the advantages that calculation amount is little, and the signal-noise ratio is high on the situation that the number of the sound sources is increased.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Speech recognition device, speech recognition method, and program

A speech recognition device includes a sound source separation unit configured to separate a mixed signal of outputs of a plurality of sound sources into signals corresponding to individual sound sources and generate separation signals of a plurality of channels; a speech recognition unit configured to input the separation signals of the plurality of channels, the separation signals being generated by the sound source separation unit, perform a speech recognition process, generate a speech recognition result corresponding to each channel, and generate additional information serving as evaluation information on the speech recognition result corresponding to each channel; and a channel selection unit configured to input the speech recognition result and the additional information, calculate a score of the speech recognition result corresponding to each channel by applying the additional information, and select and output a speech recognition result having a high score.

Owner:SONY CORP

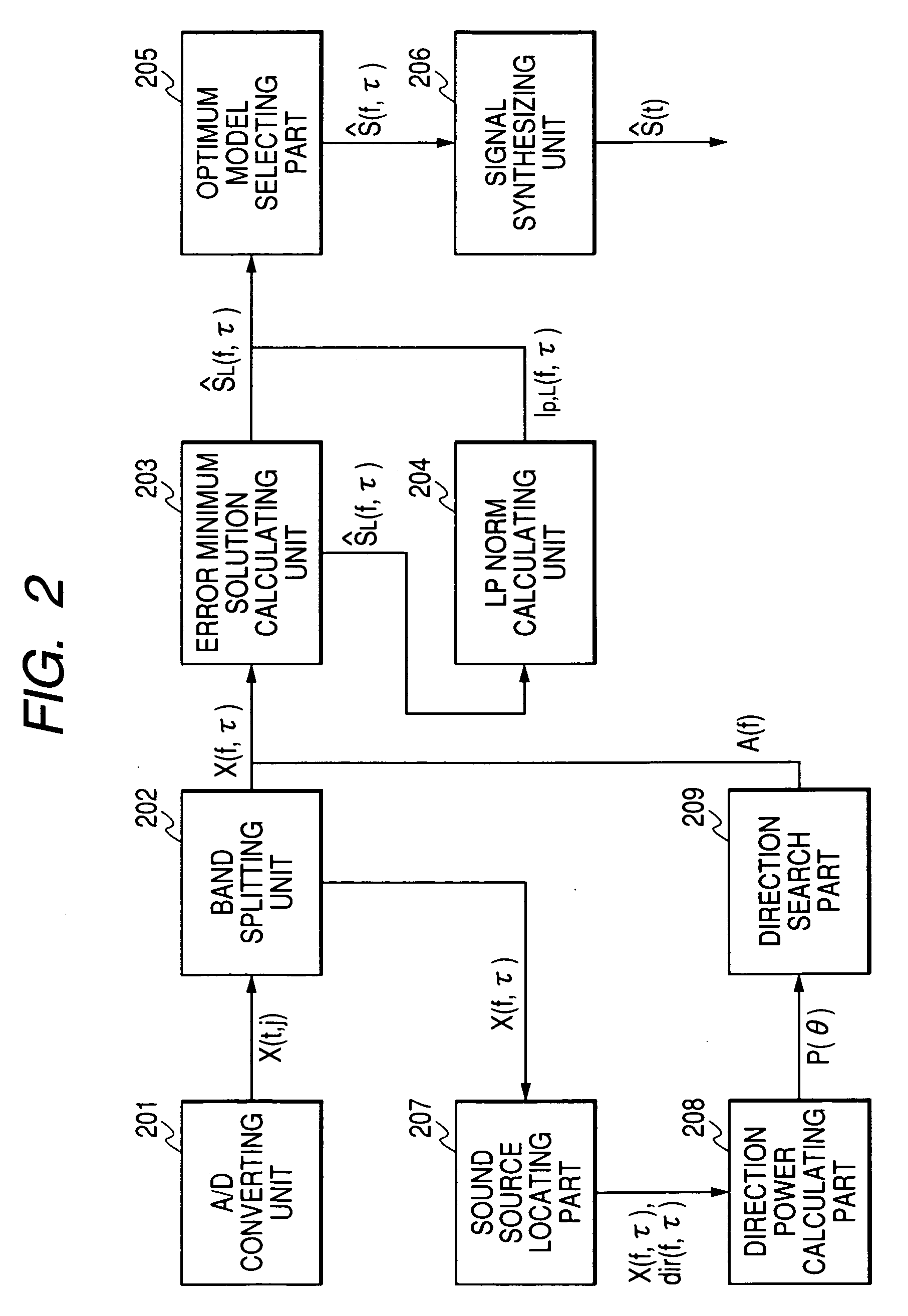

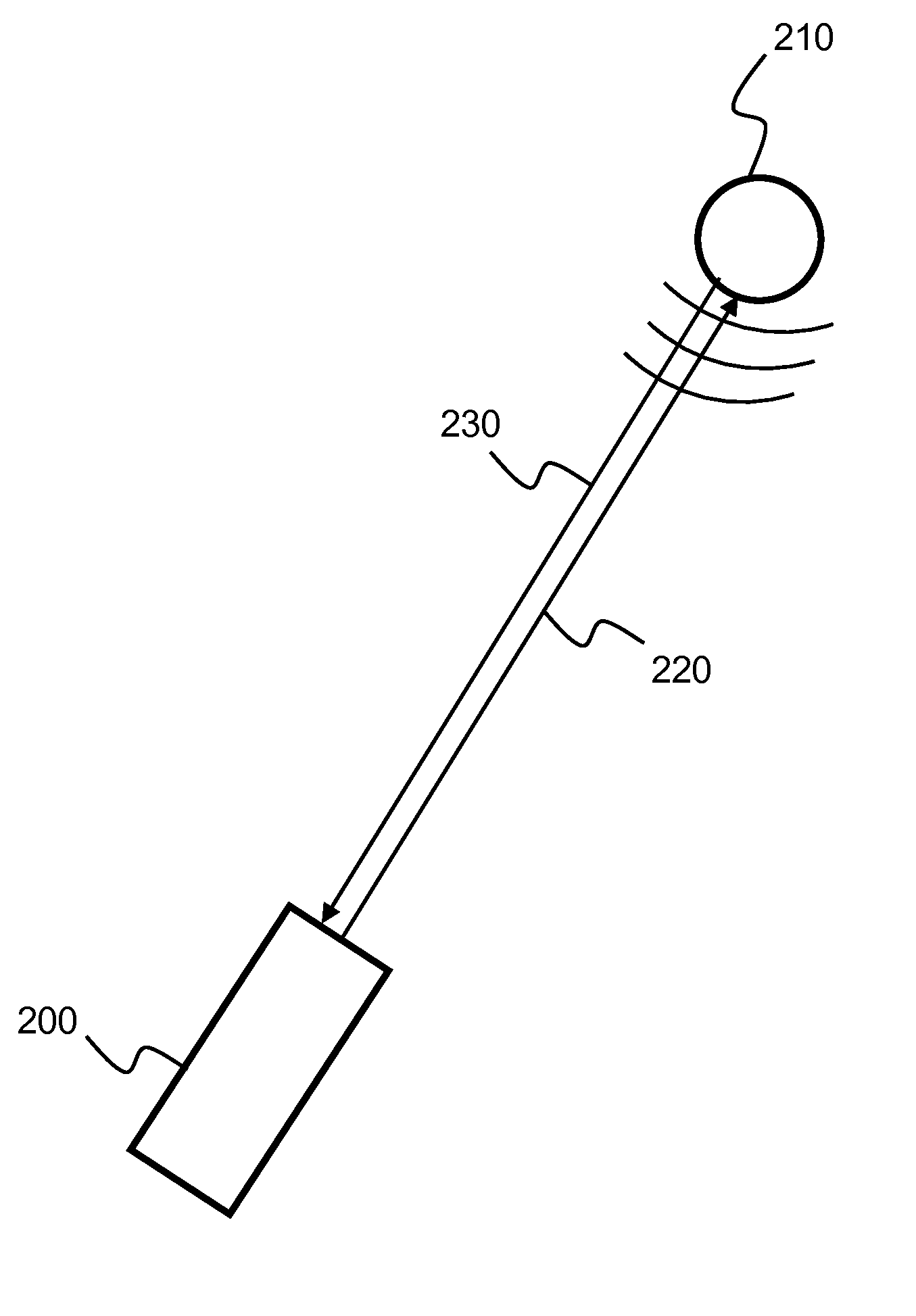

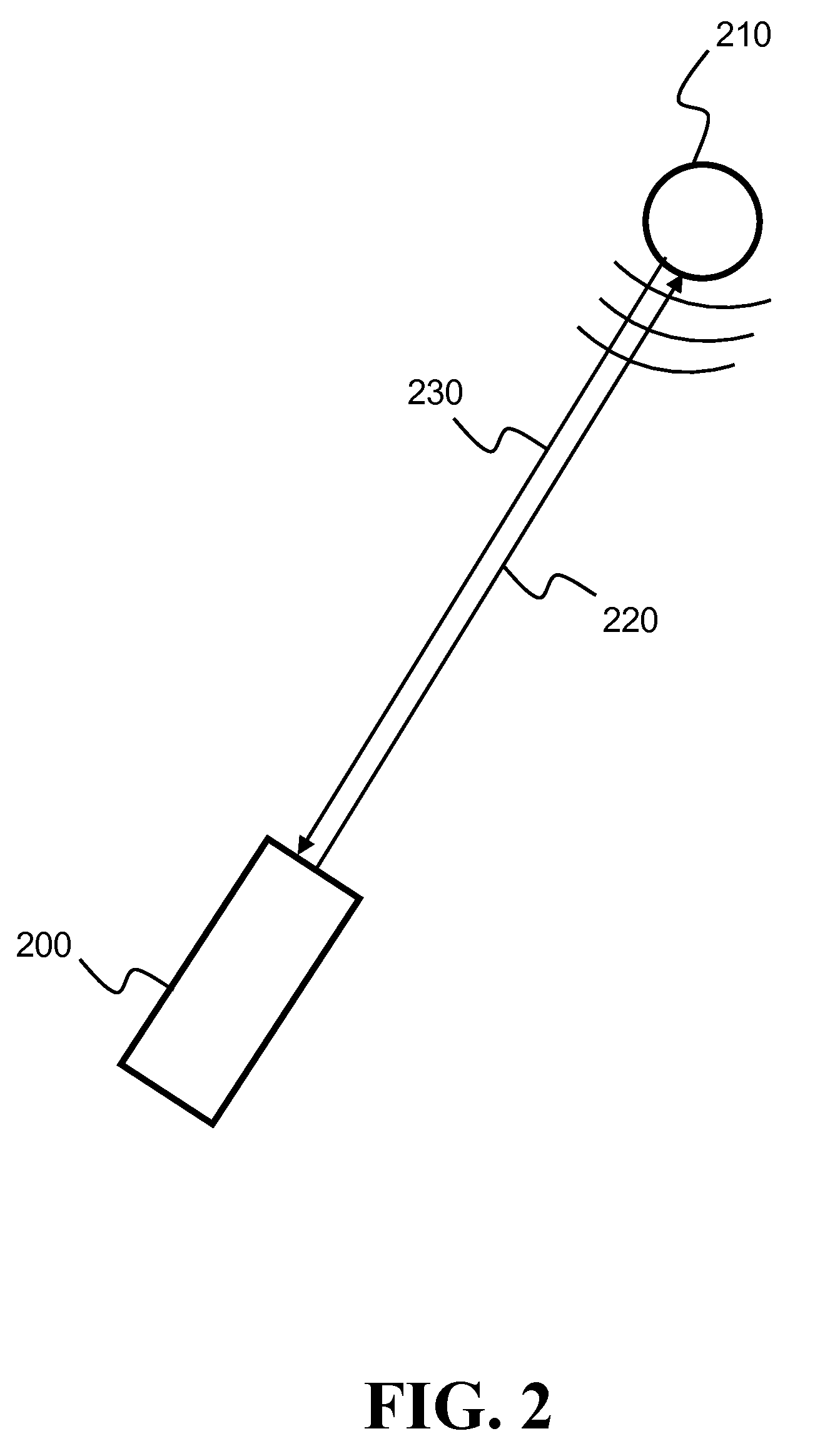

Sound source separating device, method, and program

InactiveUS20070223731A1Speech analysisMicrophones signal combinationSound source separationIndependent component analysis

Conventional independent component analysis has had a problem that performance deteriorates when the number of sound sources exceeds the number of microphones. Conventional l1 norm minimization method assumes that noises other than sound sources do not exist, and is problematic in that performance deteriorates in environments in which noises other than voices such as echoes and reverberations exist. The present invention considers the power of a noise component as a cost function in addition to an l1 norm used as a cost function when the l1 norm minimization method separates sounds. In the l1 norm minimization method, a cost function is defined on the assumption that voice has no relation to a time direction. However, in the present invention, a cost function is defined on the assumption that voice has a relation to a time direction, and because of its construction, a solution having a relation to a time direction is easily selected.

Owner:HITACHI LTD

Sound sources separation and monitoring using directional coherent electromagnetic waves

ActiveUS8286493B2Eliminate noise componentsEnsures independenceVibration measurement in solidsMultiple-port networksSound source separationLight beam

An apparatus and a method that achieve physical separation of sound sources by pointing directly a beam of coherent electromagnetic waves (i.e. laser). Analyzing the physical properties of a beam reflected from the vibrations generating sound source enable the reconstruction of the sound signal generated by the sound source, eliminating the noise component added to the original sound signal. In addition, the use of multiple electromagnetic waves beams or a beam that rapidly skips from one sound source to another allows the physical separation of these sound sources. Aiming each beam to a different sound source ensures the independence of the sound signals sources and therefore provides full sources separation.

Owner:VOCALZOOM SYST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com