Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

4841results about "Acquiring/recognising eyes" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Apparatus and method for determining eye gaze from stereo-optic views

InactiveUS8824779B1Improve accuracyImprove image processing capabilitiesImage enhancementImage analysisWide fieldOptical axis

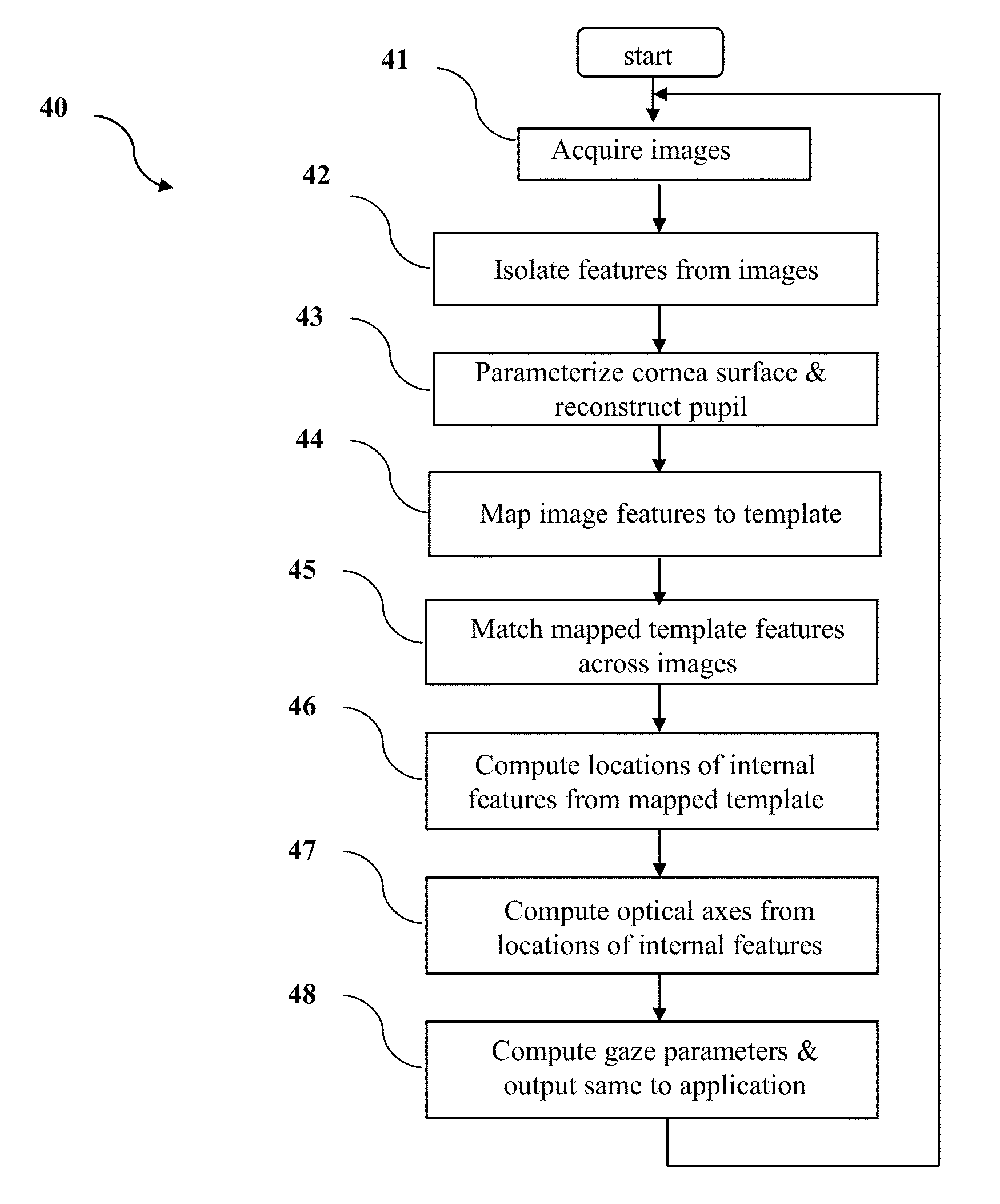

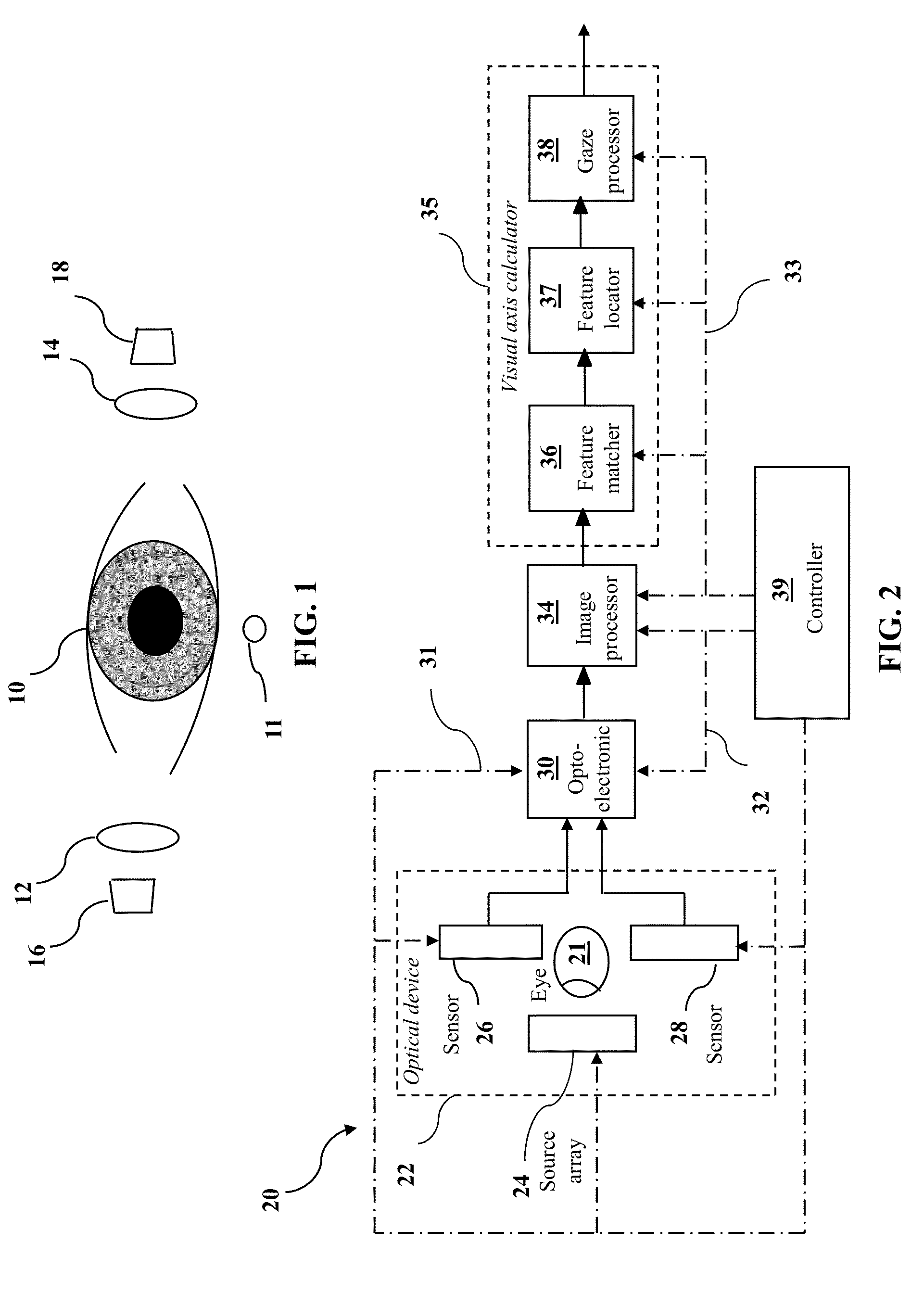

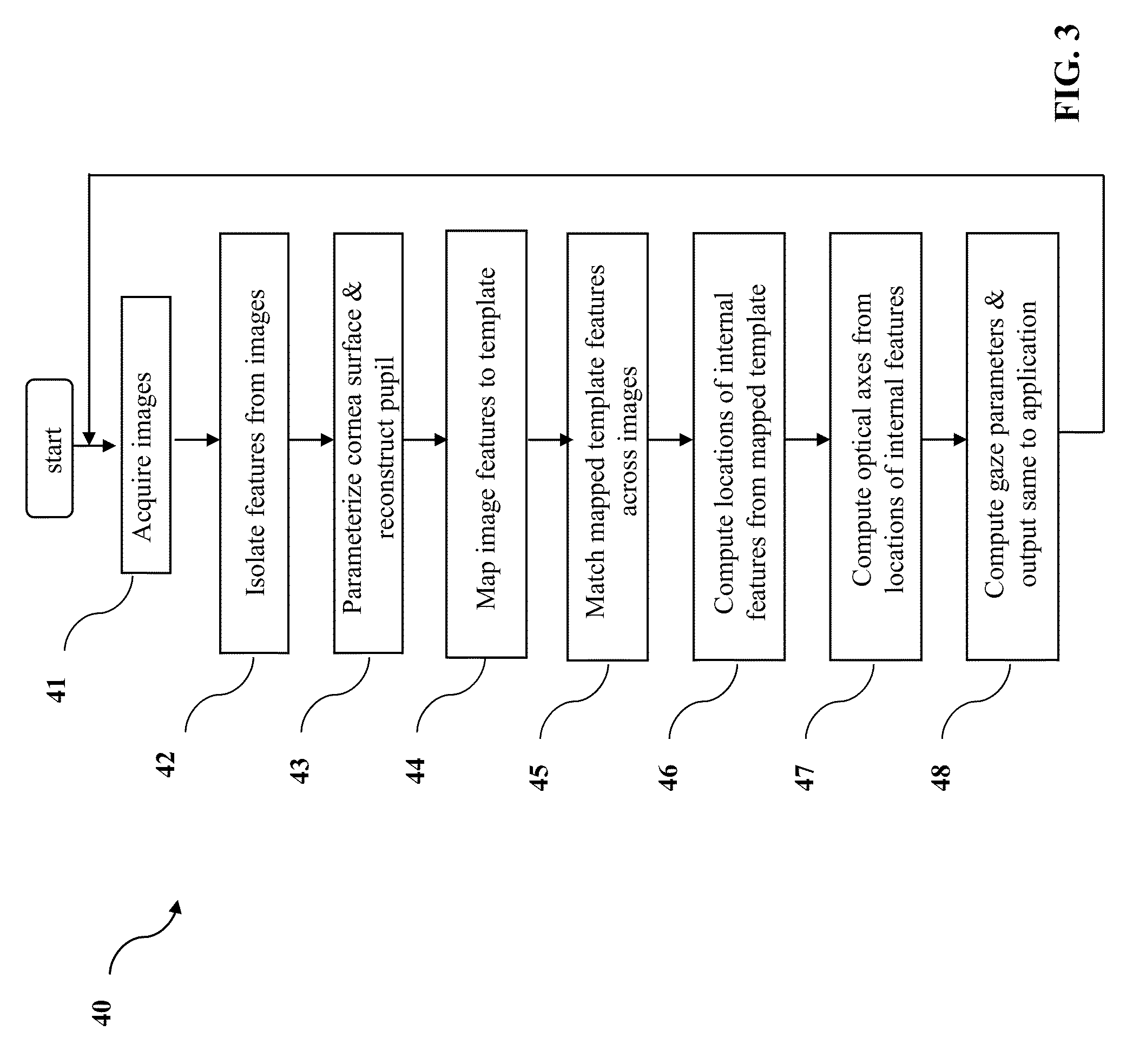

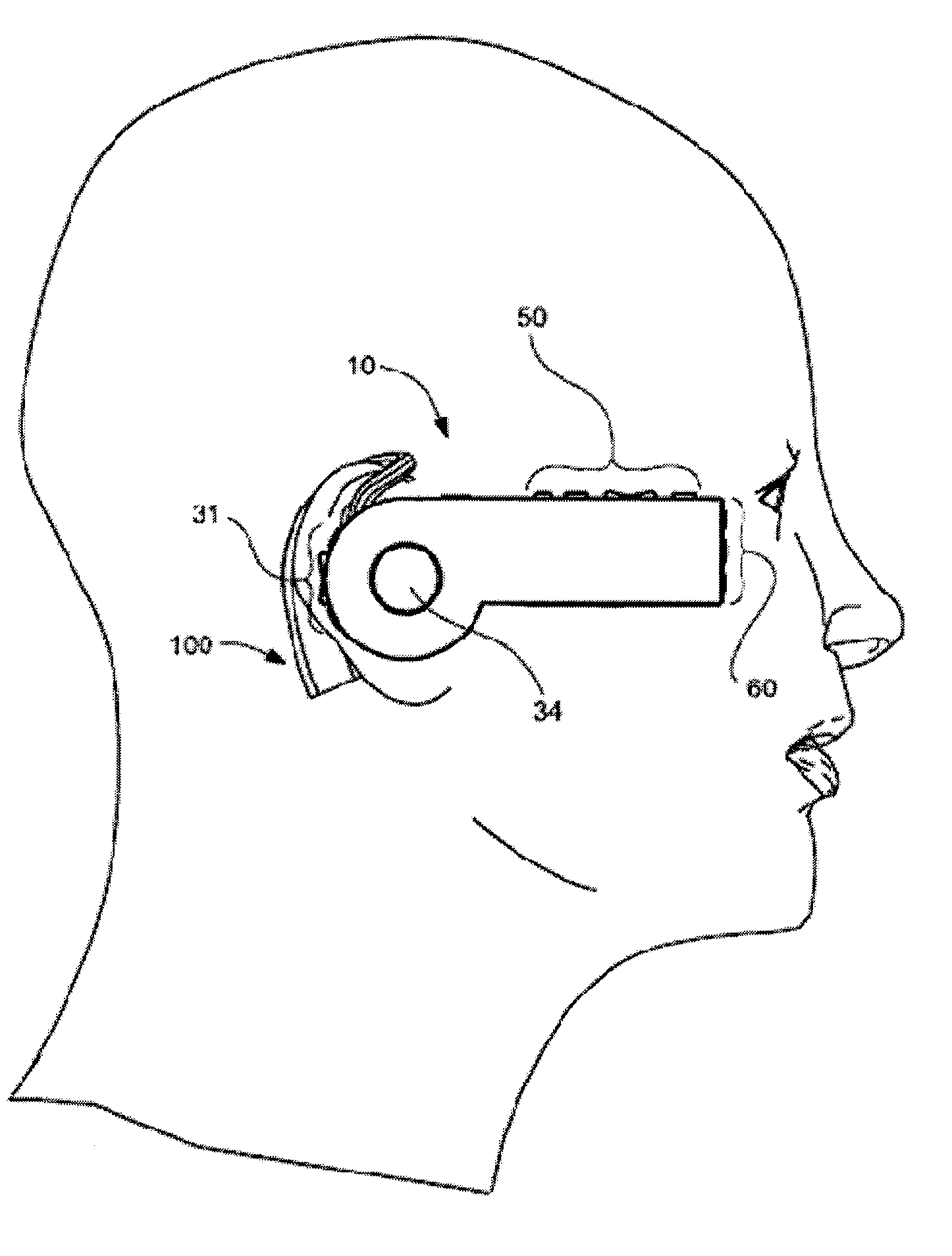

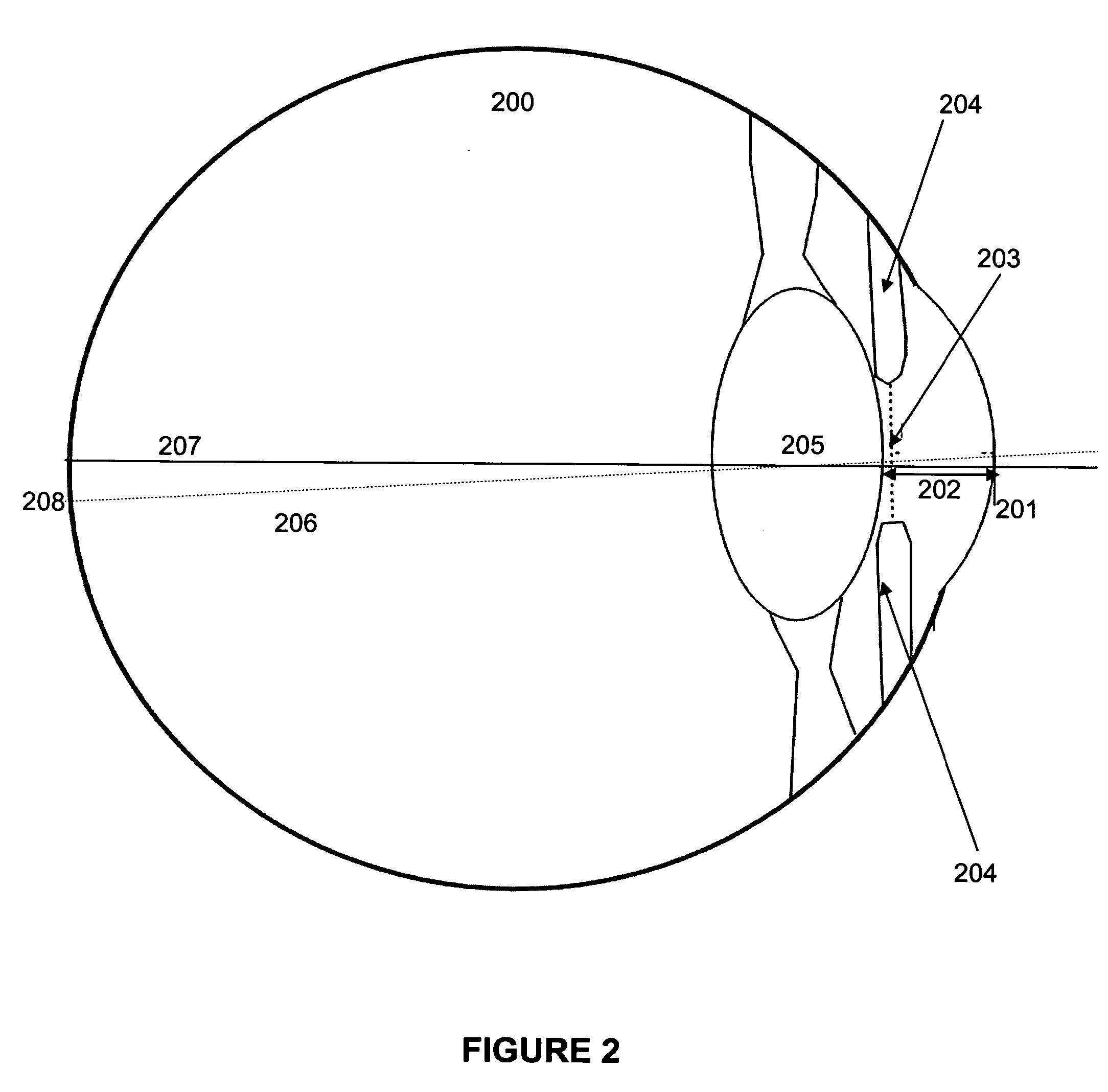

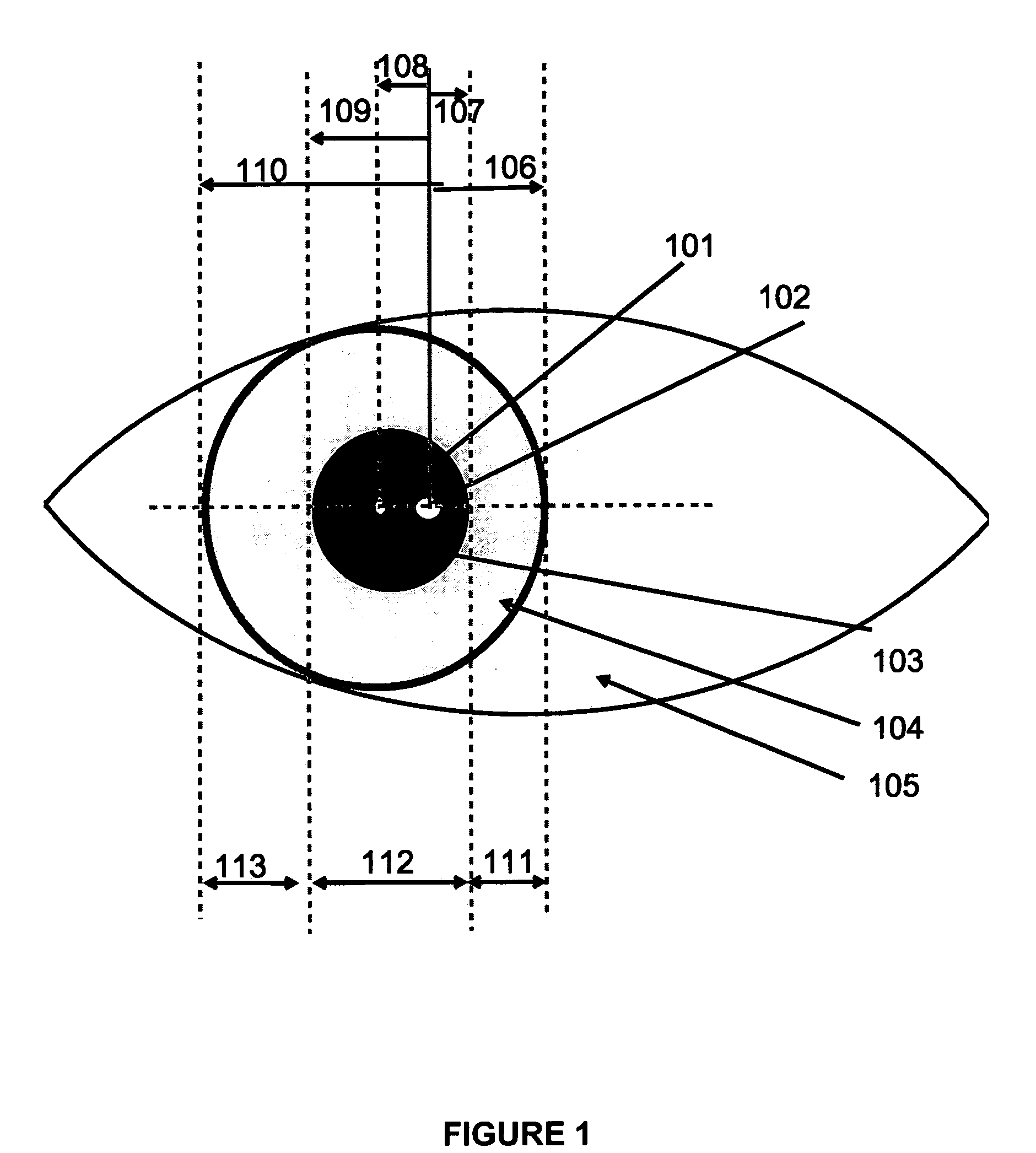

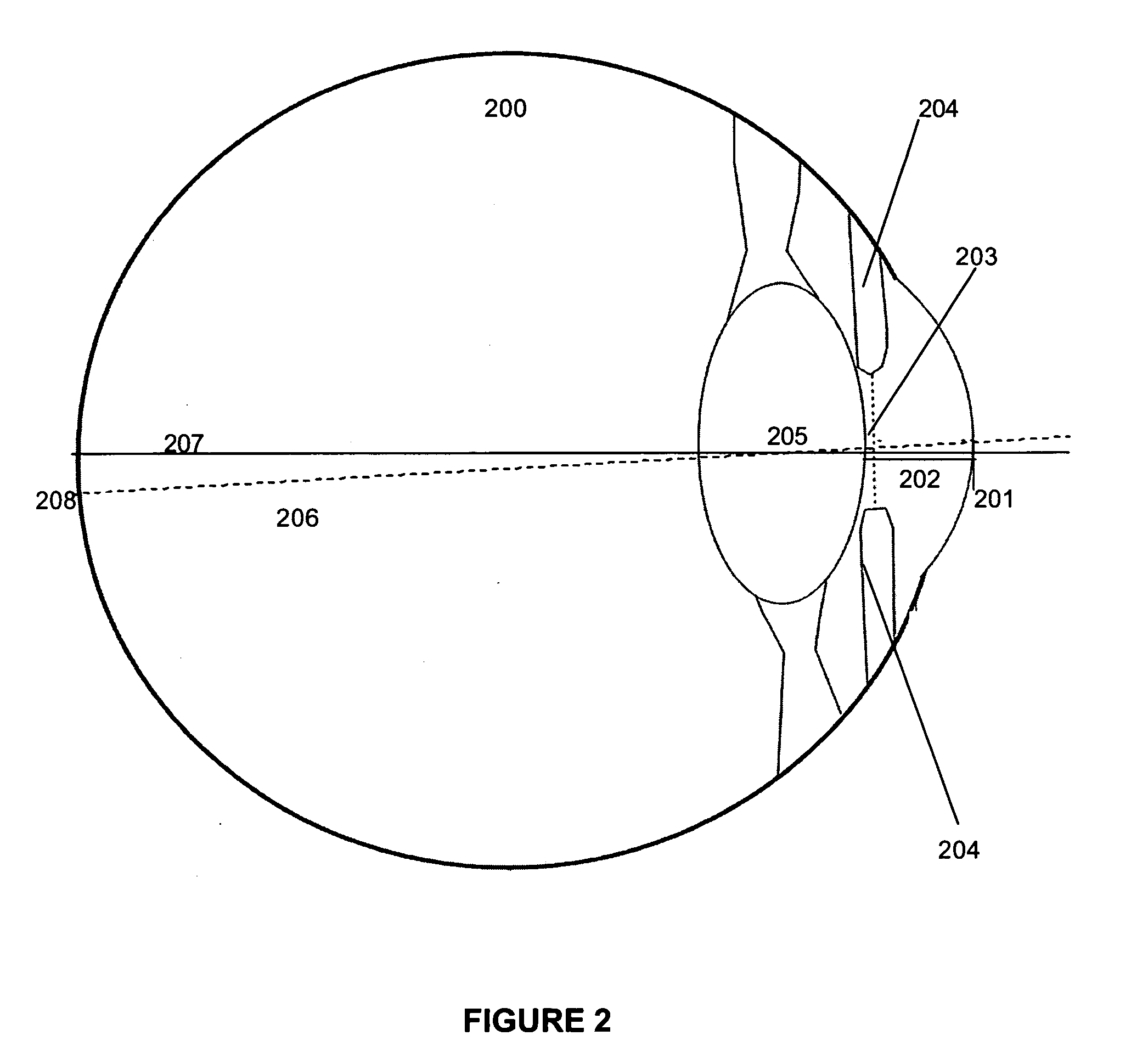

The invention, exemplified as a single lens stereo optics design with a stepped mirror system for tracking the eye, isolates landmark features in the separate images, locates the pupil in the eye, matches landmarks to a template centered on the pupil, mathematically traces refracted rays back from the matched image points through the cornea to the inner structure, and locates these structures from the intersection of the rays for the separate stereo views. Having located in this way structures of the eye in the coordinate system of the optical unit, the invention computes the optical axes and from that the line of sight and the torsion roll in vision. Along with providing a wider field of view, this invention has an additional advantage since the stereo images tend to be offset from each other and for this reason the reconstructed pupil is more accurately aligned and centered.

Owner:CORTICAL DIMENSIONS LLC

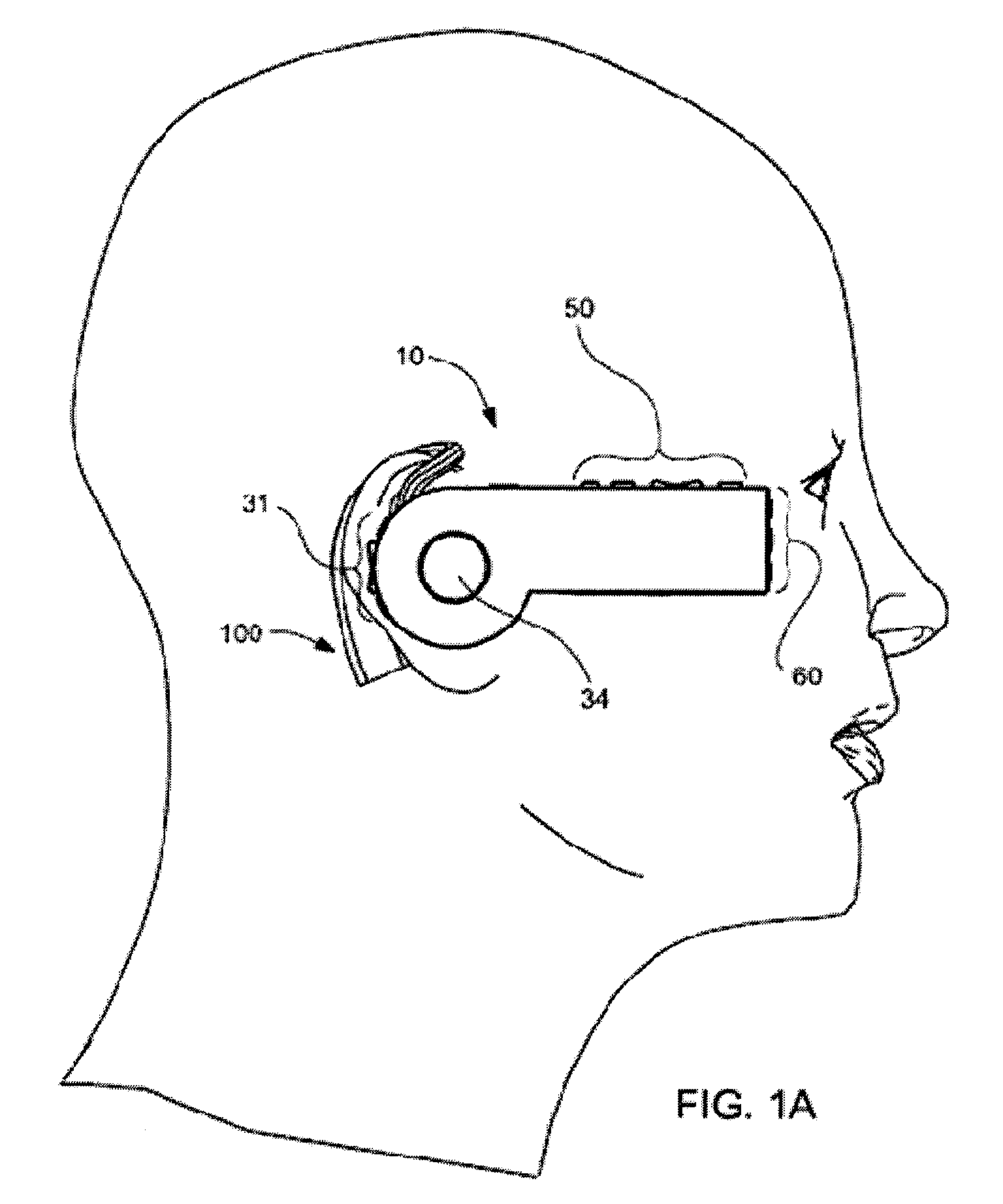

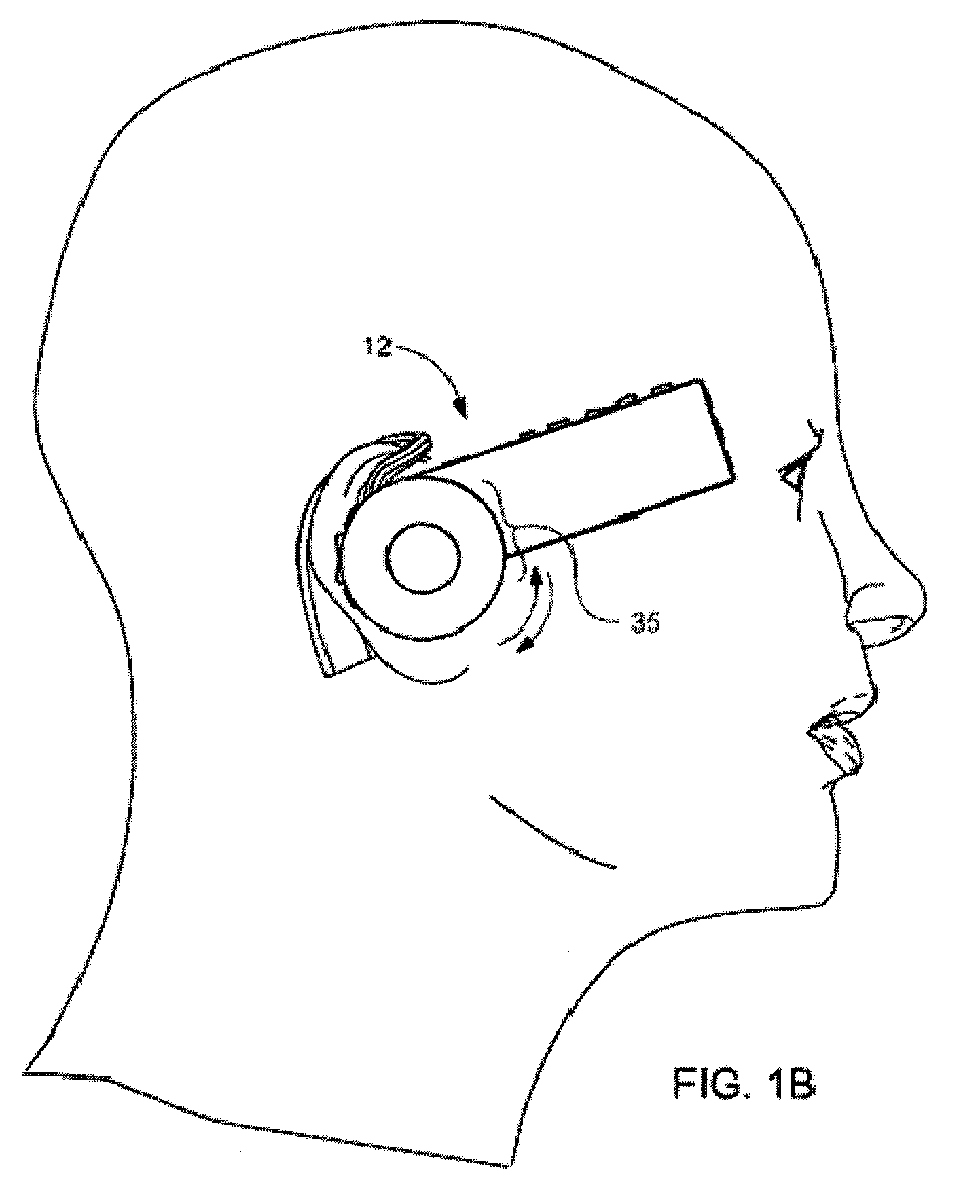

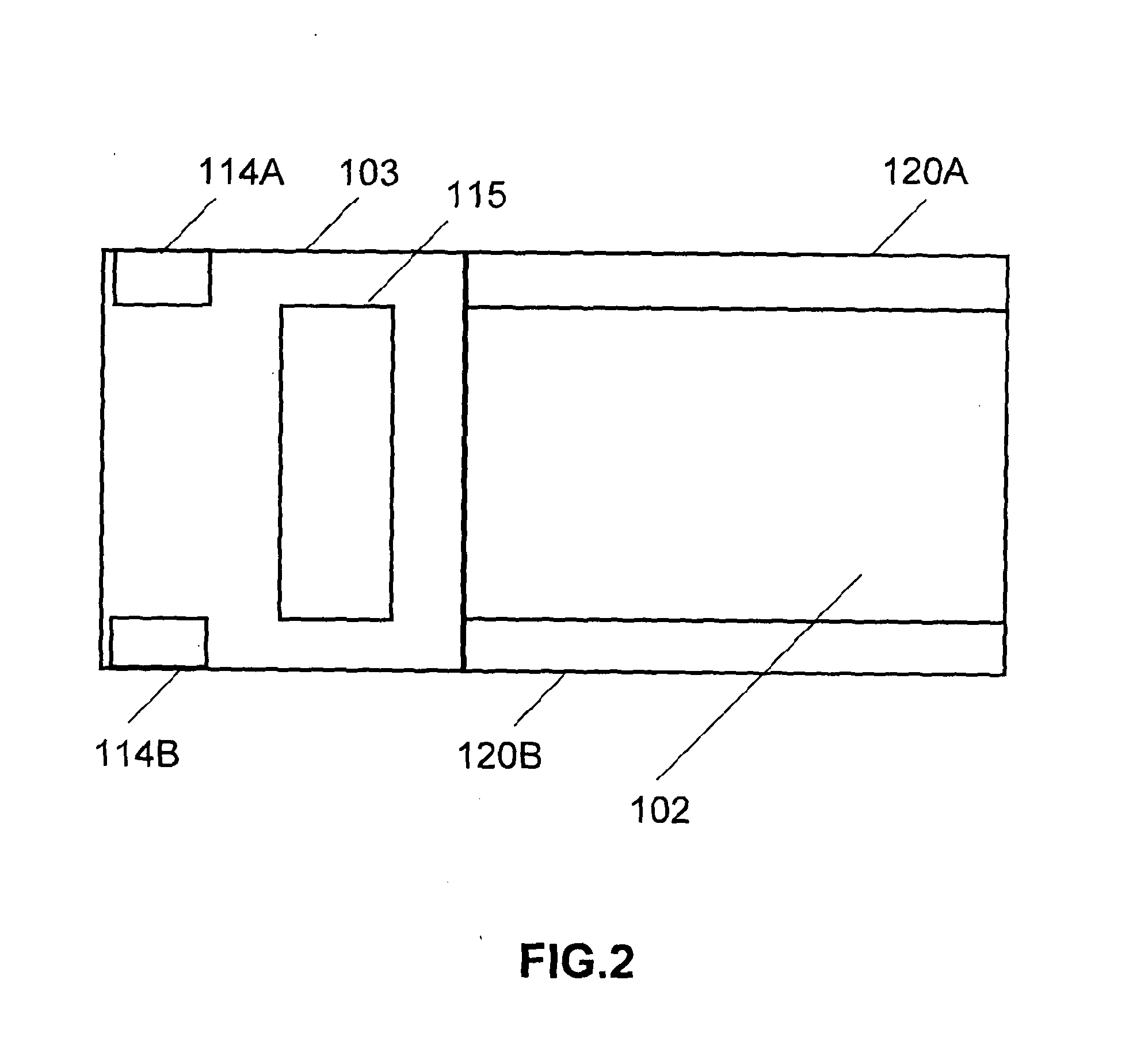

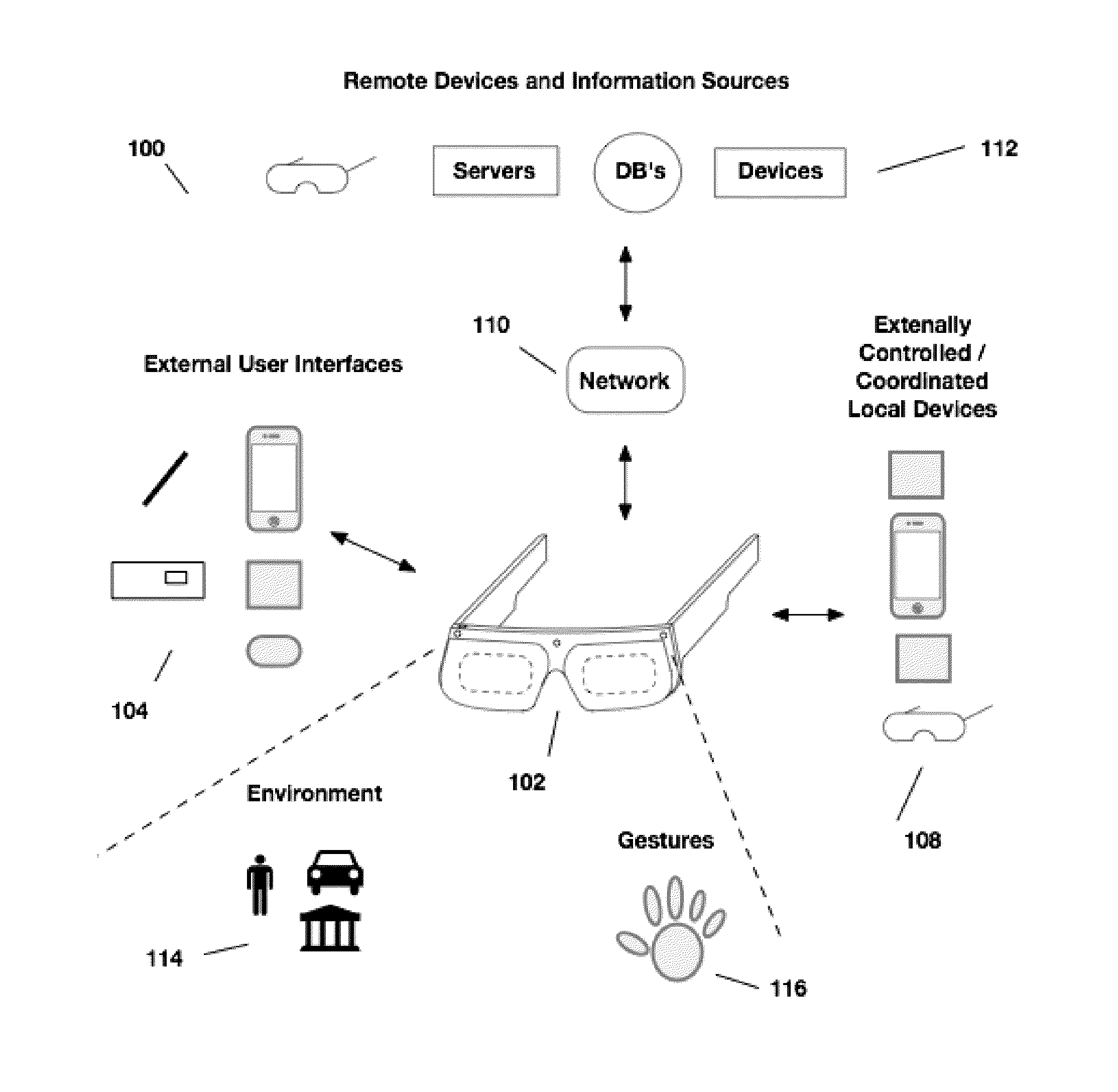

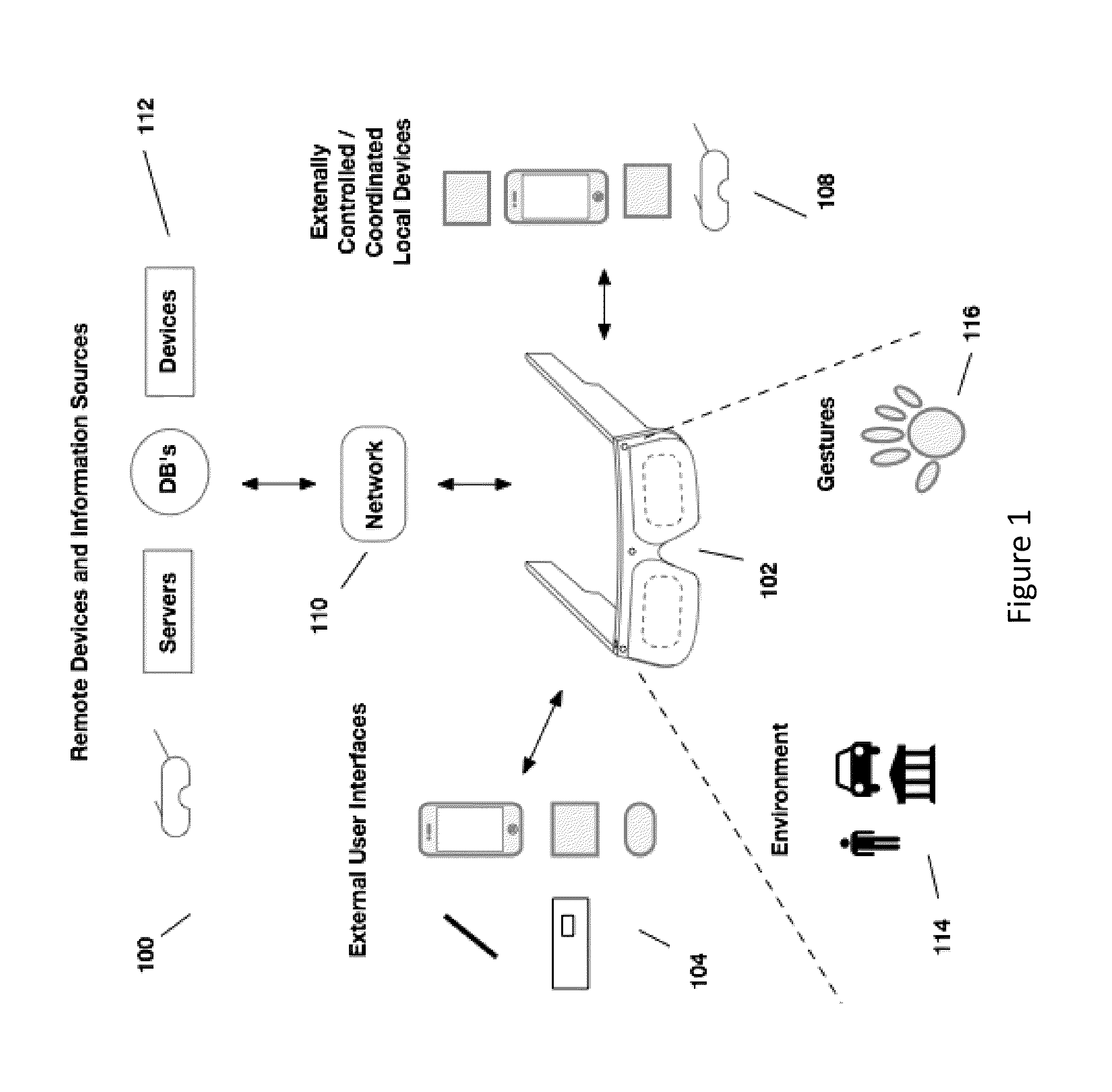

Headset-Based Telecommunications Platform

ActiveUS20100245585A1Extend battery lifeTelevision system detailsOptical rangefindersData streamPeer-to-peer

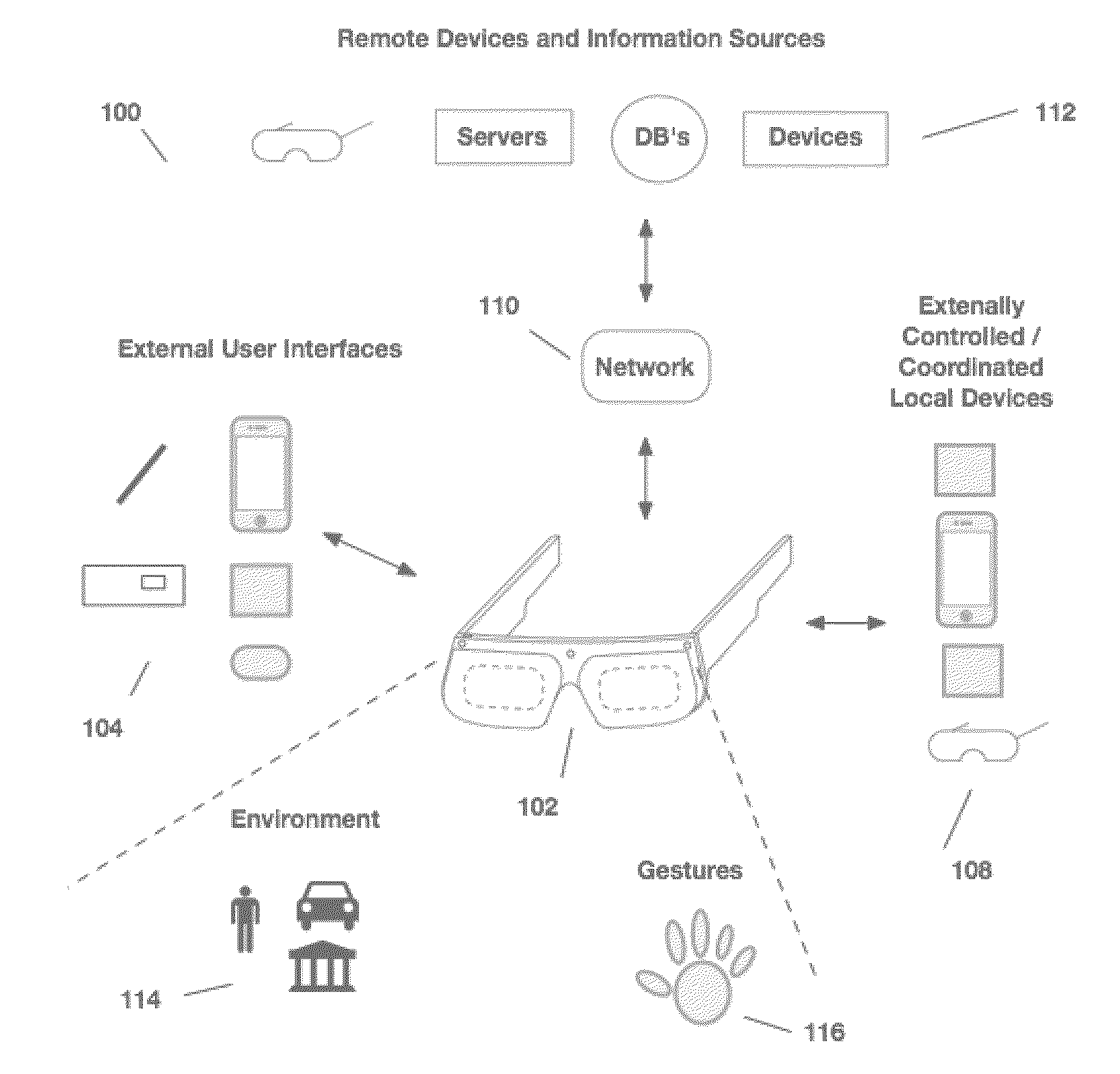

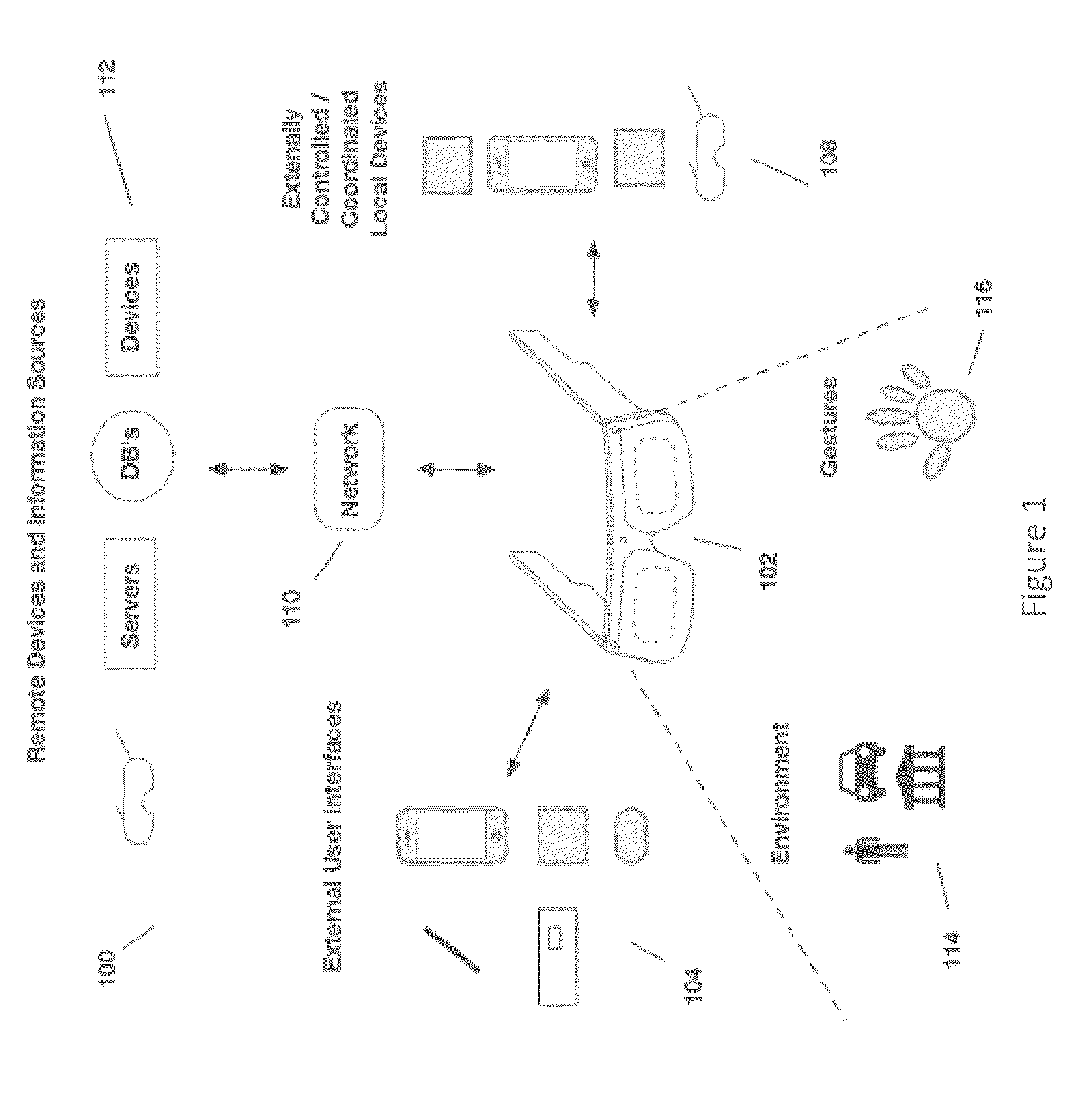

A hands-free wireless wearable GPS enabled video camera and audio-video communications headset, mobile phone and personal media player, capable of real-time two-way and multi-feed wireless voice, data and audio-video streaming, telecommunications, and teleconferencing, coordinated applications, and shared functionality between one or more wirelessly networked headsets or other paired or networked wired or wireless devices and optimized device and data management over multiple wired and wireless network connections. The headset can operate in concert with one or more wired or wireless devices as a paired accessory, as an autonomous hands-free wide area, metro or local area and personal area wireless audio-video communications and multimedia device and / or as a wearable docking station, hot spot and wireless router supporting direct connect multi-device ad-hoc virtual private networking (VPN). The headset has built-in intelligence to choose amongst available network protocols while supporting a variety of onboard, and remote operational controls including a retractable monocular viewfinder display for real time hands-free viewing of captured or received video feed and a duplex data-streaming platform supporting multi-channel communications and optimized data management within the device, within a managed or autonomous federation of devices or other peer-to-peer network configuration.

Owner:EYECAM INC

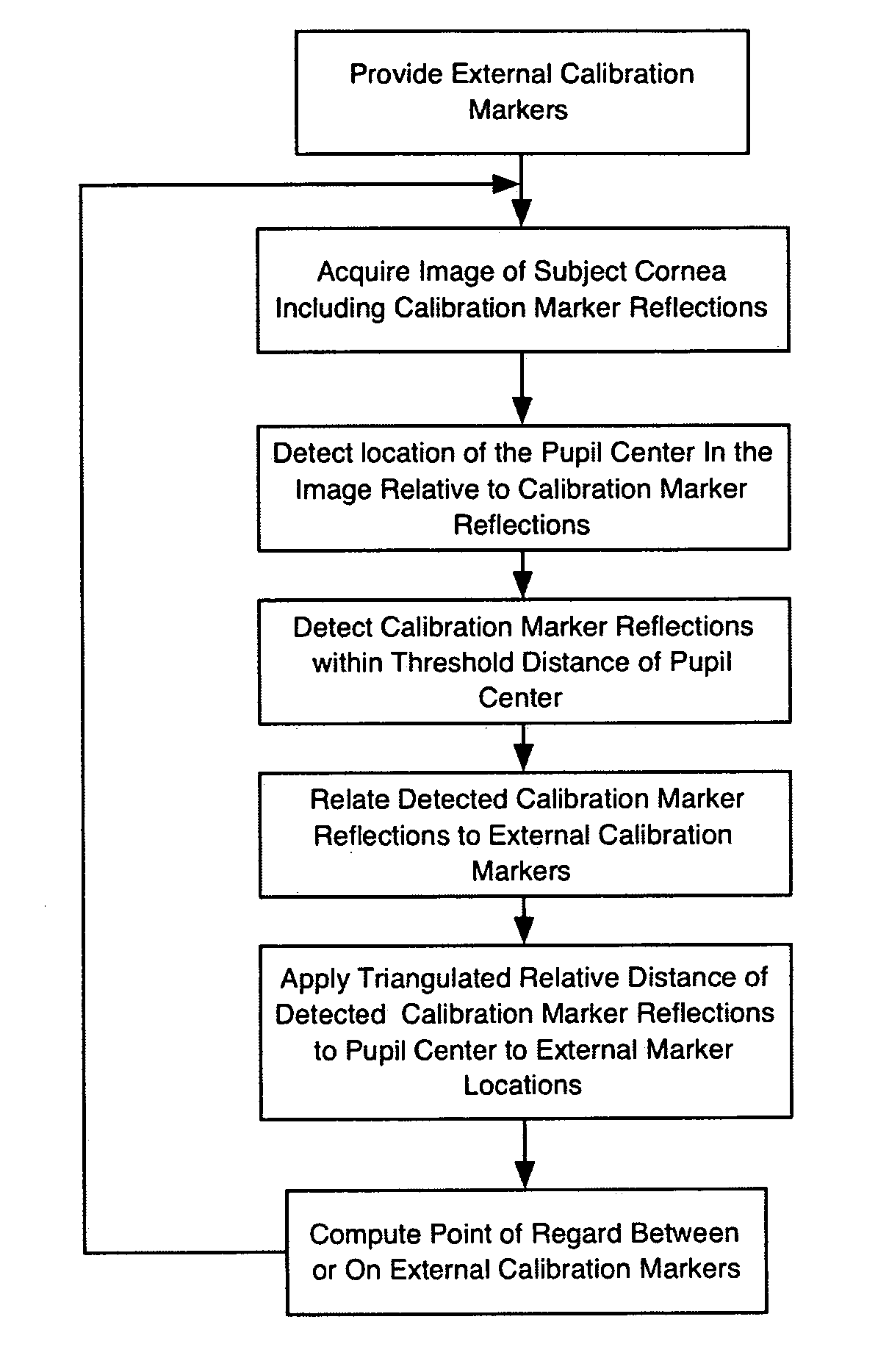

Method and apparatus for calibration-free eye tracking

ActiveUS20060110008A1Extended durationImage enhancementImage analysisCorneal surfaceAngular distance

A system and method for eye gaze tracking in human or animal subjects without calibration of cameras, specific measurements of eye geometries or the tracking of a cursor image on a screen by the subject through a known trajectory. The preferred embodiment includes one uncalibrated camera for acquiring video images of the subject's eye(s) and optionally having an on-axis illuminator, and a surface, object, or visual scene with embedded off-axis illuminator markers. The off-axis markers are reflected on the corneal surface of the subject's eyes as glints. The glints indicate the distance between the point of gaze in the surface, object, or visual scene and the corresponding marker on the surface, object, or visual scene. The marker that causes a glint to appear in the center of the subject's pupil is determined to be located on the line of regard of the subject's eye, and to intersect with the point of gaze. Point of gaze on the surface, object, or visual scene is calculated as follows. First, by determining which marker glints, as provided by the corneal reflections of the markers, are closest to the center of the pupil in either or both of the subject's eyes. This subset of glints forms a region of interest (ROI). Second, by determining the gaze vector (relative angular or Cartesian distance to the pupil center) for each of the glints in the ROI. Third, by relating each glint in the ROI to the location or identification (ID) of a corresponding marker on the surface, object, or visual scene observed by the eyes. Fourth, by interpolating the known locations of each these markers on the surface, object, or visual scene, according to the relative angular distance of their corresponding glints to the pupil center.

Owner:CHENG DANIEL +3

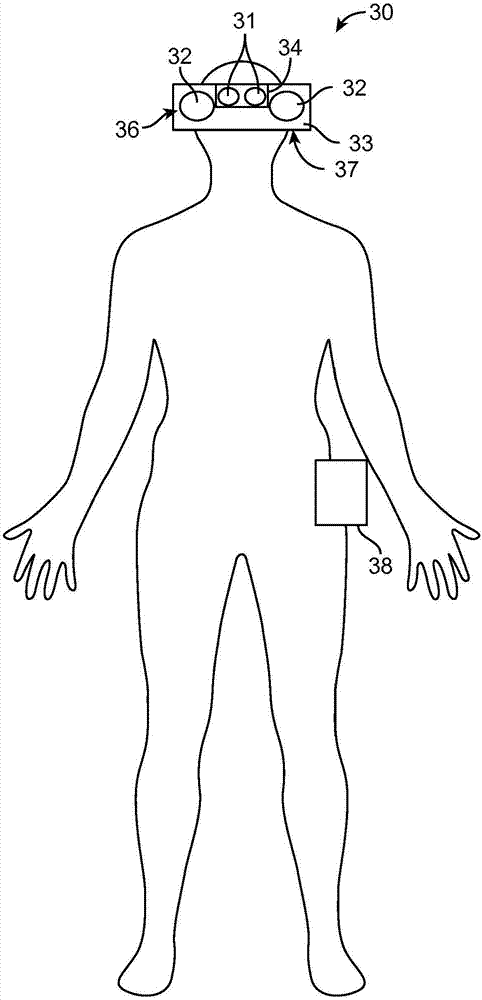

Visual attention and emotional response detection and display system

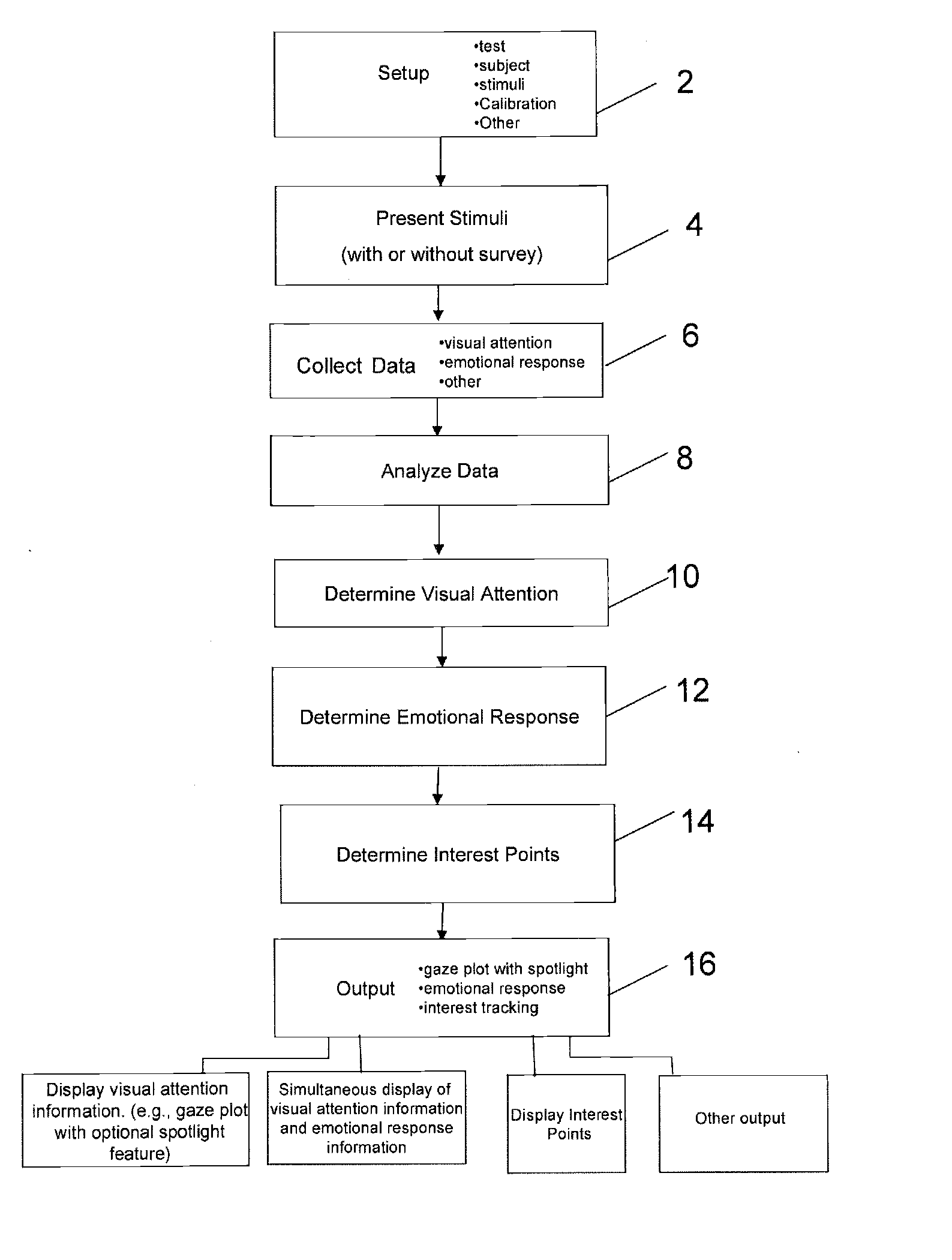

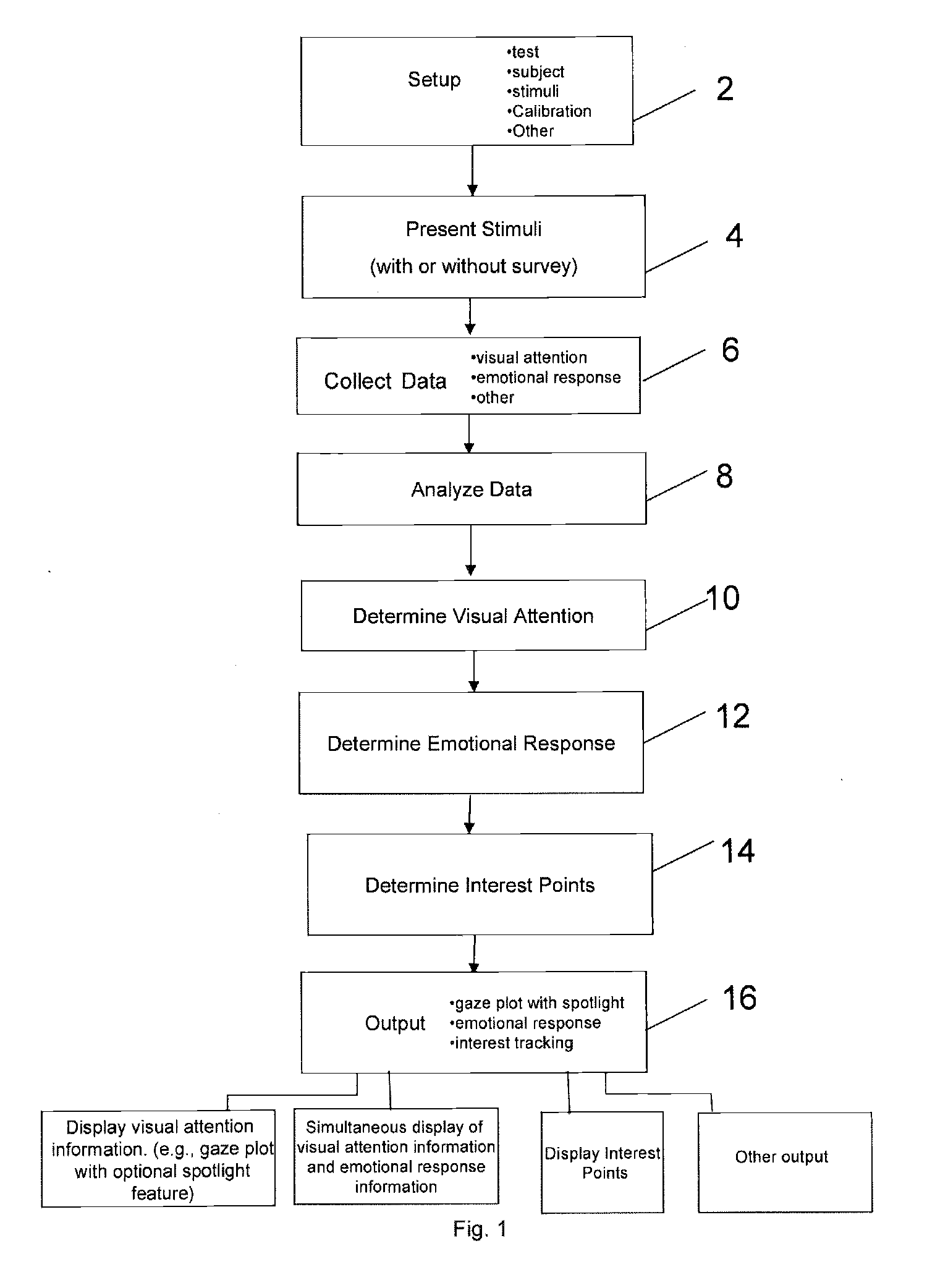

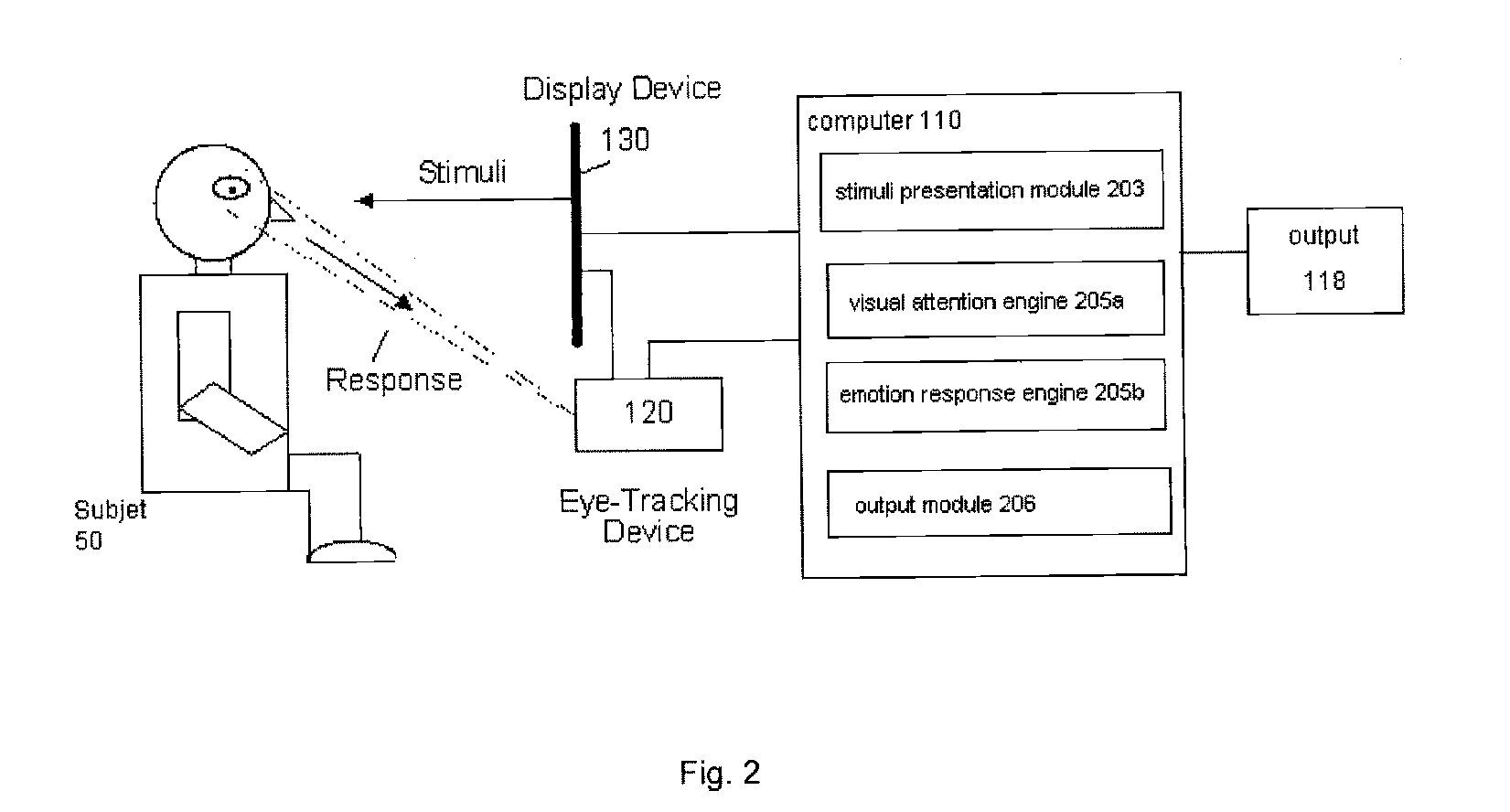

The invention is a system and method for determining visual attention, and supports the eye tracking measurements with other physiological signal measurements like emotions. The system and method of the invention is capable of registering stimulus related emotions from eye-tracking data. An eye tracking device of the system and other sensors collect eye properties and / or other physiological properties which allows a subject's emotional and visual attention to be observed and analyzed in relation to stimuli.

Owner:IMOTIONS EMOTION TECH

Red-eye filter method and apparatus

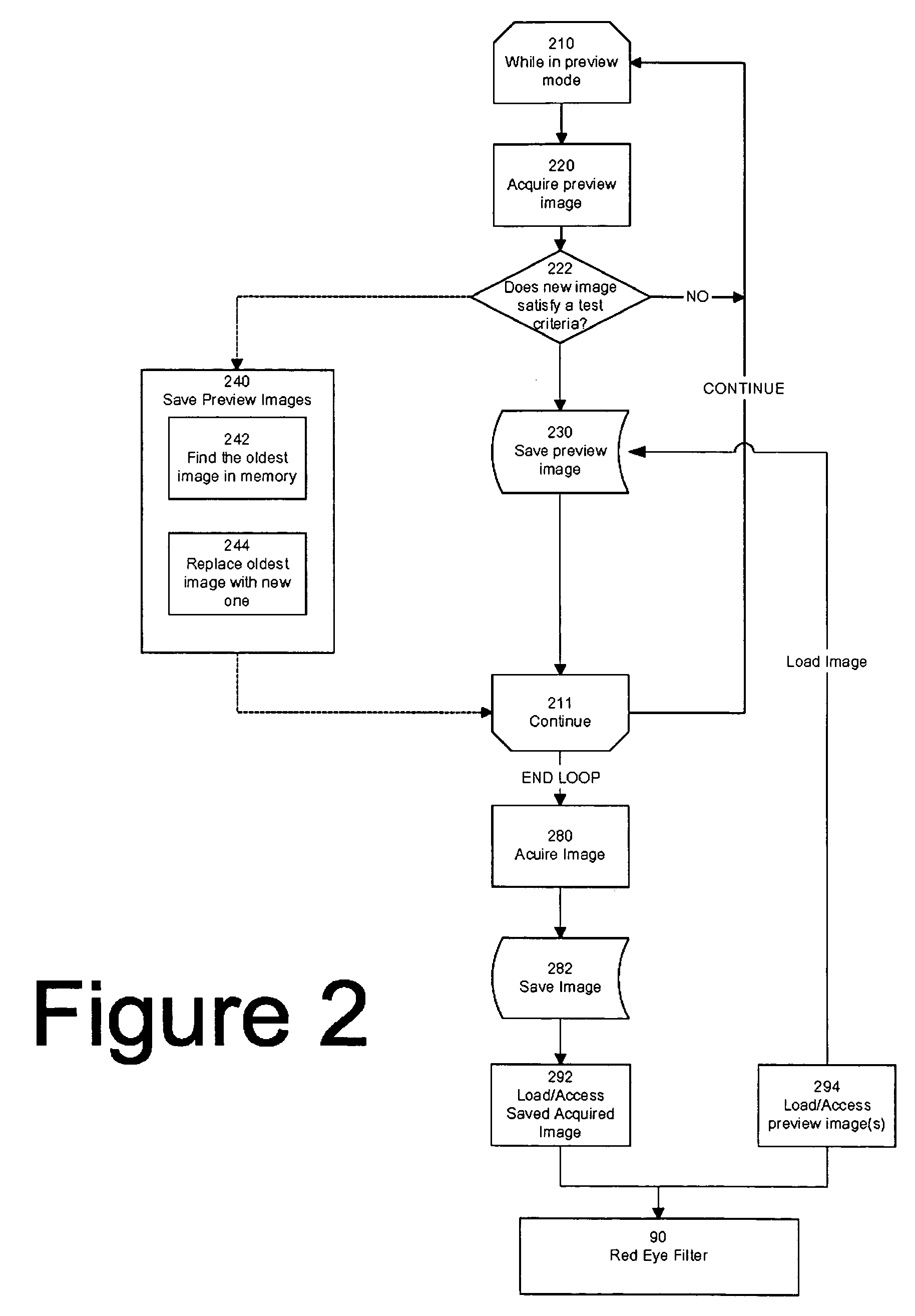

A digital image acquisition system having no photographic film, such as a digital camera, has a flash unit for providing illumination during image capture and a red-eye filter for detecting a region within a captured image indicative of a red-eye phenomenon, the detection being based upon a comparison of the captured image and a reference image of nominally the same scene taken without flash. In the embodiment the reference image is a preview image of lower pixel resolution than the captured image, the filter matching the pixel resolutions of the captured and reference images by up-sampling the preview image and / or sub-sampling the captured image. The filter also aligns at least portions of the captured image and reference image prior to comparison to allow for, e.g. movement in the subject.

Owner:FOTONATION LTD

Method and apparatus for calibration-free eye tracking using multiple glints or surface reflections

InactiveUS20050175218A1Input/output for user-computer interactionImage enhancementCorneal surfaceAngular distance

A system and method for eye gaze tracking in human or animal subjects without calibration of cameras, specific measurements of eye geometries or the tracking of a cursor image on a screen by the subject through a known trajectory. The preferred embodiment includes one uncalibrated camera for acquiring video images of the subject's eye(s) and optionally having an on-axis illuminator, and a surface, object, or visual scene with embedded off-axis illuminator markers. The off-axis markers are reflected on the corneal surface of the subject's eyes as glints. The glints indicate the distance between the point of gaze in the surface, object, or visual scene and the corresponding marker on the surface, object, or visual scene. The marker that causes a glint to appear in the center of the subject's pupil is determined to be located on the line of regard of the subject's eye, and to intersect with the point of gaze. Point of gaze on the surface, object, or visual scene is calculated as follows. First, by determining which marker glints, as provided by the corneal reflections of the markers, are closest to the center of the pupil in either or both of the subject's eyes. This subset of glints forms a region of interest (ROI). Second, by determining the gaze vector (relative angular or cartesian distance to the pupil center) for each of the glints in the ROI. Third, by relating each glint in the ROI to the location or identification (ID) of a corresponding marker on the surface, object, or visual scene observed by the eyes. Fourth, by interpolating the known locations of each these markers on the surface, object, or visual scene, according to the relative angular distance of their corresponding glints to the pupil center.

Owner:CHENG DANIEL +3

Eye glint imaging in see-through computer display systems

ActiveUS20160116979A1Improve transmittanceInput/output for user-computer interactionAcquiring/recognising eyesDisplay deviceComputer science

Owner:OSTERHOUT GROUP INC

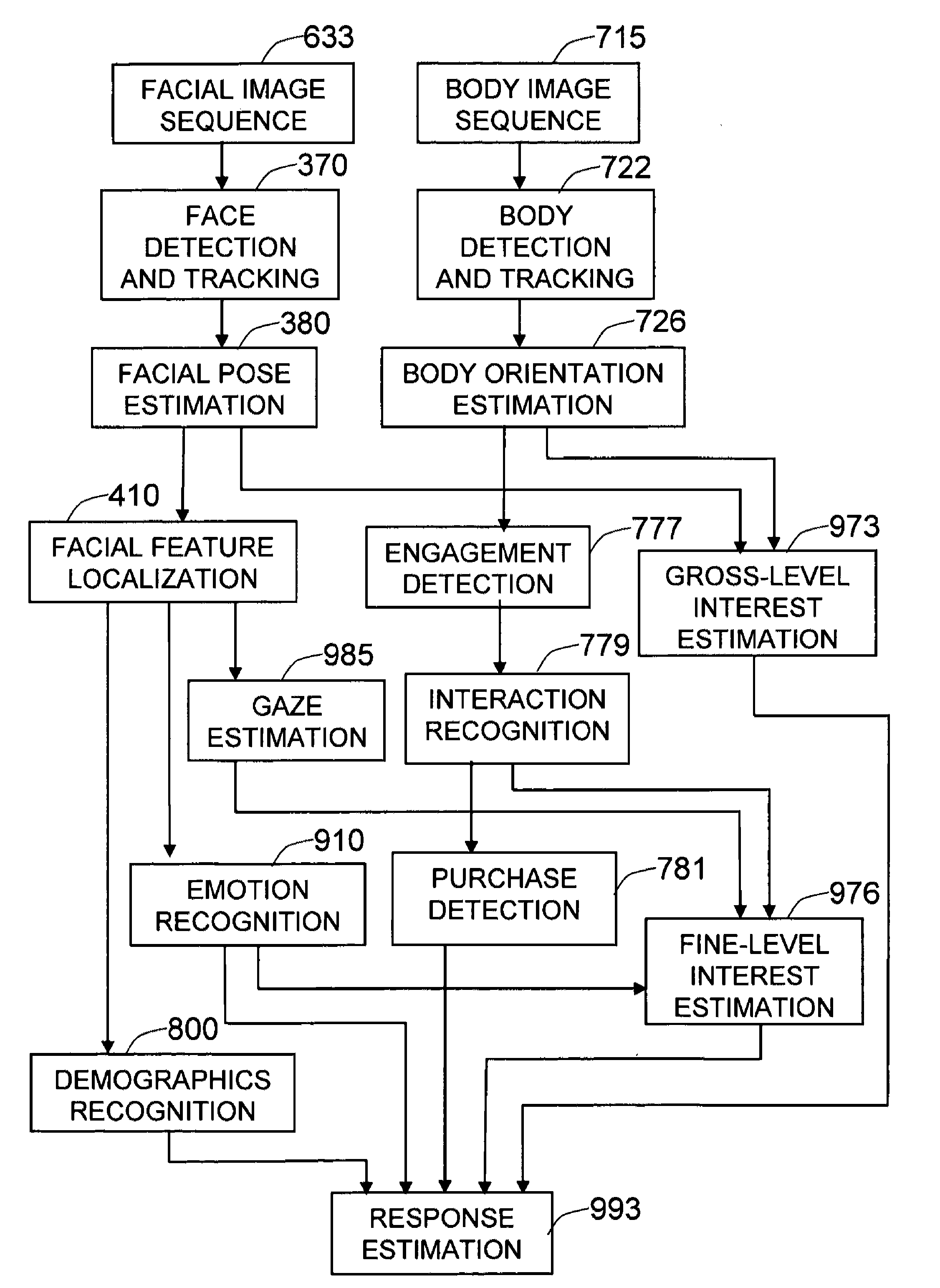

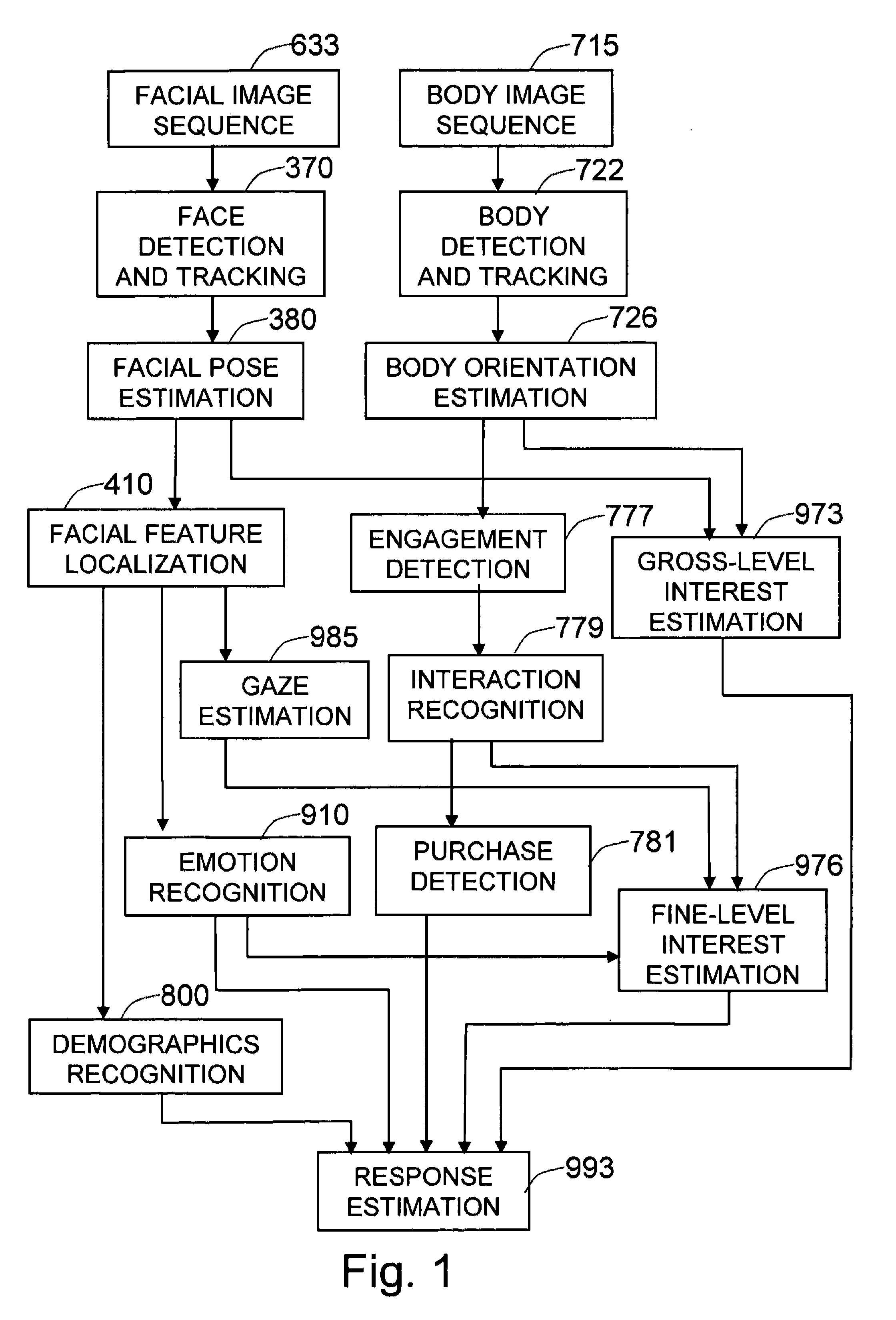

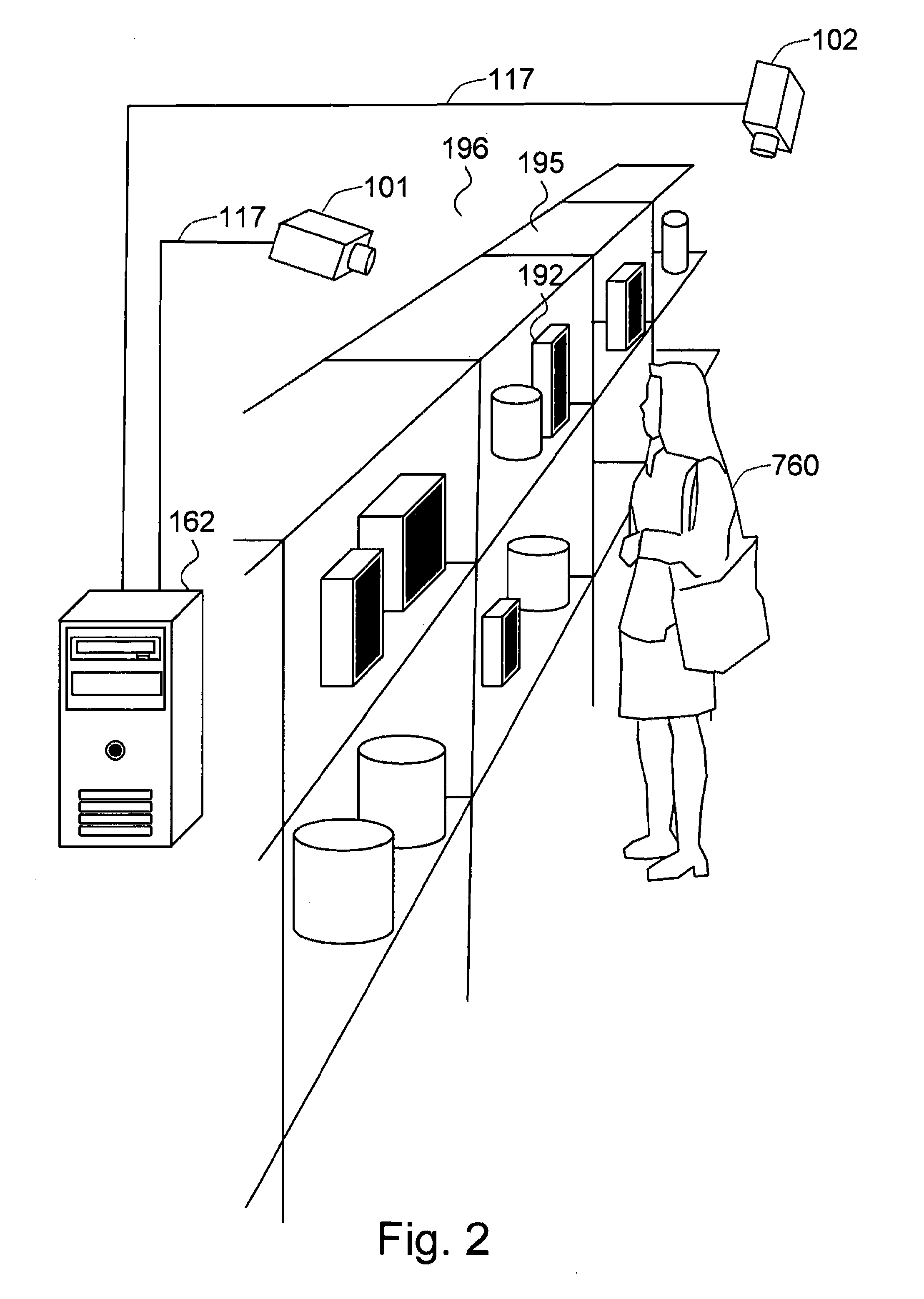

Method and system for measuring shopper response to products based on behavior and facial expression

ActiveUS8219438B1Reliable informationAccurate locationMarket predictionsAcquiring/recognising eyesPattern recognitionProduct base

The present invention is a method and system for measuring human response to retail elements, based on the shopper's facial expressions and behaviors. From a facial image sequence, the facial geometry—facial pose and facial feature positions—is estimated to facilitate the recognition of facial expressions, gaze, and demographic categories. The recognized facial expression is translated into an affective state of the shopper and the gaze is translated into the target and the level of interest of the shopper. The body image sequence is processed to identify the shopper's interaction with a given retail element—such as a product, a brand, or a category. The dynamic changes of the affective state and the interest toward the retail element measured from facial image sequence is analyzed in the context of the recognized shopper's interaction with the retail element and the demographic categories, to estimate both the shopper's changes in attitude toward the retail element and the end response—such as a purchase decision or a product rating.

Owner:PARMER GEORGE A

Systems and methods for identifying gaze tracking scene reference locations

A system is provided for identifying reference locations within the environment of a device wearer. The system includes a scene camera mounted on eyewear or headwear coupled to a processing unit. The system may recognize objects with known geometries that occur naturally within the wearer's environment or objects that have been intentionally placed at known locations within the wearer's environment. One or more light sources may be mounted on the headwear that illuminate reflective surfaces at selected times and wavelengths to help identify scene reference locations and glints projected from known locations onto the surface of the eye. The processing unit may control light sources to adjust illumination levels in order to help identify reference locations within the environment and corresponding glints on the surface of the eye. Objects may be identified substantially continuously within video images from scene cameras to provide a continuous data stream of reference locations.

Owner:GOOGLE LLC

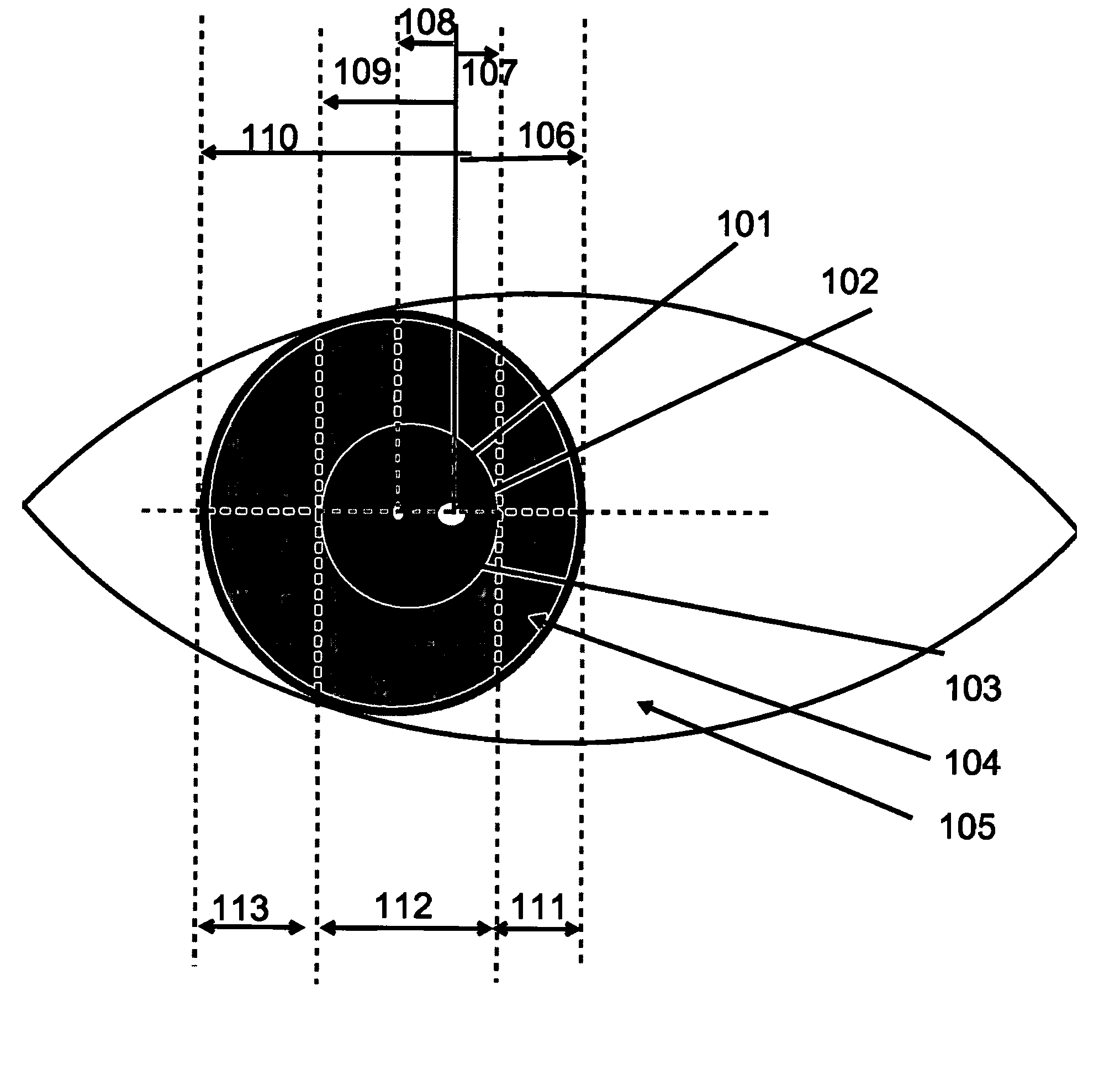

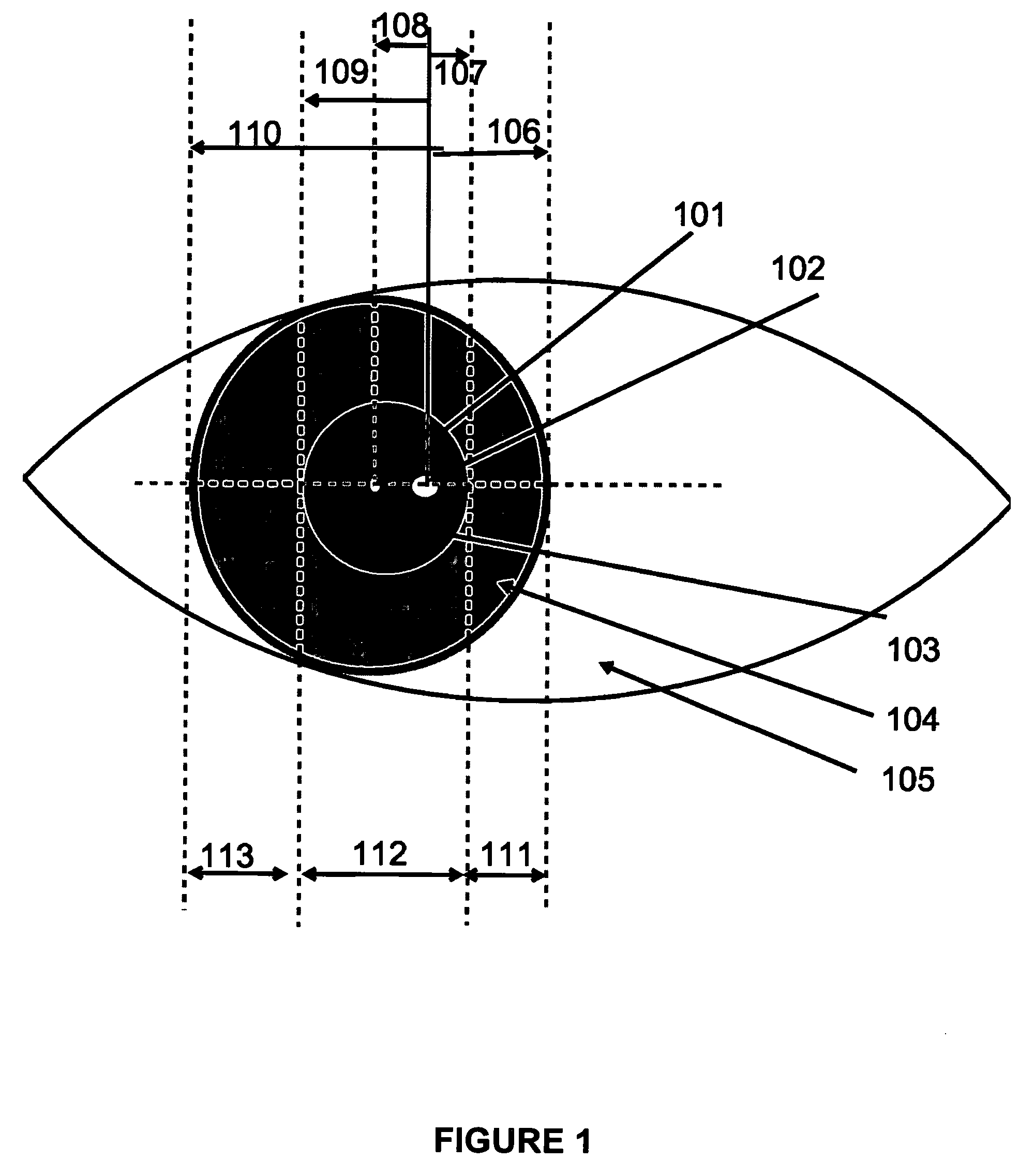

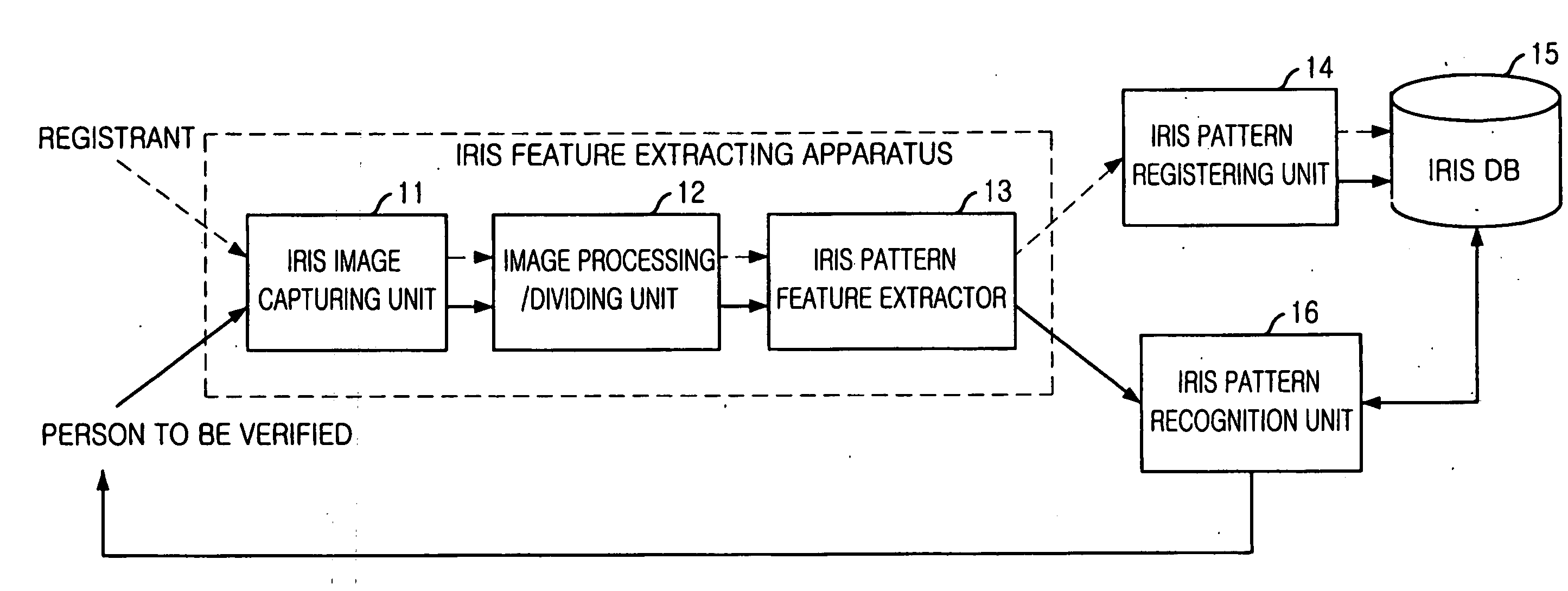

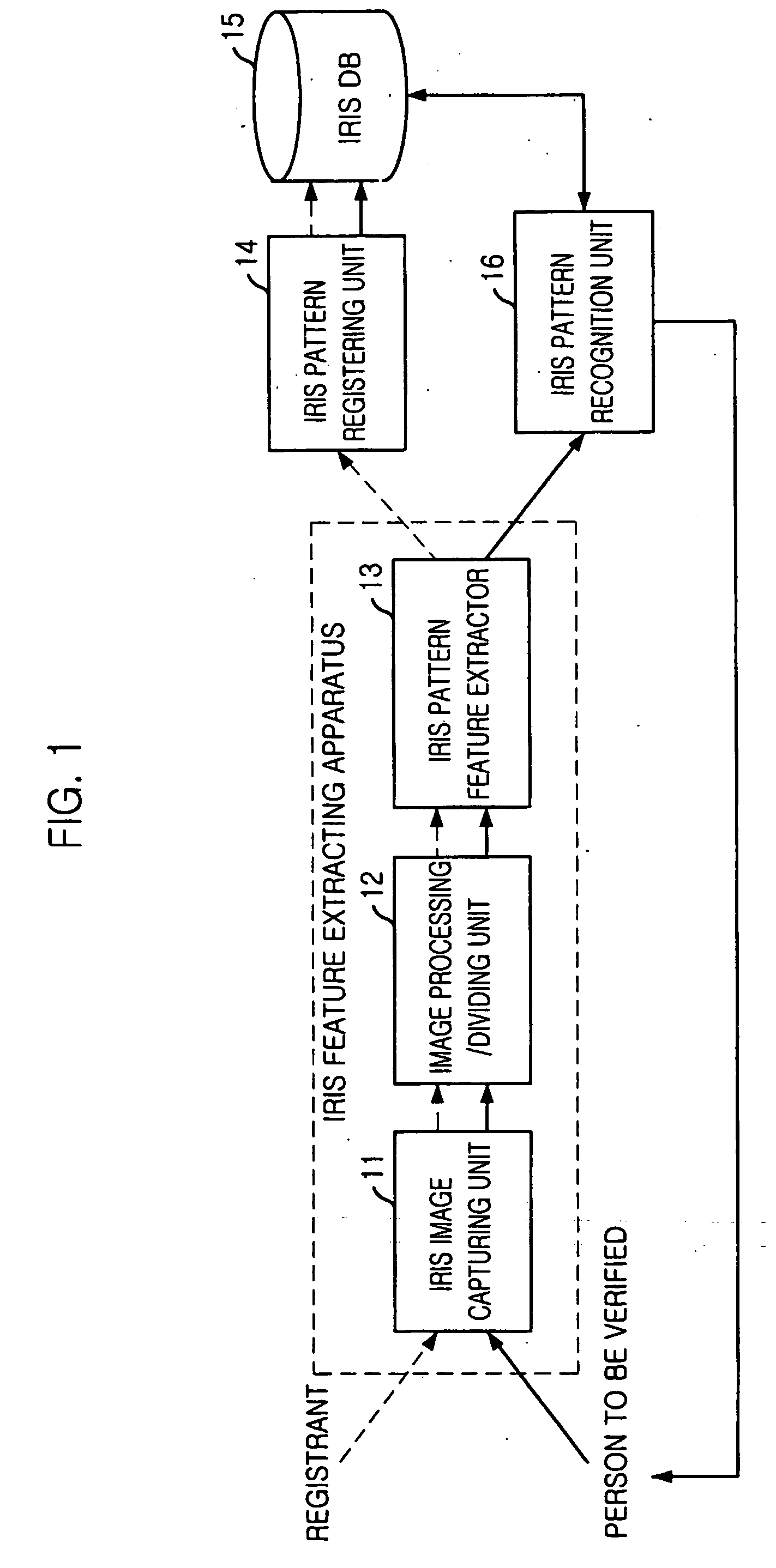

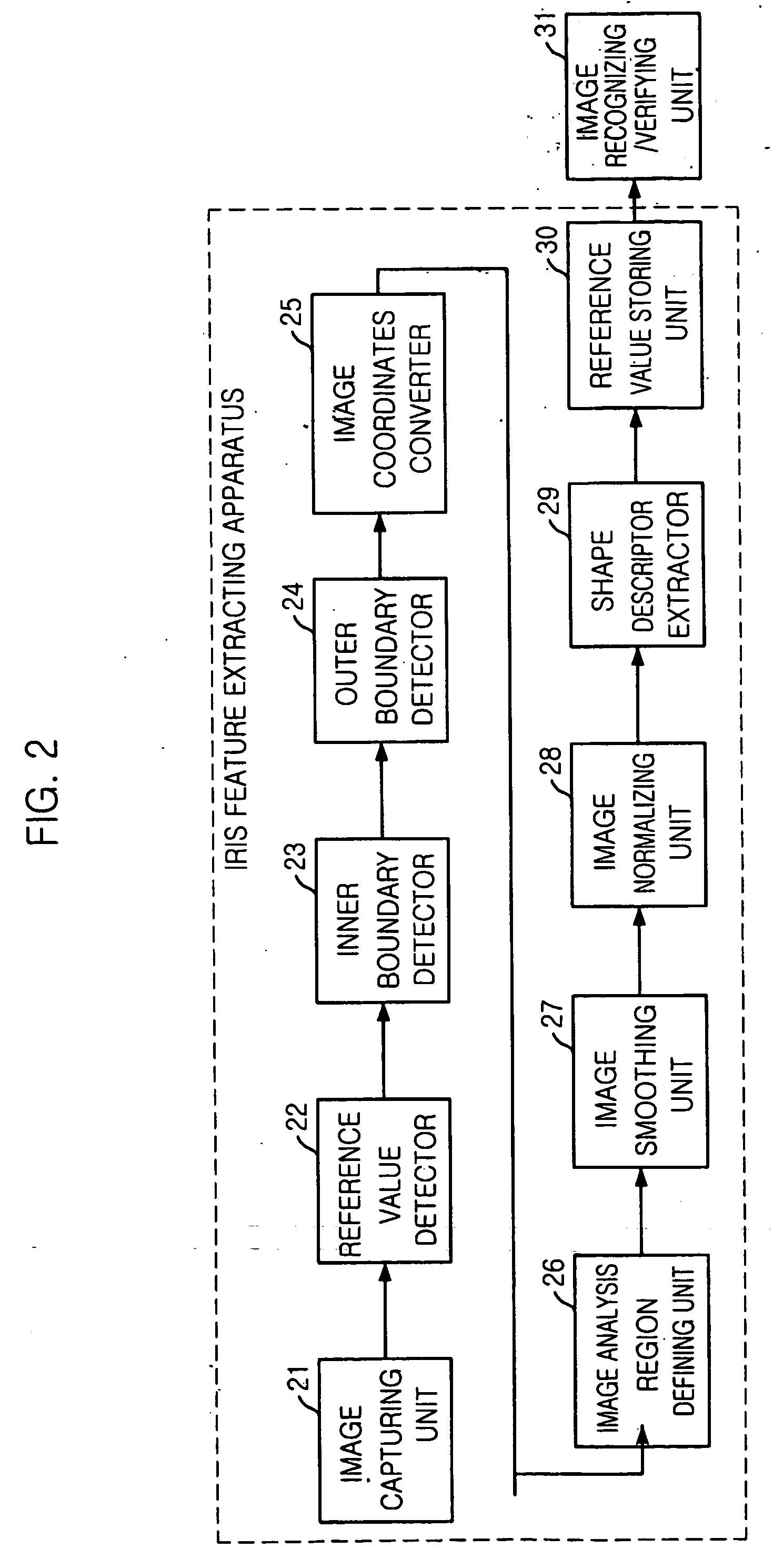

Pupil detection method and shape descriptor extraction method for a iris recognition, iris feature extraction apparatus and method, and iris recognition system and method using its

Provided is pupil detection method and shape descriptor extraction method for an iris recognition, iris feature extraction apparatus and method, and iris recognition system and method using the same. The method for detecting a pupil for iris recognition, includes the steps of: a) detecting light sources in the pupil from an eye image as two reference points; b) determining first boundary candidate points located between the iris and the pupil of the eye image, which cross over a straight line between the two reference points; c) determining second boundary candidate points located between the iris and the pupil of the eye image, which cross over a perpendicular bisector of a straight line between the first boundary candidate points; and d) determining a location and a size of the pupil by obtaining a radius of a circle and coordinates of a center of the circle based on a center candidate point, wherein the center candidate point is a center point of perpendicular bisectors of straight line between the neighbor boundary candidate points, to thereby detect the pupil.

Owner:JIRIS

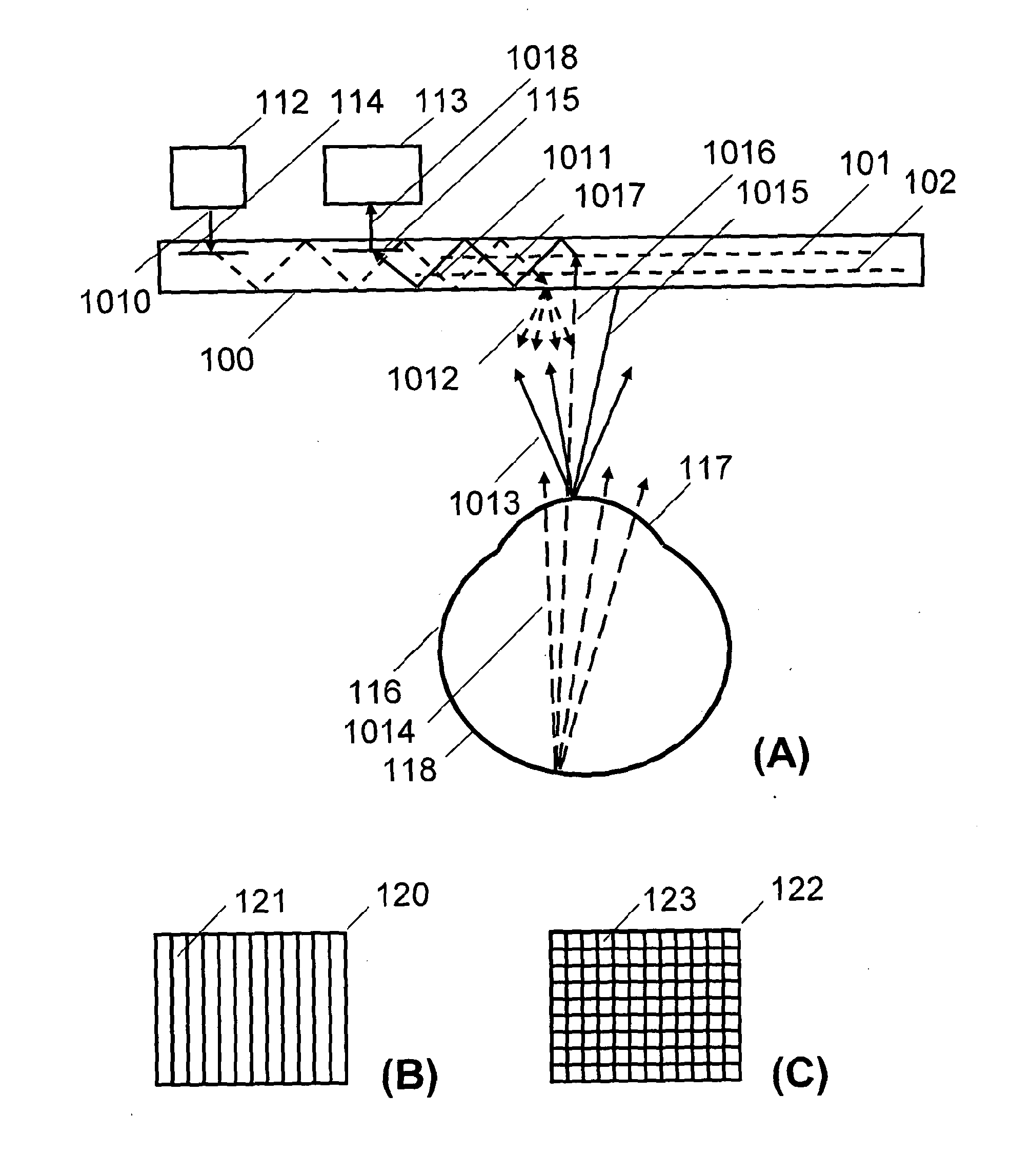

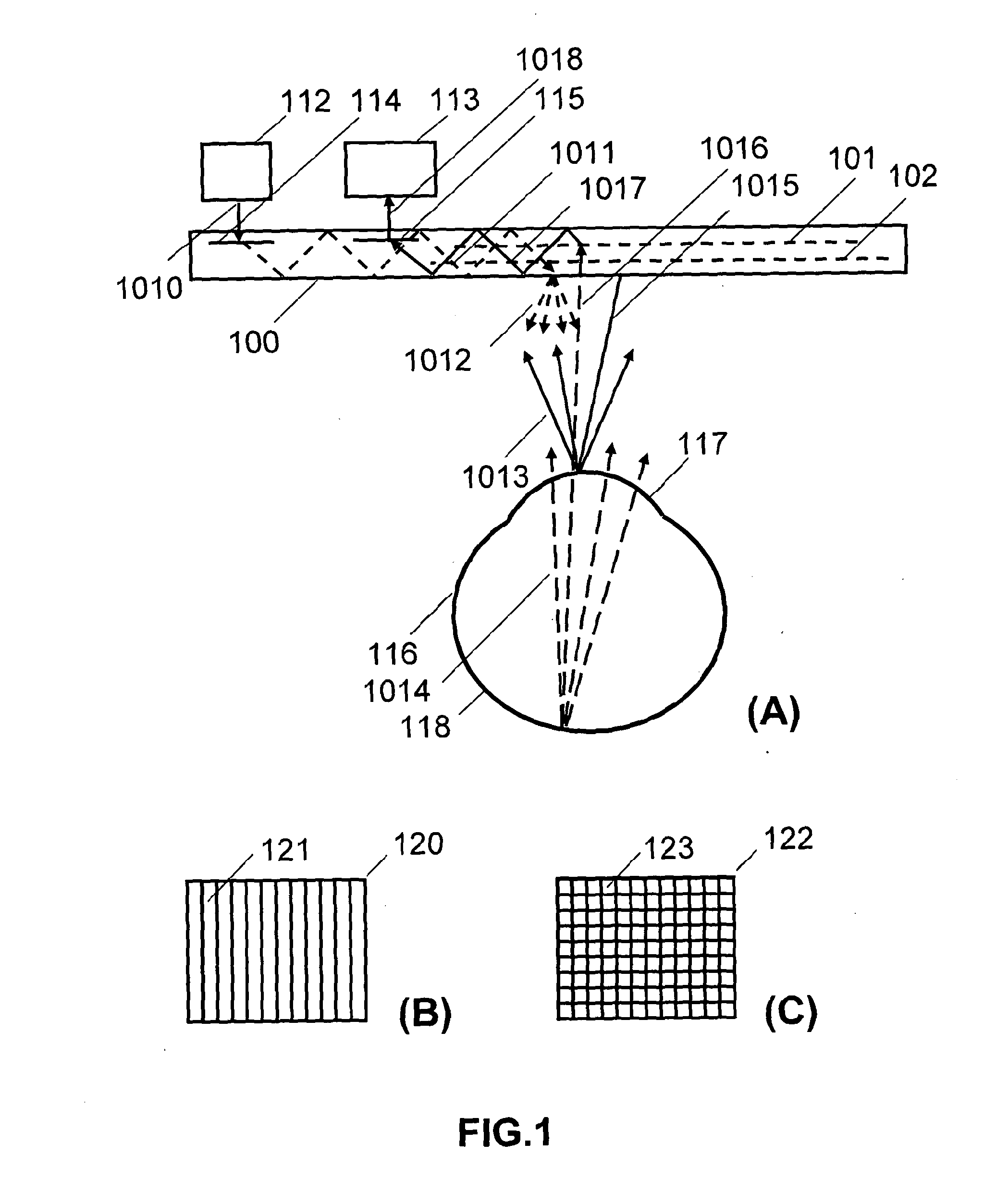

Apparatus for eye tracking

ActiveUS20150289762A1Large field of viewHigh transparencyTelevision system detailsAcquiring/recognising eyesGratingOptical coupling

An eye tracker having a waveguide for propagating illumination light towards an eye and propagating image light reflected from at least one surface of an eye, a light source optically coupled to the waveguide, and a detector optically coupled to the waveguide. Disposed in the waveguide is at least one grating lamina for deflecting the illumination light towards the eye along a first waveguide path and deflecting the image light towards the detector along a second waveguide path.

Owner:DIGILENS

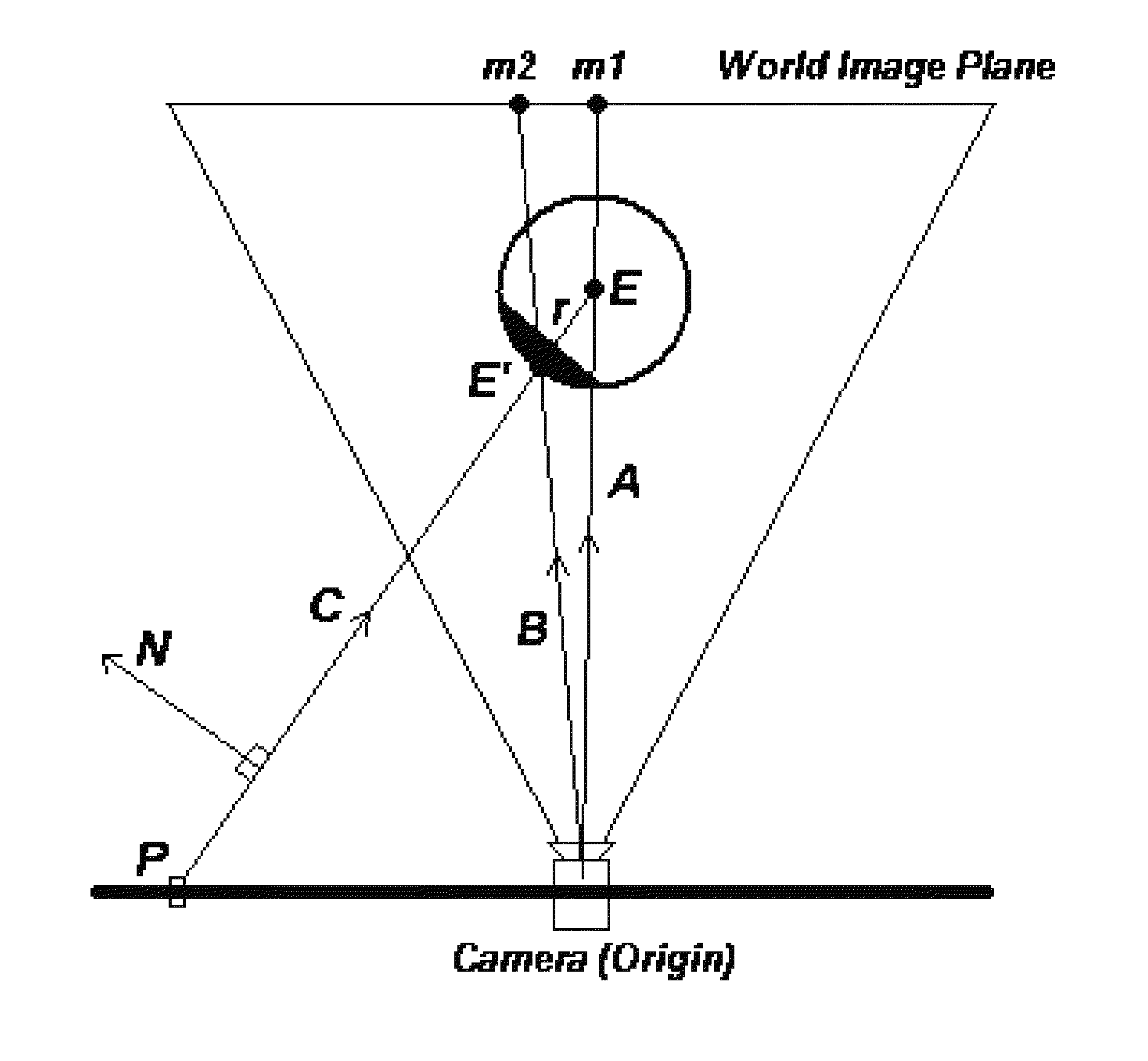

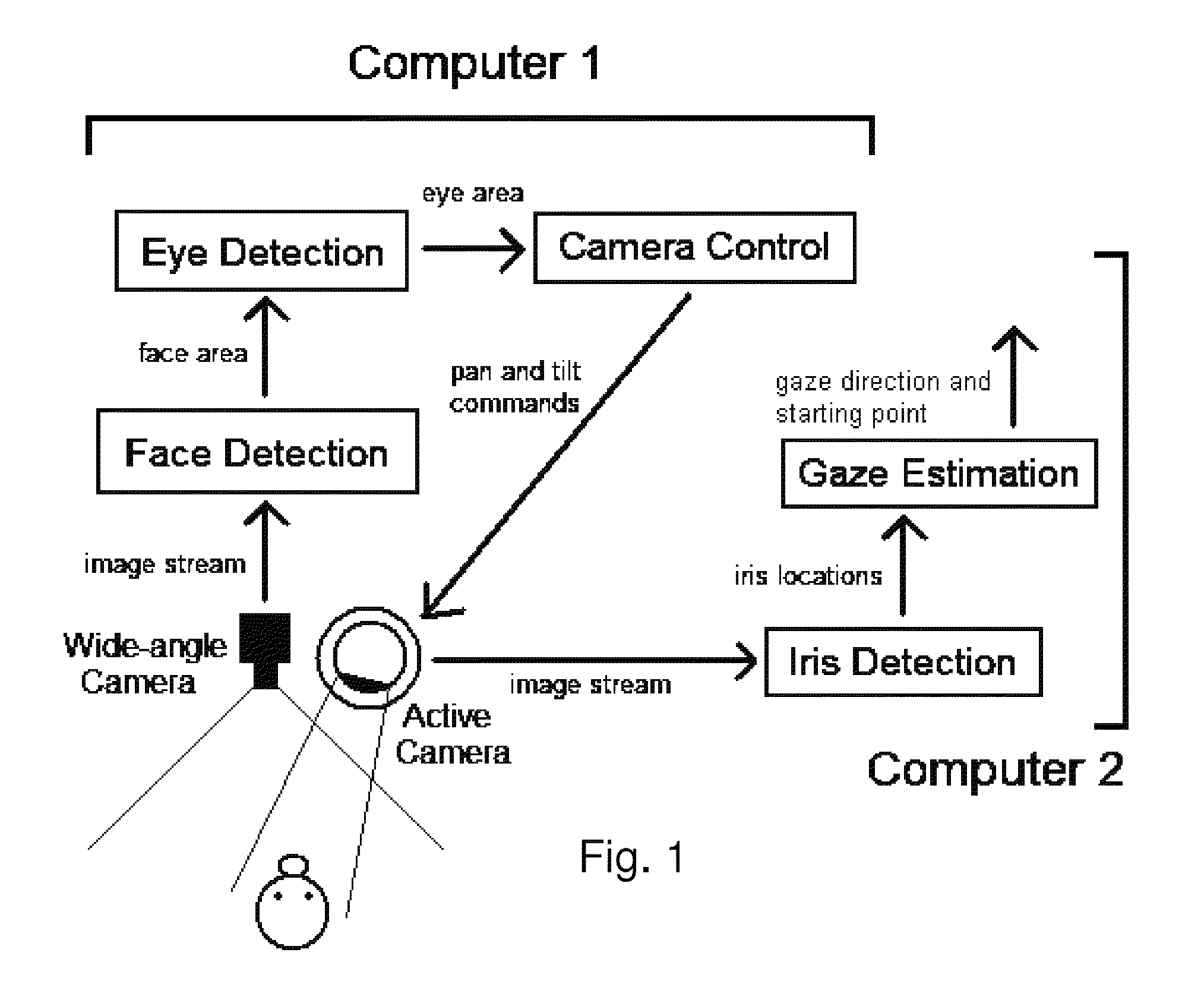

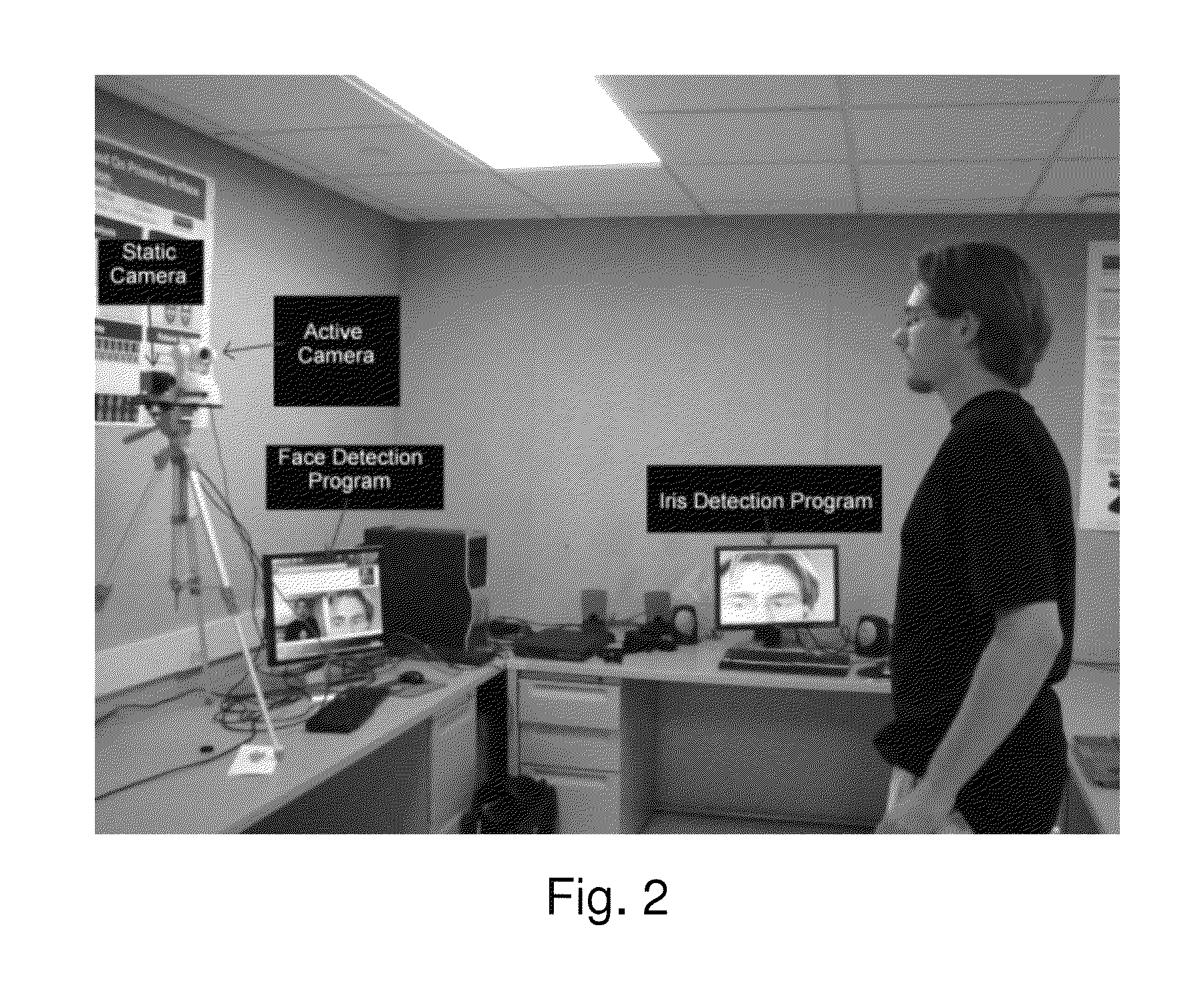

Real time eye tracking for human computer interaction

ActiveUS8885882B1Improve interactivityMore intelligent behaviorImage enhancementImage analysisOptical axisGaze directions

A gaze direction determining system and method is provided. A two-camera system may detect the face from a fixed, wide-angle camera, estimates a rough location for the eye region using an eye detector based on topographic features, and directs another active pan-tilt-zoom camera to focus in on this eye region. A eye gaze estimation approach employs point-of-regard (PoG) tracking on a large viewing screen. To allow for greater head pose freedom, a calibration approach is provided to find the 3D eyeball location, eyeball radius, and fovea position. Both the iris center and iris contour points are mapped to the eyeball sphere (creating a 3D iris disk) to get the optical axis; then the fovea rotated accordingly and the final, visual axis gaze direction computed.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

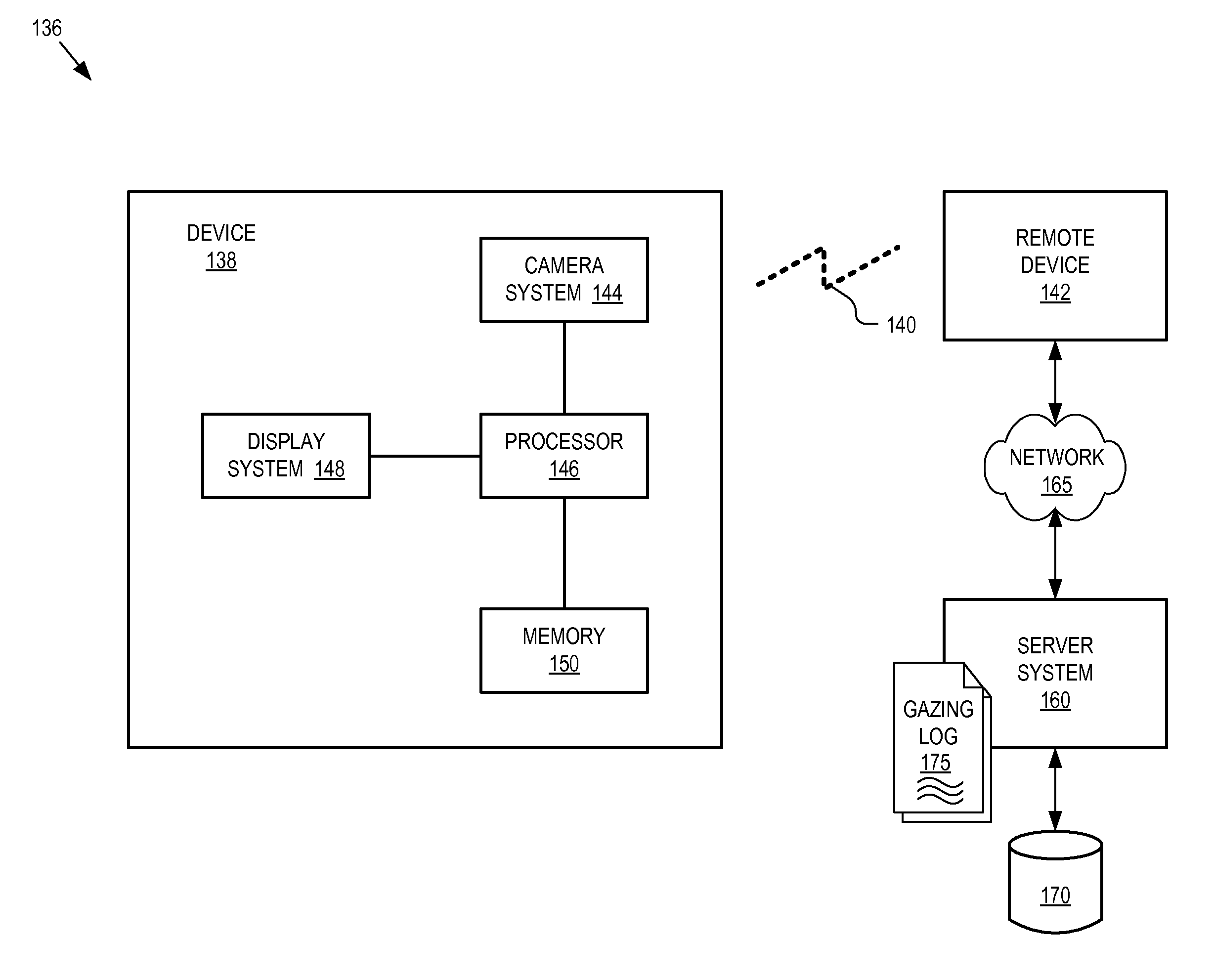

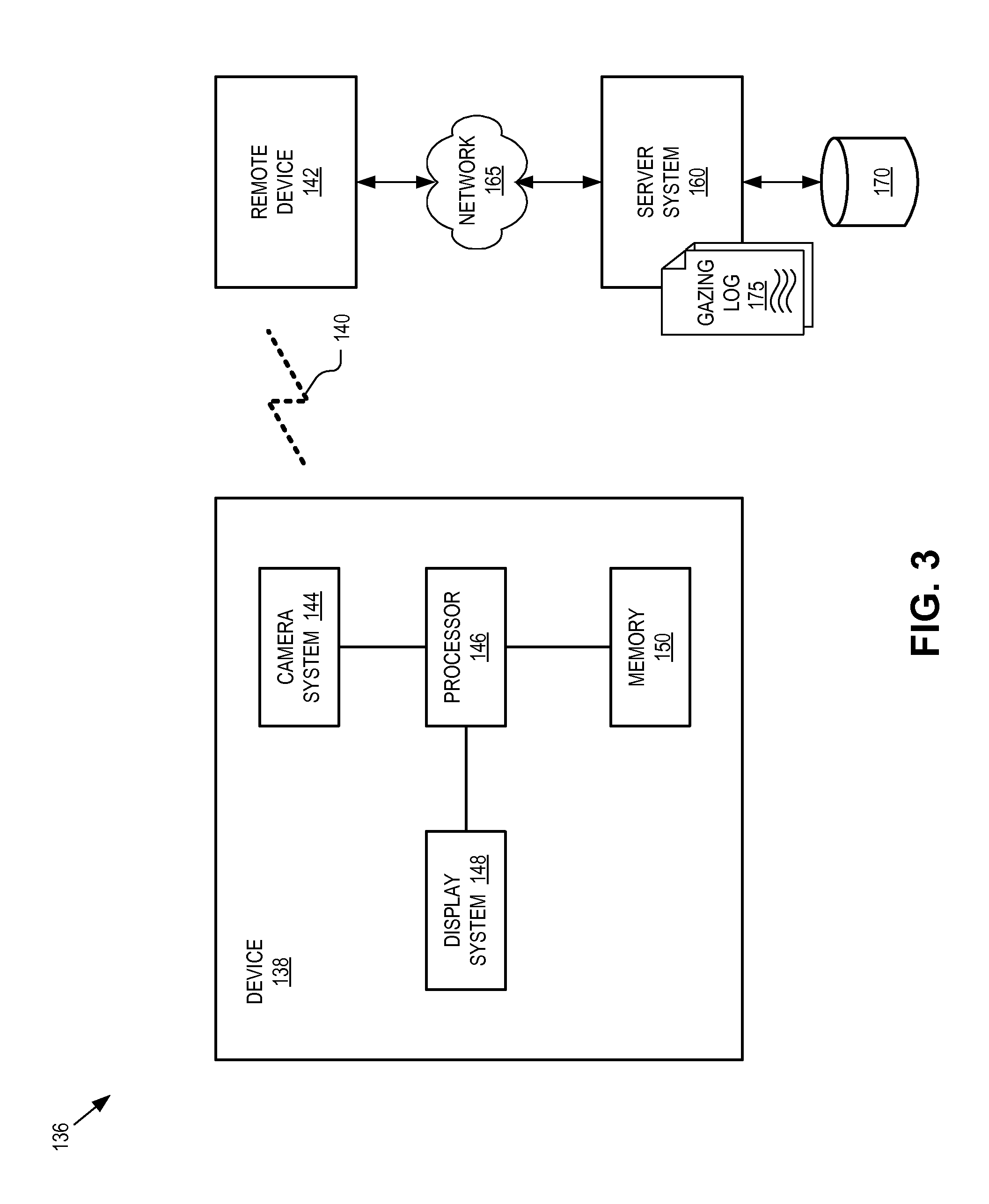

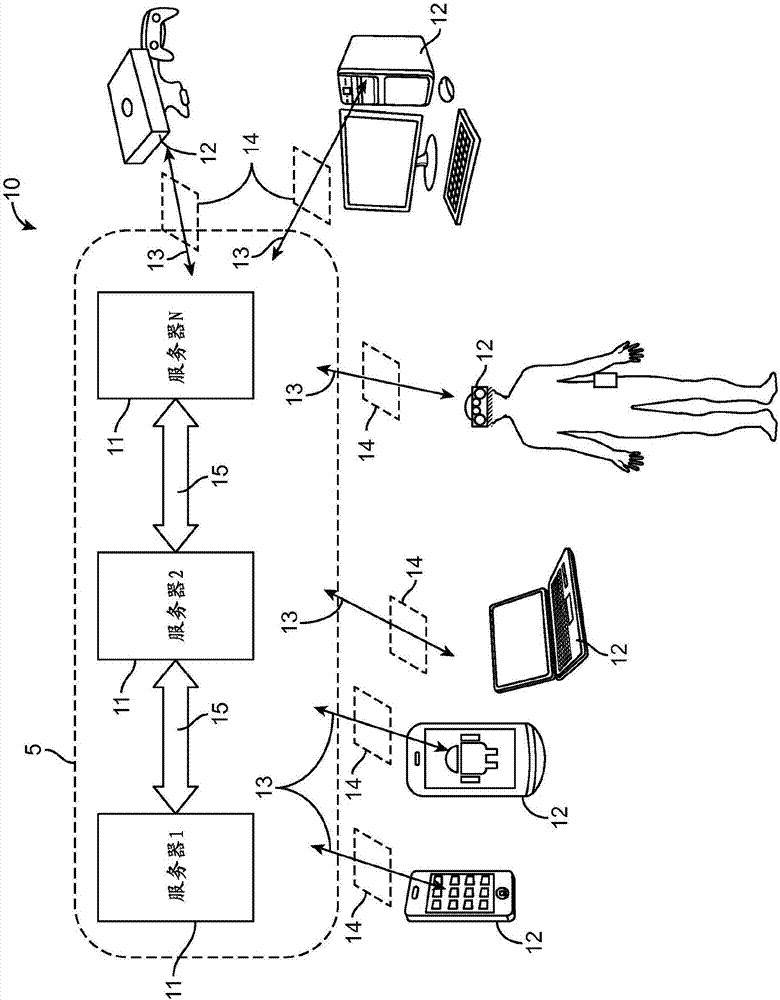

Gaze tracking system

A gaze tracking technique is implemented with a head mounted gaze tracking device that communicates with a server. The server receives scene images from the head mounted gaze tracking device which captures external scenes viewed by a user wearing the head mounted device. The server also receives gaze direction information from the head mounted gaze tracking device. The gaze direction information indicates where in the external scenes the user was gazing when viewing the external scenes. An image recognition algorithm is executed on the scene images to identify items within the external scenes viewed by the user. A gazing log tracking the identified items viewed by the user is generated.

Owner:GOOGLE LLC

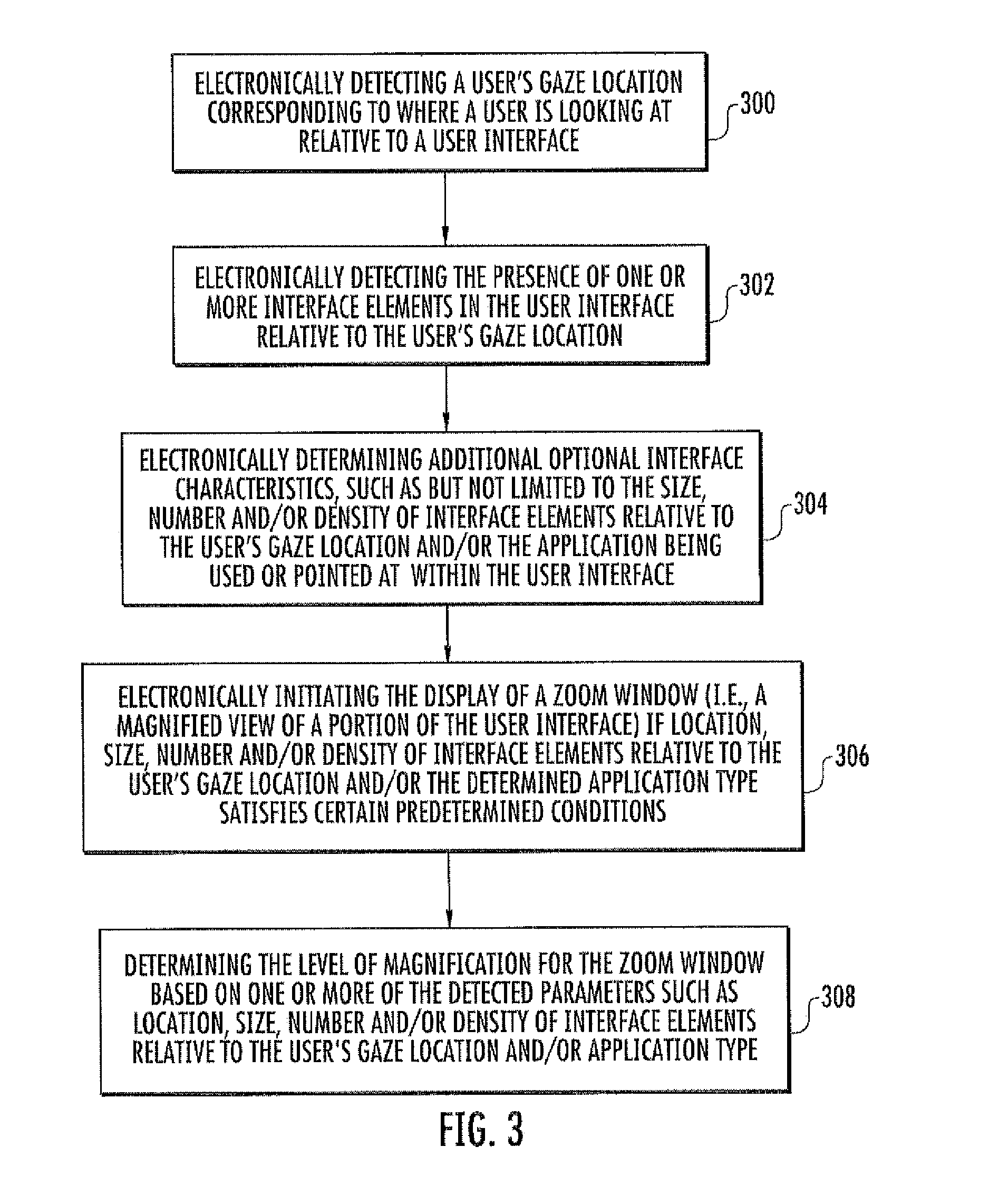

Calibration free, motion tolerent eye-gaze direction detector with contextually aware computer interaction and communication methods

InactiveUS20120105486A1Improved eye tracking systemImprove methodAcquiring/recognising eyesColor television detailsHuman–computer interaction

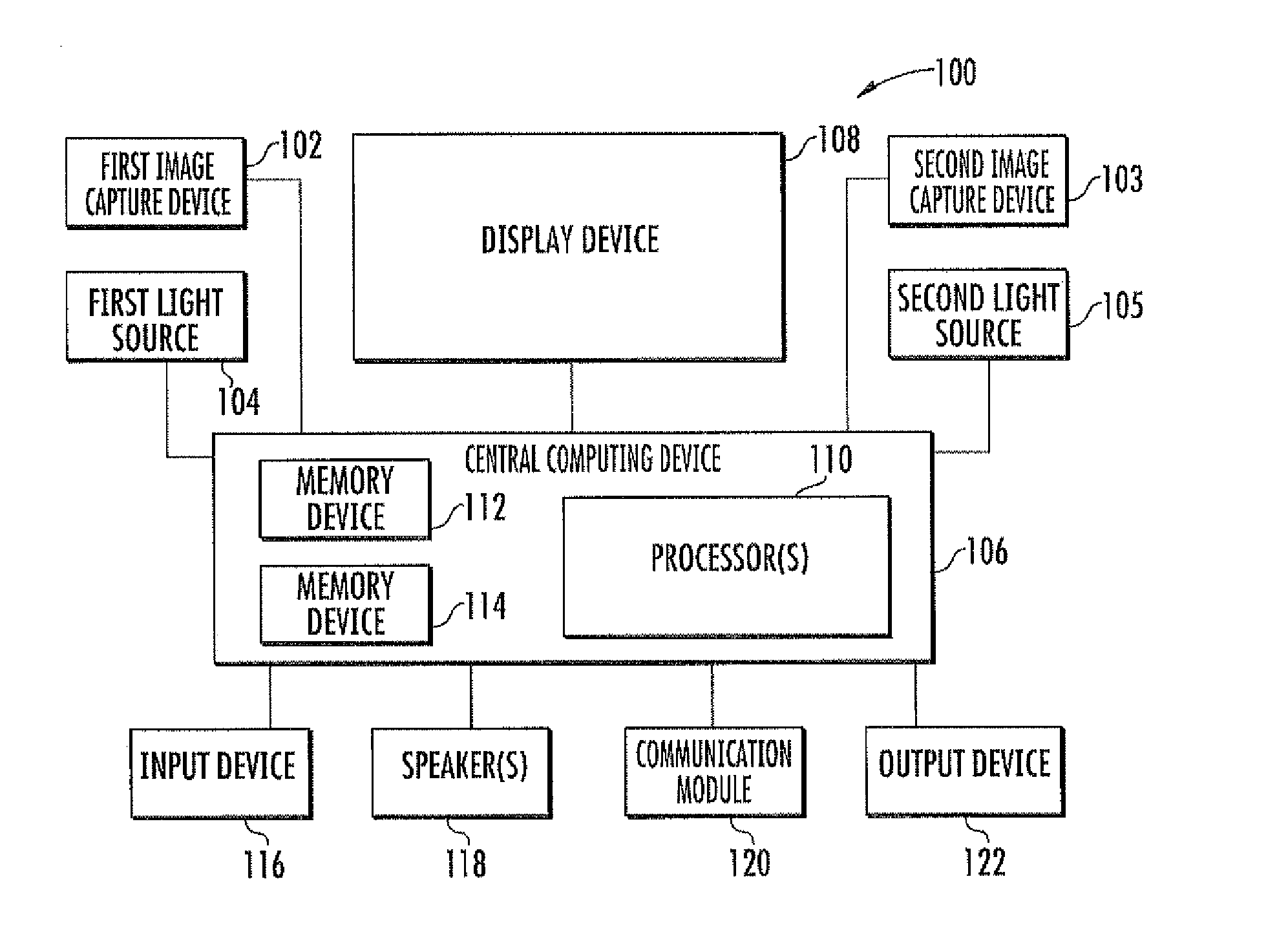

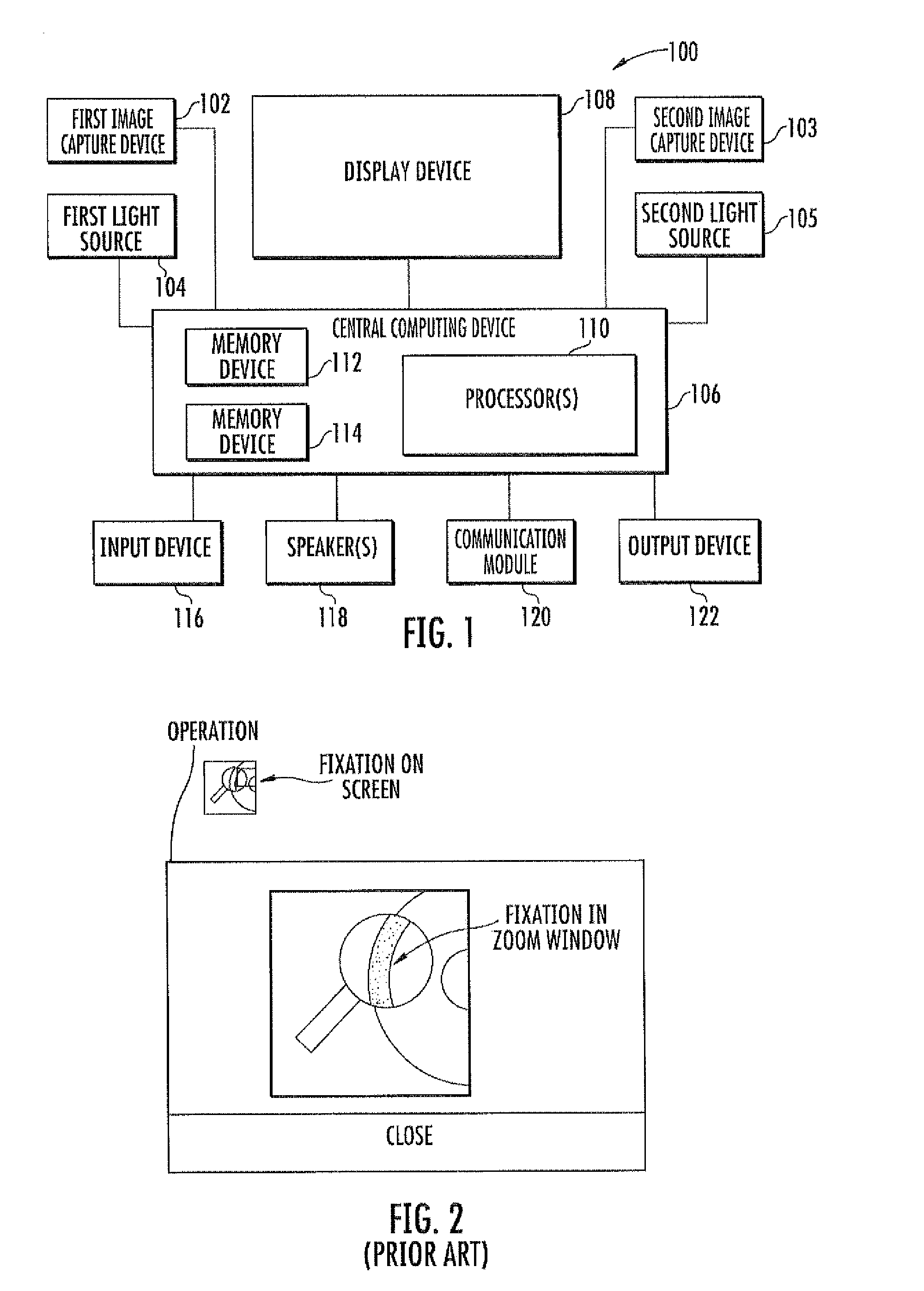

Eye tracking systems and methods include such exemplary features as a display device, at least one image capture device and a processing device. The display device displays a user interface including one or more interface elements to a user. The at least one image capture device detects a user's gaze location relative to the display device. The processing device electronically analyzes the location of user elements within the user interface relative to the user's gaze location and dynamically determine whether to initiate the display of a zoom window. The dynamic determination of whether to initiate display of the zoom window may further include analysis of the number, size and density of user elements within the user interface relative to the user's gaze location, the application type associated with the user interface or at the user's gaze location, and / or the structure of eye movements relative to the user interface.

Owner:DYNAVOX SYST

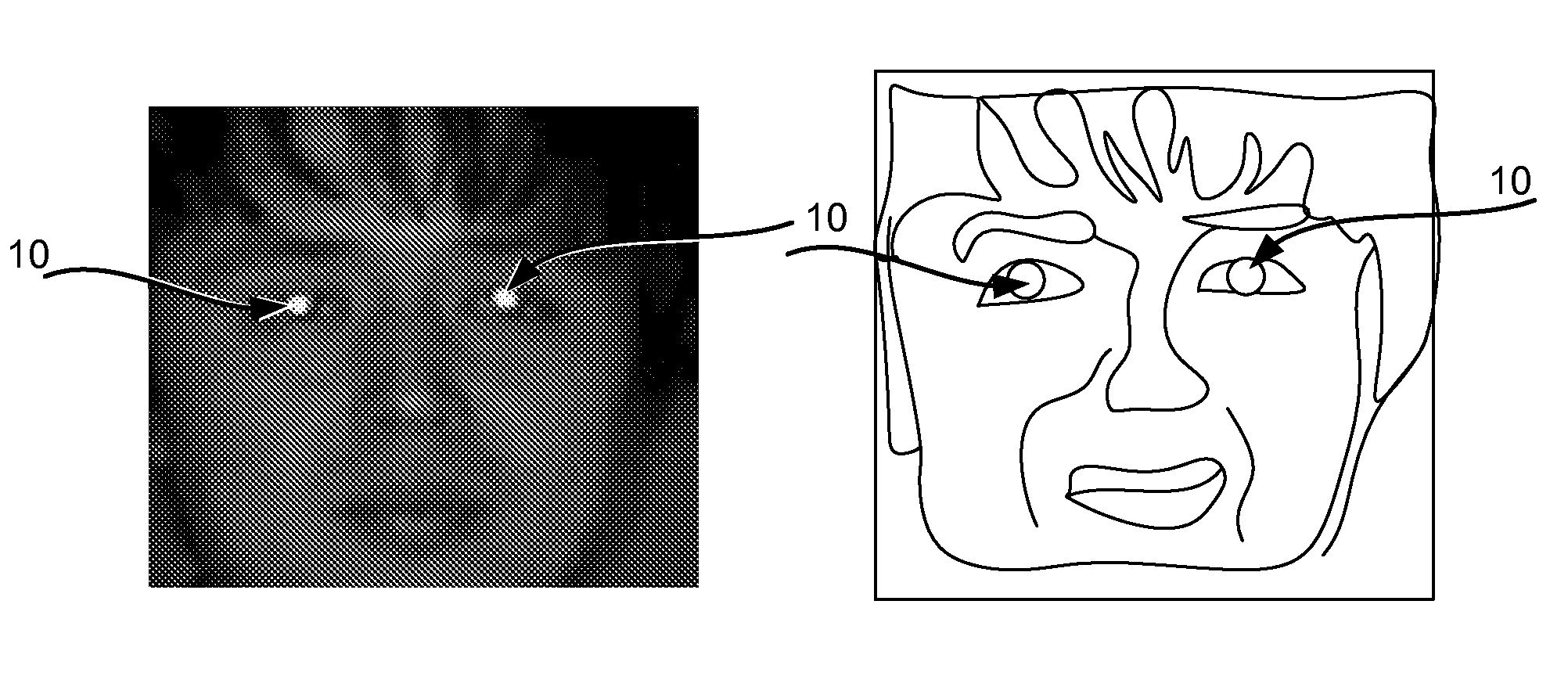

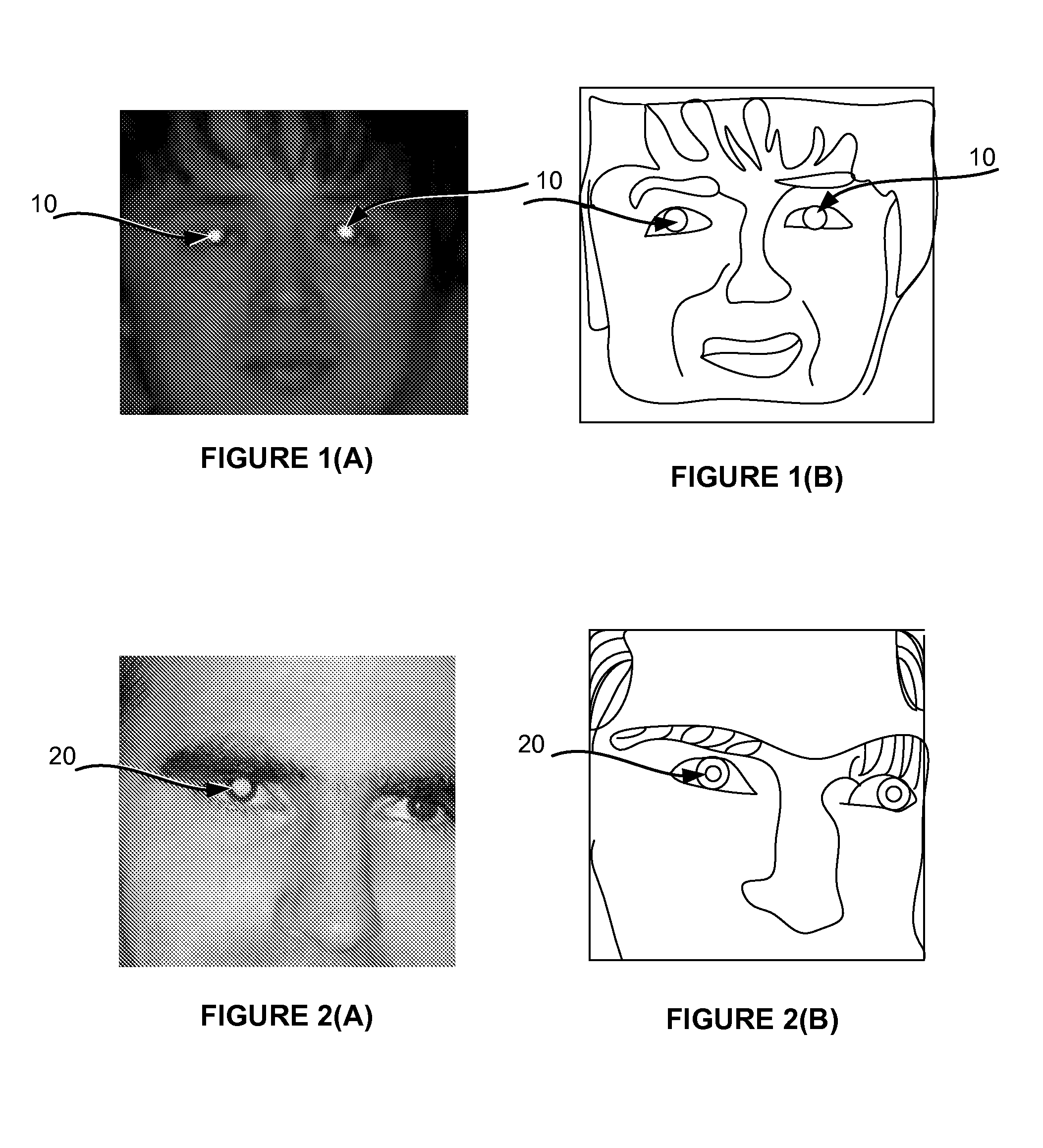

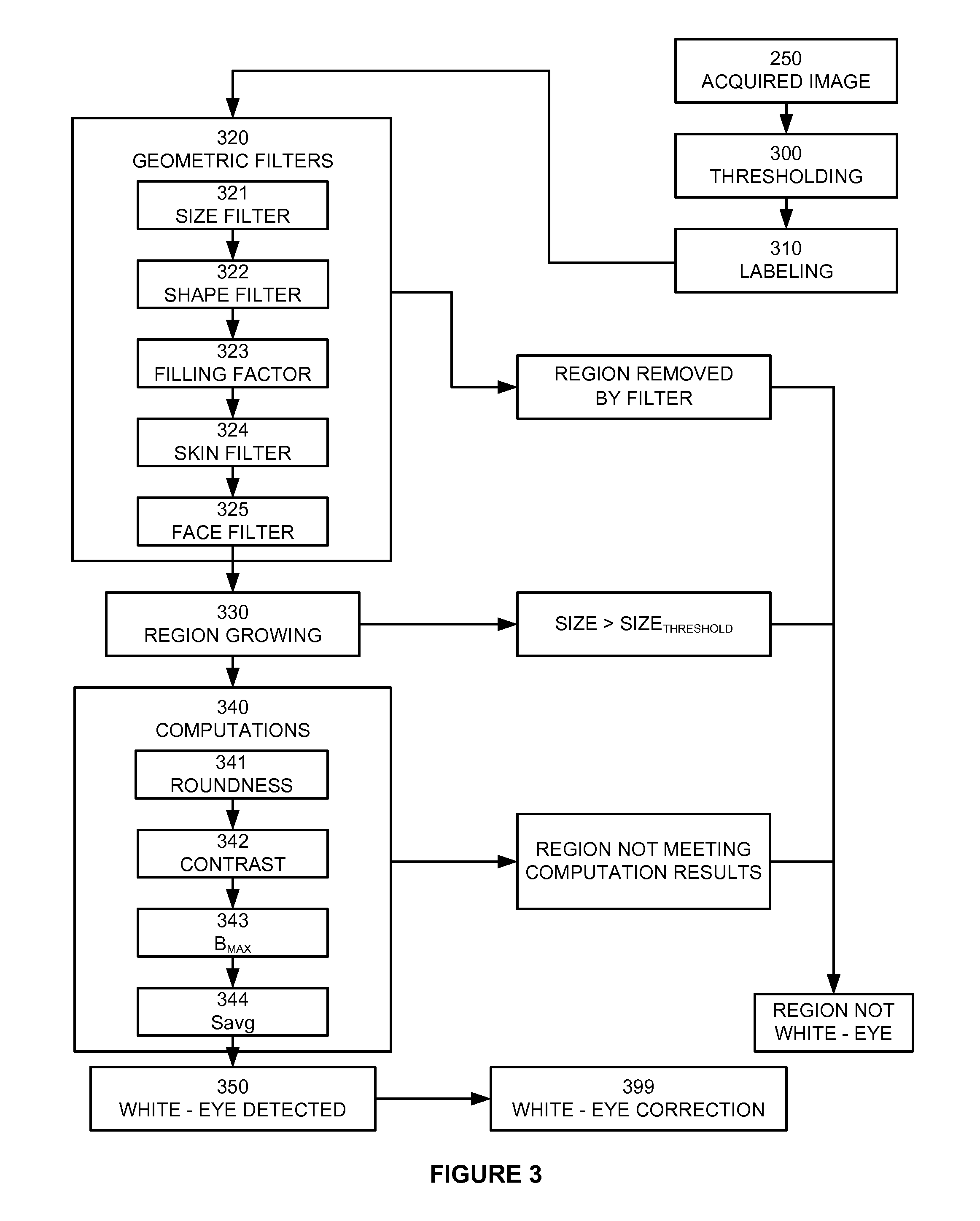

Automatic detection and correction of non-red eye flash defects

Owner:FOTONATION LTD

Method and apparatus for determining and analyzing a location of visual interest

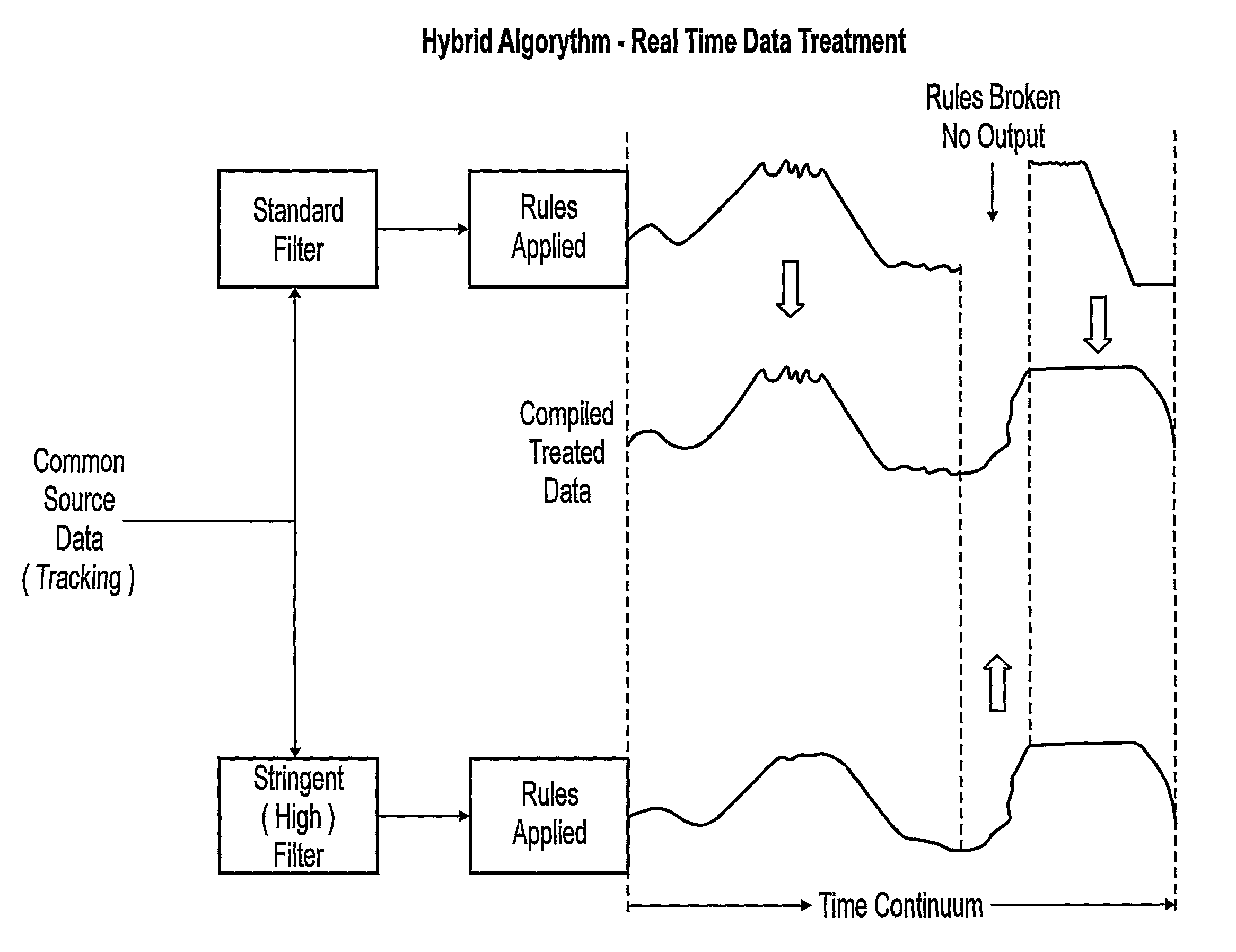

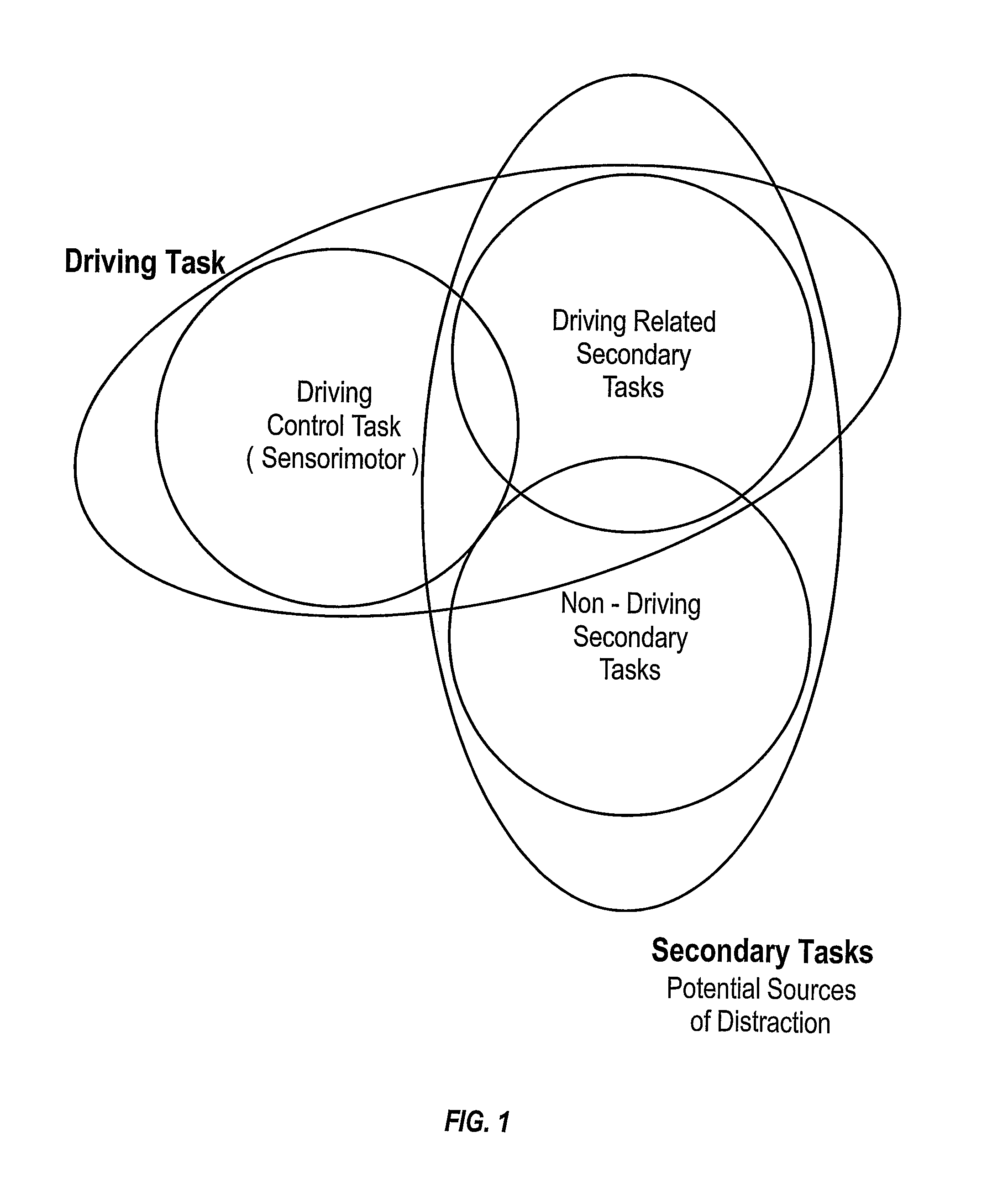

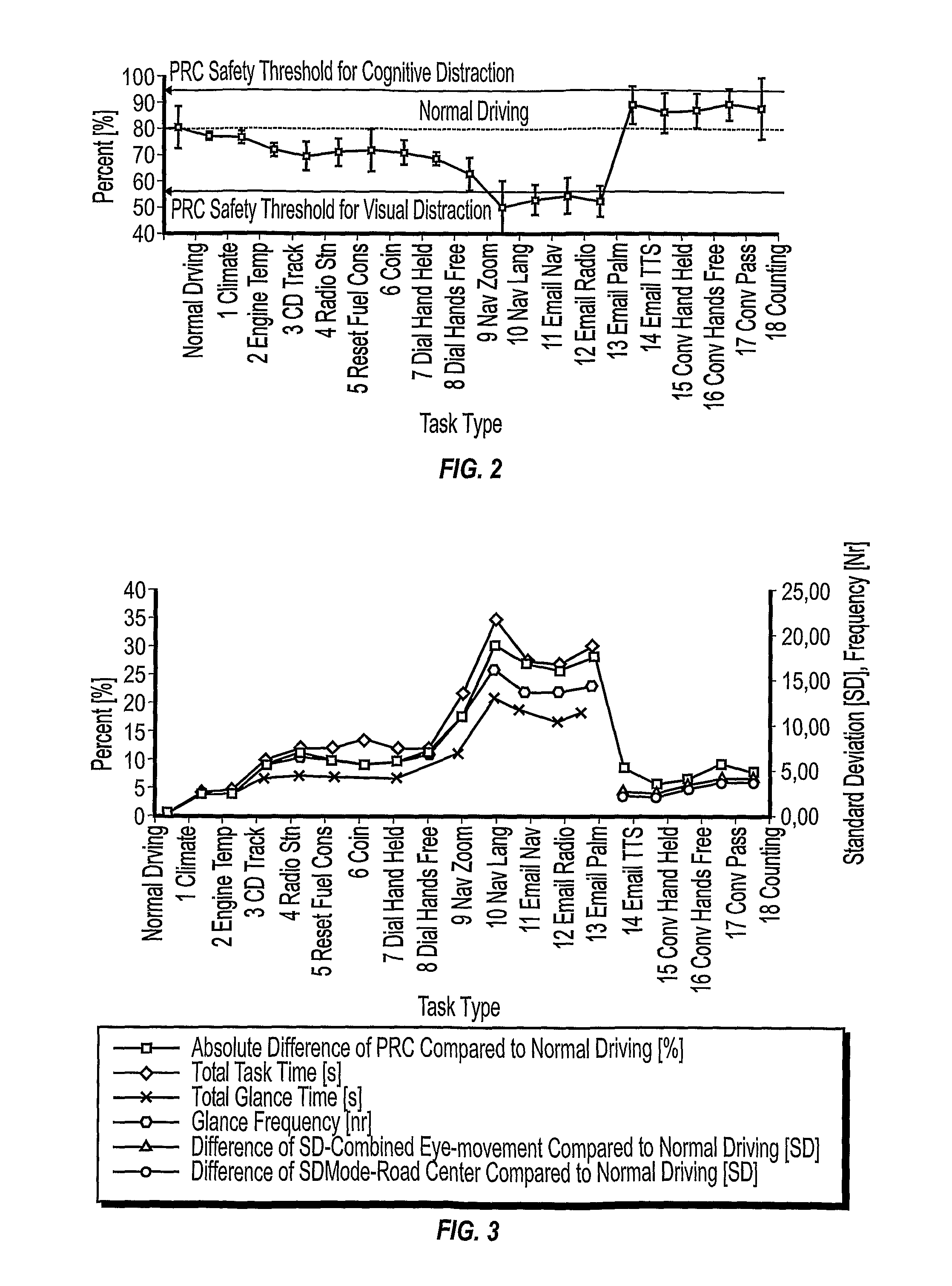

InactiveUS20100033333A1Effect of occurrence is minimizedMinimize impactElectric devicesAcquiring/recognising eyesPattern recognitionDriver/operator

A method of analyzing data based on the physiological orientation of a driver is provided. Data is descriptive of a driver's gaze-direction is processing and criteria defining a location of driver interest is determined. Based on the determined criteria, gaze-direction instances are classified as either on-location or off-location. The classified instances can then be used for further analysis, generally relating to times of elevated driver workload and not driver drowsiness. The classified instances are transformed into one of two binary values (e.g., 1 and 0) representative of whether the respective classified instance is on or off location. The uses of a binary value makes processing and analysis of the data faster and more efficient. Furthermore, classification of at least some of the off-location gaze direction instances can be inferred from the failure to meet the determined criteria for being classified as an on-location driver gaze direction instance.

Owner:VOLVO LASTVAGNAR AB

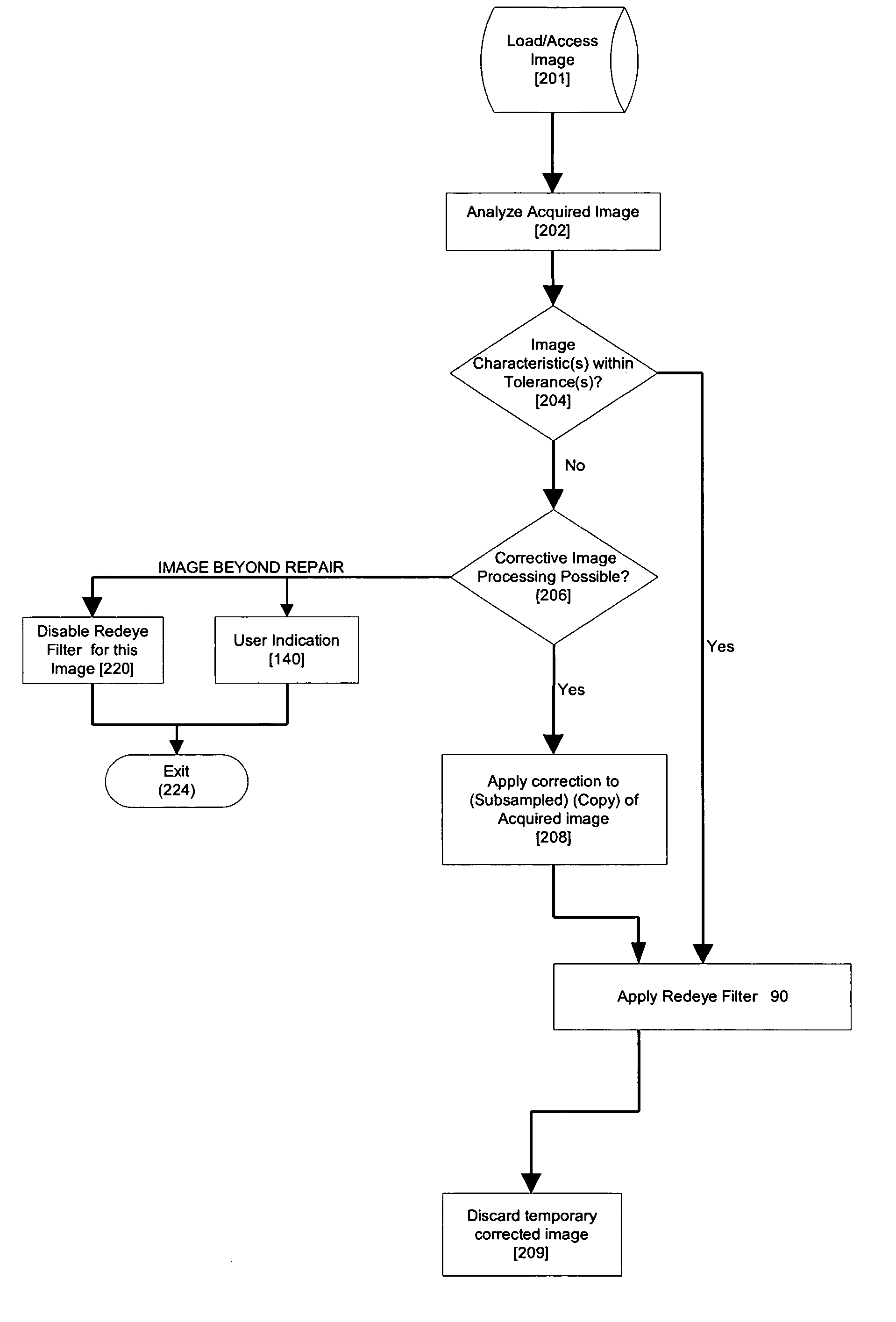

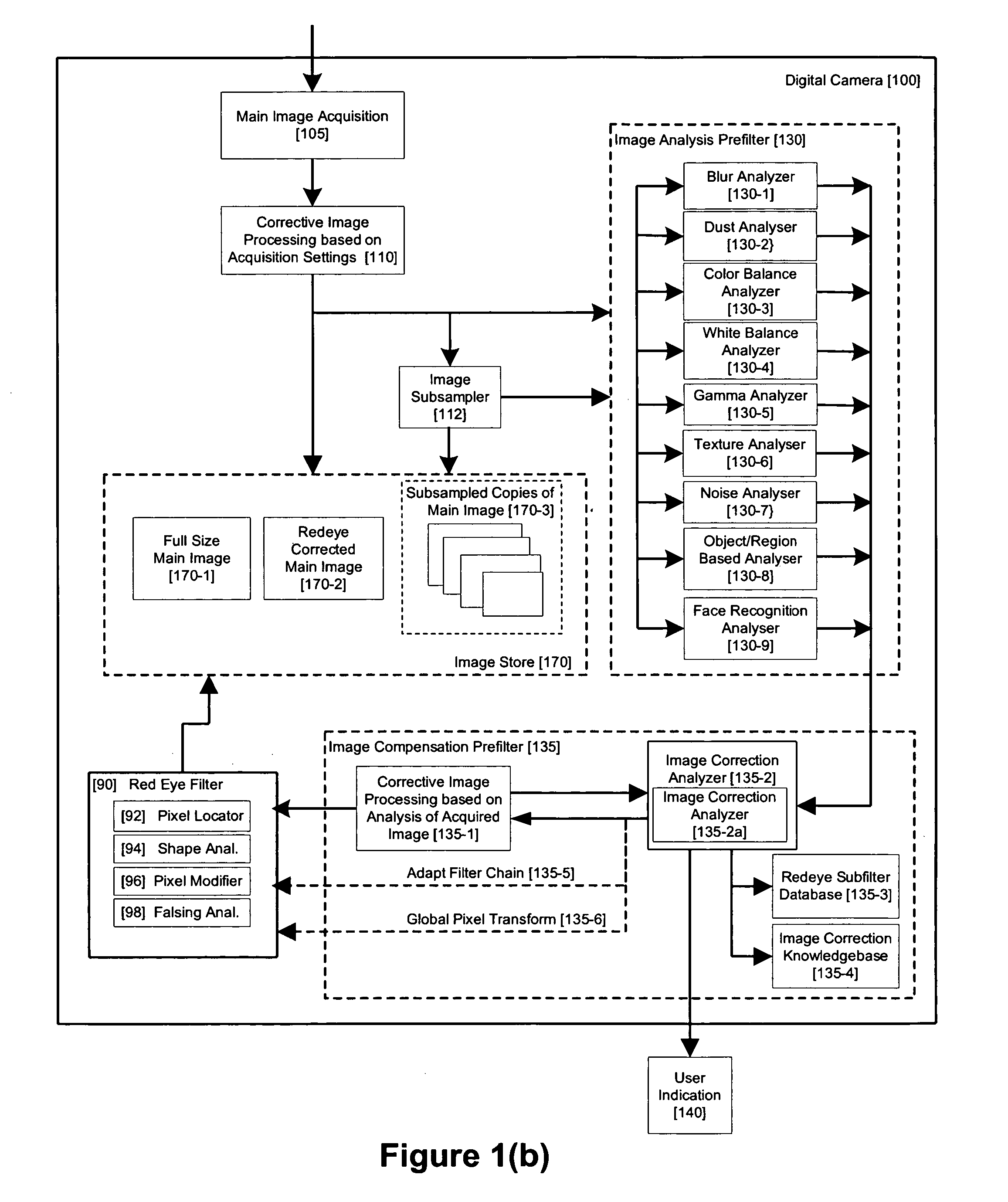

Method and apparatus for red-eye detection in an acquired digital image

ActiveUS20060120599A1Reduce false alarm rateIncrease success rateImage enhancementImage analysisImaging qualityDigital image

A method for red-eye detection in an acquired digital image comprises acquiring a first image and analyzing the first acquired image to provide a plurality of characteristics indicative of image quality. The process then determines if one or more corrective processes can be beneficially applied to the first acquired image according to the characteristics. Any such corrective processes are then applied to the first acquired image. Red-eye defects are then detected in a second acquired image using the corrected first acquired image. Defect detection can comprise applying a chain of one or more red-eye filters to the first acquired image. In this case, prior to the detecting step, it is determined if the red-eye filter chain can be adapted in accordance with the plurality of characteristics; and the red-eye filter is adapted accordingly.

Owner:FOTONATION LTD

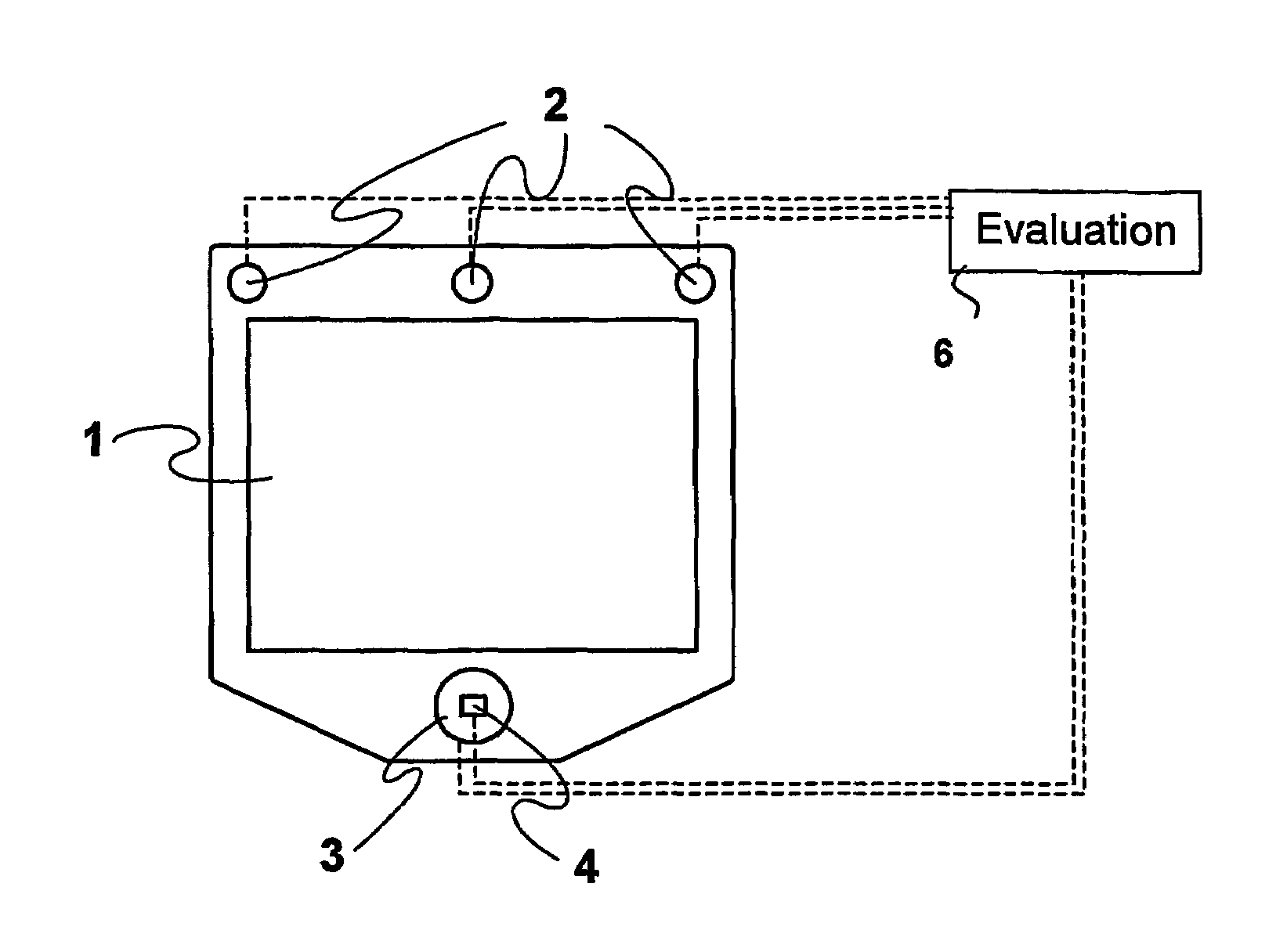

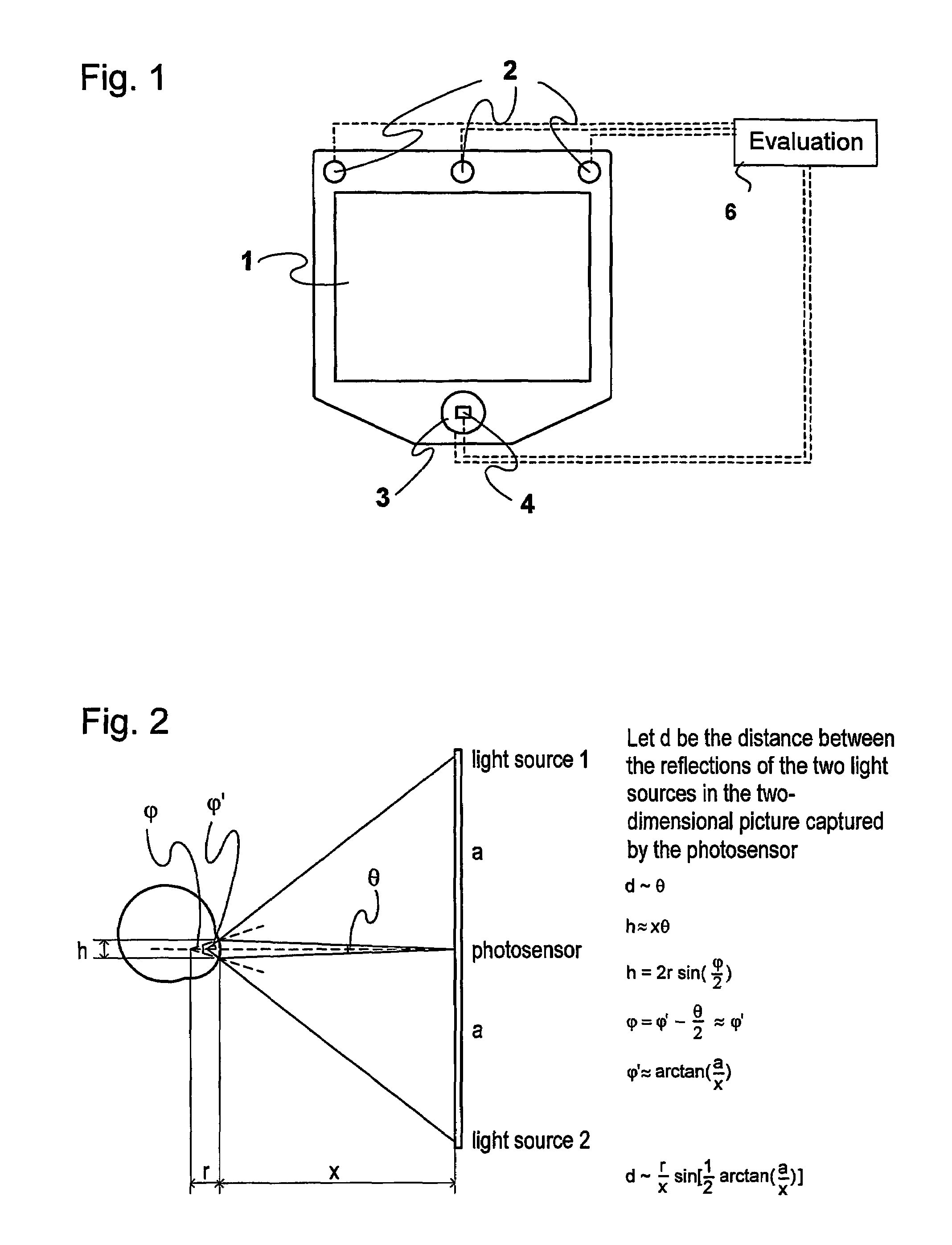

Method and installation for detecting and following an eye and the gaze direction thereof

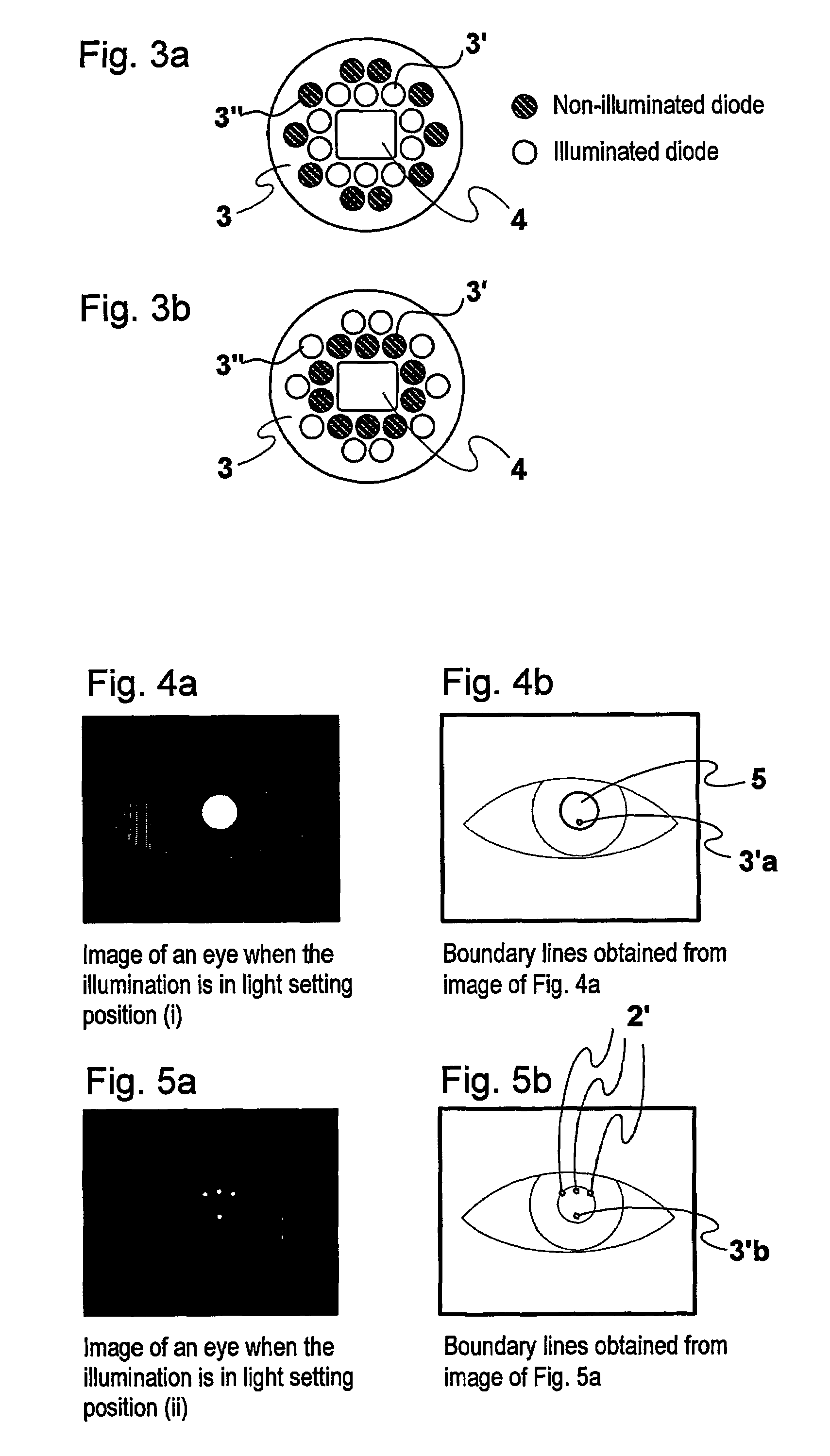

When detecting the position and gaze direction of eyes, a photo sensor (1) and light sources (2, 3) placed around a display (1) and a calculation and control unit (6) are used. One of the light sources is placed around the sensor and includes inner and outer elements (3′; 3″). When only the inner elements are illuminated, a strong bright eye effect in a captured image is obtained, this resulting in a simple detection of the pupils and thereby a safe determination of gaze direction. When only the outer elements and the outer light sources (2) are illuminated, a determination of the distance of the eye from the photo sensor is made. After it has been possible to determine the pupils in an image, in the following captured images only those areas around the pupils are evaluated where the images of the eyes are located. Which one of the eyes that is the left eye and the right eye can be determined by following the images of the eyes and evaluating the positions thereof in successively captured images.

Owner:TOBII TECH AB

Systems and methods for high-resolution gaze tracking

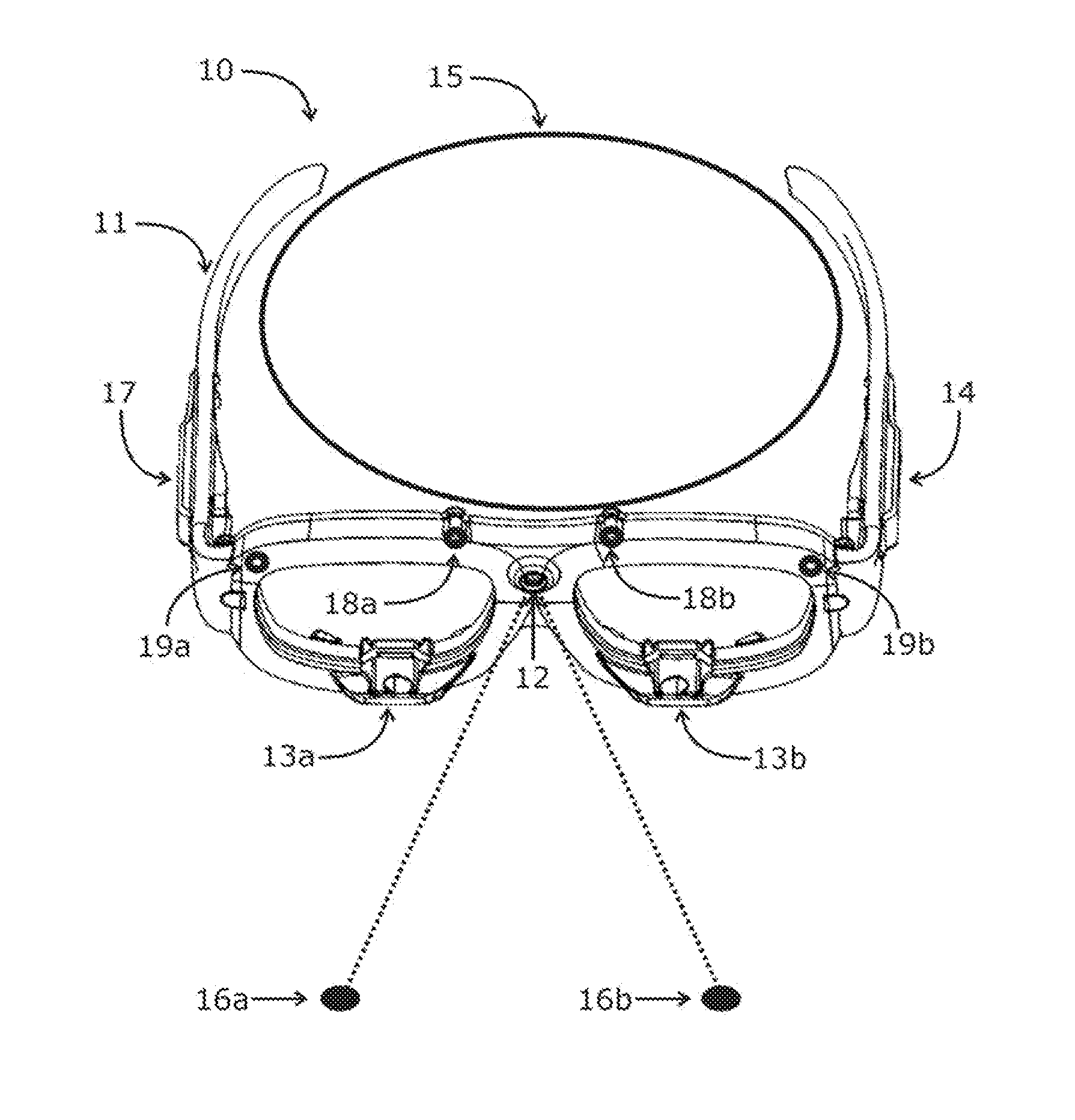

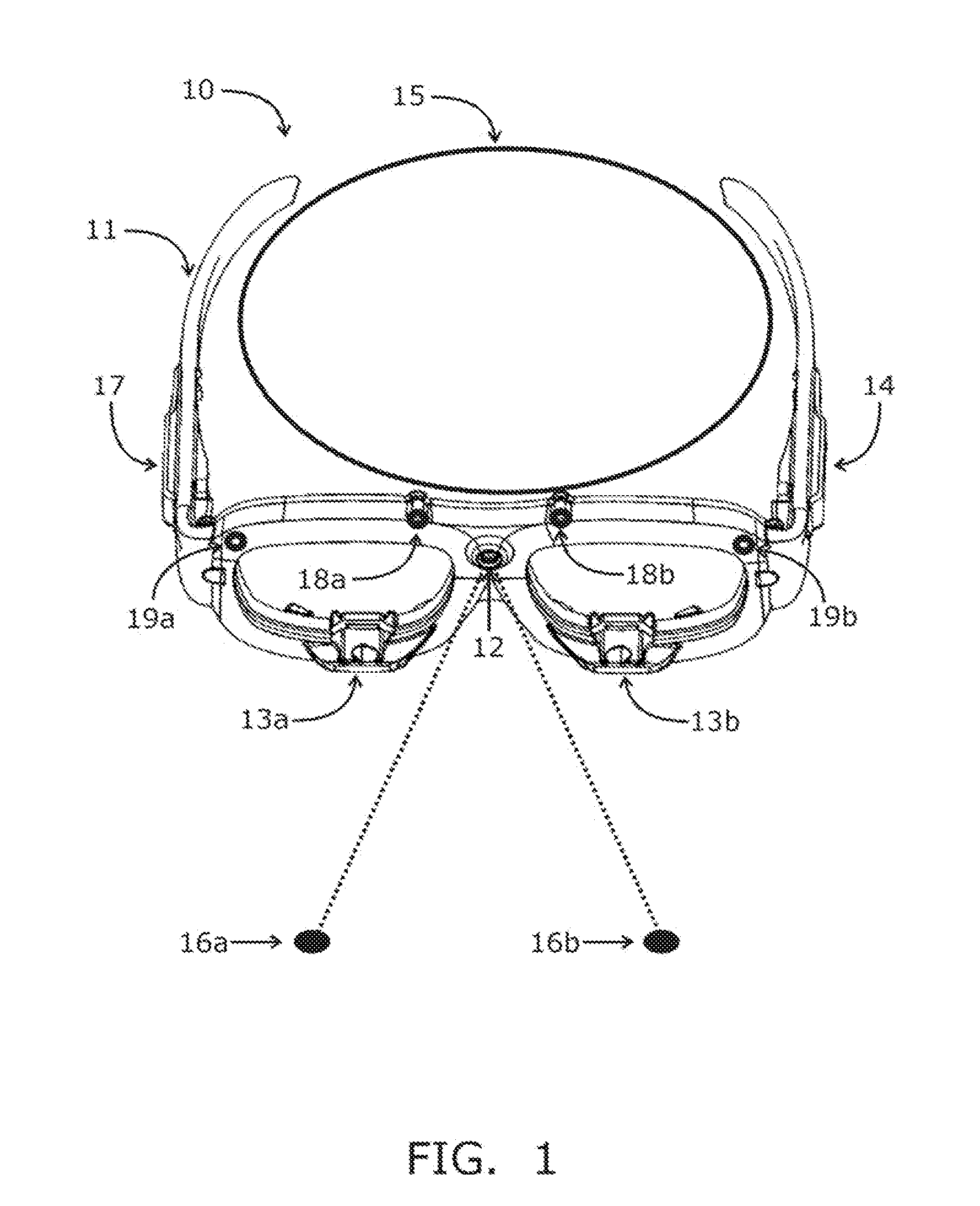

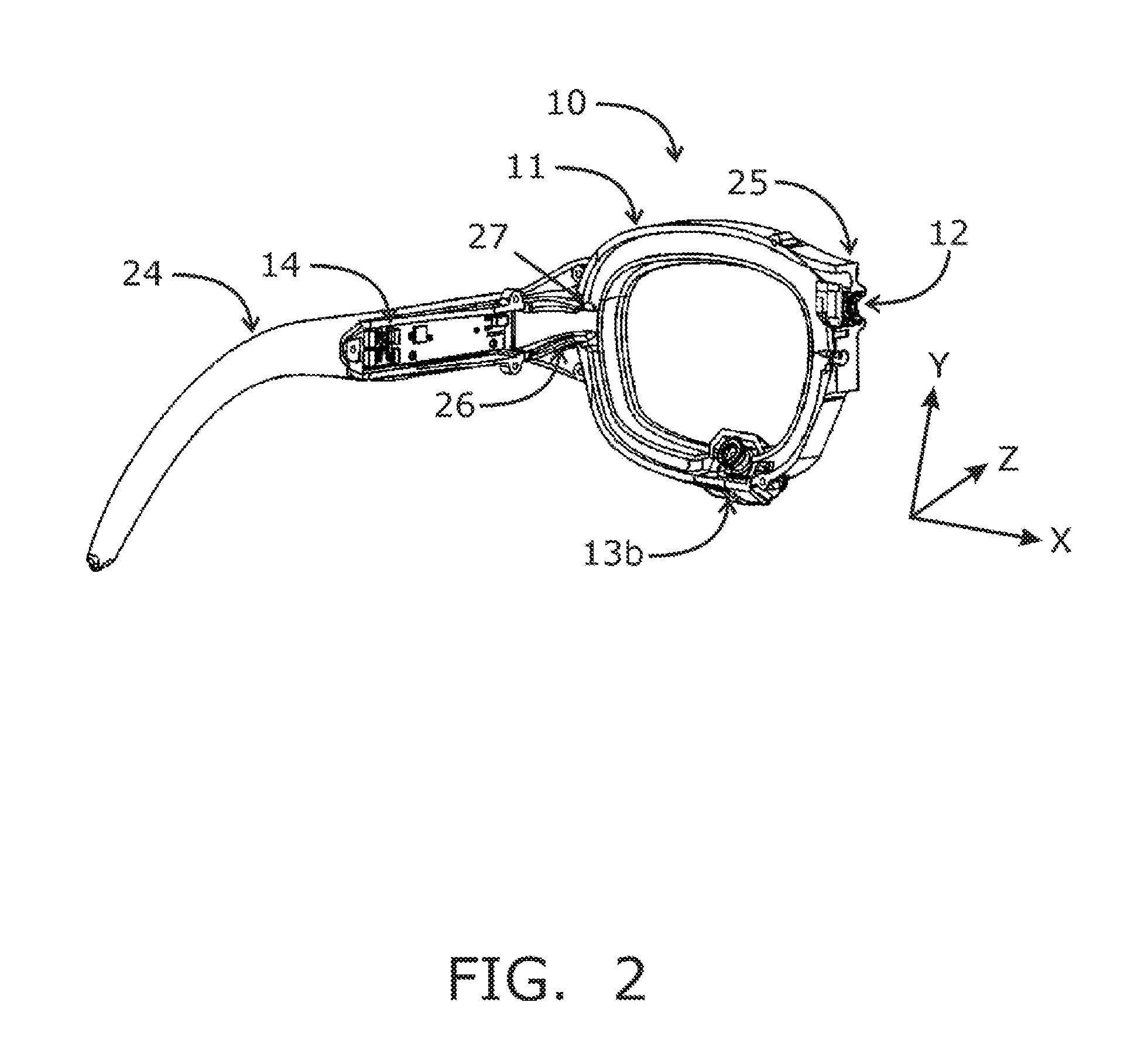

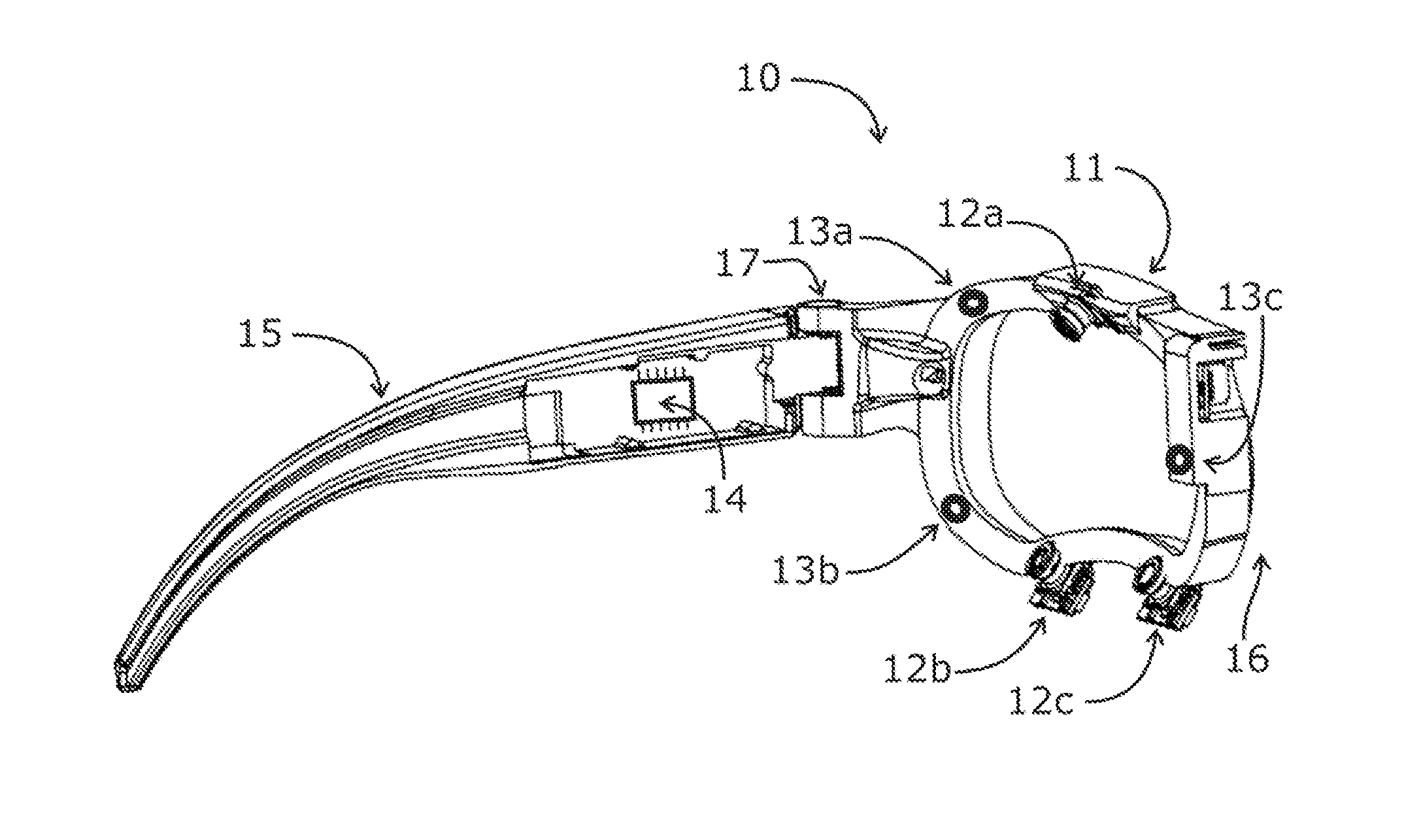

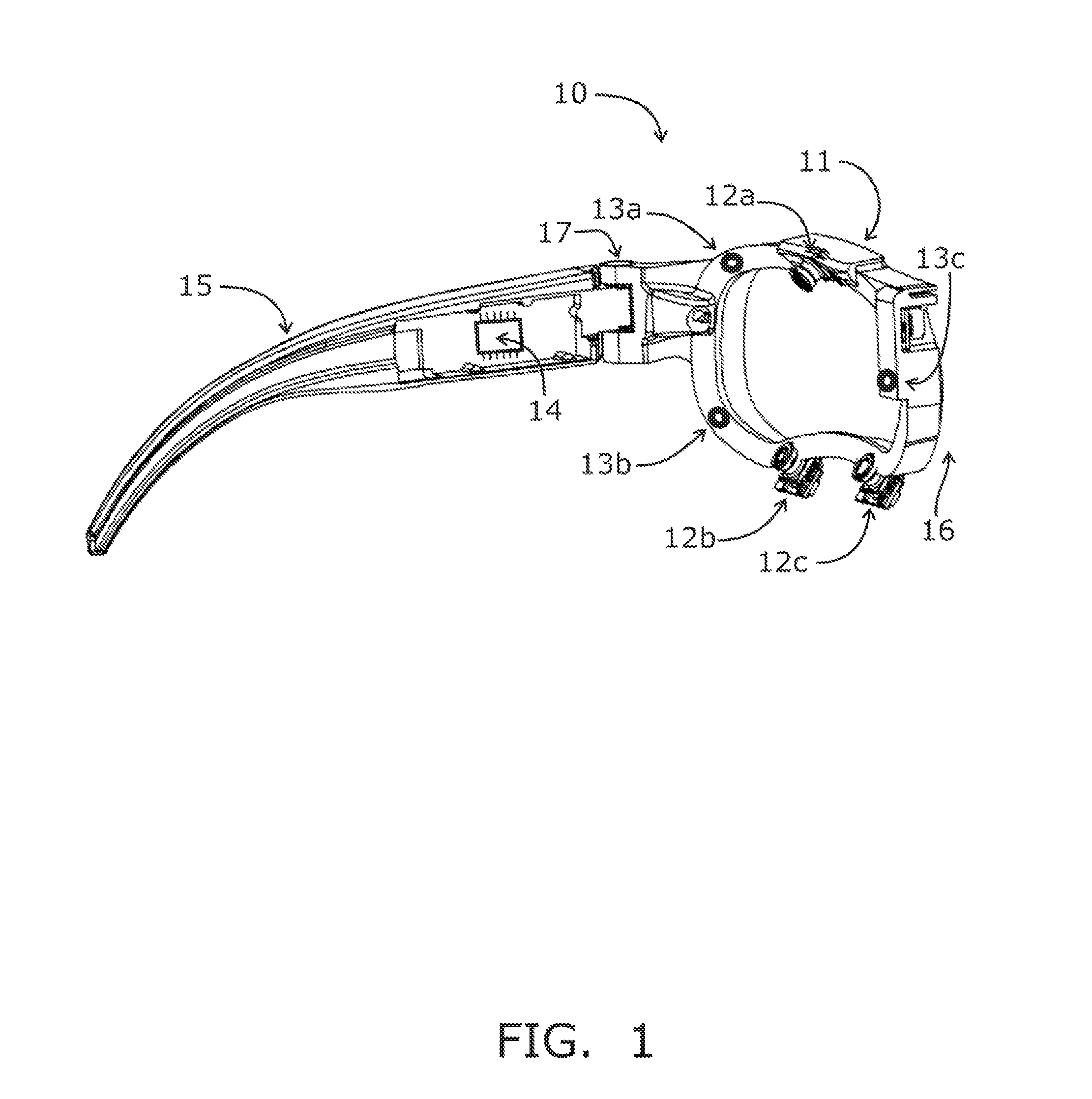

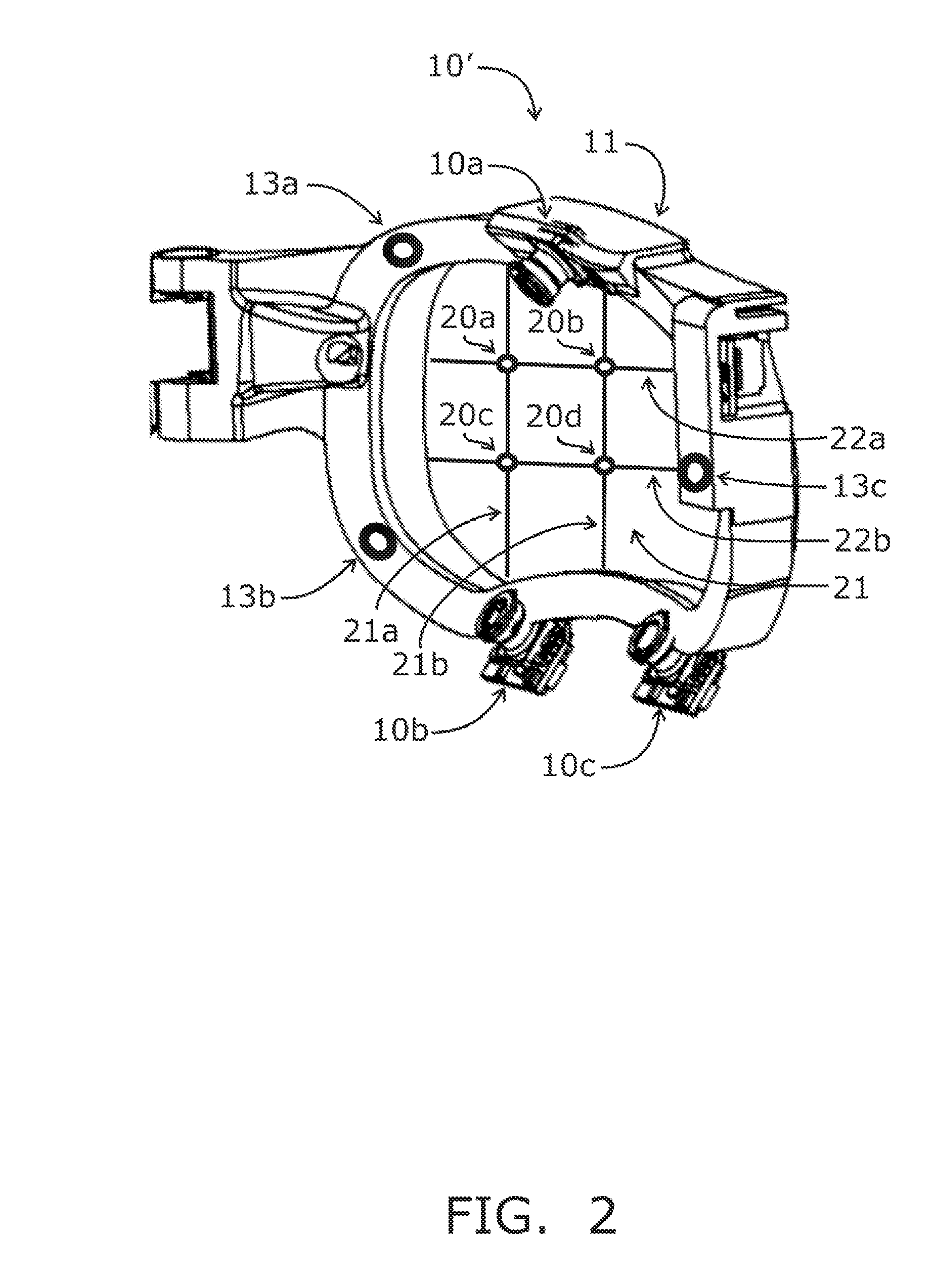

ActiveUS20130114850A1Accurate trackingSure easyImage enhancementImage analysisReference vectorEyewear

A system is mounted within eyewear or headwear to unobtrusively produce and track reference locations on the surface of one or both eyes of an observer. The system utilizes multiple illumination sources and / or multiple cameras to generate and observe glints from multiple directions. The use of multiple illumination sources and cameras can compensate for the complex, three-dimensional geometry of the head and anatomical variations of the head and eye region that occurs among individuals. The system continuously tracks the initial placement and any slippage of eyewear or headwear. In addition, the use of multiple illumination sources and cameras can maintain high-precision, dynamic eye tracking as an eye moves through its full physiological range. Furthermore, illumination sources placed in the normal line-of-sight of the device wearer increase the accuracy of gaze tracking by producing reference vectors that are close to the visual axis of the device wearer.

Owner:GOOGLE LLC

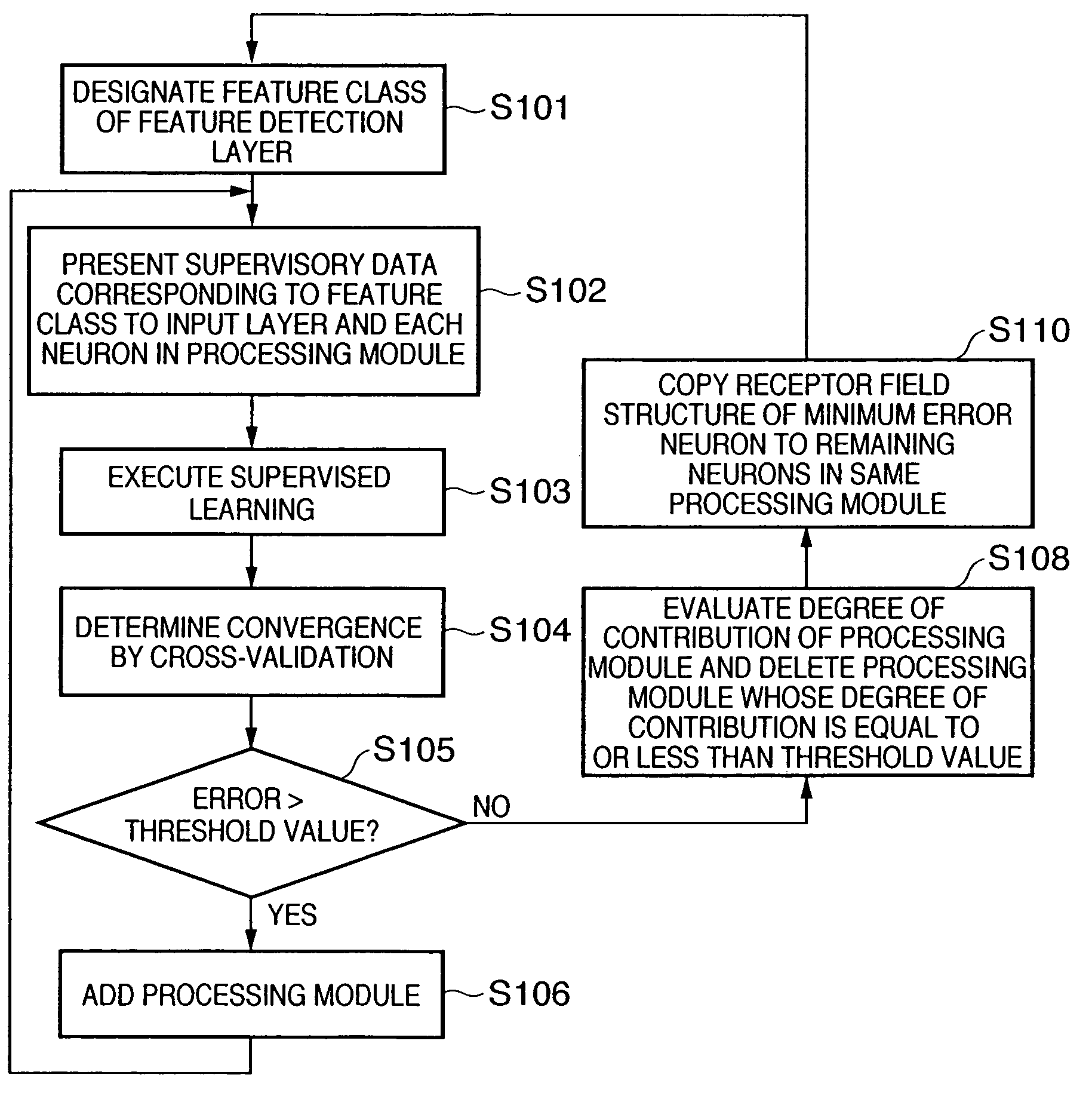

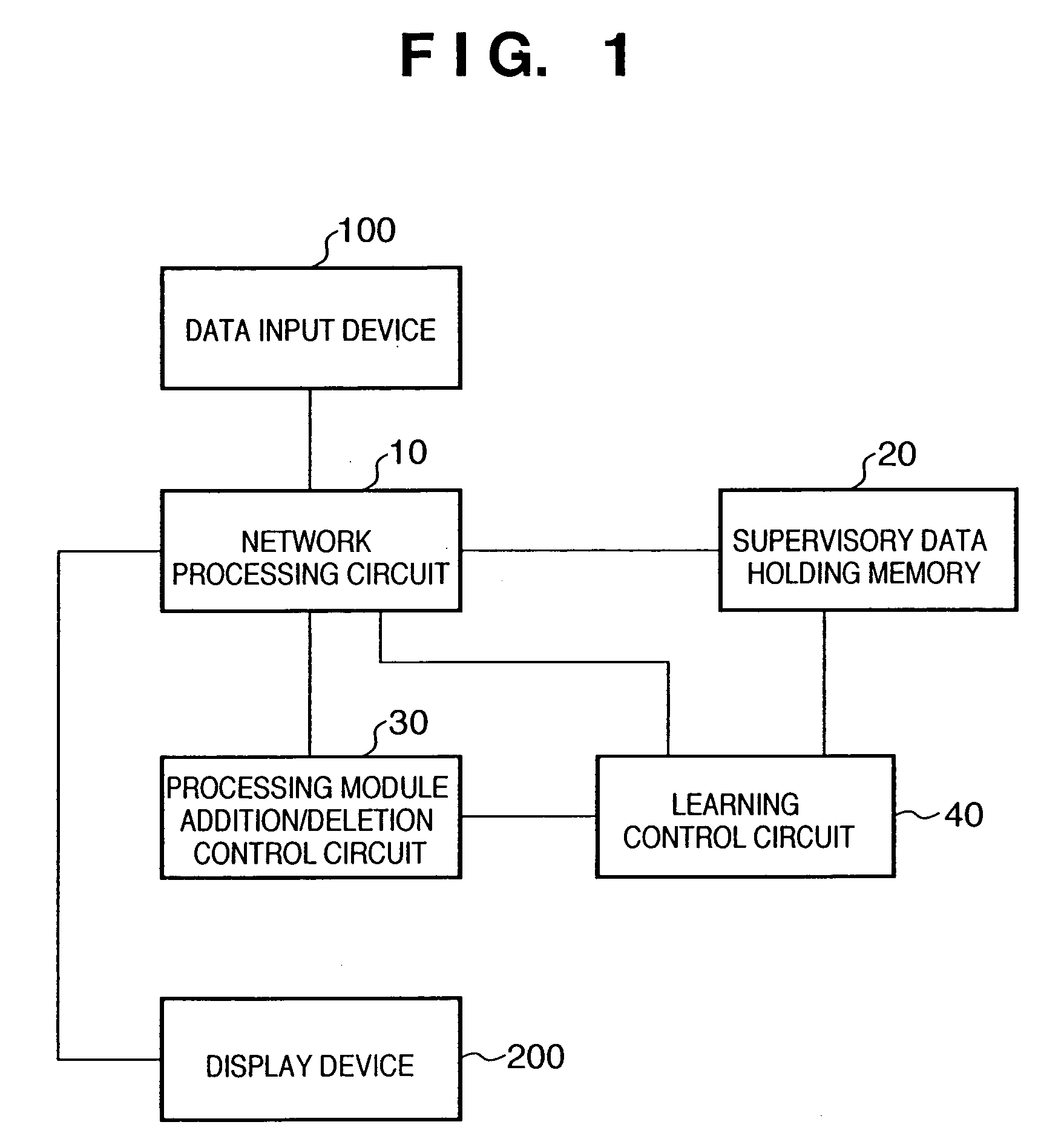

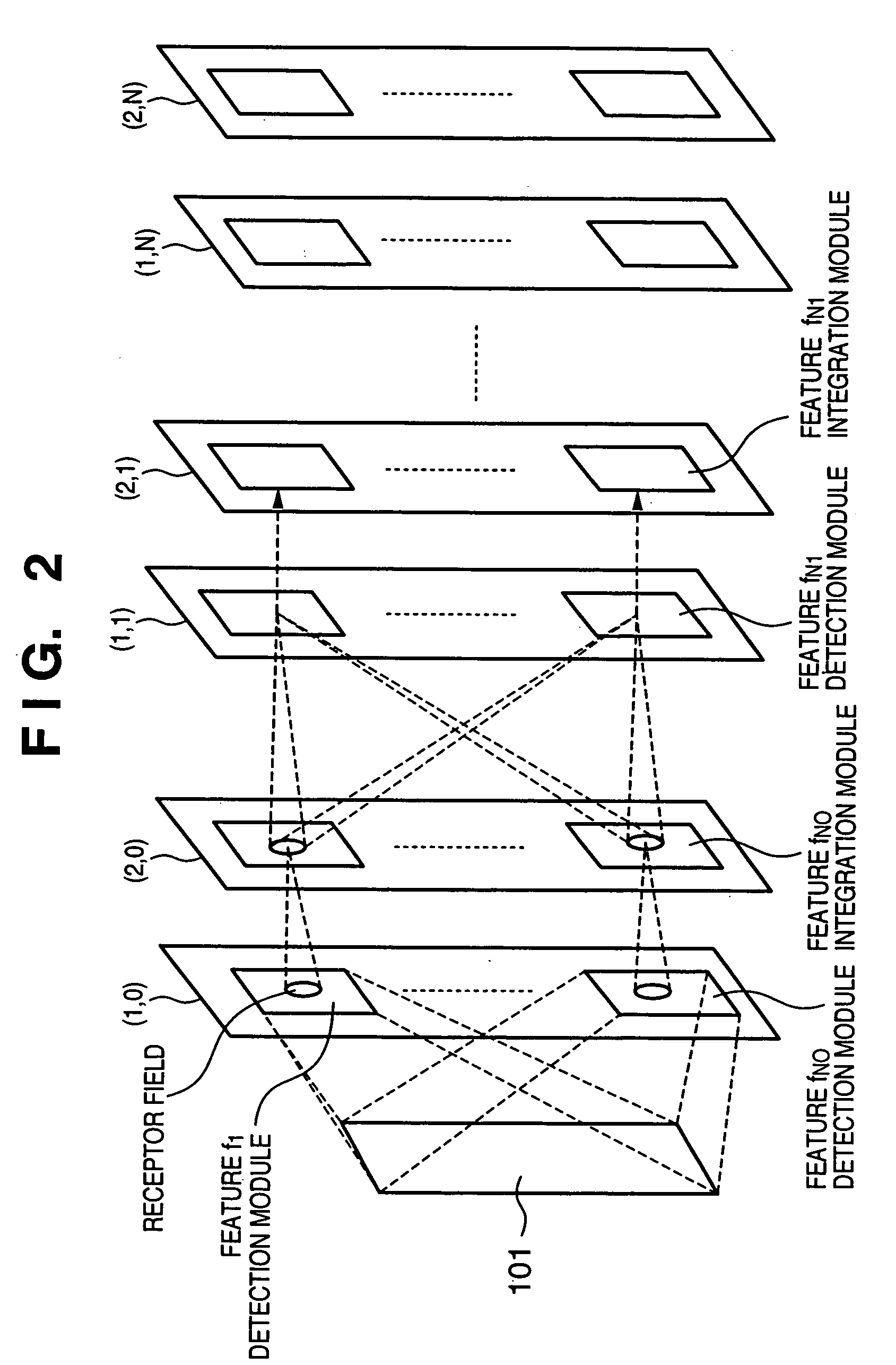

Information processing apparatus, information processing method, pattern recognition apparatus, and pattern recognition method

InactiveUS20050283450A1Image analysisDigital computer detailsPattern recognitionInformation processing

In a hierarchical neural network having a module structure, learning necessary for detection of a new feature class is executed by a processing module which has not finished learning yet and includes a plurality of neurons which should learn an unlearned feature class and have an undetermined receptor field structure by presenting a predetermined pattern to a data input layer. Thus, a feature class necessary for subject recognition can be learned automatically and efficiently.

Owner:CANON KK

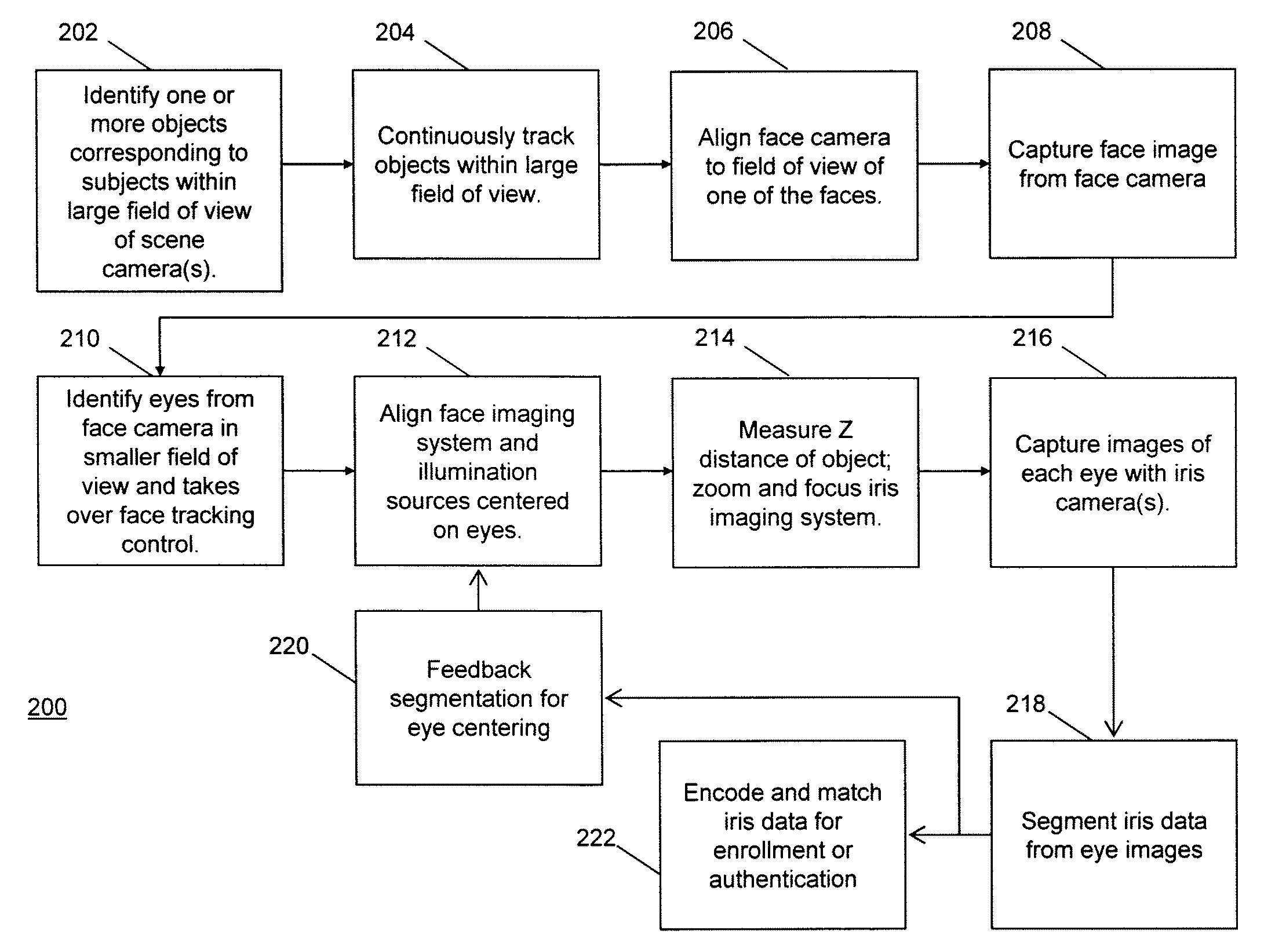

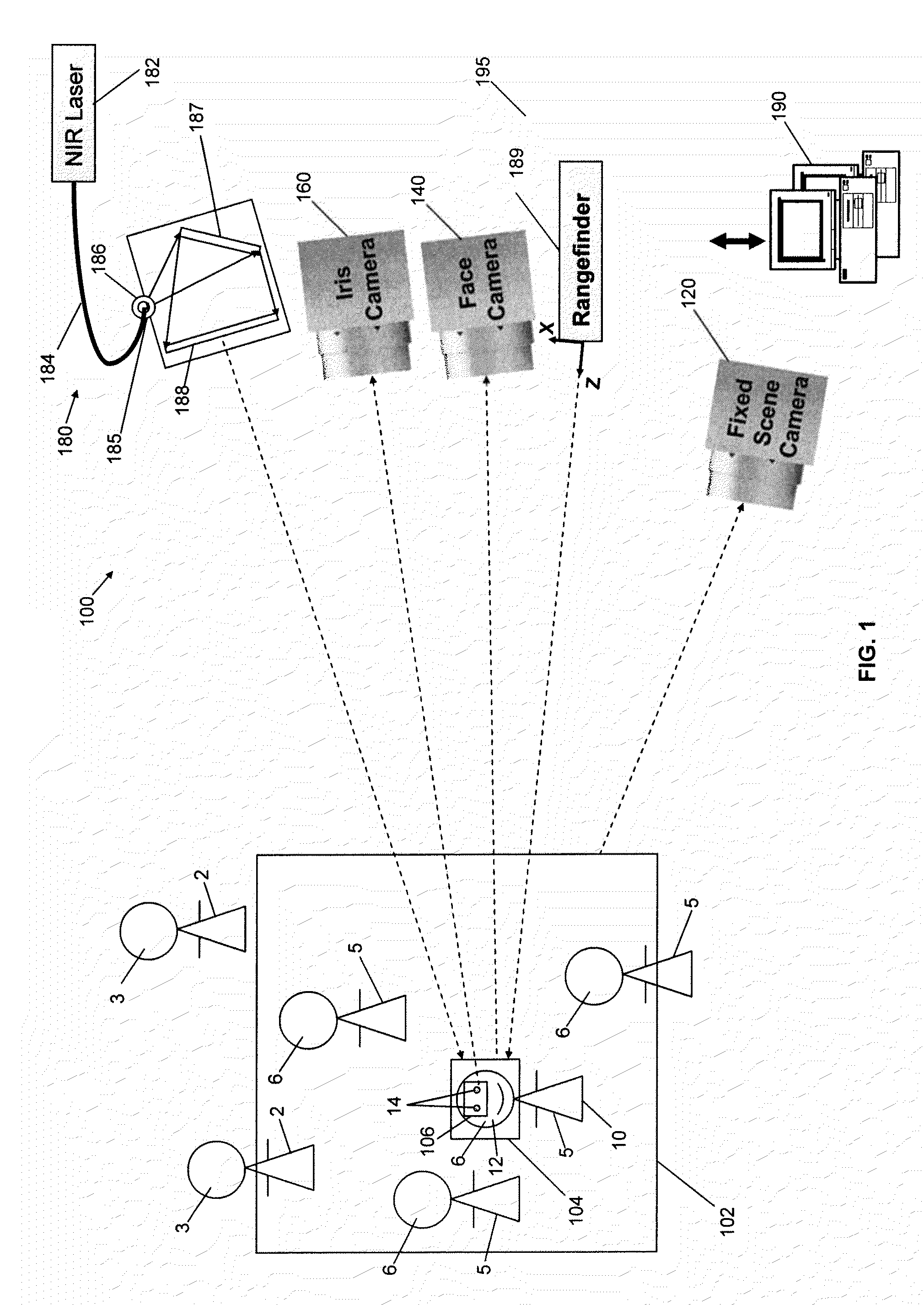

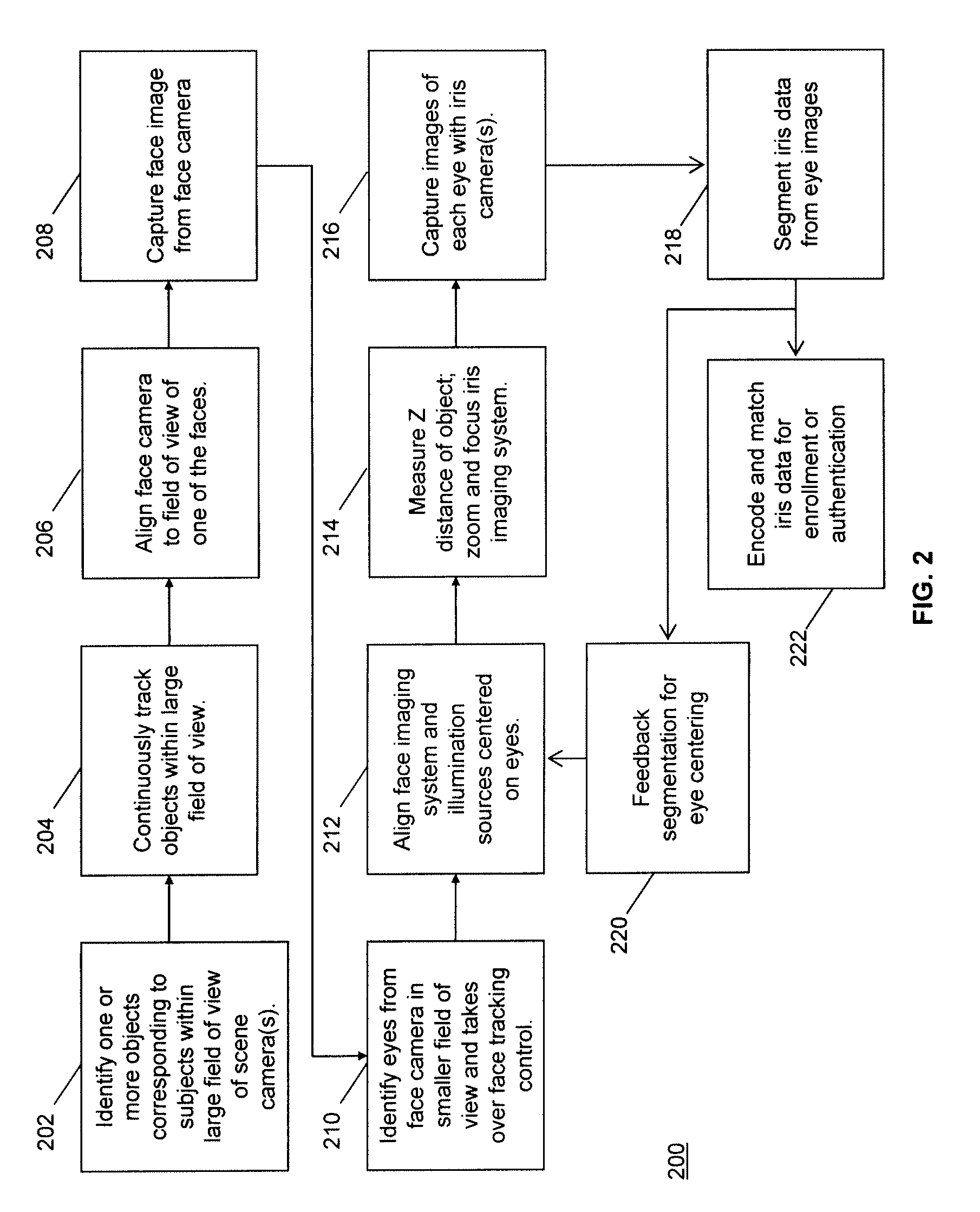

Long distance multimodal biometric system and method

InactiveUS20100290668A1Good compatibilityFew constraintsAcquiring/recognising eyesColor television detailsComputer scienceField of view

A system for multimodal biometric identification has a first imaging system that detects one or more subjects in a first field of view, including a targeted subject having a first biometric characteristic and a second biometric characteristic; a second imaging system that captures a first image of the first biometric characteristic according to first photons, where the first biometric characteristic is positioned in a second field of view smaller than the first field of view, and the first image includes first data for biometric identification; a third imaging system that captures a second image of the second biometric characteristic according to second photons, where the second biometric characteristic is positioned in a third field of view which is smaller than the first and second fields of view, and the second image includes second data for biometric identification. At least one active illumination source emits the second photons.

Owner:MORPHOTRUST USA

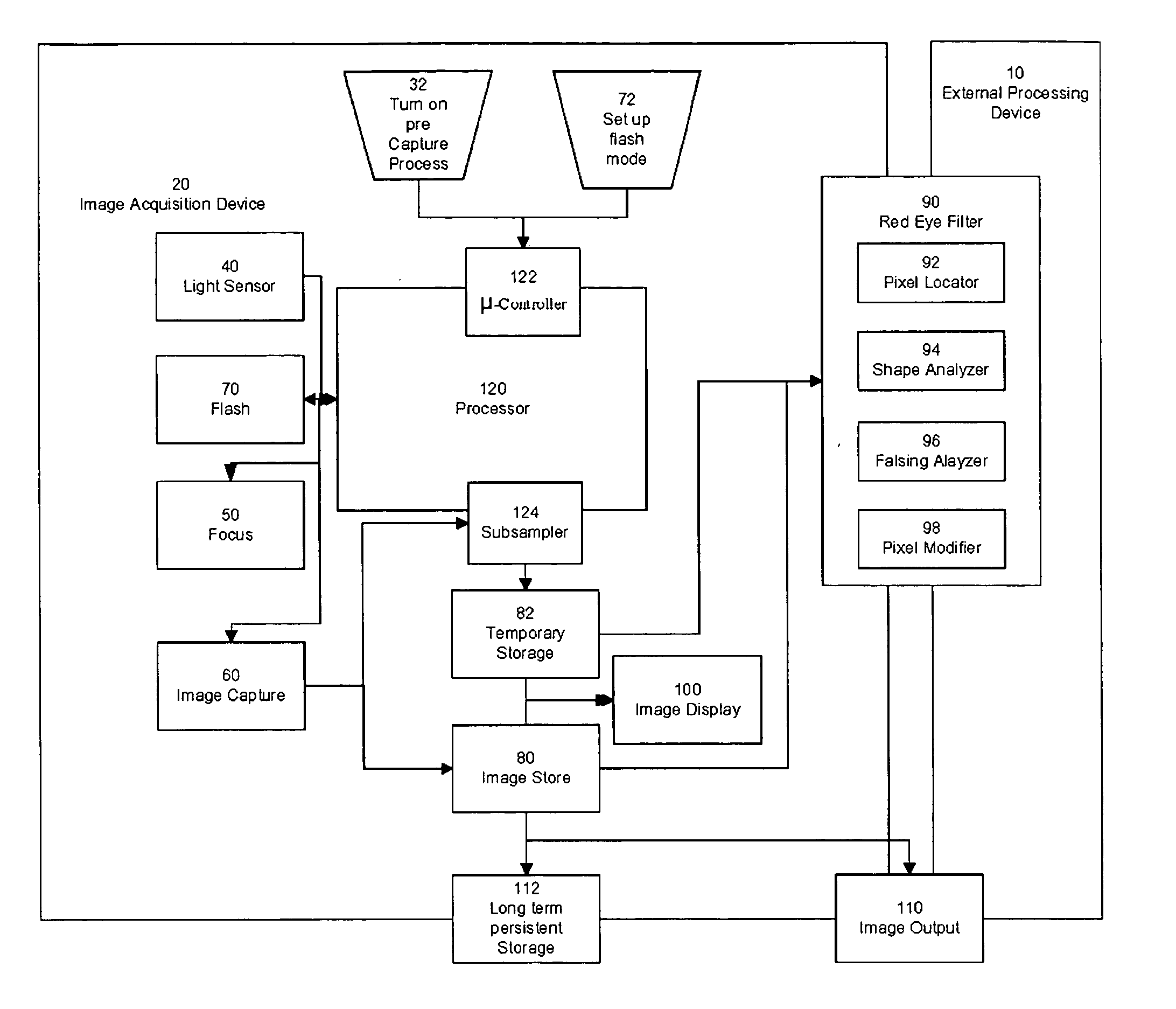

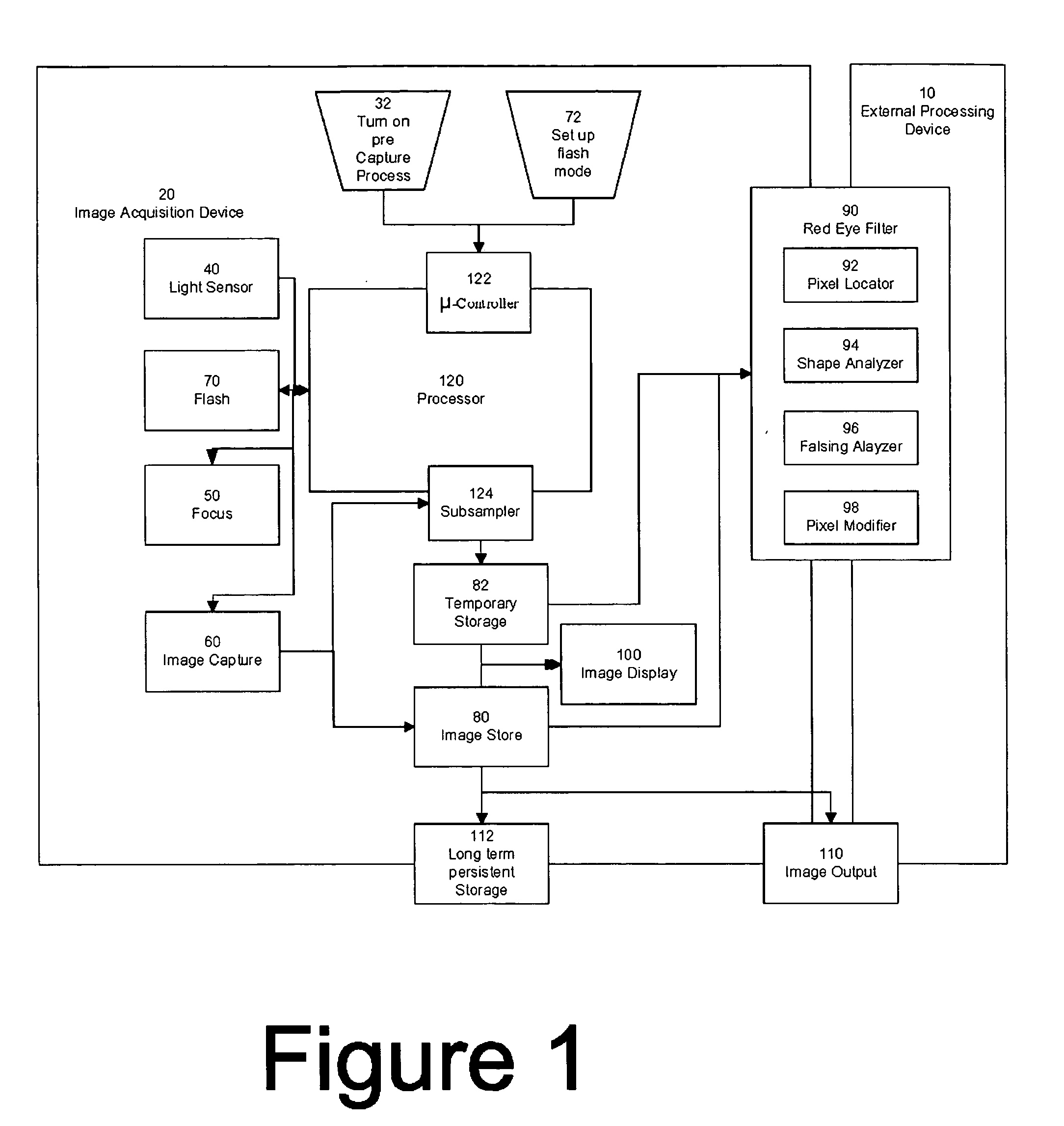

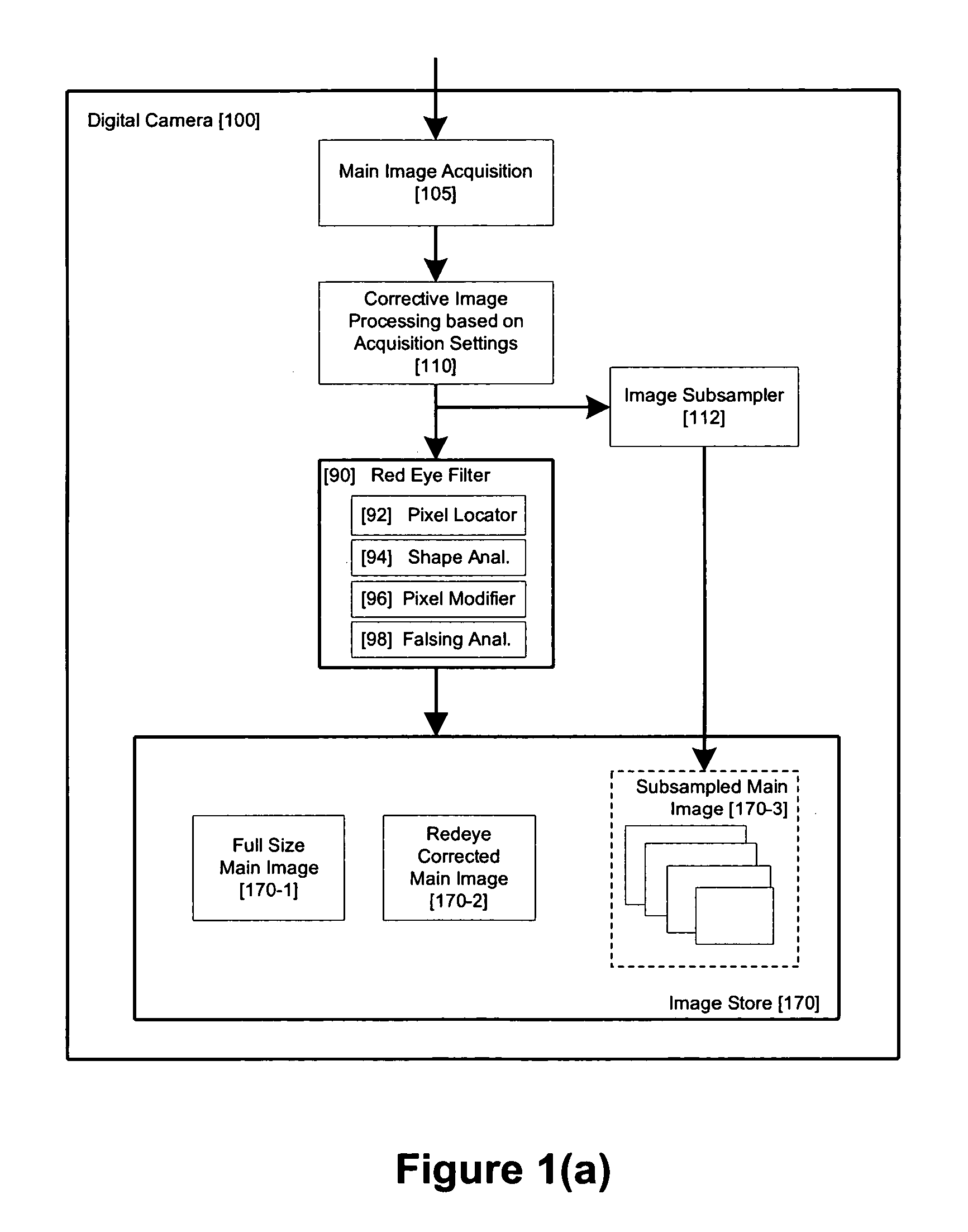

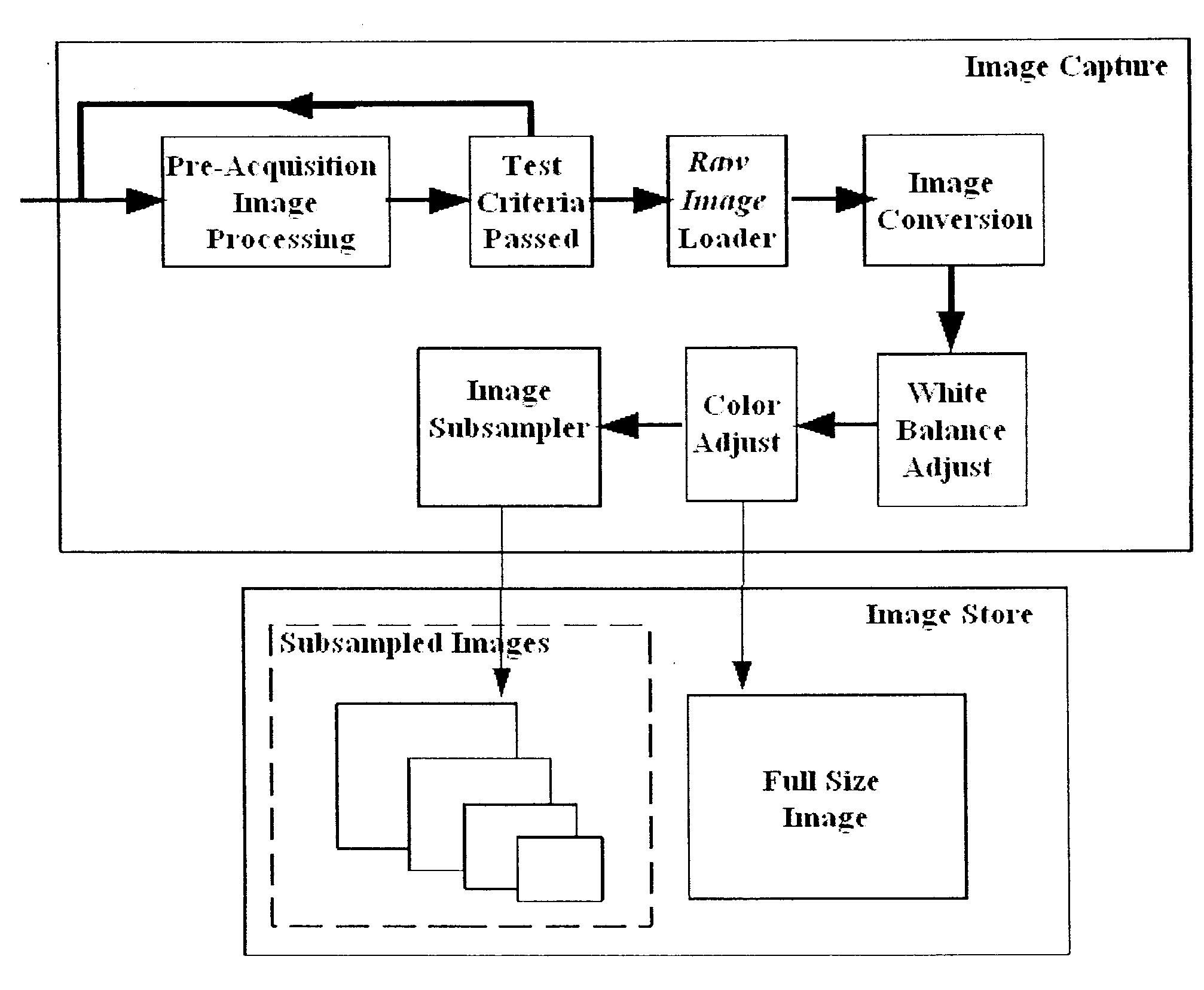

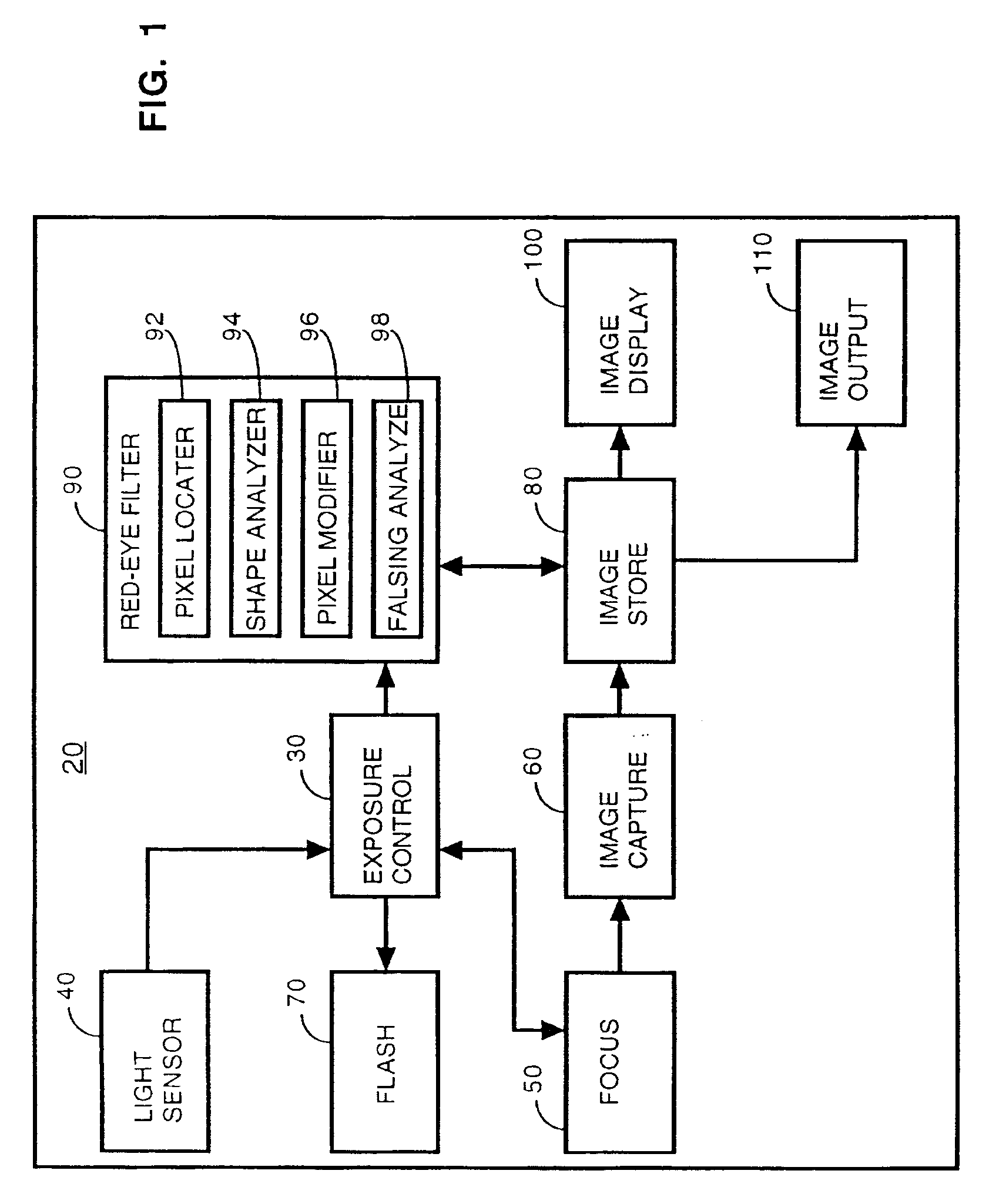

Optimized Performance and Performance for Red-Eye Filter Method and Apparatus

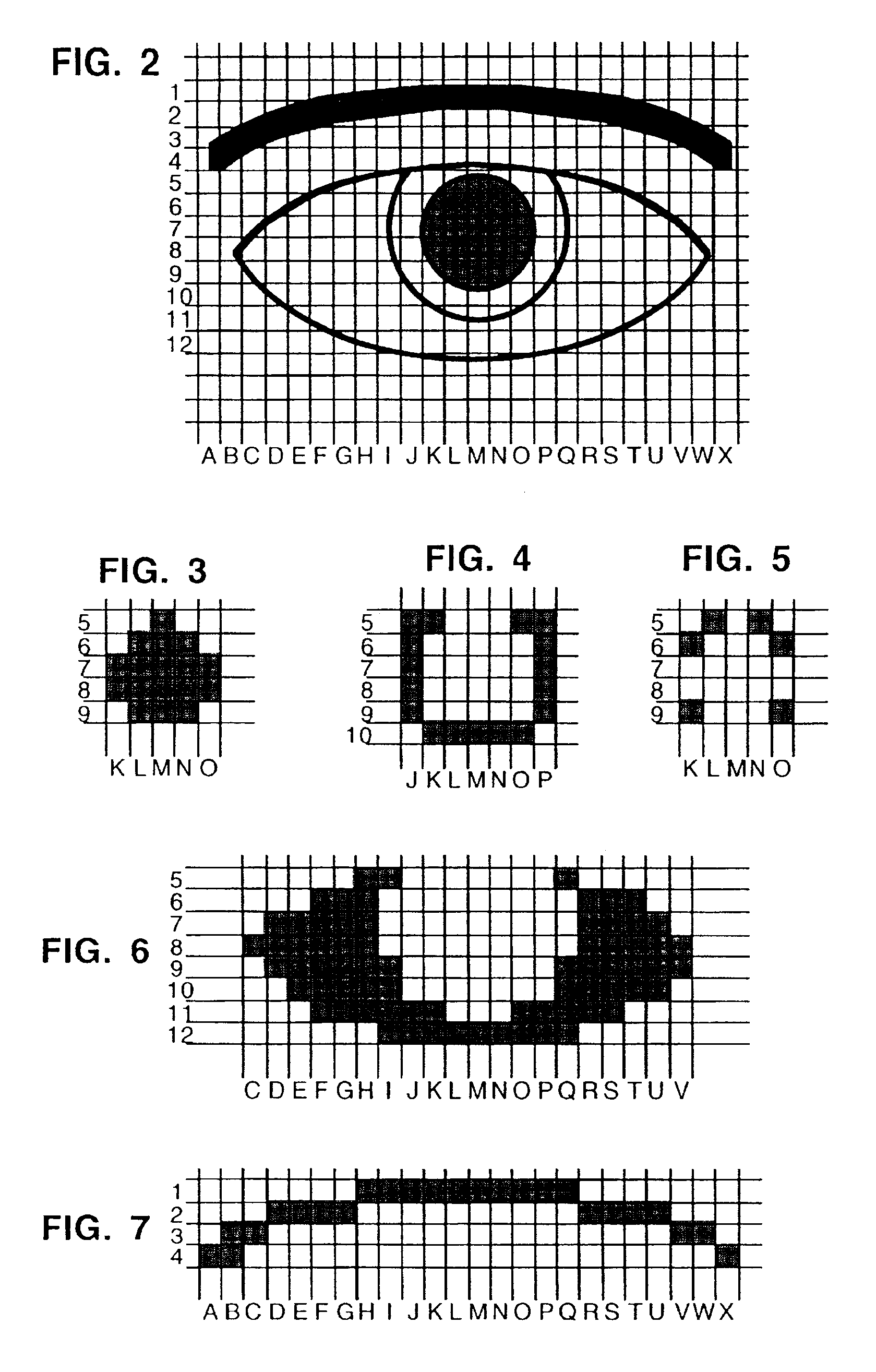

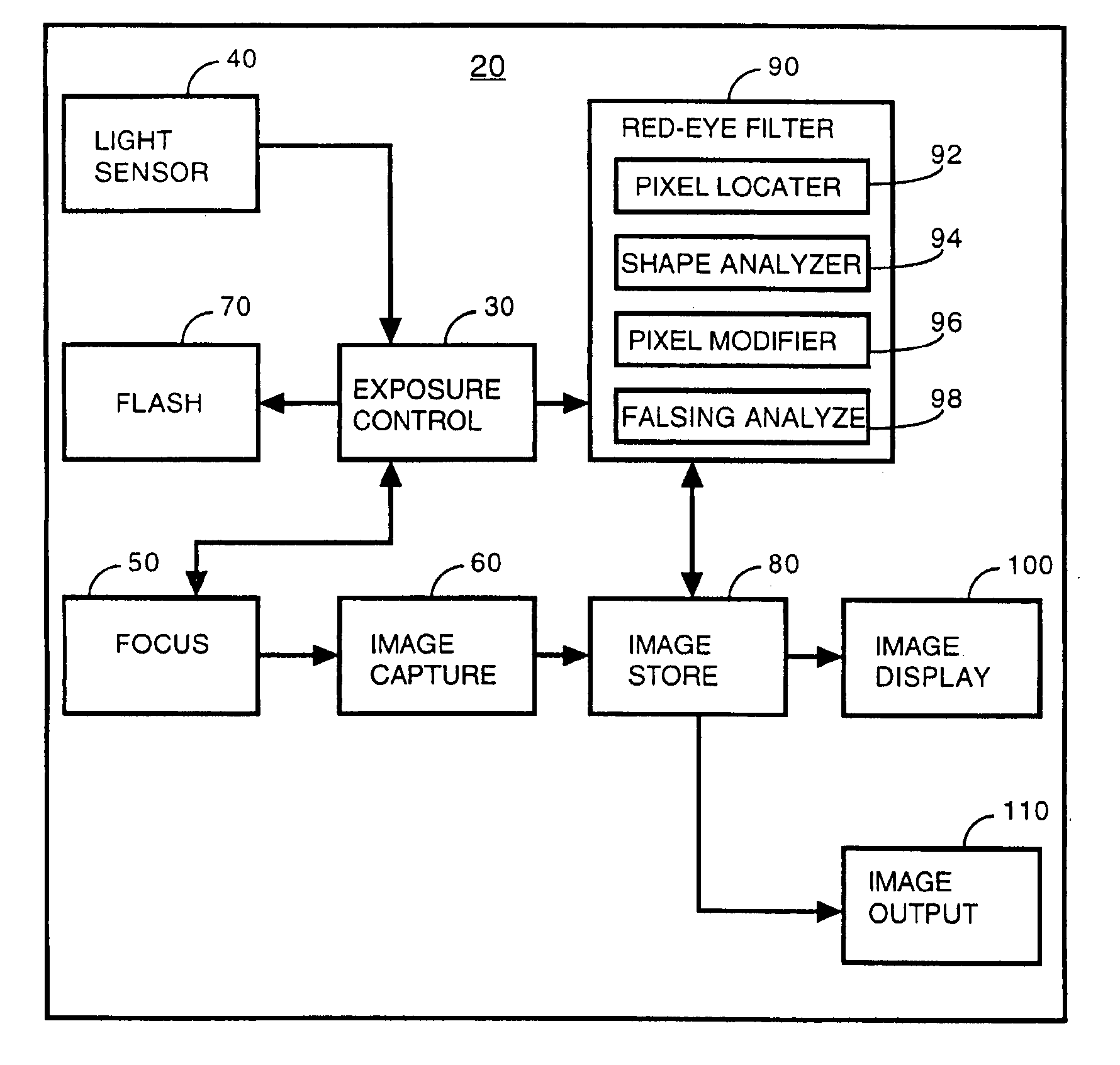

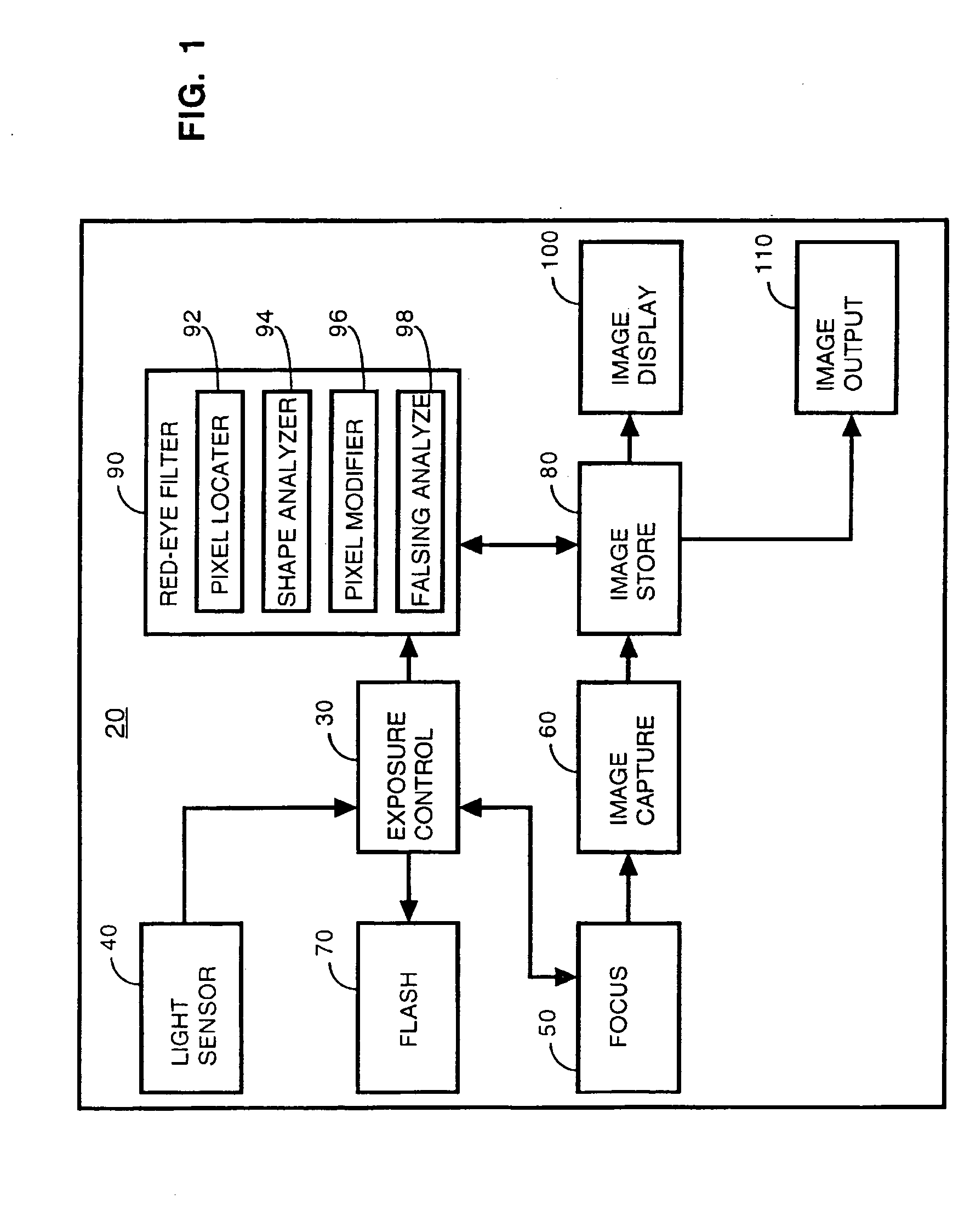

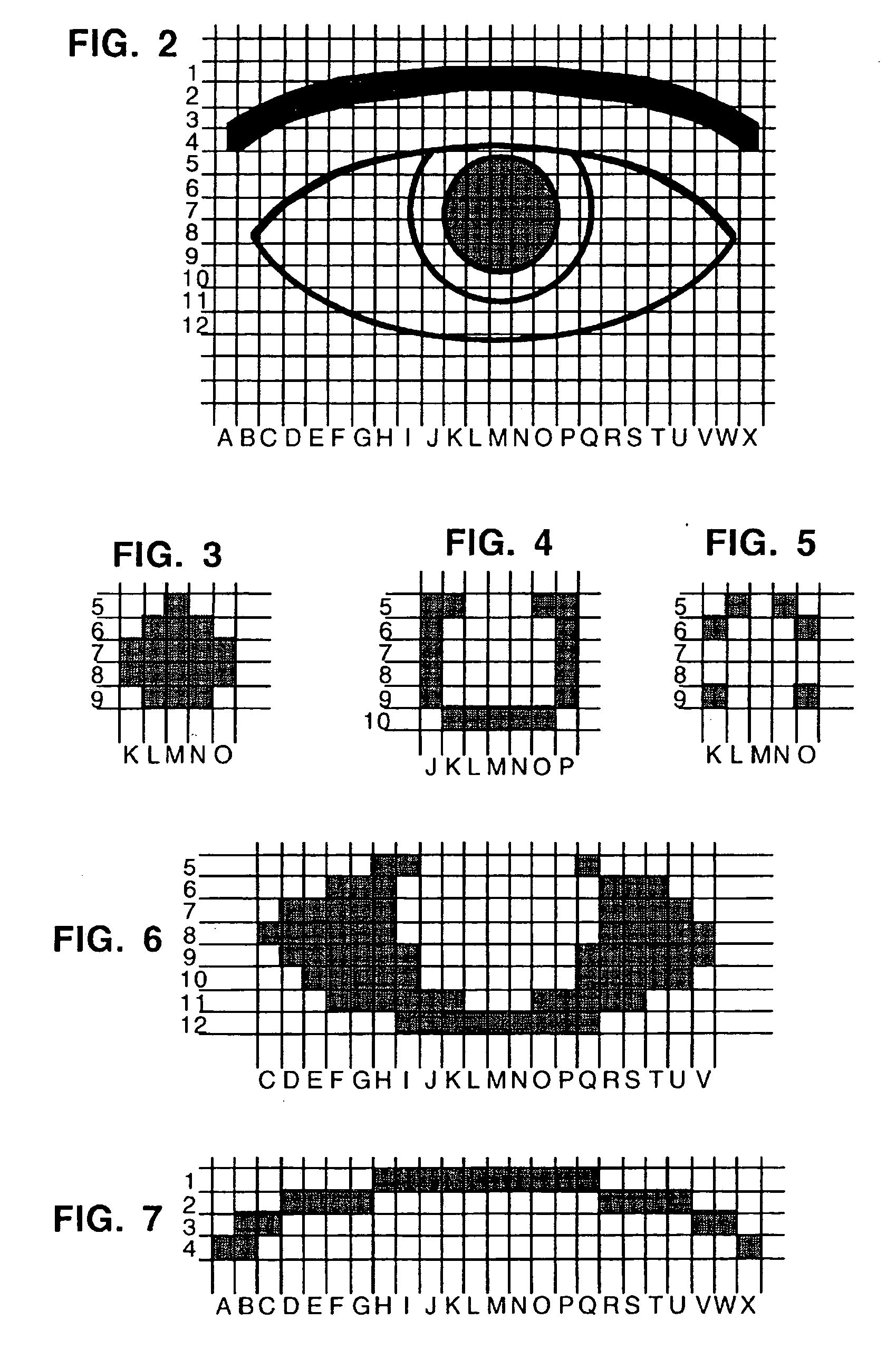

A digital camera has an integral flash and stores and displays a digital image. Under certain conditions, a flash photograph taken with the camera may result in a red-eye phenomenon due to a reflection within an eye of a subject of the photograph. A digital apparatus has a red-eye filter which analyzes the stored image for the red-eye phenomenon and modifies the stored image to eliminate the red-eye phenomenon by changing the red area to black. The modification of the image is enabled when a photograph is taken under conditions indicative of the red-eye phenomenon. The modification is subject to anti-falsing analysis which further examines the area around the red-eye area for indicia of the eye of the subject. The detection and correction can be optimized for performance and quality by operating on subsample versions of the image when appropriate.

Owner:TESSERA TECH IRELAND LTD

Optimized performance and performance for red-eye filter method and apparatus

A digital camera has an integral flash and stores and displays a digital image. Under certain conditions, a flash photograph taken with the camera may result in a red-eye phenomenon due to a reflection within an eye of a subject of the photograph. A digital apparatus has a red-eye filter which analyzes the stored image for the red-eye phenomenon and modifies the stored image to eliminate the red-eye phenomenon by changing the red area to black. The modification of the image is enabled when a photograph is taken under conditions indicative of the red-eye phenomenon. The modification is subject to anti-falsing analysis which further examines the area around the red-eye area for indicia of the eye of the subject. The detection and correction can be optimized for performance and quality by operating on subsample versions of the image when appropriate.

Owner:DIGITALPTICS EURO

Method and apparatus for determining and analyzing a location of visual interest

InactiveUS8487775B2Efficient analysisEasy to handleElectric devicesAcquiring/recognising eyesDriver/operatorWorkload

A method of analyzing data based on the physiological orientation of a driver is provided. Data is descriptive of a driver's gaze-direction is processing and criteria defining a location of driver interest is determined. Based on the determined criteria, gaze-direction instances are classified as either on-location or off-location. The classified instances can then be used for further analysis, generally relating to times of elevated driver workload and not driver drowsiness. The classified instances are transformed into one of two binary values (e.g., 1 and 0) representative of whether the respective classified instance is on or off location. The uses of a binary value makes processing and analysis of the data faster and more efficient. Furthermore, classification of at least some of the off-location gaze direction instances can be inferred from the failure to meet the determined criteria for being classified as an on-location driver gaze direction instance.

Owner:VOLVO LASTVAGNAR AB

Methods and systems for creating virtual and augmented reality

Configurations are disclosed for presenting virtual reality and augmented reality experiences to users. The system may comprise an image capturing device to capture one or more images, the one or more images corresponding to a field of the view of a user of a head-mounted augmented reality device, and a processor communicatively coupled to the image capturing device to extract a set of map points from the set of images, to identify a set of sparse points and a set of dense points from the extracted set of map points, and to perform a normalization on the set of map points.

Owner:MAGIC LEAP INC

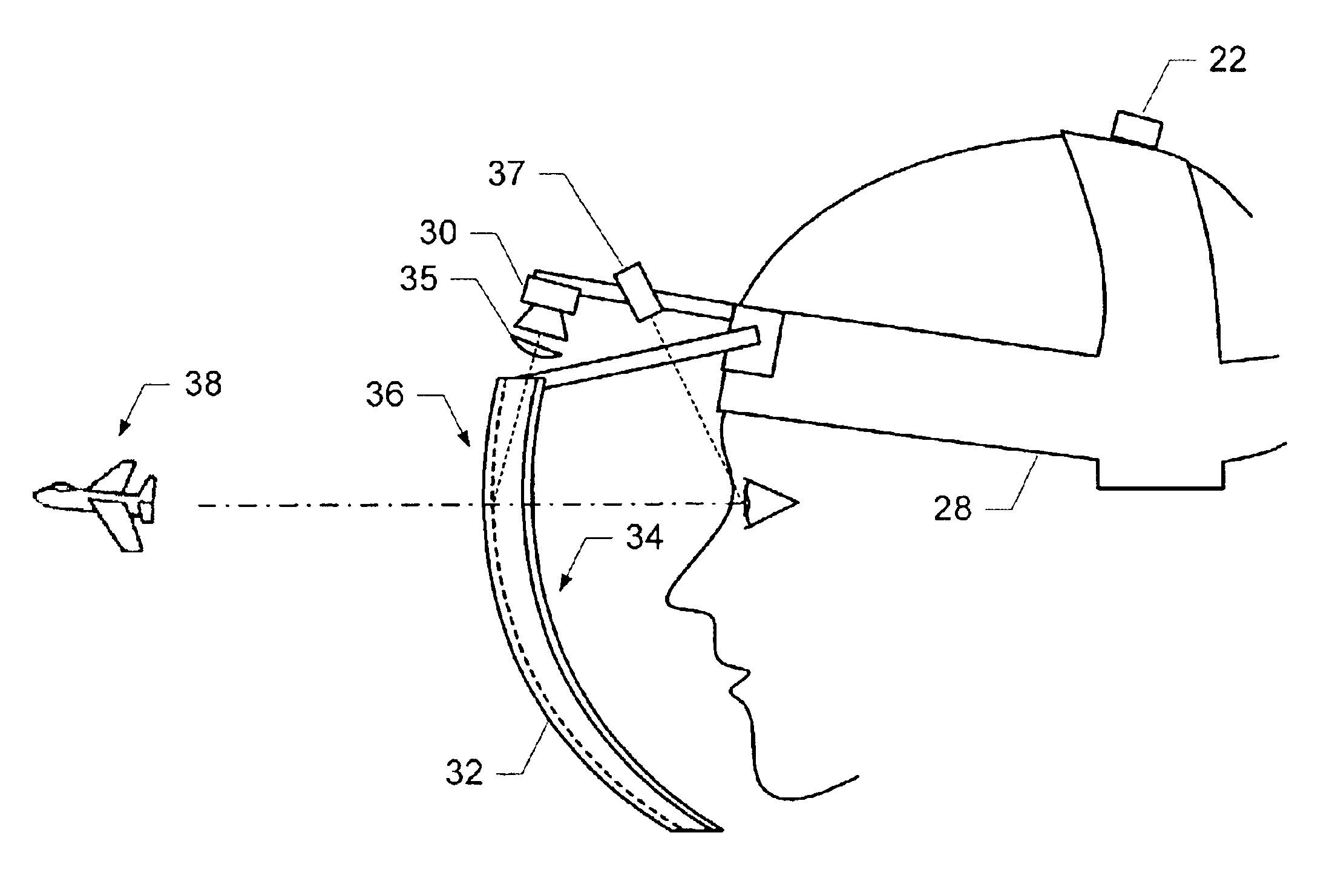

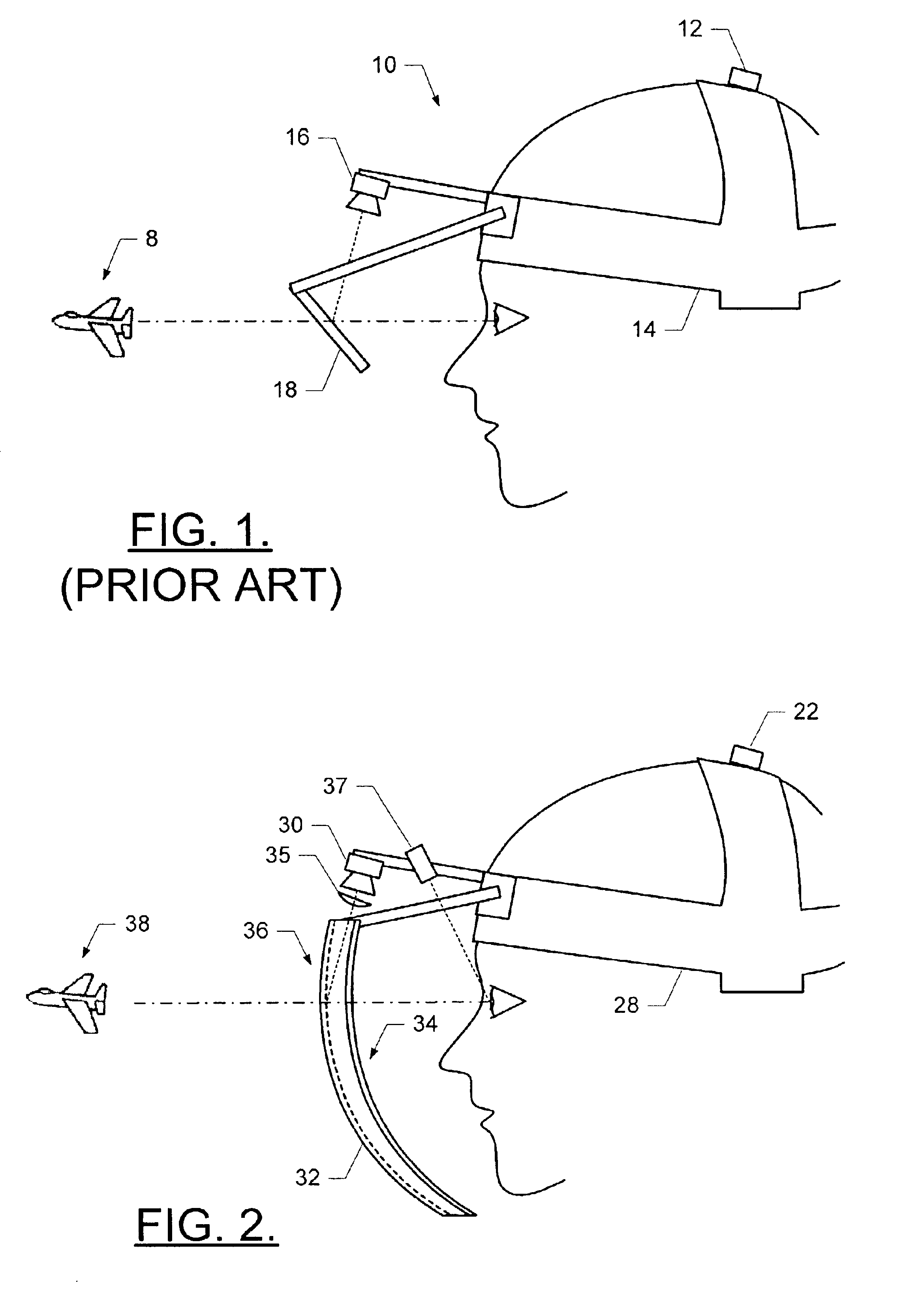

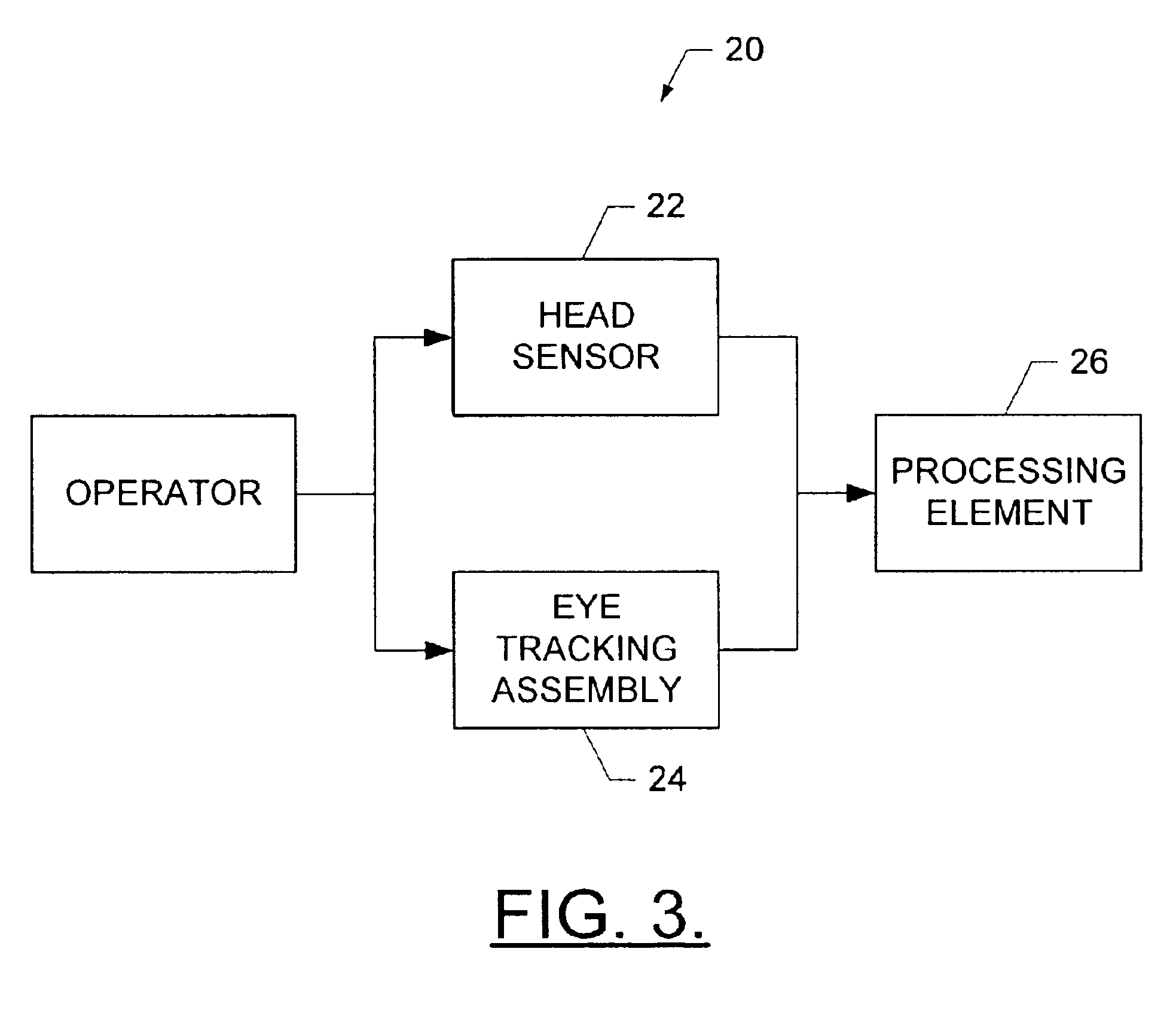

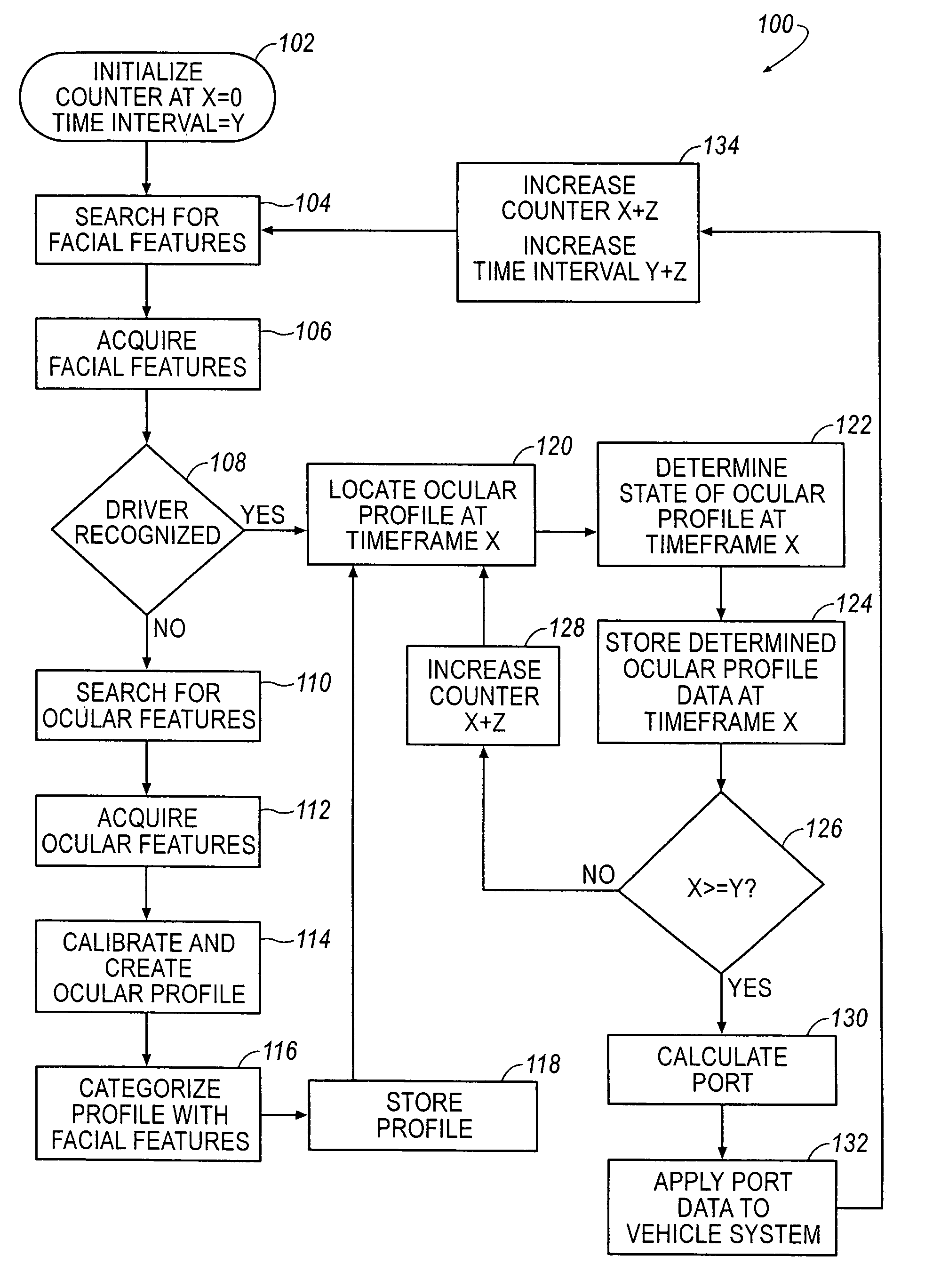

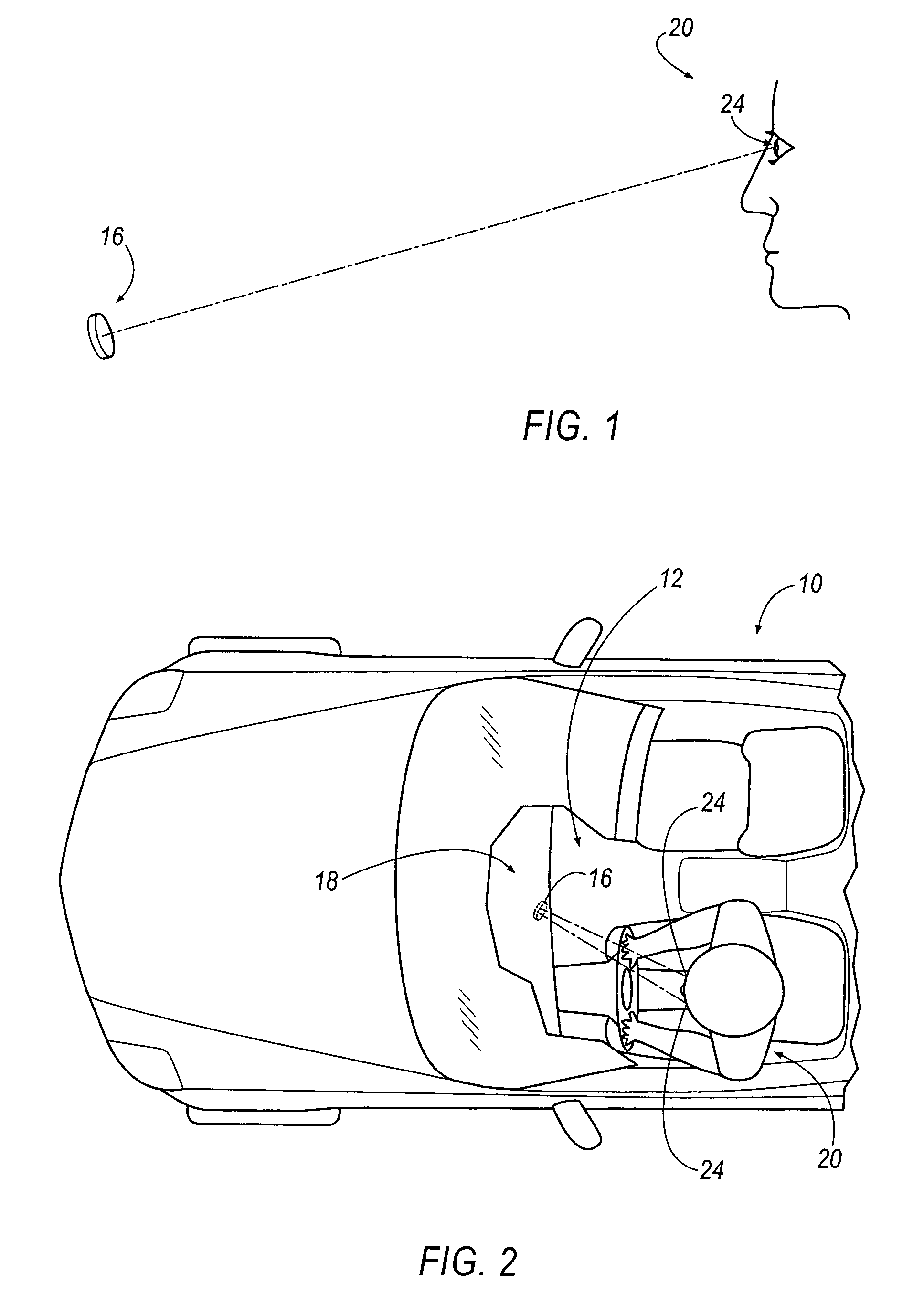

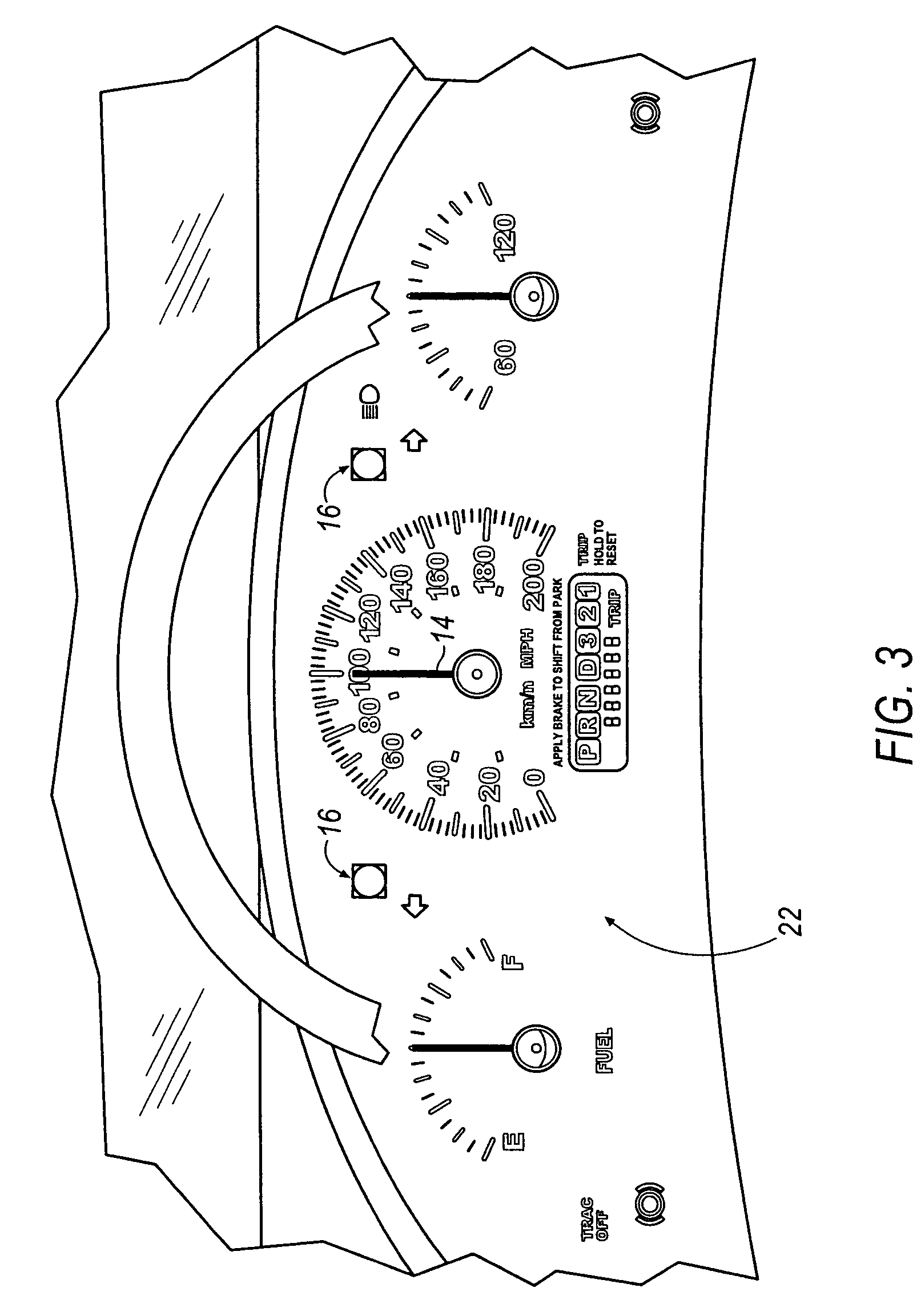

Gaze tracking system, eye-tracking assembly and an associated method of calibration

InactiveUS6943754B2Natural environmentAccurate for calibrating gaze tracking systemInput/output for user-computer interactionCosmonautic condition simulationsPosition dependentProcessing element

A system for tracking a gaze of an operator includes a head-mounted eye tracking assembly, a head-mounted head tracking assembly and a processing element. The head-mounted eye tracking assembly comprises a visor having an arcuate shape including a concave surface and an opposed convex surface. The visor is capable of being disposed such that at least a portion of the visor is located outside a field of view of the operator. The head-mounted head tracking sensor is capable of repeatedly determining a position of the head to thereby track movement of the head. In this regard, each position of the head is associated with a position of the at least one eye. Thus, the processing element can repeatedly determine the gaze of the operator, based upon each position of the head and the associated position of the eyes, thereby tracking the gaze of the operator.

Owner:THE BOEING CO

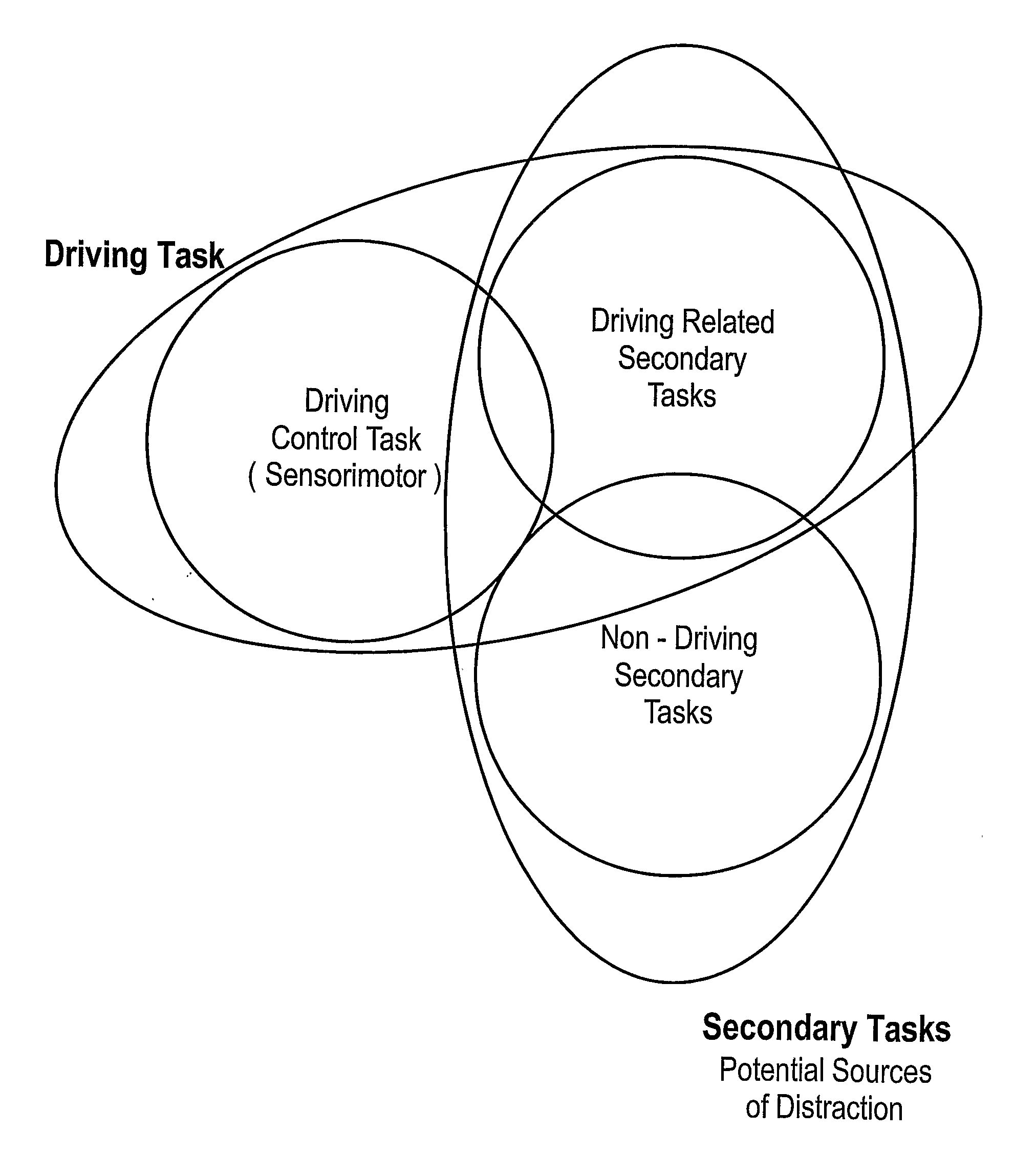

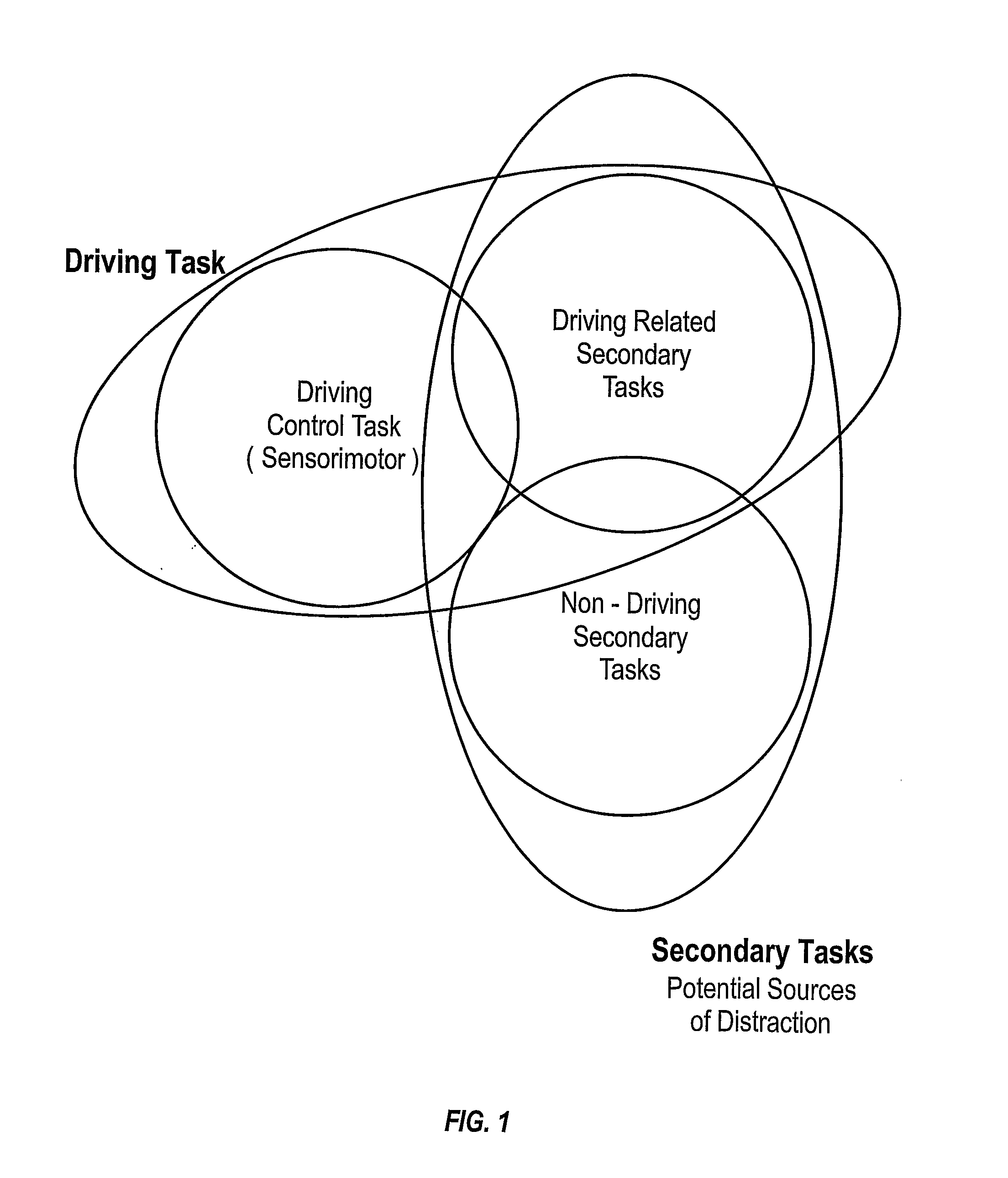

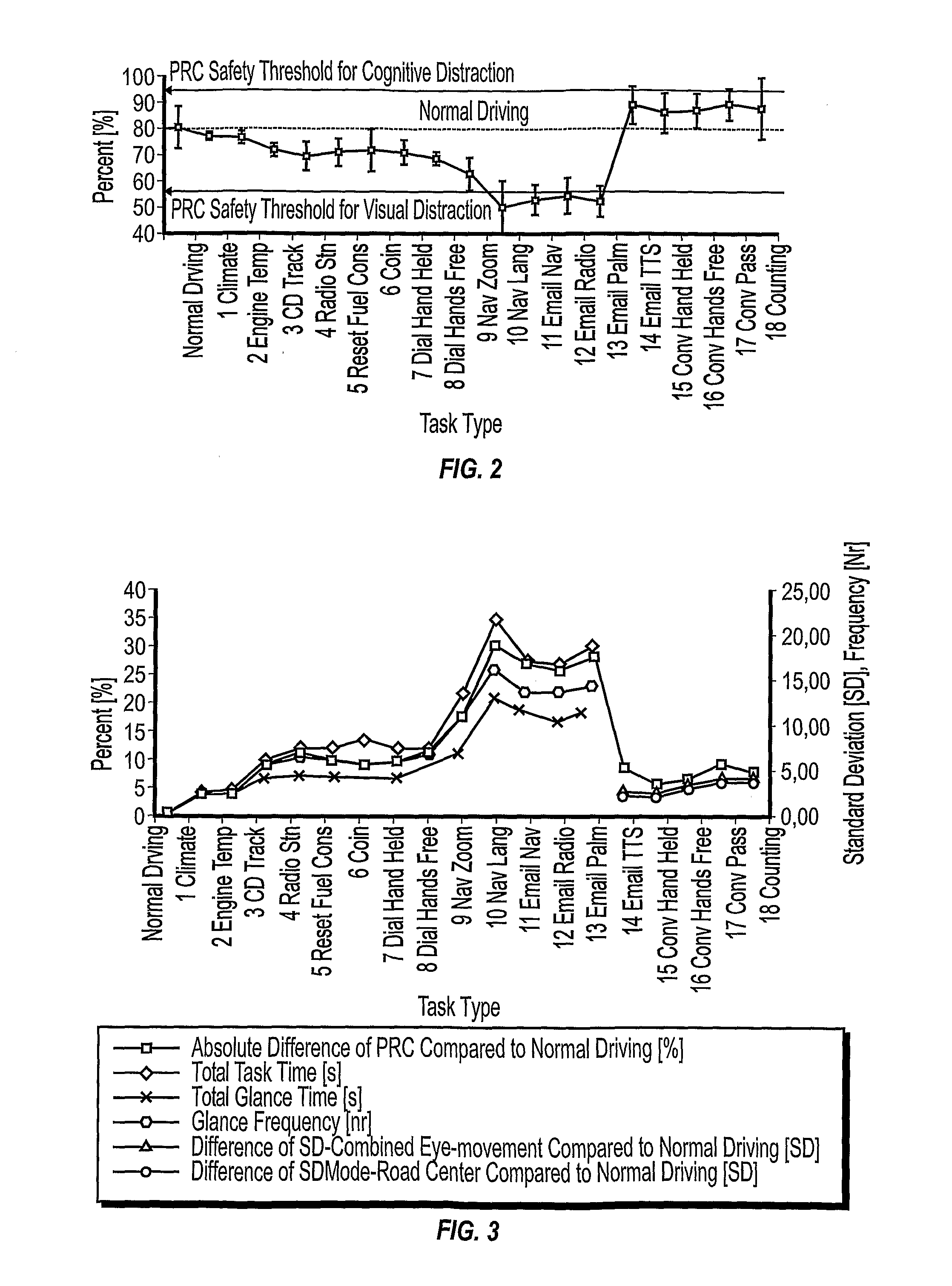

Method of mitigating driver distraction

ActiveUS7835834B2Reduce distractionsDigital data processing detailsAcquiring/recognising eyesDriver/operatorIn vehicle

A driver alert for mitigating driver distraction is issued based on a proportion of off-road gaze time and the duration of a current off-road gaze. The driver alert is ordinarily issued when the proportion of off-road gaze exceeds a threshold, but is not issued if the driver's gaze has been off-road for at least a reference time. In vehicles equipped with forward-looking object detection, the driver alert is also not issued if the closing speed of an in-path object exceeds a calibrated closing rate.

Owner:APTIV TECH LTD

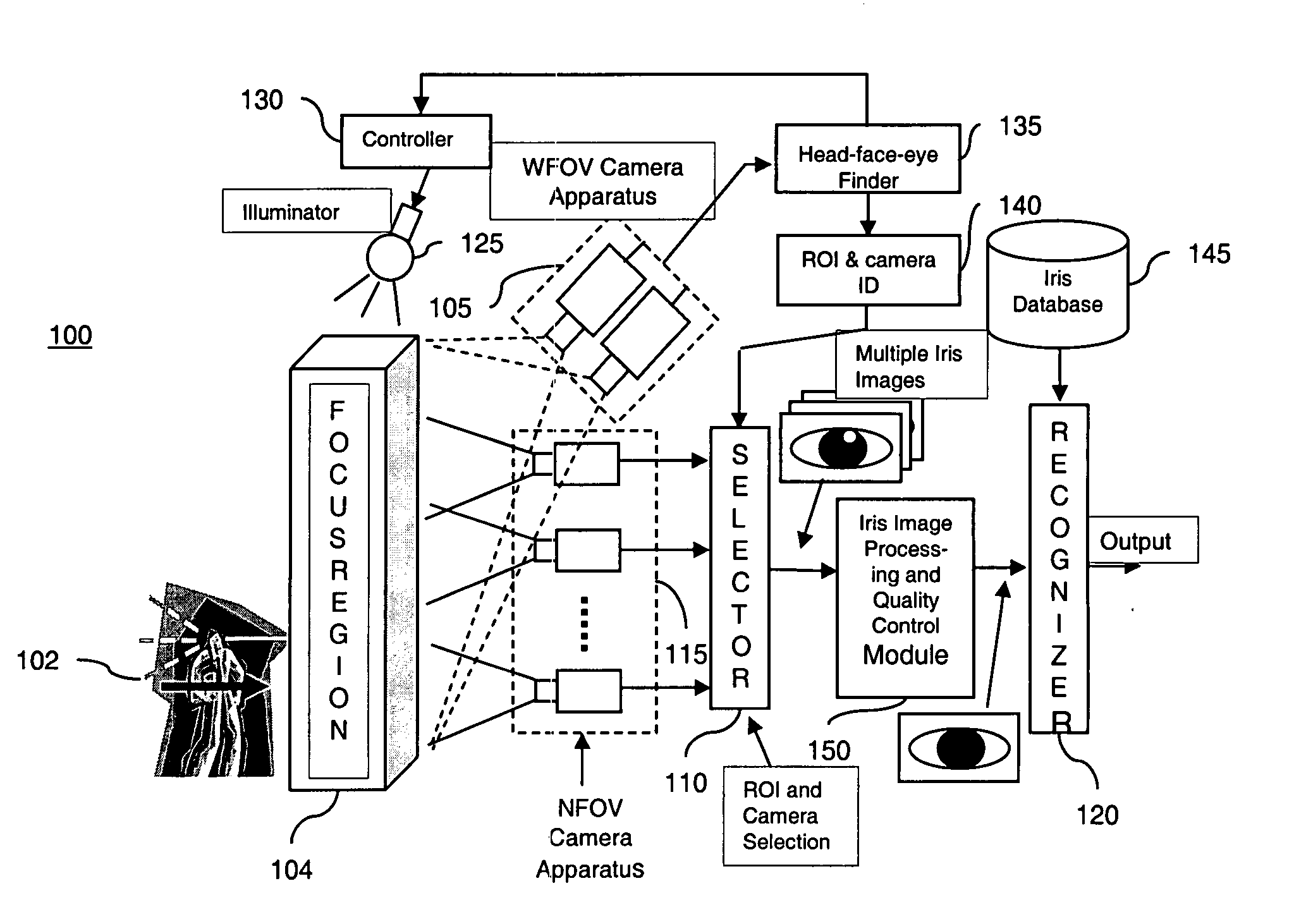

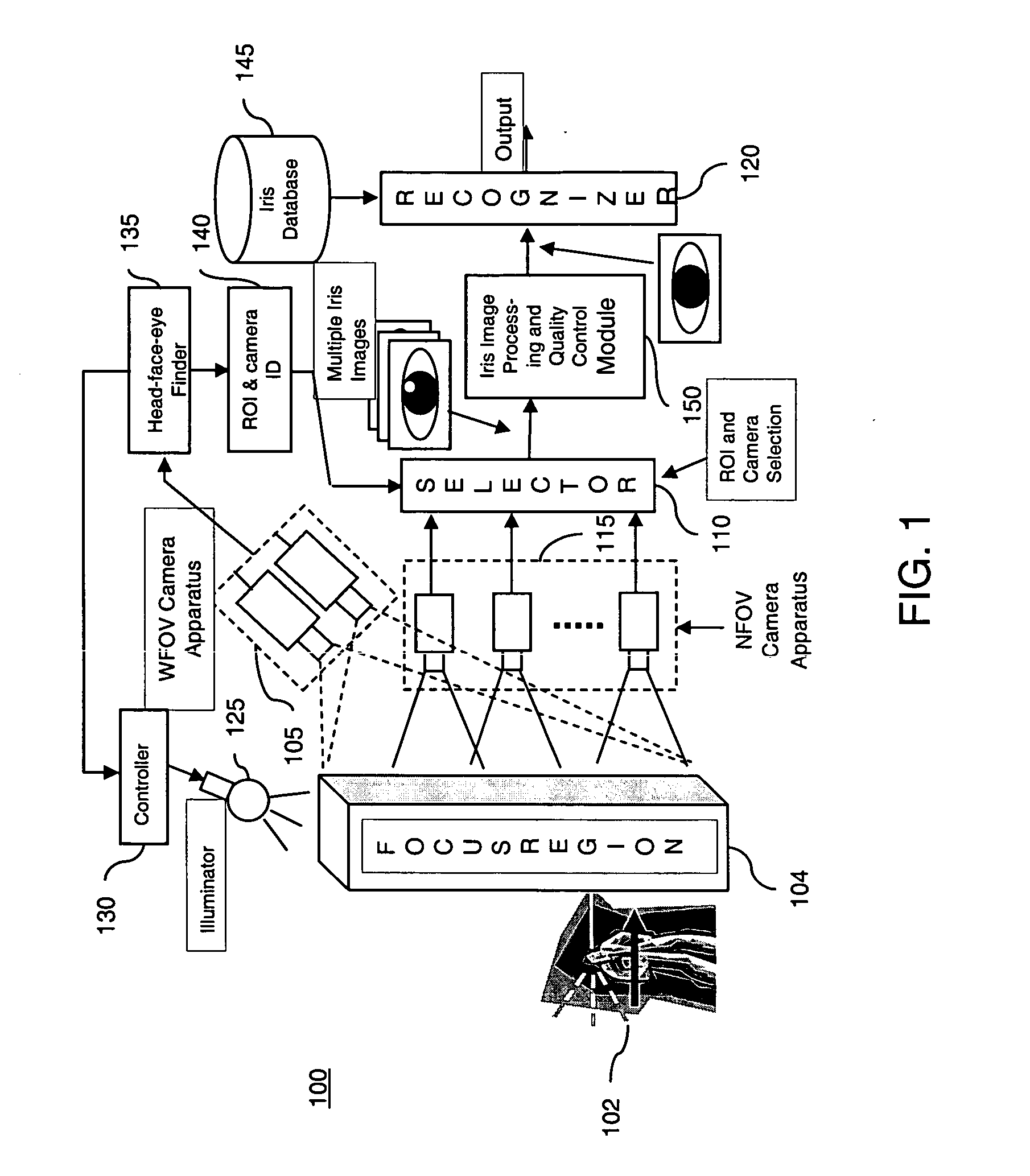

Method and apparatus for performing iris recognition from an image

InactiveUS20050084179A1Acquiring/recognising eyesClosed circuit television systemsComputer visionIris recognition

A method and apparatus for performing iris recognition from at least one image is disclosed. A plurality of cameras is used to capture a plurality of images where at least one of the images contains a region having at least a portion of an iris. At least one of the plurality of images is then processed to perform iris recognition.

Owner:SARNOFF CORP

Power management for head worn computing

InactiveUS20160133201A1Input/output for user-computer interactionAcquiring/recognising eyesComputer hardwareComputer engineering

Owner:MENTOR ACQUISITION ONE LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com