Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

11667 results about "Virtual reality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Virtual reality (VR) is a simulated experience that can be similar to or completely different from the real world. Applications of virtual reality can include entertainment (i.e. gaming) and educational purposes (i.e. medical or military training). Other, distinct types of VR style technology include augmented reality and mixed reality.

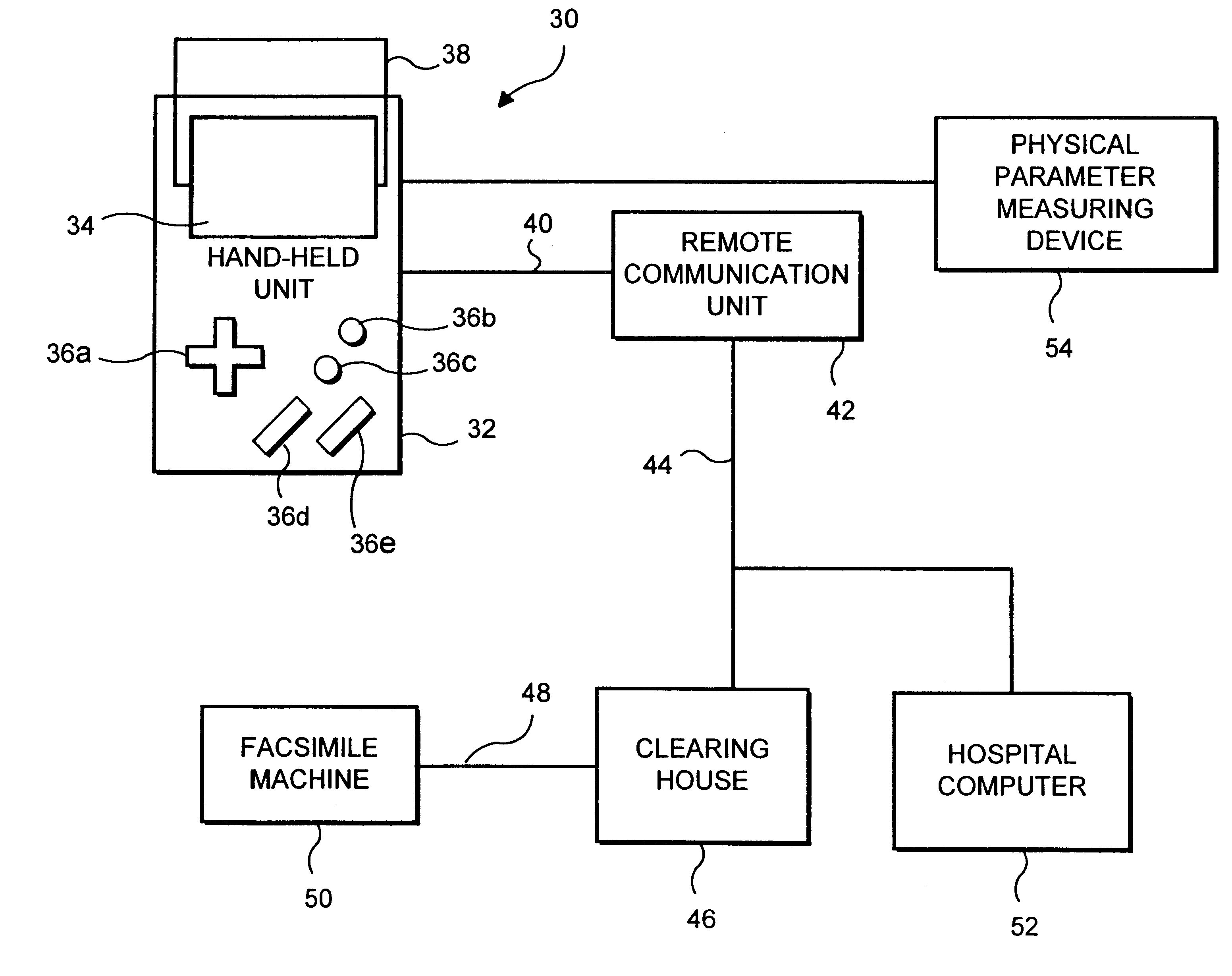

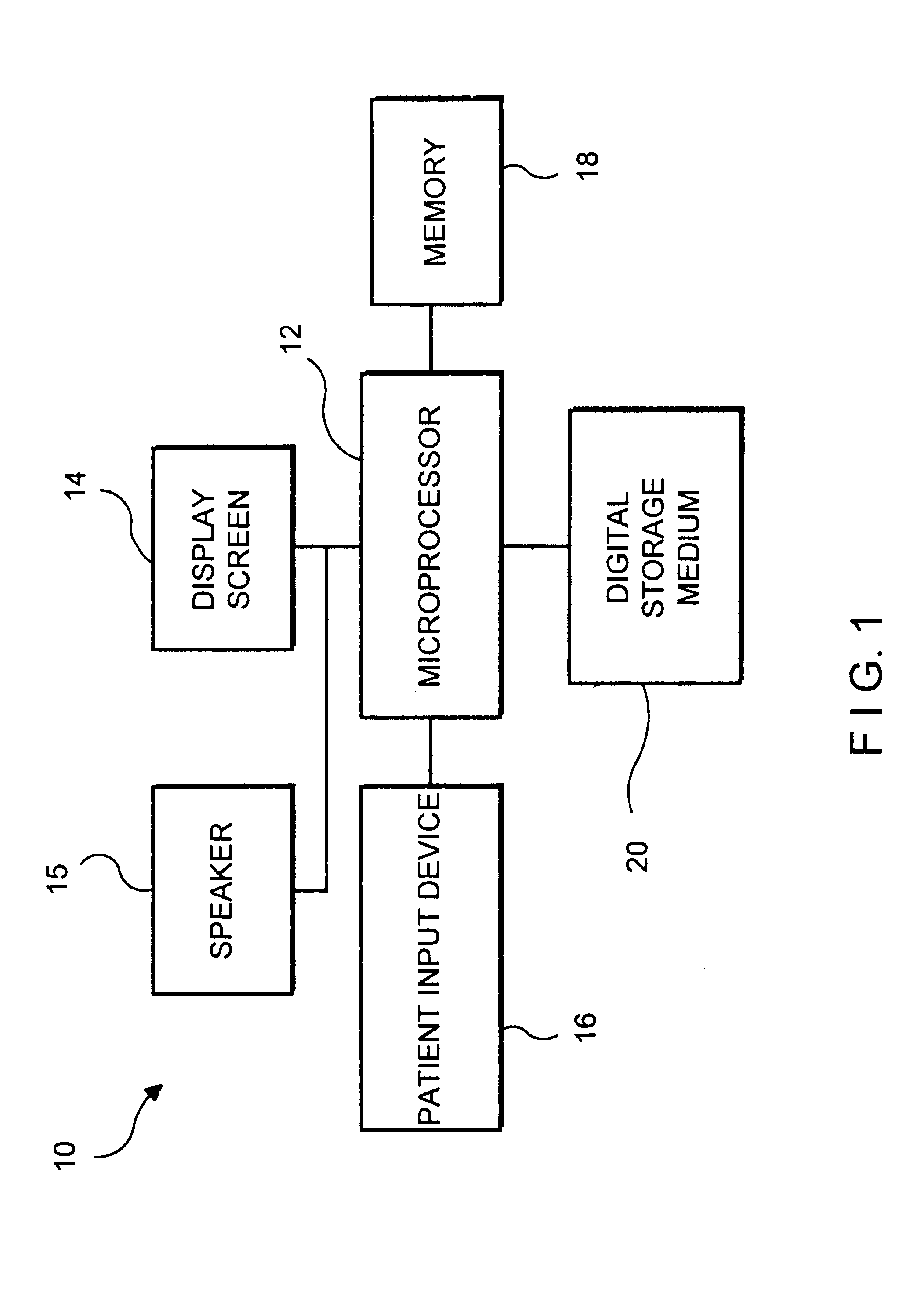

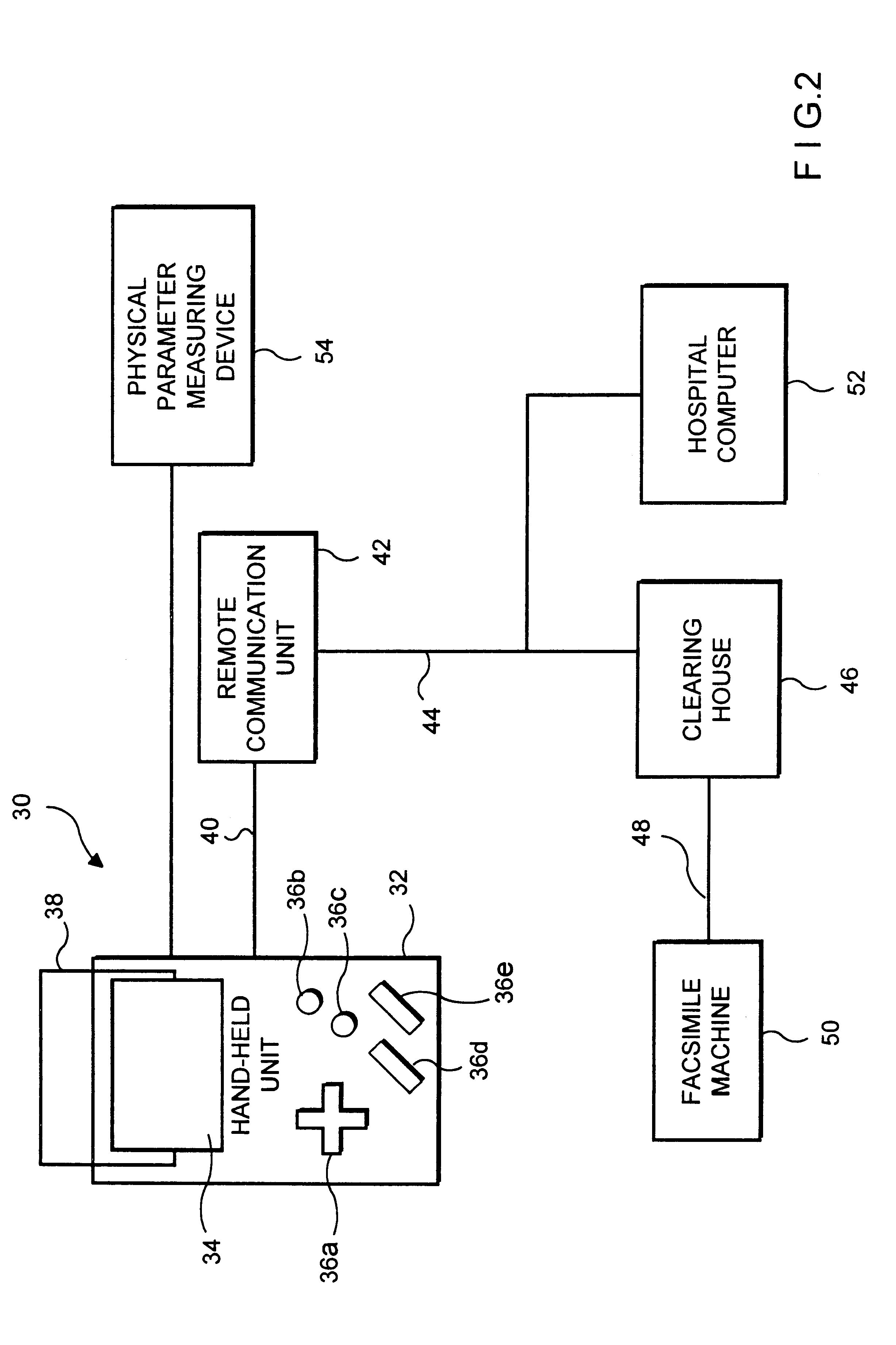

Method for diagnosis and treatment of psychological and emotional conditions using a microprocessor-based virtual reality simulator

Methods and systems for monitoring, diagnosing and / or treating psychological conditions and / or disorders in patients with the aid of computer-based virtual reality simulations. Pursuant to one preferred embodiment, a computer program product is used to control a computer. The program product includes a computer-readable medium, and a controlling mechanism that directs the computer to generate an output signal for controlling a video display device. The video display device is equipped to display representations of three-dimensional images, and the output signal represents a virtual reality simulation directed to diagnosis and / or treatment of a psychological condition and / or disorder.

Owner:HEALTH HERO NETWORK

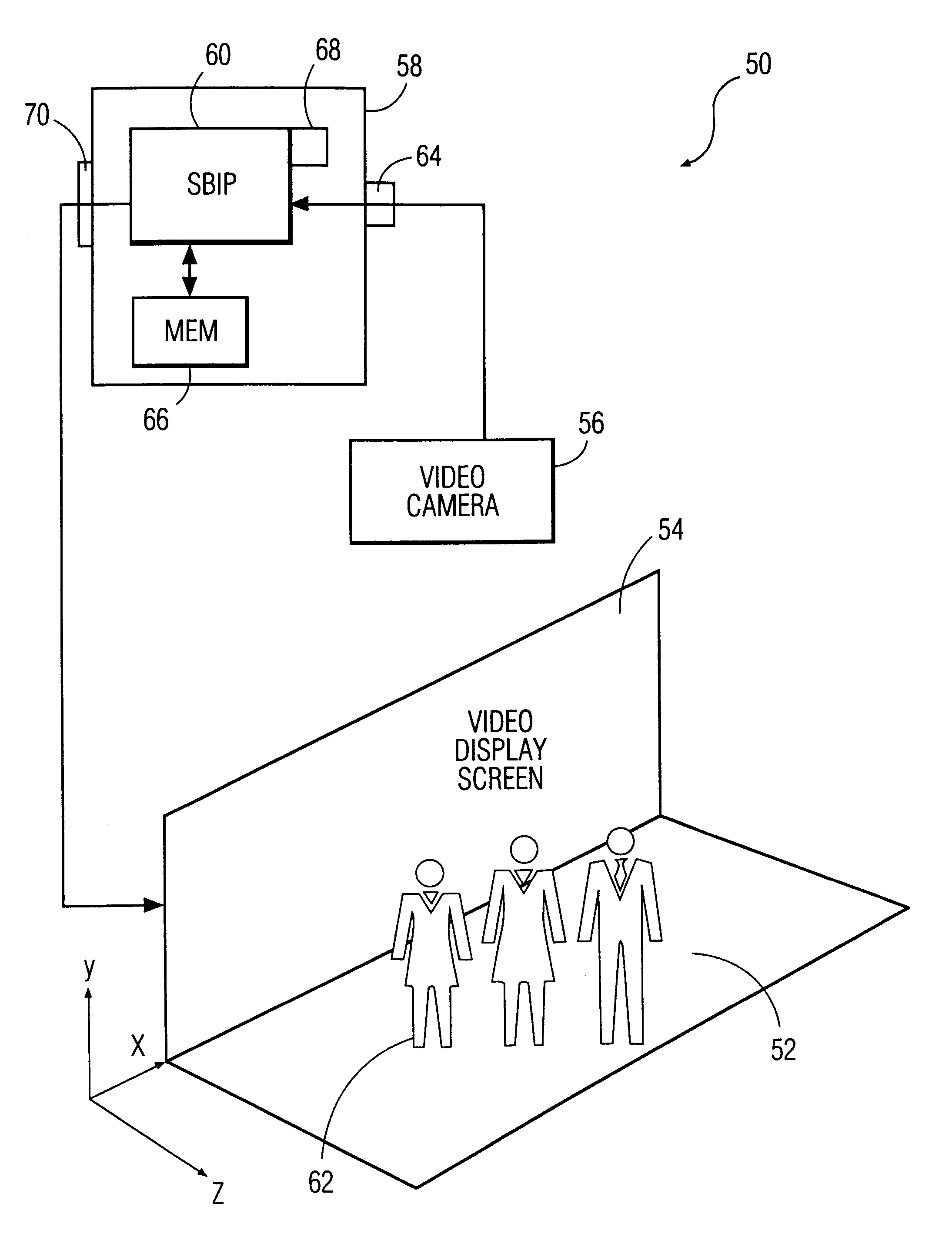

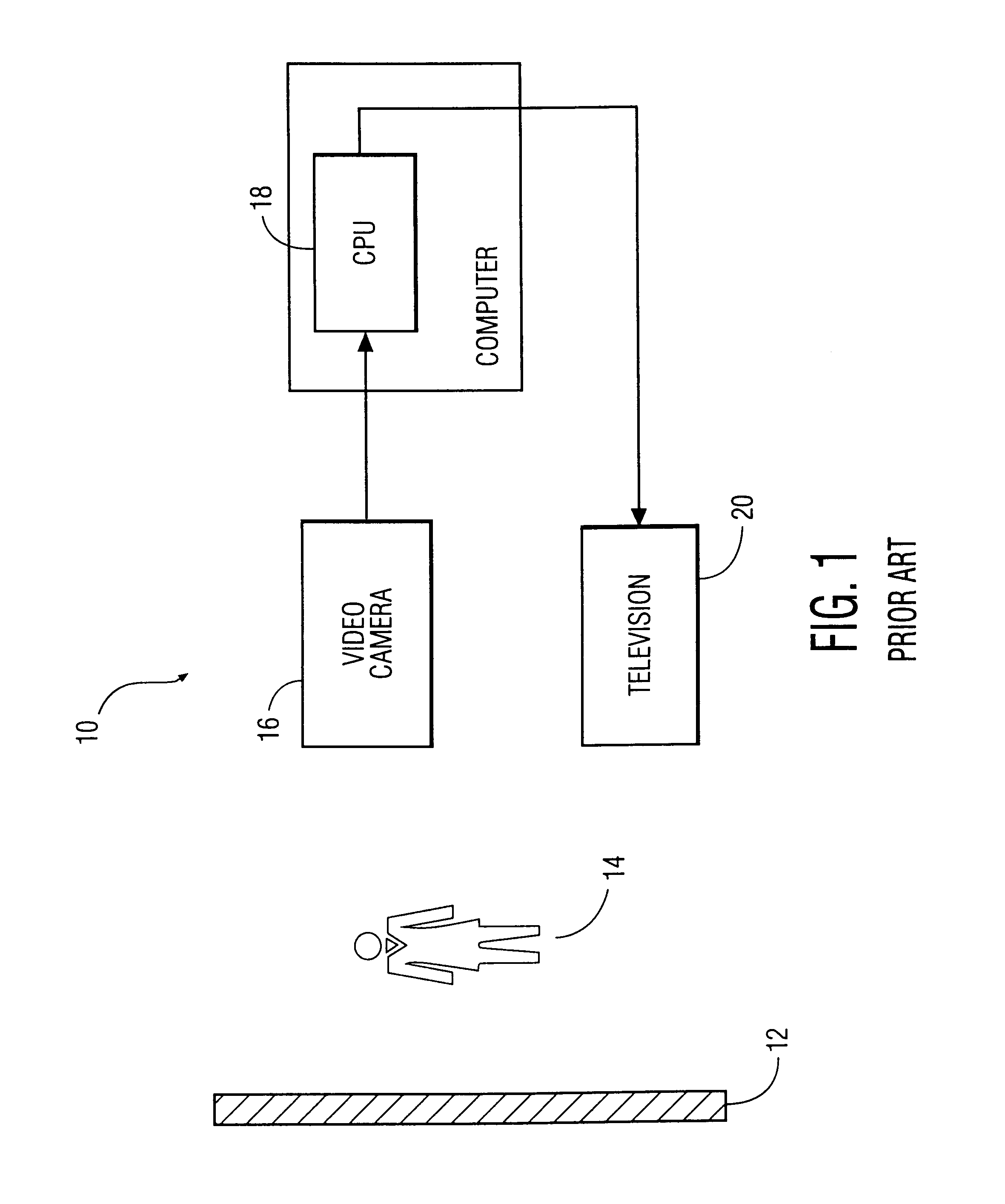

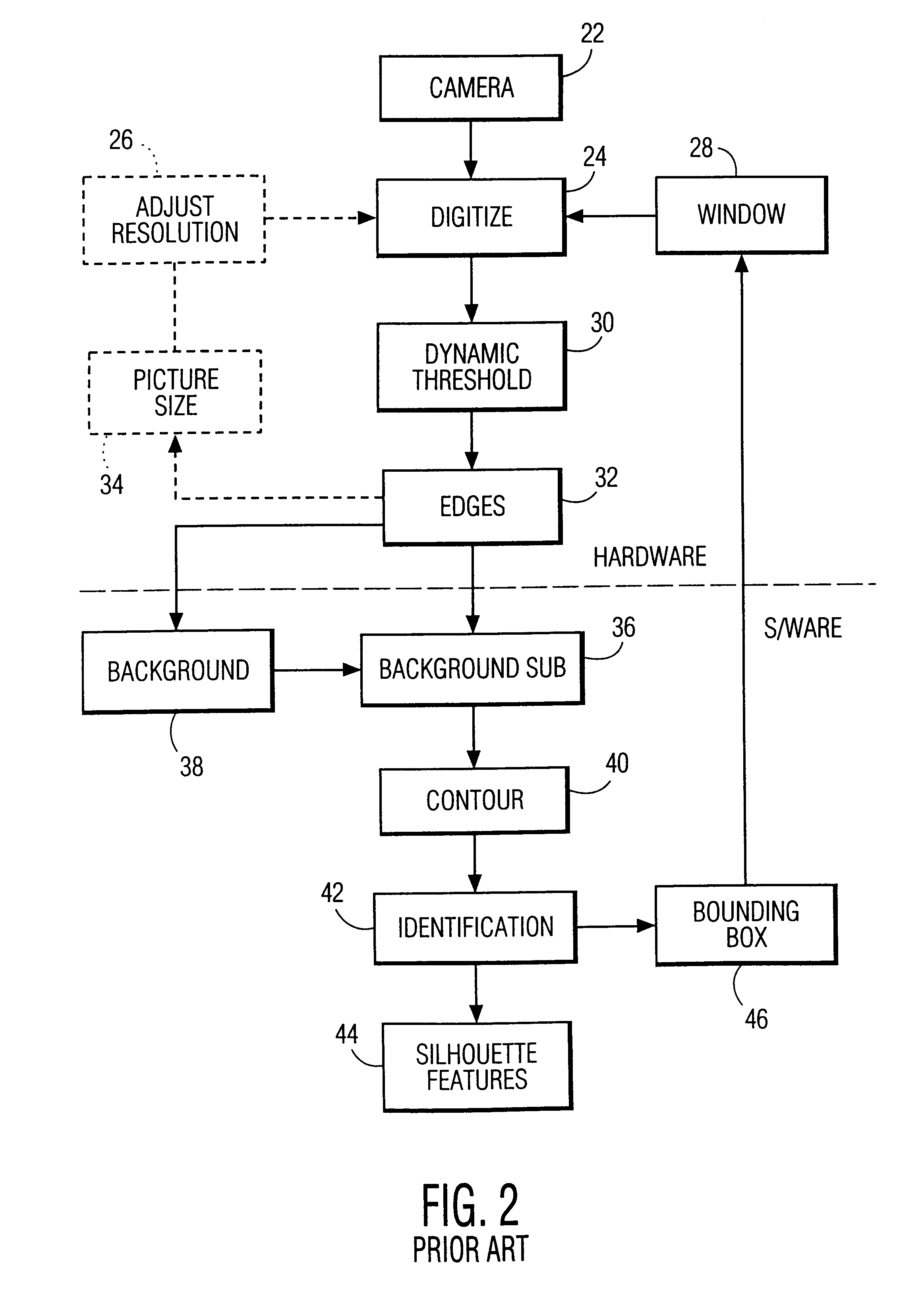

System and method for permitting three-dimensional navigation through a virtual reality environment using camera-based gesture inputs

InactiveUS6181343B1Input/output for user-computer interactionCosmonautic condition simulationsDisplay deviceThree dimensional graphics

A system and method for permitting three-dimensional navigation through a virtual reality environment using camera-based gesture inputs of a system user. The system comprises a computer-readable memory, a video camera for generating video signals indicative of the gestures of the system user and an interaction area surrounding the system user, and a video image display. The video image display is positioned in front of the system user. The system further comprises a microprocessor for processing the video signals, in accordance with a program stored in the computer-readable memory, to determine the three-dimensional positions of the body and principle body parts of the system user. The microprocessor constructs three-dimensional images of the system user and interaction area on the video image display based upon the three-dimensional positions of the body and principle body parts of the system user. The video image display shows three-dimensional graphical objects within the virtual reality environment, and movement by the system user permits apparent movement of the three-dimensional objects displayed on the video image display so that the system user appears to move throughout the virtual reality environment.

Owner:PHILIPS ELECTRONICS NORTH AMERICA

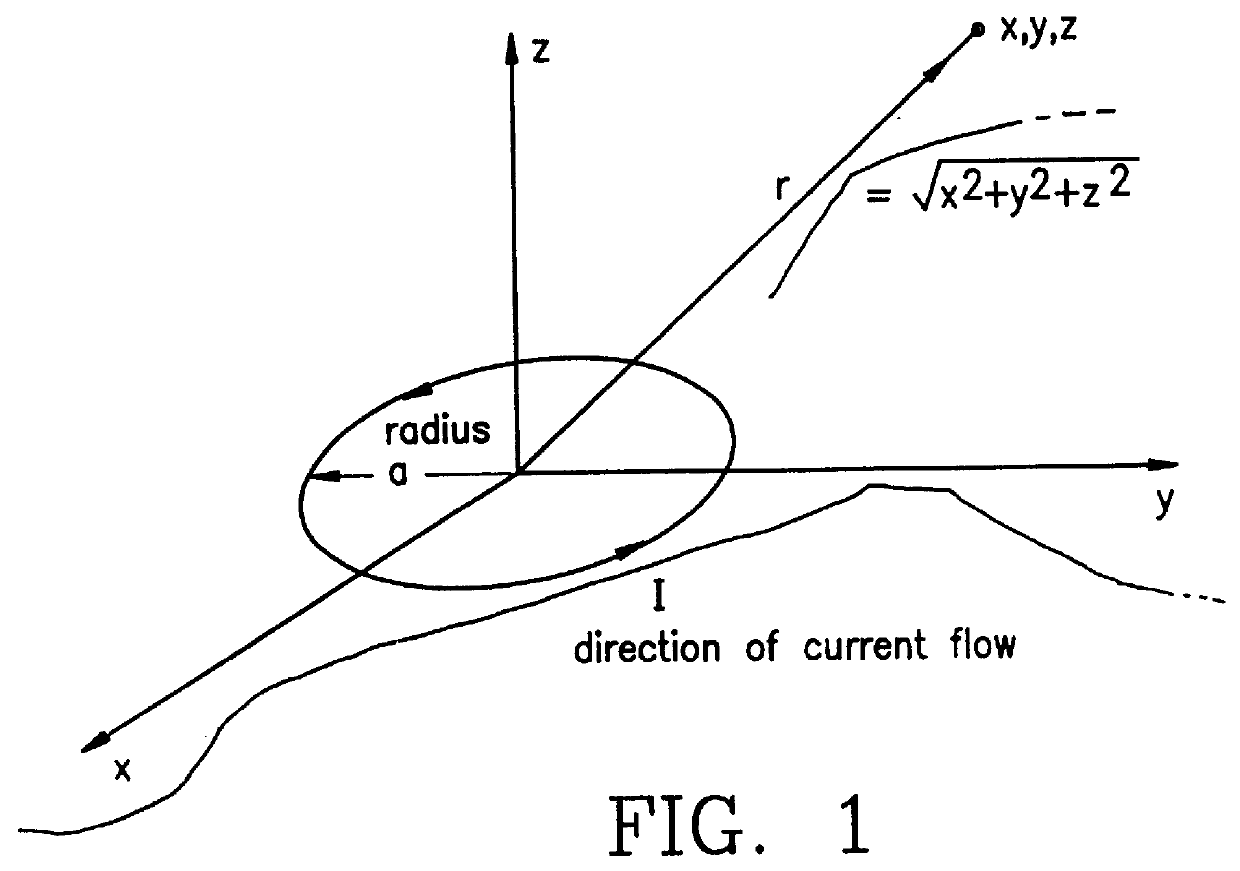

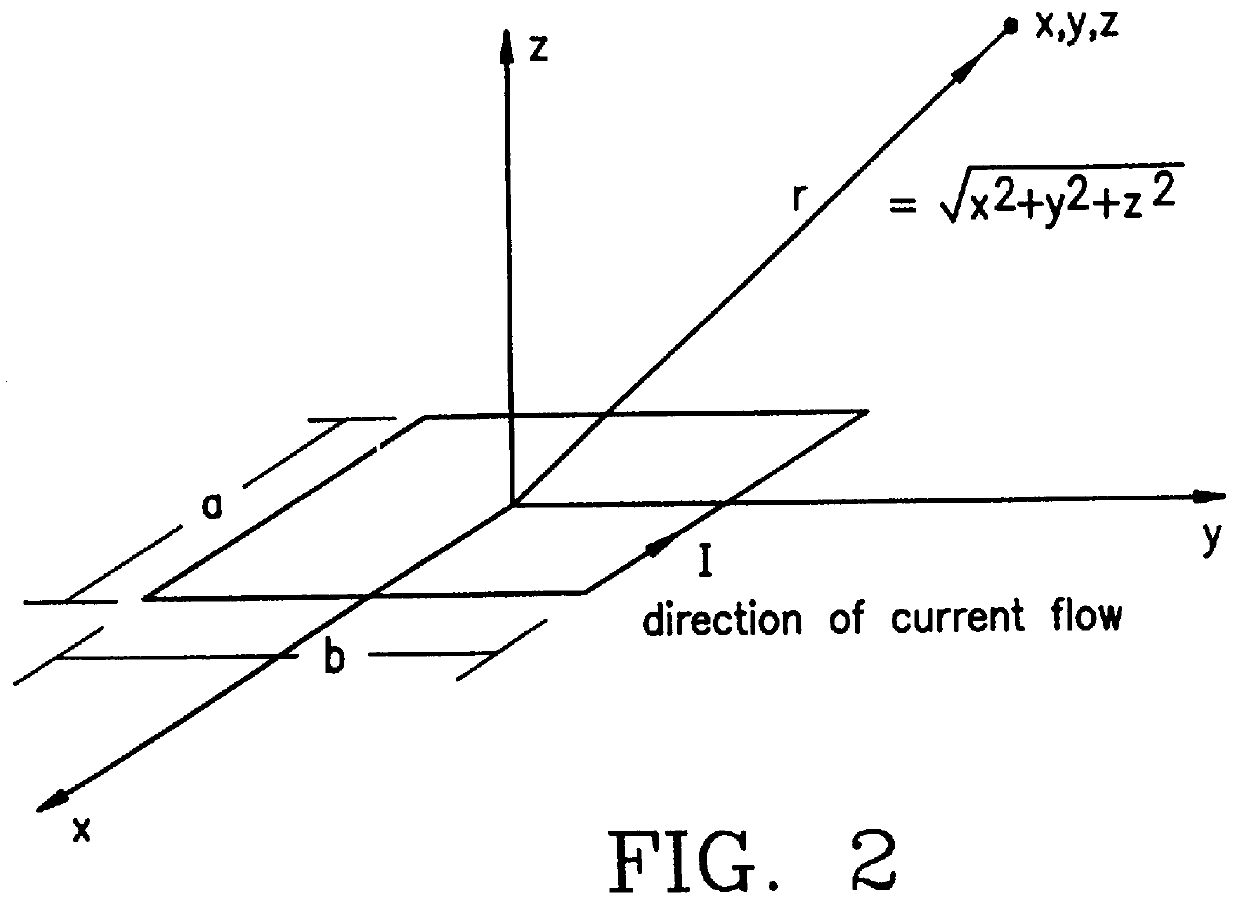

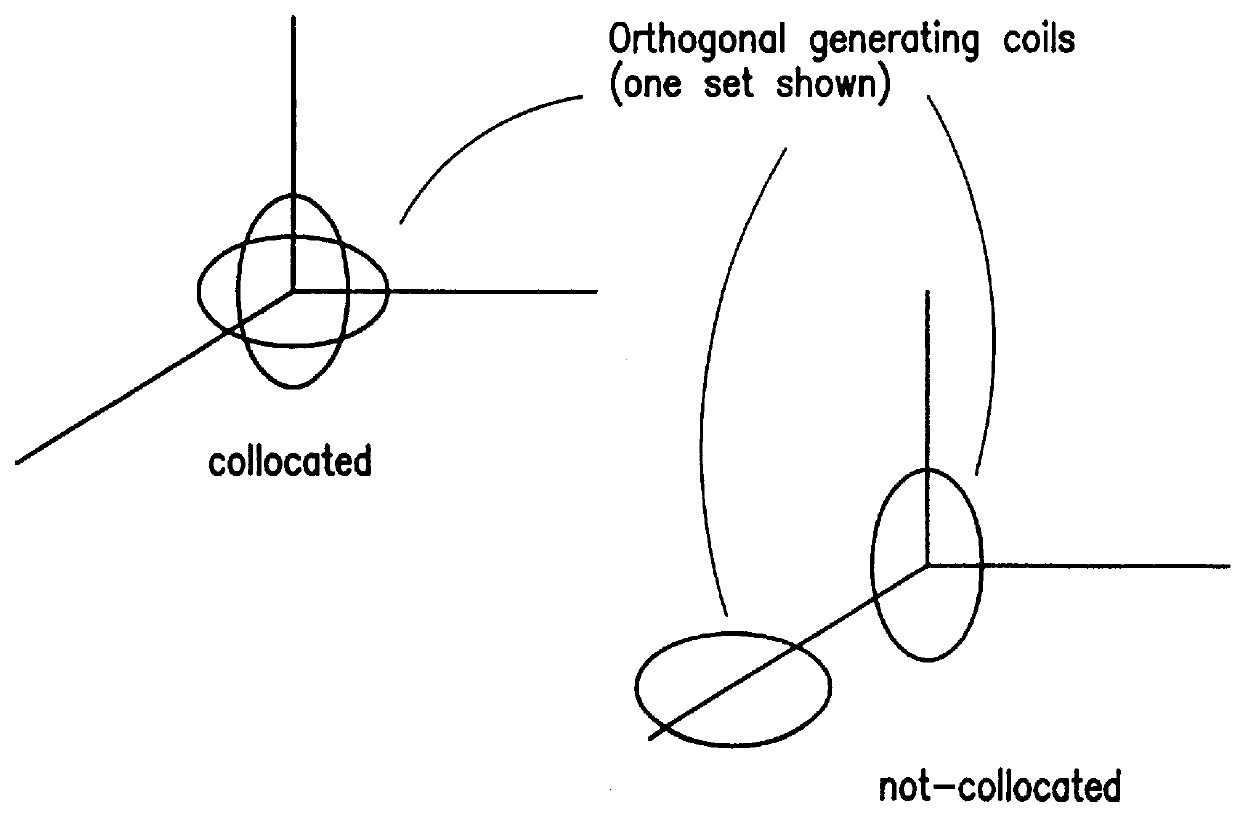

Measuring position and orientation using magnetic fields

InactiveUS6073043APrecise positioningMinimize timeMagnetic measurementsSurgeryMagnetic trackingMagnetic field coupling

A method and apparatus for determining the position and orientation of a remote object relative to a reference coordinate frame includes a plurality of field-generating elements for generating electromagnetic fields, a drive for applying, to the generating elements, signals that generate a plurality of electromagnetic fields that are distinguishable from one another, a remote sensor having one or more field-sensing elements for sensing the fields generated and a processor for processing the outputs of the sensing element(s) into remote object position and orientation relative to the generating element reference coordinate frame. The position and orientation solution is based on the exact formulation of the magnetic field coupling as opposed to approximations used elsewhere. The system can be used for locating the end of a catheter or endoscope, digitizing objects for computer databases, virtual reality and motion tracking. The methods presented here can also be applied to other magnetic tracking technologies as a final "polishing" stage to improve the accuracy of their P&O solution.

Owner:CORMEDICA

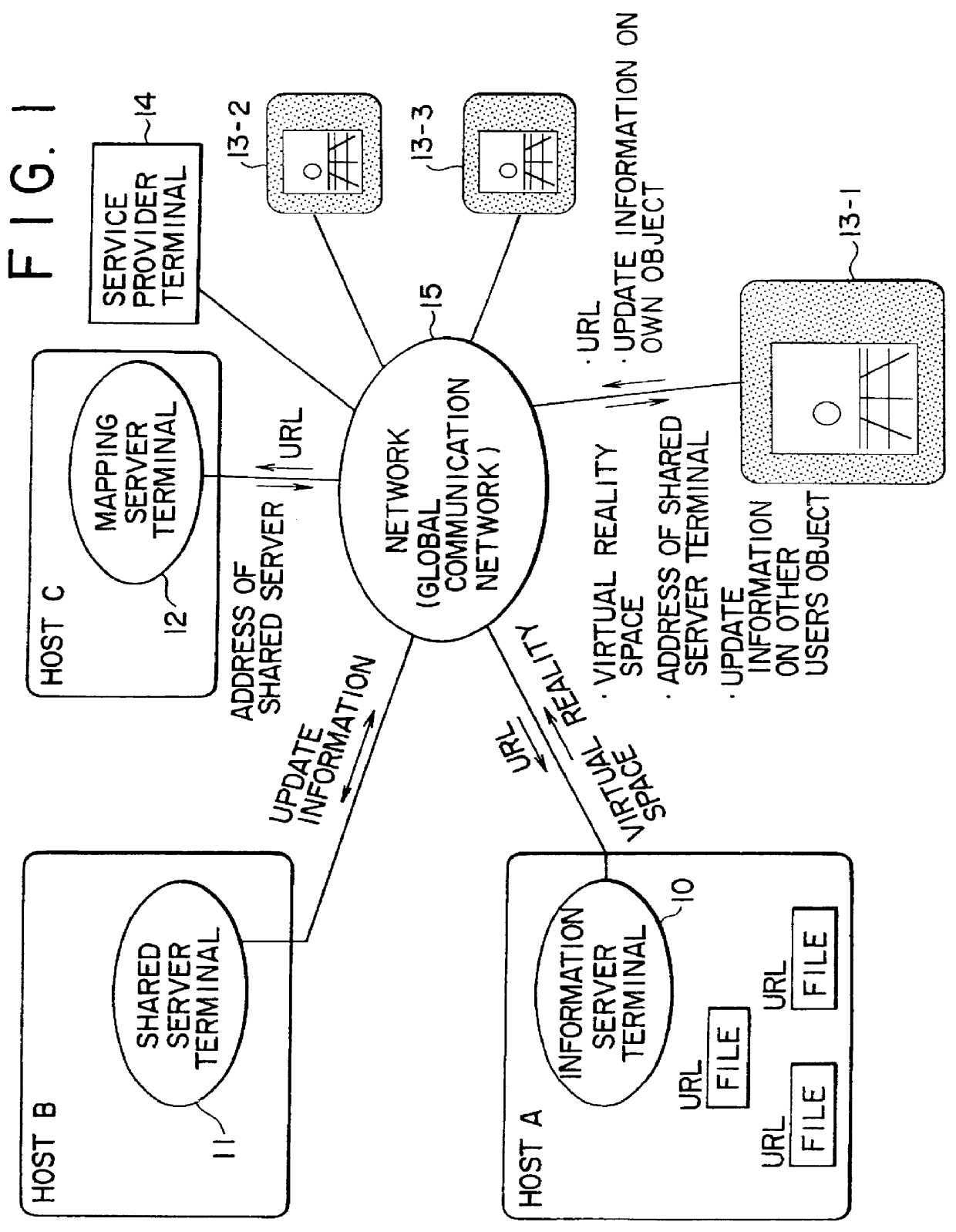

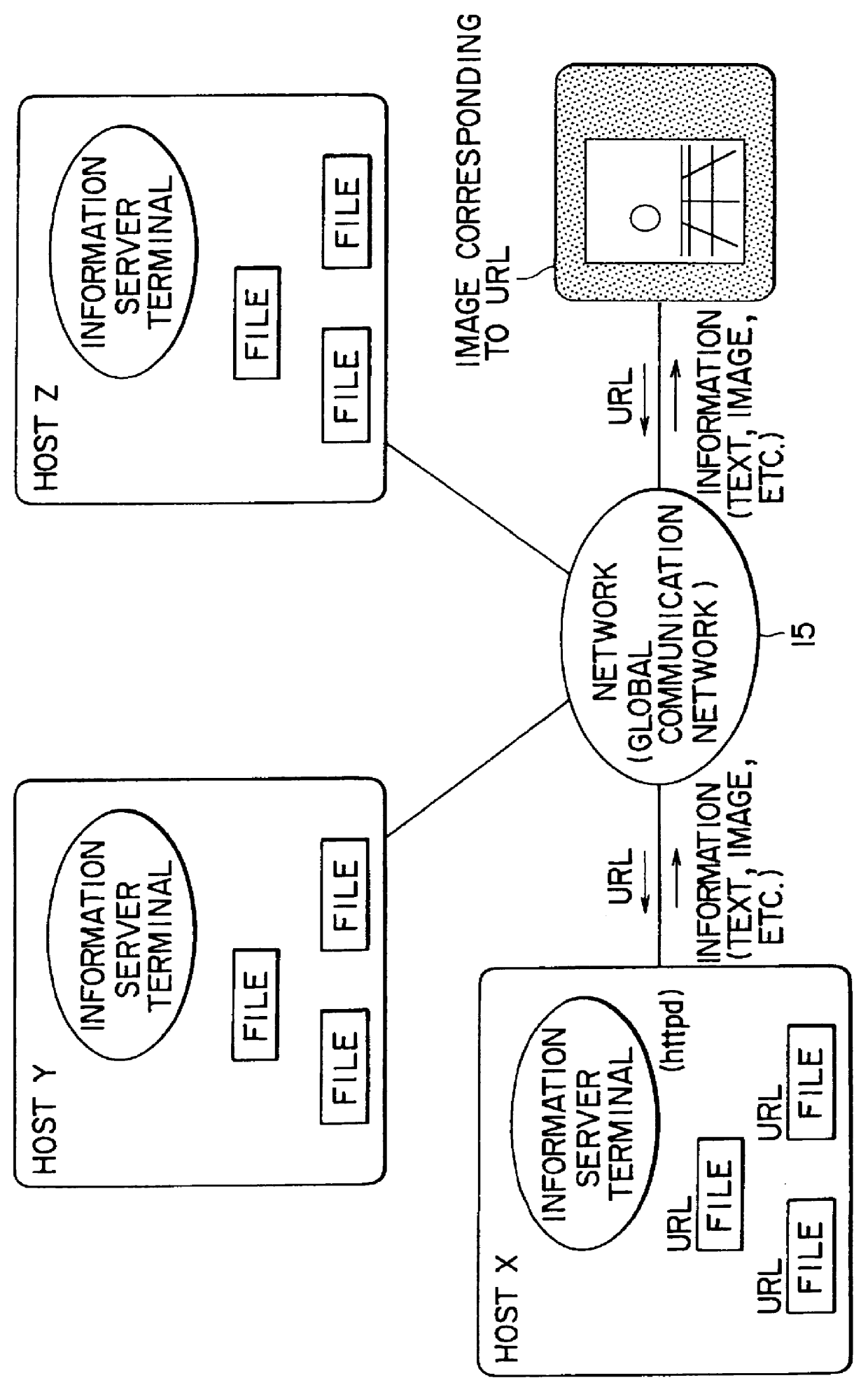

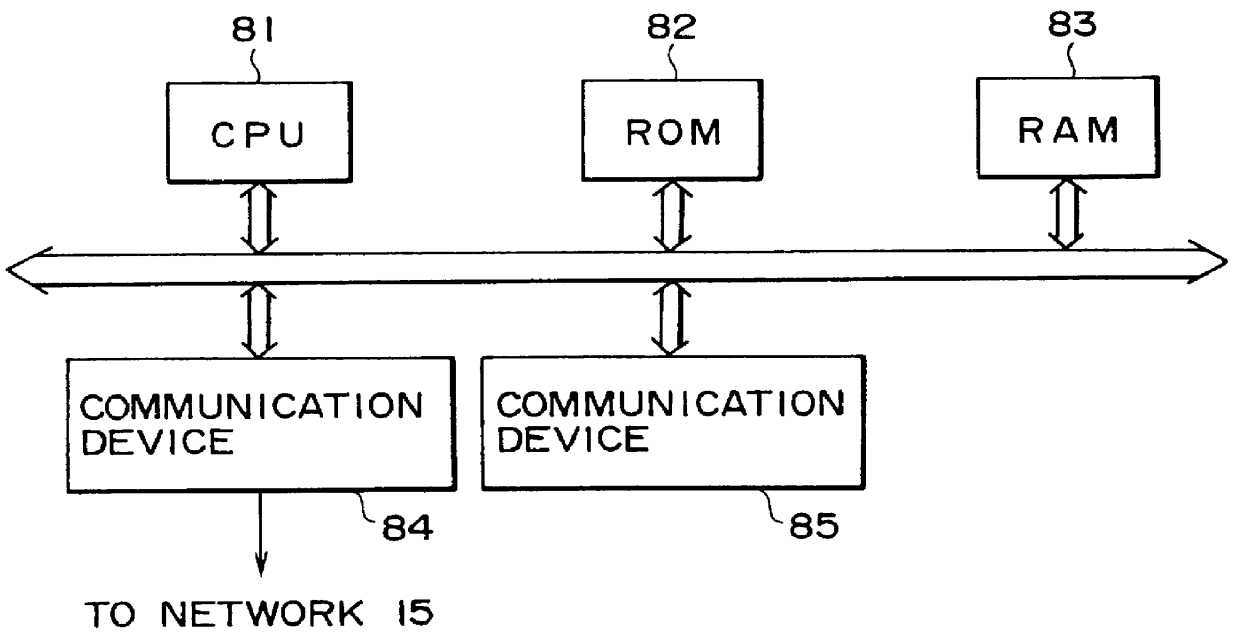

3D virtual reality multi-user interaction with superimposed positional information display for each user

Positions of users other than a particular user in a virtual reality space shared by many users can be recognized with ease and in a minimum display space. The center (or intersection) of a cross of radar map corresponds to the particular user and the positions of other users (to be specific, the avatars of the other users) around the particular user are indicated by dots or squares colored red for example in the radar map. This radar map is displayed on virtual reality space image in a superimposed manner.

Owner:SONY CORP

Method and apparatus for delivering a virtual reality environment

InactiveUS20030046689A1Easy to getConvenient transactionTelevision system detailsDigital computer detailsPersonalizationVirtual intelligence

This invention involves a virtual environment created through the combination of technologies. The invention employs the knowledge and experience of a Personal Assistant or Host, created through Artificial Intelligence applications, which assists and guides the user of the environment to products and / or services that they will most likely be interested in purchasing or requiring. The intelligent assistant's choices are based on its experiences with the specific user. The intelligent assistant communicates with the user by means of a speech recognition and speech synthesis device. This invention is an easy to use virtual reality environment that takes advantage of existing technologies and global communications networks such as the Internet without requiring any given degree of computer literacy. This invention includes a virtual intelligent assistant for each user which adapts to its user as it provides individualized guidance. The intelligent assistant or avatar projects human-like features and behaviors appropriate to the preferences of its user and appears as a virtual person to the user.

Owner:MISSION THE

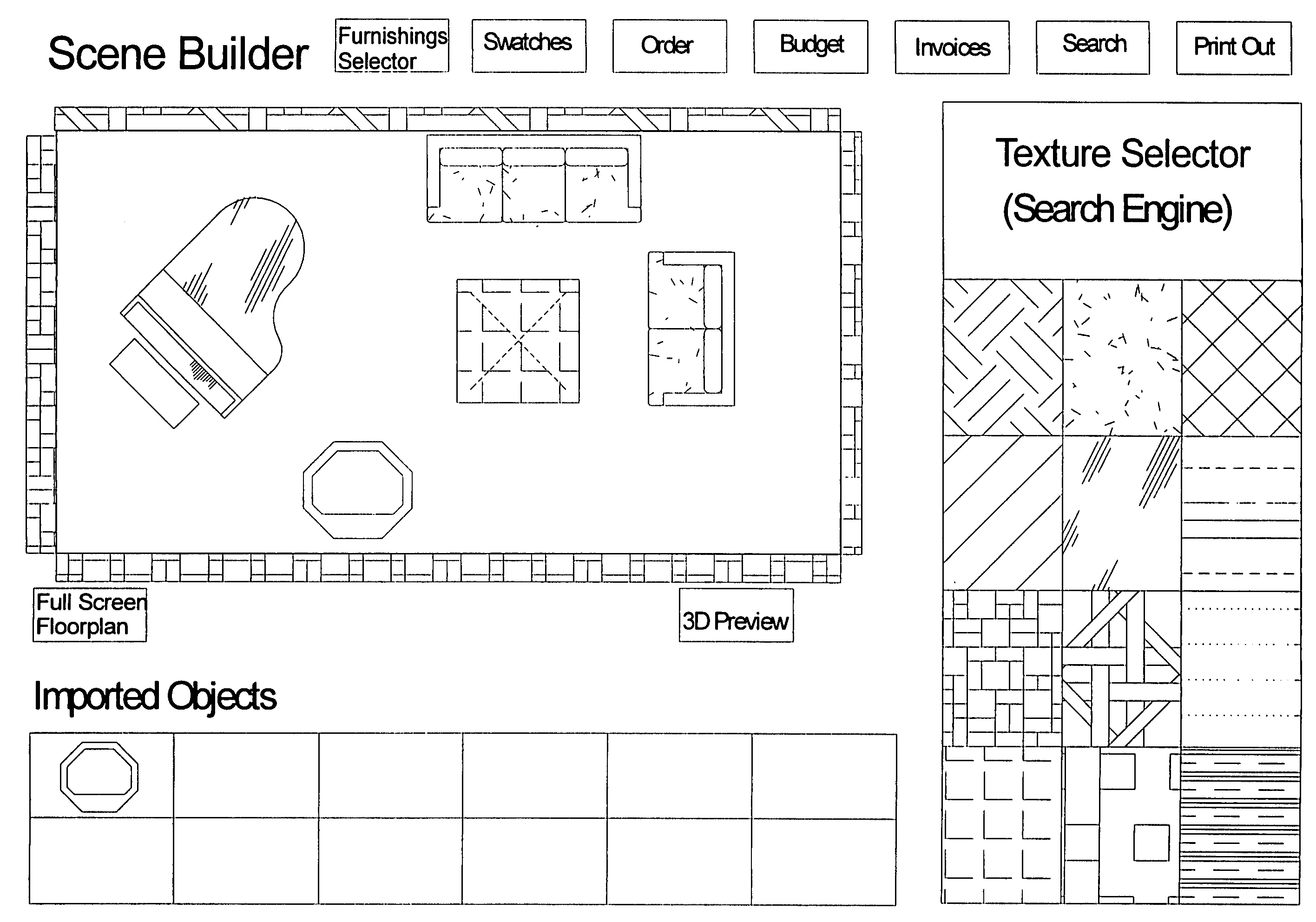

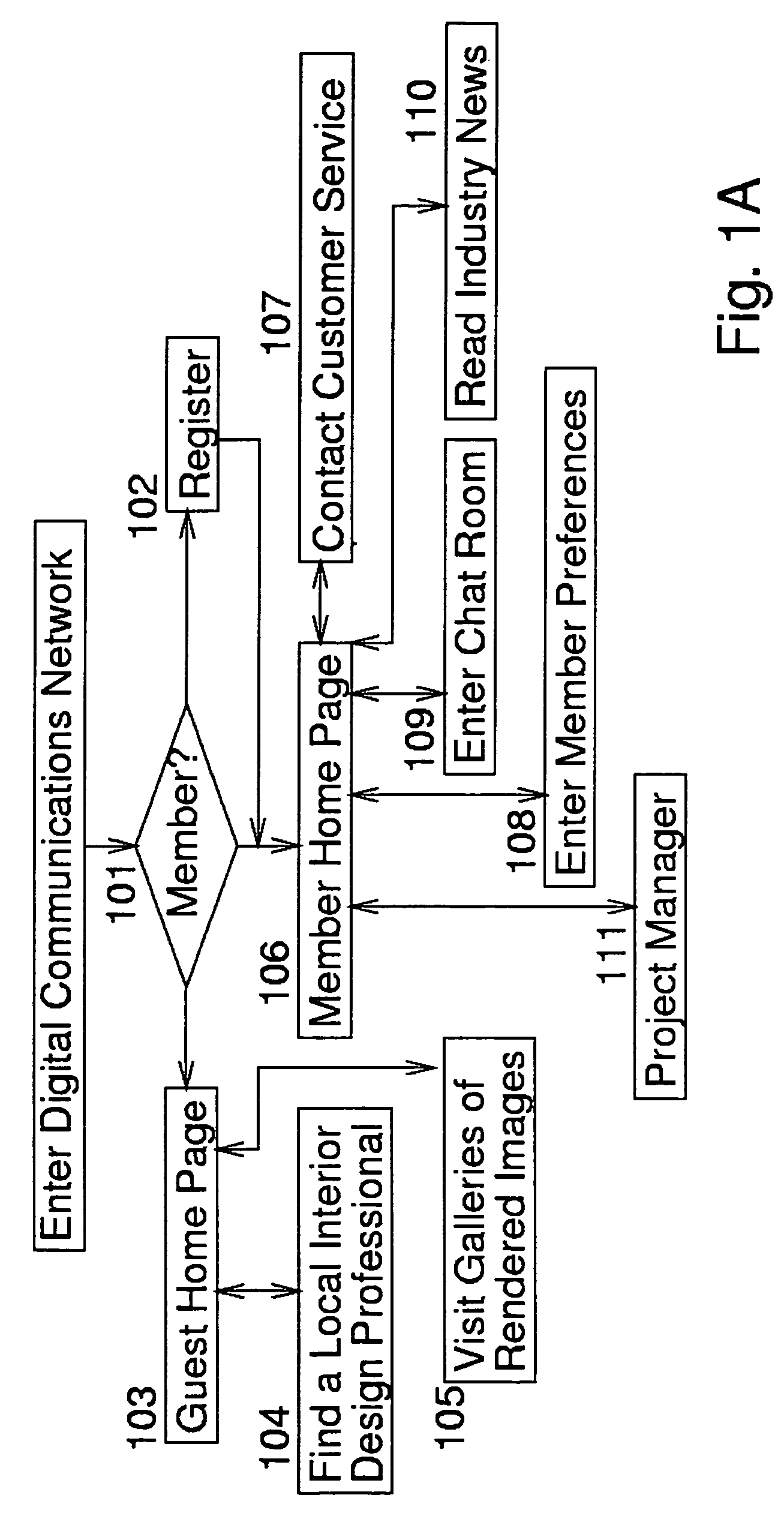

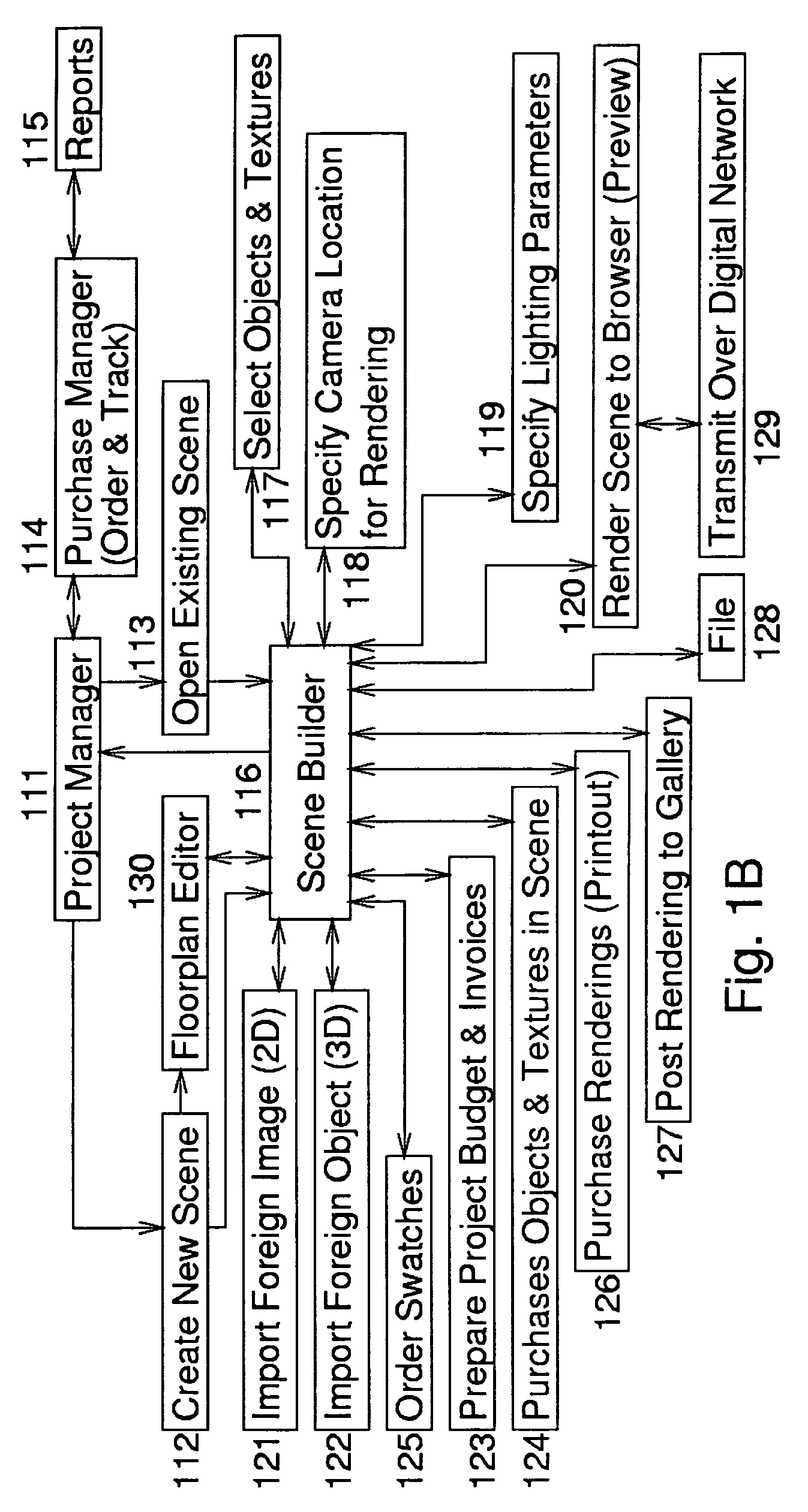

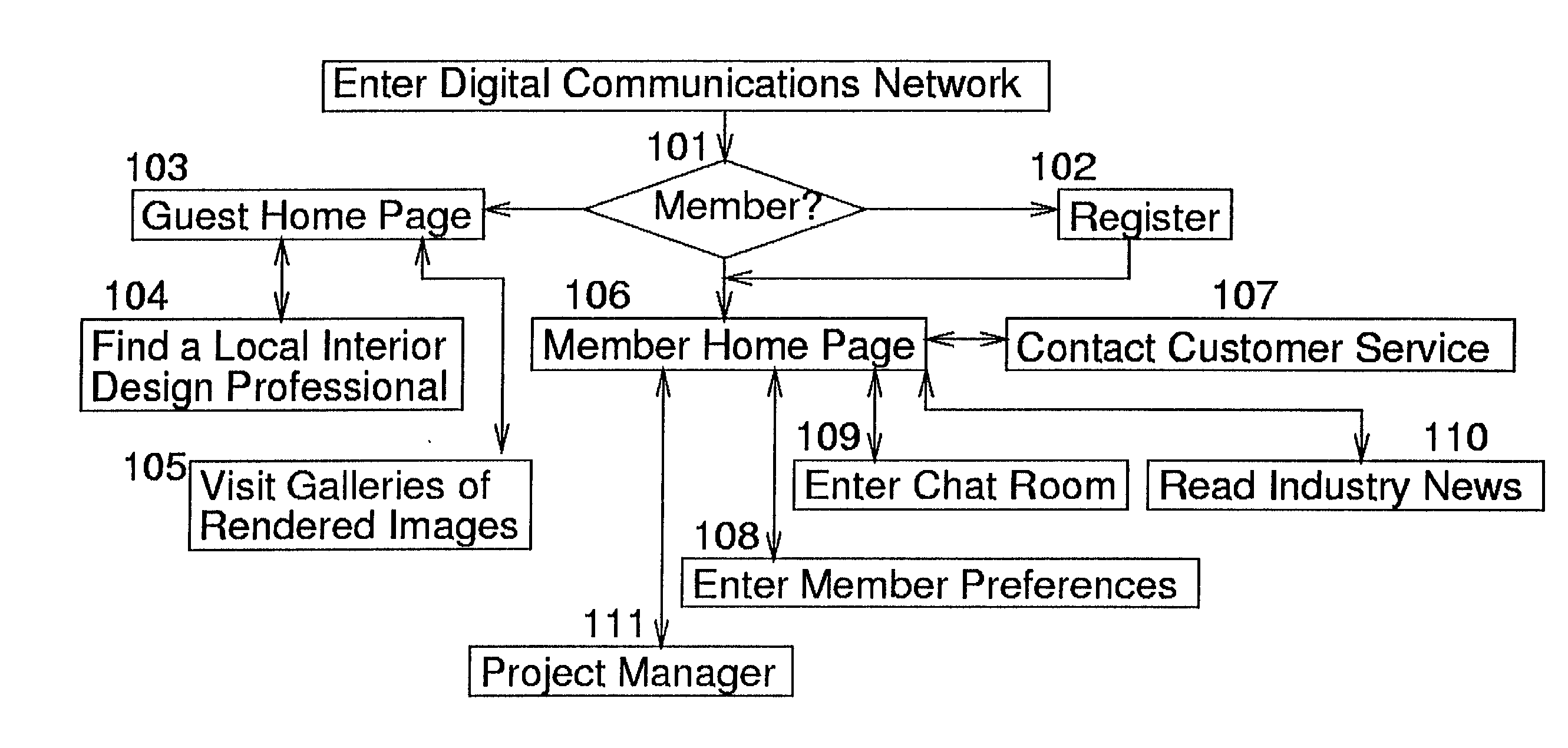

Network-linked interactive three-dimensional composition and display of saleable objects in situ in viewer-selected scenes for purposes of promotion and procurement

InactiveUS7062722B1Sufficiently accurateSufficiently appealingSpecial data processing applicationsMarketingFull custom3d image

A design professional such as an interior designer running a browser program at a client computer (i) optionally causes a digital image of a room, or a room model, or room images to be transmitted across the world wide web to a graphics server computer, and (ii) interactively selects furnishings from this server computer, so as to (iii) receive and display to his or her client a high-fidelity high-quality virtual-reality perspective-view image of furnishings displayed in, most commonly, an actual room of a client's home. Opticians may, for example, (i) upload one or more images of a client's head, and (ii) select eyeglass frames and components, to (iii) display to a prospective customer eyeglasses upon the customer's own head. The realistic images, optionally provided to bona fide design professionals for free, promote the sale to the client of goods which are normally obtained through the graphics service provider, profiting both the service provider and the design professional. Models of existing objects are built as necessary from object views. Full custom objects, including furniture and eyeglasses not yet built, are readily presented in realistic virtual image.Also, a method of interactive advertising permits a prospective customer of a product, such as a vehicle, to view a virtual image of the selected product located within a customer-selected virtual scene, such as the prospective customer's own home driveway. Imaging for all purposes is supported by comprehensive and complete 2D to 3D image translation with precise object placement, scaling, angular rotation, coloration, shading and lighting so as to deliver flattering perspective images that, by selective lighting, arguably look better than actual photographs of real world objects within the real world.

Owner:CARLIN BRUCE +3

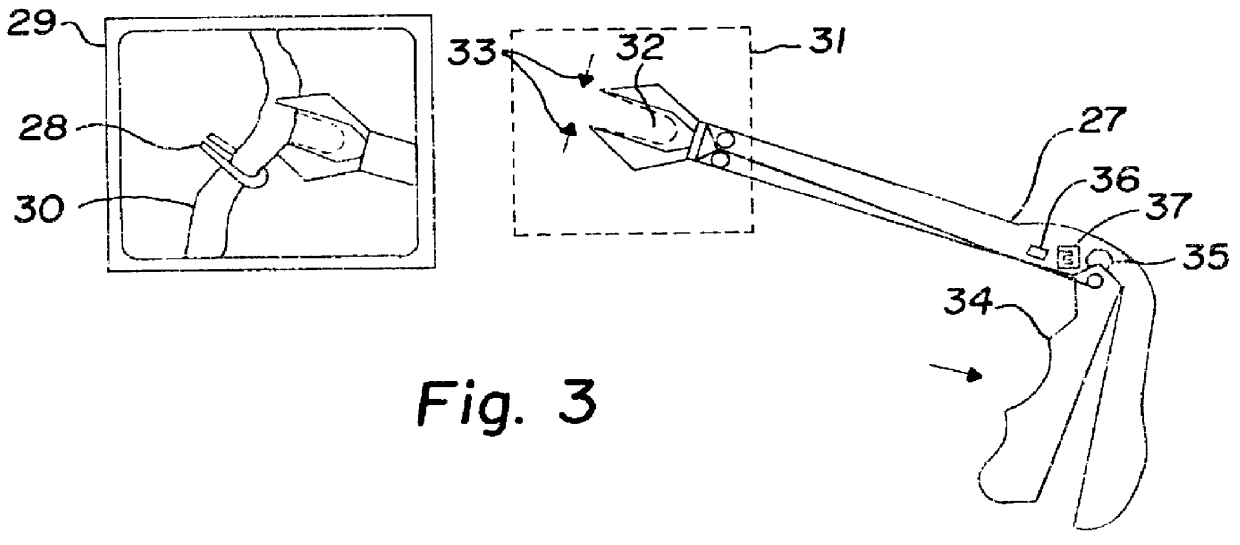

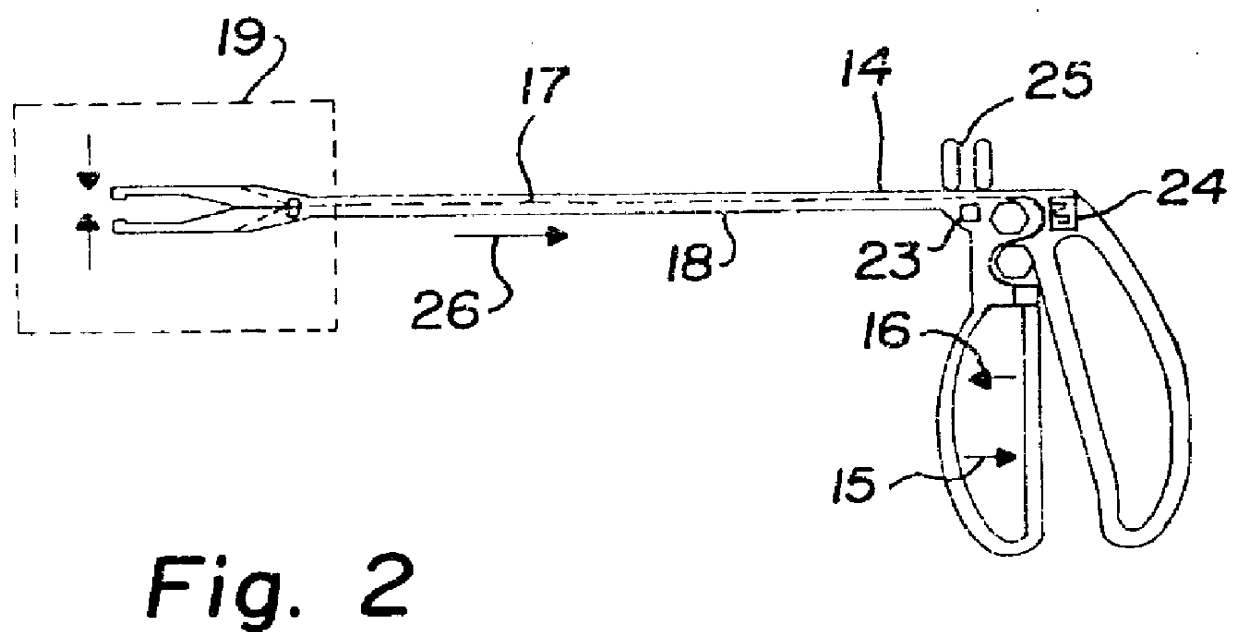

Selectable instruments with homing devices for haptic virtual reality medical simulation

Invention is apparatus for using selectable instruments in virtual medical simulations with input devices actuated by user and resembling medical instruments which transmit various identifying data to the virtual computer model from said instruments which have been selected; then, said apparatus assist in creating full immersion for the user in the virtual reality model by tracking and homing to instruments with haptic, or force feedback generating, receptacles with which said instruments dock by means of a numerical grid, creating a seamless interface of instrument selection and use in the virtual reality anatomy.

Owner:HON DAVID C

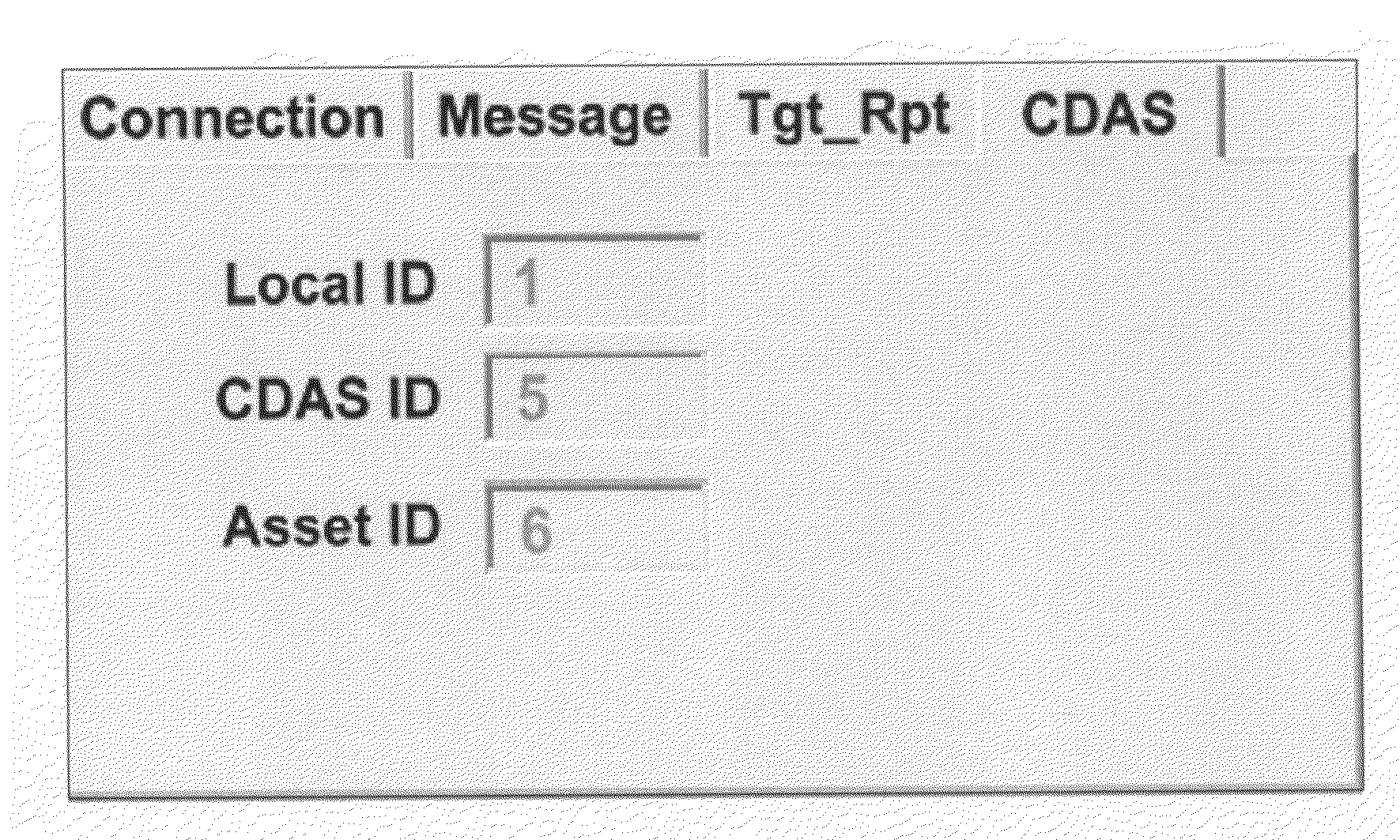

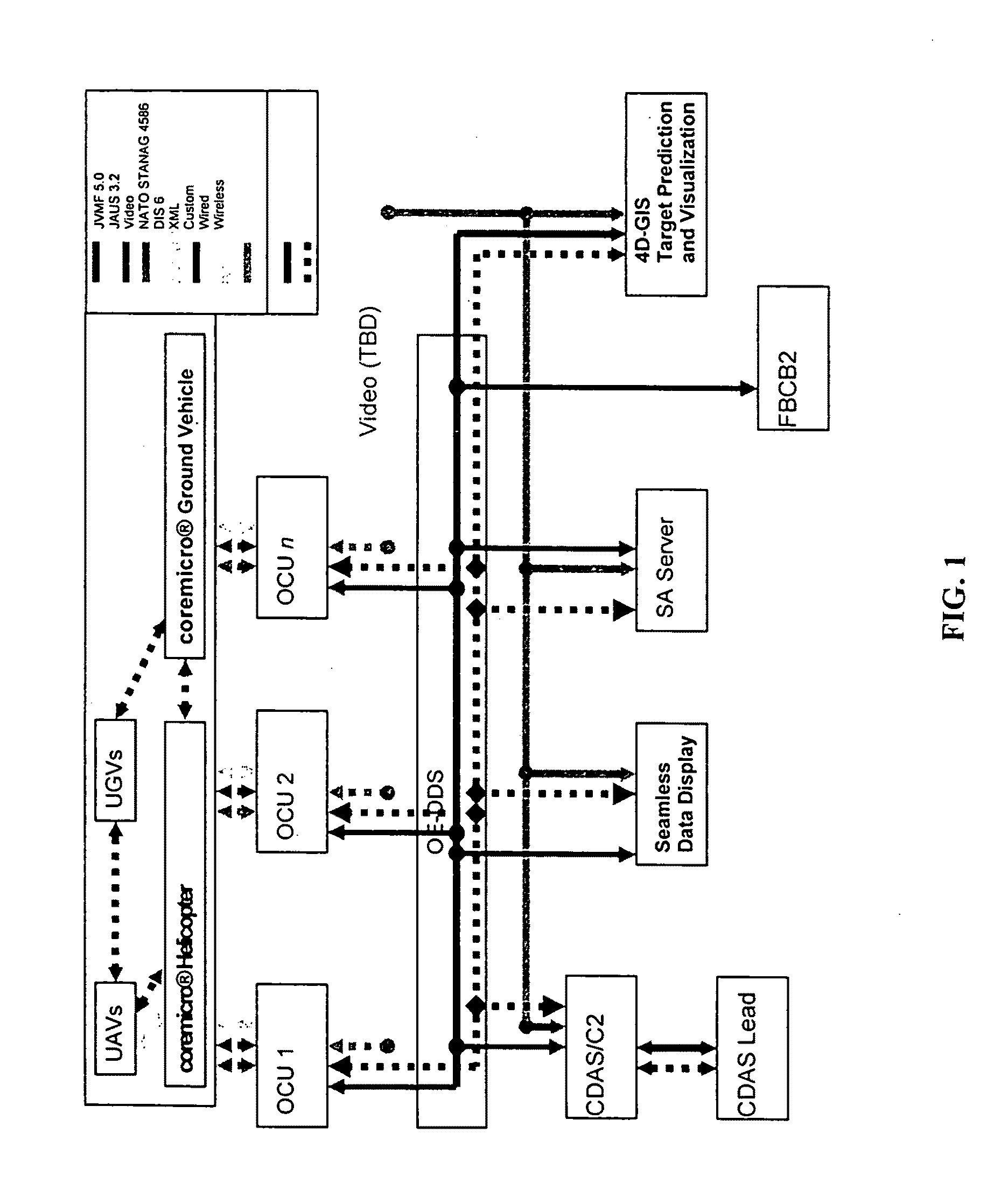

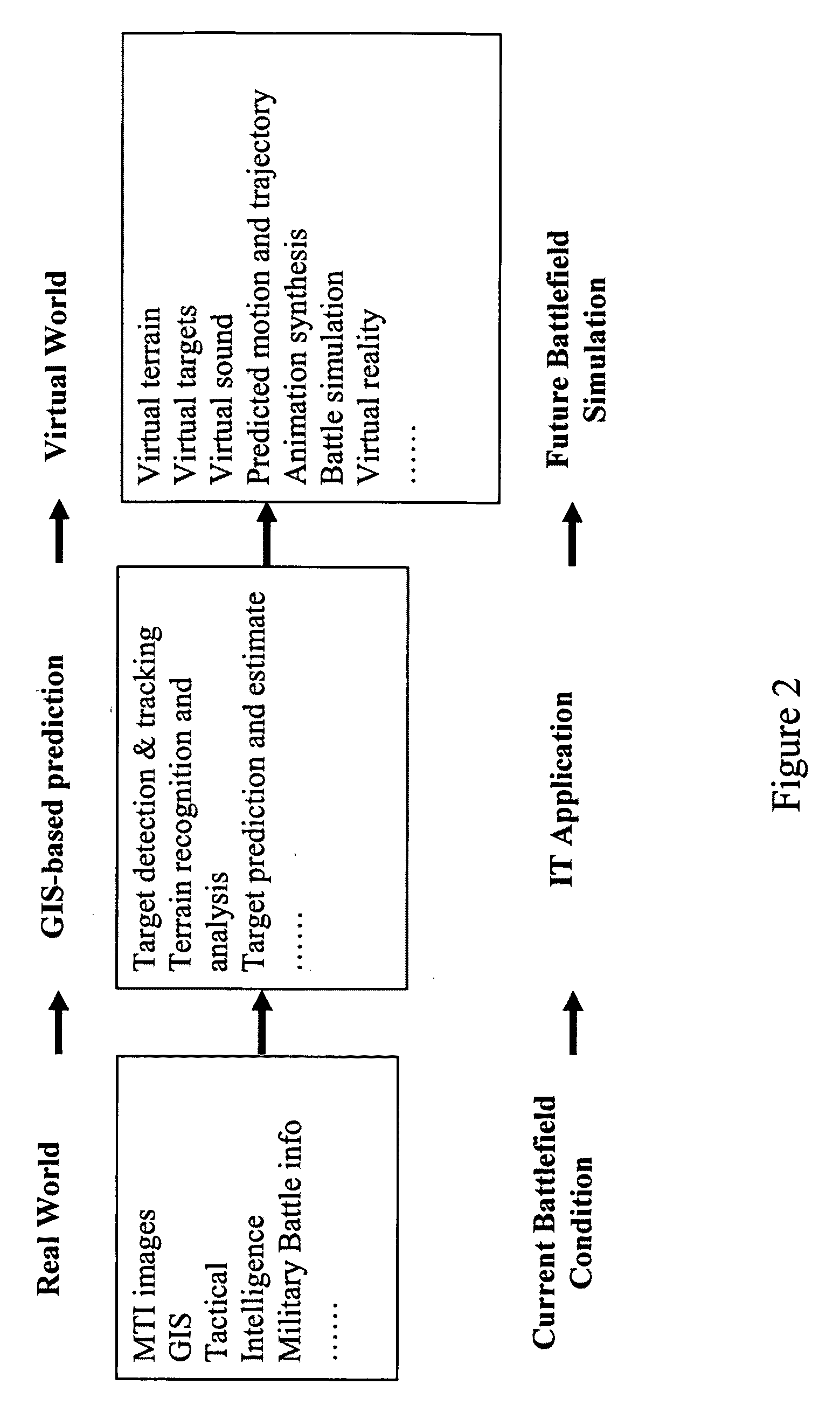

4D GIS based virtual reality for moving target prediction

ActiveUS20090087029A1Enhanced degree of confidencePrecise processImage enhancementImage analysisMoving averageTerrain

The technology of the 4D-GIS system deploys a GIS-based algorithm used to determine the location of a moving target through registering the terrain image obtained from a Moving Target Indication (MTI) sensor or small Unmanned Aerial Vehicle (UAV) camera with the digital map from GIS. For motion prediction the target state is estimated using an Extended Kalman Filter (EKF). In order to enhance the prediction of the moving target's trajectory a fuzzy logic reasoning algorithm is used to estimate the destination of a moving target through synthesizing data from GIS, target statistics, tactics and other past experience derived information, such as, likely moving direction of targets in correlation with the nature of the terrain and surmised mission.

Owner:AMERICAN GNC

Network-linked interactive three-dimensional composition and display of saleable objects in situ in viewer-selected scenes for purposes of object promotion and procurement, and generation of object advertisements

A design professional such as an interior designer, furniture sales associate or advertising designer running a browser program at a client computer (i) uses the world wide web to connect to a graphics server computer, and (ii) interactively selects or specifies furnishings or other objects from this server computer and previews the scene and communicates with the server, so as to (iii) receive and display to his or her client a high-fidelity high-quality virtual-reality perspective-view photorealistic image of furnishings or other objects displayed in, most commonly, a virtual representation of an actual room of a client's home or an advertisement scene. The photorealistic images, optionally provided to bona fide design professionals and their clients for free, but typically paid for by the product's manufacturer, promote the sale to the client of goods which are normally obtained through the graphics service provider's customer's distributor, profiting both the service provider and the design professional. Models, textures and maps of existing objects are built as necessary from object views or actual objects. Full custom objects, including furniture and other products not yet built, are readily presented in realistic virtual image. Also, a method of interactive advertising permits a prospective customer of a product, such as furniture, to view a virtual but photorealistic, image of a selected product located within a customer-selected scene, such as the prospective customer's own home, to allow in-context visualization.

Owner:CARLIN BRUCE

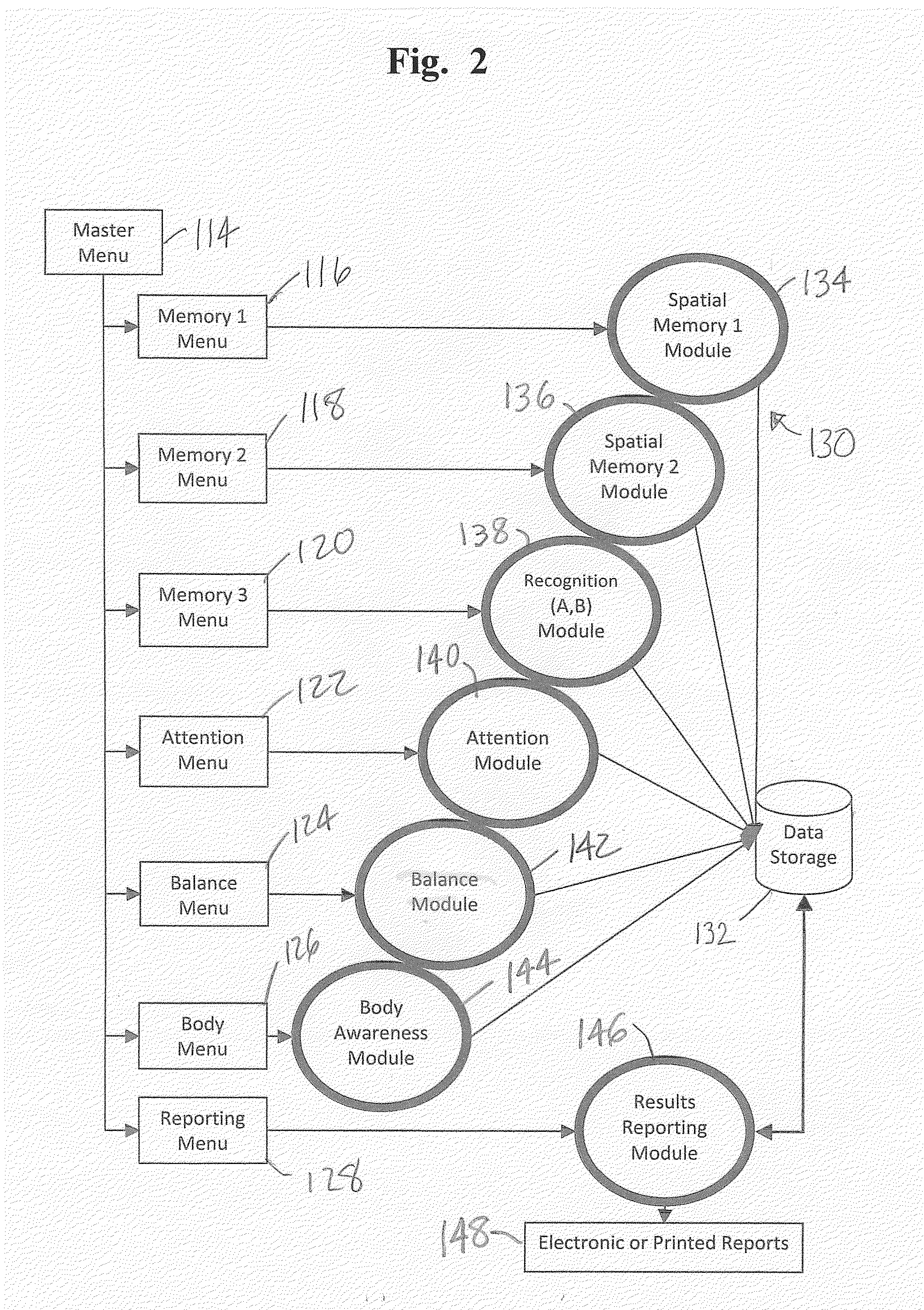

Assessment and Rehabilitation of Cognitive and Motor Functions Using Virtual Reality

InactiveUS20120108909A1Easy to identifyEasily track rehabilitation progressHealth-index calculationSensorsSkill setsMotor skill

A user-friendly reliable process is provided to help diagnose (assess) and treat (rehabilitate) impairment or deficiencies in a person (subject or patient) caused by a traumatic brain injury (TBI) or other neurocognitive disorders. The economical, safe, effective process can include: generating and electronically displaying a virtual reality environment (VRE) with moveable images; identifying and letting the TBI person perform a task in the VRE; electronically inputting and recording the performance data with an electronic interactive communications device; electronically evaluating the person's performance and assessing the person's impairment by electronically determining a deficiency in the person's cognitive function (e.g. memory, recall, recognition, attention, spatial awareness) and / or motor function (i.e. motor skills, e.g. balance) as a result of the TBI or other neurocognitive disorder.

Owner:HEADREHAB

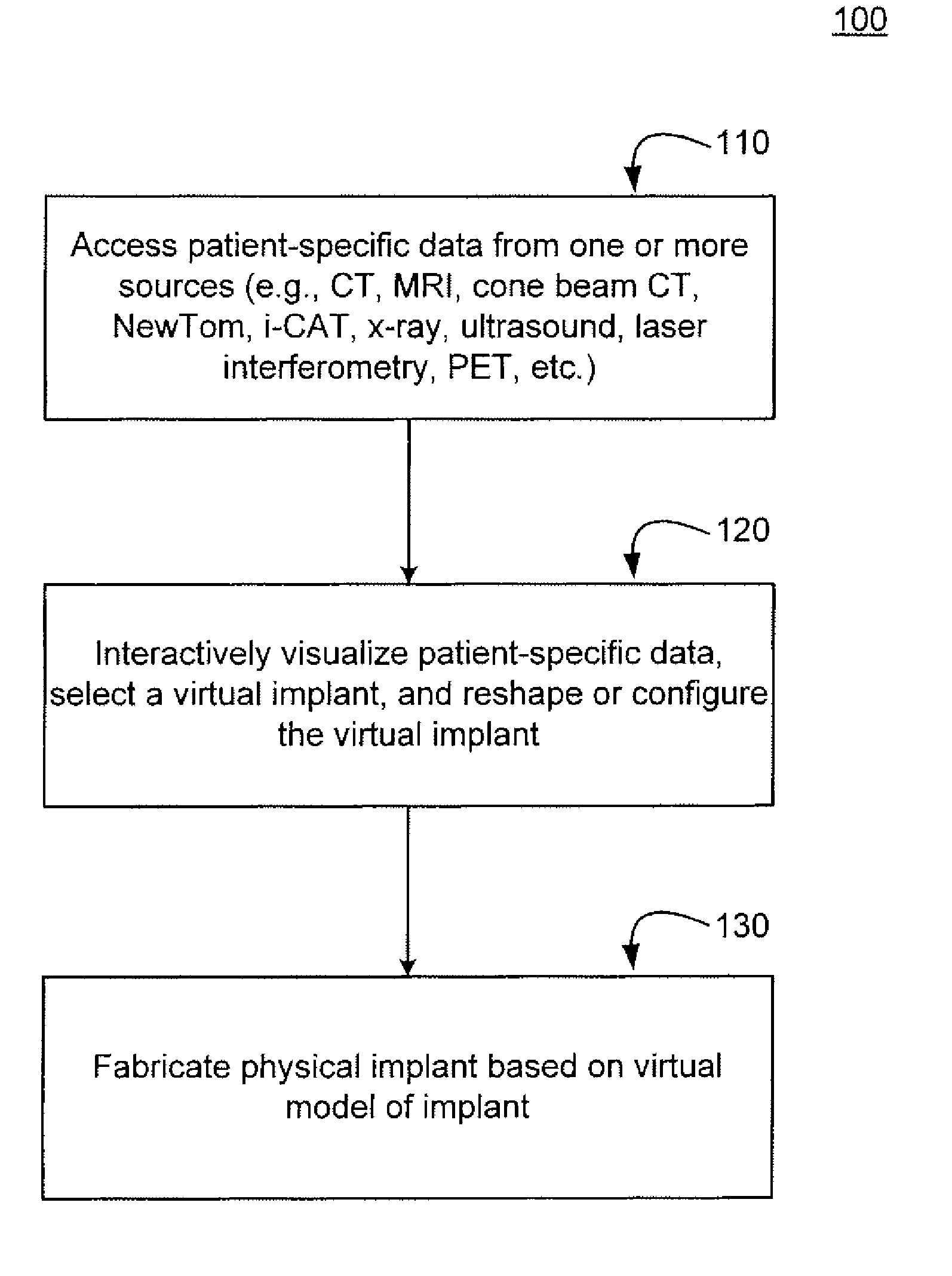

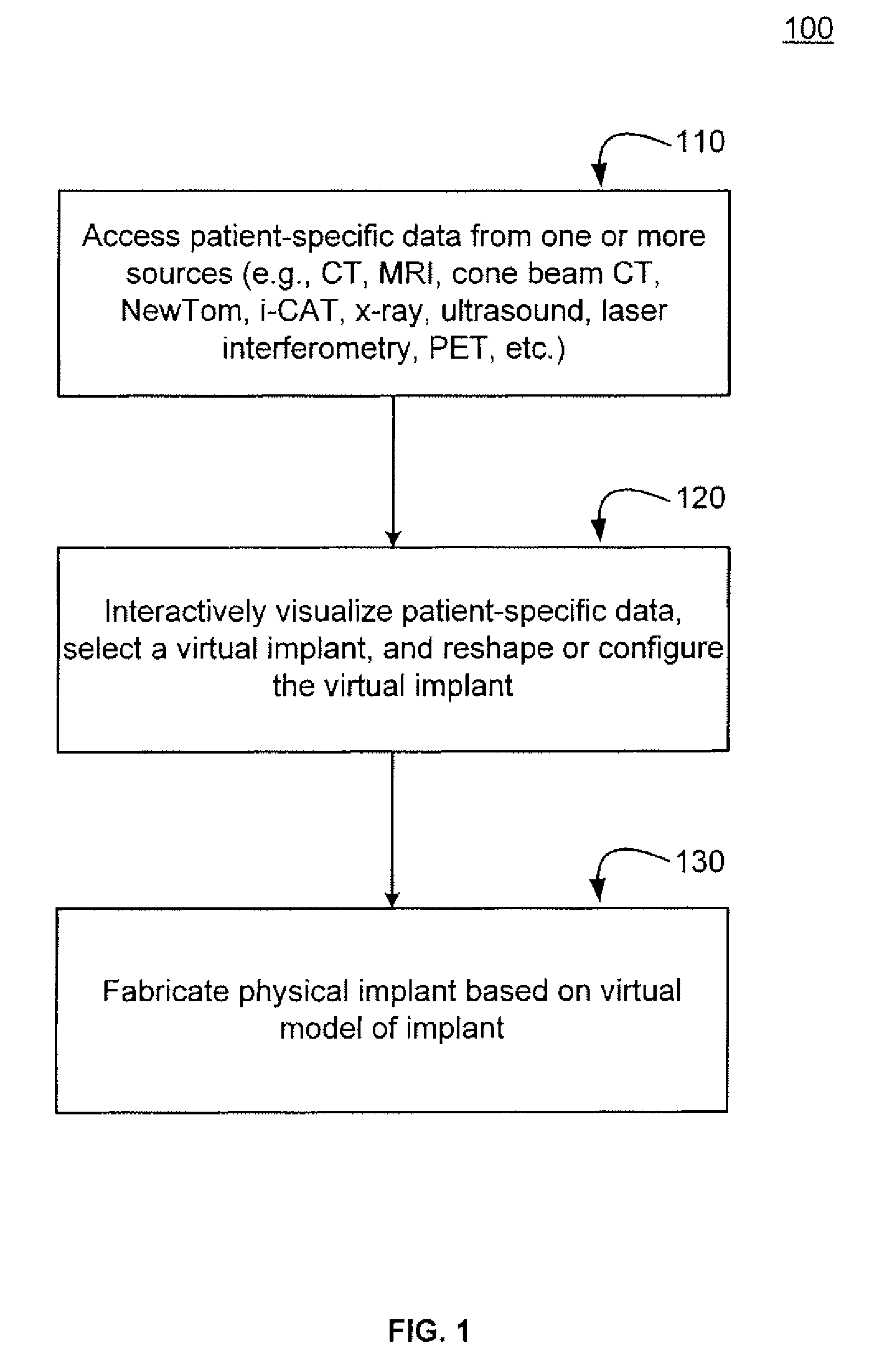

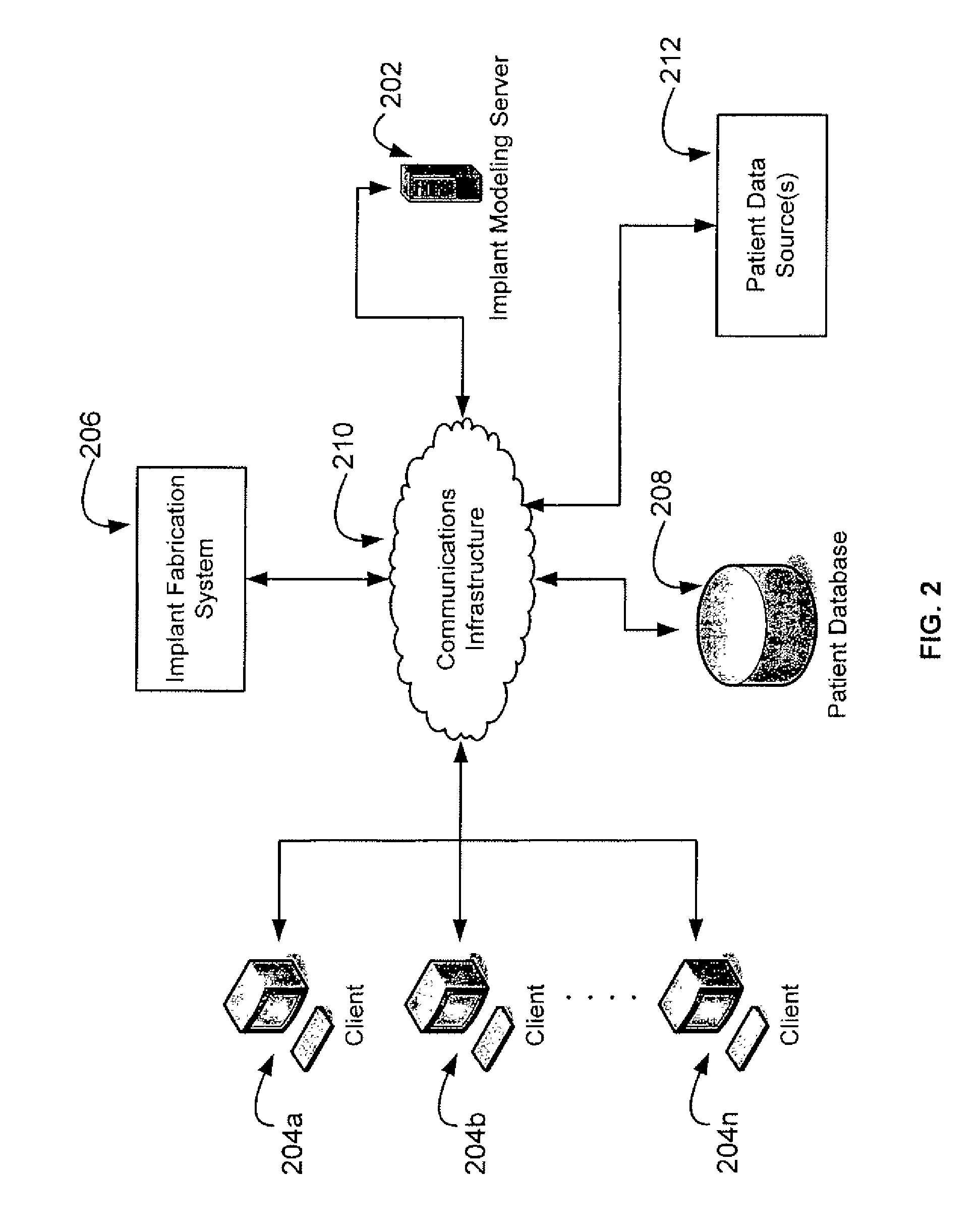

Methods, systems, and computer program products for shaping medical implants directly from virtual reality models

InactiveUS20090149977A1Improve aestheticsReduce probabilityMedical simulationSpecial data processing applicationsAnatomical structuresX-ray

A virtual interactive environment enables a surgeon or other medical professional to manipulate implants, prostheses, or other instruments using patient-specific data from virtual reality models. The patient data includes a combination of volumetric data, surface data, and fused images from various sources (e.g., CT, MRI, x-ray, ultrasound, laser interferometry, PET, etc.). The patient data is visualized to permit a surgeon to manipulate a virtual image of the patient's anatomy, the implant, or both, until the implant is ideally positioned within the virtual model as the surgeon would position a physical implant in actual surgery. Thus, the interactive tools can simulate changes in an anatomical structure (e.g., bones or soft tissue), and their effects on the external, visual appearance of the patient. CAM software is executed to fabricate the implant, such that it is customized for the patient without having to modify the structures during surgery or to produce a better fit.

Owner:SCHENDEL STEPHEN A

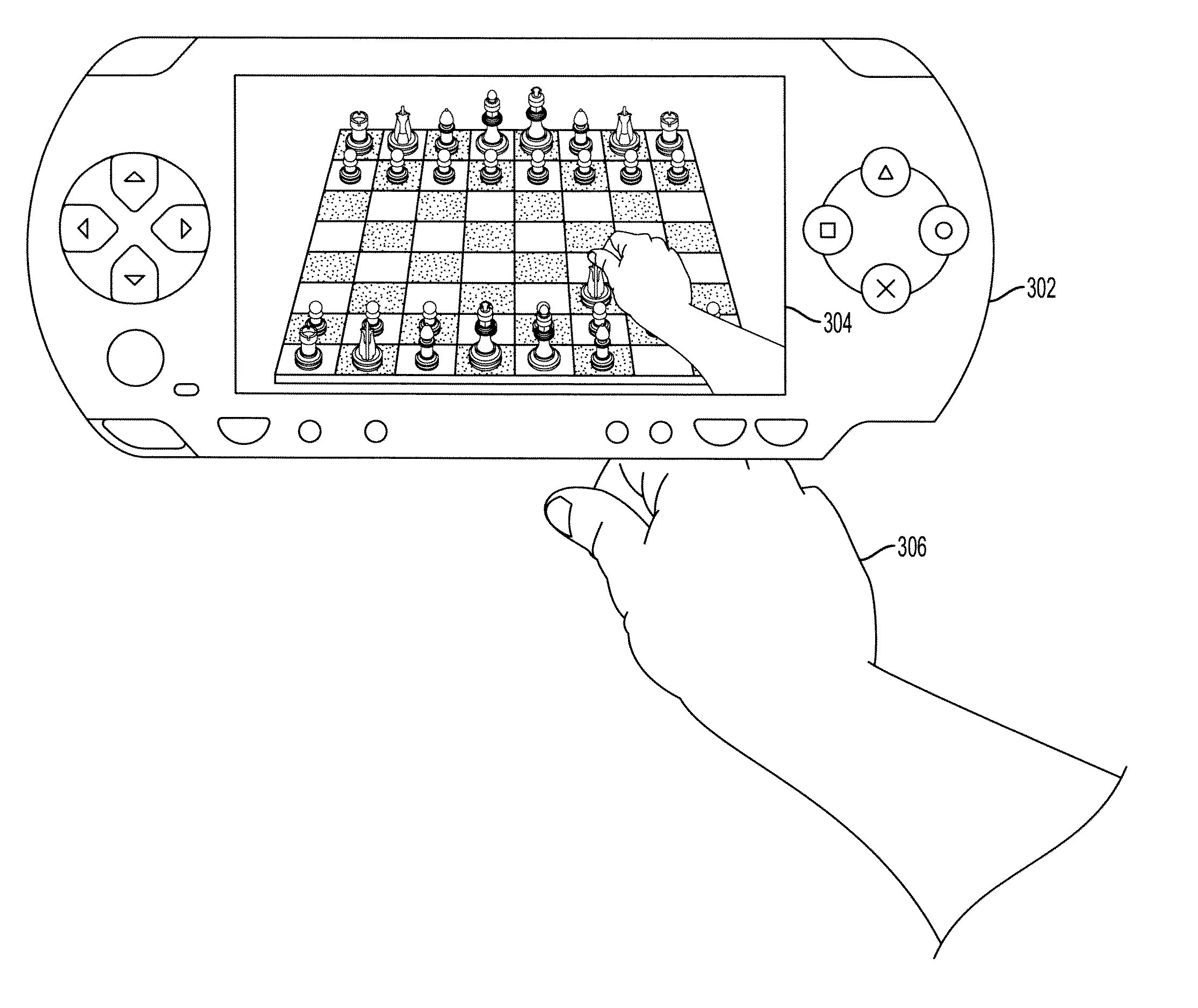

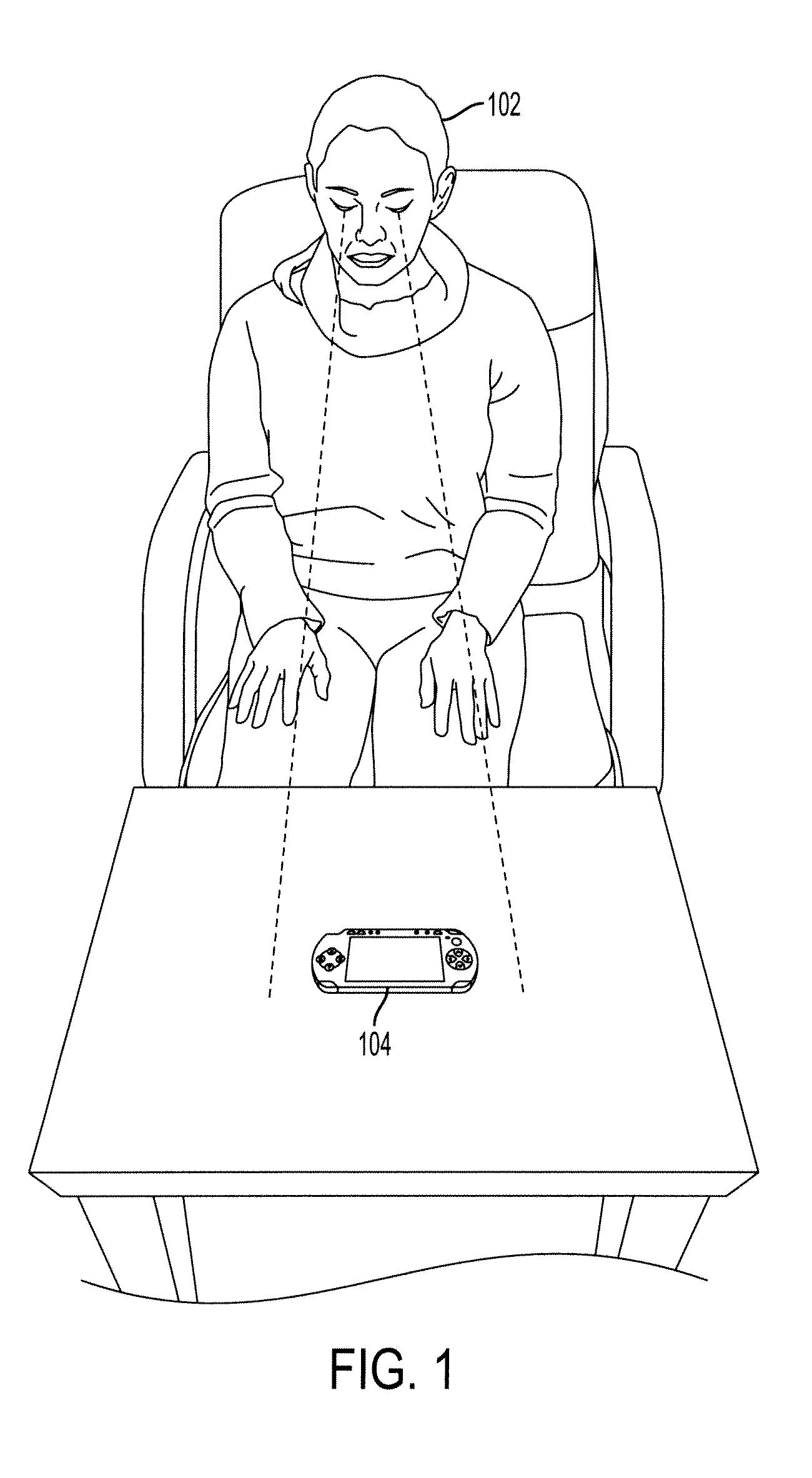

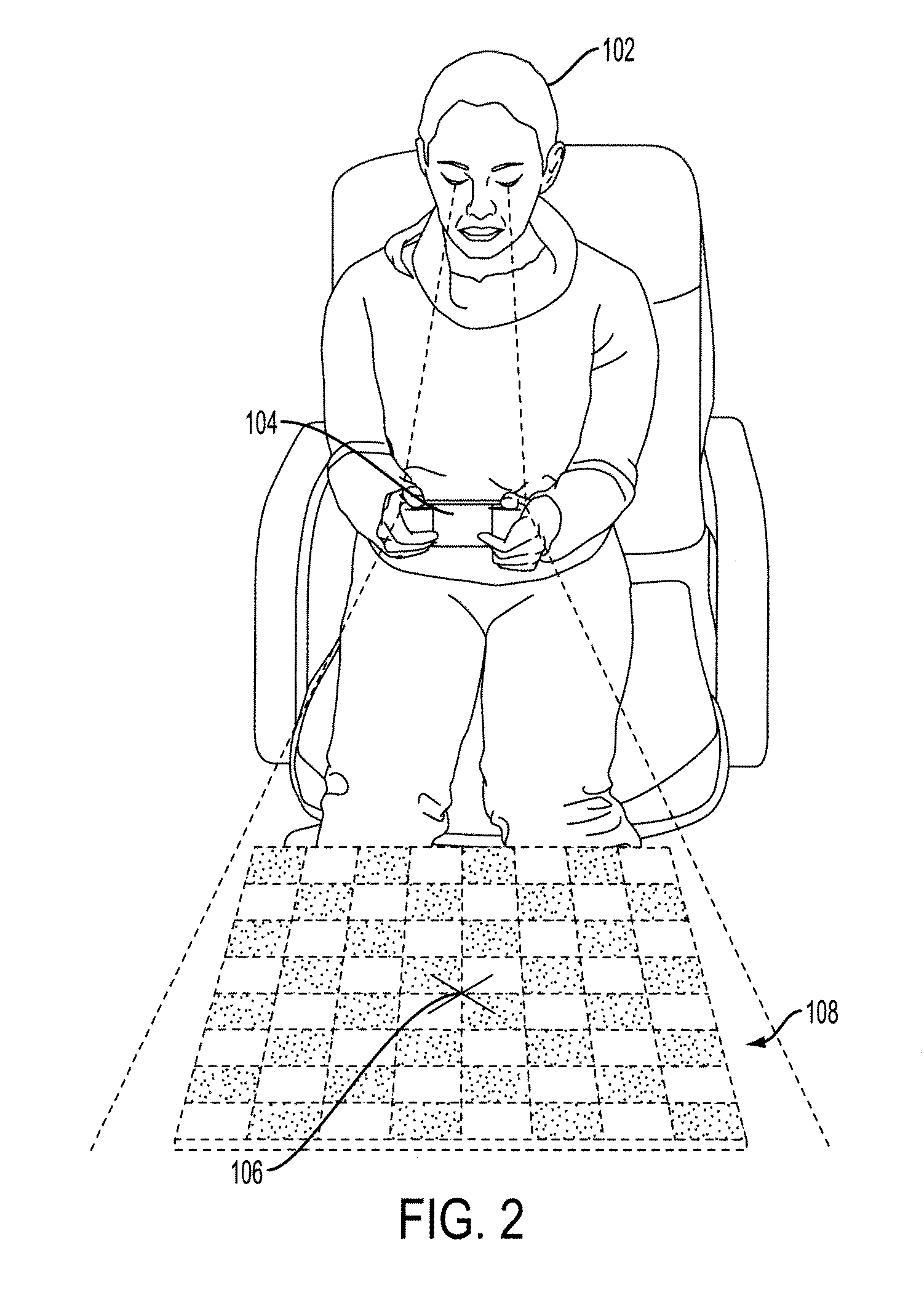

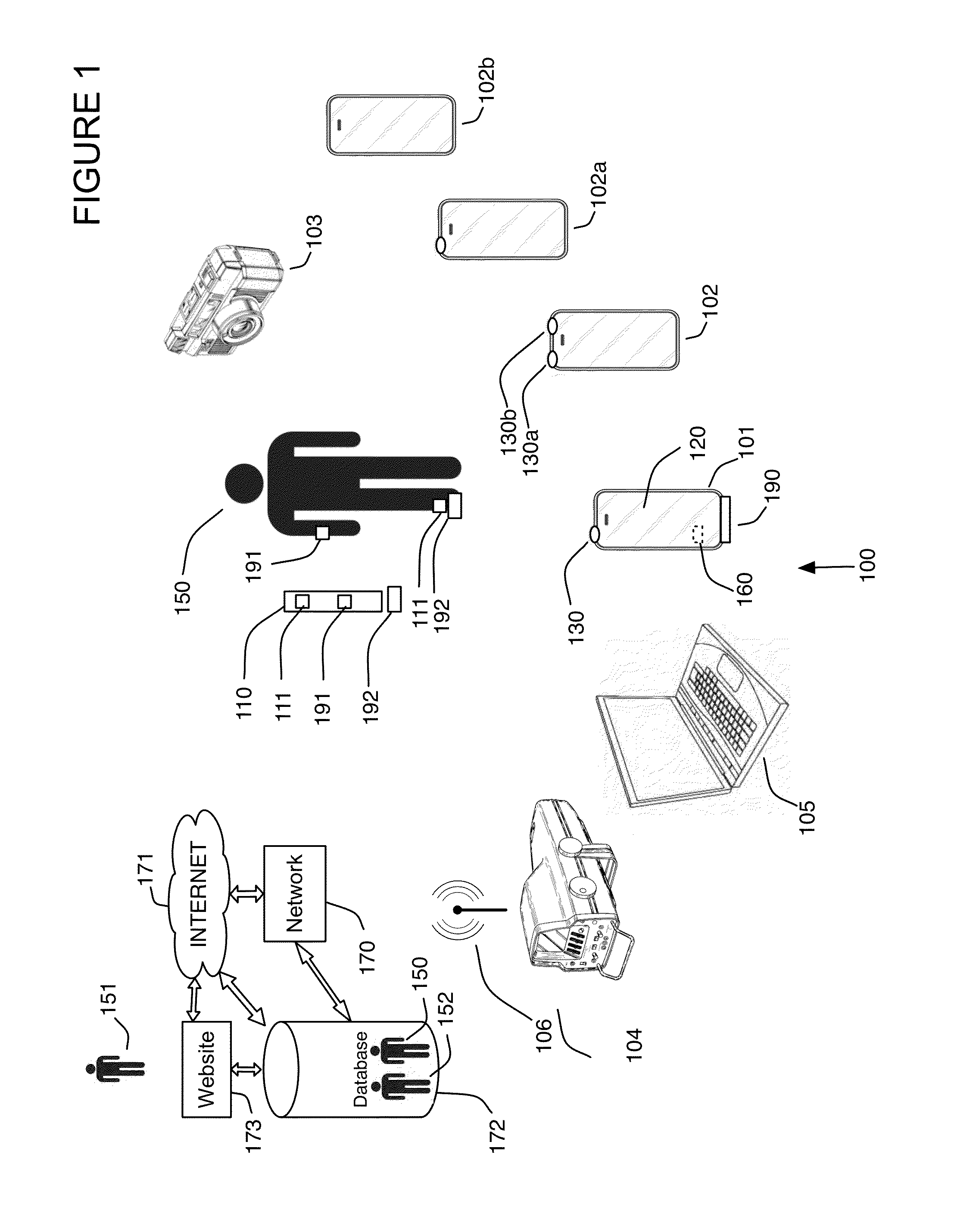

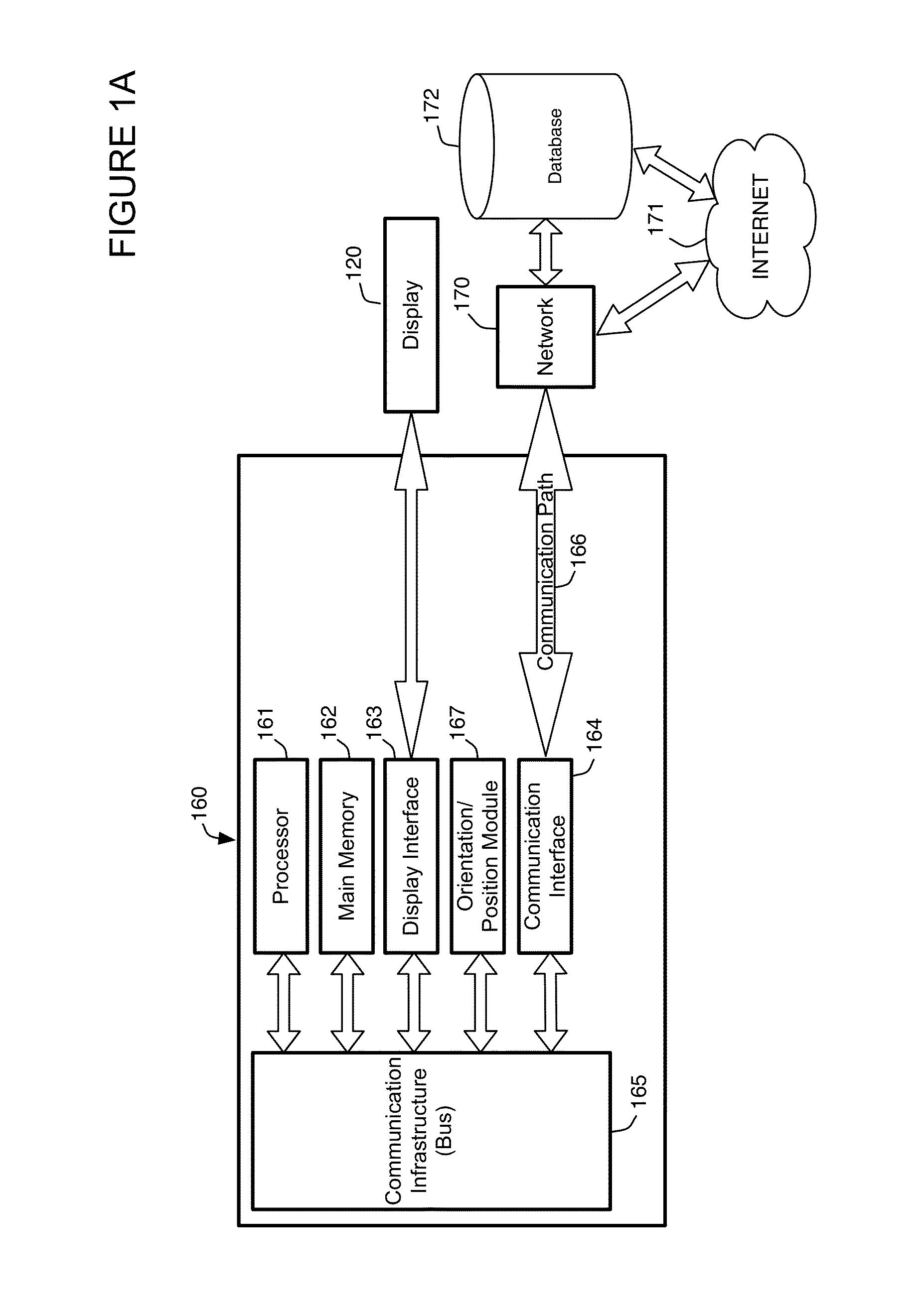

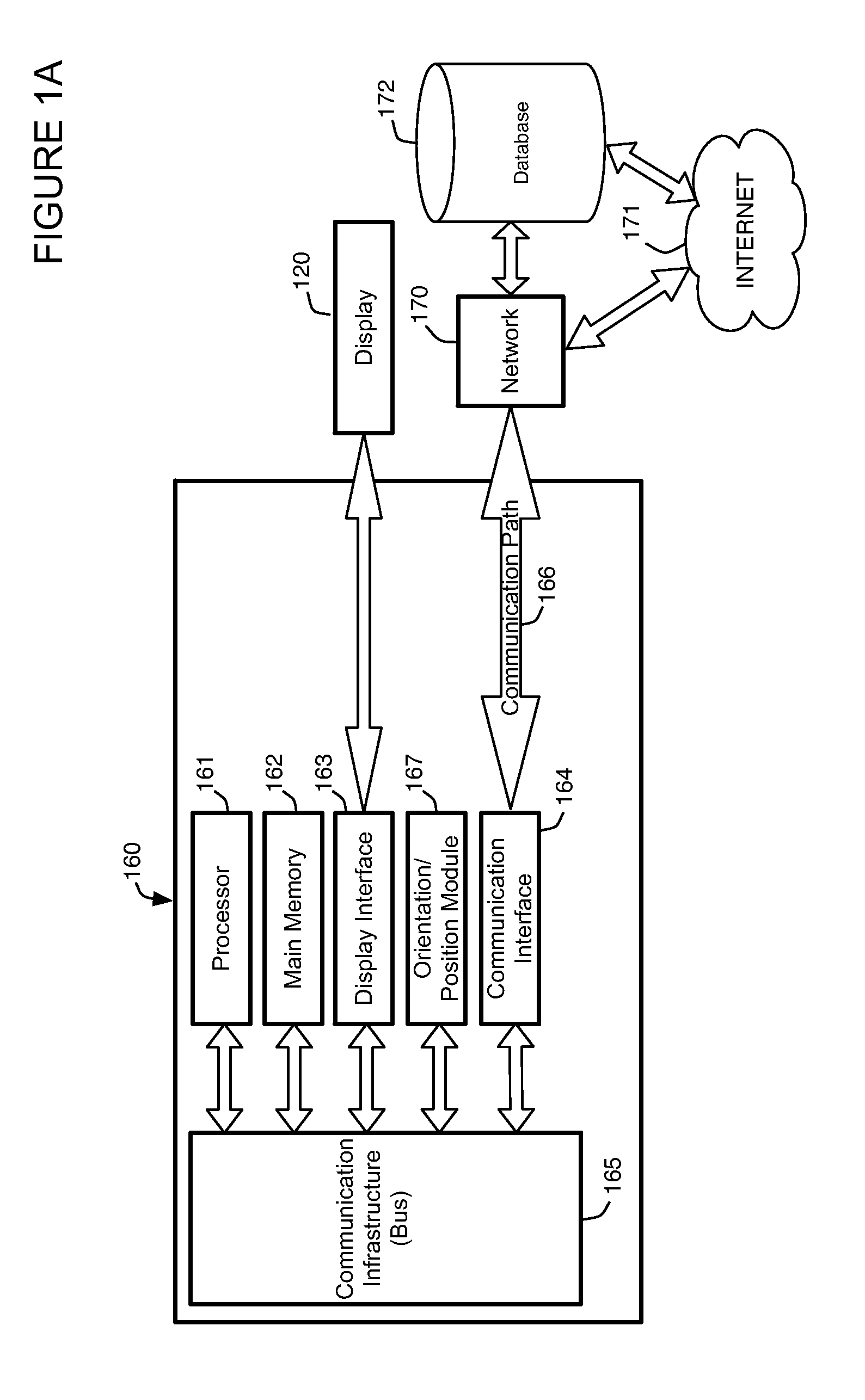

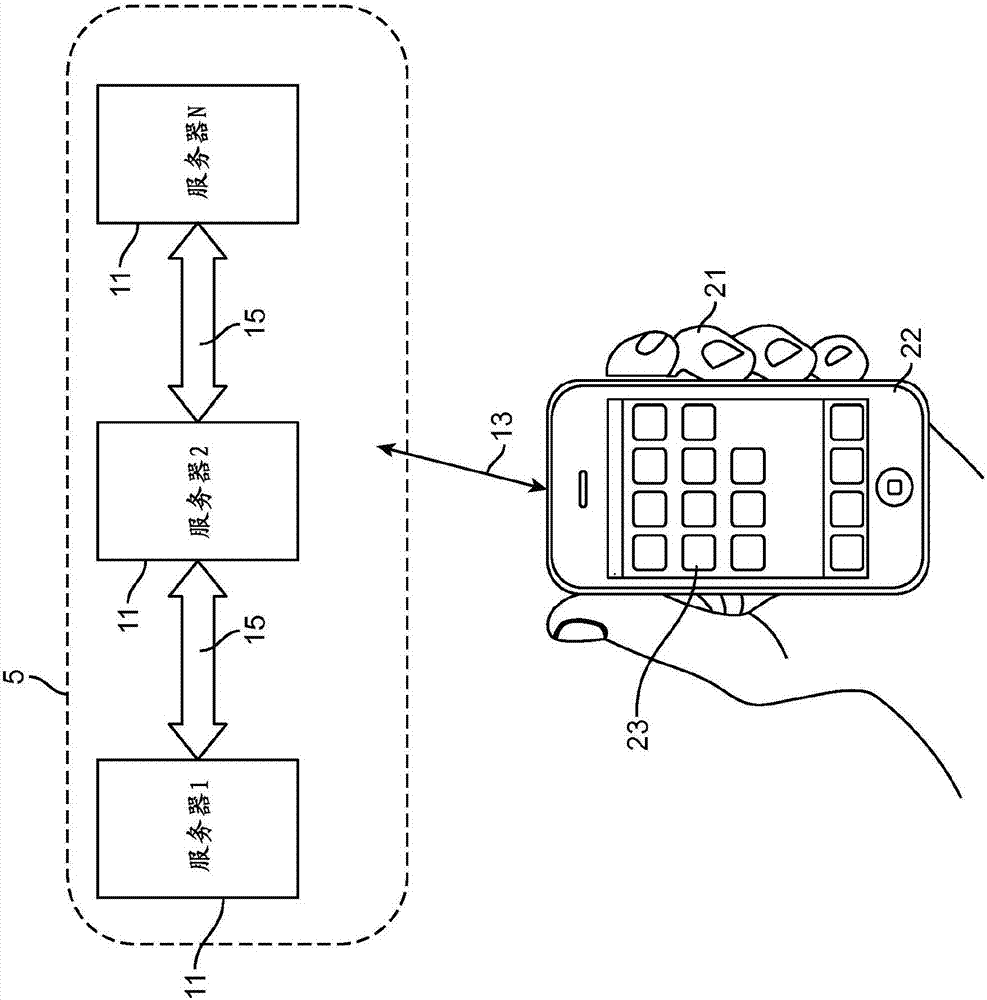

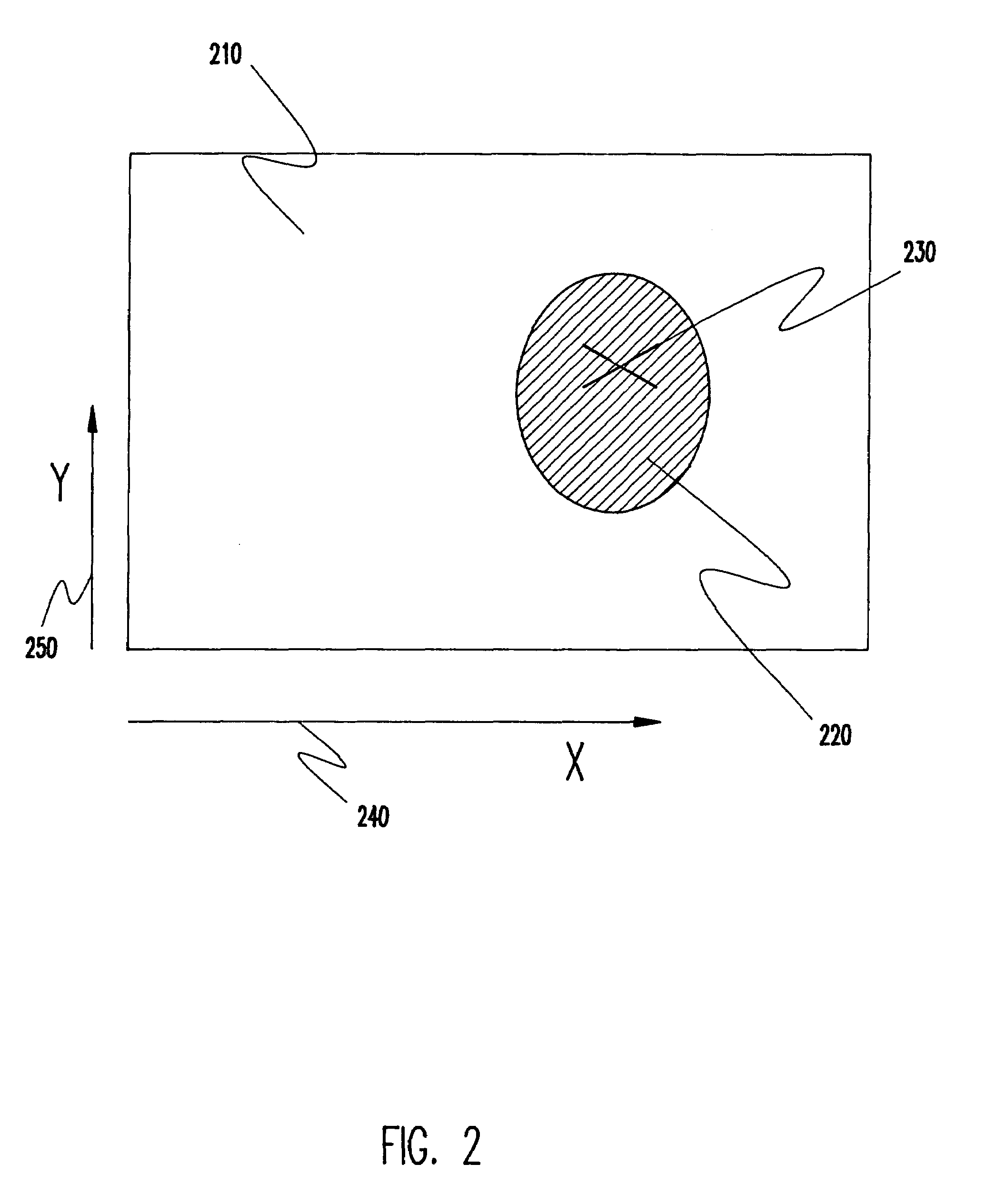

Maintaining Multiple Views on a Shared Stable Virtual Space

Methods, apparatus, and computer programs for controlling a view of a virtual scene with a portable device are presented. In one method, a signal is received and the portable device is synchronized to make the location of the portable device a reference point in a three-dimensional (3D) space. A virtual scene, which includes virtual reality elements, is generated in the 3D space around the reference point. Further, the method determines the current position in the 3D space of the portable device with respect to the reference point and creates a view of the virtual scene. The view represents the virtual scene as seen from the current position of the portable device and with a viewing angle based on the current position of the portable device. Additionally, the created view is displayed in the portable device, and the view of the virtual scene is changed as the portable device is moved by the user within the 3D space. In another method, multiple players shared the virtual reality and interact among each other view the objects in the virtual reality.

Owner:SONY INTERACTIVE ENTRTAINMENT LLC

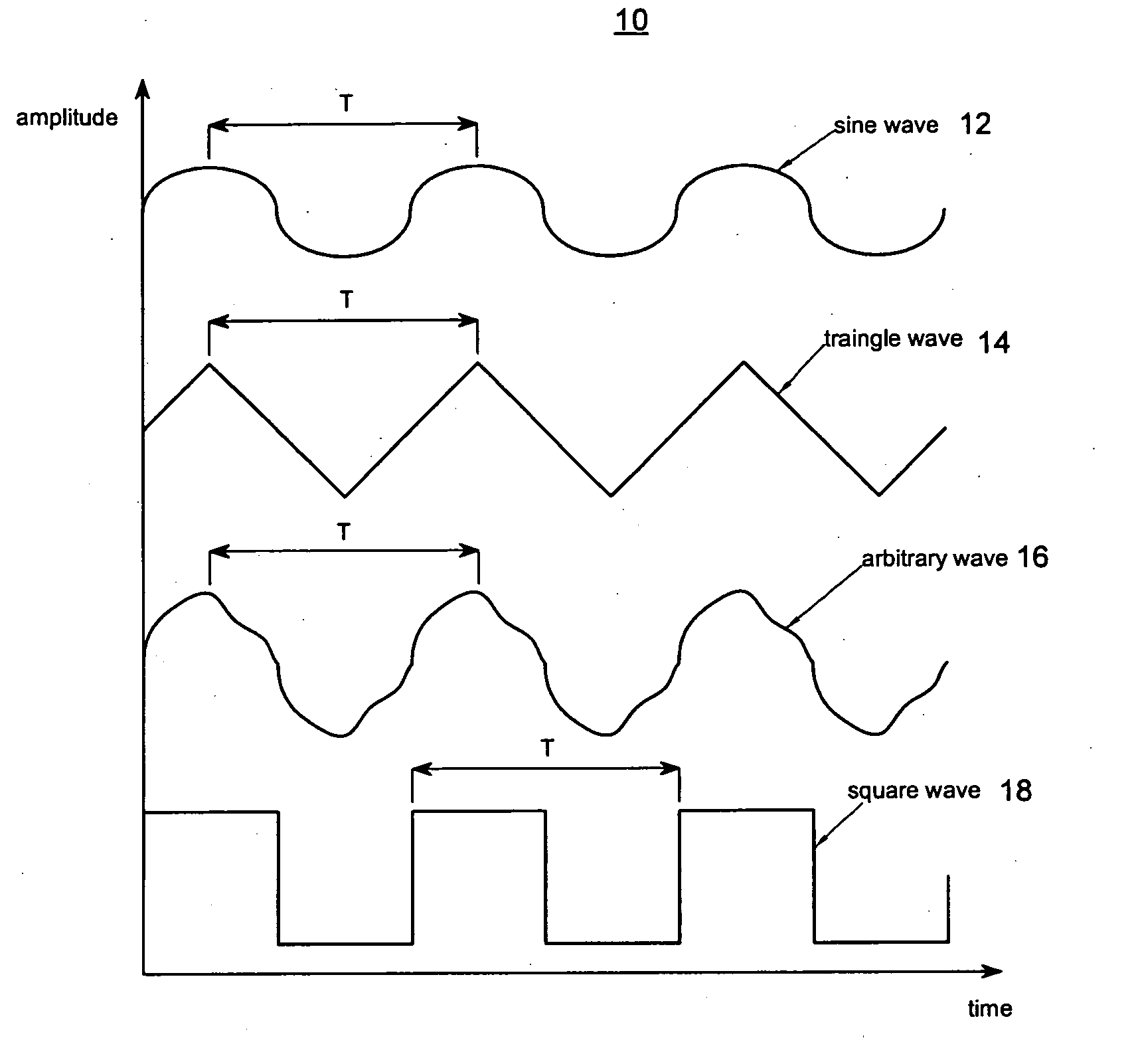

Synchronized vibration device for haptic feedback

ActiveUS20060290662A1DC motor speed/torque controlAc-dc conversion without reversalDriver circuitVibration control

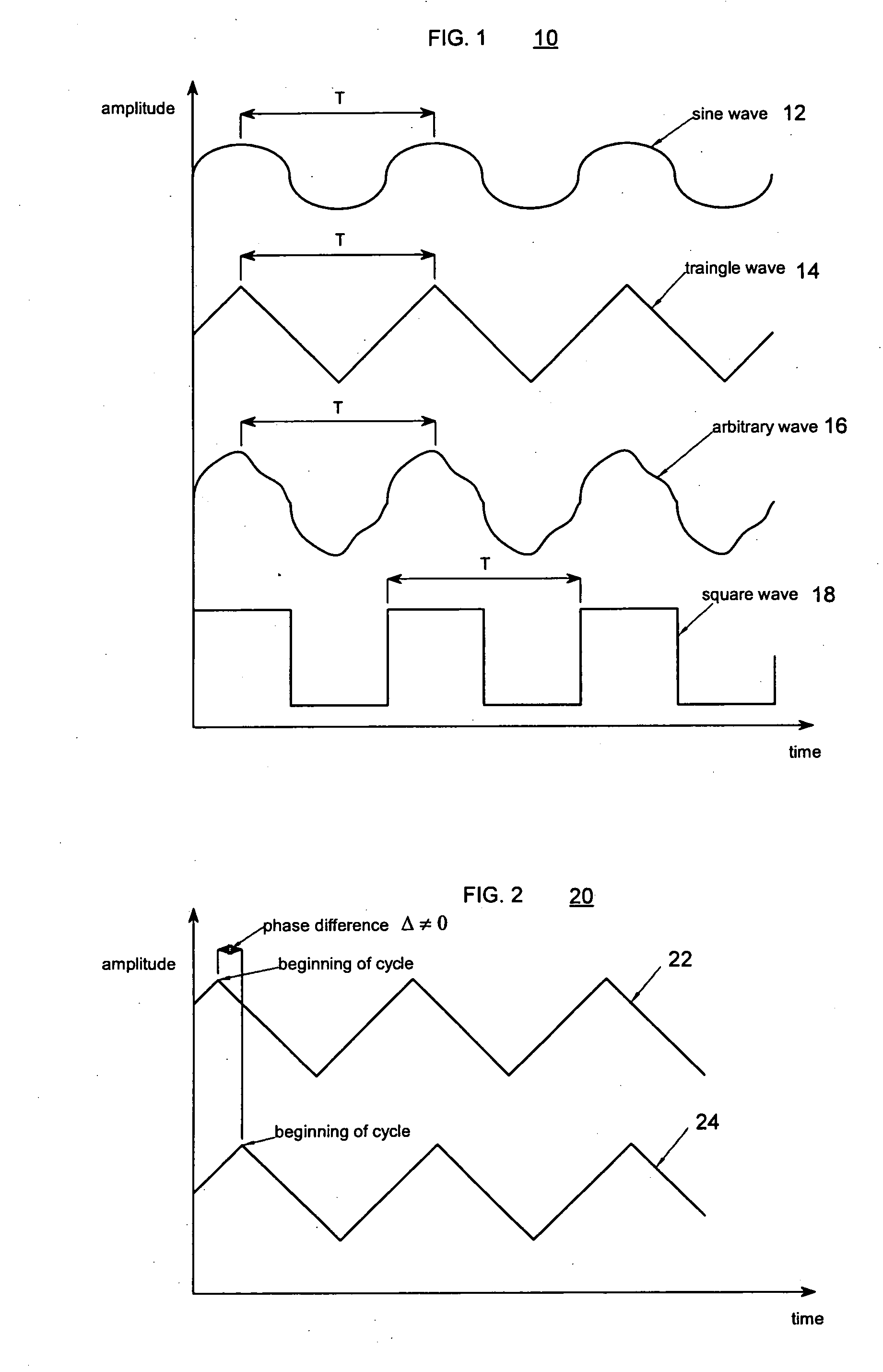

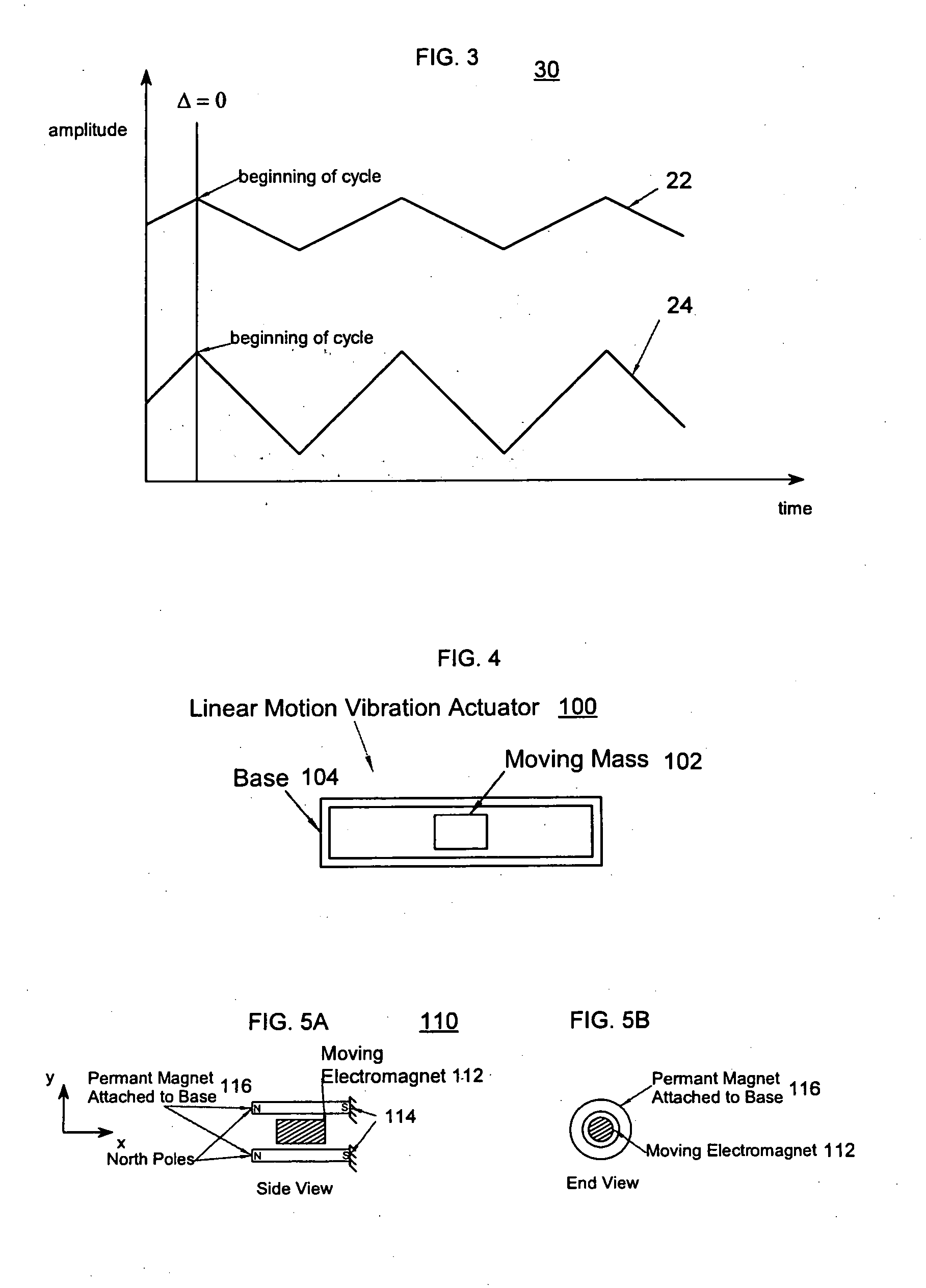

The present invention relates to synchronized vibration devices that can provide haptic feedback to a user. A wide variety of actuator types may be employed to provide synchronized vibration, including linear actuators, rotary actuators, rotating eccentric mass actuators, and rocking mass actuators. A controller may send signals to one or more driver circuits for directing operation of the actuators. The controller may provide direction and amplitude control, vibration control, and frequency control to direct the haptic experience. Parameters such as frequency, phase, amplitude, duration, and direction can be programmed or input as different patterns suitable for use in gaming, virtual reality and real-world situations.

Owner:COACTIVE DRIVE CORP

Panoramic image-based virtual reality/telepresence audio-visual system and method

InactiveUS20080024594A1Reduce distortionTelevision system detailsSelective content distributionComputer graphics (images)Display device

A panoramic system generally employs a panoramic input component, a processing component, and a panoramic display component. The panoramic input component a panoramic sensor assembly and / or image selectors that can be used on an individual or network basis. The processing component provides various applications such as video capture / control, image stabilization, target / feature selection, image stitching, image mosaicing, 3-D modeling / texture mapping, perspective / distortion correction and interactive game control. The panoramic display component can be embodied as a head mounted display device or system, a portable device, or a room.

Owner:RITCHEY KURTIS J

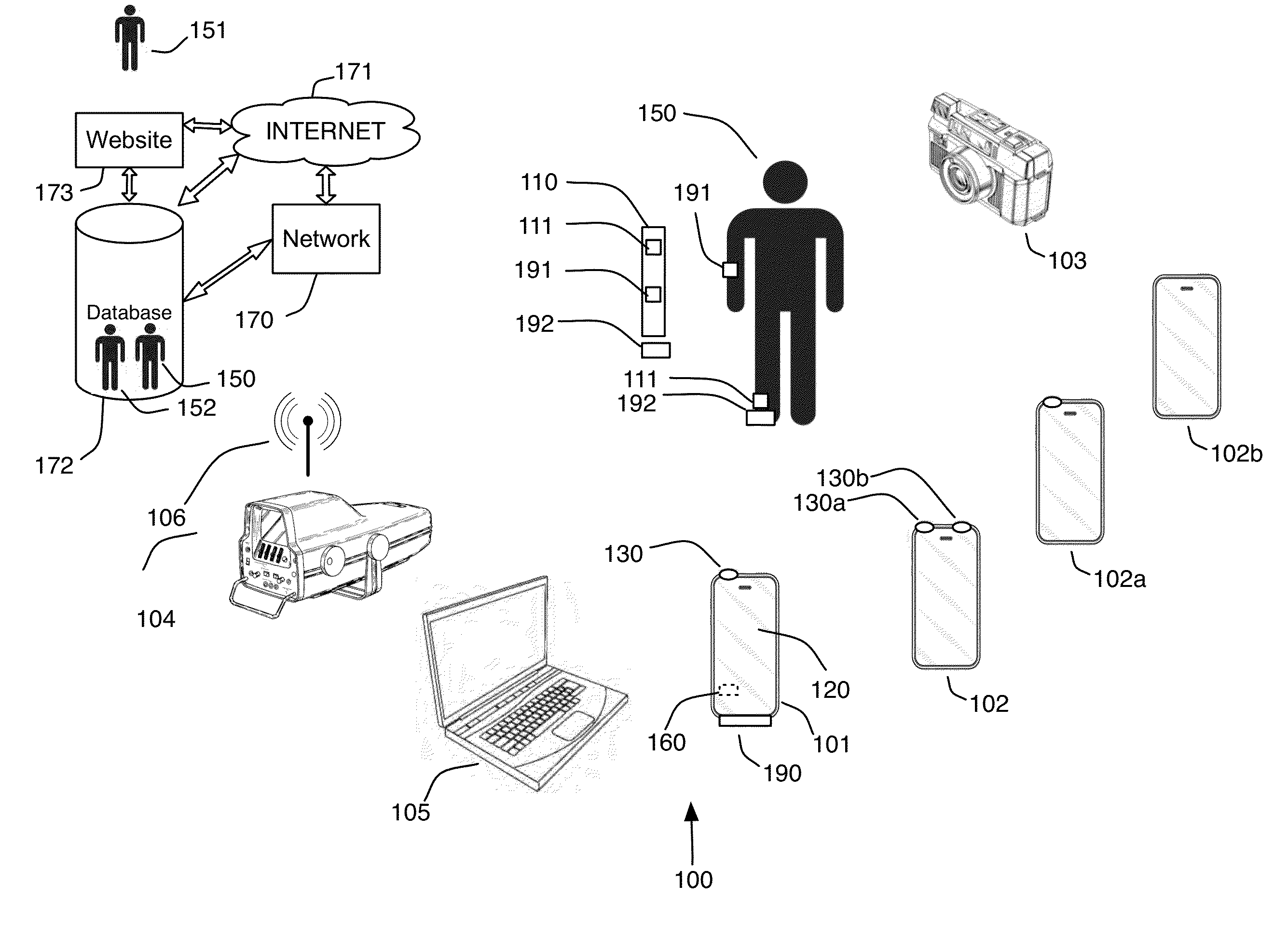

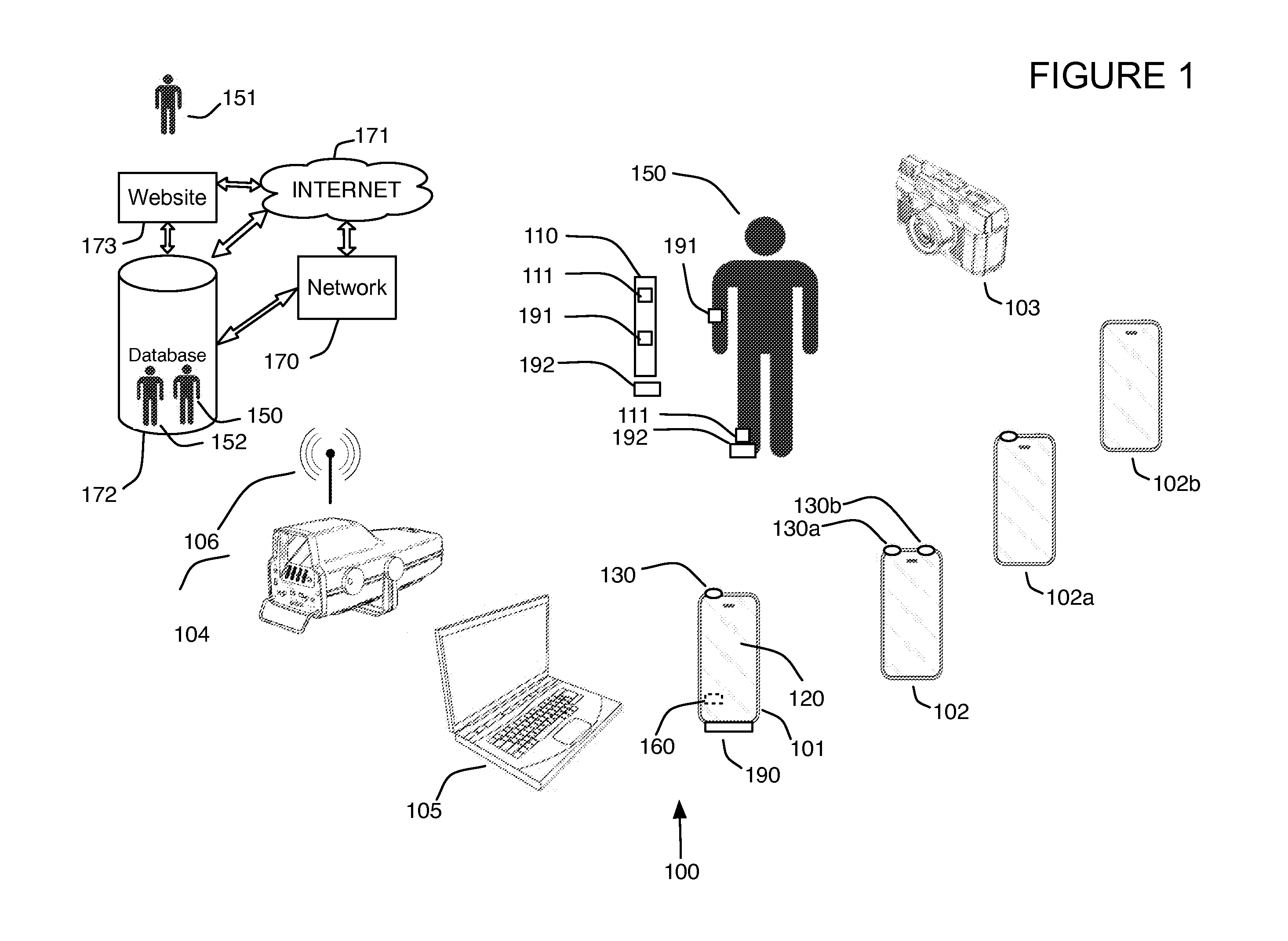

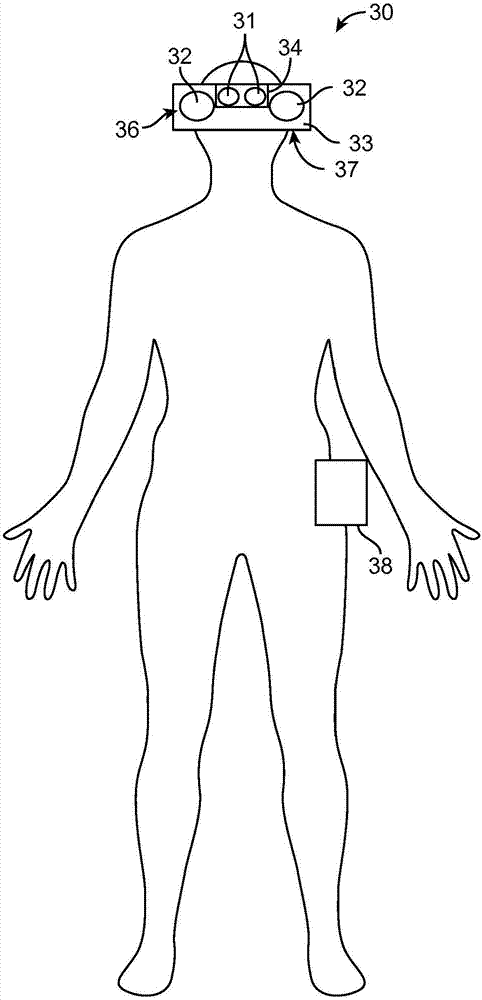

Intelligent motion capture element

ActiveUS20130128022A1Accurate and power efficientIncrease capacityGymnastic exercisingDevices with sensorPower applicationAnalysis data

Intelligent motion capture element that includes sensor personalities that optimize the sensor for specific movements and / or pieces of equipment and / or clothing and may be retrofitted onto existing equipment or interchanged therebetween and automatically detected for example to switch personalities. May be used for low power applications and accurate data capture for use in healthcare compliance, sporting, gaming, military, virtual reality, industrial, retail loss tracking, security, baby and elderly monitoring and other applications for example obtained from a motion capture element and relayed to a database via a mobile phone. System obtains data from motion capture elements, analyzes data and stores data in database for use in these applications and / or data mining. Enables unique displays associated with the user, such as 3D overlays onto images of the user to visually depict the captured motion data. Enables performance related equipment fitting and purchase. Includes active and passive identifier capabilities.

Owner:NEWLIGHT CAPITAL LLC

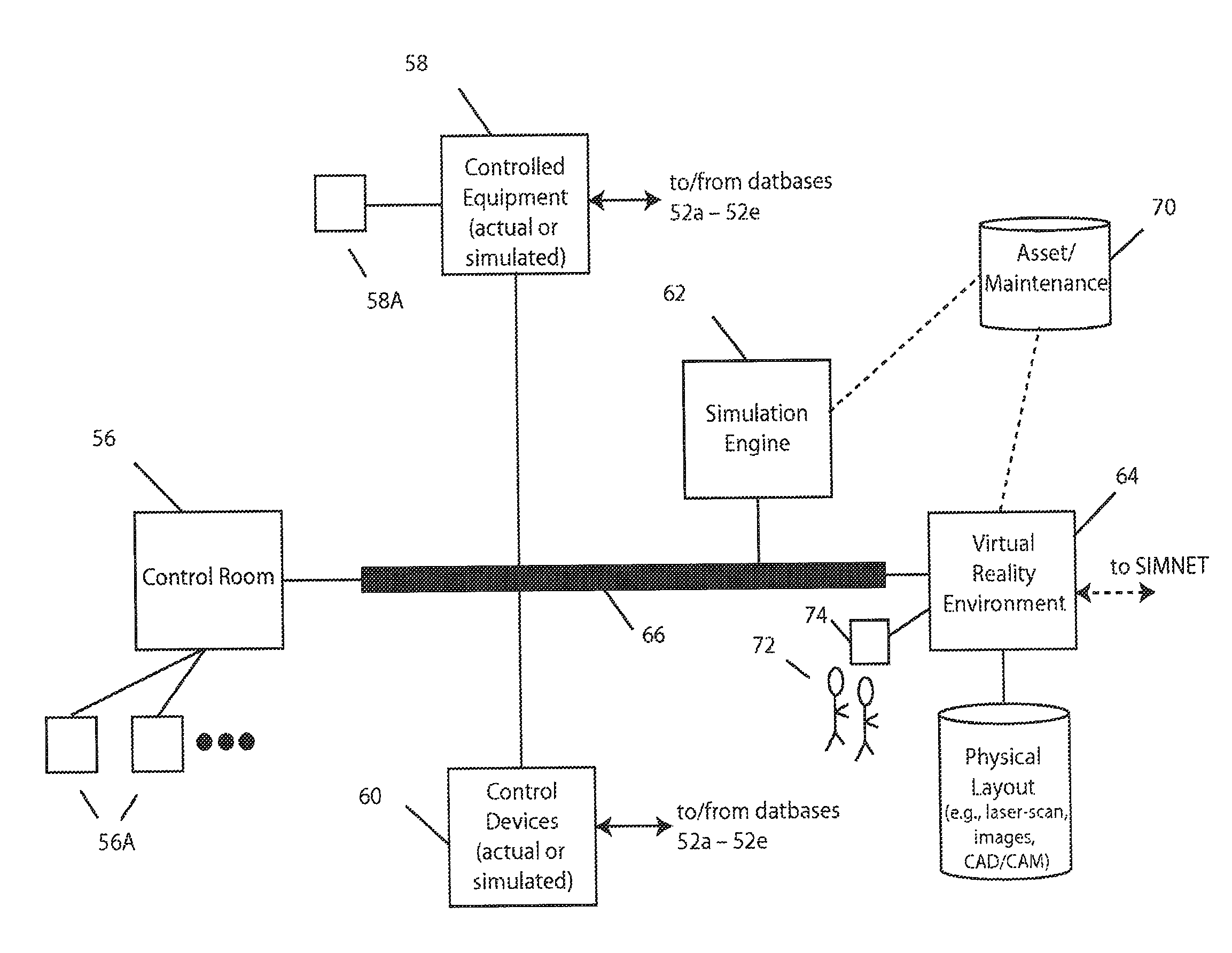

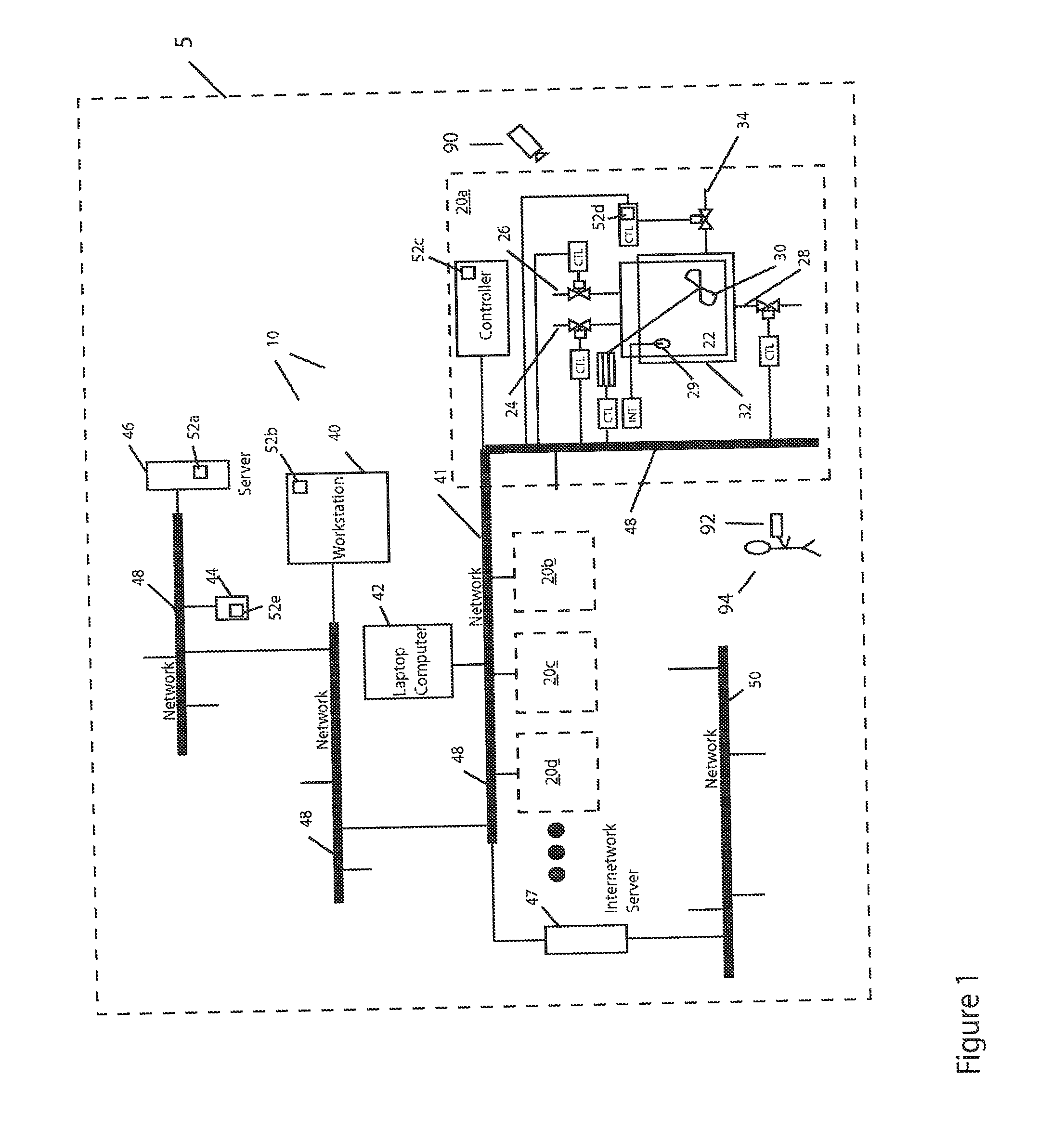

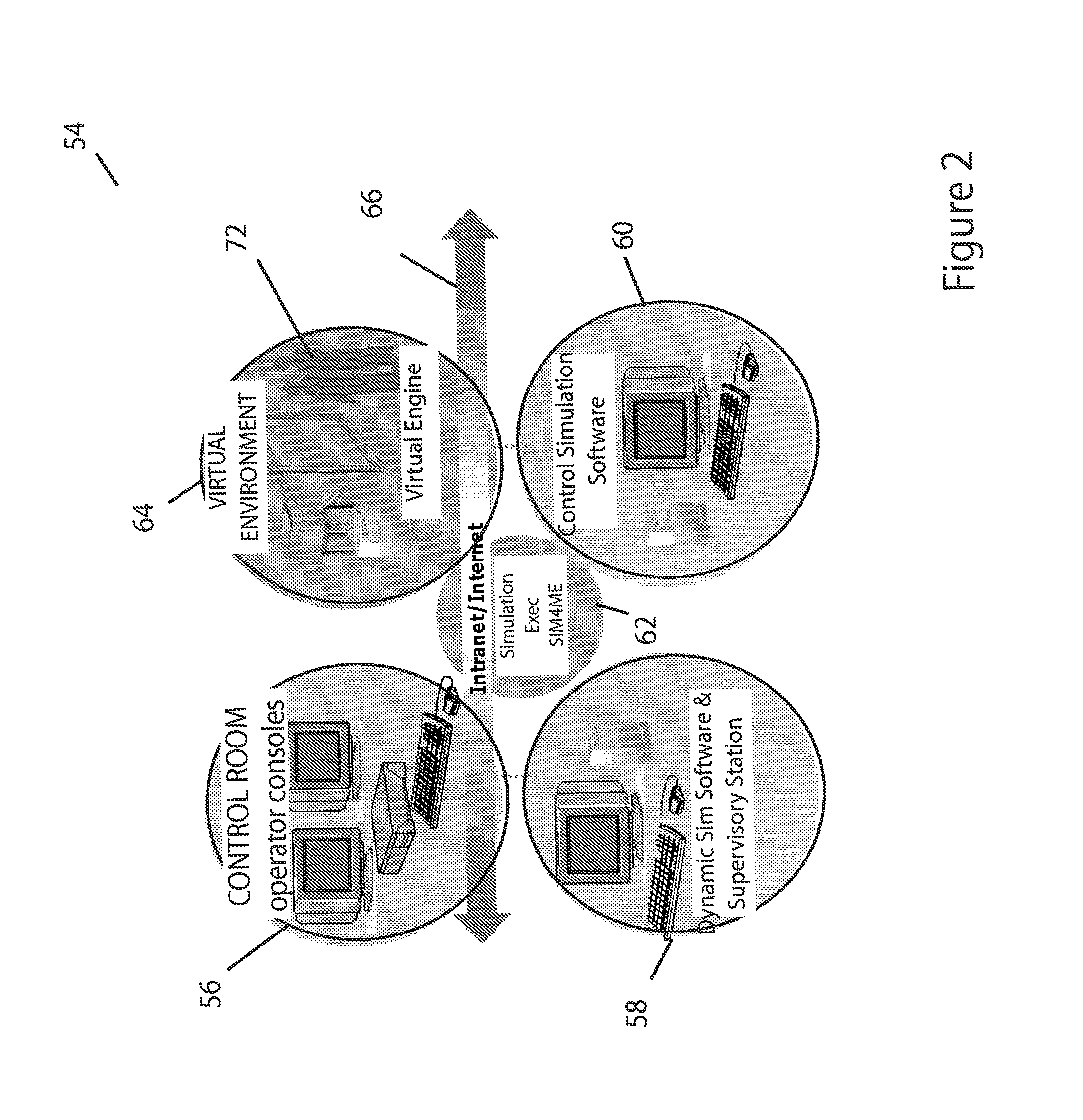

Systems and methods for immersive interaction with actual and/or simulated facilities for process, environmental and industrial control

The invention provides, in some aspects, systems for interaction with a control environment that includes controlled equipment along with control devices that monitor and control that controlled equipment. According to some of those aspects, such a system includes first functionality that generates output representing an operational status of the controlled equipment, as well as second functionality that generates output representing an operational of one or more of the control devices. An engine coordinates the first functionality and to the second functionality to generate an operational status of the control environment. A virtual reality environment generates, as a function of that operational status and one or more physical aspects of the control environment, a three-dimensional (“3D”) display of the control environment. The virtual reality environment is responsive to user interaction with one or more input devices to generate the 3D display so as to permit the user to interact with at least one of the control devices and the controlled equipment at least as represented by the 3D display of the control environment. The engine applies to at least one of the first and second functionality indicia of those interactions to discern resulting changes in the operational status of the control environment. It applies indicia of those changes to the virtual reality environment to effect corresponding variation in the 3D display of the control environment—i.e., variation indicative of the resulting change in the control environment.

Owner:INVENSYS SYST INC

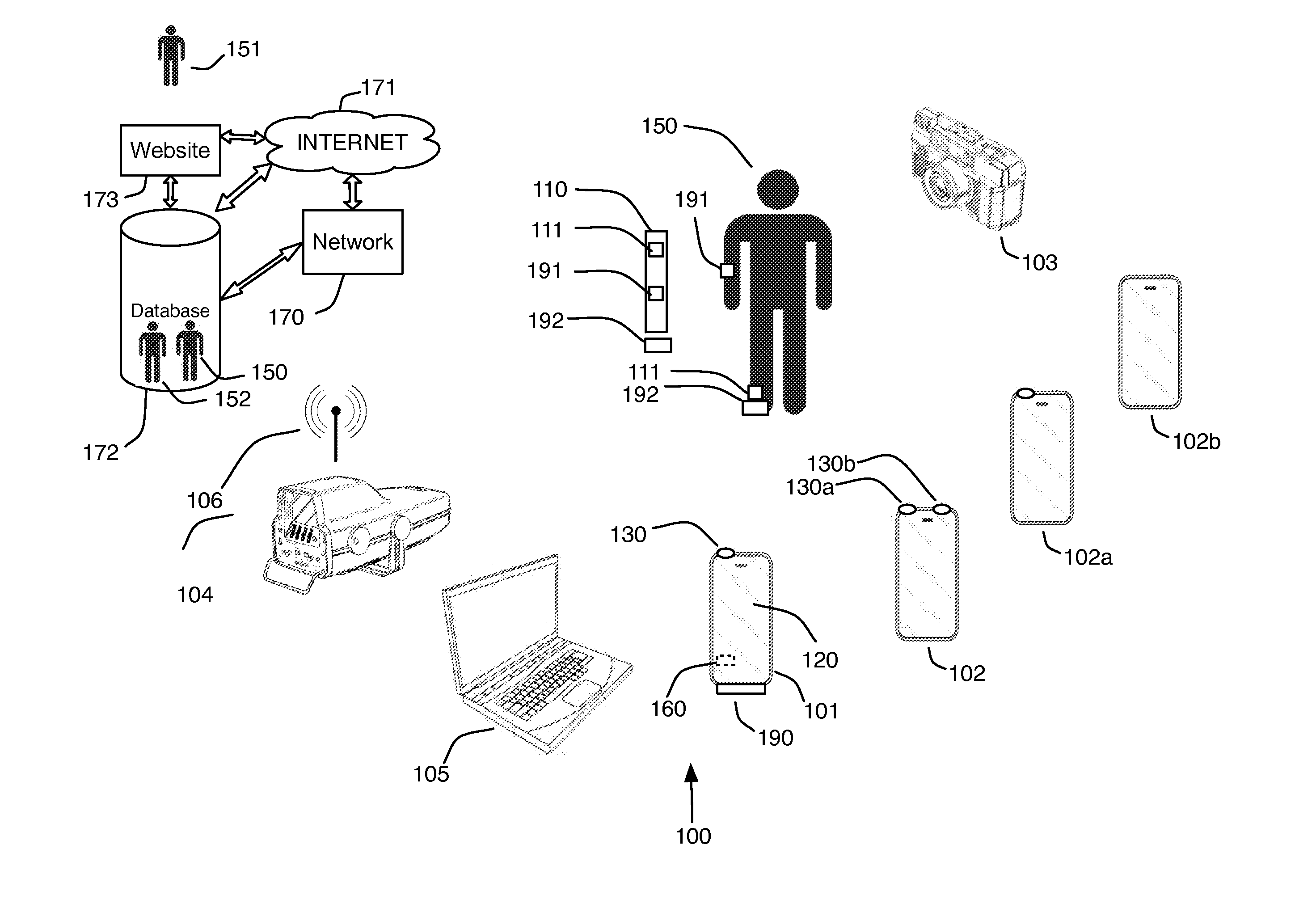

Motion capture element

ActiveUS20120116548A1Accurate and power efficientIncrease capacityDiagnostic recording/measuringSensorsData dredgingSimulation

Motion capture element for low power and accurate data capture for use in healthcare compliance, sporting, gaming, military, virtual reality, industrial, retail loss tracking, security, baby and elderly monitoring and other applications for example obtained from a motion capture element and relayed to a database via a mobile phone. System obtains data from motion capture elements, analyzes data and stores data in database for use in these applications and / or data mining, which may be charged for. Enables unique displays associated with the user, such as 3D overlays onto images of the user to visually depict the captured motion data. Ratings, compliance, ball flight path data can be calculated and displayed, for example on a map or timeline or both. Enables performance related equipment fitting and purchase. Includes active and passive identifier capabilities.

Owner:NEWLIGHT CAPITAL LLC

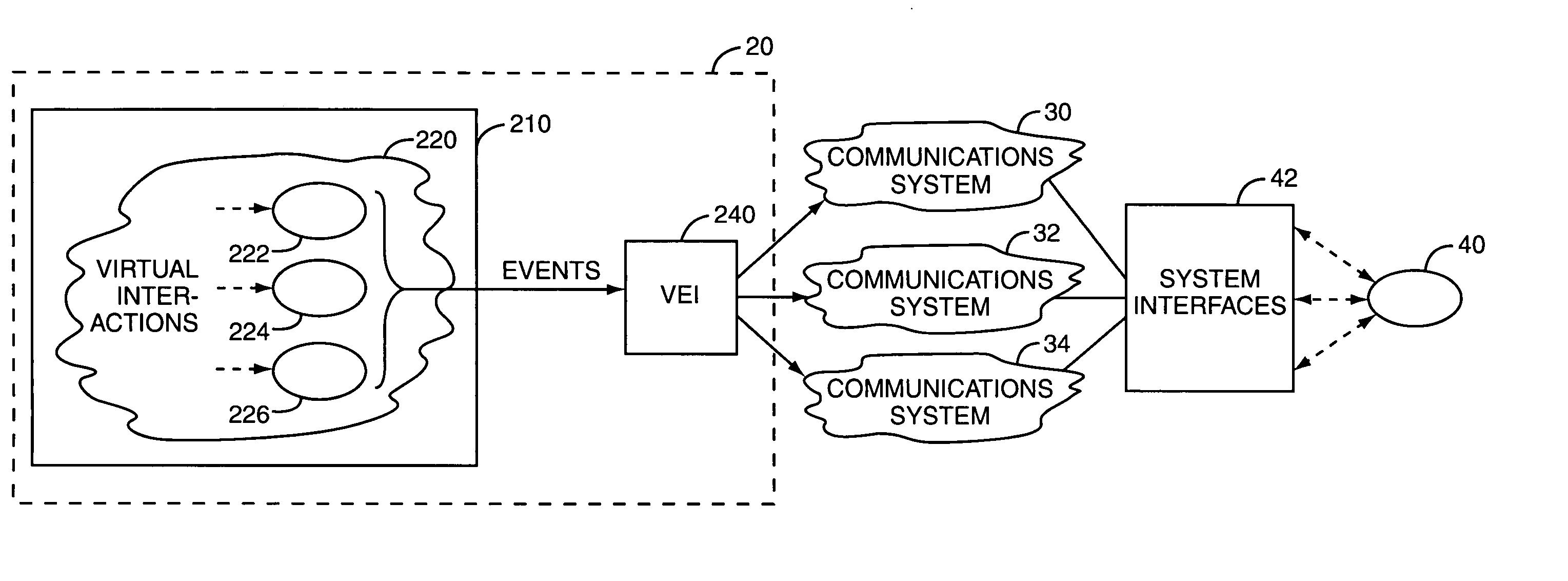

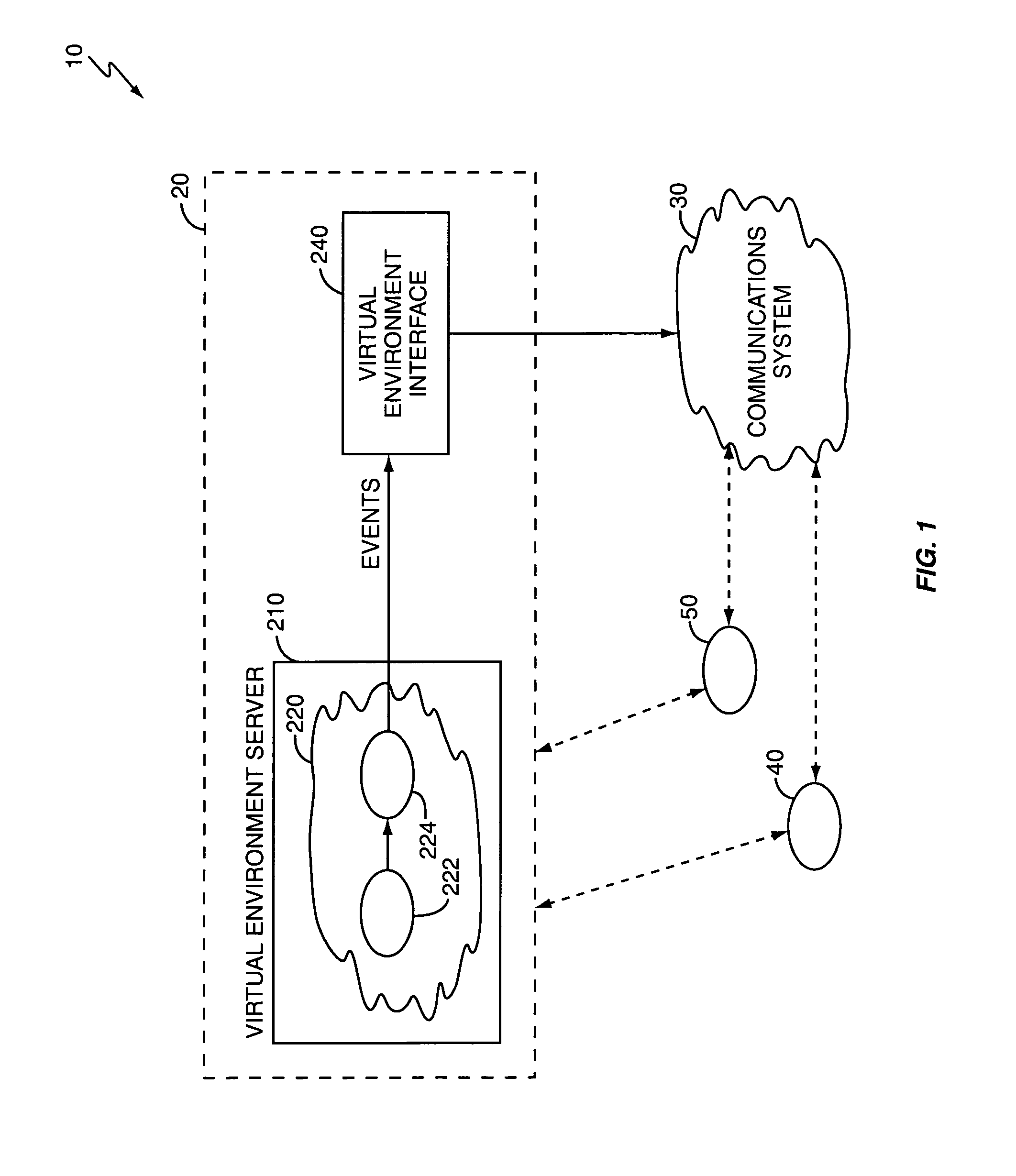

Controlling communications through a virtual reality environment

InactiveUS7036082B1Maximum flexibilitySuitable for communicationProgram controlNetwork connectionsTelecommunications linkCommunications system

A virtual reality system initiates desired real world actions in response to defined events occurring within a virtual environment. A variety of systems, such as communications devices, computer networks, and software applications, may be interfaced with the virtual reality system and made responsive to virtual events. For example, the virtual reality system may trigger a communications system to establish a communications link between people in response to a virtual event. Users, represented as avatars within the virtual environment, generate events by interacting with virtual entities, such as other avatars, virtual objects, and virtual locations. Virtual entities can be associated with specific users, and users can define desired behaviors for associated entities. Behaviors control the real world actions triggered by virtual events. Users can modify these behaviors, and the virtual reality system may change behaviors based on changing conditions, such as time of day or the whereabouts of a particular user.

Owner:AVAYA INC

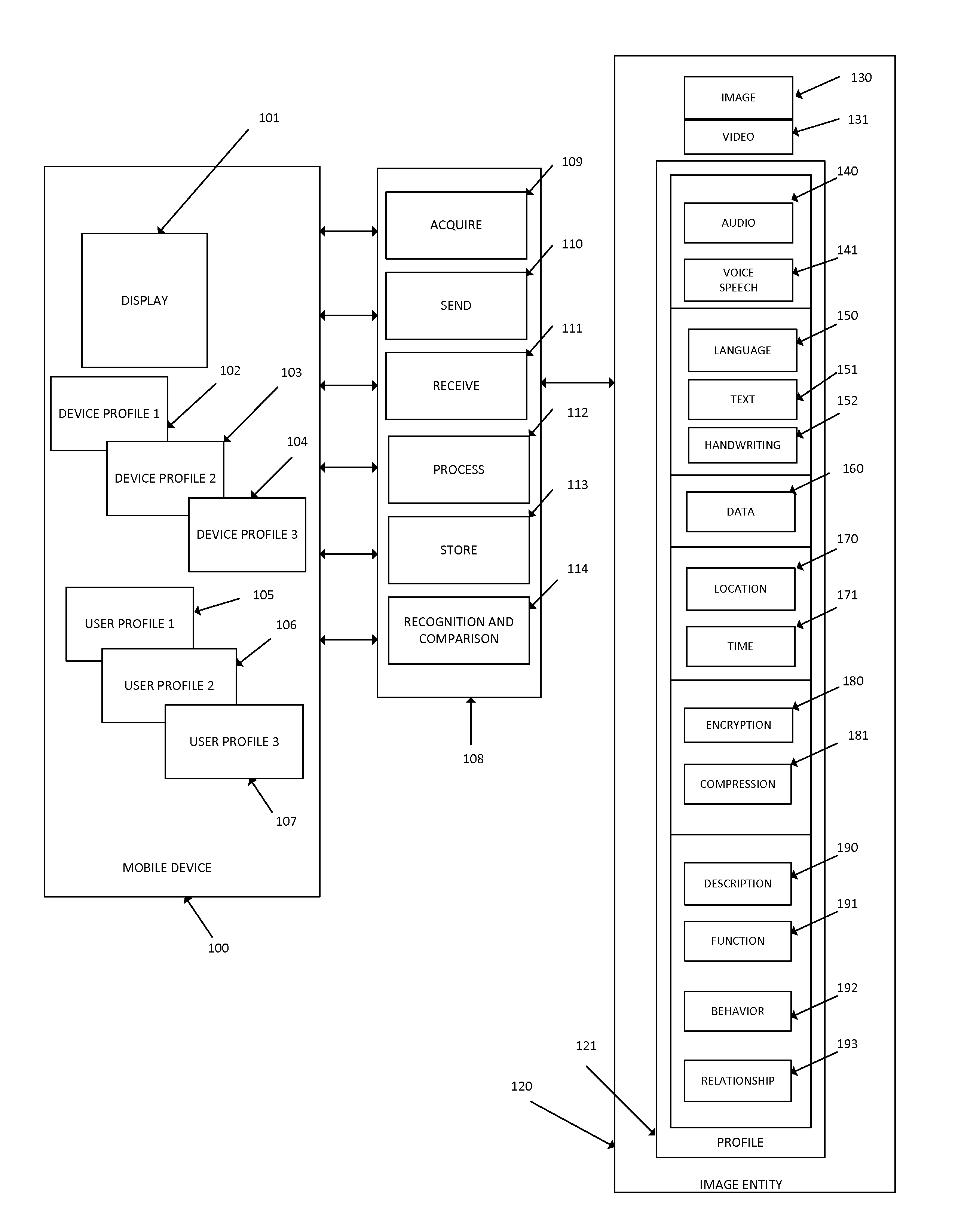

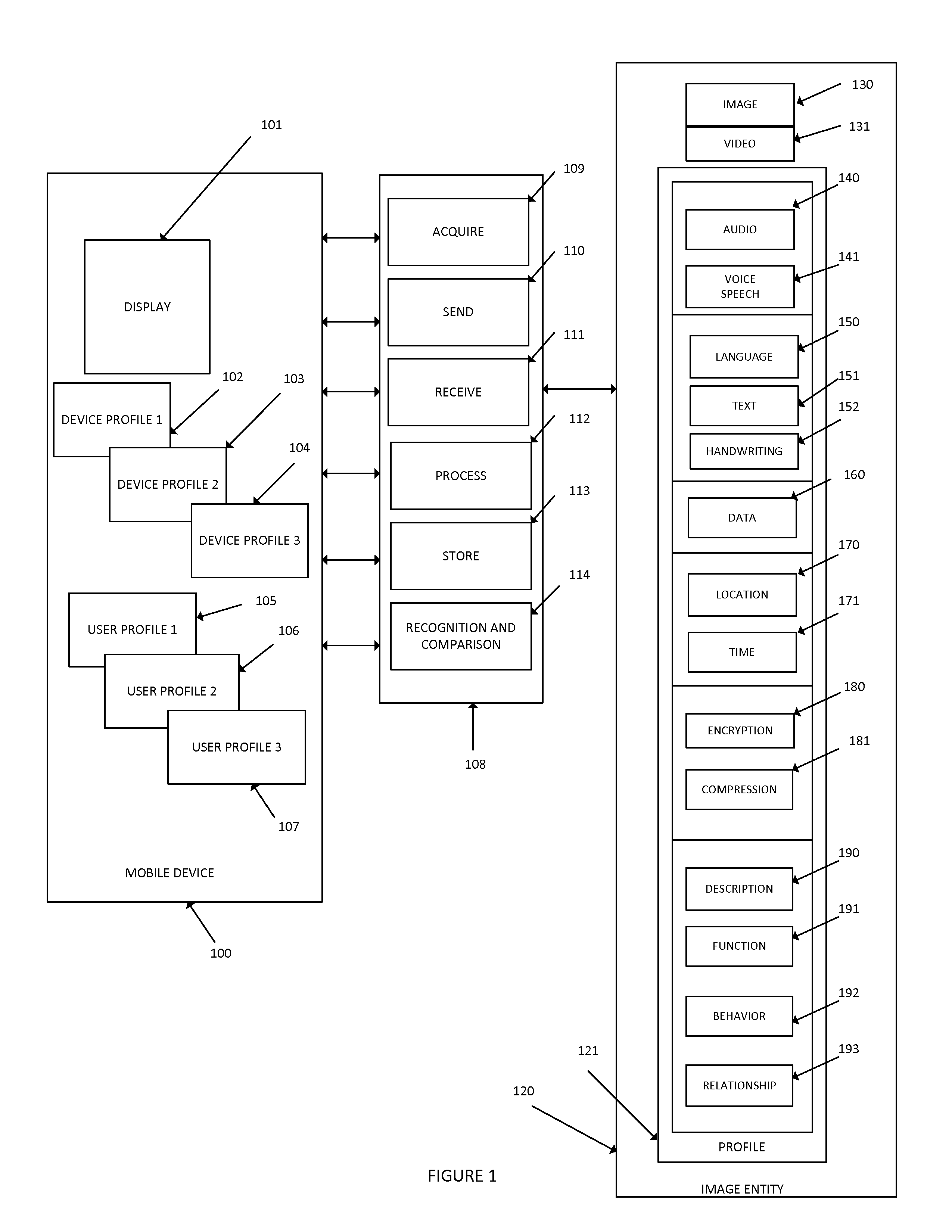

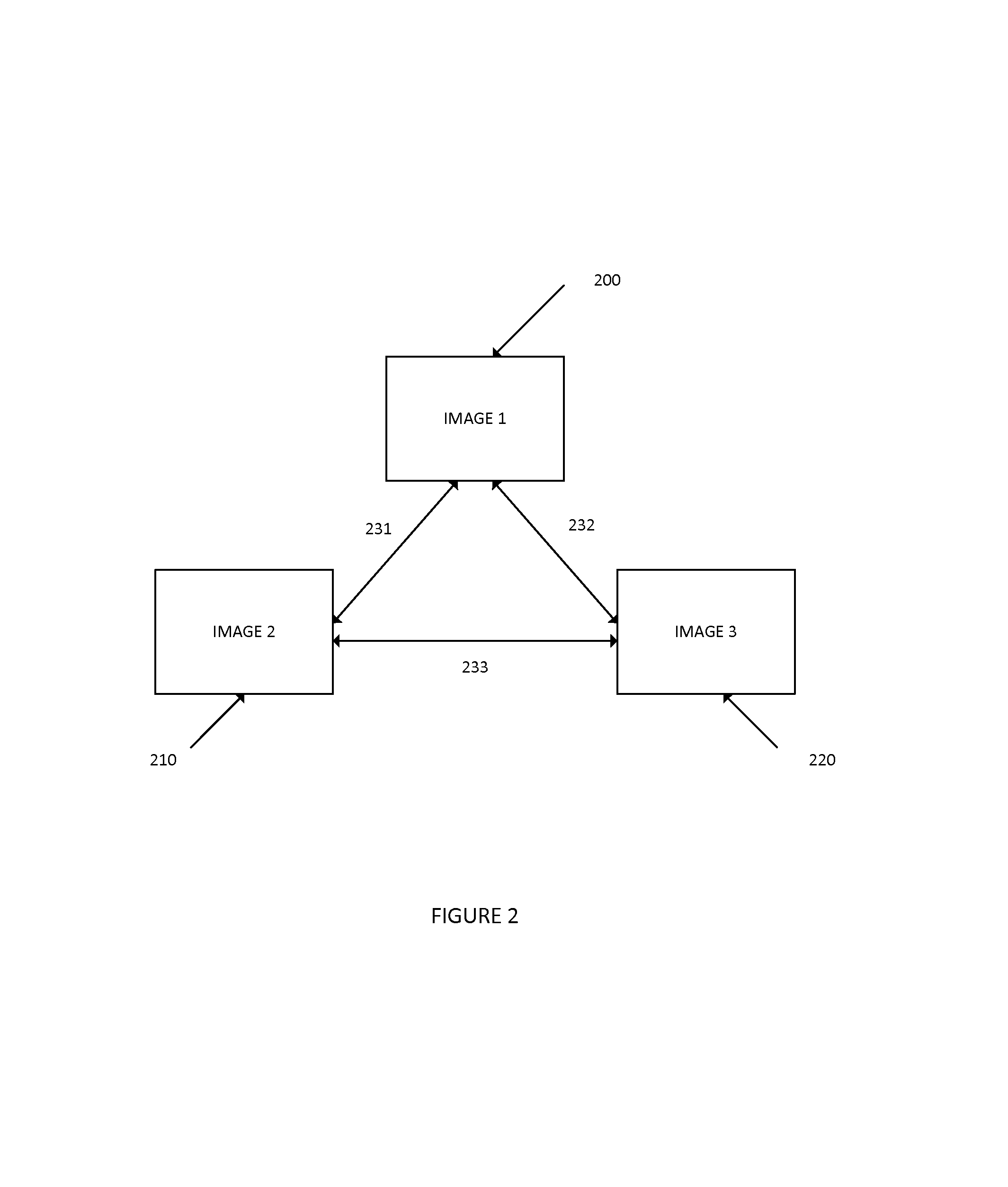

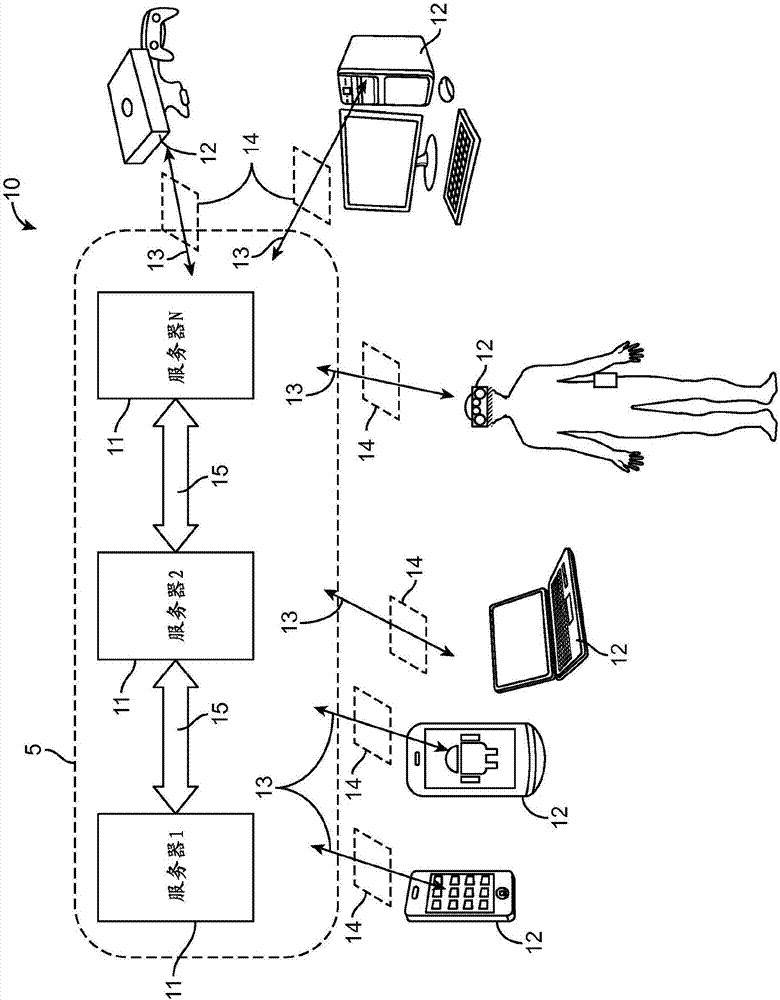

Image and augmented reality based networks using mobile devices and intelligent electronic glasses

A mobile communication system based on digital content including images and video that may be acquired, processed, and displayed using a plurality of mobile device, smartphones, tablet computers, stationary computers, intelligent electronic glasses, smart glasses, headsets, watches, smart devices, vehicles, and servers. Content may be acquired continuously by input and output devices and displayed in a social network. Images may have associated additional properties including nature of voice, audio, data and other information. This content may be displayed and acted upon in an augmented reality or virtual reality system. The imaged base network system may have the ability to learn and form intelligent association between aspects, people and other entities; between images and the associated data relating to both animate and inanimate entities for intelligent image based communication in a network. Acquired content may be processed for predictive analytics. Individuals may be imaged for targeted and personalized advertising.

Owner:AIRSPACE REALITY

Panoramic image-based virtual reality/telepresence audio-visual system and method

InactiveUS20070182812A1Readily availableHigh resolutionTelevision system detailsSelective content distributionComputer graphics (images)Virtual reality

A panoramic system comprises means for obtaining a panoramic image, and means for displaying the panoramic image.

Owner:RITCHEY KURTIS J

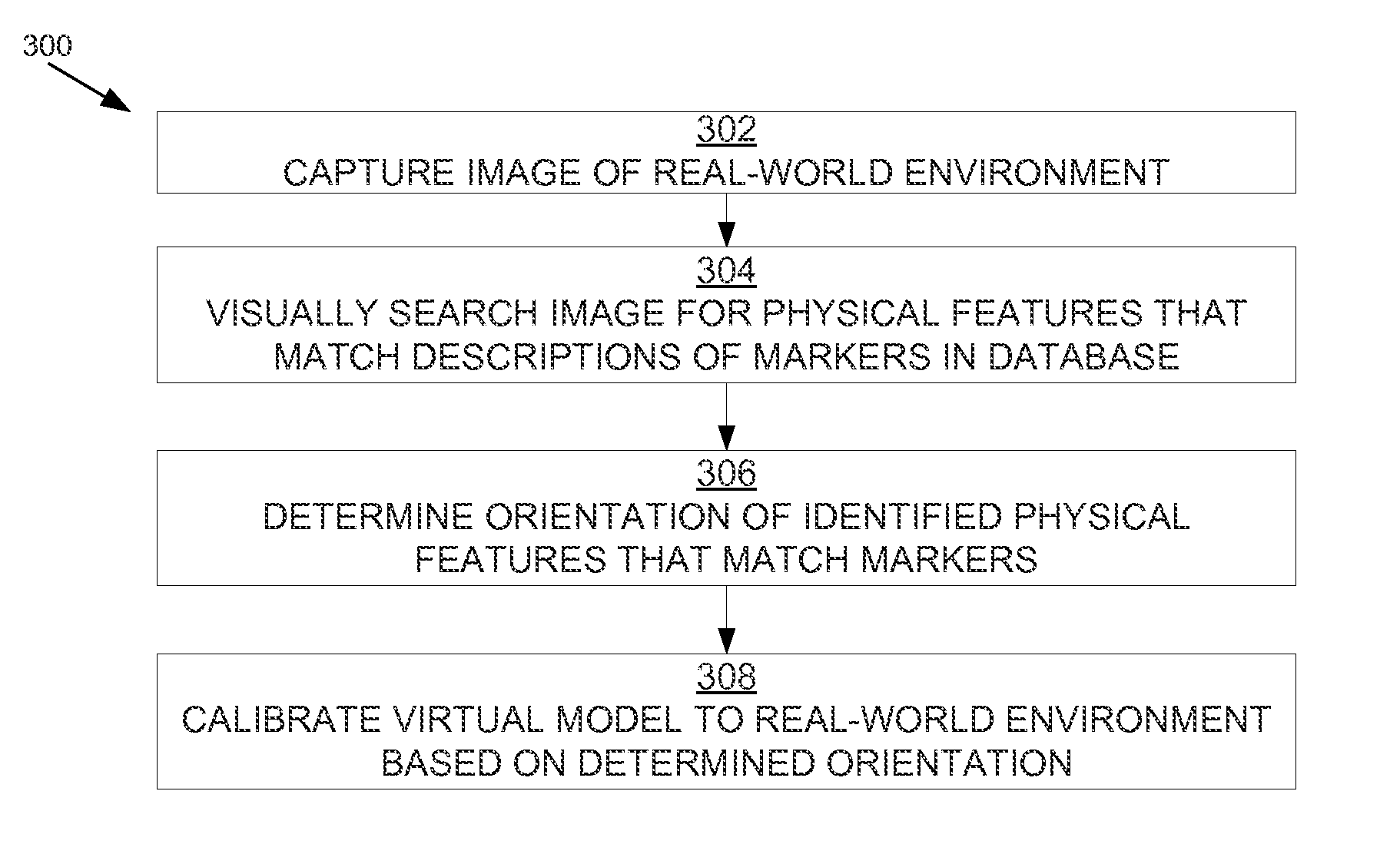

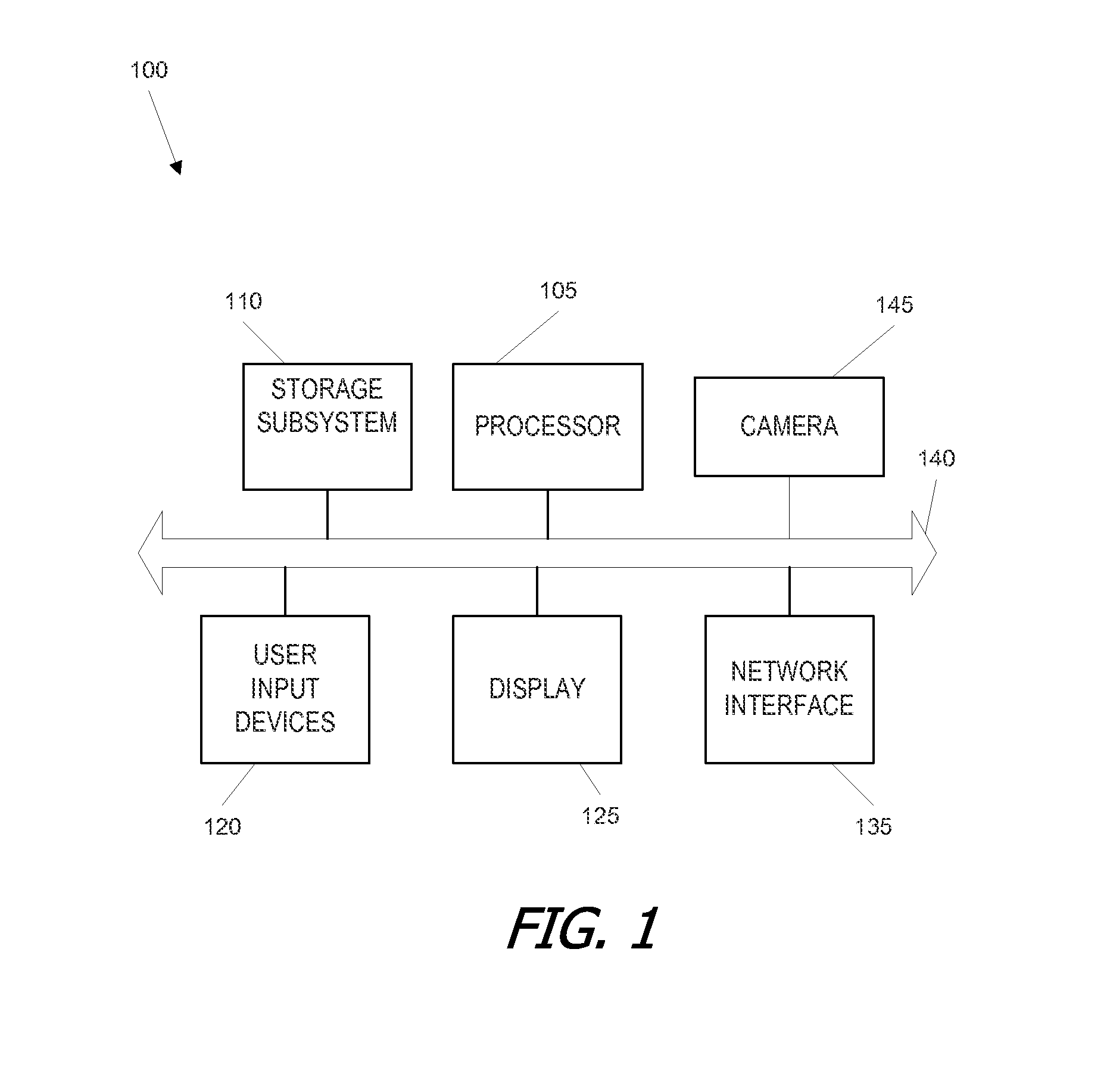

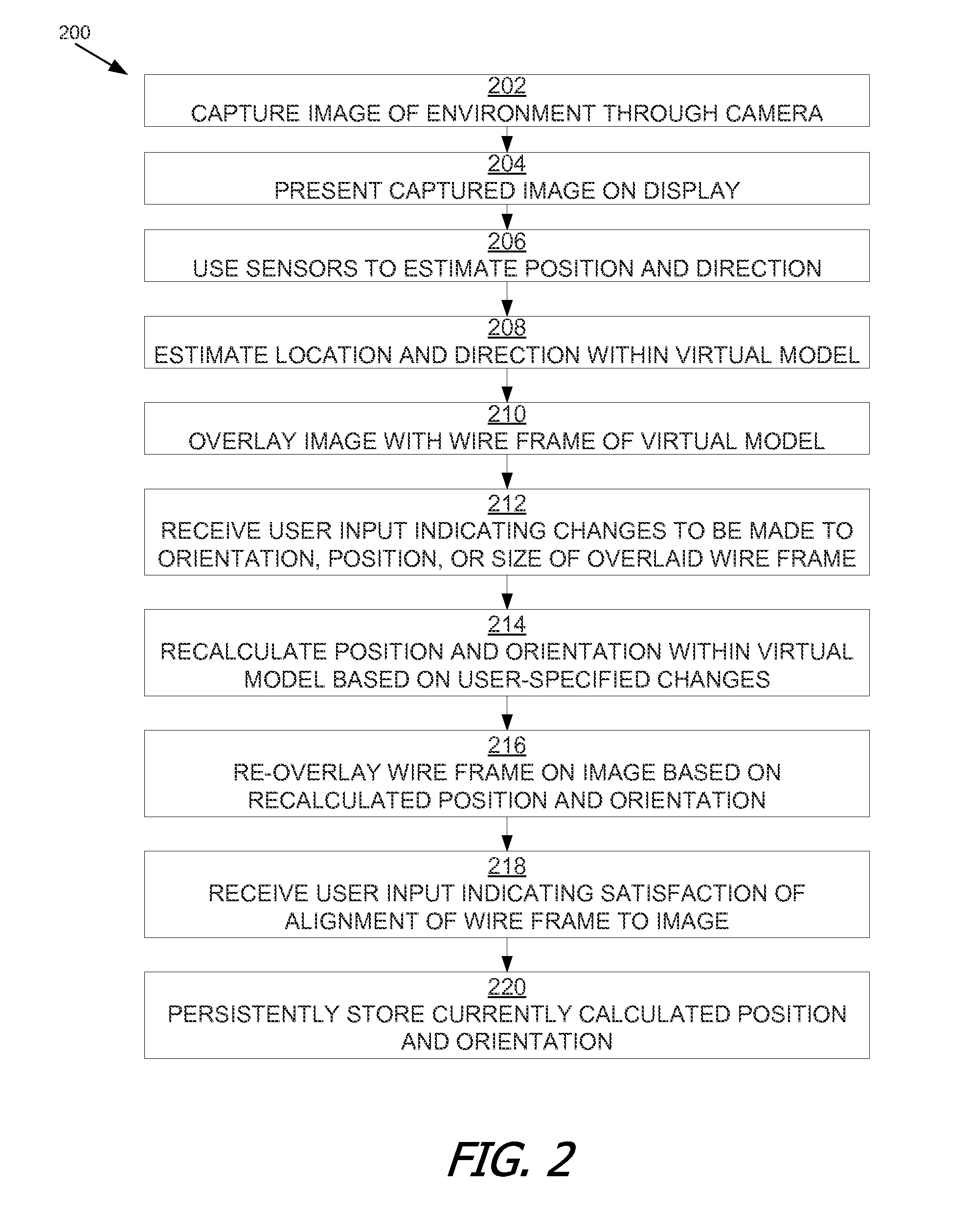

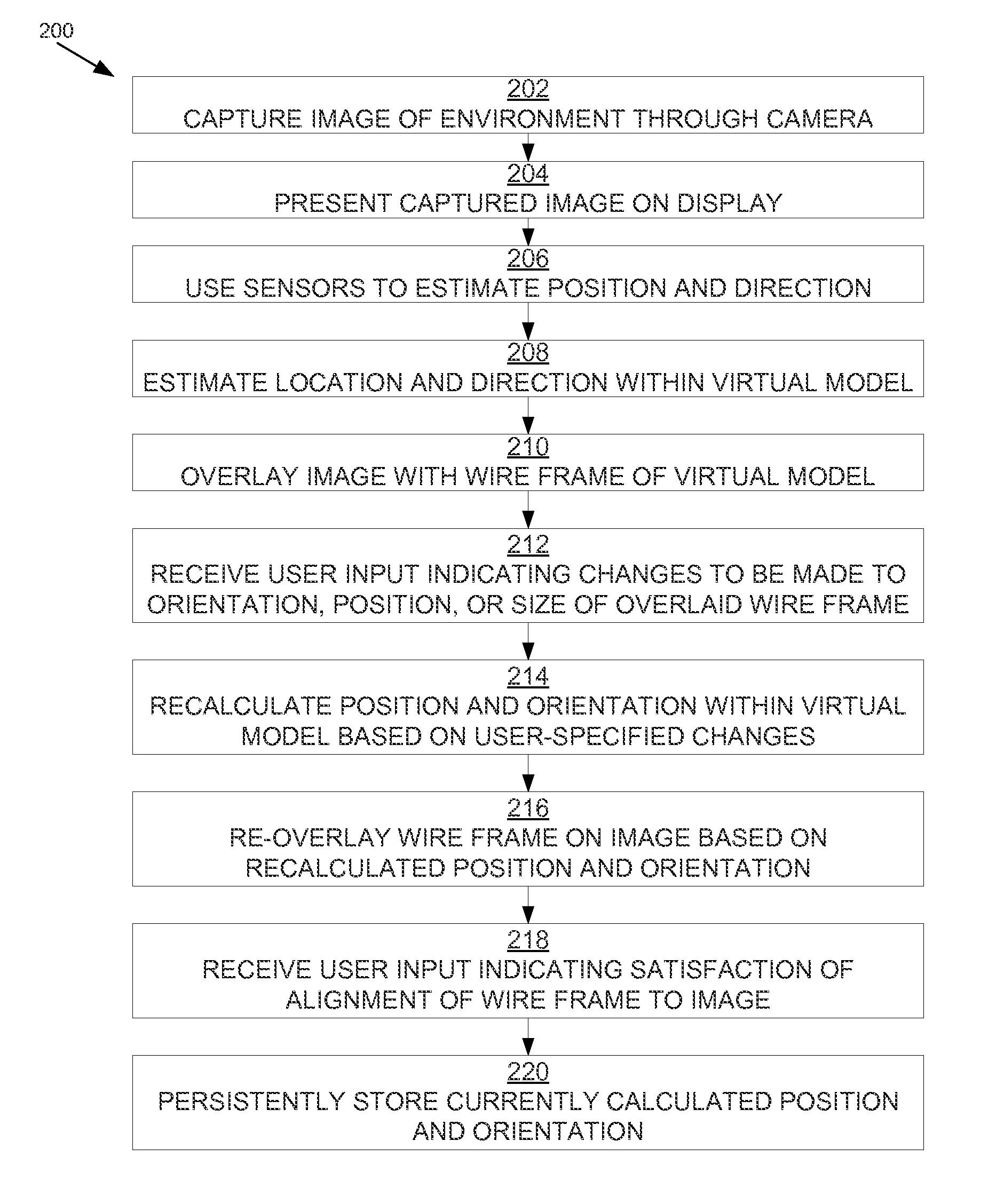

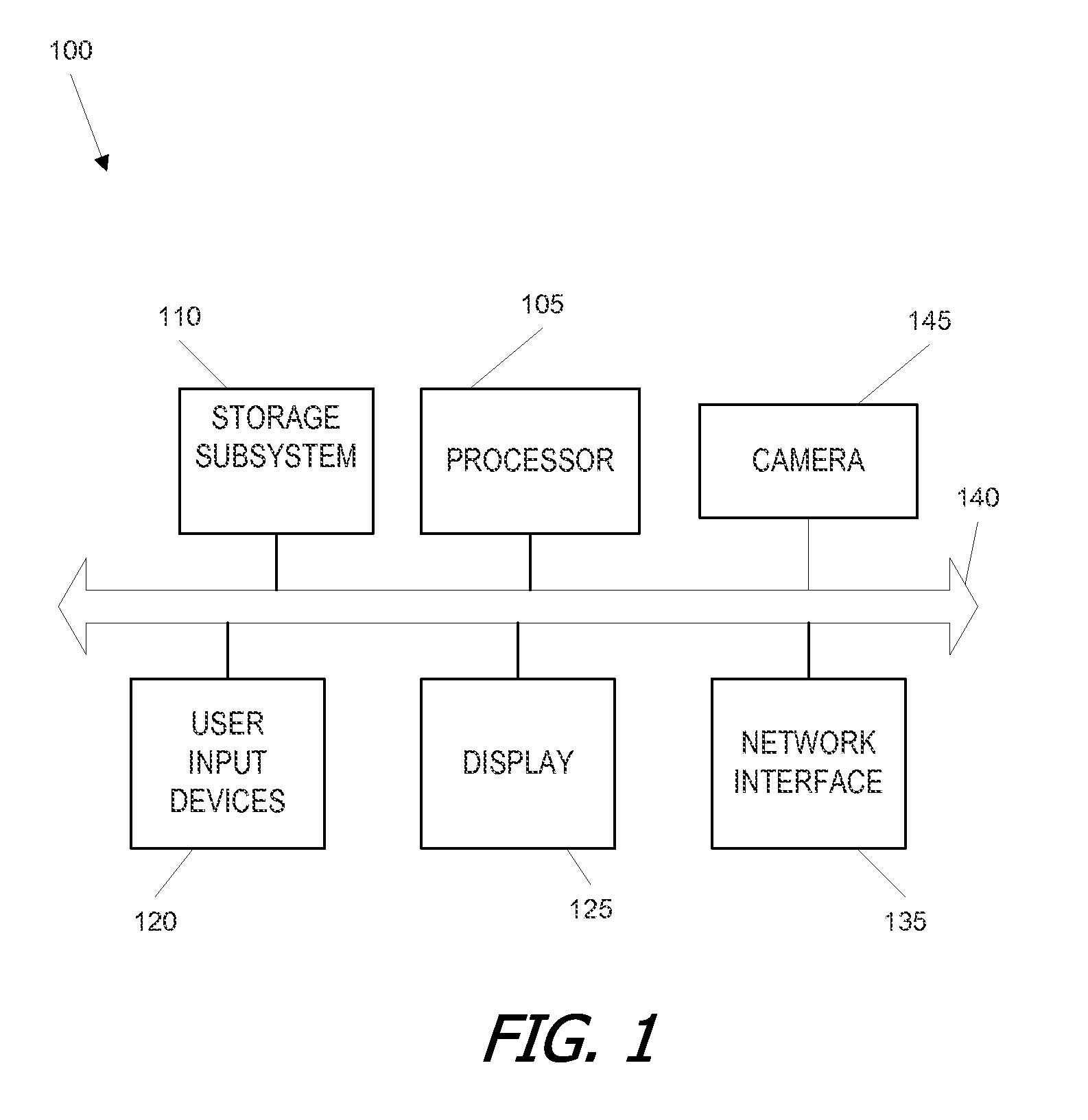

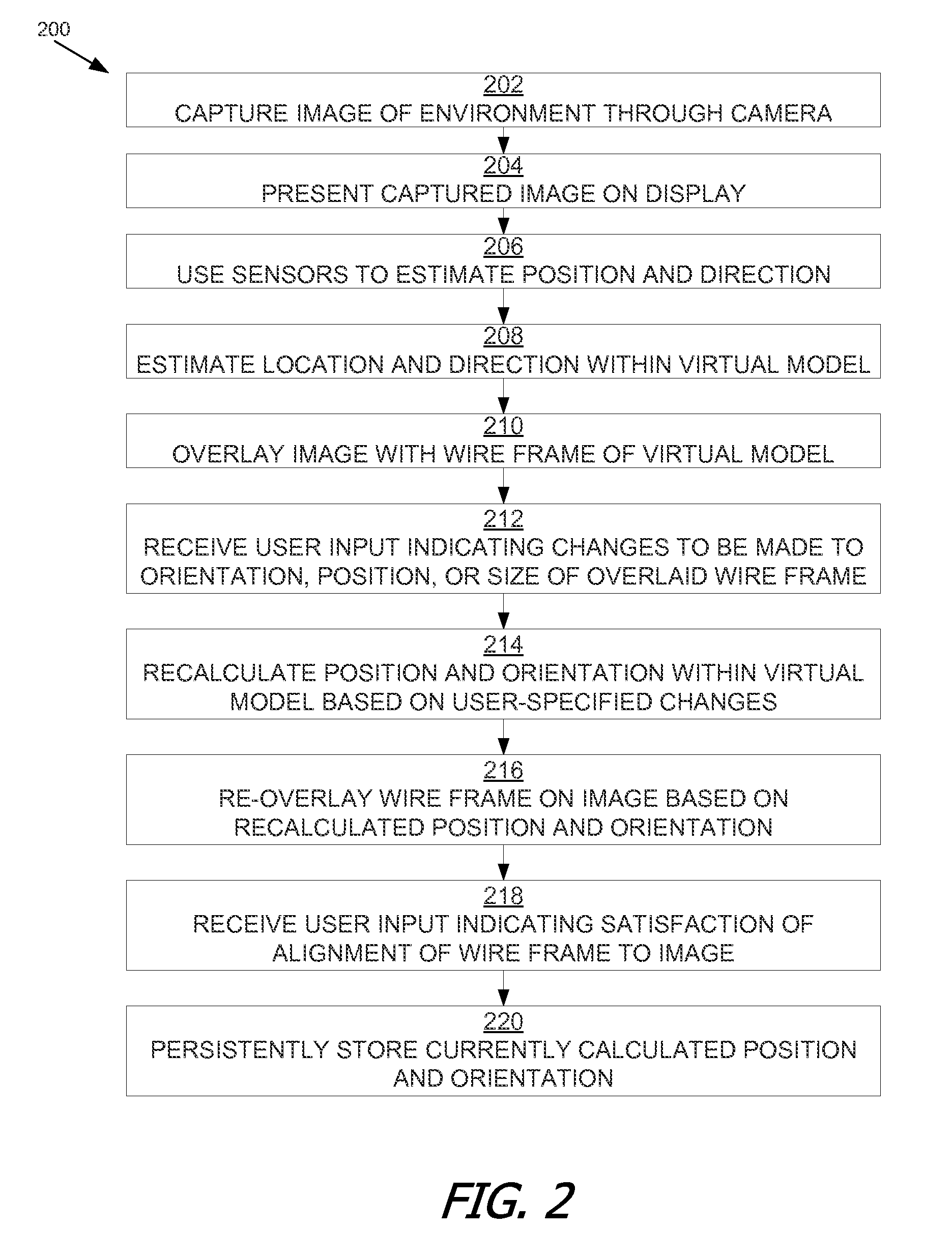

Registration between actual mobile device position and environmental model

ActiveUS20140247279A1Precise positioningCathode-ray tube indicatorsImage data processingComputer graphics (images)Display device

A user interface enables a user to calibrate the position of a three dimensional model with a real-world environment represented by that model. Using a device's sensor, the device's location and orientation is determined. A video image of the device's environment is displayed on the device's display. The device overlays a representation of an object from a virtual reality model on the video image. The position of the overlaid representation is determined based on the device's location and orientation. In response to user input, the device adjusts a position of the overlaid representation relative to the video image.

Owner:APPLE INC

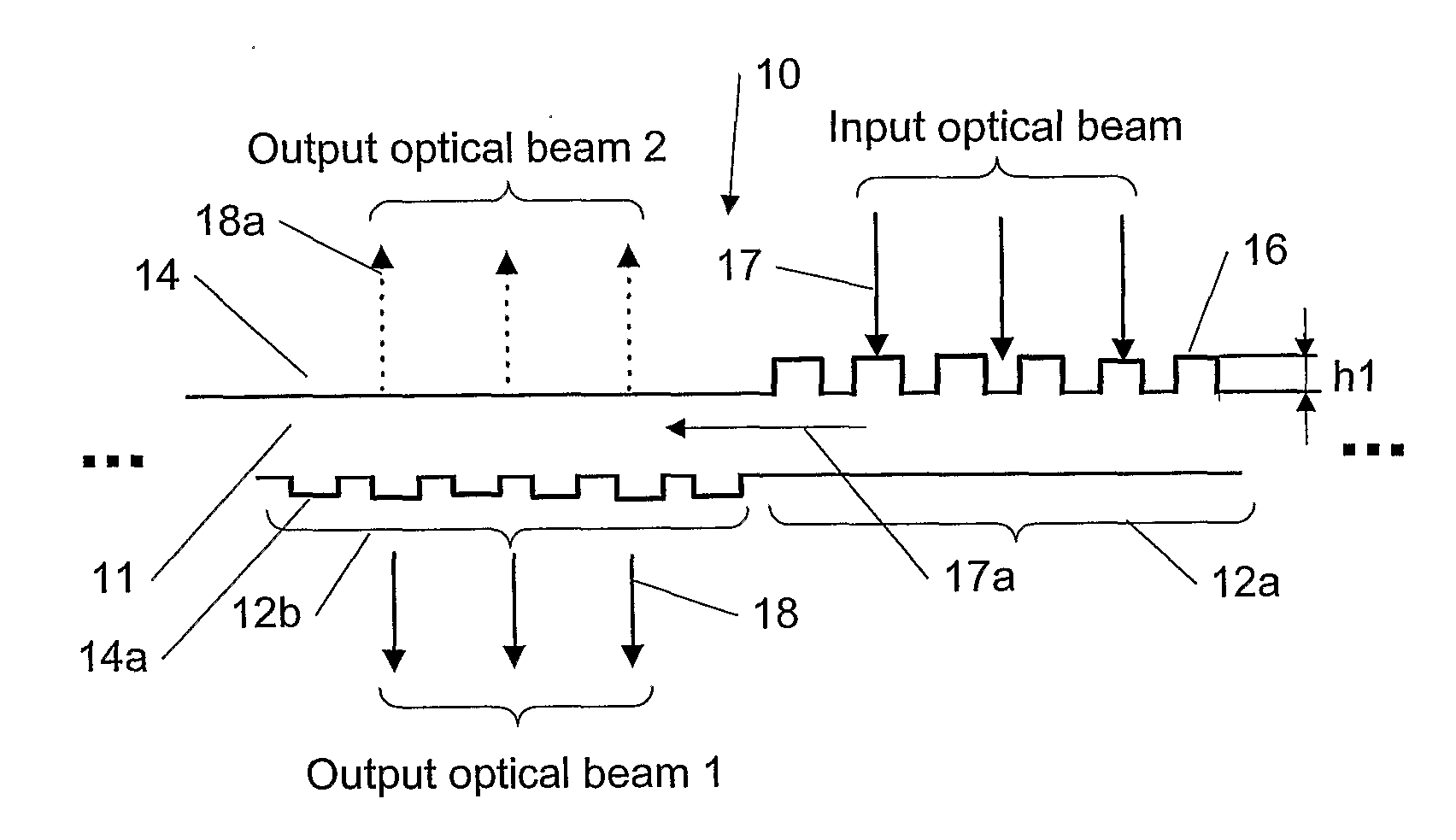

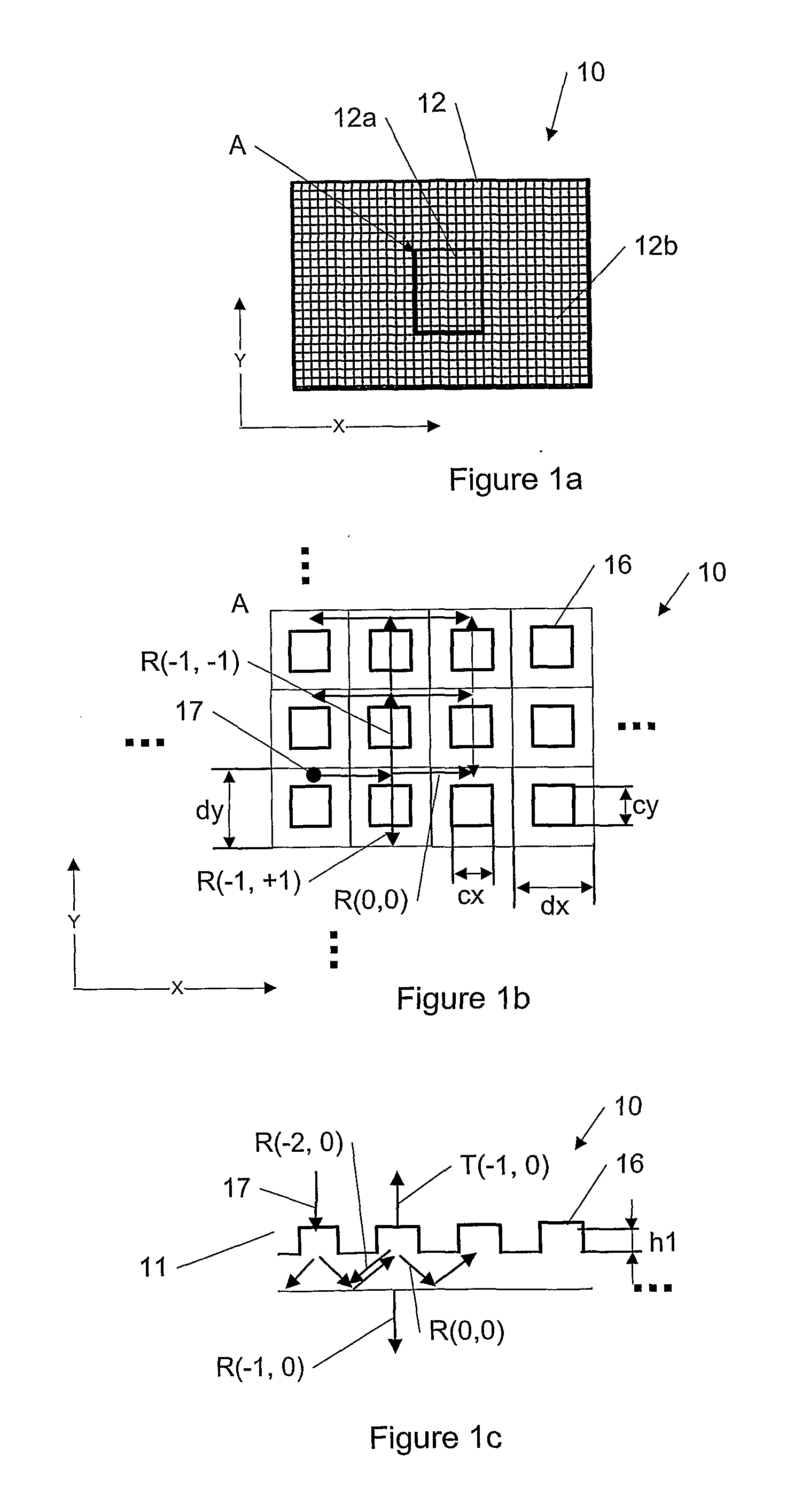

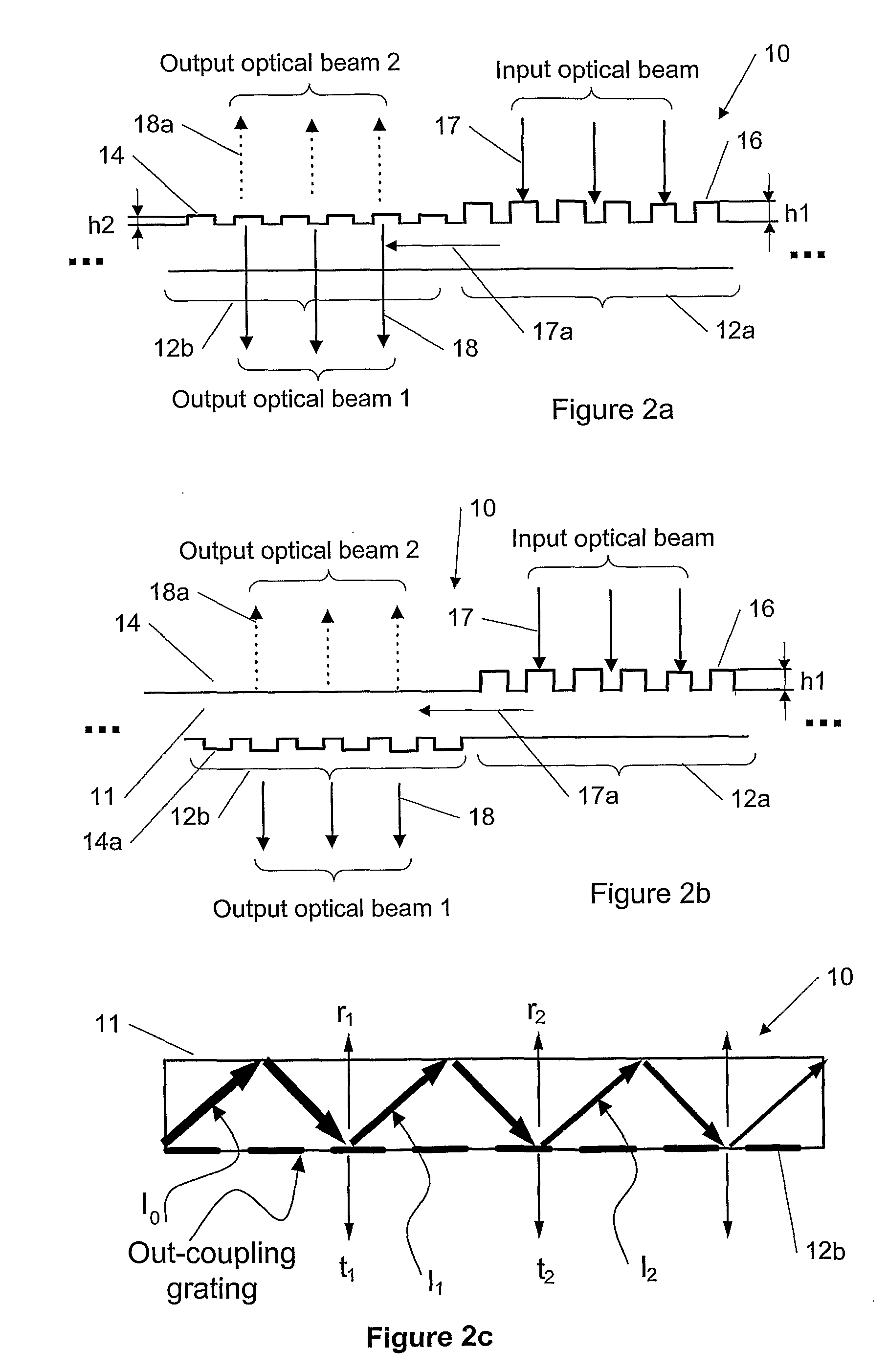

Beam expansion with three-dimensional diffractive elements

The specification and drawings present a new apparatus and method for using a three-dimensional (3D) diffractive element (e.g., a 3D diffractive grating) for expanding in one or two dimensions the exit pupil of an optical beam in electronic devices. Various embodiments of the present invention can be applied, but are not limited, to forming images in virtual reality displays, to illuminating of displays (e.g., backlight illumination in liquid crystal displays) or keyboards, etc.

Owner:MAGIC LEAP

Methods and systems for creating virtual and augmented reality

Configurations are disclosed for presenting virtual reality and augmented reality experiences to users. The system may comprise an image capturing device to capture one or more images, the one or more images corresponding to a field of the view of a user of a head-mounted augmented reality device, and a processor communicatively coupled to the image capturing device to extract a set of map points from the set of images, to identify a set of sparse points and a set of dense points from the extracted set of map points, and to perform a normalization on the set of map points.

Owner:MAGIC LEAP INC

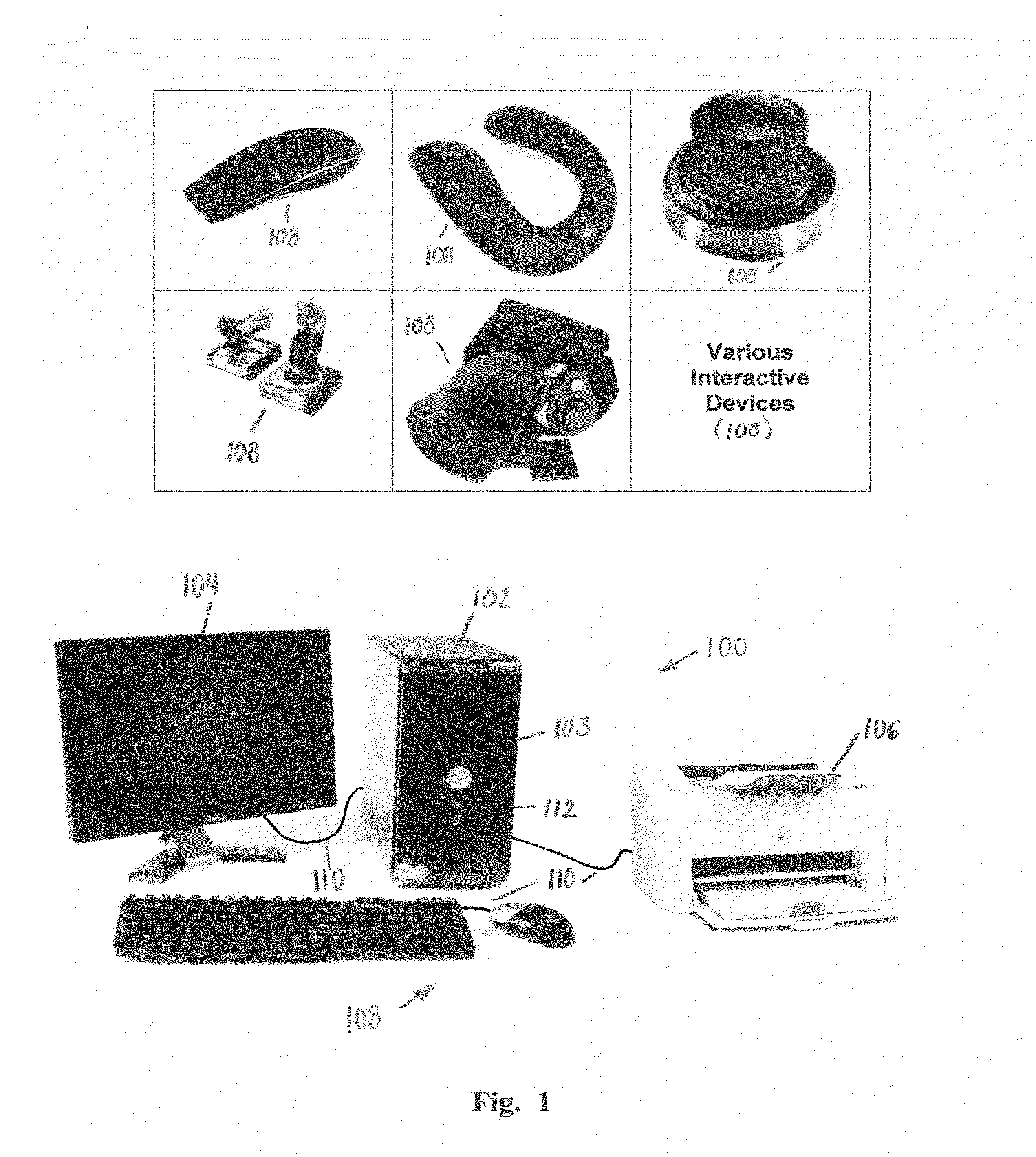

To a combined fingerprint acquisition and control device

The present invention combines the functionality of a computer pointing device with a fingerprint authentication system, In the preferred embodiment, by regularly scanning fingerprints acquired from the pointing device touch pad, fingerprint features may be extracted and compared to stored data on authorized users for passive authentication. Furthermore, calculations based upon the acquired fingerprint images and associated features allows the system to determine six degrees of freedom of the finger, allowing the user to control a variety of functions or to manipulate a three-dimensional model or virtual reality system.

Owner:SYNAPTICS INC

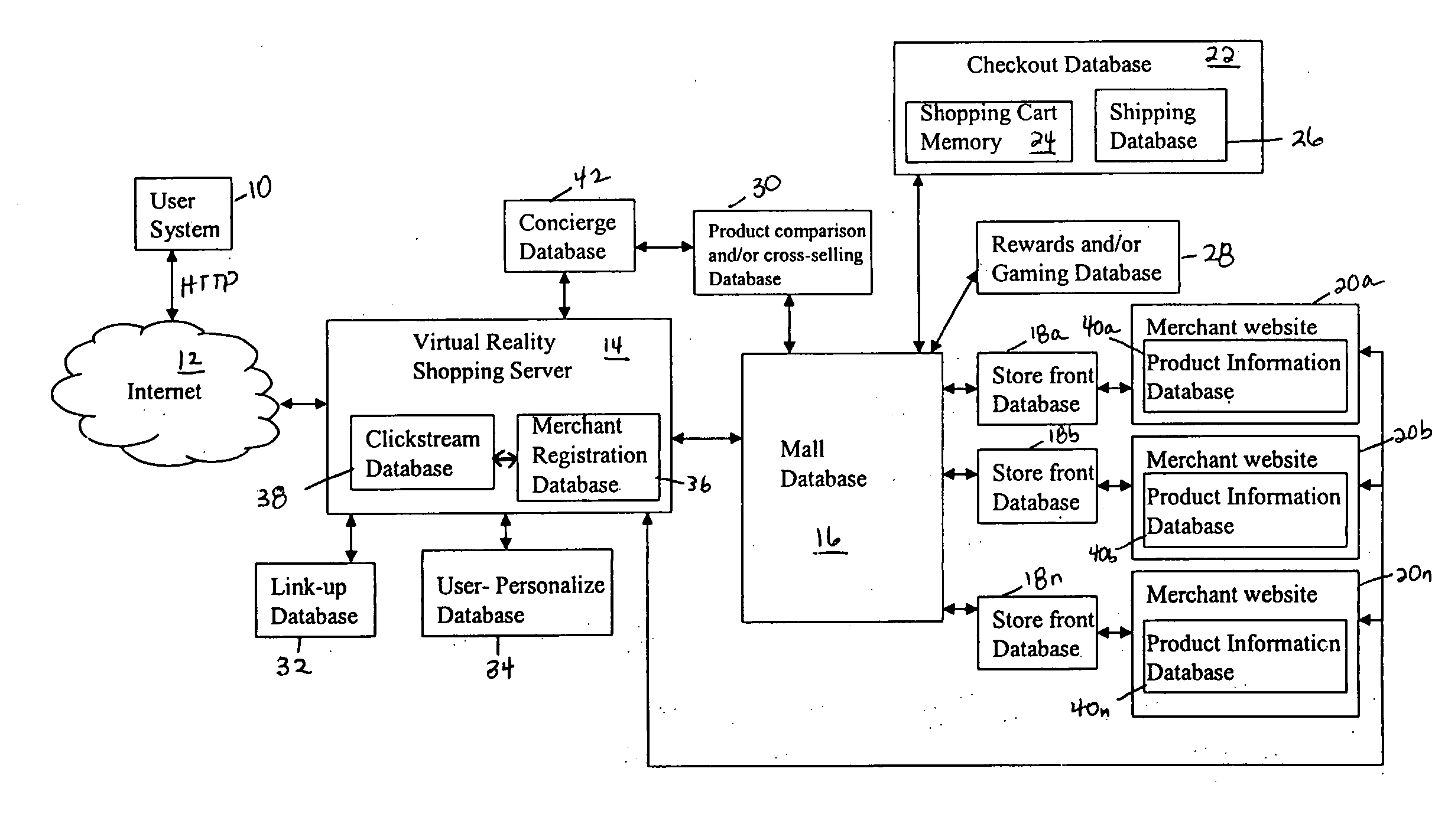

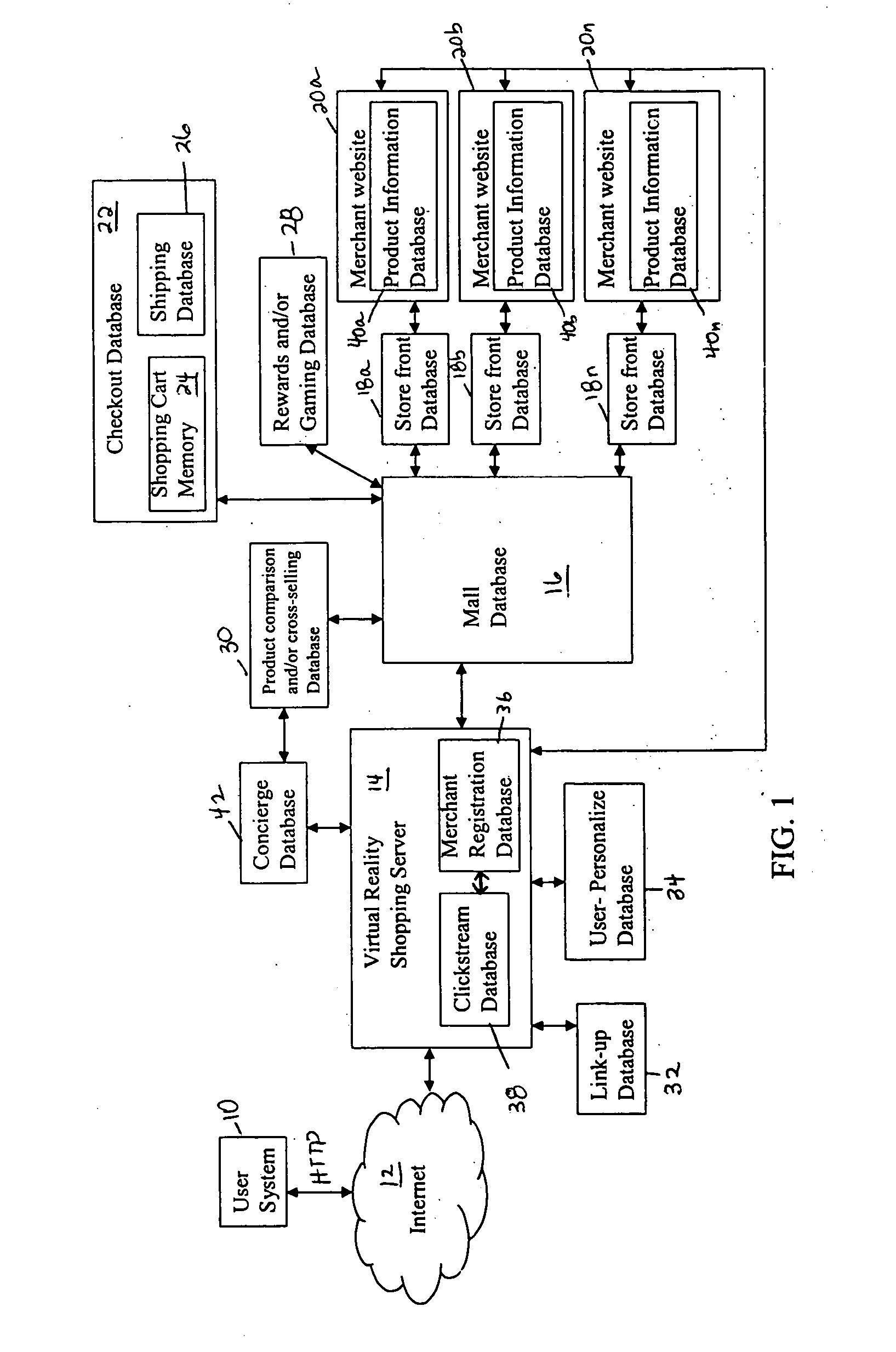

Virtual reality shopping experience

A solution is provided for a method for a user to shop online in a three dimensional (3D) virtual reality (VR) setting by receiving a request at a shopping server to view a shopping location, having at least one store, and displaying the shopping location to the user's computer in a 3D interactive simulation view via a web browser to emulate a real-life shopping experience for the user. The server then obtains a request to enter into one of the stores and displays the store website to the user in the same web browser. The store website has one or more enhanced VR features. The server then receives a request to view at least one product and the product is presented in a 3D interactive simulation view to emulate a real-life viewing of the product.

Owner:LIBERTY PEAK VENTURES LLC

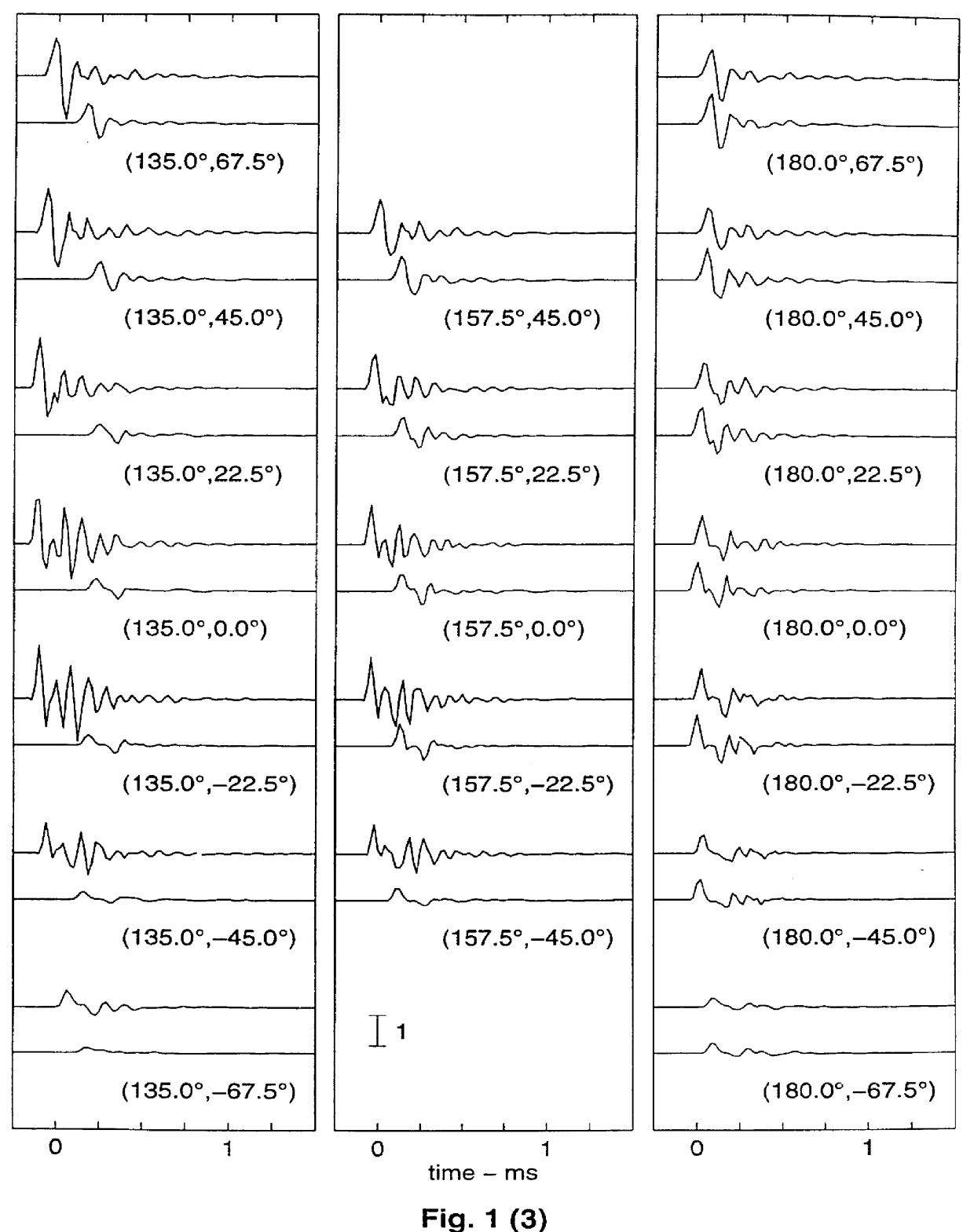

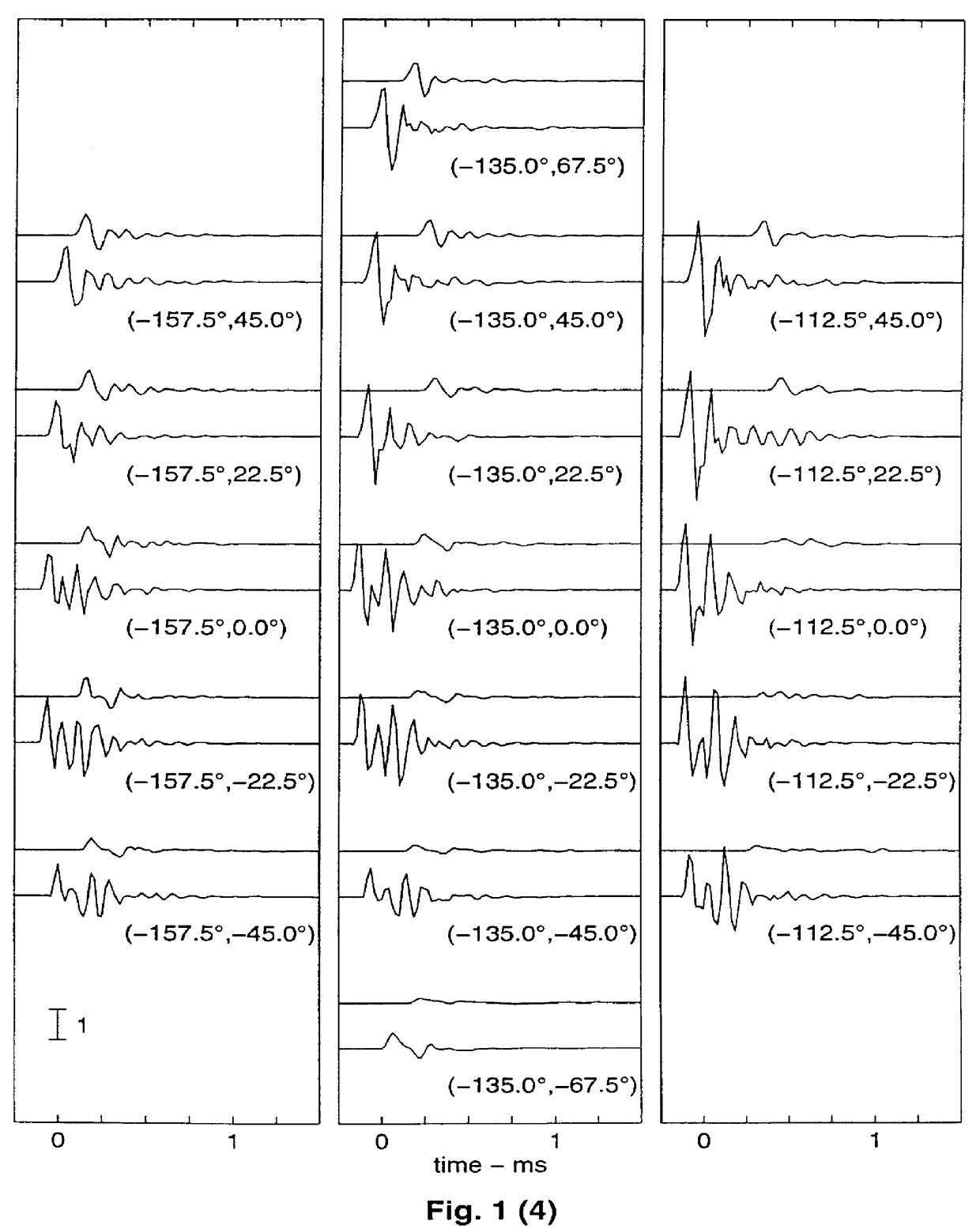

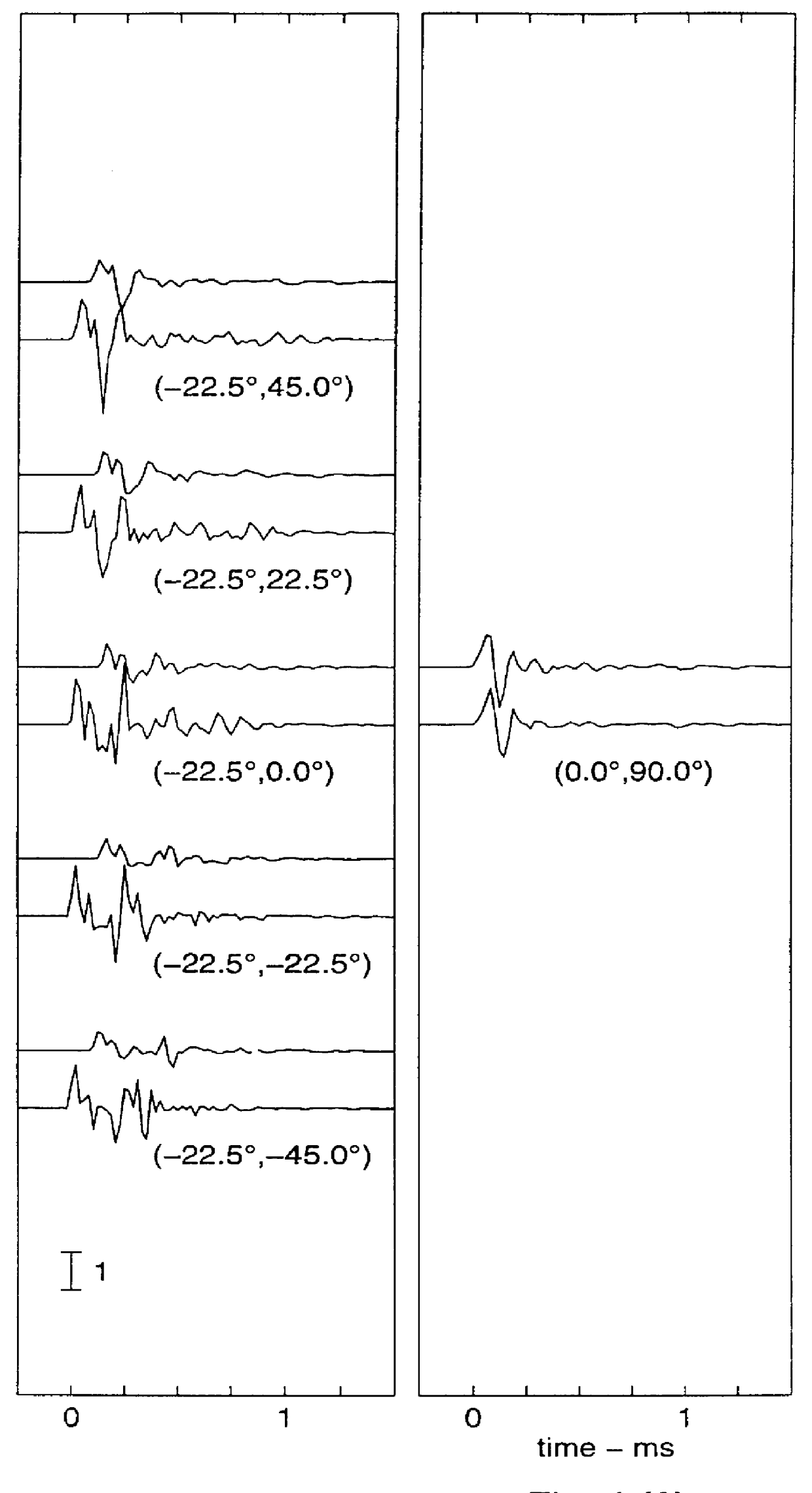

Binaural synthesis, head-related transfer functions, and uses thereof

InactiveUS6118875AReduce the differenceFunction increaseTwo-channel systemsLoudspeaker spatial/constructional arrangementsTime domainSound sources

PCT No. PCT / DK95 / 00089 Sec. 371 Date Dec. 27, 1996 Sec. 102(e) Date Dec. 27, 1996 PCT Filed Feb. 27, 1995 PCT Pub. No. WO95 / 23493 PCT Pub. Date Aug. 31, 1995A method and apparatus for simulating the transmission of sound from sound sources to the ear canals of a listener encompasses novel head-related transfer functions (HTFs), novel methods of measuring and processing HTFs, and novel methods of changing or maintaining the directions of the sound sources as perceived by the listener. The measurement methods enable the measurement and construction of HTFs for which the time domain descriptions are surprisingly short, and for which the differences between listeners are surprisingly small. The novel HTFs can be exploited in any application concerning the simulation of sound transmission, measurement, simulation, or reproduction. The invention is particularly advantageous in the field of binaural synthesis, specifically, the creation, by means of two sound sources, of the perception in the listener of listening to sound generated by a multichannel sound system. It is also particularly useful in the designing of electronic filters used, for example, in virtual reality systems, and in the designing of an "artificial head" having HTFs that approximate the HTFs of the invention as closely as possible in order to make the best possible representation of humans by the artificial head, thereby making artificial head recordings of optimal quality.

Owner:M O SLASHED LLER HENRIK +3

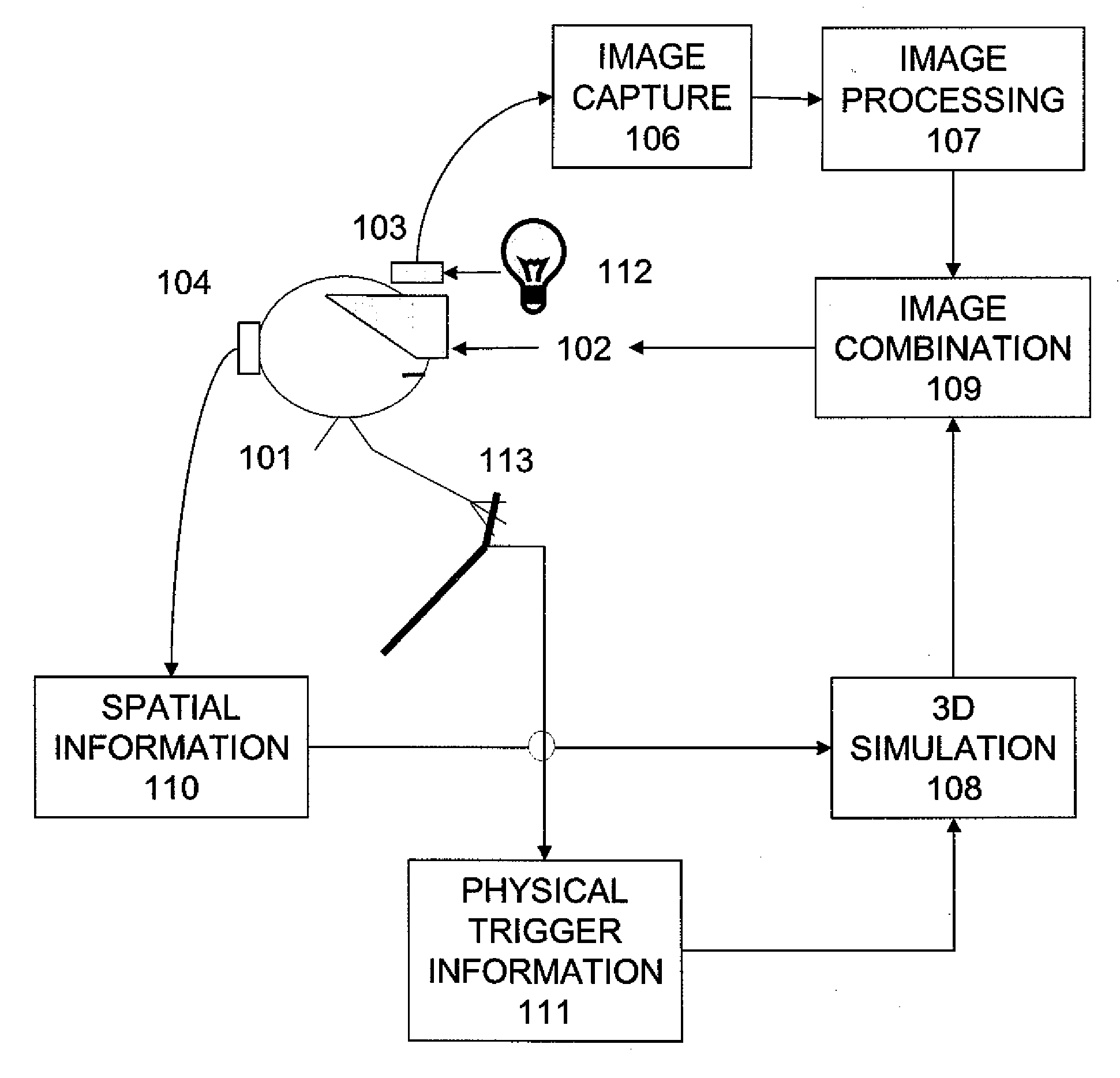

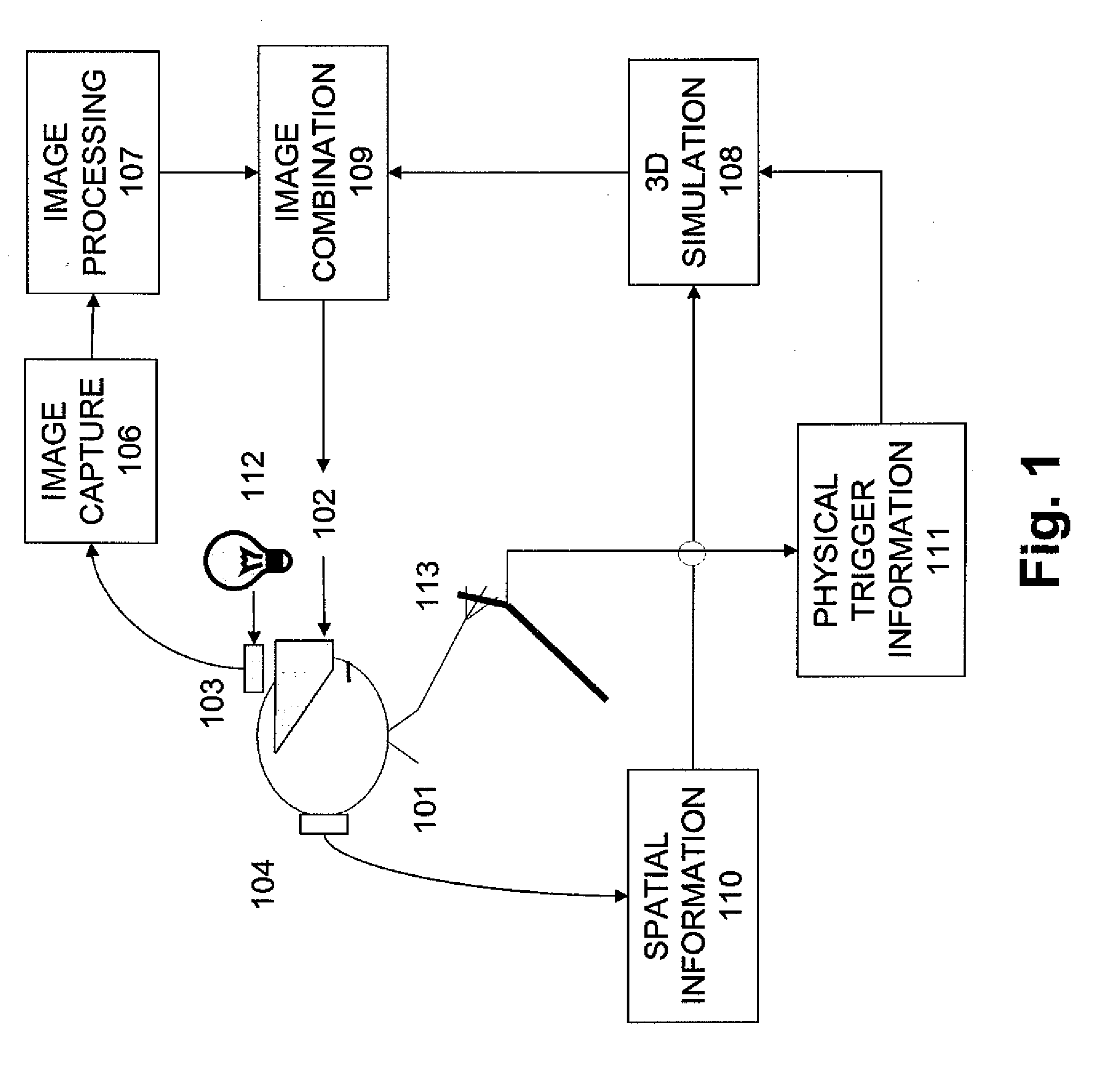

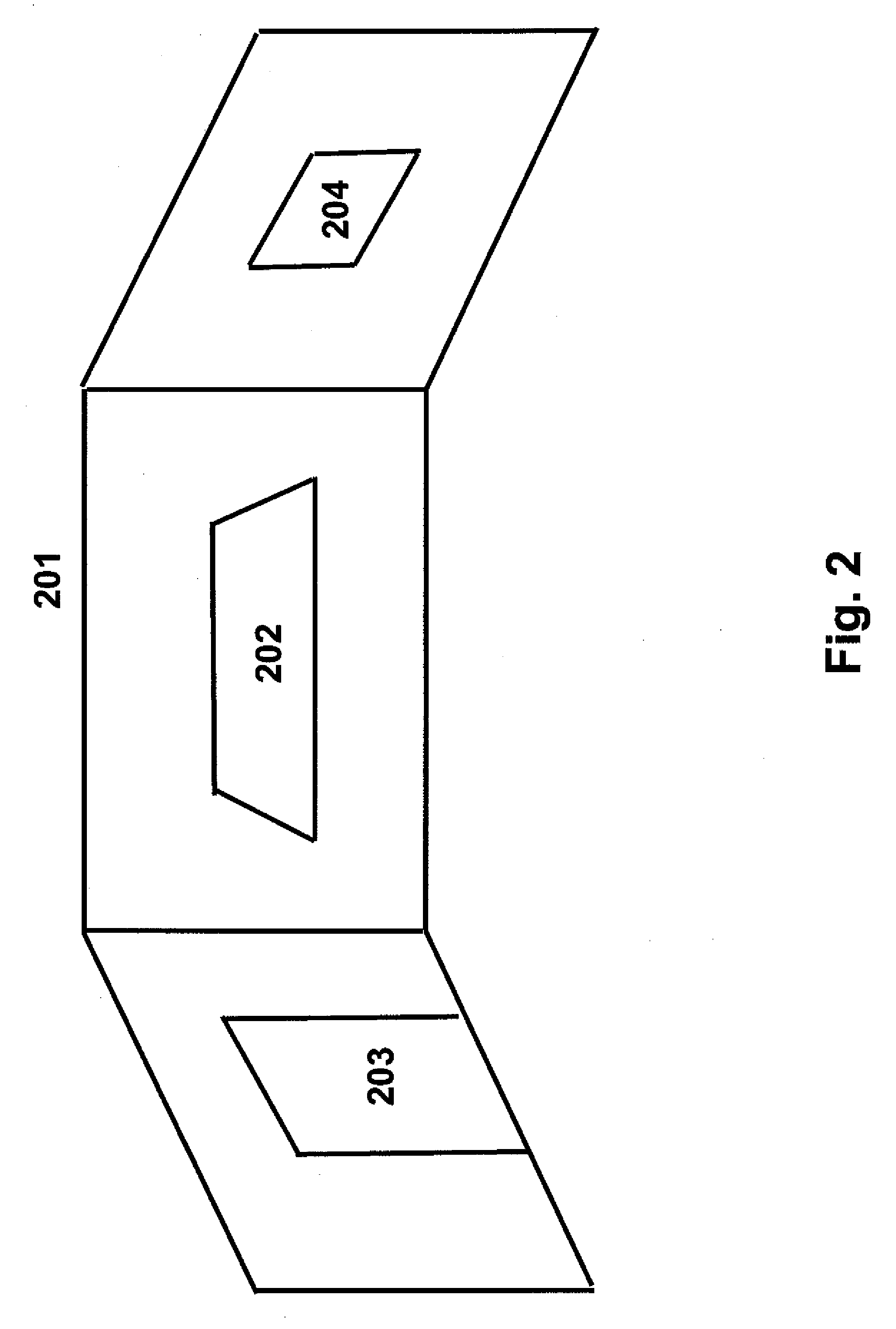

Systems and methods for combining virtual and real-time physical environments

Systems, methods and structures for combining virtual reality and real-time environment by combining captured real-time video data and real-time 3D environment renderings to create a fused, that is, combined environment, including capturing video imagery in RGB or HSV / HSV color coordinate systems and processing it to determine which areas should be made transparent, or have other color modifications made, based on sensed cultural features, electromagnetic spectrum values, and / or sensor line-of-sight, wherein the sensed features can also include electromagnetic radiation characteristics such as color, infra-red, ultra-violet light values, cultural features can include patterns of these characteristics, such as object recognition using edge detection, and whereby the processed image is then overlaid on, and fused into a 3D environment to combine the two data sources into a single scene to thereby create an effect whereby a user can look through predesignated areas or “windows” in the video image to see into a 3D simulated world, and / or see other enhanced or reprocessed features of the captured image.

Owner:BACHELDER EDWARD N +1

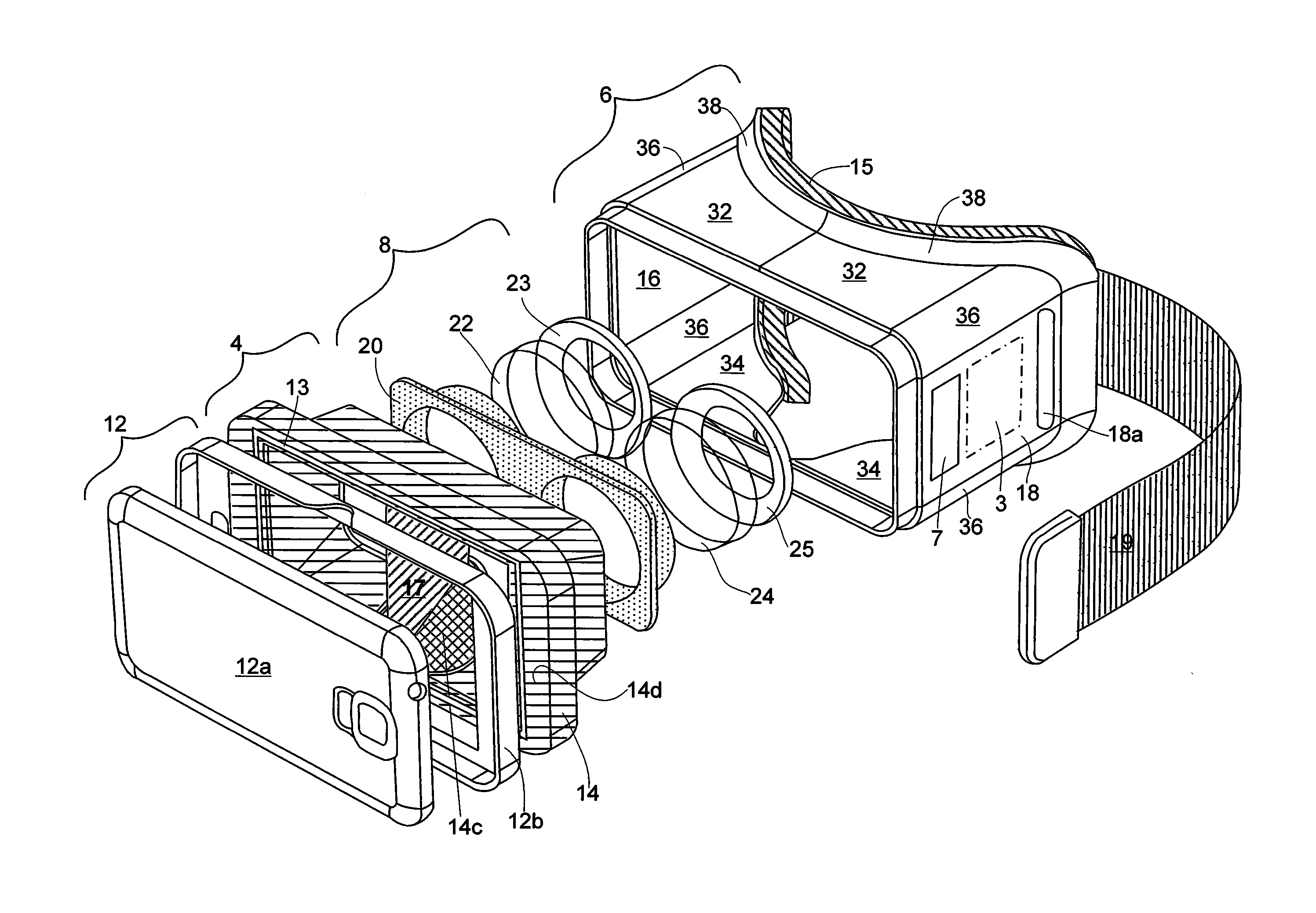

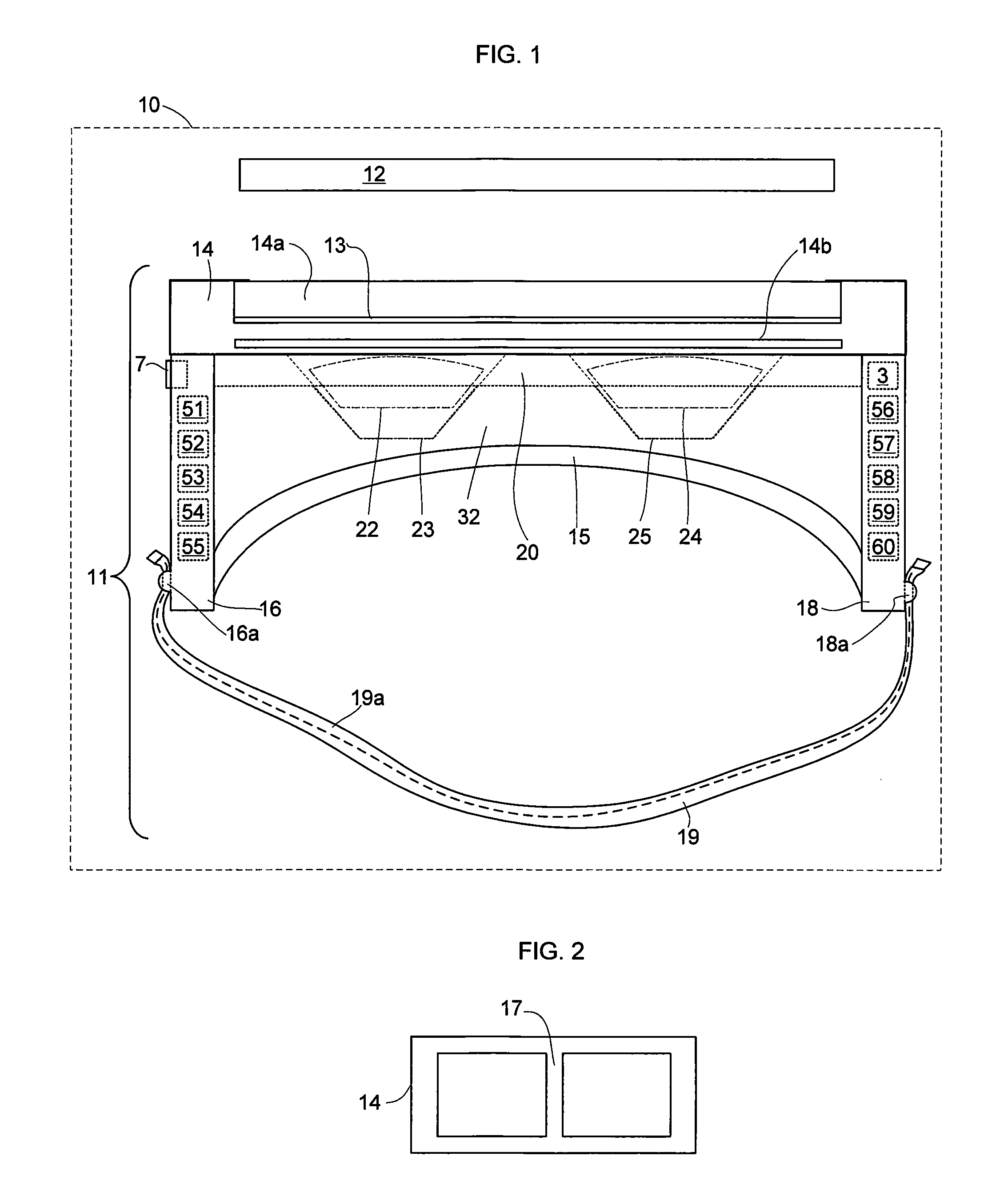

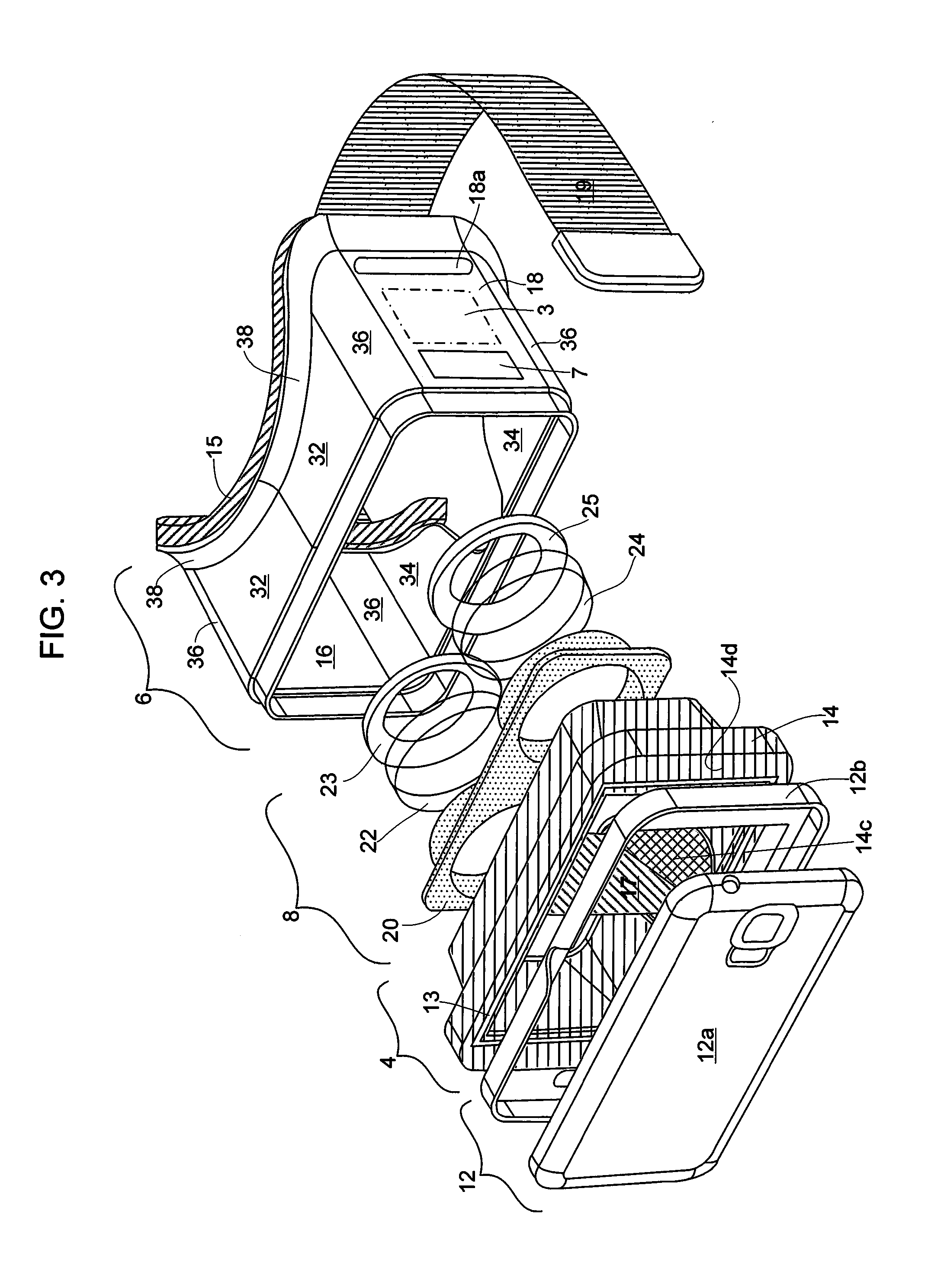

Modular and Convertible Virtual Reality Headset System

InactiveUS20150253574A1Easy to customizeFacilitate specific usOptical elementsModularityComputer module

A modular and customizable virtual reality headset system comprises an optional mobile device case, a support module, a device module with a dock for a mobile device or for the mobile device case, and a lens module. The modules preferably removably attach together with nesting extensions and a removable plug that extends through aligned holes defined by the extensions. The support module comprises one or more walls, optional corners, and optional edges. The system further comprises control and processing components, one or more input devices, a comfort module, and a strap. Additional features include a microphone, headphones, a camera, a display, communication components, motion sensors and movement trackers, filters, battery chargers, and warning devices. The basic components of the VR headset system and the optional features and components are preferably all customizable and upgradeable to match the user's aesthetic preferences and technical requirements.

Owner:ION VIRTUAL TECH

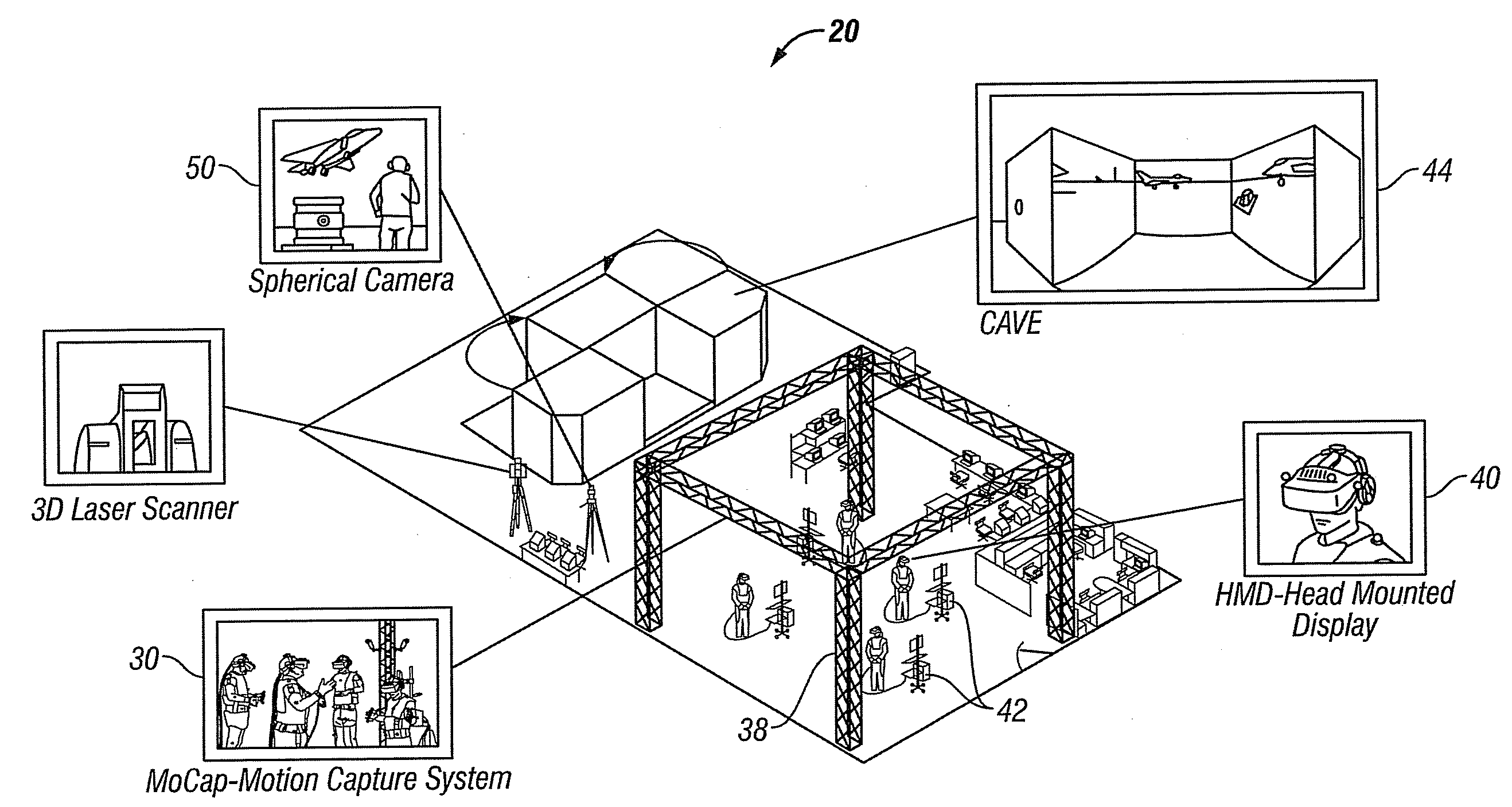

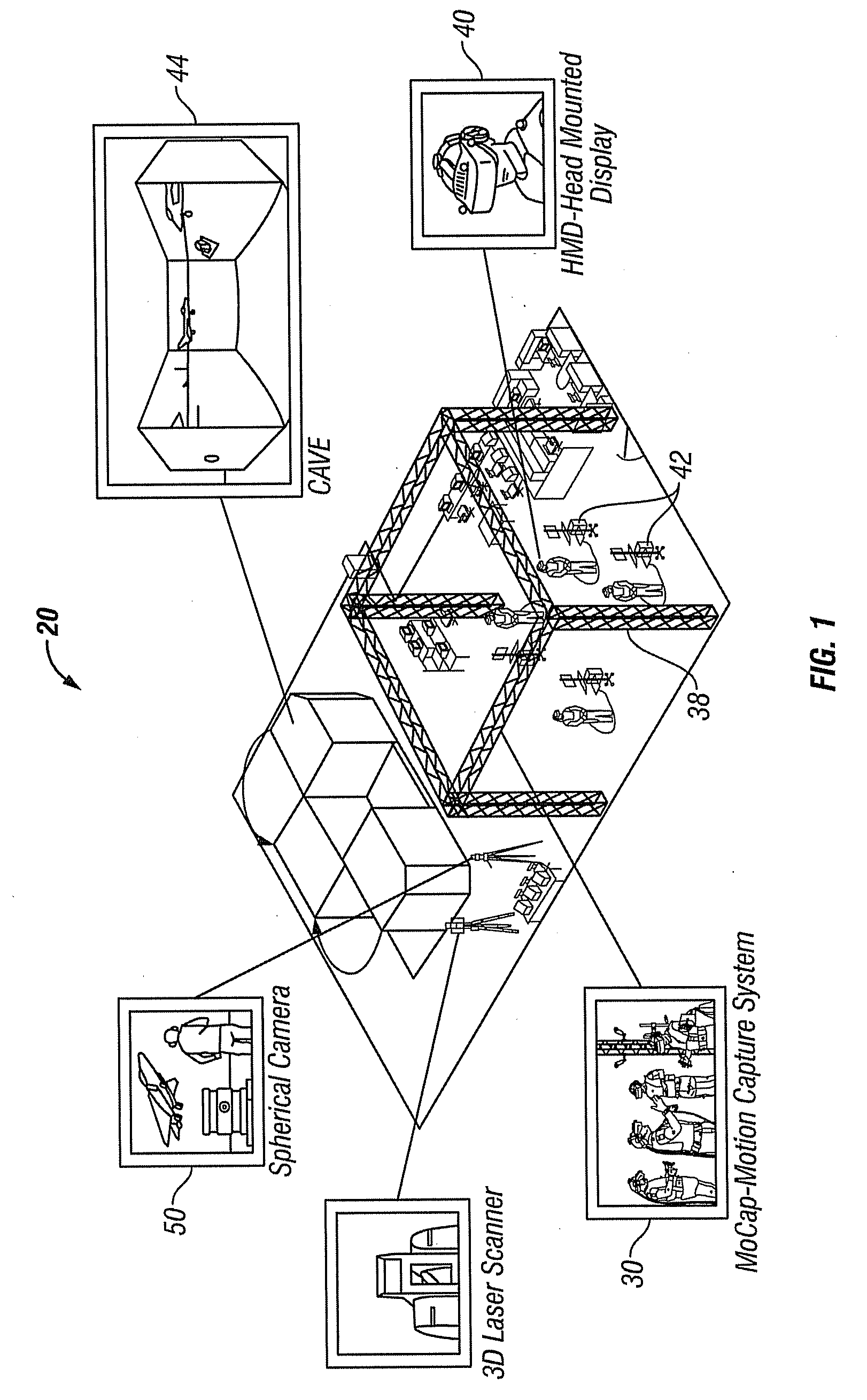

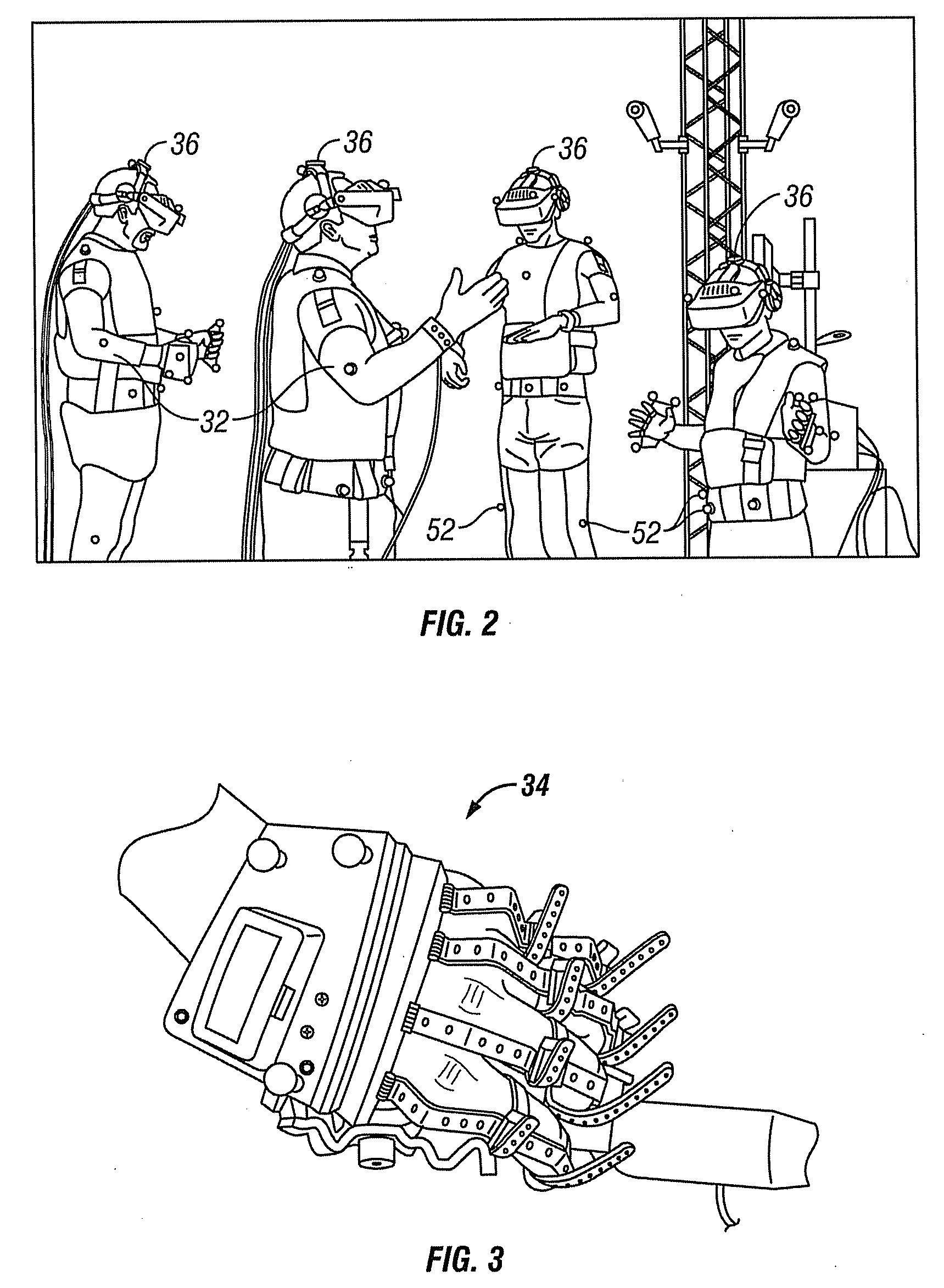

Immersive Collaborative Environment Using Motion Capture, Head Mounted Display, and Cave

ActiveUS20090187389A1Cathode-ray tube indicatorsSpecial data processing applicationsComputer Aided DesignKinematics

A collaborative visualization system integrates motion capture and virtual reality, along with kinematics and computer-aided design (CAD), for the purpose, for example, of evaluating an engineering design. A virtual reality simulator creates a full-scale, three-dimensional virtual reality simulation responsive to computer-aided design (CAD) data. Motion capture data is obtained from users simultaneously interacting with the virtual reality simulation. The virtual reality simulator animates in real time avatars responsive to motion capture data from the users. The virtual reality simulation, including the interactions of the one or more avatars and also objects, is displayed as a three-dimensional image in a common immersive environment using one or more head mounted displays so that the users can evaluate the CAD design to thereby verify that tasks associated with a product built according to the CAD design can be performed by a predetermined range of user sizes.

Owner:LOCKHEED MARTIN CORP

Federated mobile device positioning

ActiveUS20140247280A1Precise positioningCathode-ray tube indicatorsImage data processingComputer graphics (images)Video image

A user interface enables a user to calibrate the position of a three dimensional model with a real-world environment represented by that model. Using a device's sensor suite, the device's location and orientation is determined. A video image of the device's environment is displayed on the device's display. The device overlays a representation of an object from a virtual reality model on the video image. The position of the overlaid representation is determined based on the device's location and orientation. In response to user input, the device adjusts a position of the overlaid representation relative to the video image.

Owner:APPLE INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com