Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

5483 results about "Edge detection" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Edge detection includes a variety of mathematical methods that aim at identifying points in a digital image at which the image brightness changes sharply or, more formally, has discontinuities. The points at which image brightness changes sharply are typically organized into a set of curved line segments termed edges. The same problem of finding discontinuities in one-dimensional signals is known as step detection and the problem of finding signal discontinuities over time is known as change detection. Edge detection is a fundamental tool in image processing, machine vision and computer vision, particularly in the areas of feature detection and feature extraction.

Barcode reader with edge detection enhancement

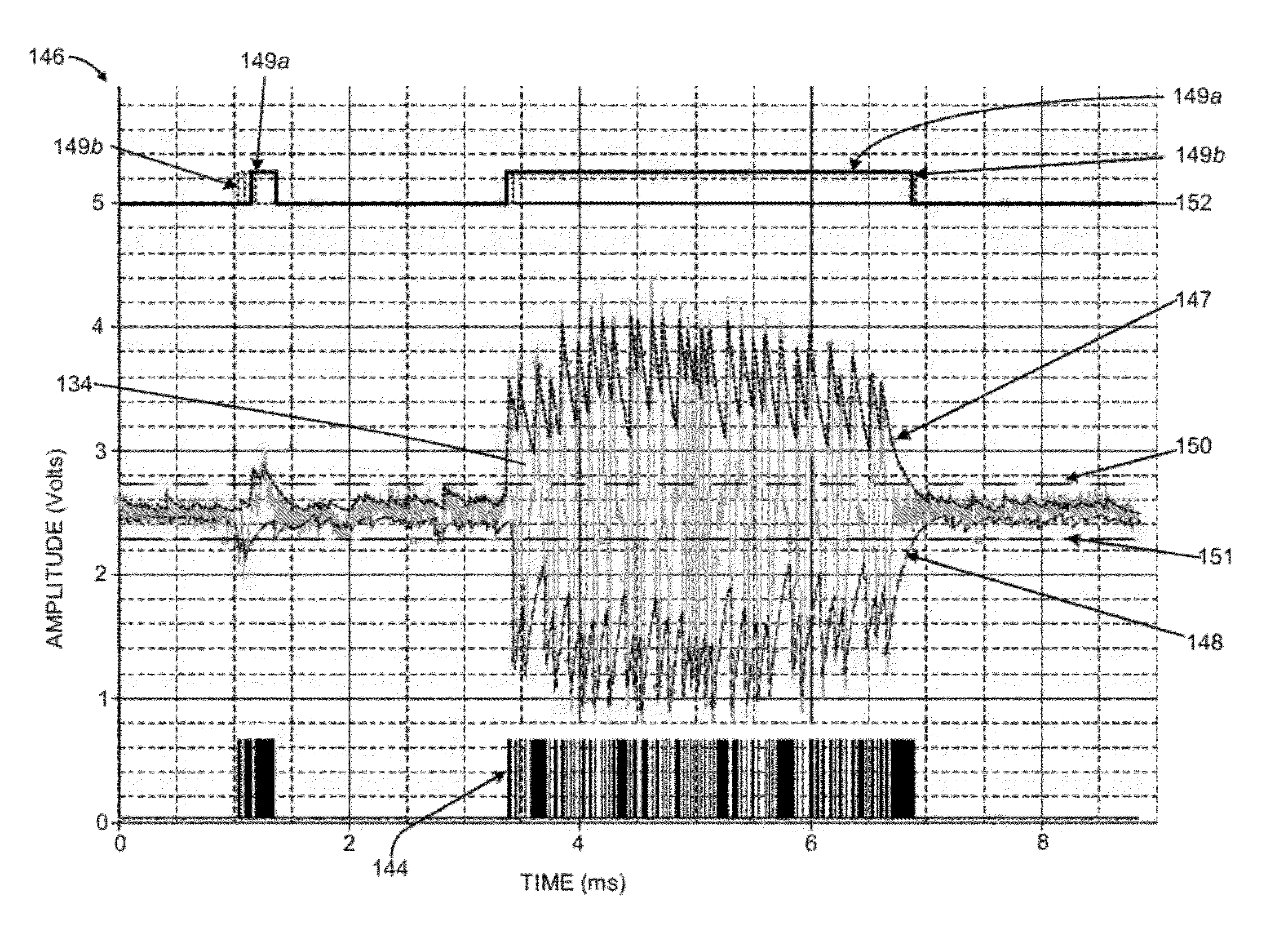

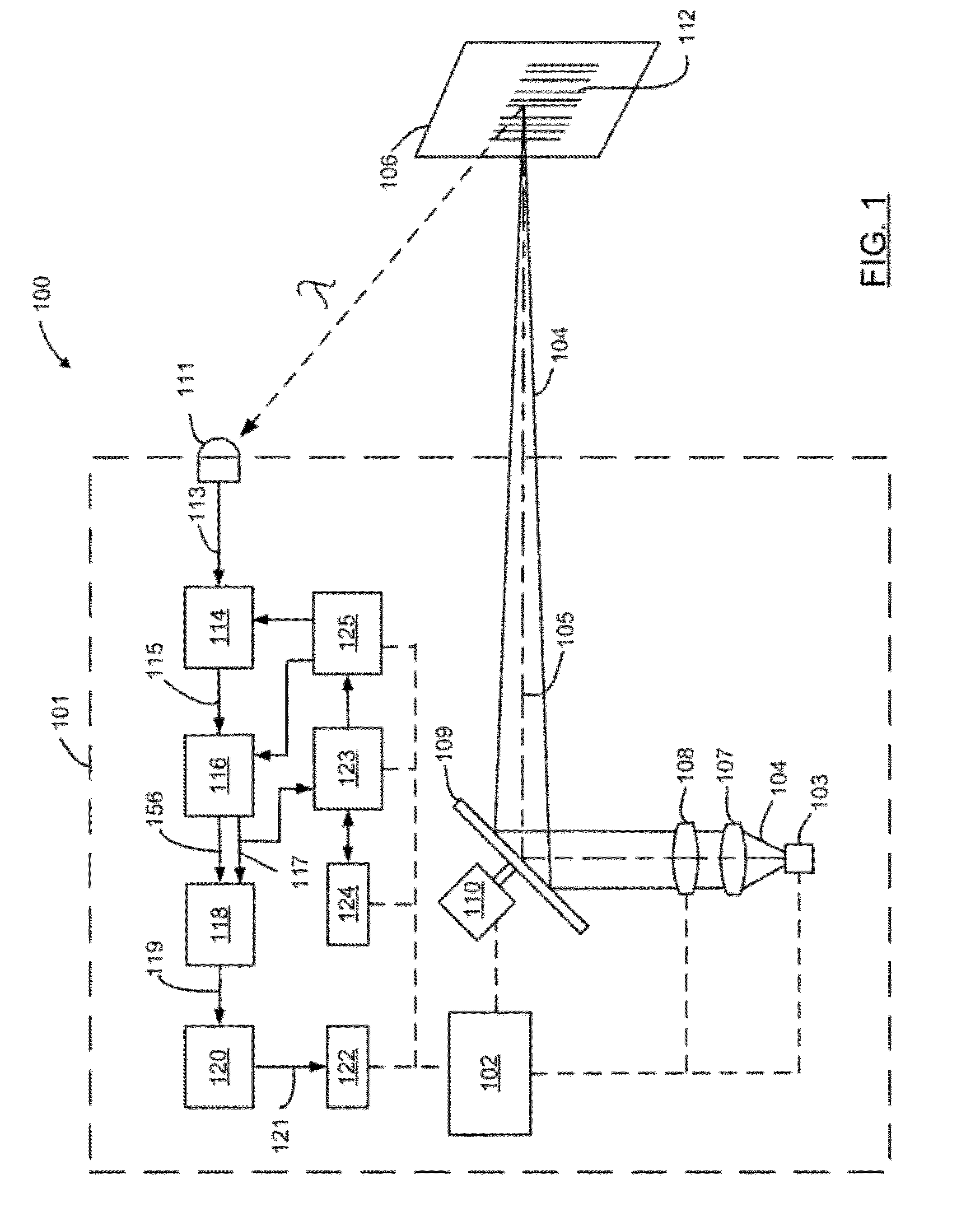

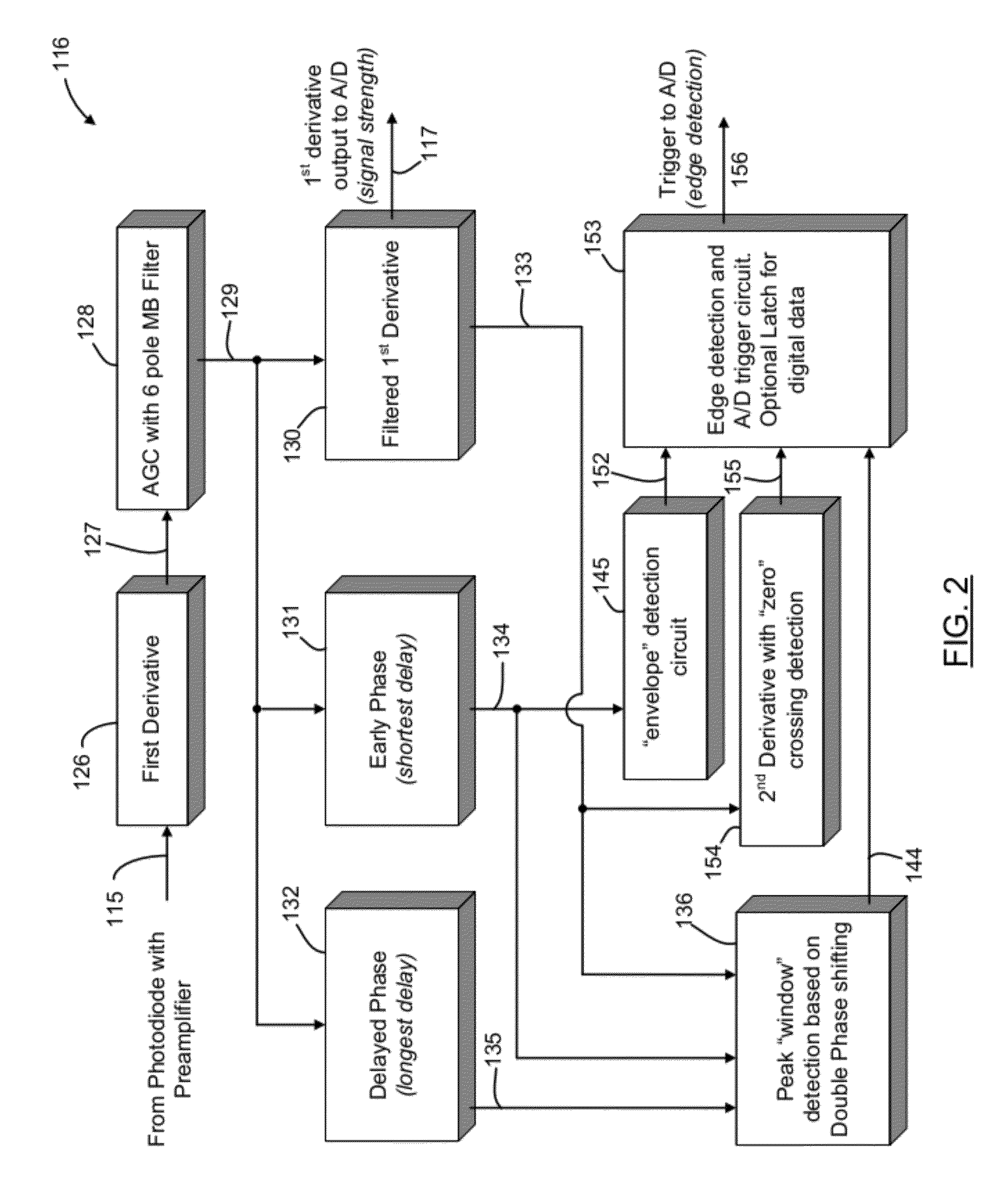

ActiveUS8950678B2Character and pattern recognitionElectric pulse generatorPropagation delayTelecommunications

An optical reader for decoding an encoded symbol character of a symbology includes a scan data signal processor having as an input a scan data signal encoding information representative of the encoded symbol character. The scan data signal processor includes a first time delay stage adapted to provide a primary phase waveform from the scan data signal, a second time delay stage adapted to provide an early phase waveform from the scan data signal, and a third time delay stage adapted to provide a delayed phase waveform from the scan data signal. The early phase waveform has a propagation delay less than the primary phase waveform, and the delayed phase waveform has a propagation delay greater than the primary phase waveform. The scan data signal processor further includes a peak window detection stage for generating a peak window timeframe when an amplitude of the primary phase waveform is greater than, less than, or equal to both an amplitude of the early phase waveform and the delayed phase waveform. The optical reader further includes a digitizer circuit adapted to accept, within the peak window timeframe, the scan data signal processor output.

Owner:HAND HELD PRODS +1

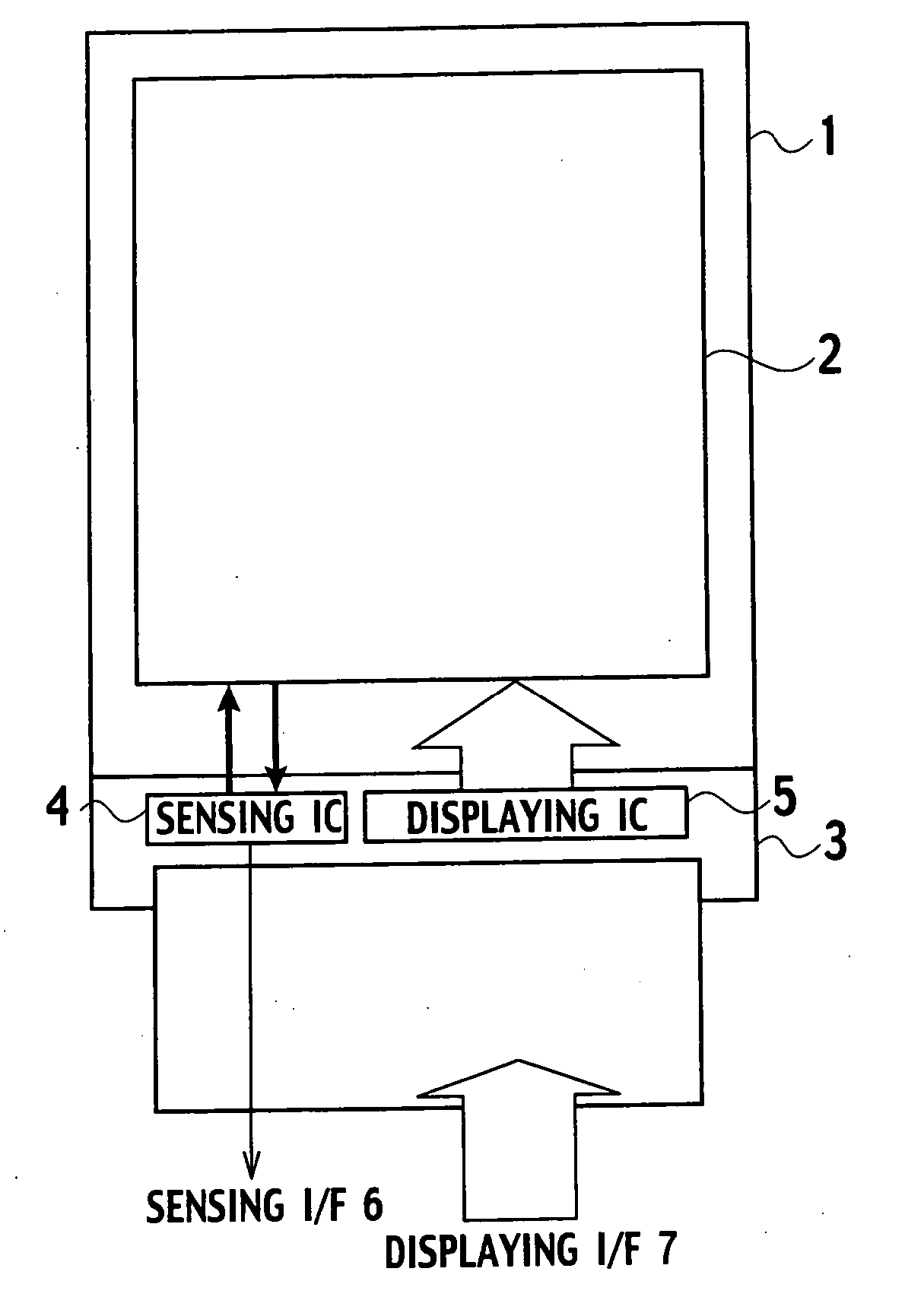

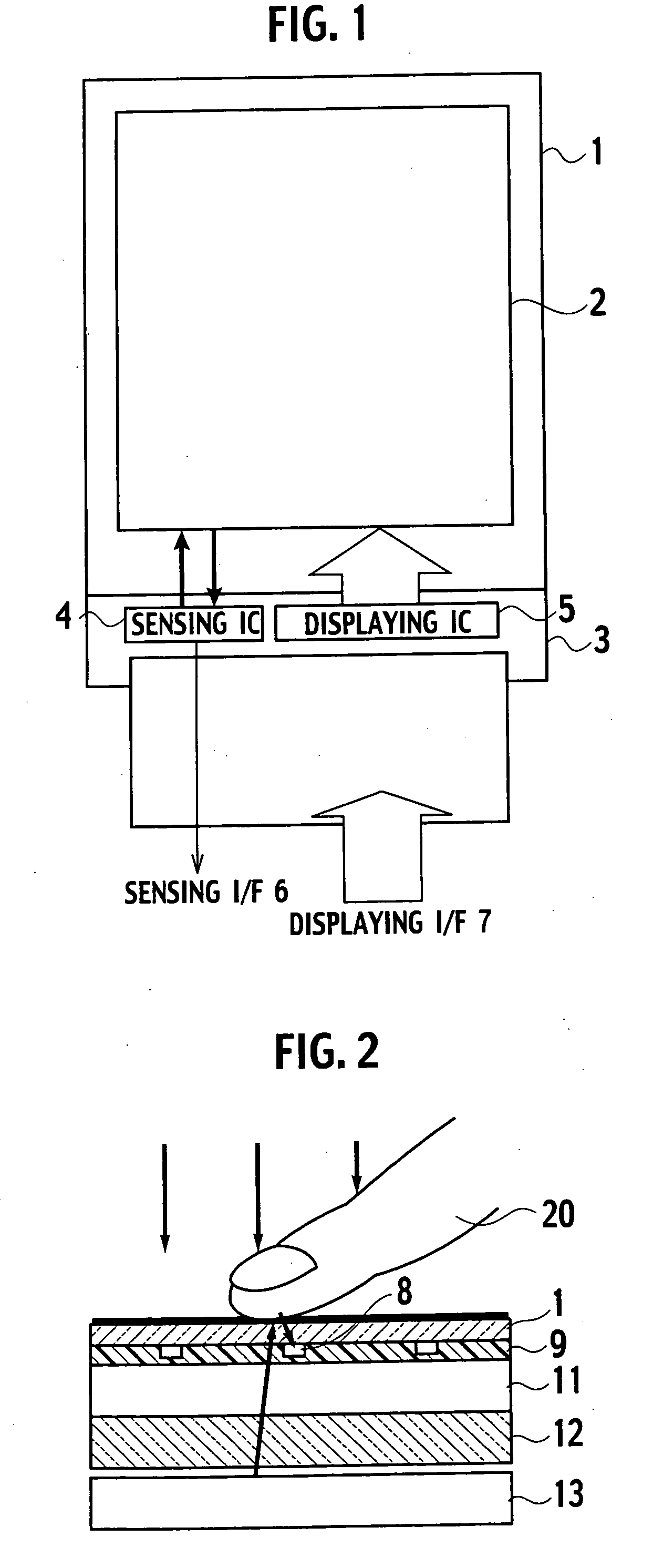

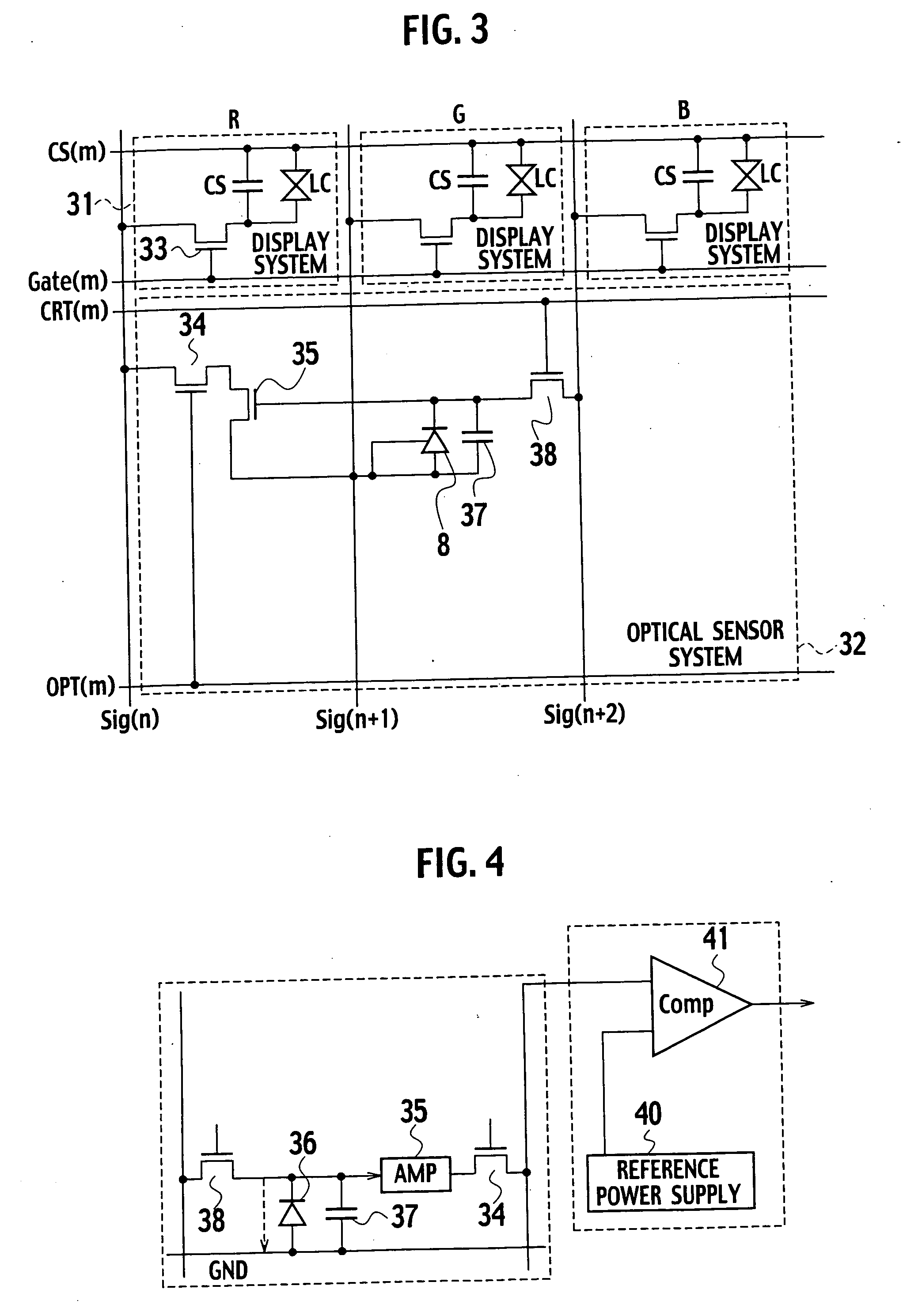

Display device including function to input information from screen by light

ActiveUS20060170658A1Ensure correct executionHigh measurement accuracyInput/output for user-computer interactionCathode-ray tube indicatorsDisplay deviceComputer science

In order to enhance accuracy of determination as to whether an object has contacted a screen and to enhance accuracy of calculation of a coordinate position of the object, edges of an imaged image are detected by an edge detection circuit 76, and by using the edges, it is determined by a contact determination circuit 77 whether or not the object has contacted the screen. Moreover, in order to appropriately control sensitivity of optical sensors in response to external light, by a calibration circuit 93, a drive condition of the optical sensors is changed based on output values of the optical sensors, which are varied in response to the external light.

Owner:JAPAN DISPLAY CENT INC

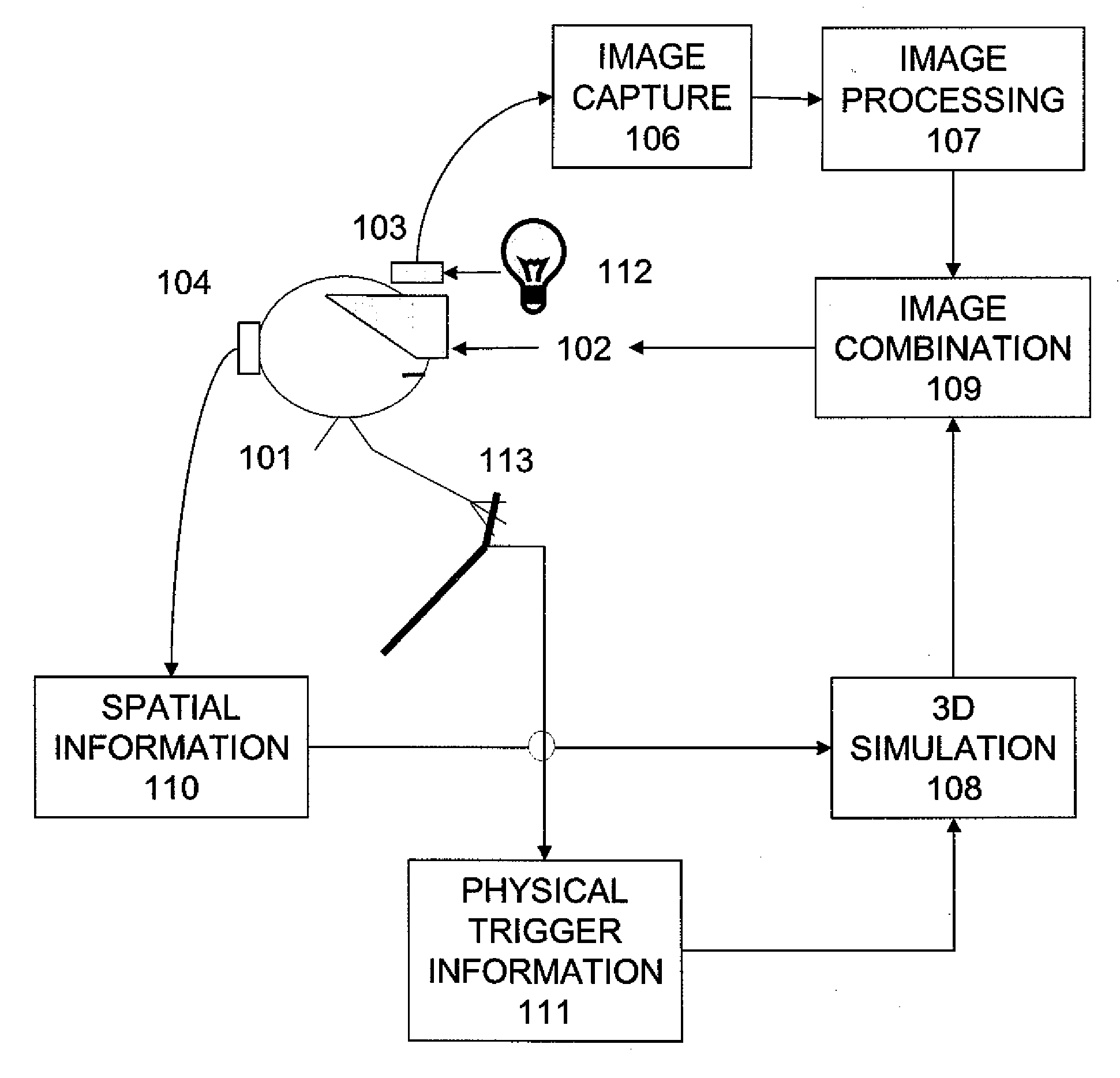

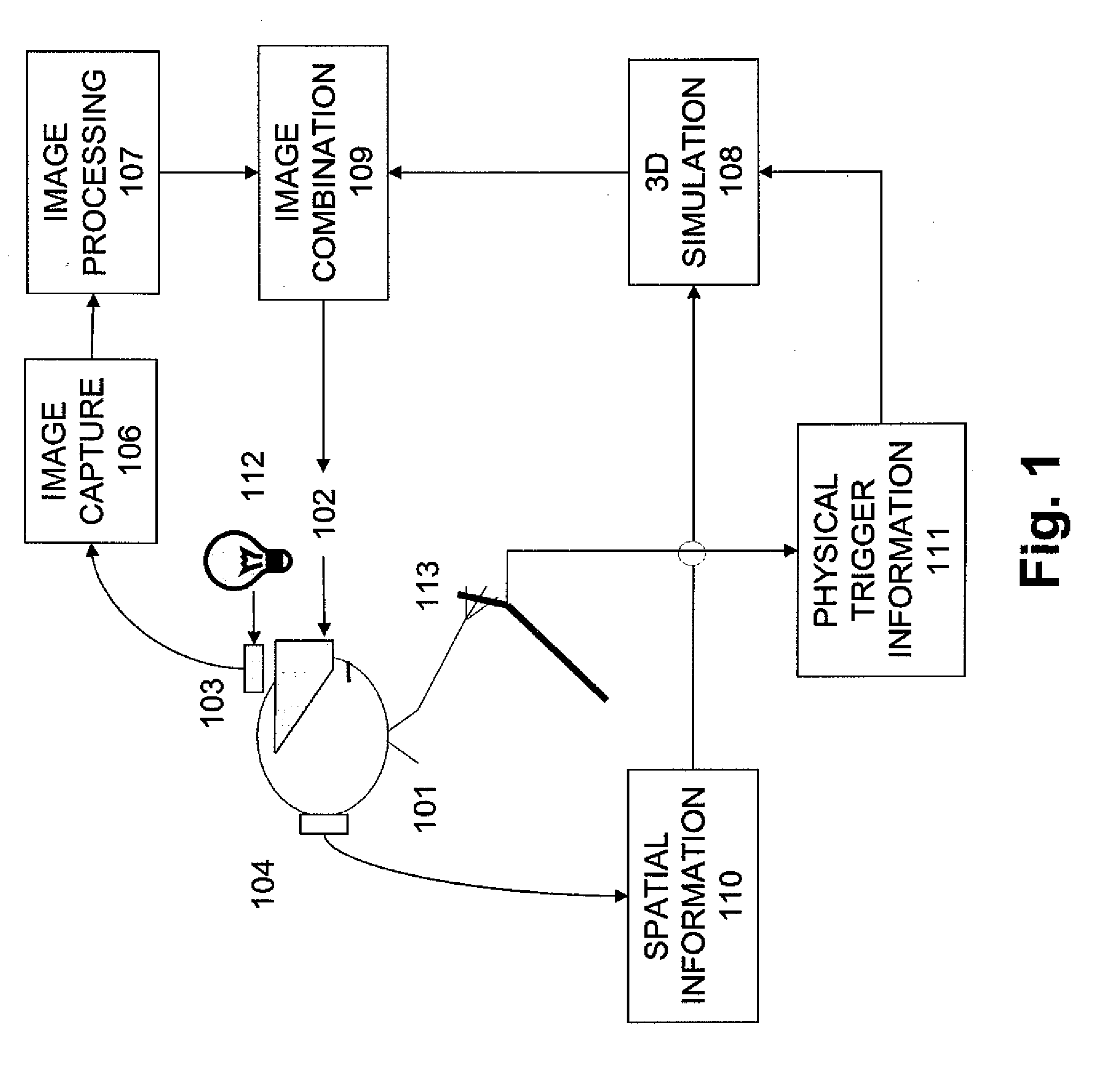

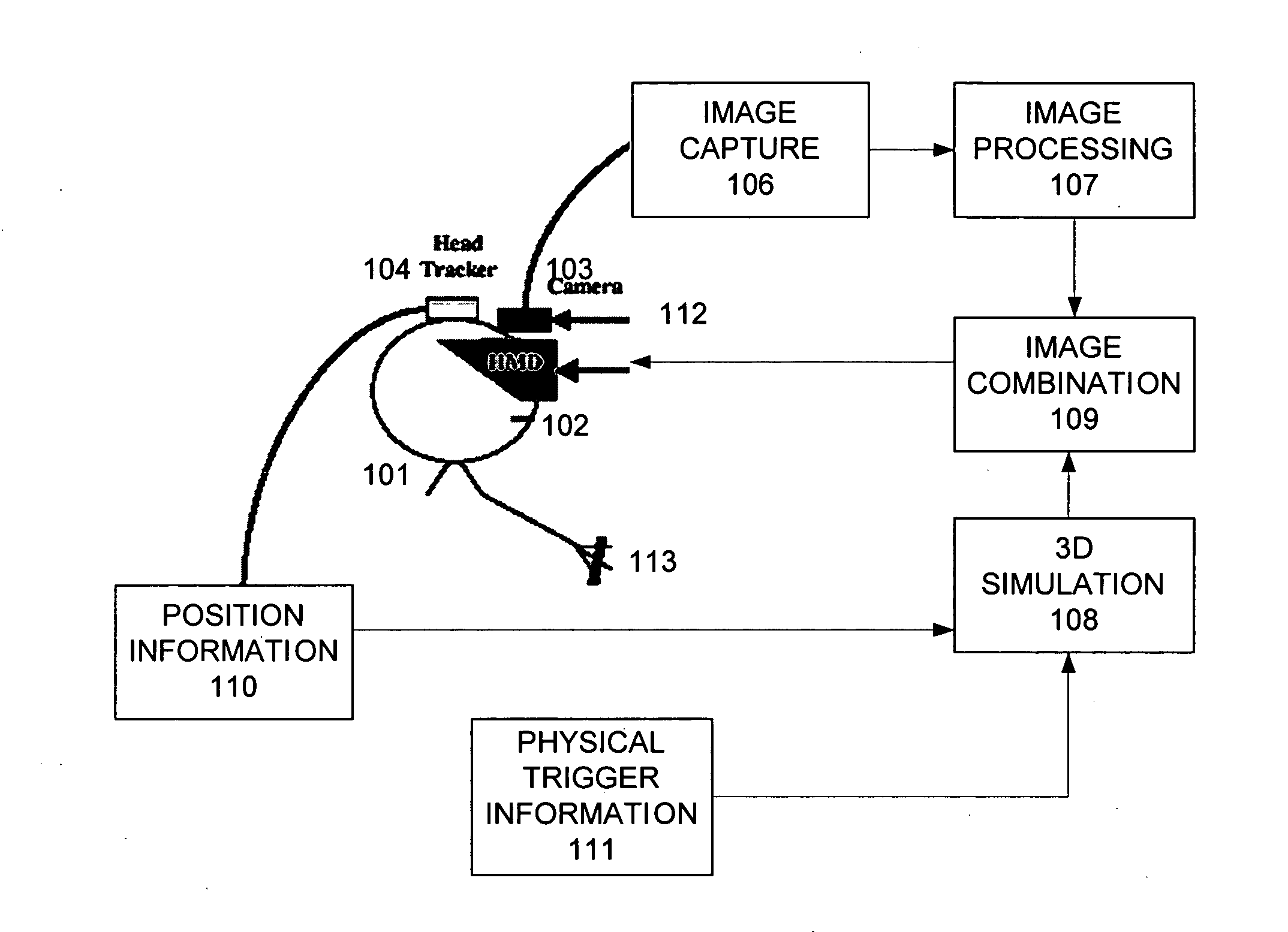

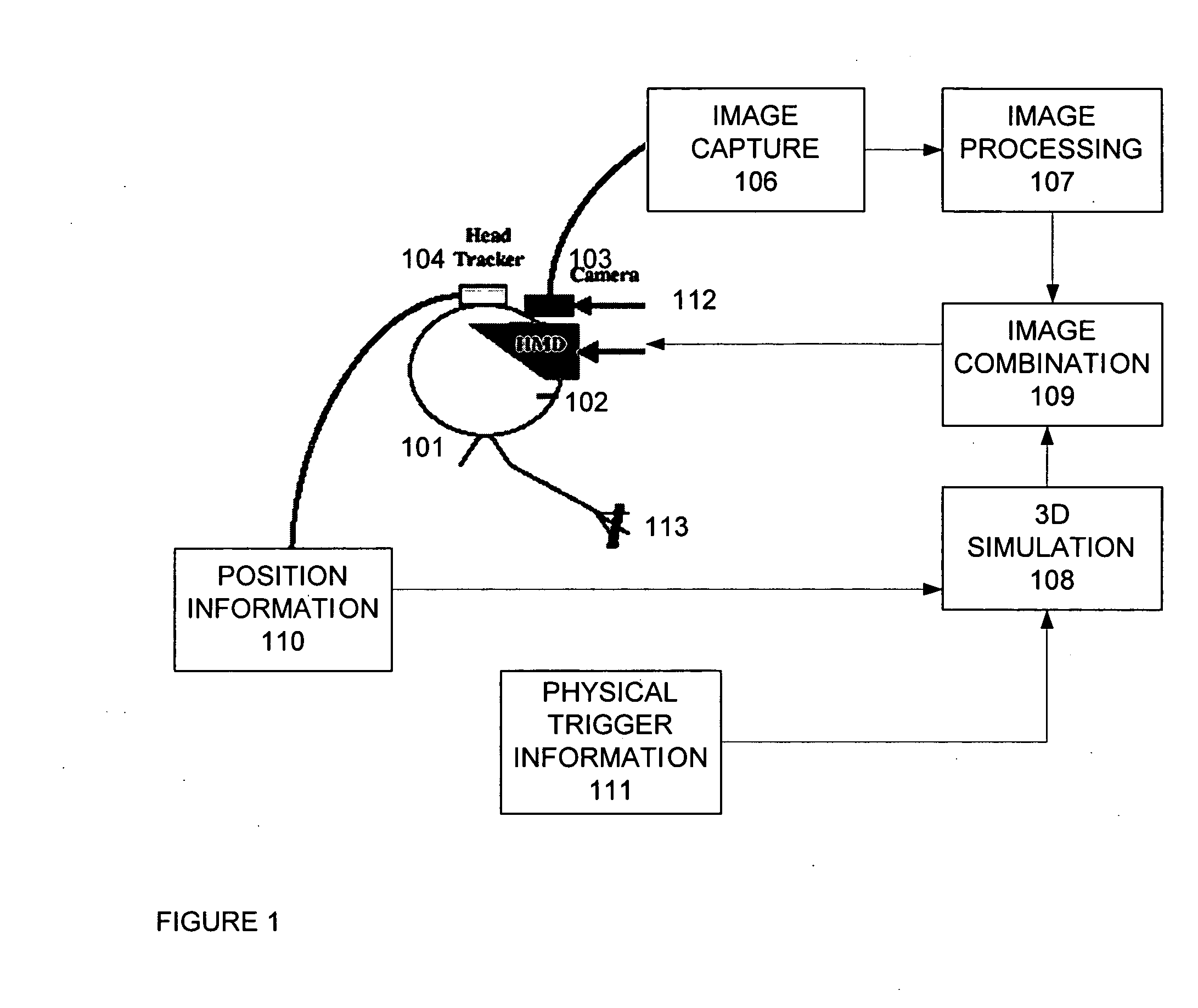

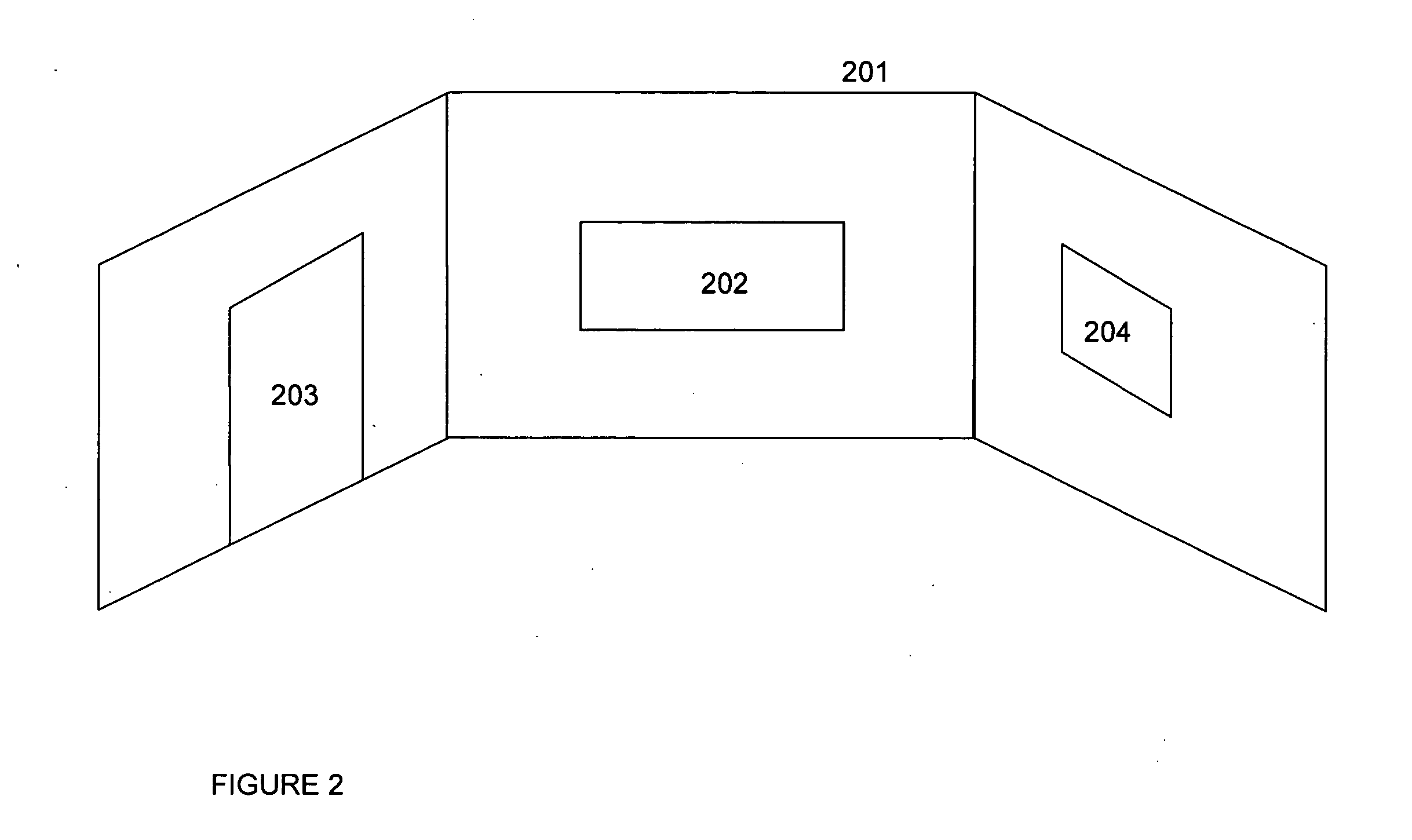

Systems and methods for combining virtual and real-time physical environments

Systems, methods and structures for combining virtual reality and real-time environment by combining captured real-time video data and real-time 3D environment renderings to create a fused, that is, combined environment, including capturing video imagery in RGB or HSV / HSV color coordinate systems and processing it to determine which areas should be made transparent, or have other color modifications made, based on sensed cultural features, electromagnetic spectrum values, and / or sensor line-of-sight, wherein the sensed features can also include electromagnetic radiation characteristics such as color, infra-red, ultra-violet light values, cultural features can include patterns of these characteristics, such as object recognition using edge detection, and whereby the processed image is then overlaid on, and fused into a 3D environment to combine the two data sources into a single scene to thereby create an effect whereby a user can look through predesignated areas or “windows” in the video image to see into a 3D simulated world, and / or see other enhanced or reprocessed features of the captured image.

Owner:BACHELDER EDWARD N +1

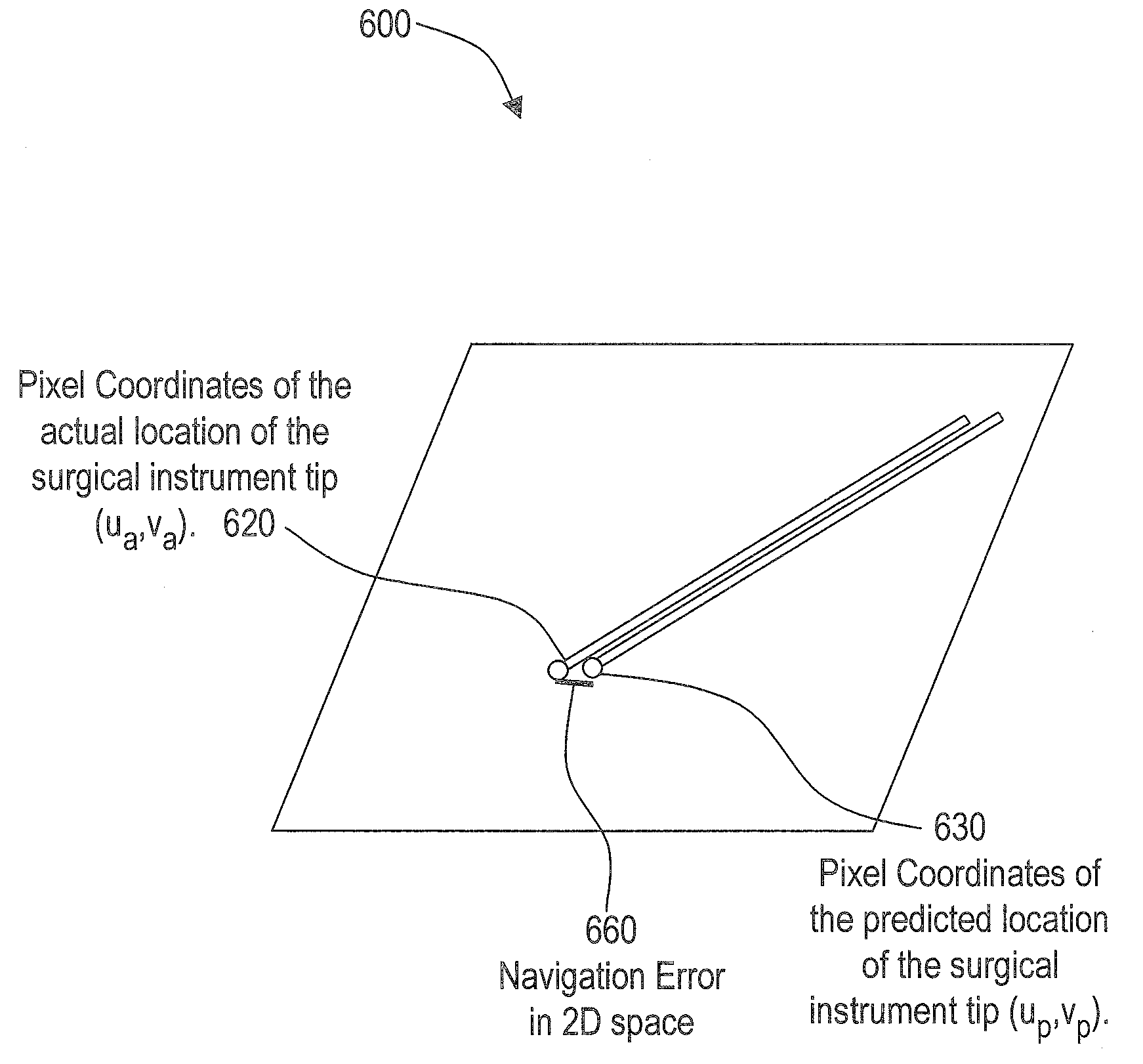

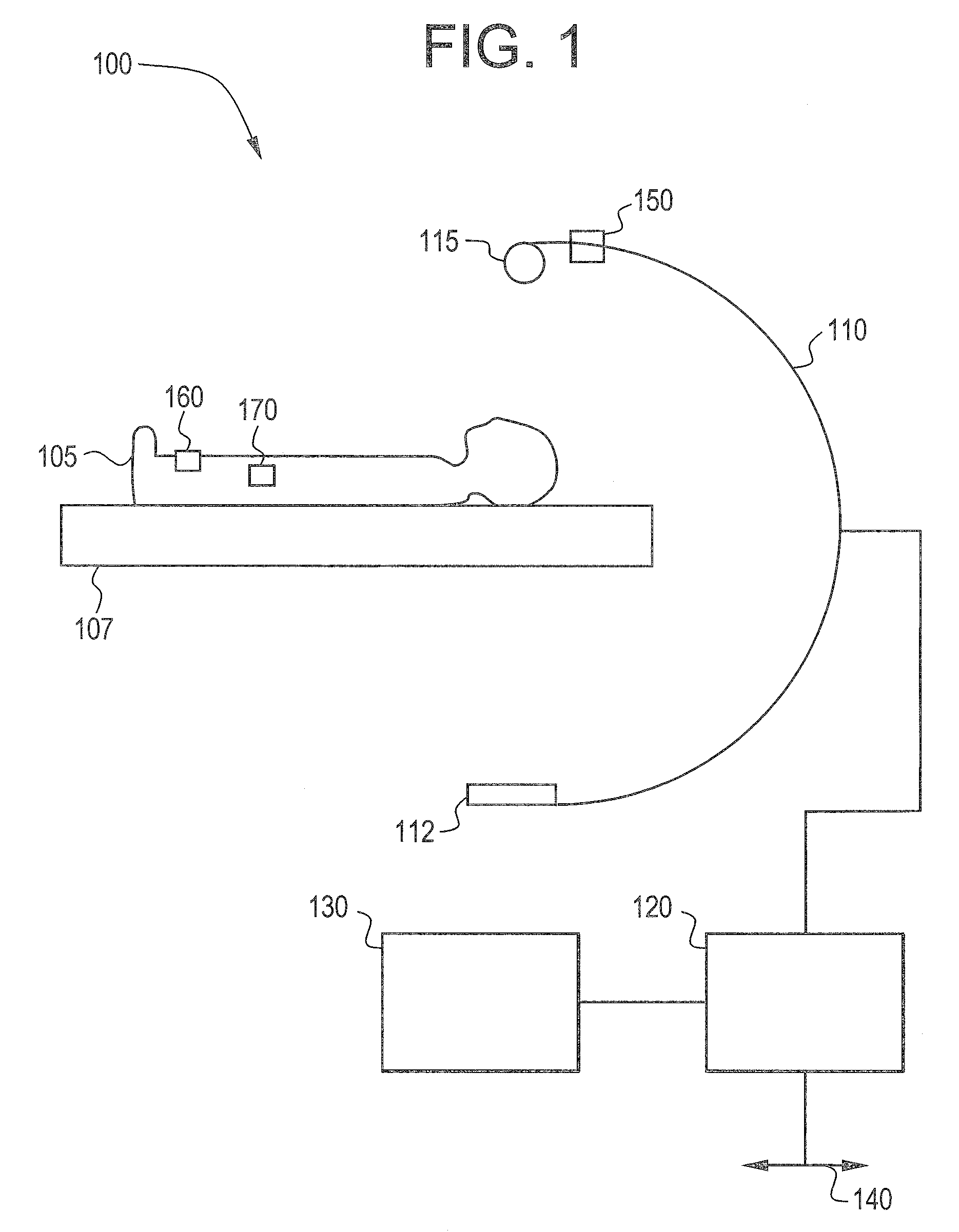

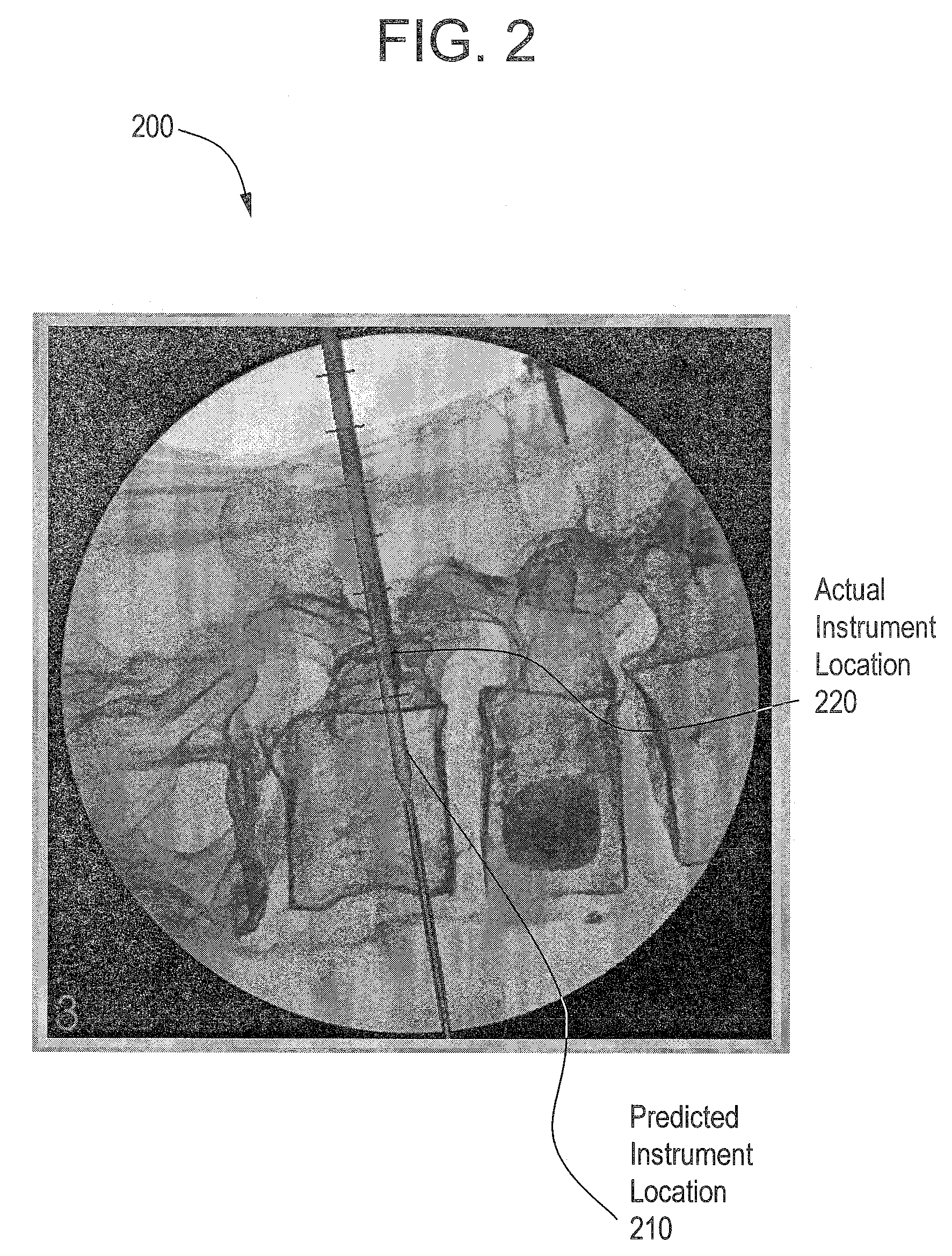

System and method for accuracy verification for image based surgical navigation

ActiveUS20080319311A1Material analysis using wave/particle radiationImage analysisEngineeringNavigation system

Certain embodiments of the present invention provide for a system and method for assessing the accuracy of a surgical navigation system. The method may include acquiring an X-ray image that captures a surgical instrument. The method may also include segmenting the surgical instrument in the X-ray image. In an embodiment, the segmenting may be performed using edge detection or pattern recognition. The distance between the predicted location of the surgical instrument tip and the actual location of the surgical instrument tip may be computed. The distance between the predicted location of the surgical instrument tip and the actual location of the surgical instrument tip may be compared with a threshold value. If the distance between the predicted location of the surgical instrument tip and the actual location of the surgical instrument tip is greater than the threshold value, the user may be alerted.

Owner:STRYKER EURO OPERATIONS HLDG LLC

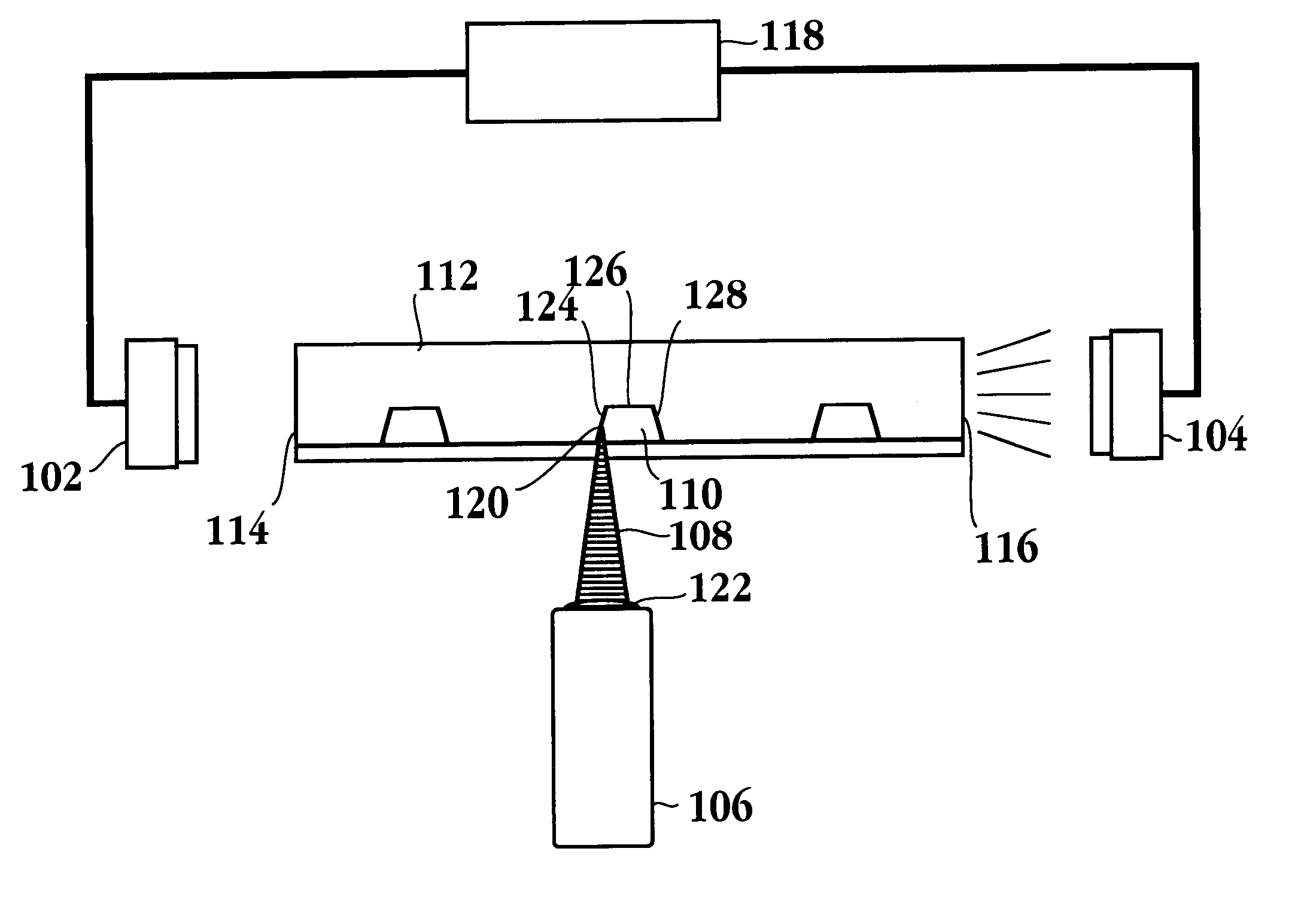

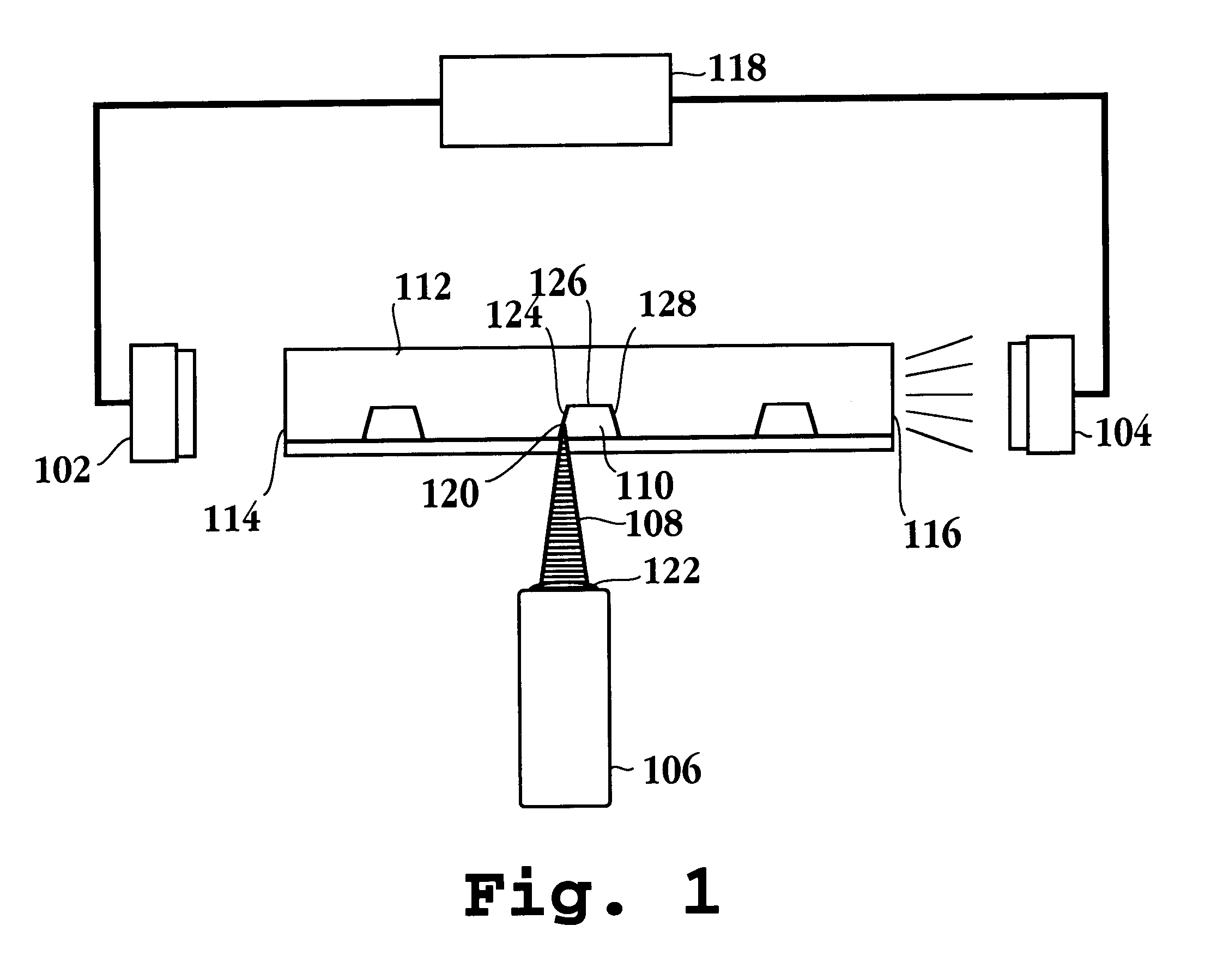

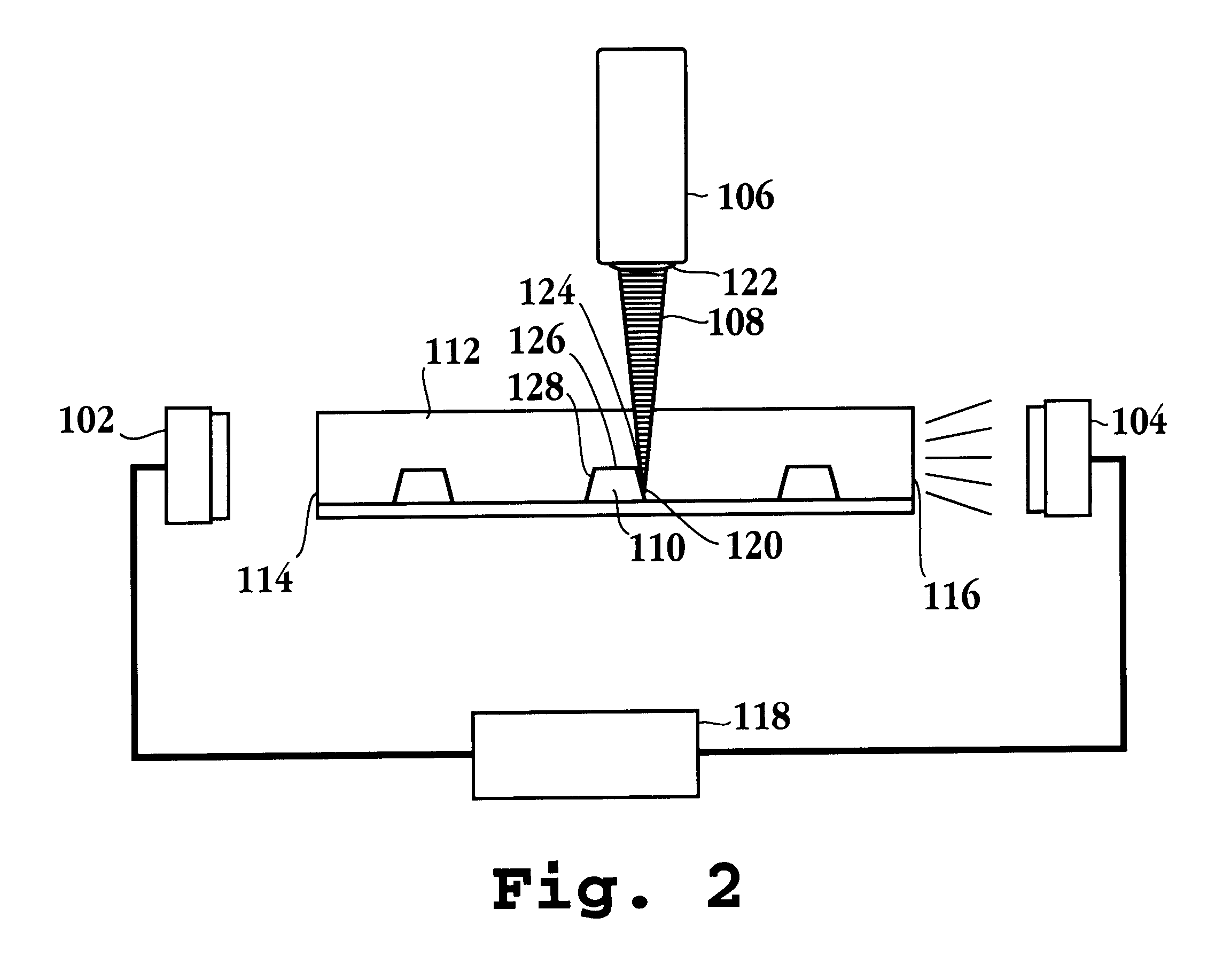

Optical alignment in capillary detection using capillary wall scatter

The present invention provides for methods and apparatus for locating a capillary channel that is disposed within a lab chip. The method provides for scanning the channel with a light source, monitoring the resulting light at the edges of the lab chip, and interpreting this information whereby the light detected at the edges of the lab chip can be used as a means for characterizing the location of the side walls of the channel within the lab chip. The apparatus provides for a carriage system to which a light source and the lab chip are attached. It also provides for one or more scatter detectors directed towards the edges of the chip and connected to a computer processor for purposes of analysis.

Owner:MONOGRAM BIOSCIENCES

Context and Epsilon Stereo Constrained Correspondence Matching

InactiveUS20130002828A1Television system detailsCharacter and pattern recognitionParallaxGreek letter epsilon

A catadioptric camera having a perspective camera and multiple curved mirrors, images the multiple curved mirrors and uses the epsilon constraint to establish a vertical parallax between points in one mirror and their corresponding reflection in another. An ASIFT transform is applied to all the mirror images to establish a collection of corresponding feature points, and edge detection is applied on mirror images to identify edge pixels. A first edge pixel in a first imaged mirror is selected, its 25 nearest feature points are identified, and a rigid transform is applied to them. The rigid transform is fitted to 25 corresponding feature points in a second imaged mirror. The closes edge pixel to the expected location as determined by the fitted rigid transform is identified, and its distance to the vertical parallax is determined. If the distance is not greater than predefined maximum, then it is deemed correlate to the edge pixel in the first imaged mirror.

Owner:SEIKO EPSON CORP

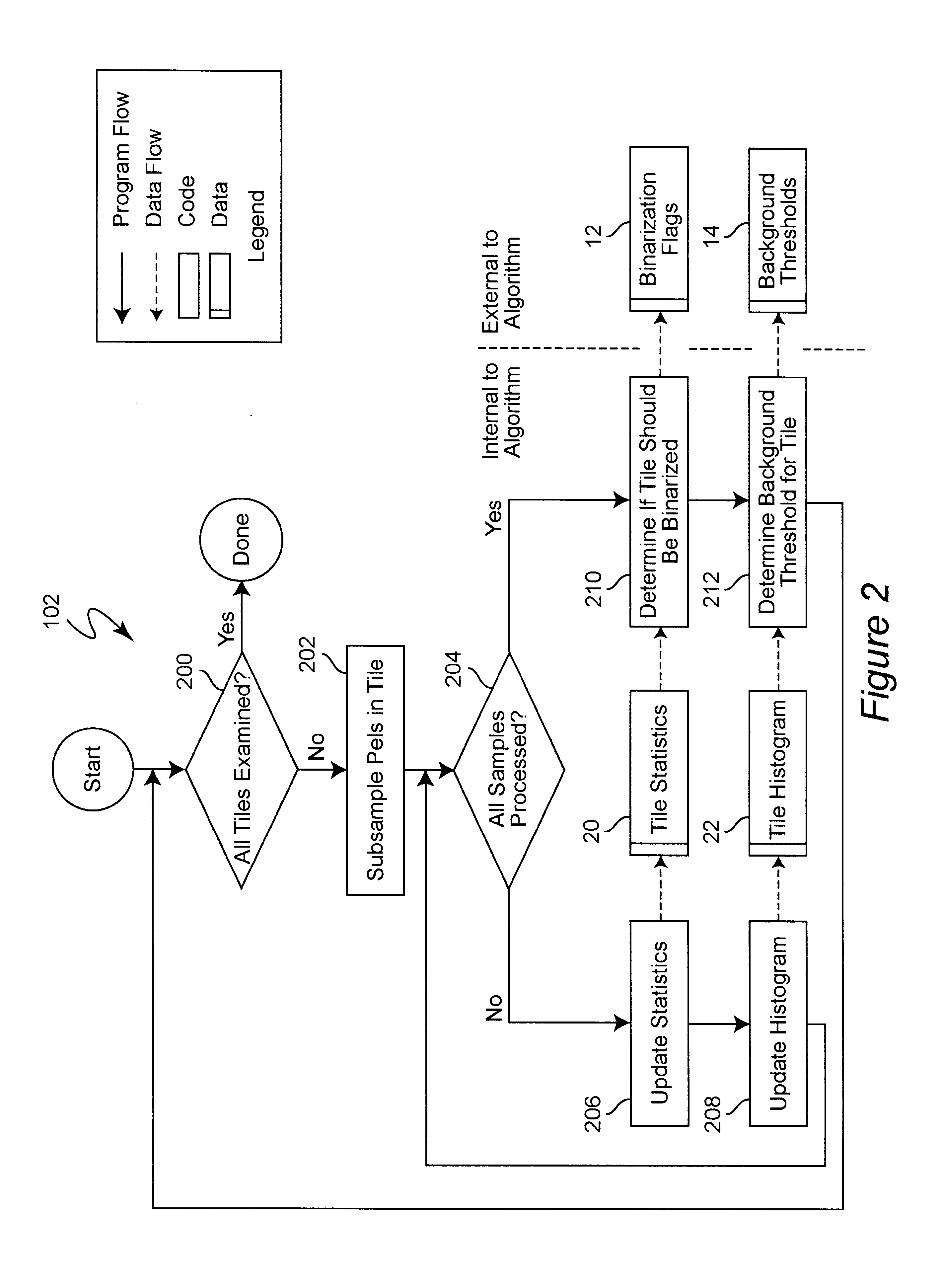

Real time binarization of gray images

InactiveUS6738496B1Promote resultsConvenient statisticsCharacter recognitionComputer visionGrayscale

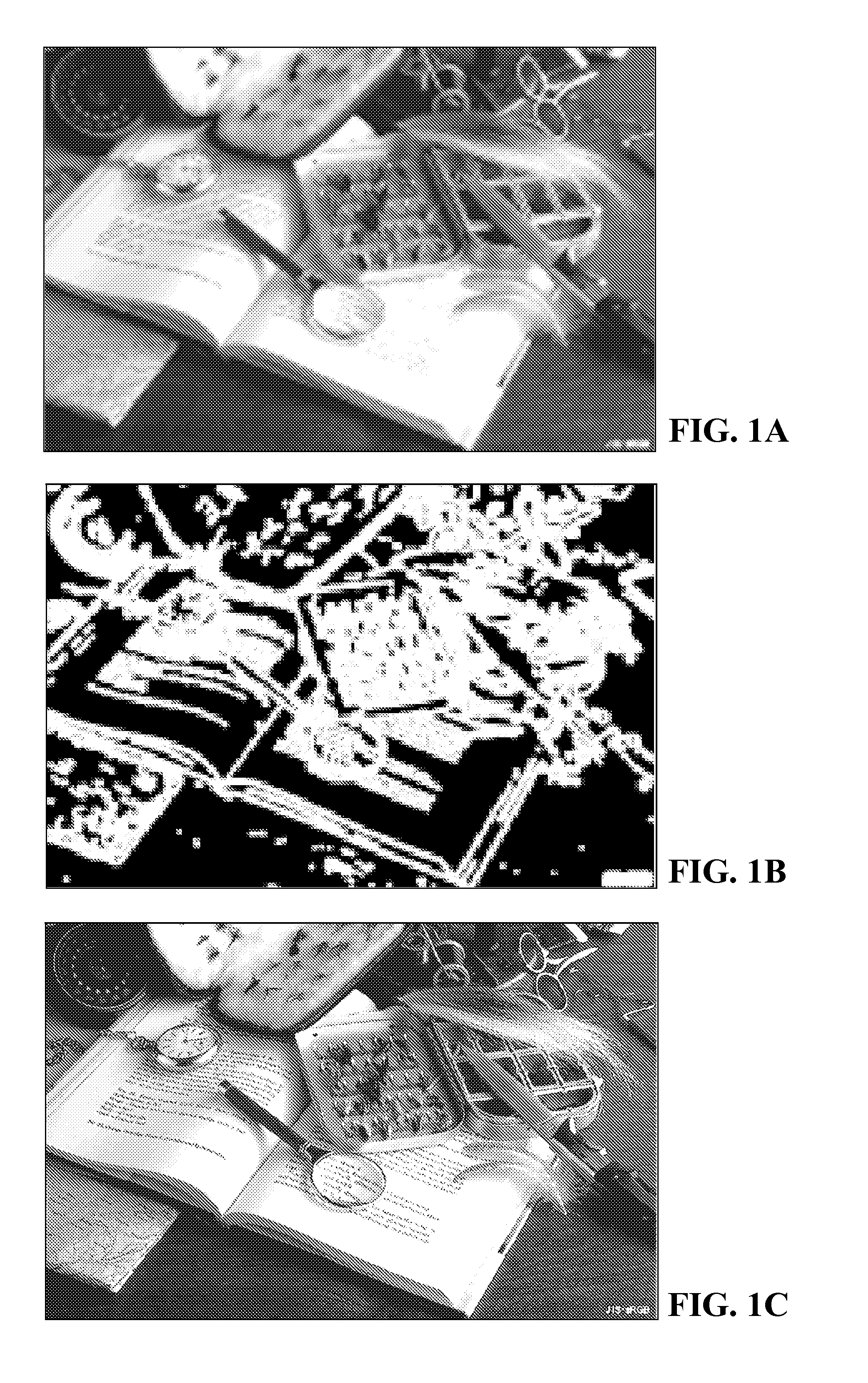

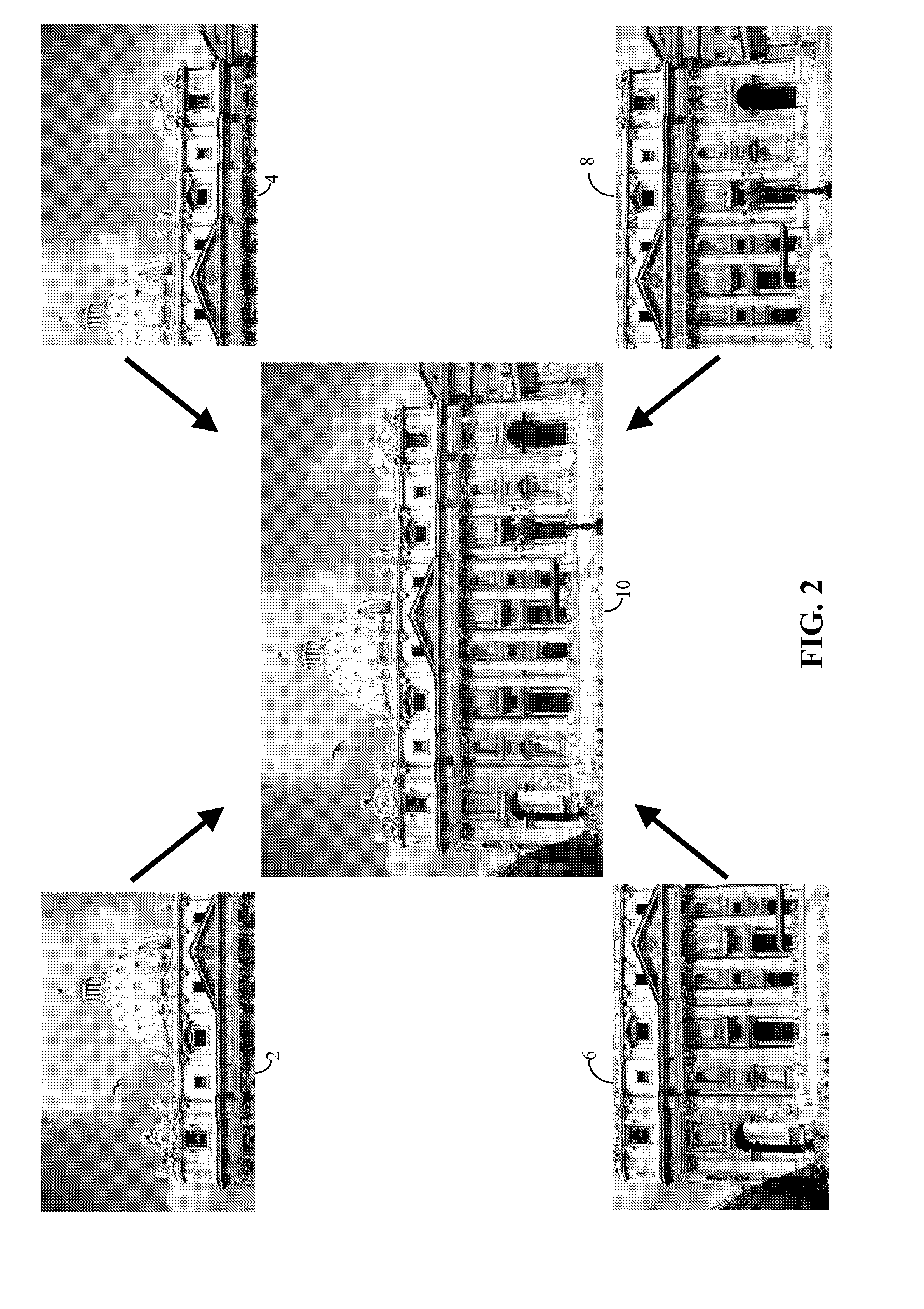

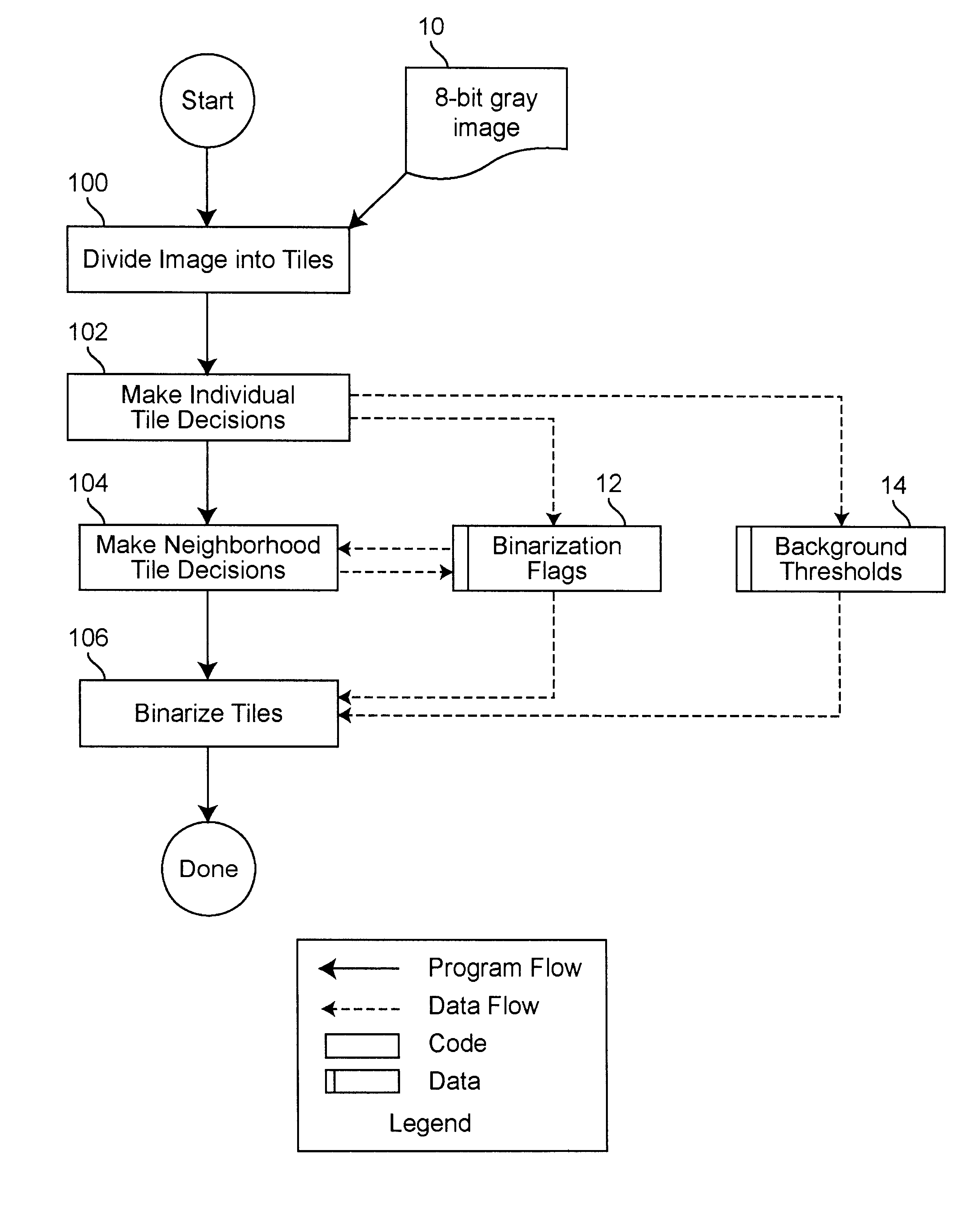

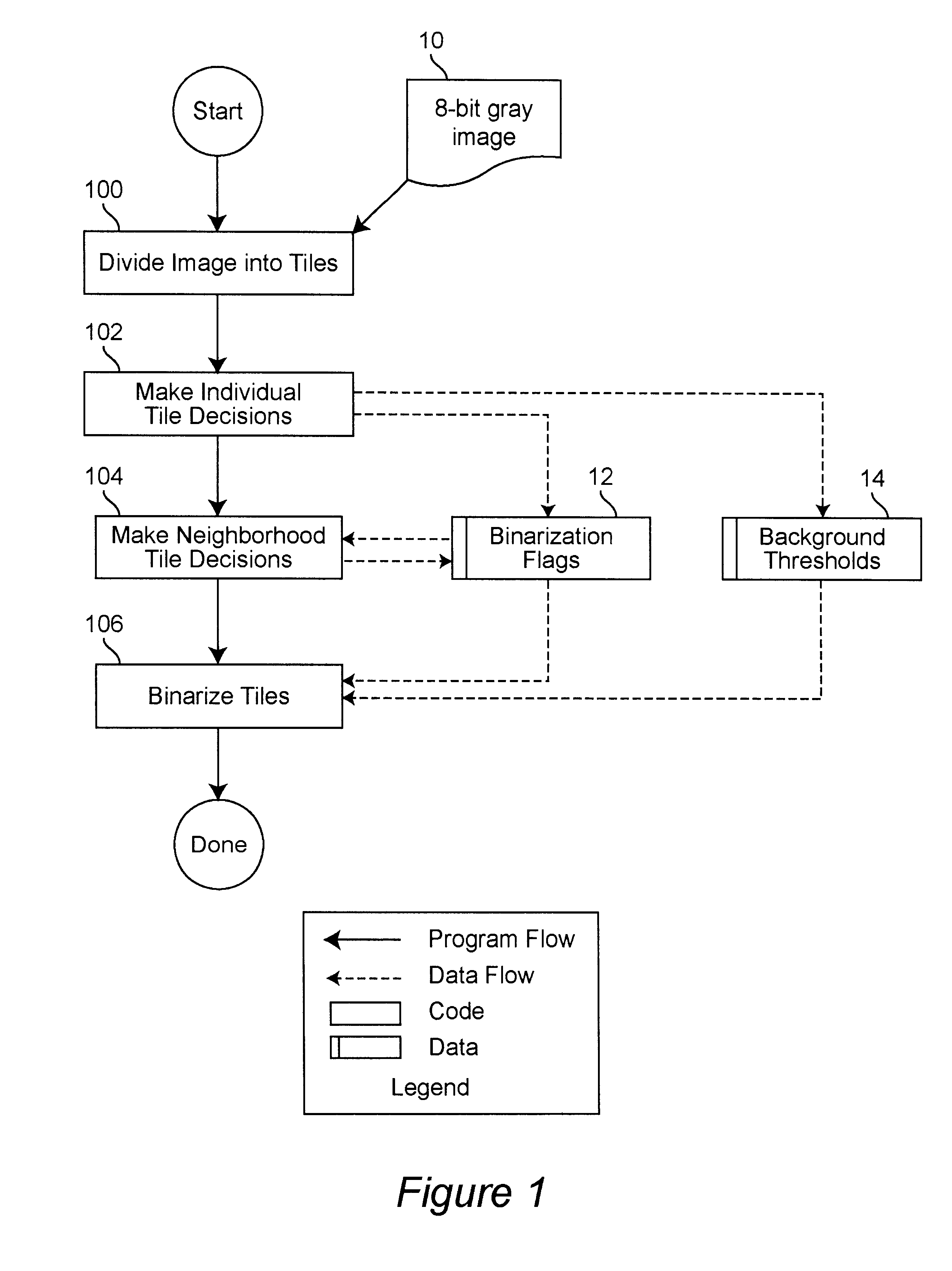

A binarization method for gray address images which combines high quality and high speed. The method is designed specifically for efficient software implementation. Two binarization approaches, localized background thresholds and Laplacian edge enhancement, are combined into a process to enhance the strengths of the two methods and eliminate their weaknesses. The image is divided into tiles, making binarization decisions for each tile. Tile decisions are modified based on adjacent tile decisions and then the tiles are binarized. Binarization of pixels is performed by performing background thresholding and edge detection thresholding. Only pixels exceeding both thresholds are selected as "on".

Owner:LOCKHEED MARTIN CORP

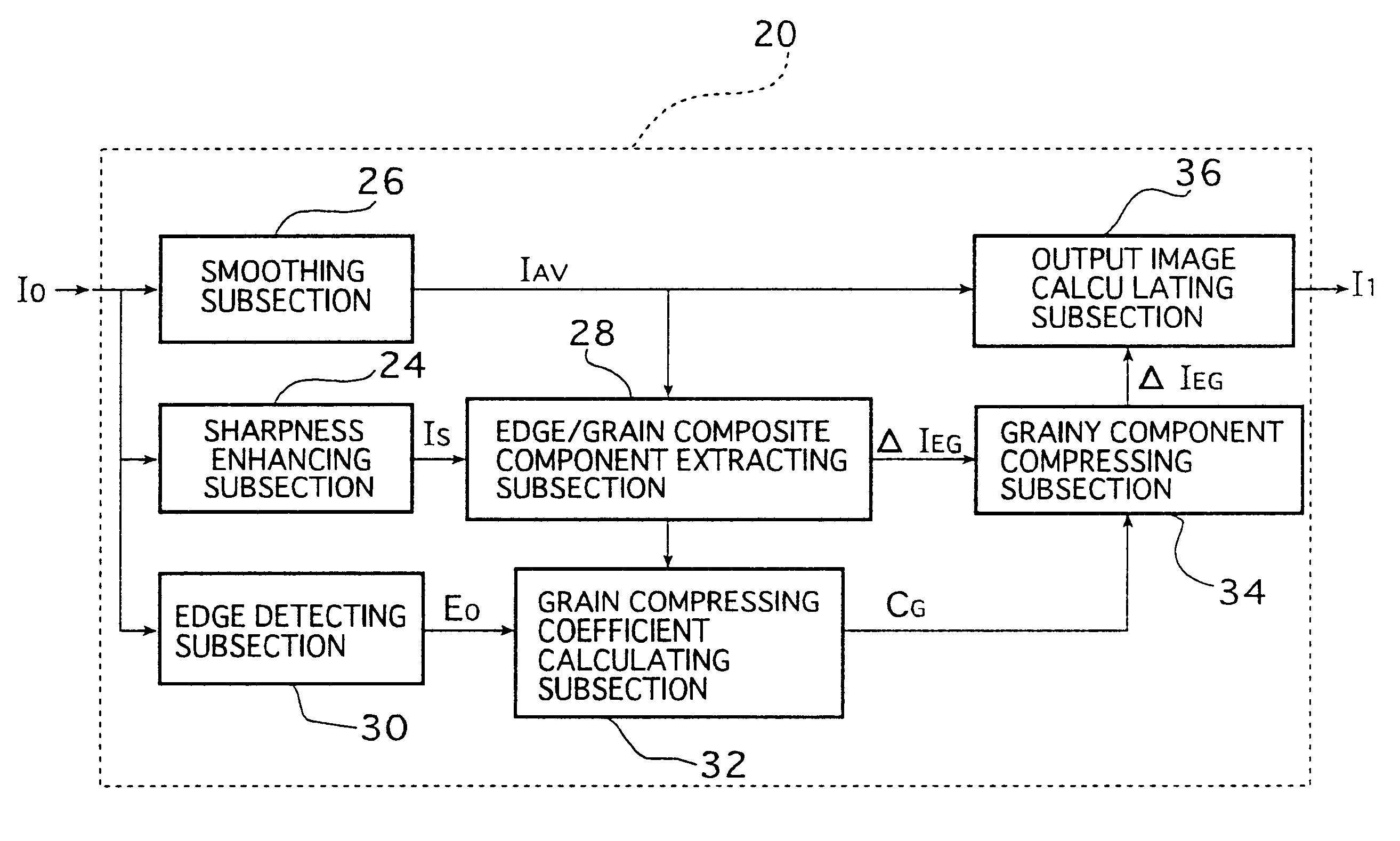

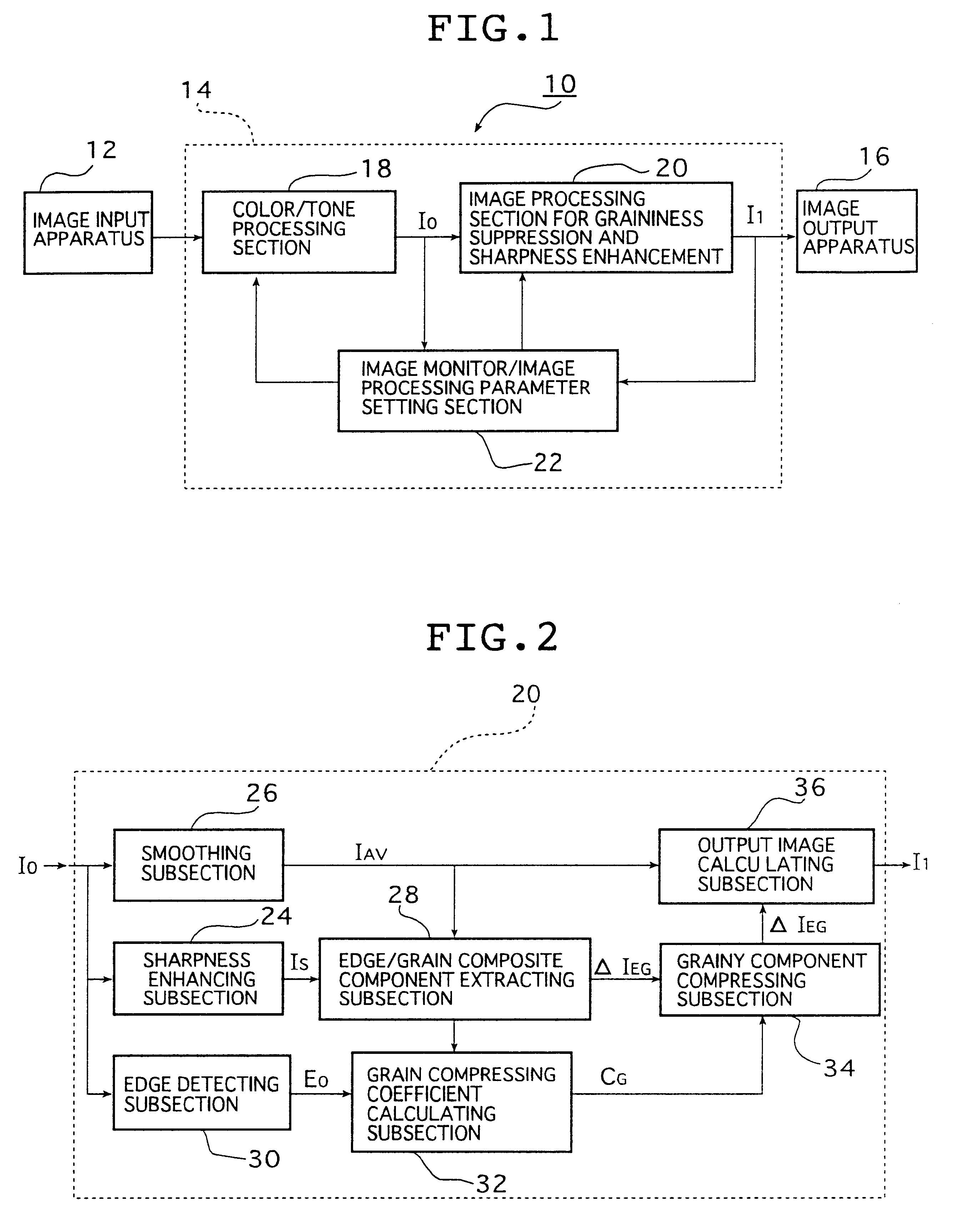

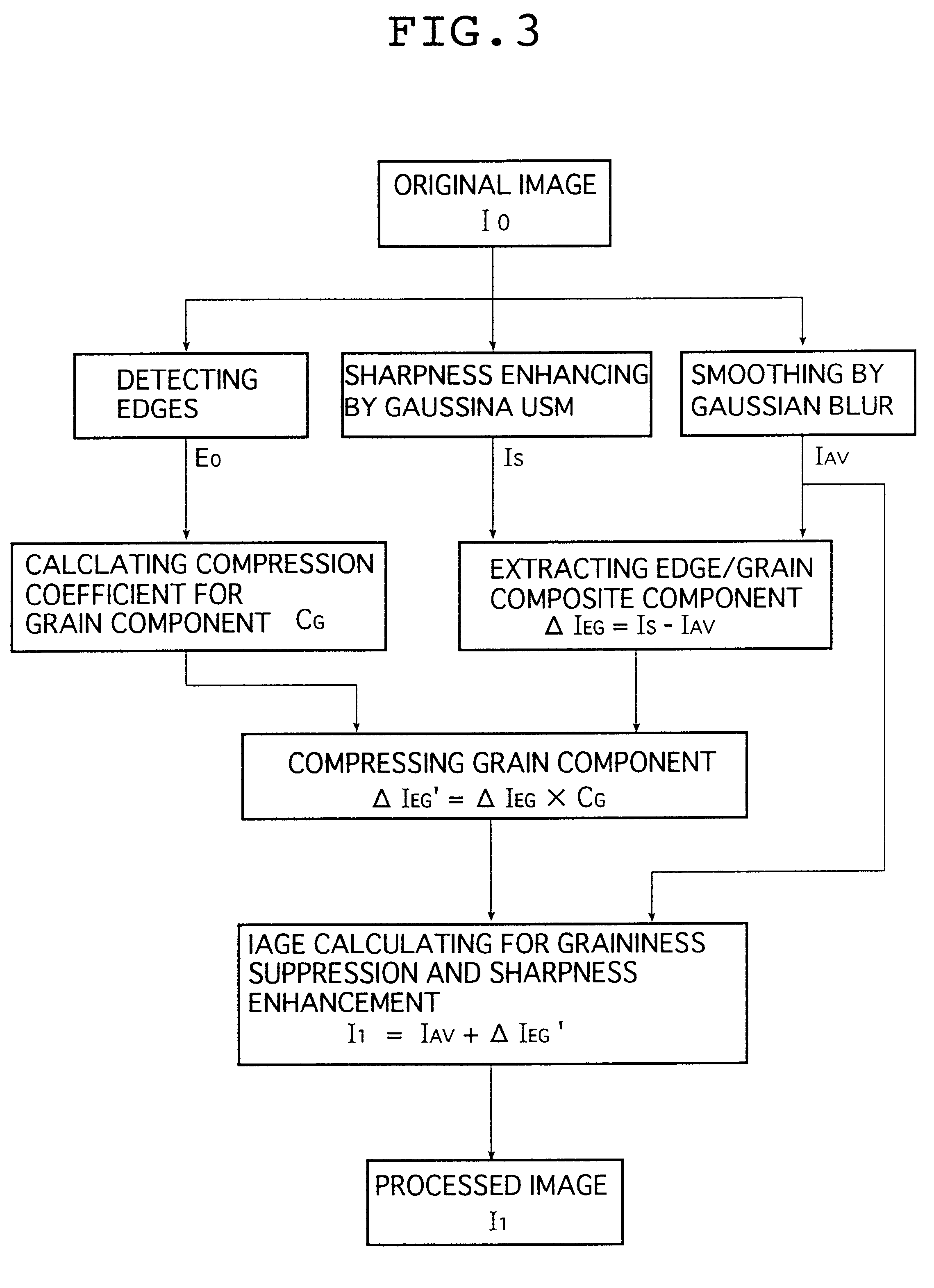

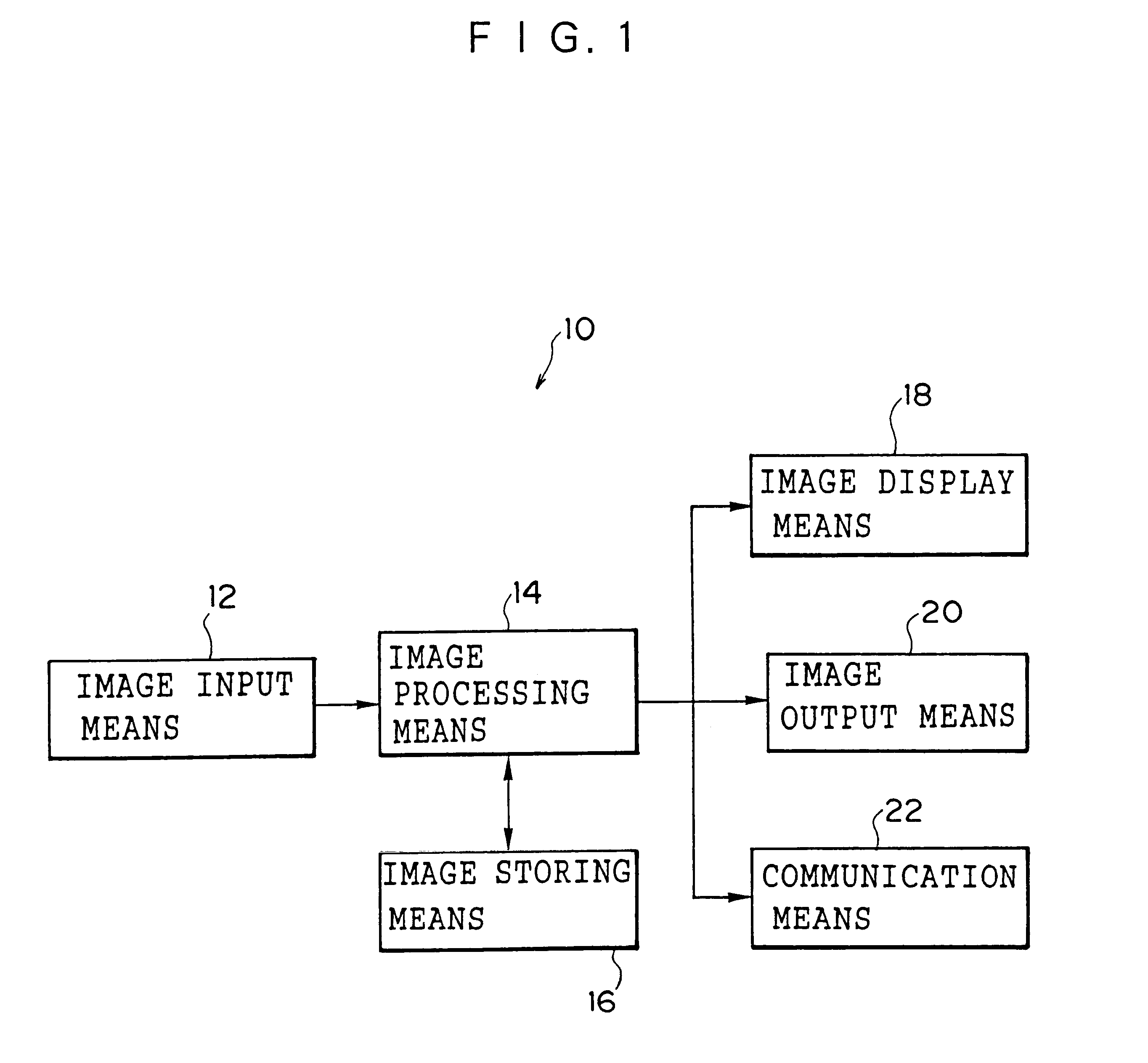

Method and apparatus for image processing

InactiveUS6373992B1Improve clarityReduce the amplitudeImage enhancementImage analysisImaging processingDigital image

There is provided a method for processing a digital image for noise suppression and sharpness enhancement, comprising the steps of performing a sharpness enhancing process on original image data to create sharpness enhanced image data; performing a smoothing process on the original image data to create smoothed image data; subtracting the smoothed image data from the sharpness enhanced image data to create first edge / grain composite image data; performing an edge detection to determine an edge intensity data; using the edge intensity data to determine grainy fluctuation compressing coefficient data for compressing amplitude of an grainy fluctuation component in the grainy region; multiplying the first edge / grain composite image data by the grainy fluctuation compressing coefficient data to compress only the grainy fluctuation component in the grainy region, and to thereby create second edge / grain composite image data; and adding the second edge / grain composite image data to the smoothed image data to thereby create an processed image. There is also provided an image processing apparatus for implementing the above method. An improvement is thus achieved in both graininess and sharpness without causing any artificiality and oddities due to "blurry graininess".

Owner:FUJIFILM CORP +1

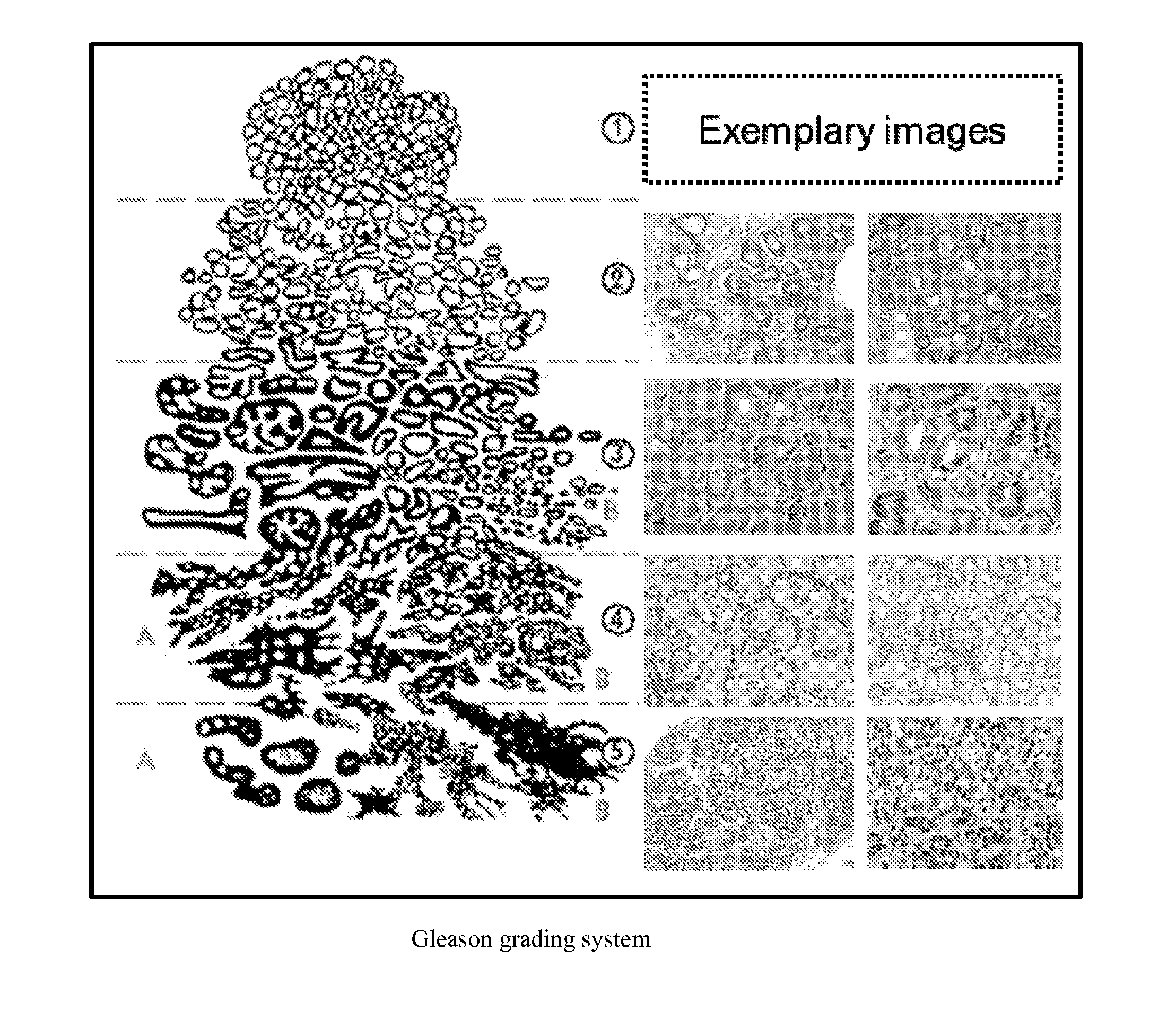

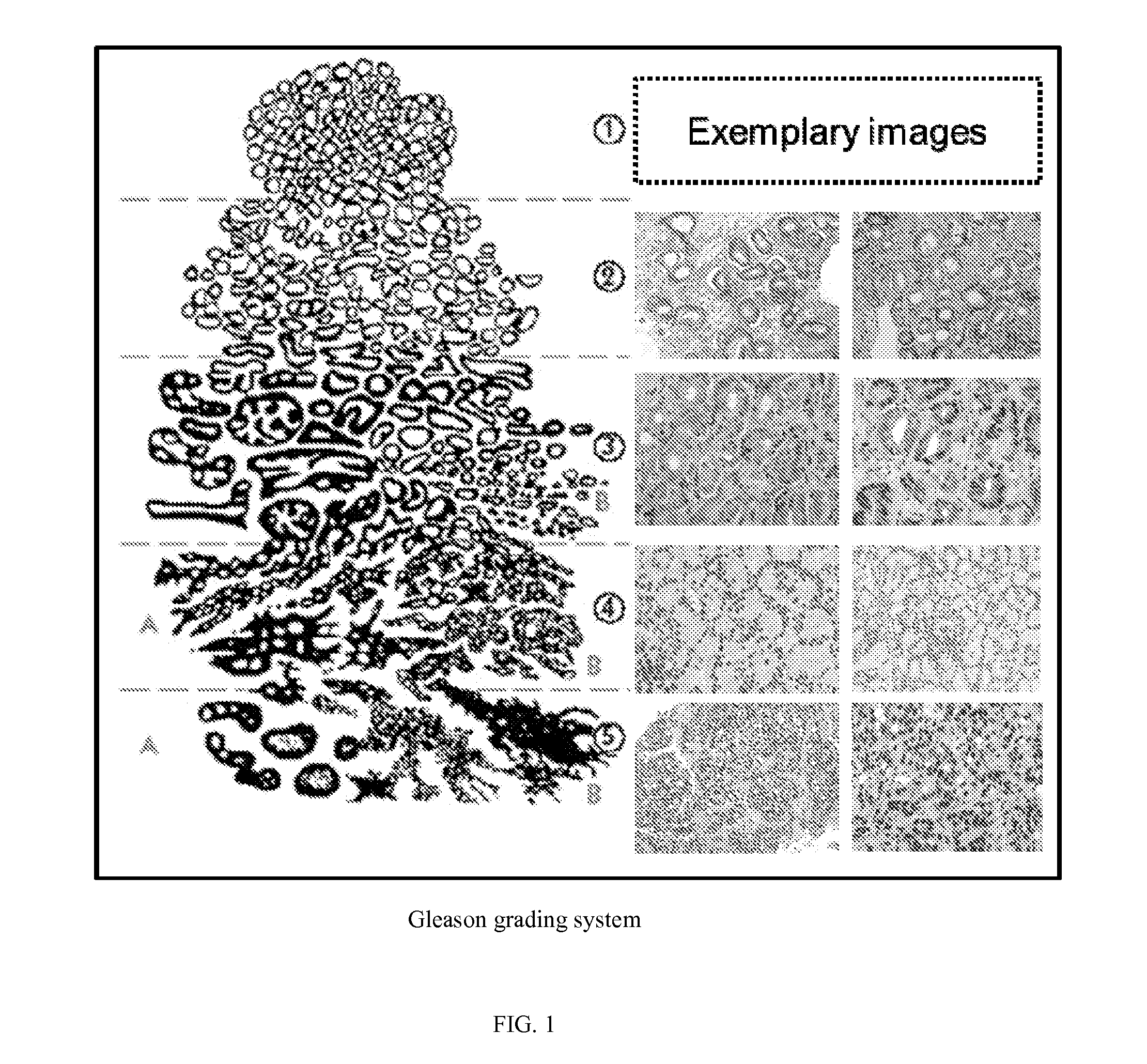

Systems and methods for automated screening and prognosis of cancer from whole-slide biopsy images

InactiveUS20140233826A1Accurate and unambiguous measureReduce dependenceImage enhancementMedical data miningFeature setProstate cancer

The invention provides systems and methods for detection, grading, scoring and tele-screening of cancerous lesions. A complete scheme for automated quantitative analysis and assessment of human and animal tissue images of several types of cancers is presented. Various aspects of the invention are directed to the detection, grading, prediction and staging of prostate cancer on serial sections / slides of prostate core images, or biopsy images. Accordingly, the invention includes a variety of sub-systems, which could be used separately or in conjunction to automatically grade cancerous regions. Each system utilizes a different approach with a different feature set. For instance, in the quantitative analysis, textural-based and morphology-based features may be extracted at image- and (or) object-levels from regions of interest. Additionally, the invention provides sub-systems and methods for accurate detection and mapping of disease in whole slide digitized images by extracting new features through integration of one or more of the above-mentioned classification systems. The invention also addresses the modeling, qualitative analysis and assessment of 3-D histopathology images which assist pathologists in visualization, evaluation and diagnosis of diseased tissue. Moreover, the invention includes systems and methods for the development of a tele-screening system in which the proposed computer-aided diagnosis (CAD) systems. In some embodiments, novel methods for image analysis (including edge detection, color mapping characterization and others) are provided for use prior to feature extraction in the proposed CAD systems.

Owner:BOARD OF RGT THE UNIV OF TEXAS SYST

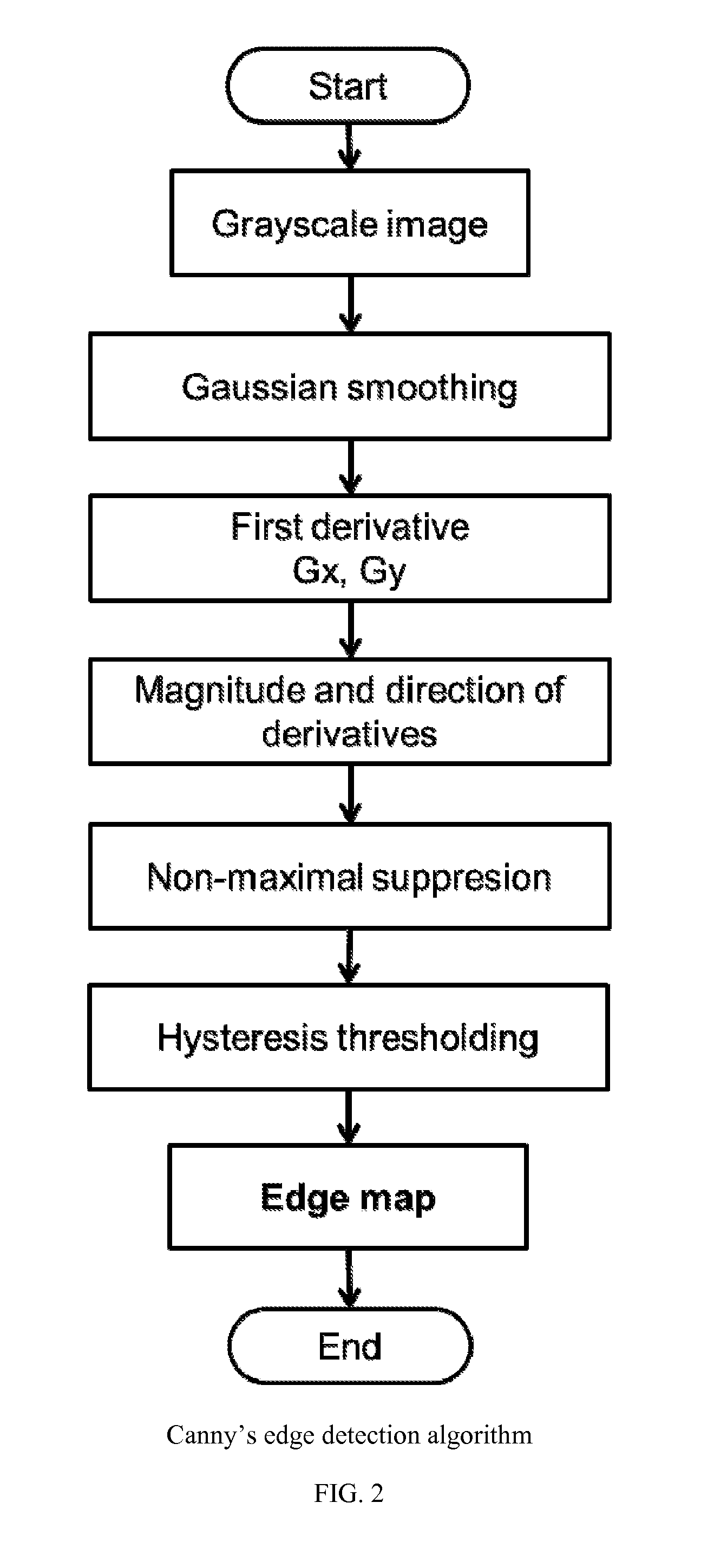

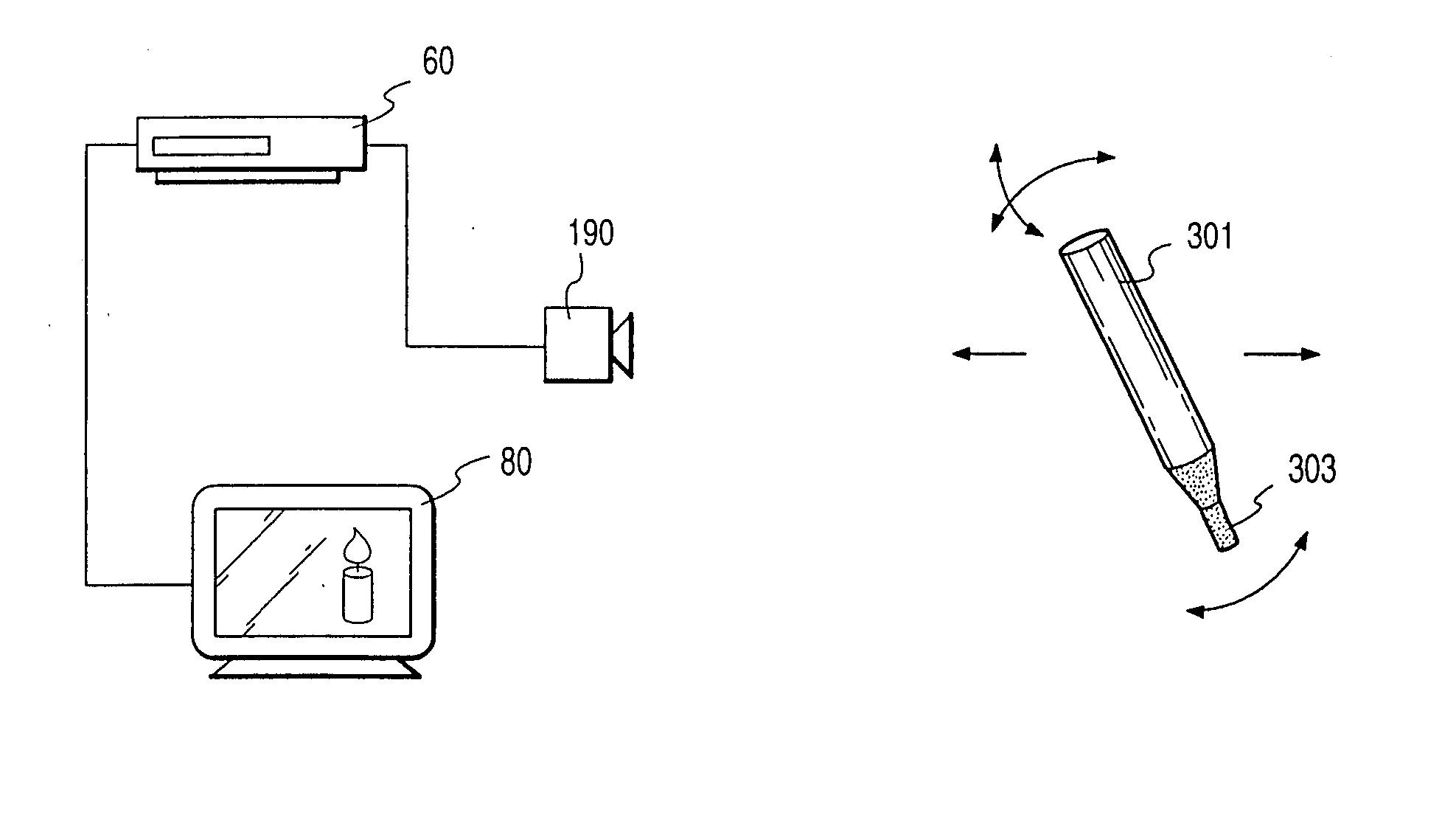

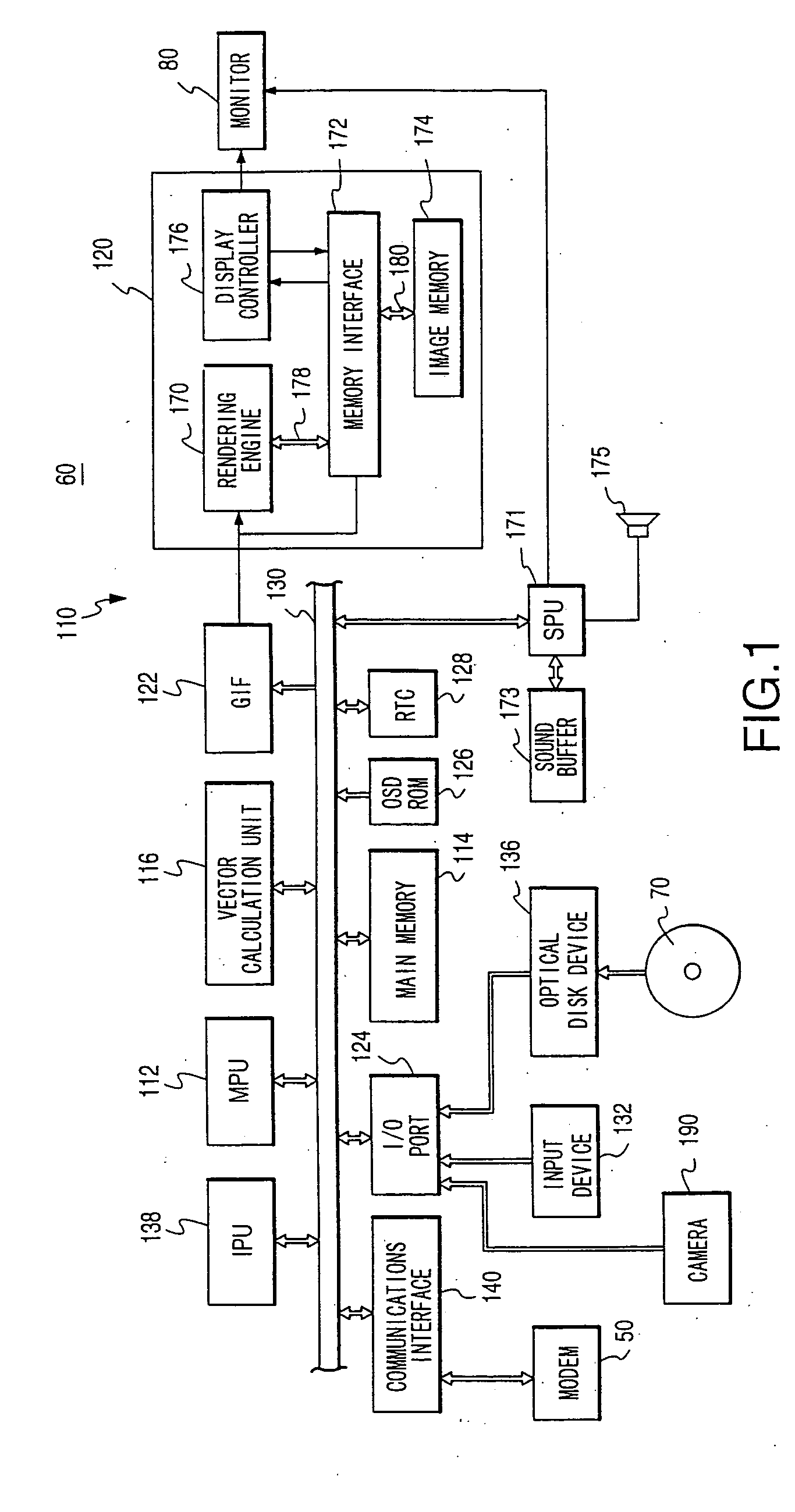

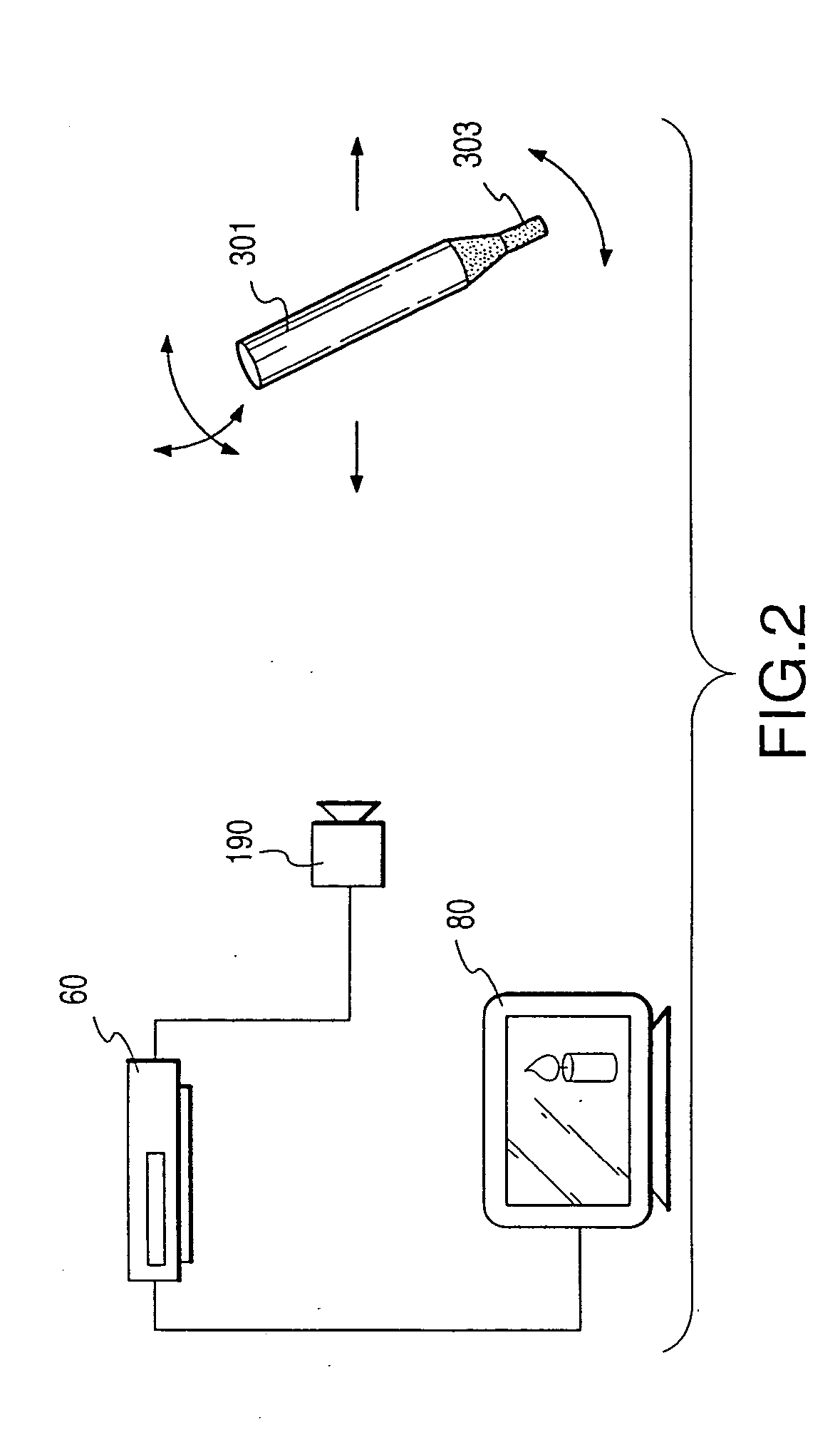

System and method for object tracking

InactiveUS20050026689A1Maximizing abilityImage analysisImage data processing detailsCamera imageColor transformation

A hand-manipulated prop is picked-up via a single video camera, and the camera image is analyzed to isolate the part of the image pertaining to the object for mapping the position and orientation of the object into a three-dimensional space, wherein the three-dimensional description of the object is stored in memory and used for controlling action in a game program, such as rendering of a corresponding virtual object in a scene of a video display. Algorithms for deriving the three-dimensional descriptions for various props employ geometry processing, including area statistics, edge detection and / or color transition localization, to find the position and orientation of the prop from two-dimensional pixel data. Criteria are proposed for the selection of colors of stripes on the props which maximize separation in the two-dimensional chrominance color space, so that instead of detecting absolute colors, significant color transitions are detected. Thus, the need for calibration of the system dependent on lighting conditions which tend to affect apparent colors can be avoided.

Owner:SONY COMPUTER ENTERTAINMENT INC

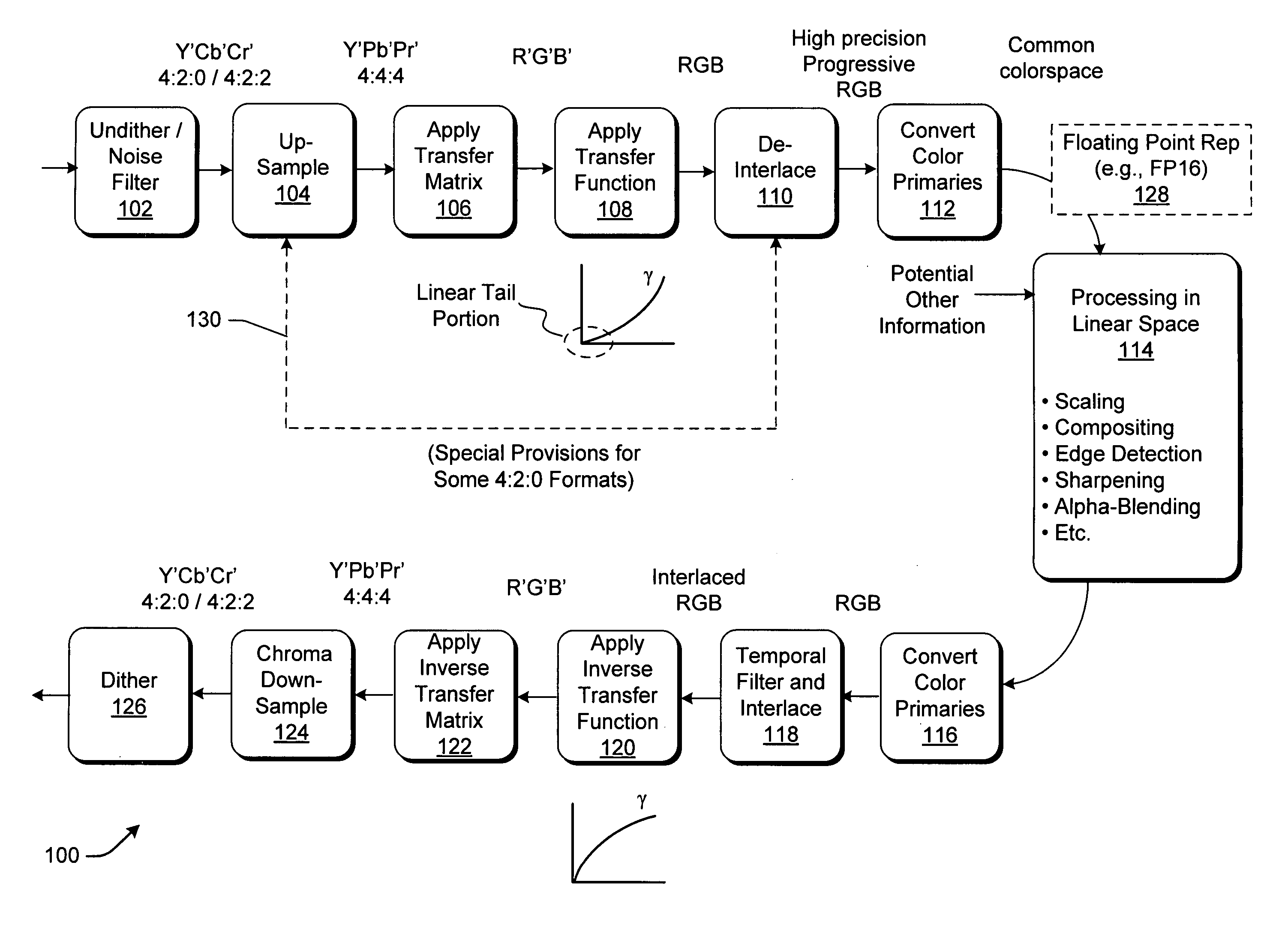

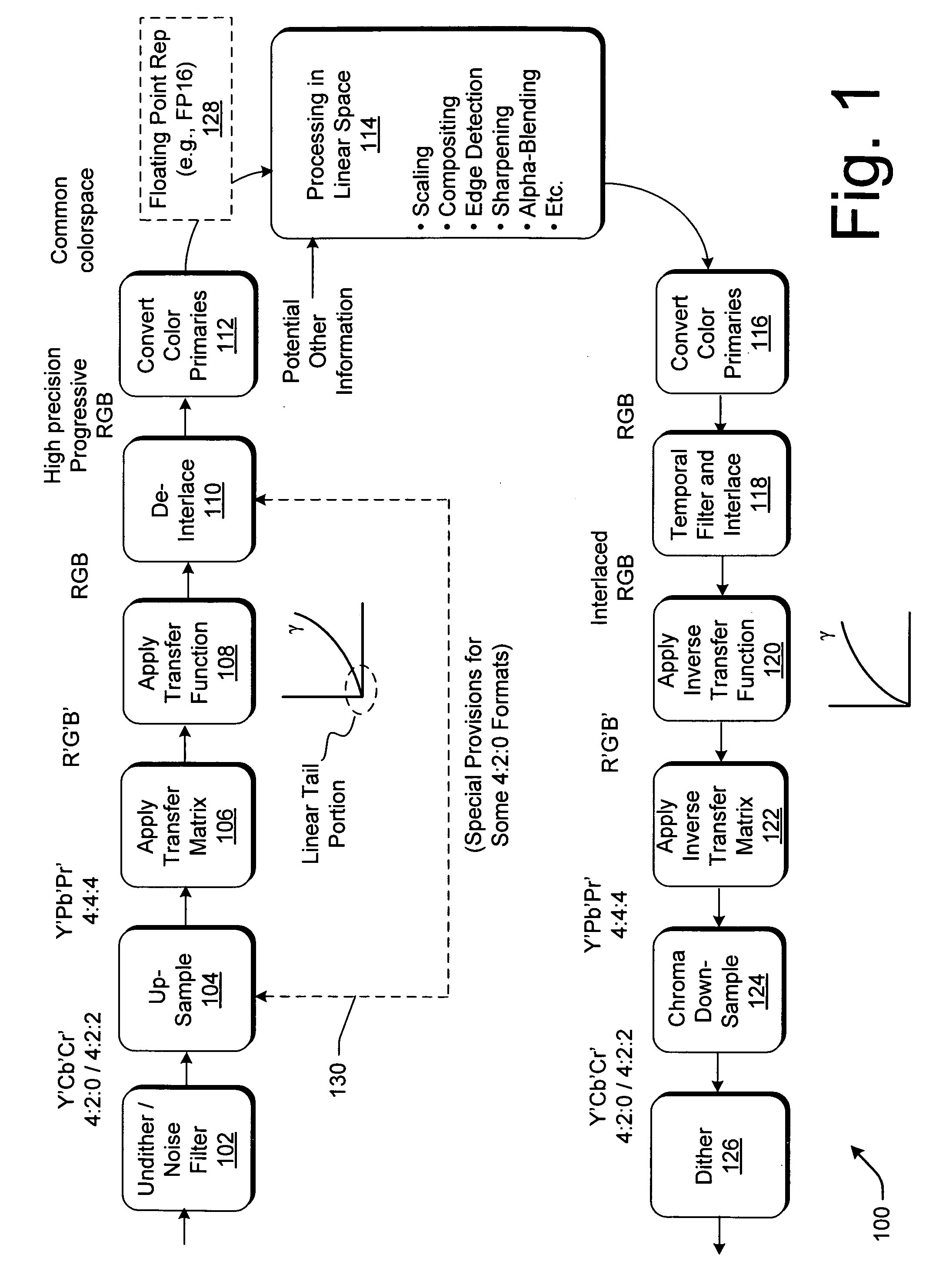

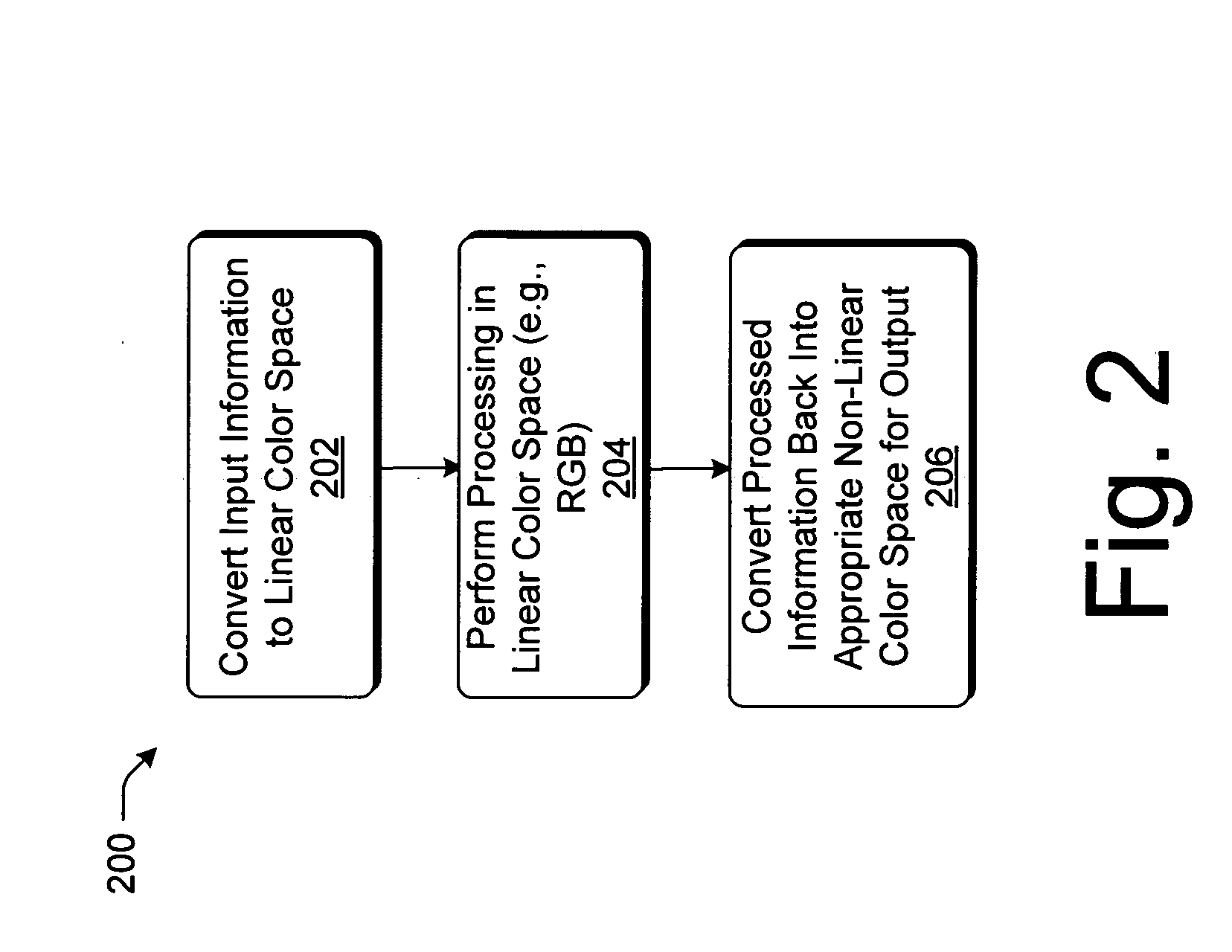

Image processing using linear light values and other image processing improvements

ActiveUS20050063586A1Reduce the amount requiredImprove accuracyCharacter and pattern recognitionPictoral communicationImaging processingFloating point

Strategies are described for processing image information in a linear form to reduce the amount of artifacts (compared to processing the data in nonlinear form). Exemplary types of processing operations can include, scaling, compositing, alpha-blending, edge detection, and so forth. In a more specific implementation, strategies are described for processing image information that is: a) linear; b) in the RGB color space; c) high precision (e.g., provided by floating point representation); d) progressive; and e) full channel. Other improvements provide strategies for: a) processing image information in a pseudo-linear space to improve processing speed; b) implementing an improved error dispersion technique; c) dynamically calculating and applying filter kernels; d) producing pipeline code in an optimal manner; and e) implementing various processing tasks using novel pixel shader techniques.

Owner:MICROSOFT TECH LICENSING LLC

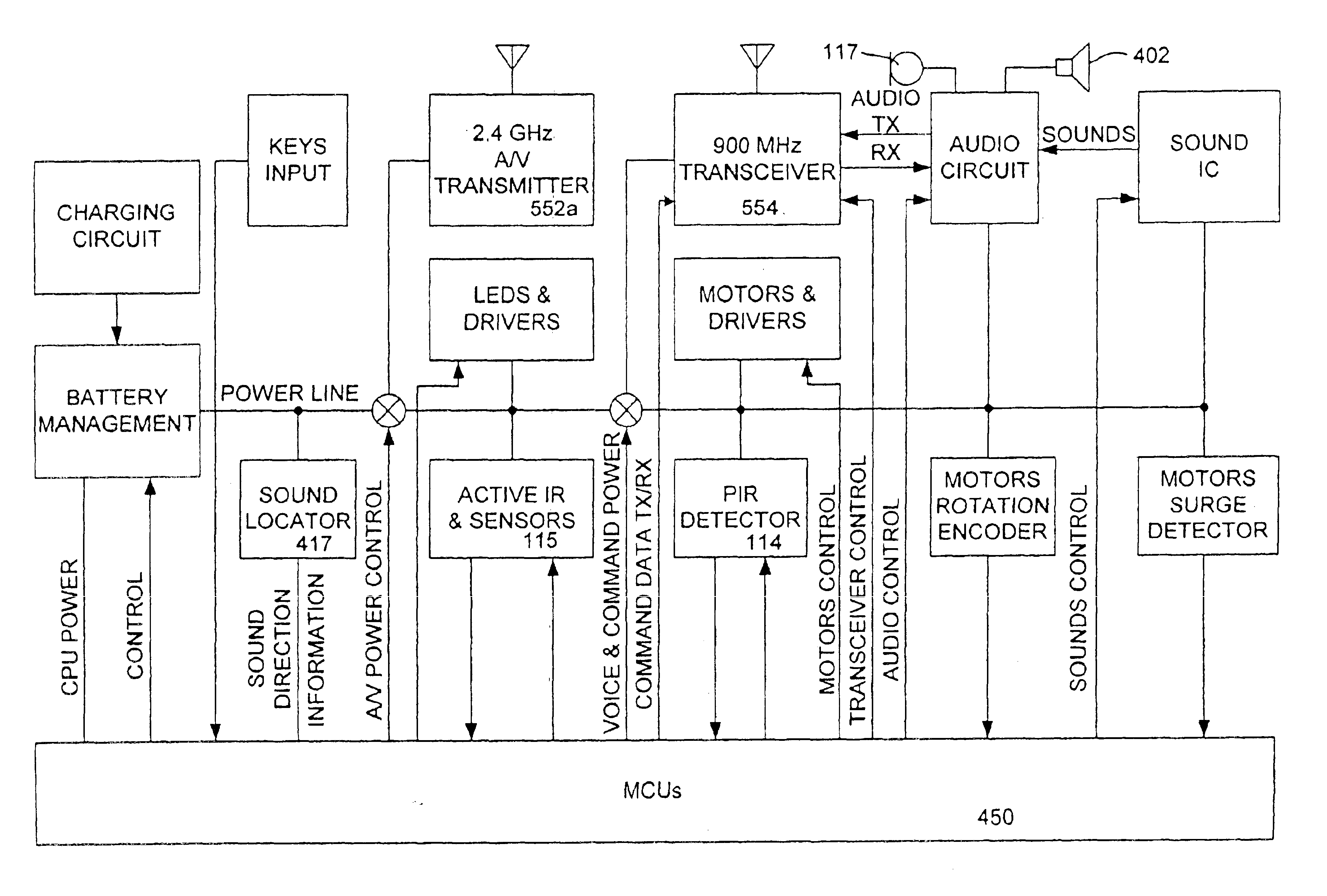

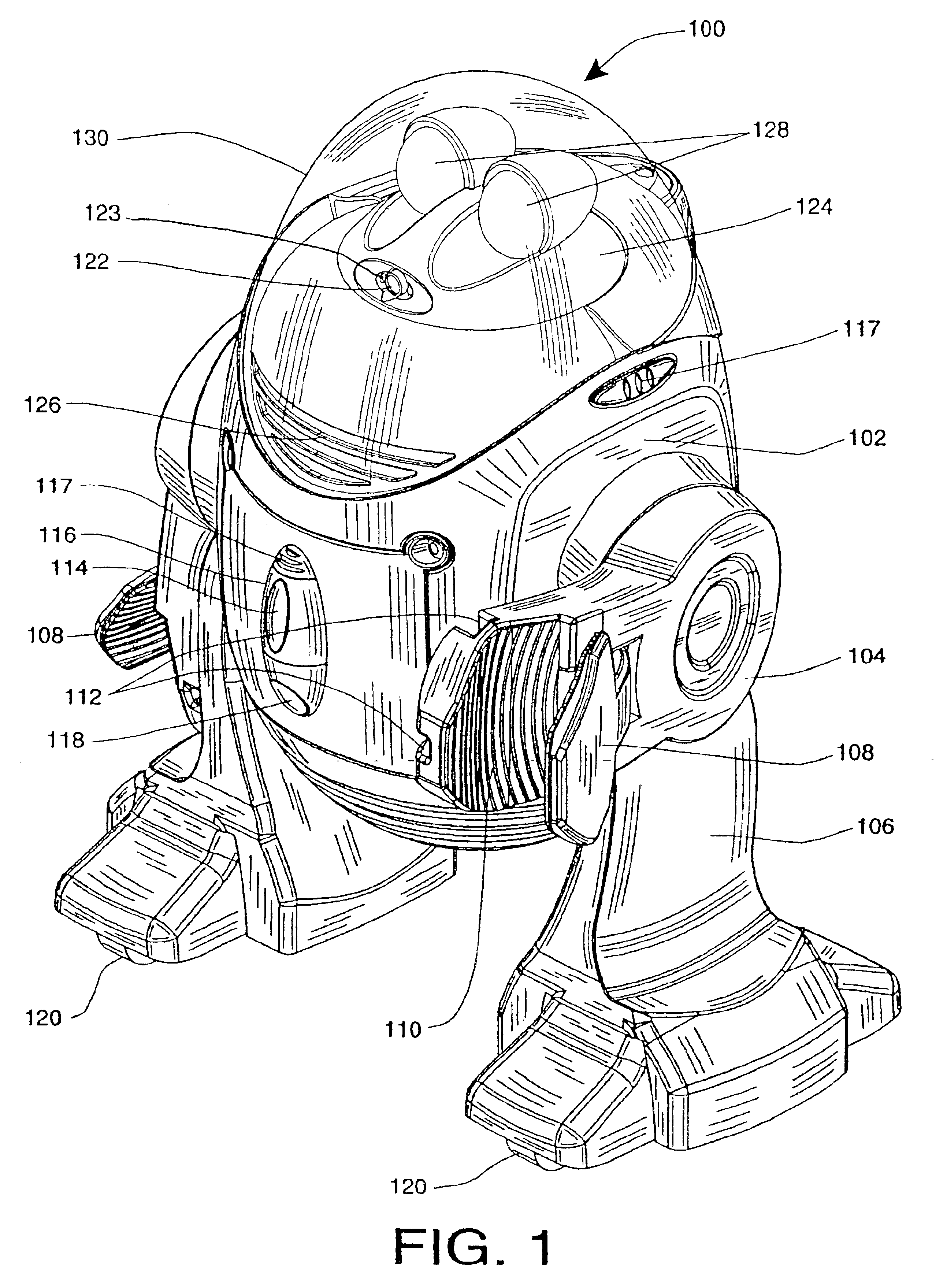

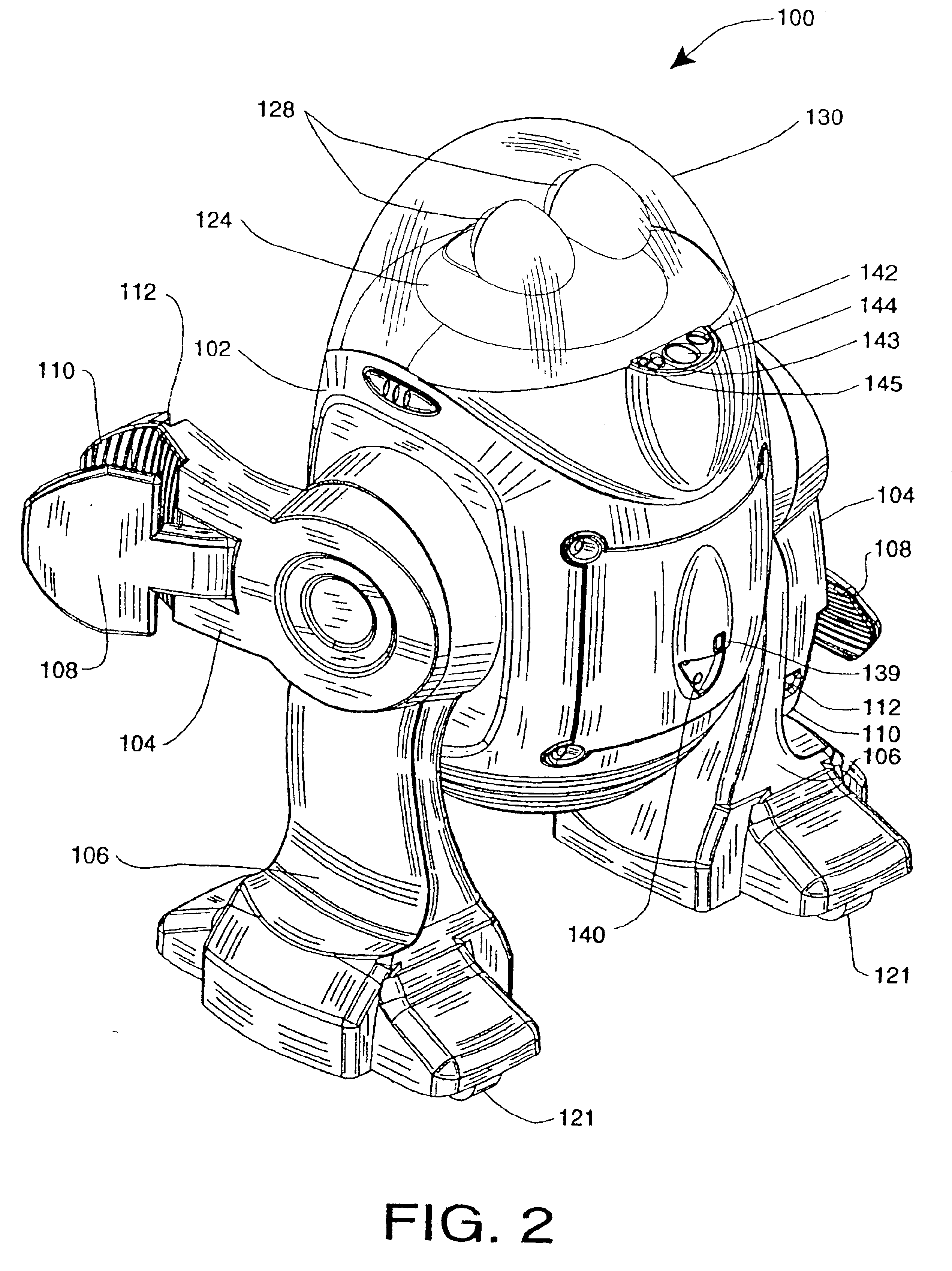

Robot capable of detecting an edge

InactiveUS6865447B2Provide securityEasy to operateProgramme controlComputer controlEngineeringEdge based

Owner:SHARPER IMAGE ACQUISITION LLC A DELAWARE LIMITED LIABILITY

Methods and systems for text detection in mixed-context documents using local geometric signatures

InactiveUS7043080B1Quality andSpeed andCharacter and pattern recognitionVisual presentationGraphicsImaging processing

Embodiments of the present invention relate to methods and systems for detection and delineation of text characters in images which may contain combinations of text and graphical content. Embodiments of the present invention employ intensity contrast edge detection methods and intensity gradient direction determination methods in conjunction with analyses of intensity curve geometry to determine the presence of text and verify text edge identification. These methods may be used to identify text in mixed-content images, to determine text character edges and to achieve other image processing purposes.

Owner:SHARP KK

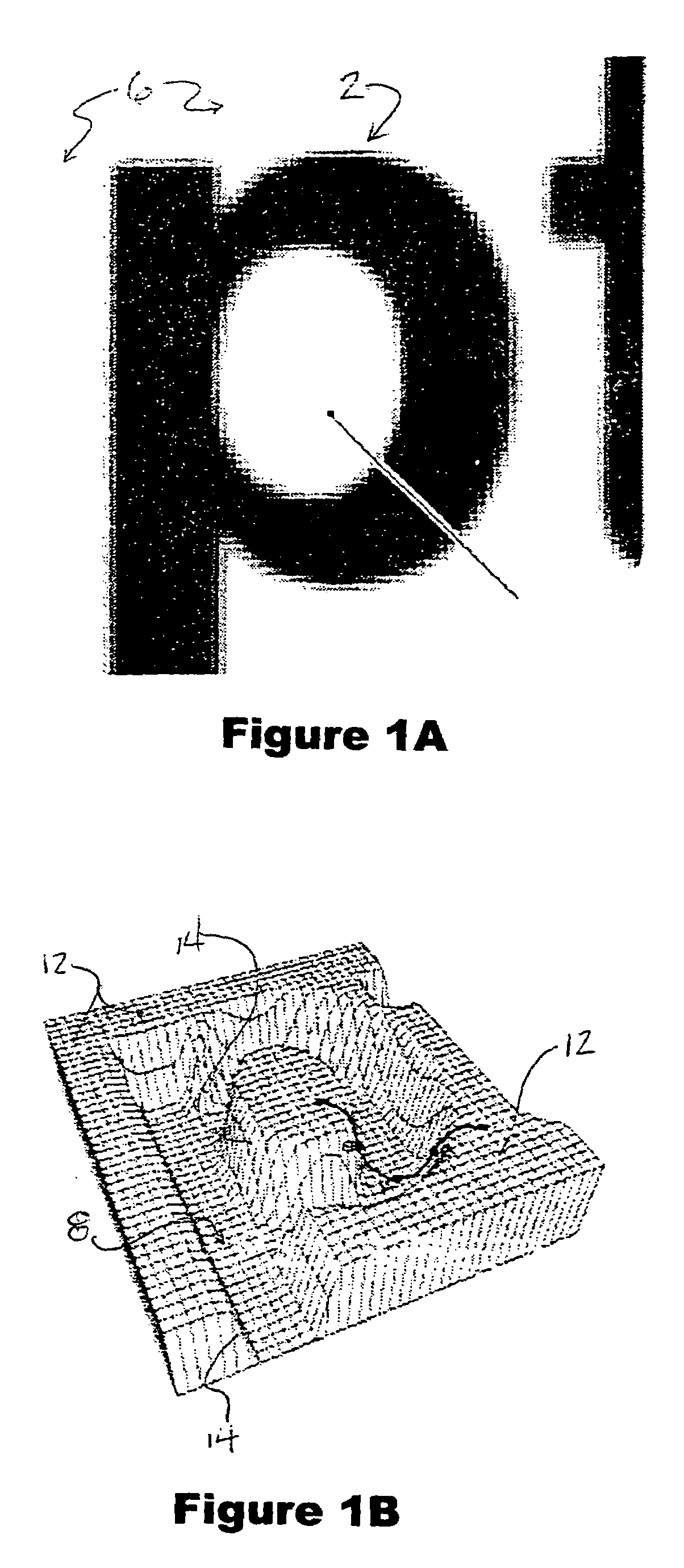

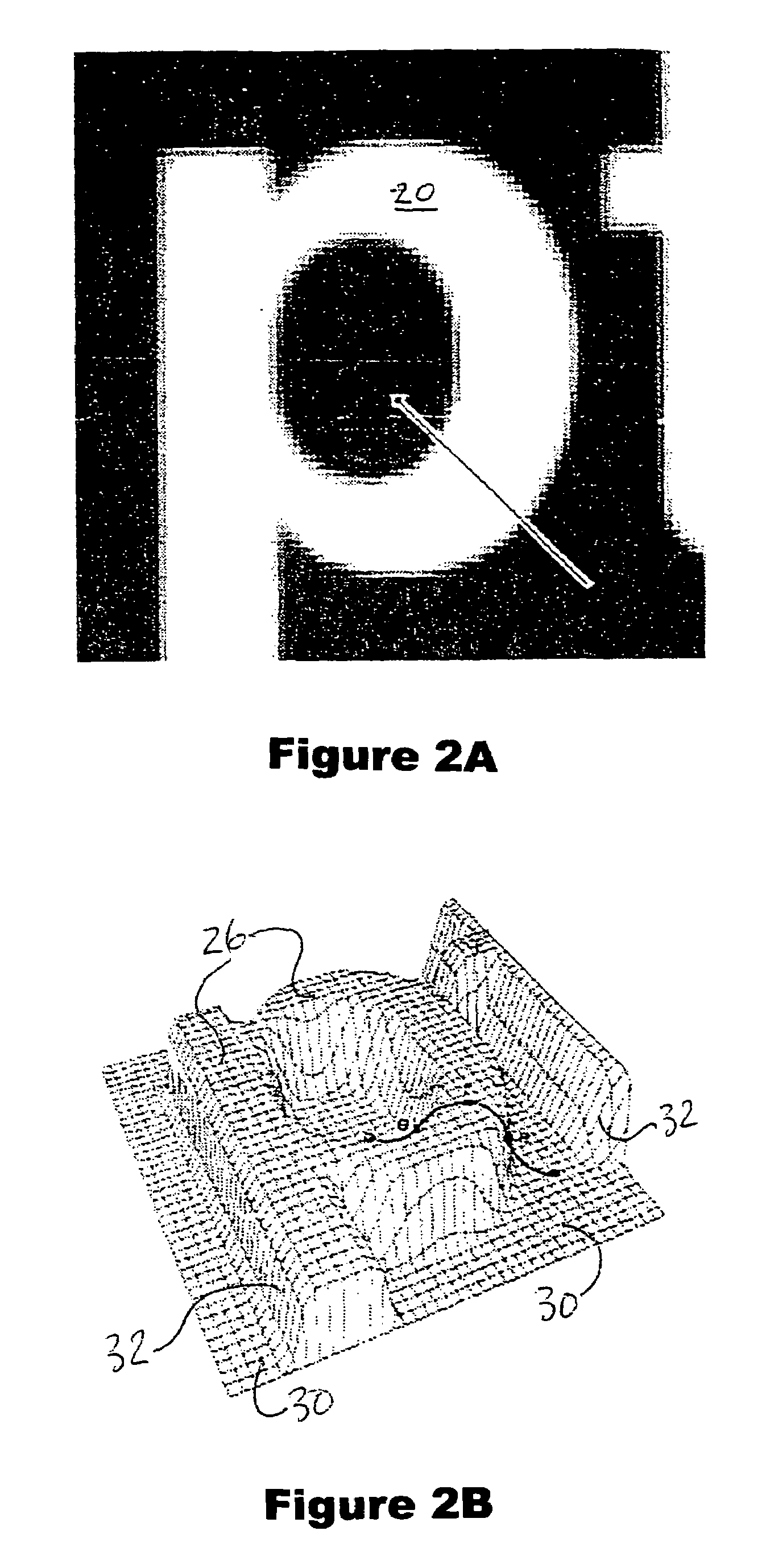

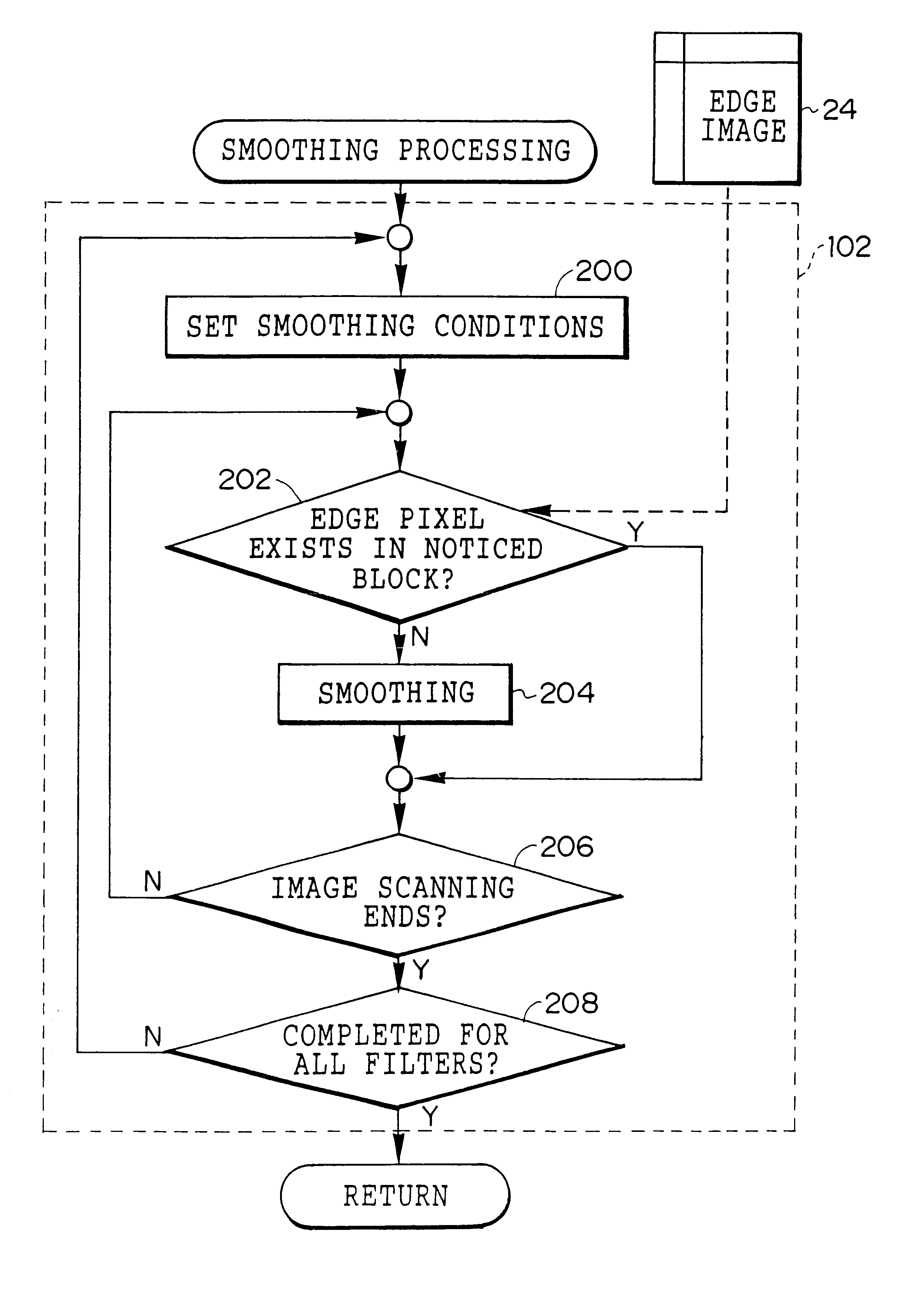

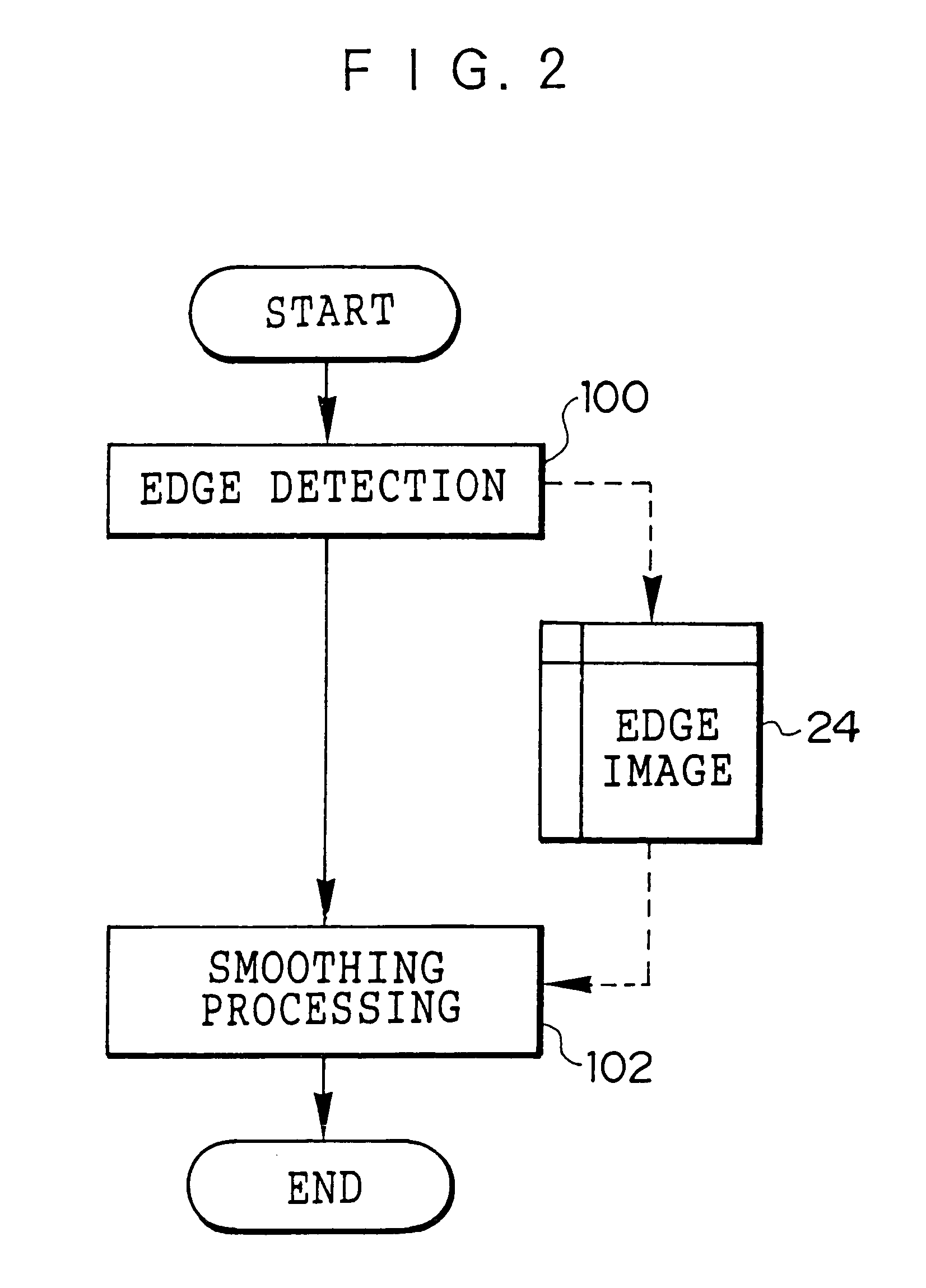

Image processing device and recording medium

InactiveUS6901170B1Low dispersionQuality imageImage enhancementImage analysisImaging processingRecording media

An image processing apparatus and a recording medium which can improve the quality of a color document image. Smoothing conditions which are defined by three parameters of “filter size”, “offset”, and “overlap” are set. An edge image which was generated in an edge detection processing is referred to, and a determination is made as to whether an edge pixel exists in a noticed region. When the edge pixel does not exist in the noticed region, a smoothing processing is carried out. When the edge pixel exists in the noticed region, it is determined inappropriate to effect the smoothing processing, and the process goes to a subsequent processing without effecting the smoothing processing. The processing is carried out until the image scanning ends. When the image scanning ends, the next smoothing conditions are set and the processing which is the same as the one described above is performed.

Owner:FUJIFILM BUSINESS INNOVATION CORP +1

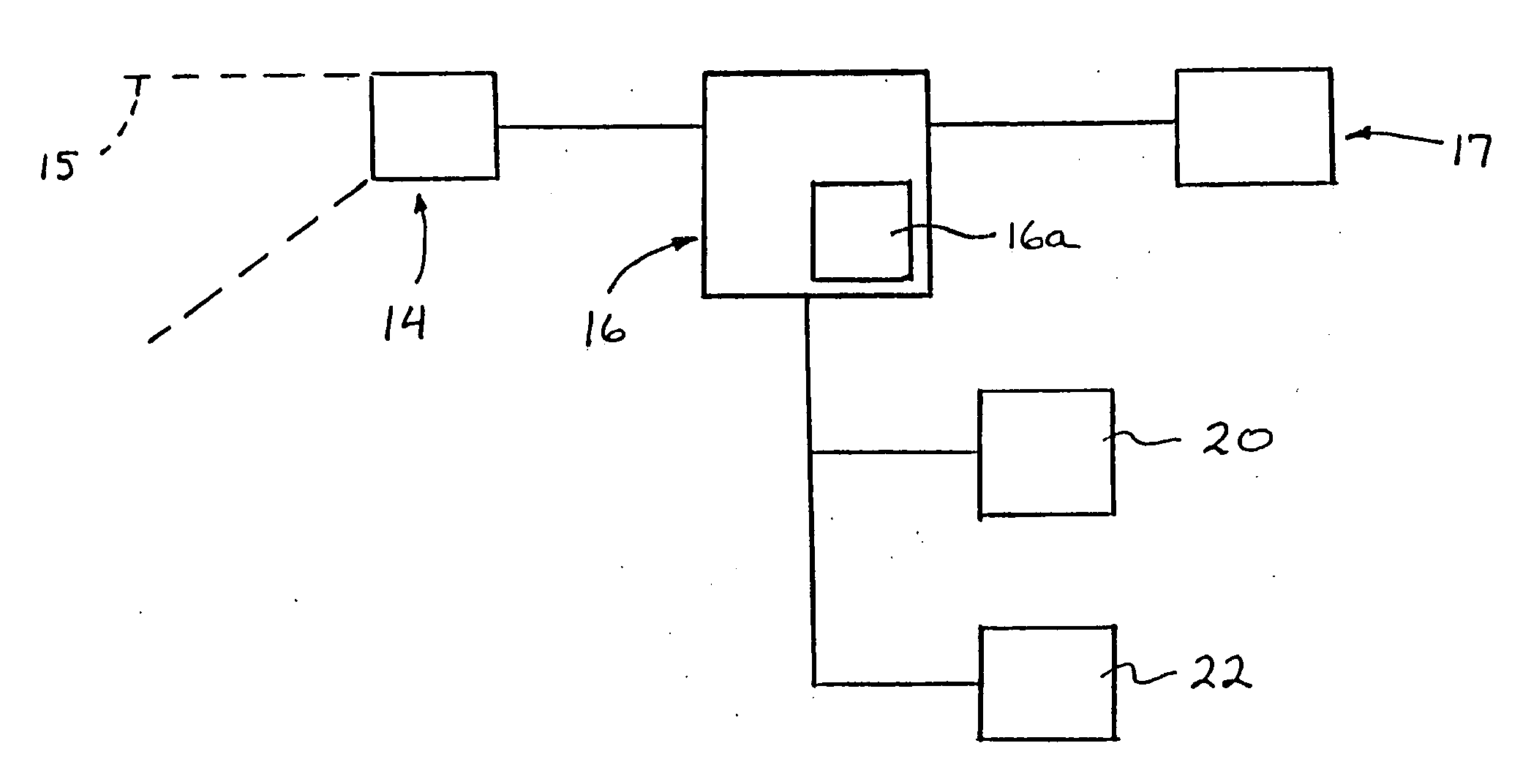

Object detection system for vehicle

InactiveUS20060206243A1Reduce processing requirementsReduce the possibilityImage enhancementImage analysisLane departure warning systemData set

An imaging system for a vehicle includes an imaging sensor and a control. The imaging sensor is operable to capture an image of a scene occurring exteriorly of the vehicle. The control receives the captured image, which comprises an image data set representative of the exterior scene. The control may apply an edge detection algorithm to a reduced image data set of the image data set. The reduced image data set is representative of a target zone of the captured image. The control may be operable to process the reduced image data set more than other image data, which are representative of areas of the captured image outside of the target zone, to detect objects present within the target zone. The imaging system may be associated with a side object detection system, a lane change assist system, a lane departure warning system and / or the like.

Owner:MAGNA ELECTRONICS INC

Systems and methods for tracking objects in video sequences

InactiveUS6901110B1Reduce computational overheadPrevent steppingImage enhancementTelevision system detailsMotion vectorMean difference

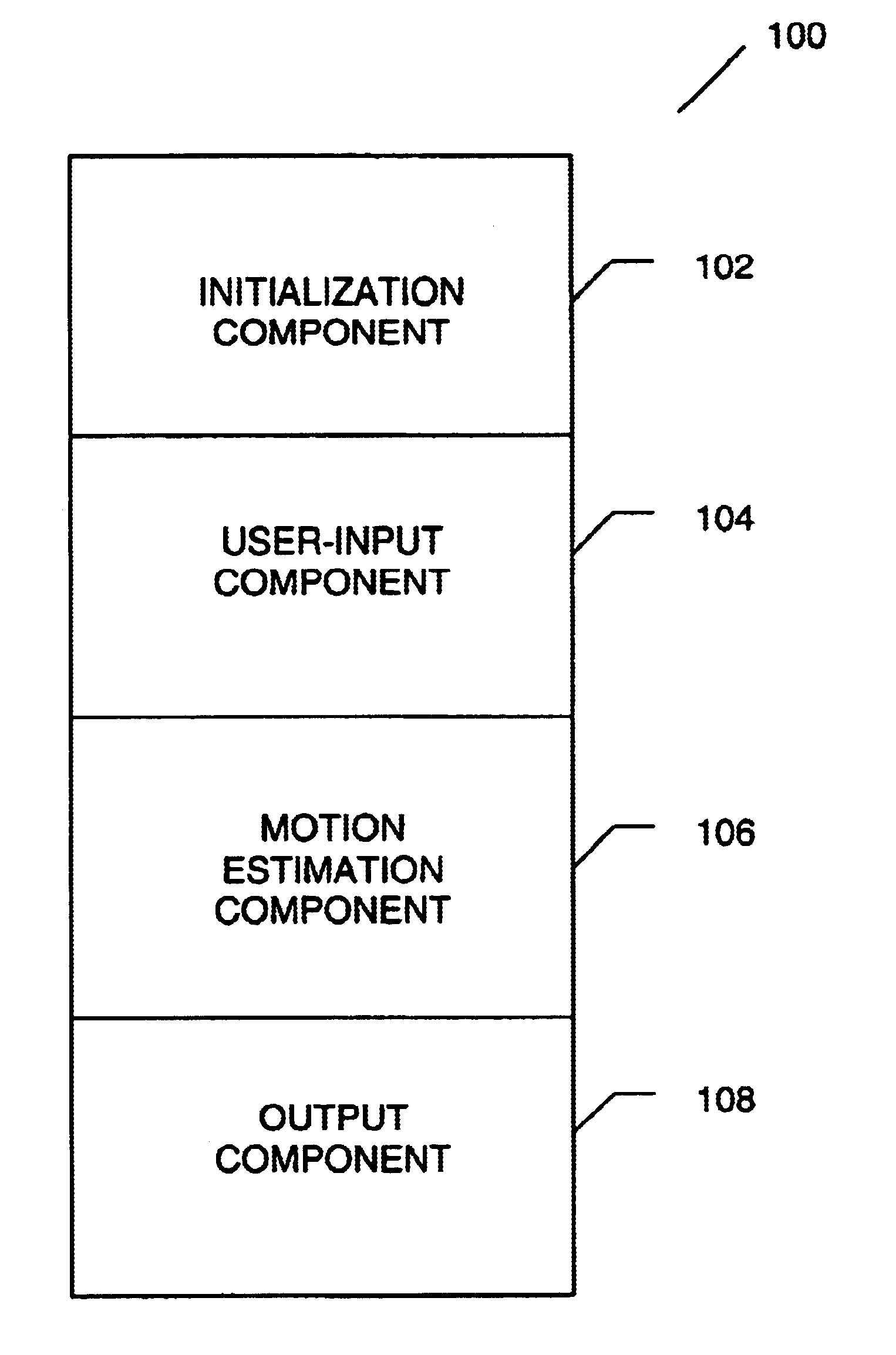

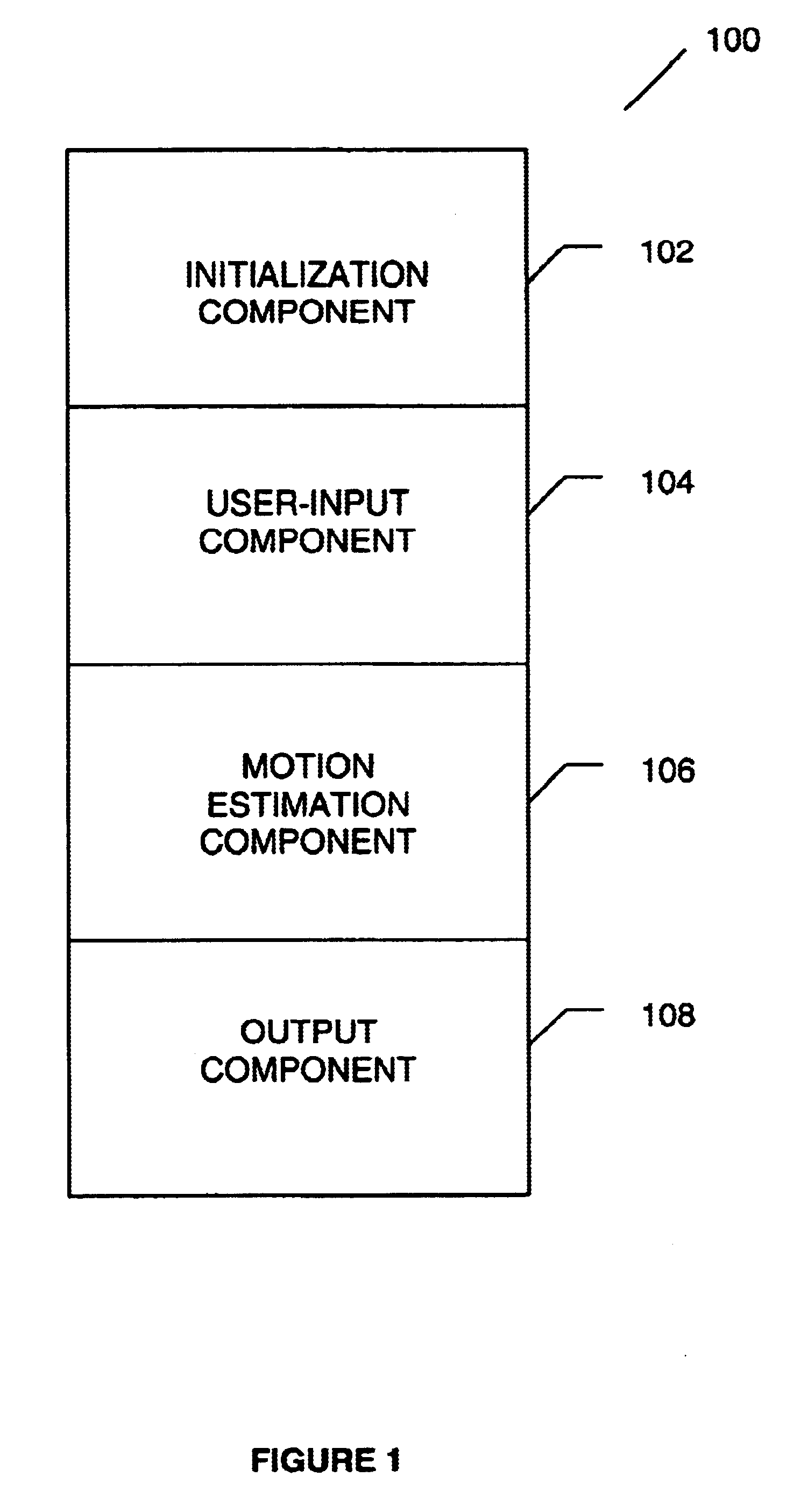

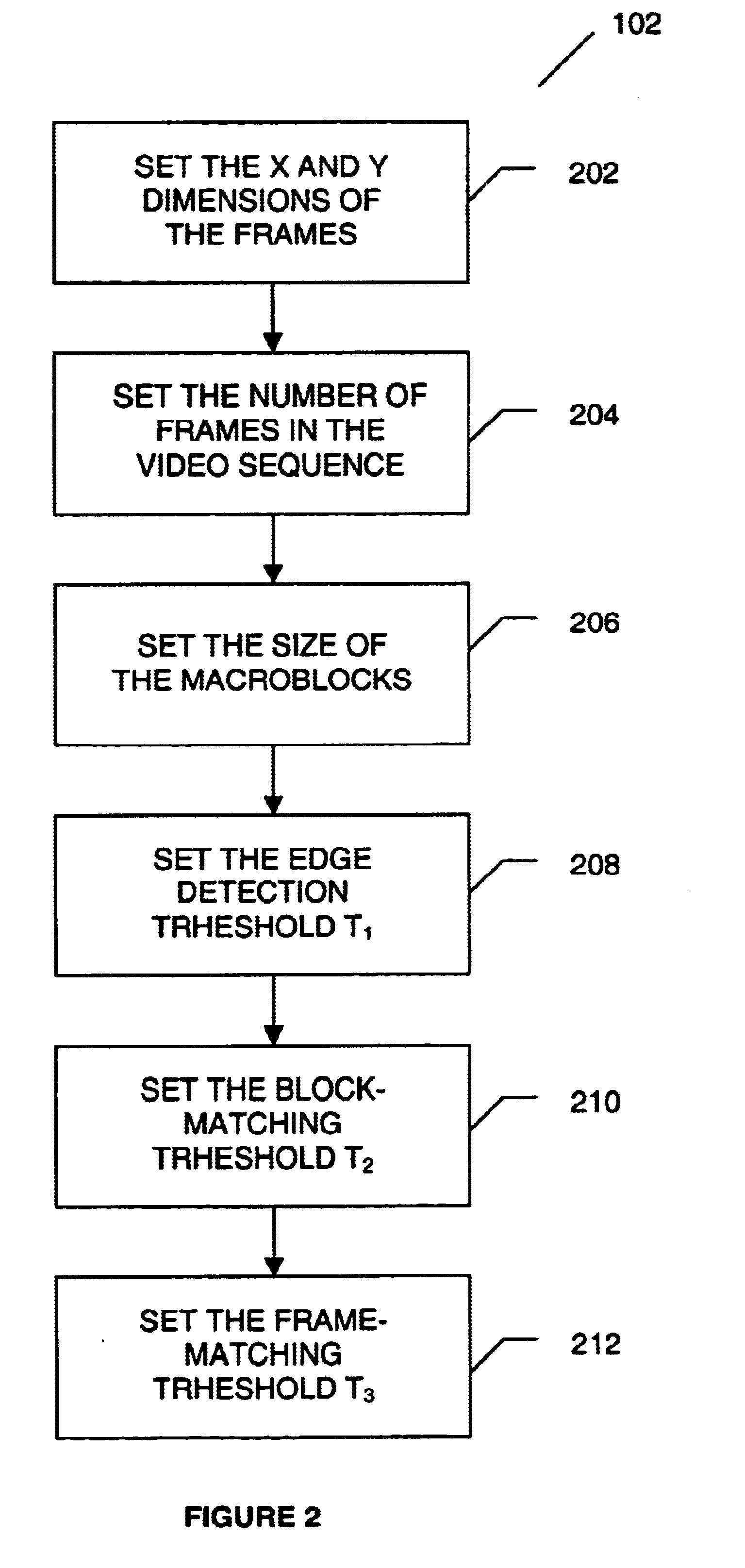

A method for tracking one or multiple objects from an input video sequence allows a user to select one or more regions that contain the object(s) of interest in the first and the last frame of their choice. An initialization component selects the current and the search frame and divides the selected region into equal sized macroblocks. An edge detection component computes the gradient of the current frame for each macroblock and a threshold component decides then which of the macroblocks contain sufficient information for tracking the desired object. A motion estimation component computes for each macroblock in the current frame its position in the search frame. The motion estimation component utilizes a search component that executes a novel search algorithm to find the best match. The mean absolute difference between two macroblocks is used as the matching criterion. The motion estimation component returns the estimated displacement vector for each block. An output component collects the motion vectors of all the predicted blocks and calculates the new position of the object in the next frame.

Owner:SONY CORP

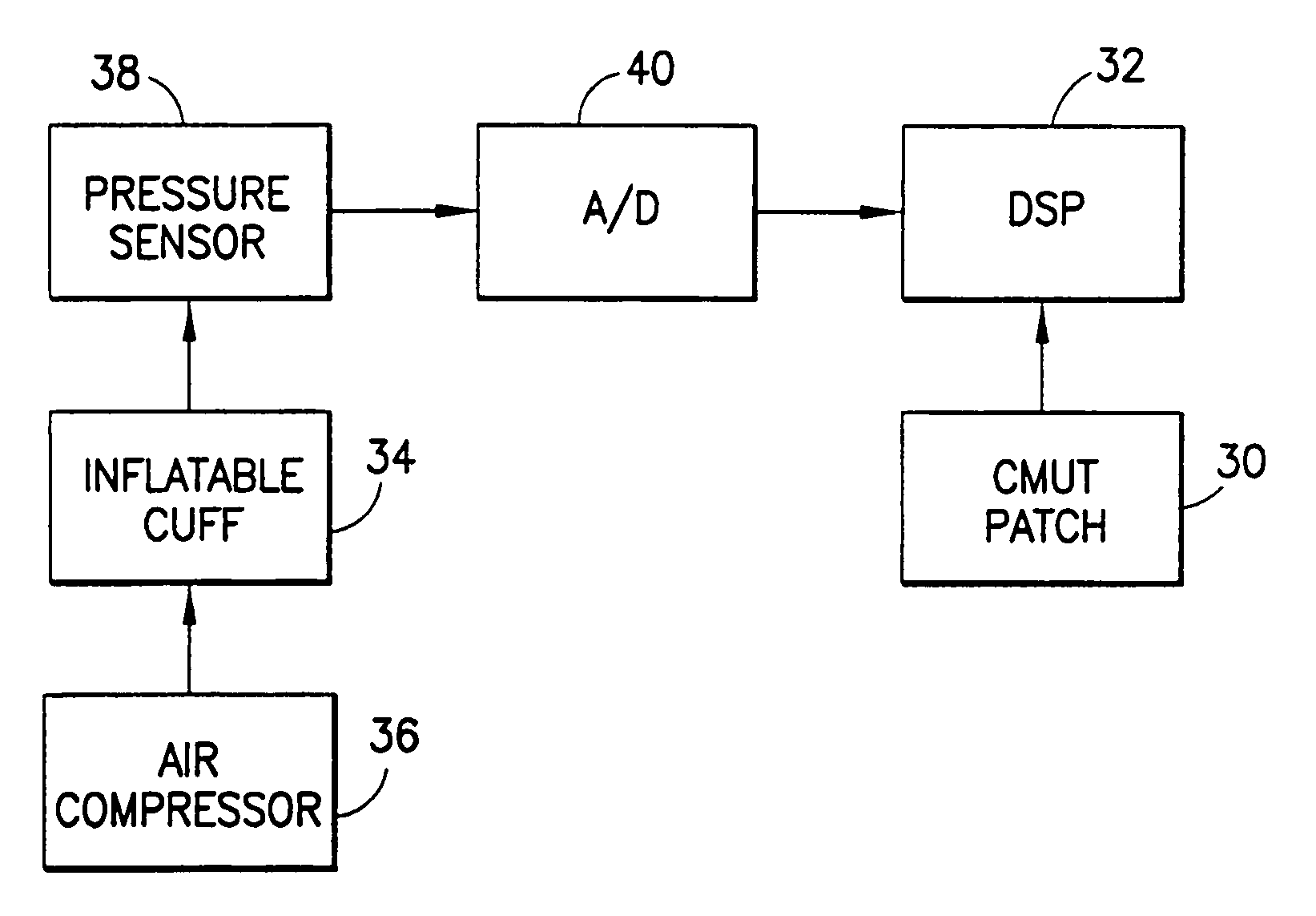

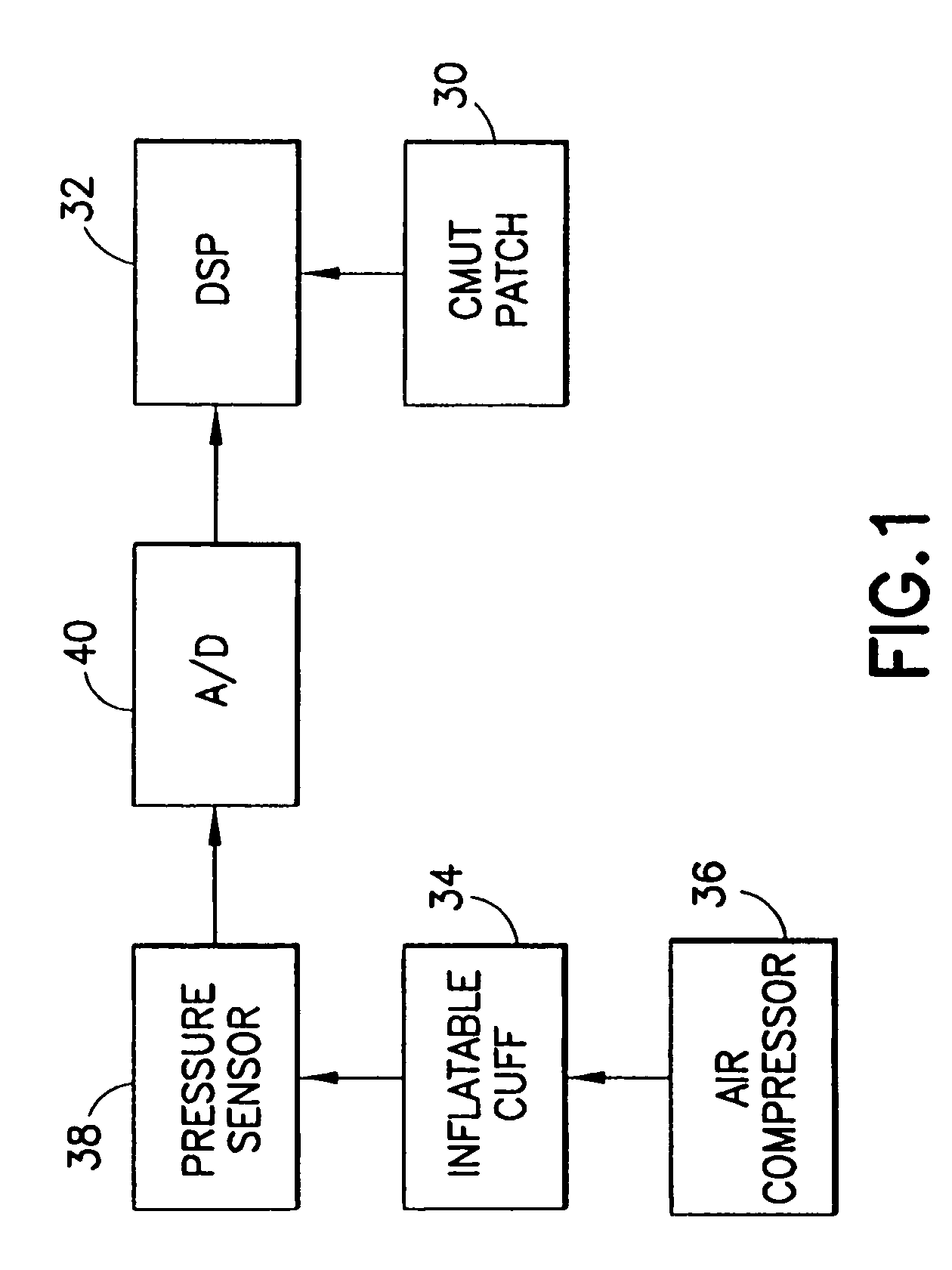

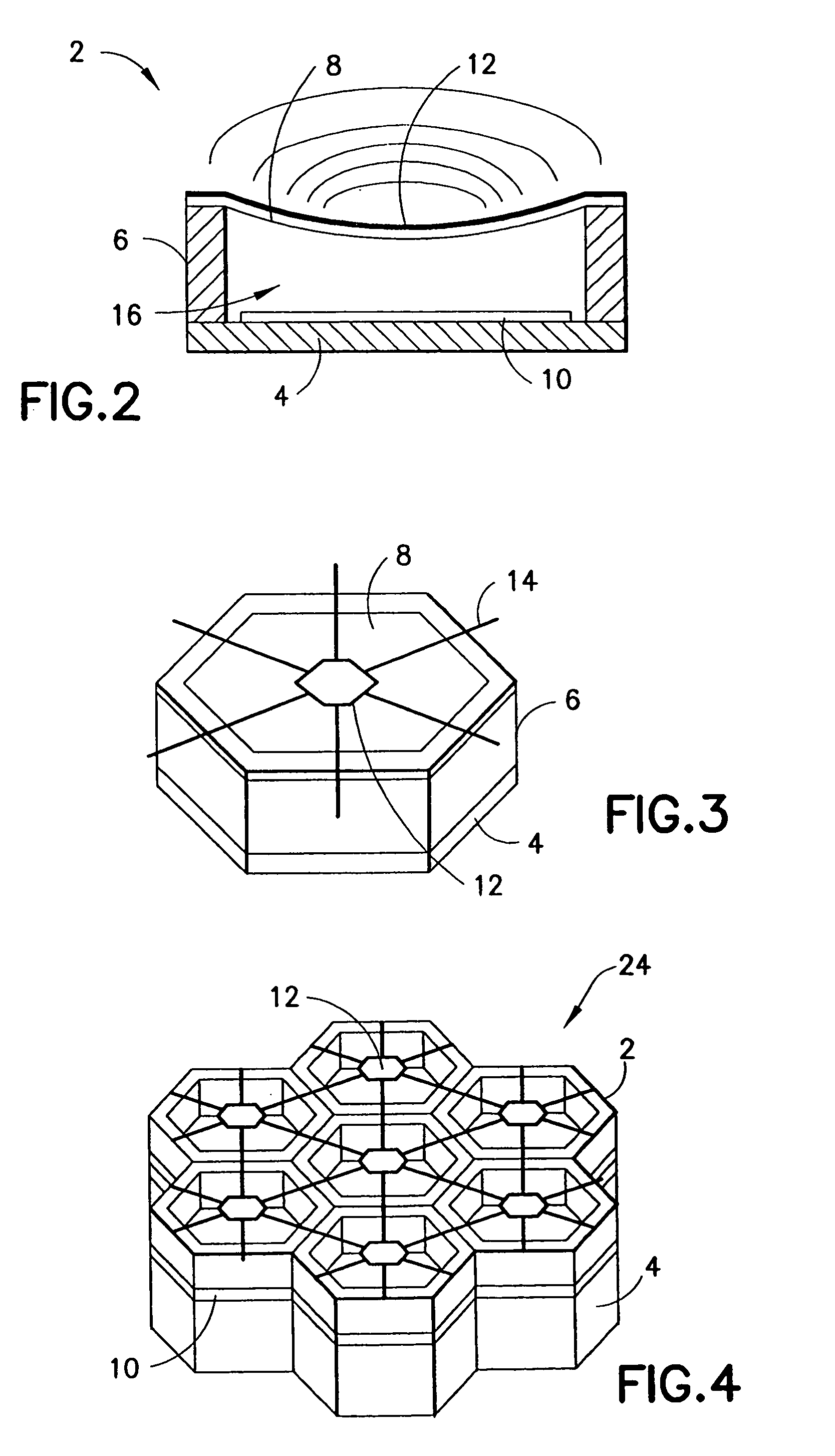

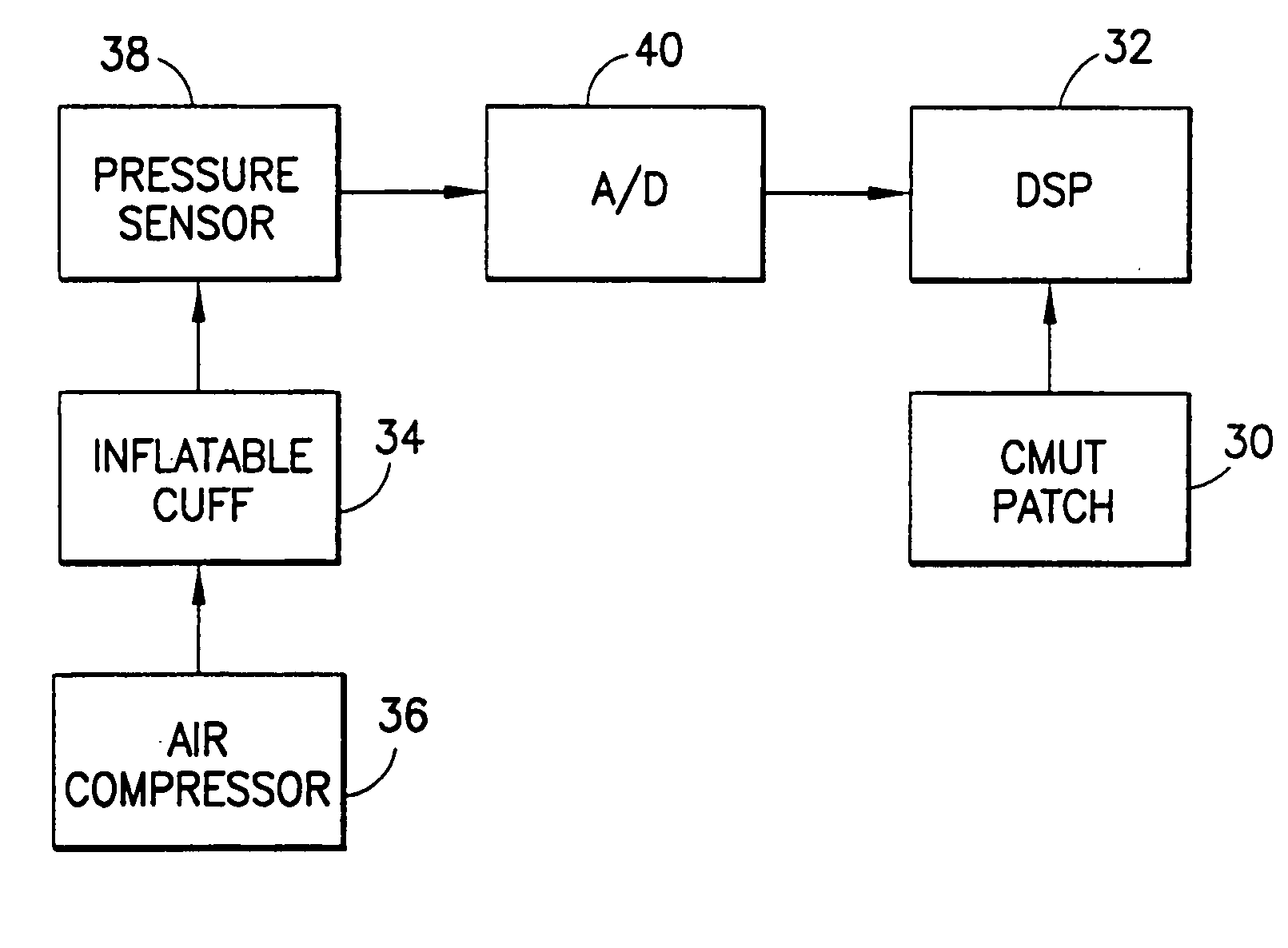

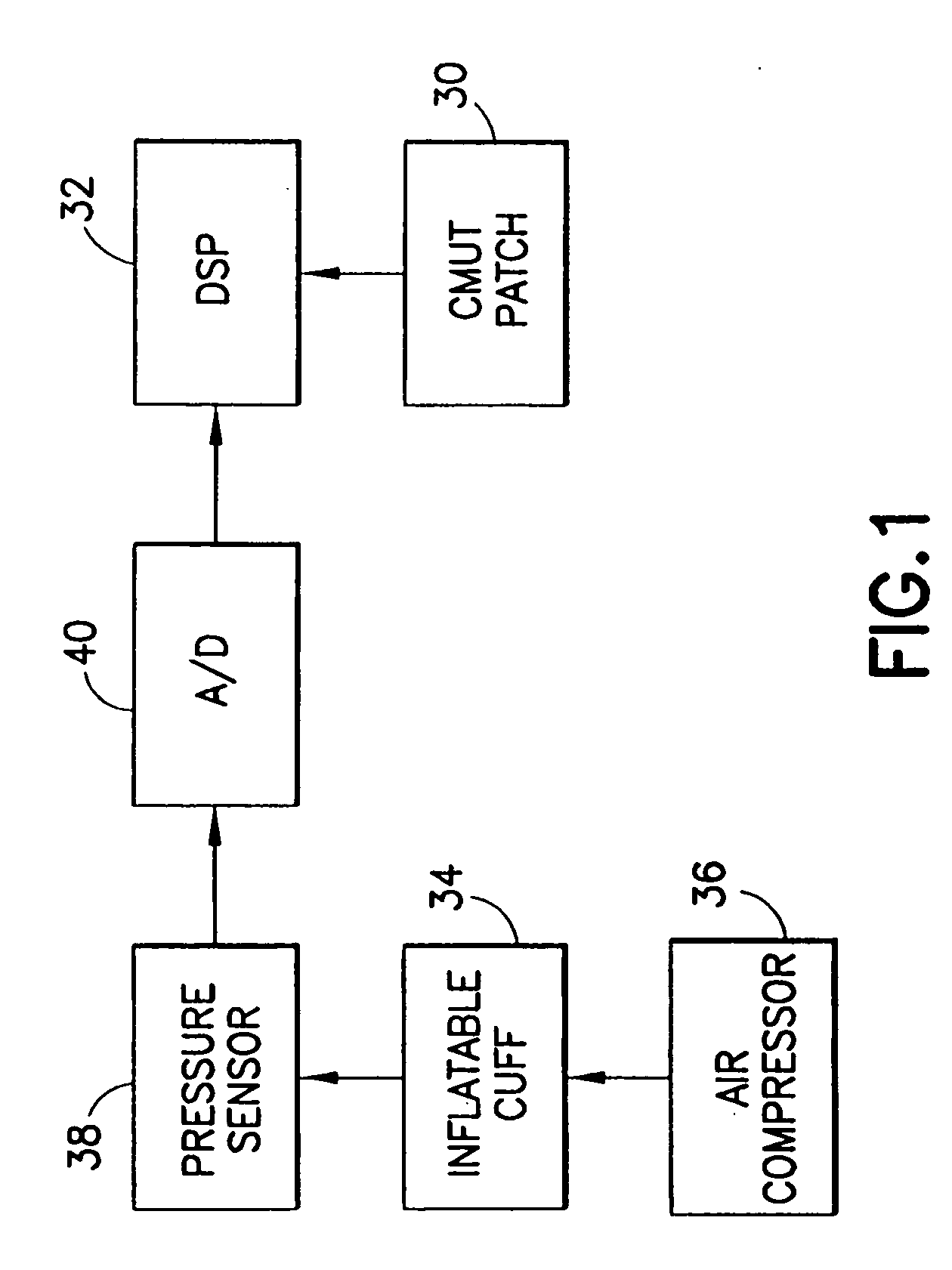

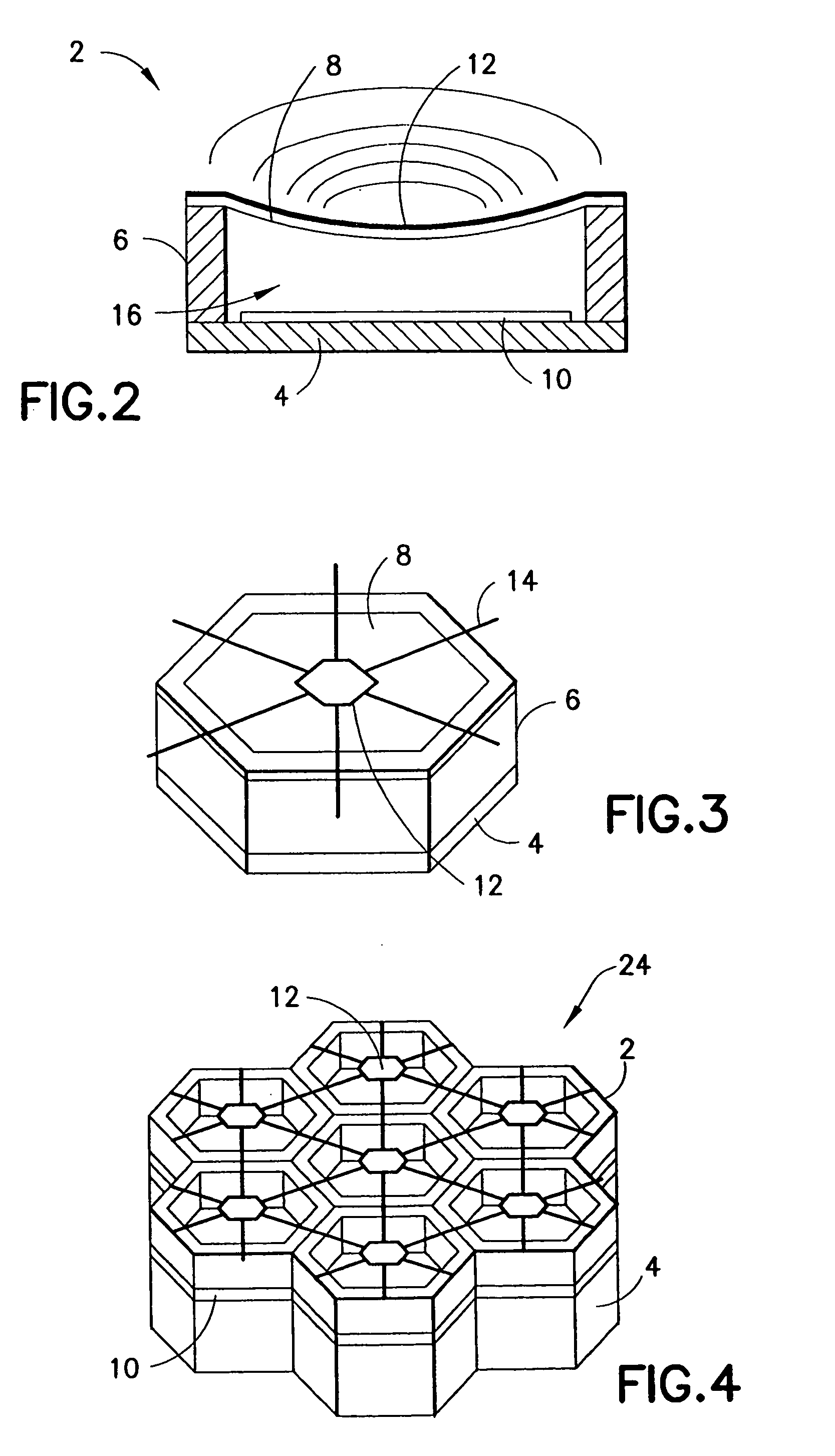

Method and apparatus for ultrasonic continuous, non-invasive blood pressure monitoring

Ultrasound is used to provide input data for a blood pressure estimation scheme. The use of transcutaneous ultrasound provides arterial lumen area and pulse wave velocity information. In addition, ultrasound measurements are taken in such a way that all the data describes a single, uniform arterial segment. Therefore a computed area relates only to the arterial blood volume present. Also, the measured pulse wave velocity is directly related to the mechanical properties of the segment of elastic tube (artery) for which the blood volume is being measured. In a patient monitoring application, the operator of the ultrasound device is eliminated through the use of software that automatically locates the artery in the ultrasound data, e.g., using known edge detection techniques. Autonomous operation of the ultrasound system allows it to report blood pressure and blood flow traces to the clinical users without those users having to interpret an ultrasound image or operate an ultrasound imaging device.

Owner:GENERAL ELECTRIC CO

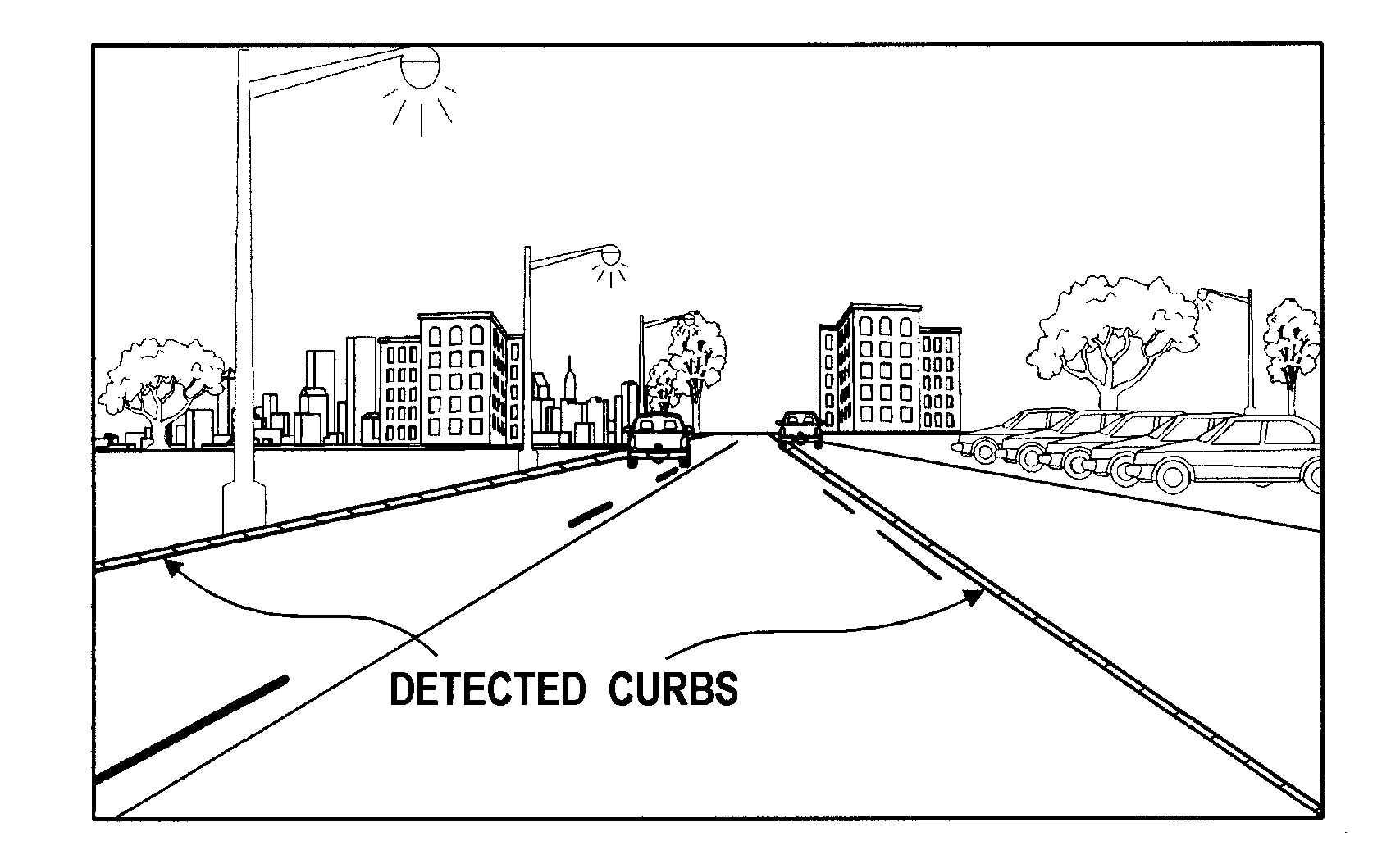

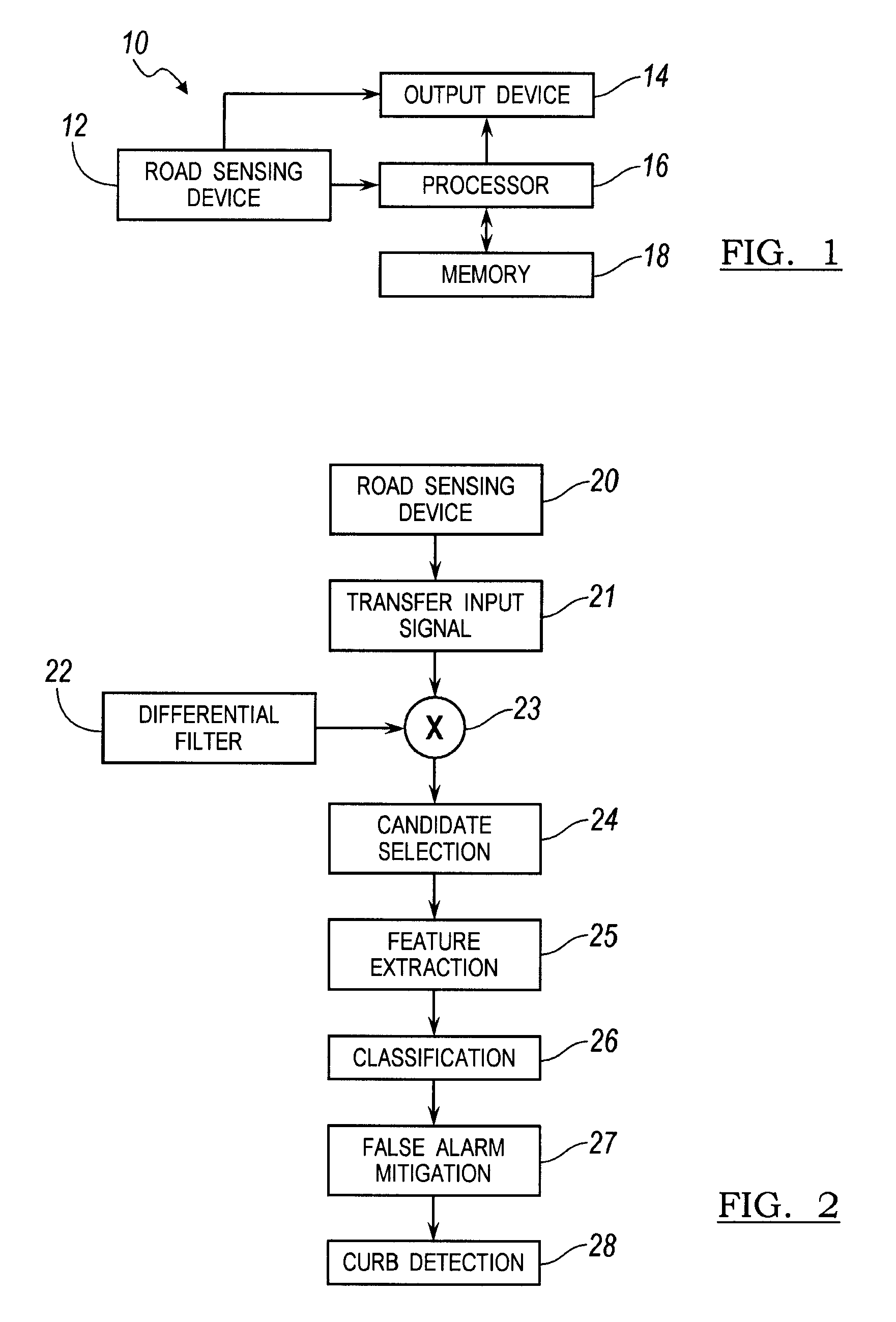

Road-edge detection

ActiveUS20100017060A1Increase speedEasy to detectImage enhancementImage analysisGround planeComputer science

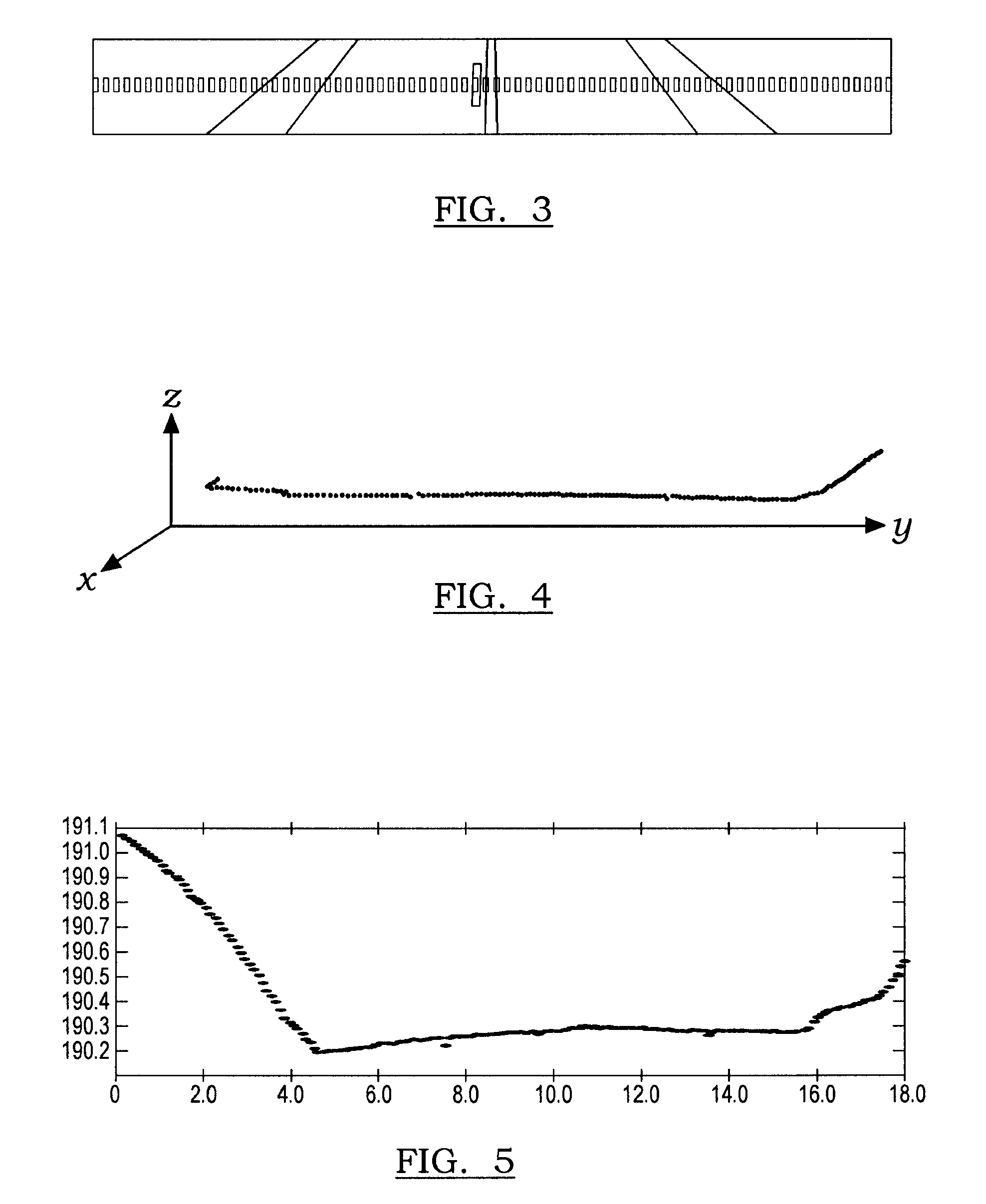

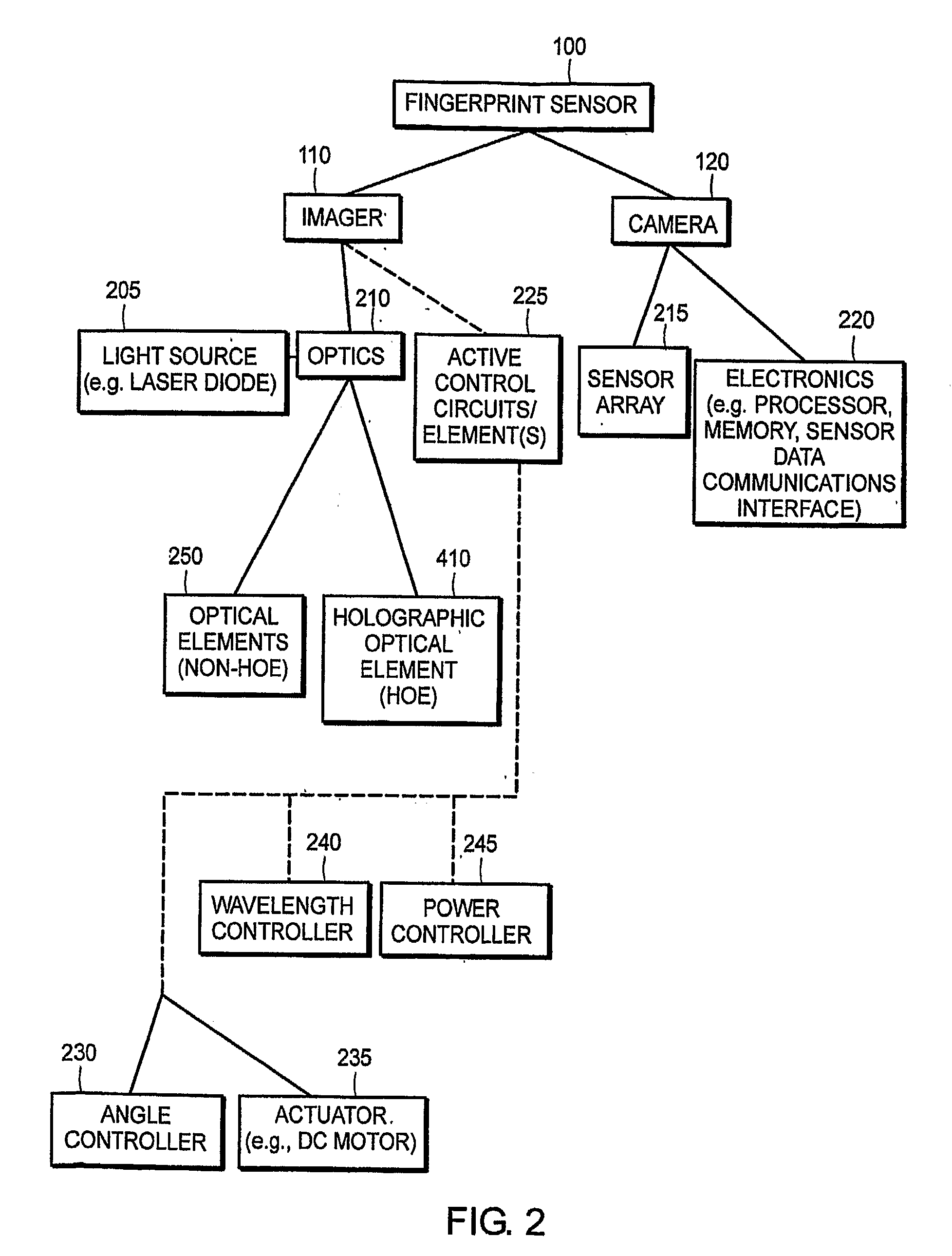

A method is provided for detecting road-side edges in a road segment using a light-based sensing system. Input range data is captured using the light-based sensing system. An elevation-based road segment is generated based on the captured input range data. The elevation-based road segment is processed by filtering techniques to identify the road segment candidate region and by pattern recognition techniques to determine whether the candidate region is a road segment. The input range data is also projected onto the ground plane for further validation. The line representation of the projected points are identified. The line representation of the candidate regions is compared to a simple road / road-edge model in the top-down view to determine whether the candidate region is a road segment with its edges. The proposed method provides fast processing speeds and reliable detection performance for both road and road-side edges simultaneously in the captured range data.

Owner:GM GLOBAL TECH OPERATIONS LLC

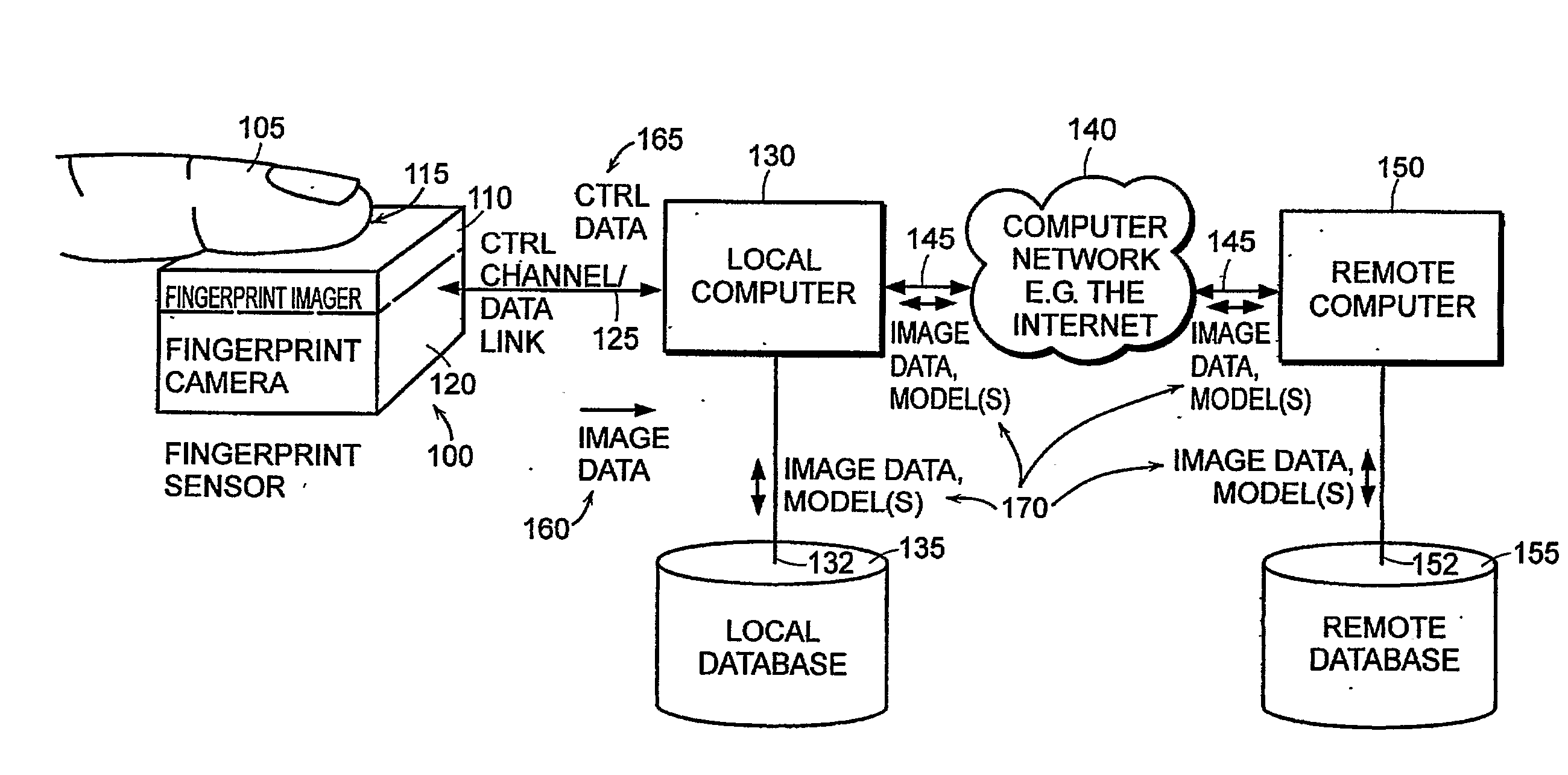

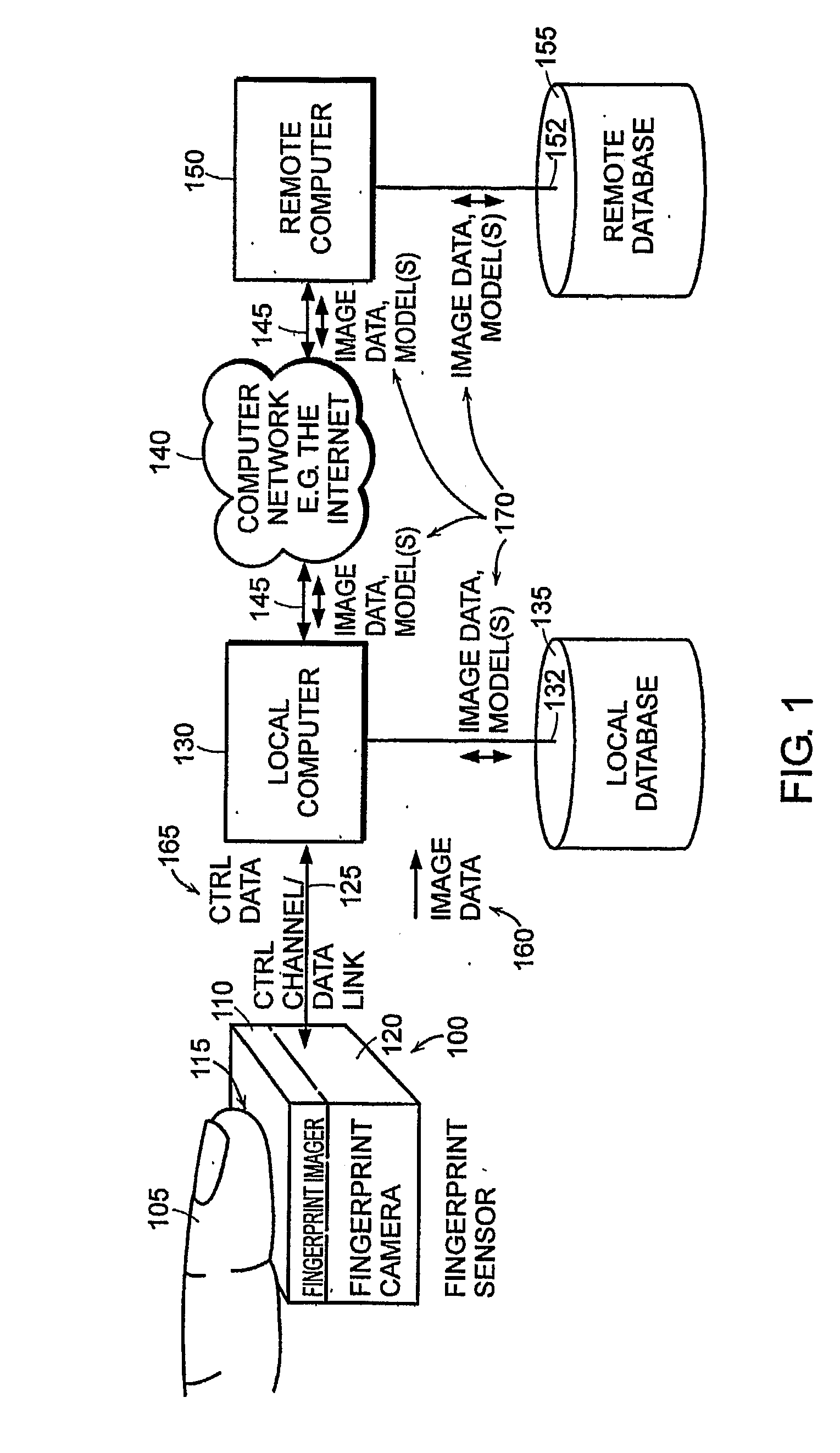

Method and apparatus for processing biometric images

InactiveUS20090226052A1Highly accurate matchingLess differenceImage analysisCharacter and pattern recognitionImage resolutionHigh resolution image

A method and apparatus for applying gradient edge detection to detect features in a biometric, such as a fingerprint, based on data representing an image of at least a portion of the biometric. The image is modeled as a function of the features. Data representing an image of the biometric is acquired, and features of the biometric are modeled for at least two resolutions. The method and apparatus improves analysis of both high-resolution images of biometrics of friction ridge containing skin that include resolved pores and lower resolution images of biometrics without resolved pores.

Owner:APRILIS

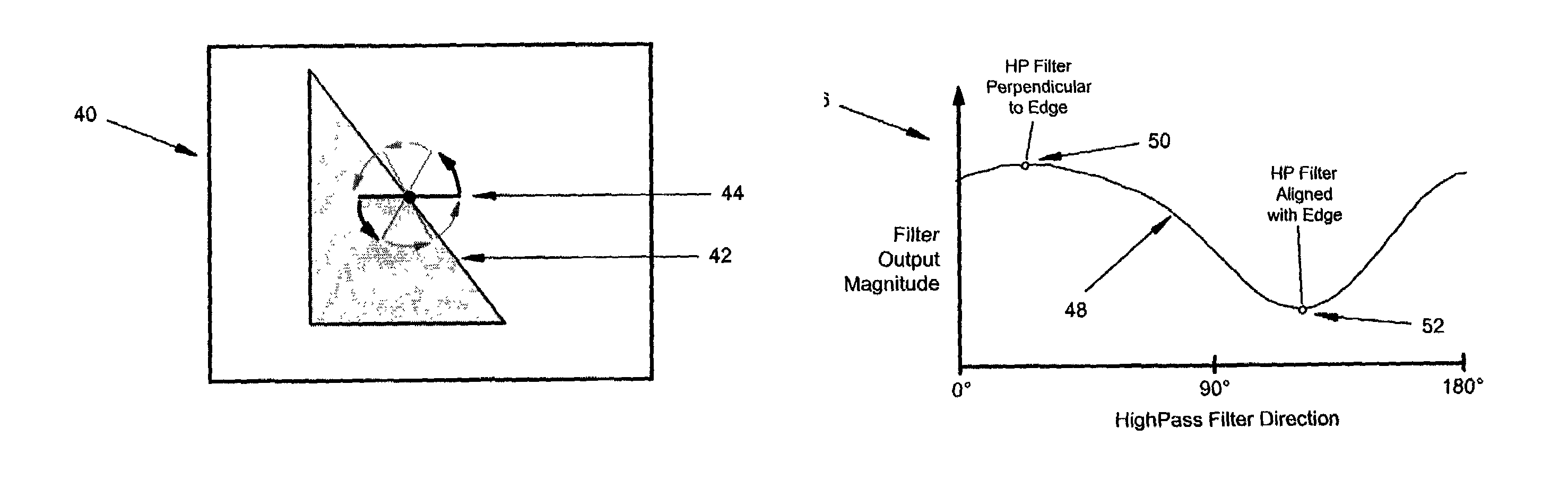

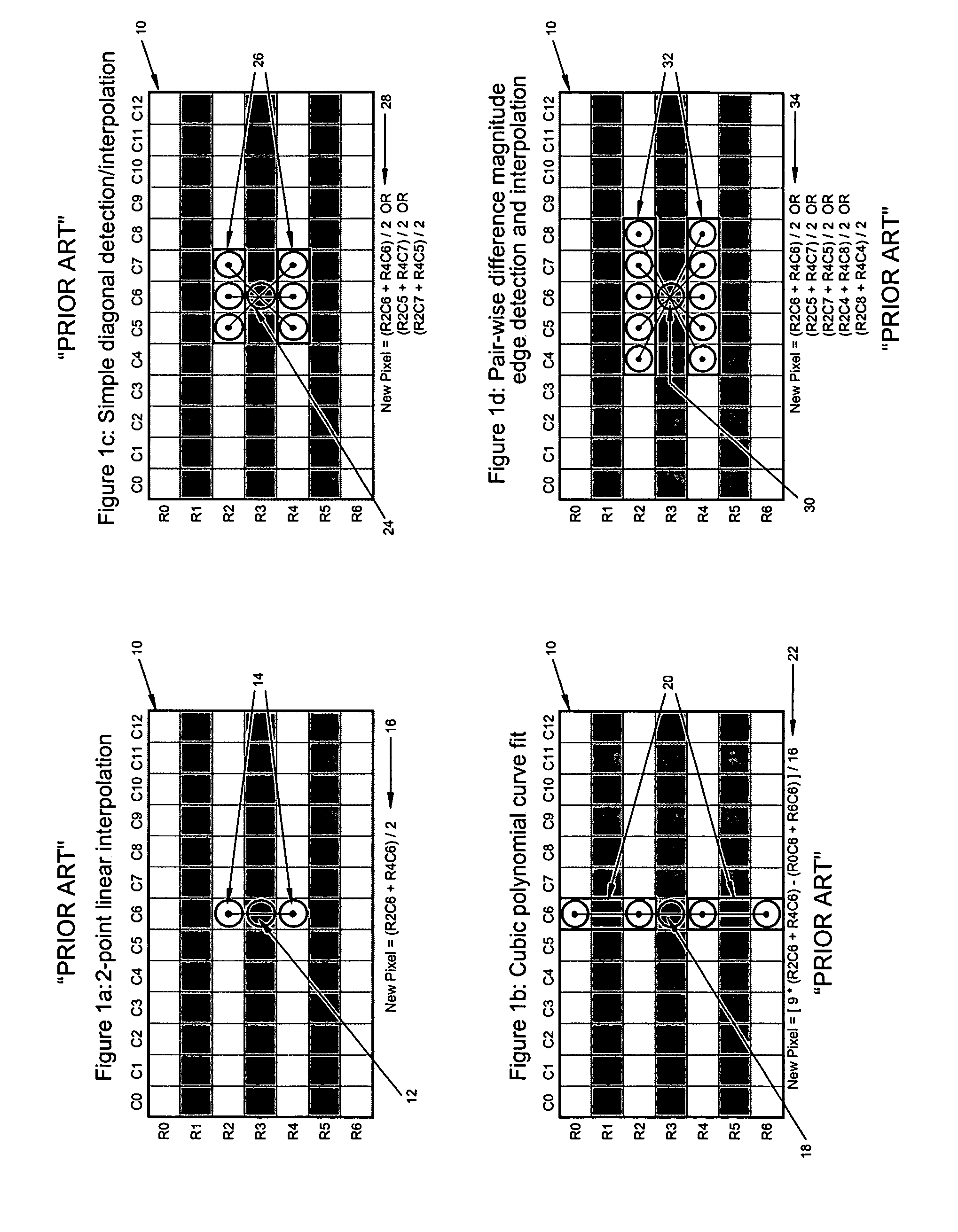

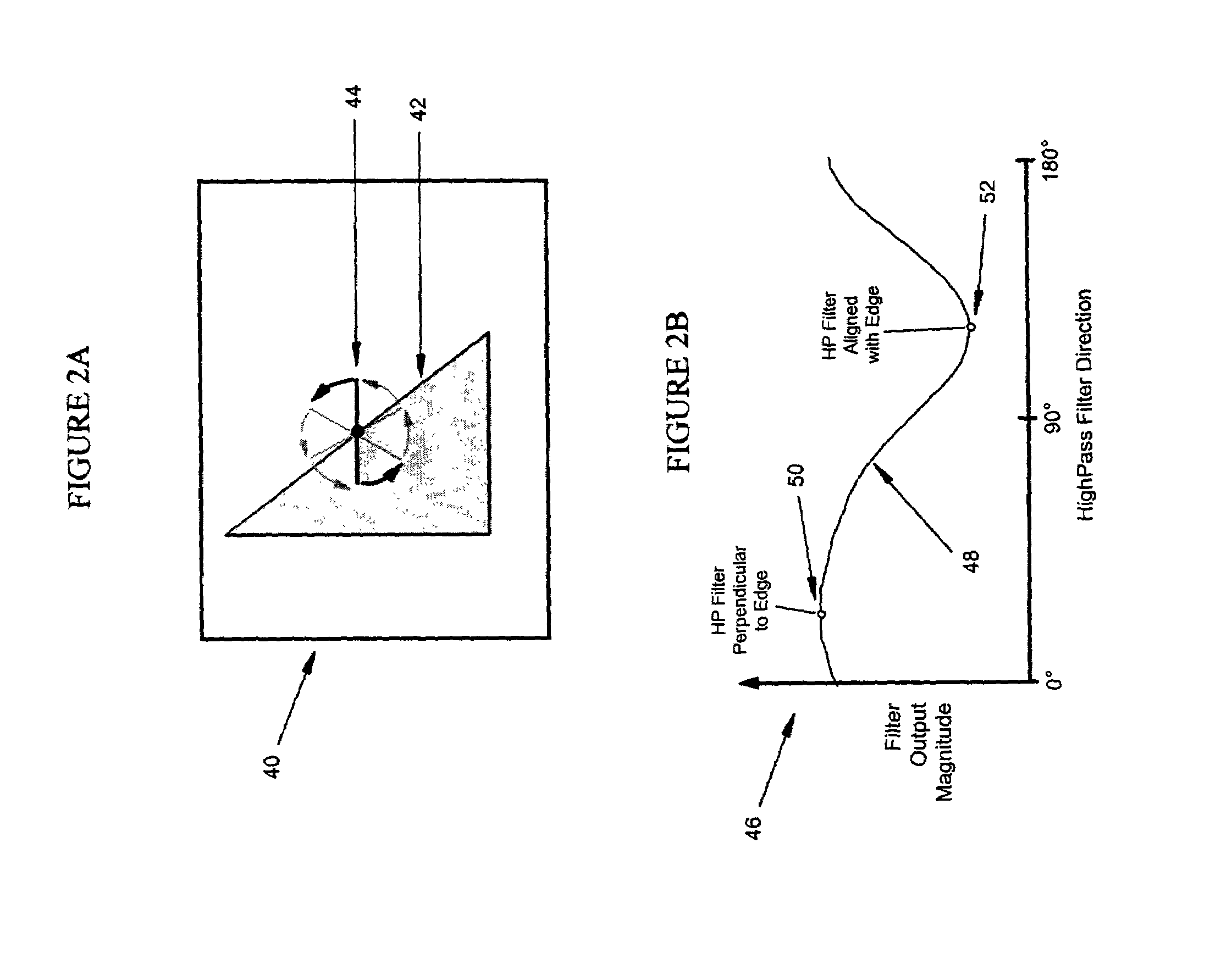

Deinterlacing of video sources via image feature edge detection

ActiveUS7023487B1Reduce artifactsPreserves maximum amount of vertical detailImage enhancementTelevision system detailsInterlaced videoProgressive scan

An interlaced to progressive scan video converter which identifies object edges and directions, and calculates new pixel values based on the edge information. Source image data from a single video field is analyzed to detect object edges and the orientation of those edges. A 2-dimensional array of image elements surrounding each pixel location in the field is high-pass filtered along a number of different rotational vectors, and a null or minimum in the set of filtered data indicates a candidate object edge as well as the direction of that edge. A 2-dimensional array of edge candidates surrounding each pixel location is characterized to invalidate false edges by determining the number of similar and dissimilar edge orientations in the array, and then disqualifying locations which have too many dissimilar or too few similar surrounding edge candidates. The surviving edge candidates are then passed through multiple low-pass and smoothing filters to remove edge detection irregularities and spurious detections, yielding a final edge detection value for each source image pixel location. For pixel locations with a valid edge detection, new pixel data for the progressive output image is calculated by interpolating from source image pixels which are located along the detected edge orientation.

Owner:LATTICE SEMICON CORP

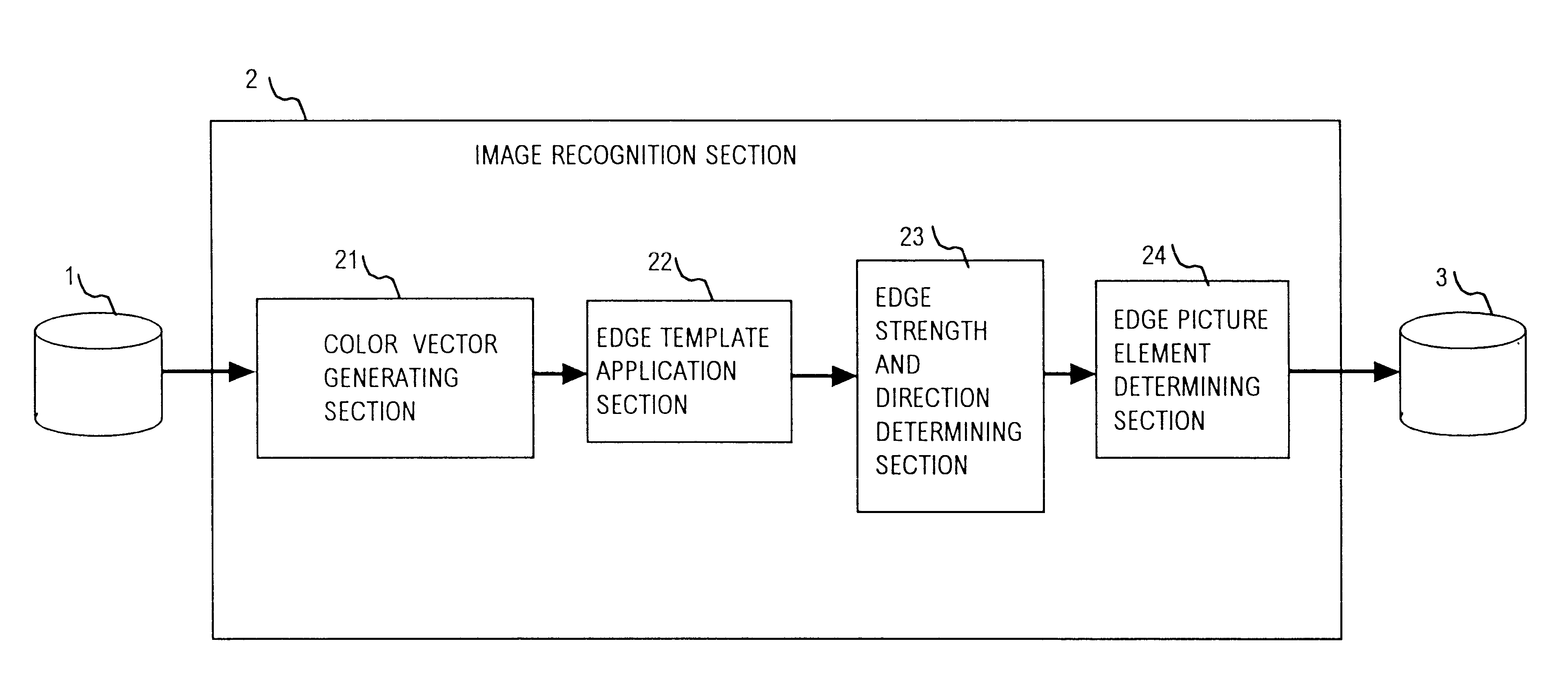

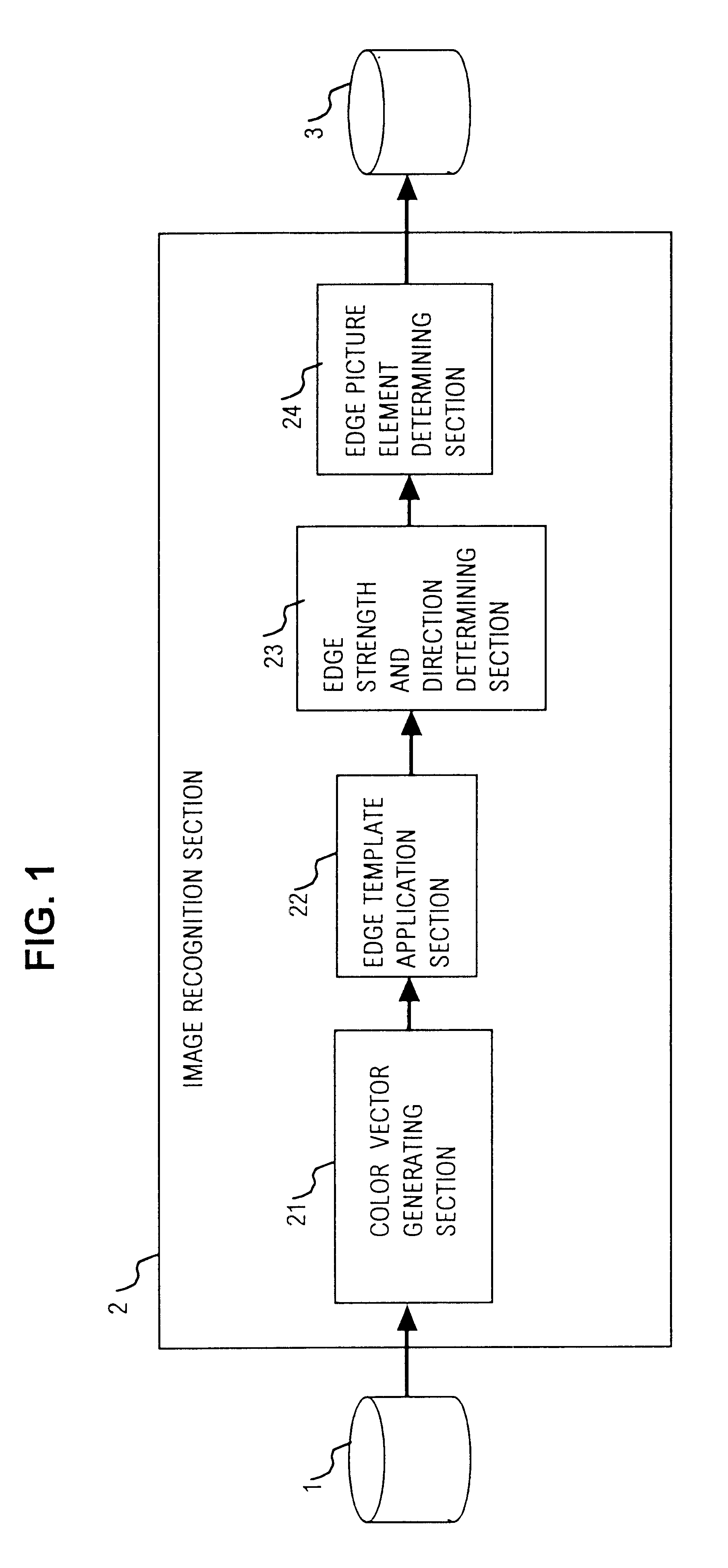

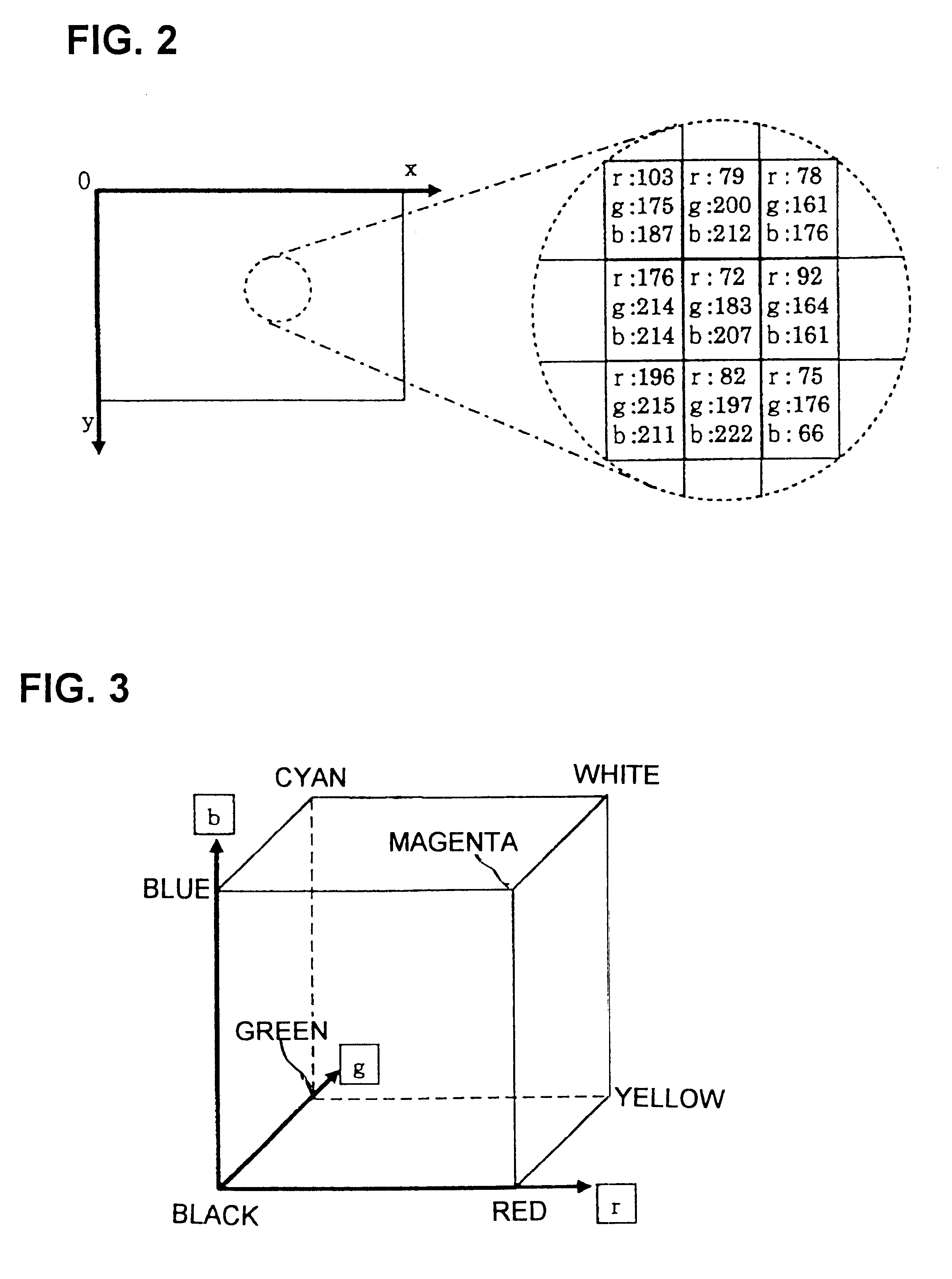

Image recognition method and apparatus utilizing edge detection based on magnitudes of color vectors expressing color attributes of respective pixels of color image

InactiveUS6665439B1Easy to identifyReduce precisionImage enhancementImage analysisColor imageArray data structure

An image recognition apparatus operates on data of a color image to obtain an edge image expressing the shapes of objects appearing in the color image, the apparatus including a section for expressing the color attributes of each pixel of the image as a color vector, in the form of a set of coordinates of an orthogonal color space, a section for applying predetermined arrays of numeric values as edge templates to derive for each pixel a number of edge vectors each corresponding to a specific edge direction, with each edge vector obtained as the difference between weighted vector sums of respective sets of color vectors of two sets of pixels which are disposed symmetrically opposing with respect to the corresponding edge direction, and a section for obtaining the maximum modulus of these edge vectors as a value of edge strength for the pixel which is being processed. By comparing the edge strength of a pixel with those of immediately adjacent pixels and with a predetermined threshold value, a decision can be reliably made for each pixel as to whether it is actually located on an edge and, if so, the direction of that edge.

Owner:PANASONIC CORP

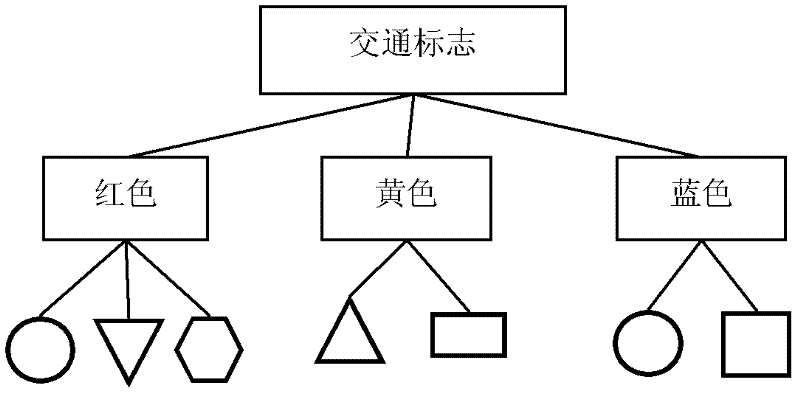

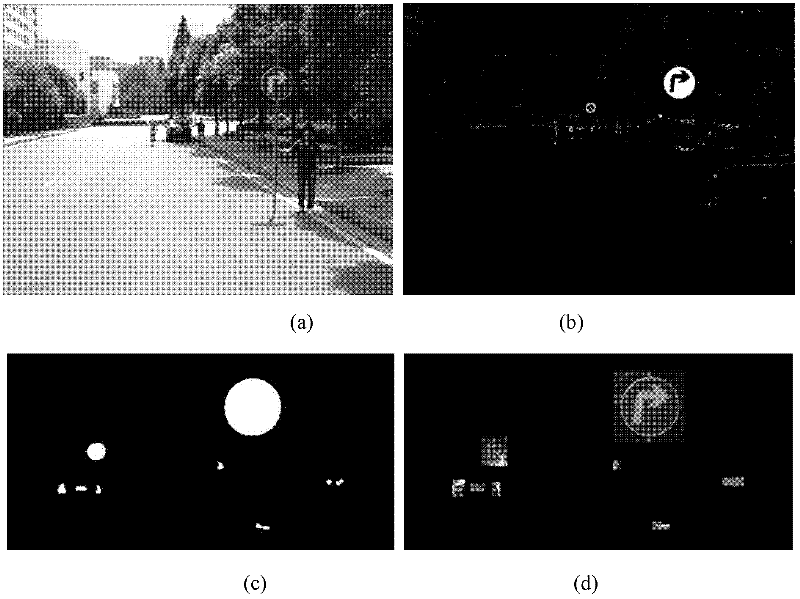

Method for recognizing road traffic sign for unmanned vehicle

InactiveCN102542260AFast extractionFast matchingDetection of traffic movementCharacter and pattern recognitionClassification methodsNear neighbor

The invention discloses a method for recognizing a road traffic sign for an unmanned vehicle, comprising the following steps of: (1) changing the RGB (Red, Green and Blue) pixel value of an image to strengthen a traffic sign feature color region, and cutting the image by using a threshold; (2) carrying out edge detection and connection on a gray level image to reconstruct an interested region; (3) extracting a labeled graph of the interested region as a shape feature of the interested region, classifying the shape of the region by using a nearest neighbor classification method, and removing a non-traffic sign region; and (4) graying and normalizing the image of the interested region of the traffic sign, carrying out dual-tree complex wavelet transform on the image to form a feature vector of the image, reducing the dimension of the feature vector by using a two-dimension independent component analysis method, and sending the feature vector into a support vector machine of a radial basis function to judge the type of the traffic sign of the interested region. By using the method, various types of traffic signs in a running environment of the unmanned vehicle can be stably and efficiently detected and recognized.

Owner:CENT SOUTH UNIV

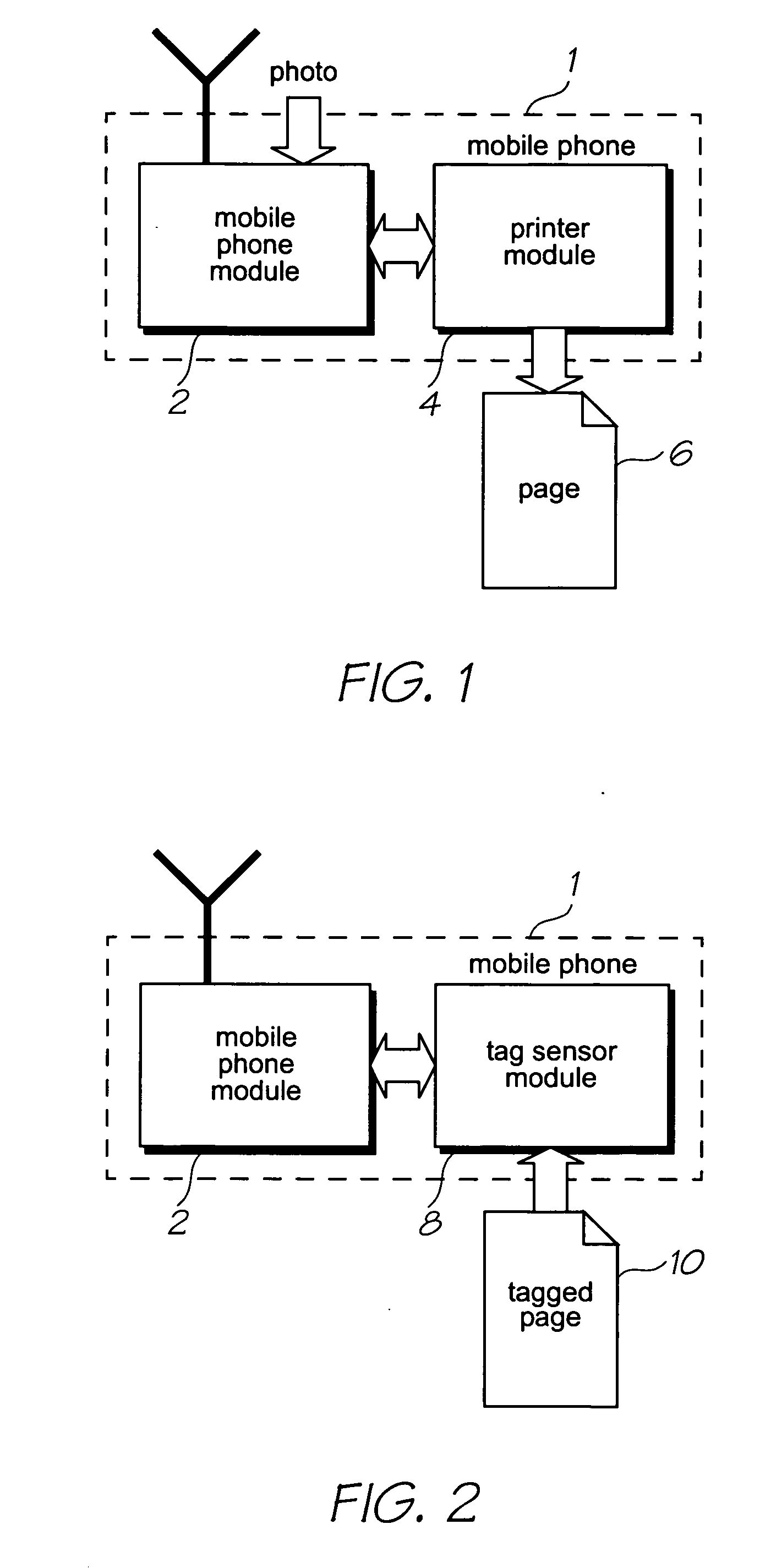

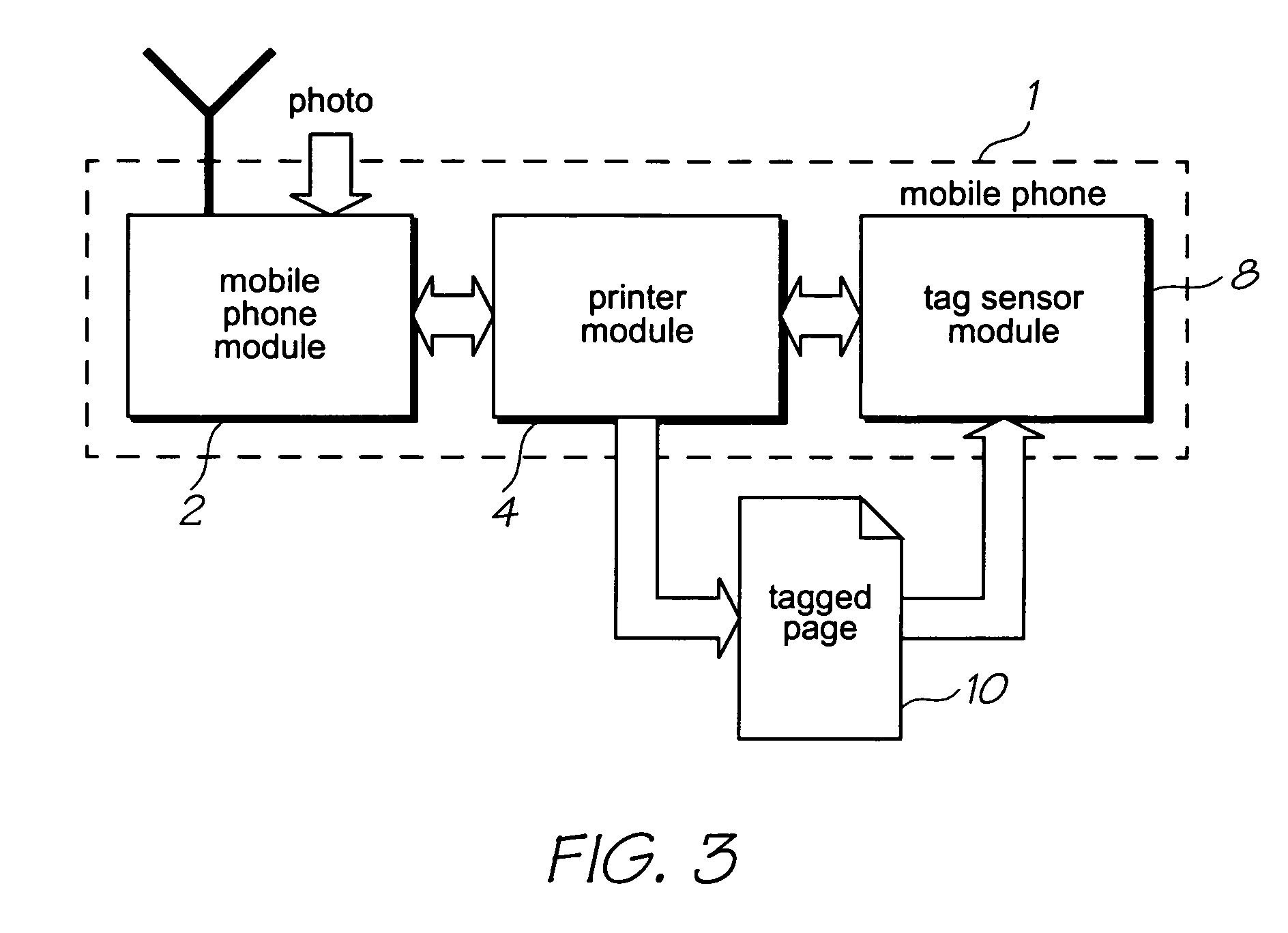

Mobile telecommunications device with media edge detection

InactiveUS20060250479A1Increased complexityIncrease in sizeTypewritersOther printing apparatusPaper sheetElectrical and Electronics engineering

A mobile telecommunications device comprising: a printhead for printing a sheet of media substrate, the sheet of media substrate having coded data on at least part of its surface; a media feed assembly for feeding the sheet of media substrate along a feed path past the printhead; a print engine controller for operatively controlling the printhead; and, a sensor for reading the coded data and generating a signal indicative of at least one dimension of the sheet, and transmitting the signal to the print engine controller; such that, the print engine controller uses the signal to initiate the printing when the sheet is at a predetermined position relative to the printhead.

Owner:SILVERBROOK RES PTY LTD

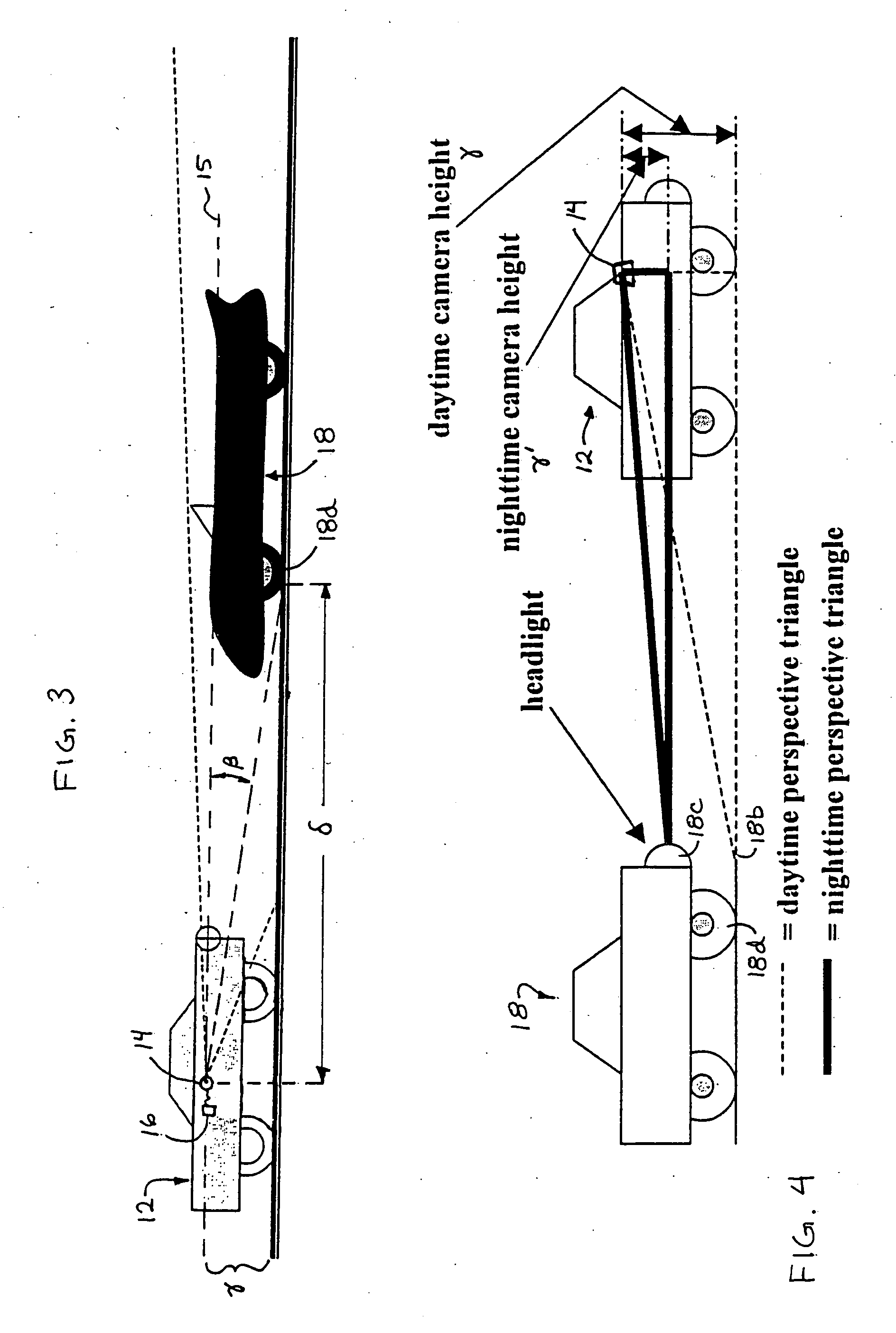

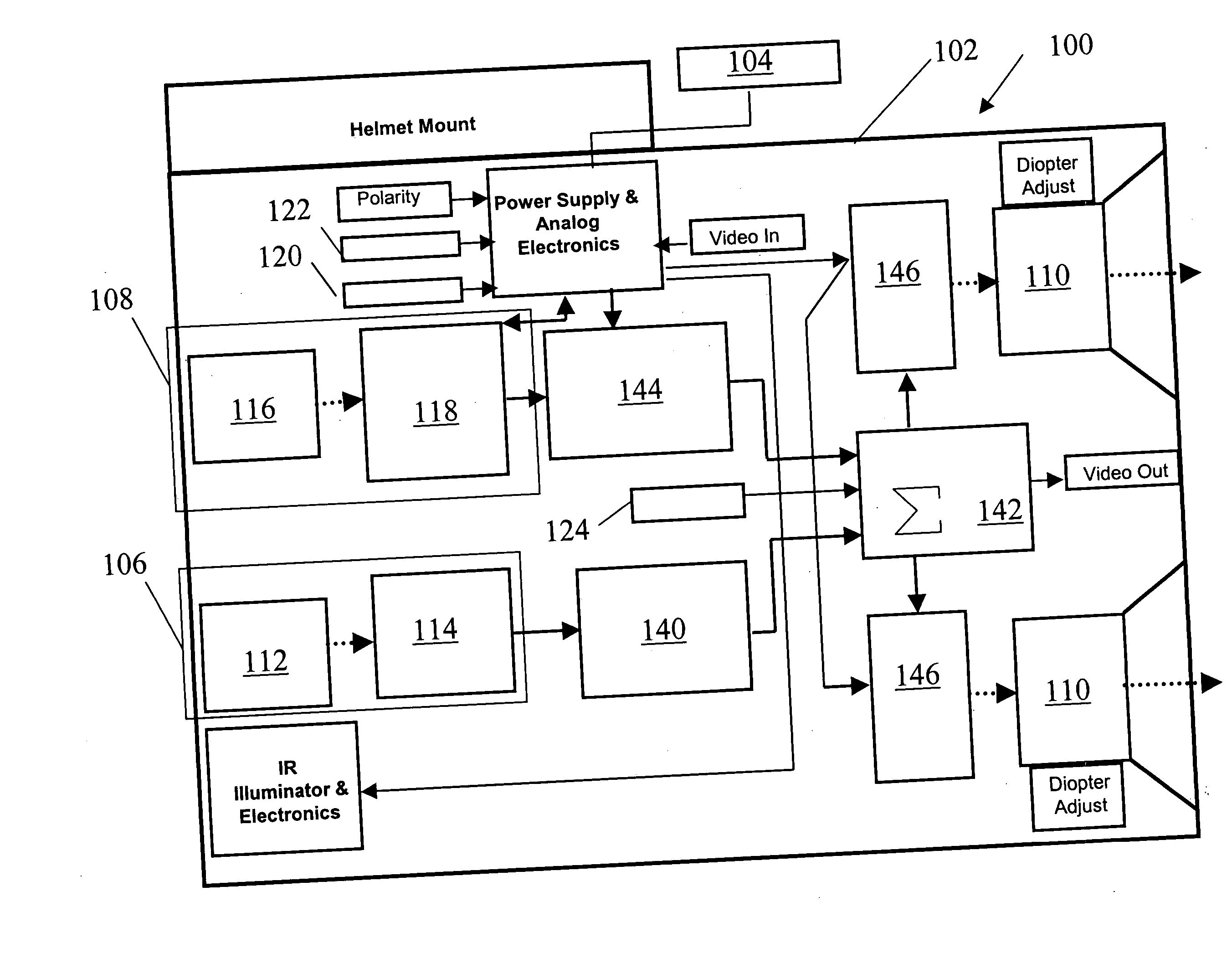

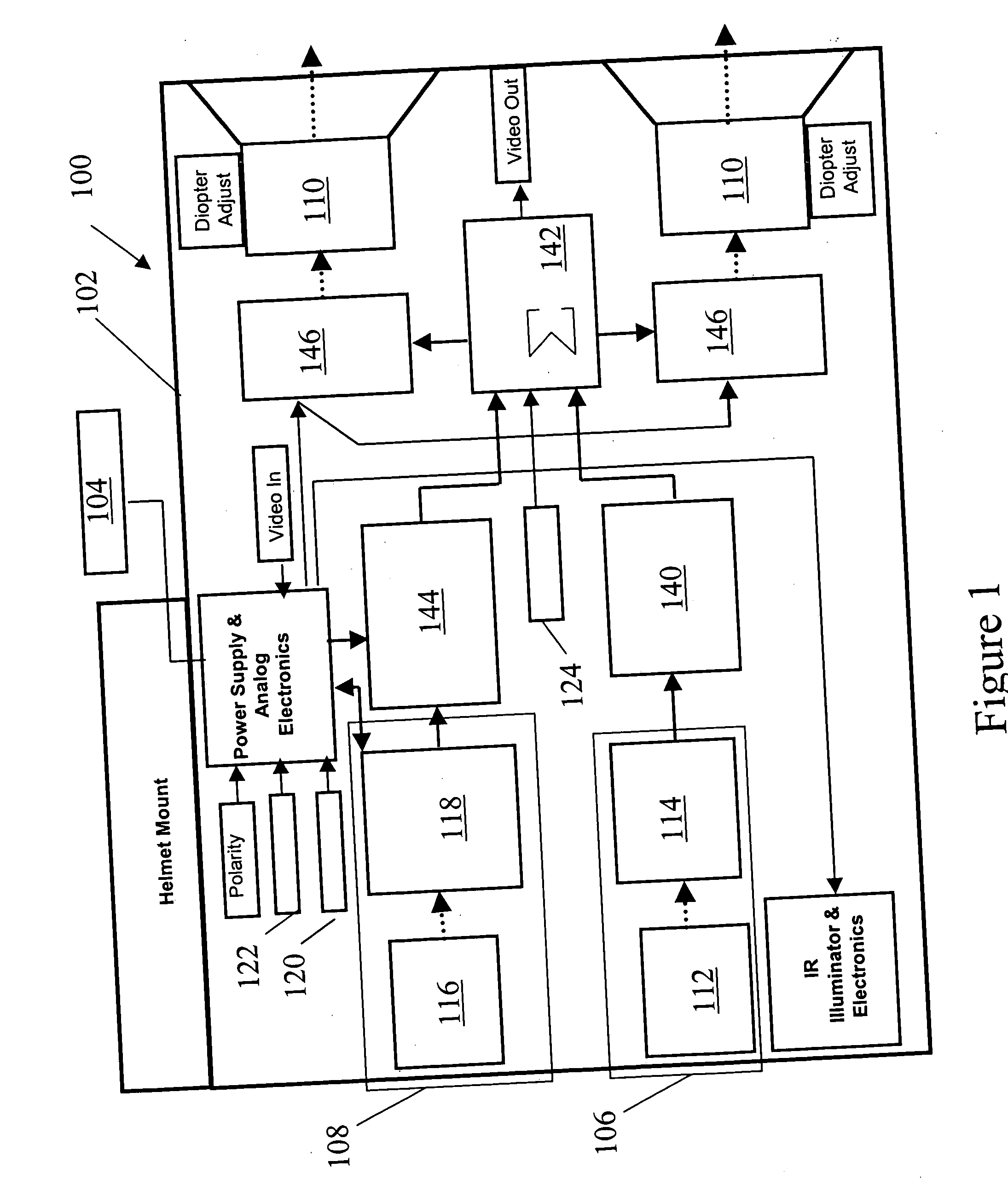

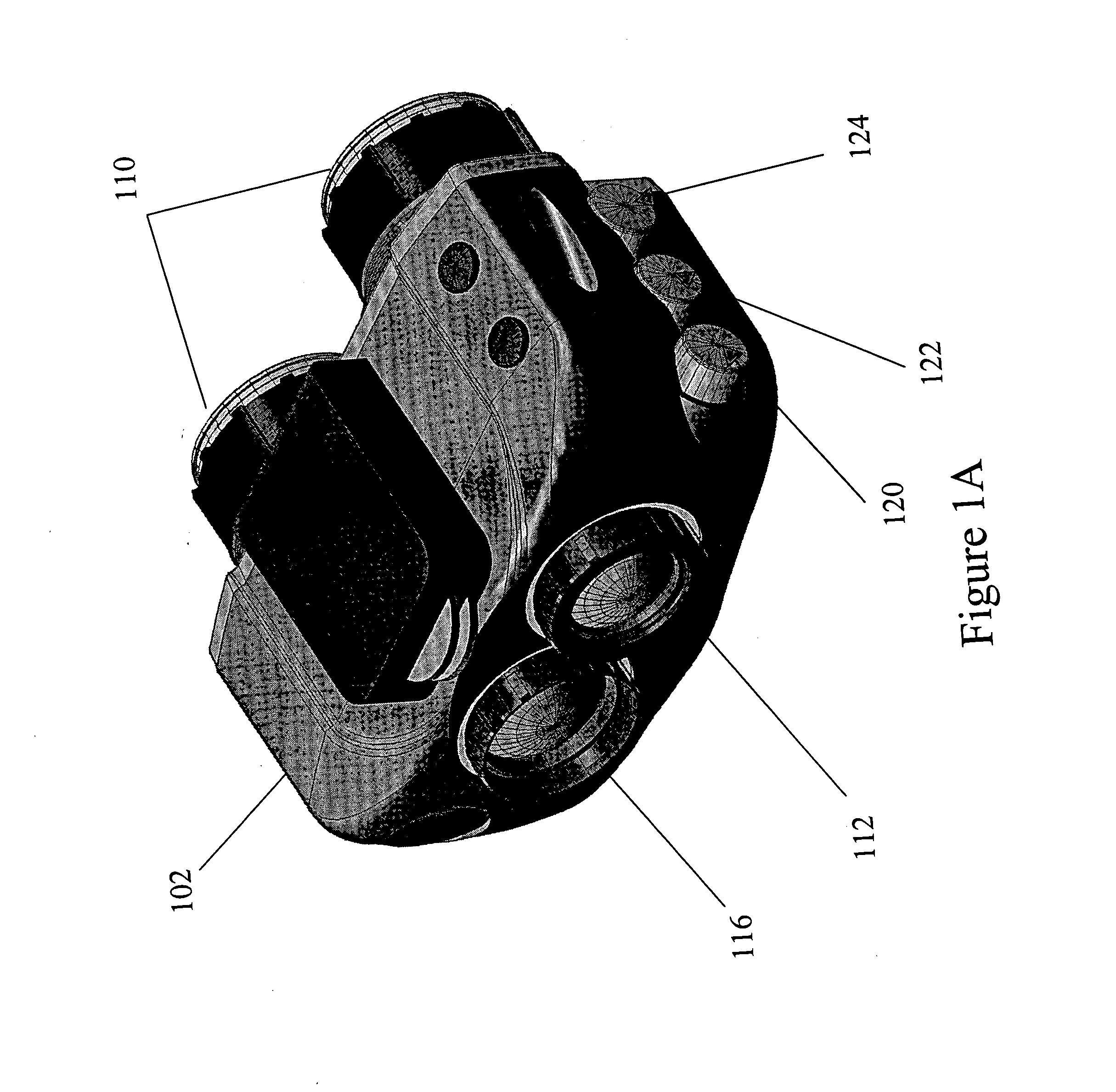

Fusion night vision system

ActiveUS20070235634A1Enhance the imageTelevision system detailsRadiation pyrometryParallaxNight vision

A fusion night vision system having image intensification and thermal imaging capabilities includes an edge detection filter circuit to aid in acquiring and identifying targets. An outline of the thermal image is generated and combined with the image intensification image without obscuration of the image intensification image. The fusion night vision system may also include a parallax compensation circuit to overcome parallax problems as a result of the image intensification channel being spaced from the thermal channel. The fusion night vision system may also include a control circuit configured to maintain a perceived brightness through an eyepiece over a mix of image intensification information and thermal information. The fusion night vision system may incorporate a targeting mode that allows an operator to acquire a target without having the scene saturated by a laser pointer. The night vision system may also include a detector, an image combiner for forming a fused image from the detector and a display, and a camera aligned with image combiner for recording scene information processed by the first detector.

Owner:L 3 COMM INSIGHT TECH

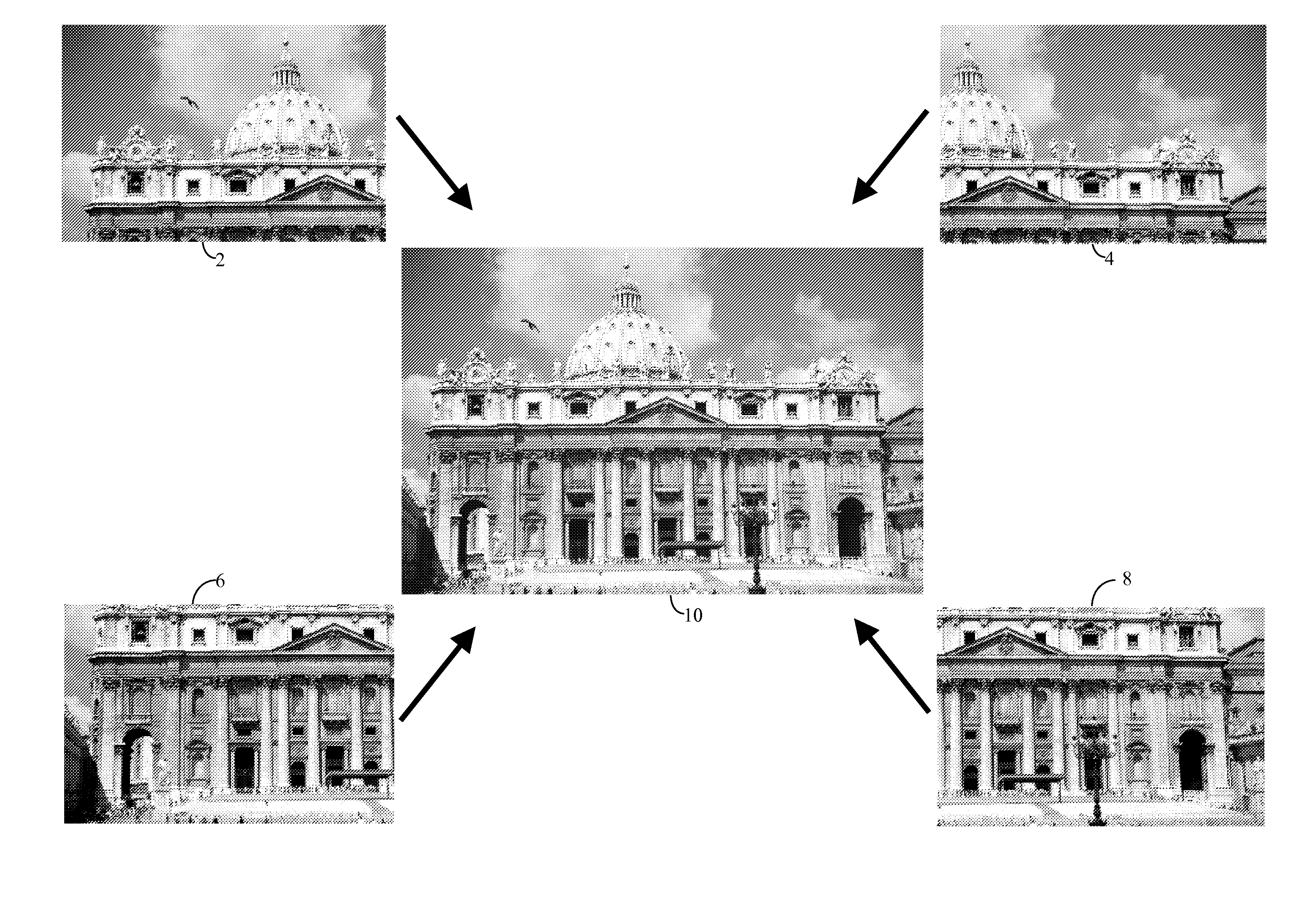

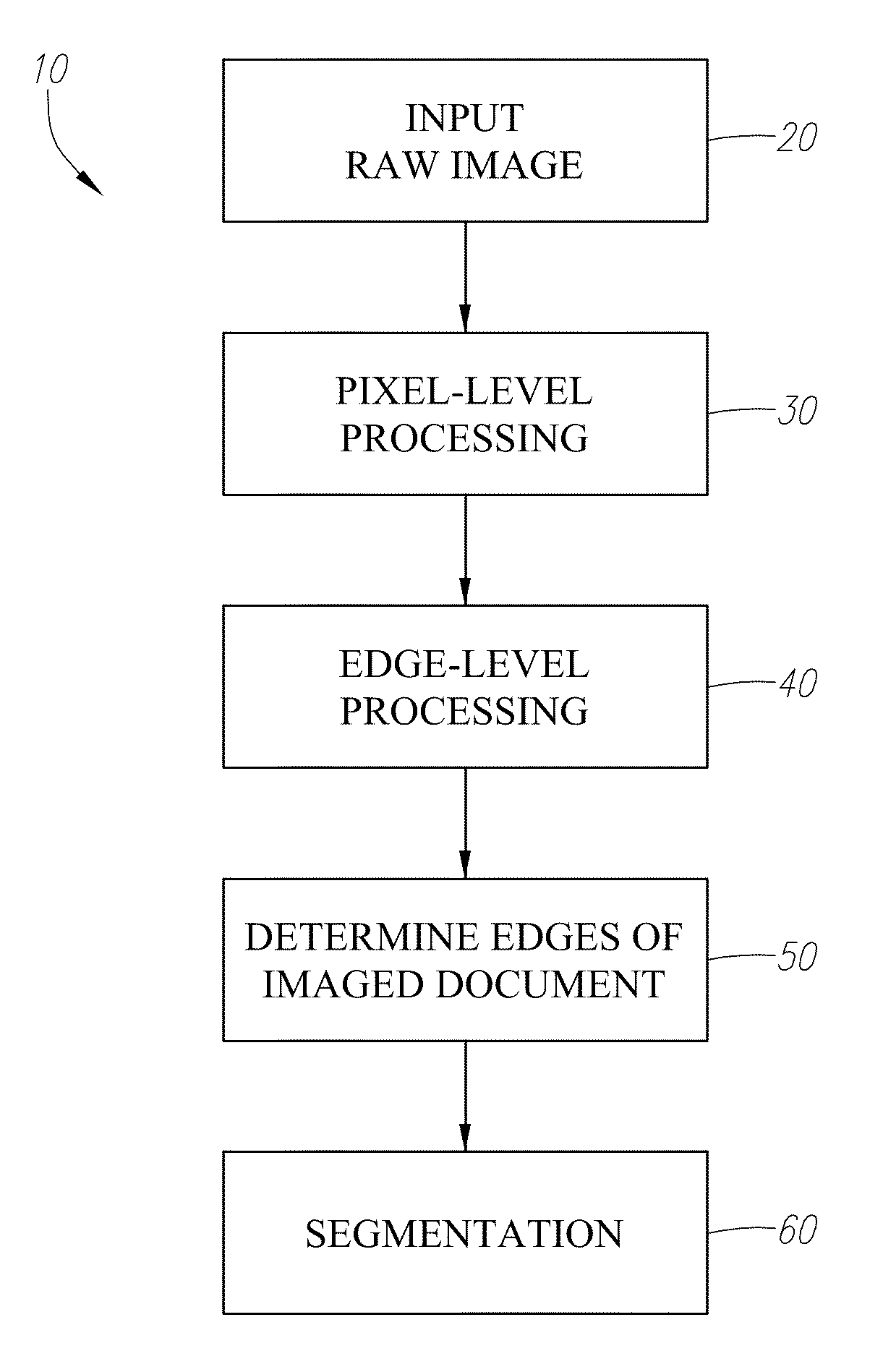

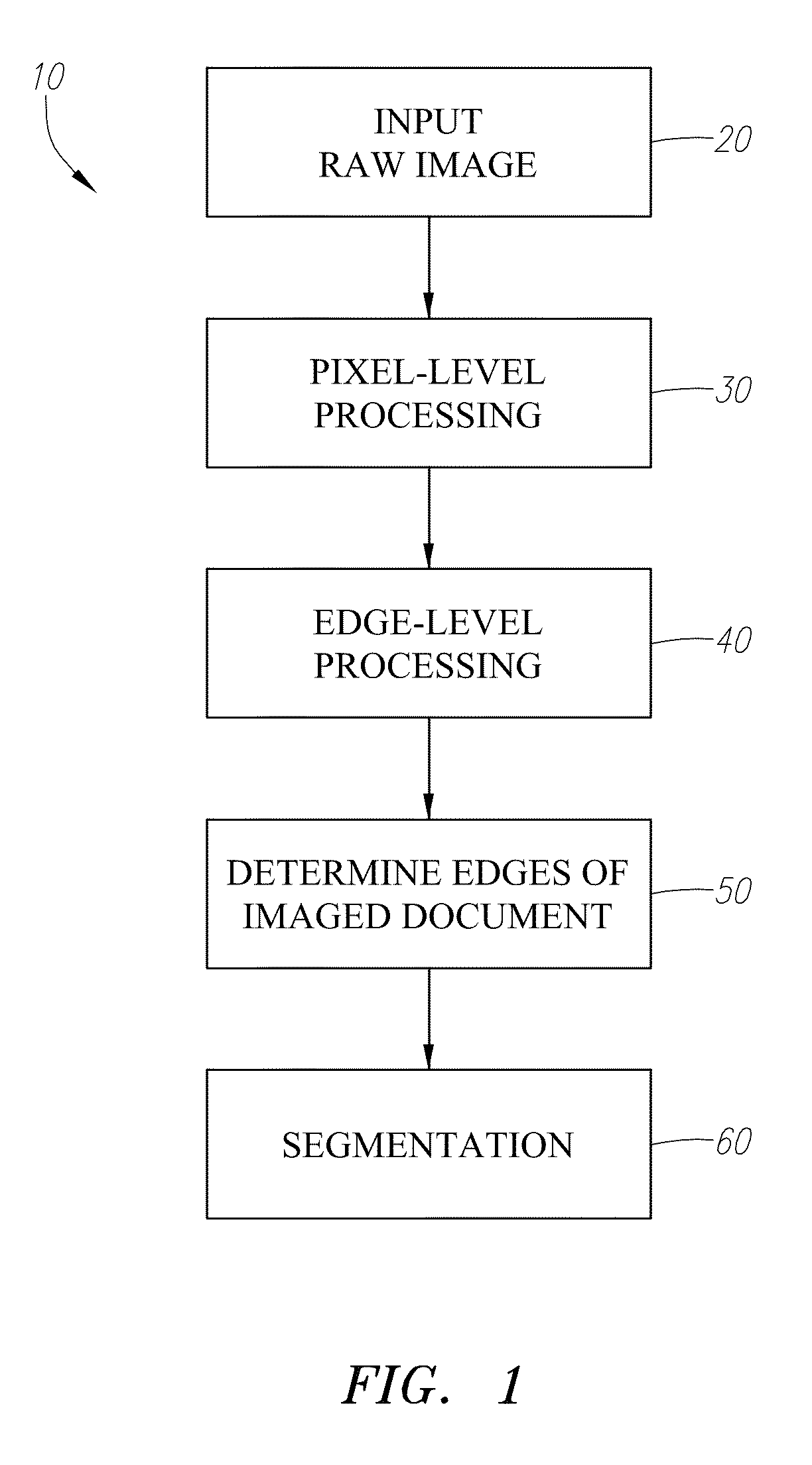

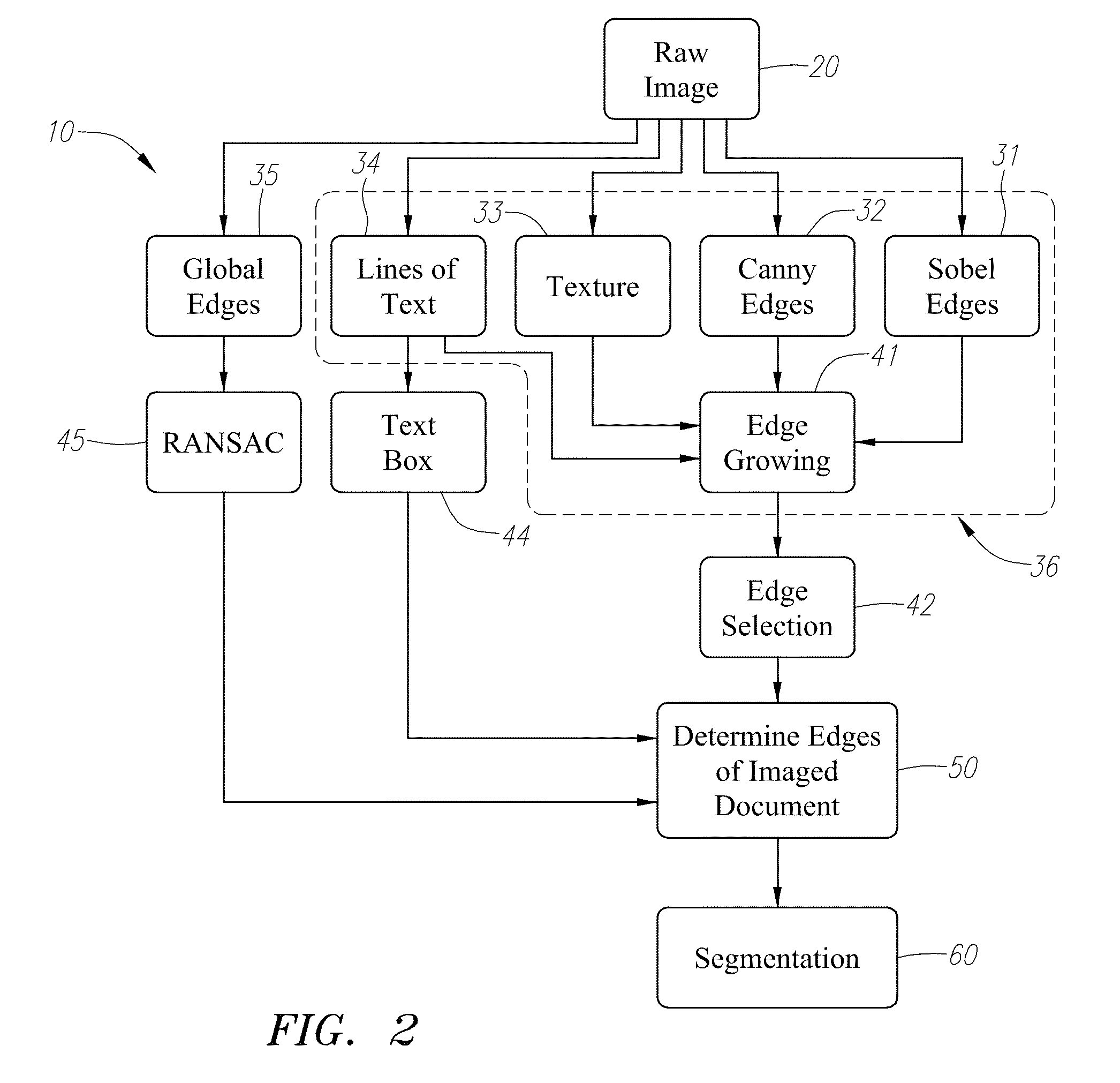

Photo-document segmentation method and system

The present application provides an improved segmentation method and system for processing digital images that include an imaged document and surrounding image. A plurality of edge detection techniques are used to determine the edges of the imaged document and then segment the imaged document from the surrounding image.

Owner:COMPULINK MANAGEMENT CENT

Regional depth edge detection and binocular stereo matching-based three-dimensional reconstruction method

ActiveCN101908230AStable matching costReduce mistakesImage analysis3D modellingObject pointReconstruction method

The invention discloses a regional depth edge detection and binocular stereo matching-based three-dimensional reconstruction method, which is implemented by the following steps: (1) shooting a calibration plate image with a mark point at two proper angles by using two black and white cameras; (2) keeping the shooting angles constant and shooting two images of a shooting target object at the same time by using the same camera; (3) performing the epipolar line rectification of the two images of the target objects according to the nominal data of the camera; (4) searching the neighbor regions of each pixel of the two rectified images for a closed region depth edge and building a supporting window; (5) in the built window, computing a normalized cross-correlation coefficient of supported pixels and acquiring the matching price of a central pixel; (6) acquiring a parallax by using a confidence transmission optimization method having an acceleration updating system; (7) estimating an accurate parallax by a subpixel; and (8) computing the three-dimensional coordinates of an actual object point according to the matching relationship between the nominal data of the camera and the pixel and consequently reconstructing the three-dimensional point cloud of the object and reducing the three-dimensional information of a target.

Owner:江苏省华强纺织有限公司 +1

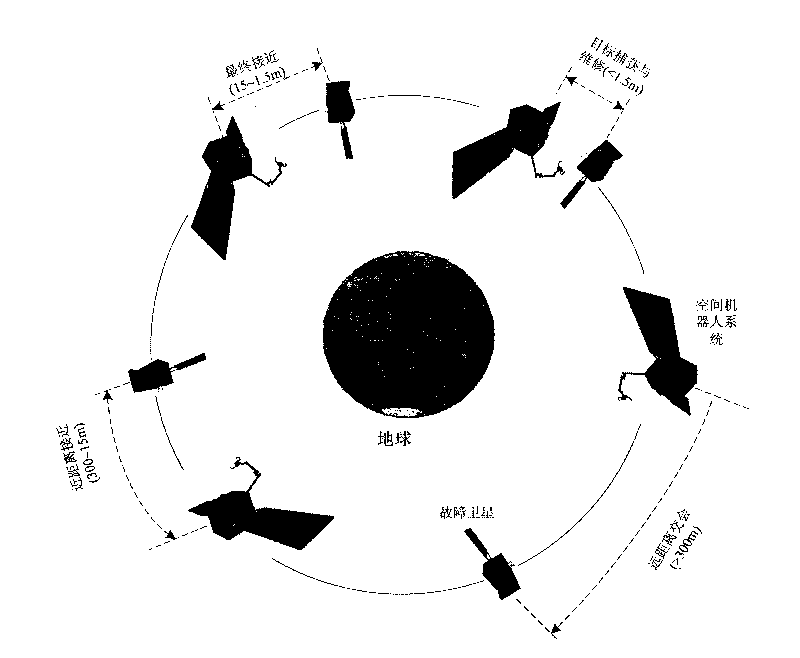

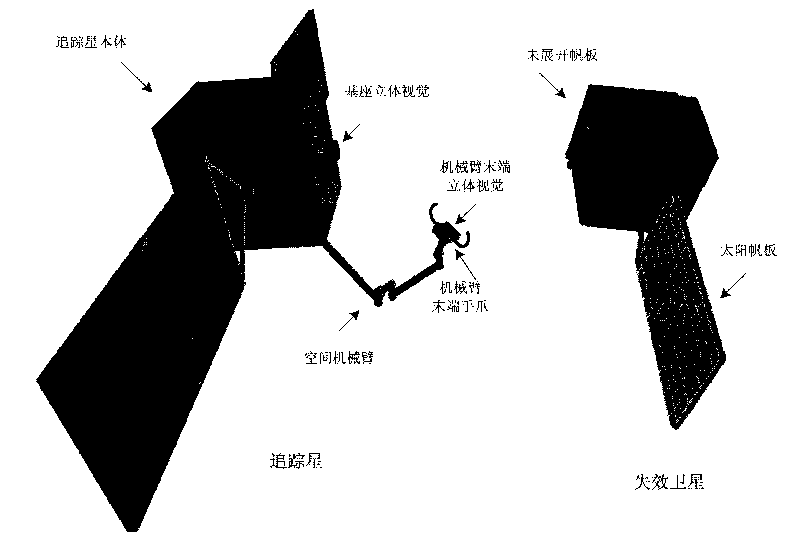

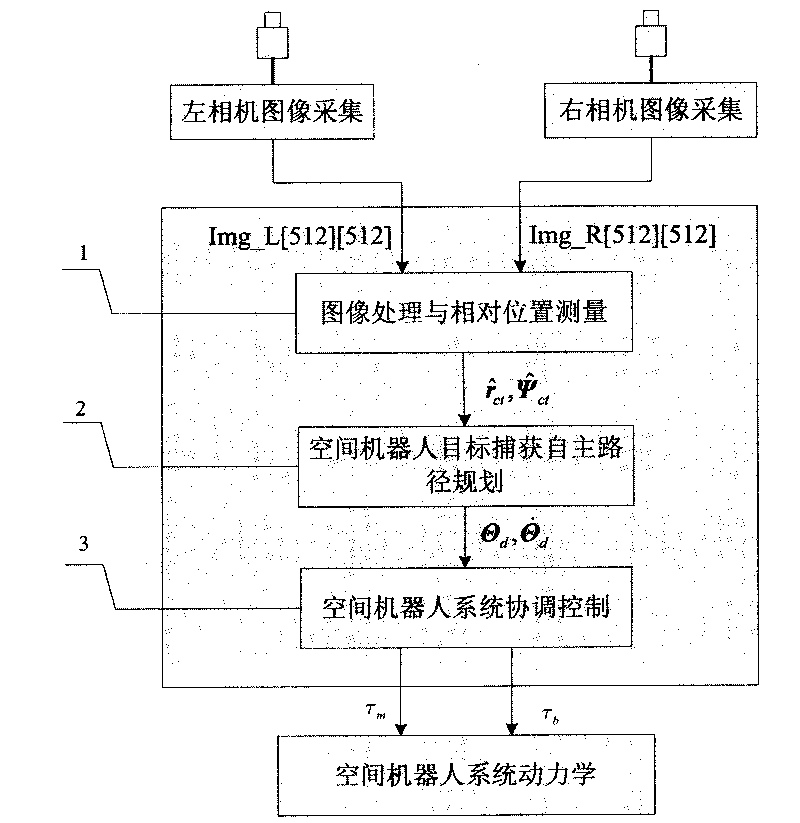

Autonomously identifying and capturing method of non-cooperative target of space robot

InactiveCN101733746AReal-time prediction of motion statusPredict interference in real timeProgramme-controlled manipulatorToolsKinematicsTarget capture

The invention relates to an autonomously identifying and capturing method of a non-cooperative target of a space robot, comprising the main steps of (1) pose measurement based on stereoscopic vision, (2) autonomous path planning of the target capture of the space robot and (3) coordinative control of a space robot system, and the like. The pose measurement based on the stereoscopic vision is realized by processing images of a left camera and a right camera in real time, and computing the pose of a non-cooperative target star relative to a base and a tail end, wherein the processing comprises smoothing filtering, edge detection, linear extraction, and the like. The autonomous path planning of the target capture of the space robot comprises is realized by planning the motion tracks of joints in real time according to the pose measurement results. The coordinative control of the space robot system is realized by coordinately controlling mechanical arms and the base to realize the optimal control property of the whole system. In the autonomously identifying and capturing method, a self part of a spacecraft is directly used as an identifying and capturing object without installing a marker or a comer reflector on the target star or knowing the geometric dimension of the object, and the planned path can effectively avoid the singular point of dynamics and kinematics.

Owner:HARBIN INST OF TECH

Method and apparatus for ultrasonic continuous, non-invasive blood pressure monitoring

Ultrasound is used to provide input data for a blood pressure estimation scheme. The use of transcutaneous ultrasound provides arterial lumen area and pulse wave velocity information. In addition, ultrasound measurements are taken in such a way that all the data describes a single, uniform arterial segment. Therefore a computed area relates only to the arterial blood volume present. Also, the measured pulse wave velocity is directly related to the mechanical properties of the segment of elastic tube (artery) for which the blood volume is being measured. In a patient monitoring application, the operator of the ultrasound device is eliminated through the use of software that automatically locates the artery in the ultrasound data, e.g., using known edge detection techniques. Autonomous operation of the ultrasound system allows it to report blood pressure and blood flow traces to the clinical users without those users having to interpret an ultrasound image or operate an ultrasound imaging device.

Owner:GENERAL ELECTRIC CO

System for combining virtual and real-time environments

ActiveUS20070035561A1Cathode-ray tube indicatorsImage data processingComputer graphics (images)Data source

The present invention relates to a method and an apparatus for combining virtual reality and real-time environment. The present invention provides a system that combines captured real-time video data and real-time 3D environment rendering to create a fused (combined) environment. The system captures video imagery and processes it to determine which areas should be made transparent (or have other color modifications made), based on sensed cultural features and / or sensor line-of-sight. Sensed features can include electromagnetic radiation characteristics (i.e. color, infra-red, ultra-violet light). Cultural features can include patterns of these characteristics (i.e. object recognition using edge detection). This processed image is then overlaid on a 3D environment to combine the two data sources into a single scene. This creates an effect where a user can look through ‘windows’ in the video image into a 3D simulated world, and / or see other enhanced or reprocessed features of the captured image.

Owner:SUBARU TECNICA INTERNATIONAL

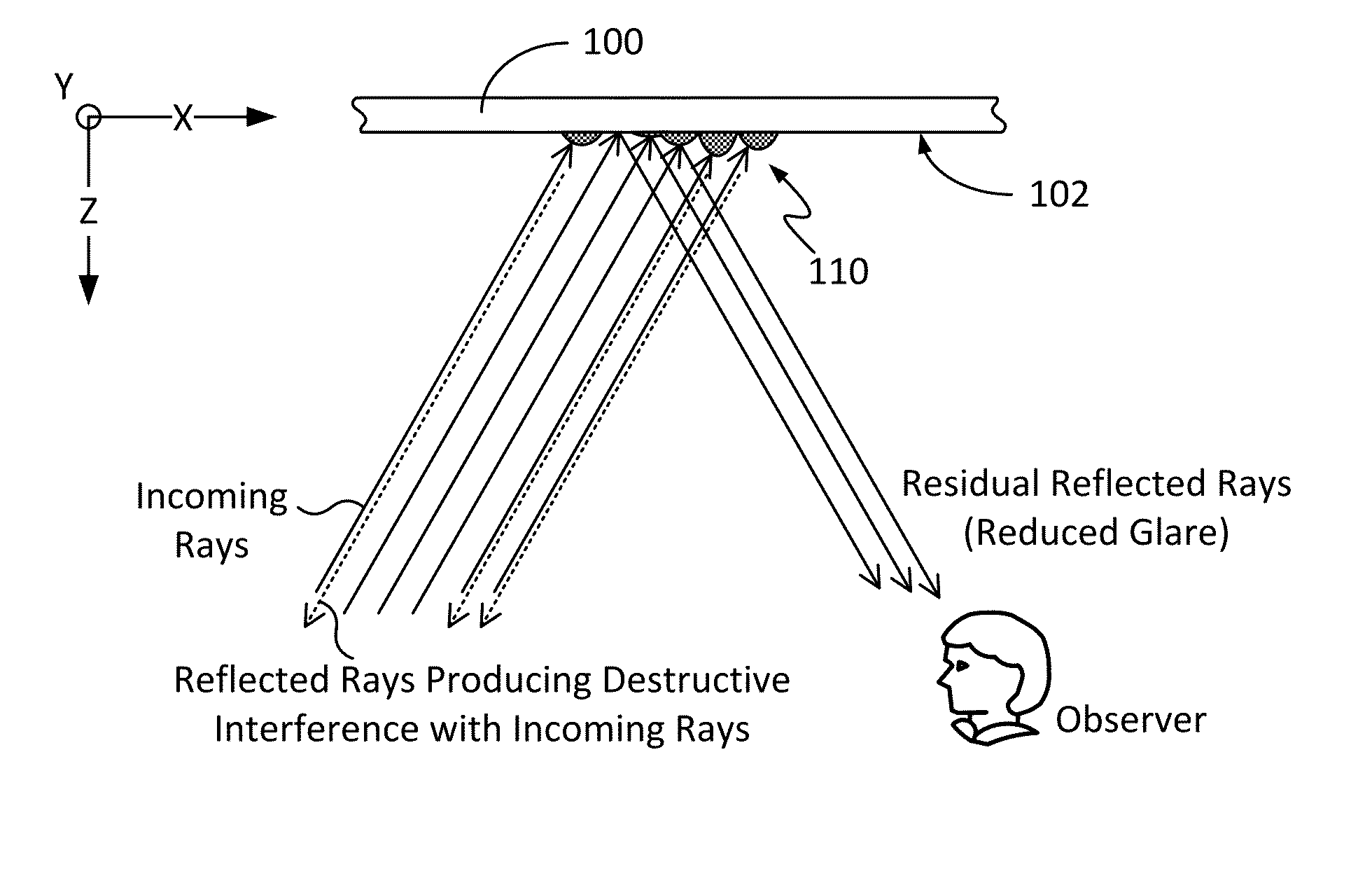

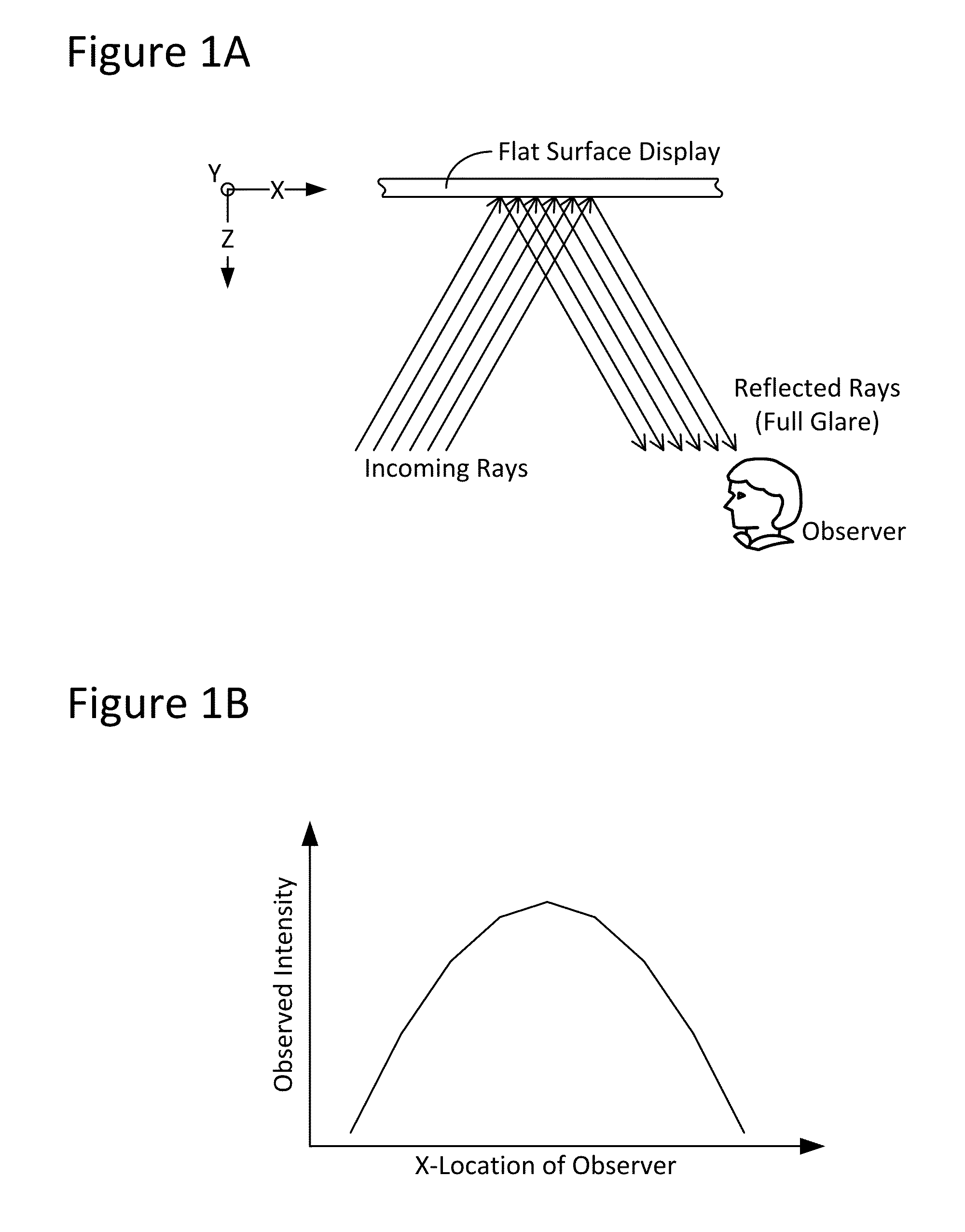

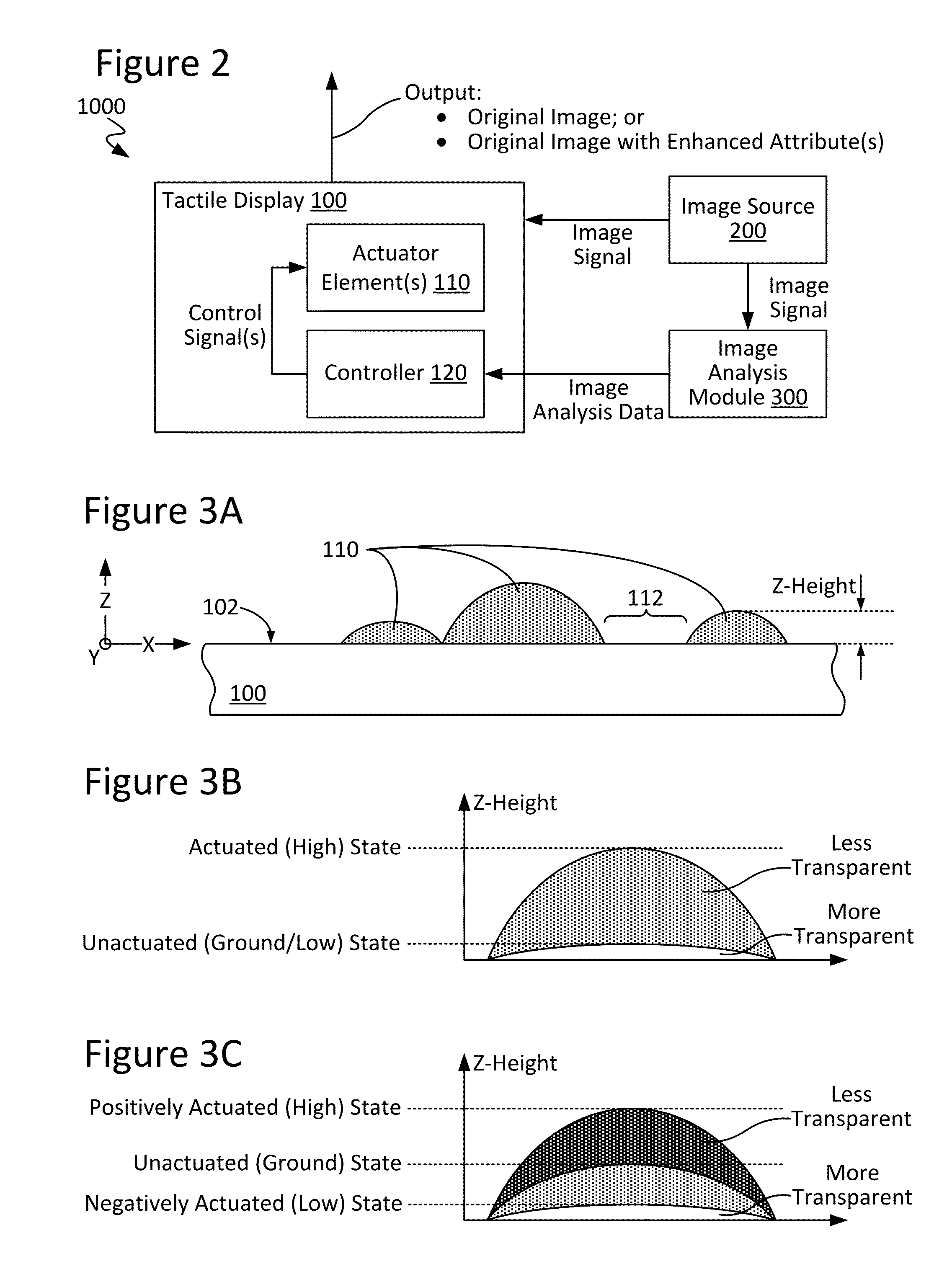

Techniques for image enhancement using a tactile display

Techniques are disclosed for enhancing the quality of a displayed image using a tactile or other texture display. In particular, the disclosed techniques leverage active-texture display technology to enhance the quality of graphics by providing, for example, outlining and / or shading when presenting a given image, so as to create the effect of increased contrast and image quality and / or to reduce observable glare. These effects can be present even at high viewing angles and in environments of high light reflection. To these ends, one or more graphics processes, such as edge-detection and / or shading, may be applied to an image to be displayed. In turn, an actuator element (e.g., microelectromechanical systems, or MEMS, devices) of the tactile display may be manipulated (e.g., in Z-height) to provide fine-grain adjustment of image attributes such as: pixel brightness / intensity; pixel color; edge highlighting; object outlining; effective shading; image contrast; and / or viewing angle.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com