Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

138 results about "Epipolar line" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

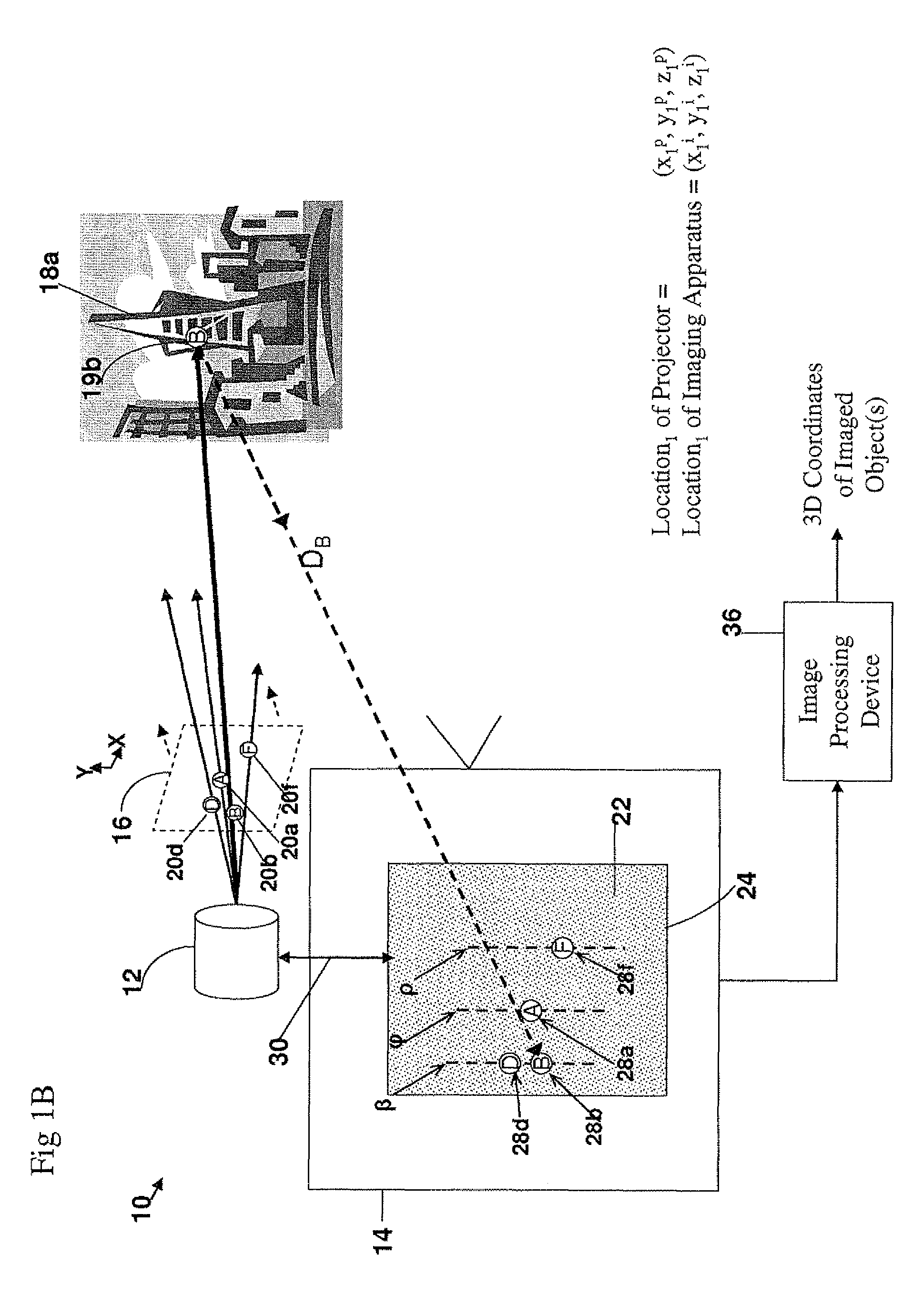

3D Geometric Modeling And Motion Capture Using Both Single And Dual Imaging

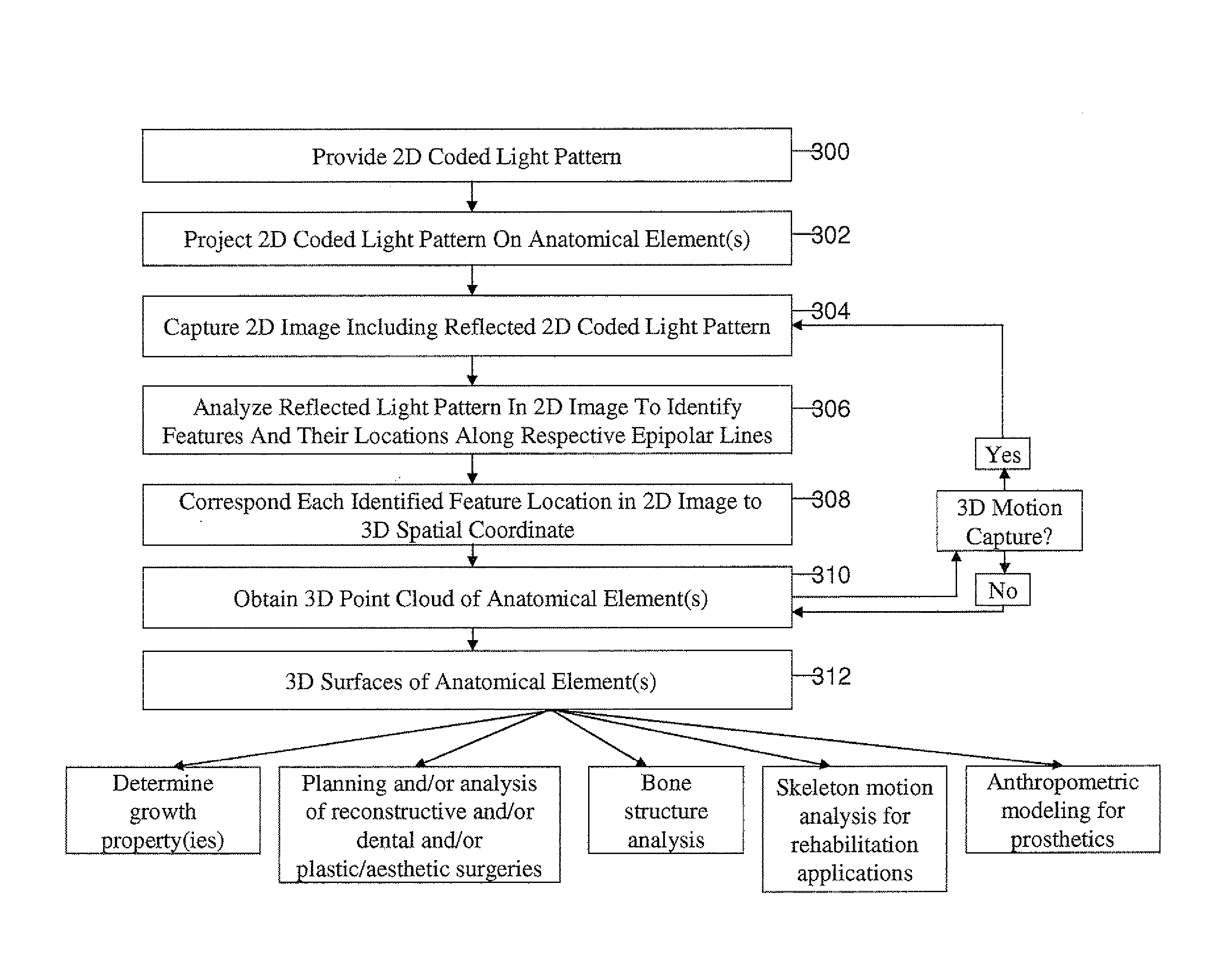

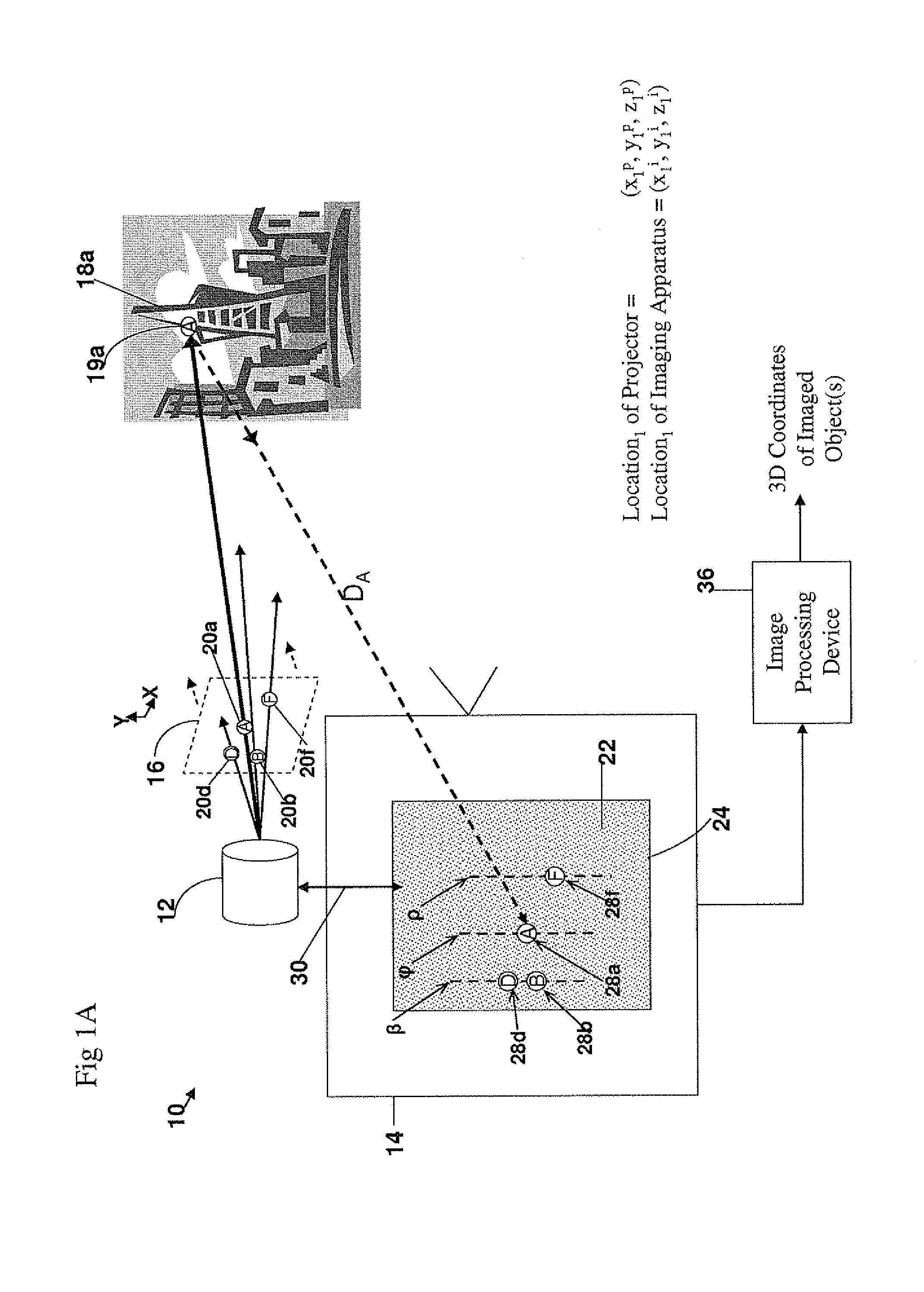

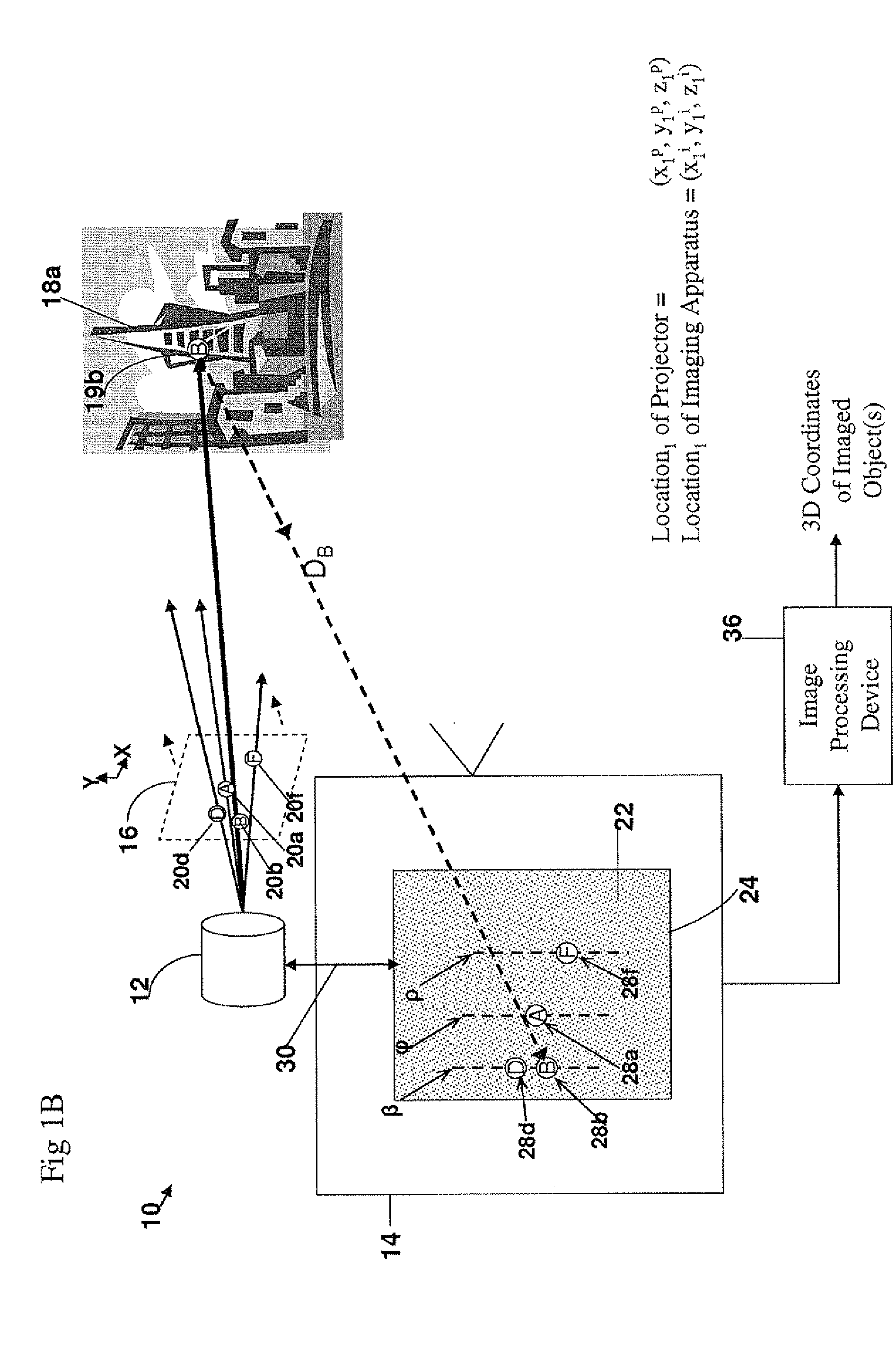

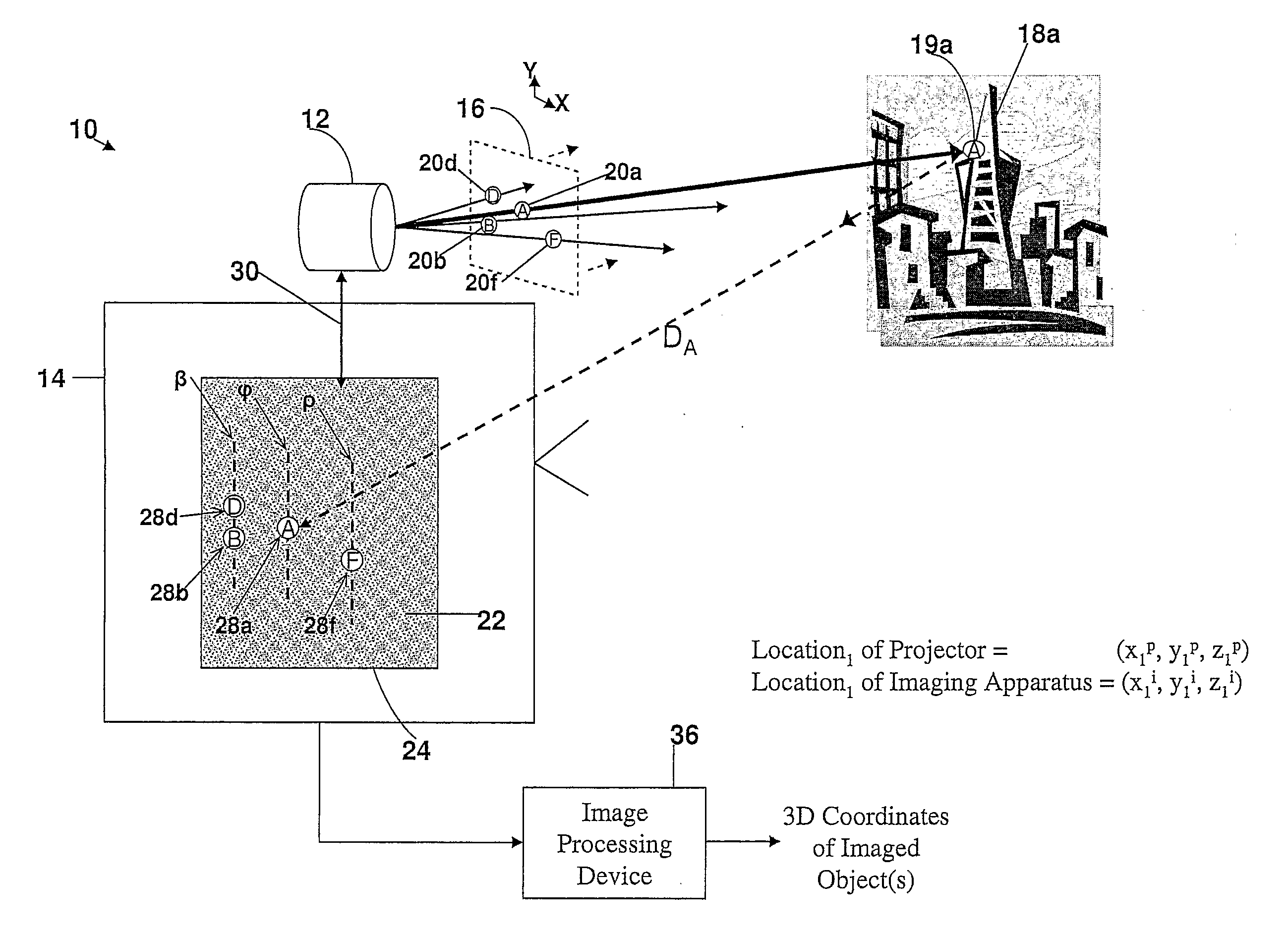

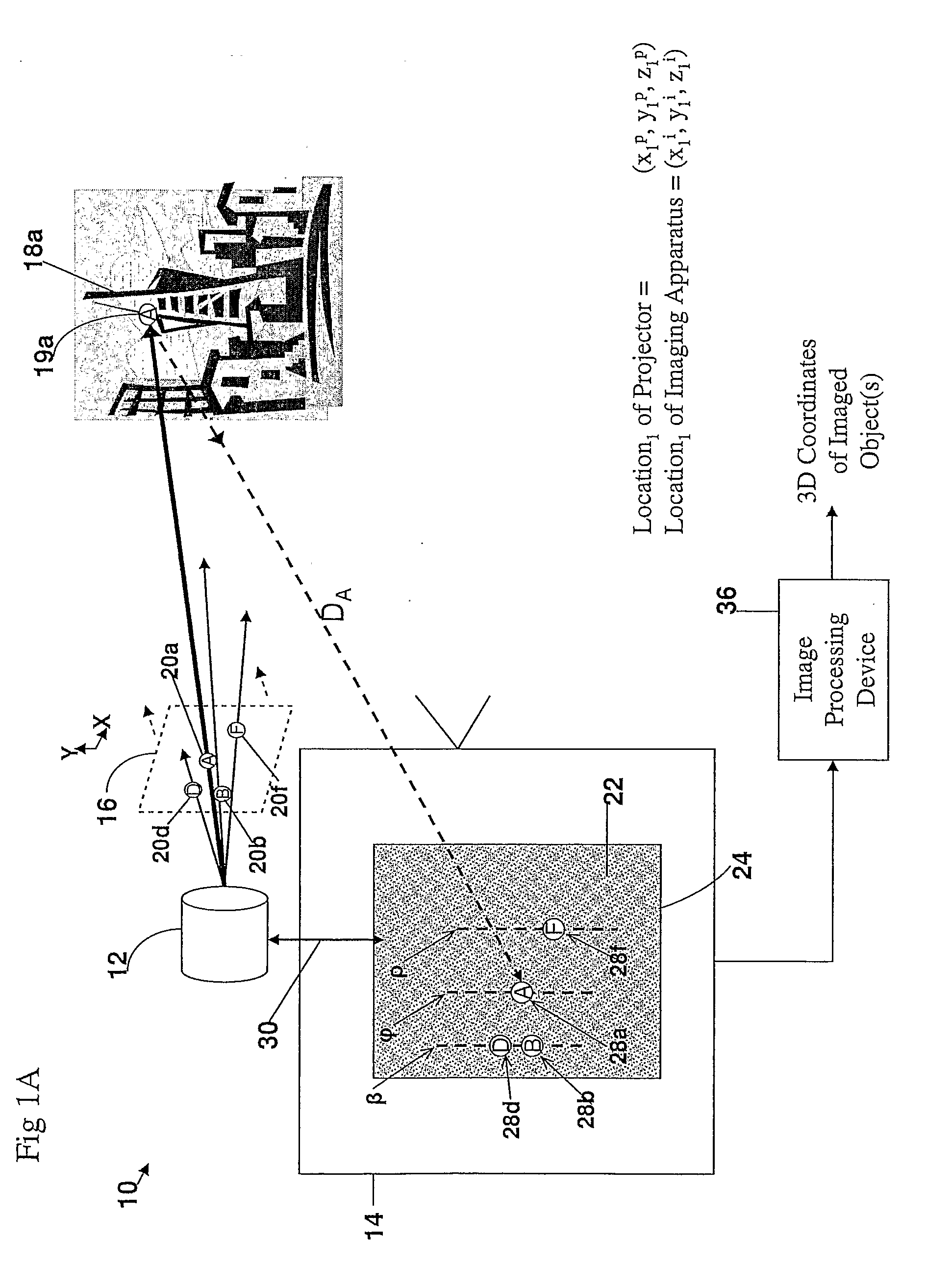

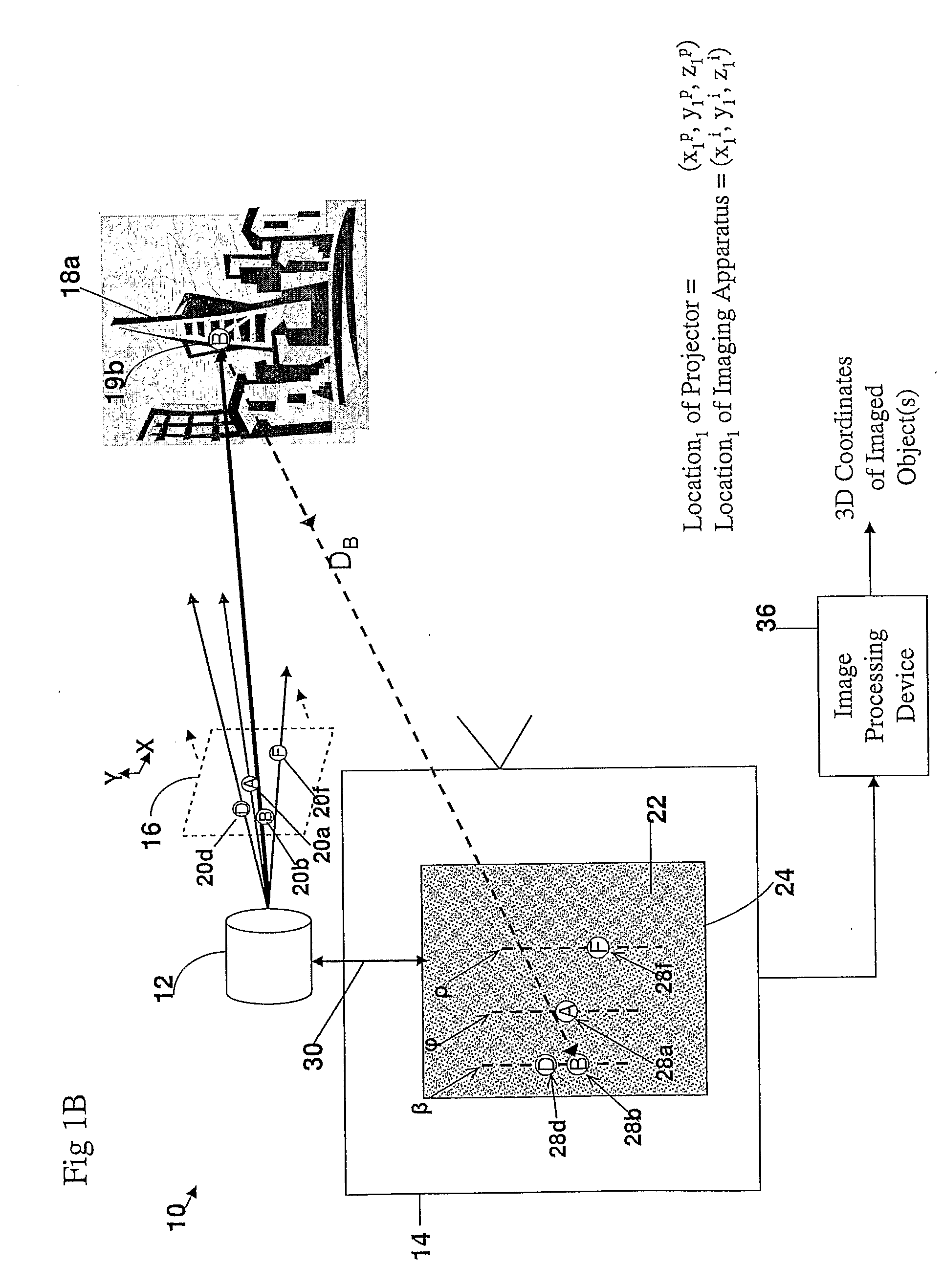

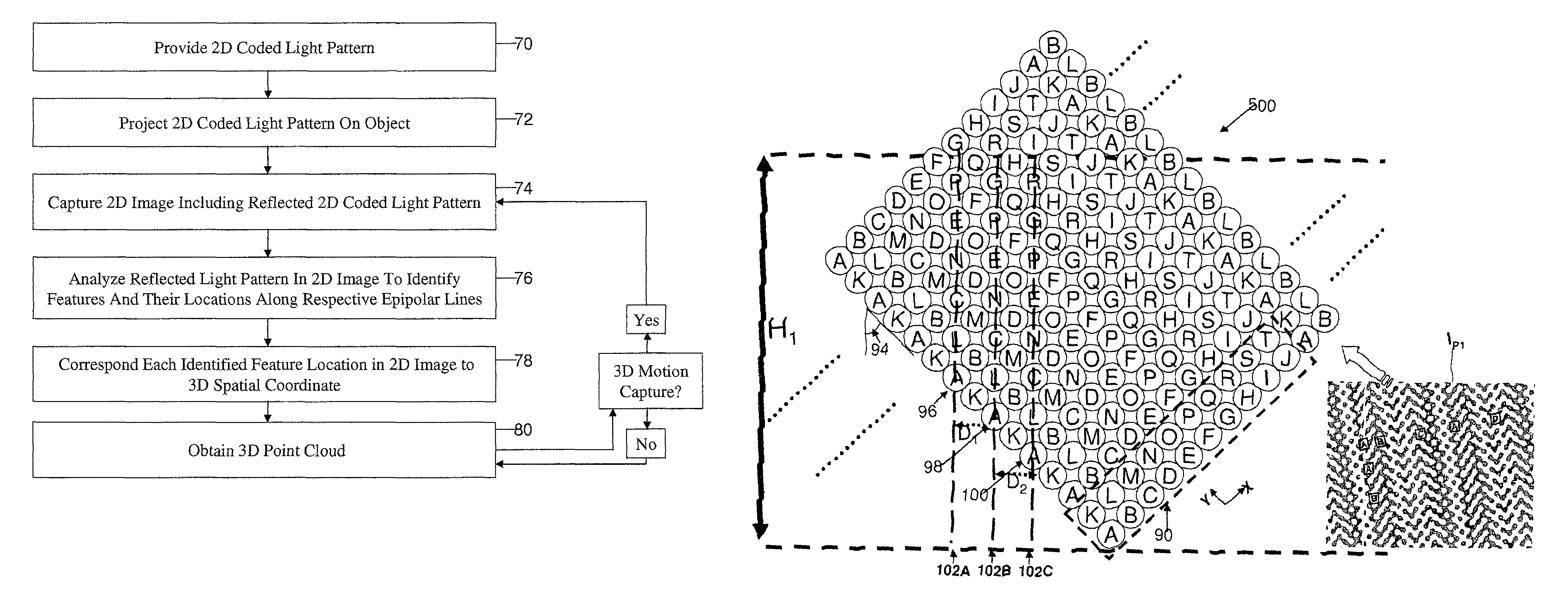

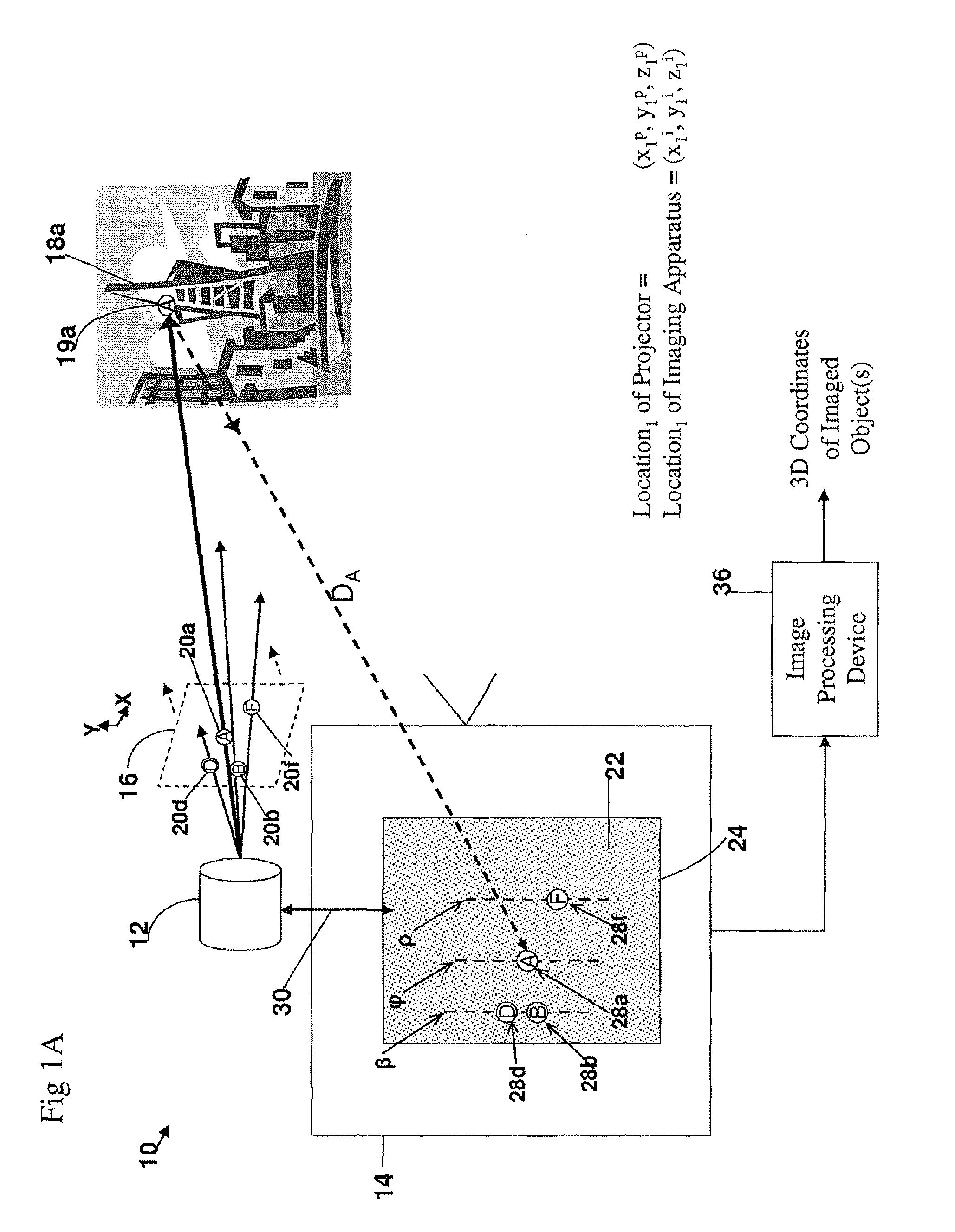

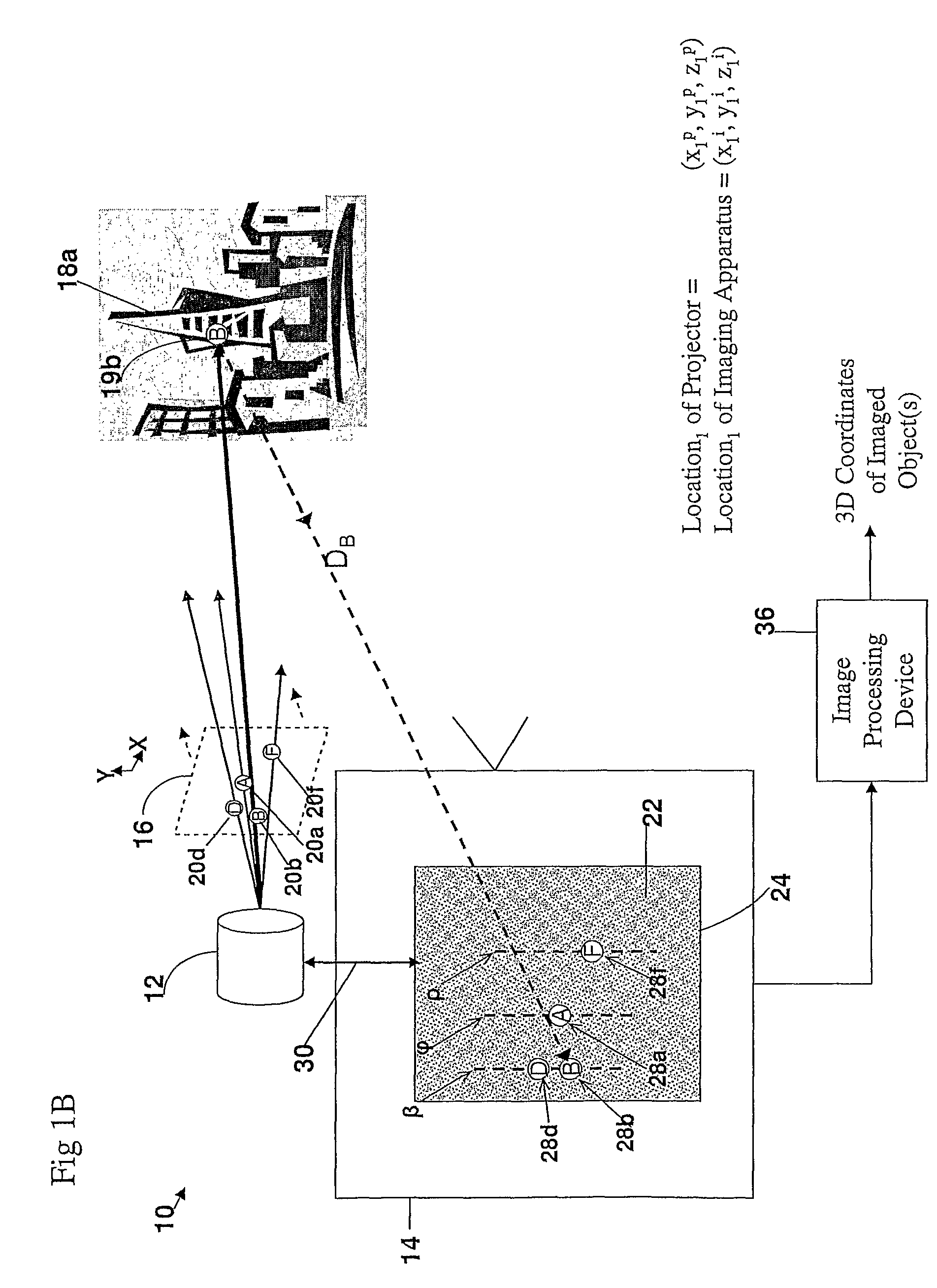

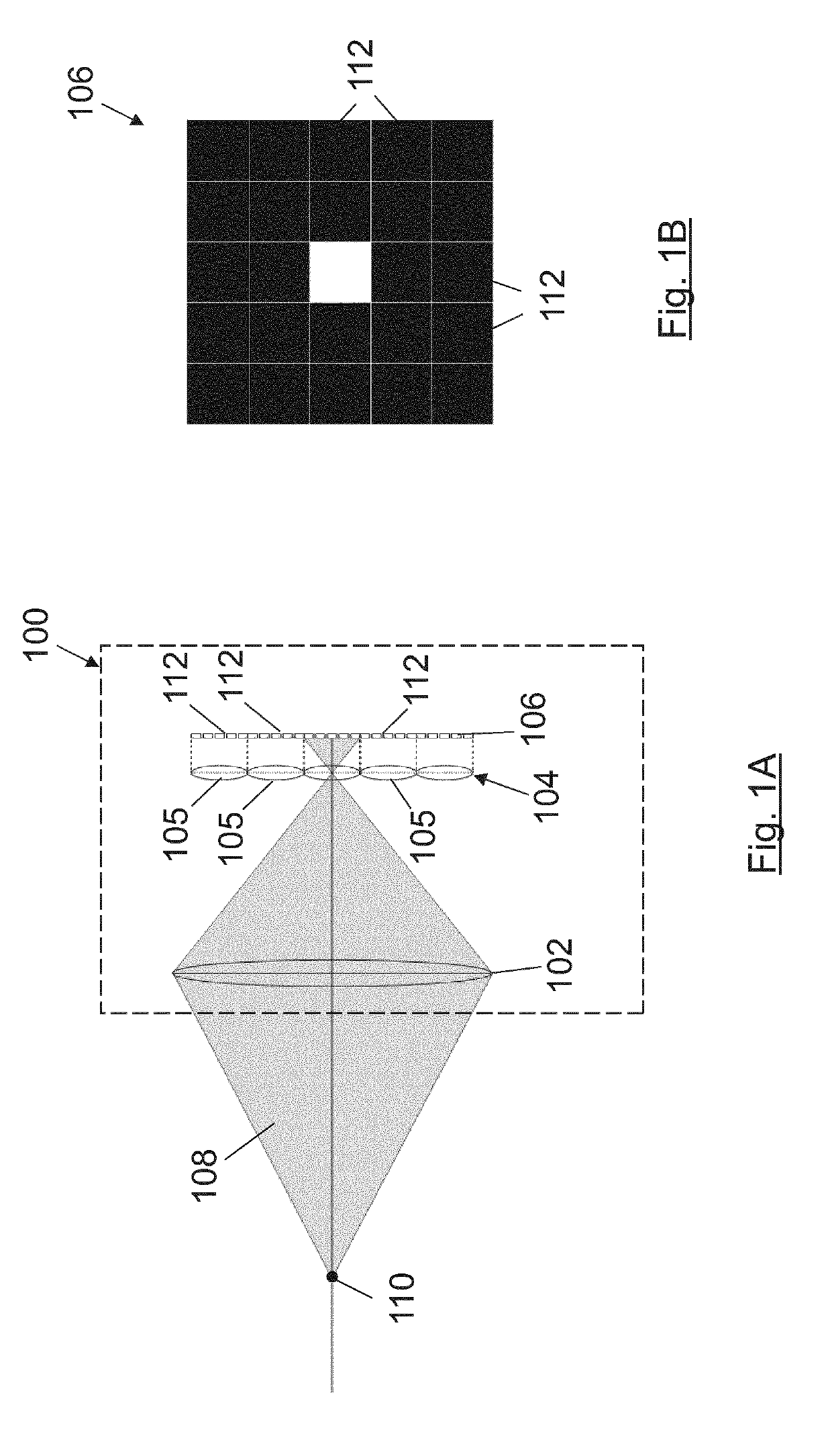

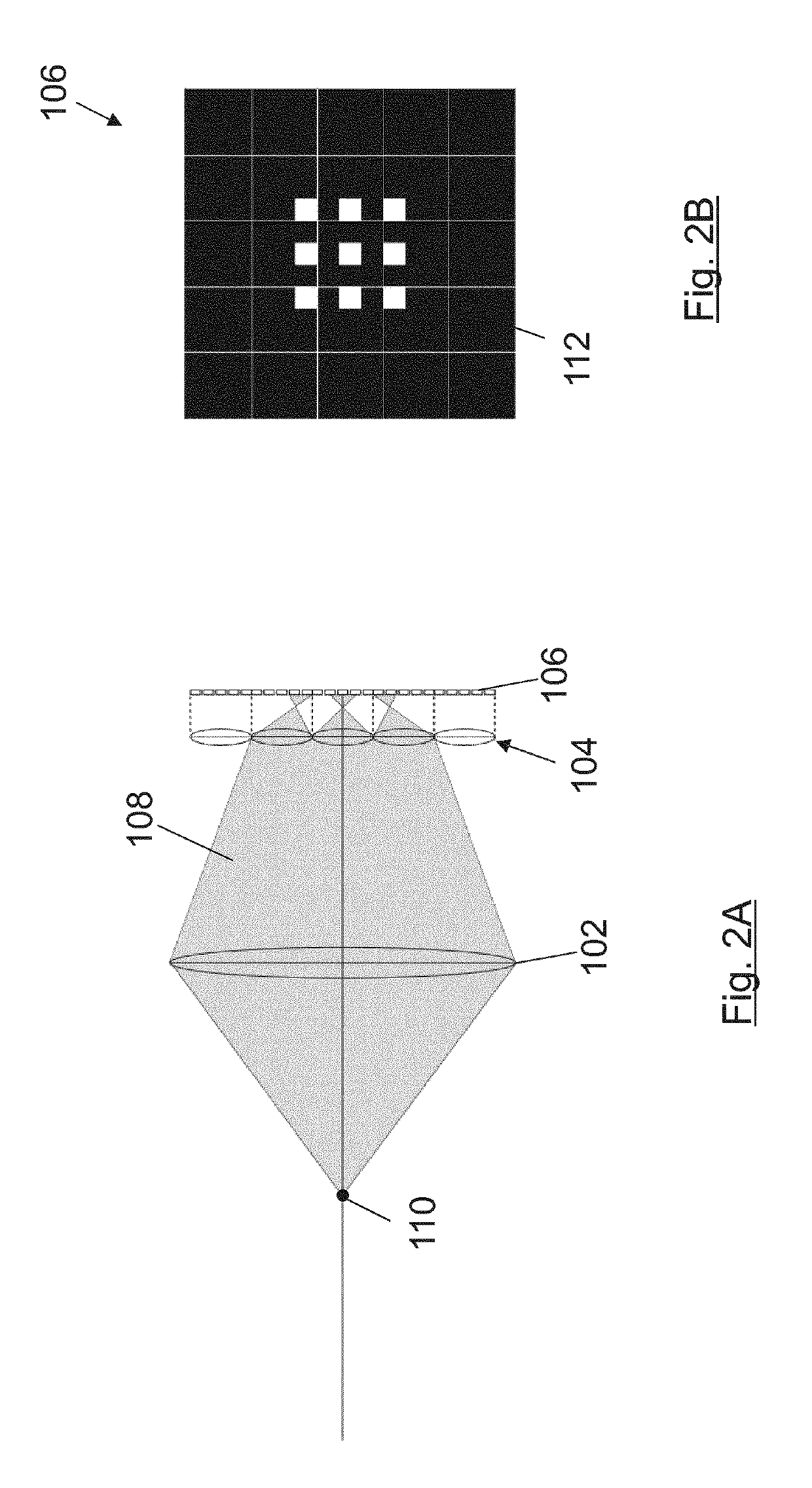

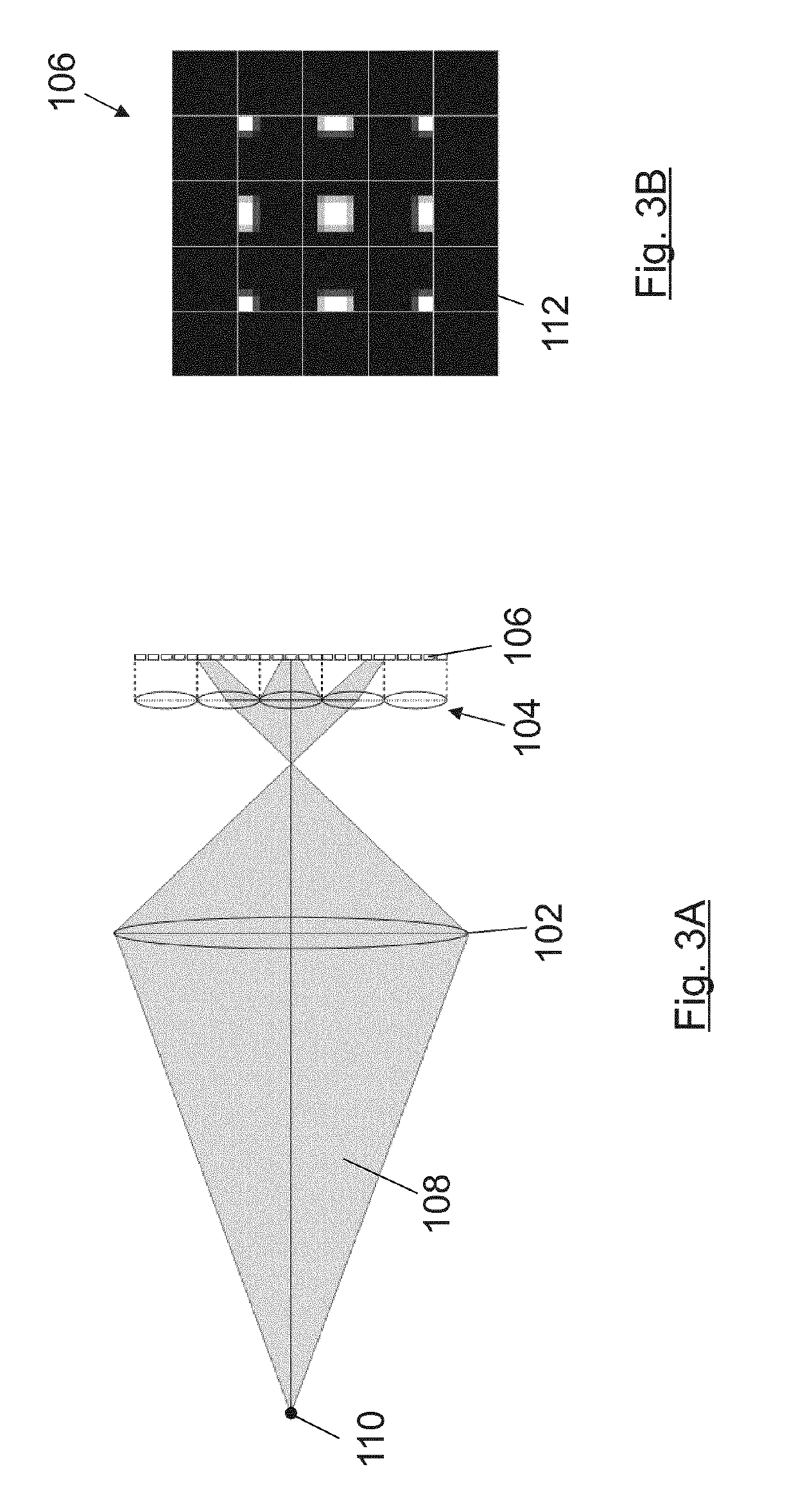

A method and apparatus for obtaining an image to determine a three dimensional shape of a stationary or moving object using a bi dimensional coded light pattern having a plurality of distinct identifiable feature types. The coded light pattern is projected on the object such that each of the identifiable feature types appears at most once on predefined sections of distinguishable epipolar lines. An image of the object is captured and the reflected feature types are extracted along with their location on known epipolar lines in the captured image. Displacements of the reflected feature types along their epipolar lines from reference coordinates thereupon determine corresponding three dimensional coordinates in space and thus a 3D mapping or model of the shape of the object at any point in time.

Owner:MANTIS VISION LTD

3D geometric modeling and 3D video content creation

A system, apparatus and method of obtaining data from a 2D image in order to determine the 3D shape of objects appearing in said 2D image, said 2D image having distinguishable epipolar lines, said method comprising: (a) providing a predefined set of types of features, giving rise to feature types, each feature type being distinguishable according to a unique bi-dimensional formation; (b) providing a coded light pattern comprising multiple appearances of said feature types; (c) projecting said coded light pattern on said objects such that the distance between epipolar lines associated with substantially identical features is less than the distance between corresponding locations of two neighboring features; (d) capturing a 2D image of said objects having said projected coded light pattern projected thereupon, said 2D image comprising reflected said feature types; and (e) extracting: (i) said reflected feature types according to the unique bi-dimensional formations; and (ii) locations of said reflected feature types on respective said epipolar lines in said 2D image.

Owner:MANTIS VISION

3D geometric modeling and motion capture using both single and dual imaging

Owner:MANTIS VISION LTD

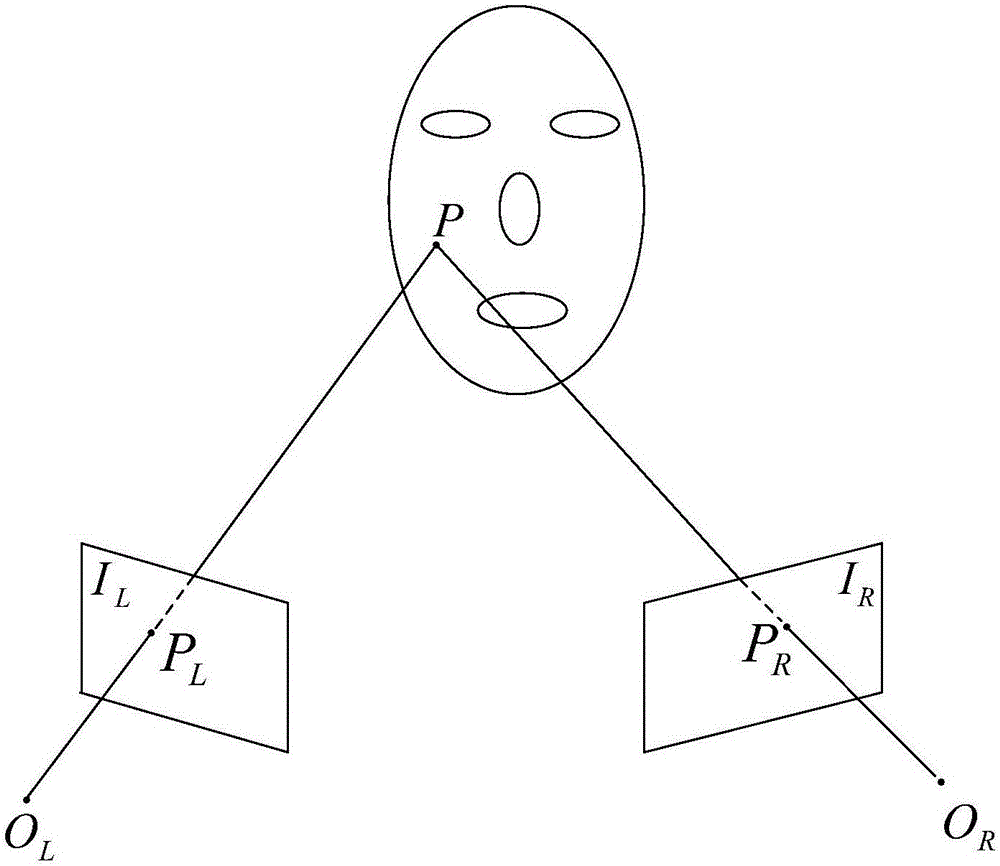

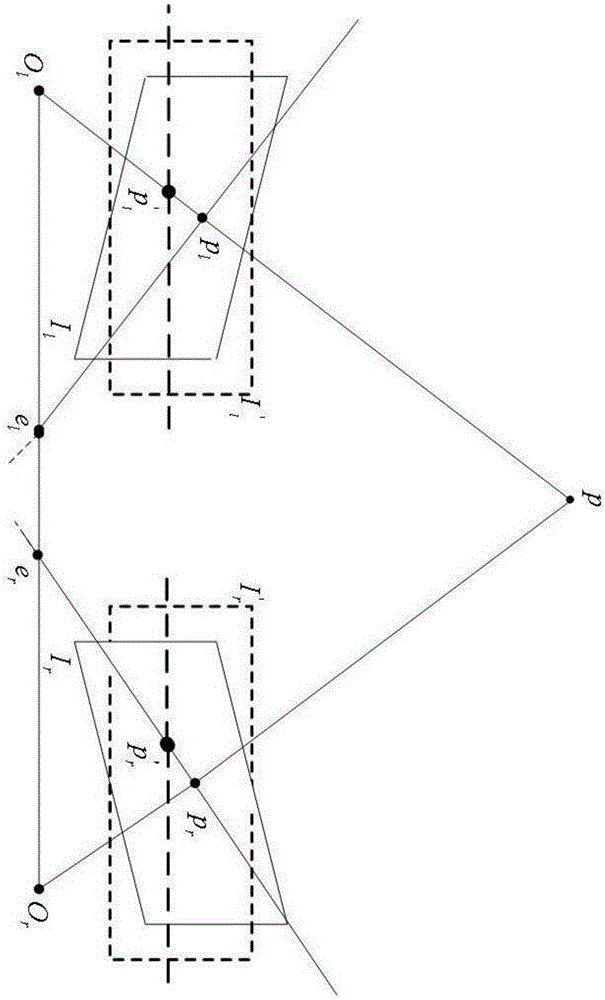

Regional depth edge detection and binocular stereo matching-based three-dimensional reconstruction method

ActiveCN101908230AStable matching costReduce mistakesImage analysis3D modellingObject pointReconstruction method

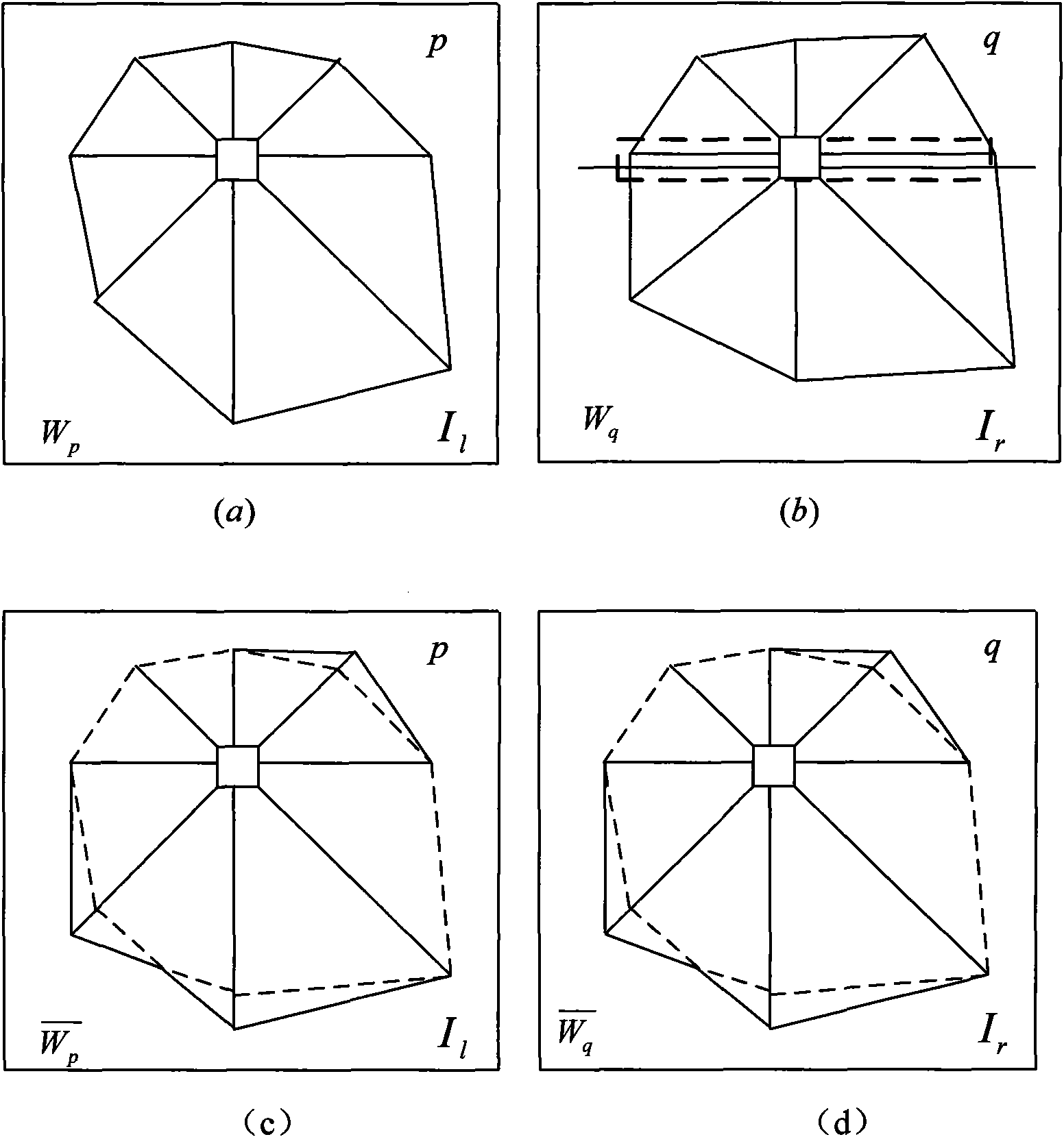

The invention discloses a regional depth edge detection and binocular stereo matching-based three-dimensional reconstruction method, which is implemented by the following steps: (1) shooting a calibration plate image with a mark point at two proper angles by using two black and white cameras; (2) keeping the shooting angles constant and shooting two images of a shooting target object at the same time by using the same camera; (3) performing the epipolar line rectification of the two images of the target objects according to the nominal data of the camera; (4) searching the neighbor regions of each pixel of the two rectified images for a closed region depth edge and building a supporting window; (5) in the built window, computing a normalized cross-correlation coefficient of supported pixels and acquiring the matching price of a central pixel; (6) acquiring a parallax by using a confidence transmission optimization method having an acceleration updating system; (7) estimating an accurate parallax by a subpixel; and (8) computing the three-dimensional coordinates of an actual object point according to the matching relationship between the nominal data of the camera and the pixel and consequently reconstructing the three-dimensional point cloud of the object and reducing the three-dimensional information of a target.

Owner:江苏省华强纺织有限公司 +1

3D geometric modeling and 3D video content creation

A system, apparatus and method of obtaining data from a 2D image in order to determine the 3D shape of objects appearing in said 2D image, said 2D image having distinguishable epipolar lines, said method comprising: (a) providing a predefined set of types of features, giving rise to feature types, each feature type being distinguishable according to a unique bi-dimensional formation; (b) providing a coded light pattern comprising multiple appearances of said feature types; (c) projecting said coded light pattern on said objects such that the distance between epipolar lines associated with substantially identical features is less than the distance between corresponding locations of two neighboring features; (d) capturing a 2D image of said objects having said projected coded light pattern projected thereupon, said 2D image comprising reflected said feature types; and (e) extracting: (i) said reflected feature types according to the unique bi-dimensional formations; and (ii) locations of said reflected feature types on respective said epipolar lines in said 2D image.

Owner:MANTIS VISION LTD

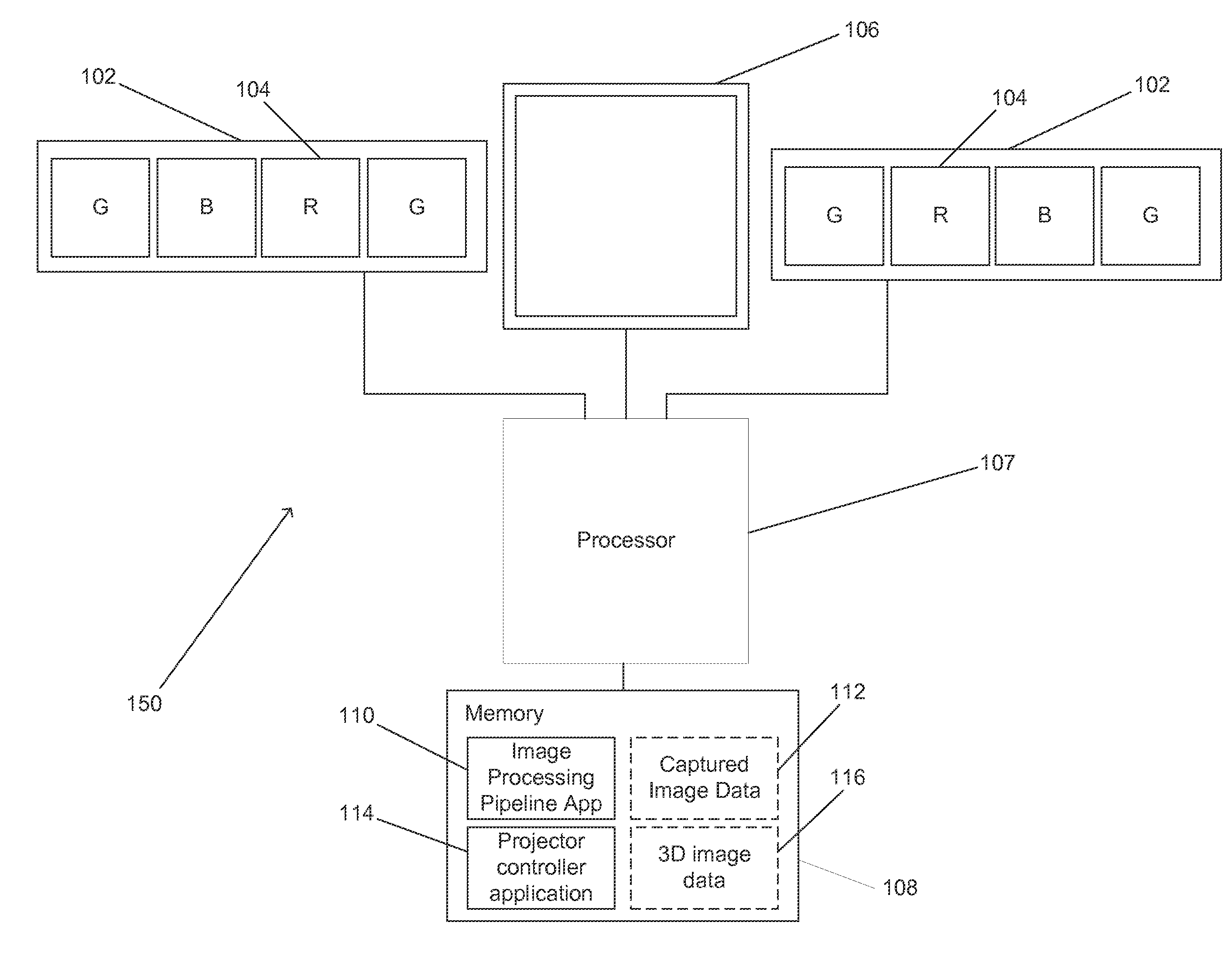

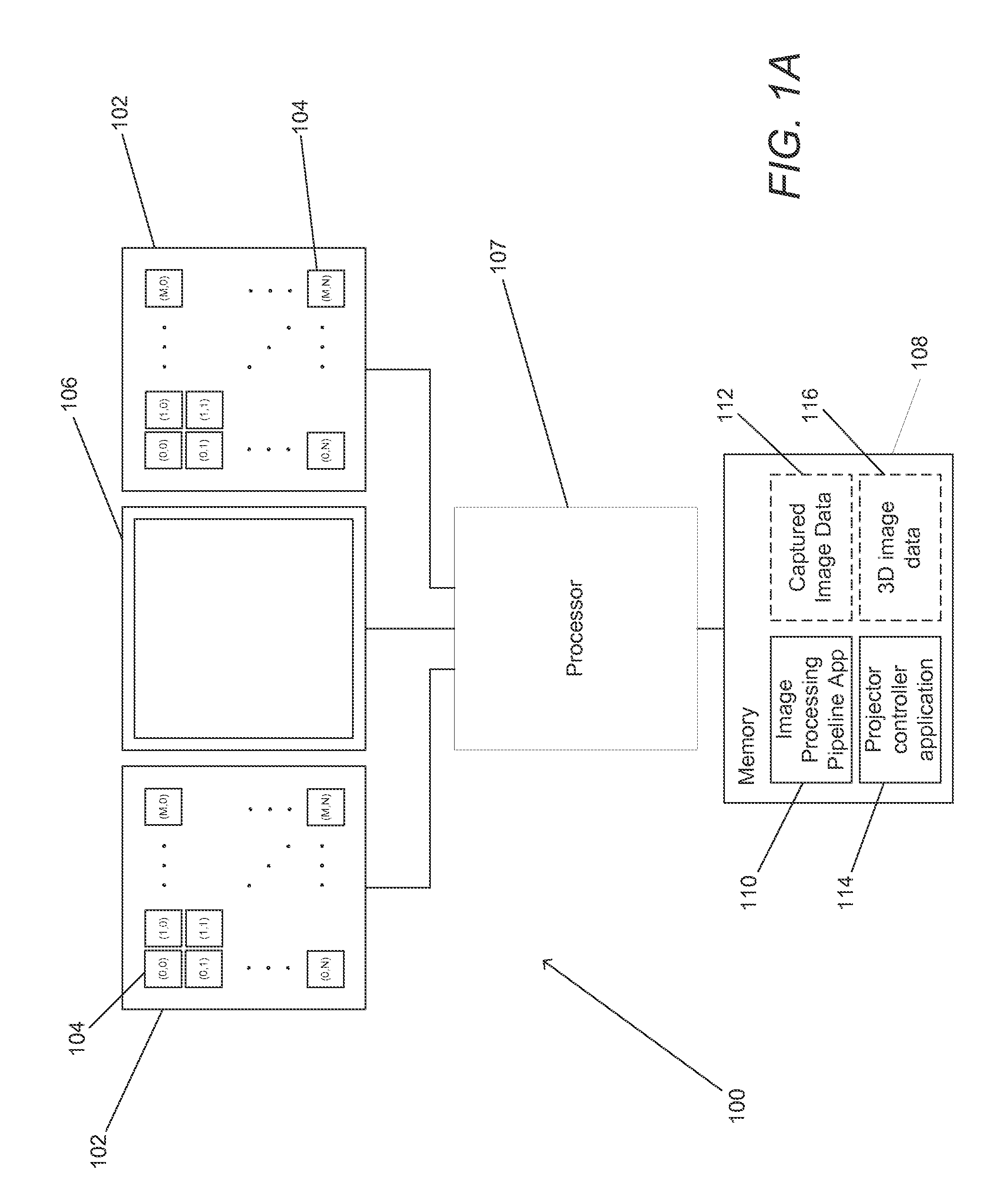

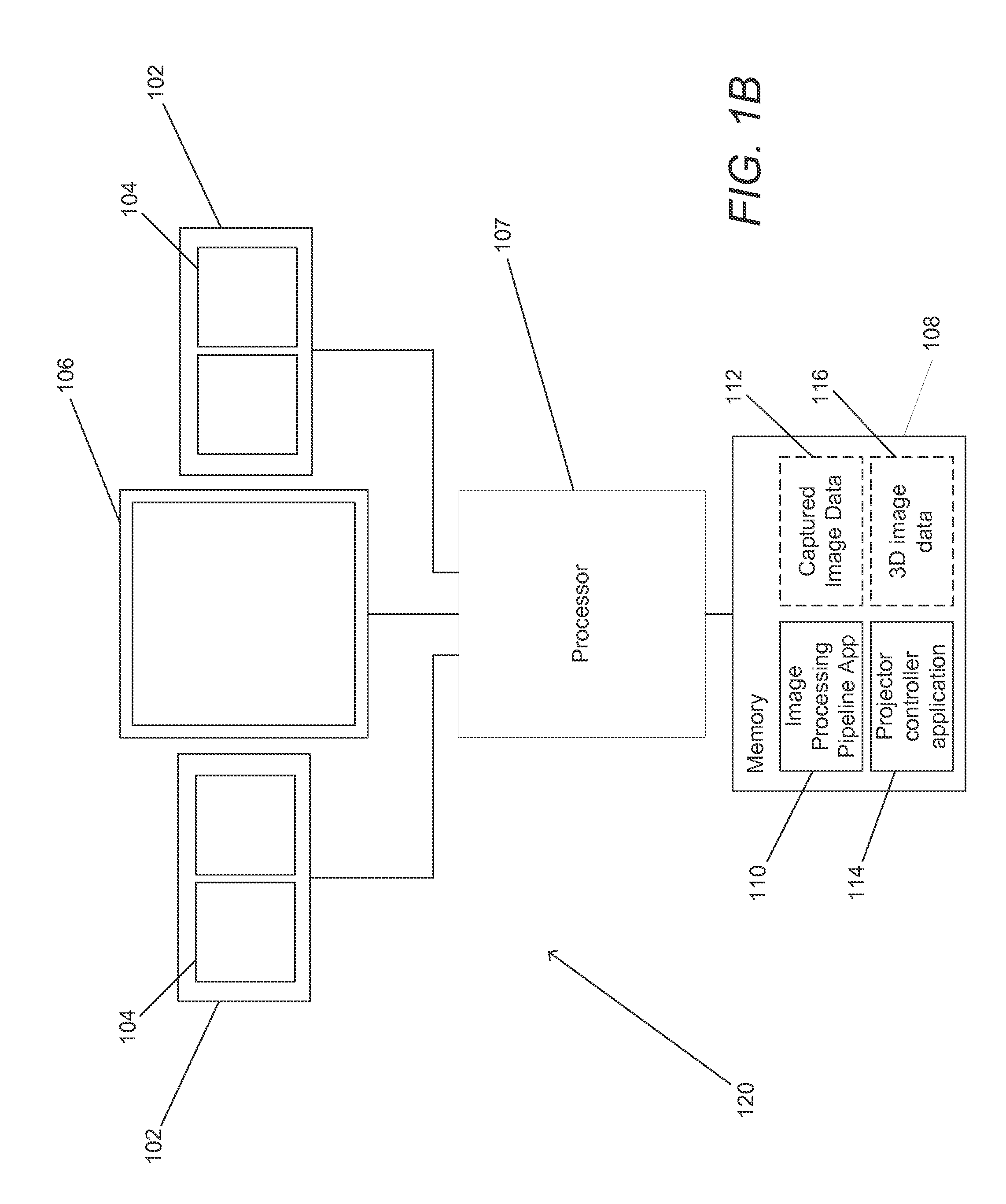

Systems and Methods for Estimating Depth from Projected Texture using Camera Arrays

Systems and methods in accordance with embodiments of the invention estimate depth from projected texture using camera arrays. One embodiment of the invention includes: at least one two-dimensional array of cameras comprising a plurality of cameras; an illumination system configured to illuminate a scene with a projected texture; a processor; and memory containing an image processing pipeline application and an illumination system controller application. In addition, the illumination system controller application directs the processor to control the illumination system to illuminate a scene with a projected texture. Furthermore, the image processing pipeline application directs the processor to: utilize the illumination system controller application to control the illumination system to illuminate a scene with a projected texture capture a set of images of the scene illuminated with the projected texture; determining depth estimates for pixel locations in an image from a reference viewpoint using at least a subset of the set of images. Also, generating a depth estimate for a given pixel location in the image from the reference viewpoint includes: identifying pixels in the at least a subset of the set of images that correspond to the given pixel location in the image from the reference viewpoint based upon expected disparity at a plurality of depths along a plurality of epipolar lines aligned at different angles; comparing the similarity of the corresponding pixels identified at each of the plurality of depths; and selecting the depth from the plurality of depths at which the identified corresponding pixels have the highest degree of similarity as a depth estimate for the given pixel location in the image from the reference viewpoint.

Owner:FOTONATION LTD

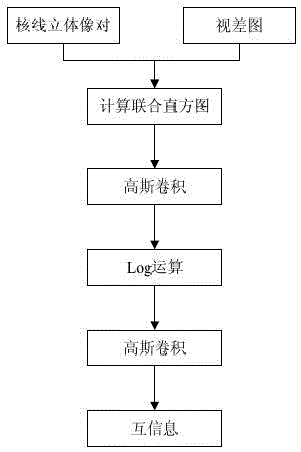

Stereoscopic vision-based real low-texture image reconstruction method

InactiveCN101887589AIncrease the differenceImprove recognitionImage analysis2D-image generationFiltrationObject point

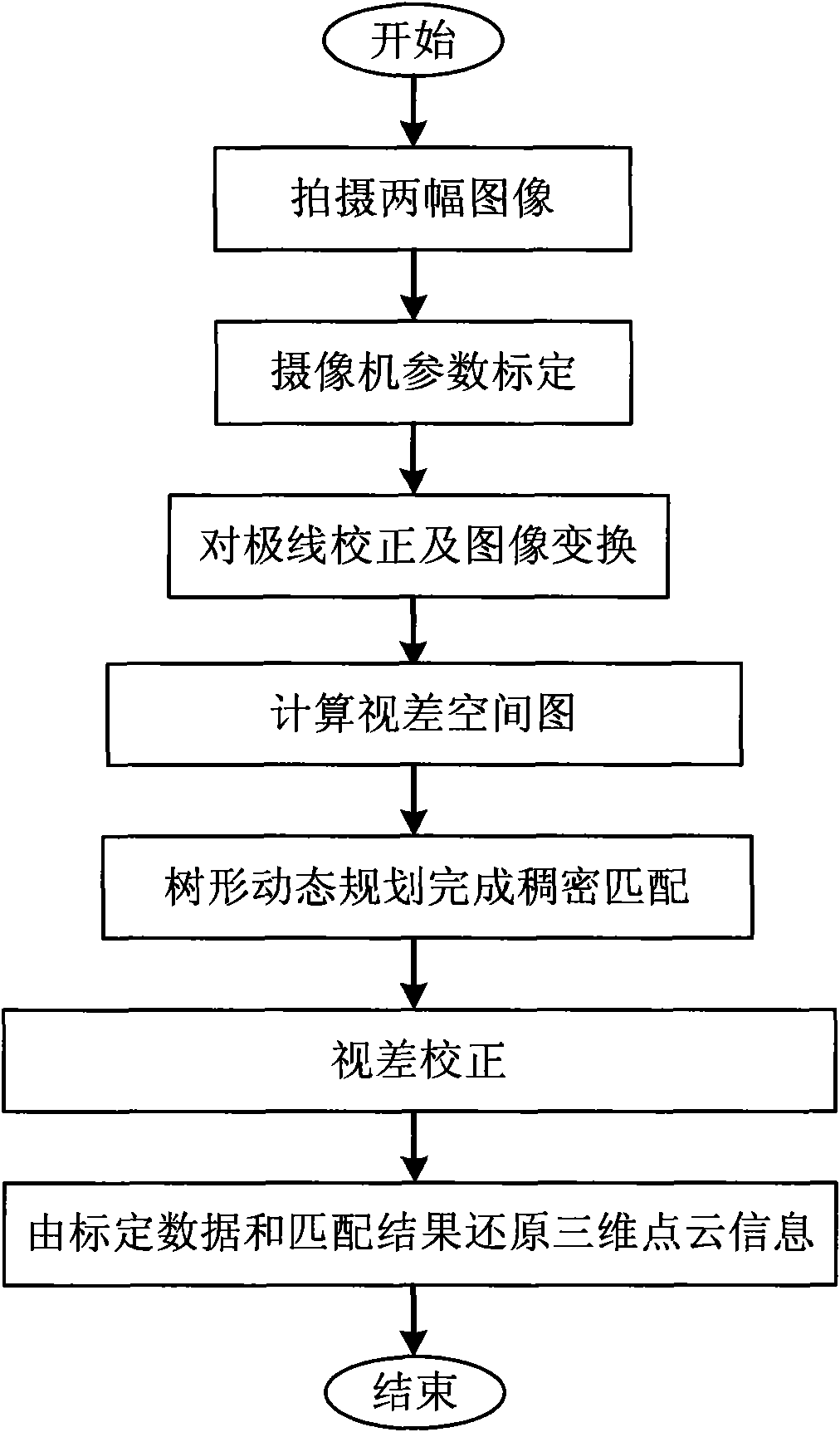

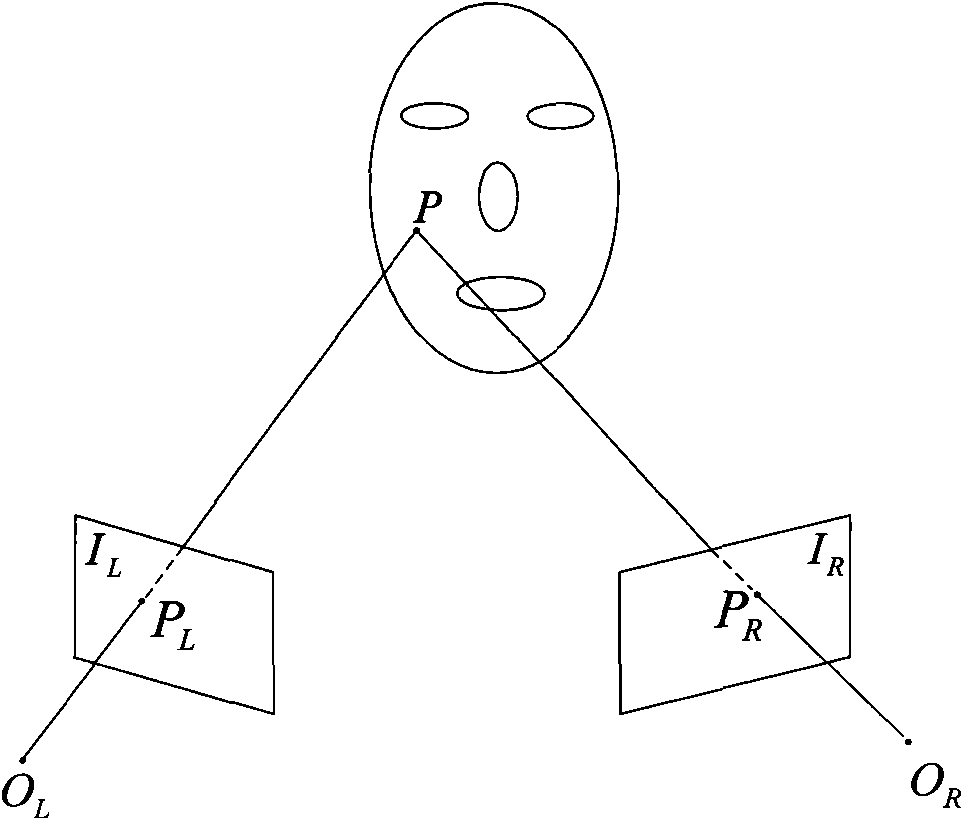

The invention discloses a stereoscopic vision-based real low-texture image reconstruction method, which is implemented by the following steps: (1) shooting images by two cameras at two proper angles at the same time, respectively, wherein one image is used as a reference image and the other image is used as a registered image; (2) calibrating the inside and outside parameter matrixes of the two cameras respectively; (3) performing epipolar line correction, image transformation and Gauss filtration according to the calibrated data; (4) calculating a self-adaptive polygonal prop window of each point in the two calibrated images, and calculating the matching of pixel points to obtain a parallax space diagram; (5) completing dense matching by executing a tree dynamic programming algorithm pixel by pixel in the whole diagram; (6) extracting error matching points according to a left and right consistency principle, and performing parallax correction to obtain a final parallax diagram; and (7) calculating the three-dimensional coordinates of actual object points according to the calibrated data and a matching relationship to construct the three-dimensional point cloud of an object.

Owner:南通瑞银服饰有限公司 +1

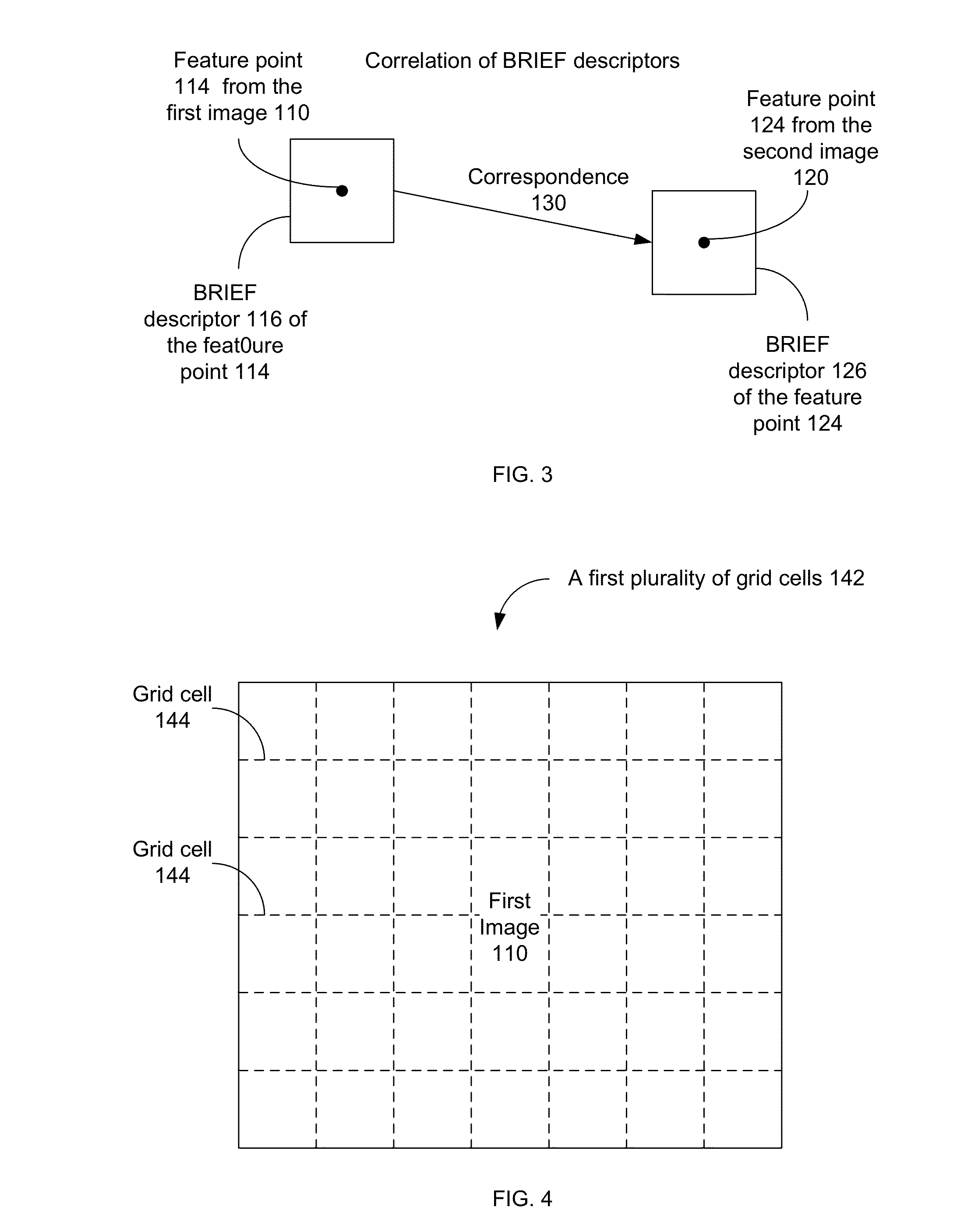

Image characteristic matching method

InactiveCN102693542AHigh precisionEvenly distributedImage analysisCorrelation coefficientSpace environment

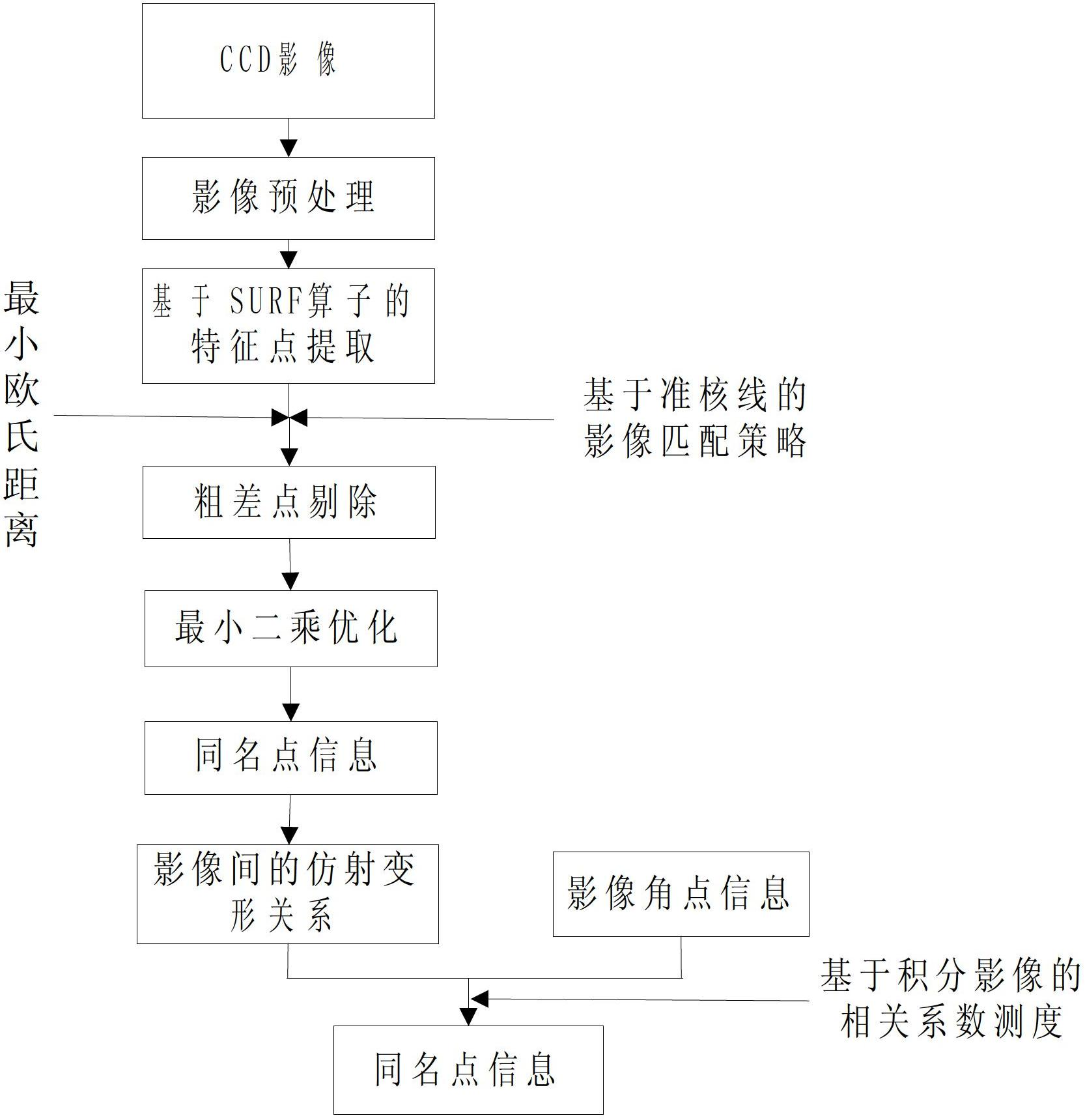

The invention relates to an image characteristic matching method. The image characteristic matching method includes the steps of pre-processing an obtained CCD (charge coupled device) image; extracting characteristic points of the pre-processed CCD image by a SURF operator and conducting matching image by the quasi epipolar line limit condition and minimum Euclidean distance condition to obtain the identical point information; establishing affine deformation relation between the CCD images according to the obtained identical point information; extracting the characteristic points of a reference image by Harris corner extracting operator, projecting the characteristics points to a searching image by the affine transformation to obtain points to be matched; in neighbourhood around the points to be matched, counting the correlation coefficient between the characteristic points and the points in the neighbourhood and taking extreme points as the identical points; and using the comprehensive results of twice matching as the final identical point information. According to the method of the invention, can match surface images of deep-space stars obtained in a deep-space environment is utilized for imaging matching to obtain high-precision identical point information of CCD images, so that the characteristic matching is realized.

Owner:THE PLA INFORMATION ENG UNIV

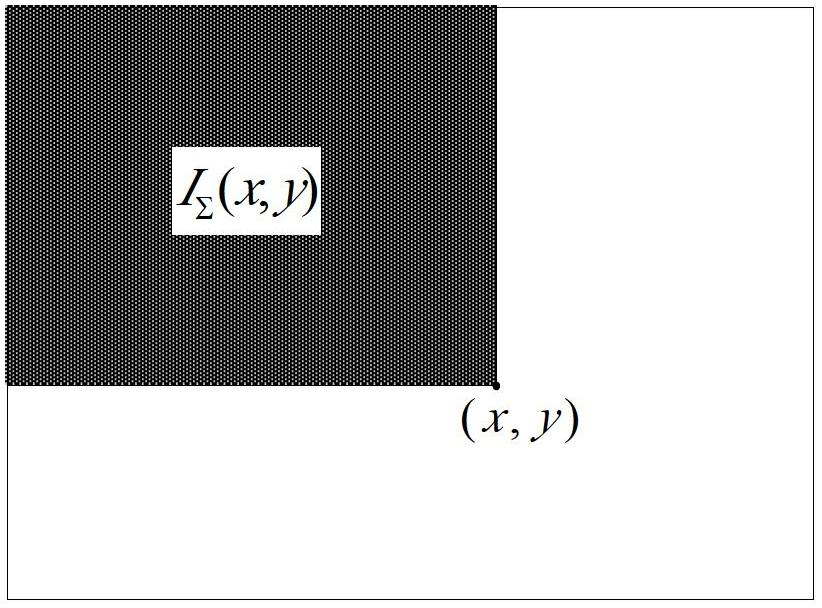

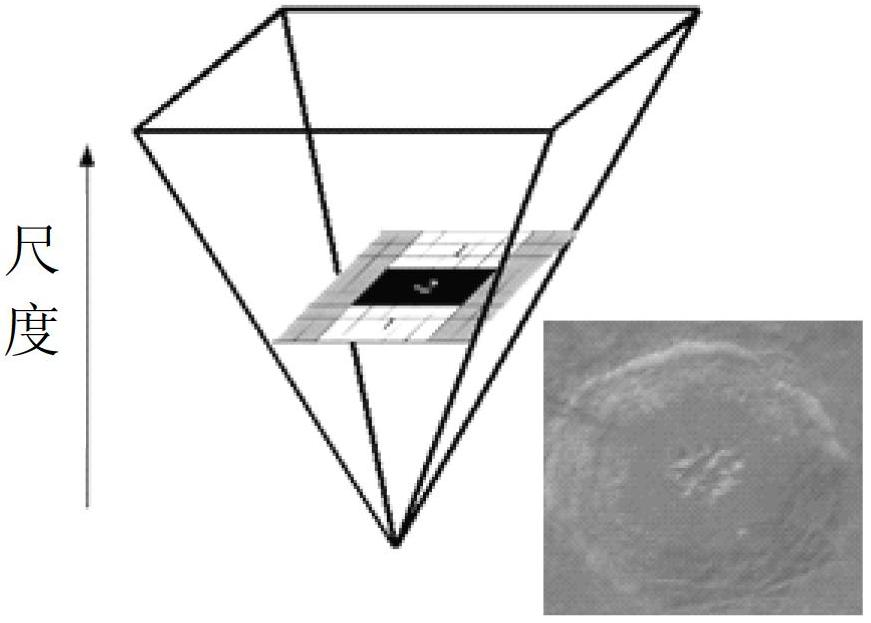

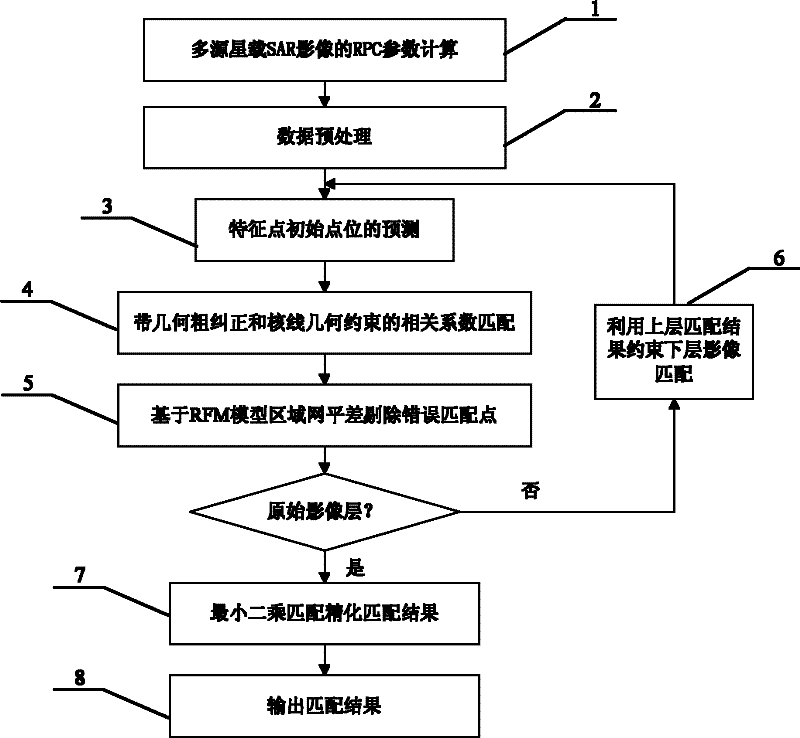

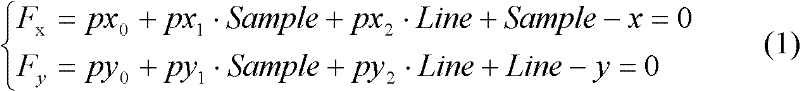

Method for automatically matching multisource space-borne SAR (Synthetic Aperture Radar) images based on RFM (Rational Function Model)

ActiveCN102213762AAutomatic and reliable matchingMeet the requirements for co-locationRadio wave reradiation/reflectionSynthetic aperture radarWorkload

The invention discloses a method for automatically matching multisource space-borne SAR (Synthetic Aperture Radar) images based on an RFM (Rational function model). The method comprises the following steps of: calculating respective RPC (Rational Polynominal Coefficient) parameter of images; performing forecast of initial positions of points to be matched, matching of approximate epipolar line geometric establishment constraints and geometric rough correction of matched window images by using the RPC parameters of the images on every pyramid image layer, deleting wrong matching points from the image matching result of every layer of pyramid by adopting regional computer network error compensation based on an RFM model; refining the RPC parameters of the images and calculating the object space coordinates of the matching points; refining the matching result to the original image layer by layer; and refining a matching result by using a least square image matching method to realize automatic and reliable matching of common points of multisource space-borne SAR images. In the method, the RFM model is introduced into automatic matching of the multisource space-borne SAR images, and the regional computer network error compensation of the RFM model is blended into the image matching process of every layer pyramid, so that wrong matching points in the matching process can be effectively deleted, and the workload of manual measurement of common points is effectively lowered.

Owner:CCCC SECOND HIGHWAY CONSULTANTS CO LTD

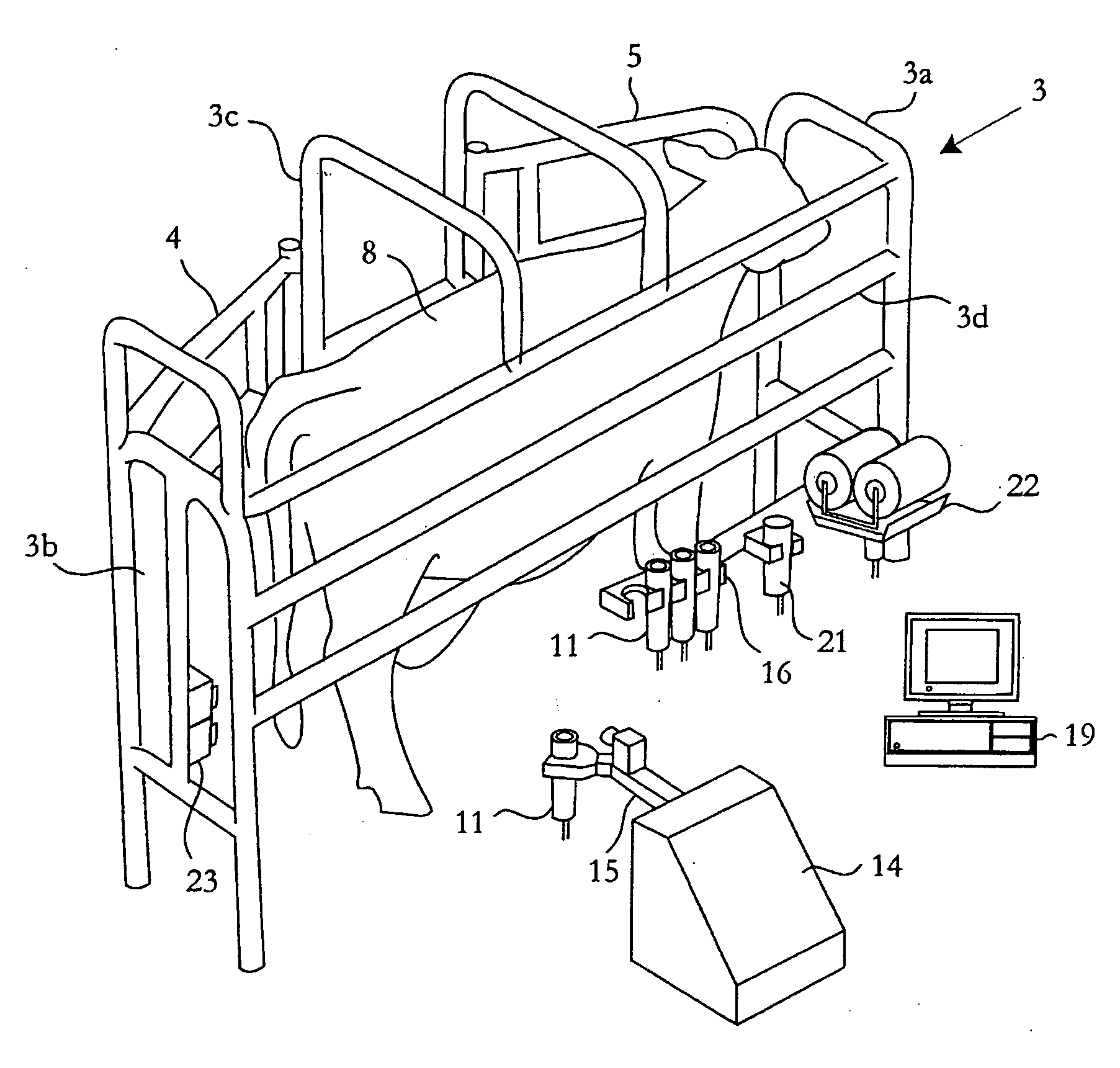

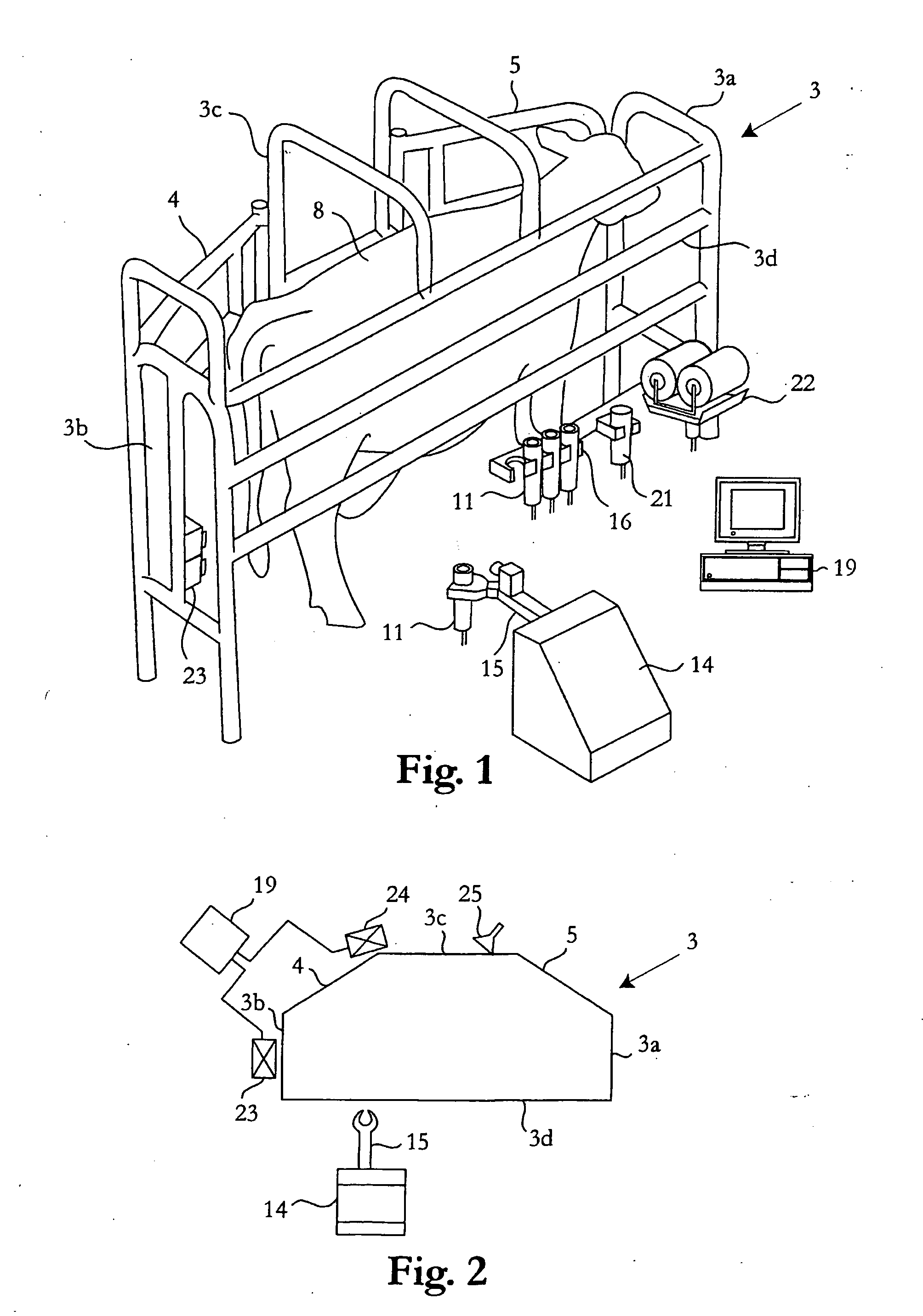

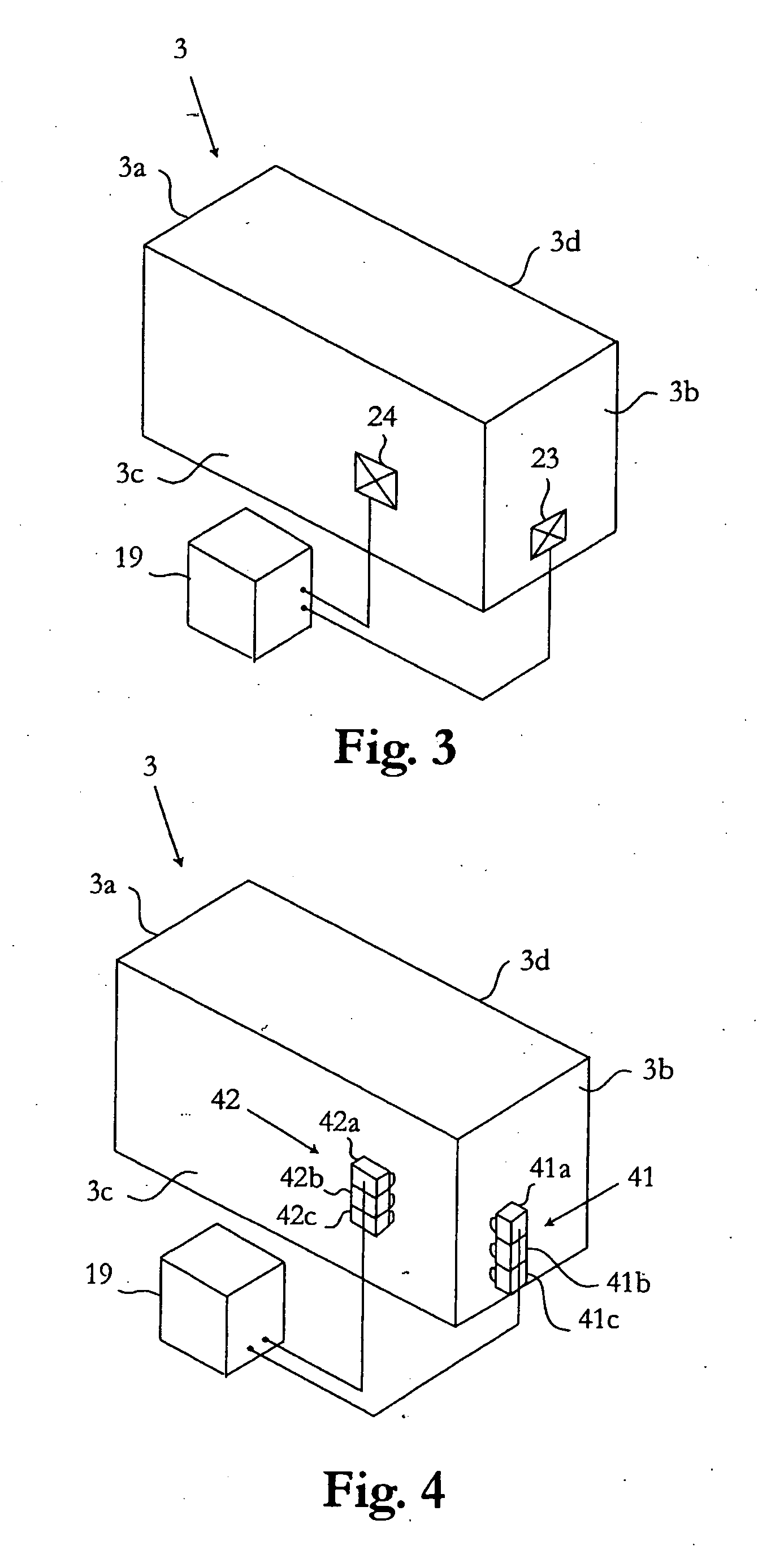

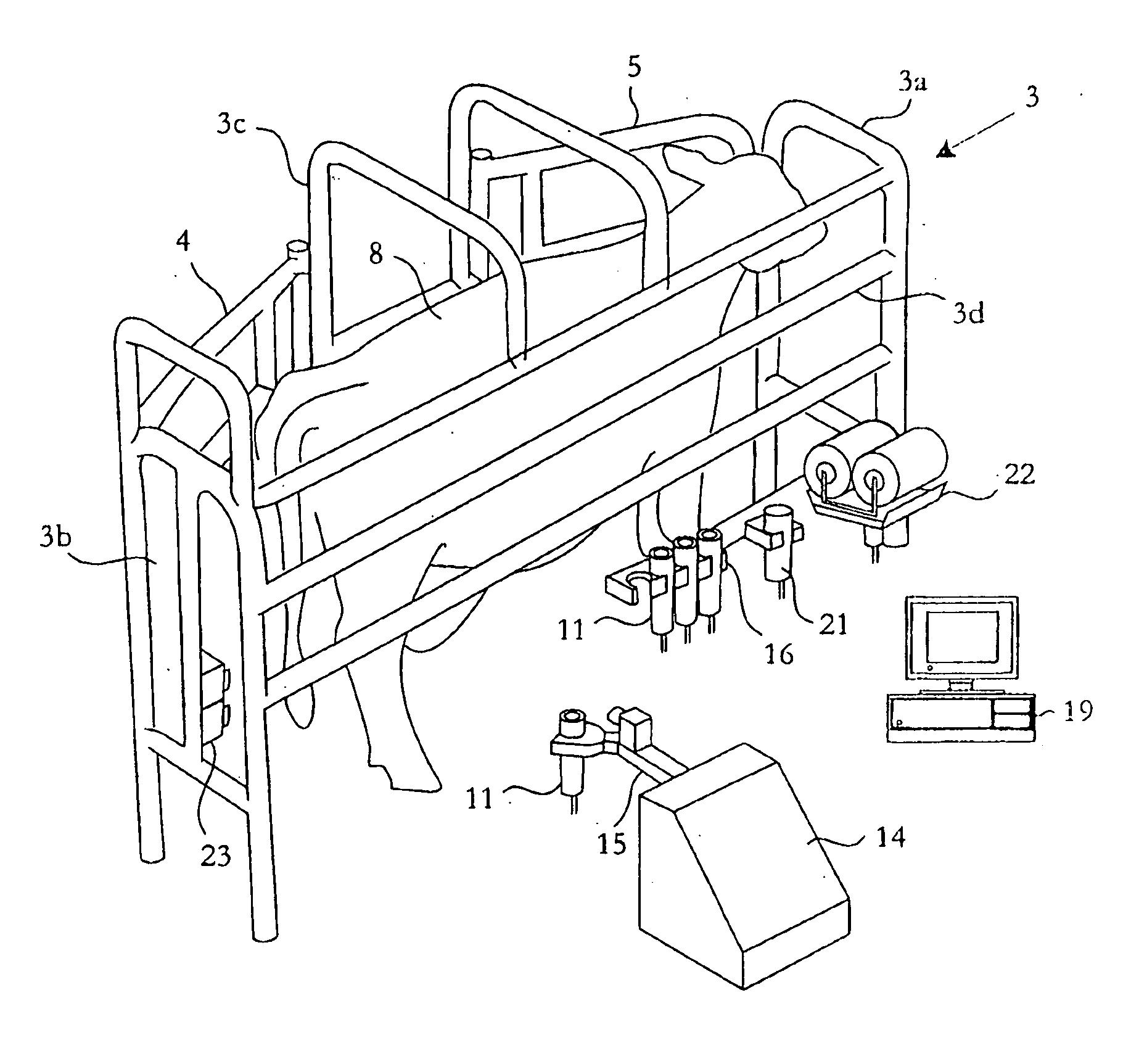

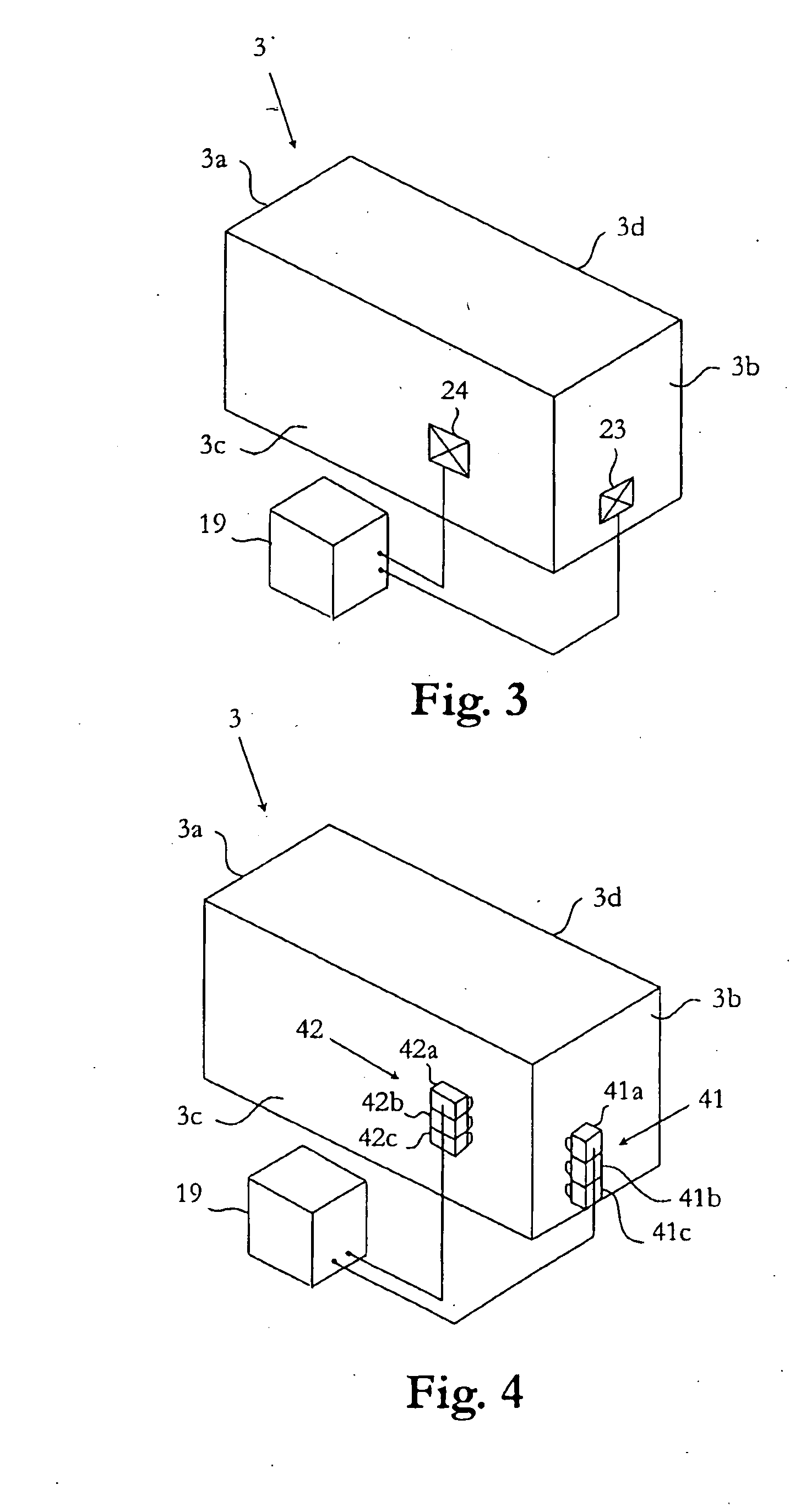

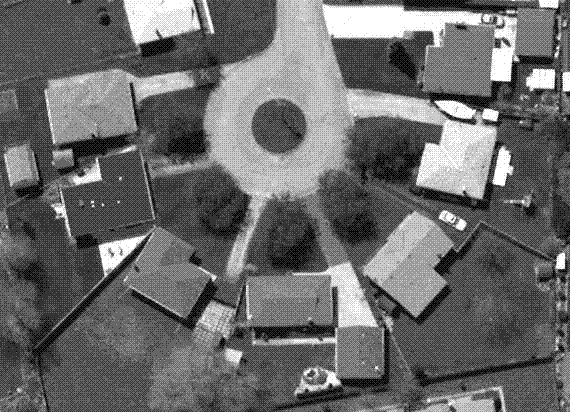

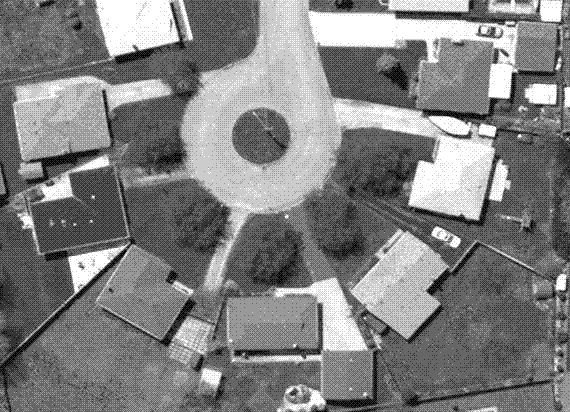

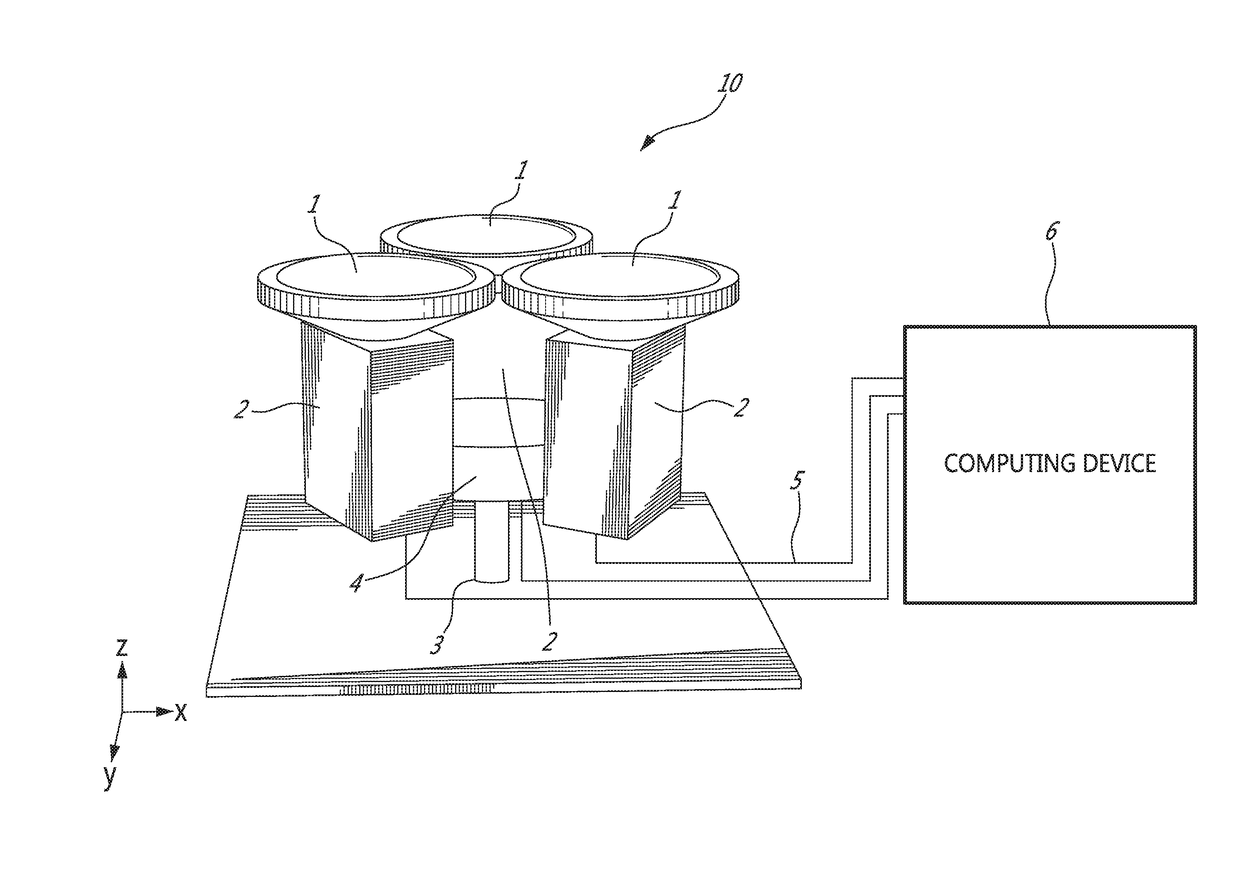

Arrangement and method for determining positions of the teats of a milking animal

InactiveUS20100199915A1Easy and efficientReduce dispersionImage enhancementImage analysisImaging processingEngineering

An arrangement for determining positions of the teats of an animal is provided in a milking system comprising a robot arm for automatically attaching teat cups to the teats of an animal when being located in a position to be milked, and a control device for controlling the movement of the robot arm based on determined positions of the teats of the animal. The arrangement comprises a camera pair directed towards the teats of the animal for repeatedly recording pairs of images, and an image processing device for repeatedly detecting the teats of the animal and determining their positions by a stereoscopic calculation method based on the repeatedly recorded pairs of images, wherein the cameras of the camera pair are arranged vertically one above the other, and the image processing device is provided, for each teat and for each pair of images, to define the position of the lower tip of the teat in the pair of images as conjugate points, and to find the conjugate points along a substantially vertical epipolar line.

Owner:DELAVAL HLDG AB

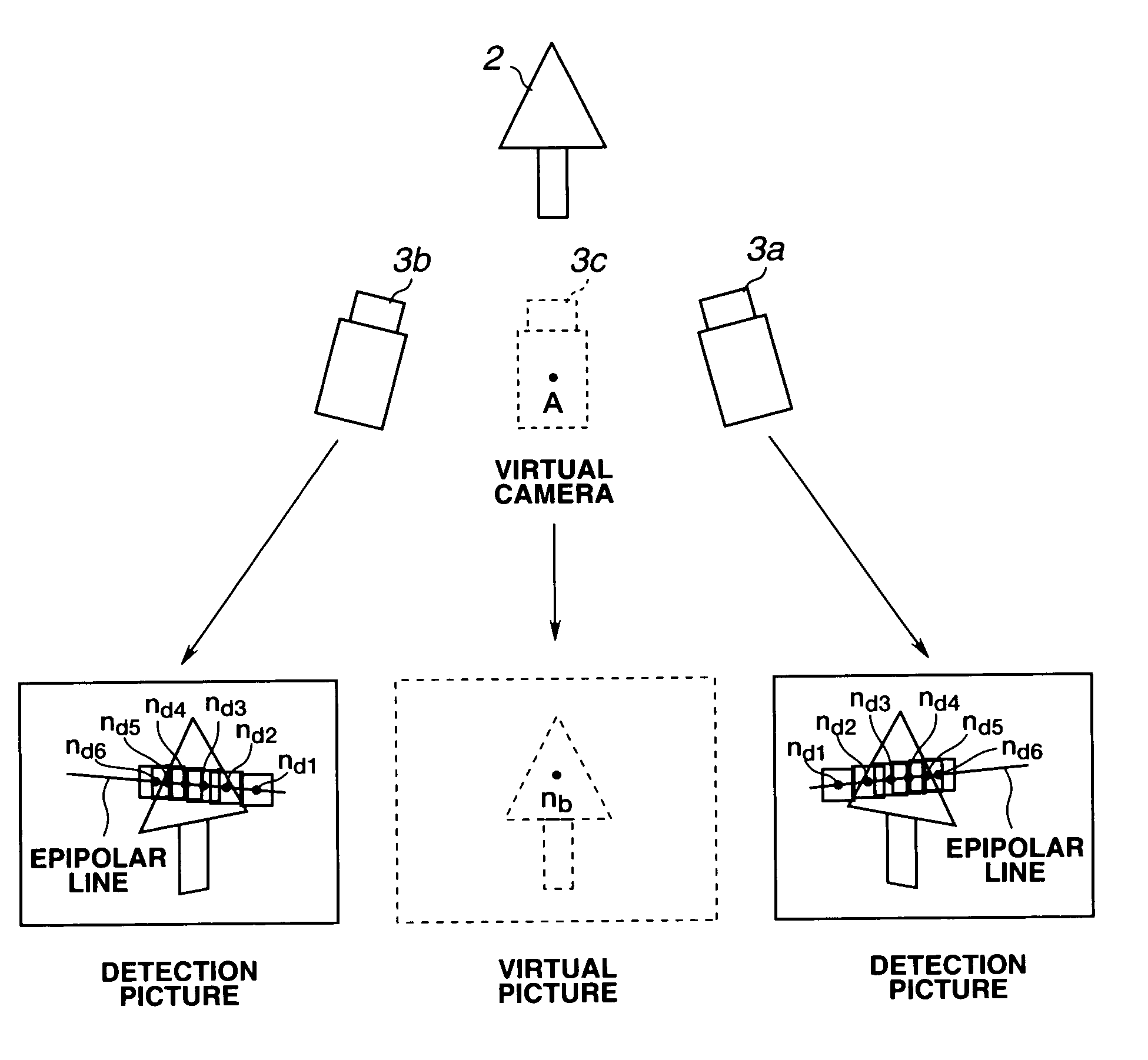

Picture generating apparatus and picture generating method

InactiveUS7015951B1Television system detailsCharacter and pattern recognitionPattern recognitionComputer graphics (images)

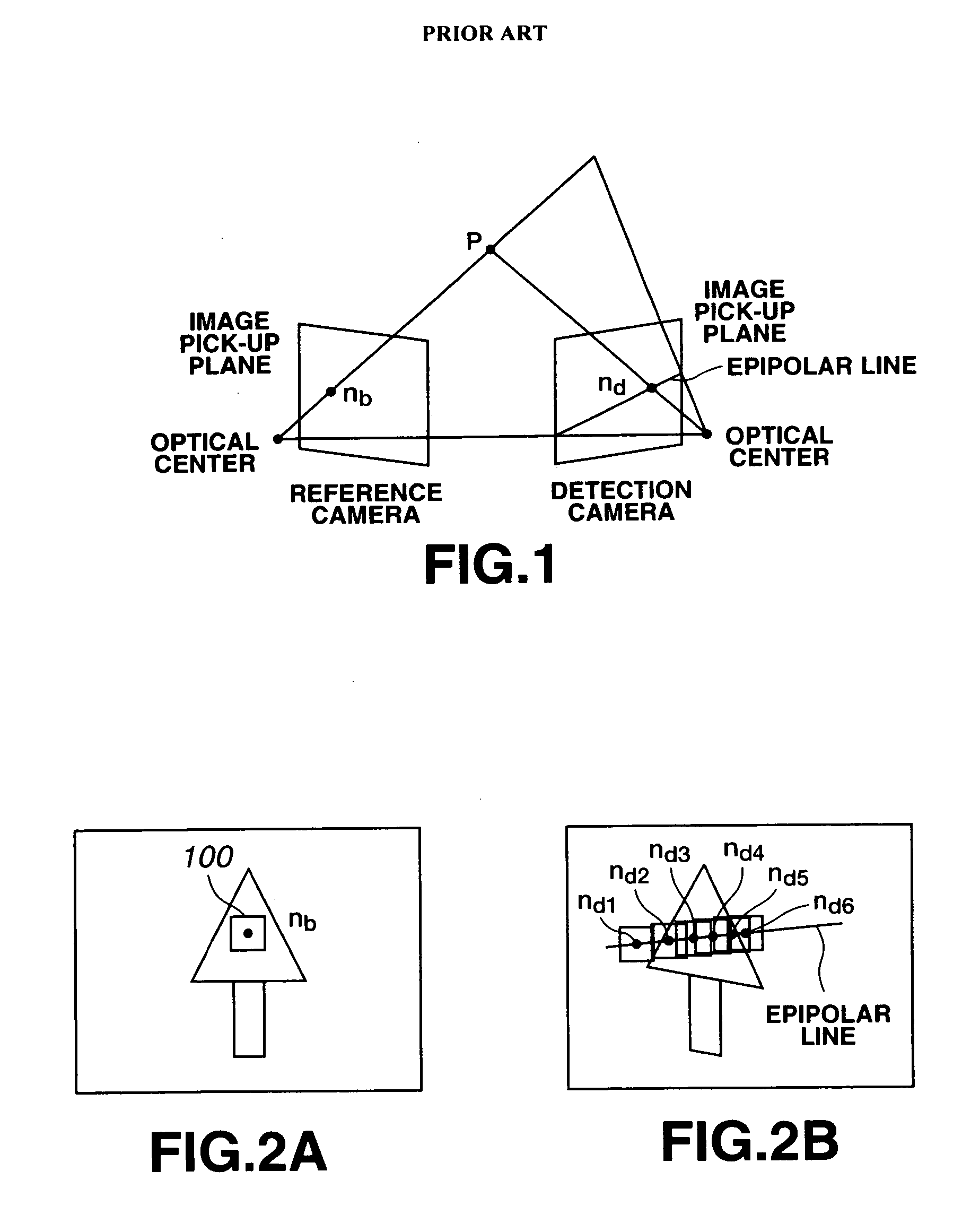

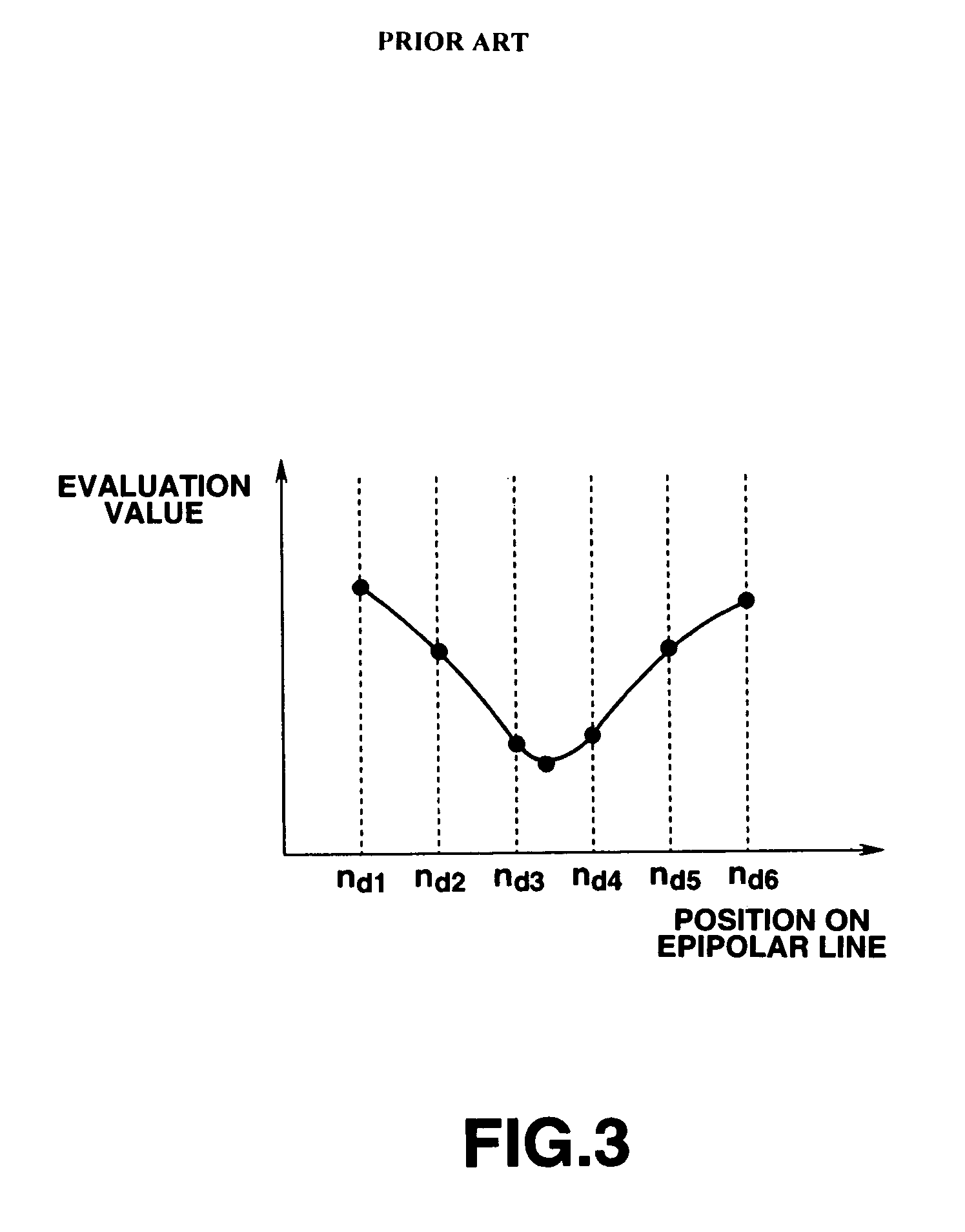

A picture generating apparatus according to this invention comprises: two image pick-up devices 3a, 3b or more adapted for picking up image of an object 2 to be imaged to generate picture data and respectively disposed at different positions; a correlation detecting section 5 for comparing, with each other, respective picture data generated by the respective image pick-up devices 3a, 3b on epipolar line determined by connecting correspondence points of line of sight connecting virtual position A and the object 2 to be imaged and line of sight connecting position of each of the image pick-up devices 3a, 3b and the object 2 to be imaged to detect correlation therebetween; and a distance picture generating section 5 for generating distance picture indicating distance between virtual position and the object to be imaged on the basis of correlation detected by the correlation detecting section 5.

Owner:SONY CORP

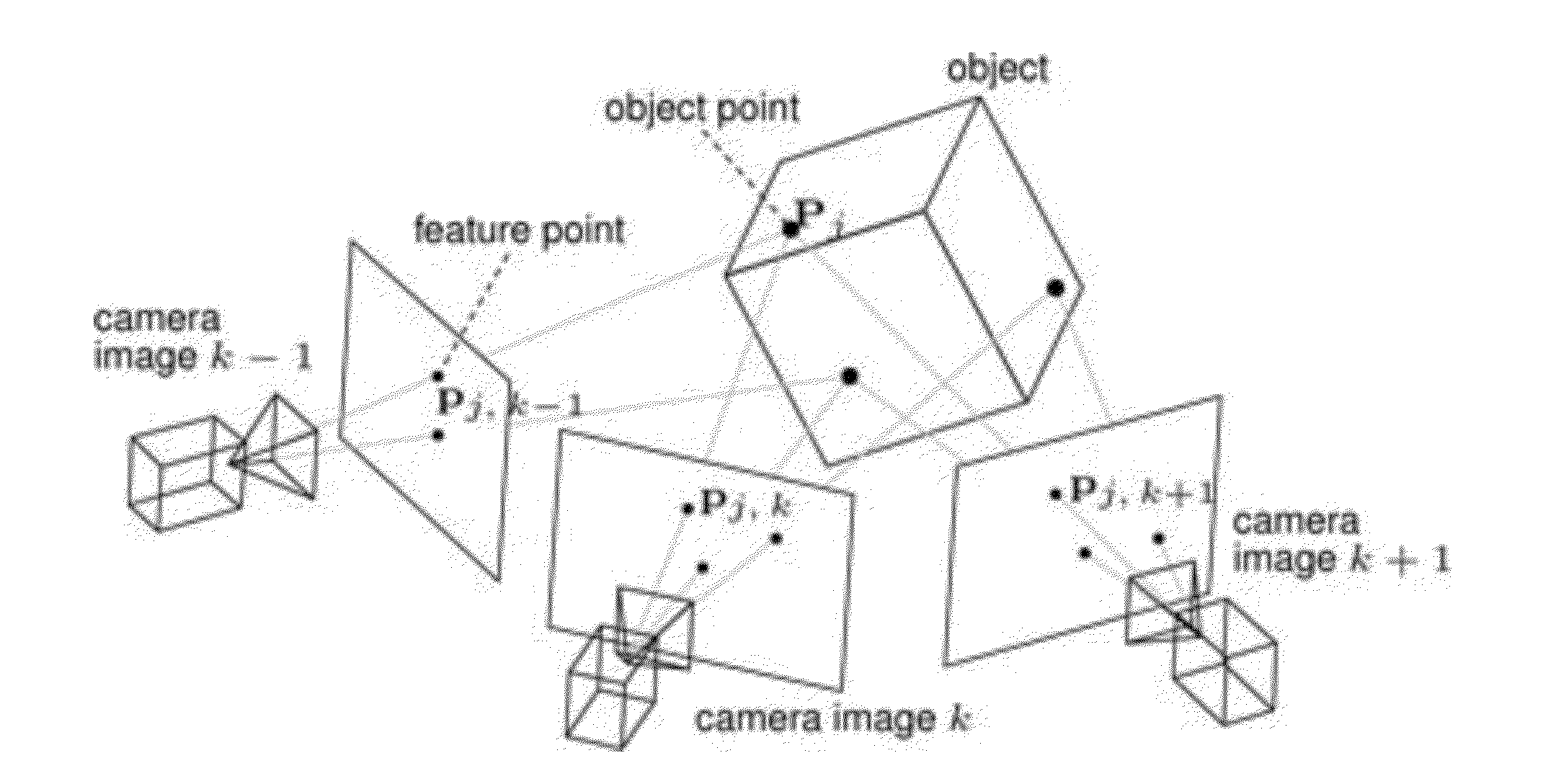

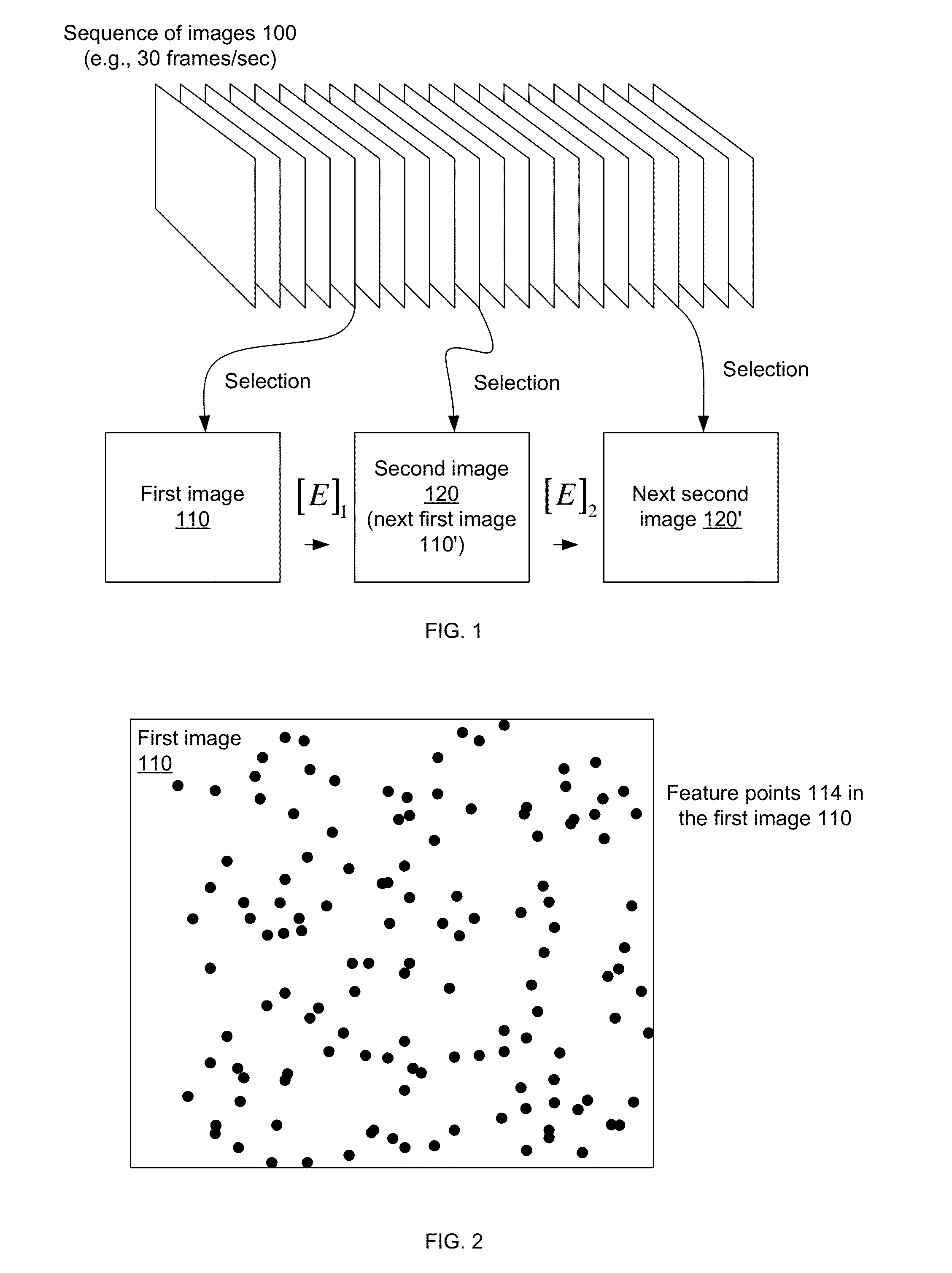

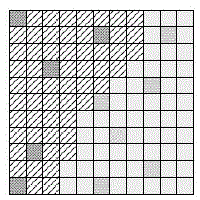

Fast 3-D point cloud generation on mobile devices

A system, apparatus and method for determining a 3-D point cloud is presented. First a processor detects feature points in the first 2-D image and feature points in the second 2-D image and so on. This set of feature points is first matched across images using an efficient transitive matching scheme. These matches are pruned to remove outliers by a first pass of s using projection models, such as a planar homography model computed on a grid placed on the images, and a second pass using an epipolar line constraint to result in a set of matches across the images. These set of matches can be used to triangulate and form a 3-D point cloud of the 3-D object. The processor may recreate the 3-D object as a 3-D model from the 3-D point cloud.

Owner:QUALCOMM INC

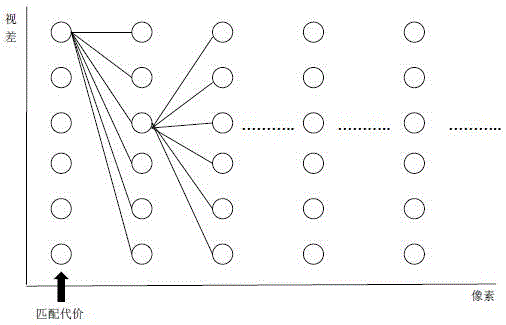

Stereoscopic image dense matching method and system based on LiDAR point cloud assistance

According to a stereoscopic image dense matching method and system based on LiDAR point cloud assistance, LiDAR point clouds in a projection acquired stereopair overlapping range are subjected to parallel filtering processing; the filtered point clouds are projected to an epipolar line stereopair and a parallax range of subsequent dense matching is determined; a pyramid is built, starting from a top layer of the pyramid, a cost matrix is transformed by adopting a triangulation network constraint, SGM dense matching is carried out, and left and right consistency detection is carried out so as to obtain a final parallax image of the top layer; a parallax image of a current layer of the pyramid is transferred to a next layer to be used as an initial parallax image, a parallax range of the next layer is correspondingly determined according to the parallax image of the current layer, and the next layer is used as a new current layer; and on the basis of the new current layer, processing is carried out as well to obtain a final parallax image of the current layer up to a bottom layer of the pyramid, a parallax image of an original image is output, and according to the parallax image of the original image, corresponding image points of the stereopair are obtained and the densely matched point clouds are generated.

Owner:WUHAN UNIV

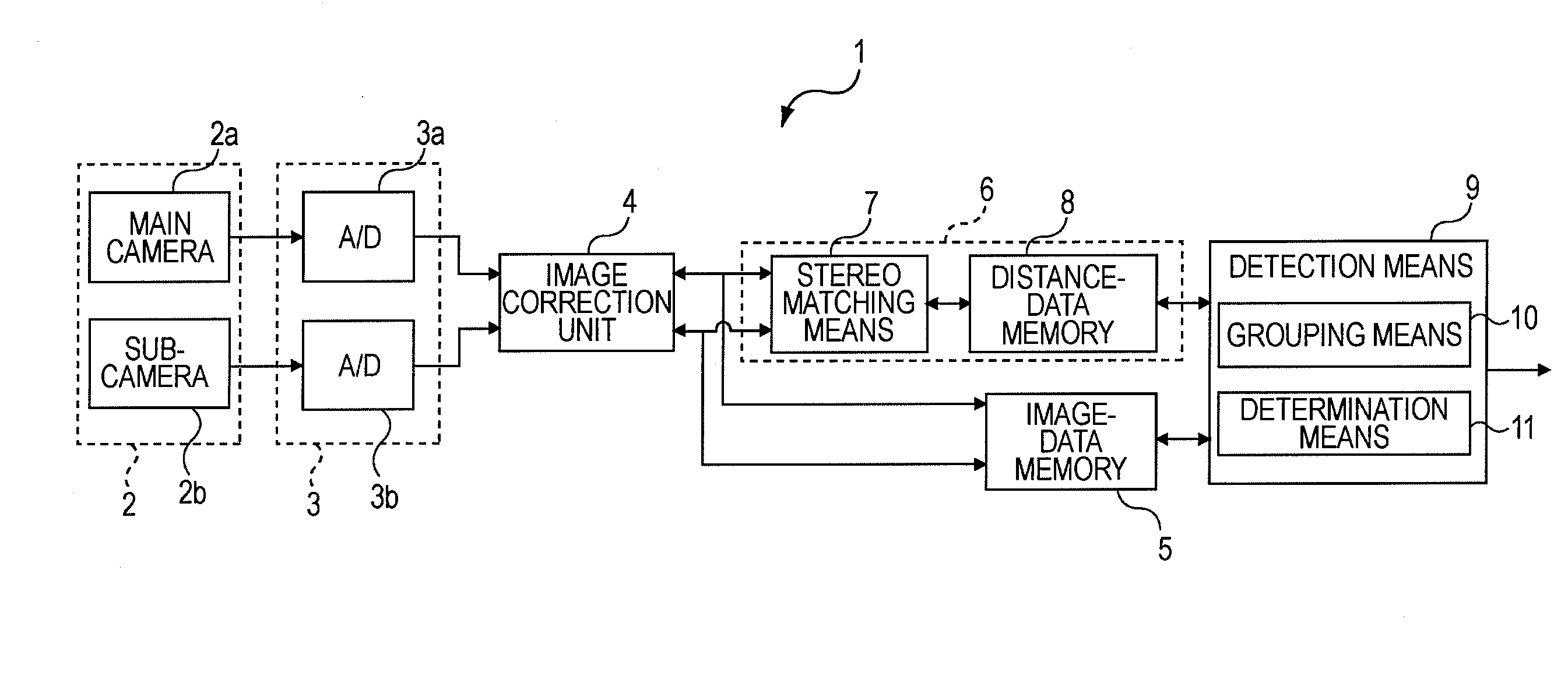

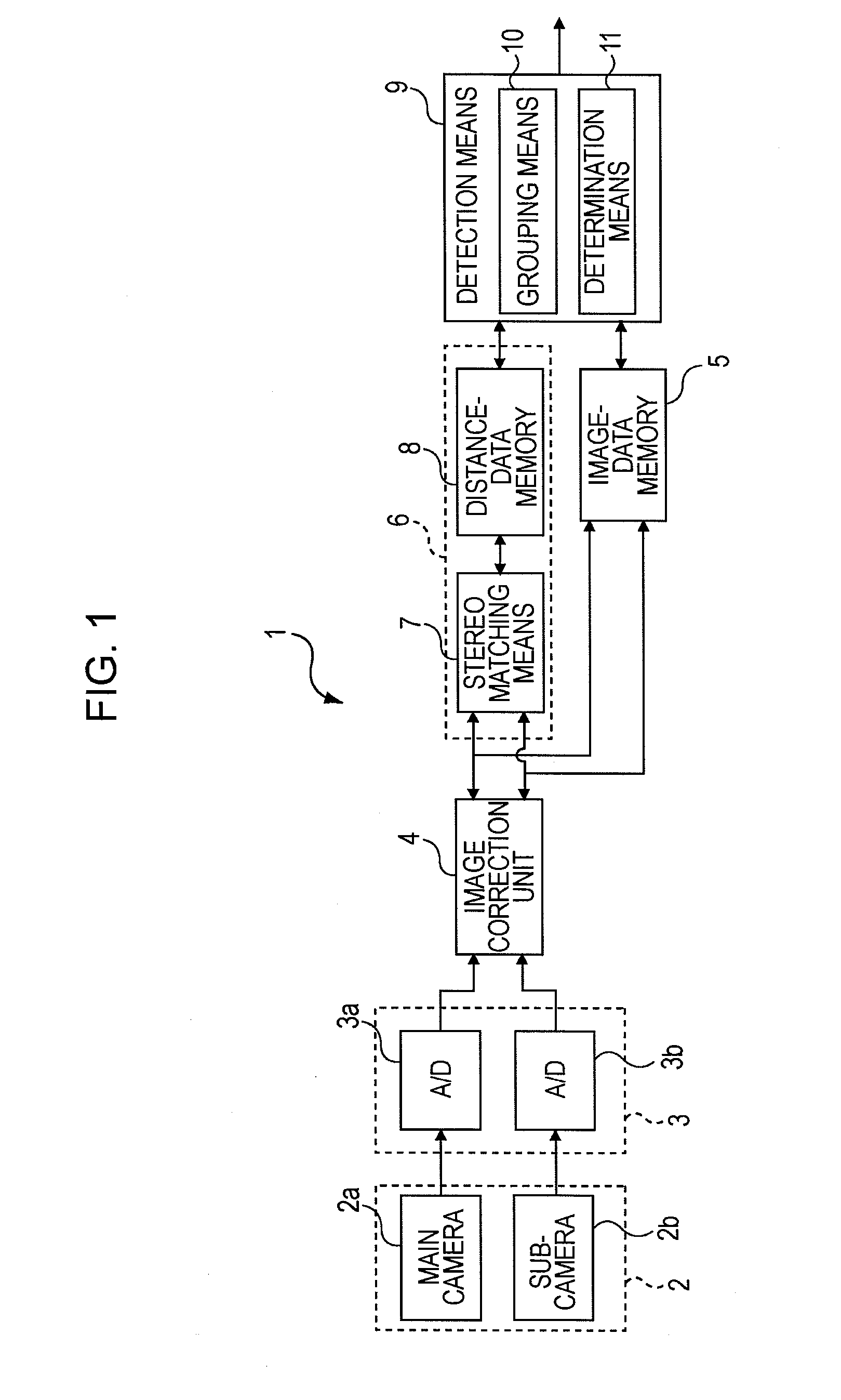

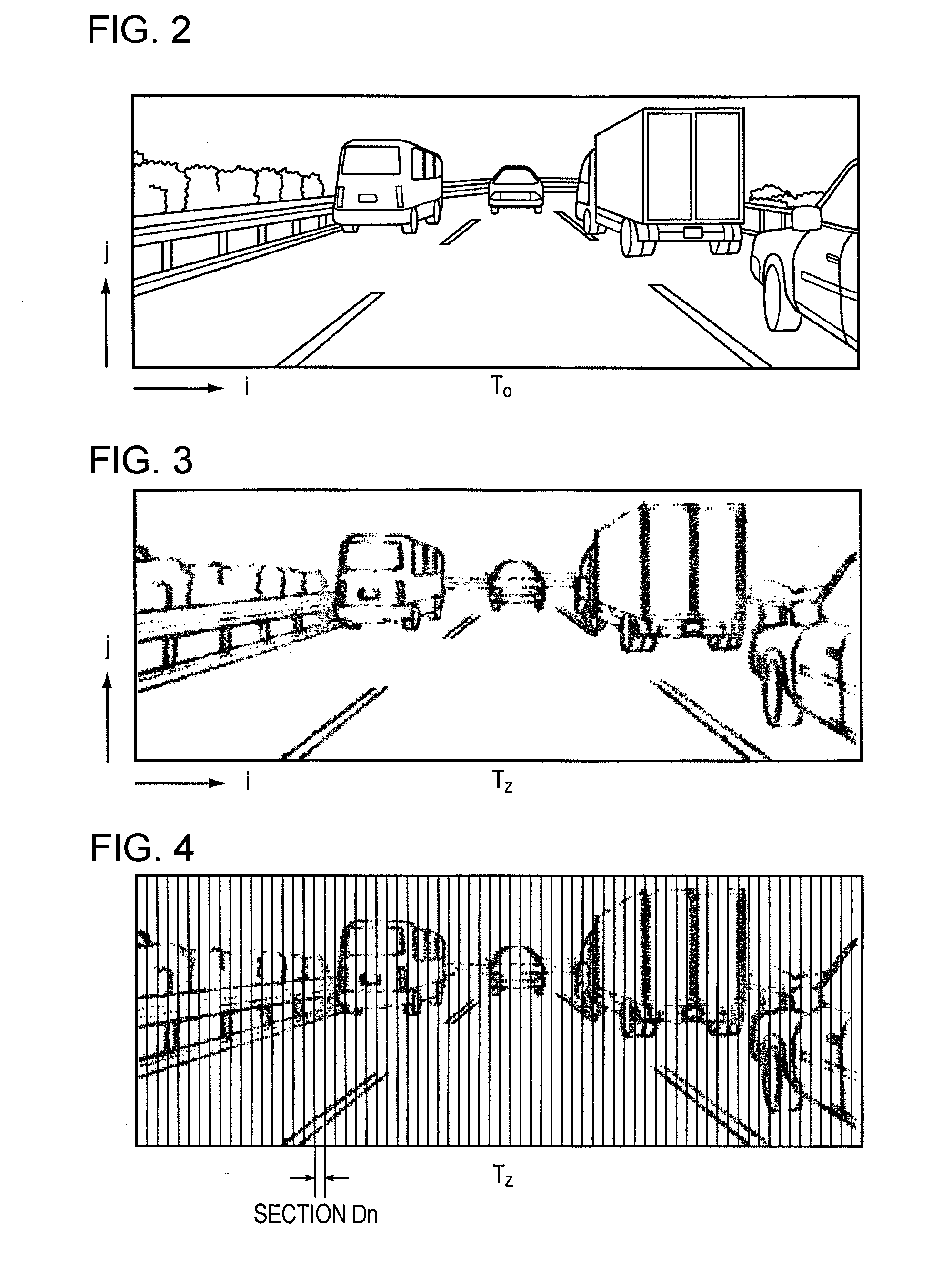

Object Detecting System

ActiveUS20090237491A1Accurate distinctionRoad vehicles traffic controlScene recognitionParallaxStereo matching

An object detecting system includes stereo-image taking means for taking images of an object and outputting the images as a reference image and a comparative image, stereo matching means for calculating parallaxes by stereo matching, and determination means for setting regions of objects in the reference image on the basis of the parallaxes grouped by grouping means, and performing stereo matching again for an area on the left side of a comparative pixel block specified on an epipolar line in the comparative image corresponding to a reference pixel block in a left end portion of each region. When a comparative pixel block that is different from the specified comparative pixel block and provides the local minimum SAD value less than or equal to a threshold value is detected, the determination means determines that the object in the region is mismatched.

Owner:SUBARU CORP

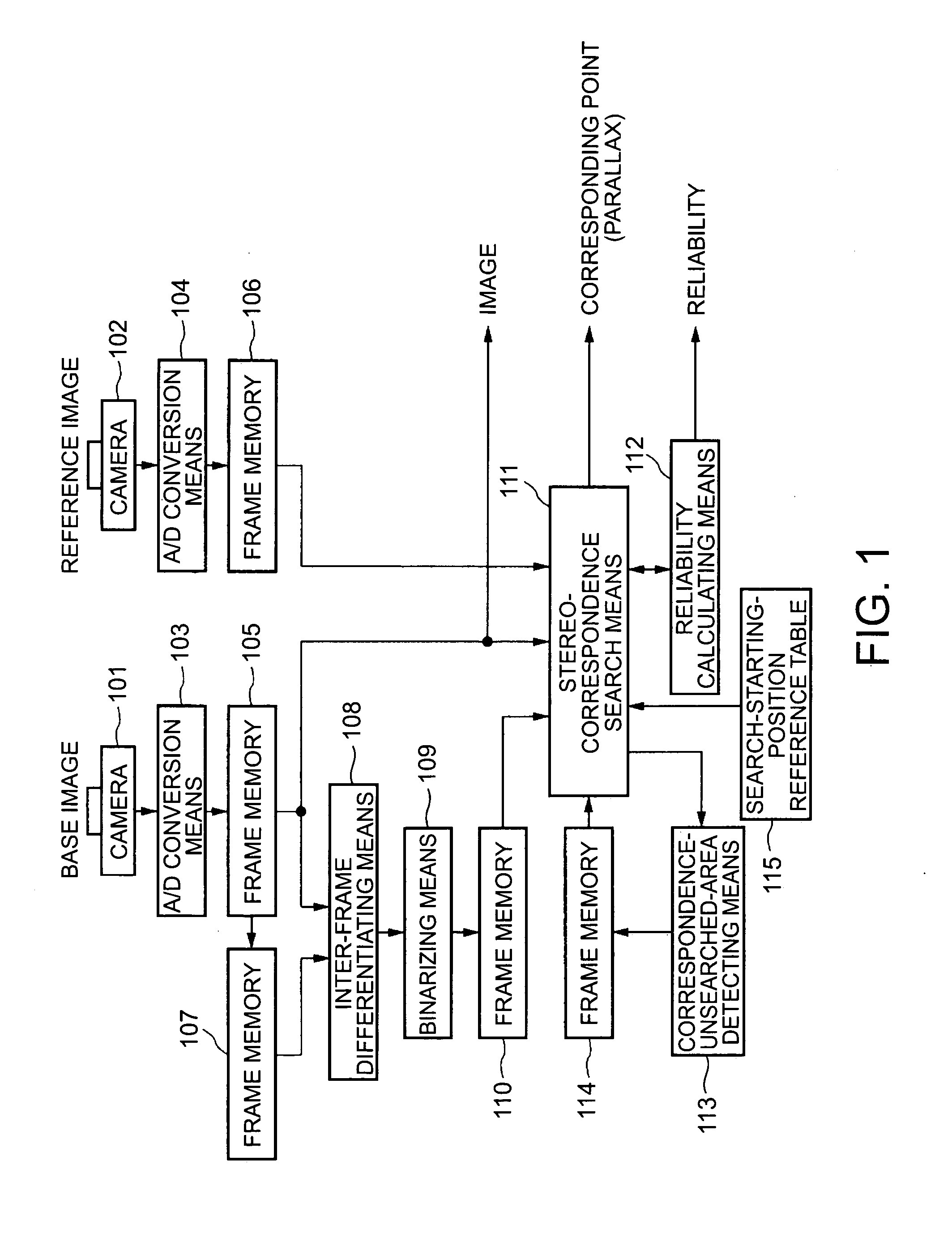

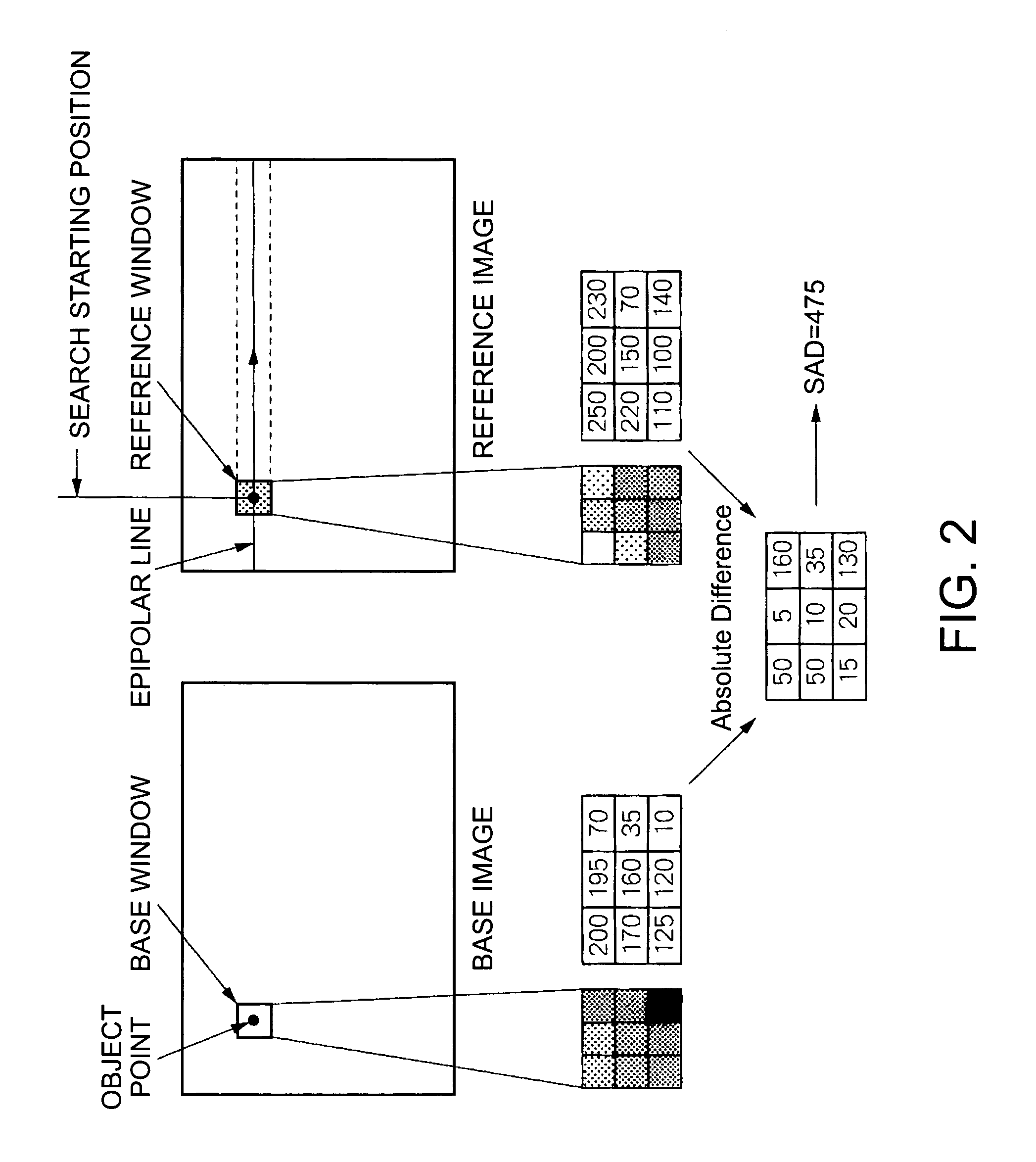

Method, apparatus, and program for processing stereo image

InactiveUS20050008220A1Accurate graspImprove reliabilityImage analysisOptical rangefindersParallaxReference window

A method for processing a stereo image is provided which includes the steps of setting a specified area of a base window with reference to a point for obtaining a corresponding point in the base image; setting a reference window having the same size as that of the base window in the reference image; evaluating the difference between the base window and the reference window while scanning the reference window along an epipolar line; determining the position of the reference window having the minimum difference as a stereo corresponding point; measuring parallax from the difference between the position of the base window and the position of the reference window; calculating the reliability of the corresponding point (parallax) by first reliability based on the sharpness of the peak of the evaluation-value distribution of the difference of patterns between the base window and the reference window and second reliability based on the shape of the peaks; and outputting the value of the reliability of the parallax together with information on the parallax.

Owner:SEIKO EPSON CORP

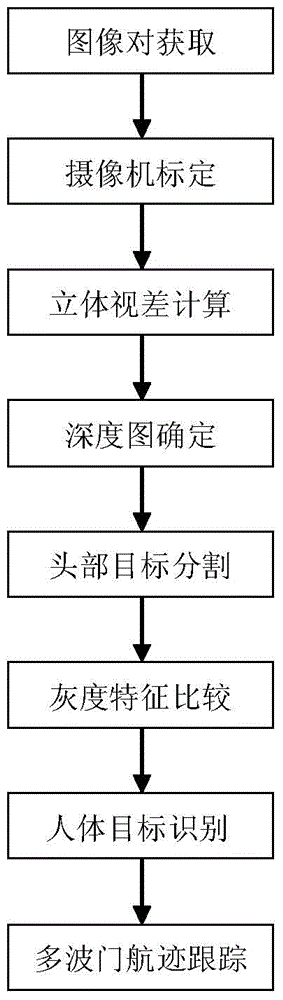

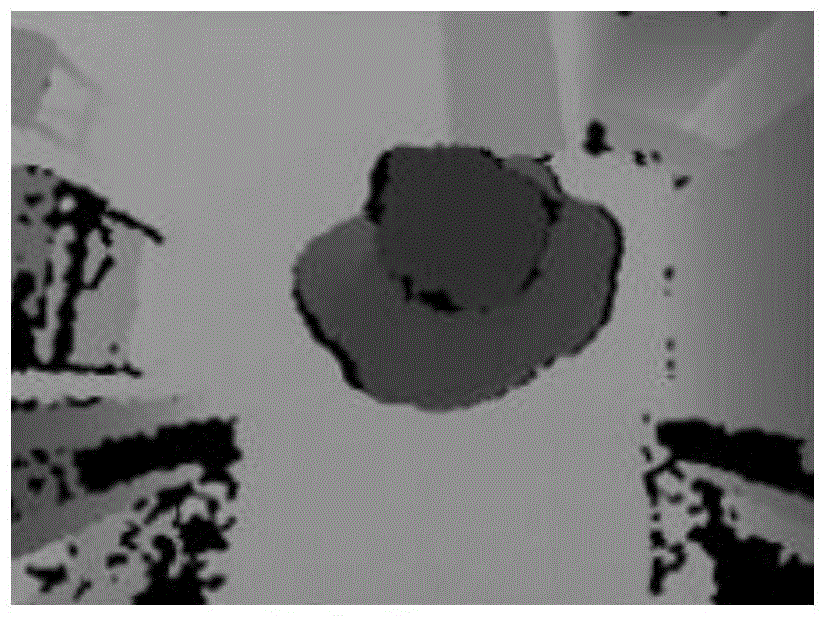

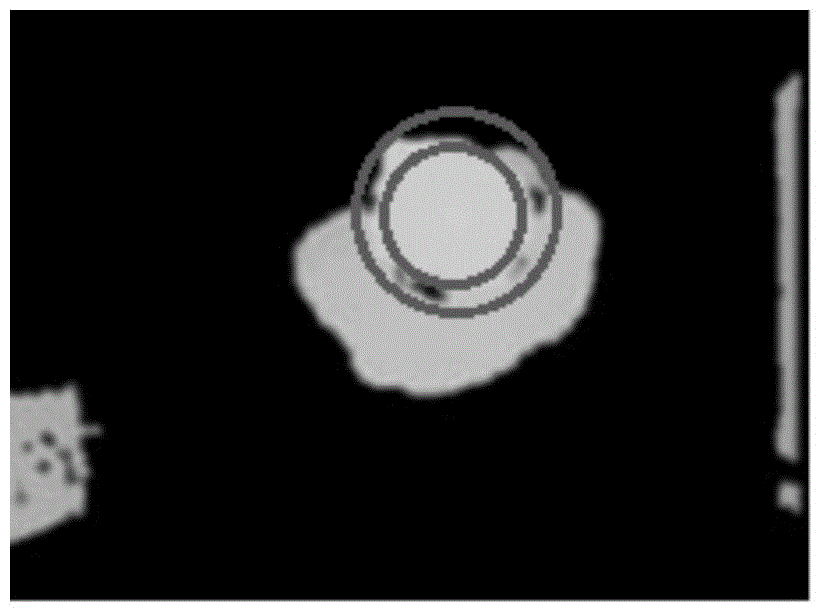

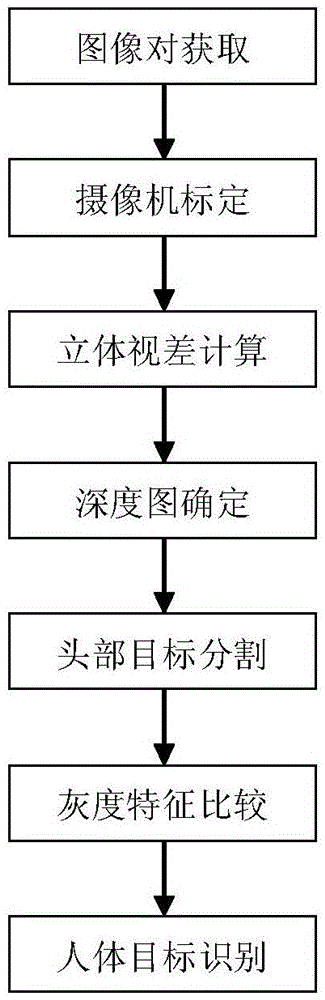

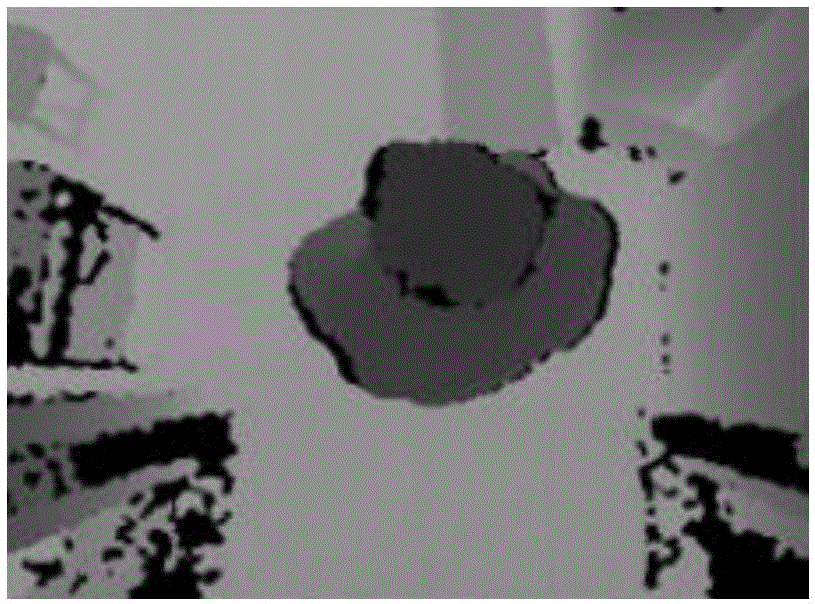

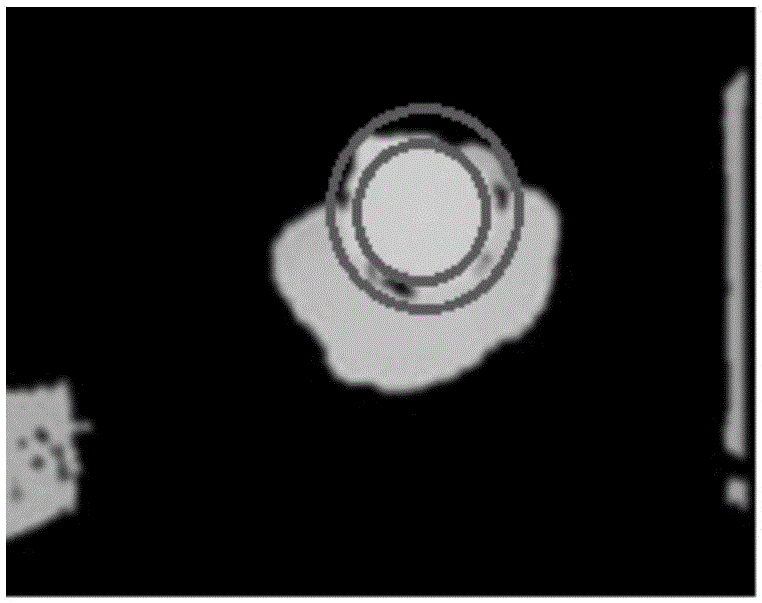

Human body target identifying and tracking method based on stereoscopic vision technology

InactiveCN106485735AAccurate identificationSmall amount of calculationImage enhancementImage analysisHuman bodyParallax

The invention discloses a human body target identifying and tracking method based on stereoscopic vision technology. The method comprises the following steps: simultaneously obtaining pictures of the same scene from two different angles by two cameras to form a stereo image pair; determining internal and external parameters of the cameras by marking the cameras, and establishing an imaging model; adopting a window-based matching algorithm to create a window with a to-be-matched point of one image as the center, creating the same sliding window on the other image, sequentially moving the sliding window along an epipolar line with a pixel point as the unit, calculating a window matching degree, finding an optimal matching point, obtaining three-dimensional geometrical information of a target by the parallax principle, and generating a depth image; distinguishing head and shoulder information by using a one-dimensional maximum entropy threshold segmentation method and gray features; and tracking the human body target by using an adaptive gate tracking method, and obtaining a target track. By adoption of the human body target identifying and tracking method based on the stereoscopic vision technology disclosed by the invention, accurate identification of the human body target and tracking counting are realized, background noise is effectively removed, and the interference of the environment is avoided.

Owner:NANJING UNIV OF SCI & TECH

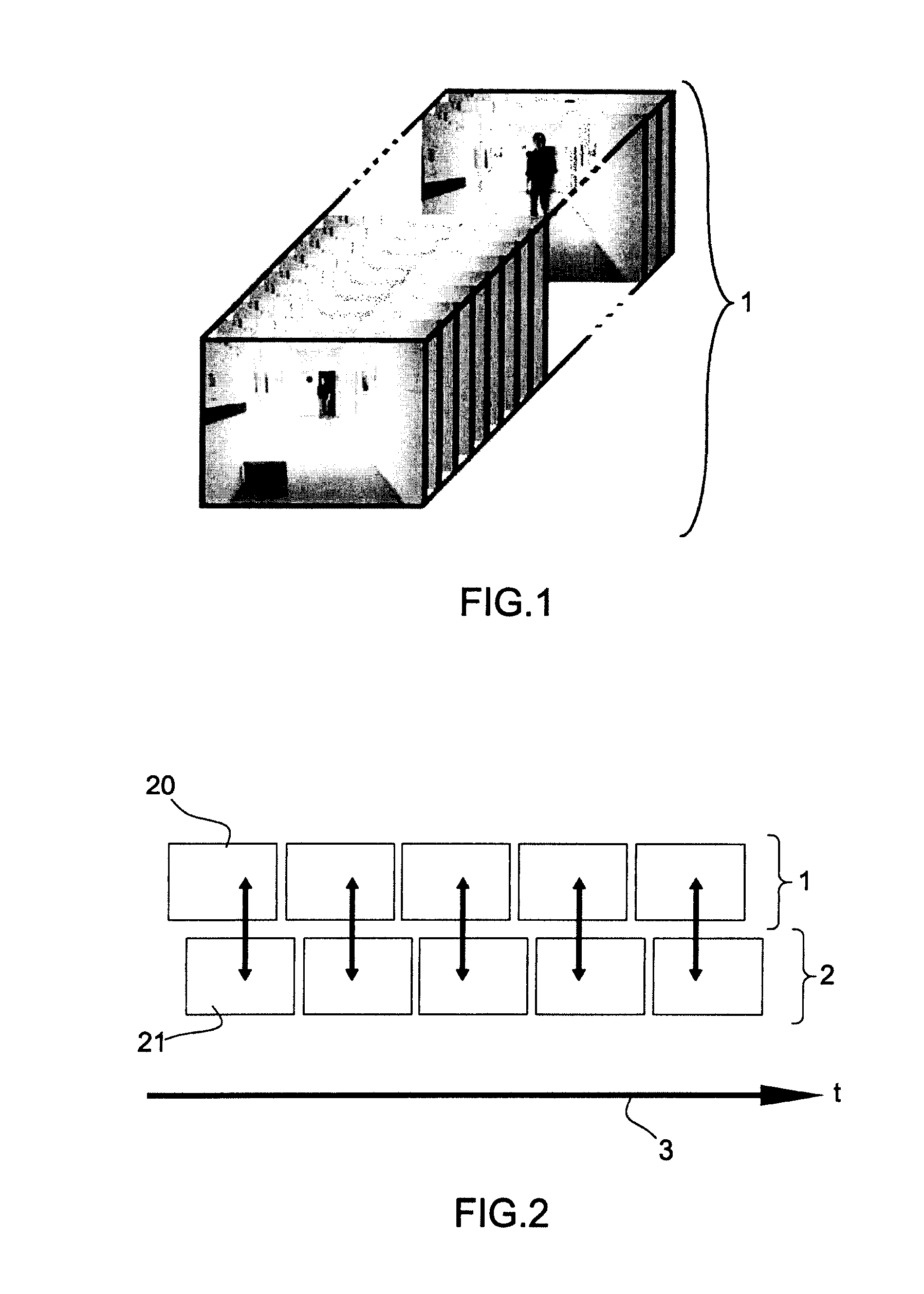

Method for synchronizing video streams

InactiveUS20110043691A1Television system detailsCarrier indexing/addressing/timing/synchronisingComputer graphics (images)Video recording

A method for synchronizing at least two video streams originating from at least two cameras having a common visual field. The method includes acquiring the video streams and recording of the images composing each video stream on a video recording medium; rectifying the images of the video streams along epipolar lines; extracting an epipolar line from each rectified image of each video stream; composing an image of a temporal epipolar line for each video stream; computing a temporal shift value δ between the video streams by matching the images of a temporal epipolar line of each video stream for each epipolar line of the video streams; computing a temporal desynchronization value Dt between the video streams by taking account of the temporal shift values 6 computed for each epipolar line of the video streams; synchronizing the video streams by taking into account the computed temporal desynchronization value Dt.

Owner:THALES SA

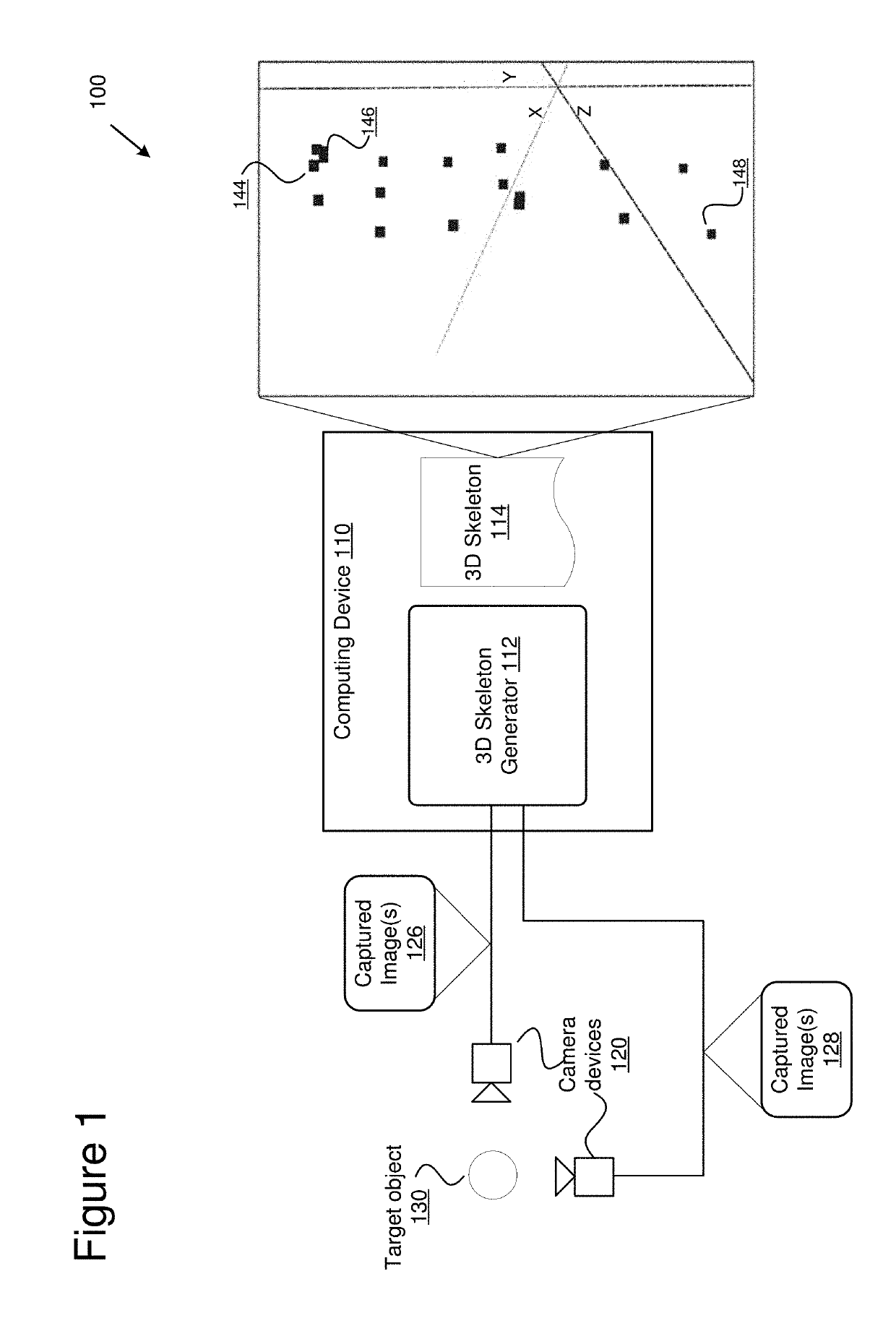

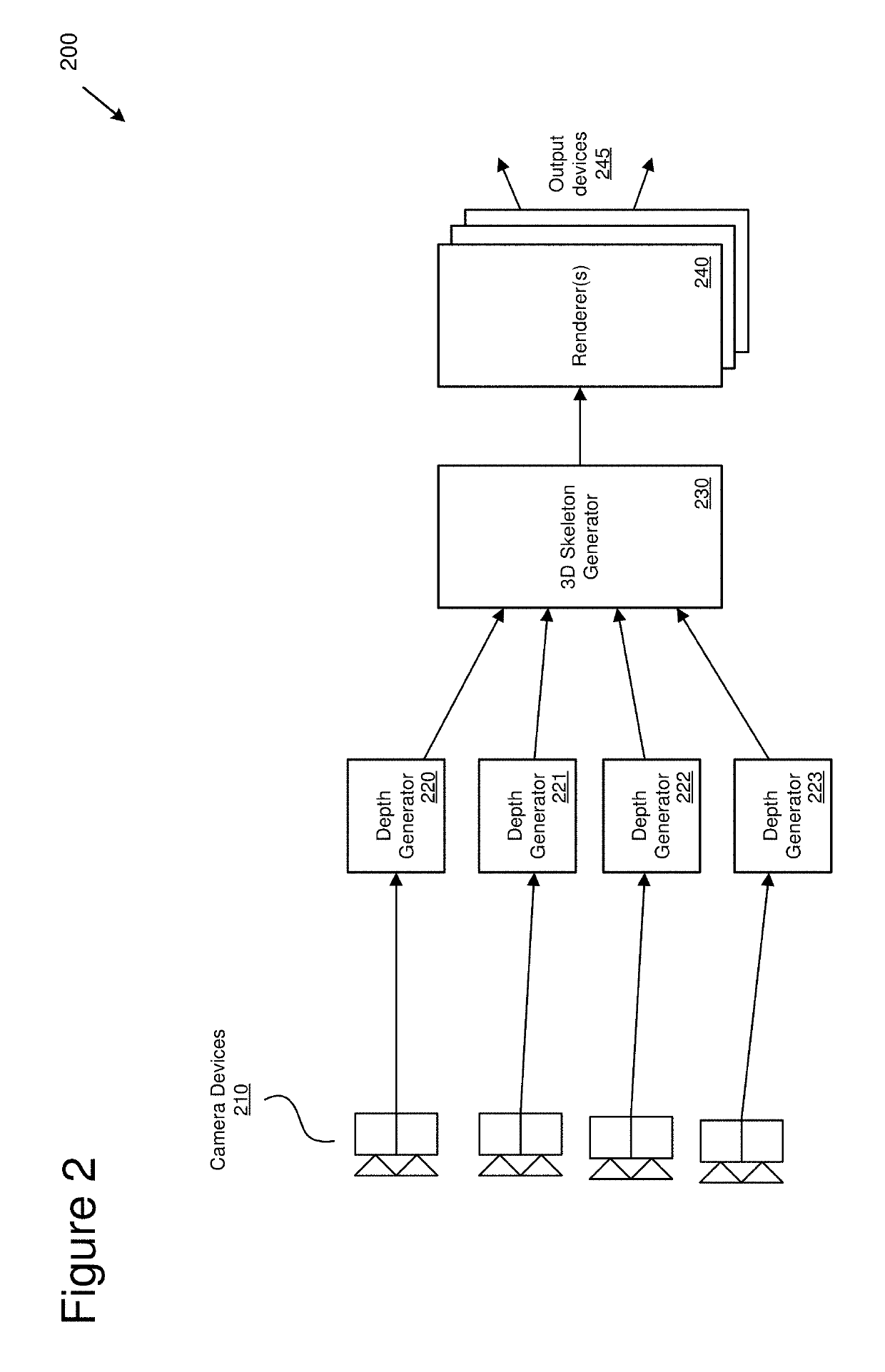

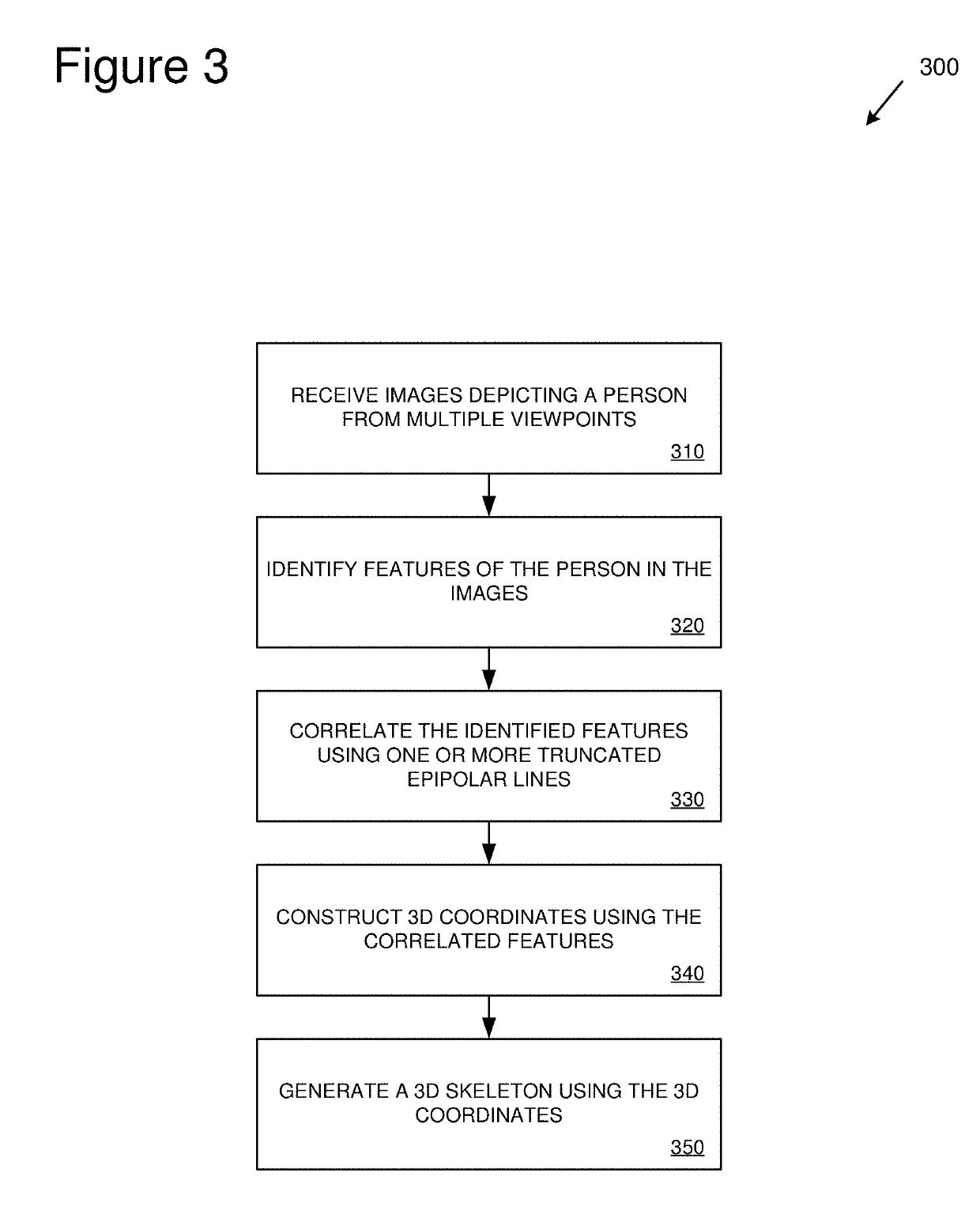

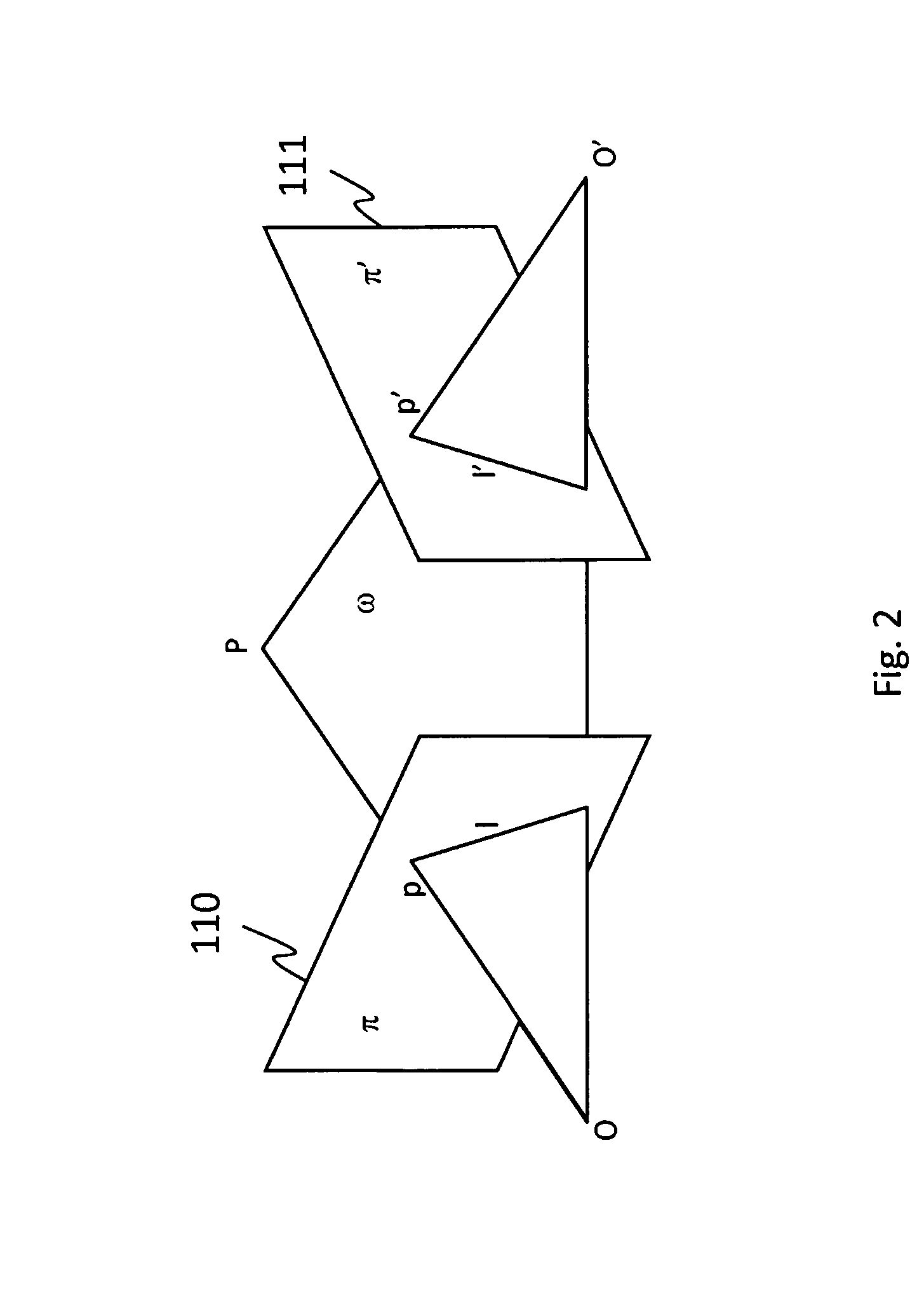

3D skeletonization using truncated epipolar lines

Technologies are provided for generating three-dimensional (3D) skeletons of target objects using images of the target objects captured from different viewpoints. Images of an object (such as a person) can be captured from different camera angles. Feature keypoints of the object can be identified in the captured images. Keypoints that identify a same feature in separate images can be correlated using truncated epipolar lines. For example, depth information for a keypoint can be used to truncate an epipolar line that is created using the keypoint. The correlated feature keypoints can be used to create 3D feature coordinates for the associated features of the object. A 3D skeleton can be generated using the 3D feature coordinates. One or more 3D models can be mapped to the 3D skeleton and rendered. The rendered one or more 3D models can be displayed on one or more display devices.

Owner:MICROSOFT TECH LICENSING LLC

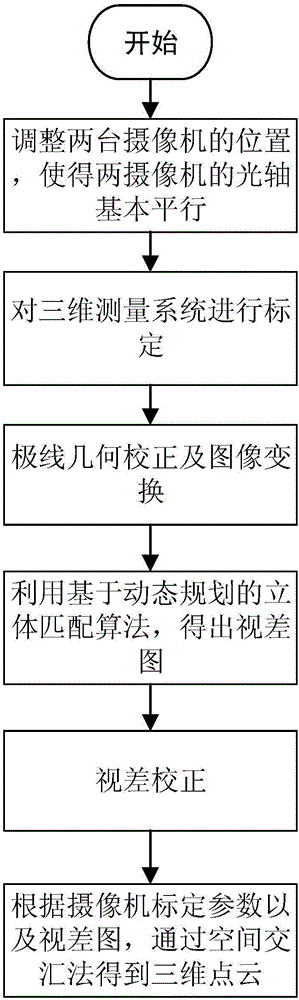

Stereo matching three-dimensional reconstruction method based on dynamic programming

InactiveCN106228605AHigh precisionLow efficiencyDetails involving processing stepsImage enhancementParallaxStereo matching

The invention discloses a stereo matching three-dimensional reconstruction method based on dynamic programming. A system according to the method is composed of two video cameras. The stereo matching three-dimensional reconstruction method comprises the following steps of (1), adjusting the positions of the two video cameras so that imaging planes of the two video cameras are parallel as possible; (2), performing calibration on a three-dimensional measuring system, obtaining inner parameters and outer parameters of the two video cameras, and obtaining a correspondence between pixel coordinates on an image and a world coordinate system; (3), performing epipolar line rectification and image conversion; (4), obtaining a parallax graph by means of a stereo matching algorithm based on dynamic programming; (5), performing parallax correction; and (6), obtaining a three-dimensional point cloud according to a video camera calibration parameter and the parallax graph through a spatial intersection method. The stereo matching three-dimensional reconstruction method has advantages of high parallax graph precision and high real-time performance. Furthermore the three-dimensional point cloud of the image can be accurately, quickly and automatically reconstructed.

Owner:SOUTHEAST UNIV

Apparatus and methods for image alignment

ActiveUS9792709B1Reduce measurementTelevision system detailsGeometric image transformationParallaxSource image

Multiple images may be combined to obtain a composite image. Individual images may be obtained with different camera sensors and / or at different time instances. In order to obtain the composite image source images may be aligned in order to produce a seamless stitch. Source images may be characterized by a region of overlap. A disparity measure may be determined for pixels along a border region between the source images. A warp transformation may be determined using an optimizing process configured to determine displacement of pixels of the border region based on the disparity. Pixel displacement at a given location may be constrained to direction configured tangential to an epipolar line corresponding to the location. The warp transformation may be propagated to pixels of the image. Spatial and / or temporal smoothing may be applied. In order to obtain optimized solution, the warp transformation may be determined at multiple spatial scales.

Owner:GOPRO

Device and method for obtaining distance information from views

ActiveUS20190236796A1Improve quality resolutionPrecision in depth measurementImage enhancementImage analysisImage captureImage acquisition

A device and method for obtaining depth information from a light field is provided. The method generating a plurality of epipolar images from a light field captured by a light field acquisition device; an edge detection step for detecting, in the epipolar images, edges of objects in the scene captured by the light field acquisition device; for each epipolar image, detecting valid epipolar lines formed by a set of edges; and determining the slopes of the valid epipolar lines. In a preferred embodiment, the method extend the epipolar images with additional information of images captured by additional image acquisition devices and obtain extended epipolar lines. The edge detection step calculates a second spatial derivative for each pixel of the epipolar images and detects the zero-crossings of the second spatial derivatives.

Owner:PHOTONIC SENSORS & ALGORITHMS SL

Arrangement and Method for Determining Positions of the Teats of a Milking Animal

InactiveUS20080314324A1Easy and efficientEasy to useImage enhancementImage analysisImaging processingEngineering

An arrangement for determining positions of the teats of an animal is provided in a milking system comprising a robot arm for automatically attaching teat cups to the teats of an animal when being located in a position to be milked, and a control device for controlling the movement of the robot arm based on determined positions of the teats of the animal. The arrangement comprises a camera pair directed towards the teats of the animal for repeatedly recording pairs of images, and an image processing device for repeatedly detecting the teats of the animal and determining their positions by a stereoscopic calculation method based on the repeatedly recorded pairs of images, wherein the cameras of the camera pair are arranged vertically one above the other, and the image processing device is provided, for each teat and for each pair of images, to define the position of the lower tip of the teat in the pair of images as conjugate points, and to find the conjugate points along a substantially vertical epipolar line.

Owner:DELAVAL HLDG AB

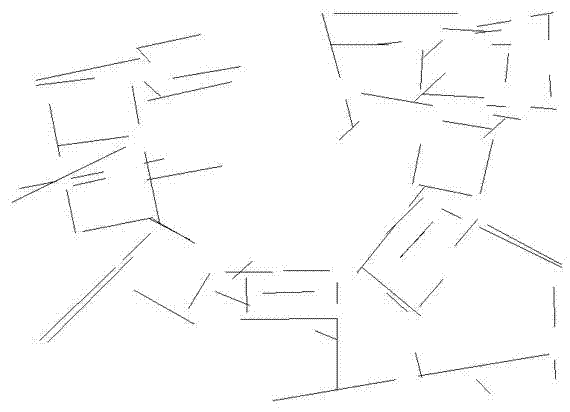

Line matching method based on affine invariant feature and homography

InactiveCN102930525ACompensating for cases of strong geometric constraintsPrecise deliveryImage analysisPattern recognitionImage pair

The invention relates to a line matching method based on affine invariant feature and homography. As line matching is lack of an effective geometric constraint of an epipolar line in point matching. A homography constraint is introduced as the geometric constraint of line segment matching to make up the defect that the line segment matching is lack of a strong geometric constraint. The invention additionally discloses a line segment automatic matching method based on the homography constraint. Line segments are transmitted and sleeved among images through the homography constraint, so that the searching difficulty for the line segments of the same name is reduced, and the matching accuracy is improved; the line segments of the same name are backwards searched after primary matching, so that matching errors are removed, and the matching accuracy is further improved. The method achieves the line segment automatic matching for remote-sensing image pairs.

Owner:WUHAN UNIV +1

Human body target identification method based on three-dimensional visual technology

InactiveCN106503605AAccurate identificationSmall amount of calculationImage enhancementImage analysisHuman bodyPattern recognition

The invention discloses a human body target identification method based on three-dimensional visual technology. The human body target identification method comprises steps that two pick-up heads are used to acquire the image of the same scene from two different angles, and a three-dimensional image pair is formed; video camera calibration is used to calibrate and determine an internal parameter and an external parameter of a video camera, and an imaging model is determined; by adopting a matching algorithm based on a window, the window is created by taking the to-be-matched point of one of the images as a center, and the same sliding window is created on the other image, and the sliding window moves sequentially along an epipolar line by taking pixel points as units, and a window matching measuring degree is calculated, and an optical matching point is found, and then the three-dimensional geometric information of the target is acquired by adopting a parallax error principle, and then a depth image is generated; by adopting a one-dimensional maximum entropy threshold segmentation method, and by combing with a gray scale characteristic, head part information and shoulder part information are distinguished, and a human body target is identified. The human body target identification method is advantageous in that calculation amount is small, and the human body target is identified quickly and accurately by using simple images.

Owner:NANJING UNIV OF SCI & TECH

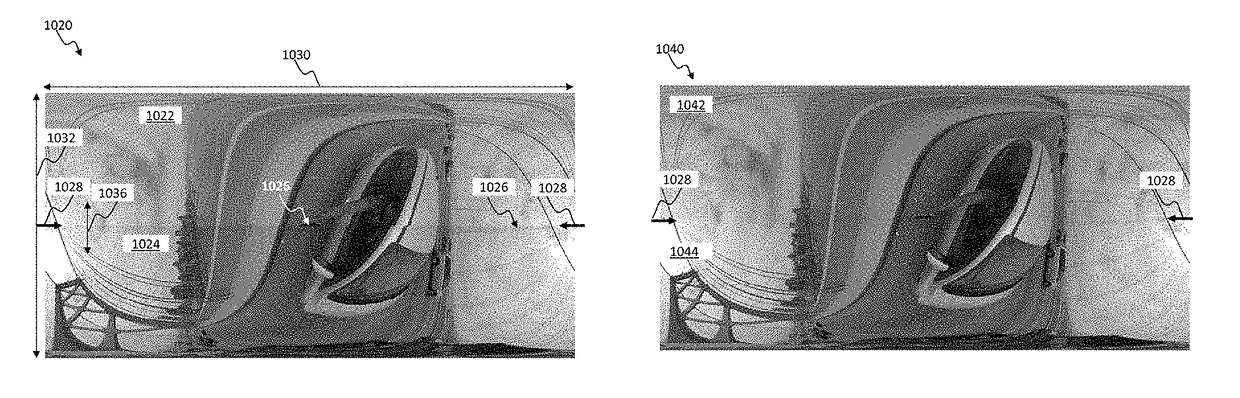

Omnistereo imaging

ActiveUS20170280056A1Reduce misalignmentTelevision system detailsGeometric image transformationVisible cameraField of view

A method for generating a 360 degree view. N input images are captured from N cameras fixed at a baseline height equidistantly about a circle in an omnipolar camera setup where N≧3. Two epipolar lines are defined per field of view from the cameras to divide the field of view of each one of the cameras into four parts. Image portions from each one of the cameras are stitched together along vertical stitching planes passing through the epipolar lines. Regions corresponding to a visible camera are removed from the image portions using deviations performed along the epipolar lines. Output images are formed for left and right eye omnistereo views by stitching together the first one of the four parts from each one of the fields of view and the second one of the four parts from each one of the fields of view, respectively.

Owner:VALORISATION RECH LLP

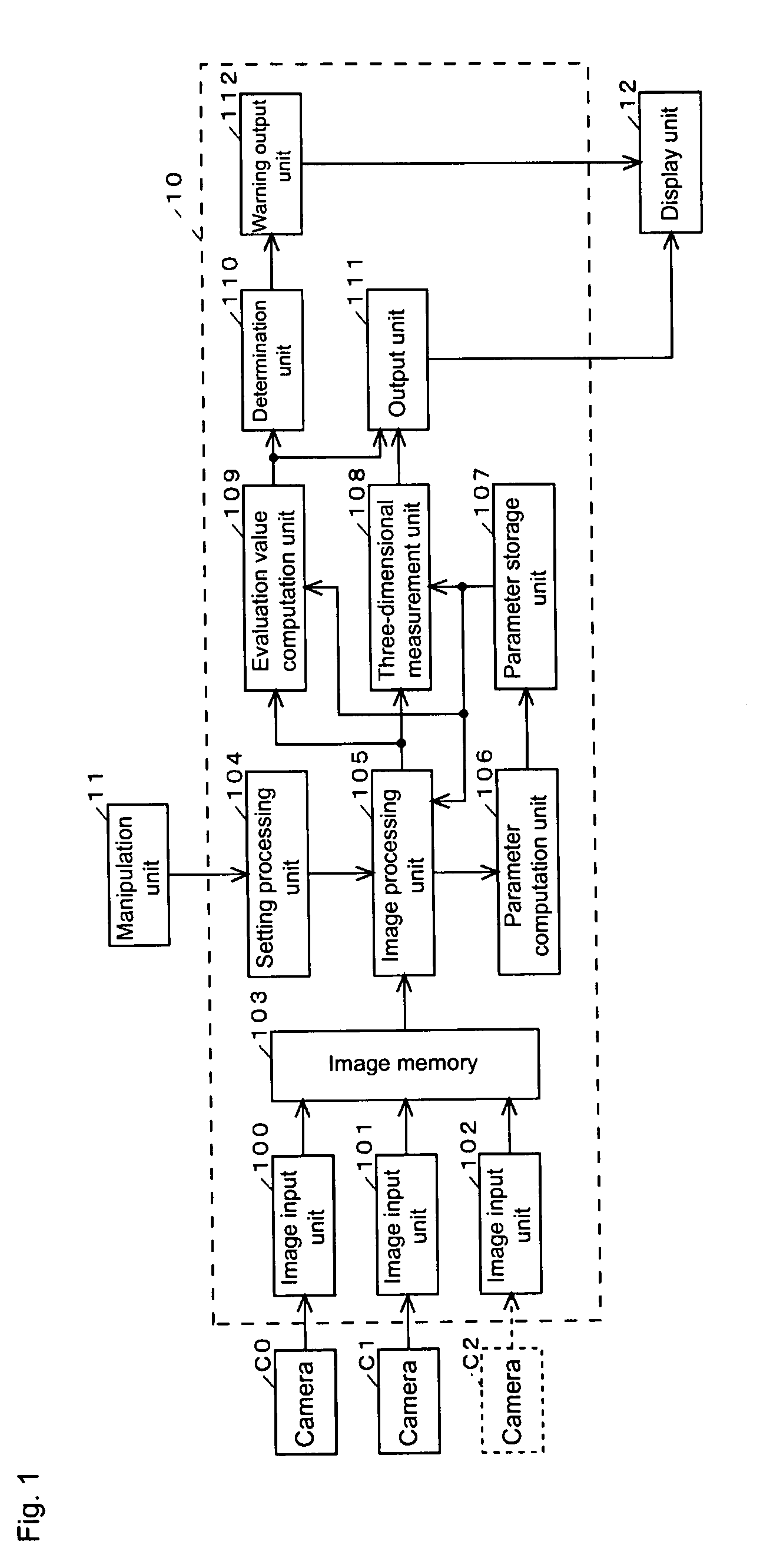

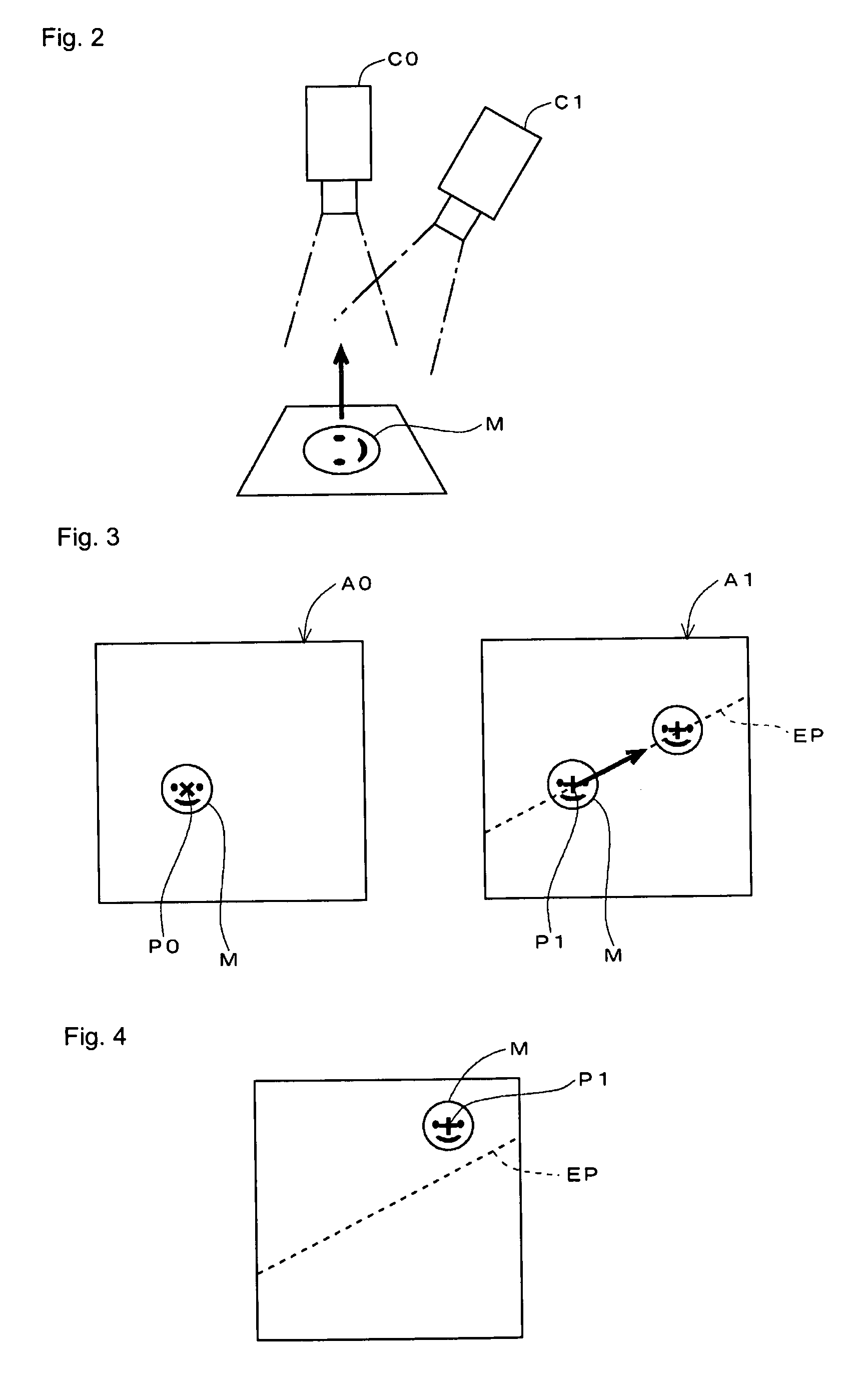

Method and apparatus for performing three-dimensional measurement

ActiveUS20090066968A1Convenient and accurateImprove accuracyUsing optical meansReference imageThree dimensional measurement

A control processing unit simultaneously drives cameras to produce images of a measuring object, the control processing unit searches corresponding point on a comparative image produced by one of the cameras for a representative point in a reference image produced by the other camera, and the control processing unit computes a three-dimensional coordinate corresponding to a coordinate using the coordinate of each point correlated by the search. The control processing unit also obtains a shift amount at the corresponding point specified by the search to an epipolar line specified based on a coordinate of a representative point on the reference image side or a parameter indicating a relationship between the cameras, and the control processing unit supplies the shift amount as an evaluation value indicating accuracy of three-dimensional measurement along with three-dimensional measurement result.

Owner:ORMON CORP

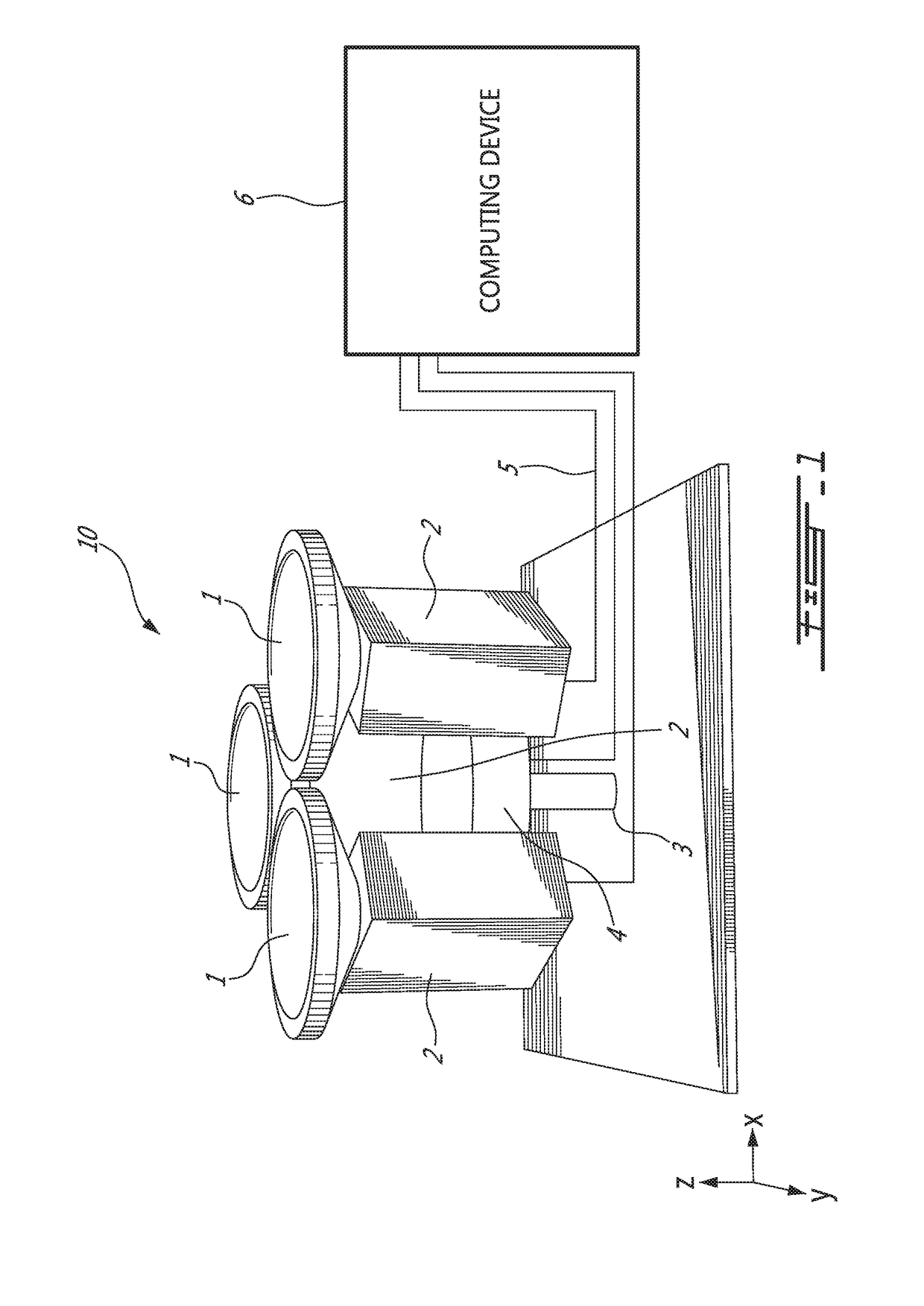

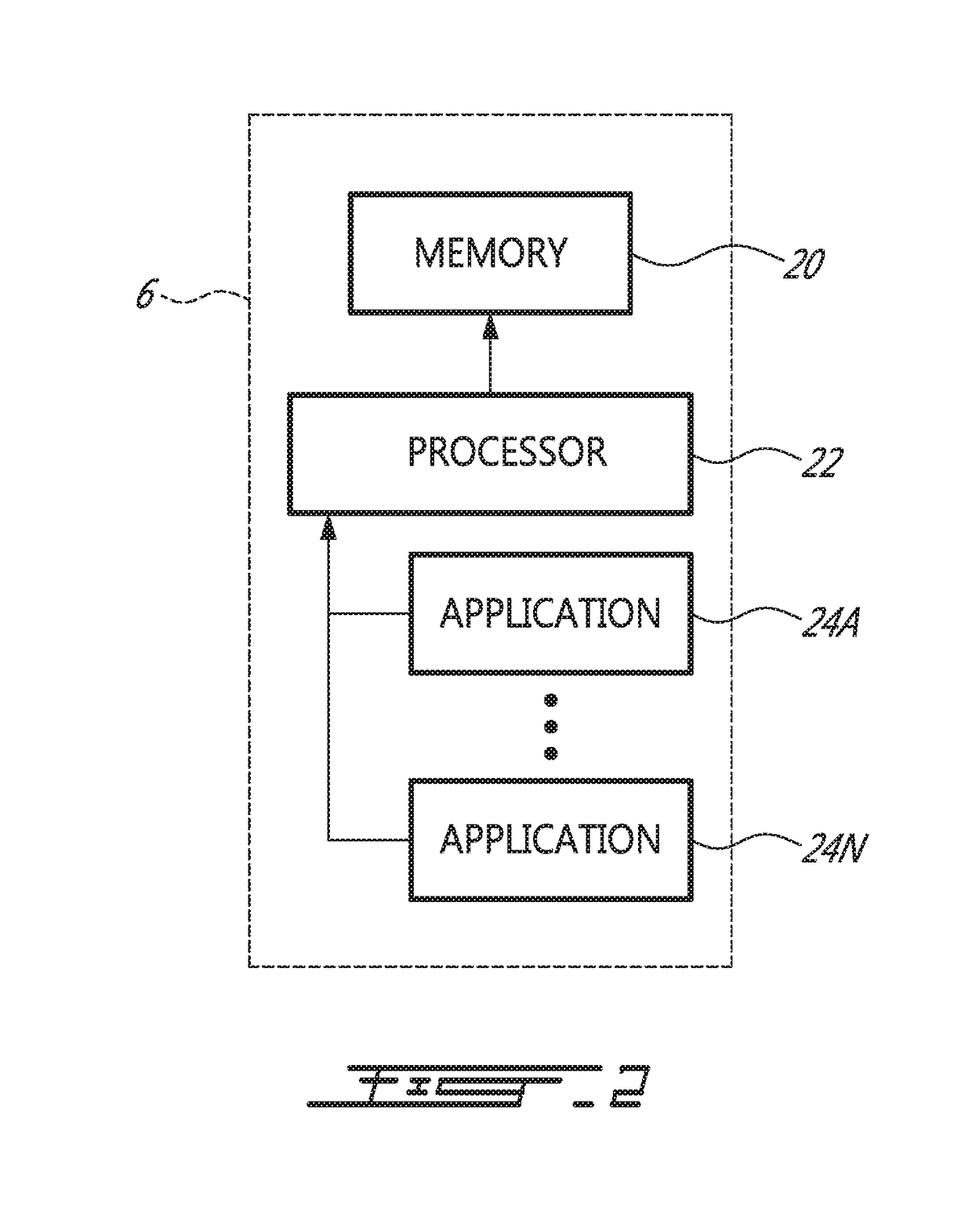

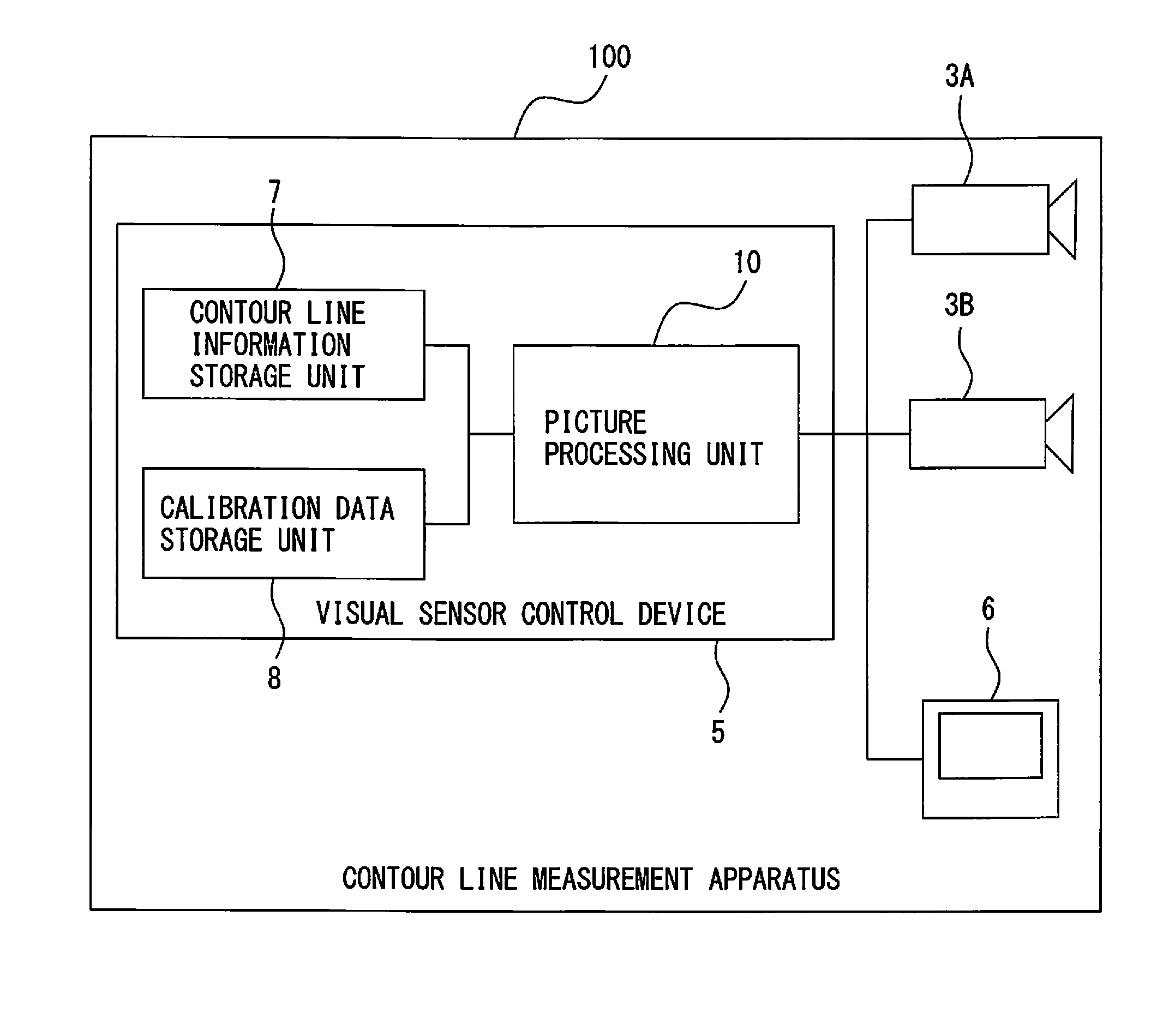

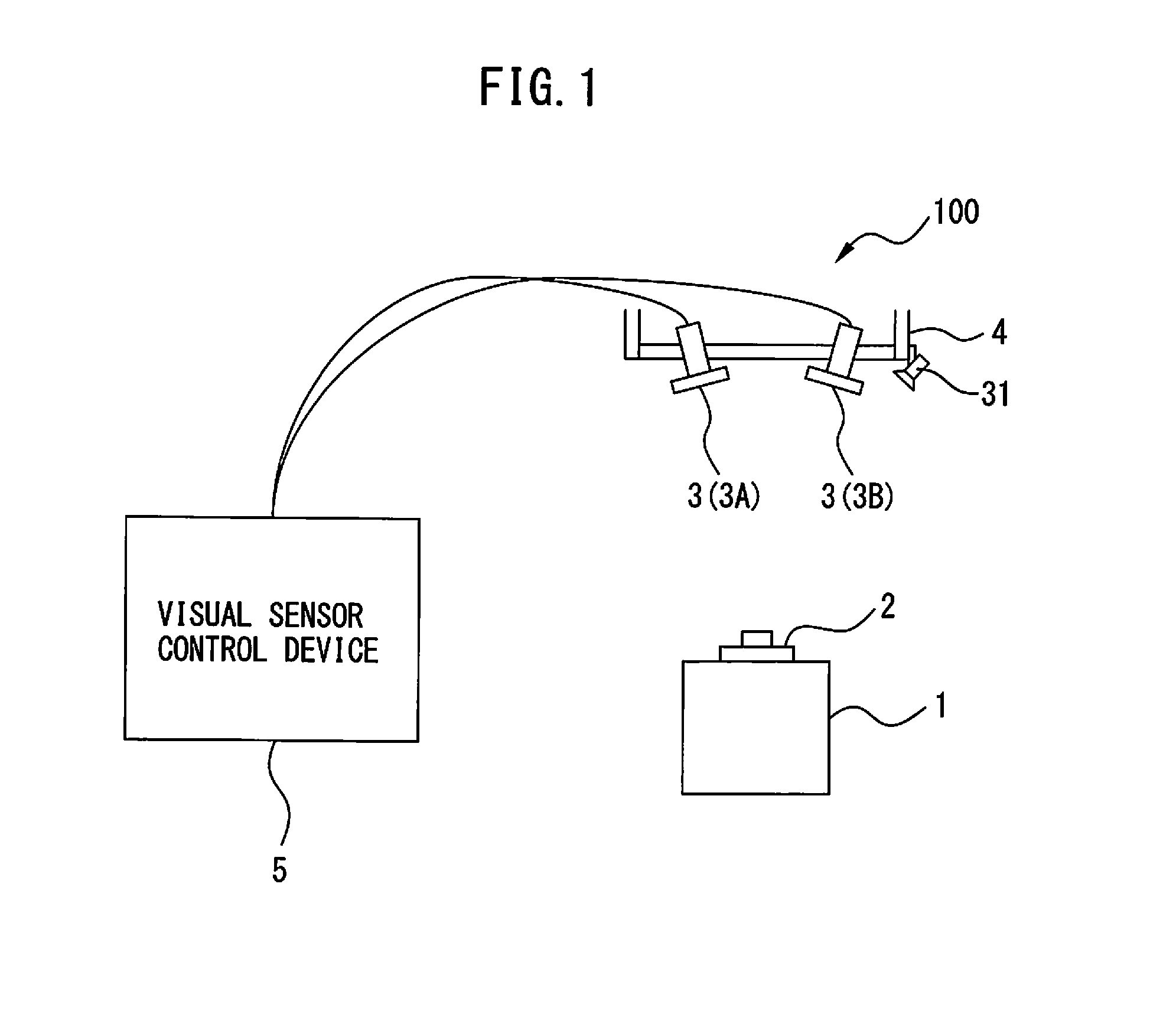

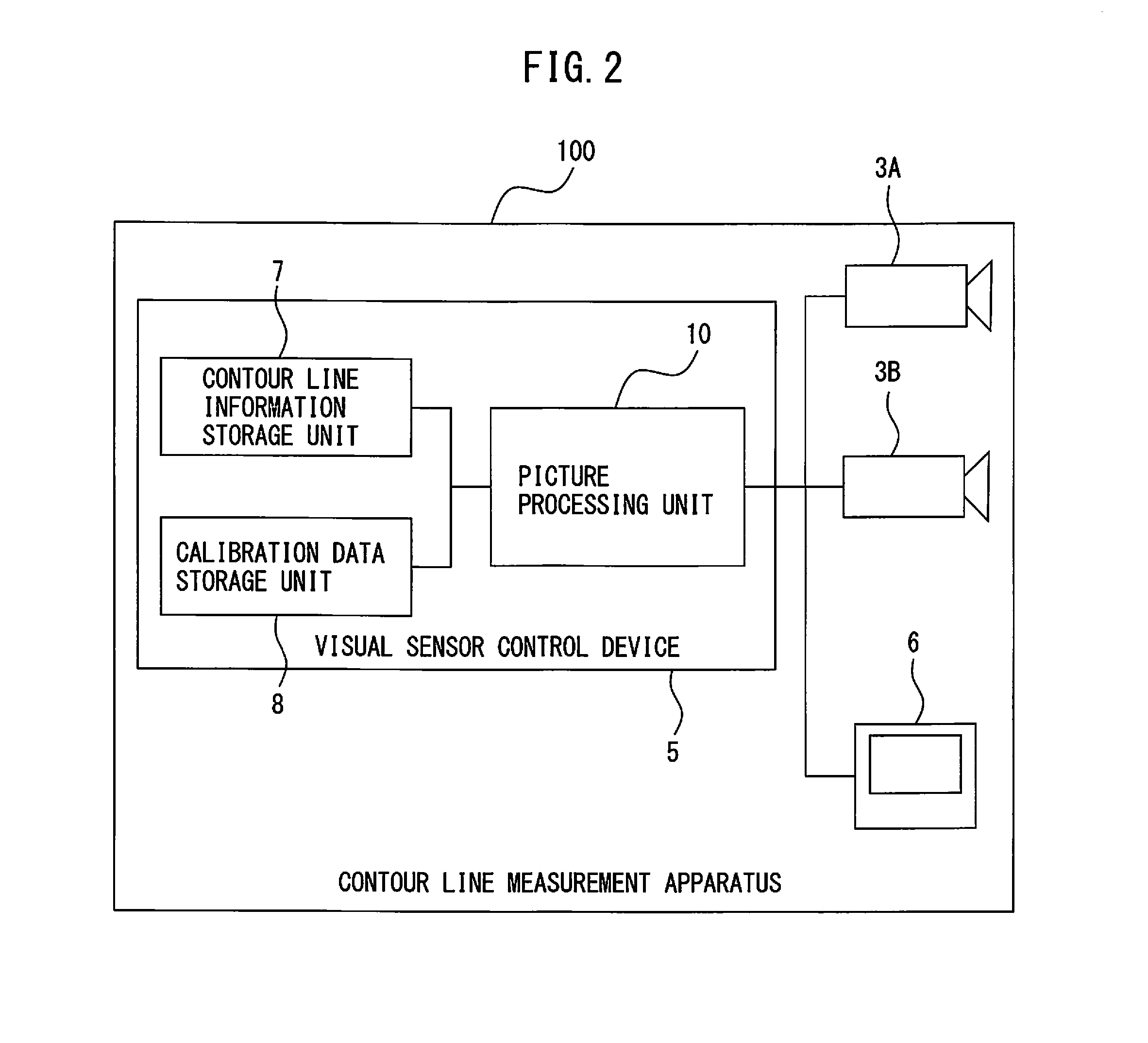

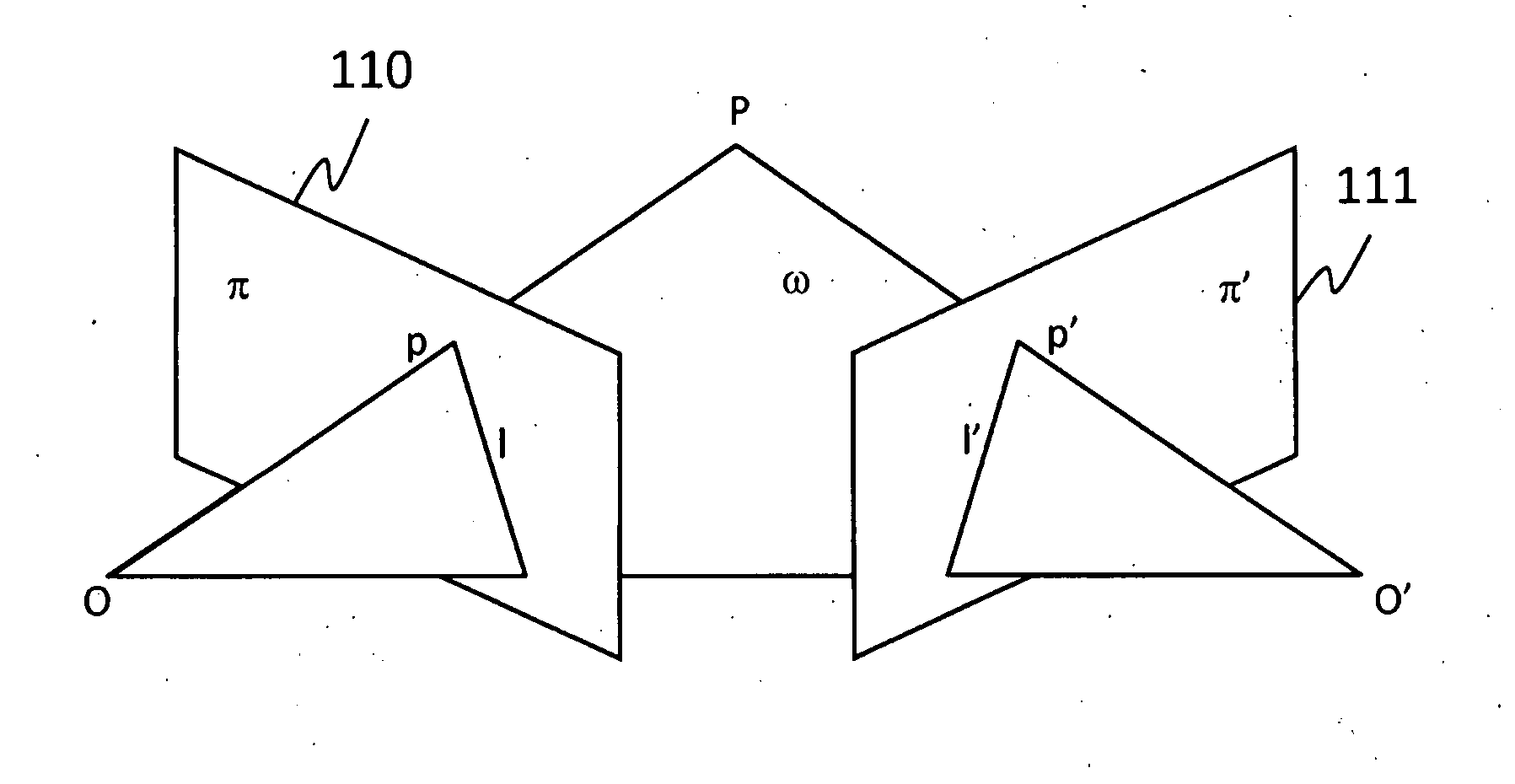

Contour line measurement apparatus and robot system

A contour line measurement apparatus includes an edge line extraction unit for setting a picture processing region and extracting an edge line from an object picture in each of the regions, an edge point generation unit for generating edge points which are intersections of the edge lines and epipolar lines, a corresponding point selection unit for selecting, from the plurality of edge points, a pair of edge points corresponding to the same portion of the reference contour line, and a three dimensional point calculation unit for calculating a three dimensional point on the contour line of the object on the basis of lines of sight of cameras which pass the pair of edge points.

Owner:FANUC LTD

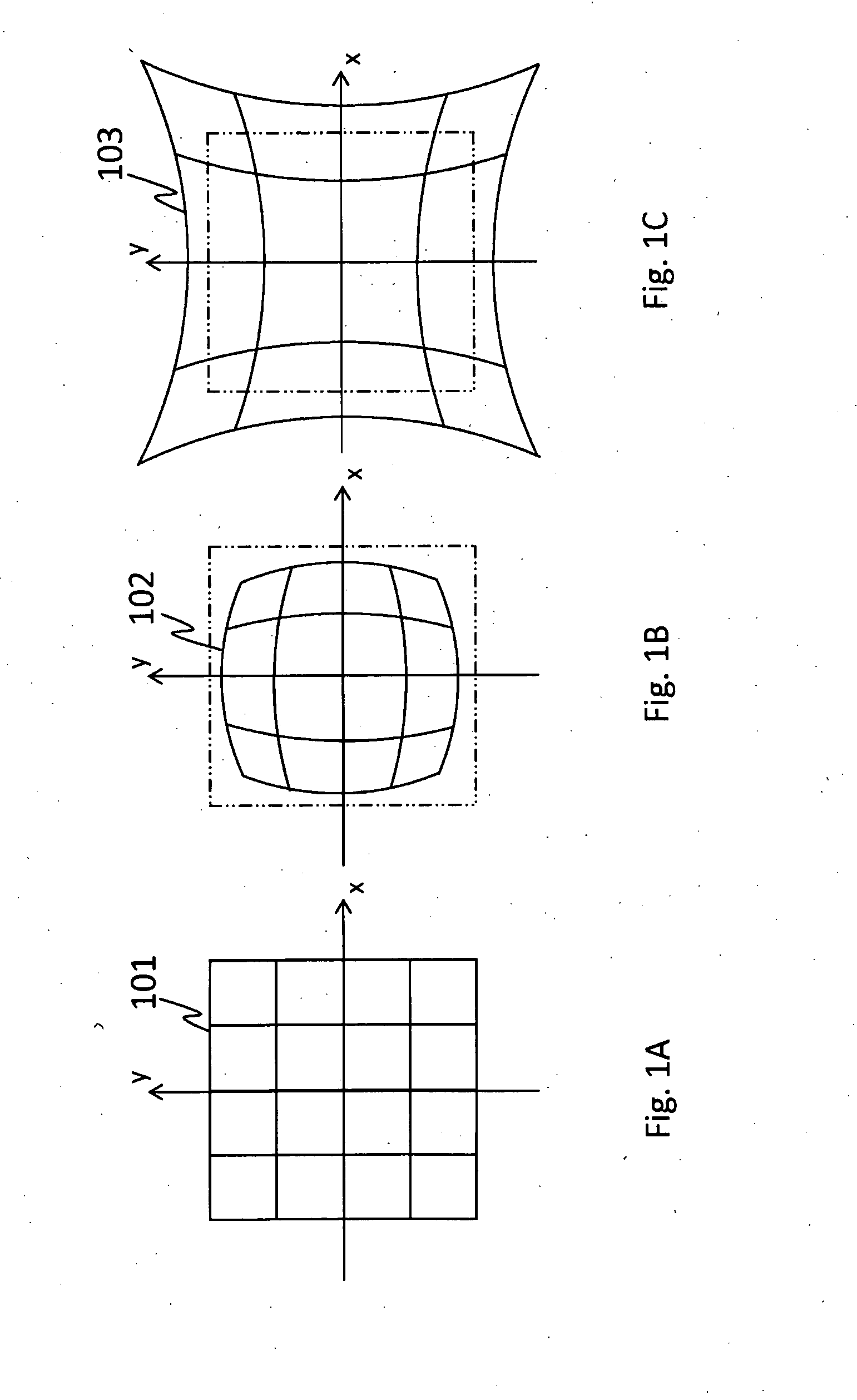

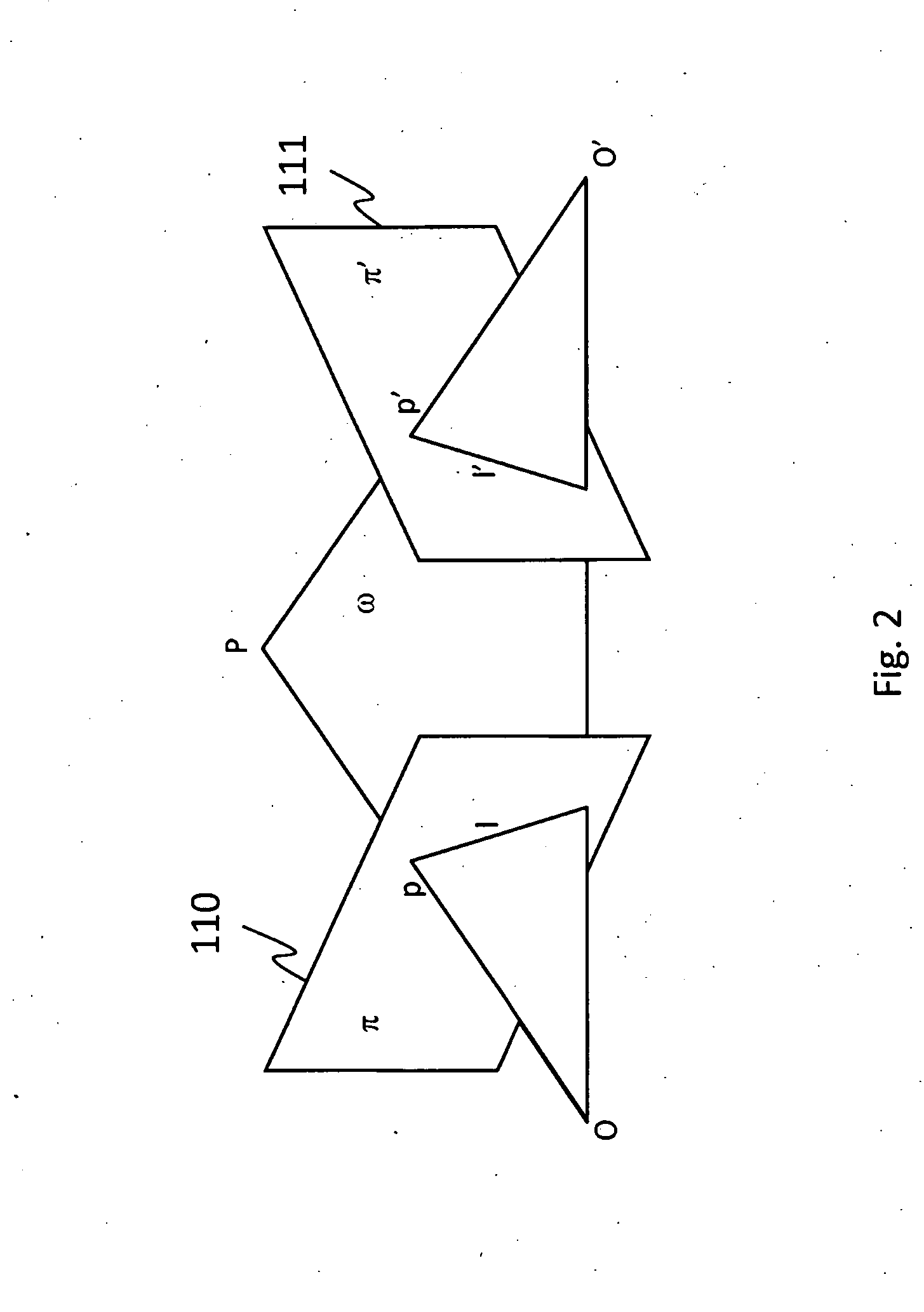

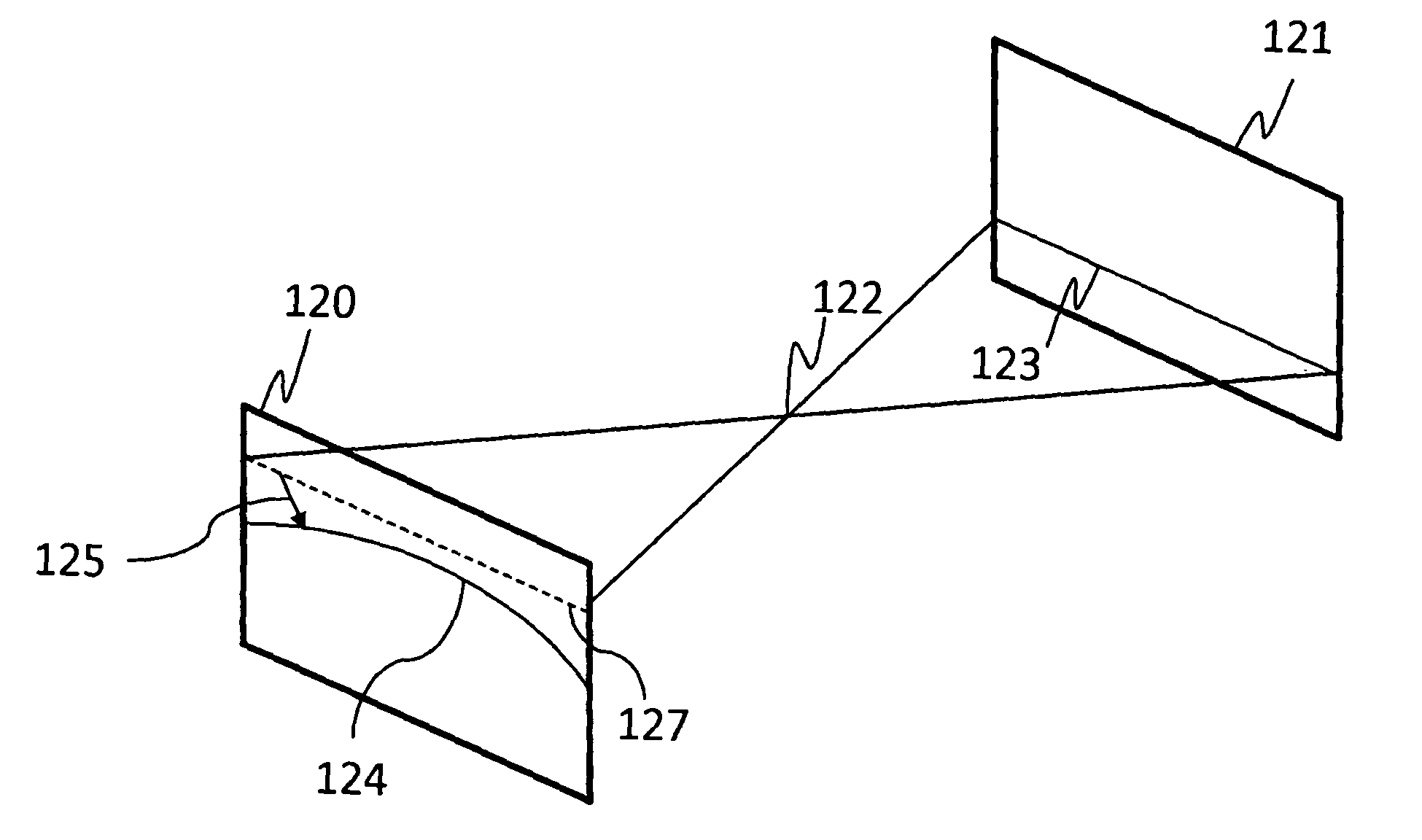

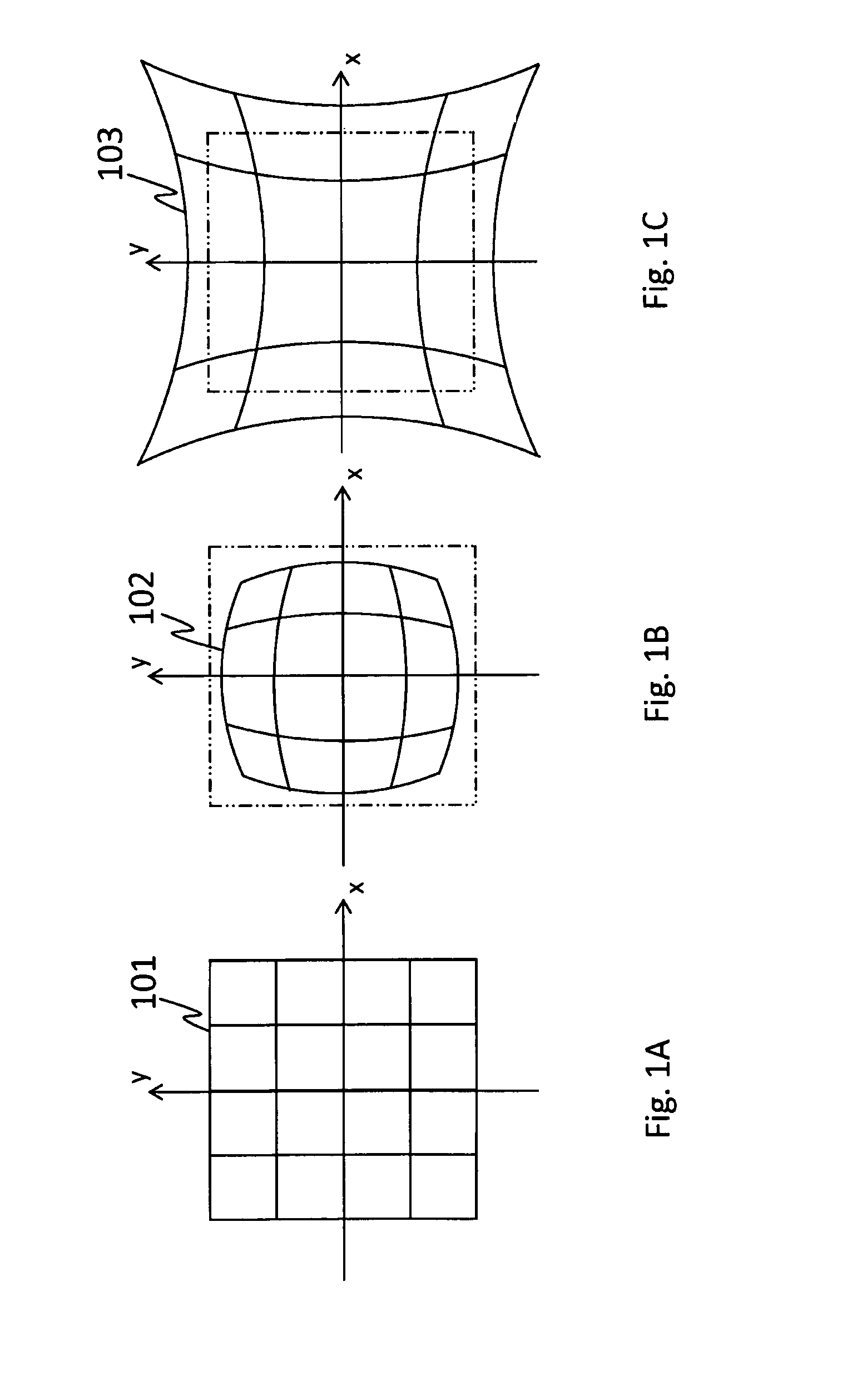

Method and system for alignment of a pattern on a spatial coded slide image

A method for preparing a spatial coded slide image in which a pattern of the spatial coded slide image is aligned along epipolar lines at an output of a projector in a system for 3D measurement, comprising: obtaining distortion vectors for projector coordinates, each vector representing a distortion from predicted coordinates caused by the projector; retrieving an ideal pattern image which is an ideal image of the spatial coded pattern aligned on ideal epipolar lines; creating a real slide image by, for each real pixel coordinates of the real slide image, retrieving a current distortion vector; removing distortion from the real pixel coordinates using the current distortion vector to obtain ideal pixel coordinates in the ideal pattern image; extracting a pixel value at the ideal pixel coordinates in the ideal pattern image; copying the pixel value at the real pixel coordinates in the real slide image.

Owner:CREAFORM INC

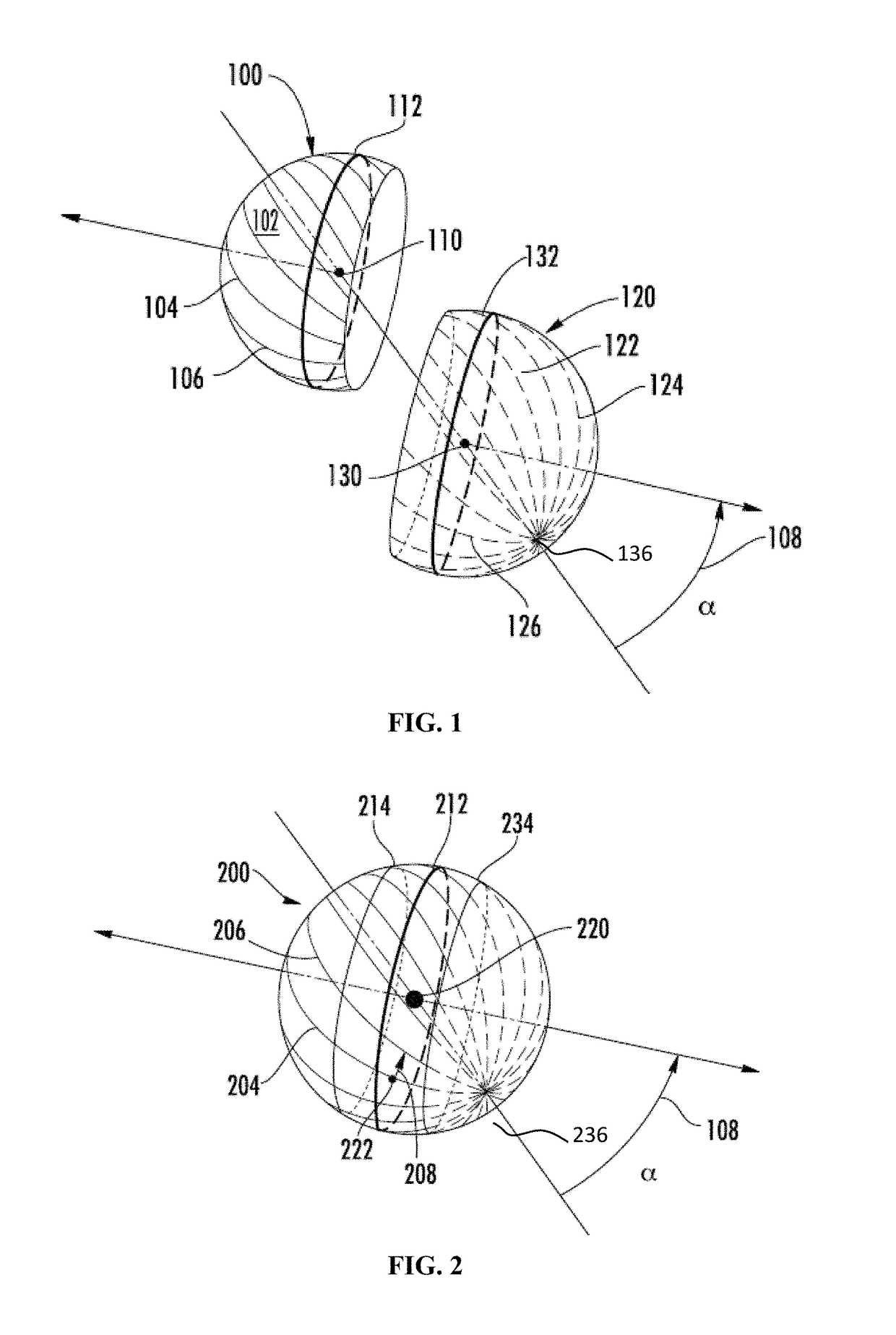

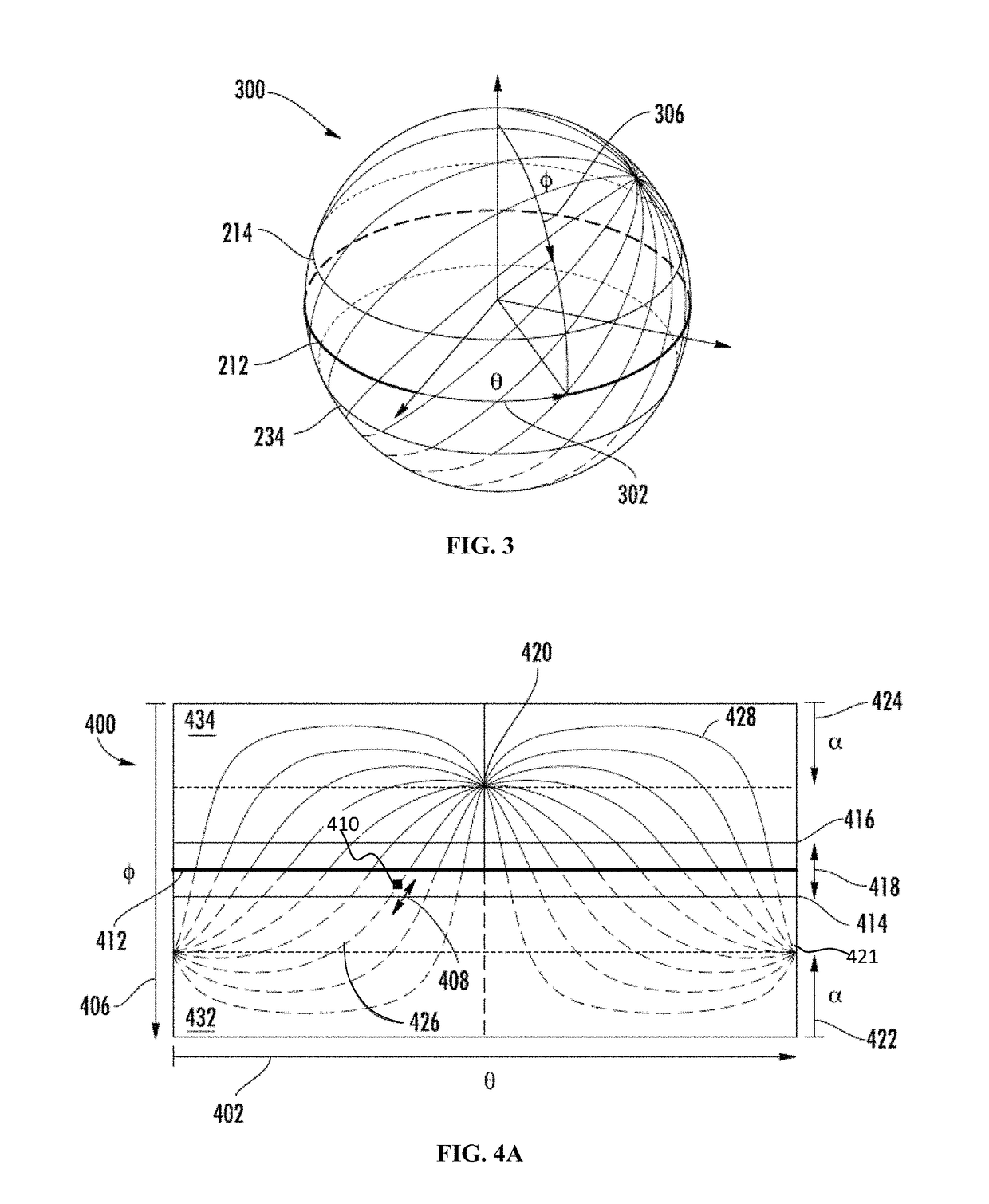

Catadioptric single camera systems having radial epipolar geometry and methods and means thereof

InactiveUS7420750B2High depth and spatial resolutionImage analysisCharacter and pattern recognitionPlane mirrorStereo image

Catadioptric single camera systems capable of sampling the lightfield of a scene from a locus of circular viewpoints and the methods thereof are described. The epipolar lines of the system are radial, and the systems have foveated vision characteristics. A first embodiment of the invention is directed to a camera capable of looking at a scene through a cylinder with a mirrored inside surface. A second embodiment uses a truncated cone with a mirrored inside surface. A third embodiment uses a first truncated cone with a mirrored outside surface and a second truncated cone with a mirrored inside surface. A fourth embodiment of the invention uses a planar mirror with a truncated cone with a mirrored inside surface. The present invention allows high quality depth information to be gathered by capturing stereo images having radial epipolar lines in a simple and efficient method.

Owner:THE TRUSTEES OF COLUMBIA UNIV IN THE CITY OF NEW YORK

Method and system for alignment of a pattern on a spatial coded slide image

A method for preparing a spatial coded slide image in which a pattern of the spatial coded slide image is aligned along epipolar lines at an output of a projector in a system for 3D measurement, comprising: obtaining distortion vectors for projector coordinates, each vector representing a distortion from predicted coordinates caused by the projector; retrieving an ideal pattern image which is an ideal image of the spatial coded pattern aligned on ideal epipolar lines; creating a real slide image by, for each real pixel coordinates of the real slide image, retrieving a current distortion vector; removing distortion from the real pixel coordinates using the current distortion vector to obtain ideal pixel coordinates in the ideal pattern image; extracting a pixel value at the ideal pixel coordinates in the ideal pattern image; copying the pixel value at the real pixel coordinates in the real slide image.

Owner:CREAFORM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com