Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

453 results about "Virtual position" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A virtual job refers to a job that can be performed outside of an employer’s office. Also known as remote, work from home, or telecommuting jobs, these positions have become increasingly common because of technology like telephones, internet, email, and video conference calls, which help to facilitate employer-employee communication.

Method and apparatus for synthesizing new video and/or still imagery from a collection of real video and/or still imagery

ActiveUS7085409B2Quality improvementIncrease speedImage enhancementImage analysisViewpointsVirtual position

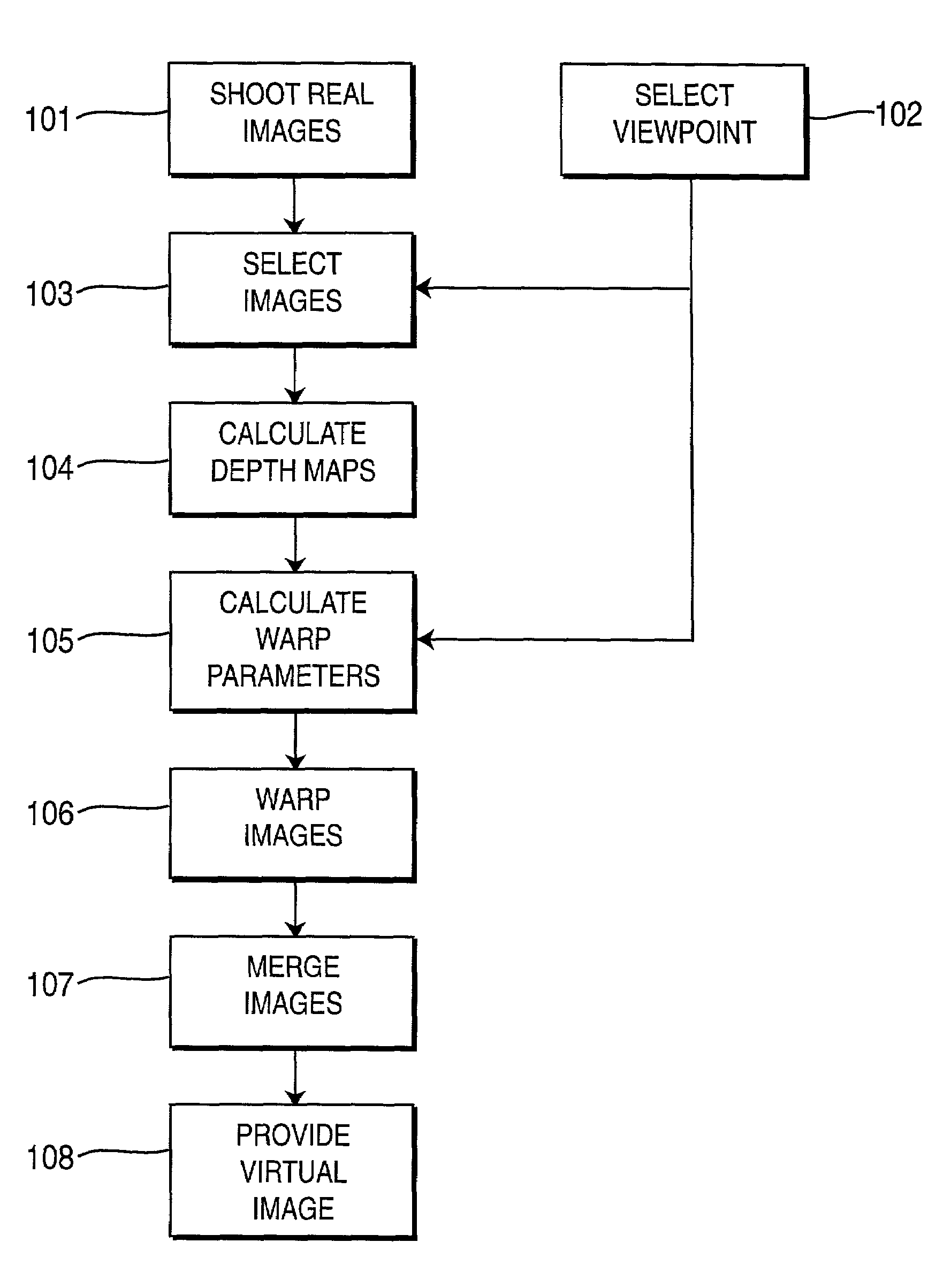

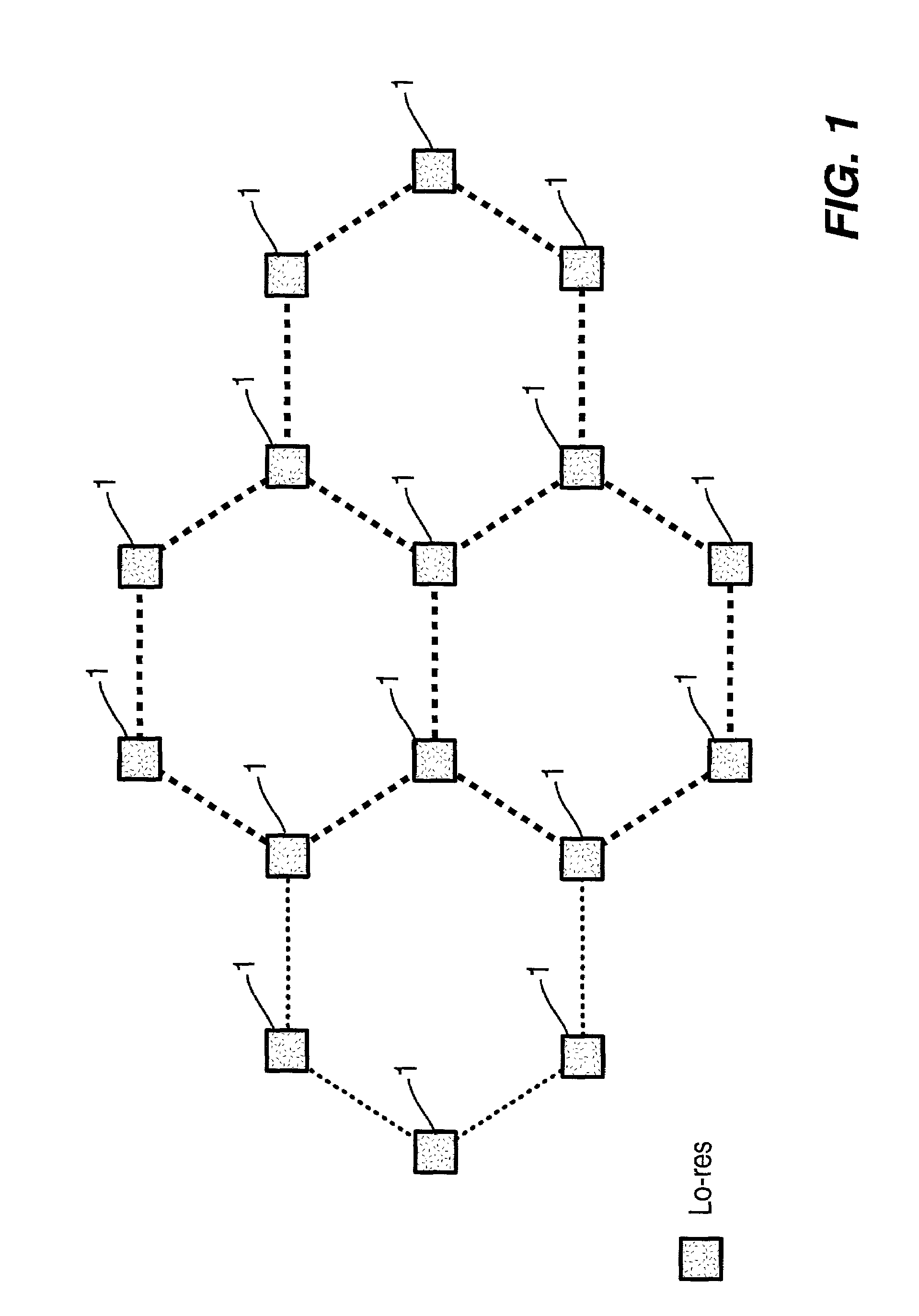

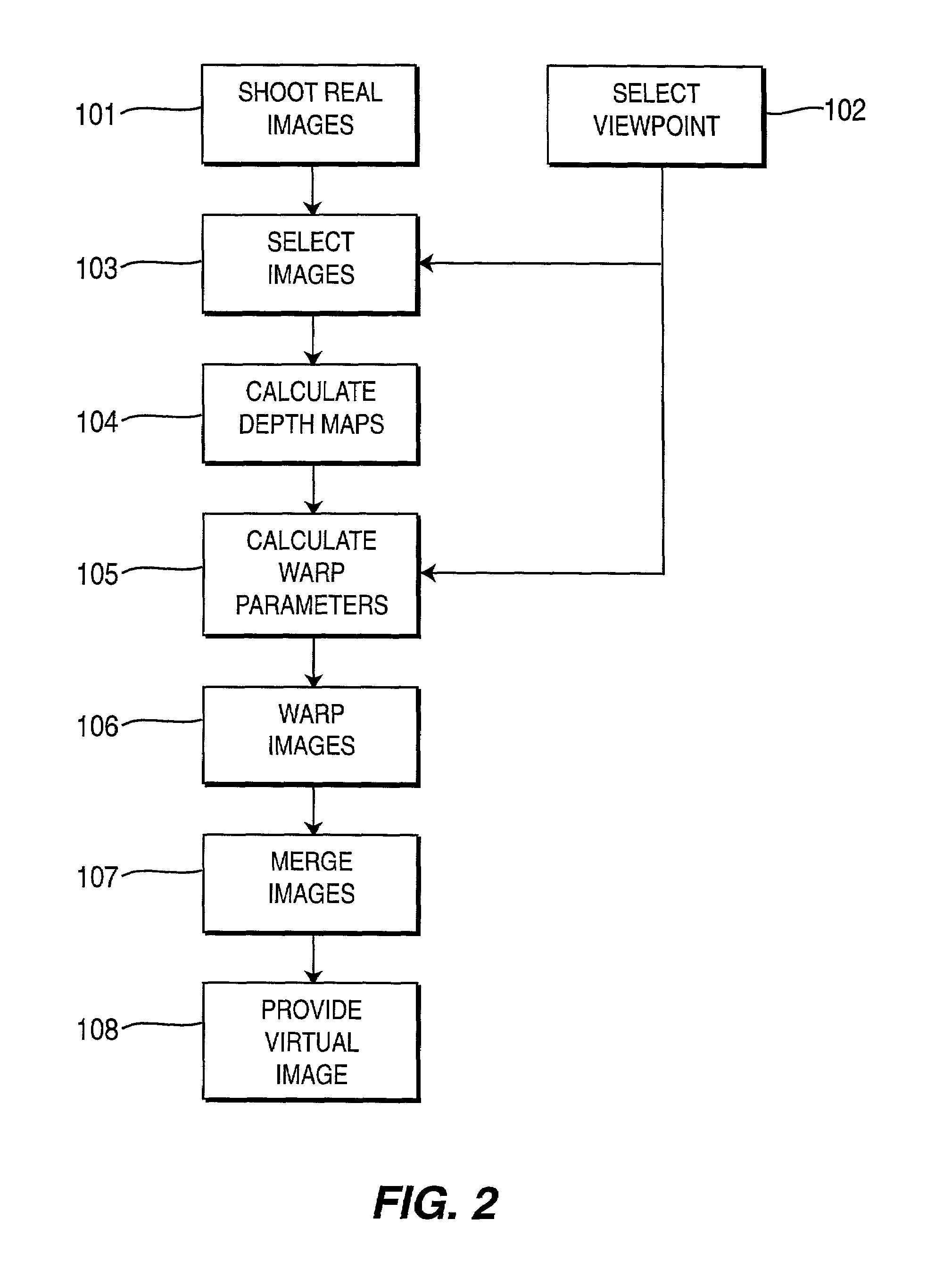

An image-based tele-presence system forward warps video images selected from a plurality fixed imagers using local depth maps and merges the warped images to form high quality images that appear as seen from a virtual position. At least two images, from the images produced by the imagers, are selected for creating a virtual image. Depth maps are generated corresponding to each of the selected images. Selected images are warped to the virtual viewpoint using warp parameters calculated using corresponding depth maps. Finally the warped images are merged to create the high quality virtual image as seen from the selected viewpoint. The system employs a video blanket of imagers, which helps both optimize the number of imagers and attain higher resolution. In an exemplary video blanket, cameras are deployed in a geometric pattern on a surface.

Owner:SRI INTERNATIONAL

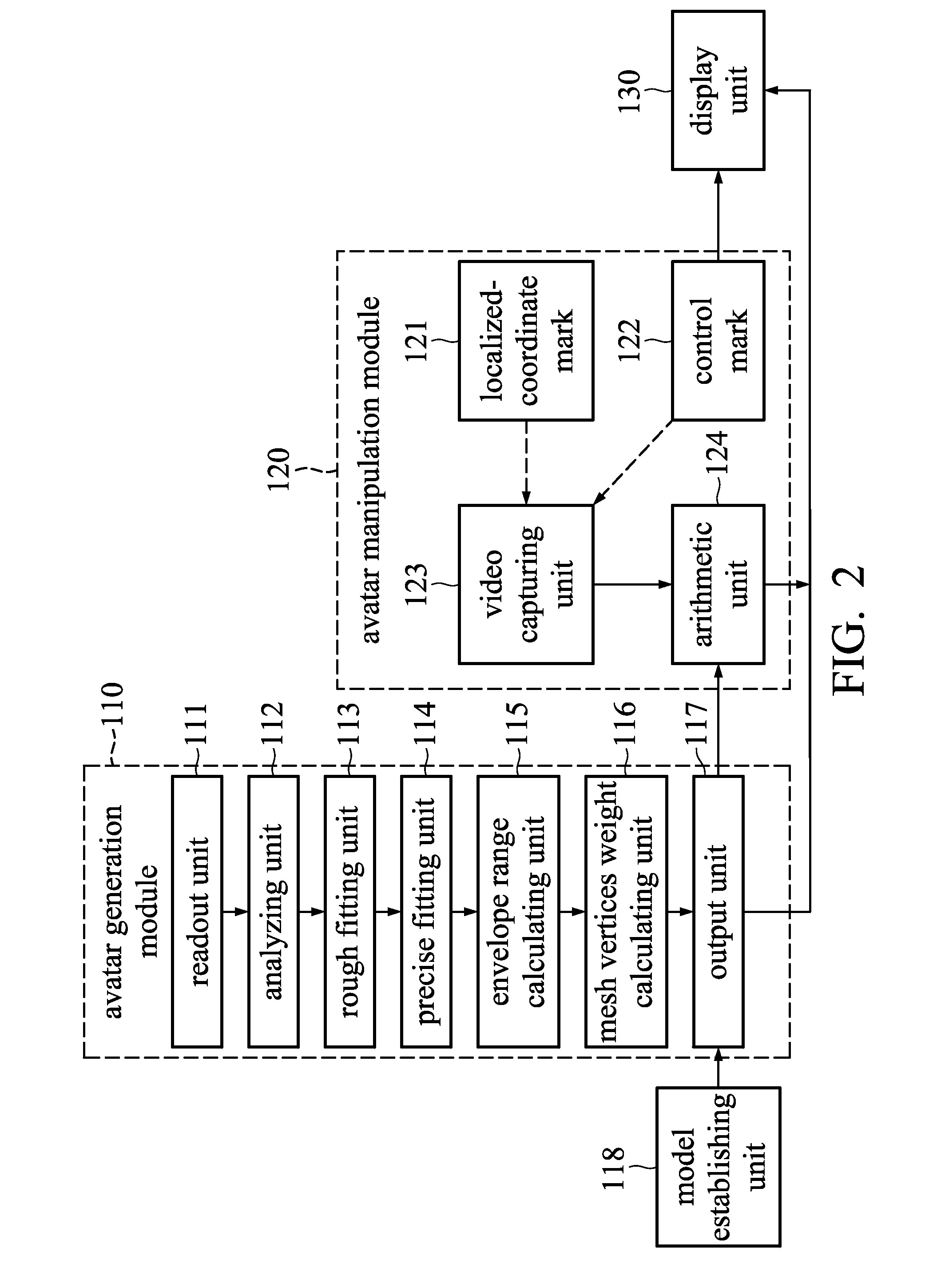

Animation generation systems and methods

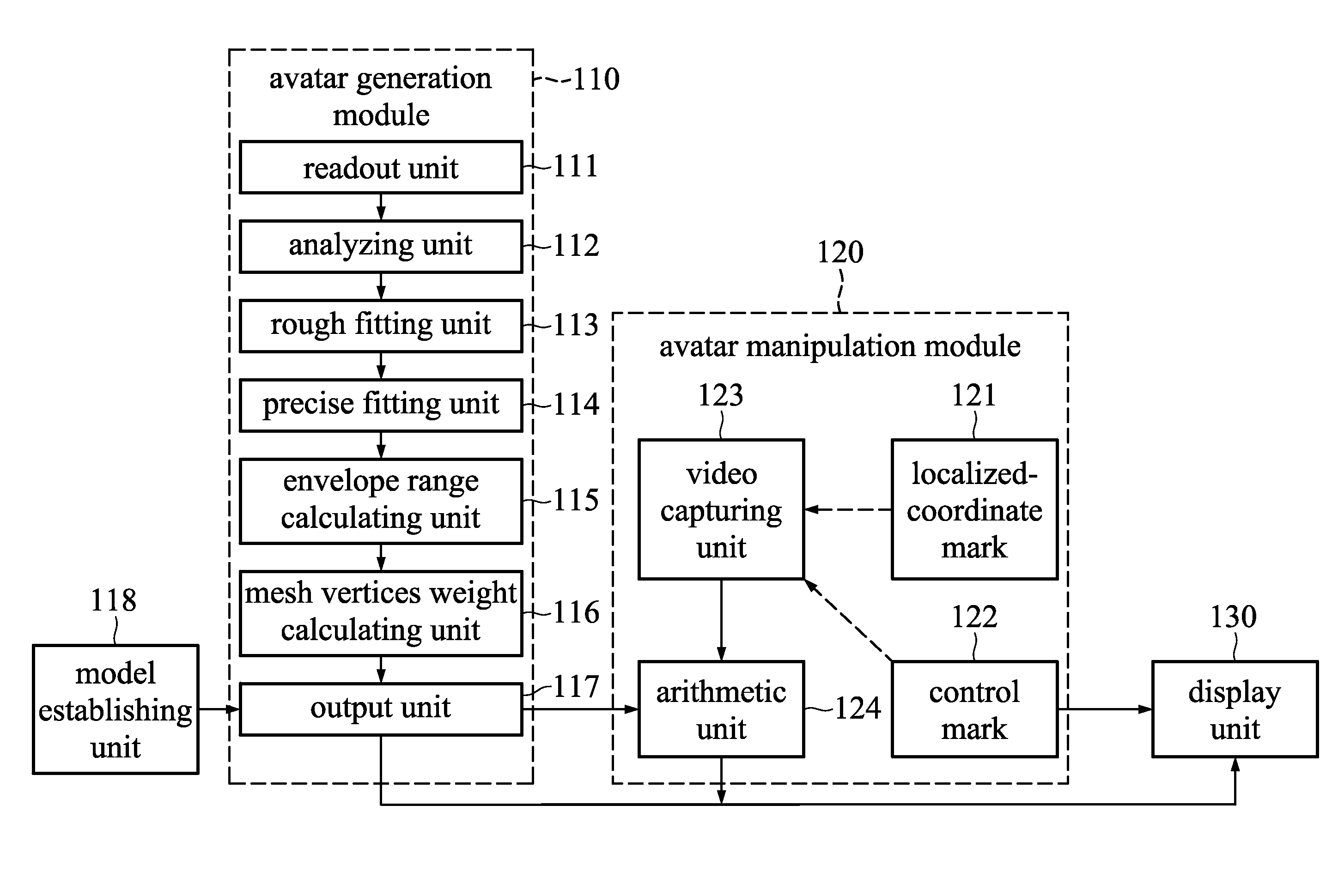

The animation generation system includes: an avatar generation module for generating an avatar in a virtual space, wherein the avatar has a set of skeletons and a skin, and the movable nodes of the set of skeletons are manipulated so that a motion of the skin is induced; and an avatar manipulation module for manipulating the movable nodes, including: a position mark which is moved to at least one first real position in a real space; at least one control mark which is moved to at least one second real position in the real space; and a video capturing unit for capturing the images of the real space; an arithmetic unit for identifying the first real position and the second real position from the images of the real space, and converting the first real position into a first virtual position, and the second real position into a second virtual position.

Owner:IND TECH RES INST

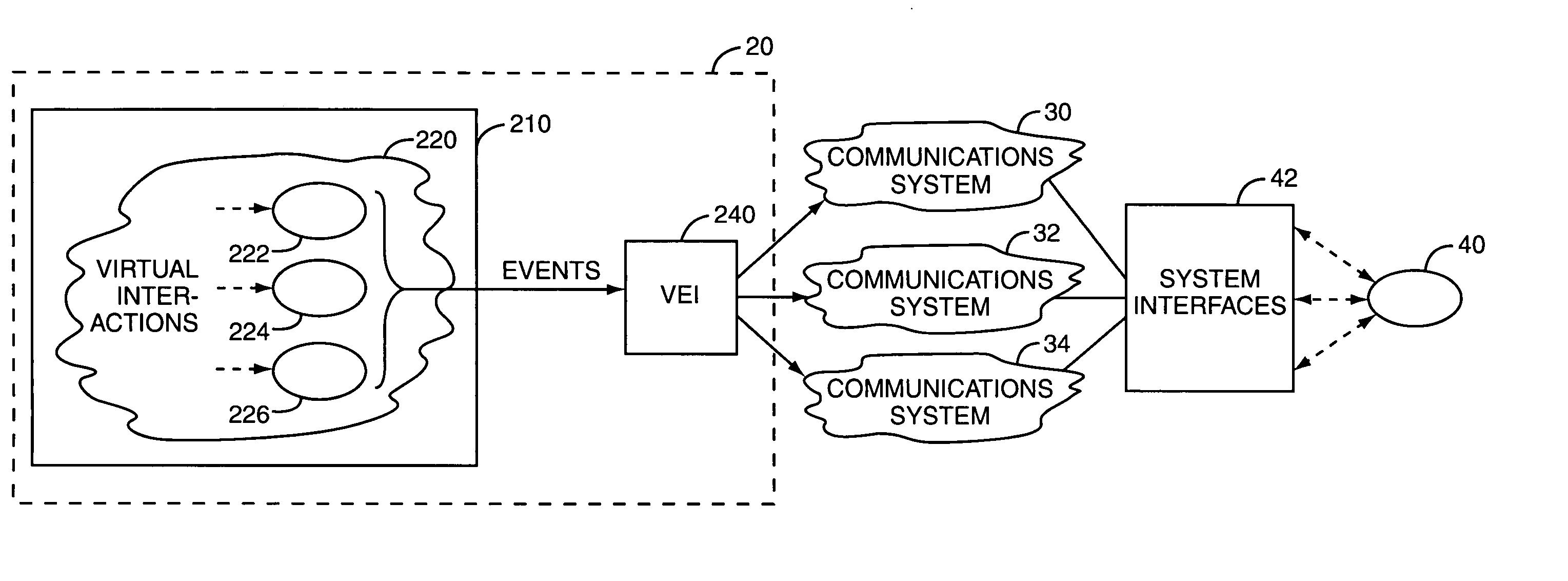

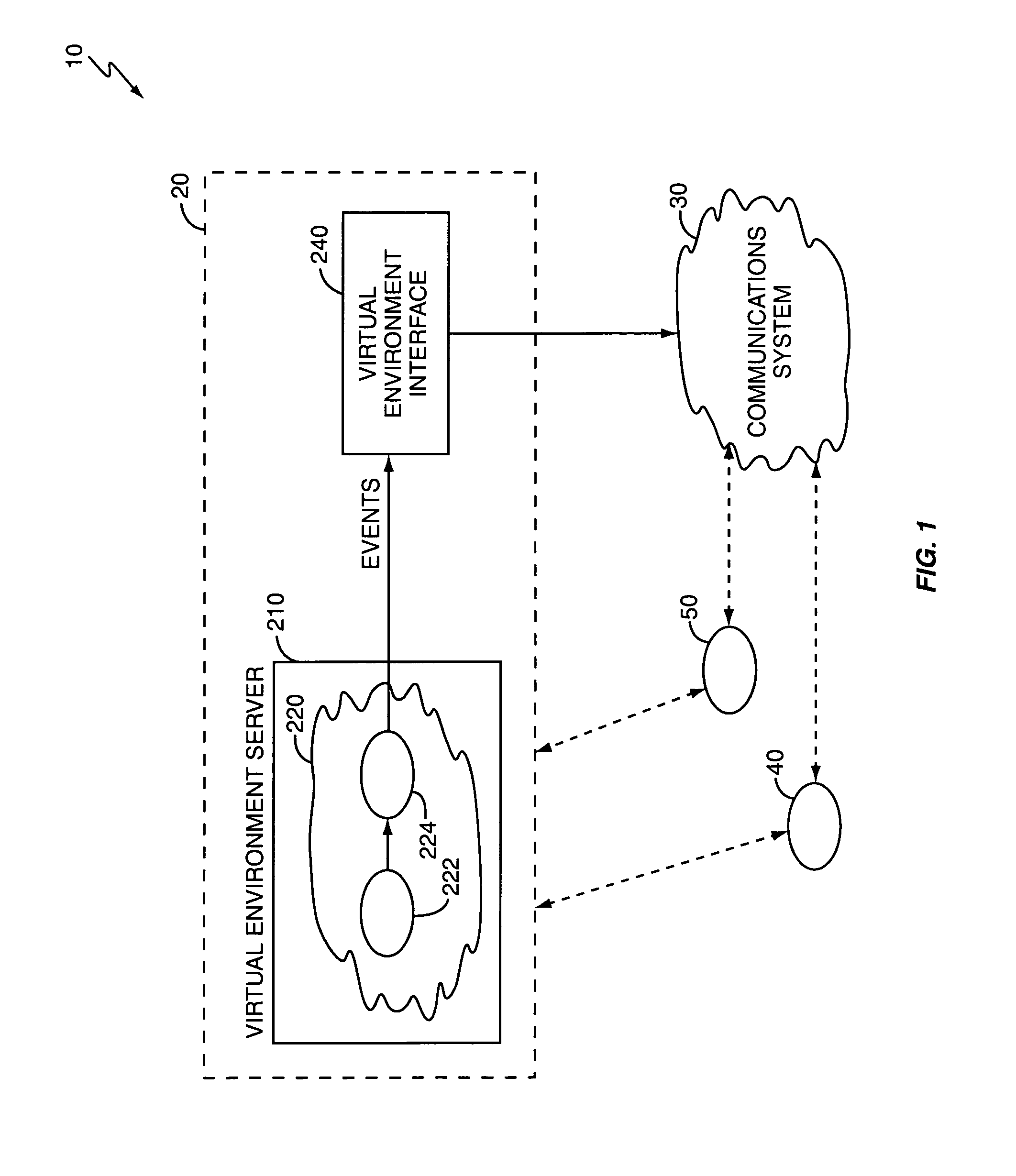

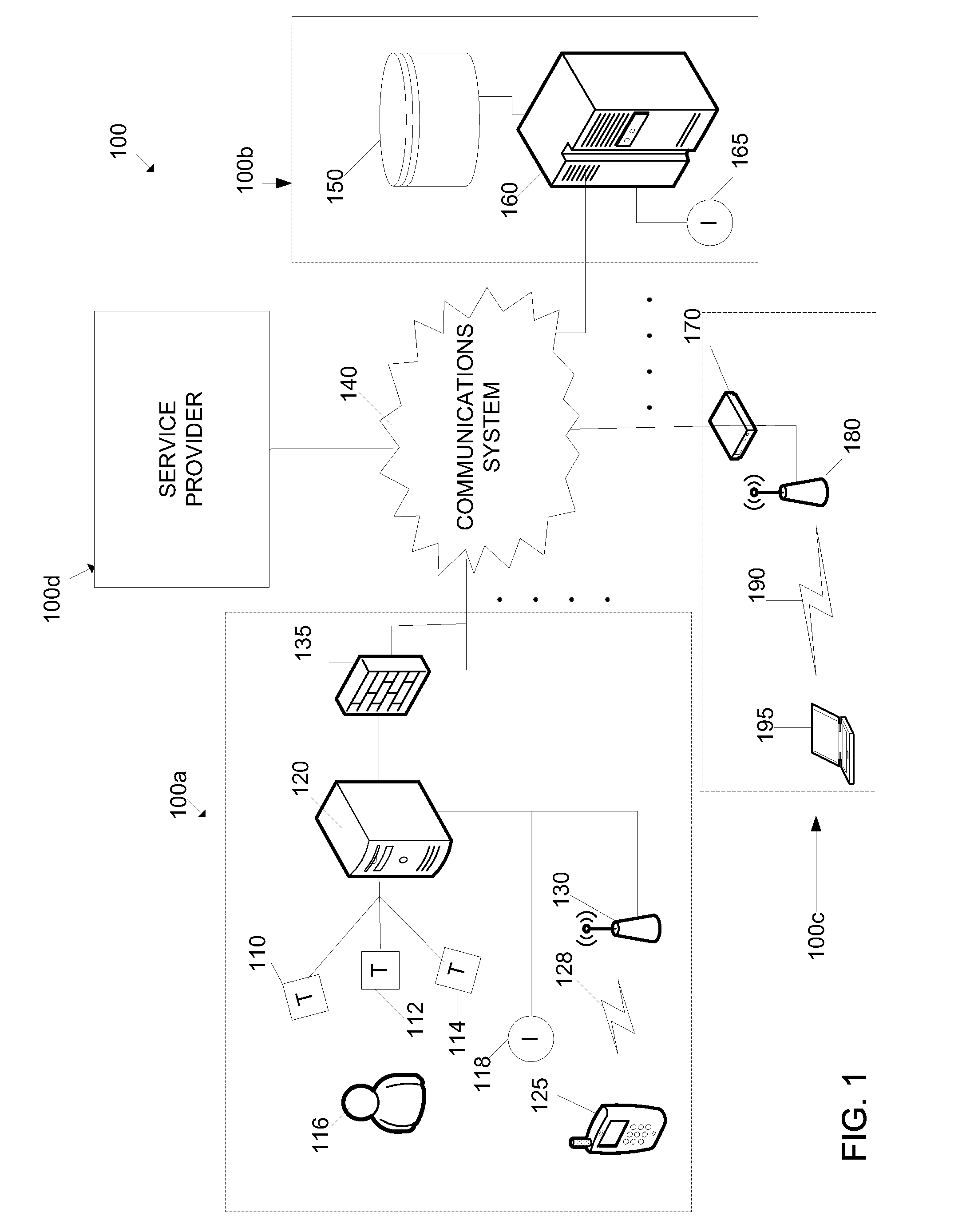

Controlling communications through a virtual reality environment

InactiveUS7036082B1Maximum flexibilitySuitable for communicationProgram controlNetwork connectionsTelecommunications linkCommunications system

A virtual reality system initiates desired real world actions in response to defined events occurring within a virtual environment. A variety of systems, such as communications devices, computer networks, and software applications, may be interfaced with the virtual reality system and made responsive to virtual events. For example, the virtual reality system may trigger a communications system to establish a communications link between people in response to a virtual event. Users, represented as avatars within the virtual environment, generate events by interacting with virtual entities, such as other avatars, virtual objects, and virtual locations. Virtual entities can be associated with specific users, and users can define desired behaviors for associated entities. Behaviors control the real world actions triggered by virtual events. Users can modify these behaviors, and the virtual reality system may change behaviors based on changing conditions, such as time of day or the whereabouts of a particular user.

Owner:AVAYA INC

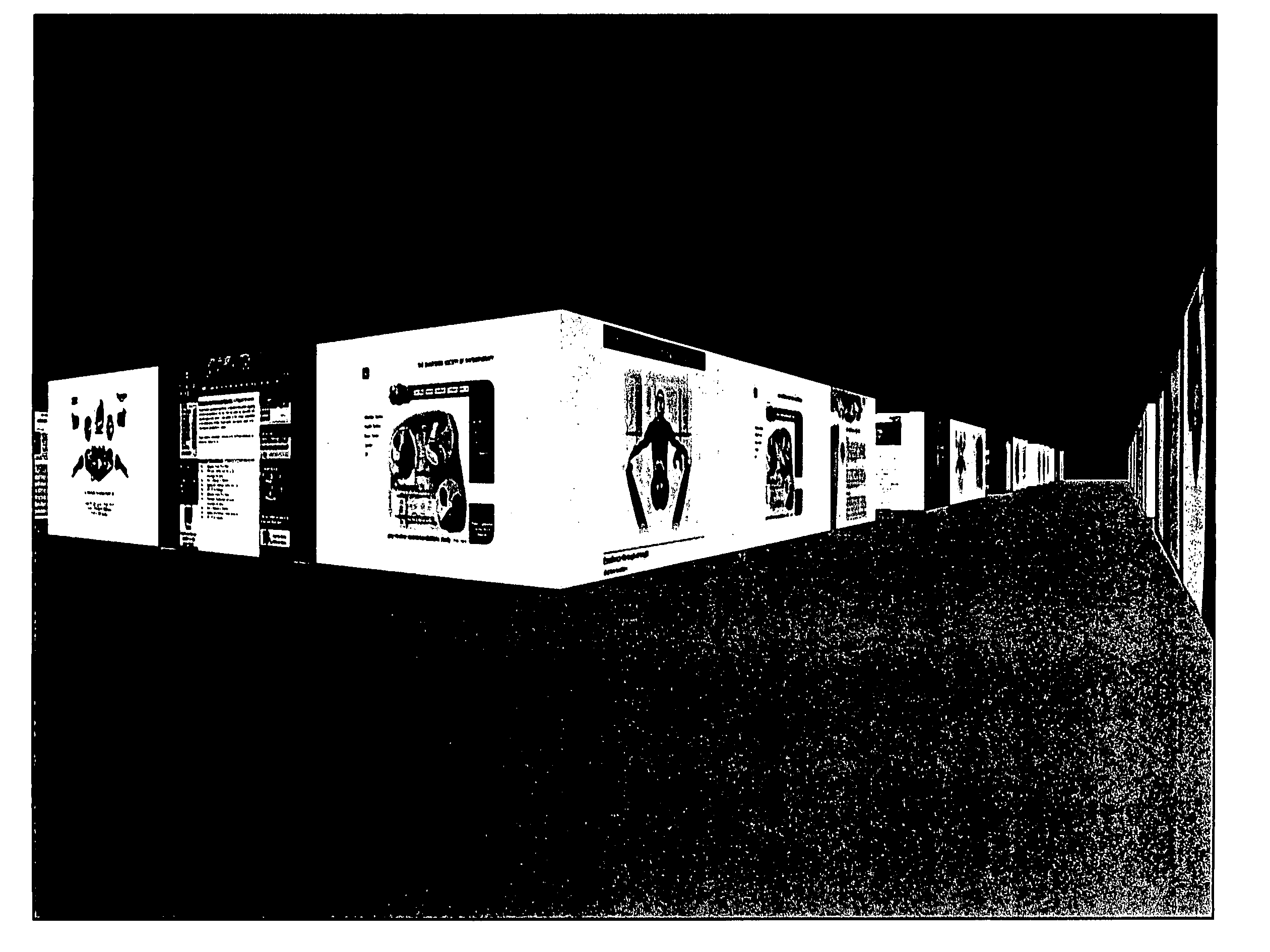

Information display

InactiveUS20050022139A1Precise positioningCathode-ray tube indicatorsWeb data navigationComputer graphics (images)Virtual space

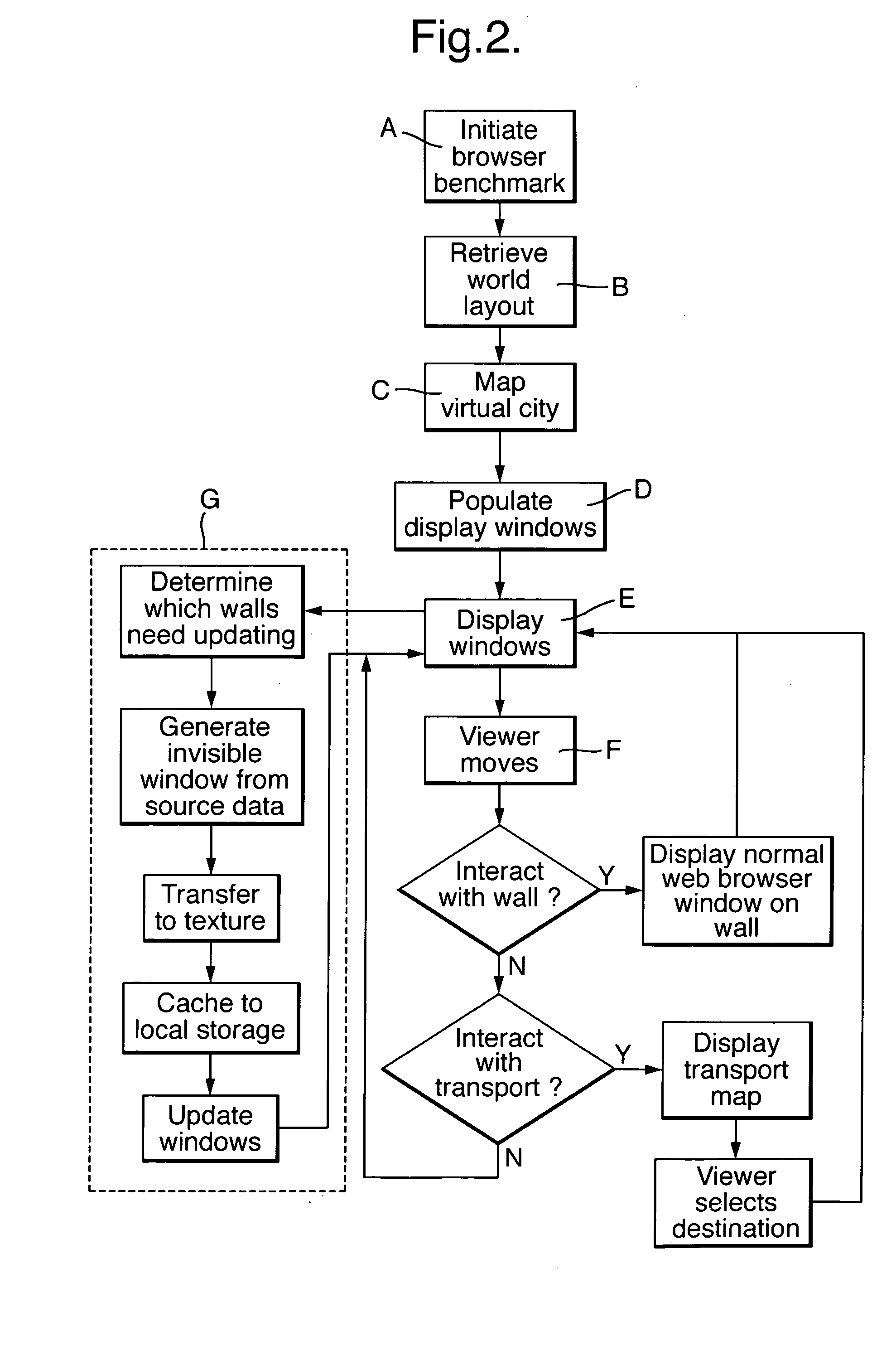

A method for organizing and presenting material content on a display to a viewer, the method comprising: mapping a plurality of display windows within a virtual three-dimensional space so that each display window is allocated a specific and predetermined position in the space, rendering each display window in three-dimensional perspective according to its position and angle relative to a viewer's virtual position in the virtual space, cross-referencing the position of each display window to a storage location of the material content that is designated to be rendered in that particular display window at a particular time based on at least one predetermined condition, allocating at least part of the three-dimensional virtual space to display windows whose content is not chosen or determined by the viewer, selecting, retrieving and preparing material content for possible subsequent display according to a predetermined algorithm, selecting and rendering prepared material content within its cross-referenced display window according to a predetermined algorithm, providing a means of virtual navigation that changes the viewer's position in the space in such a manner as to simulate movement through a plurality of predefined channels in the virtual space.

Owner:THREE B INT

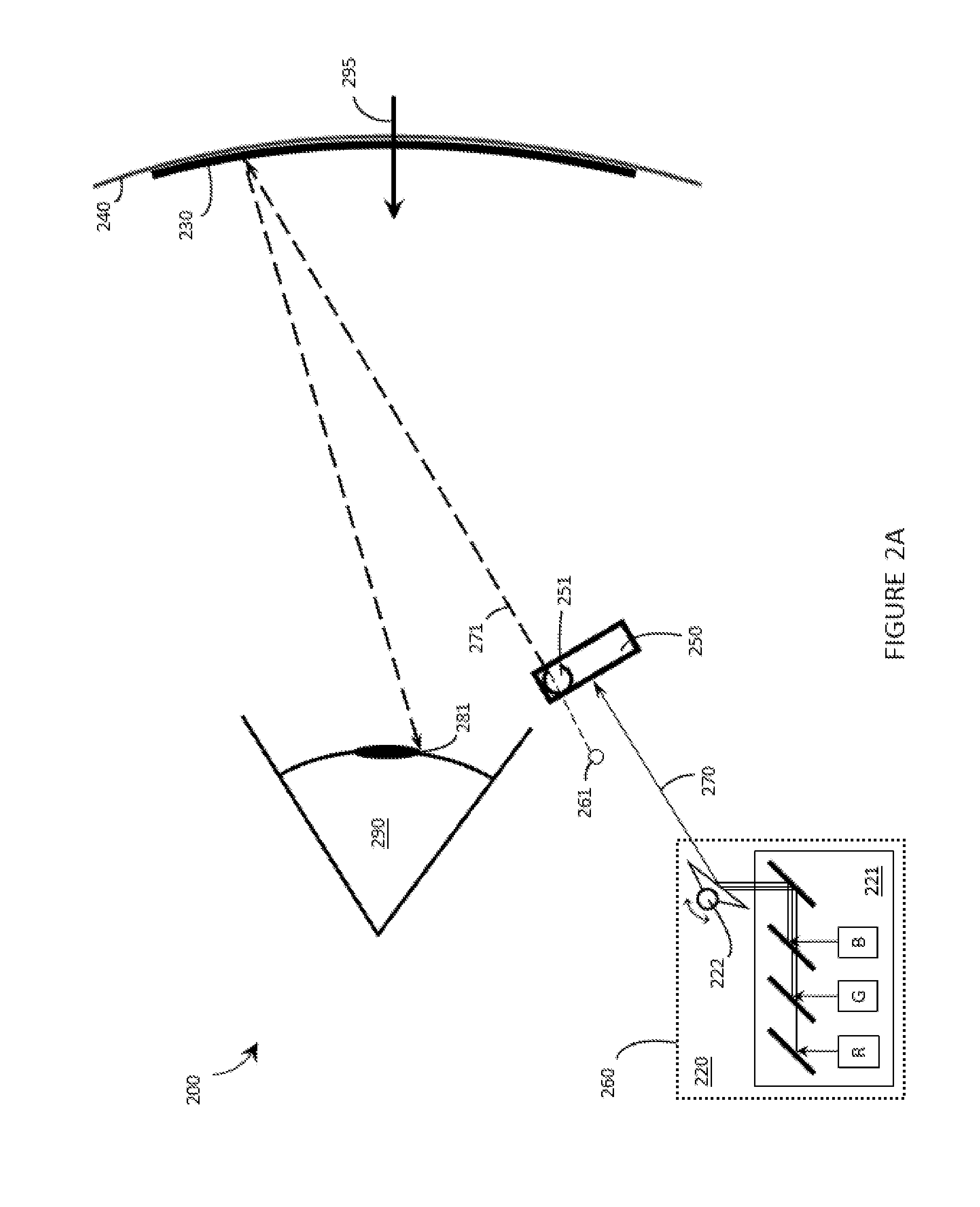

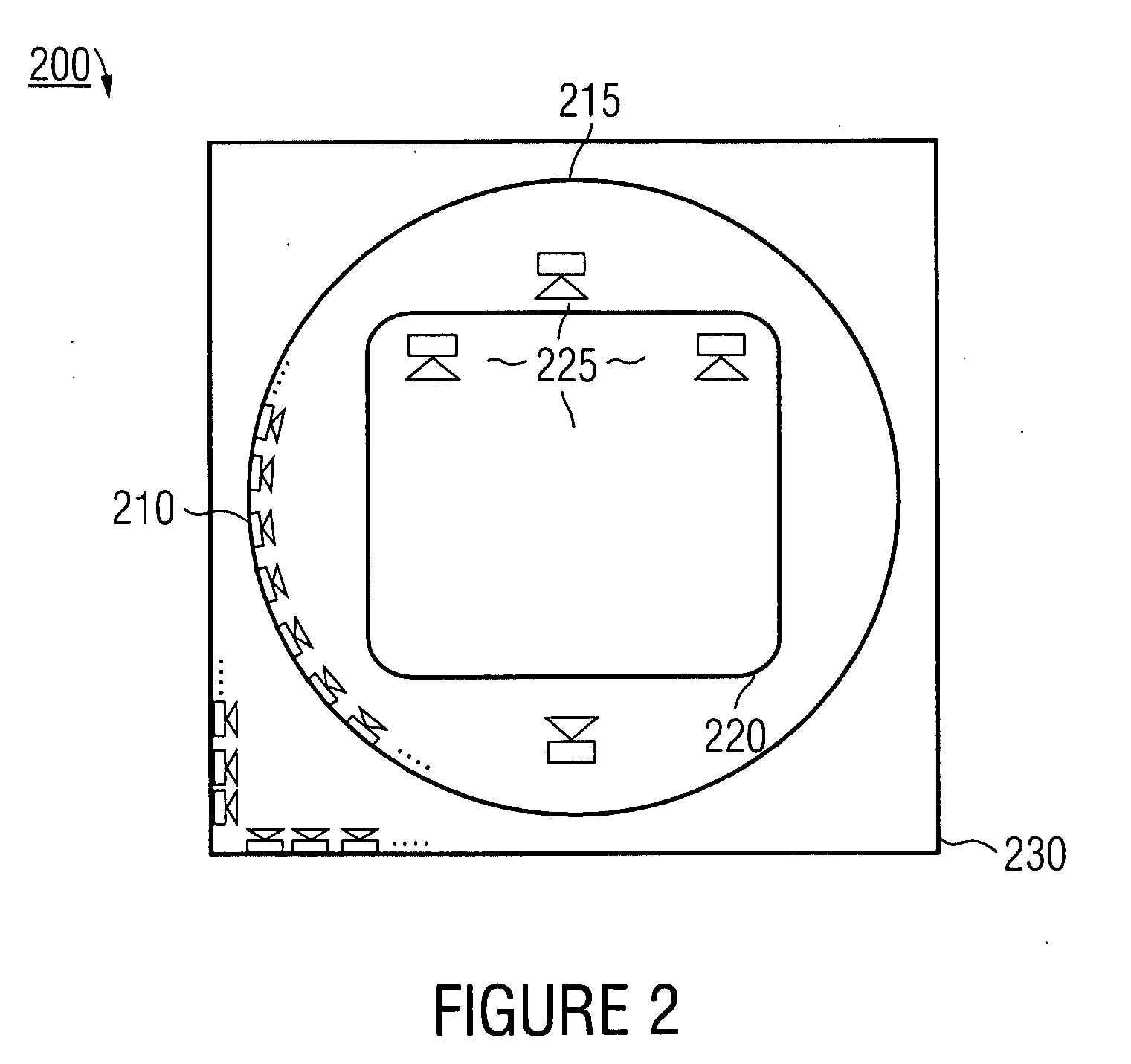

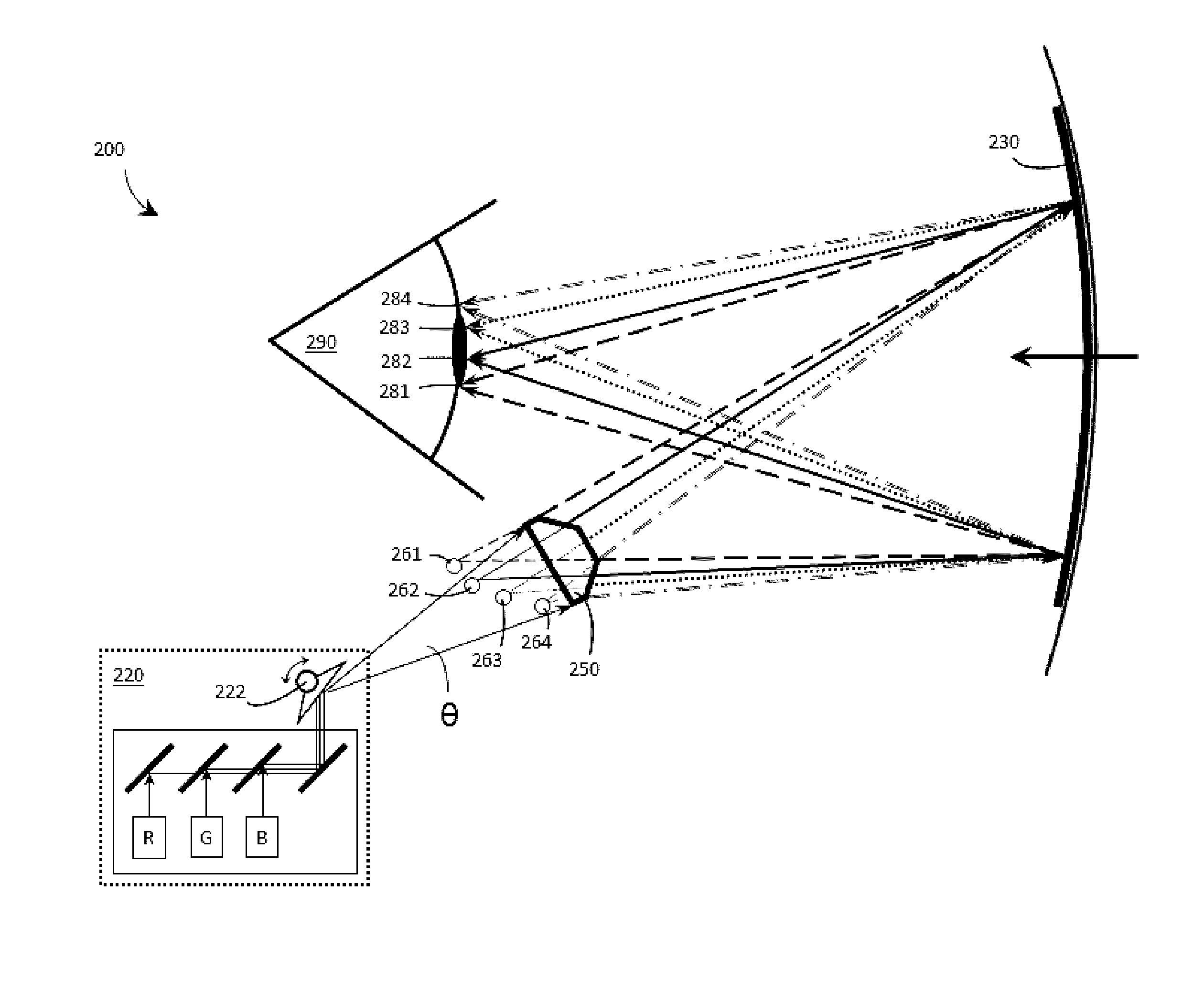

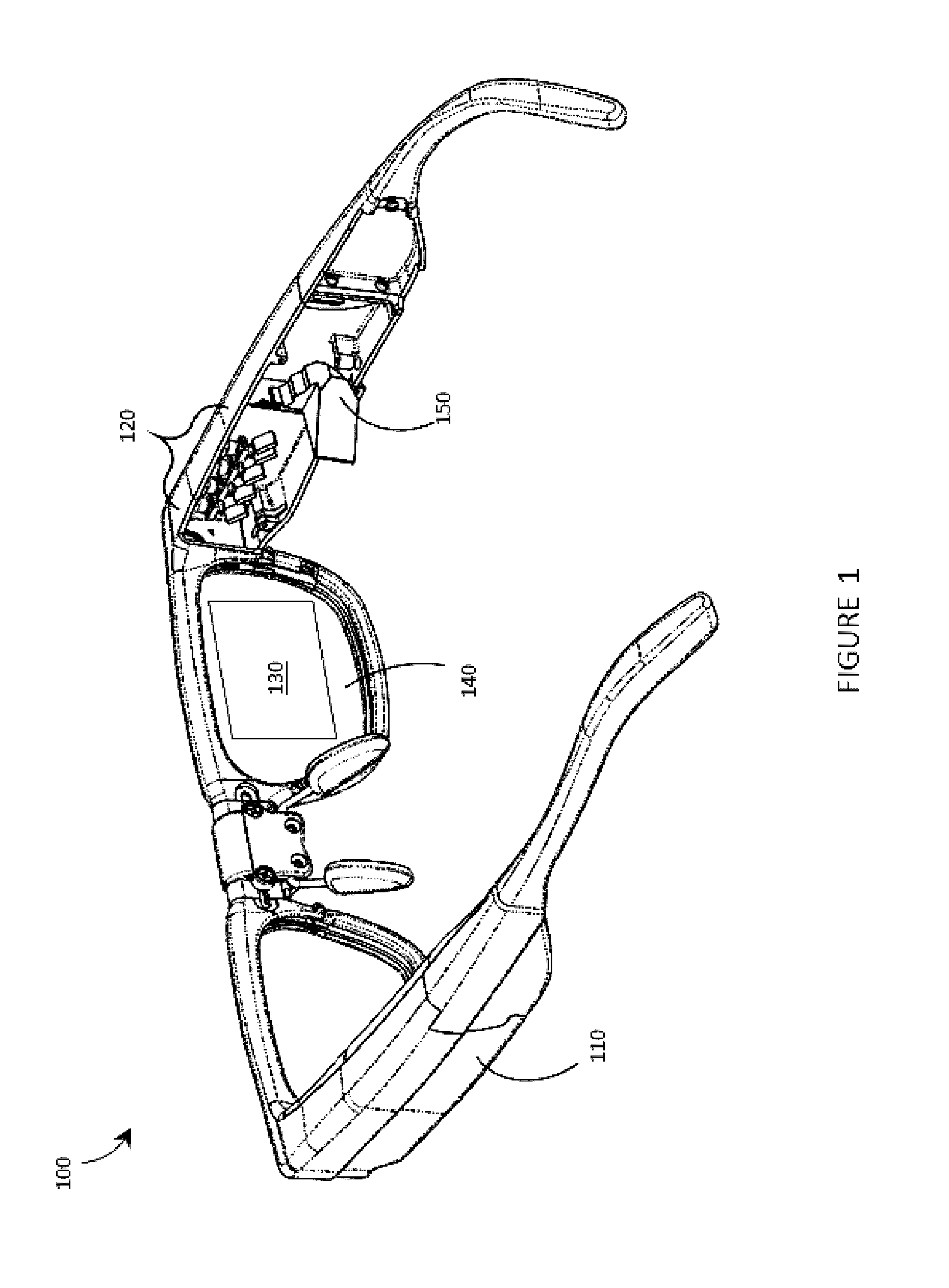

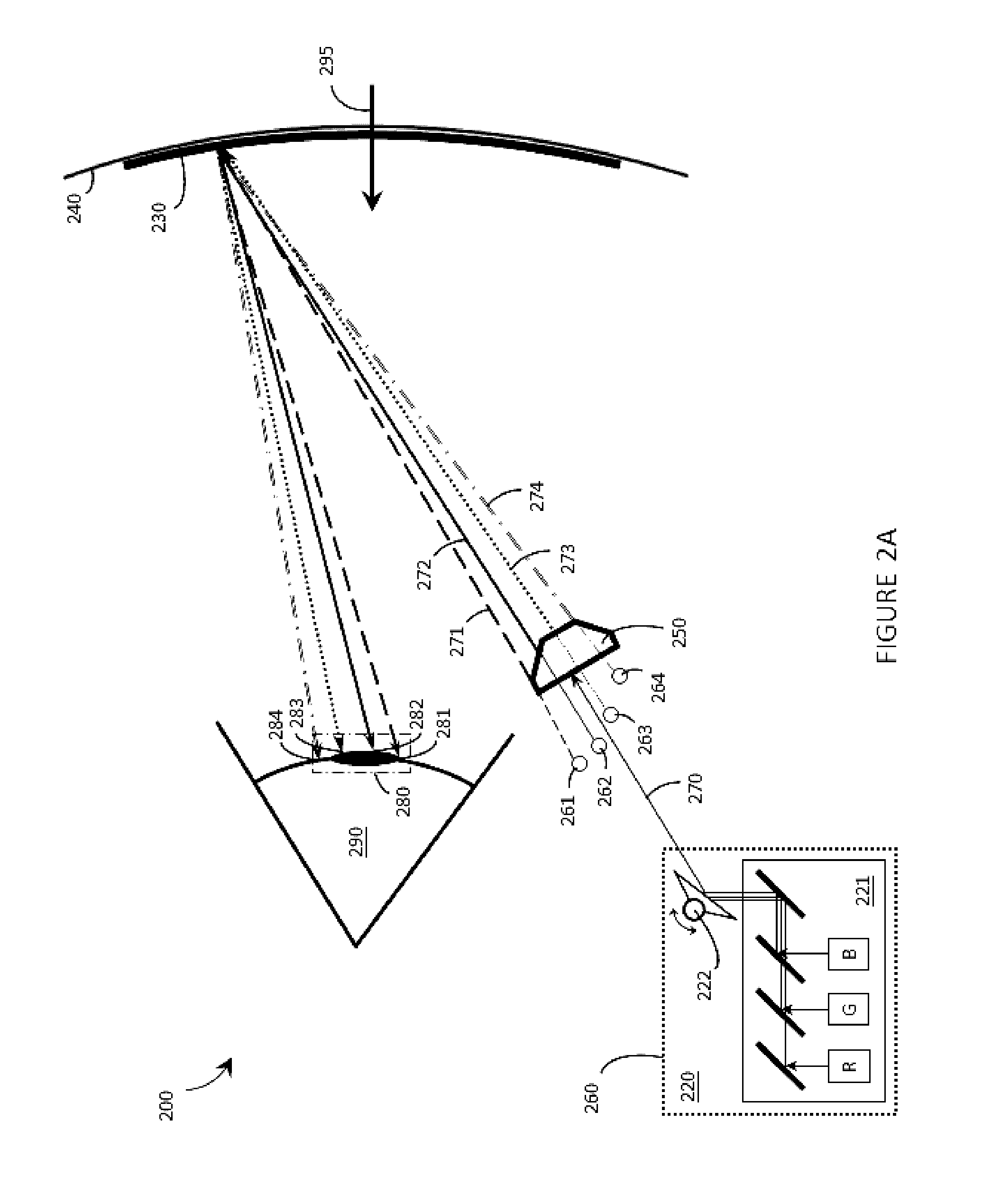

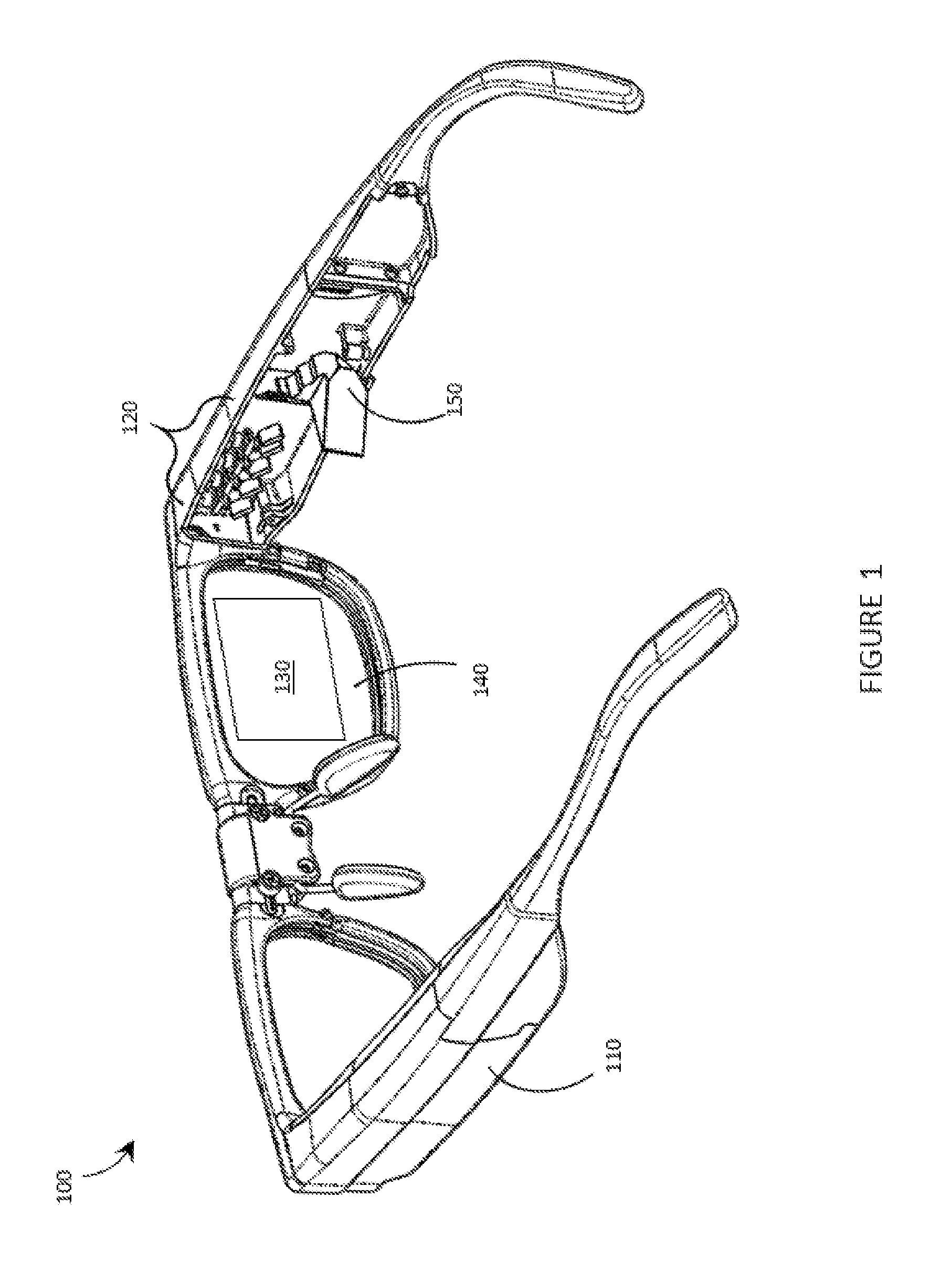

Systems, devices, and methods for eyebox expansion in wearable heads-up displays

ActiveUS20160238845A1Input/output for user-computer interactionStatic indicating devicesHead-up displayExit pupil

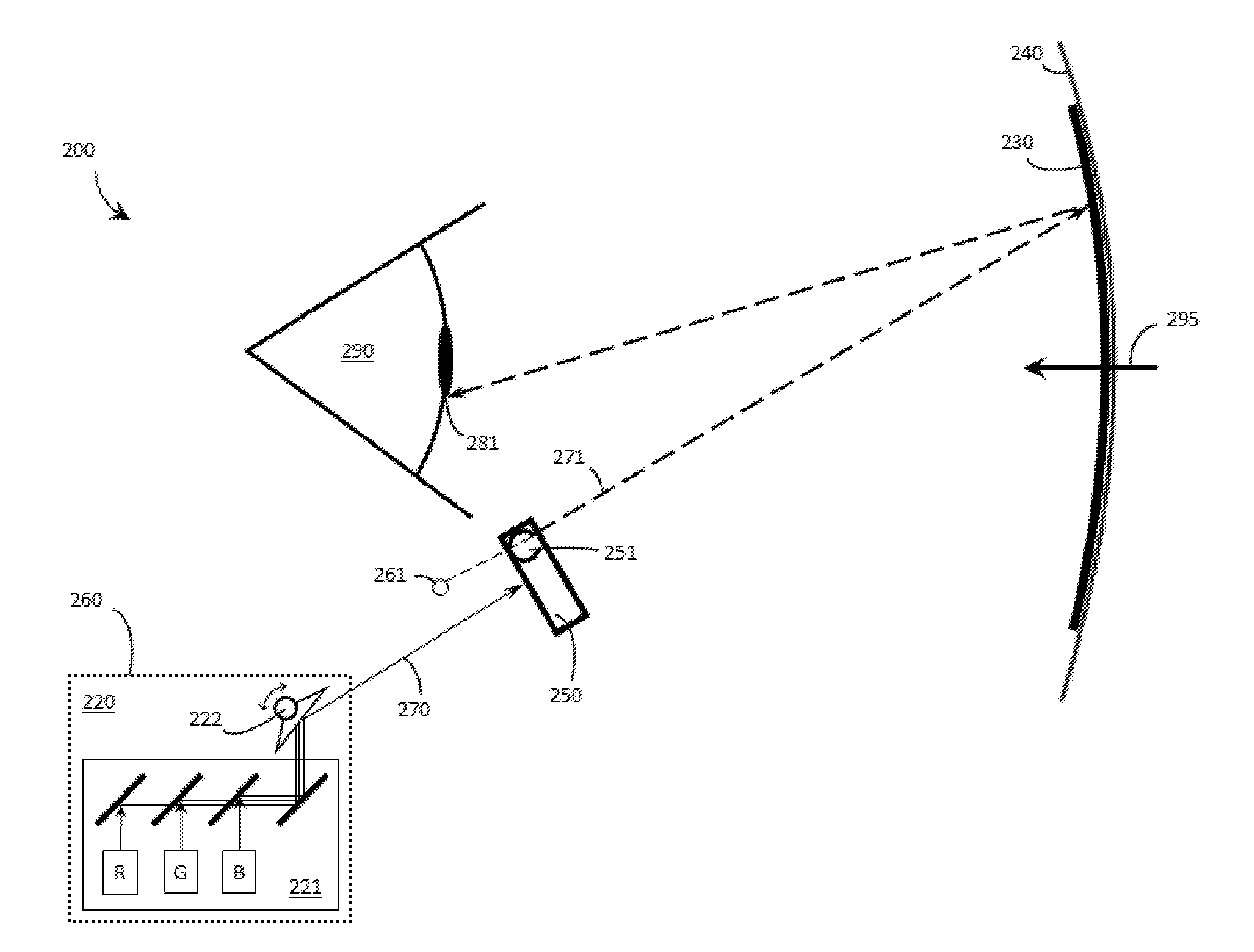

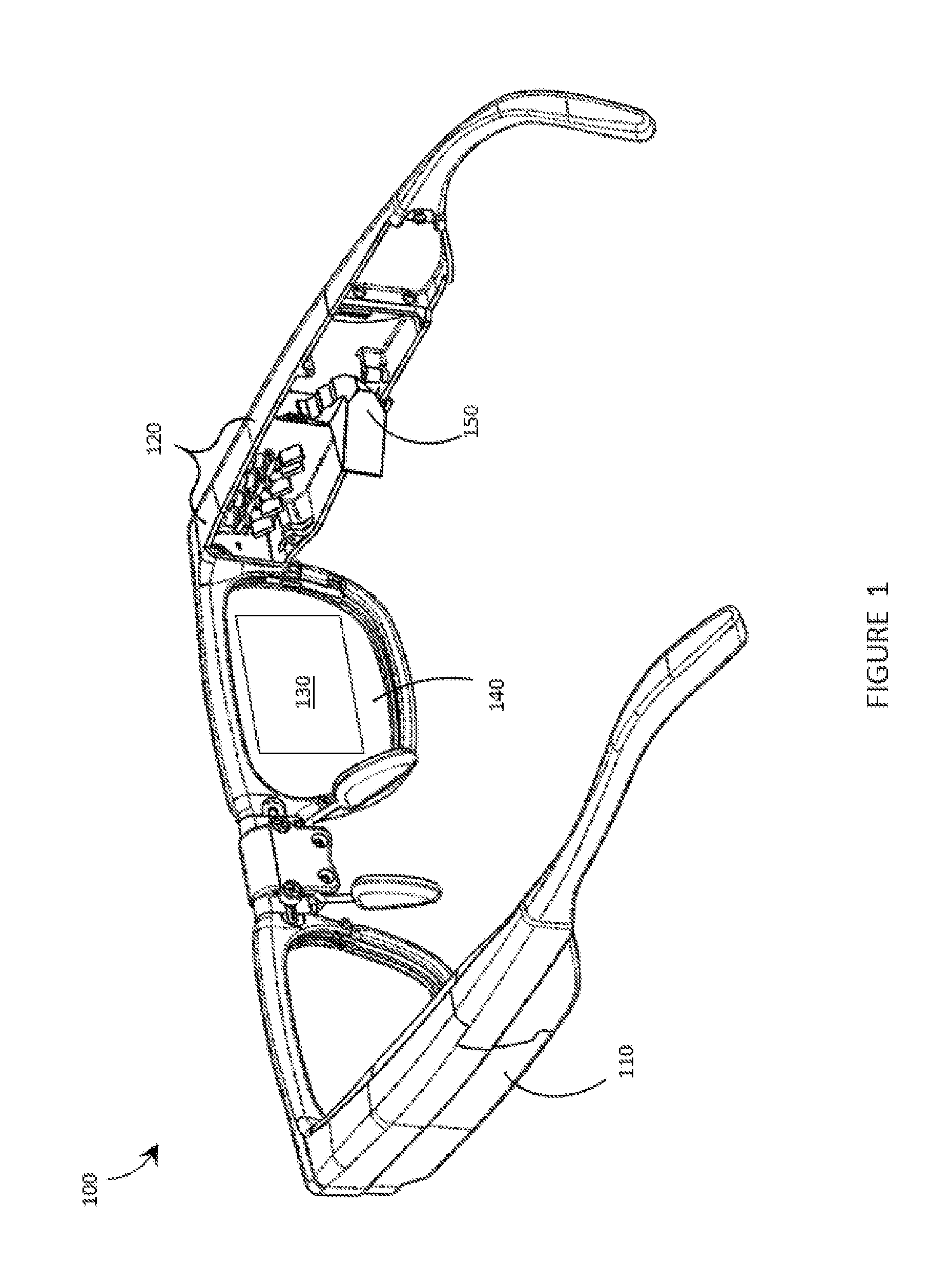

Systems, devices, and methods for eyebox expansion by exit pupil replication in wearable heads-up displays (“WHUDs”) are described. A WHUD includes a scanning laser projector (“SLP”), a holographic combiner, and an exit pupil selector positioned in the optical path therebetween. The exit pupil selector is controllably switchable into and between N different configurations. In each of the N configurations, the exit pupil selector receives a light signal from the SLP and redirects the light signal towards the holographic combiner effectively from a respective one of N virtual positions for the SLP. The holographic combiner converges the light signal to a particular one of N exit pupils at the eye of the user based on the particular virtual position from which the light signal is made to effectively originate. In this way, multiple instances of the exit pupil are distributed over the eye and the eyebox of the WHUD is expanded.

Owner:GOOGLE LLC

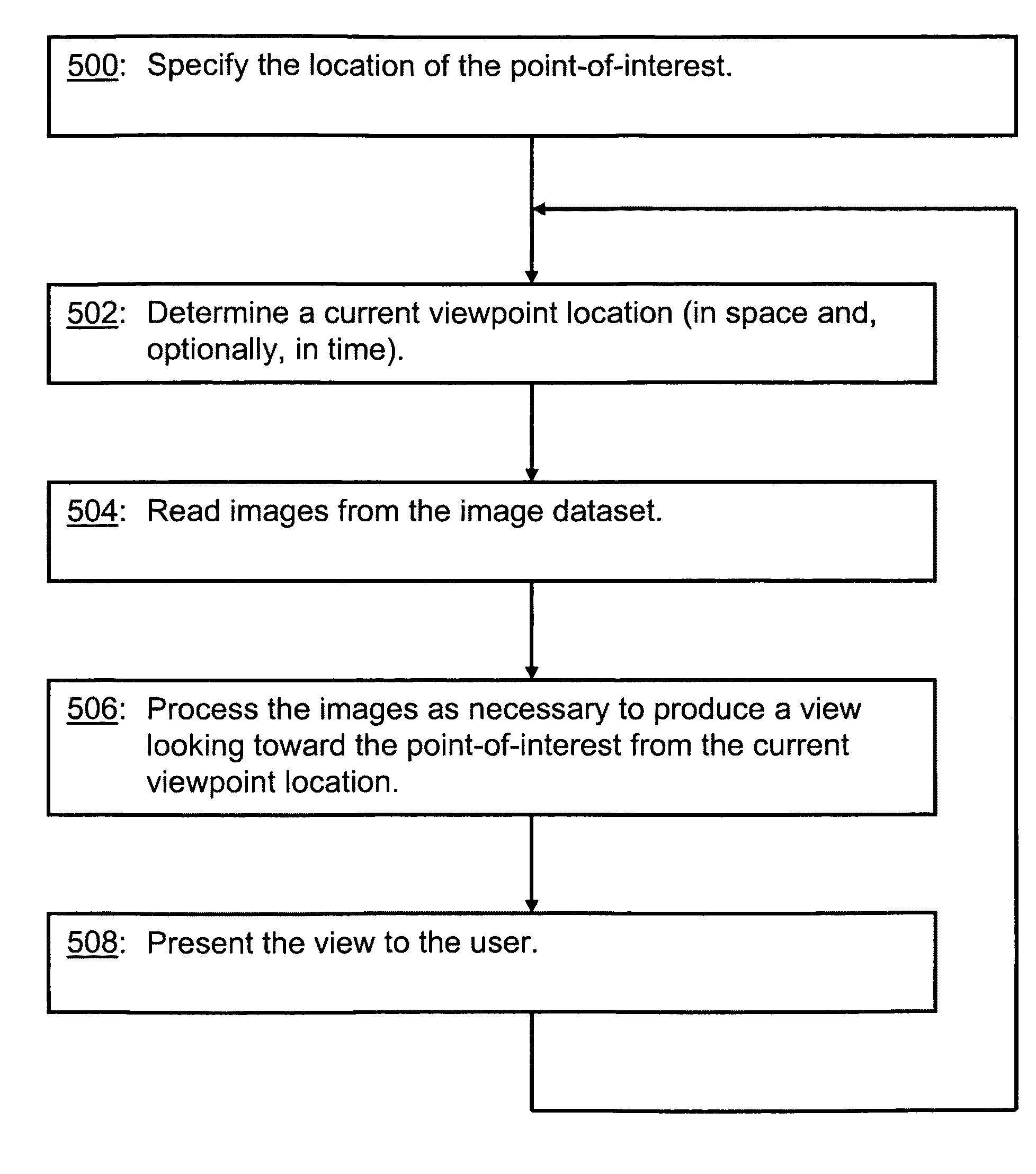

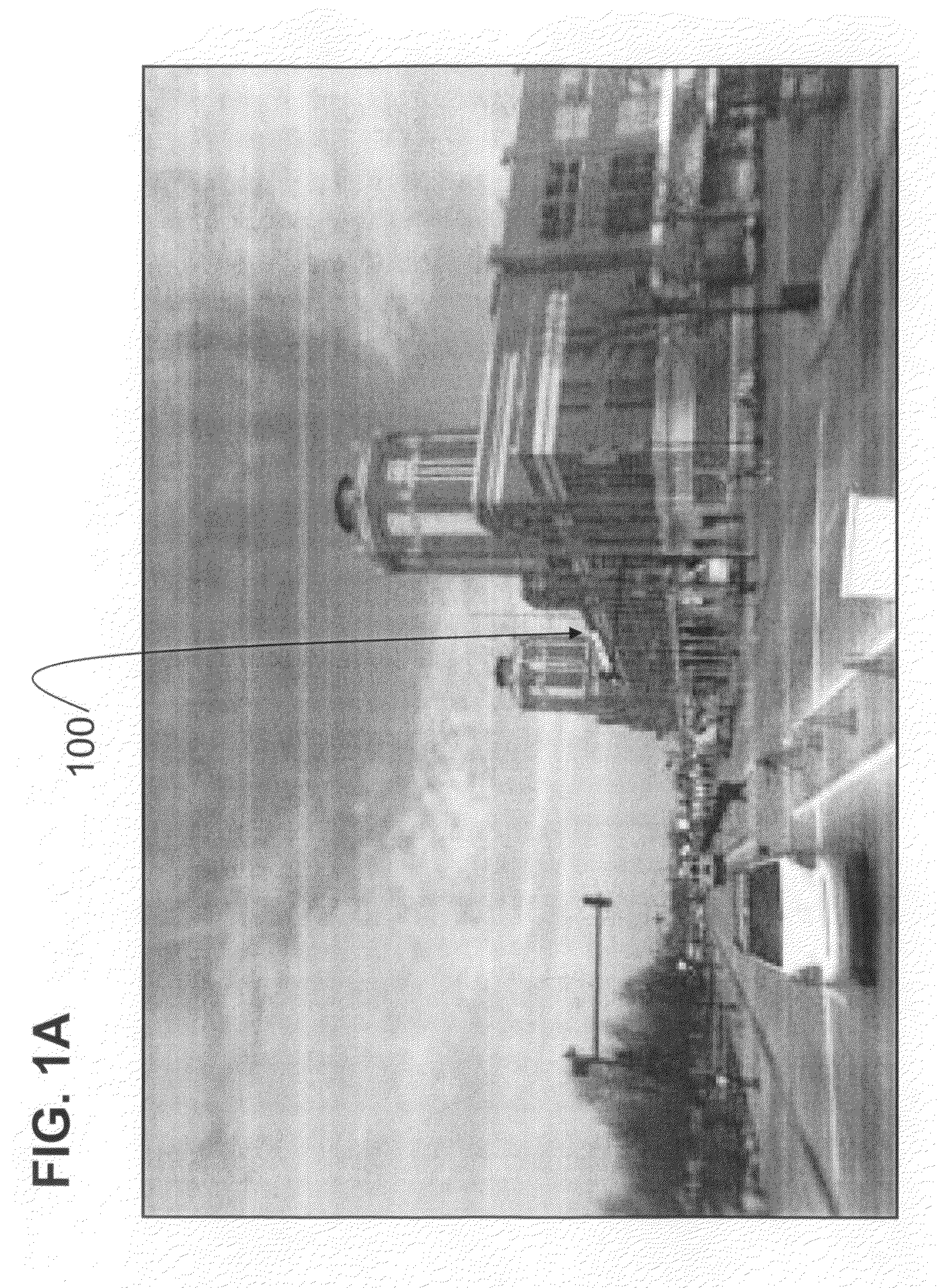

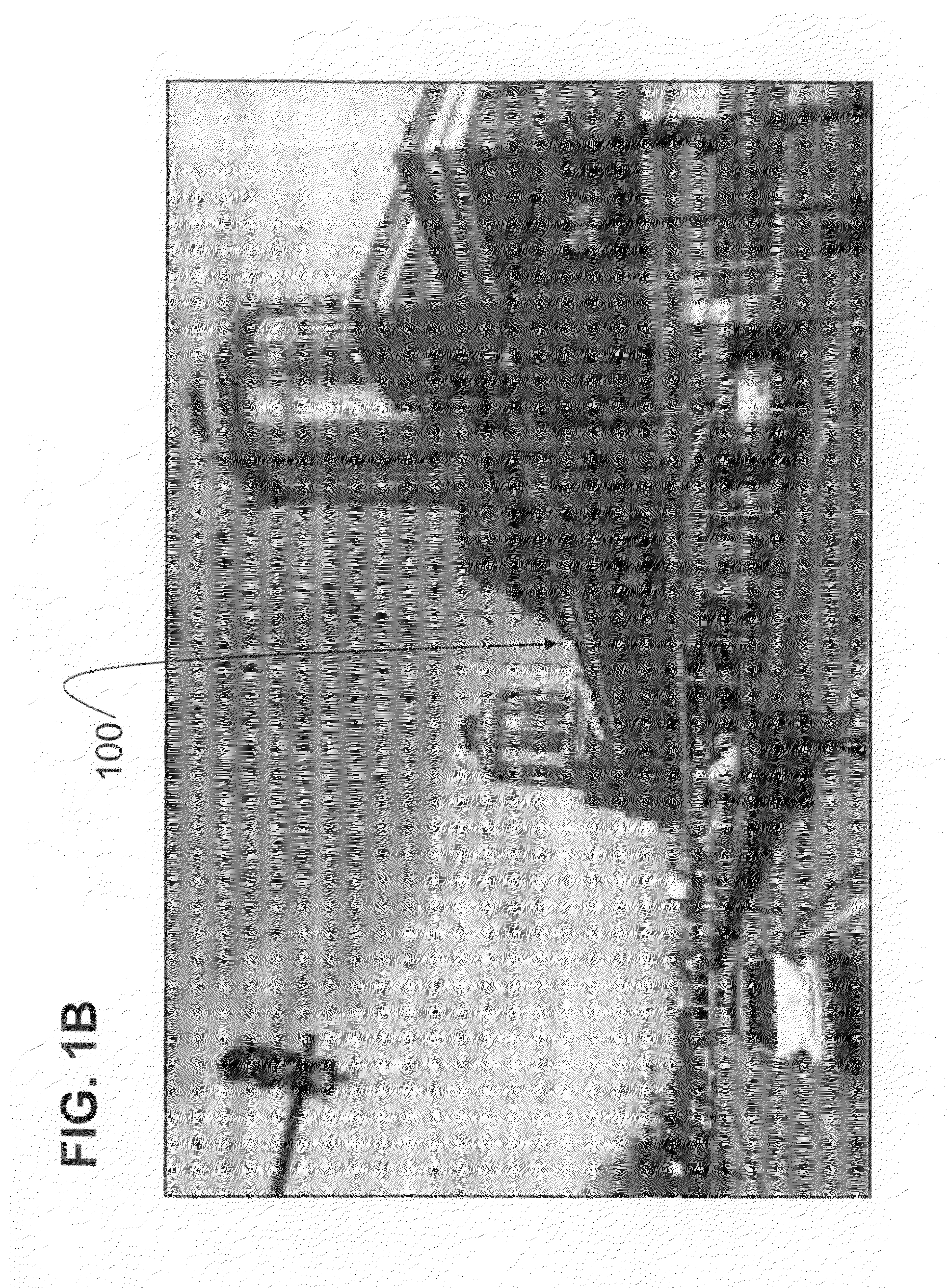

System and method for producing multi-angle views of an object-of-interest from images in an image dataset

ActiveUS20090153549A1Road vehicles traffic controlGeometric image transformationComputer animationData set

Disclosed are a system and method for creating multi-angle views of an object-of-interest from images stored in a dataset. A user specifies the location of an object-of-interest. As the user virtually navigates through the locality represented by the image dataset, his current virtual position is determined. Using the user's virtual position and the location of the object-of-interest, images in the image dataset are selected and interpolated or stitched together, if necessary, to present to the user a view from his current virtual position looking toward the object-of-interest. The object-of-interest remains in the view no matter where the user virtually travels. From the same image dataset, another user can select a different object-of-interest and virtually navigate in a similar manner, with his own object-of-interest always in view. The object-of-interest also can be “virtual,” added by computer-animation techniques to the image dataset. For some image datasets, the user can virtually navigate through time as well as through space.

Owner:HERE GLOBAL BV

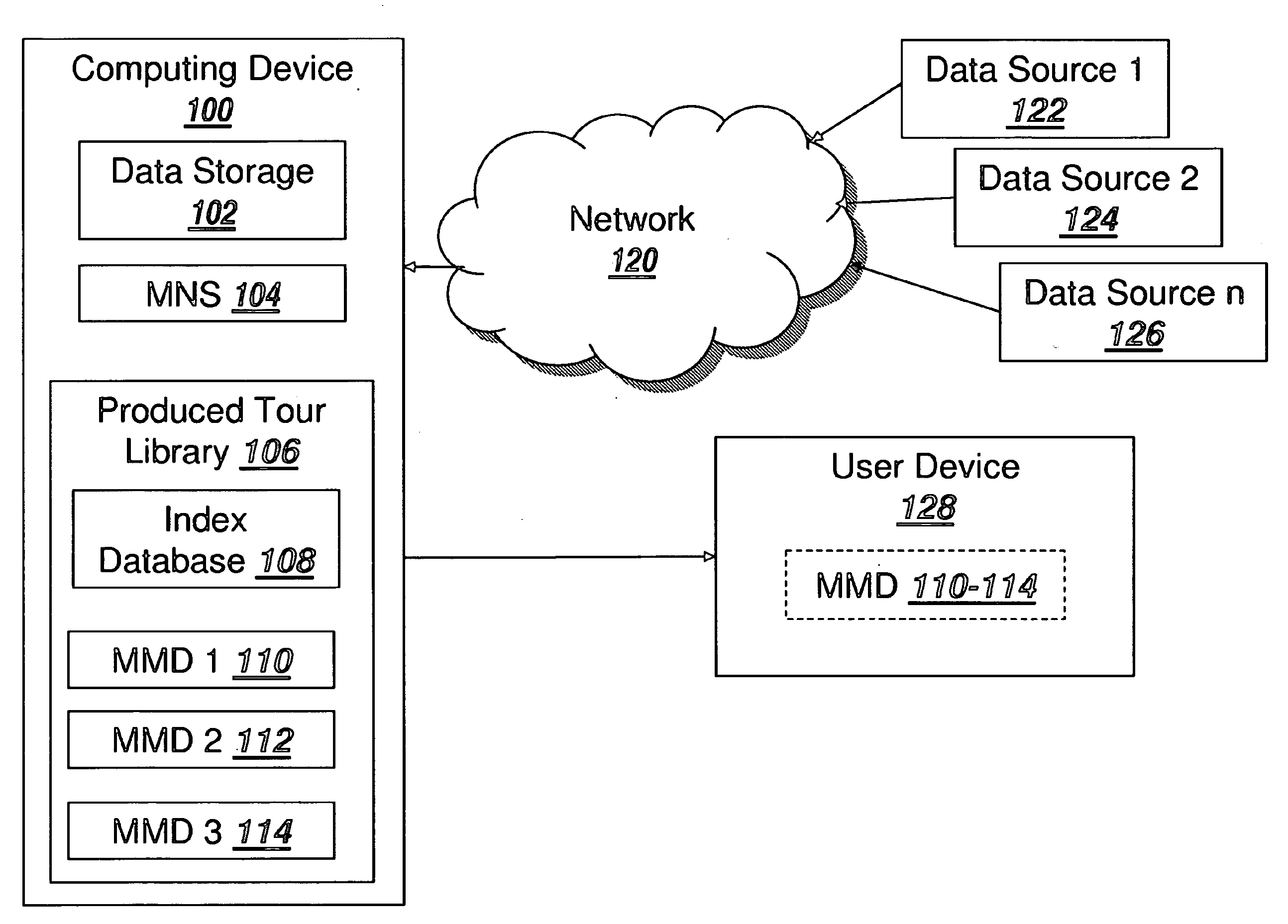

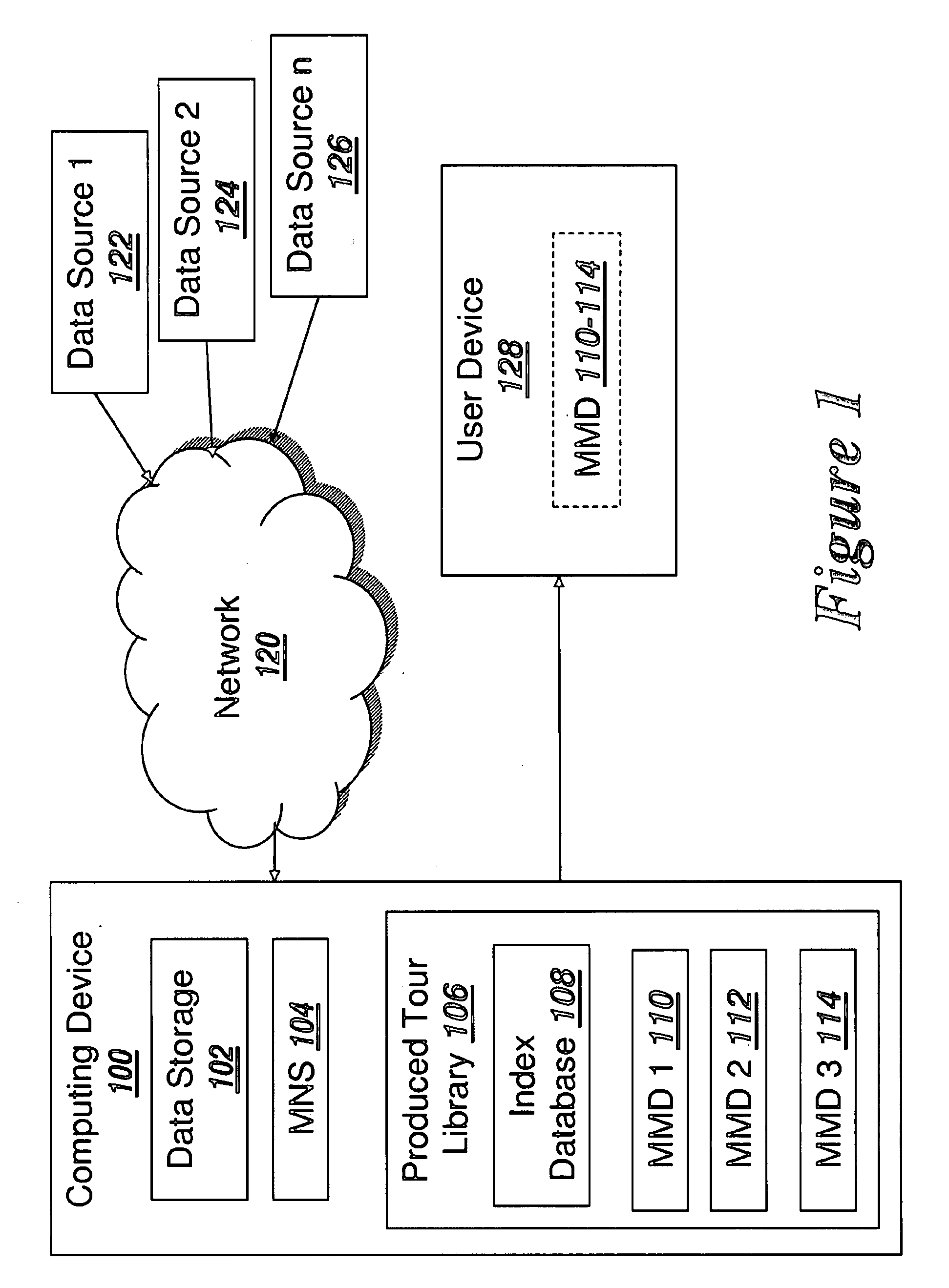

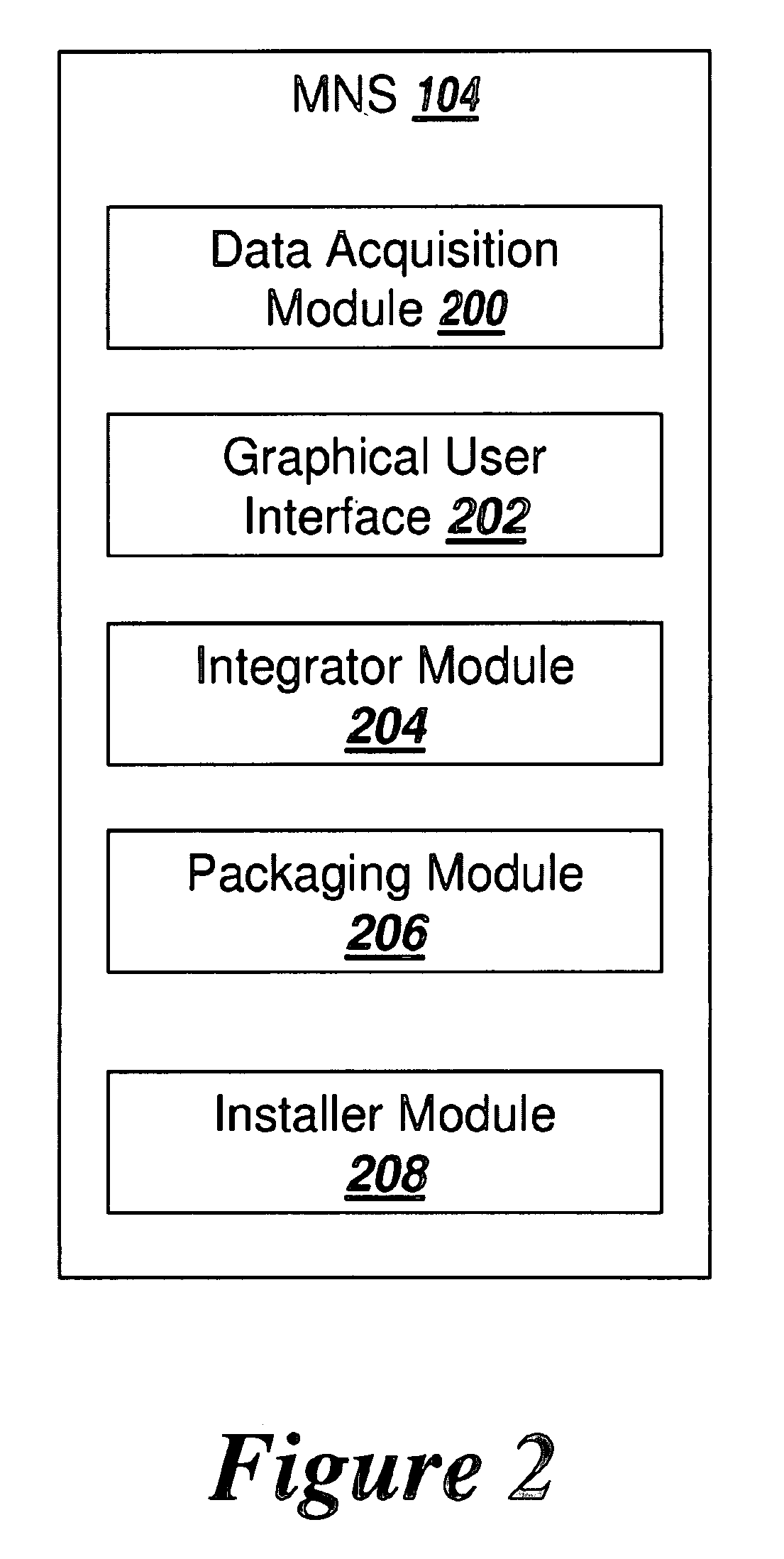

System and method for using known path data in delivering enhanced multimedia content to mobile devices

InactiveUS20080177793A1Instruments for road network navigationGeographical information databasesGraphicsInteractive content

An authoring tool supporting the creation, editing and use of a “Mobile Media Documentary” (MMD) is discussed. An MMD is an interactive tour of physical and virtual locations that is accompanied by multimedia content mapped to spatial data for a known path. The multimedia content includes character-driven audio narrative supplemented by graphics, text, video and interactive content.

Owner:UNTRAVEL MEDIA

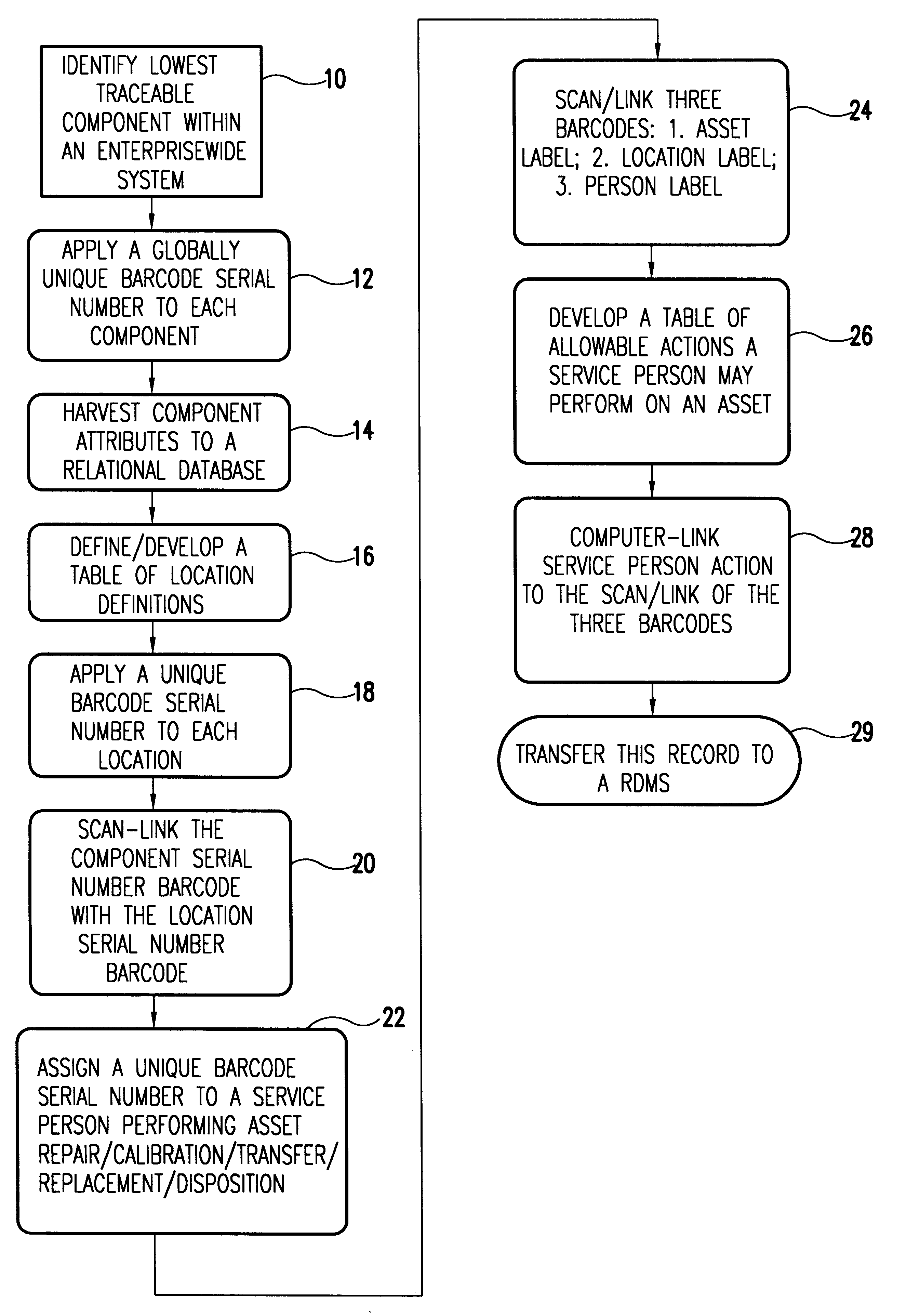

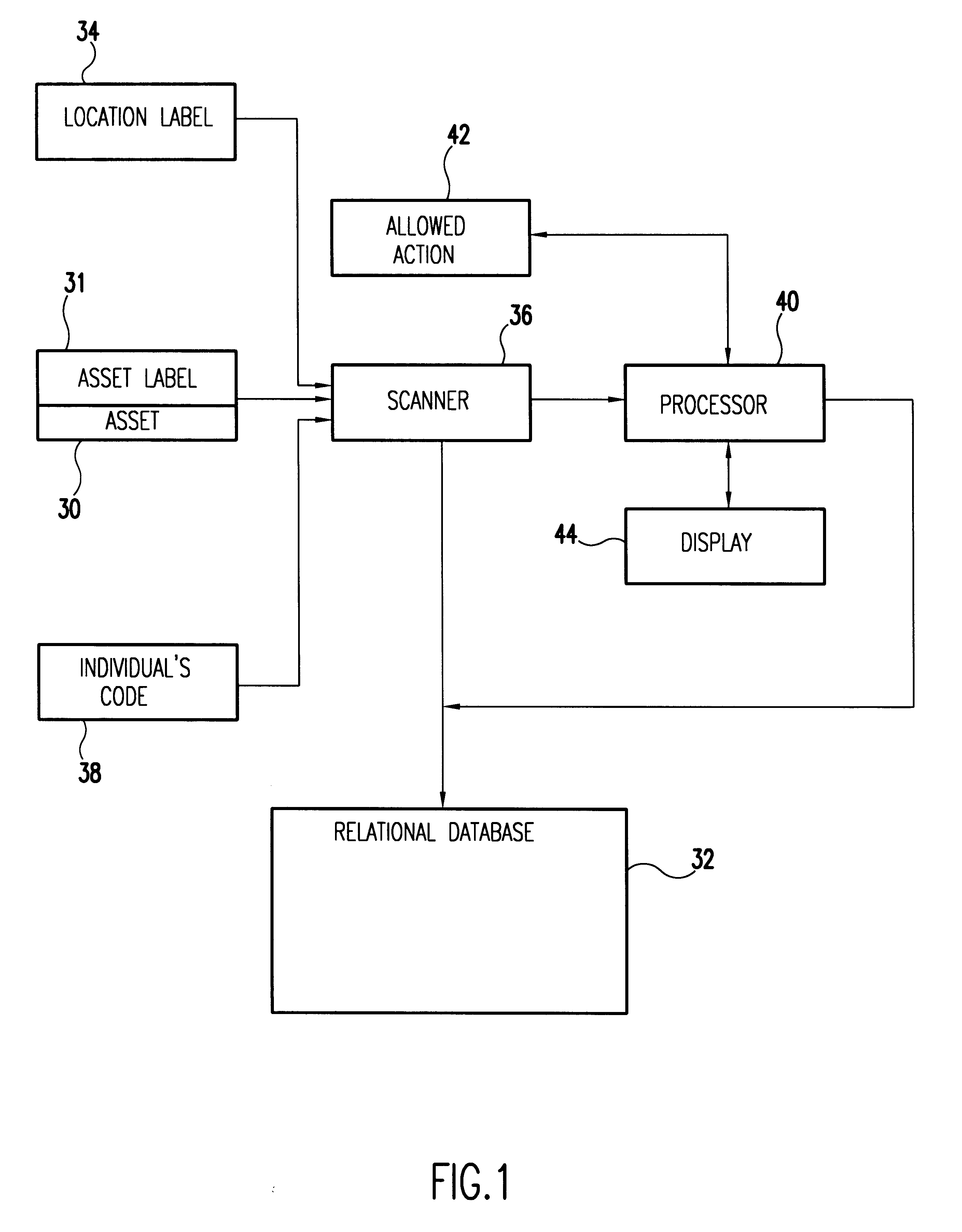

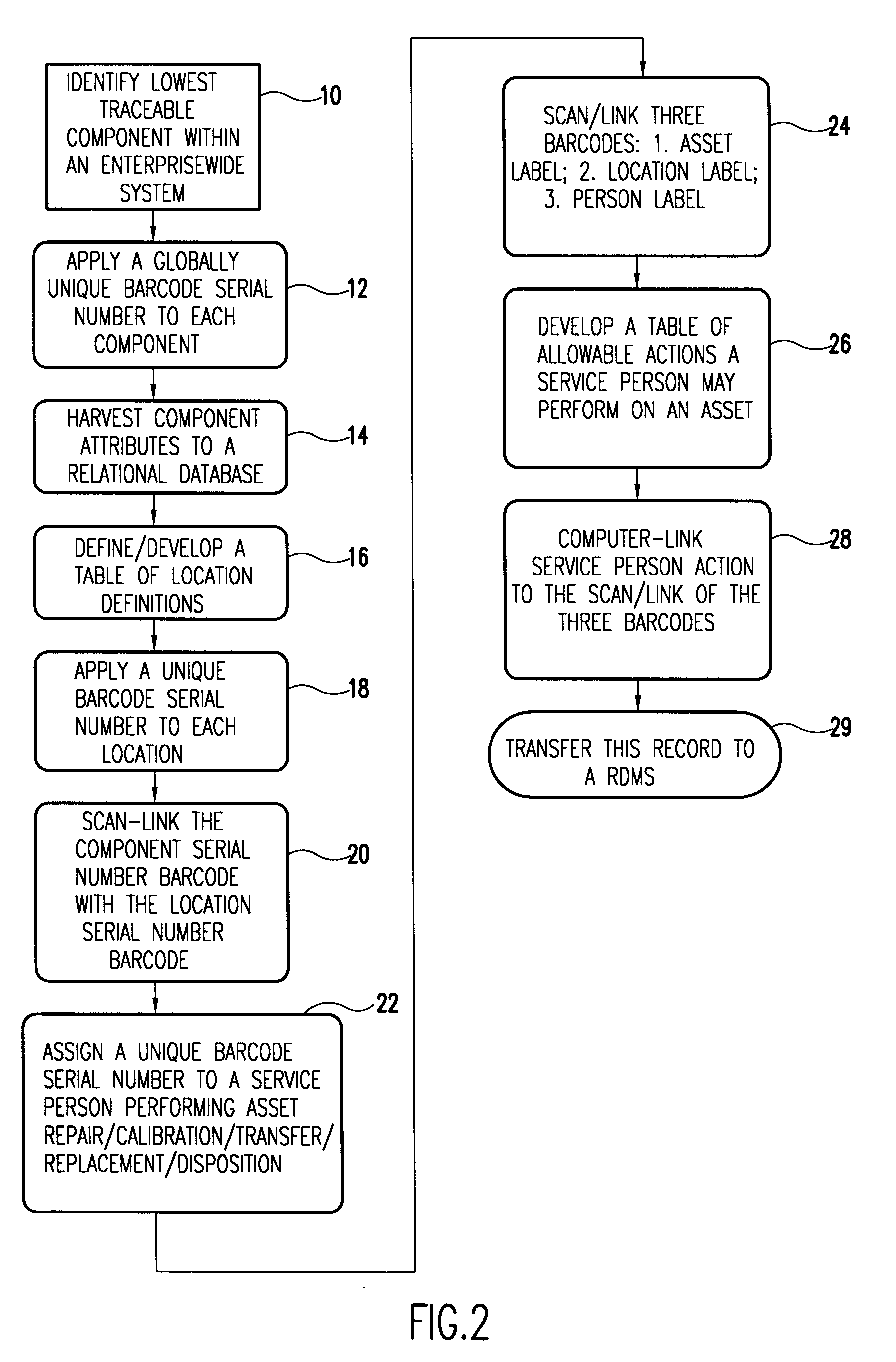

Asset tracking within and across enterprise boundaries

InactiveUS6237051B1Hand manipulated computer devicesSpecial service for subscribersRelational databaseGeolocation

A unique data label is affixed to each tracked asset and a unique data label for each location in the enterprise, both real and virtual locations. Location history data of the asset is related to other asset data in a relational data base. Assets typically include system components down to the least repairable / replaceable unit (LRU). The data label, in the preferred embodiment of the invention, is a code label using a code that ensures each label is unique to the asset or location to which it is attached. Here the word location is an inclusive term. It includes the geographical location and the identity of the building in which the asset is housed. Location also includes the identity of the system of which a component is a part and, if relevant, location within the system. It includes also any real or virtual location of interest for subsequent analysis and is ultimately defined by the nature of the system being tracked.People assigned to install, upgrade, maintain, and do other work on the system are identified and this identifying data is entered into the data base along with each activity performed on the system and its components. Preferably, in response to a scanned asset label, a menu of allowable activities is presented so that the person assigned to do a task associated with the asset can easily make entries into the data base of the code assigned to the task performed. With asset data, asset location, and task record (including the person performing the task), entered into the relational data base, it is relatively easy to track the components of complex systems in a large enterprise over time and build complex relational records.

Owner:RATEZE REMOTE MGMT LLC +1

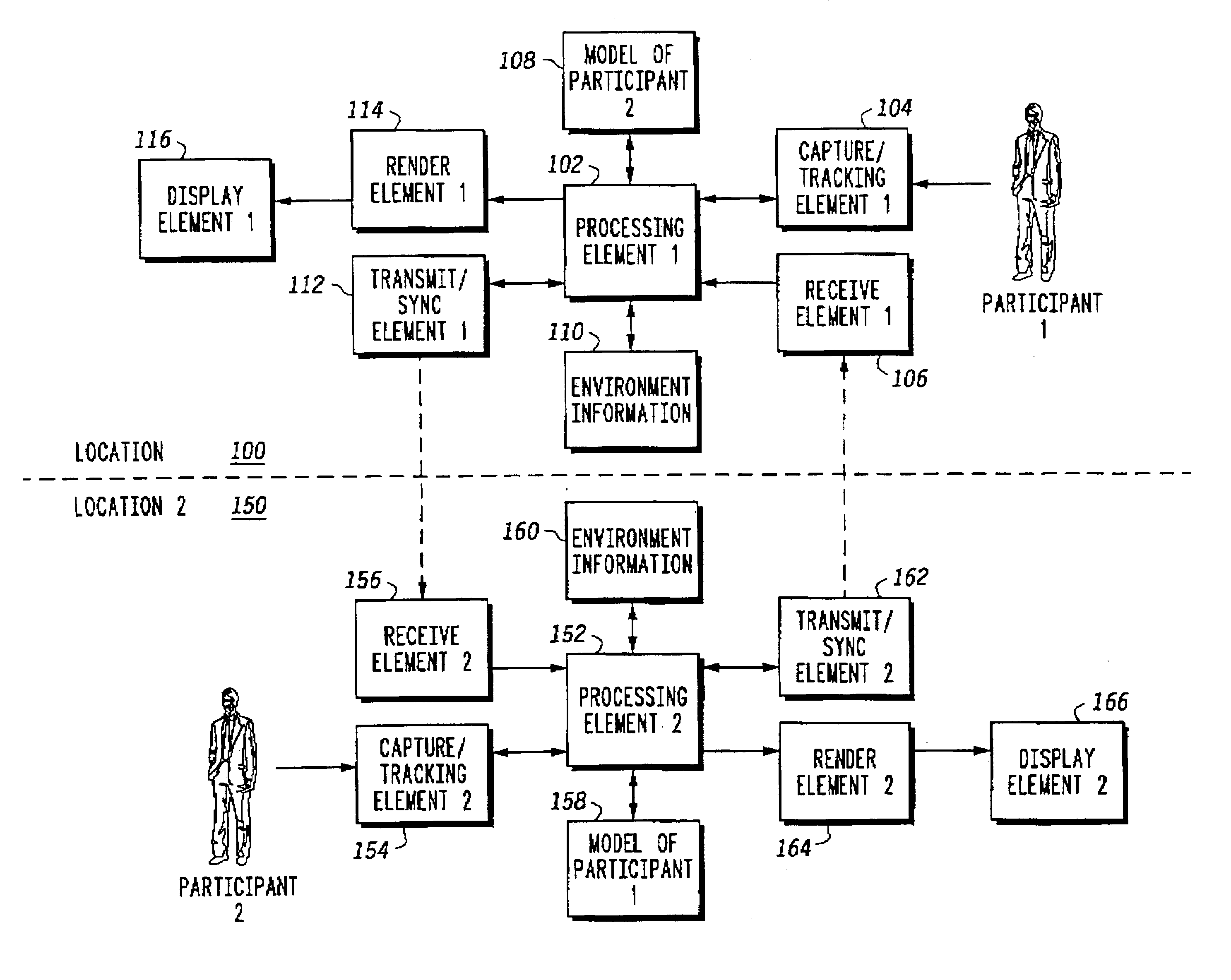

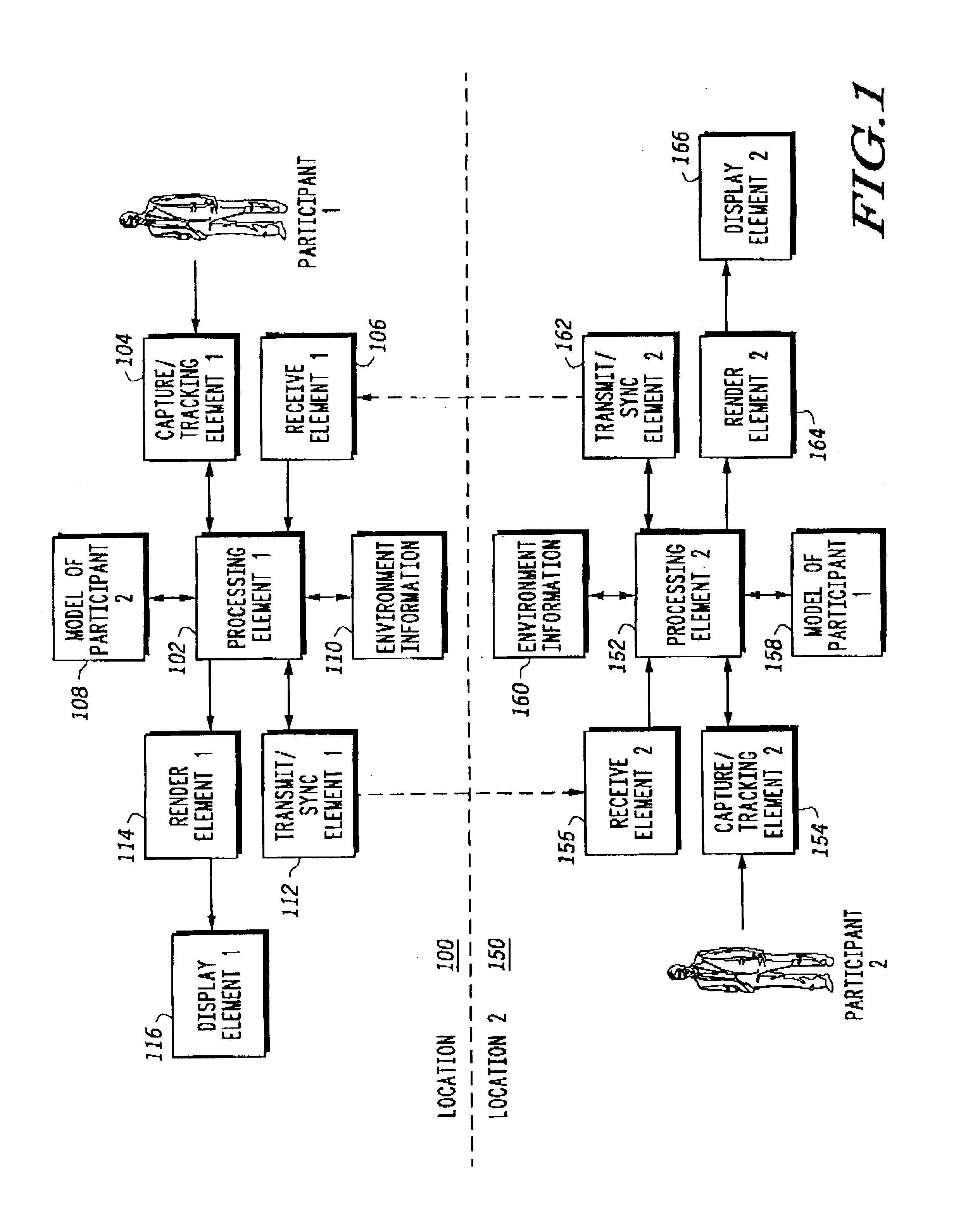

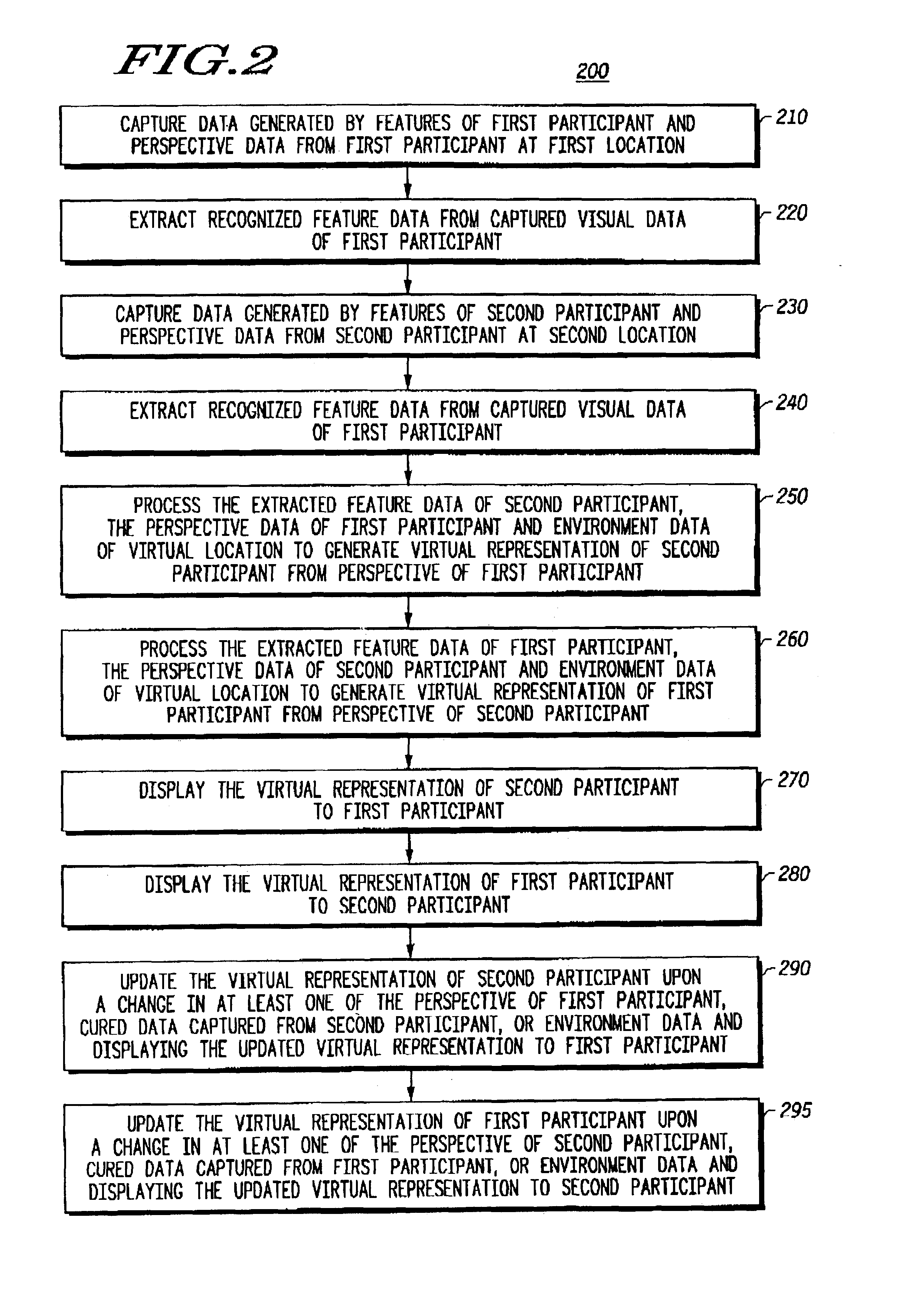

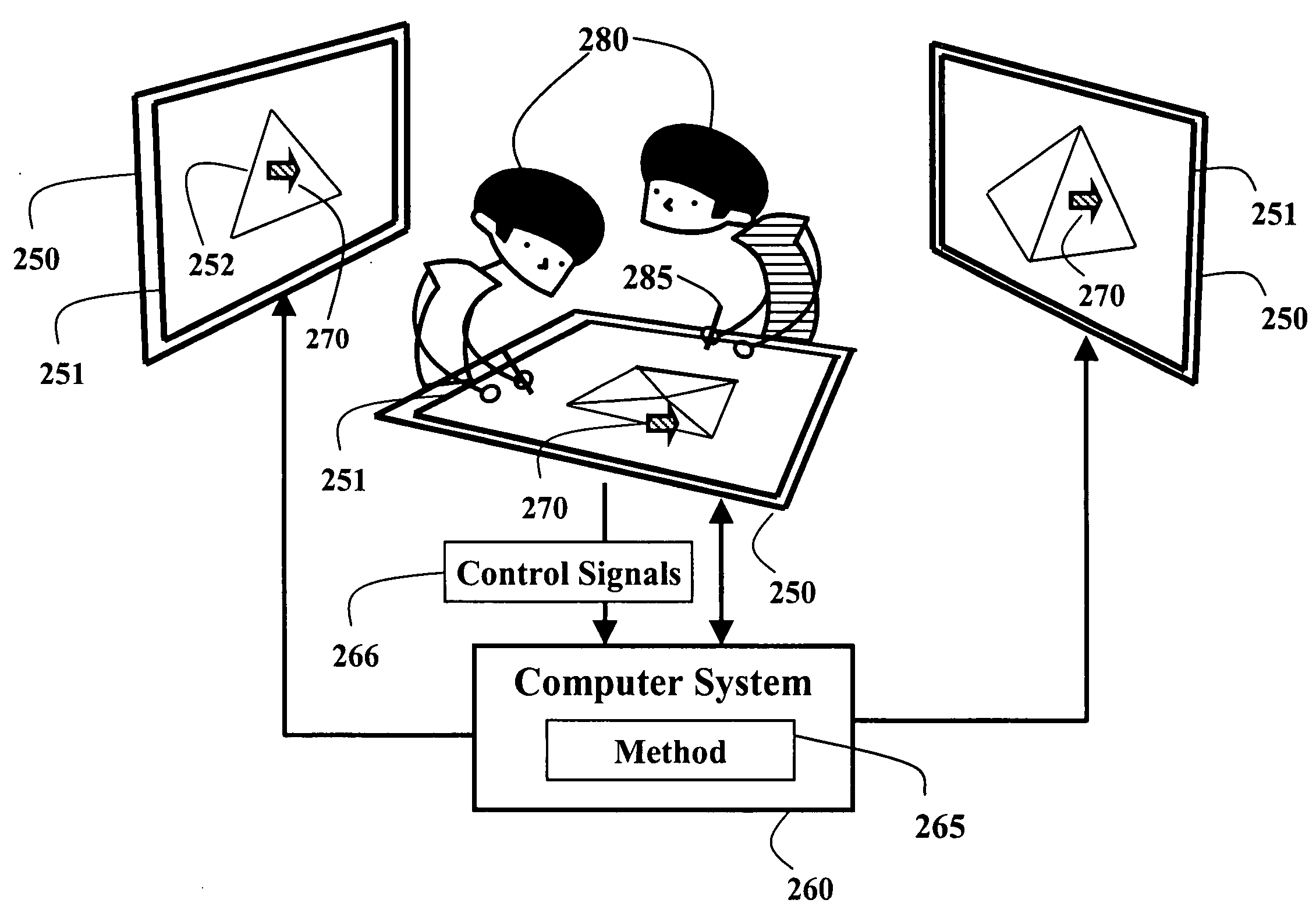

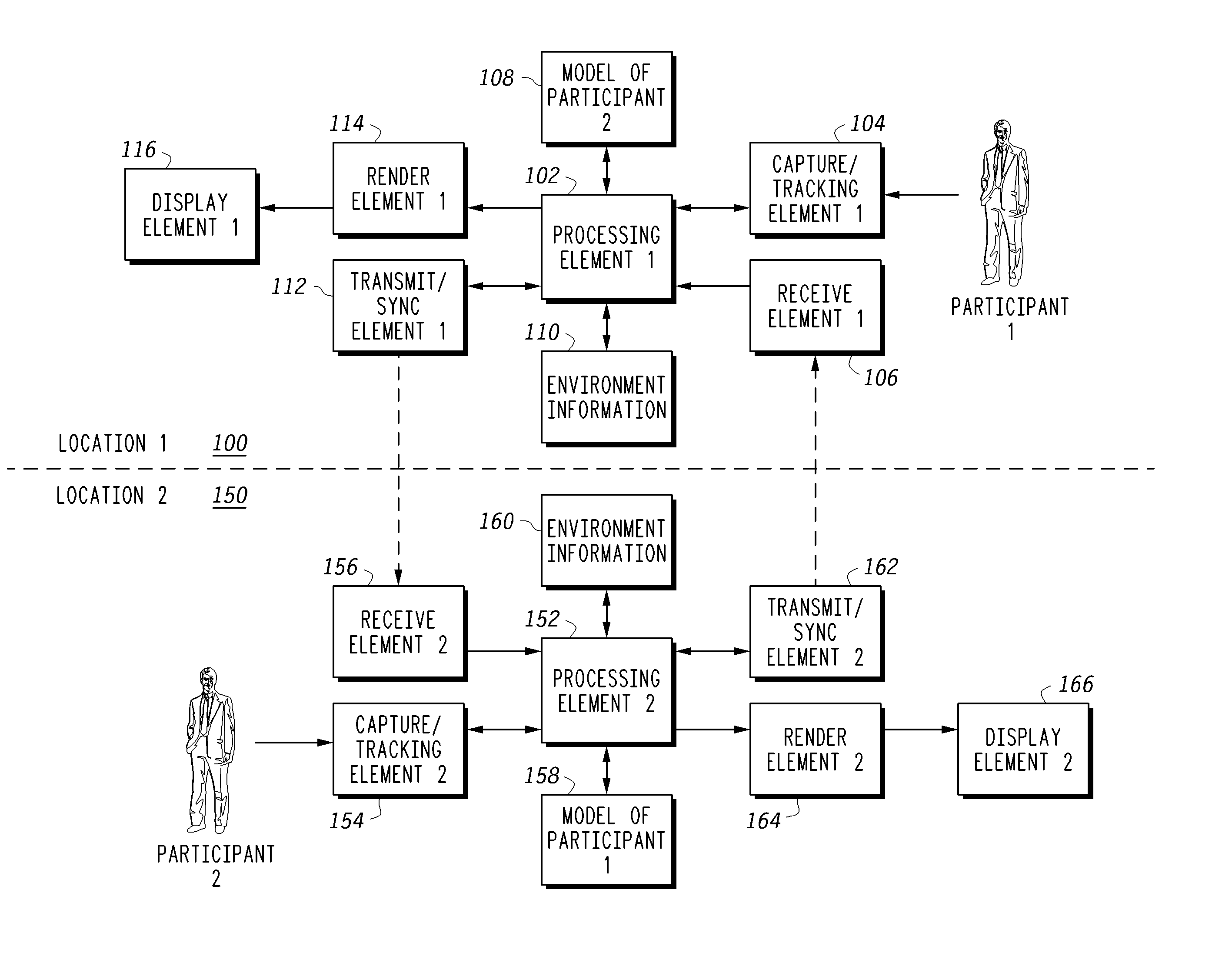

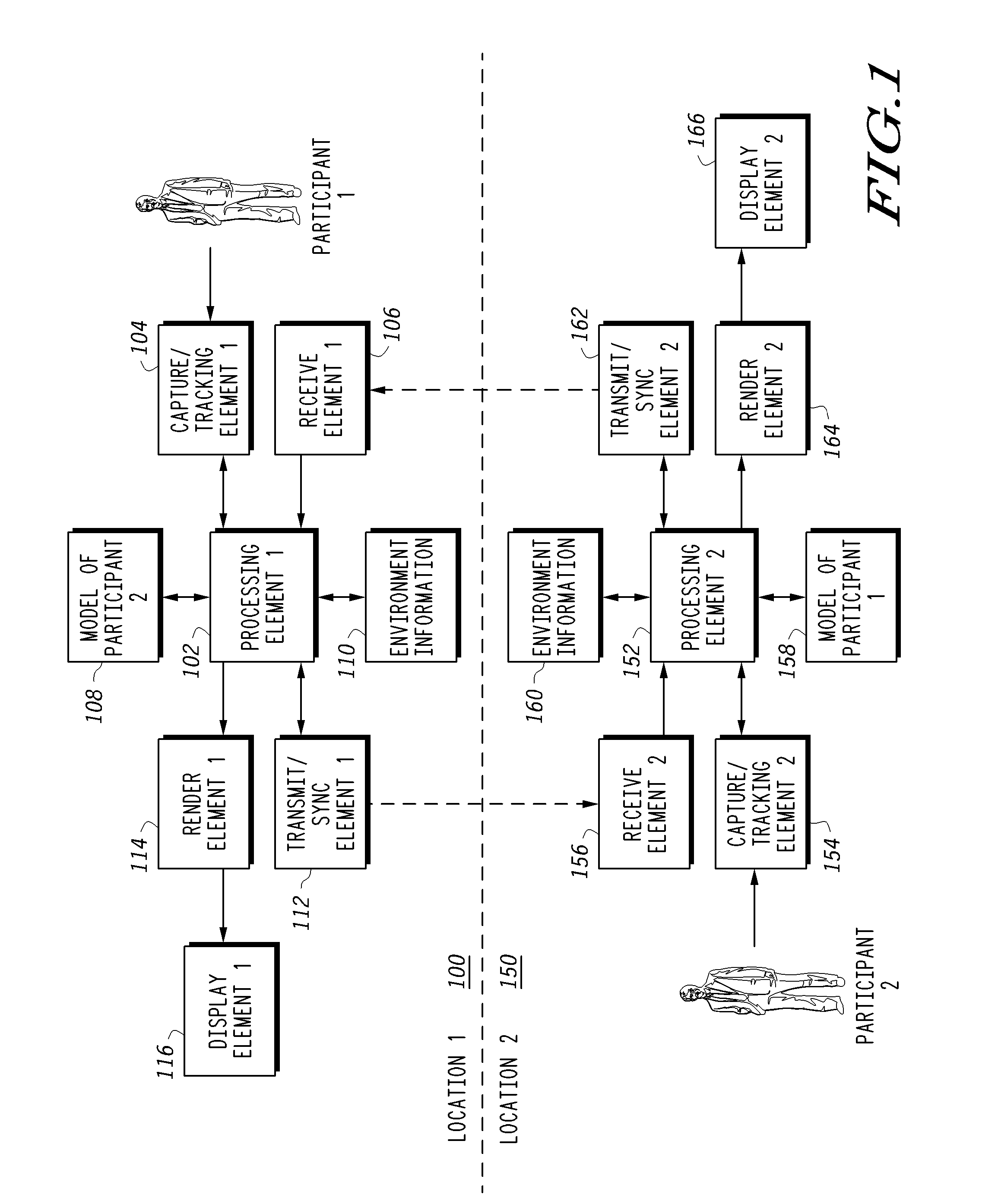

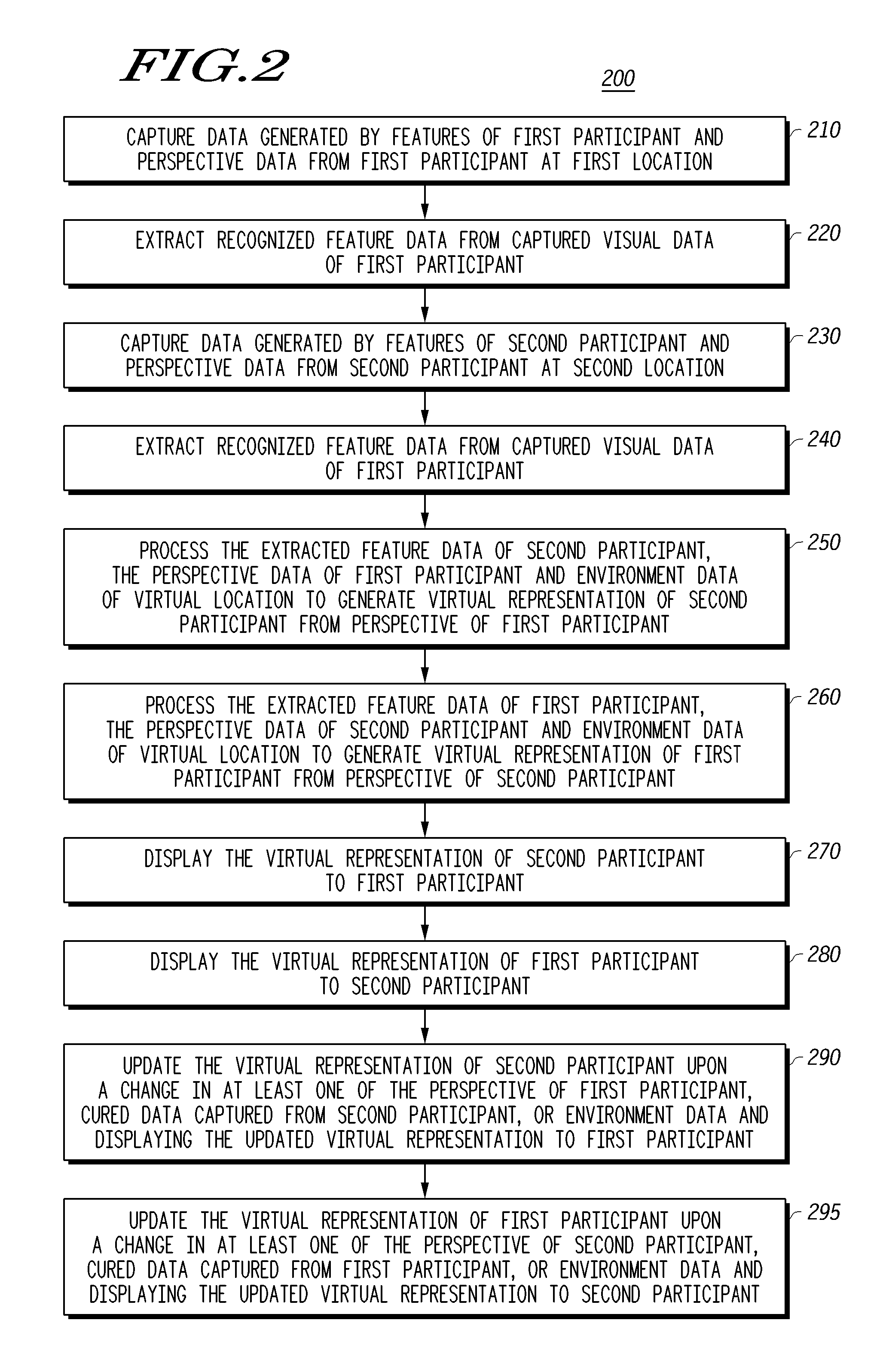

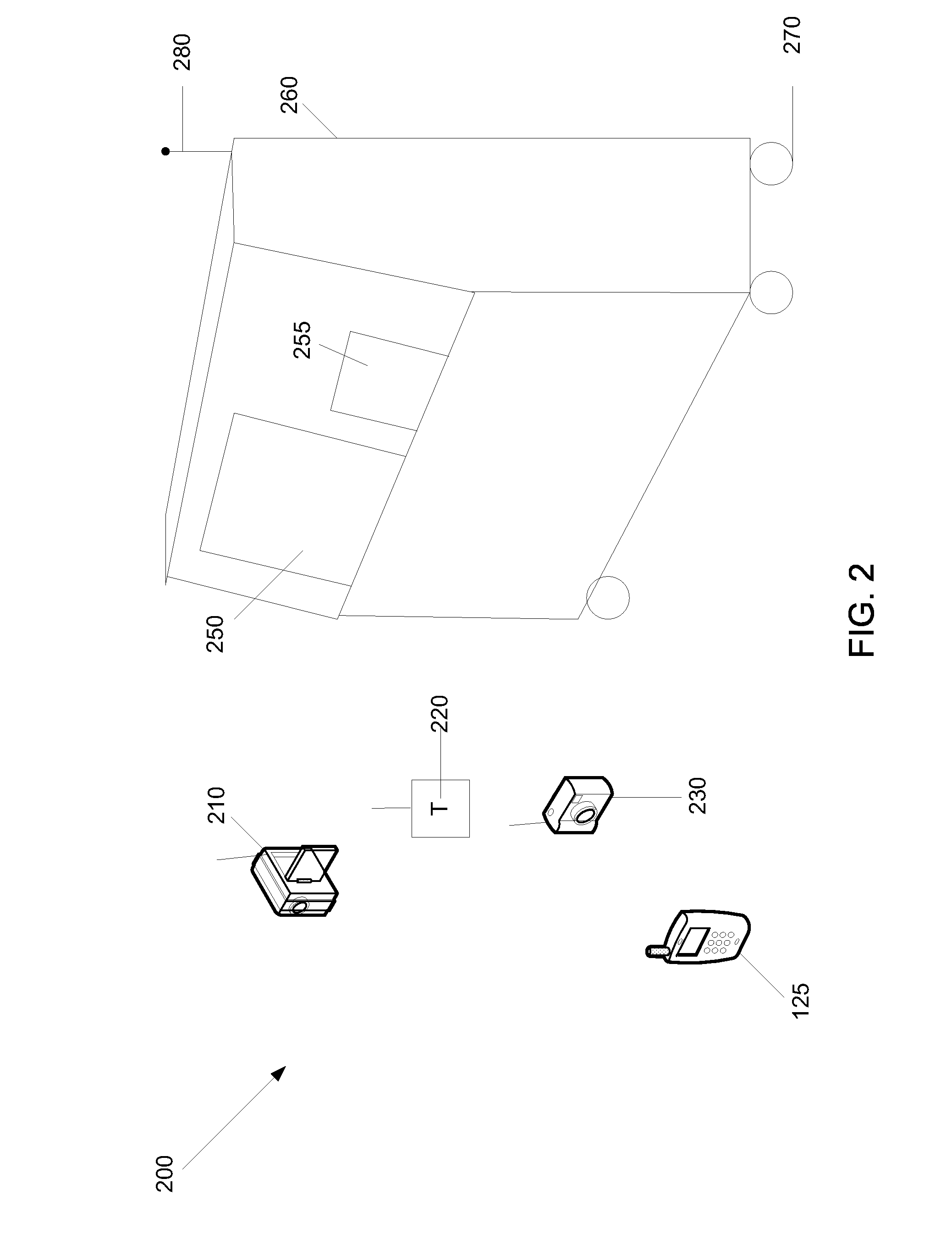

Method, system and apparatus for telepresence communications

ActiveUS7106358B2Two-way working systemsInput/output processes for data processingFeature extractionVirtual position

An apparatus, system and method for telepresence communications at a virtual location between two or more participants at multiple locations (100, 200). First perspective data descriptive of the perspective of the virtual location environment experienced by a first participant at a first location and feature data extracted from features of a second participant at a second location (210, 220) are processed to generate a first virtual representation of the second participant in the virtual environment from the perspective of the first participant (250). Likewise, second perspective data descriptive of the perspective of the virtual location environment experienced by the second participant and feature data extracted from features of the first participant (230, 240) are processed to generate a second virtual representation of the first participant in the virtual environment from the perspective of the second participant (260). The first and second virtual representations are rendered and then displayed to the first and second participants, respectively (260, 270). The first and second virtual representations are updated and redisplayed to the participants upon a change in one or more of the perspective data and extracted feature data from which they are generated (290, 295). The apparatus, system and method are scalable to two or more participants.

Owner:GOOGLE TECH HLDG LLC

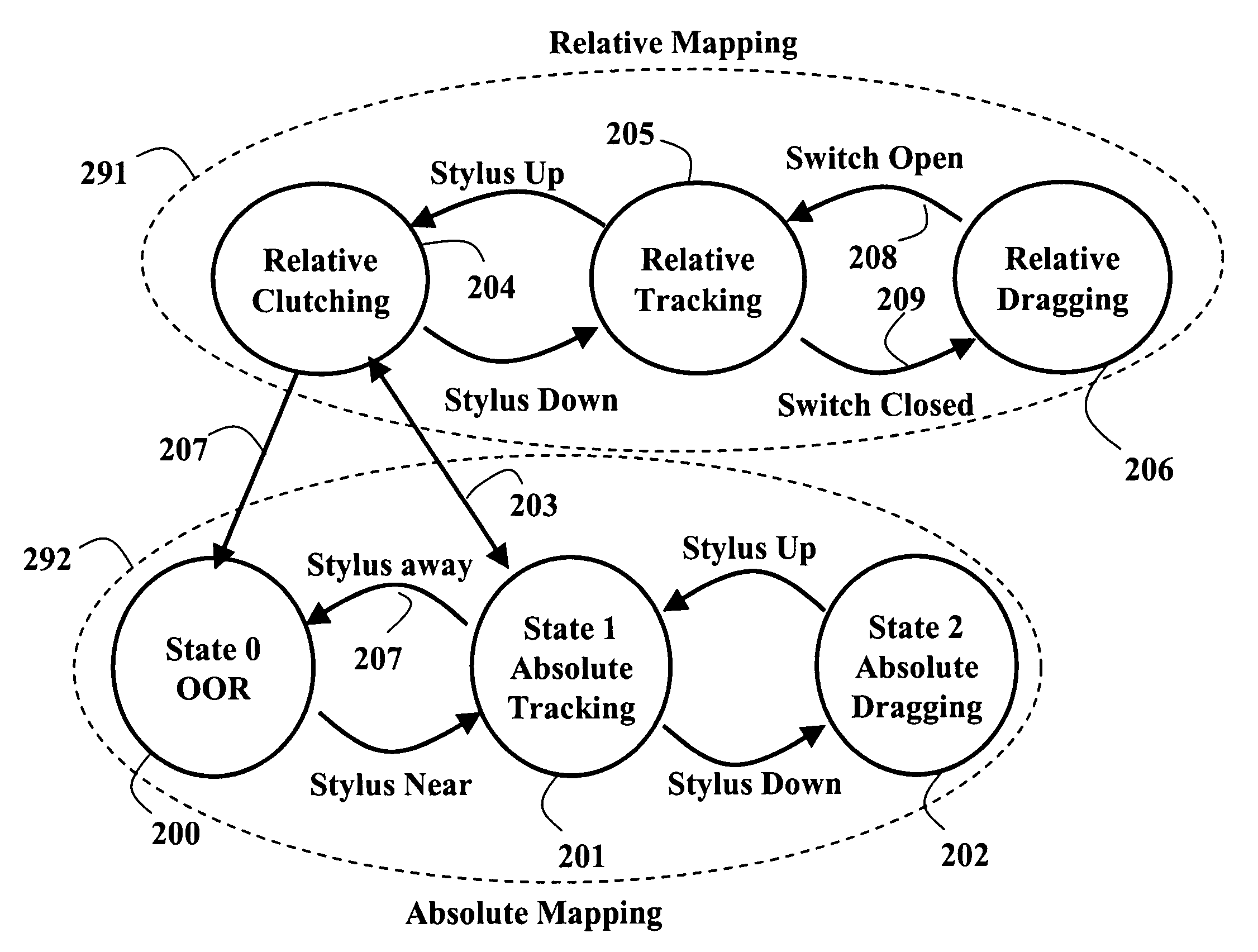

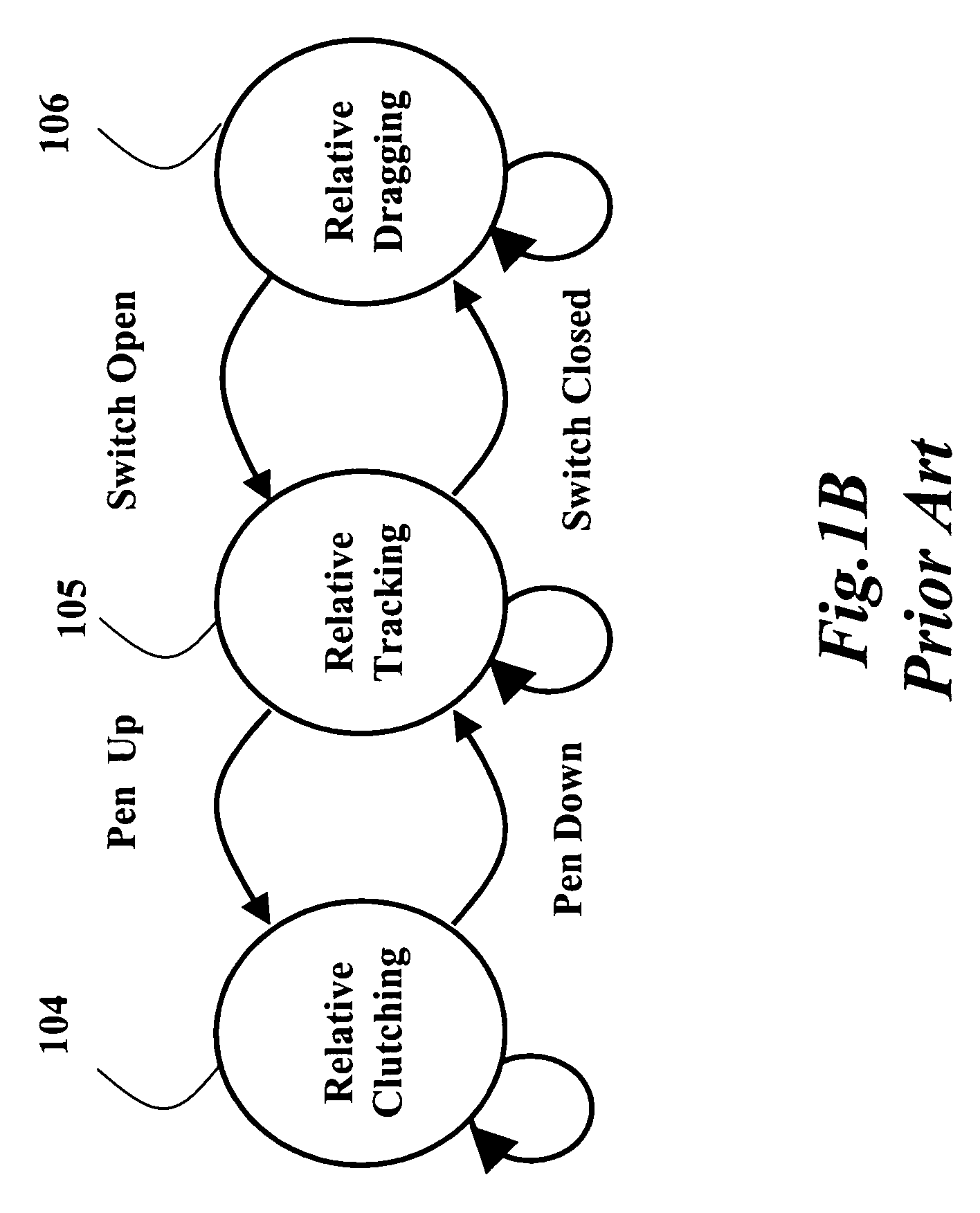

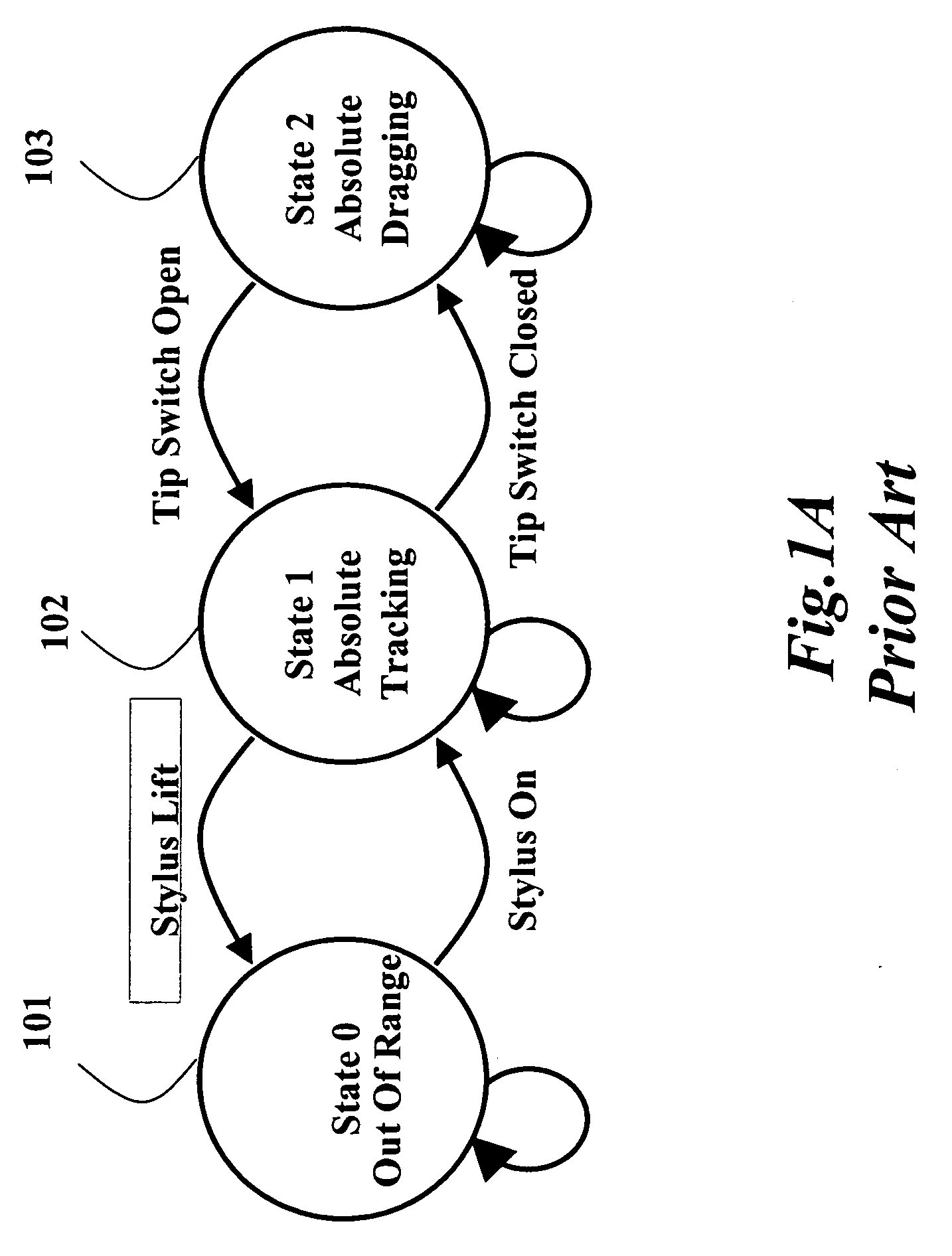

Method and system for switching between absolute and relative pointing with direct input devices

InactiveUS7640518B2Cathode-ray tube indicatorsInput/output processes for data processingControl signalVirtual position

A method maps positions of a direct input device to locations of a pointer displayed on a surface. The method performs absolute mapping between physical positions of a direct input device and virtual locations of a pointer on a display device when operating in an absolute mapping mode, and relative mapping between the physical positions of the input device and the locations the pointer when operating in a relative mapping mode. Switching between the absolute mapping and the relative mappings is in response to control signals detected from the direct input device.

Owner:MITSUBISHI ELECTRIC RES LAB INC

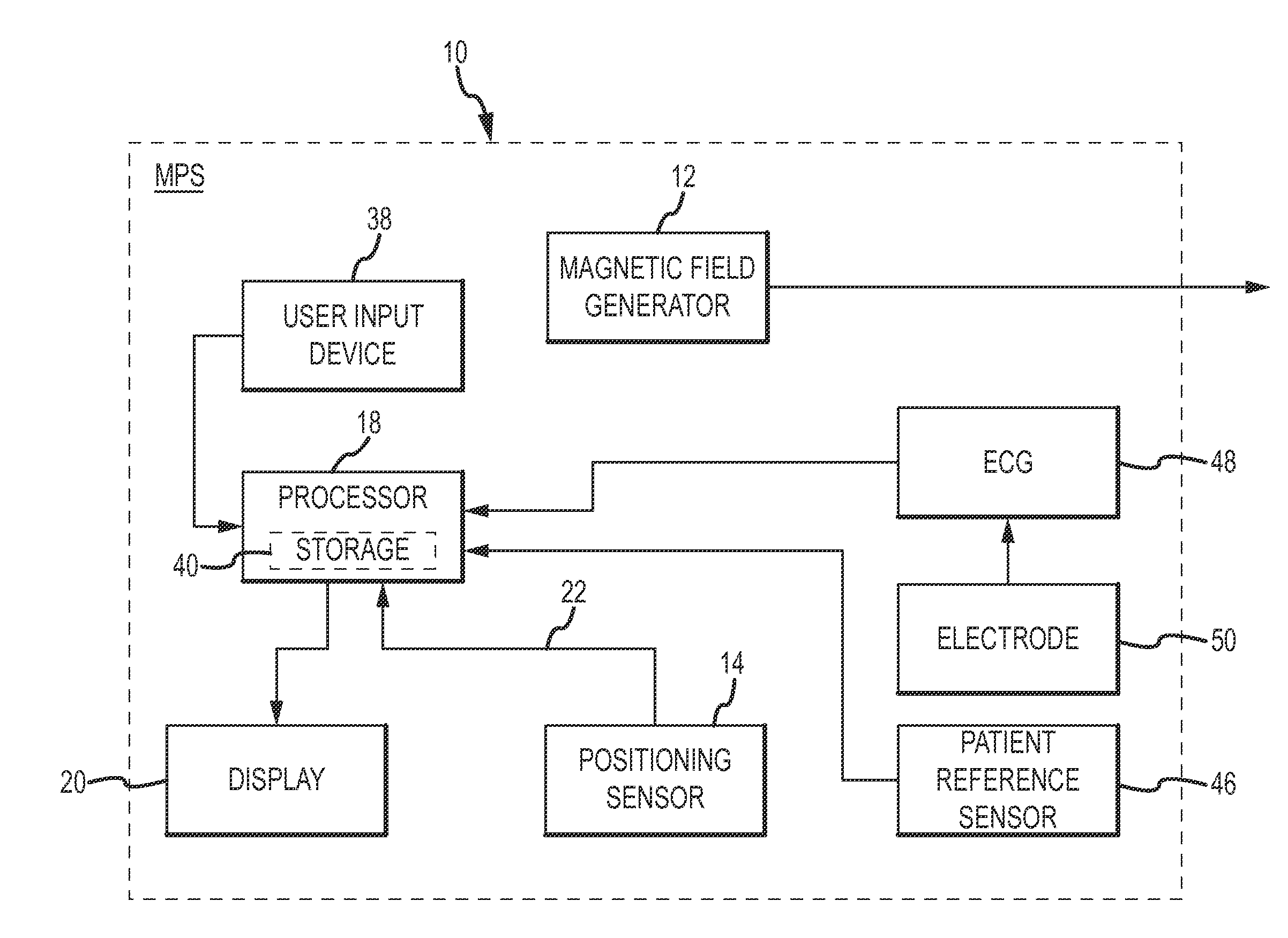

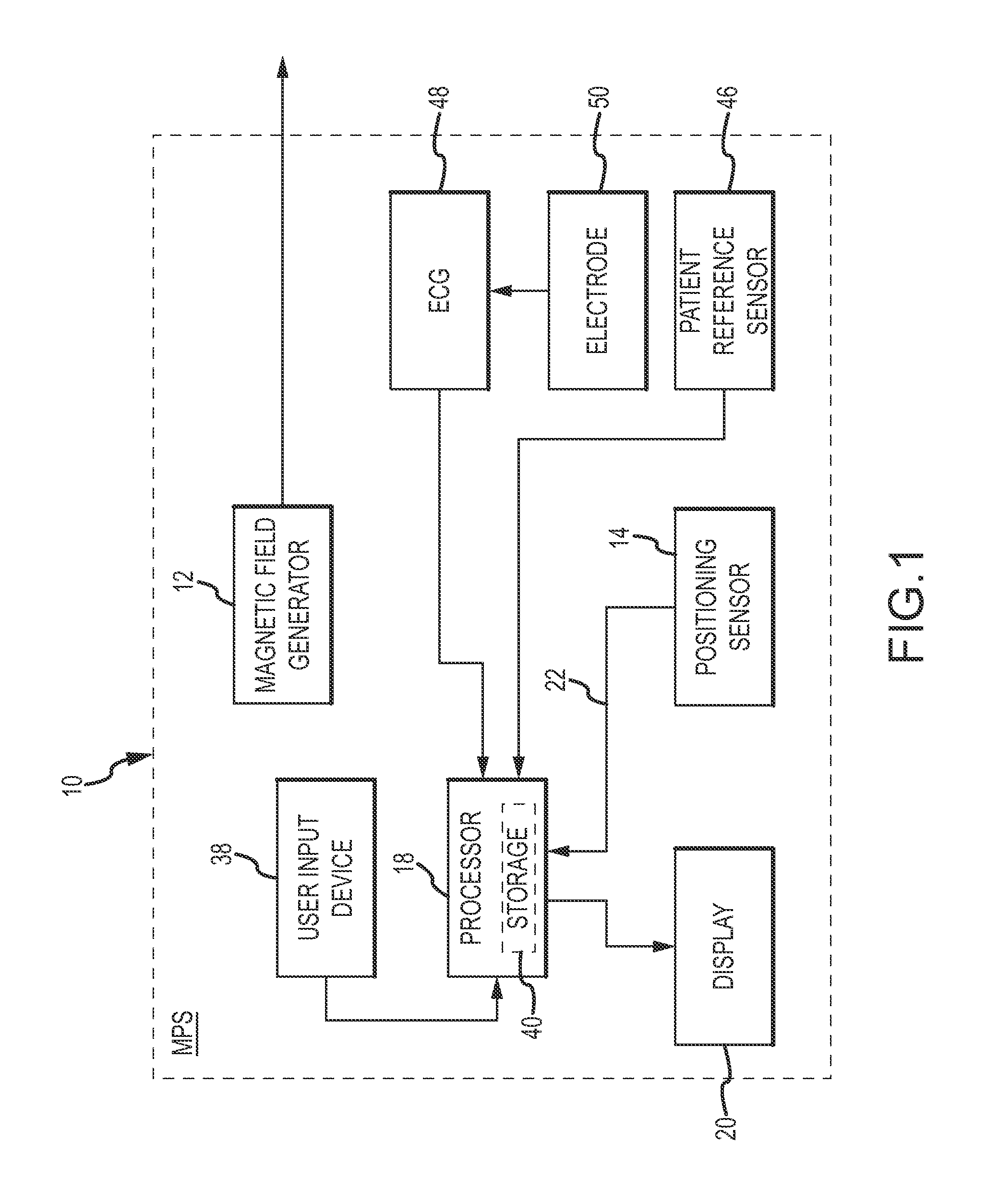

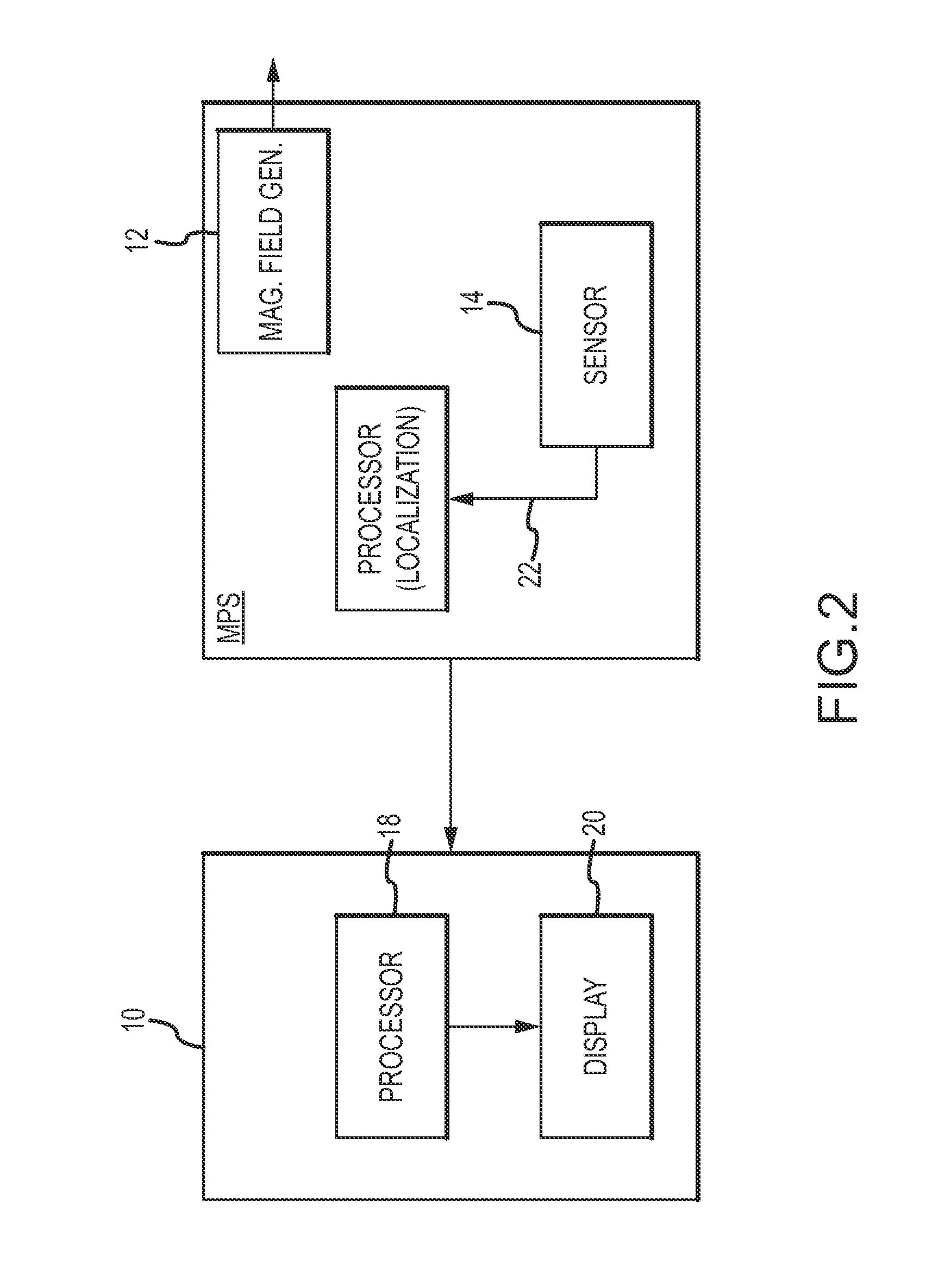

system and method for real-time surface and volume mapping of anatomical structures

A method and system for mapping a volume of an anatomical structure includes a processor for computing a contour of a medical device as a function of positional and / or a shape constraints, and to translate the contour into known and virtual 3D positions. The processor is configured to determine a spatial volume based on a virtual position, and to render a 3D representation of the spatial volume. A method and system of mapping a surface of an anatomical structure includes a processor configured to obtain an image of the structure. The processor is further configured to receive a signal indicative of a medical device contacting the surface of the anatomical structure, and to determine a position of the device upon when contact has been made. The processor is configured to superimpose marks on the image indicative of a contact points between the device and the structure.

Owner:ST JUDE MEDICAL INT HLDG SARL

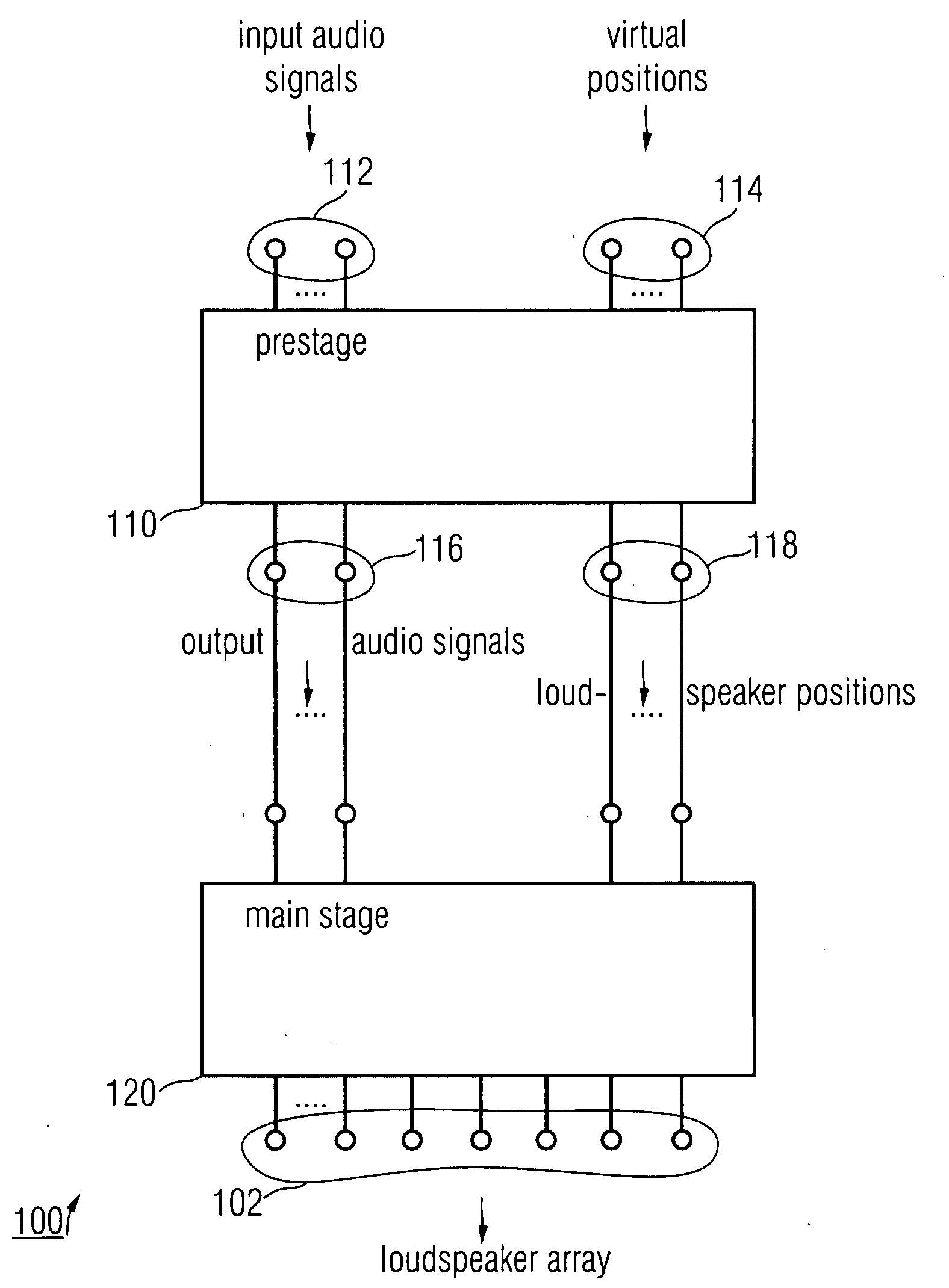

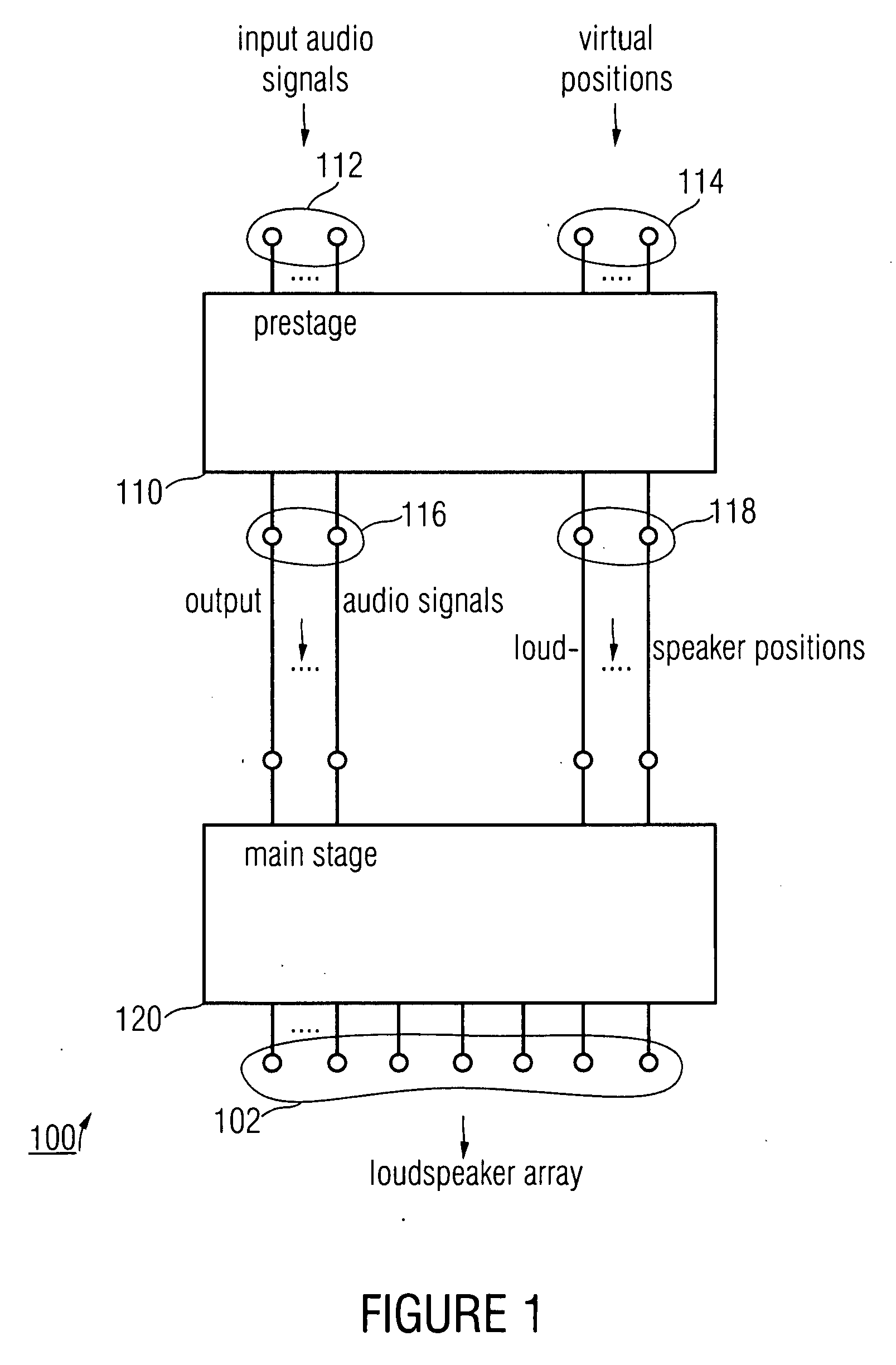

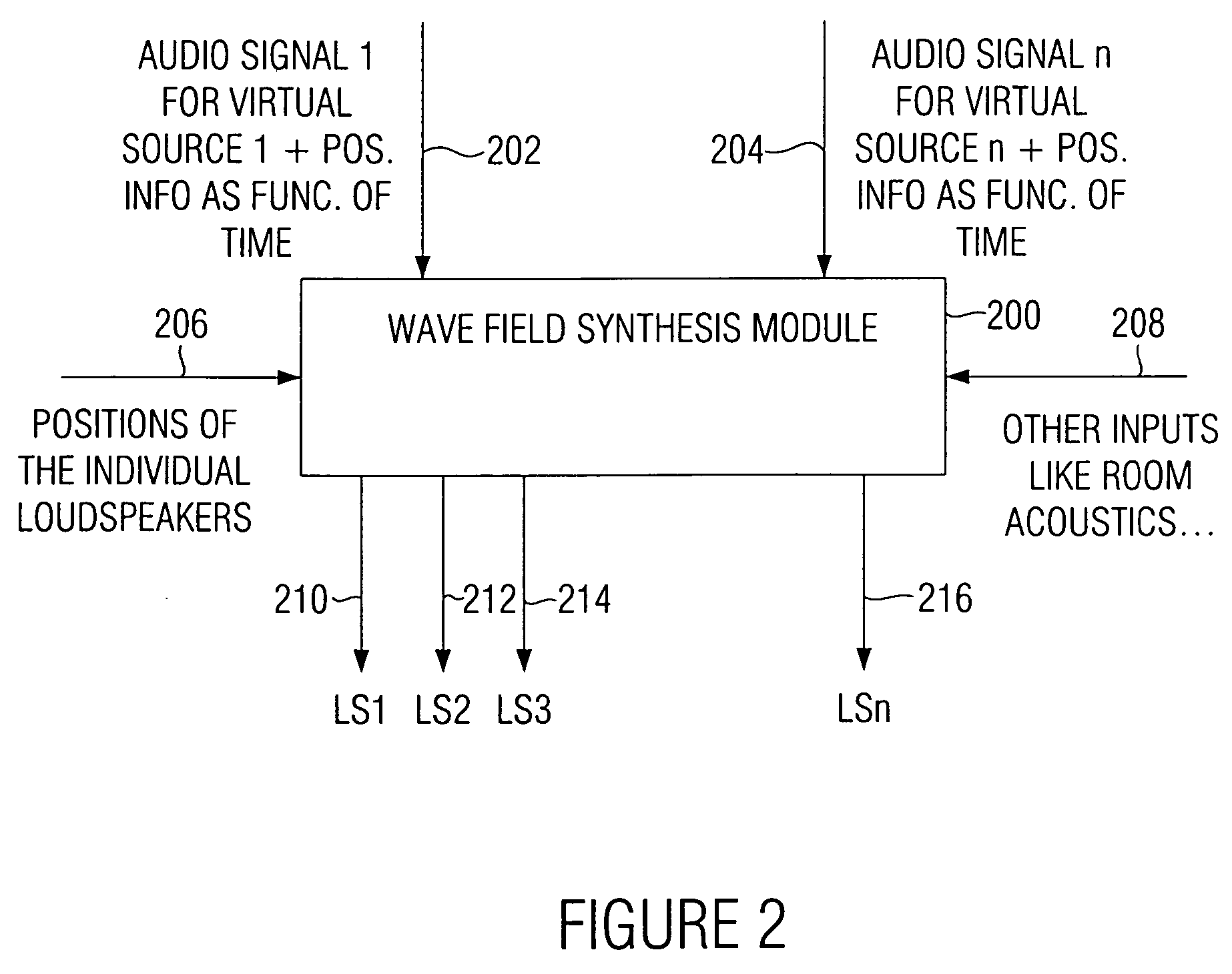

Apparatus and method for generating a number of loudspeaker signals for a loudspeaker array which defines a reproduction space

ActiveUS20100092014A1Improve spatial resolutionComputing expenditure can be keptDC motor speed/torque controlDynamo-electric converter controlVirtual positionAudio signal flow

An apparatus for generating a number of loudspeaker signals for a loudspeaker array defining a reproduction space includes a prestage configured to generate a plurality of output audio signals while using one or more audio signals associated with one or more virtual positions, each output audio signal being associated to a loudspeaker position such that the plurality of output audio signals together replicate a reproduction of the input audio signal(s) at the virtual position(s), and a number of output audio signals being smaller than a number of loudspeaker signals. The apparatus further includes a main stage configured to obtain the plurality of output audio signals and further to obtain, as a virtual position for each output audio signal, the loudspeaker positions, and to generate the number of loudspeaker signals for the loudspeaker array such that the loudspeaker positions are replicated as a virtual sources by the loudspeaker array.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

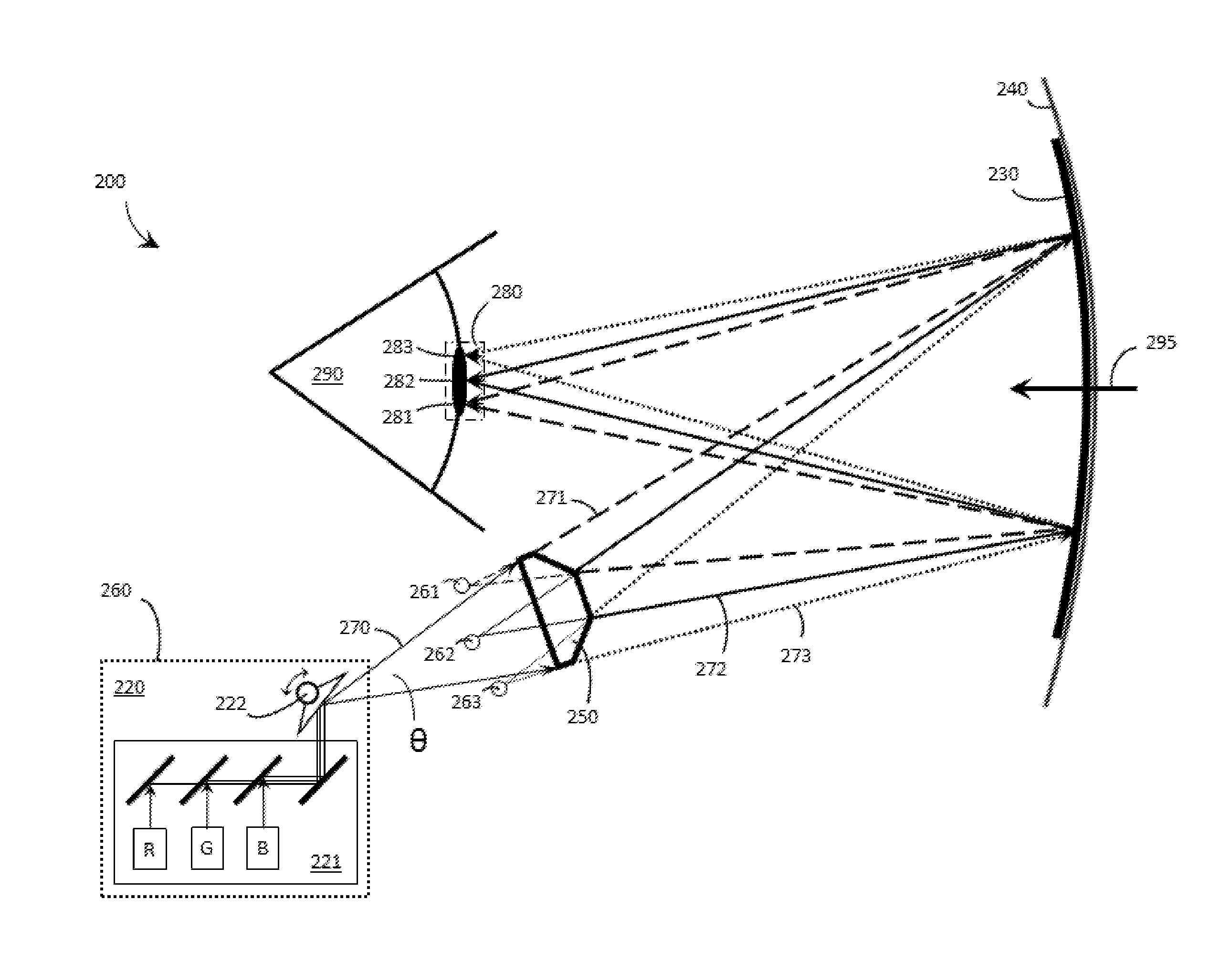

Systems, devices, and methods for eyebox expansion in wearable heads-up displays

ActiveUS20160377865A1Input/output for user-computer interactionCathode-ray tube indicatorsHead-up displayExit pupil

Systems, devices, and methods for eyebox expansion by exit pupil replication in scanning laser-based wearable heads-up displays (“WHUDs”) are described. The WHUDs described herein each include a scanning laser projector (“SLP”), a holographic combiner, and an optical replicator positioned in the optical path therebetween. For each light signal generated by the SLP, the optical replicator receives the light signal and redirects each one of N>1 instances of the light signal towards the holographic combiner effectively from a respective one of N spatially-separated virtual positions for the SLP. The holographic combiner converges each one of the N instances of the light signal to a respective one of N spatially-separated exit pupils at the eye of the user. In this way, multiple instances of the exit pupil are distributed over the area of the eye and the eyebox of the WHUD is expanded.

Owner:GOOGLE LLC

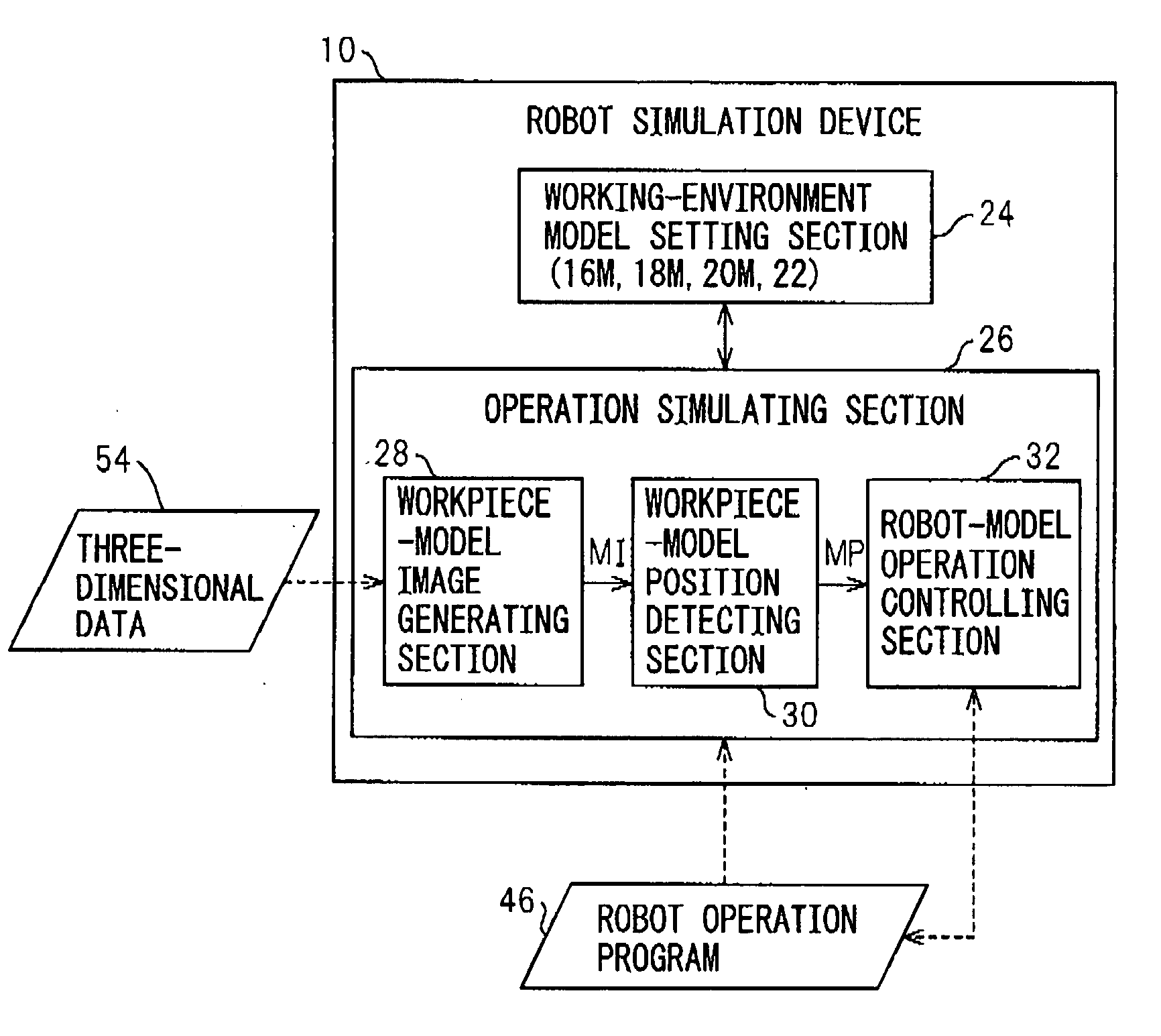

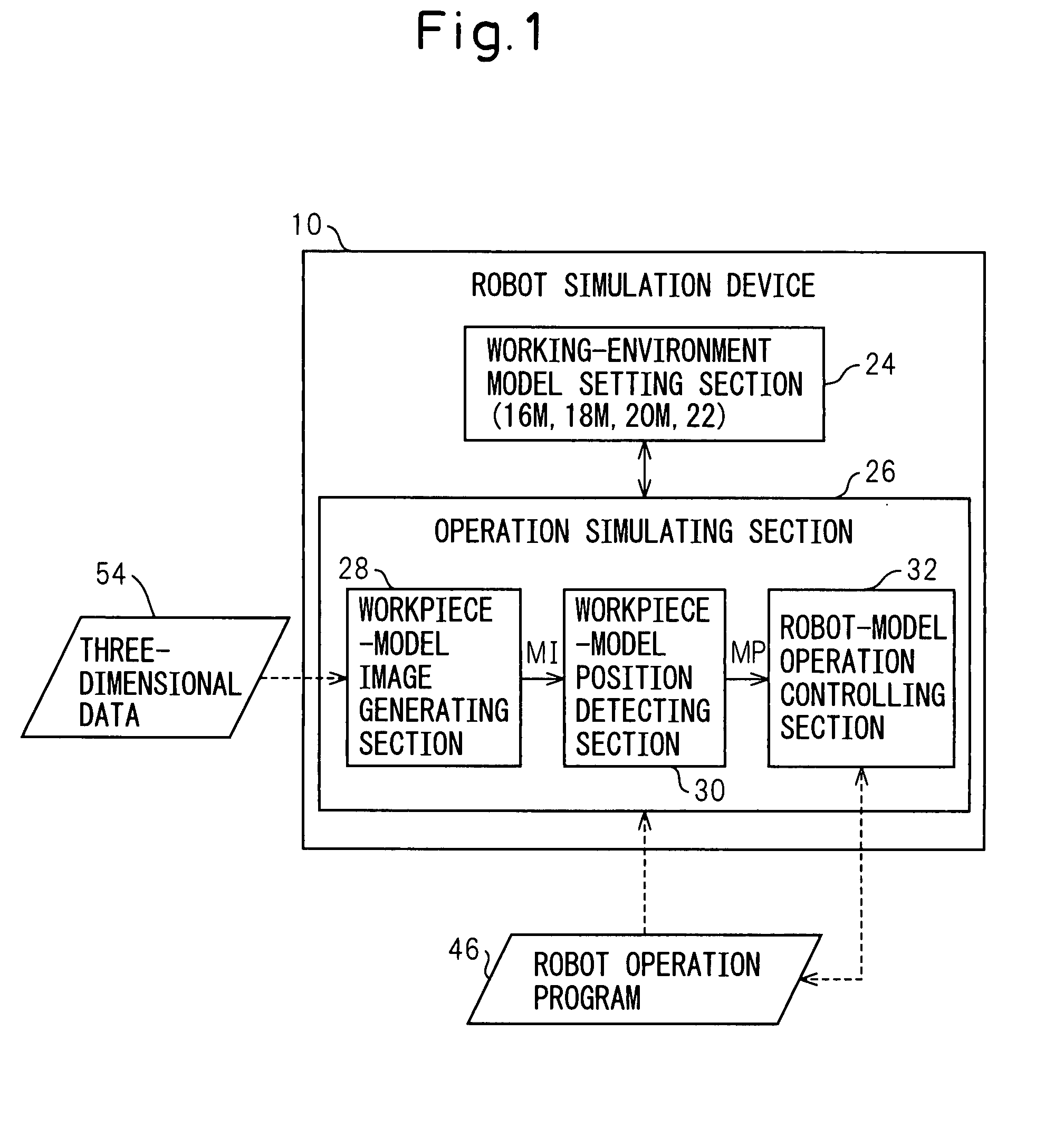

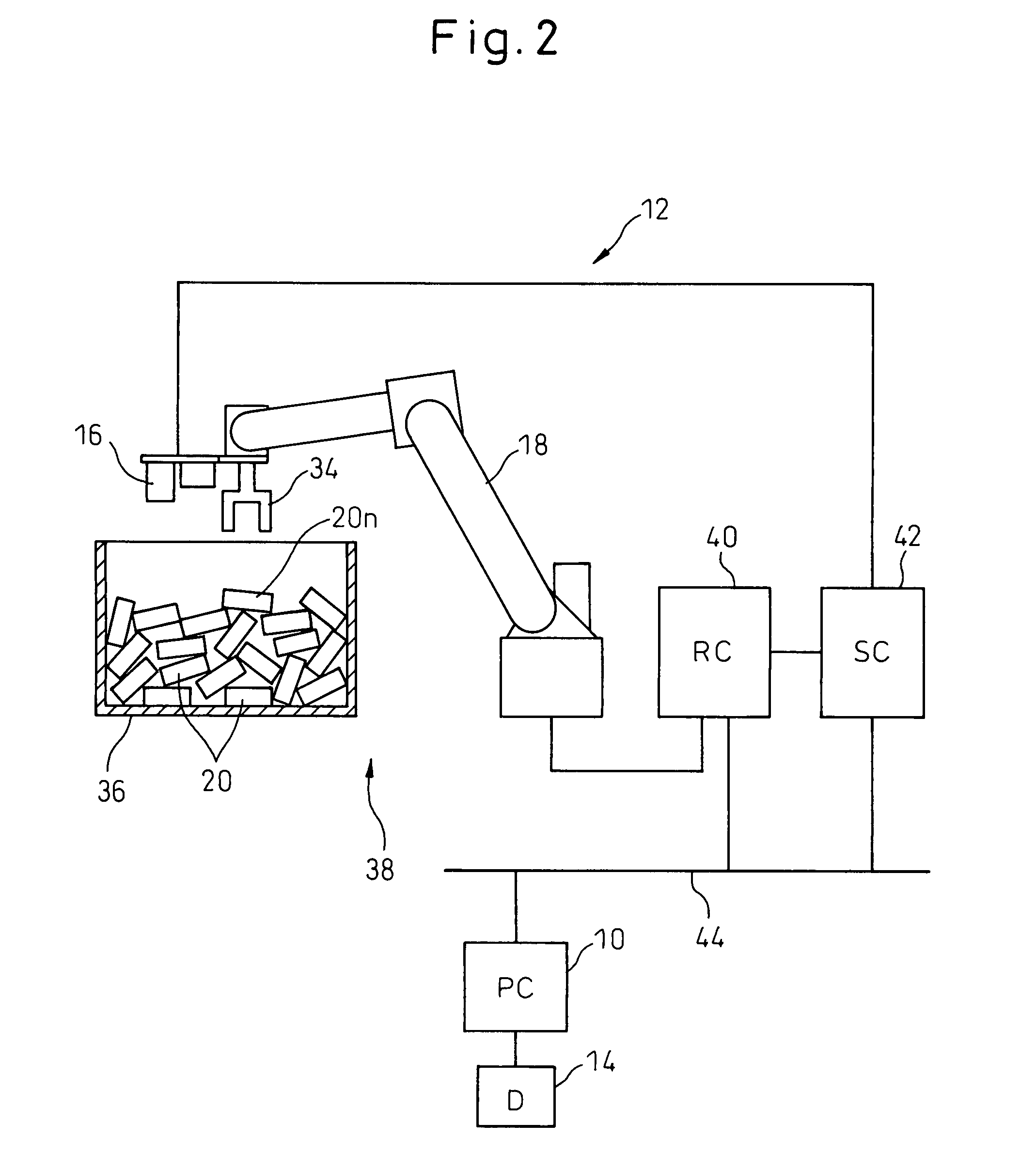

Device, program, recording medium and method for robot simulation

InactiveUS20070213874A1Quick calculationEasy to operateProgramme controlProgramme-controlled manipulatorLocation detectionSimulation

A robot simulation device for simulating an operation of a robot having a vision sensor in an off-line mode. The device includes a working-environment model setting section for arranging a sensor model, a robot model and a plurality of irregularly piled workpiece models in a virtual working environment; and an operation simulating section for allowing the sensor model and the robot model to simulate a workpiece detecting operation and a bin picking motion. The operation simulating section includes a workpiece-model image generating section for allowing the sensor model to pick up the workpiece models and generating a virtual image thereof; a workpiece-model position detecting section for identifying an objective workpiece model from the virtual image and detecting a virtual position thereof; and a robot-model operation controlling section for allowing the robot model to pick out the objective workpiece model based on the virtual position.

Owner:FANUC LTD

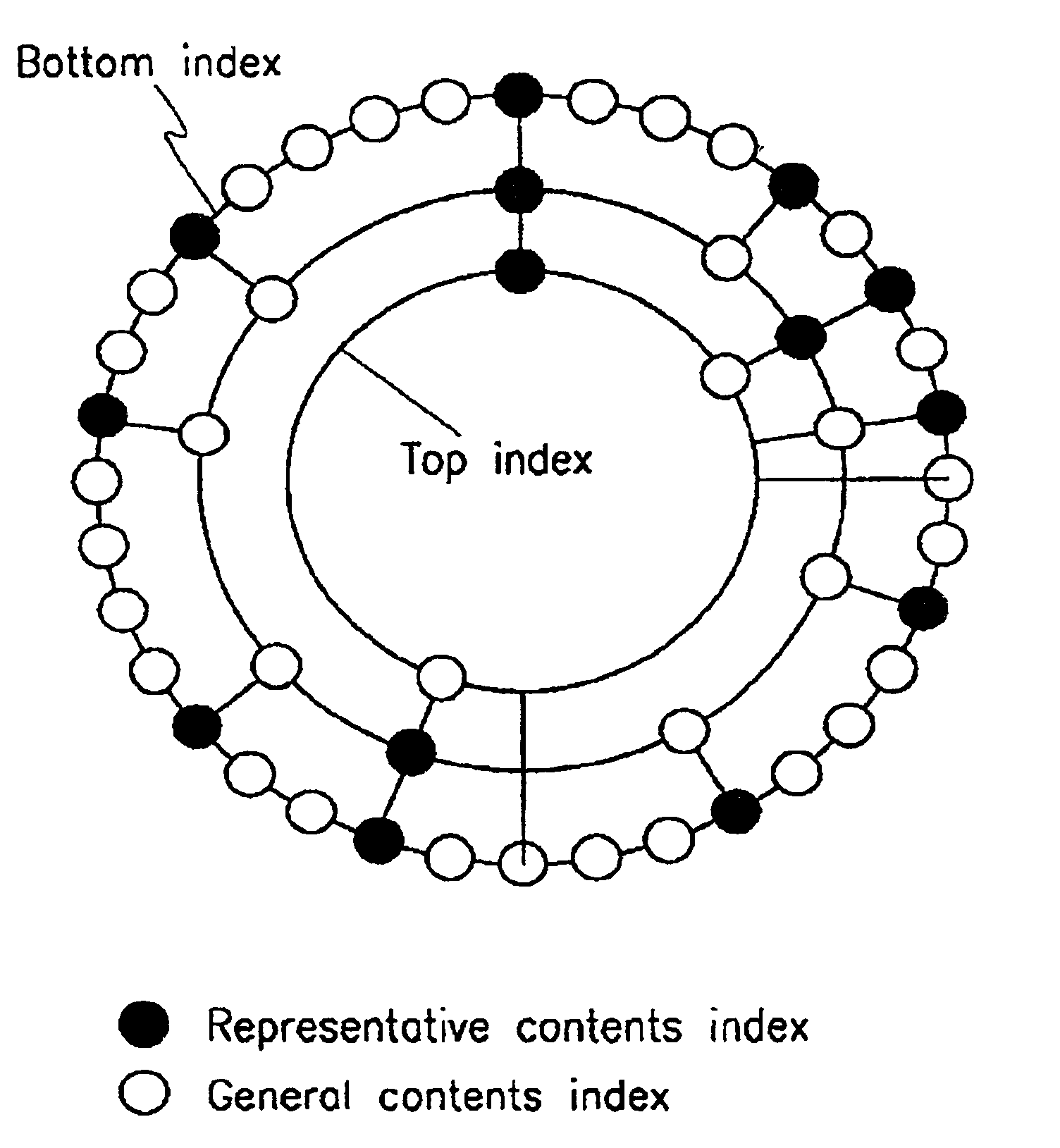

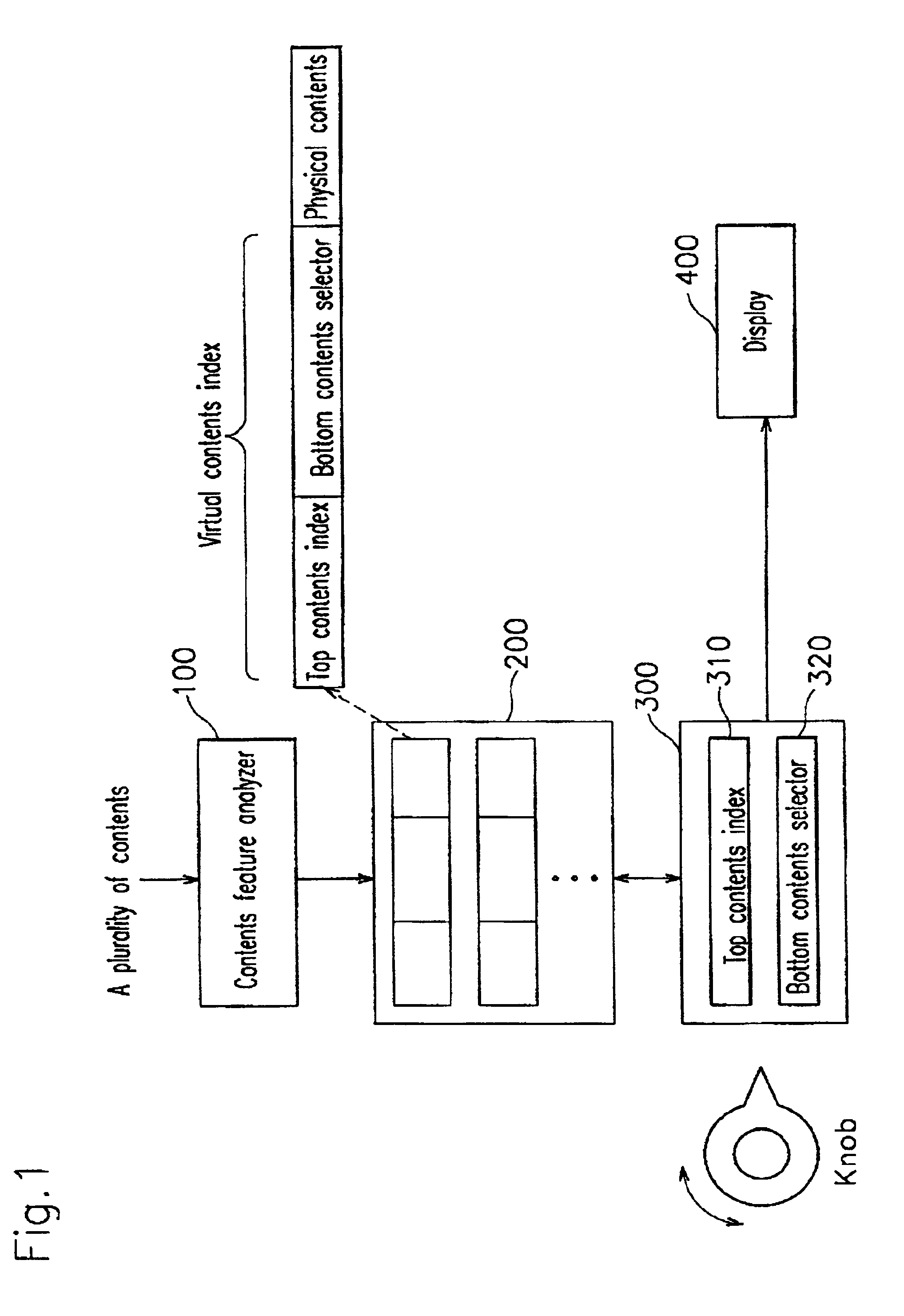

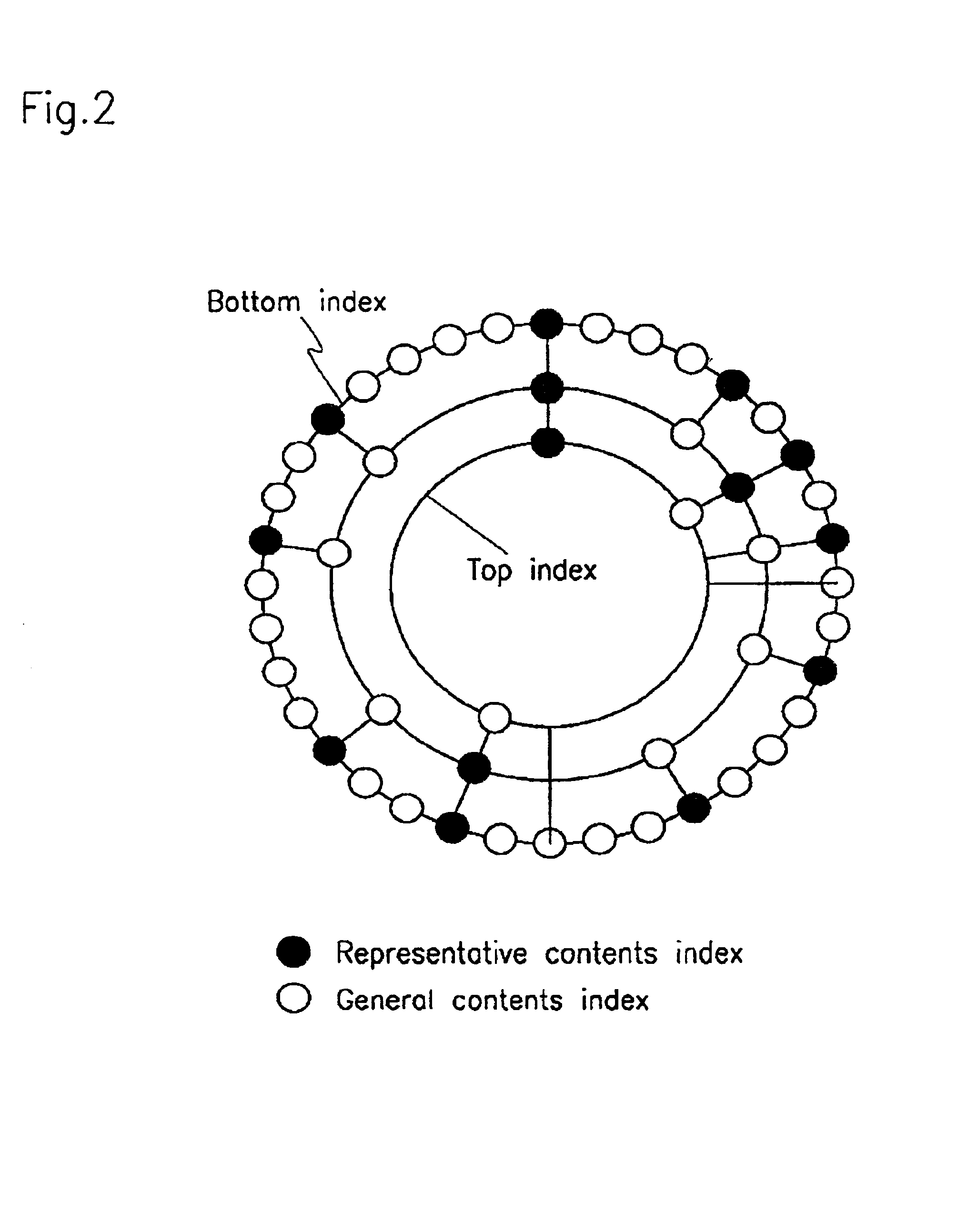

Contents browsing system with multi-level circular index and automated contents analysis function

InactiveUS6920445B2Effective classificationEasy to displayData processing applicationsDigital data information retrievalVirtual positionConfusion

Disclosed is a circular index structure. The contents feature analyzer analyzes the contents and stores information about virtual locations to be located on the indexes of the respective contents, and information on the physical indexes for displaying the corresponding contents, in a memory. The contents selector extracts the information on the physical indexes using the information stored in the memory according to the user's request, and displays the contents. Therefore, the user can easily select desired various contents having various channels and categories such as the digital televisions and the web more intellectually, and accordingly, the user's confusion caused by the overflow of information is prevented and the user can quickly access the contents.

Owner:MIND FUSION LLC

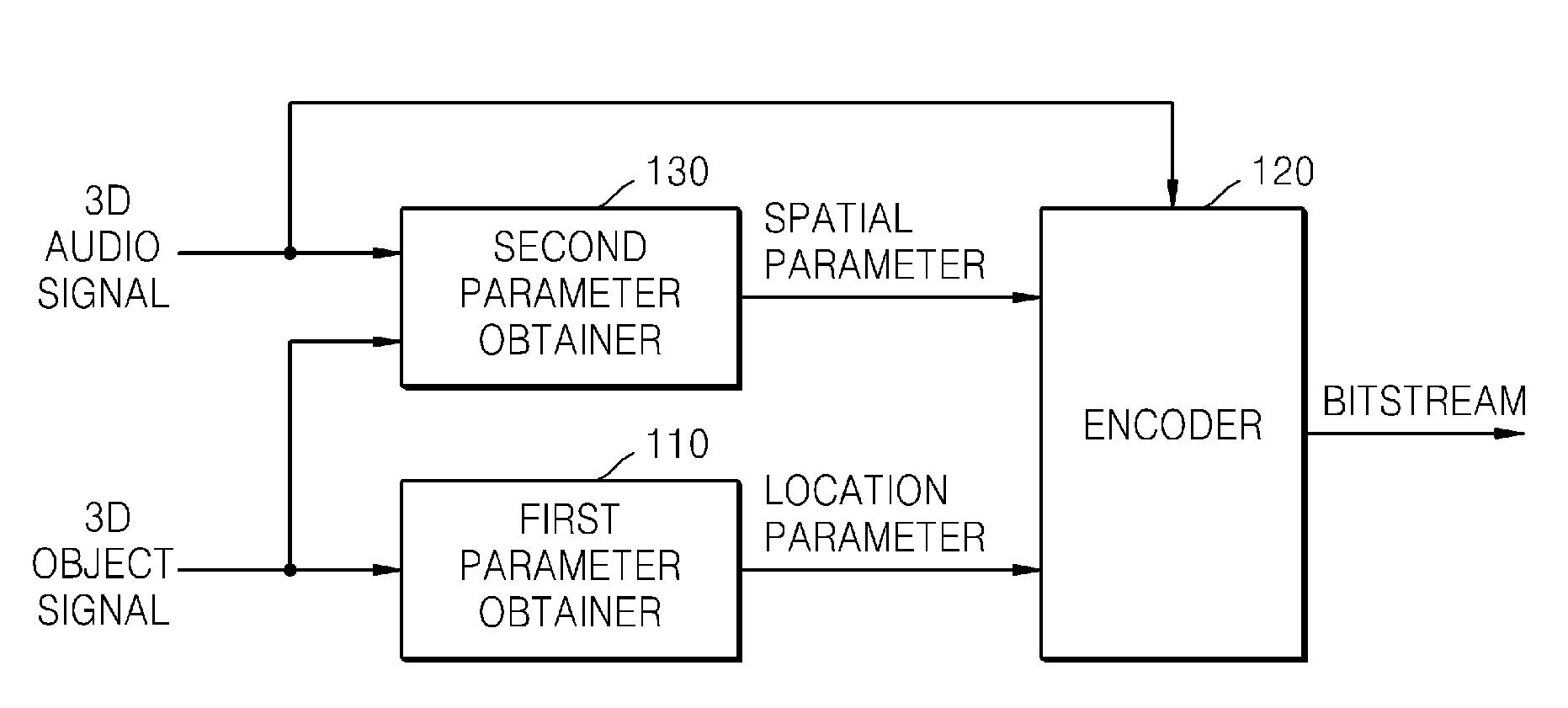

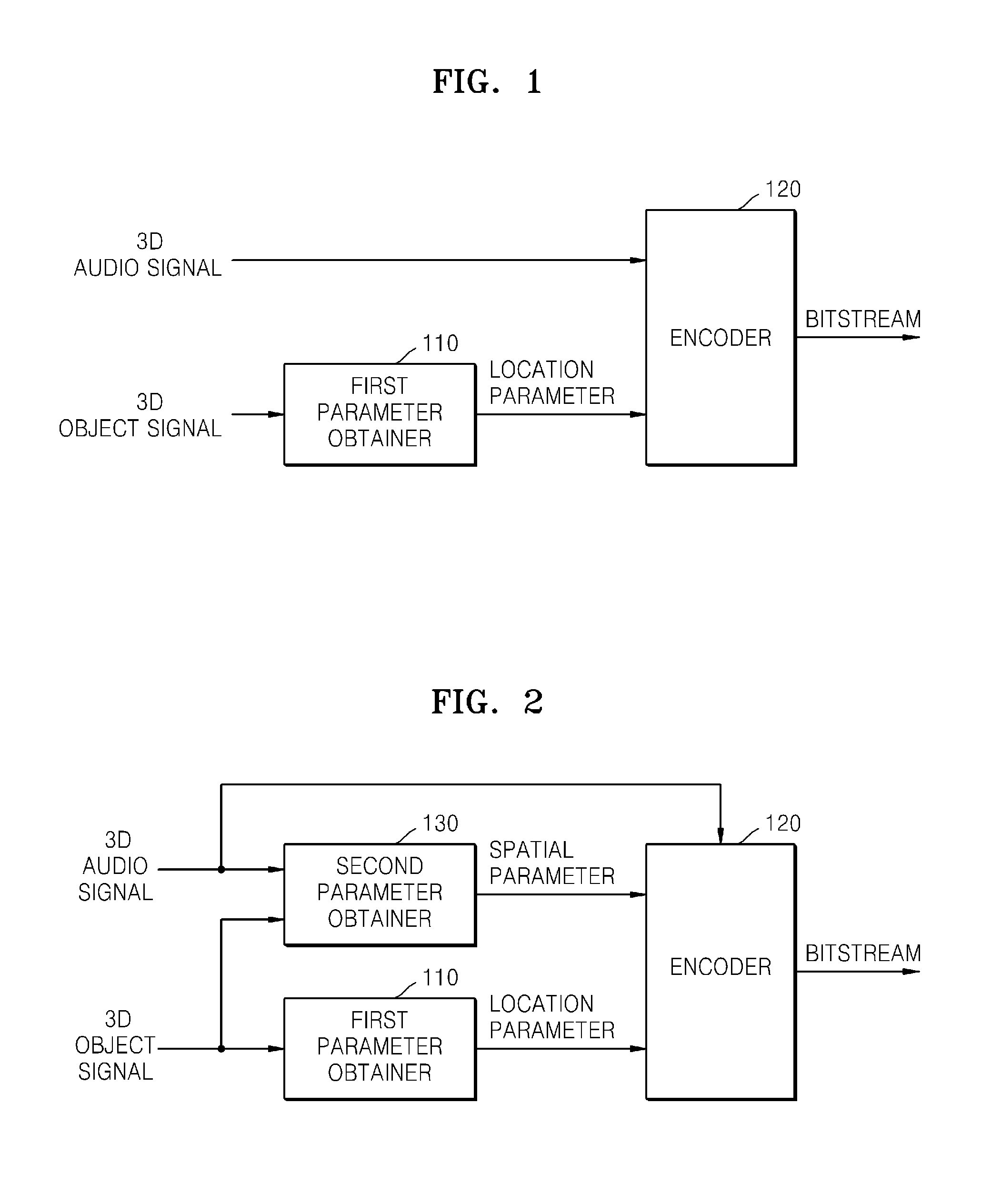

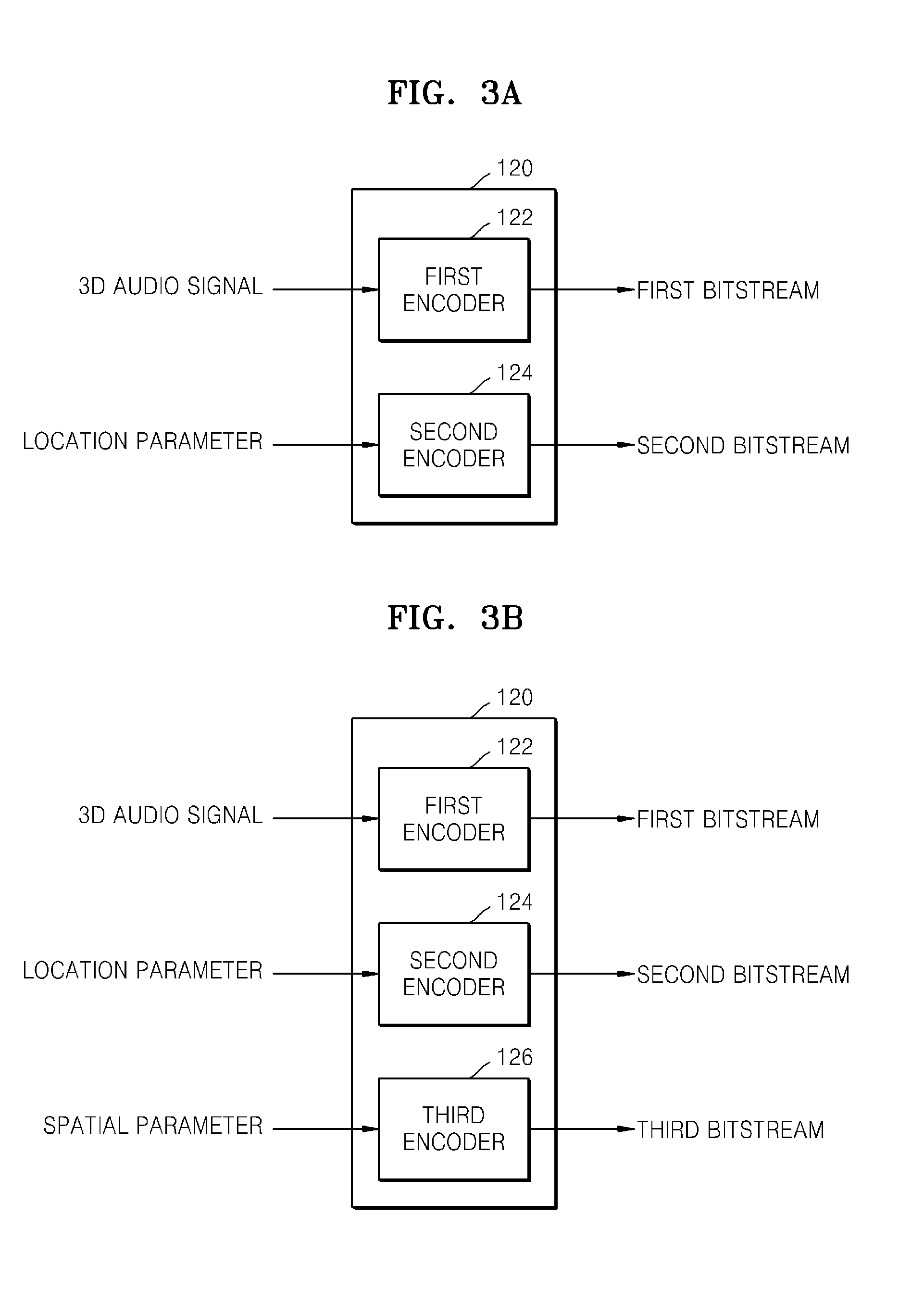

Method and apparatus for encoding and decoding 3-dimensional audio signal

ActiveUS20120314875A1Precise maintenanceSpeech analysisStereophonic arrangmentsSignal onVirtual position

A method of encoding a multi-channel 3-dimensional (3D) audio signal mixed with a multi-channel 3D object signal is provided. The method includes: obtaining a location parameter indicating a virtual location of the multi-channel 3D object signal on a multi-channel speaker layout based on a gain value of the multi-channel 3D object signal for each channel; and encoding the multi-channel 3D audio signal and the location parameter.

Owner:SAMSUNG ELECTRONICS CO LTD

Systems, devices, and methods for eyebox expansion in wearable heads-up displays

ActiveUS20160377866A1Cathode-ray tube indicatorsDetails for portable computersHead-up displayExit pupil

Systems, devices, and methods for eyebox expansion by exit pupil replication in wearable heads-up displays (“WHUDs”) are described. A WHUD includes a scanning laser projector (“SLP”), a holographic combiner, and an optical splitter positioned in the optical path therebetween. The optical splitter receives light signals generated by the SLP and separates the light signals into N sub-ranges based on the point of incidence of each light signal at the optical splitter. The optical splitter redirects the light signals corresponding to respective ones of the N sub-ranges towards the holographic combiner effectively from respective ones of N spatially-separated virtual positions for the SLP. The holographic combiner converges the light signals to respective ones of N spatially-separated exit pupils at the eye of the user. In this way, multiple instances of the exit pupil are distributed over the area of the eye and the eyebox of the WHUD is expanded.

Owner:GOOGLE LLC

Method and system for switching between absolute and relative pointing with direct input devices

InactiveUS20070291007A1Cathode-ray tube indicatorsInput/output processes for data processingControl signalVirtual position

A method maps positions of a direct input device to locations of a pointer displayed on a surface. The method performs absolute mapping between physical positions of a direct input device and virtual locations of a pointer on a display device when operating in an absolute mapping mode, and relative mapping between the physical positions of the input device and the locations the pointer when operating in a relative mapping mode. Switching between the absolute mapping and the relative mappings is in response to control signals detected from the direct input device.

Owner:MITSUBISHI ELECTRIC RES LAB INC

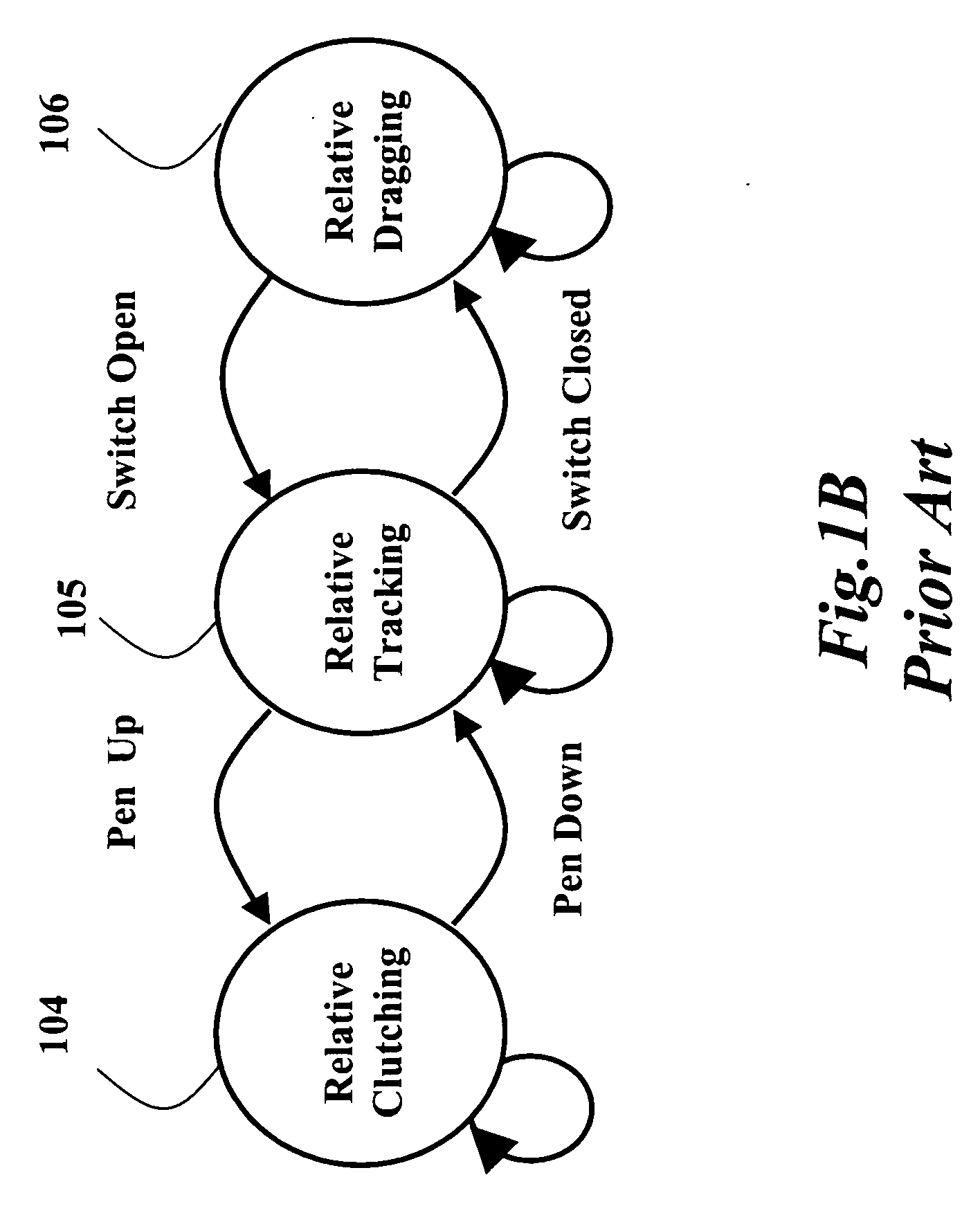

Image processing method, image processing system, and modifying-data producing method

InactiveUS6661931B1Reduces amount of modificationReduce the amount requiredImage enhancementImage analysisImaging processingVirtual screen

A method of processing batches of object-part-image data representing object-part images which are taken by one or more image-taking devices from parts of an object, respectively, and thereby obtaining one or more optical characteristic values of the object, the object-part images imaging the parts of the object such that a first object-part image images a first part and a second object-part image images a second part adjacent to the first part in the object and includes an overlapping portion imaging a portion of the first part, each of the batches of object-part-image data including optical characteristic values respectively associated with physical positions, and thereby defining a corresponding one of physical screens, the method including the steps of designating at least one virtual position on a virtual screen corresponding to the parts of the object, modifying, based on predetermined modifying data, the at least one virtual position on the virtual screen, and thereby determining at least one physical position corresponding to the at least one virtual position, on one of the physical screens, and obtaining at least one optical characteristic value associated with the at least one physical position on the one physical screen, as at least one optical characteristic value associated with the at least one virtual position on the virtual screen and as the at least one optical characteristic value of the object.

Owner:FUJI MASCH MFG CO LTD

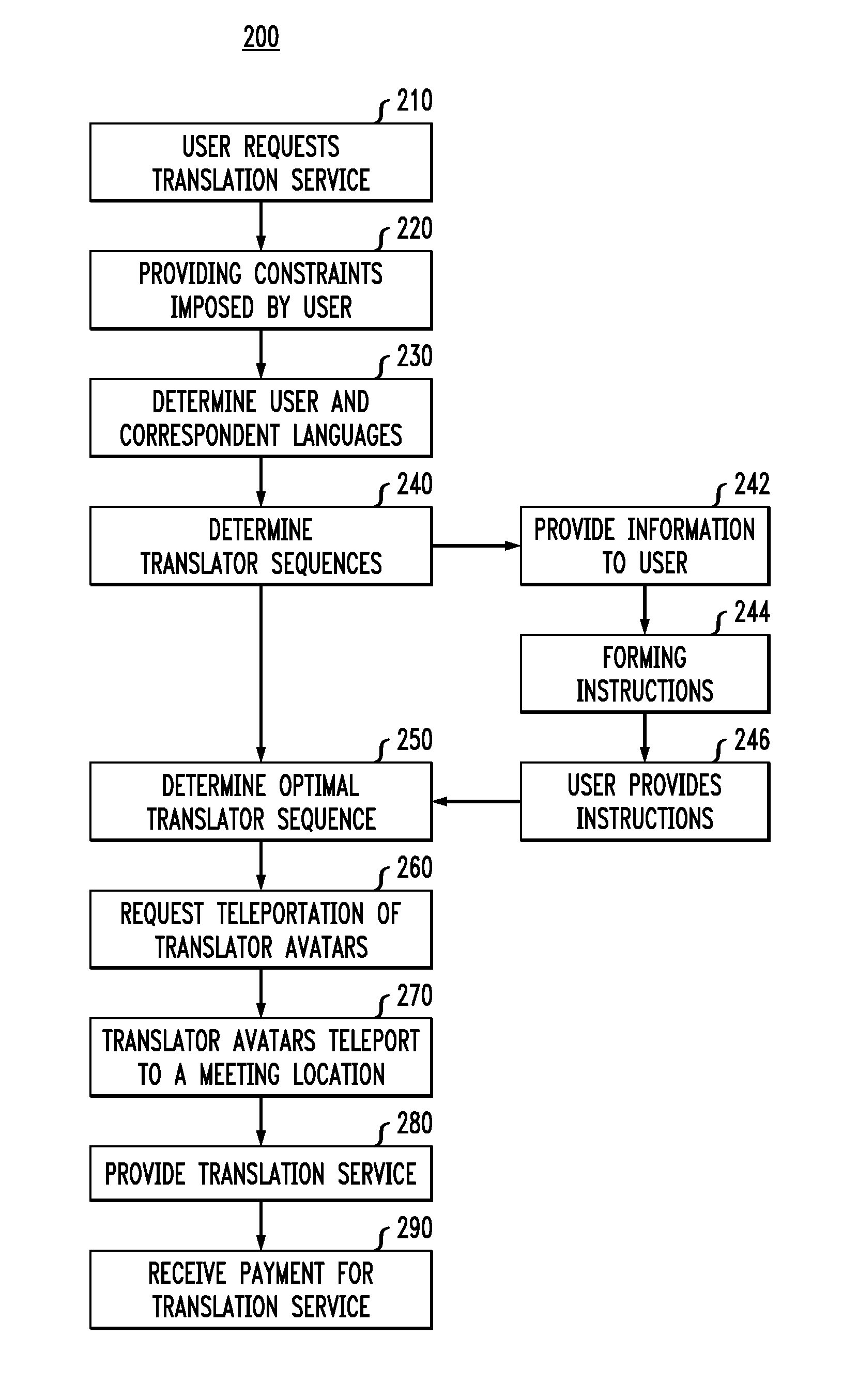

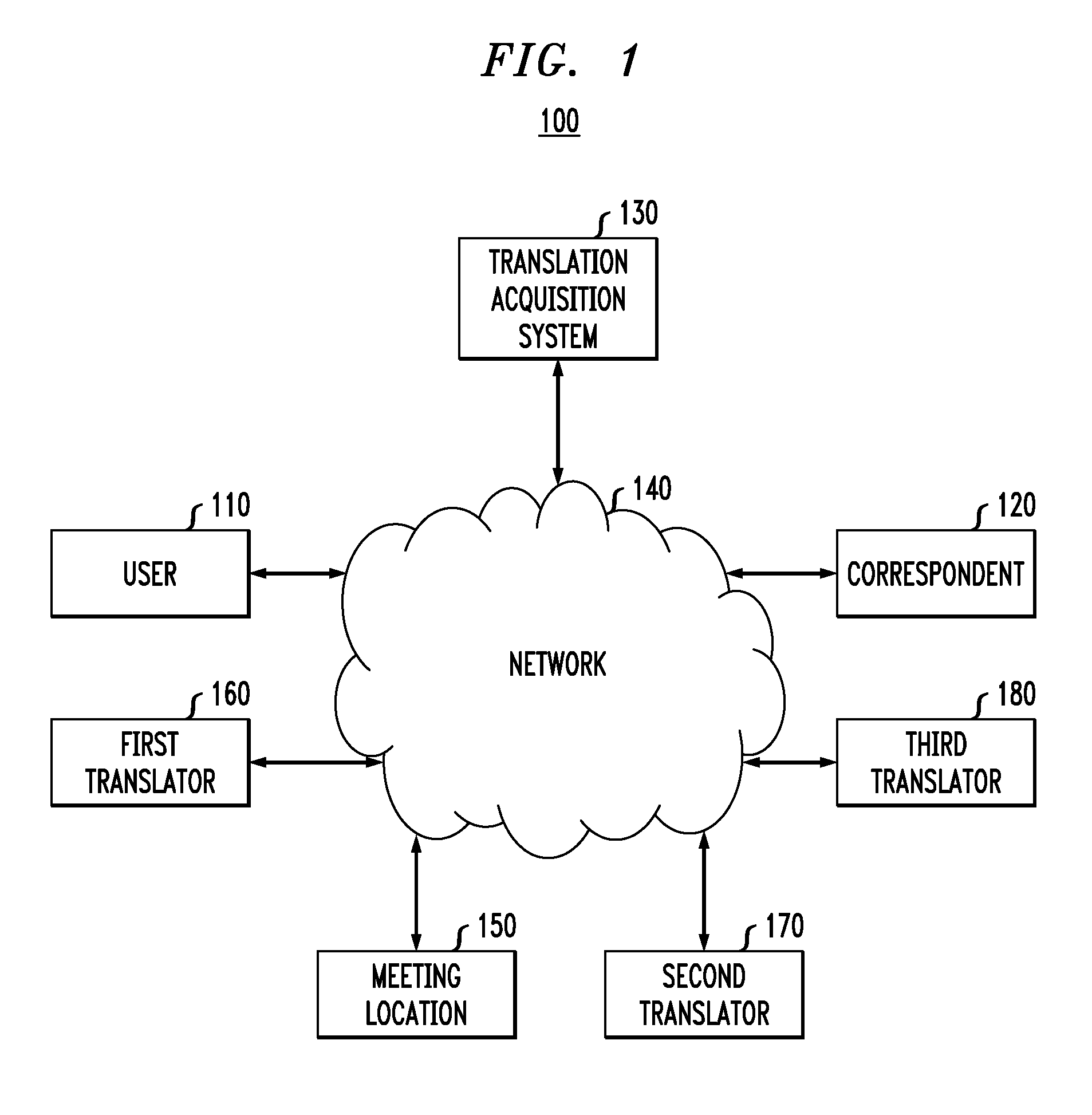

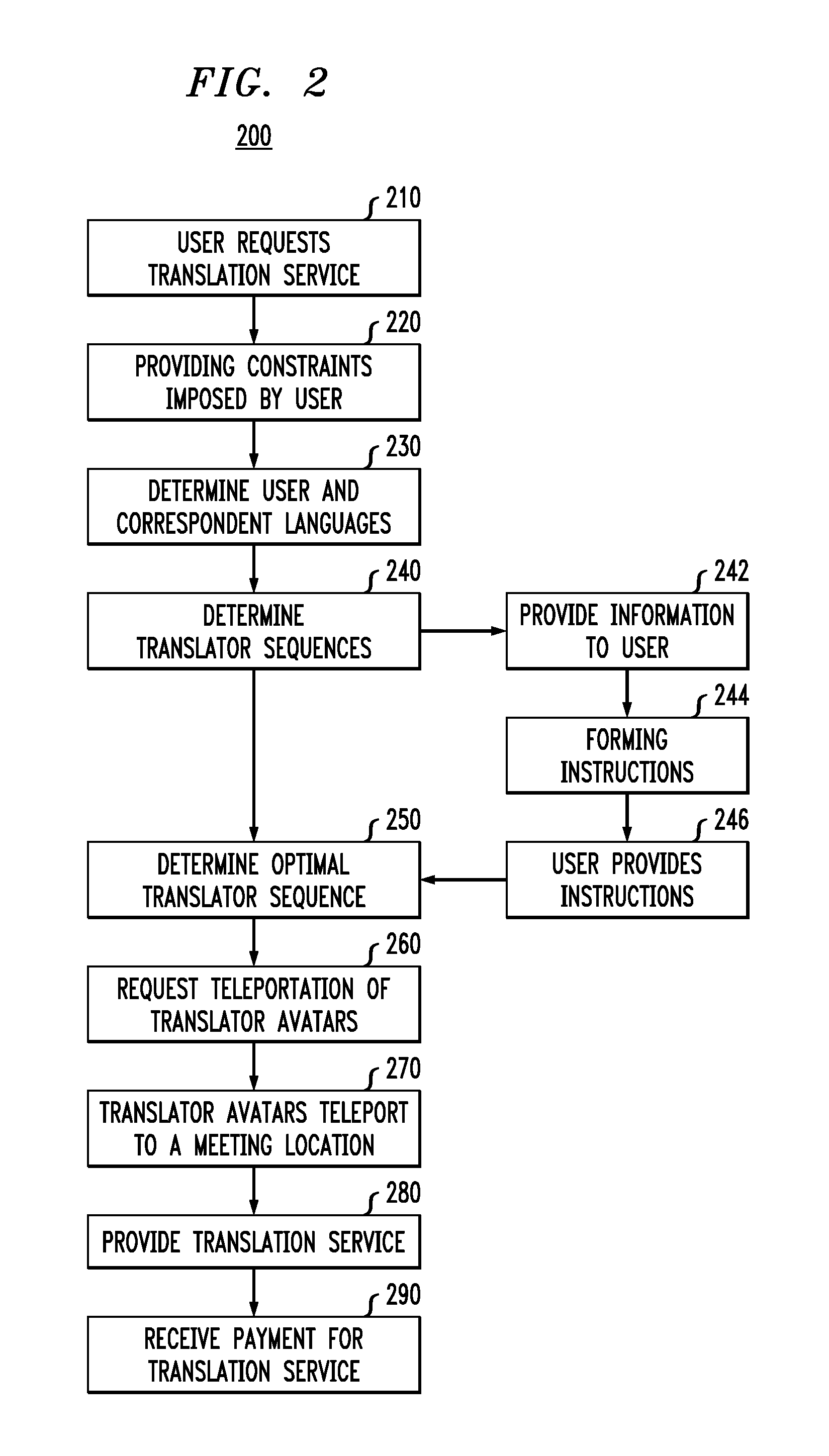

Language Translation in an Environment Associated with a Virtual Application

ActiveUS20110077934A1Facilitate translated communicationNatural language translationFinanceApplication softwareHuman–computer interaction

Methods and apparatus for language translation in a computing environment associated with a virtual application are presented. For example, a method for providing language translation includes determining languages of a user and a correspondent; determining one or more sequences of translators; determining a selected sequence of selected translators from the one or more sequences of the translators; requesting a change in virtual locations, within the computing environment associated with the virtual application, of one or more selected translator virtual representations of the selected translators to a virtual meeting location within the computing environment associated with the virtual application; and changing virtual locations of the one or more selected translator virtual representations to the virtual meeting location. One or more of determining languages, determining one or more sequences, determining a selected sequence, requesting a change in virtual locations, and changing virtual locations occur on a processor device.

Owner:IBM CORP

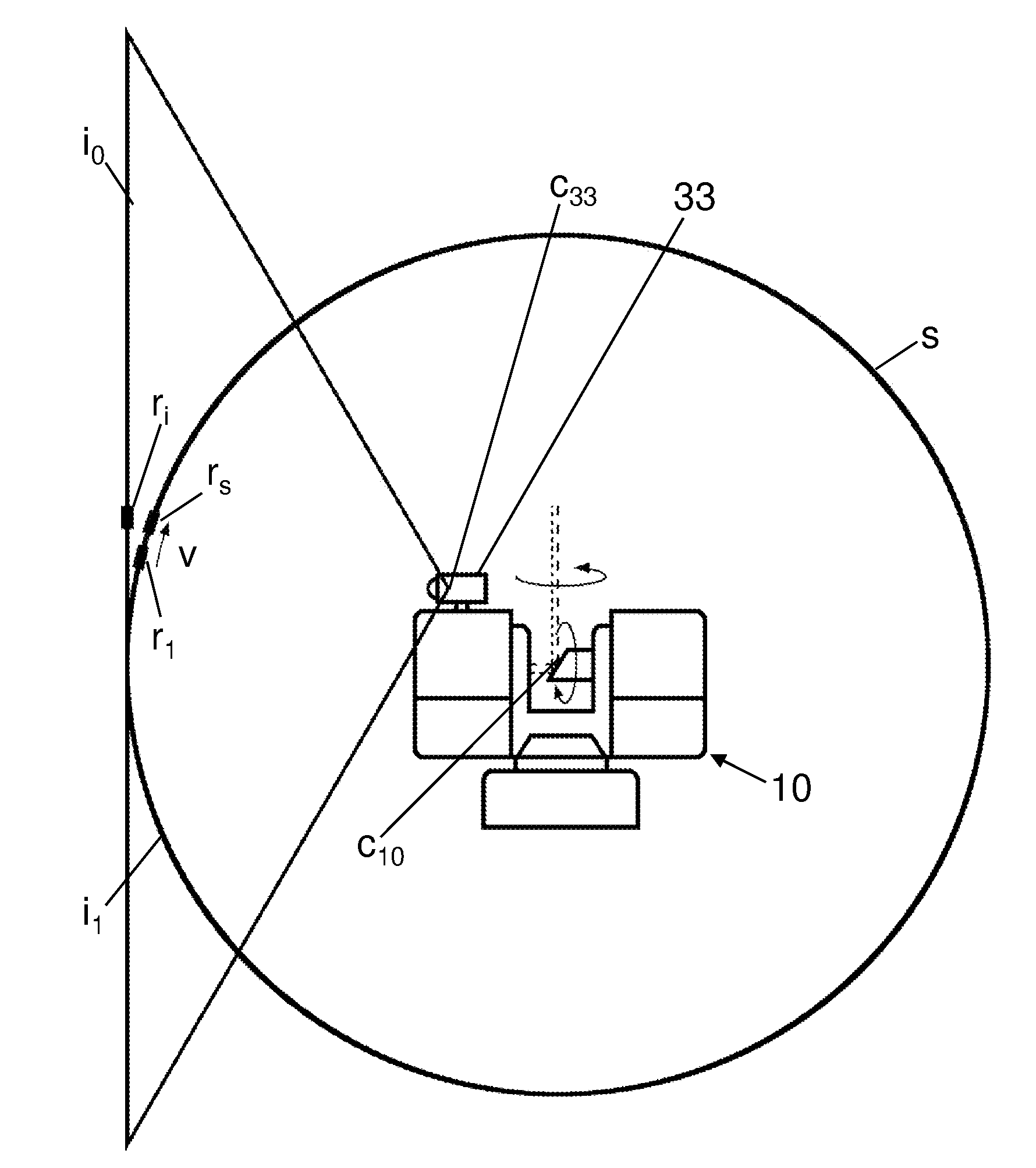

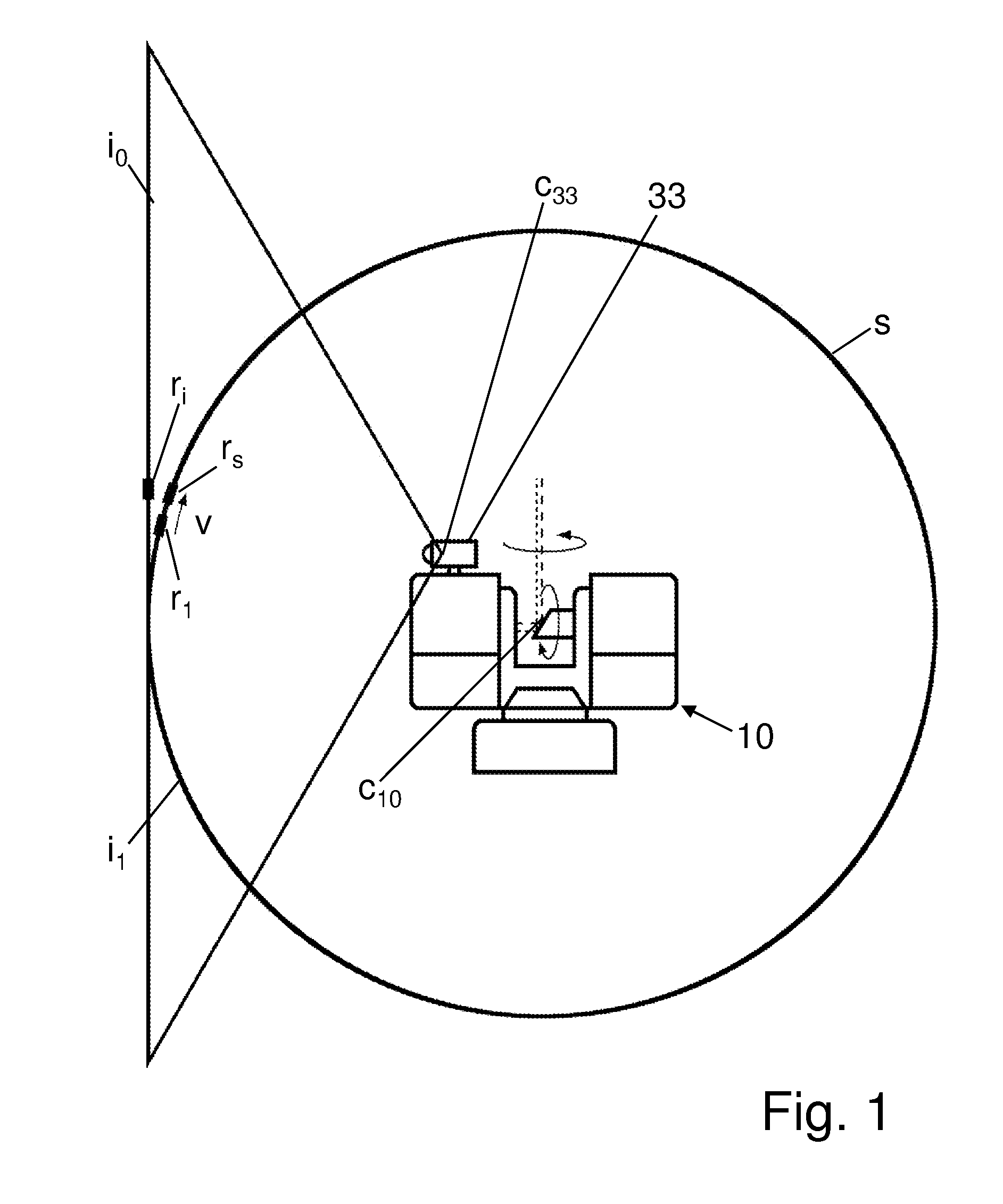

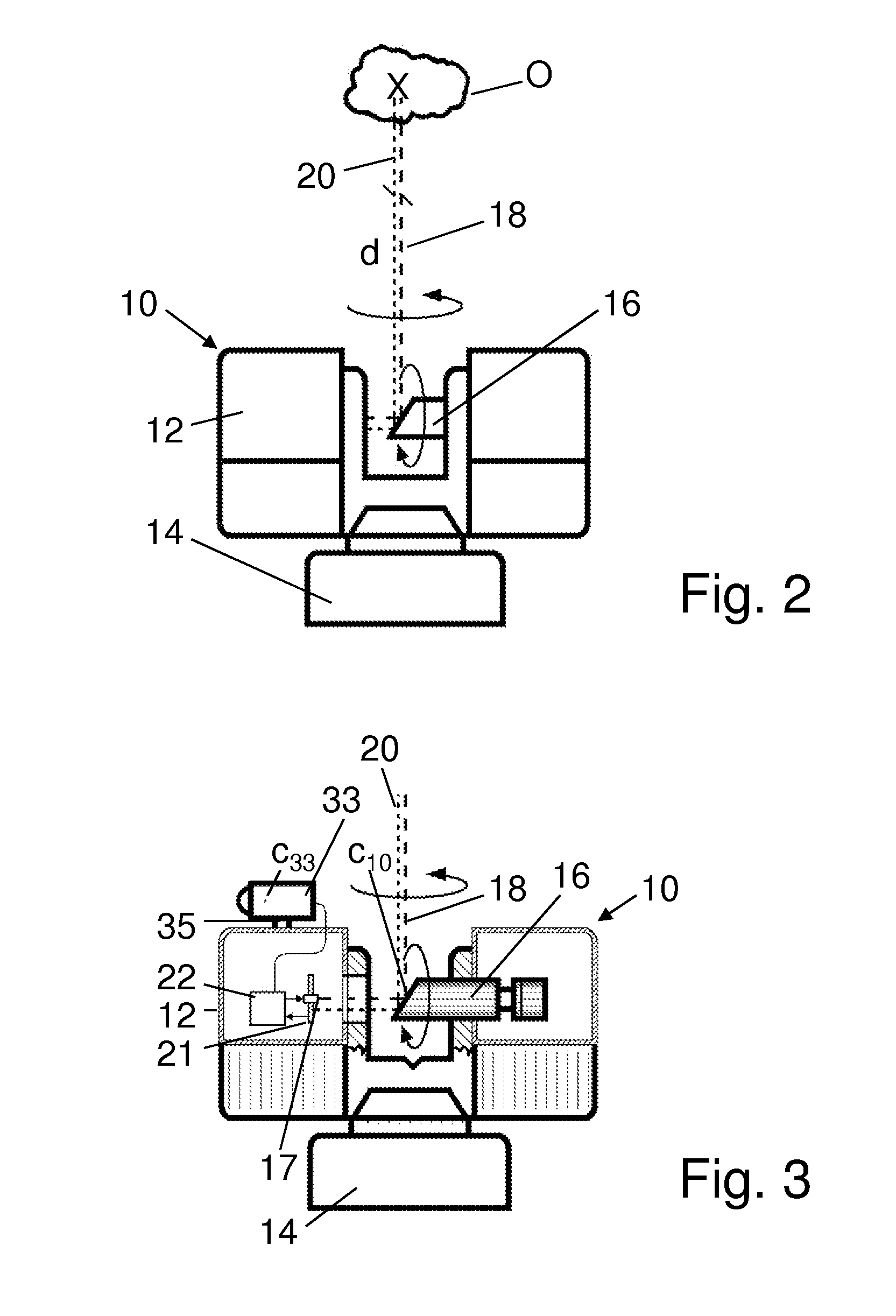

Method for optically scanning and measuring an environment

InactiveUS20120070077A1Correct deviationImprove performanceActive open surveying meansCharacter and pattern recognitionColor imageLaser scanning

With a method for optically scanning and measuring an environment by means of a laser scanner, which has a center, and which, for making a scan, optically scans and measures its environment by means of light beams and evaluates it by means of a control and evaluation unit, wherein a color camera having a center takes colored images of the environment which must be linked with the scan, the control and evaluation unit of the laser scanner, to which the color camera is connected, links the scan and the colored images and corrects deviations of the center and / or the orientation of the color camera relative to the center and / or the orientation of the laser scanner by virtually moving the color camera iteratively for each colored image and by transforming at least part of the colored image for this new virtual position and / or orientation of the color camera, until the projection of the colored image and the projection of the scan onto a common reference surface comply with each other in the best possible way.

Owner:FARO TECH INC

A method system and apparatus for telepresence communications utilizing video avatars

ActiveUS20060210045A1Special service for subscribersTwo-way working systemsFeature extractionVirtual position

An apparatus, system and method for telepresence communications in an environment of a virtual location between two or more participants at multiple locations. First perspective data descriptive of the perspective of the virtual location environment experienced by a first participant at a first location and feature data extracted and / or otherwise captured from a second participant at a second location are processed to generate a first virtual representation of the second participant in the virtual environment from the perspective of the first participant. Likewise, second perspective data descriptive of the perspective of the virtual location environment experienced by the second participant and feature data extracted and / or otherwise captured from features of the first participant are processed to generate a second virtual representation of the first participant in the virtual environment from the perspective of the second participant. The first and second virtual representations are rendered and then displayed to the first and second participants, respectively. The first and second virtual representations are updated and redisplayed to the participants upon a change in one or more of the perspective data and feature data from which they are generated. The apparatus, system and method are scalable to two or more participants.

Owner:GOOGLE TECH HLDG LLC

Computer simulation of visual images using 2d spherical images extracted from 3D data

A system, method, and computer-readable instructions for virtual real-time computer simulation of visual images of perspective scenes. A plurality of 2D spherical images are saved as a data set including 3D positional information of the 2D spherical images corresponding to a series of locations in a 3D terrain, wherein each 2D spherical image comprises a defined volume and has an adjacency relation with adjacent 2D spherical images in 3D Euclidean space. As the input for a current virtual position changes based on simulated movement of the observer in the 3D terrain, a processor updates the current 2D spherical image to an adjacent 2D spherical image as the current virtual position crosses into the adjacent 2D spherical image.

Owner:LOCKHEED MARTIN CORP

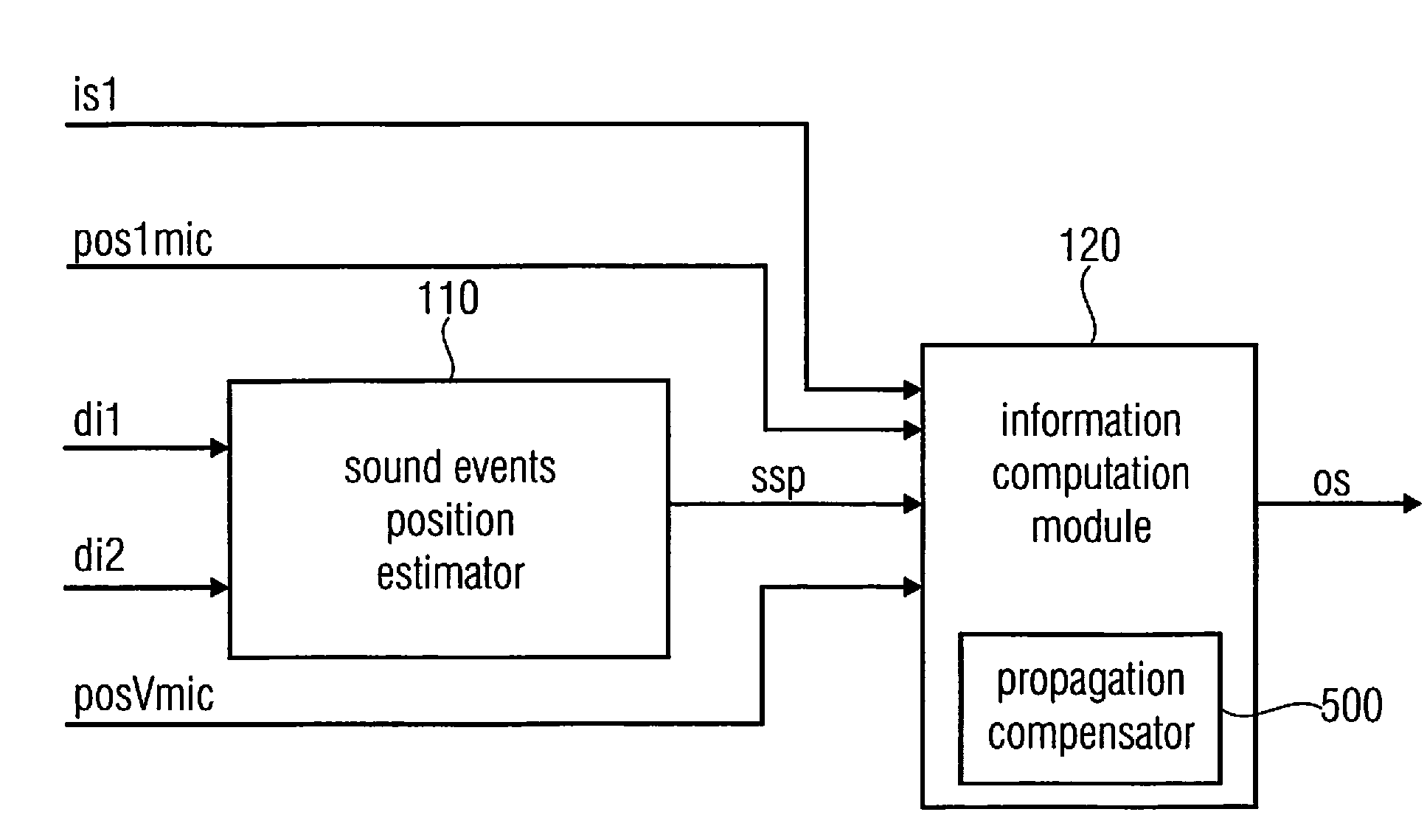

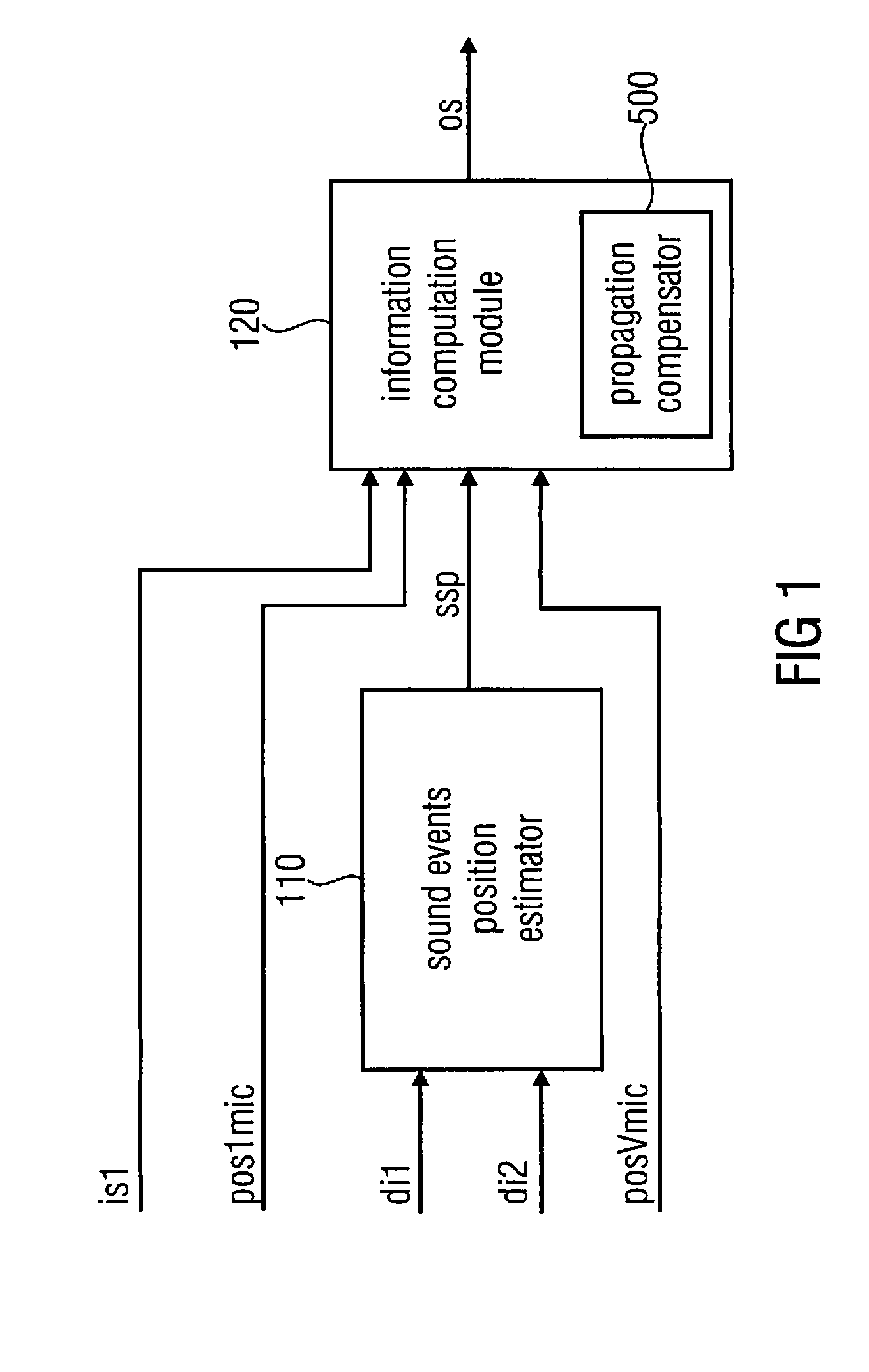

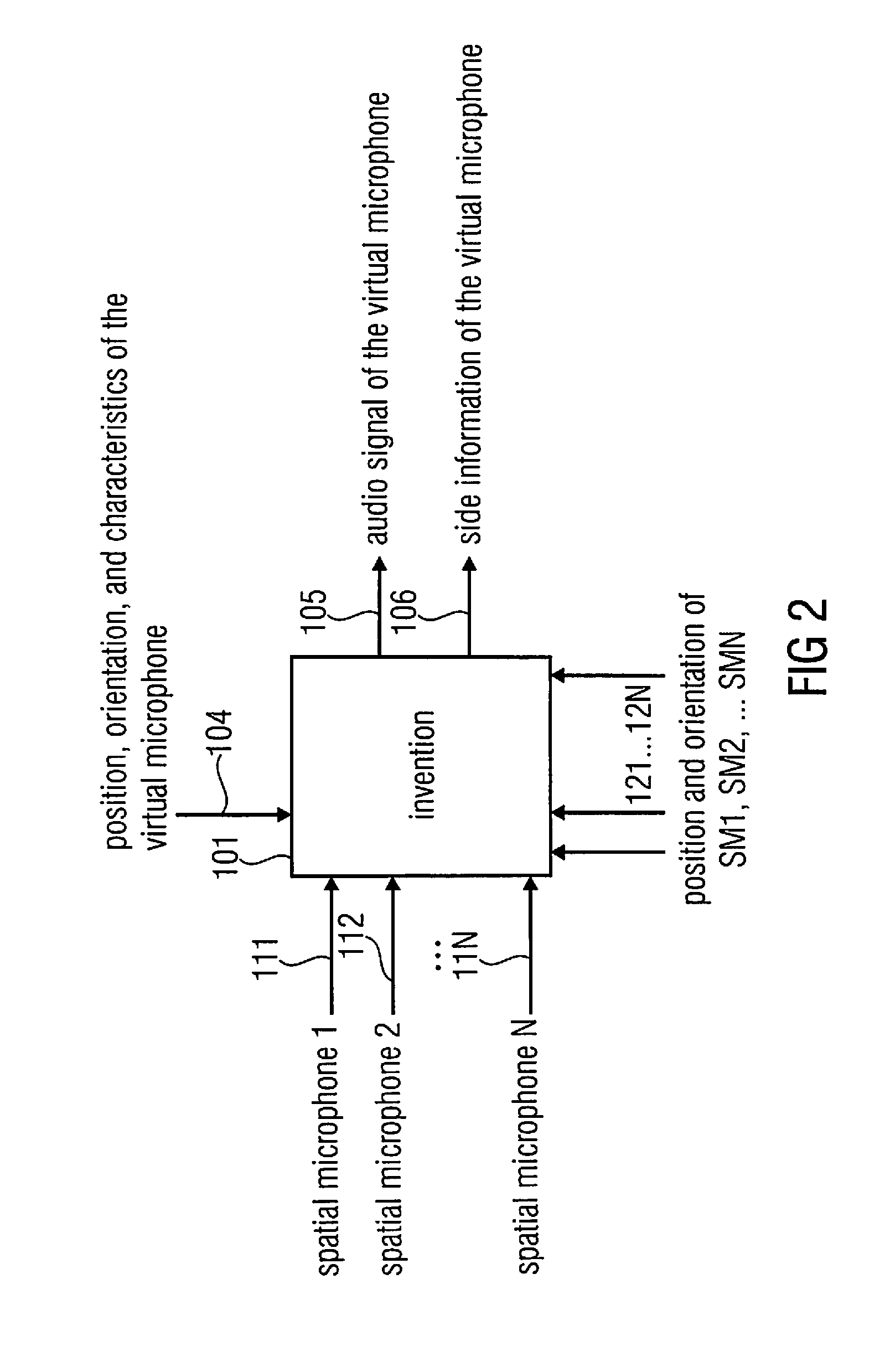

Sound acquisition via the extraction of geometrical information from direction of arrival estimates

An apparatus for generating an audio output signal to simulate a recording of a virtual microphone at a configurable virtual position in an environment includes a sound events position estimator and an information computation module. The former is adapted to estimate a sound source position indicating a position of a sound source in the environment, wherein the sound events position estimator is adapted to estimate the sound source position based on first and second direction information provided by first and second real spatial microphones, respectively, located at first and second real microphone positions in the environment, respectively. The information computation module is adapted to generate the audio output signal based on a first recorded audio input signal, on the first real microphone position, on the virtual position of the virtual microphone, and on the sound source position.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

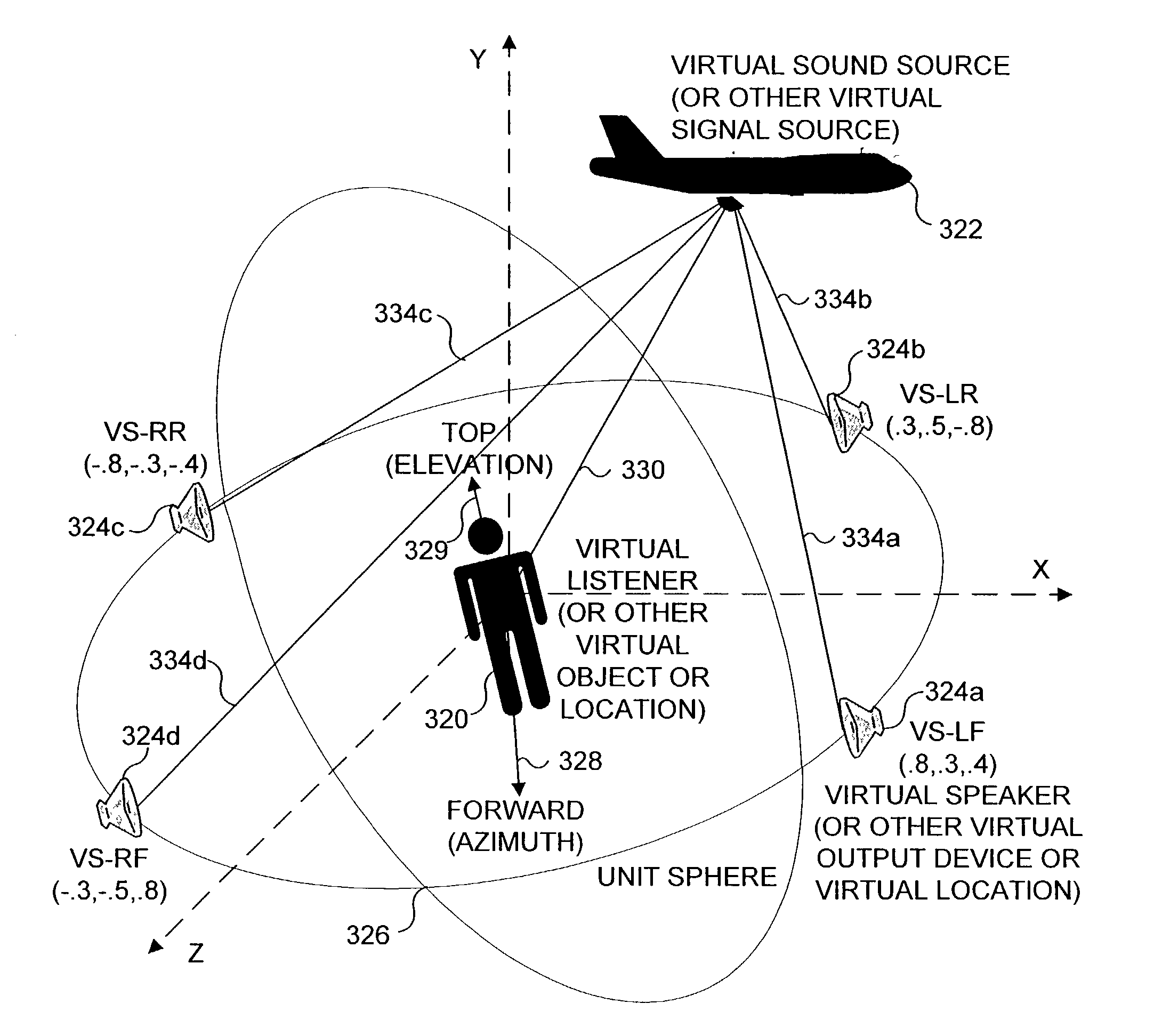

Virtual sound source positioning

ActiveUS7113610B1Simple calculationStereophonic systemsLoudspeaker spatial/constructional arrangementsSound sourcesVirtual position

Indicating a spatial location of a virtual sound source by determining an output for each of one or more physical speakers as a function of an orientation of corresponding virtual speakers that track the position and orientation of a virtual listener relative to the virtual sound source in a virtual environment or game simulation. A vector distance between the virtual sound source and each virtual speaker is used to determine a volume level for each corresponding physical speaker. Each virtual speaker is specified at a fixed location on a unit sphere centered on the virtual listener, and the virtual sound source is normalized to a virtual position on the unit sphere. All computations are performed in Cartesian coordinates. Preferably, each virtual speaker vector distance is used in a nonlinear function to compute a volume attenuation factor for the corresponding physical speaker output.

Owner:MICROSOFT TECH LICENSING LLC

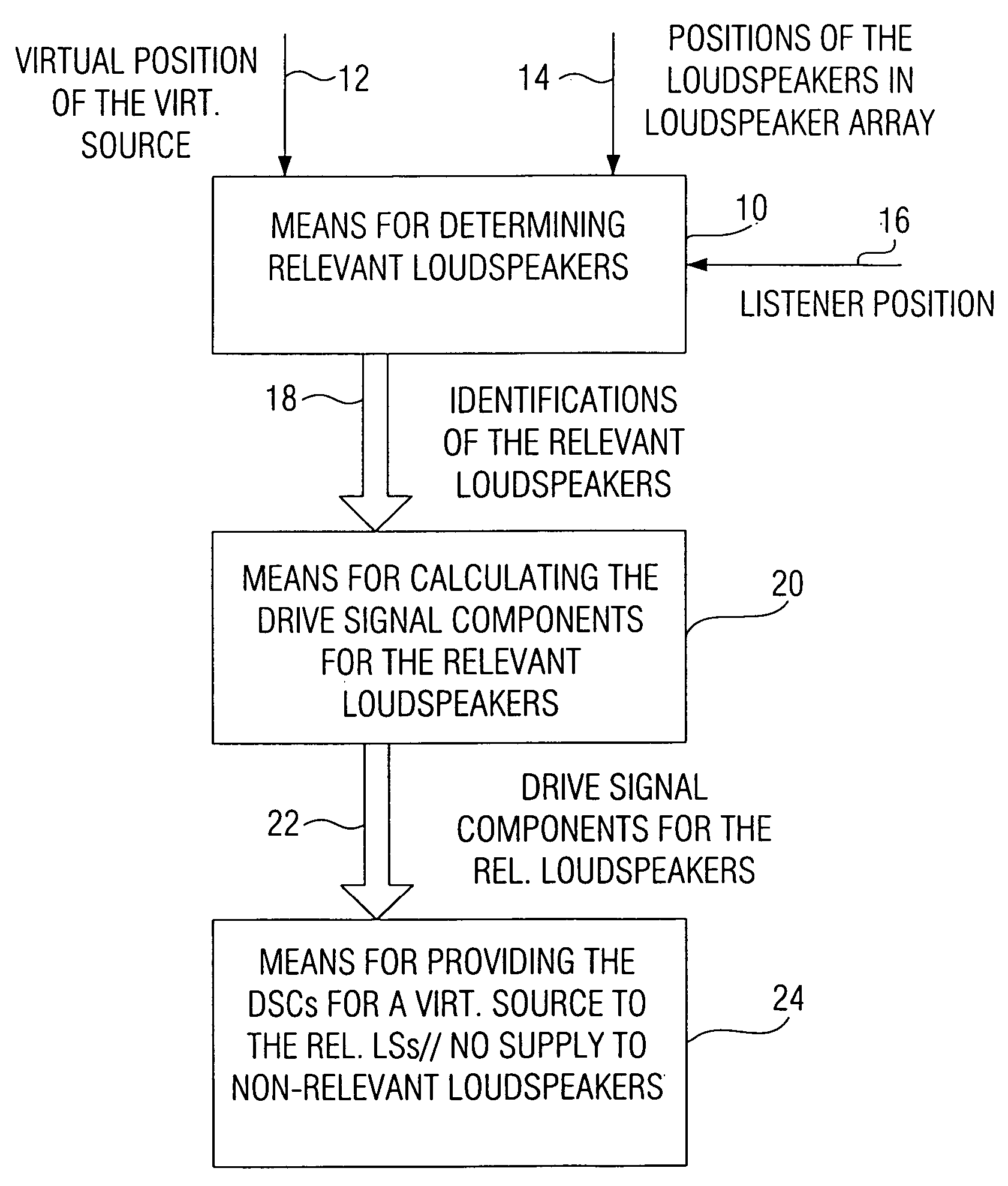

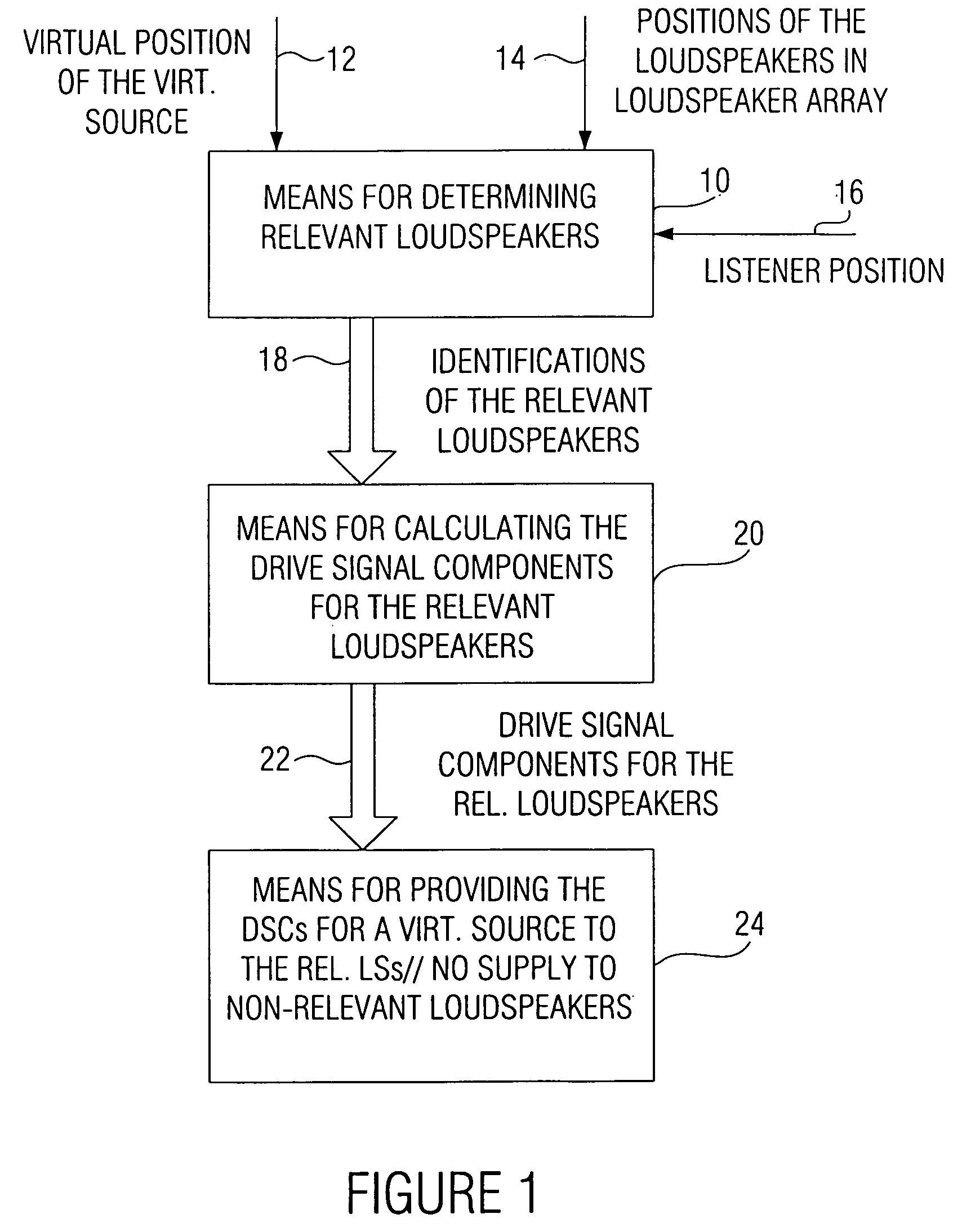

Wave field synthesis apparatus and method of driving an array of loudspeakers

ActiveUS20060098830A1Reduce artifactsSubstation/switching arrangement detailsLine-transmissionWave field synthesisWave field

In a wave field synthesis apparatus for driving an array of loudspeakers with drive signals, the loudspeakers being arranged at different defined positions, a drive signal for a loudspeaker being based on an audio signal associated with a virtual source having a virtual position with reference to the loudspeaker array and on the defined position of the loudspeaker, at first relevant loudspeakers of the loudspeaker array are determined on the basis of the position of the virtual source, a predefined listener position, and the defined positions of the loudspeakers, so that artifacts due to loudspeaker signals moving opposite to a direction from the virtual source to the predefined listener position are reduced. Downstream to means for calculating the drive signal components for the relevant loudspeakers and for a virtual source, there is means for providing the drive signal components for the relevant loudspeakers for the virtual source to the relevant loudspeakers, wherein no drive signals for the virtual source are provided to loudspeakers of the loudspeaker array not belonging to the relevant loudspeakers. With this, artifacts in an area of the audience room due to a generation wave field are suppressed, so that in this area only the useful wave field is heard in artifact-free manner.

Owner:FRAUNHOFER GESELLSCHAFT ZUR FOERDERUNG DER ANGEWANDTEN FORSCHUNG EV

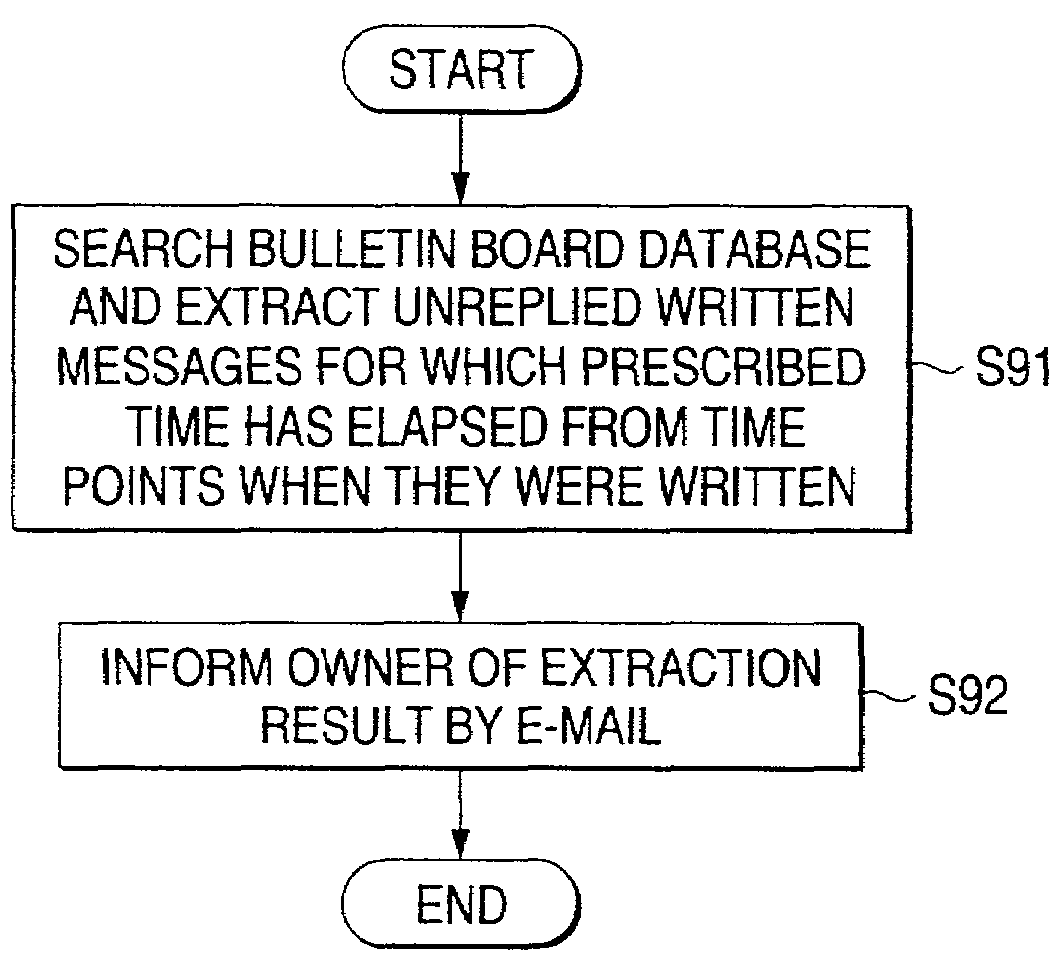

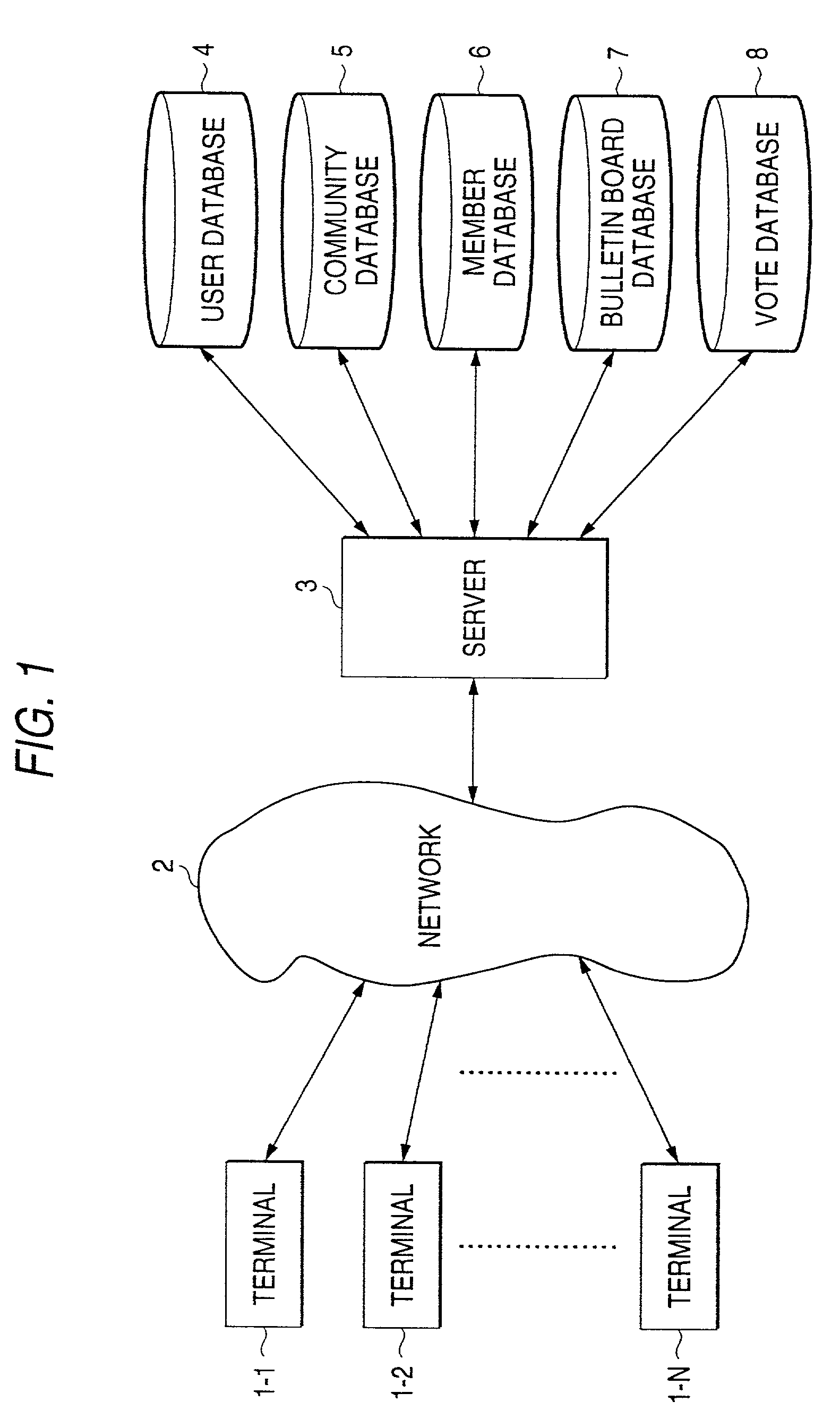

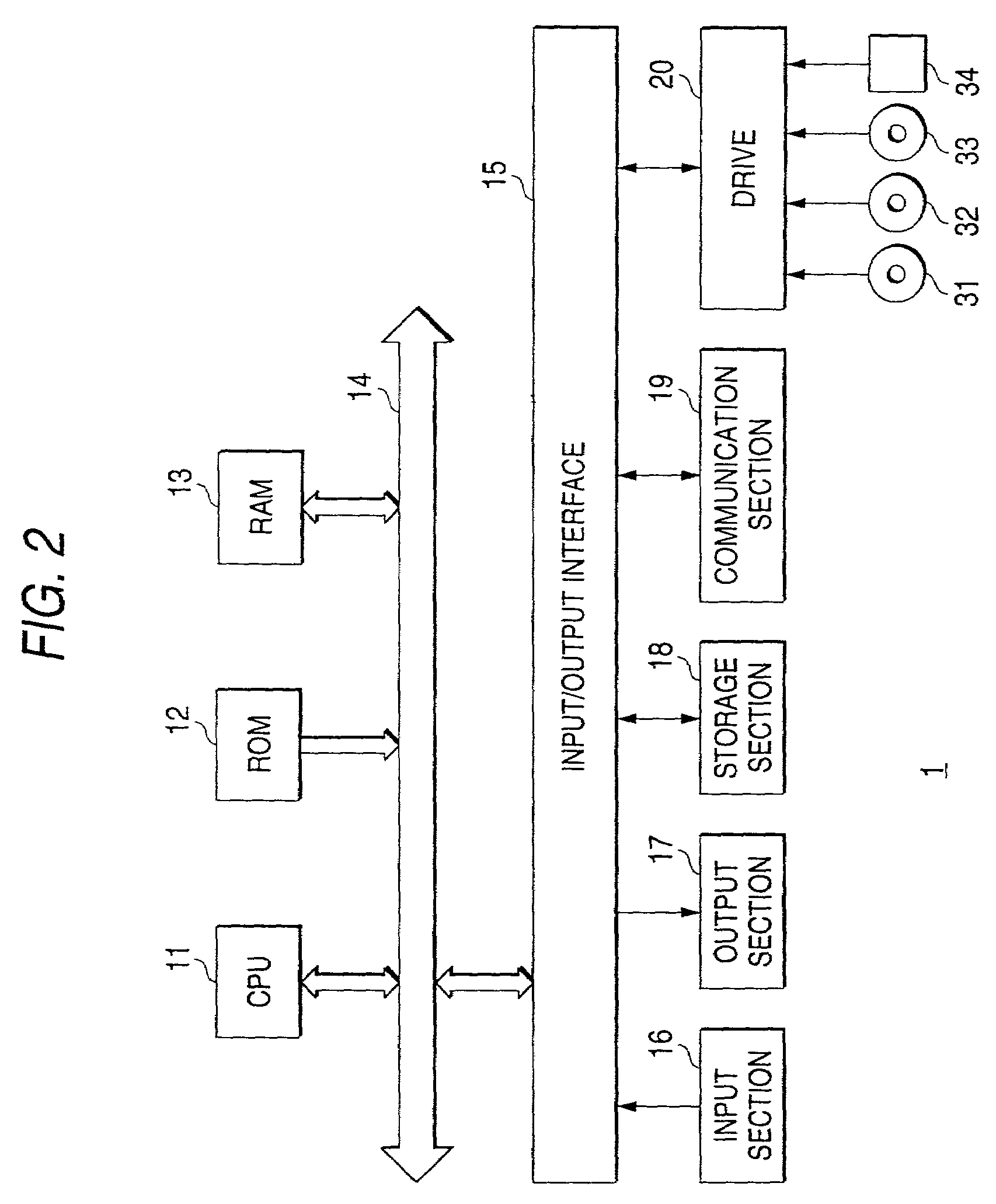

Advertising and managing communities within a virtual space

A method and an information processing apparatus are provided for efficiently advertising a community provided in a virtual space. A user transmits a request for generating a community in a virtual space. Data relating to a community is newly generated and stored. Greeting sentences are thereafter sent to communities that are near the newly generated community in a virtual positional relationship of a virtual space.

Owner:LINE CORPORATION

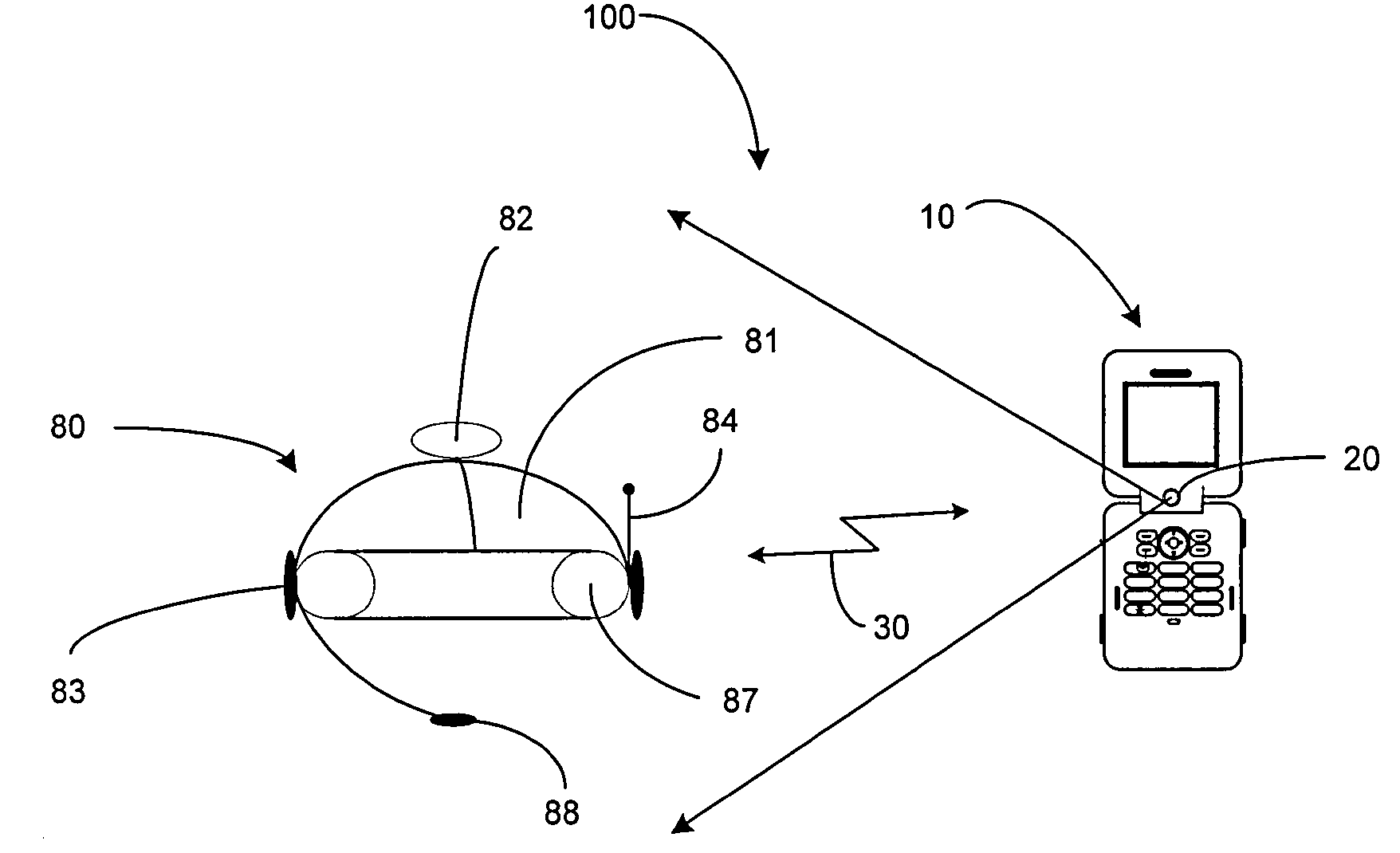

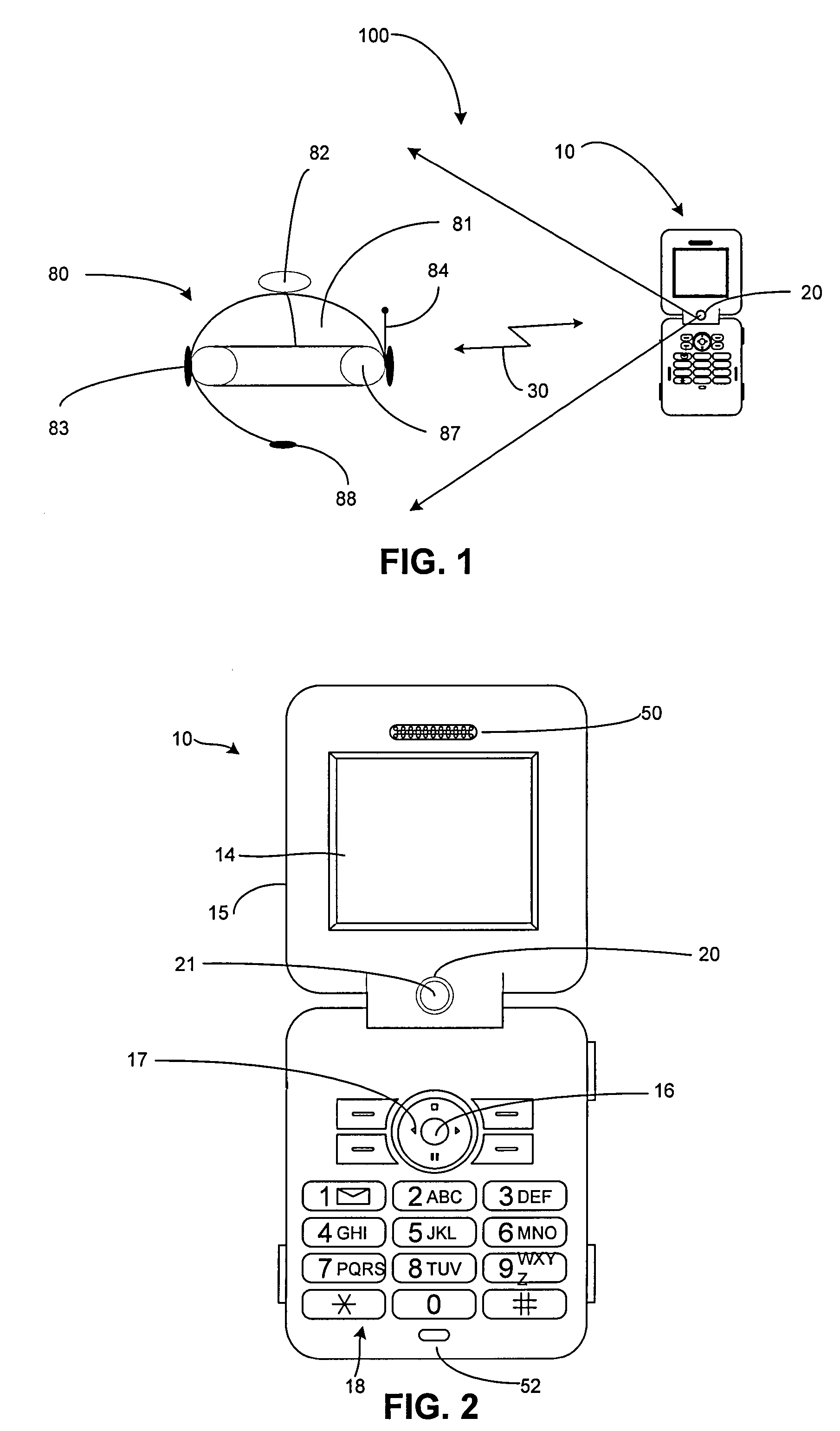

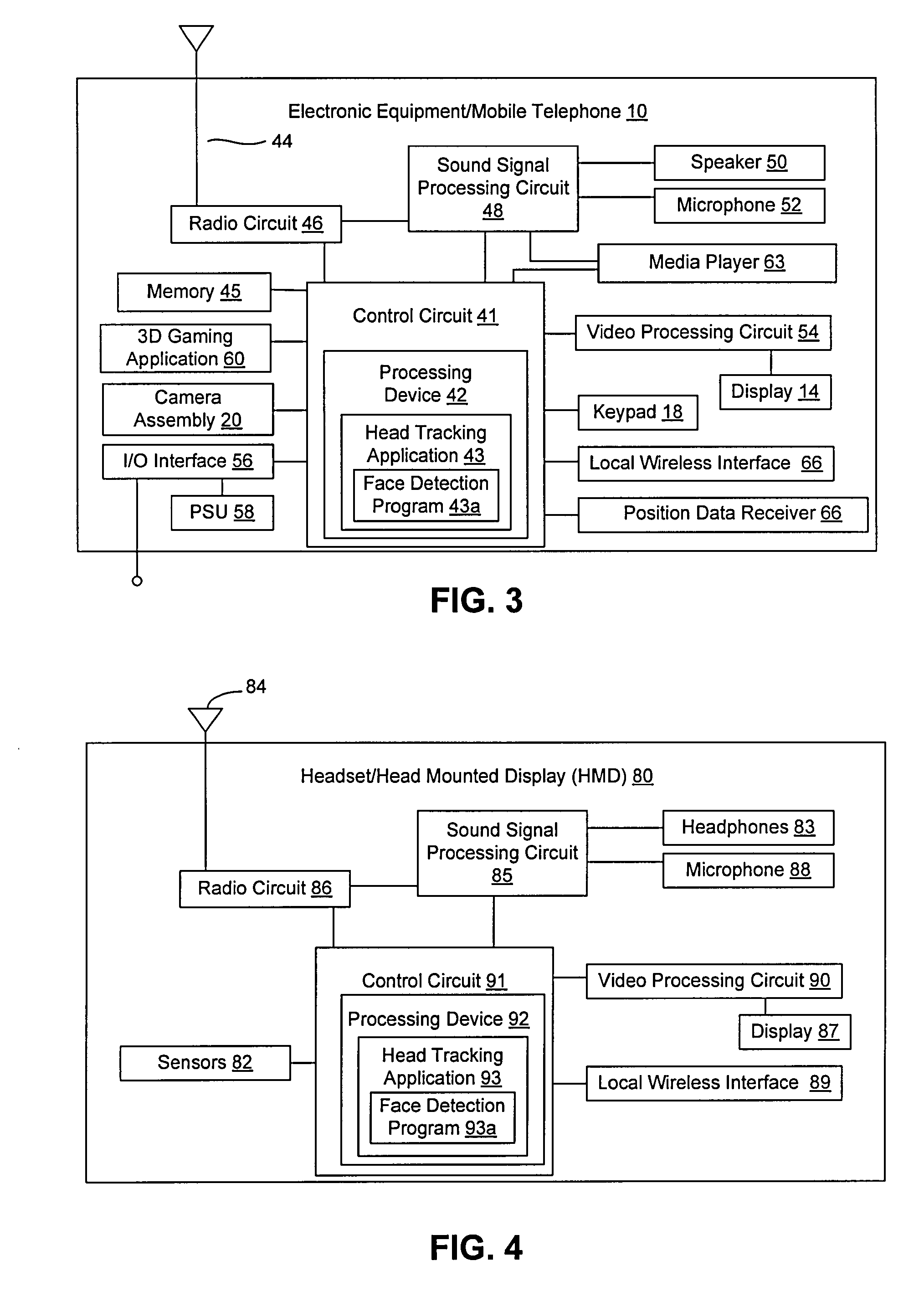

Head tracking for enhanced 3D experience using face detection

InactiveUS20090219224A1Improve consumer experienceEnhancing multimedia experienceCathode-ray tube indicatorsStereophonic systemsFace detectionComputer graphics (images)

Embodiments of the present invention provide a system and method for enhanced rendering of a virtual environment. The system may include a portable electronic device having a video camera with a lens that faces the user, and a display. A speaker system is in communication with the portable electronic device. A head tracking application uses face detection to render a user's virtual position in the virtual environment. A video portion of the virtual environment may be rendered on the display. An audio portion of the virtual environment may be rendered in the speaker system in a manner that imitates the directional component of an audio source within the environment. The speaker system may be part of a headset in wireless communication with the portable electronic device. In one embodiment, the system is used with a 3D video gaming application.

Owner:SONY ERICSSON MOBILE COMM AB

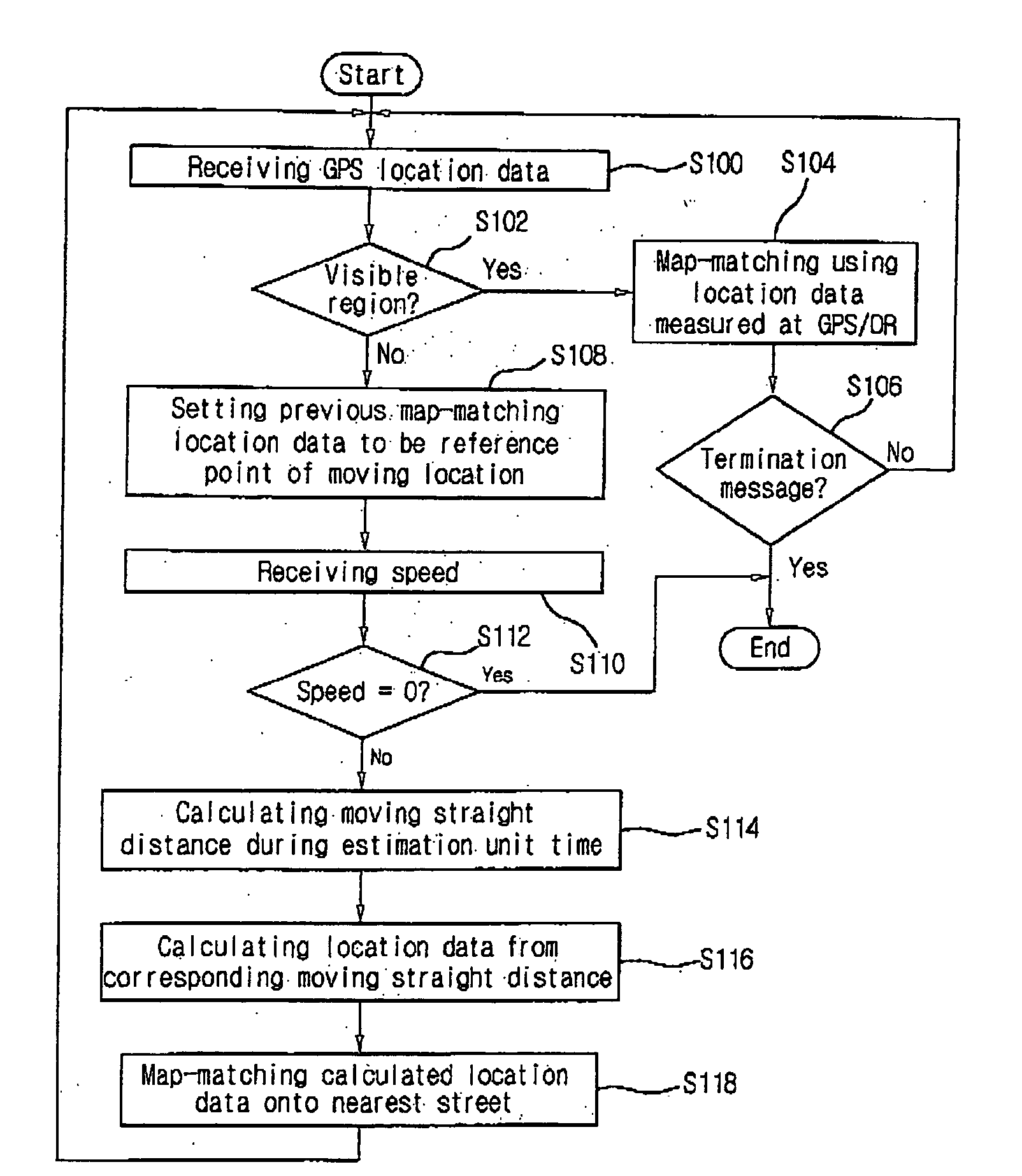

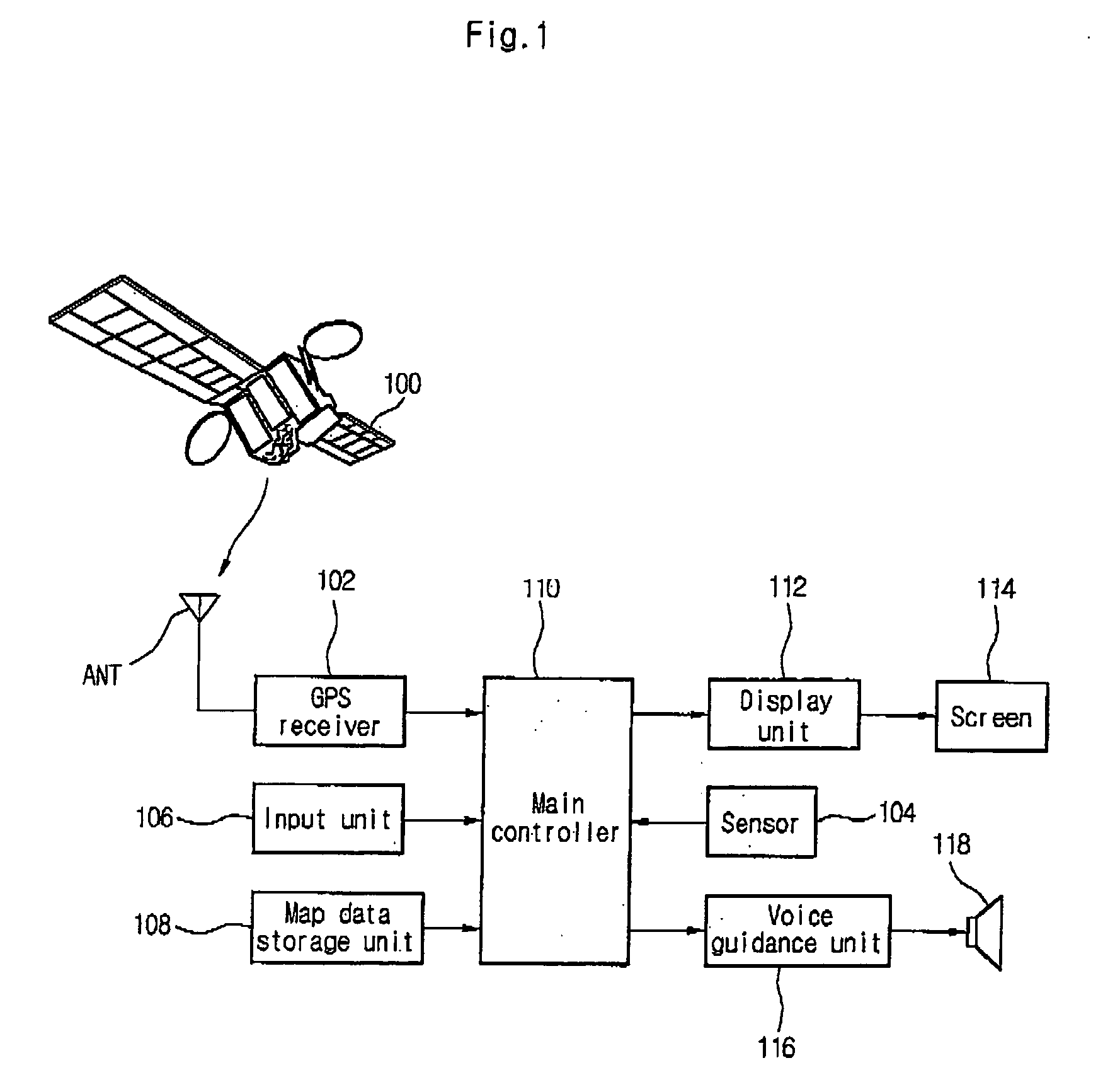

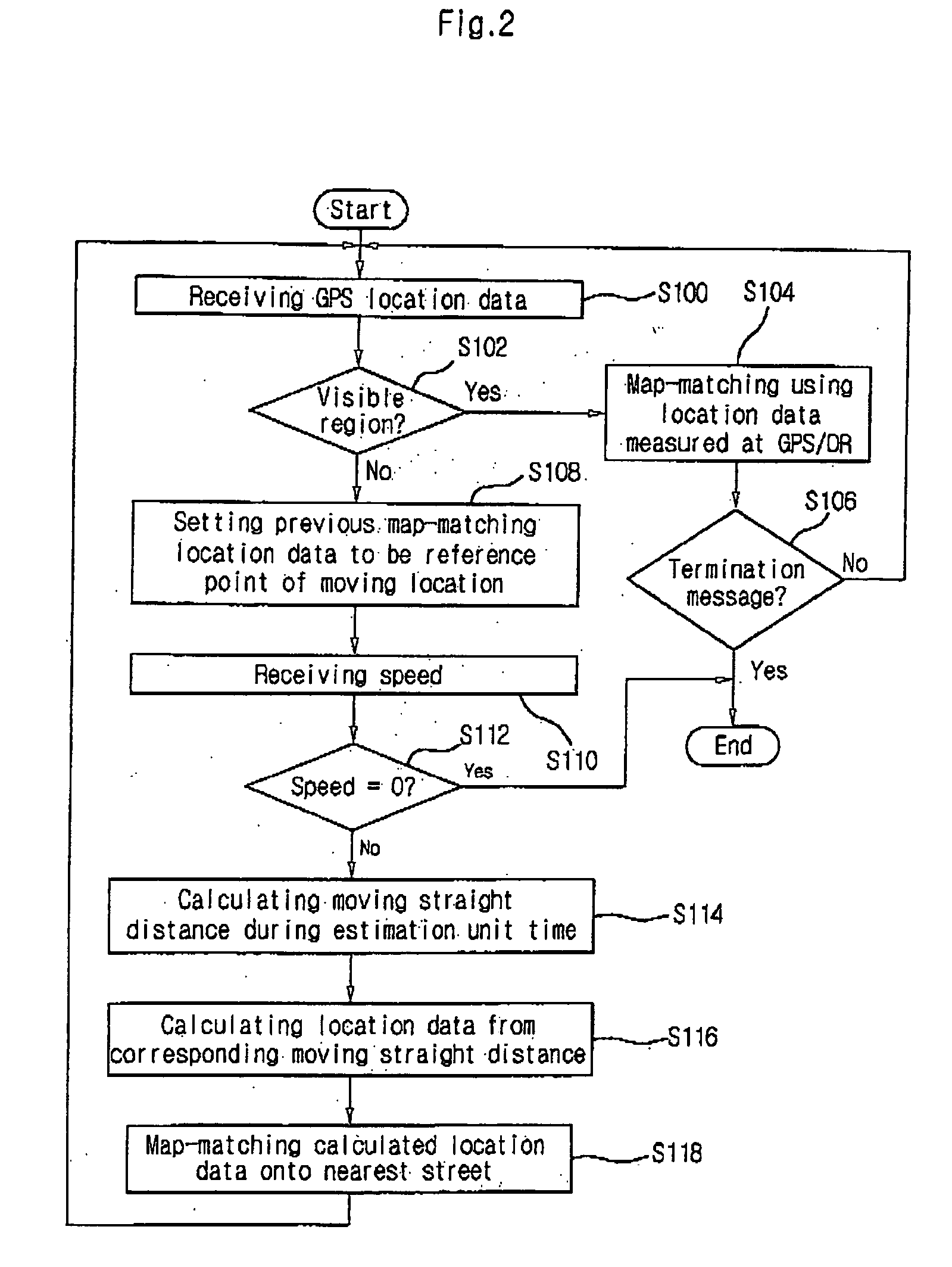

Method for estimating location of moving object in navigation system

InactiveUS20040073364A1Accurate estimateInstruments for road network navigationRoad vehicles traffic controlVirtual positionNavigation system

The present invention relates to a method for estimating location of a moving object in a navigation system, which is capable of accurately estimating location of the object in a shadow area of GPS location data so that navigation service is provided. A method for estimating location of a moving object in a navigation system includes the steps of: (a) receiving GPS location data from a moving object; (b) determining GPS shadow area by using the received GPS location data; (c) calculating moving straight distance of the moving object with reference to a last GPS location data in visible regions when the moving object is in a GPS shadow area; (d) calculating virtual location data by using the calculated moving straight distance of the moving object; and (e) calculating estimated location on a digital numeric map positioned nearest from the virtual location data, and performing a map-matching to provide a navigation service.

Owner:LG ELECTRONICS INC

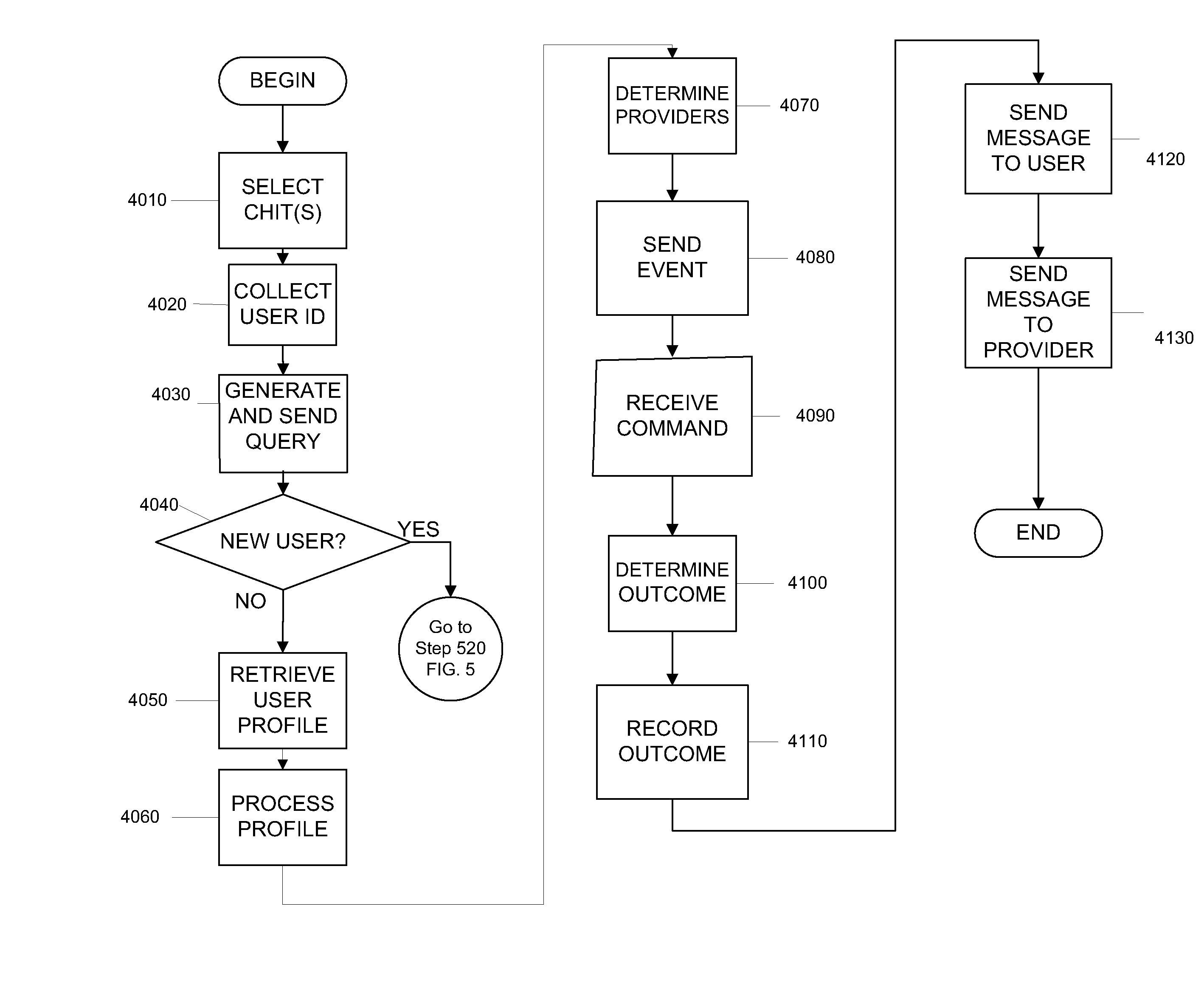

System, program and method for experientially inducing user activity

A unified experiential multimedia inducement and entertainment system, computer program and method for providing broadcasts of multimedia content from a plurality of providers to one or more users. The system, computer program and method provide for experience-based entertainment and inducement to individual users over one or more diverse communications medium, inducing the users to visit, frequent, or stay longer at a provider's physical or virtual location.

Owner:GREEN JERMON D

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com