Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

3230 results about "Vision sensor" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

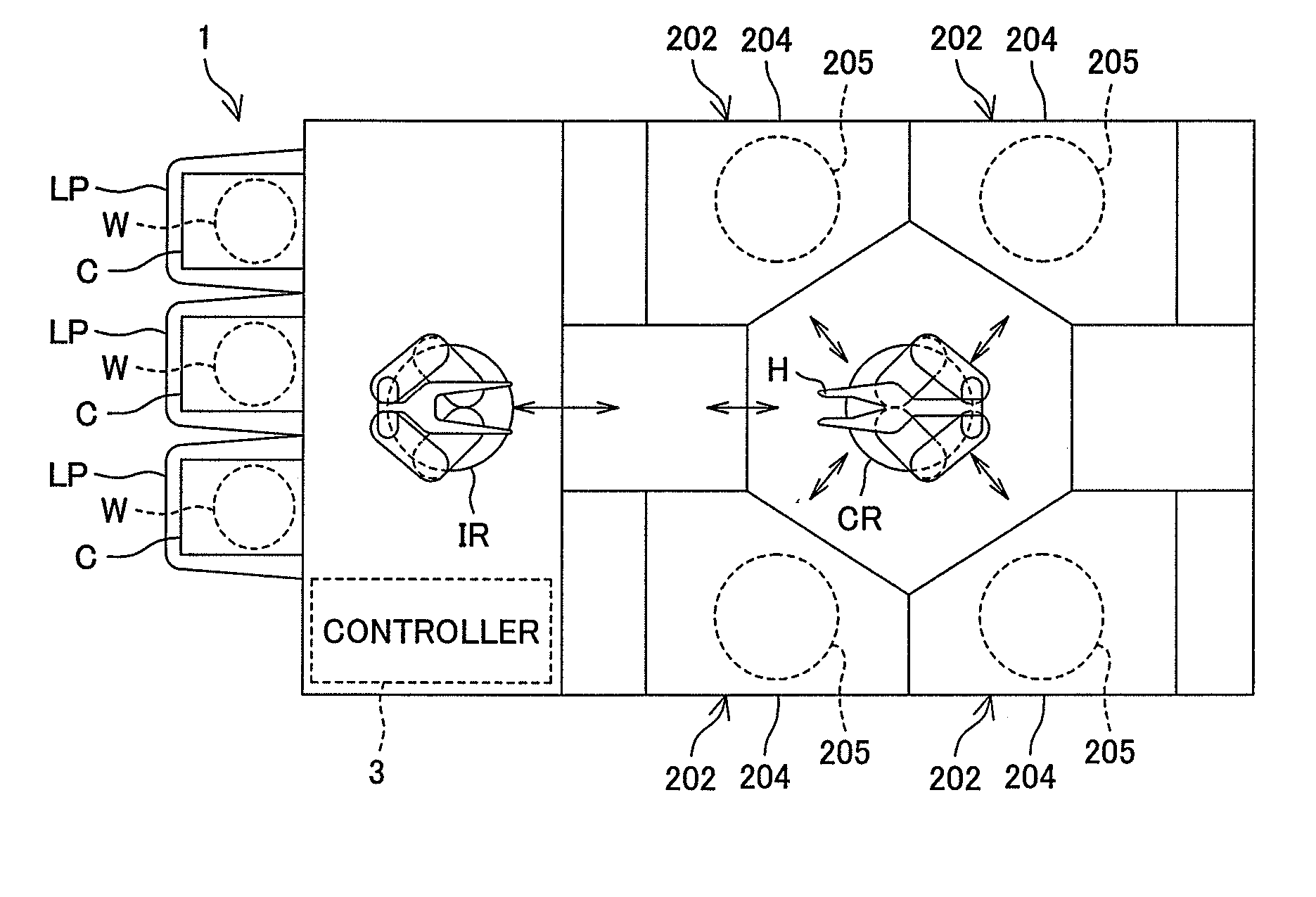

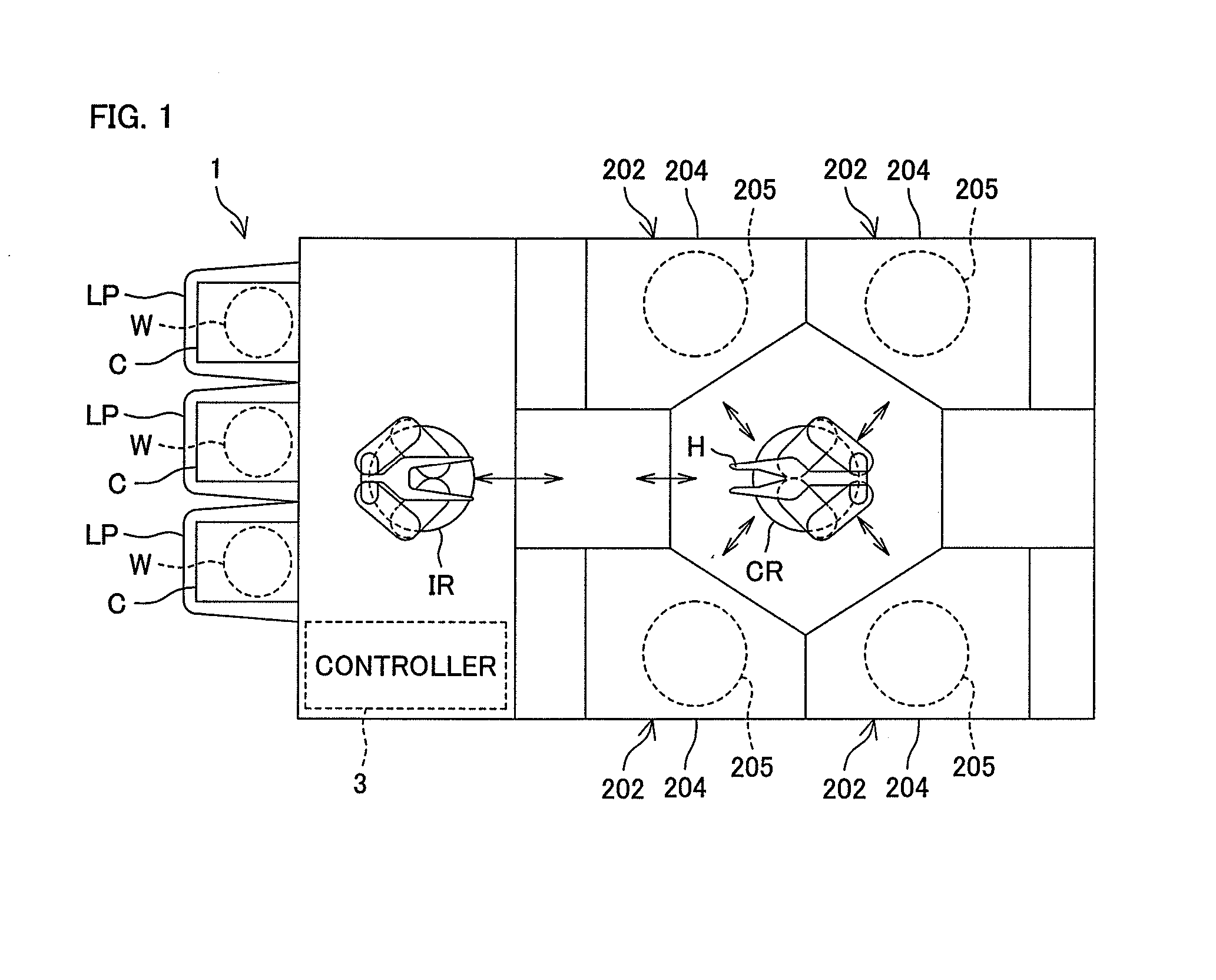

Substrate processing apparatus and substrate processing method

InactiveUS20150270146A1Supply is stoppedDry surfaceSemiconductor/solid-state device testing/measurementSemiconductor/solid-state device manufacturingOrganic solventEngineering

In parallel with a substrate heating step, a liquid surface sensor is used to monitor the raising of an IPA liquid film. An organic solvent removing step is started in response to the raising of the IPA liquid film over the upper surface of the substrate. At the end of the organic solvent removing step, a visual sensor is used to determine whether or not IPA droplets remain on the upper surface of the substrate.

Owner:DAINIPPON SCREEN MTG CO LTD

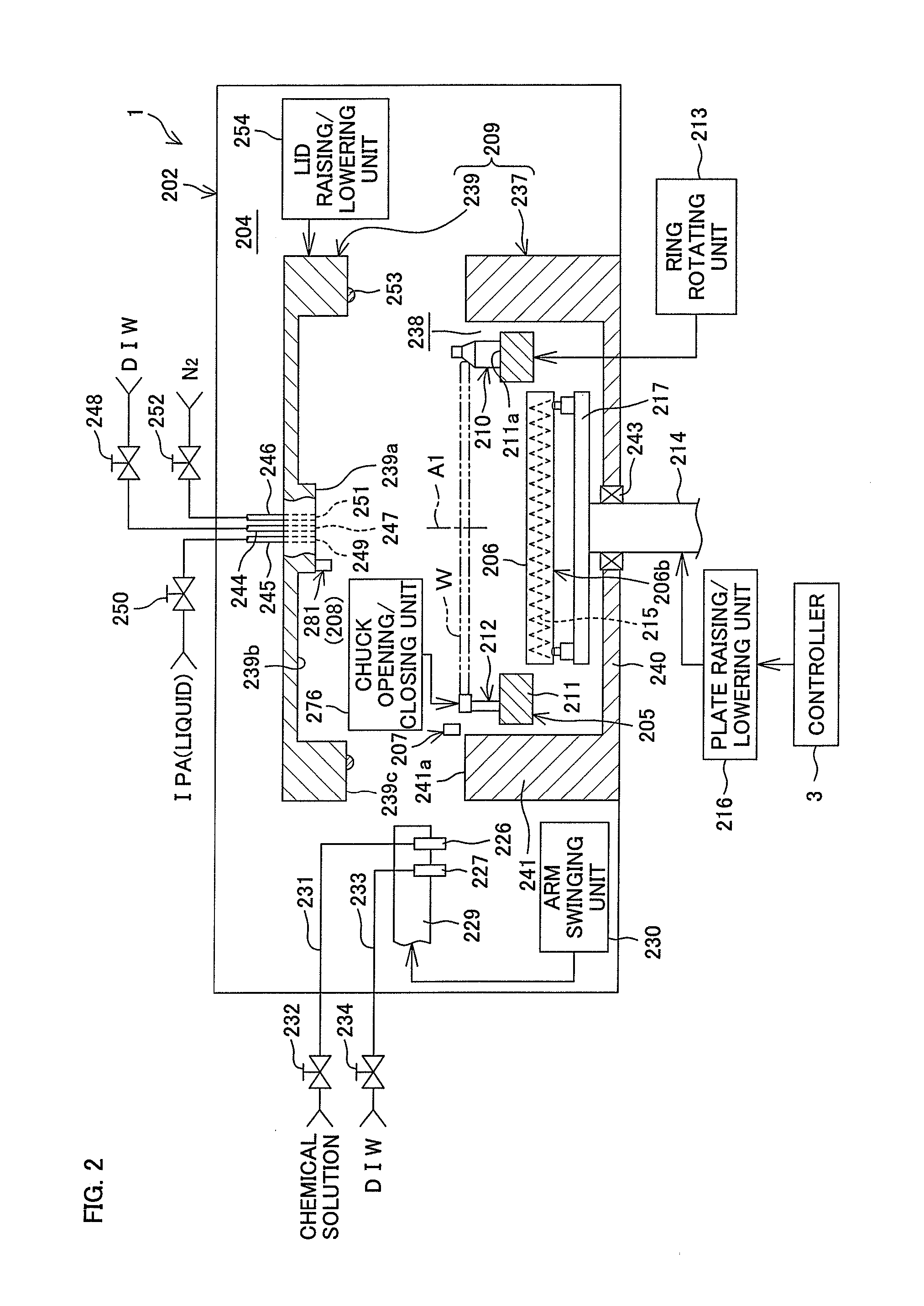

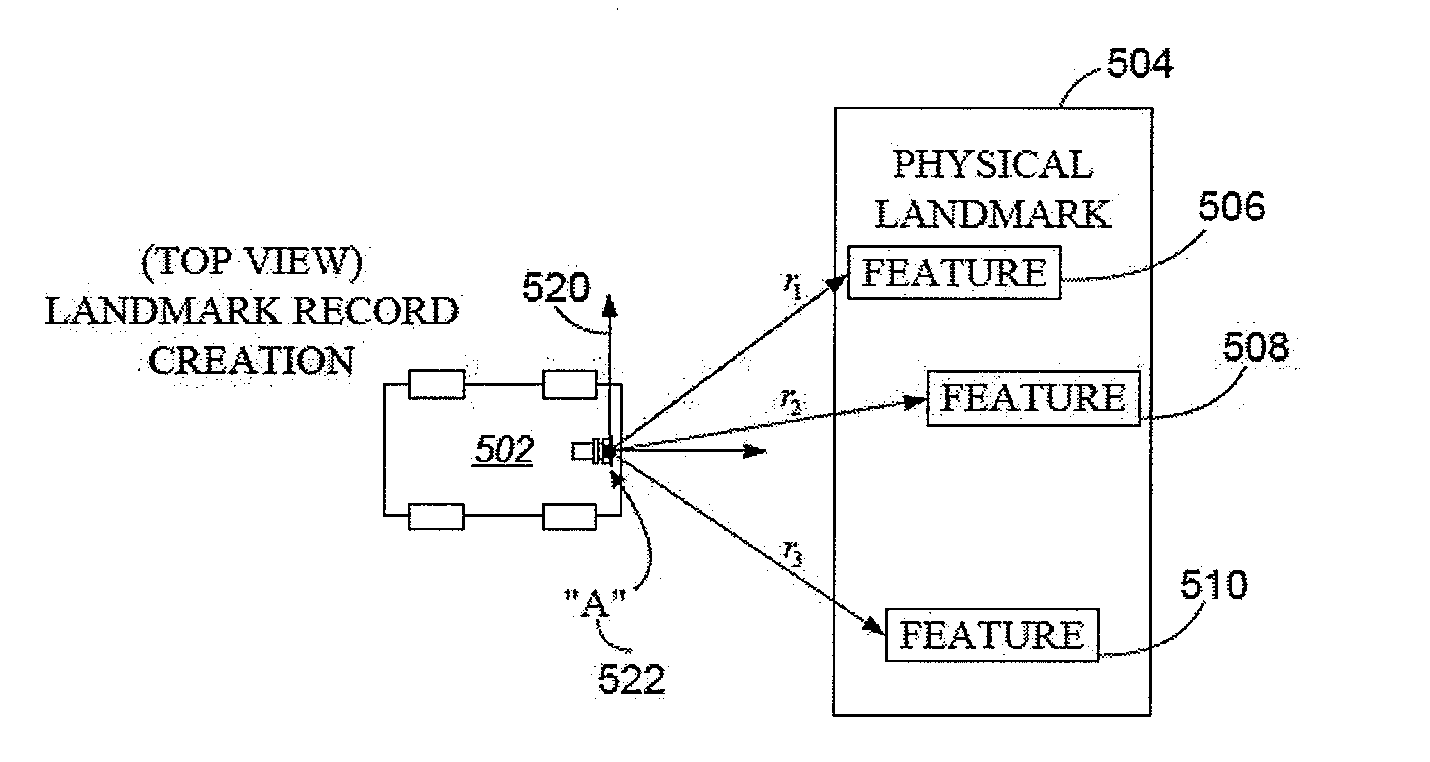

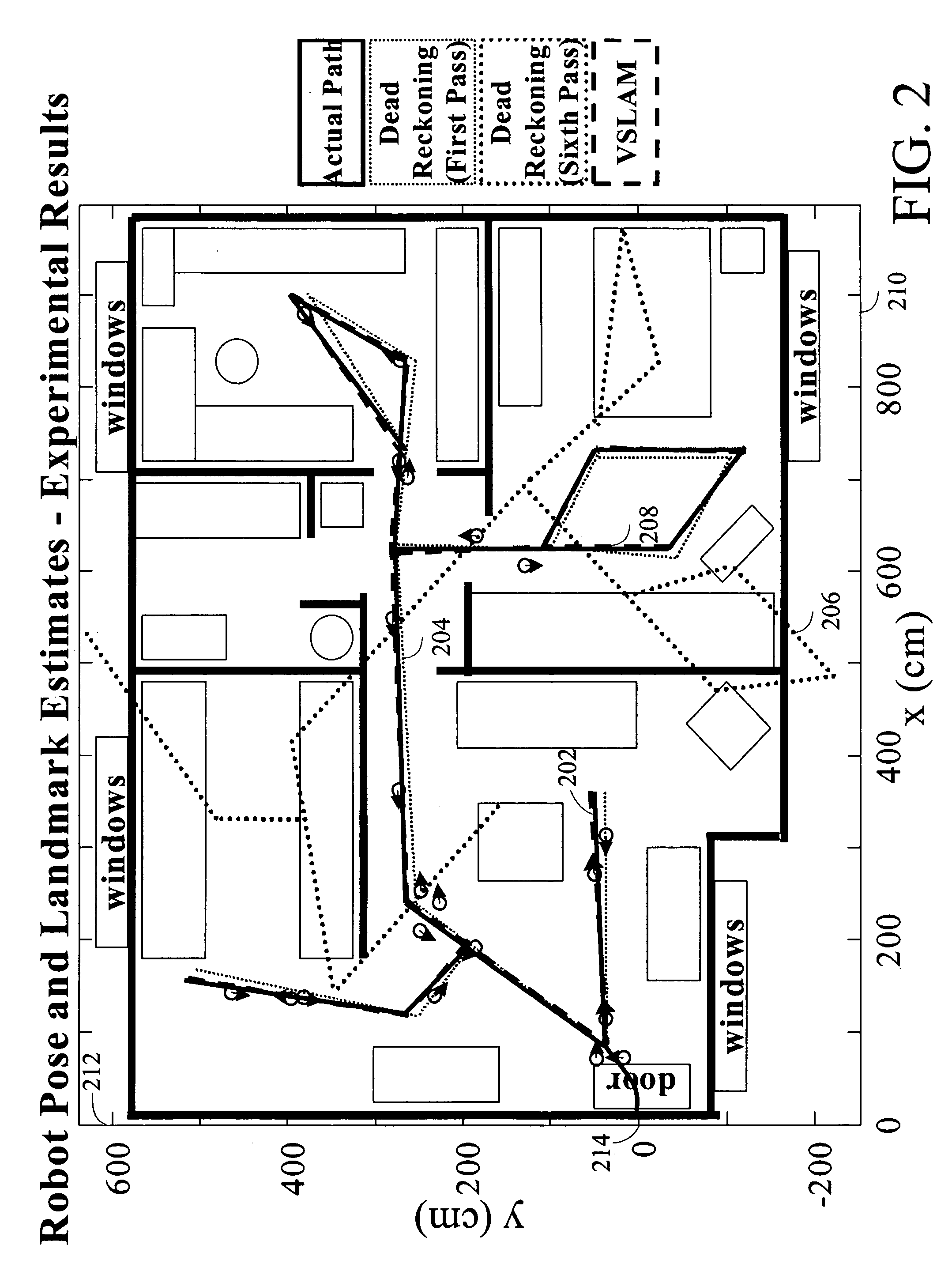

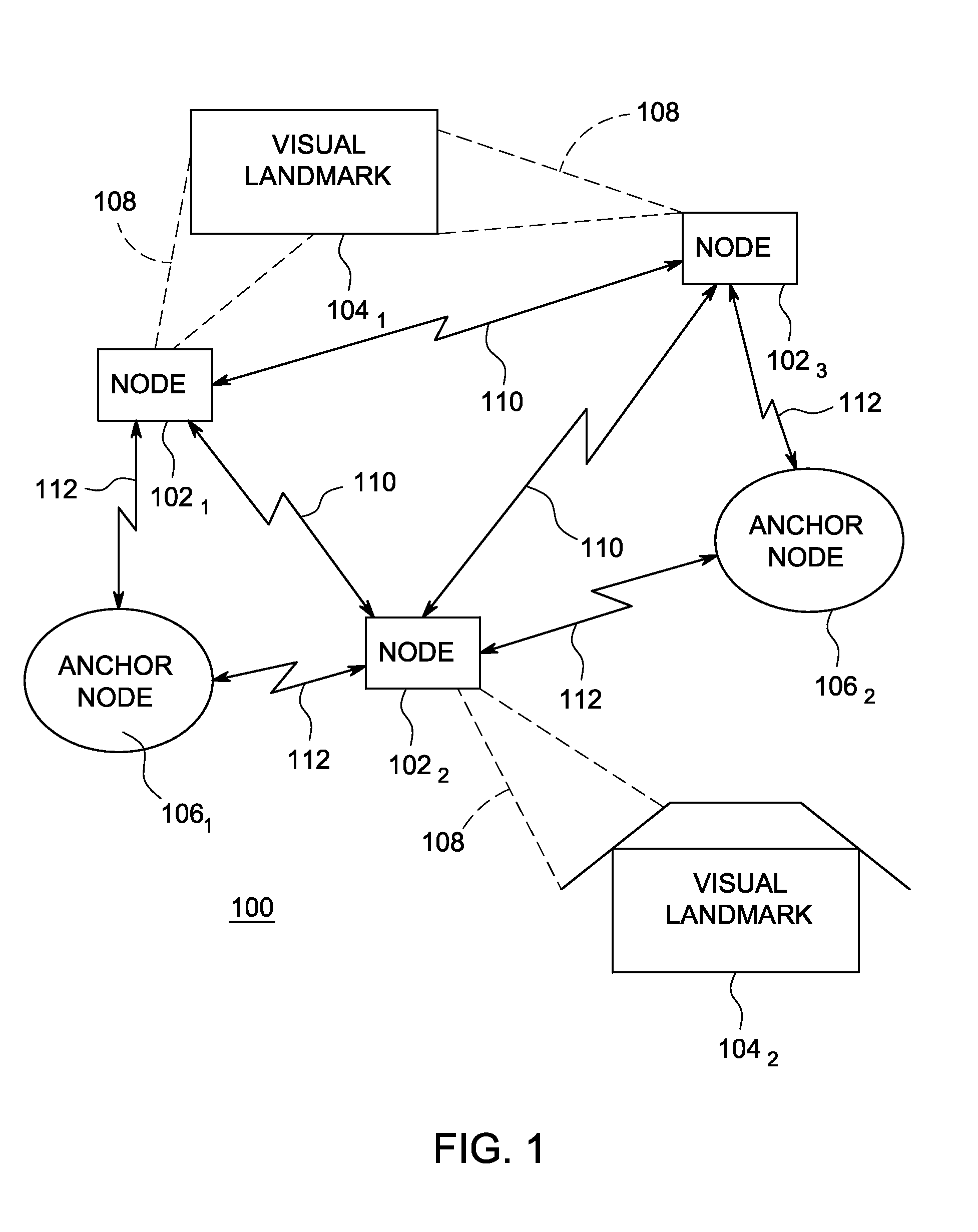

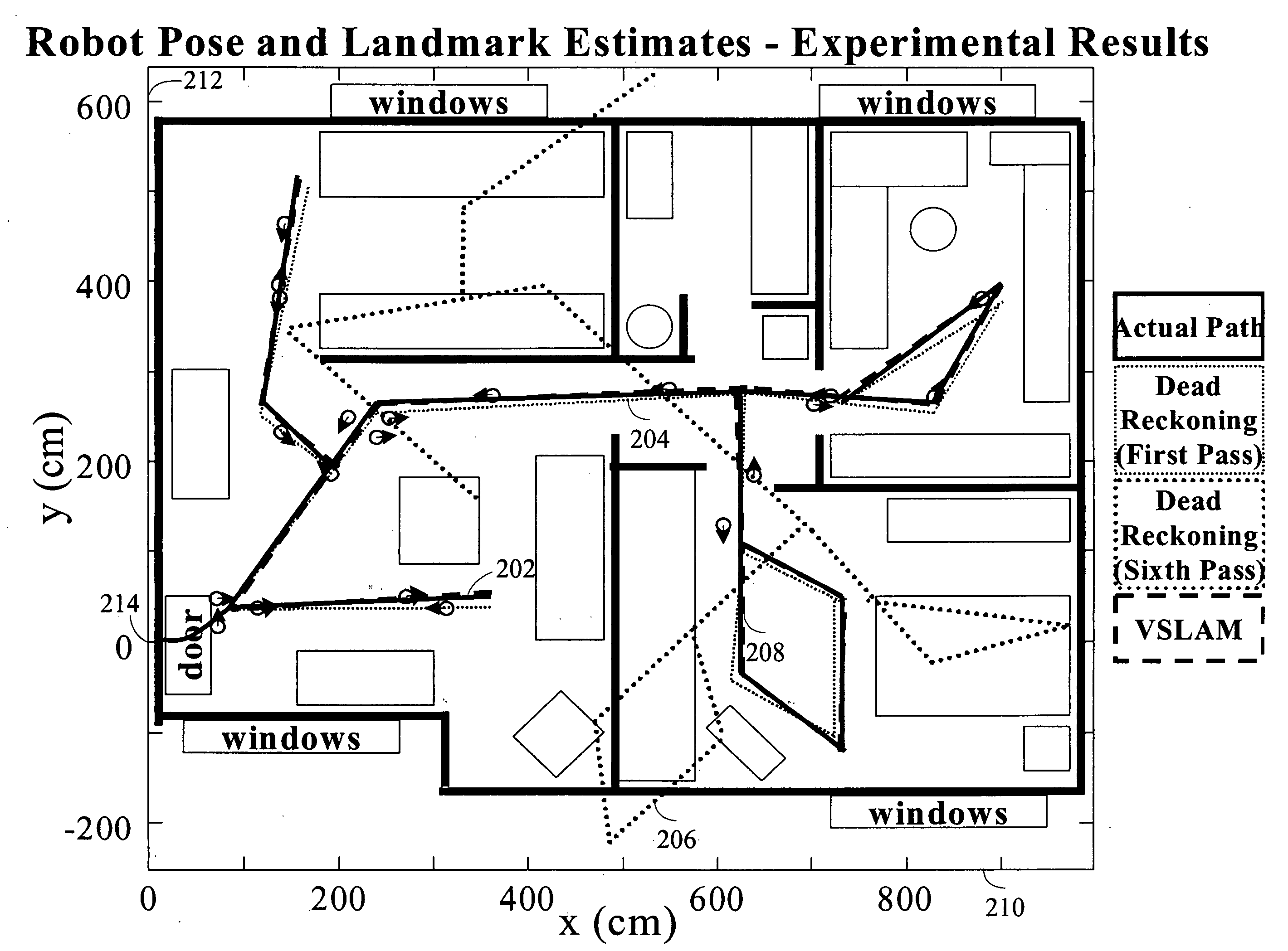

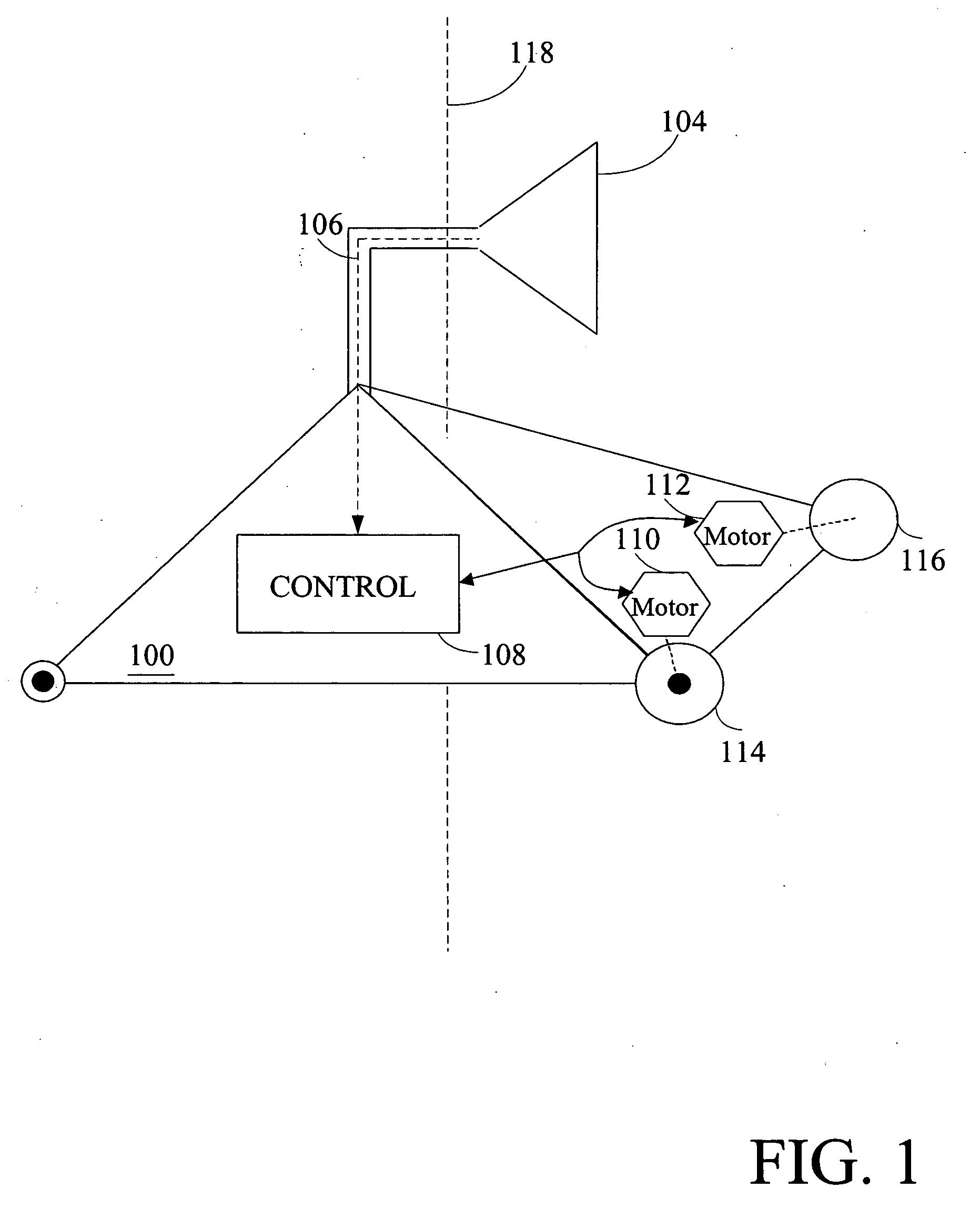

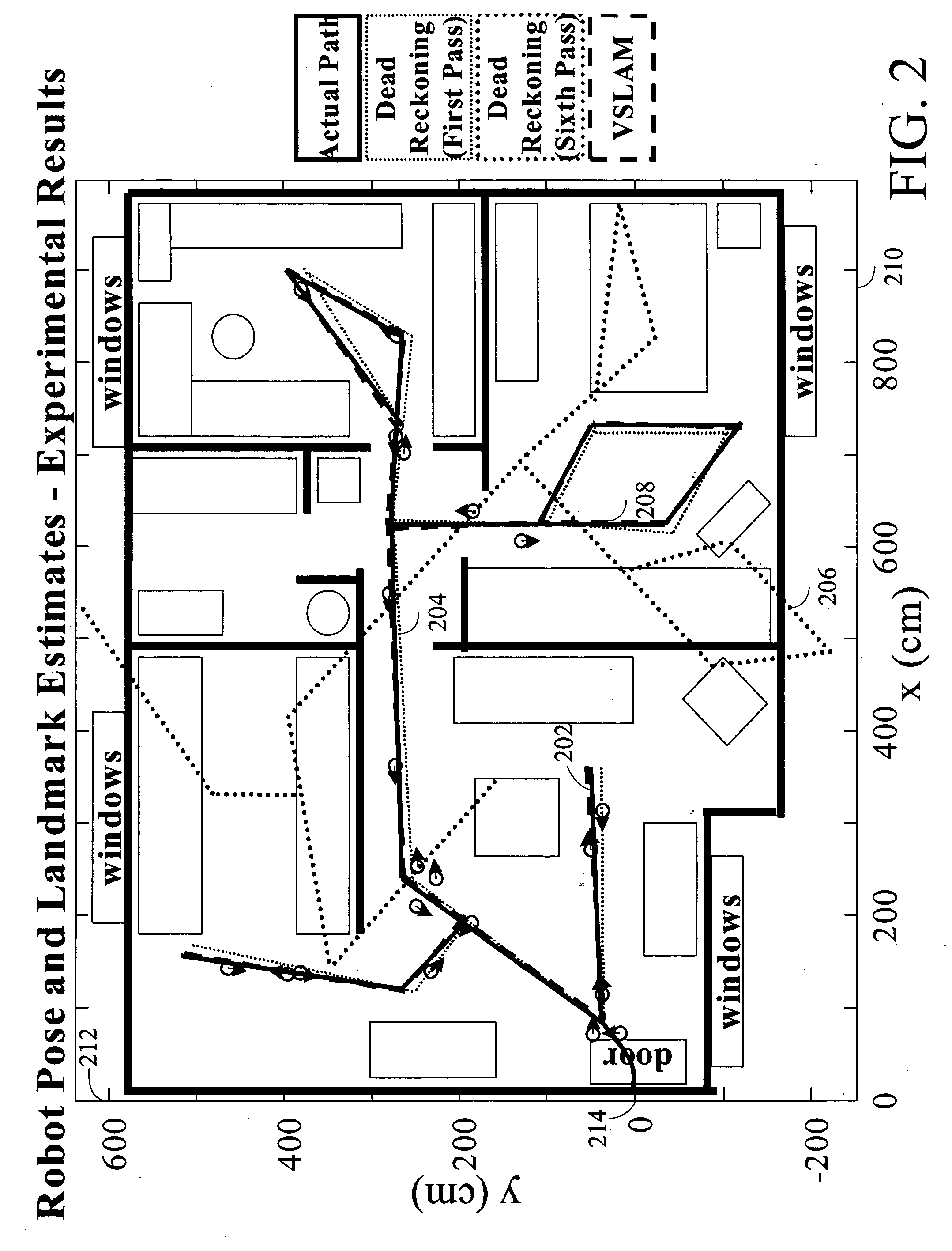

Systems and methods for vslam optimization

ActiveUS20120121161A1Database queryingCharacter and pattern recognitionSimultaneous localization and mappingLandmark matching

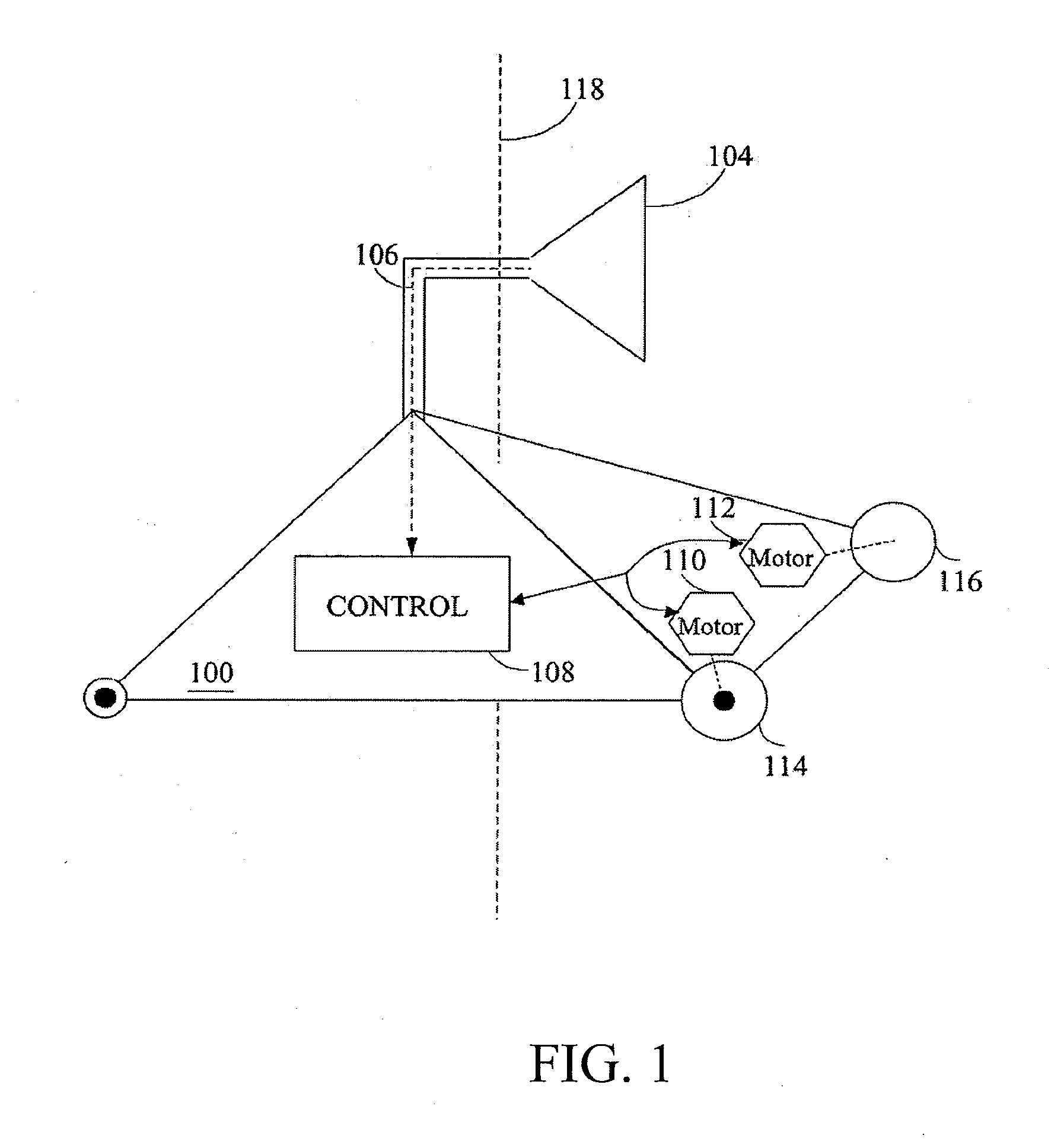

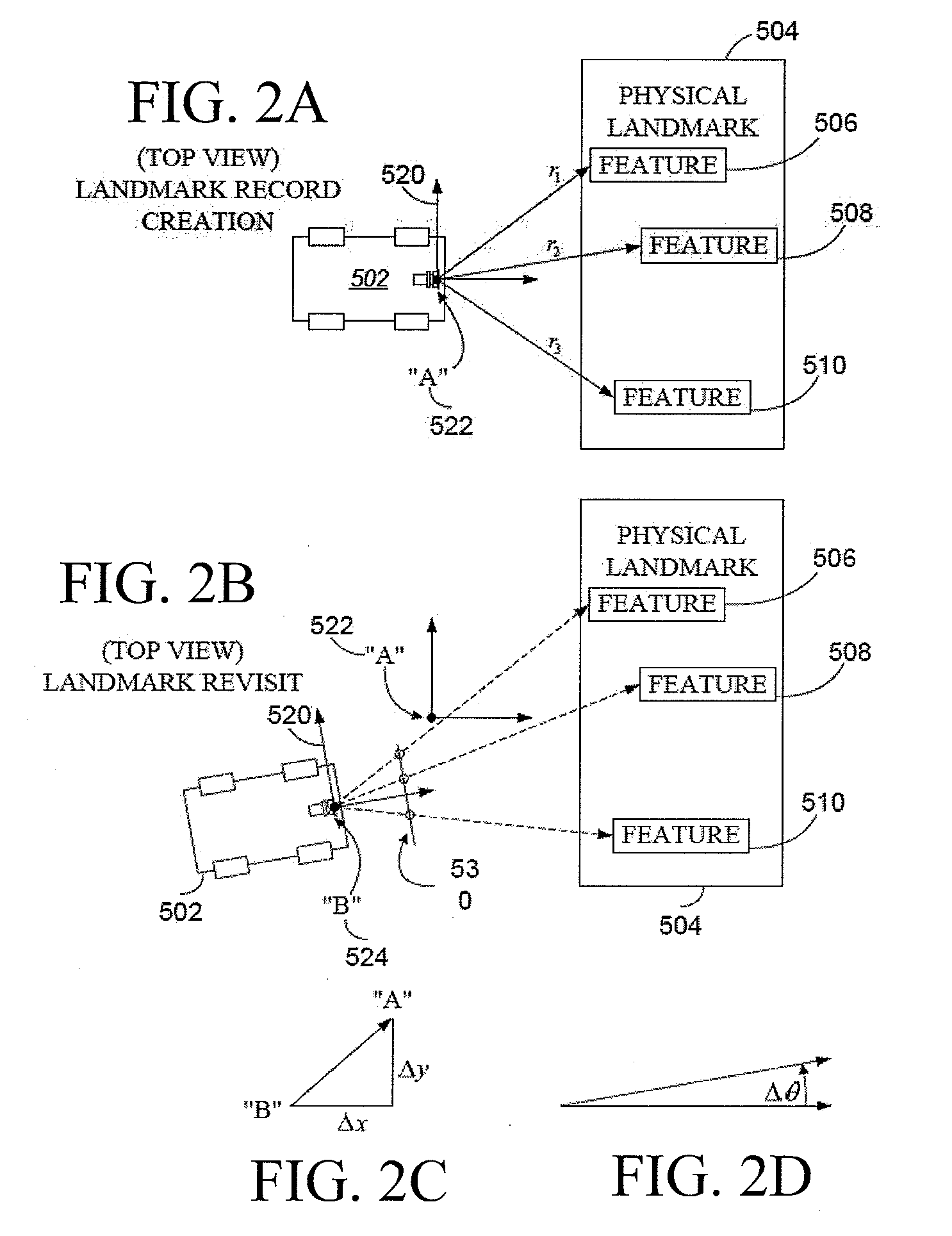

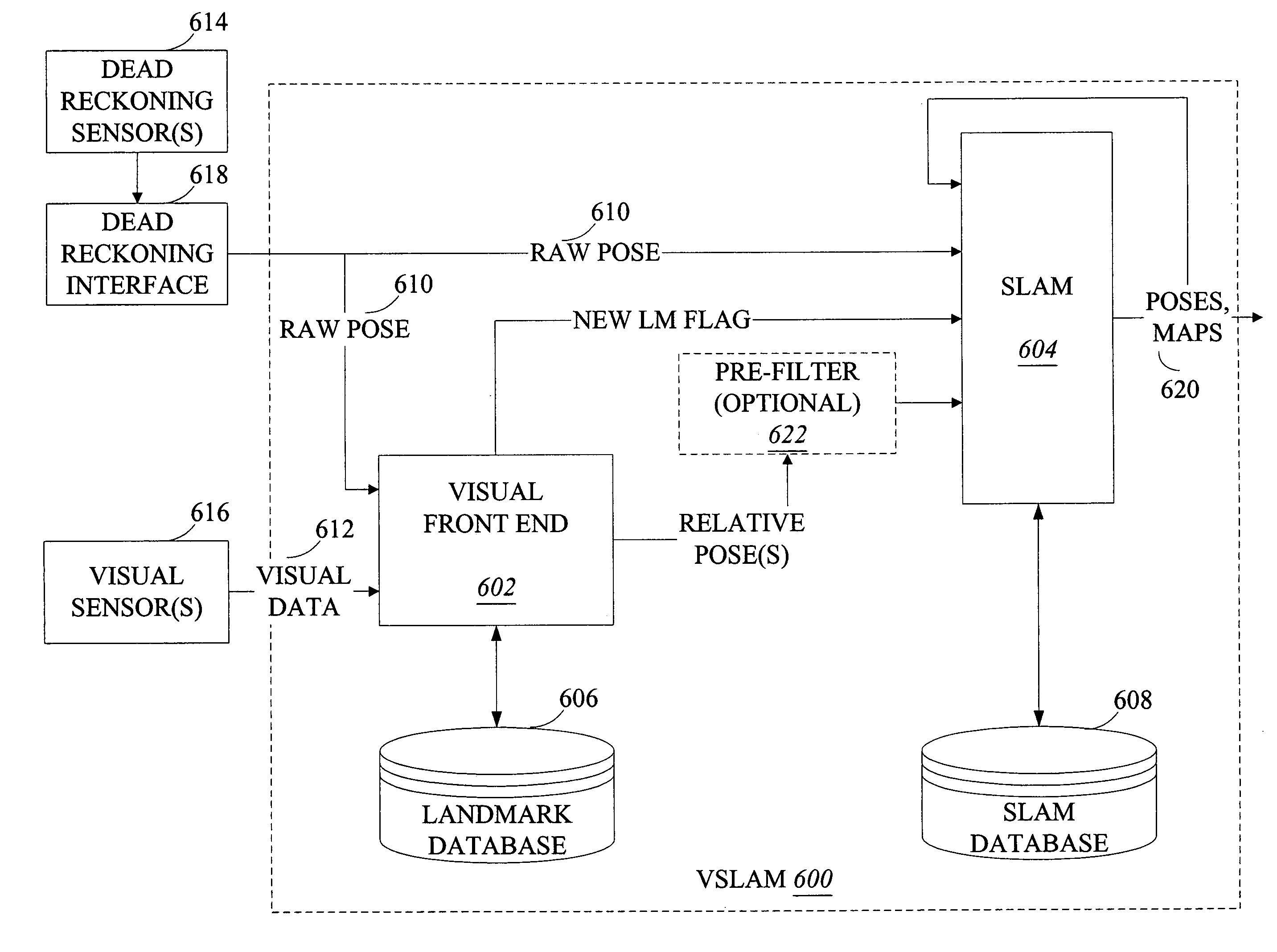

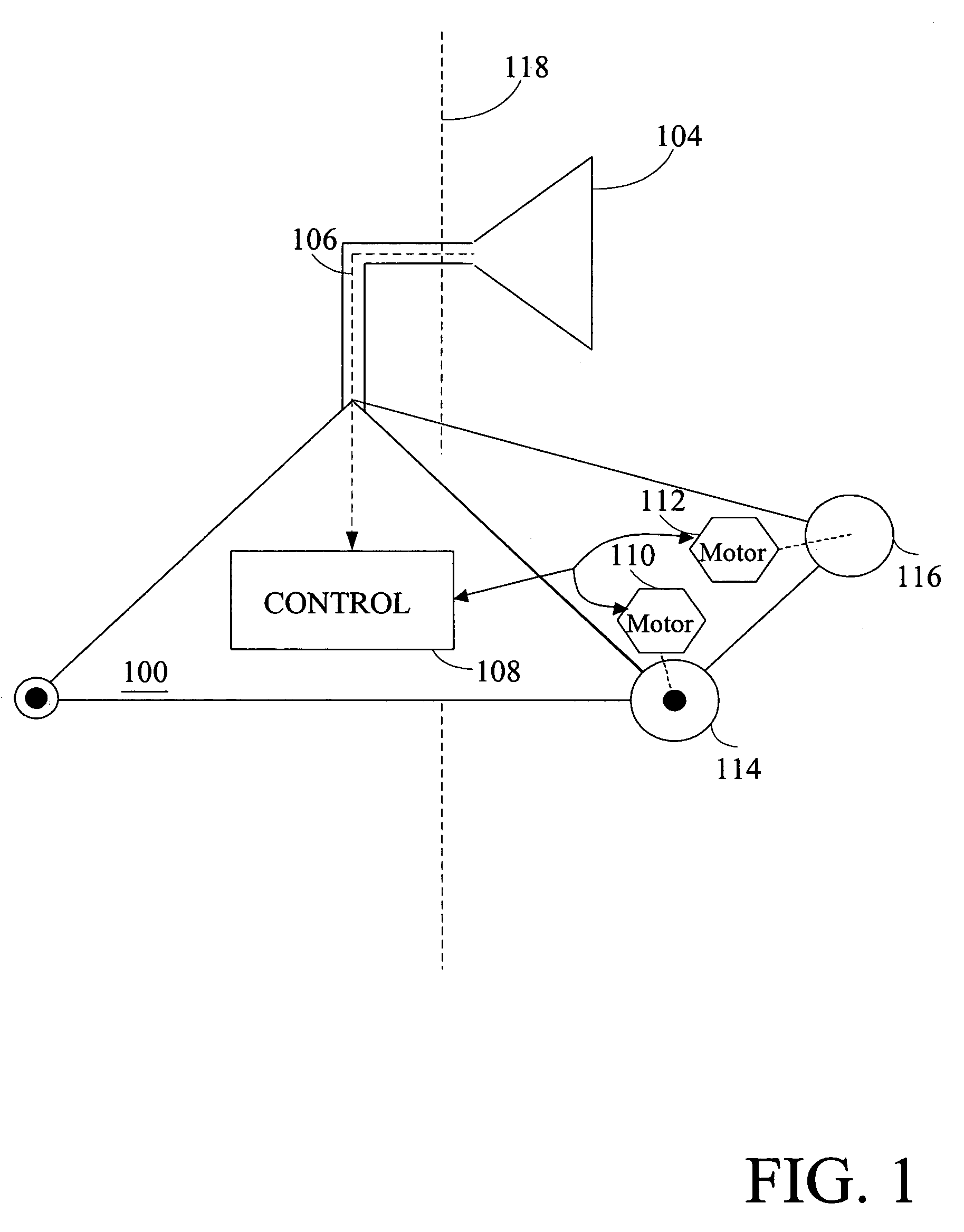

The invention is related to methods and apparatus that use a visual sensor and dead reckoning sensors to process Simultaneous Localization and Mapping (SLAM). These techniques can be used in robot navigation. Advantageously, such visual techniques can be used to autonomously generate and update a map. Unlike with laser rangefinders, the visual techniques are economically practical in a wide range of applications and can be used in relatively dynamic environments, such as environments in which people move. Certain embodiments contemplate improvements to the front-end processing in a SLAM-based system. Particularly, certain of these embodiments contemplate a novel landmark matching process. Certain of these embodiments also contemplate a novel landmark creation process. Certain embodiments contemplate improvements to the back-end processing in a SLAM-based system. Particularly, certain of these embodiments contemplate algorithms for modifying the SLAM graph in real-time to achieve a more efficient structure.

Owner:IROBOT CORP

Systems and methods for incrementally updating a pose of a mobile device calculated by visual simultaneous localization and mapping techniques

The invention is related to methods and apparatus that use a visual sensor and dead reckoning sensors to process Simultaneous Localization and Mapping (SLAM). These techniques can be used in robot navigation. Advantageously, such visual techniques can be used to autonomously generate and update a map. Unlike with laser rangefinders, the visual techniques are economically practical in a wide range of applications and can be used in relatively dynamic environments, such as environments in which people move. One embodiment further advantageously uses multiple particles to maintain multiple hypotheses with respect to localization and mapping. Further advantageously, one embodiment maintains the particles in a relatively computationally-efficient manner, thereby permitting the SLAM processes to be performed in software using relatively inexpensive microprocessor-based computer system.

Owner:IROBOT CORP

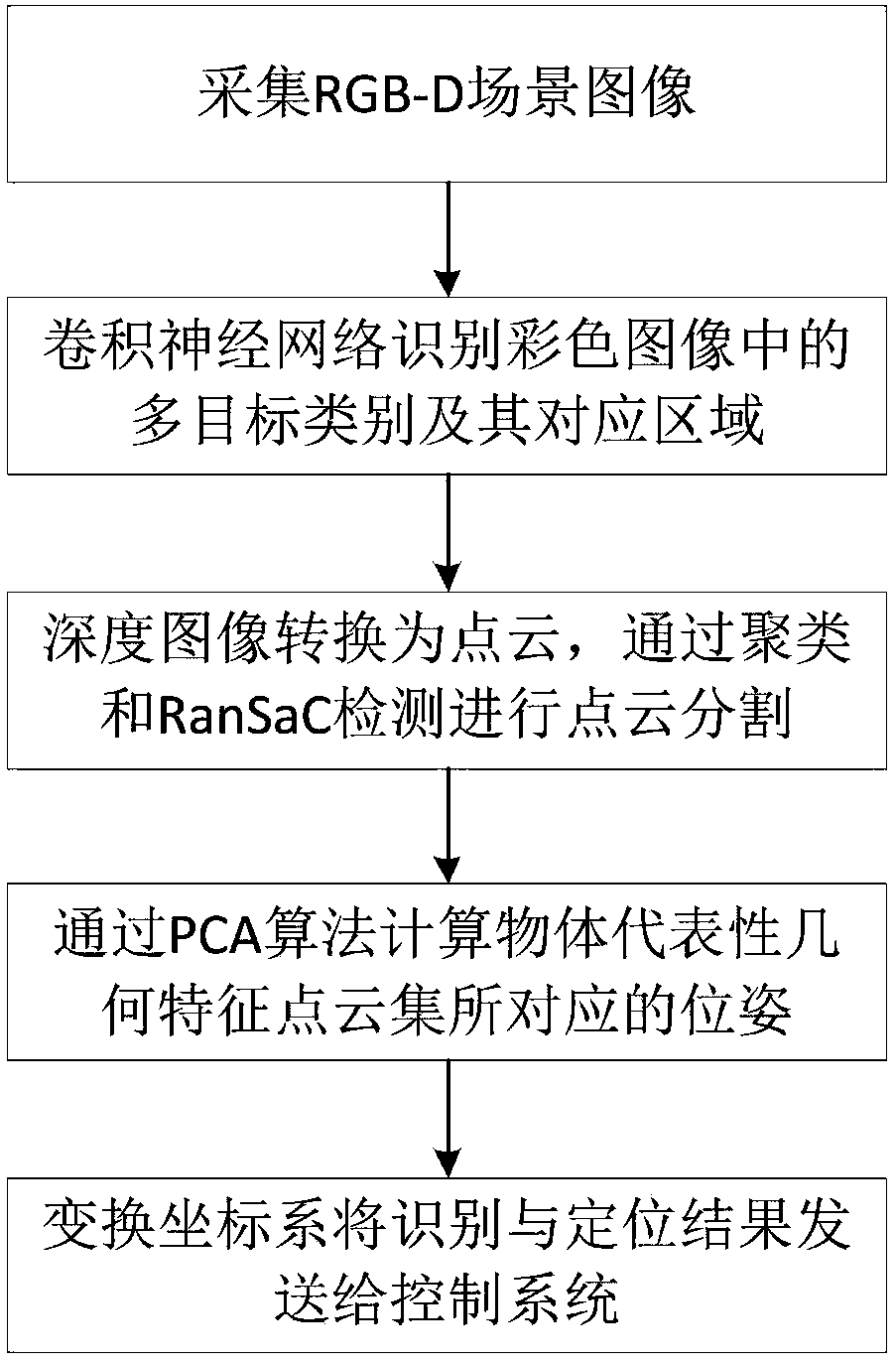

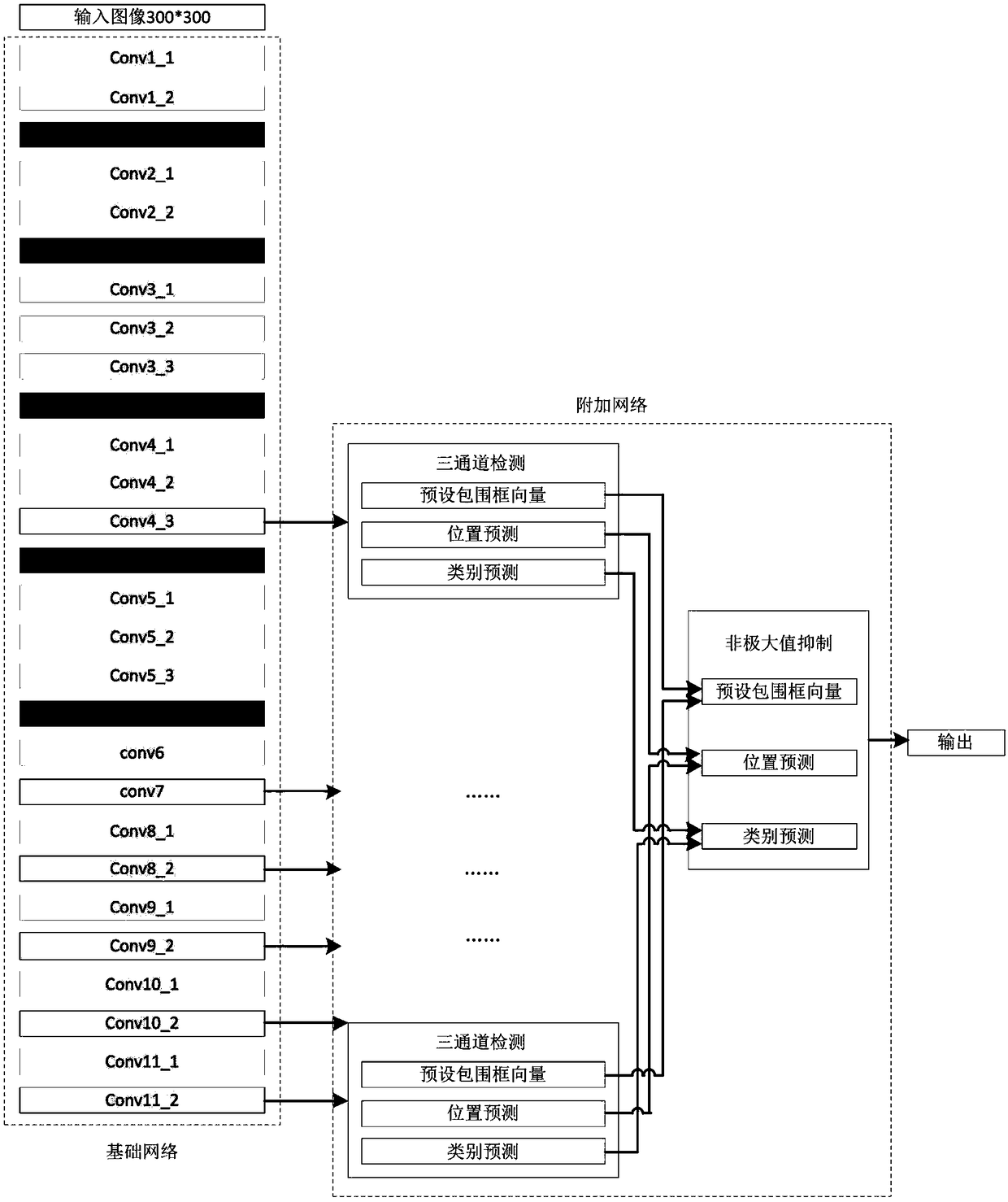

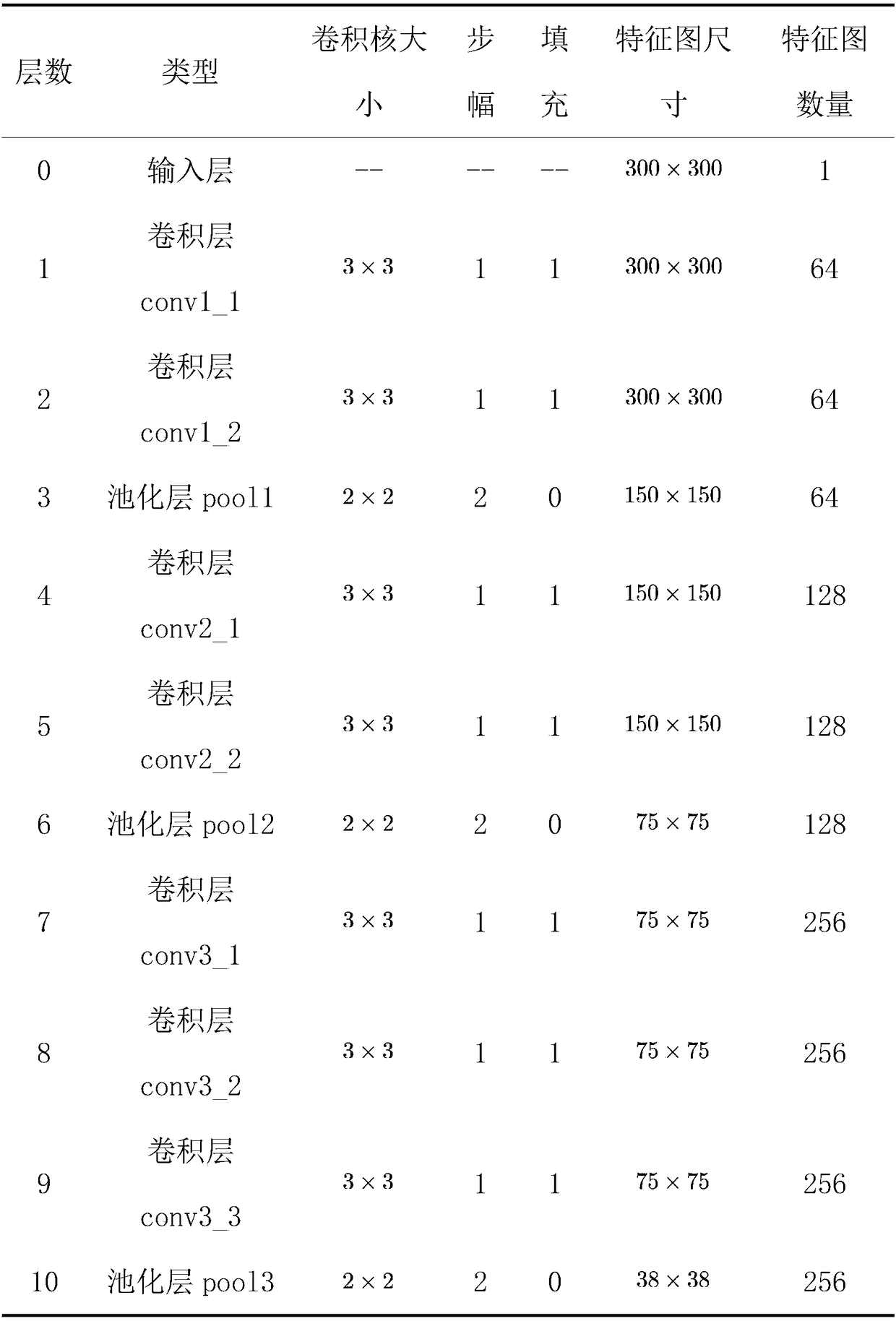

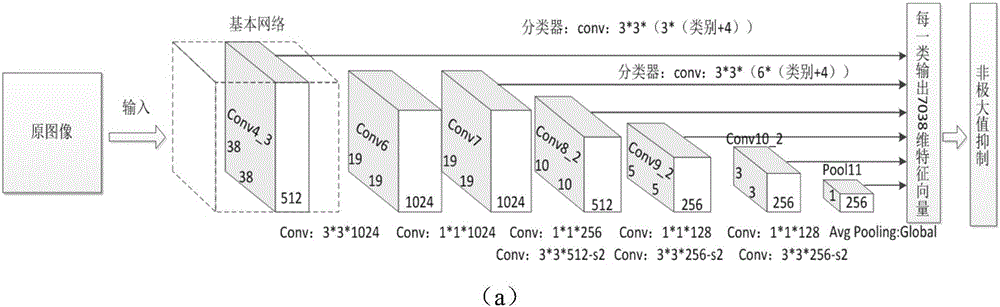

Visual recognition and positioning method for robot intelligent capture application

The invention relates to a visual recognition and positioning method for robot intelligent capture application. According to the method, an RGB-D scene image is collected, a supervised and trained deep convolutional neural network is utilized to recognize the category of a target contained in a color image and a corresponding position region, the pose state of the target is analyzed in combinationwith a deep image, pose information needed by a controller is obtained through coordinate transformation, and visual recognition and positioning are completed. Through the method, the double functions of recognition and positioning can be achieved just through a single visual sensor, the existing target detection process is simplified, and application cost is saved. Meanwhile, a deep convolutional neural network is adopted to obtain image features through learning, the method has high robustness on multiple kinds of environment interference such as target random placement, image viewing anglechanging and illumination background interference, and recognition and positioning accuracy under complicated working conditions is improved. Besides, through the positioning method, exact pose information can be further obtained on the basis of determining object spatial position distribution, and strategy planning of intelligent capture is promoted.

Owner:合肥哈工慧拣智能科技有限公司

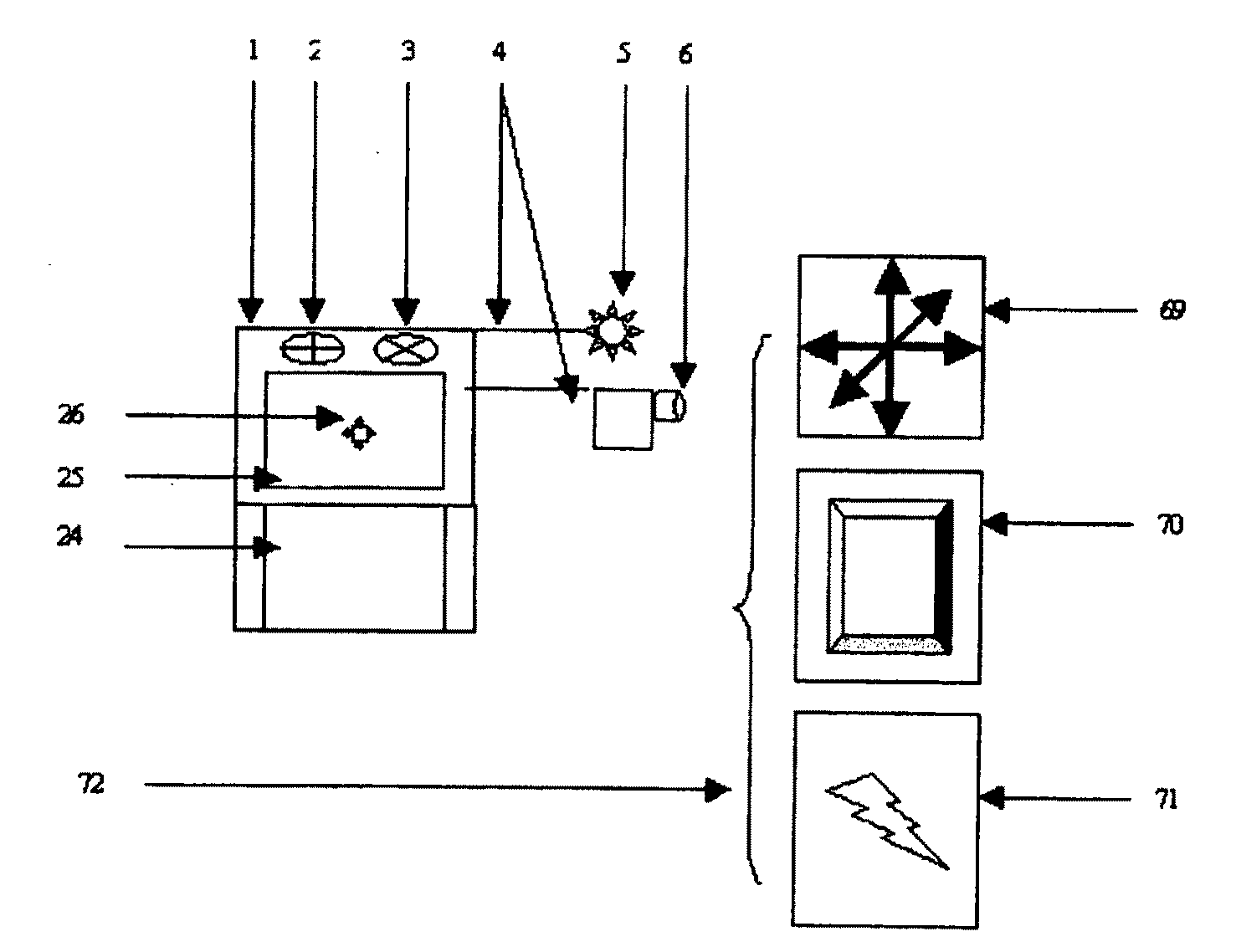

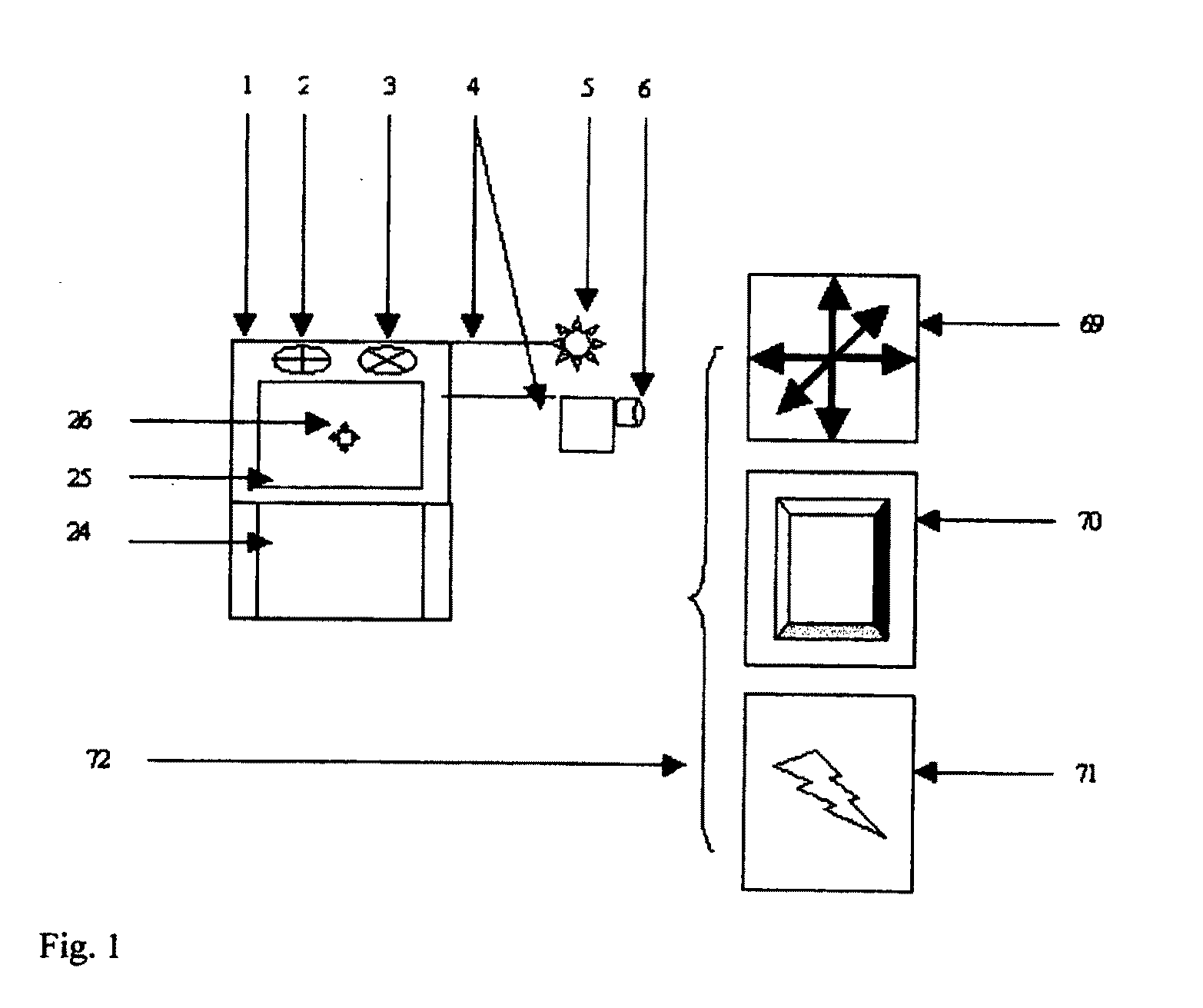

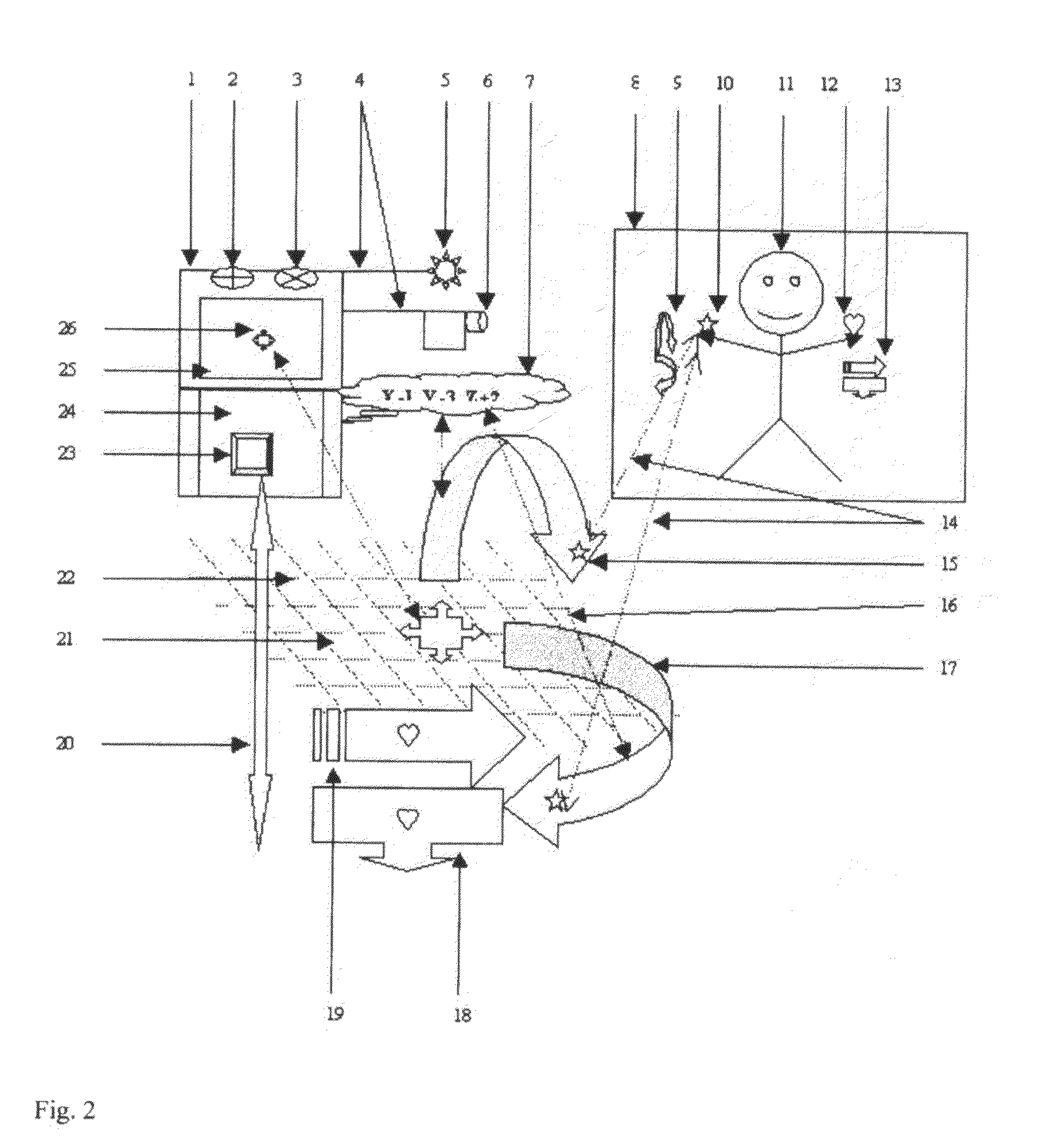

Intelligent robotic interface input device

InactiveUS20100103106A1Shorten the timeSave spaceProgramme controlInput/output for user-computer interactionVirtual spaceVision sensor

Universal Video Computer Vision Input Virtual Space Mouse-Keyboard Control Panel Robot has computer system use video vision camera sensors, logical vision sensor programming as trainable computer vision seeing objects movements X, Y, Z dimensions' definitions to recognize users commands by their Hands gestures and / or enhance symbols, colors objects combination actions to virtually input data, and commands to operate computer, and machines. The robot has automatically calibrated working space into Space Mouse Zone, Space Keyboard zone, and Hand-Sign Languages Zone between user and itself. The robot automatically translate the receiving coordination users' hand gesture actions combinations on the customizable puzzle-cell positions of working space and mapping to its software mapping lists for each of the puzzle-cell position definition and calibrate these user hand and / or body gestures' virtual space actions into entering data and commands to computer meaningful computer, machine, home appliances operations.

Owner:CHIU HSIEN HSIANG

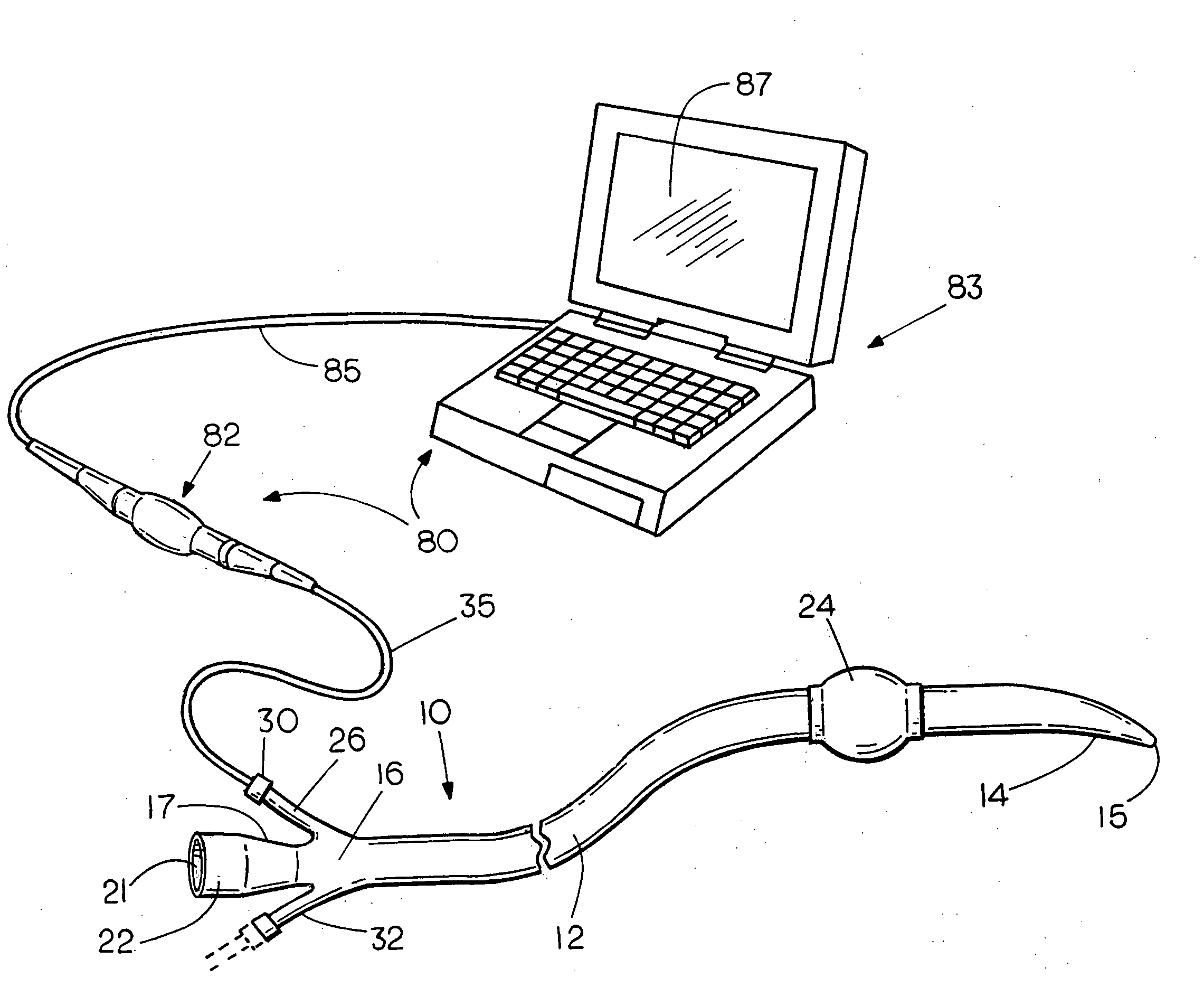

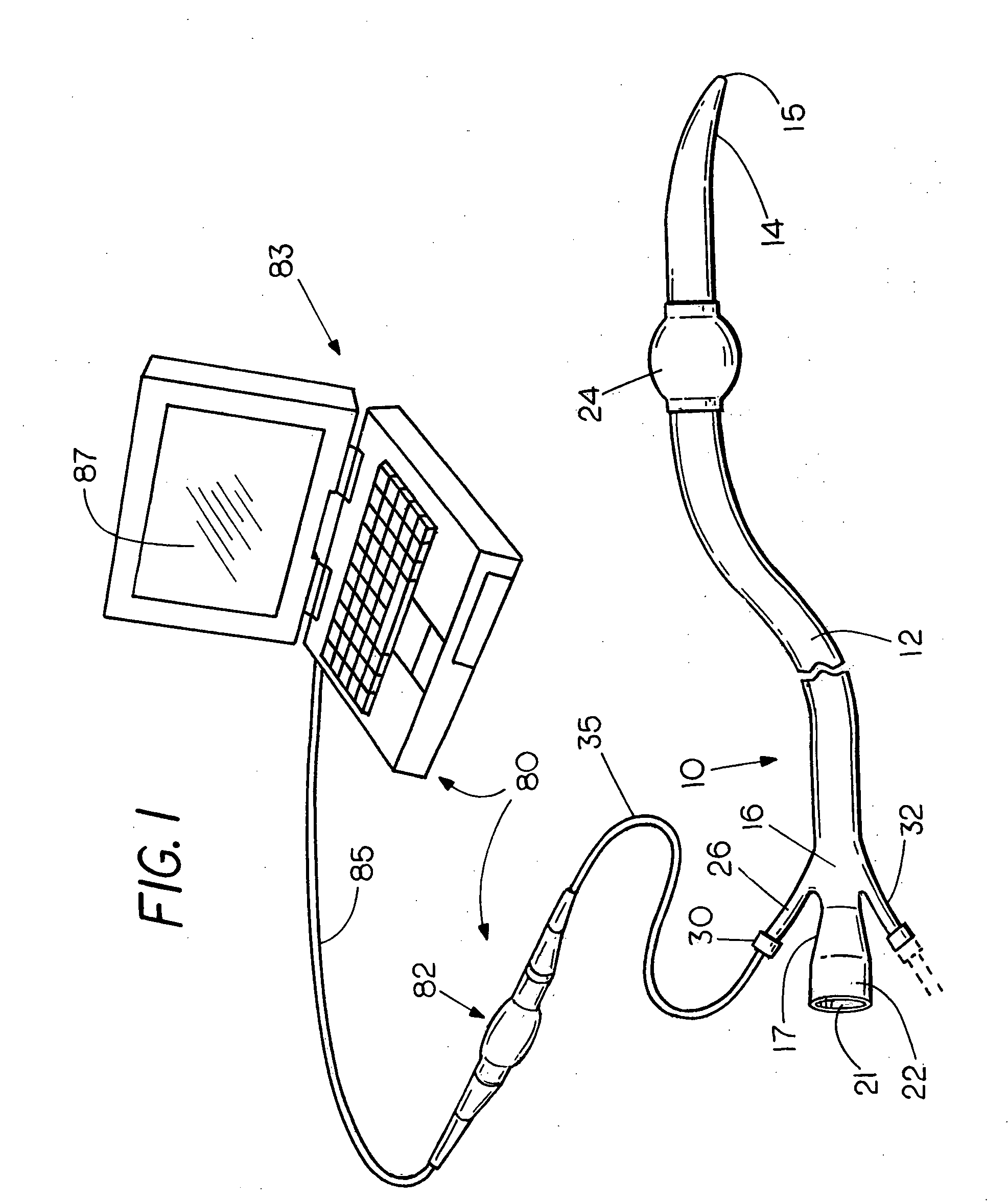

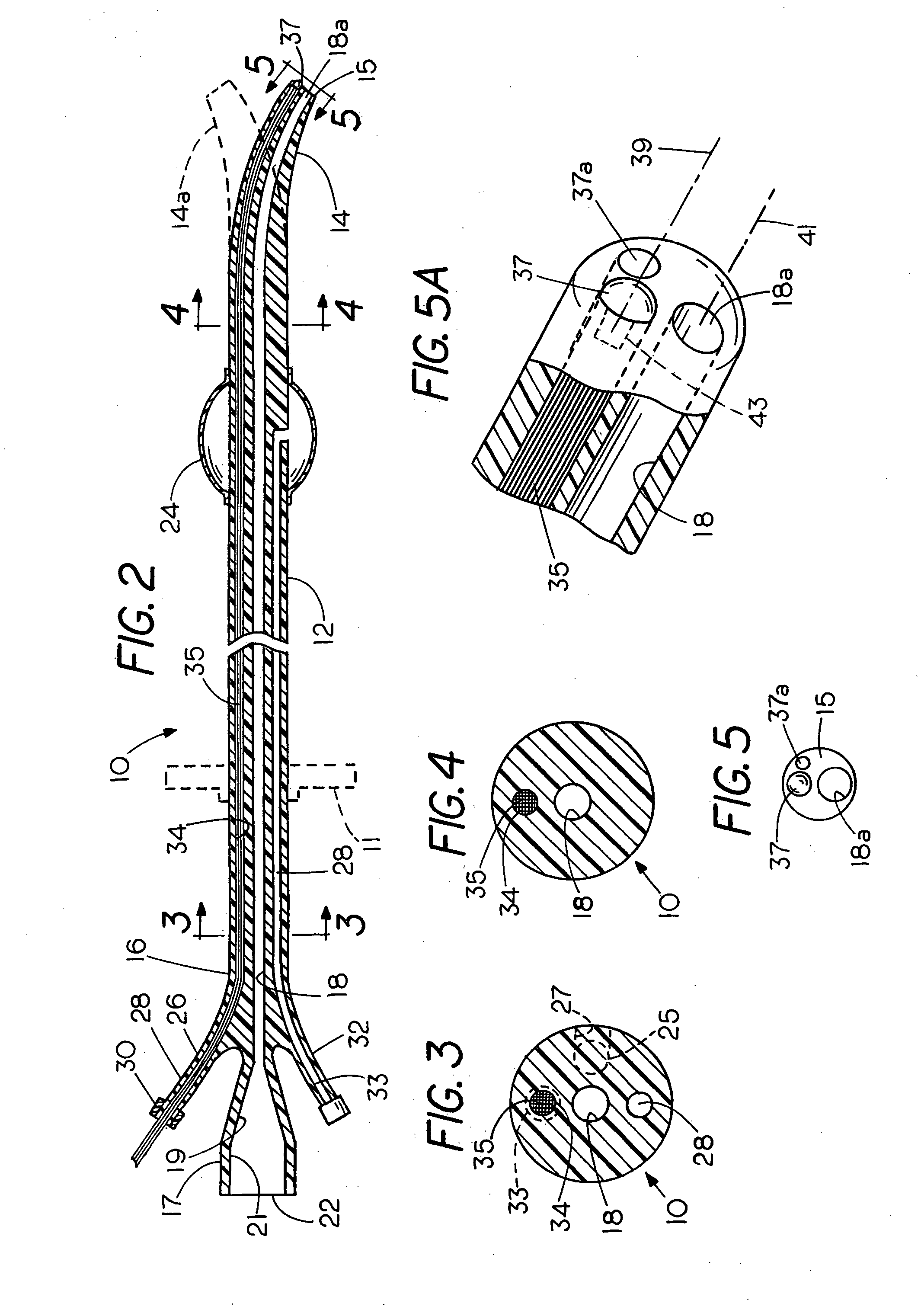

Flexible visually directed medical intubation instrument and method

Owner:PERCUVISION

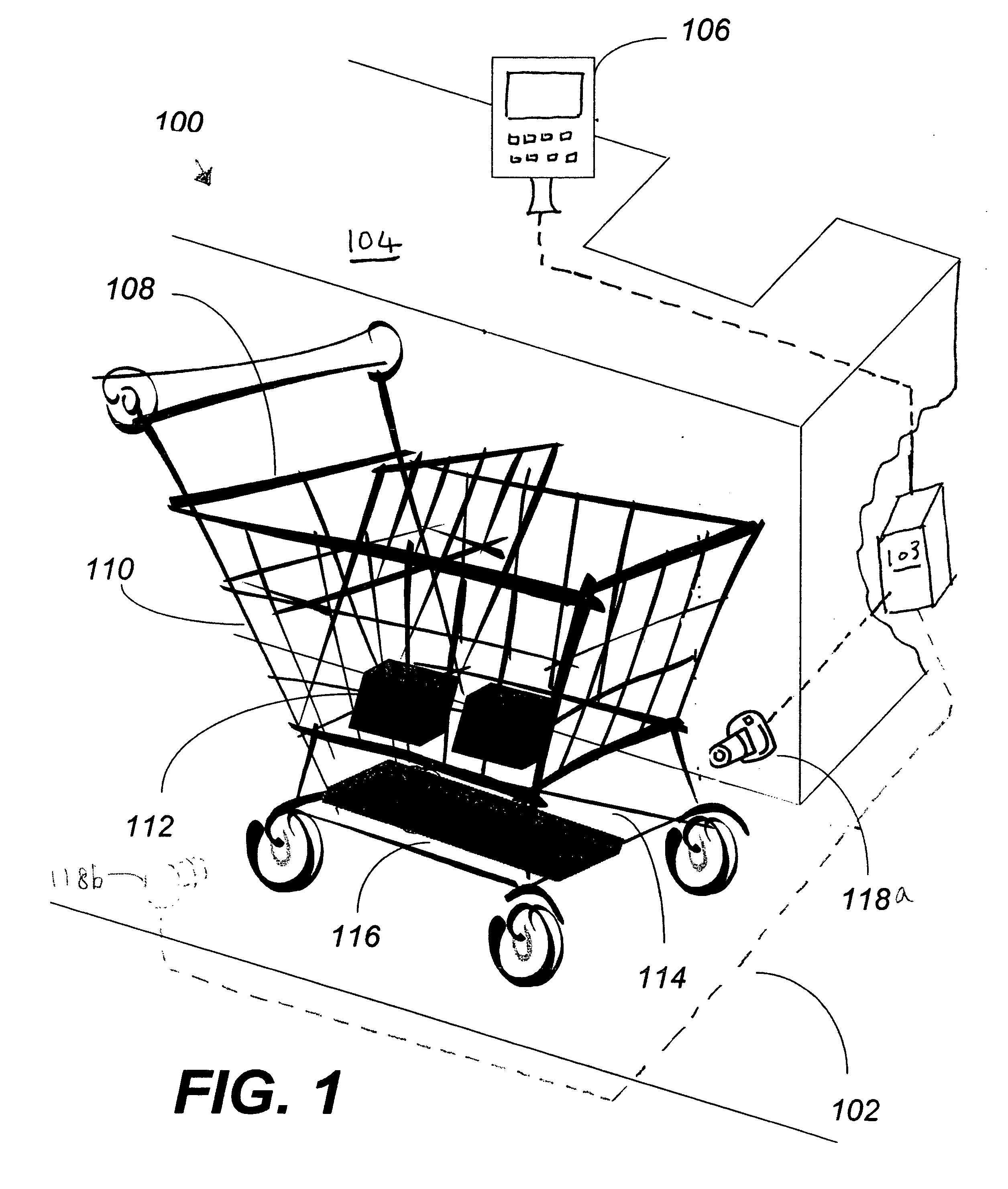

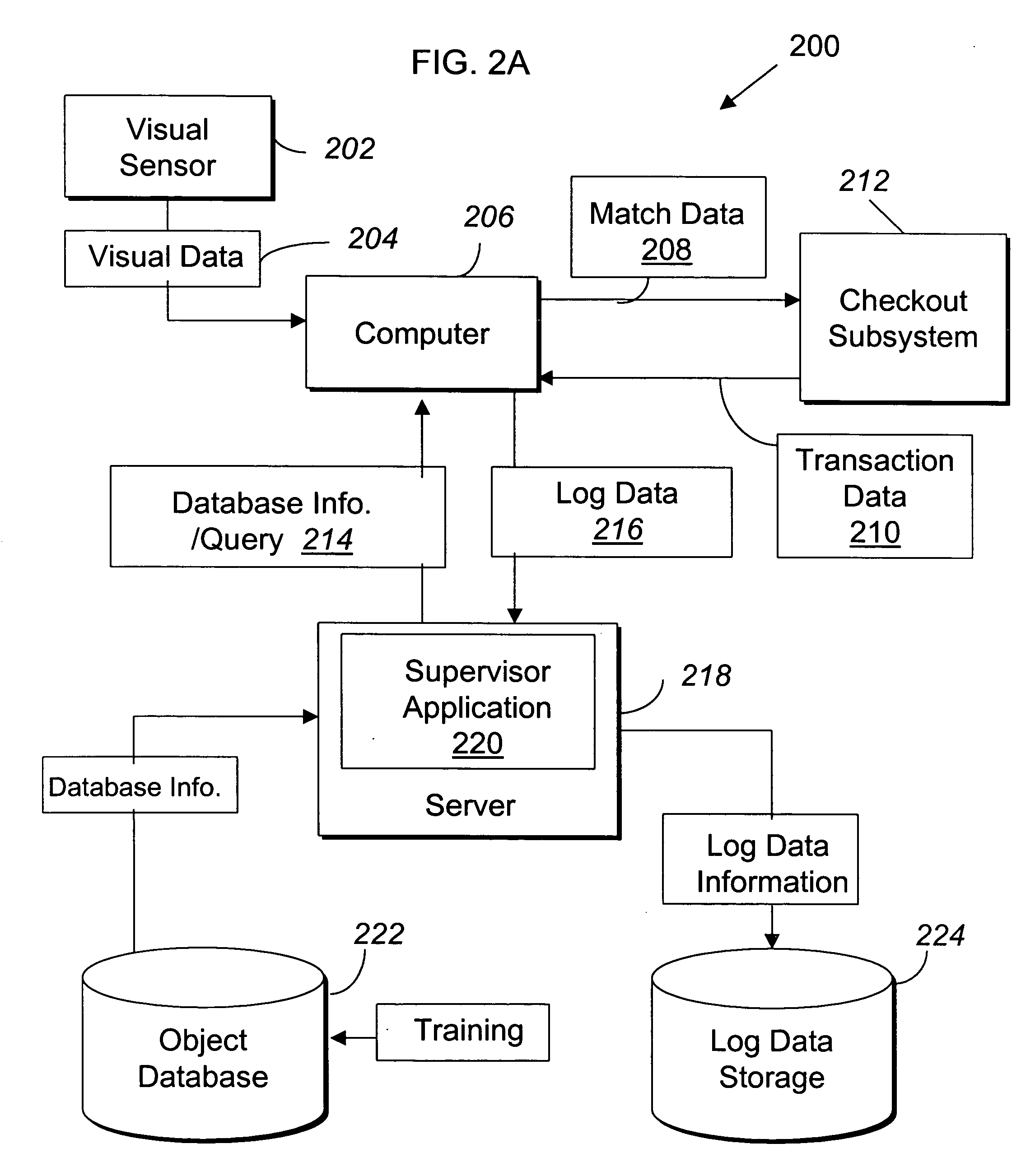

Method of merchandising for checkout lanes

ActiveUS20050189412A1Reduces and prevents bottom-of-the-basket lossImprove checkout speedCredit registering devices actuationCash registersComing outArtificial intelligence

Methods and computer readable media for recognizing and identifying items located on the belt of a counter and / or in a shopping cart of a store environment for the purpose of reducing / preventing bottom-of-the-basket loss, checking out the items automatically, reducing the checkout time, preventing consumer fraud, increasing revenue and replacing a conventional UPC scanning system to enhance the checking out speed. The images of the items taken by visual sensors may be analyzed to extract features using the scale-invariant feature-transformation (SIFT) method. Then, the extracted features are compared to those of trained images stored in a database to find a set of matches. Based on the set of matches, the items are recognized and associated with one or more instructions, commands or actions without the need for personnel to visually see the items, such as by having to come out from behind a check out counter or peering over a check out counter.

Owner:DATALOGIC ADC

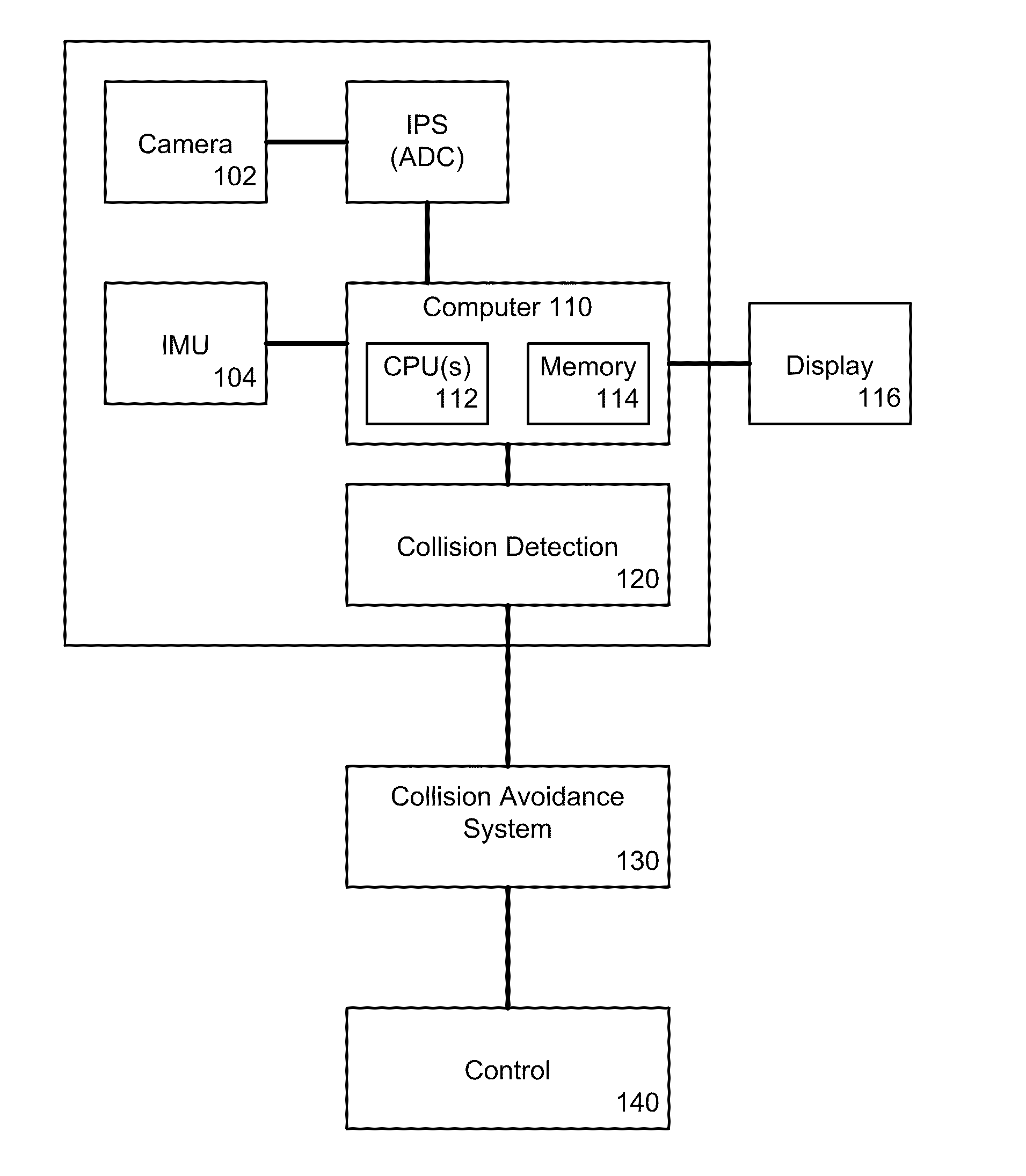

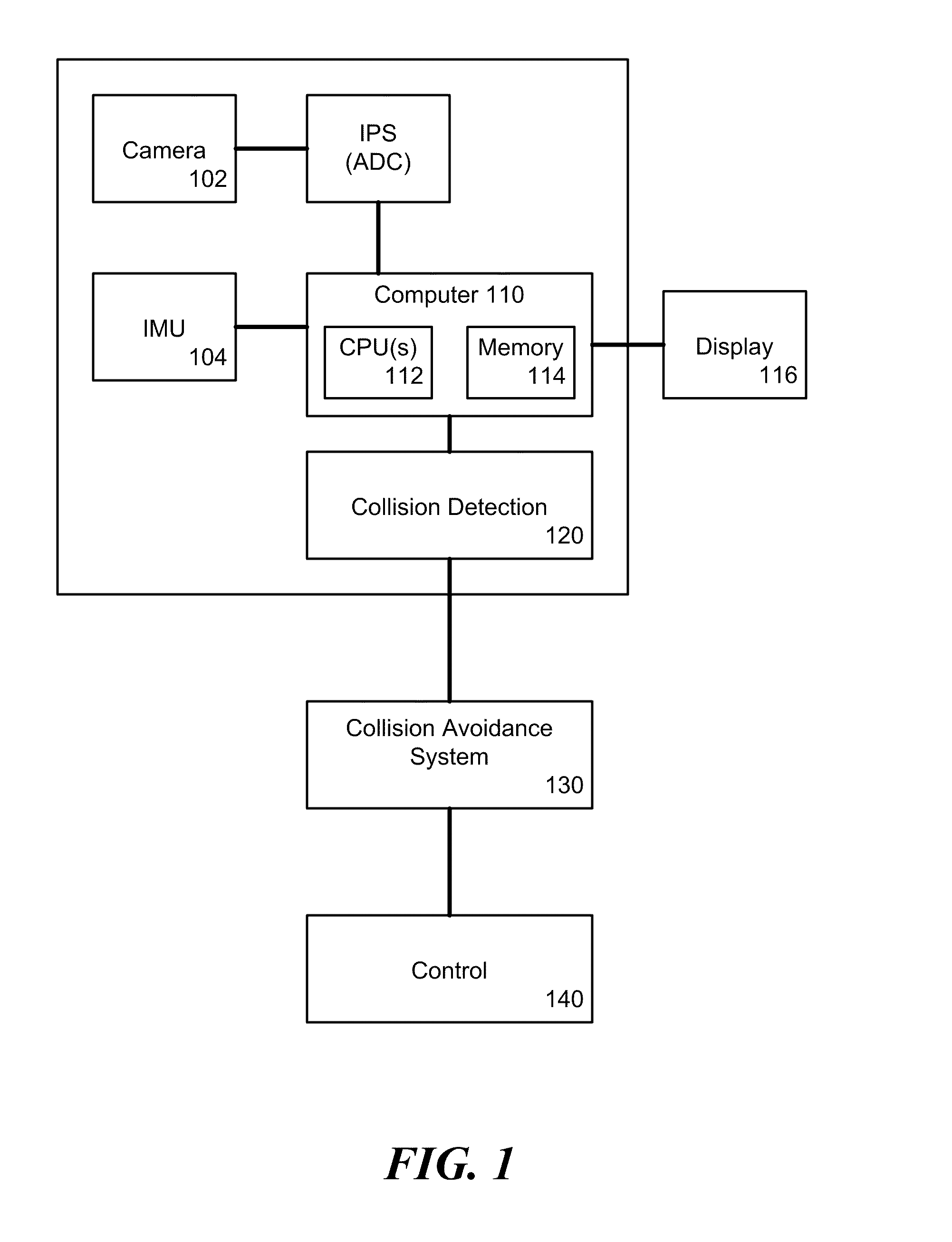

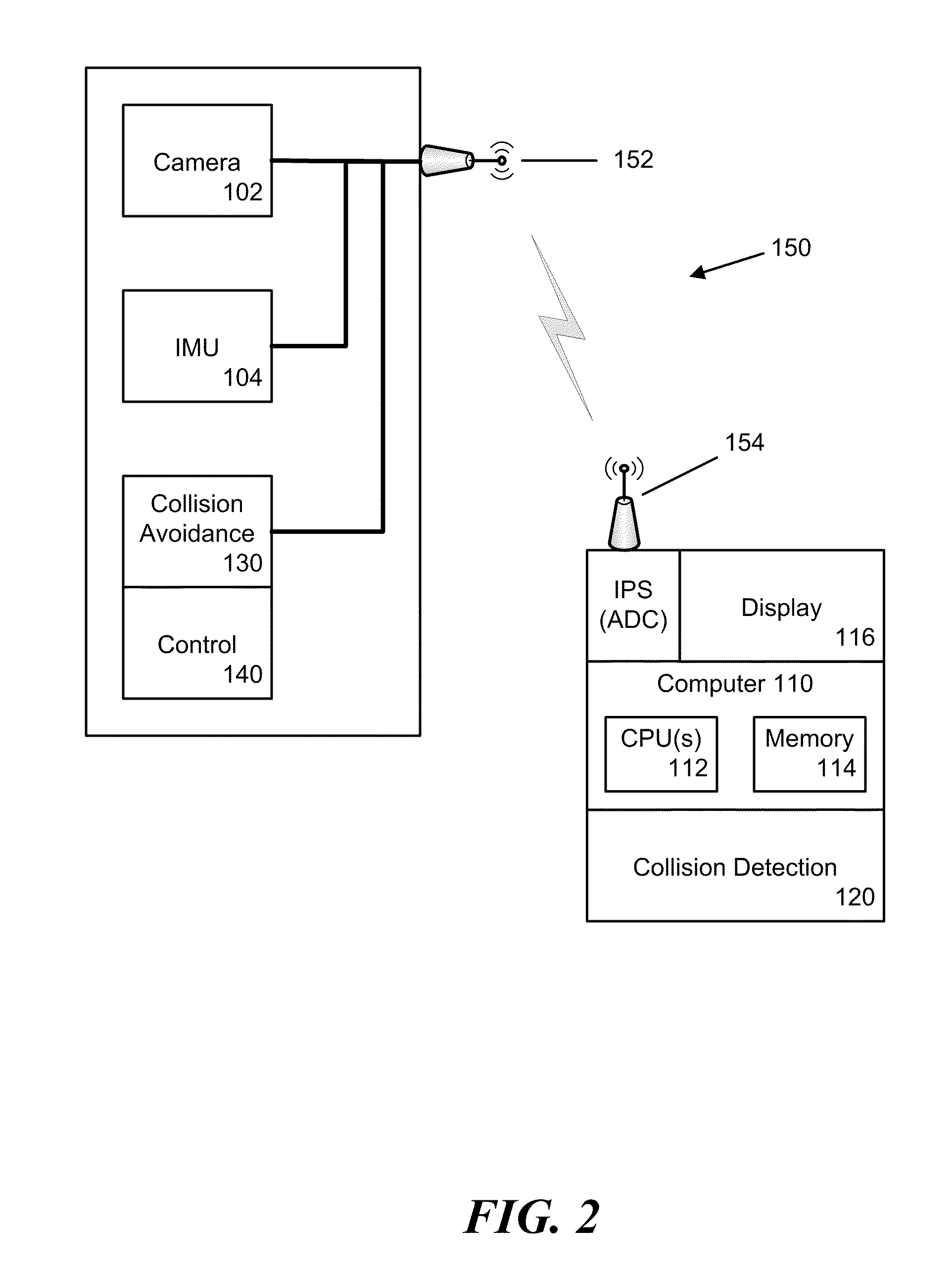

Method and System for Visual Collision Detection and Estimation

InactiveUS20100305857A1Optimized time to collision estimationConvenient timeImage enhancementImage analysisCollision detectionVision sensor

Collision detection and estimation from a monocular visual sensor is an important enabling technology for safe navigation of small or micro air vehicles in near earth flight. In this paper, we introduce a new approach called expansion segmentation, which simultaneously detects “collision danger regions” of significant positive divergence in inertial aided video, and estimates maximum likelihood time to collision (TTC) in a correspondenceless framework within the danger regions. This approach was motivated from a literature review which showed that existing approaches make strong assumptions about scene structure or camera motion, or pose collision detection without determining obstacle boundaries, both of which limit the operational envelope of a deployable system. Expansion segmentation is based on a new formulation of 6-DOF inertial aided TTC estimation, and a new derivation of a first order TTC uncertainty model due to subpixel quantization error and epipolar geometry uncertainty. Proof of concept results are shown in a custom designed urban flight simulator and on operational flight data from a small air vehicle.

Owner:BYRNE JEFFREY +1

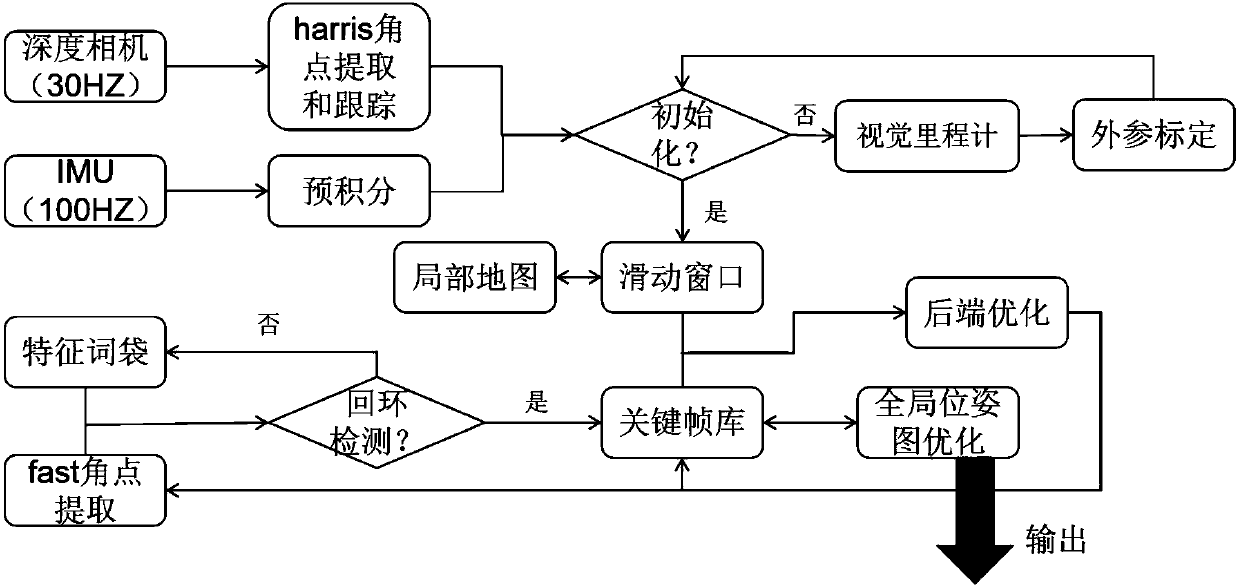

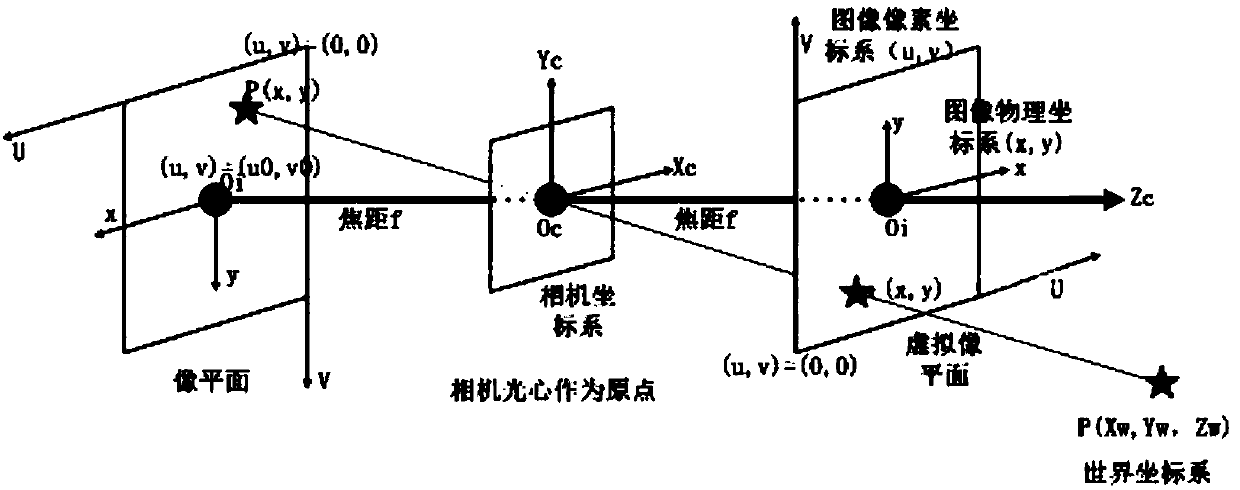

Positioning method and system based on visual inertial navigation information fusion

ActiveCN107869989AEasy to assembleEasy to disassembleNavigational calculation instrumentsNavigation by speed/acceleration measurementsRgb imageVision based

The invention discloses a positioning method and system based on visual inertial navigation information fusion. The method comprises steps as follows: acquired sensor information is preprocessed, wherein the sensor information comprises an RGB image and depth image information of a depth vision sensor and IMU (inertial measurement unit) data; external parameters of a system which the depth visionsensor and an IMU belong to are acquired; the pre-processed sensor information and external parameters are processed with an IMU pre-integration model and a depth camera model, and pose information isacquired; the pose information is corrected on the basis of a loop detection mode, and the corrected globally uniform pose information is acquired. The method has good robustness in the positioning process and the positioning accuracy is improved.

Owner:NORTHEASTERN UNIV

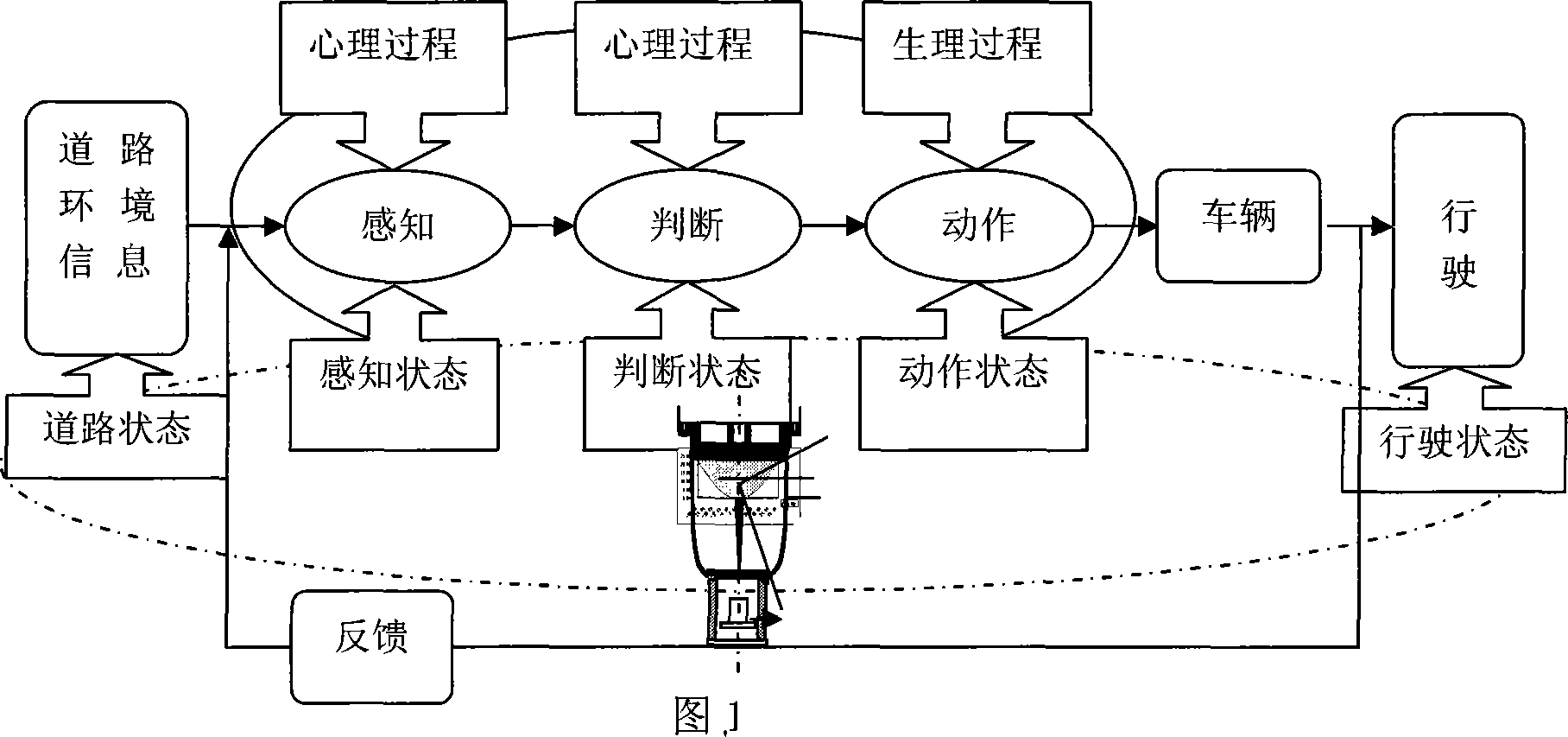

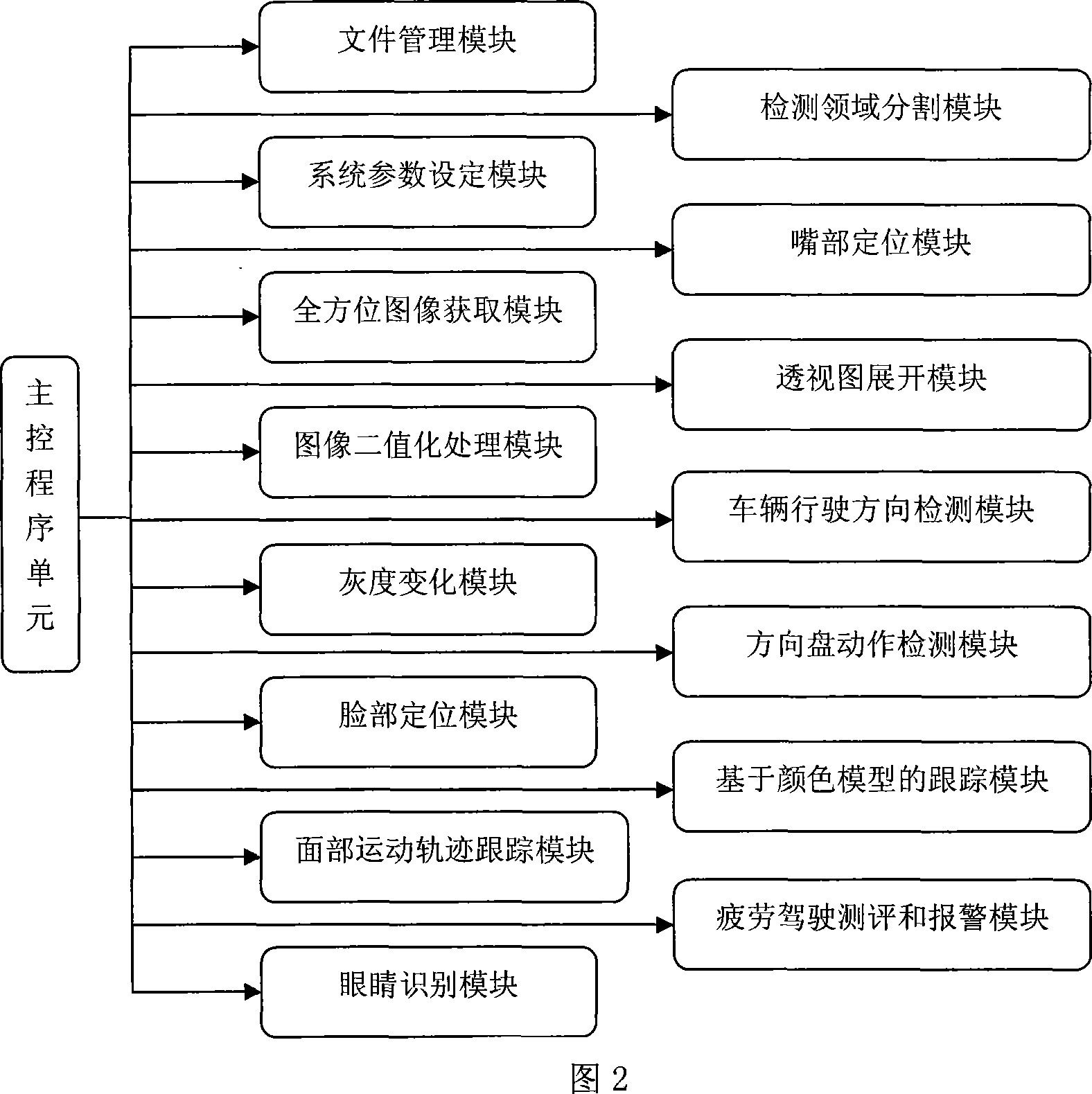

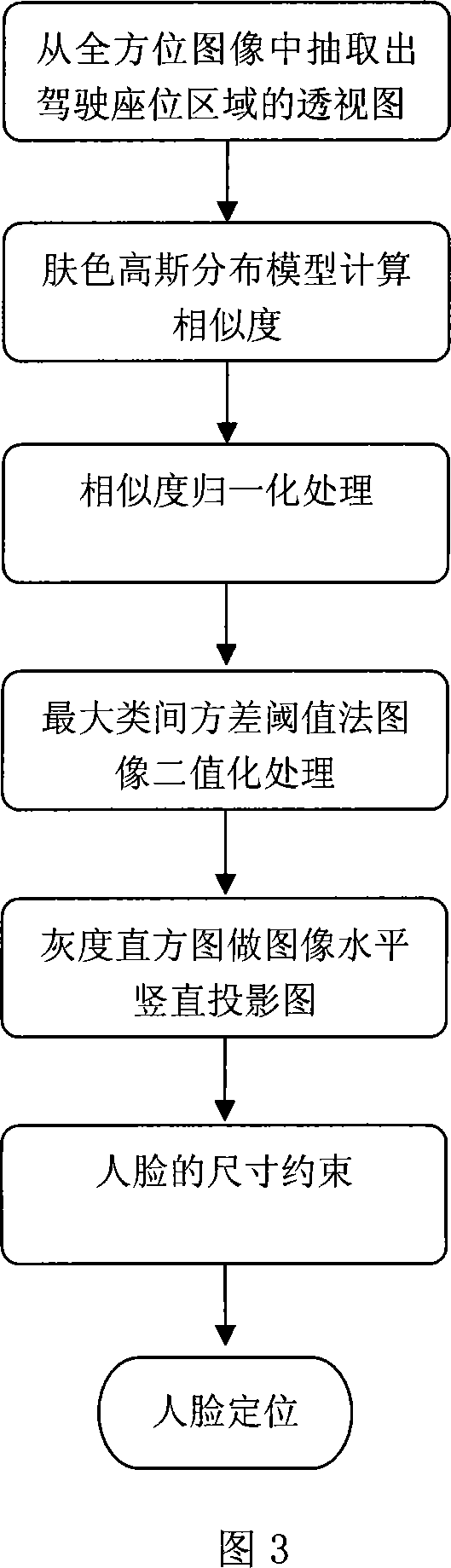

Safe driving auxiliary device based on omnidirectional computer vision

ActiveCN101032405AImprove reliabilityHigh measurement accuracyPsychotechnic devicesSpecial data processing applicationsEye stateSteering wheel

The auxiliary safety operation equipment based on omnibearing computer vision includes an omnibearing vision sensor for acquiring omnibearing video information outside and inside the vehicle, and an auxiliary safety operation controller for detecting fatigue operation and warning on fatigue operation. The omnibearing vision sensor installed in the right of the driver's seat and connected to the auxiliary safety operation controller detects the facial state, eye state and mouth state of the driver and the steering wheel state, monitors the vehicle direction, speed, etc, and warns the fatigue operation in case of detecting fatigue operation state. The present invention detects the characteristic parameter of fatigue operation state, and has high judgment precision and high measurement precision.

Owner:STRAIT INNOVATION INTERNET CO LTD

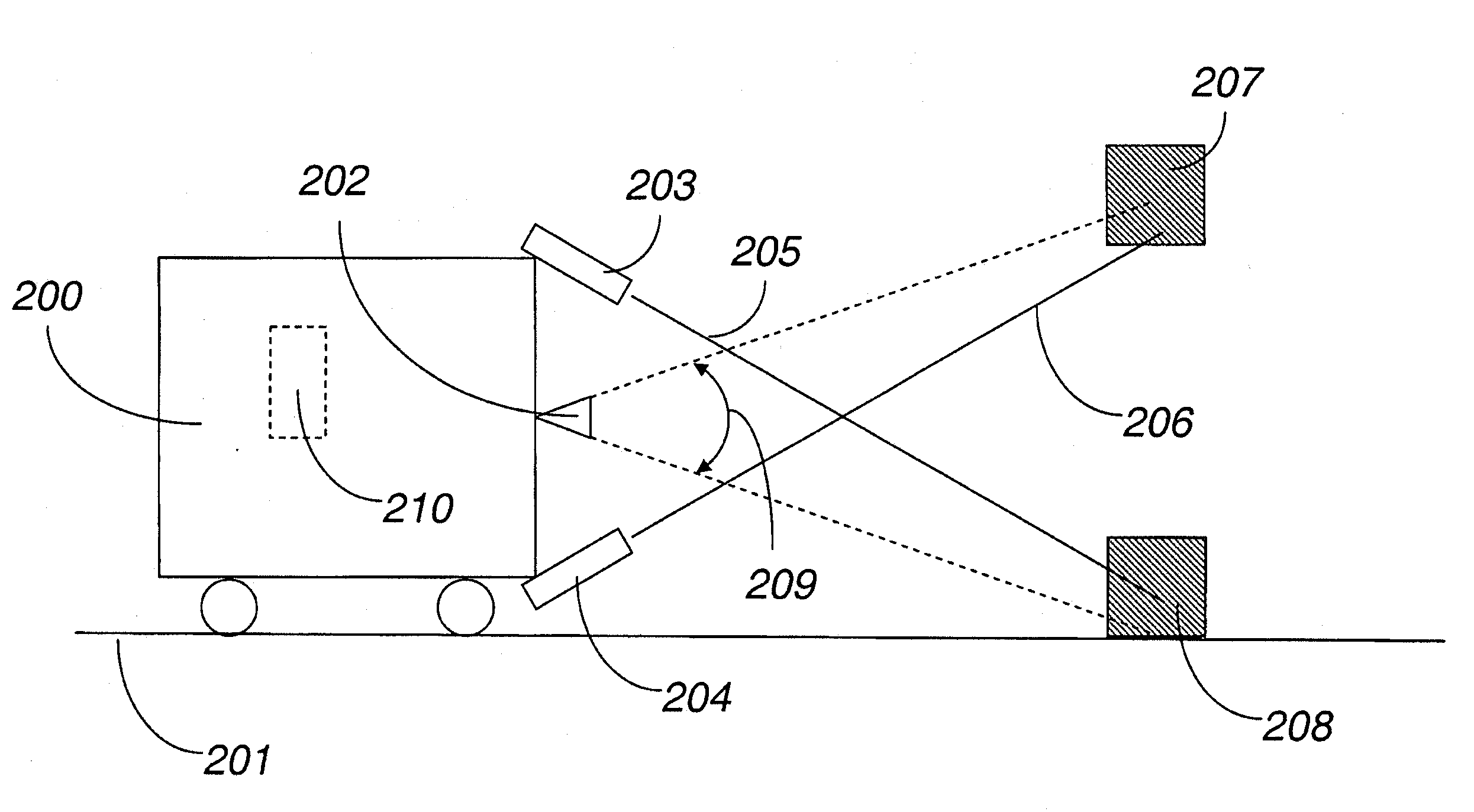

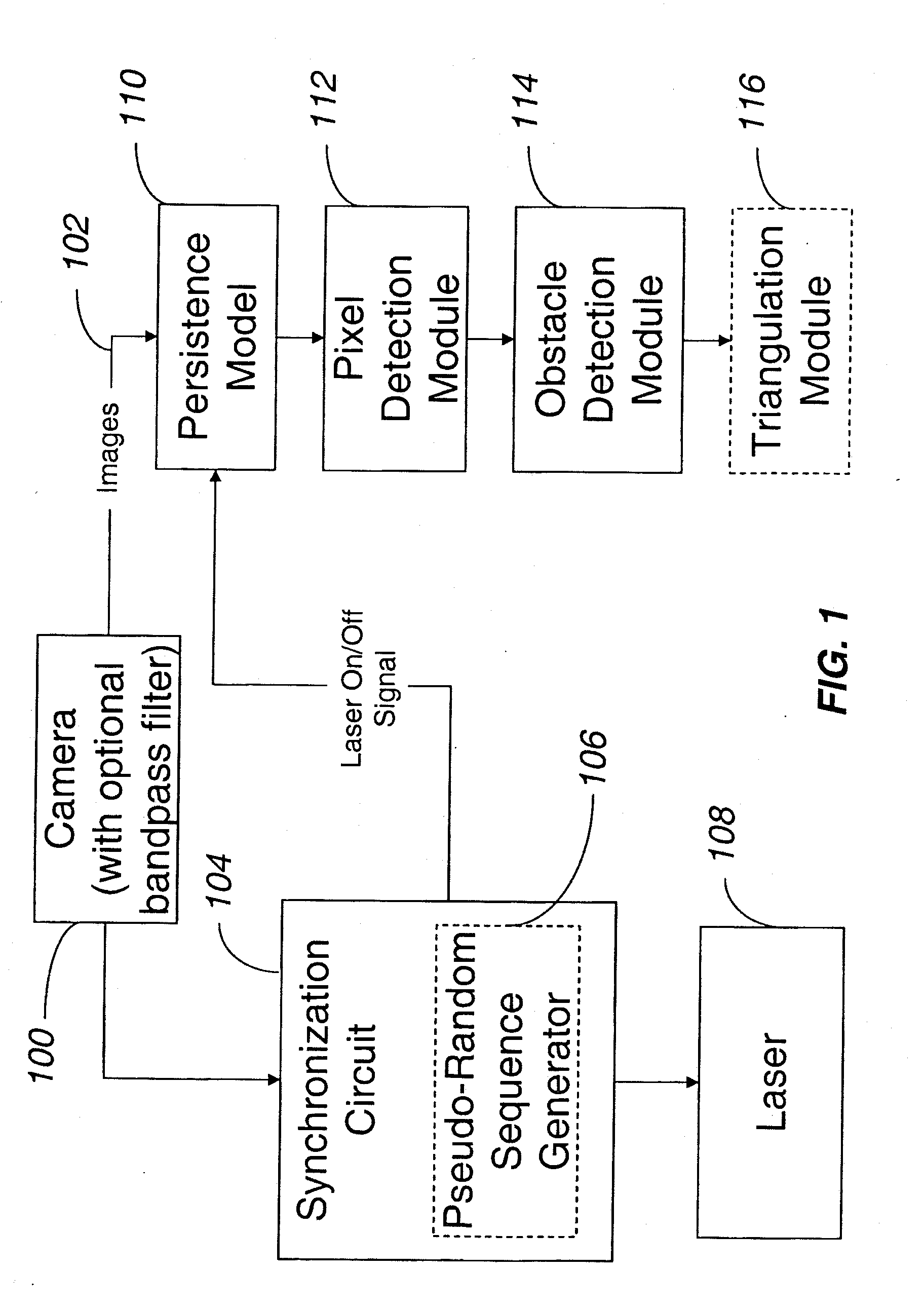

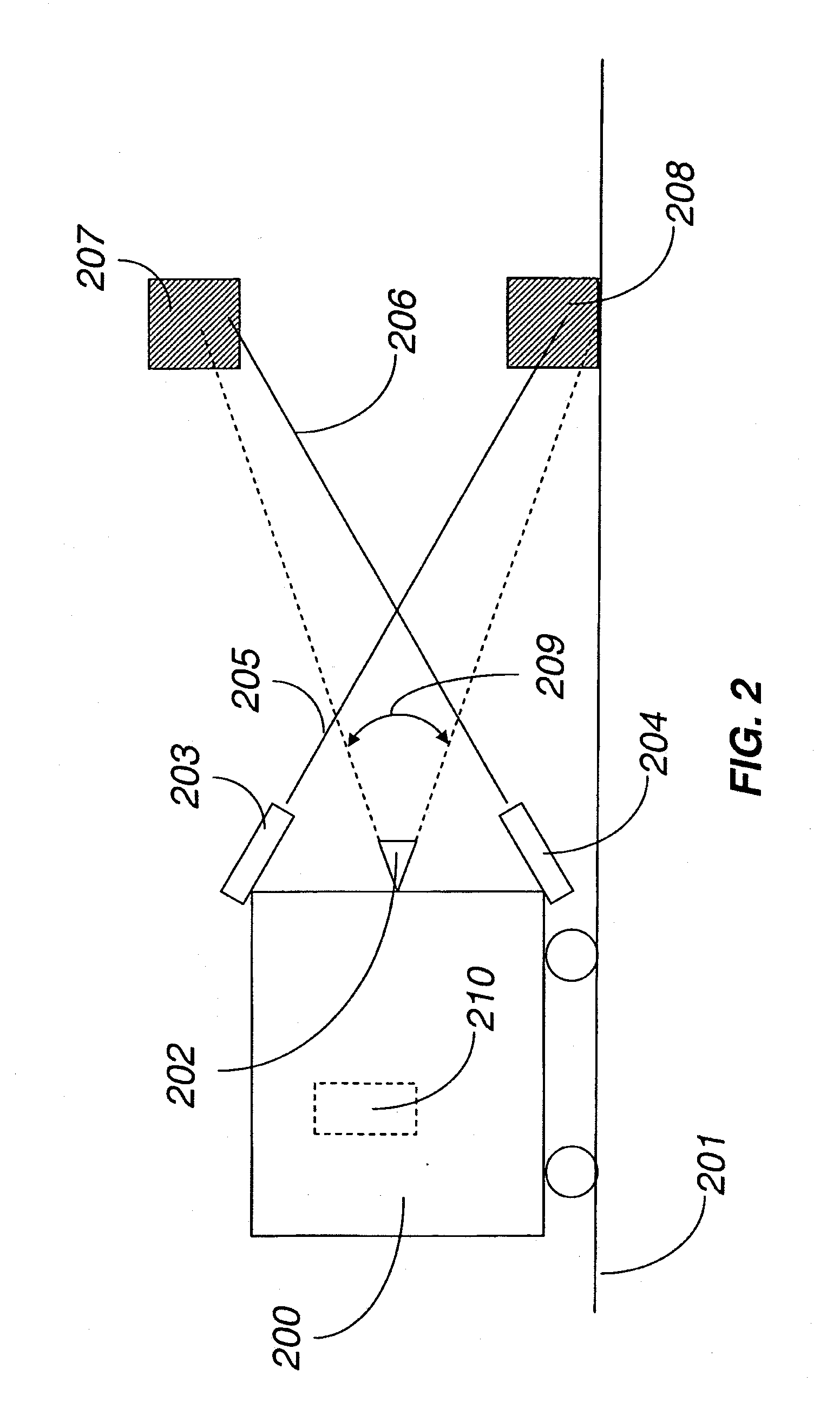

Methods and systems for obstacle detection using structured light

ActiveUS20150168954A1Robust detectionImprove accuracyProgramme controlComputer controlProcessing elementVision sensor

An obstacle detector for a mobile robot while the robot is in motion is disclosed. The detector preferably includes at least one light source configured to project pulsed light in the path of the robot; a visual sensor for capturing a plurality of images of light reflected from the path of the robot; a processing unit configured to extract the reflections from the images; and an obstacle detection unit configured to detect an obstacle in the path of the robot based on the extracted reflections. In the preferred embodiment, the reflections of the projected light are extracted by subtracting pairs of images in which each pair includes a first image captured with the at least one light source on and a second image captured with the at least one light source off, and then combining images of two or more extracted reflections to suppress the background.

Owner:IROBOT CORP

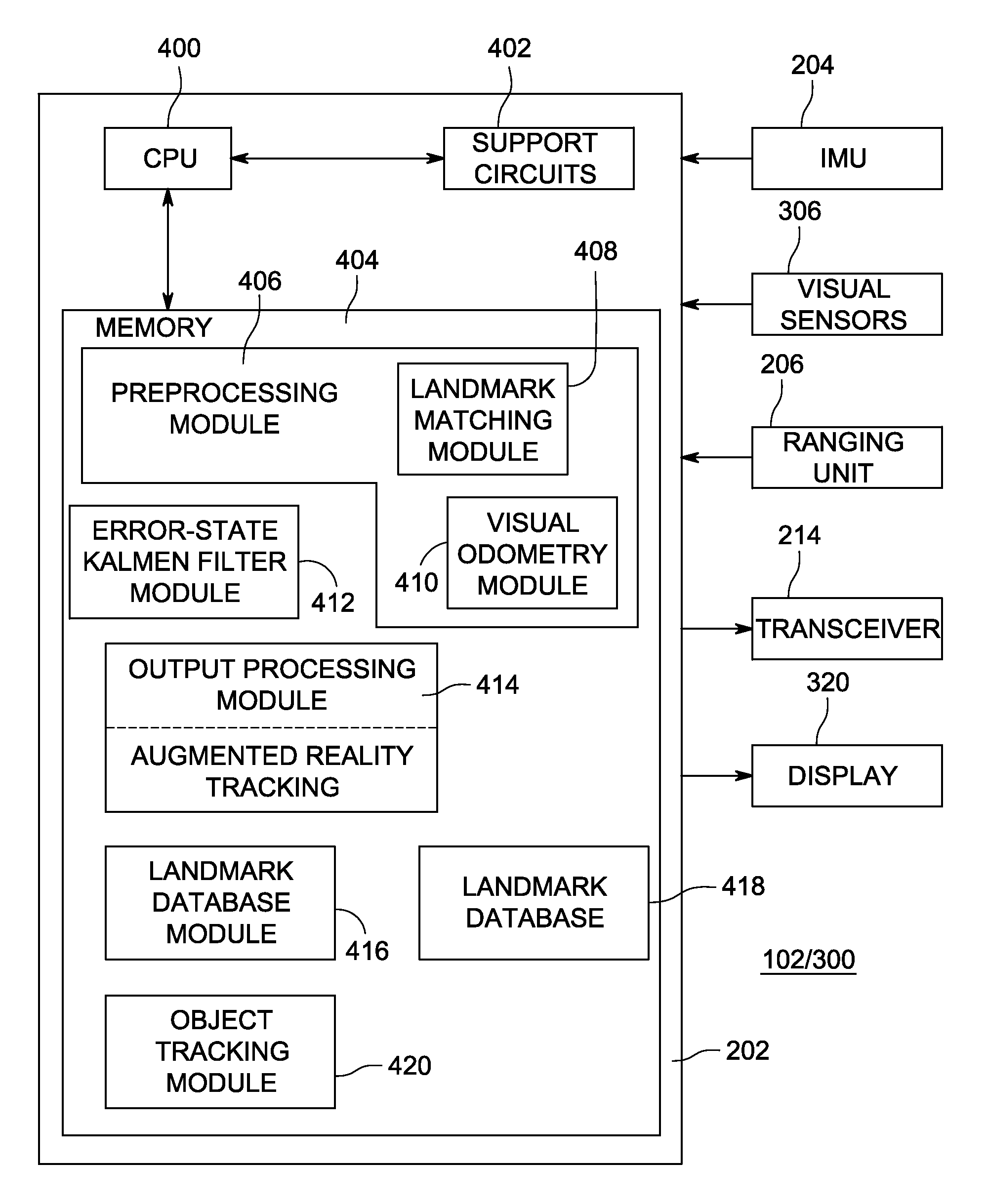

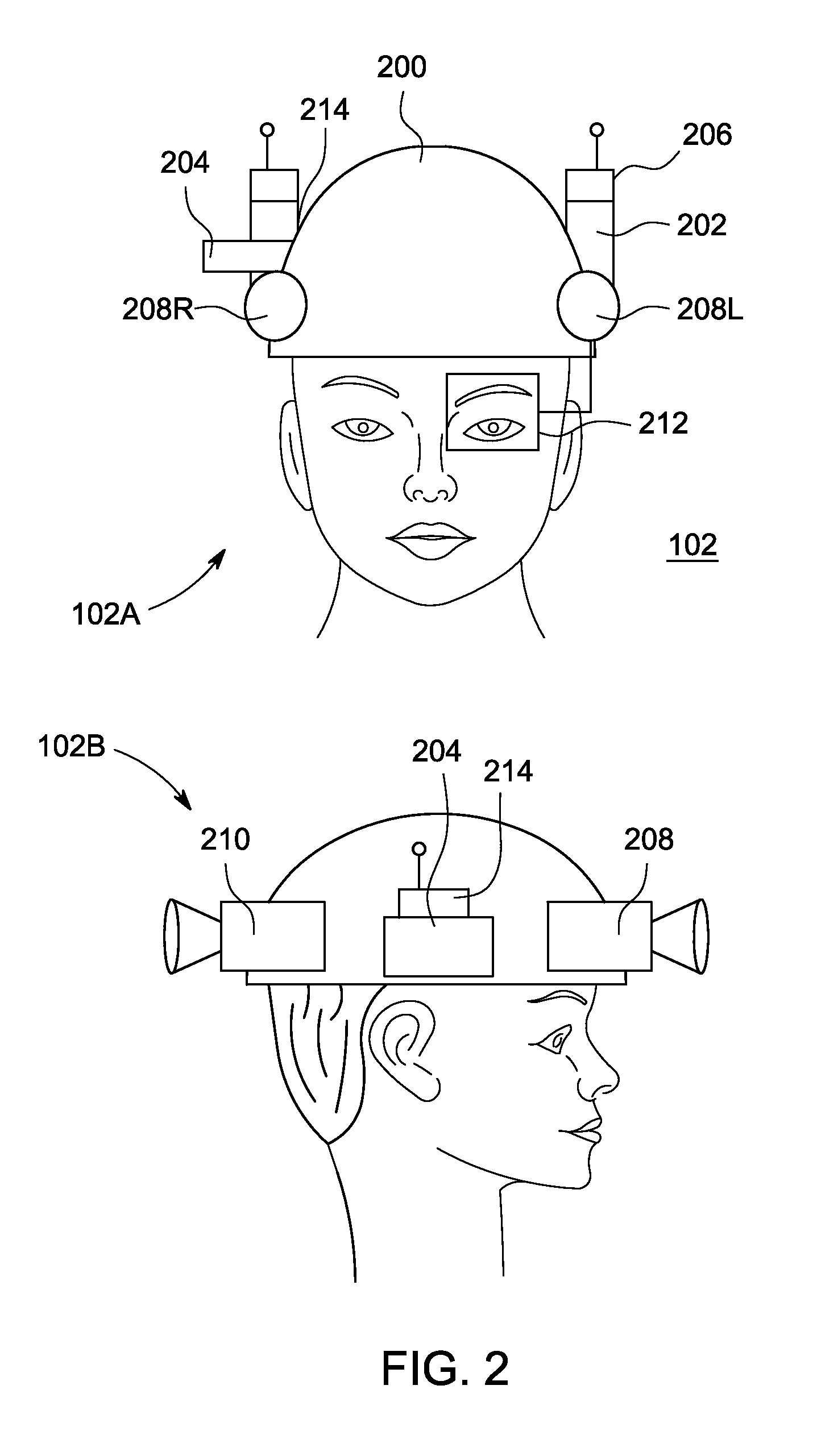

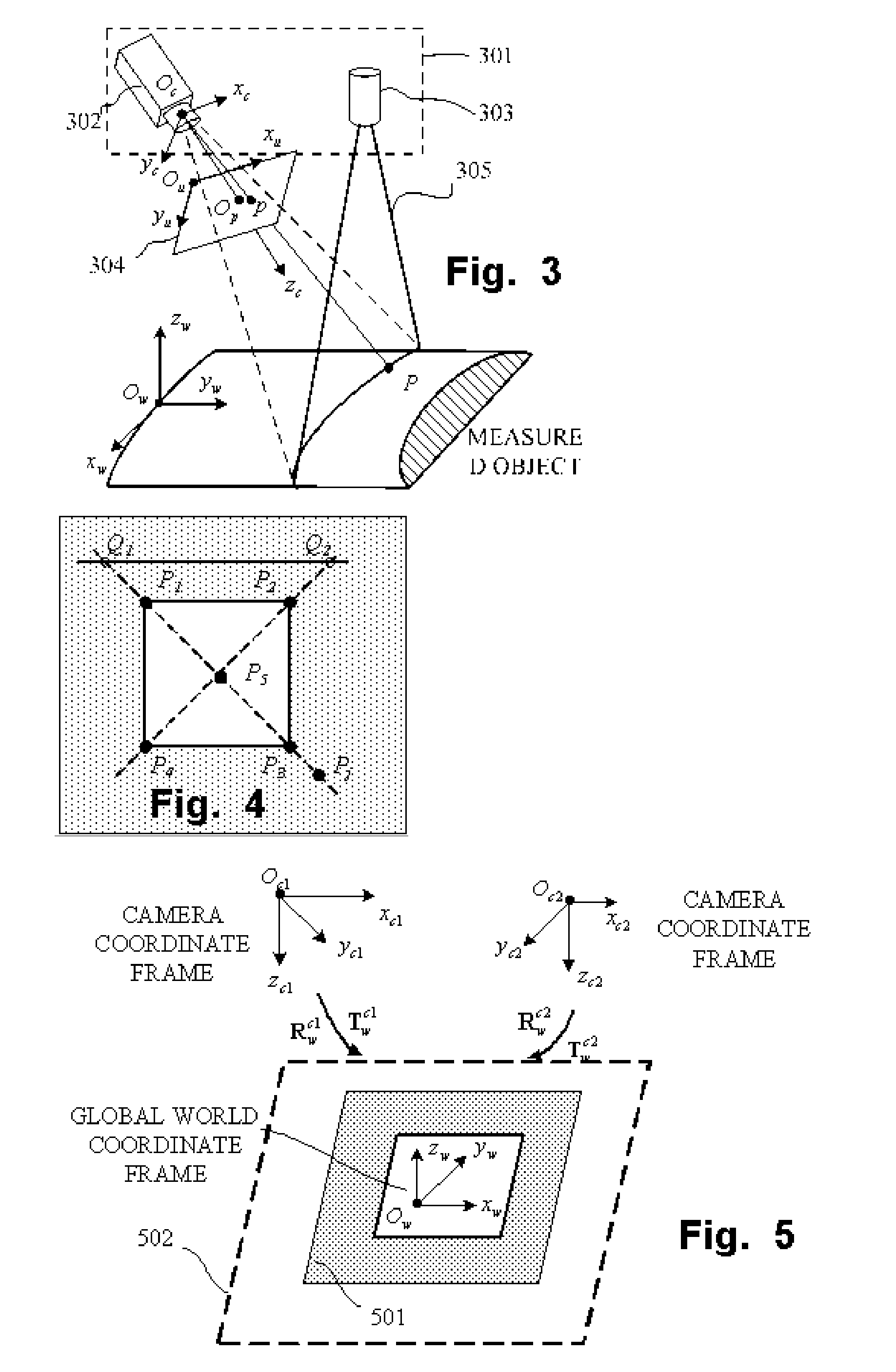

Method and apparatus for generating three-dimensional pose using multi-modal sensor fusion

A method and apparatus for providing three-dimensional navigation for a node comprising an inertial measurement unit for providing gyroscope, acceleration and velocity information (collectively IMU information); a ranging unit for providing distance information relative to at least one reference node; at least one visual sensor for providing images of an environment surrounding the node; a preprocessor, coupled to the inertial measurement unit, the ranging unit and the plurality of visual sensors, for generating error states for the IMU information, the distance information and the images; and an error-state predictive filter, coupled to the preprocessor, for processing the error states to produce a three-dimensional pose of the node.

Owner:SRI INTERNATIONAL

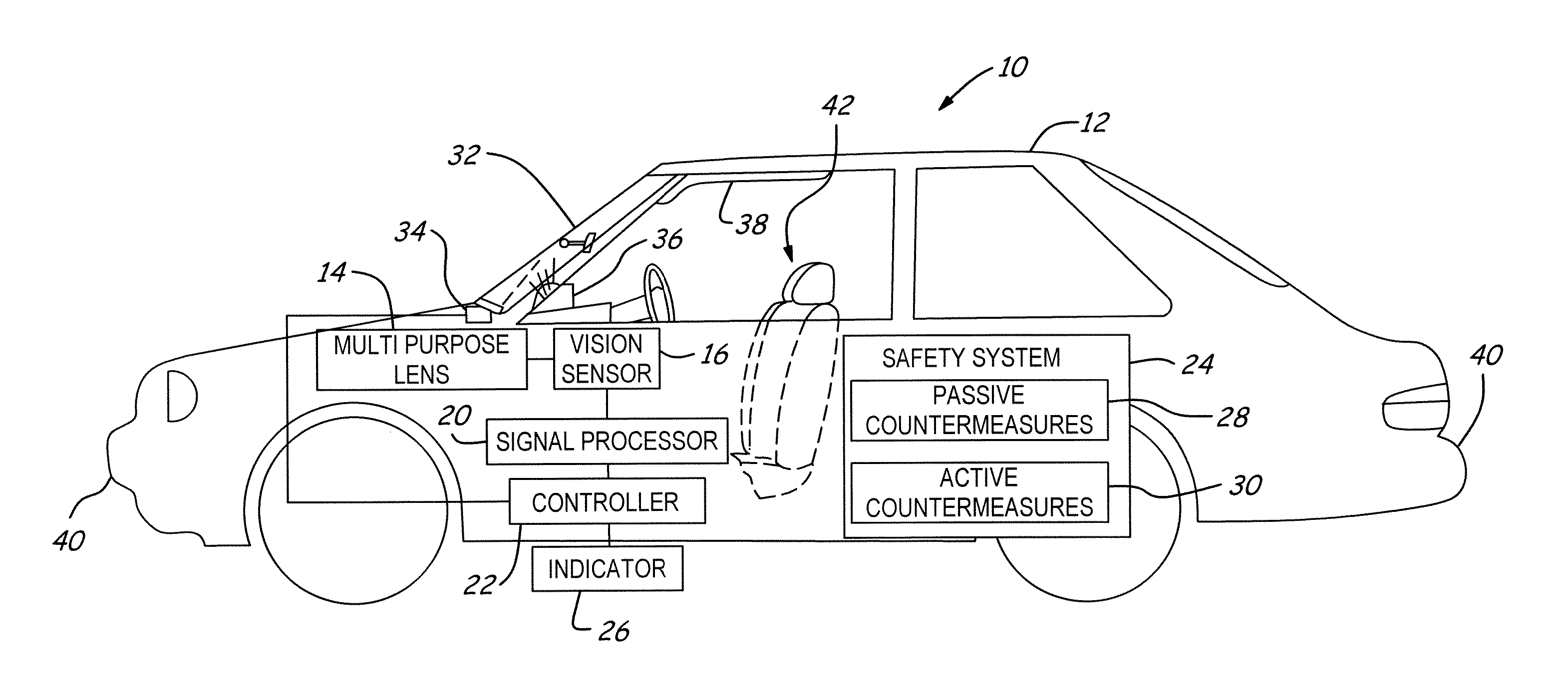

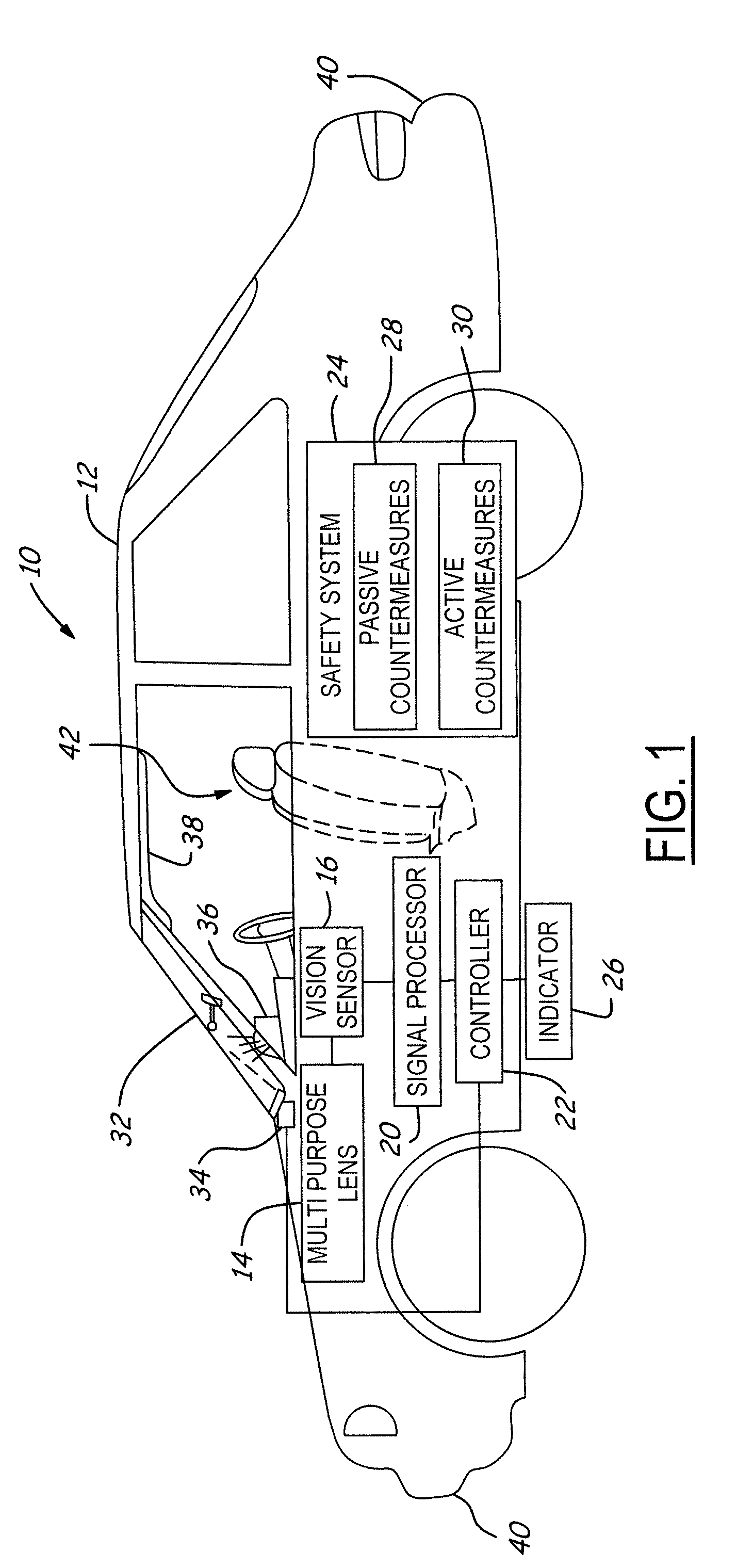

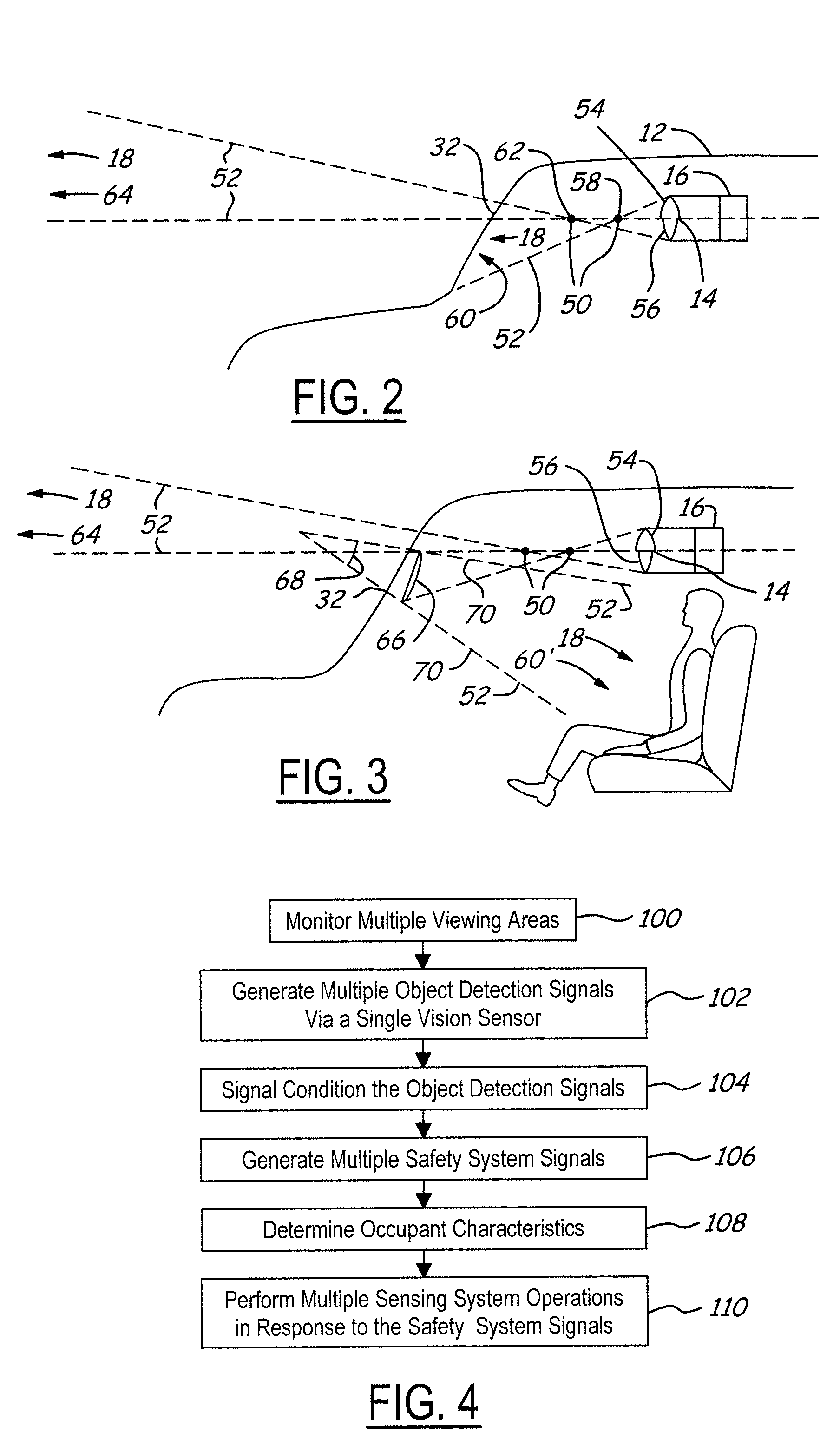

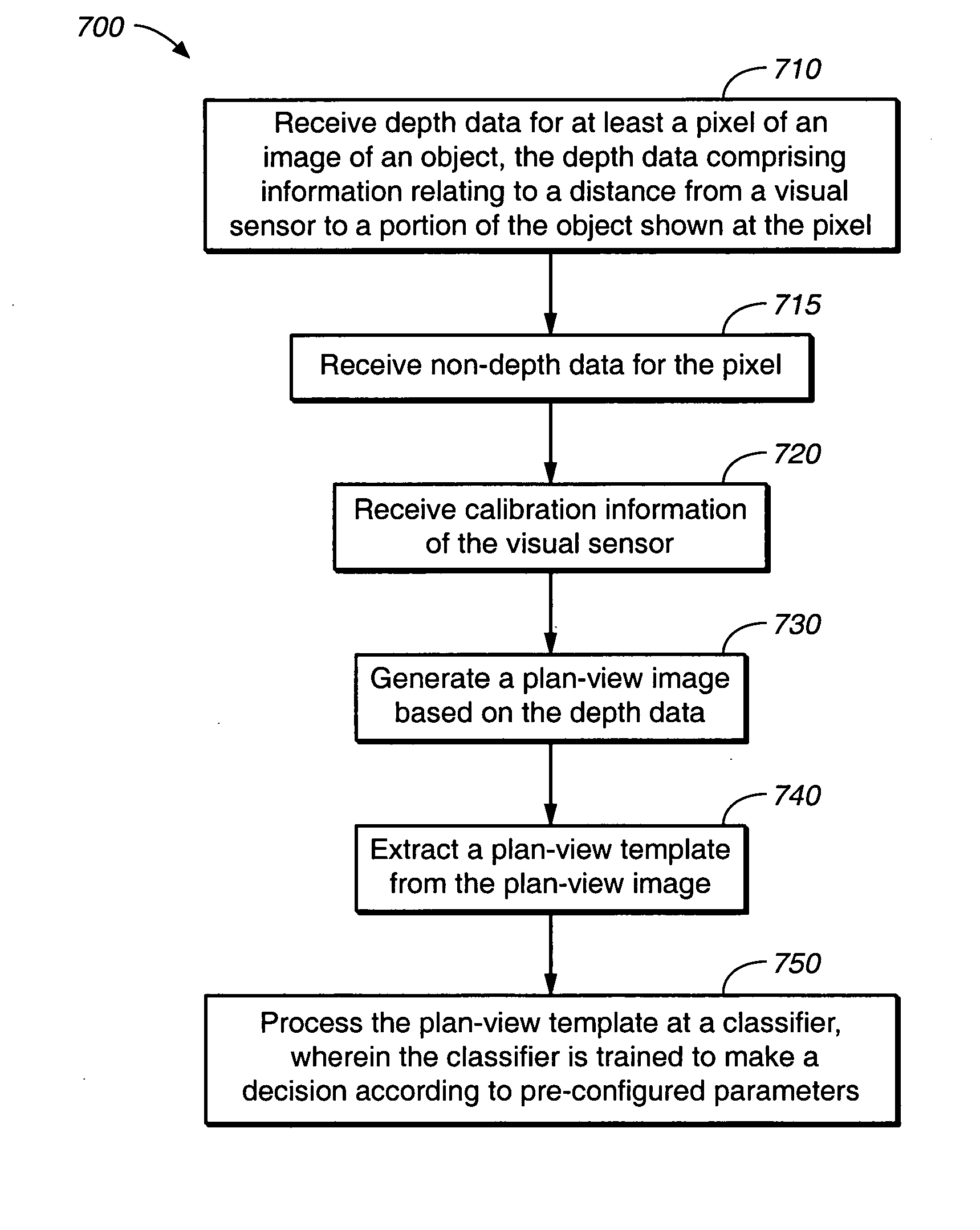

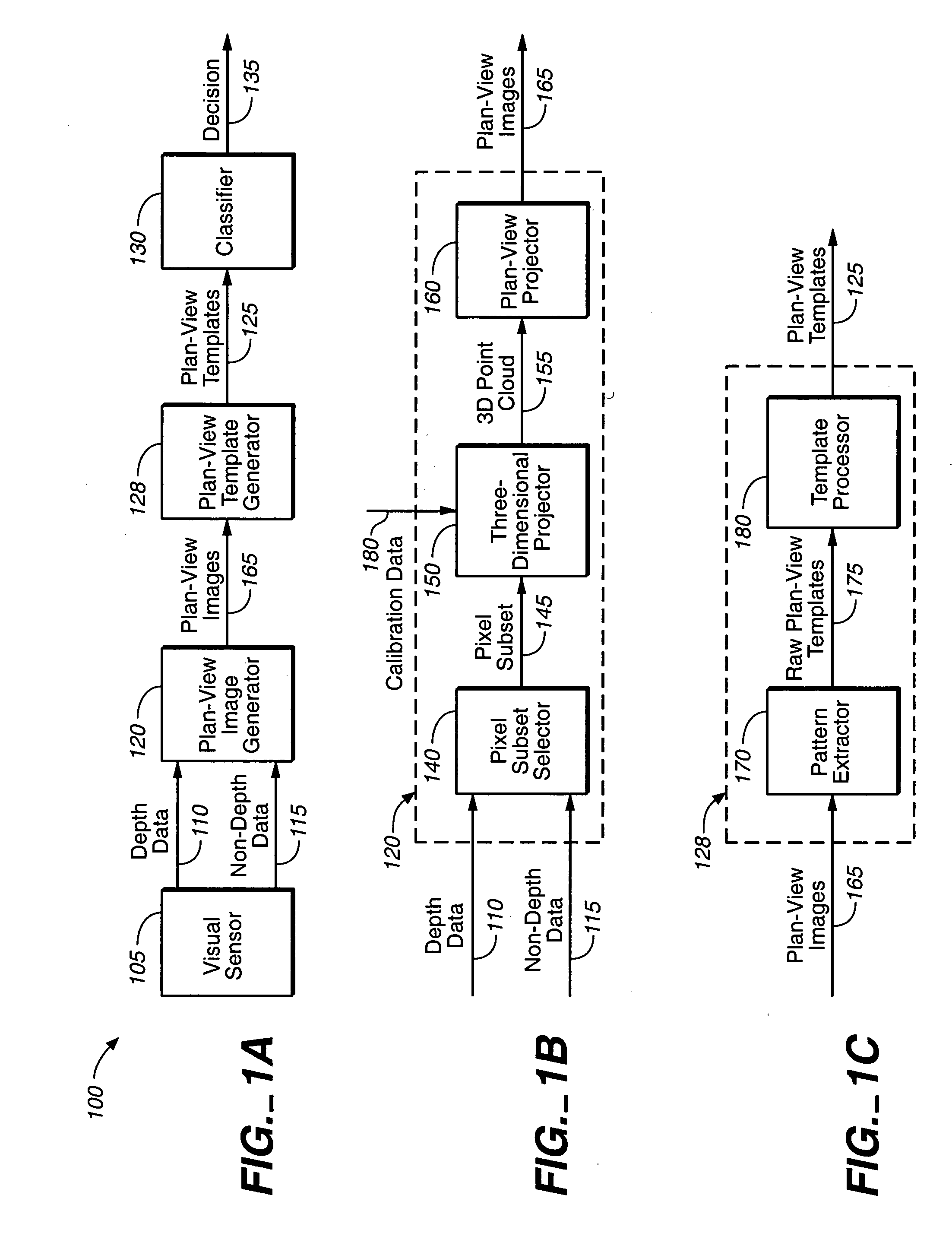

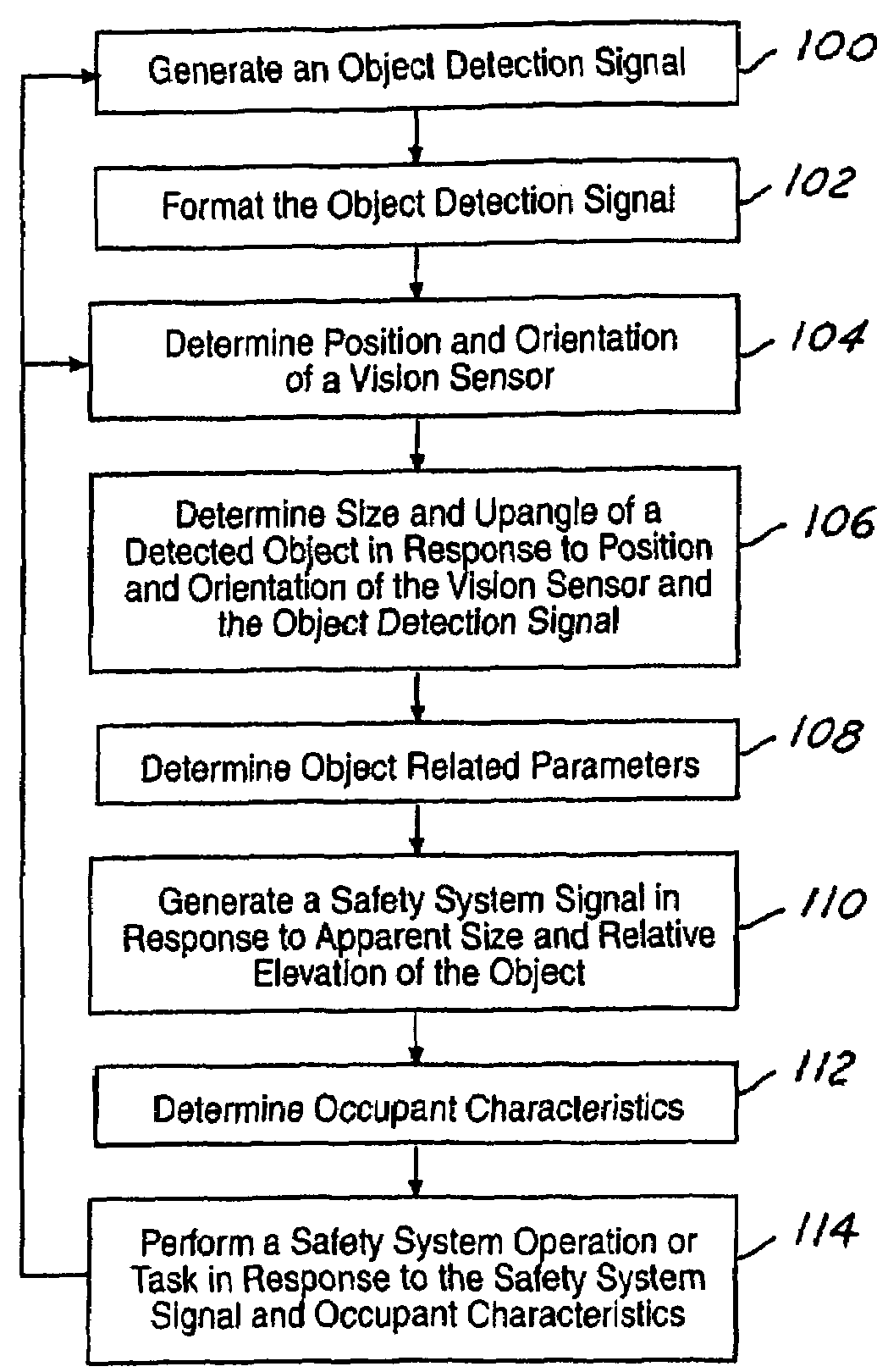

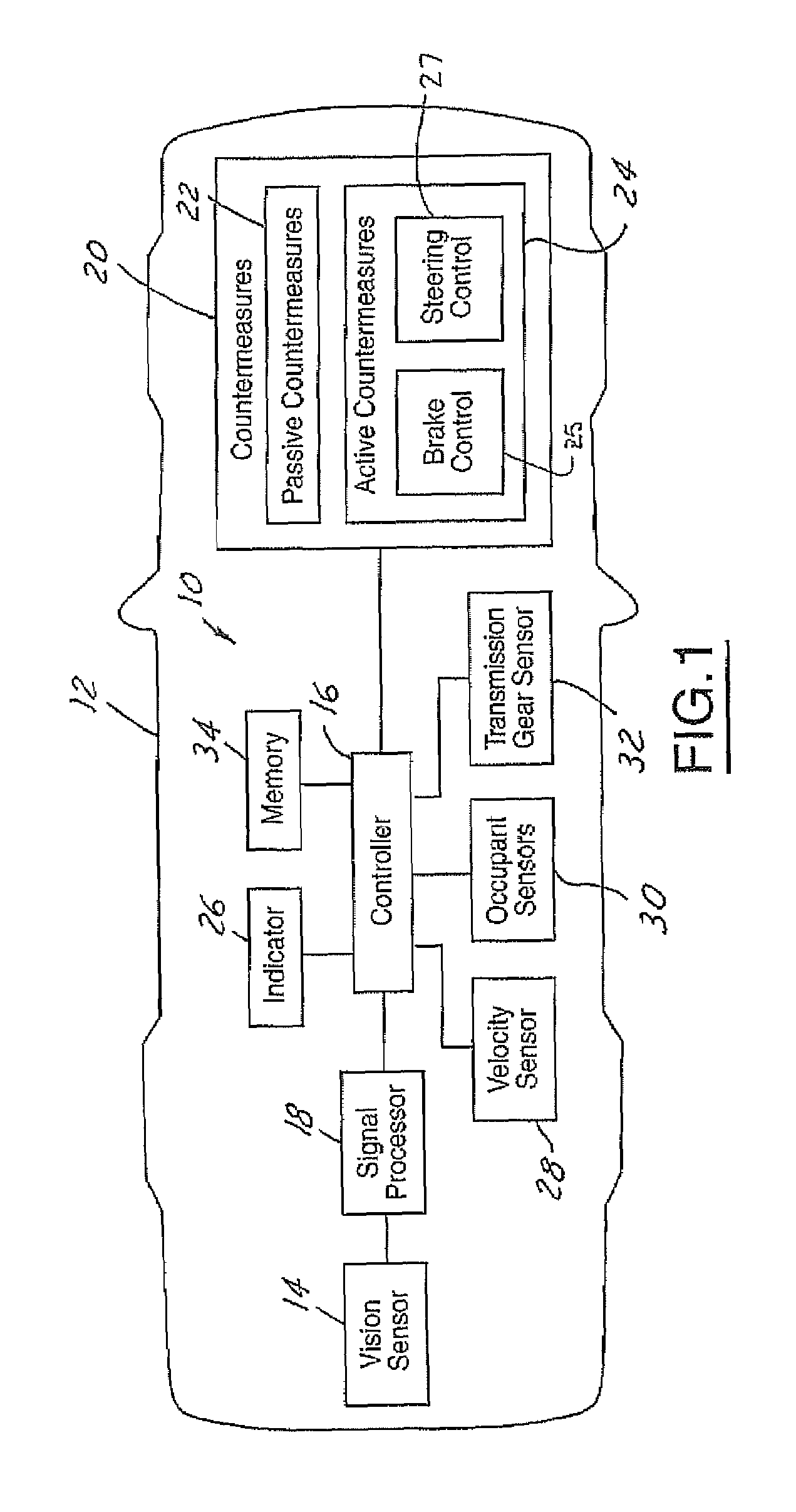

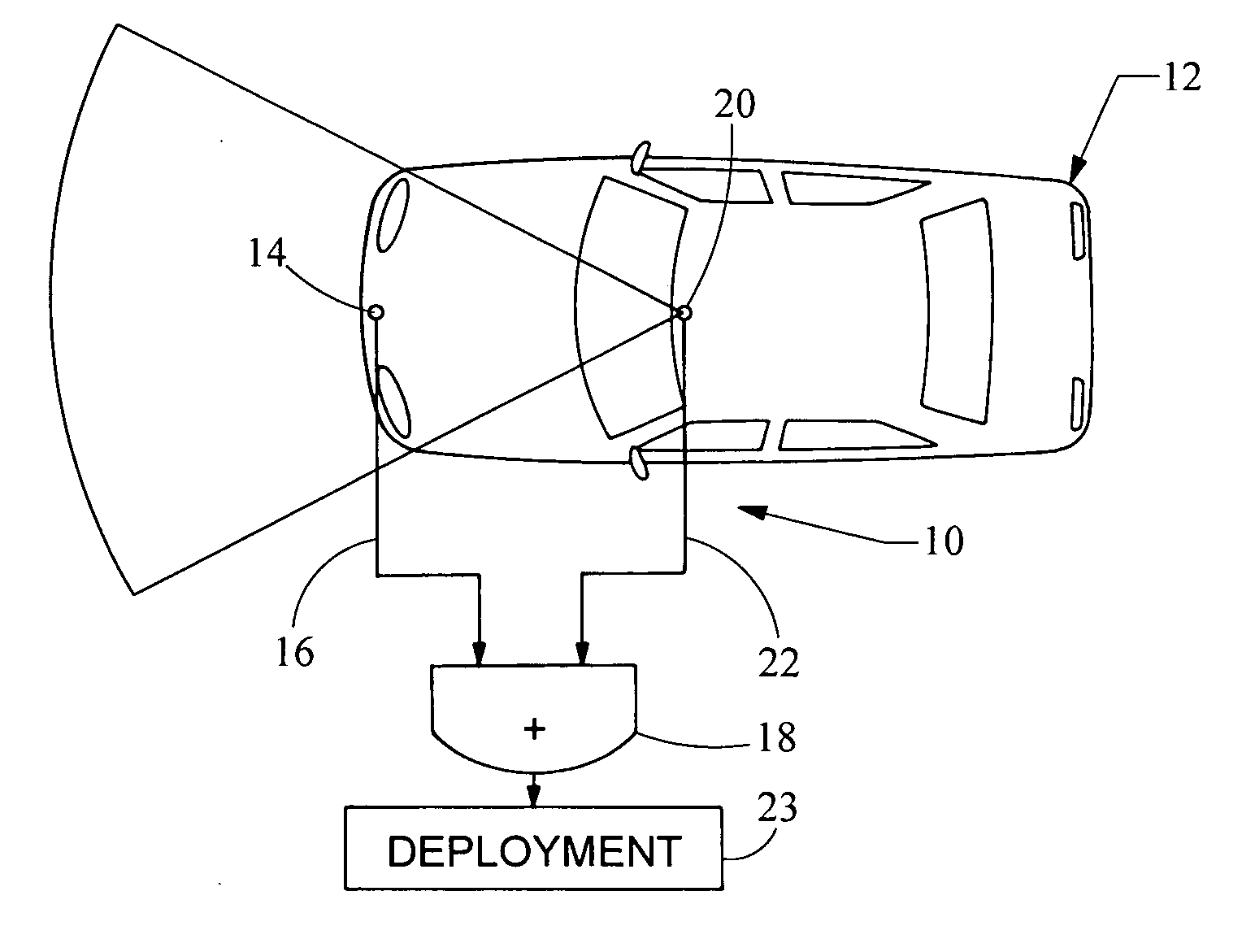

Multipurpose vision sensor system

InactiveUS6958683B2Low costMinimize the numberDigital data processing detailsPedestrian/occupant safety arrangementEngineeringVision sensor

A multipurpose sensing system (10) for a vehicle (12) includes an optic (14) that is directed at multiple viewing areas (18). A vision sensor (16) is coupled to the optic (14) and generates multiple object detection signals corresponding to the viewing areas (18). A controller (22) is coupled to the vision sensor (16) and generates multiple safety system signals in response to the object detection signals.

Owner:FORD GLOBAL TECH LLC

Method for visual-based recognition of an object

Owner:HEWLETT PACKARD DEV CO LP

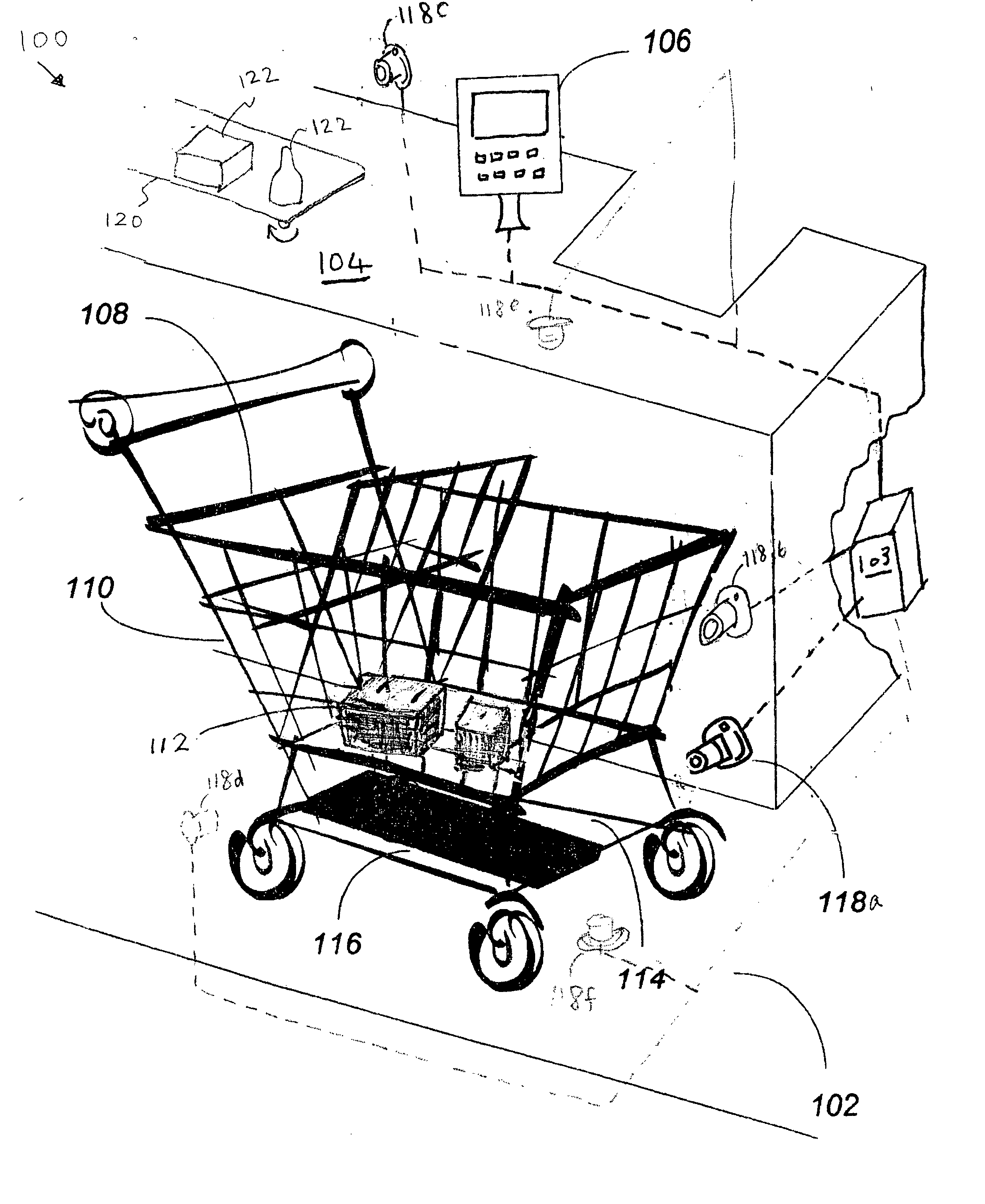

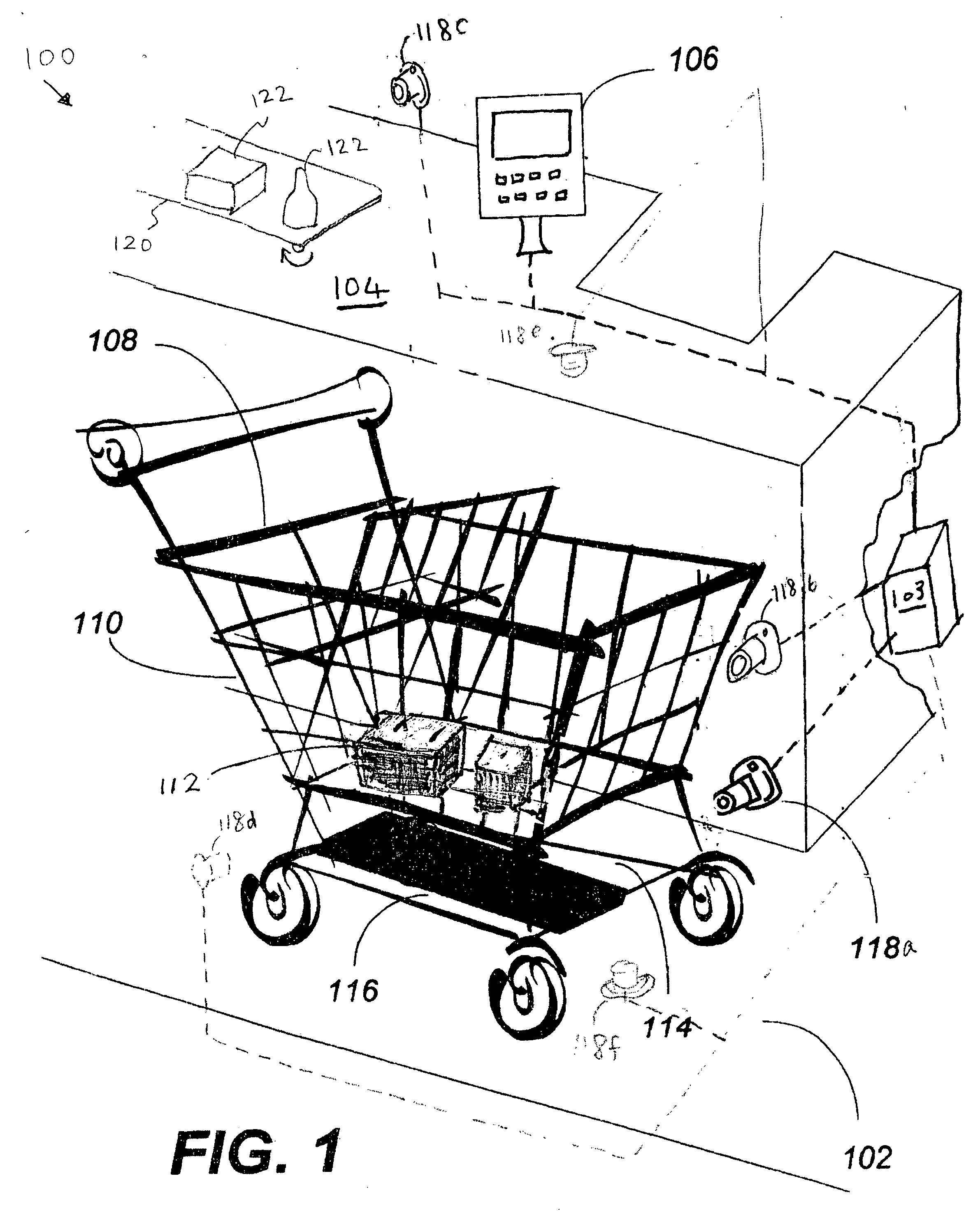

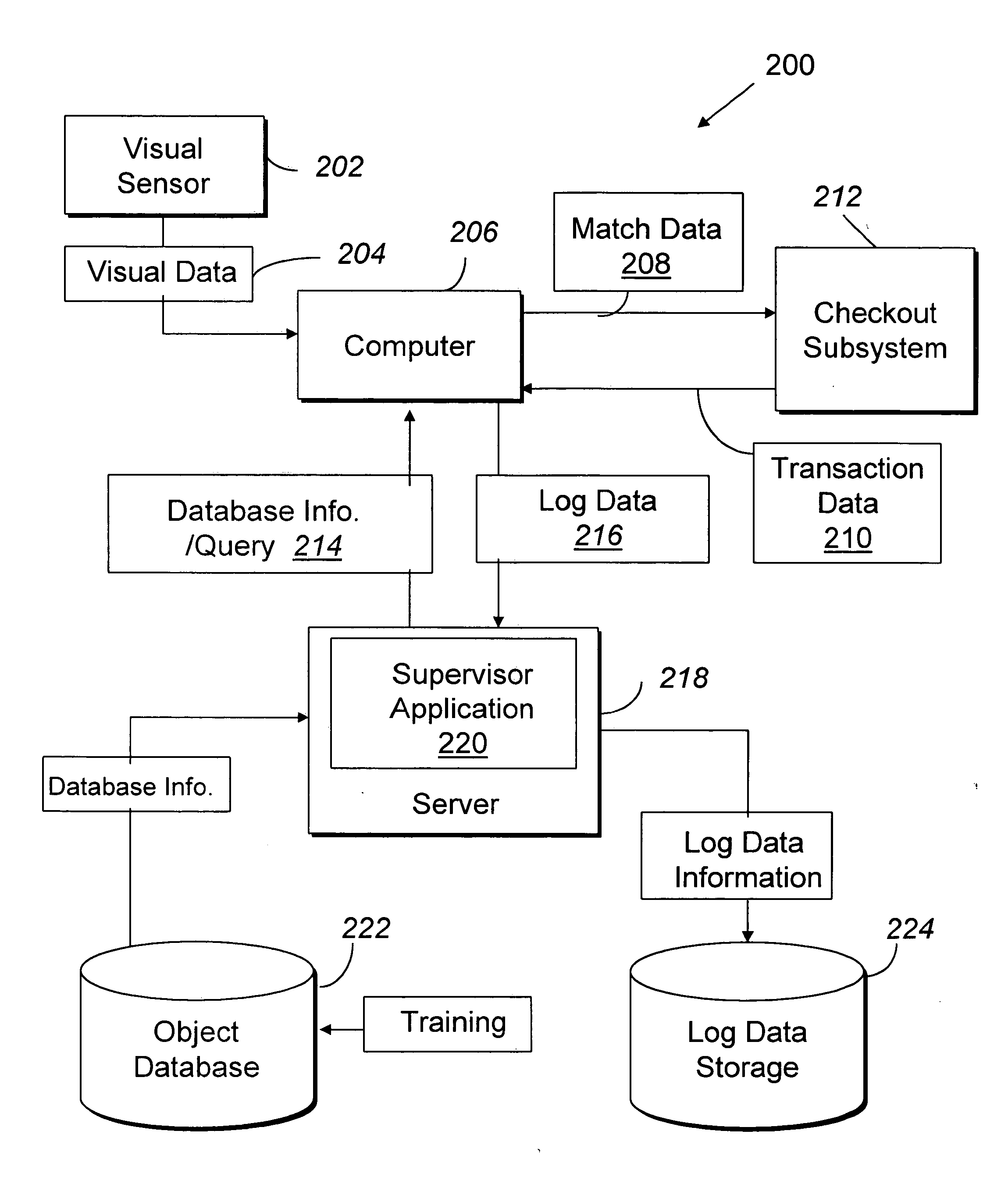

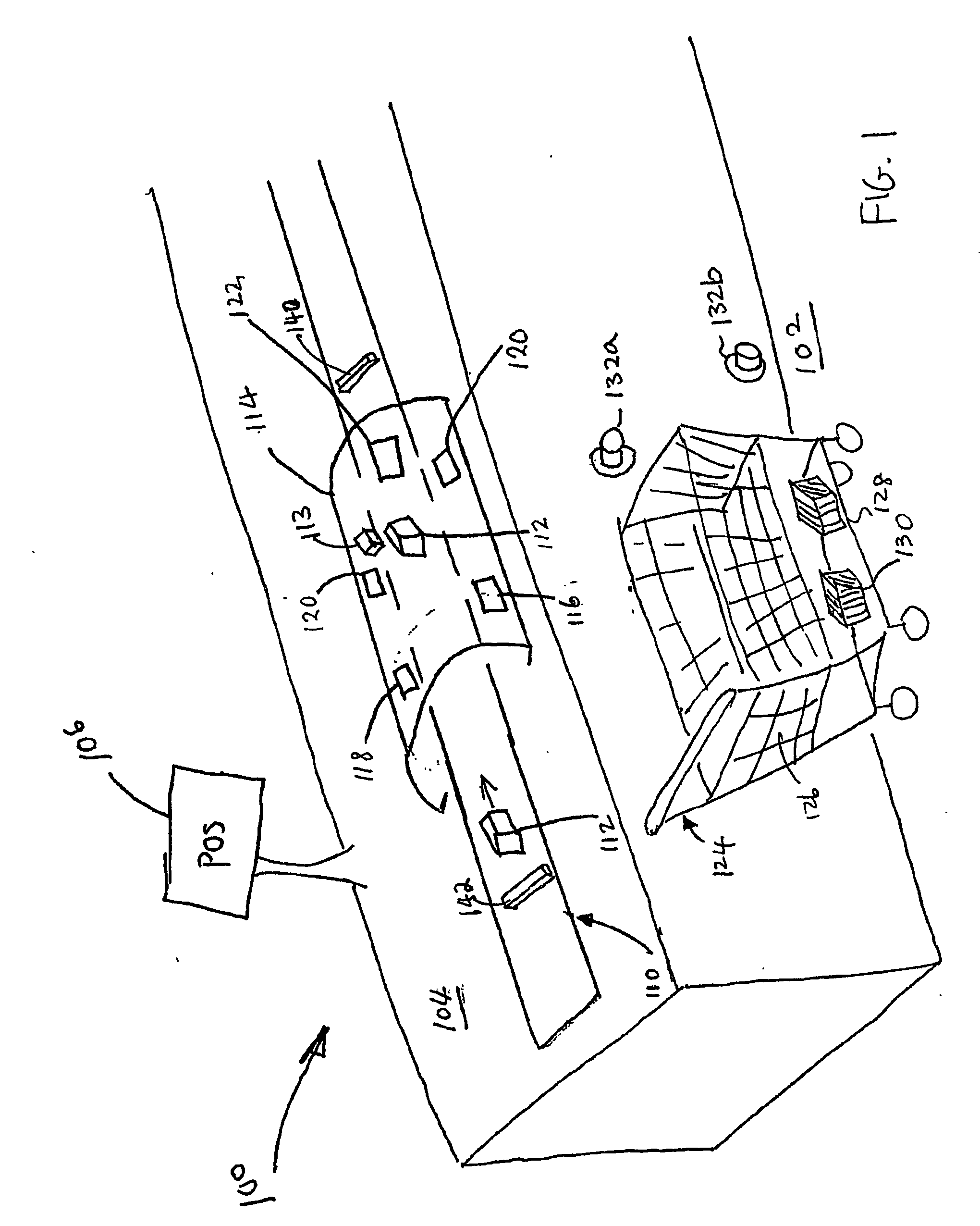

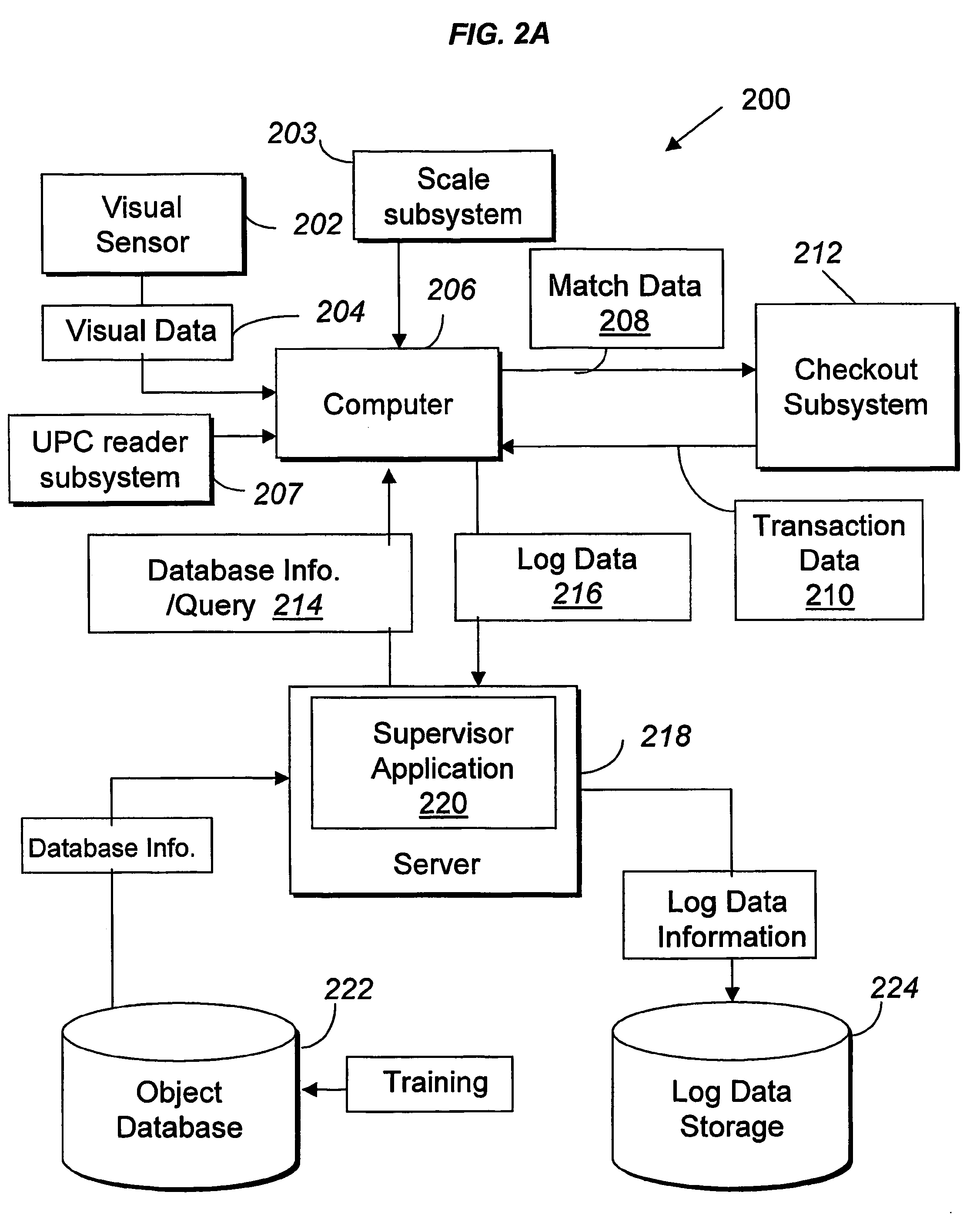

Systems and methods for merchandise checkout

ActiveUS20050189411A1Reduce and prevent lossReduce and prevent and fraudCredit registering devices actuationCash registersPattern recognitionVision sensor

Systems and methods for recognizing and identifying items located on the lower shelf of a shopping cart in a checkout lane of a retail store environment for the purpose of reducing or preventing loss or fraud and increasing the efficiency of a checkout process. The system includes one or more visual sensors that can take images of items and a computer system that receives the images from the one or more visual sensors and automatically identifies the items. The system can be trained to recognize the items using images taken of the items. The system relies on matching visual features from training images to match against features extracted from images taken at the checkout lane. Using the scale-invariant feature transformation (SIFT) method, for example, the system can compare the visual features of the images to the features stored in a database to find one or more matches, where the found one or more matches are used to identify the items.

Owner:DATALOGIC ADC

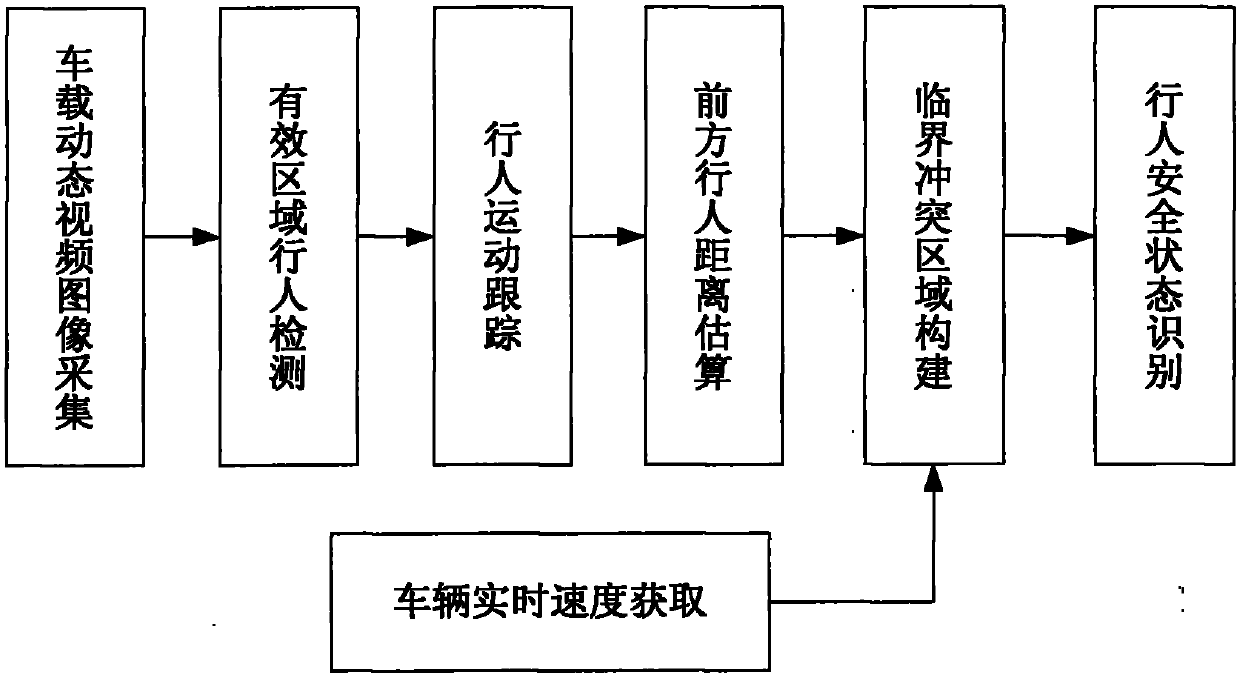

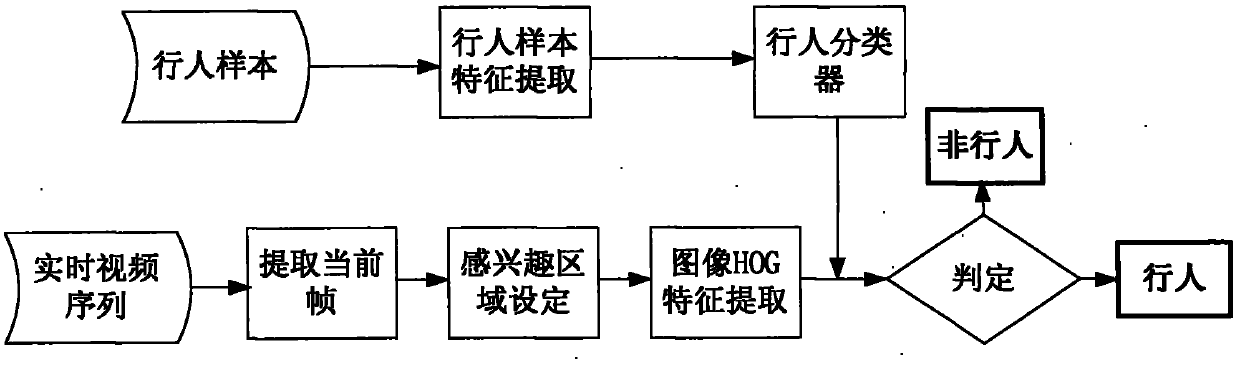

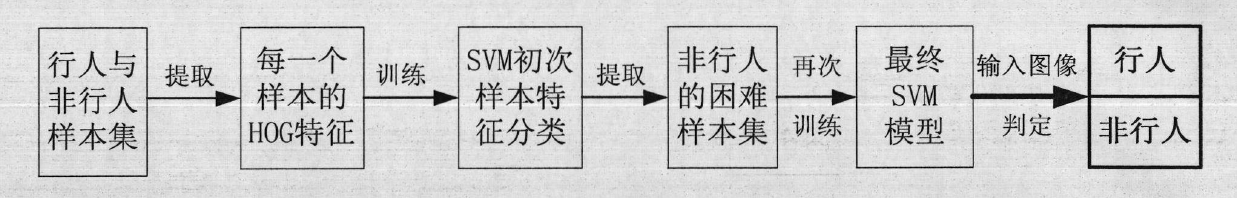

Safe state recognition system for people on basis of machine vision

InactiveCN102096803AEnsure safetyHigh technology contentImage analysisAnti-collision systemsDriver/operatorSecure state

The invention discloses a safe state recognition system for people on the basis of machine vision, aiming to solve the problem that the corresponding intelligent control decision for the vehicle driving behaviour can not be formulated according to the safe state of the people in the prior art. The method comprises the following steps: collecting a vehicle-mounted dynamic video image; detecting and recognizing a pedestrian in an interested area in front of a vehicle; tracking a moving pedestrian; detecting and calculating the distance of pedestrian in front of the vehicle; and obtaining vehicle real-time speed; and recognizing the safe state of the pedestrian. The process of recognizing the safe state of the pedestrian comprises the following steps: building a critical conflict area; judging the safe state when the pedestrian is out of the conflict area in the relative moving process; and judging the safe state when the pedestrian is in the conflict area in the relative moving process. Whether the pedestrian enters a dangerous area can be predicted by the relative speed and the relative position of a motor vehicle and the pedestrian, which are obtained by a vision sensor in the above steps. The safe state recognition system can assist drivers in adopting measures to avoid colliding pedestrians.

Owner:JILIN UNIV

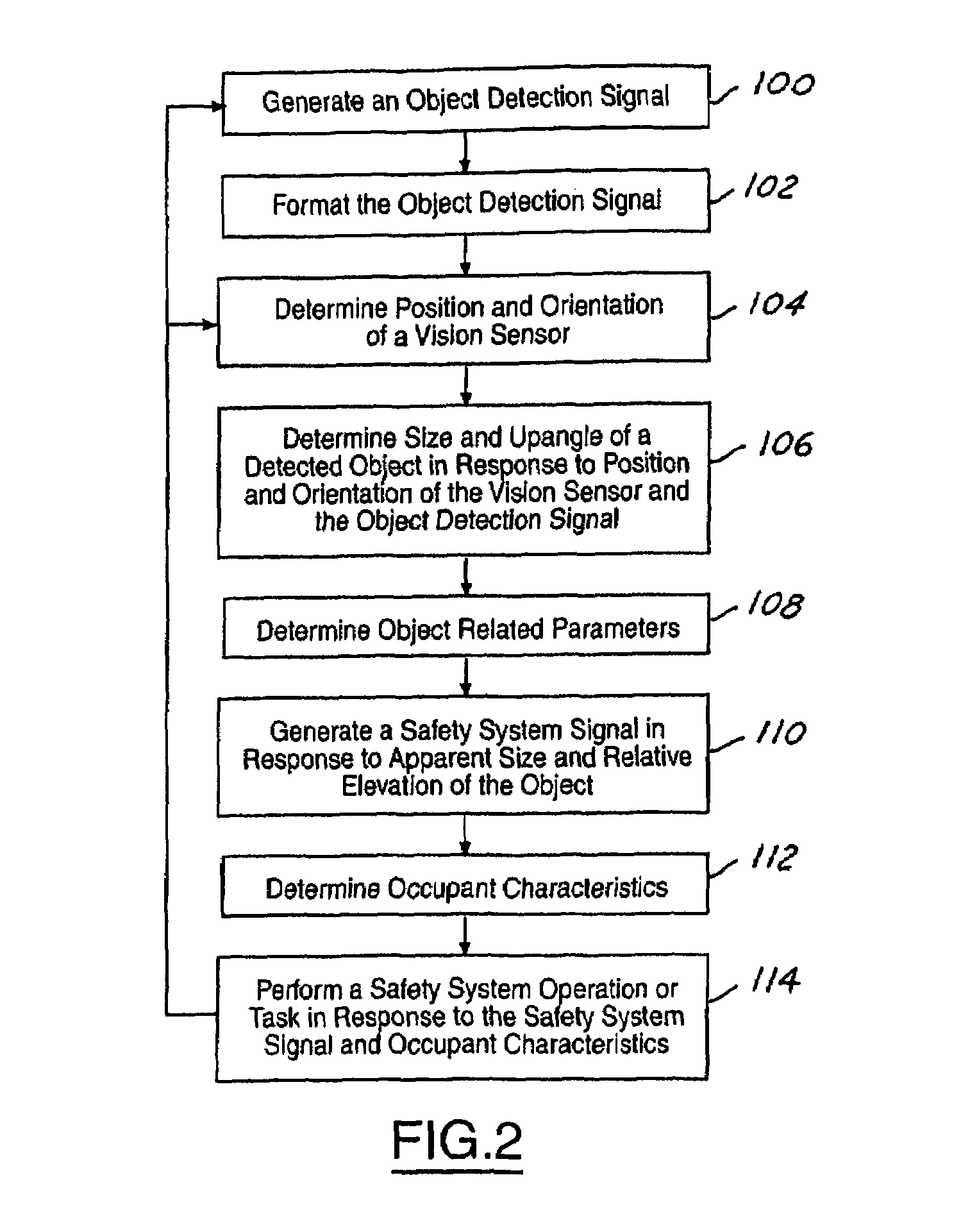

Single vision sensor object detection system

InactiveUS7389171B2Improve system performanceVehicle fittingsDigital data processing detailsEngineeringVision sensor

A sensing system (10) for a vehicle (12) includes a single vision sensor (14) that has a position on the vehicle (12). The single vision sensor (14) detects an object (40) and generates an object detection signal. A controller (16) is coupled to the vision sensor (14) and generates a safety system signal in response to the position of the vision sensor (14) and the object detection signal.

Owner:FORD GLOBAL TECH LLC

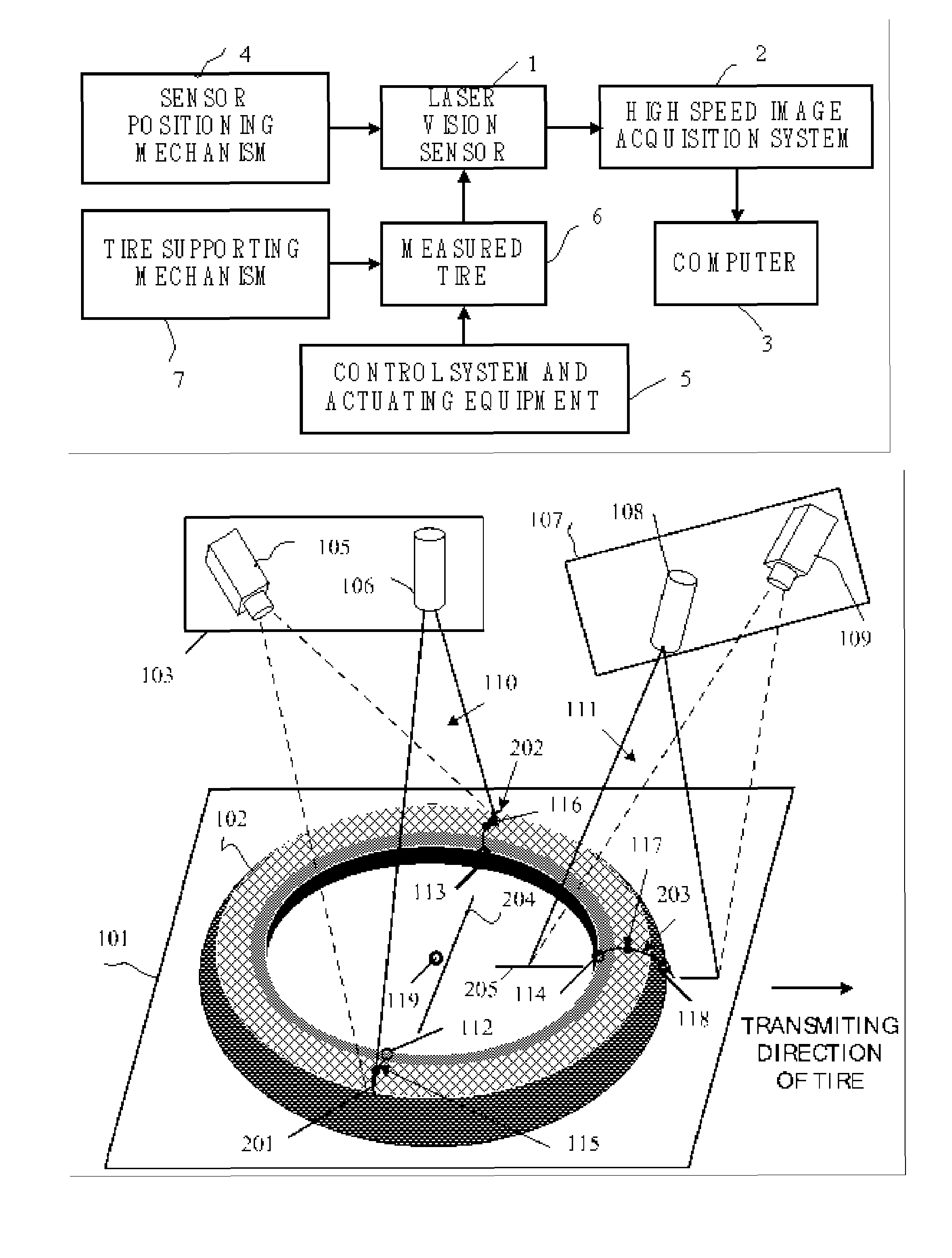

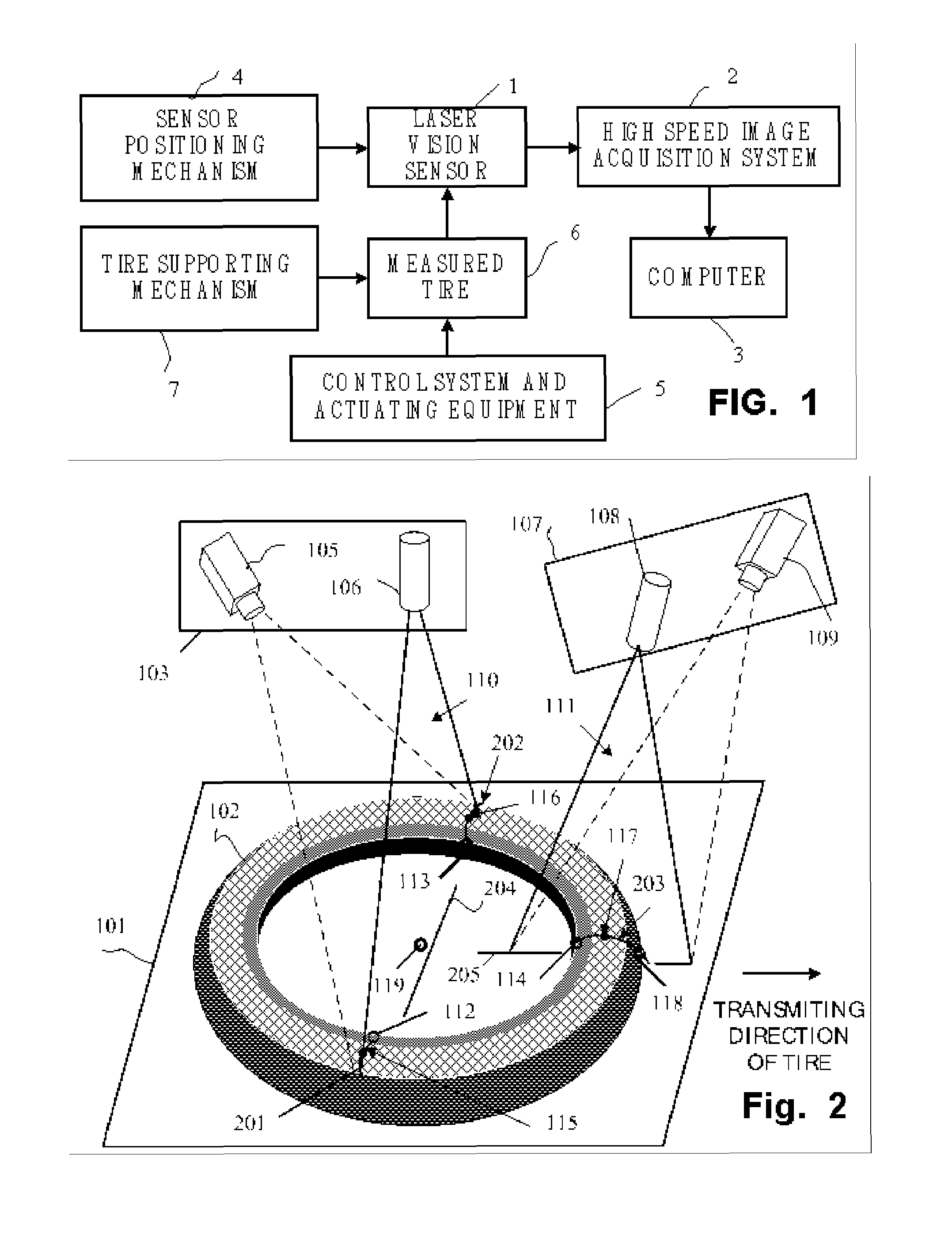

Method and apparatus for dynamic measuring three-dimensional parameters of tire with laser vision

ActiveUS7177740B1Reduce equipment costsHigh measurement accuracyVehicle testingRegistering/indicating working of vehiclesEngineeringVision sensor

Three-dimensional parameters of a tire are measured dynamically in a non-contact way using laser vision technology. Two laser vision sensors are used, which are calibrated and then used for practical measurement. The system offers a wide measuring range, high accuracy and high efficiency, even though the tire is moving, and it minimizes the size of the measuring apparatus, and reduces the cost of the apparatus.

Owner:BEIHANG UNIV

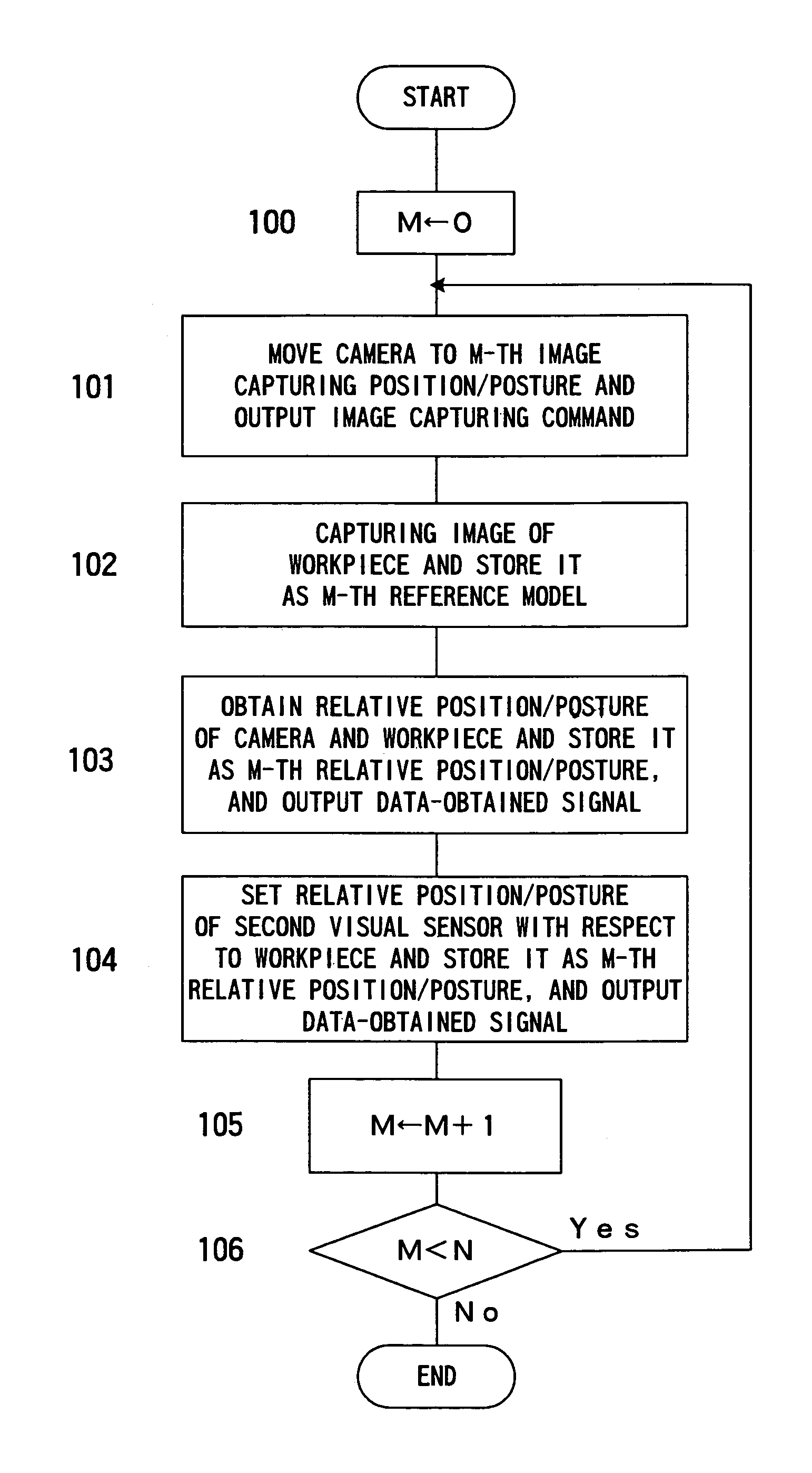

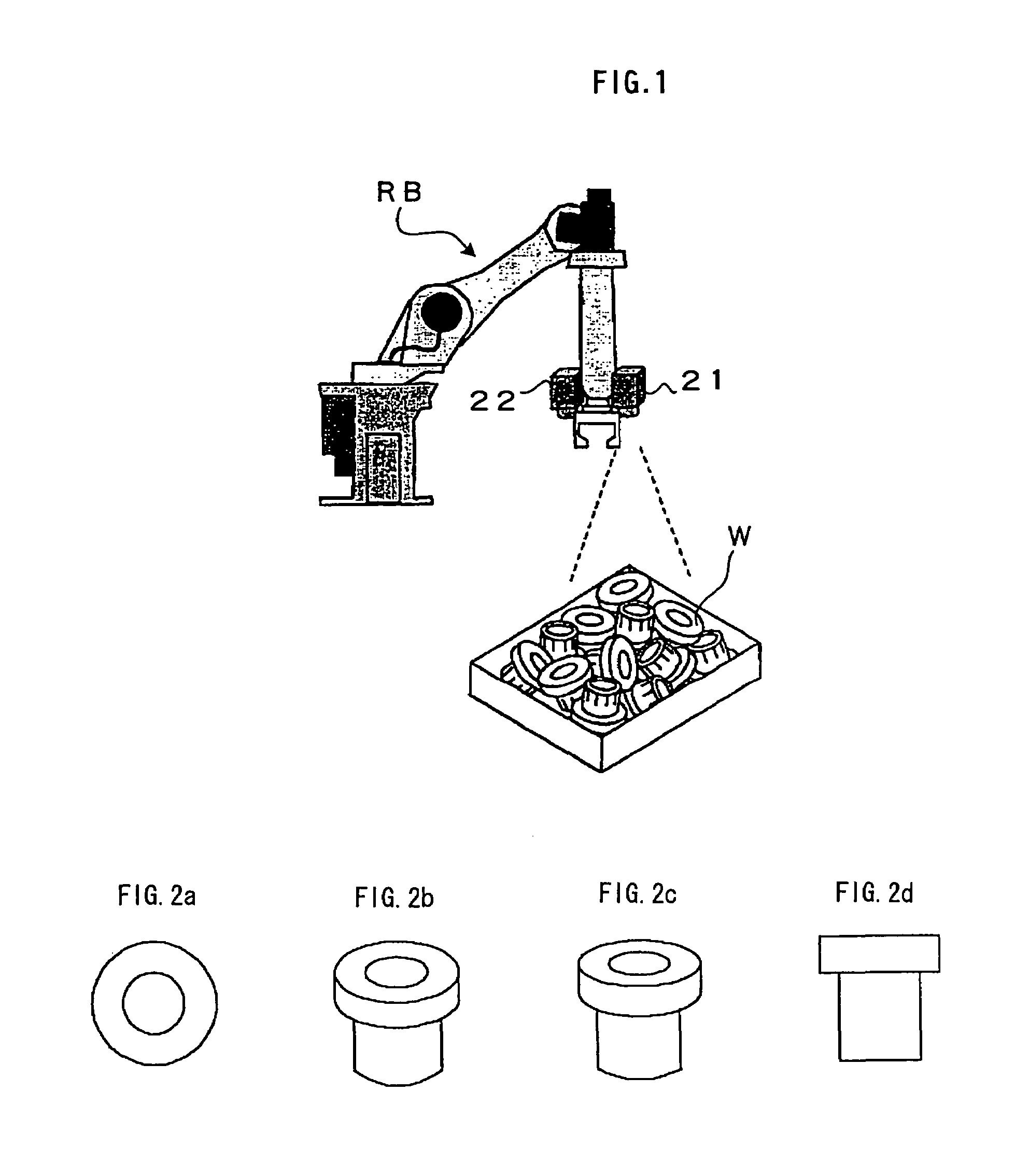

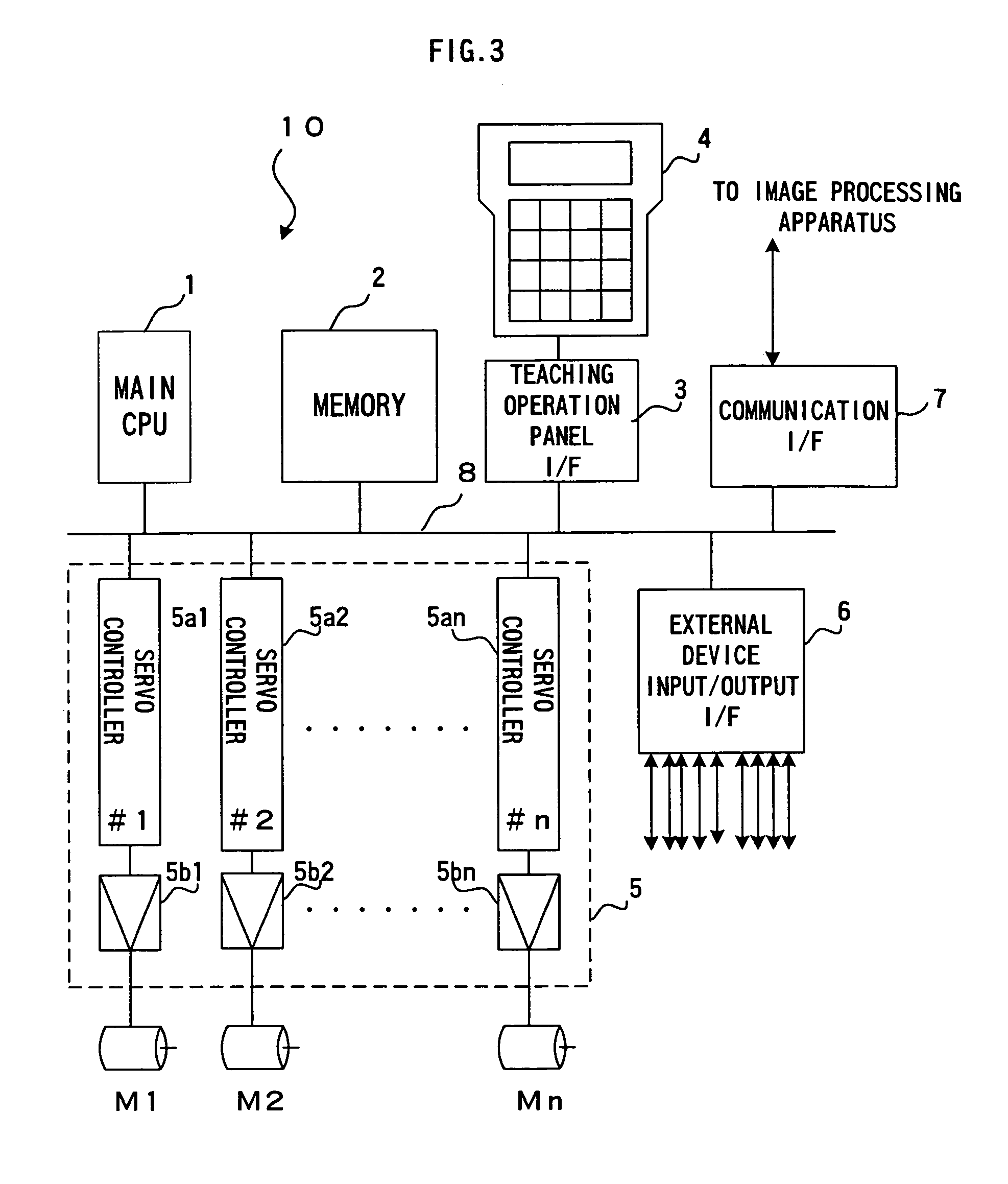

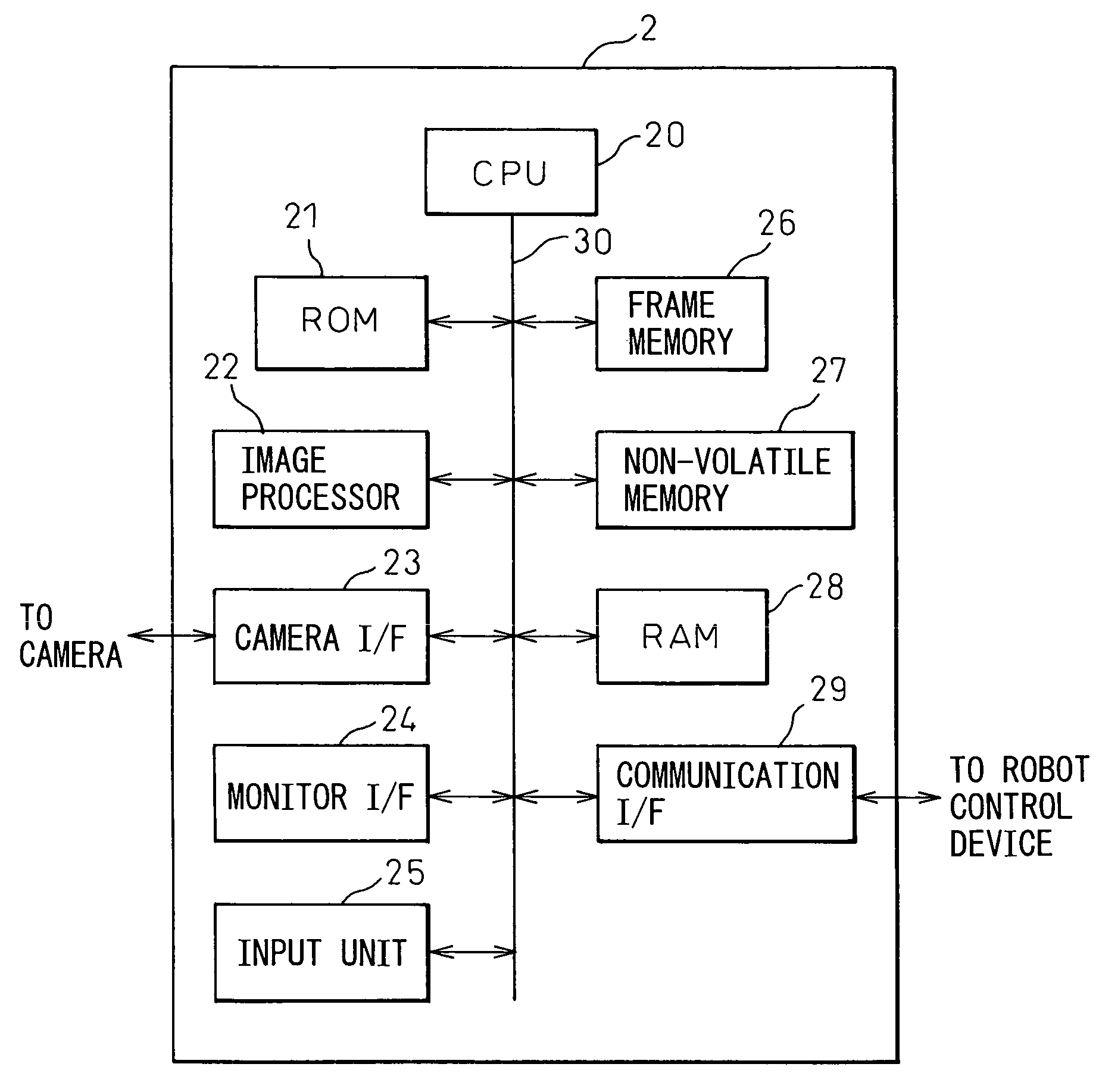

Robot system having image processing function

A robot system having an image processing function capable of detecting position and / or posture of individual workpieces randomly arranged in a stack to determine posture, or posture and position of a robot operation suitable for the detected position and / or posture of the workpiece. Reference models are created from two-dimensional images of a reference workpiece captured in a plurality of directions by a first visual sensor and stored. Also, the relative positions / postures of the first visual sensor with respect to the workpiece at the respective image capturing, and relative position / posture of a second visual sensor to be situated with respect to the workpiece are stored. Matching processing between an image of a stack of workpieces captured by the camera and the reference models are performed and an image of a workpiece matched with one reference model is selected. A three-dimensional position / posture of the workpiece is determined from the image of the selected workpiece, the selected reference model and position / posture information associated with the reference model. The position / posture of the second visual sensor to be situated for measurement is determined based on the determined position / posture of the workpiece and the stored relative position / posture of the second visual sensor, and precise position / posture of the workpiece is measured by the second visual sensor at the determined position / posture of the second visual sensor. A picking operation for picking out a respective workpiece from a randomly arranged stack can be performed by a robot based on the measuring results of the second visual sensor.

Owner:FANUC LTD

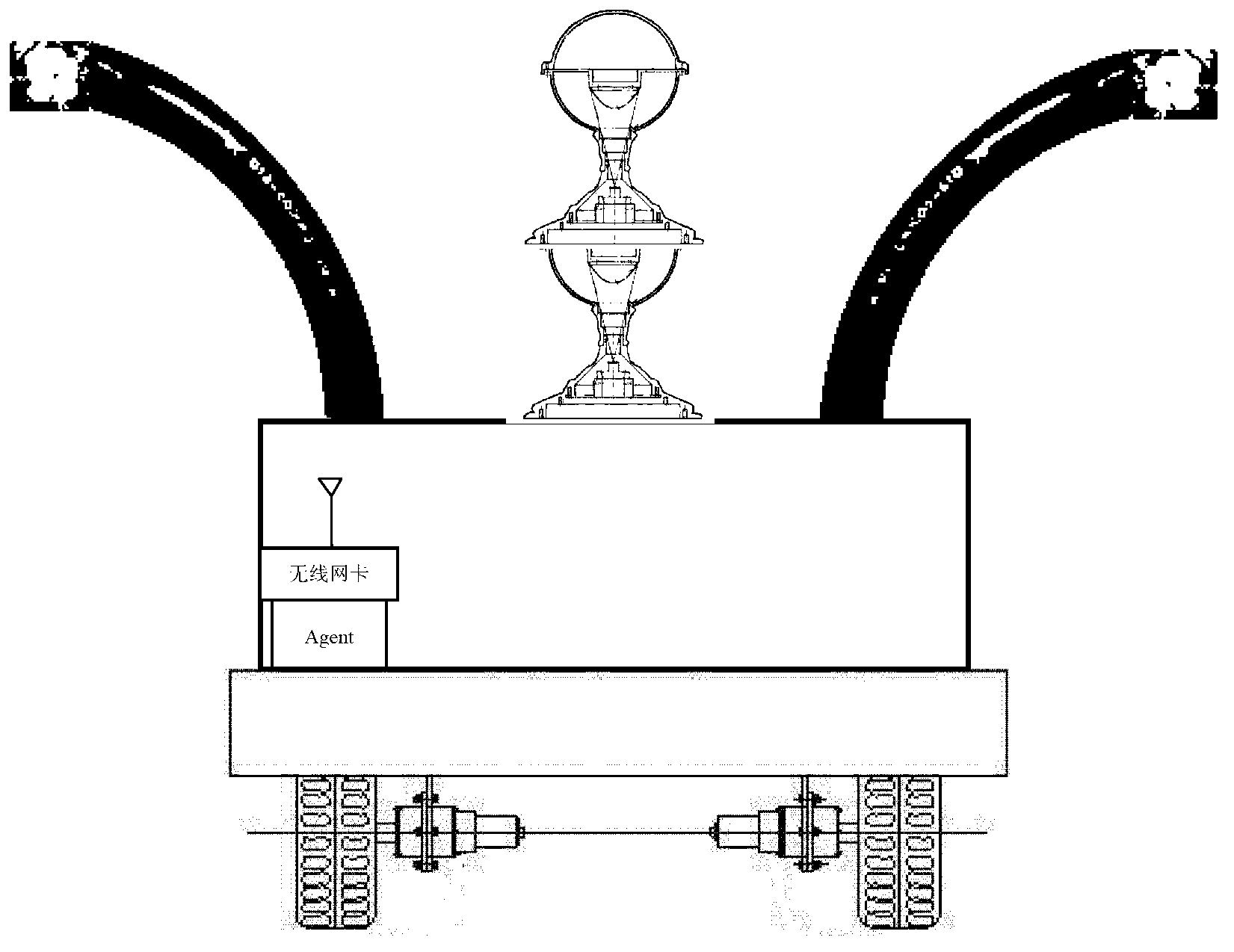

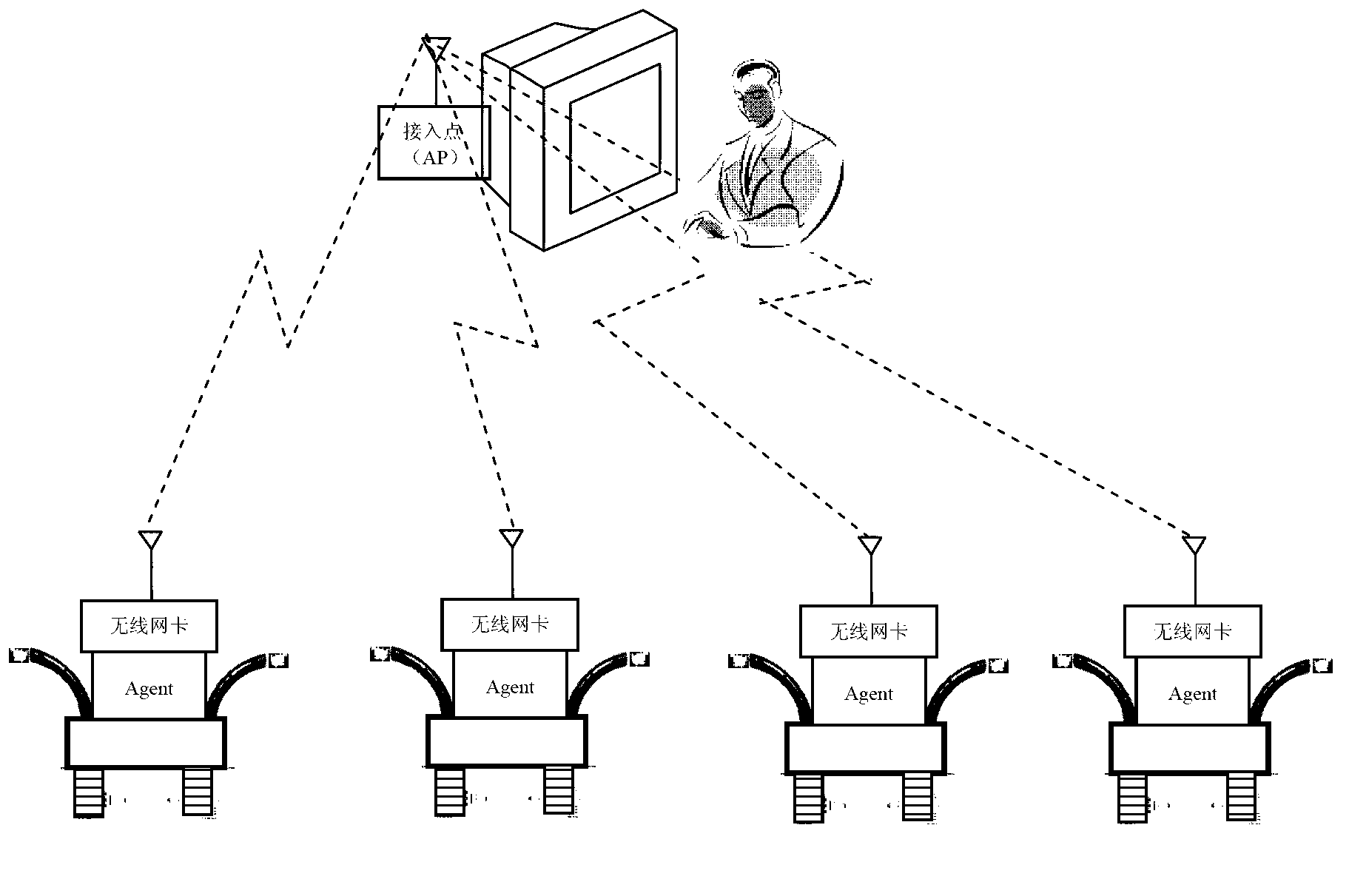

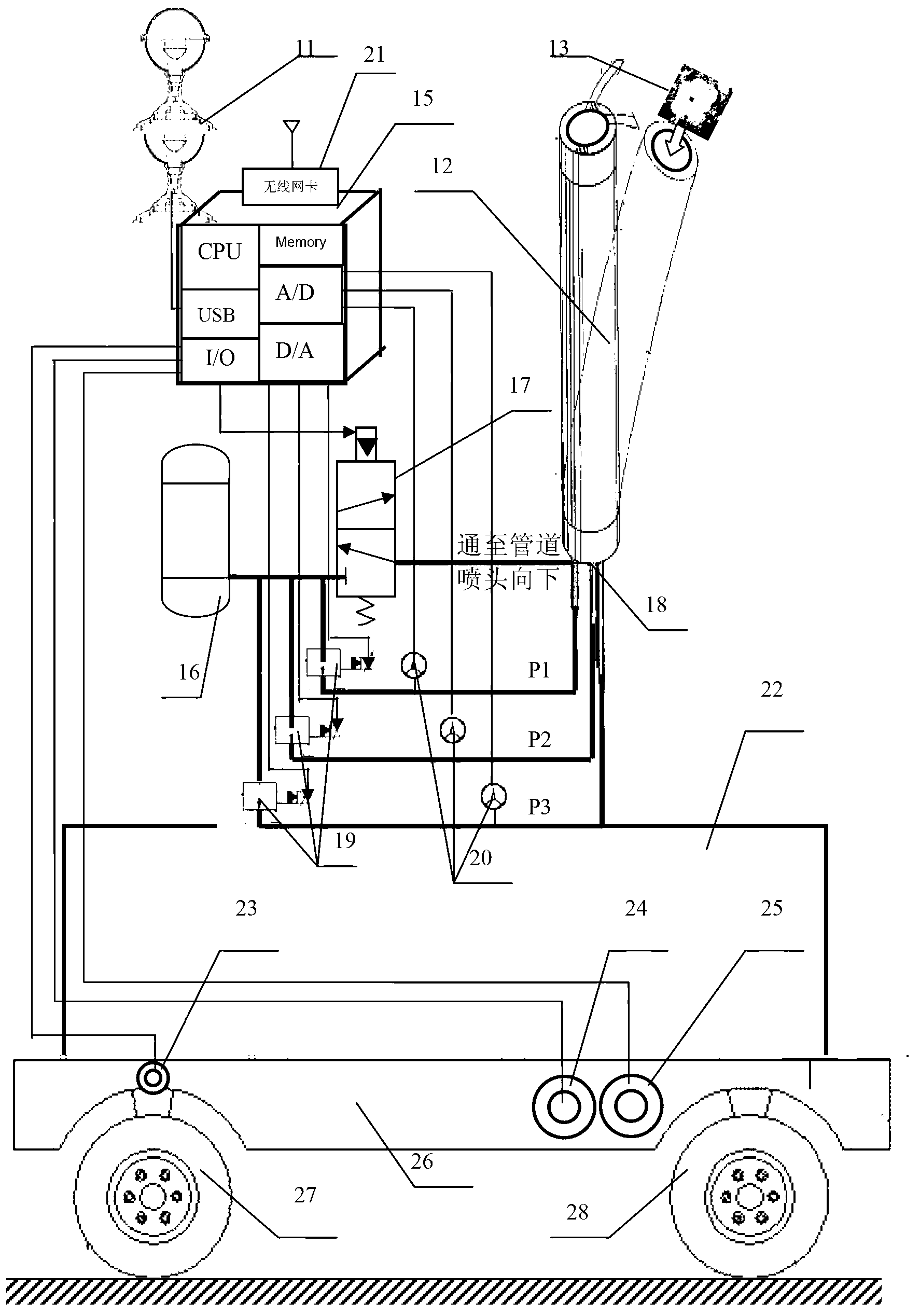

Autonomous navigation and man-machine coordination picking operating system of picking robot

ActiveCN102914967AImprove practicalityReduce the level of intelligent control requiredPicking devicesAdaptive controlStereoscopic videoMan machine

An autonomous navigation and man-machine coordination picking operating system of a picking robot comprises a travelling unit of the picking robot, a picking robot arm, an Agent, a wireless sensor network, a computer and a panoramic stereoscopic visual sensor. The Agent has functions of autonomous navigation, obstacle avoidance, positioning and path planning and the like, the wireless sensor network is used for completing man-machine coordination picking information interaction, the computer is used for providing long-distance intervention and management for picking administrators during man-machine coordination picking operation, and the panoramic stereoscopic visual sensor is used for acquiring panoramic stereoscopic video images in a picking area. The autonomous navigation and man-machine coordination picking operating system of the picking robot is fine in natural flexibility, simple in mechanism, low in control complexity, limited intelligent, high in picking efficiency, fine in environmental adaptation, and low in manufacturing and maintenance costs., Picking objects and crop are not damaged during picking.

Owner:ZHEJIANG UNIV OF TECH

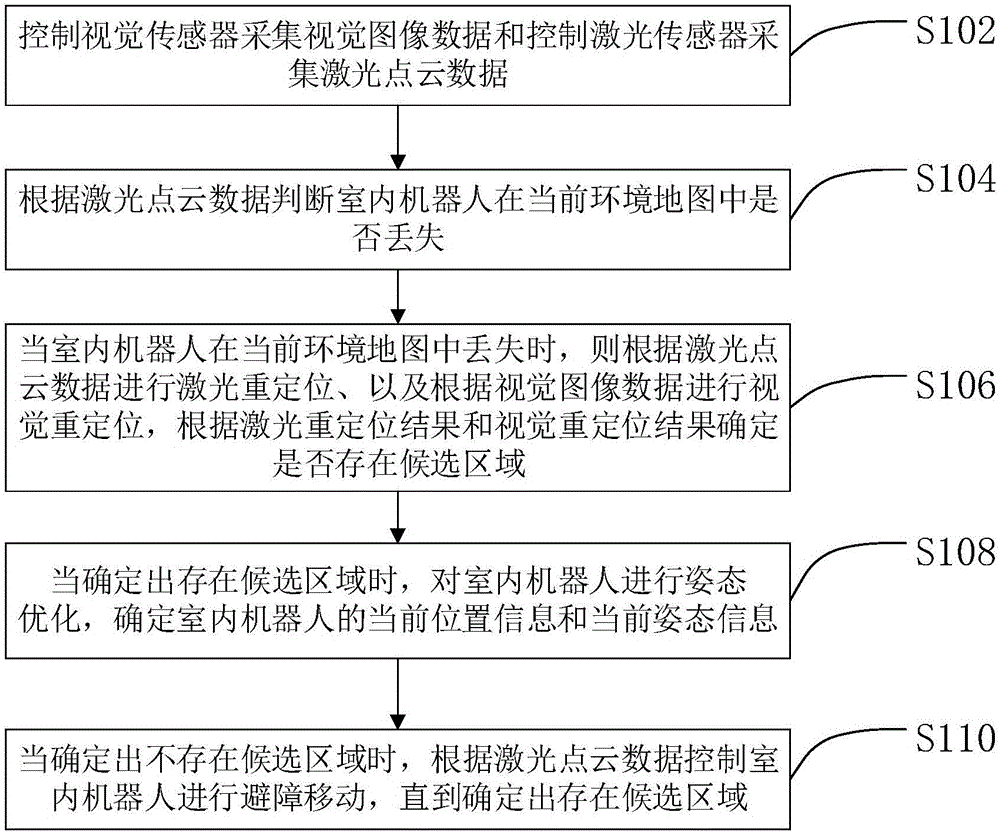

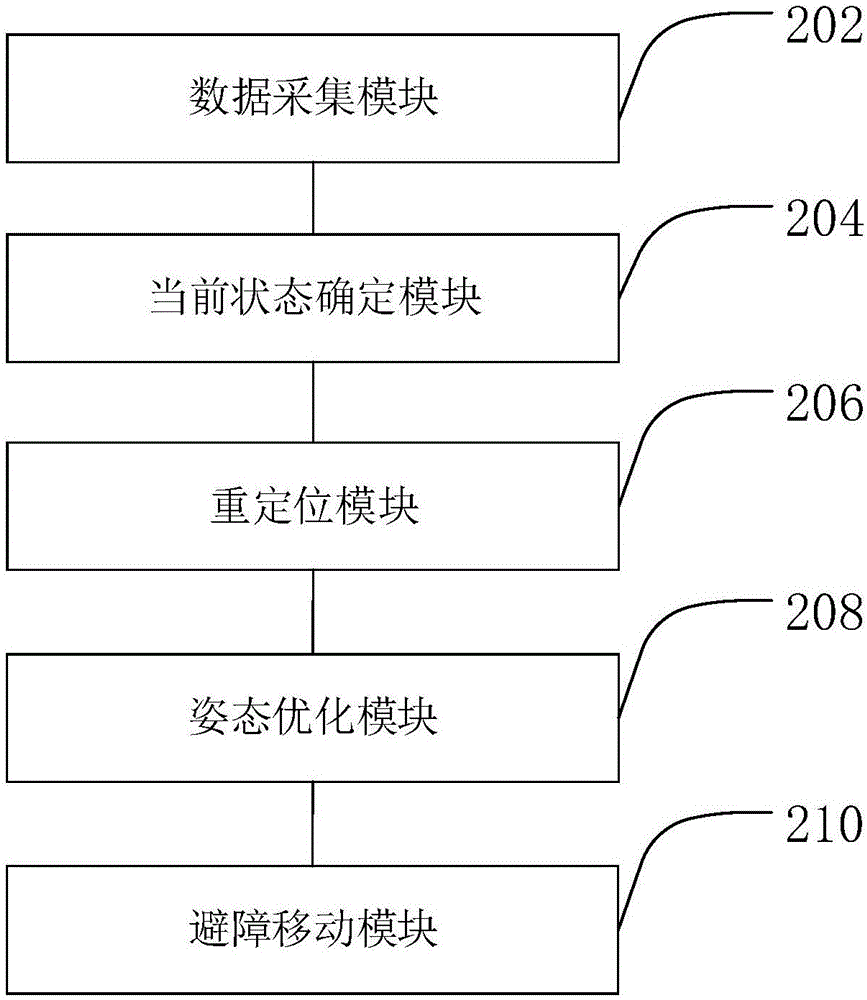

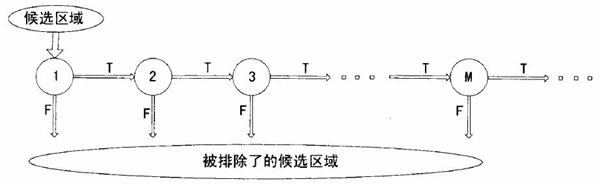

Relocating method and apparatus of indoor robot

ActiveCN106092104AImprove accuracyAccurate Autonomous NavigationNavigational calculation instrumentsLaser sensorCloud data

The invention provides a relocating method and apparatus of an indoor robot. The method comprises the following steps: controlling a visual sensor to acquire visual image data, and controlling a laser sensor to acquire laser dot cloud data; judging whether the robot is lost in a current environmental map according to the laser dot cloud data; if yes, laser relocating the robot according to the laser dot cloud data, visually relocating the robot according to the visual image data, and determining whether a candidate area exists or not according to a laser relocating result and a visual relocating result; when the candidate area exists, carrying out the posture optimization for the robot, and determining current position information and current posture information of the robot; and when the candidate area does not exist, controlling the robot to make obstacle avoidance motion according to the laser dot cloud data until the candidate area is determined. The robot is relocated by adopting a way of combining the laser sensor and the visual sensor, so that the relocating accuracy of the robot is improved, and the robot can be accurately and autonomously navigated.

Owner:SHENZHEN WEIFU ROBOT TECH CO LTD

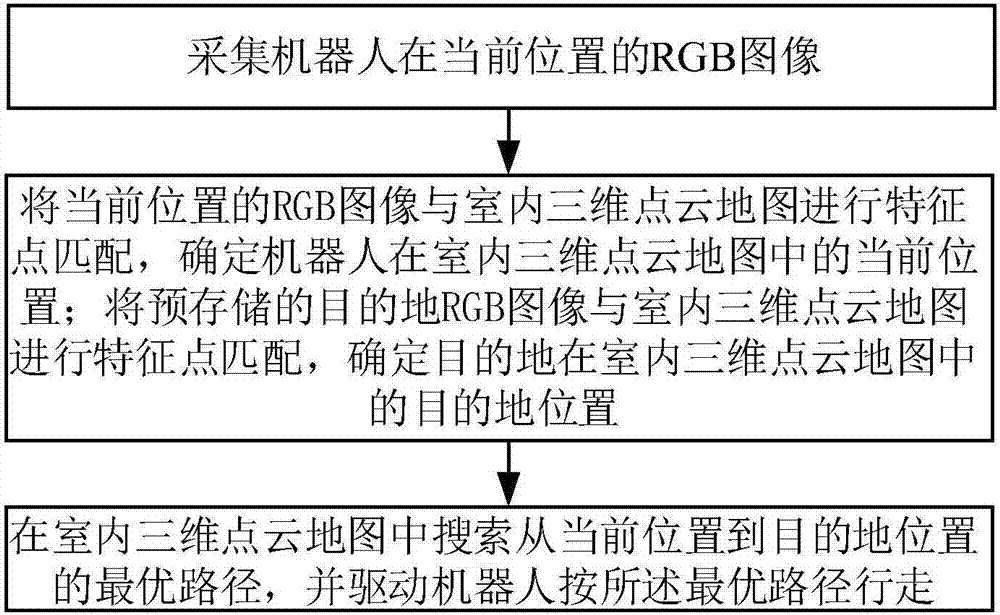

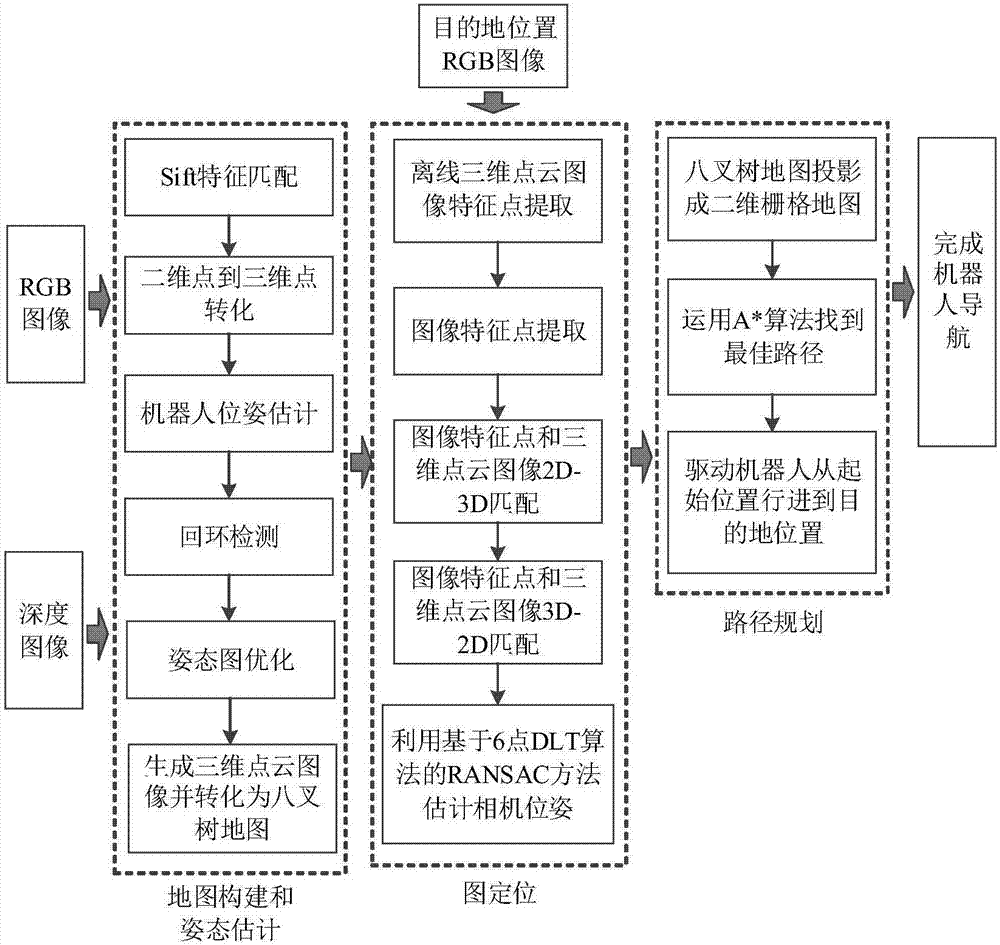

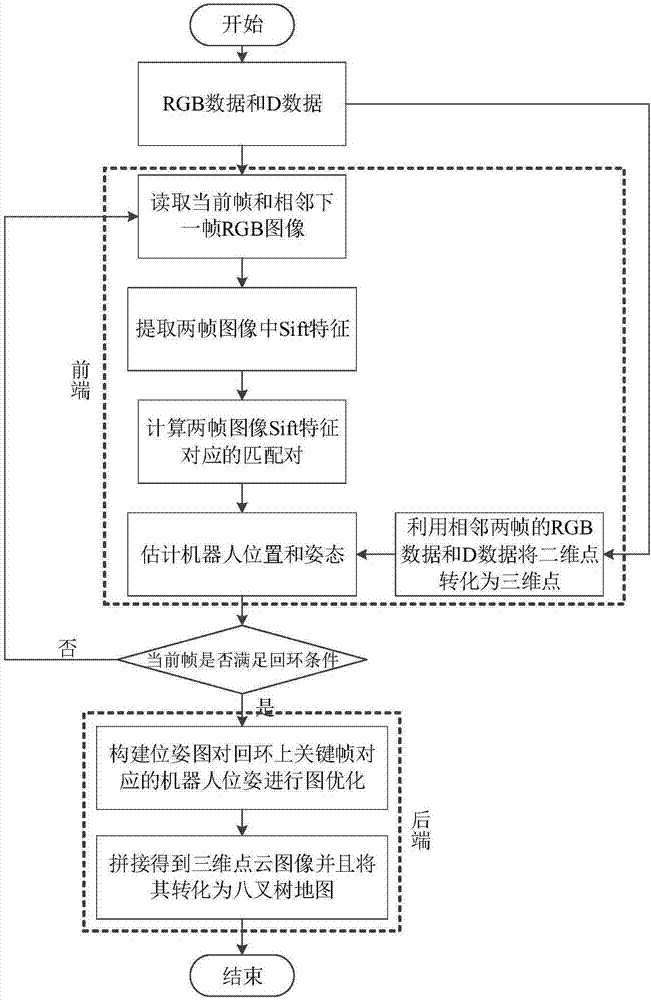

Autonomous location and navigation method and autonomous location and navigation system of robot

ActiveCN106940186AAutomate processingFair priceNavigational calculation instrumentsPoint cloudRgb image

The invention discloses an autonomous location and navigation method and an autonomous location and navigation system of a robot. The method comprises the following steps: acquiring the RGB image of the robot in the current position; carrying out characteristic point matching on the RGB image of the current position and an indoor three-dimensional point cloud map, and determining the current position of the robot in the indoor 3D point cloud map; carrying out characteristic point matching on a pre-stored RGB image of a destination and the indoor three-dimensional point cloud map, and determining the position of the destination in the indoor 3D point cloud map; and searching an optimal path from the current position to the destination position in the indoor 3D point cloud map, and driving the robot to run according to the optimal path. The method and the system have the advantages of completion of autonomous location and navigation through using a visual sensor, simple device structure, low cost, simplicity in operation, and high path planning real-time property, and can be used in the fields of unmanned driving and indoor location and navigation.

Owner:HUAZHONG UNIV OF SCI & TECH

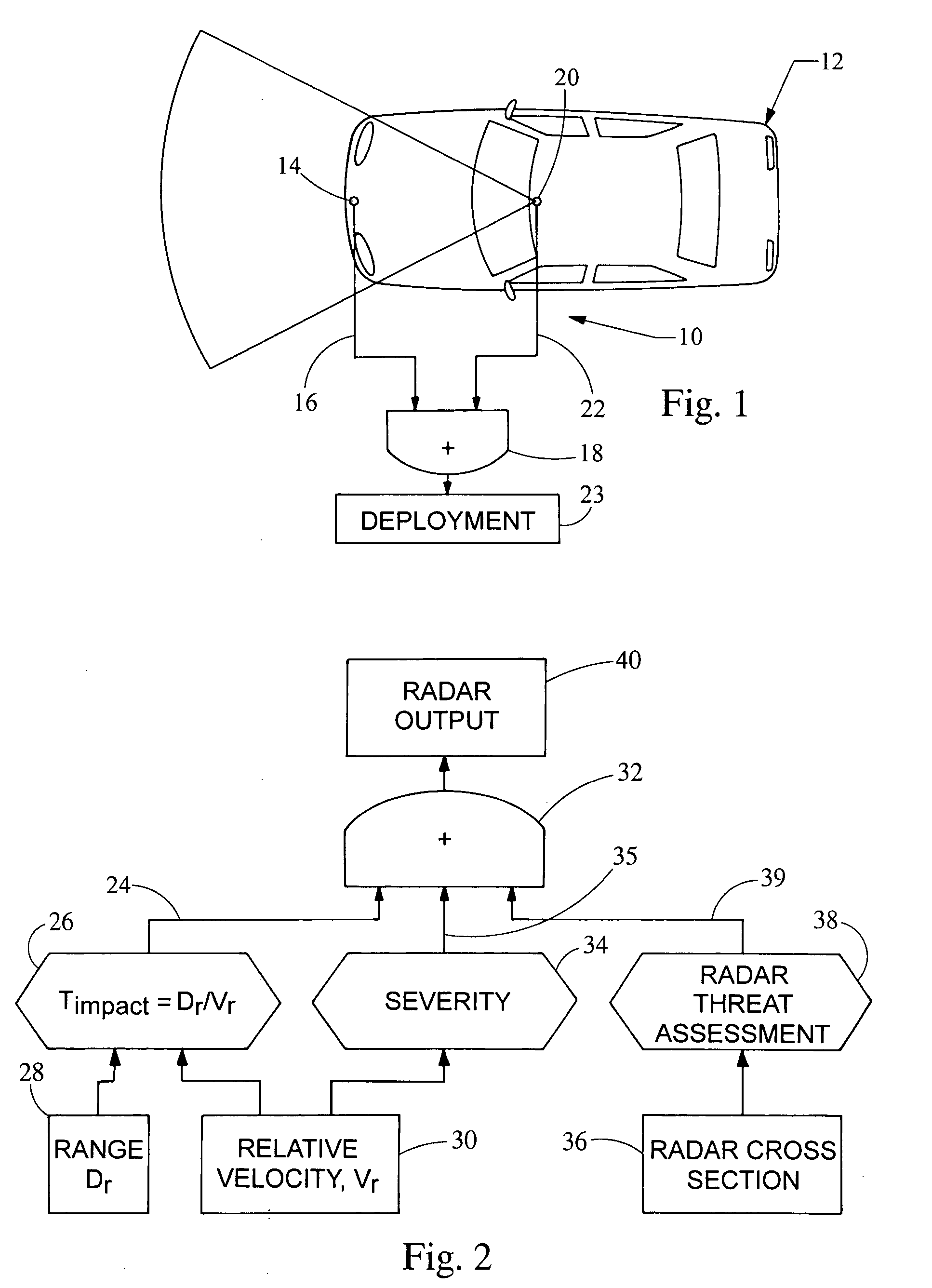

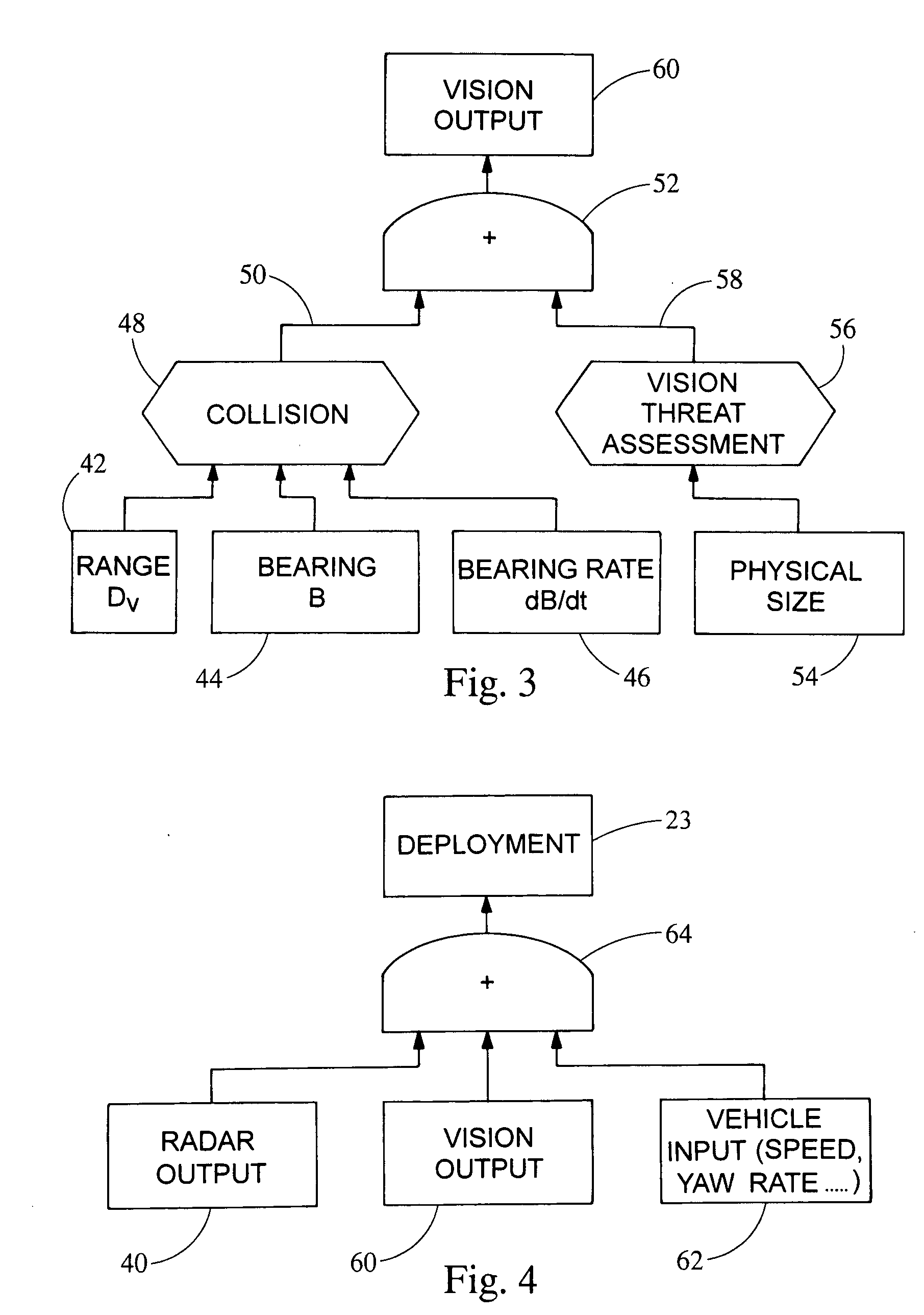

Sensor system with radar sensor and vision sensor

InactiveUS20060091654A1Improve reliabilityImproved occupant protectionPedestrian/occupant safety arrangementElectromagnetic wave reradiationRadarAirbag deployment

A motor vehicle crash sensor system for activating an external safety system such as an airbag in response to the detection of an impending collision target. The system includes a radar sensor carried by the vehicle providing a radar output related to the range and relative velocity of the target. A vision sensor is carried by the vehicle which provides a vision output related to the bearing and bearing rate of the target. An electronic control module receives the radar output and the vision output for producing a deployment signal for the safety system.

Owner:AUTOLIV ASP INC

Systems and methods for incrementally updating a pose of a mobile device calculated by visual simultaneous localization and mapping techniques

ActiveUS20060012493A1Image enhancementInstruments for road network navigationPattern recognitionSimultaneous localization and mapping

The invention is related to methods and apparatus that use a visual sensor and dead reckoning sensors to process Simultaneous Localization and Mapping (SLAM). These techniques can be used in robot navigation. Advantageously, such visual techniques can be used to autonomously generate and update a map. Unlike with laser rangefinders, the visual techniques are economically practical in a wide range of applications and can be used in relatively dynamic environments such as environments in which people move. One embodiment further advantageously uses multiple particles to maintain multiple hypotheses with respect to localization and mapping. Further advantageously, one embodiment maintains the particles in a relatively computationally-efficient manner, thereby permitting the SLAM processes to be performed in software using relatively inexpensive microprocessor-based computer systems.

Owner:IROBOT CORP

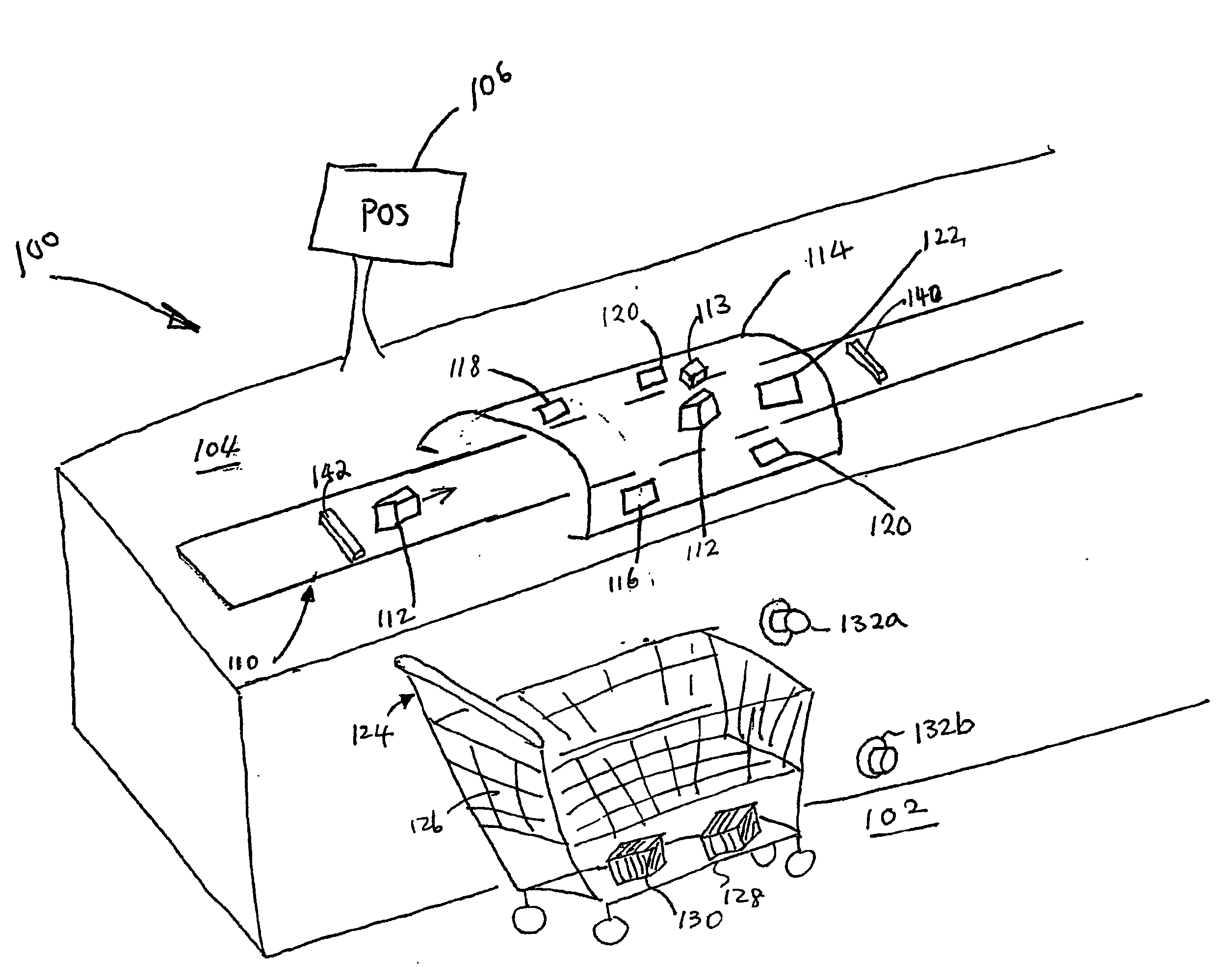

Systems and methods for merchandise automatic checkout

Systems and methods for automatically checking out items located on a moving conveyor belt for the purpose of increasing the efficiency of a checkout process and revenue at a point-of-sale. The system includes a conveyor subsystem for moving the items, a housing that enclosed a portion of the conveyor subsystem, a lighting subsystem that illuminates an area within the housing, visual sensors that can take images of the items including UPCs, and a checkout system that receives the images from the visual sensors and automatically identifies the items. The system may include a scale subsystem located under the conveyor subsystem to measure the weights of the items, where the weight of each item is used to check if the corresponding item is identified correctly. The system relies on matching visual features from images stored in a database to match against features extracted from images taken by the visual sensors.

Owner:DATALOGIC ADC

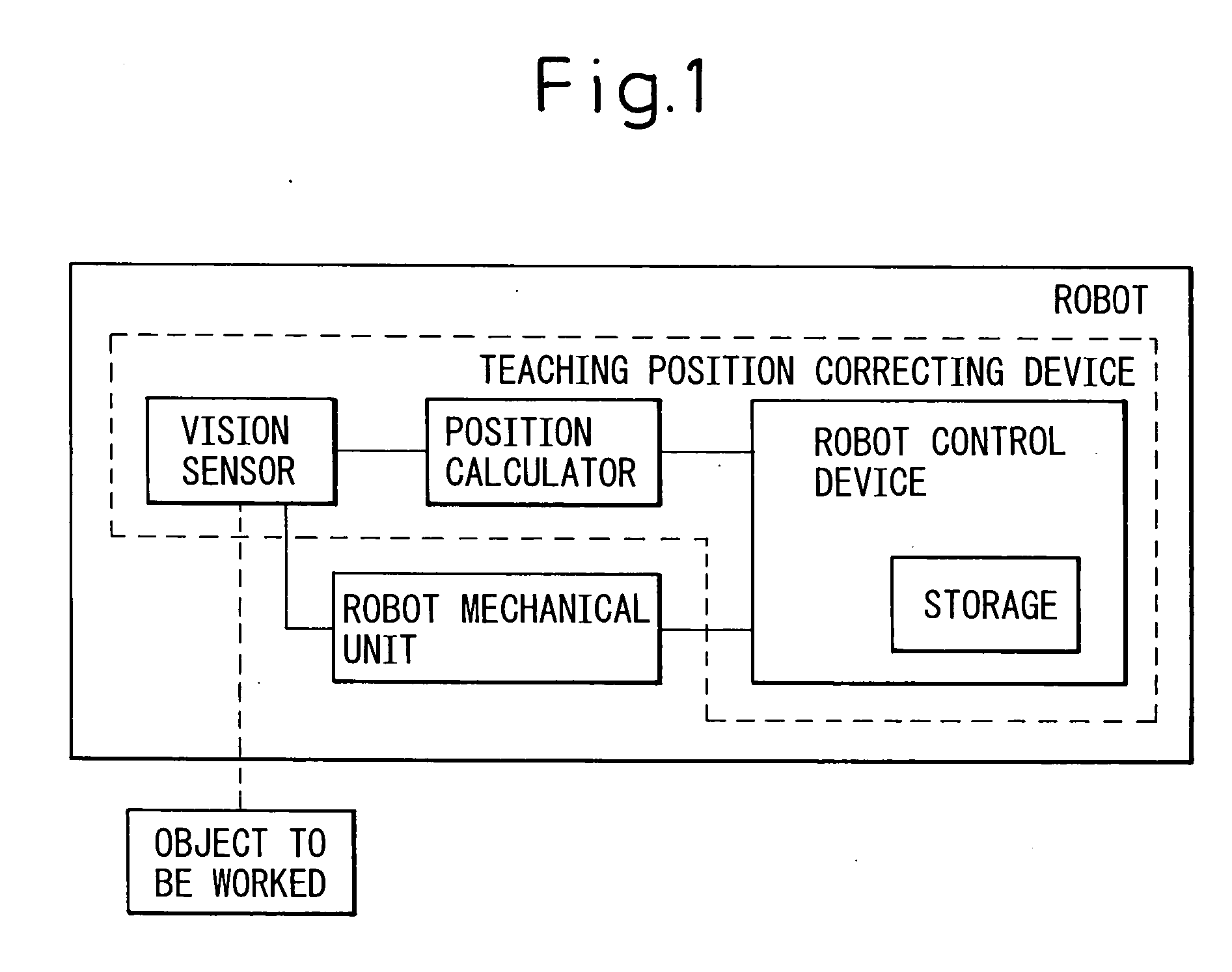

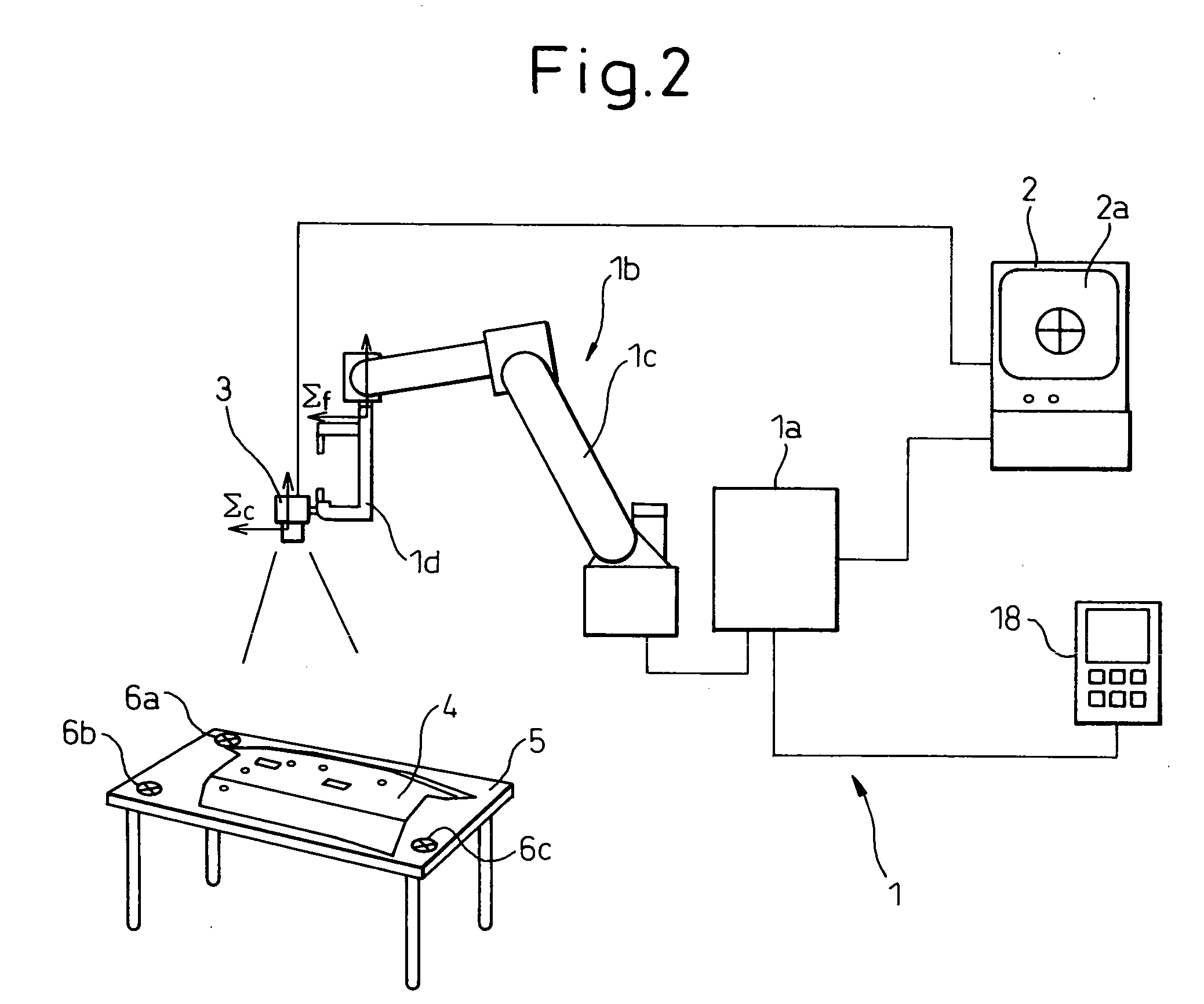

Teaching position correcting device

InactiveUS20050107920A1Easy to correctReduce loadProgramme-controlled manipulatorComputer controlCcd cameraVision sensor

A teaching position correcting device which can easily correct, with high precision, teaching positions after shifting at least one of a robot and an object worked by the robot. Calibration is carried out using a vision sensor (i.e., CCD camera) that is mounted on a work tool. The vision sensor measures three-dimensional positions of at least three reference marks not aligned in a straight line on the object. The vision sensor is optionally detached from the work tool, and at least one of the robot and the object is shifted. After the shifting, calibration (this can be omitted when the vision sensor is not detached) and measuring of three-dimensional positions of the reference marks are carried out gain. A change in a relative positional relationship between the robot and the object is obtained using the result of measuring three-dimensional positions of the reference marks before and after the shifting respectively. To compensate for this change, the teaching position data that is valid before the shifting is corrected. The robot can have a measuring robot mechanical unit having a vision sensor, and a separate working robot mechanical unit that works the object. In this case, positions of the working robot mechanical unit before and after the shifting, respectively, are also measured.

Owner:FANUC LTD

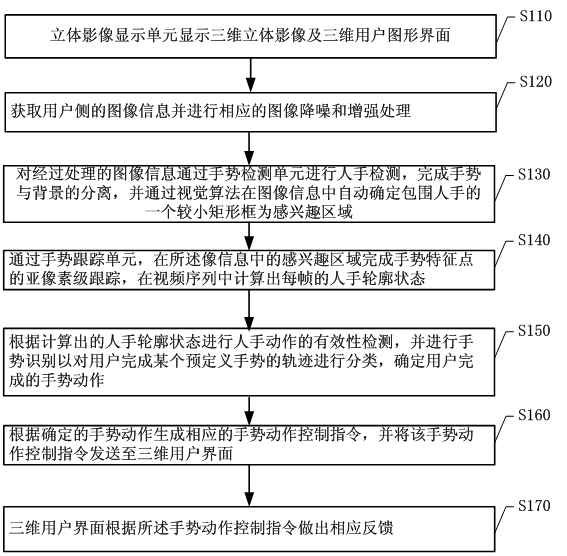

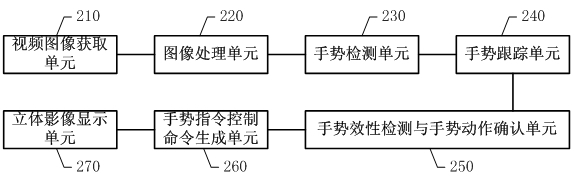

Man-machine interactive system and real-time gesture tracking processing method for same

InactiveCN102426480AReal-time hand detectionReal-time trackingInput/output for user-computer interactionCharacter and pattern recognitionVision algorithmsMan machine

The invention discloses a man-machine interactive system and a real-time gesture tracking processing method for the same. The method comprises the following steps of: obtaining image information of user side, executing gesture detection by a gesture detection unit to finish separation of a gesture and a background, and automatically determining a smaller rectangular frame which surrounds the hand as a region of interest in the image information through a vision algorithm; calculating the hand profile state of each frame in a video sequence by a gesture tracking unit; executing validity check on hand actions according to the calculated hand profile state, and determining the gesture action finished by the user; and generating a corresponding gesture action control instruction according to the determined gesture action, and making corresponding feedbacks by a three-dimensional user interface according to the gesture action control instruction. In the system and the method, all or part of gesture actions of the user are sensed so as to finish accurate tracking on the gesture, thus, a real-time robust solution is provided for an effective gesture man-machine interface port based on a common vision sensor.

Owner:KONKA GROUP

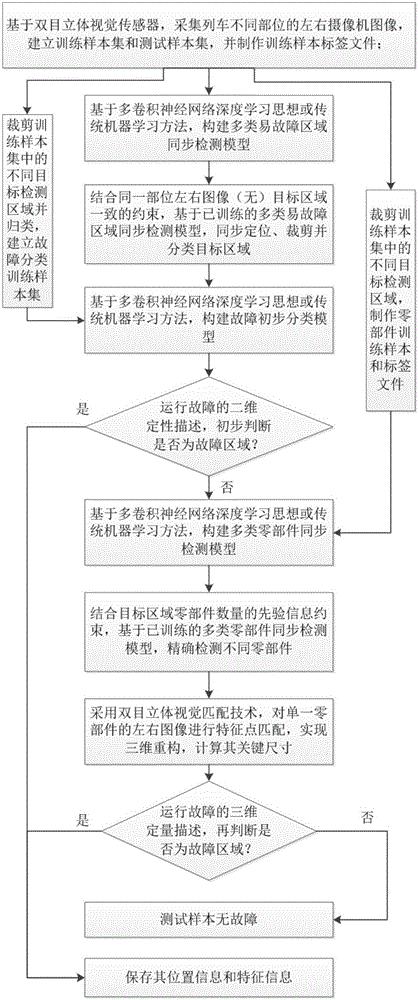

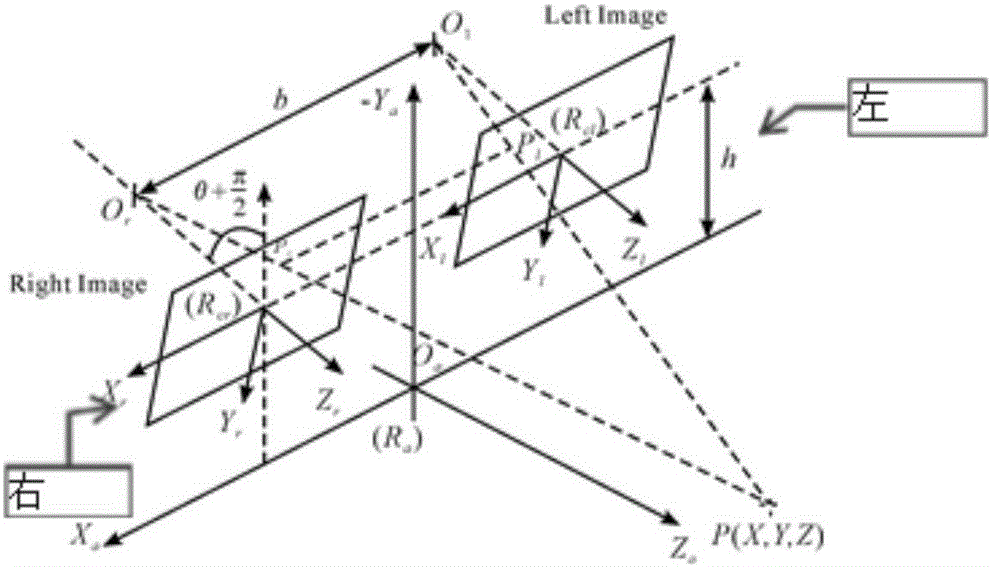

Train operation fault automatic detection system and method based on binocular stereoscopic vision

ActiveCN106600581AReduce maintenance costsRealize the second judgment of faultImage analysisMaterial analysis by optical meansCamera imageStudy methods

The invention discloses a train operation fault automatic detection system and method based on binocular stereoscopic vision, and the method comprises the steps: collecting left and right camera images of different parts of a train based on a binocular stereoscopic vision sensor; achieving the synchronous precise positioning of various types of target regions where faults are liable to happen based on the deep learning theory of a multi-layer convolution neural network or a conventional machine learning method through combining with the left and right image consistency fault (no-fault) constraint of the same part; carrying out the preliminary fault classification and recognition of a positioning region; achieving the synchronous precise positioning of multiple parts in a non-fault region through combining with the priori information of the number of parts in the target regions; carrying out the feature point matching of the left and right images of the same part through employing the technology of binocular stereoscopic vision, achieving the three-dimensional reconstruction, calculating a key size, and carrying out the quantitative description of fine faults and gradually changing hidden faults, such as loosening or playing. The method achieves the synchronous precise detection of the deformation, displacement and falling faults of all big parts of the train, or carries out the three-dimensional quantitative description of the fine and gradually changing hidden troubles, and is more complete, timely and accurate.

Owner:BEIHANG UNIV

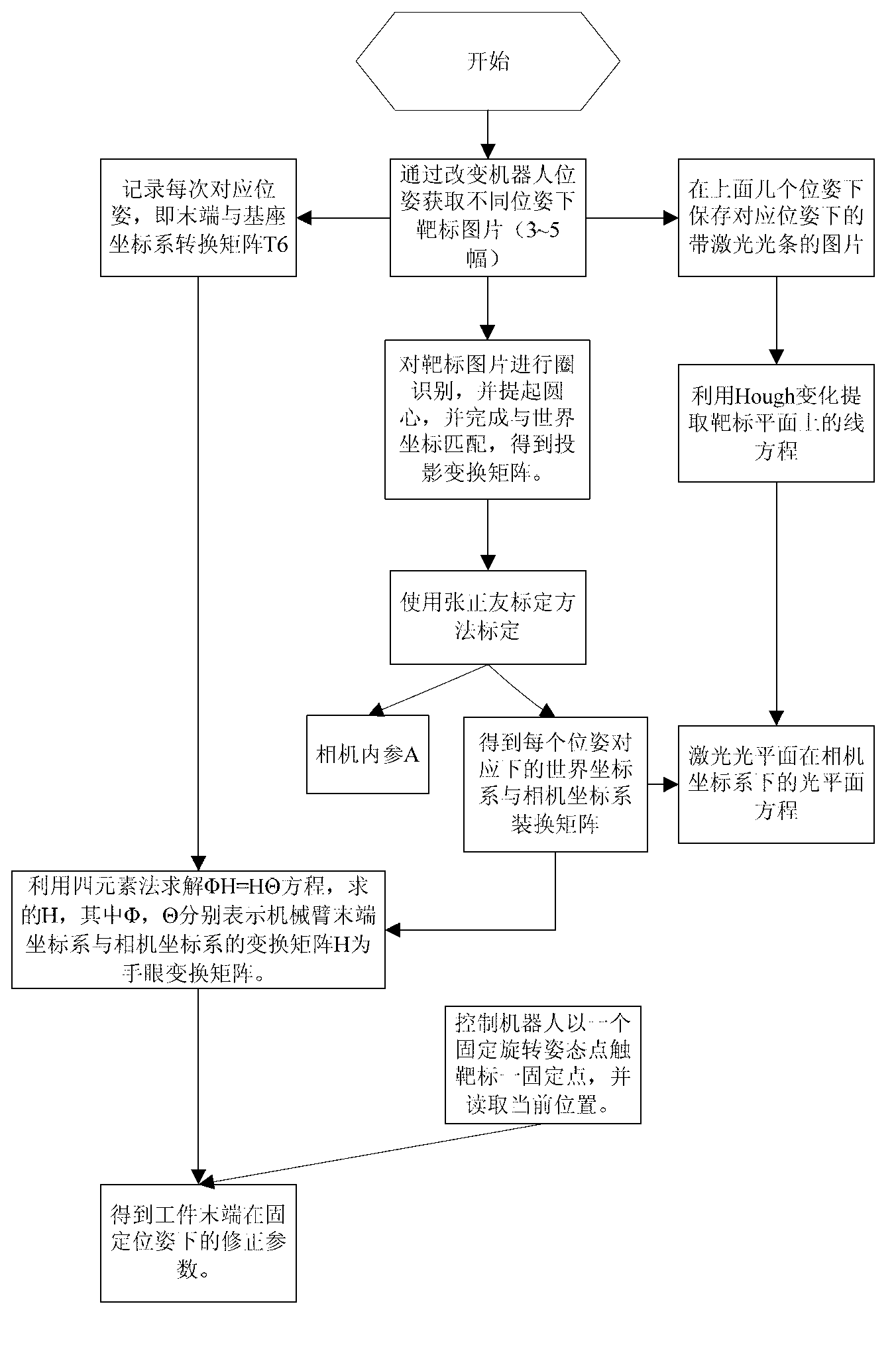

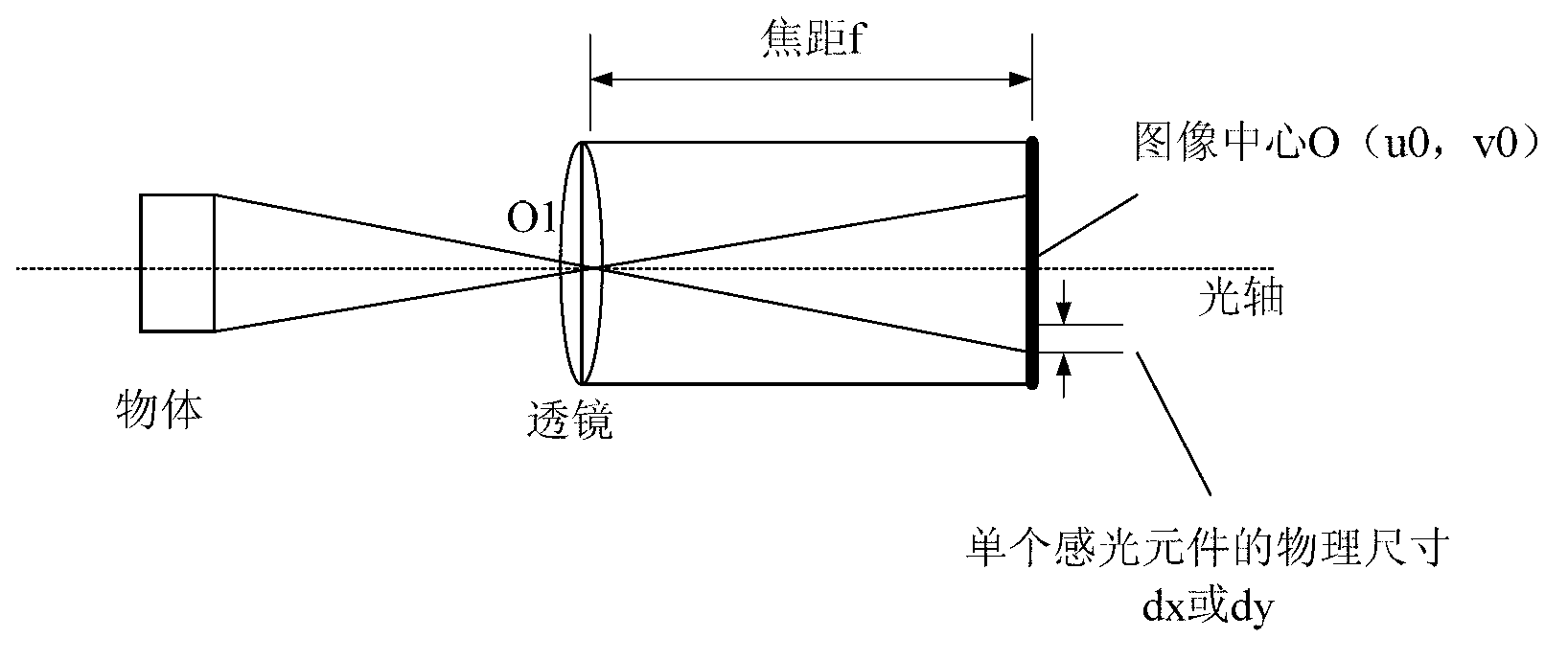

Systematic calibration method of welding robot guided by line structured light vision sensor

ActiveCN102794763AImprove tracking accuracyIncrease flexibilityProgramme-controlled manipulatorWelding/cutting auxillary devicesQuaternionEngineering

The invention relates to a systematic calibration method of a welding robot guided by a line structured light vision sensor, which comprises the following steps: firstly, controlling a mechanical arm to change pose, obtaining a round target image through a camera, accomplishing the matching of the round target image and a world coordinate, and then obtaining an internal parameter matrix and an external parameter matrix RT of the camera; secondly, solving a line equation of a line laser bar by Hough transformation, and using the external parameter matrix RT obtained in the first step to obtain a plane equation of the plane of the line laser bar under a coordinate system of the camera; thirdly, calculating to obtain a transformation matrix of a tail end coordinate system of the mechanical arm and a base coordinate system of the mechanical arm by utilizing a quaternion method; and fourthly, calculating a coordinate value of a tail end point of a welding workpiece under the coordinate of the mechanical arm, and then calculating an offset value of the workpiece in the pose combined with the pose of the mechanical arm. The systematic calibration method of the welding robot guided by the line structured light vision sensor is flexible, simple and fast, and is high in precision and generality, good in stability and timeliness and small in calculation amount.

Owner:JIANGNAN UNIV +1

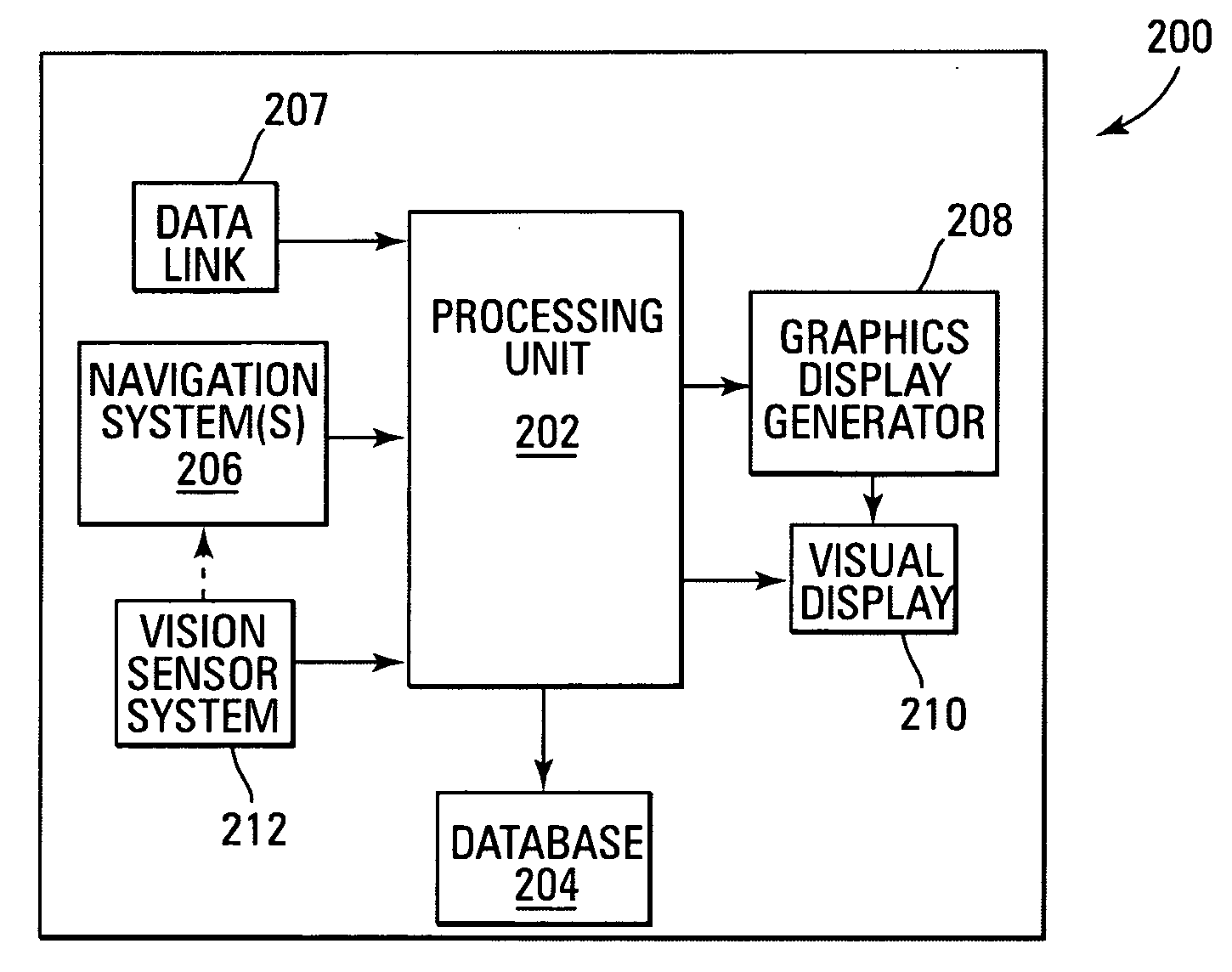

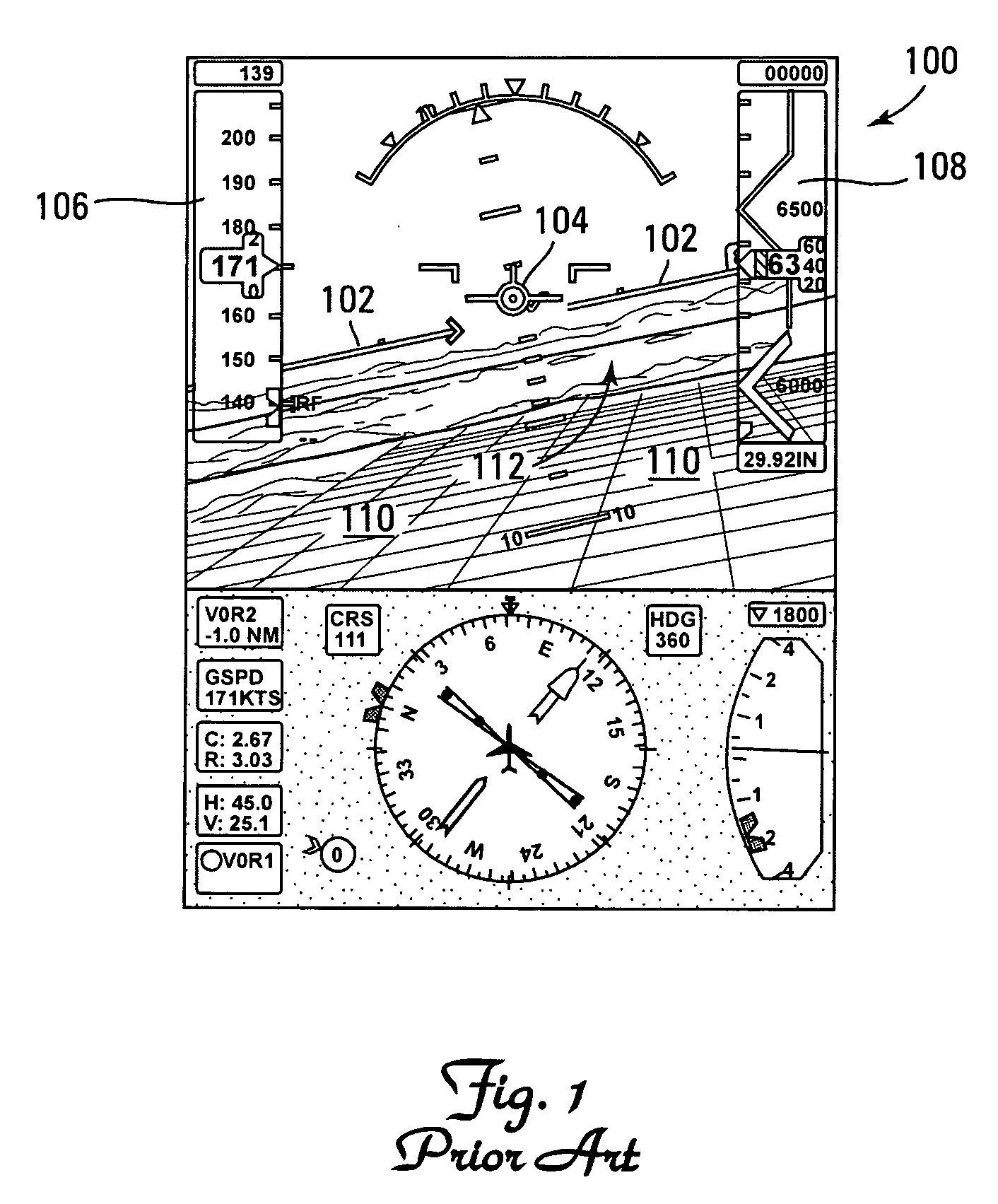

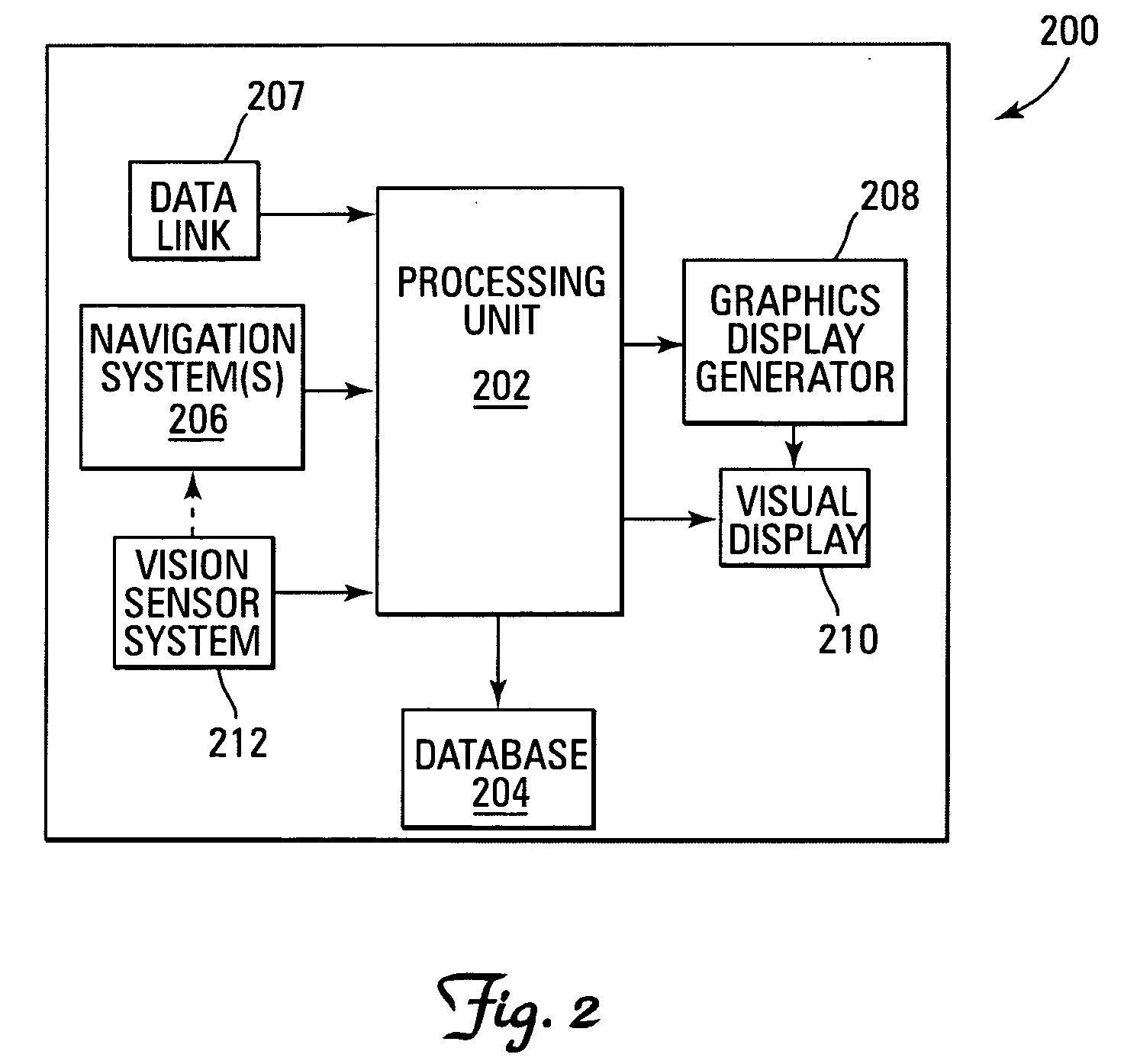

System and method for enhancing computer-generated images of terrain on aircraft displays

ActiveUS20070005199A1Increase awarenessQuick initializationAnalogue computers for vehiclesDigital data processing detailsTerrainDisplay device

A system and method are disclosed for enhancing the visibility and ensuring the correctness of terrain and navigation information on aircraft displays, such as, for example, continuous, three-dimensional perspective view aircraft displays conformal to the visual environment. More specifically, an aircraft display system is disclosed that includes a processing unit, a navigation system, a database for storing high resolution terrain data, a graphics display generator, and a visual display. One or more independent, higher precision databases with localized position data, such as navigation data or position data is onboard. Also, one or more onboard vision sensor systems associated with the navigation system provides real-time spatial position data for display, and one or more data links is available to receive precision spatial position data from ground-based stations. Essentially, before terrain and navigational objects (e.g., runways) are displayed, a real-time correction and augmentation of the terrain data is performed for those regions that are relevant and / or critical to flight operations, in order to ensure that the correct terrain data is displayed with the highest possible integrity. These corrections and augmentations performed are based upon higher precision, but localized onboard data, such as navigational object data, sensor data, or up-linked data from ground stations. Whenever discrepancies exist, terrain data having a lower integrity can be corrected in real-time using data from a source having higher integrity data. A predictive data loading approach is used, which substantially reduces computational workload and thus enables the processing unit to perform such augmentation and correction operations in real-time.

Owner:HONEYWELL INT INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com