Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

120 results about "Epipolar geometry" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

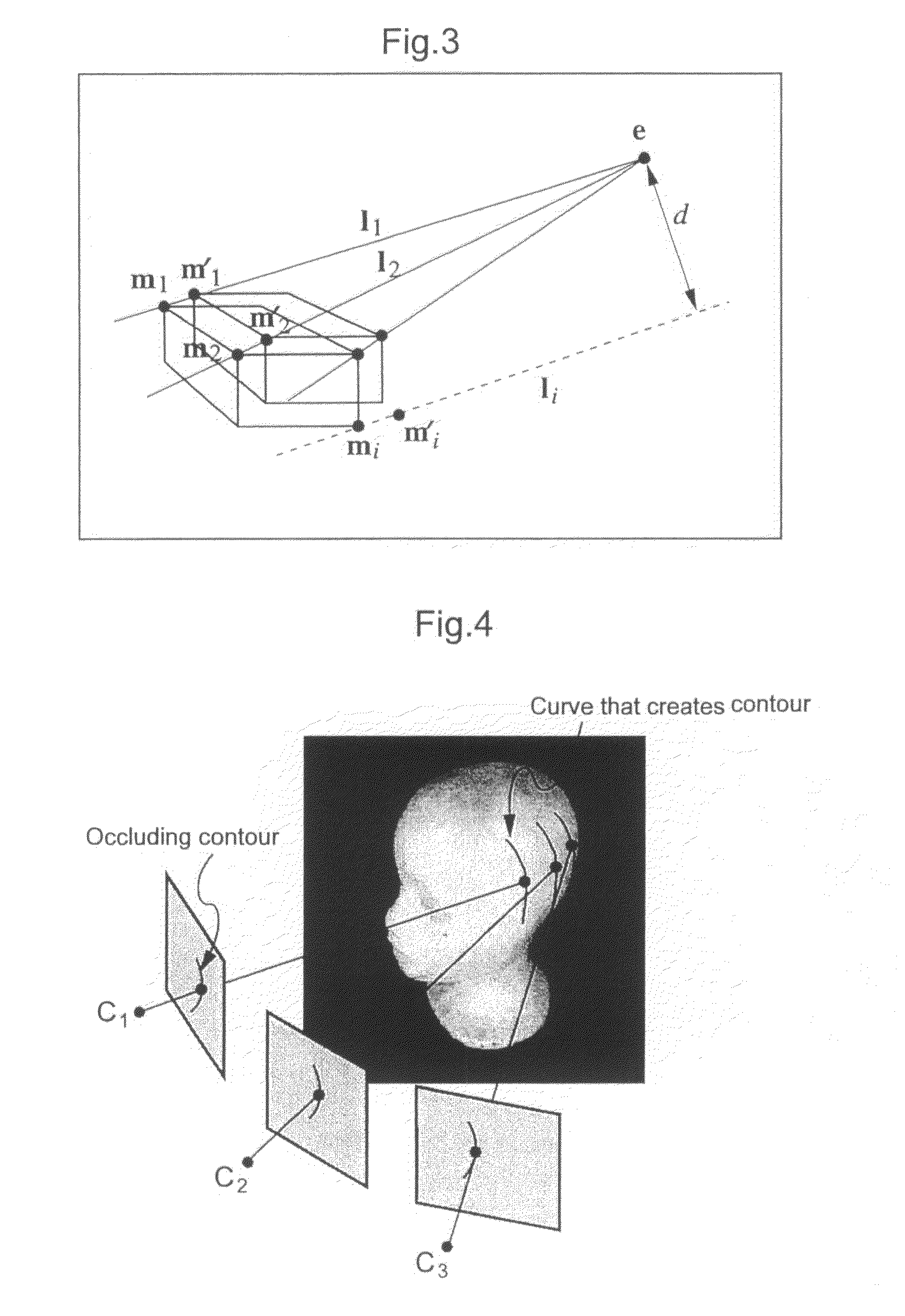

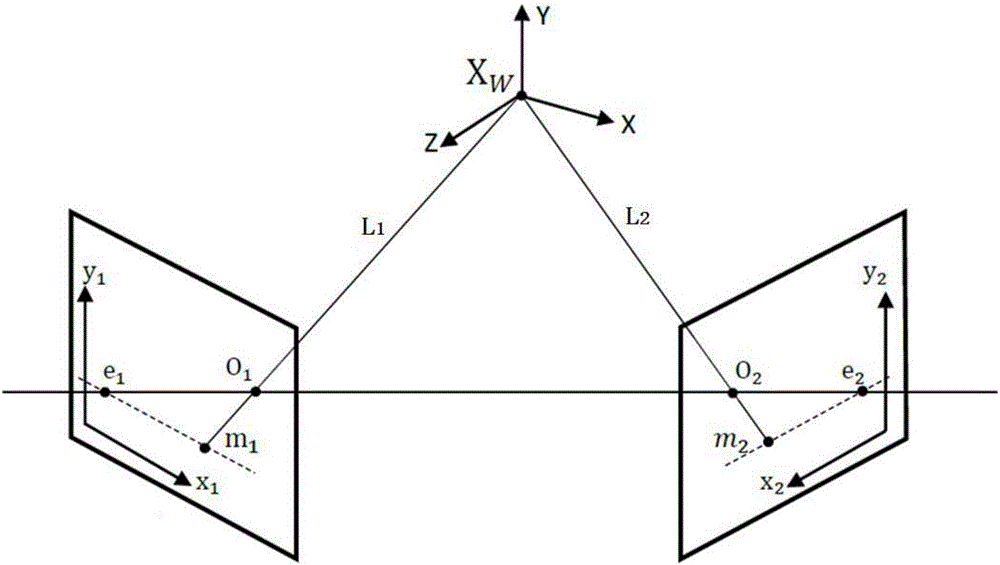

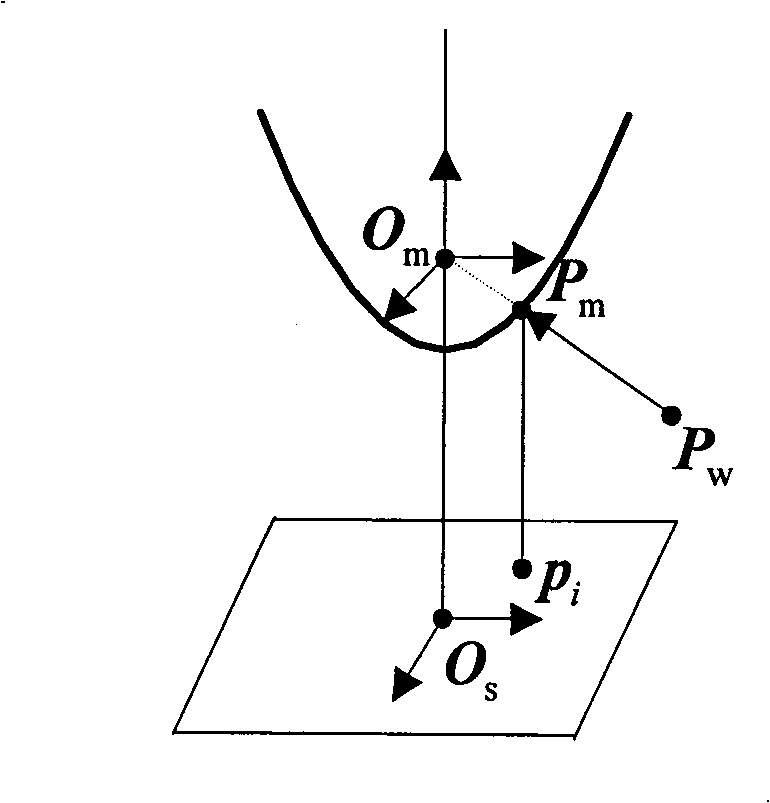

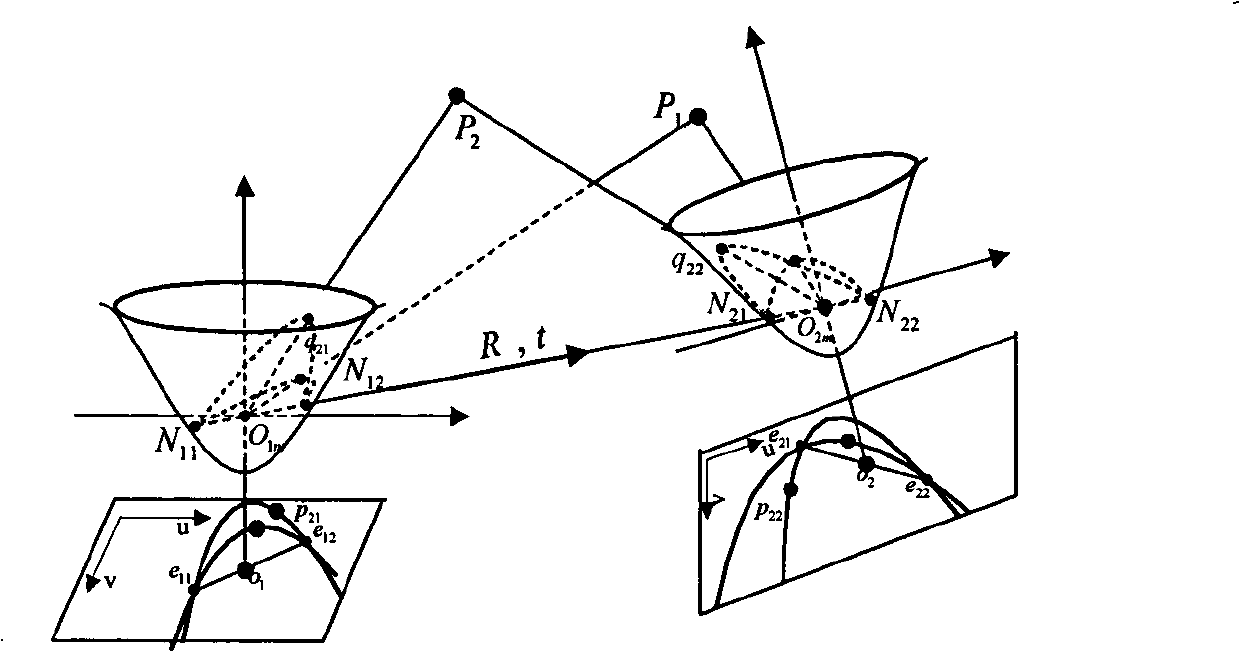

Epipolar geometry is the geometry of stereo vision. When two cameras view a 3D scene from two distinct positions, there are a number of geometric relations between the 3D points and their projections onto the 2D images that lead to constraints between the image points. These relations are derived based on the assumption that the cameras can be approximated by the pinhole camera model.

Method for Determining Scattered Disparity Fields in Stereo Vision

ActiveUS20090207235A1Quality improvementCompensating for such errorImage enhancementImage analysisComputer scienceVisual perception

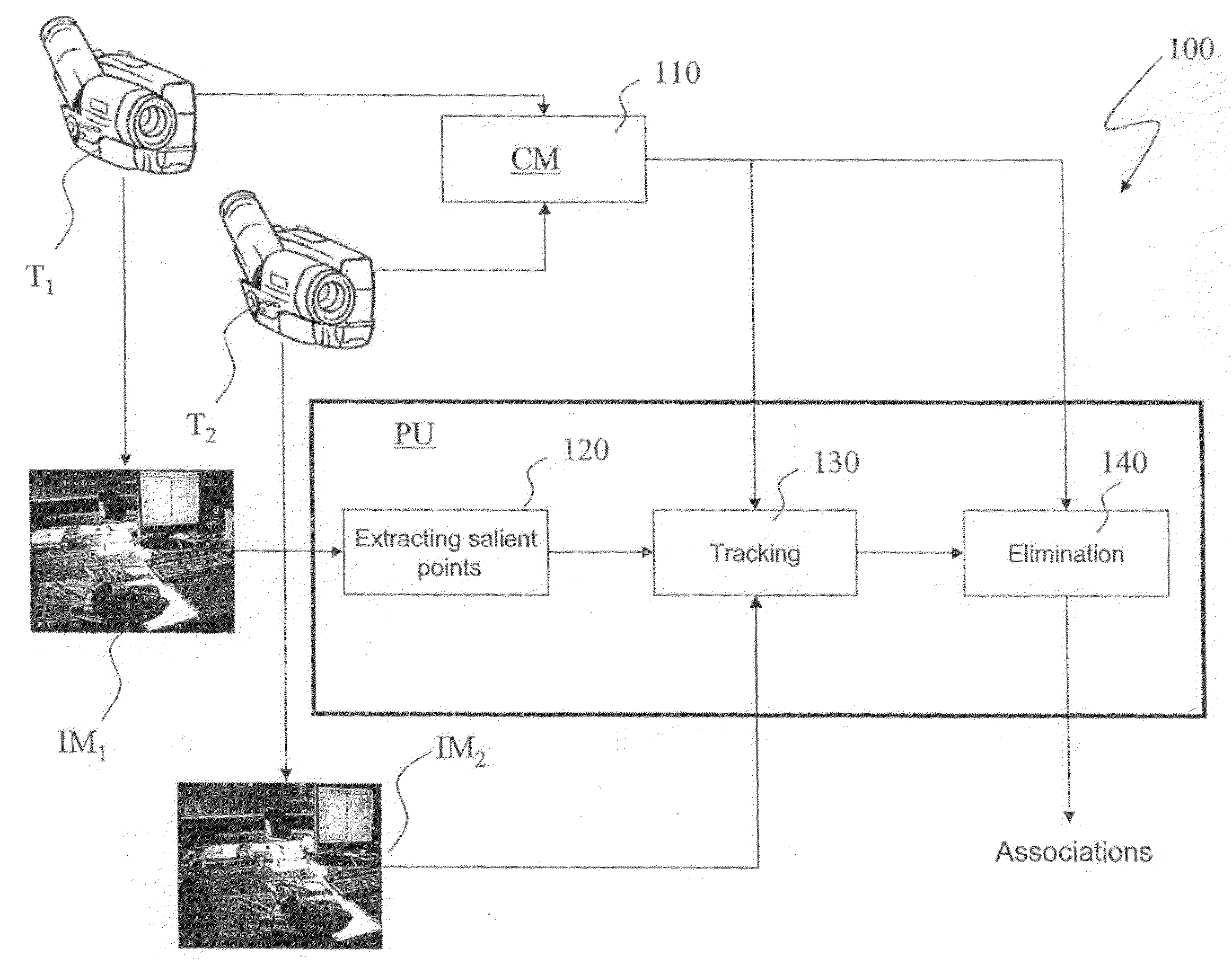

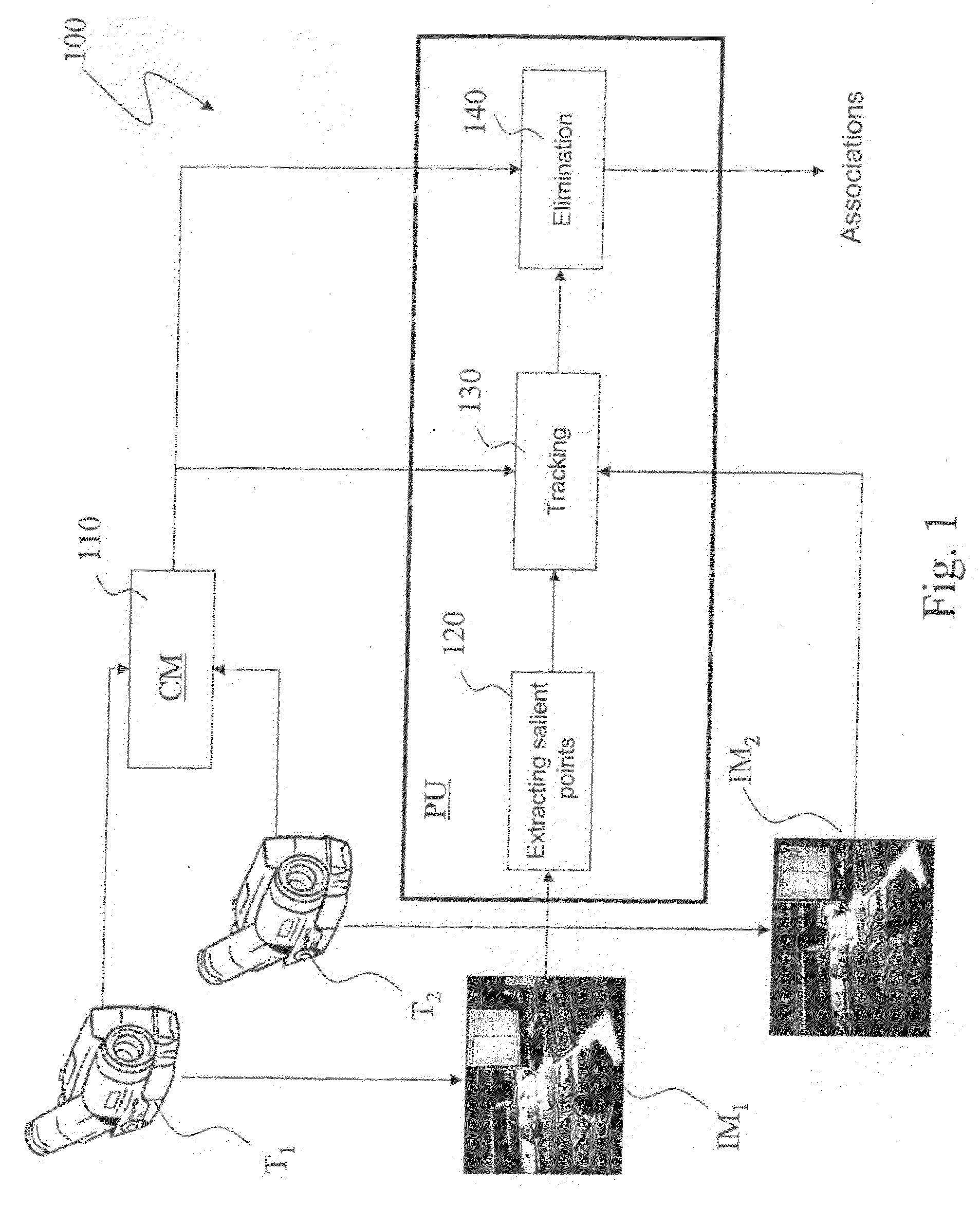

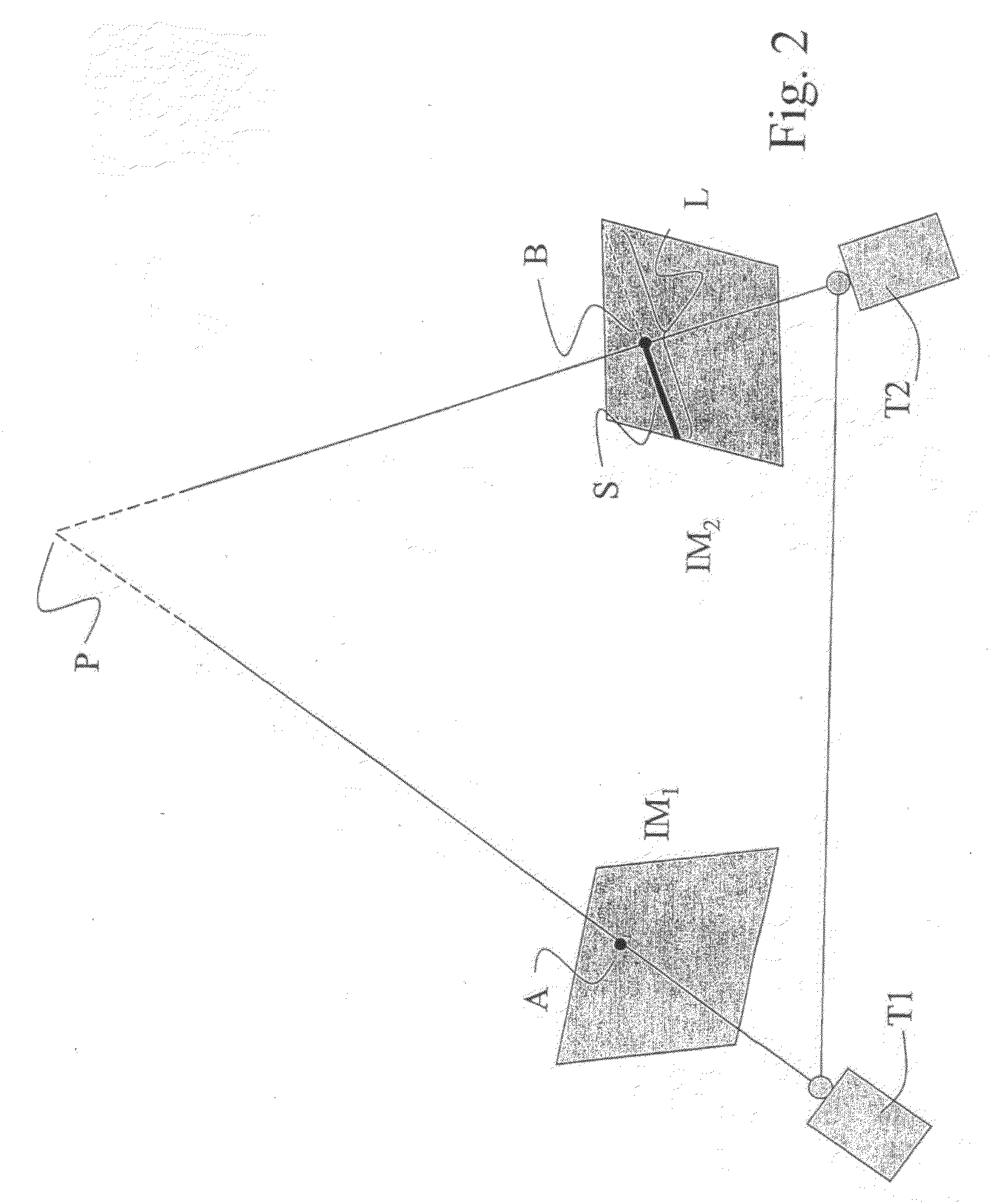

In a system for stereo vision including two cameras shooting the same scene, a method is performed for determining scattered disparity fields when the epipolar geometry is known, which includes the steps of: capturing, through the two cameras, first and second images of the scene from two different positions; selecting at least one pixel in the first image, the pixel being associated with a point of the scene and the second image containing a point also associated with the above point of the scene; and computing the displacement from the pixel to the point in the second image minimising a cost function, such cost function including a term which depends on the difference between the first and the second image and a term which depends on the distance of the above point in the second image from a epipolar straight line, and a following check whether it belongs to an allowability area around a subset to the epipolar straight line in which the presence of the point is allowed, in order to take into account errors or uncertainties in calibrating the cameras.

Owner:TELECOM ITALIA SPA

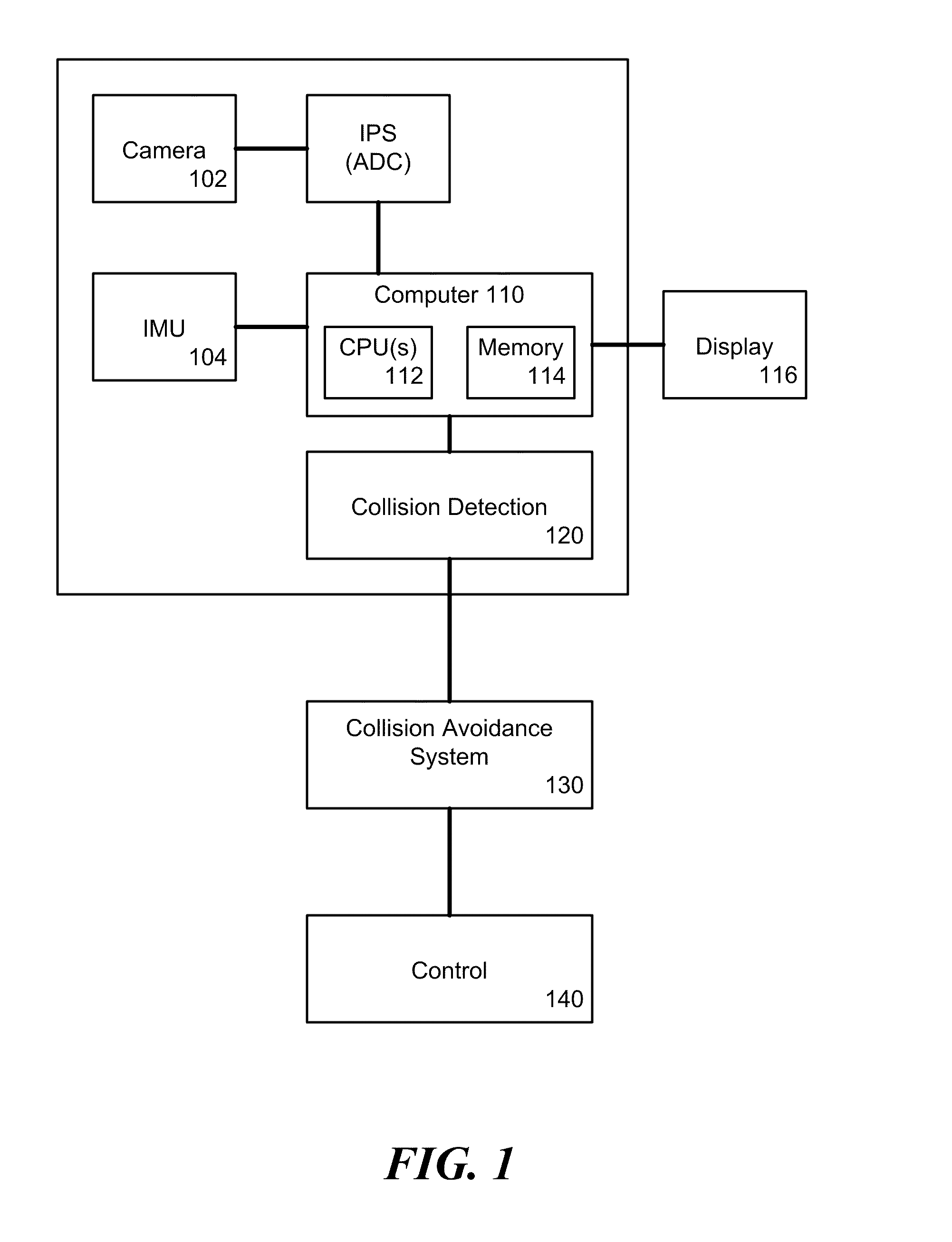

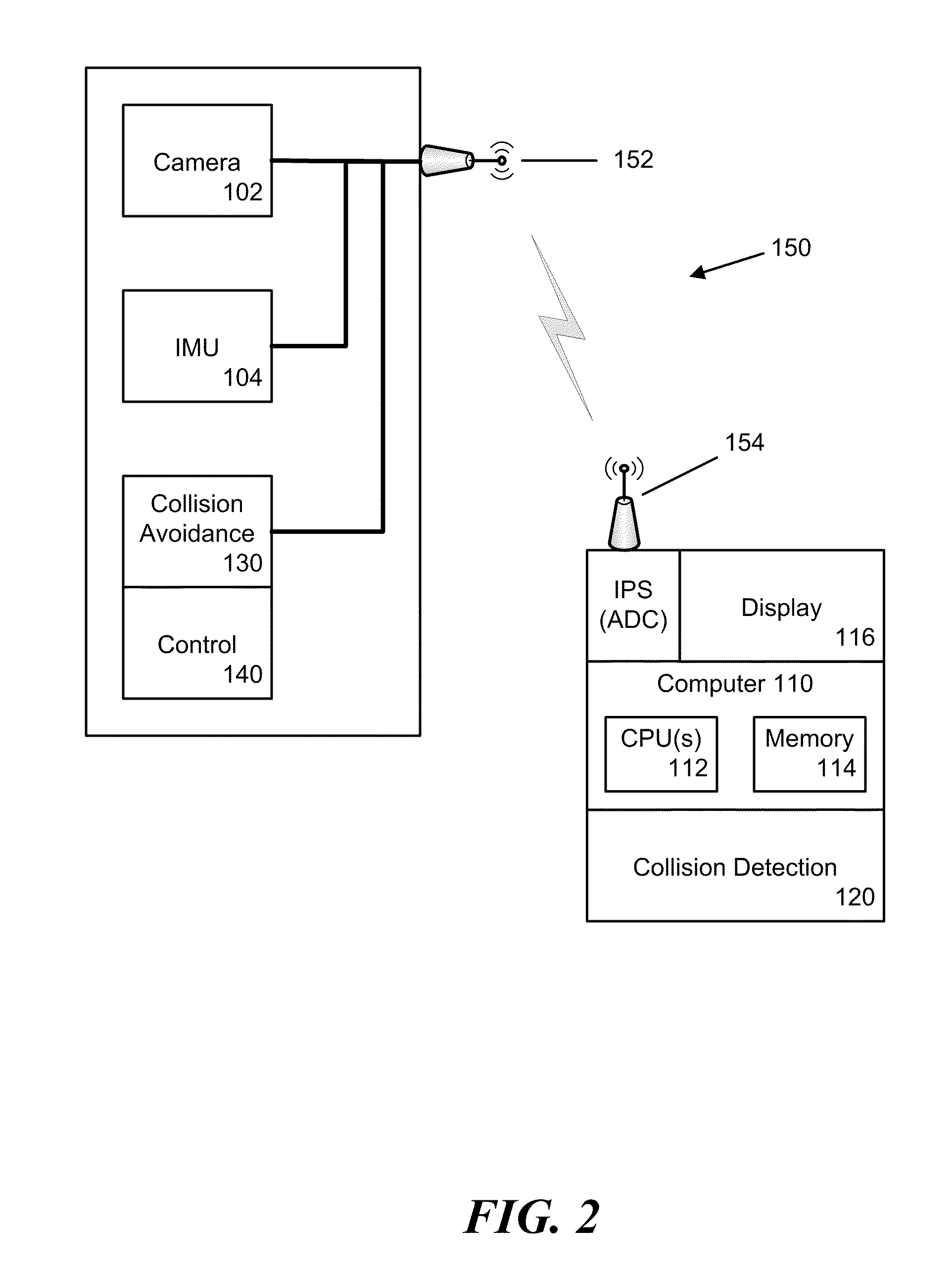

Method and System for Visual Collision Detection and Estimation

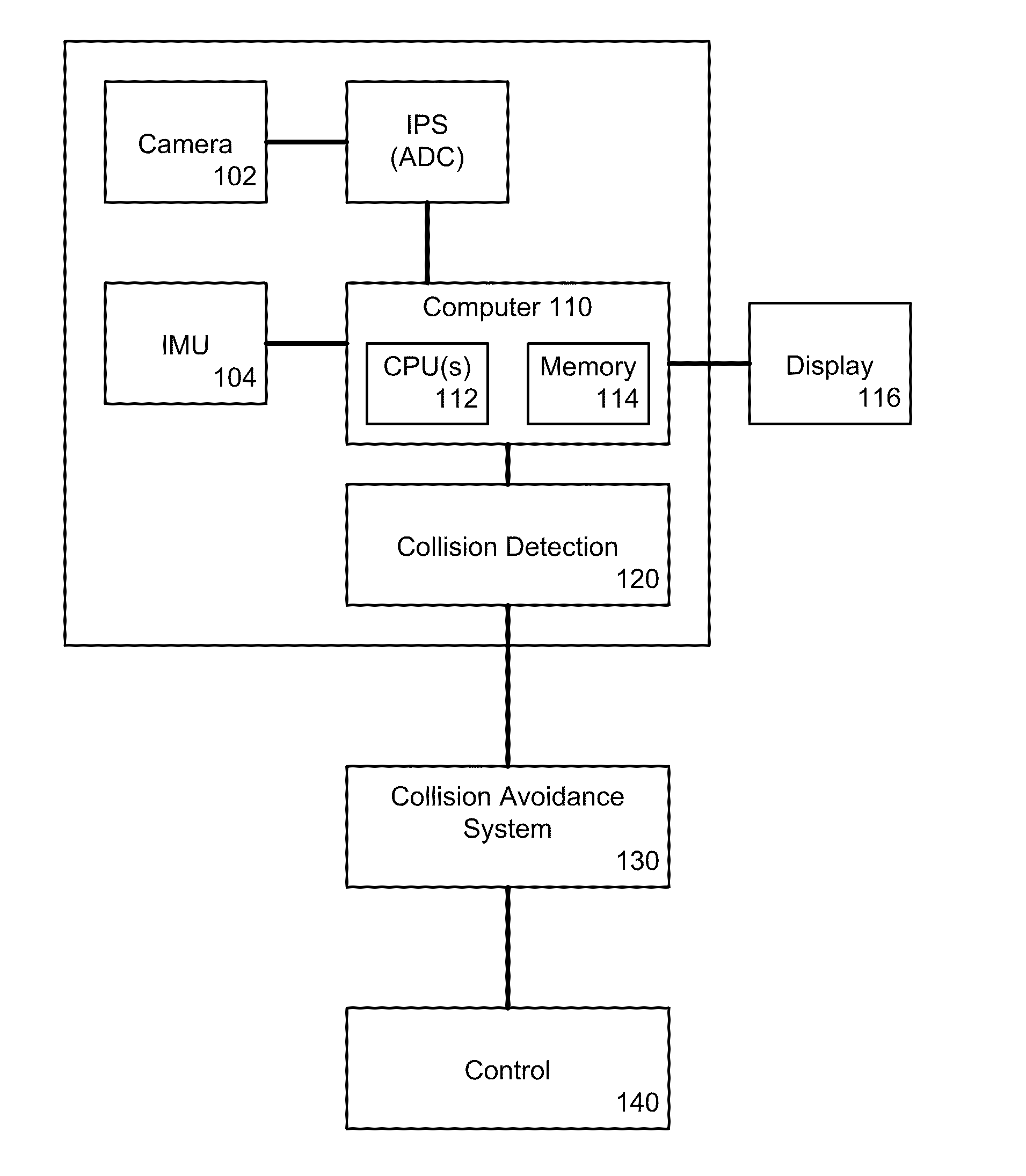

InactiveUS20100305857A1Optimized time to collision estimationConvenient timeImage enhancementImage analysisCollision detectionVision sensor

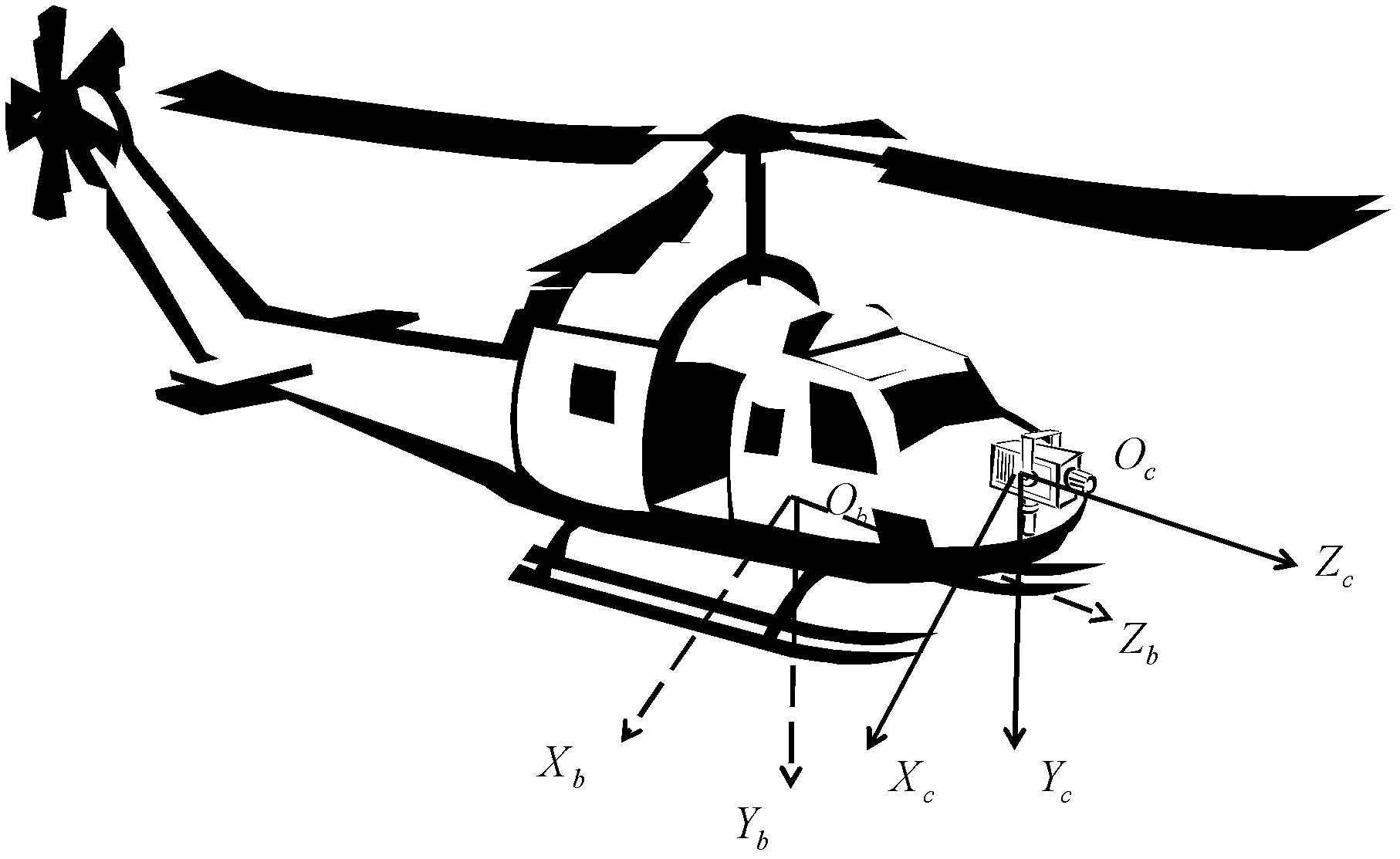

Collision detection and estimation from a monocular visual sensor is an important enabling technology for safe navigation of small or micro air vehicles in near earth flight. In this paper, we introduce a new approach called expansion segmentation, which simultaneously detects “collision danger regions” of significant positive divergence in inertial aided video, and estimates maximum likelihood time to collision (TTC) in a correspondenceless framework within the danger regions. This approach was motivated from a literature review which showed that existing approaches make strong assumptions about scene structure or camera motion, or pose collision detection without determining obstacle boundaries, both of which limit the operational envelope of a deployable system. Expansion segmentation is based on a new formulation of 6-DOF inertial aided TTC estimation, and a new derivation of a first order TTC uncertainty model due to subpixel quantization error and epipolar geometry uncertainty. Proof of concept results are shown in a custom designed urban flight simulator and on operational flight data from a small air vehicle.

Owner:BYRNE JEFFREY +1

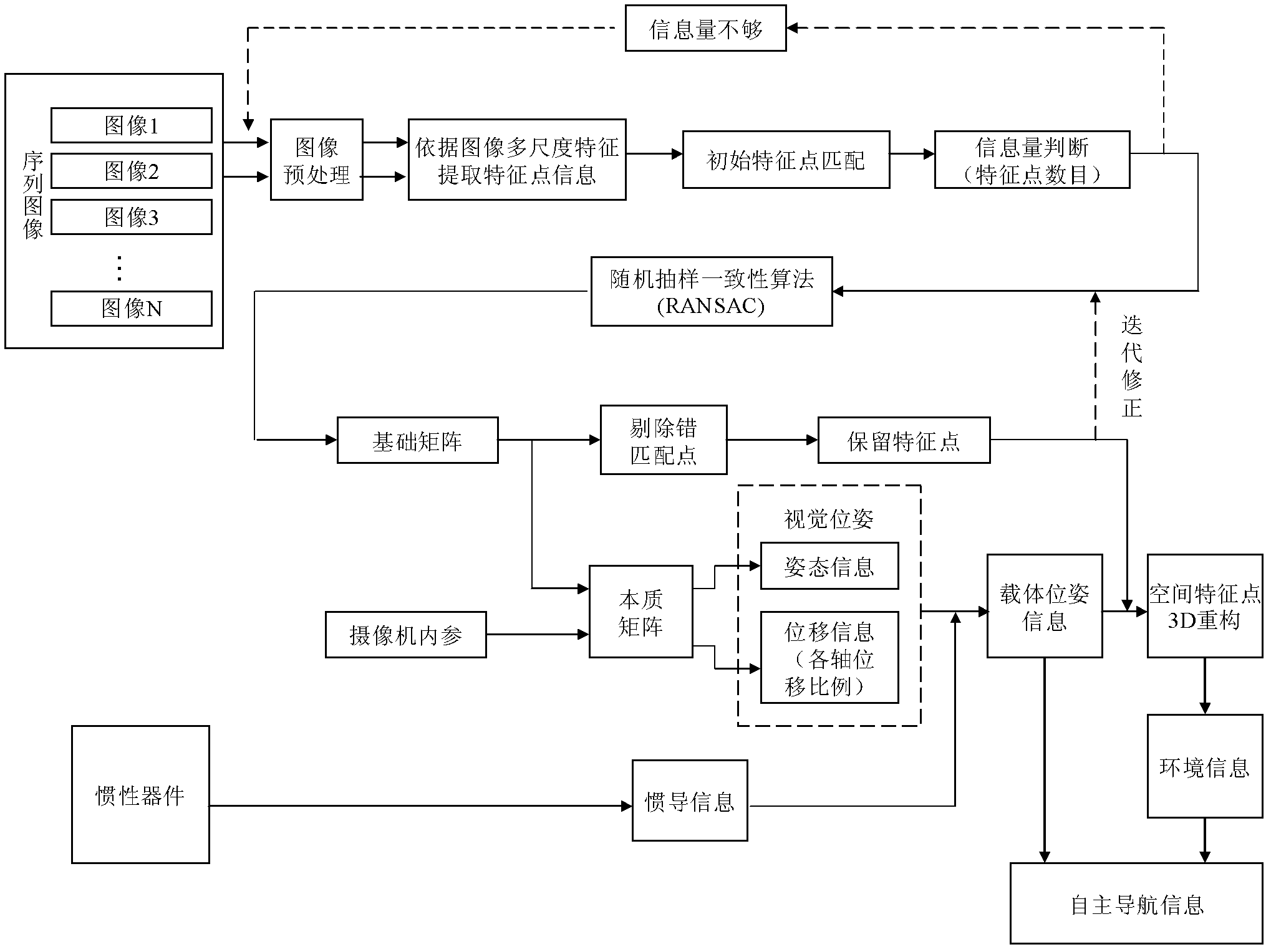

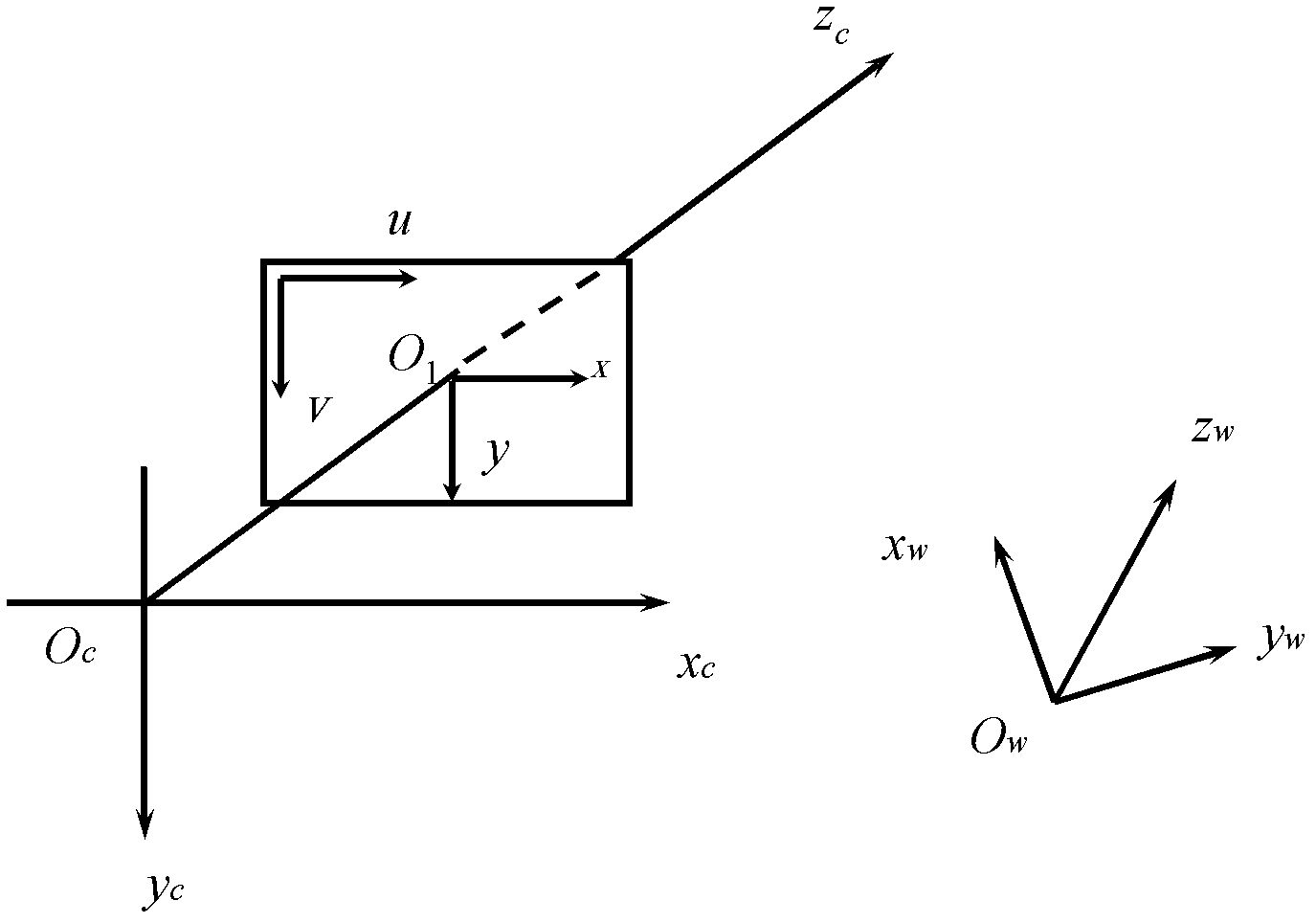

Monocular vision/inertia autonomous navigation method for indoor environment

ActiveCN102435188ALow costSimple algorithmNavigation by speed/acceleration measurementsParallaxEssential matrix

The invention discloses a monocular vision / inertia autonomous navigation method for an indoor environment, belonging to the field of vision navigation and inertia navigation. The method comprises the following steps: acquiring feature point information based on local invariant features of images, solving a basis matrix by using an epipolar geometry formed by a parallax generated by camera movements, solving an essential matrix by using calibrated camera internal parameters, acquiring camera position information according to the essential matrix, finally combining the vision navigation information with the inertia navigation information to obtain accurate and reliable navigation information, and carrying out 3D reconstruction on space feature points to obtain an environment information mapto complete the autonomous navigation of a carrier. According to the invention, the autonomous navigation of the carrier in a strange indoor environment is realized with independent of a cooperative target, and the method has the advantages of high reliability and low cost of implementation.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

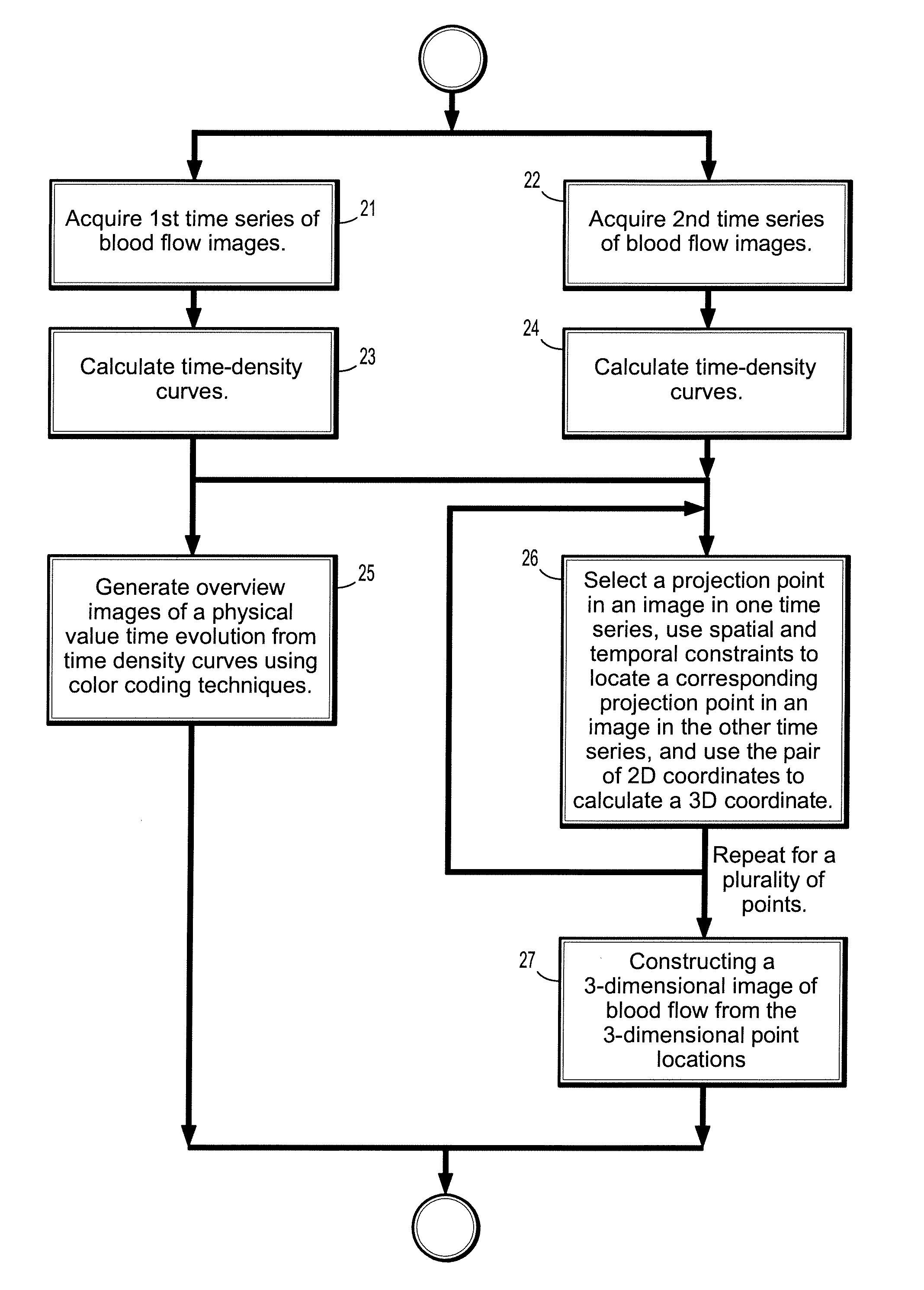

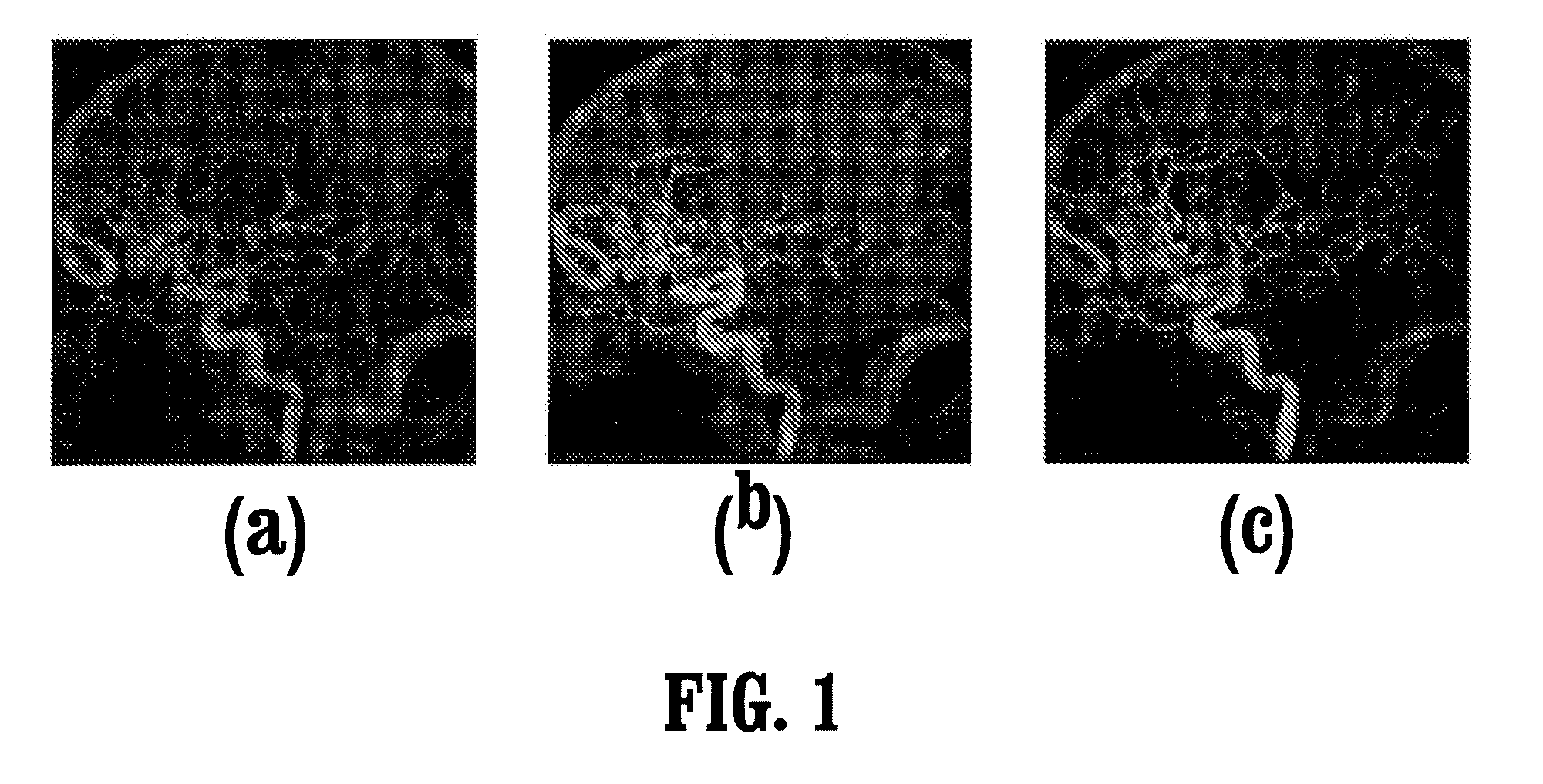

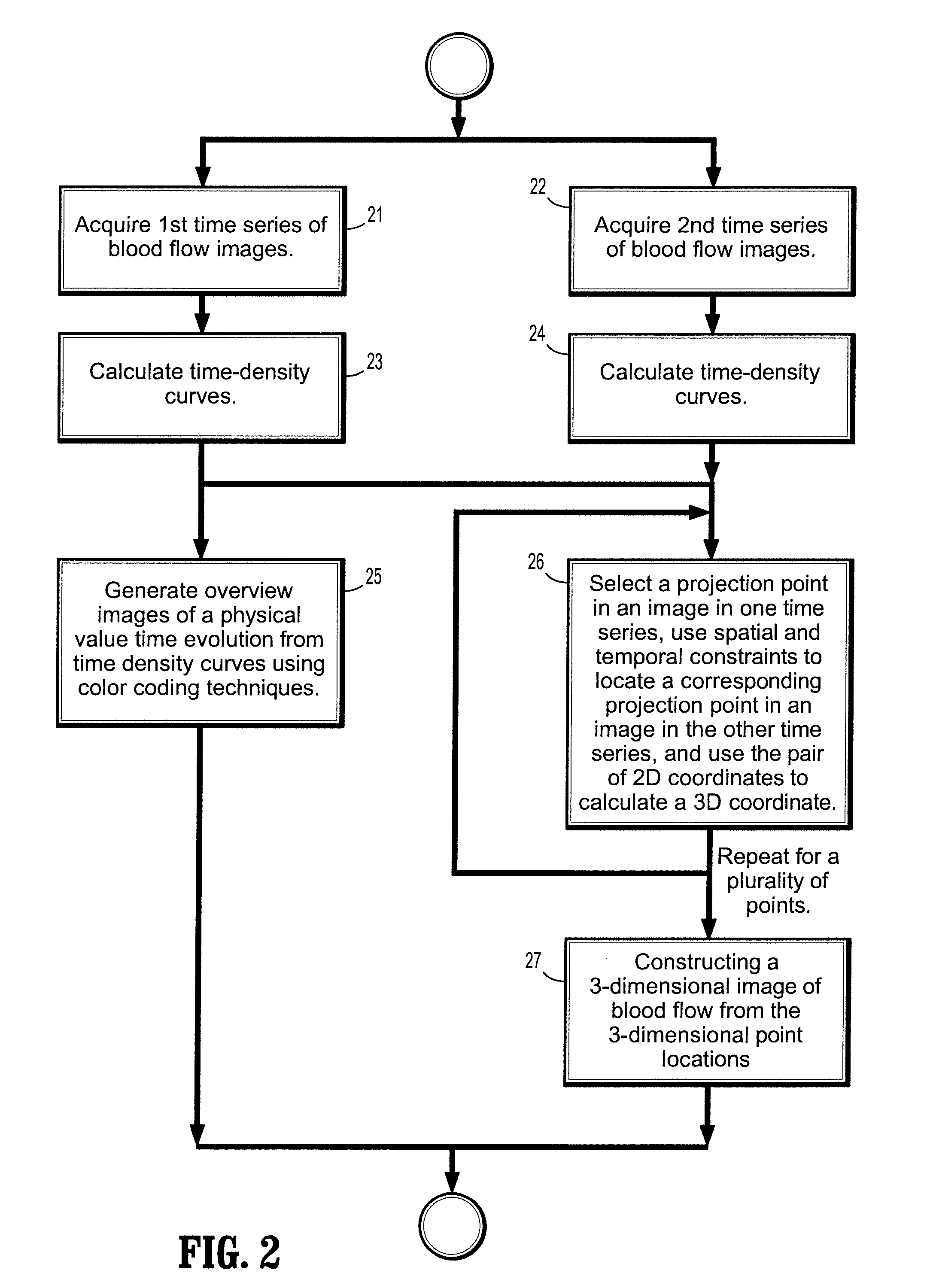

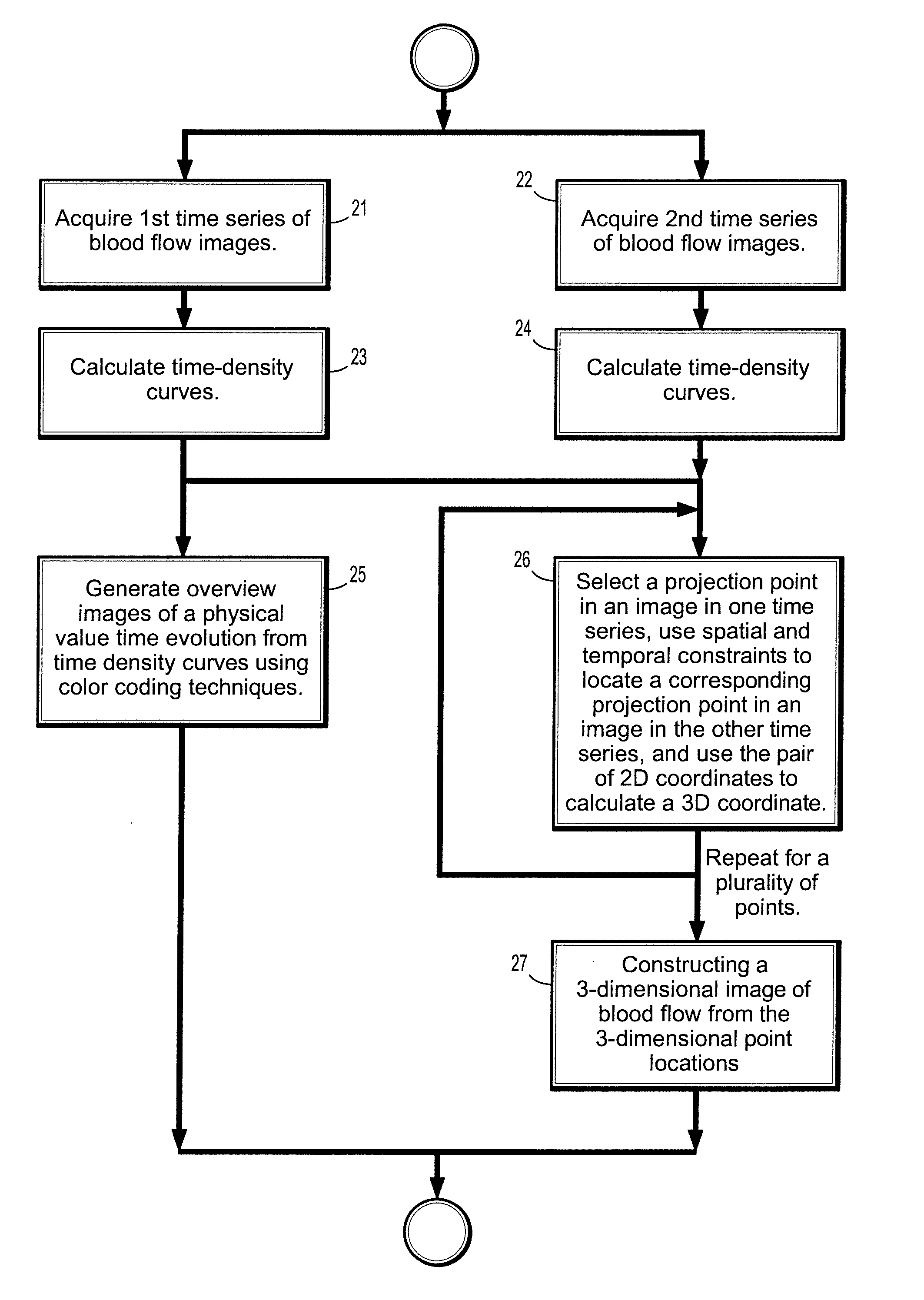

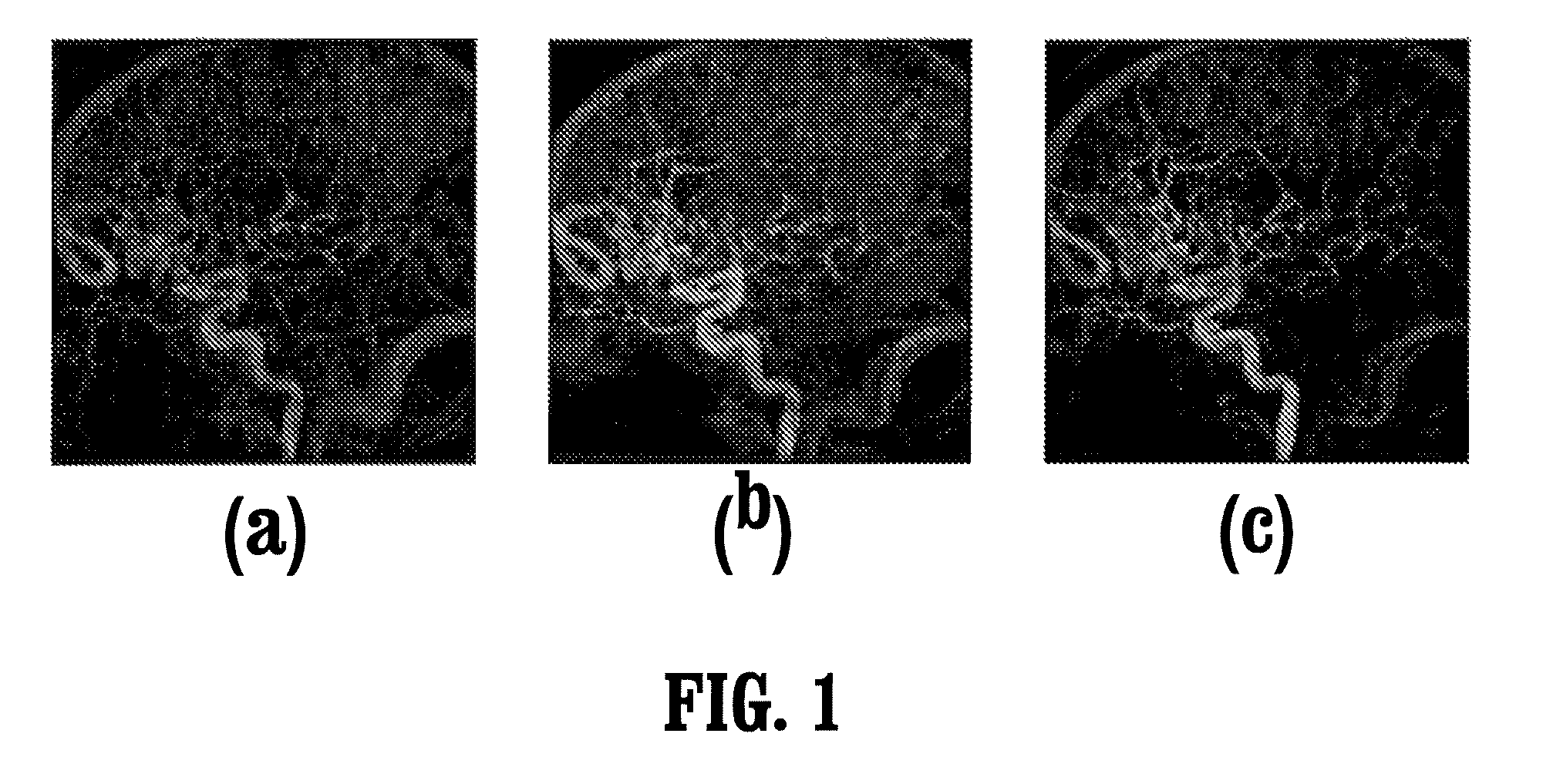

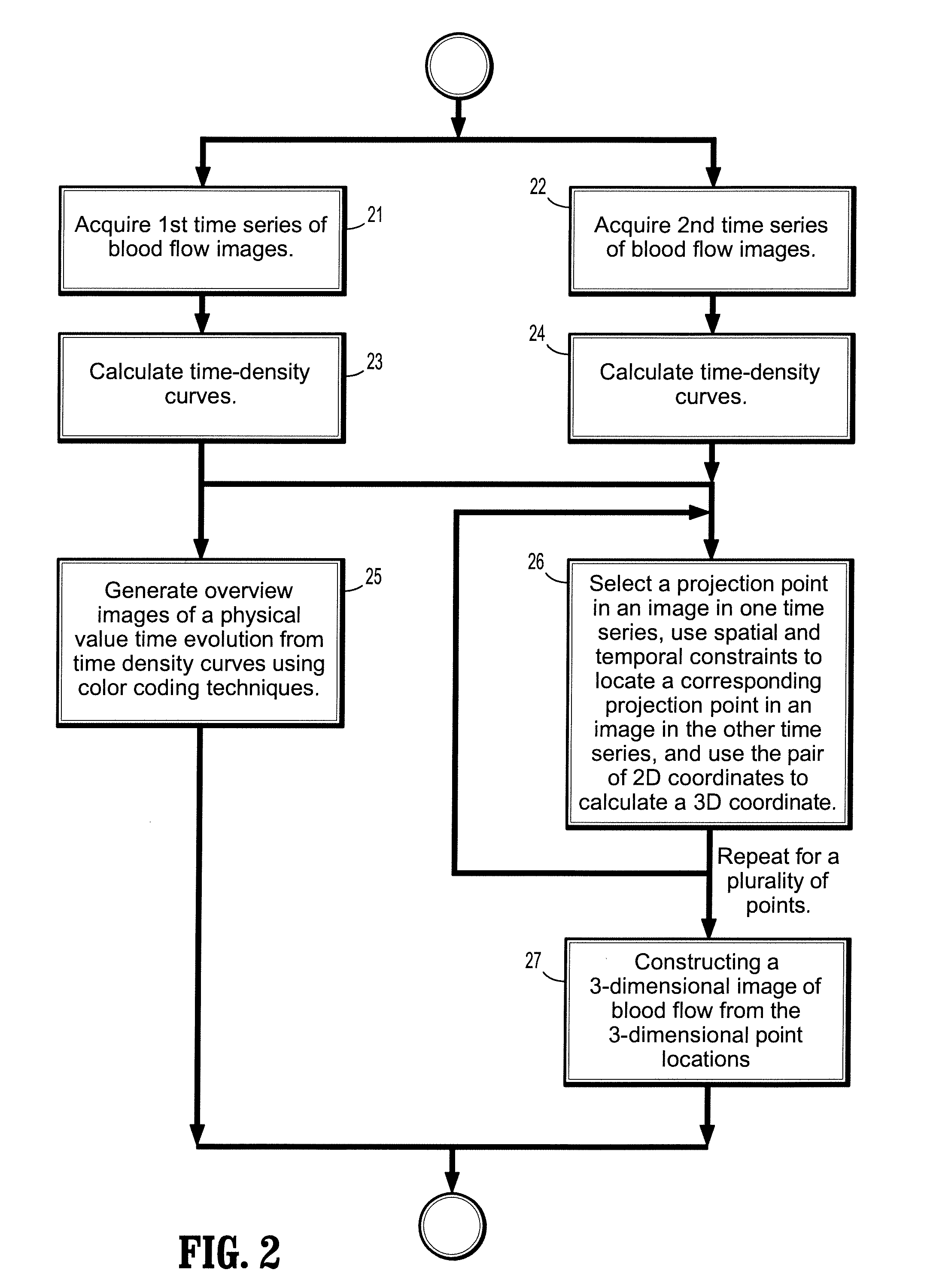

System and method for two-dimensional visualization of temporal phenomena and three dimensional vessel reconstruction

A method for visualizing temporal phenomena and constructing 3D views from a series of medical images includes providing a first time series of digital images of contrast-enhanced blood flow in a patient, each acquired from a same viewing point with a known epipolar geometry, each said image comprising a plurality of intensities associated with an N-dimensional grid of points, calculating one or more time-density curves from said first time series of digital images, each curve indicative of how the intensity at corresponding points in successive images changes over time, and generating one or more overview images from said time density curves using a color coding technique, wherein said each overview image depict how a physical property value changes from said blood flow at selected corresponding points in said first time series of images.

Owner:SIEMENS HEALTHCARE GMBH

Dynamic target position and attitude measurement method based on monocular vision at tail end of mechanical arm

ActiveCN103759716ASimplified Calculation Process MethodOvercome deficienciesPicture interpretationEssential matrixFeature point matching

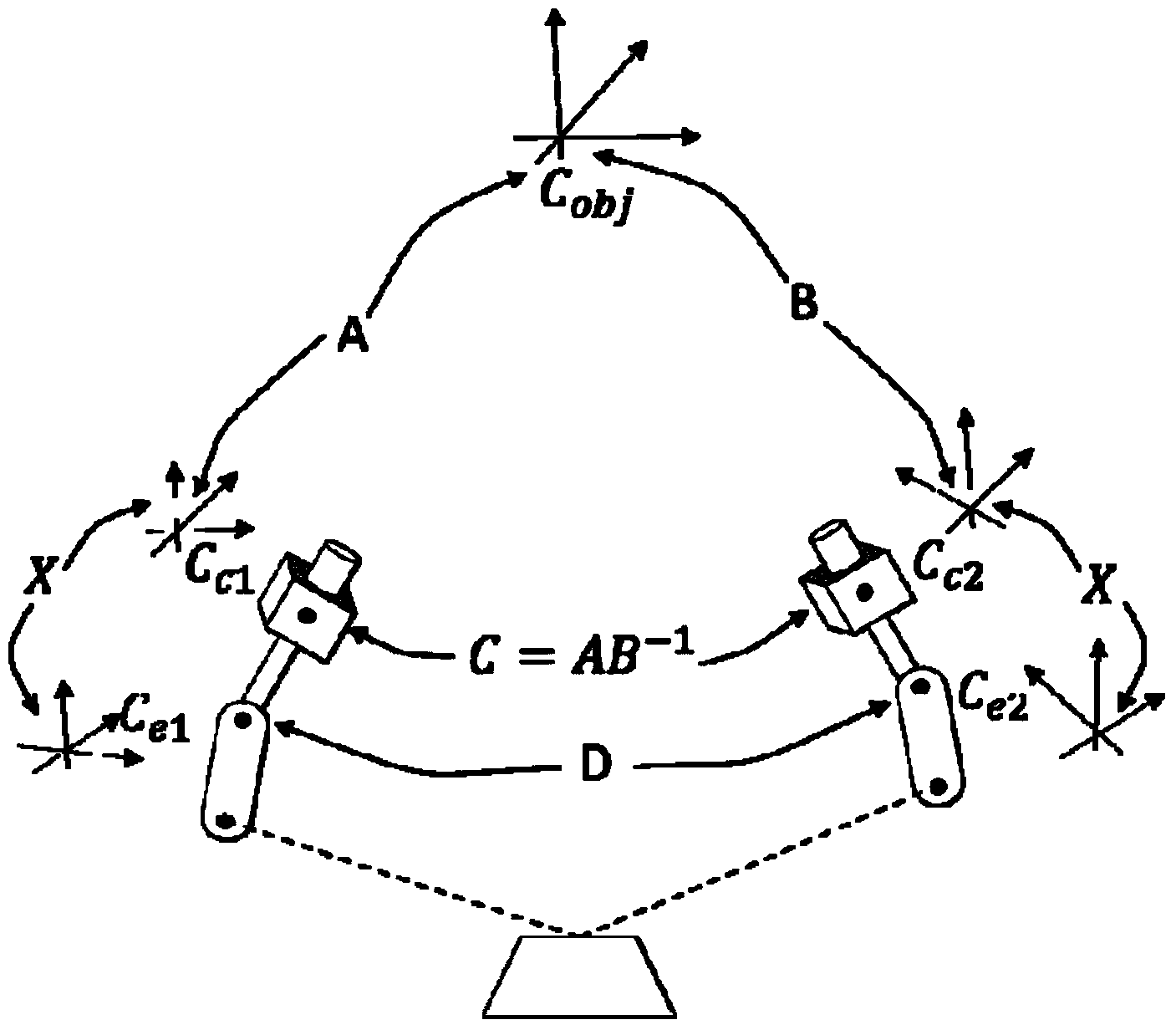

The invention relates to a dynamic target position and attitude measurement method based on monocular vision at the tail end of a mechanical arm and belongs to the field of vision measurement. The method comprises the following steps: firstly calibrating with a video camera and calibrating with hands and eyes; then shooting two pictures with the video camera, extracting spatial feature points in target areas in the pictures by utilizing a scale-invariant feature extraction method and matching the feature points; resolving a fundamental matrix between the two pictures by utilizing an epipolar geometry constraint method to obtain an essential matrix, and further resolving a rotation transformation matrix and a displacement transformation matrix of the video camera; then performing three-dimensional reconstruction and scale correction on the feature points; and finally constructing a target coordinate system by utilizing the feature points after reconstruction so as to obtain the position and the attitude of a target relative to the video camera. According to the method provided by the invention, the monocular vision is adopted, the calculation process is simplified, the calibration with the hands and the eyes is used, and the elimination of error solutions in the measurement process of the position and the attitude of the video camera can be simplified. The method is suitable for measuring the relative positions and attitudes of stationery targets and low-dynamic targets.

Owner:TSINGHUA UNIV

A 3D scene reconstruction method based on depth learning

ActiveCN109461180ADeep structure optimizationOvercoming the inability to estimate sizeImage enhancementImage analysisColor imagePoint cloud

The invention relates to a three-dimensional scene reconstruction method based on depth learning, belonging to the technical field of depth learning and machine vision. The depth structure of the scene is estimated by convolution neural network, and the dense structure is refined by multi-view method. A full convolution residual neural network is trained to predict the depth map. Based on the color images taken from different angles, the depth map is optimized and the camera attitude is estimated by using epipolar geometry and dense optimization methods. Finally, the optimized depth map is projected to three-dimensional space and visualized by point cloud. It can effectively solve the problem of outdoor 3D reconstruction, and provide high-quality point cloud output results; the method canbe used in any lighting conditions; It can overcome the shortcoming that the monocular method can not estimate the actual size of the object.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

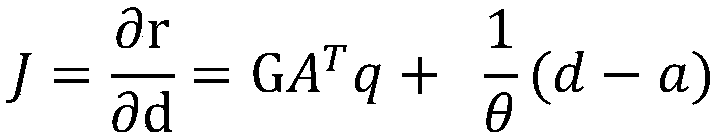

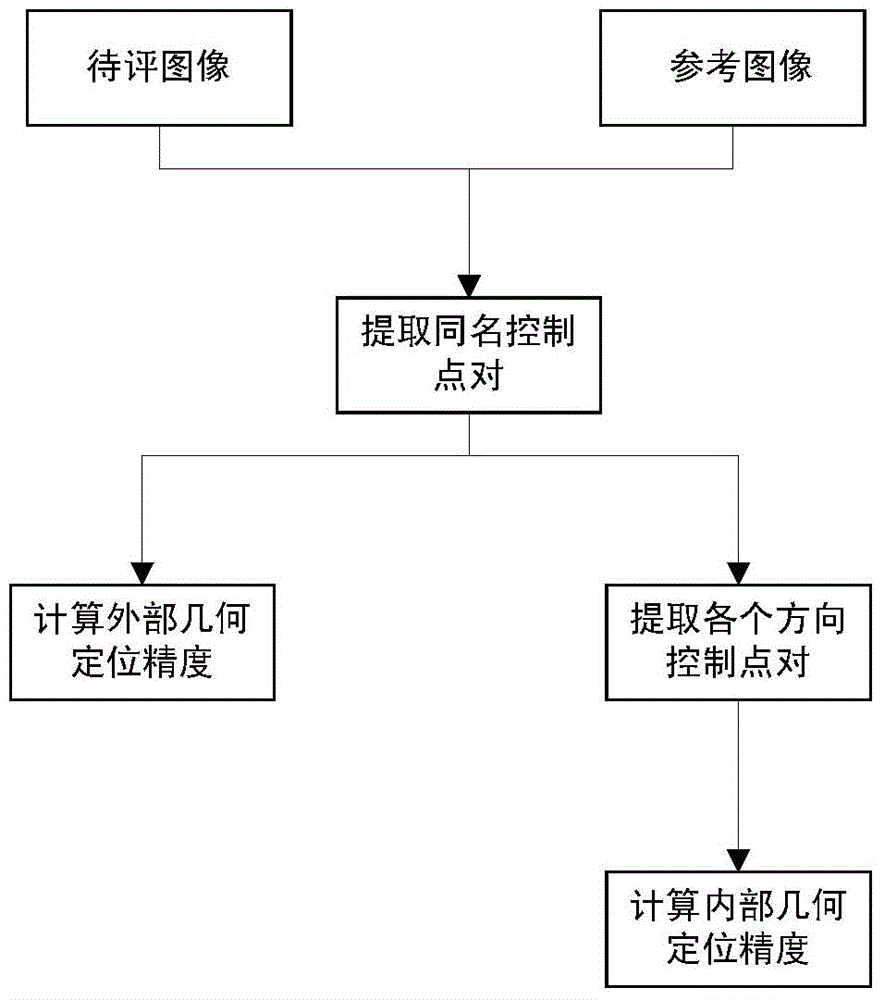

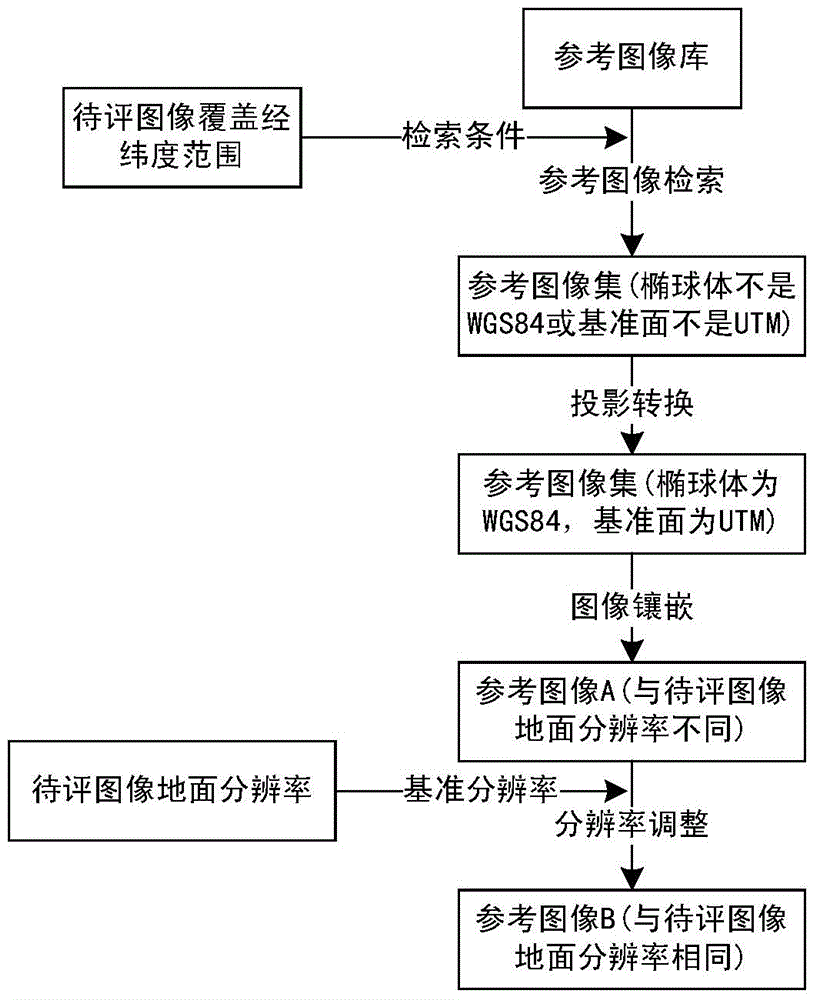

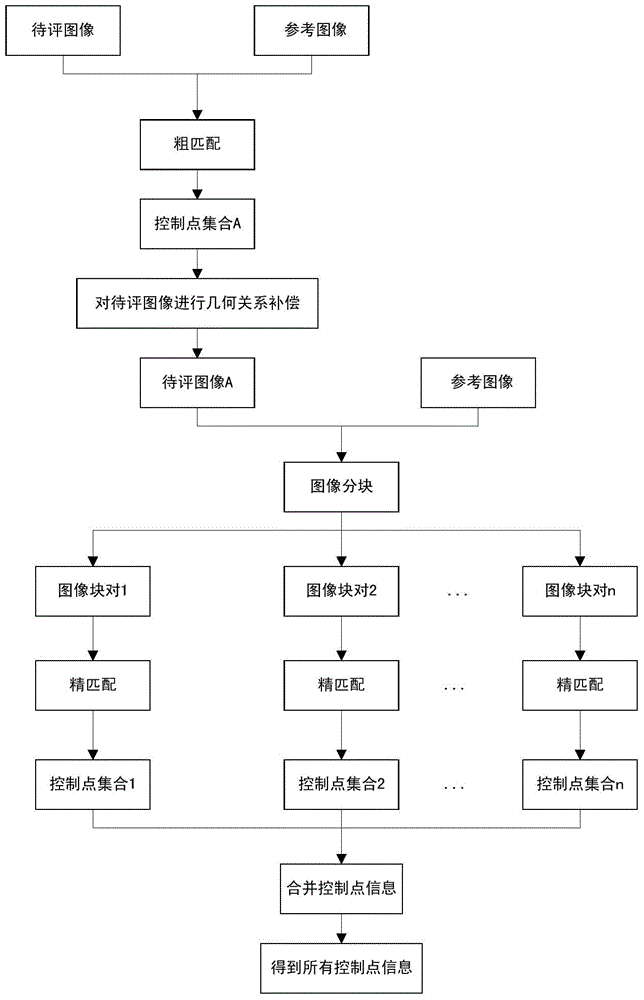

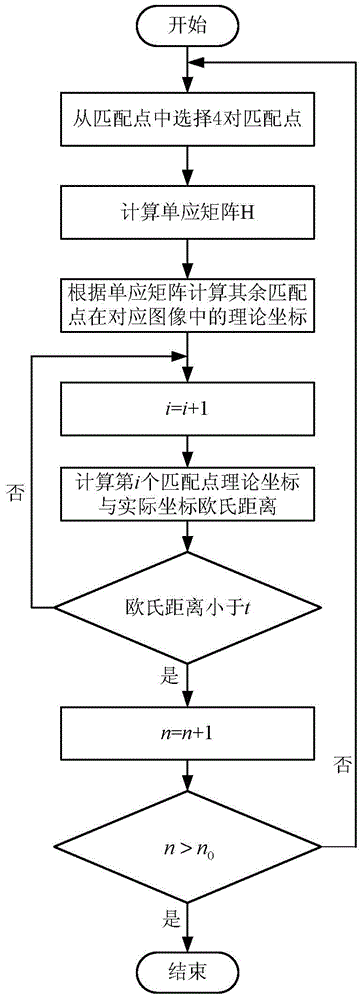

On-orbit satellite image geometric positioning accuracy evaluation method on basis of multi-source remote sensing data

ActiveCN104574347ACalculating Geometric Positioning AccuracyEvenly distributedImage enhancementImage analysisSensing dataImage resolution

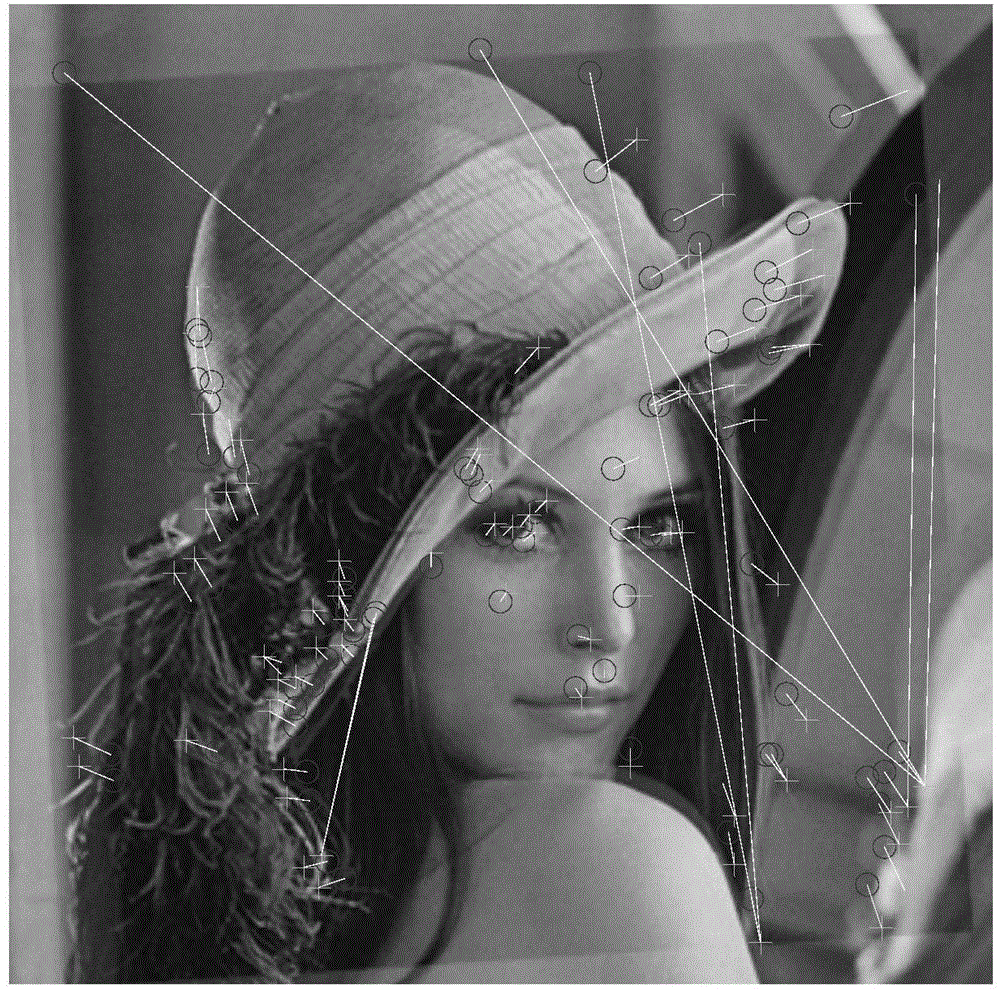

The invention discloses an on-orbit satellite image geometric positioning accuracy evaluation method on the basis of multi-source remote sensing data. The on-orbit satellite image geometric positioning accuracy evaluation method comprises the following steps: Step 1, regulating an image to be evaluated and a reference image into two images under the condition of the same spheroid, datum plane and resolution; Step 2, carrying out down-sampling on the two images and carrying out radiometric enhancement; Step 3, carrying out rough matching on the two images by using an Surf (Speed up robust features) algorithm and removing mismatched dot pairs by epipolar geometry; Step 4, according to a rough matching result, carrying out geometrical relationship compensation on the image to be evaluated and carrying out accurate blocking on the image to be evaluated, which is subjected to geometrical compensation, and the reference image; Step 5, aiming at block pairs of the image to be evaluated and the reference image, carrying out precise matching by using the Surf algorithm and removing mismatched dot pairs by epipolar geometry; Step 6, calculating external geospatial positioning accuracy and calculating internal geospatial positioning accuracy according to the screened direction control dot pairs. The on-orbit satellite image geometric positioning accuracy evaluation method can realize automatic, rapid and accurate evaluation on multisource high-accuracy remote sensing images from different sensors, different spectral regions and different time phases.

Owner:NANJING UNIV OF SCI & TECH

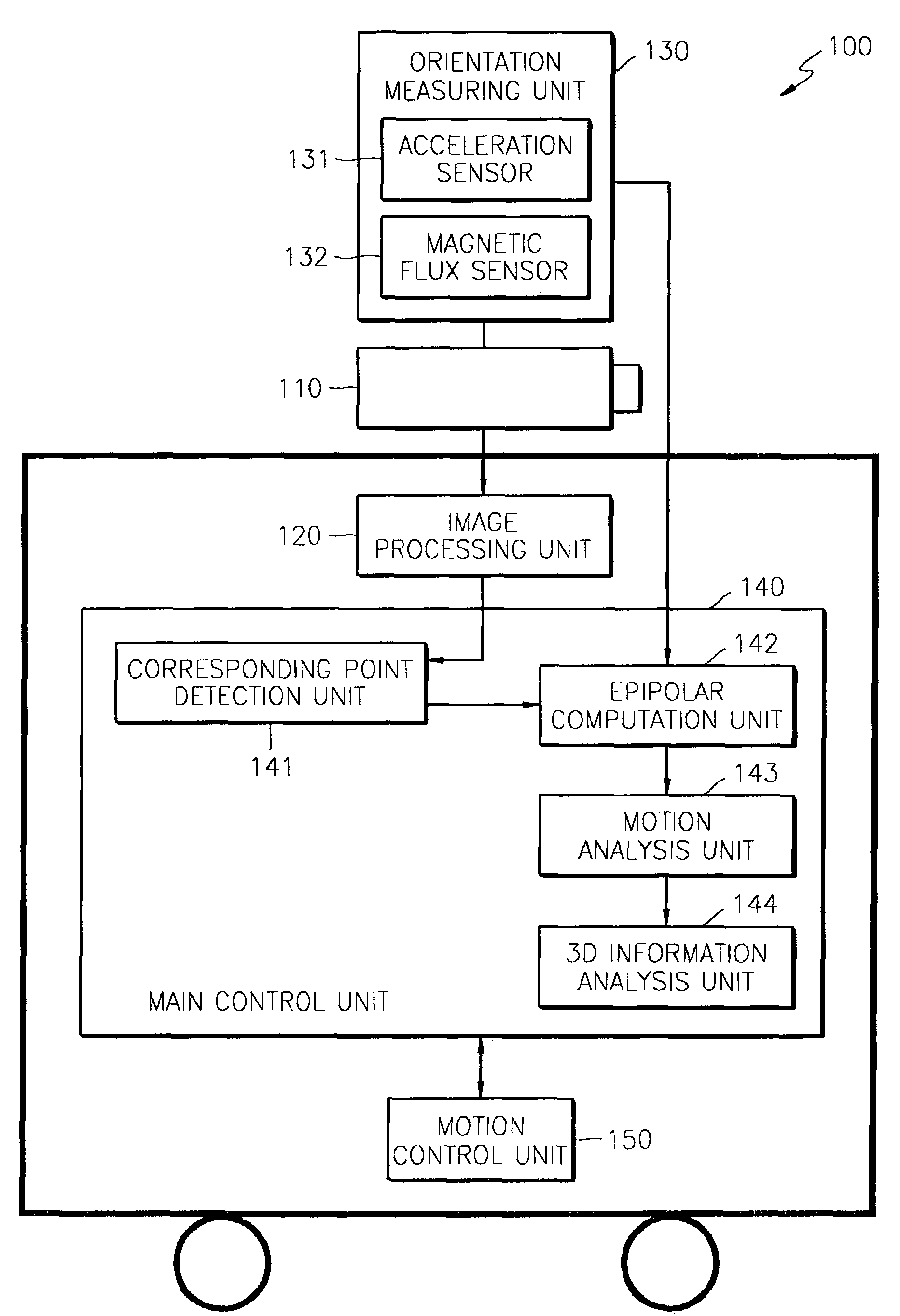

Autonomous vehicle and motion control therefor

There are provided an autonomous vehicle, and an apparatus and method for estimating the motion of the autonomous vehicle and detecting three-dimensional (3D) information of an object appearing in front of the moving autonomous vehicle. The autonomous vehicle measures its orientation using an acceleration sensor and a magnetic flux sensor, and extracts epipolar geometry information using the measured orientation information. Since the corresponding points between images required for extracting the epipolar geometry information can be reduced to two, it is possible to more easily and correctly obtain motion information of the autonomous vehicle and 3D information of an object in front of the autonomous vehicle.

Owner:SAMSUNG ELECTRONICS CO LTD

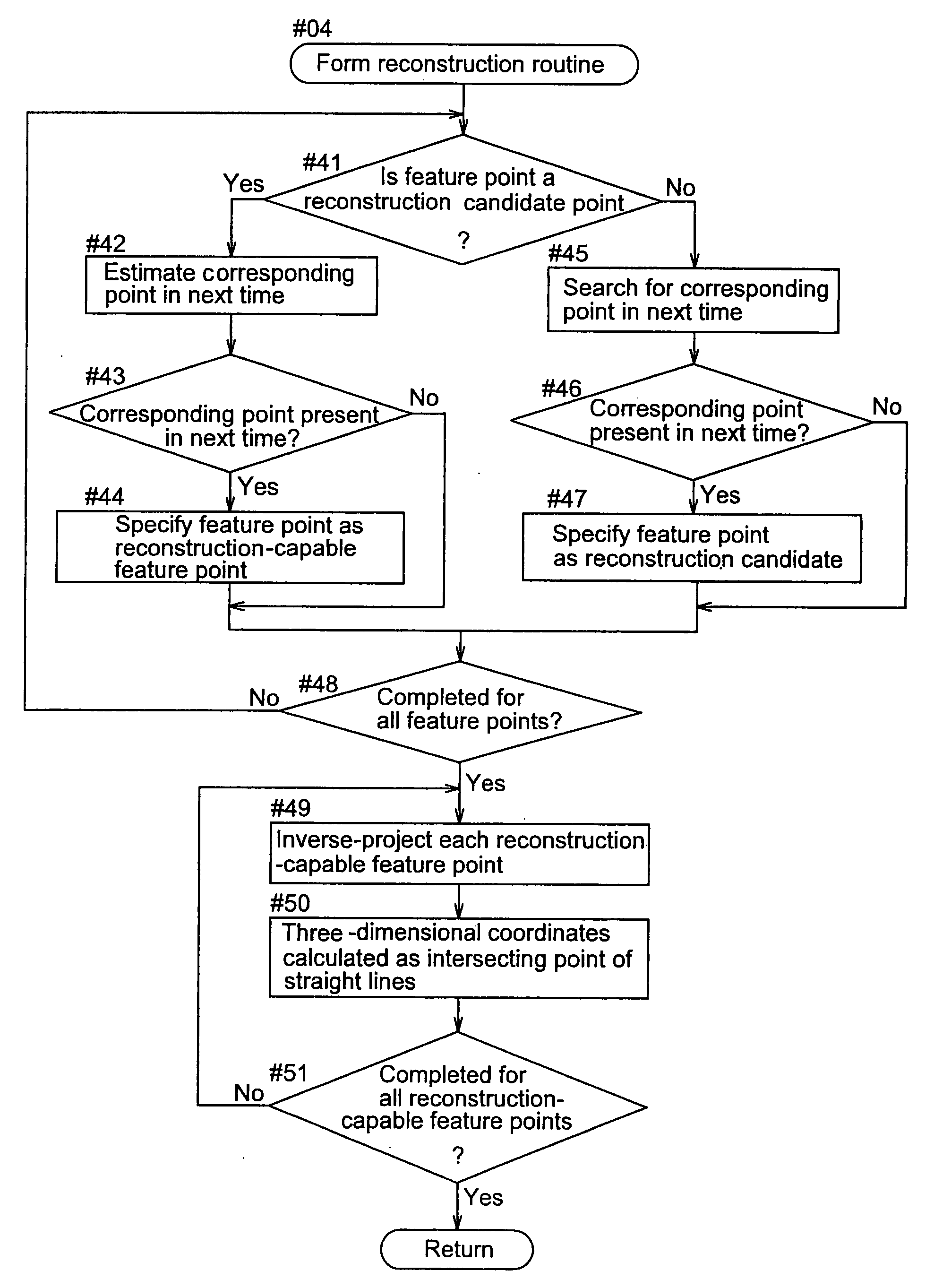

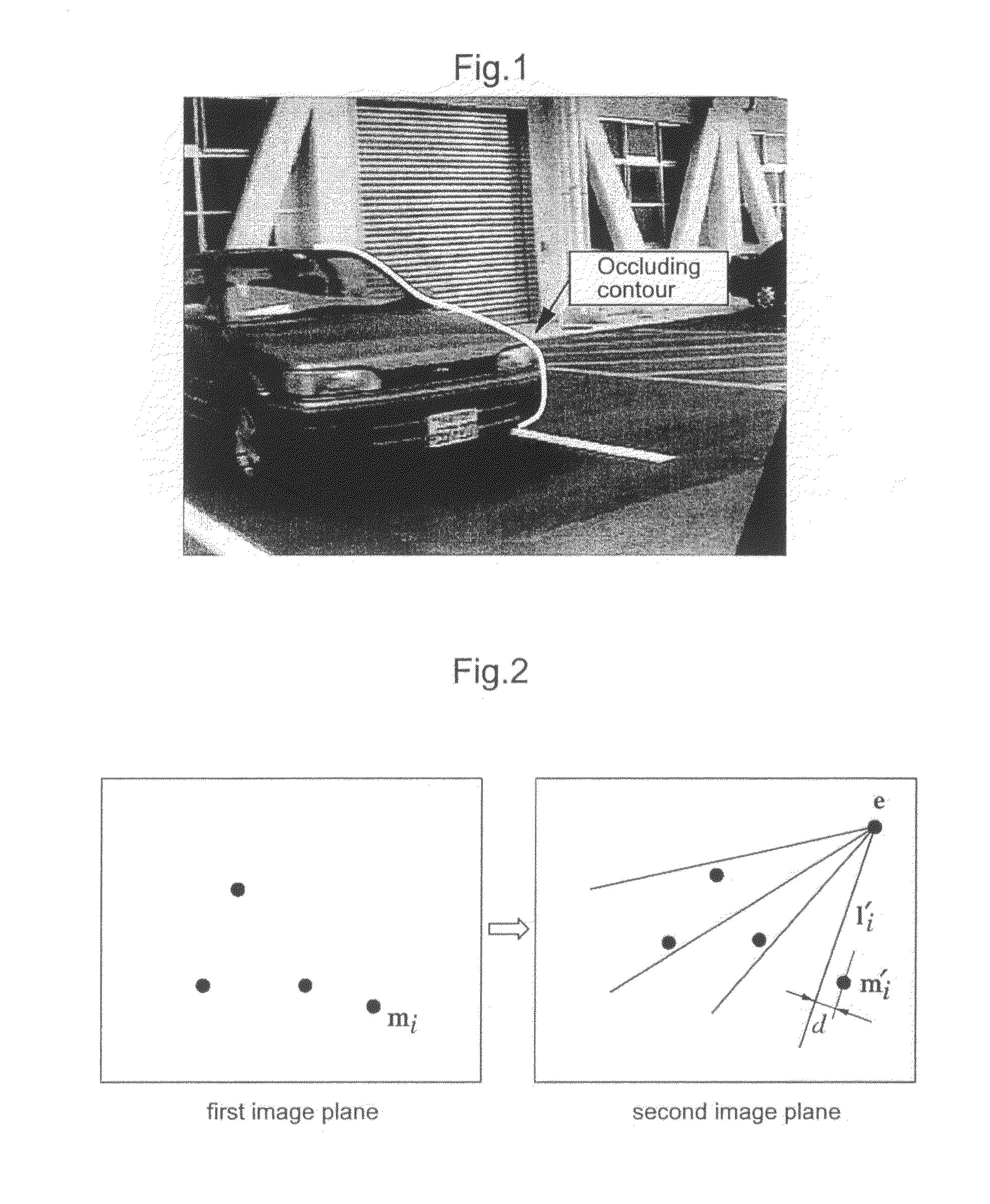

Object Recognition Apparatus and Object Recognition Method Using Epipolar Geometry

An object recognition apparatus that processes images, as acquired by an imaging means (10) mounted on a moving object, in a first image plane and a second image plane of different points of view, and recognizes an object in the vicinity of the moving object. The object recognition apparatus comprises: a feature point detection means (42) that detects feature points in first and second image planes of an object image; a fundamental matrix determination means (43a) that determines, based on calculation of an epipole through the auto-epipolar property, a fundamental matrix that expresses a geometrically corresponding relationship based on translational camera movement, with not less than two pairs of feature points corresponding between the first and second image planes; and a three-dimensional position calculation means (43b) that calculates a three-dimensional position of an object based on the coordinates of the object in the first and second image planes and the determined fundamental matrix.

Owner:AISIN SEIKI KK

System and method for two-dimensional visualization of temporal phenomena and three dimensional vessel reconstruction

A method for visualizing temporal phenomena and constructing 3D views from a series of medical images includes providing a first time series of digital images of contrast-enhanced blood flow in a patient, each acquired from a same viewing point with a known epipolar geometry, each said image comprising a plurality of intensities associated with an N-dimensional grid of points, calculating one or more time-density curves from said first time series of digital images, each curve indicative of how the intensity at corresponding points in successive images changes over time, and generating one or more overview images from said time density curves using a color coding technique, wherein said each overview image depict how a physical property value changes from said blood flow at selected corresponding points in said first time series of images.

Owner:SIEMENS HEALTHCARE GMBH

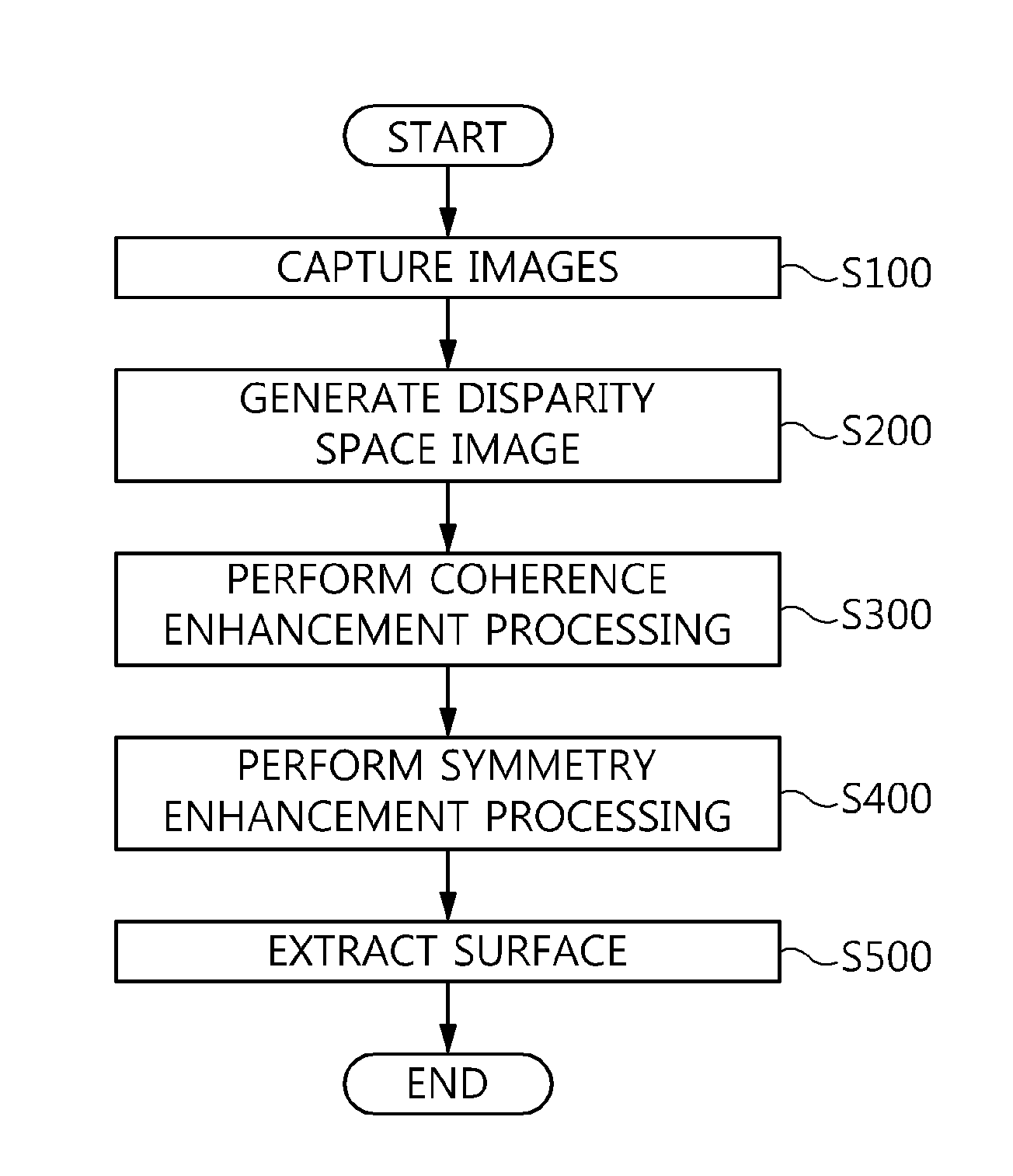

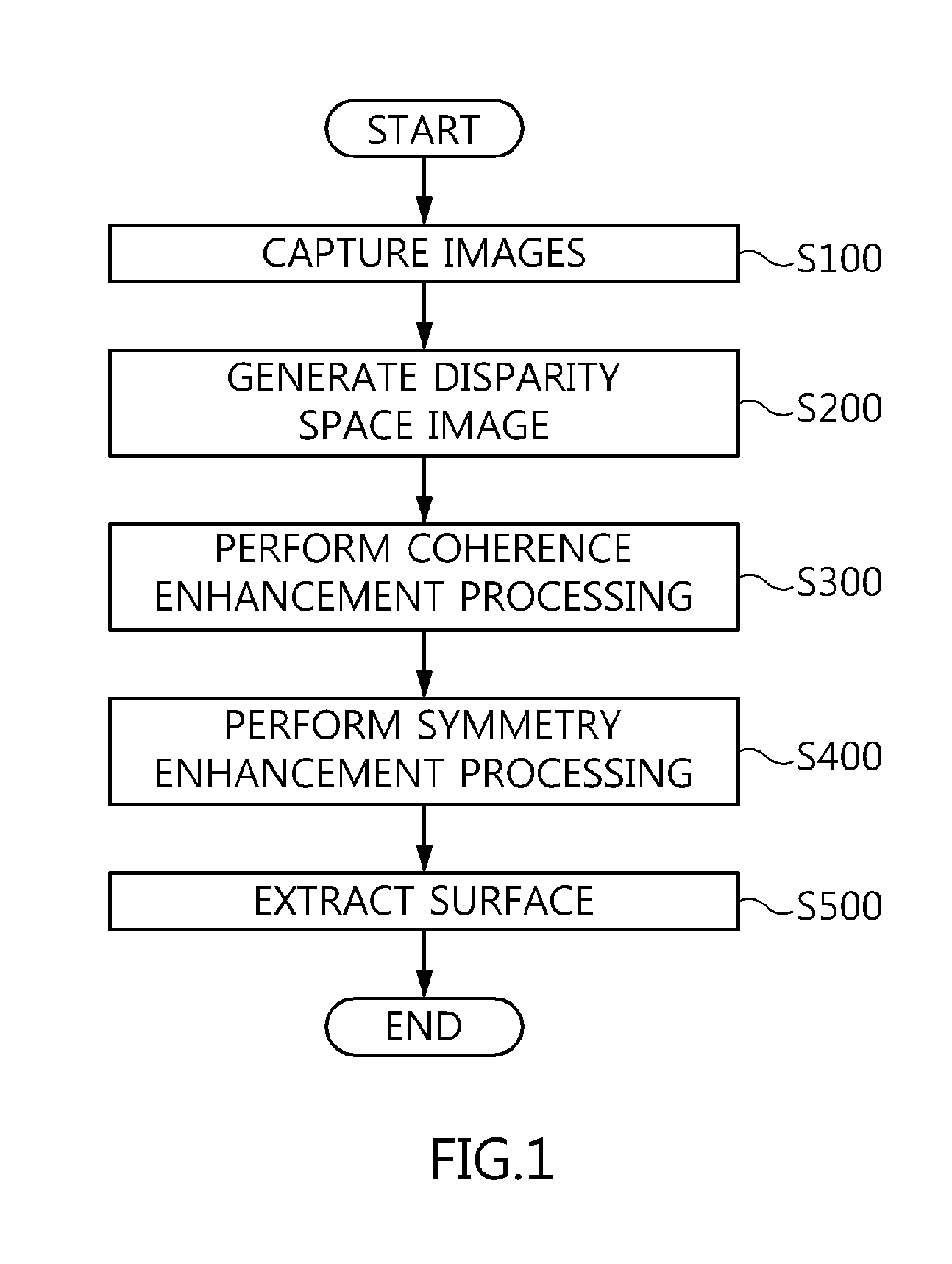

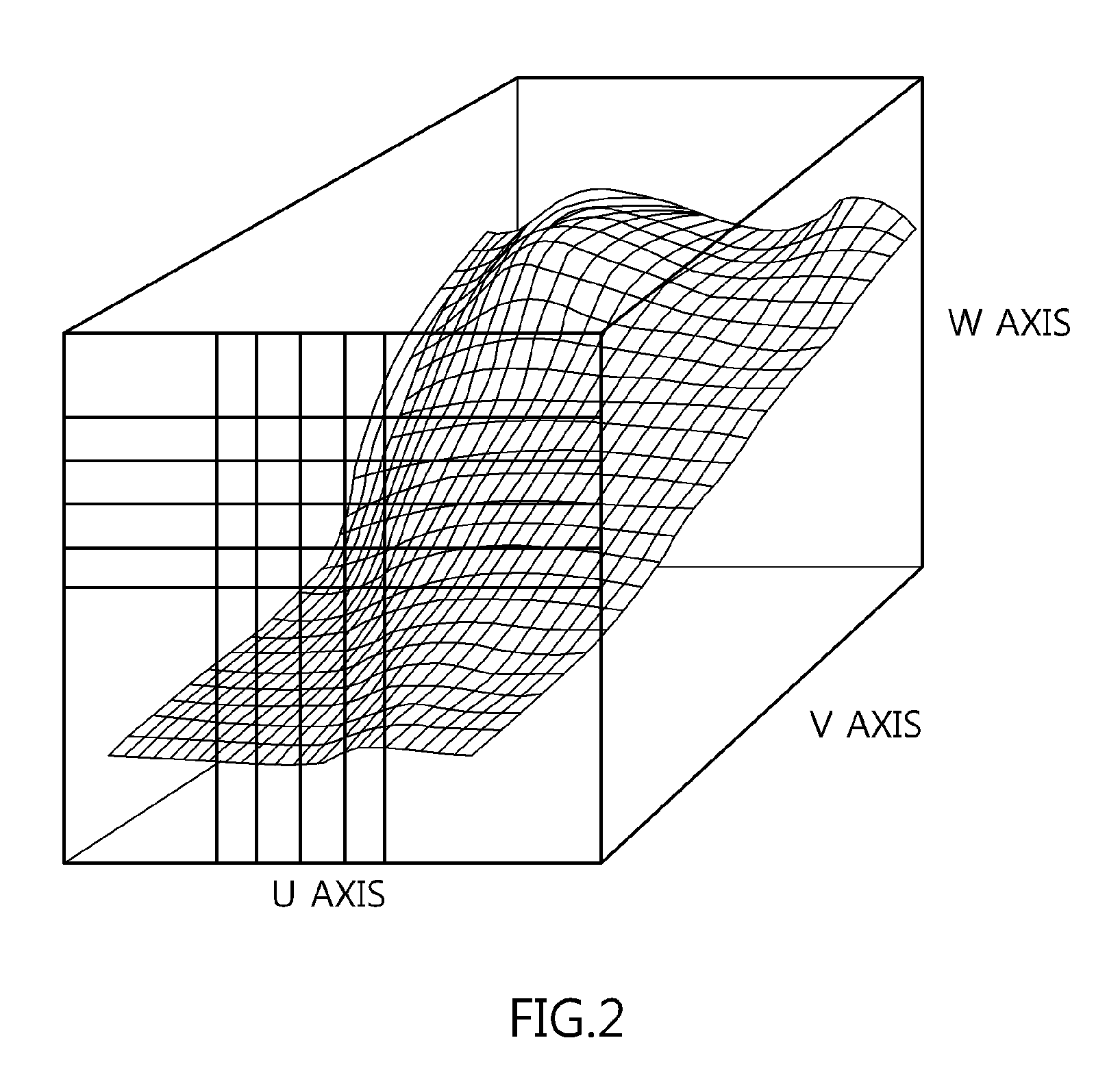

Method of processing disparity space image

The present invention relates to a processing method that emphasizes neighboring information around a disparity surface included in a source disparity space image by means of processing that emphasizes similarity at true matching points using inherent geometric information, that is, coherence and symmetry. The method of processing the disparity space image includes capturing stereo images satisfying epipolar geometry constraints using at least two cameras having parallax, generating pixels of a 3D disparity space image based on the captured images, reducing dispersion of luminance distribution of the disparity space image while keeping information included in the disparity space image, generating a symmetry-enhanced disparity space image by performing processing for emphasizing similarities of pixels arranged at reflective symmetric locations along a disparity-changing direction in the disparity space image, and extracting a disparity surface by connecting at least three matching points in the symmetry-enhanced disparity space image.

Owner:ELECTRONICS & TELECOMM RES INST

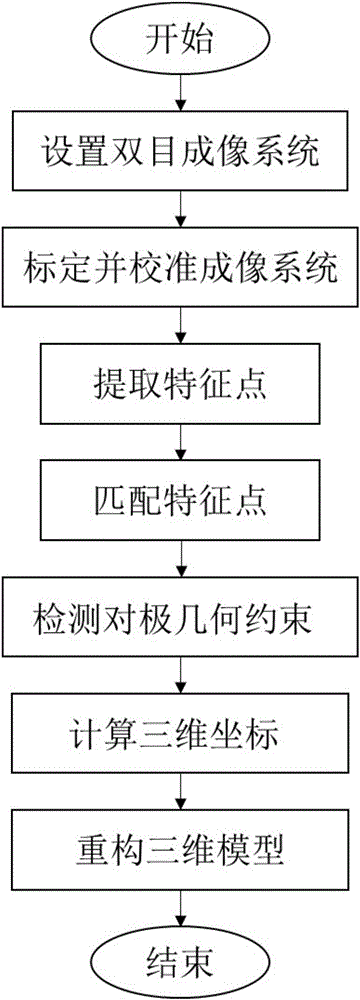

Binocular three-dimensional reconstruction method

ActiveCN105894574AReduce false match rateReduce outliersDetails involving processing steps3D modellingFeature extractionReconstruction method

The invention discloses a binocular three-dimensional reconstruction method comprising the following steps of: 1) acquiring an image of an object to be reconstructed by two image acquisition apparatuses with the same model in order to obtain a left image and a right image; 2) calibrating the image acquisition apparatuses by a chessboard method, computing the internal and external parameter and a lens distortion coefficient, processing the left image and the right image to remove distortion in the images; 3) extracting features from the two processed images in the step 2) to obtain the feature points of the two images; 4) matching the feature points by using the feature points of the two images in the step 3) to obtain feature point pairs; 5) performing epipolar geometric constraint detection on the obtained feature point pairs to remove mismatched feature point pairs; and 6) computing three-dimensional coordinates of corresponding points, in a world coordinate system, of the obtained feature points by using the reserved feature point pairs. The binocular three-dimensional reconstruction method may reduce abnormal points and acquires an accurate three-dimensional reconstruction model.

Owner:HUIZHOU FRONT OPTOELECTRONIC TECH CO LTD

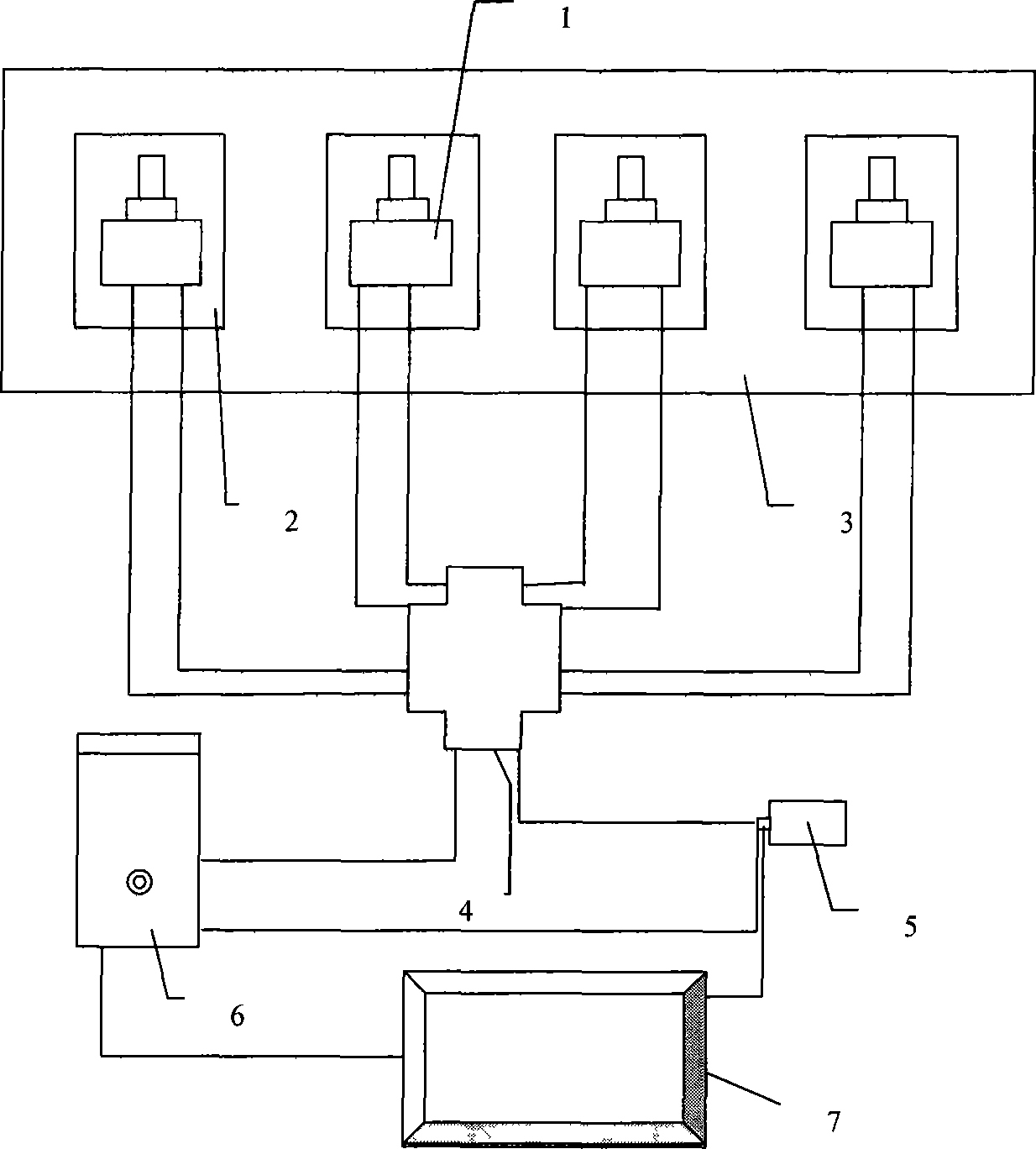

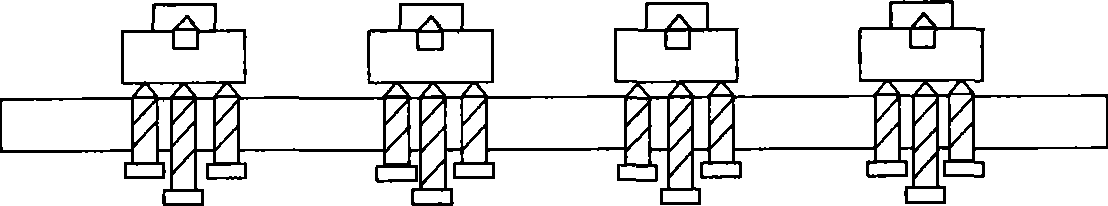

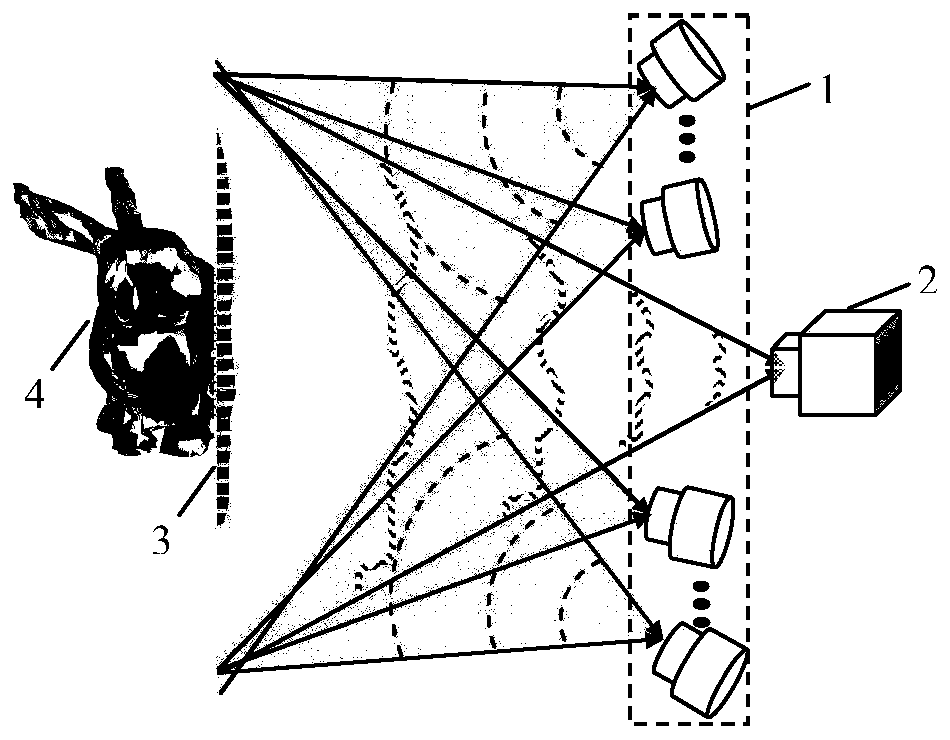

Real acquisition real display multi-lens digital stereo system

The invention designs an immediate-shooting immediate-display multi-lens digital stereoscopic system. The system consists of a stereoscopic shooting system and a stereoscopic display system jointly, and concretely comprises a plurality of CCD camera units (1), a platform (2) for fixing the CCD camera units, a bracket (3) for supporting the platform, an image acquisition card (4), a power source (5), a PC (6), a cylindrical-surface grating-type or slit-type naked-eye free stereoscopic display (7) and the like. The stereoscopic system adopts a camera calibration method to initially adjust the spatial position relation of the CCD camera units and calculate the distortion coefficients of camera lenses, corrects the geometric distortion of images according to the distortion coefficients, adopts epipolar geometry to correct trapezium distortion caused by camera layout, and then adjusts unreasonable parallax in multipath acquisition. Through the combination of the methods, the stereoscopic system can shoot not only static scenes, but also dynamic scenes, enables a shooting process to be simpler, convenient and flexible, and can realize shooting-displaying synchronization.

Owner:SICHUAN UNIV

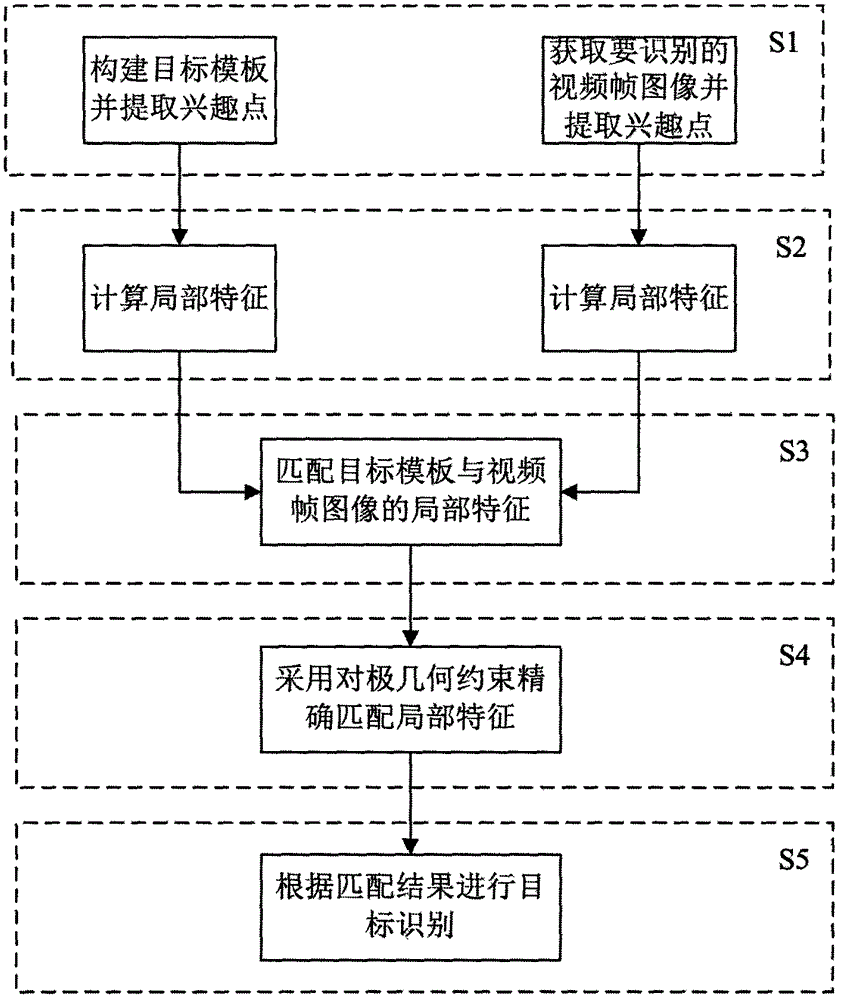

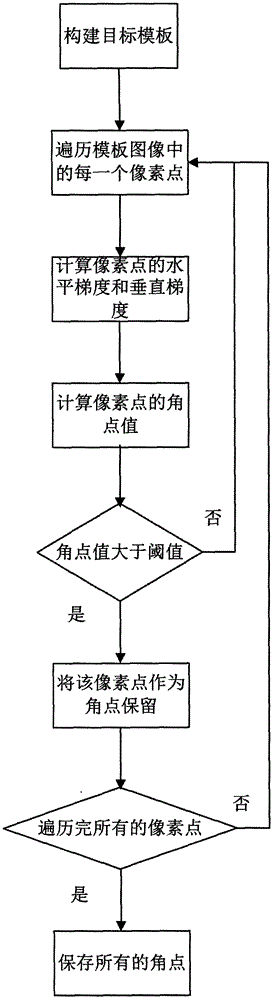

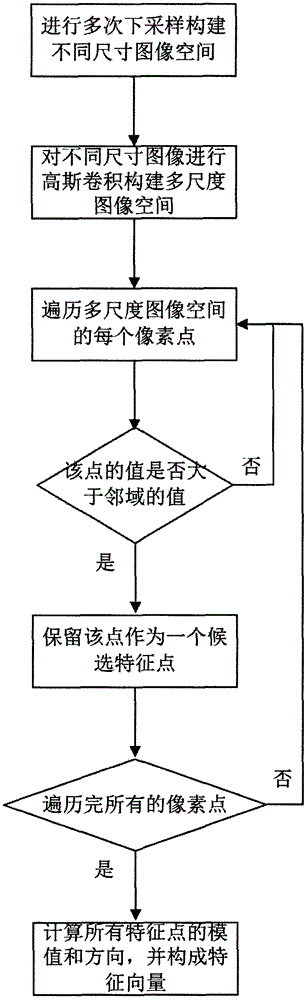

Target identification method based on multi-angle local feature matching

InactiveCN106203342AGuaranteed accuracyCharacter and pattern recognitionPattern recognitionGoal recognition

The invention provides a target identification method based on multi-angle local feature matching. The method comprises the following steps of S1, acquiring a plurality of target object images at different angles to serve as templates, and extracting interest points in the template images; S2, computing local features of areas around the interest points in the templates images by using a feature description method; S3, extracting the local feature of a target image to be identified, and matching the extracted local feature with the local feature in each template image, thus acquiring roughly matched local feature pairs; S4, for the roughly matched local feature pairs, computing a basic matrix between the target image and the template images by using random sampling consistency algorithm, and building epipolar geometry restriction according to the basic matrix to filter the local feature pairs, thus acquiring exactly matched local feature point pairs; and S5, computing the number of the matched local feature points in the target image, if the number of the found feature points is more than a set threshold, determining that identification is successful, and otherwise, determining that the target object is not matched with the templates.

Owner:GUANGDONG POLYTECHNIC NORMAL UNIV

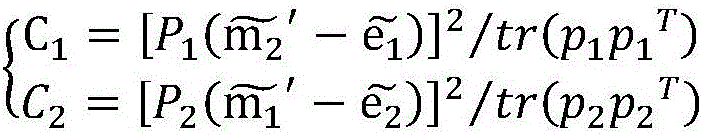

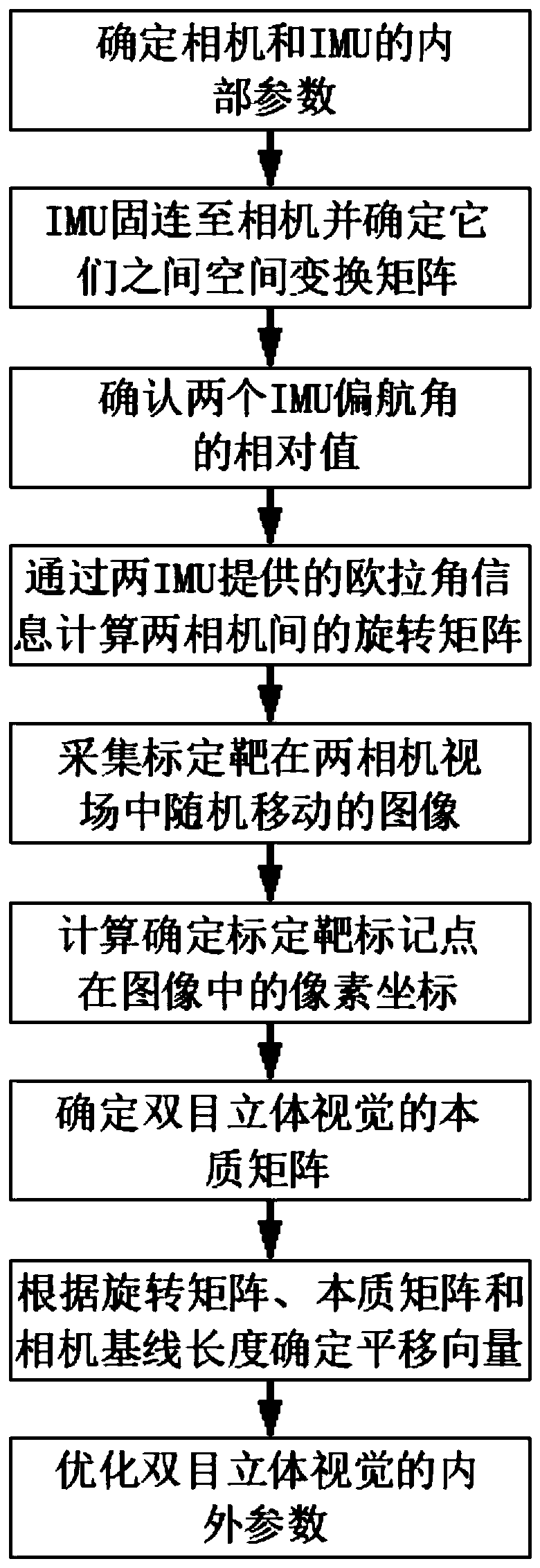

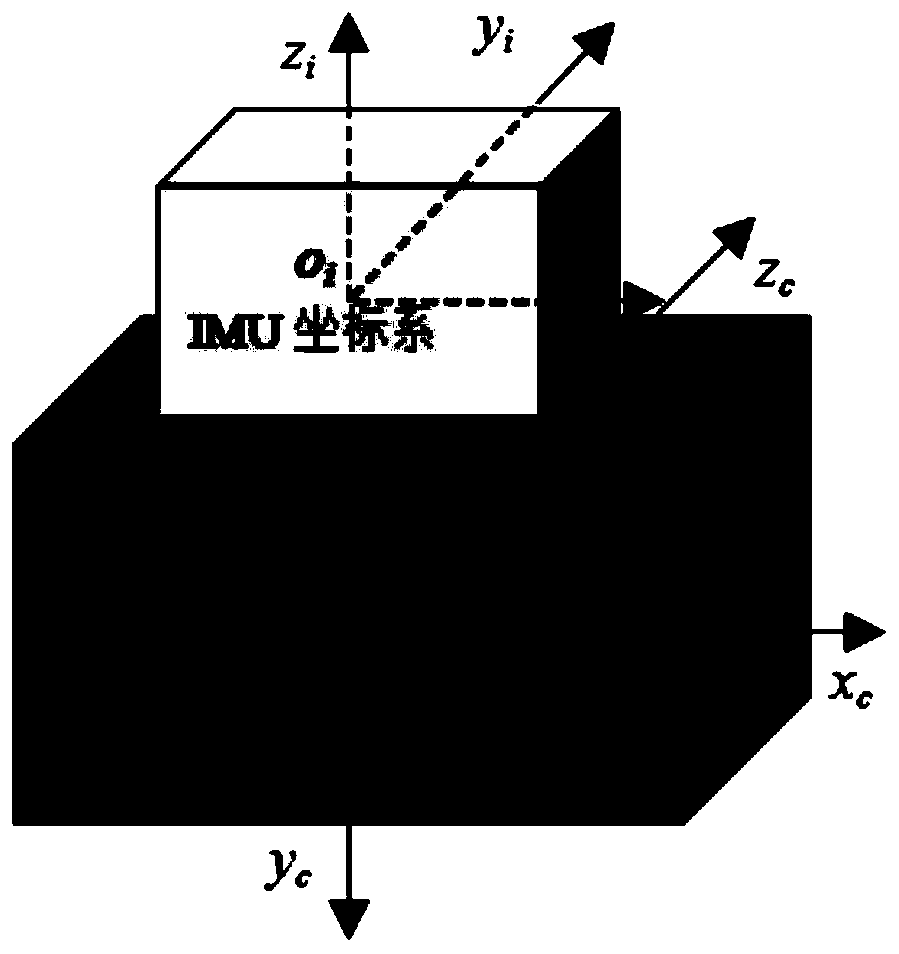

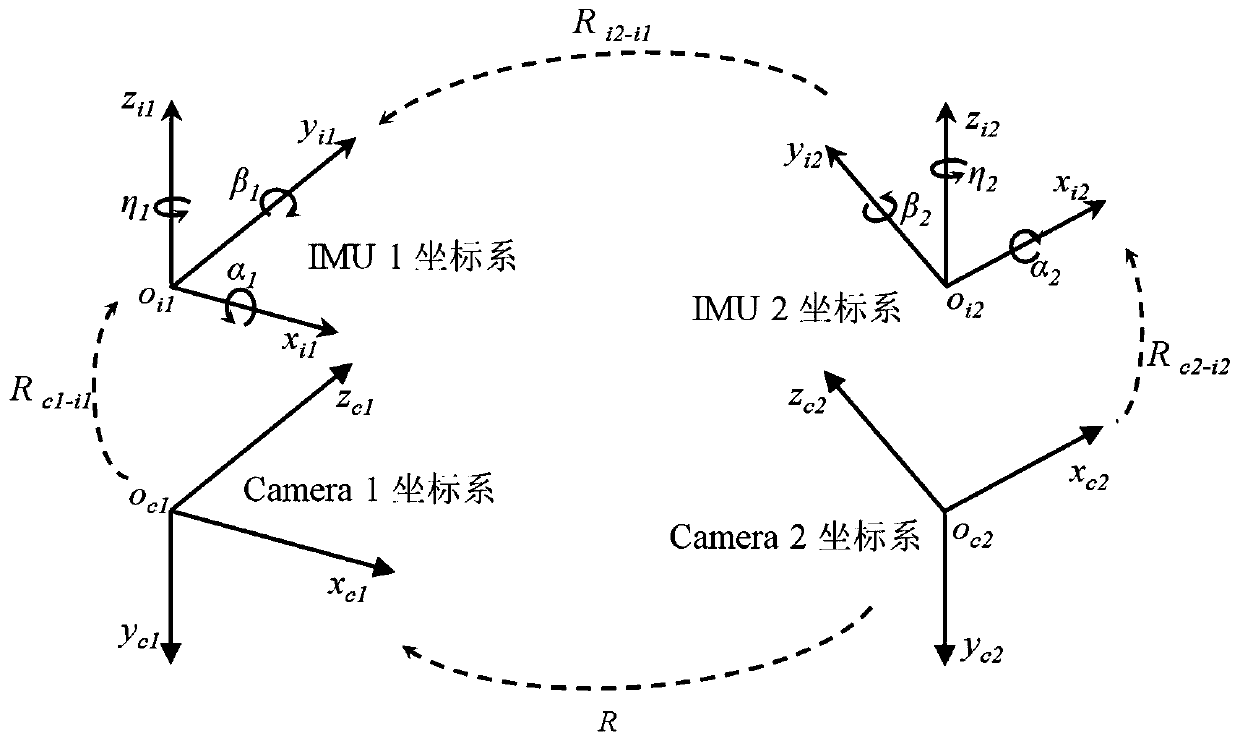

Dual-eye three-dimensional visual measurement method and system fused with IMU calibration

ActiveCN110296691ALow priceSolve the defect of low precisionImage enhancementImage analysisVisual field lossLight beam

The invention belongs to the field of photoelectric detection, and particularly relates to a dual-eye three-dimensional visual measurement method and system fused with IMU for calibration. The methodcomprises the steps of fixedly and respectively connecting two IMUs with cameras, calculating a space conversion relation between the cameras and the IMUs, and determining a rotation matrix between the two cameras according to eulerian angles of (z-y-x) sequences of the IMUs and by a yaw angle differential method proposed by the invention; and determining a translation vector according to an epipolar geometrical principle and the rotation matrix, and optimizing internal parameters of the camera, the rotation matrix and the translation vector by a sparse light beam compensation method to obtainan optimized camera parameter. By the method, a large-size accurately-fabricated calibration plate is not needed, the dual-eye three-dimensional visual calibration can be completed only by measuringlengths of two camera base lines, and the defects that a traditional calibration method is only applicable to an indoor small visual field and a self-calibration method is low in accuracy are overcome. The method can be used in a complicated environment such as outdoors and a large visual field and has relatively high accuracy, robustness and flexibility.

Owner:SHANGHAI UNIV +1

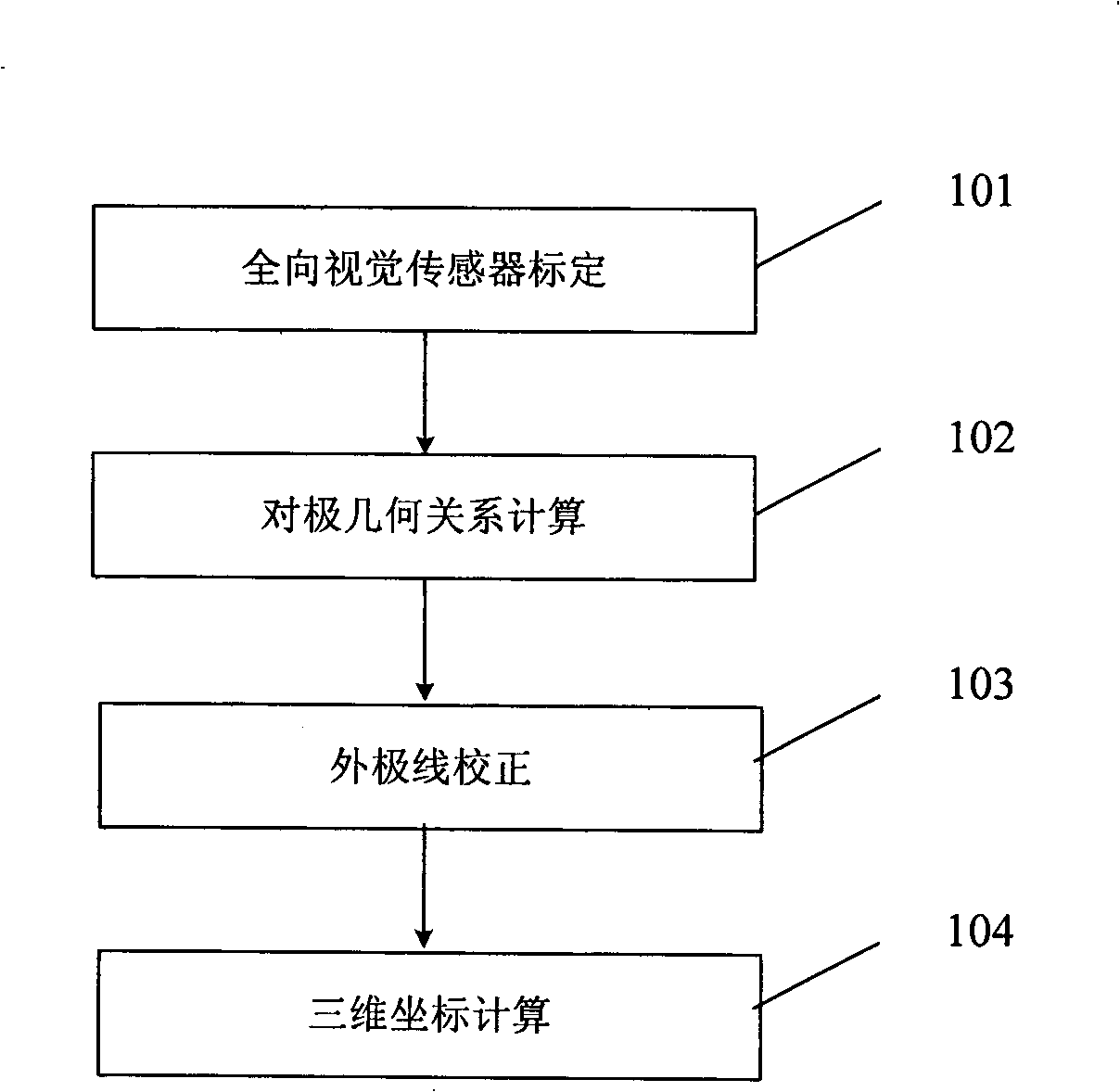

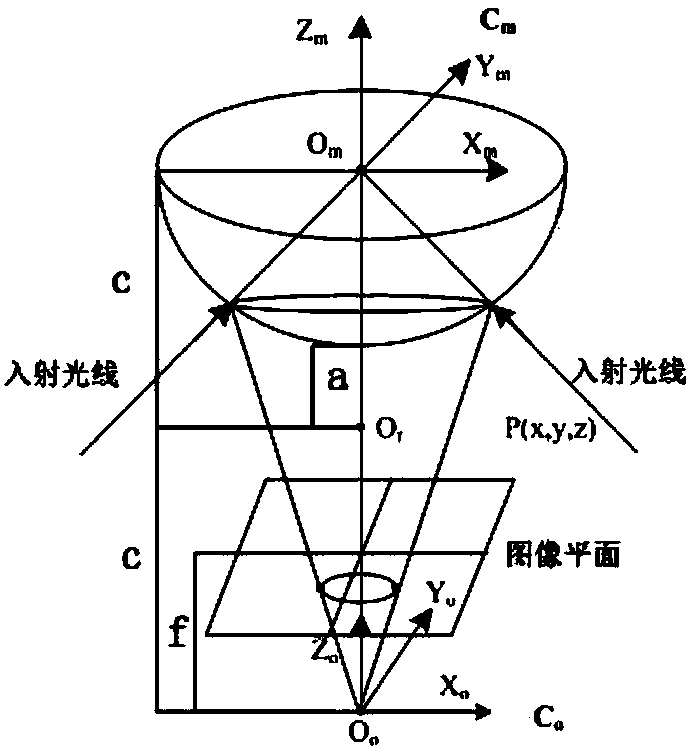

Omnidirectional stereo vision three-dimensional rebuilding method based on Taylor series model

InactiveCN101354796AHigh precision3D reconstruction worksImage analysis3D modellingReconstruction methodDirectional antenna

The invention discloses an omni-directional stereo vision three-dimensional reconstruction method based on Taylor series models. The method comprises the following: a step of calibrating a camera, which is to utilize a Taylor series model to calibrate an omni-directional vision sensor so as to obtain internal parameters of the camera; a step of obtaining epipolar geometric relation, which comprises the steps of calculating an essential matrix between binocular omni-directional cameras and extracting the rotation and translation component of the cameras; a step of correcting an outer polar line, which is to correct the outer polar line of a shot omni-directional stereo image so as to allow a corrected polar quadratic curve to coincide with an image scan line; and a step of three-dimensional reconstruction, which is to carry out feature point matching to the corrected stereo image and calculate the three-dimensional coordinates of points according to matching results. The method can be applicable to various omni-directional vision sensors, has the characteristics of wide application range and high precision, and can carry out effective three-dimensional reconstruction under the condition that the parameters of the omni-directional vision sensors are unknown.

Owner:ZHEJIANG UNIV

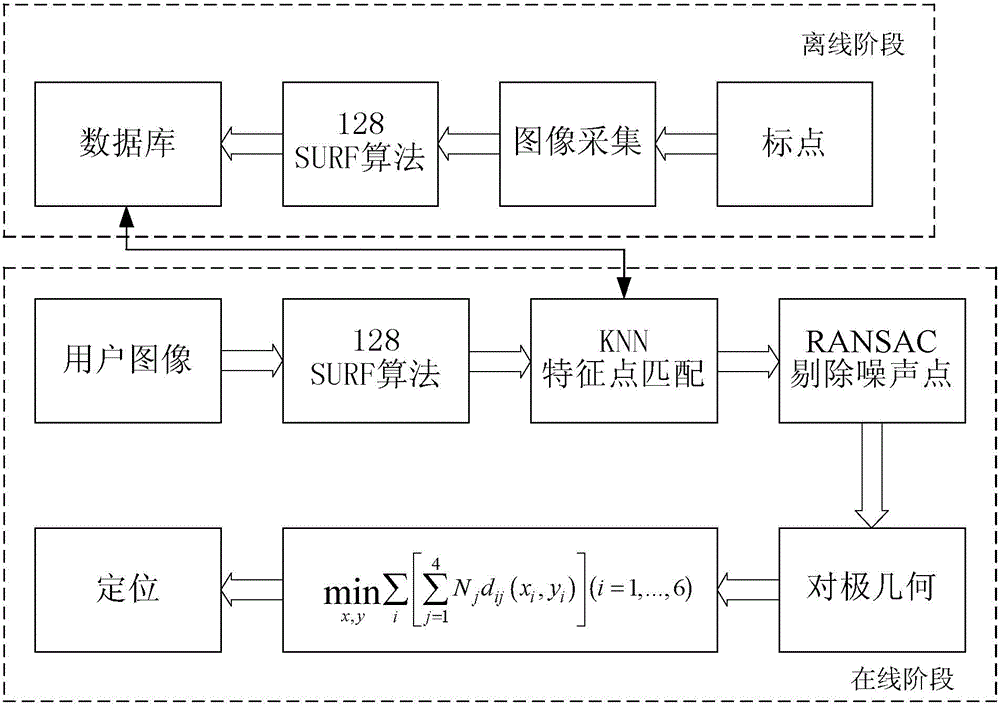

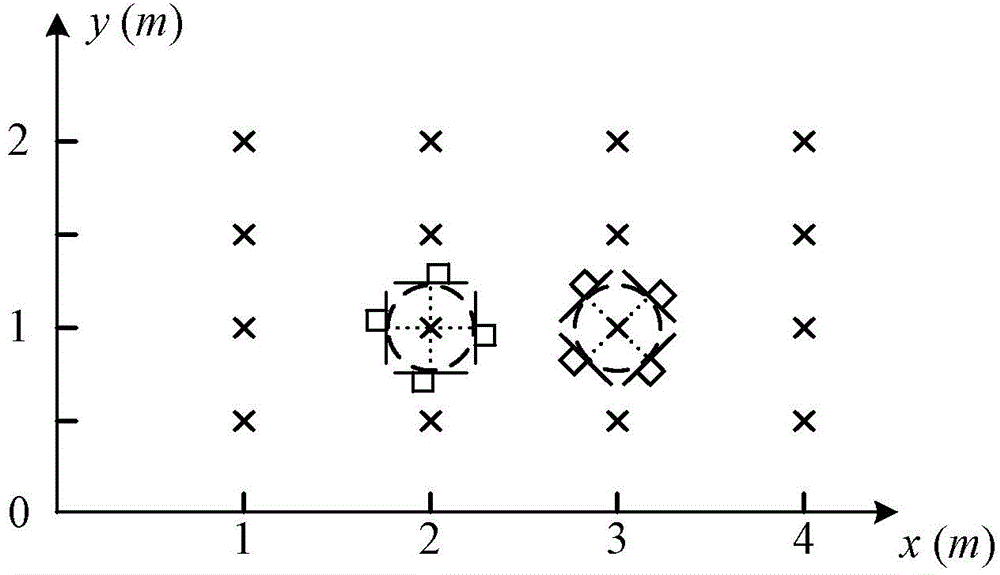

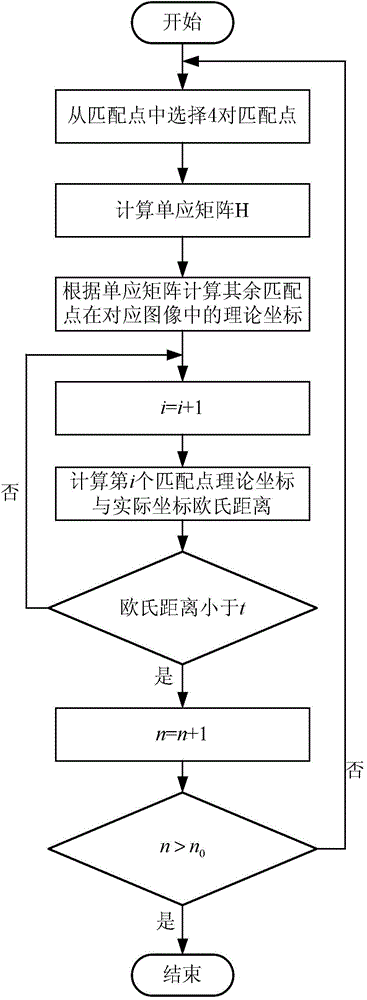

RANSAC algorithm-based visual localization method

ActiveCN104596519AReduce the number of iterationsRun fastNavigational calculation instrumentsPicture interpretationPattern recognitionVisual localization

The invention discloses an RANSAC algorithm-based visual localization method which belongs to the field of visual localization. The traditional RANSAC algorithm has more iteration times, large calculation amount and long computation time, so that the visual localization method implemented by this algorithm has the problem of low localization speed. The RANSAC algorithm-based visual localization method comprises the following steps: calculating feature points of images uploaded by a user to be localized by an SURF algorithm and feature point description information; selecting one picture with the most matching points from a database, performing SURF matching on the obtained feature point description information of the images and the feature point description information of the pictures, defining each pair of images and pictures for matching as one pair of matching images, and obtaining a group of matching points after matching each pair of matching images; eliminating mistaken matching points in the matching points of each pair of matching images by the RANSAC algorithm of matching quality, and determining four pairs of matching images with the most correct matching points; calculating a position coordinate of the user by an epipolar geometric algorithm based on the obtained four pairs of matching images, so as to complete the indoor localization.

Owner:严格集团股份有限公司

360-degree three-dimensional reconstruction optimization method based on continuous phase dense matching

PendingCN111242990AHigh precisionAchieve global optimizationImage enhancementImage analysisPoint cloudAlgorithm

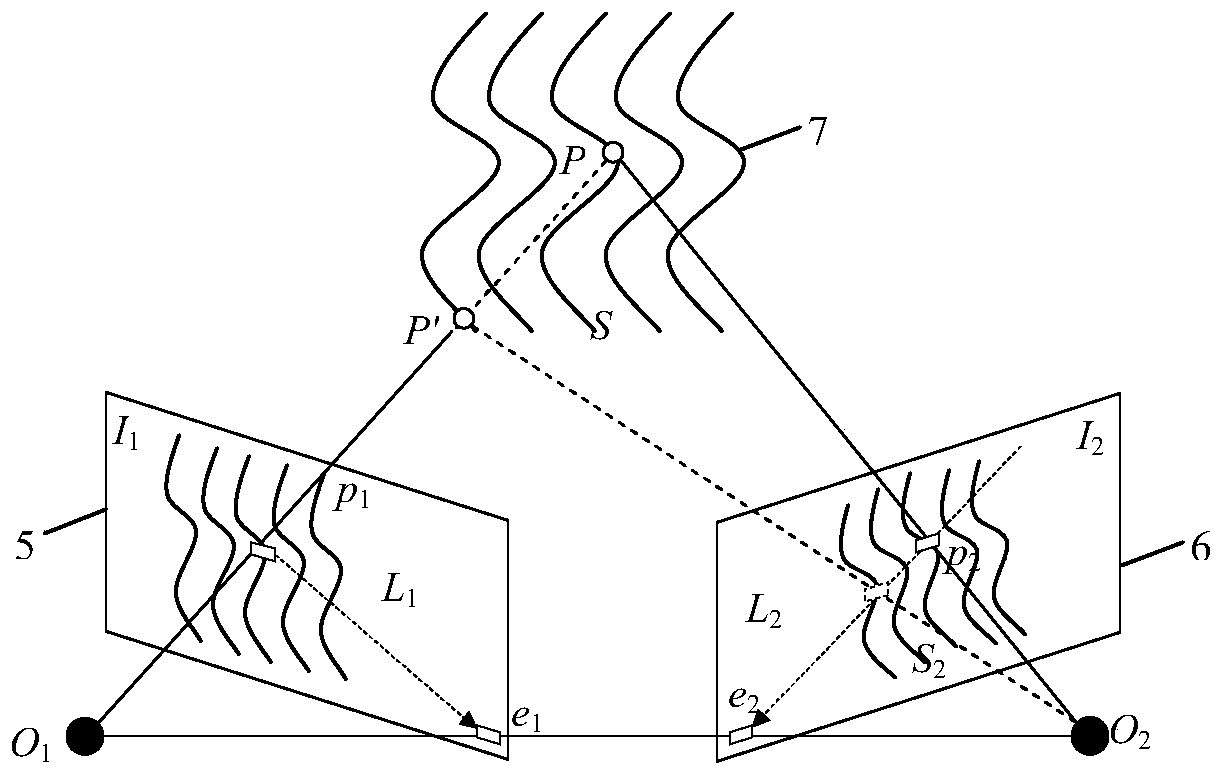

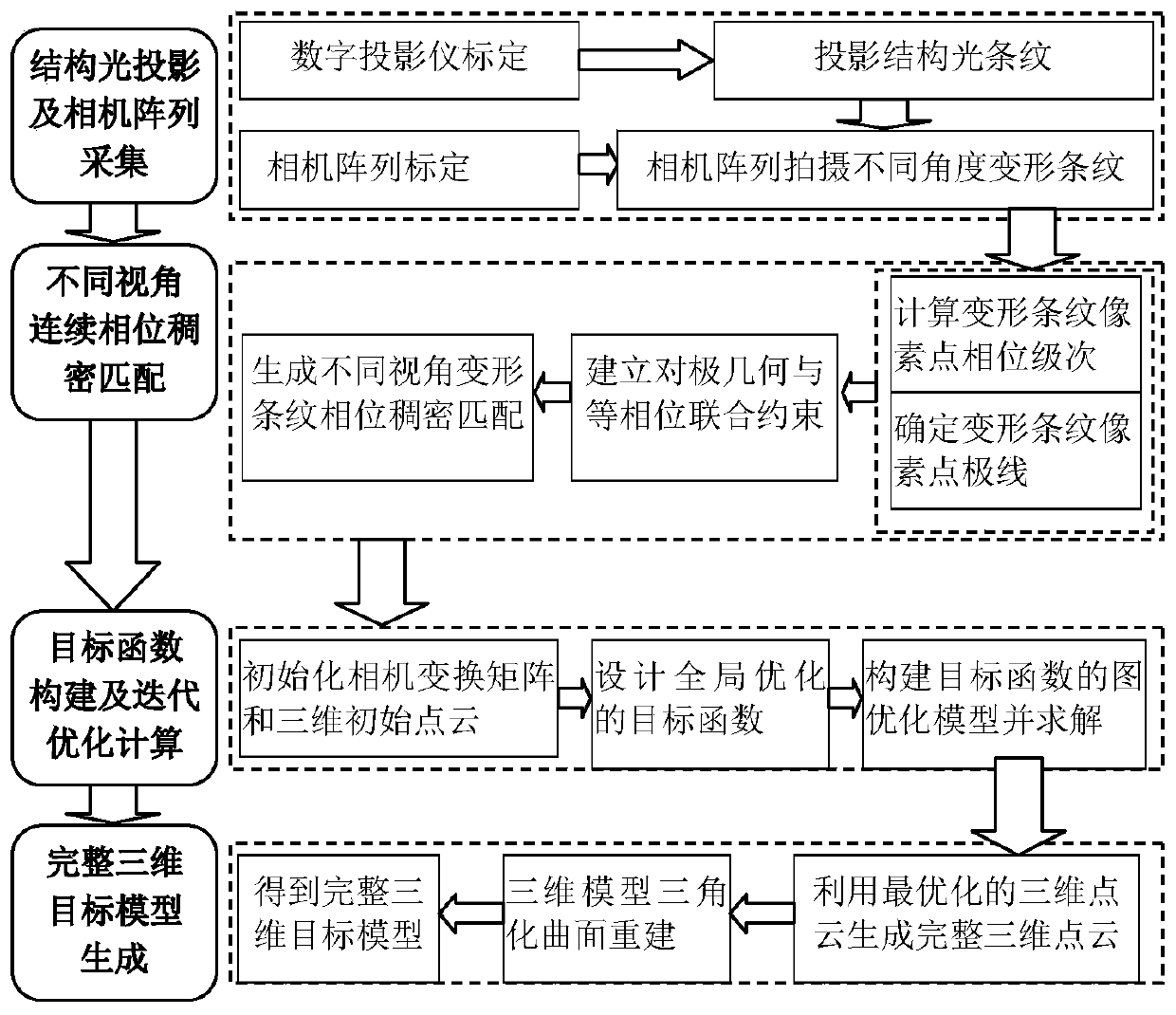

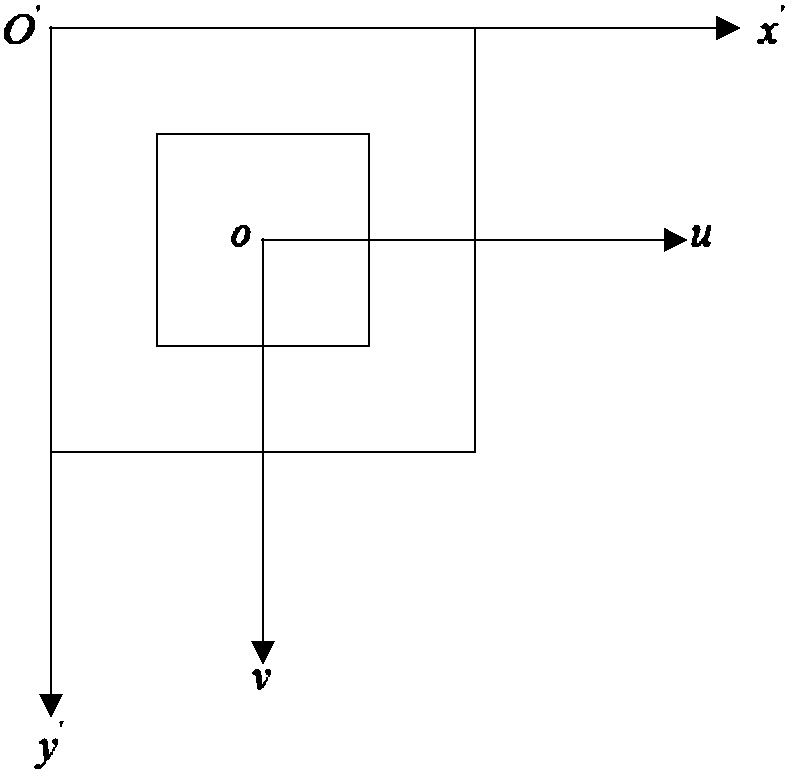

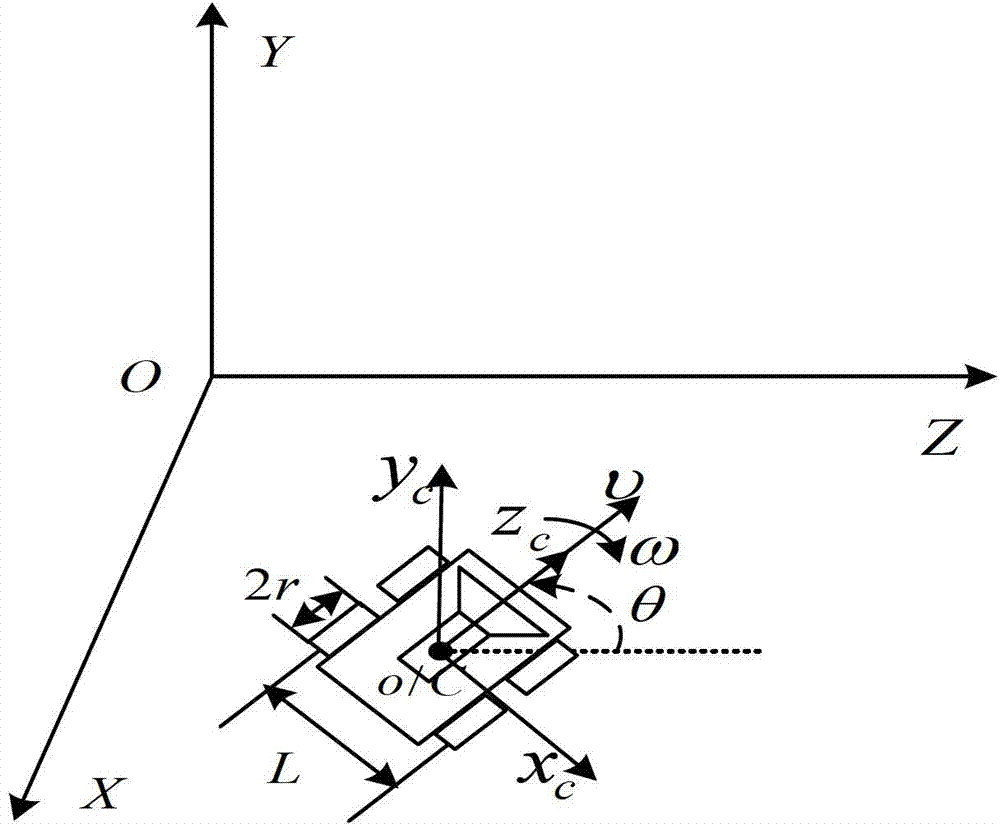

The invention discloses a 360-degree three-dimensional reconstruction optimization method based on continuous phase dense matching. According to the method, 360-degree reconstruction of the three-dimensional point cloud of the measured object can be realized rapidly; a reconstruction result is subjected to nonlinear optimization; the method is realized through the following scheme: the method comprises the following steps: firstly, carrying out calibration of a digital projector and a camera; obtaining a corresponding structured light deformation image; calculating the phase order of the deformed fringe pixel points, and determining the epipolar lines of the deformed fringe pixel points in different camera imaging planes of the camera array at the same time, thereby establishing epipolar geometry and equiphase joint constraints, calculating the dense matching of structured light images at different viewing angles, and generating a phase dense matching relationship of the deformed fringe pixels at different angles; initializing a camera transformation matrix and a three-dimensional point cloud initial point by utilizing a phase dense matching relationship and a triangularization principle, constructing an objective function and a graph optimization model thereof, and solving the objective function and the graph optimization model; and performing triangulation surface reconstruction on the optimized three-dimensional point cloud to obtain a complete 360-degree three-dimensional target reconstruction model of the measured target.

Owner:10TH RES INST OF CETC

Space non-cooperative target three-dimensional reconstruction method based on projection matrix

InactiveCN107680159AReduce cumbersome stepsMeet real-time requirementsImage enhancementImage analysisHat matrixThree-dimensional space

The invention provides a space non-cooperative target three-dimensional reconstruction method based on a projection matrix. Firstly, feature points of images taken by left and right cameras are extracted, then corresponding feature points are matched according to an image matching principle, then determining a rotation matrix R and a translation matrix T according to an epipolar geometry constraint principle; and finally working out coordinates of three-dimensional points by means of the relationship between three-dimensional points and an image plane projection matrix. According to the invention, under the condition that the image feature points are matched correctly, internal and external parameters of the cameras can be solved only through the epipolar geometry constraint, cumbersome steps of camera calibration are omitted, the calculated amount is reduced, reconstruction time is shortened, and the real-time demand of spacecraft operation can be satisfied.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Vision-based pose stabilization control method of moving trolley

InactiveCN102736626AObvious advantageGood effectPosition/course control in two dimensionsKinematic controllerVisual servoing

The invention discloses a vision-based pose stabilization control method of a moving trolley, which fully considers about a kinematics model and a dynamics model of a trolley and a camera model. The vision-based pose stabilization control method comprises the following steps of: respectively obtaining an initial image and an expected image at a starting pose position and an expected pose position through a camera, and obtaining an existing image in a movement process in real time; by utilizing an antipode geometric relation and a trilinear restrain relation among shot images, designing three independent ordered kinematics controllers based on Epipolar geometry and 1D trifocal tensor by utilizing a three-step conversion control policy; finally designing a dynamic conversion control rule by taking outputs of the kinematics controllers as the inputs of the kinematics controllers by utilizing an retrieval method so that the trolley quickly and stably reaches an expected pose along a shortest path. The invention solves problems in the traditional vision servo method that the dynamics characteristic of the trolley is not considered during pose stability control and slow servo speed is slow, and the vision-based pose stabilization control method is practical and can enable the trolley to quickly and stably reach the expected pose.

Owner:BEIJING UNIV OF CHEM TECH

Visual loopback detection method based on semantic segmentation and image restoration in dynamic scene

ActiveCN111696118AImprove reliabilityPrevent extractionImage enhancementImage analysisPattern recognitionRgb image

The invention discloses a visual loopback detection method based on semantic segmentation and image restoration in a dynamic scene. The visual loopback detection method comprises the following steps:1) pre-training an ORB feature offline dictionary in a historical image library; 2) acquiring a current RGB image as a current frame, and segmenting out that the image belongs to a dynamic scene areaby using a DANet semantic segmentation network; 3) carrying out image restoration on the image covered by the mask by utilizing an image restoration network; 4) taking all the historical database images as key frames, and performing loopback detection judgment on the current frame image and all the key frame images one by one; 5) judging whether a loop is formed or not according to the similarityand epipolar geometry of the bag-of-words vectors of the two frames of images; and 6) performing judgement. The visual loopback detection method can be used for loopback detection in visual SLAM in adynamic operation environment, and is used for solving the problems that feature matching errors are caused by existence of dynamic targets such as operators, vehicles and inspection robots in a scene, and loopback cannot be correctly detected due to too few feature points caused by segmentation of a dynamic region.

Owner:SOUTHEAST UNIV

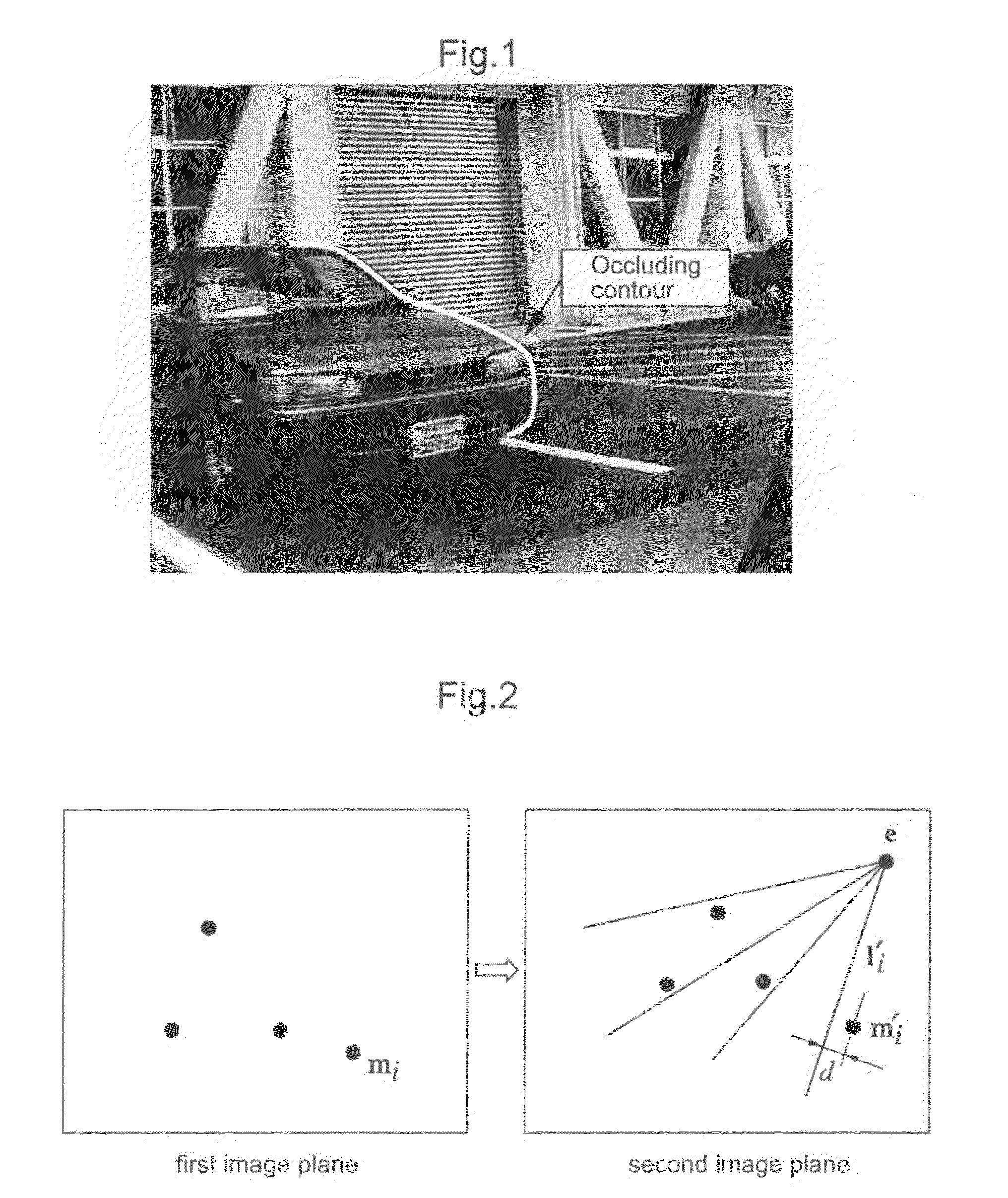

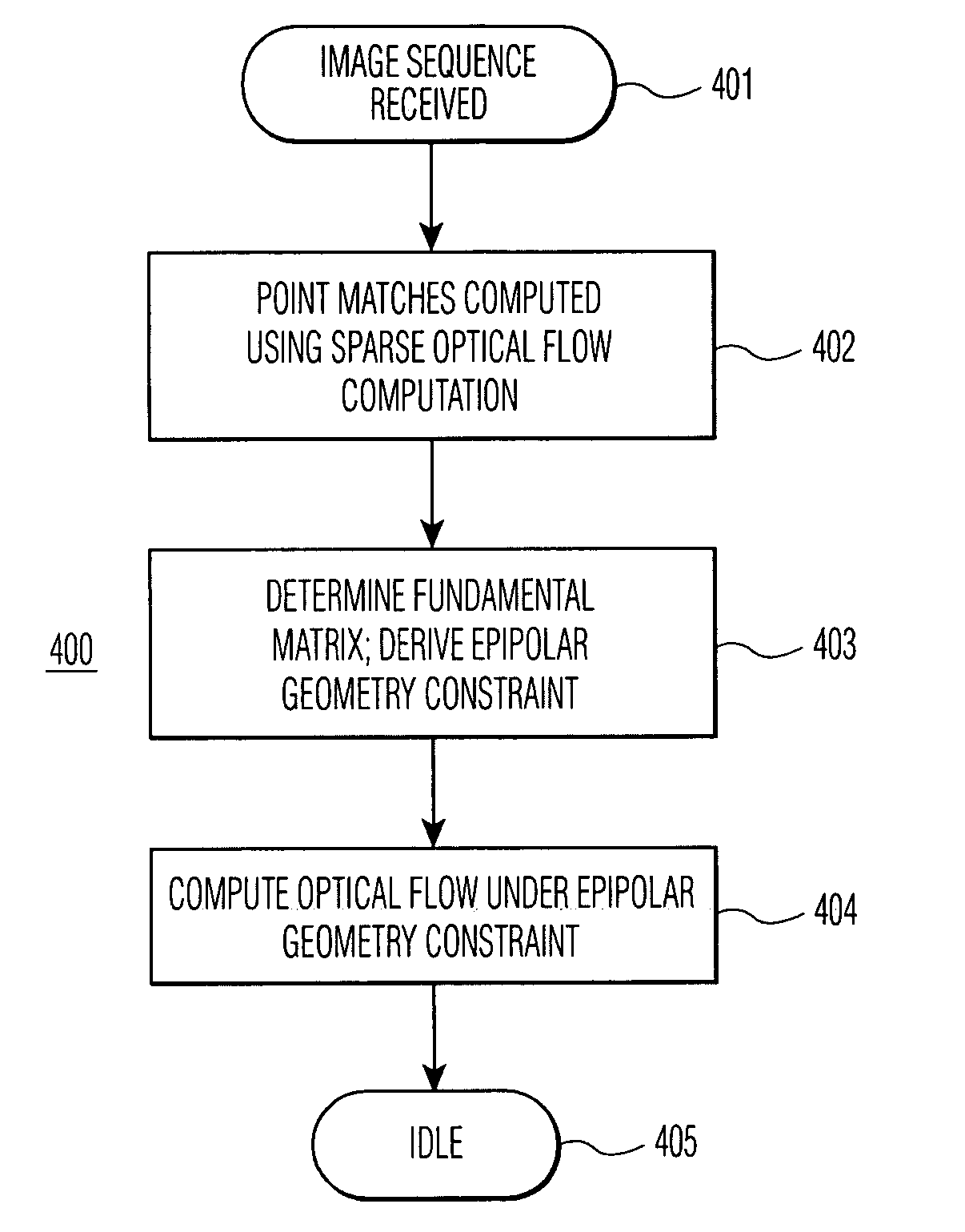

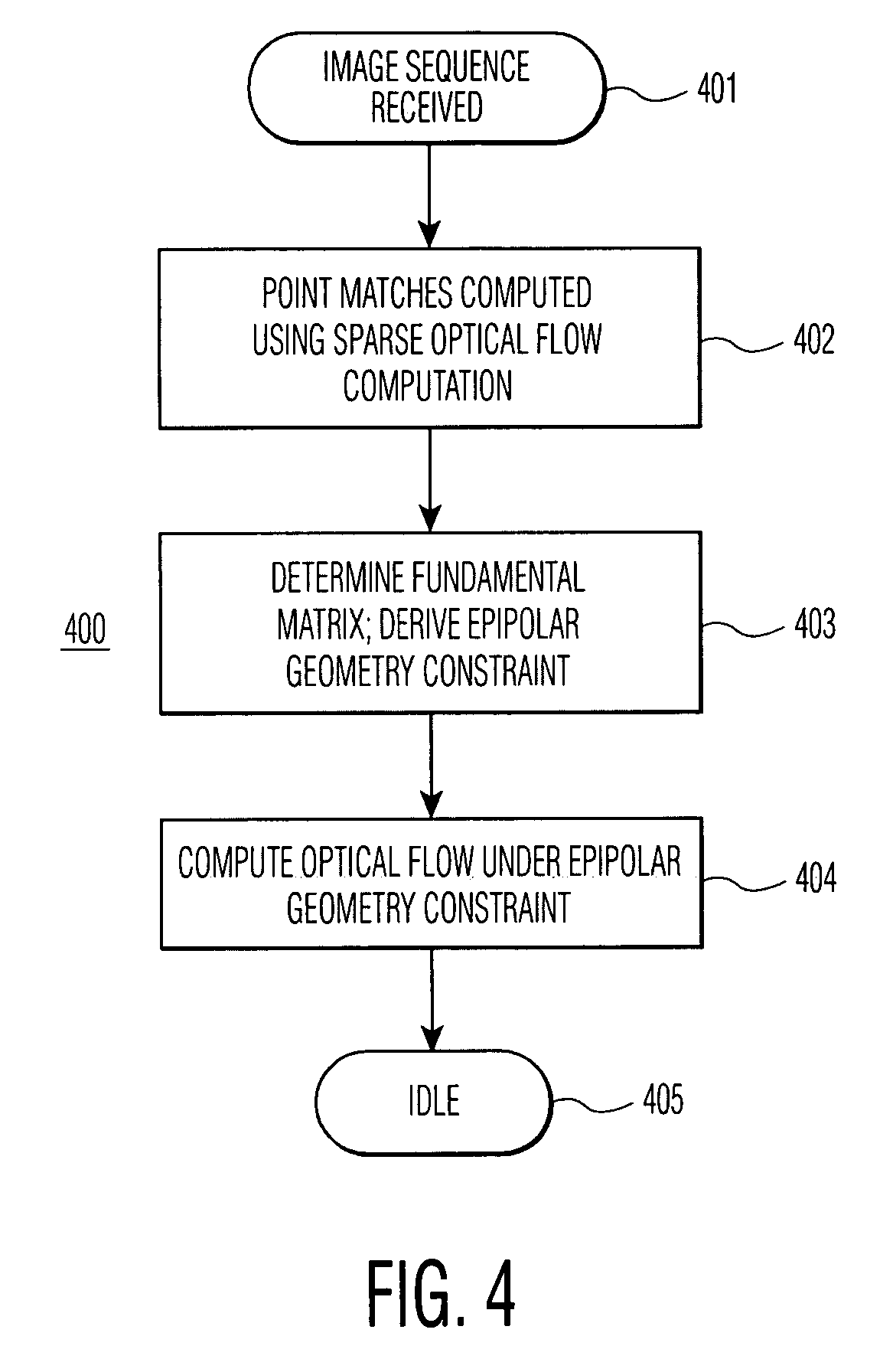

Method for computing optical flow under the epipolar constraint

InactiveUS7031497B2Improve accuracyImprove performanceImage enhancementImage analysisEssential matrixAlgorithm

Point matches between images within an image sequence are identified by sparse optical flow computation and employed to compute a fundamental matrix for the epipolar geometry, which in turn is employed to derive an epipolar geometry constraint for computing dense optical flow for the image sequence. The epipolar geometry constraint may further be combined with local, heuristic constraints or robust statistical methods. Improvements in both accuracy and performance in computing optical flow are achieved utilizing the epipolar geometry constraint.

Owner:UNILOC 2017 LLC

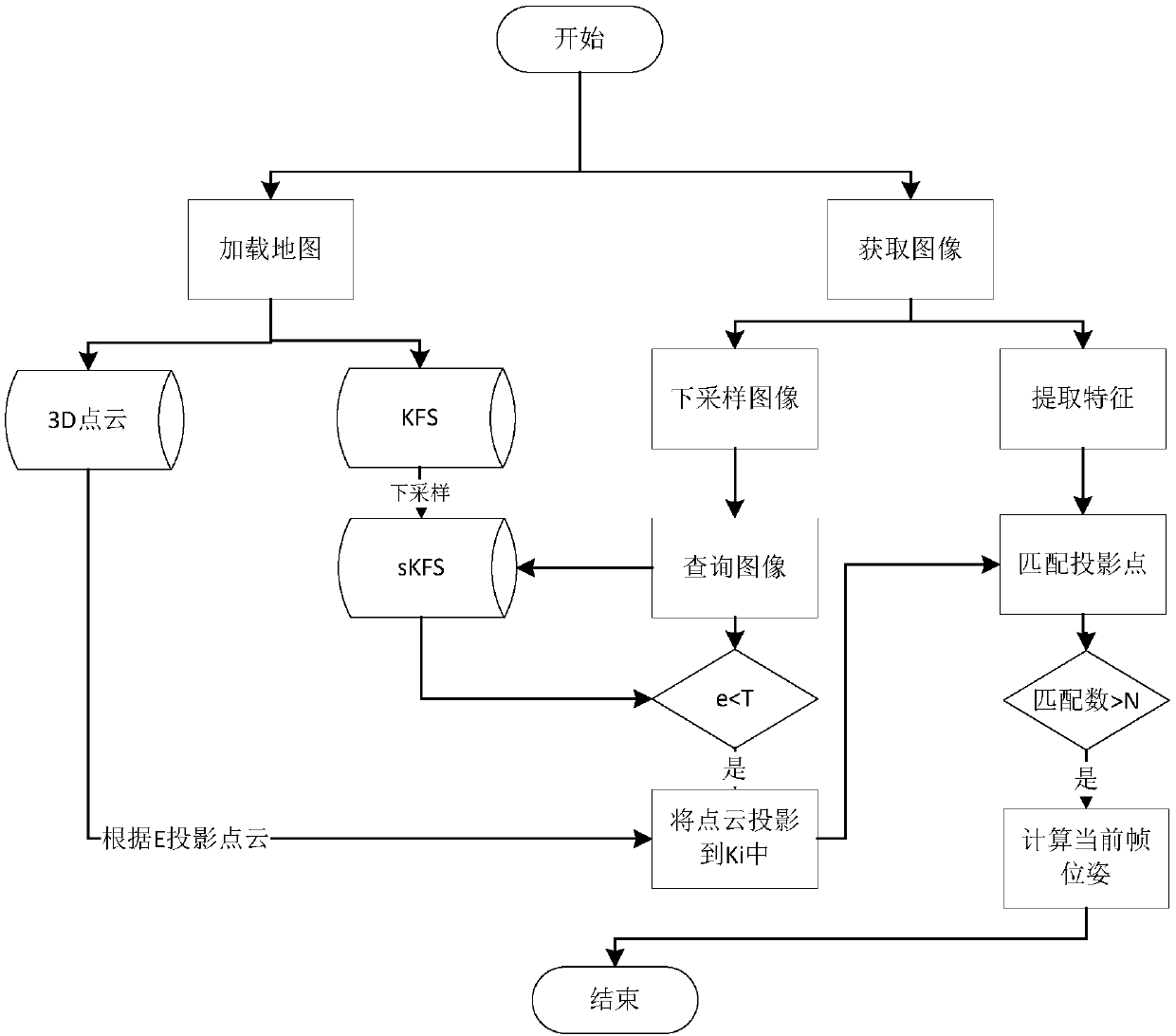

SLAM-based indoor positioning method

The invention provides an SLAM-based indoor positioning method. The method comprises the following steps of: loading an SLAM map to obtain a key frame set KFS and a 3D point cloud, and down-sampling the key frame set KFS to obtain sKFS; extracting feature points of an input image and down-sampling the image to obtain a thumbnail; solving a change matrix H between each key frame in the sKFS and theinput image; aligning each sKFS with the input image, calculating a minimum error ei, and if the minimum error ei is smaller than a threshold value T, considering that the input image is matched withthe ith key frame Ki in the KFS; mapping the 3D point cloud into Ki according to a pose Ei of Ki; mapping points in Ki into the input image according to the change matrix H and searching a matched feature point in the input image; and calculating a pose E of the input image by utilizing an epipolar geometric theory according to the matched feature point. According to the indoor positioning method, equipment is positioned on the basis of carrying out indoor mapping by an SLAM, and camera poses are optimized through matching feature points, so that more correct results can be obtained.

Owner:NANJING WEIJING SHIKONG INFORMATION TECH CO LTD

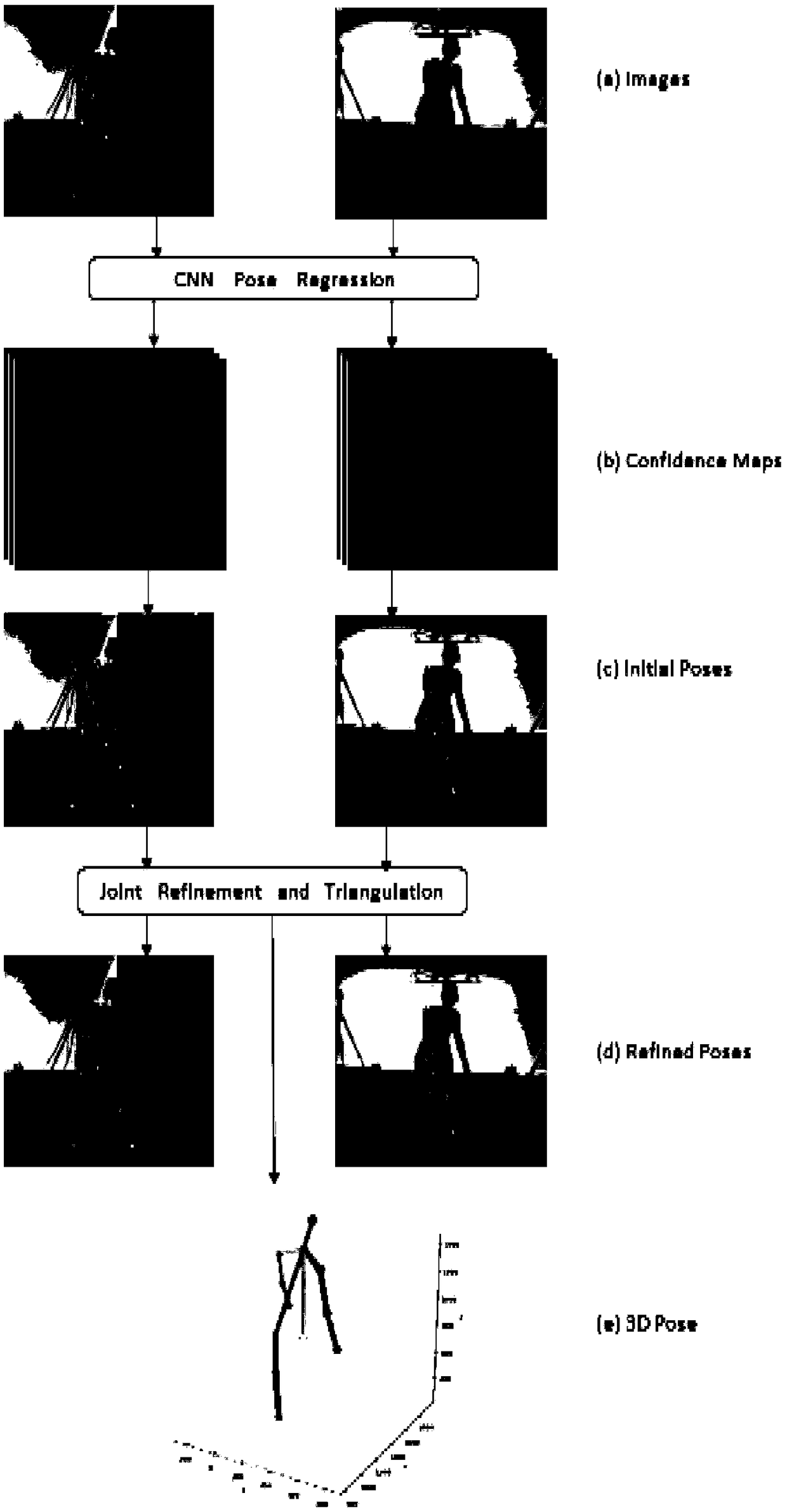

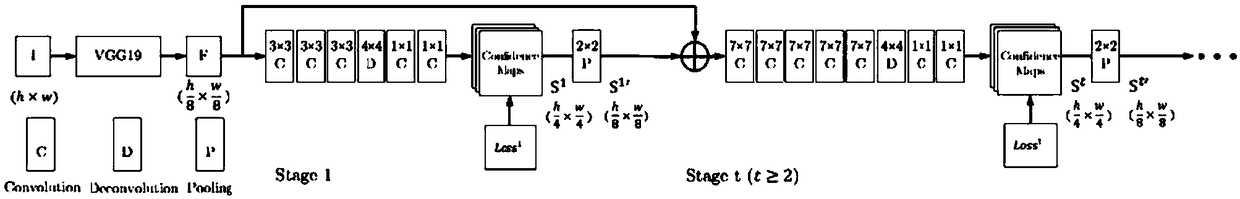

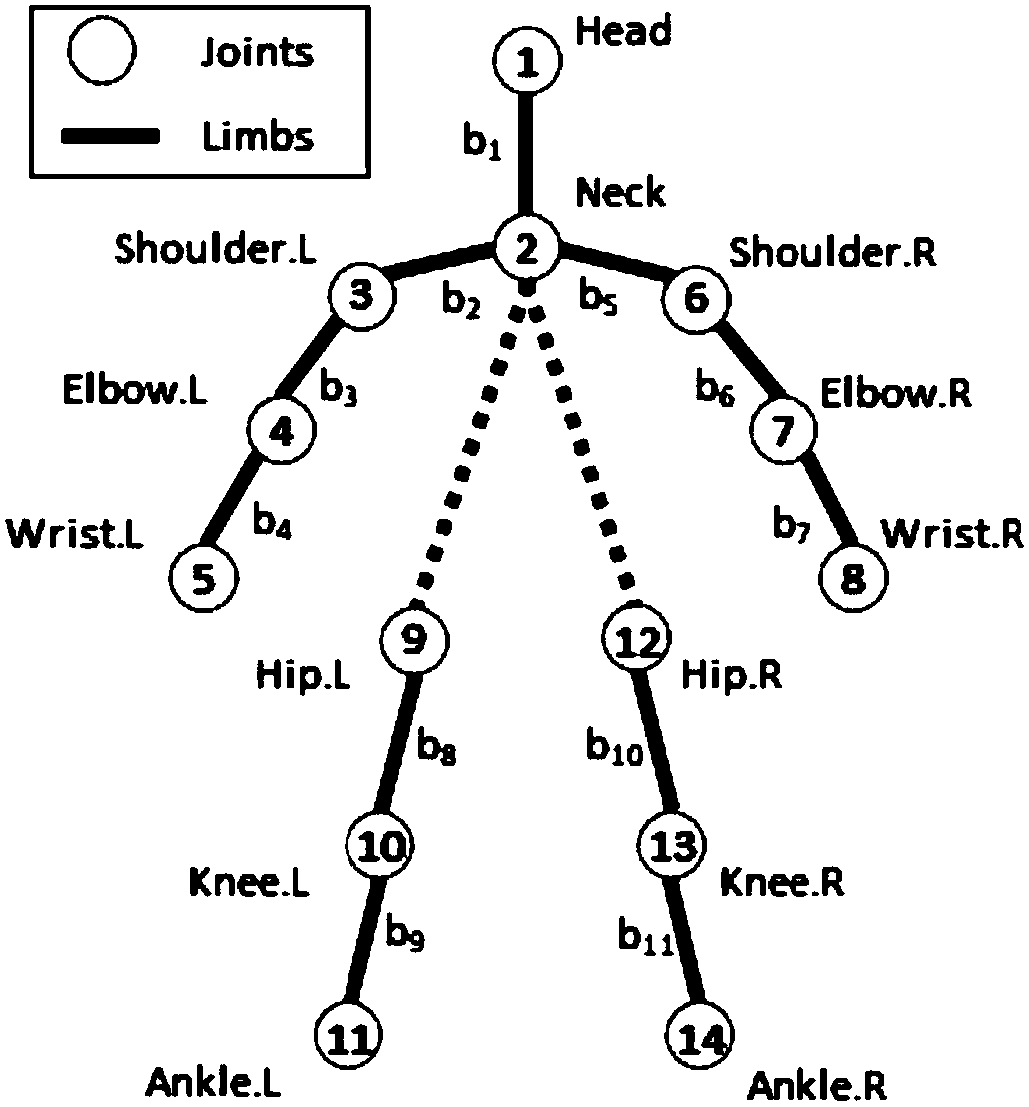

A global three-dimensional human posture credible estimation method based on two views

The invention provides a global three-dimensional human posture credible estimation method based on two views, which can be used for unmarked human motion capture. The core innovation points of the invention are as follows: Firstly, the invention proposes to detect unreliable joint points based on the polar geometry constraint and the human bone length constraint in two views. Secondly, the invention proposes to correct unreliable joints by polar constraint and bone length constraint based on the confidence diagram of the joint estimated by the network. Finally, the invention provides a simpleand efficient camera external parameter automatic calibration technology and a bone length calculation method. The invention can realize stable and reliable two-dimensional and global three-dimensional human posture estimation for anyone with different body shapes without using human body model or assuming any prior knowledge of human body. The posture generated by the invention satisfies the epipolar geometry constraint of two views and the human bone length constraint, and realizes robust and reliable human posture estimation under challenging scenarios such as severe occlusion, symmetric ambiguity, motion blur and the like.

Owner:ZHEJIANG UNIV +1

Single-point calibration object-based multi-camera calibration

The invention relates to a single-point calibration object-based multi-camera internal and external parameter calibration method and a calibration component. The calibration method comprises the following steps of: acquiring a single calibration point which moves freely and an image point of an L-shaped rigid body which is used for indicating a world coordinate system by using a plurality of infrared cameras which are fixed at different positions in a scene; and uploading the single calibration point and the image point into an upper computer so as to calibrate internal and external parametersof the plurality of cameras by utilizing image point data. According to the method, cameras with common viewpoints can be calibrated in pairs according to a pinhole and distortion camera model and anepipolar geometric constraint relationship between image point pairs. According to the method, a utilized calibration tool is simple to manufacture, and calibration objects do not need to be limitedto move in a common view field of all the cameras, so that the operability is strong; through a multi-camera cascade path determined by utilizing a common view field relationship, more image points can participate in operation, so that the algorithm robustness is better; and through multi-step optimization, calibration parameters can achieve sub pixel-level re-projection errors, so that high-precision demands can be completely satisfied.

Owner:北京轻威科技有限责任公司

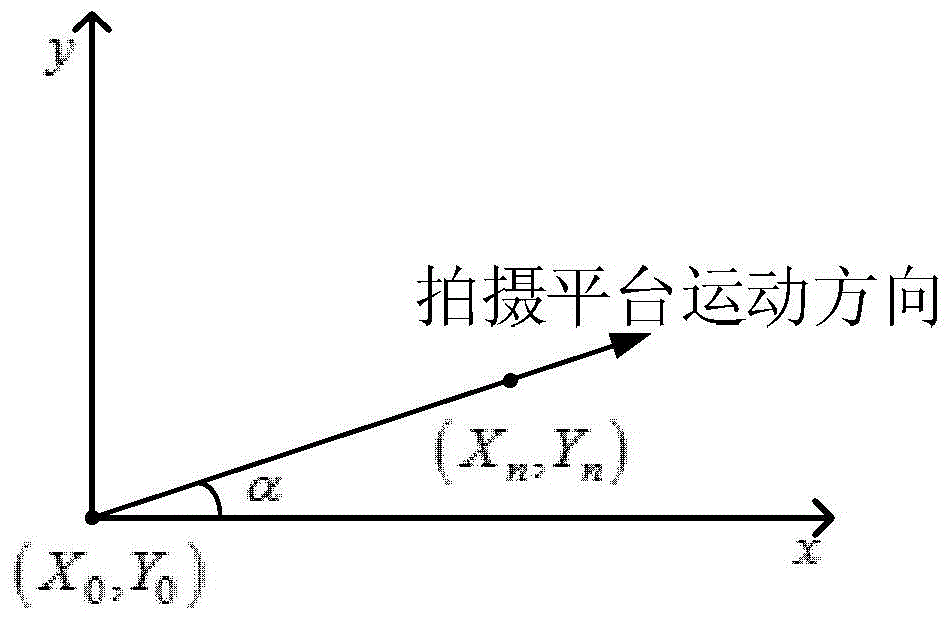

Video-acquisition-based Visual Map database establishing method and indoor visual positioning method using database

ActiveCN104457758AReduce workloadHigh precisionNavigational calculation instrumentsSpecial data processing applicationsLinear motionVisual positioning

The invention discloses a video-acquisition-based Visual Map database establishing method and an indoor visual positioning method using a database, relates to the field of indoor positioning and navigation, and aims to solve the problems of low indoor visual positioning accuracy, high time consumption and high labor consumption of an existing method. The indoor visual positioning method using the database is characterized in that a platform carrying a video acquisition device is used for recording videos in the constant-speed linear motion process on the basis of the quickly established video-based Visual Map database; the acquired videos are processed for recording coordinate position information and image matching information of frames of the videos; in an on-line positioning stage, a system is used for roughly matching an image uploaded by a positioned user with the video-based Visual Map database by using a hash value which is obtained by calculating by using a perceptual hash algorithm, and completing the visual indoor positioning by virtue of the roughly matched frames and the uploaded image by using an SURF algorithm and a corresponding epipolar geometry algorithm. The indoor visual positioning method is applied to indoor visual positioning places.

Owner:哈尔滨工业大学高新技术开发总公司

Image capture-based Visual Map database construction method and indoor positioning method using database

ActiveCN104484881AHigh positioning accuracyImprove matchImage enhancementImage analysisComputer scienceMarine navigation

The invention discloses an image capture-based Visual Map database construction method and an indoor positioning method using the database, relates to the field of indoor positioning and navigation, and aims at solving the problem of low WLAN positioning precision due to the influence of multiple factors such as door opening and closing, people walking and wall blocking. The indoor positioning method comprises the following steps: establishing an offline Visual Map, extracting feature points of a photo shot by a smartphone of a user to match with the feature points of images on fingerprints at positions in the Visual Map, and precisely estimating the position of the user by using epipolar geometry. The image capture-based Visual Map database construction method and the indoor positioning method using the database are suitable for indoor positioning occasions.

Owner:严格集团股份有限公司

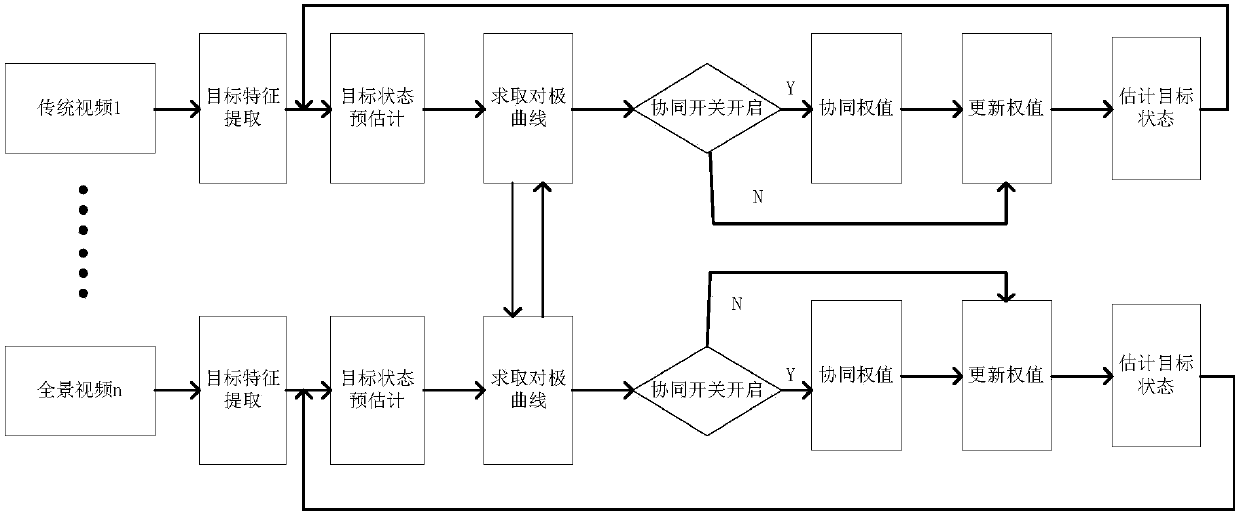

Multi-type vision sensing cooperative target tracking method

InactiveCN107038714AExpand the field of viewLow costImage enhancementImage analysisOphthalmologyField of view

Disclosed is a multi-type vision sensing cooperative target tracking method, specifically comprising the following steps: step 1, tracking the same target using a traditional camera C1 and a panoramic camera C2, and estimating the state of the target at next moment by adopting a particle filter method in C1 and C2 respectively; step 2, establishing an epipolar geometrical relationship between C1 and C2; step 3, establishing a cooperative switching model, and judging whether a cooperative tracking mode is started according to the positions of the target in fields of view of C1 and C2; step 4, in the cooperative tracking mode, starting a cooperation mechanism, and correcting and updating the state of a shaded target according to the epipolar geometrical relationship; step 5, taking the target state processed in step 4 as the current moment state of the target, returning to step 2, thereby realizing continuous cooperative tracking on the moving target between C1 and C2. The method solves the problem that the cooperative tracking effective area among multiple cameras is narrow, and realizes continuous cooperative tracking on the moving target.

Owner:XIAN UNIV OF TECH

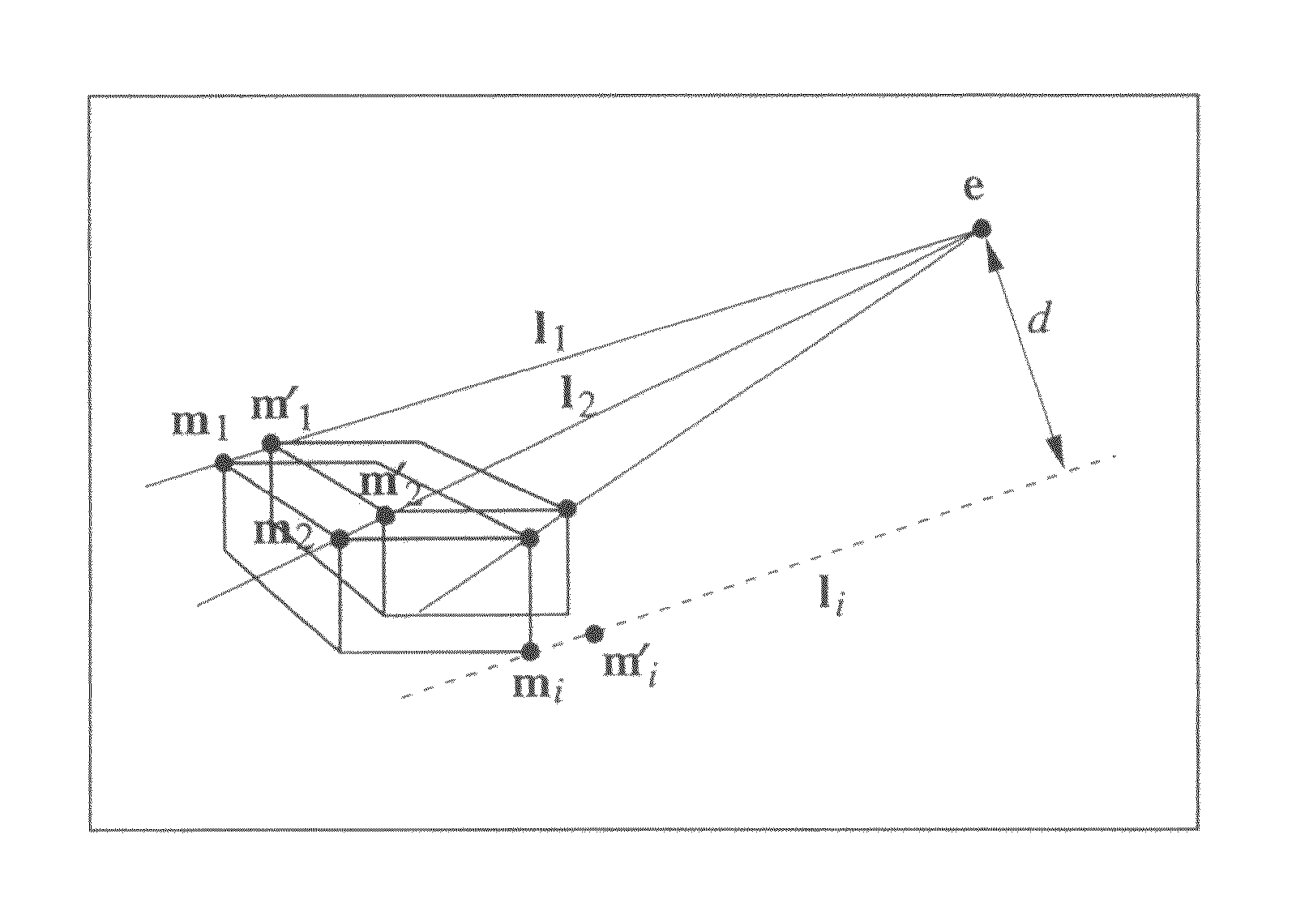

Object recognition apparatus and object recognition method using epipolar geometry

An object recognition apparatus that processes images, as acquired by an imaging means (10) mounted on a moving object, in a first image plane and a second image plane of different points of view, and recognizes an object in the vicinity of the moving object. The object recognition apparatus comprises: a feature point detection means (42) that detects feature points in first and second image planes of an object image; a fundamental matrix determination means (43a) that determines, based on calculation of an epipole through the auto-epipolar property, a fundamental matrix that expresses a geometrically corresponding relationship based on translational camera movement, with not less than two pairs of feature points corresponding between the first and second image planes; and a three-dimensional position calculation means (43b) that calculates a three-dimensional position of an object based on the coordinates of the object in the first and second image planes and the determined fundamental matrix.

Owner:AISIN SEIKI KK

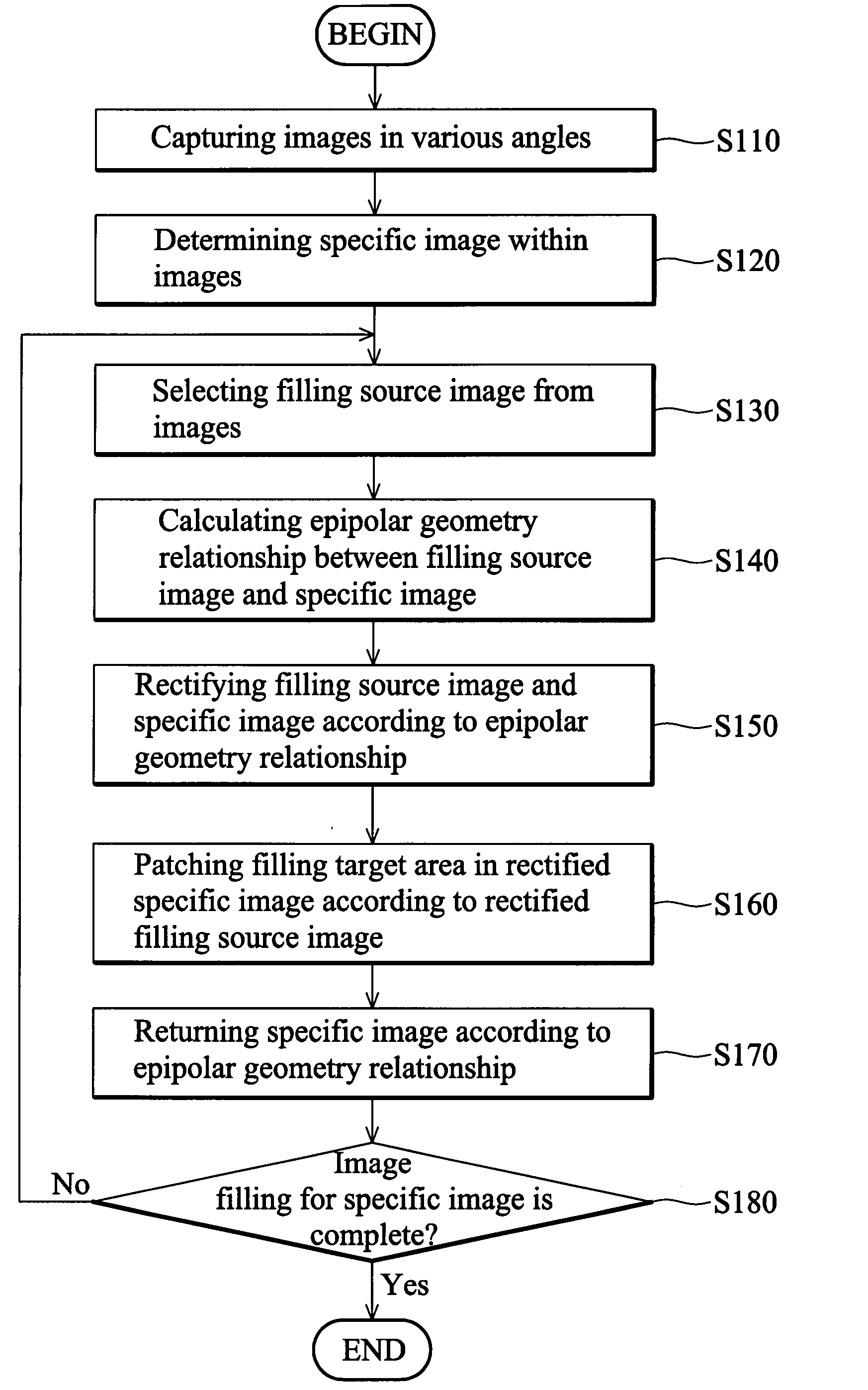

Image filling methods

Image filling methods. A plurality of images corresponding to a target object or a scene are captured at various angles. An epipolar geometry relationship between a filling source image and a specific image within the images is calculated. The filling source image and the specific image are rectified according to the epipolar geometry relationship. At least one filling target area in the rectified specific image is patched according to the rectified filling source image.

Owner:IND TECH RES INST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com