Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

2314 results about "Visual positioning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Assisted Guidance and Navigation Method in Intraoral Surgery

InactiveUS20140234804A1Shorten the construction periodGood curative effectRadiation diagnostic image/data processingTeeth fillingVisual positioningSurgical department

An assisted guidance and navigation method in intraoral surgeries is a method using computerized tomography (CT) photography and an optical positioning system to track medical appliances, the method including: first providing an optical positioning treatment instrument and an optical positioning device; then obtaining image data of the intraoral tissue receiving treatment through CT photography, precisely displaying actions of the treatment instrument in the image data, and real-time checking an image and guidance and navigation. Therefore, during the surgery, the existing use habits of the physicians are not affected and accurate and convenient auxiliary information is provided, and attention is paid to using the treatment instrument in physical environments such as a patient's tooth or dental model.

Owner:HUANG JERRY T +1

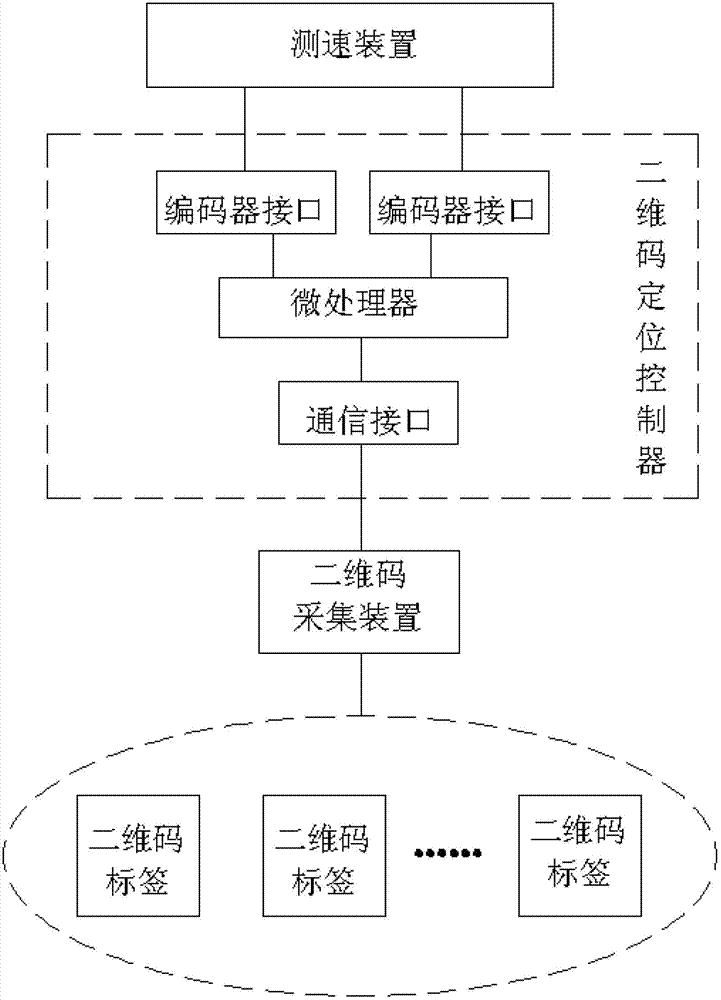

Indoor mobile robot positioning system and method based on two-dimensional code

ActiveCN102735235ASolve the speed problemFix the angle problemNavigation instrumentsCommunication interfaceImaging processing

The present invention relates to an indoor mobile robot positioning system and method based on two-dimensional code. The system includes a two-dimensional code positioning controller mounted on a mobile robot, a two-dimensional code acquisition device and a two-dimensional code label distributed in the indoor environment. The two-dimensional code positioning controller is composed of a microprocessor and a communication interface that are connected together. The microprocessor is connected with the two-dimensional code acquisition device through the communication interface and is used for controlling the two-dimensional code acquisition device to acquire two-dimensional code images, receive the two-dimensional code images acquired by the two-dimensional code image acquisition device and realize precise positioning function. The method acquires an actual position of the mobile robot through photographing the indoor two-dimensional code labels, transforming coordinates and mapping code values. The method of the invention organically combines visual positioning technique, two-dimensional code positioning technology and two degree of freedom measuring technology to realize the function of precise positioning of the mobile robot and solve problems of complex image processing and inaccurate positioning of a traditional vision positioning system.

Owner:爱泊科技(海南)有限公司

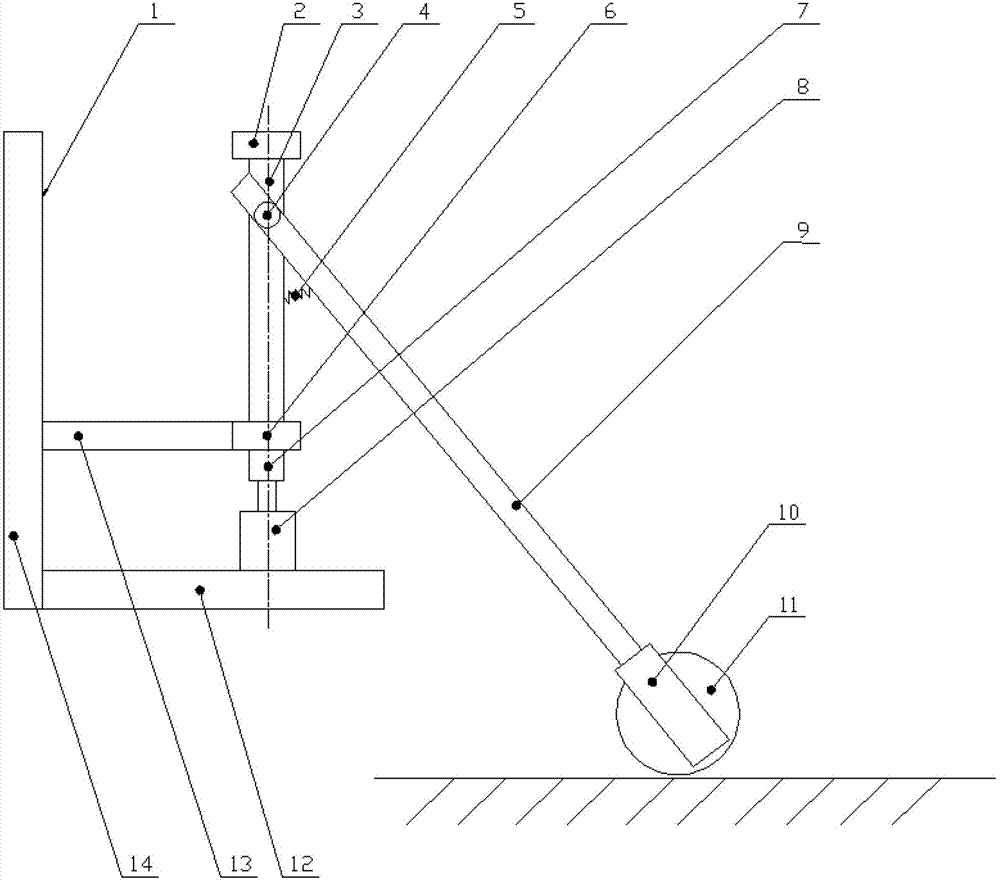

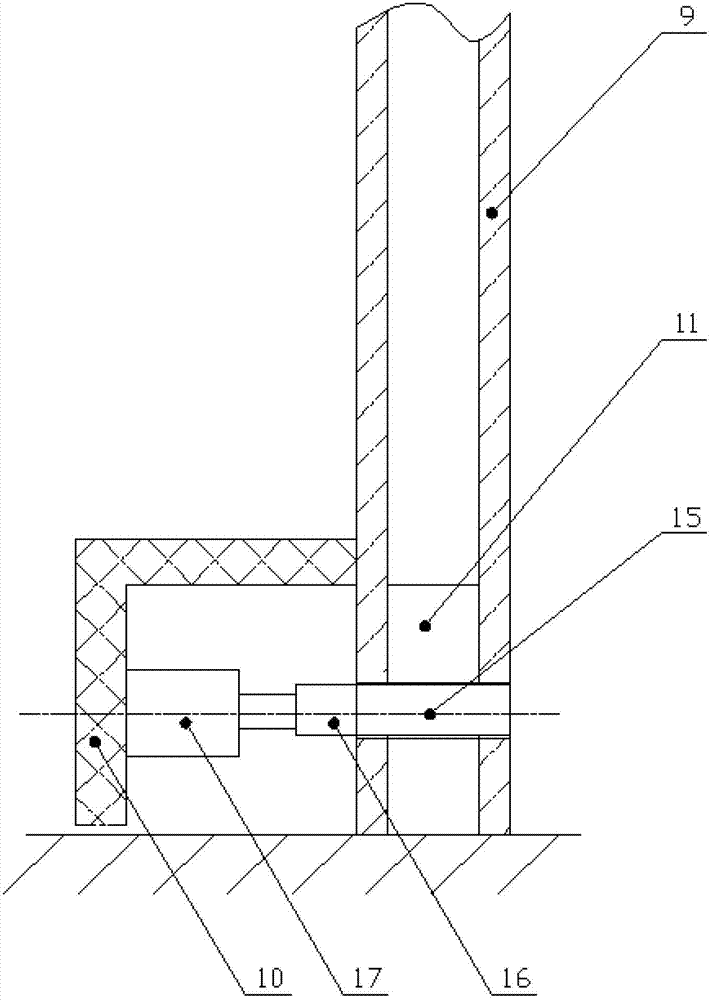

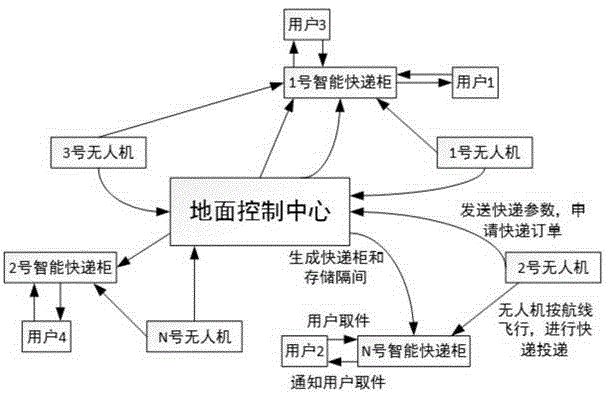

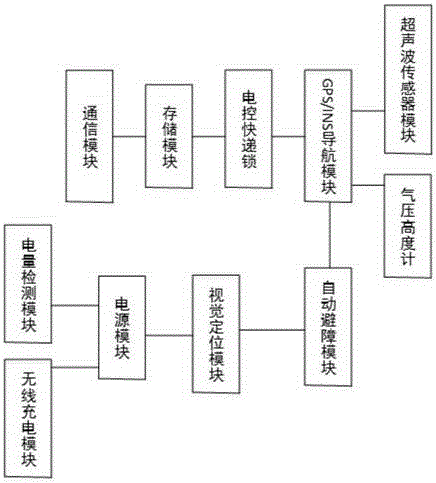

Unmanned aerial vehicle-based express delivery delivering system and method

ActiveCN106200677AImprove delivery efficiencyEnsure safetyPosition/course control in three dimensionsUncrewed vehicleTime cost

The invention discloses an unmanned aerial vehicle-based express delivery delivering system and method. The unmanned aerial vehicle-based express delivery delivering system comprises a ground control center, an unmanned aerial vehicle group and an intelligent express delivery cabinet net. During delivering processes, an express delivery company sends an express delivery delivering request to the ground control center, a specific intelligent express delivery cabinet is generated for the order, and a task flight route is generated for an unmanned aerial vehicle. After reaching a target position, the unmanned aerial vehicle applies to the express delivery cabinet for communication verification; after passing the verification, the unmanned aerial vehicle switches to a visual positioning module if the express delivery cabinet is unoccupied; after positioning operation is performed successfully, an electronic door of the express delivery cabinet is automatically opened, and the unmanned aerial vehicle hovers or lands on a landing stage for delivery operation. Deliveries can be transmitted to pre-distributed compartment by the intelligent express delivery cabinet, pick-up codes are generated and sent to users, and the users can pick up the deliveries based on the pick-up codes. Current express delivery delivering efficiency can be greatly improved via the unmanned aerial vehicle-based express delivery delivering system and method, manpower cost and time cost can be lowered, and security and privacy of express delivery can be well guaranteed.

Owner:CENT SOUTH UNIV

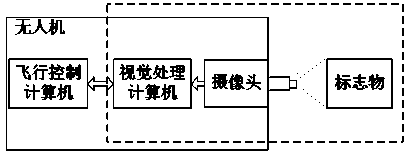

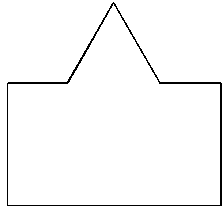

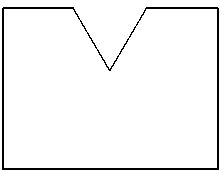

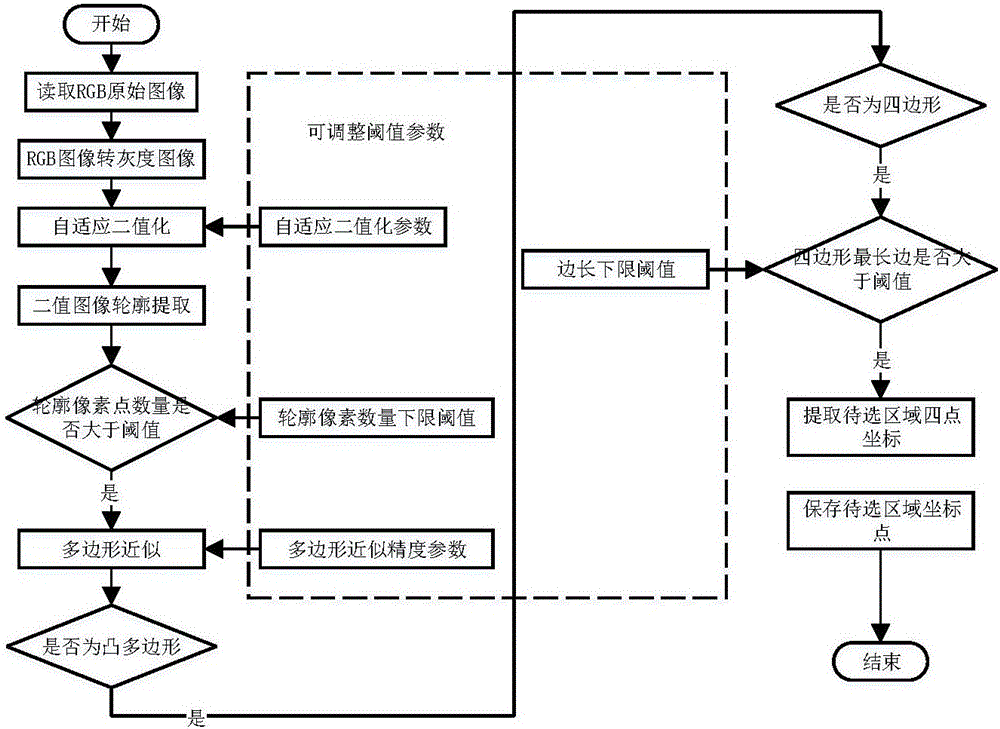

Accurate visual positioning and orienting method for rotor wing unmanned aerial vehicle

InactiveCN104298248APrecision hoverFlexible and convenient hoveringPosition/course control in three dimensionsVisual field lossVisual recognition

The invention discloses an accurate visual positioning and orienting method for a rotor wing unmanned aerial vehicle on the basis of an artificial marker. The accurate visual positioning and orienting method includes the following steps that the marker with a special pattern is installed on the surface of an artificial facility or the surface of a natural object; a camera is calibrated; the proportion mapping relation among the actual size of the marker, the relative distance between the marker and the camera and the size, in camera imaging, of the marker is set up, and the keeping distance between the unmanned aerial vehicle and the marker is set; the unmanned aerial vehicle is guided to fly to the position where the unmanned aerial vehicle is to be suspended, the unmanned aerial vehicle is adjusted so that the marker pattern can enter the visual field of the camera, and a visual recognition function is started; a visual processing computer compares the geometrical characteristic of the pattern shot currently and a standard pattern through visual analysis to obtain difference and transmits the difference to a flight control computer to generate a control law so that the unmanned aerial vehicle can be adjusted to eliminate the deviation of the position, the height and the course, and accurate positioning and orienting suspension is achieved. The accurate visual positioning and orienting method is high in independence, good in stability, high in reliability and beneficial for safety operation, nearby the artificial facility the natural object, of the unmanned aerial vehicle.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

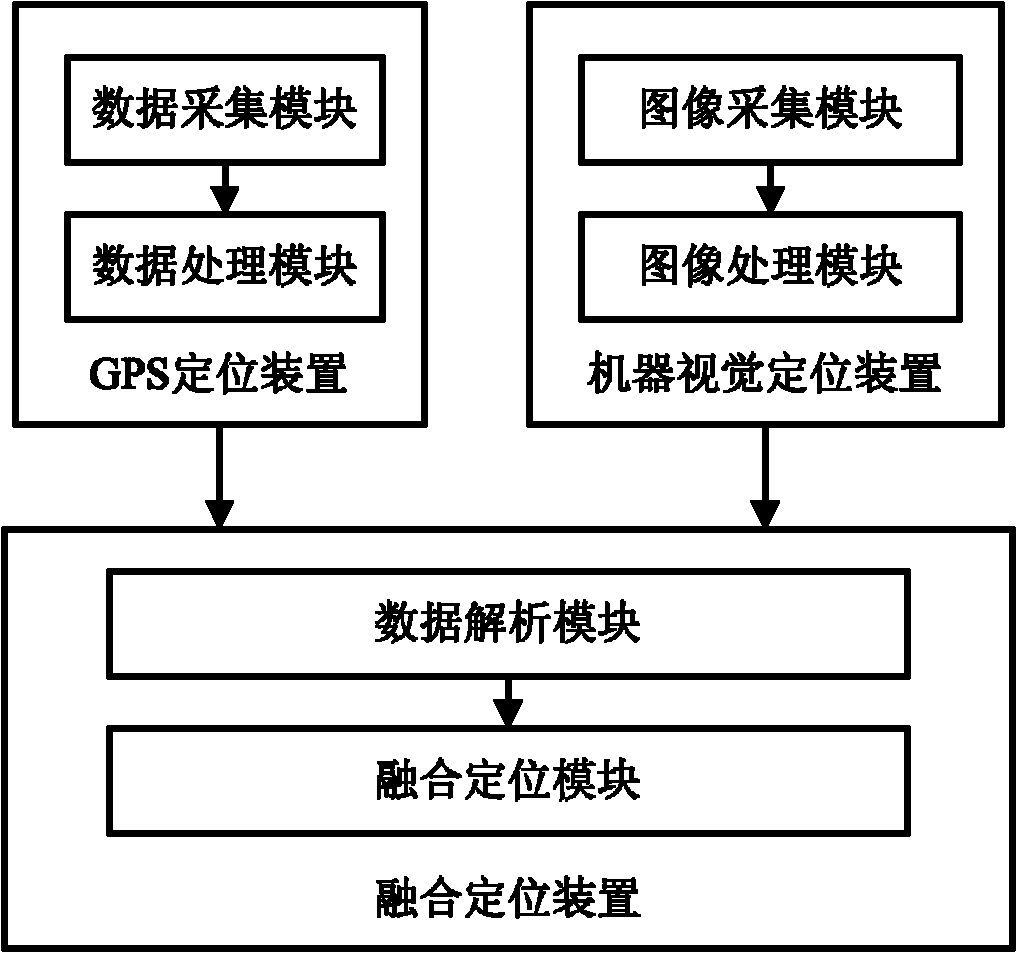

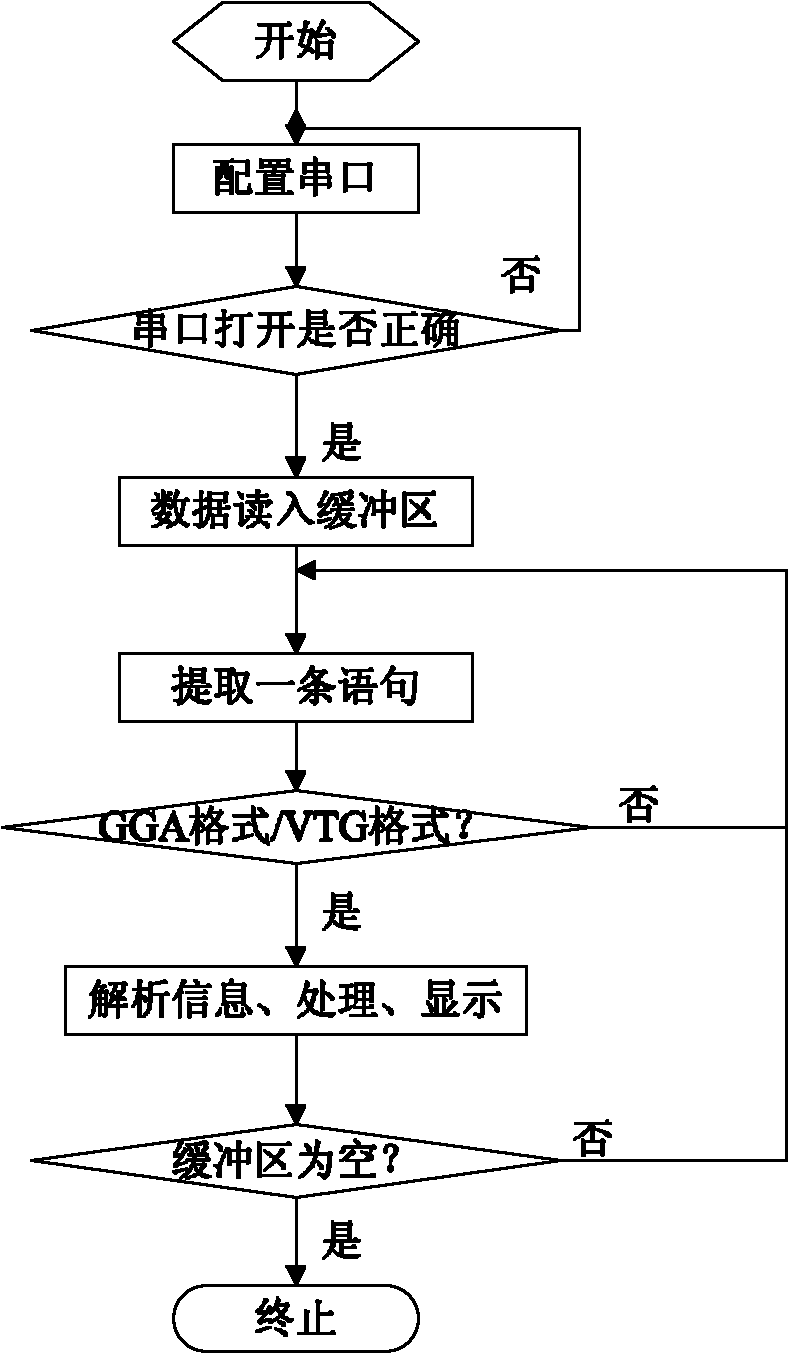

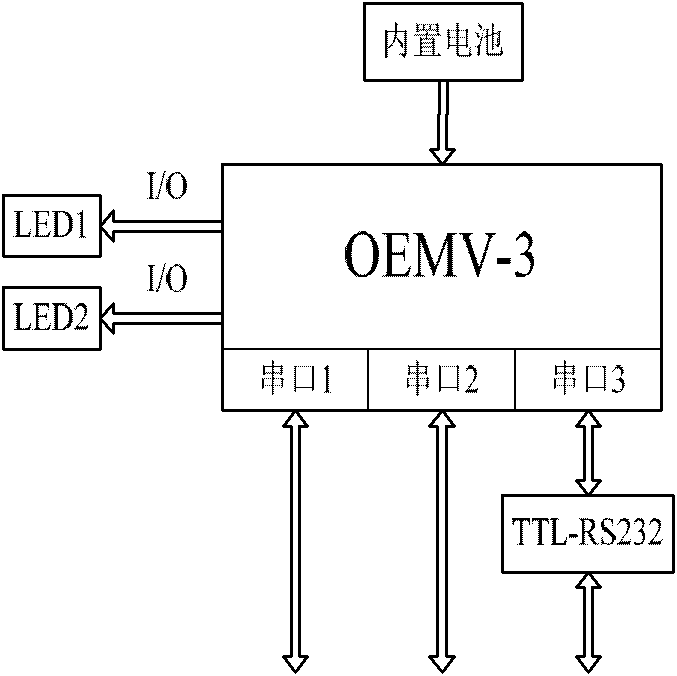

Global positioning system (GPS) and machine vision-based integrated navigation and positioning system and method

InactiveCN102252681AReduce difficultyImprove real-time performanceInstruments for road network navigationSatellite radio beaconingImaging processingMachine vision

The invention discloses a global positioning system (GPS) and machine vision-based integrated navigation and positioning system and a method. The system comprises a GPS positioning device which is utilized for carrying out GPS positioning process on a navigated vehicle, acquiring position coordinates, course angles and driving speed of the navigated vehicle and transmitting the acquired information to a fusion positioning device, a machine vision positioning device which is utilized for collecting images of farmland on a navigation path, carrying out image processing on the collected images, extracting the navigation path to obtain position coordinates of known points on the navigation path, and transmitting the position coordinates to the fusion positioning device, and the fusion positioning device which is utilized for carrying out spatial adjustment and temporal adjustment processes on information from the GPS positioning device and the machine vision positioning device and carrying out filtering processing to obtain final positioning information. The method and the system provided by the invention have the advantages of high positioning accuracy, simple operations and good applicability for real-time operation in field.

Owner:CHINA AGRI UNIV

Visual positioning method for robot transport operation

ActiveCN101637908ARealize switchingRealize automated productionProgramme-controlled manipulatorUsing optical meansVisual positioningUltimate tensile strength

The invention provides a visual positioning method for robot transport operation, which comprises a two-dimensional visual positioning method and a three-dimensional visual positioning method, whereinthe two-dimensional visual sense realizes that workpieces are free from mechanical precision positioning, and a robot can automatically compensate the grabbing function; and the three-dimensional visual sense solves the problem that automation production cannot be carried out by the positional deviation of positioning surfaces of the workpieces. The visual positioning method comprehensively applies two-dimensional visual positioning and three-dimensional visual positioning, solves the problems that workpieces to be processed are blank pieces, positions where the workpieces are grabbed are blank surface simultaneously, and the workpieces grabbed by the robot can not be fed accurately, improves the feasibility of production, has high flexibility, saves labor cost, and reduces labor intensity.

Owner:SHANGHAI FANUC ROBOTICS

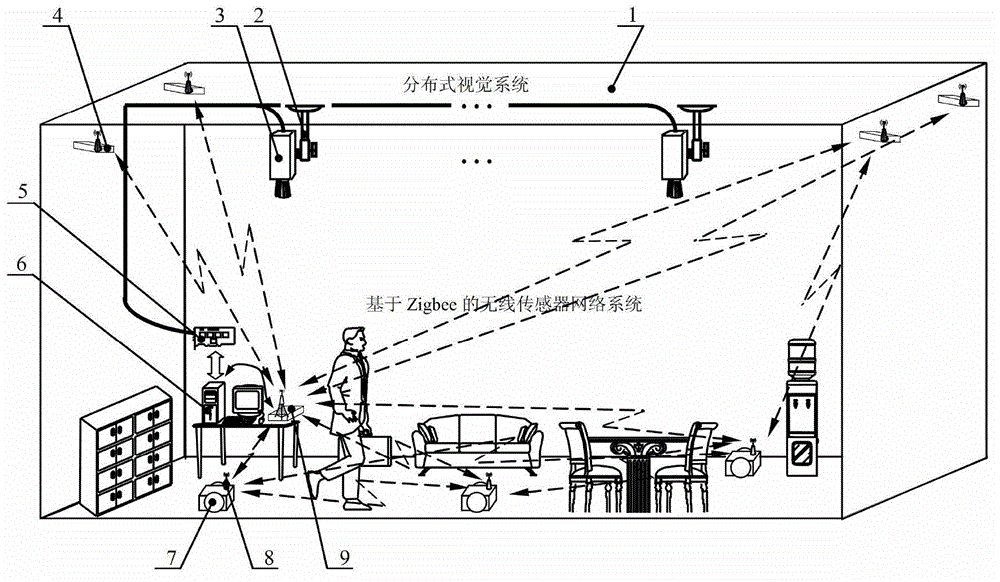

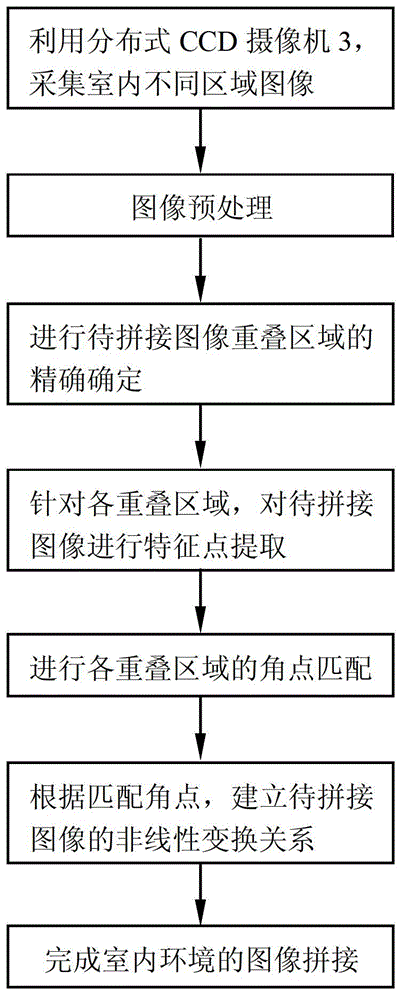

Navigation information acquisition method and intelligent space system with multiple mobile robots

InactiveCN102914303APowerfulEasy to handleNetwork topologiesNavigation instrumentsBlind zoneImage segmentation

The invention discloses a navigation information acquisition method and an intelligent space system with multiple mobile robots. The intelligent space system comprises a distributed vision system and a Zigbee technology based wireless sensor network system. The method includes: carrying out image stitching based on a maximum gradient similar curve and an affine transformation model, and then carrying out image segmentation based on Otsu threshold segmentation and the mathematical morphology so that an environmental map is obtained. The mobile robots in navigation are positioned mainly by means of visual positioning, and Zigbee and DR (dead reckoning) combined positioning are used for making up visual blind zones in visual positioning supplementarily. The visual positioning refers to that positioning is realized by processing images including robot positions and direction signs mainly based on an HIS color model and the mathematical morphology. Combined positioning refers to that information fusion positioning of Zigbee and DR is achieved by the aid of a federal Kalman filter.

Owner:JIANGSU UNIV OF SCI & TECH

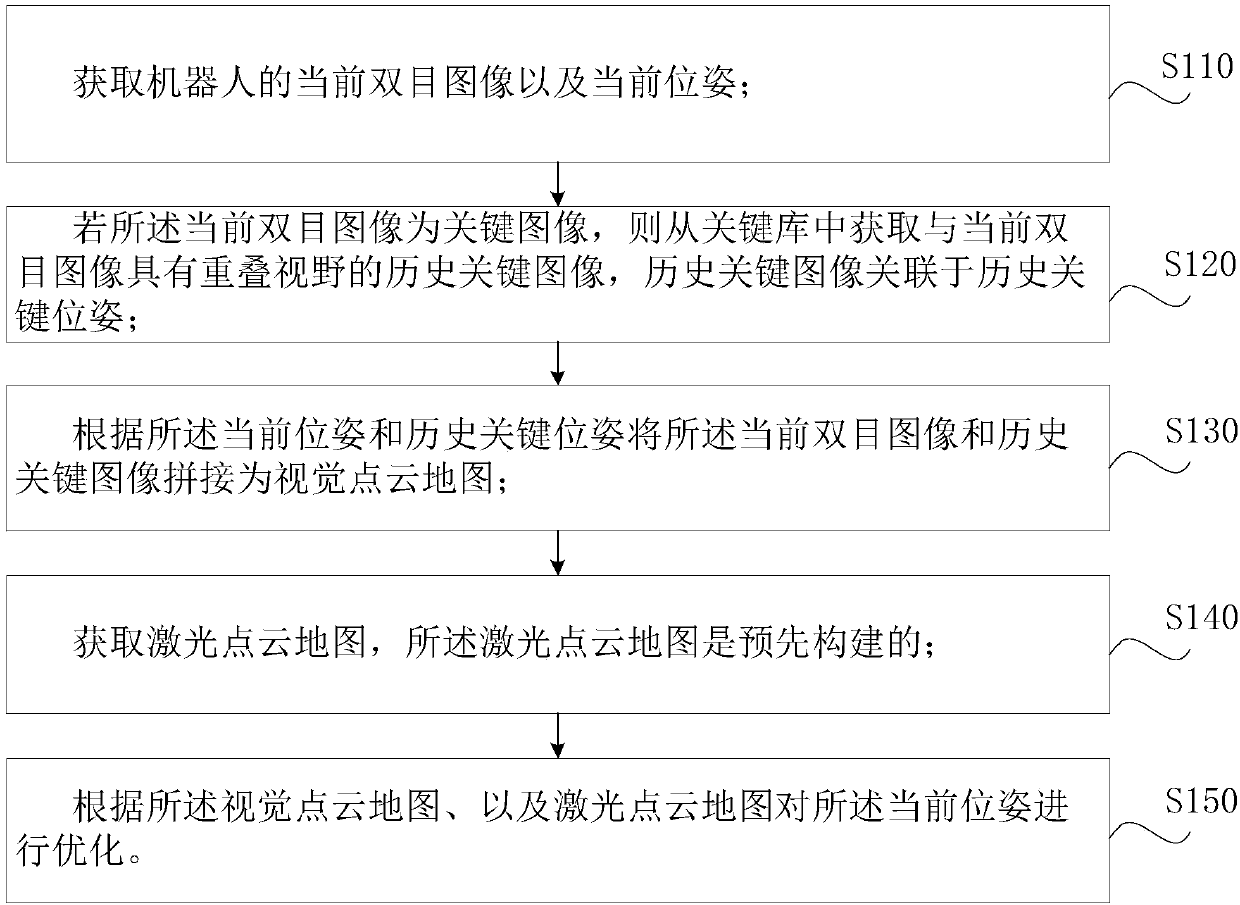

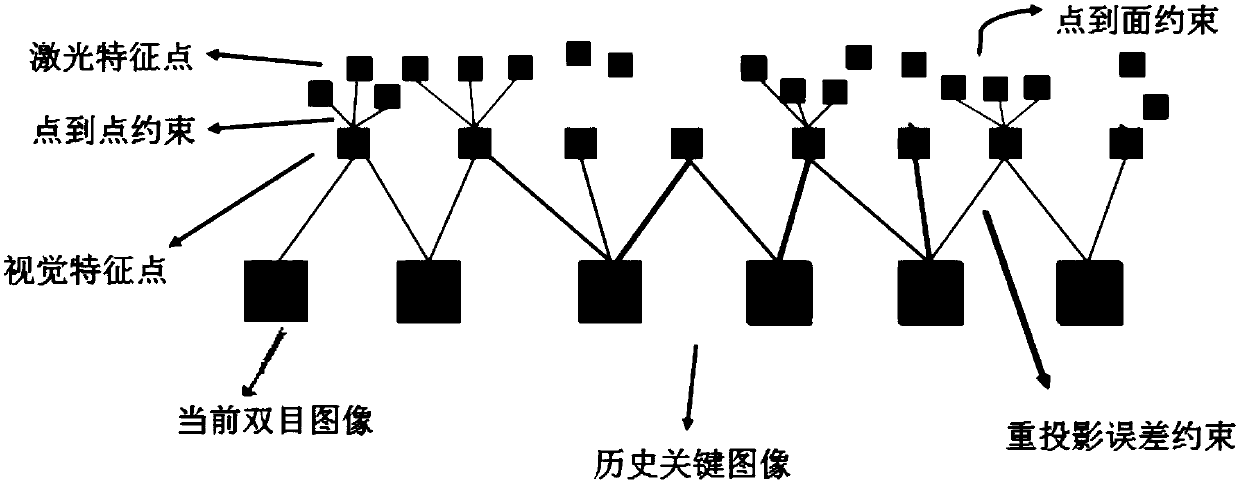

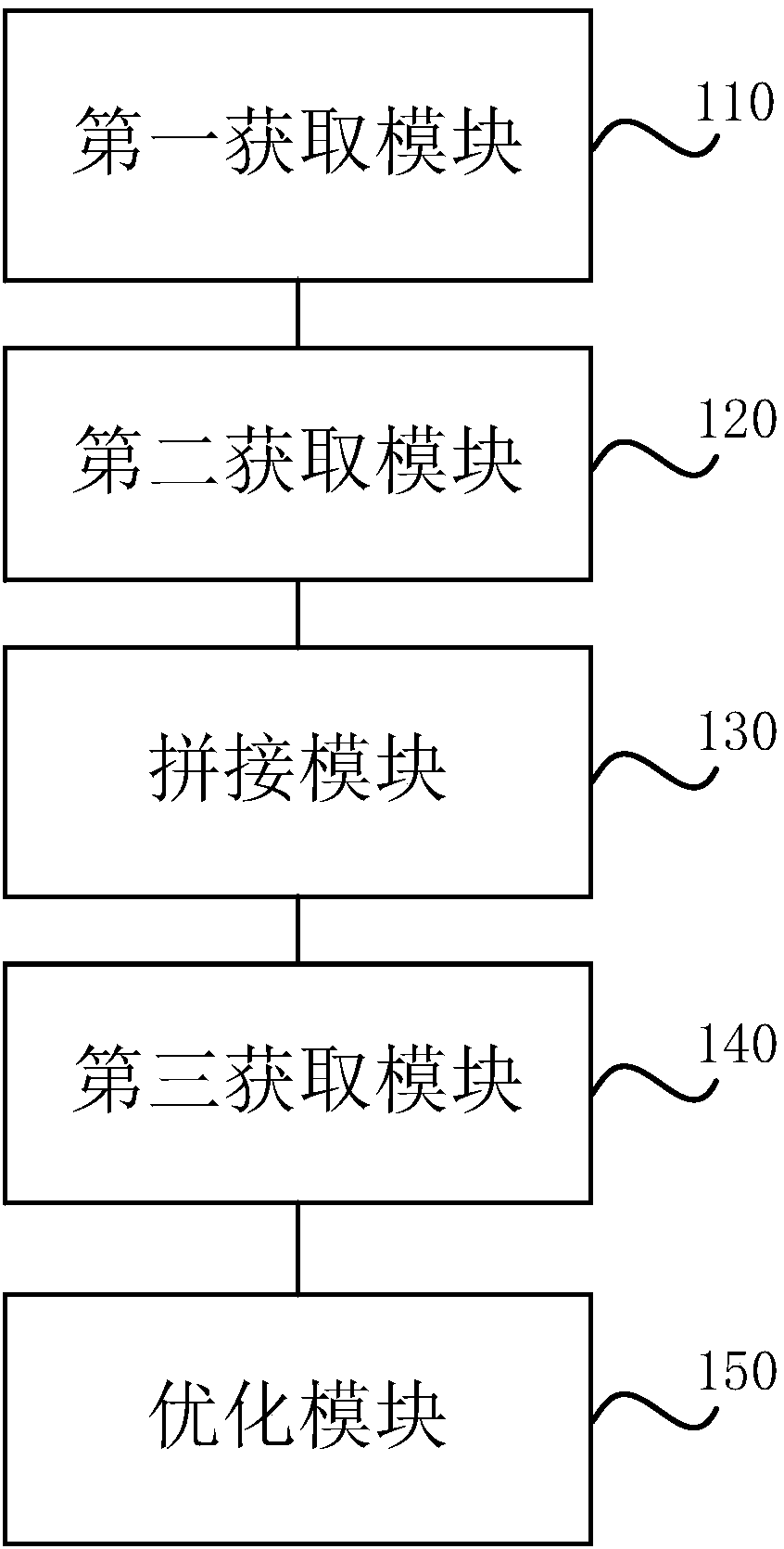

Binocular vision positioning method and binocular vision positioning device for robots, and storage medium

ActiveCN107796397ALower requirementHigh precisionNavigational calculation instrumentsPicture taking arrangementsVisual field lossPoint cloud

The invention discloses a binocular vision positioning method and a binocular vision positioning device for robots, and a storage medium. The binocular vision positioning method includes acquiring current binocular images and current positions and posture of the robots; acquiring historical key images from key libraries if the current binocular images are key images; splicing the current binocularimages and the historical key images according to the current positions and posture and historical key positions and posture to obtain vision point cloud maps; acquiring preliminarily built laser point cloud maps; optimizing the current positions and posture according to the vision point cloud maps and the laser point cloud maps. The historical key images acquired from the key libraries and the current binocular images have overlapped visual fields, and the historical key images are related to the historical key positions and posture. The binocular vision positioning method, the binocular vision positioning device and the storage medium have the advantages that position and posture estimated values are optimized by the aid of the preliminarily built laser point cloud maps at moments corresponding to key frames, and accordingly position and posture estimation cumulative errors can be continuously corrected in long-term robot operation procedures; information of the accurate laser pointcloud maps is imported in optimization procedures, and accordingly the binocular vision positioning method and the binocular vision positioning device are high in positioning accuracy.

Owner:HANGZHOU JIAZHI TECH CO LTD

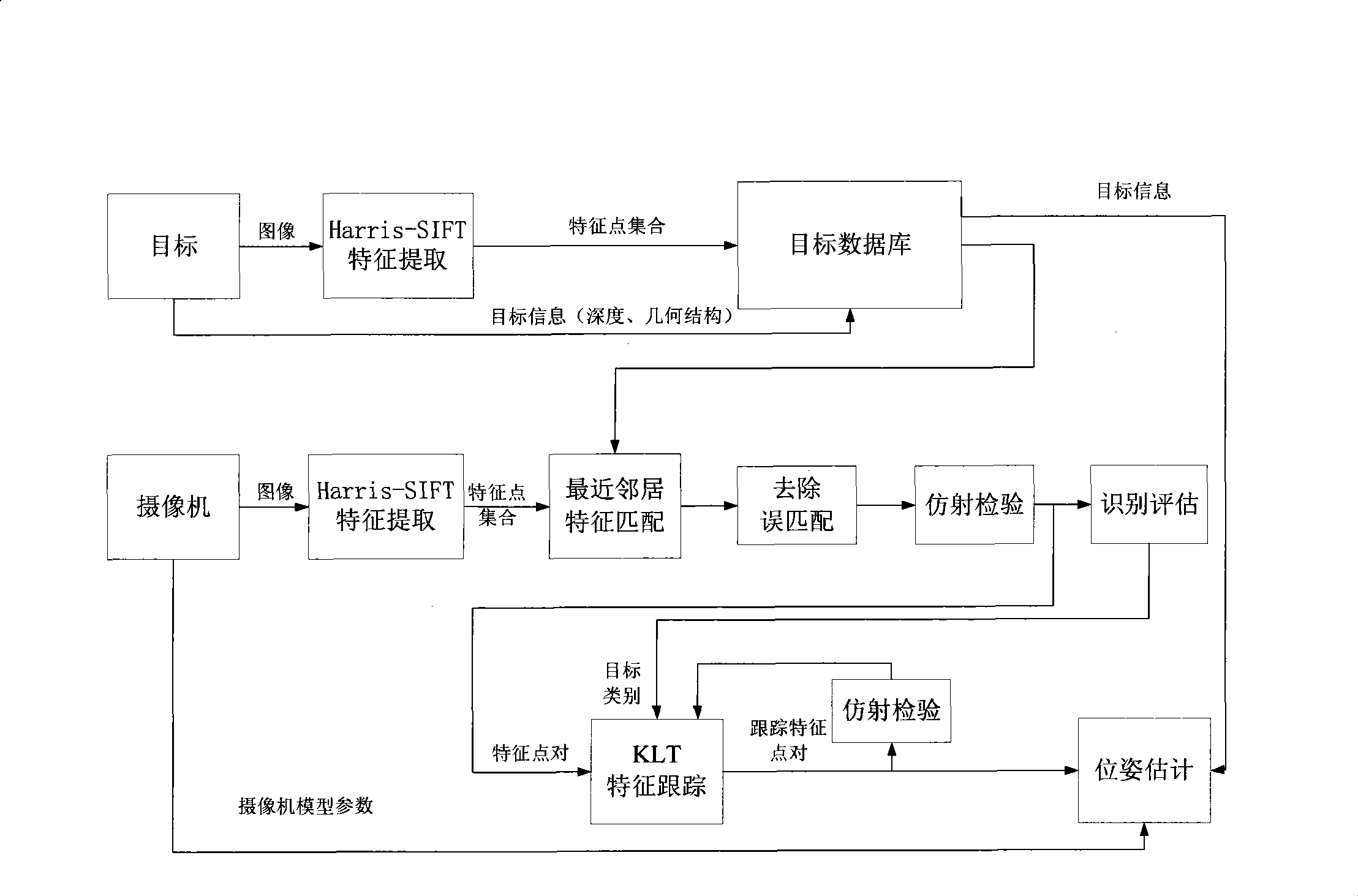

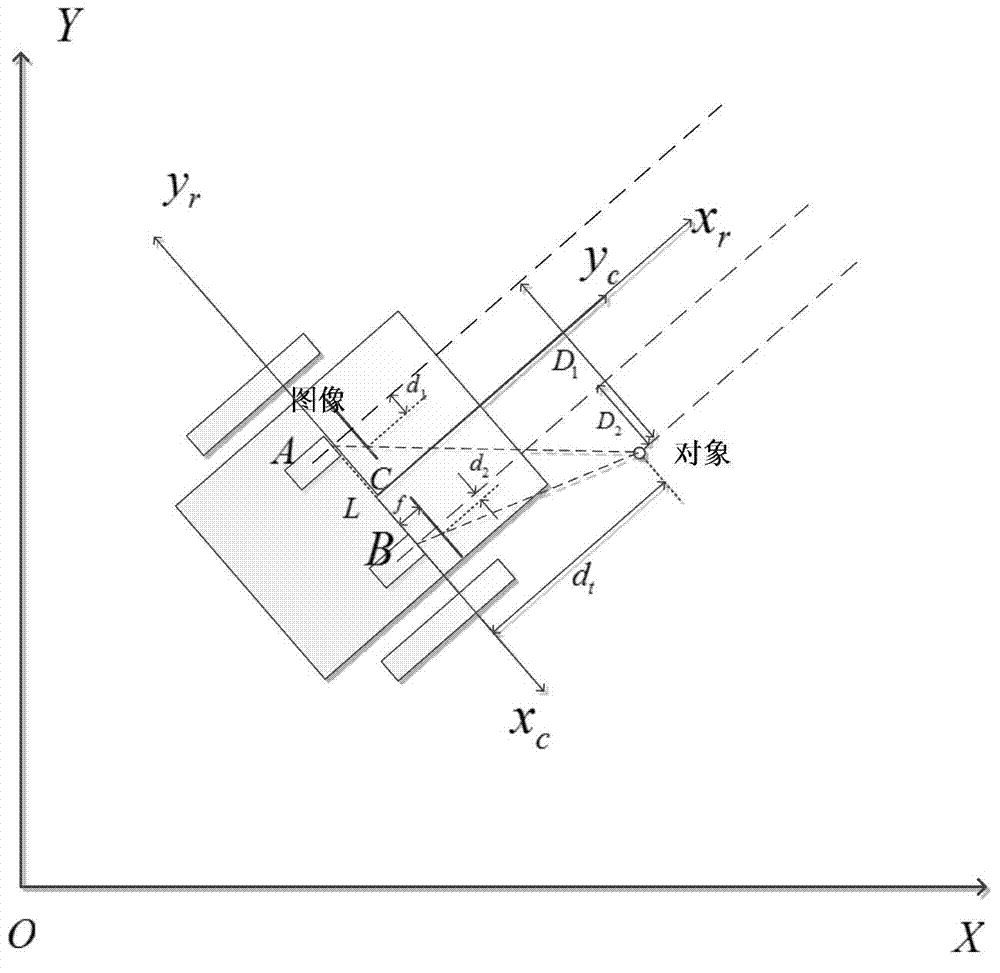

Real time vision positioning method of monocular camera

InactiveCN101441769AReduce complexityLow costTelevision system detailsImage analysisModel parametersVisual positioning

The invention relates to a method for real-time vision positioning for a monocular camera, which belongs to the field of computer vision. The method comprises the following steps: firstly, acquiring an object image characteristic point set to establish an object image database and perform real-time trainings; secondly, modeling the camera to acquire model parameters of the camera; and thirdly, extracting a real-time image characteristic point set by the camera, matching real-time image characteristic points with the characteristic point set in the object database, and removing error matching and performing an affine inspection to acquire characteristic point pairs and object type information. The characteristic point pairs and the object type information are used to perform characteristic tracking, and accurate tracking characteristic points of the object image are combined with the model parameters of the camera so as to acquire three-dimensional poses of the camera. The method can achieve the functions of self-positioning and navigation by using a single camera only, thus the system complexity and the cost are reduced.

Owner:SHANGHAI JIAO TONG UNIV

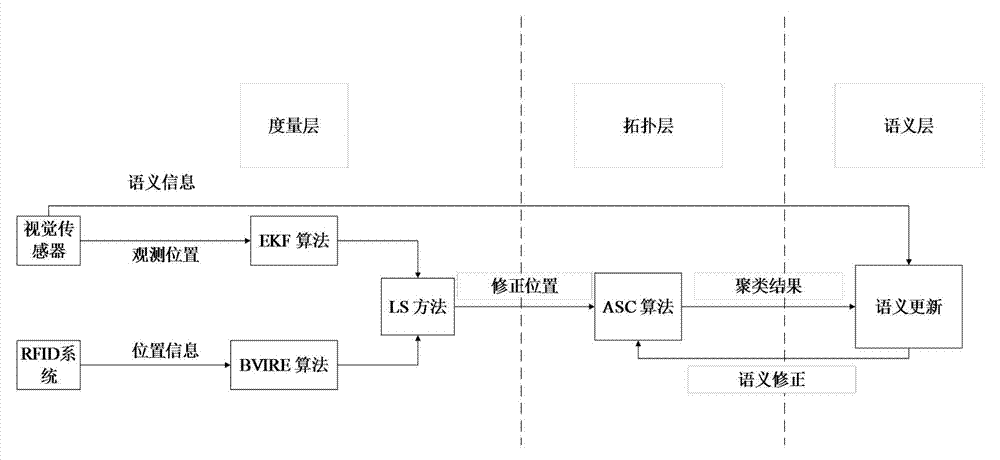

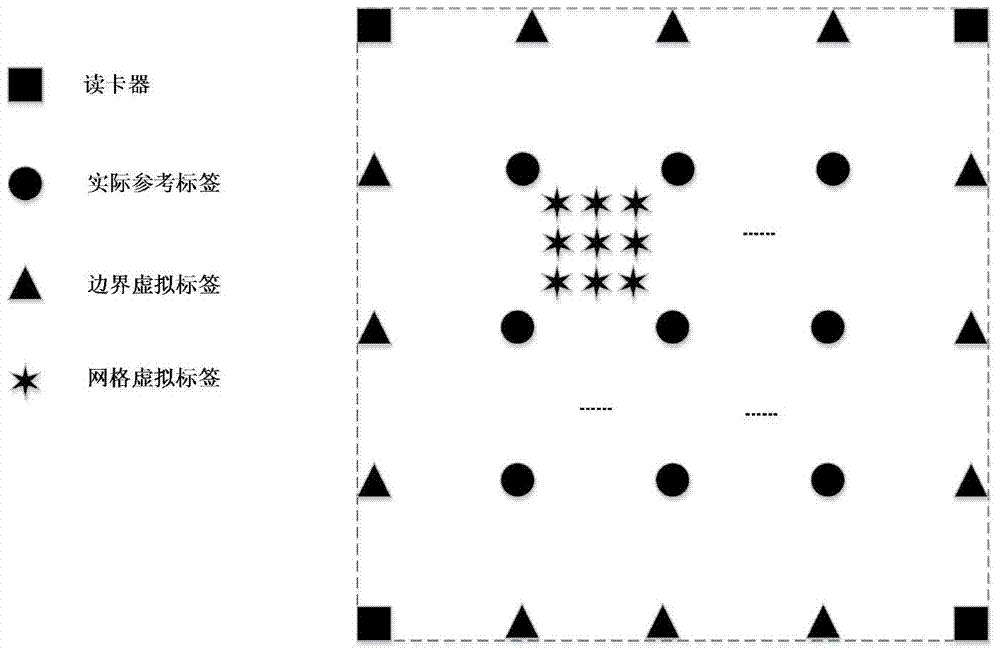

Robot distributed type representation intelligent semantic map establishment method

InactiveCN104330090AAddress limitationsHigh precisionInstruments for road network navigationVehicle position/course/altitude controlVisual positioningVisual perception

The invention discloses a robot distributed type representation intelligent semantic map establishment method which comprises the steps of firstly, traversing an indoor environment by a robot, and respectively positioning the robot and an artificial landmark with a quick identification code by a visual positioning method based on an extended kalman filtering algorithm and a radio frequency identification system based on a boundary virtual label algorithm, and constructing a measuring layer; then optimizing coordinates of a sampling point by a least square method, classifying positioning results by an adaptive spectral clustering method, and constructing a topological layer; and finally, updating the semantic property of a map according to QR code semantic information quickly identified by a camera, and constructing a semantic layer. When a state of an object in the indoor environment is detected, due to the adoption of the artificial landmark with a QR code, the efficiency of semantic map establishing is greatly improved, and the establishing difficulty is reduced; meanwhile, with the adoption of a method combining the QR code and an RFID technology, the precision of robot positioning and the map establishing reliability are improved.

Owner:BEIJING UNIV OF CHEM TECH

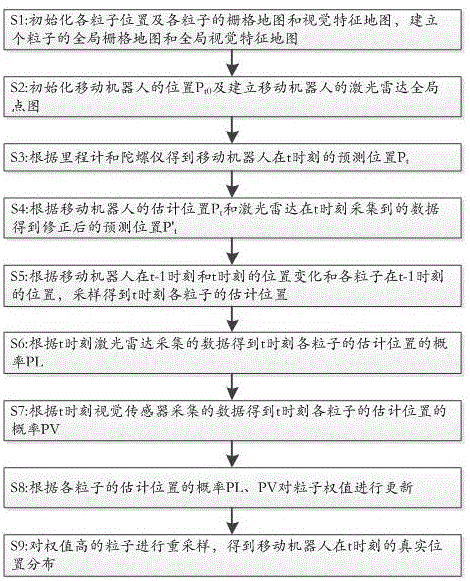

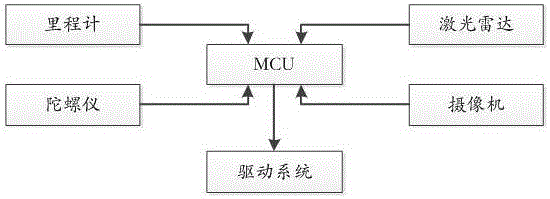

Laser and vision-based hybrid location method for mobile robot

ActiveCN105865449AWide range of applicationsMake up for the shortcomings of unstable visual positioningNavigational calculation instrumentsRadarVision based

The present invention discloses a laser and vision-based hybrid location method for a mobile robot. The mobile robot comprises a laser radar and a vision sensor. According to the technical scheme of the invention, the weight of each particle at a predicted position is updated based on the collected data of the laser radar and the collected data of the vision sensor. After that, particles of higher weights are re-sampled, so that the real location distribution of the mobile robot at the moment t can be obtained. Compared with the prior art, the above technical scheme integrates the high accuracy of the laser radar with the information integrity of the vision sensor, thus being wider in application range. Meanwhile, the defect that the visual location is unstable is overcome. In addition, in one embodiment of the present invention, a conventional particle filtering sampling model is improved, so that the diversity of particles is ensured.

Owner:SHEN ZHEN 3IROBOTICS CO LTD

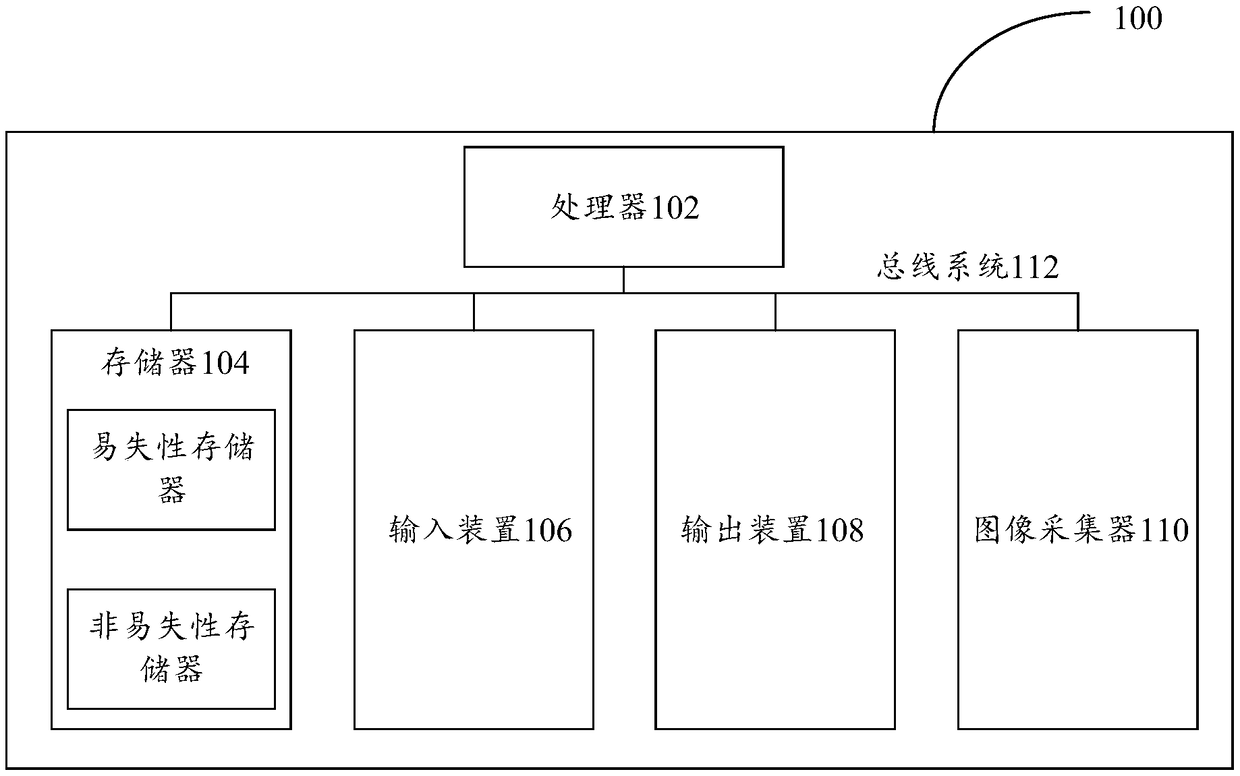

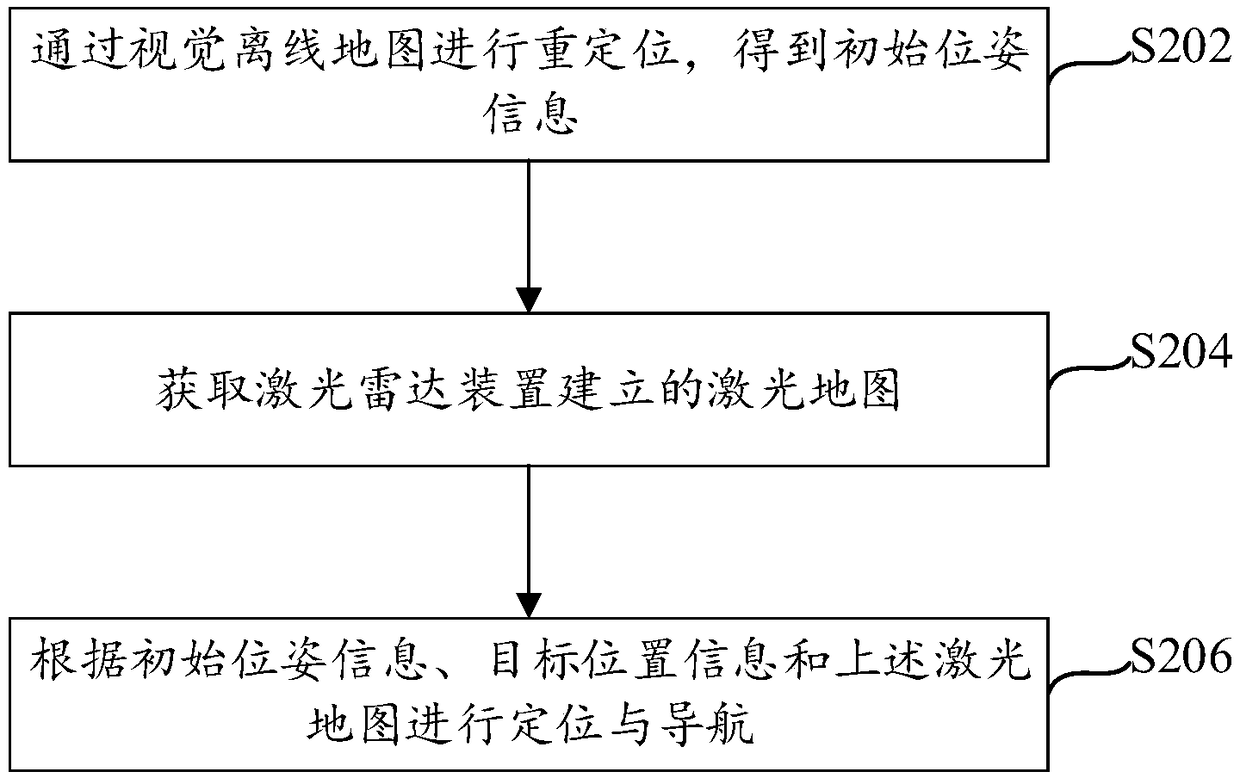

Positioning and navigating method and device and processing equipment

ActiveCN109084732AHigh precisionImprove robustnessPhotogrammetry/videogrammetryNavigation by speed/acceleration measurementsRadarVision based

The invention provides a positioning and navigating method and device and processing equipment, and relates to the technical field of navigation. The method comprises the following steps that re-positioning is conducted by a visual off-line map to obtain primary position gesture information, wherein the visual off-line map is obtained by fusion mapping based on a visual device and an inertial measurement unit; a laser map established by a laser radar device is obtained; and positioning and navigating are conducted based on the primary position gesture information, target position information and the later map. The embodiment of the invention provides the positioning method and device and the processing equipment. Positioning and mapping are conducted simultaneously by the laser radar device and the visual device, excellent re-positioning performance of visual positioning is used for providing primary position and gesture for the laser map, positioning and mapping can be achieved in theunknown environment, the positioning and autonomous navigating functions in a mapped scenario are achieved, and excellent accuracy and robustness are achieved.

Owner:BEIJING KUANGSHI TECH

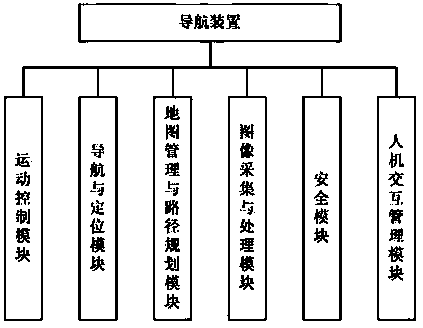

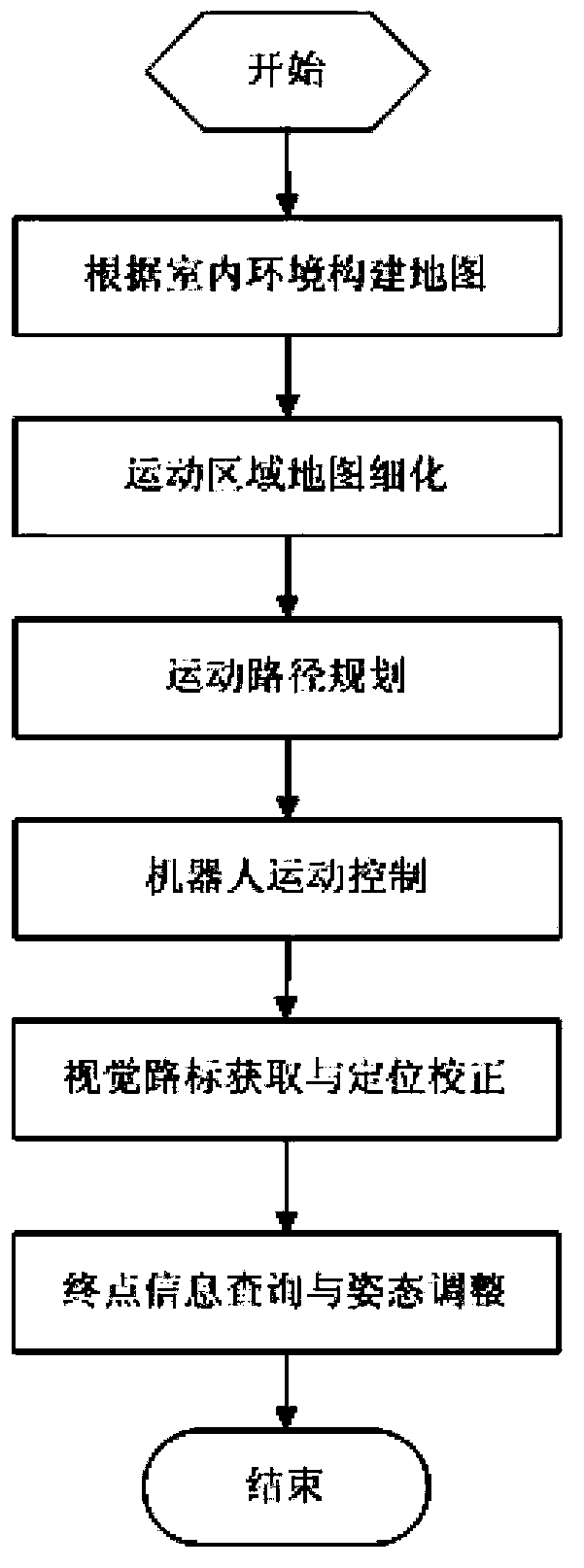

Indoor robot navigation device and navigation technology thereof

ActiveCN103353758AOvercoming the problem of poor positioning accuracyImprove anti-interference abilityPosition/course control in two dimensionsInterference resistanceComputer module

The invention discloses an indoor robot navigation device which is characterized by comprising a circuit board, wherein a motion control module, a navigation and location module, a map management and path planning module, an image acquisition and processing module, a safety module and a man-machine interaction management module. The invention also provides an indoor robot navigation technology. The technology is characterized by comprising the following steps of: (a) establishing a map and planning a motion path of the robot; (b) refining a area and setting a visual signpost; (c) acquiring and processing the visual signpost, and performing motion location and correction; (d) storing and querying area and signpost information. The device has the advantages of autonomous navigation, high interference resistance capacity, zero track movement and the like, and the problem that most of the conventional navigation modes are low in positioning precision is solved through visual positioning correction.

Owner:苏州海通机器人系统有限公司

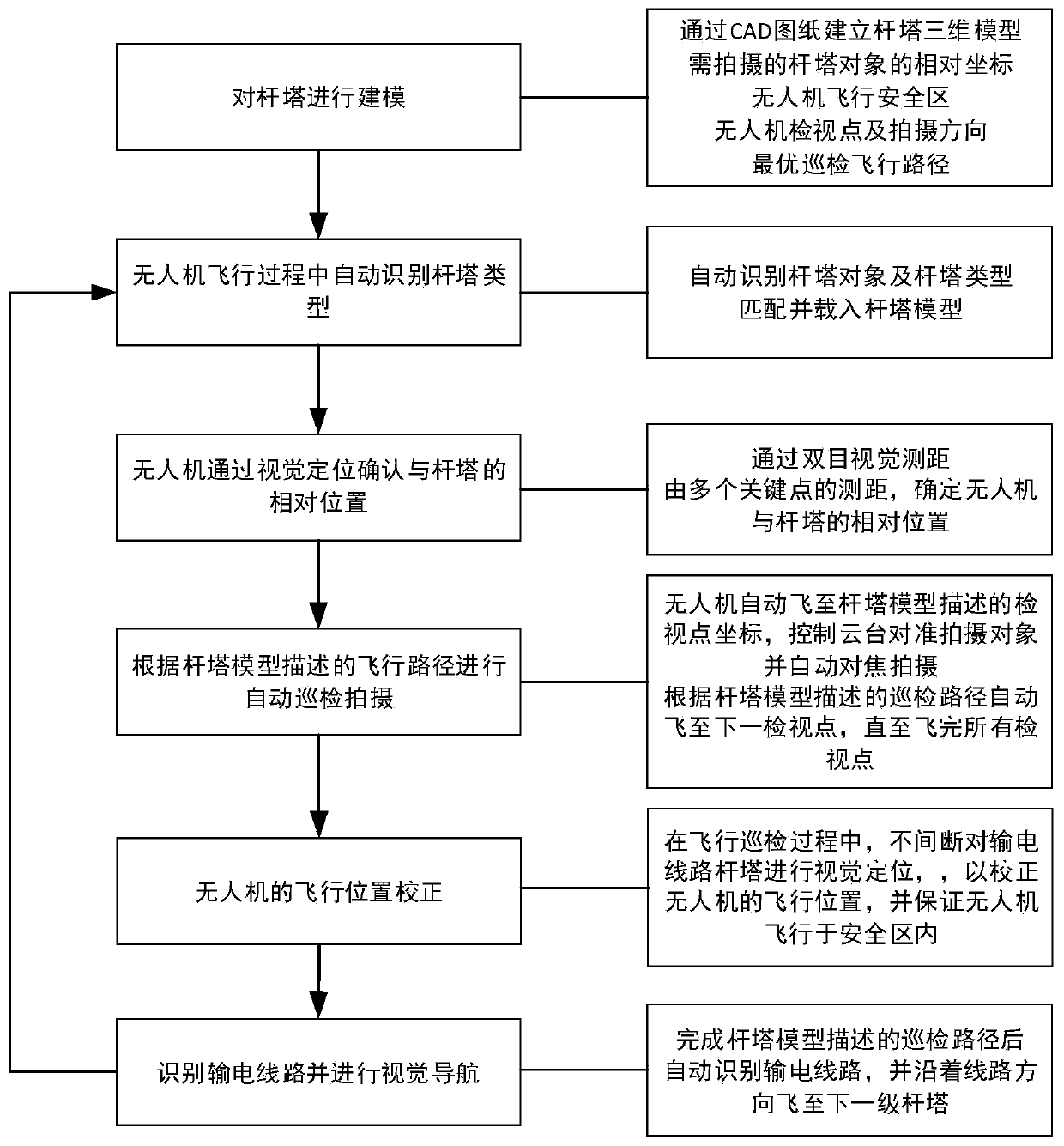

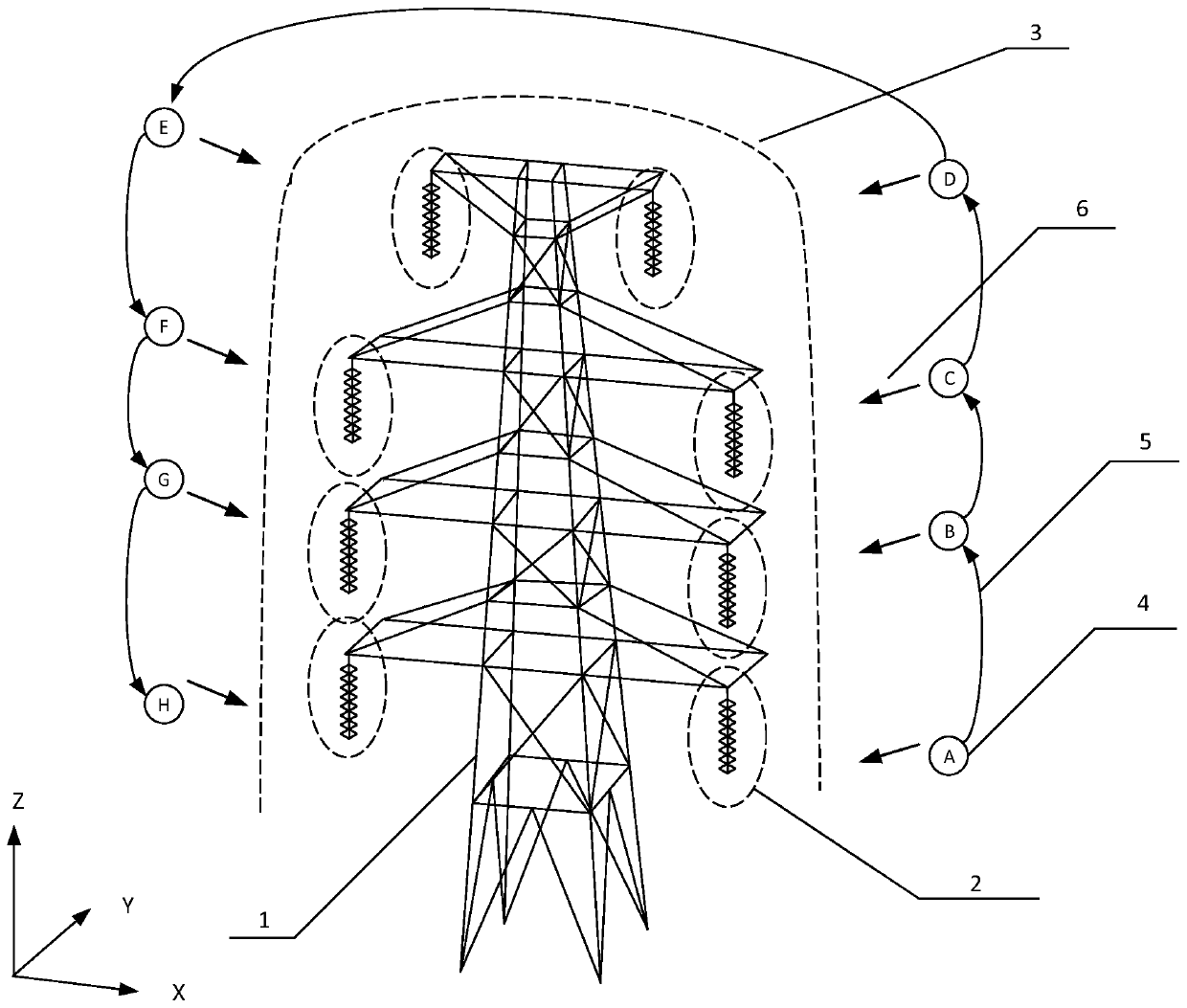

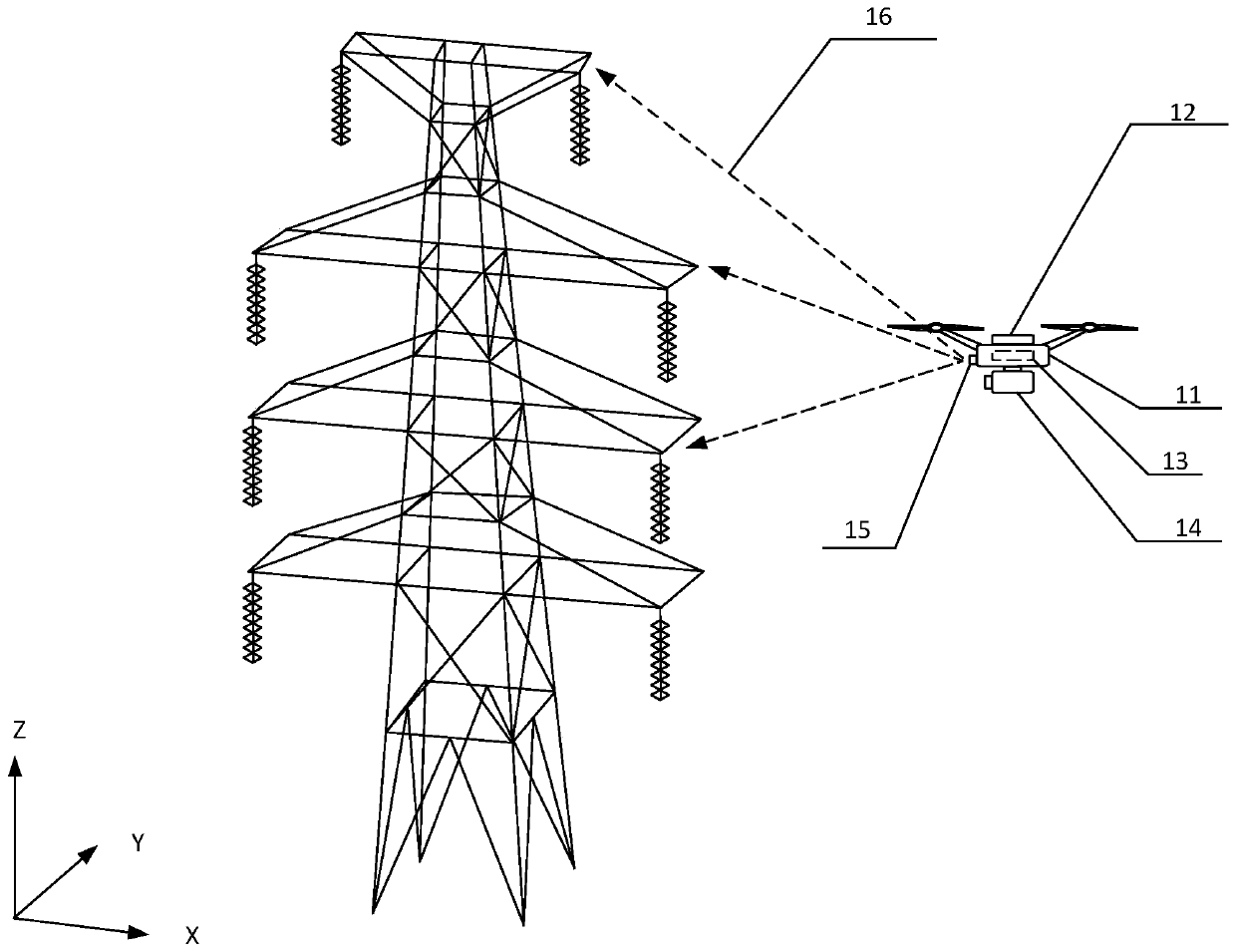

Pole tower model matching and visual navigation-based power unmanned aerial vehicle and inspection method

ActiveCN110133440AAchieve autonomous inspectionImprove inspection efficiencyFault location by conductor typesDesign optimisation/simulationUncrewed vehicleTower

The invention discloses a pole tower model matching and visual navigation-based power unmanned aerial vehicle and an inspection method. In an unmanned aerial vehicle, a depth image of a front end of the unmanned aerial vehicle is acquired by a dual-eye visual sensor, distance between the unmanned aerial vehicle and a front object is further measured, a surrounding image is acquired by a cloud deckand a camera, the object is further identified, and the flight gesture of the unmanned aerial vehicle is controlled by flight control of unmanned aerial vehicle. The method comprises the steps of performing pole tower model building on different types of power transmission line pole towers; automatically identifying the power transmission line pole towers and pole tower types by the unmanned aerial vehicle during the flight process, matching and loading a pre-built pole tower model; performing visual positioning on the power transmission line pole towers by the unmanned aerial vehicle, and acquiring relative positions of the unmanned aerial vehicle and the pole towers; and performing flight inspection by the unmanned aerial vehicle according to optimal flight path. By the unmanned aerialvehicle, the modeling workload is greatly reduced, and the model universality is improved; and the inspection method does not dependent on absolute coordinate flight, the flexibility is greatly improved, the cost is reduced, and the power facility safety is improved.

Owner:NARI TECH CO LTD

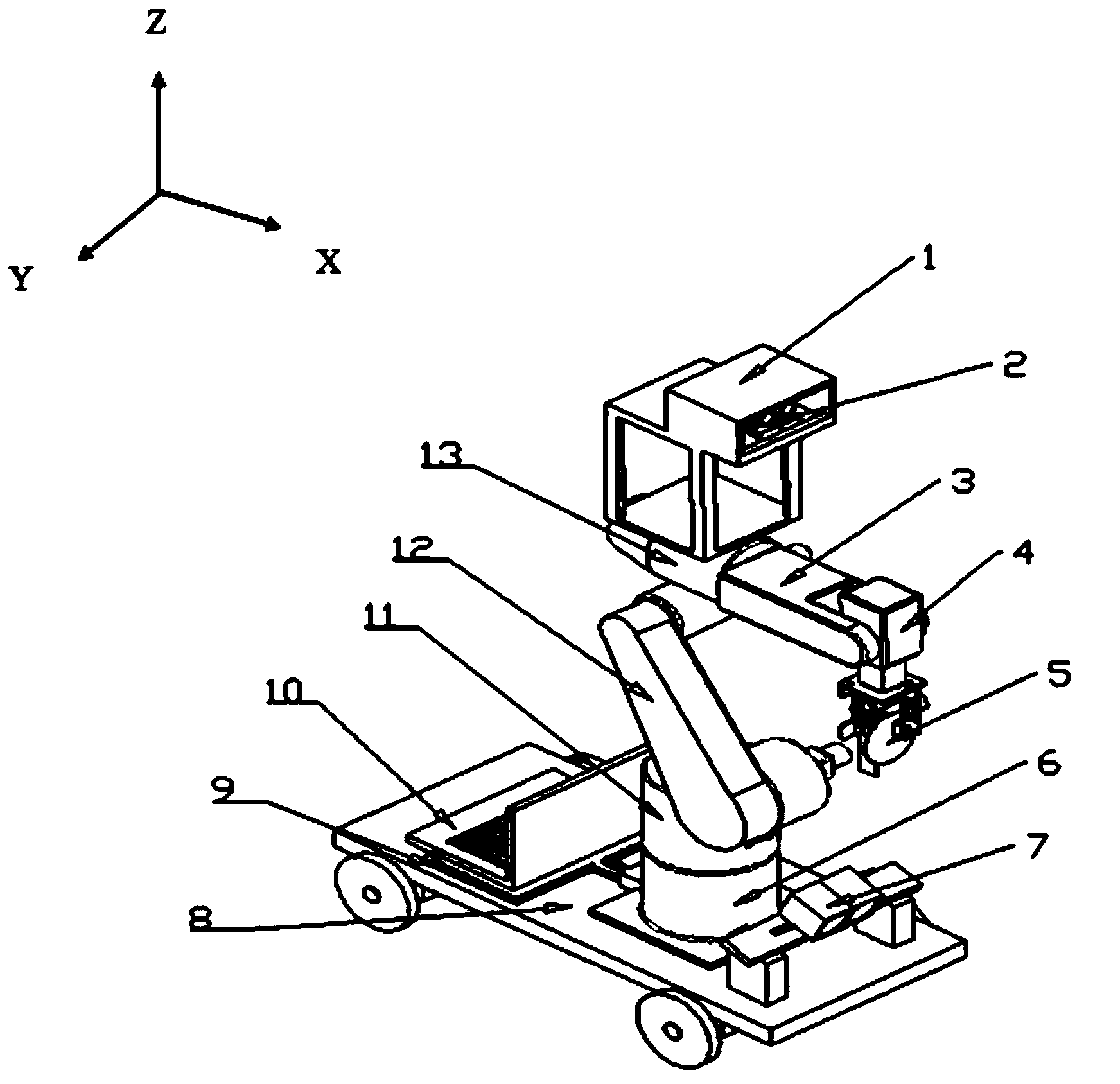

Virtual robot and real robot integration based picking system and method

ActiveCN104067781AAchieve precisionAchieve rationalityPicking devicesMachine visionReal-time simulation

The invention discloses a virtual robot and real robot integration based picking system. The virtual robot and real robot integration based picking system comprises a vision positioning system, a real robot and a virtual robot simulation system; a picking target is positioned through the vision positioning system and the real-time picking of the real robot is guided after the three-dimensional space coordinate information of the picking target is obtained; meanwhile the obtained three-dimensional space coordinate information is transmitted to the virtual robot simulation system through a data transmission line and the real-time simulation is performed on the picking process through a virtual robot. According to the virtual robot and real robot integration based picking system and method, a virtual simulation technology is combined with a machine vision technology, the robot under the virtual environment and the real robot are driven based on calculation data of a binocular vision system to perform the visual positioning and the picking behavior simultaneously, and accordingly the contrast of the accuracy of the vision positioning and the effectiveness validation of the picking behavior are implemented.

Owner:SOUTH CHINA AGRI UNIV

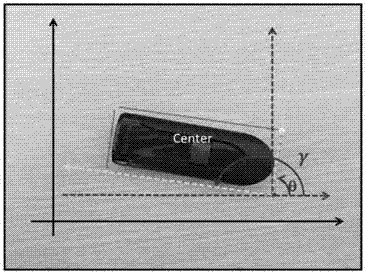

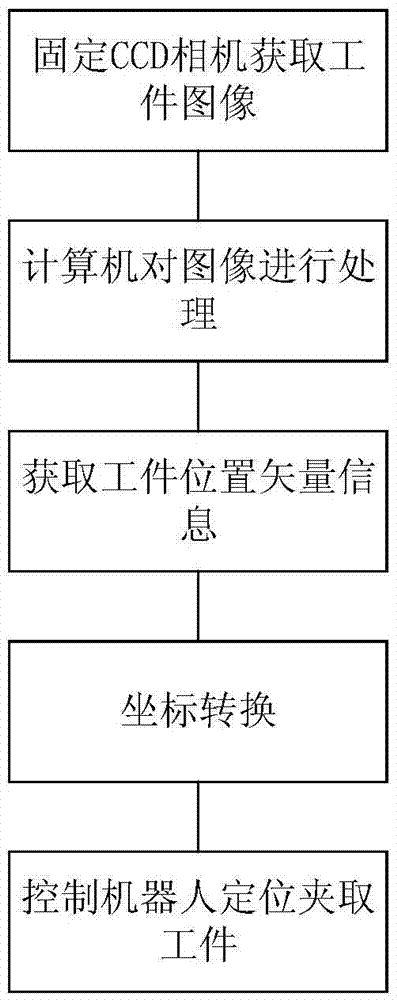

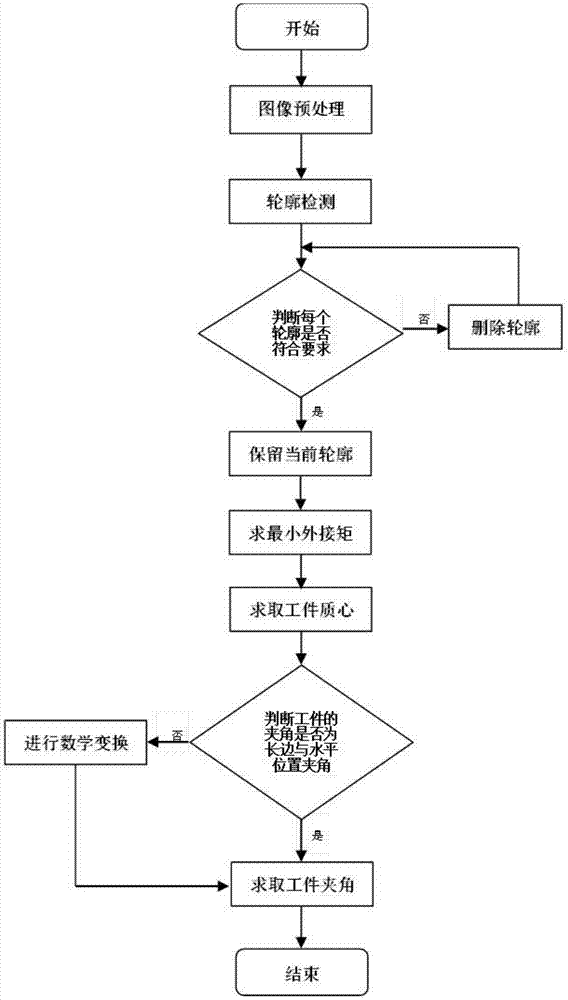

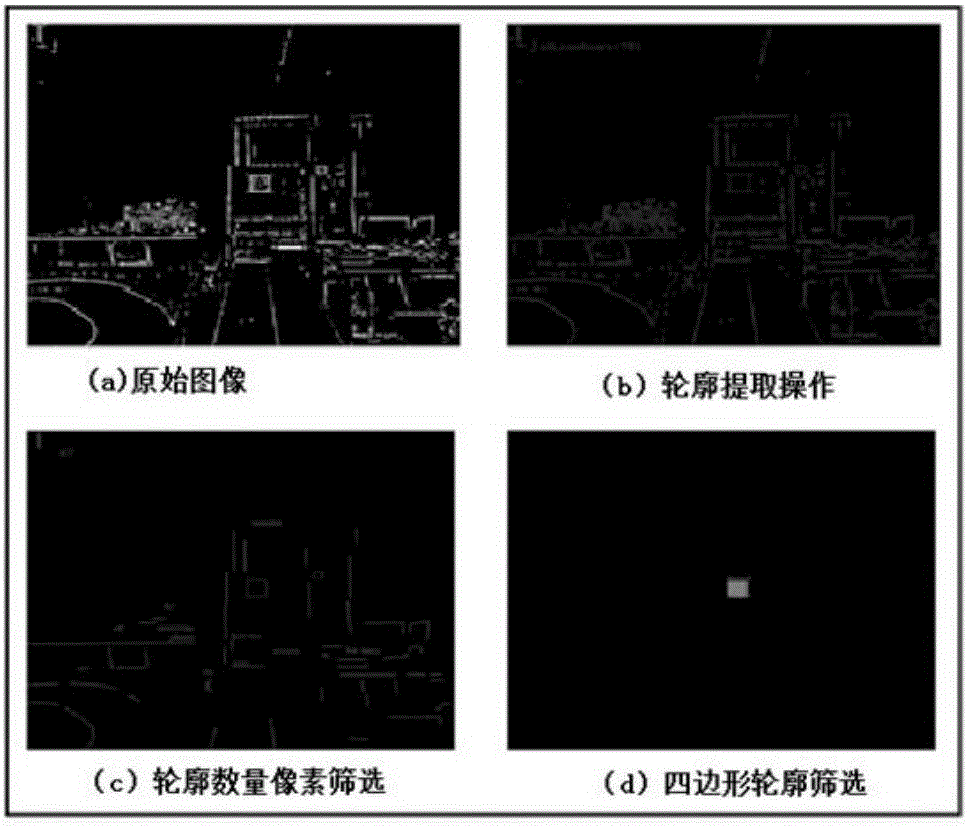

Implementation method for workpiece grasping of industrial robot based on visual positioning

InactiveCN106934813AImprove recognition accuracyAvoid uncrawlableImage enhancementImage analysisJoint coordinatesControl system

The invention relates to an implementation method for workpiece grasping of an industrial robot based on visual positioning, which comprises the steps that a workpiece image is acquired through a fixed global CCD camera, and workpiece image information is transmitted to a robot control system through an Ethernet interface; the robot control system processes the workpiece image and acquires workpiece position vector information; the robot performs Cartesian and joint coordinate transformation according to the workpiece position vector information so as to realize positioning and grasping of a tail-end claw for a workpiece. The invention provides a workpiece position information calculation method, which is characterized in that contour region screening is performed on all detected images when contour detection is performed on workpiece images, isolated and small-segment continuous edges are deleted, non-target contours are removed, and the target contour identification accuracy is improved; and meanwhile, when workpiece position information is calculated, judgment is performed on a long side of a workpiece, and the robot is controlled to grasp the long side of the workpiece, so that a failure in grasping caused by short side clamping of the robot is avoided, and the grasping efficiency is improved.

Owner:SHENYANG GOLDING NC & INTELLIGENCE TECH CO LTD

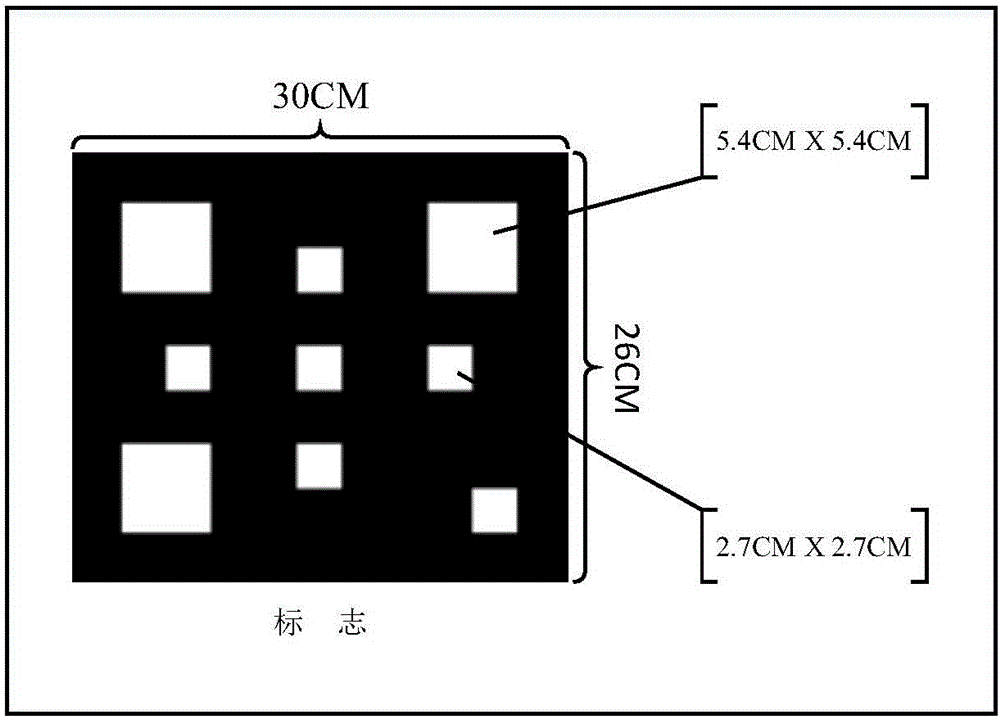

Unmanned aerial vehicle fixed-point flight control system based on visual positioning

ActiveCN106054931AAchieve the purpose of pinpointingGuaranteed correctnessTarget-seeking controlVision processingInformation control

The invention provides an unmanned aerial vehicle fixed-point flight control system based on visual positioning. The system comprises an UAV airborne module, a positioning marker, a ground monitoring module and a communication module. According to the system, through the cooperation with a visual processing algorithm, the UAV fixed-point flight is made by identification information, the disadvantages of low GPS fixed-point flight precision is made up, and the control system uses an open source operating system and a general UAV communication protocol and has good scalability and compatibility. The system can be applied to the UAV logistics, UAV monitoring and other directions.

Owner:NORTH CHINA UNIVERSITY OF TECHNOLOGY

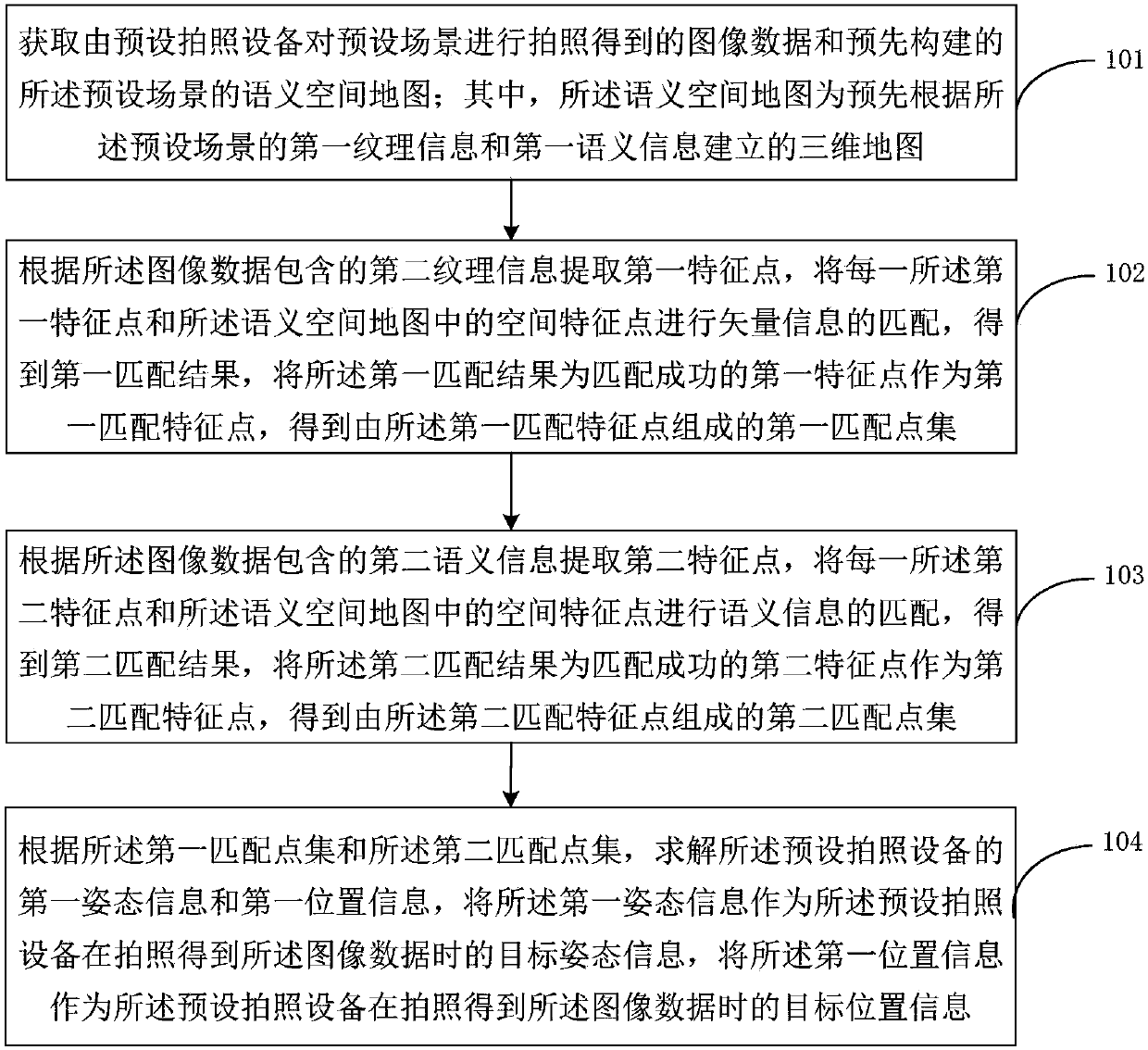

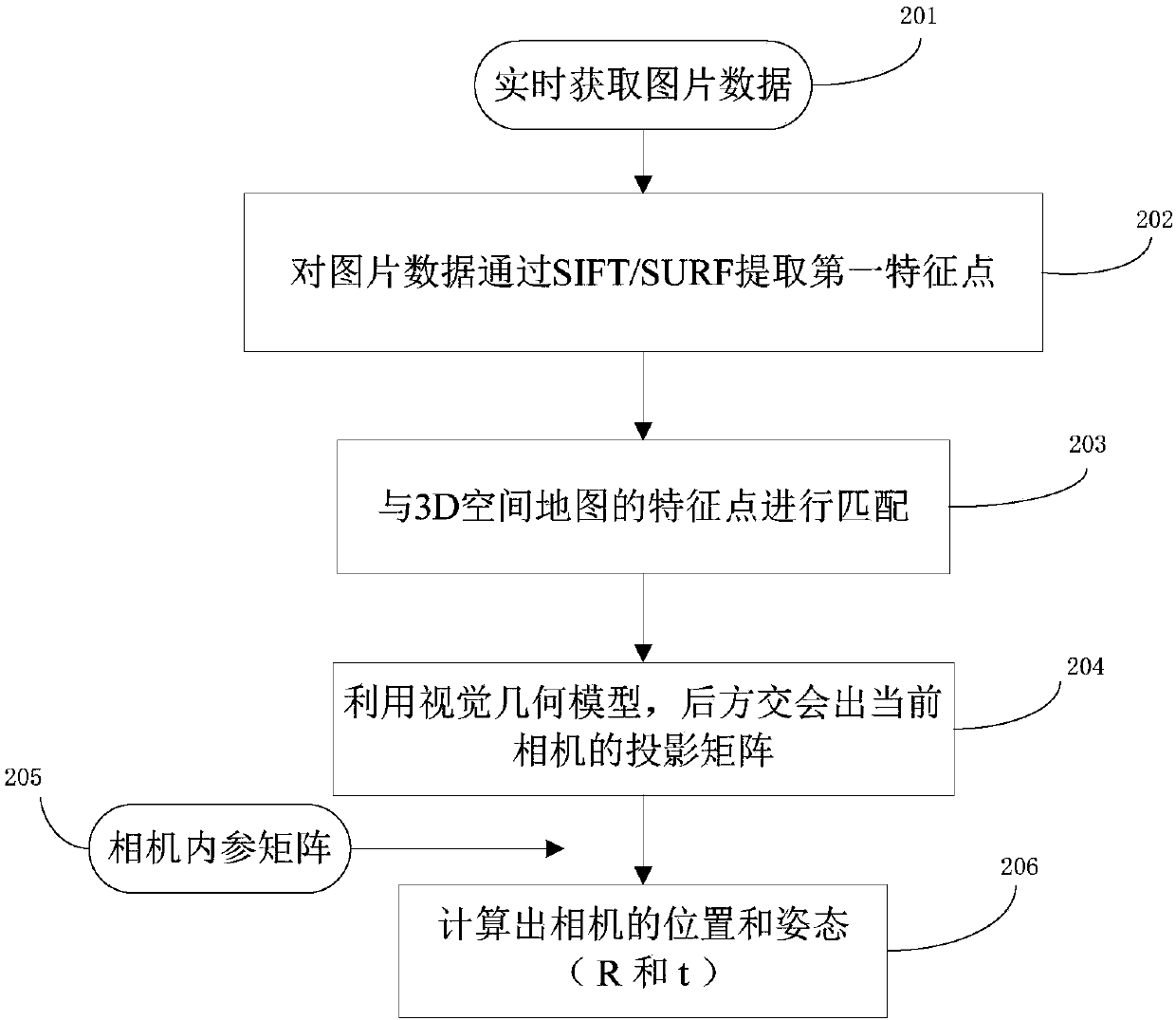

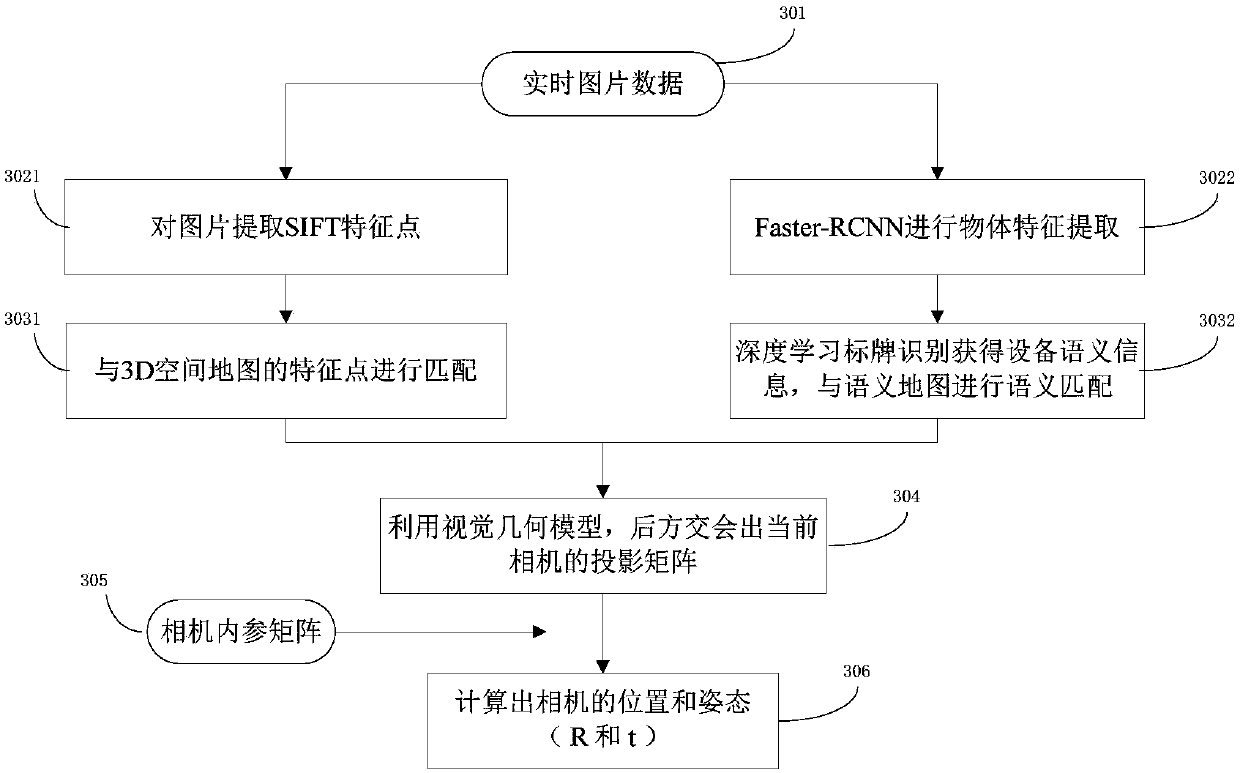

Vision localization method and device

ActiveCN107742311AReliable data baseHigh positioning accuracyImage analysisPattern recognitionSemantic space

The embodiment of the invention discloses a vision localization method and device. In the method, according to a preset camera device, a preset scene is shot to obtain image data; on the one hand, according to first feature points of an image and spatial feature points in a semantic space map, vector information matching is conducted, and a first matching point set is obtained; on the other hand,second feature points, containing second semantic information, in the image data are extracted, the second feature points and the spatial feature points are subjected to semantic information matching,and a second matching point set is obtained; according to the first matching point set and the second matching point set, the target pose information and the target position information of the presetcamera device are obtained. In the method, the texture information and the semantic information of the image are utilized at the same time to extract the feature points, more feature points which aresuccessfully matched with the spatial feature points in the semantic space map can be found in the image data, a more reliable data basis is provided for calculating the target pose information and the target position information of the preset camera device, and the localization precision is higher.

Owner:北京易达图灵科技有限公司

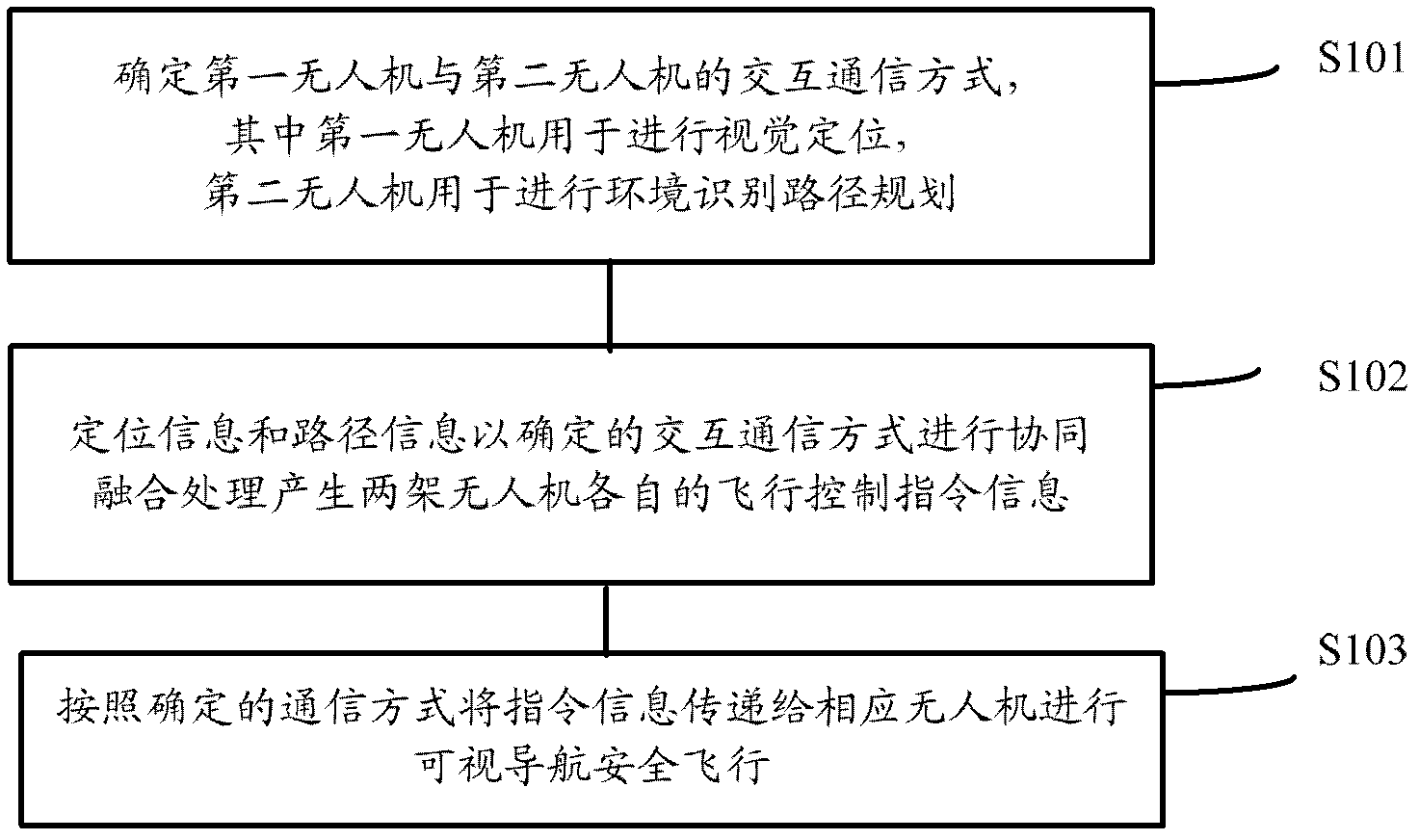

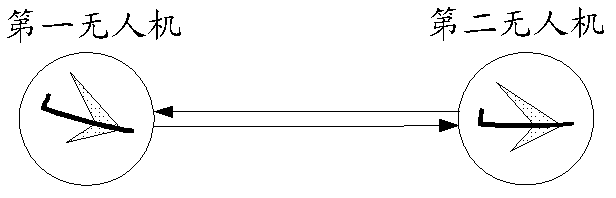

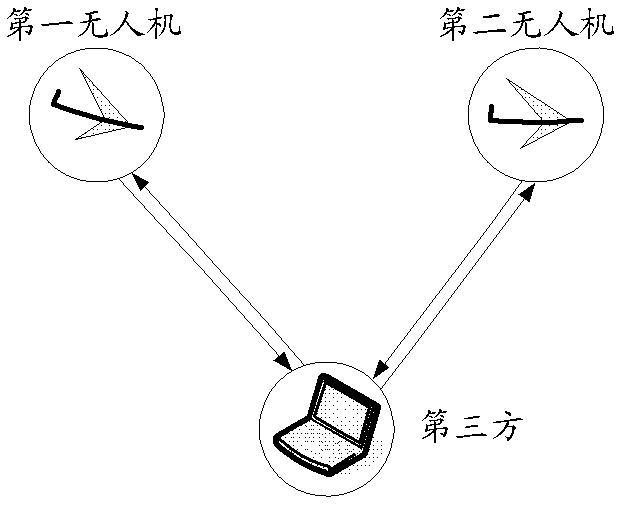

Task collaborative visual navigation method of two unmanned aerial vehicles

ActiveCN102628690AControl the amount of information transmittedImprove matchNavigation instrumentsInformation transmissionUncrewed vehicle

The invention provides a task collaborative visual navigation method of two unmanned aerial vehicles. The method comprises the following steps: determining an interactive communication mode between a first unmanned aerial vehicle and a second unmanned aerial vehicle, wherein the first unmanned aerial vehicle is used to perform visual positioning, and the second unmanned aerial vehicle is used to perform environment identification route planning; performing fusion processing on visual positioning information generated by the first unmanned aerial vehicle and route information generated by the second unmanned aerial vehicle to generate respective flight control instruction information of the first and second unmanned aerial vehicles at all times; transferring the flight control instruction information to the corresponding first and second unmanned aerial vehicles respectively by the interactive communication mode so as to perform visual navigation safe flight. The method provided in the invention can effectively control information transmission volume of real-time videos and image transmission in cooperative visual navigation of the unmanned aerial vehicles, has advantages of good match capability and good reliability, and is an effective technology for implementing cooperative visual navigation of unmanned aerial vehicles cluster to avoid risks, barriers and the like.

Owner:TSINGHUA UNIV

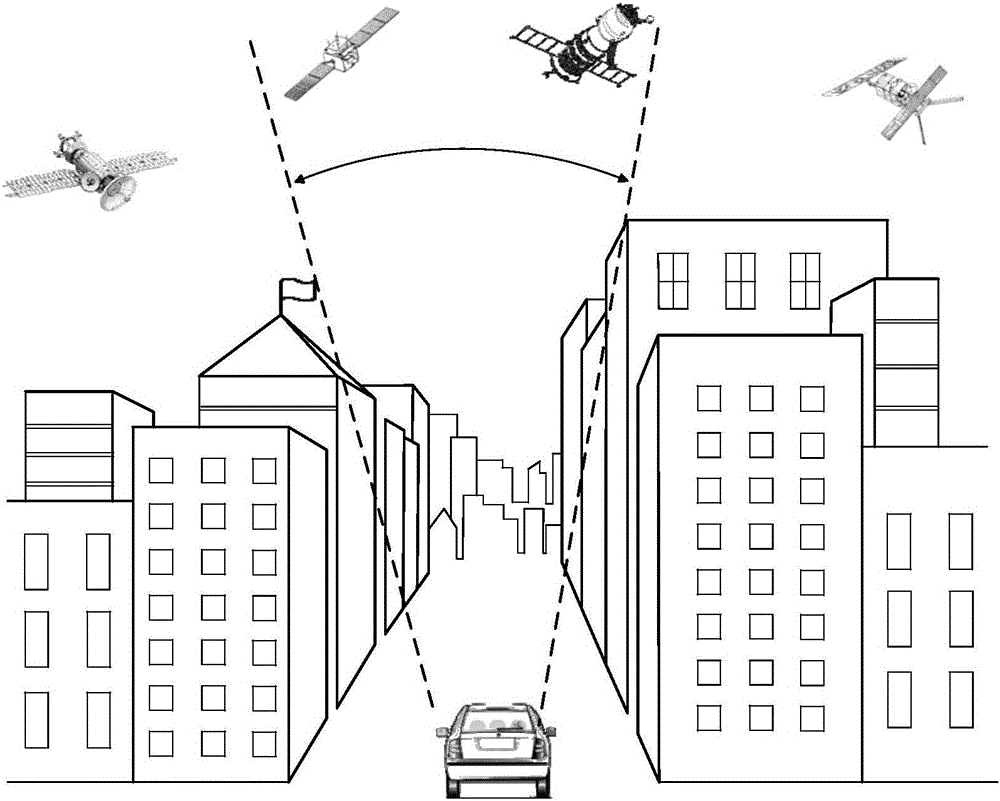

Urban area and indoor high-precision visual positioning system and method

InactiveCN106447585AAvoid multipathAvoid occlusionData processing applicationsInformation repositoryVision based

The invention provides an urban area and indoor high-precision visual positioning system and method. The image information of a surrounding environment is acquired based on a visual sensor to realize high-precision urban area and indoor positioning. The method comprises the steps that the distinguishing feature information of an image is calculated and extracted after the visual sensor captures the scene image information; according to the distinguishing feature information, similarity degree recognition and matching are carried out in the feature information base of a digital three-dimensional model; according to matching coordinate information recorded in an image feature matching process, a geometrical mapping relation from a three-dimensional scene to a two-dimensional image is restored, and a camera intersection model from a two-dimensional image coordinate to a three-dimensional space coordinate is established to determine the three-dimensional position and attitude information of the visual sensor and a dynamic user; multi-source image information captured by the visual sensor is received; a digital three-dimensional model corresponding to a real scene is reconstructed or updated; and the feature information base is updated. According to the invention, robust and sustainable positioning ability is provided when the surrounding environment changes.

Owner:WUHAN UNIV

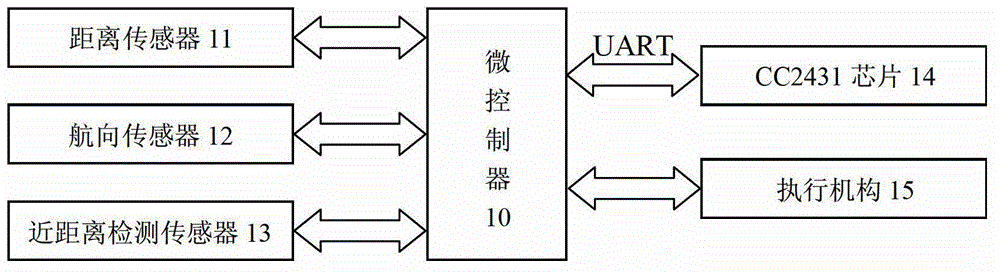

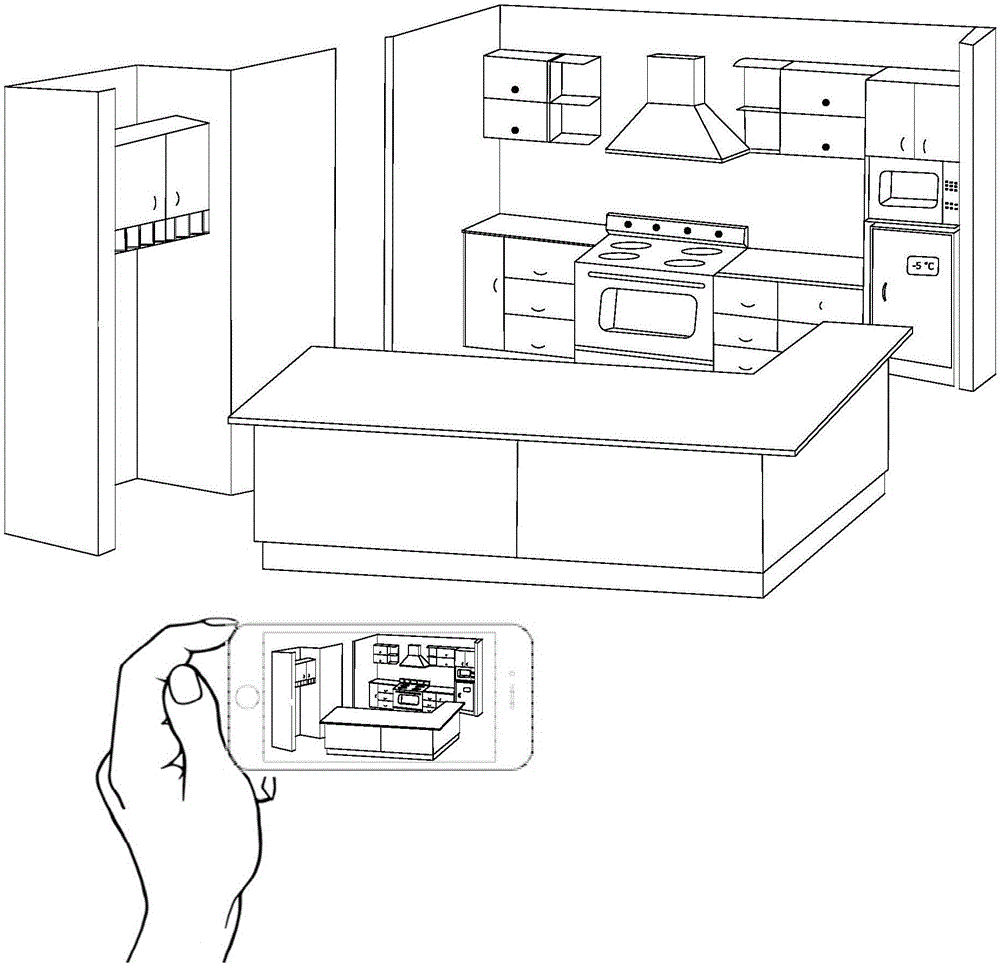

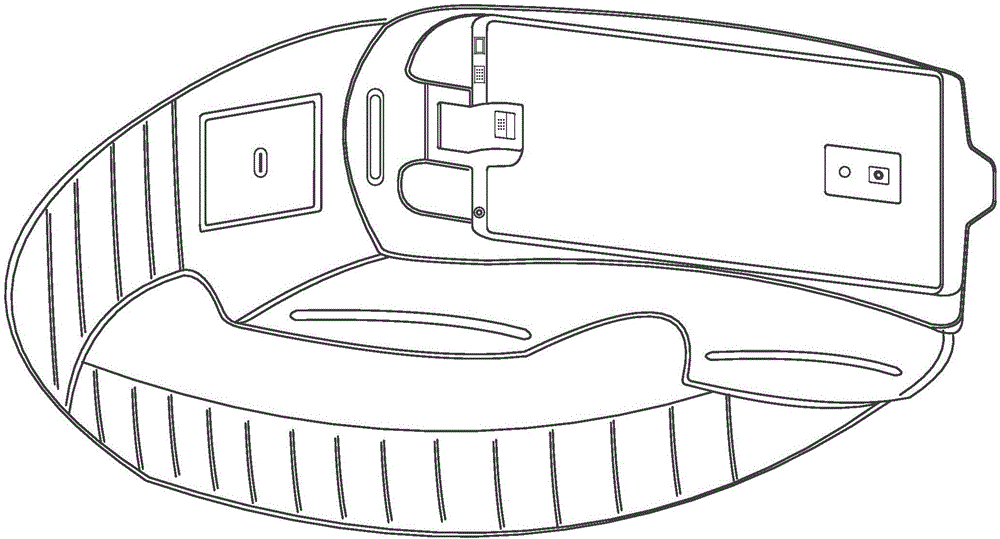

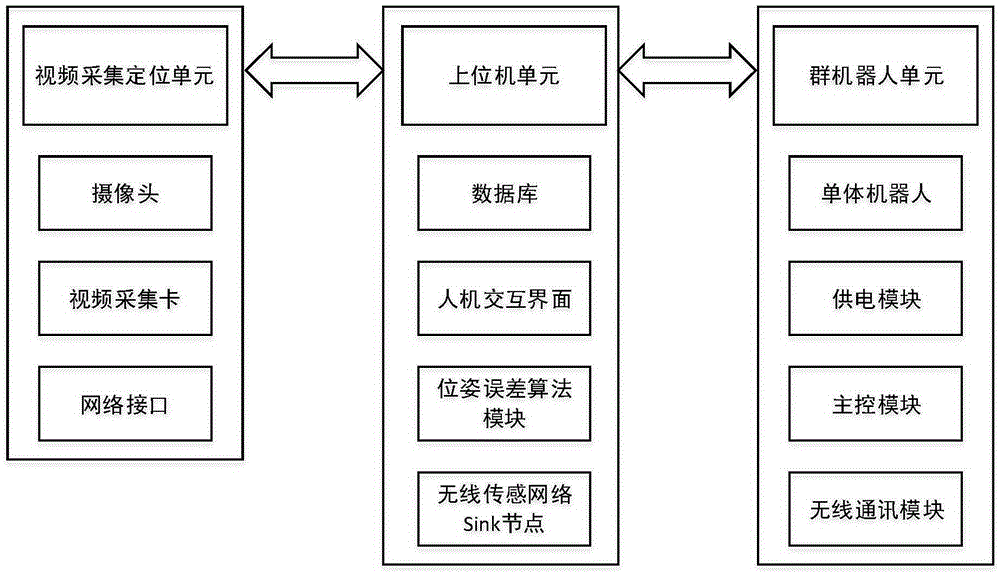

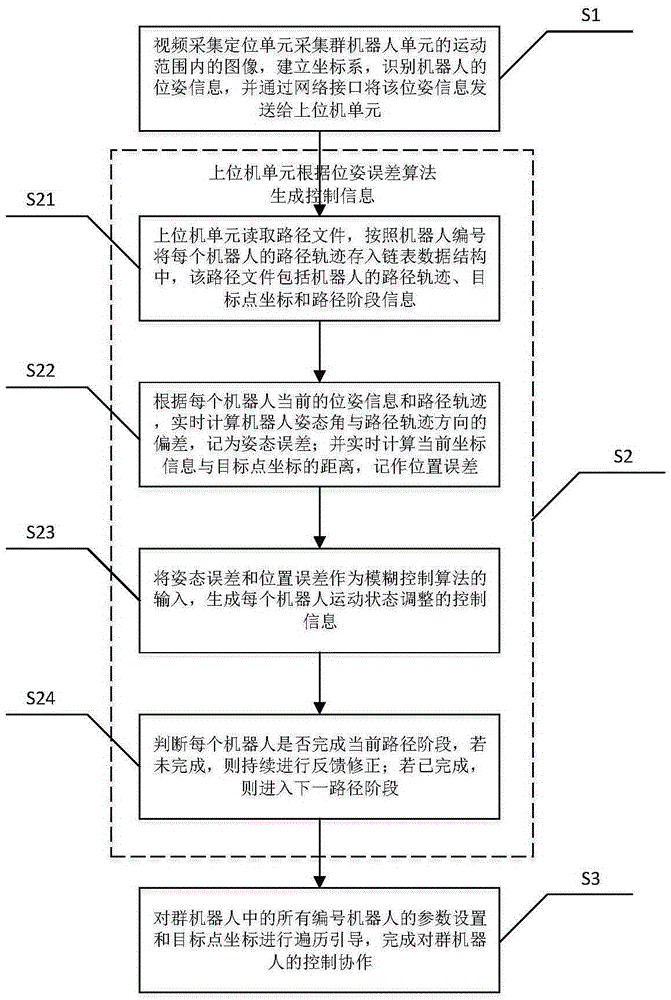

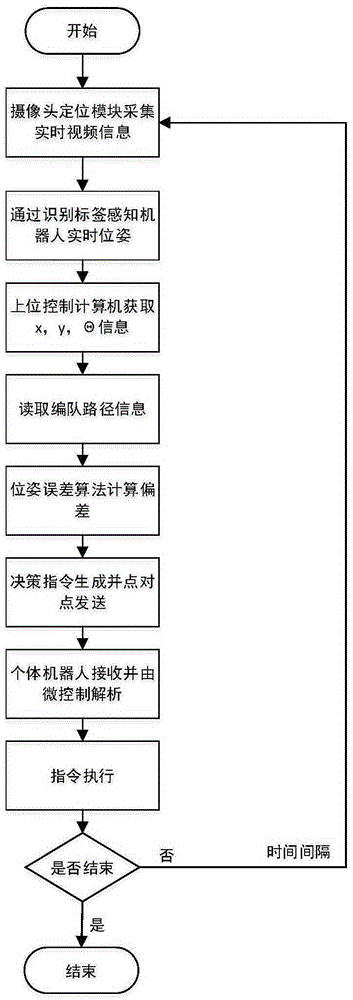

Swarm robot control system and method based on visual positioning

ActiveCN105425791AEnsure collaborative control operationImprove individual work abilityPosition/course control in two dimensionsVehiclesRange of motionEngineering

The invention discloses a swarm robot control system and method based on visual positioning, and the system comprises a video collection positioning unit, an upper computer unit, and a swarm robot unit. The video collection positioning unit is used for collecting an image in a movement range of the swarm robot unit, building a coordinate system, recognizing the pose information of each robot in the swarm robot unit, and transmitting the pose information to the upper computer unit. The upper computer unit is used for generating control information for correcting the movement posture of the robots in real time, and transmitting the control information to the robots through a wireless sensing network. The swarm robot unit consists of a plurality of robots, and is used for receiving and analyzing the control information, adjusting a walking strategy, and completing the following control of a path track and a group dispatching task. The system and method can achieve the accurate coordinative control of the movement states of the swarm robots through the technology of visual positioning, and complete the execution of a single task and a group of tasks simply, conveniently and quickly.

Owner:WUHAN UNIV OF TECH

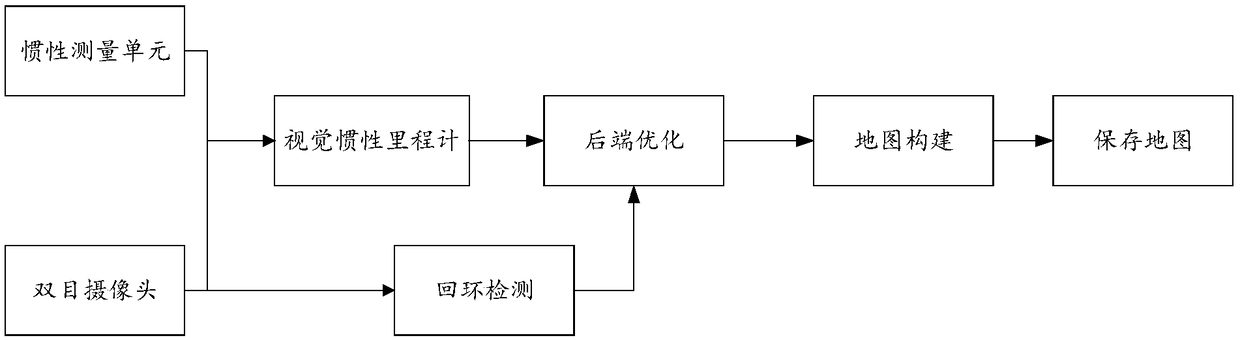

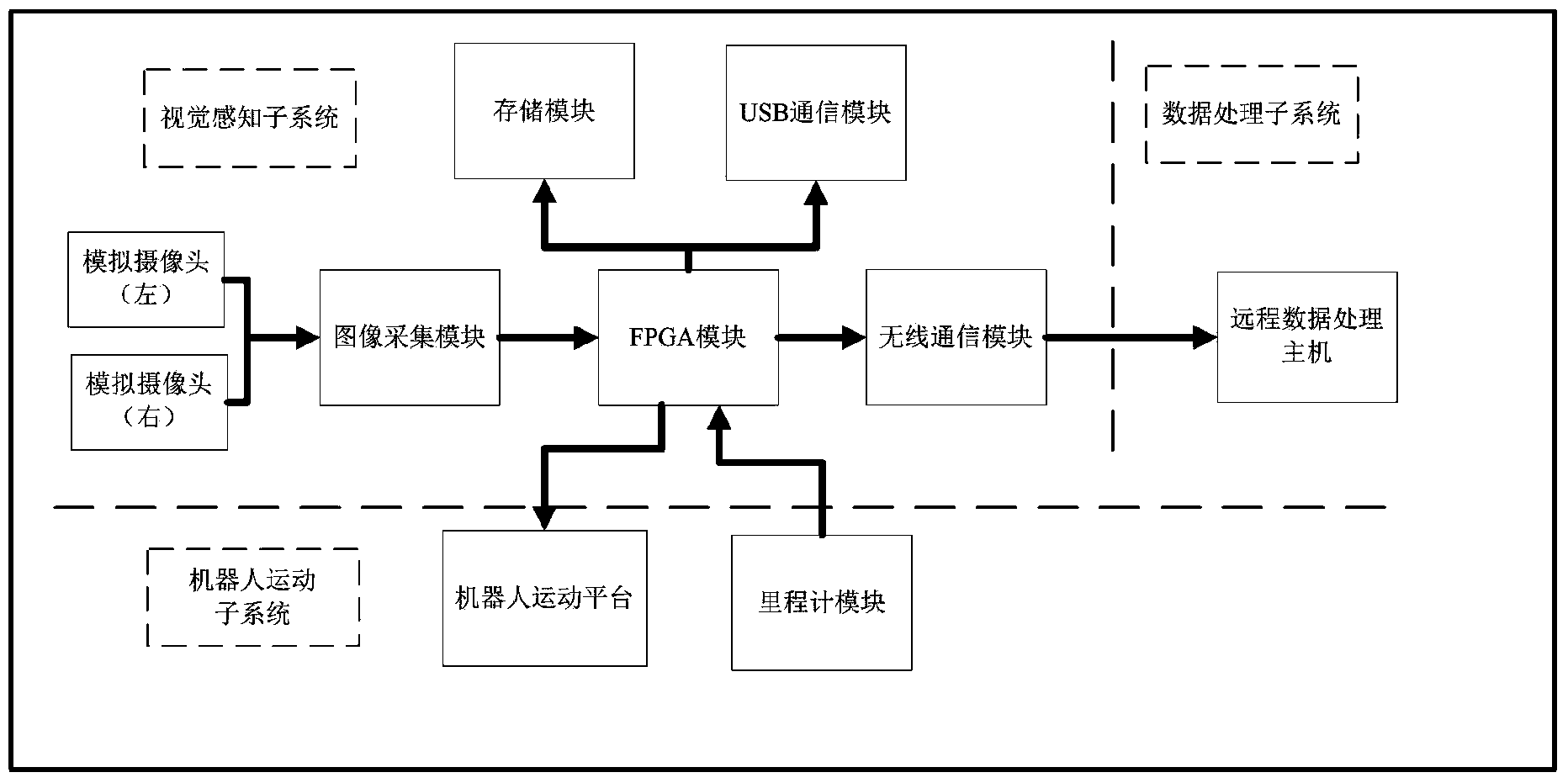

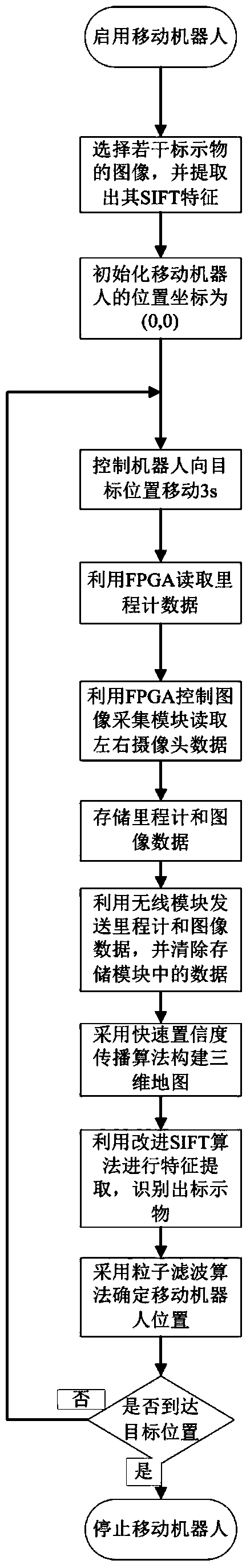

Binocular vision positioning and three-dimensional mapping method for indoor mobile robot

InactiveCN103926927AStrong ability to express the environmentSolve the shortcomings of high complexity and poor real-time performancePosition/course control in two dimensions3D modellingOdometerVisual positioning

The invention discloses a binocular vision positioning and three-dimensional mapping method for an indoor mobile robot. A binocular vision positioning and three-dimensional mapping system for the indoor mobile robot is mainly composed of a two-wheeled mobile robot platform, an odometer module, an analog camera, an FPGA core module, an image acquisition module, a wireless communication module, a storage module, a USB module and a remote data processing host computer. The FPGA core module controls the image acquisition module to collect information of a left image and a right image and sends the information of the left image and the right image to the remote data processing host computer. Distance information between images and the robot is obtained on the remote data processing host computer according to the information of the left image and the right image in a fast belief propagation algorithm, a three-dimensional environmental map is established in the remote data processing host computer, recognition of a specific marker is realized in an improved SIFT algorithm, and then the position of the mobile robot is determined through information integration in a particle filter algorithm. The binocular vision positioning and three-dimensional mapping system is compact in structure and capable of having access to an intelligent space system so that the indoor mobile robot can detect the environment in real time and provide more and accurate services according to the corresponding position information at the same time.

Owner:CHONGQING UNIV

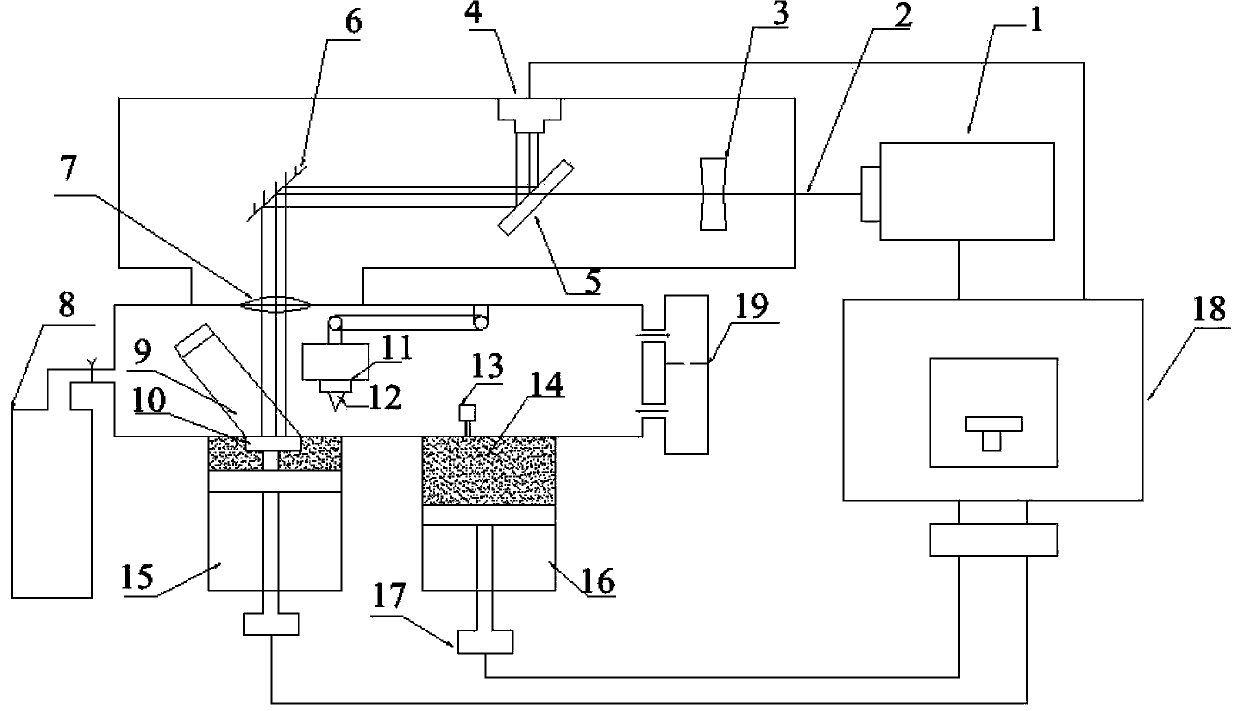

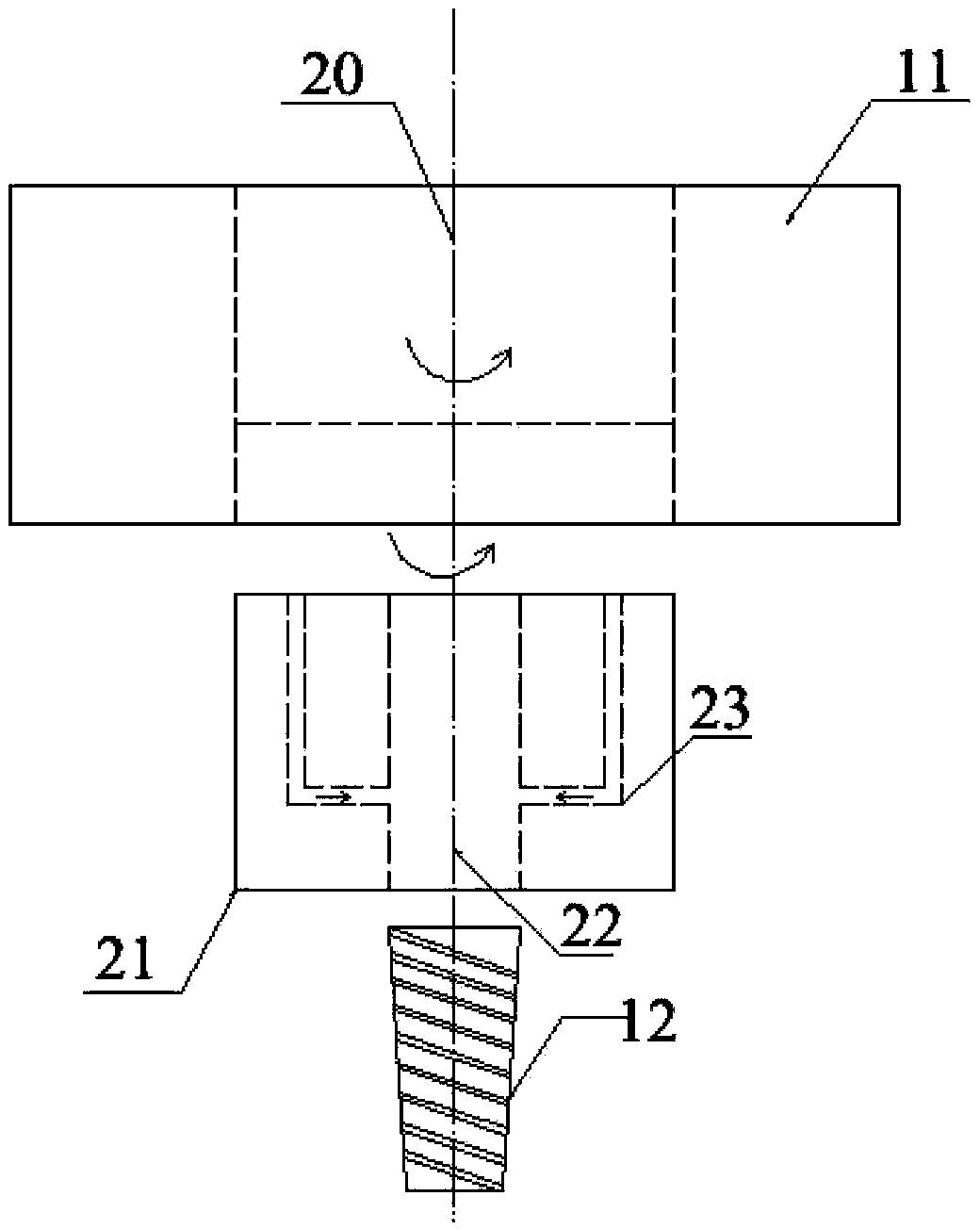

Combination manufacturing method and device for injection mold with conformal cooling water path

ActiveCN103418985AEasy to manufactureAvoid defectsIncreasing energy efficiencyManufacturing technologyLight beam

The invention discloses a combination manufacturing method and device for an injection mold with a conformal cooling water path. The device comprises a light beam focusing system, a close wave length coaxial vision positioning system, a powder pavement system and a gas protection system. The gas protection system comprises a sealed forming chamber, a shielding gas device and a powder purification device, wherein the shielding gas device is connected to one side of the sealed forming chamber, and the powder purification device is connected to the other side of the sealed forming chamber. The method is a combination processing method combined with the laser region selection fusion technology and the precision cutting processing technology, the advantages of the laser region selection fusion flexible processing are retained, and the feature that the precision of the high-speed cutting processing is good is given to play. In the process of region selection laser fusion processing, laser surface refusion processing is carried out on each layer, and the compactness and the surface quality of the mold are improved. The technique of density changing rapid manufacturing is adopted, and manufacturing efficiency is improved. Precision mold components with the interior special-shape cooling water path and the complex inner cavity structure can be integrally processed at a time.

Owner:SOUTH CHINA UNIV OF TECH

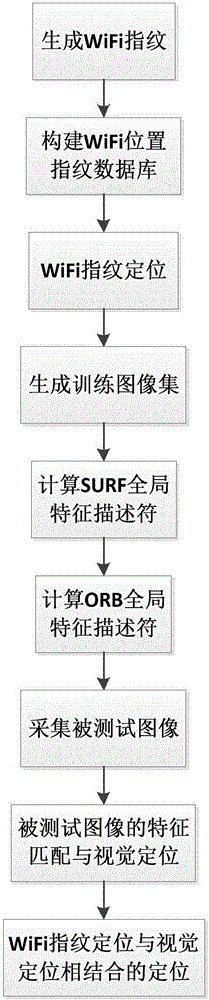

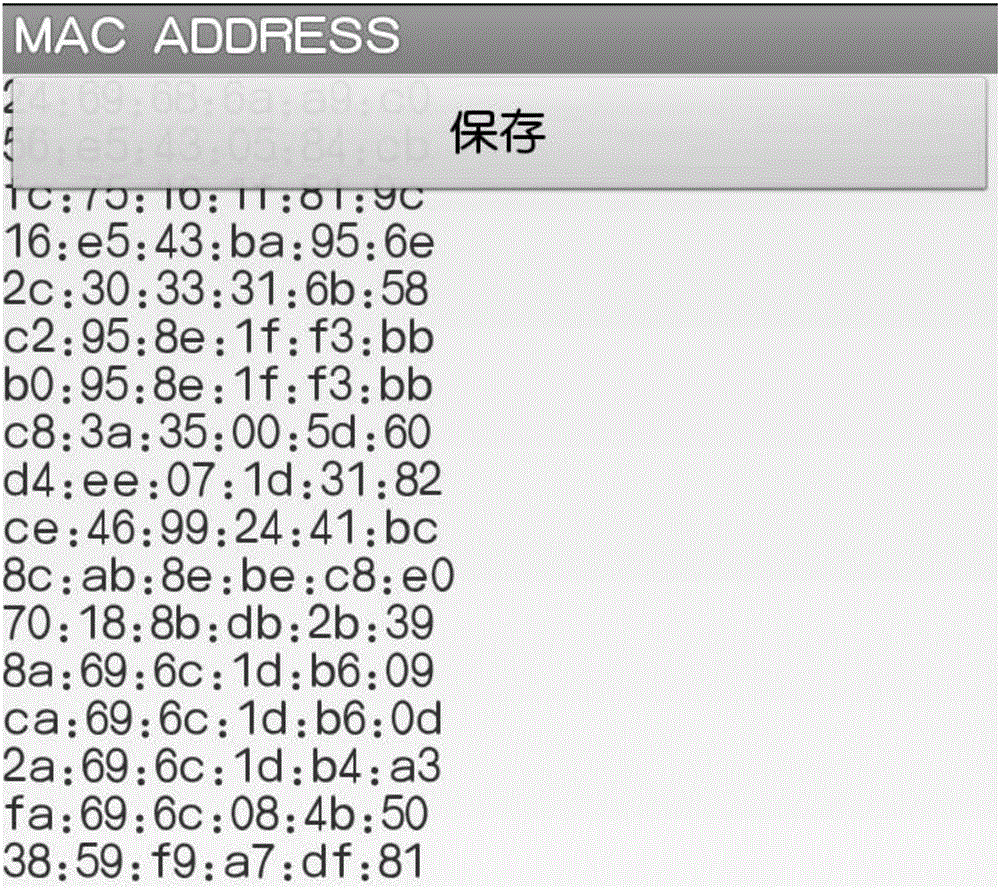

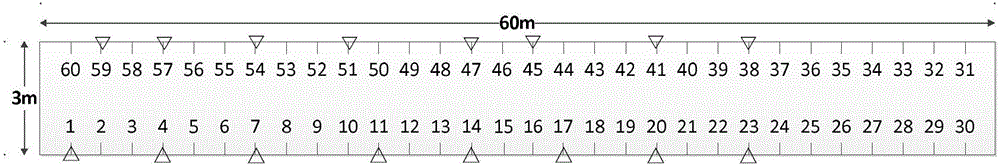

Indoor positioning method

InactiveCN106793086AHighlight substantive featuresImprove accuracyNavigational calculation instrumentsCharacter and pattern recognitionPattern recognitionNetwork management

The invention provides an indoor positioning method, and relates to a wireless communication network technology for network management. A method combining positioning based on a WiFi fingerprint and visual positioning based on a label is adopted. The method comprises the following steps: firstly, acquiring a WiFi positioning range and a WiFi positioning coordinate by using a WiFi position fingerprint positioning algorithm; secondly, acquiring a visual positioning coordinate according to feature matching and visual positioning of a tested image; lastly, combining WiFi position fingerprint positioning with visual positioning to realize high-accuracy indoor positioning. By adopting method, the defects that the conventional WiFi fingerprint positioning technology has low accuracy and the conventional single visual positioning method technology is not suitable for indoor positioning are overcome.

Owner:HEBEI UNIV OF TECH

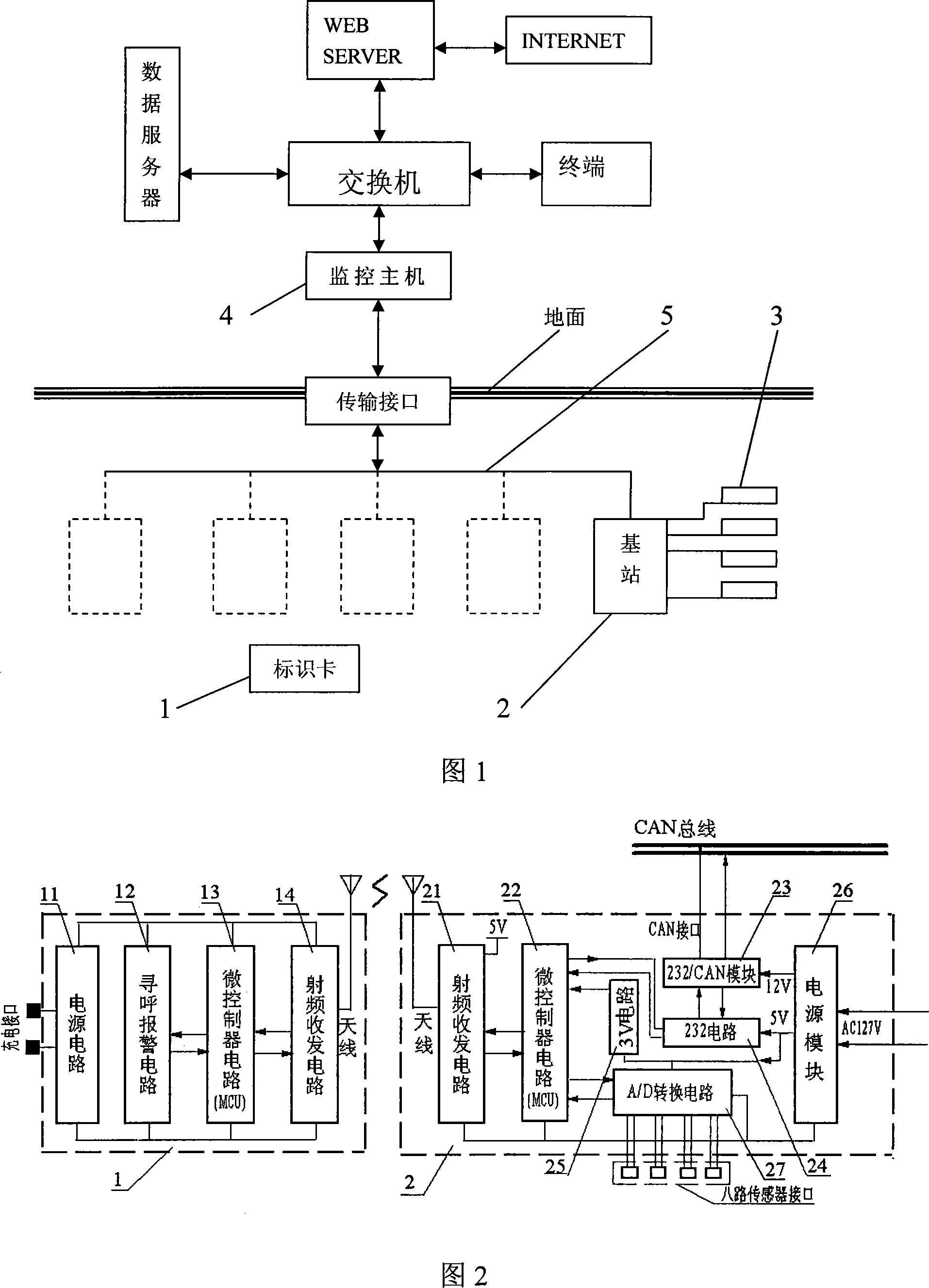

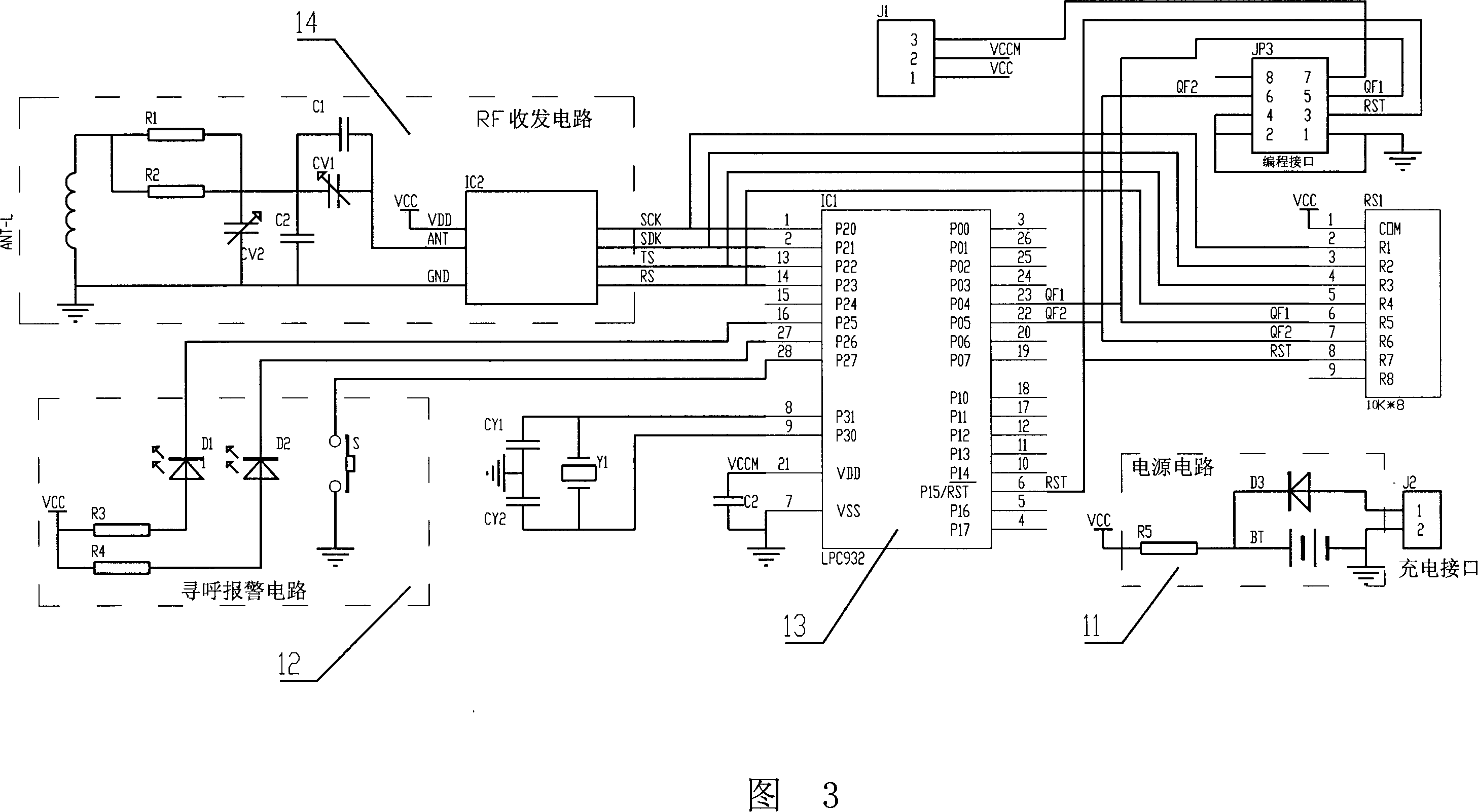

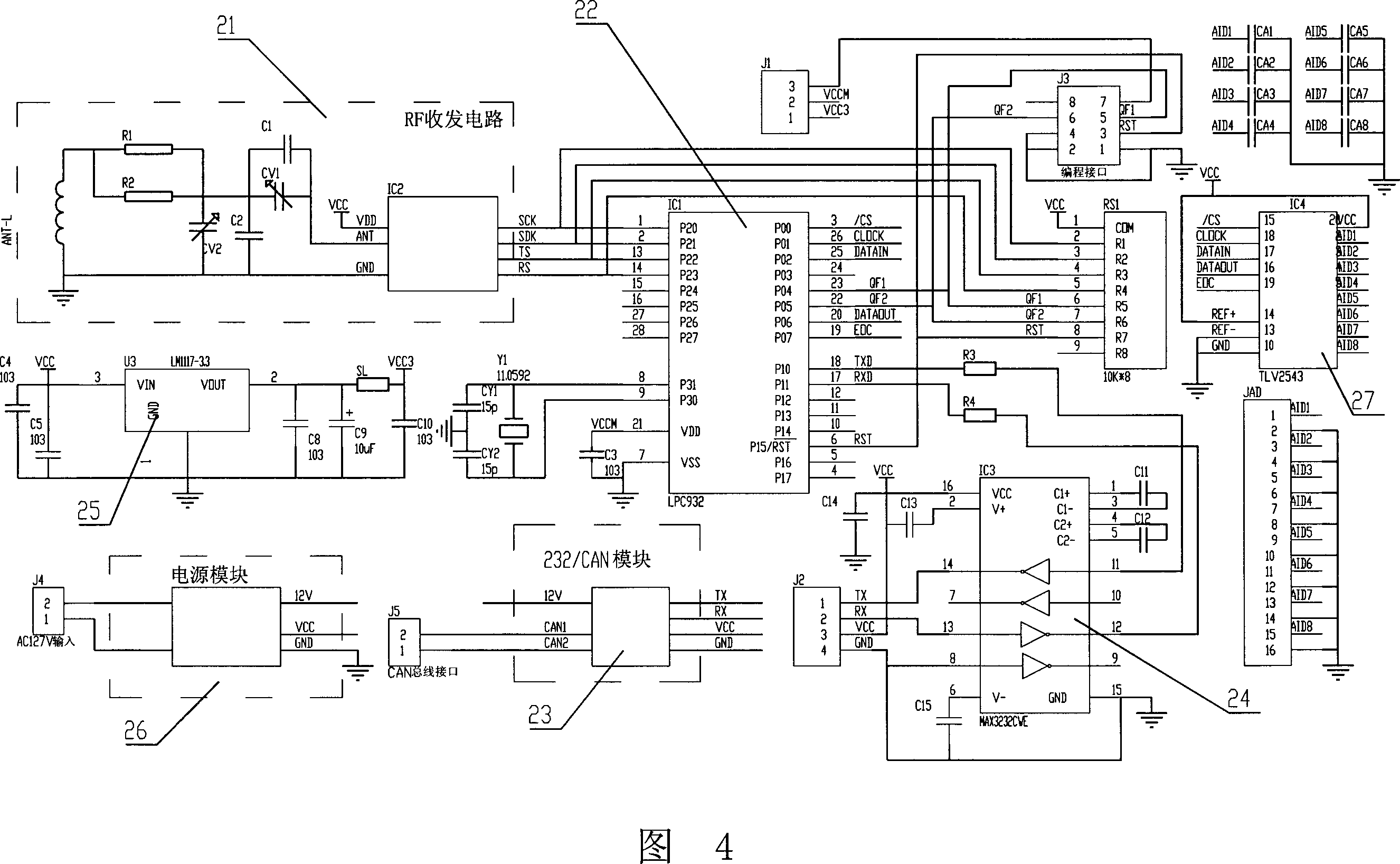

Mine down-hole visual positioning and multifunctional safety monitoring network system

InactiveCN101122242AReliable transmissionPractical and convenientMining devicesData switching by path configurationOperating pointEngineering

A visual positioning and multi-functional safety monitoring network system used in a mine well adopts a method of radio frequency information identification positioning. Workers and mobile equipment in the well are equipped with a radio frequency identification card. A single-stage or multi-stage information acquisition base stations are arranged in the well according to the distribution of operating districts, and various sensors are connected to sensor ports at the base stations based on the requirement of mine monitoring items. As a person carries the identification card when entering the well and the information acquisition base stations are arranged from a well inlet to every operating points, the card carried by the person will be read when the person passes each information acquisition base station, and the station will transmit the positioning information read from the card and the information of all monitoring parameters acquired by the sensors to a monitoring mainframe on the ground. Moreover, a set of multi-functional comprehensive monitoring system application software is used to realize the dynamic management and all-round safety monitoring of the person in the well.

Owner:株洲大成测控技术有限公司

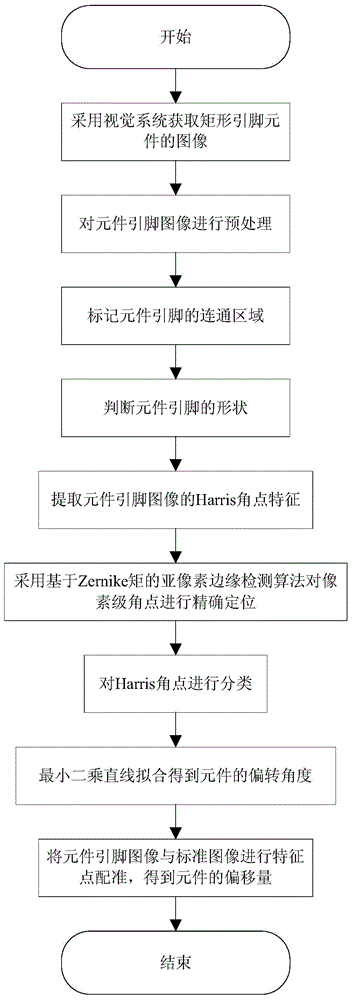

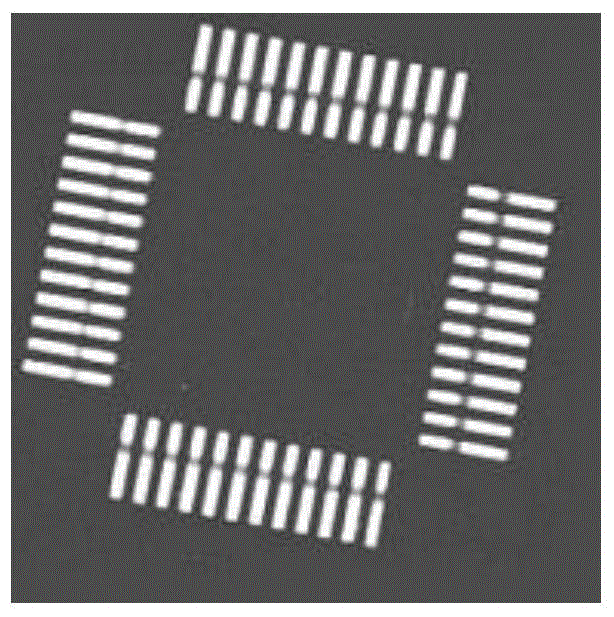

Detection method for rectangular pin component visual positioning

InactiveCN104359402AAccurate detectionHigh precisionUsing optical meansAngular pointVisual positioning

The invention provides a detection method for rectangular pin component visual positioning, and relates to the field of visual positioning and detection of rectangular pin components. The method aims to solve the problems that a traditional rectangular pin component detection method is low in positioning precision, pins break in images and deviation and deviation angles are detected separately. The method is characterized in that a measured component image is obtained by means of a chip mounter visual system, an image after binary pre-processing is obtained through threshold segmentation, a communication area of the component pins is marked, the shape of the component pins is judged, pixel-level Harris angular point coordinates of the pin image are extracted, sub-pixels of Harris angular points are solved and classified, the deviation angles of components are calculated, the pin image is matched with a standard image, and the deviation of the component is calculated. The method is mainly used for pin detection, deviation angle detection and deviation detection of the rectangular pin components.

Owner:NANJING UNIV OF TECH

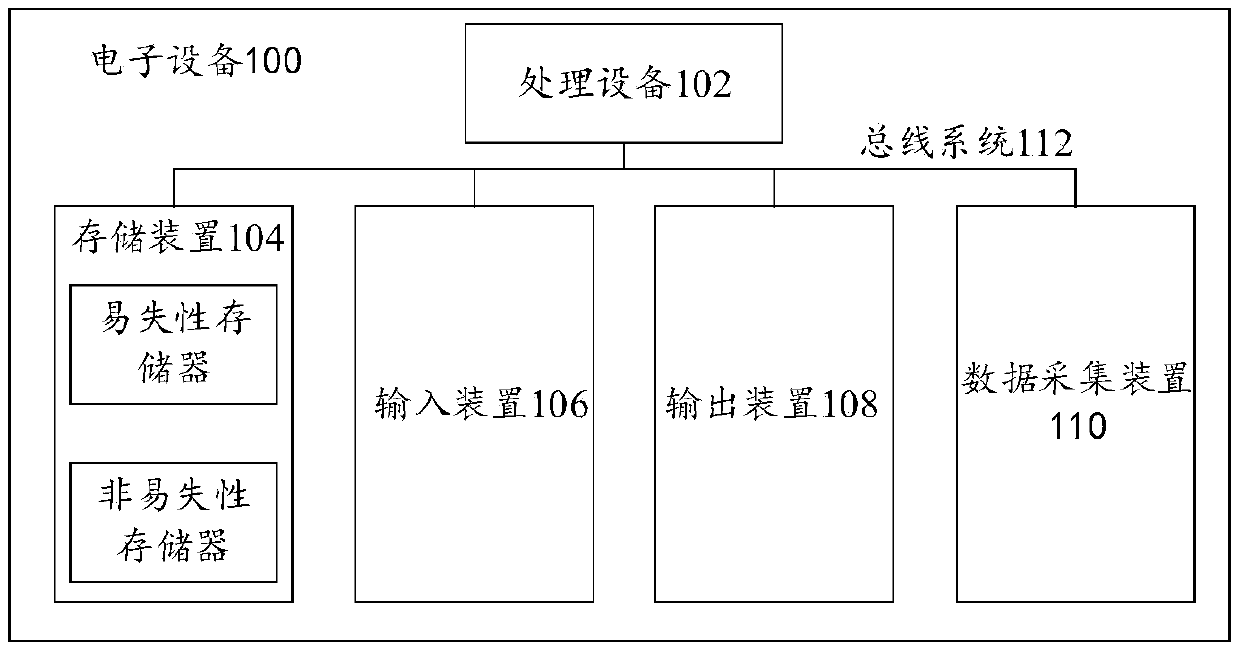

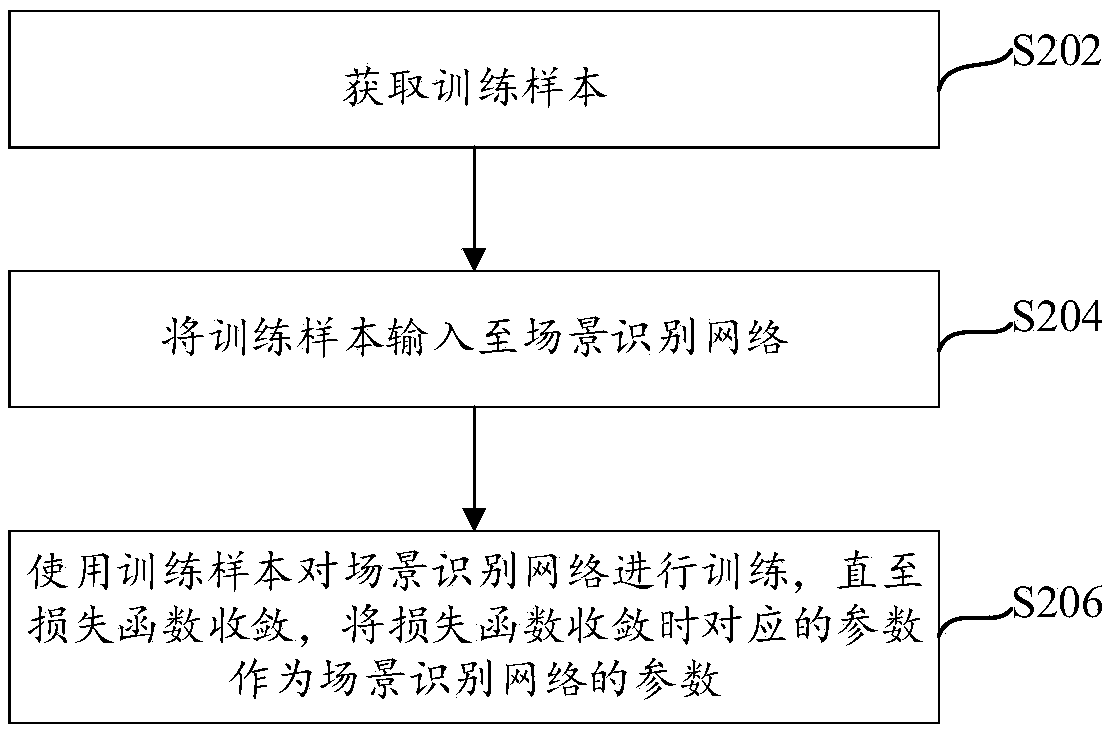

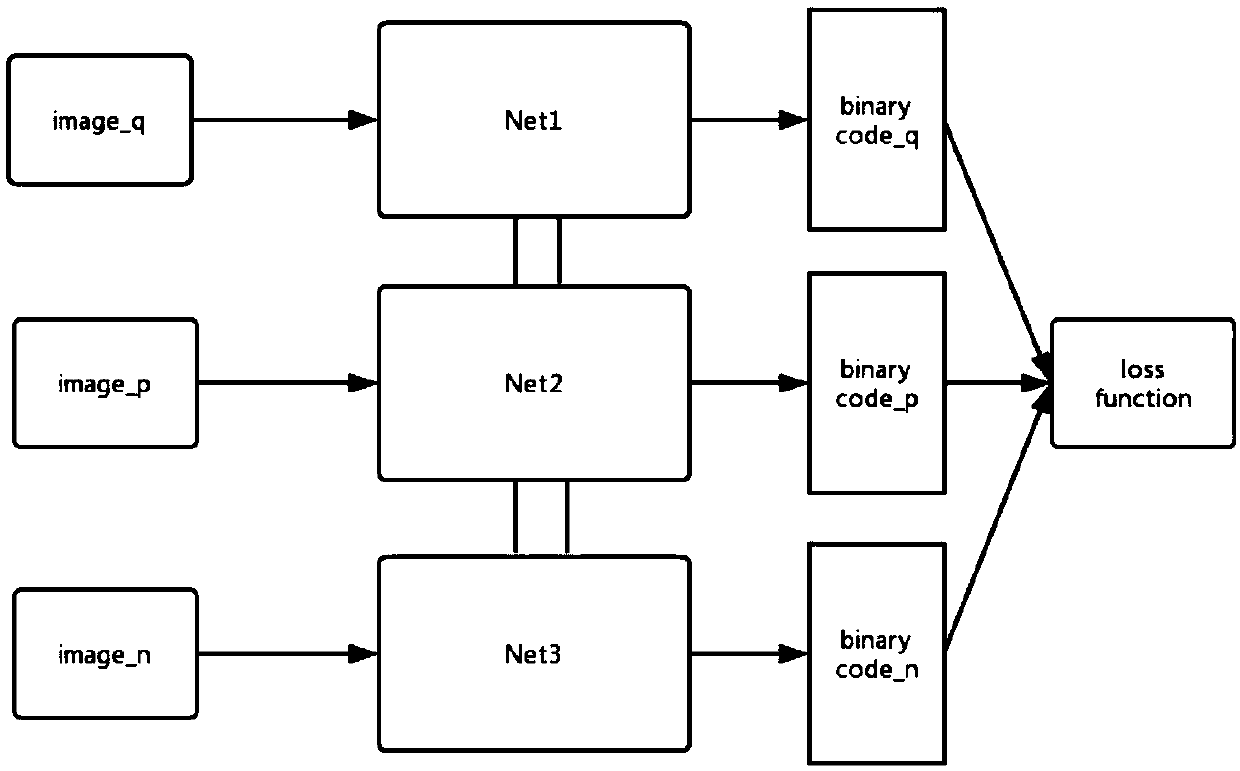

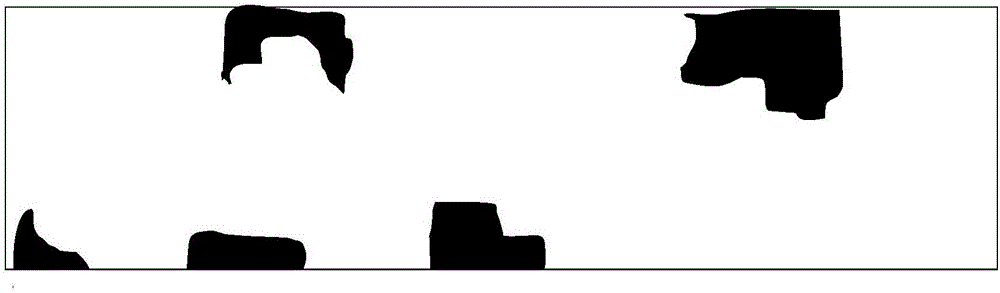

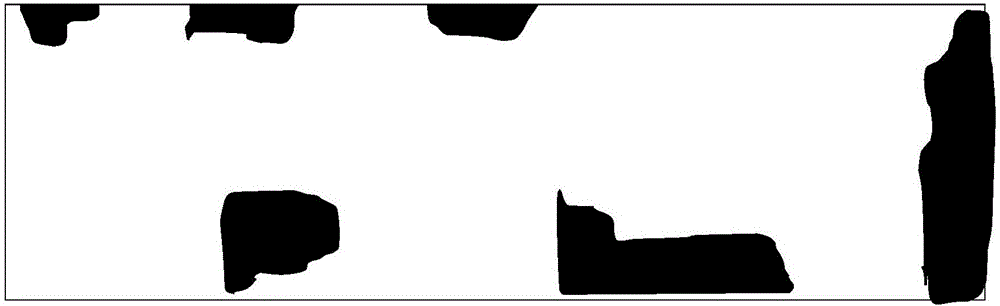

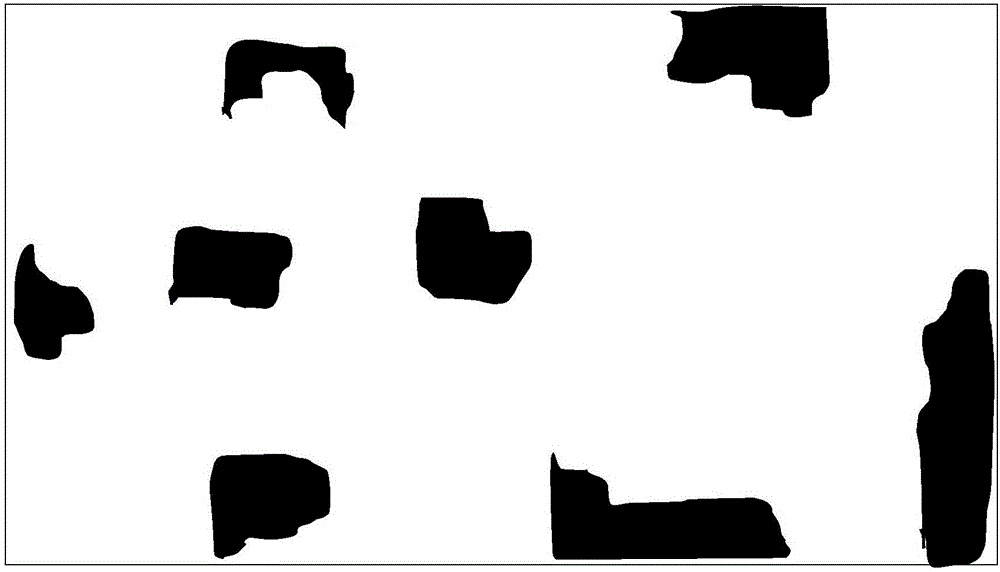

Network training method, incremental mapping method, positioning method, device and equipment

InactiveCN109658445AReduce dependenceReduce dependencyImage analysisCharacter and pattern recognitionPattern recognitionVisual positioning

The invention provides a network training method. The invention discloses an incremental mapping method, device and equipment, and relates to the technical field of visual positioning, and the methodcomprises the steps: obtaining a training sample, enabling the images in a first image set and the images in a second image set in the training sample to be similar images, and enabling the images inthe first image set and the images in a third image set to be non-similar images; Inputting the training sample into a scene recognition network, wherein the scene recognition network comprises threedeep hash-based lightweight neural networks which have the same structure and share parameters; And training the scene recognition network by using the training sample until the loss function converges, and taking the corresponding parameter when the loss function converges as the parameter of the scene recognition network. The network training method, the incremental mapping method, the positioning method, the positioning device and the equipment provided by the embodiment of the invention can run on a low-end processor in real time, so that the degree of dependence on hardware is reduced.

Owner:BEIJING KUANGSHI TECH

Transformer substation inspection robot path planning navigation method

ActiveCN106525025AImprove detection accuracyEasy to drawNavigation instrumentsTransformerRobot path planning

The invention relates to a transformer substation inspection robot path planning navigation method. The method comprises the steps that a robot walks one circle around a transformer substation, and a two-dimensional grid map of the transformer substation is generated; transformer substation equipment image information is scanned, and a feature image is selected to serve as a road and equipment identification basis; an optimal inspection path is planned; surrounding environment information is scanned to generate a two-dimensional grid map of the surrounding environment, the position where the inspection robot is located is identified by comparing the surrounding environment map with the transformer substation map, and rough positioning is achieved; surrounding equipment image information is acquired, the equipment position is identified by comparing the surrounding environment image information with an equipment feature image, errors generated in the map matching link are corrected, and then higher-precision positioning is achieved; whether map matching positioning and visual positioning are in a same area or not is inspected, if yes, it is proved that positioning is accurate, and if not, it shows that positioning is wrong, and then map matching positioning and visual positioning are conducted again. Therefore, the positioning navigation reliability and accuracy are greatly improved.

Owner:WUHAN UNIV

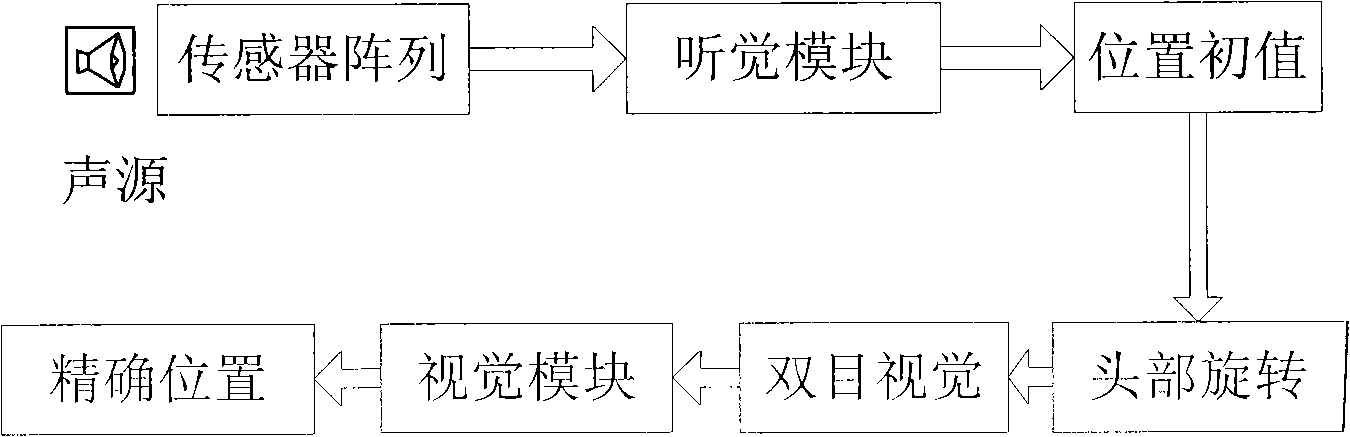

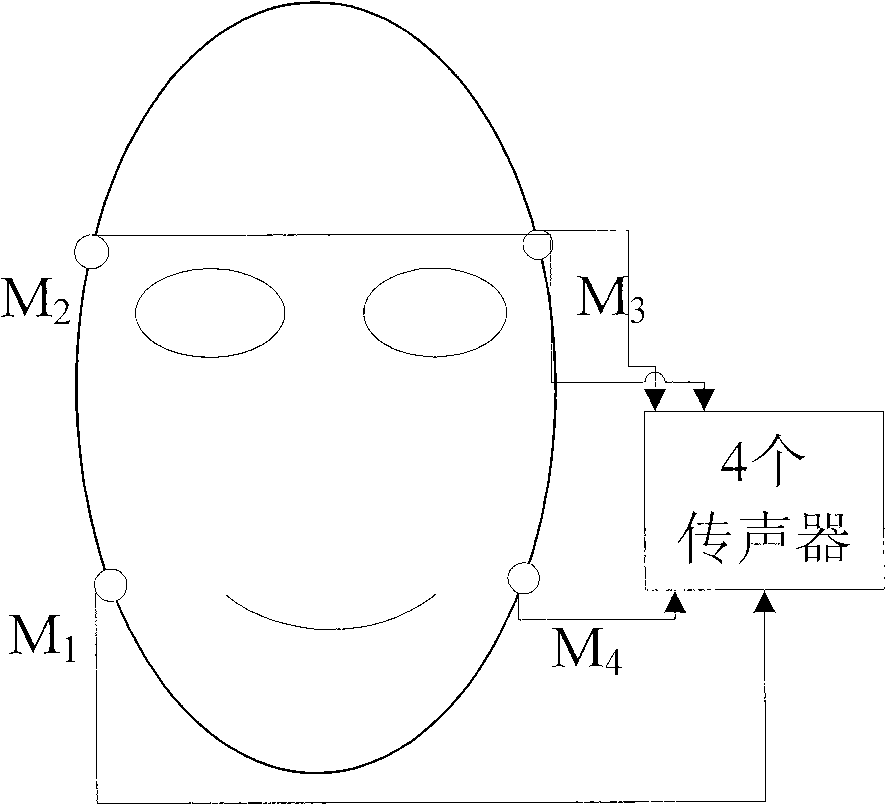

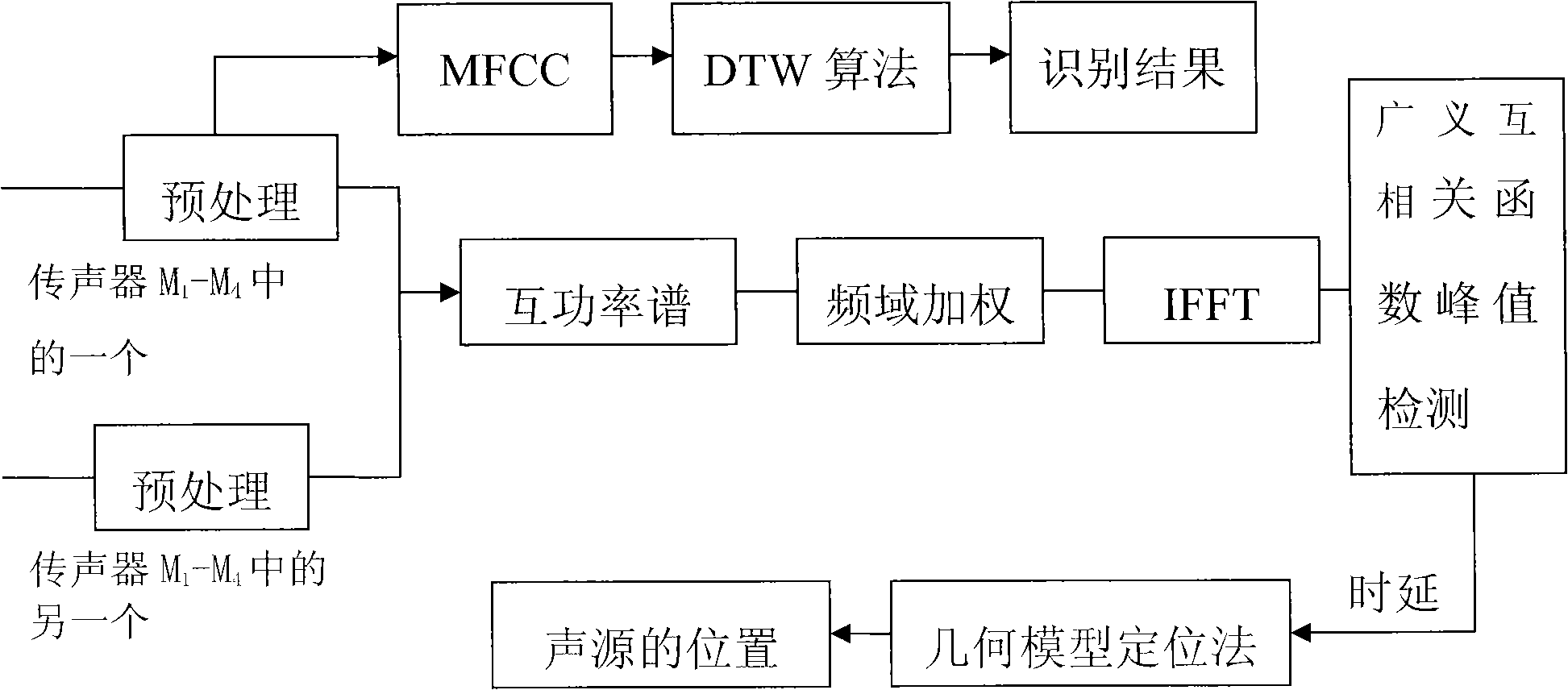

Sound source independent searching and locating method

InactiveCN101295016AHigh precisionPrecise positioningPhotogrammetry/videogrammetryPosition fixationVisual field lossSound sources

Owner:SHAANXI JIULI ROBOT MFG

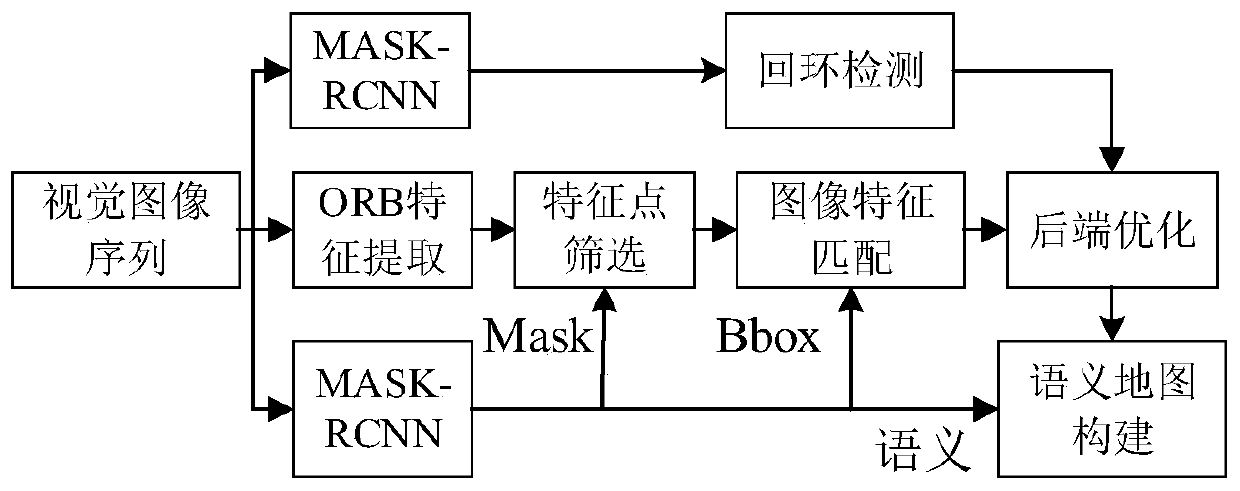

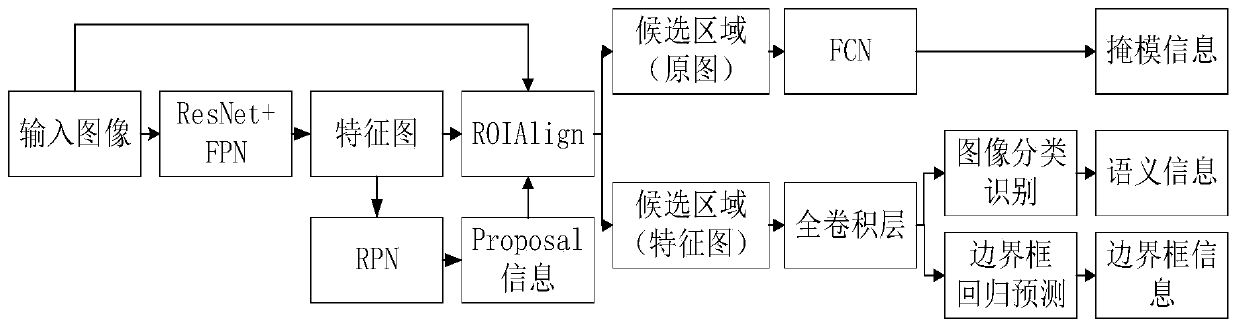

Visual SLAM method based on instance segmentation

InactiveCN110738673ARealize autonomous positioning and navigationHigh precisionImage enhancementImage analysisData setVision algorithms

The invention provides a visual SLAM algorithm based on instance segmentation, and the algorithm comprises the steps: firstly extracting feature points of an input image, and carrying out the instancesegmentation of the image through employing a convolutional neural network; secondly, using instance segmentation information for assisting in positioning, removing feature points prone to causing mismatching, and reducing a feature matching area; and finally, constructing a semantic map by using the semantic information segmented by the instance, thereby realizing reuse and man-machine interaction of the established map by the robot. According to the method, experimental verification is carried out on image instance segmentation, visual positioning and semantic map construction by using a TUM data set. Experimental results show that the robustness of image feature matching can be improved by combining image instance segmentation with visual SLAM, the feature matching speed is increased,and the positioning accuracy of the mobile robot is improved; and the algorithm can generate an accurate semantic map, so that the requirement of the robot for executing advanced tasks is met.

Owner:HARBIN UNIV OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com