Positioning and navigating method and device and processing equipment

A navigation method and relocation technology, applied in the field of navigation, can solve the problems of poor navigation effect, no solution proposed, and poor relocation ability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

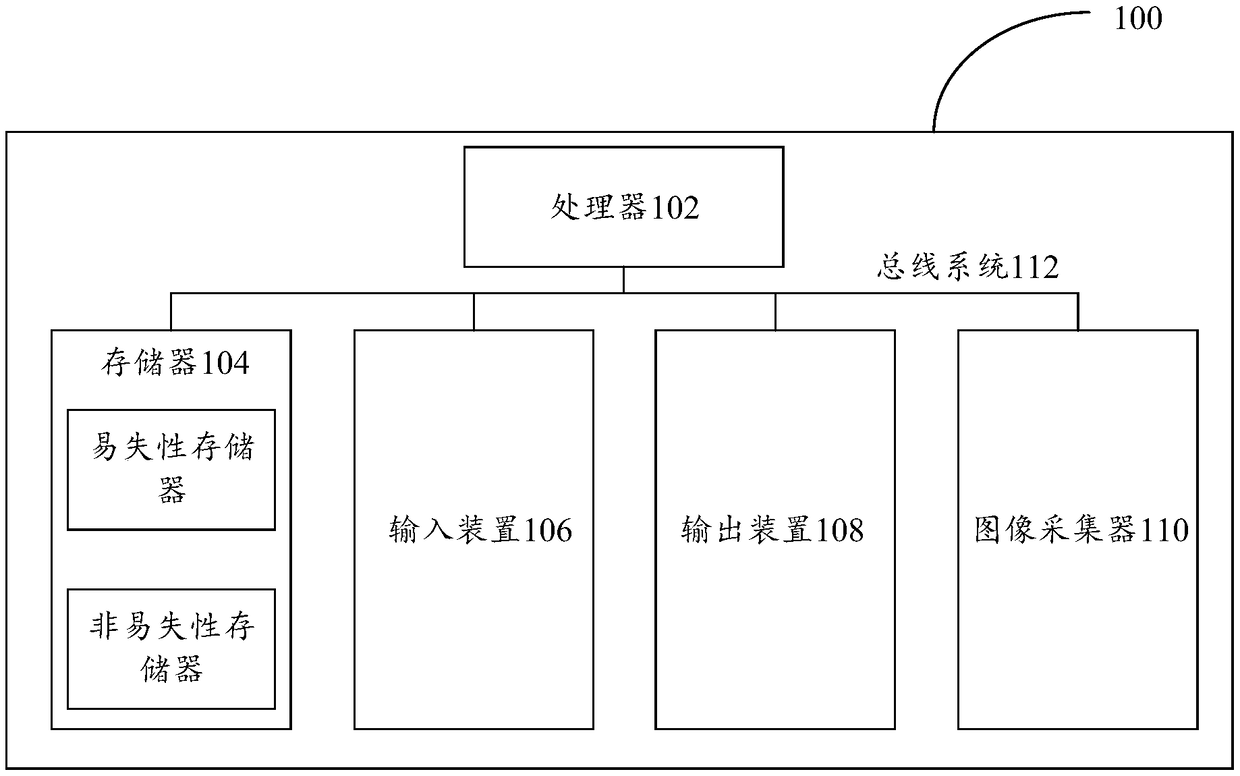

[0033] First, refer to figure 1 The processing device 100 for implementing the embodiments of the present invention will be described, and the processing device can be used to run the methods of the various embodiments of the present invention.

[0034] Such as figure 1 As shown, the processing device 100 includes one or more processors 102, one or more memories 104, an input device 106, an output device 108, and a data collector 110, and these components are connected through a bus system 112 and / or other forms ( not shown) interconnection. It should be noted that figure 1 The components and structure of the processing device 100 shown are only exemplary and not limiting, and the processing device may also have other components and structures as required.

[0035] The processor 102 may be implemented in at least one hardware form of a digital signal processor (DSP), a field programmable gate array (FPGA), a programmable logic array (PLA) and an ASIC (Application Specific I...

Embodiment 2

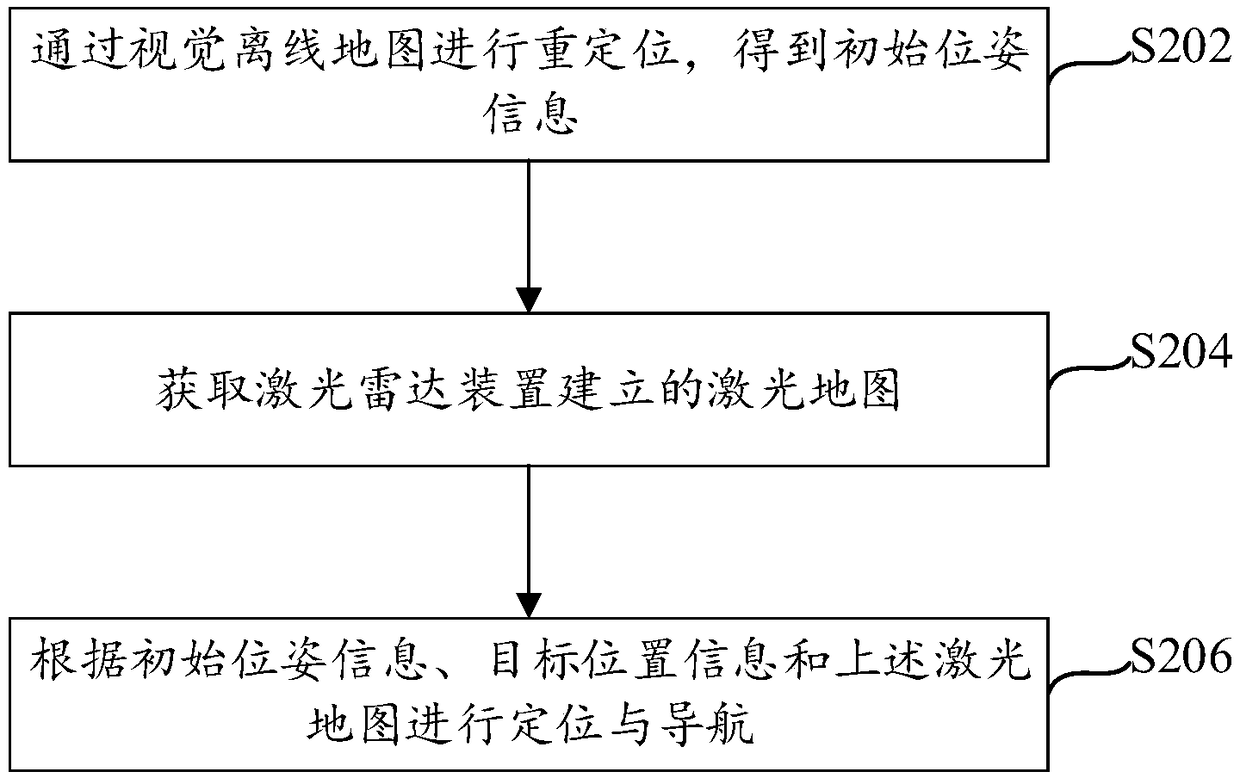

[0042] see figure 2 A flow chart of a positioning and navigation method is shown, the method can be executed by the processing device provided in the foregoing embodiment, and the method specifically includes the following steps:

[0043] Step S202, relocating through the visual offline map to obtain initial pose information. The visual offline map is obtained based on the fusion of visual devices and inertial measurement units (Inertial measurement unit, IMU).

[0044] In advance, the visual device is fused with the IMU for positioning and mapping, and a visual offline map is obtained. When using visual positioning, the data of IMU is fused, and the accuracy and robustness of visual positioning can be improved by using the acceleration and angular velocity information of IMU.

[0045] In this embodiment, an image captured by a binocular camera is taken as an example for description. Real-time image acquisition is performed through the binocular camera, and real-time angul...

Embodiment 3

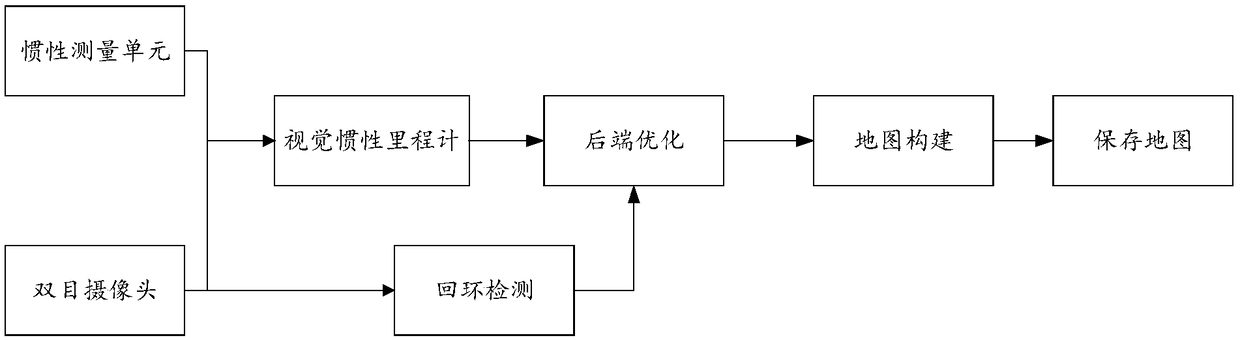

[0067] An embodiment of the present invention provides a multi-sensor fusion positioning and mapping system, including: an image acquisition module, a laser radar data acquisition module, an IMU measurement module and a data processing module.

[0068] The image acquisition module, taking the binocular camera as an example in this embodiment, is used for real-time acquisition of scene information, through matching of image feature points, such as ORB features, after deleting false matches, the basic matrix can be used to obtain the pose of the camera.

[0069] LiDAR data acquisition module, used to build two-dimensional grid map. Usually, the particle filter method is used to construct a two-dimensional grid map by using the odometer data obtained by the code disc and the laser scanning data, and the positioning is performed by the Monte Carlo algorithm.

[0070] The IMU measurement module is used to measure the angular velocity and acceleration of the camera, and obtain the r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com