Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

9382 results about "Machine vision" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Machine vision (MV) is the technology and methods used to provide imaging-based automatic inspection and analysis for such applications as automatic inspection, process control, and robot guidance, usually in industry. Machine vision refers to many technologies, software and hardware products, integrated systems, actions, methods and expertise. Machine vision as a systems engineering discipline can be considered distinct from computer vision, a form of computer science. It attempts to integrate existing technologies in new ways and apply them to solve real world problems. The term is the prevalent one for these functions in industrial automation environments but is also used for these functions in other environments such as security and vehicle guidance.

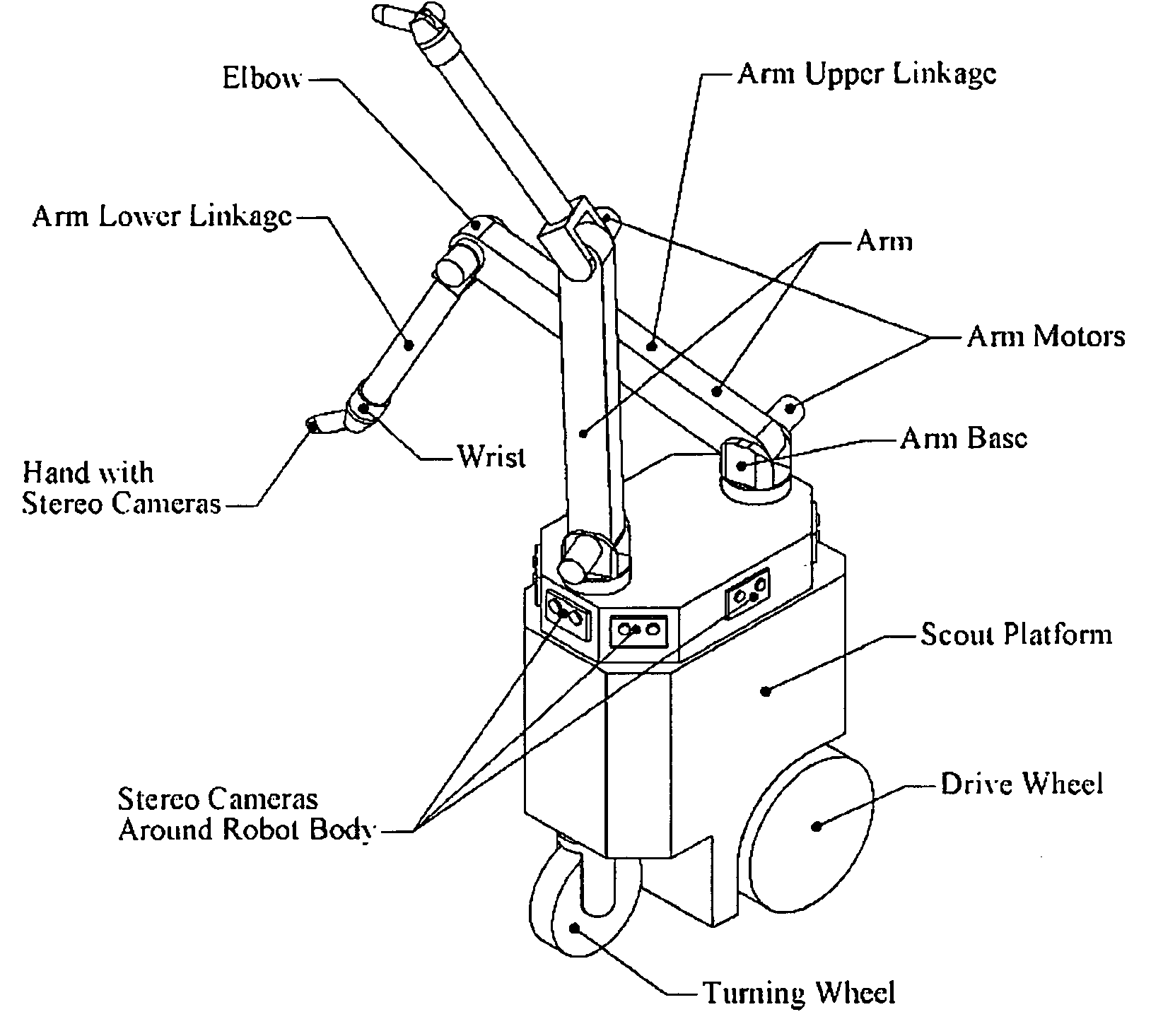

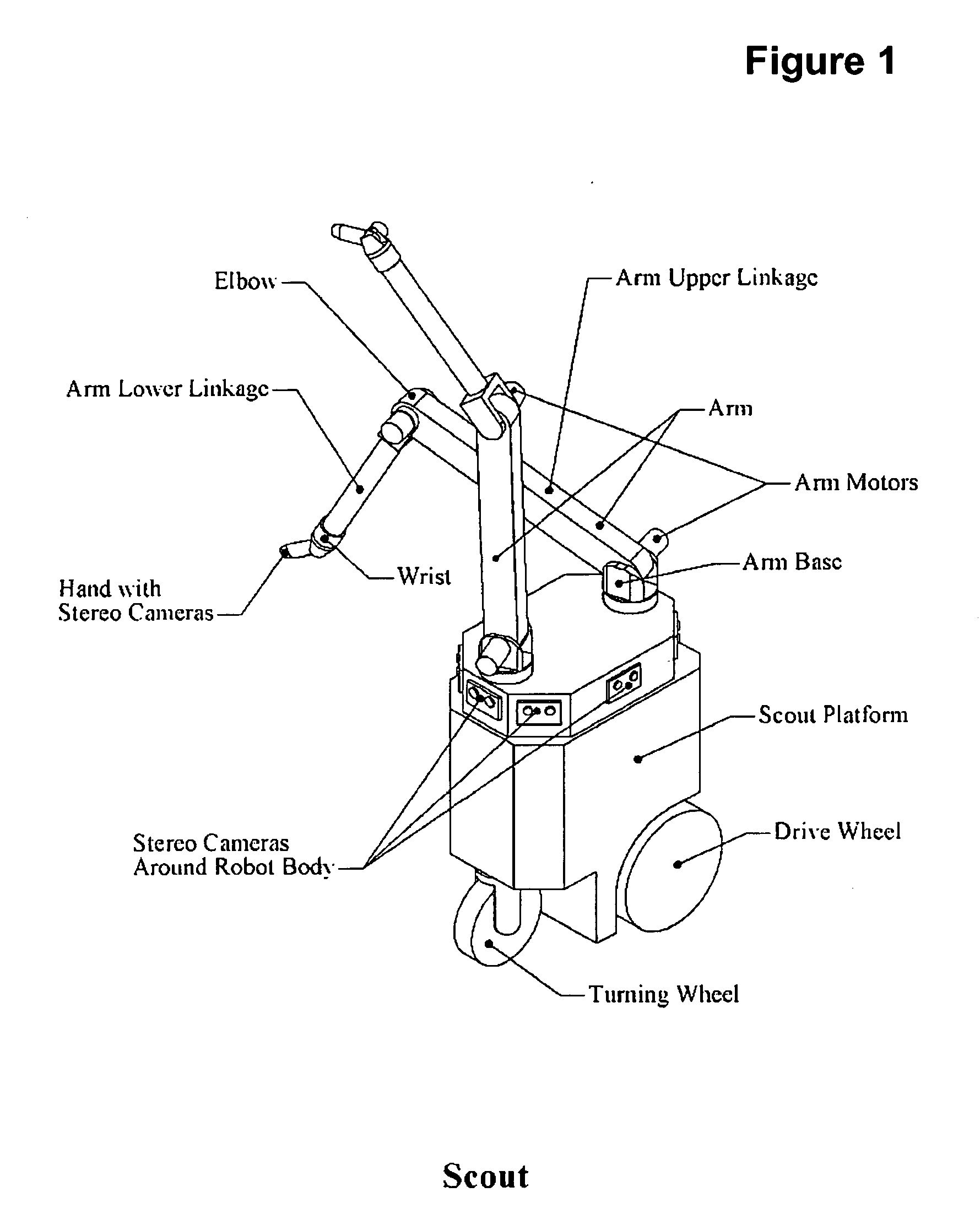

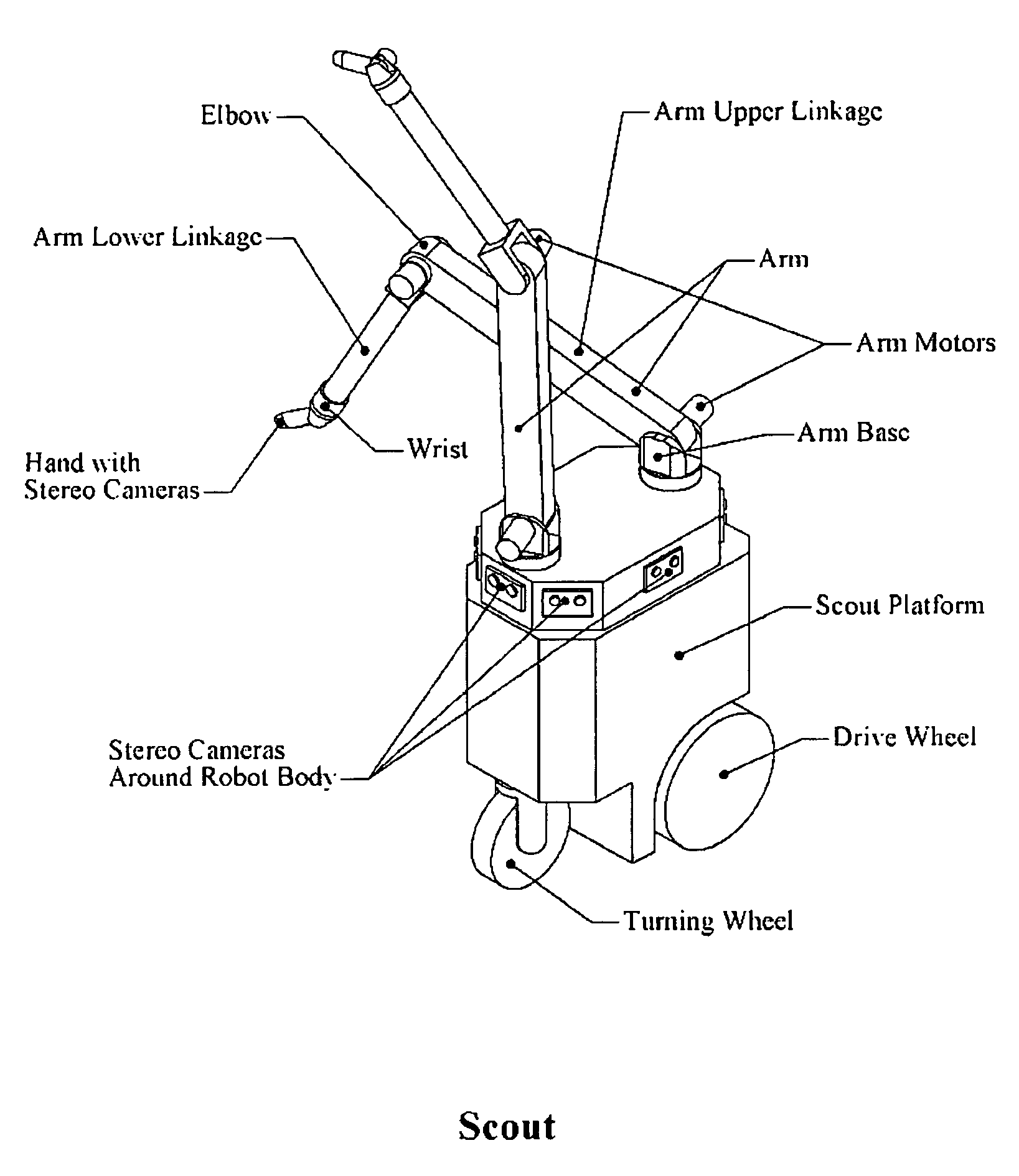

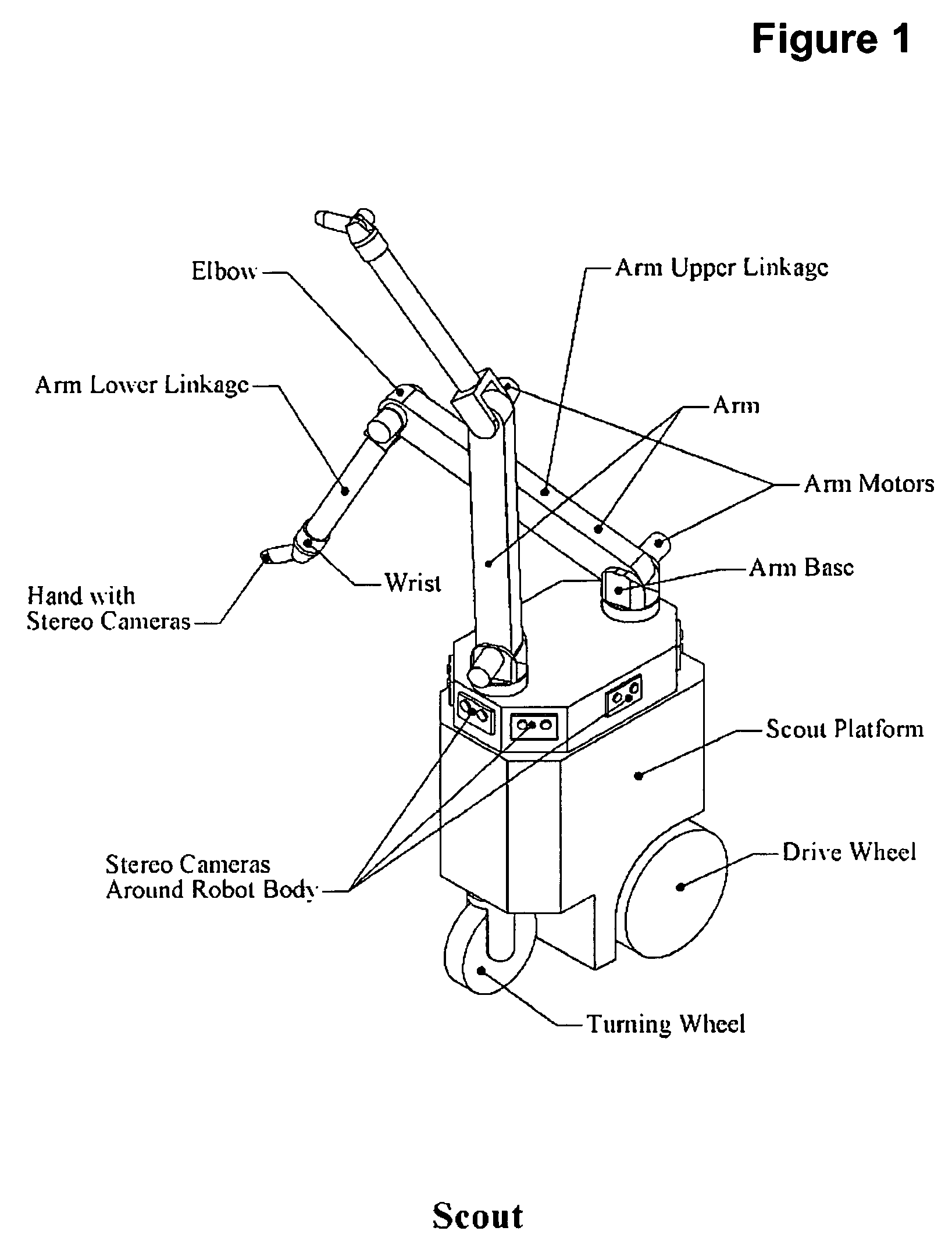

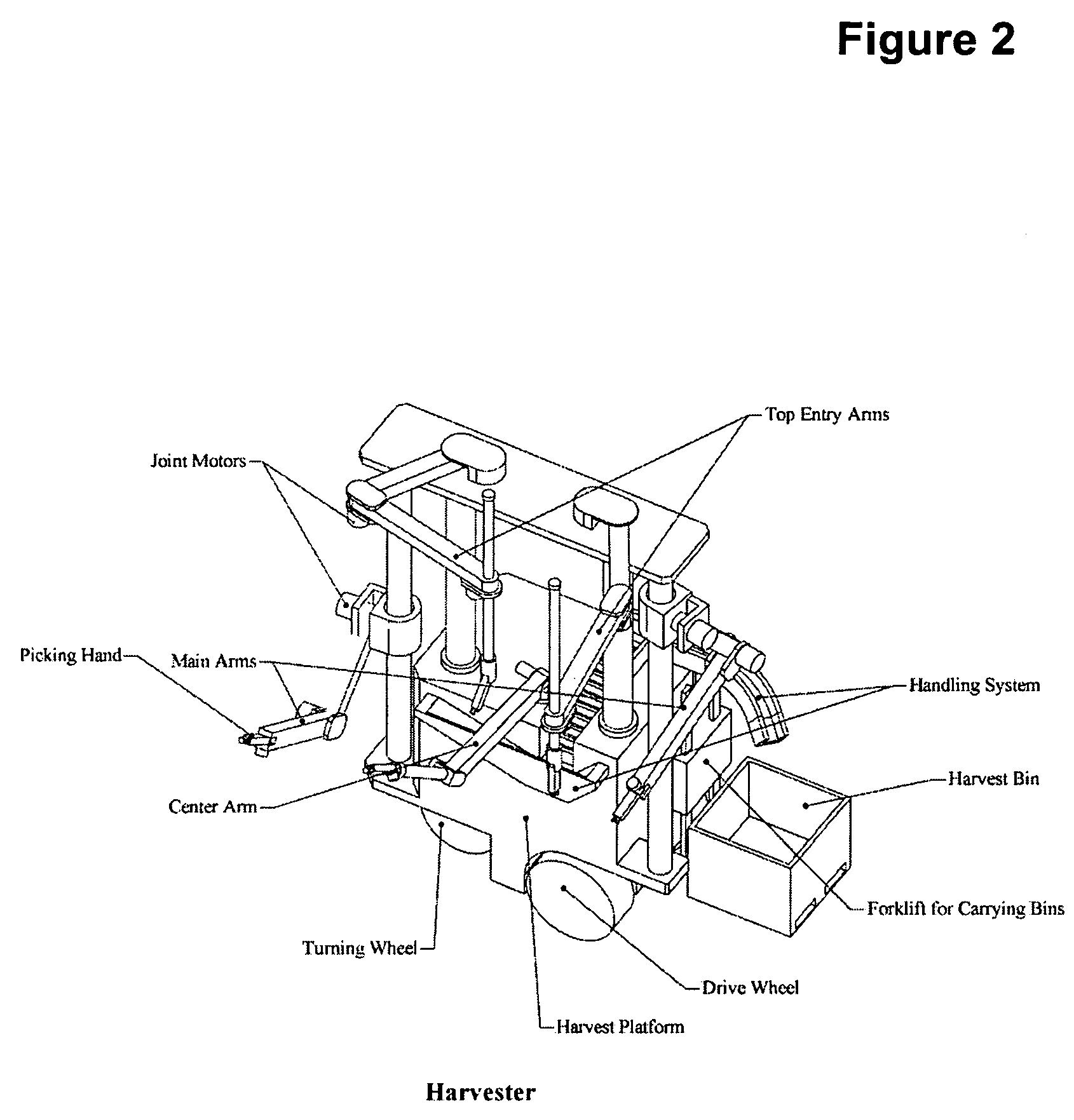

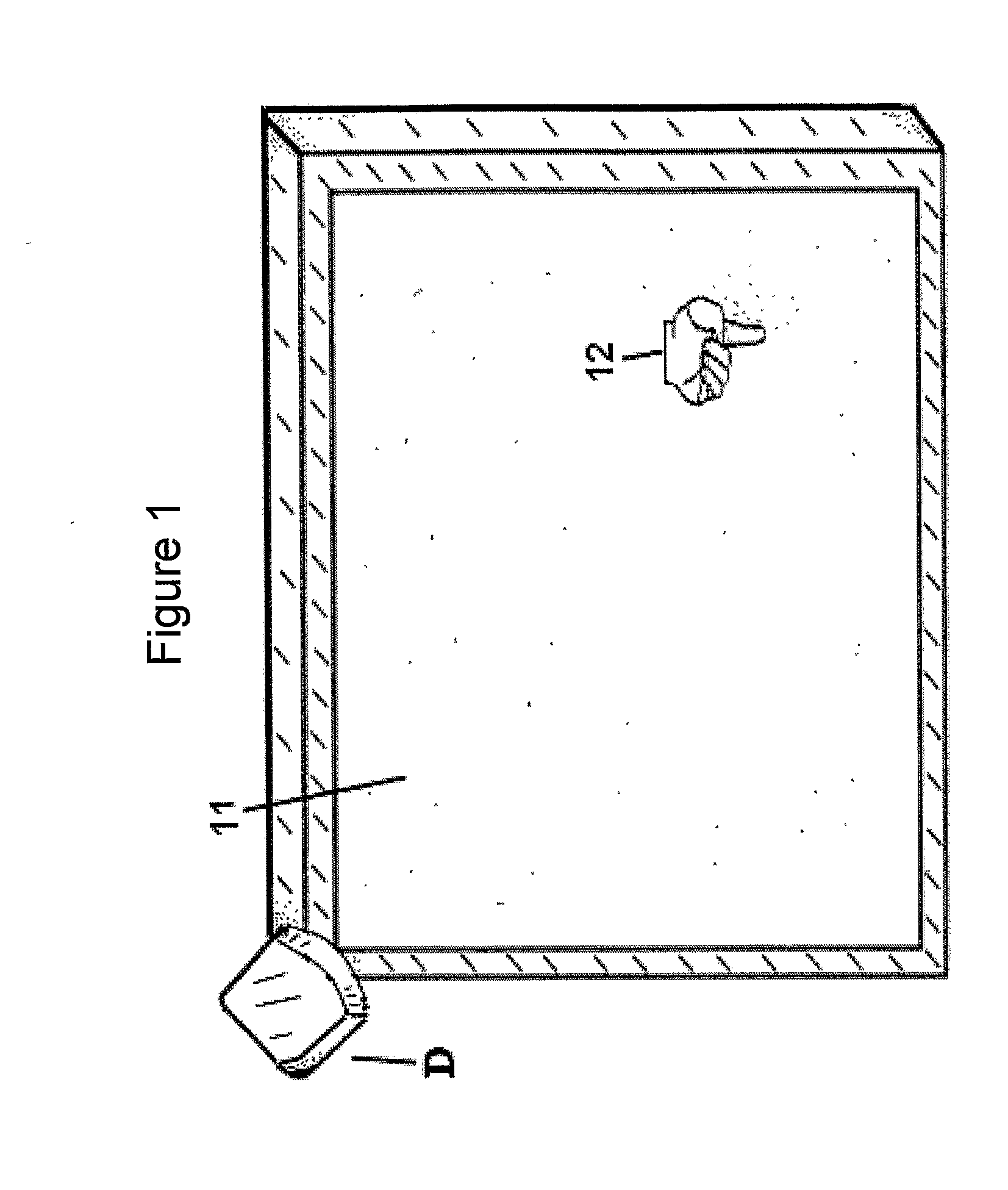

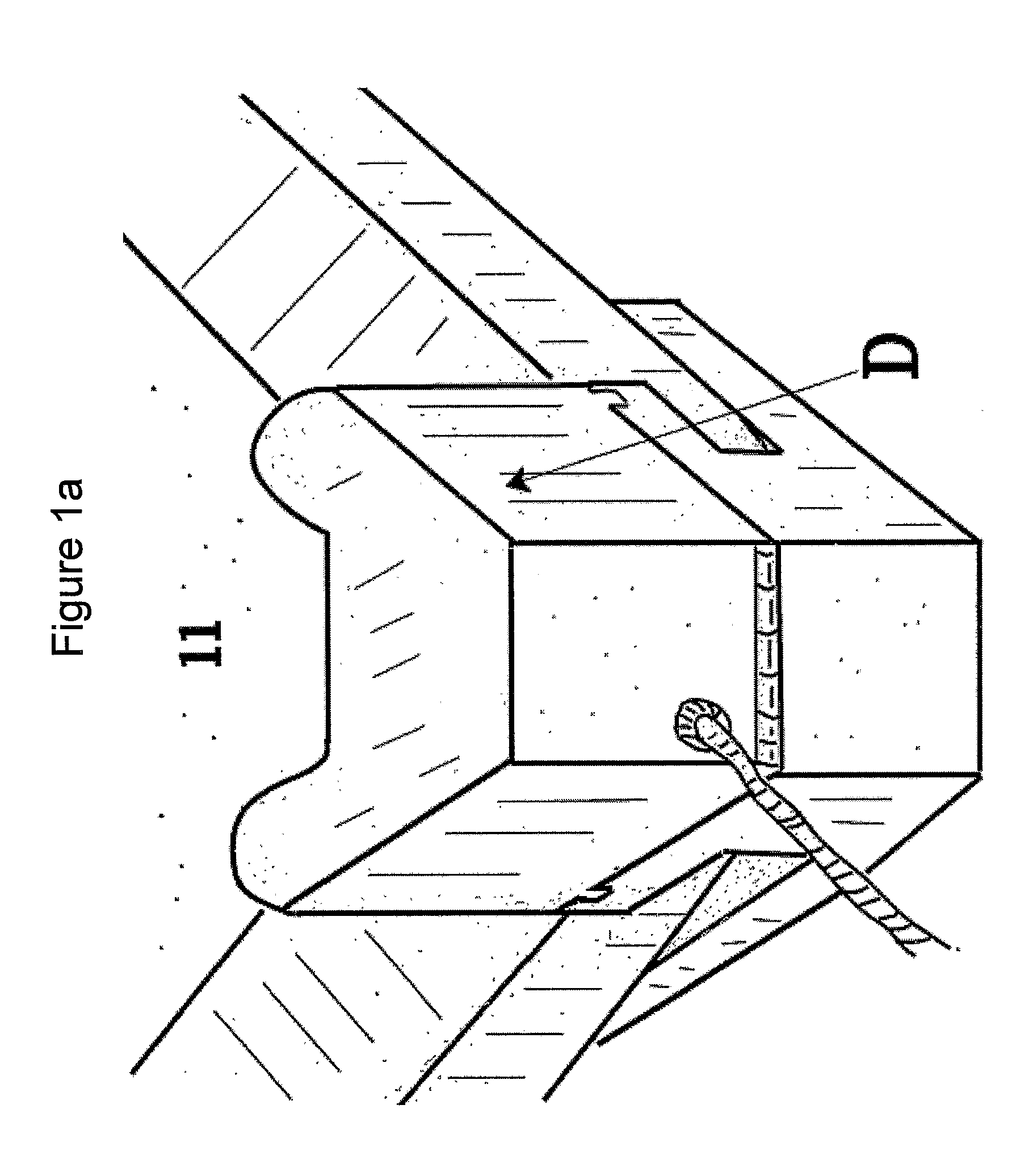

Agricultural robot system and method

InactiveUS20060213167A1Maximize efficiencyMaximizing cost-effectivenessAnalogue computers for trafficMowersMachine visionAction plan

An agricultural robot system and method of harvesting, pruning, culling, weeding, measuring and managing of agricultural crops. Uses autonomous and semi-autonomous robot(s) comprising machine-vision using cameras that identify and locate the fruit on each tree, points on a vine to prune, etc., or may be utilized in measuring agricultural parameters or aid in managing agricultural resources. The cameras may be coupled with an arm or other implement to allow views from inside the plant when performing the desired agricultural function. A robot moves through a field first to “map” the plant locations, number and size of fruit and approximate positions of fruit or map the cordons and canes of grape vines. Once the map is complete, a robot or server can create an action plan that a robot may implement. An action plan may comprise operations and data specifying the agricultural function to perform.

Owner:VISION ROBOTICS

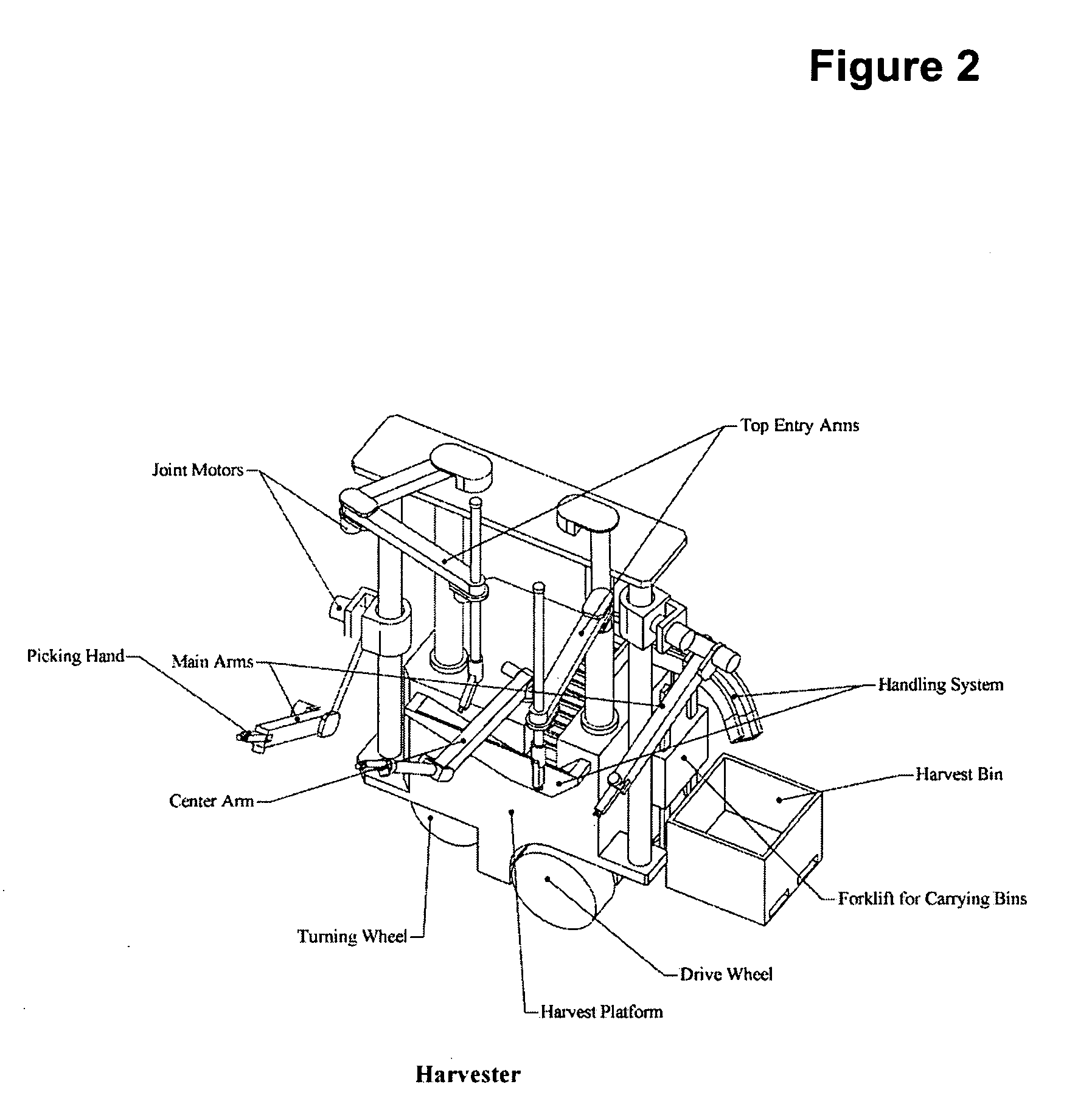

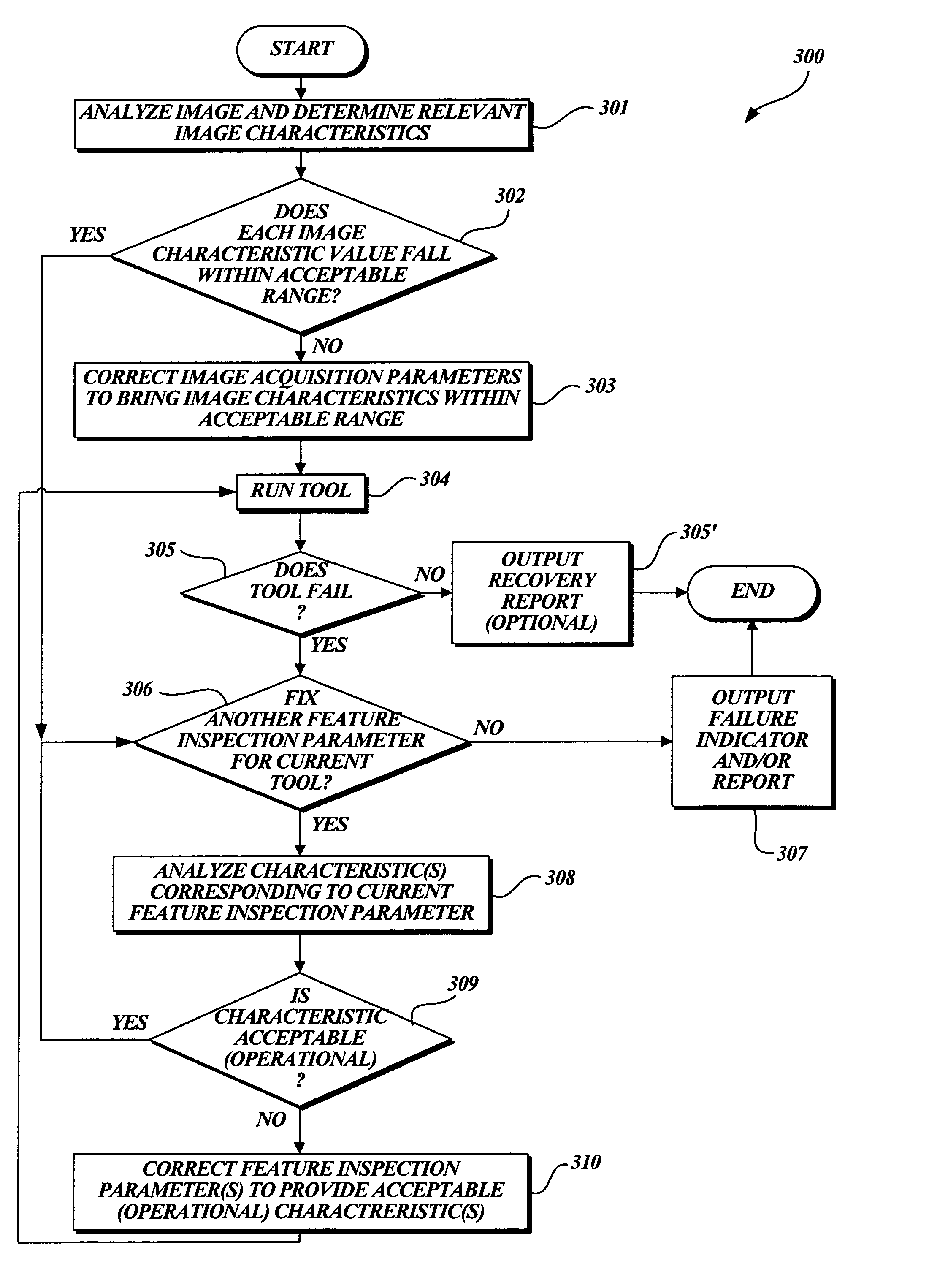

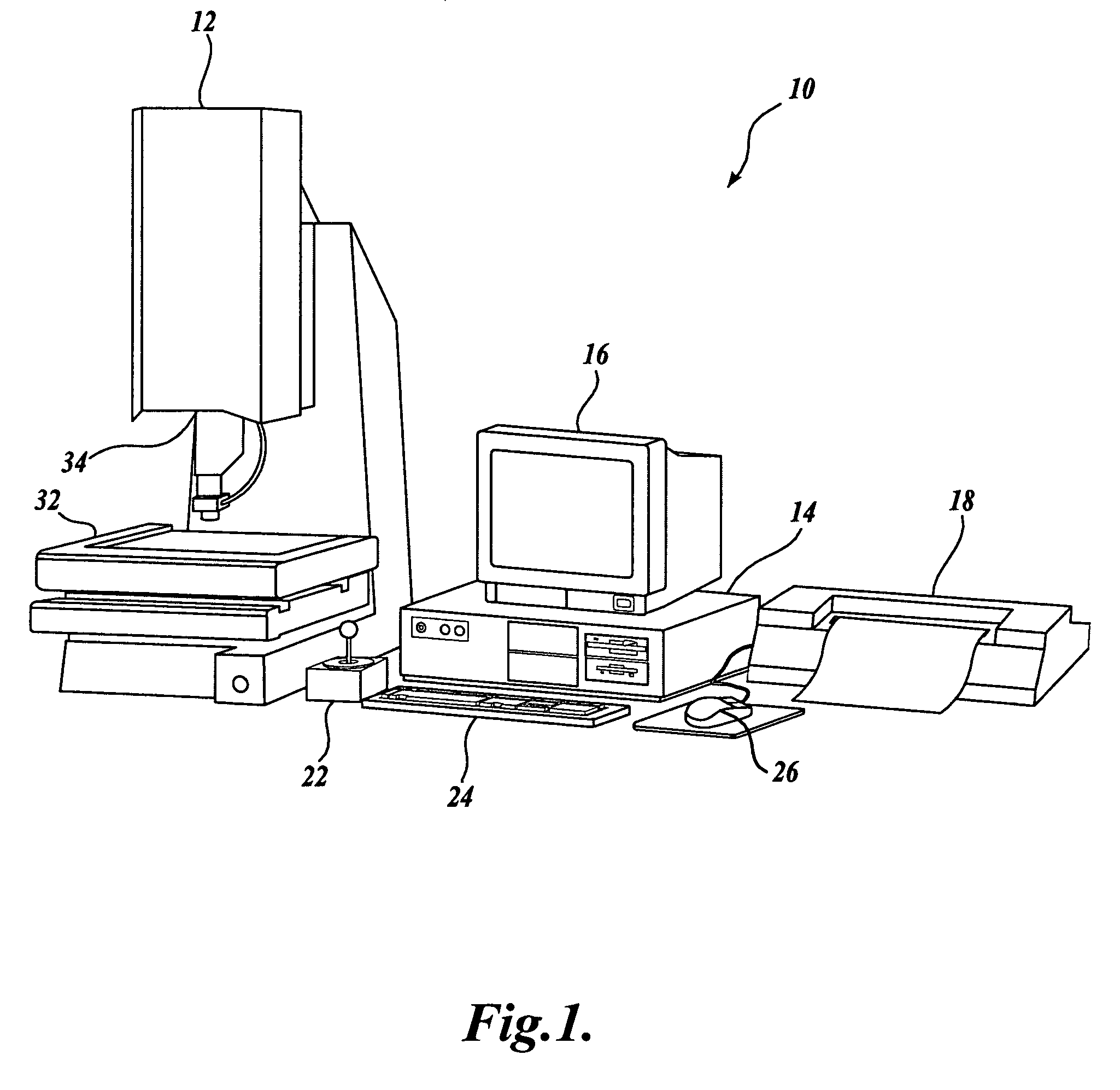

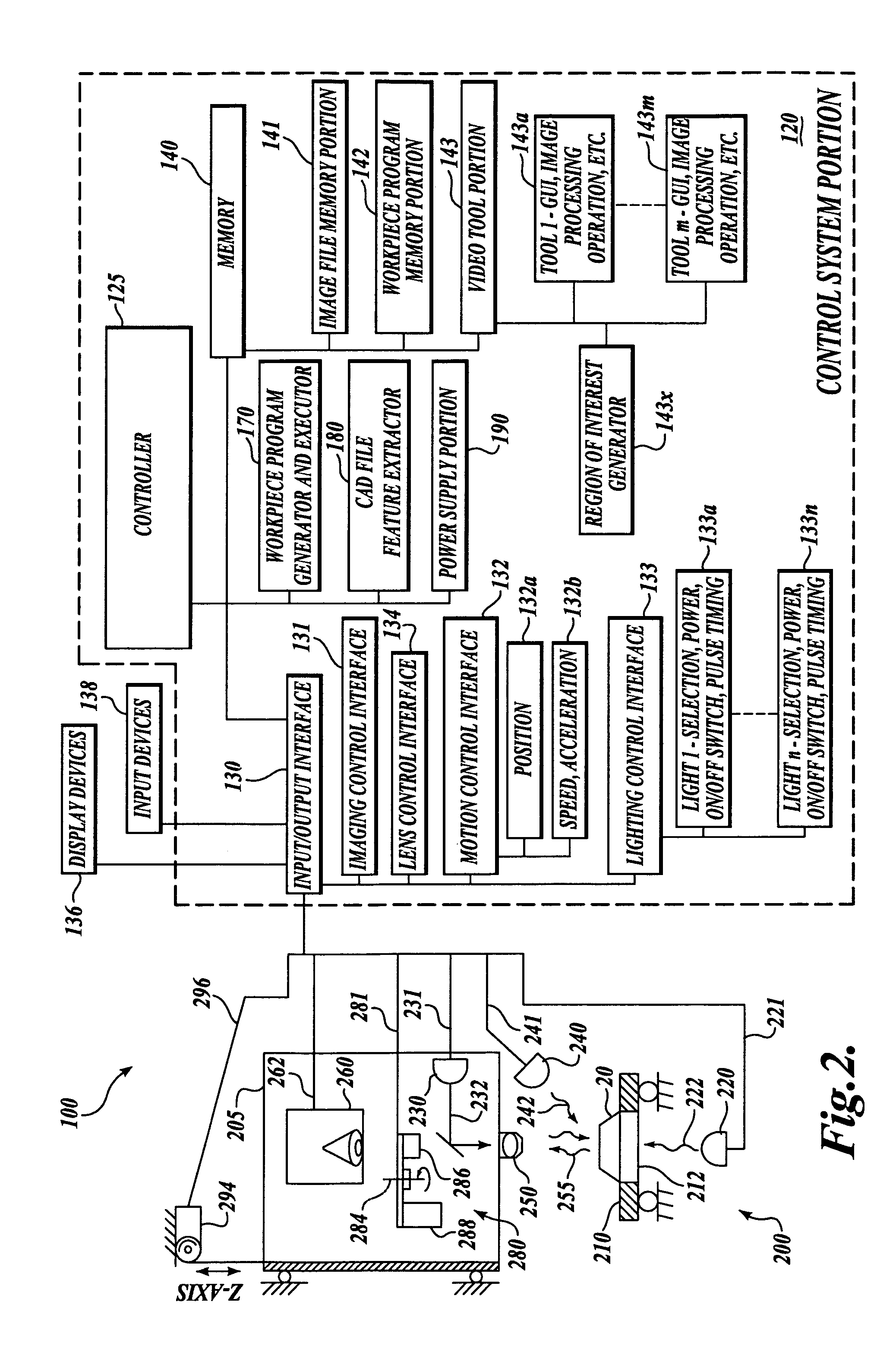

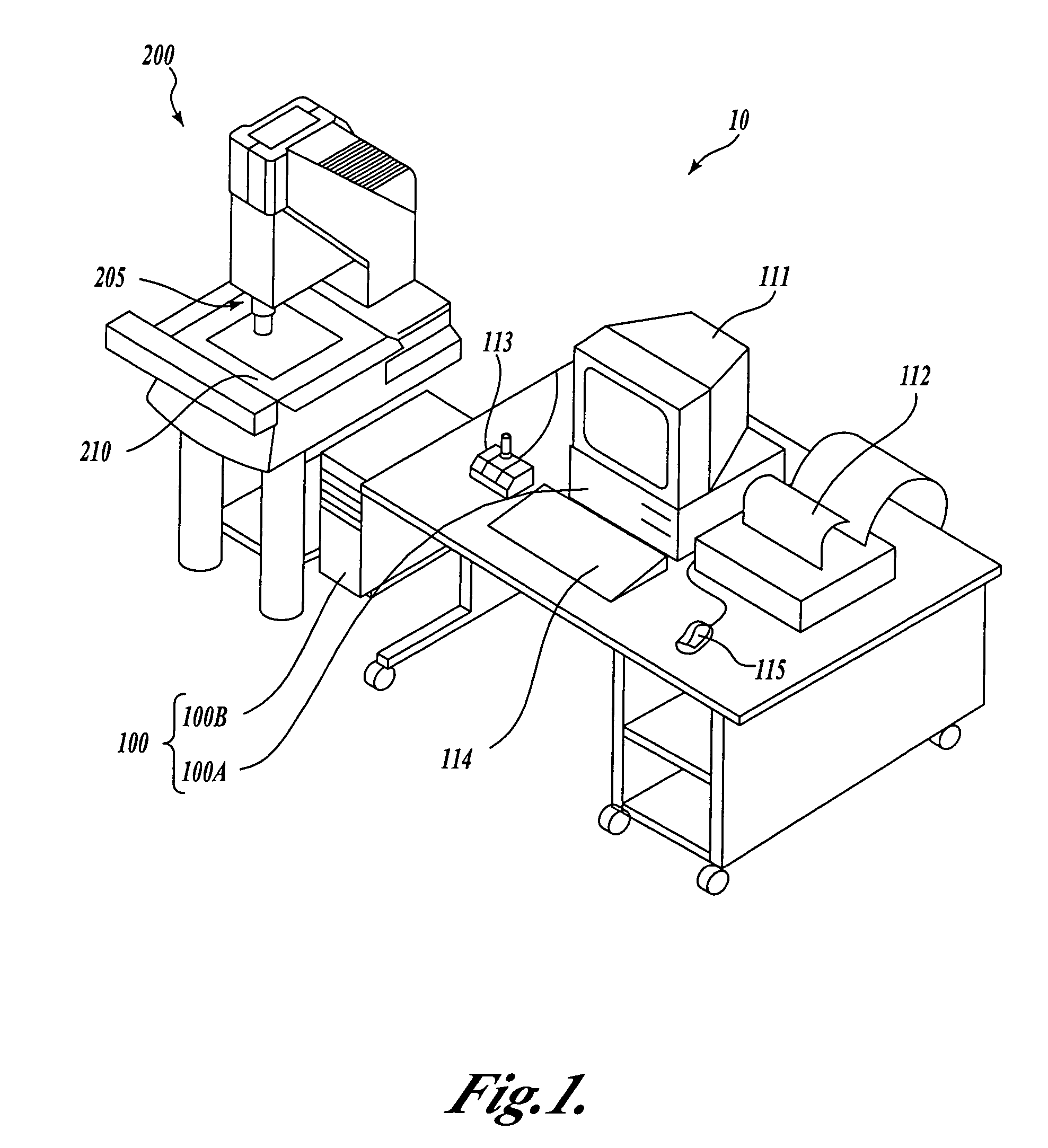

System and method for automatically recovering video tools in a vision system

ActiveUS7454053B2Reduce detectionReduce failureImage enhancementImage analysisMachine visionRelevant feature

Methods and systems for automatically recovering a failed video inspection tool in a precision machine vision inspection system are described. A set of recovery instructions may be associated or merged with a video tool to allow the tool to automatically recover and proceed to provide an inspection result after an initial failure. The recovery instructions include operations that evaluate and modify feature inspection parameters that govern acquiring an image of a workpiece feature and inspecting the feature. The set of instructions may include an initial phase of recovery that adjusts image acquisition parameters. If adjusting image acquisition parameters does not result in proper tool operation, additional feature inspection parameters, such as the tool position, may be adjusted. The order in which the multiple feature inspection parameters and their related characteristics are considered may be predefined so as to most efficiently complete the automatic tool recovery process.

Owner:MITUTOYO CORP

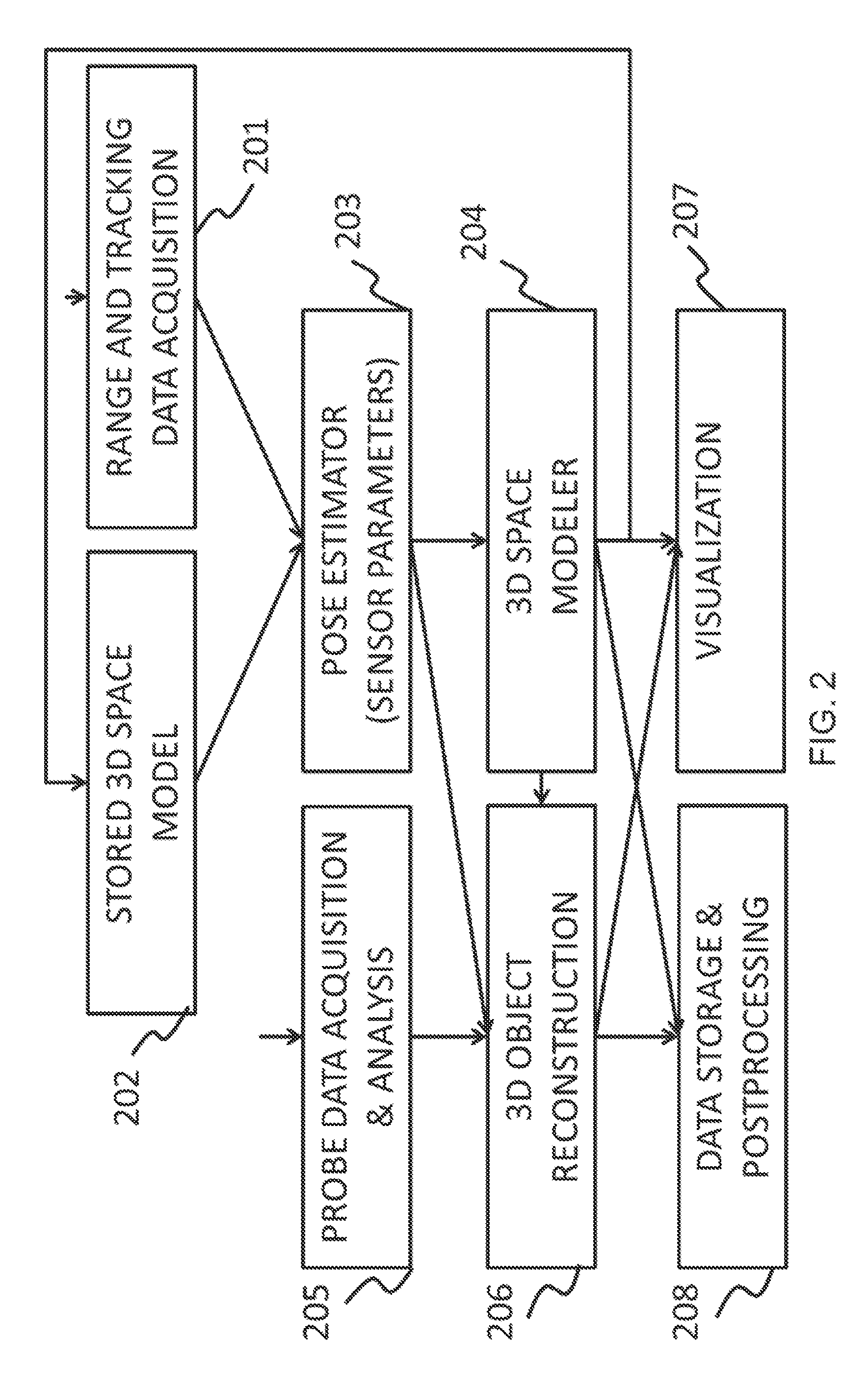

Methods and systems for tracking and guiding sensors and instruments

ActiveUS20130237811A1Reduce ultrasound artifactSpeckle reductionMedical devicesDiagnostic recording/measuringMachine visionUltrasonic sensor

Owner:ZITEO INC

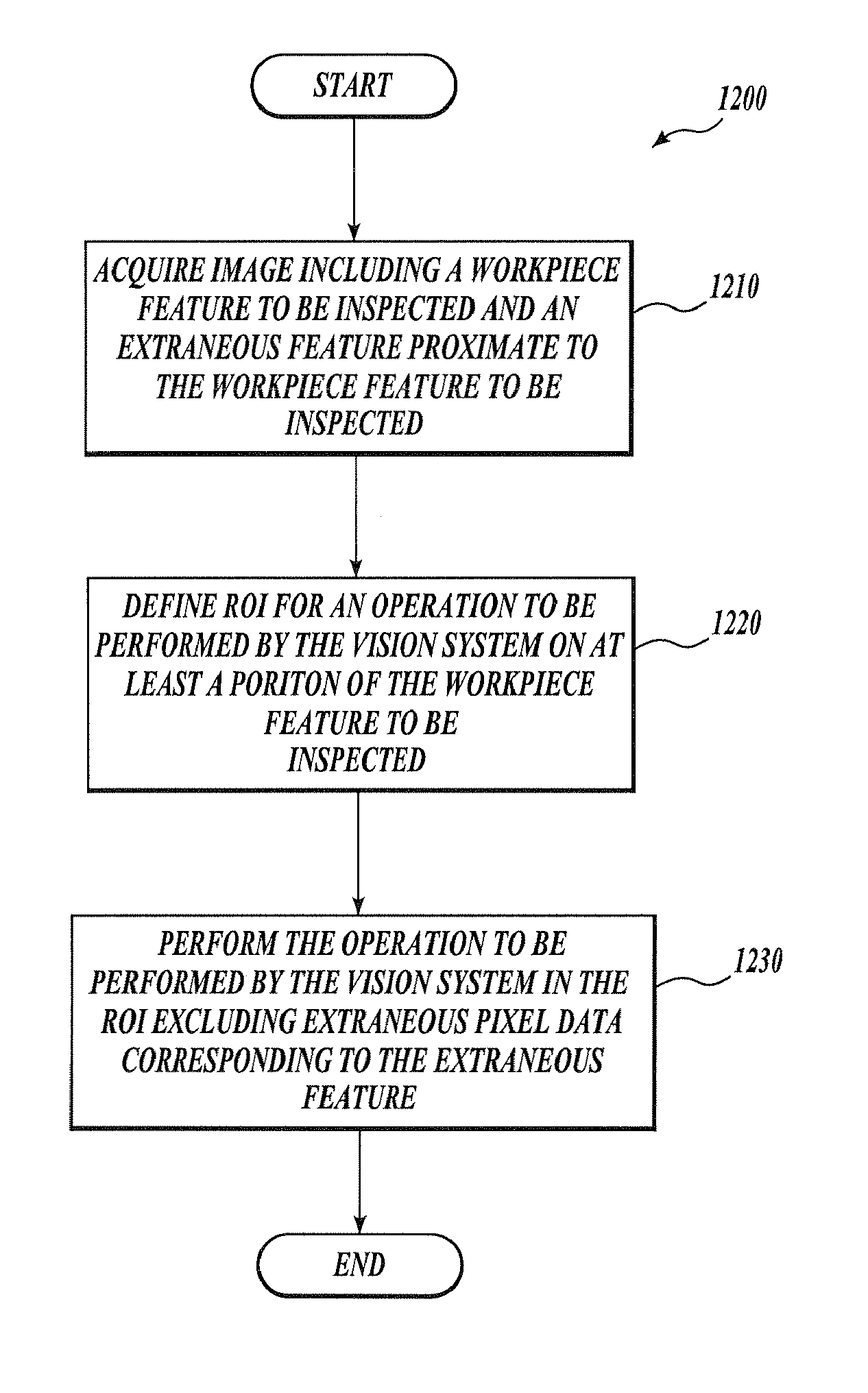

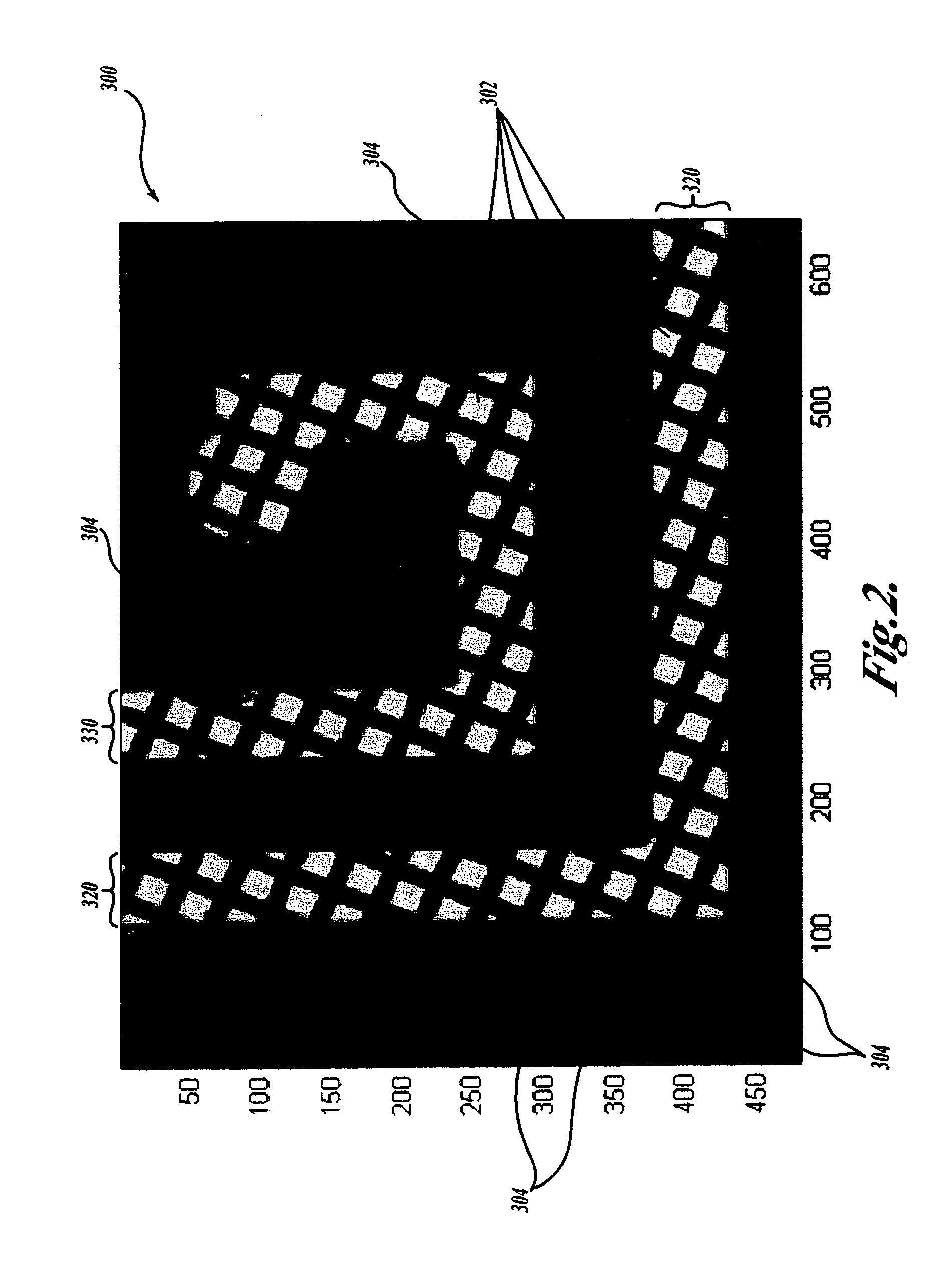

System and method for excluding extraneous features from inspection operations performed by a machine vision inspection system

ActiveUS7324682B2Easy to useVariation in spacingImage enhancementImage analysisMachine visionImaging Feature

Systems and methods are provided for excluding extraneous image features from inspection operations in a machine vision inspection system. The method identifies extraneous features that are close to image features to be inspected. No image modifications are performed on the “non-excluded” image features to be inspected. A video tool region of interest provided by a user interface of the vision system can encompass both the feature to be inspected and the extraneous features, making the video tool easy to use. The extraneous feature excluding operations are concentrated in the region of interest. The user interface for the video tool may operate similarly whether there are extraneous features in the region of interest, or not. The invention is of particular use when inspecting flat panel display screen masks having occluded features that are to be inspected.

Owner:MITUTOYO CORP

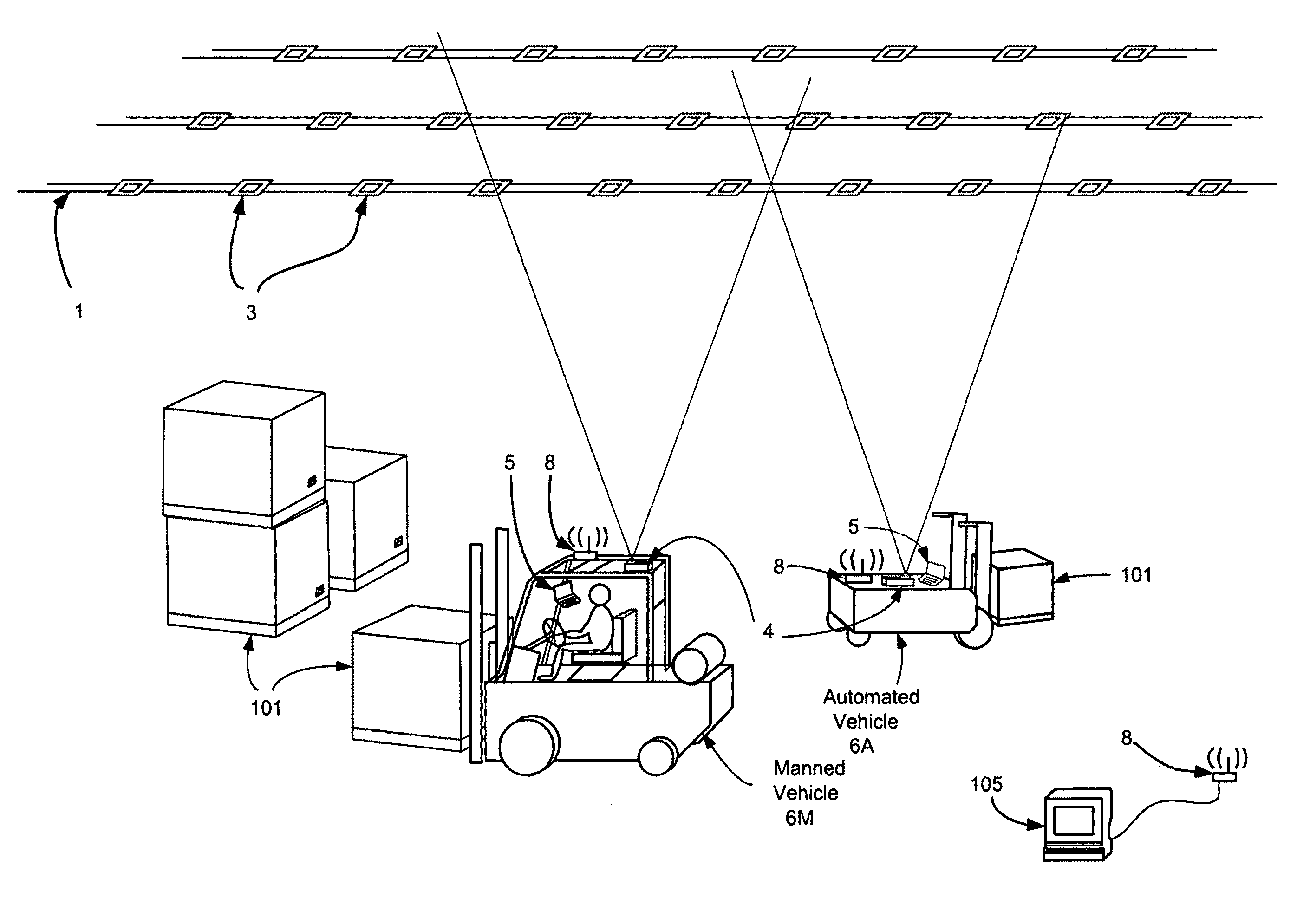

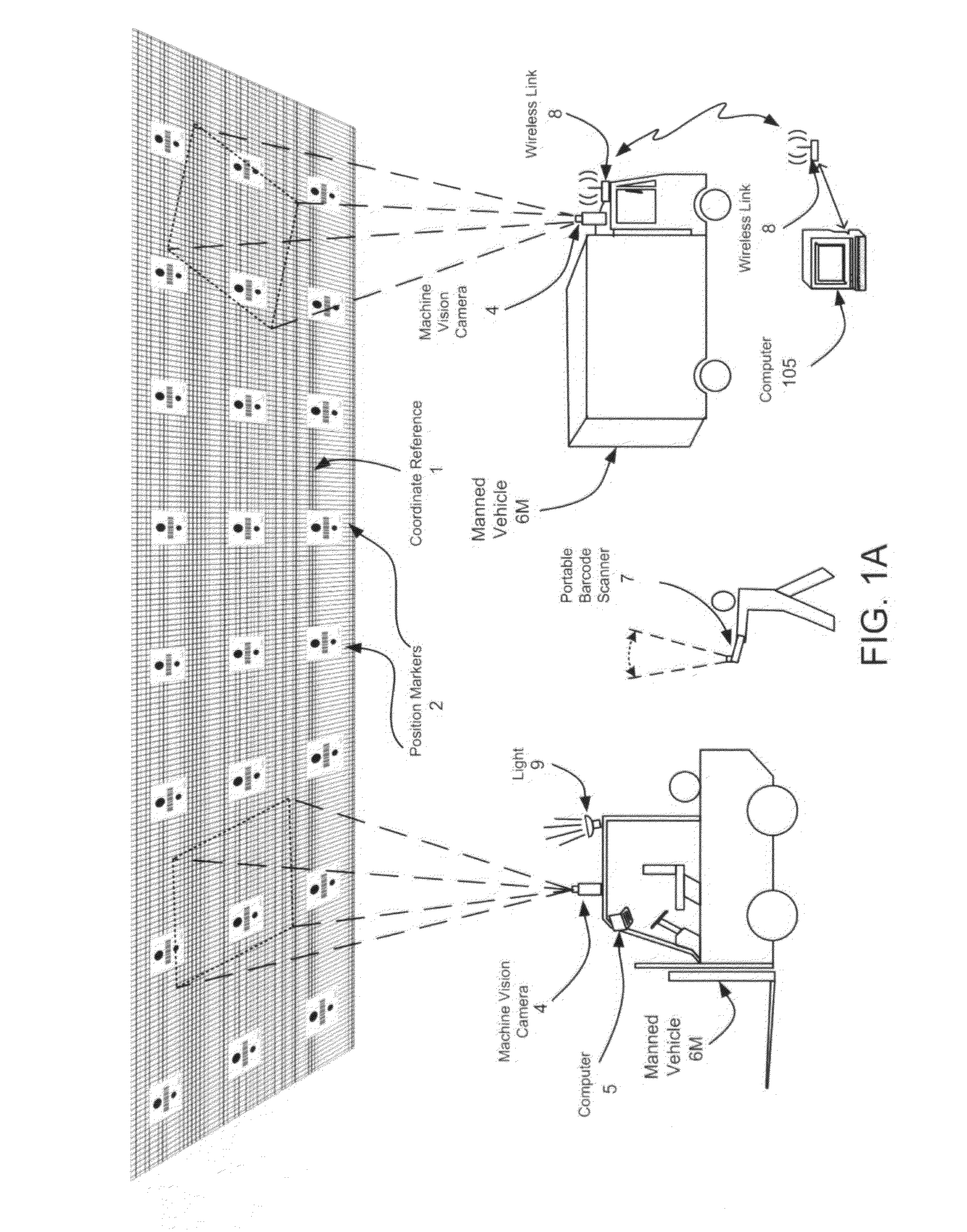

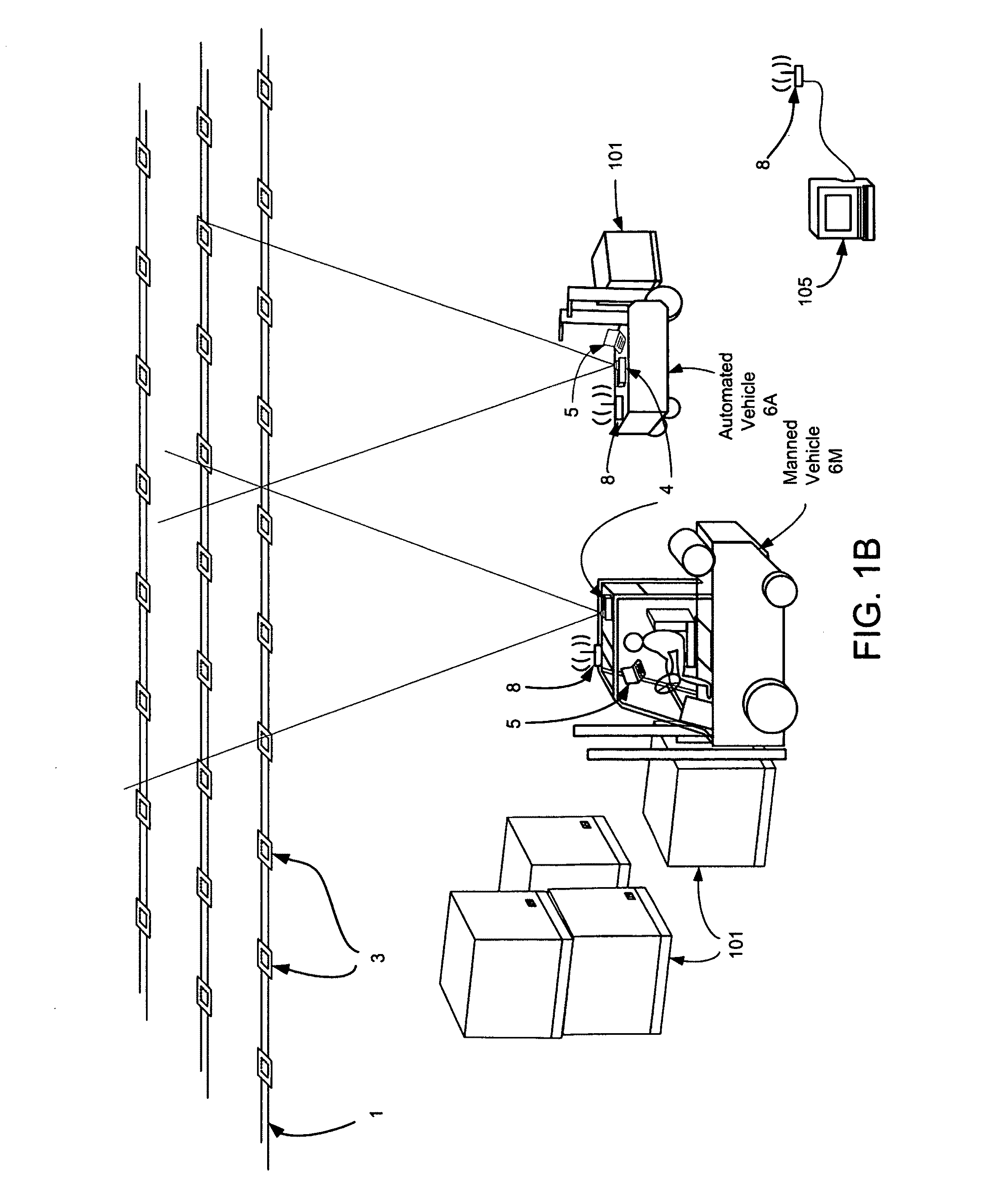

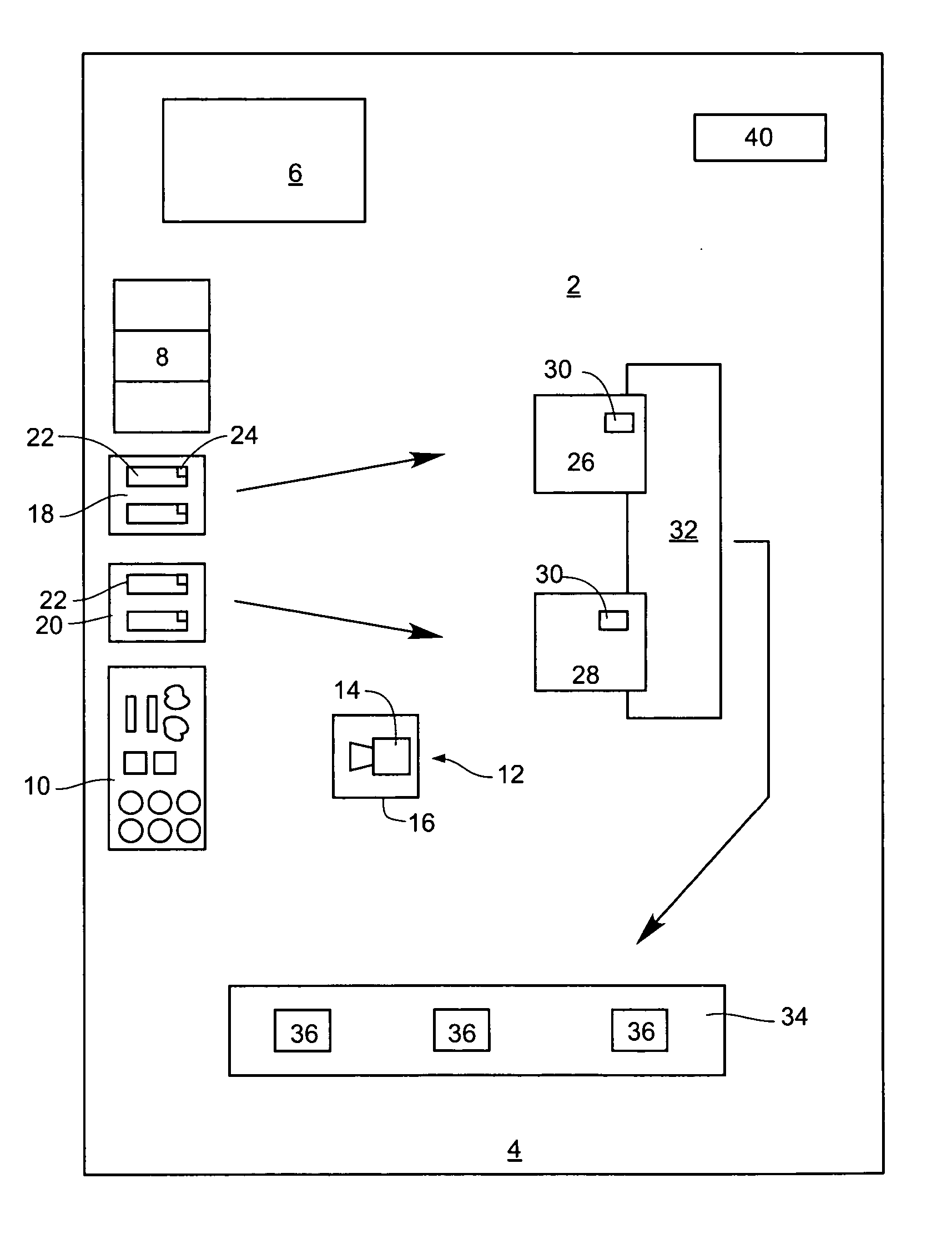

Method and apparatus for managing and controlling manned and automated utility vehicles

ActiveUS20110010023A1Vehicle testingRegistering/indicating working of vehiclesMachine visionImproved method

A method and apparatus for managing manned and automated utility vehicles, and for picking up and delivering objects by automated vehicles. A machine vision image acquisition apparatus determines the position and the rotational orientation of vehicles in a predefined coordinate space by acquiring an image of one or more position markers and processing the acquired image to calculate the vehicle's position and rotational orientation based on processed image data. The position of the vehicle is determined in two dimensions. Rotational orientation (heading) is determined in the plane of motion. An improved method of position and rotational orientation is presented. Based upon the determined position and rotational orientation of the vehicles stored in a map of the coordinate space, a vehicle controller, implemented as part of a computer, controls the automated vehicles through motion and steering commands, and communicates with the manned vehicle operators by transmitting control messages to each operator.

Owner:SHENZHEN INVENTION DISCOVERY CO LTD

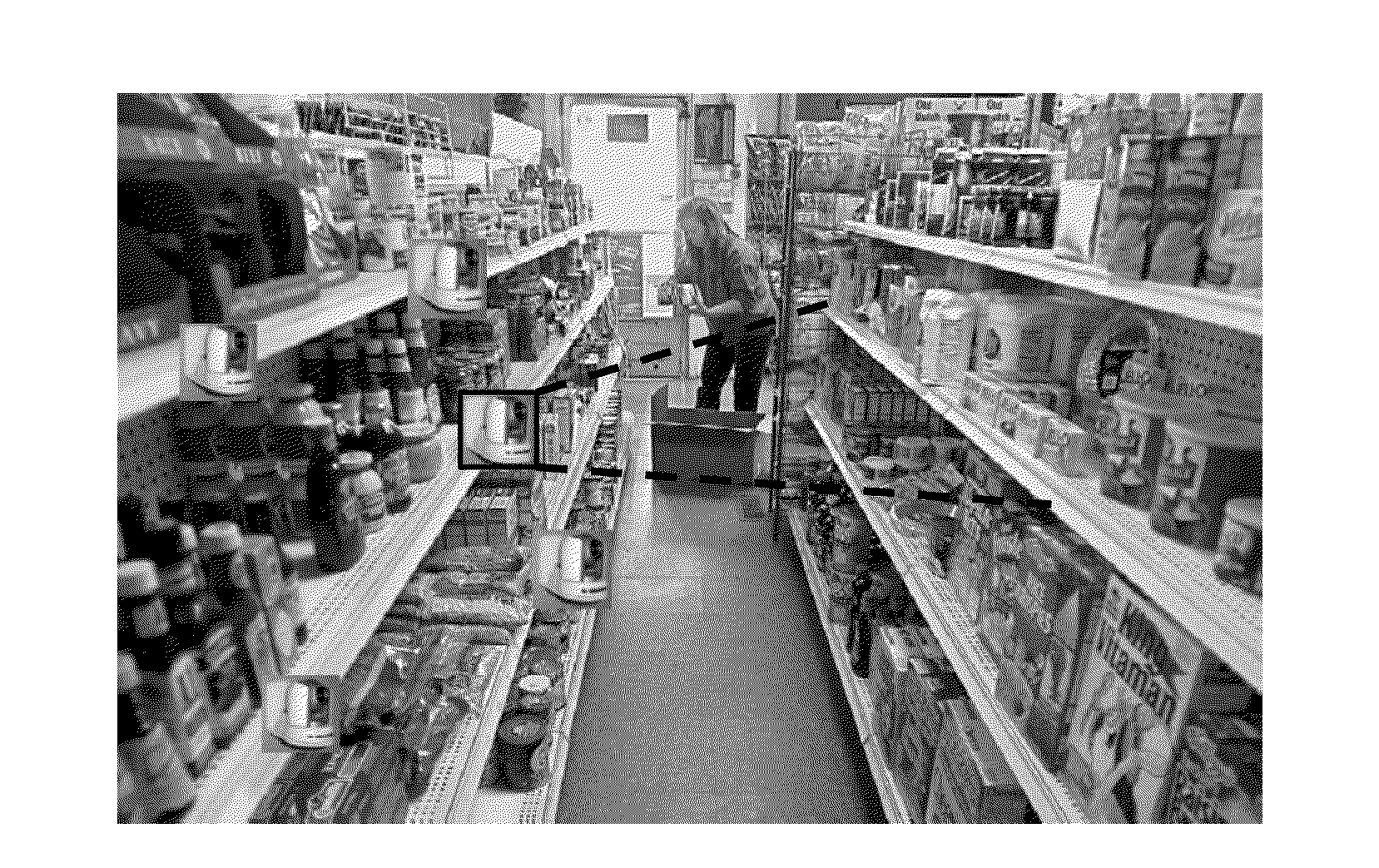

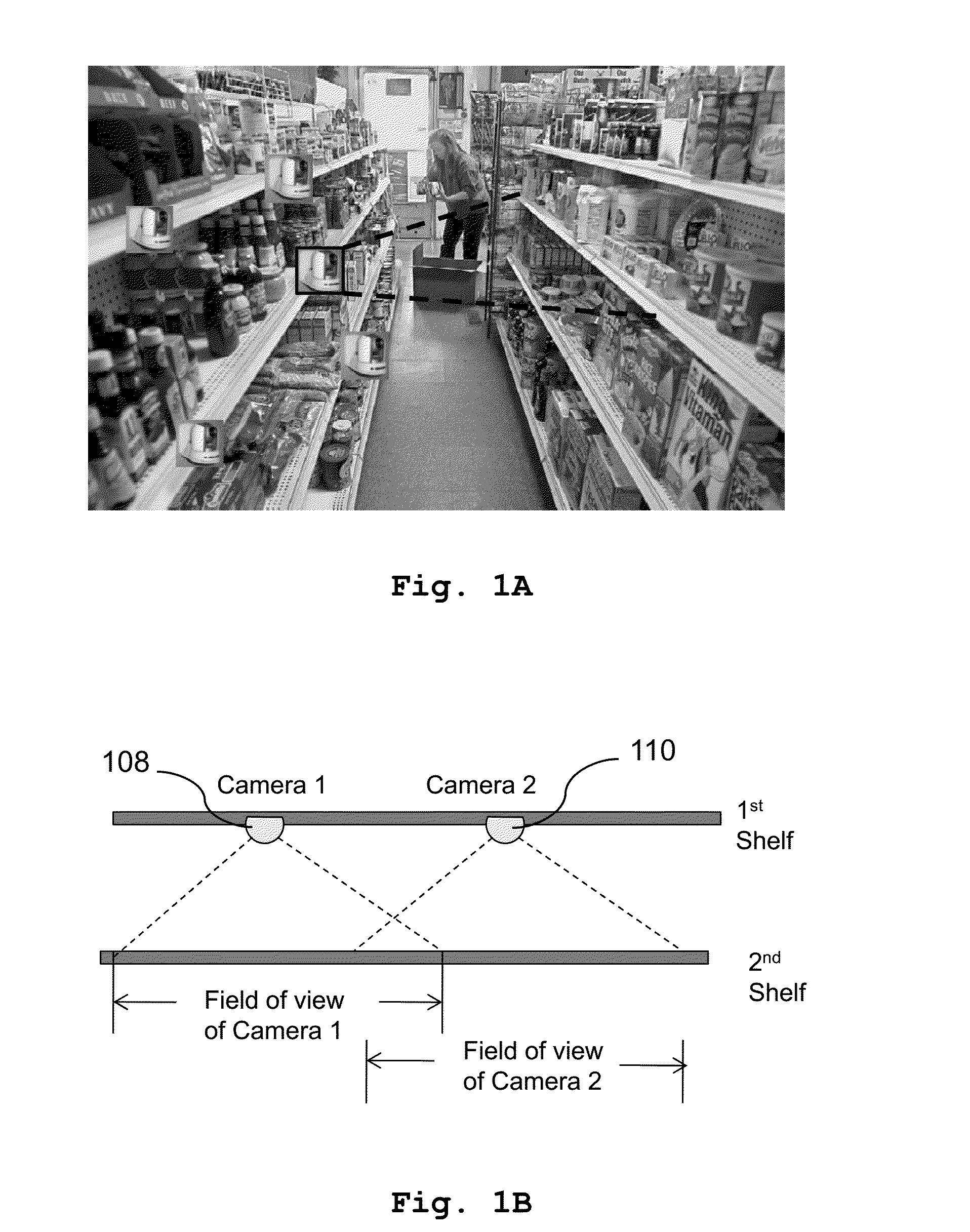

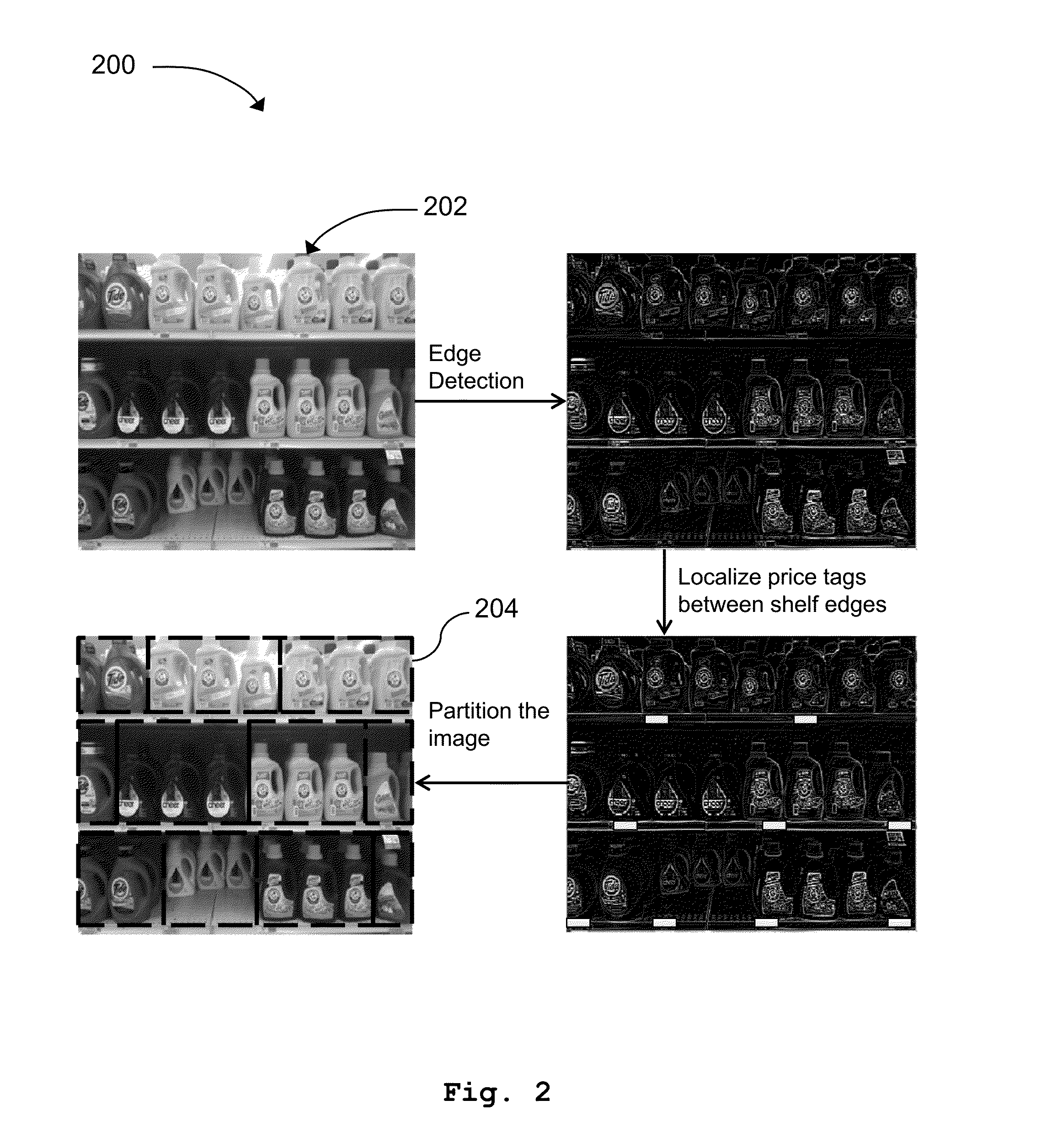

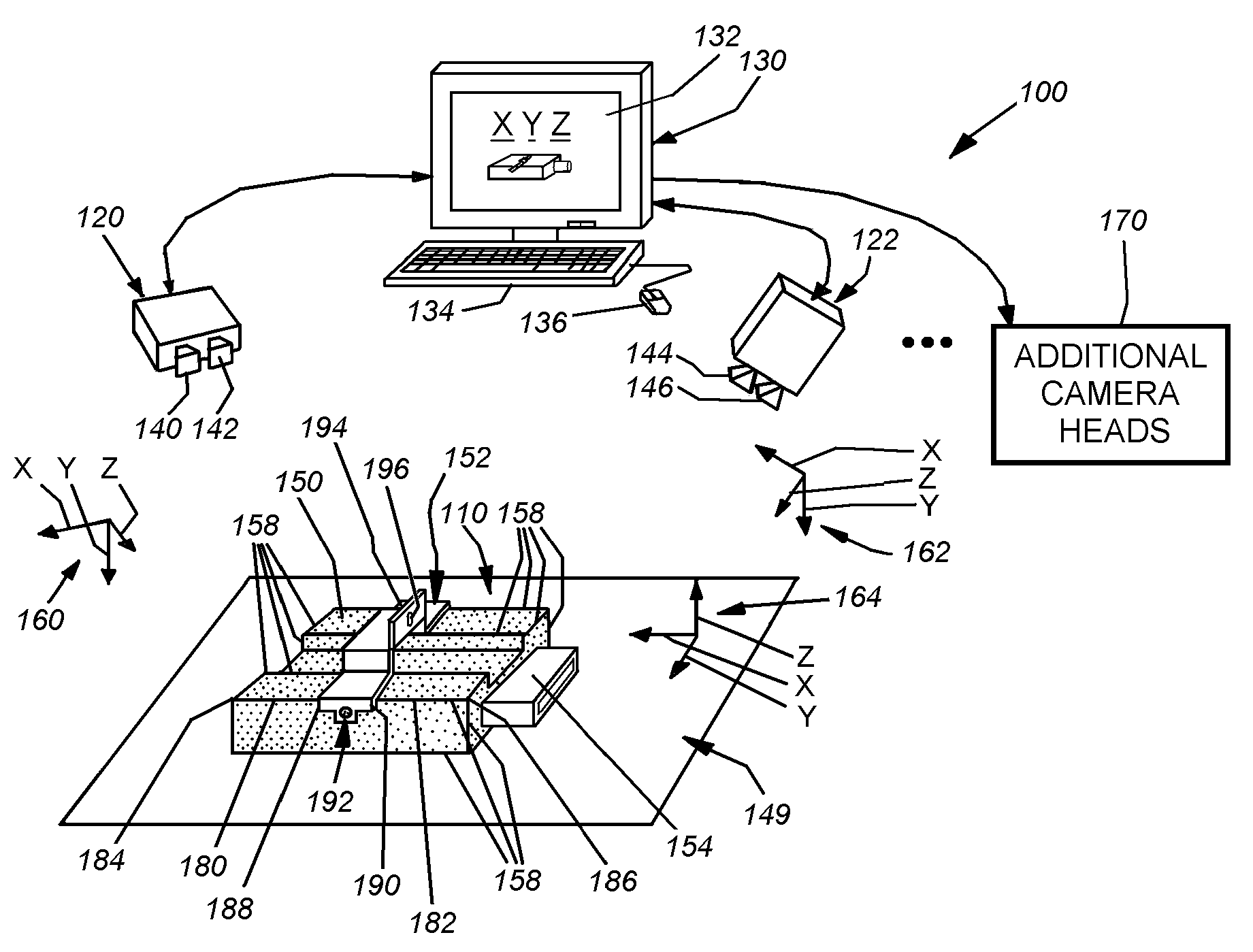

Machine vision technology for shelf inventory management

InactiveUS20150262116A1Character and pattern recognitionService system furnitureMachine visionBarcode

A system, method and computer program product for maintaining shelf inventory data on a shelf. The system includes a camera for capturing shelf images of items on the shelf. An inventory database stores a product name, type, barcode, image and inventory data. An image-count correlation database stores historical product inventory images and product shelf inventory counts associated with the products in the historical images which are read from the product inventory database. A computer processor segments the shelf images into product inventory images, matches the inventory images with the historical images, and updates the shelf inventory data in the inventory database based on the product shelf inventory counts associated with the matched historical images in the image-count correlation database.

Owner:IBM CORP

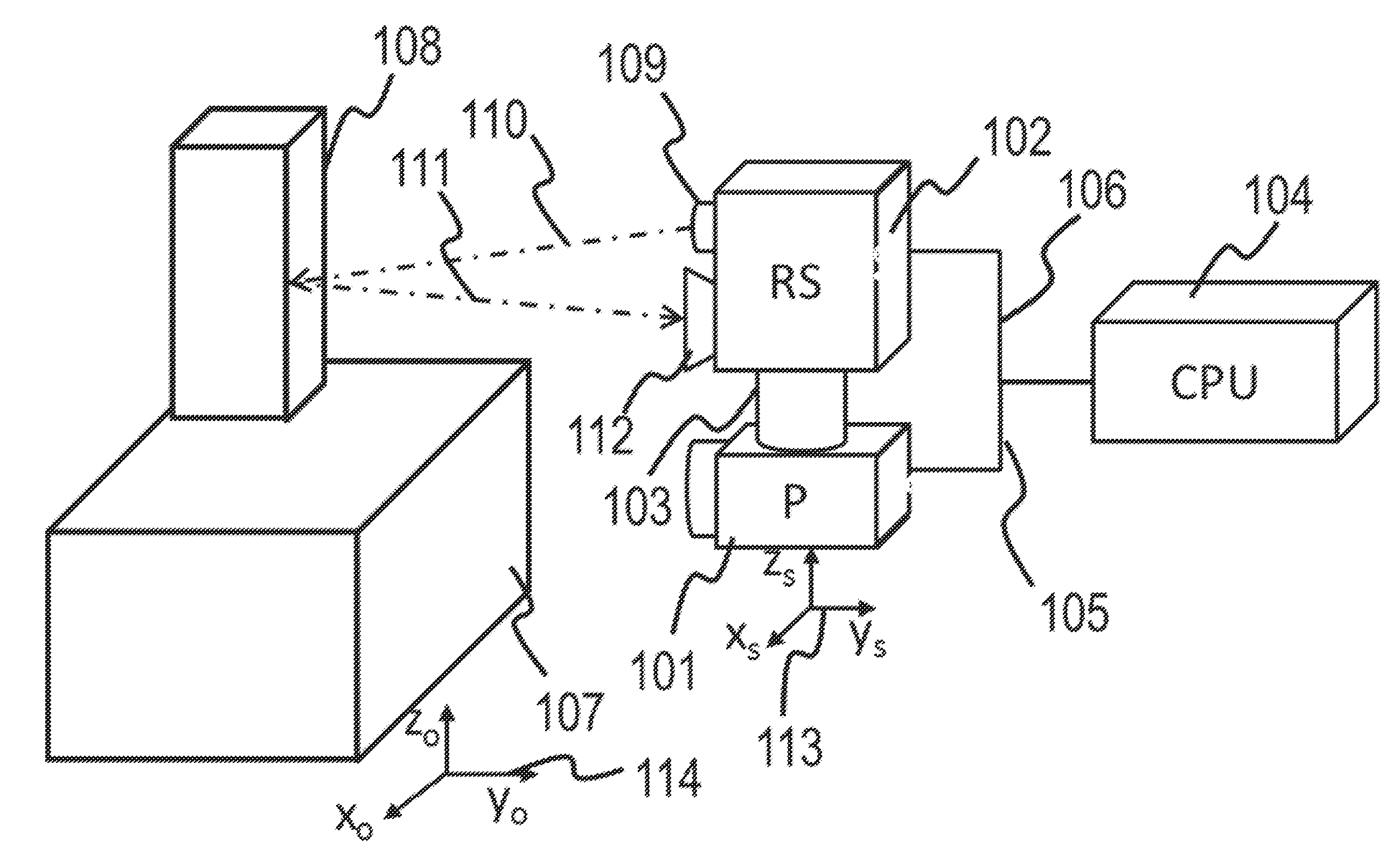

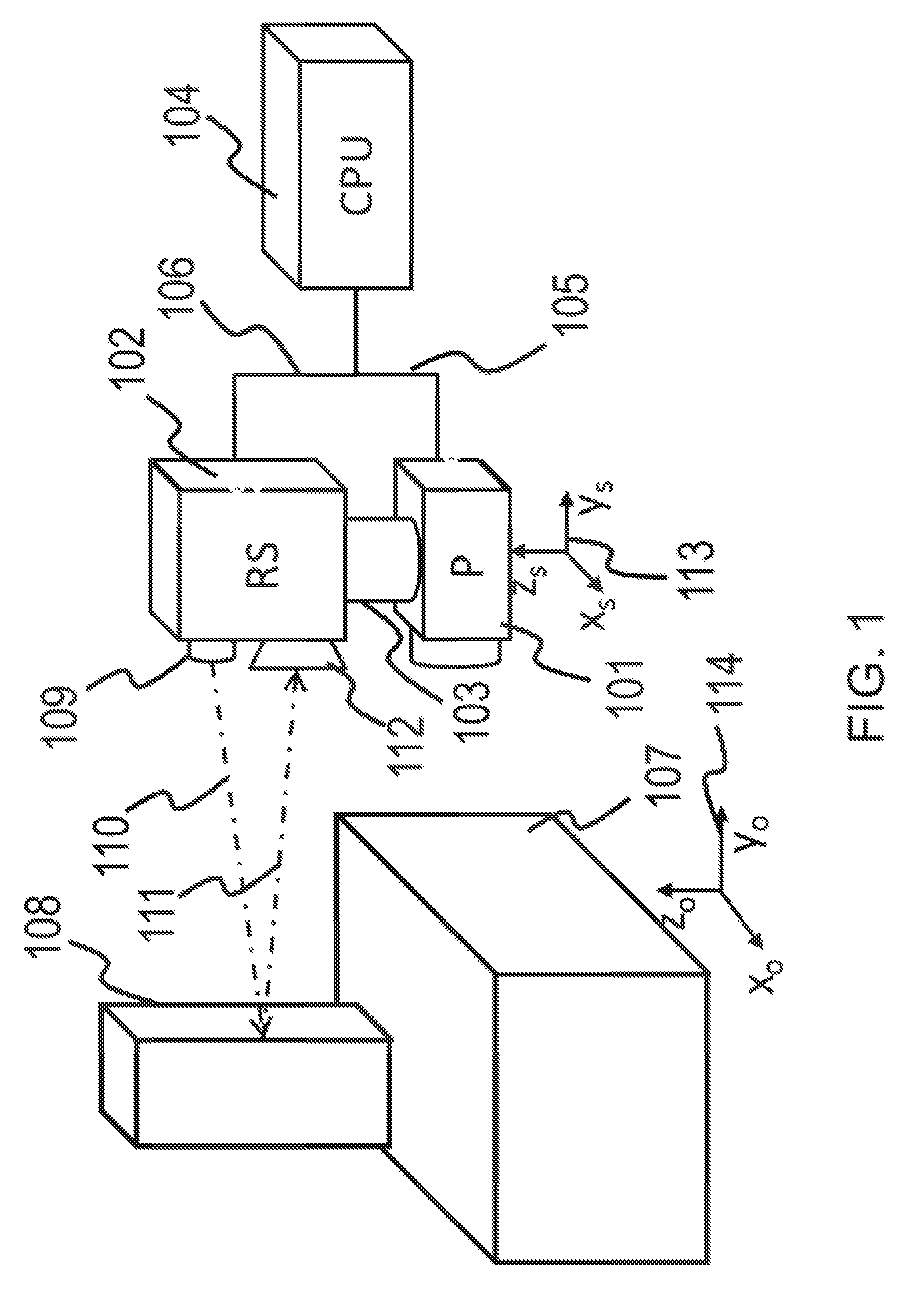

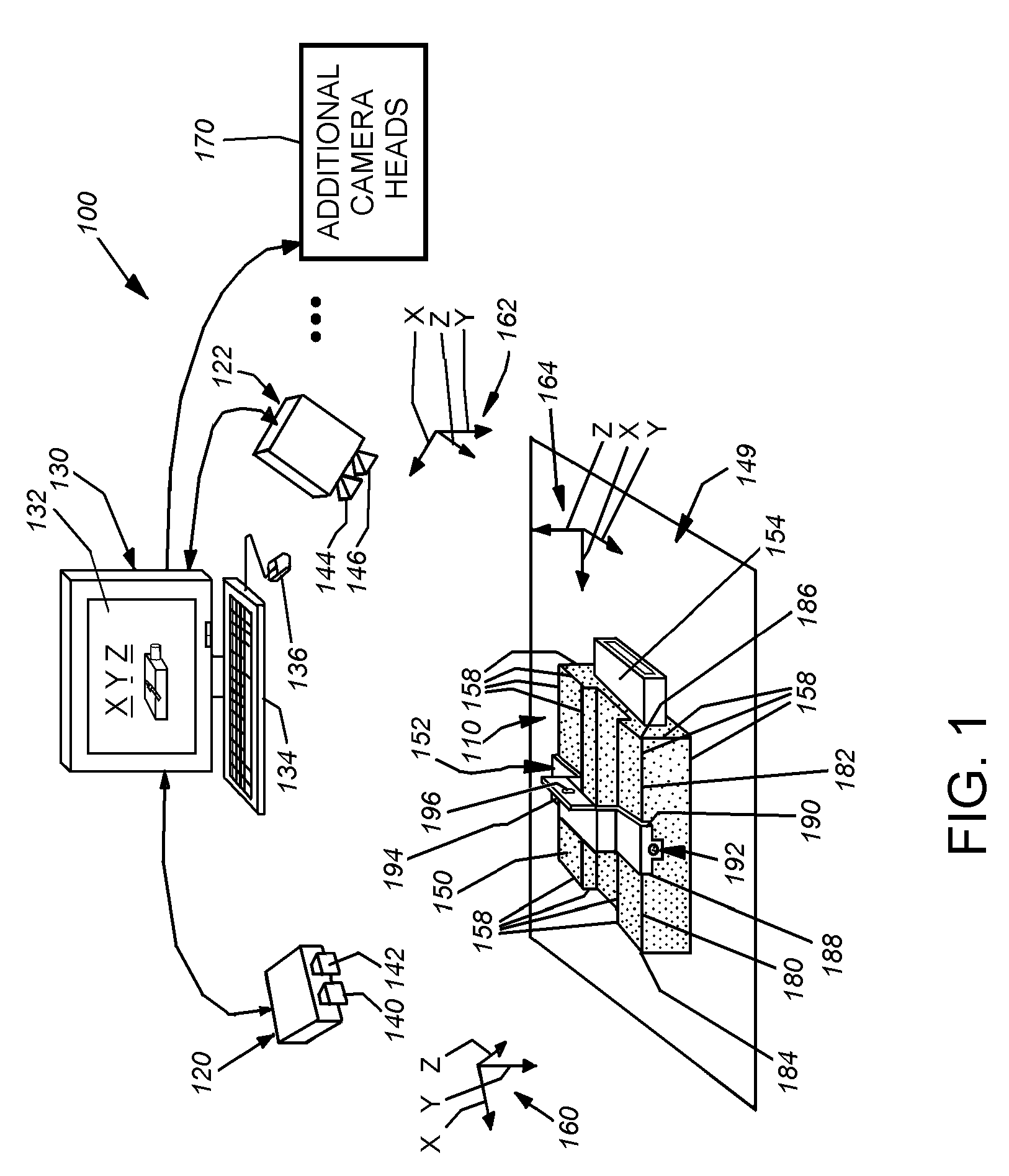

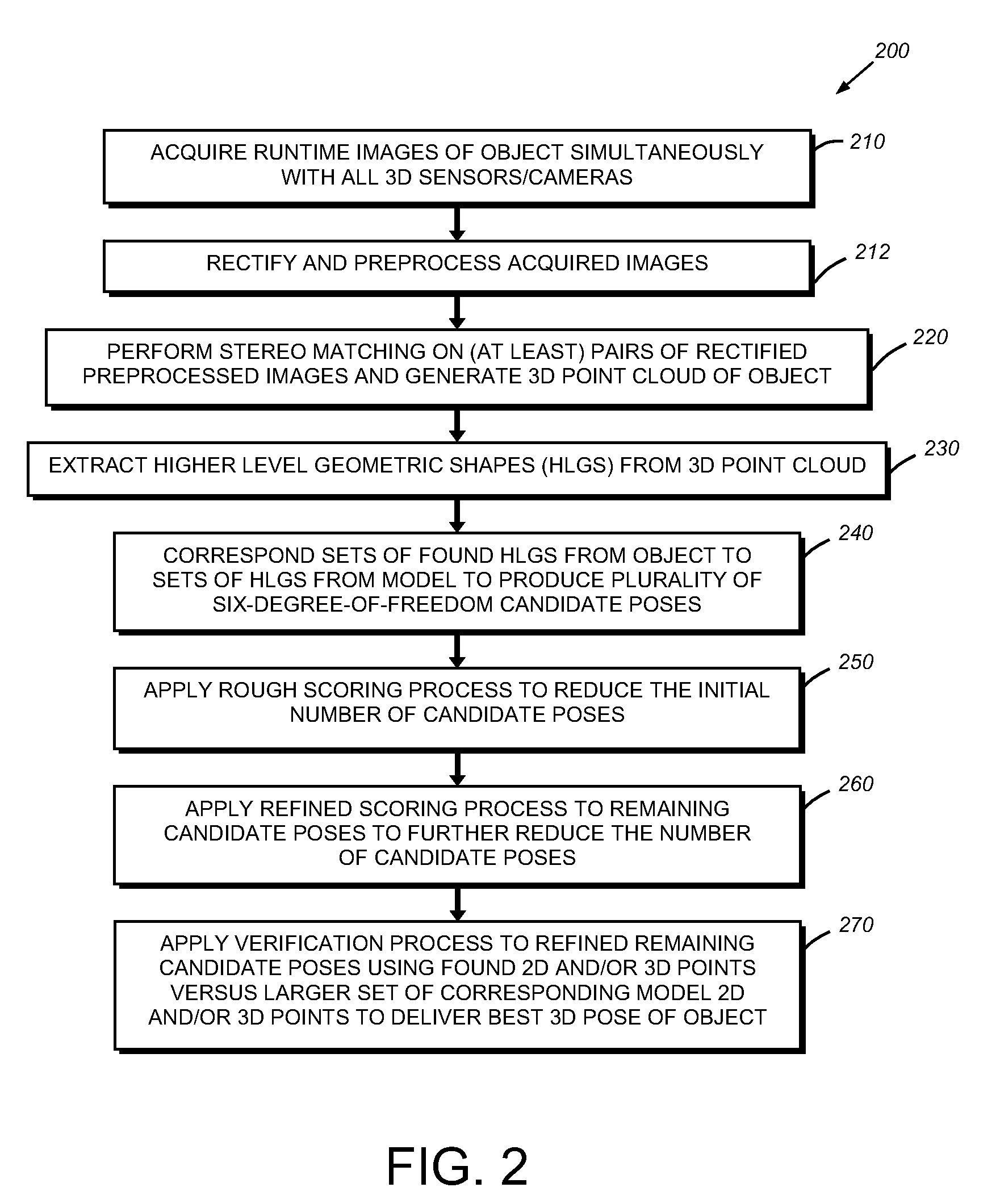

System and method for three-dimensional alignment of objects using machine vision

This invention provides a system and method for determining the three-dimensional alignment of a modeledobject or scene. After calibration, a 3D (stereo) sensor system views the object to derive a runtime 3D representation of the scene containing the object. Rectified images from each stereo head are preprocessed to enhance their edge features. A stereo matching process is then performed on at least two (a pair) of the rectified preprocessed images at a time by locating a predetermined feature on a first image and then locating the same feature in the other image. 3D points are computed for each pair of cameras to derive a 3D point cloud. The 3D point cloud is generated by transforming the 3D points of each camera pair into the world 3D space from the world calibration. The amount of 3D data from the point cloud is reduced by extracting higher-level geometric shapes (HLGS), such as line segments. Found HLGS from runtime are corresponded to HLGS on the model to produce candidate 3D poses. A coarse scoring process prunes the number of poses. The remaining candidate poses are then subjected to a further more-refined scoring process. These surviving candidate poses are then verified by, for example, fitting found 3D or 2D points of the candidate poses to a larger set of corresponding three-dimensional or two-dimensional model points, whereby the closest match is the best refined three-dimensional pose.

Owner:COGNEX CORP

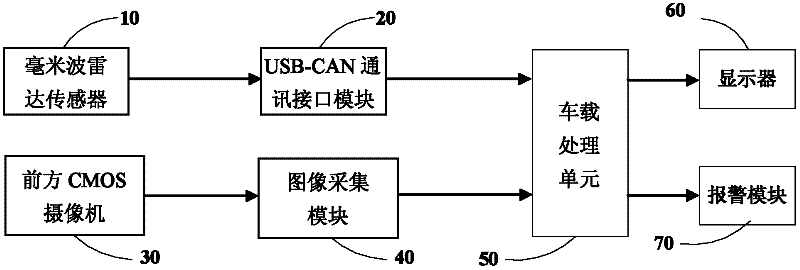

Multi-sensor information fusion-based collision and departure pre-warning device and method

InactiveCN102303605AImprove accuracyAvoid or Mitigate Collision AccidentsClosed circuit television systemsRadio wave reradiation/reflectionVisual perceptionImaging data

The invention discloses a multi-sensor information fusion-based forward collision and lane departure pre-warning device, which comprises a millimeter-wave radar, a communication interface module, a video camera, an image acquisition module, a display module, a warning module and a vehicle-mounted processing unit, wherein the vehicle-mounted processing unit can perform fusion processing of radar signals and machine visual information from the communication interface module and the image acquisition module. The pre-warning device is used for warning of forward collision and lane departure of a vehicle, and a pre-warning method comprises the steps of: driving devices; receiving and processing radar data and image data; fusing information; and finally displaying the fused information. According to the multi-sensor information fusion-based forward collision and lane departure pre-warning device disclosed by the invention, potential risk of collision during running of the vehicle can be found through the radar and the video camera so as to provide warning information for a driver. According to the pre-warning method disclosed by the invention, since a method of visible sensation combined with the radar is adopted, the accuracy rate of prevention of collision and lane departure of the vehicle is fundamentally improved, and the degree of accuracy can be increased by 5-20 percent as proved by experiments.

Owner:CHINA AUTOMOTIVE TECH & RES CENT

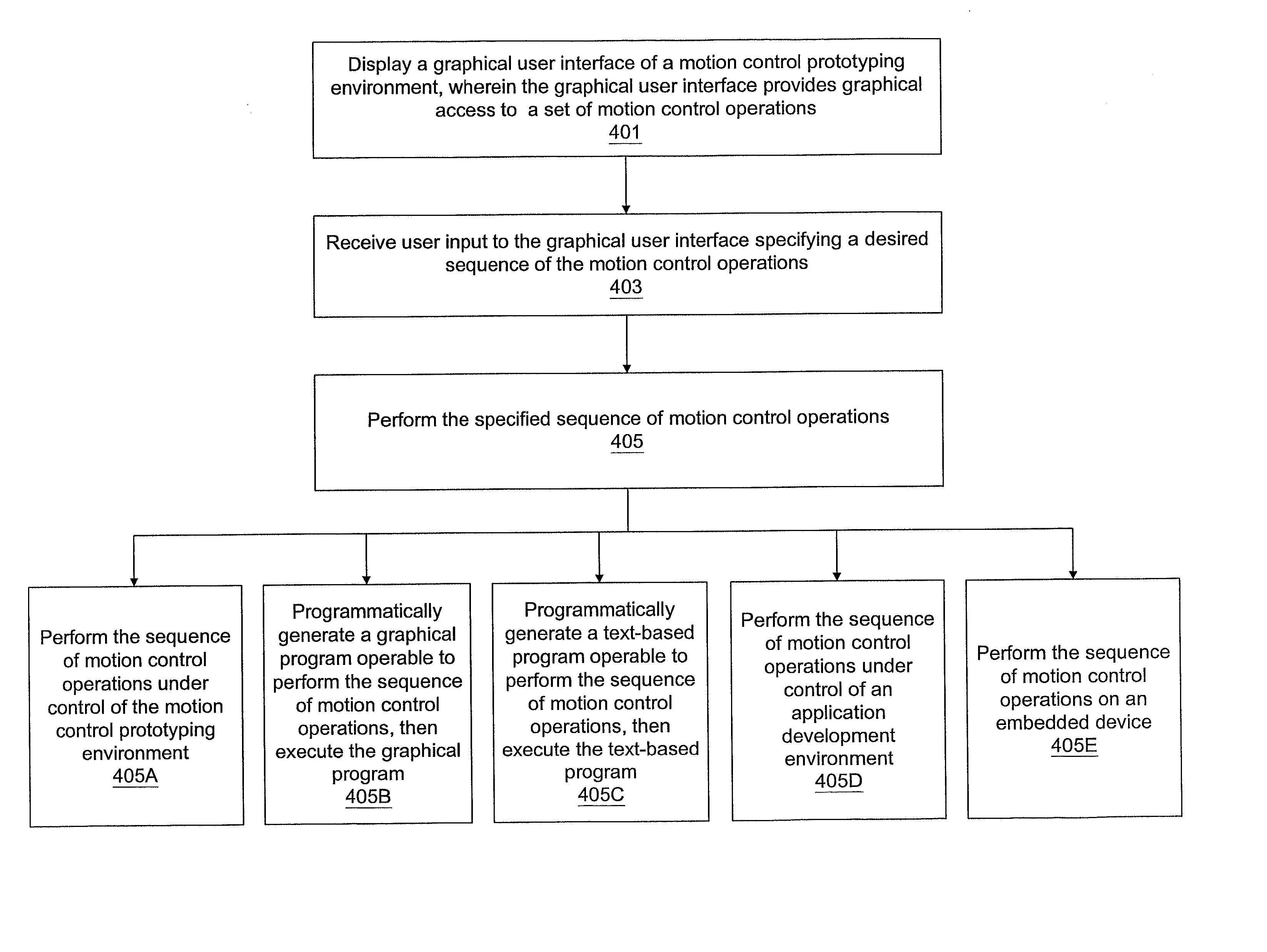

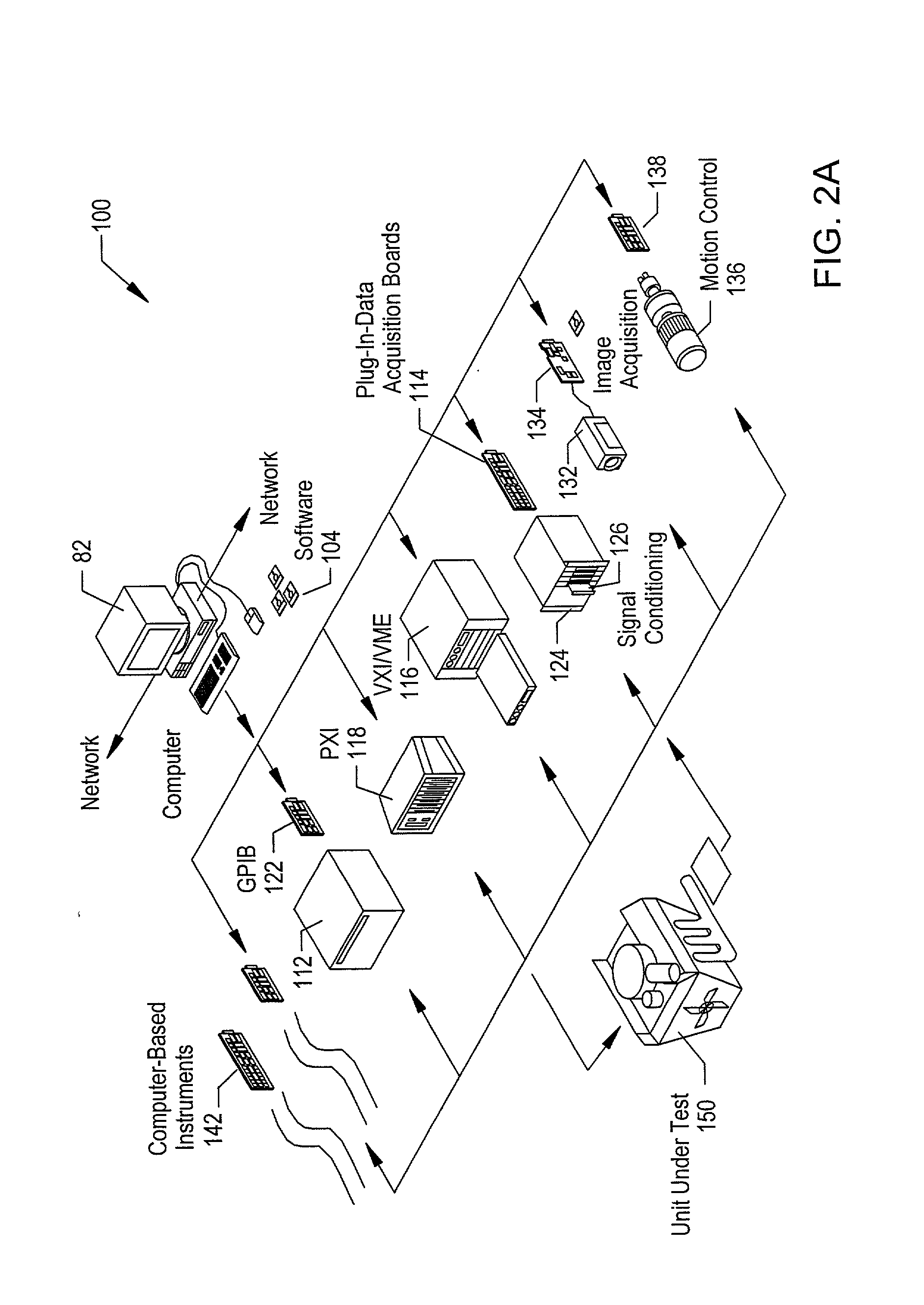

System and method for programmatically generating a graphical program based on a sequence of motion control, machine vision, and data acquisition (DAQ) operations

ActiveUS20020129333A1Easily and efficiently develop/prototypeVisual/graphical programmingSpecific program execution arrangementsGraphical user interfaceMachine vision

A user may utilize a prototyping environment to create a sequence of motion control, machine vision, and / or data acquisition (DAQ) operations, e.g., without needing to write or construct code in any programming language. For example, the environment may provide a graphical user interface (GUI) enabling the user to develop / prototype the sequence at a high level, by selecting from and configuring a sequence of operations using the GUI. The prototyping environment application may then be operable to automatically, i.e., programmatically, generate graphical program code implementing the sequence. For example, the environment may generate a standalone graphical program operable to perform the sequence of operations.

Owner:NATIONAL INSTRUMENTS

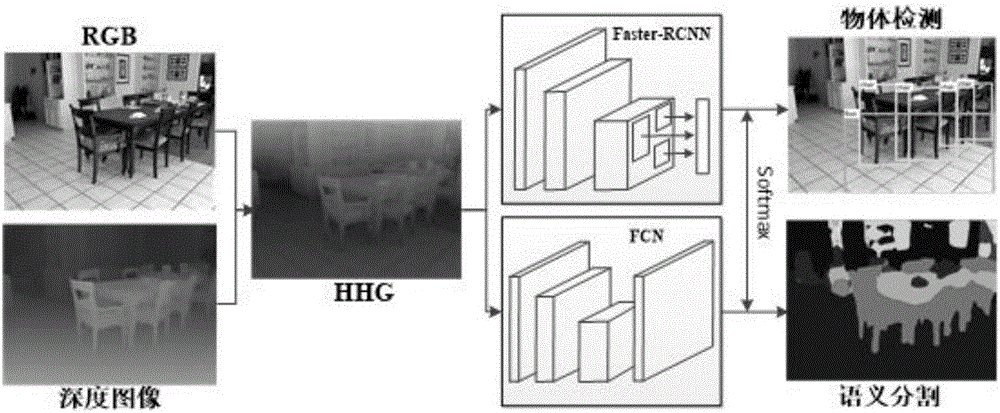

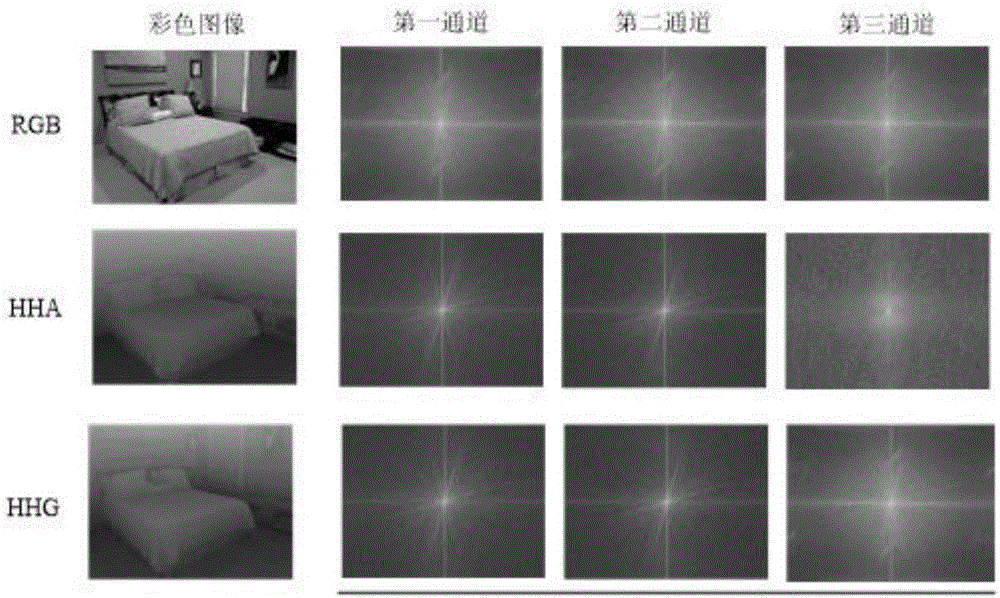

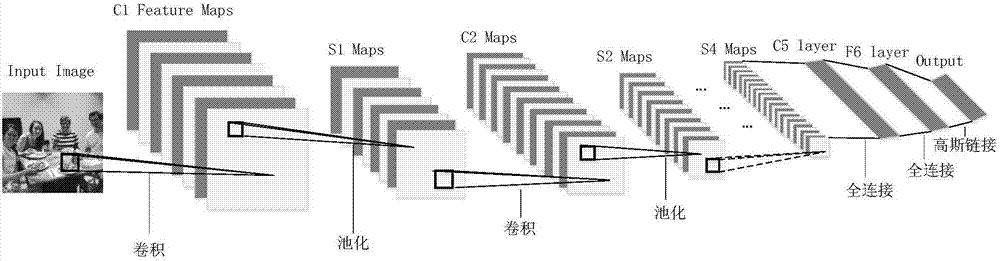

RGB-D image object detection and semantic segmentation method based on deep convolution network

ActiveCN106709568ACharacter and pattern recognitionNeural architecturesMachine visionVisual perception

The invention discloses an RGB-D image object detection and semantic segmentation method based on a deep convolution network, which belongs to the field of depth learning and machine vision. According to the method provided by the technical scheme of the invention, Faster-RCNN is used to replace the original slow RCNN; Faster-RCNN uses GPU, which is fast in the aspect of feature extracting, and at the same time generates a regional scheme in the network; the whole training process is training from end to end; FCN is used to carry out RGB-D image semantic segmentation; FCN uses a GPU and the deep convolution network to rapidly extract the deep features of an image; deconvolution is used to fuse deep features and shallow features of the image convolution; and the local semantic information of the image is integrated into the global semantic information.

Owner:深圳市小枫科技有限公司

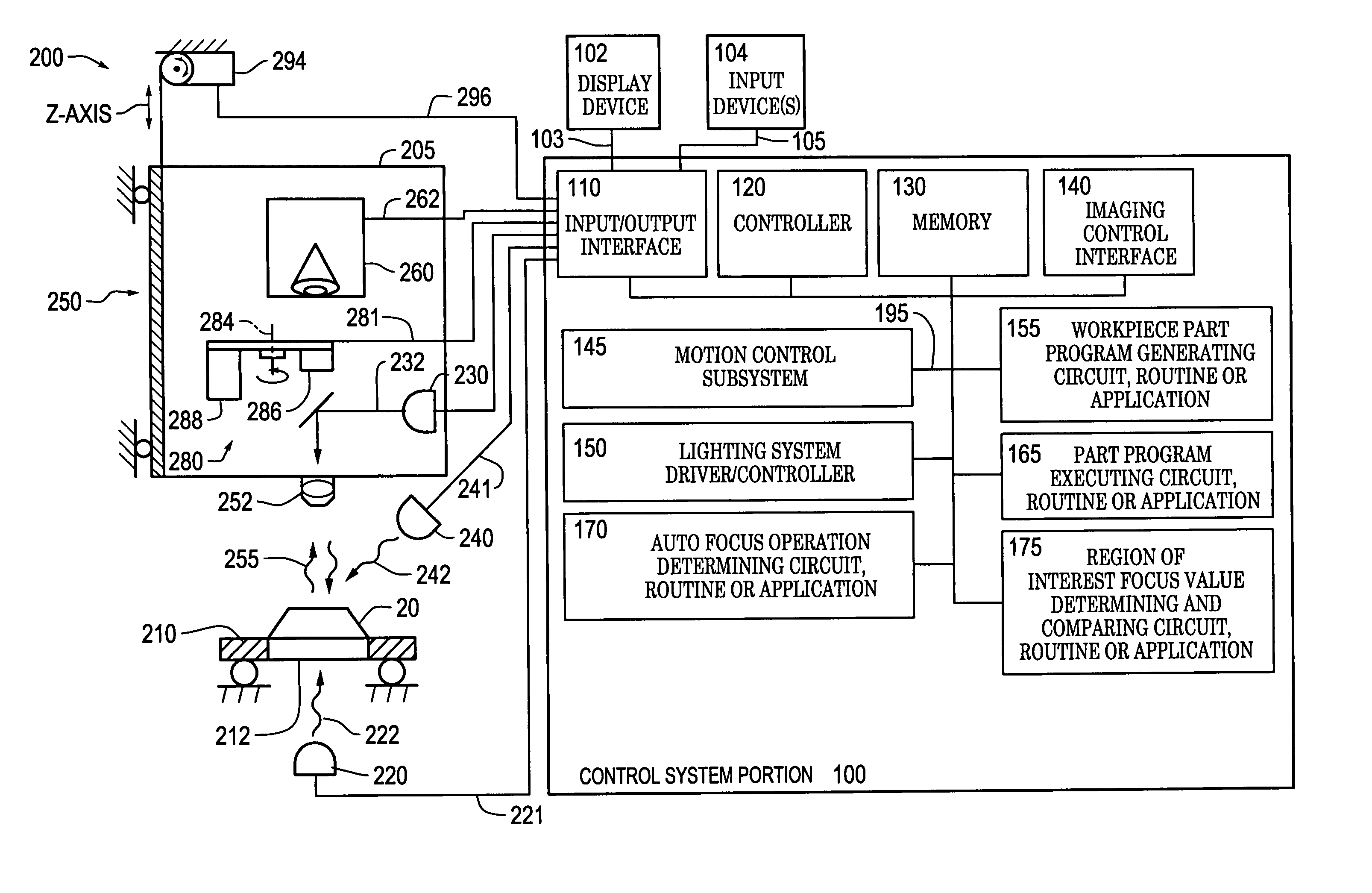

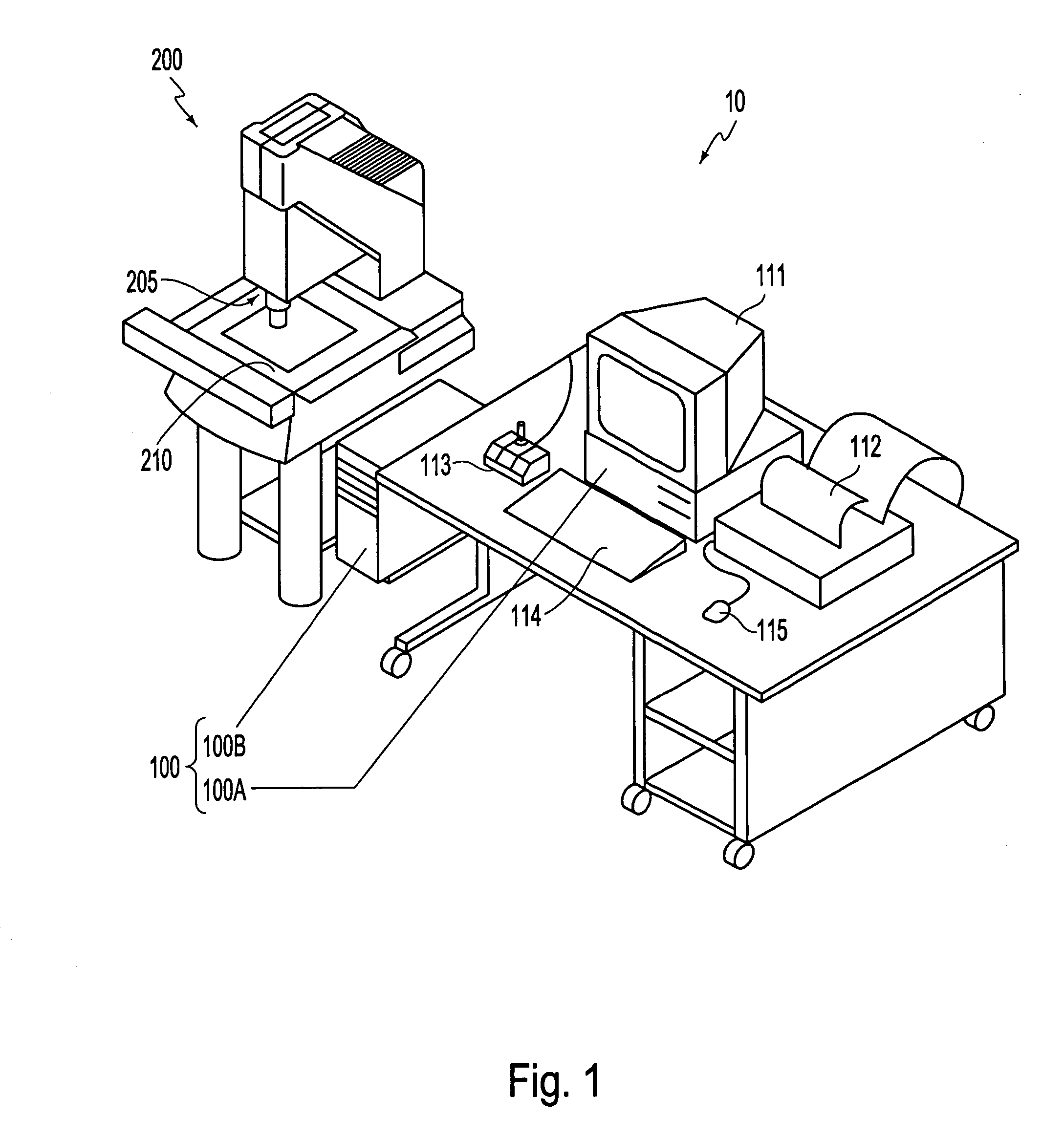

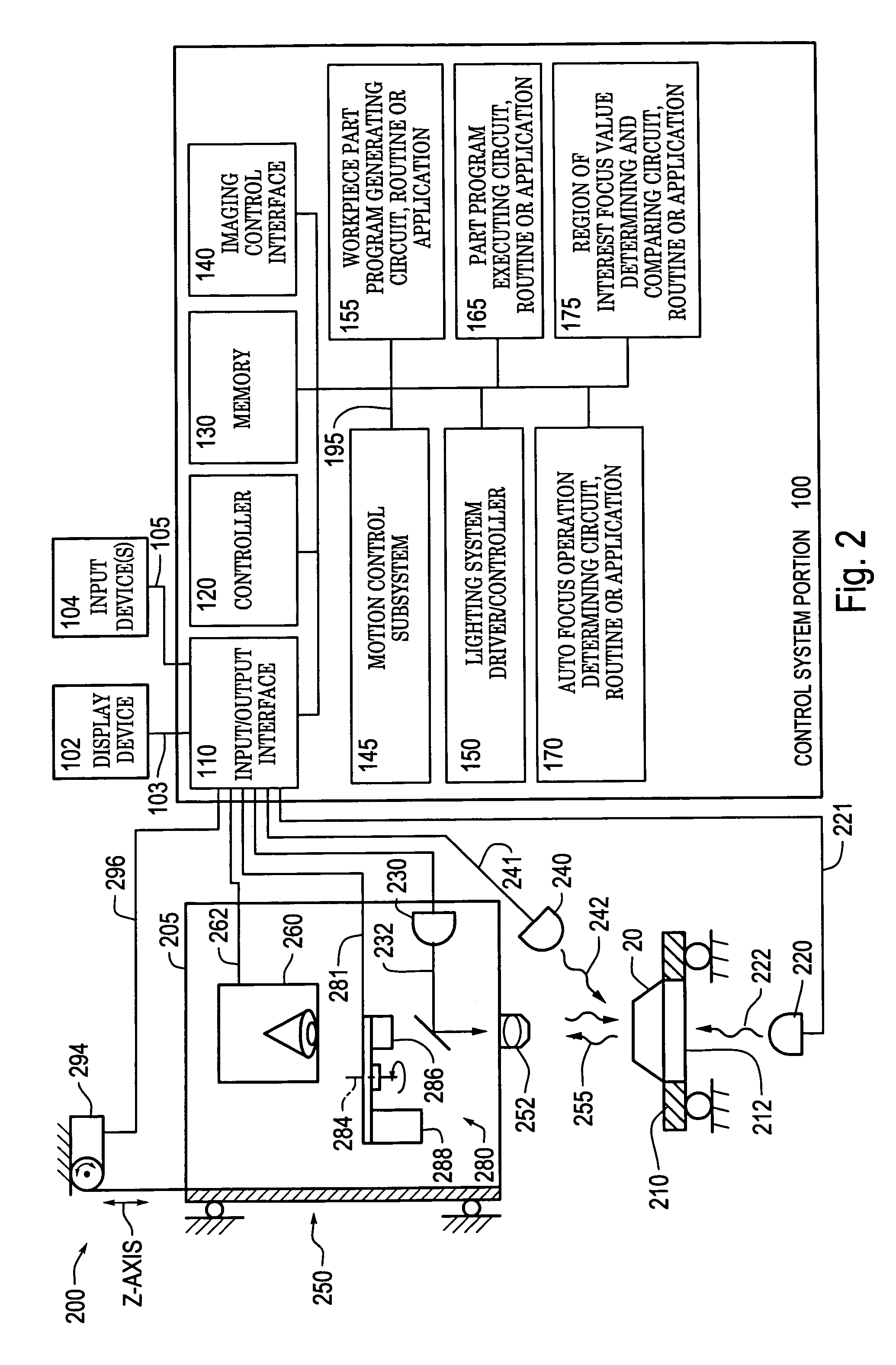

Systems and methods for rapidly automatically focusing a machine vision inspection system

ActiveUS7030351B2Increase speedAccurate autofocusTelevision system detailsSolid-state devicesMachine visionMetrology

Owner:MITUTOYO CORP

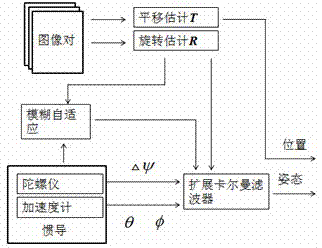

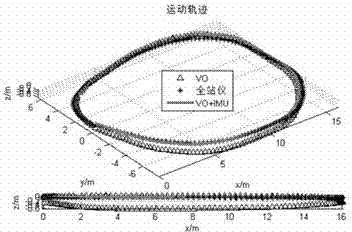

Machine vision and inertial navigation fusion-based mobile robot motion attitude estimation method

InactiveCN102538781AReduce cumulative errorHigh positioning accuracyNavigation by speed/acceleration measurementsVisual perceptionInertial navigation system

The invention discloses a machine vision and inertial navigation fusion-based mobile robot motion attitude estimation method which comprises the following steps of: synchronously acquiring a mobile robot binocular camera image and triaxial inertial navigation data; distilling front / back frame image characteristics and matching estimation motion attitude; computing a pitch angle and a roll angle by inertial navigation; building a kalman filter model to estimate to fuse vision and inertial navigation attitude; adaptively adjusting a filter parameter according to estimation variance; and carrying out accumulated dead reckoning of attitude correction. According to the method, a real-time expanding kalman filter attitude estimation model is provided, the combination of inertial navigation and gravity acceleration direction is taken as supplement, three-direction attitude estimation of a visual speedometer is decoupled, and the accumulated error of the attitude estimation is corrected; and the filter parameter is adjusted by fuzzy logic according to motion state, the self-adaptive filtering estimation is realized, the influence of acceleration noise is reduced, and the positioning precision and robustness of the visual speedometer is effectively improved.

Owner:ZHEJIANG UNIV

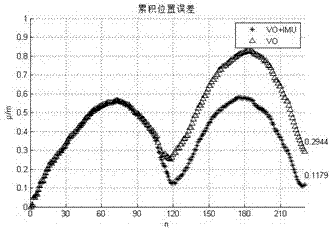

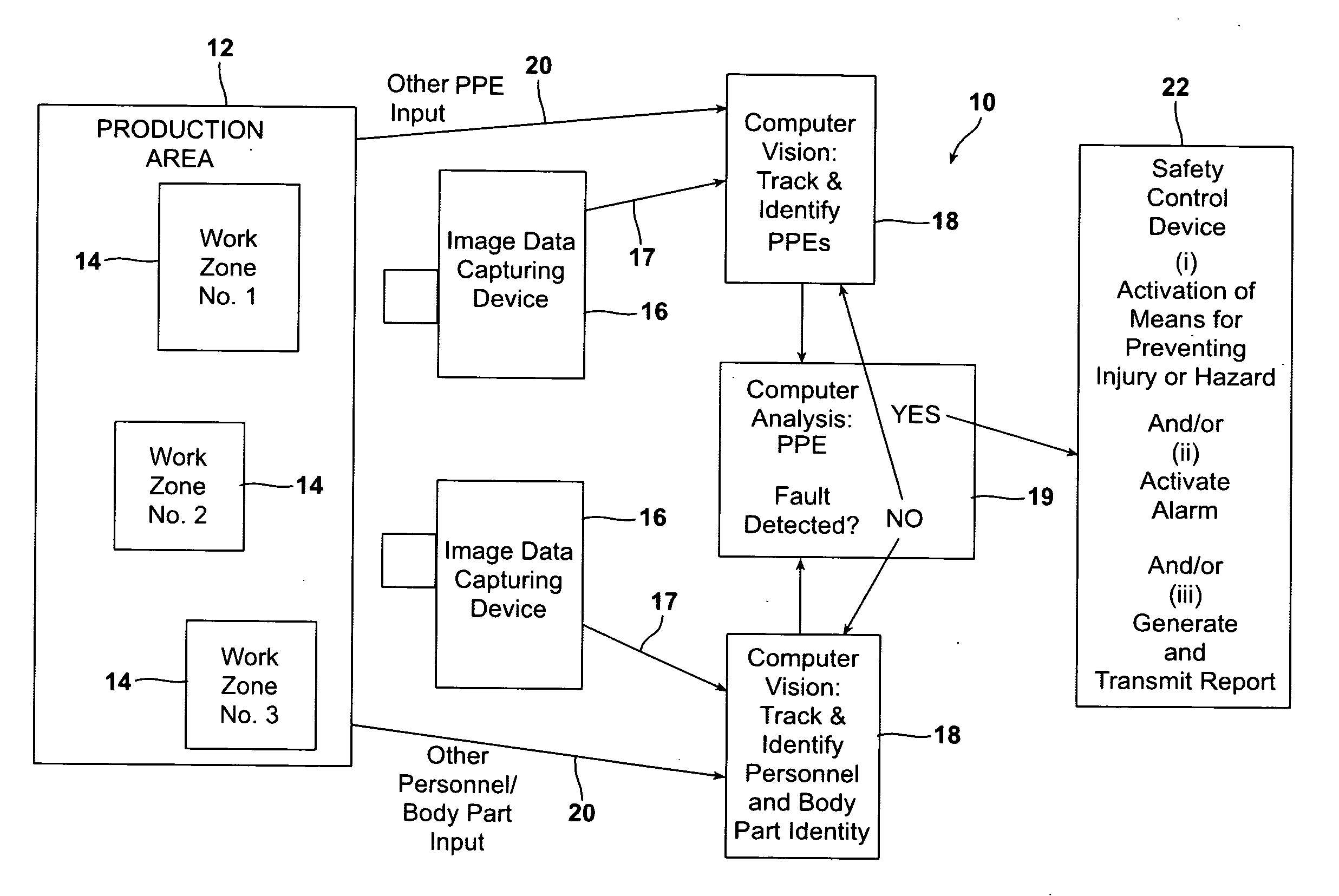

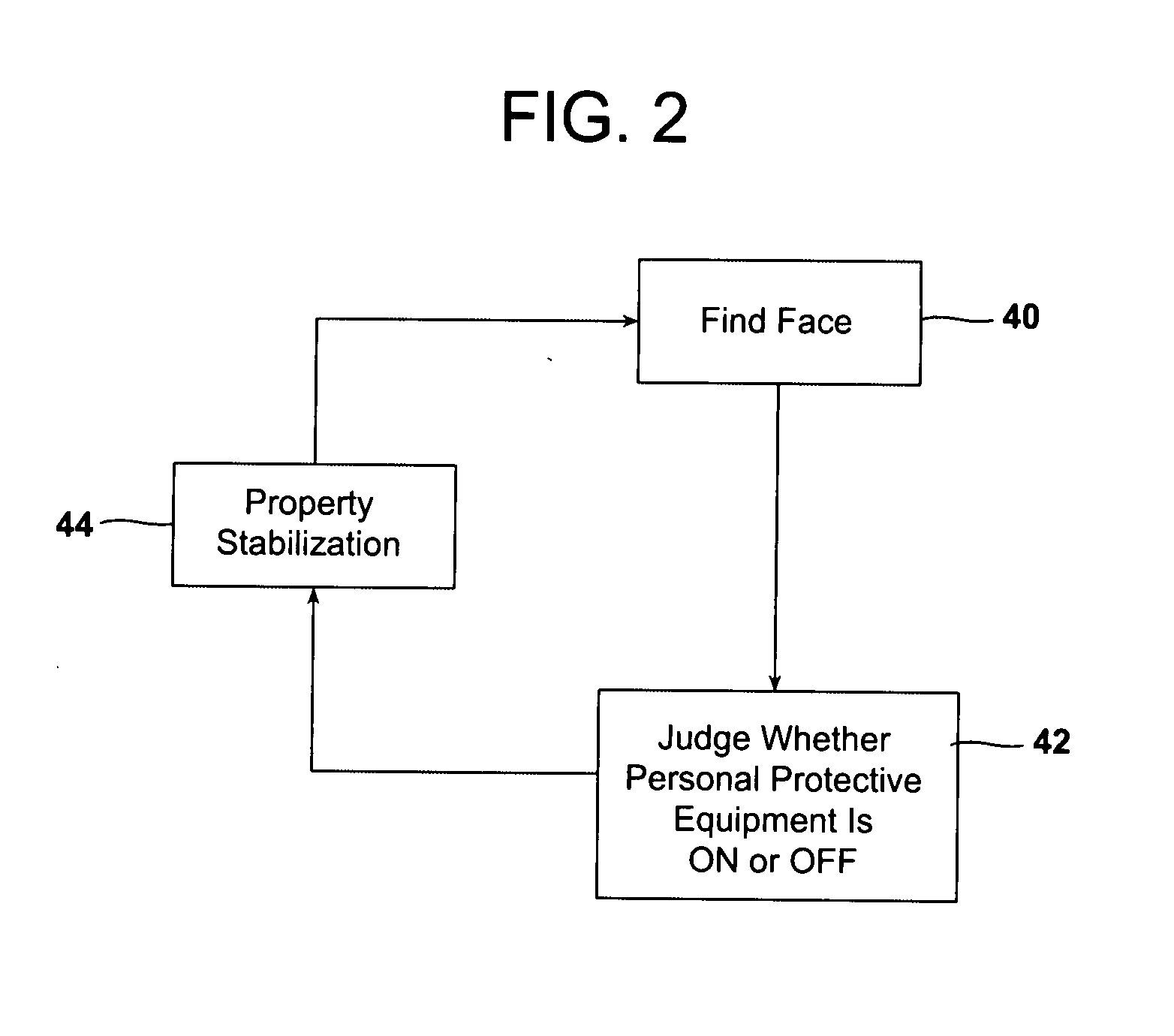

Automated monitoring and control of safety in a production area

ActiveUS20120146789A1Avoid injuryAvoid damageTelevision system scanning detailsWireless architecture usageMachine visionPersonal protective equipment

A machine vision process monitors and controls safe working practice in a production area by capturing and processing image data relative to personal protective equipment (PPE) worn by individuals, movement of various articles, and movement-related conformations of individuals and other objects in the production area. The data is analyzed to determine whether there is a violation of a predetermined minimum threshold image, movement, or conformation value for a predetermined threshold period of time. The determination of a safety violation triggers computer activation of a safety control device. The process is carried out using a system including an image data capturing device, a computer and computer-readable program code, and a safety control device.

Owner:SEALED AIR U S

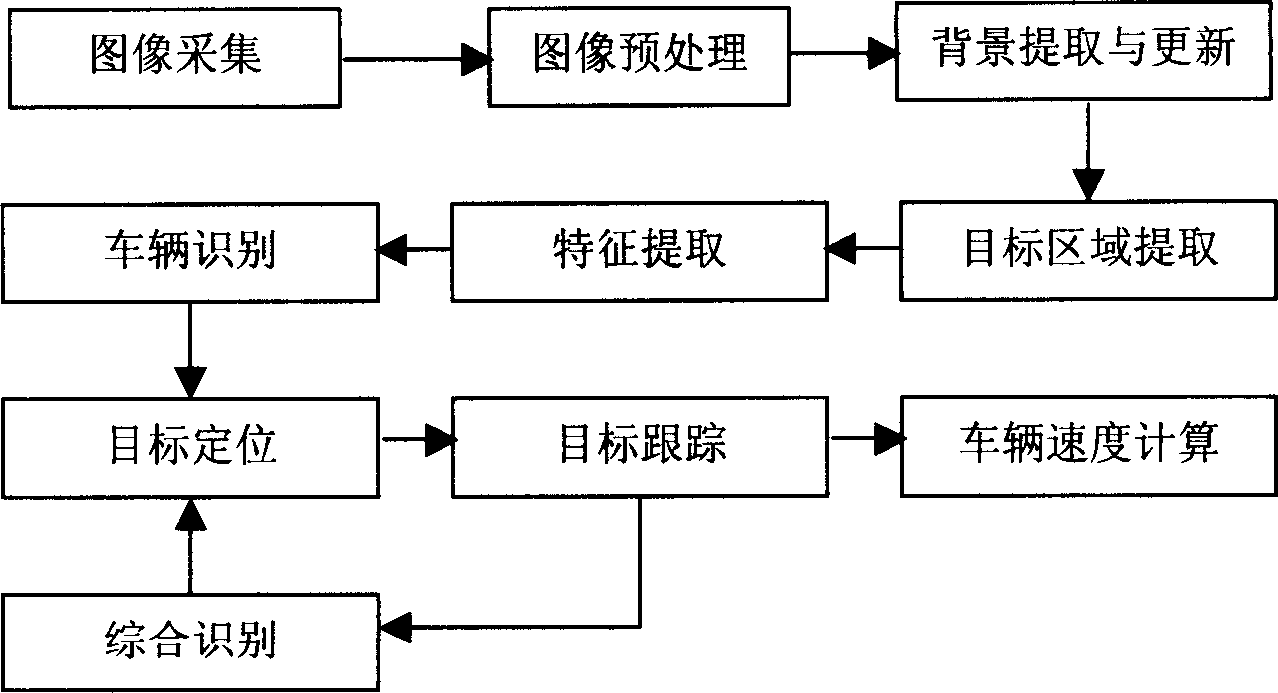

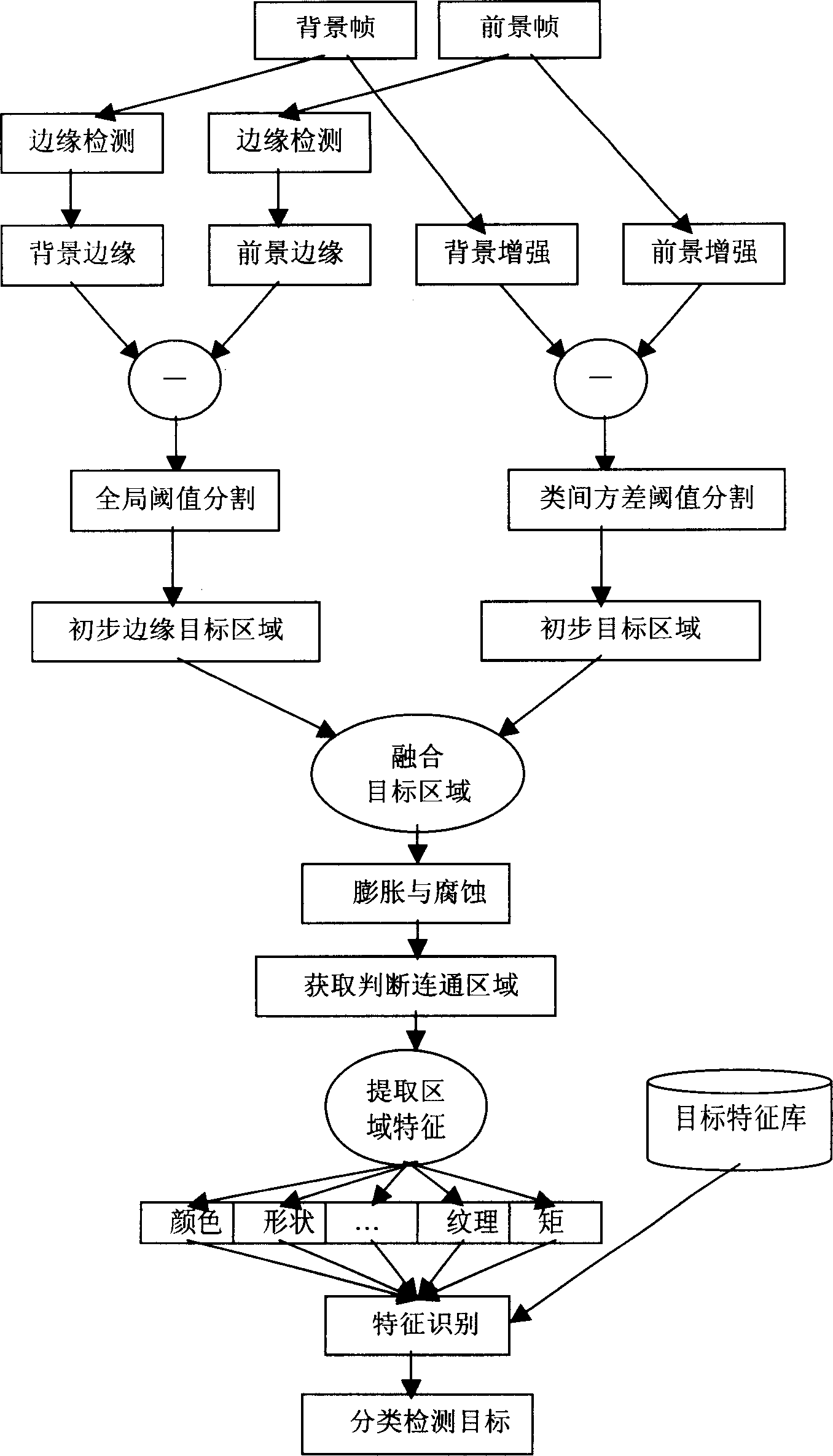

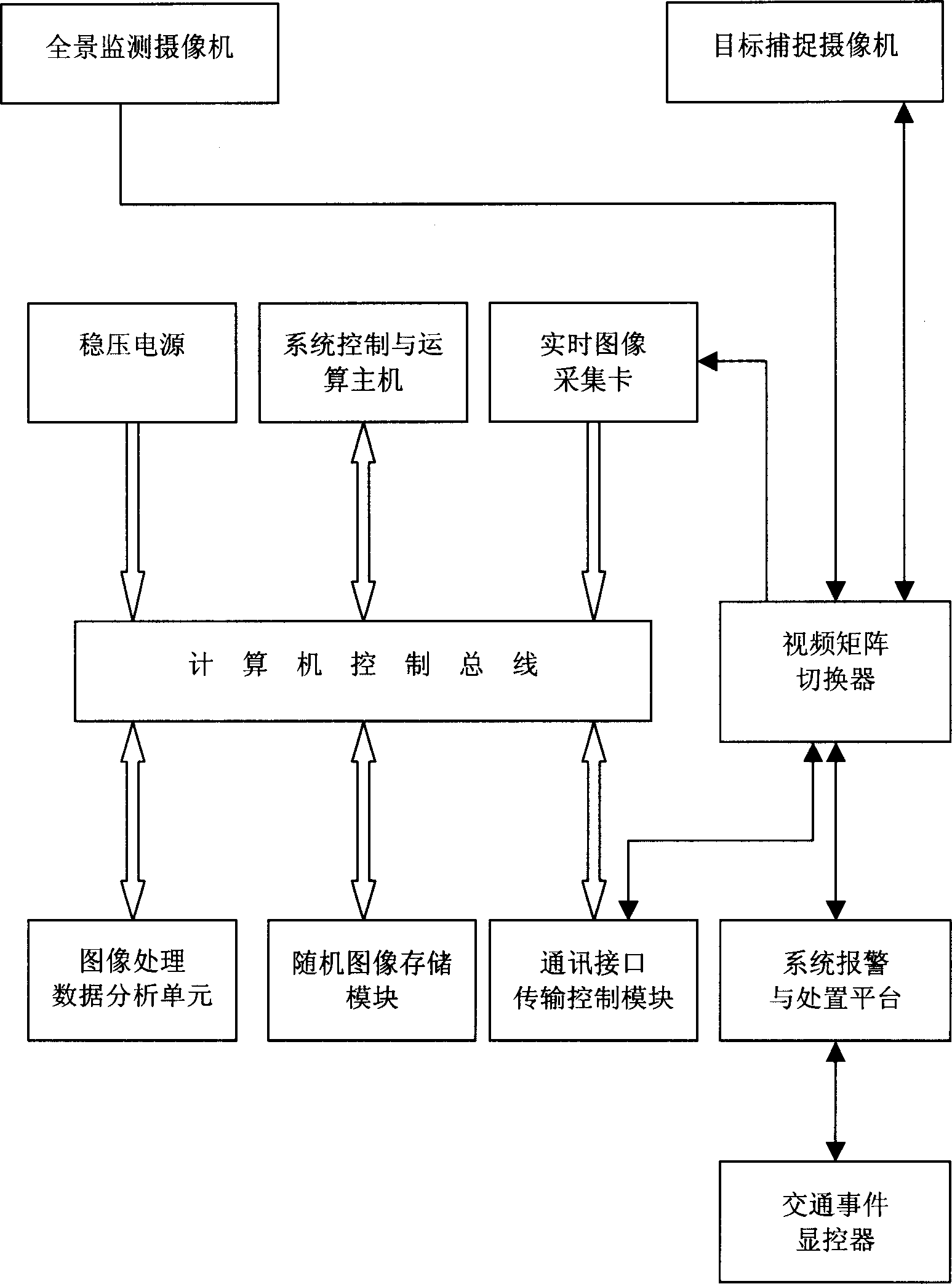

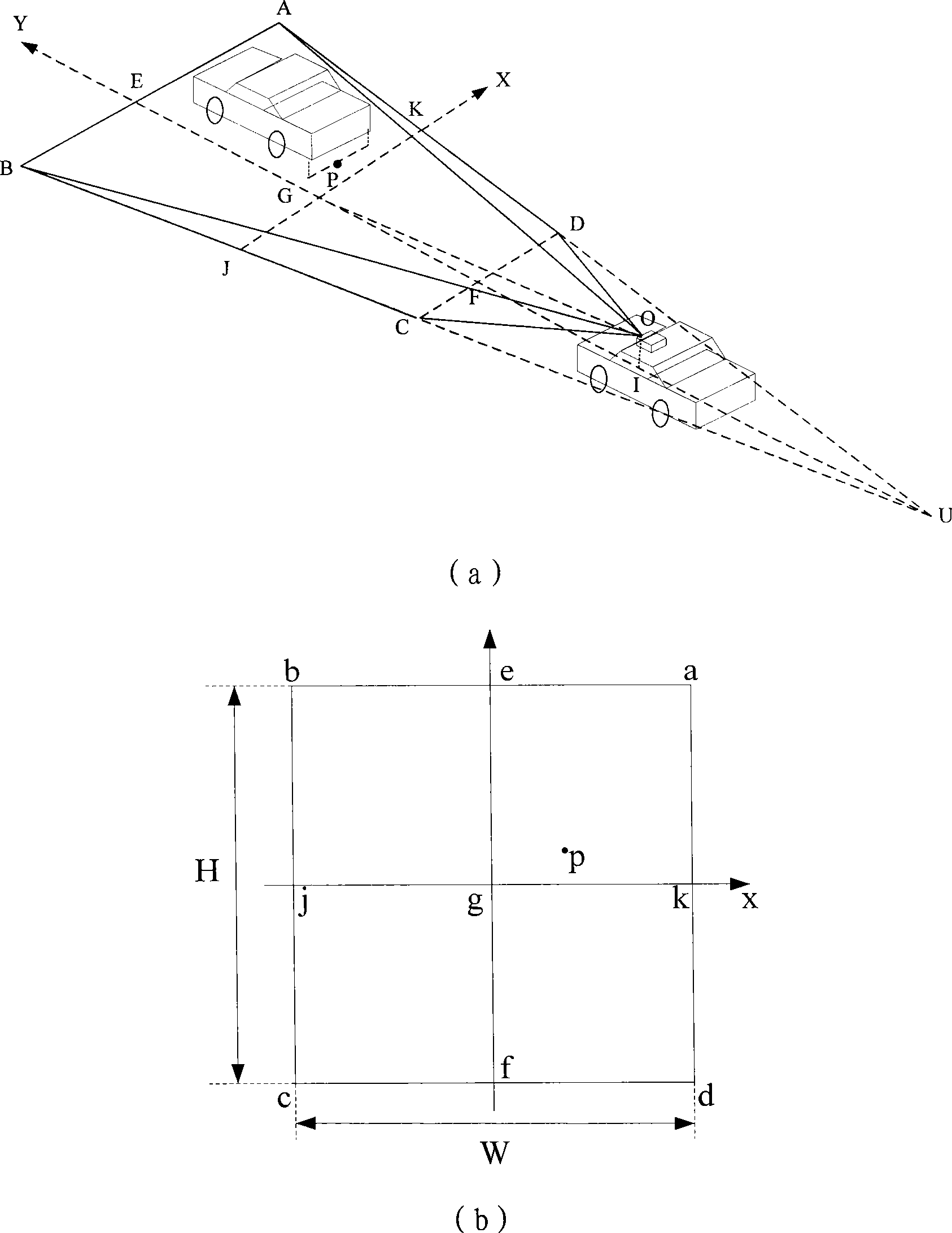

Method and system for inspecting and tracting vehicle based on machine vision

InactiveCN1897015AEfficient and reliable target recognitionImage analysisDetection of traffic movementMachine visionRoad traffic management

A method for detecting and tracking vehicle based on video camera and computer system includes obtaining preliminary edge object region by carrying out global domain value division on obtained difference image of background edge and front ground edge then carrying out interclass variance domain value division on intensified difference image to obtain preliminary object region, forming object region based on integration, carrying out expansion calculation and corrosion calculation on object region to pick up object character, carrying out object identification then locking and tracking object in real time to form object trace.

Owner:王海燕

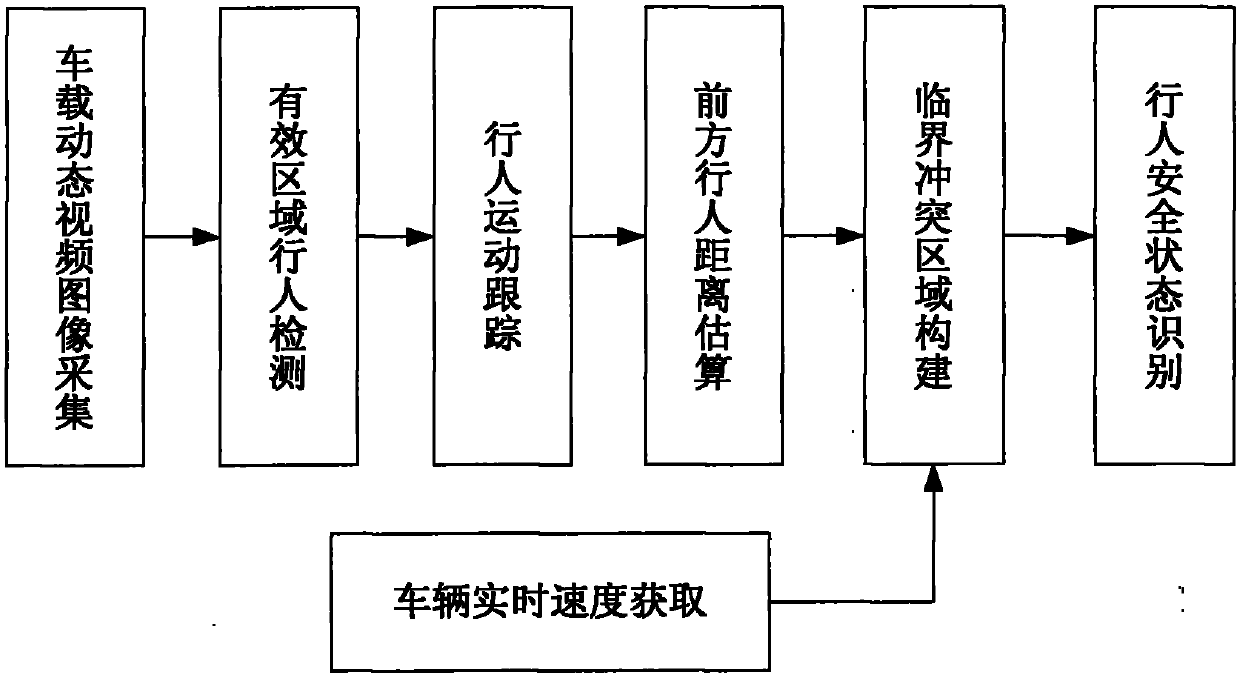

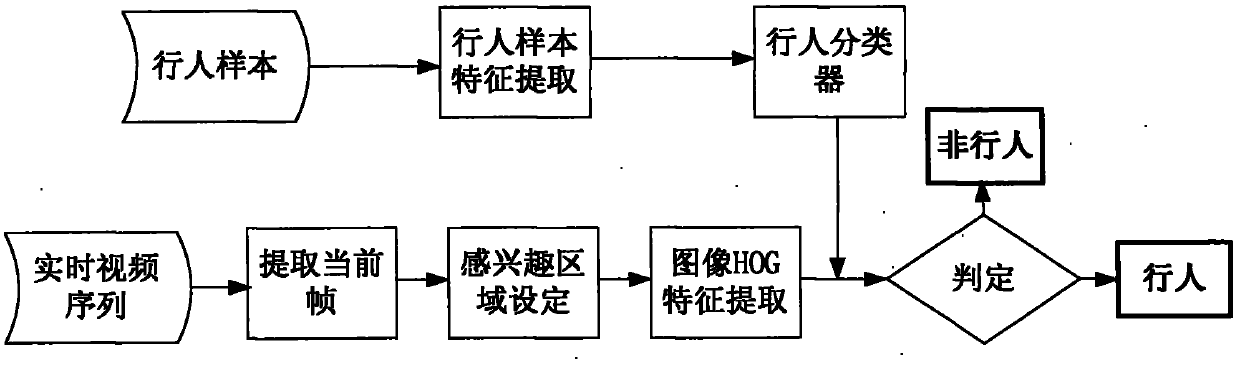

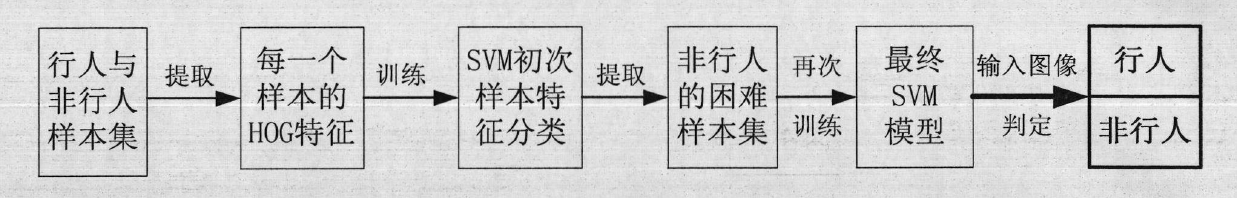

Safe state recognition system for people on basis of machine vision

InactiveCN102096803AEnsure safetyHigh technology contentImage analysisAnti-collision systemsDriver/operatorSecure state

The invention discloses a safe state recognition system for people on the basis of machine vision, aiming to solve the problem that the corresponding intelligent control decision for the vehicle driving behaviour can not be formulated according to the safe state of the people in the prior art. The method comprises the following steps: collecting a vehicle-mounted dynamic video image; detecting and recognizing a pedestrian in an interested area in front of a vehicle; tracking a moving pedestrian; detecting and calculating the distance of pedestrian in front of the vehicle; and obtaining vehicle real-time speed; and recognizing the safe state of the pedestrian. The process of recognizing the safe state of the pedestrian comprises the following steps: building a critical conflict area; judging the safe state when the pedestrian is out of the conflict area in the relative moving process; and judging the safe state when the pedestrian is in the conflict area in the relative moving process. Whether the pedestrian enters a dangerous area can be predicted by the relative speed and the relative position of a motor vehicle and the pedestrian, which are obtained by a vision sensor in the above steps. The safe state recognition system can assist drivers in adopting measures to avoid colliding pedestrians.

Owner:JILIN UNIV

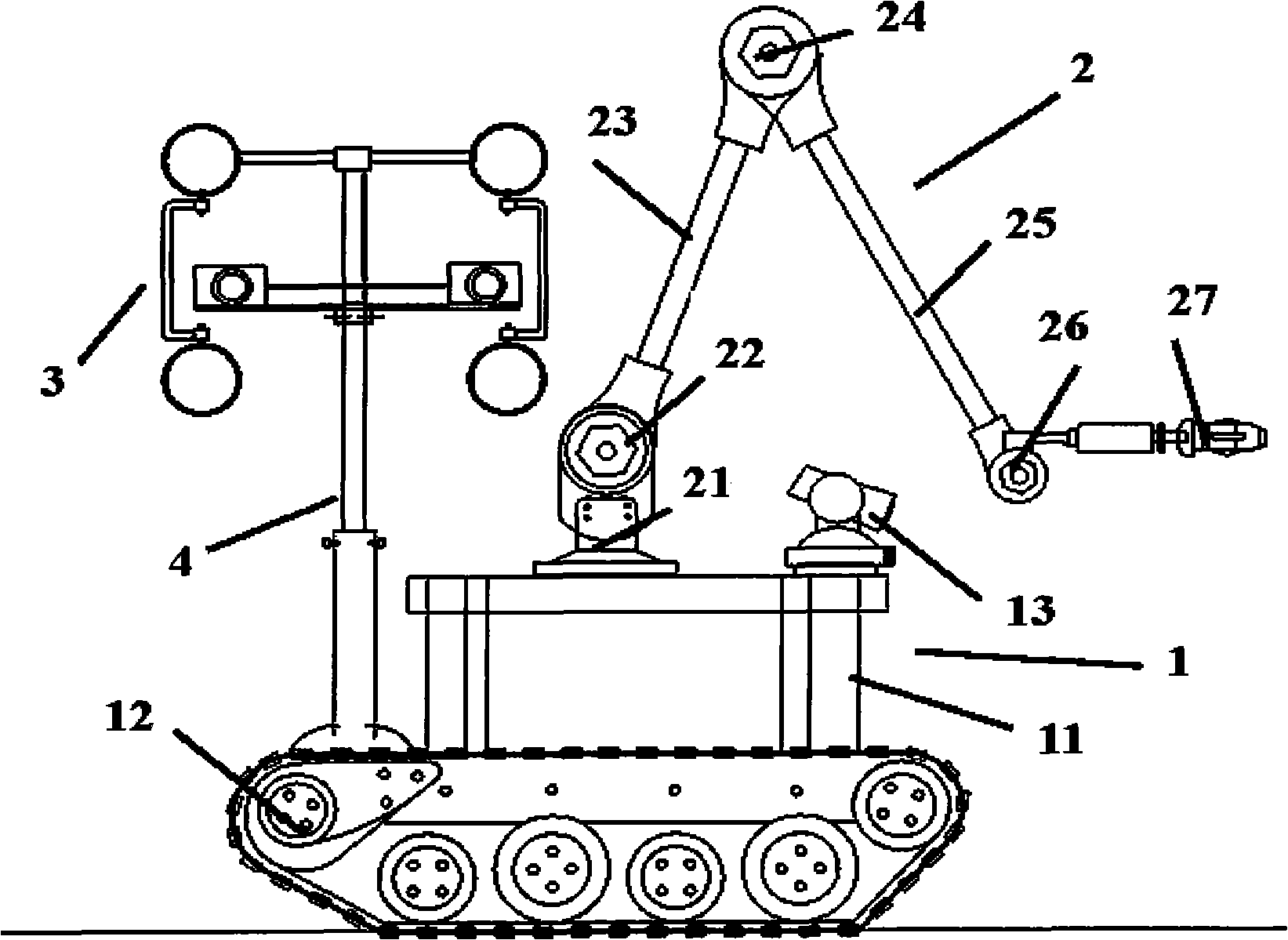

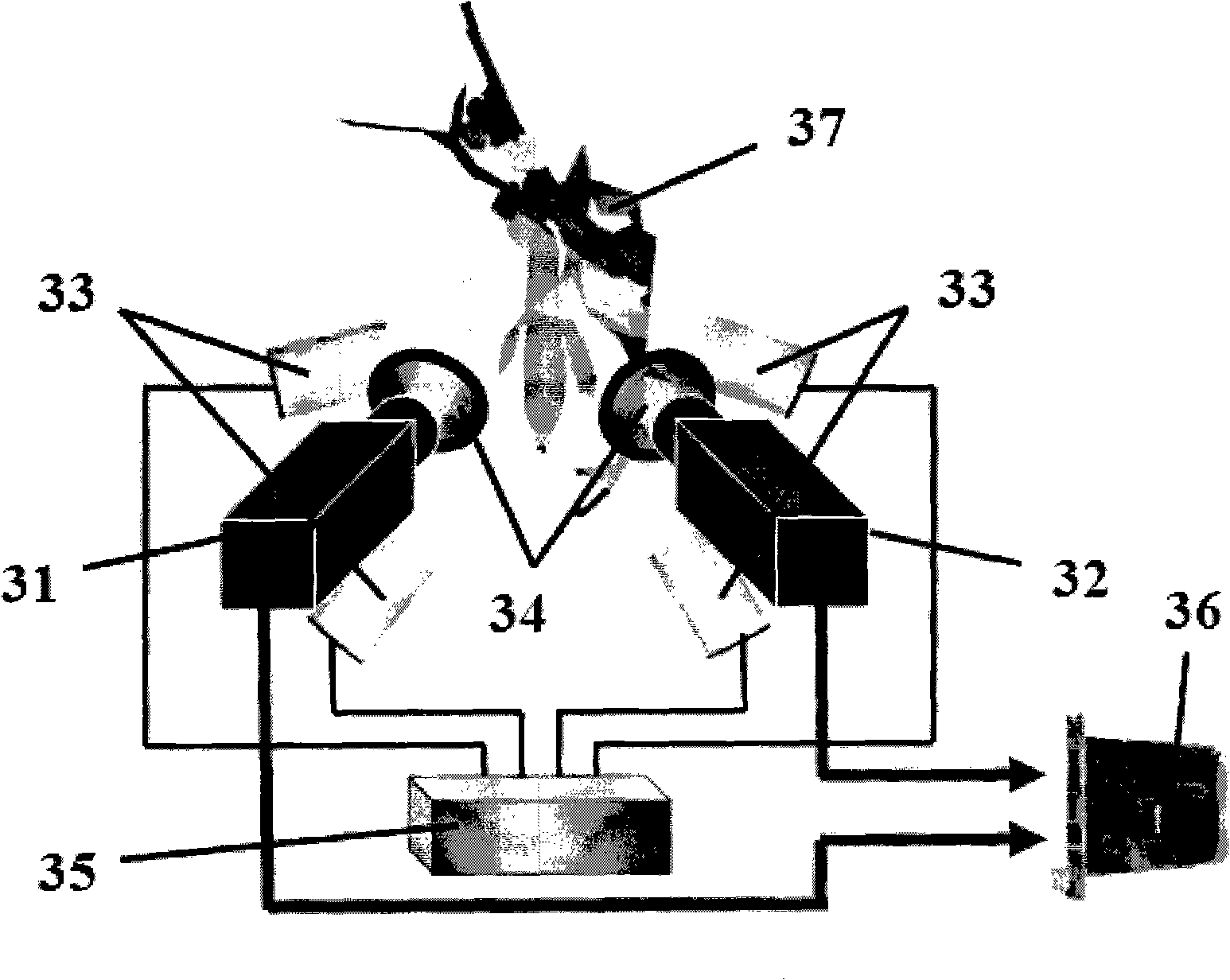

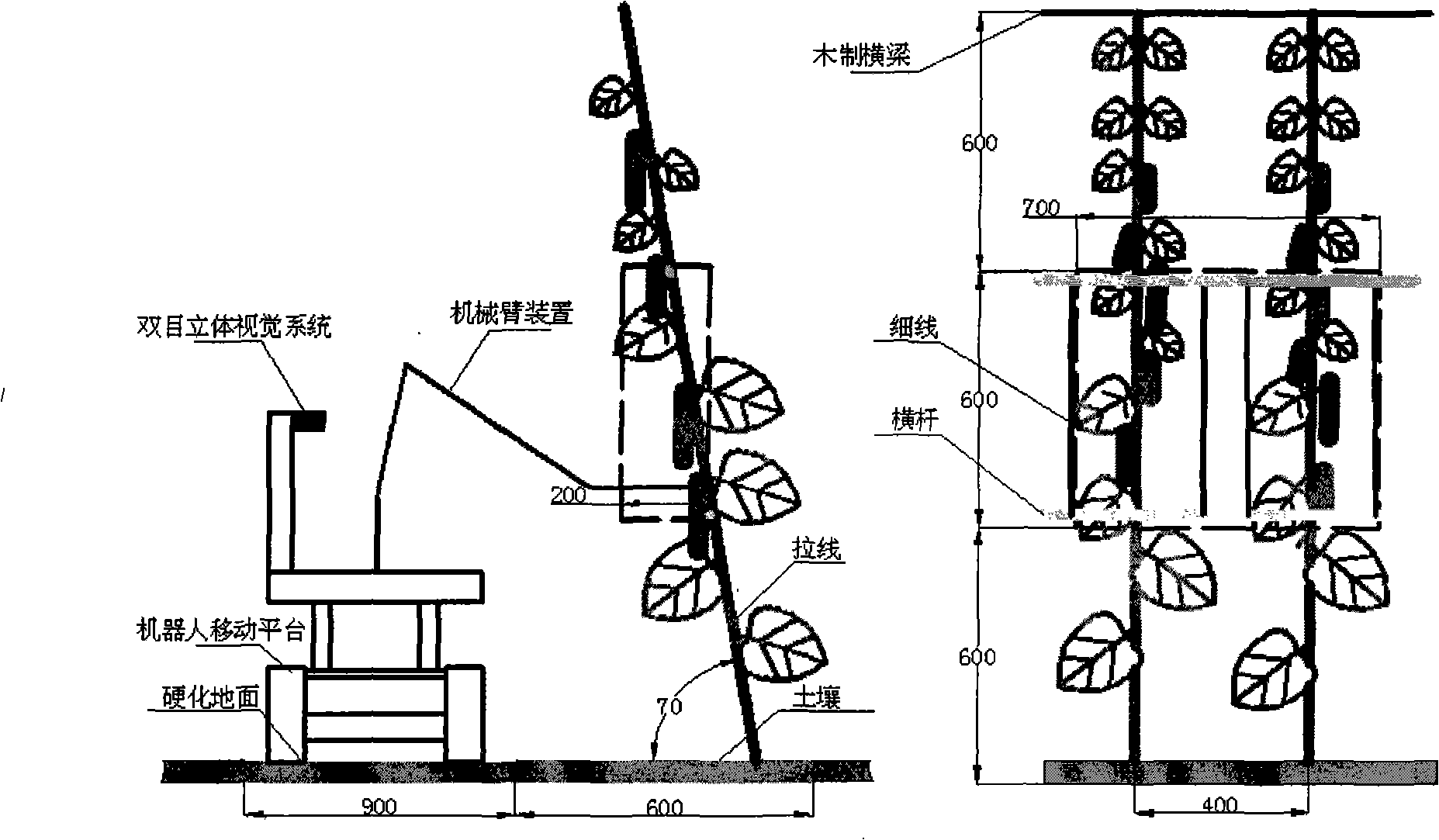

Cucumber picking robot system and picking method in greenhouse

The invention discloses a cucumber picking robot system in a greenhouse environment. The robot system comprises a binocular stereo vision system, a mechanical arm device and a robot mobile platform; the binocular stereo vision system is used for acquiring cucumber images, processing the images in real time and acquiring the position information of the acquired targets; the mechanical arm device is used for capturing and separating the acquired targets according to the position information of the acquired targets; and the robot mobile platform is used for independently moving in the greenhouse environment; wherein, the binocular stereo vision system comprises two black and white cameras, a dual-channel vision real-time processor, a lighting device and an optical filtering device; the mechanical arm device comprises an actuator, a motion control card and a joint actuator; and the robot mobile platform comprises a running mechanism, a motor actuator, a tripod head camera, a processor and a motion controller. The invention also discloses a cucumber picking method in the greenhouse environment. The method of combining machine vision and agricultural machinery is adopted to construct the cucumber picking robot system which is suitable for the greenhouse environment, thus realizing automatic robot navigation and automatic cucumber reaping, and reducing the human labor intensity.

Owner:SUZHOU AGRIBOT AUTOMATION TECH

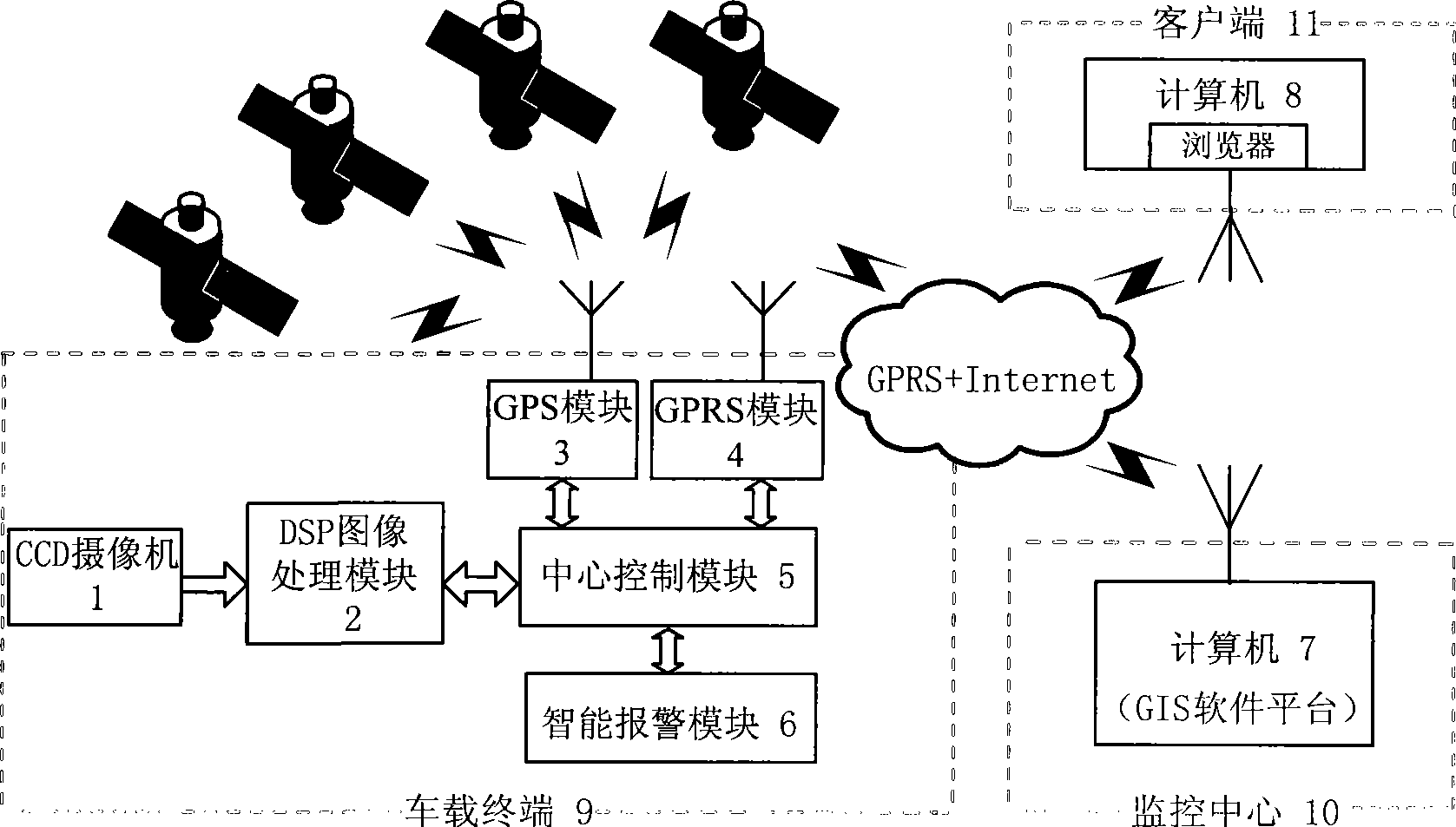

Vehicle intelligent alarming method and device

InactiveCN101391589AImprove securityEfficient detectionPedestrian/occupant safety arrangementSignalling/lighting devicesMobile vehicleMachine vision

The invention relates to an on-board intelligent alarming method and a device, wherein, the method combines a longitudinal anti-collision pre-warning method based on the single-eye vision and an accident automatic help-calling method, realizes preventing the rear-end accidents, strives for helping time, reduces injuries and deaths, effectively prevents the collision accidents and personnel deaths caused by the collision, and improves the running safety of the automobile; the device mainly comprises three parts: an on-board terminal, a monitoring center and a user end; the method and the device use the machine vision technique to differentiate the moving vehicles in the front, have high precision and wide visible range, can effectively probe the obstacles, reduce the error reporting possibility and prevent the collision accidents. The method and the device can improve the safety of the vehicle, thus having extremely large application value and prospect.

Owner:SHANGHAI UNIV

Agricultural robot system and method

An agricultural robot system and method of harvesting, pruning, culling, weeding, measuring and managing of agricultural crops. Uses autonomous and semi-autonomous robot(s) comprising machine-vision using cameras that identify and locate the fruit on each tree, points on a vine to prune, etc., or may be utilized in measuring agricultural parameters or aid in managing agricultural resources. The cameras may be coupled with an arm or other implement to allow views from inside the plant when performing the desired agricultural function. A robot moves through a field first to “map” the plant locations, number and size of fruit and approximate positions of fruit or map the cordons and canes of grape vines. Once the map is complete, a robot or server can create an action plan that a robot may implement. An action plan may comprise operations and data specifying the agricultural function to perform.

Owner:VISION ROBOTICS

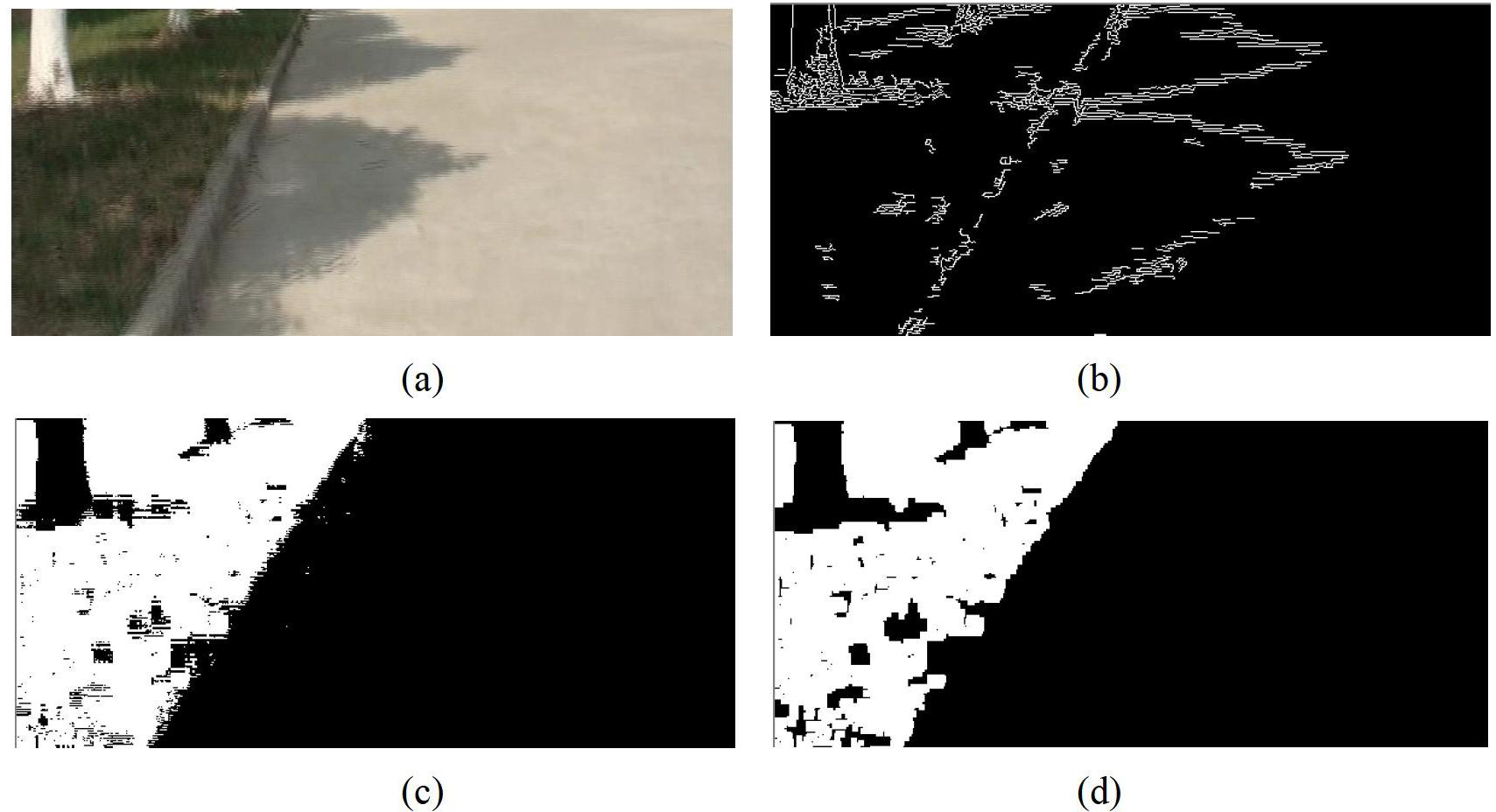

Method based on monocular vision for detecting and roughly positioning edge of road

InactiveCN102682292AAdaptableImprove versatilityCharacter and pattern recognitionNavigation instrumentsMachine visionVisual perception

The invention discloses a method based on monocular vision for detecting and roughly positioning the edge of a road, and relates to the field of machine vision and intelligent control. Aiming at a continuous road with different edge characteristics, two road edge detection methods are supplied and suitable for semistructured and nonstructured roads and can be applied to vision navigation and intelligent control over a robot. The invention provides a method for detecting the edge of the road based on colors and a method for detecting the edge of the road based on threshold value partitioning. On the basis of the obtained edge of the road, an image is subjected to inverted perspective projection transformation, so that a front view is transformed into a top view; and according to a linear corresponding relation between a pixel and an actual distance in the image, a perpendicular distance from the current position of the robot to the edge of the road and a course angle of the robot can be calculated. The method is easy to implement, high in anti-interference performance, high in instantaneity and suitable for the semistructured and nonstructured roads.

Owner:TSINGHUA UNIV

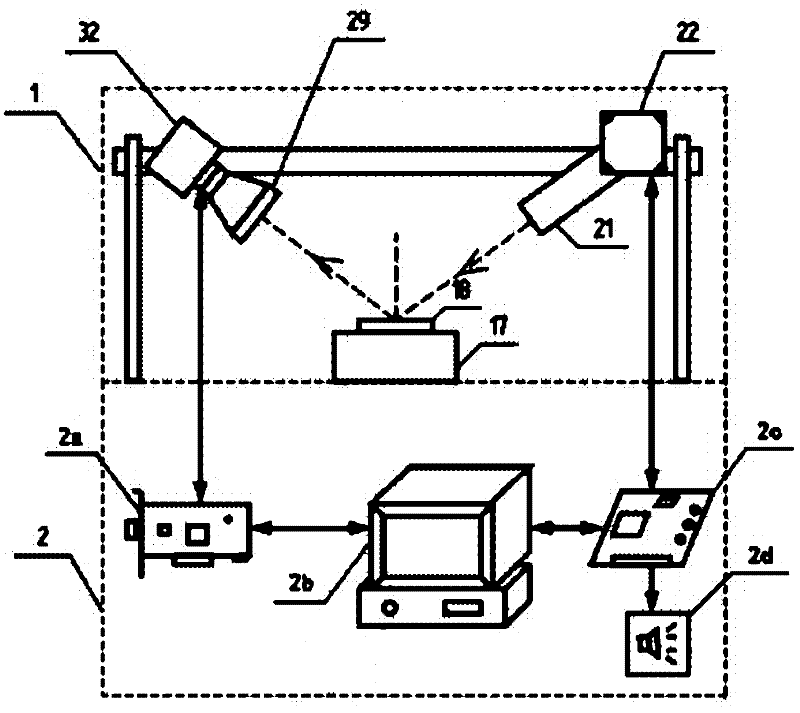

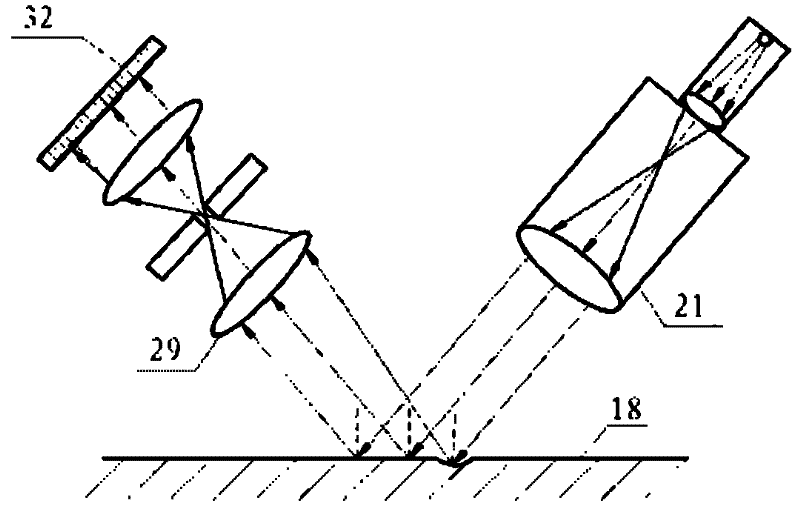

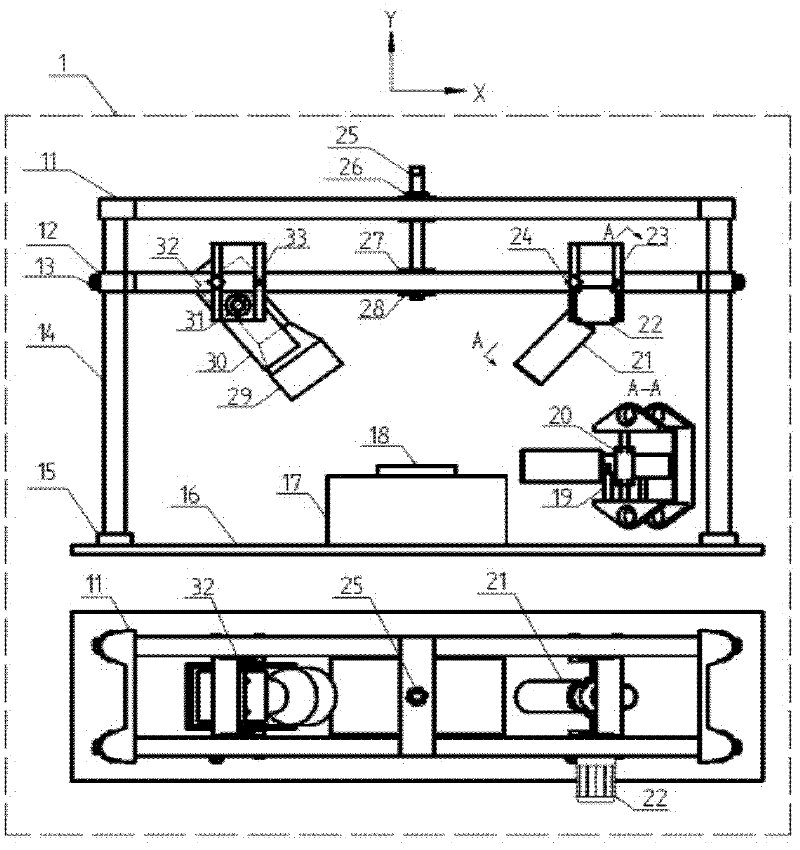

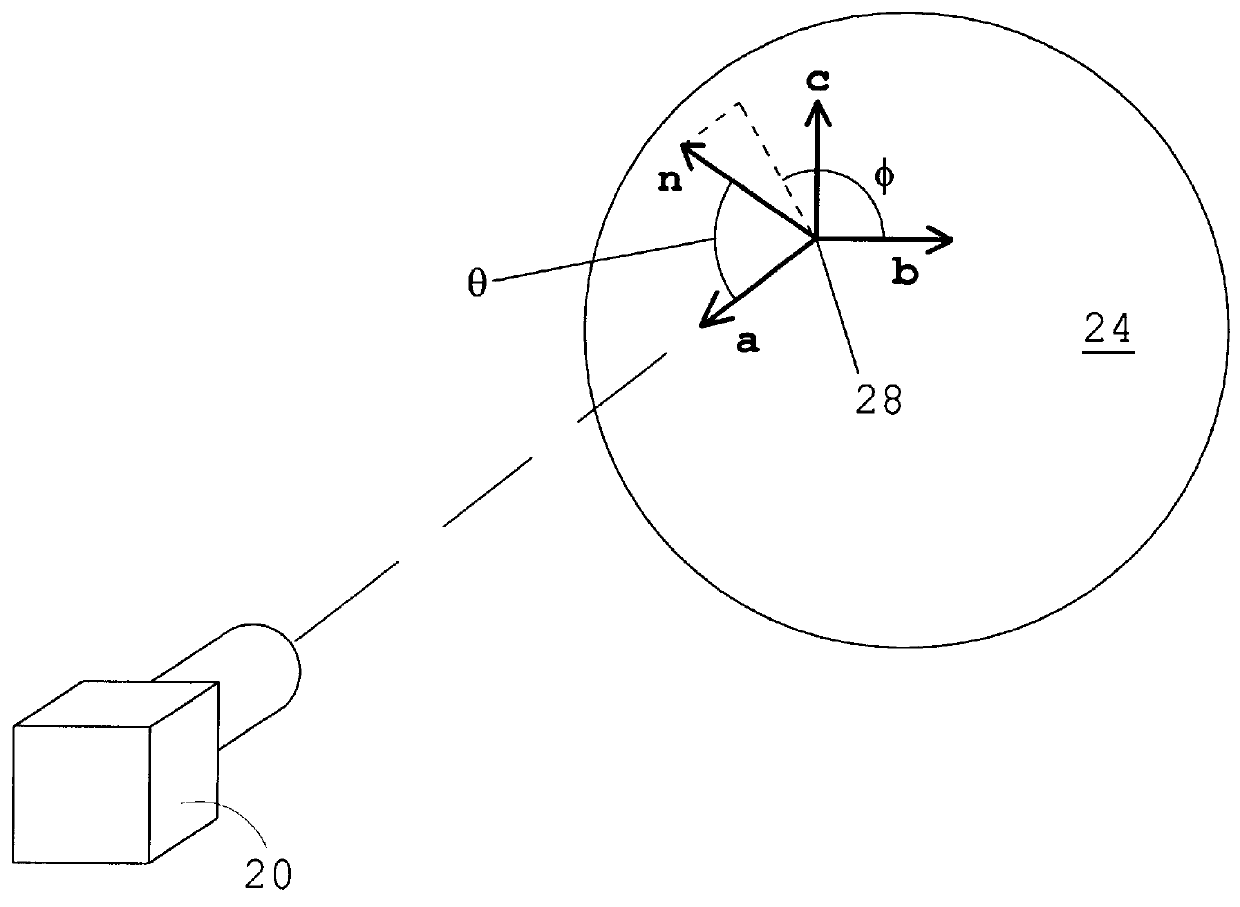

Device and method for detecting micro defects on bright and clean surface of metal part based on machine vision

ActiveCN102590218ARealize installation positioningEasy to operateOptically investigating flaws/contaminationEffect lightCcd camera

The invention relates to a device and method for detecting micro defects on the bright and clean surface of a metal part based on machine vision. The device comprises an imaging, positioning and adjusting mechanism and a processing unit, wherein the imaging, positioning and adjusting mechanism comprises a base plate, a guide rod, a fixed support, a sliding support, a stepping motor, a CCD (Charge Coupled Device) camera, a telecentric lens and parallel light sources, wherein the imaging and coaxial lighting of the CCD camera are primarily adjusted; an image collection card, an industrial personal computer, an equipment control card and an alarm are electrically connected in the processing unit and are used for collecting, transmitting, storing, processing, displaying and alarming image. The method comprises coaxial lighting adjustment and image processing, wherein coaxial lighting adjustment comprises the steps of triggering the equipment control card via software of the industrial personal computer to drive the stepping motor, and adjusting the rotating angles of the parallel light sources until the coaxial lighting condition is satisfied; and image processing comprises the steps of detecting defects on the internal surface of the detected part, respectively detecting large and small defects on the outer edge on the surface of the detected part, displaying the processing images in real time and judging the results.

Owner:安徽中科智能高技术有限责任公司

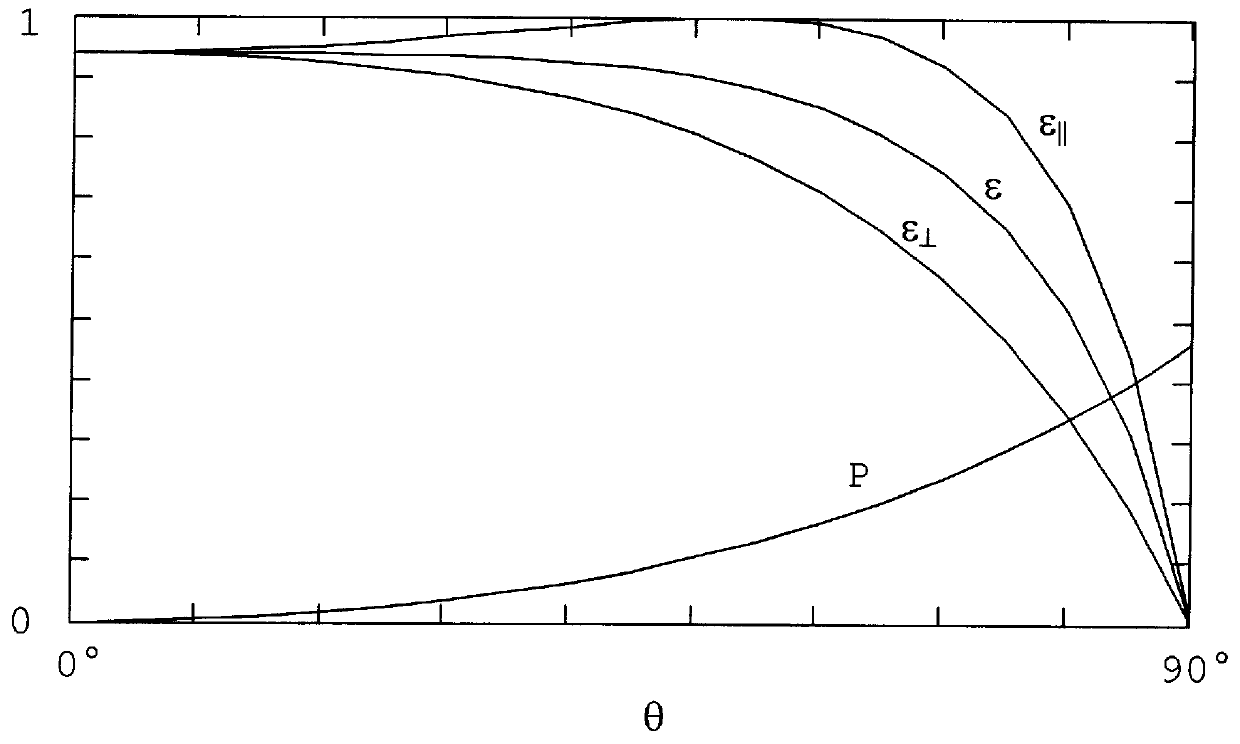

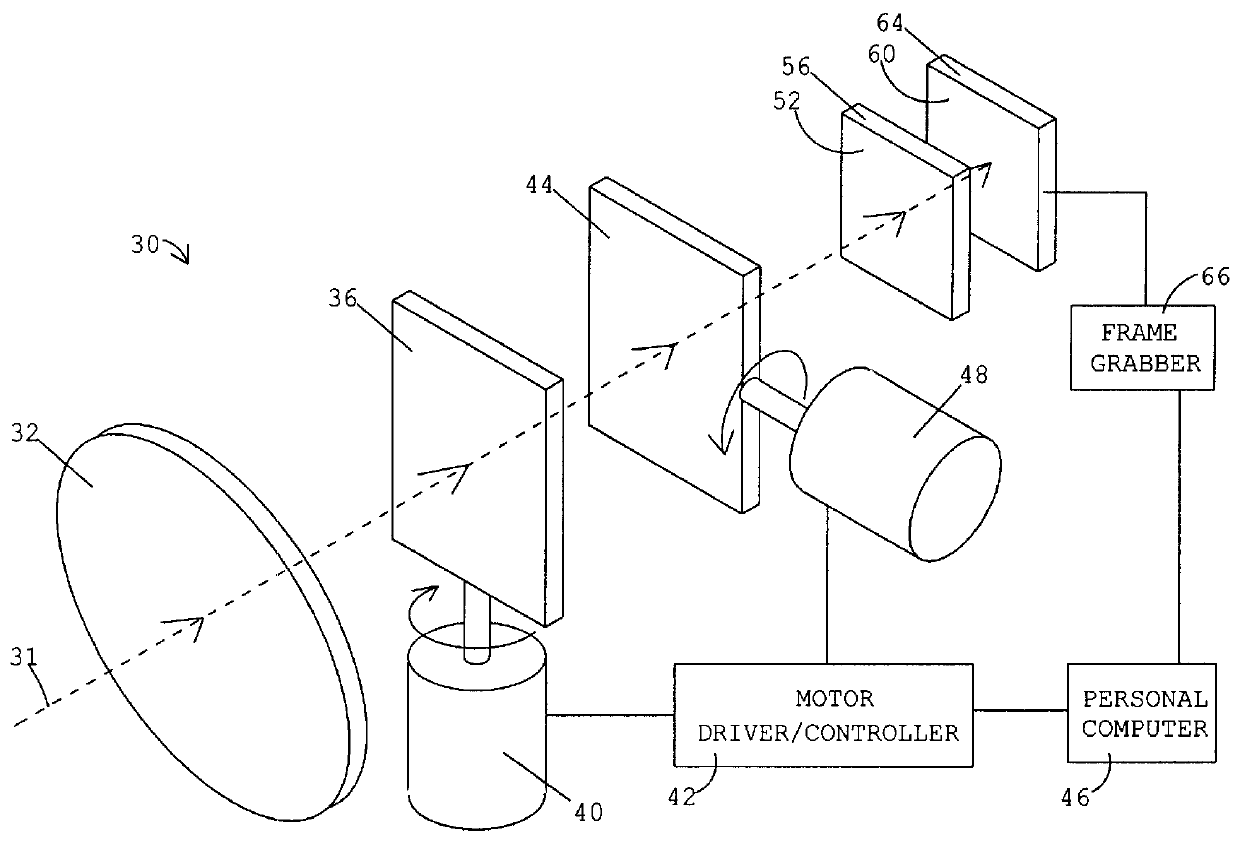

High-resolution polarization-sensitive imaging sensors

An apparatus and method to determine the surface orientation of objects in a field of view is provided by utilizing an array of polarizers and a means for microscanning an image of the objects over the polarizer array. In the preferred embodiment, a sequence of three image frames is captured using a focal plane array of photodetectors. Between frames the image is displaced by a distance equal to a polarizer array element. By combining the signals recorded in the three image frames, the intensity, percent of linear polarization, and angle of the polarization plane can be determined for radiation from each point on the object. The intensity can be used to determine the temperature at a corresponding point on the object. The percent of linear polarization and angle of the polarization plane can be used to determine the surface orientation at a corresponding point on the object. Surface orientation data from different points on the object can be combined to determine the object's shape and pose. Images of the Stokes parameters can be captured and viewed at video frequency. In an alternative embodiment, multi-spectral images can be captured for objects with point source resolution. Potential applications are in robotic vision, machine vision, computer vision, remote sensing, and infrared missile seekers. Other applications are detection and recognition of objects, automatic object recognition, and surveillance. This method of sensing is potentially useful in autonomous navigation and obstacle avoidance systems in automobiles and automated manufacturing and quality control systems.

Owner:THE UNITED STATES OF AMERICA AS REPRESENTED BY THE SECRETARY OF THE NAVY

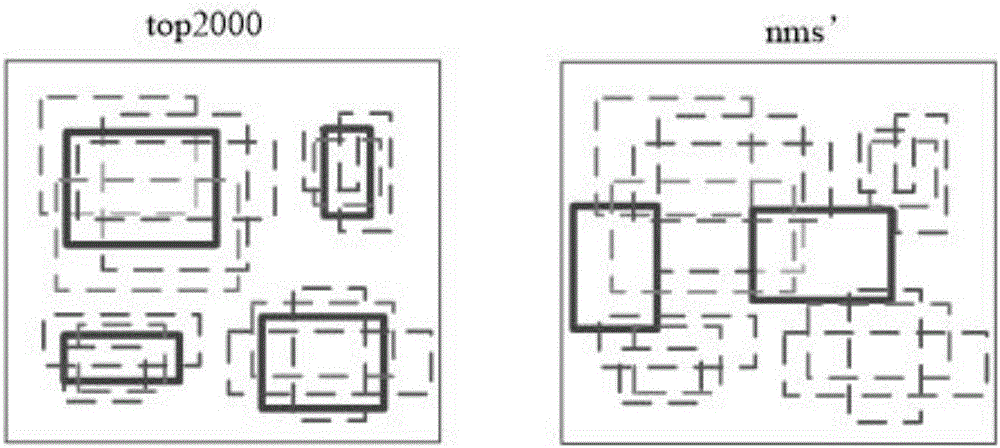

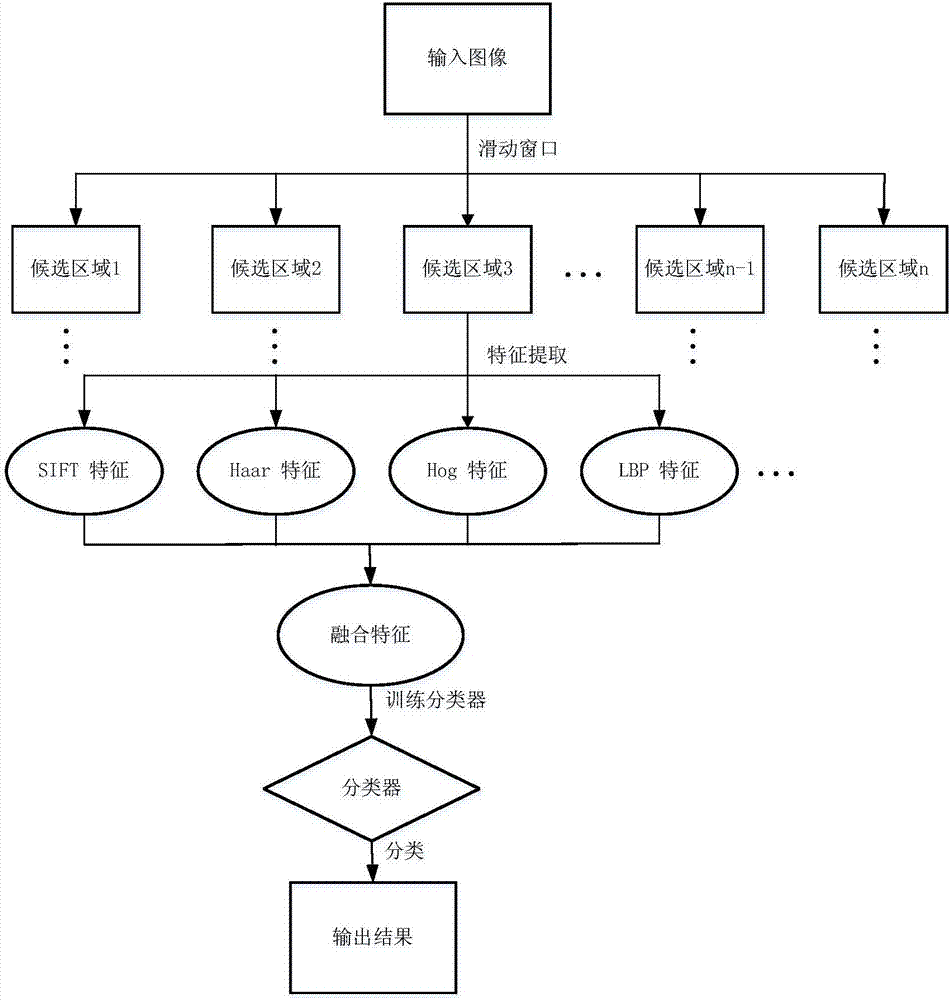

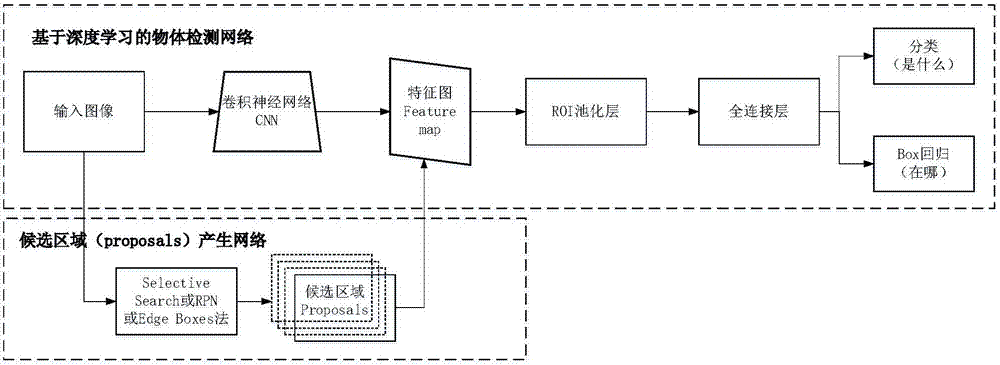

Multi-scale small object detection method based on deep-learning hierarchical feature fusion

ActiveCN107341517ARealize detectionEasy to identifyCharacter and pattern recognitionMachine visionResearch Object

The invention relates to the object verification technology in the machine vision field, and especially relates to a multi-scale small object detection method based on deep-learning hierarchical feature fusion; for solving the defects that the existing object detection is low in detection precision under real scene, constrained by scale size and different for small object detection, the invention puts forward a multi-scale small object detection method based on deep-learning hierarchical feature fusion. The detection method comprises the following steps: taking an image under the real scene as a research object, extracting the feature of the input image by constructing the convolution neural network, producing less candidate regions by using a candidate region generation network, and then mapping candidate region to a feature image generated by the convolution neural network to obtain the feature of each candidate region, obtaining the feature with fixed size and fixed dimension after passing a pooling layer to input to the full-connecting layer, wherein two branches behind the full-connecting layer respectively output the recognition type and the returned position. The method disclosed by the invention is suitable for the object verification in the machine vision field.

Owner:HARBIN INST OF TECH

Optical coordinate input device comprising few elements

InactiveUS20080062149A1Low costSave valuable spacePhotometry using reference valueMaterial analysis by optical meansManufacturing cost reductionMachine vision

The present invention is an optical electronic input device for identifying the coordinates of an object in a given area. The device may capture two-dimensional or three-dimensional input information, using a minimal number of optical units (one for two dimensional coordinates and two for three dimensional coordinates). The invention requires only basic optical elements such as a photo sensing unit, lenses, a light source, filters and shutters which may reduce manufacturing costs significantly. Since this device enables inputting the coordinates of an object in a set area, it may not only be used in systems such as optical touch screens, but also in virtual keyboard applications and in implementation for machine vision.

Owner:BARUCH ITZHAK

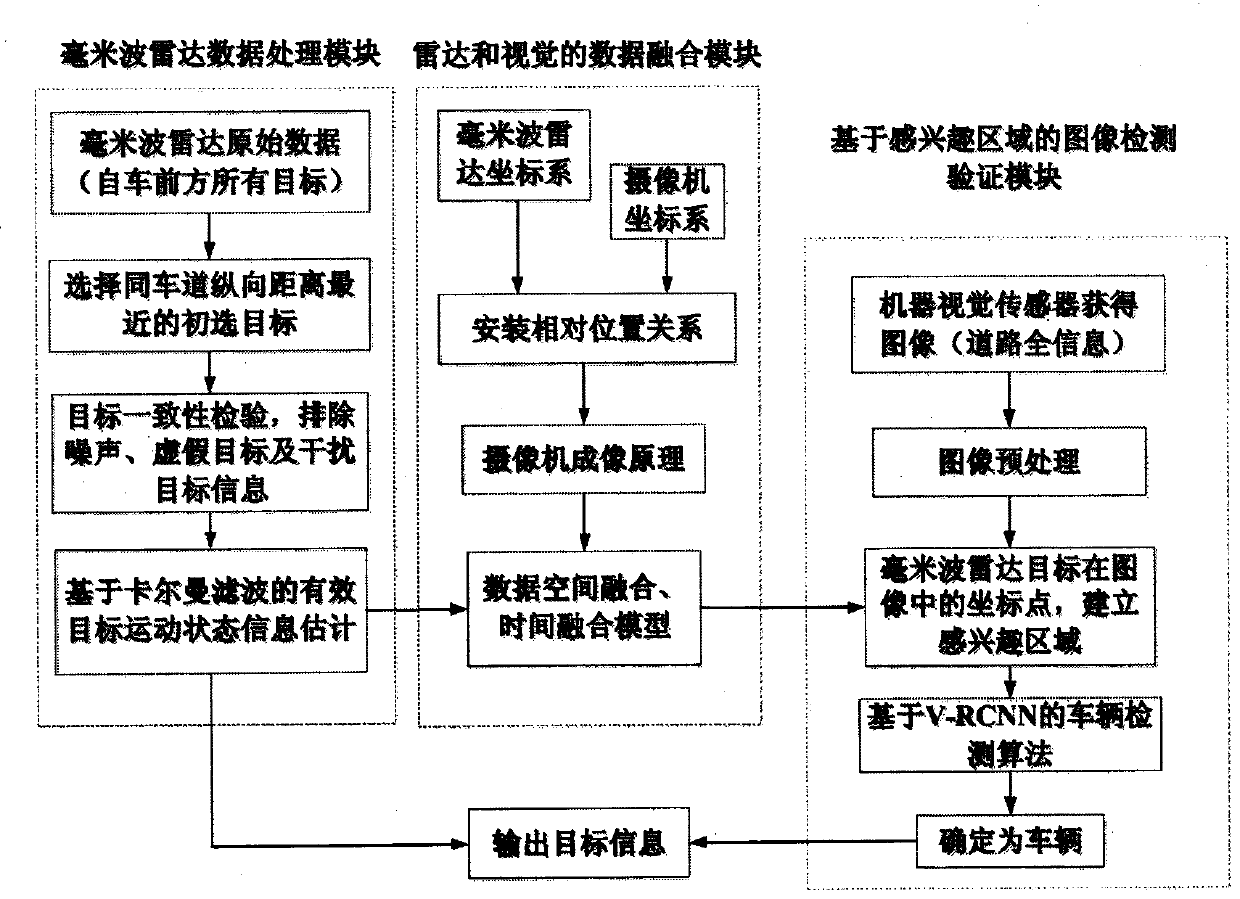

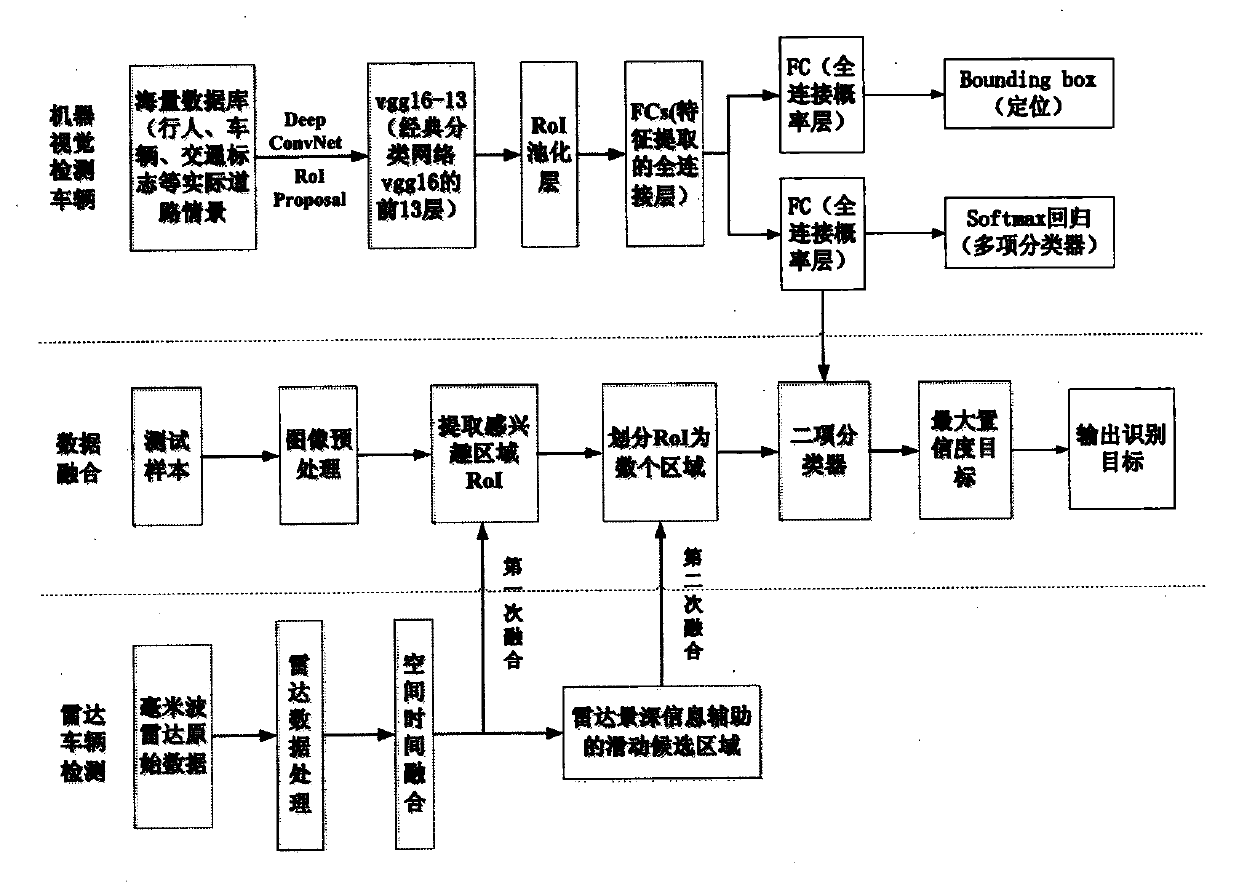

Laser radar and machine vision-based information fusion and vehicle detection system

ActiveCN107609522AImprove accuracyStrong real-timeCharacter and pattern recognitionMachine visionRadar

The invention relates to a laser radar and machine vision-based information fusion and vehicle detection system. The system comprises a millimeter-wave radar data processing module, a radar and visiondata fusion module and an air of interest-based image detection and verification module, wherein the millimeter-wave radar data processing module is capable of obtaining a relatively reliable and accurate effective target and movement state information of the effective target to serve as input of a multi-sensor data fusion module; the radar and vision data fusion module is capable of obtaining aprojection point, on a machine vision image pixel plane, of a front vehicle detected by millimeter-wave radar, establishing an area of interest around the projection point and completing multi-sensordata space fusion; and the image detection and verification module is capable of correctly positioning the size and position of an imaging area of the front vehicle and verifying whether the imaging area is a vehicle image or not. According to the system, barriers in front of vehicles can be effectively detected, and effective tracking targets can be correctly, stably and reliably screened throughcarrying out data analysis and processing on data acquired by sensors.

Owner:DONGHUA UNIV

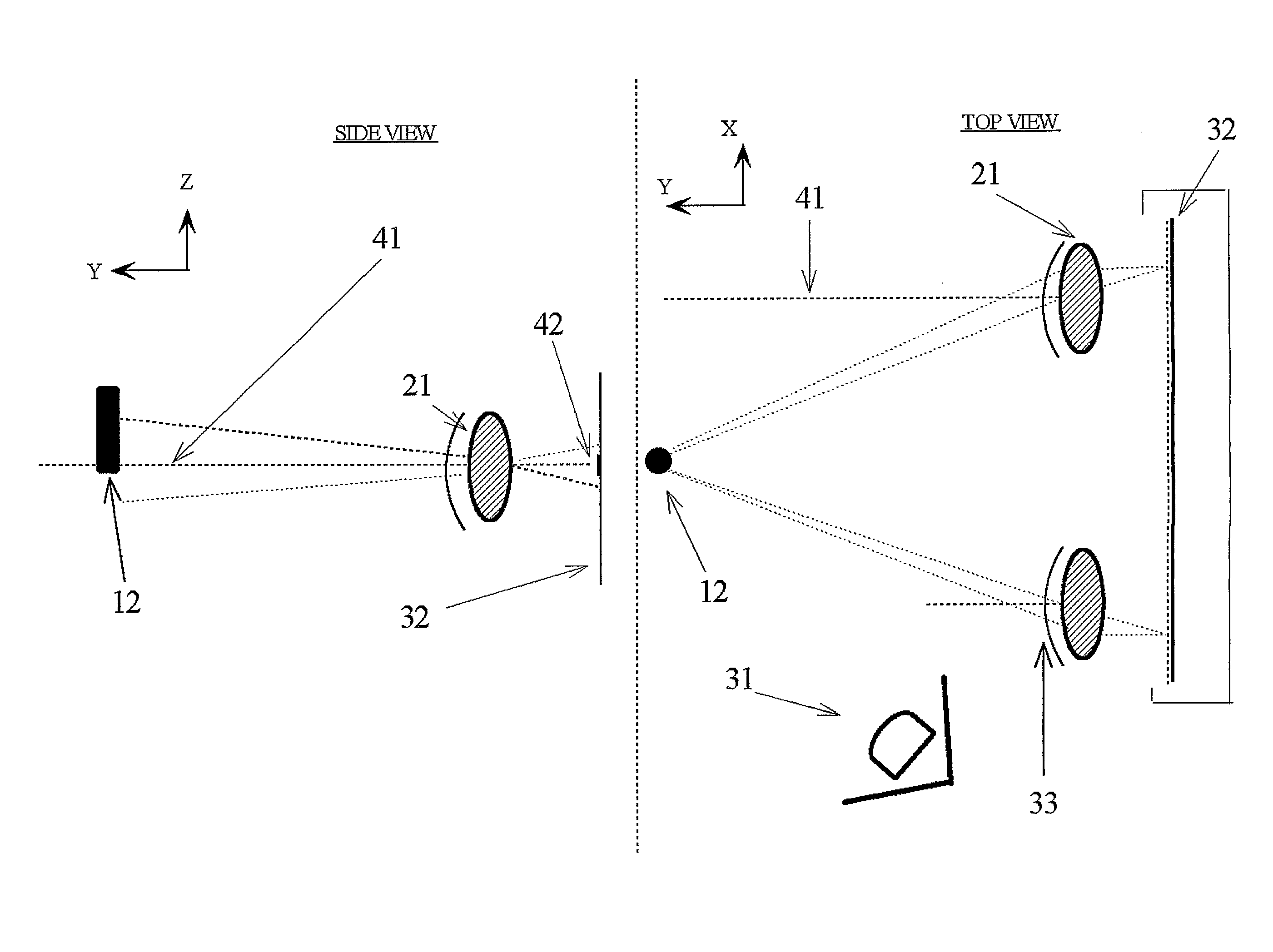

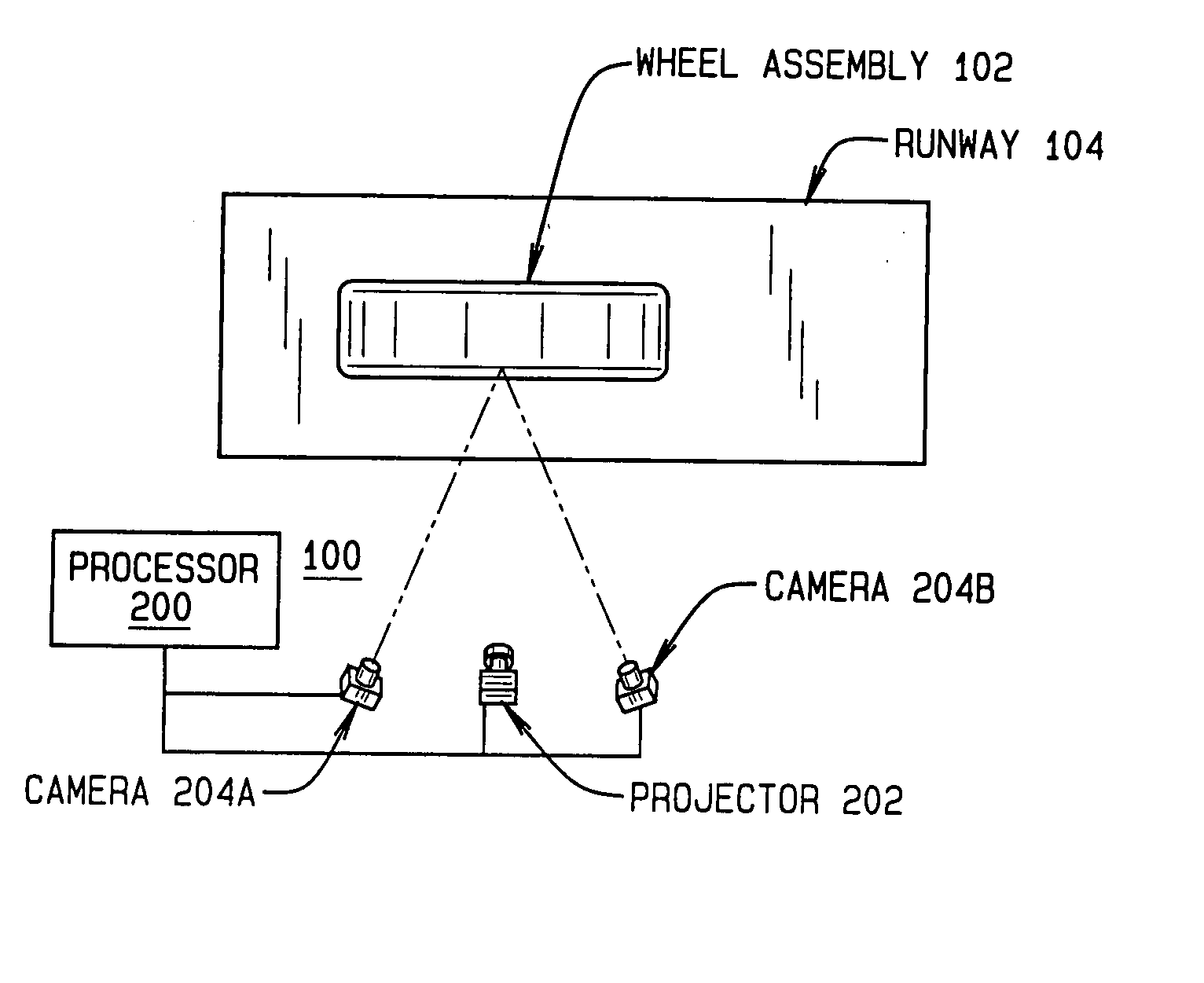

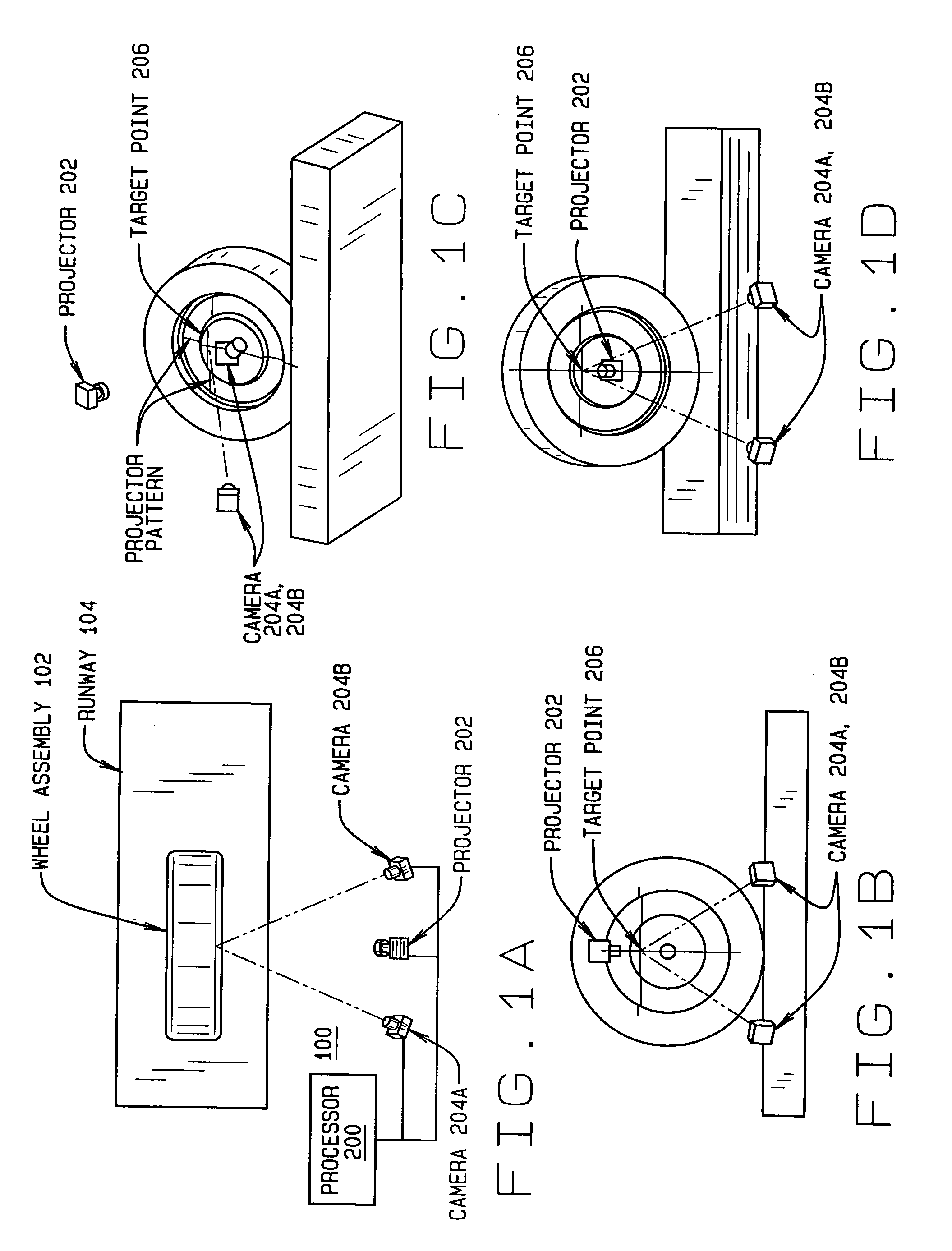

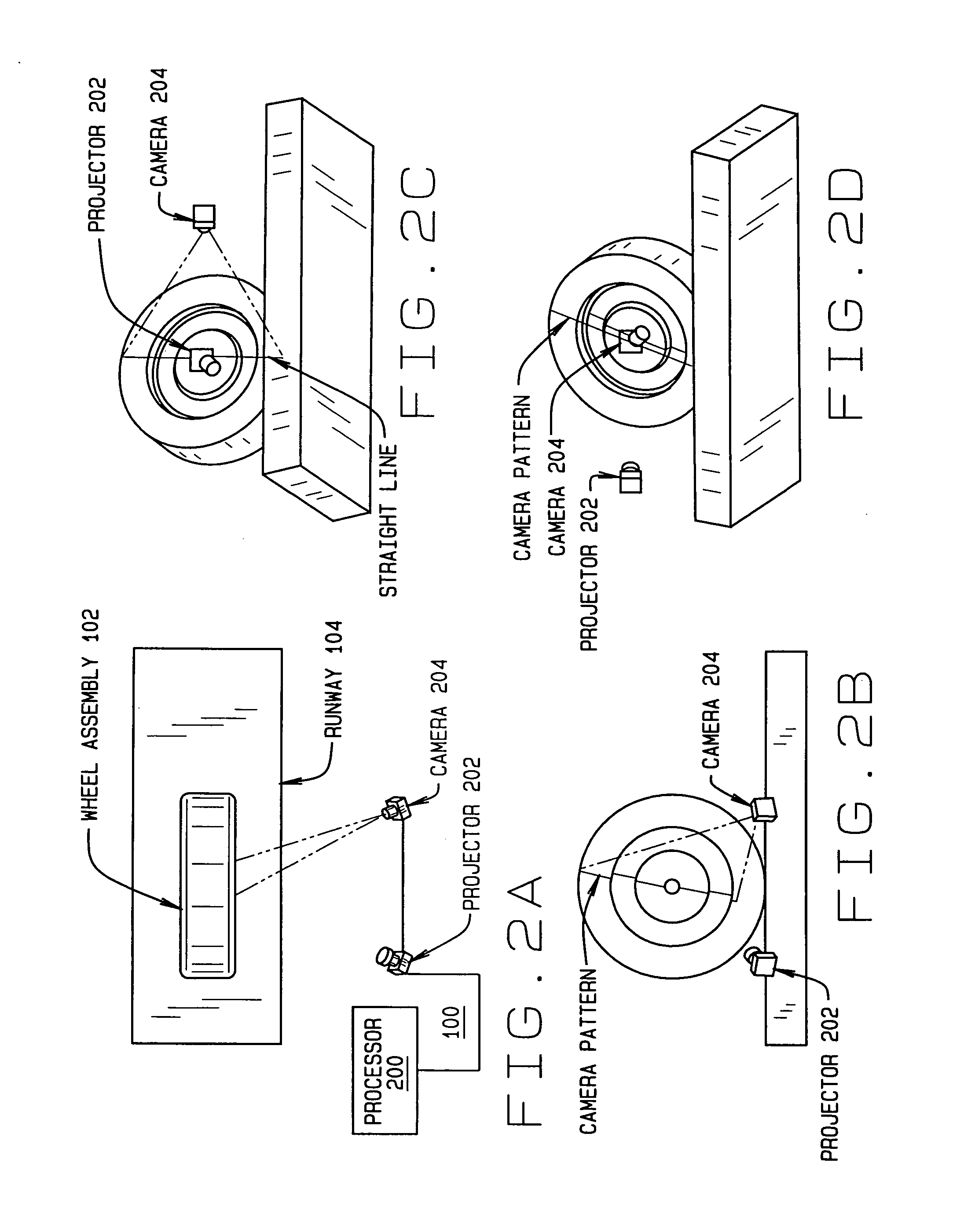

Method and Apparatus for Wheel Alignment System Target Projection and Illumination

ActiveUS20070124949A1Angles/taper measurementsUsing electrical meansMachine visionThree-dimensional space

A machine vision vehicle wheel alignment system configured with at least one cameras for acquiring images of the wheels of a vehicle, and an associated light projectors configured to project a pattern image onto the surfaces of vehicle components such as vehicle wheel assemblies. Images of the projected patterns acquired by the camera, are processed by the vehicle wheel alignment system to facilitate a determination of the relative orientation and position of the surfaces such as wheel assemblies in three dimensional space, from which vehicle parameters such as wheel alignment measurements can be subsequently determined.

Owner:HUNTER ENG

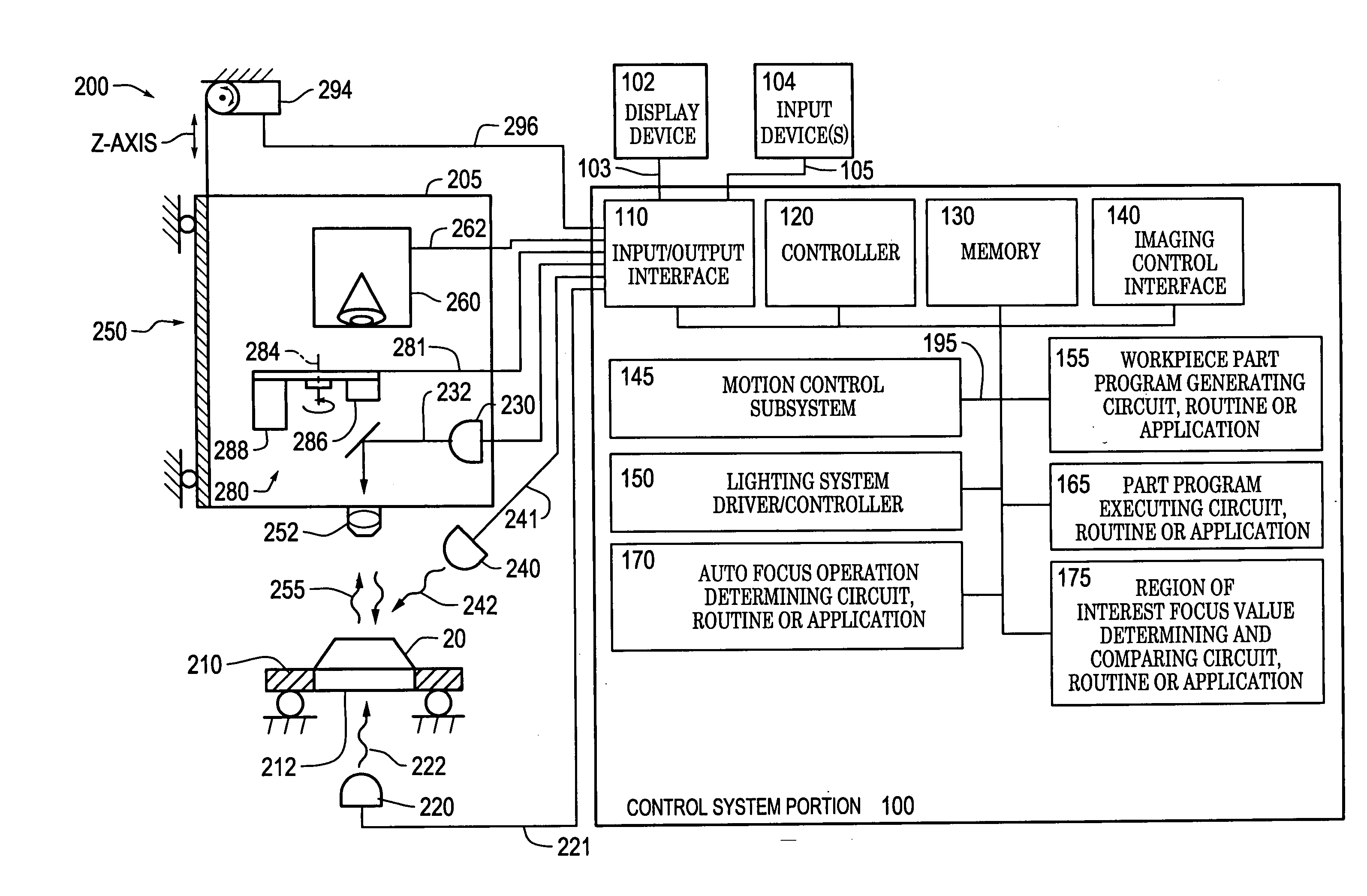

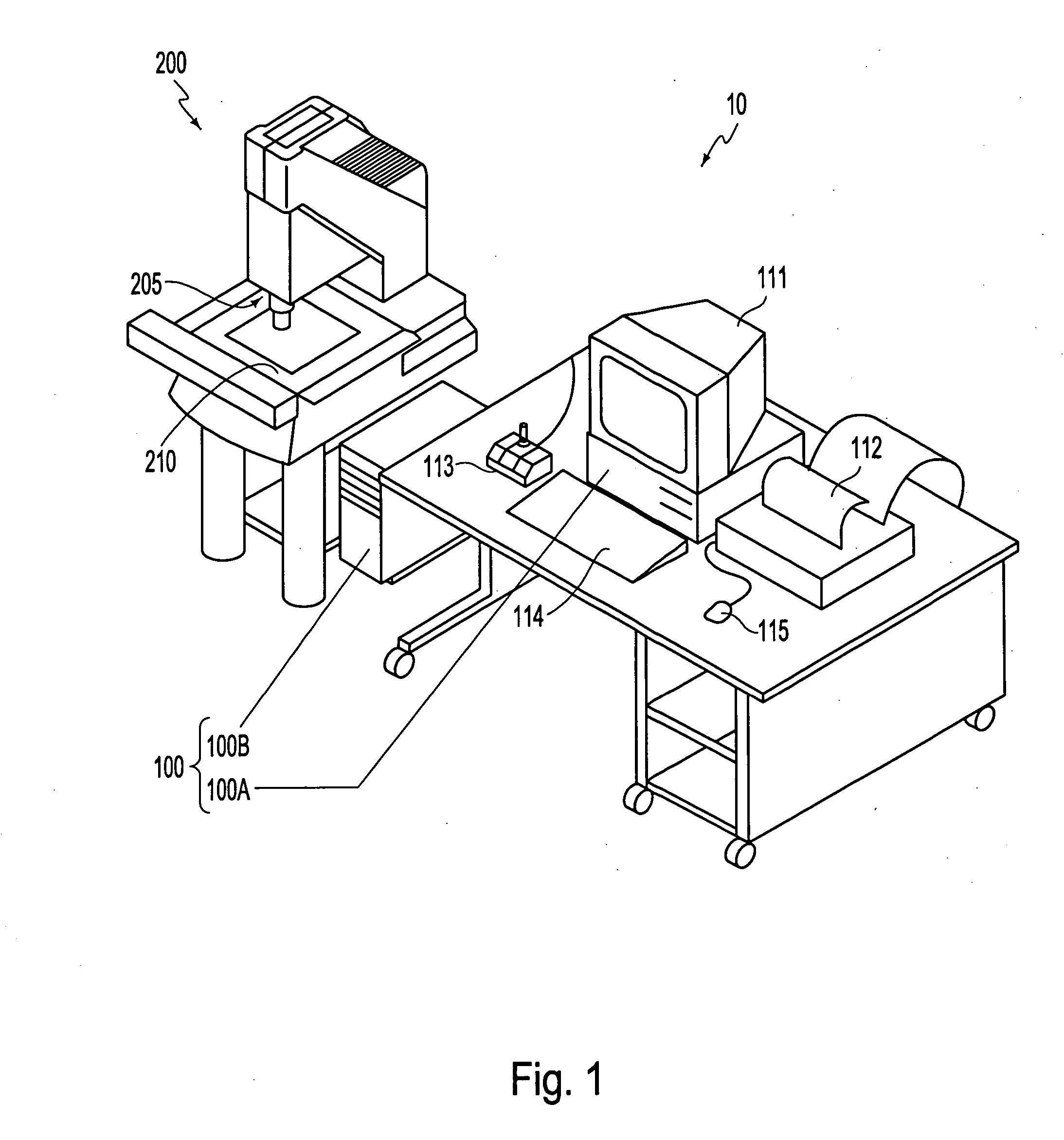

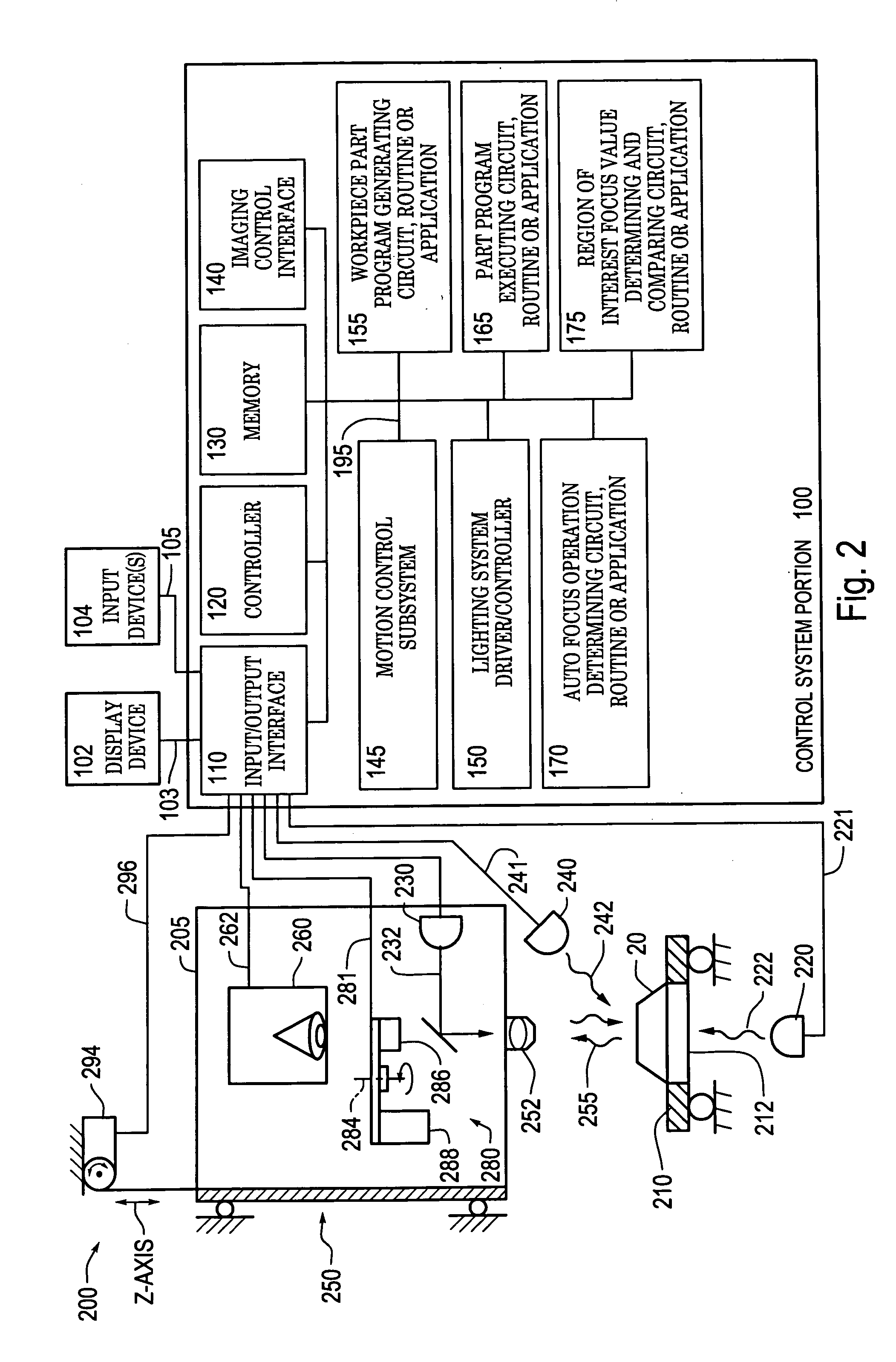

Systems and methods for rapidly automatically focusing a machine vision inspection system

ActiveUS20050109959A1Increase speedAccurate autofocusTelevision system detailsSolid-state devicesMachine visionMetrology

Auto focus systems and methods for a machine vision metrology and inspection system provide high speed and high precision auto focusing, while using relatively low-cost and flexible hardware. One aspect of various embodiments of the invention is that the portion of an image frame that is output by a camera is minimized for auto focus images, based on a reduced readout pixel set determined in conjunction with a desired region of interest. The reduced readout pixel set allows a maximized image acquisition rate, which in turn allows faster motion between auto focus image acquisition positions to achieve a desired auto focus precision at a corresponding auto focus execution speed that is approximately optimized in relation to a particular region of interest. In various embodiments, strobe illumination is used to further improve auto focus speed and accuracy. A method is provided for adapting and programming the various associated auto focus control parameters.

Owner:MITUTOYO CORP

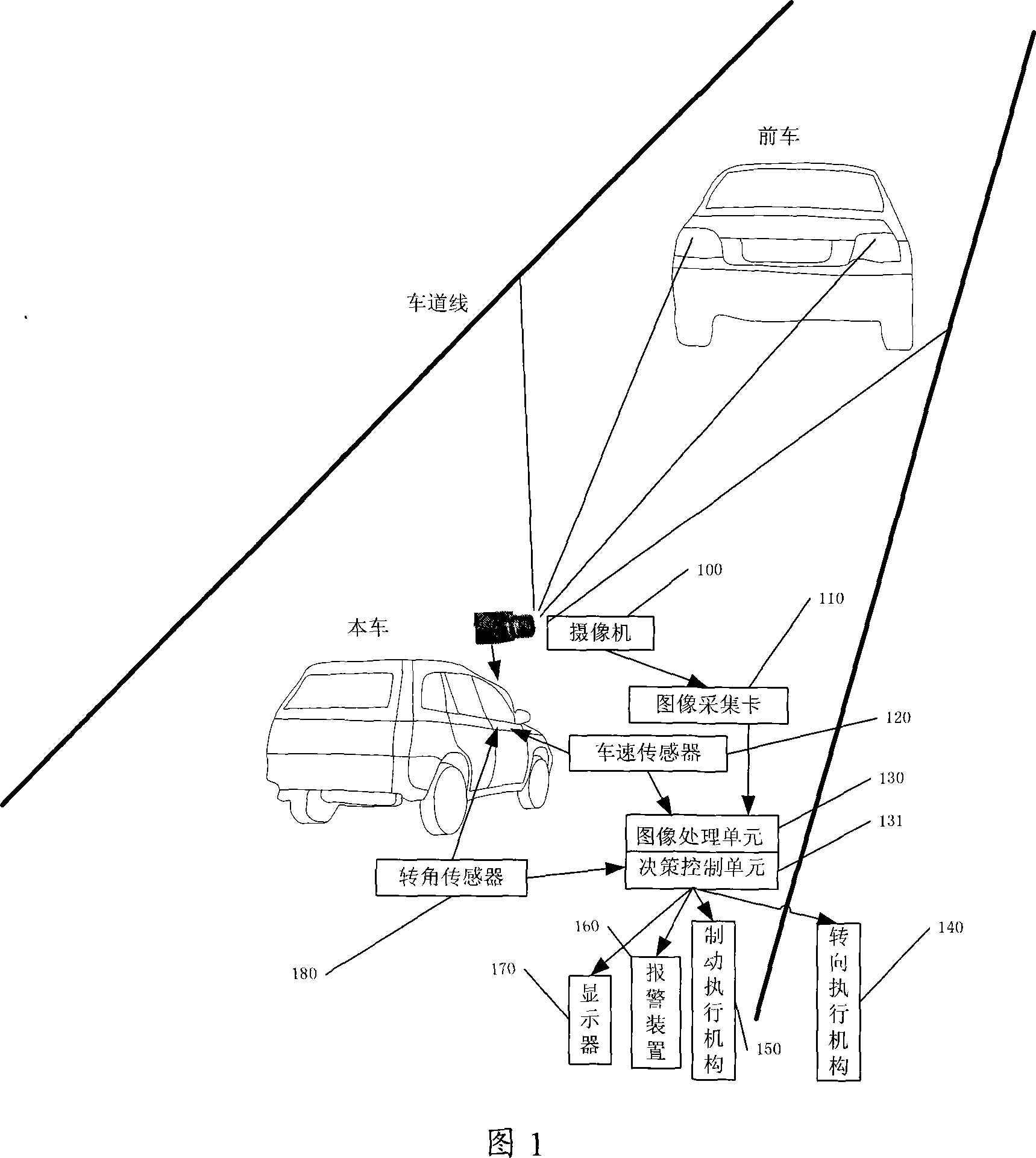

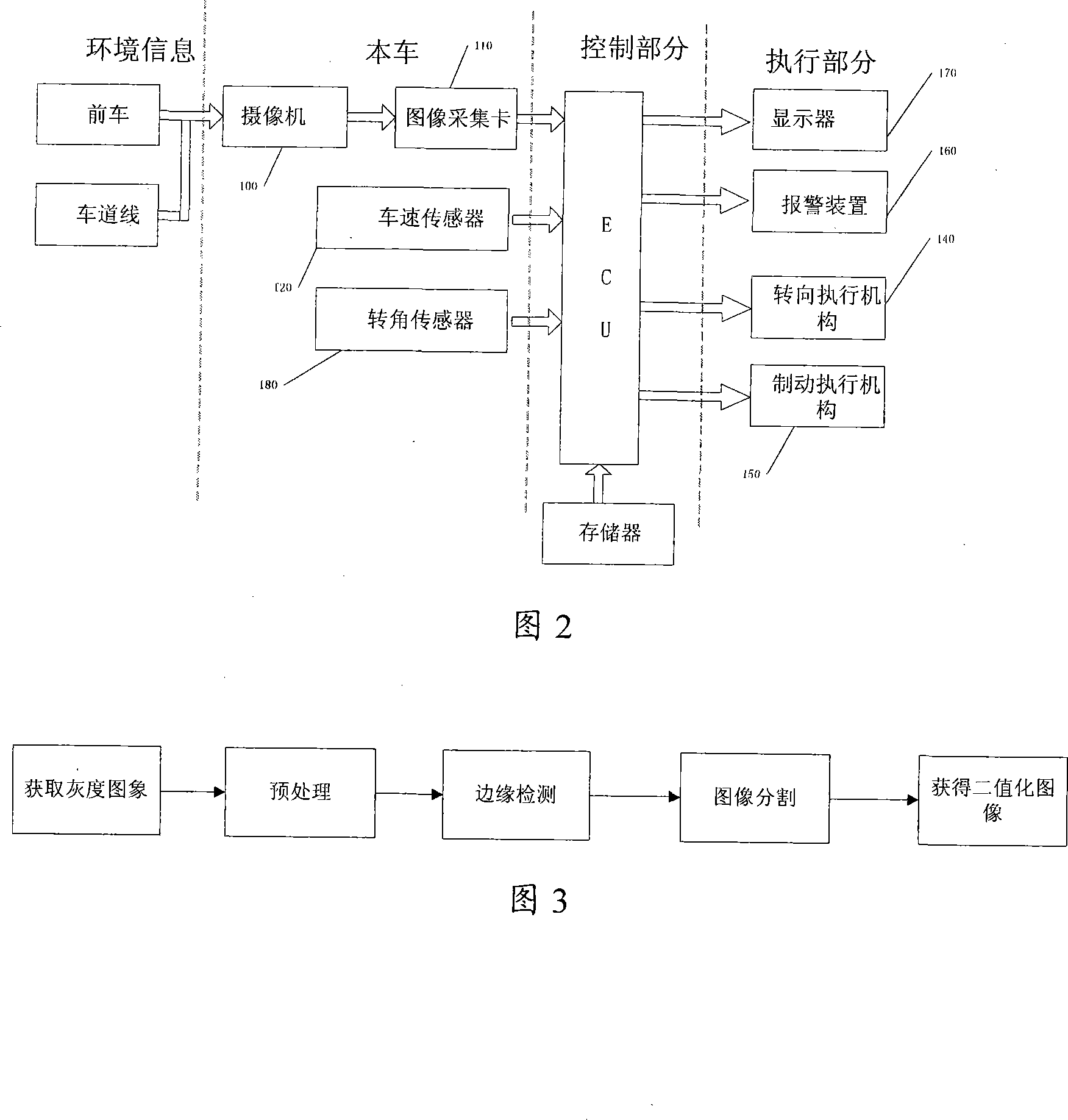

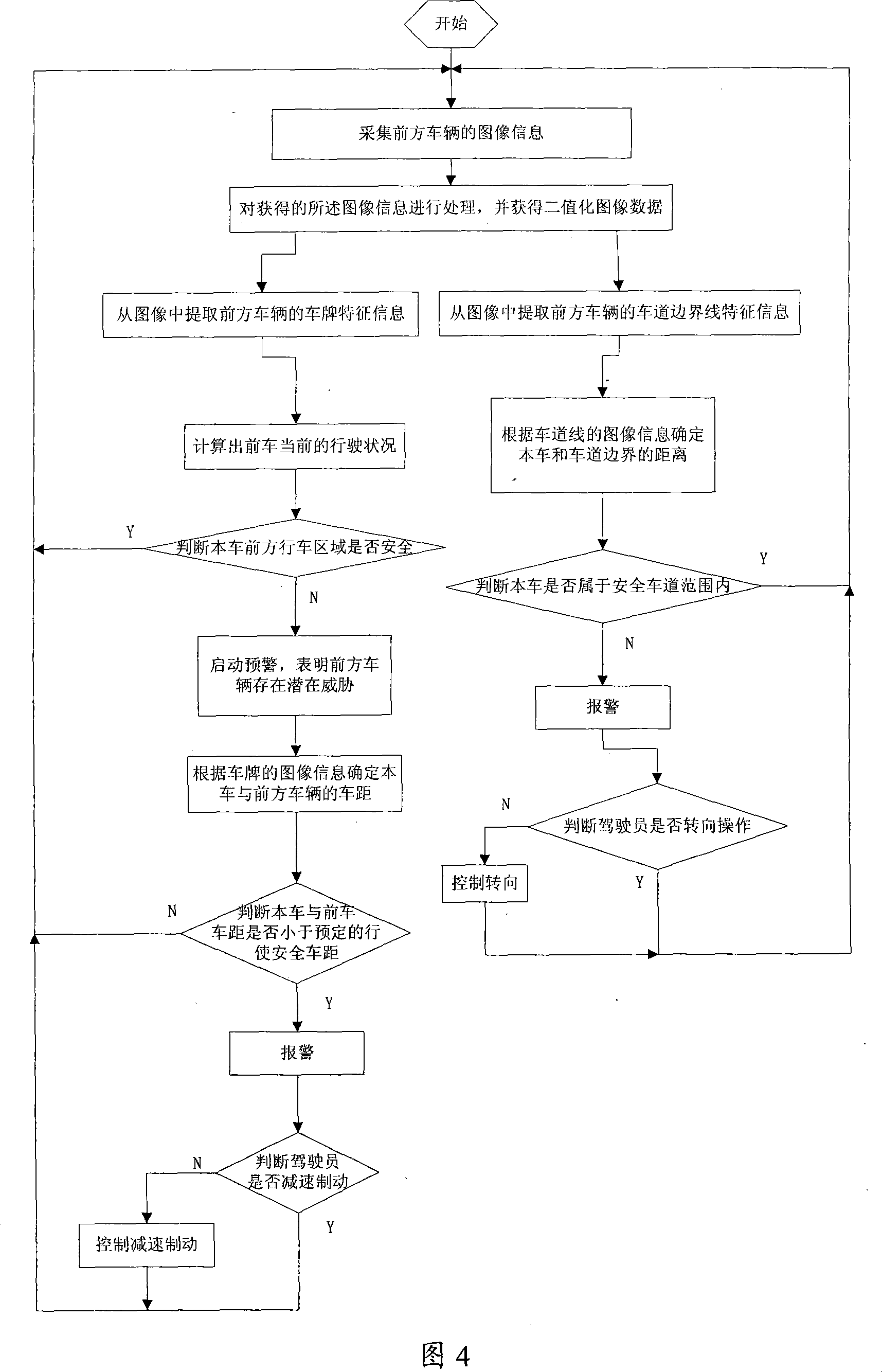

Vehicle anti-collision early warning method and apparatus based on machine vision

InactiveCN101135558AAvoid collisionImprove real-time performanceElectromagnetic wave reradiationMachine visionVisual perception

The method comprises: using a machine vision method to collecting the feature information about the vehicle license and the traffic lane information. According to the size and amount of image pixels projected on the machine vision, calculating the distance to the frontal vehicle; combining the information about the speed and direction of the current vehicle, deciding if the current car is driven in the safe traffic lane range.

Owner:SHANGHAI ZHONGKE SHENJIANG ELECTRIC VEHICLE

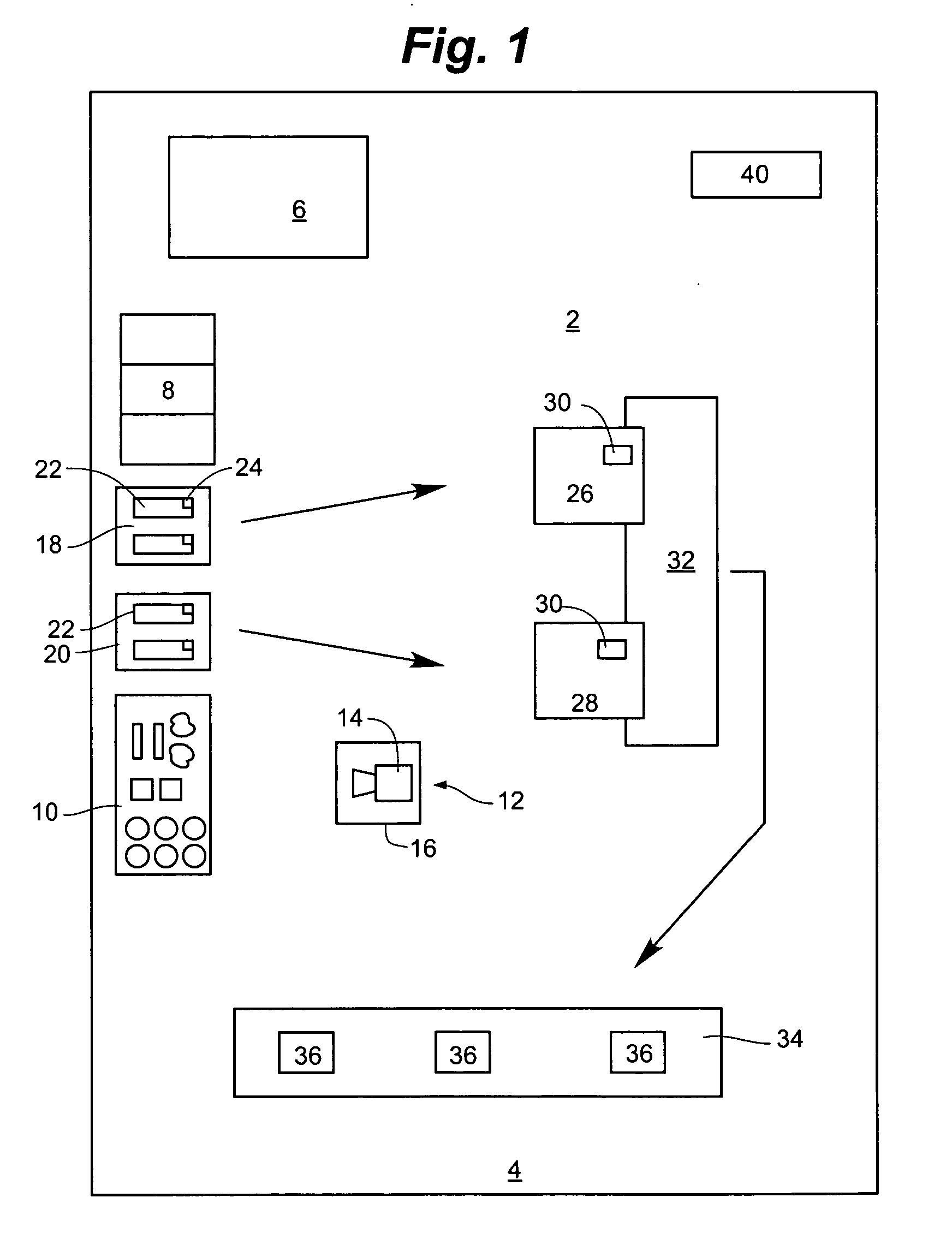

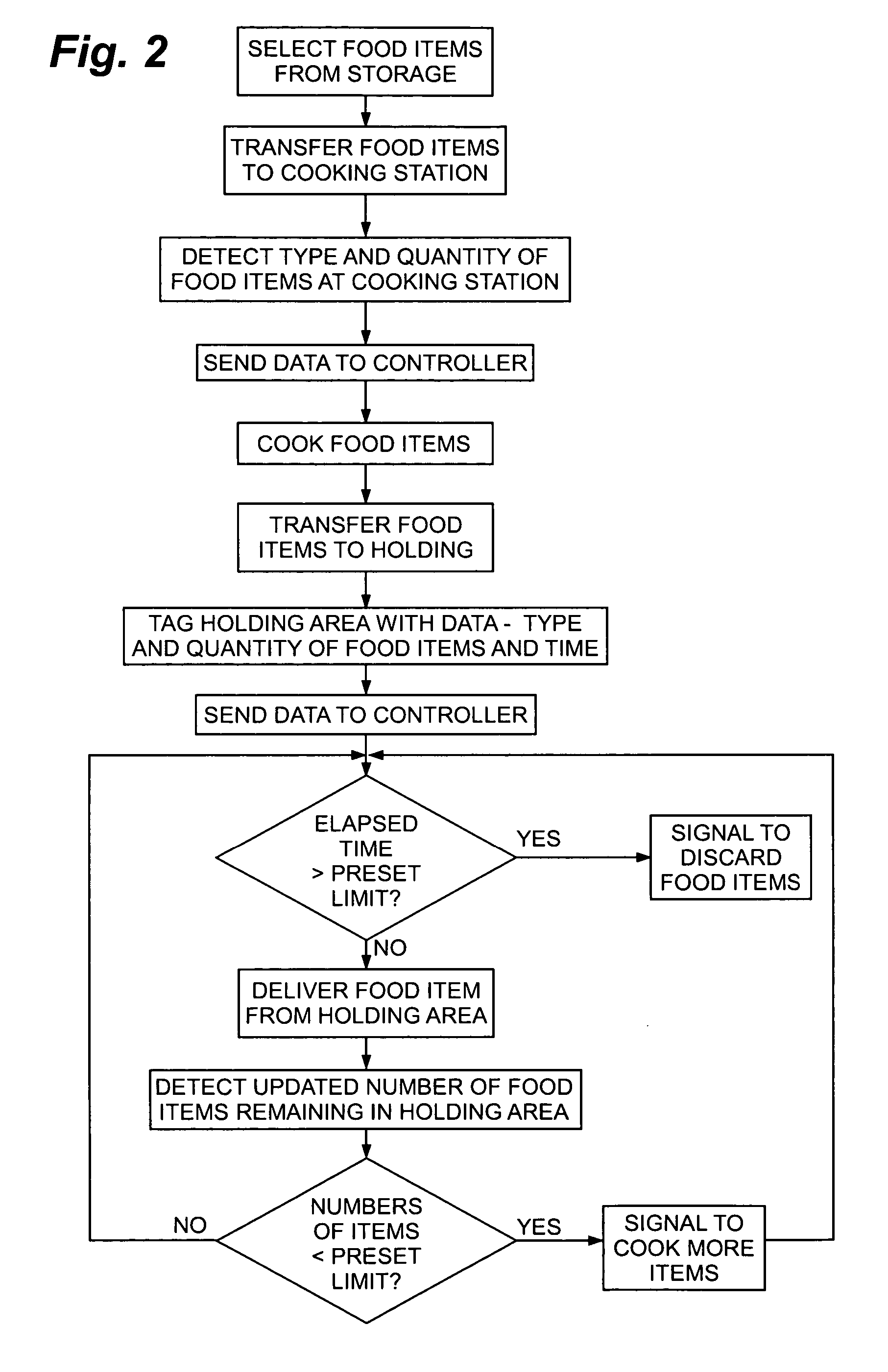

RFID food production, inventory and delivery management method for a restaurant

InactiveUS20070254080A1Electric signal transmission systemsDigital data processing detailsMachine visionSystems management

A system and method for managing food production, inventory and delivery in a restaurant by automatically monitoring the types and quantities of food types that have been cooked and are in a cooked food holding area. Food holding trays are equipped with radio frequency identification (RFID) tags, and holding cabinets are equipped with RFID interrogators. The type and quantity of food items are determined manually or by machine vision or weighing systems, and the data is stored on the RFID tags and in a controller. The system manages the use of food items on a first-in, first-out basis, alerts operators when the inventory of an item is nearing exhaustion, and alerts operators when food items in the holding area must be discarded. The system manages movable trays of food no matter where in the facility they are located.

Owner:RESTAURANT TECH

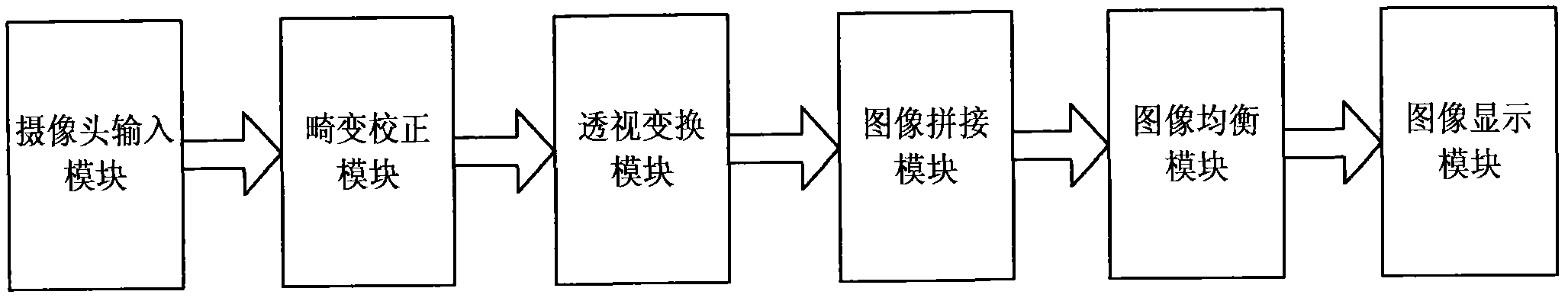

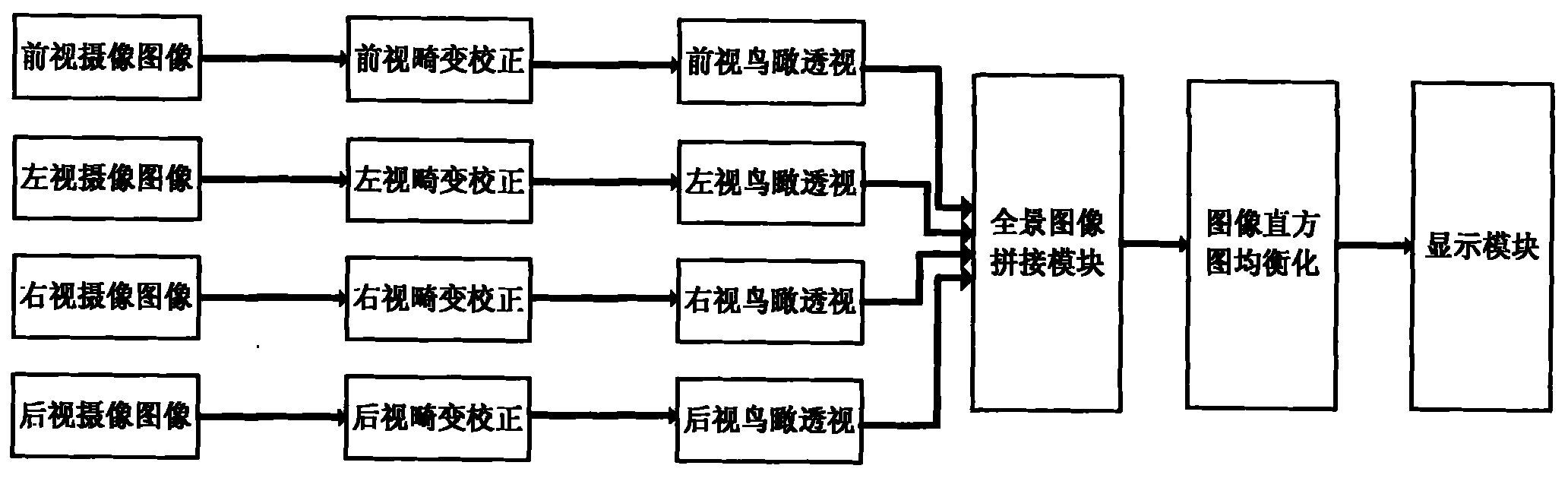

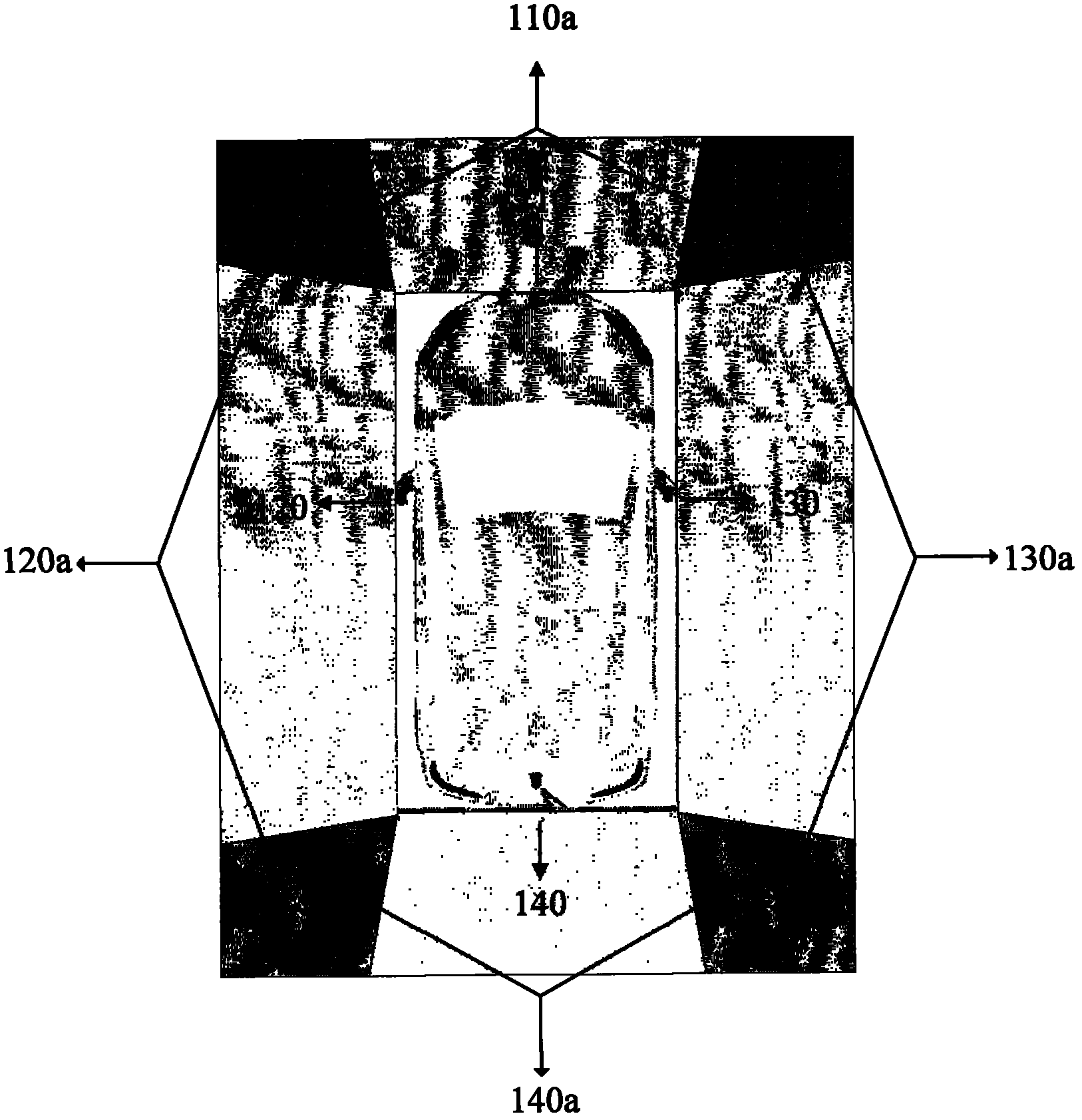

Panoramic parking assist system

The invention discloses a panoramic parking assist system, which is a technique applied to the fields of automotive electronics and auto safety driving assistance. The system comprises a camera input module, an image correction module, a bird-eye perspective module, an image mosaicking module, a histogram equalization module and an image display module. The system is used for effectively solving the problem of car crash accidents caused by that the driver can not fully observe the situations around the car in the process of parking. By using the system, a 360-degree top view around a car bodyin real time can be showed for the drivers, so that the drivers can clearly see the relative positions of obstacles around the car body and the distances between the obstacles and the car body; and the blind visual areas around the car body can be eliminated so as to improve the security and stability of car in the process of parking. Through integrating a plurality of machine vision and digital image processing algorithms and fusing a plurality of advanced techniques in the field of machine vision, and based on the advantages of low cost, high performance, strong portability and the like, the system is convenient to be popularized and applied widely.

Owner:GUANGZHOU ZHIYUAN ELECTRONICS CO LTD

Methods and apparatus for practical 3D vision system

ActiveUS20070081714A1Facilitates finding patternsHigh match scoreCharacter and pattern recognitionCamera lensMachine vision

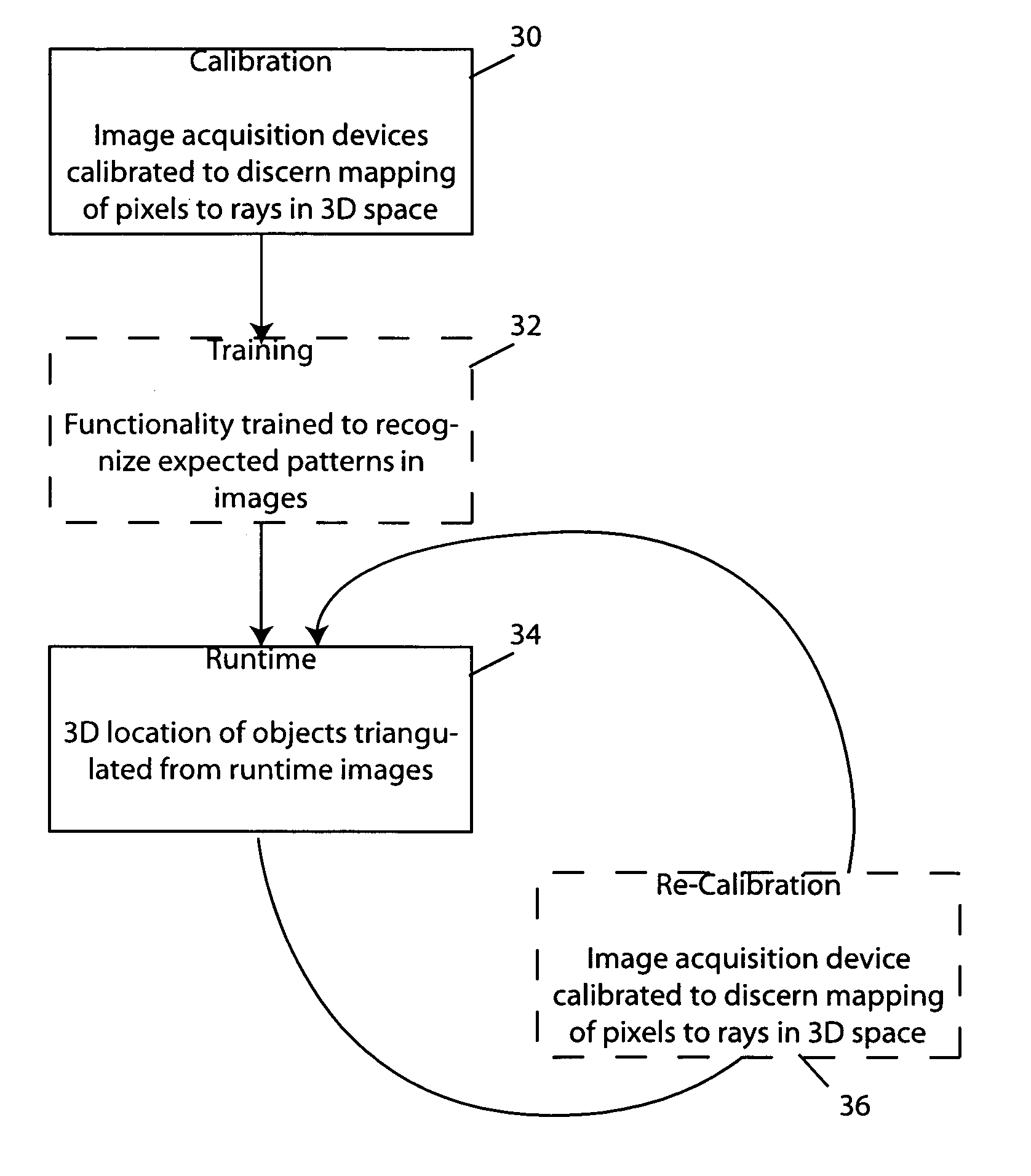

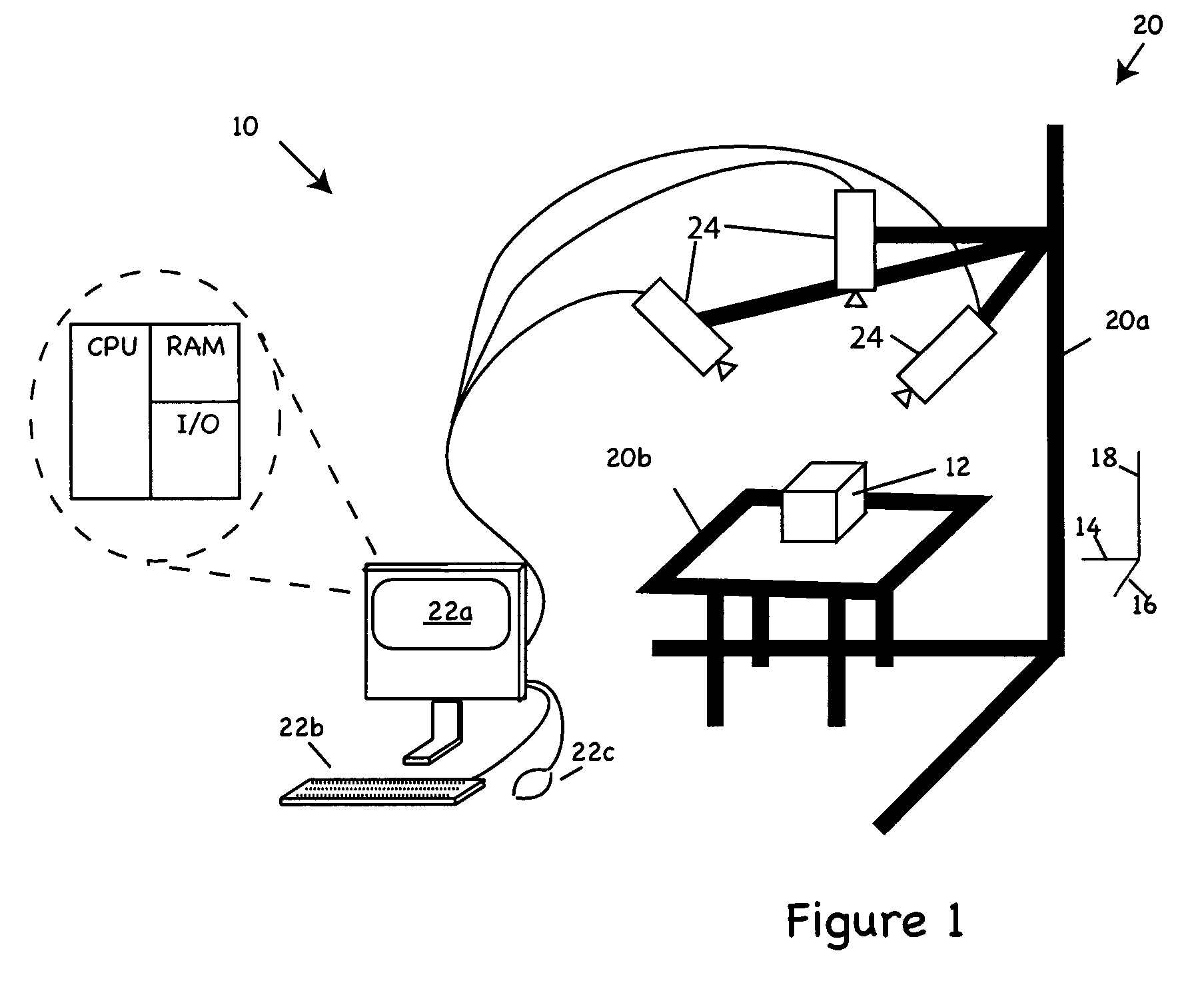

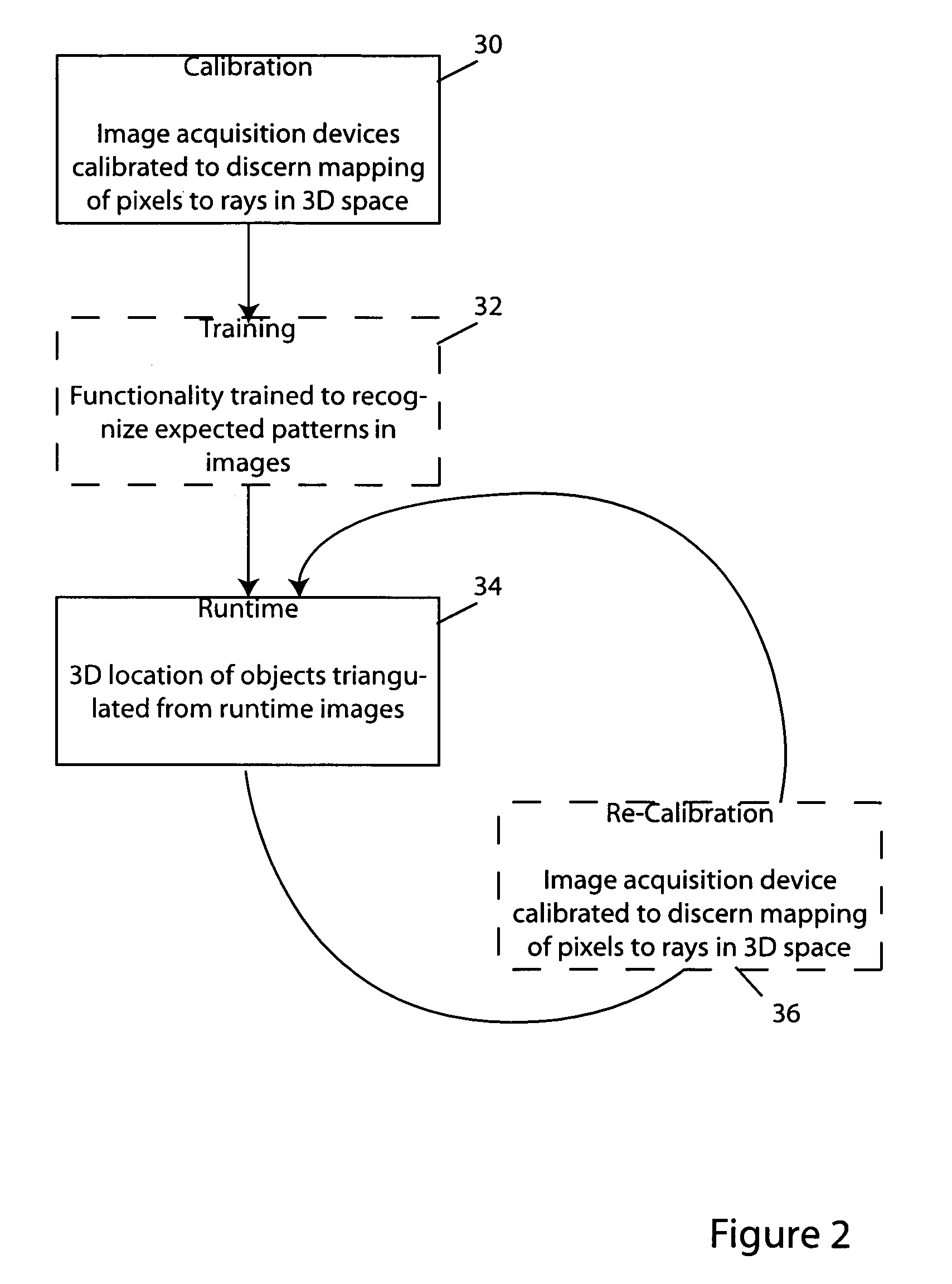

The invention provides inter alia methods and apparatus for determining the pose, e.g., position along x-, y- and z-axes, pitch, roll and yaw (or one or more characteristics of that pose) of an object in three dimensions by triangulation of data gleaned from multiple images of the object. Thus, for example, in one aspect, the invention provides a method for 3D machine vision in which, during a calibration step, multiple cameras disposed to acquire images of the object from different respective viewpoints are calibrated to discern a mapping function that identifies rays in 3D space emanating from each respective camera's lens that correspond to pixel locations in that camera's field of view. In a training step, functionality associated with the cameras is trained to recognize expected patterns in images to be acquired of the object. A runtime step triangulates locations in 3D space of one or more of those patterns from pixel-wise positions of those patterns in images of the object and from the mappings discerned during calibration step.

Owner:COGNEX TECH & INVESTMENT

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com