Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

2934 results about "Camera image" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

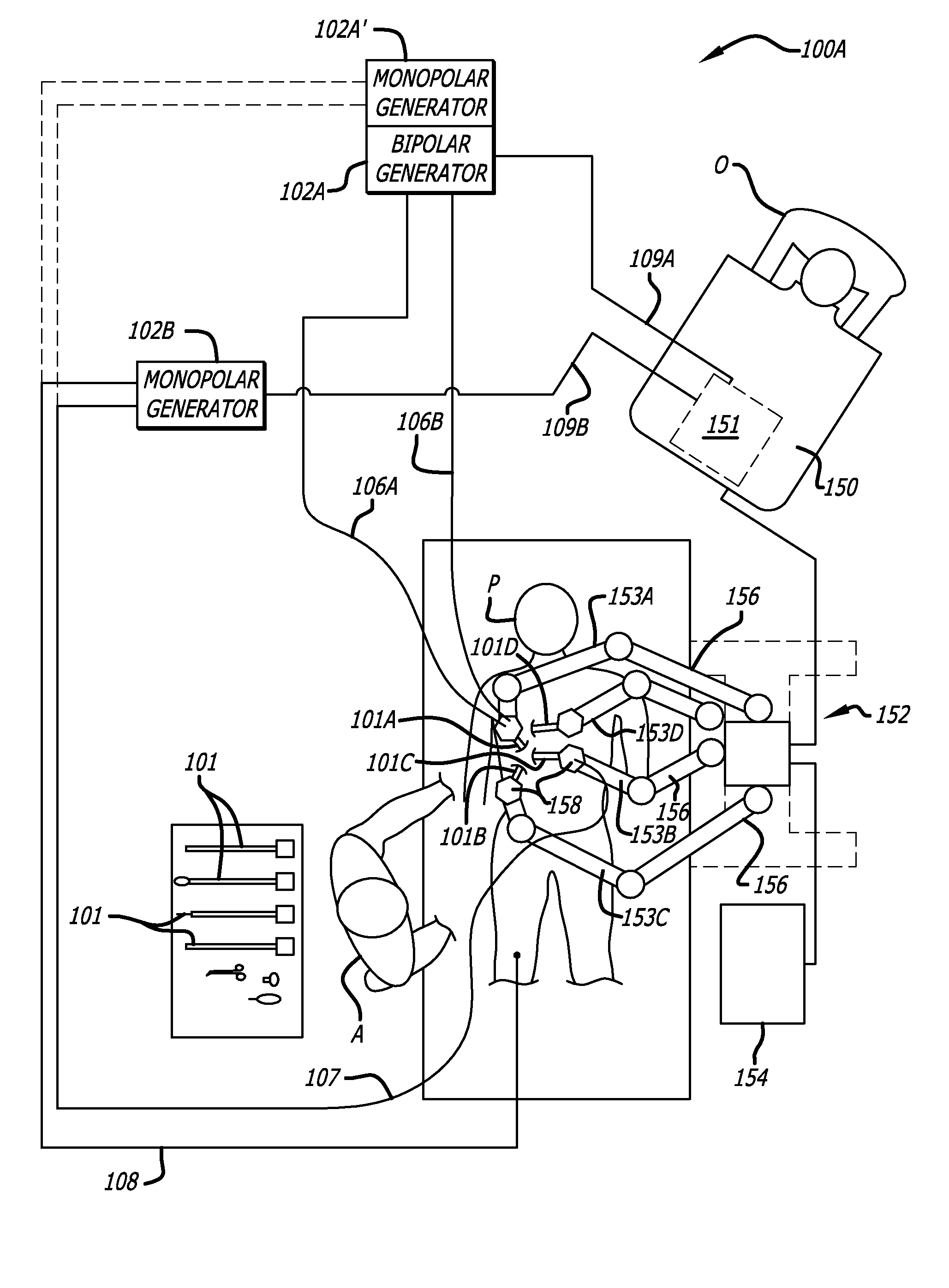

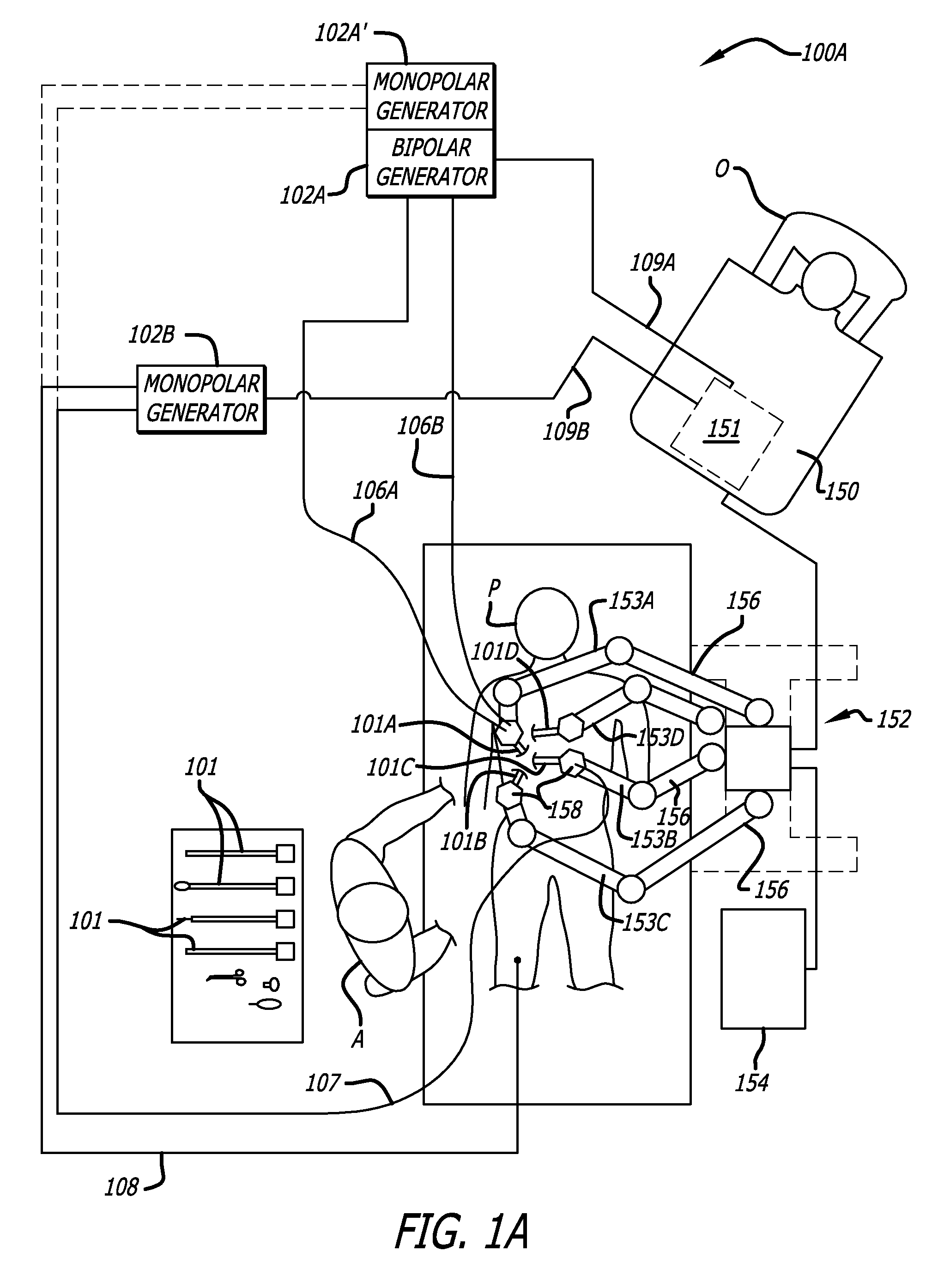

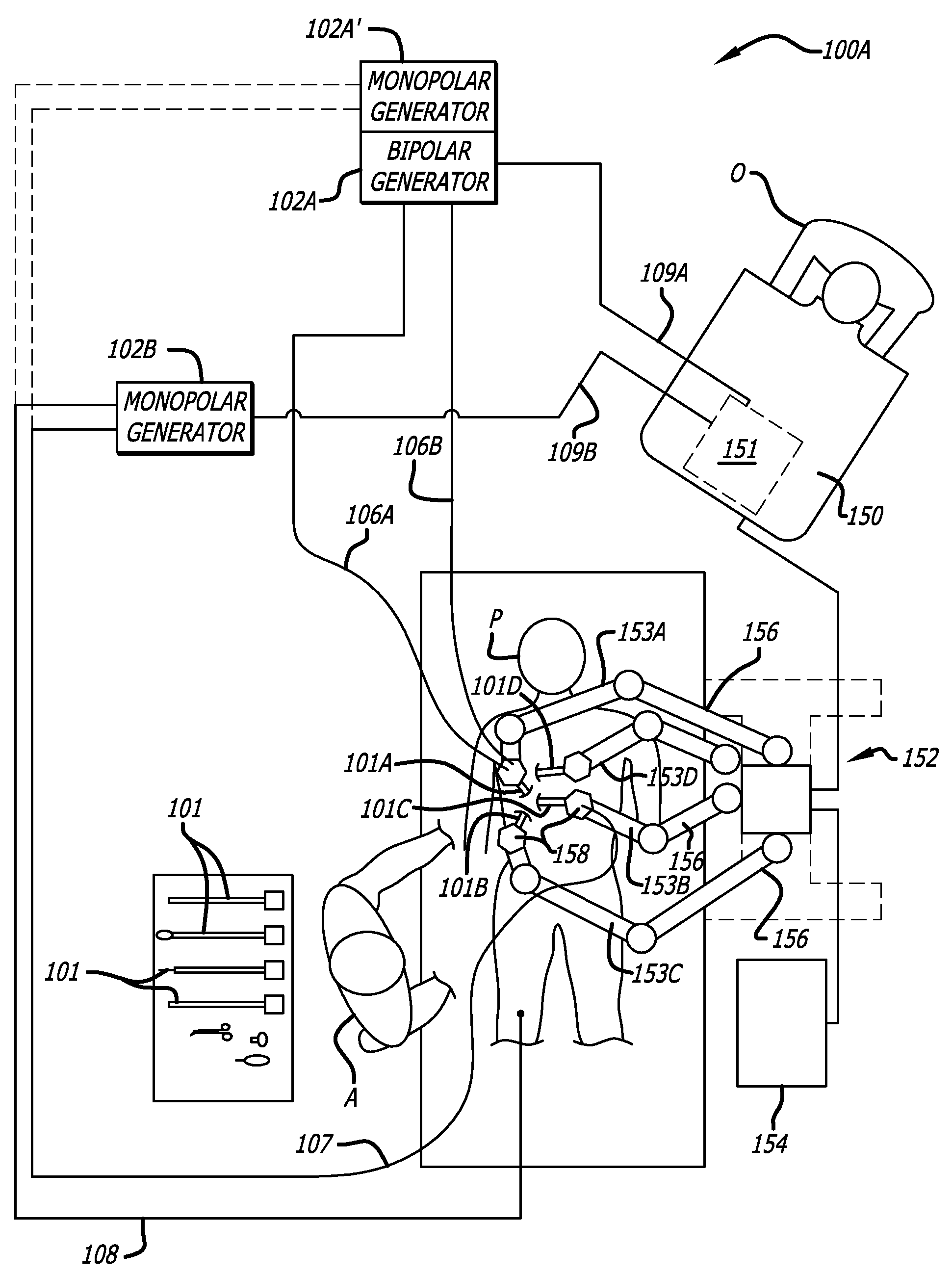

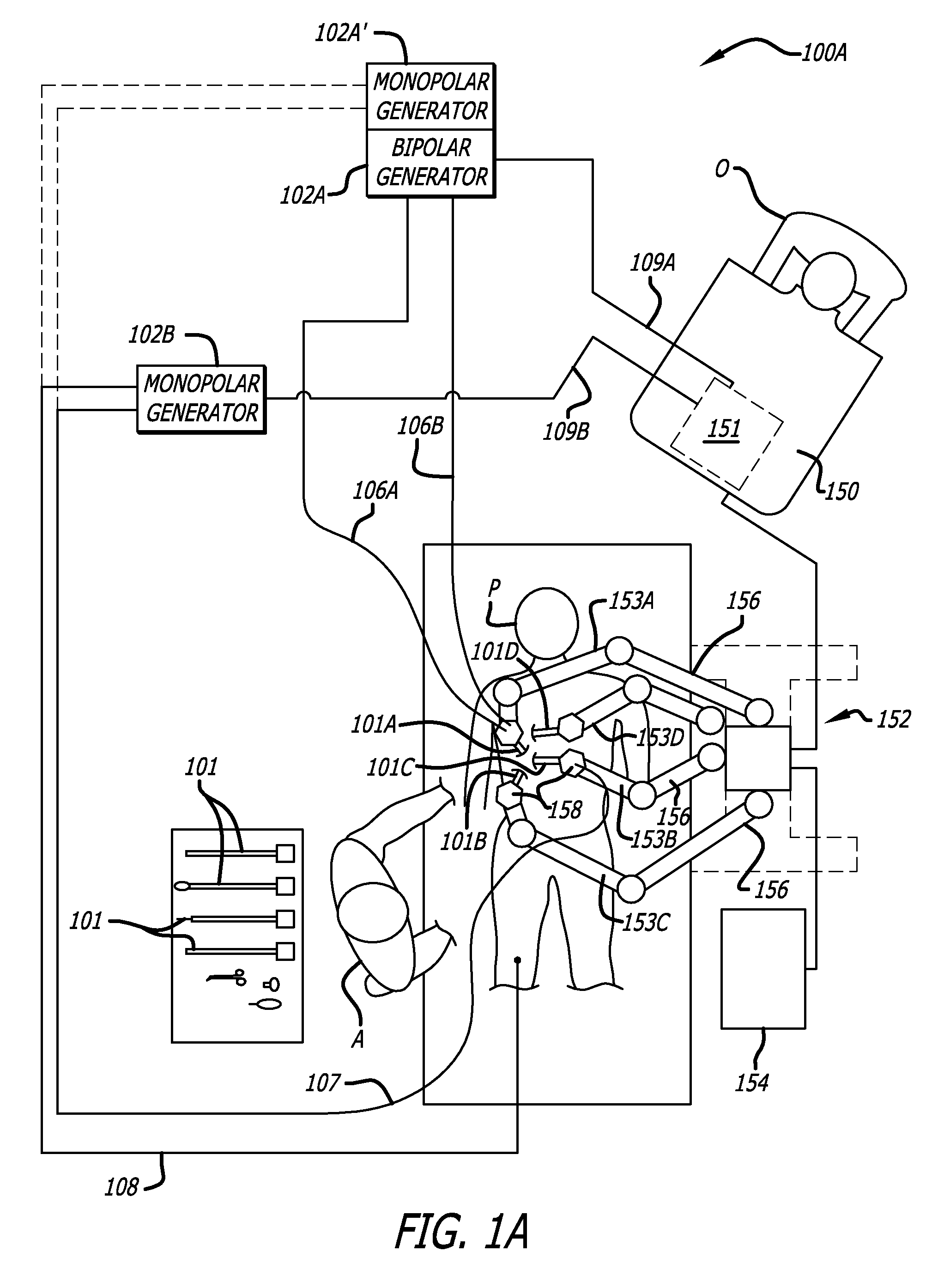

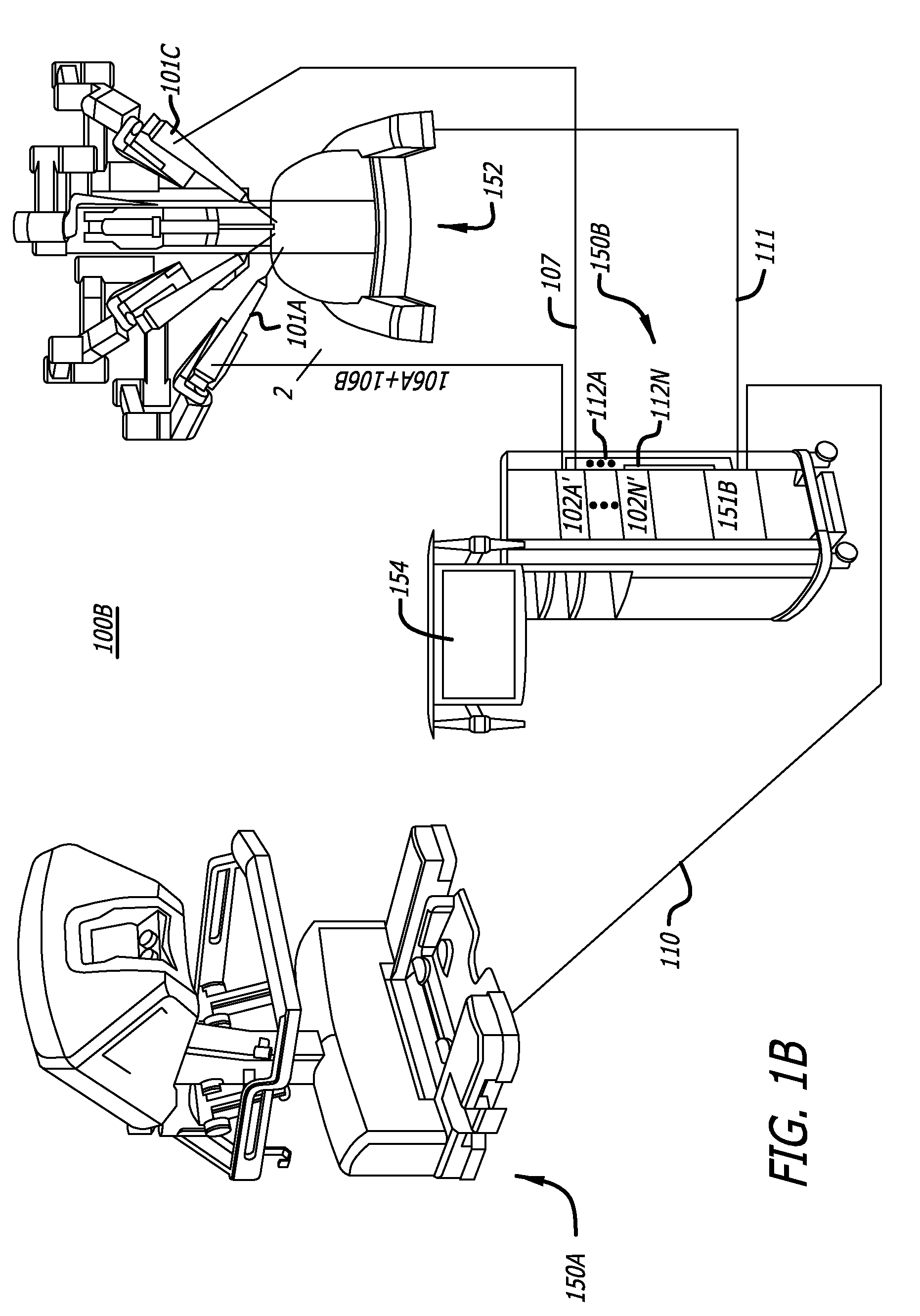

User interfaces for electrosurgical tools in robotic surgical systems

A method for a minimally invasive surgical system is disclosed including capturing camera images of a surgical site; generating a graphical user interface (GUI) including a first colored border portion in a first side and a second colored border in a second side opposite the first side; and overlaying the GUI onto the captured camera images of the surgical site for display on a display device of a surgeon console. The GUI provides information to a user regarding the first electrosurgical tool and the second tool in the surgical site that is concurrently displayed by the captured camera images. The first colored border portion in the GUI indicates that the first electrosurgical tool is controlled by a first master grip of the surgeon console and the second colored border portion indicates the tool type of the second tool controlled by a second master grip of the surgeon console.

Owner:INTUITIVE SURGICAL OPERATIONS INC

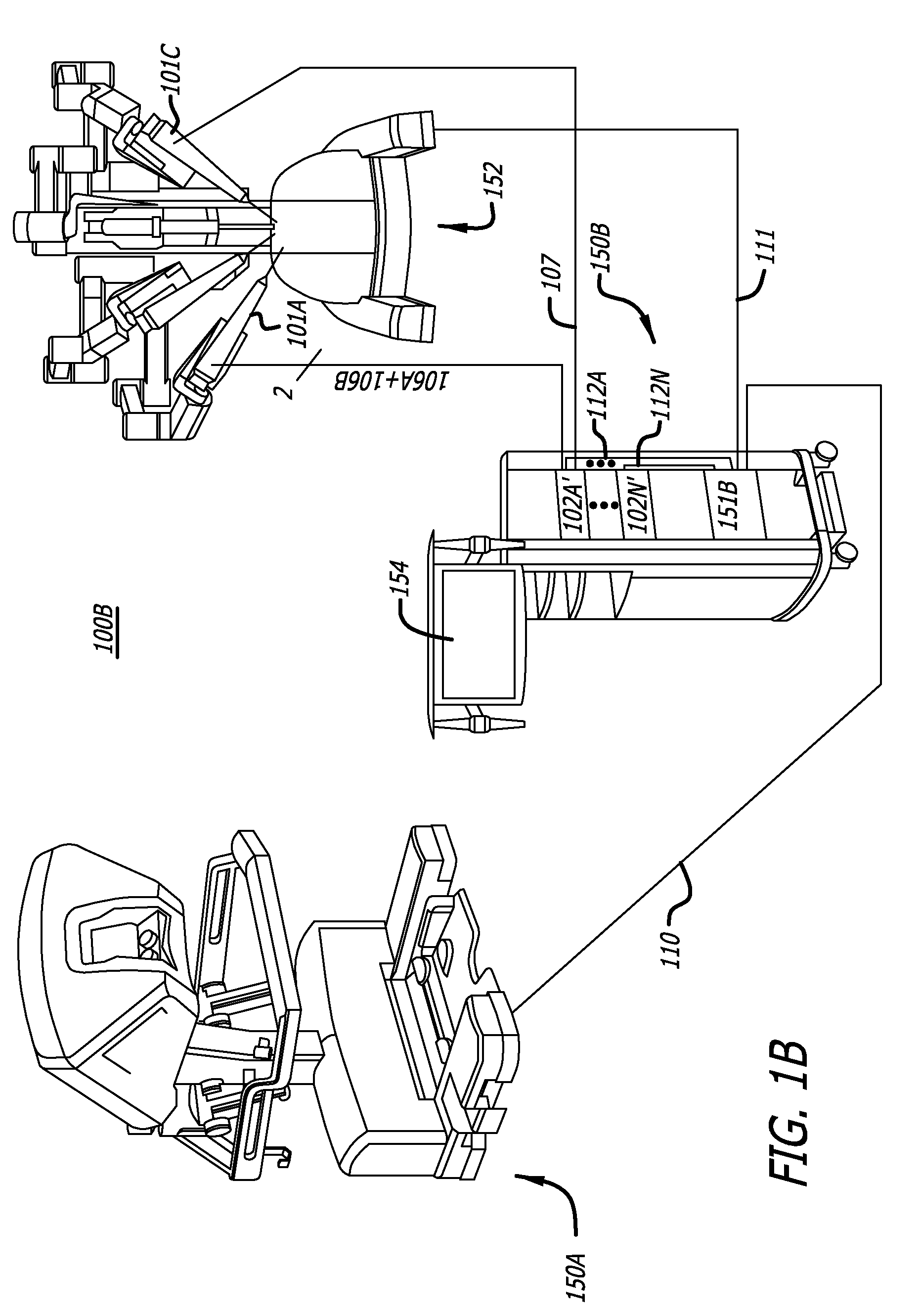

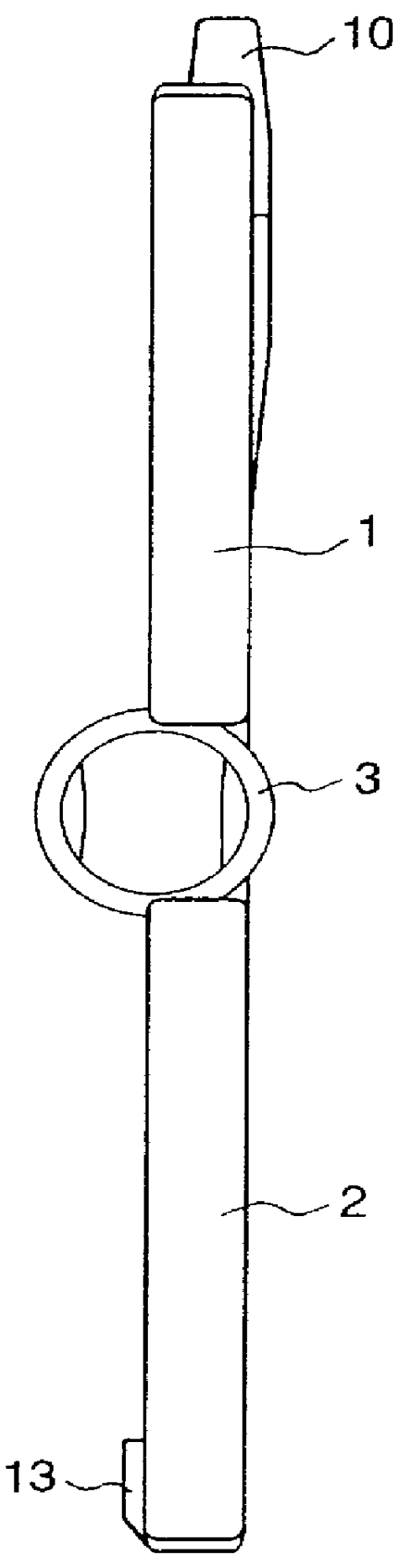

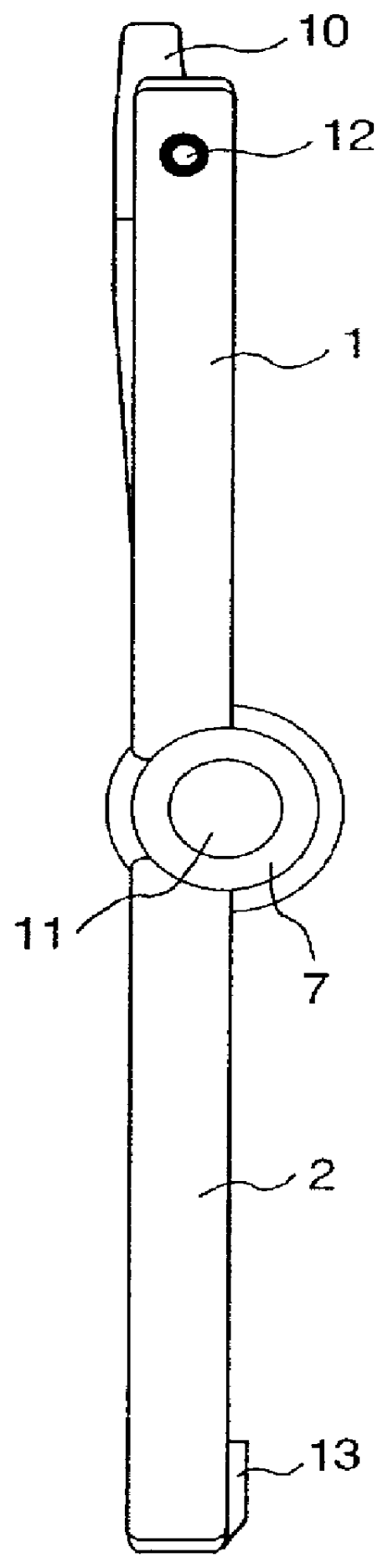

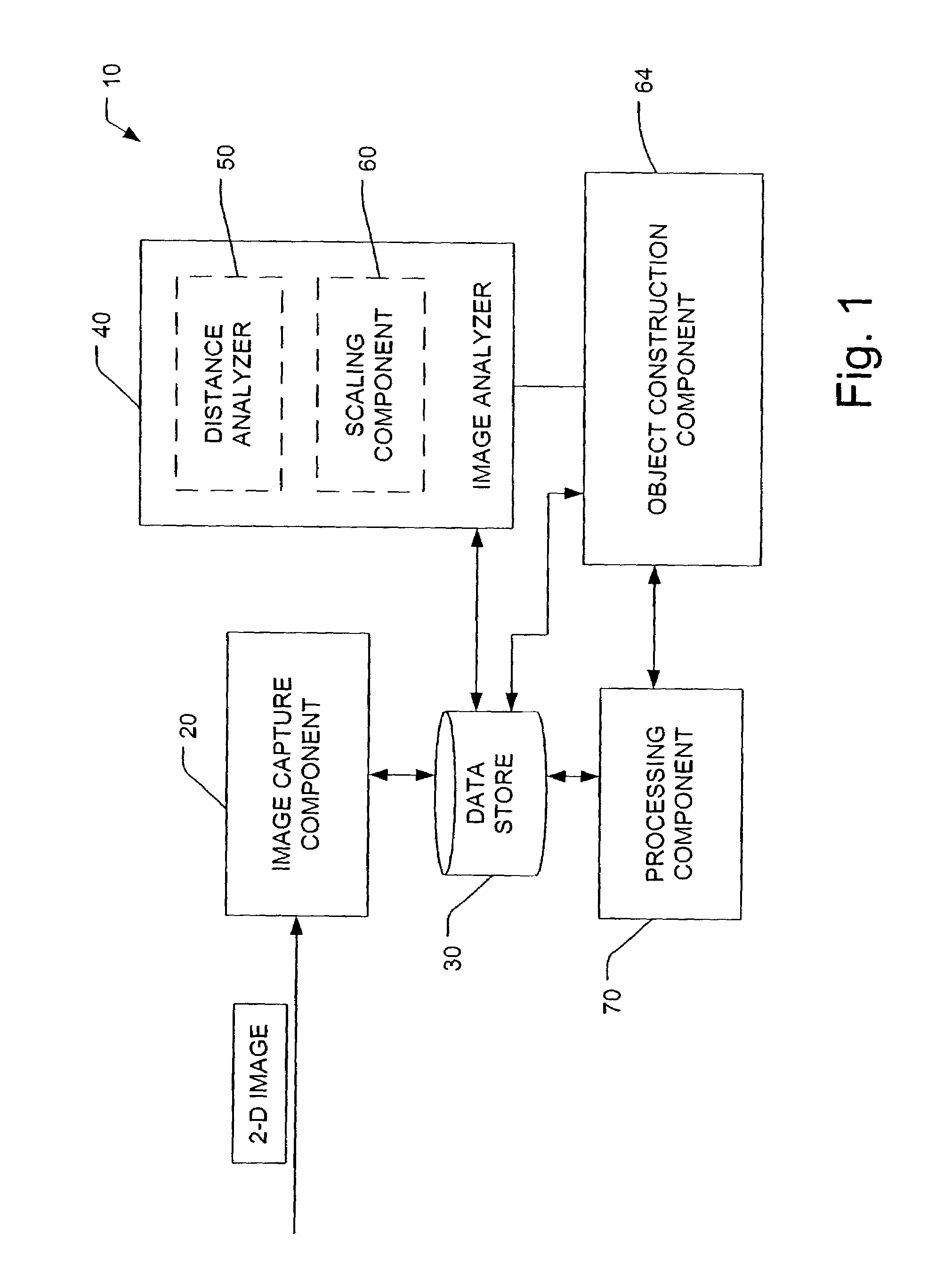

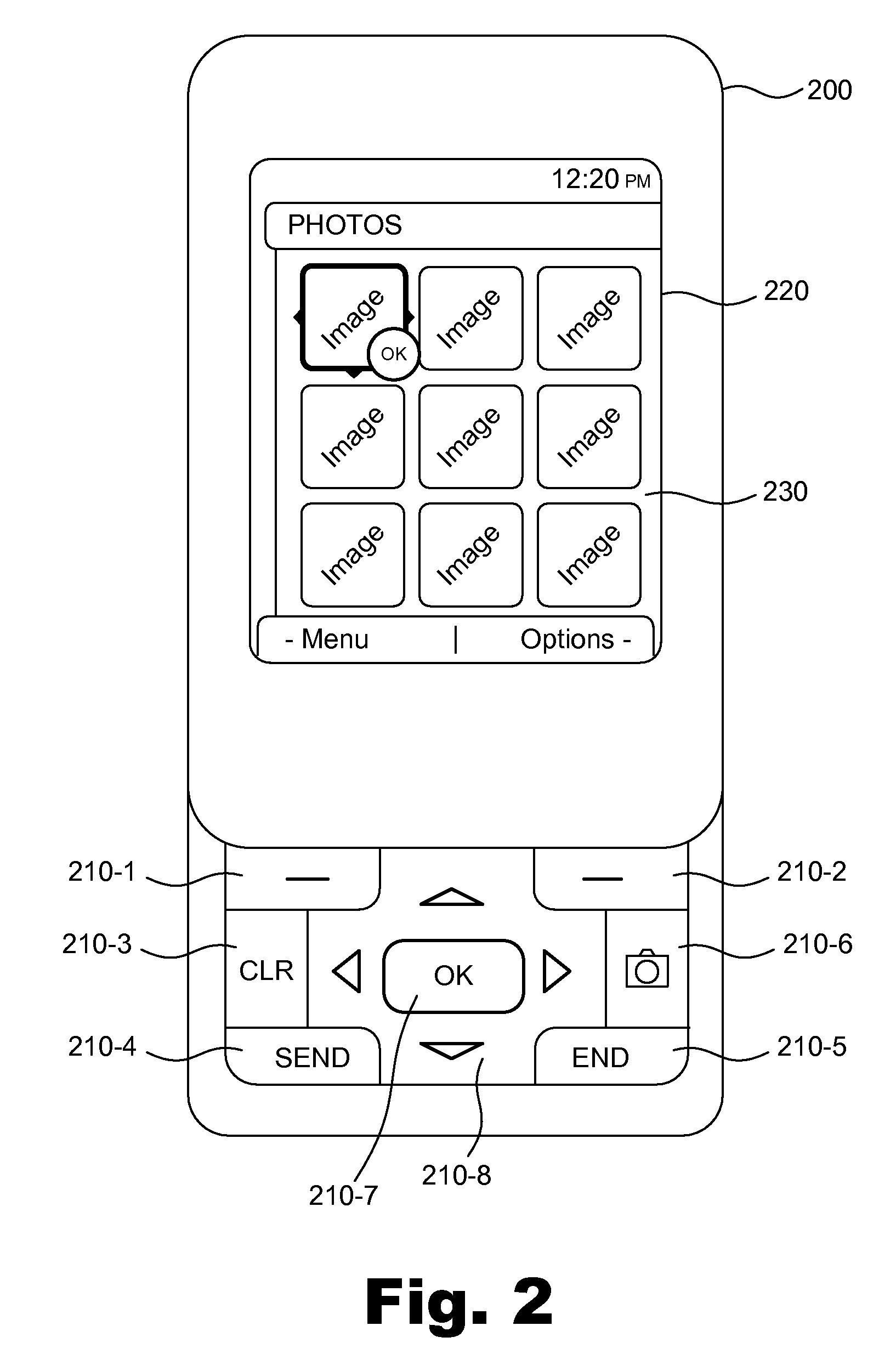

Information communication terminal device

InactiveUS6069648AImprove portabilitySolve the real problemCordless telephonesTelevision system detailsCamera lensCamera image

An upper case and a lower case are rotatably connected in a connection part. The connection part is constructed by a rotary shaft supporting part integrated in the lower case, a rotary shaft which is integrated in the upper case and a part of which is rotatably fit into the rotary shaft supporting part, and a housing member having a part rotatably fit into the rotary shaft supporting part. A video camera and a camera lens are housed in the housing member. A display / operation part is provided almost in the whole upper case and a display / operation part is provided almost in the whole lower case. In the display / operation parts and, in addition to camera images of the video camera, a reception image, and various data, touch-type operation buttons are displayed. The display / operation parts and have the functions as the operation part as well as the display part. "Recording" mode, "transmission / reception" mode, and "information acquisition" mode can be selectively set and the device can be used according to the mode.

Owner:MAXELL HLDG LTD

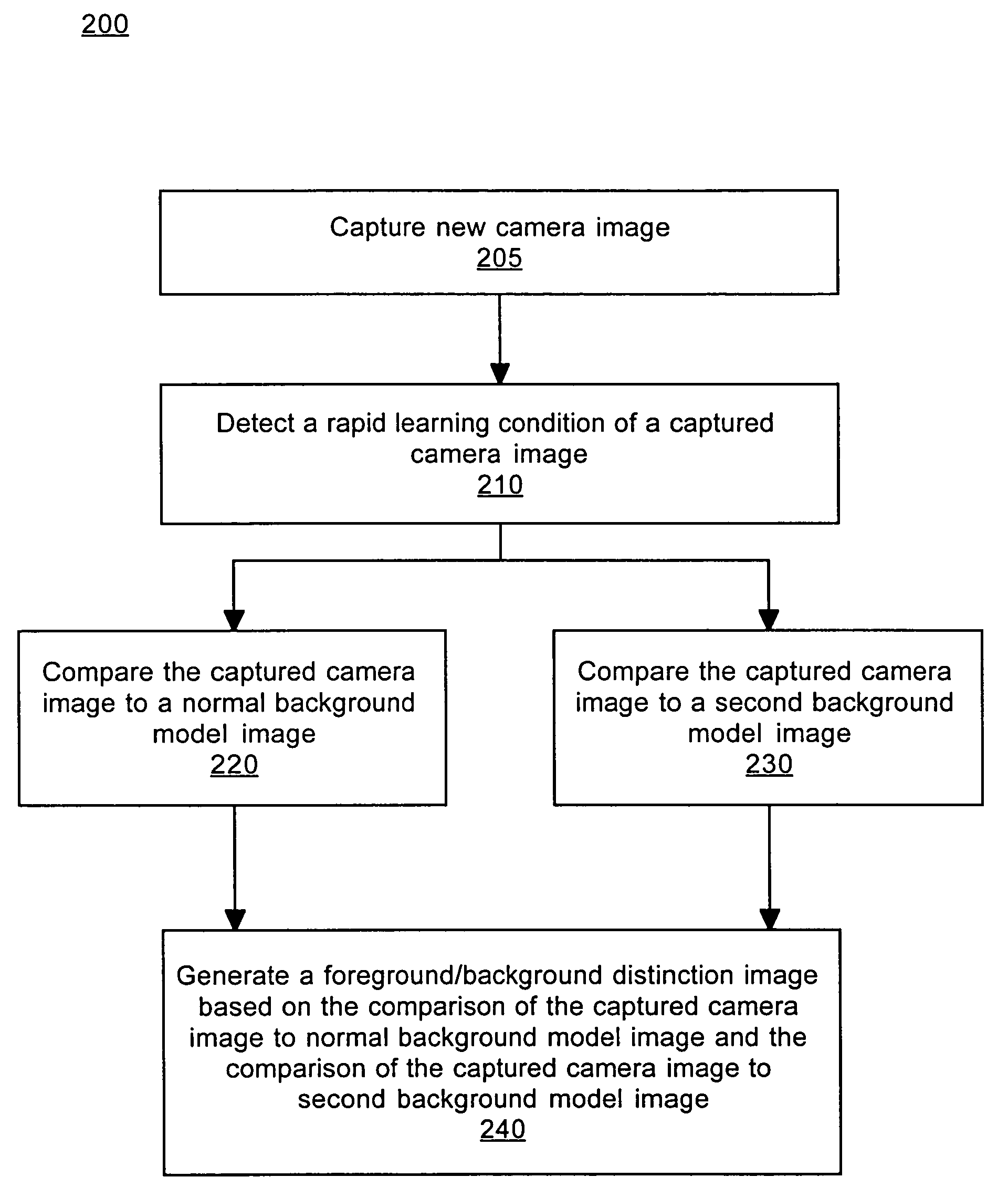

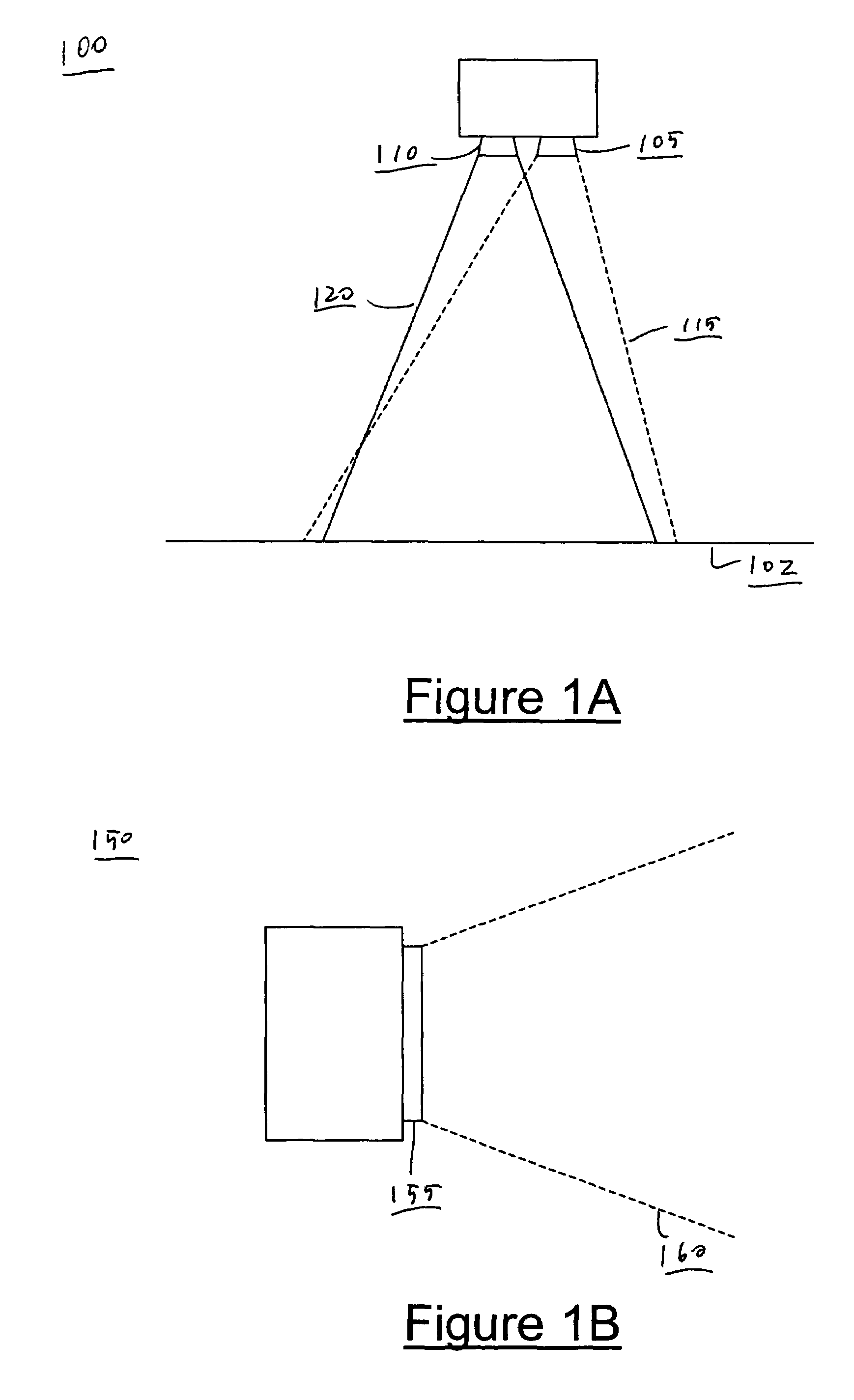

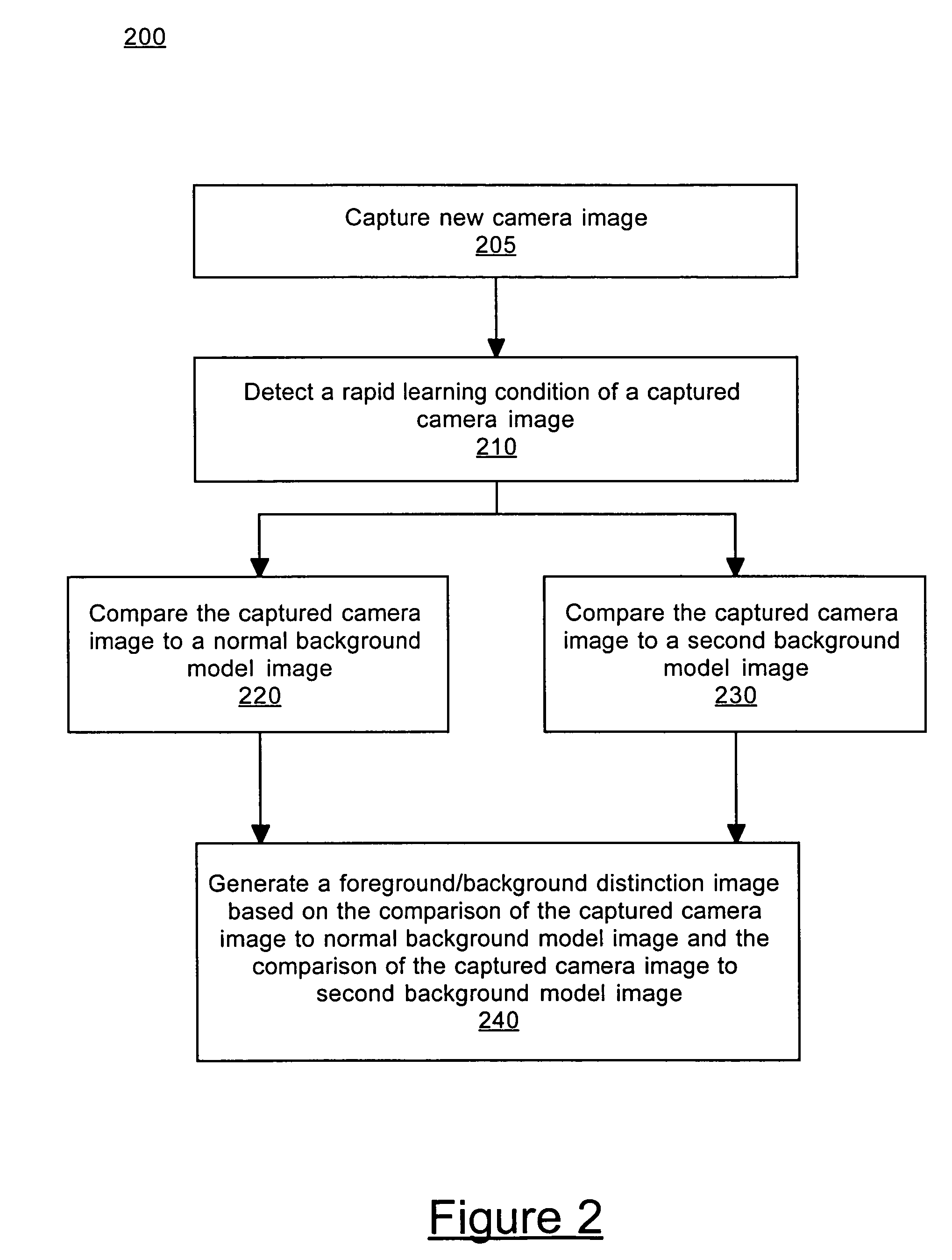

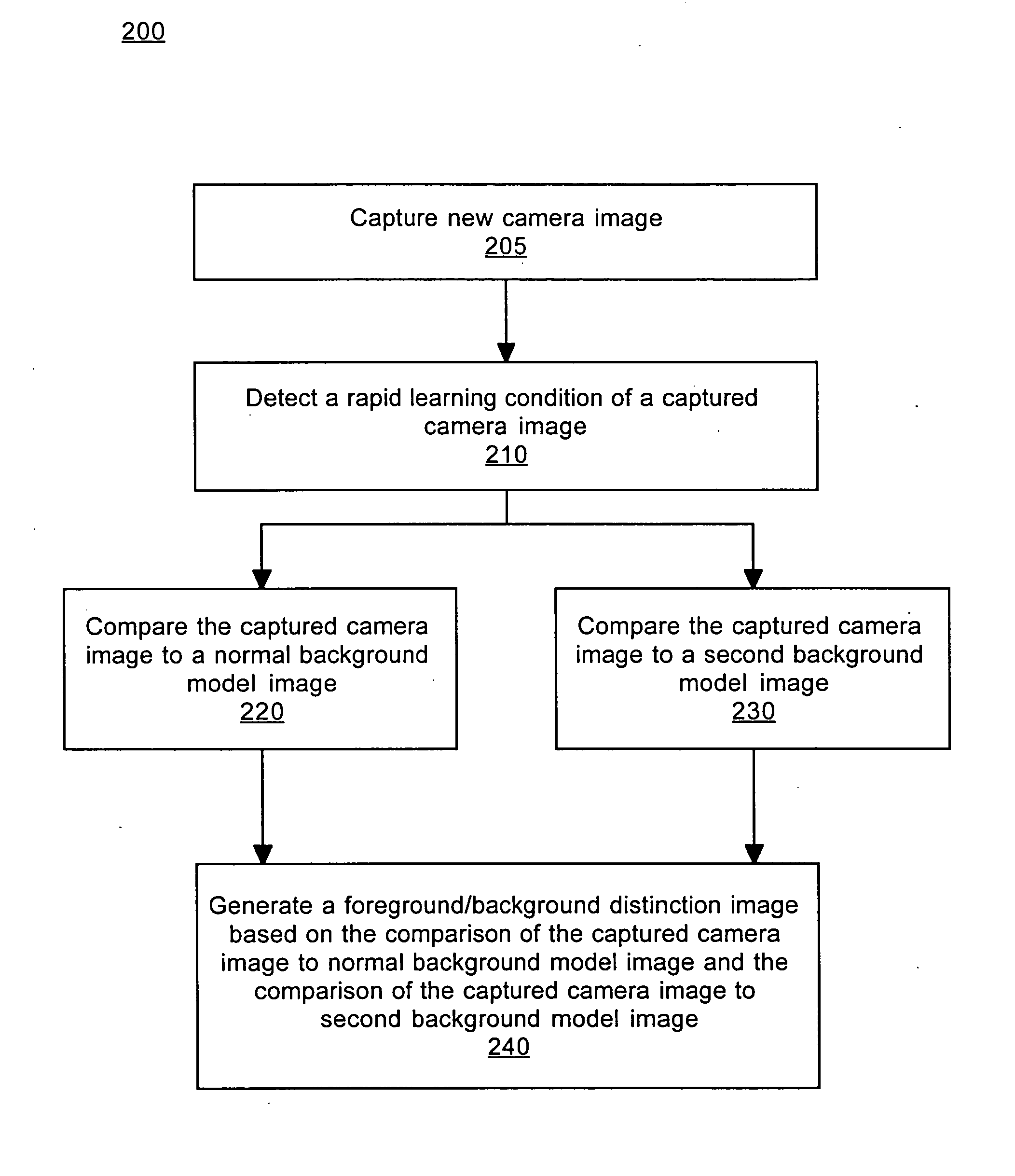

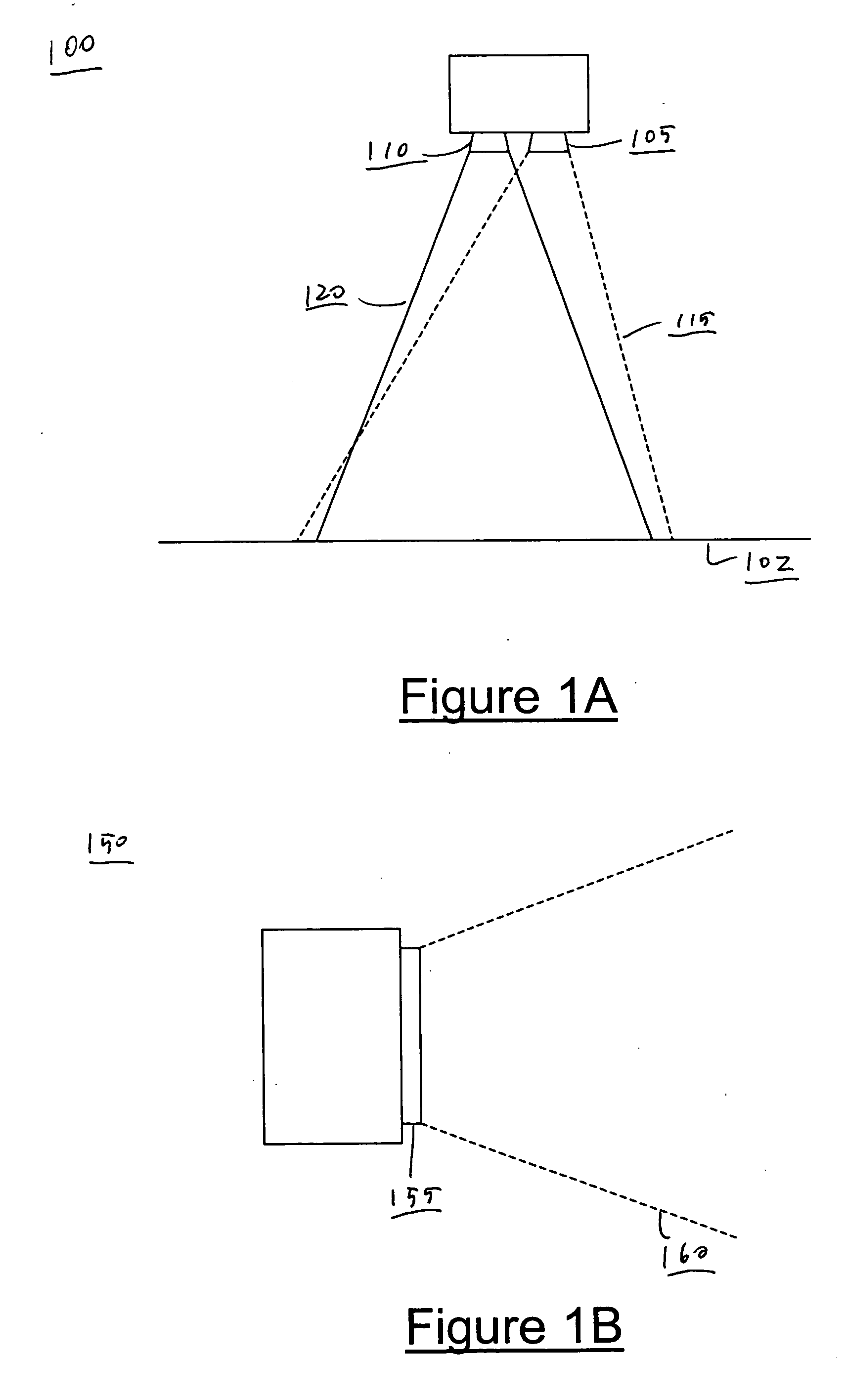

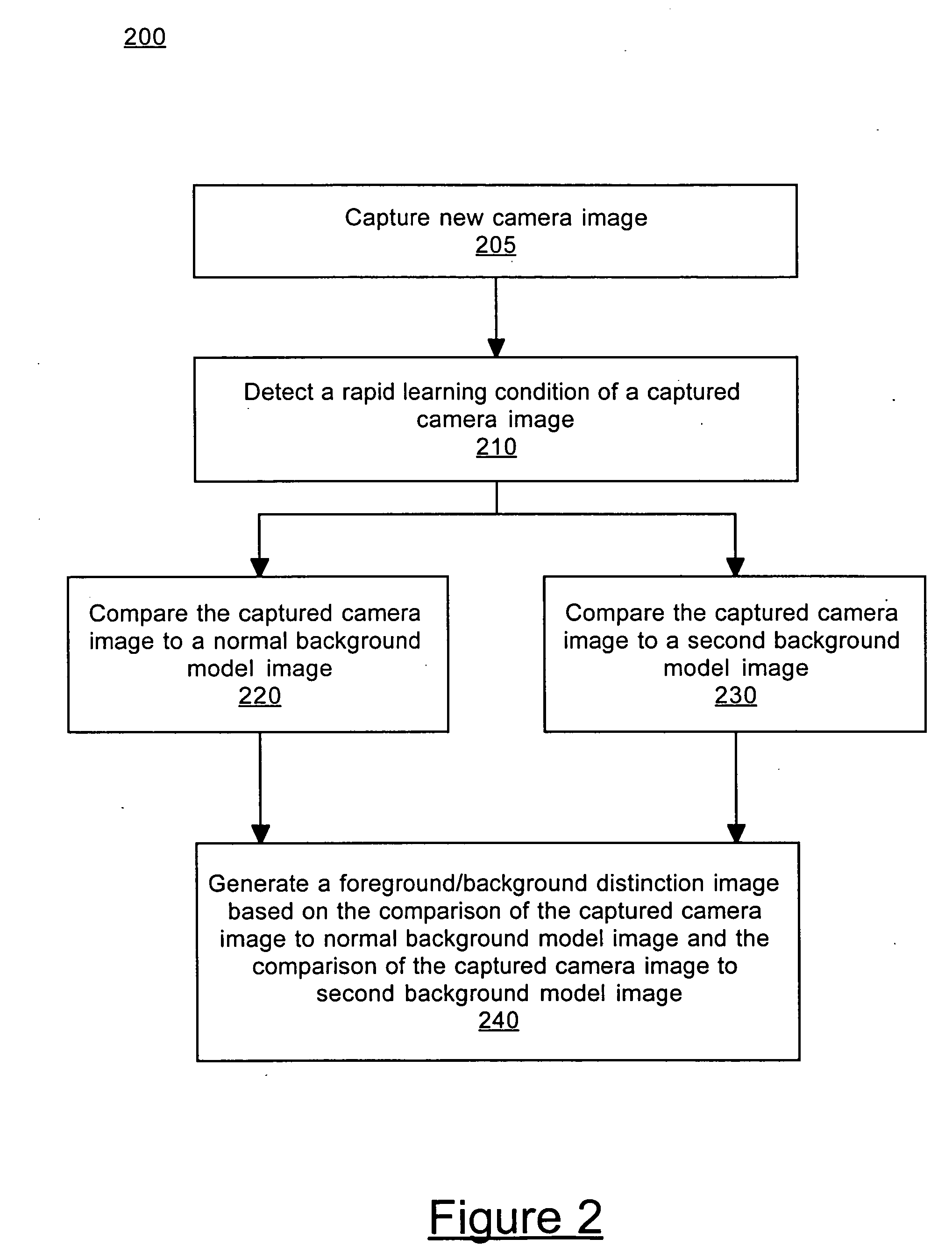

Method and system for processing captured image information in an interactive video display system

A method and system for processing captured image information in an interactive video display system. In one embodiment, a special learning condition of a captured camera image is detected. The captured camera image is compared to a normal background model image and to a second background model image, wherein the second background model is learned at a faster rate than the normal background model. A vision image is generated based on the comparisons. In another embodiment, an object in the captured image information that does not move for a predetermined time period is detected. A burn-in image comprising the object is generated, wherein the burn-in image is operable to allow a vision system of the interactive video display system to classify the object as background.

Owner:MICROSOFT TECH LICENSING LLC

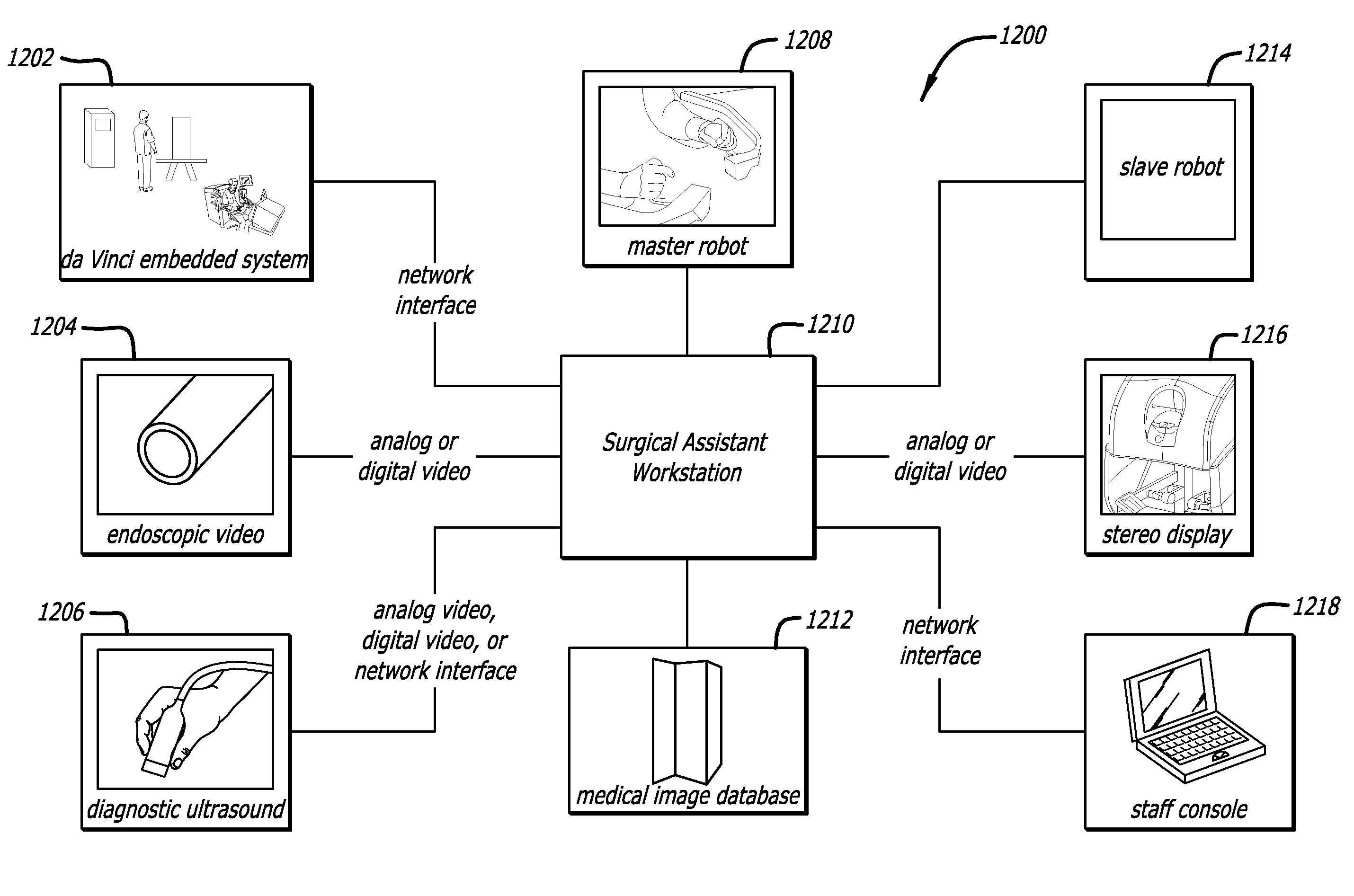

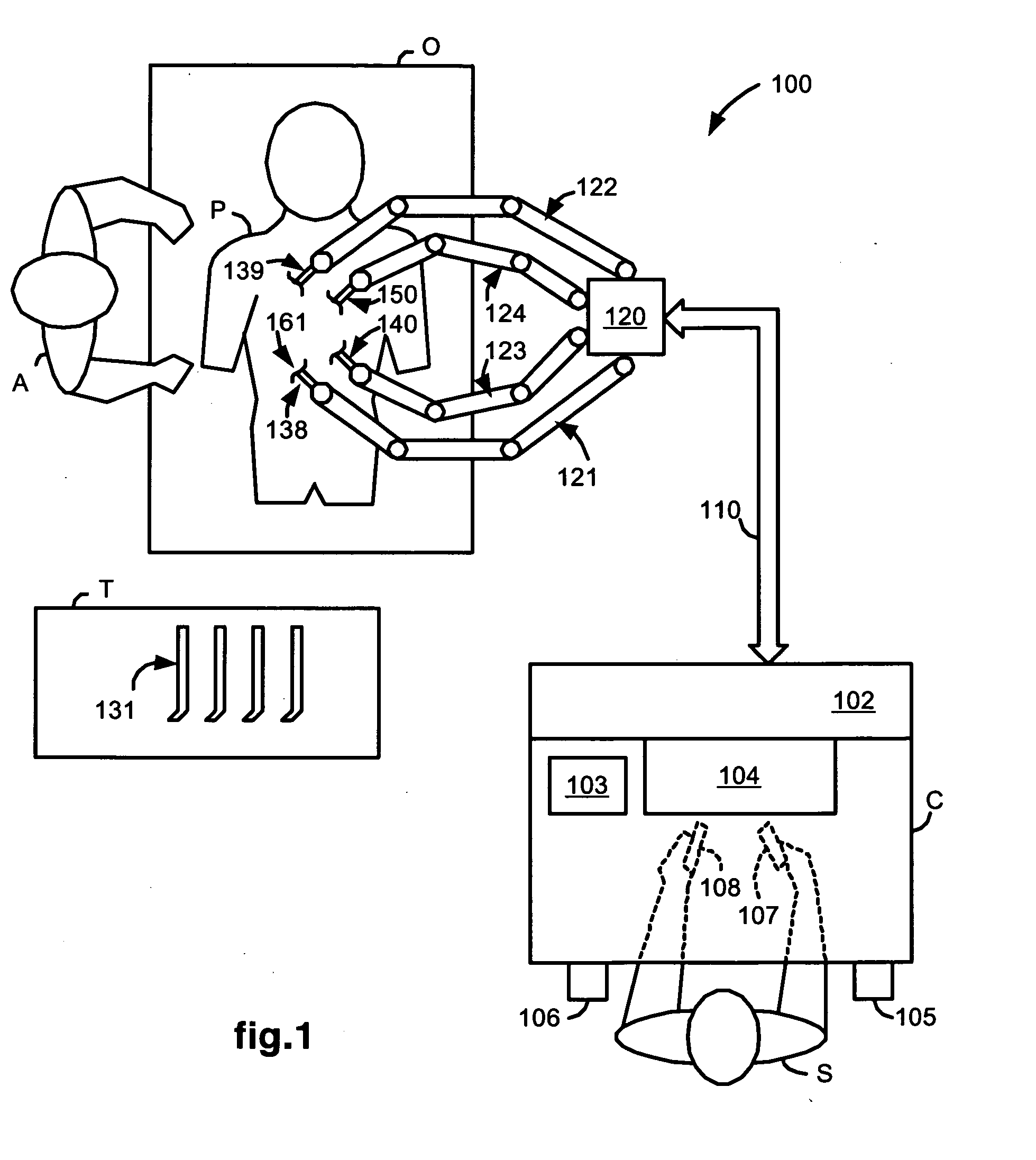

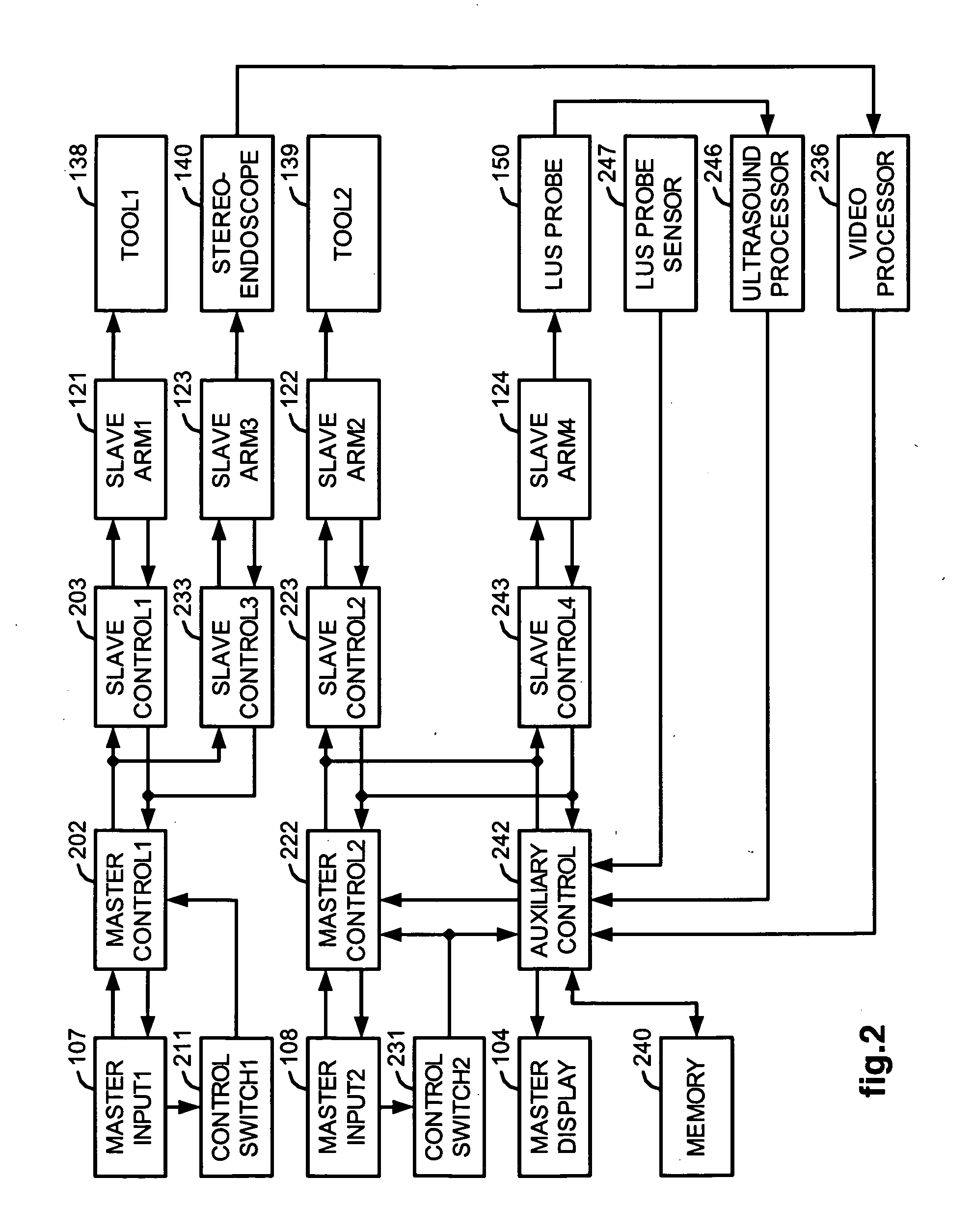

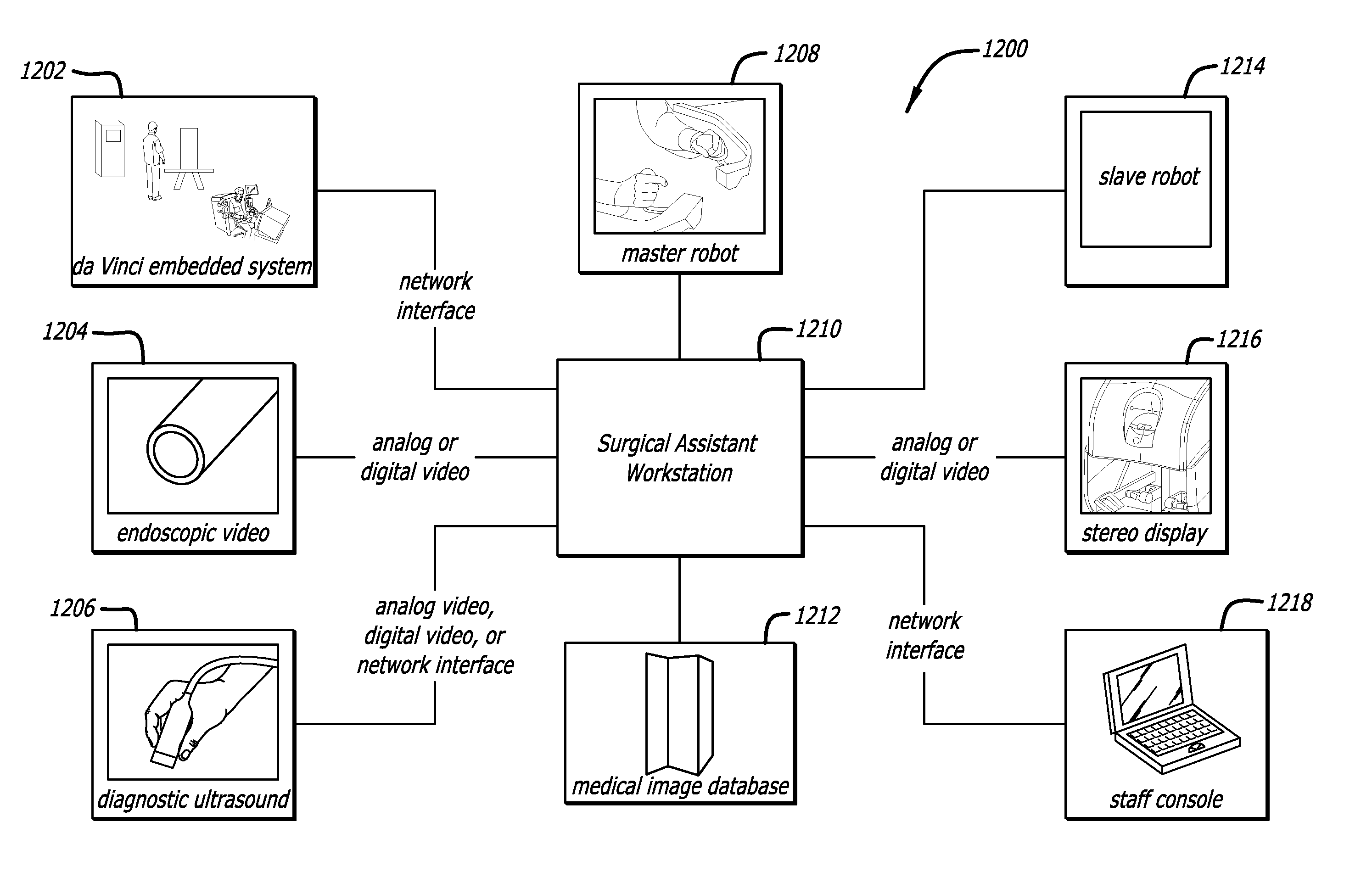

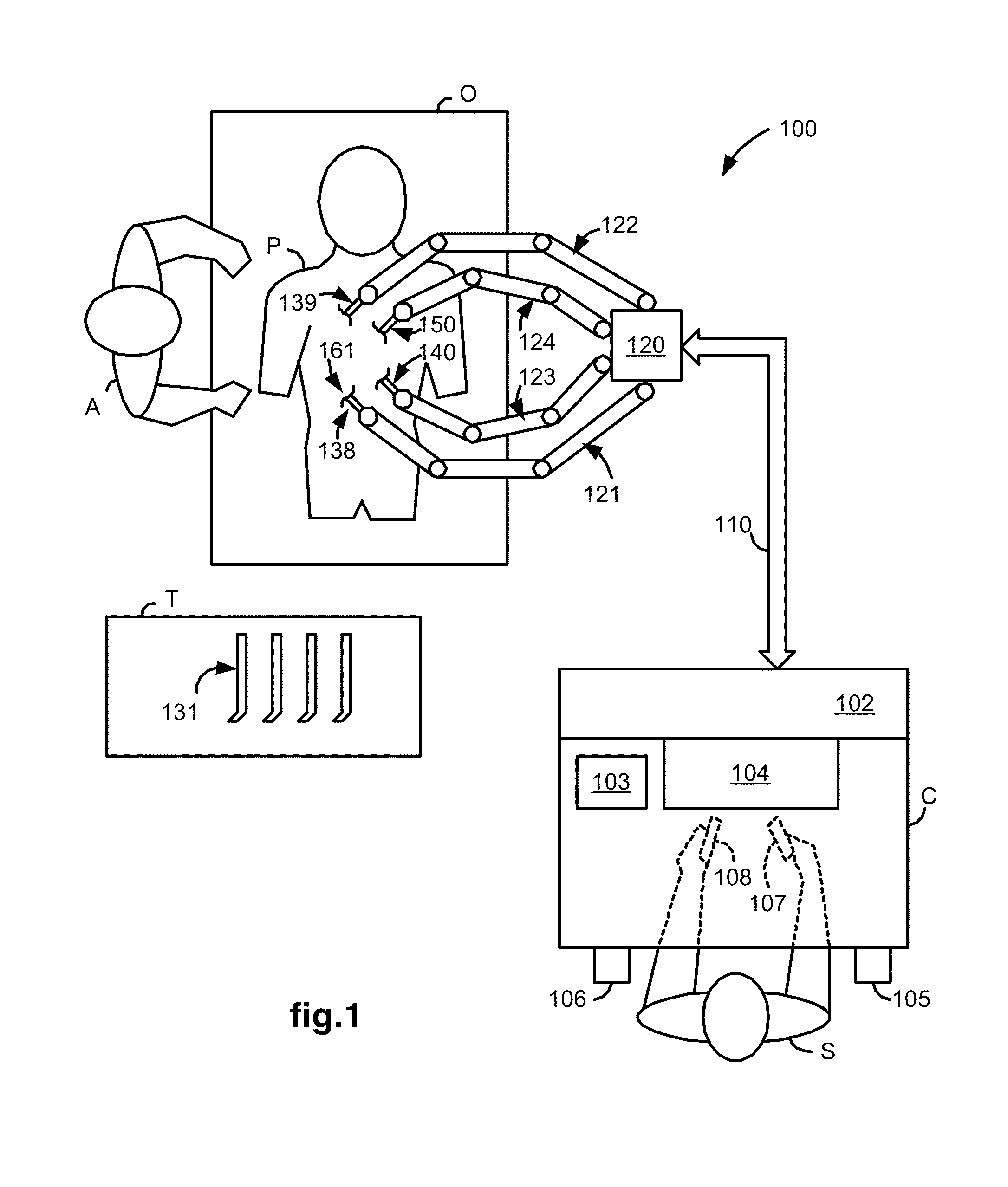

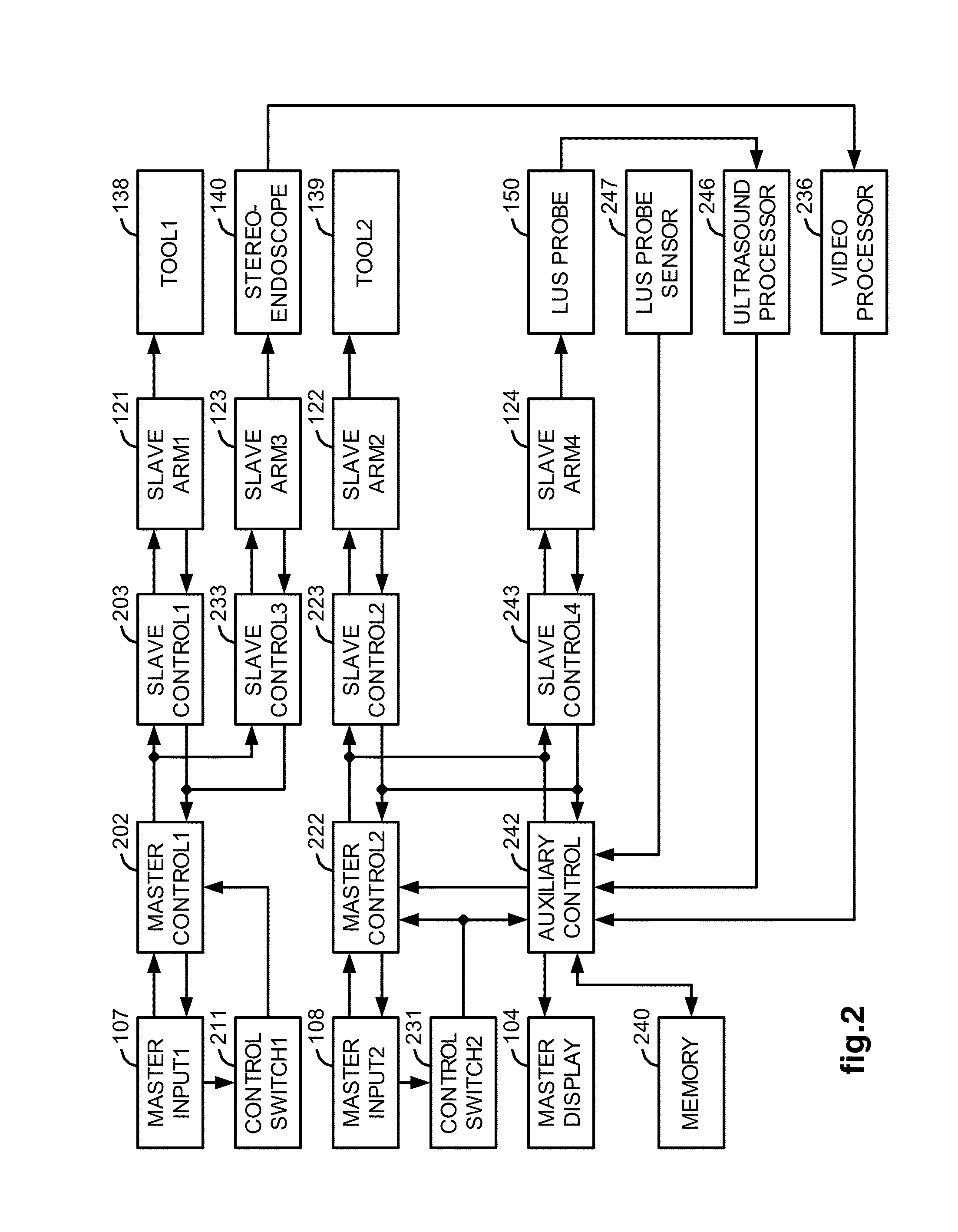

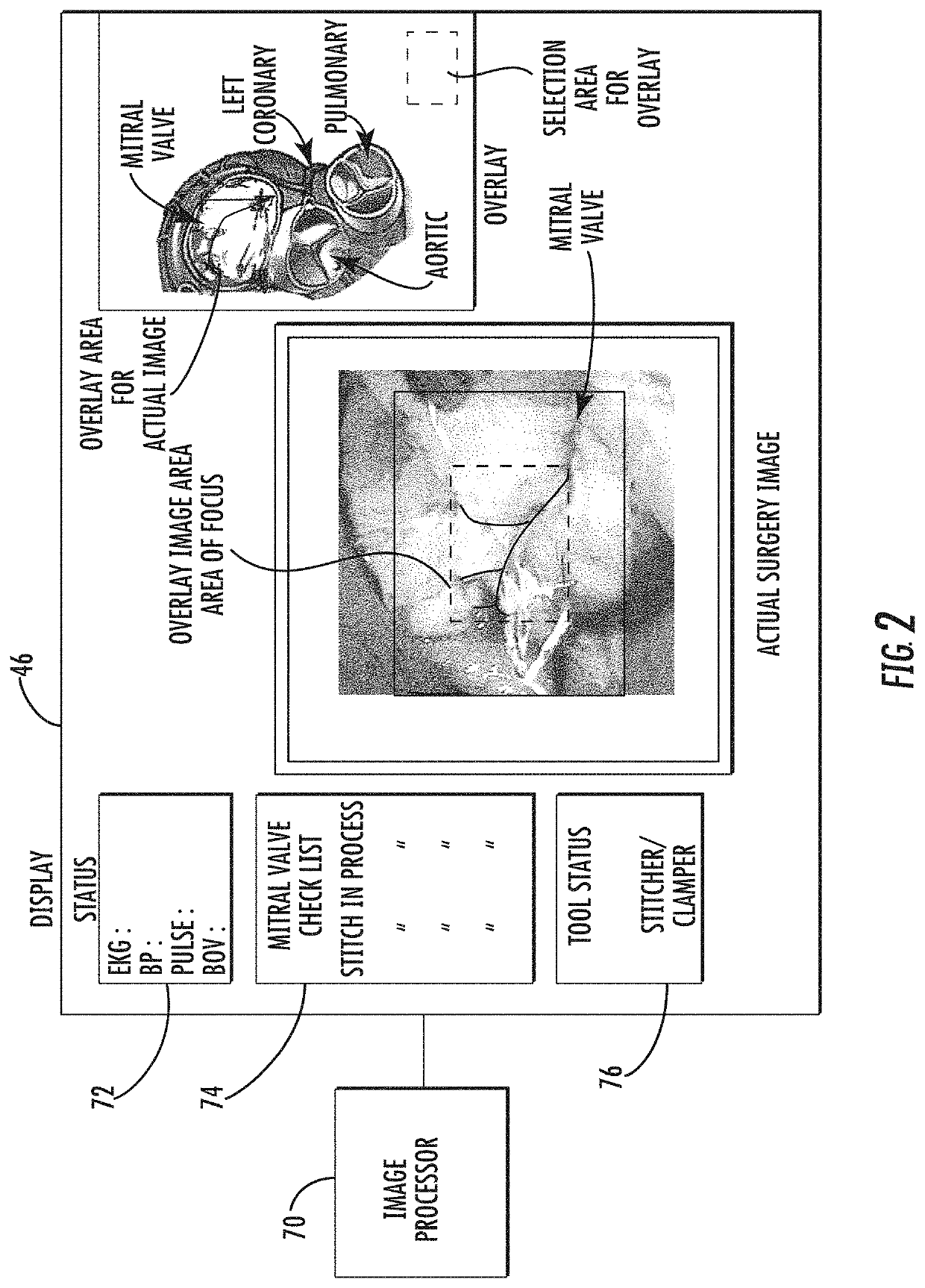

Interactive user interfaces for robotic minimally invasive surgical systems

ActiveUS20090036902A1Ultrasonic/sonic/infrasonic diagnosticsMechanical/radiation/invasive therapiesDisplay deviceSurgical site

In one embodiment of the invention, a method for a minimally invasive surgical system is disclosed. The method includes capturing and displaying camera images of a surgical site on at least one display device at a surgeon console; switching out of a following mode and into a masters-as-mice (MaM) mode; overlaying a graphical user interface (GUI) including an interactive graphical object onto the camera images; and rendering a pointer within the camera images for user interactive control. In the following mode, the input devices of the surgeon console may couple motion into surgical instruments. In the MaM mode, the input devices interact with the GUI and interactive graphical objects. The pointer is manipulated in three dimensions by input devices having at least three degrees of freedom. Interactive graphical objects are related to physical objects in the surgical site or a function thereof and are manipulatable by the input devices.

Owner:THE JOHN HOPKINS UNIV SCHOOL OF MEDICINE +1

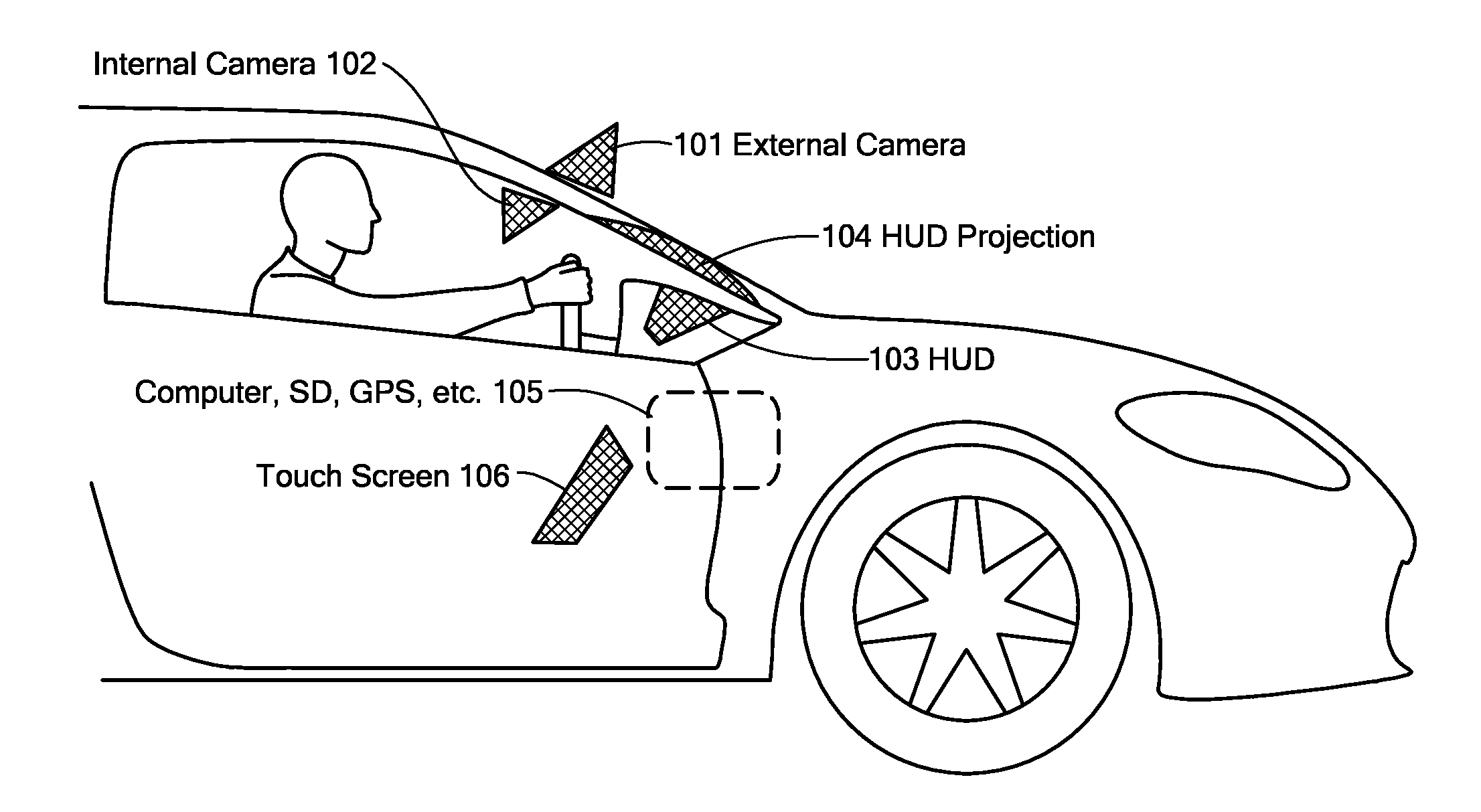

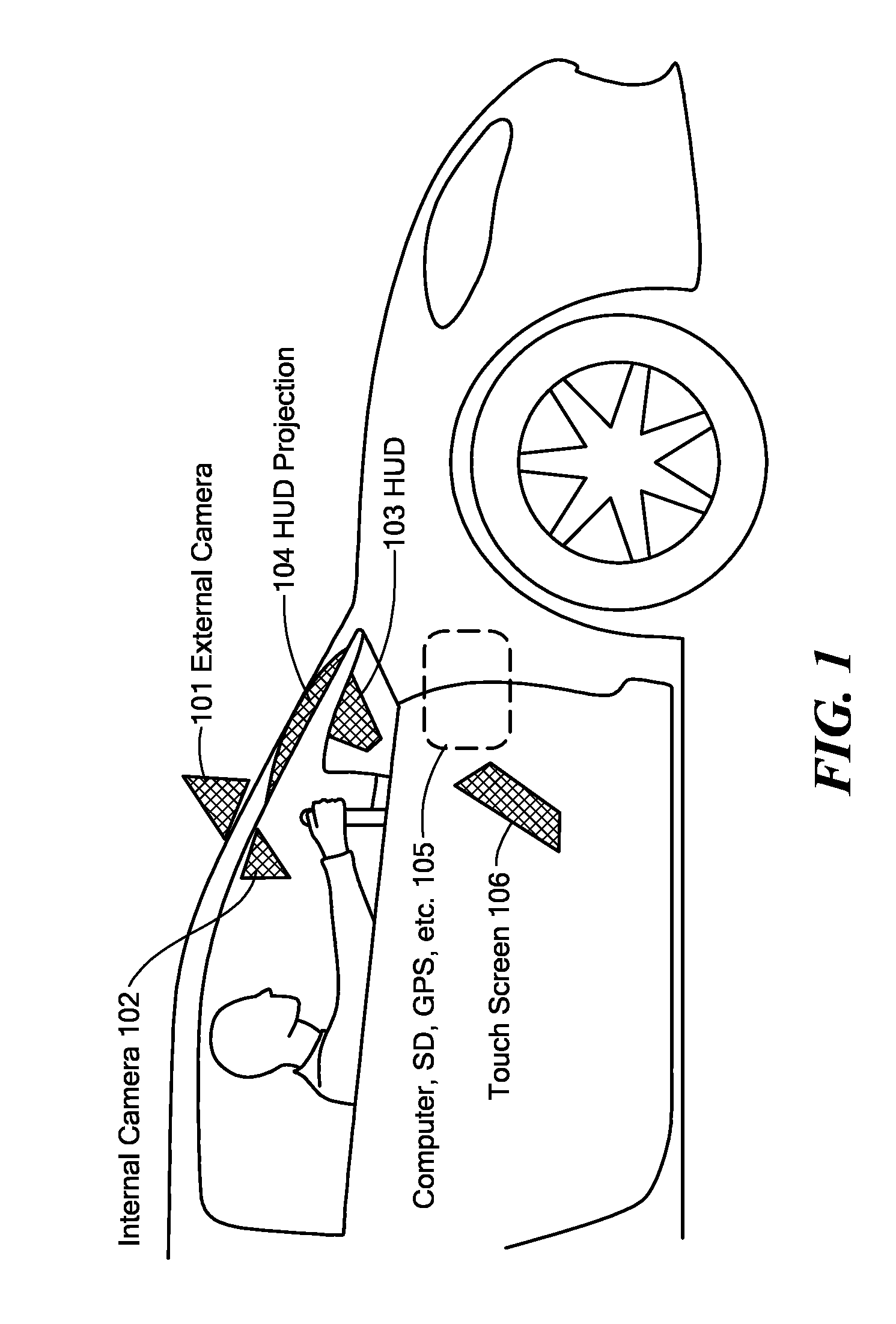

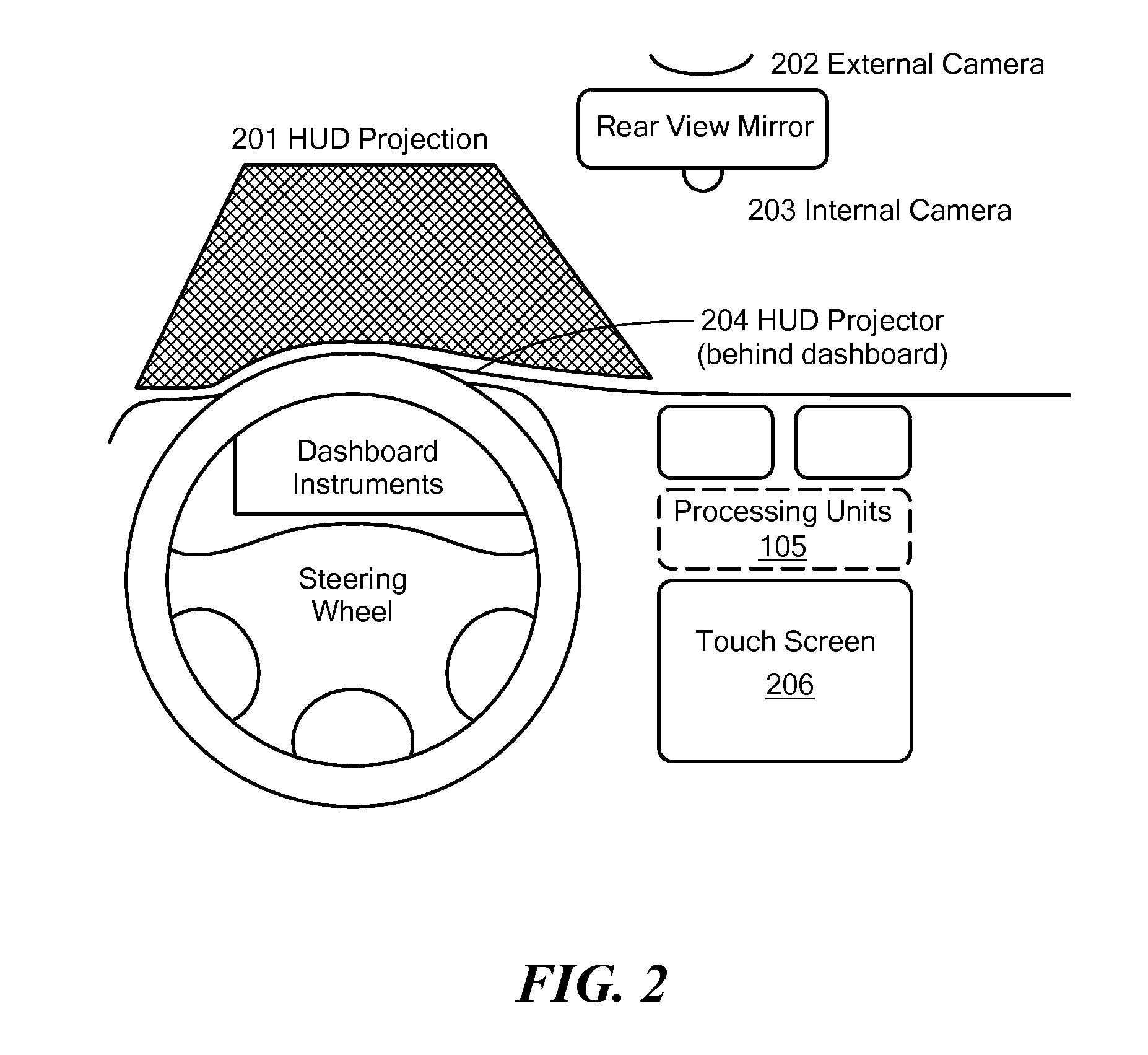

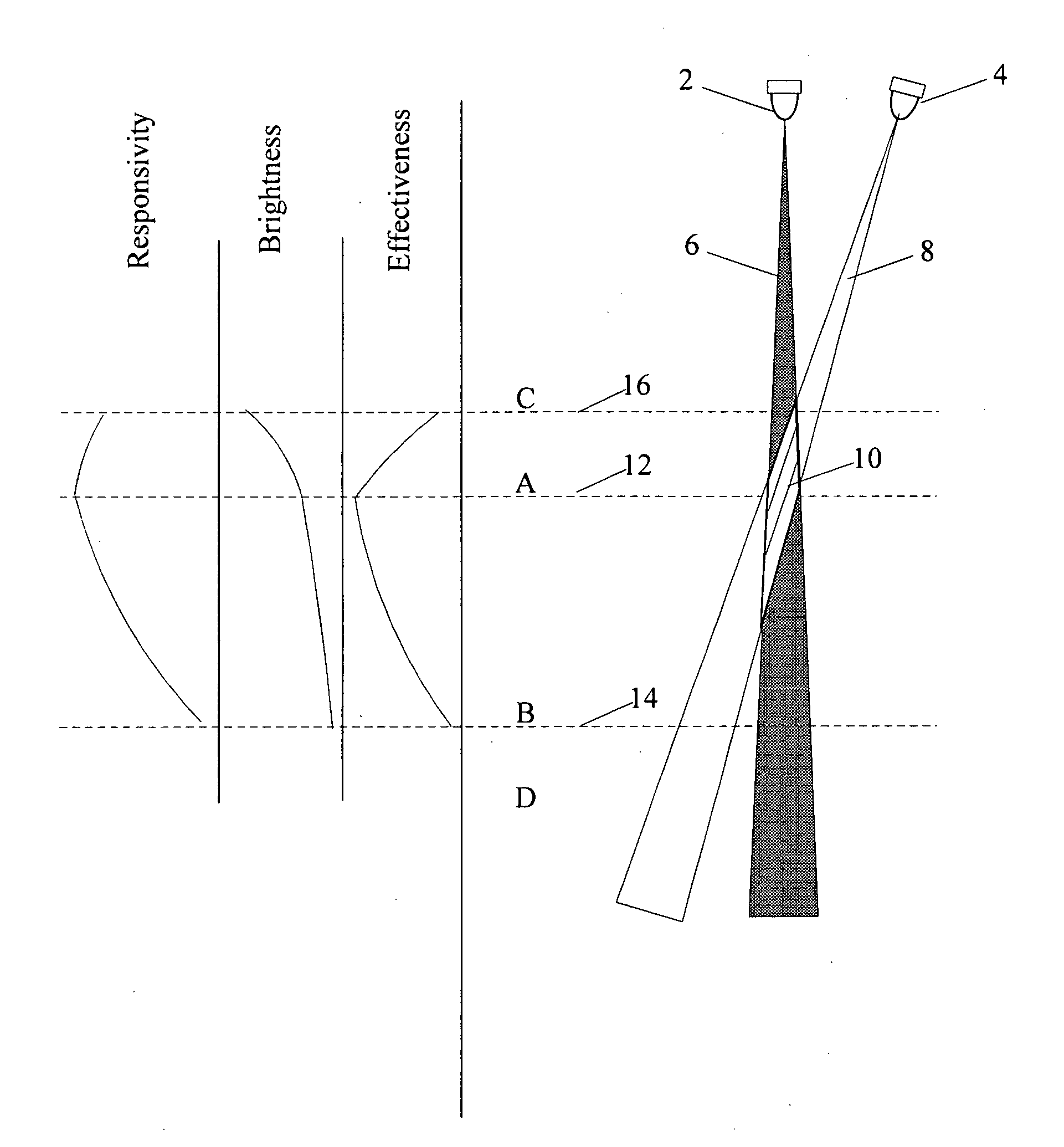

Reducing Driver Distraction Using a Heads-Up Display

InactiveUS20120224060A1Reduce driver distractionReduce distractionsInstrument arrangements/adaptationsColor television detailsHead-up displayCamera image

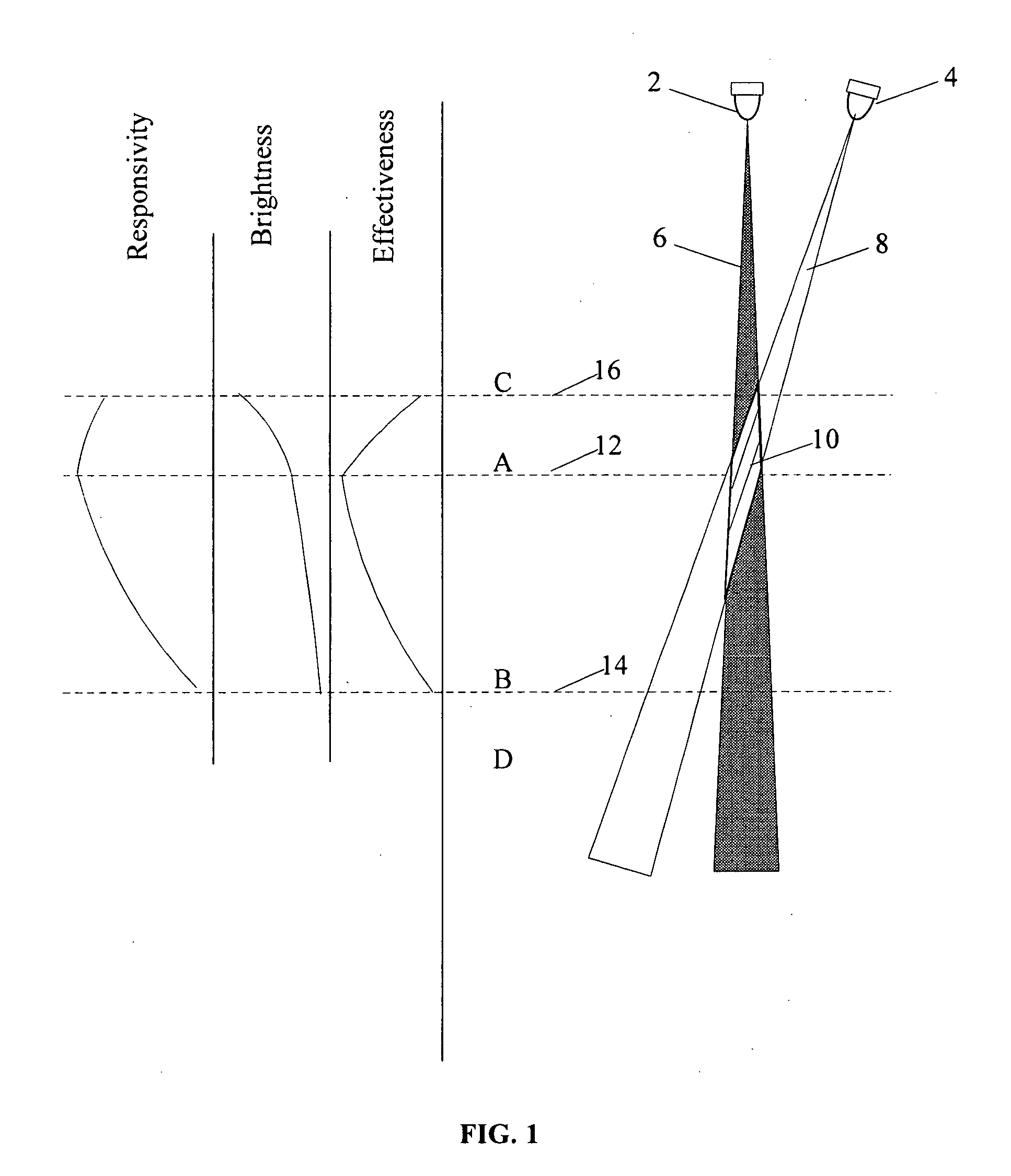

Driver distraction is reduced by providing information only when necessary to assist the driver, and in a visually pleasing manner. Obstacles such as other vehicles, pedestrians, and road defects are detected based on analysis of image data from a forward-facing camera system. An internal camera images the driver to determine a line of sight. Navigational information, such as a line with an arrow, is displayed on a windshield so that it appears to overlay and follow the road along the line of sight. Brightness of the information may be adjusted to correct for lighting conditions, so that the overlay will appear brighter during daylight hours and dimmer during the night. A full augmented reality is modeled and navigational hints are provided accordingly, so that the navigational information indicates how to avoid obstacles by directing the driver around them. Obstacles also may be visually highlighted.

Owner:INTEGRATED NIGHT VISION SYST

Interactive user interfaces for robotic minimally invasive surgical systems

ActiveUS8398541B2Ultrasonic/sonic/infrasonic diagnosticsMechanical/radiation/invasive therapiesMini invasive surgeryDisplay device

In one embodiment of the invention, a method for a minimally invasive surgical system is disclosed. The method includes capturing and displaying camera images of a surgical site on at least one display device at a surgeon console; switching out of a following mode and into a masters-as-mice (MaM) mode; overlaying a graphical user interface (GUI) including an interactive graphical object onto the camera images; and rendering a pointer within the camera images for user interactive control. In the following mode, the input devices of the surgeon console may couple motion into surgical instruments. In the MaM mode, the input devices interact with the GUI and interactive graphical objects. The pointer is manipulated in three dimensions by input devices having at least three degrees of freedom. Interactive graphical objects are related to physical objects in the surgical site or a function thereof and are manipulatable by the input devices.

Owner:THE JOHN HOPKINS UNIV SCHOOL OF MEDICINE +1

User interfaces for electrosurgical tools in robotic surgical systems

A method for a minimally invasive surgical system is disclosed including capturing camera images of a surgical site; generating a graphical user interface (GUI) including a first colored border portion in a first side and a second colored border in a second side opposite the first side; and overlaying the GUI onto the captured camera images of the surgical site for display on a display device of a surgeon console. The GUI provides information to a user regarding the first electrosurgical tool and the second tool in the surgical site that is concurrently displayed by the captured camera images. The first colored border portion in the GUI indicates that the first electrosurgical tool is controlled by a first master grip of the surgeon console and the second colored border portion indicates the tool type of the second tool controlled by a second master grip of the surgeon console.

Owner:INTUITIVE SURGICAL OPERATIONS INC

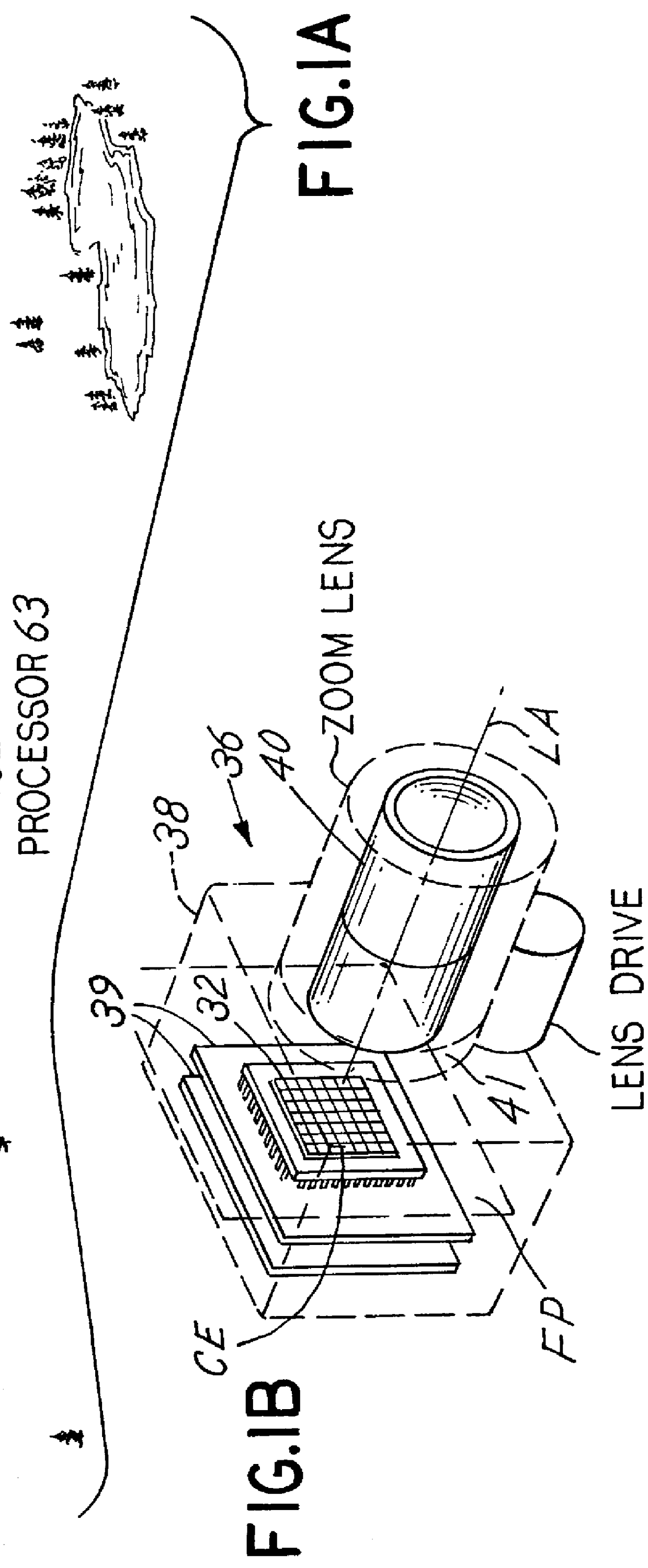

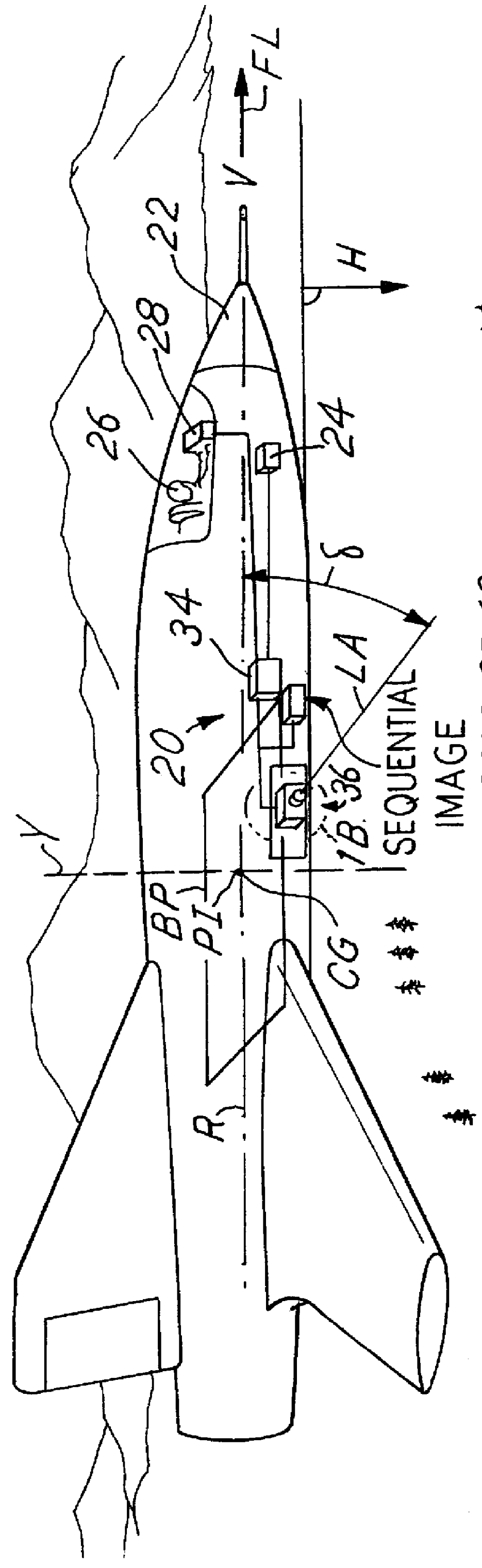

Autonomous electro-optical framing camera system with constant ground resolution, unmanned airborne vehicle therefor, and methods of use

InactiveUS6130705AReduce vibrationIncrease flexibilityTelevision system detailsOptical rangefindersCamera imageImage resolution

An aerial reconnaissance system generates imagery of a scene that meets resolution or field of view objectives automatically and autonomously. In one embodiment, a passive method of automatically calculating range to the target from a sequence of airborne reconnaissance camera images is used. Range information is use for controlling the adjustment of a zoom lens to yield frame-to-frame target imagery that has a desired, e.g., constant, ground resolution or field of view at the center of the image despite rapid and significant aircraft altitude and attitude changes. Image to image digital correlation is used to determine the displacement of the target at the focal plane. Camera frame rate and aircraft INS / GPS information is used to accurately determine the frame to frame distance (baseline). The calculated range to target is then used to drive a zoom lens servo mechanism to the proper focal length to yield the desired resolution or field of view for the next image. The method may be performed based on parameters other than range, such as aircraft height and stand off distance.

Owner:THE BF GOODRICH CO

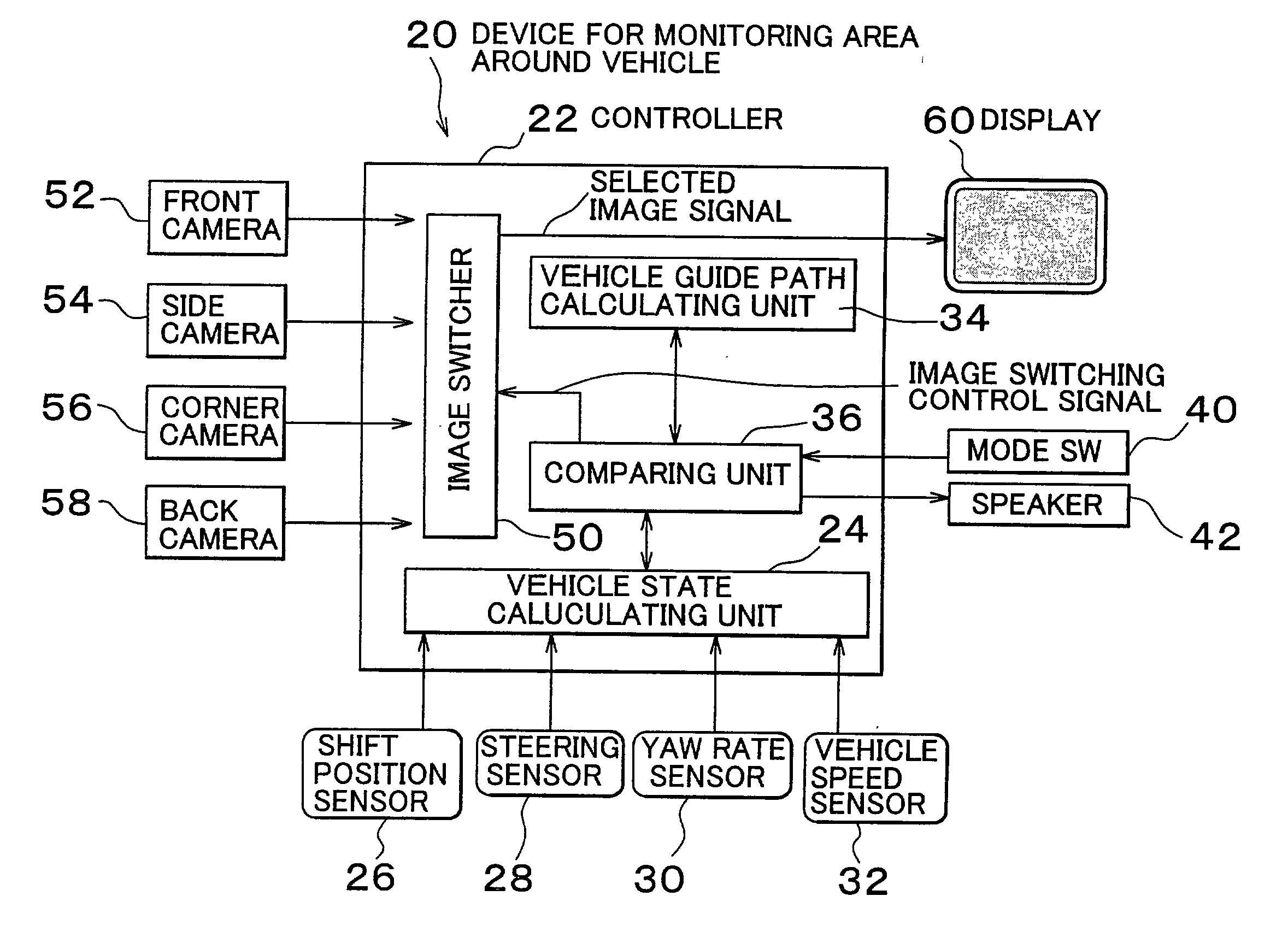

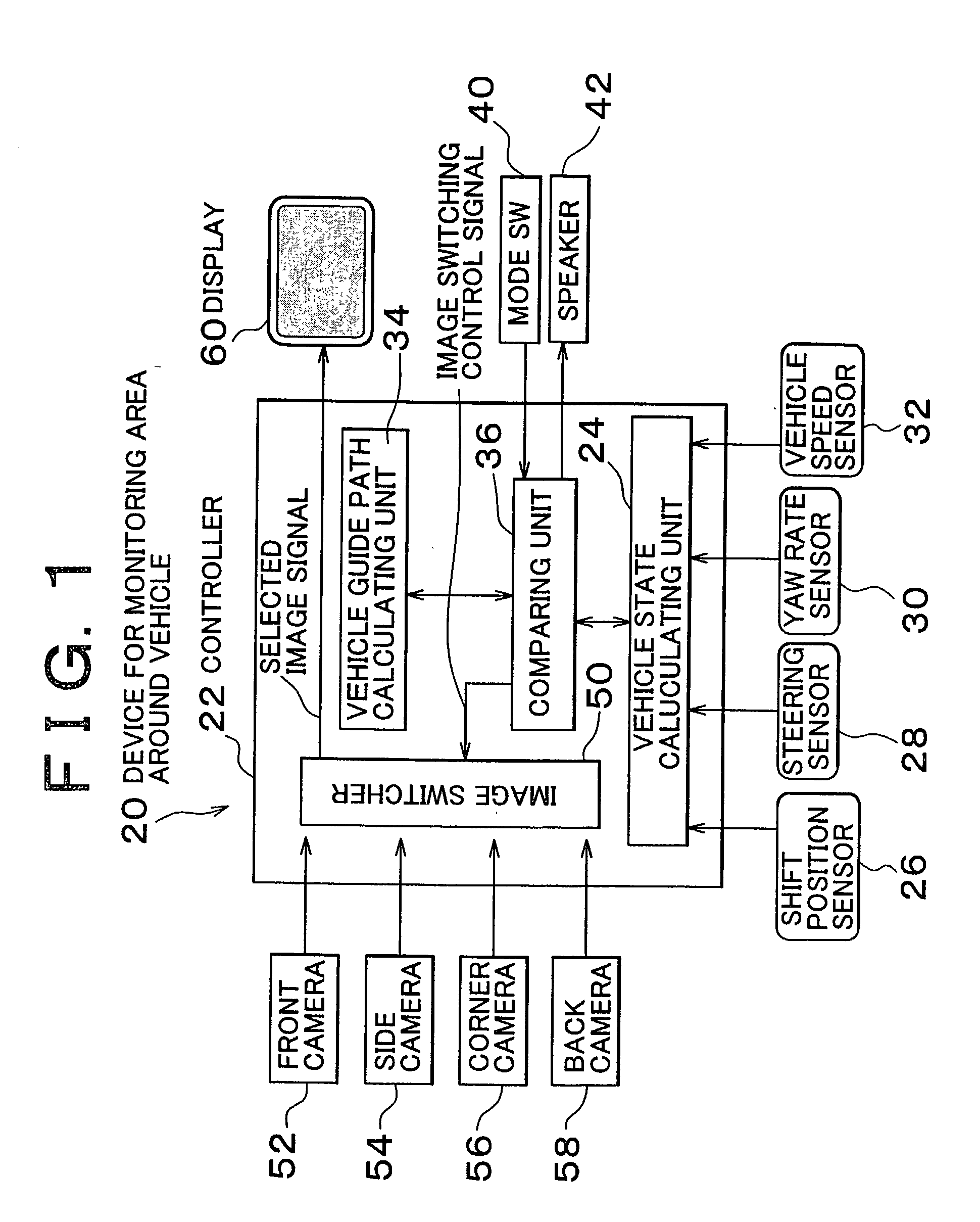

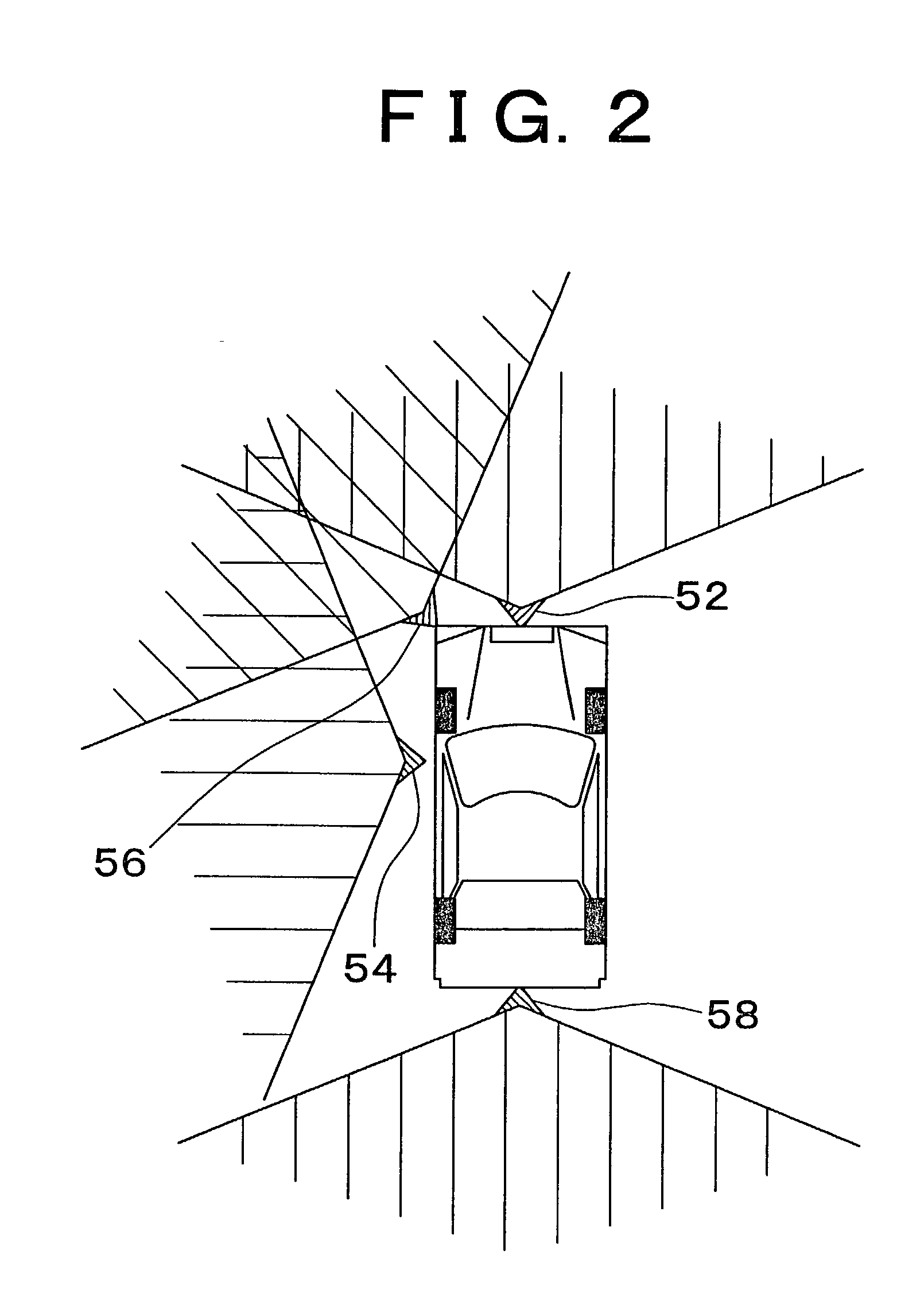

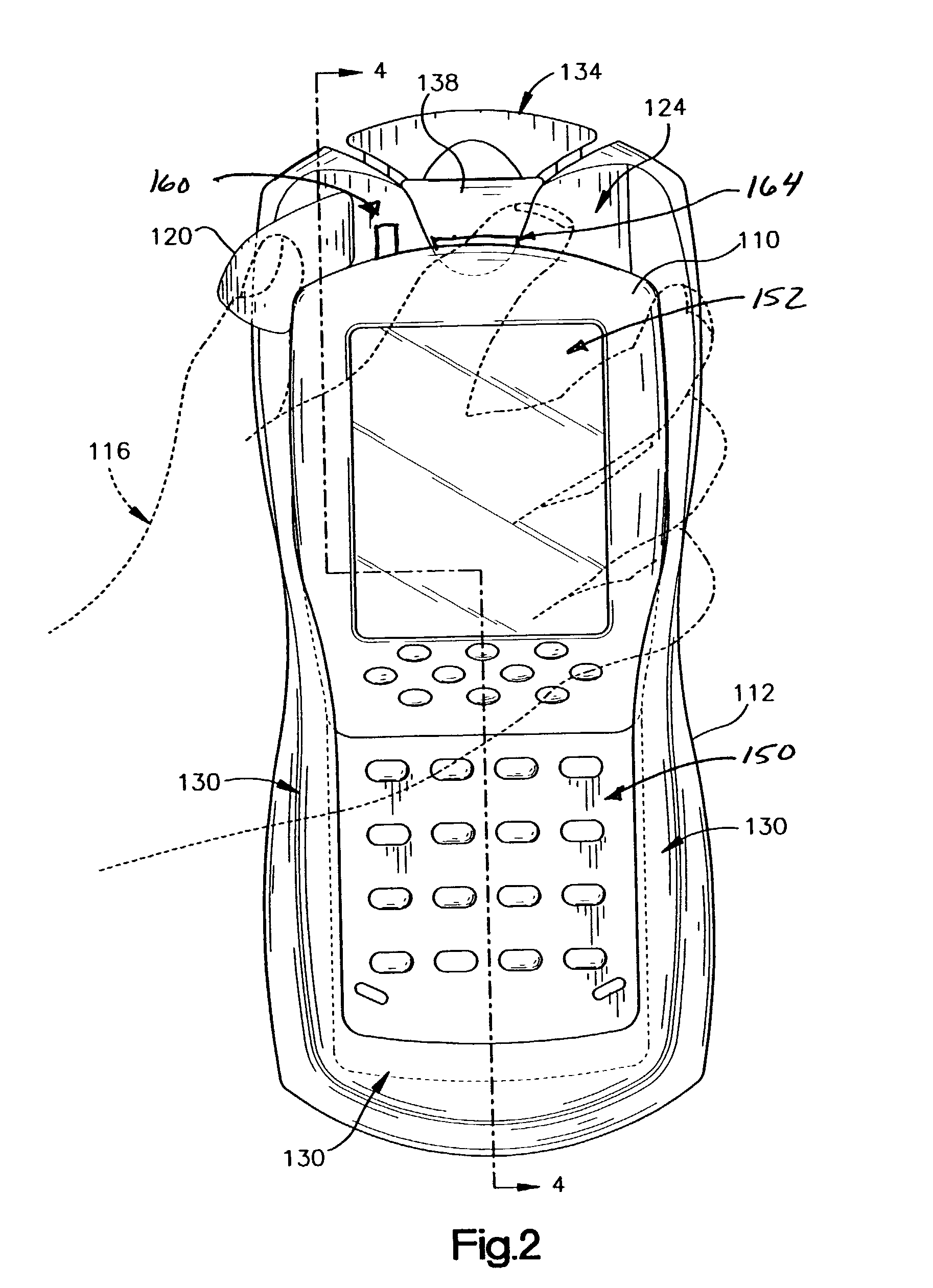

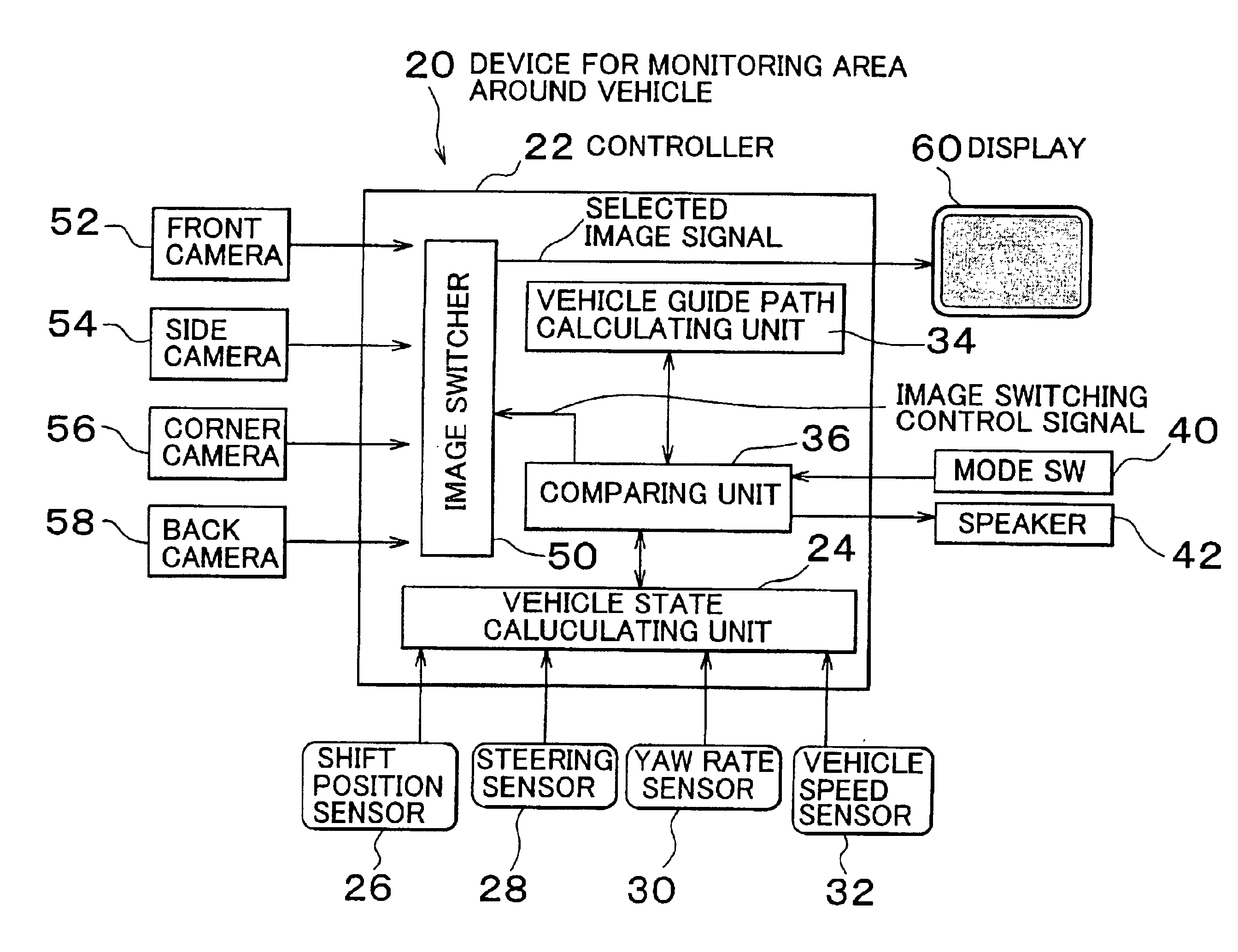

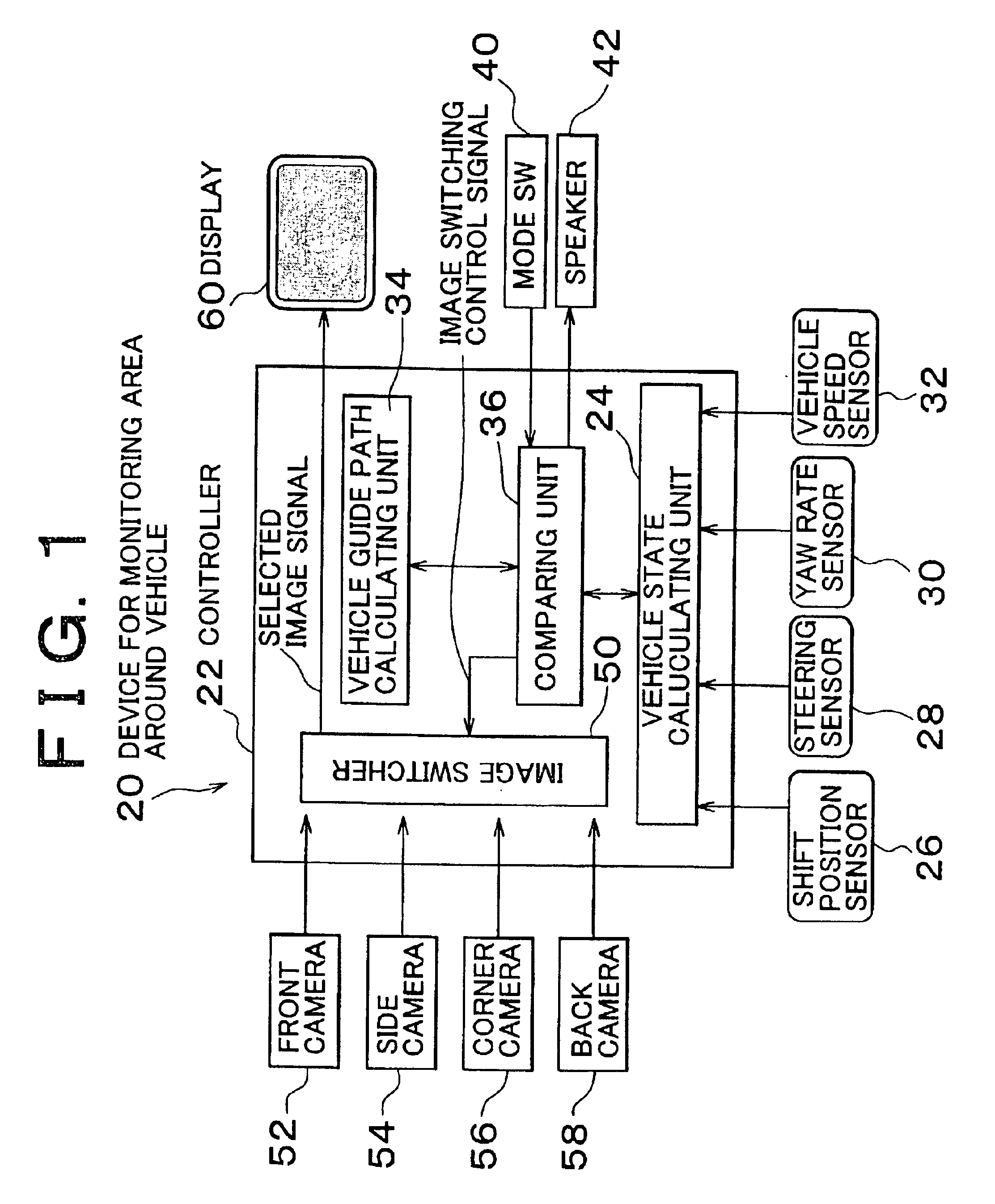

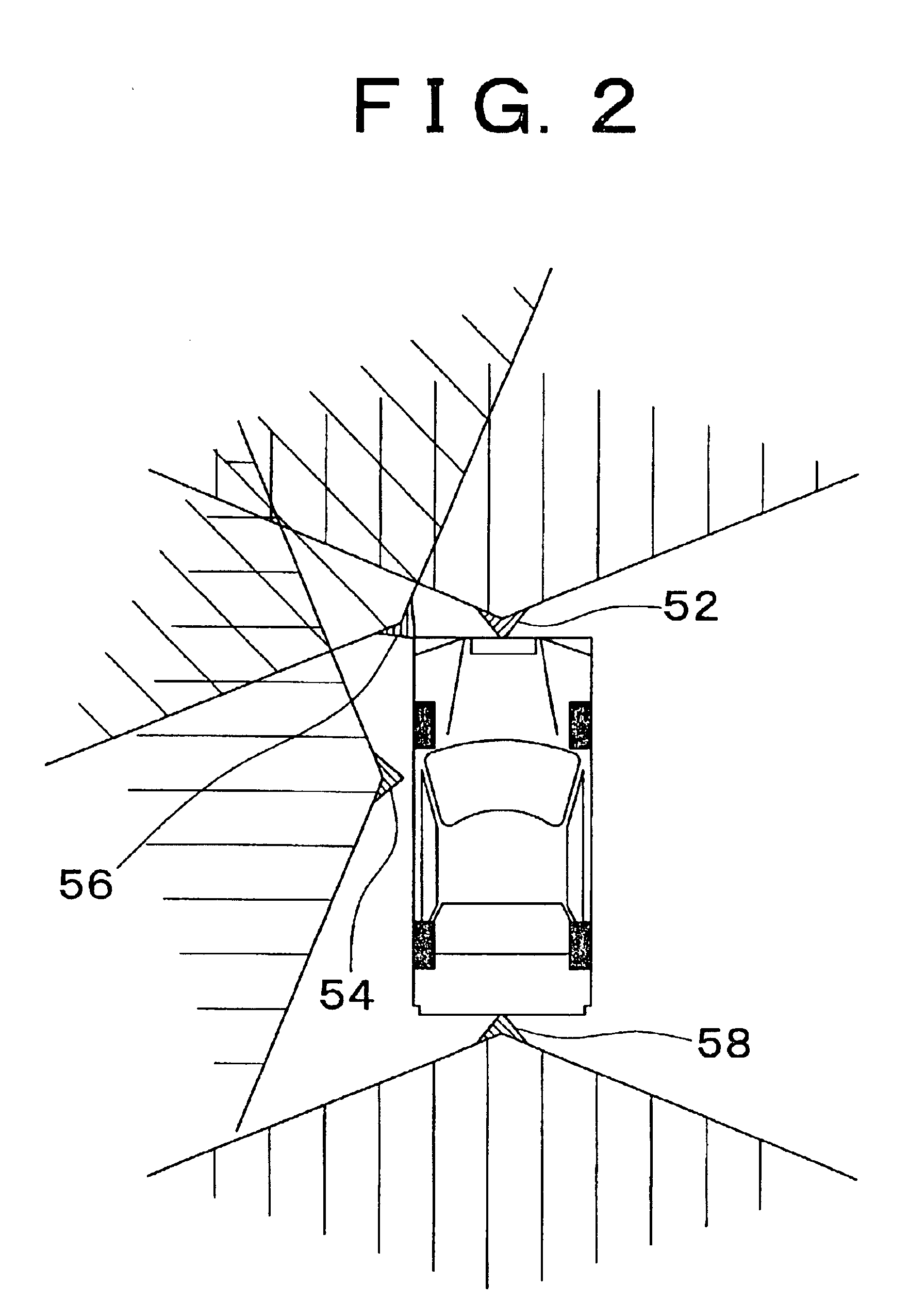

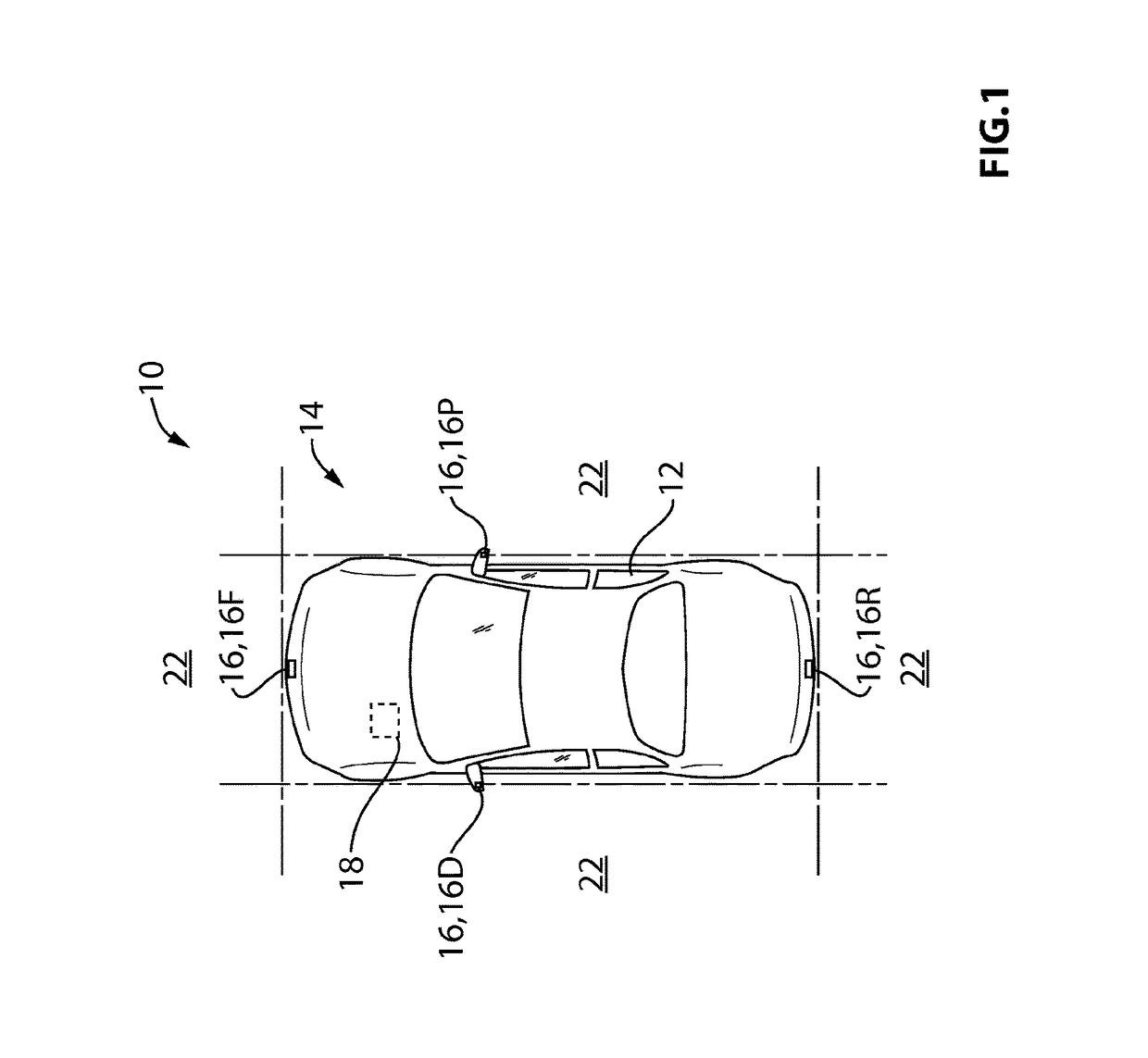

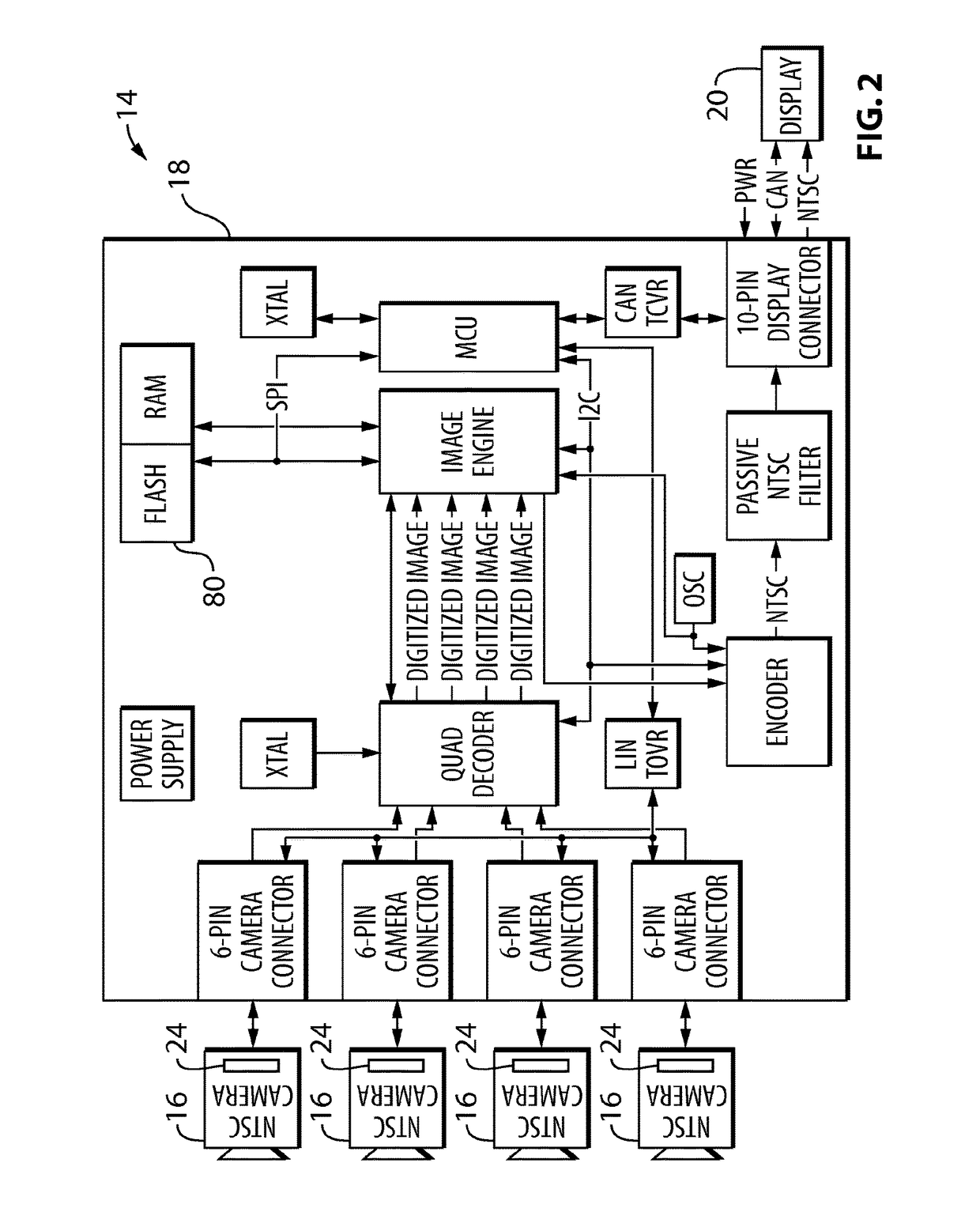

Device for monitoring area around vehicle

InactiveUS20030080877A1Raise the possibilityDigital data processing detailsIndication of parksing free spacesCamera imageDisplay device

A back camera image from a back camera mounted on a rear portion of a vehicle body is displayed on a display mounted on the console inside the vehicle cabin when the vehicle is parallel parking. After the vehicle passes a steering direction reversal point in this state, a corner camera image from a corner camera mounted on a corner portion of a front portion of the vehicle body is displayed on the display when the vehicle nears a vehicle parked in front, or, more specifically, when an angle of the vehicle after it has reached the steering direction reversal point has reached a predetermined value. Guidance images may be overlaid upon the camera images during some or all of the parallel parking maneuver.

Owner:TOYOTA JIDOSHA KK +1

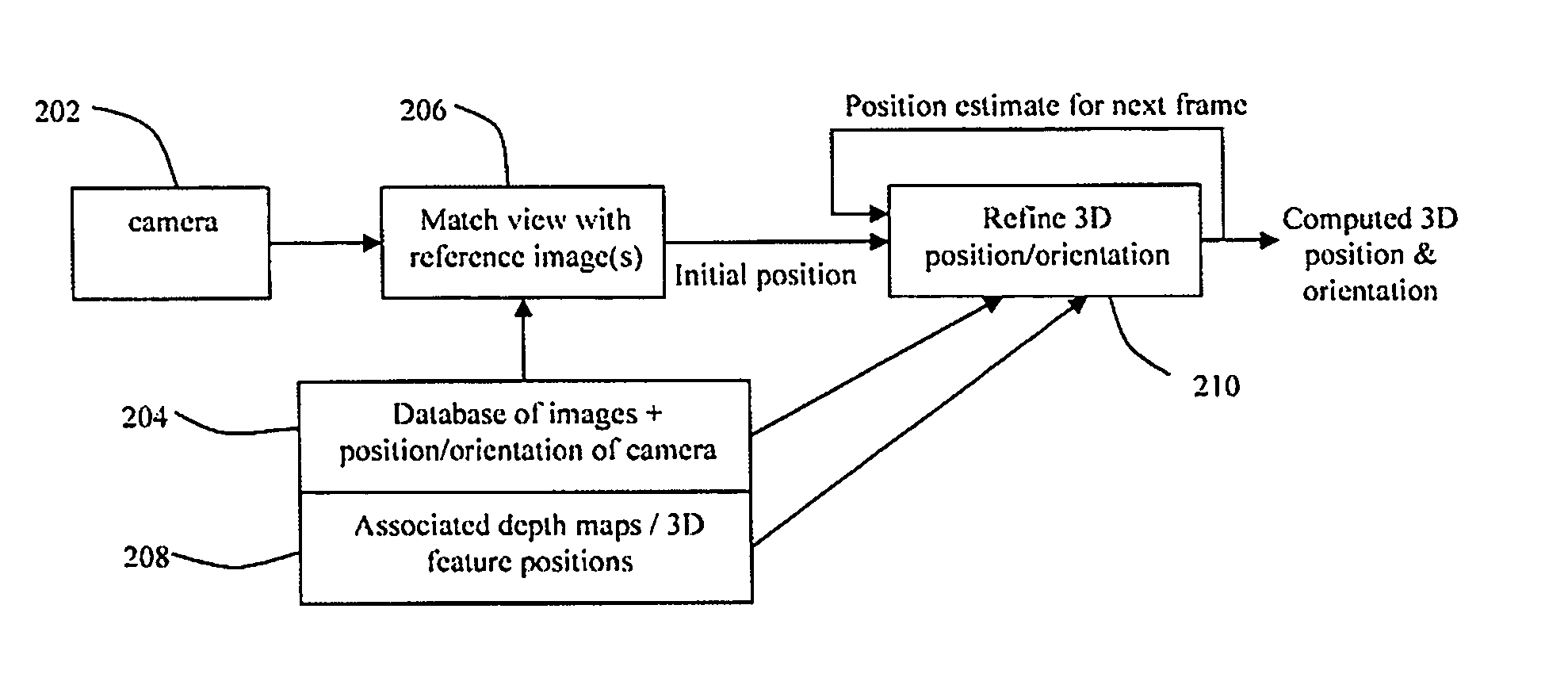

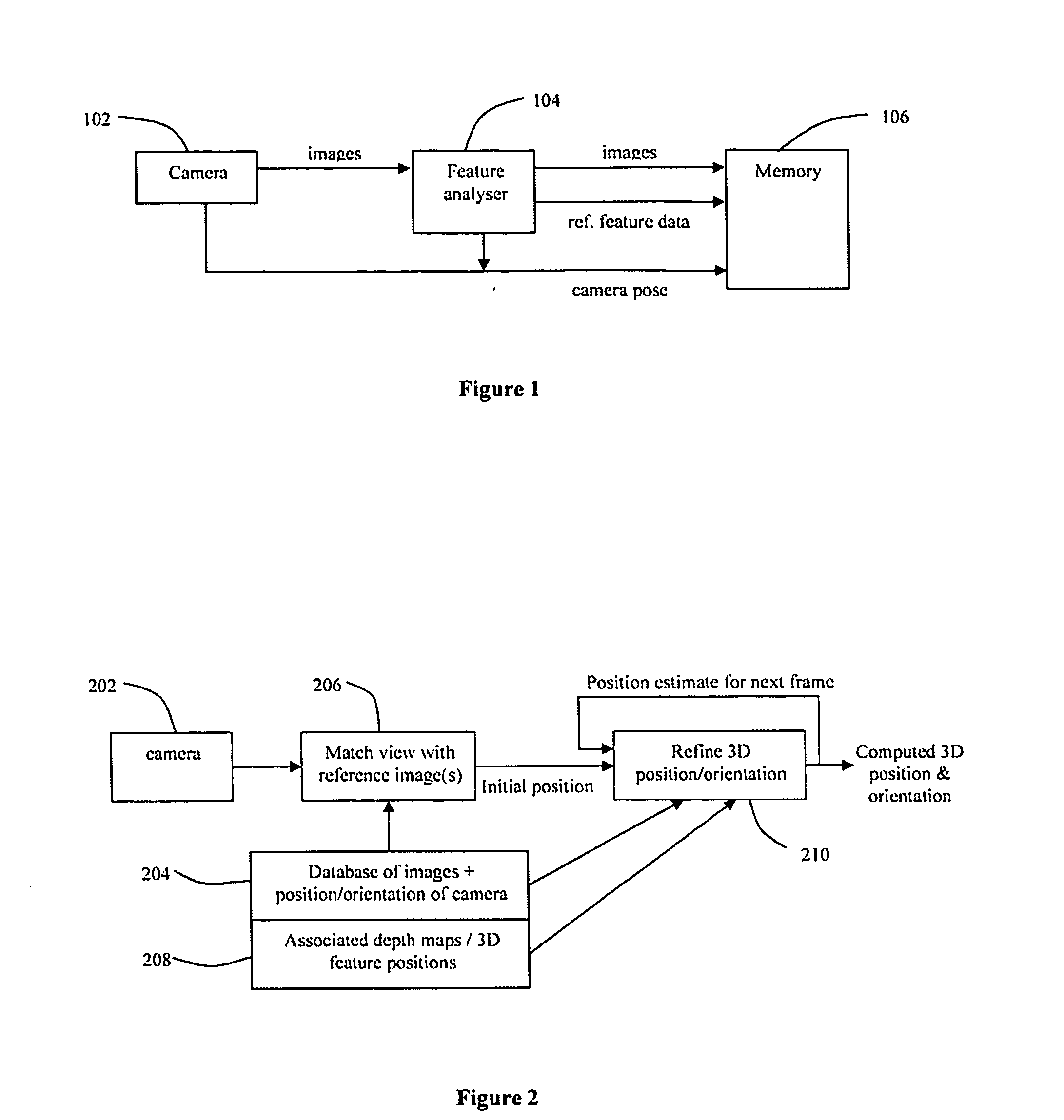

System and method for position determination

A method for determining the position and orientation of a camera, which may not rely on the use of special markers. A set of reference images may be stored, together with camera pose and feature information for each image. A first estimate of camera position is determined by comparing the current camera image with the set of reference images. A refined estimate can be obtained using features from the current image matched in a subset of similar reference images, and in particular, the 3D positions of those features. A consistent 3D model of all stored feature information need not be provided.

Owner:BRITISH BROADCASTING CORP

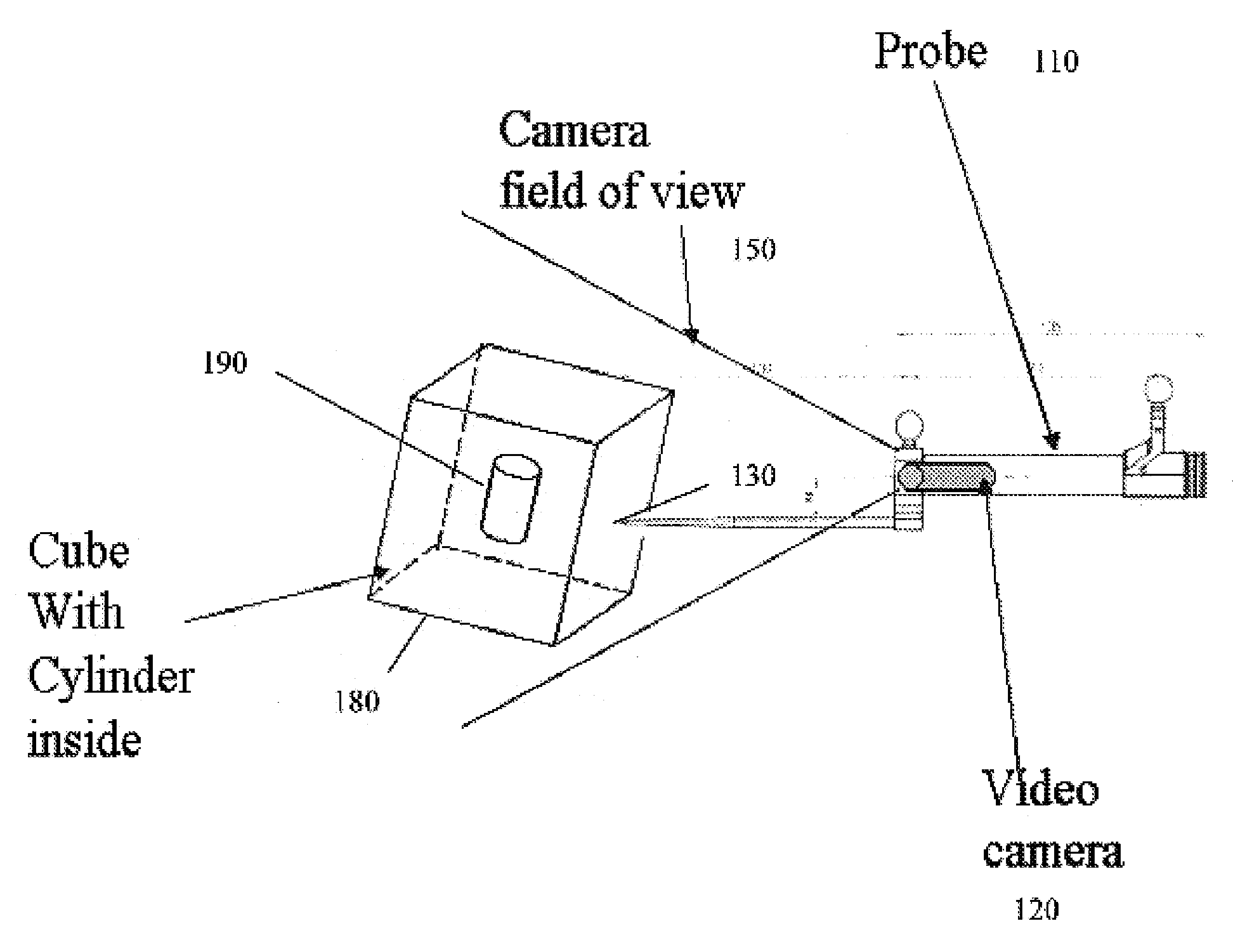

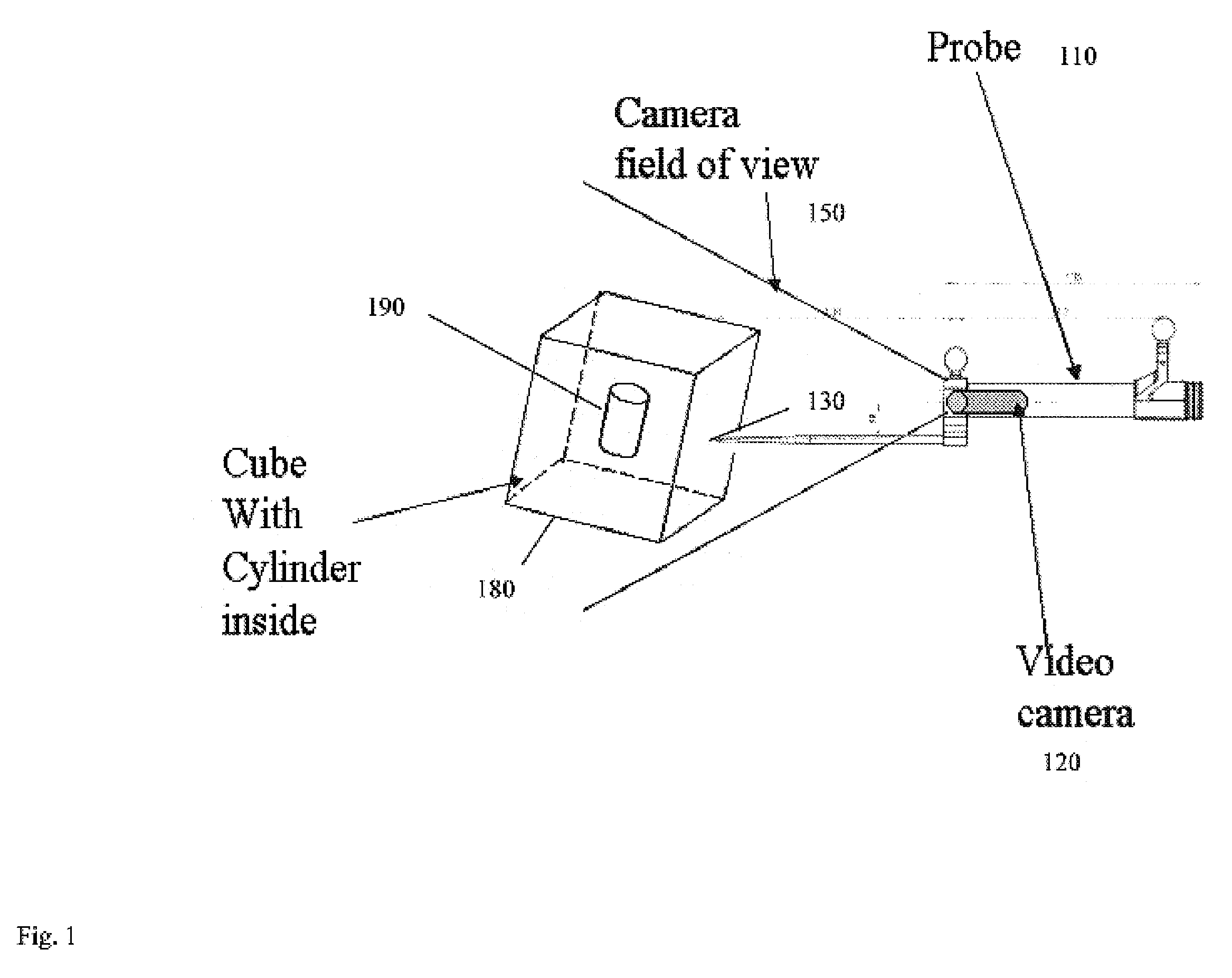

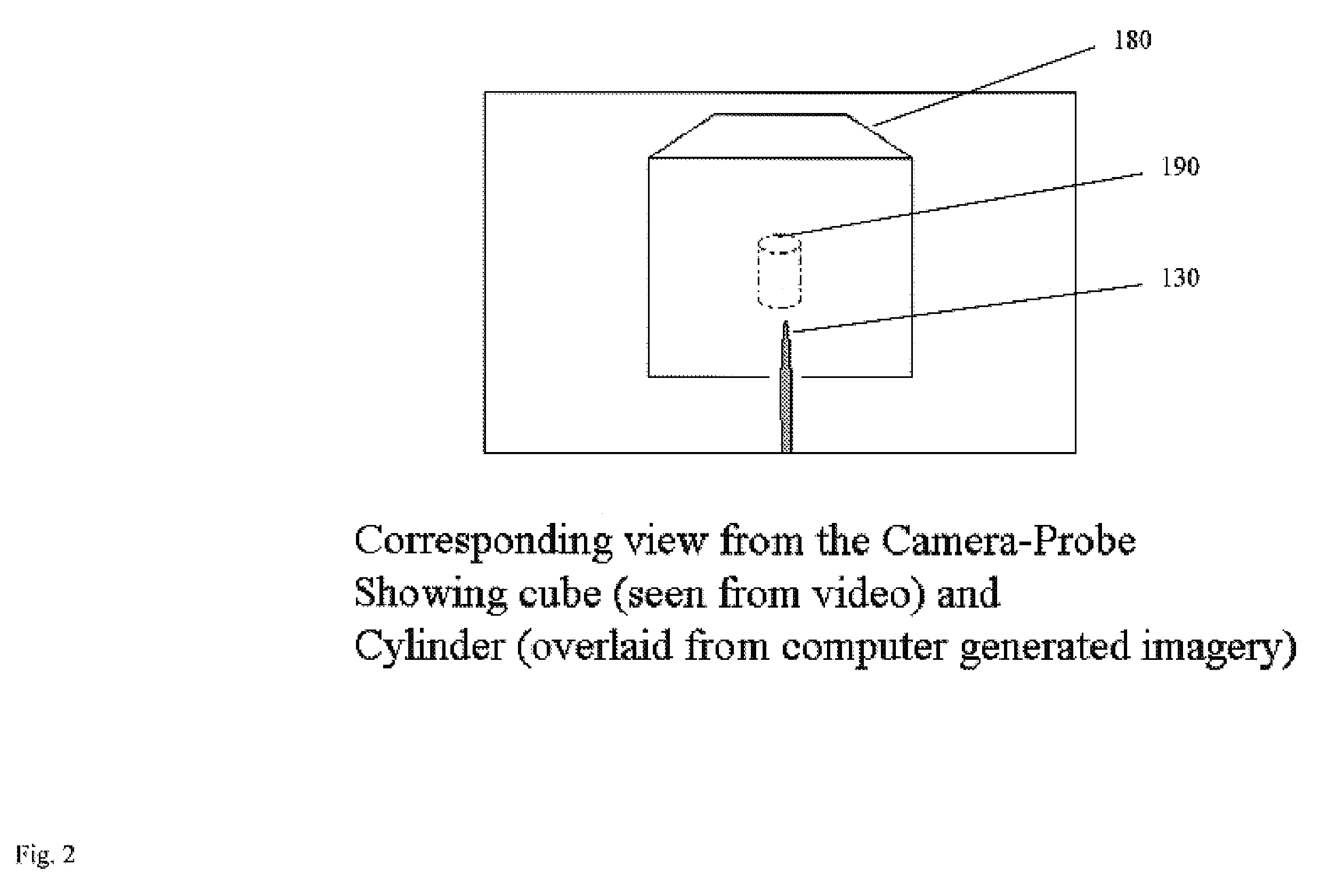

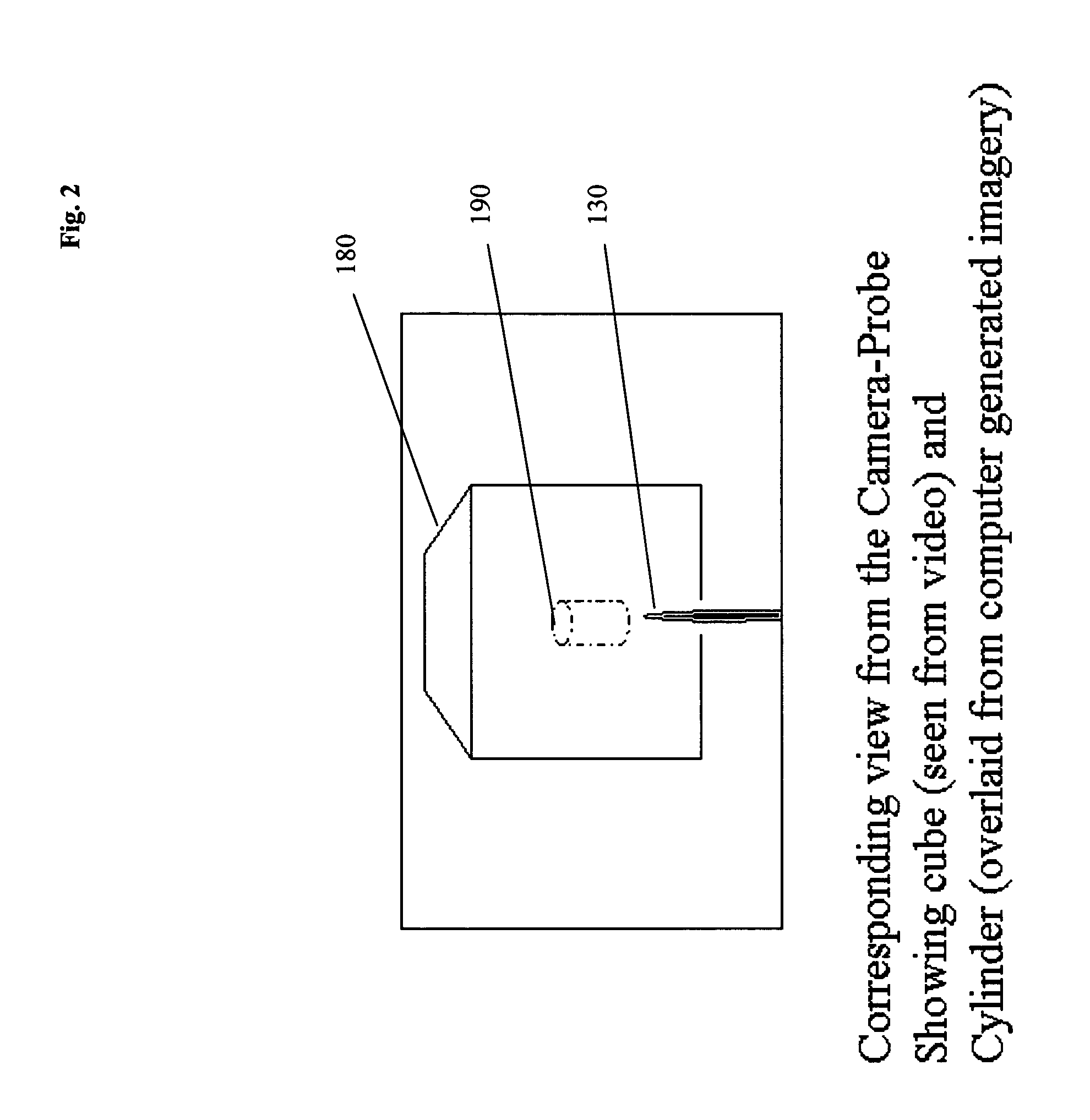

Computer enhanced surgical navigation imaging system (camera probe)

ActiveUS7491198B2Enhanced interactionEasy to navigateSurgical navigation systemsEndoscopesViewpointsSurgical approach

A system and method for navigation within a surgical field are presented. In exemplary embodiments according to the present invention a micro-camera can be provided in a hand-held navigation probe tracked by a tracking system. This enables navigation within an operative scene by viewing real-time images from the viewpoint of the micro-camera within the navigation probe, which are overlaid with computer generated 3D graphics depicting structures of interest generated from pre-operative scans. Various transparency settings of the camera image and the superimposed 3D graphics can enhance the depth perception, and distances between a tip of the probe and any of the superimposed 3D structures along a virtual ray extending from the probe tip can be dynamically displayed in the combined image. In exemplary embodiments of the invention a virtual interface can be displayed adjacent to the combined image on a system display, thus facilitating interaction with various navigation related functions. In exemplary embodiments according to the present invention virtual reality systems can be used to plan surgical approaches with multi-modal CT and MRI data. This allows for generating 3D structures as well as marking ideal surgical paths. The system and method presented thus enable transfer of a surgical planning scenario to a real-time view of an actual surgical field, thus enhancing navigation.

Owner:BRACCO IMAGINIG SPA

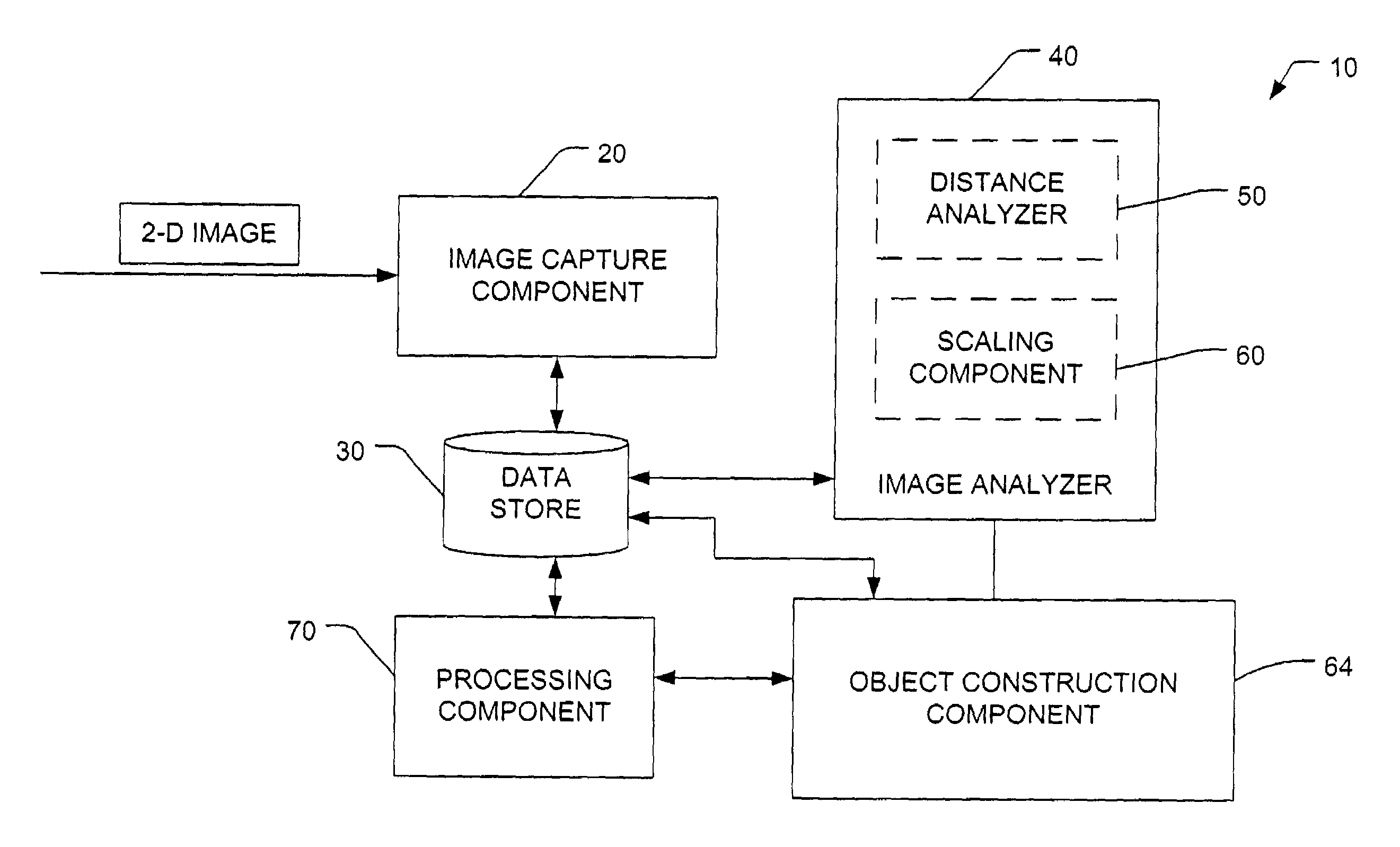

Measurement of dimensions of solid objects from two-dimensional image(s)

The present invention facilitates solid object reconstruction from a two-dimensional image. If an object is of known and regular shape, information about the object can be extracted from at least one view by utilizing appropriate constraints and measuring a distance between a camera and the object and / or by estimating a scale factor between a camera image and a real world image. The same device can perform both the image capture and the distance measurement or the scaling factor estimation. The following processes can be performed for object identification: parameter estimation; image enhancement; detection of line segments; aggregation of short line segments into segments; detection of proximity clusters of segments; estimation of a convex hull of at least one cluster; derivation of an object outline from the convex hull; combination of the object outline, shape constraints, and distance value.

Owner:SYMBOL TECH LLC

Device for monitoring area around vehicle

InactiveUS6940423B2Raise the possibilityDigital data processing detailsIndication of parksing free spacesCamera imageDisplay device

Owner:TOYOTA JIDOSHA KK +1

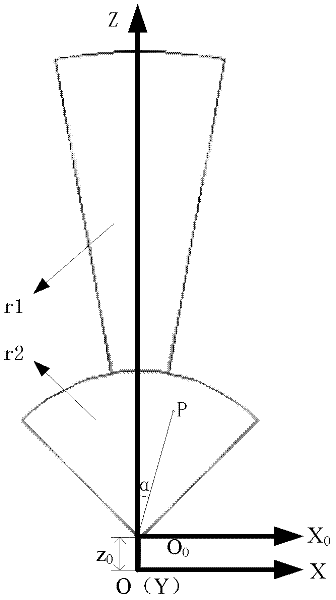

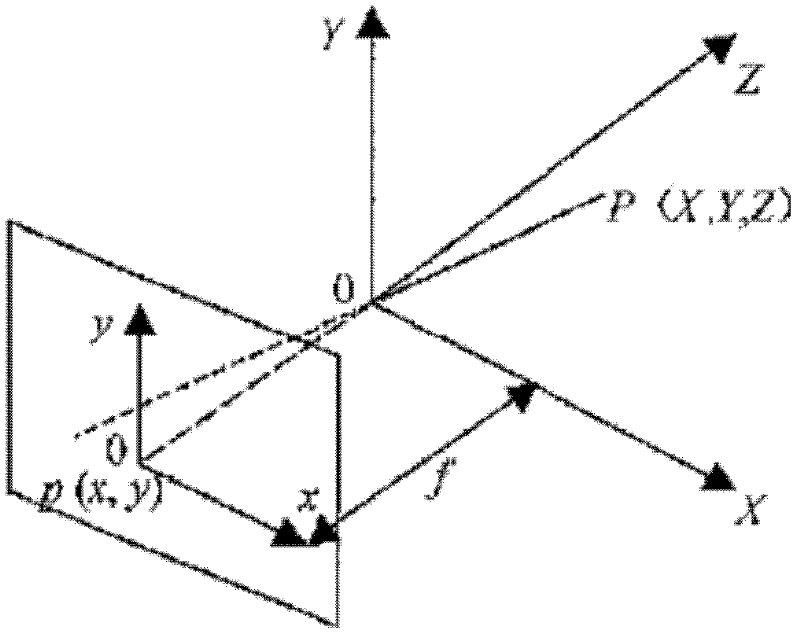

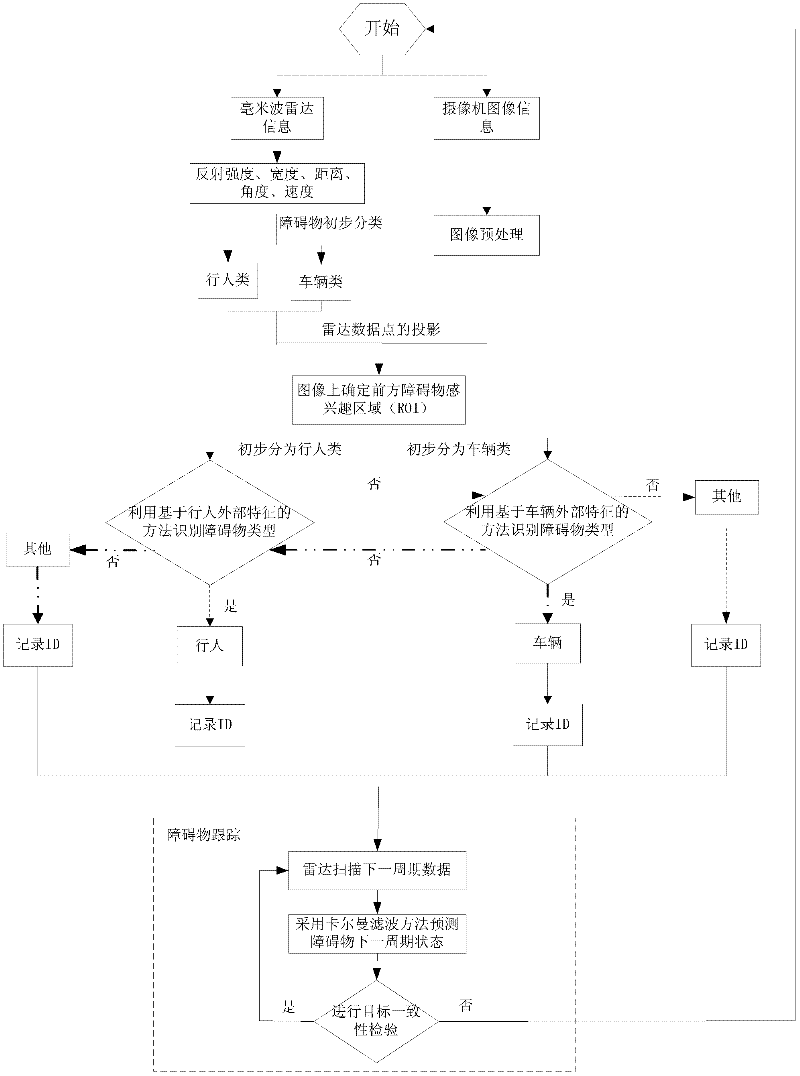

Method for detecting and tracking obstacles in front of vehicle

InactiveCN102508246ASolve problems that are difficult to classify and identify at the same timeSolve elusive problemsPhotogrammetry/videogrammetryCharacter and pattern recognitionCamera imageImaging processing

The invention discloses a method for detecting and tracking obstacles in front of a vehicle, aiming to overcome the defect and shortage in detection and tracking of obstacles in front of the vehicle by using a single-type sensor. The method comprises the following steps of: 1. establishing a relation for realizing data conversion between a millimeter wave radar coordinate system and a camera coordinate system; 2. receiving, resolving and processing the millimeter wave radar data, and carrying out preliminary classification on the obstacles; 3. synchronously collecting a camera image and receiving millimeter wave radar data; 4. classifying the obstacles in front of the vehicle: 1) projecting scanning points of the millimeter wave radar data on a camera coordinate system by combining the methods of millimeter wave radar and monocular vision, and establishing a region of interest (ROI) of the obstacles on the image; 2) carrying out preliminary classification on different obstacles in theROI established on the image, and confirming the types of the obstacles by using different image processing algorithms; and 5. tracking the obstacles in front of the vehicle.

Owner:JILIN UNIV

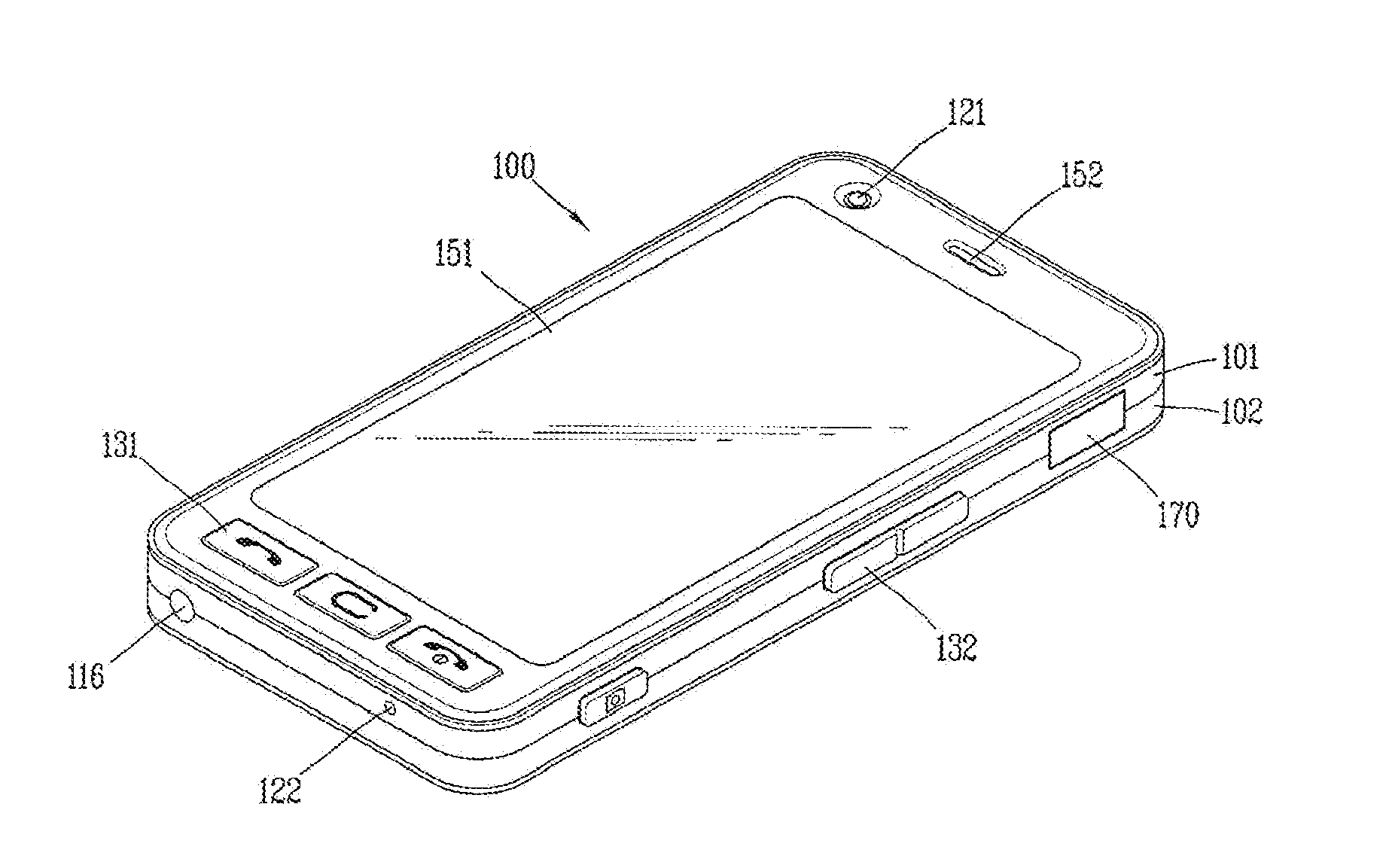

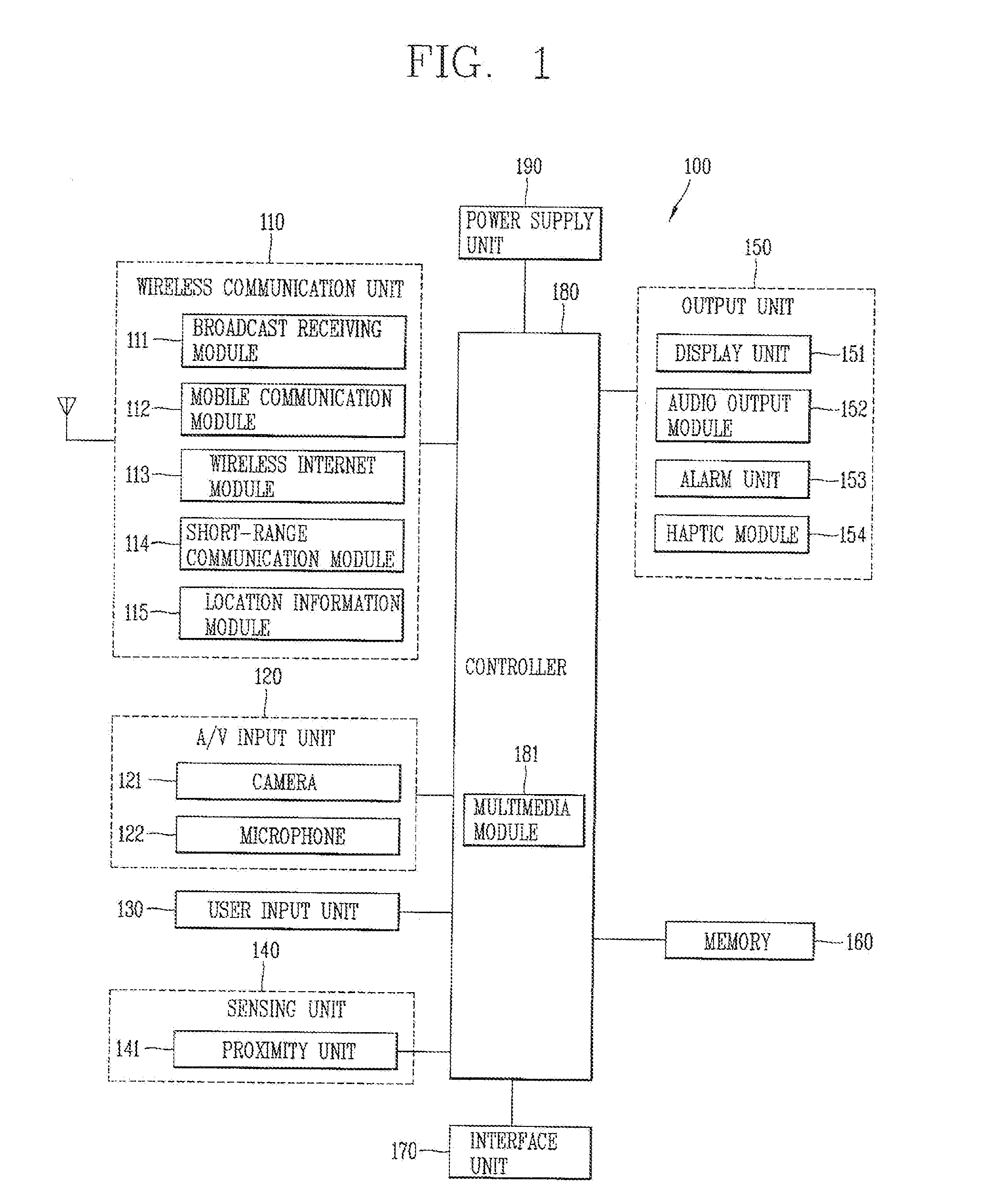

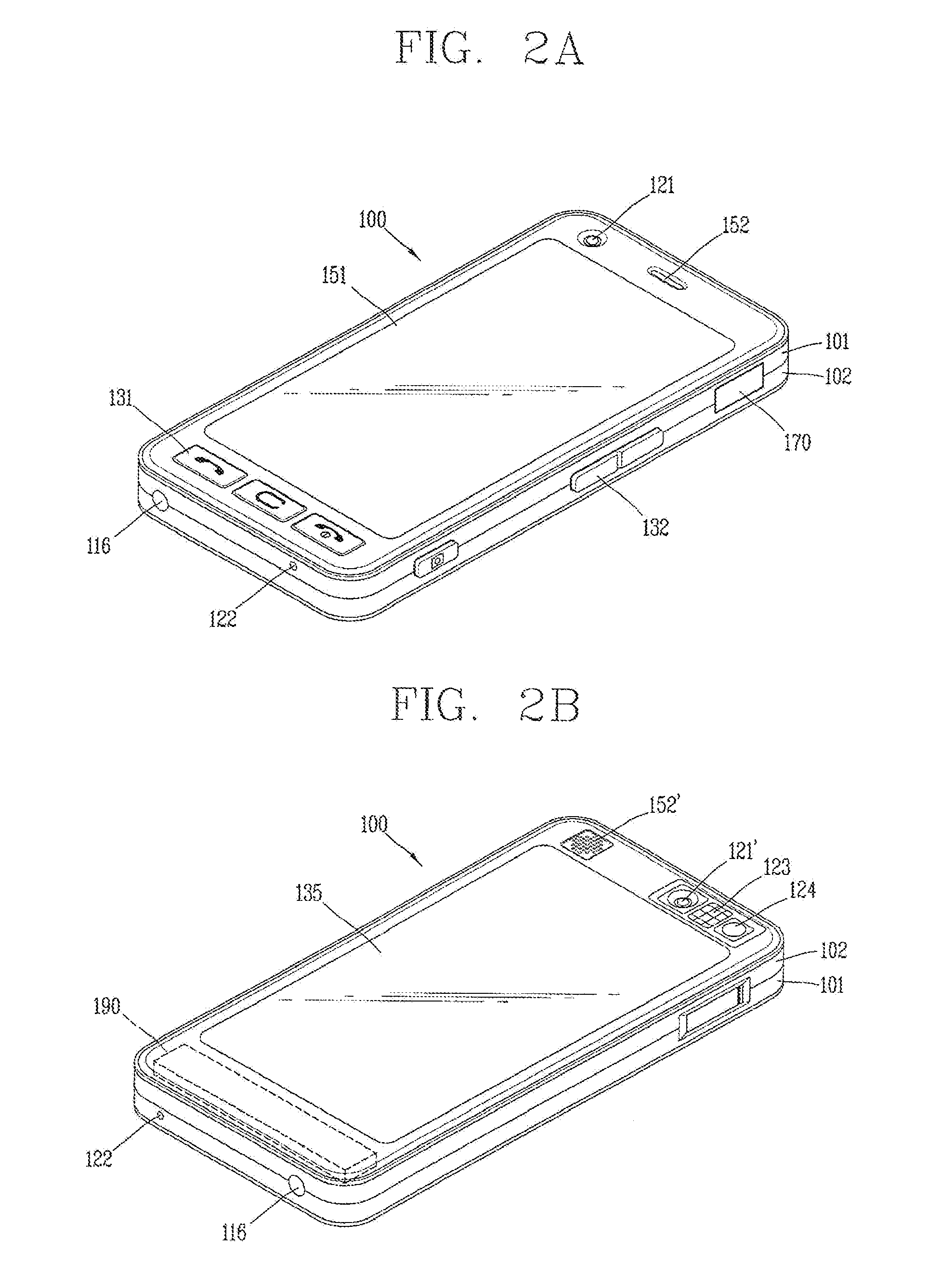

Mobile terminal and camera image control method thereof

ActiveUS20100173678A1Easy to useEffective displayTelevision system detailsSpeech analysisCamera imageComputer graphics (images)

A method of controlling a mobile terminal, and which includes displaying, via a display on the mobile terminal, a captured or a preview image in a first display portion, displaying, via the display, the same captured or a preview image in a second display portion, zooming, via a controller on the mobile terminal, the captured or preview image displayed in the first display portion, and displaying, via the display, a zoom guide on the image displayed in the second display portion that identifies a zoomed portion of the image displayed in the first display portion.

Owner:LG ELECTRONICS INC

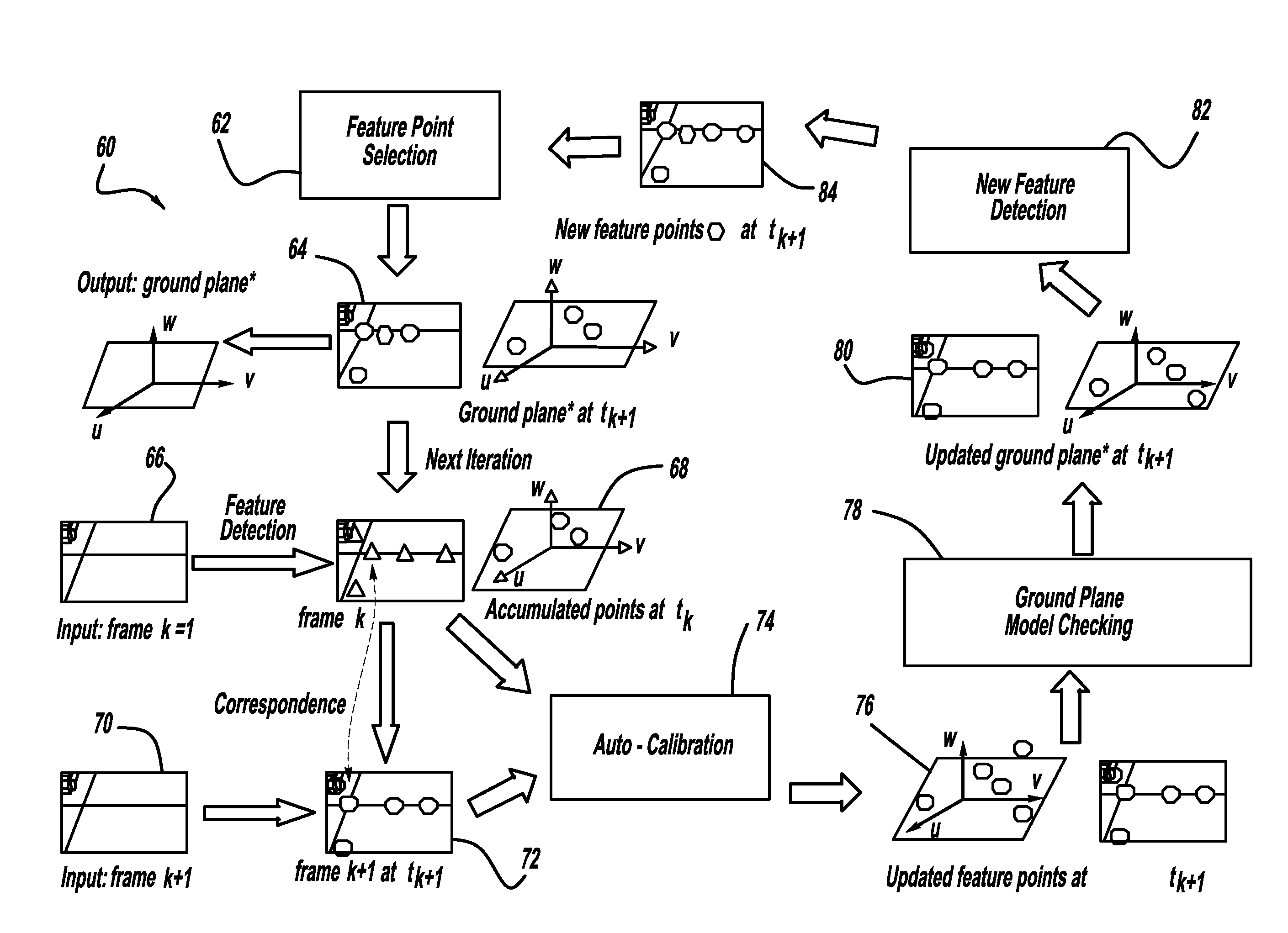

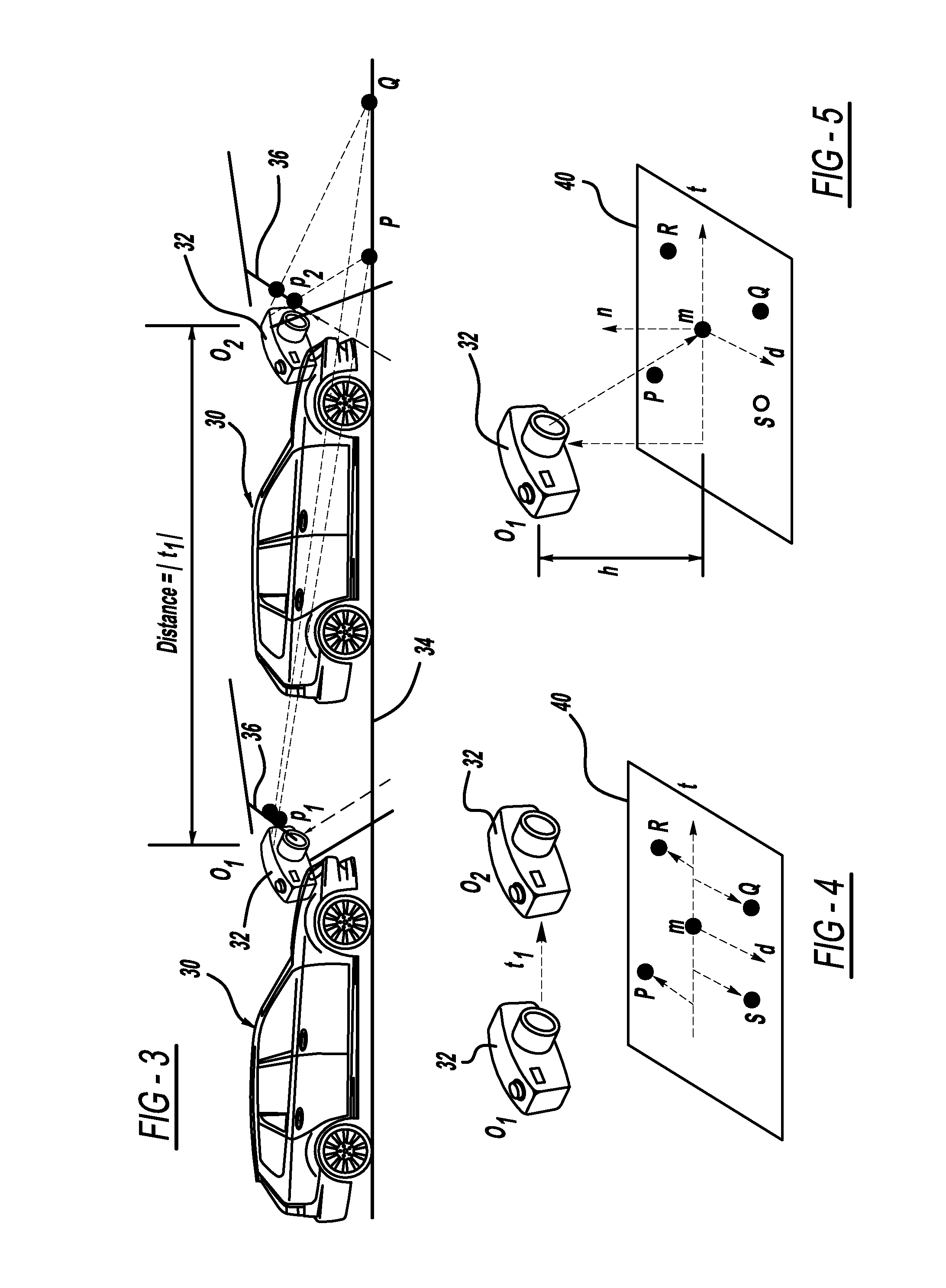

Self calibration of extrinsic camera parameters for a vehicle camera

A system and method for calibrating a camera on a vehicle as the vehicle is being driven. The method includes identifying at least two feature points in at least two camera images from a vehicle that has moved between taking the images. The method then determines a camera translation direction between two camera positions. Following this, the method determines a ground plane in camera coordinates based on the corresponding feature points from the images and the camera translation direction. The method then determines a height of the camera above the ground and a rotation of the camera in vehicle coordinates.

Owner:GM GLOBAL TECH OPERATIONS LLC

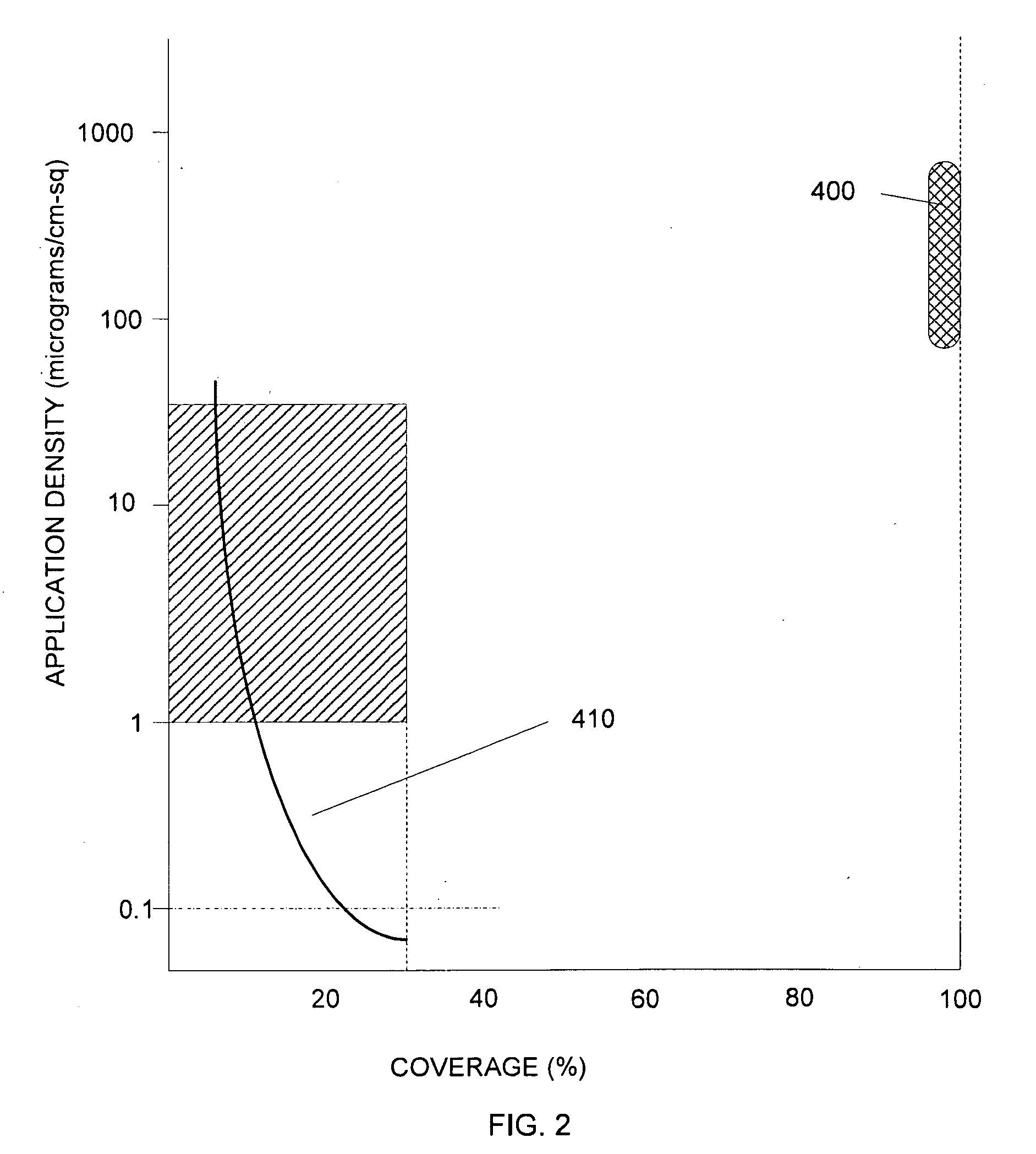

Apparatus and method for the precision application of cosmetics

ActiveUS20090025747A1Easy to moveArea coveredTypewritersPackaging toiletriesCamera imageVolumetric Mass Density

One or more reflectance modifying agent (RMA) such as a pigmented cosmetic agent is applied selectively and precisely with a controlled spray to human skin according to local skin reflectance or texture attributes. One embodiment uses digital control based on the analysis of a camera images. Another embodiment, utilizes a calibrated scanning device comprising a plurality of LEDs and photodiode sensors to correct reflectance readings to compensate for device distance and orientation relative to the skin. Ranges of desired RMA application parameters of high luminance RMA, selectively applied to middle spatial frequency features, at low opacity or application density are each be significantly different from conventional cosmetic practice. The ranges are complementary and the use of all three techniques in combination provides a surprisingly effective result which preserves natural beauty while applying a minimum amount of cosmetic agent.

Owner:TCMS TRANSPARENT BEAUTY LLC

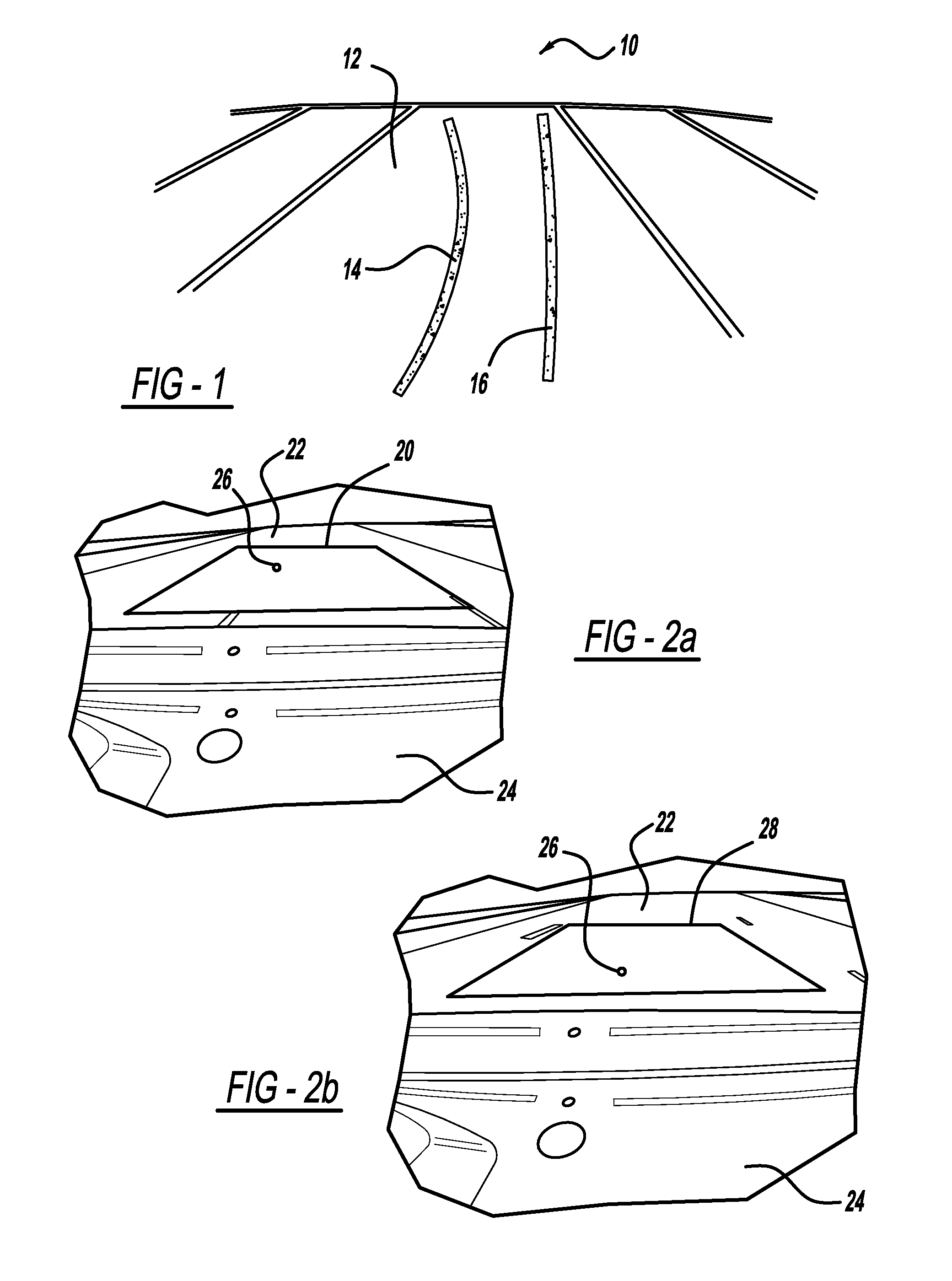

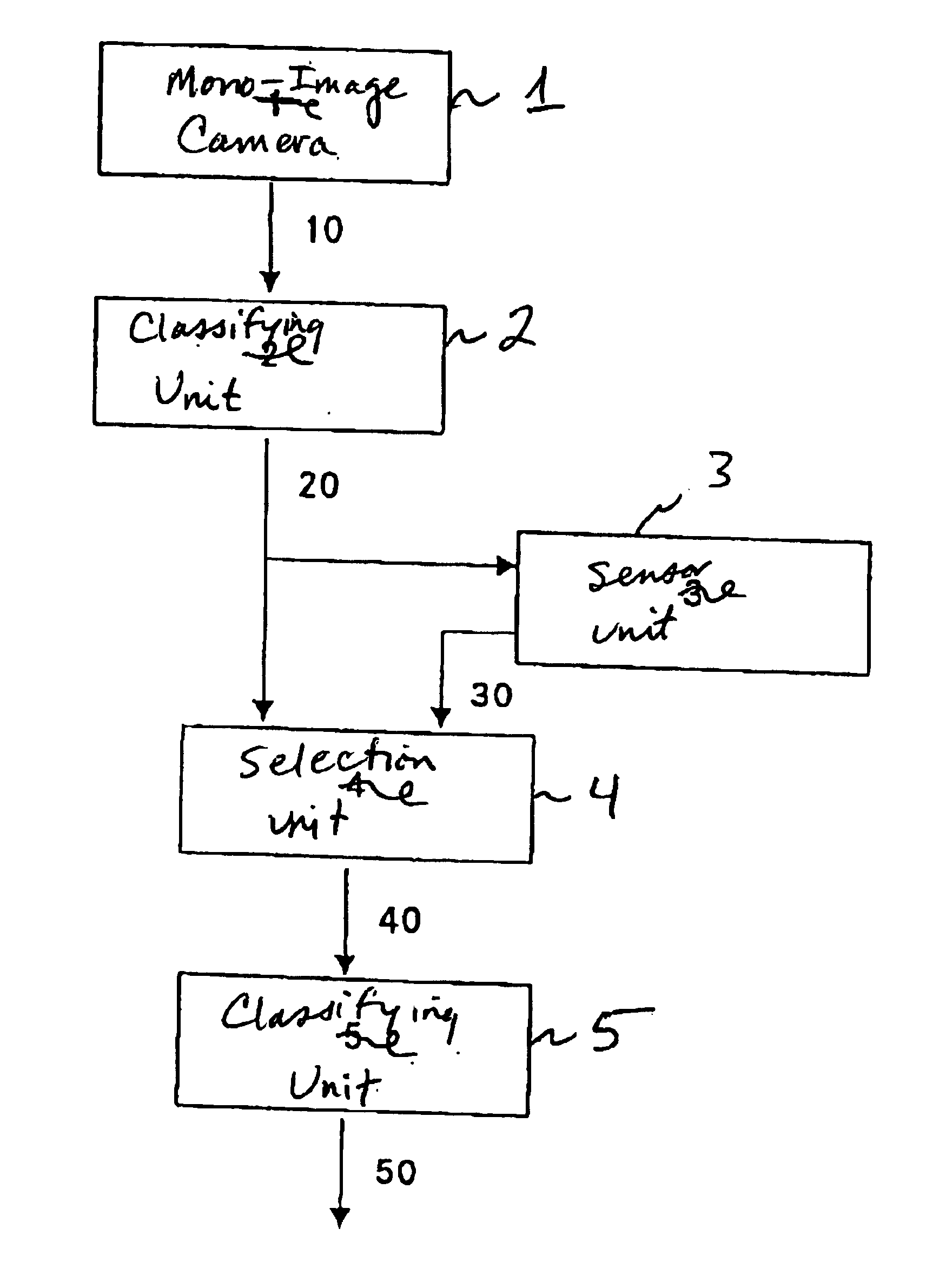

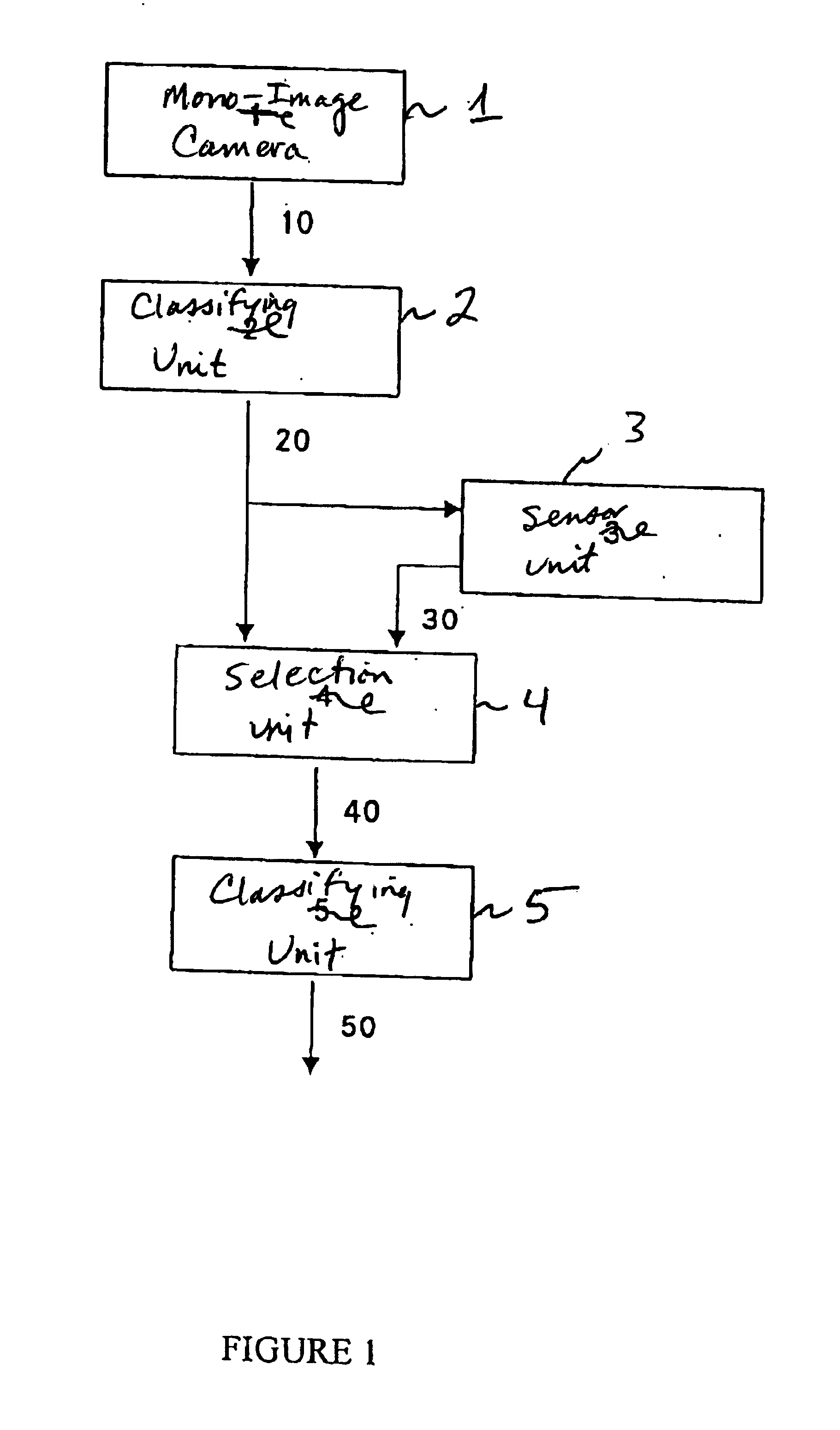

Camera-based precrash detection system

InactiveUS6838980B2Determine distanceEasy to usePedestrian/occupant safety arrangementAnti-collision systemsCamera imageTyping Classification

A method and a device for detecting road users and obstacles on the basis of camera images, in order to determine their distance from the observer and to classify them. In a two-step classification, potential other parties involved in a collision are detected and identified. In so doing, in a first step, potential other parties involved in a collision are marked in the image data of a mono-image camera; their distance and relative velocity are subsequently determined so that endangering objects can be selectively subjected to a type classification in real time. By breaking down the detection activity into a plurality of steps, the real-time capability of the system is also rendered possible using conventional sensors already present in the vehicle.

Owner:DAIMLER AG

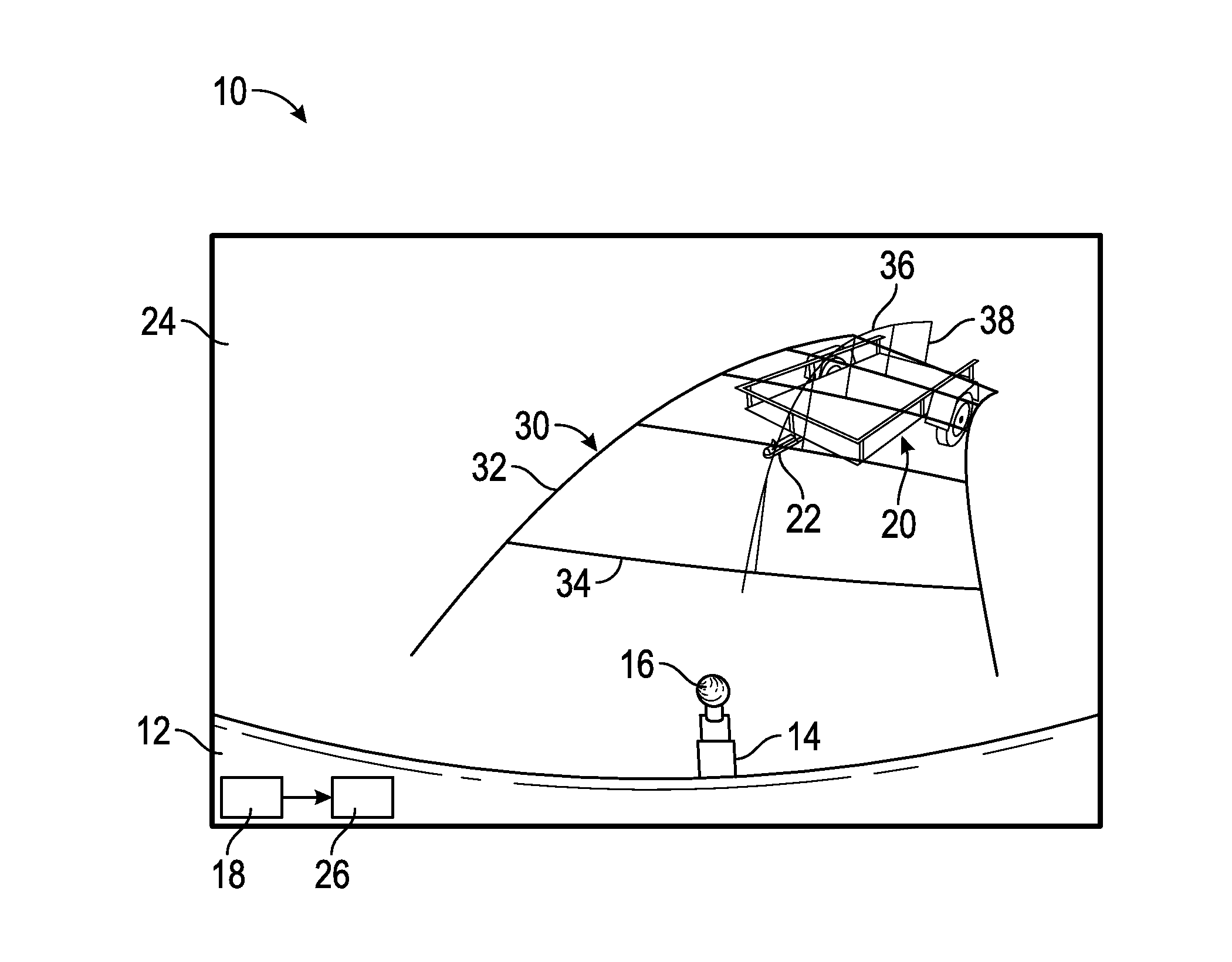

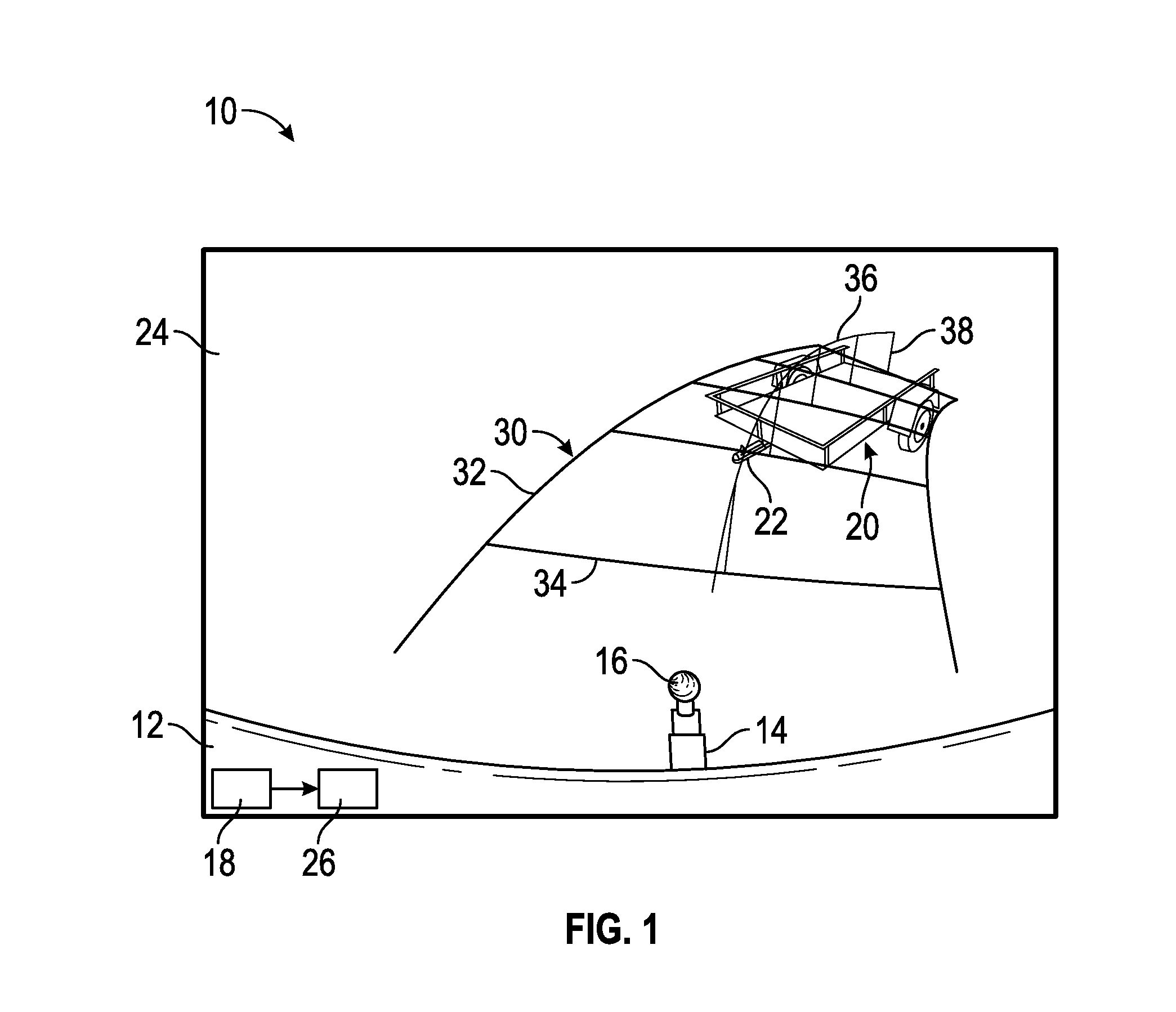

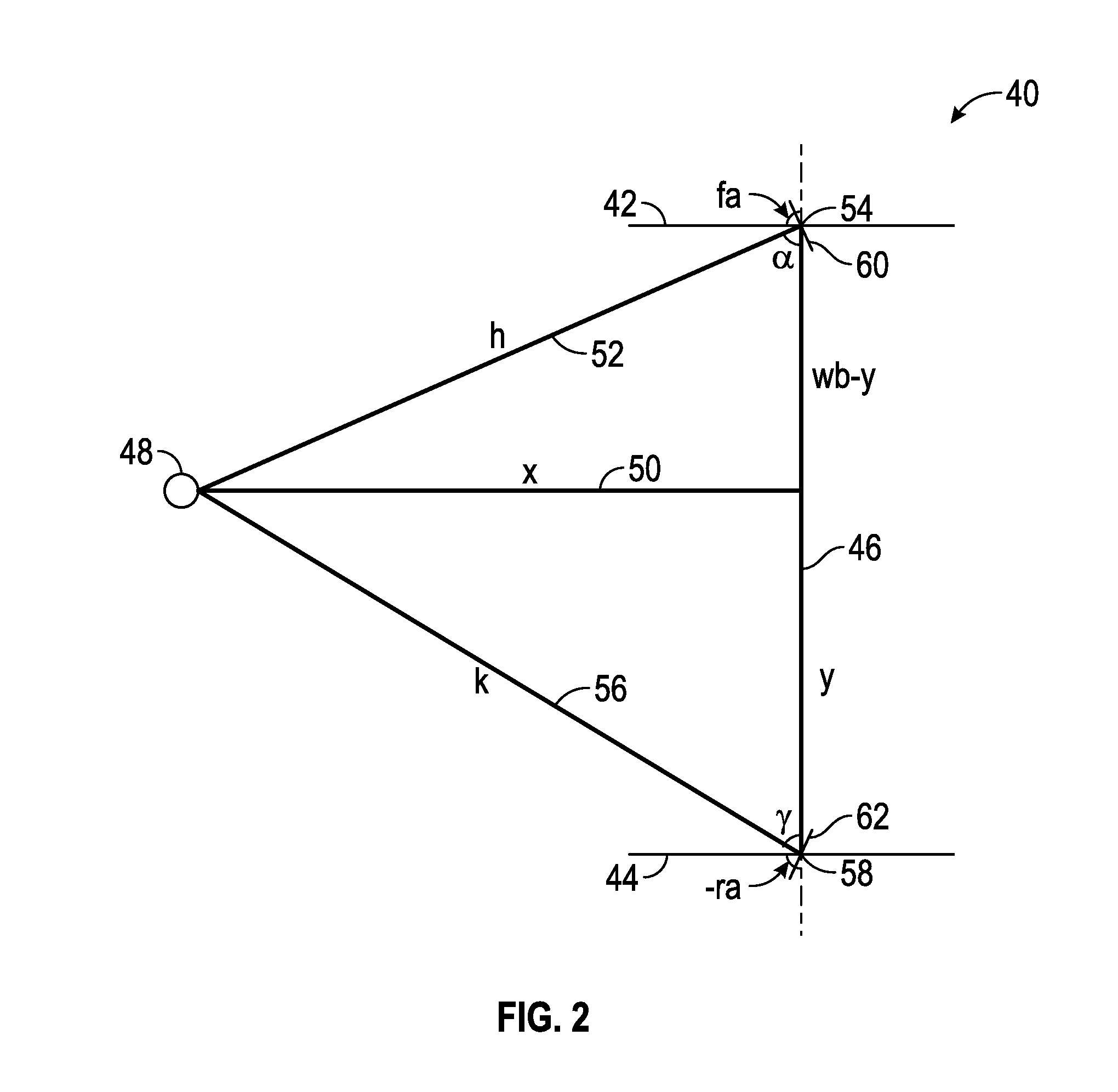

Smart tow

InactiveUS20150115571A1Character and pattern recognitionClosed circuit television systemsVehicle dynamicsCamera image

A system and method for providing visual assistance through a graphic overlay super-imposed on a back-up camera image for assisting a vehicle operator when backing up a vehicle to align a tow ball with a trailer tongue. The method includes providing camera modeling to correlate the camera image in vehicle coordinates to world coordinates, where the camera modeling provides the graphic overlay to include a tow line having a height in the camera image that is determined by an estimated height of the trailer tongue. The method also includes providing vehicle dynamic modeling for identifying the motion of the vehicle as it moves around a center of rotation. The method then predicts the path of the vehicle as it is being steered including calculating the center of rotation.

Owner:GM GLOBAL TECH OPERATIONS LLC

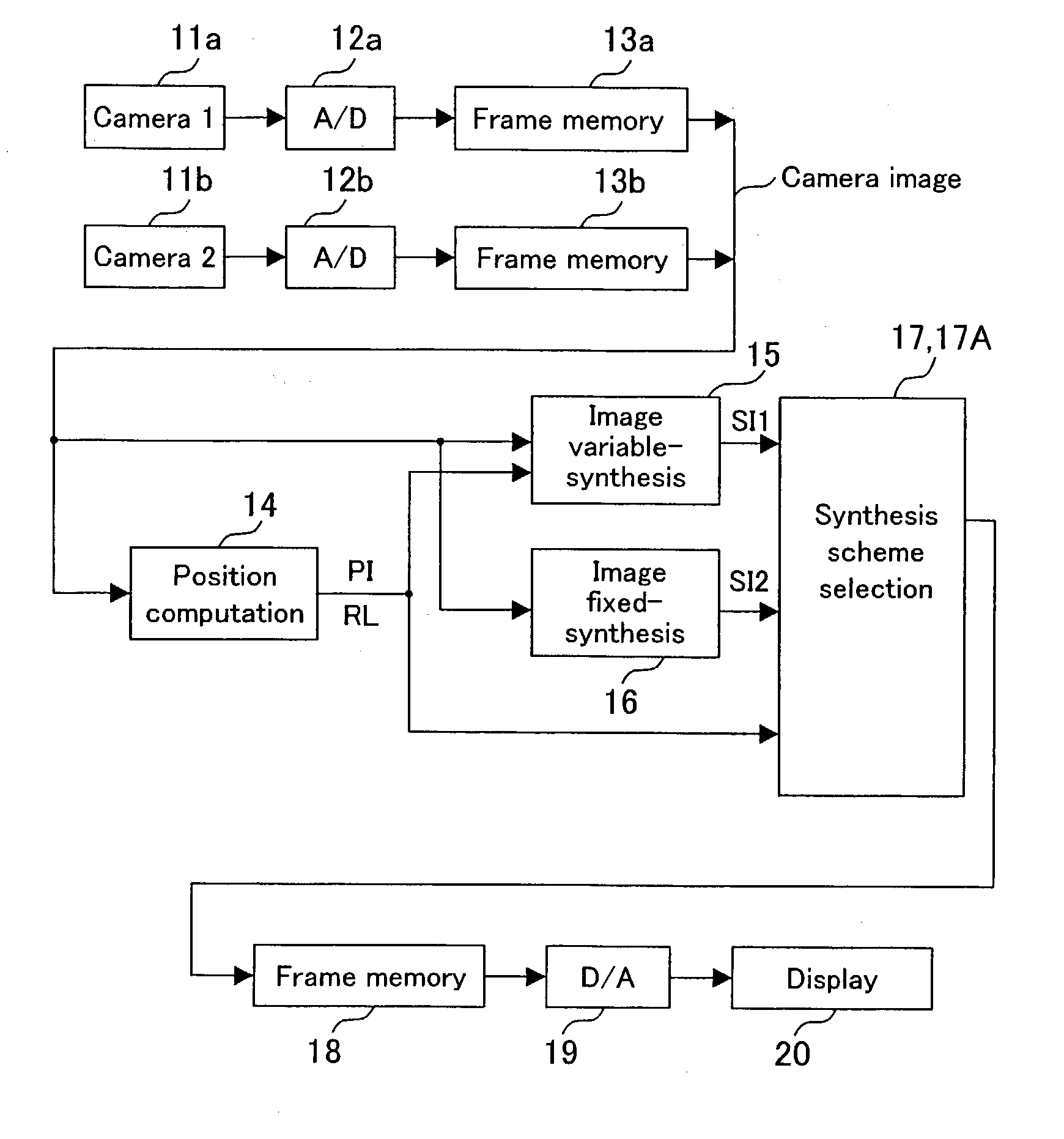

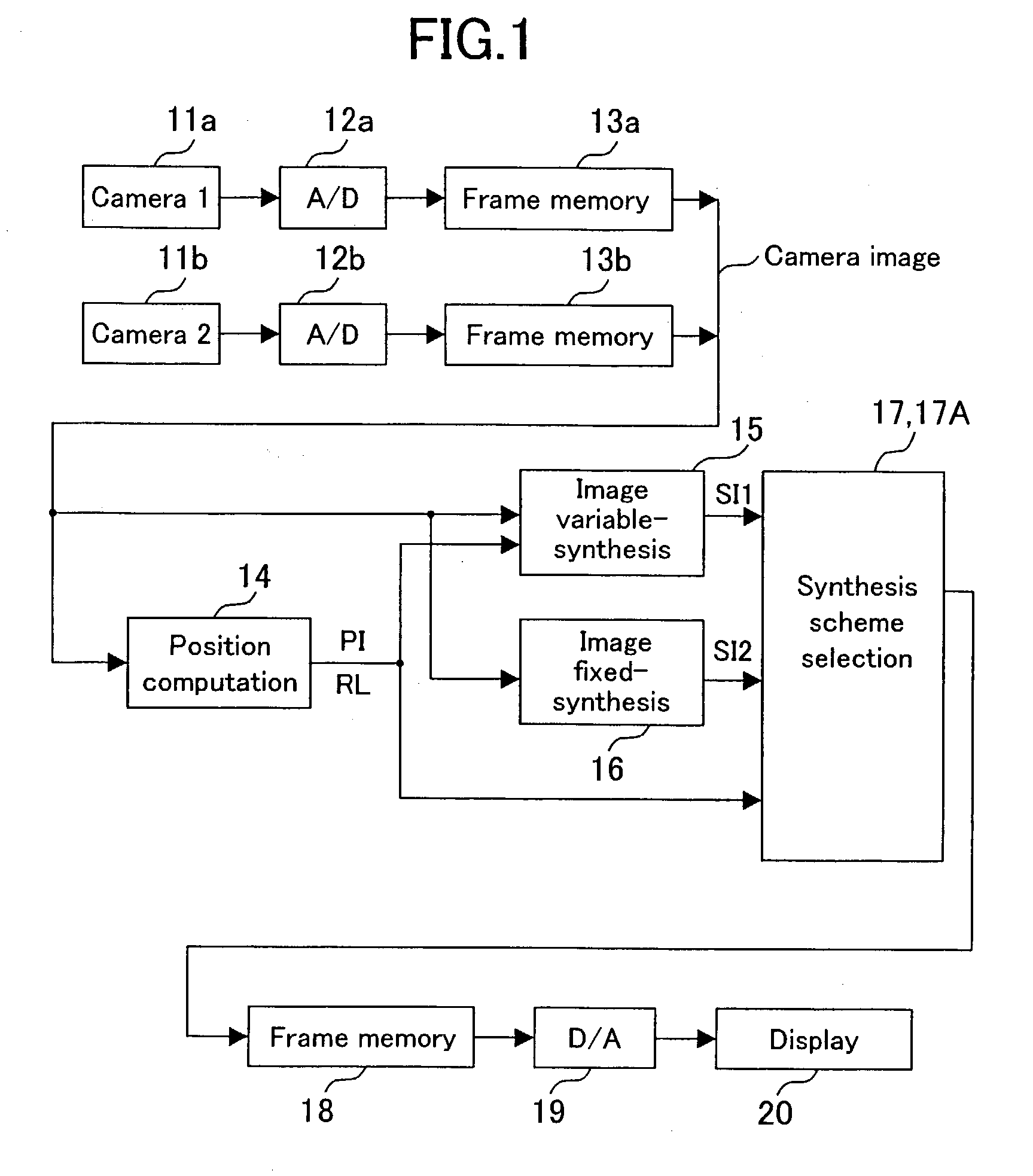

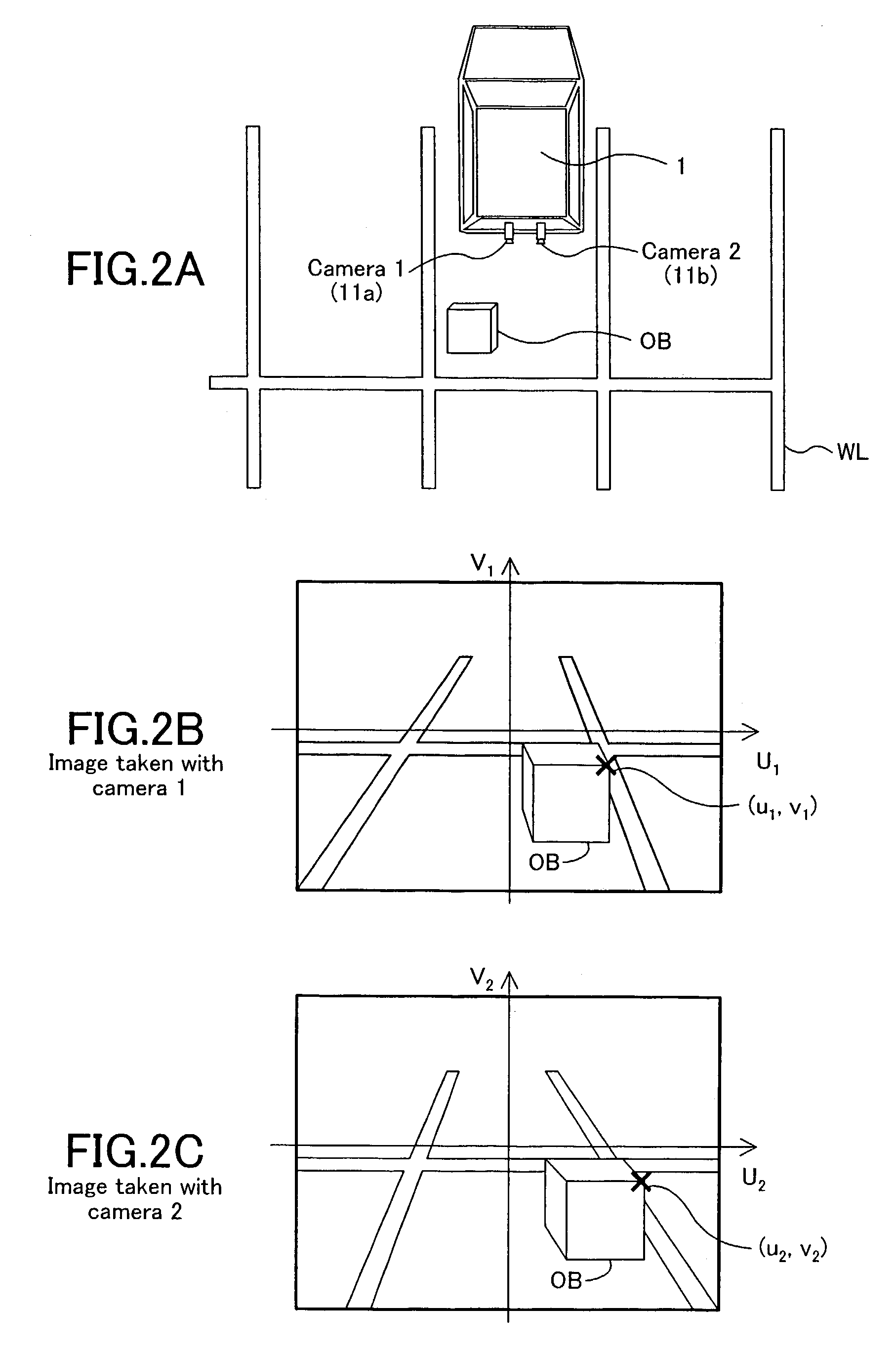

Vehicle surroundings monitoring device, and image production method/program

InactiveUS7110021B2Small distortionImage analysisPosition fixationCamera imageComputer graphics (images)

A synthesized image showing the situation around a vehicle is produced from images taken with cameras capturing the surroundings of the vehicle and presented to a display. A position computation section computes position information and the reliability of the position information, for a plurality of points in the camera images. An image variable-synthesis section produces a first synthesized image from the camera images using the position information. An image fixed-synthesis section produces a second synthesized image by road surface projection, for example, without use of the position information. A synthesis scheme selection section selects either one of the first and second synthesized images according to the reliability as the synthesized image to be presented.

Owner:PANASONIC CORP

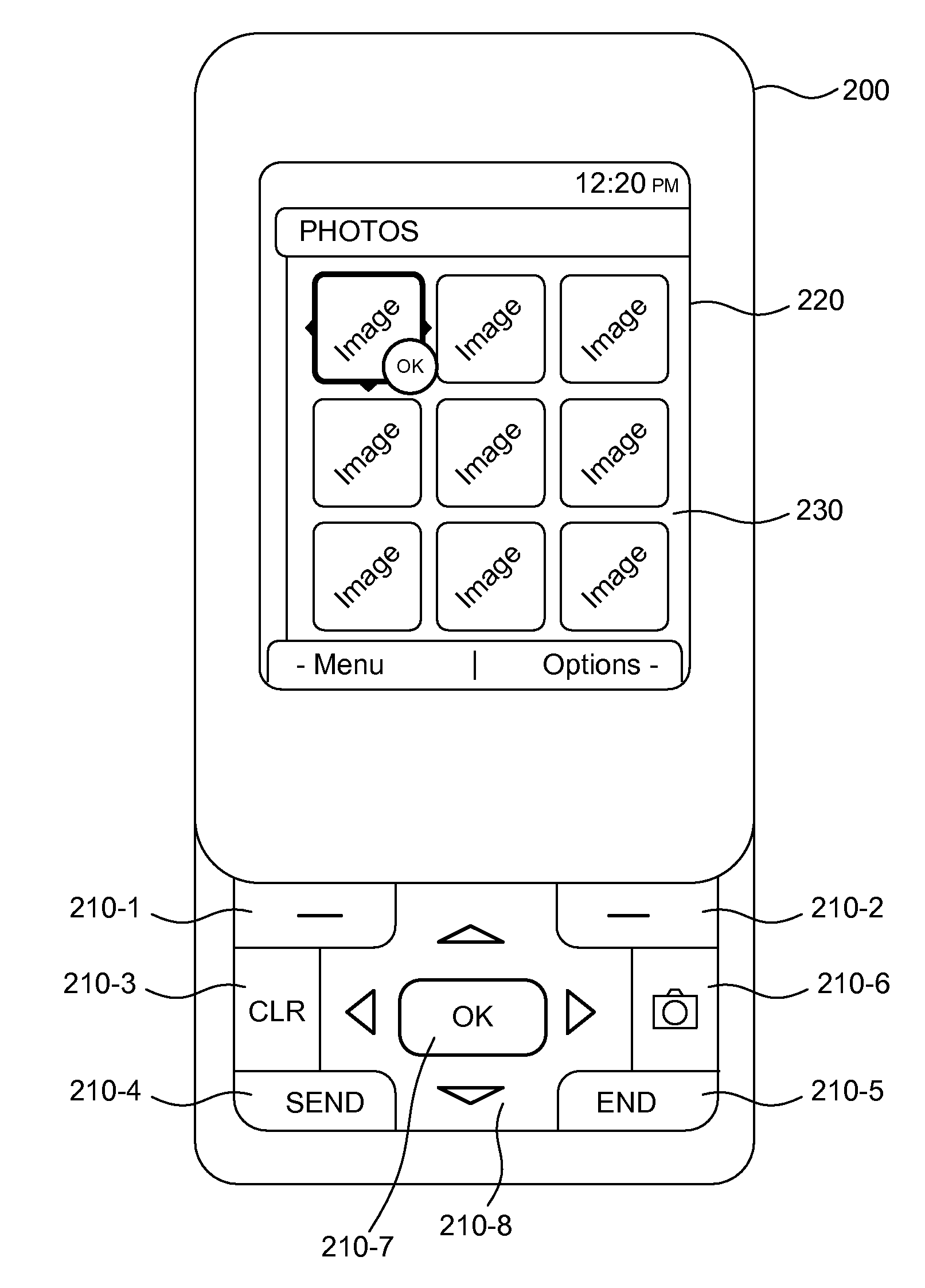

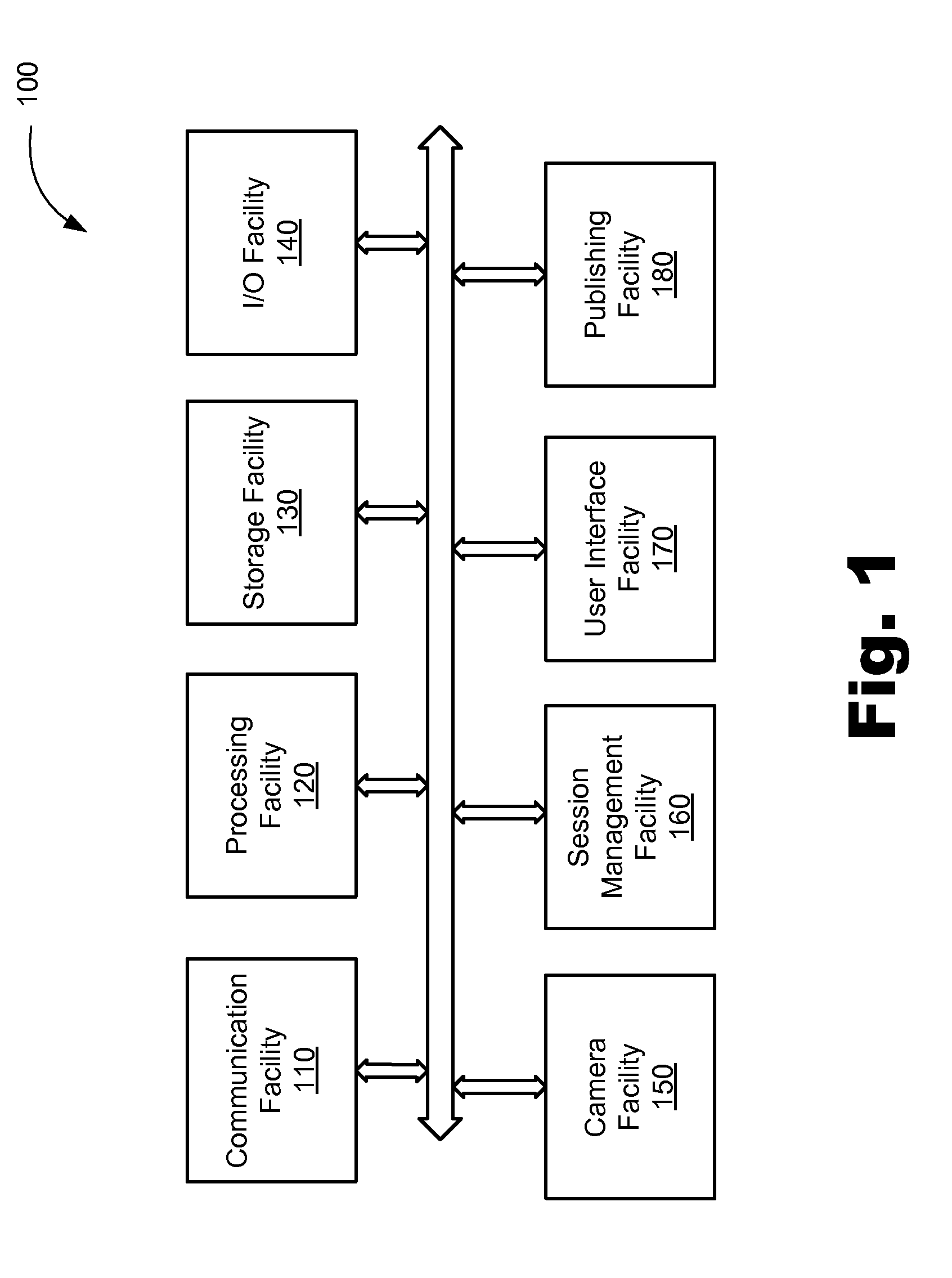

Camera data management and user interface apparatuses, systems, and methods

In certain embodiments, a graphical user interface (“GUI”) including a live camera sensor view is displayed and, in response to the capture of a camera image, an image manager pane is displayed together with the live camera sensor view in the graphical user interface. The image manager pane includes a visual indicator representative of the captured camera image. In certain embodiments, a camera image is captured and automatically assigned to a session based on a predefined session grouping heuristic. In certain embodiments, data representative of a captured camera image is provided to a content distribution subsystem over a network, and the content distribution subsystem is configured to distribute data representative of the camera image to a plurality of predefined destinations.

Owner:VERIZON PATENT & LICENSING INC

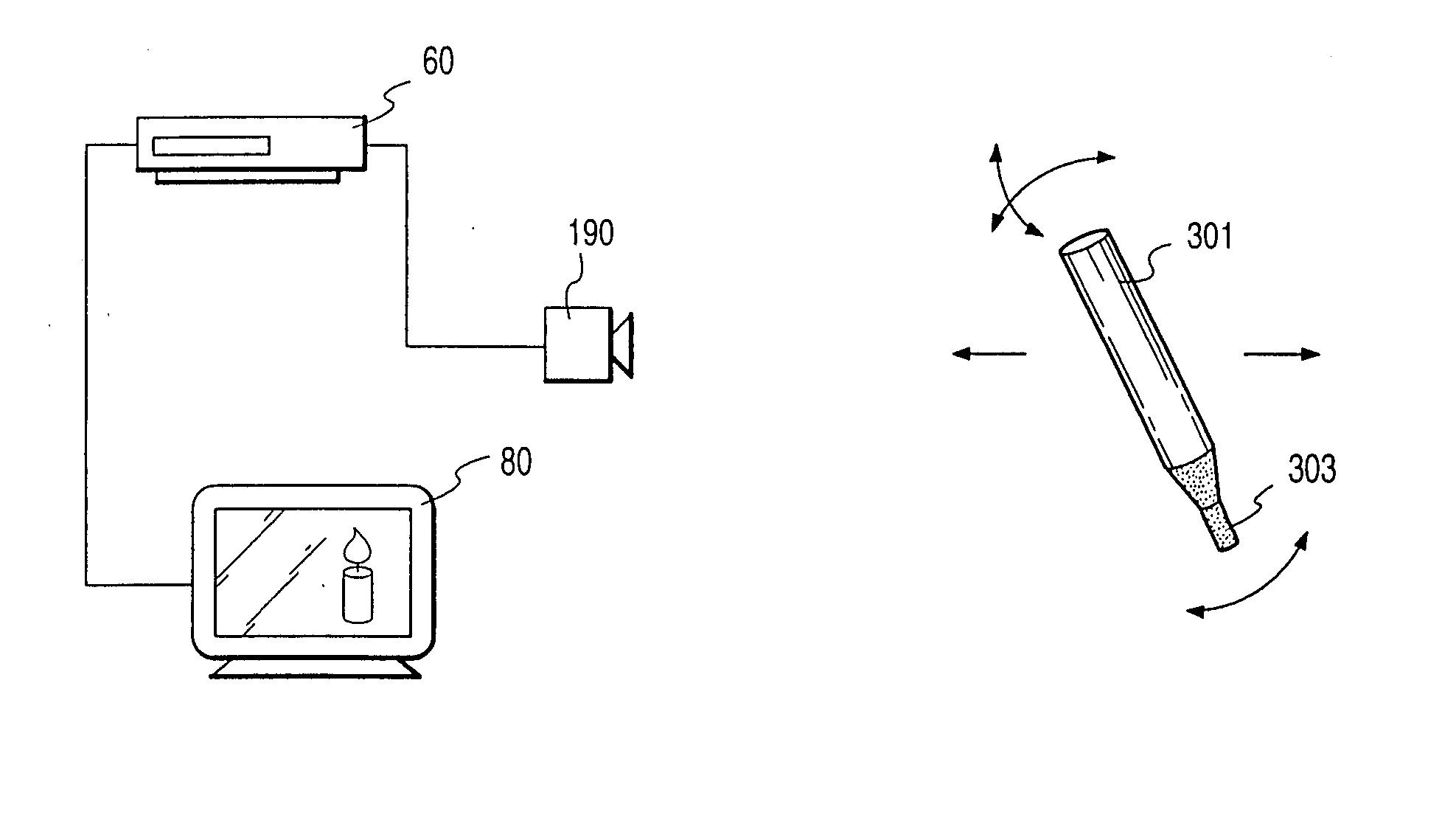

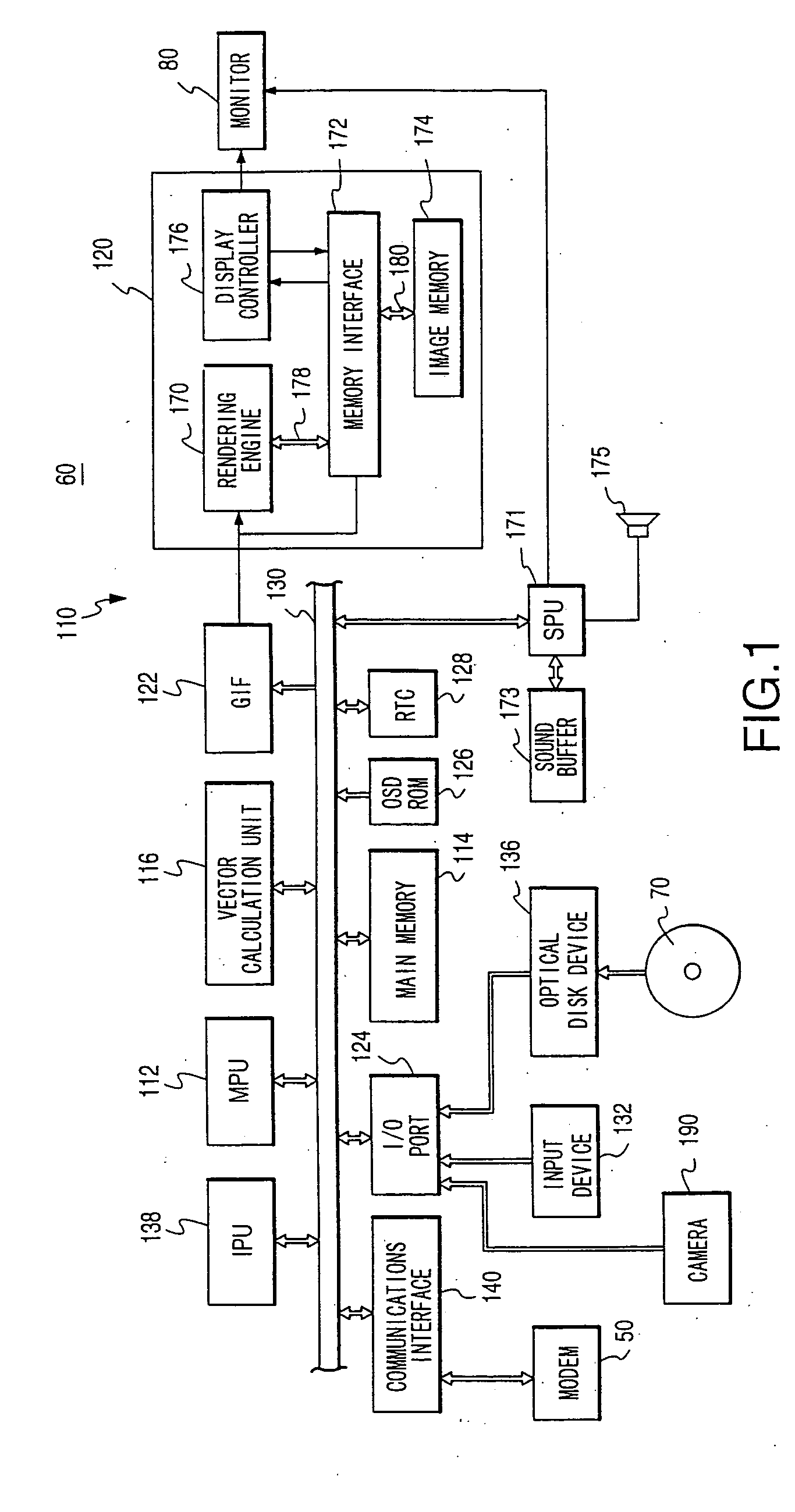

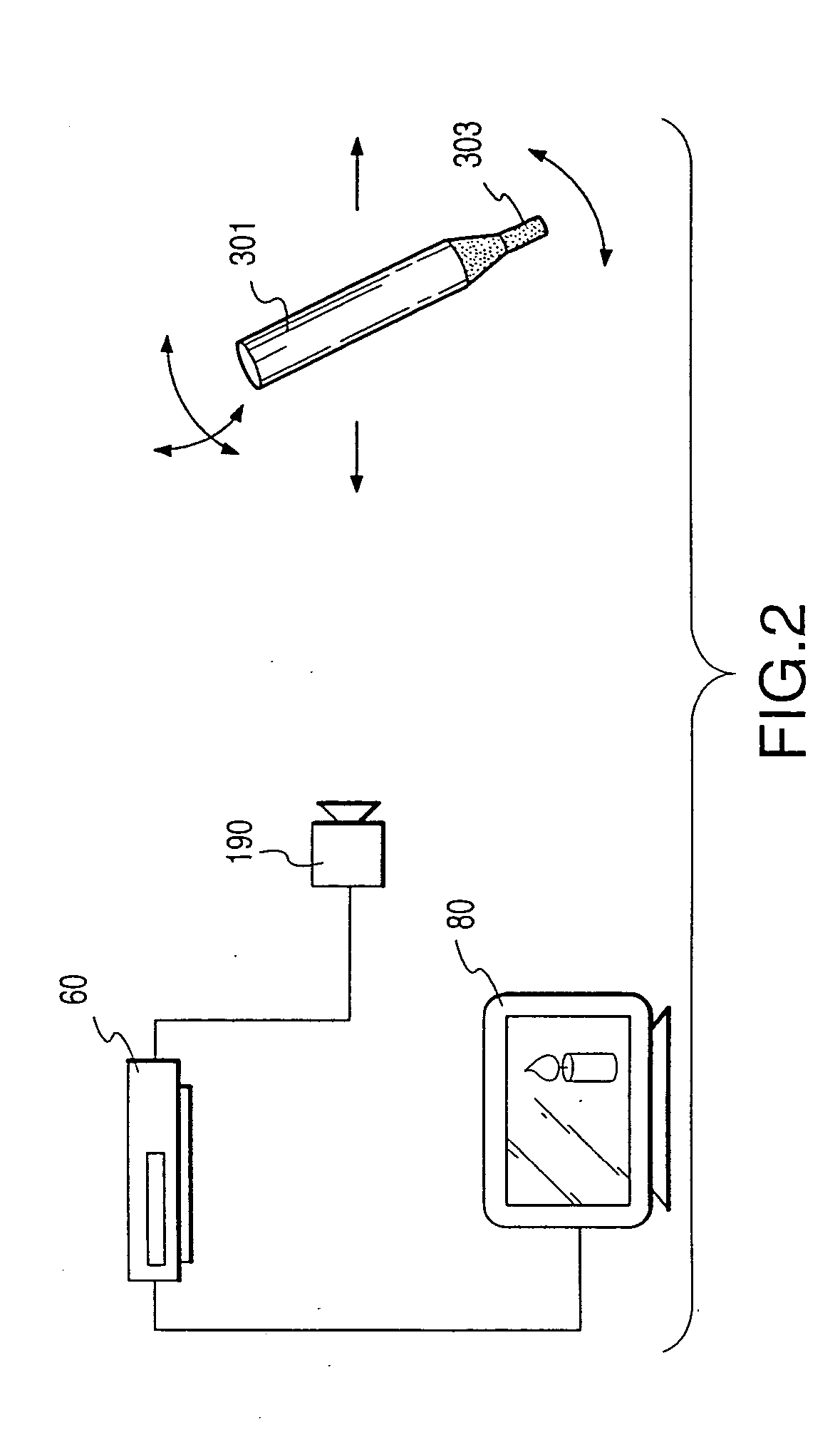

System and method for object tracking

InactiveUS20050026689A1Maximizing abilityImage analysisImage data processing detailsCamera imageColor transformation

A hand-manipulated prop is picked-up via a single video camera, and the camera image is analyzed to isolate the part of the image pertaining to the object for mapping the position and orientation of the object into a three-dimensional space, wherein the three-dimensional description of the object is stored in memory and used for controlling action in a game program, such as rendering of a corresponding virtual object in a scene of a video display. Algorithms for deriving the three-dimensional descriptions for various props employ geometry processing, including area statistics, edge detection and / or color transition localization, to find the position and orientation of the prop from two-dimensional pixel data. Criteria are proposed for the selection of colors of stripes on the props which maximize separation in the two-dimensional chrominance color space, so that instead of detecting absolute colors, significant color transitions are detected. Thus, the need for calibration of the system dependent on lighting conditions which tend to affect apparent colors can be avoided.

Owner:SONY COMPUTER ENTERTAINMENT INC

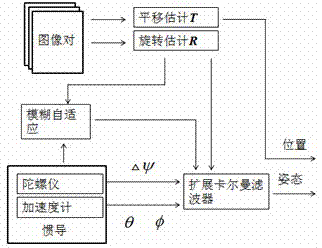

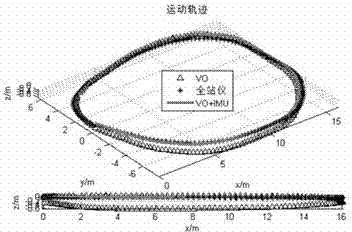

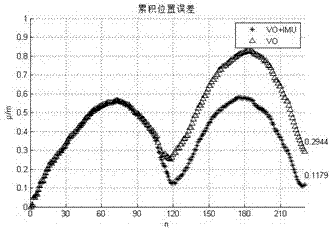

Machine vision and inertial navigation fusion-based mobile robot motion attitude estimation method

InactiveCN102538781AReduce cumulative errorHigh positioning accuracyNavigation by speed/acceleration measurementsVisual perceptionInertial navigation system

The invention discloses a machine vision and inertial navigation fusion-based mobile robot motion attitude estimation method which comprises the following steps of: synchronously acquiring a mobile robot binocular camera image and triaxial inertial navigation data; distilling front / back frame image characteristics and matching estimation motion attitude; computing a pitch angle and a roll angle by inertial navigation; building a kalman filter model to estimate to fuse vision and inertial navigation attitude; adaptively adjusting a filter parameter according to estimation variance; and carrying out accumulated dead reckoning of attitude correction. According to the method, a real-time expanding kalman filter attitude estimation model is provided, the combination of inertial navigation and gravity acceleration direction is taken as supplement, three-direction attitude estimation of a visual speedometer is decoupled, and the accumulated error of the attitude estimation is corrected; and the filter parameter is adjusted by fuzzy logic according to motion state, the self-adaptive filtering estimation is realized, the influence of acceleration noise is reduced, and the positioning precision and robustness of the visual speedometer is effectively improved.

Owner:ZHEJIANG UNIV

Computer enhanced surgical navigation imaging system (camera probe)

ActiveUS20050015005A1Enhanced interactionEasy to navigateSurgical navigation systemsEndoscopesViewpointsSurgical approach

A system and method for navigation within a surgical field are presented. In exemplary embodiments according to the present invention a micro-camera can be provided in a hand-held navigation probe tracked by a tracking system. This enables navigation within an operative scene by viewing real-time images from the viewpoint of the micro-camera within the navigation probe, which are overlaid with computer generated 3D graphics depicting structures of interest generated from pre-operative scans. Various transparency settings of the camera image and the superimposed 3D graphics can enhance the depth perception, and distances between a tip of the probe and any of the superimposed 3D structures along a virtual ray extending from the probe tip can be dynamically displayed in the combined image. In exemplary embodiments of the invention a virtual interface can be displayed adjacent to the combined image on a system display, thus facilitating interaction with various navigation related functions. In exemplary embodiments according to the present invention virtual reality systems can be used to plan surgical approaches with multi-modal CT and MRI data. This allows for generating 3D structures as well as marking ideal surgical paths. The system and method presented thus enable transfer of a surgical planning scenario to a real-time view of an actual surgical field, thus enhancing navigation.

Owner:BRACCO IMAGINIG SPA

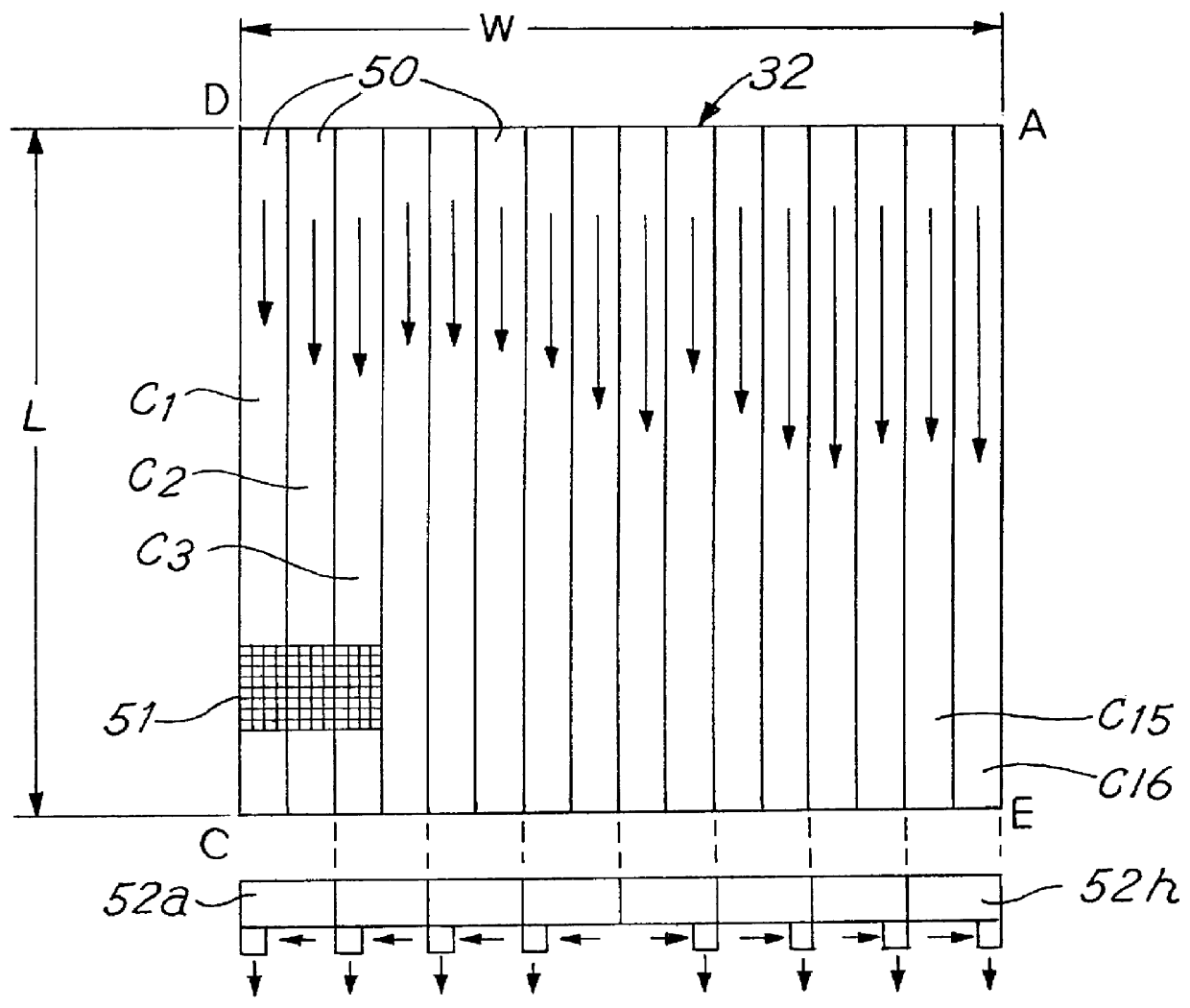

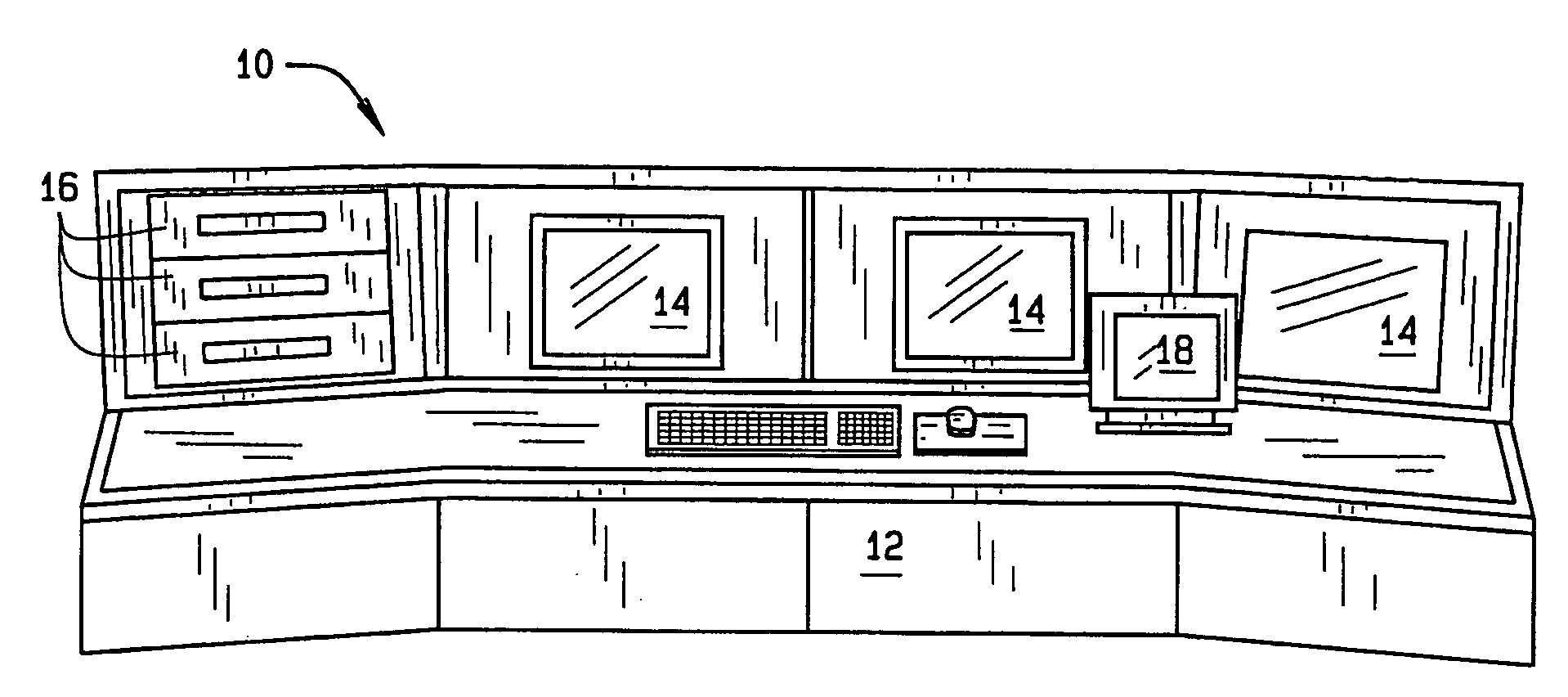

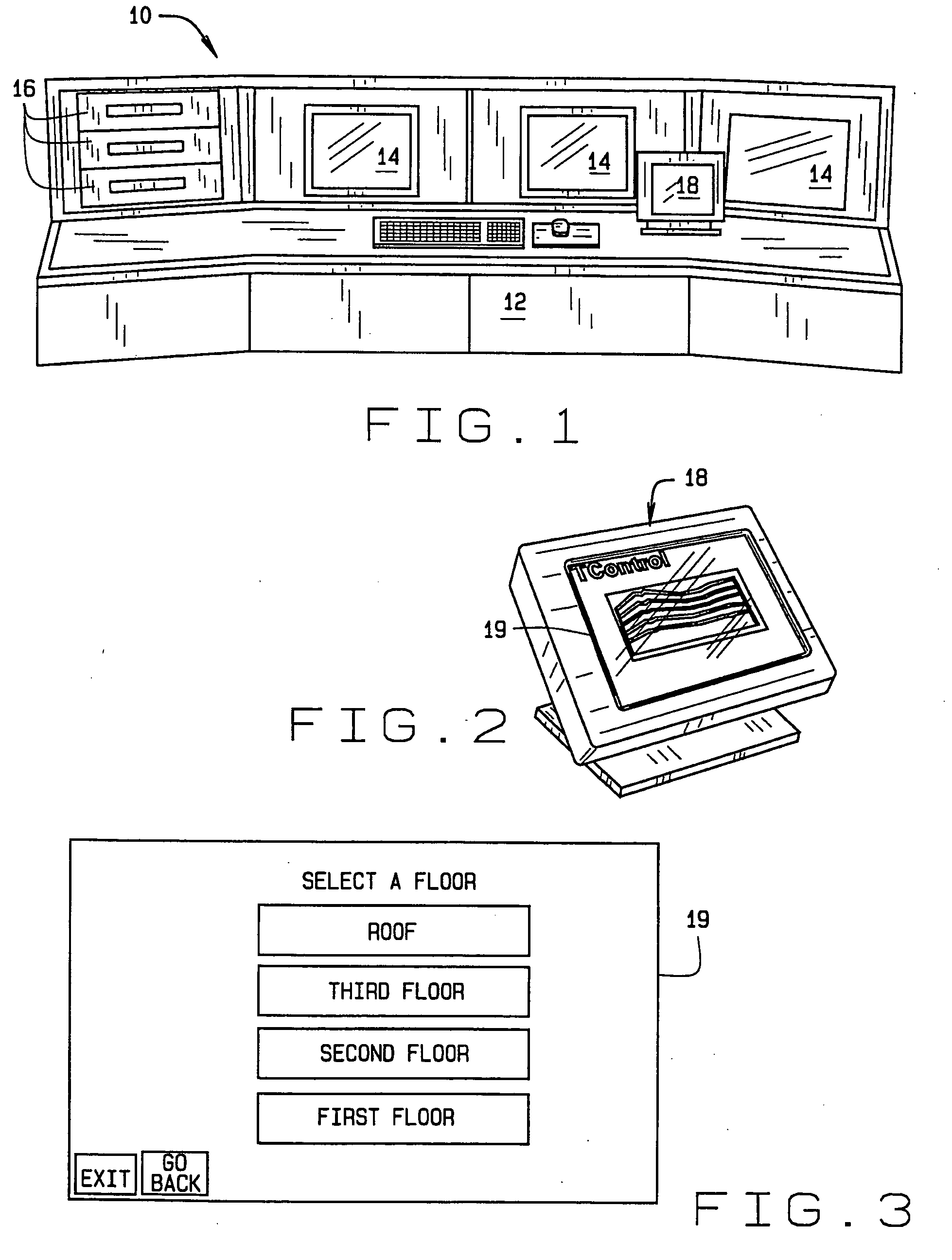

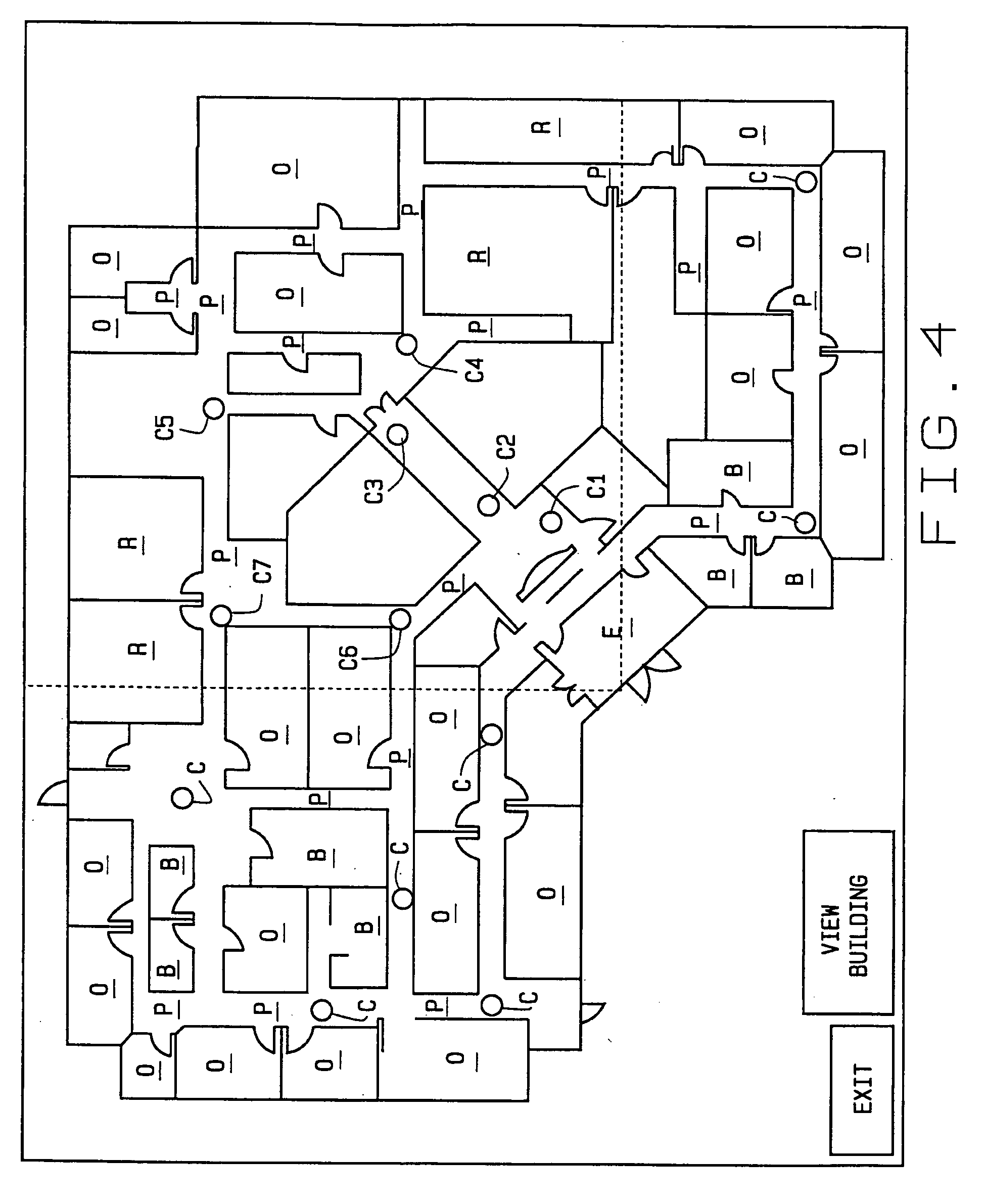

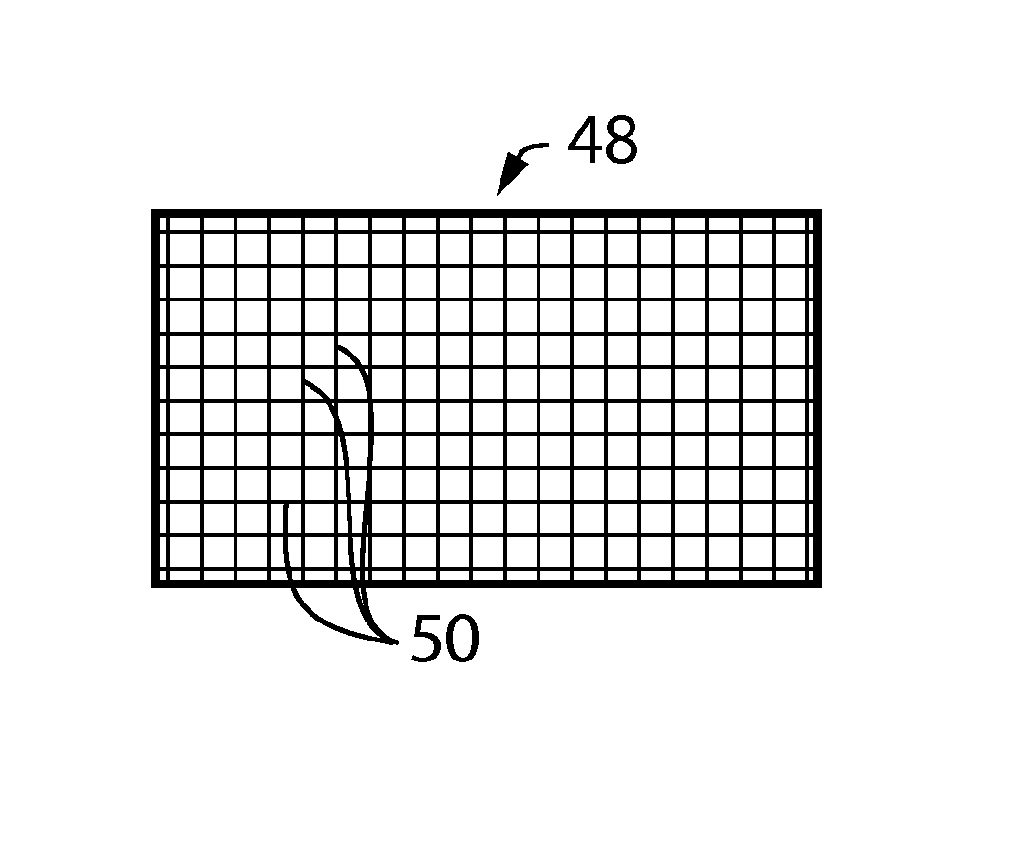

Closed circuit TV security system

InactiveUS20050225634A1Small sizeColor television detailsClosed circuit television systemsTelevision systemCamera image

A closed circuit television system (10) includes a plurality of cameras (C1-Cn) installed throughout an area along routes (R1-Rn) followed by someone passing through the area. A touch screen monitor (18) displays camera images (SC). A console 12 operator selects which camera's image to display. The camera selected is a camera observing a person or object in a path by which the person or object is moving through the area. A tracking system (30) is responsive to the operator for selecting one or more additional cameras positioned along the routes the person or object would follow in moving from their current location. The monitor also displays images from each of these other selected cameras. The tracking system allows the operator to change the selection of cameras whose images are displayed in response to the route, and changes in route, as well as to initiate recording of the camera imagery.

Owner:ADT SECURITY SERVICES INC

System and method of establishing a multi-camera image using pixel remapping

ActiveUS9900522B2Eliminate misalignmentTelevision system detailsGeometric image transformationCamera imageComputer graphics (images)

Owner:MAGNA ELECTRONICS INC

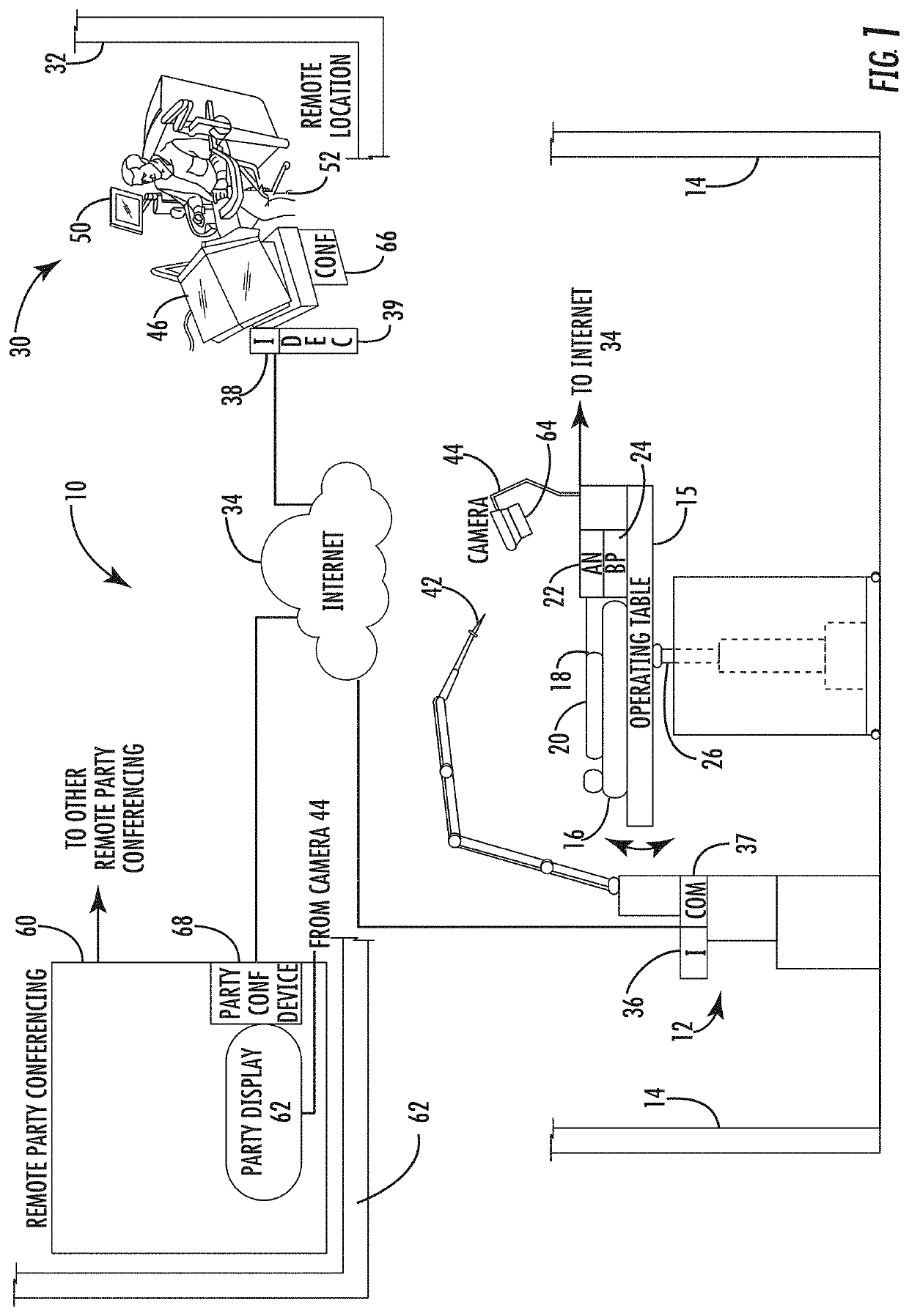

Surgical simulation system using force sensing and optical tracking and robotic surgery system

A surgical simulation device includes a support structure and animal tissue carried in a tray. A simulated human skeleton is carried by the support structure above the animal tissue and includes simulated human skin. A camera images the animal tissue and an image processor receives images of markers positioned on the ribs and animal tissue and forms a three-dimensional wireframe image. An operating table is adjacent a local robotic surgery station as part of a robotic surgery station and includes at least one patient support configured to support the patient during robotic surgery. At least one patient force / torque sensor is coupled to the at least one patient support and configured to sense at least one of force and torque experienced by the patient during robotic surgery.

Owner:INTUITIVE SURGICAL OPERATIONS INC

Method and system for processing captured image information in an interactive video display system

A method and system for processing captured image information in an interactive video display system. In one embodiment, a special learning condition of a captured camera image is detected. The captured camera image is compared to a normal background model image and to a second background model image, wherein the second background model is learned at a faster rate than the normal background model. A vision image is generated based on the comparisons. In another embodiment, an object in the captured image information that does not move for a predetermined time period is detected. A burn-in image comprising the object is generated, wherein the burn-in image is operable to allow a vision system of the interactive video display system to classify the object as background.

Owner:MICROSOFT TECH LICENSING LLC

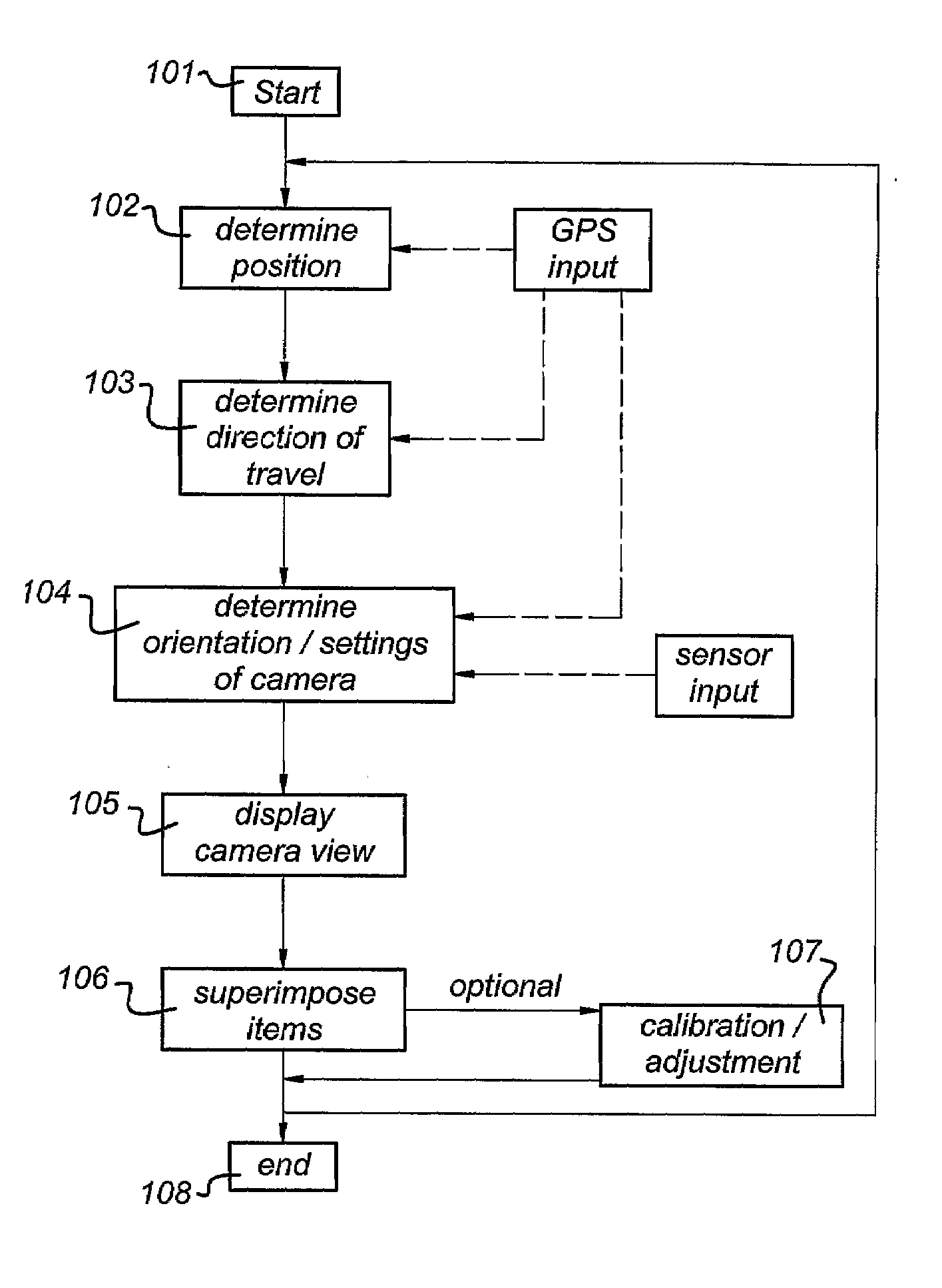

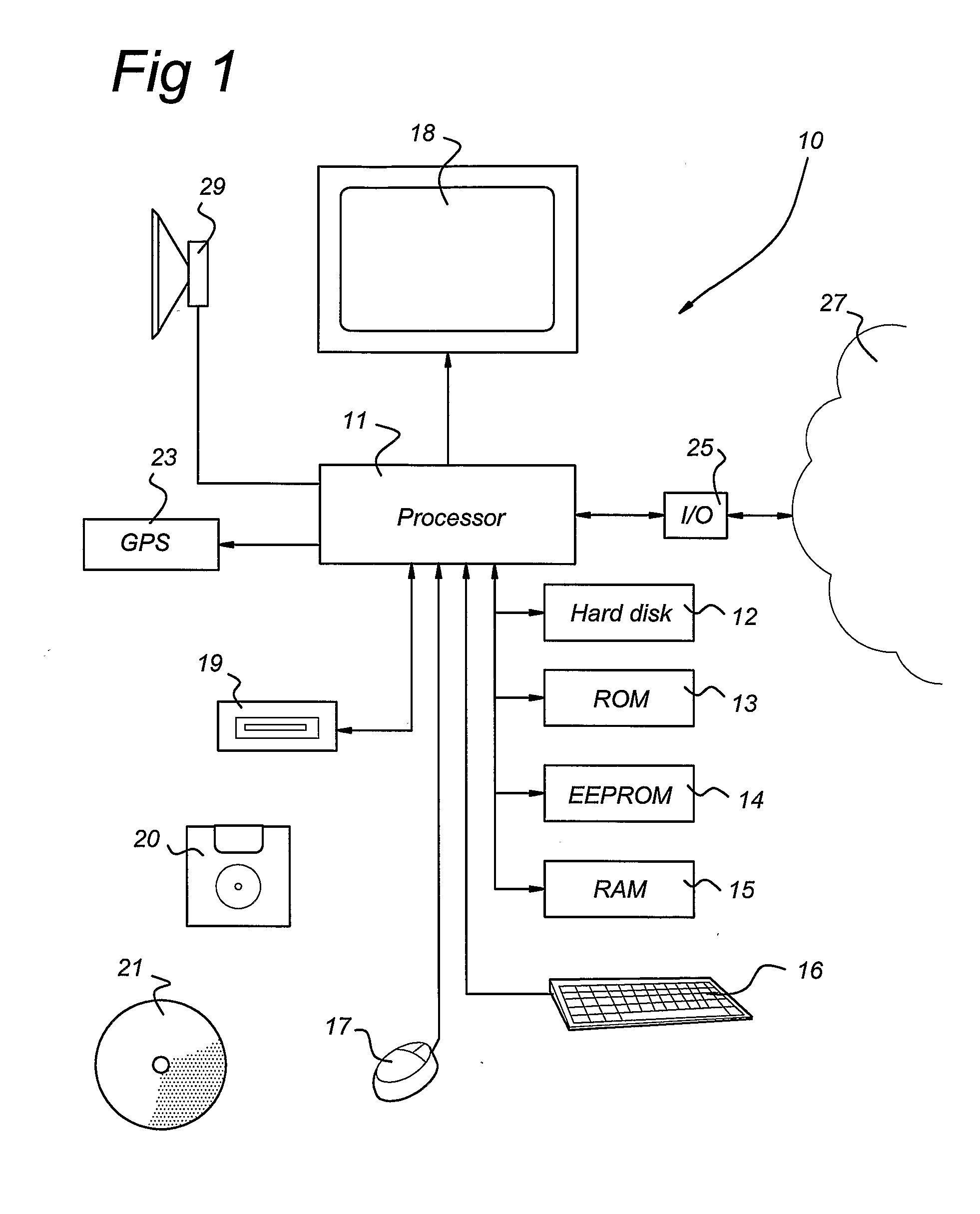

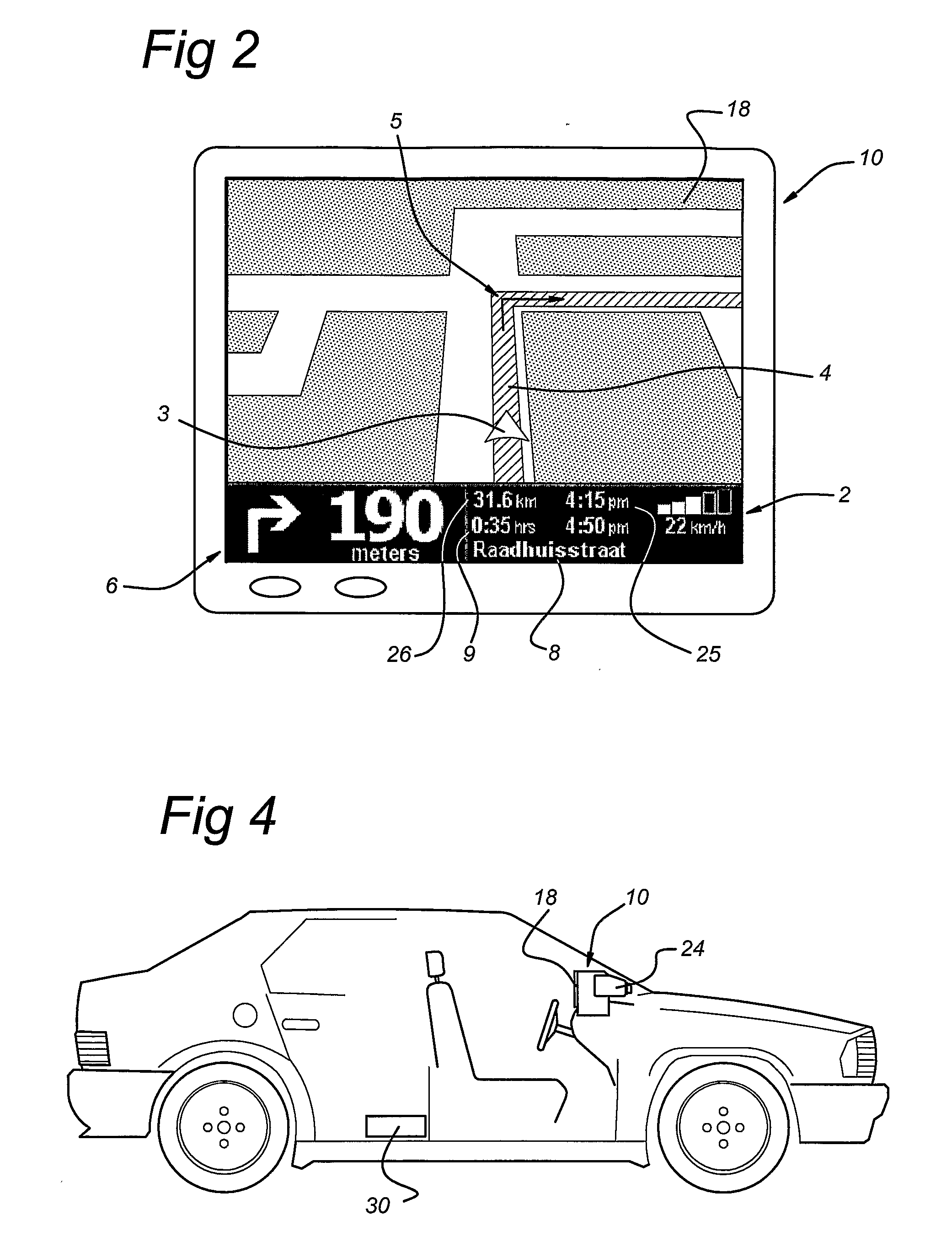

Navigation Device with Camera-Info

ActiveUS20090125234A1Easy to explainInstruments for road network navigationRoad vehicles traffic controlCamera imageDisplay device

The present invention relates to a navigation device (10). The navigation device (10) is arranged to display navigation directions (3, 4, 5) on a display (18). The navigation device (10) is further arranged to receive a feed from a camera (24). The navigation device (10) is further arranged to display a combination of a camera image fr>m the feed fr>m the camera (24) and the navigation directions (3, 4, 5) on the display (18).

Owner:TOMTOM NAVIGATION BV

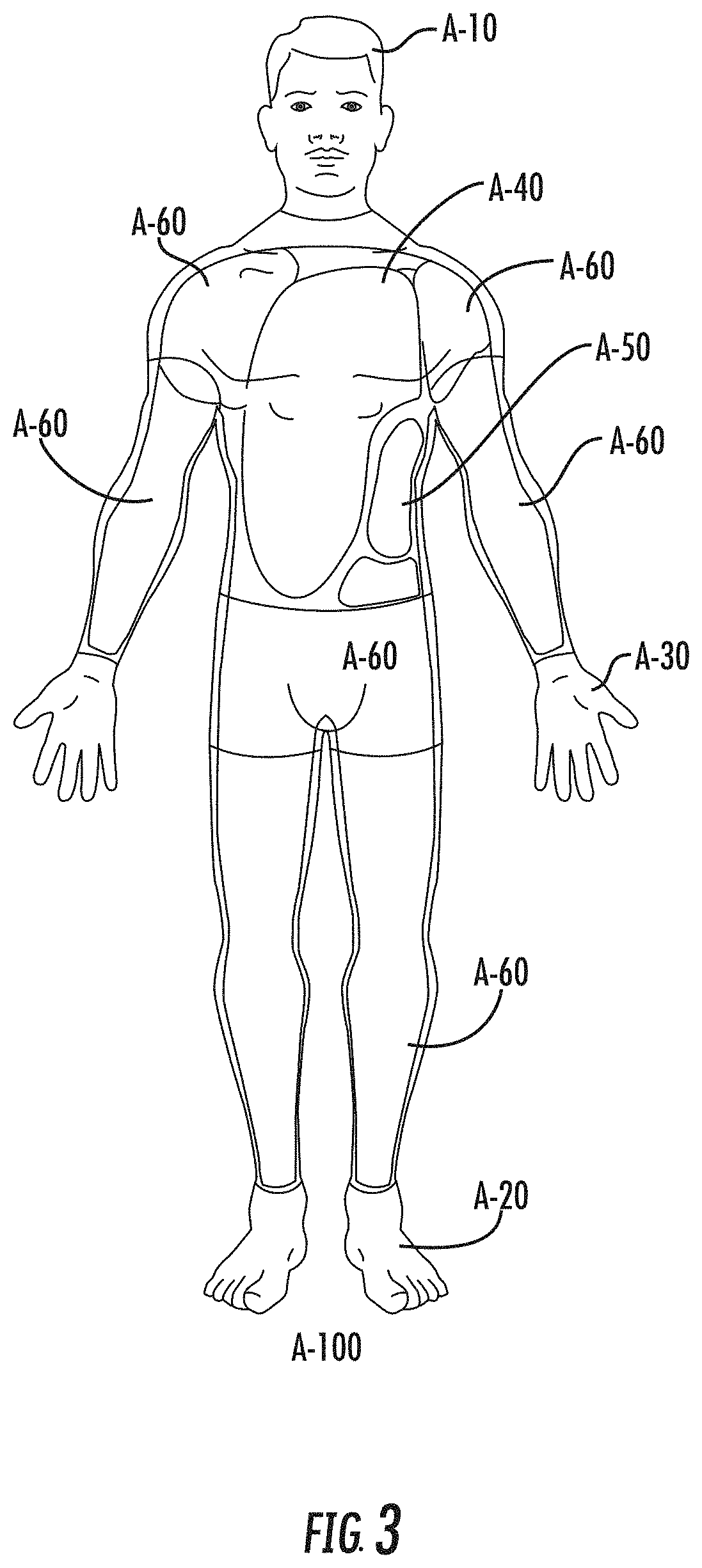

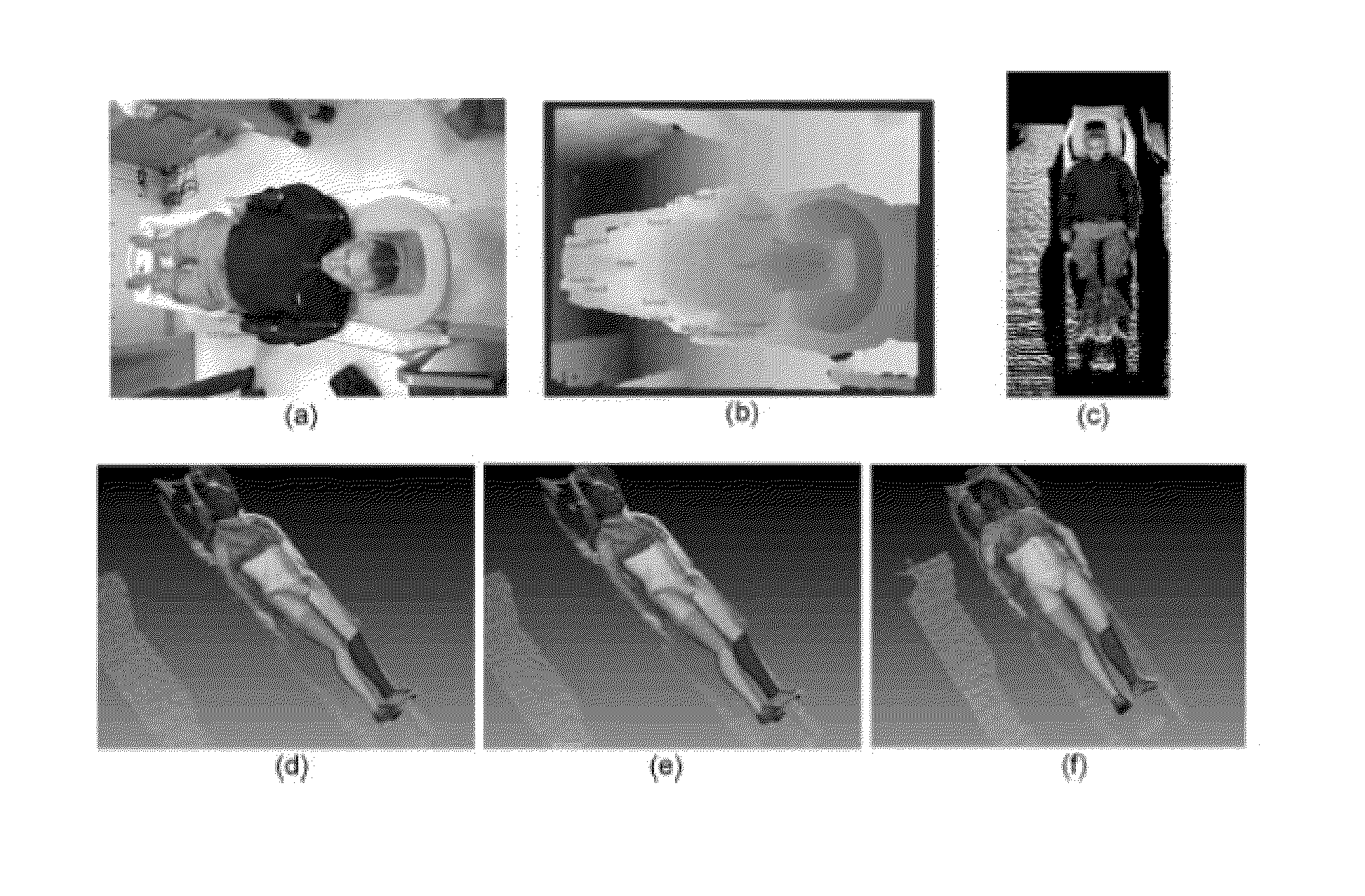

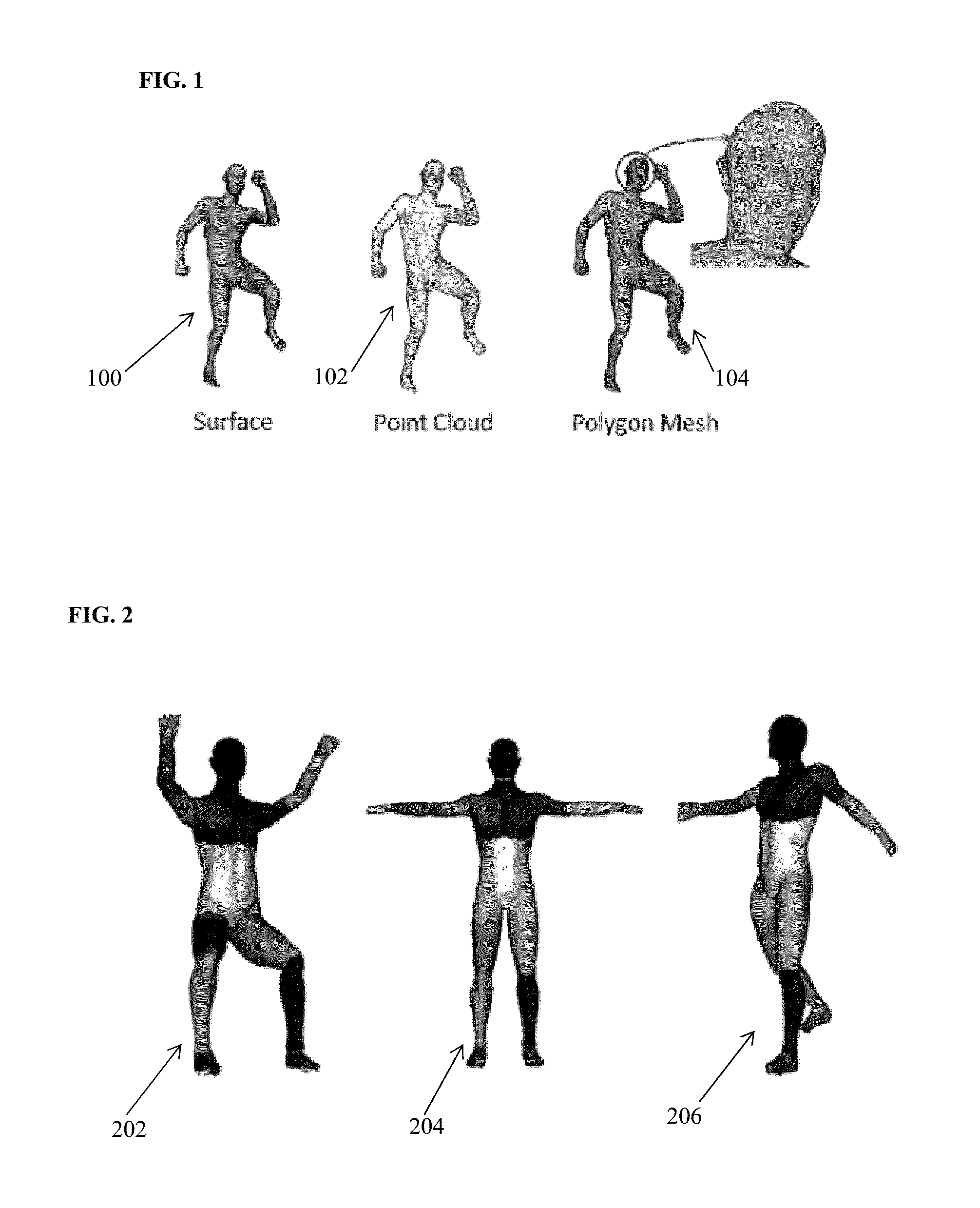

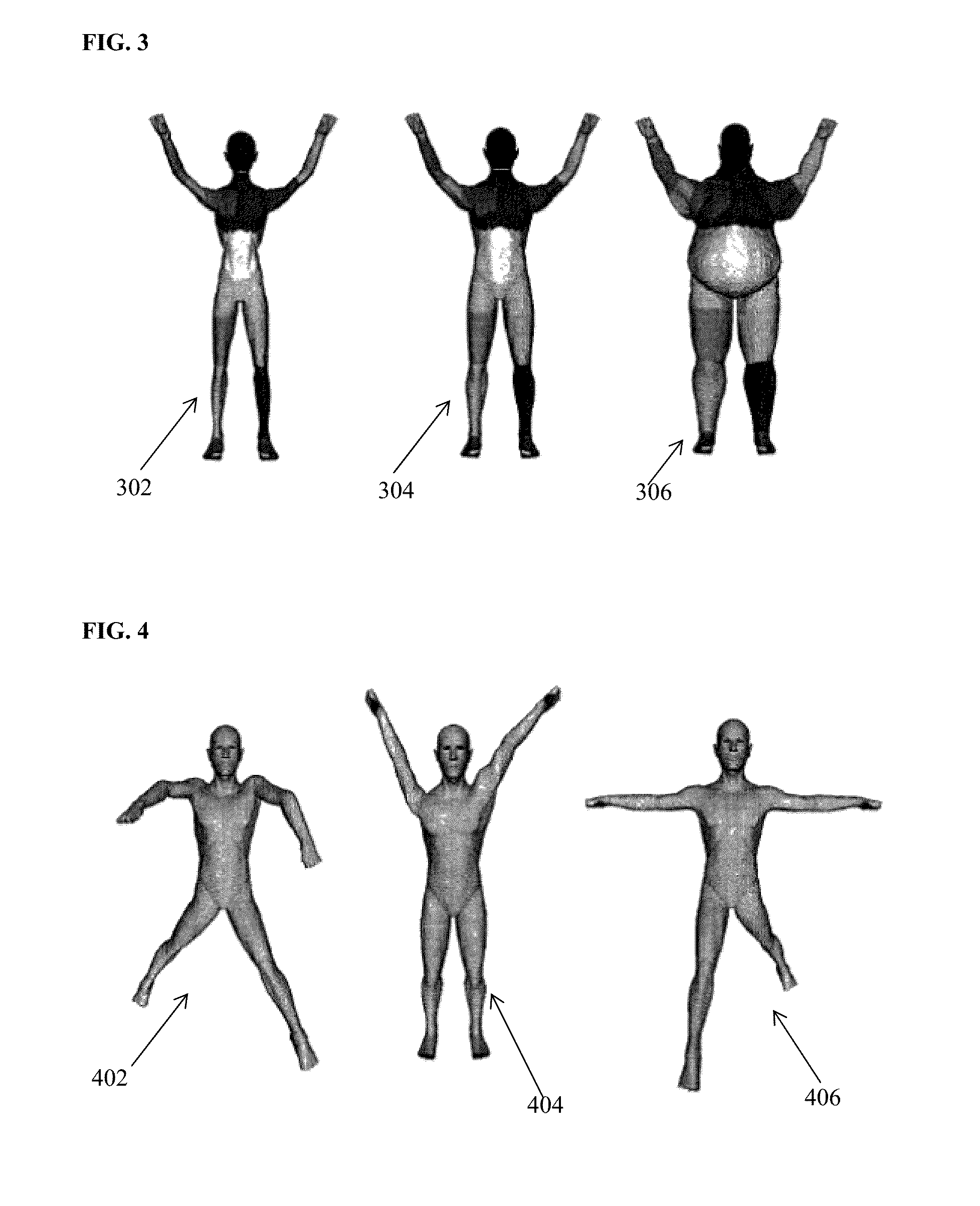

Method and System for Constructing Personalized Avatars Using a Parameterized Deformable Mesh

A method and apparatus for generating a 3D personalized mesh of a person from a depth camera image for medical imaging scan planning is disclosed. A depth camera image of a subject is converted to a 3D point cloud. A plurality of anatomical landmarks are detected in the 3D point cloud. A 3D avatar mesh is initialized by aligning a template mesh to the 3D point cloud based on the detected anatomical landmarks. A personalized 3D avatar mesh of the subject is generated by optimizing the 3D avatar mesh using a trained parametric deformable model (PDM). The optimization is subject to constraints that take into account clothing worn by the subject and the presence of a table on which the subject in lying.

Owner:SIEMENS HEALTHCARE GMBH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com