Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

3118 results about "Optical flow" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Optical flow or optic flow is the pattern of apparent motion of objects, surfaces, and edges in a visual scene caused by the relative motion between an observer and a scene. Optical flow can also be defined as the distribution of apparent velocities of movement of brightness pattern in an image. The concept of optical flow was introduced by the American psychologist James J. Gibson in the 1940s to describe the visual stimulus provided to animals moving through the world. Gibson stressed the importance of optic flow for affordance perception, the ability to discern possibilities for action within the environment. Followers of Gibson and his ecological approach to psychology have further demonstrated the role of the optical flow stimulus for the perception of movement by the observer in the world; perception of the shape, distance and movement of objects in the world; and the control of locomotion.

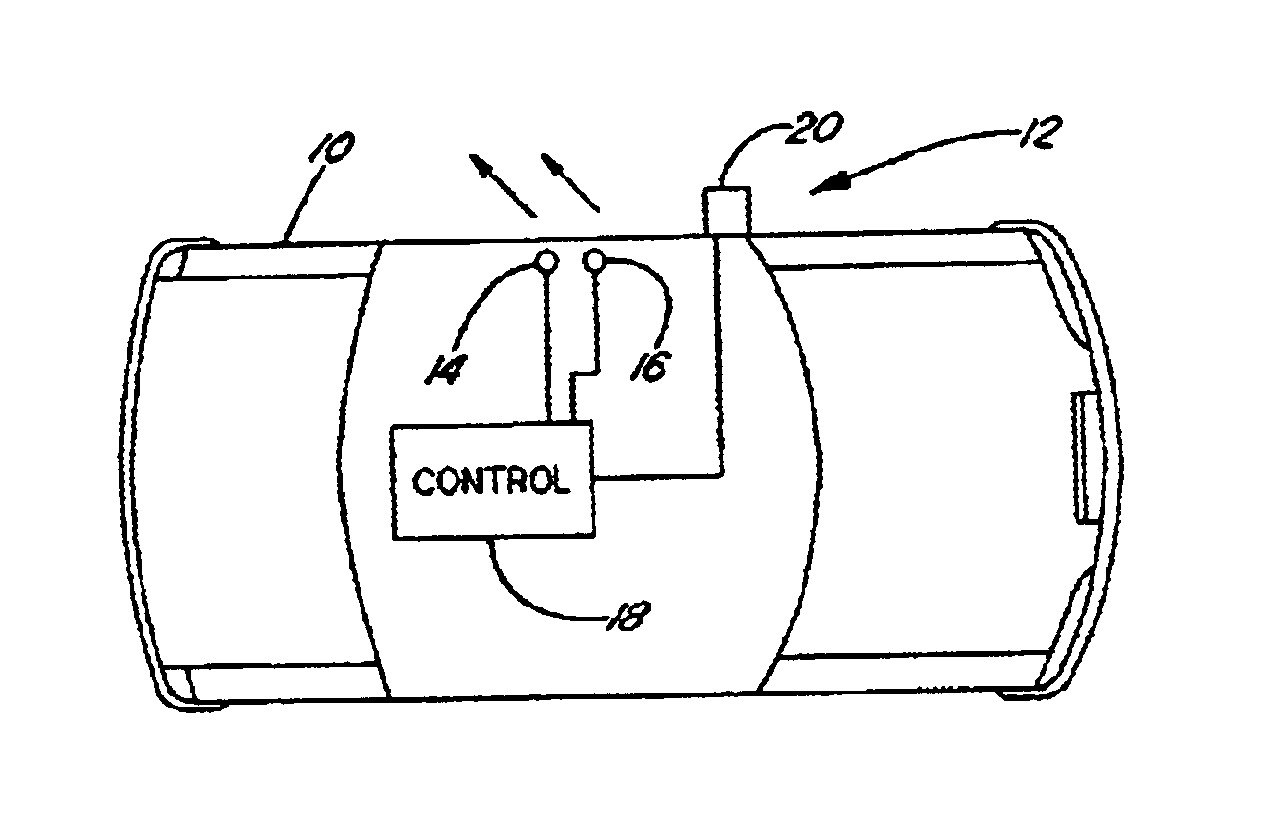

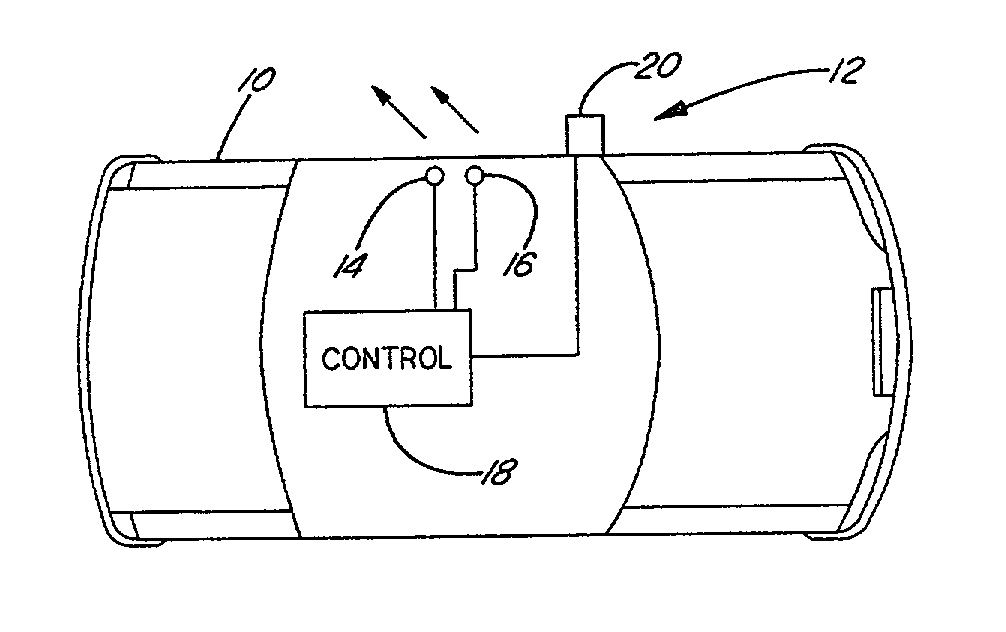

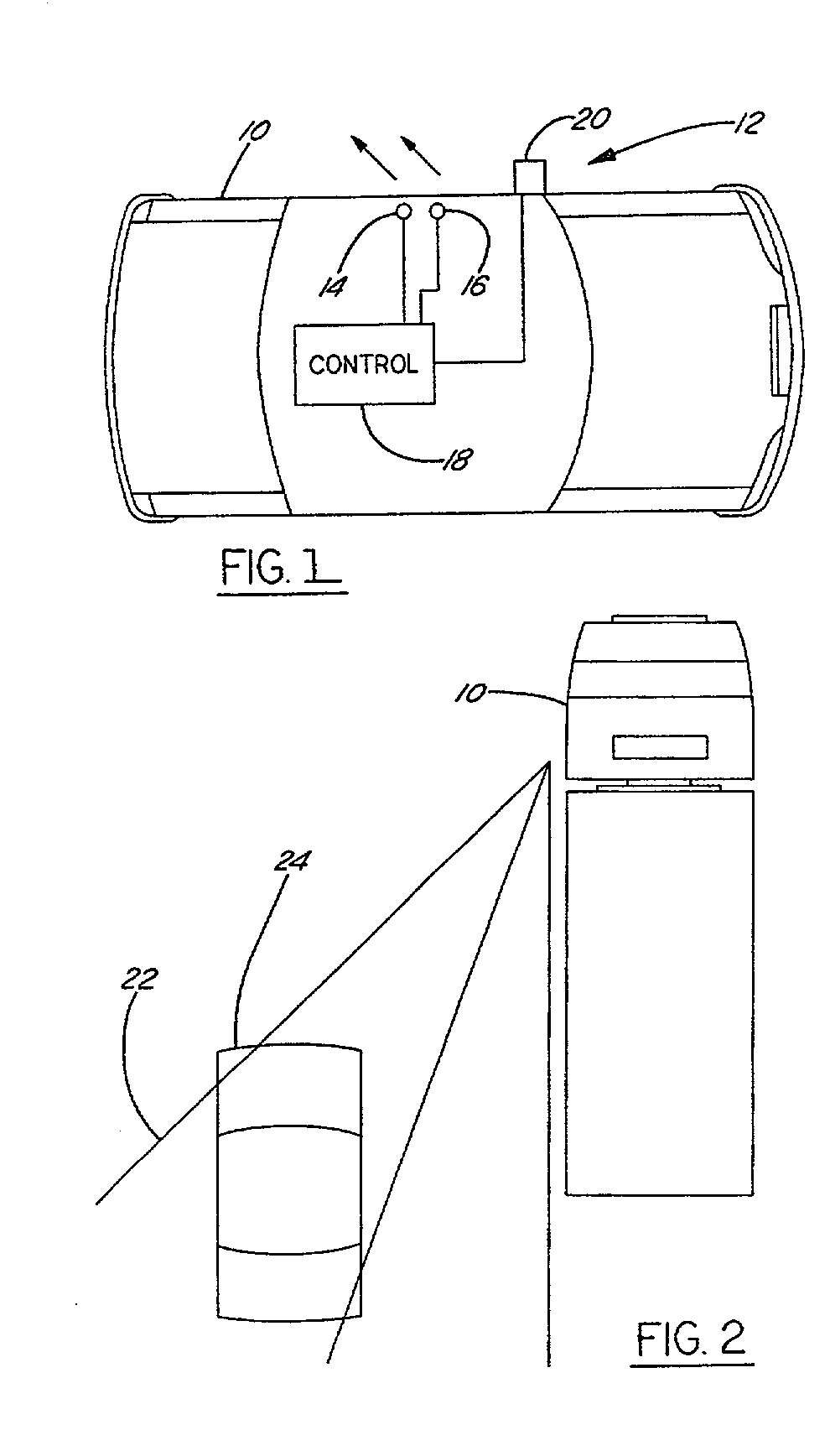

Vehicle blind spot monitoring system

InactiveUS6737964B2Improve securityAnti-collision systemsCharacter and pattern recognitionMonitoring systemDisplay device

A blind spot monitoring system for a vehicle includes two pairs of stereo cameras, two displays and a controller. The stereo cameras monitor vehicle blind spots and generate a corresponding pair of digital signals. The display shows a rearward vehicle view and may replace, or work in tandem with, a side view mirror. The controller is located in the vehicle and receives two pairs of digital signals. The controller includes control logic operative to analyze a stereopsis effect between each pair of stereo cameras and the optical flow over time to control the displays. The displays will show an expanded rearward view when a hazard is detected in the vehicle blind spot and show a normal rearward view when no hazard is detected in the vehicle blind spot.

Owner:FORD GLOBAL TECH LLC

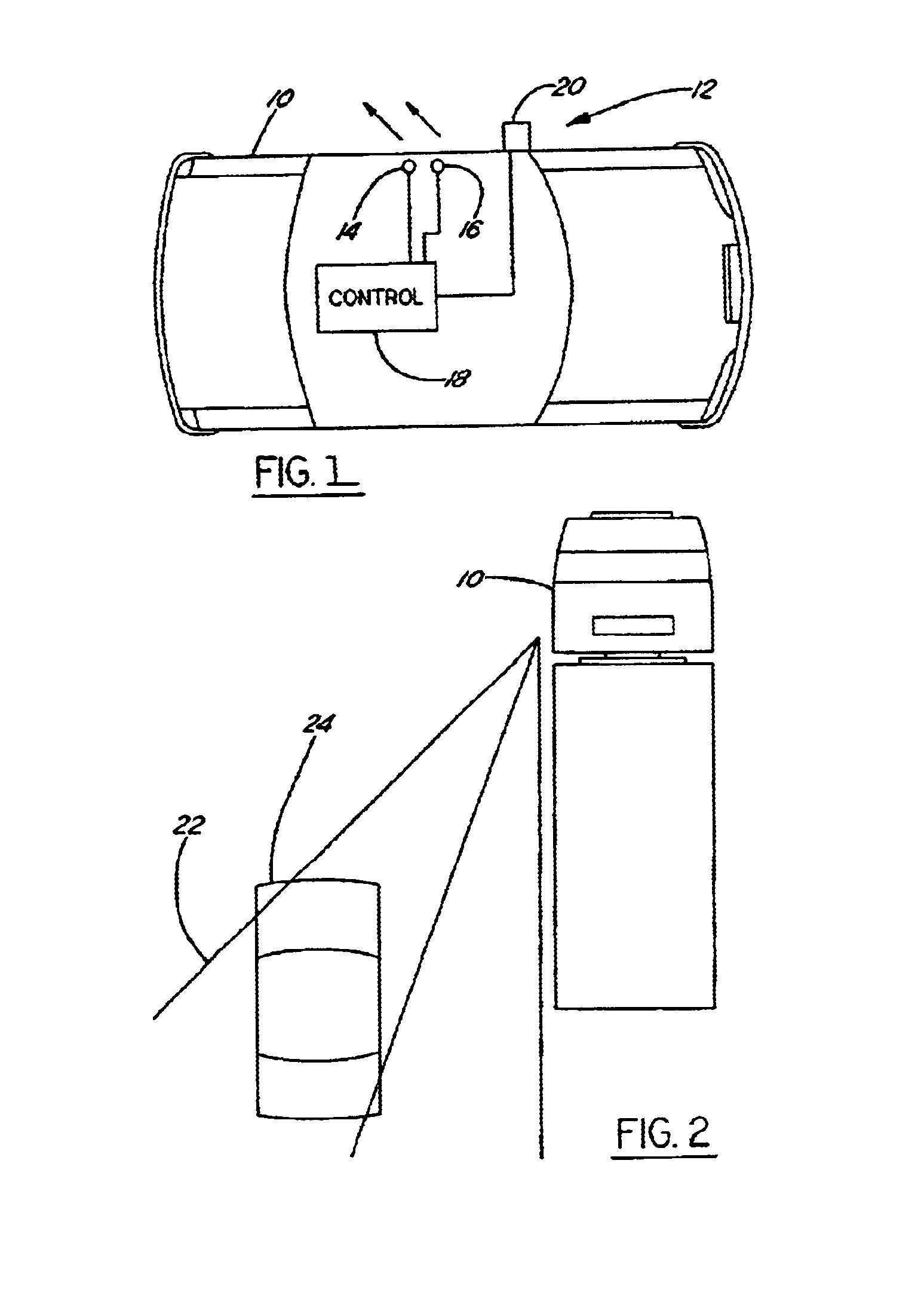

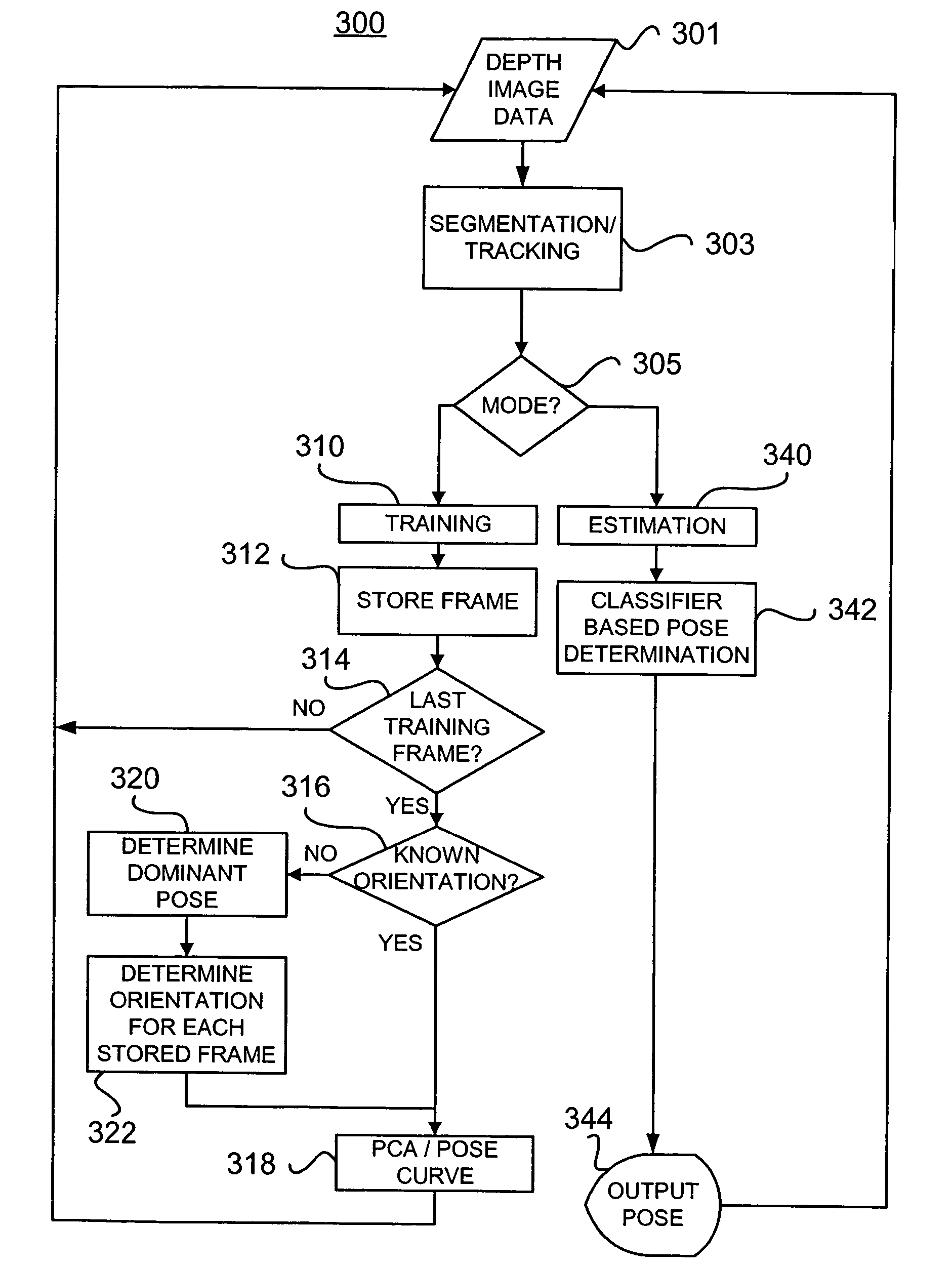

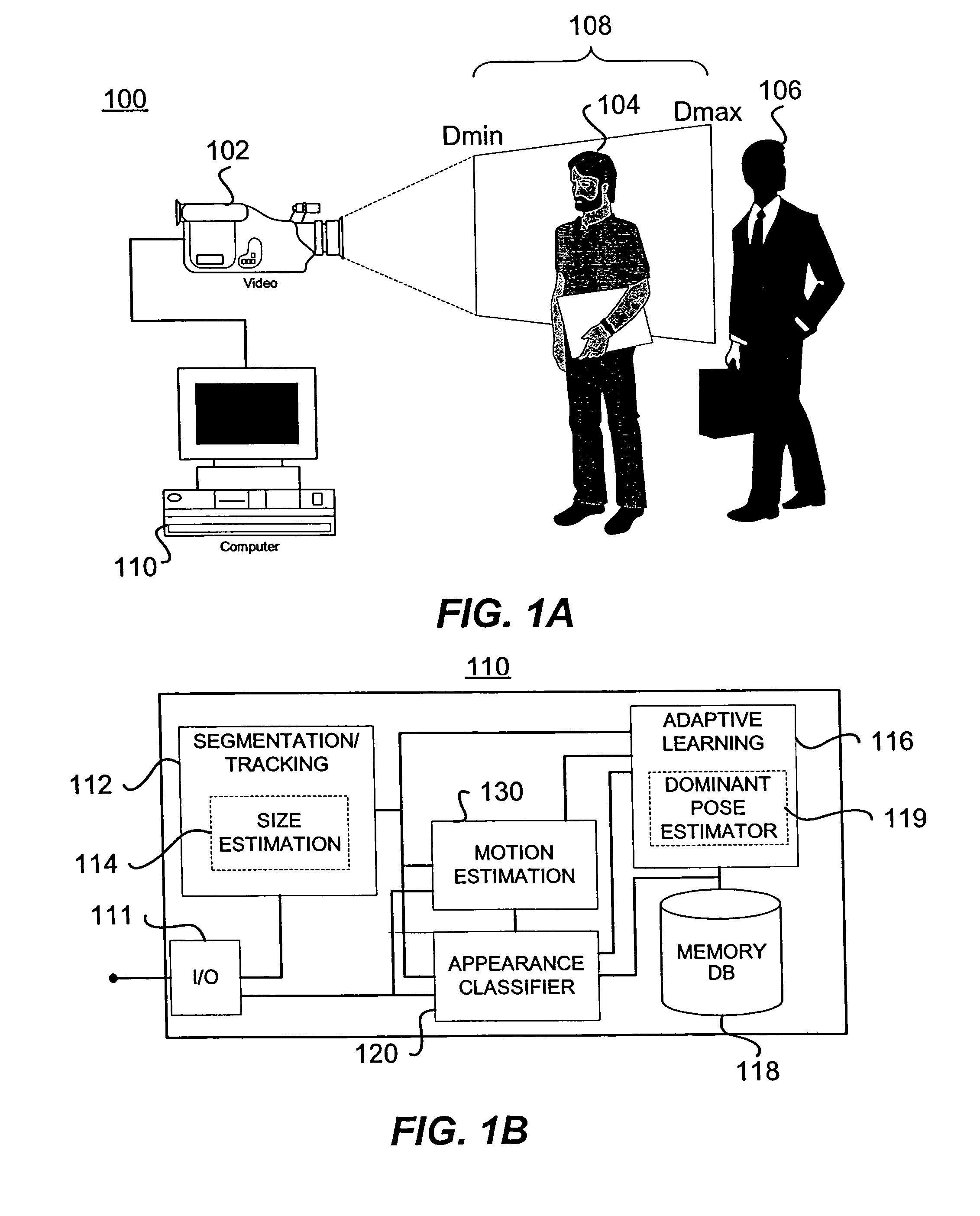

Target orientation estimation using depth sensing

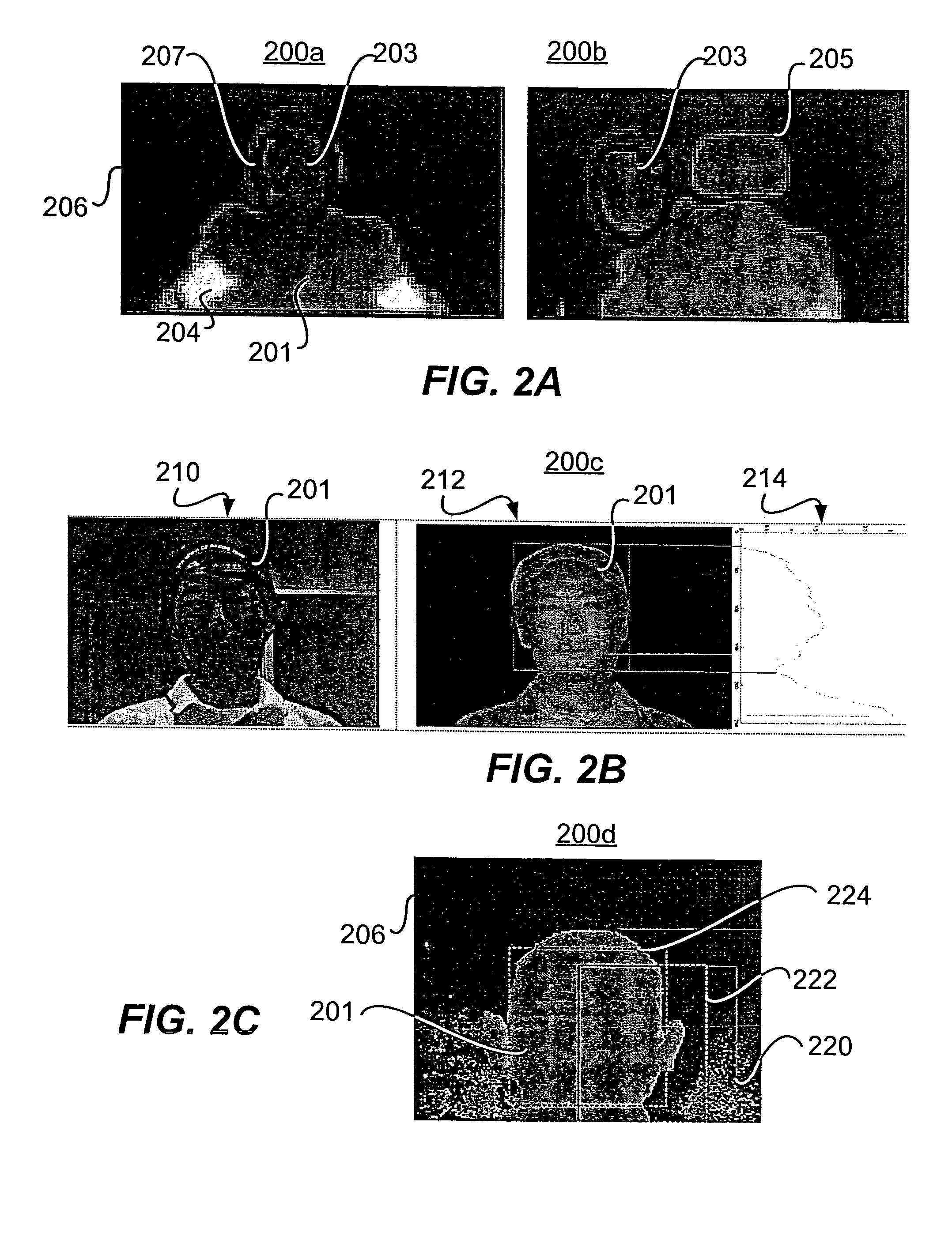

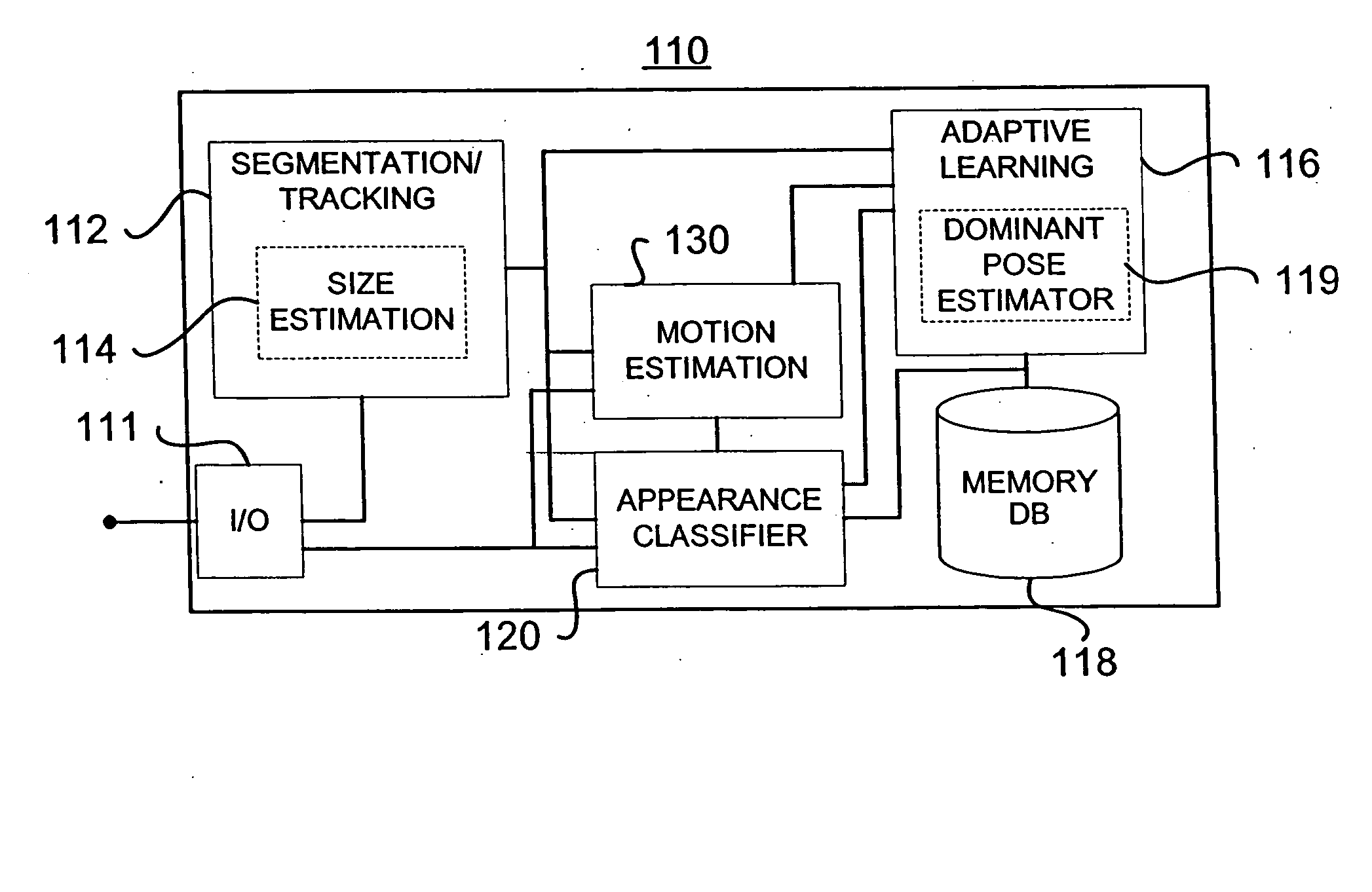

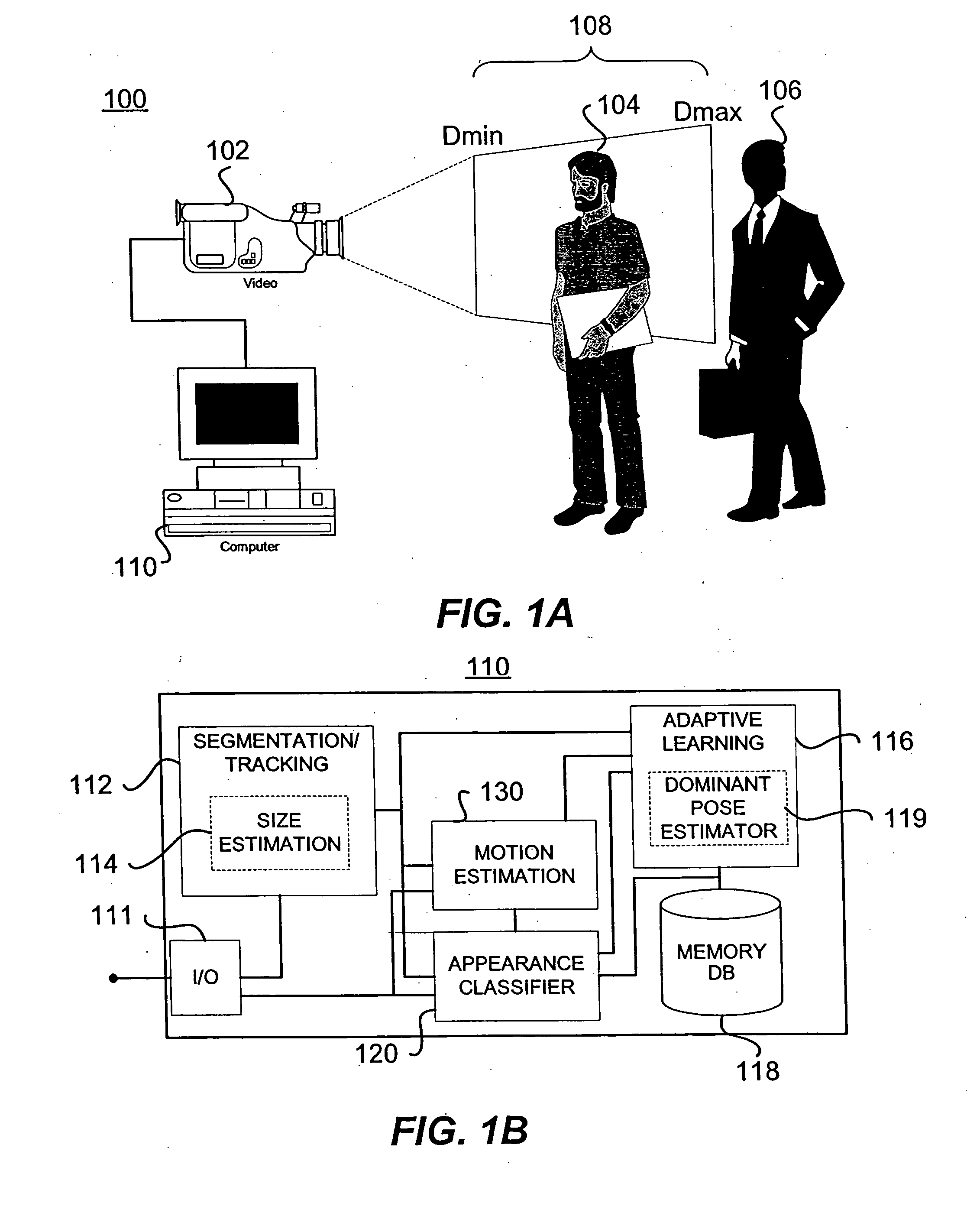

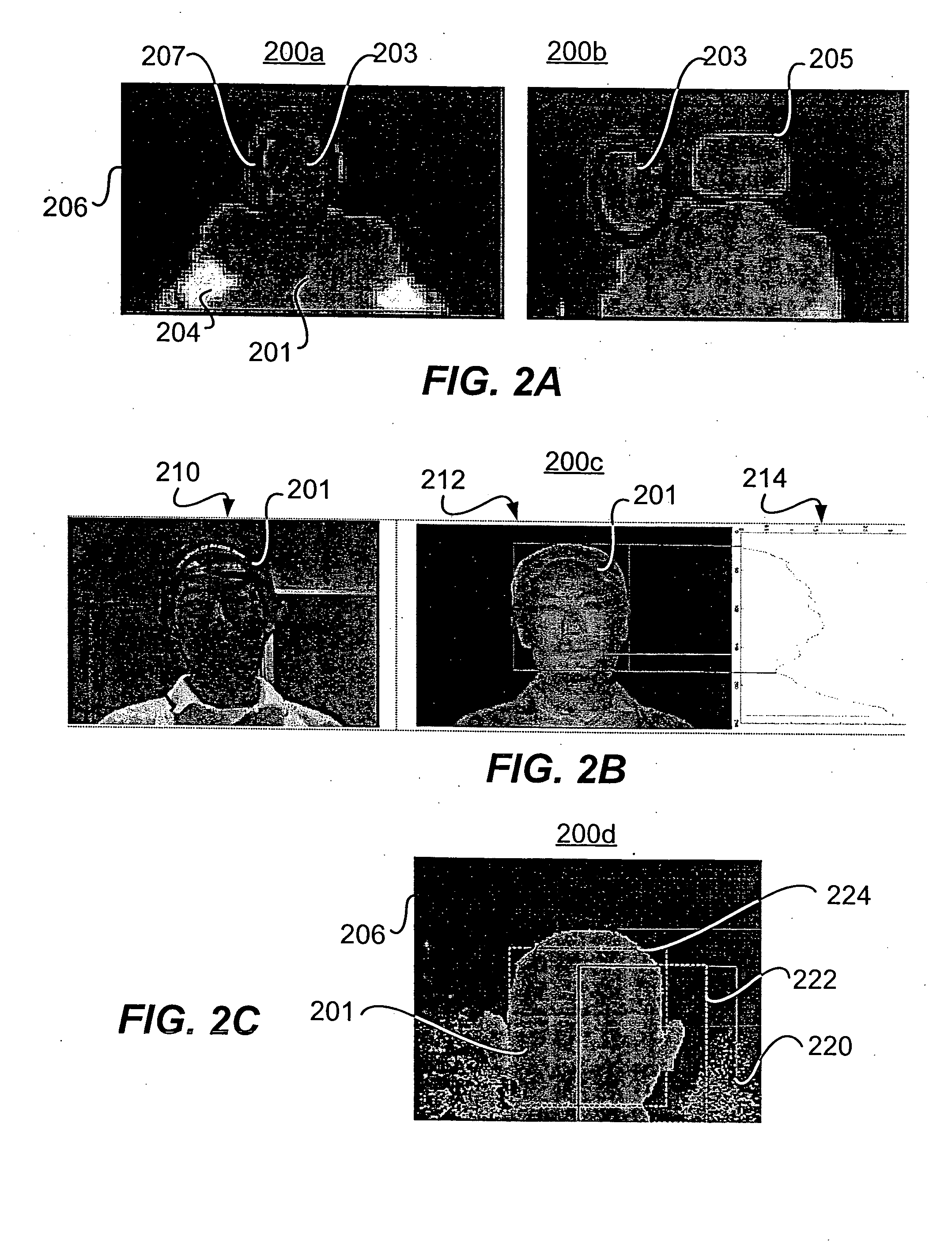

A system for estimating orientation of a target based on real-time video data uses depth data included in the video to determine the estimated orientation. The system includes a time-of-flight camera capable of depth sensing within a depth window. The camera outputs hybrid image data (color and depth). Segmentation is performed to determine the location of the target within the image. Tracking is used to follow the target location from frame to frame. During a training mode, a target-specific training image set is collected with a corresponding orientation associated with each frame. During an estimation mode, a classifier compares new images with the stored training set to determine an estimated orientation. A motion estimation approach uses an accumulated rotation / translation parameter calculation based on optical flow and depth constrains. The parameters are reset to a reference value each time the image corresponds to a dominant orientation.

Owner:HONDA MOTOR CO LTD +1

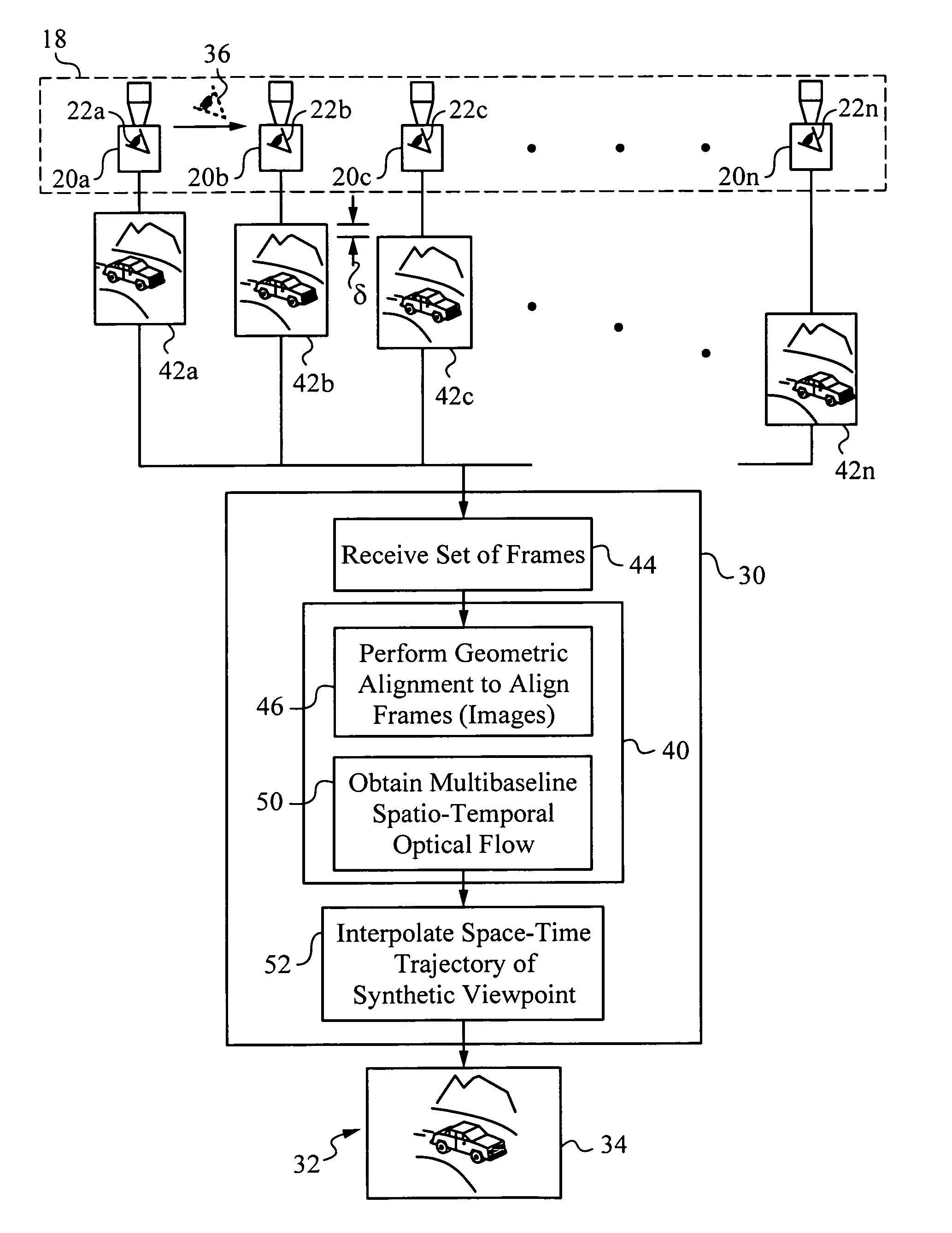

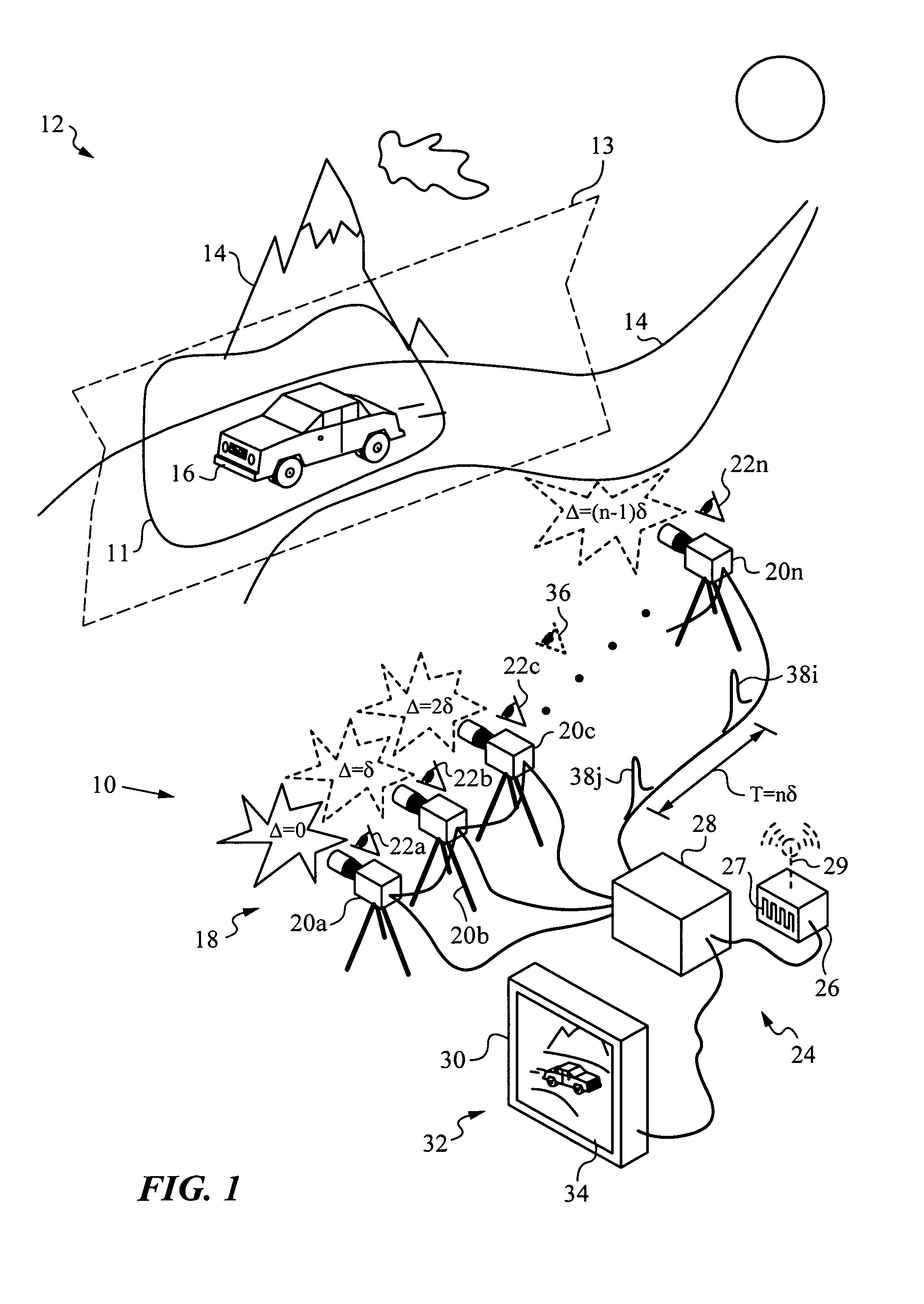

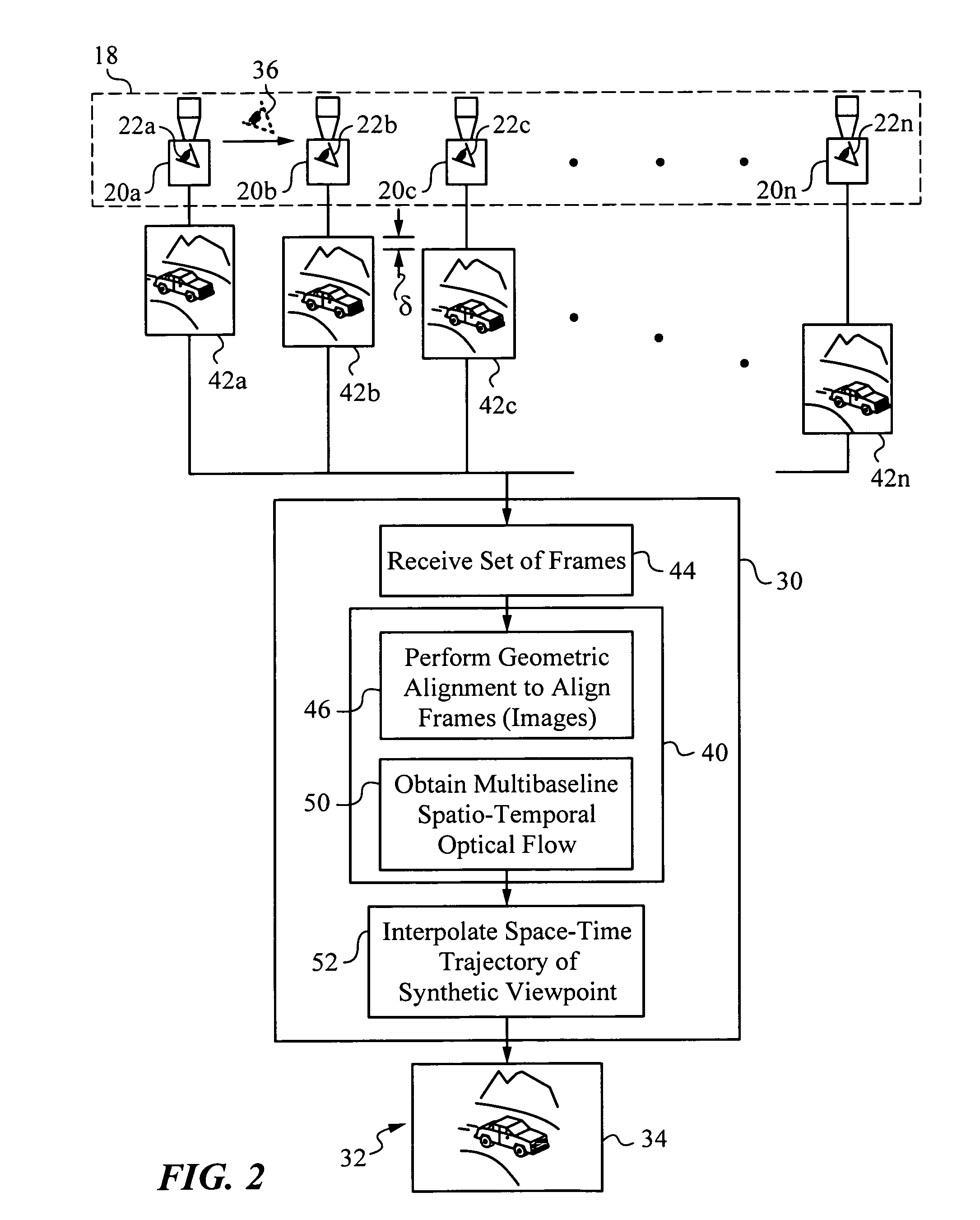

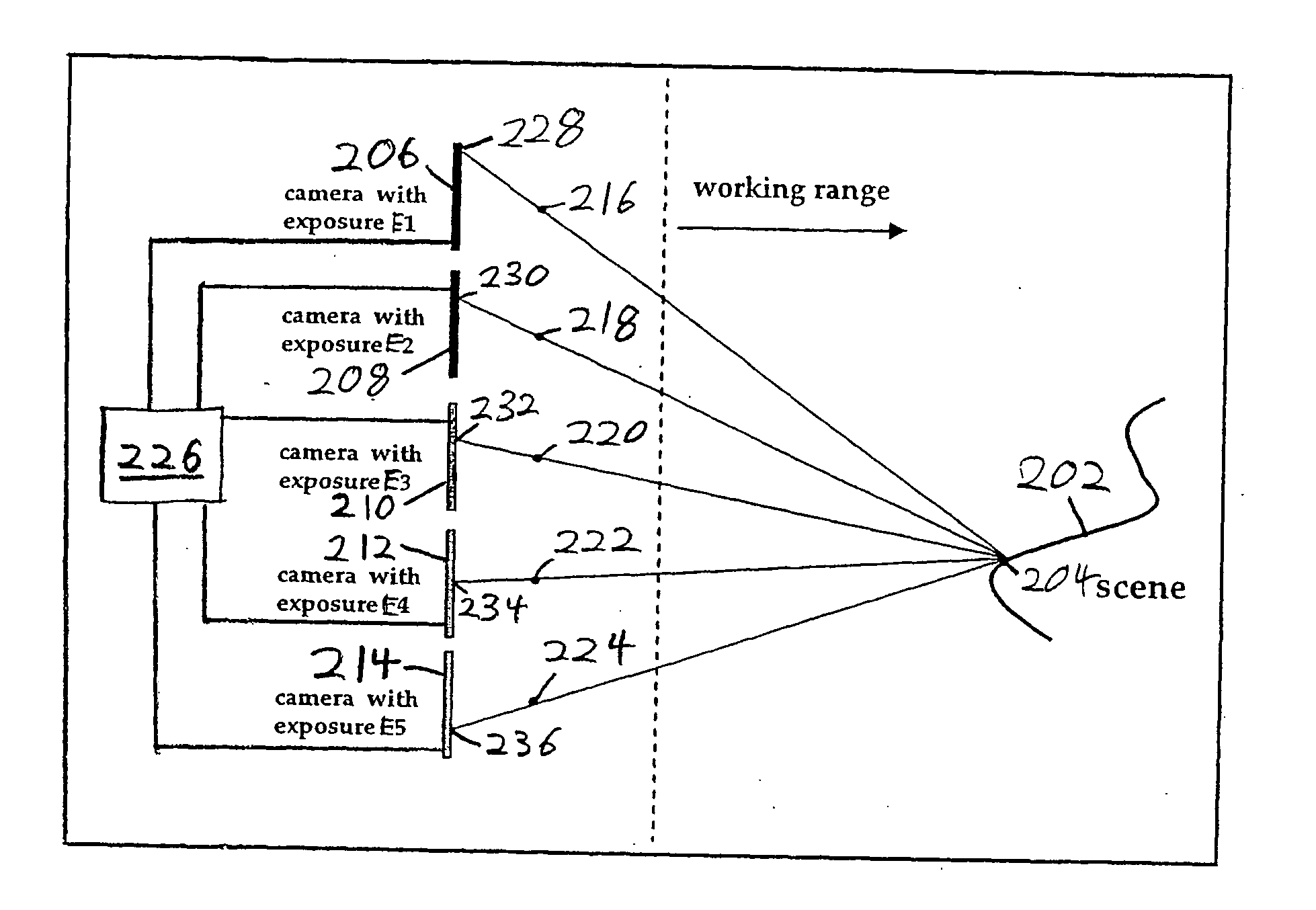

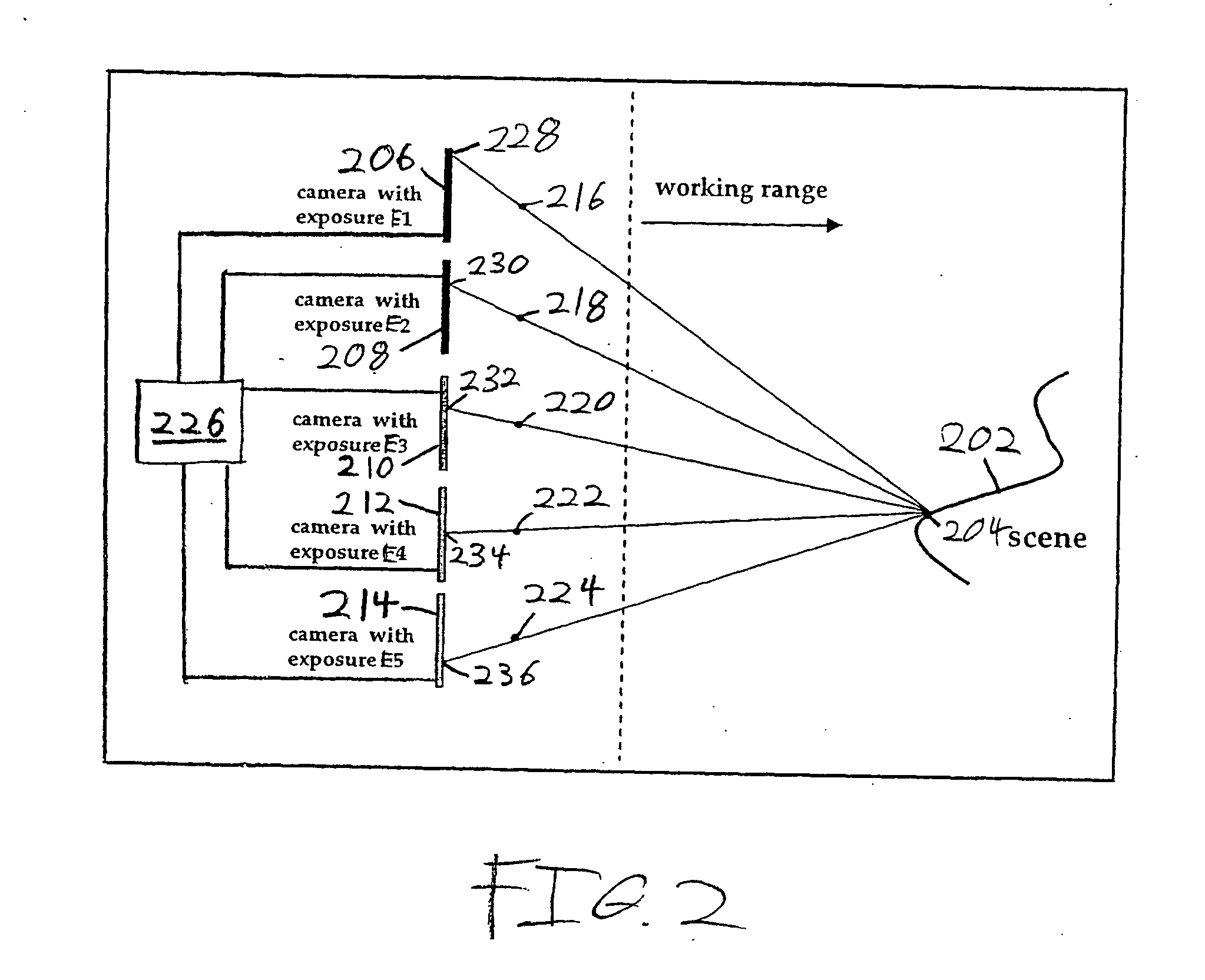

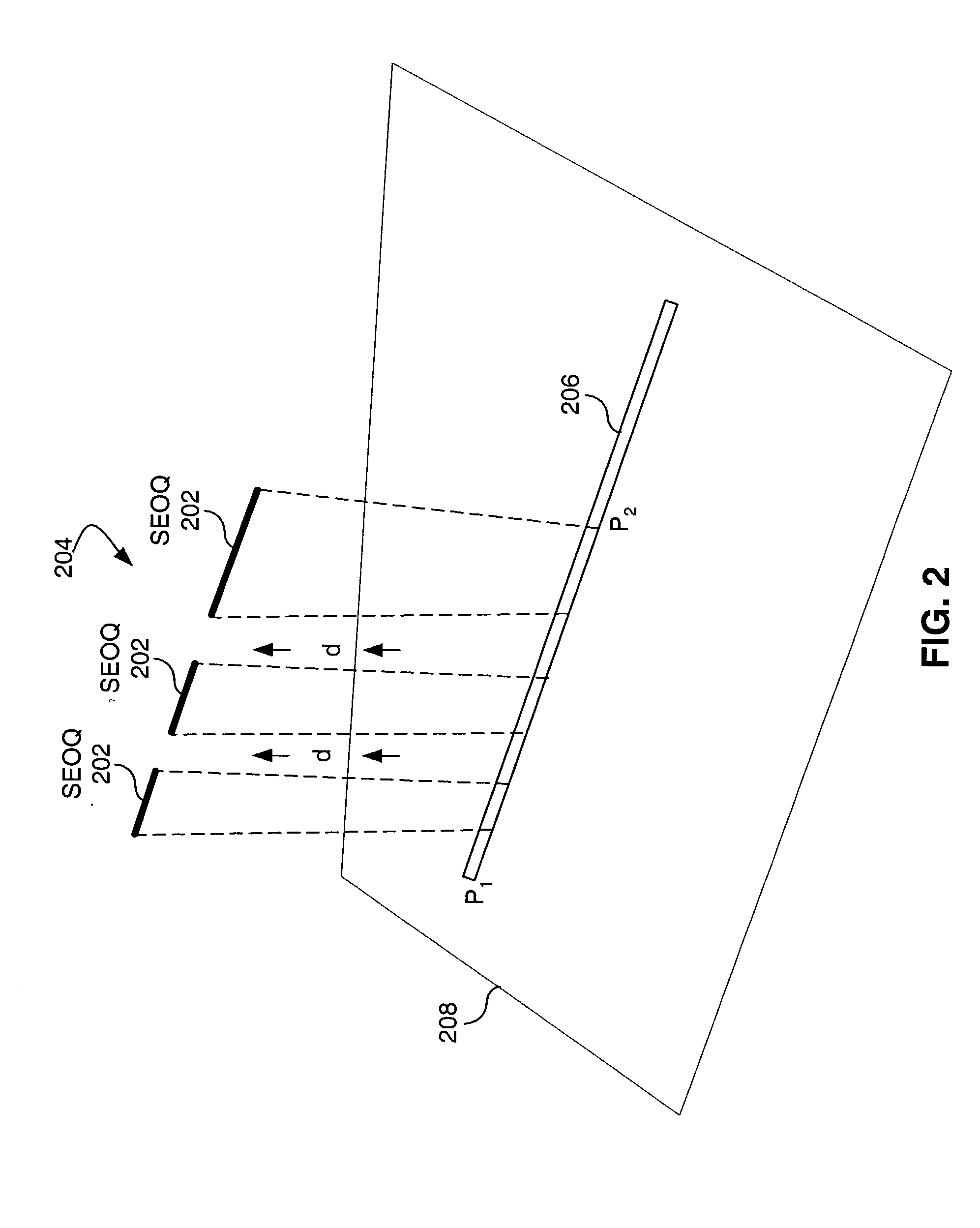

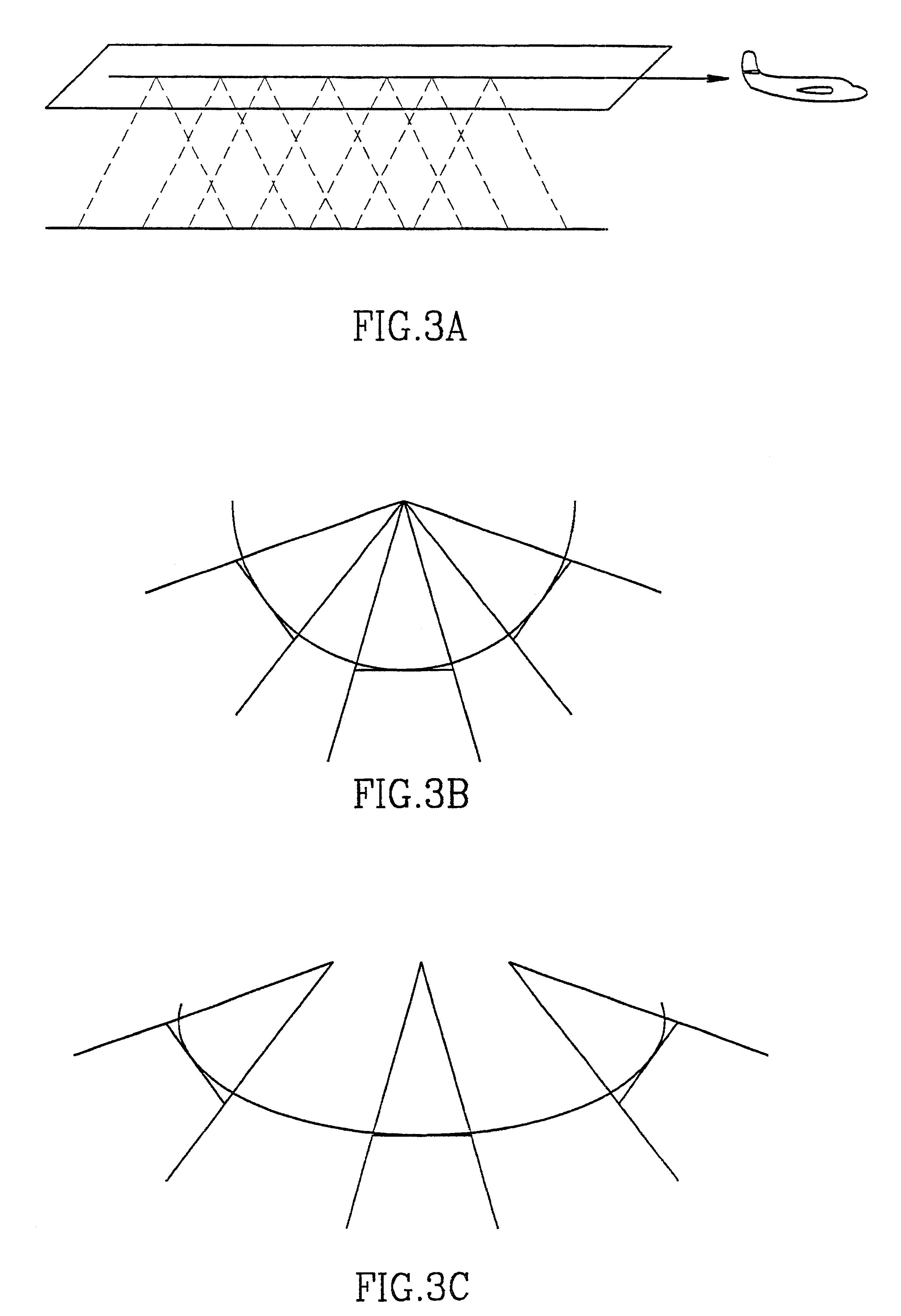

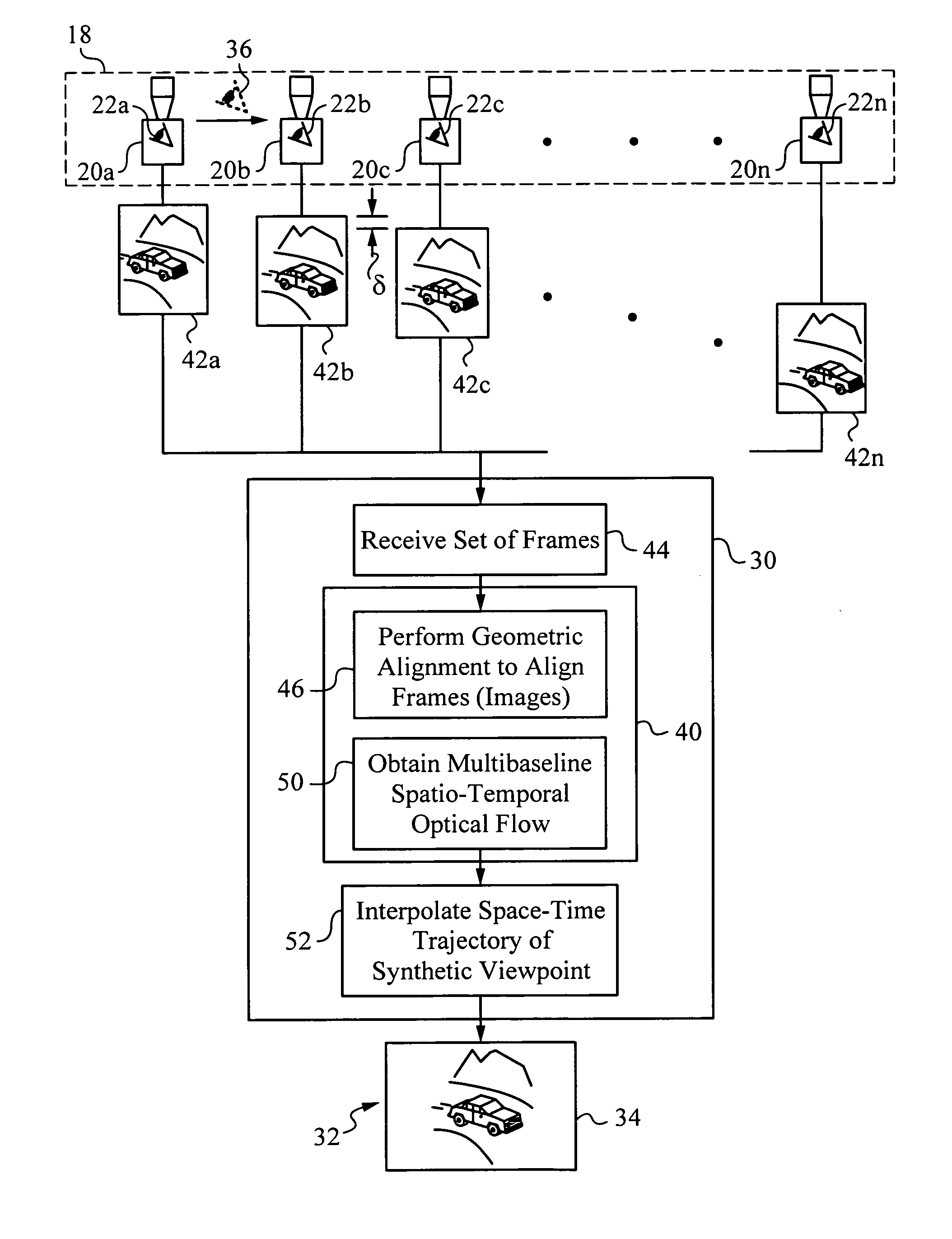

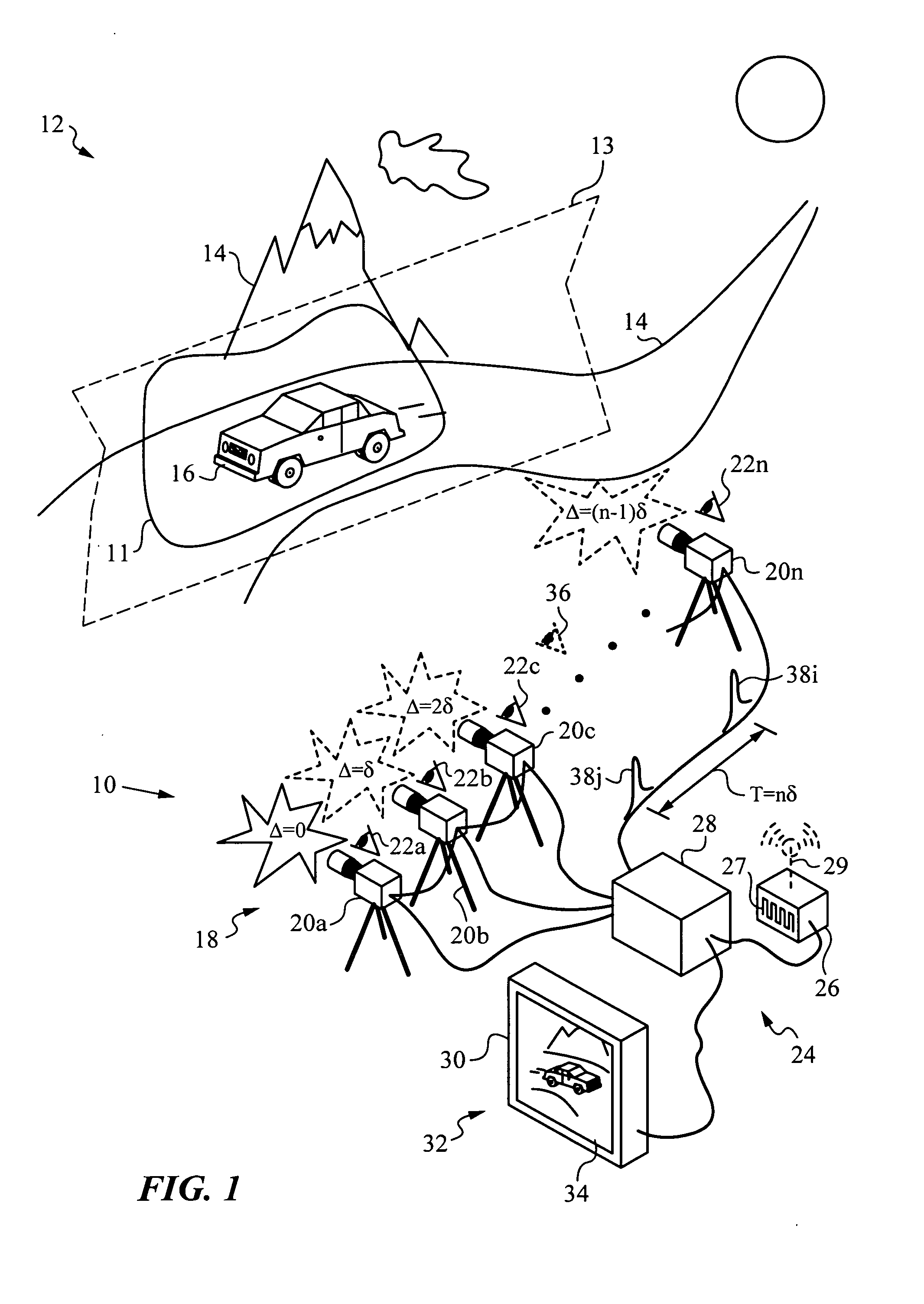

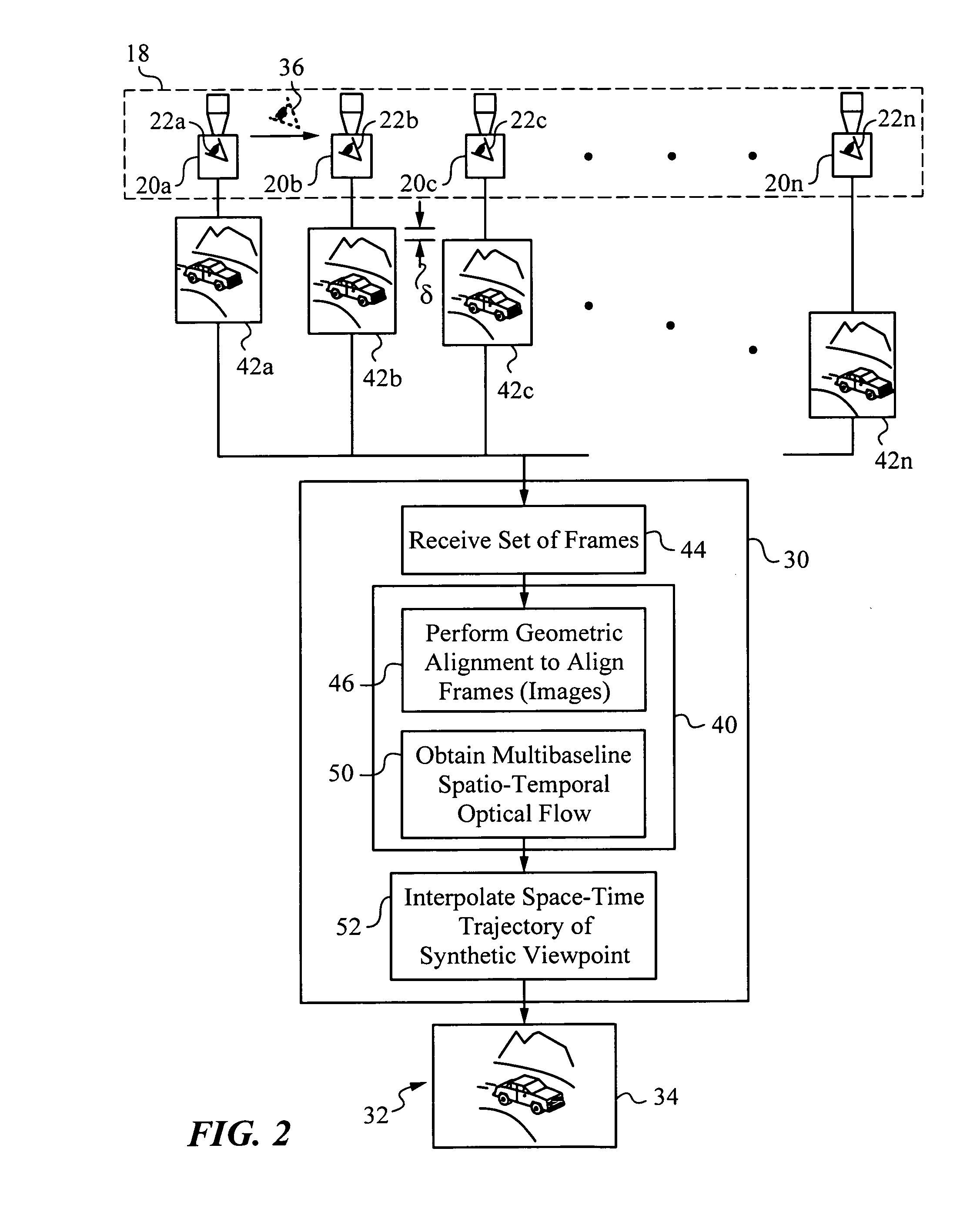

Apparatus and method for capturing a scene using staggered triggering of dense camera arrays

This invention relates to an apparatus and a method for video capture of a three-dimensional region of interest in a scene using an array of video cameras. The video cameras of the array are positioned for viewing the three-dimensional region of interest in the scene from their respective viewpoints. A triggering mechanism is provided for staggering the capture of a set of frames by the video cameras of the array. The apparatus has a processing unit for combining and operating on the set of frames captured by the array of cameras to generate a new visual output, such as high-speed video or spatio-temporal structure and motion models, that has a synthetic viewpoint of the three-dimensional region of interest. The processing involves spatio-temporal interpolation for determining the synthetic viewpoint space-time trajectory. In some embodiments, the apparatus computes a multibaseline spatio-temporal optical flow.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

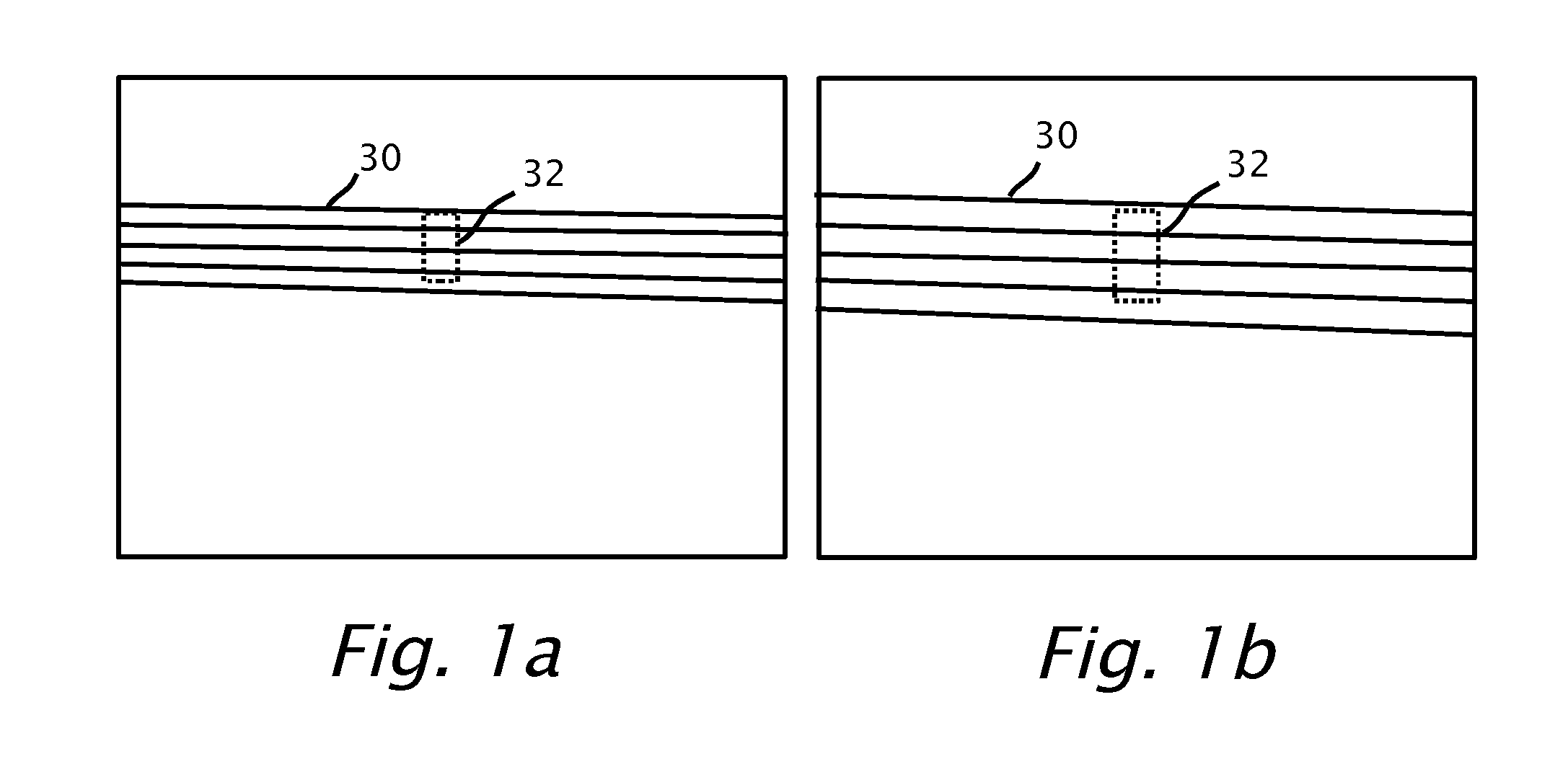

Pedestrian collision warning system

ActiveUS20120314071A1Avoid collisionEliminate and reduce false collision warningImage enhancementImage analysisSimulationOptical flow

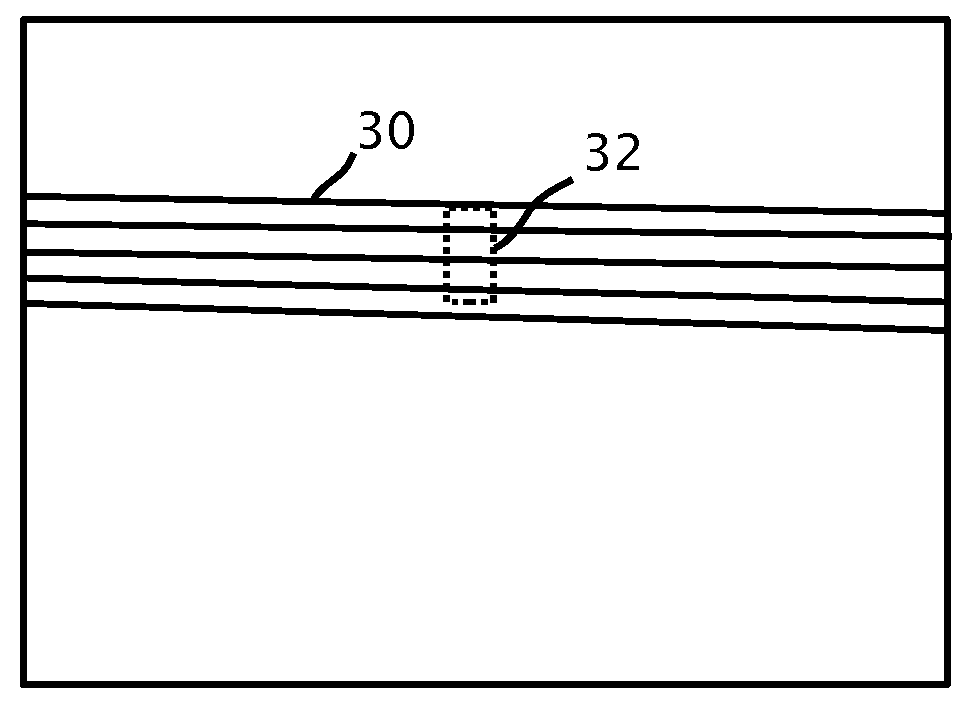

A method is provided for preventing a collision between a motor vehicle and a pedestrian. The method uses a camera and a processor mountable in the motor vehicle. A candidate image is detected. Based on a change of scale of the candidate image, it may be determined that the motor vehicle and the pedestrian are expected to collide, thereby producing a potential collision warning. Further information from the image frames may be used to validate the potential collision warning. The validation may include an analysis of the optical flow of the candidate image, that lane markings prediction of a straight road, a calculation of the lateral motion of the pedestrian, if the pedestrian is crossing a lane mark or curb and / or if the vehicle is changing lanes.

Owner:MOBILEYE VISION TECH LTD

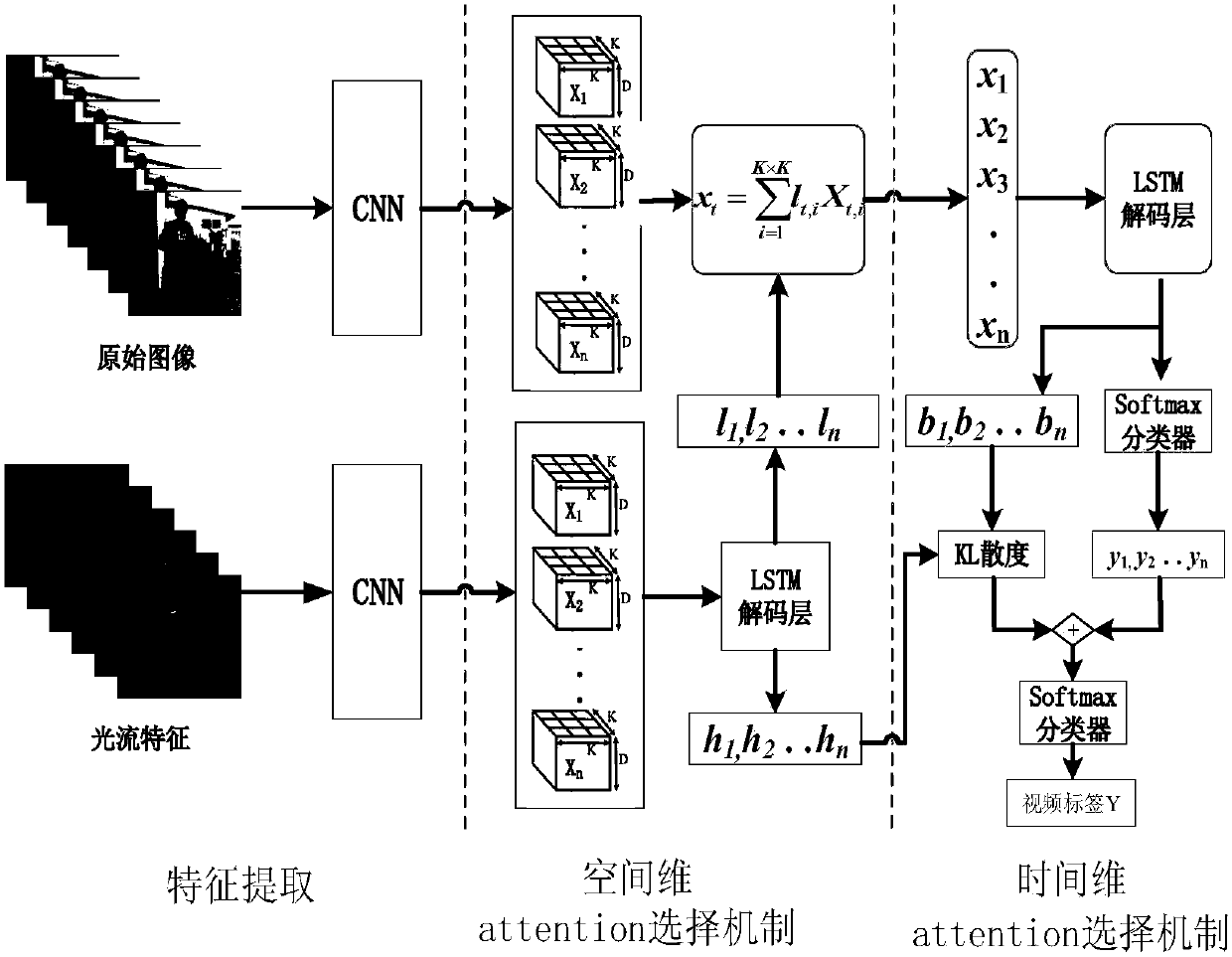

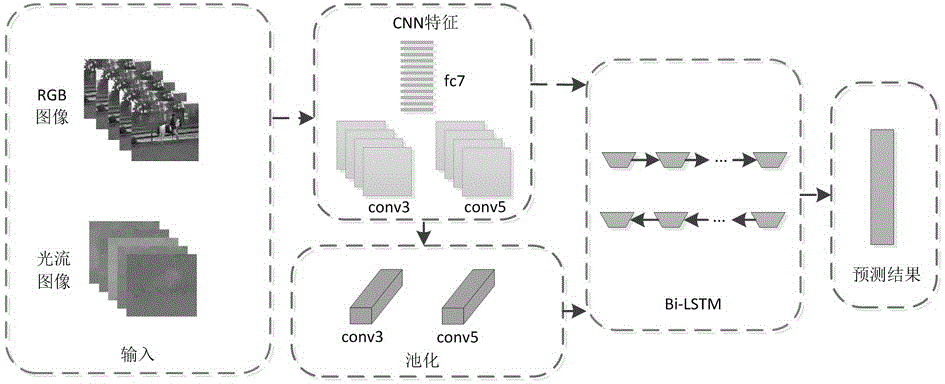

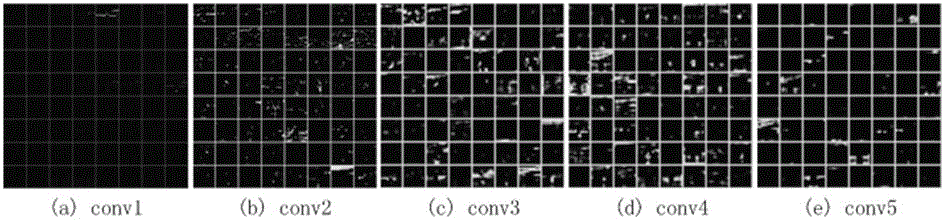

Human behavior recognition method integrating space-time dual-network flow and attention mechanism

ActiveCN107609460AImprove accuracyEliminate distractionsCharacter and pattern recognitionNeural architecturesHuman behaviorPattern recognition

The invention discloses a human behavior recognition method integrating the space-time dual-network flow and an attention mechanism. The method includes the steps of extracting moving optical flow features and generating an optical flow feature image; constructing independent time flow and spatial flow networks to generate two segments of high-level semantic feature sequences with a significant structural property; decoding the high-level semantic feature sequence of the time flow, outputting a time flow visual feature descriptor, outputting an attention saliency feature sequence, and meanwhile outputting a spatial flow visual feature descriptor and the label probability distribution of each frame of a video window; calculating an attention confidence scoring coefficient per frame time dimension, weighting the label probability distribution of each frame of the video window of the spatial flow, and selecting a key frame of the video window; and using a softmax classifier decision to recognize the human behavior action category of the video window. Compared with the prior art, the method of the invention can effectively focus on the key frame of the appearance image in the originalvideo, and at the same time, can select and obtain the spatial saliency region features of the key frame with high recognition accuracy.

Owner:NANJING UNIV OF POSTS & TELECOMM

Method and system for forming a panoramic image of a scene having minimal aspect distortion

InactiveUS20110043604A1Improve robustnessTelevision system detailsGeometric image transformationOptical flowDistortion

Owner:JOLLY SEVEN SERIES 70 OF ALLIED SECURITY TRUST I

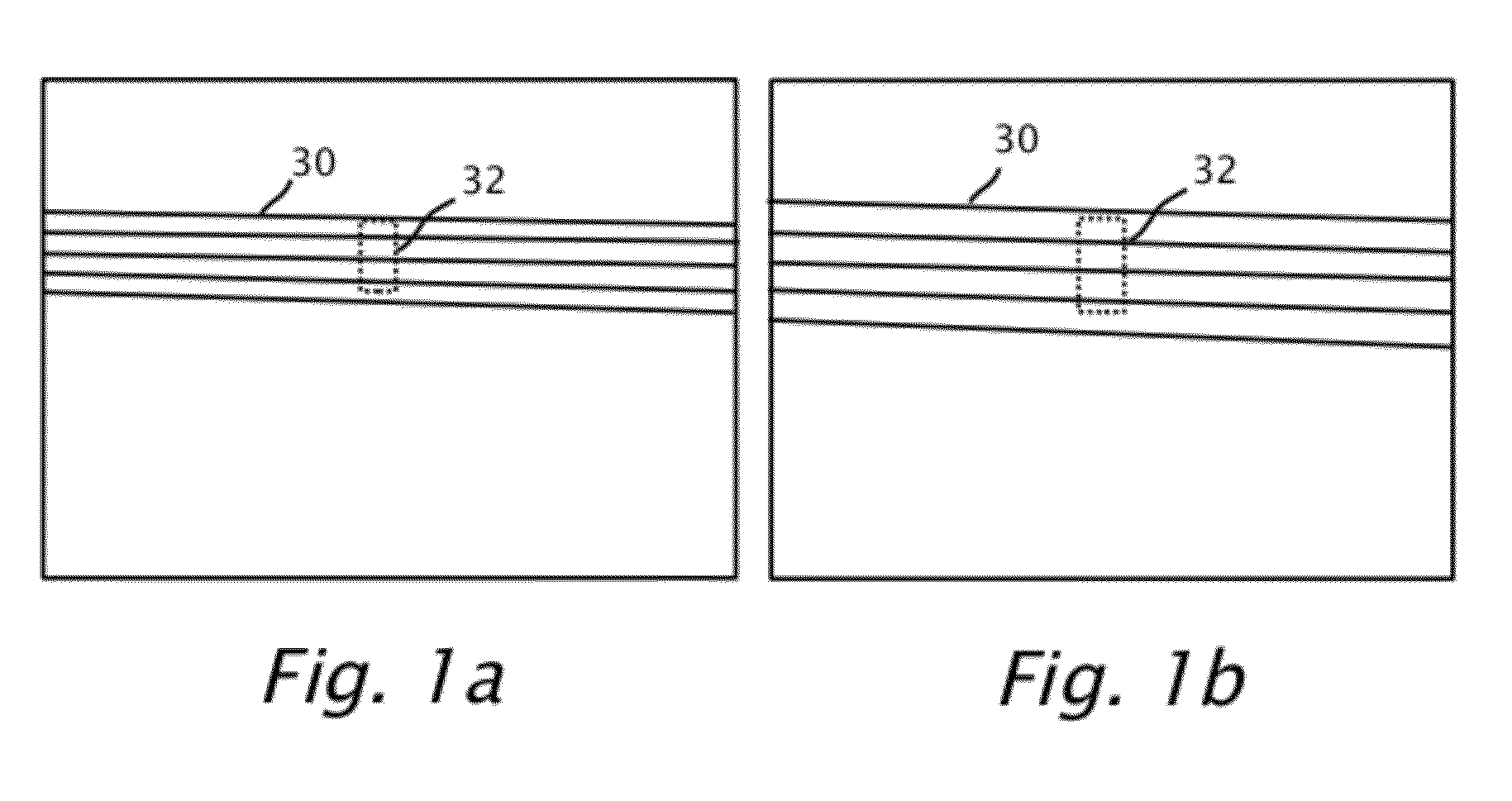

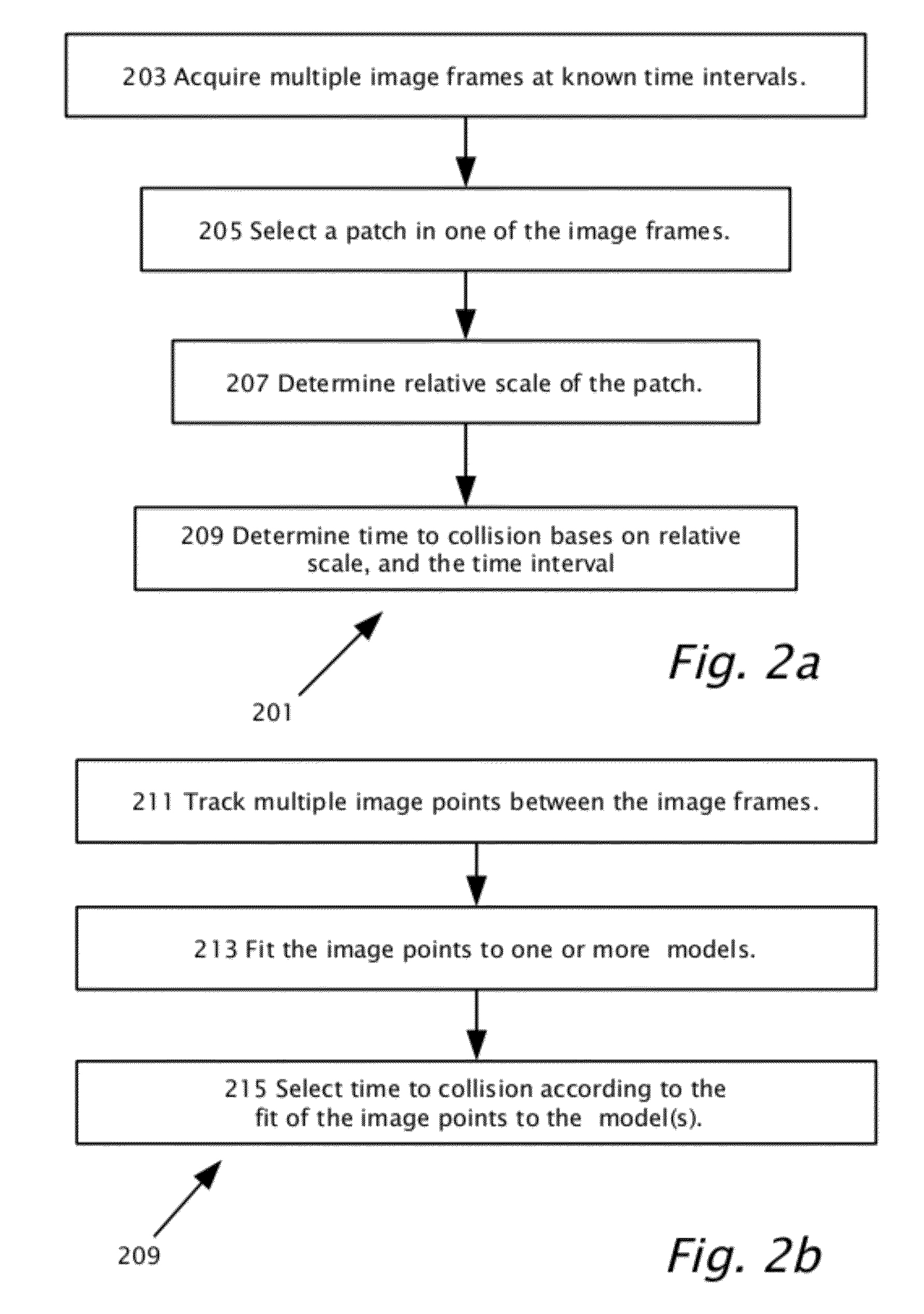

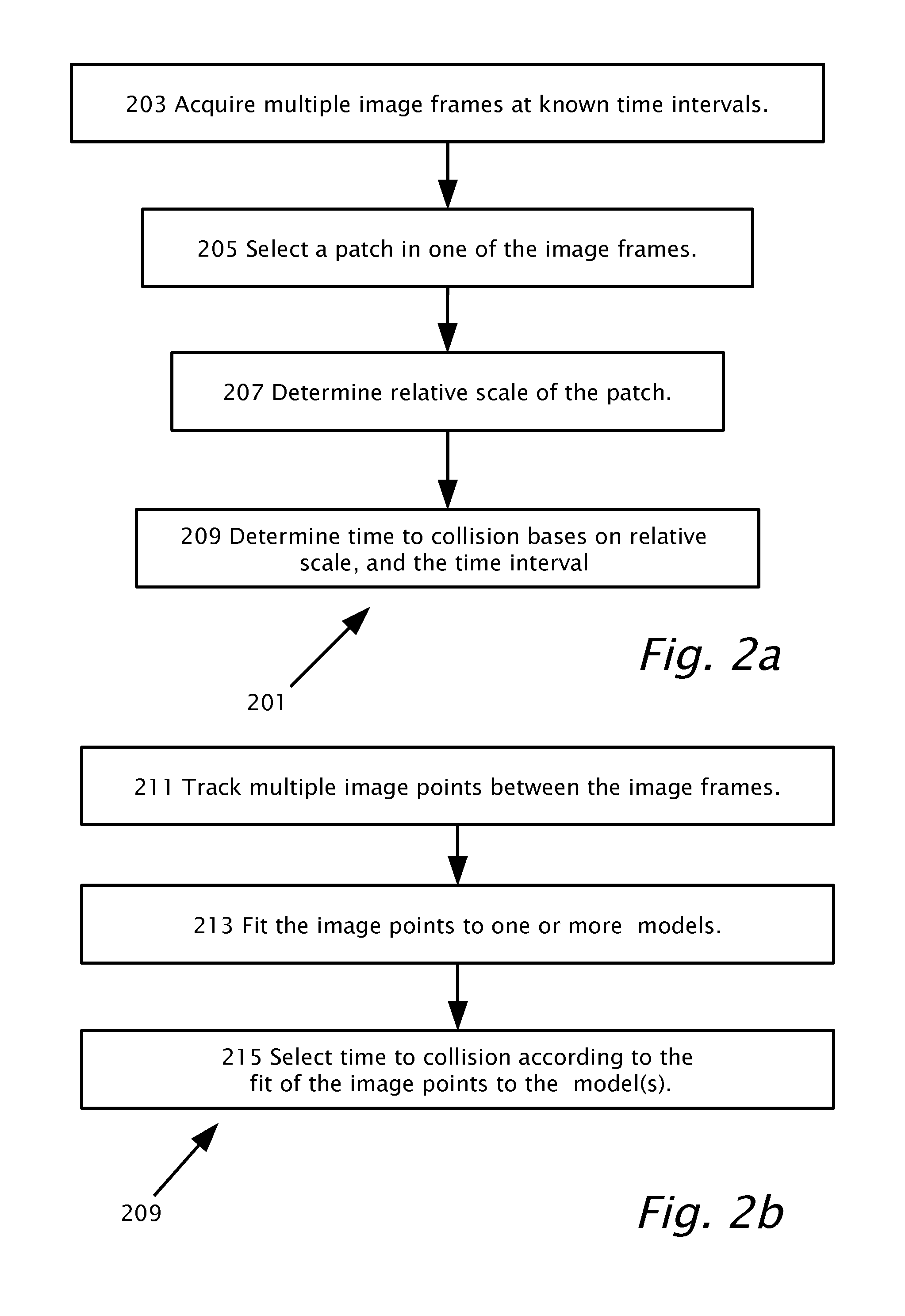

Forward collision warning trap and pedestrian advanced warning system

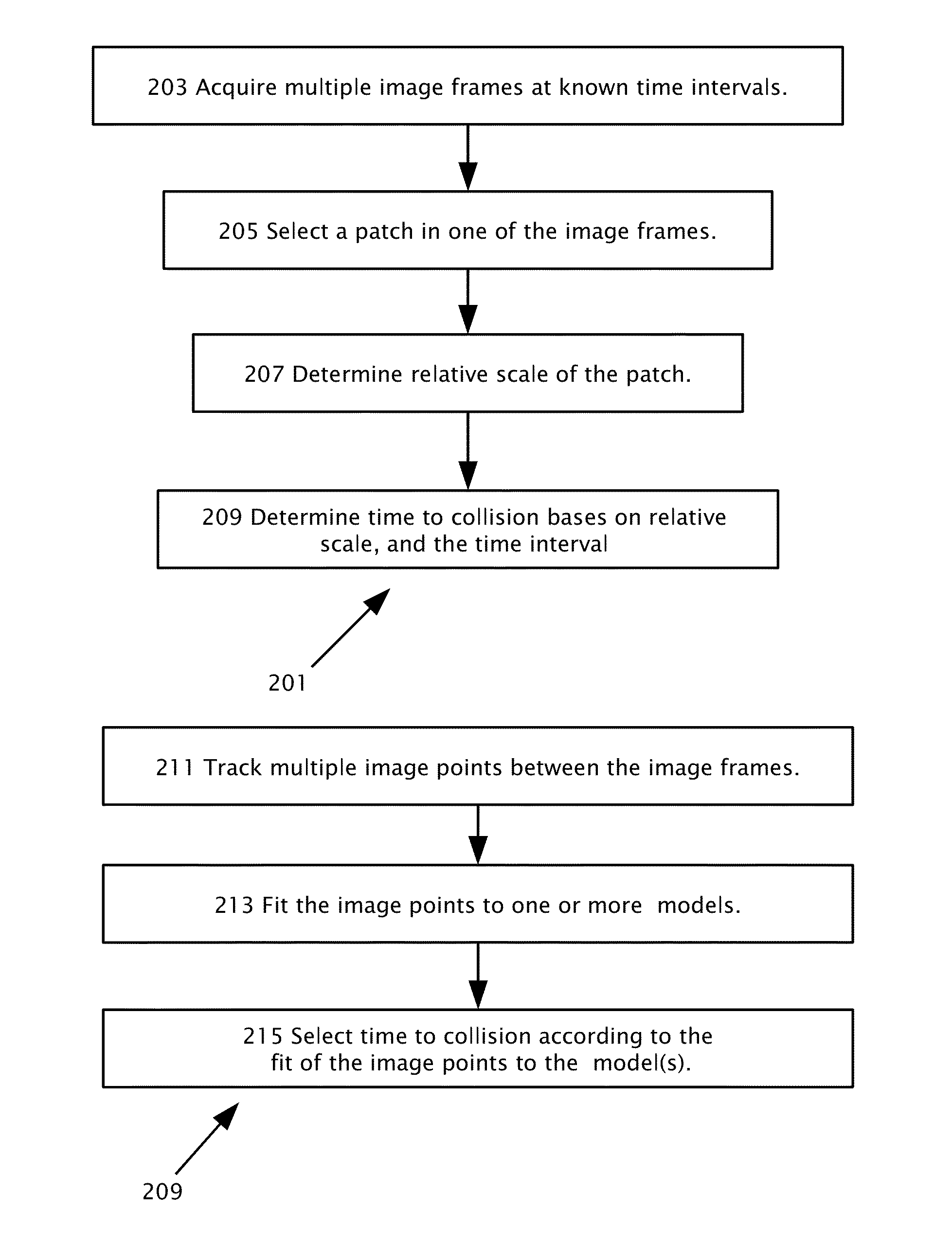

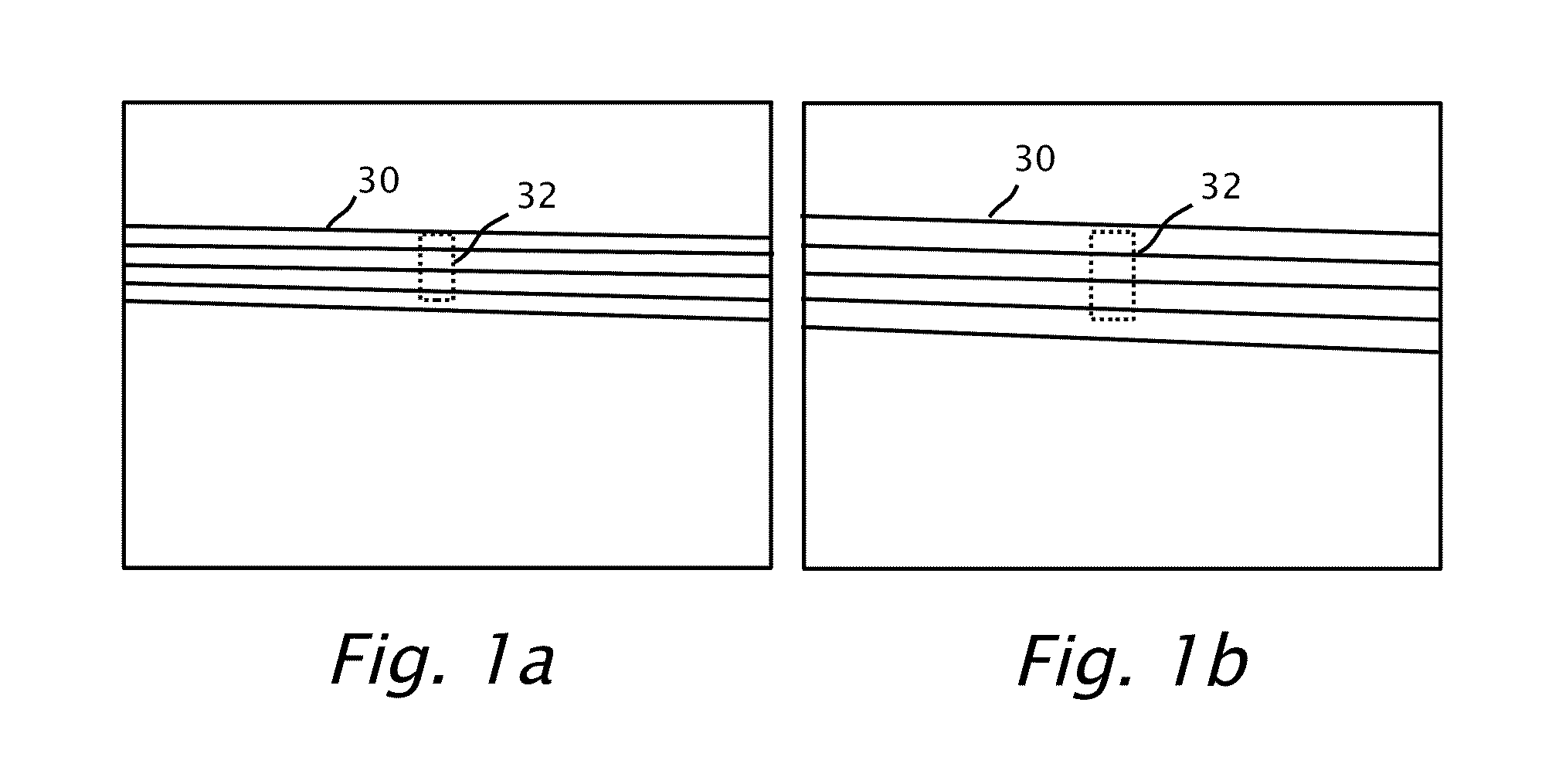

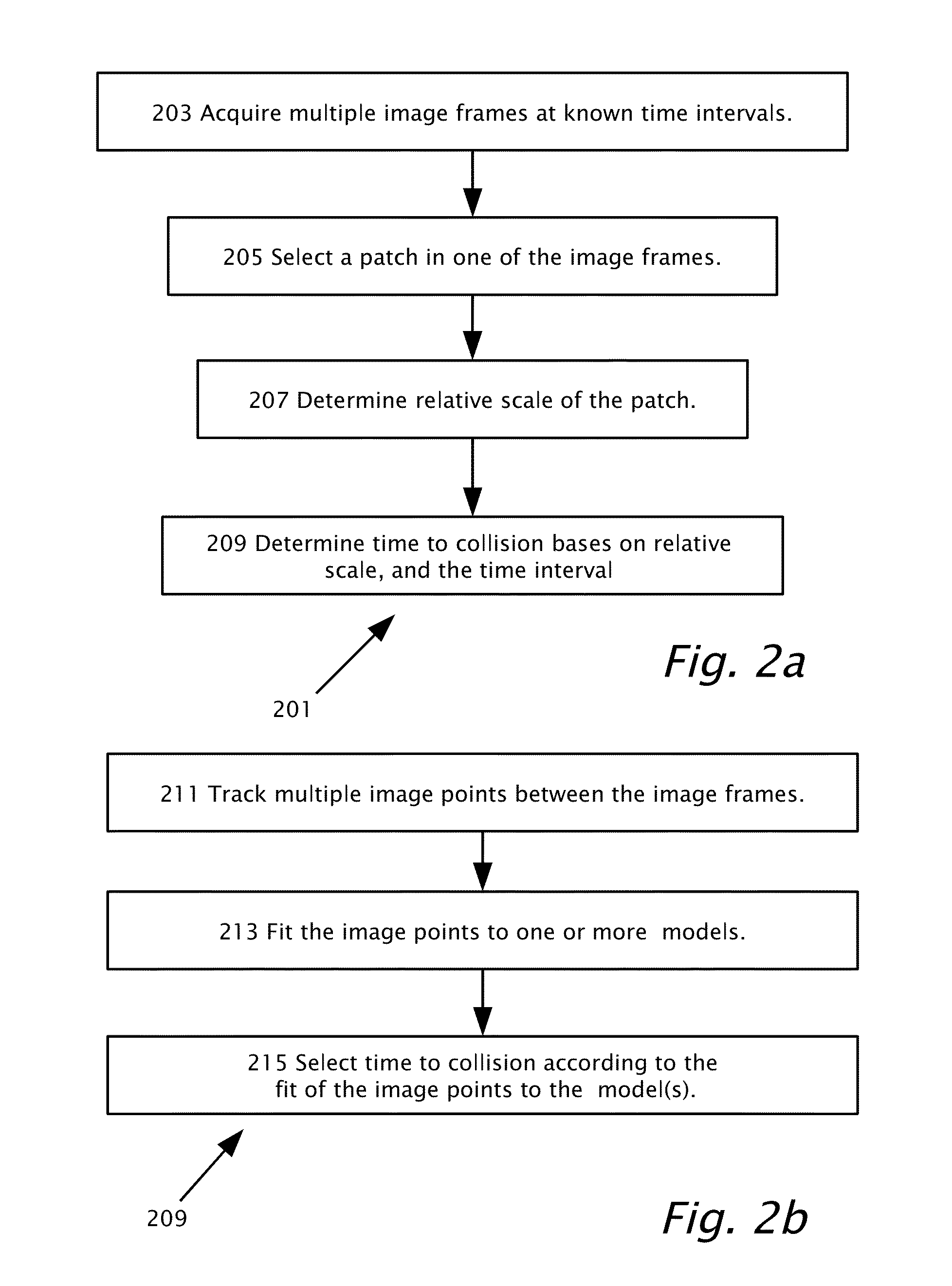

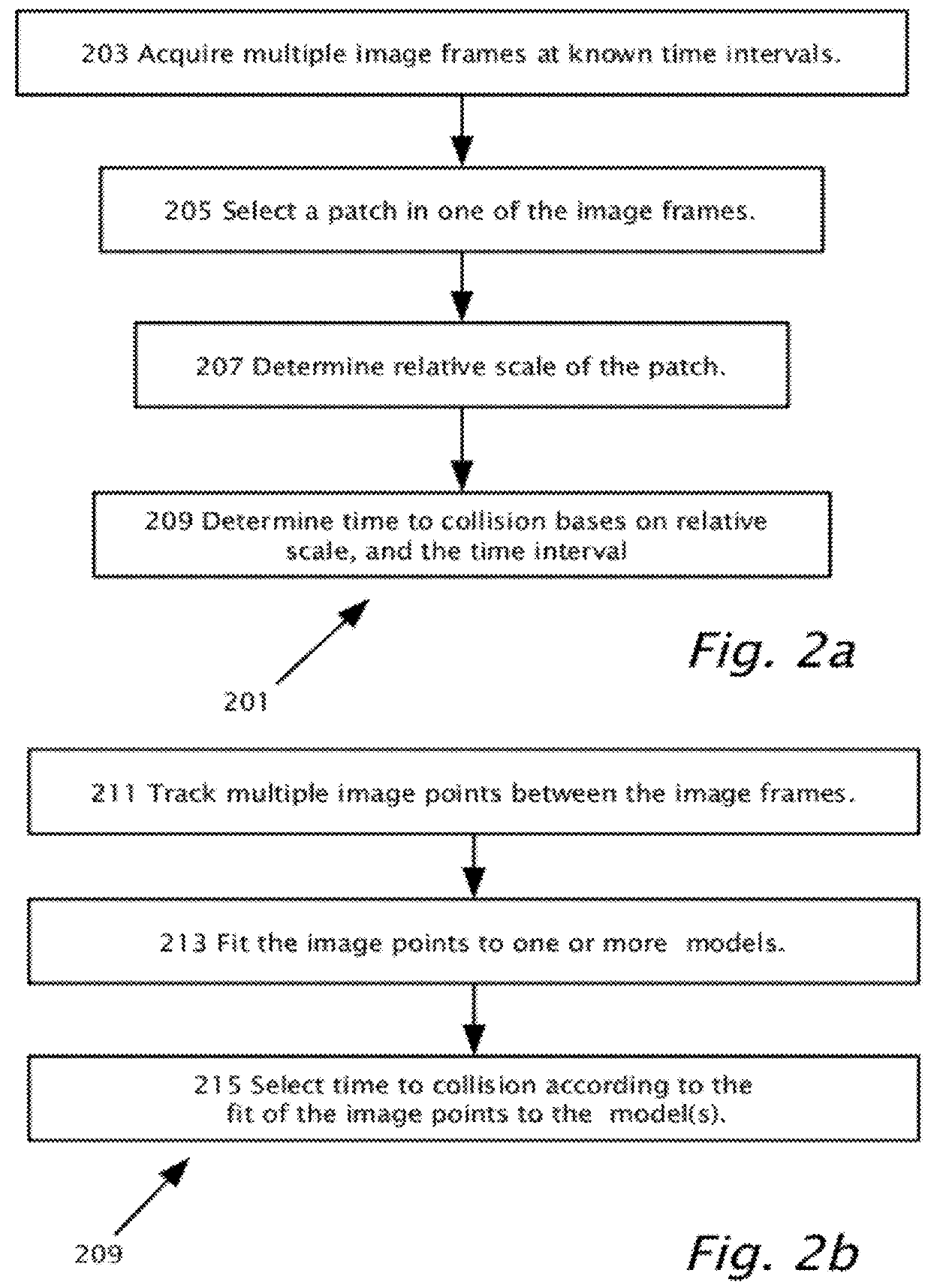

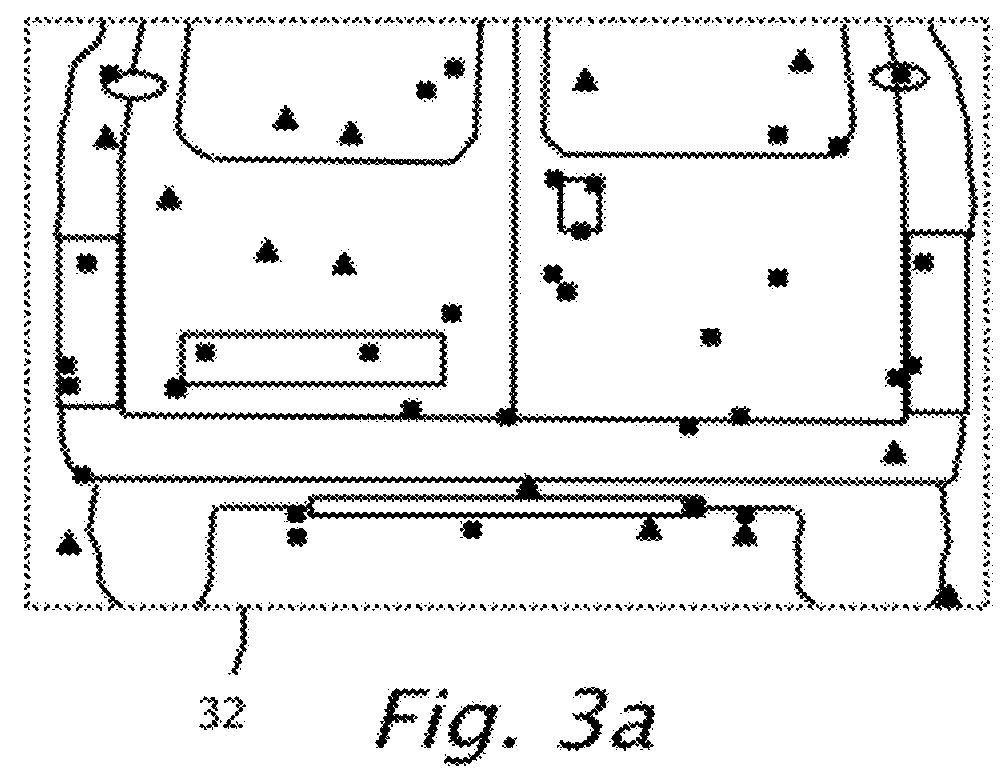

A method for providing a forward collision warning using a camera mountable in a motor vehicle. The method acquires multiple image frames at known time intervals. A patch may be selected in at least one of the image frames. Optical flow may be tracked between the image frames of multiple image points of the patch. Based on the fit of the image points to a model, a time-to collision (TTC) may be determined if a collision is expected. The image points may be fit to a road surface model and a portion of the image points is modeled to be imaged from a road surface. A collision is not expected based on the fir of the image points to the road surface model.

Owner:MOBILEYE VISION TECH LTD

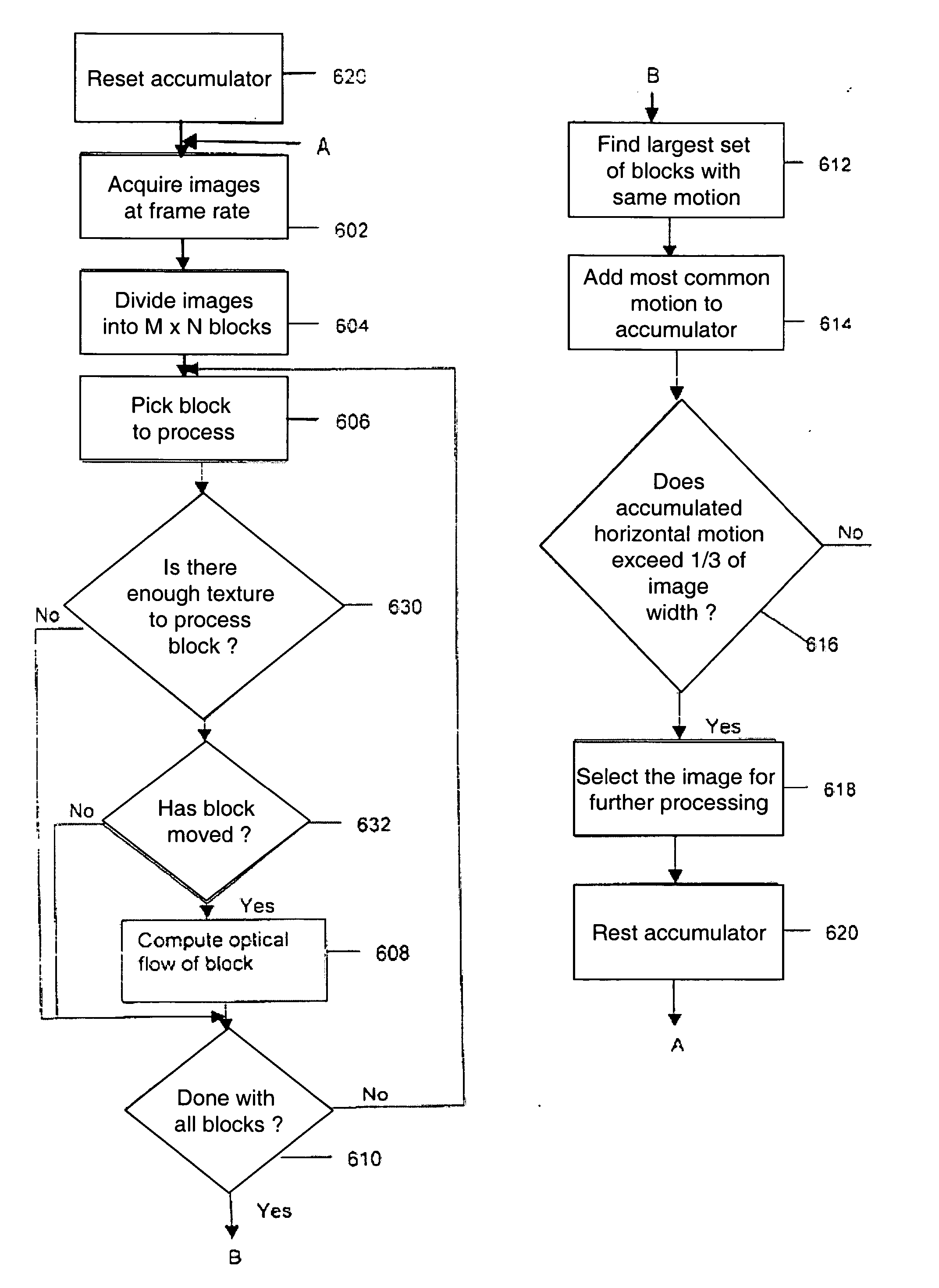

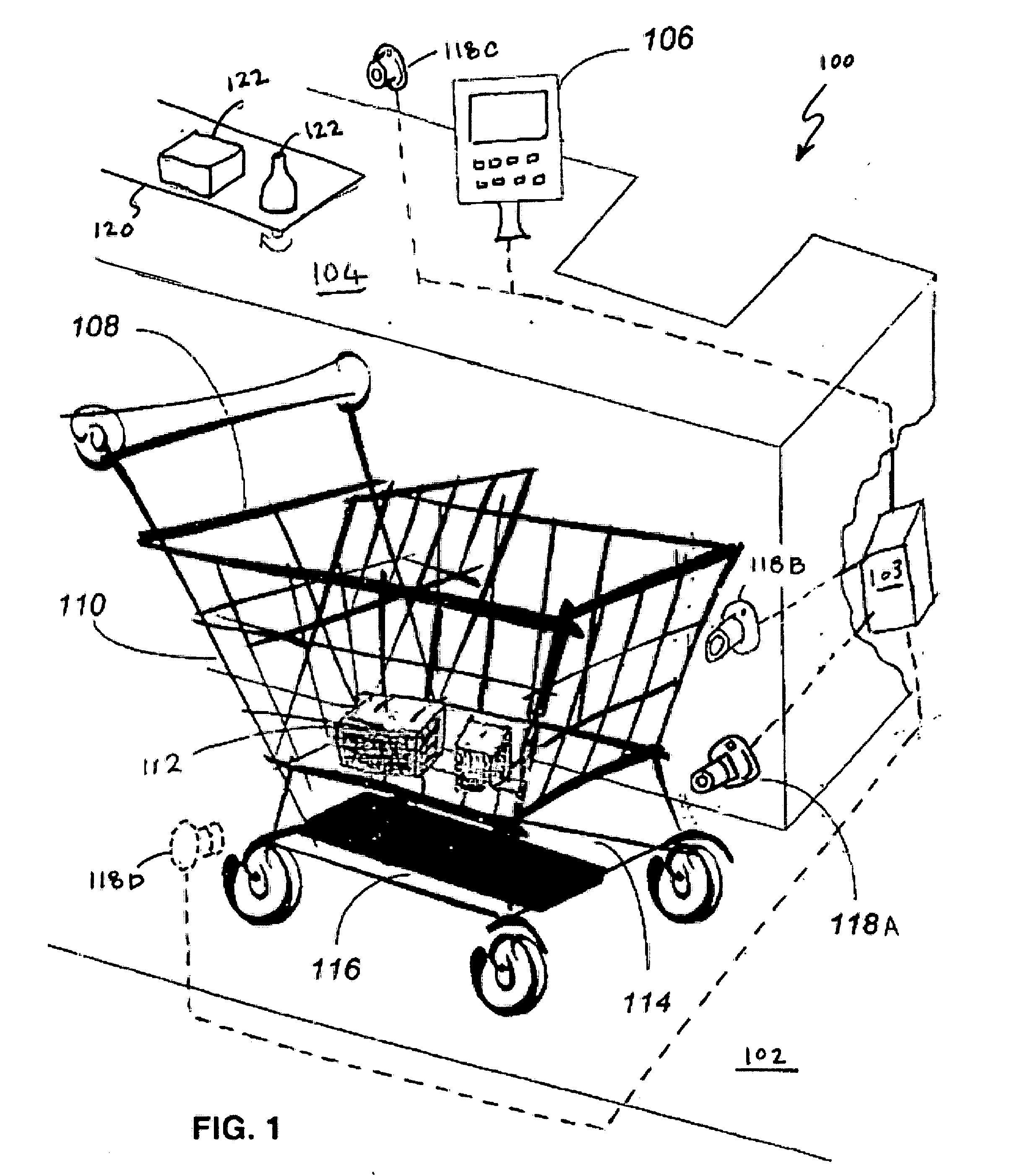

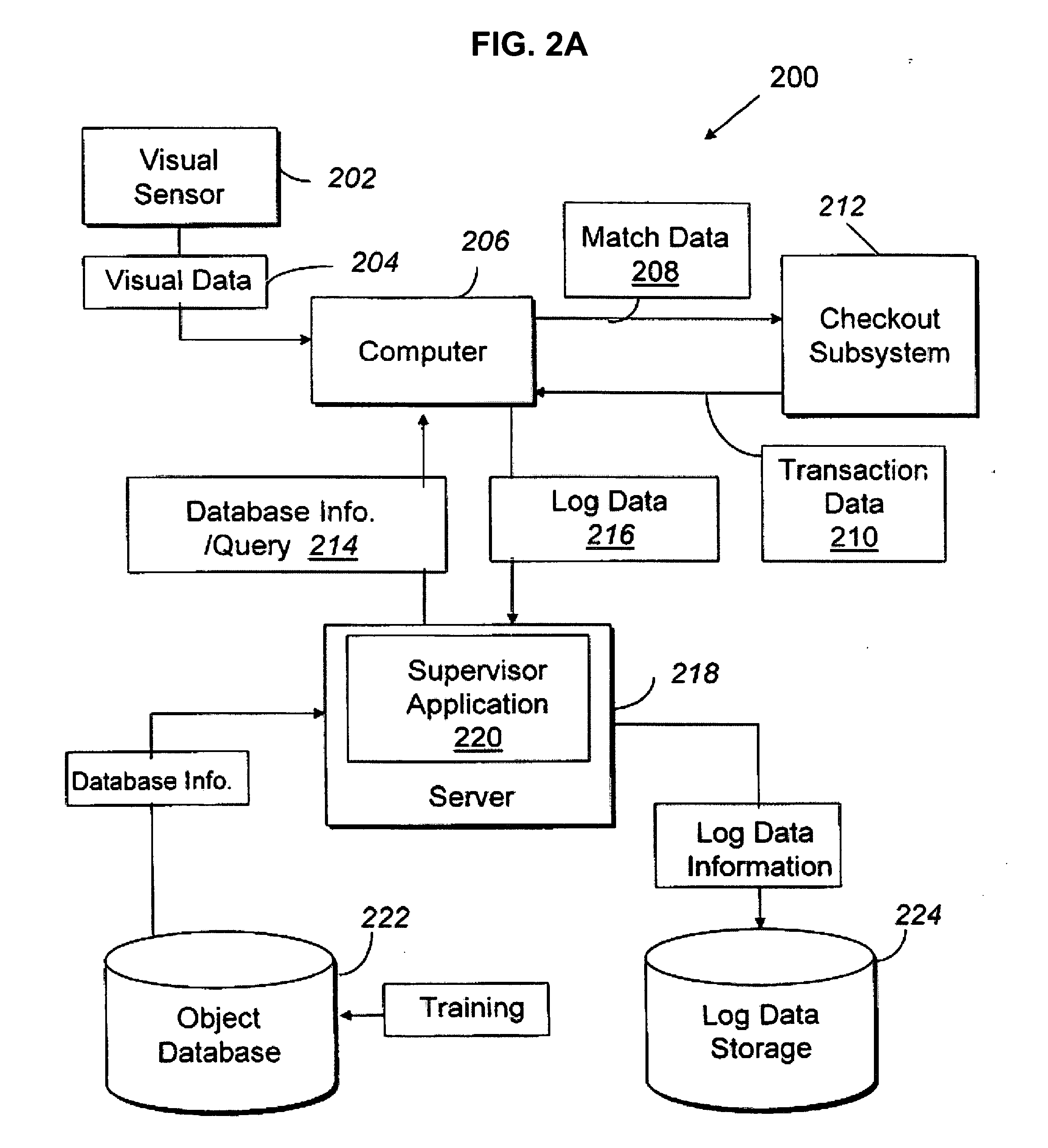

Optical flow for object recognition

Disclosed is a system and method for using optical flow to detect objects moving past a camera and to select images of the moving objects. A shopping cart, for example, may be detected by subdividing an image into a plurality of image blocks; comparing the blocks to a preceding image to determine the motion of the portion of the object pictured; associating the most common motion with the shopping cart. The motion of the cart may also be integrated over time for purposes of tracking cart motion and selecting a subset of the captured images for object recognition processing. Detection of the cart and image selection improves computational efficiency an increase merchandise throughput.

Owner:DATALOGIC ADC

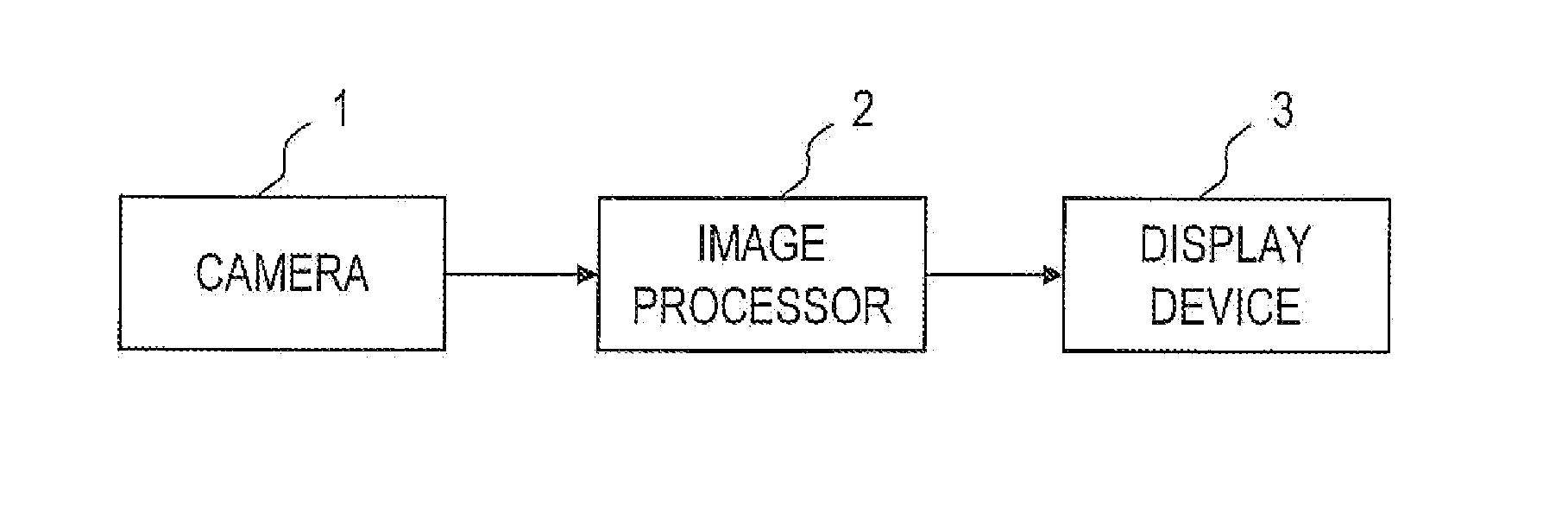

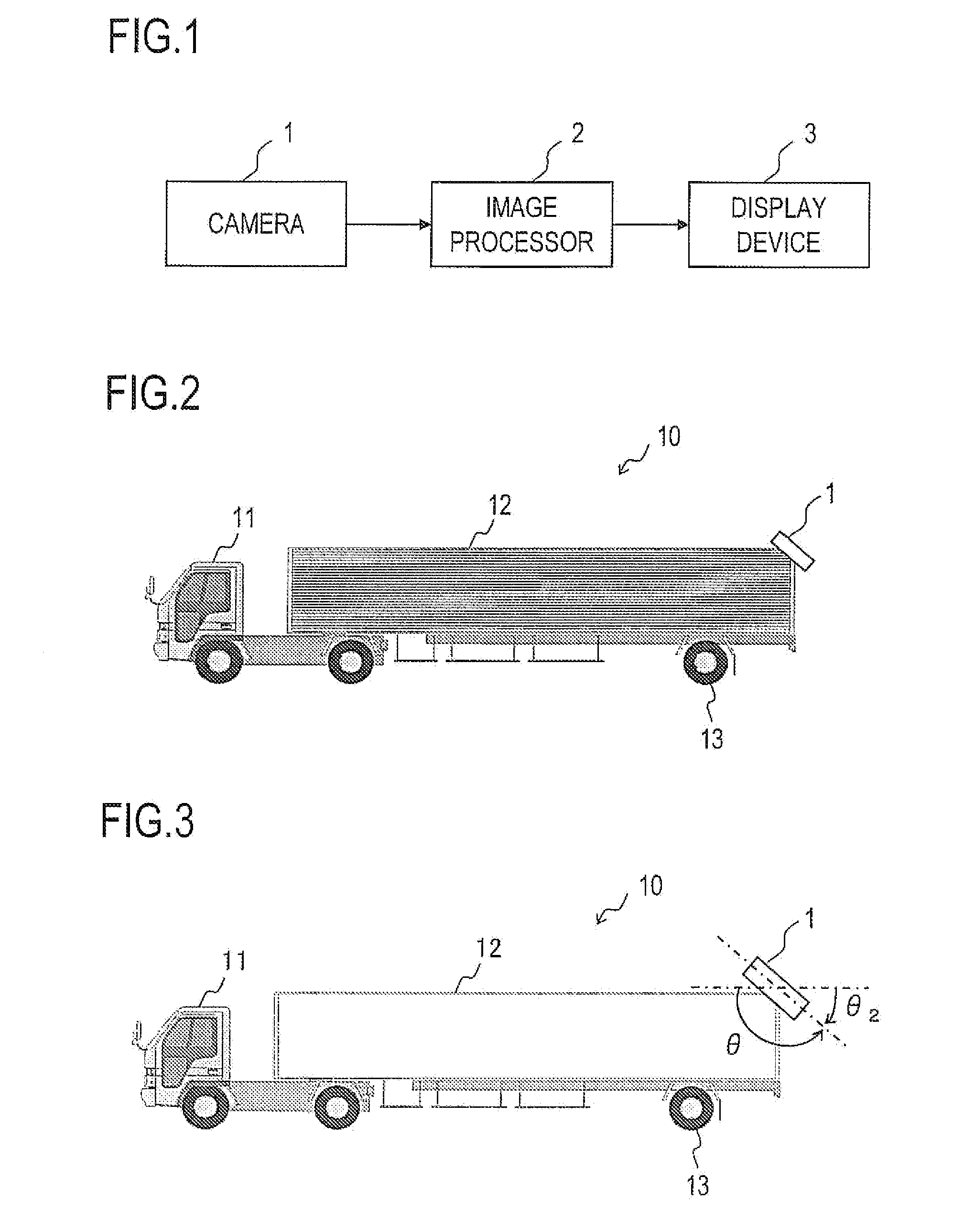

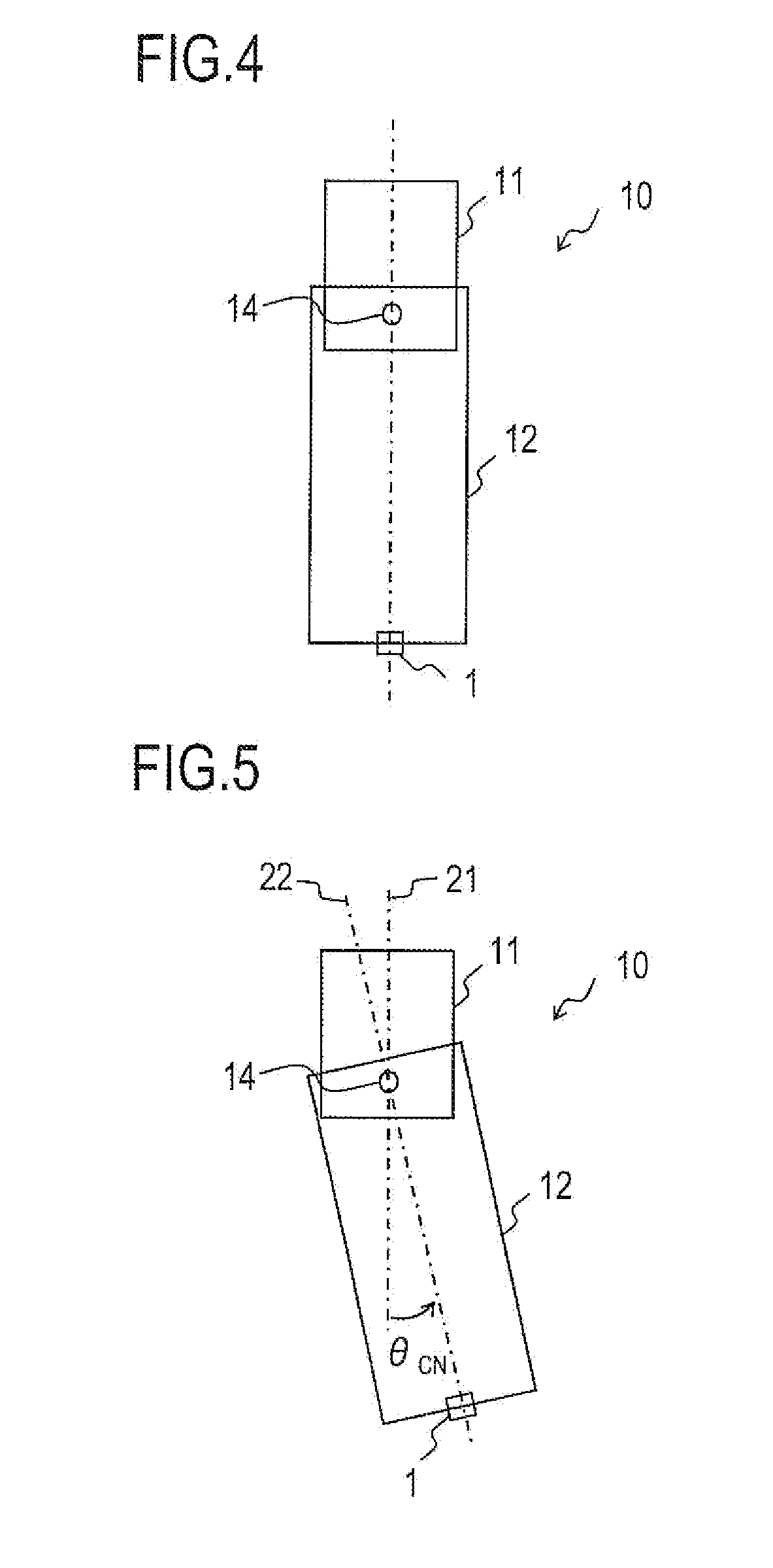

Driving Assistance System And Connected Vehicles

InactiveUS20100171828A1Assist driving of inexpensively and satisfactorilySignificance and benefits of the inventionImage enhancementImage analysisDisplay deviceOptical flow

A tractor and a trailer are connected together and a camera is installed on the trailer side of the connected vehicles. The camera captures images behind the trailer. A driving assistance system projects the captured images on bird's-eye view coordinates parallel with a road surface to convert the images into bird's-eye view images and obtains on the bird's-eye view coordinates an optical flow of a moving image composed of the captured images. The connection angle between the tractor and the trailer is estimated based on the optical flow and on movement information on the tractor, and further, a predicted movement trajectory of the trailer is obtained from both the connection angle and the movement information on the tractor. The predicted movement trajectory is overlaid on the bird's-eye view images and the resulting image is outputted to a display device.

Owner:SANYO ELECTRIC CO LTD

Target orientation estimation using depth sensing

A system for estimating orientation of a target based on real-time video data uses depth data included in the video to determine the estimated orientation. The system includes a time-of-flight camera capable of depth sensing within a depth window. The camera outputs hybrid image data (color and depth). Segmentation is performed to determine the location of the target within the image. Tracking is used to follow the target location from frame to frame. During a training mode, a target-specific training image set is collected with a corresponding orientation associated with each frame. During an estimation mode, a classifier compares new images with the stored training set to determine an estimated orientation. A motion estimation approach uses an accumulated rotation / translation parameter calculation based on optical flow and depth constrains. The parameters are reset to a reference value each time the image corresponds to a dominant orientation.

Owner:HONDA MOTOR CO LTD +1

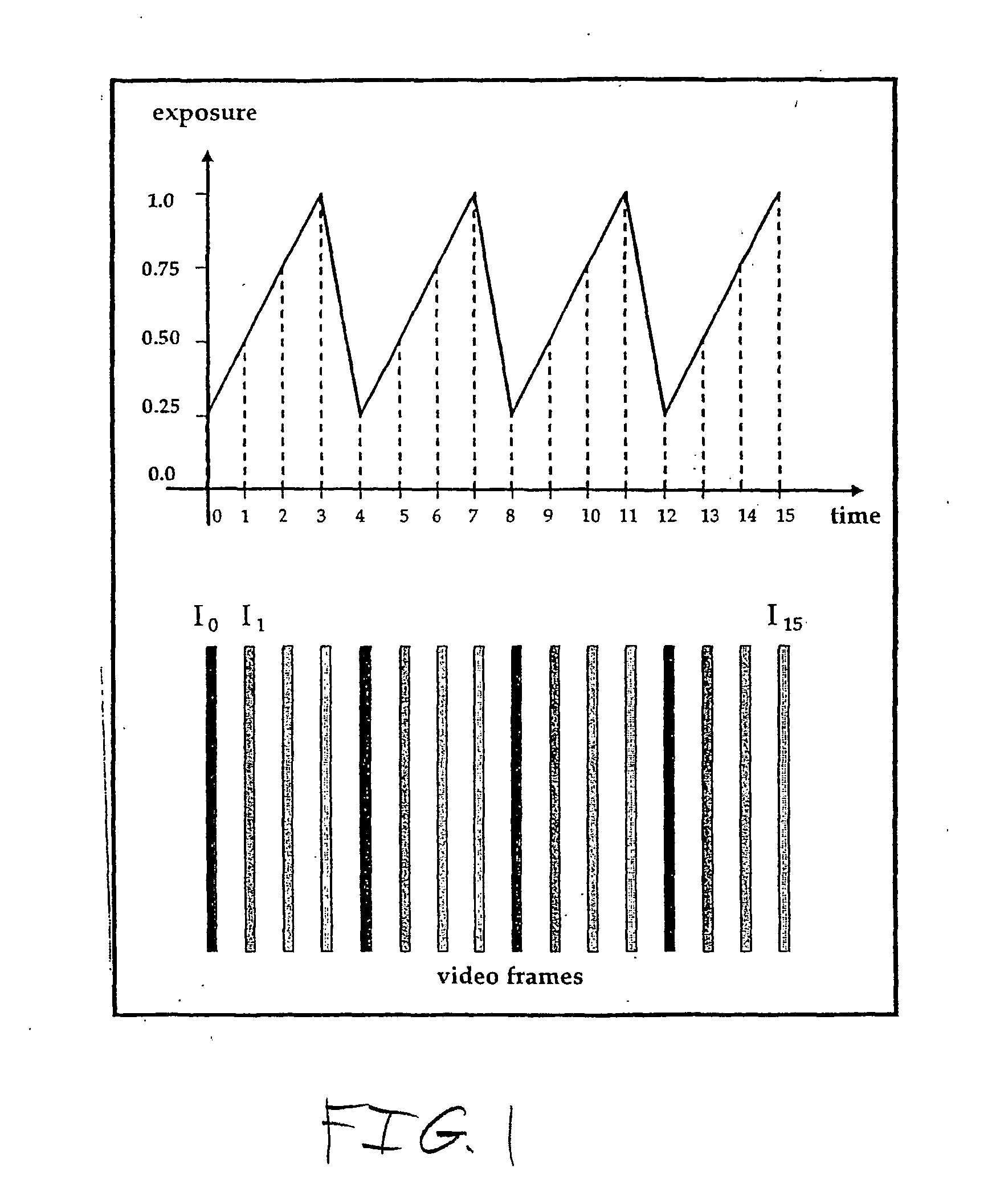

Imaging method and system

ActiveUS20050275747A1Improve dynamic rangeIncrease frame rateTelevision system detailsColor television detailsHigh-dynamic-range imagingViewpoints

A method and system for accurately imaging scenes having large brightness variations. If a particular object in the scene is of interest, the imager exposure setting is adjusted based on the brightness of that object. For high dynamic range imaging of an entire scene, two imagers with different viewpoints and exposure settings are used, or the exposure setting of a single imager is varied as multiple images are captured. An optical flow technique can be used to track and image moving objects, or a video sequence can be generated by selectively updating only those pixels whose brightnesses are within the preferred brightness range of the imager.

Owner:THE TRUSTEES OF COLUMBIA UNIV IN THE CITY OF NEW YORK

Vehicle blind spot monitoring system

InactiveUS20030085806A1Enhance driver safetyImproved and reliableAnti-collision systemsCharacter and pattern recognitionMonitoring systemDisplay device

A blind spot monitoring system for a vehicle includes two pairs of stereo cameras, two displays and a controller. The stereo cameras monitor vehicle blind spots and generate a corresponding pair of digital signals. The display shows a rearward vehicle view and may replace, or work in tandem with, a side view mirror. The controller is located in the vehicle and receives two pairs of digital signals. The controller includes control logic operative to analyze a stereopsis effect between each pair of stereo cameras and the optical flow over time to control the displays. The displays will show an expanded rearward view when a hazard is detected in the vehicle blind spot and show a normal rearward view when no hazard is detected in the vehicle blind spot.

Owner:FORD GLOBAL TECH LLC

Method and apparatus for matching portions of input images

InactiveUS20070185946A1Overcome difficultiesLinear complexityImage enhancementTelevision system detailsAmbiguityImage segmentation

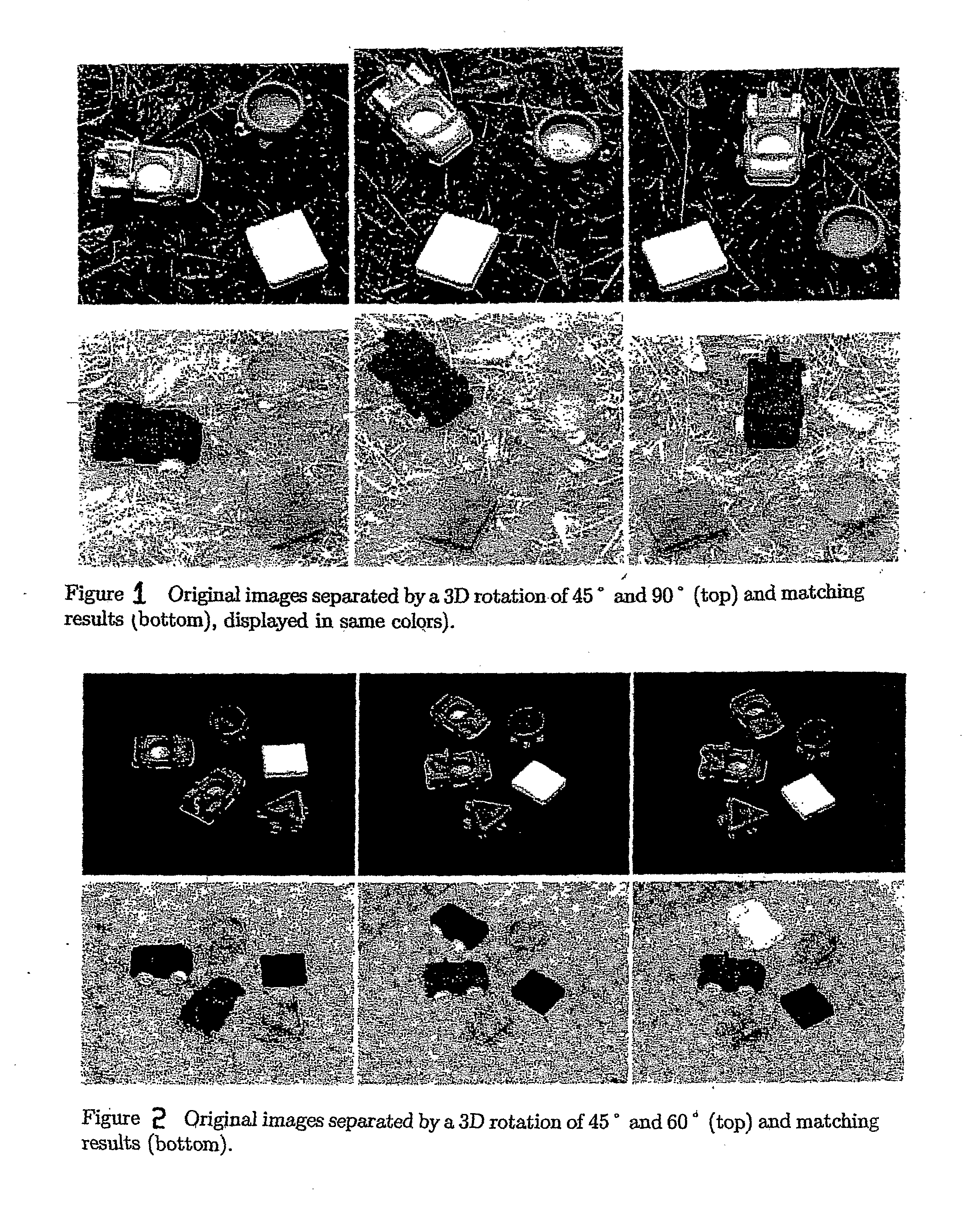

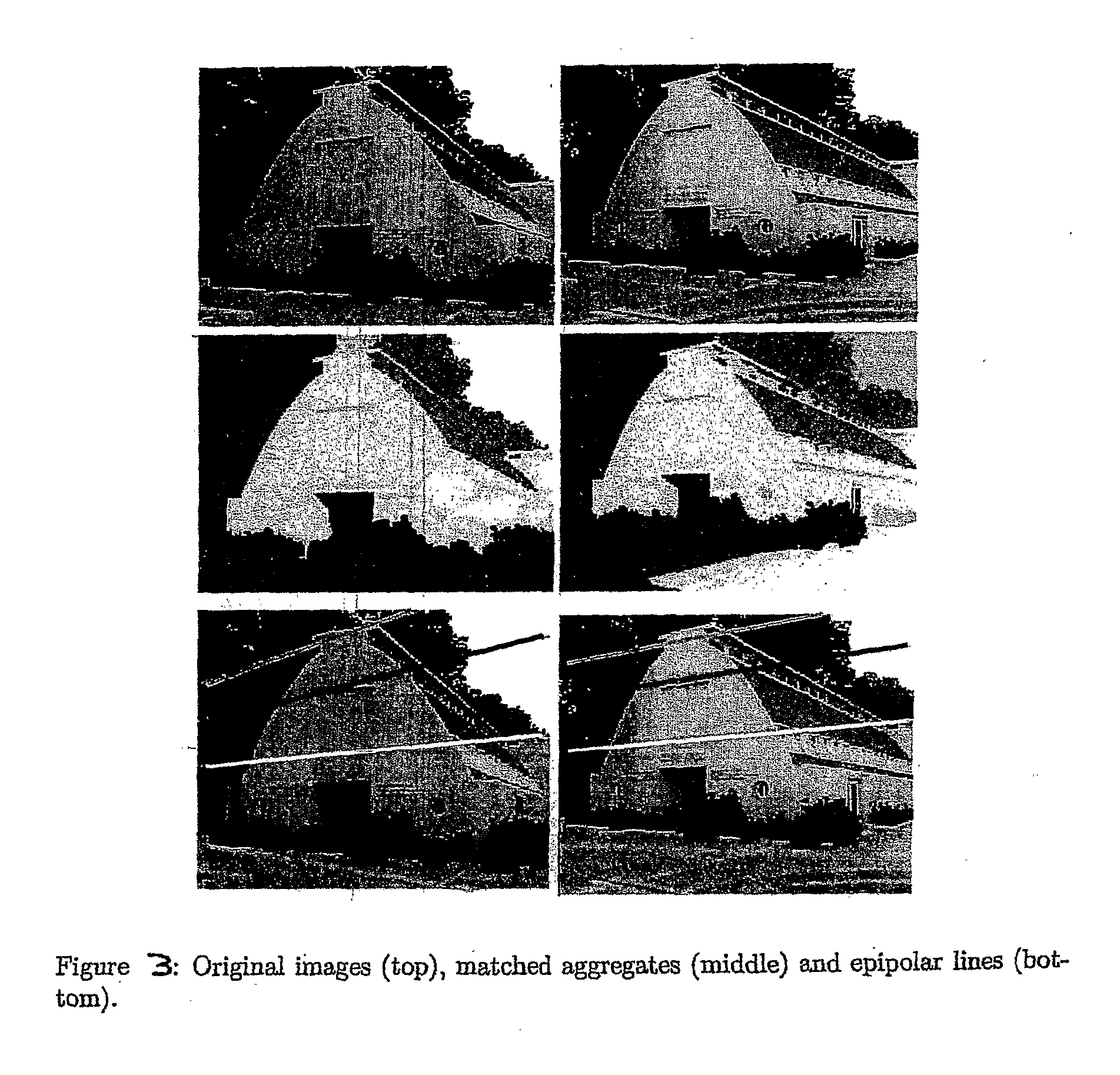

A method and apparatus for finding correspondence between portions of two images that first subjects the two images to segmentation by weighted aggregation (10), then constructs directed acylic graphs (16,18) from the output of the segmentation by weighted aggregation to obtain hierarchical graphs of aggregates (20,22), and finally applies a maximally weighted subgraph isomorphism to the hierarchical graphs of aggregates to find matches between them (24). Two algorithms are described; one seeks a one-to-one matching between regions, and the other computes a soft matching, in which is an aggregate may have more than one corresponding aggregate. A method and apparatus for image segmentation based on motion cues. Motion provides a strong cue for segmentation. The method begins with local, ambiguous optical flow measurements. It uses a process of aggregation to resolve the ambiguities and reach reliable estimates of the motion. In addition, as the process of aggregation proceeds and larger aggregates are identified, it employs a progressively more complex model to describe the motion. In particular, the method proceeds by recovering translational motion at fine levels, through affine transformation at intermediate levels, to 3D motion (described by a fundamental matrix) at the coarsest levels. Finally, the method is integrated with a segmentation method that uses intensity cues. The utility of the method is demonstrated on both random dot and real motion sequences.

Owner:YEDA RES & DEV CO LTD

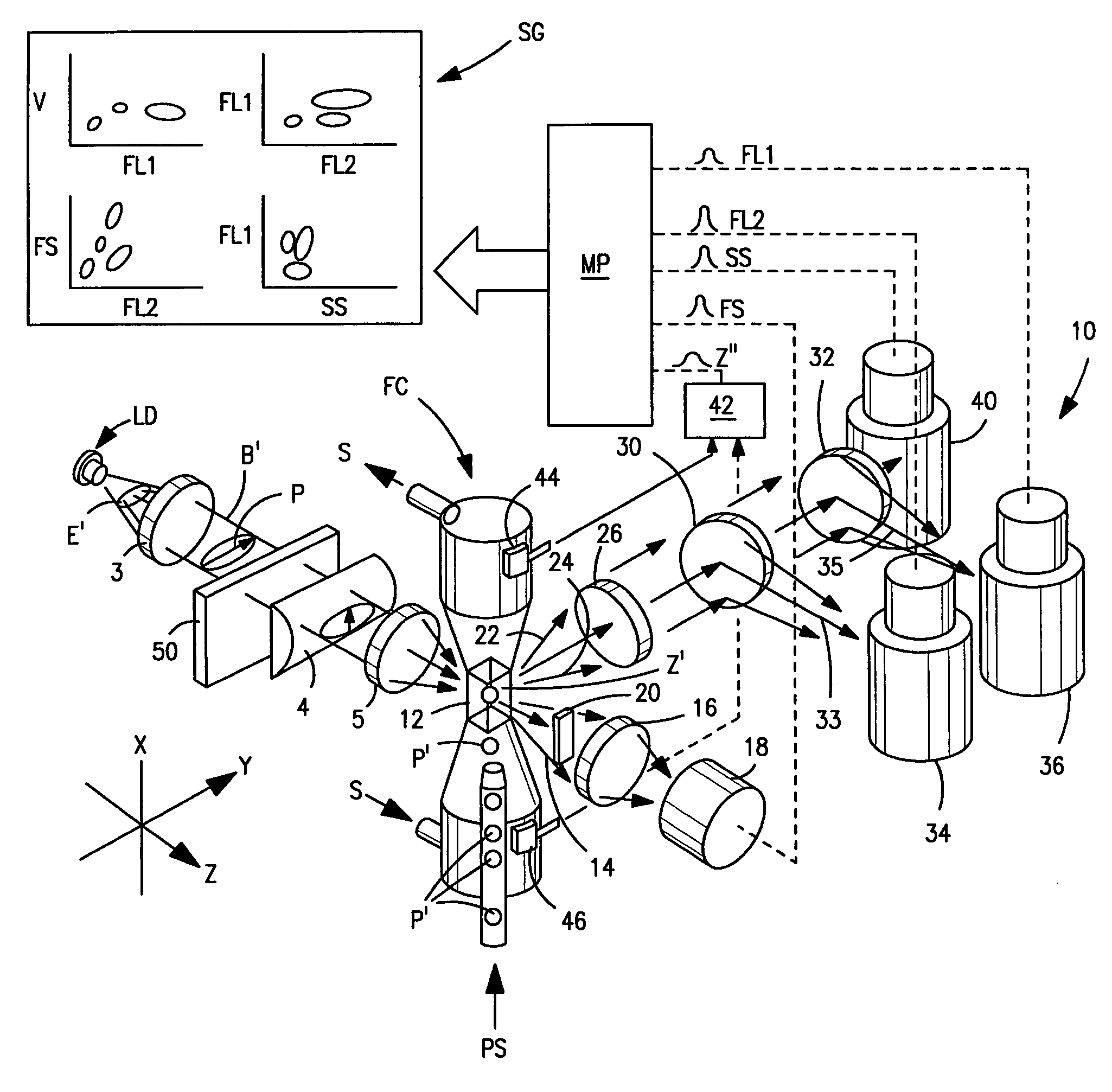

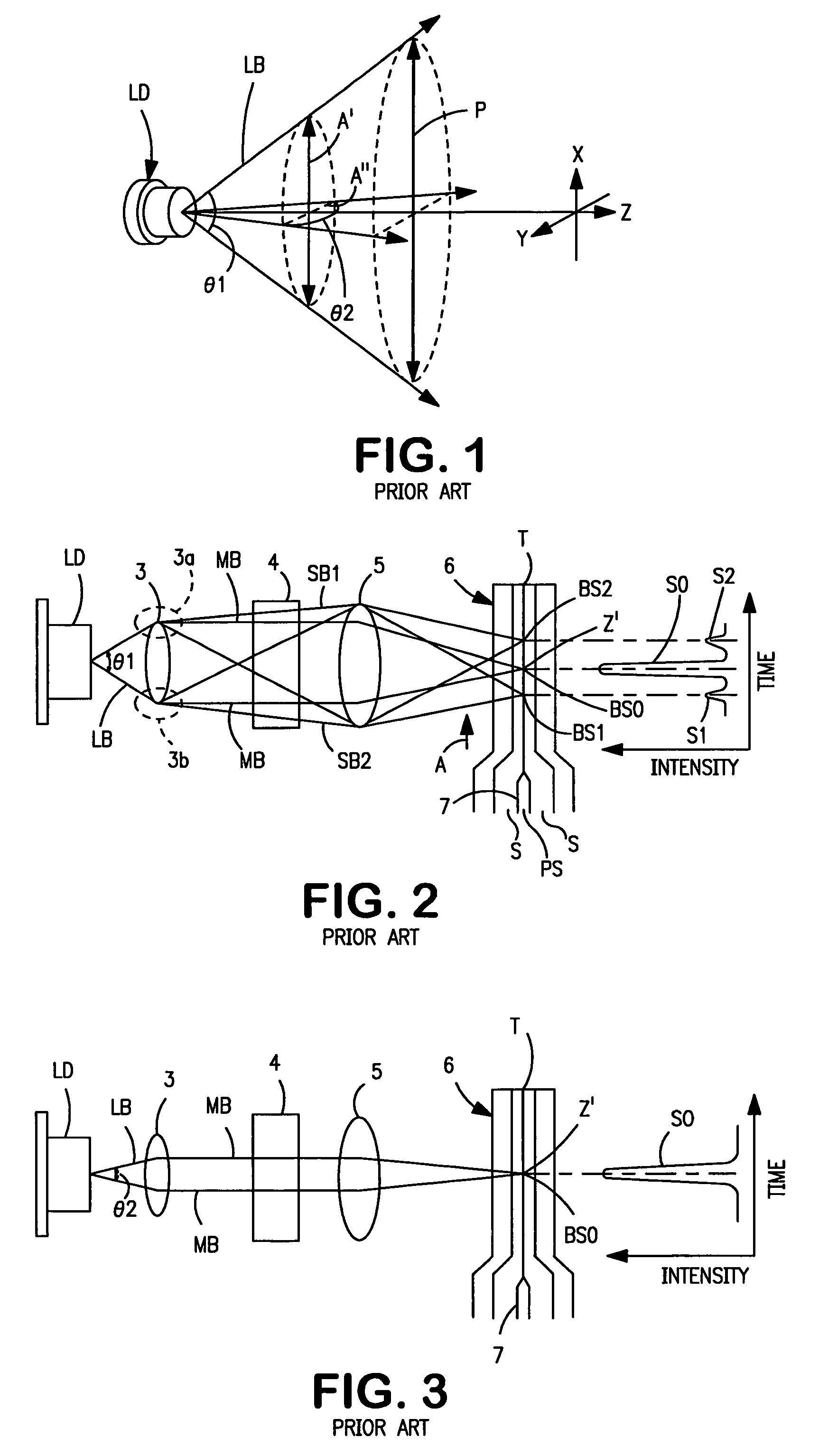

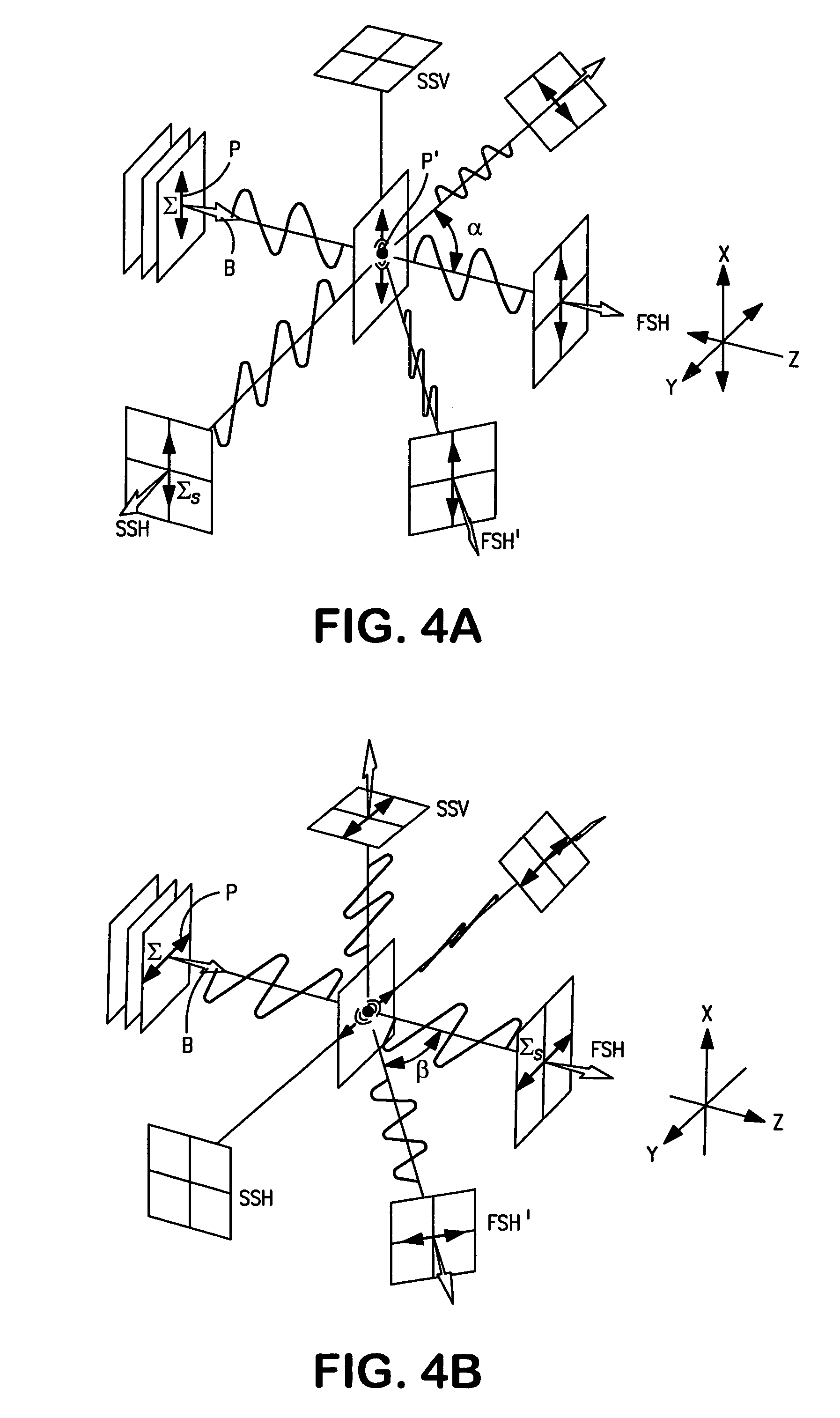

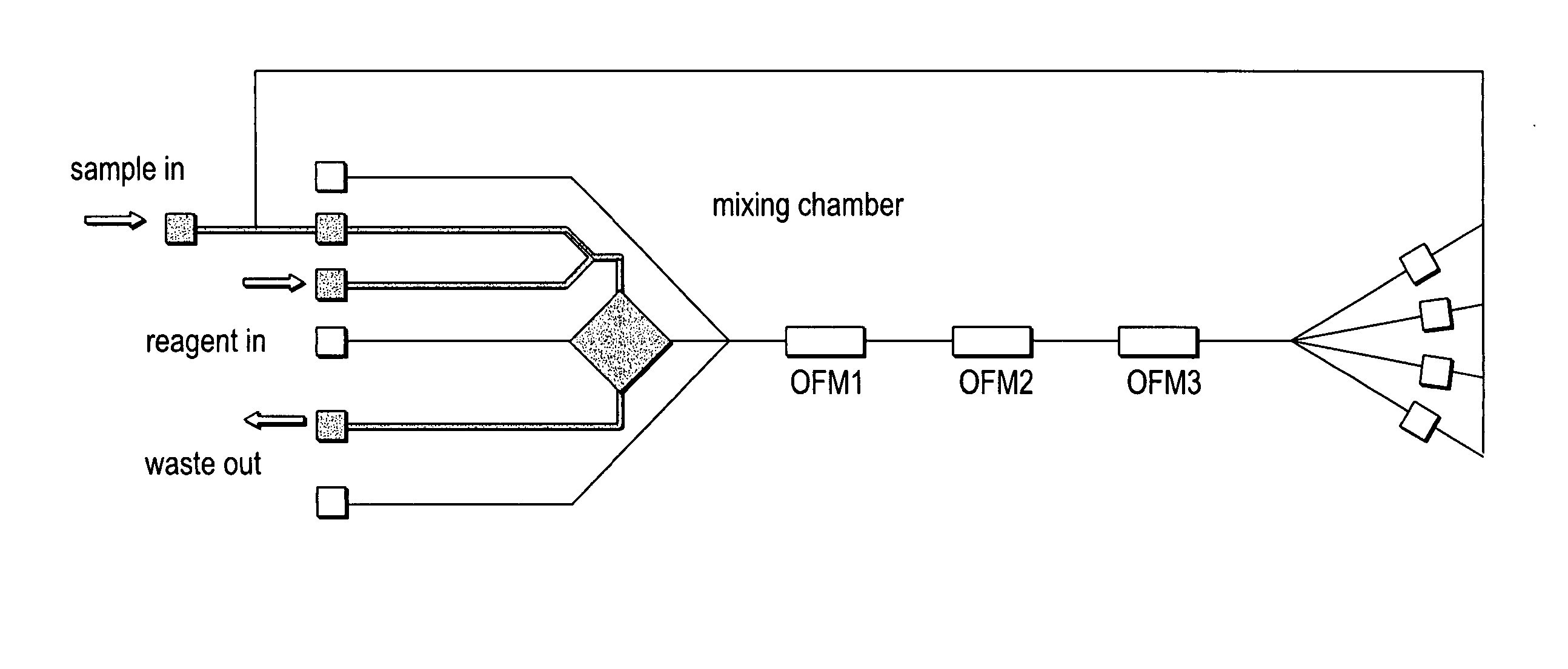

Flow cytometer for differentiating small particles in suspension

A flow cytometer includes an optical flow cell through which particles to be characterized on the basis of at least their respective side-scatter characteristics are caused to flow seriatim. A plane-polarized laser beam produced by a laser diode is used to irradiate the particles as they pass through a focused elliptical spot having its minor axis oriented parallel to the particle flow path. Initially, the plane of polarization of the laser beam extends perpendicular to the path of particles through the flow cell. A half-wave plate or the like is positioned in the laser beam path to rotate the plane of polarization of the laser beam so that it is aligned with the path of particles before it irradiated particles moving along such path.

Owner:BECKMAN COULTER INC

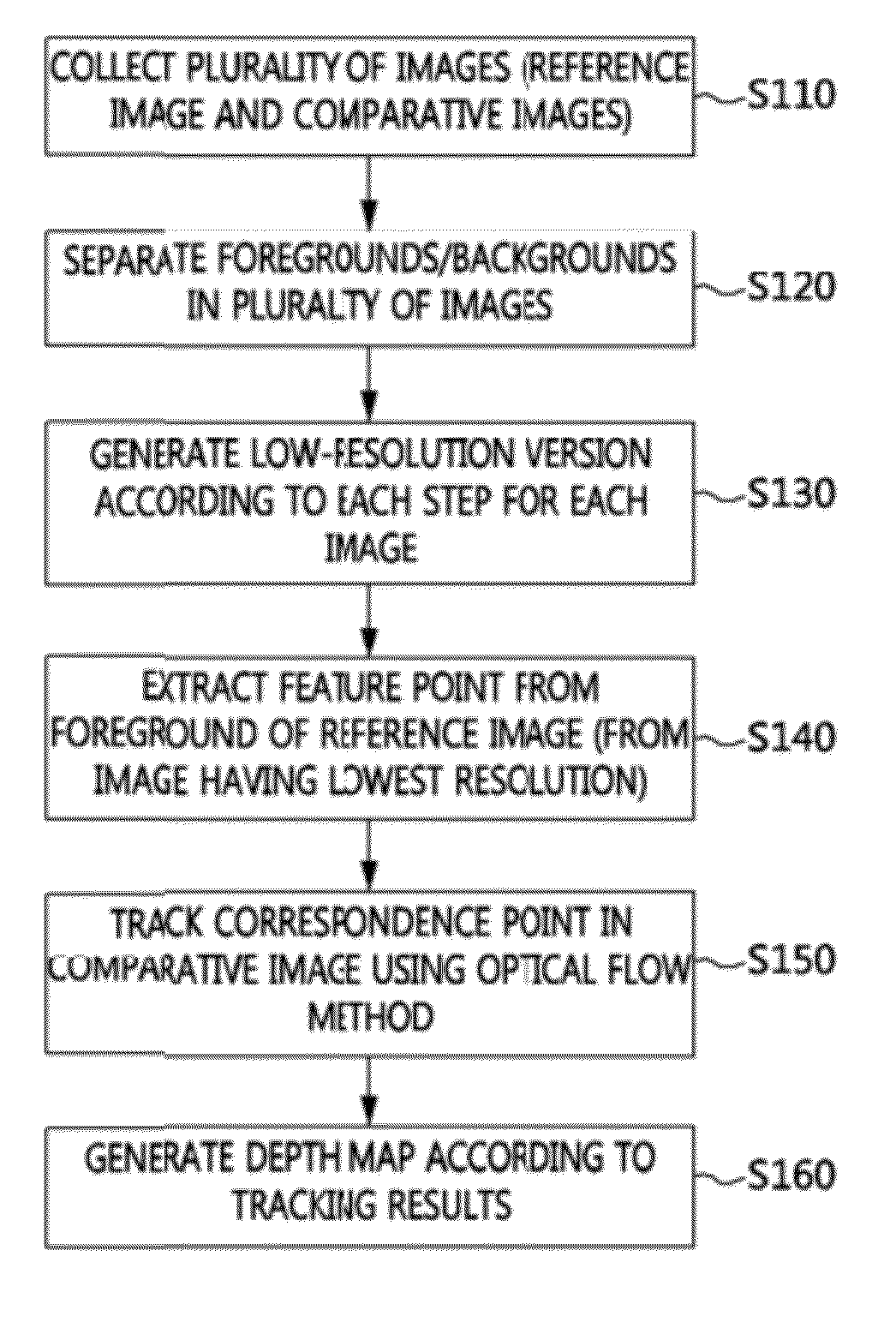

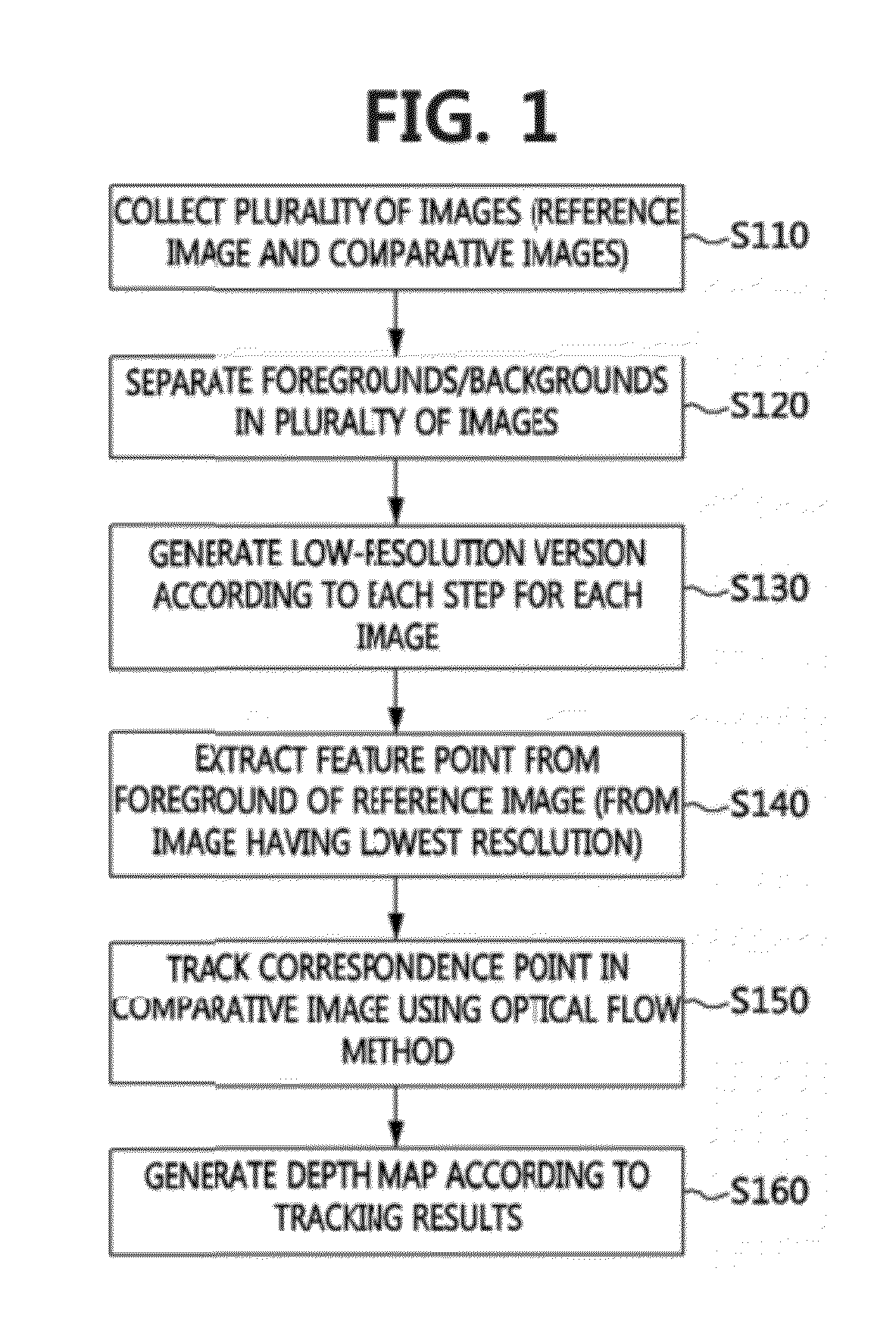

Method and apparatus for feature-based stereo matching

Disclosed are a method and apparatus for feature-based stereo matching. A method for stereo matching of a reference image and at least one comparative image captured by at least two cameras from different points of view using a computer device includes collecting the reference image and the at least one comparative image, extracting feature points from the reference image, tracking points corresponding to the feature points in the at least one comparative image using an optical flow technique, and generating a depth map according to correspondence-point tracking results.

Owner:ELECTRONICS & TELECOMM RES INST

Pedestrian collision warning system

A method is provided for preventing a collision between a motor vehicle and a pedestrian. The method uses a camera and a processor mountable in the motor vehicle. A candidate image is detected. Based on a change of scale of the candidate image, it may be determined that the motor vehicle and the pedestrian are expected to collide, thereby producing a potential collision warning. Further information from the image frames may be used to validate the potential collision warning. The validation may include an analysis of the optical flow of the candidate image, that lane markings prediction of a straight road, a calculation of the lateral motion of the pedestrian, if the pedestrian is crossing a lane mark or curb and / or if the vehicle is changing lanes.

Owner:MOBILEYE VISION TECH LTD

Forward collision warning trap and pedestrian advanced warning system

Owner:MOBILEYE VISION TECH LTD

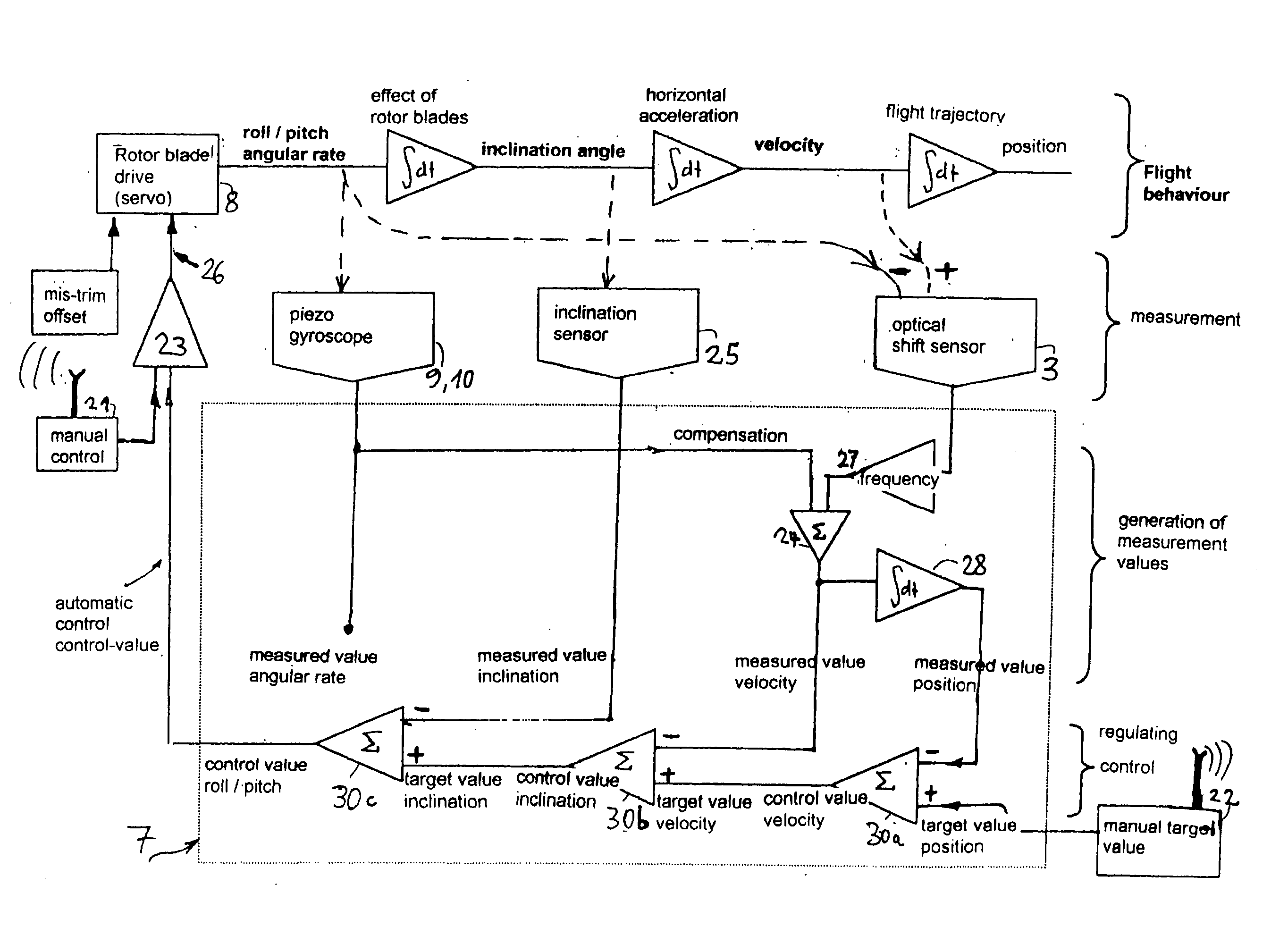

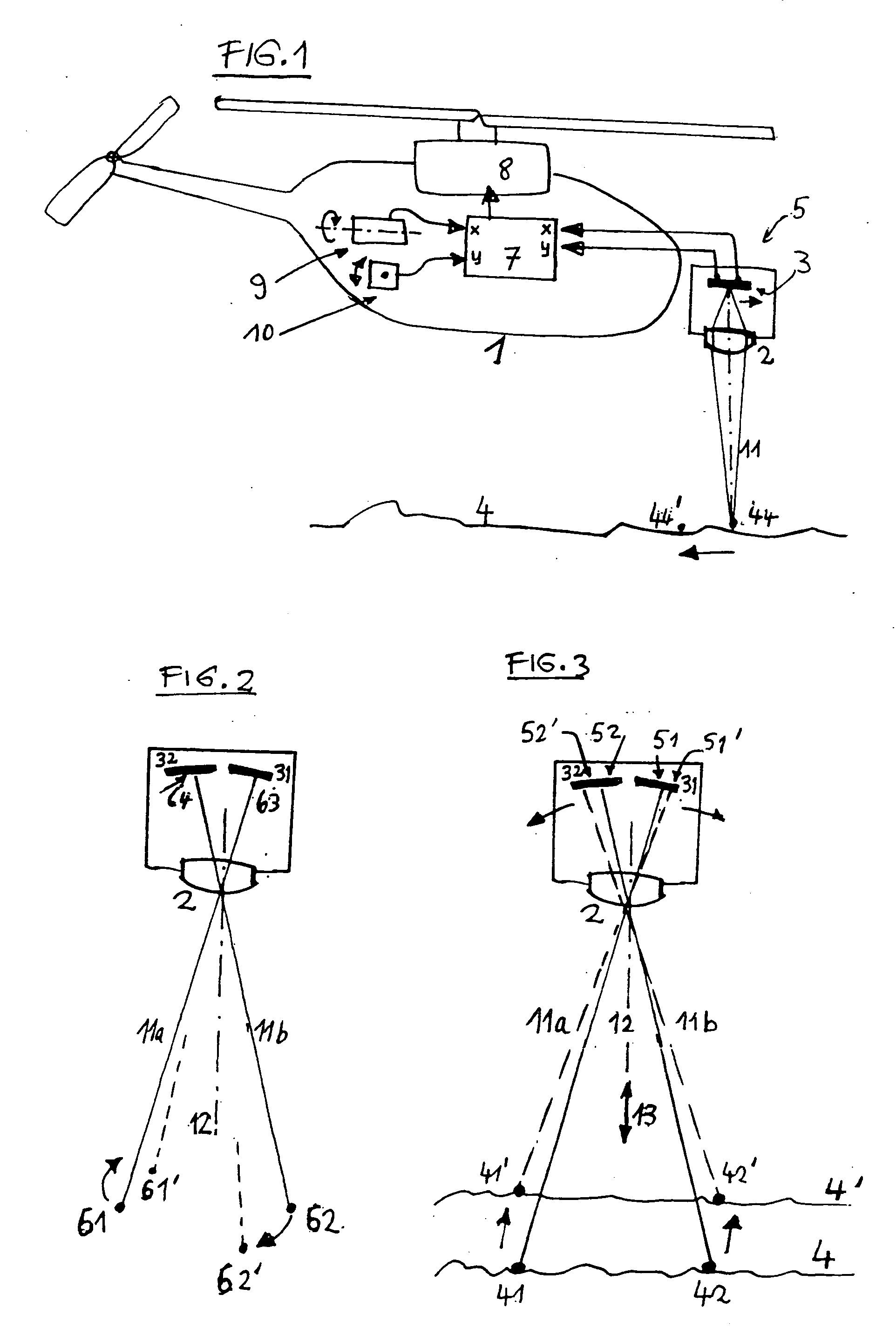

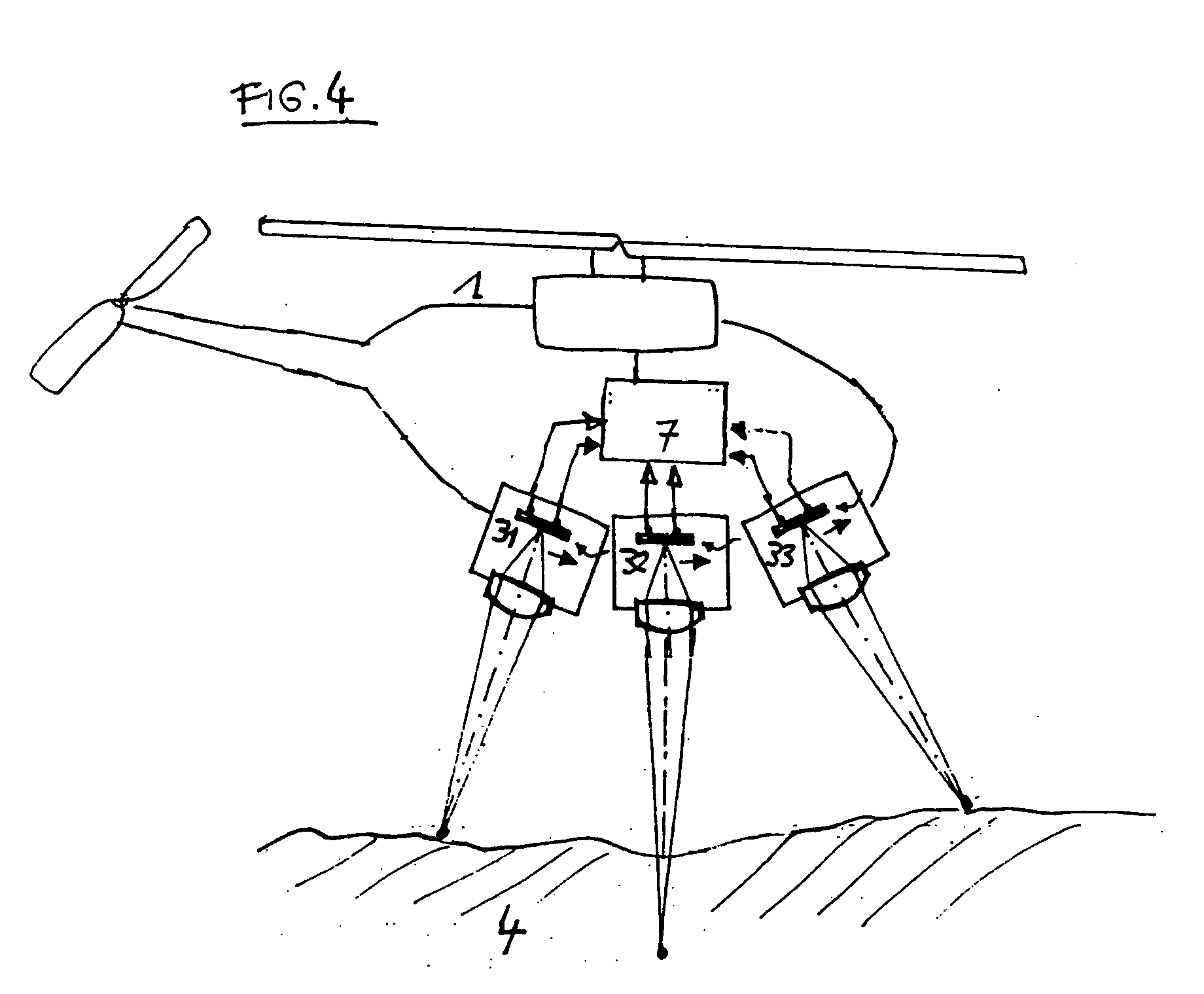

Optical sensing system and system for stabilizing machine-controllable vehicles

ActiveUS20050165517A1Stabilising flight movementAvoiding undesirable fluctuationDigital data processing detailsPosition fixationAutomatic controlEngineering

In order to measure the movement of a vehicle, especially an aircraft, an imaging optical system 2 which is to be positioned on the vehicle is used to detect an image of the environment 4, and an optoelectronic shift sensor 3 chip of the type comprising an inherent evaluation unit is used to measure any shift of the image from structures thereof. The shift sensor is equal or similar to the sensor used on an optical mouse. The sensor is positioned in such a way that infinite objects are focused. The measuring signal is evaluated to indicate movements and / or the position of the aircraft. The inventive system can also be used to measure distances e.g. in order to control the flight altitude. The invention further relates to methods for automatically controlling particularly a hovering flight by means of a control loop using optical flow measurement.

Owner:IRON BIRD LLC

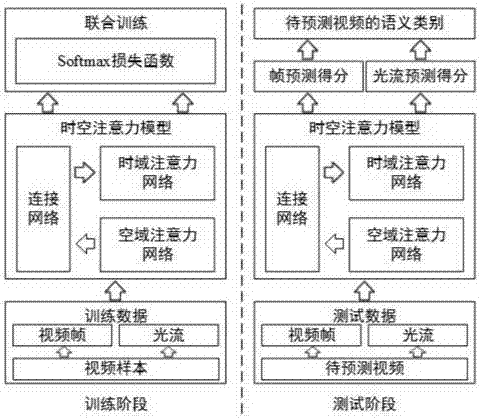

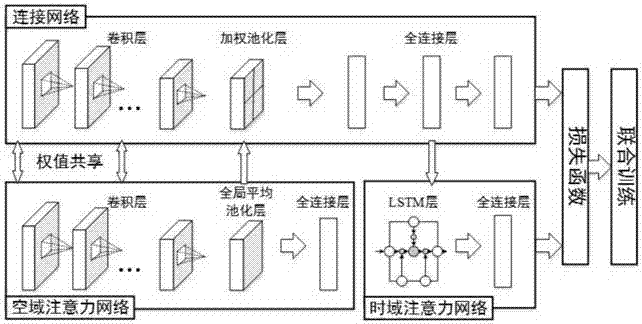

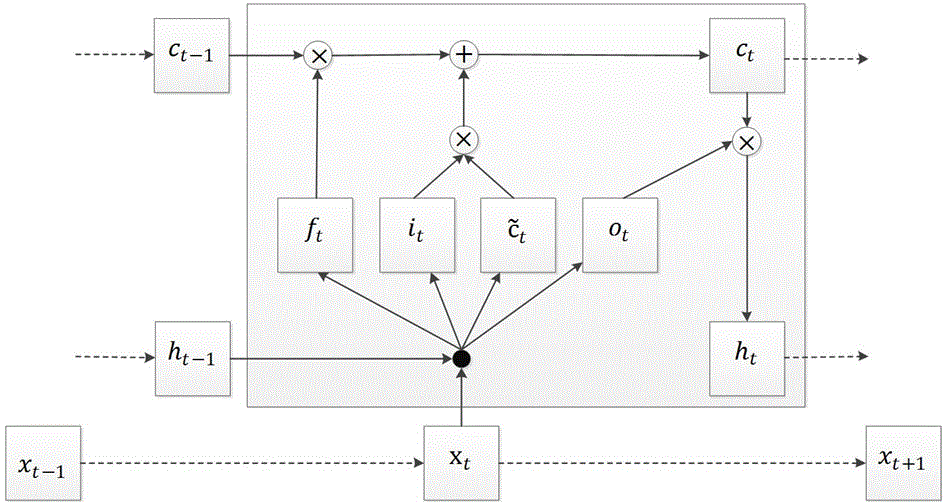

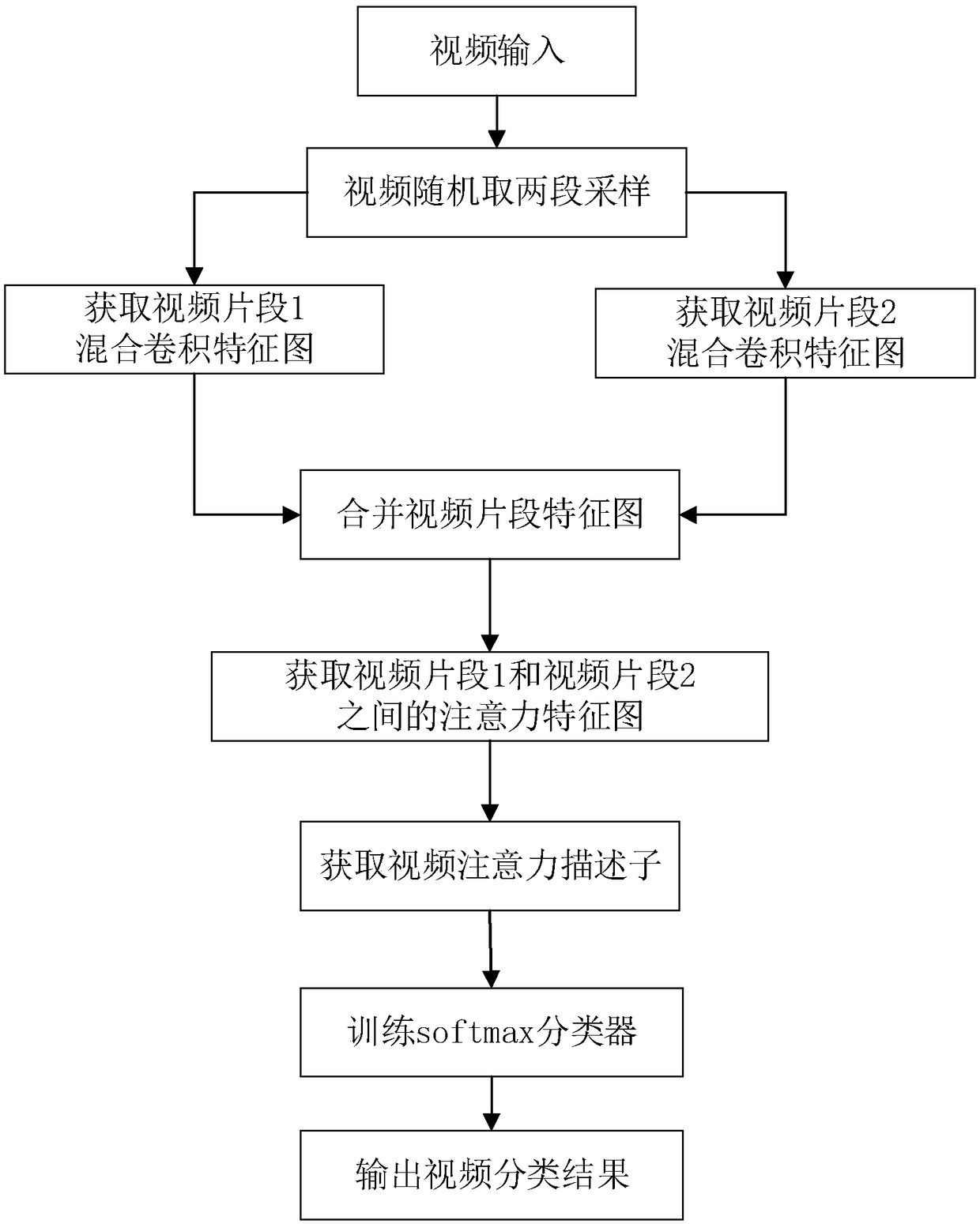

Space-time attention based video classification method

ActiveCN107330362AImprove classification performanceTime-domain saliency information is accurateCharacter and pattern recognitionAttention modelTime domain

The invention relates to a space-time attention based video classification method, which comprises the steps of extracting frames and optical flows for training video and video to be predicted, and stacking a plurality of optical flows into a multi-channel image; building a space-time attention model, wherein the space-time attention model comprises a space-domain attention network, a time-domain attention network and a connection network; training the three components of the space-time attention model in a joint manner so as to enable the effects of the space-domain attention and the time-domain attention to be simultaneously improved and obtain a space-time attention model capable of accurately modeling the space-domain saliency and the time-domain saliency and being applicable to video classification; extracting the space-domain saliency and the time-domain saliency for the frames and optical flows of the video to be predicted by using the space-time attention model obtained by learning, performing prediction, and integrating prediction scores of the frames and the optical flows to obtain a final semantic category of the video to be predicted. According to the space-time attention based video classification method, modeling can be performing on the space-domain attention and the time-domain attention simultaneously, and the cooperative performance can be sufficiently utilized through joint training, thereby learning more accurate space-domain saliency and time-domain saliency, and thus improving the accuracy of video classification.

Owner:PEKING UNIV

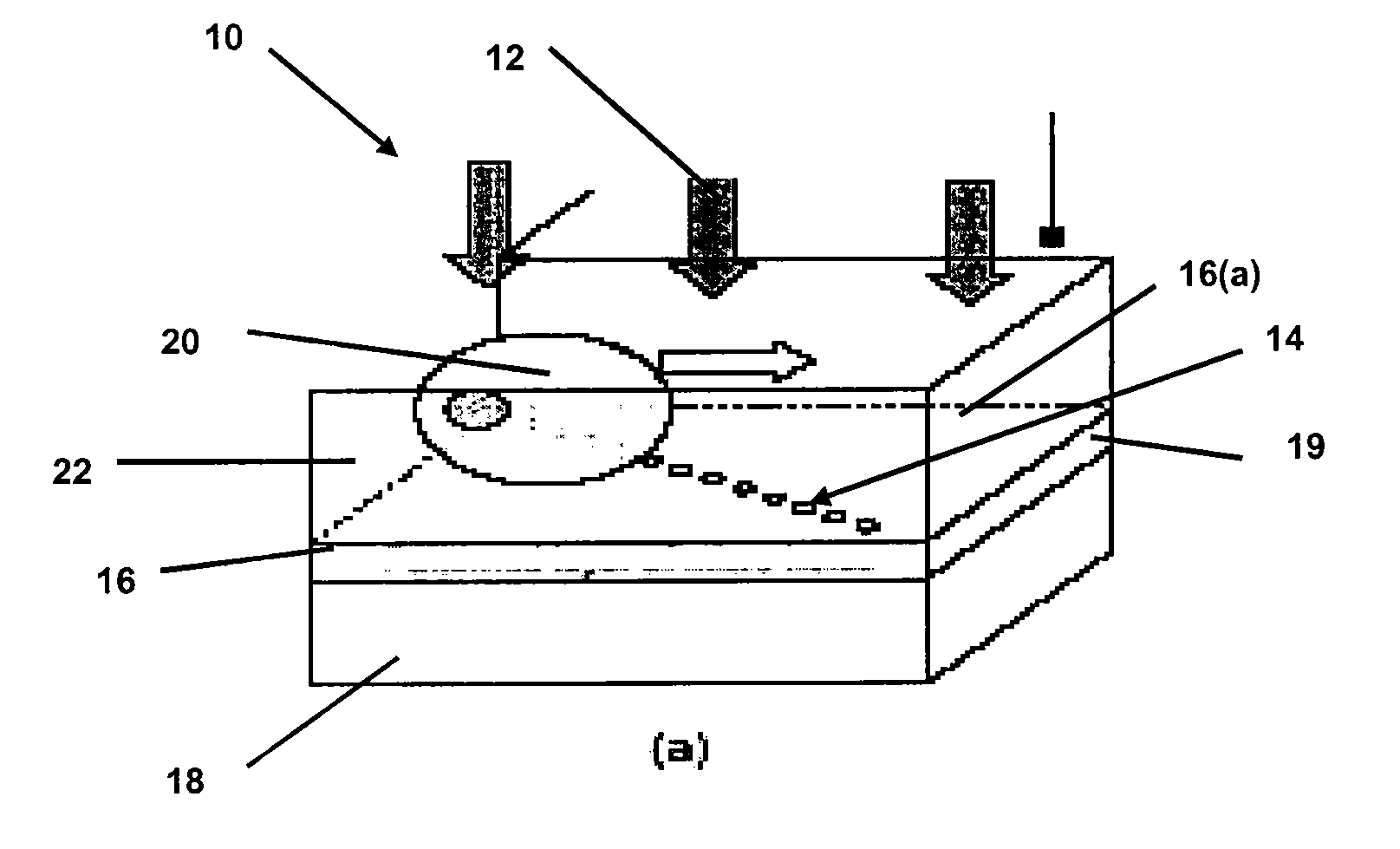

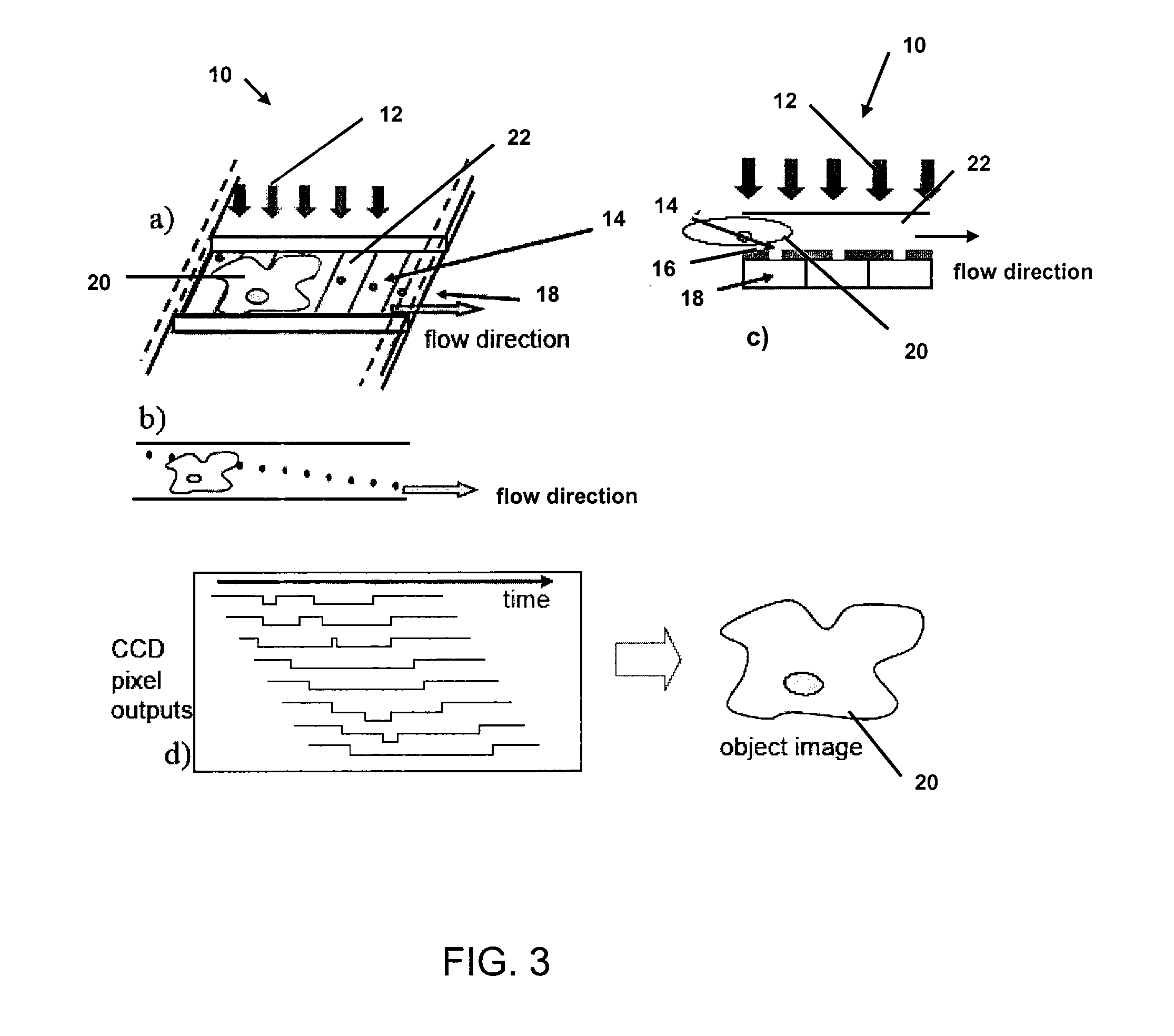

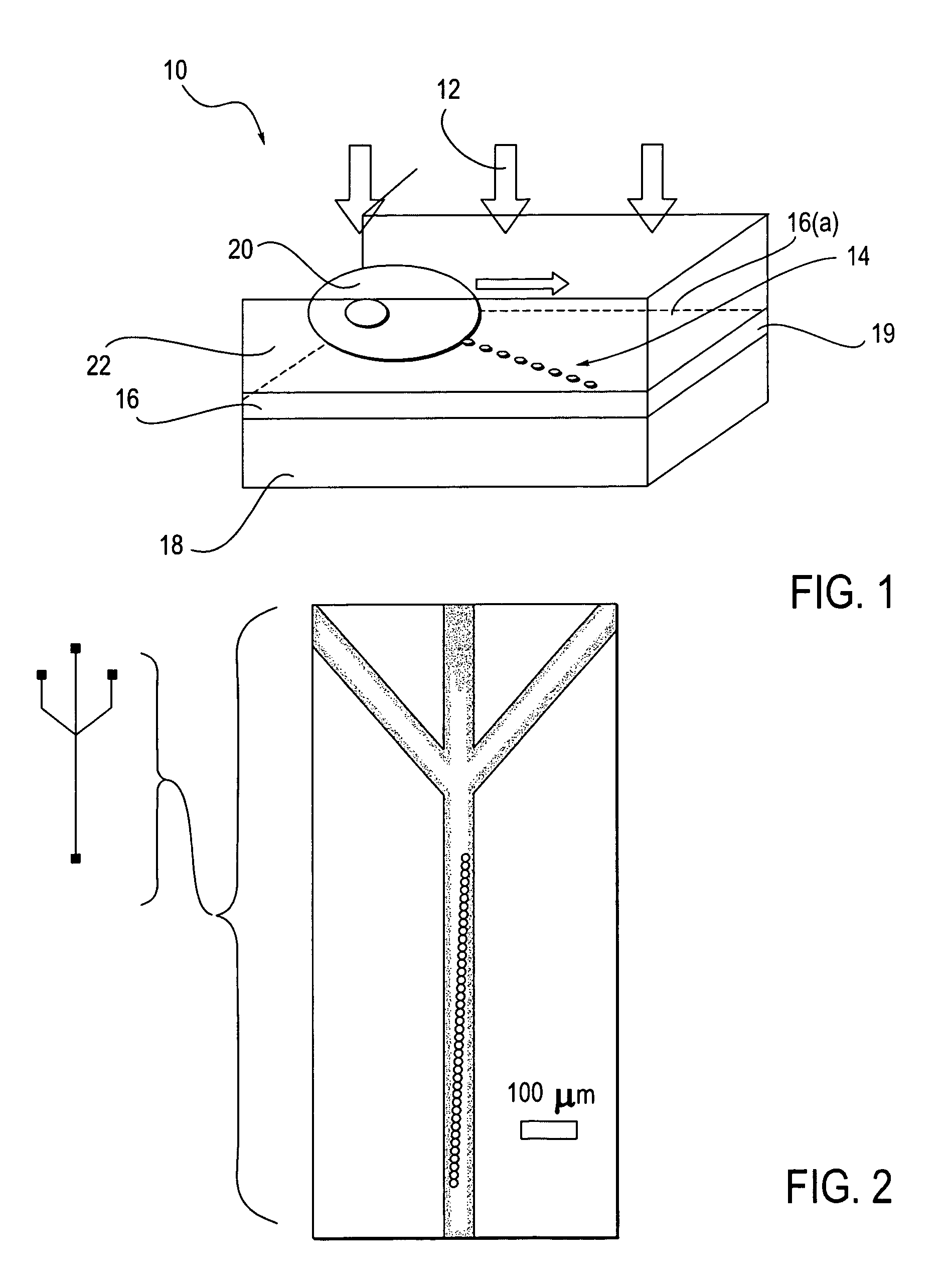

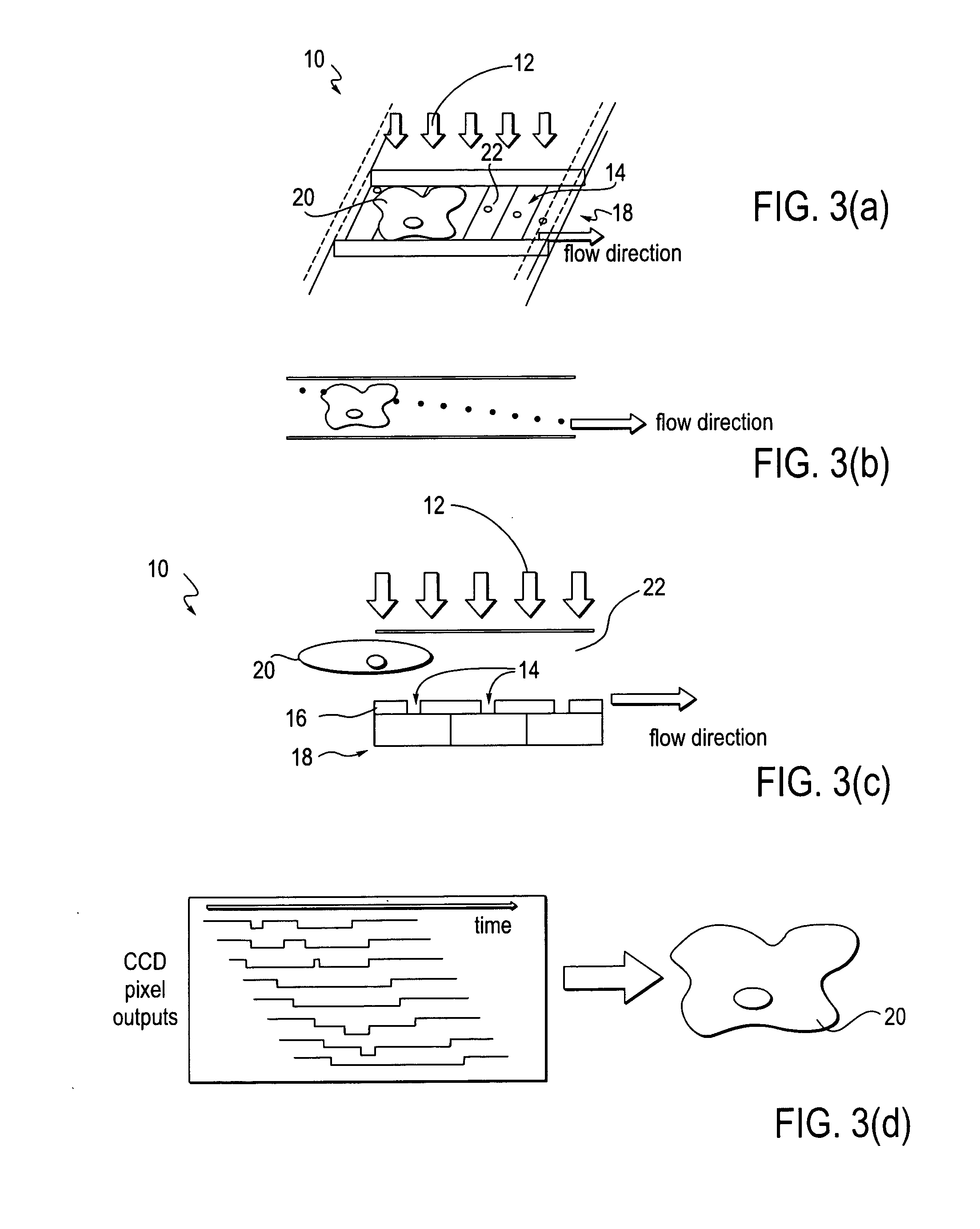

Optofluidic microscope device

InactiveUS20070207061A1High imaging throughput rateEasy to handleBioreactor/fermenter combinationsMaterial nanotechnologyOptical flowMicroscope

An optofluidic microscope device is disclosed. The device includes a fluid channel having a surface and an object such as a bacterium or virus may flow through the fluid channel. Light transmissive regions of different sizes may be used to image the object.

Owner:CALIFORNIA INST OF TECH

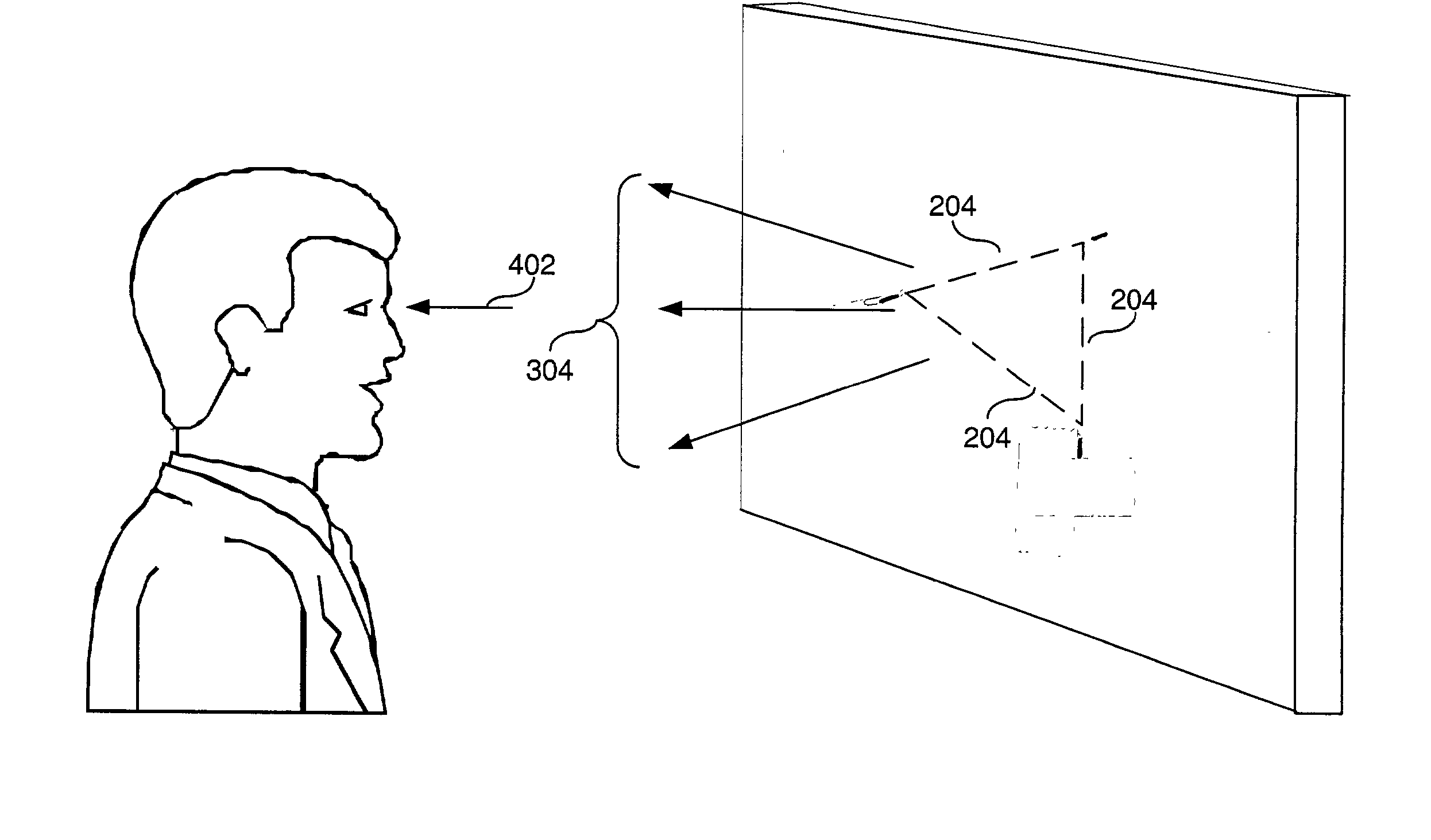

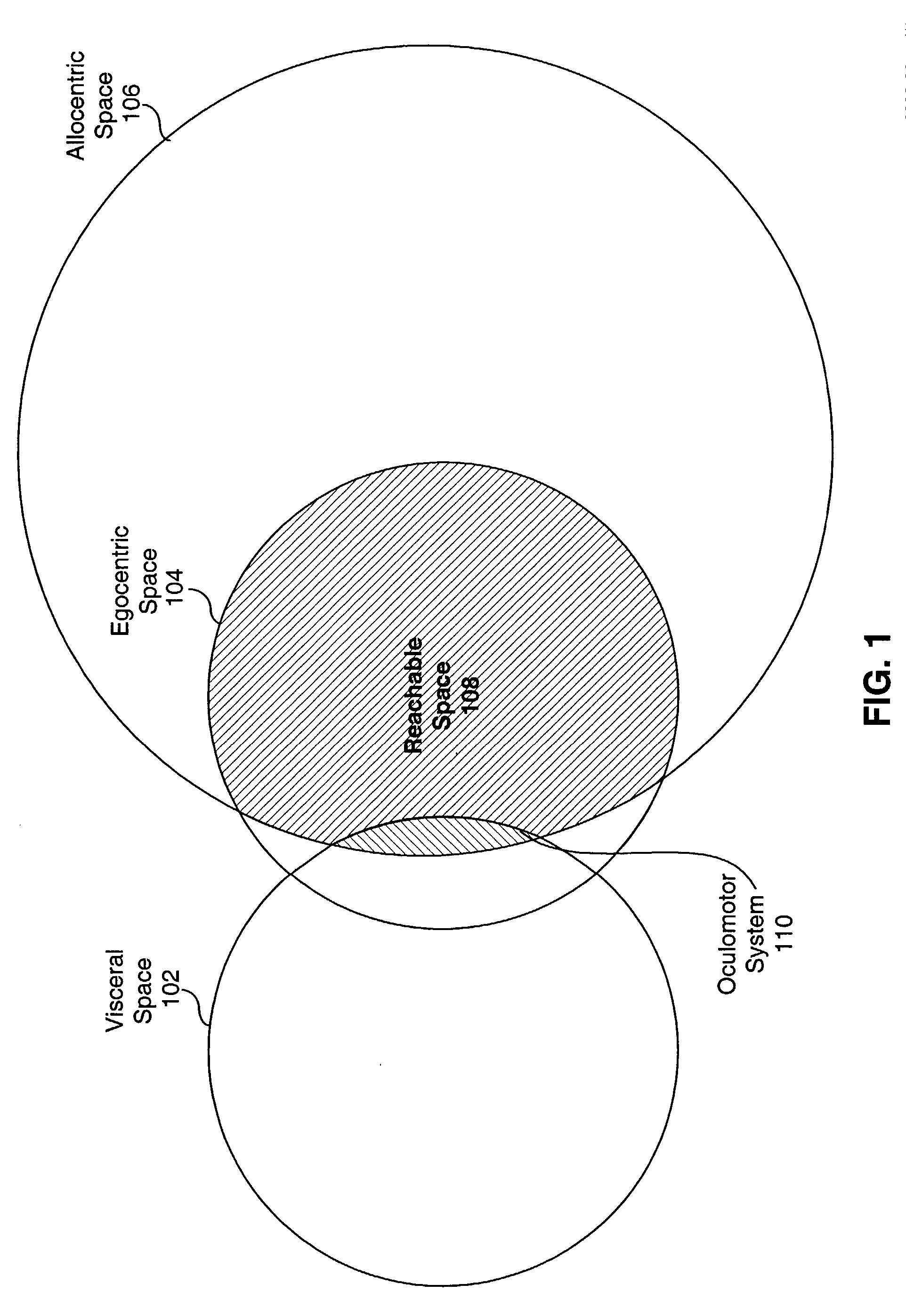

Apparatus, method and computer program product to facilitate ordinary visual perception via an early perceptual-motor extraction of relational information from a light stimuli array to trigger an overall visual-sensory motor integration in a subject

ActiveUS20040049124A1Increase probabilityEasy extractionSurgeryVaccination/ovulation diagnosticsOptical flowSensorimotor integration

An apparatus, method and computer program product is presented to address early visual-sensory motor perception of a subject, where the method comprising the steps of: (1) controlling photic energetic parameters and / or photic perceptual attributes to trigger pre-attentive cuing or increase reactivity in magnocellular activity towards transient visual stimuli of the subject; and (2) generating a optical field comprising optical events based on said photic energetic parameters and said photic perceptual attributes, wherein said optical field transforms into a simple optical flow in the perceptual visual field of said subject. The photic energetic parameters may comprise light array energetic features, including one or more of wavelength, amplitude, intensity, phase, polarization, coherence, hue, brightness, and saturation.

Owner:BRIGHTSTAR LEARNING LTD

Optofluidic microscope device

InactiveUS20050271548A1High imaging throughput rateEasy to handleBioreactor/fermenter combinationsBiological substance pretreatmentsOptical flowPerformed Imaging

An optofluidic microscope device is disclosed. The device includes a fluid channel having a surface and an object such as a bacterium or virus may flow through the fluid channel. Light imaging elements in the bottom of the fluid channel may be used to image the object.

Owner:CALIFORNIA INST OF TECH

Bidirectional long short-term memory unit-based behavior identification method for video

InactiveCN106845351AImprove accuracyGuaranteed accuracyCharacter and pattern recognitionNeural architecturesTime domainTemporal information

Owner:SUZHOU UNIV

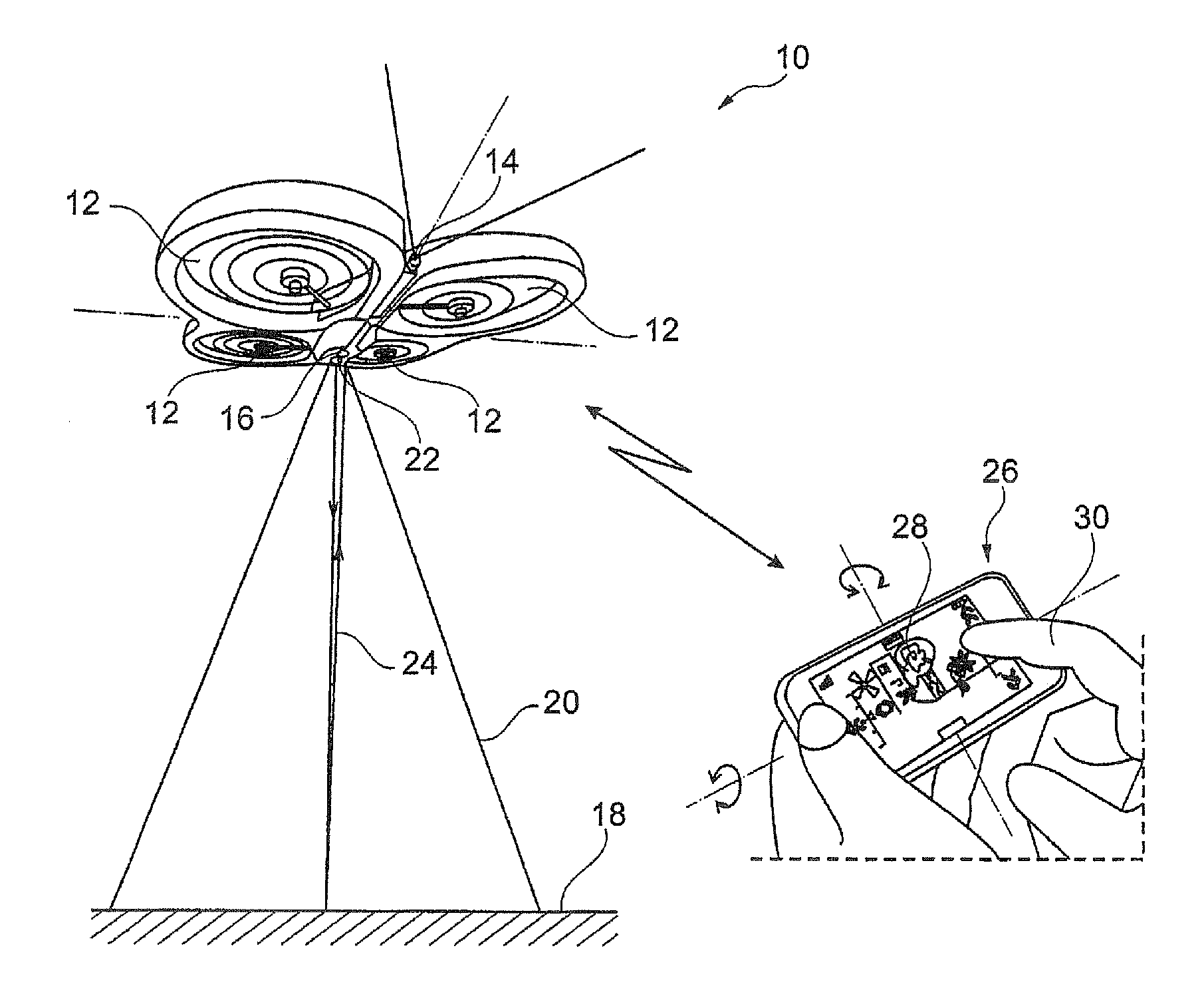

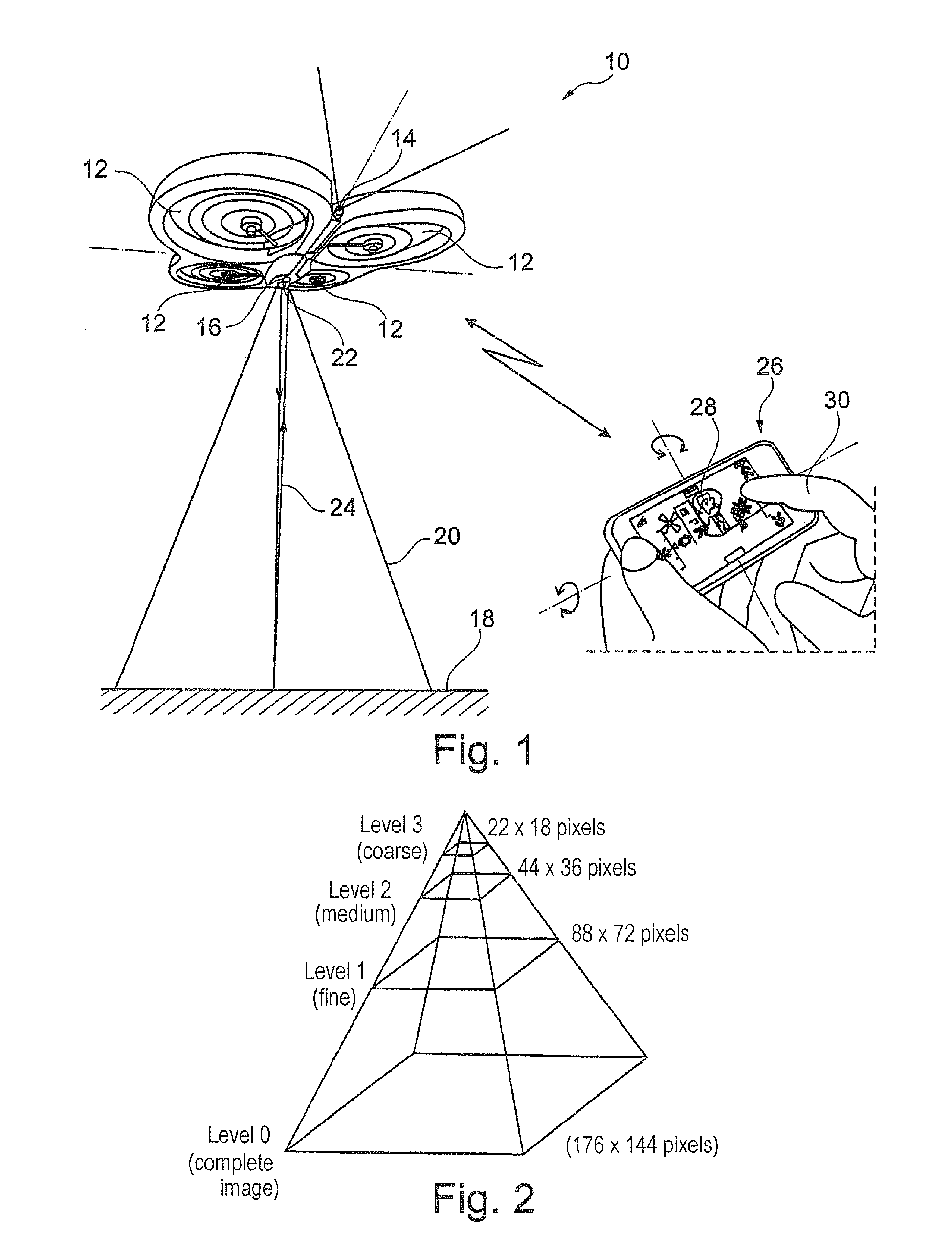

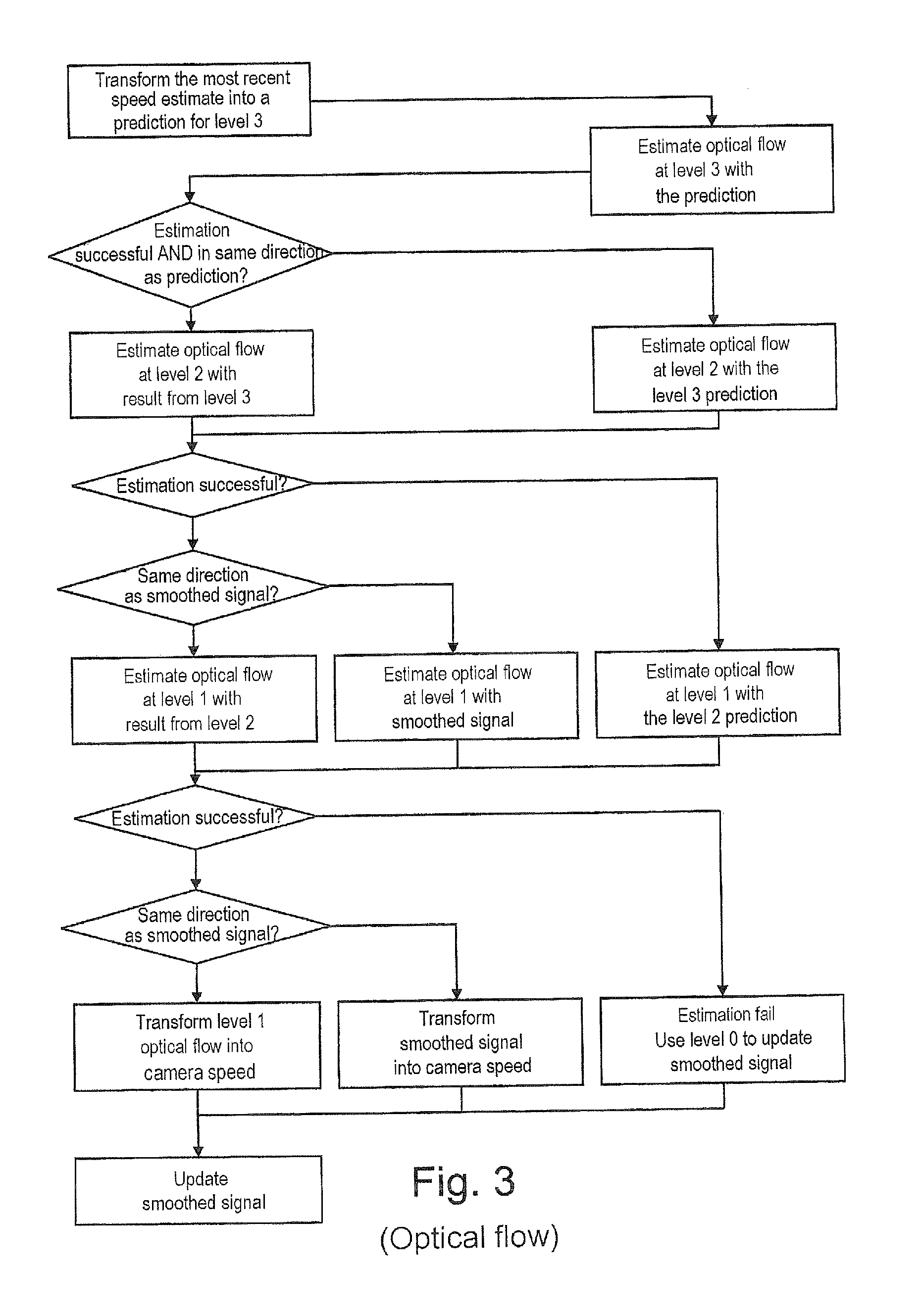

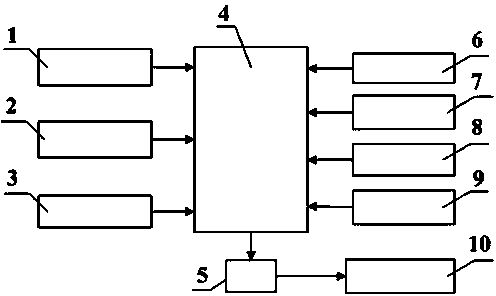

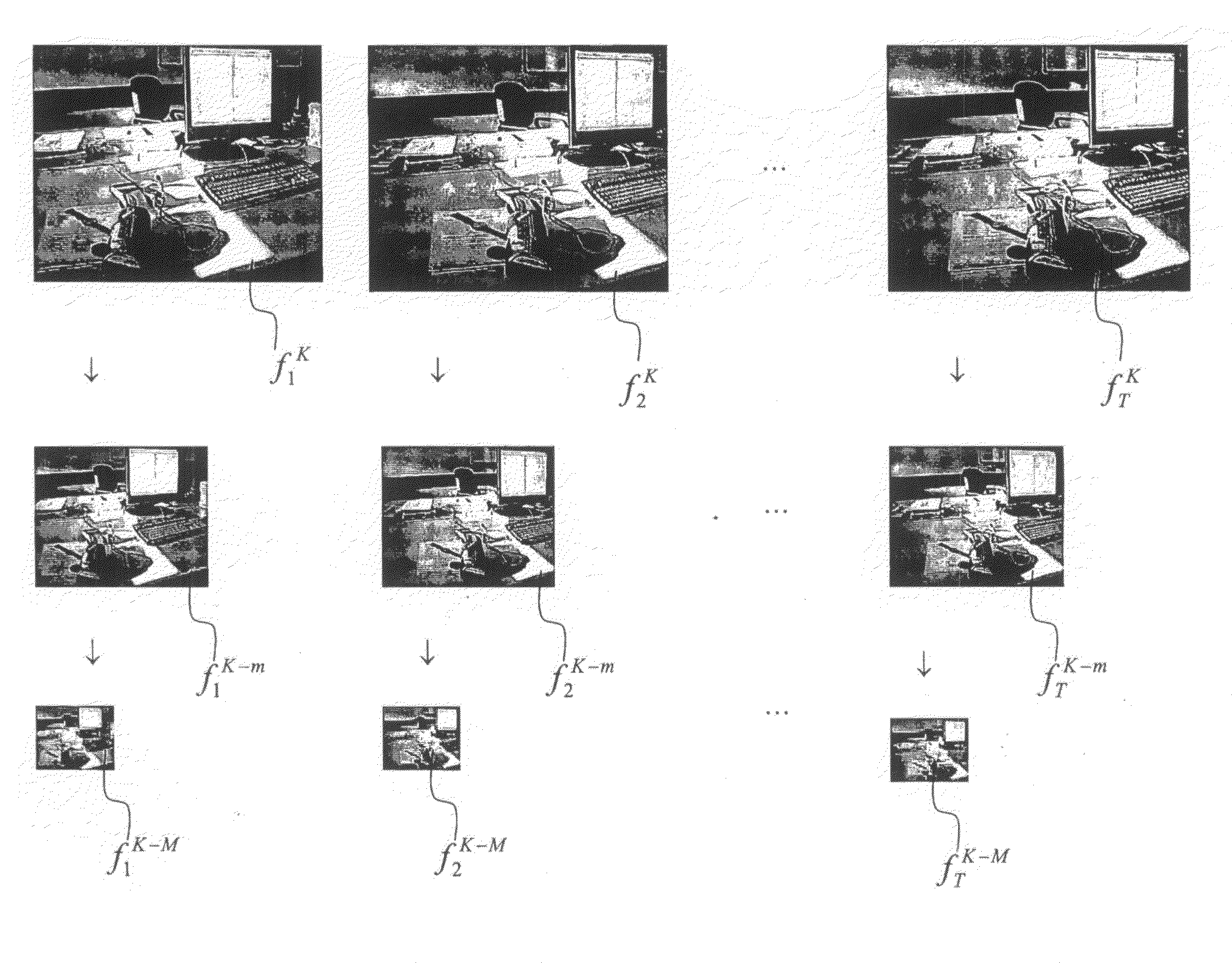

Method of evaluating the horizontal speed of a drone, in particular a drone capable of performing hovering flight under autopilot

ActiveUS20110311099A1Reduce contrastImprove noiseImage enhancementImage analysisImage resolutionUncrewed vehicle

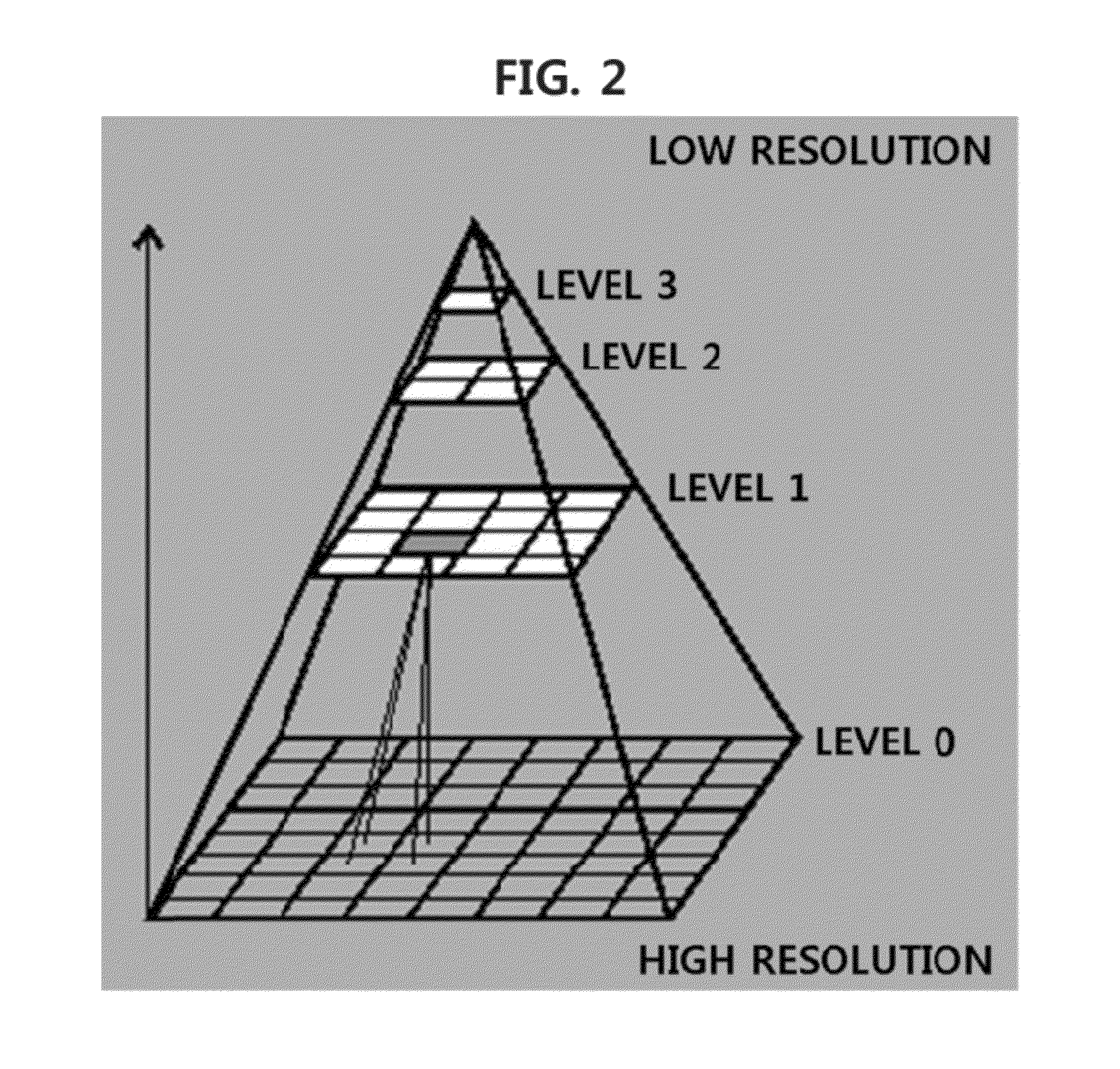

The method operates by estimating the differential movement of the scene picked up by a vertically-oriented camera. Estimation includes periodically and continuously updating a multiresolution representation of the pyramid of images type modeling a given picked-up image of the scene at different, successively-decreasing resolutions. For each new picked-up image, an iterative algorithm of the optical flow type is applied to said representation. The method also provides responding to the data produced by the optical-flow algorithm to obtain at least one texturing parameter representative of the level of microcontrasts in the picked-up scene and obtaining an approximation of the speed, to which parameters a battery of predetermined criteria are subsequently applied. If the battery of criteria is satisfied, then the system switches from the optical-flow algorithm to an algorithm of the corner detector type.

Owner:PARROT

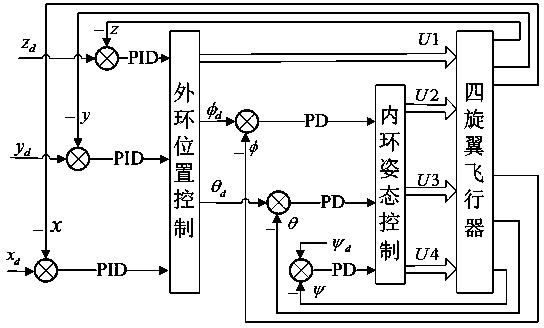

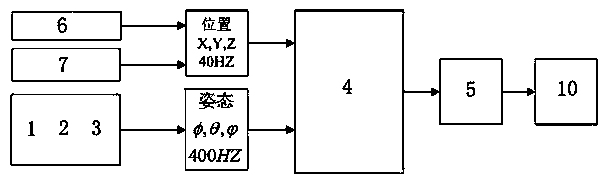

Small four-rotor aircraft control system and method based on airborne sensor

ActiveCN103853156ALow costRealize autonomous vertical take-off and landingAttitude controlPosition/course control in three dimensionsBrushless motorsUltrasonic sensor

The invention relates to the technical field of four-rotor aircrafts, in particular to a small four-rotor aircraft control system and method based on an airborne sensor. The small four-rotor aircraft control system based on the airborne sensor comprises an inertia measurement unit module, a microprocessor, an electronic speed controller, an ultrasonic sensor, an optical flow sensor, a camera, a wireless module and a DC brushless motor. By merging the information of a light and low-cost airborne sensor system, the six-DOF flight attitude of the aircraft is estimated in real time, and a closed-loop control strategy comprising inner-loop attitude control and outer-ring position control is designed. Under the environment without a GPS or an indoor positioning system, flight path control and aircraft formation control based on the leader followed strategy over the rotorcraft are achieved through the airborne sensor system and the microprocessor, wherein the flight path control comprises autonomous vertical take-off and landing, indoor accurate positioning, autonomous hovering and autonomous flight path point tracking. According to the small four-rotor aircraft control system and method, a reliable, accurate and low-cost control strategy is provided for achieving autonomous flight of the rotorcraft.

Owner:SUN YAT SEN UNIV

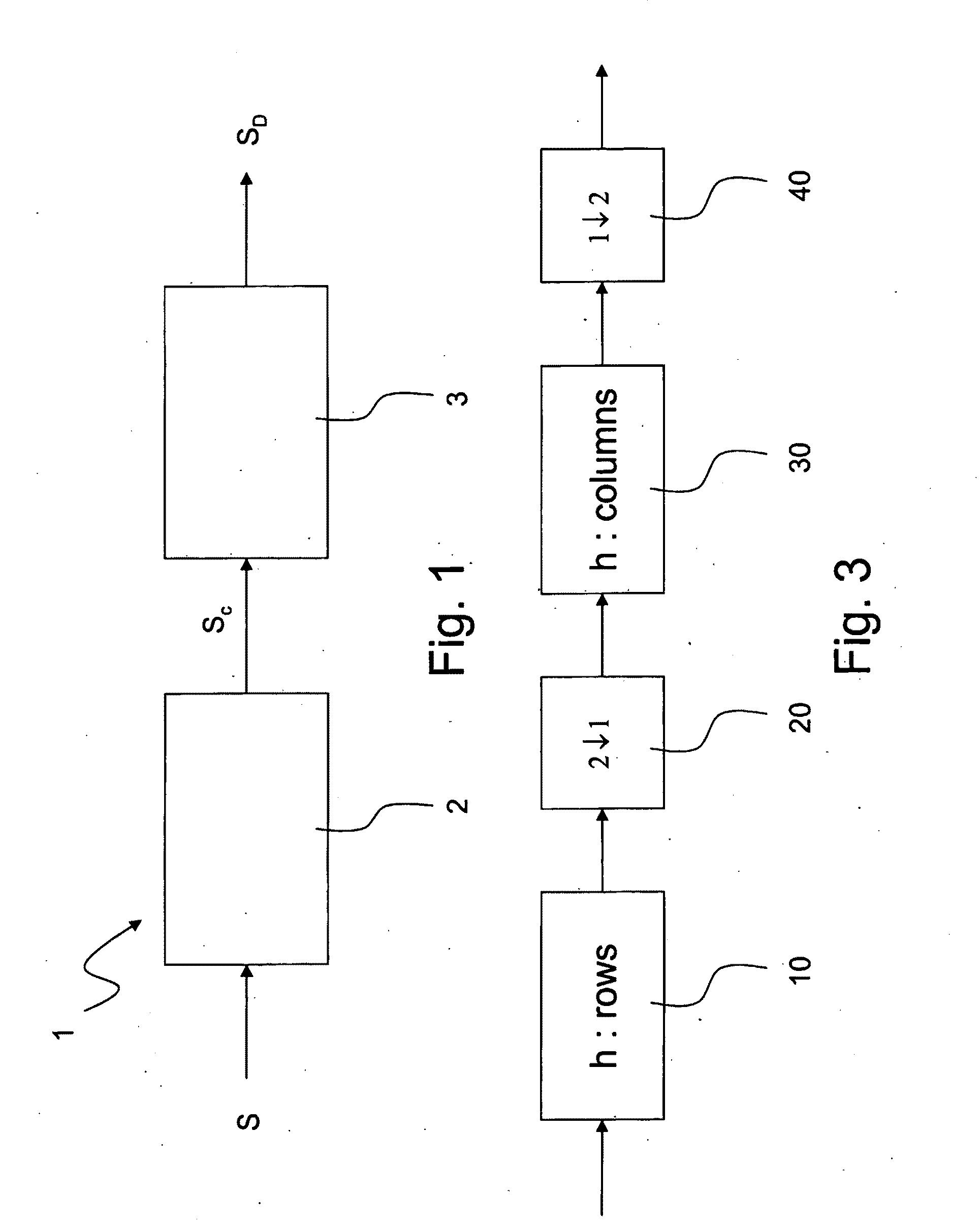

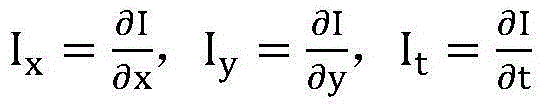

Method for Scalable Video Coding

ActiveUS20090304090A1Improve device performanceColor television with pulse code modulationColor television with bandwidth reductionMotion fieldImage resolution

A method for estimating motion for the scalable video coding, includes the step of estimating the motion field of a sequence of photograms which can be represented with a plurality of space resolution levels including computing the motion field for the minimum resolution level and, until the maximum resolution level is reached, repeating the steps of: rising by one resolution level; extracting the photograms for such resolution level; and computing the motion field for such resolution level. The motion field is computed through an optical flow equation which contains, for every higher level than the minimum resolution level, a regularization factor between levels which points out the difference between the solution for the considered level and the solution for the immediately lower resolution level. A more or less high value of the regularization factor implies more or less relevant changes of the component at the considered resolution during the following process iterations.

Owner:TELECOM ITALIA SPA

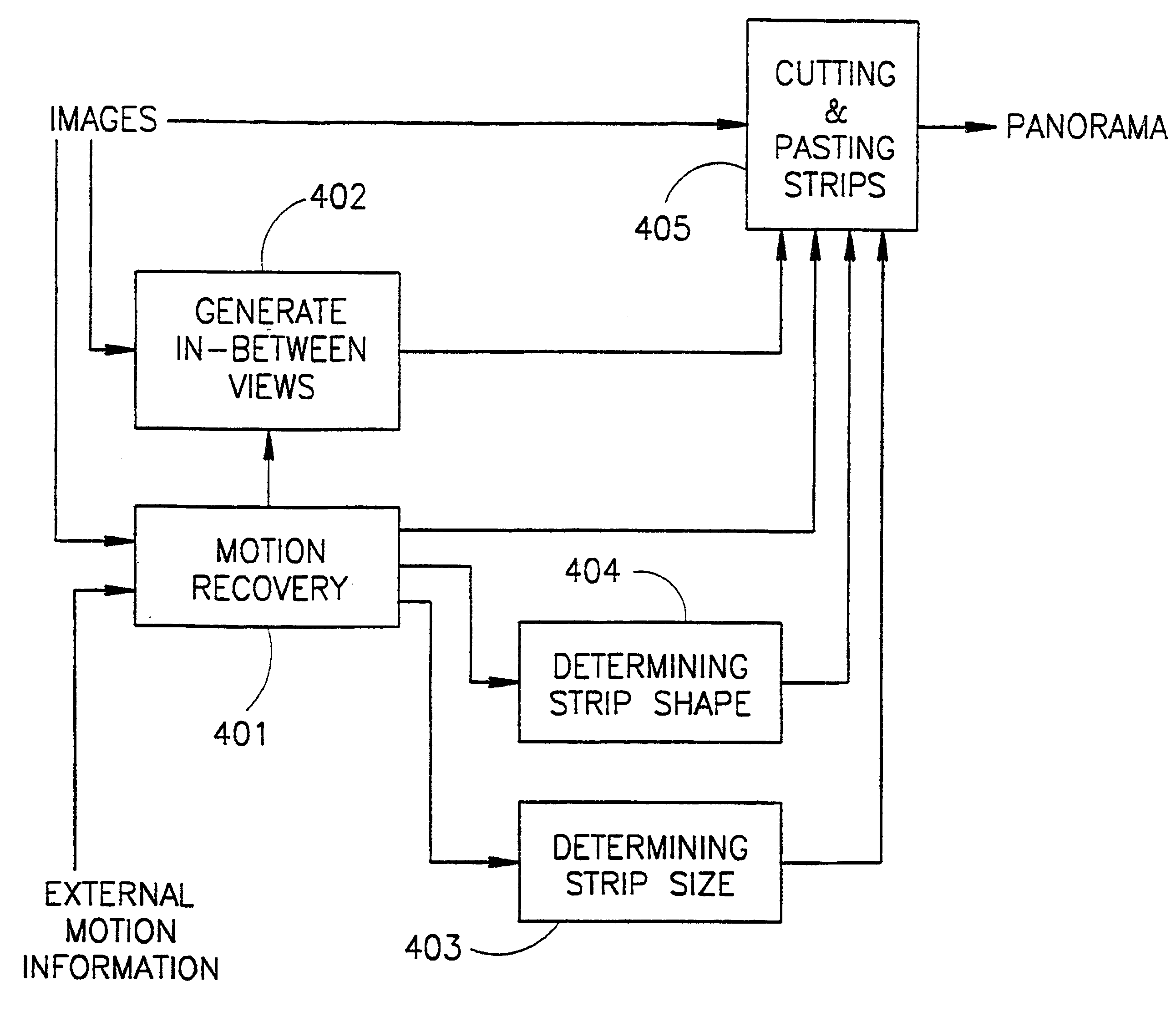

Generalized panoramic mosaic

InactiveUS6532036B1Television system detailsGeometric image transformationOptical flowRelative motion

A method for combining a sequence of two-dimensional images of a scene to construct a panoramic mosaic of the scene has a sequence of images acquired by a camera with relative motion to the scene. The relative motion gives rise to an optical flow between the images. The images are warped so that the optical flow becomes substantially parallel to a direction in which the mosaic is constructed and pasted so that the sequence of the images is continuous for the scene to construct the panoramic mosaic of the scene. For this, the images are projected onto a three-dimensional cylinder having a major axis that approximates the path of an optical center of the camera. The combination of the images is achieved by translating the projected two-dimensional images substantially along the cylindrical surface of the three-dimensional cylinder.

Owner:YISSUM RES DEV CO OF THE HEBREWUNIVERSITY OF JERUSALEM LTD

Apparatus and method for capturing a scene using staggered triggering of dense camera arrays

ActiveUS20070030342A1Minimal computational loadEasy to sampleTelevision system detailsCharacter and pattern recognitionViewpointsOptical flow

This invention relates to an apparatus and a method for video capture of a three-dimensional region of interest in a scene using an array of video cameras. The video cameras of the array are positioned for viewing the three-dimensional region of interest in the scene from their respective viewpoints. A triggering mechanism is provided for staggering the capture of a set of frames by the video cameras of the array. The apparatus has a processing unit for combining and operating on the set of frames captured by the array of cameras to generate a new visual output, such as high-speed video or spatio-temporal structure and motion models, that has a synthetic viewpoint of the three-dimensional region of interest. The processing involves spatio-temporal interpolation for determining the synthetic viewpoint space-time trajectory. In some embodiments, the apparatus computes a multibaseline spatio-temporal optical flow.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

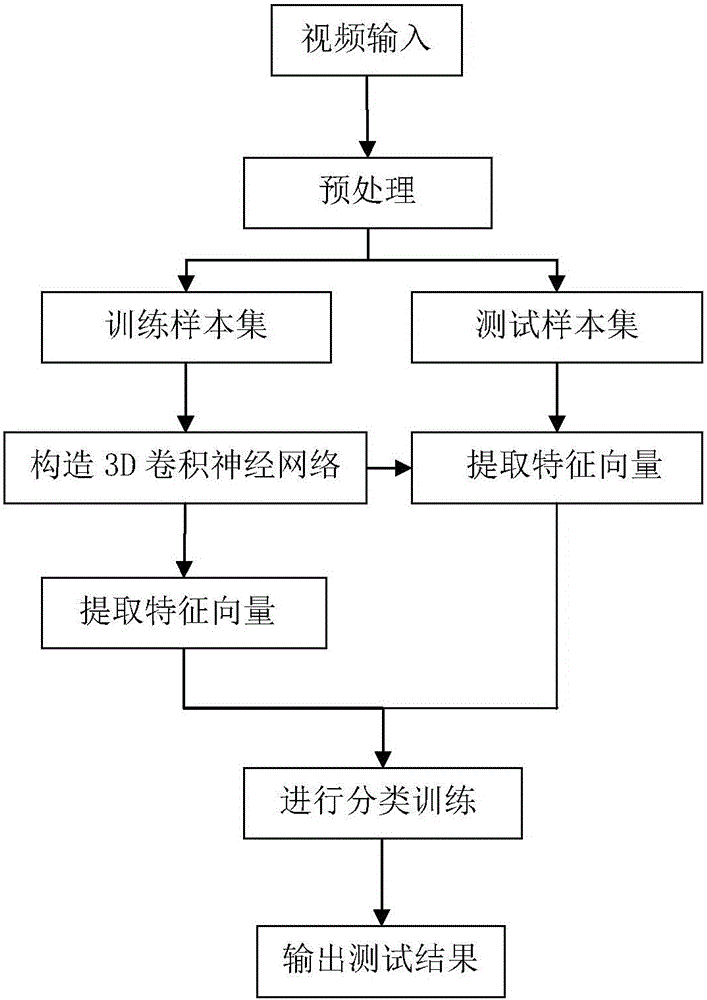

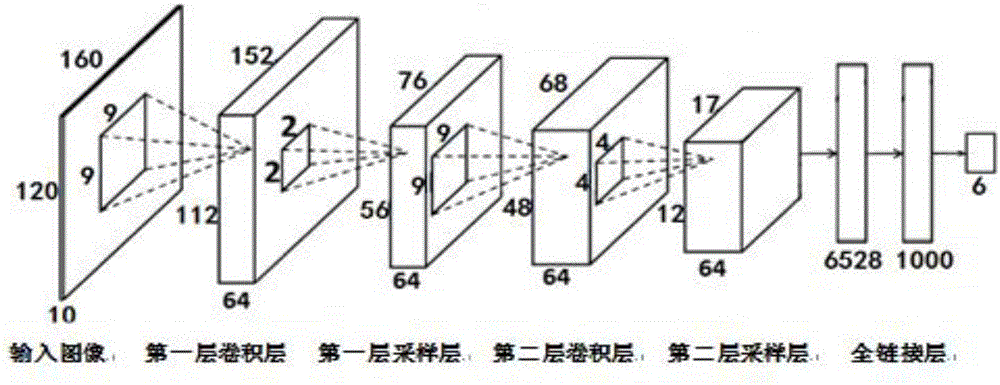

3D (three-dimensional) convolutional neural network based human body behavior recognition method

InactiveCN105160310AThe extracted features are highly representativeFast extractionCharacter and pattern recognitionHuman bodyFeature vector

The present invention discloses a 3D (three-dimensional) convolutional neural network based human body behavior recognition method, which is mainly used for solving the problem of recognition of a specific human body behavior in the fields of computer vision and pattern recognition. The implementation steps of the method are as follows: (1) carrying out video input; (2) carrying out preprocessing to obtain a training sample set and a test sample set; (3) constructing a 3D convolutional neural network; (4) extracting a feature vector; (5) performing classification training; and (6) outputting a test result. According to the 3D convolutional neural network based human body behavior recognition method disclosed by the present invention, human body detection and movement estimation are implemented by using an optical flow method, and a moving object can be detected without knowing any information of a scenario. The method has more significant performance when an input of a network is a multi-dimensional image, and enables an image to be directly used as the input of the network, so that a complex feature extraction and data reconstruction process in a conventional recognition algorithm is avoided, and recognition of a human body behavior is more accurate.

Owner:XIDIAN UNIV

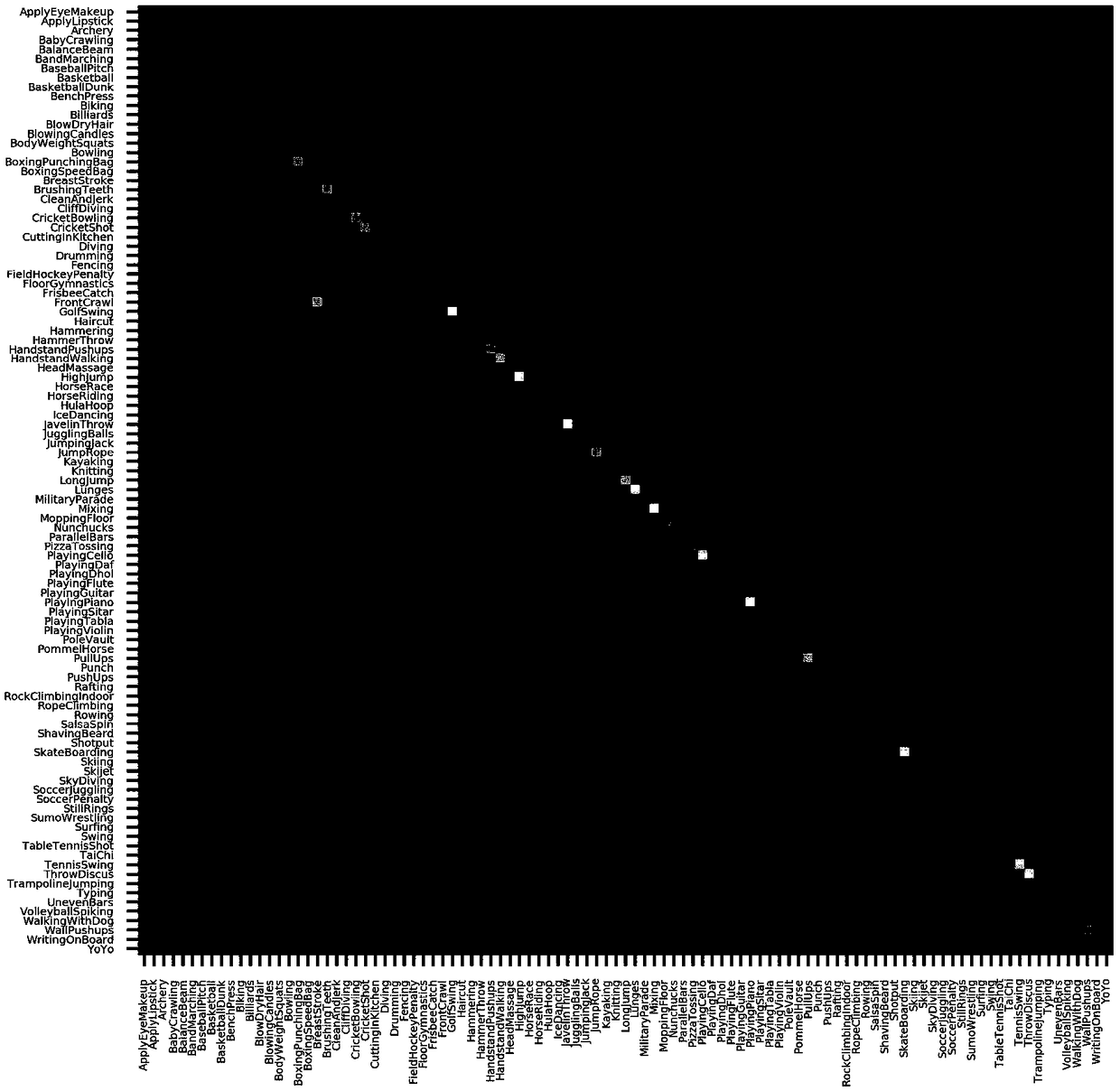

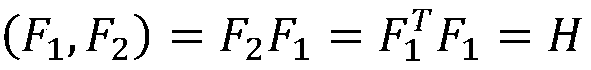

Video classification based on hybrid convolution and attention mechanism

ActiveCN109389055ASmall amount of calculationImprove accuracyCharacter and pattern recognitionNeural architecturesVideo retrievalData set

The invention discloses a video classification method based on a mixed convolution and attention mechanism, which solves the problems of complex calculation and low accuracy of the prior art. The method comprises the following steps of: selecting a video classification data set; Segmented sampling of input video; Preprocessing two video segments; Constructing hybrid convolution neural network model; The video mixed convolution feature map is obtained in the direction of temporal dimension. Video Attention Feature Map Obtained by Attention Mechanism Operation; Obtaining a video attention descriptor; Training the end-to-end entire video classification model; Test Video to be Categorized. The invention directly obtains mixed convolution characteristic maps for different video segments, Compared with the method of obtaining optical flow features, the method of obtaining optical flow features reduces the computational burden and improves the speed, introduces the attention mechanism betweendifferent video segments, describes the relationship between different video segments and improves the accuracy and robustness, and is used for video retrieval, video tagging, human-computer interaction, behavior recognition, event detection and anomaly detection.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com