Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

175 results about "Time-of-flight camera" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

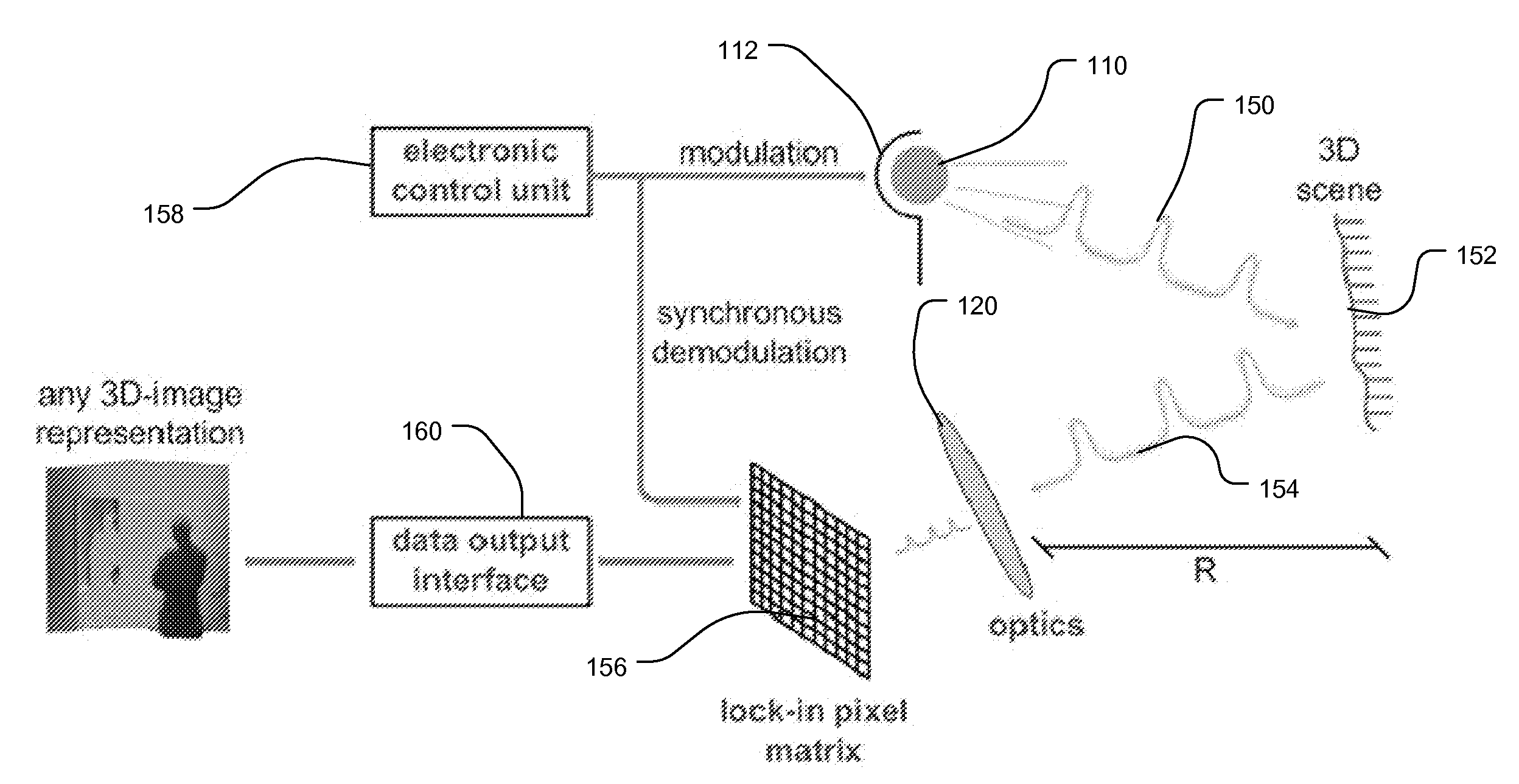

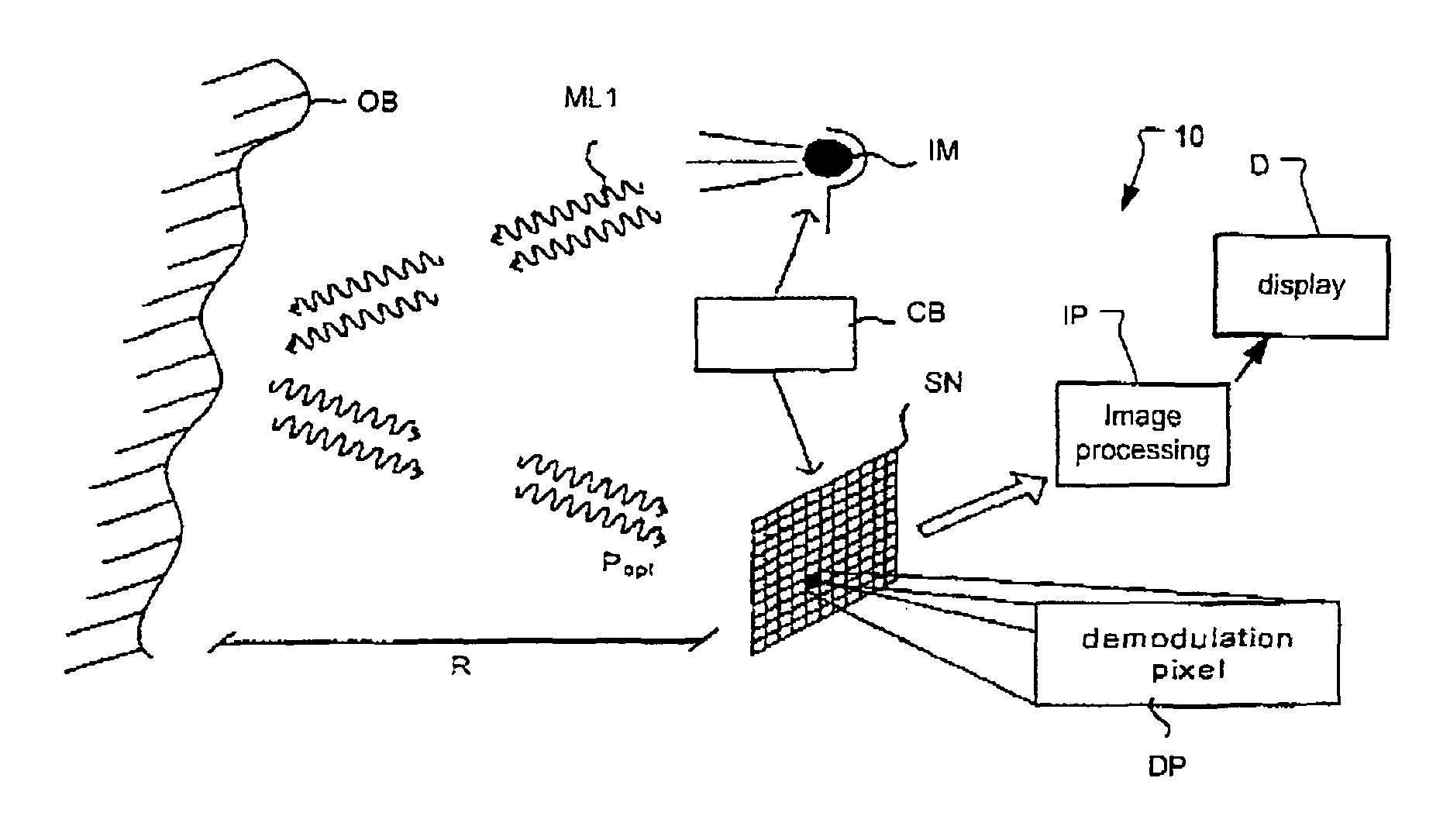

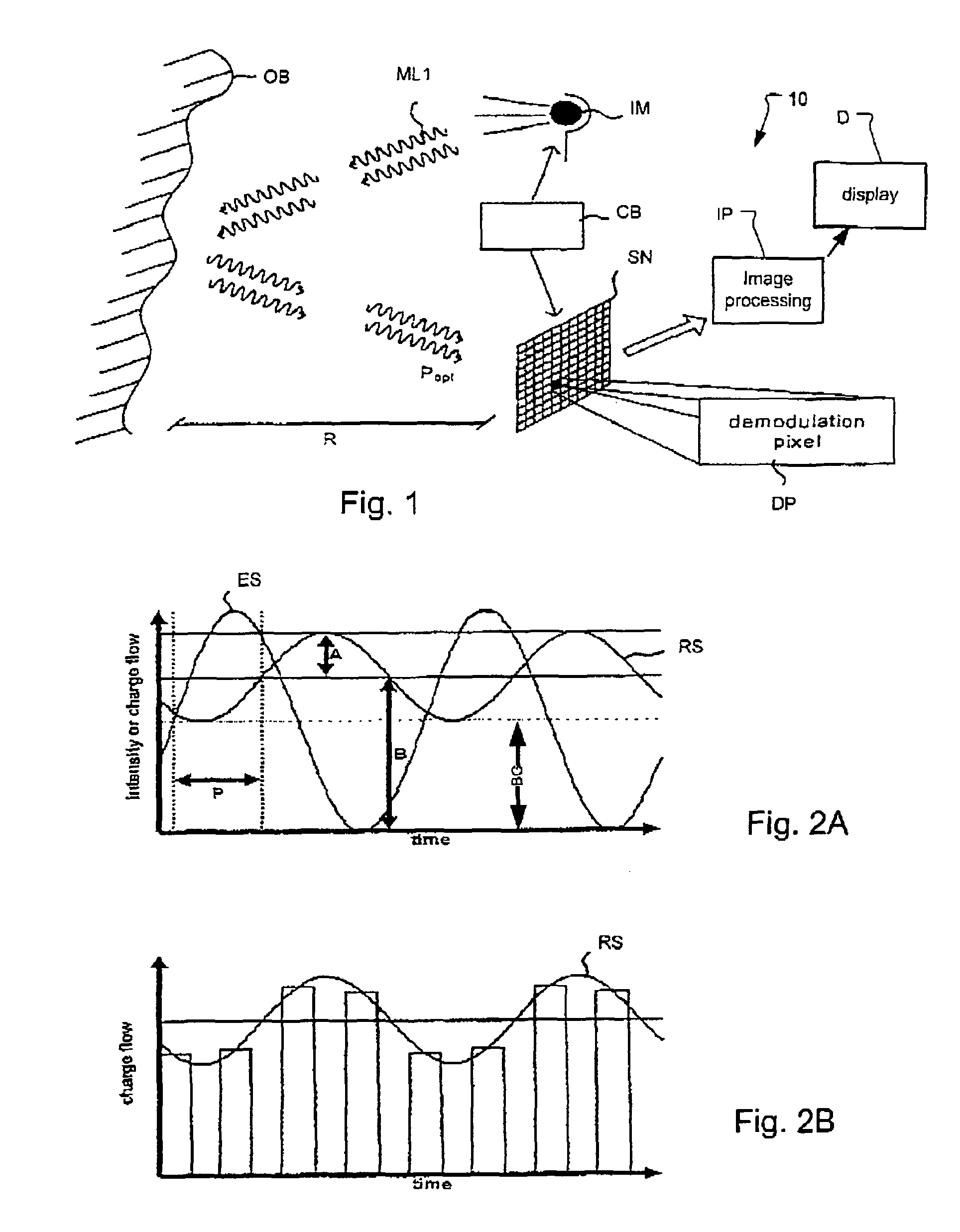

A time-of-flight camera (ToF camera) is a range imaging camera system that employs time-of-flight techniques to resolve distance between the camera and the subject for each point of the image, by measuring the round trip time of an artificial light signal provided by a laser or an LED. Laser-based time-of-flight cameras are part of a broader class of scannerless LIDAR, in which the entire scene is captured with each laser pulse, as opposed to point-by-point with a laser beam such as in scanning LIDAR systems.

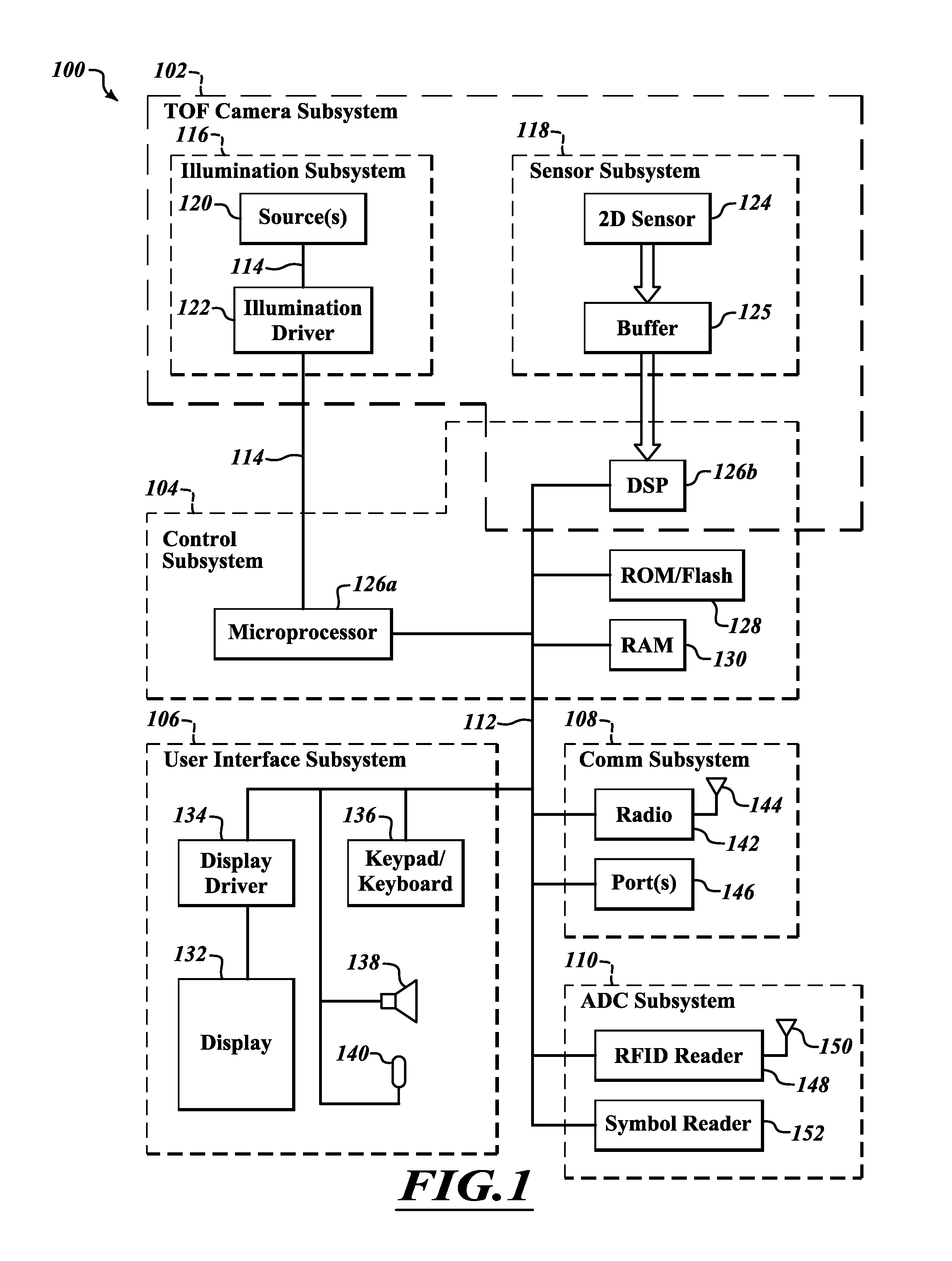

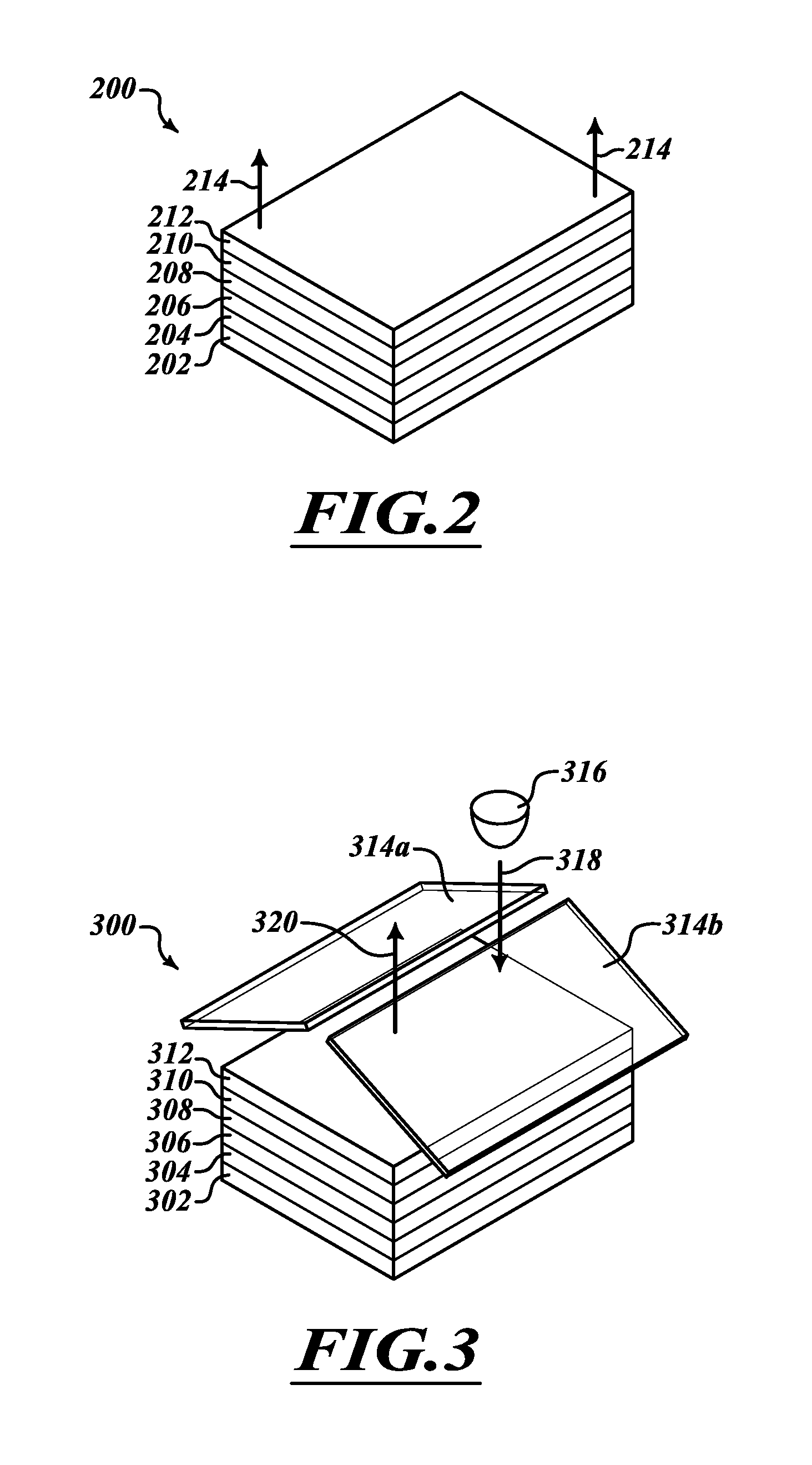

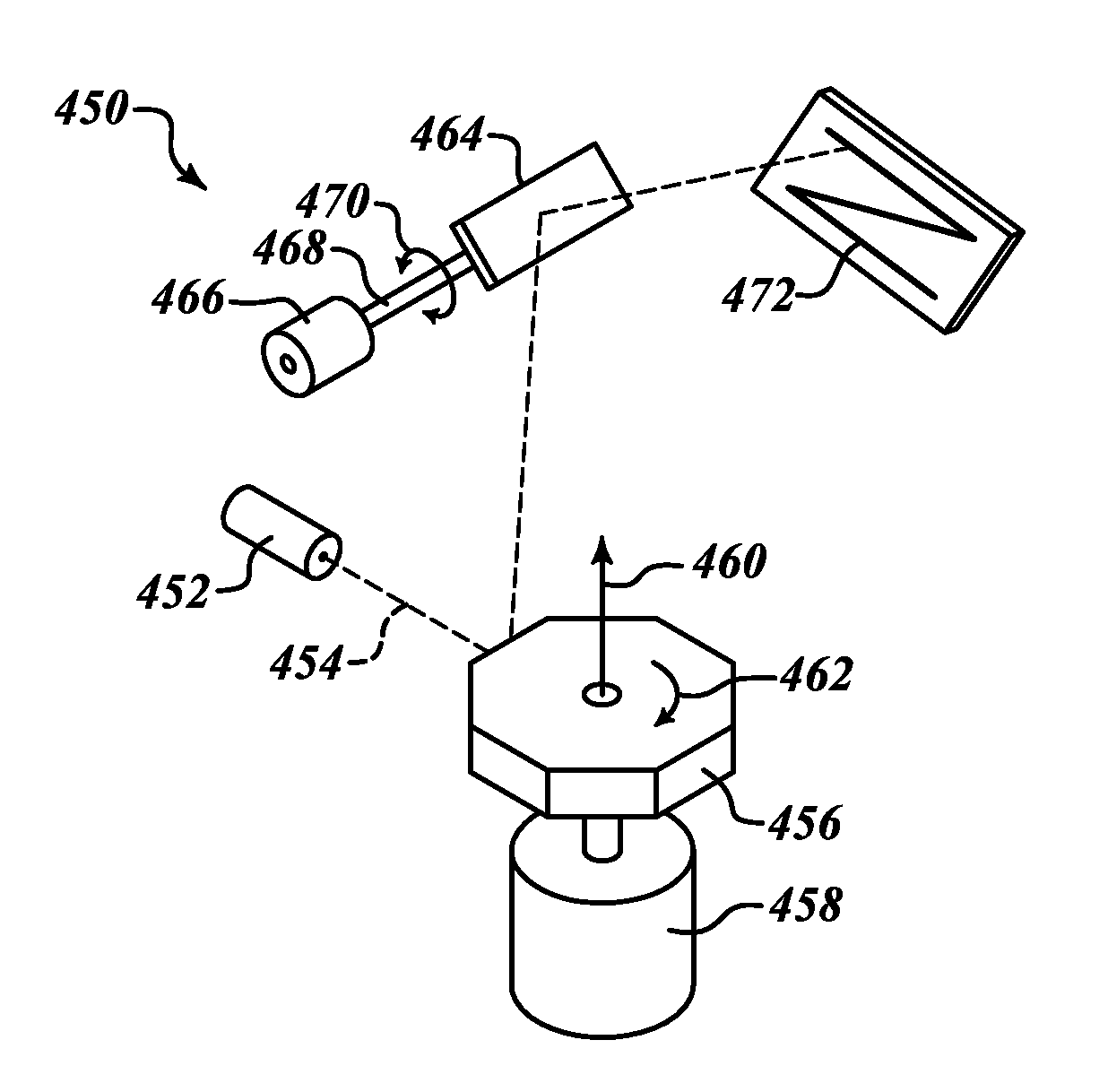

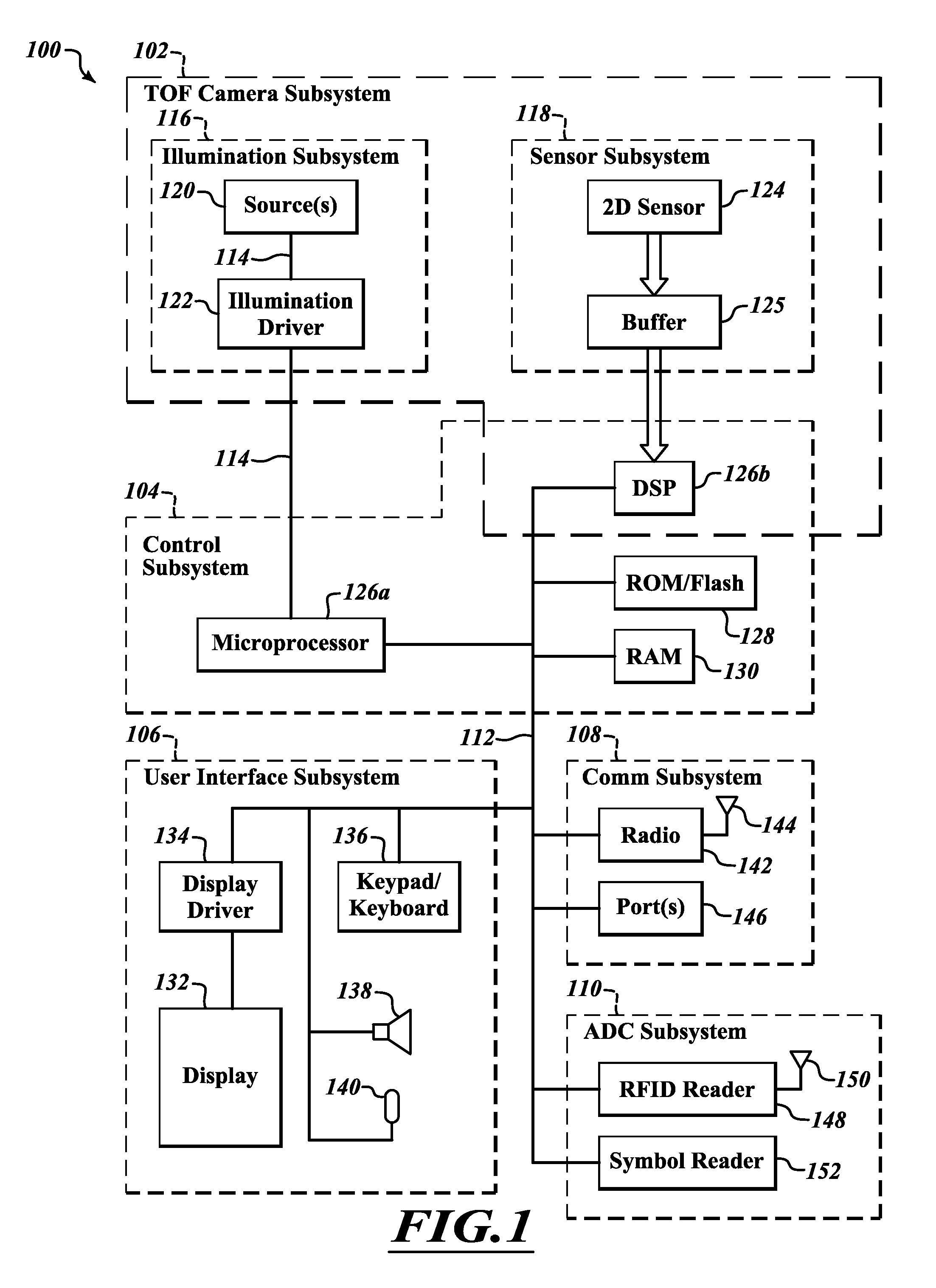

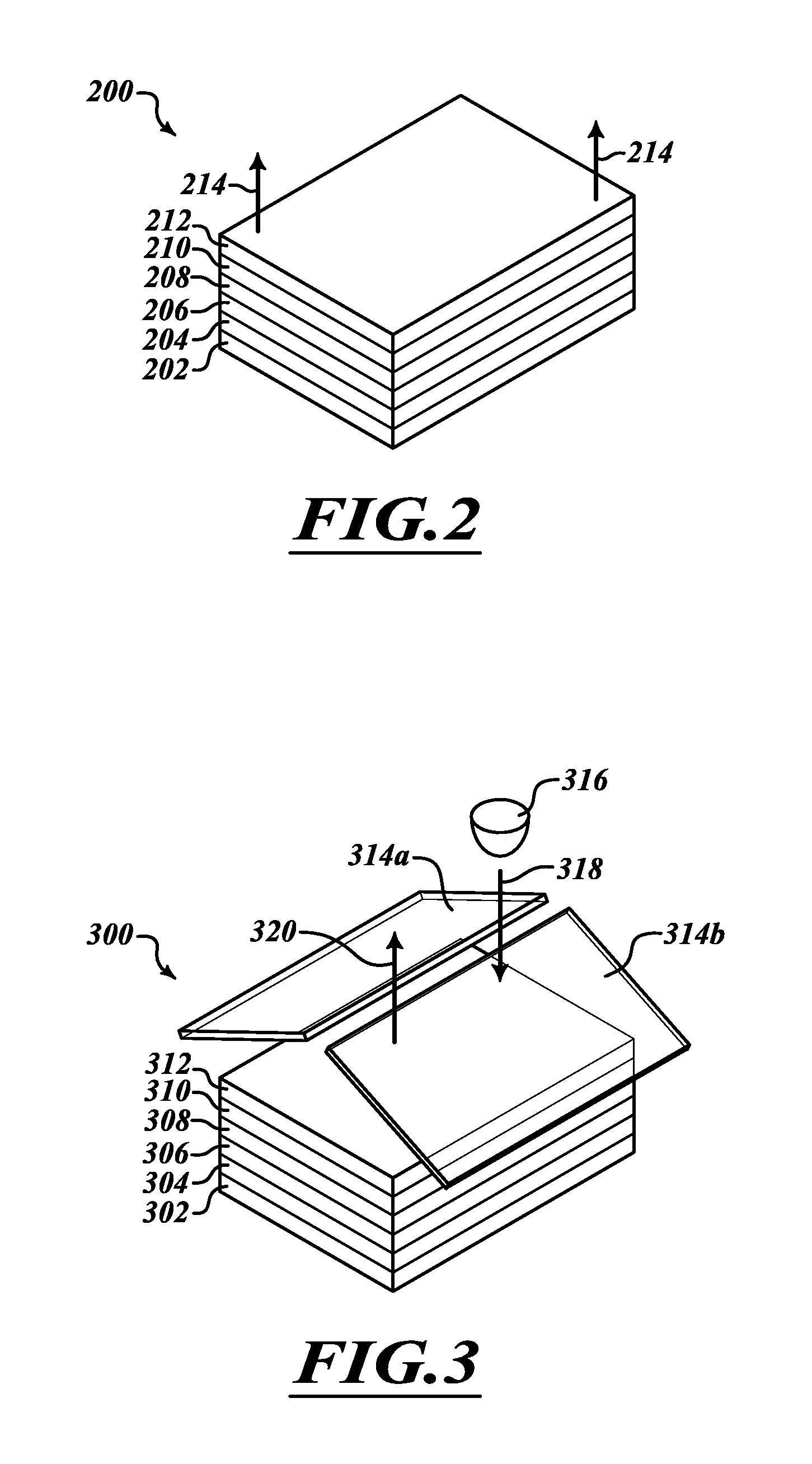

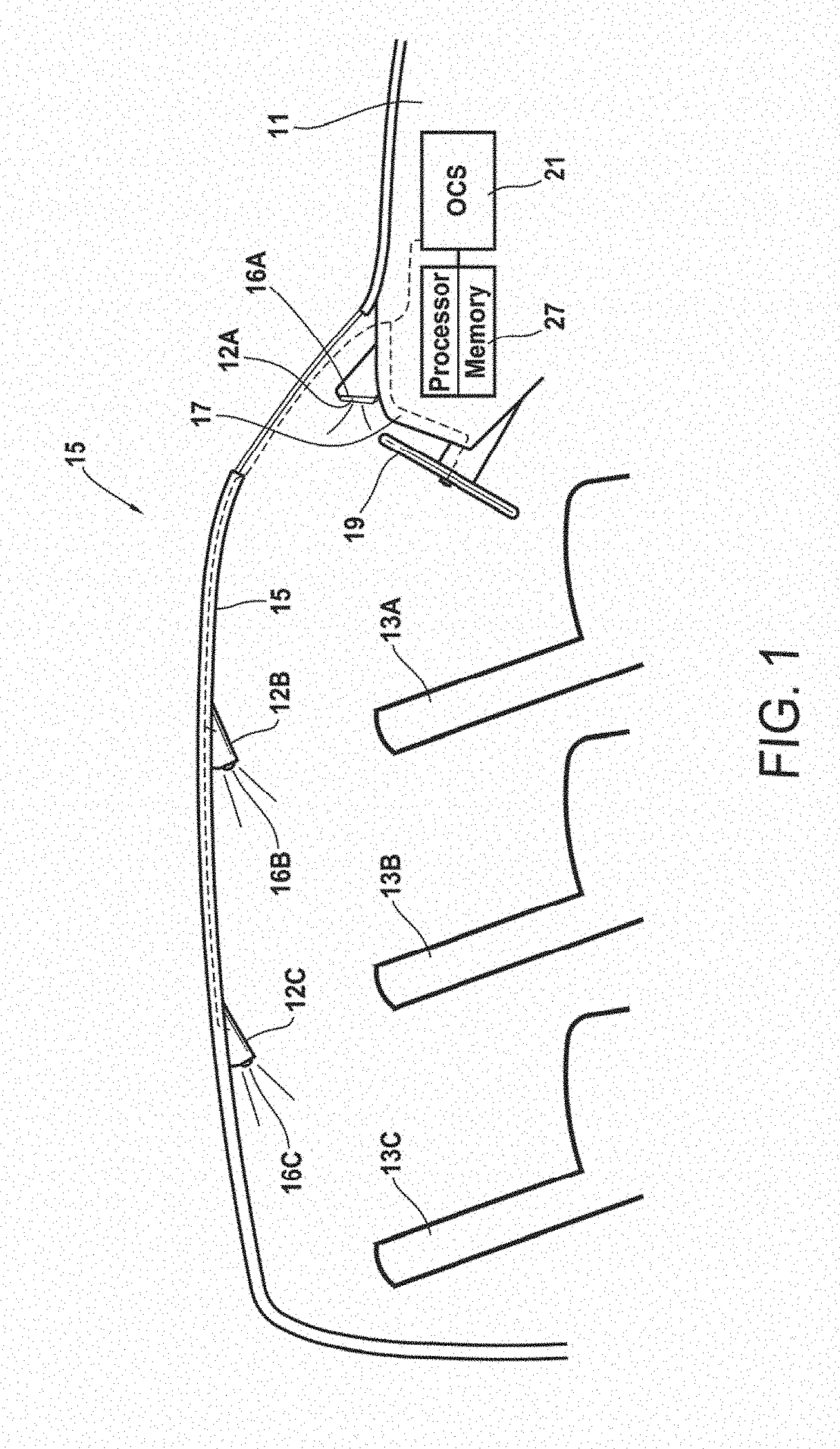

Volume dimensioning system and method employing time-of-flight camera

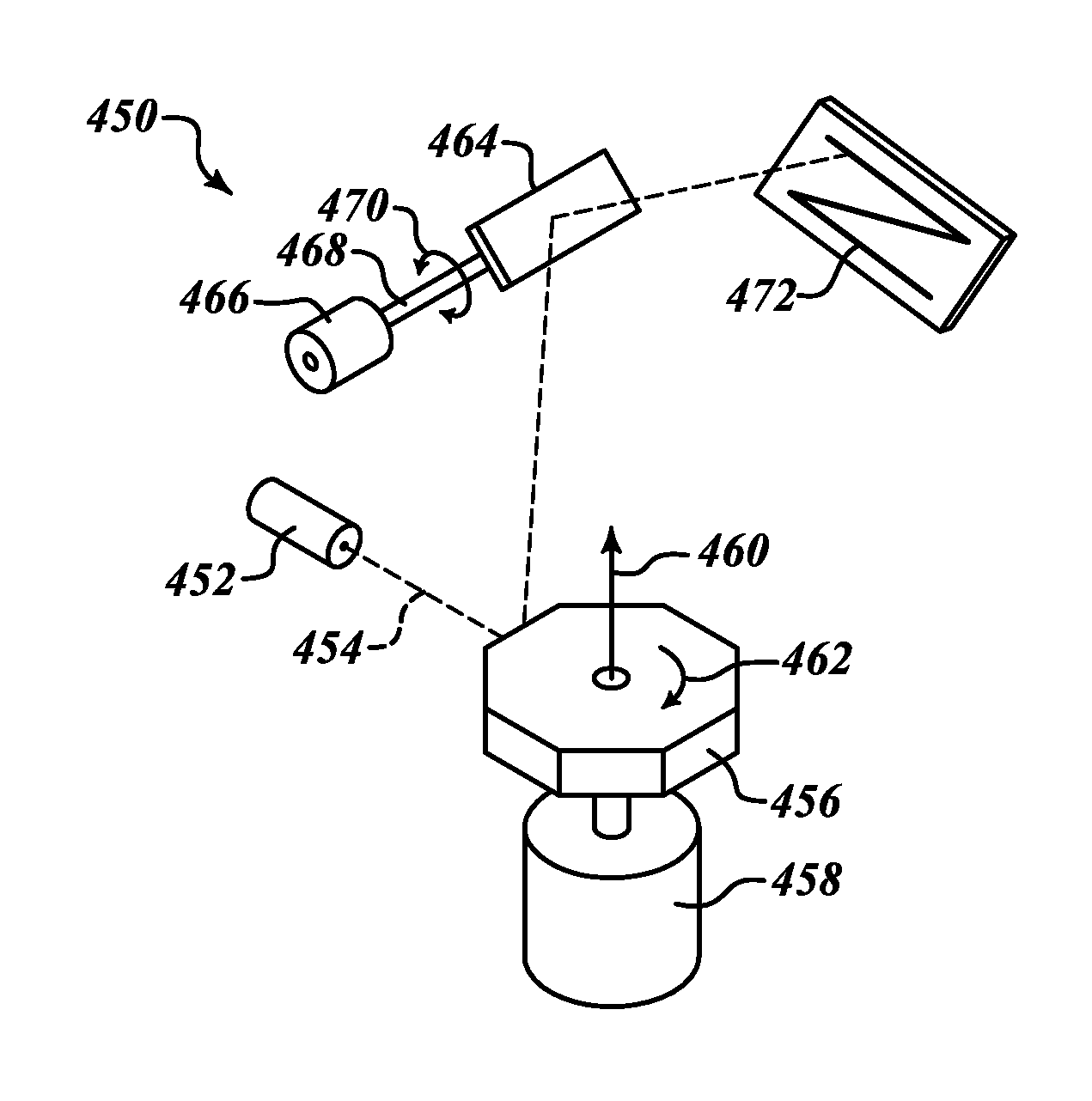

ActiveUS8854633B2Cost effectiveLess spaceInvestigating moving sheetsUsing optical meansPattern recognitionTime-of-flight camera

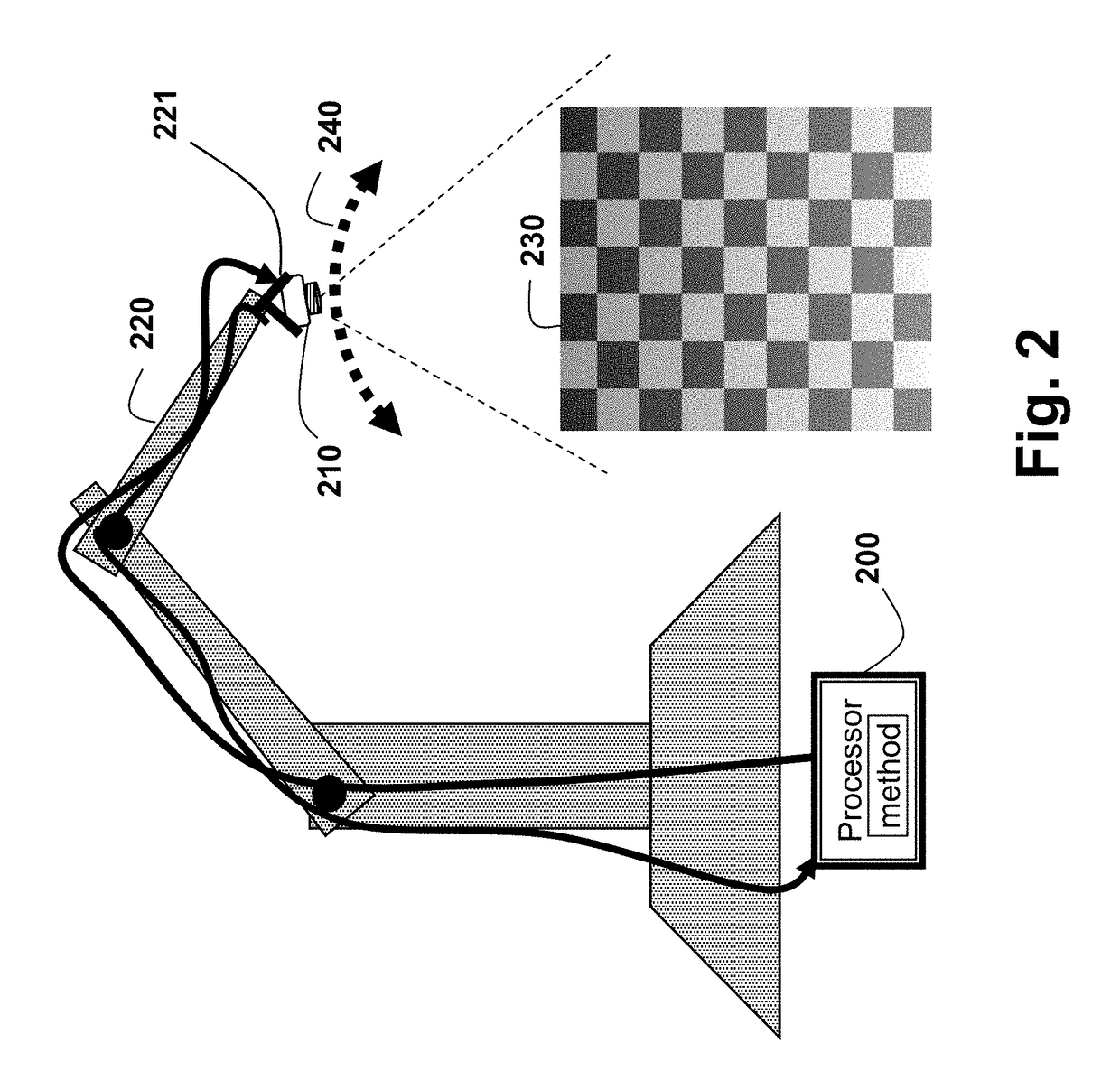

Volume dimensioning employs techniques to reduce multipath reflection or return of illumination, and hence distortion. Volume dimensioning for any given target object includes a sequence of one or more illuminations and respective detections of returned illumination. A sequence typically includes illumination with at least one initial spatial illumination pattern and with one or more refined spatial illumination patterns. Refined spatial illumination patterns are generated based on previous illumination in order to reduce distortion. The number of refined spatial illumination patterns in a sequence may be fixed, or may vary based on results of prior illumination(s) in the sequence. Refined spatial illumination patterns may avoid illuminating background areas that contribute to distortion. Sometimes, illumination with the initial spatial illumination pattern may produce sufficiently acceptable results, and refined spatial illumination patterns in the sequence omitted.

Owner:INTERMEC IP CORP

Volume dimensioning system and method employing time-of-flight camera

ActiveUS20140002828A1Reduce reflectionReduce the impactUsing optical meansContainer/cavity capacity measurementPattern recognitionTime-of-flight camera

Volume dimensioning employs techniques to reduce multipath reflection or return of illumination, and hence distortion. Volume dimensioning for any given target object includes a sequence of one or more illuminations and respective detections of returned illumination. A sequence typically includes illumination with at least one initial spatial illumination pattern and with one or more refined spatial illumination patterns. Refined spatial illumination patterns are generated based on previous illumination in order to reduce distortion. The number of refined spatial illumination patterns in a sequence may be fixed, or may vary based on results of prior illumination(s) in the sequence. Refined spatial illumination patterns may avoid illuminating background areas that contribute to distortion. Sometimes, illumination with the initial spatial illumination pattern may produce sufficiently acceptable results, and refined spatial illumination patterns in the sequence omitted.

Owner:INTERMEC IP

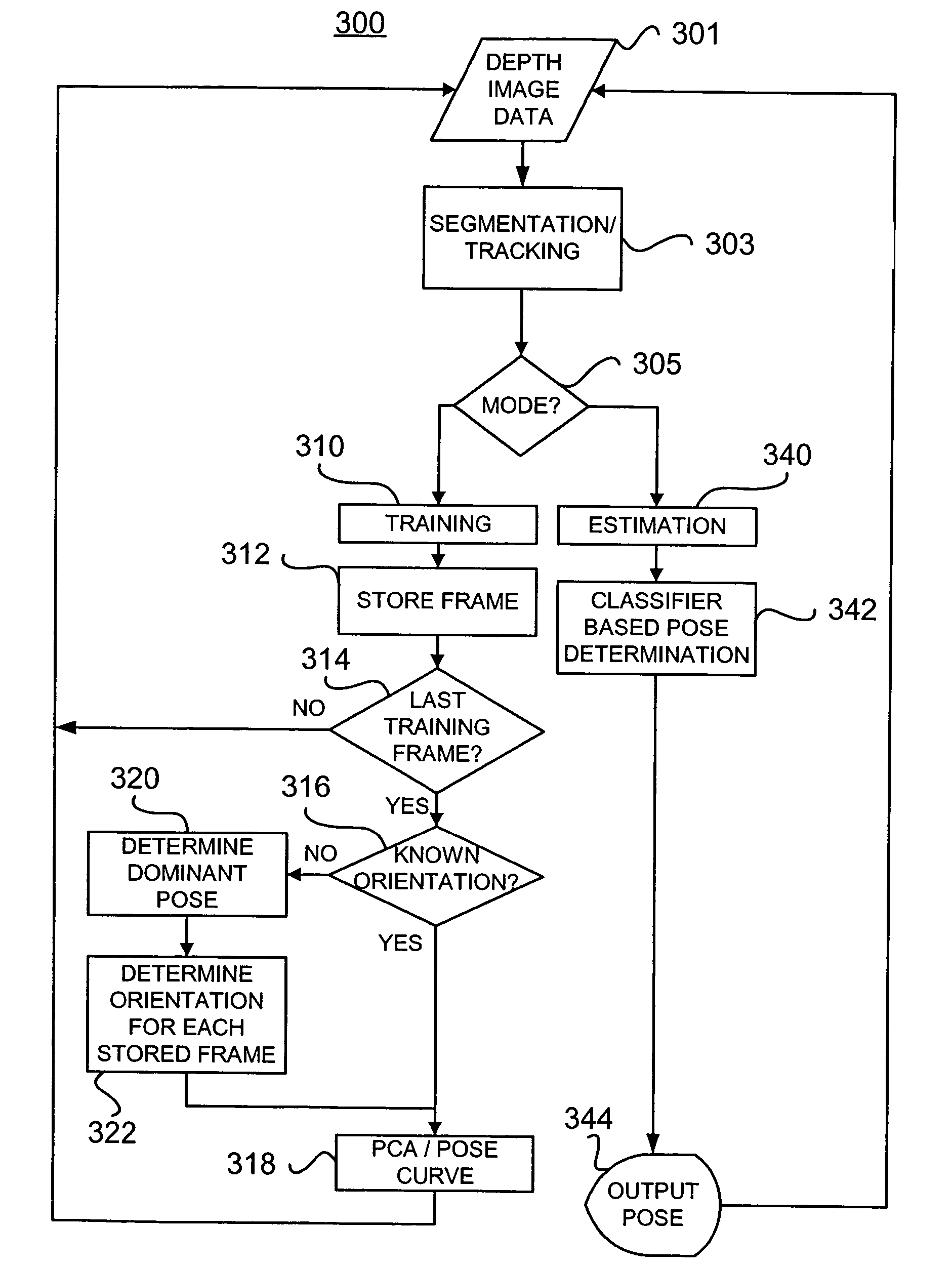

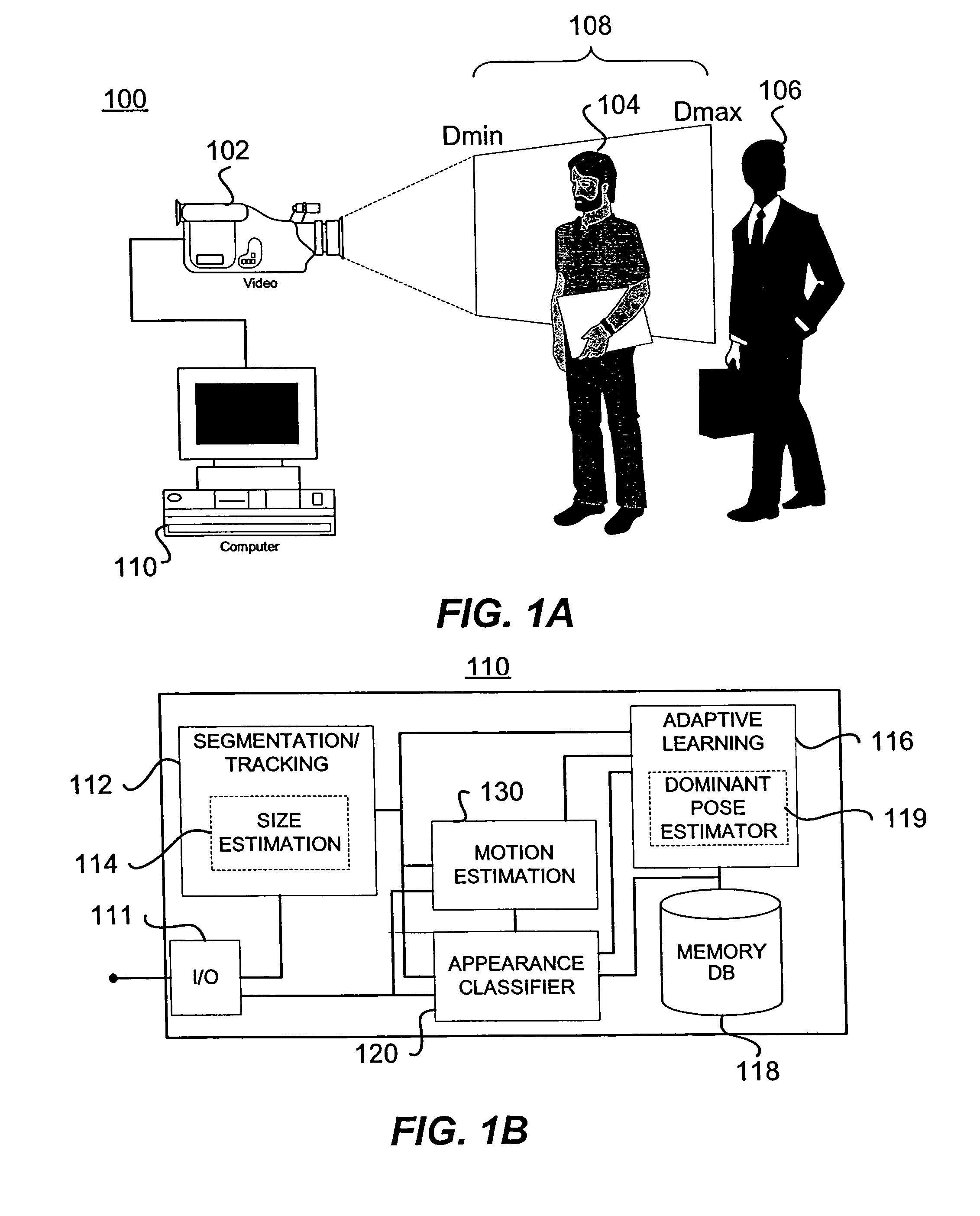

Target orientation estimation using depth sensing

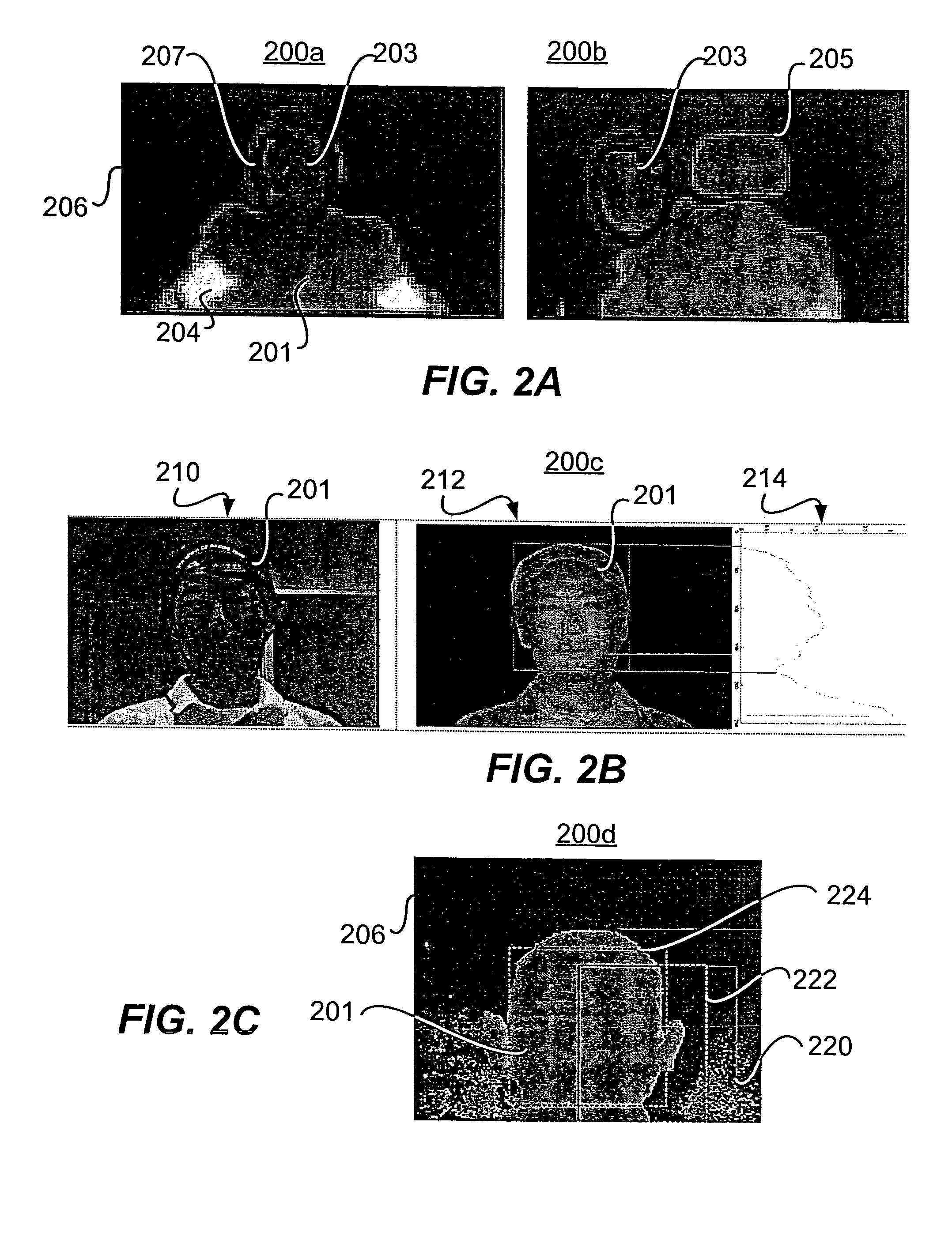

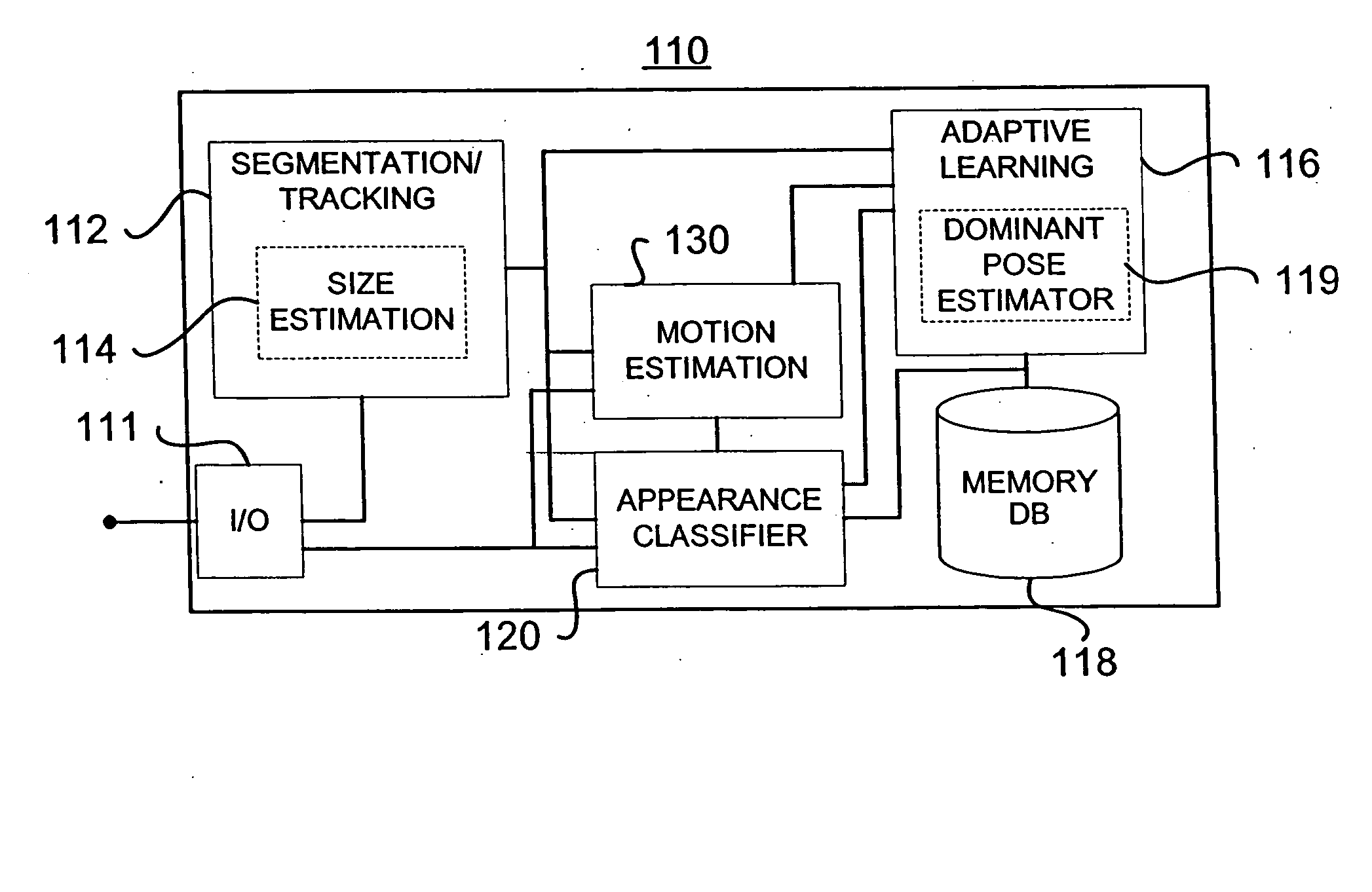

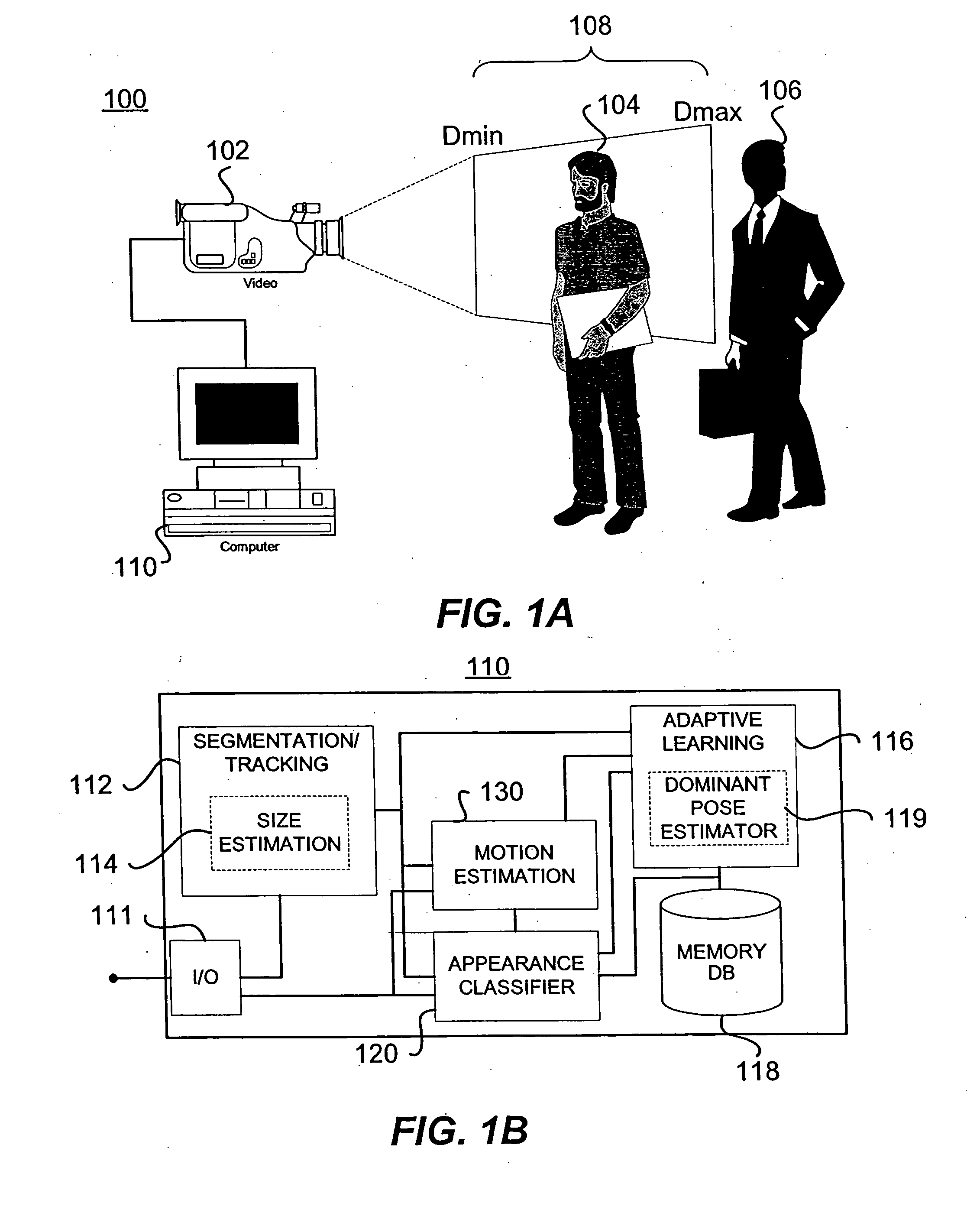

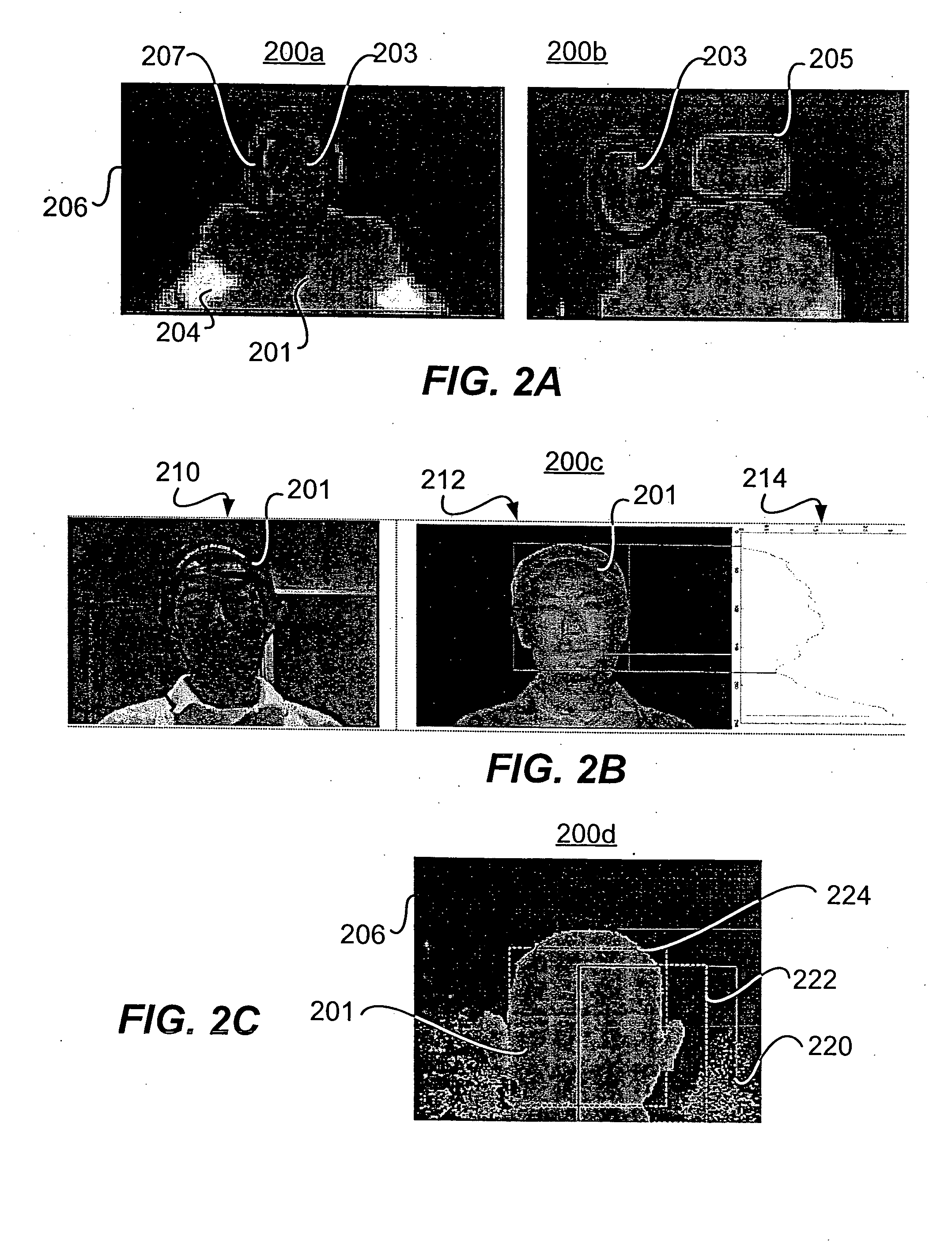

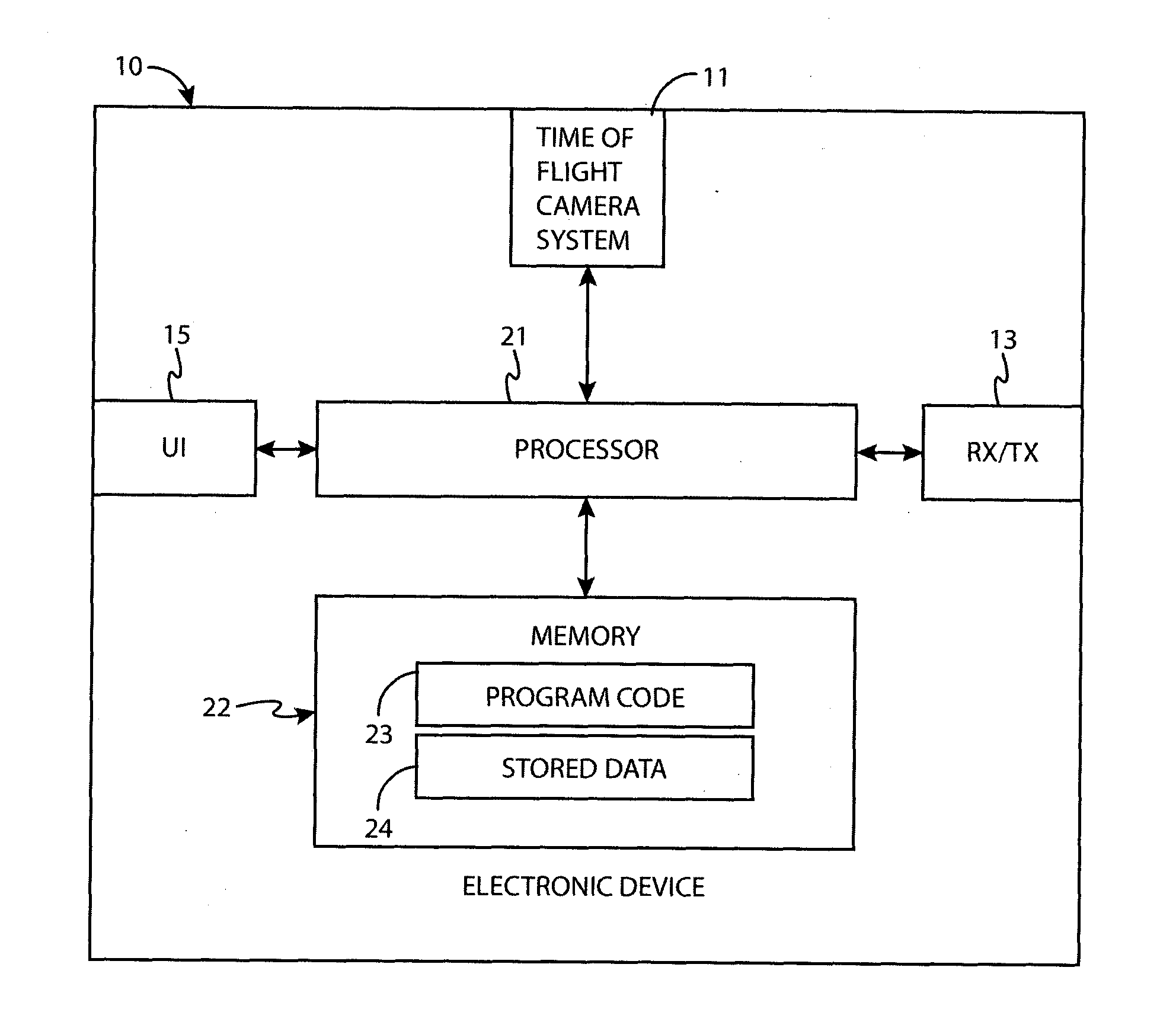

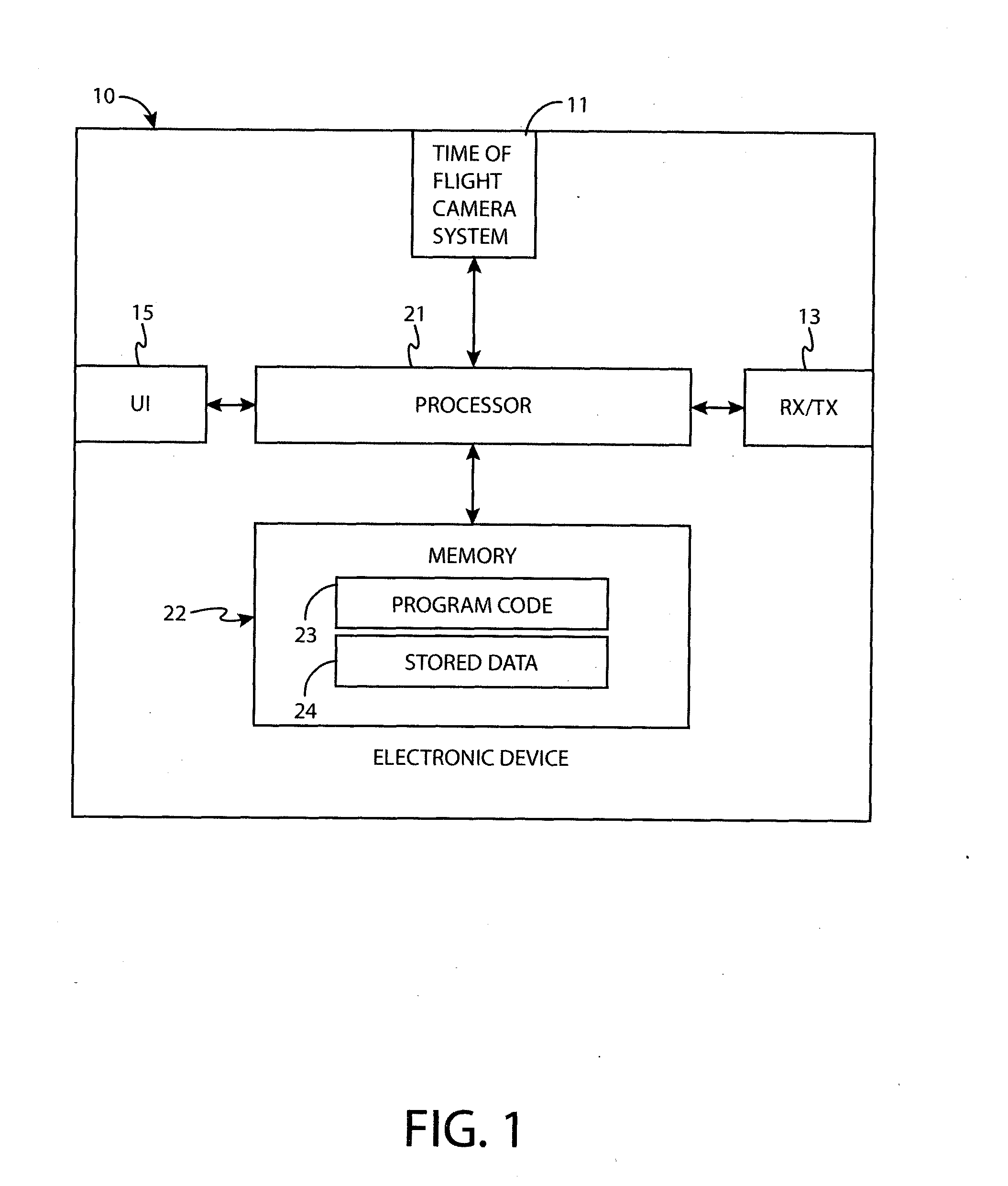

A system for estimating orientation of a target based on real-time video data uses depth data included in the video to determine the estimated orientation. The system includes a time-of-flight camera capable of depth sensing within a depth window. The camera outputs hybrid image data (color and depth). Segmentation is performed to determine the location of the target within the image. Tracking is used to follow the target location from frame to frame. During a training mode, a target-specific training image set is collected with a corresponding orientation associated with each frame. During an estimation mode, a classifier compares new images with the stored training set to determine an estimated orientation. A motion estimation approach uses an accumulated rotation / translation parameter calculation based on optical flow and depth constrains. The parameters are reset to a reference value each time the image corresponds to a dominant orientation.

Owner:HONDA MOTOR CO LTD +1

Target orientation estimation using depth sensing

A system for estimating orientation of a target based on real-time video data uses depth data included in the video to determine the estimated orientation. The system includes a time-of-flight camera capable of depth sensing within a depth window. The camera outputs hybrid image data (color and depth). Segmentation is performed to determine the location of the target within the image. Tracking is used to follow the target location from frame to frame. During a training mode, a target-specific training image set is collected with a corresponding orientation associated with each frame. During an estimation mode, a classifier compares new images with the stored training set to determine an estimated orientation. A motion estimation approach uses an accumulated rotation / translation parameter calculation based on optical flow and depth constrains. The parameters are reset to a reference value each time the image corresponds to a dominant orientation.

Owner:HONDA MOTOR CO LTD +1

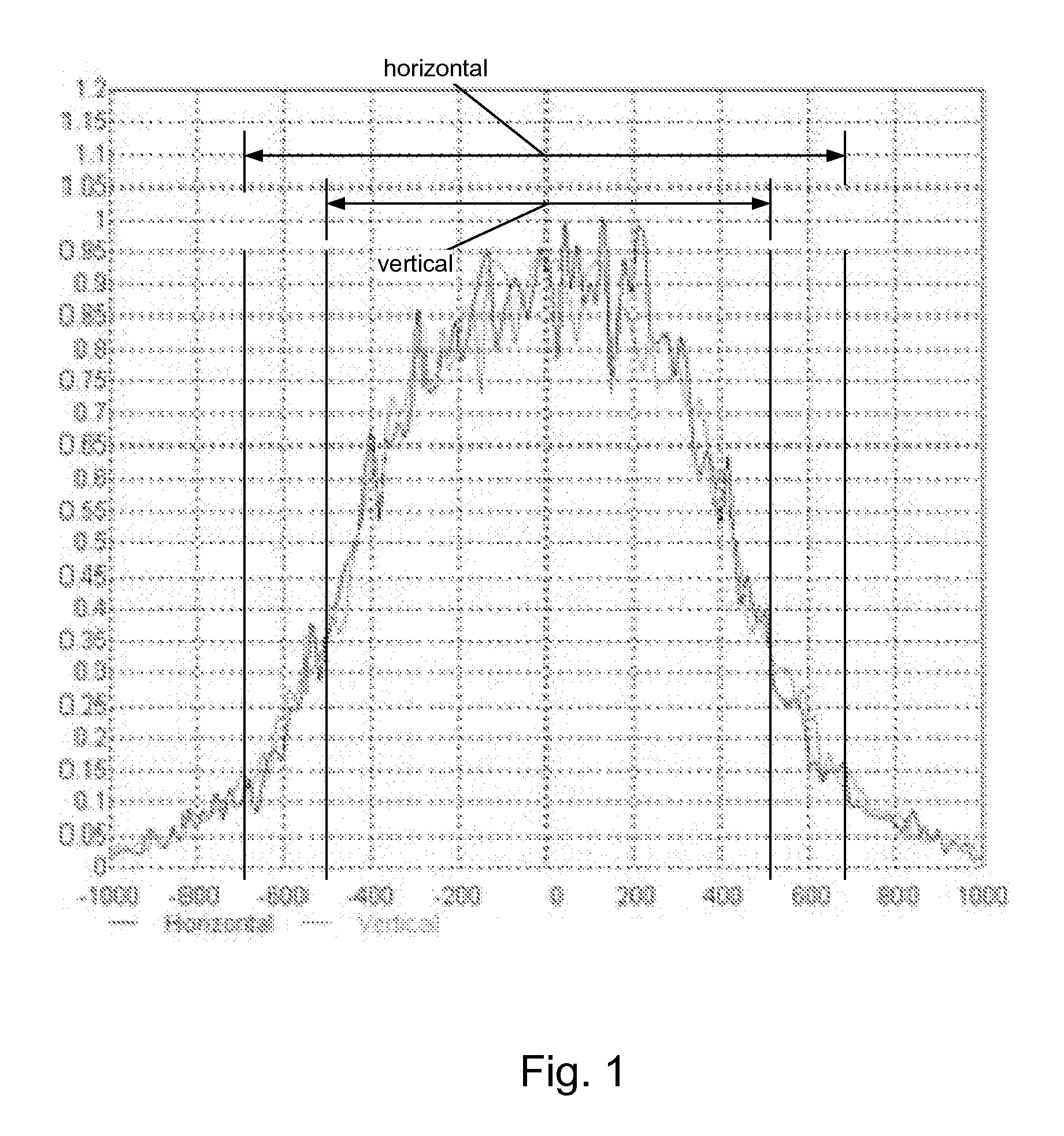

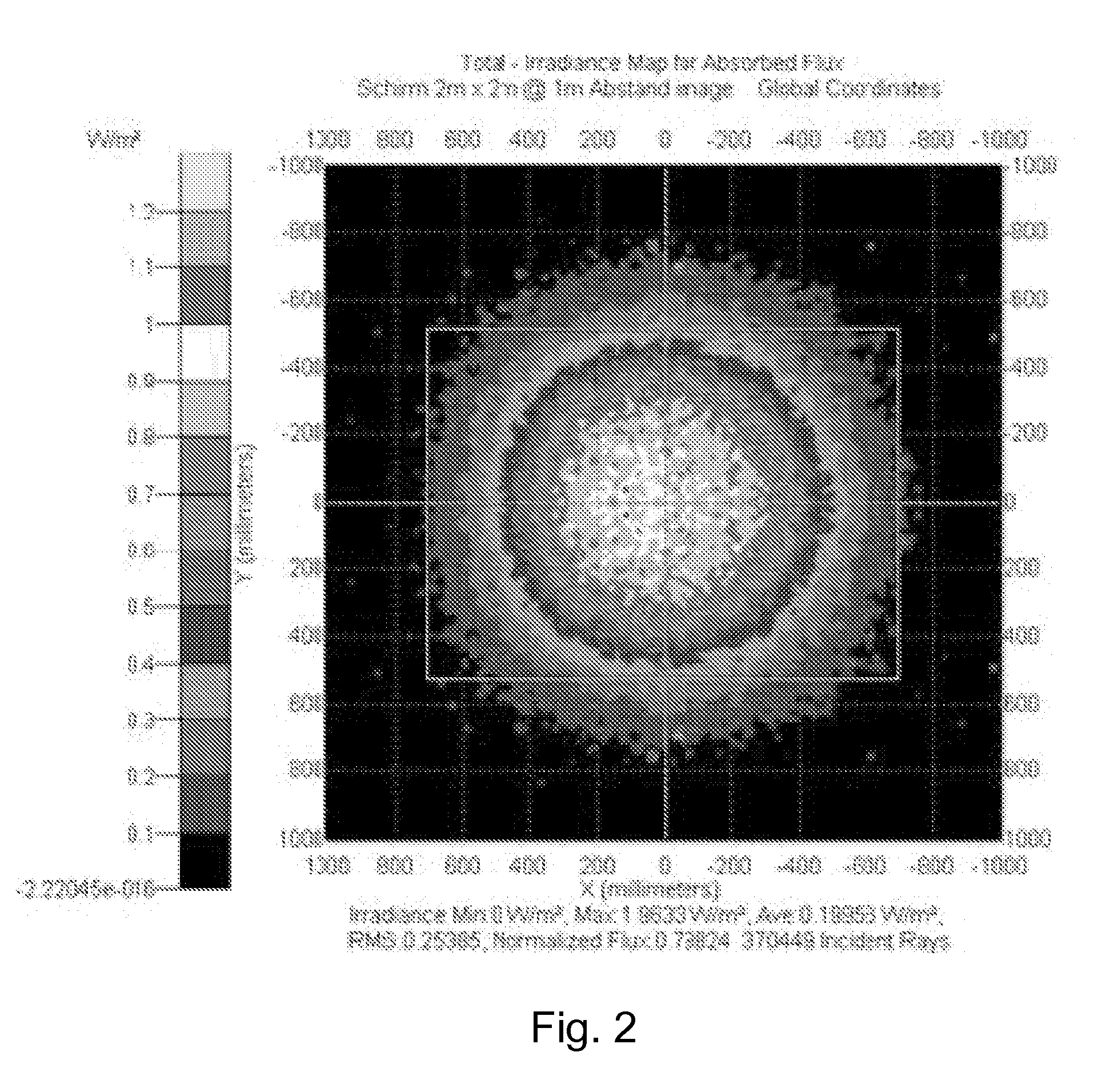

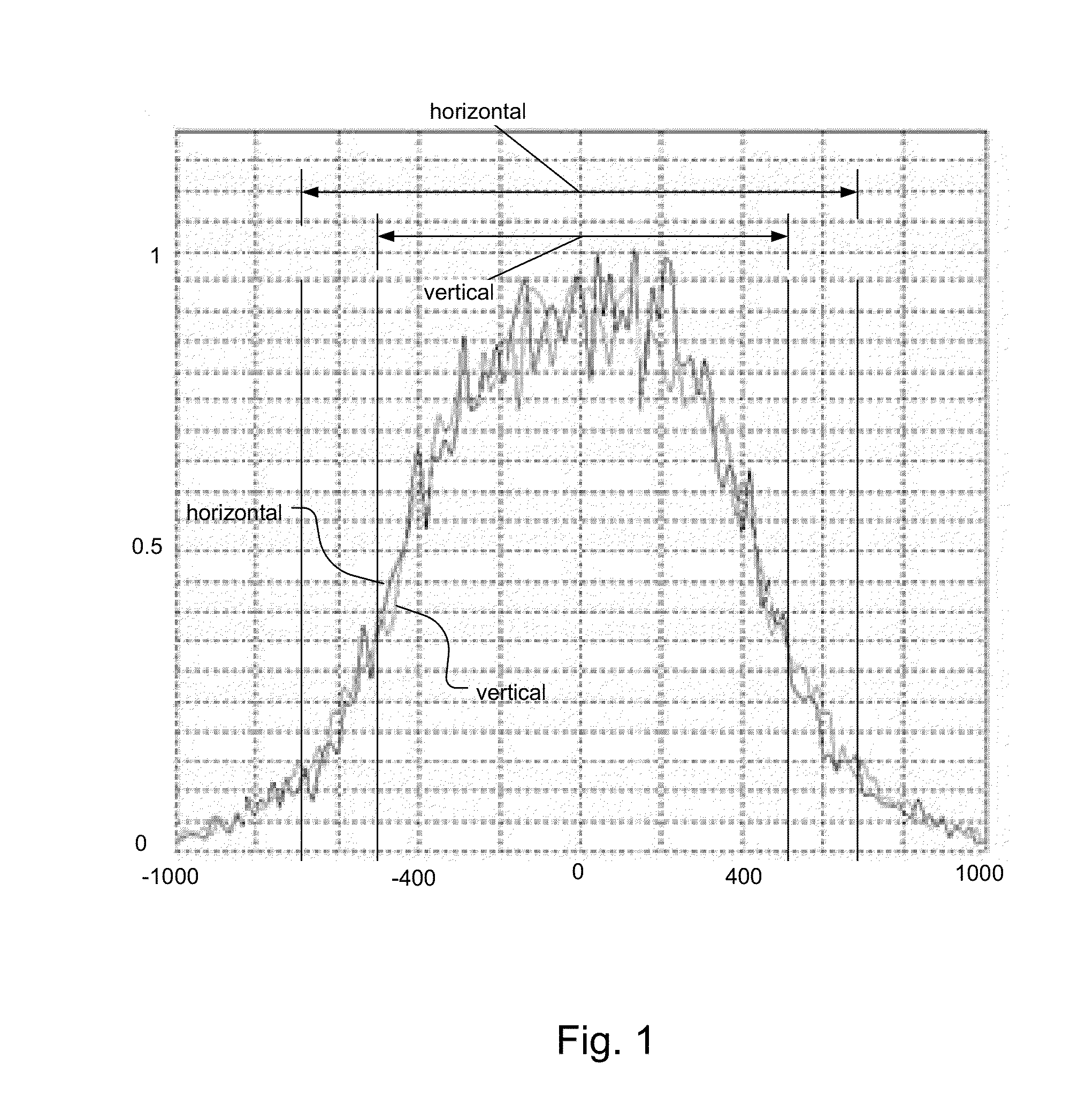

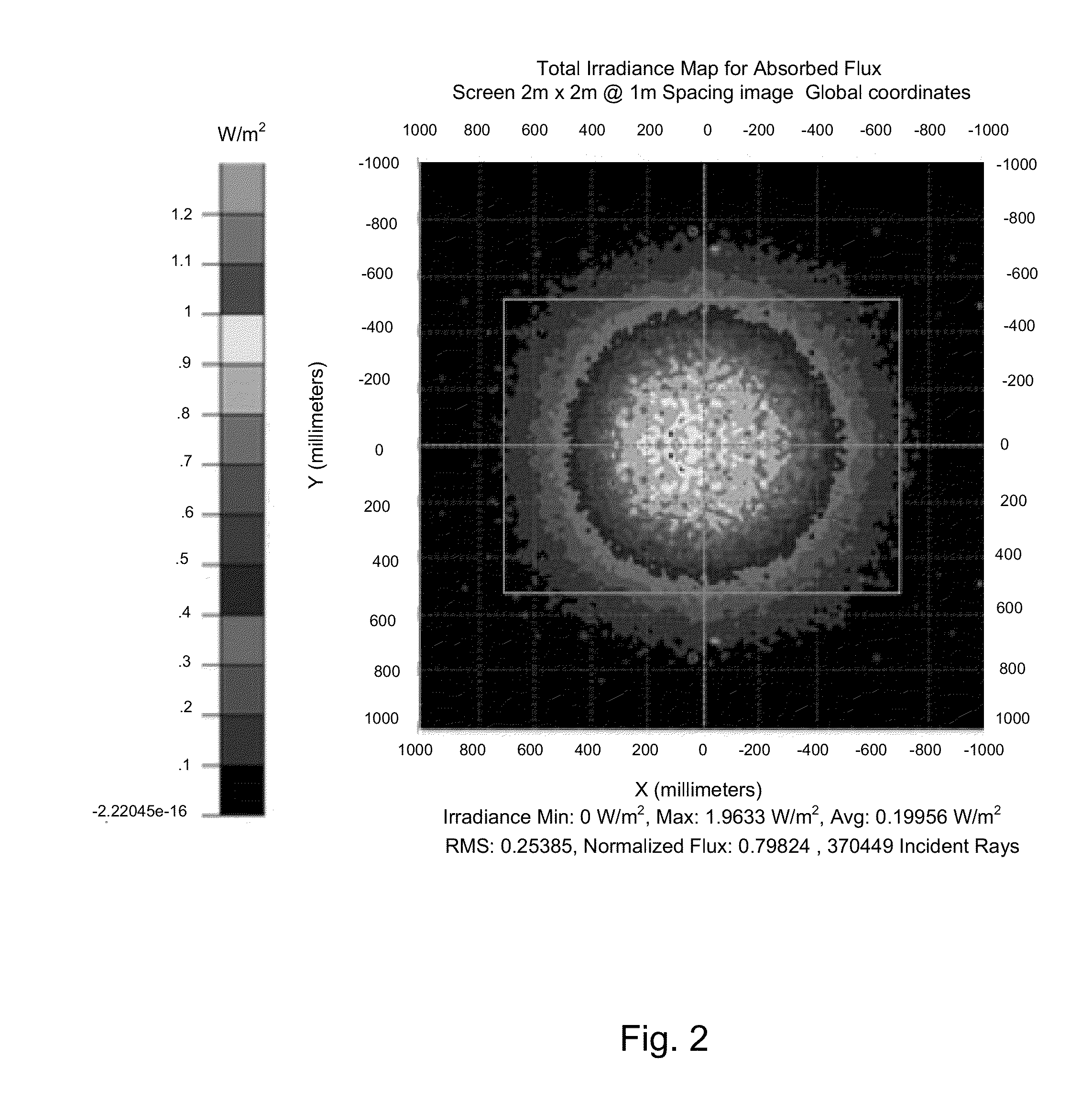

Time of Flight Camera with Rectangular Field of Illumination

Owner:AMS SENSORS SINGAPORE PTE LTD

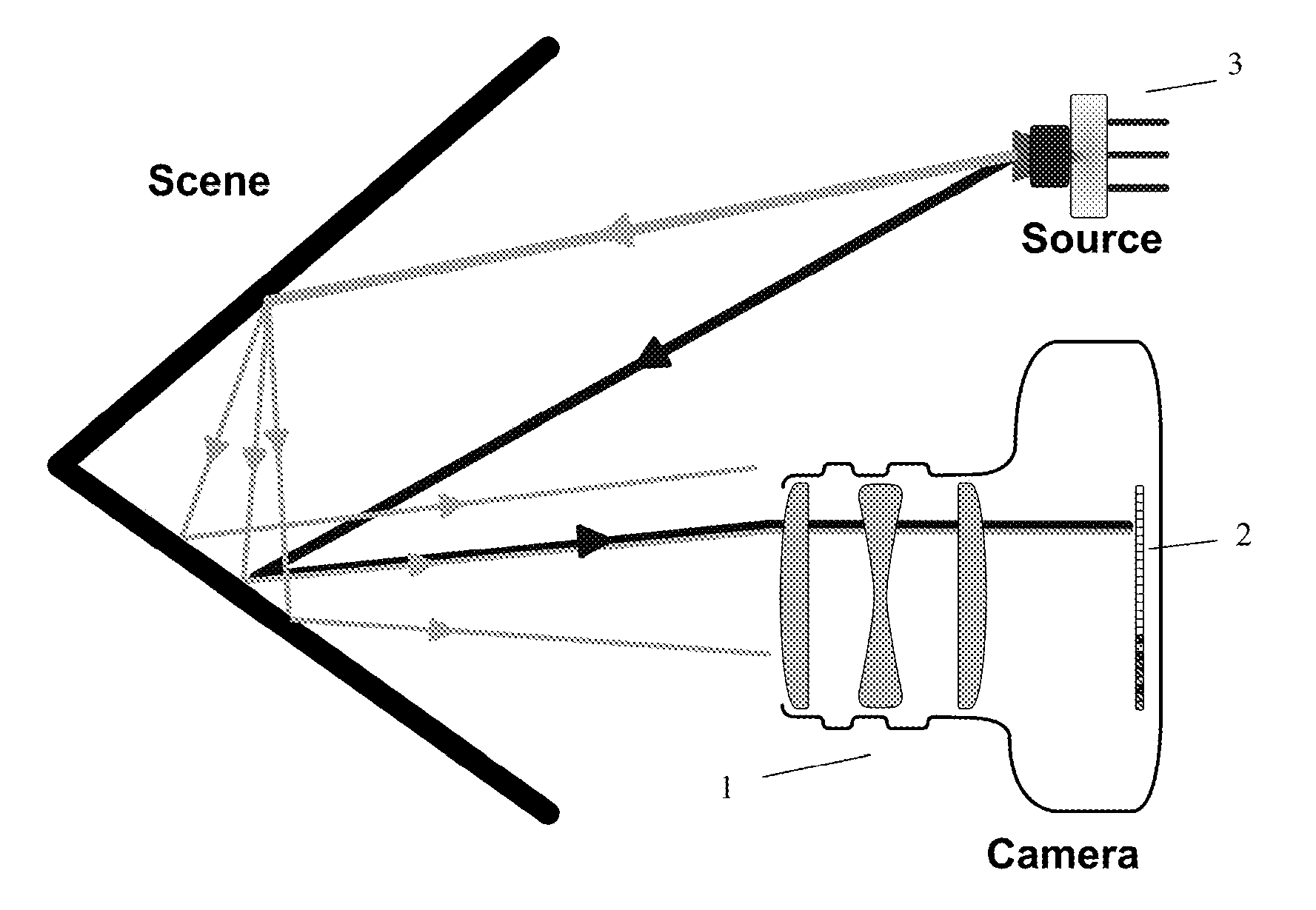

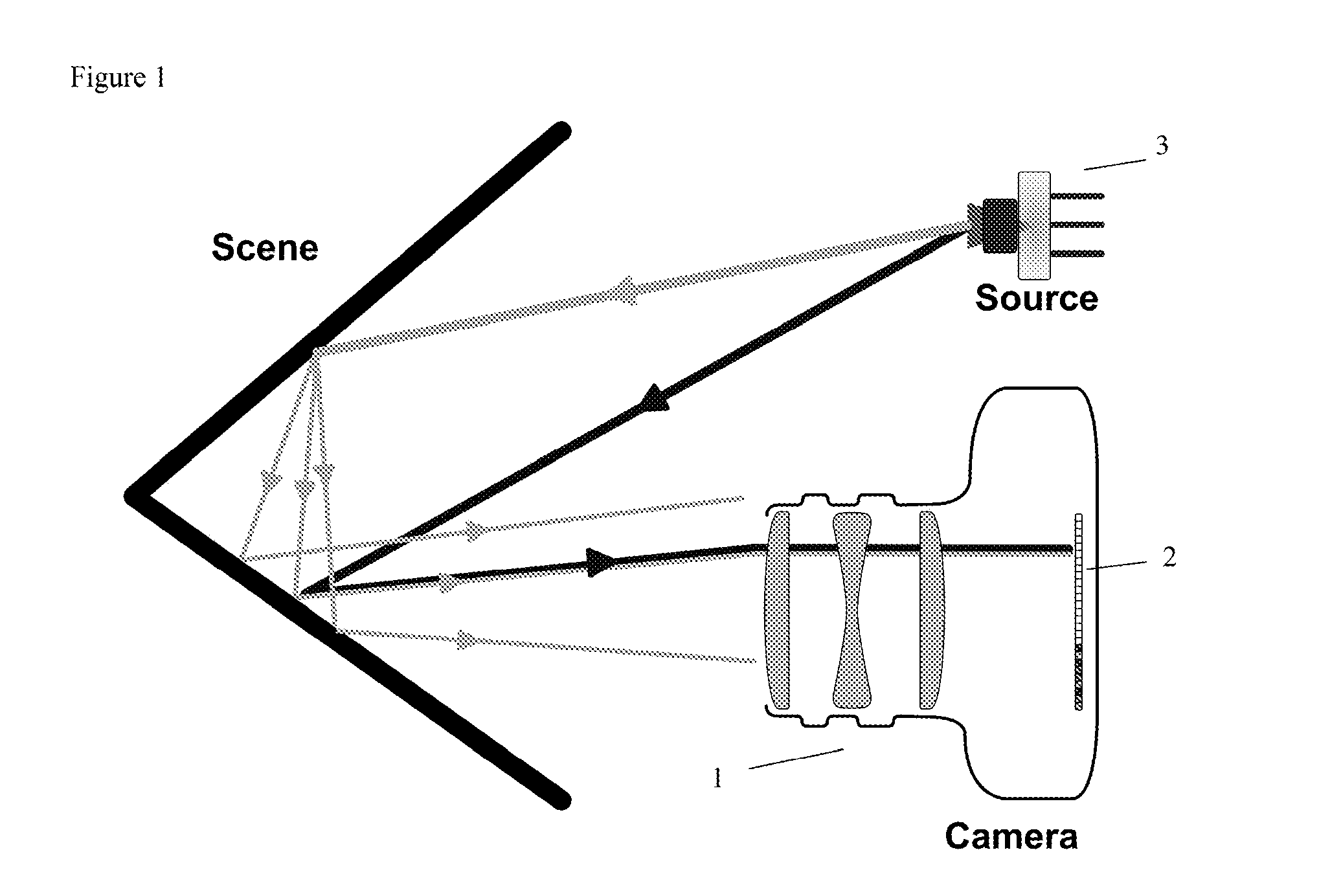

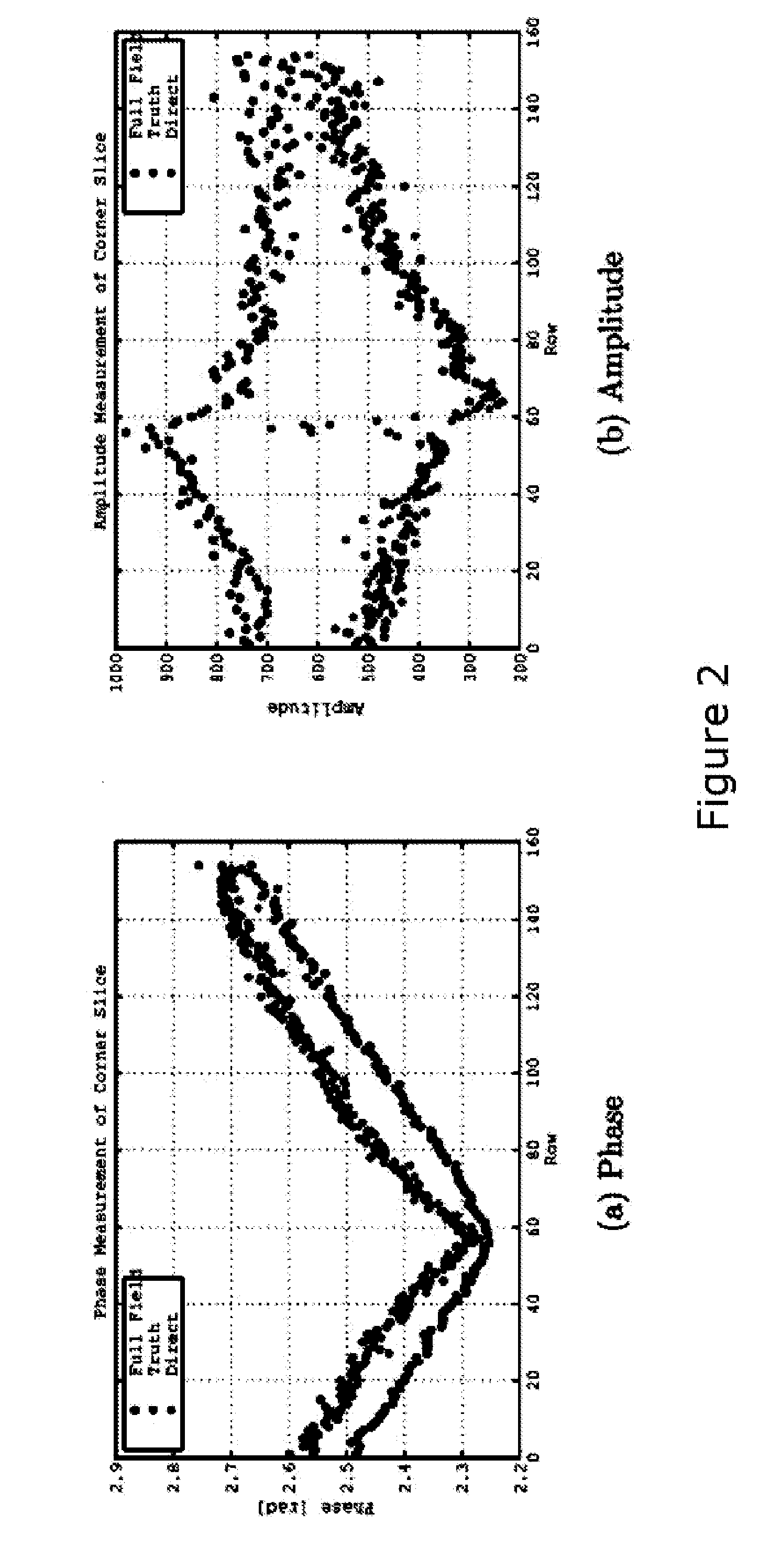

Time of flight camera system which resolves direct and multi-path radiation components

ActiveUS20150253429A1Simplify image processing techniqueEliminate measurement errorsOptical rangefindersElectromagnetic wave reradiationTime-of-flight cameraDirect path

A time of flight camera system resolves the direct path component or the multi-path component of modulated radiation reflected from a target. The camera system includes a time of flight transmitter arranged to transmit modulated radiation at a target, and at least one pattern application structure operating between the transmitter and the target, the pattern application structure operating to apply at least one structured light pattern to the modulated transmitter radiation, and an image sensor configured to measure radiation reflected from a target. The time of flight camber is arranged to resolve from the measurements received the contribution of direct source reflection radiation reflected from the target.

Owner:CHRONOPTICS LTD

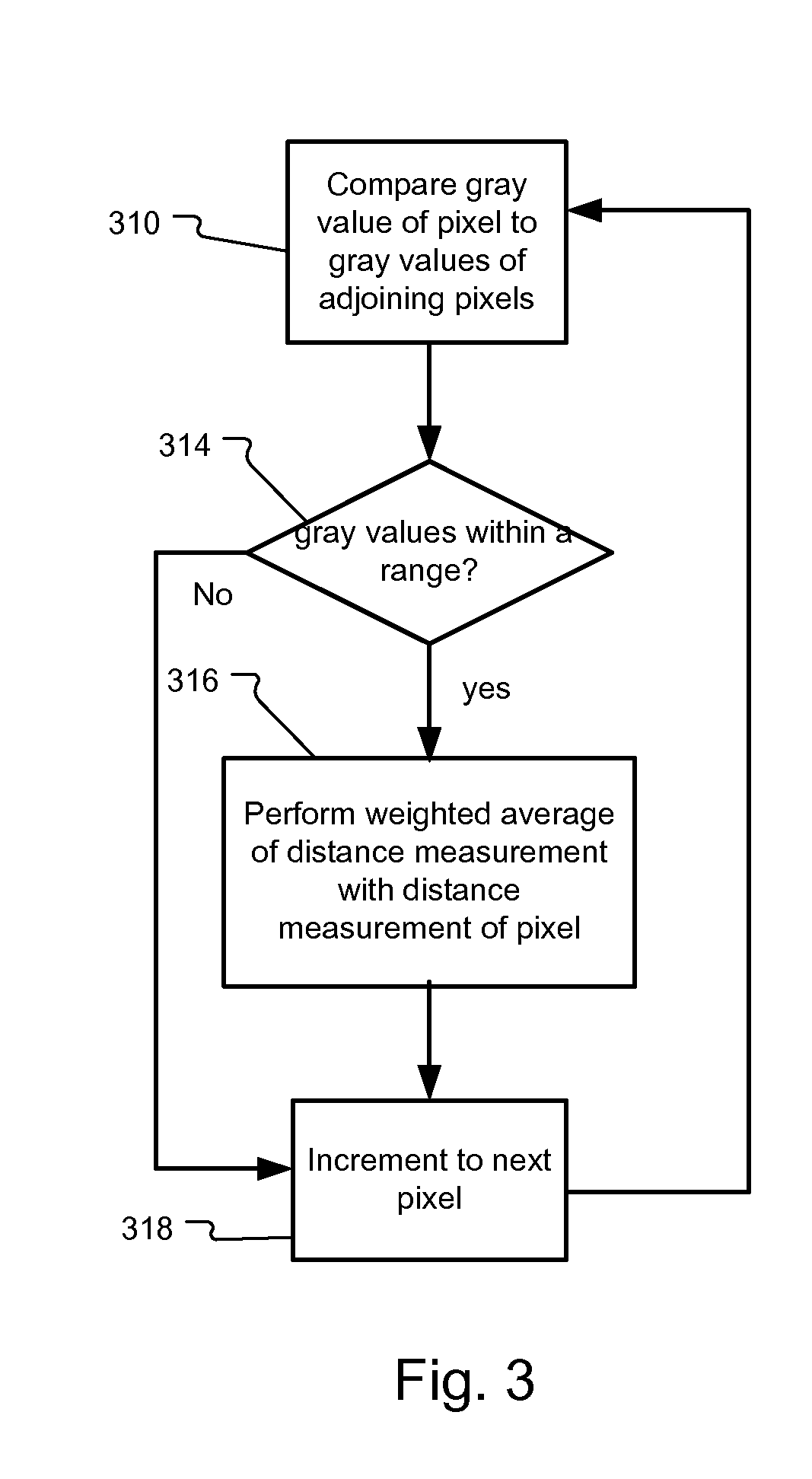

Adaptive Neighborhood Filtering (ANF) System and Method for 3D Time of Flight Cameras

A method for filtering distance information from a 3D-measurement camera system comprises comparing amplitude and / or distance information for pixels to adjacent pixels and averaging distance information for the pixels with the adjacent pixels when amplitude and / or distance information for the pixels is within a range of the amplitudes and / or distances for the adjacent pixels. In addition to that the range of distances may or may not be defined as a function depending on the amplitudes.

Owner:AMS SENSORS SINGAPORE PTE LTD

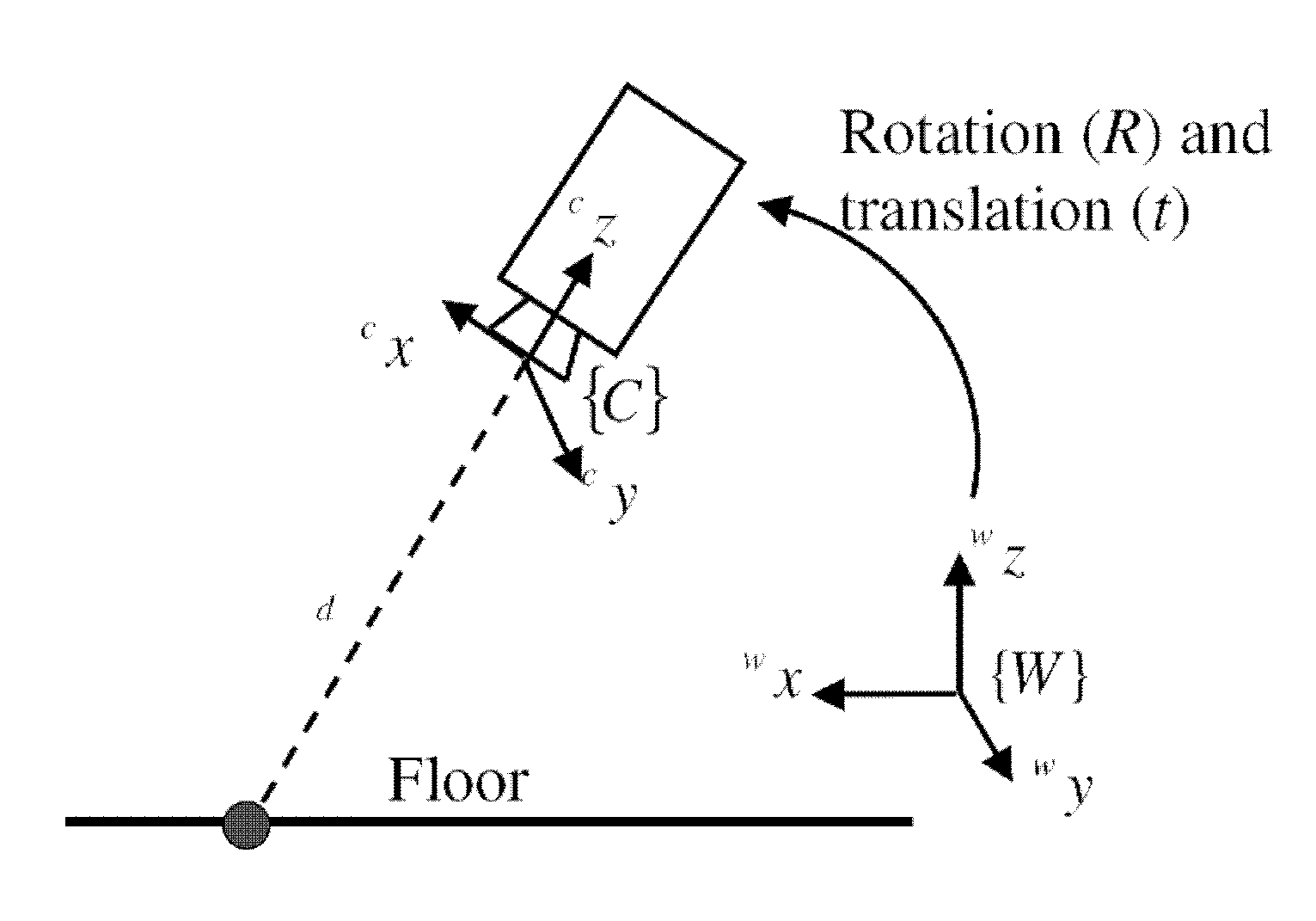

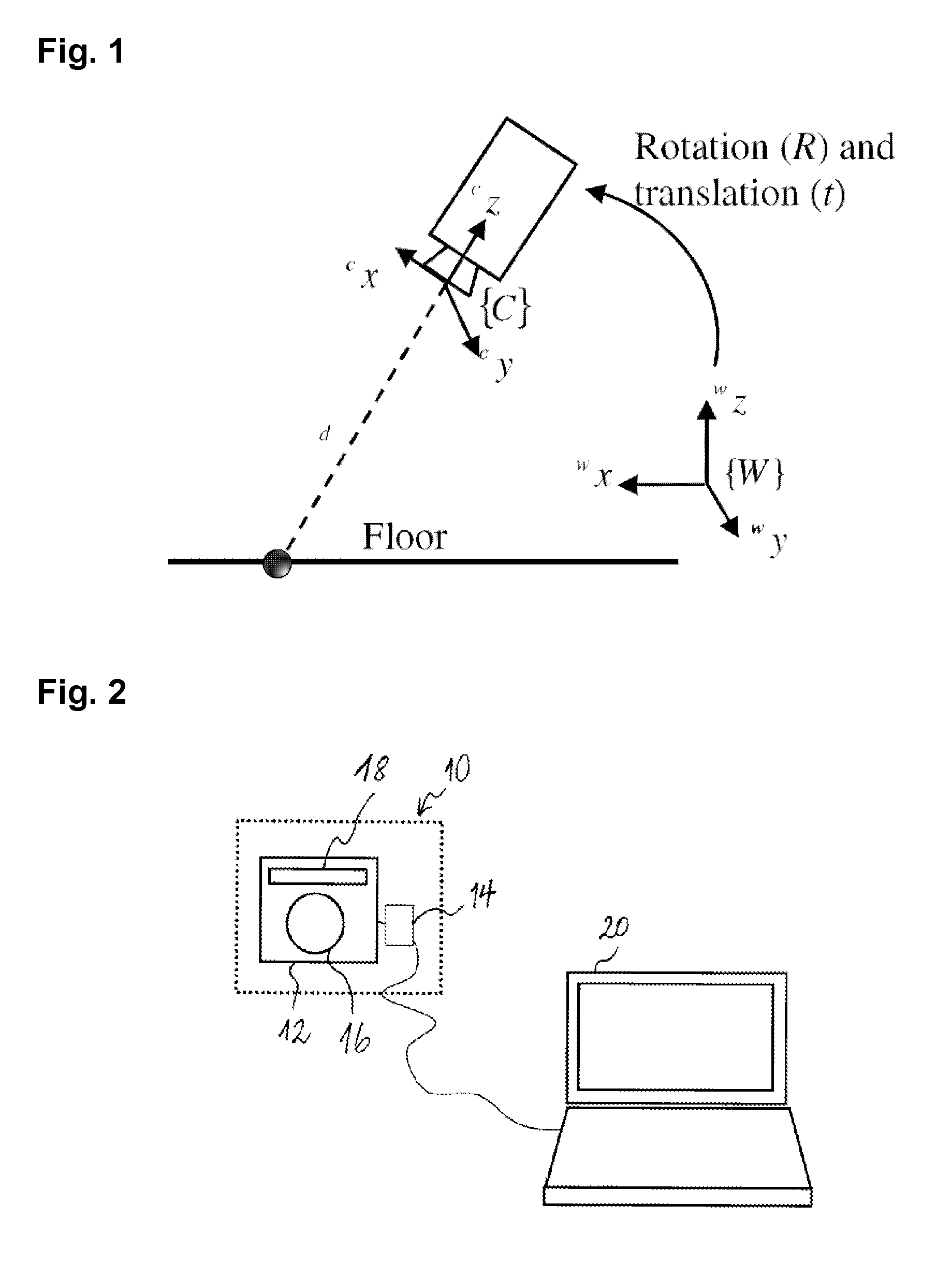

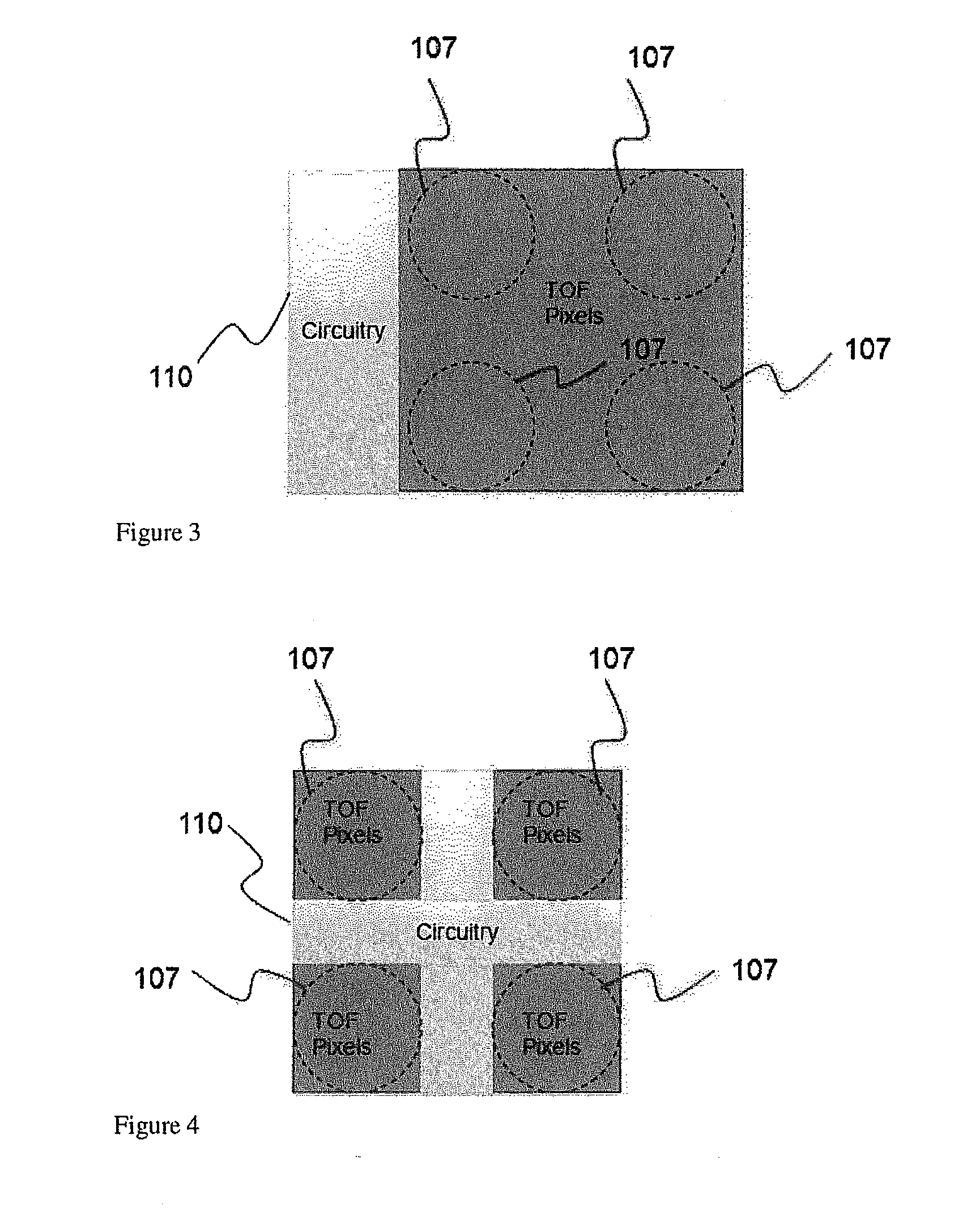

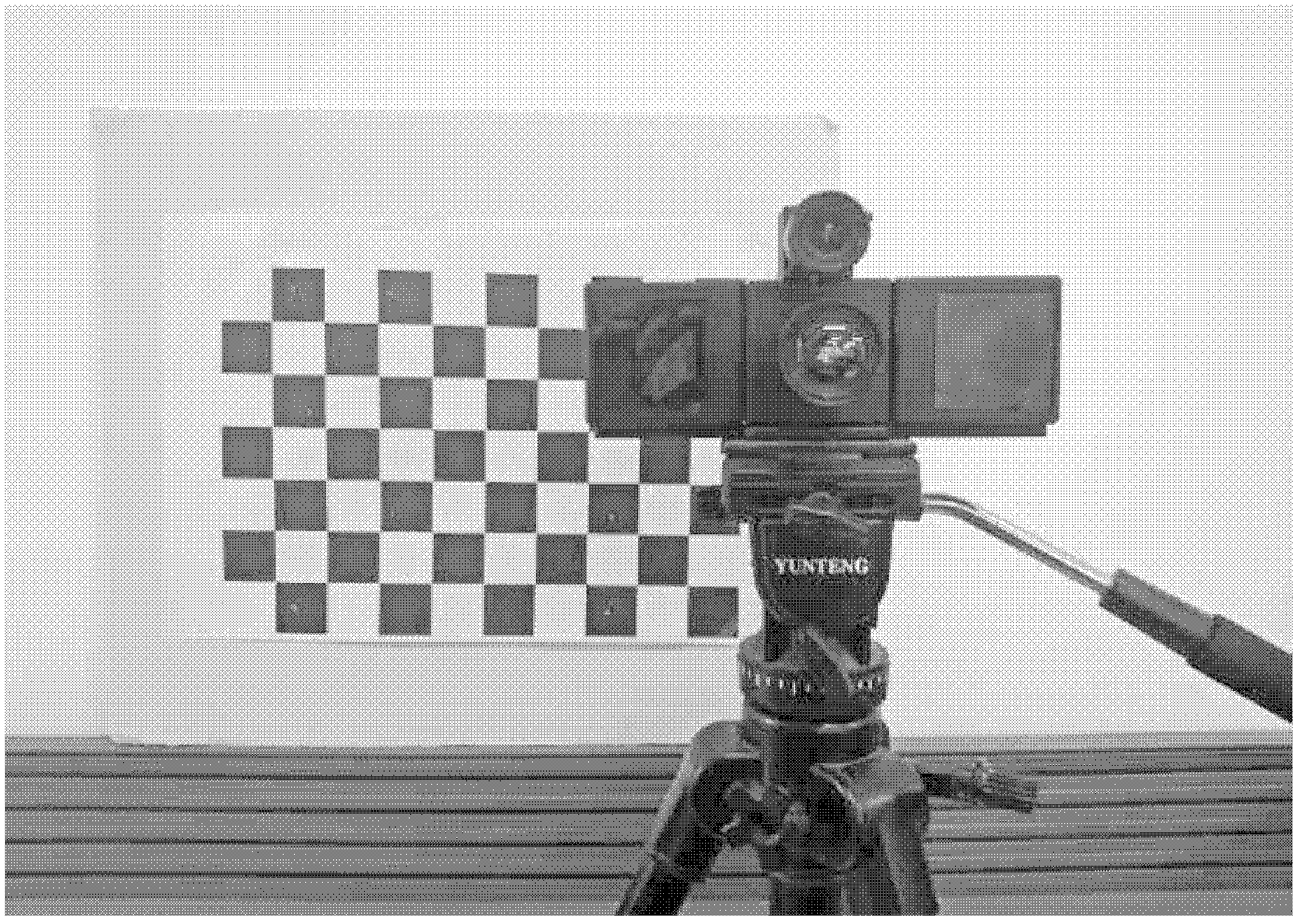

3D time-of-flight camera system and position/orientation calibration method therefor

ActiveUS20110205340A1Easy to installImage enhancementImage analysisTime-of-flight cameraAngle of view

A camera system comprises a 3D TOF camera for acquiring a camera-perspective range image of a scene and an image processor for processing the range image. The image processor contains a position and orientation calibration routine implemented therein in hardware and / or software, which position and orientation calibration routine, when executed by the image processor, detects one or more planes within a range image acquired by the 3D TOF camera, selects a reference plane among the at least one or more planes detected and computes position and orientation parameters of the 3D TOF camera with respect to the reference plane, such as, e.g., elevation above the reference plane and / or camera roll angle and / or camera pitch angle.

Owner:IEE INT ELECTRONICS & ENG SA

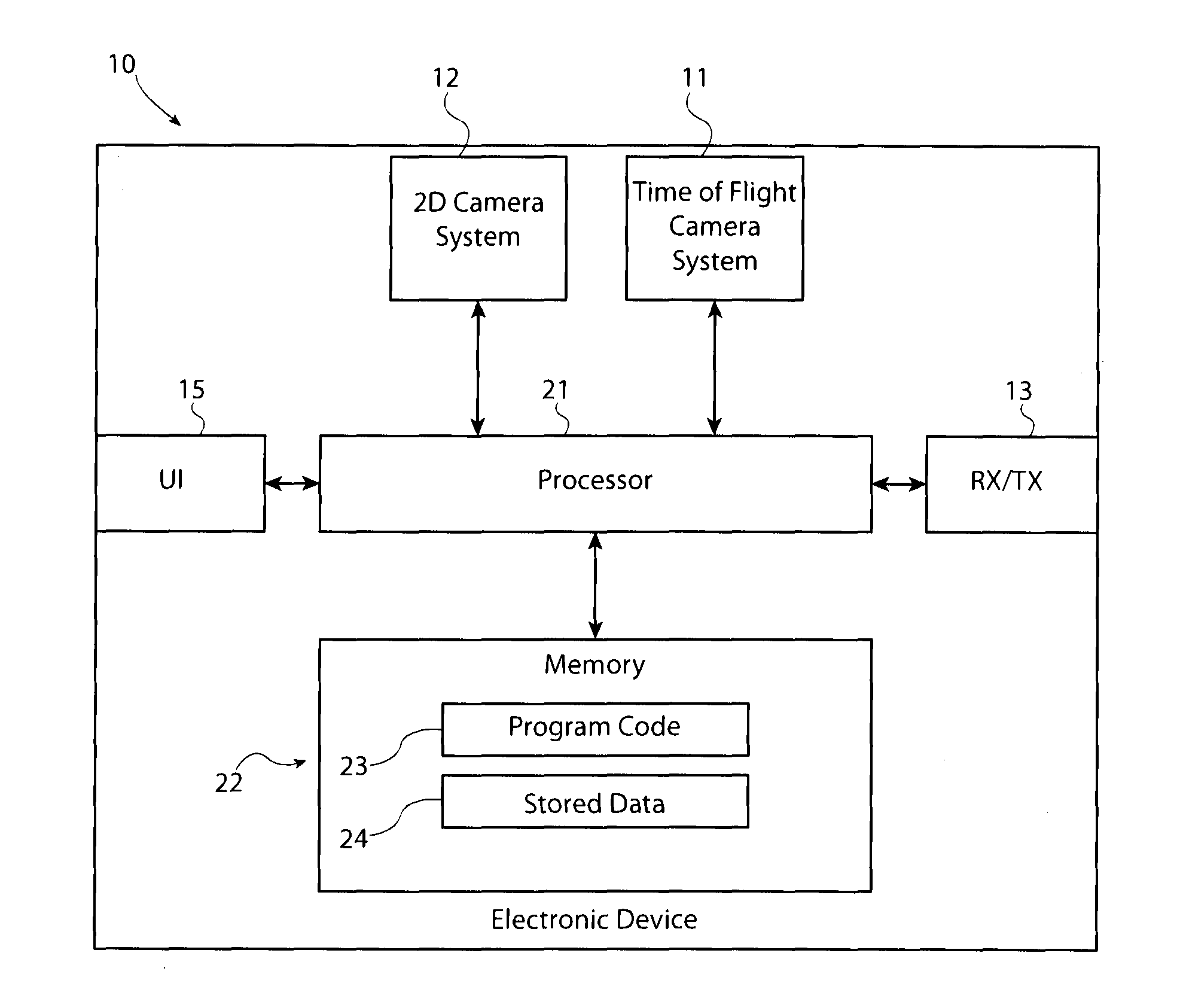

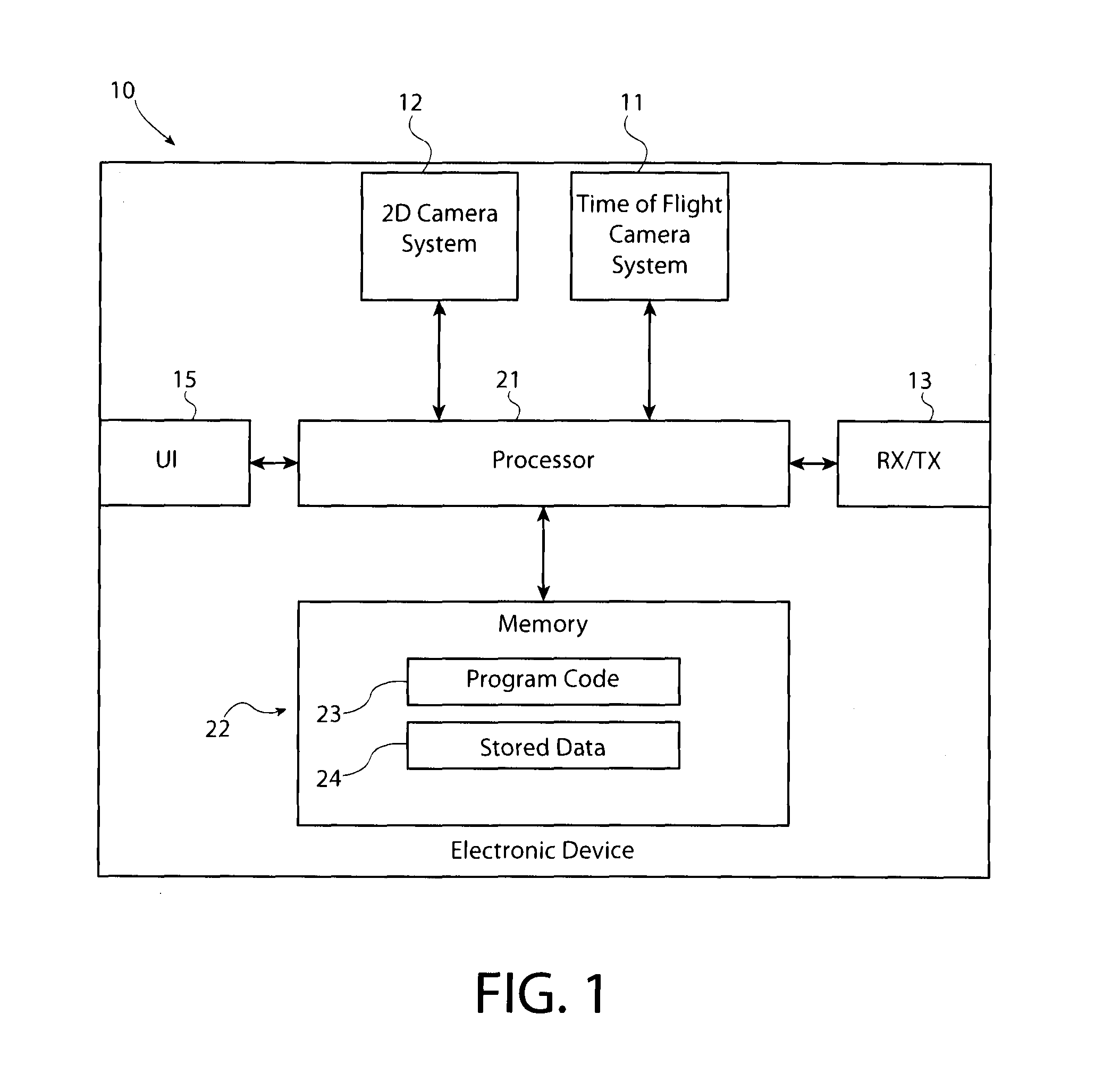

Method and apparatus for fusing distance data from a distance sensing camera with an image

It is inter alia disclosed a method comprising: projecting a distance value and position associated with each of a plurality of pixels of an array of pixel sensors in an image sensor of a time of flight camera system onto a three dimensional world coordinate space as a plurality of depth sample points; and merging pixels from a two dimensional camera image with the plurality of depth sample points of the three dimensional world coordinate space to produce a fused two dimensional depth mapped image.

Owner:NOKIA TECHNOLOGLES OY

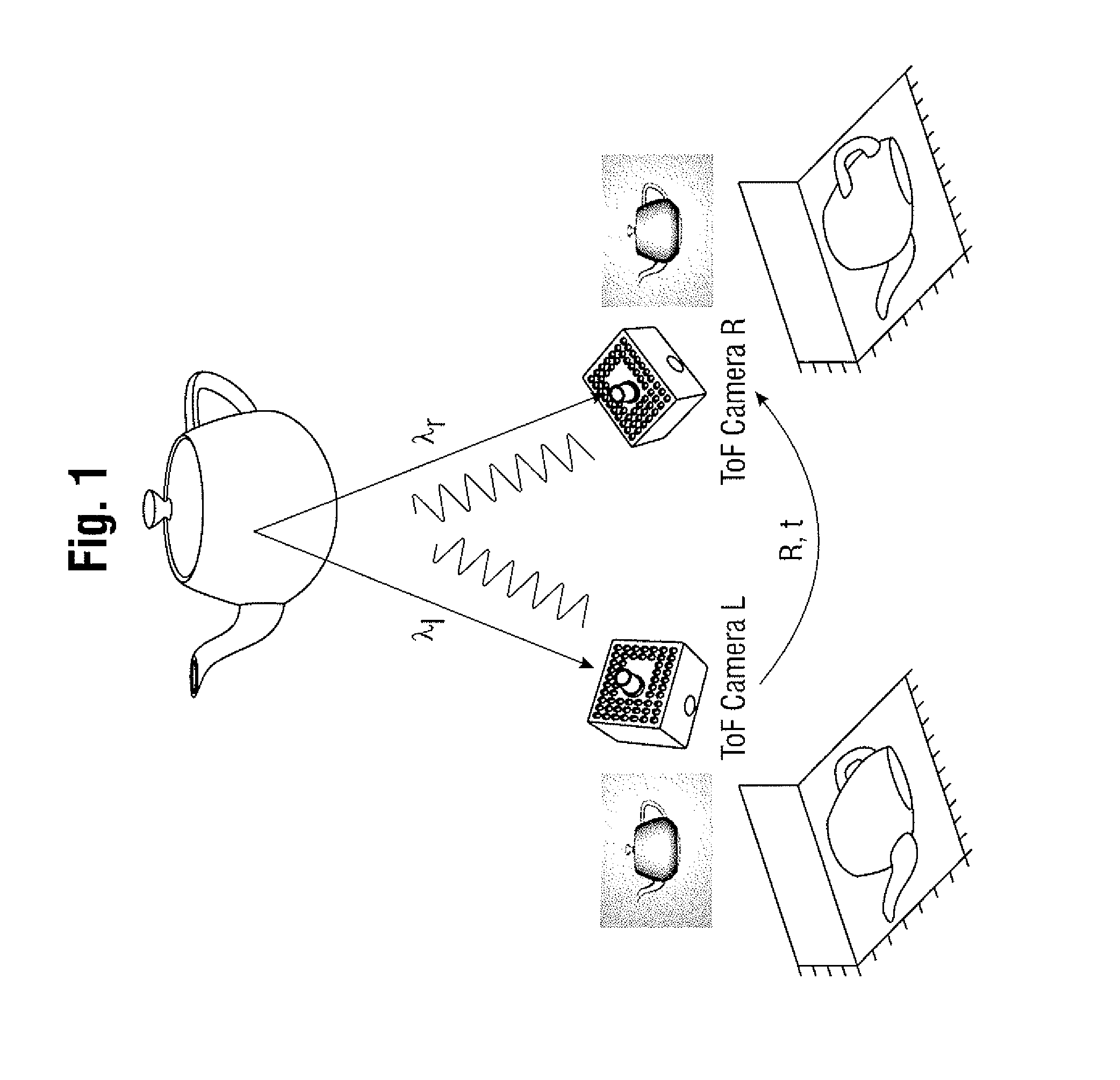

Synthesis system of time-of-flight camera and stereo camera for reliable wide range depth acquisition and method therefor

ActiveUS20130222550A1Removing noise through median filteringTelevision system detailsImage analysisColor imageStereo cameras

Provided is a synthesis system of a time-of-flight (ToF) camera and a stereo camera for reliable wide range depth acquisition and a method therefor. The synthesis system may estimate an error per pixel of a depth image, may calculate a value of a maximum distance multiple per pixel of the depth image using the error per pixel of the depth image, a left color image, and a right color image, and may generate a reconstructed depth image by conducting phase unwrapping on the depth image using the value of the maximum distance multiple per pixel of the depth image.

Owner:SAMSUNG ELECTRONICS CO LTD

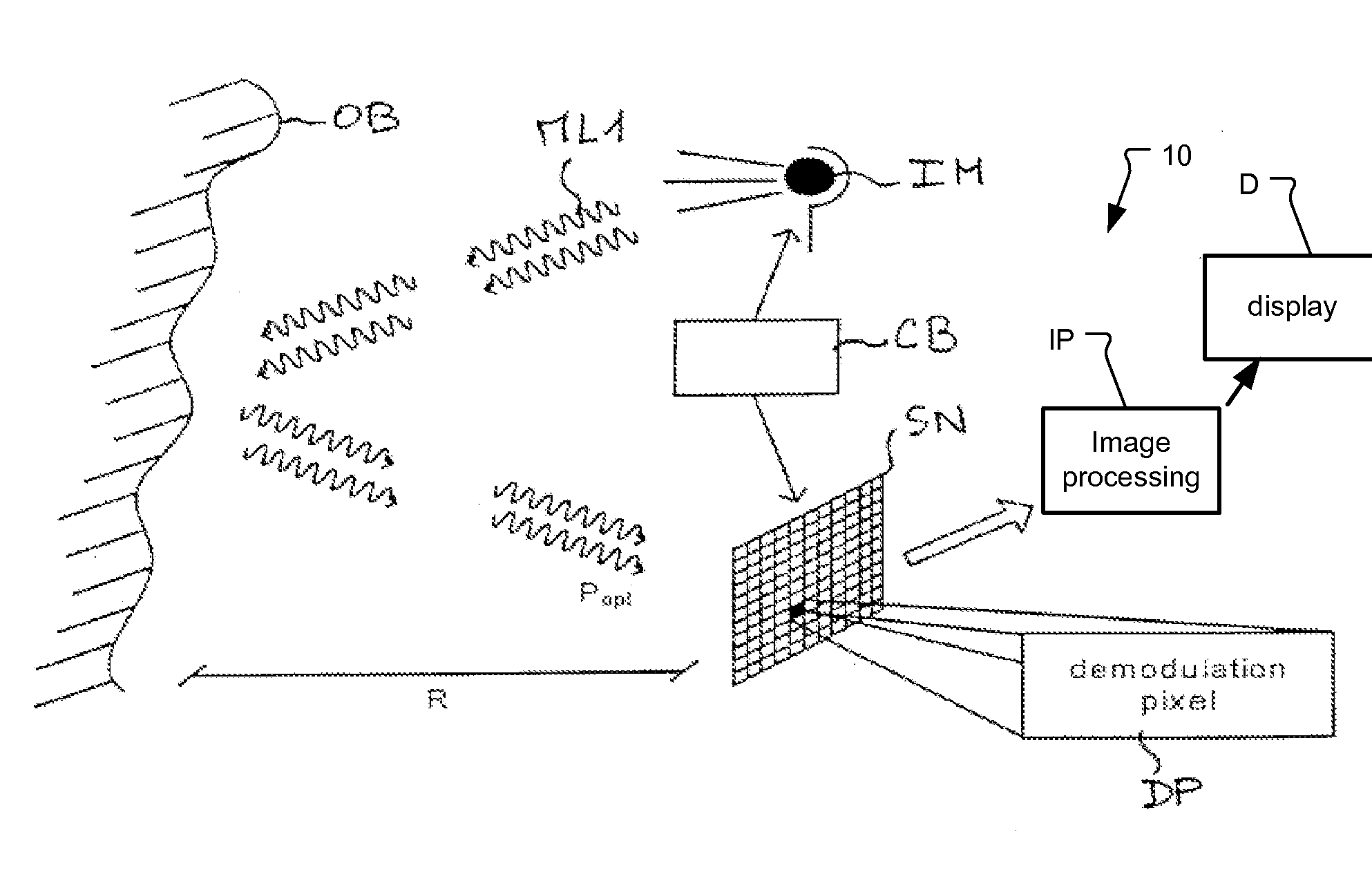

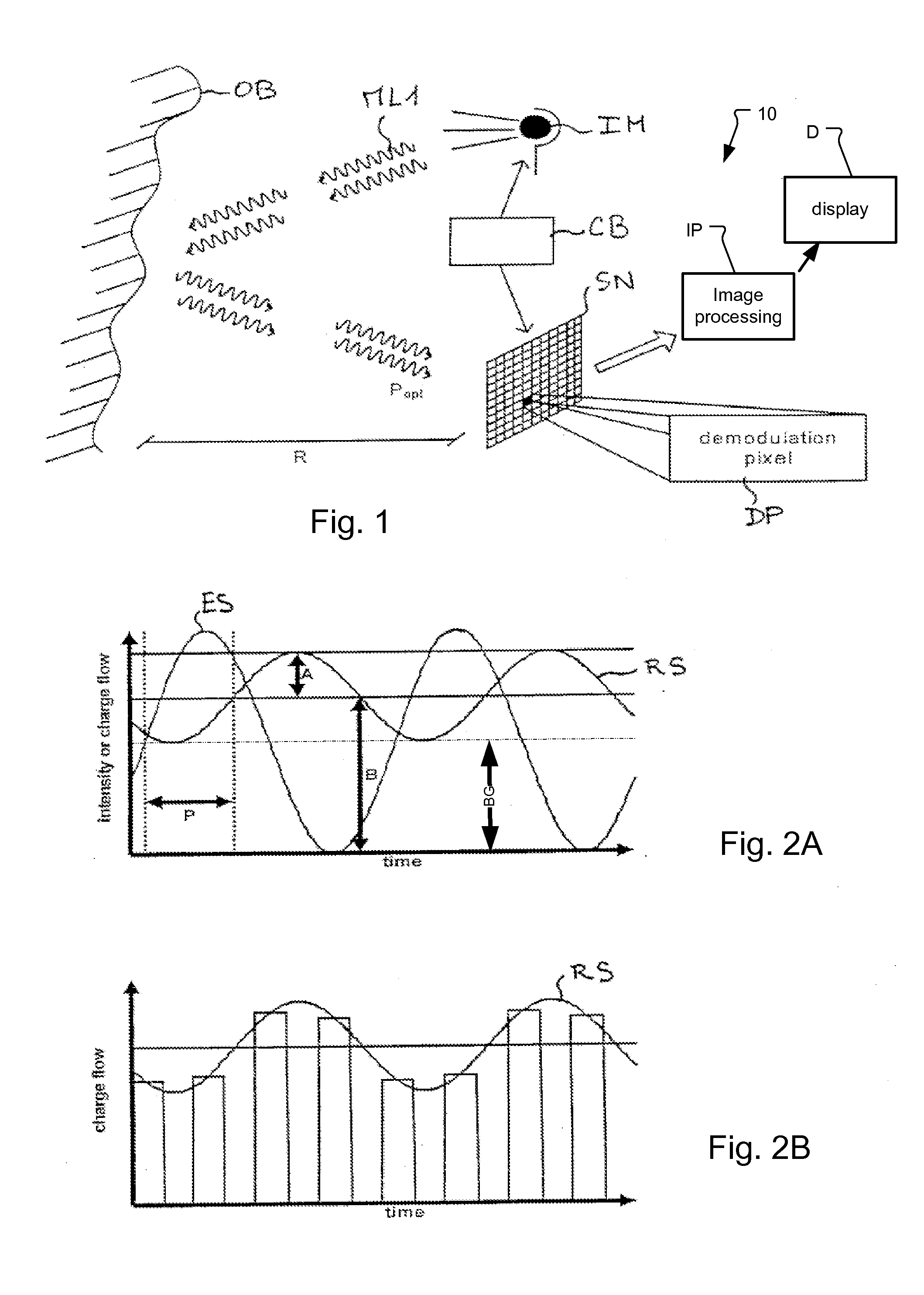

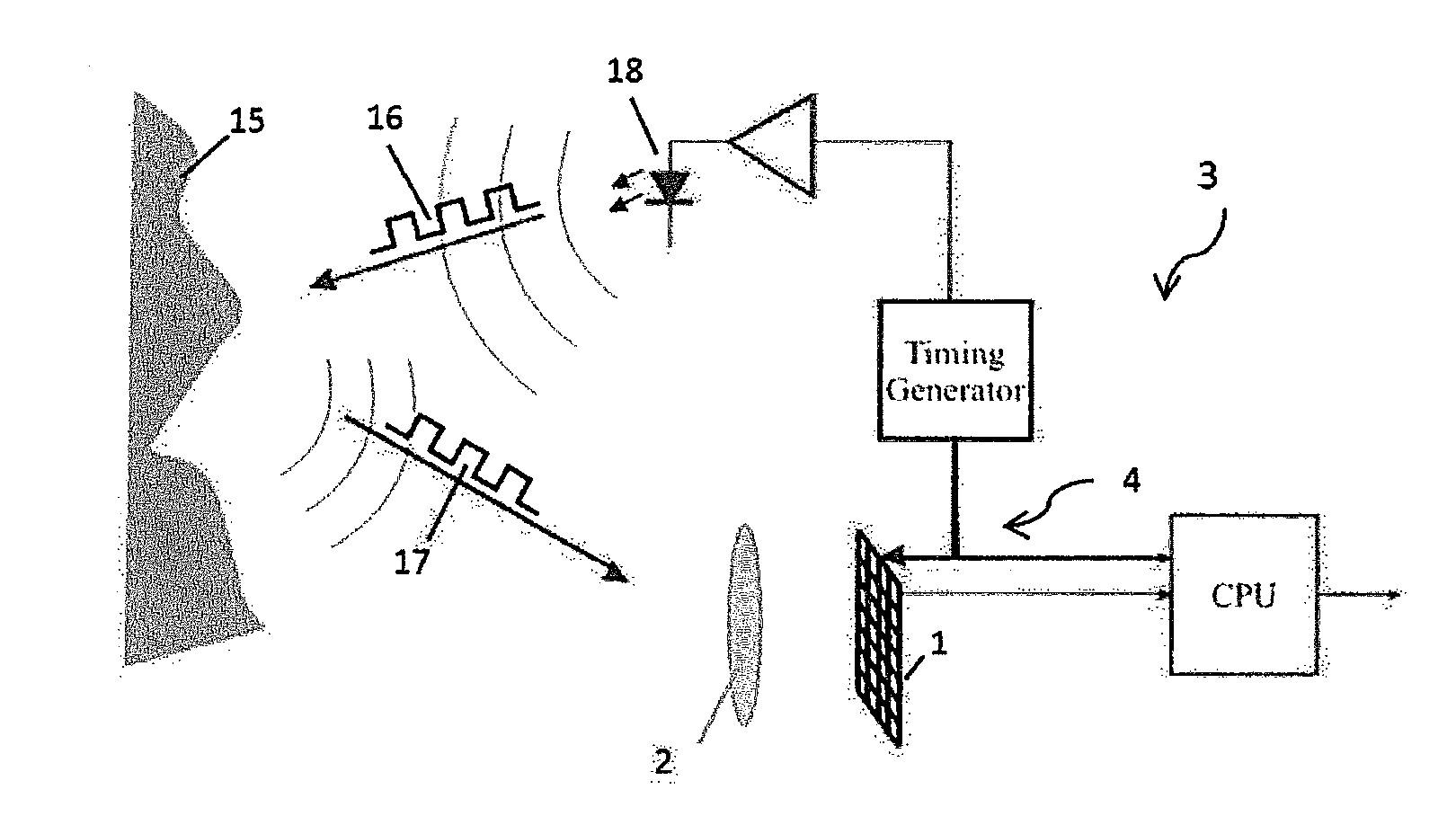

3D time-of-flight camera and method

ActiveUS20120098935A1Effectively detectEffectively compensateElectromagnetic wave reradiationSteroscopic systemsPhase shiftedOriginal data

The present invention relates to a 3D time-of-flight camera for acquiring information about a scene, in particular for acquiring depth images of a scene, information about phase shifts of a scene or environmental information about the scene. The proposed camera particularly compensates motion artifacts by real-time identification of affected pixels and, preferably, corrects its data before actually calculating the desired scene-related information values from the raw data values obtained from radiation reflected by the scene.

Owner:SONY CORP

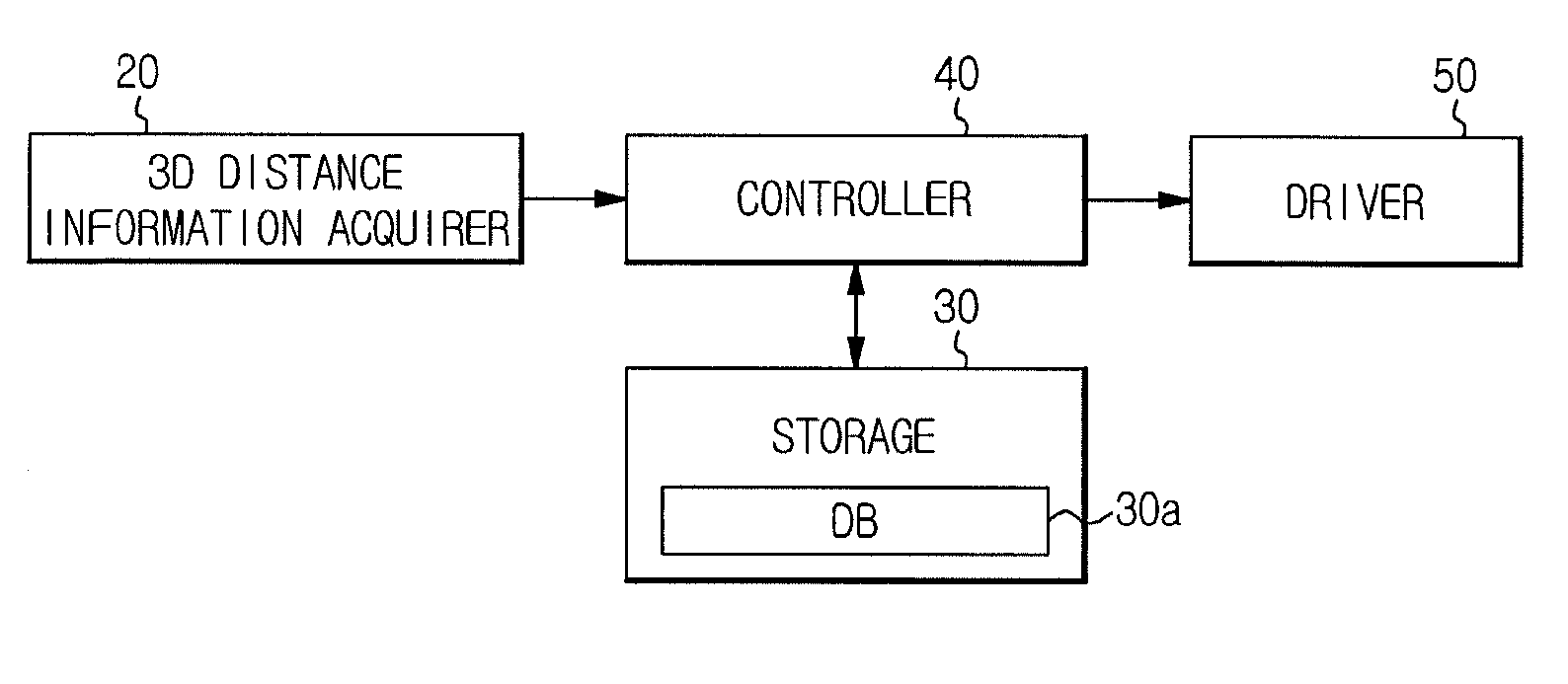

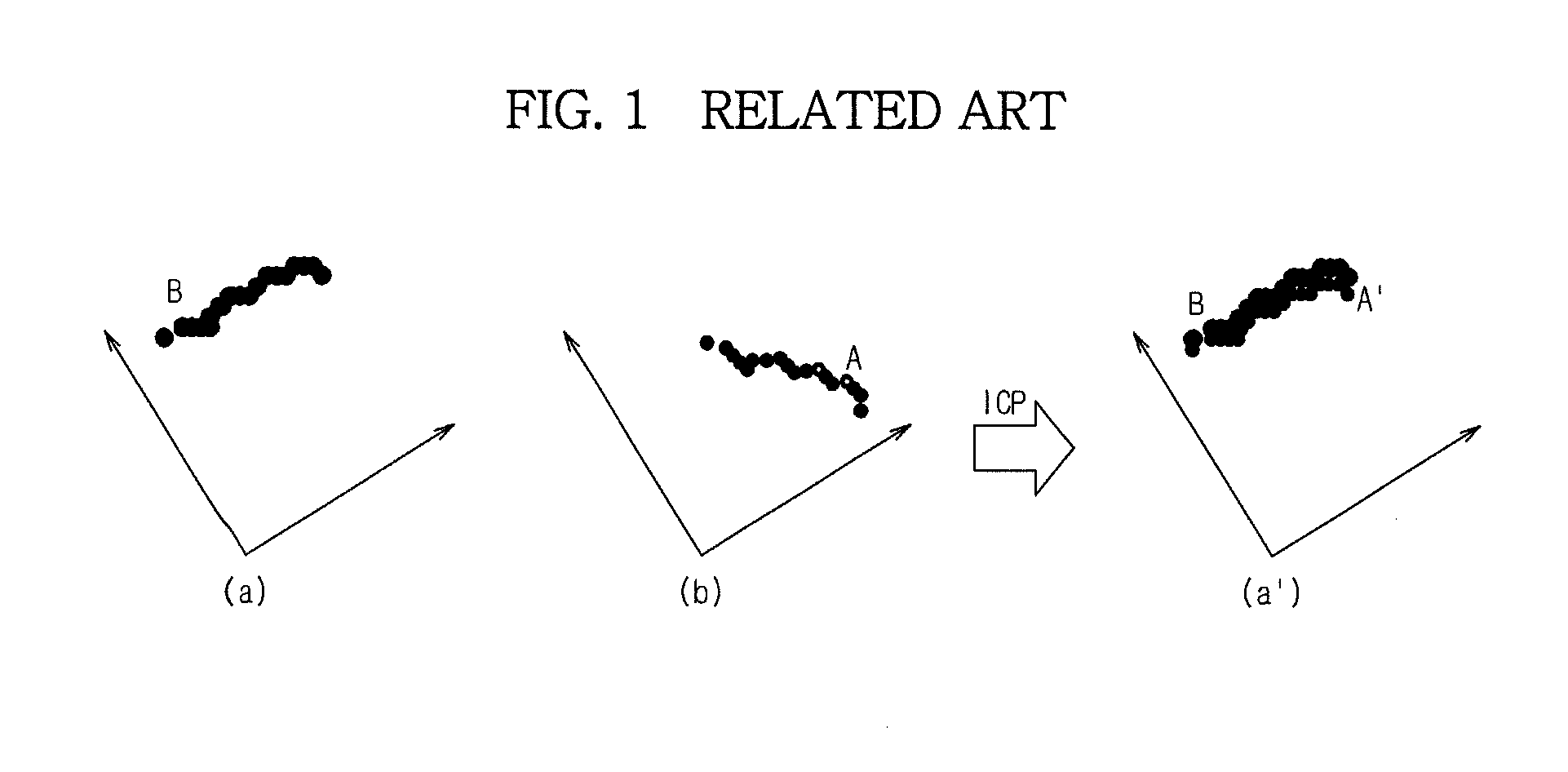

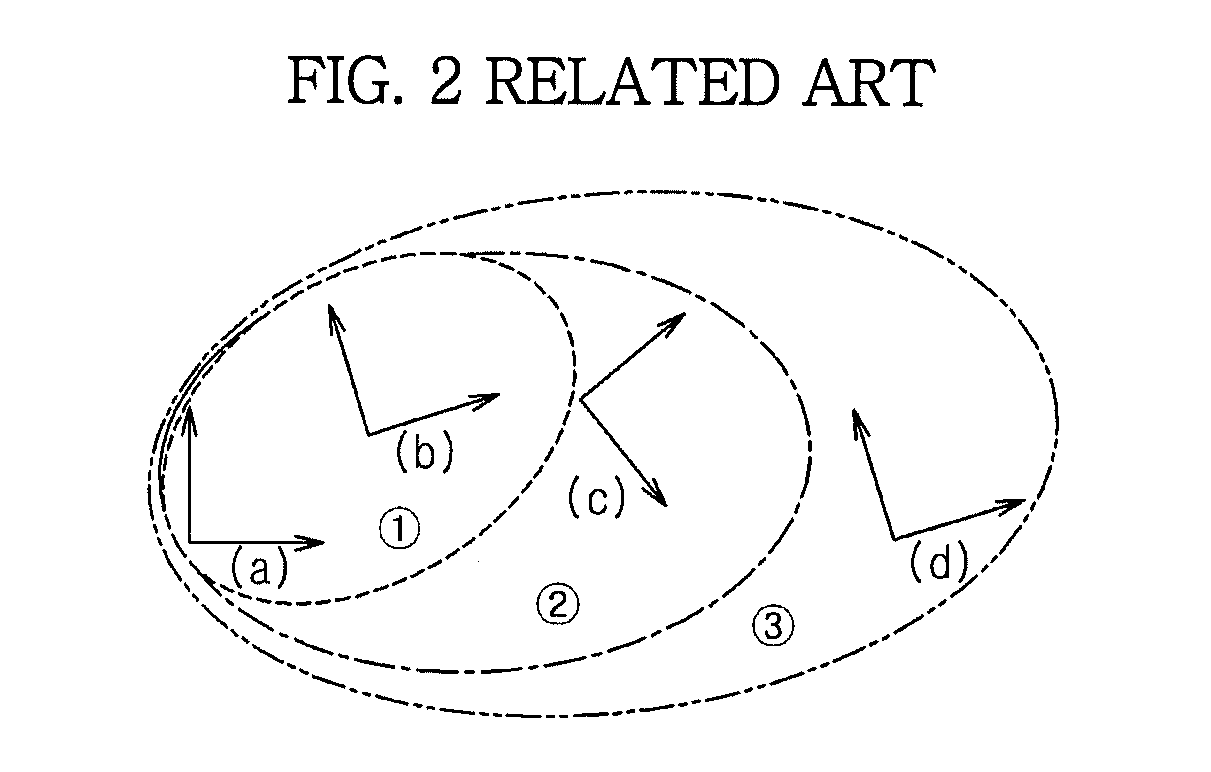

Moving robot and method to build map for the same

ActiveUS20120089295A1Programme-controlled manipulatorDistance measurementComputer graphics (images)Time-of-flight camera

A moving robot and a method to build a map for the same, wherein a 3D map for an ambient environment of the moving robot may be built using a Time of Flight (TOF) camera that may acquire 3D distance information in real time. The method acquires 3D distance information of an object present in a path along which the moving robot moves, accumulates the acquired 3D distance information to construct a map of a specific level and stores the map in a database, and then hierarchically matches maps stored in the database to build a 3D map for a set space. This method may quickly and accurately build a 3D map for an ambient environment of the moving robot.

Owner:SAMSUNG ELECTRONICS CO LTD

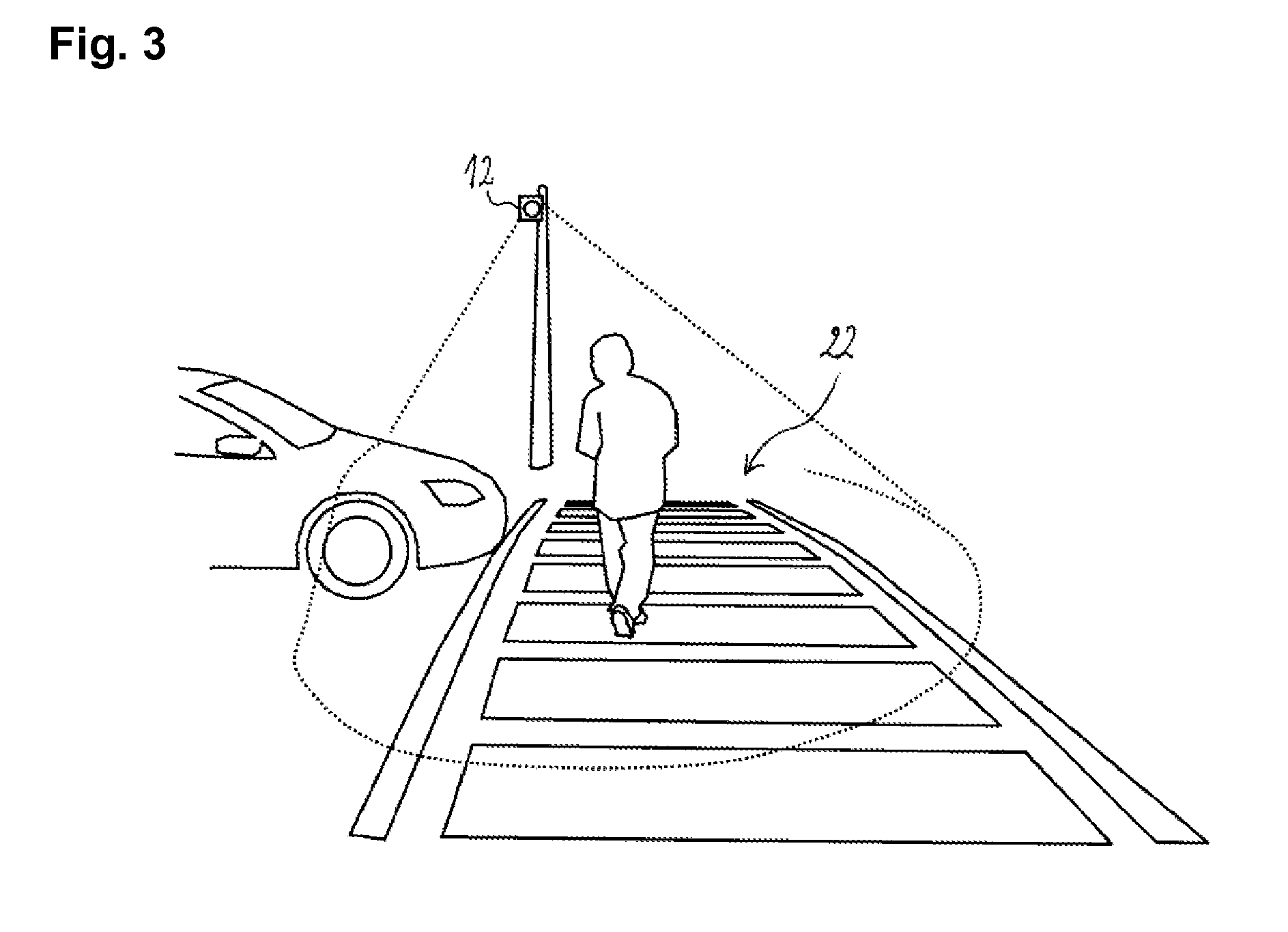

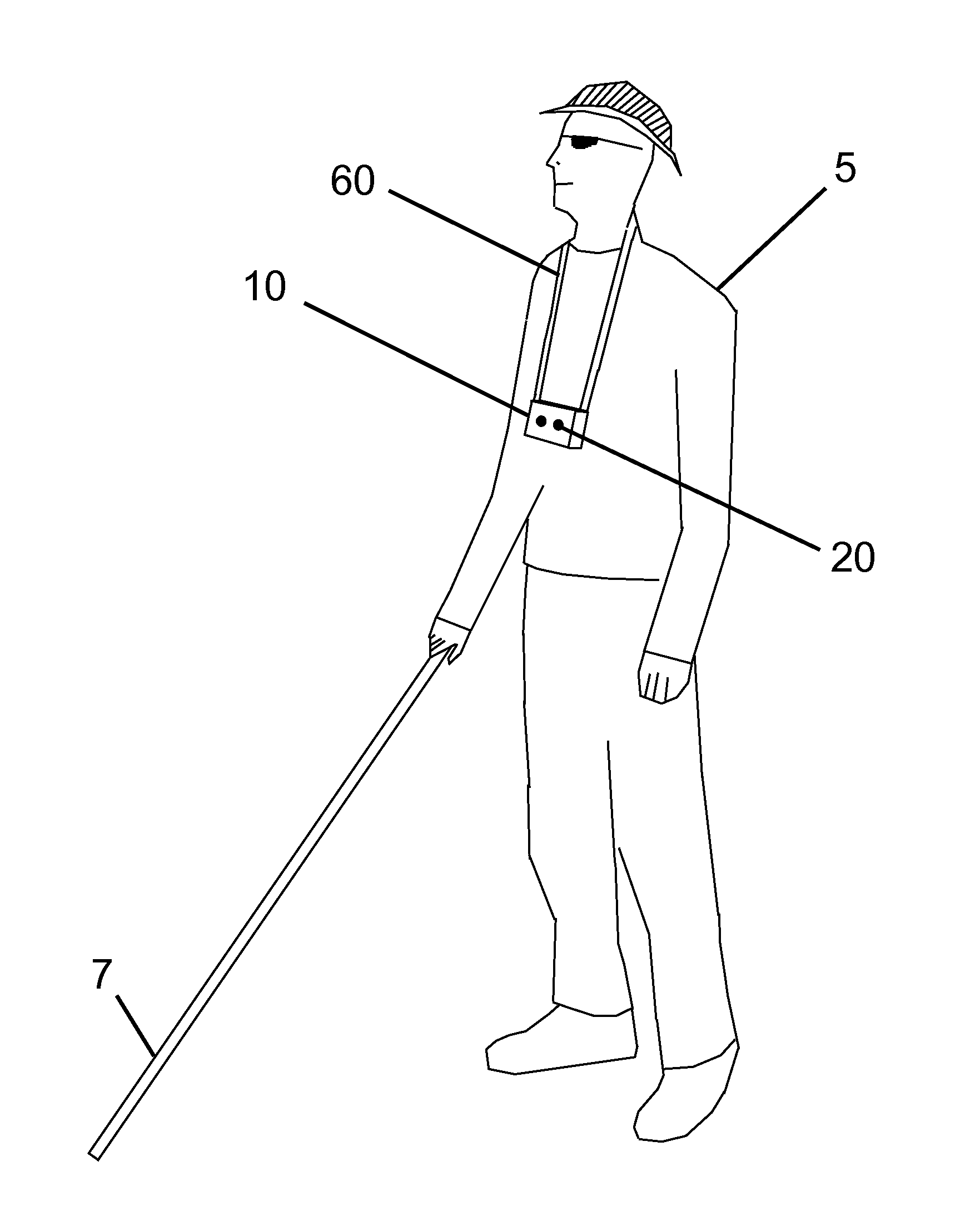

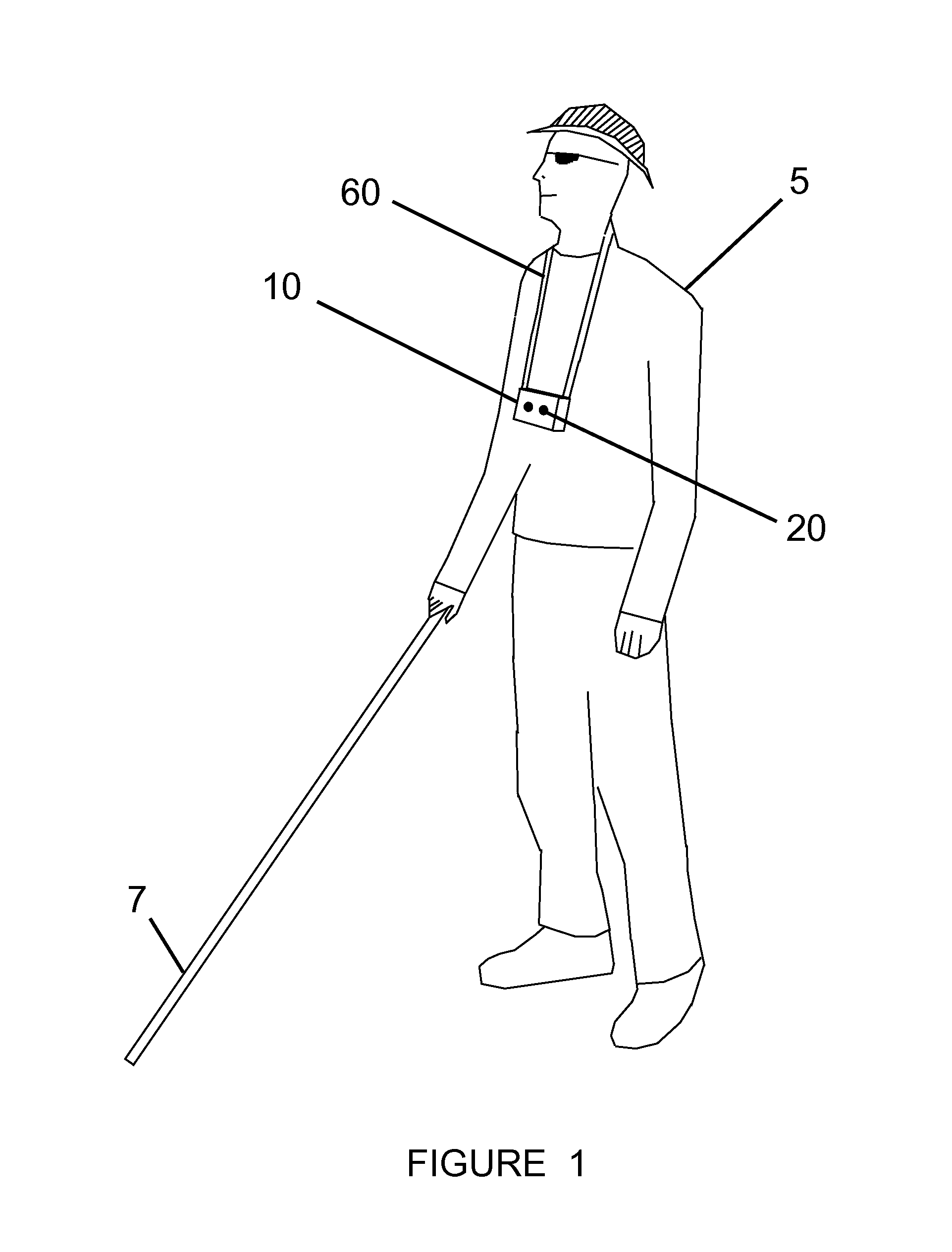

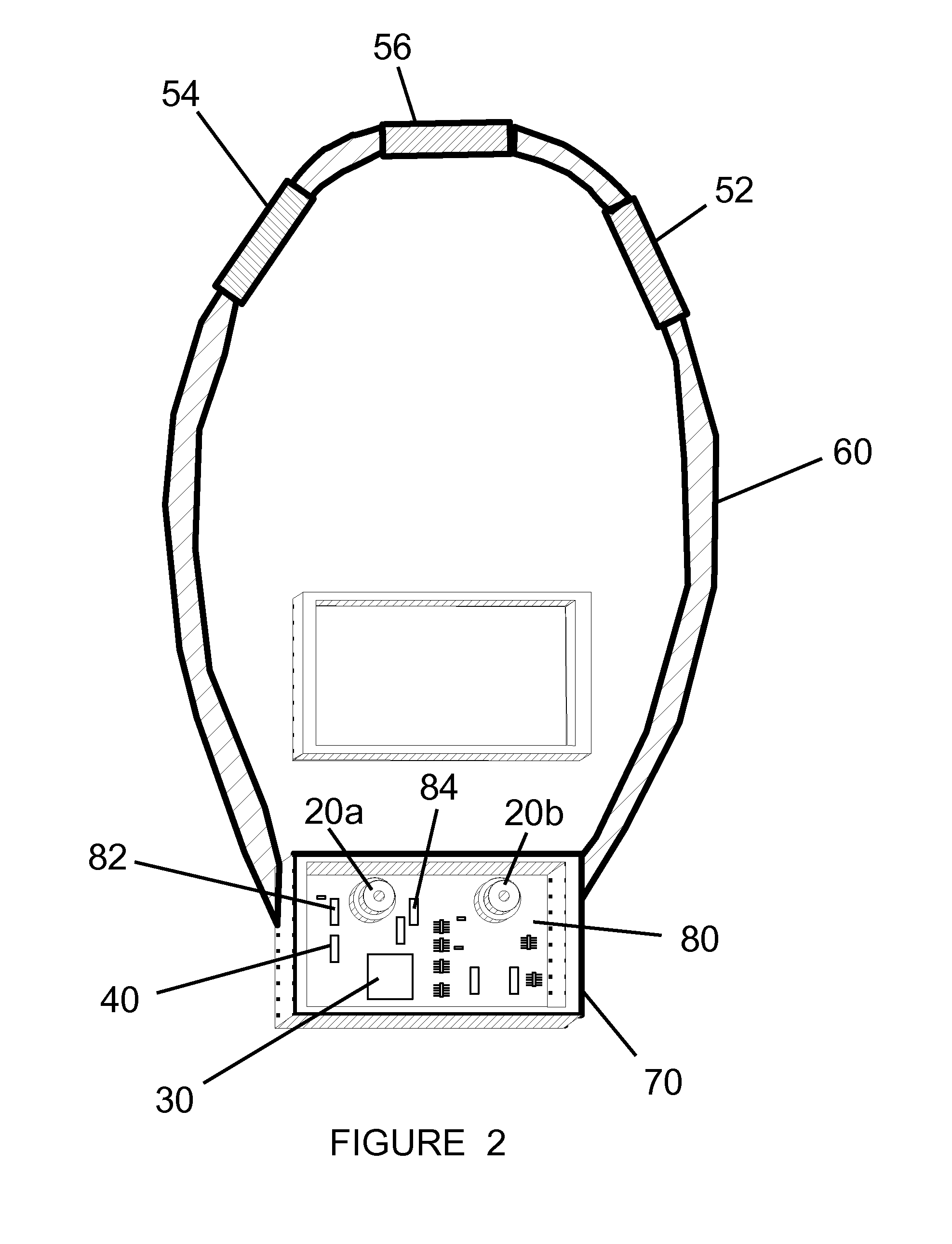

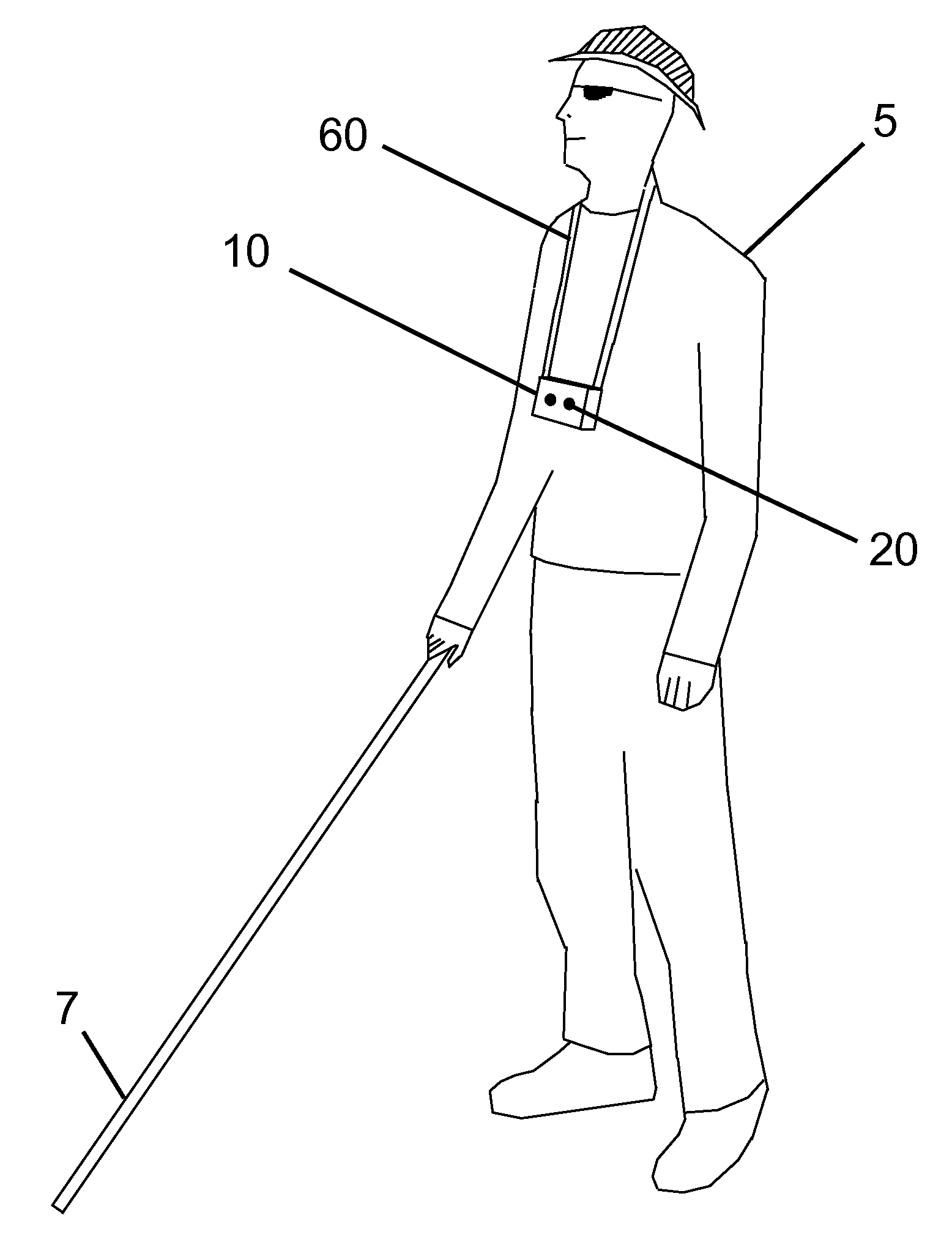

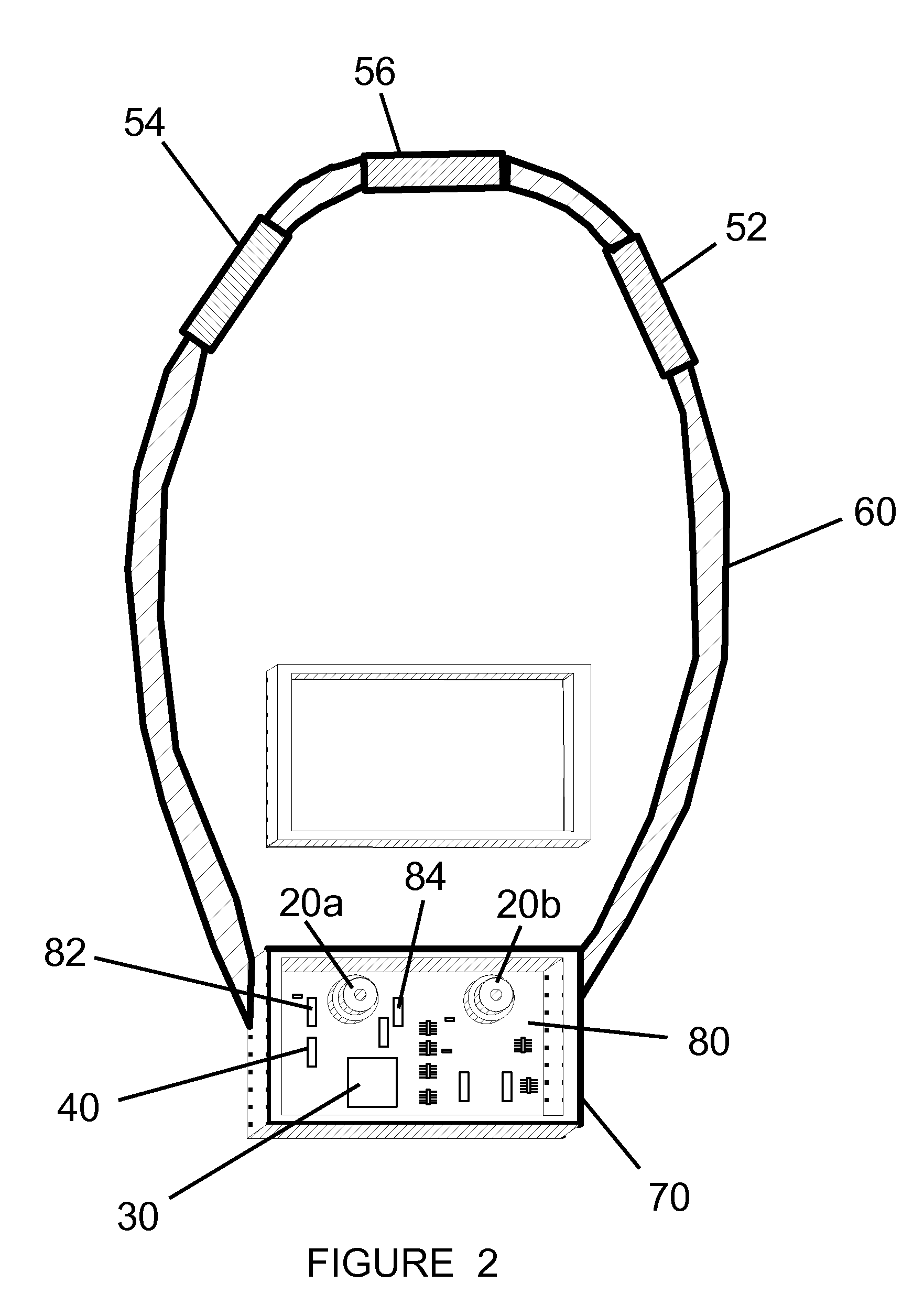

System And Method For Alerting Visually Impaired Users Of Nearby Objects

ActiveUS20120092460A1Remove distortionWalking aidsCharacter and pattern recognitionVisually impairedComputer graphics (images)

A system and method for assisting a visually impaired user including a time of flight camera, a processing unit for receiving images from the time of flight camera and converting the images into signals for use by one or more controllers, and one or more vibro-tactile devices, wherein the one or more controllers activates one or more of the vibro-tactile devices in response to the signals received from the processing unit. The system preferably includes a lanyard means on which the one or more vibro-tactile devices are mounted. The vibro-tactile devices are activated depending on a determined position in front of the user of an object and the distance from the user to the object.

Owner:MAHONEY ANDREW

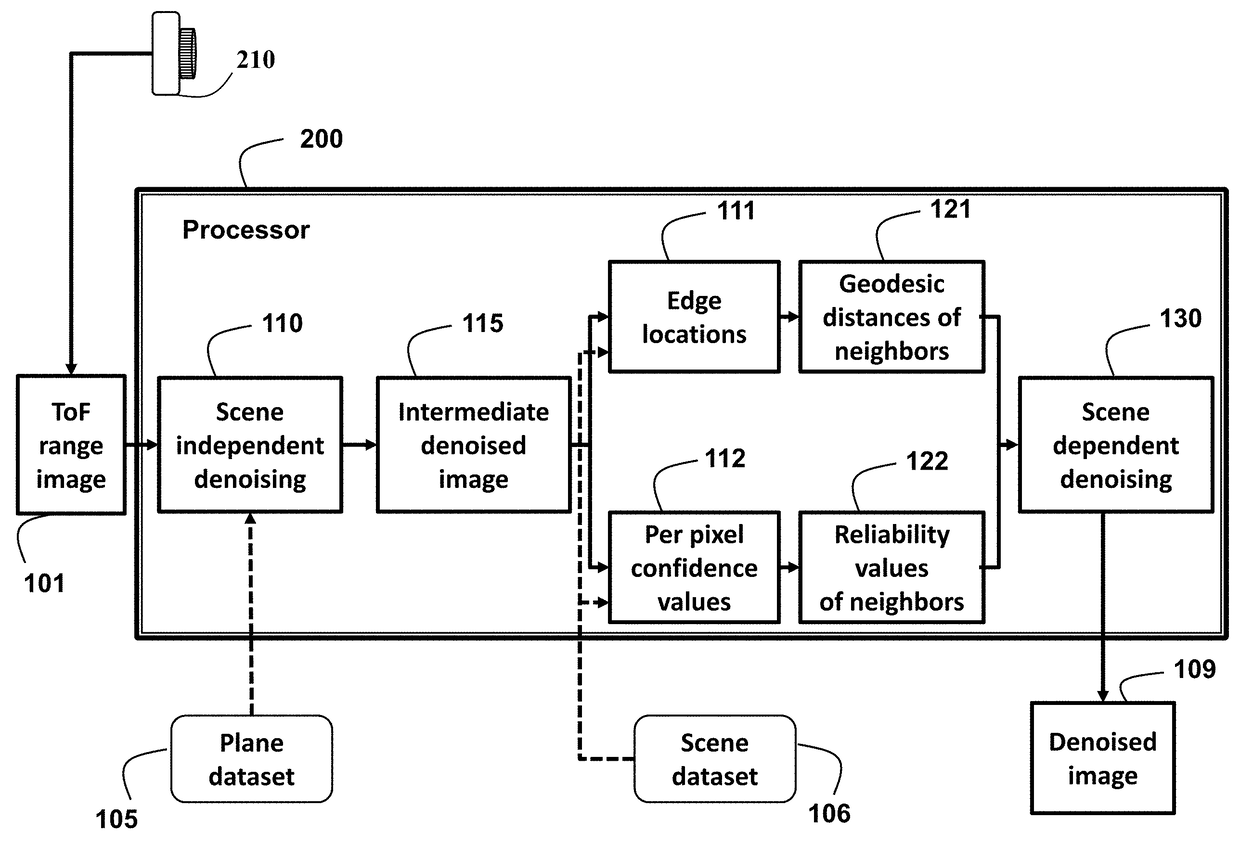

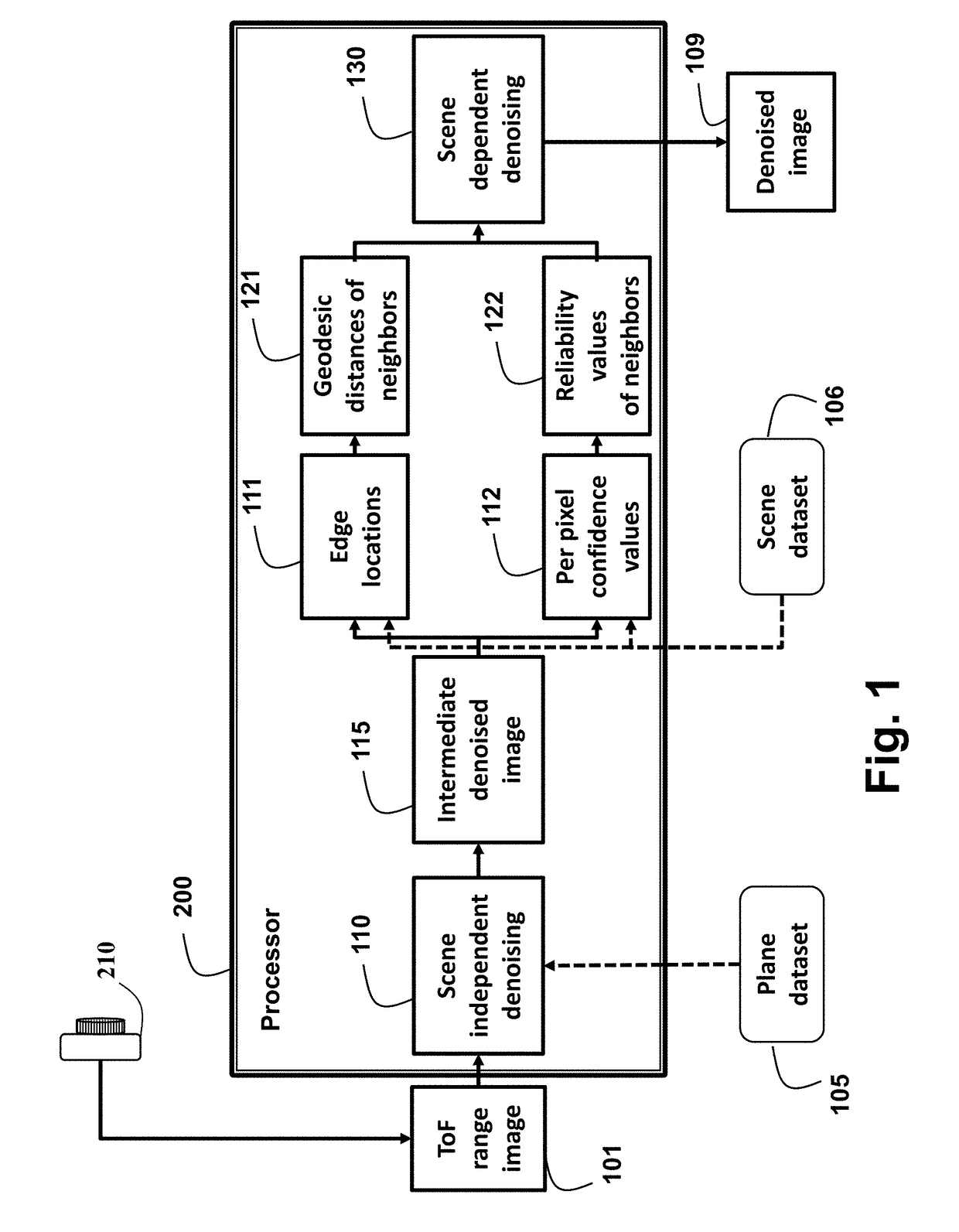

Method for denoising time-of-flight range images

A method for denoising a range image acquired by a time-of-flight (ToF) camera by first determining locations of edges, and a confidence value of each pixel, and based on the locations of the edges, determining geodesic distances of neighboring pixels. Based on the confidence values, reliabilities of the neighboring pixels are determined and scene dependent noise is reduced using a filter.

Owner:MITSUBISHI ELECTRIC RES LAB INC

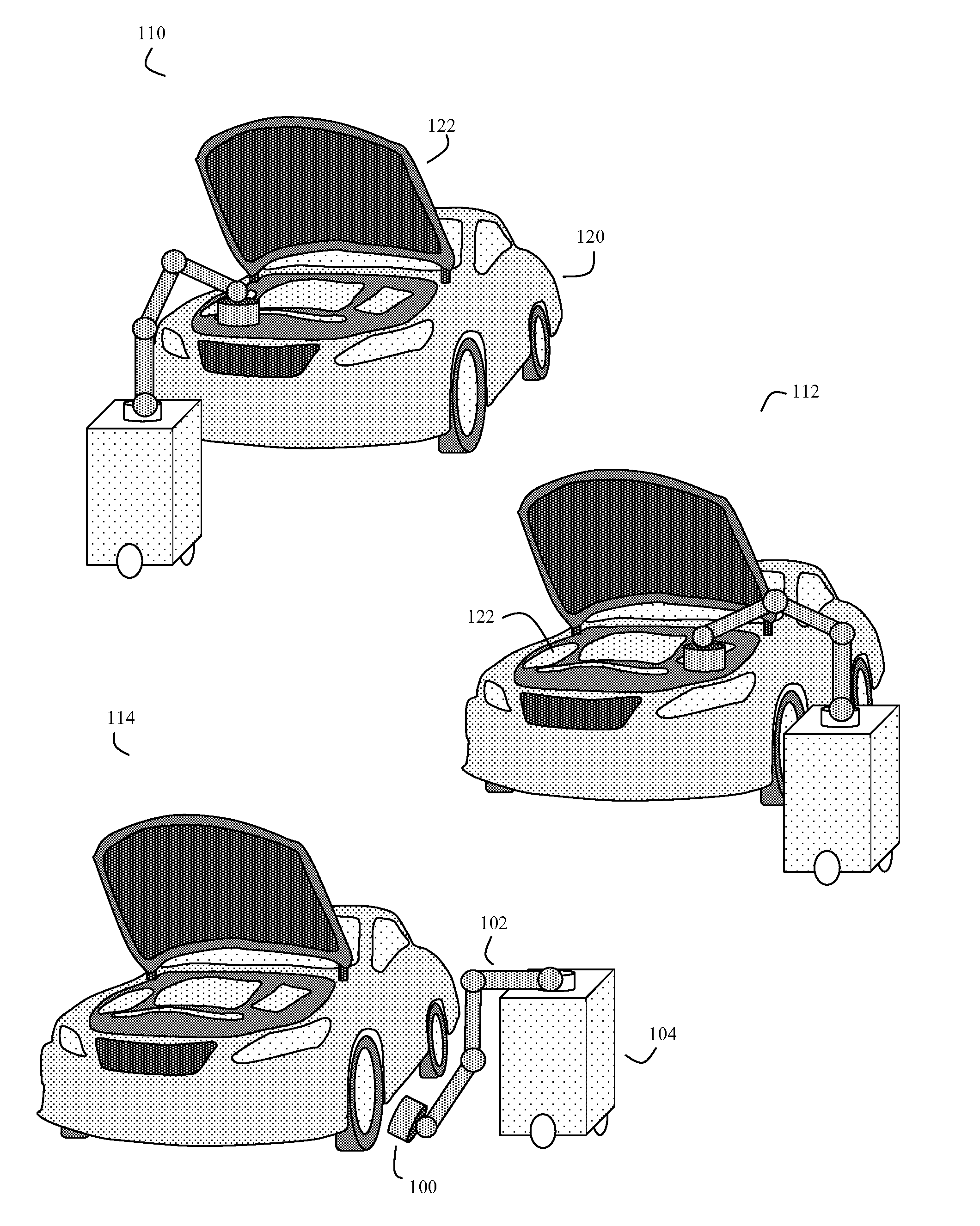

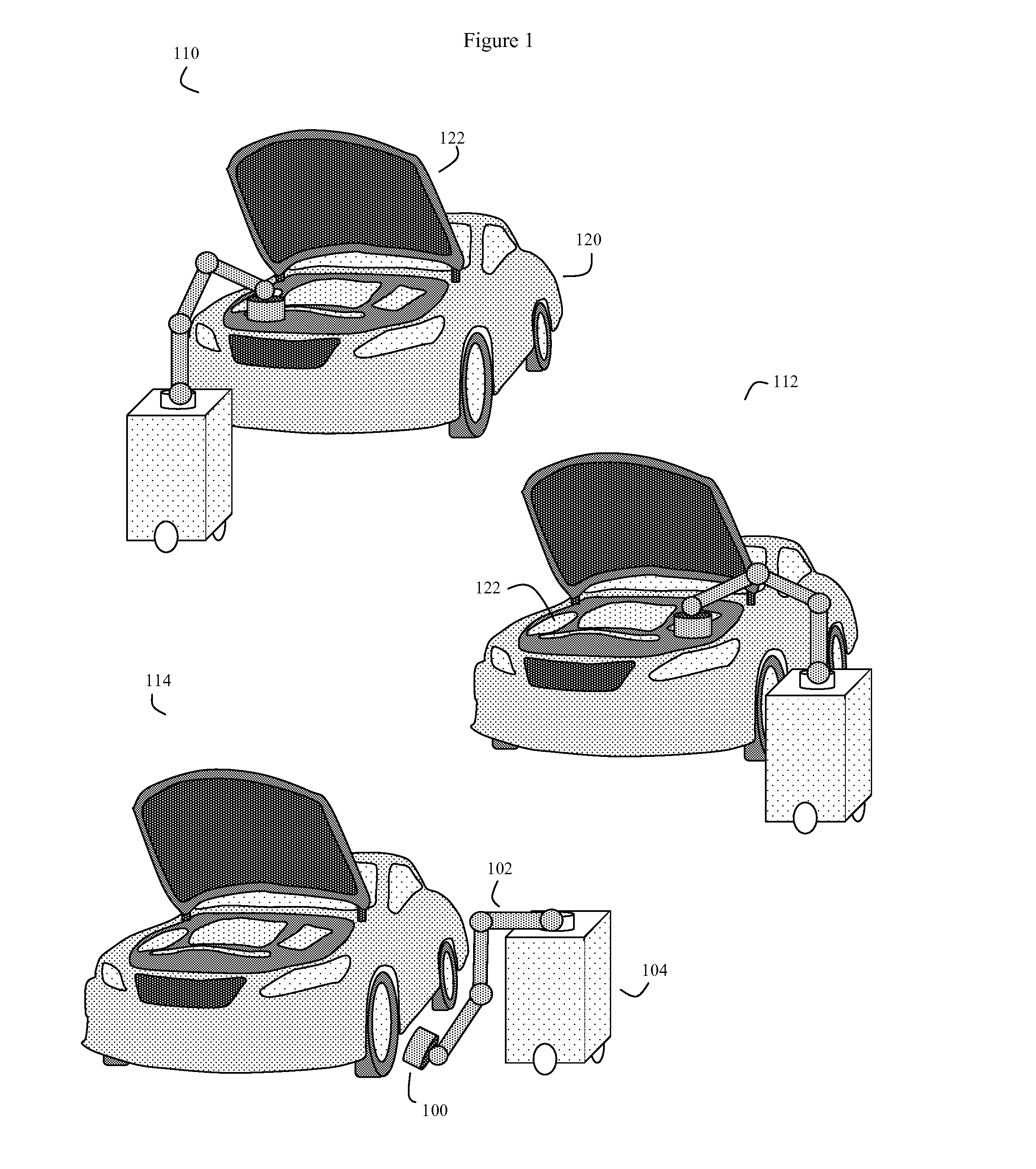

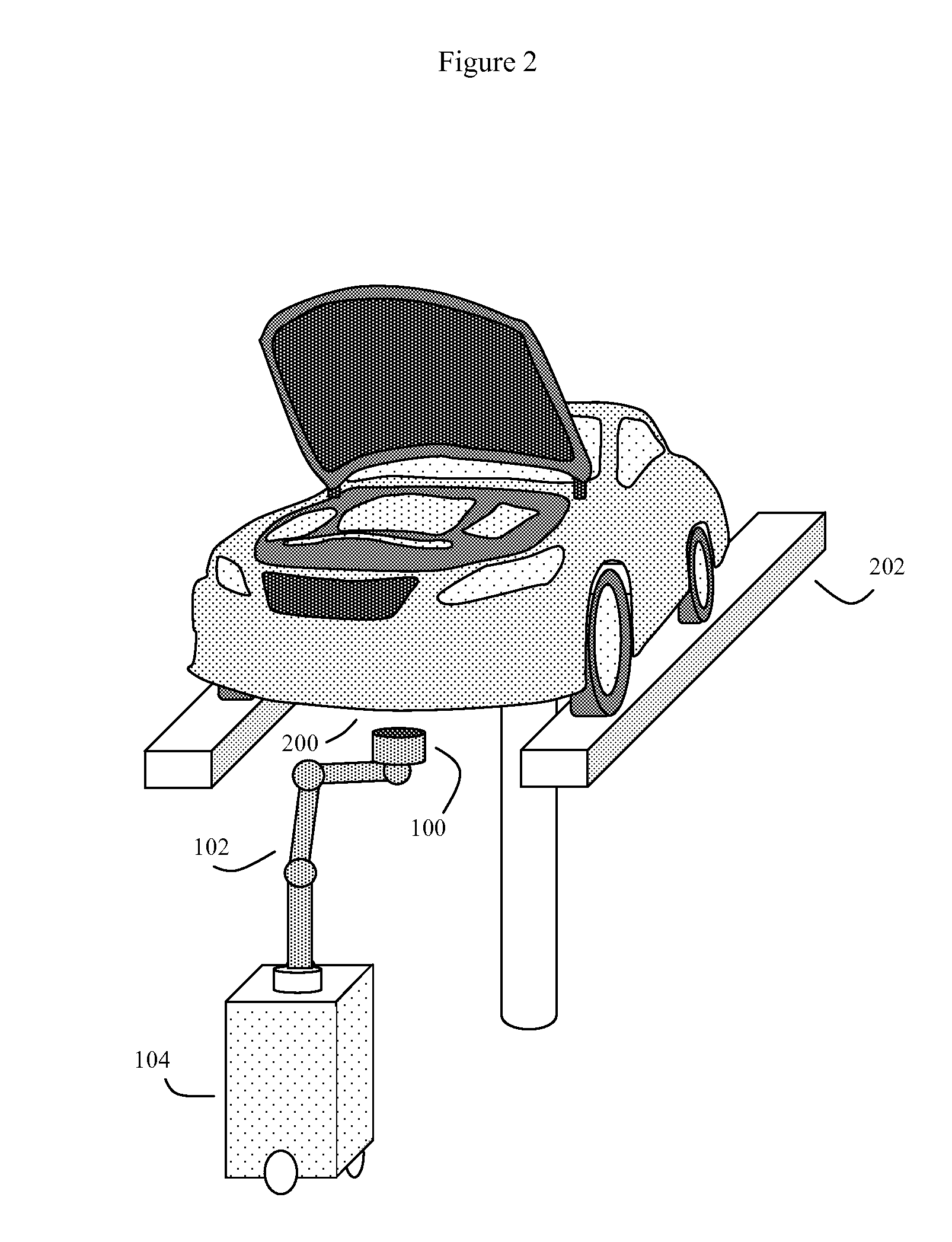

System and method of automated 3D scanning for vehicle maintenance

System and method for using automated 3D scans to diagnose the mechanical status of substantially intact vehicles. One or more processor controlled 3D scanners utilize optical and other methods to assess the exposed surfaces of various vehicle components. Computer vision and other computerized pattern recognition techniques then compare the 3D scanner output versus a reference computer database of these various vehicle components in various normal and malfunctioning states. Those components judged to be aberrant are flagged. These flagged components can be reported to the vehicle users, as well as various insurance or repair entities. In some embodiments, the 3D scans can be performed using time-of-flight cameras, and optionally infrared, stereoscopic, and even audio sensors attached to the processor controlled arm of a mobile robot. Much of the subsequent data analysis and management can be done using remote Internet servers.

Owner:WOOD ROBERT BRUCE

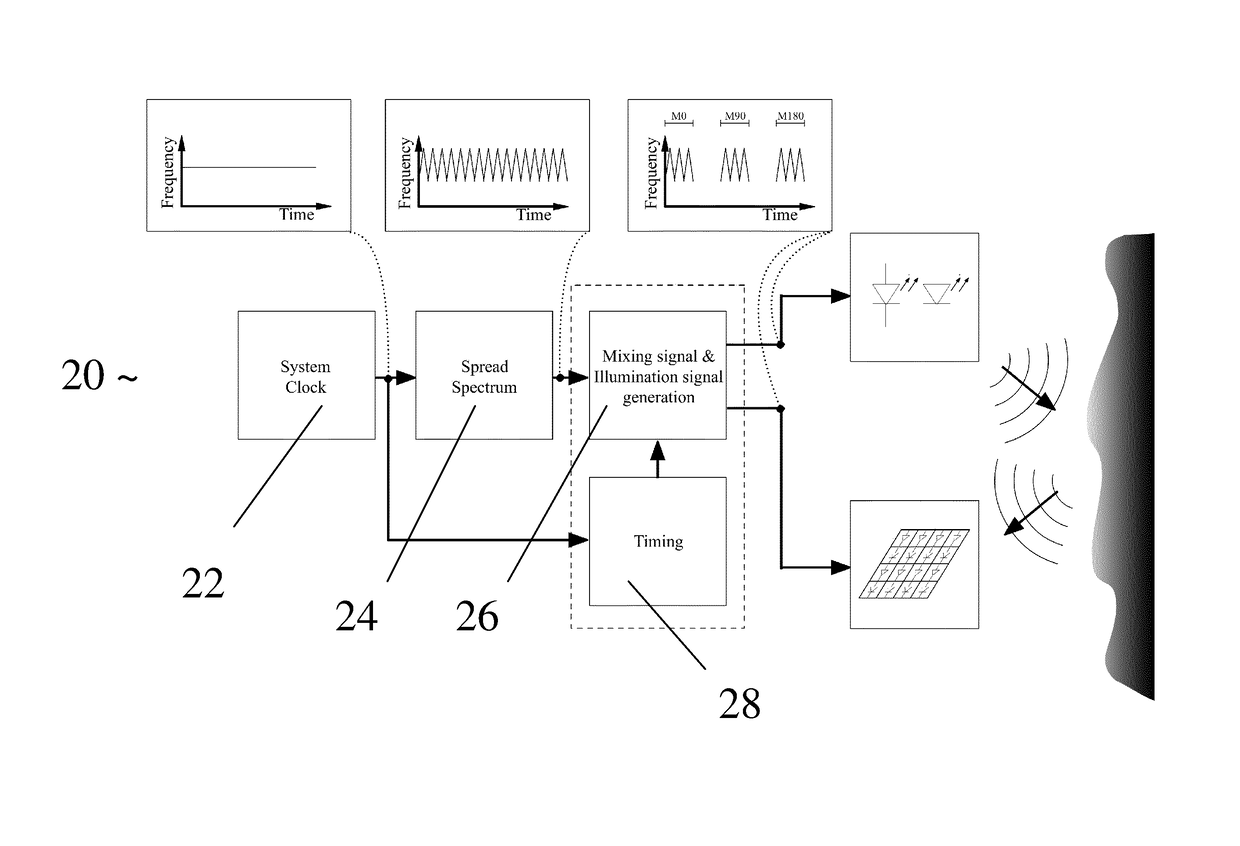

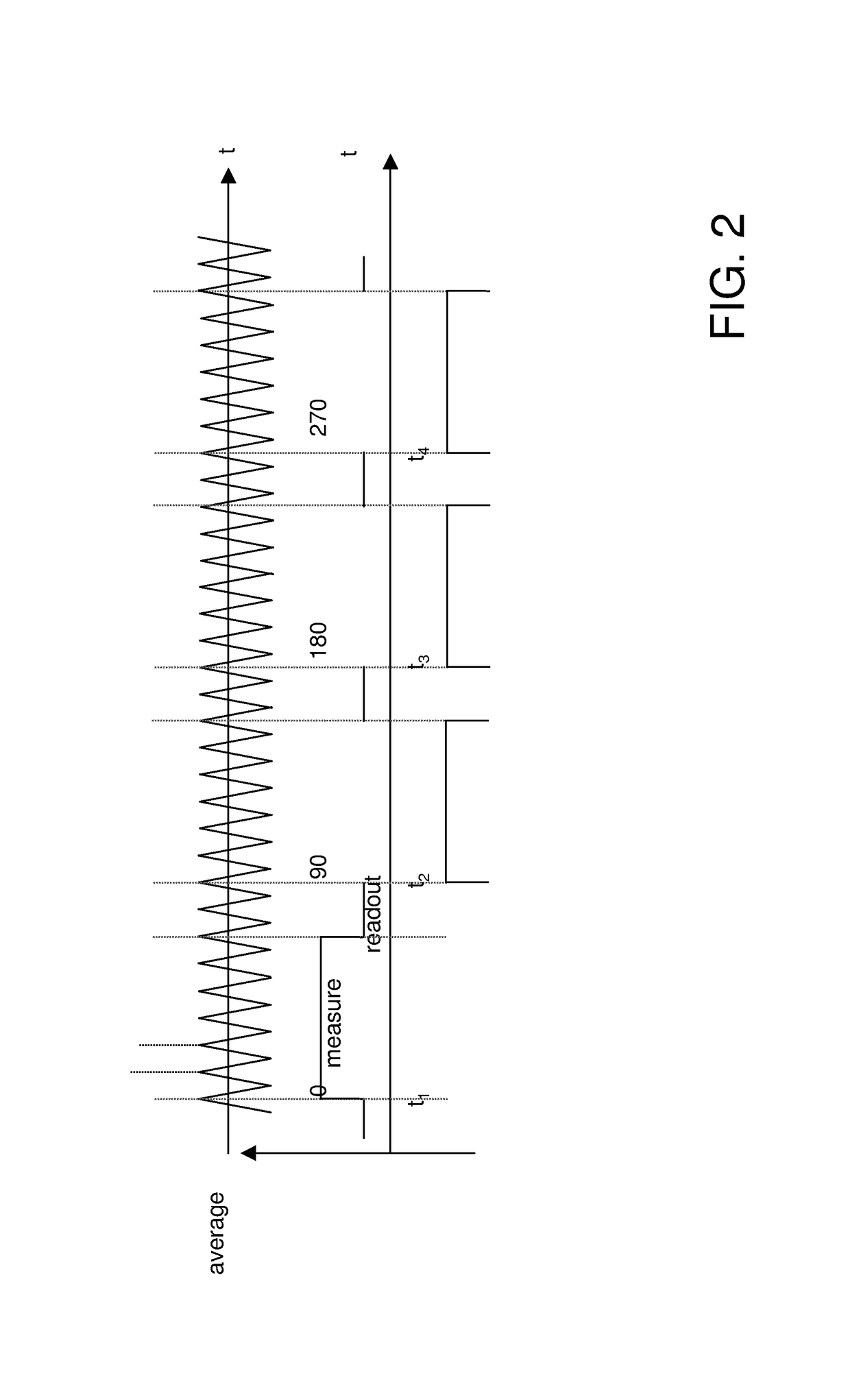

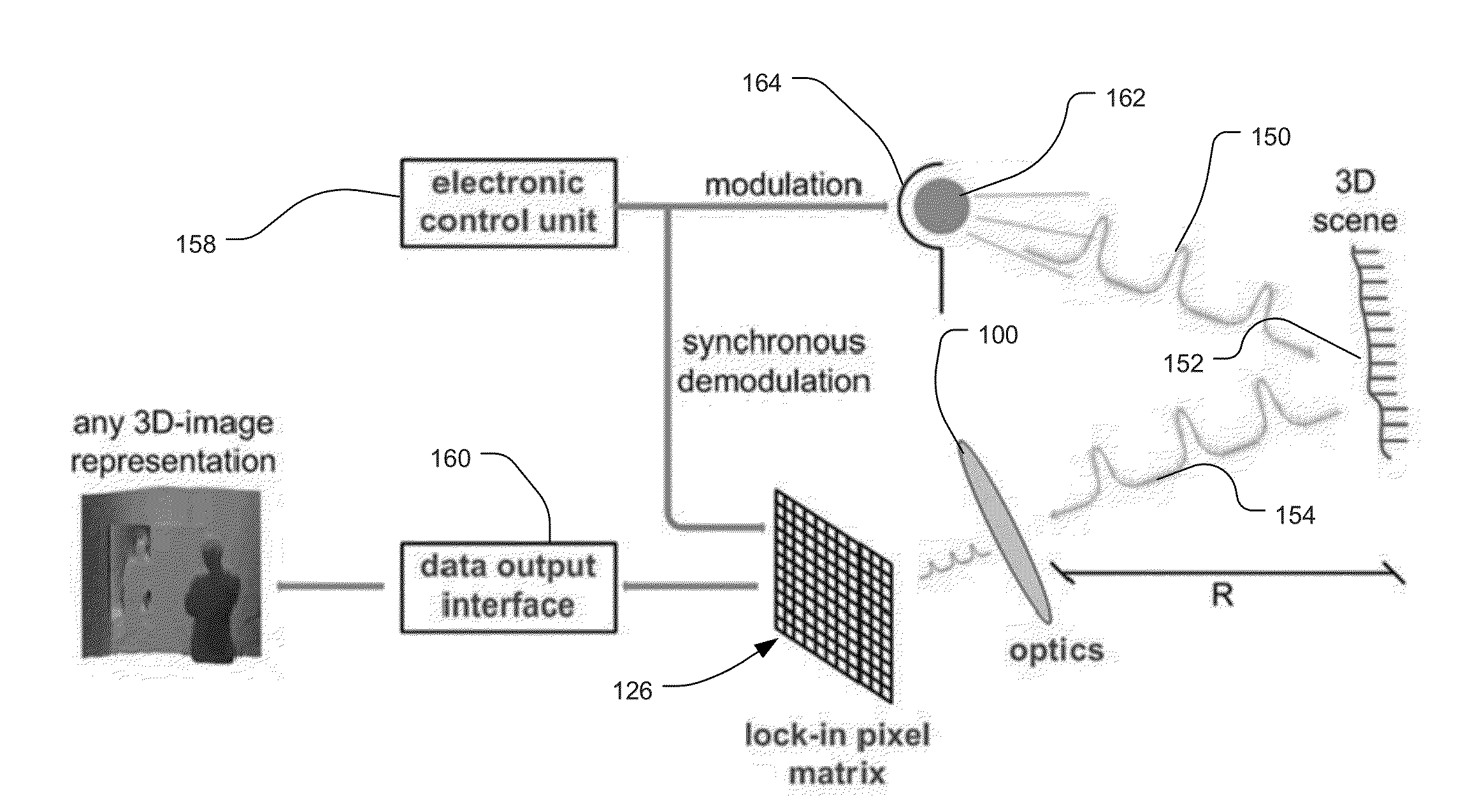

Method and time-of-flight camera for providing distance information

ActiveUS9599712B2Improve accuracyReduce electromagnetic interferenceOptical rangefindersTransmission noise suppressionOptoelectronicsTime-of-flight camera

The invention relates to a method for providing distance information of a scene with a time-of-flight camera (1), comprising the steps of emitting a modulated light pulse towards the scene, receiving reflections of the modulated light pulse from the scene, evaluating a time-of-flight information for the received reflections of the modulated light pulse, and deriving distance information from the time-of-flight information for the received reflections, whereby a spread spectrum signal is applied to a base frequency of the modulation of the light pulse, and the time-of-flight information is evaluated under consideration of the a spread spectrum signal applied to the base frequency of the modulation of the light pulse. The invention further relates to a time-of-flight camera (1) for providing distance information from a scene, whereby the time-of-flight camera (1) performs the above method.

Owner:SOFTKINETIC SENSORS

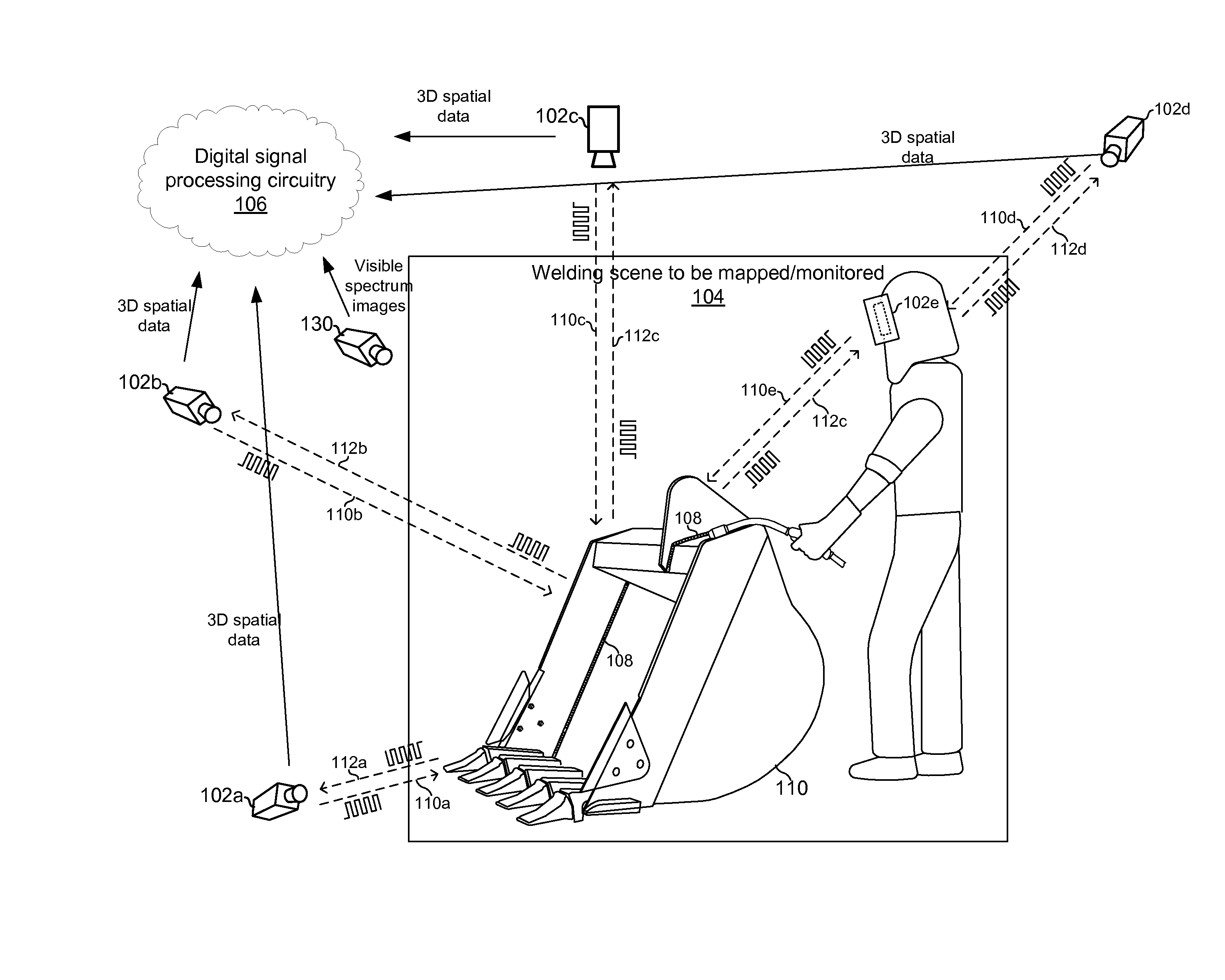

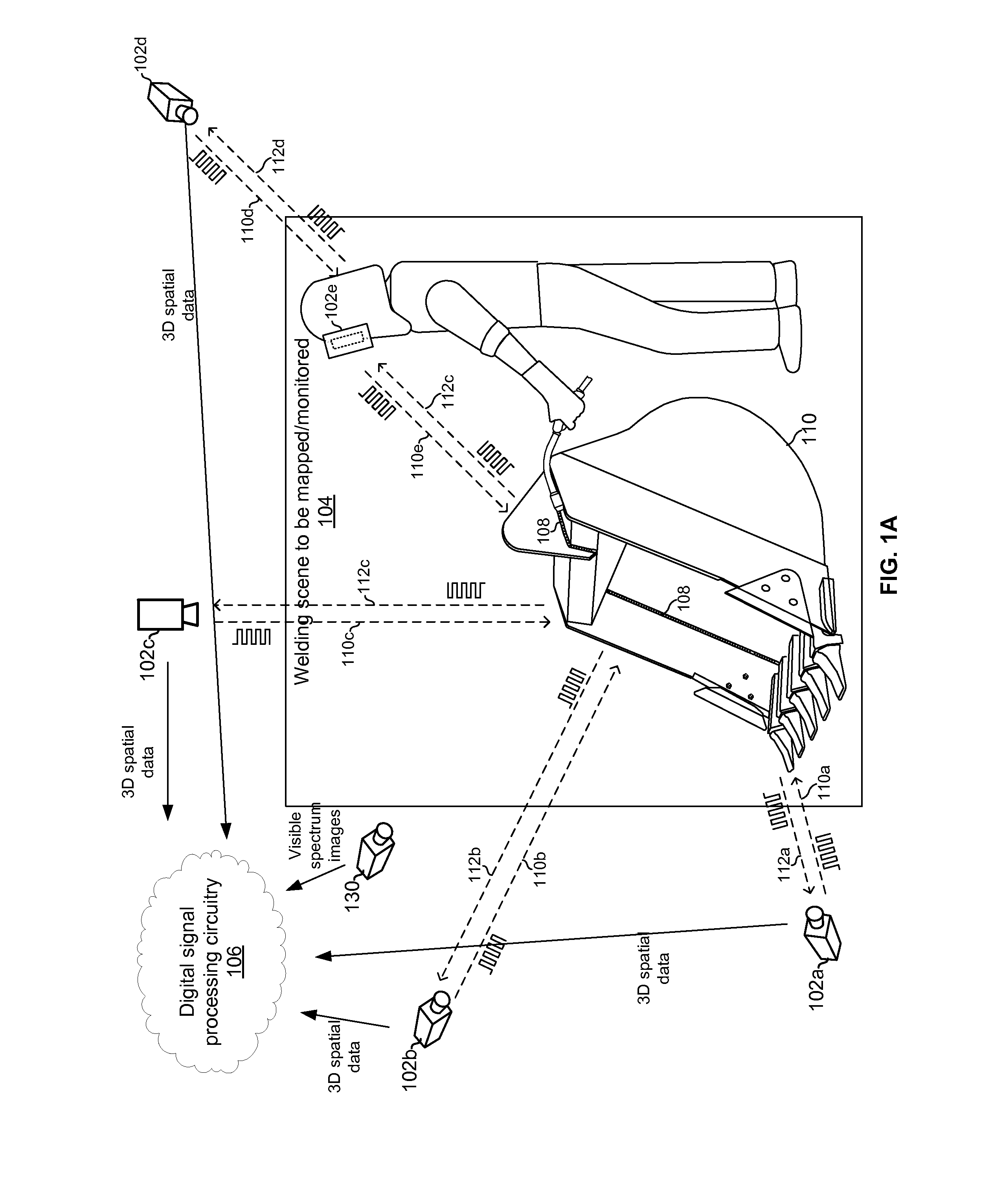

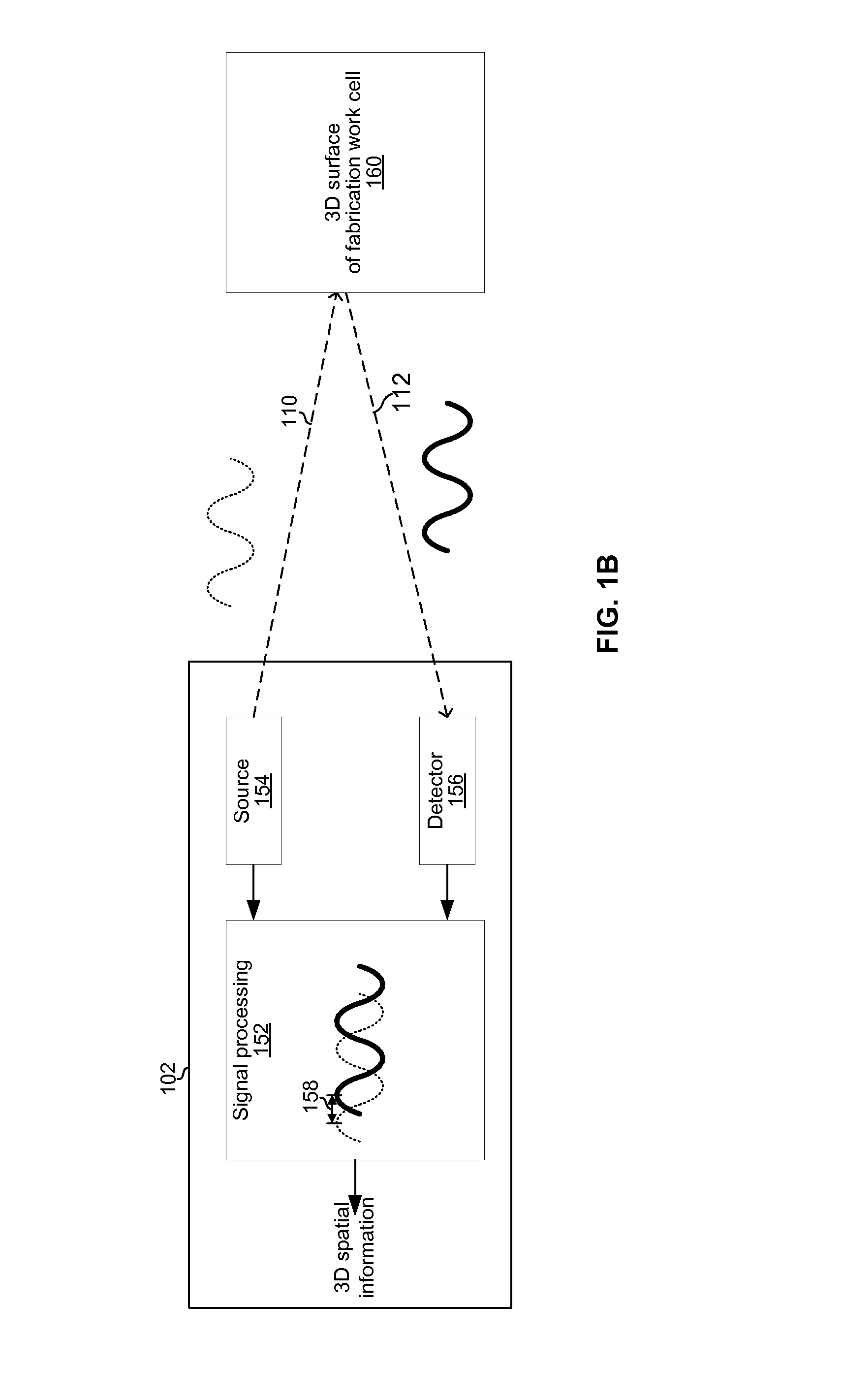

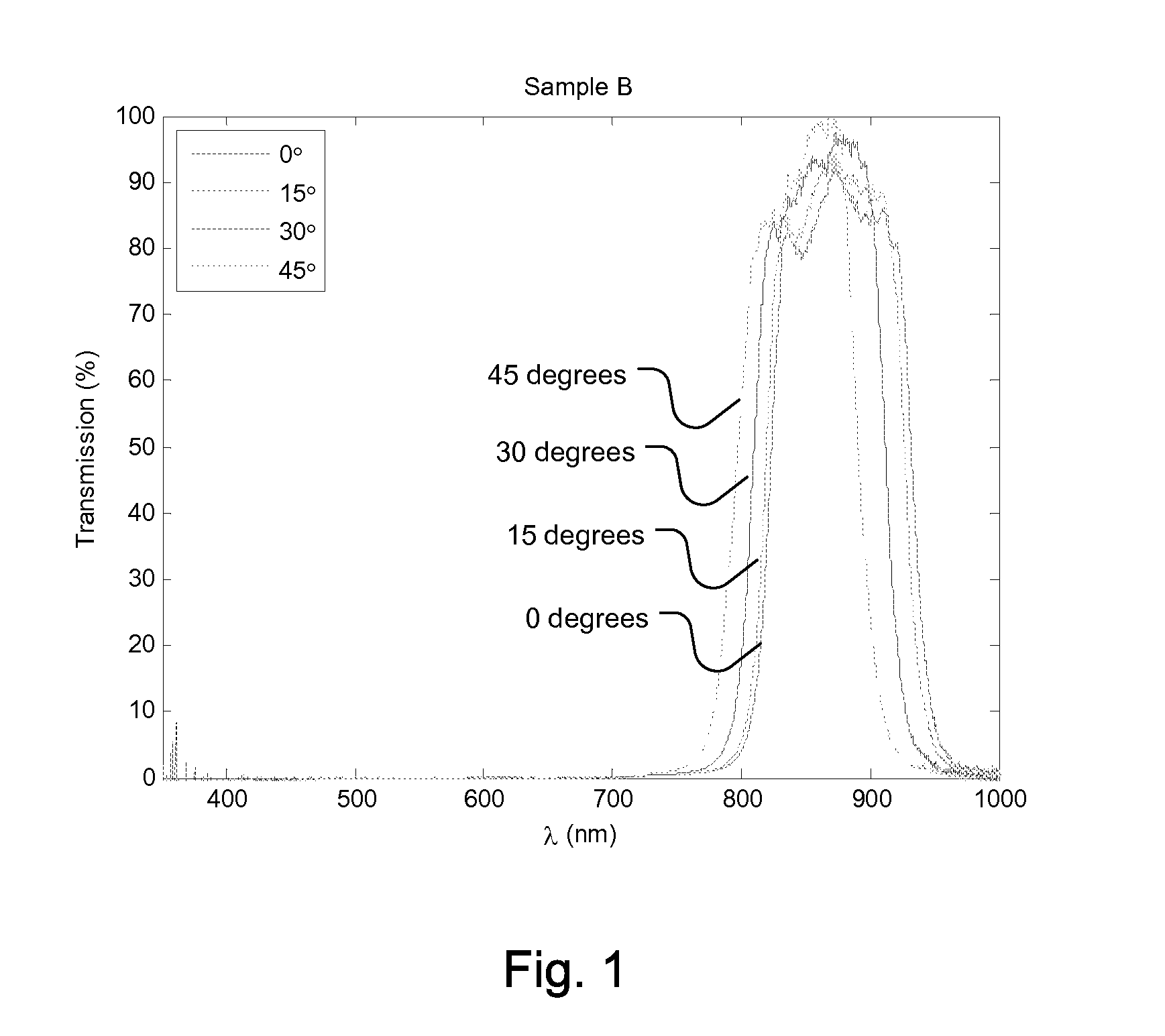

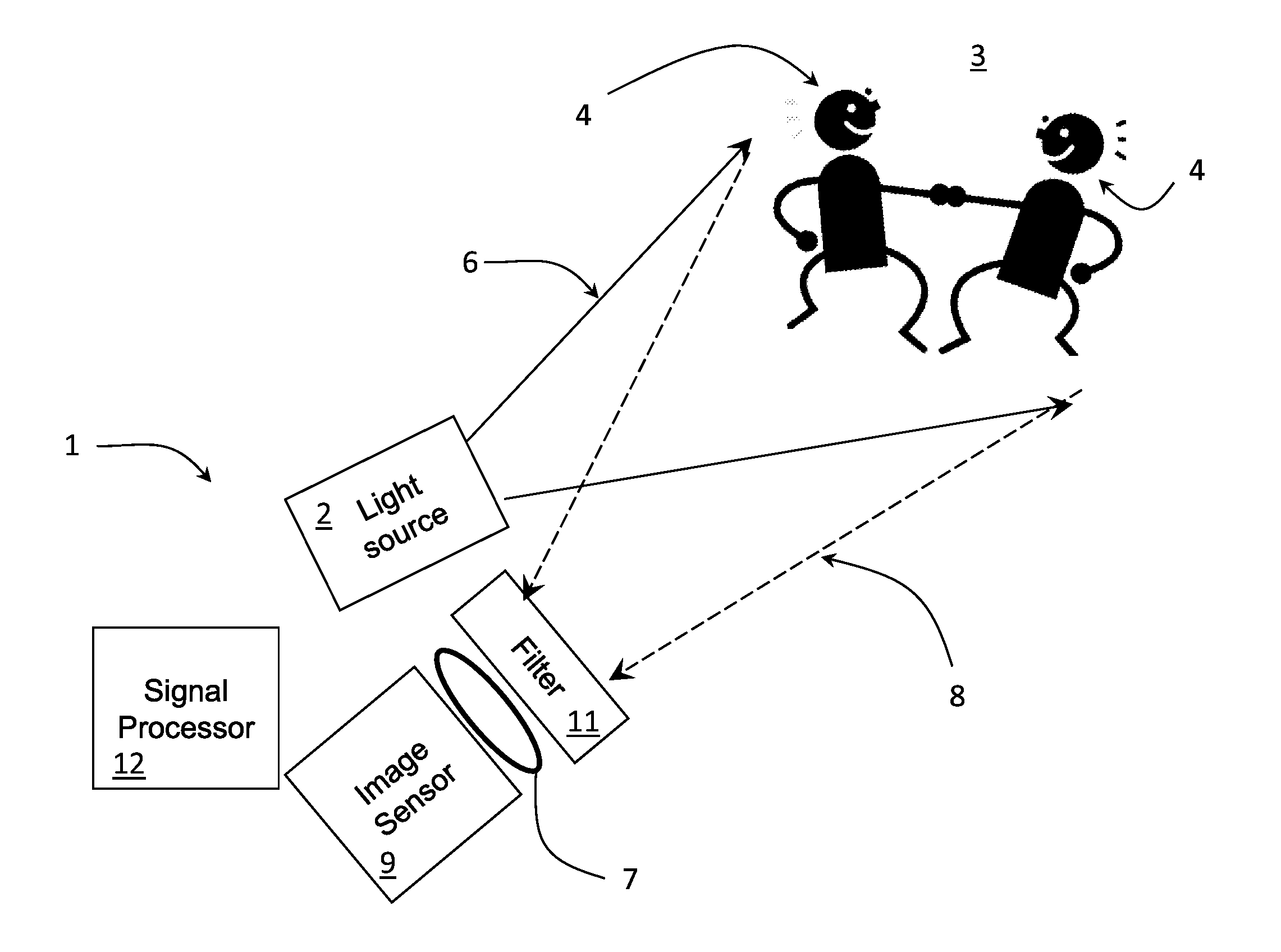

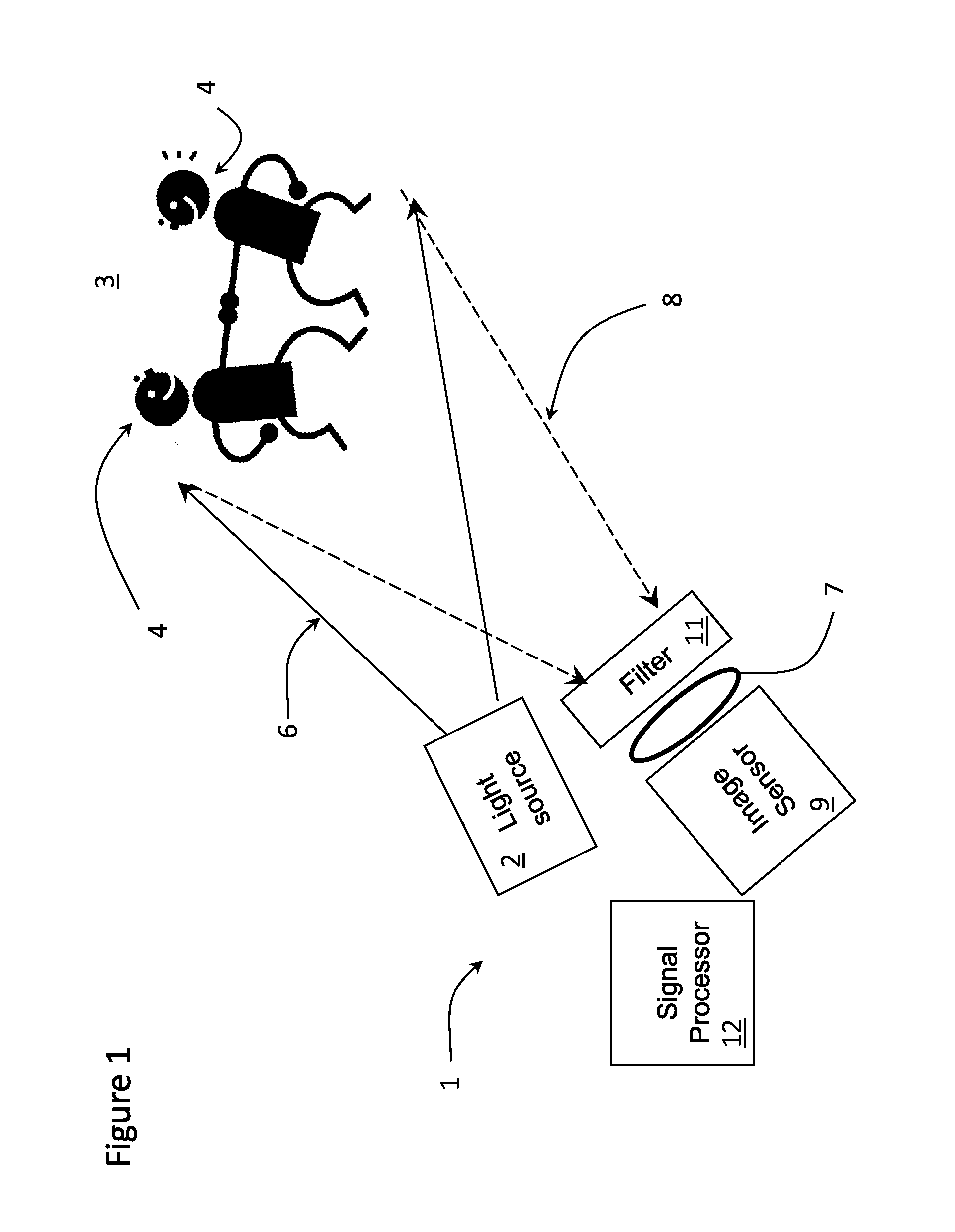

Time of flight camera for welding machine vision

ActiveUS20160375524A1Automatic control devicesPrecision positioning equipmentMachine visionEngineering

A machine-vision-assisted welding system comprises welding equipment, a time of Flight (ToF) camera operable to generate a three-dimensional depth map of a welding scene, digital image processing circuitry operable to extract welding information from the 3D depth map, and circuitry operable to control a function of the welding equipment based on the extracted welding information. The welding equipment may comprise, for example, arc welding equipment that forms an arc during a welding operation, and a light source of the ToF camera may emit light whose spectrum comprises a peak that is centered at a first wavelength, wherein the first wavelength is selected such that a power of the peak is at least a threshold amount above a power of light from the arc at the first wavelength.

Owner:ILLINOIS TOOL WORKS INC

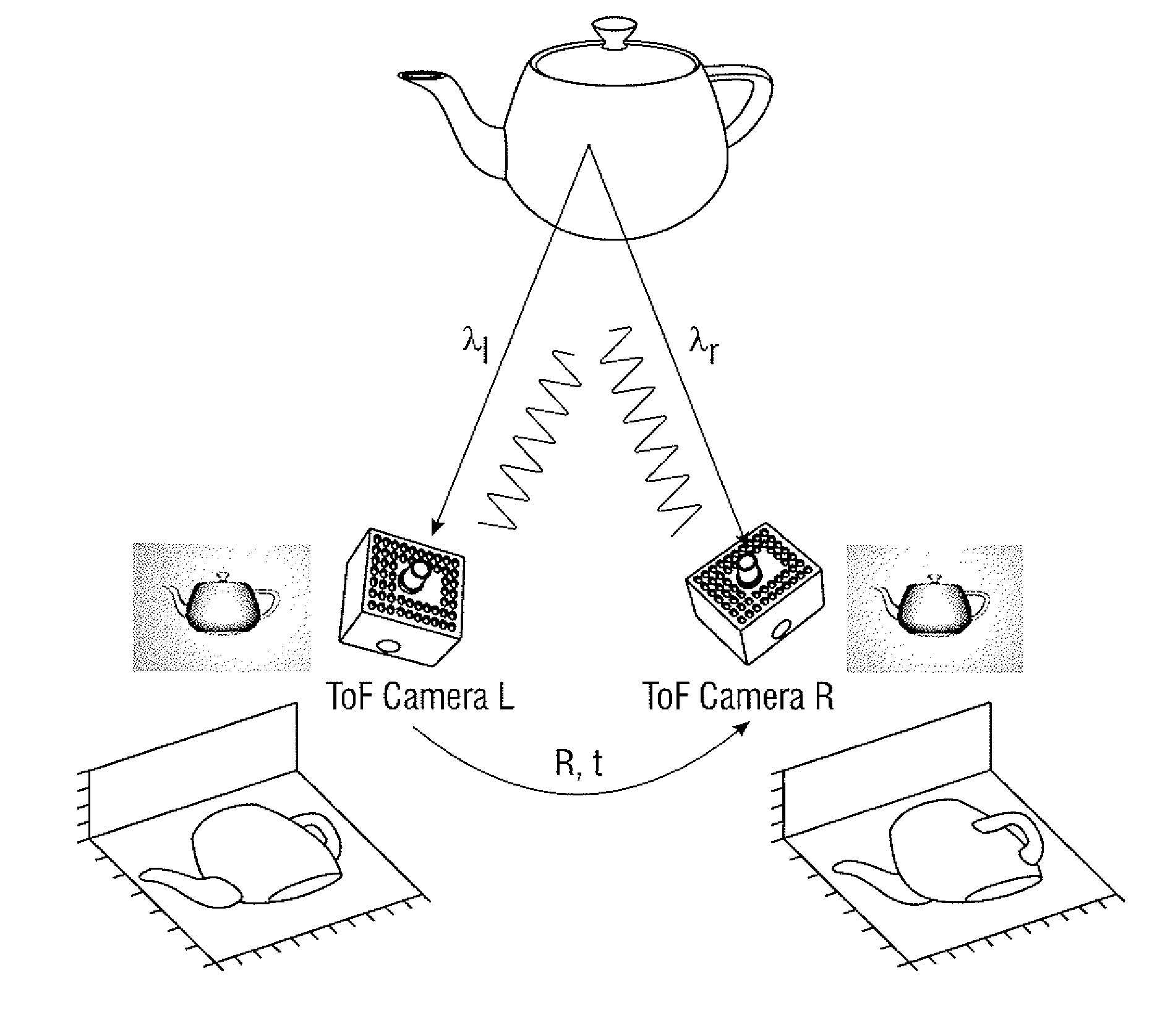

Method of Enhanced Depth Image Acquisition

InactiveUS20140333728A1Optimise depth image formedReduce noiseElectromagnetic wave reradiationSteroscopic systemsTime-of-flight cameraLight signal

The present invention provides a method and apparatus for depth image acquisition of an object wherein at least two time-of-flight cameras are provided and the object is illuminated with a plurality of different light emission states by emitting light signals from each camera. Measurements of the light reflected by the object during each light emission state are obtained at all the cameras wherein the measurements may then be used to optimise the depth images at each of the cameras.

Owner:TECHN UNIV MUNCHEN FORSCHUNGSFORDERUNG & TECHTRANSFER

System and method for alerting visually impaired users of nearby objects

ActiveUS9370459B2Remove distortionWalking aidsCharacter and pattern recognitionVisually impairedPhysical medicine and rehabilitation

A system and method for assisting a visually impaired user including a time of flight camera, a processing unit for receiving images from the time of flight camera and converting the images into signals for use by one or more controllers, and one or more vibro-tactile devices, wherein the one or more controllers activates one or more of the vibro-tactile devices in response to the signals received from the processing unit. The system preferably includes a lanyard means on which the one or more vibro-tactile devices are mounted. The vibro-tactile devices are activated depending on a determined position in front of the user of an object and the distance from the user to the object.

Owner:MAHONEY ANDREW

3D time-of-flight camera and method

InactiveCN102622745AIncrease frame rateImage analysisElectromagnetic wave reradiationPhase shiftedTime-of-flight camera

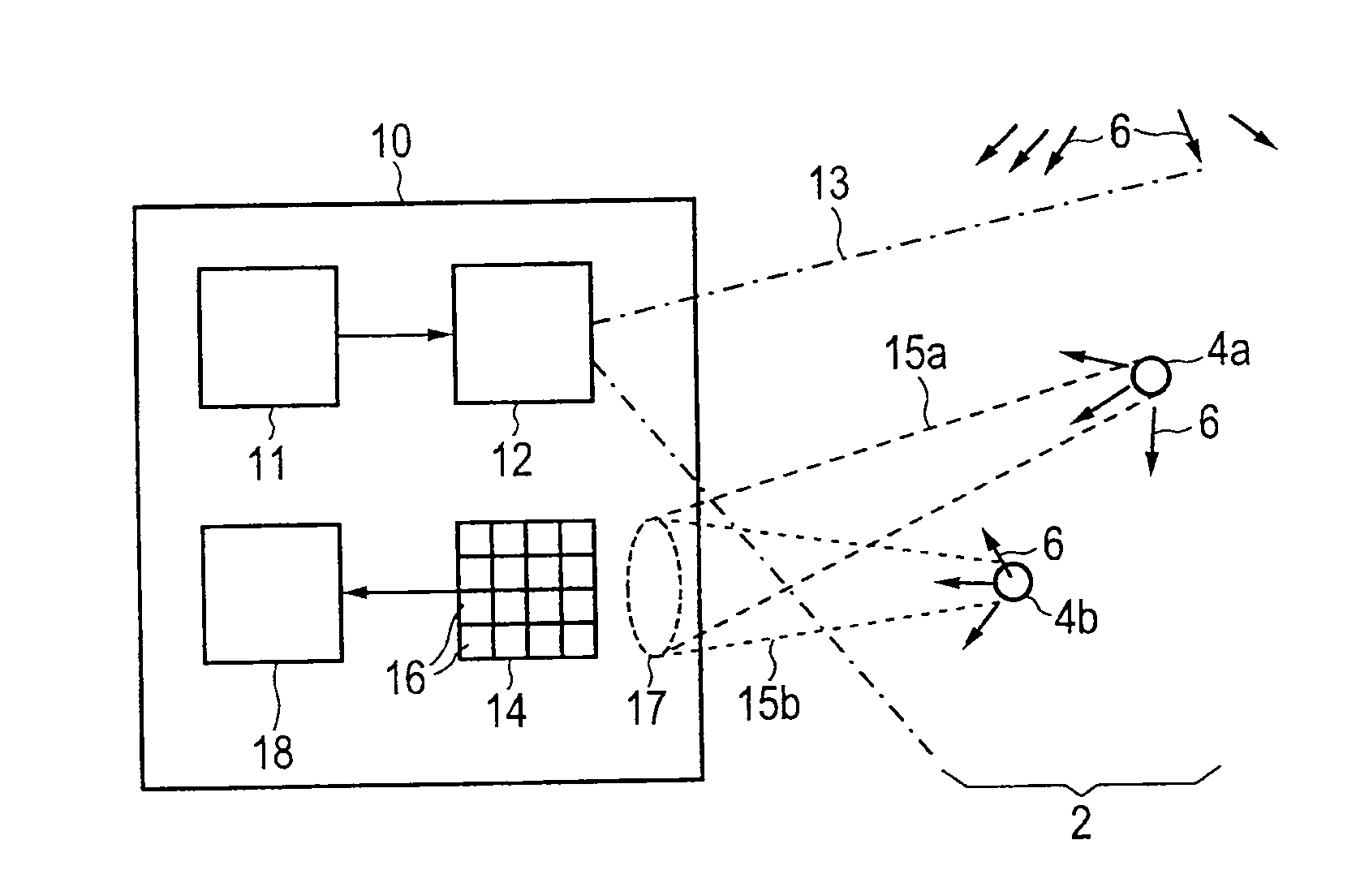

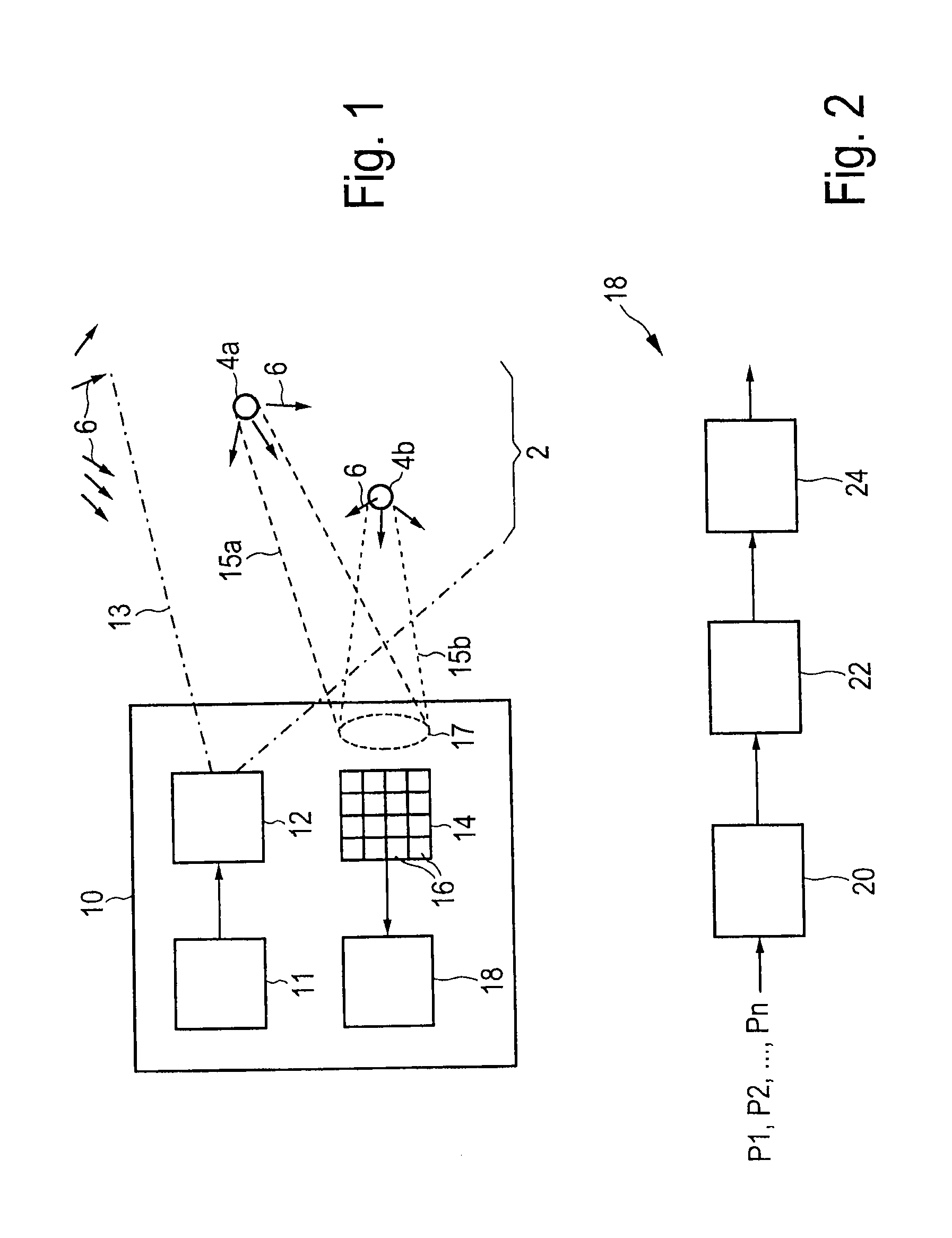

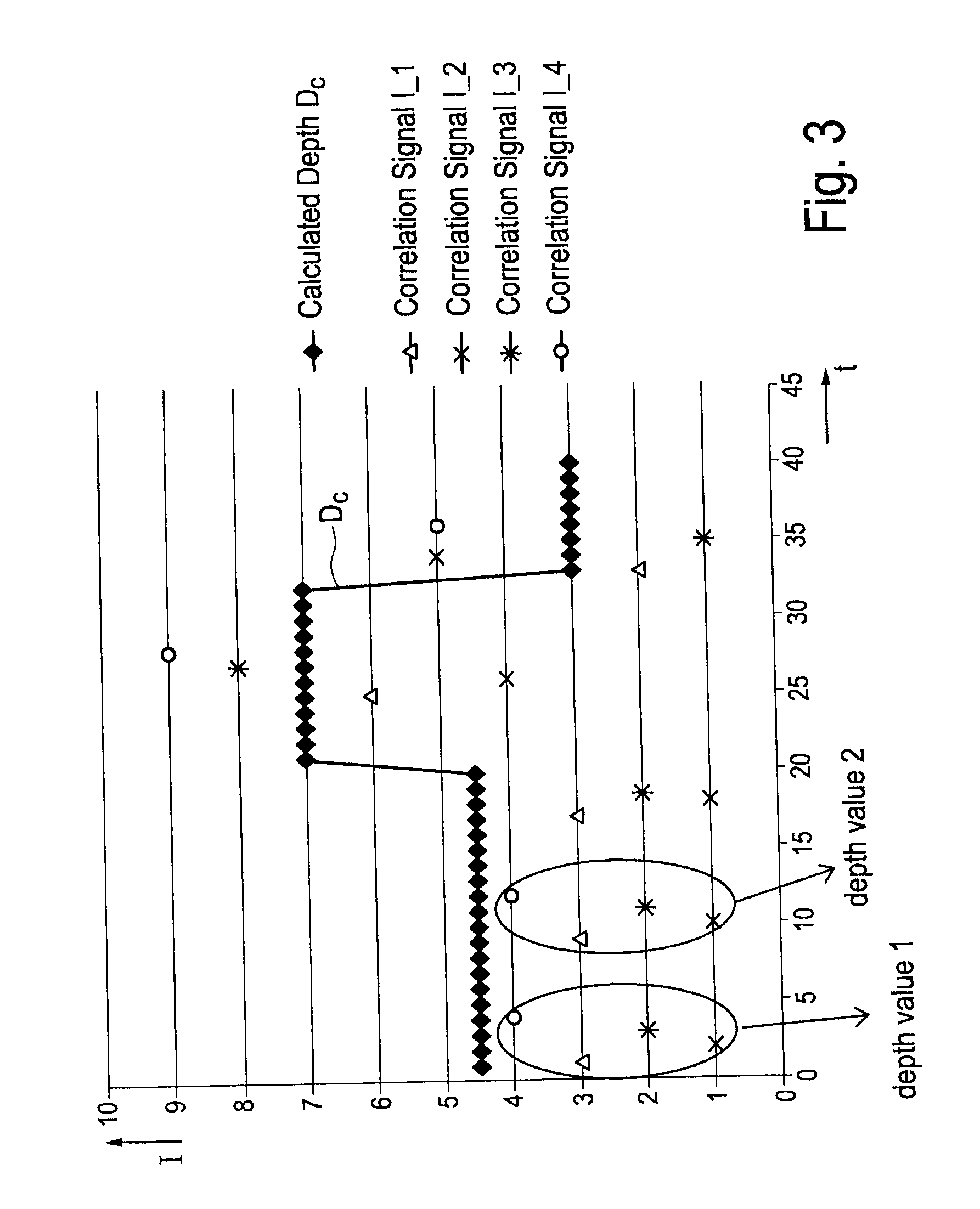

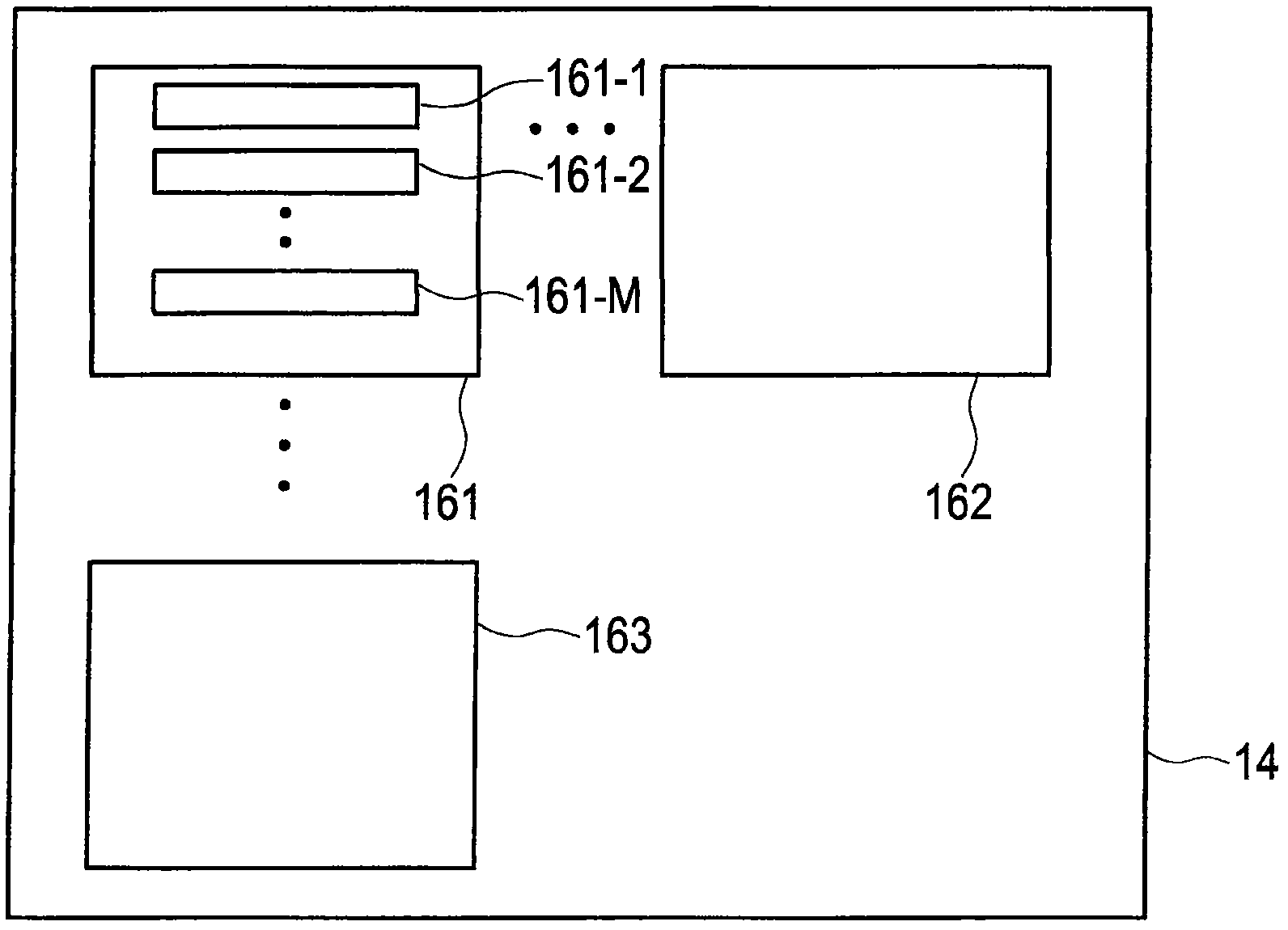

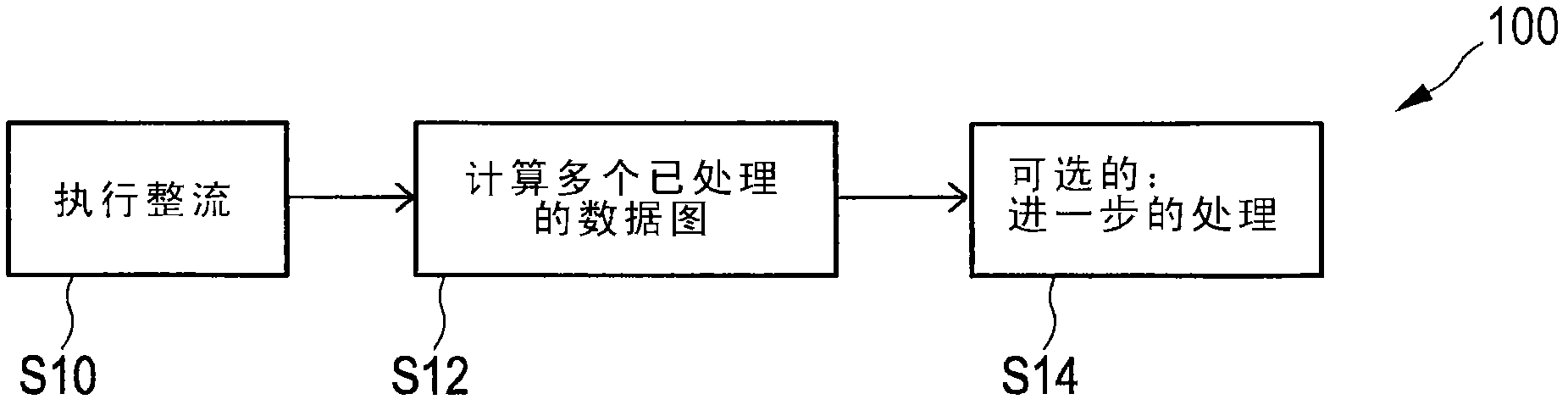

The present invention relates to a 3D time-of-flight camera and a corresponding method for acquiring information about a scene, in particular for acquiring depth images of a scene, information about phase shifts between a reference signal and incident radiation of a scene or environmental information about the scene. To increase the frame rate, the proposed camera comprises a radiation source (12) that generates and emits electromagnetic radiation (13) for illuminating said scene (2), a radiation detector (14) that detects electromagnetic radiation (15a, 15b) reflected from said scene (2), said radiation detector (14) comprising one or more pixels (16), in particular an array of pixels, wherein said one or more pixels individually detect electromagnetic radiation reflected from said scene, wherein a pixel comprises two or more detection units (161-1, 161-2, ...) each detecting samples of a sample set of two or more samples and an evaluation unit (18) that evaluates said sample sets of said two or more detection units and generates scene-related information from said sample sets, wherein said evaluation unit comprises a rectification unit (20) that rectifies a subset of samples of said sample sets by use of a predetermined rectification operator defining a correlation between samples detected by two different detection units of a particular pixel, and an information value calculator (22) that determines an information value of said scene-related information from said subset of rectified samples and the remaining samples of the sample sets.

Owner:SONY CORP

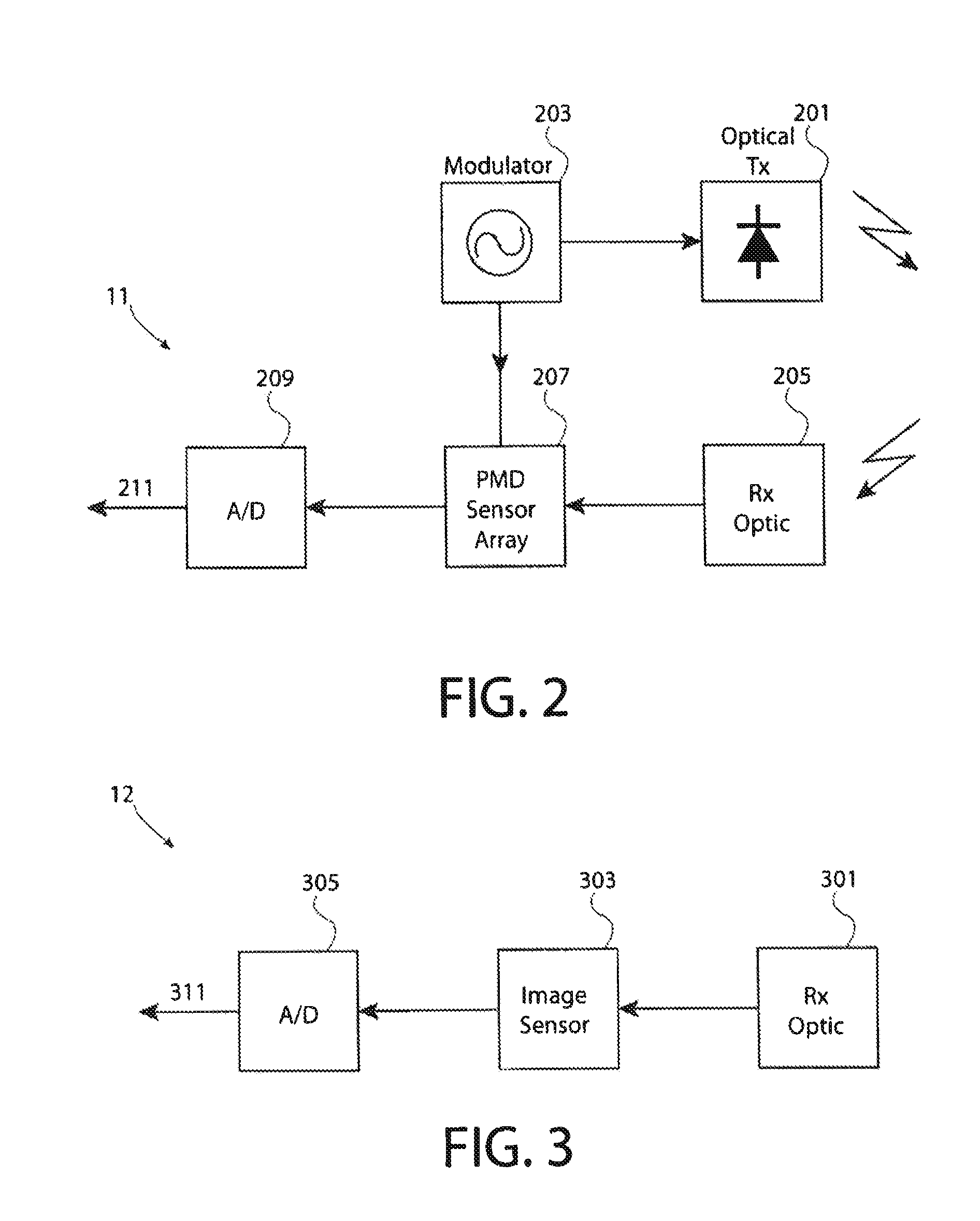

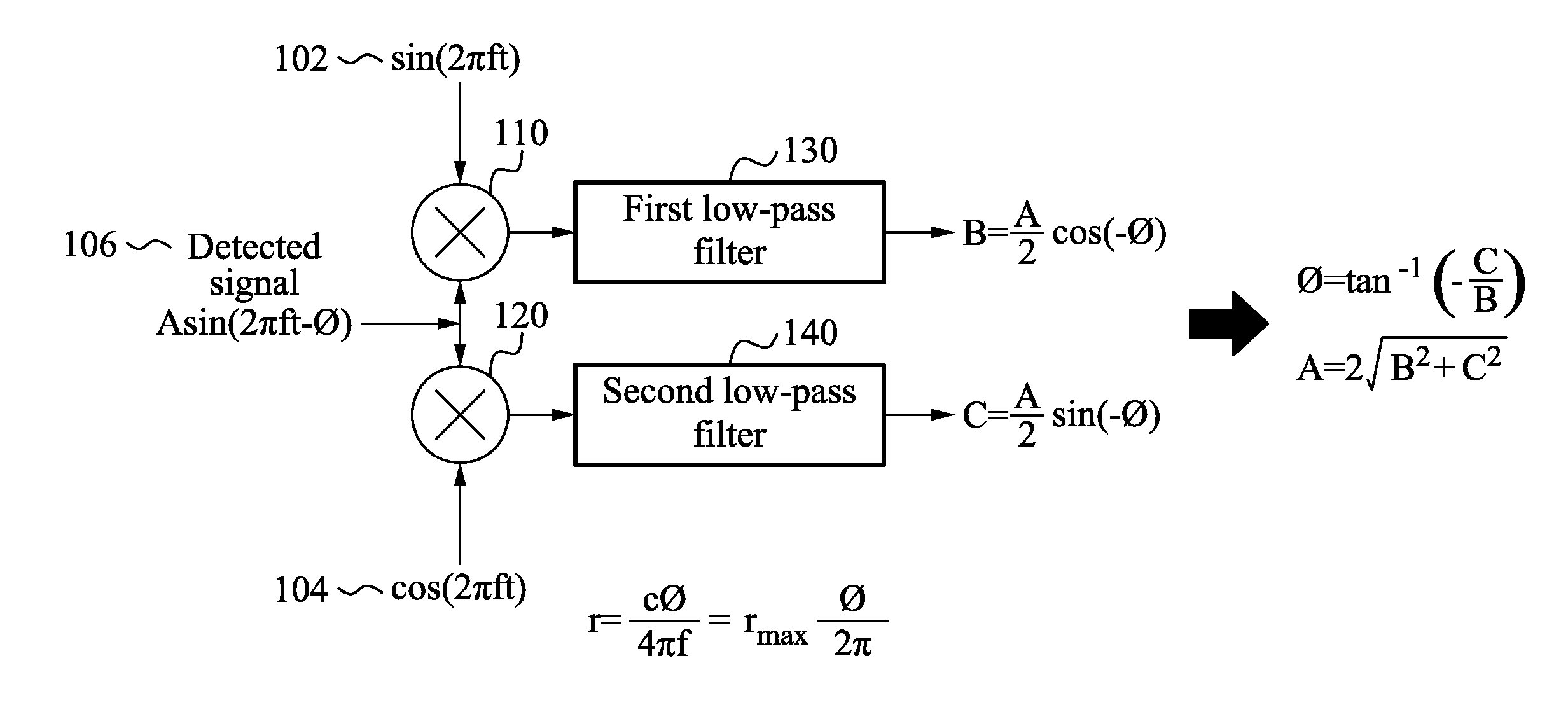

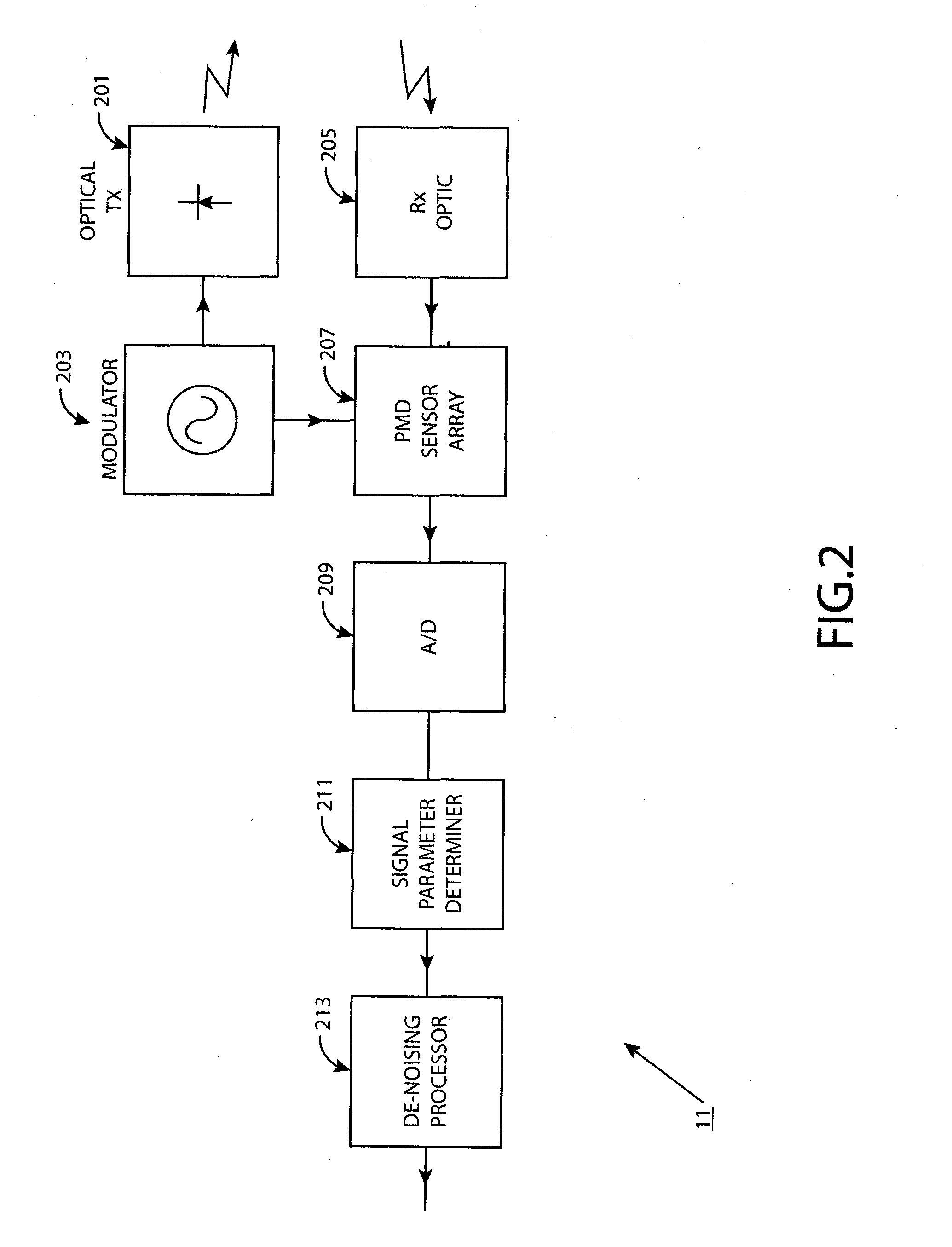

A method and apparatus for de-noising data from a distance sensing camera

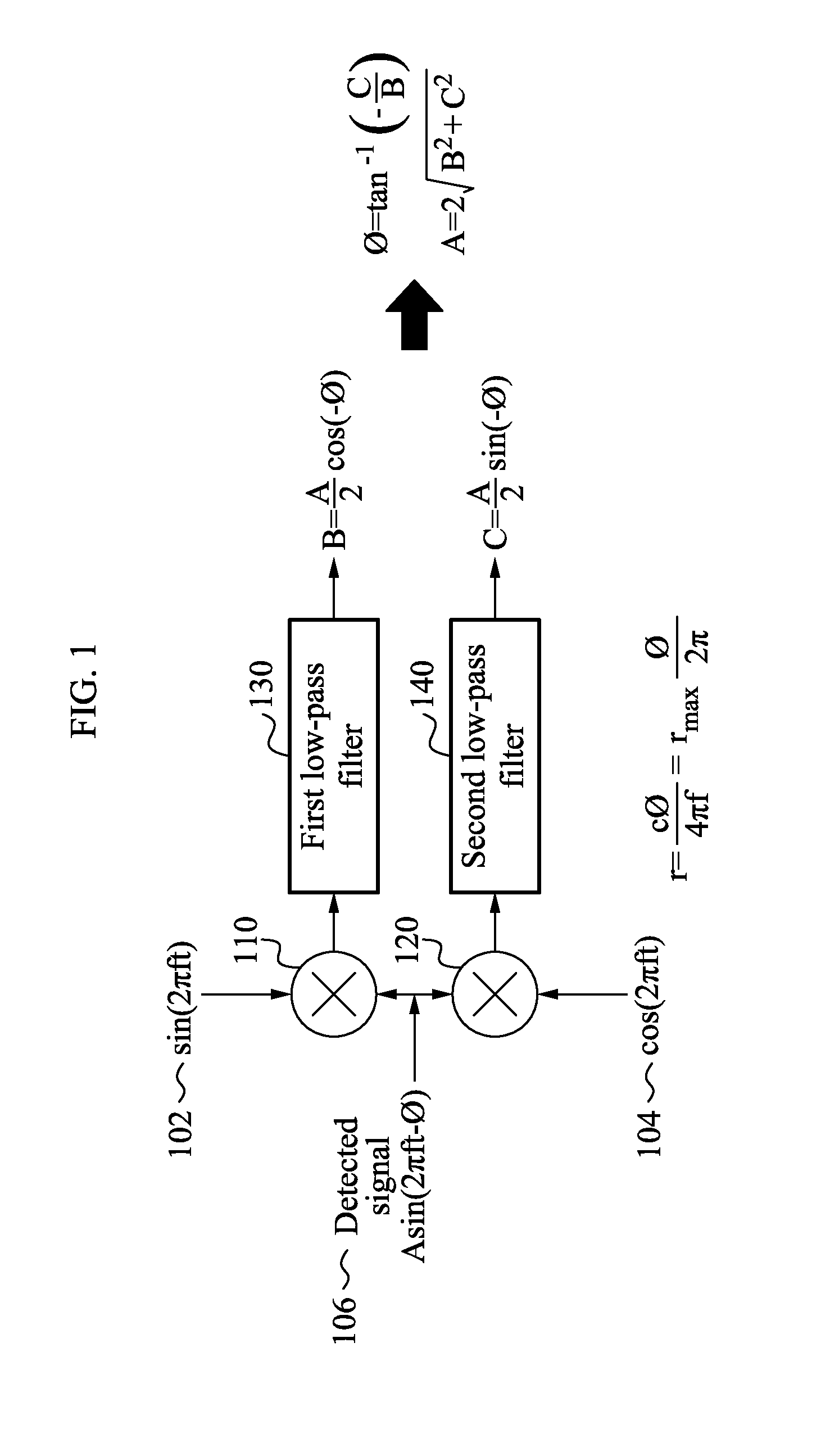

It is inter alia disclosed to determine a phase difference between a light signal transmitted by a time of flight camera system and a reflected light signal received by at least one pixel sensor of an array of pixel sensors in an image sensor of the time of flight camera system, wherein the reflected light signal received by the at least one pixel sensor is reflected from an object illuminated by the transmitted light signal (301); determine an amplitude of the reflected light signal received by the at least one pixel sensor (301); combine the amplitude and phase difference for the at least one pixel sensor into a combined signal parameter for the at least one pixel sensor (307); and de-noise the combined signal parameter for the at least one pixel sensor by filtering with a filter the combined parameter for the at least one pixel sensor (309).

Owner:NOKIA TECHNOLOGLES OY

Time-of-flight camera system

ActiveUS20160295193A1Refining and enhancing qualityHigh quality depthmapElectromagnetic wave reradiationSteroscopic systemsTime-of-flight cameraVideo camera

The invention relates to a TOF camera system comprising several cameras, at least one of the cameras being a TOF camera, wherein the cameras are assembled on a common substrate and are imaging the same scene simultaneously and wherein at least two cameras are driven by different driving parameters.

Owner:SONY DEPTHSENSING SOLUTIONS SA NV

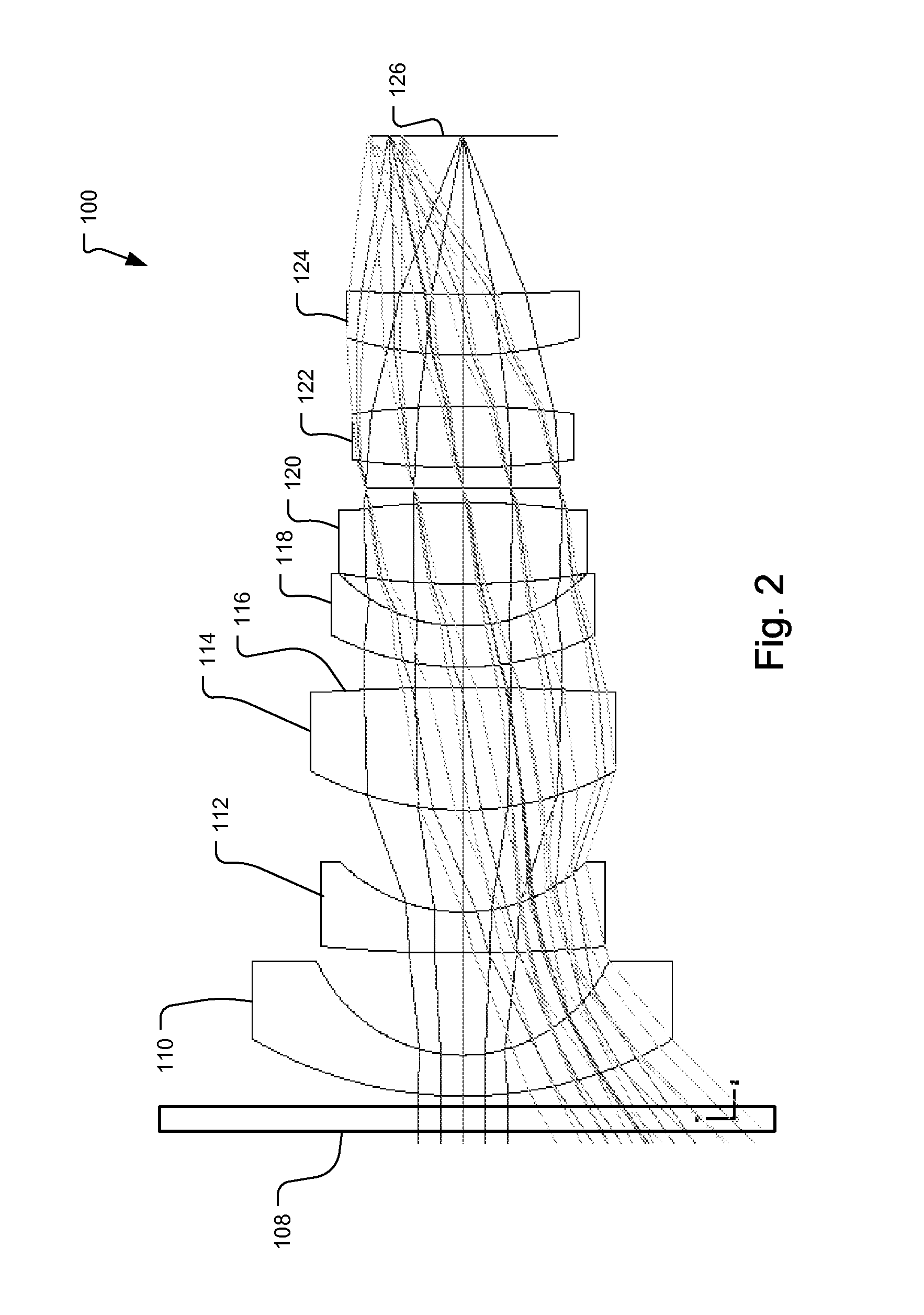

Optical Filter on Objective Lens for 3D Cameras

InactiveUS20150358601A1Broaden the spectral widthReduce spectral widthOptical filtersCamera filtersBandpass filteringTime-of-flight camera

An optical bandpass filter for background light suppression in a three-dimensional time of flight camera is added on one of the lens surfaces inside the objective lens system.

Owner:HEPTAGON MICRO OPTICS

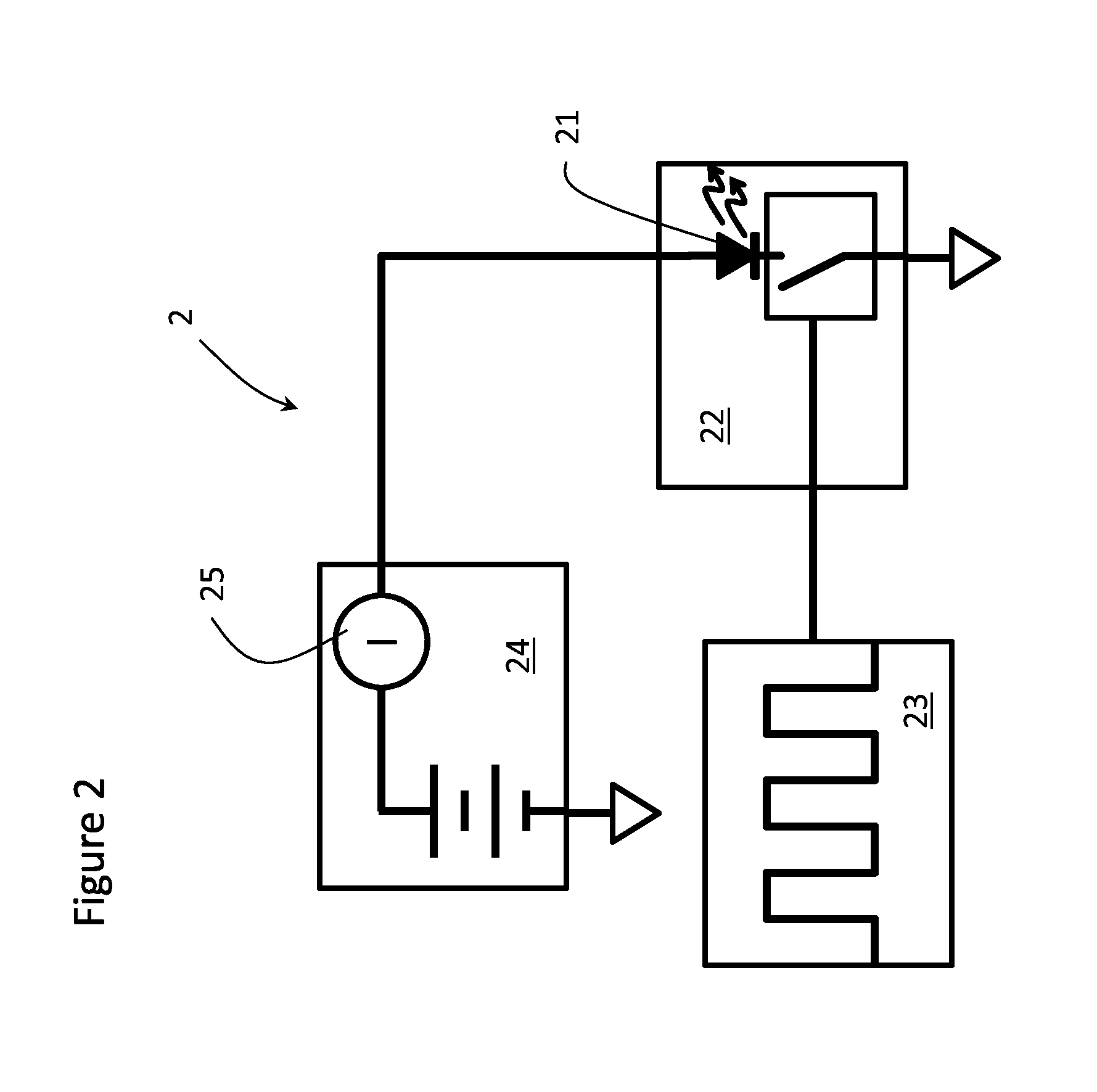

Power efficient pulsed laser driver for time of flight cameras

ActiveUS20140104592A1Optical rangefindersElectromagnetic wave reradiationTime-of-flight cameraElectric equipment

A time of flight camera device comprises an light source for illuminating an environment including an object with light of a first wavelength; an image sensor for measuring time the light has taken to travel from the light source to the object and back; optics for gathering reflected light from the object and imaging the environment onto the image sensor; driver electronics for controlling the light source with a high speed signal at a clock frequency; and a controller for calculating the distance between the object and the illumination unit. To minimize power consumption and resulting heat dissipation requirements, the light source / driver electronics are operated at their resonant frequency. Ideally, the driver electronics includes a reactance adjuster for changing a resonant frequency of the illumination unit and driver electronics system.

Owner:LUMENTUM OPERATIONS LLC

Depth calculation imaging method based on flight time TOF camera

ActiveCN102663712AAvoid changeImplementing the super-resolution processImage enhancementImage analysisComputer visionHigh resolution

The invention belongs to the field of computer vision. In order to achieve the balance between general quantization error and overload error to ensure that the quantization output noise-signal ratio is optimum, the method adopts the technical scheme of a depth calculation imaging method based on a flight time TOF camera, and the method comprises the following steps: firstly, obtaining respective internal parameters including focal distances and optical centers and external parameters including rotation and translation of the TOF camera and a color camera after camera calibration, and obtaining a plurality of depth scatters on a high resolution diagram; secondly, building an autoregression model item of an energy function; thirdly, building an basic data item and a final solve equation of the energy function, building a data item of the energy function through an initial depth scatter diagram, and combining the data item and an autoregression item with a factor Lambada into a whole body to be served as a final solve equation through a lagrange equation; and fourthly, performing solving on an optimized equation through a linear function optimization method. The method is mainly applied to digital image processing.

Owner:TIANJIN UNIV

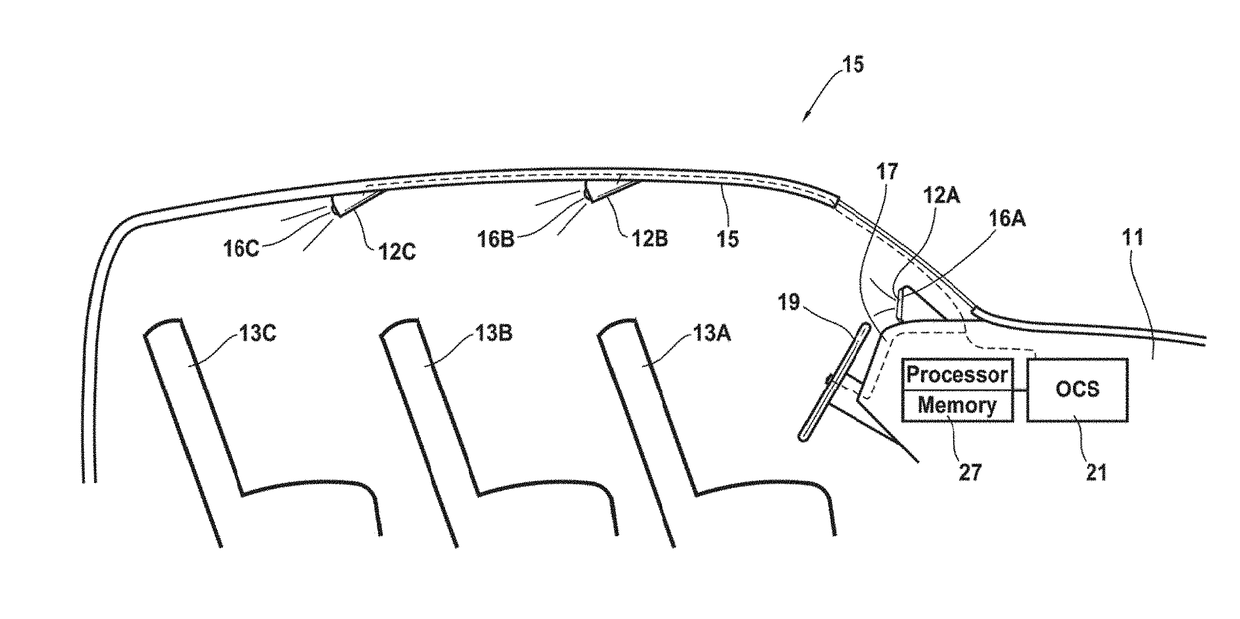

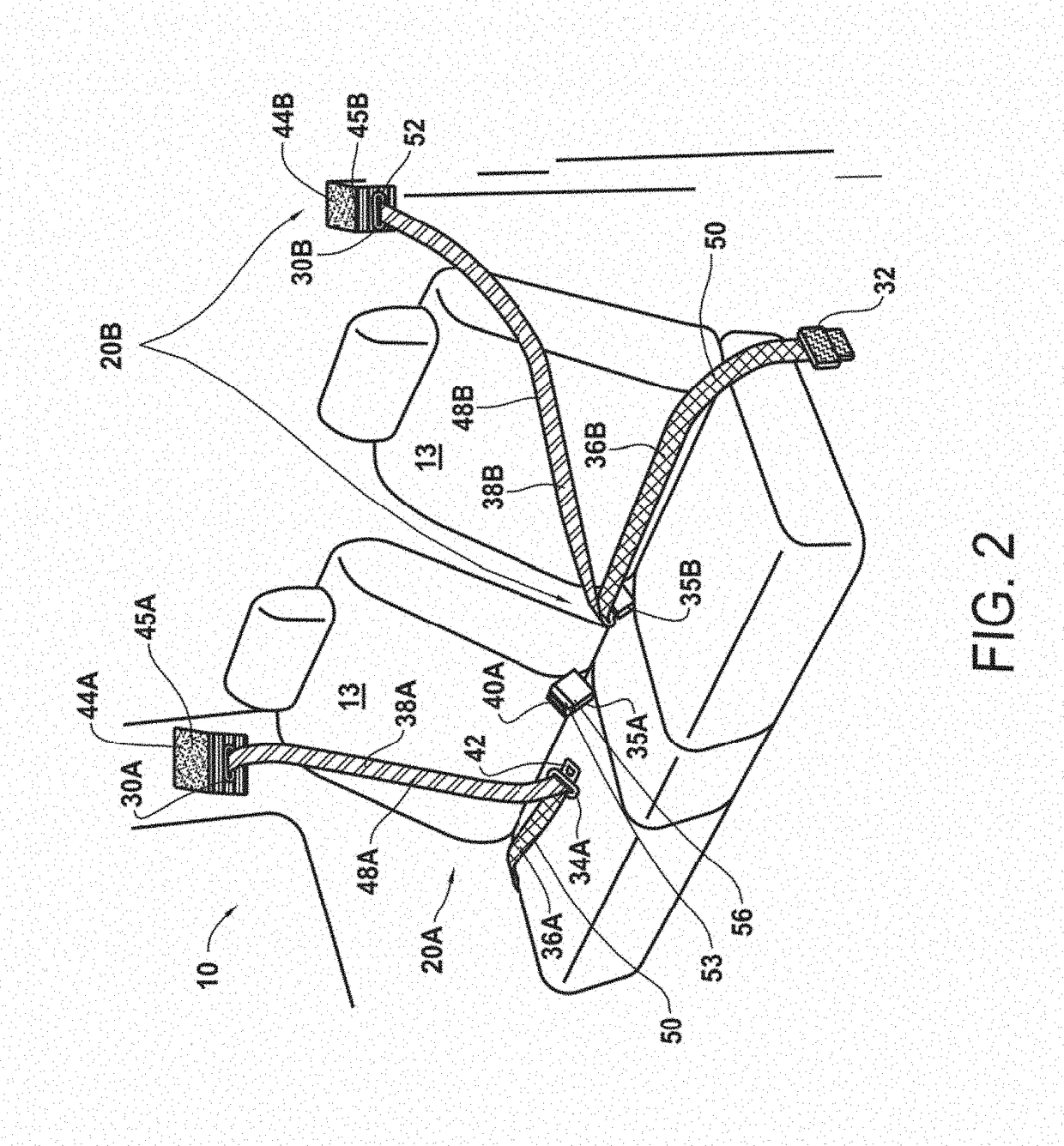

Detection and Monitoring of Occupant Seat Belt

In one embodiment, a system of detecting seat belt operation in a vehicle includes at least one light source configured to emit a predetermined wavelength of light onto structures within the vehicle, wherein at least one of the structures is a passenger seat belt assembly having a pattern that reflects the predetermined wavelength at a preferred luminance. At least one 3-D time of flight camera is positioned in the vehicle to receive reflected light from the structures in the vehicle and provide images of the structures that distinguish the preferred luminance of the pattern from other structures in the vehicle. A computer processor connected to computer memory and the camera includes computer readable instructions causing the processor to reconstruct 3-D information in regard to respective images of the structures and calculate a depth measurement of the distance of the reflective pattern on the passenger seat belt assembly from the camera.

Owner:JOYSON SAFETY SYST ACQUISITION LLC

Time of flight camera with rectangular field of illumination

Owner:AMS SENSORS SINGAPORE PTE LTD

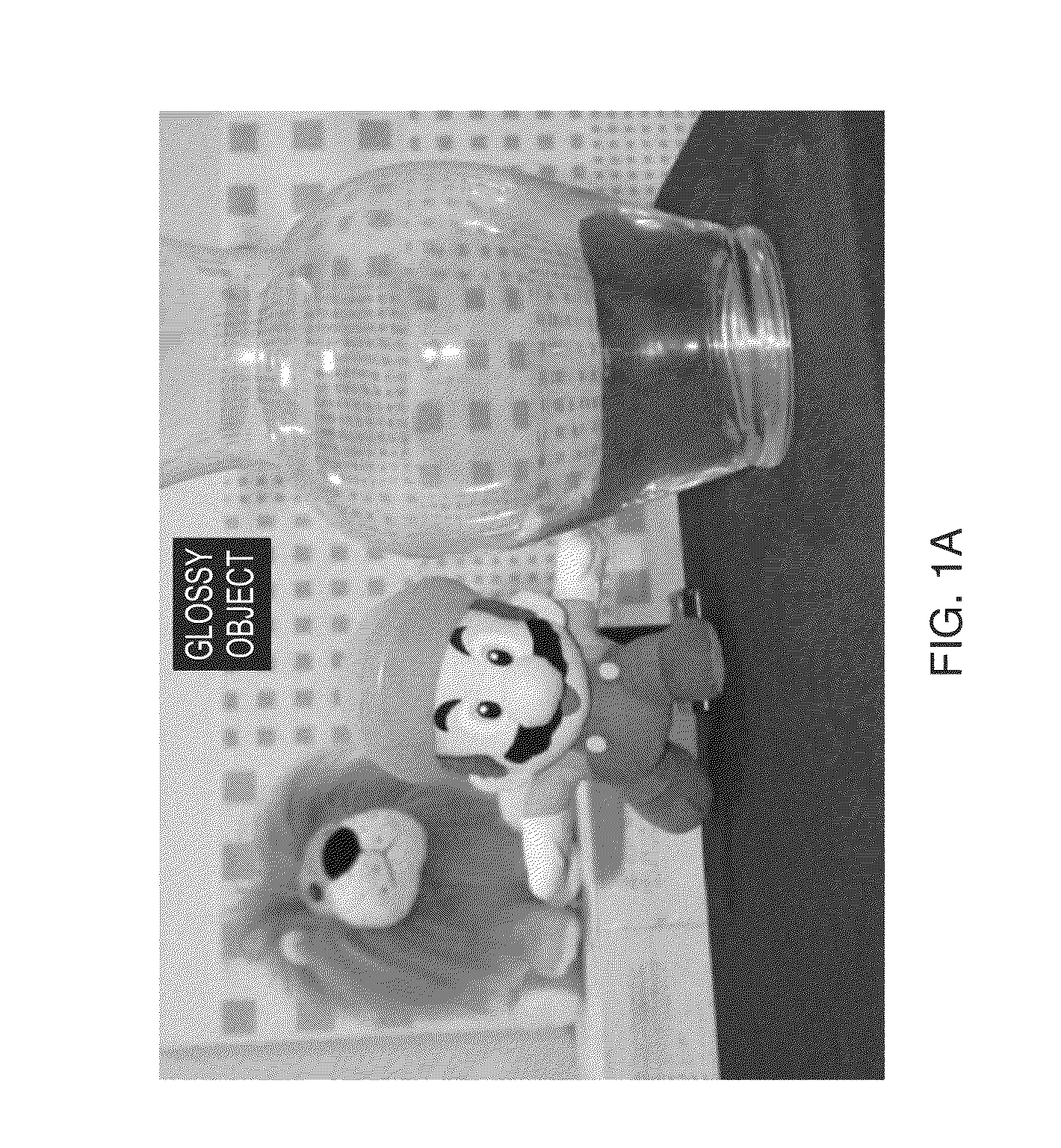

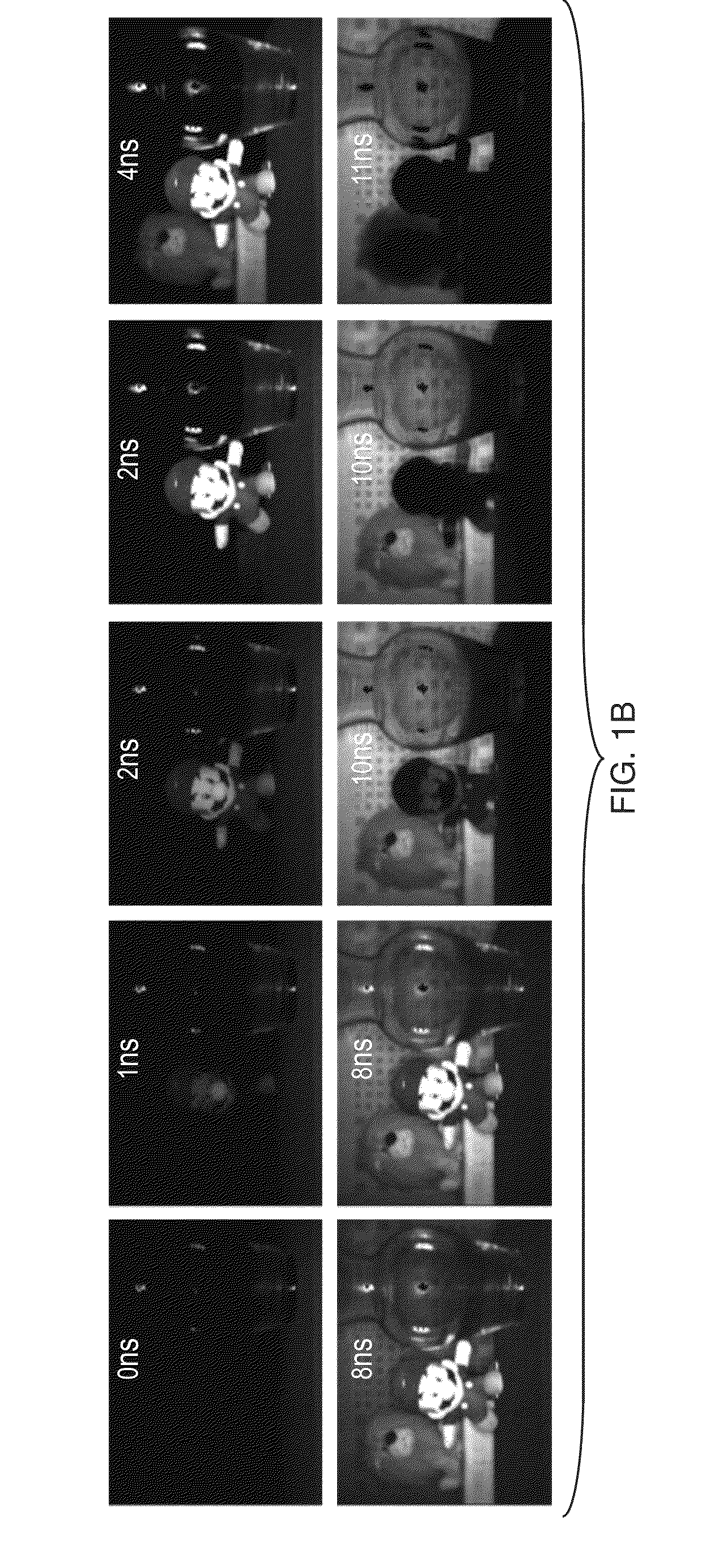

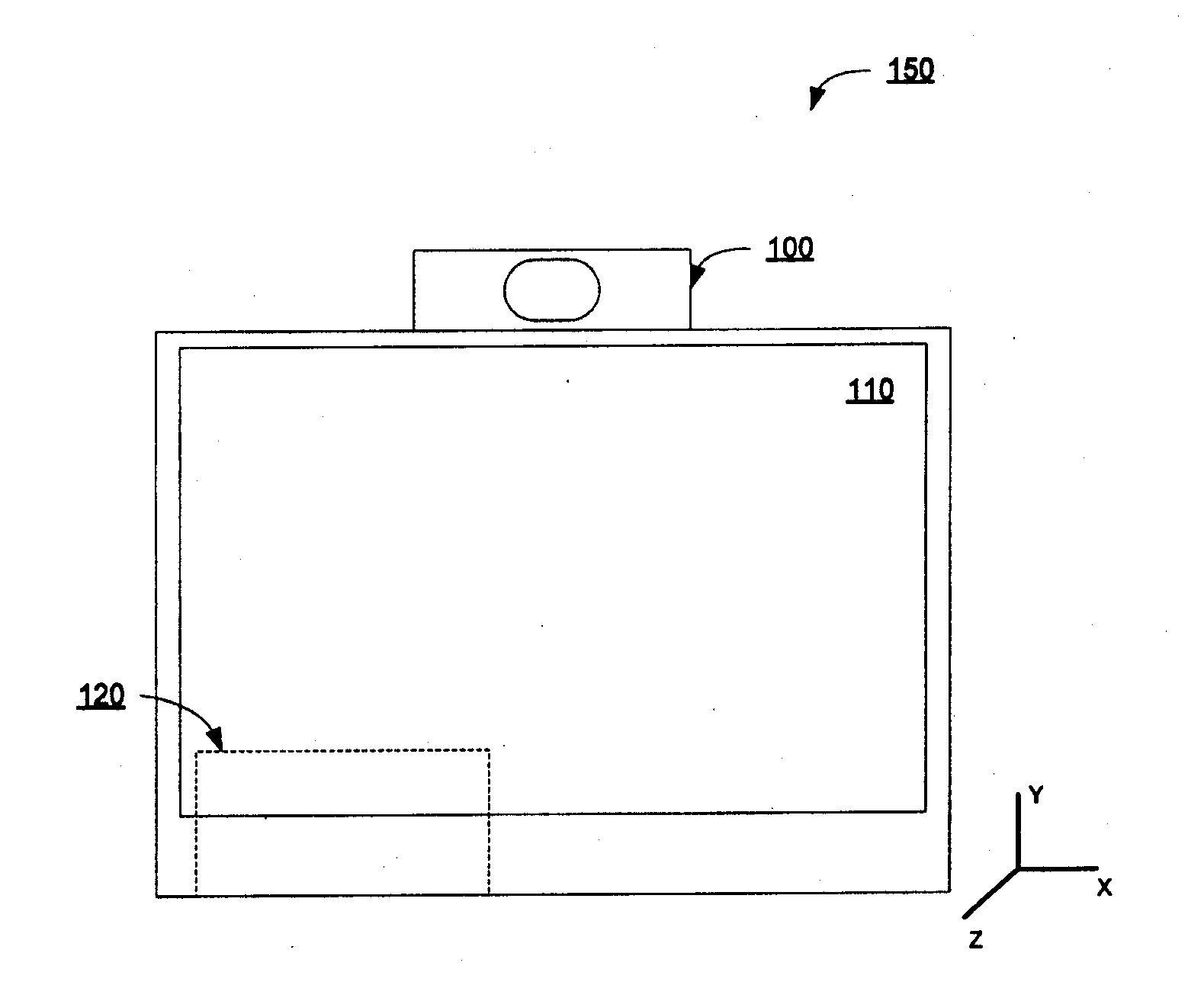

Methods and Apparatus for Coded Time-of-Flight Camera

ActiveUS20150120241A1Accurate measurementDepth accurateOptical rangefindersMechanical depth measurementsWiener deconvolutionMultipath interference

In illustrative implementations, a time-of-flight camera robustly measures scene depths, despite multipath interference. The camera emits amplitude modulated light. An FPGA sends at least two electrical signals, the first being to control modulation of radiant power of a light source and the second being a reference signal to control modulation of pixel gain in a light sensor. These signals are identical, except for time delays. These signals comprise binary codes that are m-sequences or other broadband codes. The correlation waveform is not sinusoidal. During measurements, only one fundamental modulation frequency is used. One or more computer processors solve a linear system by deconvolution, in order to recover an environmental function. Sparse deconvolution is used if the scene has only a few objects at a finite depth. Another algorithm, such as Wiener deconvolution, is used is the scene has global illumination or a scattering media.

Owner:MASSACHUSETTS INST OF TECH

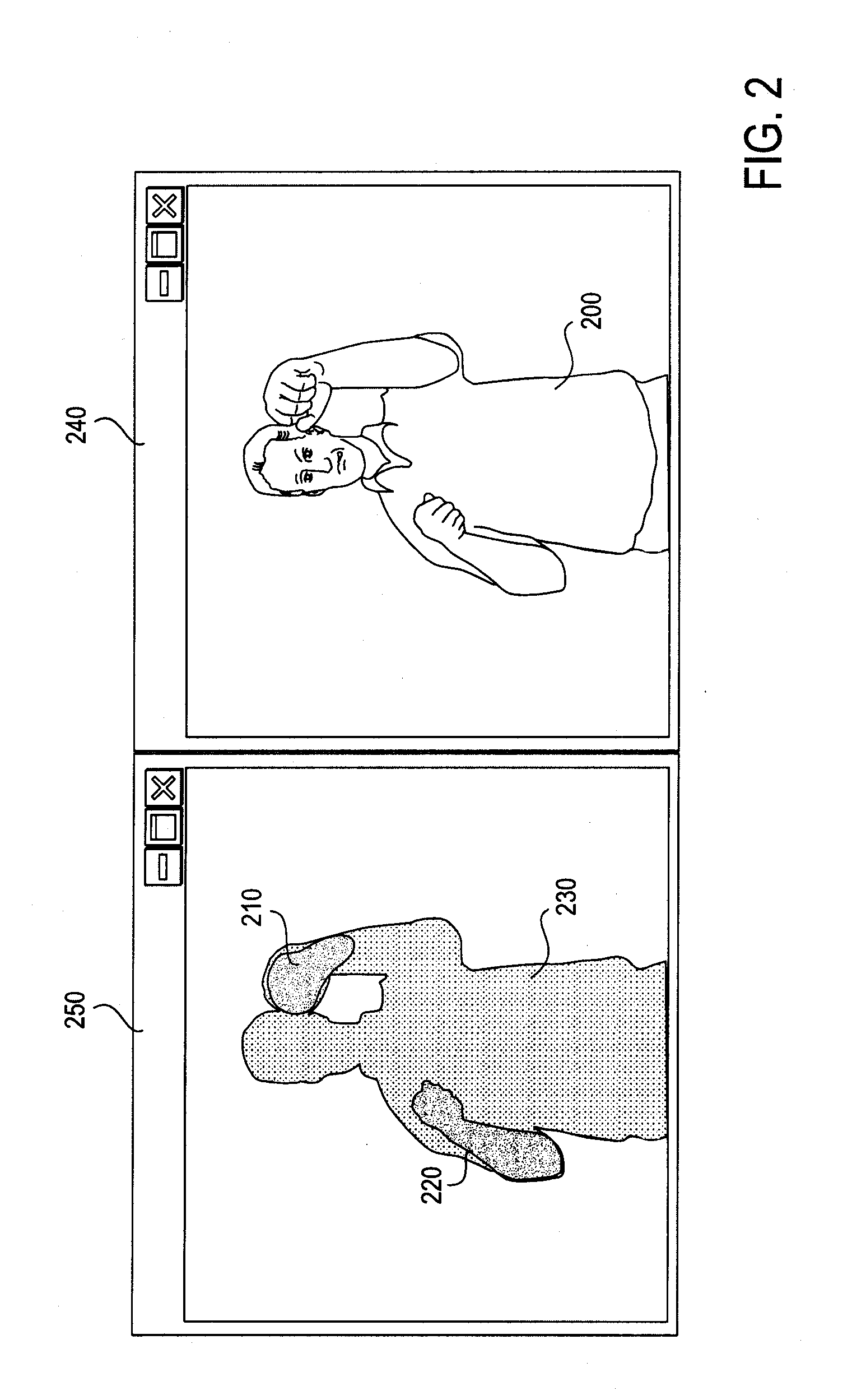

Method and system for vision-based interaction in a virtual environment

ActiveUS20120162378A1Wing accessoriesCathode-ray tube indicatorsComputer graphics (images)Vision based

Owner:MICROSOFT TECH LICENSING LLC

Adaptive neighborhood filtering (ANF) system and method for 3D time of flight cameras

A method for filtering distance information from a 3D-measurement camera system comprises comparing amplitude and / or distance information for pixels to adjacent pixels and averaging distance information for the pixels with the adjacent pixels when amplitude and / or distance information for the pixels is within a range of the amplitudes and / or distances for the adjacent pixels. In addition to that the range of distances may or may not be defined as a function depending on the amplitudes.

Owner:AMS SENSORS SINGAPORE PTE LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com