Visual recognition and positioning method for robot intelligent capture application

A technology of robot intelligence and visual recognition, applied in the field of intelligent robots, can solve the problems of poor robust performance, large amount of calculation, slow detection speed, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034]The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments, wherein the schematic embodiments and descriptions are only used to explain the present invention, but are not intended to limit the present invention.

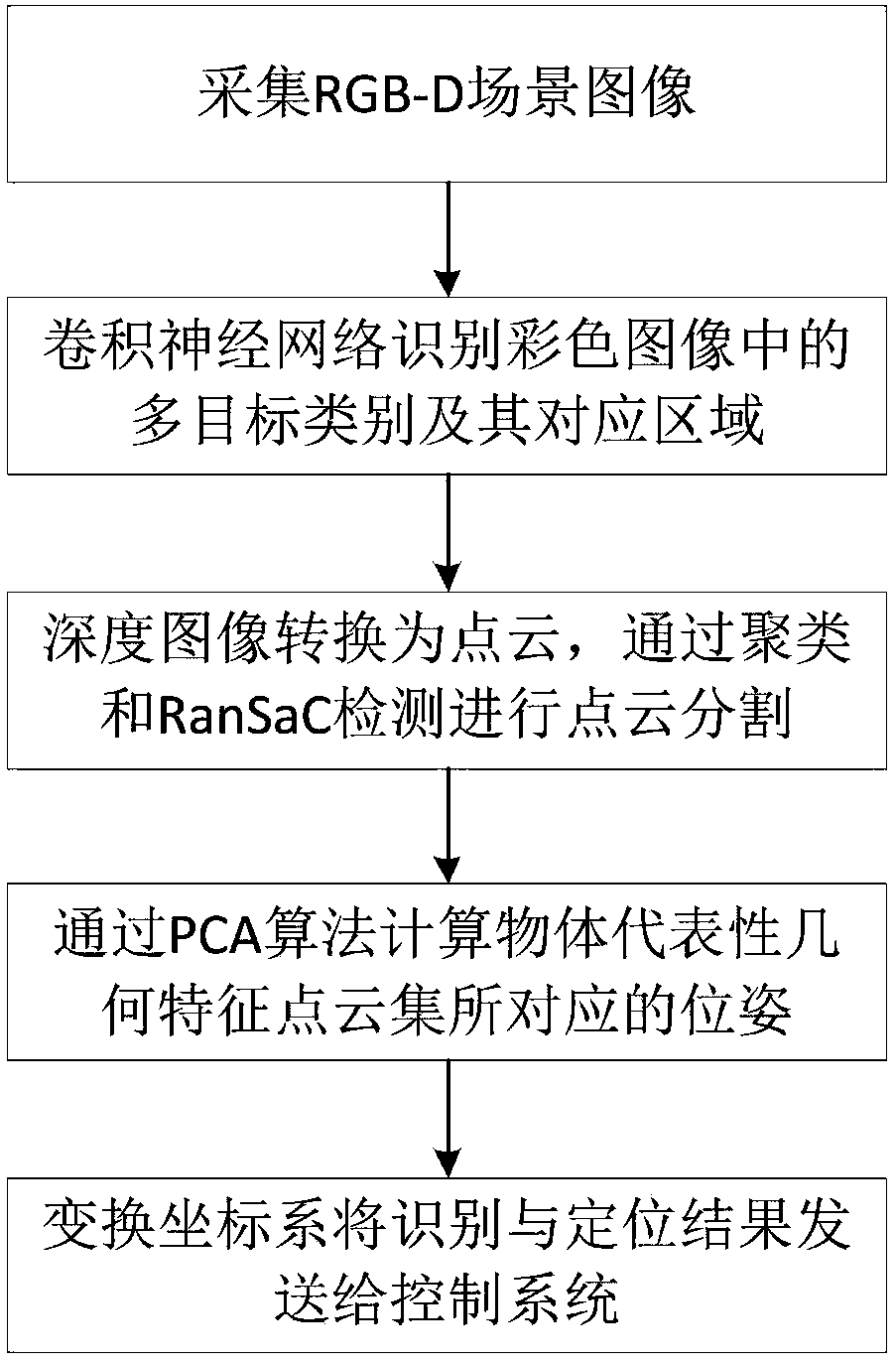

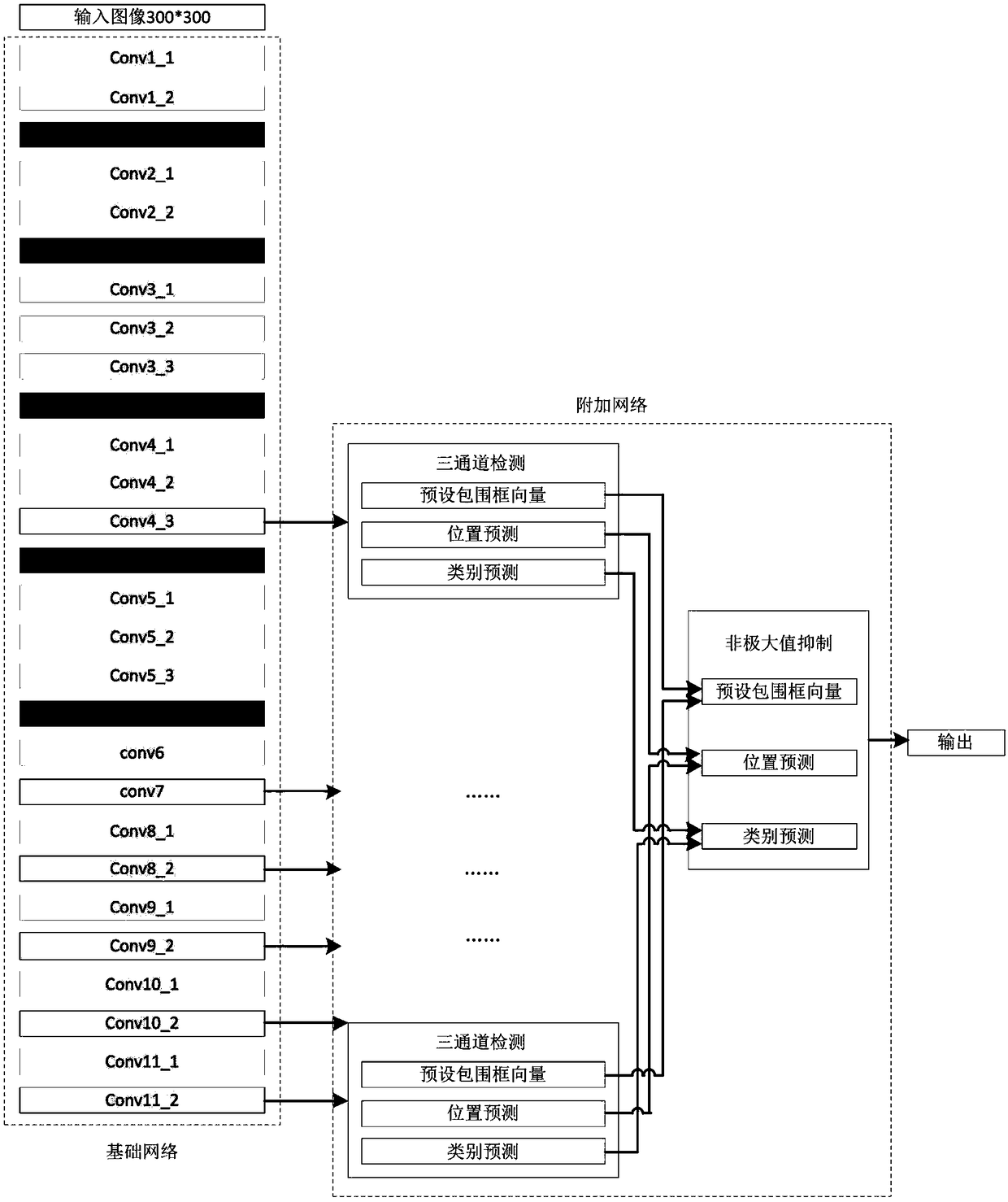

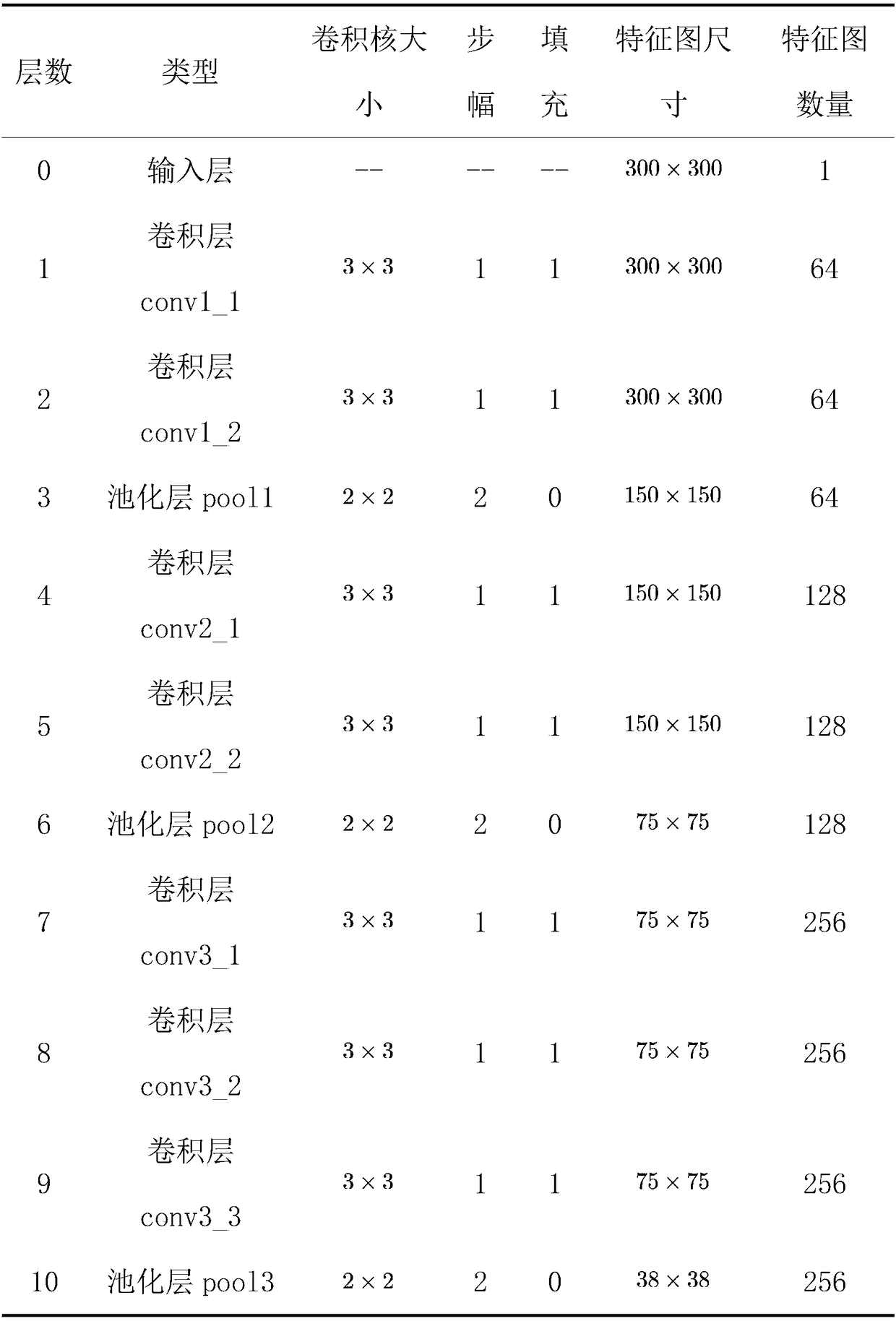

[0035] First of all, the present invention constructs and trains a corresponding deep convolutional neural network for the visual recognition and positioning objects of the robot, specifically including the steps of building a deep learning data set, building a deep convolutional neural network, and offline training of a deep convolutional neural network. , detailed as follows:

[0036] (A) Deep learning dataset construction steps: Collect sample images in the corresponding scene according to the detection objects and task requirements, and manually label the sample images with the help of open source tools. The label information includes the category of the target object in the scene and its co...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com