Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1629 results about "Facial expression" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A facial expression is one or more motions or positions of the muscles beneath the skin of the face. According to one set of controversial theories, these movements convey the emotional state of an individual to observers. Facial expressions are a form of nonverbal communication. They are a primary means of conveying social information between humans, but they also occur in most other mammals and some other animal species. (For a discussion of the controversies on these claims, see Fridlund and Russell & Fernandez Dols.)

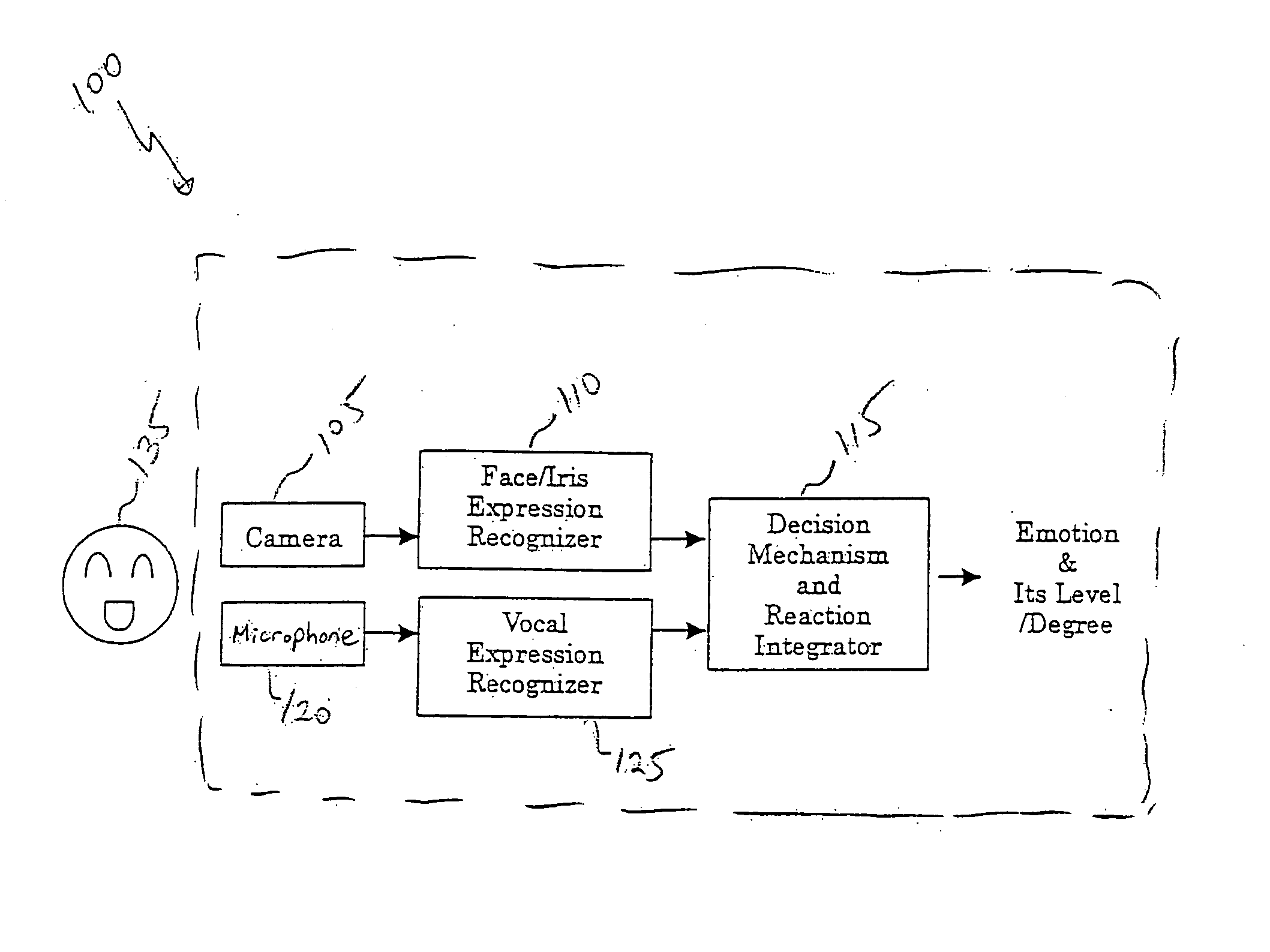

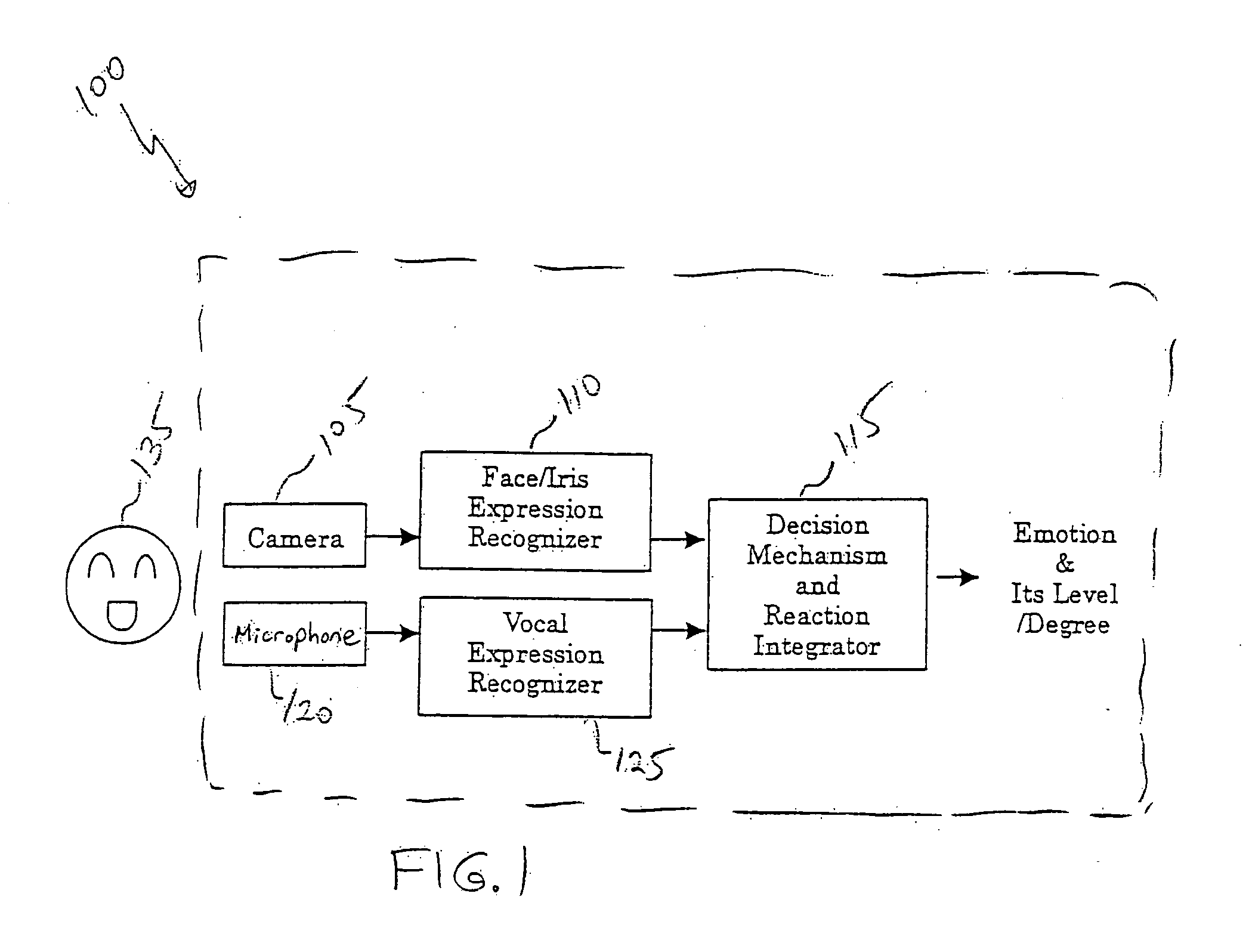

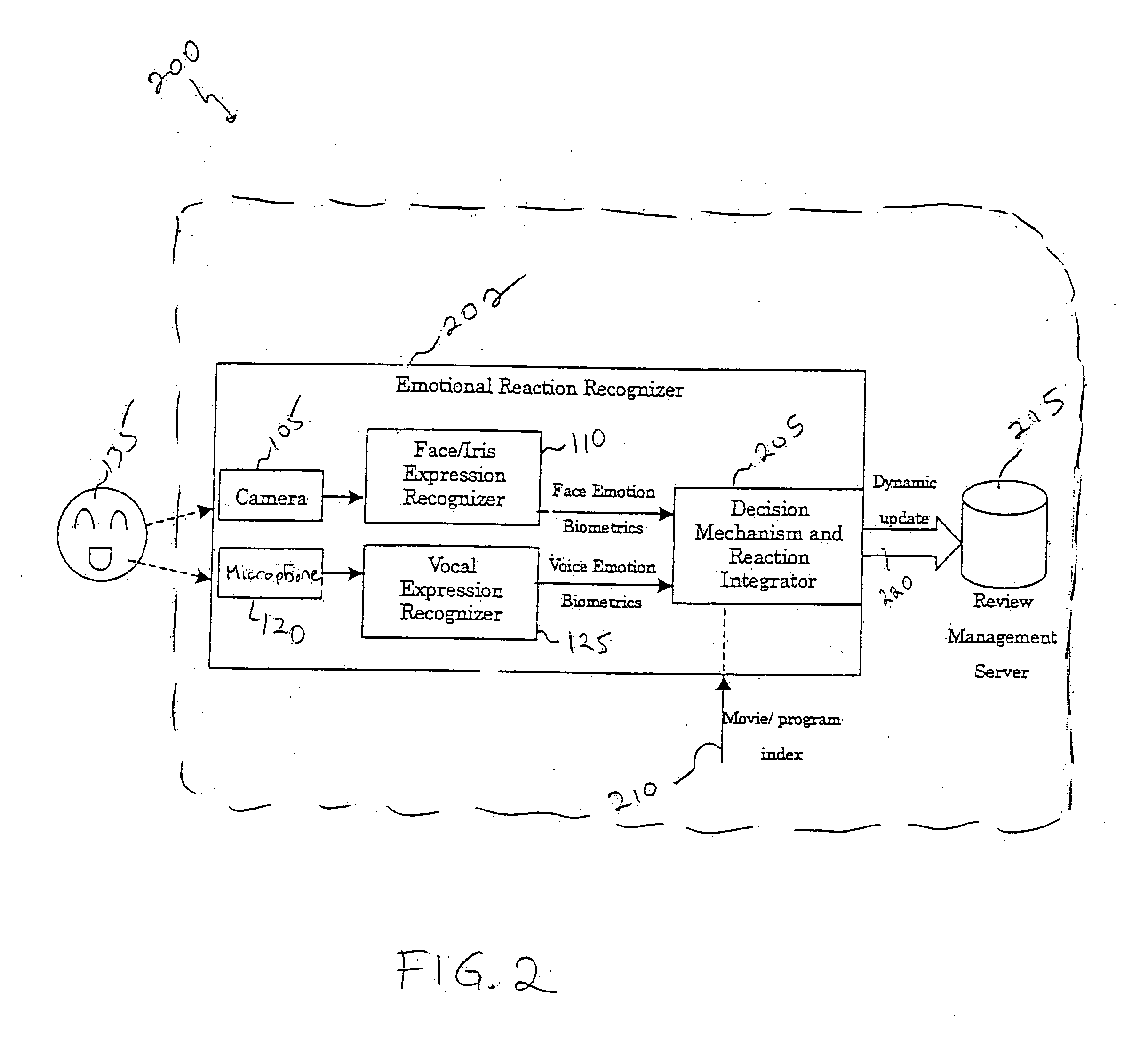

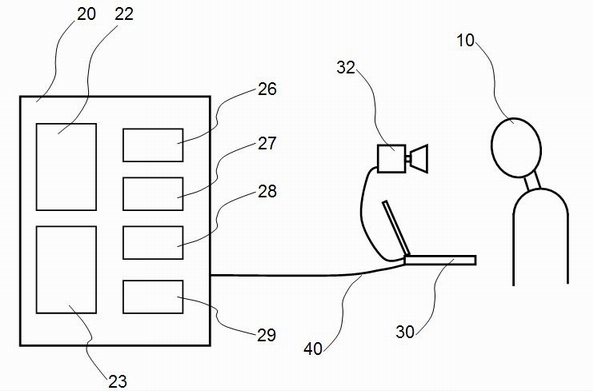

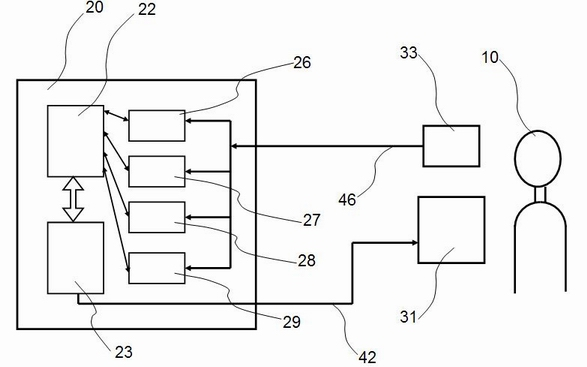

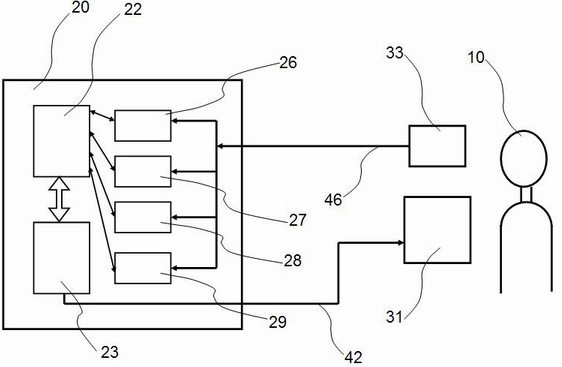

System and method for capturing and using biometrics to review a product, service, creative work or thing

InactiveUS20050289582A1Efficient use ofEvaluate public opinion more accuratelyPerson identificationAnalogue secracy/subscription systemsFacial expressionCrying

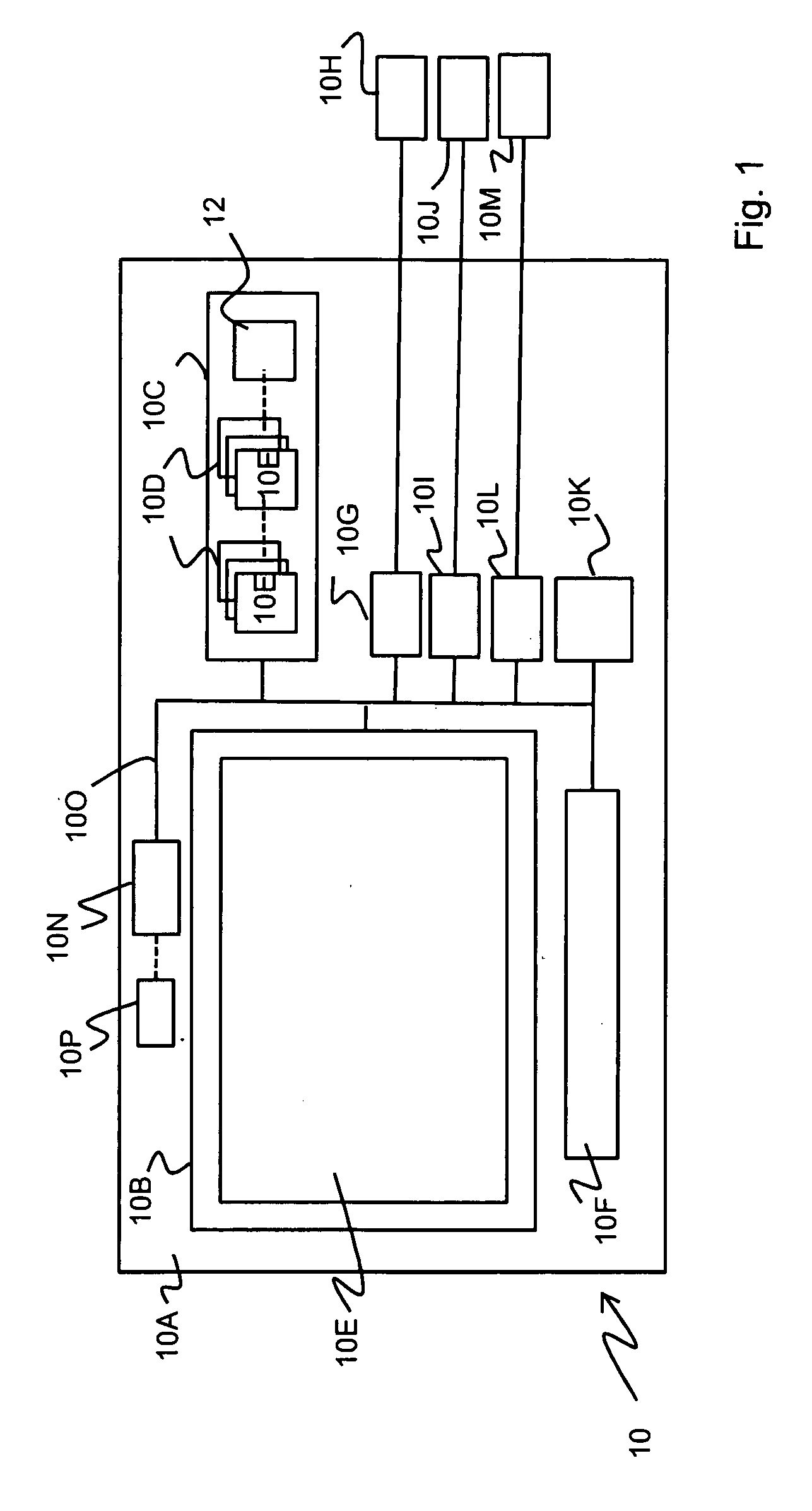

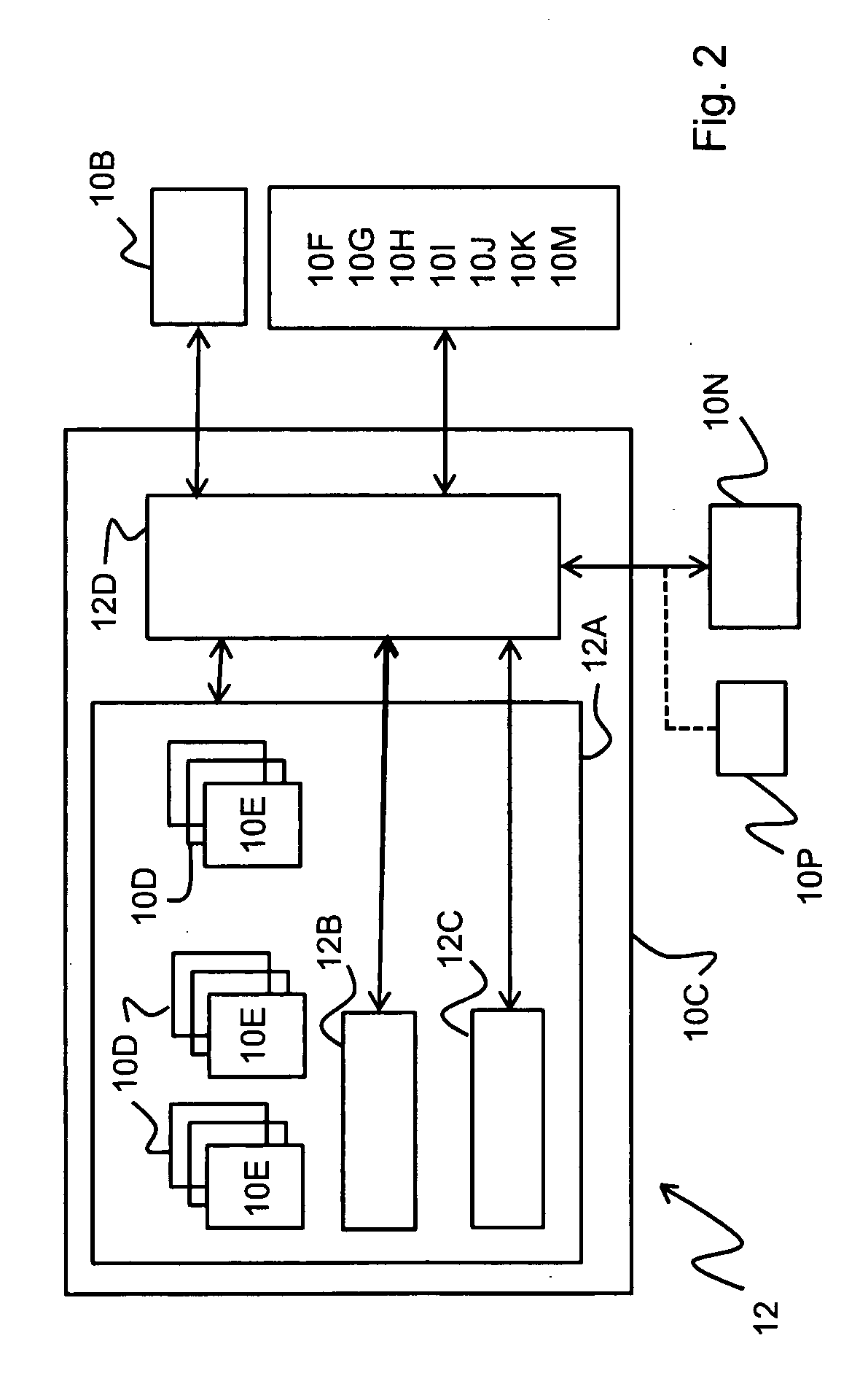

A system enables capturing biometric information while a user is perceiving a particular product, service, creative work or thing. For example, while movie-goers watch a movie, the system can capture and recognize the facial expressions, vocal expressions and / or eye expressions (e.g., iris information) of one or more person's in the audience to determine an audience's reaction to movie content. Alternatively, the system could be used to evaluate an audience's reaction to a public spokesman, e.g., political figure. The system could be useful to evaluate consumer products or story-boards before substantial investment in movie development occurs. Because these biometric expressions (laughing, crying, etc.) are generally universal, the system is generally independent of language and can be applied easily for global-use products and applications. The system can store the biometric information and / or results of any analysis of the biometric information as the generally true opinion of the particular product, service, creative work or thing, and can then enable other potential users of the product to review the information when evaluating the product.

Owner:HITACHI LTD

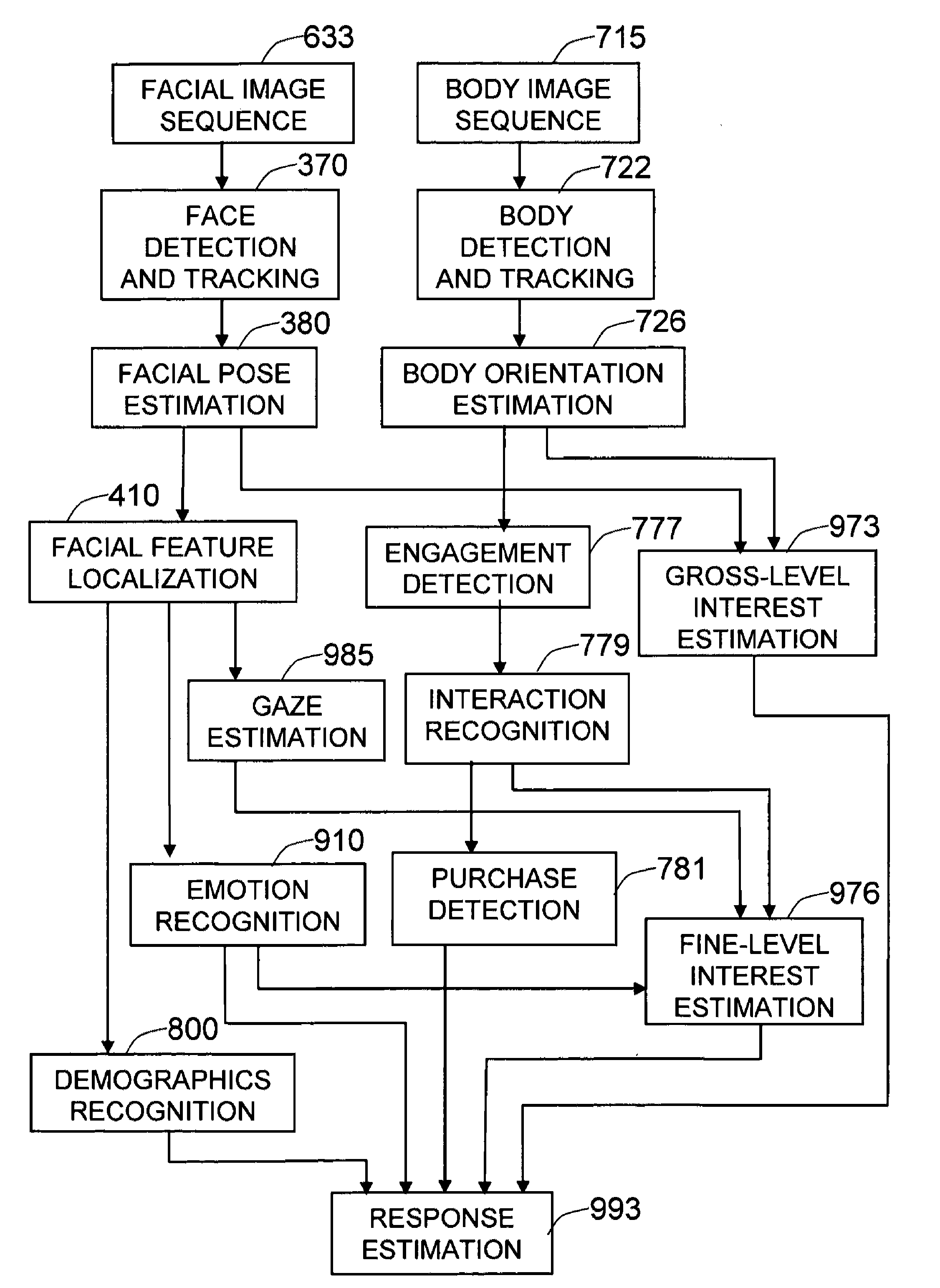

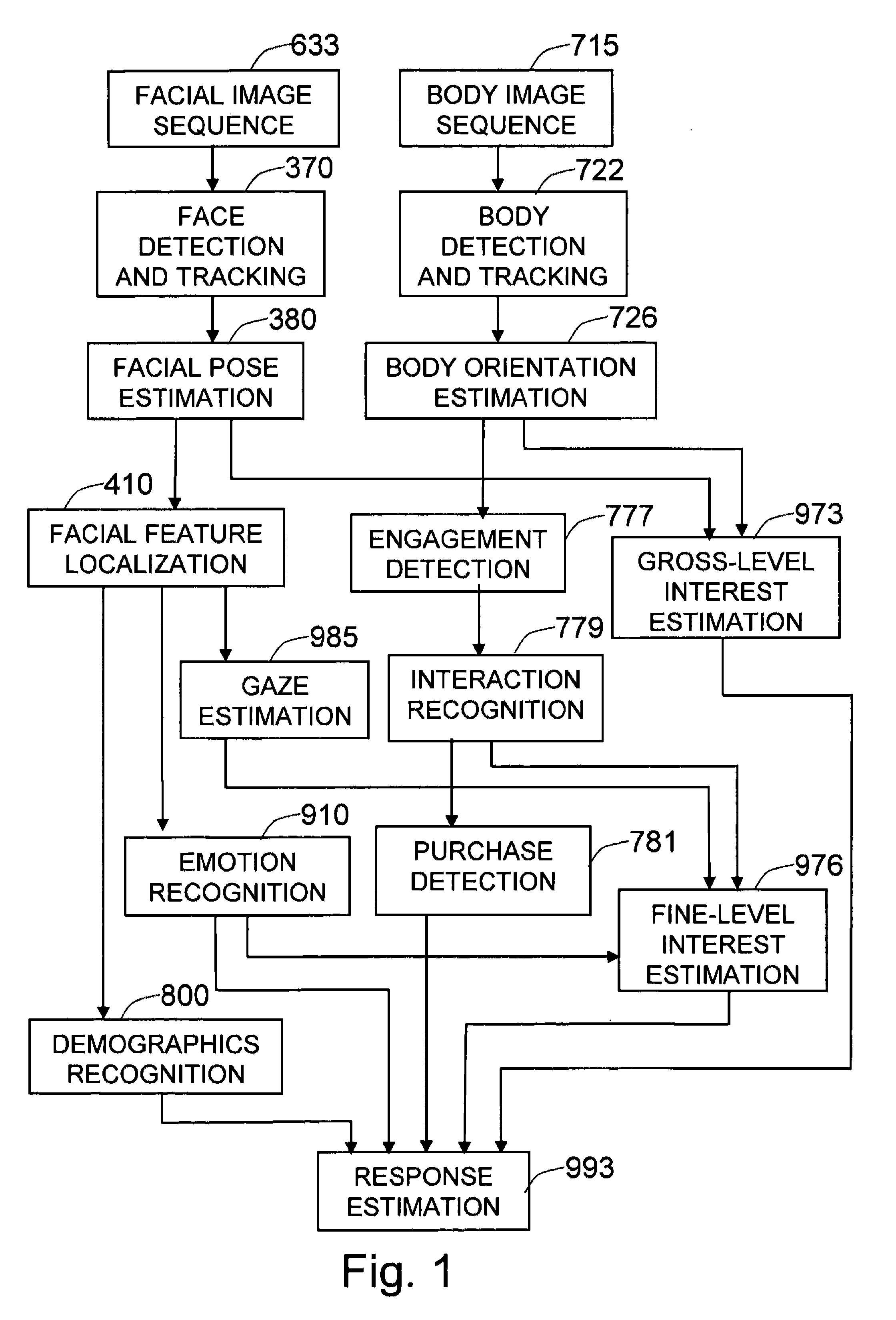

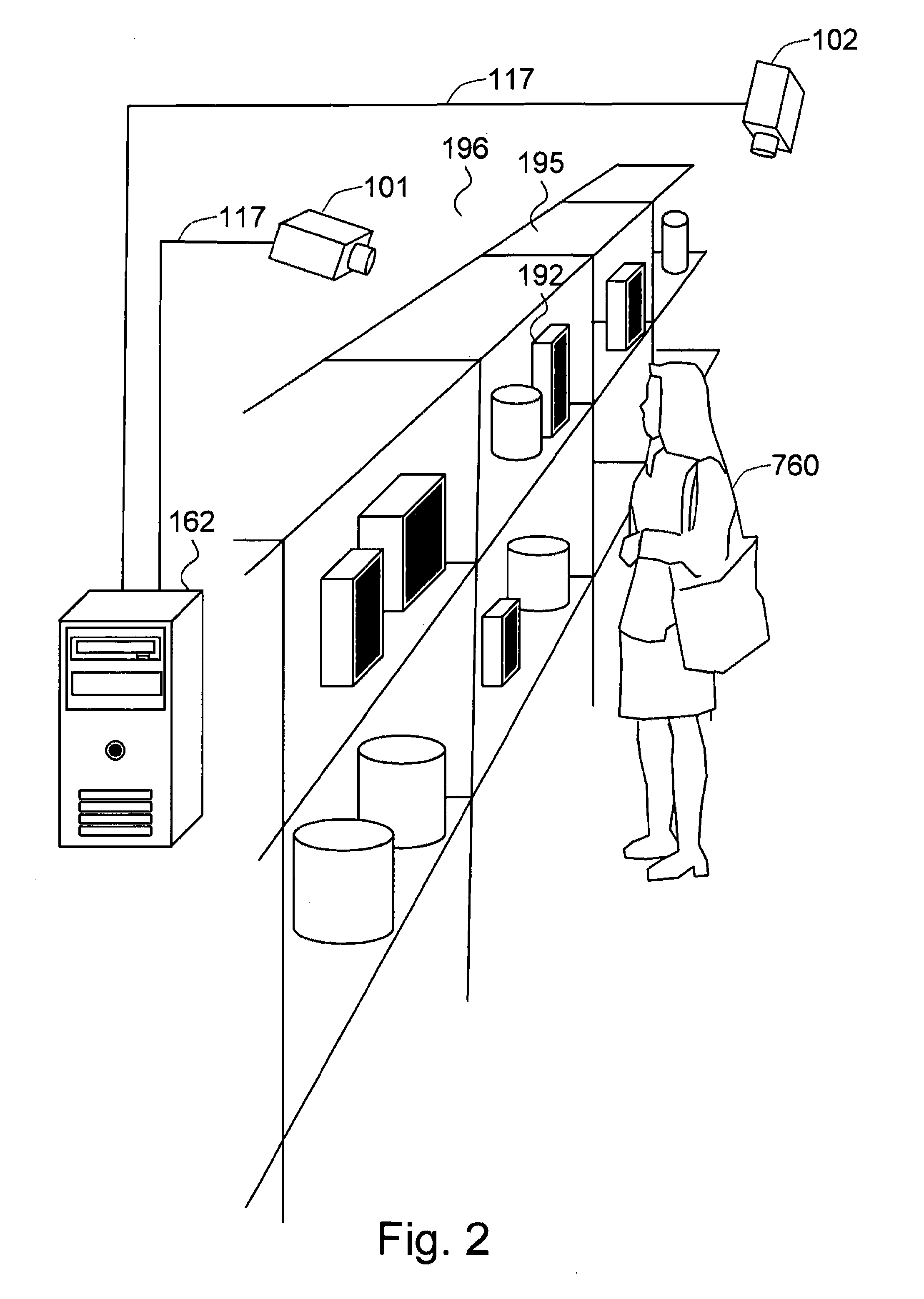

Method and system for measuring shopper response to products based on behavior and facial expression

ActiveUS8219438B1Reliable informationAccurate locationMarket predictionsAcquiring/recognising eyesPattern recognitionProduct base

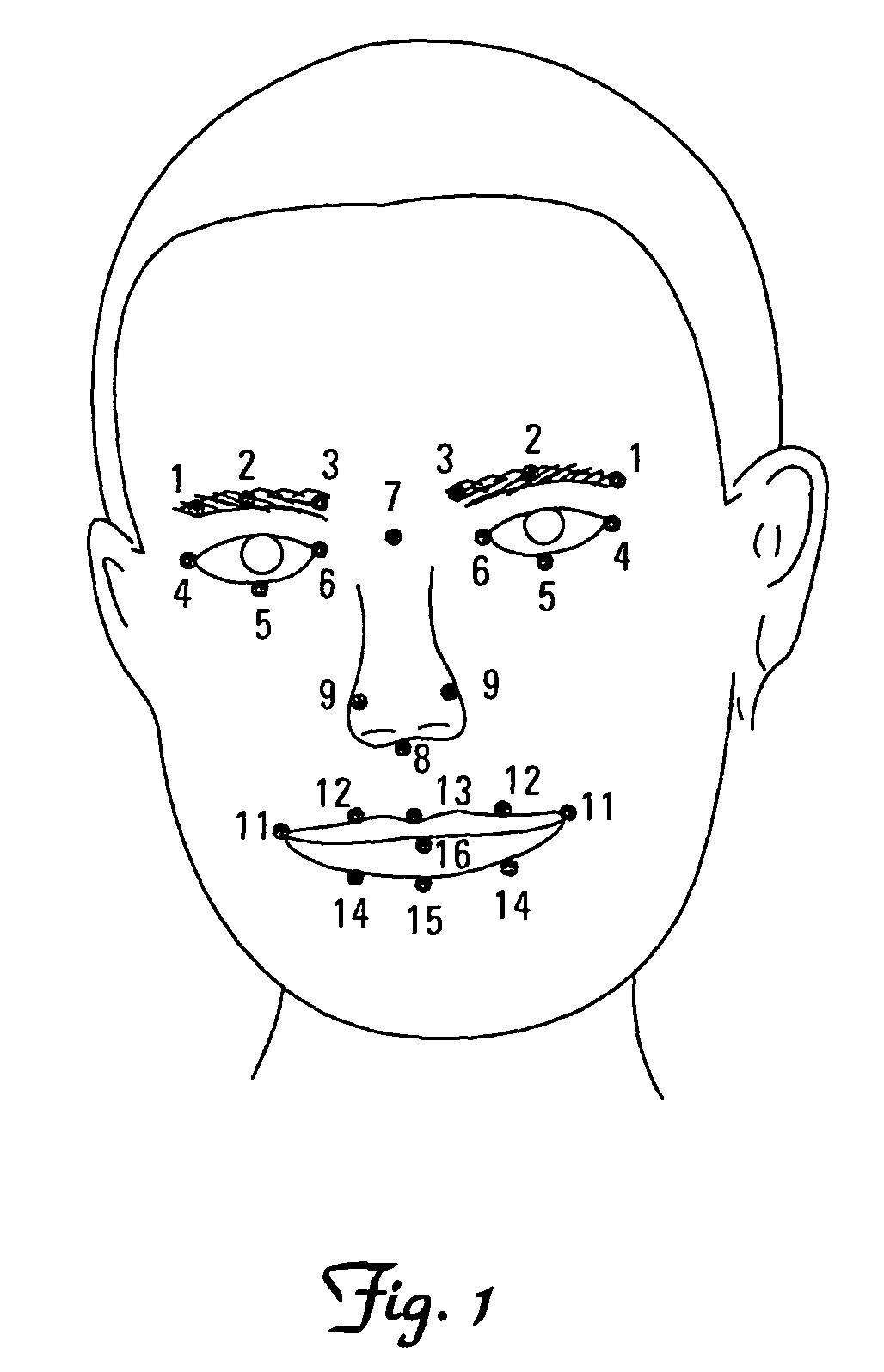

The present invention is a method and system for measuring human response to retail elements, based on the shopper's facial expressions and behaviors. From a facial image sequence, the facial geometry—facial pose and facial feature positions—is estimated to facilitate the recognition of facial expressions, gaze, and demographic categories. The recognized facial expression is translated into an affective state of the shopper and the gaze is translated into the target and the level of interest of the shopper. The body image sequence is processed to identify the shopper's interaction with a given retail element—such as a product, a brand, or a category. The dynamic changes of the affective state and the interest toward the retail element measured from facial image sequence is analyzed in the context of the recognized shopper's interaction with the retail element and the demographic categories, to estimate both the shopper's changes in attitude toward the retail element and the end response—such as a purchase decision or a product rating.

Owner:PARMER GEORGE A

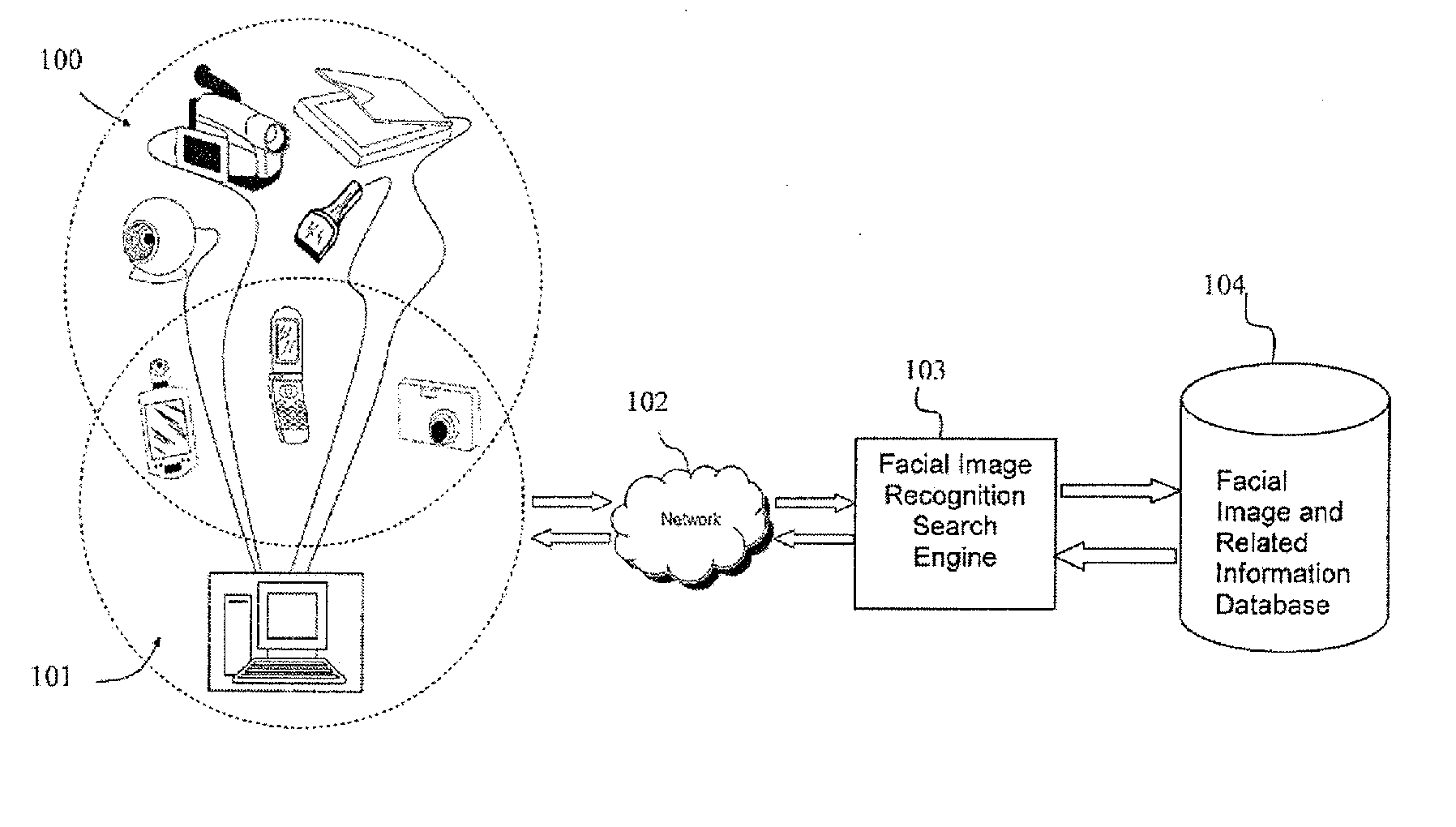

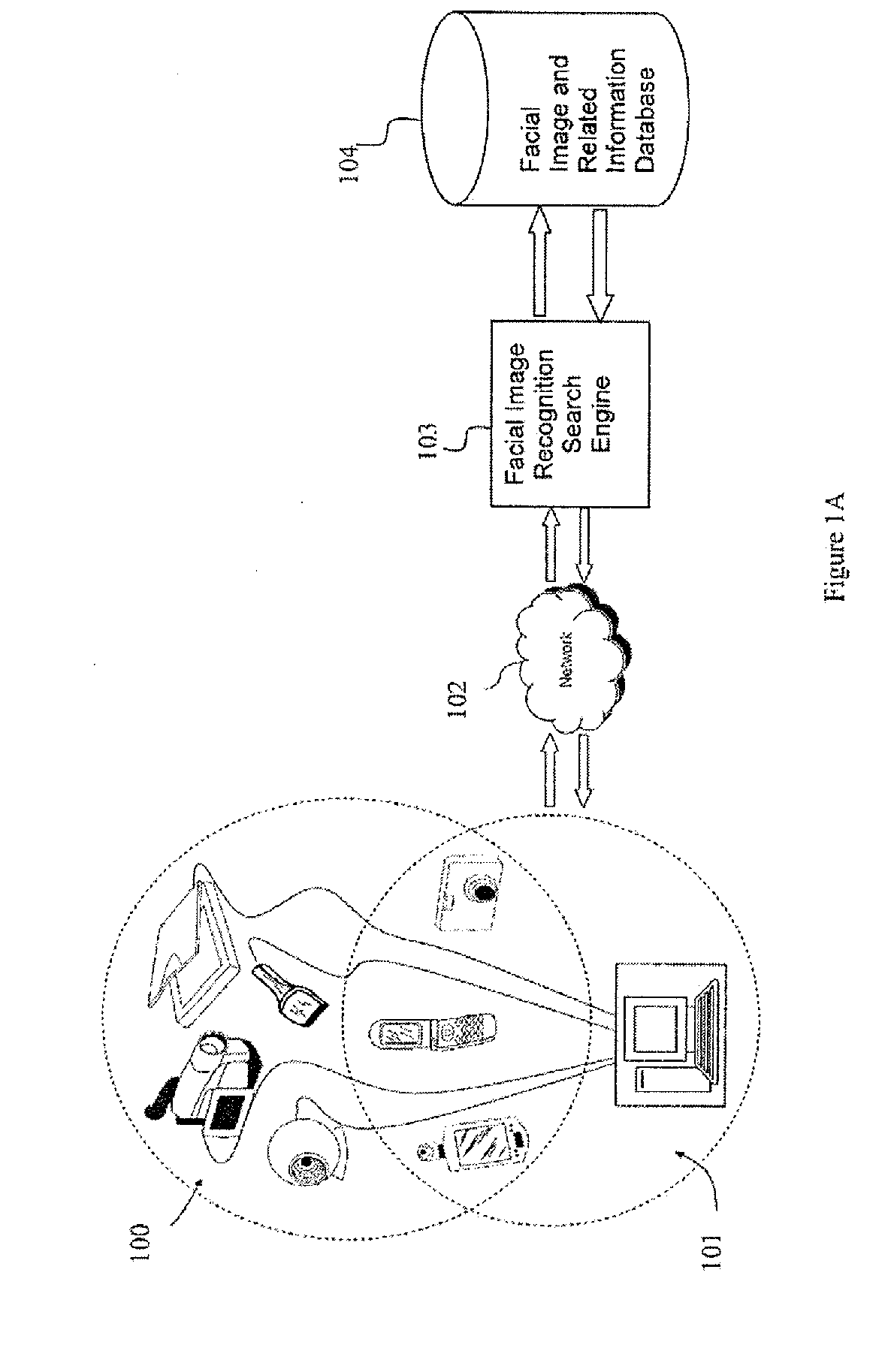

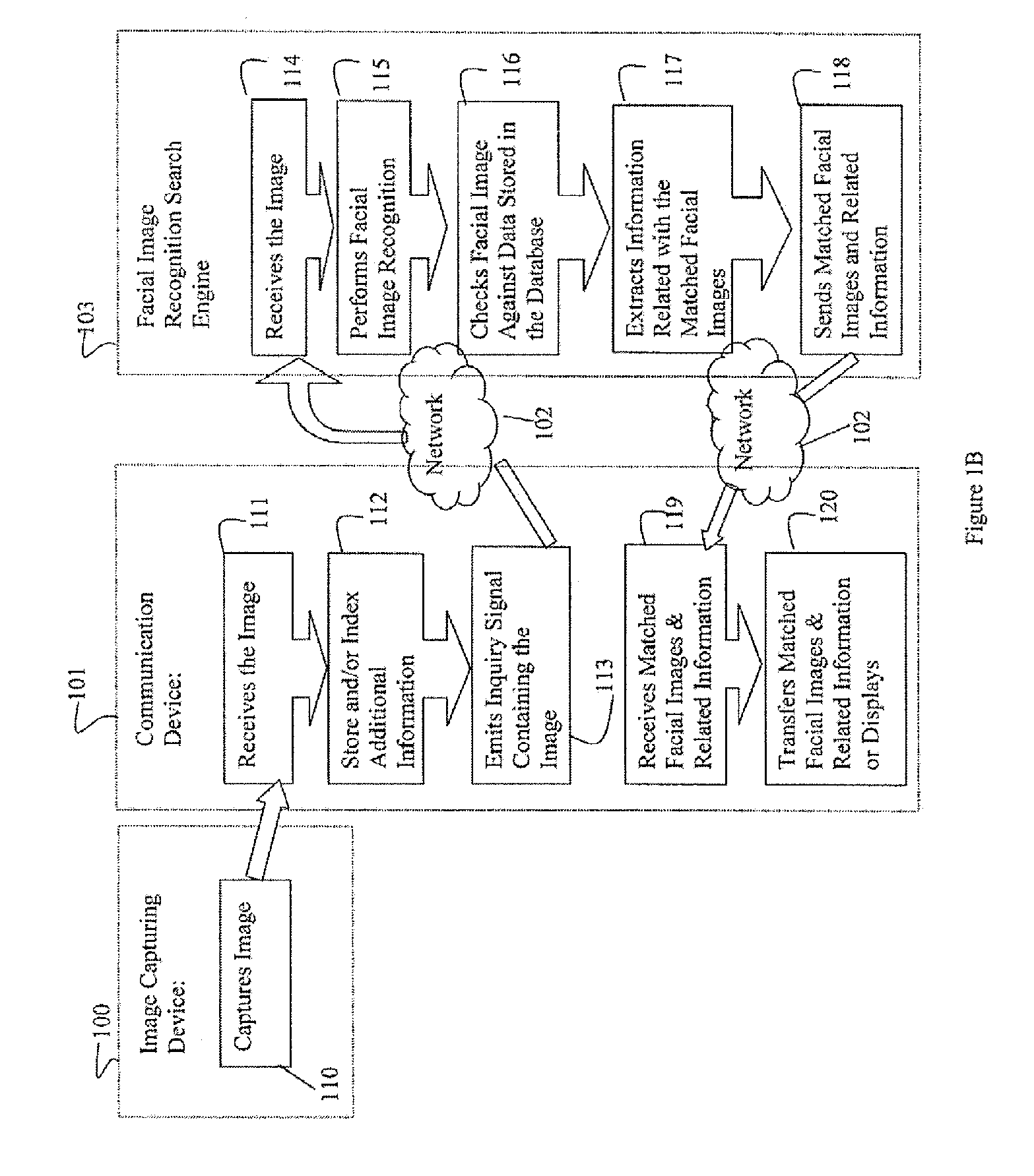

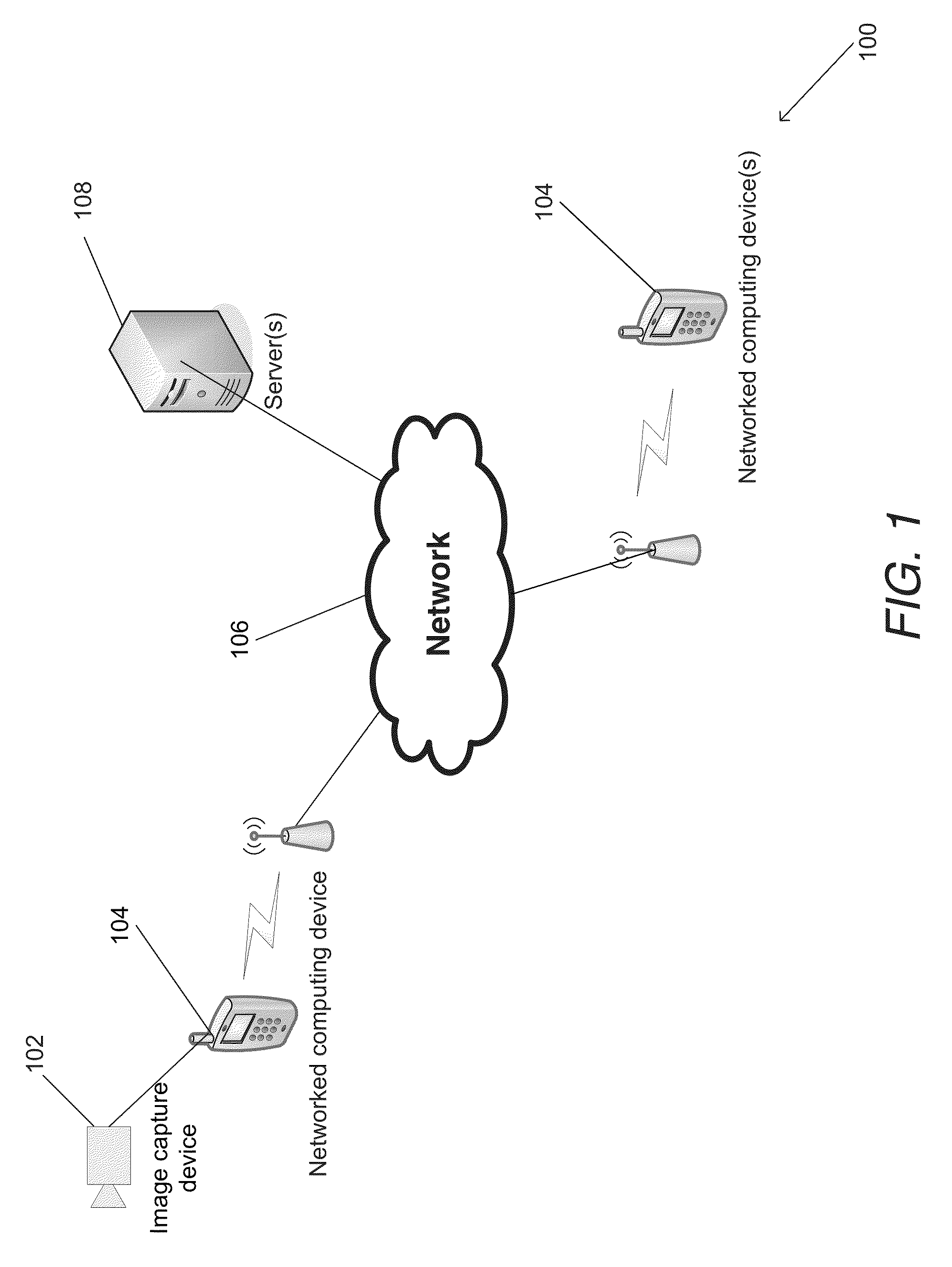

Photo Automatic Linking System and method for accessing, linking, and visualizing "key-face" and/or multiple similar facial images along with associated electronic data via a facial image recognition search engine

ActiveUS20070172155A1Quick searchEnhanced and improved organization, classification, and fast sorts and retrievalDigital data information retrievalCharacter and pattern recognitionHealth professionalsWeb crawler

The present invention provides a system and method for input of images containing faces for accessing, linking, and or visualizing multiple similar facial images and associated electronic data for innovative new on-line commercialization, medical and training uses. The system uses various image capturing devices and communication devices to capture images and enter them into a facial image recognition search engine. Embedded facial image recognition techniques within the image recognition search engine extract facial images and encode the extracted facial images in a computer readable format. The processed facial images are then entered for comparison into at least one database populated with facial images and associated information. Once the newly captured facial images are matched with similar “best-fit match” facial images in the facial image recognition search engine's database, the “best-fit” matching images and each image's associated information are returned to the user. Additionally, the newly captured facial image can be automatically linked to the “best-fit” matching facial images, along with comparisons calculated, and / or visualized. Key new use innovations of the system include but are not limited to: input of user selected facial images for use finding multiple similar celebrity look-a-likes, with automatic linking that return the look-a-like celebrities' similar images, associated electronic information, and convenient opportunities to purchase fashion, jewelry, products and services to better mimic your celebrity look-a-likes; health monitoring and diagnostic use by conveniently organizing and superimposing periodically captured patient images for health professionals to view progress of patients; entirely new classes of semi-transparent superimposed training your face to mimic other similar faces, such as mimic celebrity look-a-like cosmetic applications, and or facial expressions; intuitive automatic linking of similar facial images for enhanced information technology in the context of enhanced and improved organization, classification, and fast retrieval objects and advantages; and an improved method of facial image based indexing and retrieval of information from the web-crawler or spider searched Web, USENET, and other resources to provide new types of intuitive easy to use searching, and / or combined use with current key-word searching for optimized searching.

Owner:VR REHAB INC +2

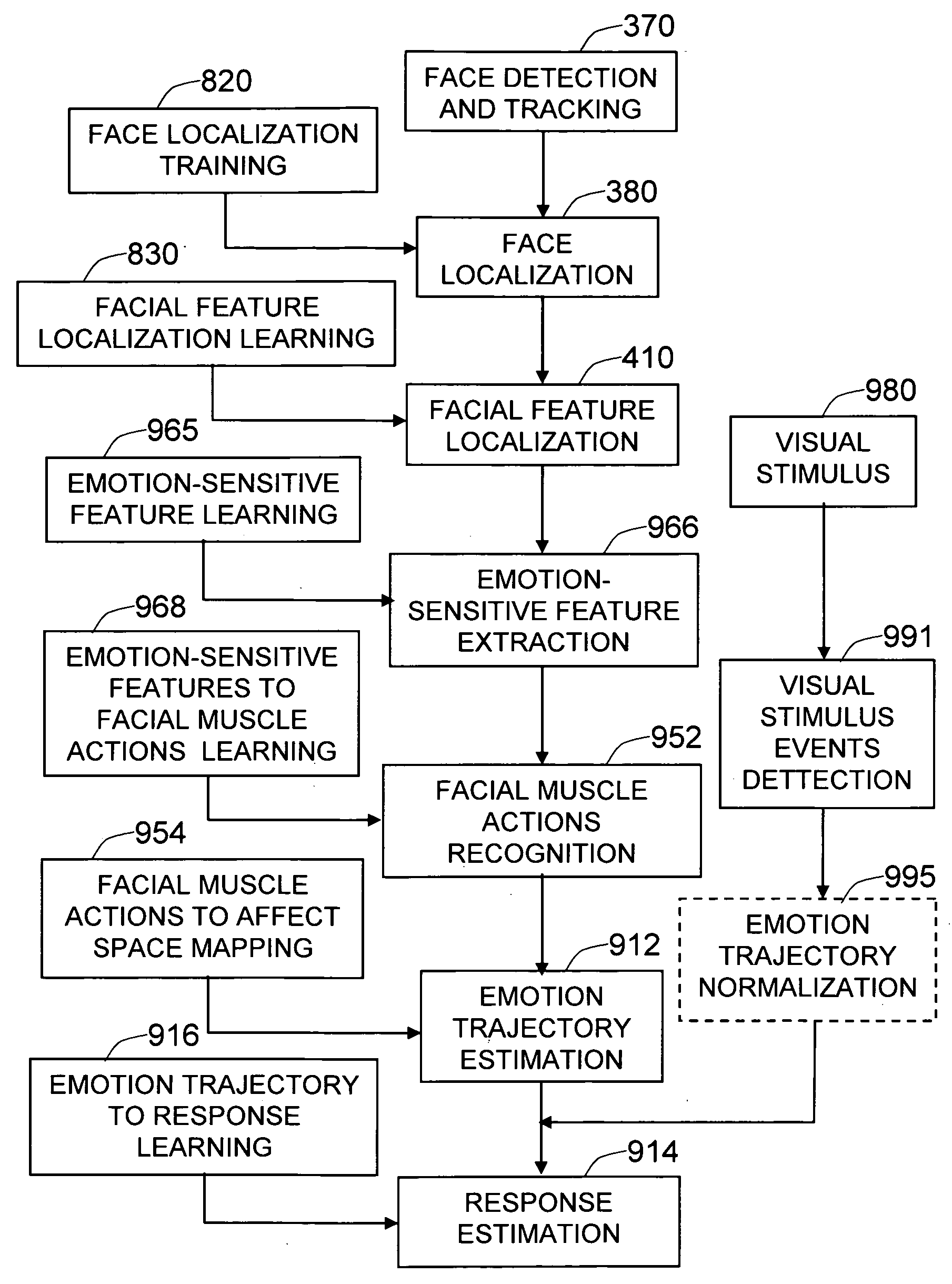

Method and system for measuring human response to visual stimulus based on changes in facial expression

ActiveUS20090285456A1Improve responseCharacter and pattern recognitionEye diagnosticsWrinkle skinLearning machine

The present invention is a method and system for measuring human emotional response to visual stimulus, based on the person's facial expressions. Given a detected and tracked human face, it is accurately localized so that the facial features are correctly identified and localized. Face and facial features are localized using the geometrically specialized learning machines. Then the emotion-sensitive features, such as the shapes of the facial features or facial wrinkles, are extracted. The facial muscle actions are estimated using a learning machine trained on the emotion-sensitive features. The instantaneous facial muscle actions are projected to a point in affect space, using the relation between the facial muscle actions and the affective state (arousal, valence, and stance). The series of estimated emotional changes renders a trajectory in affect space, which is further analyzed in relation to the temporal changes in visual stimulus, to determine the response.

Owner:MOTOROLA SOLUTIONS INC

Device-driven non-intermediated blockchain system over a social integrity network

ActiveUS20170091397A1Enabling social interactionData processing applicationsPatient personal data managementFacial expressionAuthorization

A blockchain configured device-driven disintermediated distributed system for facilitating multi-faceted communication over a network. The system includes entities connected with a communications network. Each of the entities and associated devices and sensors and networks serve as a source of data records. The system includes a blockchain configured data bank accessible by each of the plurality of entities based on rules and preferences of the entities upon authorization by the blockchain configured data bank. The blockchain configured data bank includes a processing component for executing stored instructions to process the data records of the entities over the communications network. The system includes a blockchain configured component communicatively coupled to the blockchain configured data bank and adapted to be accessible by each of the plurality of entities. The system includes a validation device including a facial expression-based validation device and a geo-tagging-based validation device.

Owner:INTELLECTUAL FRONTIERS LLC

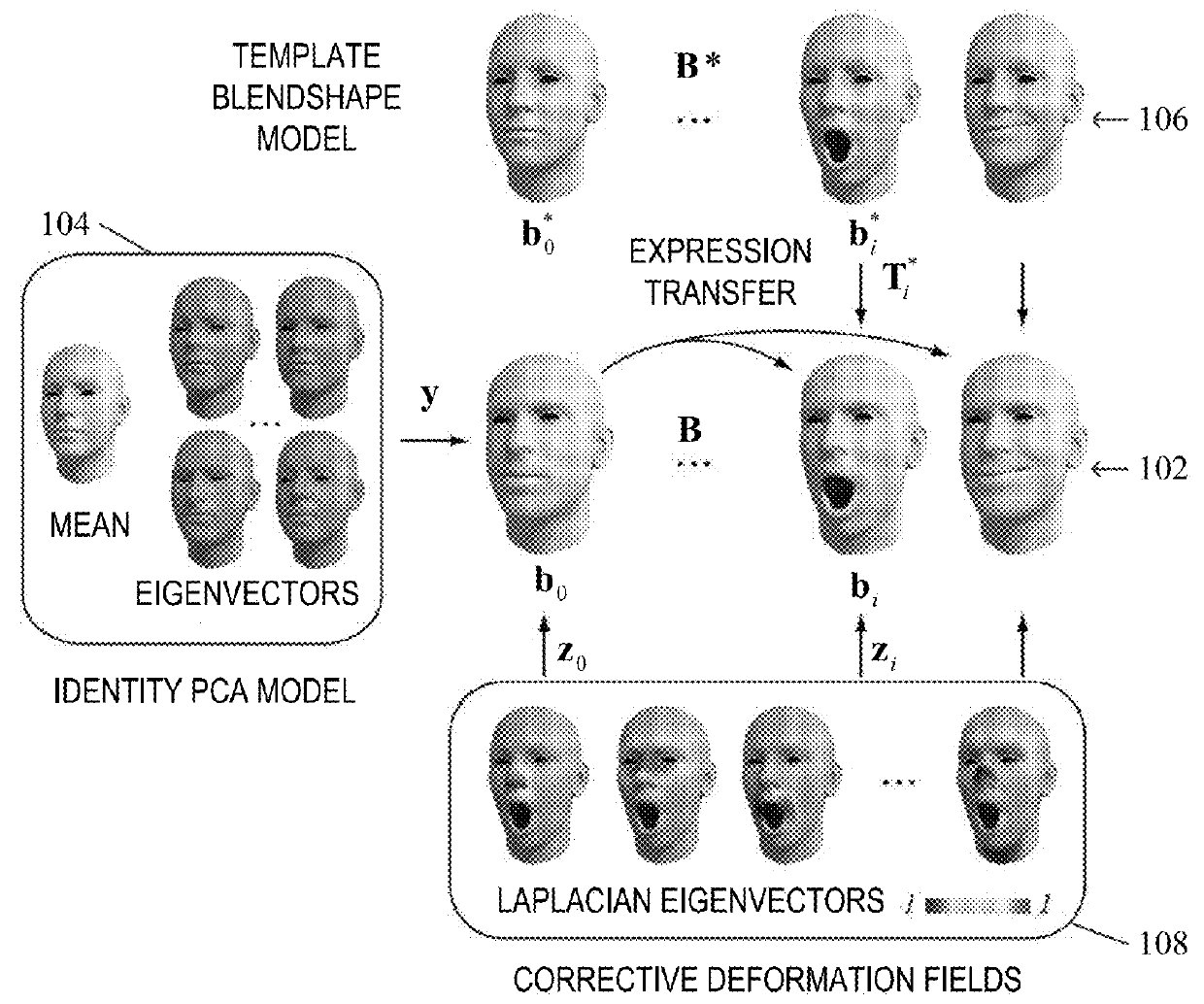

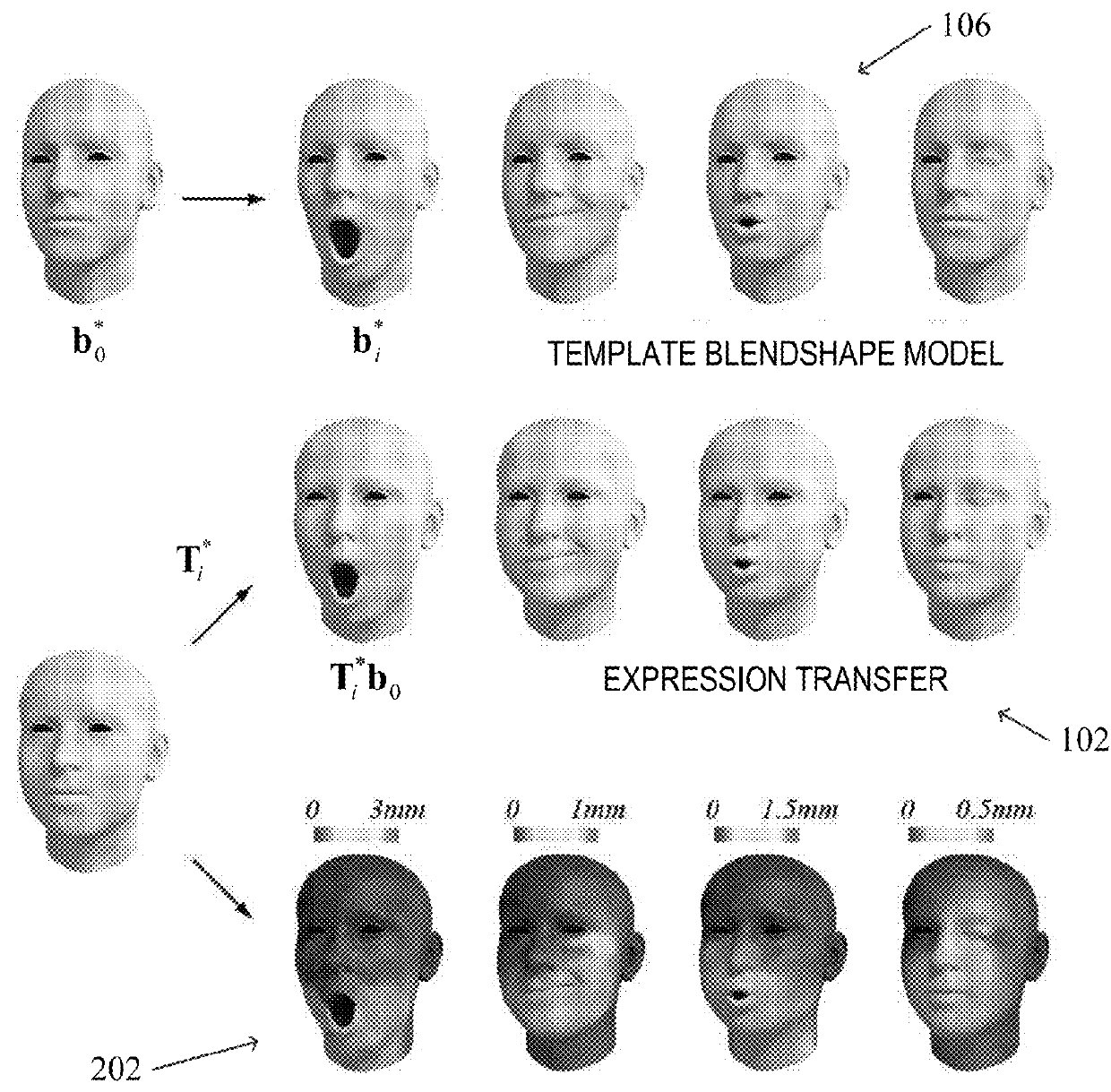

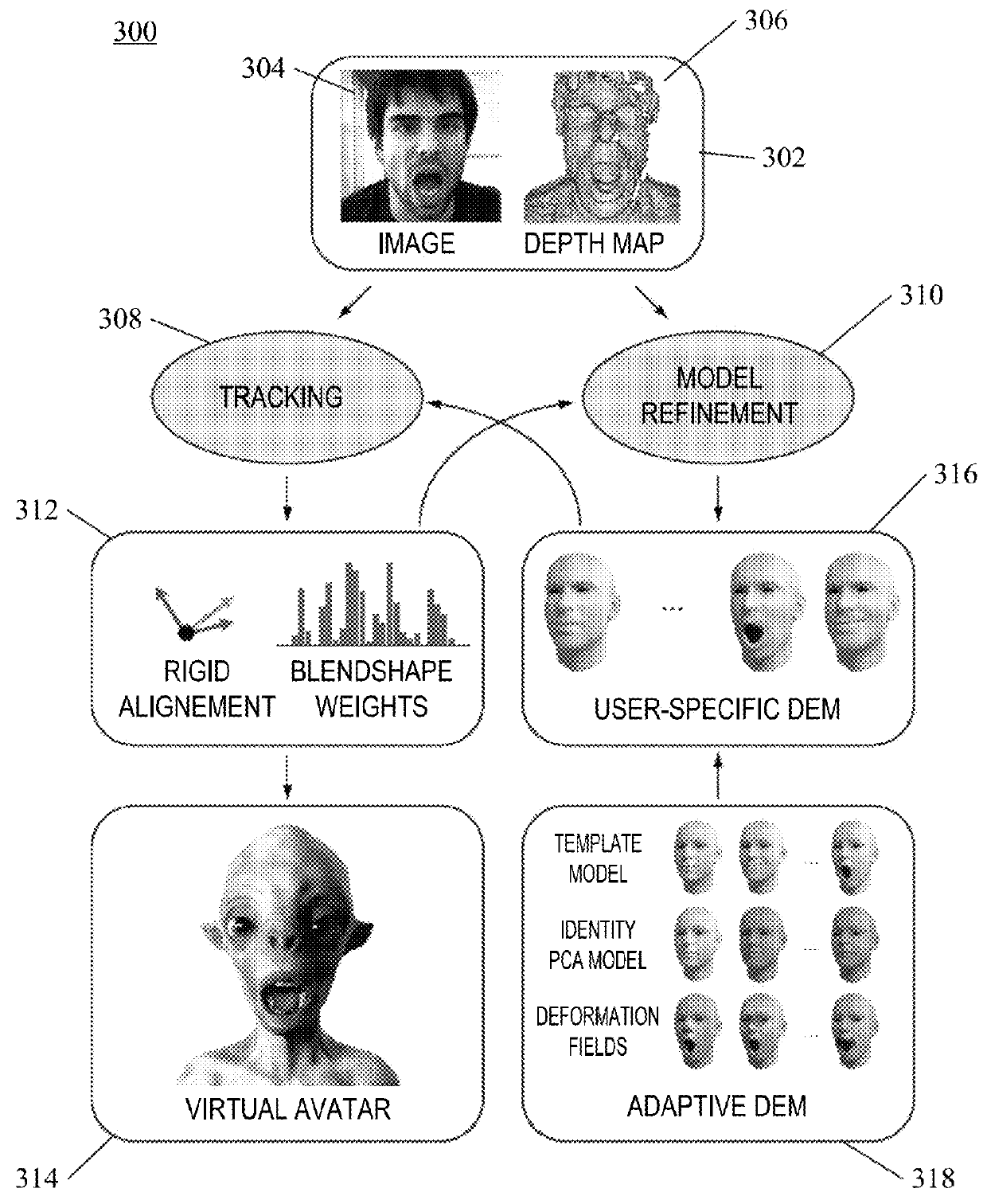

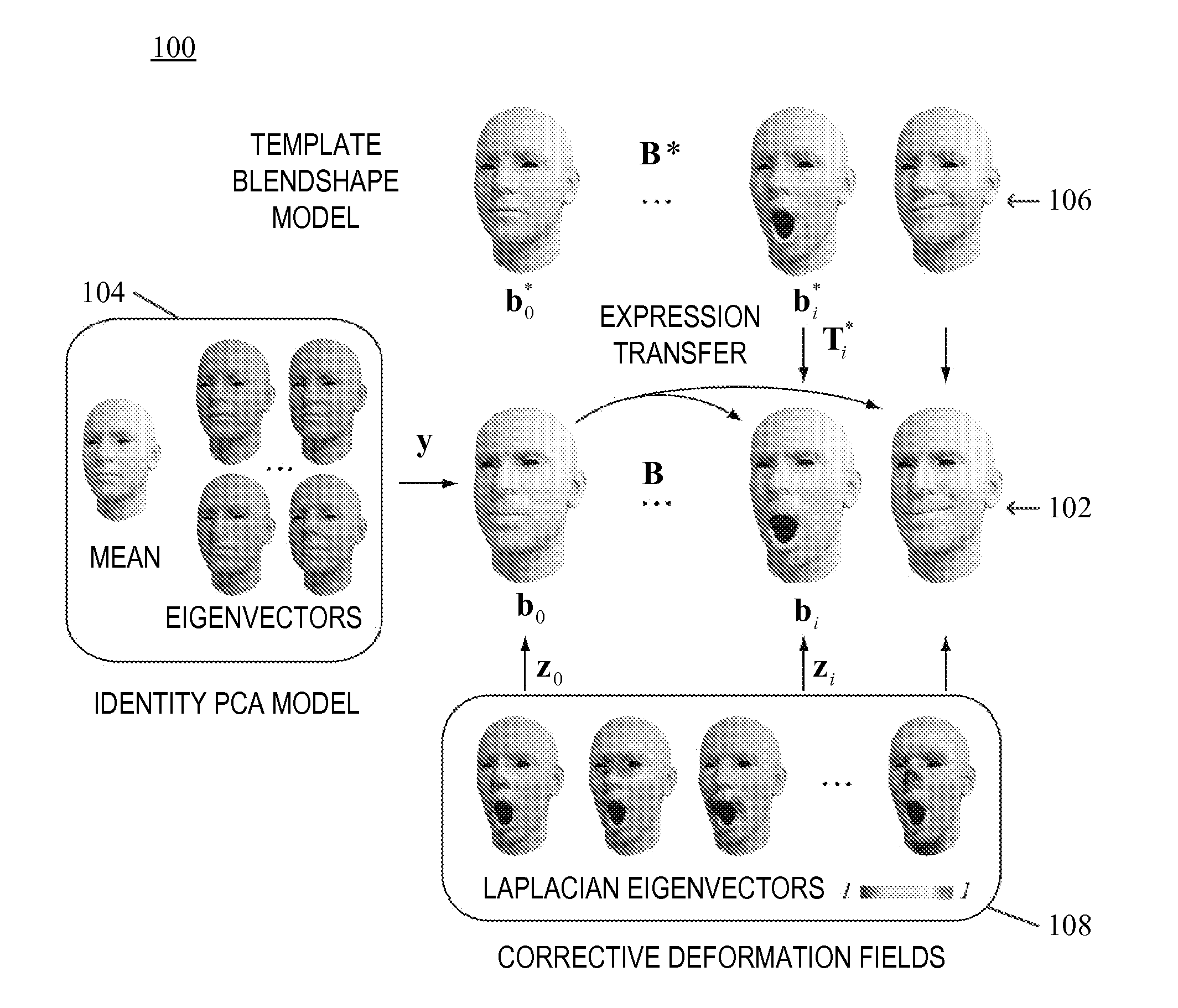

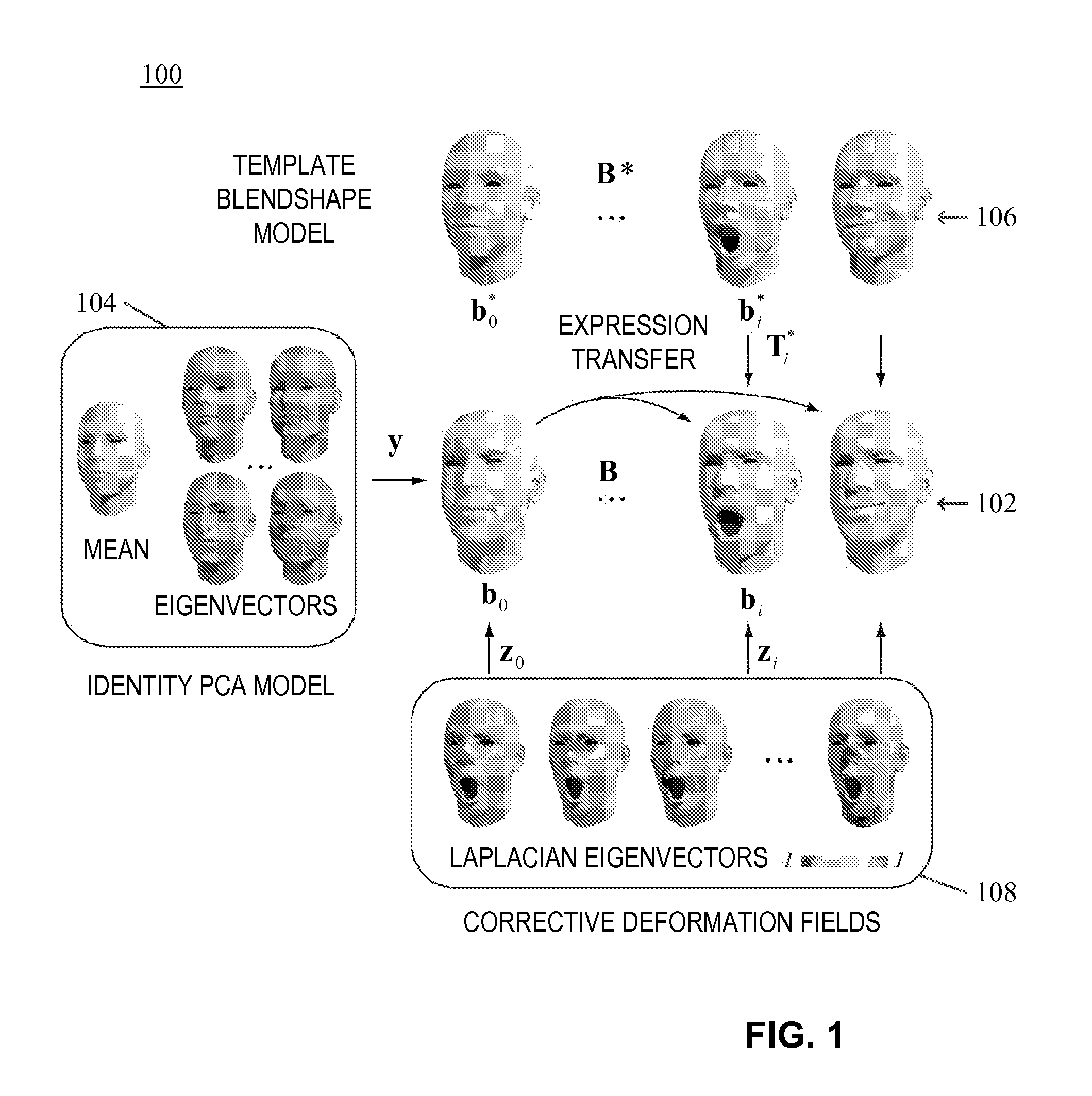

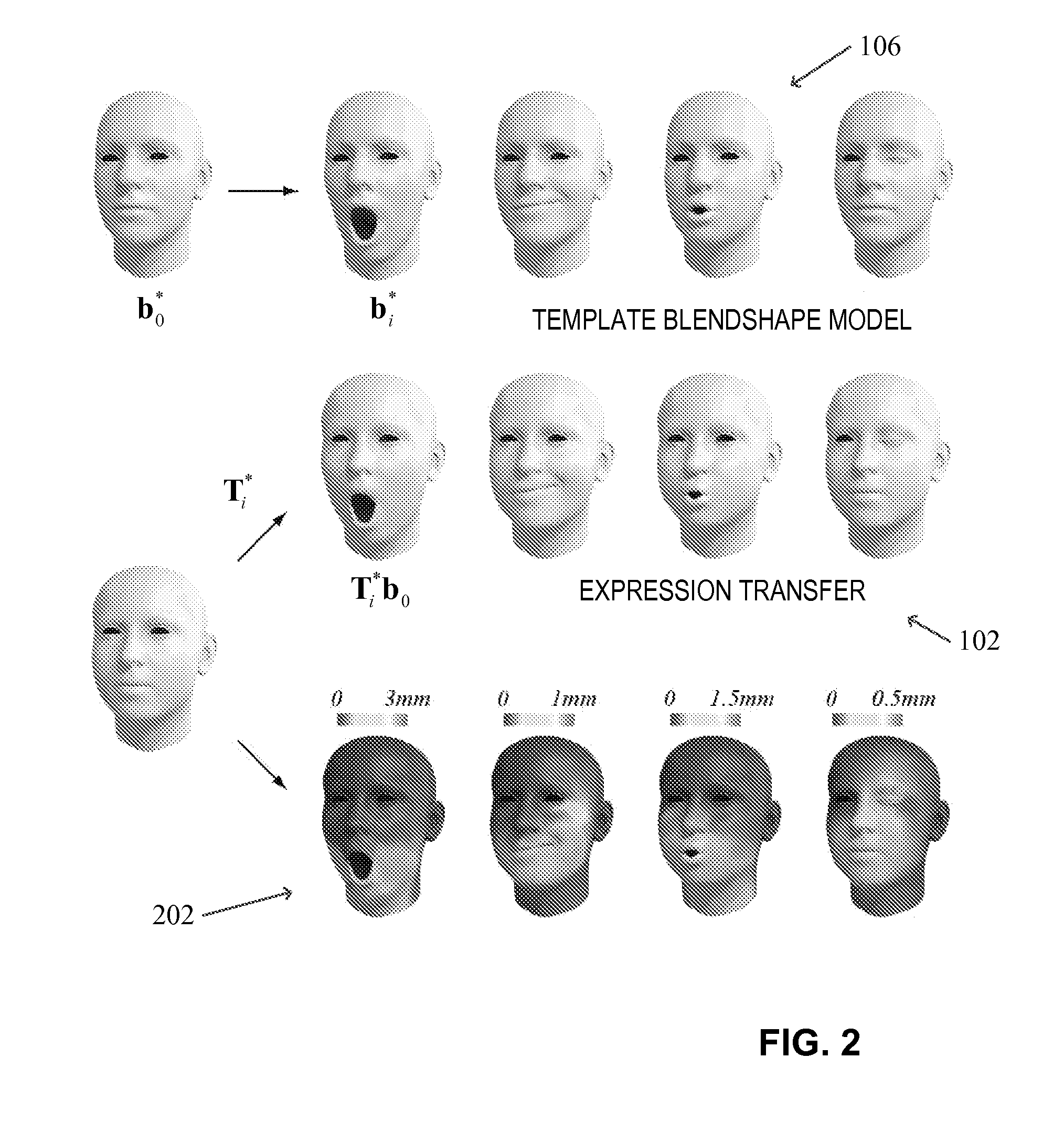

Online modeling for real-time facial animation

ActiveUS9378576B2Shorten the trackImprove tracking performanceImage analysisCharacter and pattern recognitionGraphicsPattern recognition

Embodiments relate to a method for real-time facial animation, and a processing device for real-time facial animation. The method includes providing a dynamic expression model, receiving tracking data corresponding to a facial expression of a user, estimating tracking parameters based on the dynamic expression model and the tracking data, and refining the dynamic expression model based on the tracking data and estimated tracking parameters. The method may further include generating a graphical representation corresponding to the facial expression of the user based on the tracking parameters. Embodiments pertain to a real-time facial animation system.

Owner:APPLE INC

Laugh detector and system and method for tracking an emotional response to a media presentation

Information in the form of emotional responses to a media presentation may be passively collected, for example by a microphone and / or a camera. This information may be tied to metadata at a time reference level in the media presentation and used to examine the content of the media presentation to assess a quality of, or user emotional response to, the content and / or to project the information onto a demographic. Passive collection of emotional responses may be used to add emotion as an element of speech or facial expression detection, to make use of such information, for example to judge the quality of content or to judge the nature of various individuals for future content that is to be provided to them or to those similarly situated demographically. Thus, the invention asks and answers such questions as: What makes people happy? What makes them laugh? What do they find interesting? Boring? Exciting?

Owner:SONY INTERACTIVE ENTRTAINMENT LLC

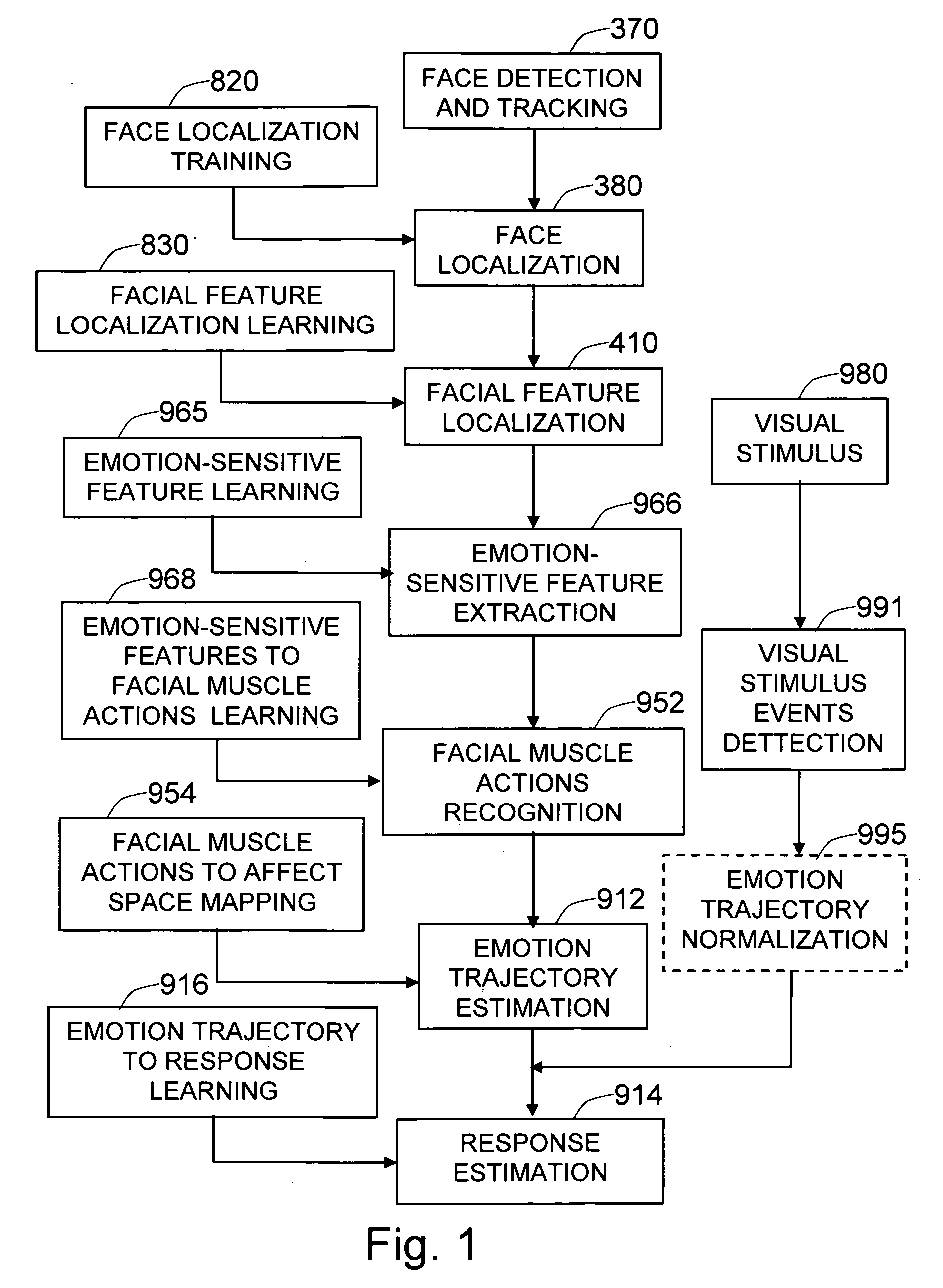

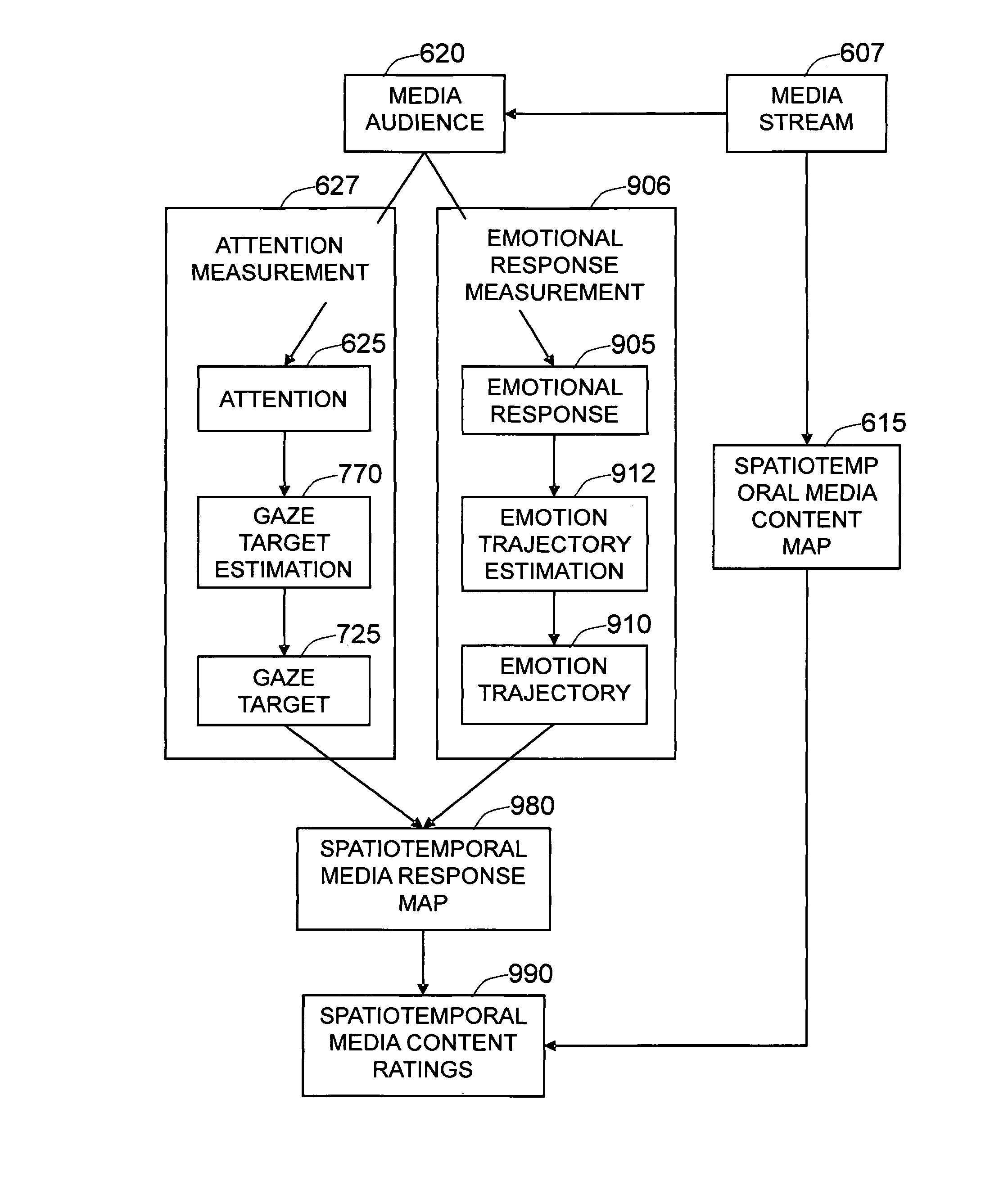

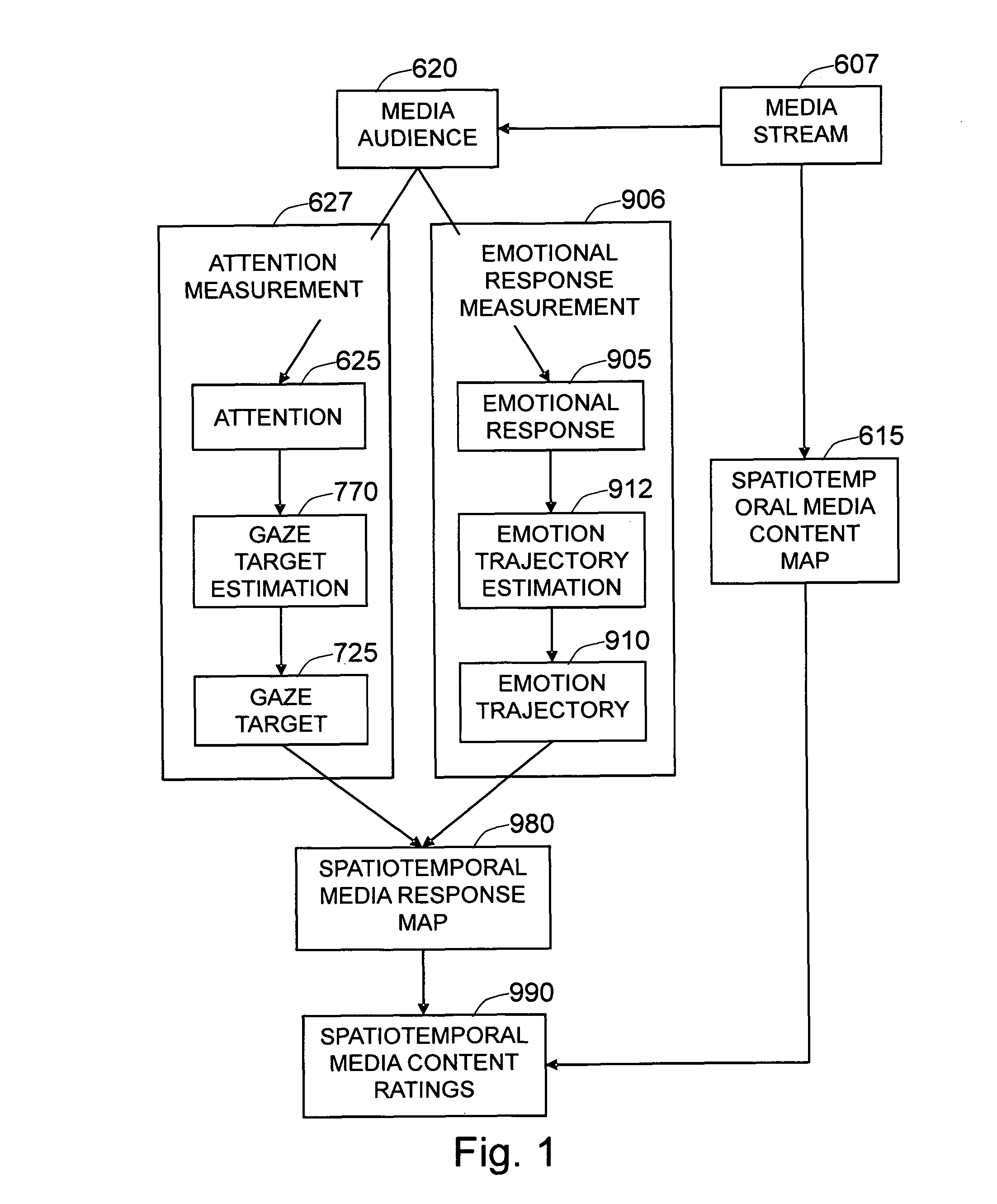

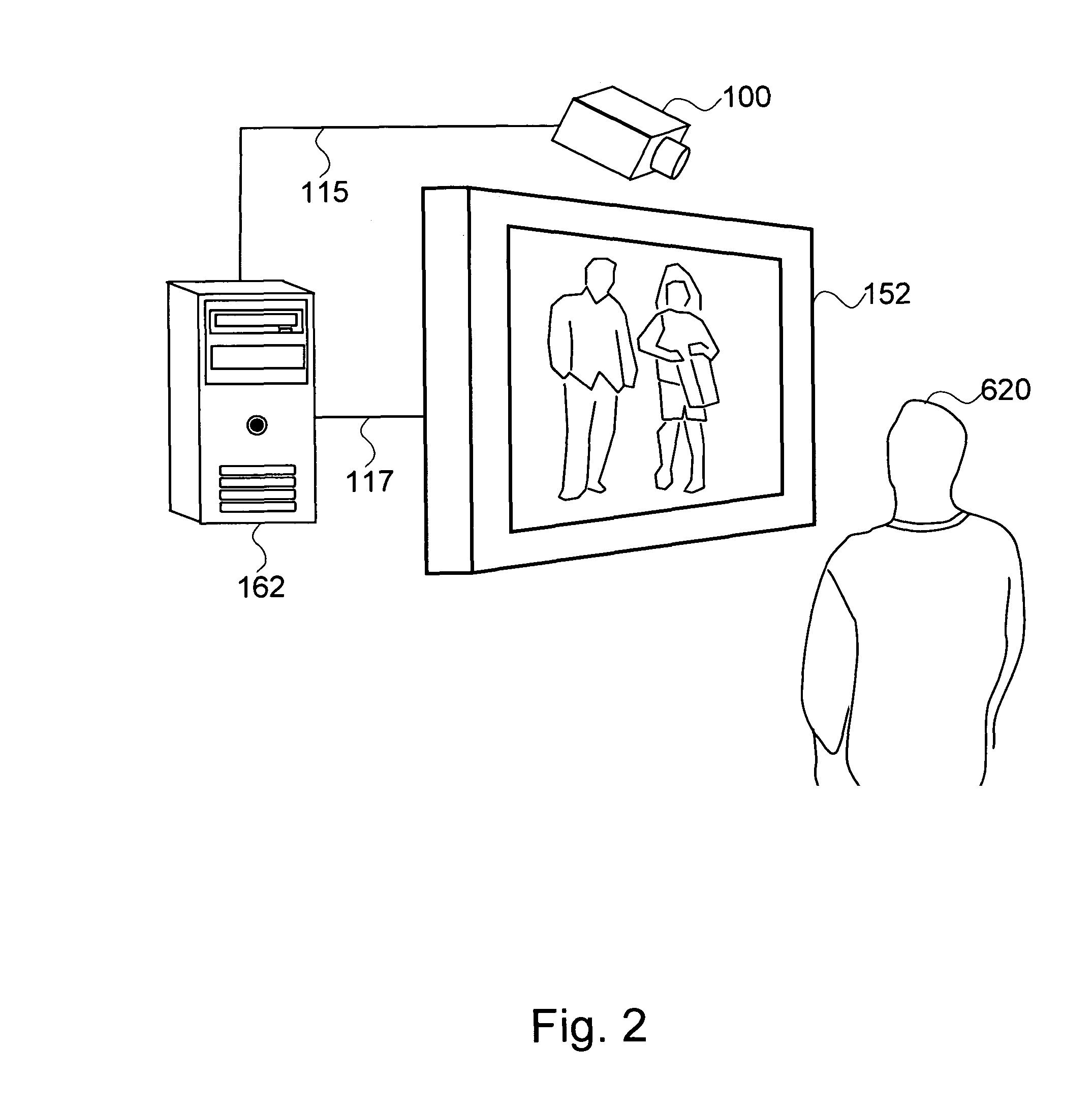

Method and system for measuring emotional and attentional response to dynamic digital media content

The present invention is a method and system to provide an automatic measurement of people's responses to dynamic digital media, based on changes in their facial expressions and attention to specific content. First, the method detects and tracks faces from the audience. It then localizes each of the faces and facial features to extract emotion-sensitive features of the face by applying emotion-sensitive feature filters, to determine the facial muscle actions of the face based on the extracted emotion-sensitive features. The changes in facial muscle actions are then converted to the changes in affective state, called an emotion trajectory. On the other hand, the method also estimates eye gaze based on extracted eye images and three-dimensional facial pose of the face based on localized facial images. The gaze direction of the person, is estimated based on the estimated eye gaze and the three-dimensional facial pose of the person. The gaze target on the media display is then estimated based on the estimated gaze direction and the position of the person. Finally, the response of the person to the dynamic digital media content is determined by analyzing the emotion trajectory in relation to the time and screen positions of the specific digital media sub-content that the person is watching.

Owner:MOTOROLA SOLUTIONS INC

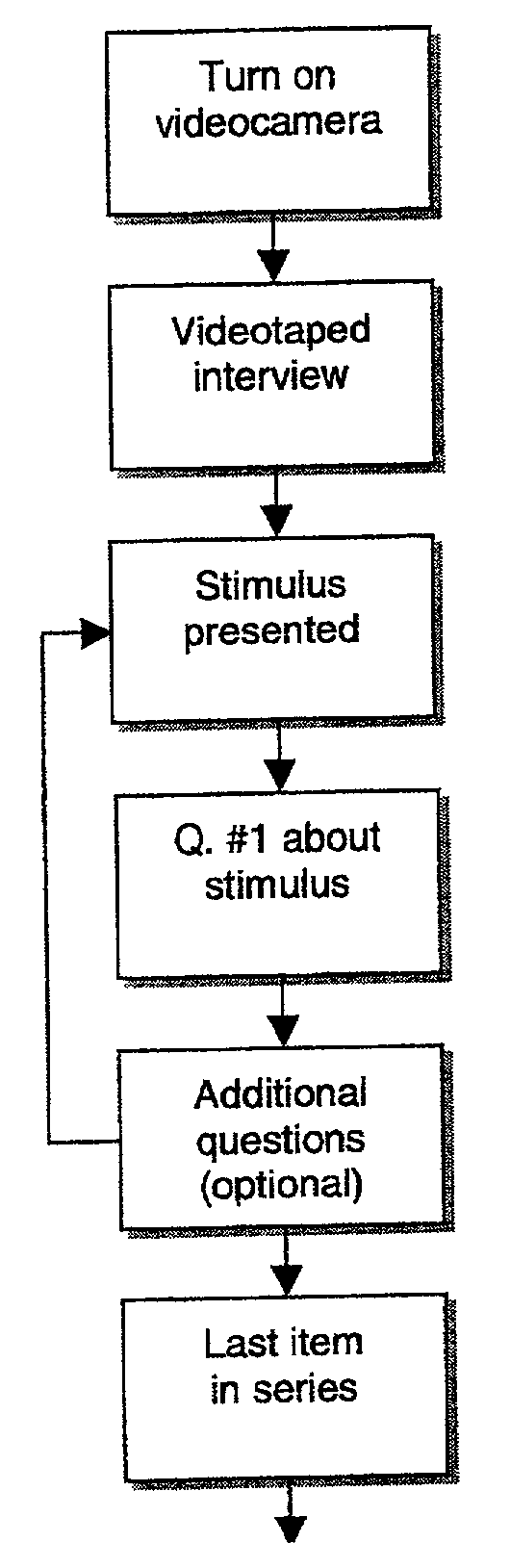

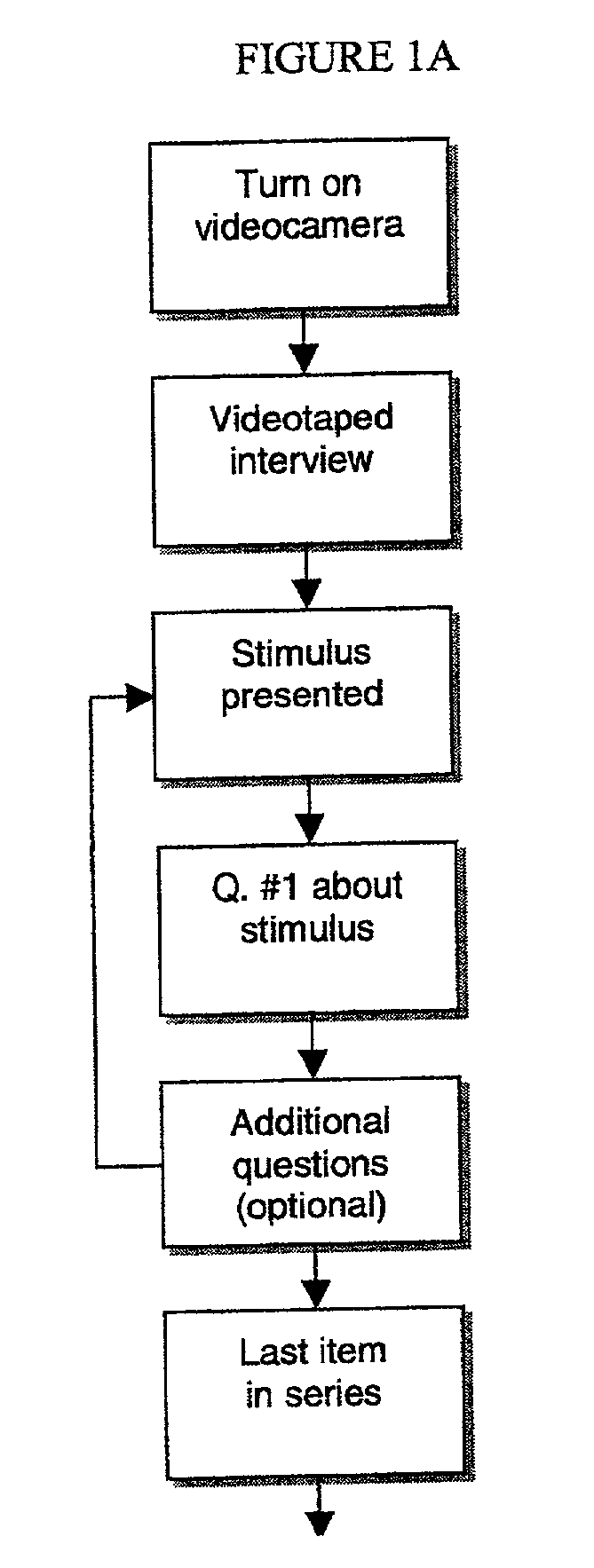

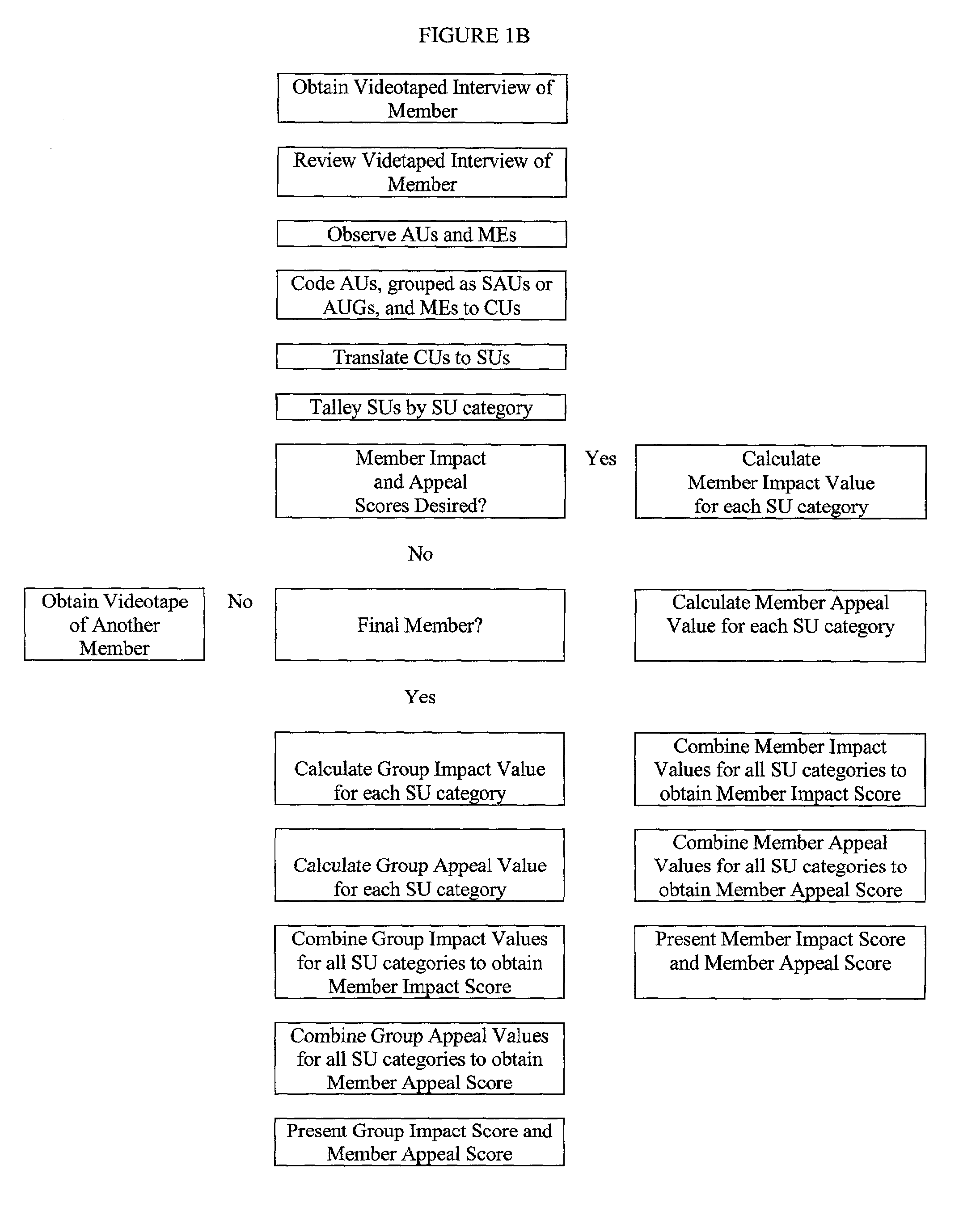

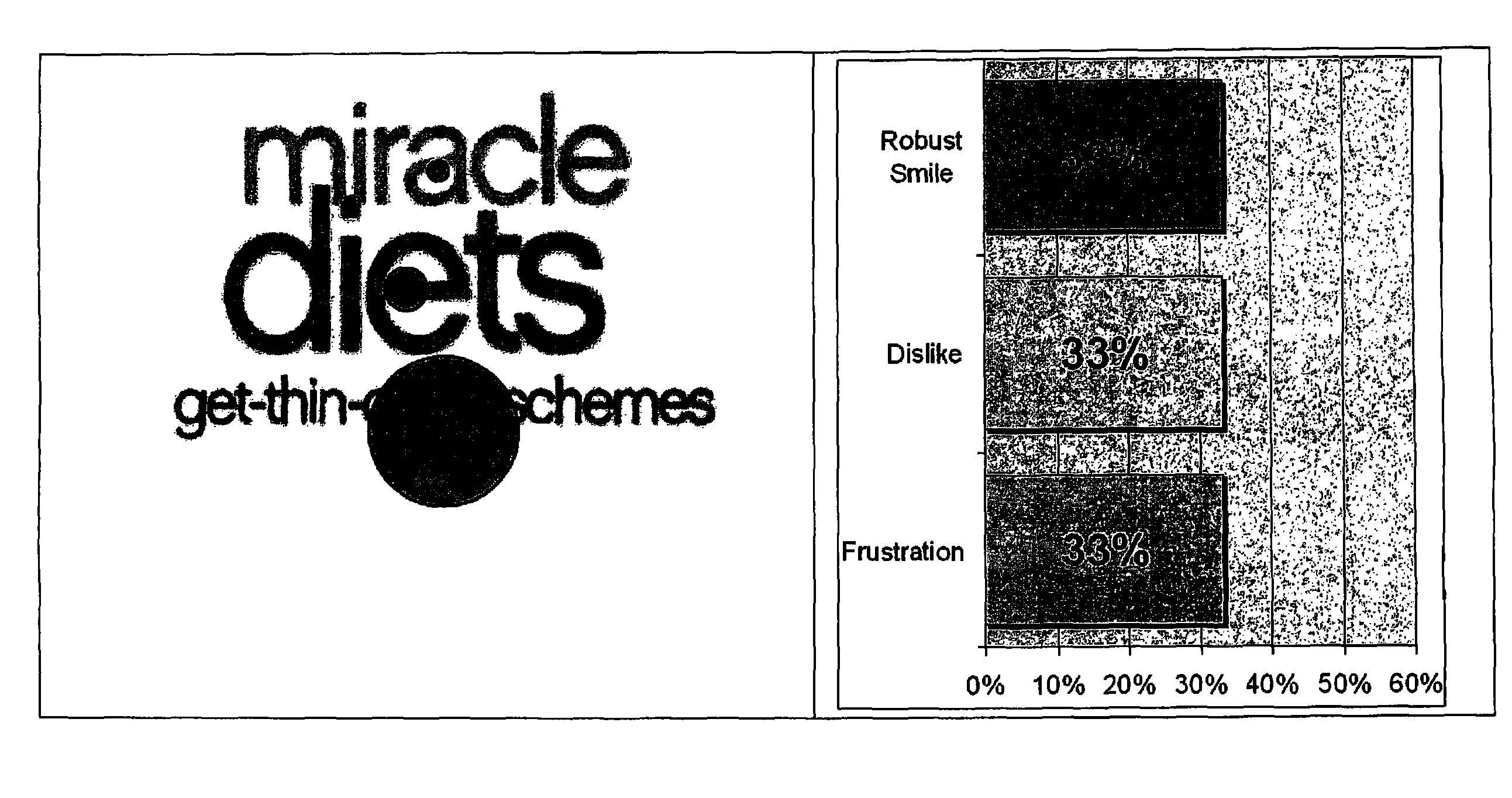

Method of facial coding monitoring for the purpose of gauging the impact and appeal of commercially-related stimuli

A method of assessing consumer reaction to a marketing stimulus, involving the steps of (a) exposing a sample population to a marketing stimulus for a period of time, (b) interviewing members of the sample population immediately after exposure of the members to the marketing stimulus, (c) videotaping any facial expressions and associated verbal comments of individual members of the sample population during the exposure period and interview, (d) reviewing the videotaped facial expressions and associated verbal comments of individual members of the sample population to (1) detect the occurrence of action units, (2) detect the occurrence of a smile, (3) categorize any detected smile as duchenne or social smile, (4) detect the occurrence of any verbal comment associated with a detected smile, and (5) categorize any associated verbal comment as positive, neutral or negative, (e) coding a single action unit or combination of action units to a coded unit, (f) associating coded units with any contemporaneously detected smile, (g) translating the coded unit to a scored unit, (h) tallying the scored unit by scoring unit category, (i) repeating steps (d) through (h) throughout the exposure period, (j) repeating steps (d) through (h) for a plurality of the members of the sample population, (k) calculating an impact value for each scoring unit category by multiplying the tallied number of scored units for each scoring unit category by a predetermined impact factor for that scoring unit category, (l) calculating an appeal value for each scoring unit category by multiplying the tallied number of scored units for each scoring unit category by a predetermined appeal factor for that scoring unit category, (m) combining the impact values obtained for each scoring unit category to obtain an impact score, (n) combining the appeal values obtained for each scoring unit category to obtain an appeal score, and (o) representing the appeal and impact scores with an identification of the corresponding marketing stimulus to which the members were exposed.

Owner:SENSORY LOGIC

Online modeling for real-time facial animation

ActiveUS20140362091A1Improve tracking performanceShorten the trackImage analysisCharacter and pattern recognitionGraphicsPattern recognition

Embodiments relate to a method for real-time facial animation, and a processing device for real-time facial animation. The method includes providing a dynamic expression model, receiving tracking data corresponding to a facial expression of a user, estimating tracking parameters based on the dynamic expression model and the tracking data, and refining the dynamic expression model based on the tracking data and estimated tracking parameters. The method may further include generating a graphical representation corresponding to the facial expression of the user based on the tracking parameters. Embodiments pertain to a real-time facial animation system.

Owner:APPLE INC

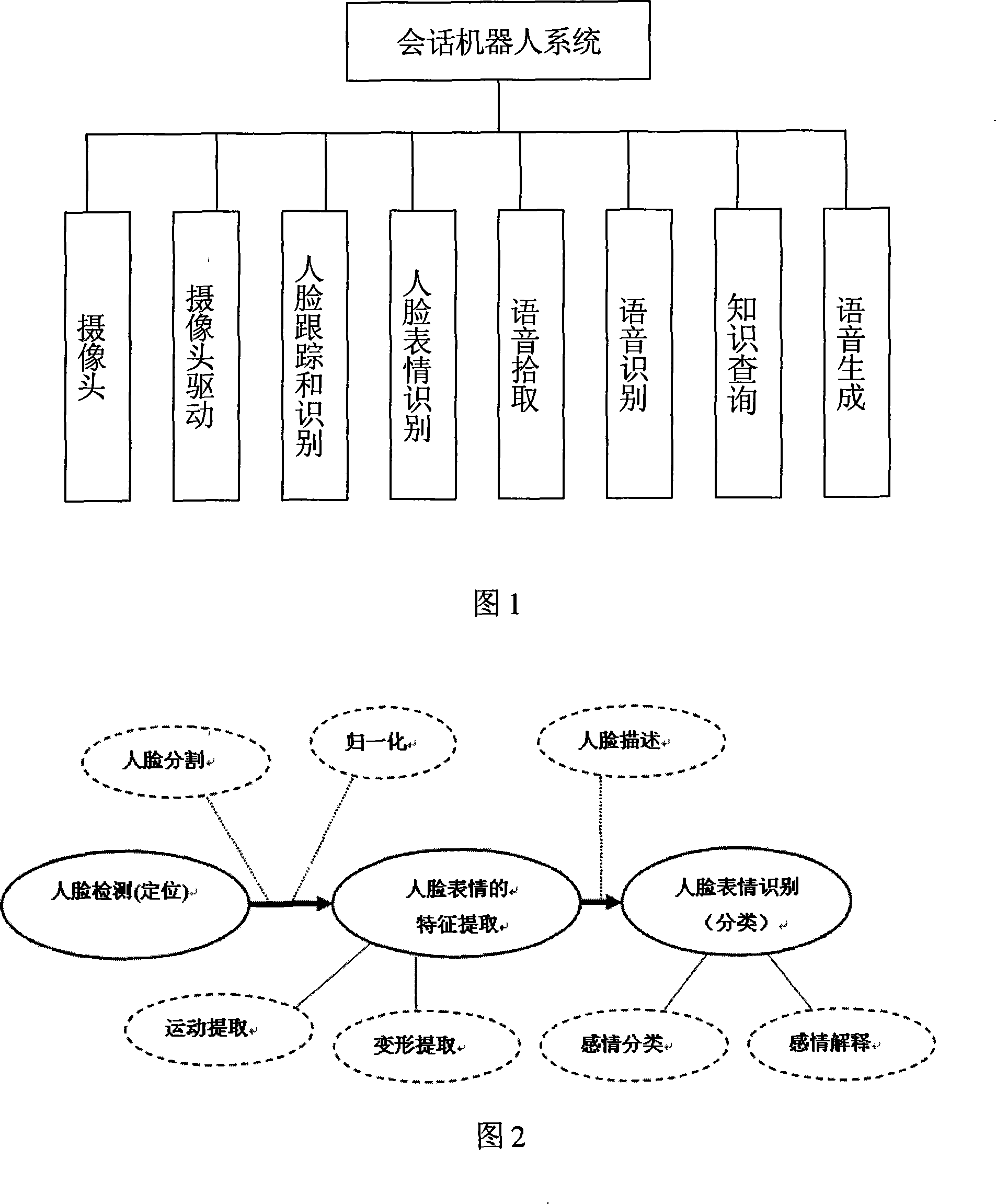

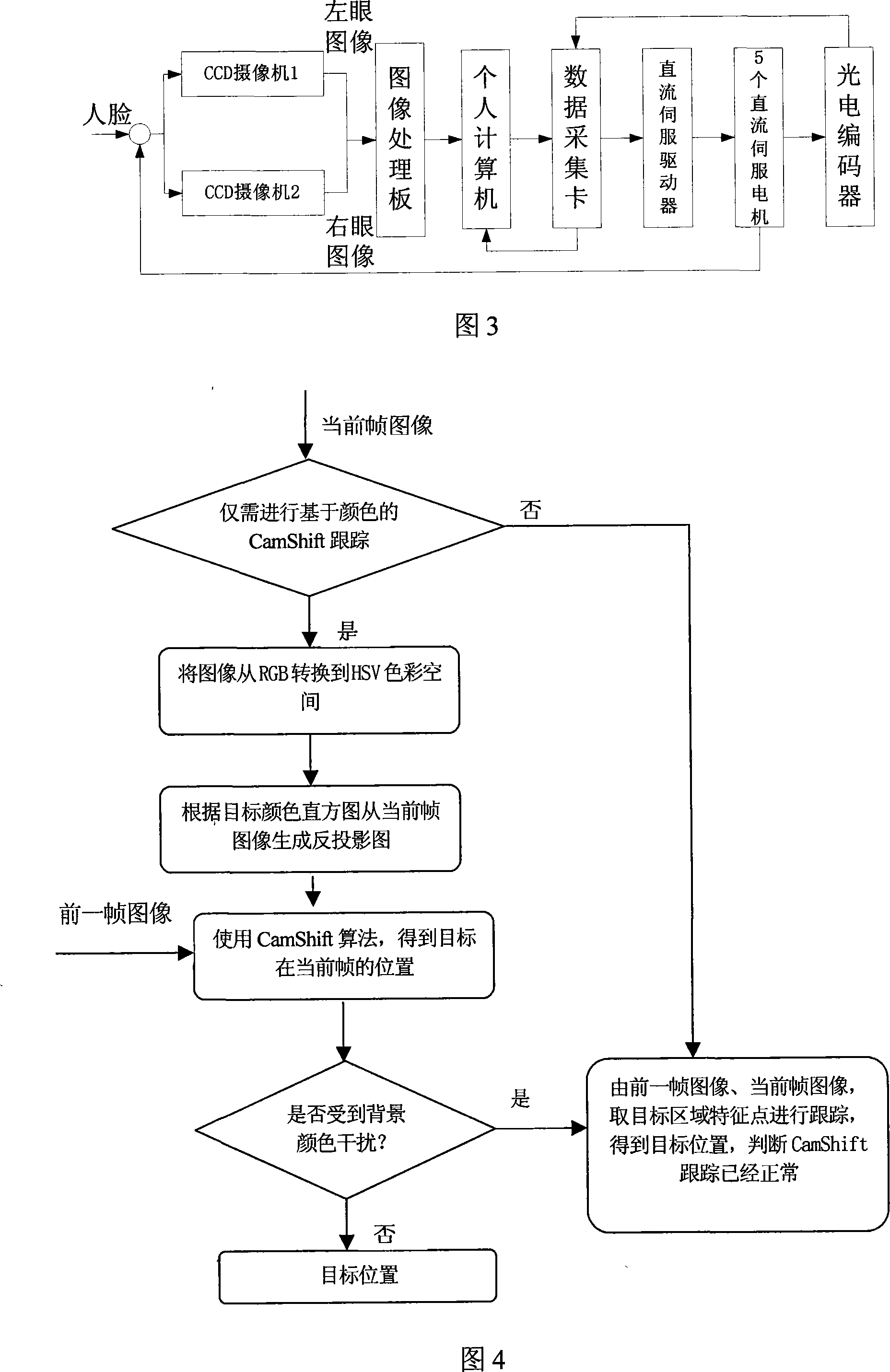

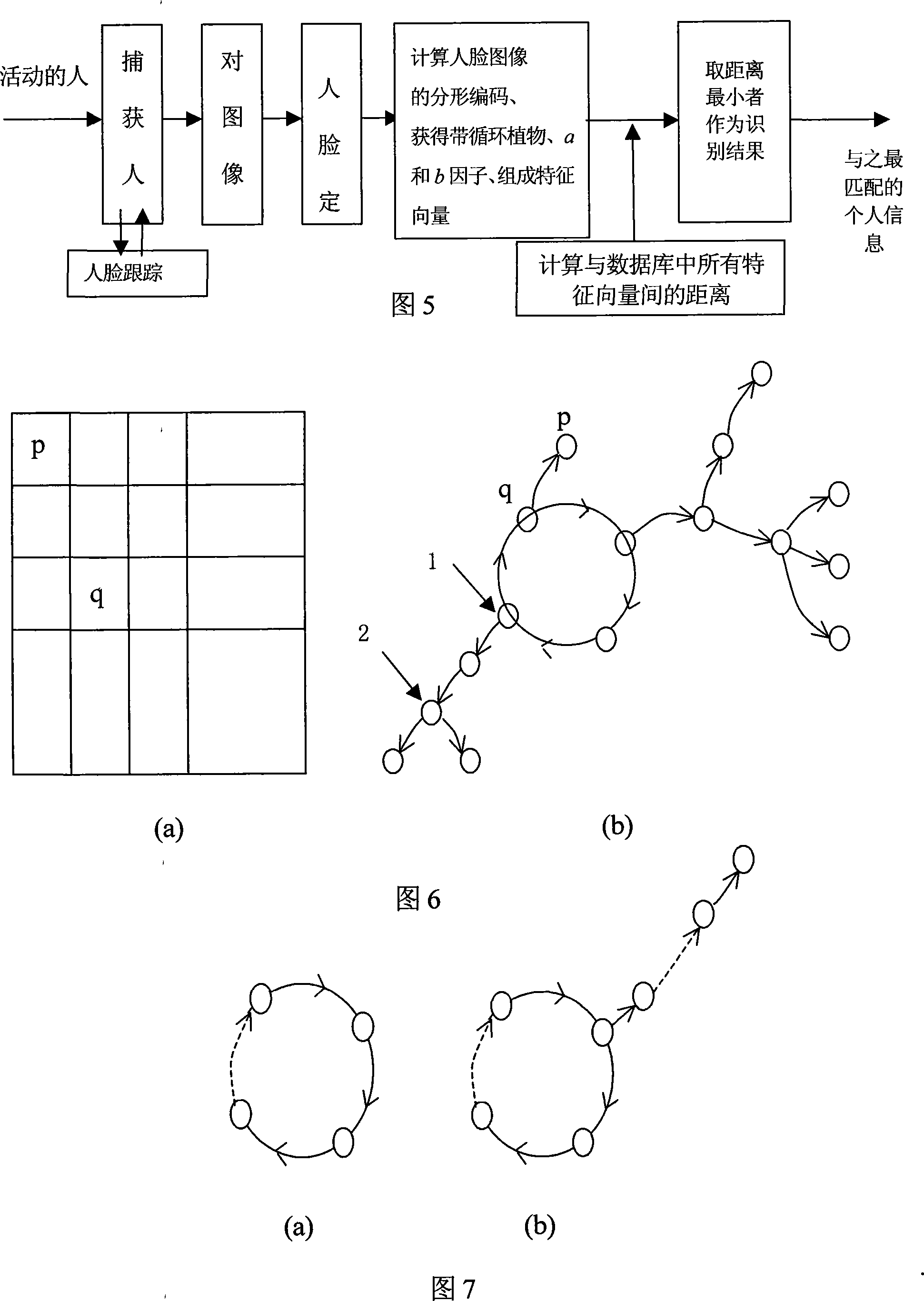

A session robotic system

InactiveCN101187990ARecognizableHave the ability to understandInput/output for user-computer interactionBiological modelsRobotic systemsSpeech identification

The invention discloses a conservational robot system. A human face tracking and recognizing module tracks and recognizes a human face image captured by a camera; a human facial expression recognizing module recognizes the expression; and semantic meaning is recognized after voice signals pass through a voice picking module and a voice recognizing module. The robot system understands human demands according to facial expressions and / or voice and then forms conservation statement through a knowledge inquiry module and generates voice for communication with humans through a voice generating module. The conservational robot system has voice recognizing and understanding abilities and can understand commands of users. The invention can be applied to schools, families, hotels, companies, airports, bus stations, docks, meeting rooms and so on for education, chat, conservation, consultation, etc. In addition, the invention can also help users with propaganda and introduction, guest reception, business inquiry, secretary service, foreign language interpretation, etc.

Owner:SOUTH CHINA UNIV OF TECH

Systems and methods for creating and distributing modifiable animated video messages

Systems and methods in accordance with embodiments of the invention enable collaborative creation, transmission, sharing, non-linear exploration, and modification of animated video messages. One embodiment includes a video camera, a processor, a network interface, and storage containing an animated message application, and a 3D character model. In addition, the animated message application configures the processor to: capture a video sequence using the video camera; detect a human face within a sequence of video frames; track changes in human facial expression of a human face detected within a sequence of video frames; map tracked changes in human facial expression to motion data, where the motion data is generated to animate the 3D character model; apply motion data to animate the 3D character model; render an animation of the 3D character model into a file as encoded video; and transmit the encoded video to a remote device via the network interface.

Owner:ADOBE INC

Information processing apparatus and method

InactiveUS20050187437A1Condition can be detectedSurgeryVaccination/ovulation diagnosticsPattern recognitionInformation processing

An information processing apparatus detects the facial expression and body action of a person image included in image information, and determines the physical / mental condition of the user on the basis of the detection results. Presentation of information by a presentation unit which visually and / or audibly presenting information is controlled by the determined physical / mental condition of the user.

Owner:CANON KK

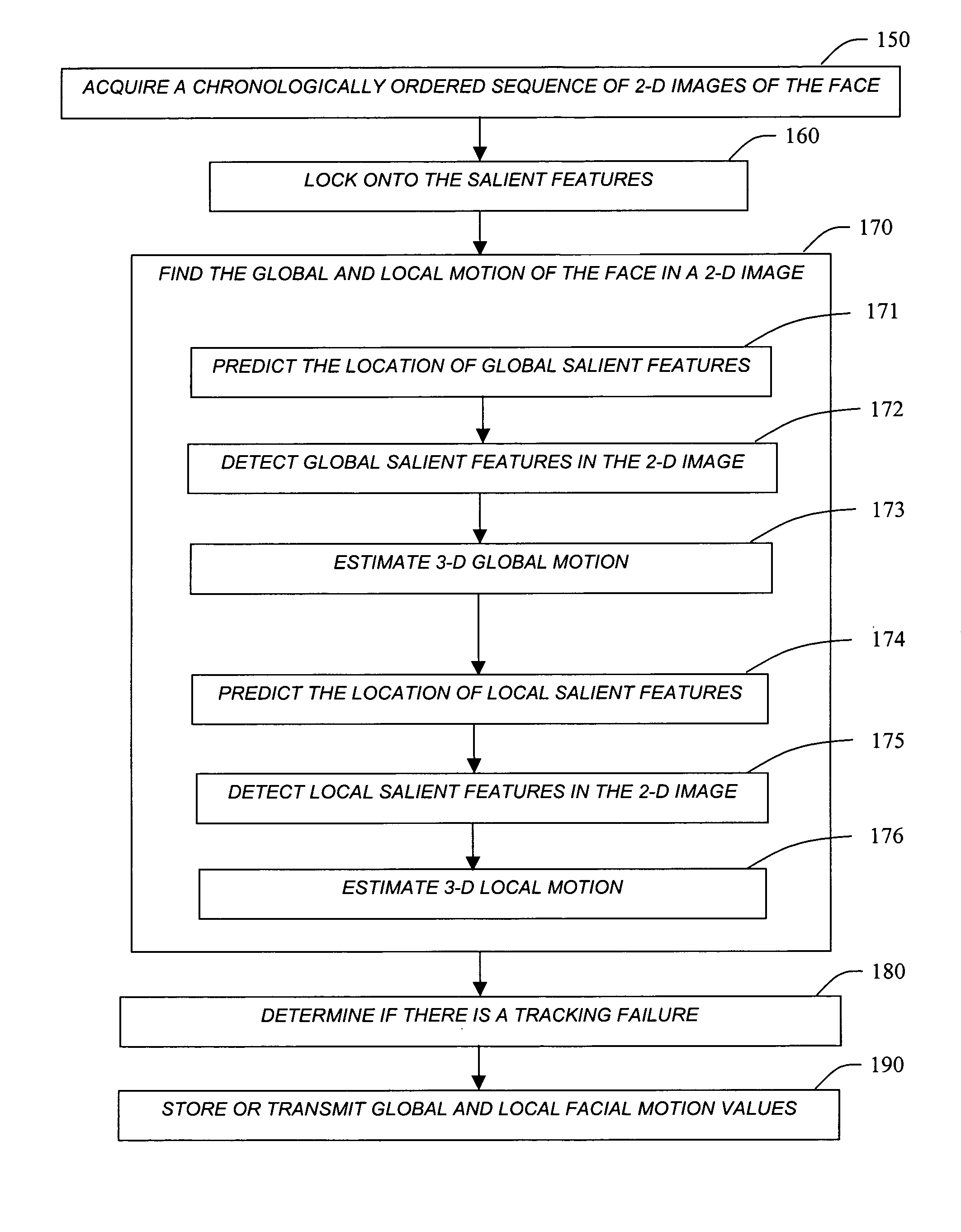

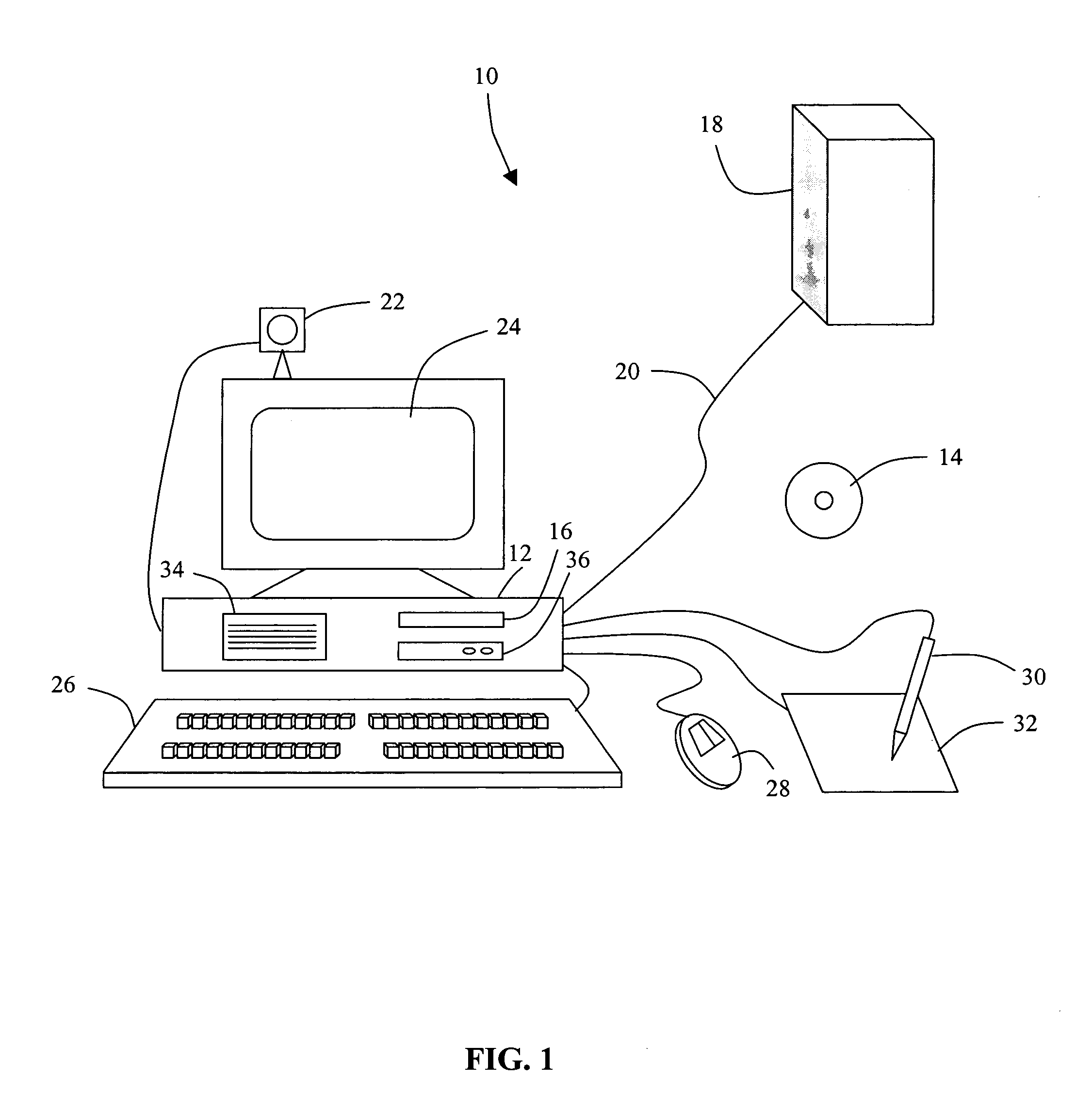

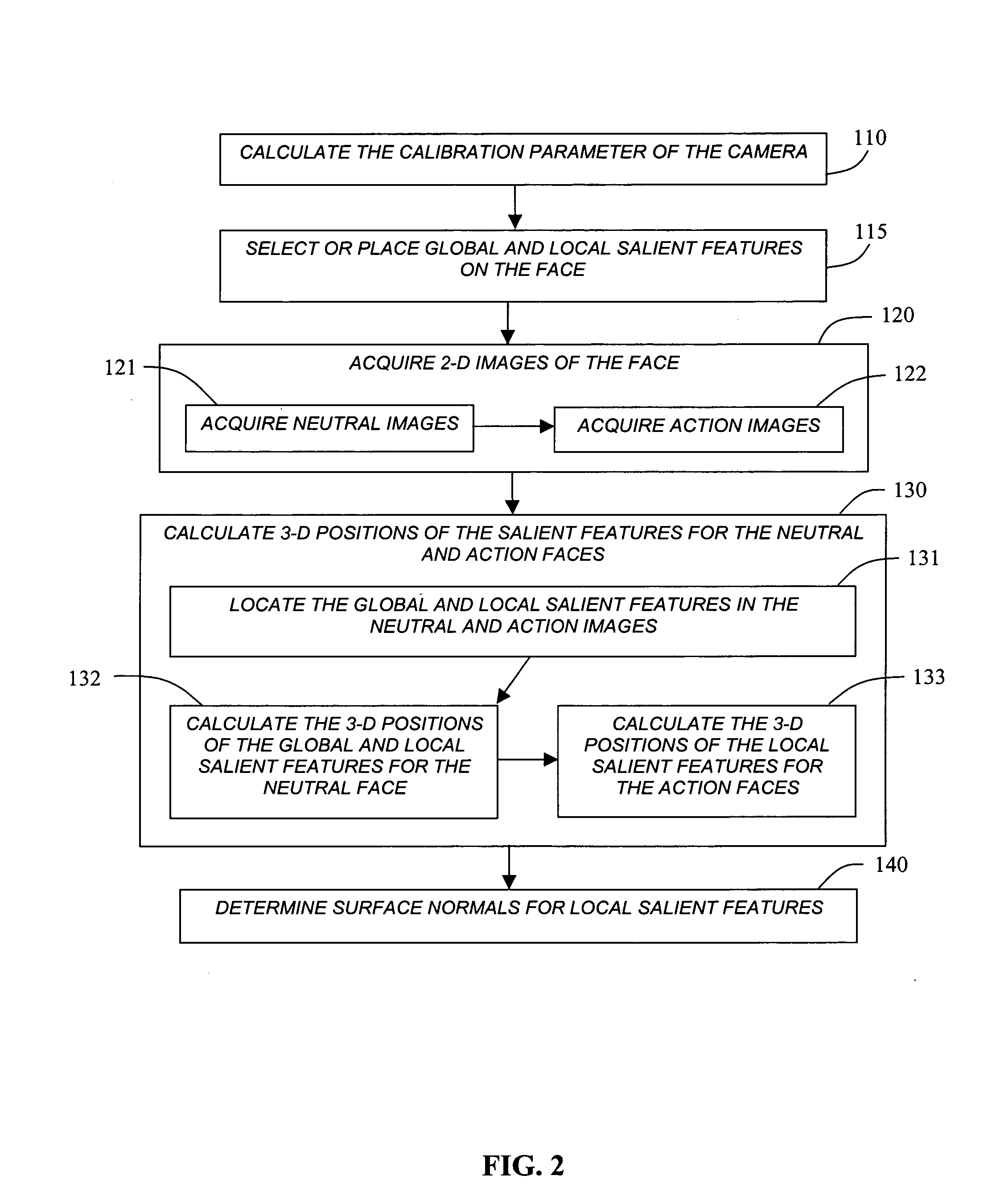

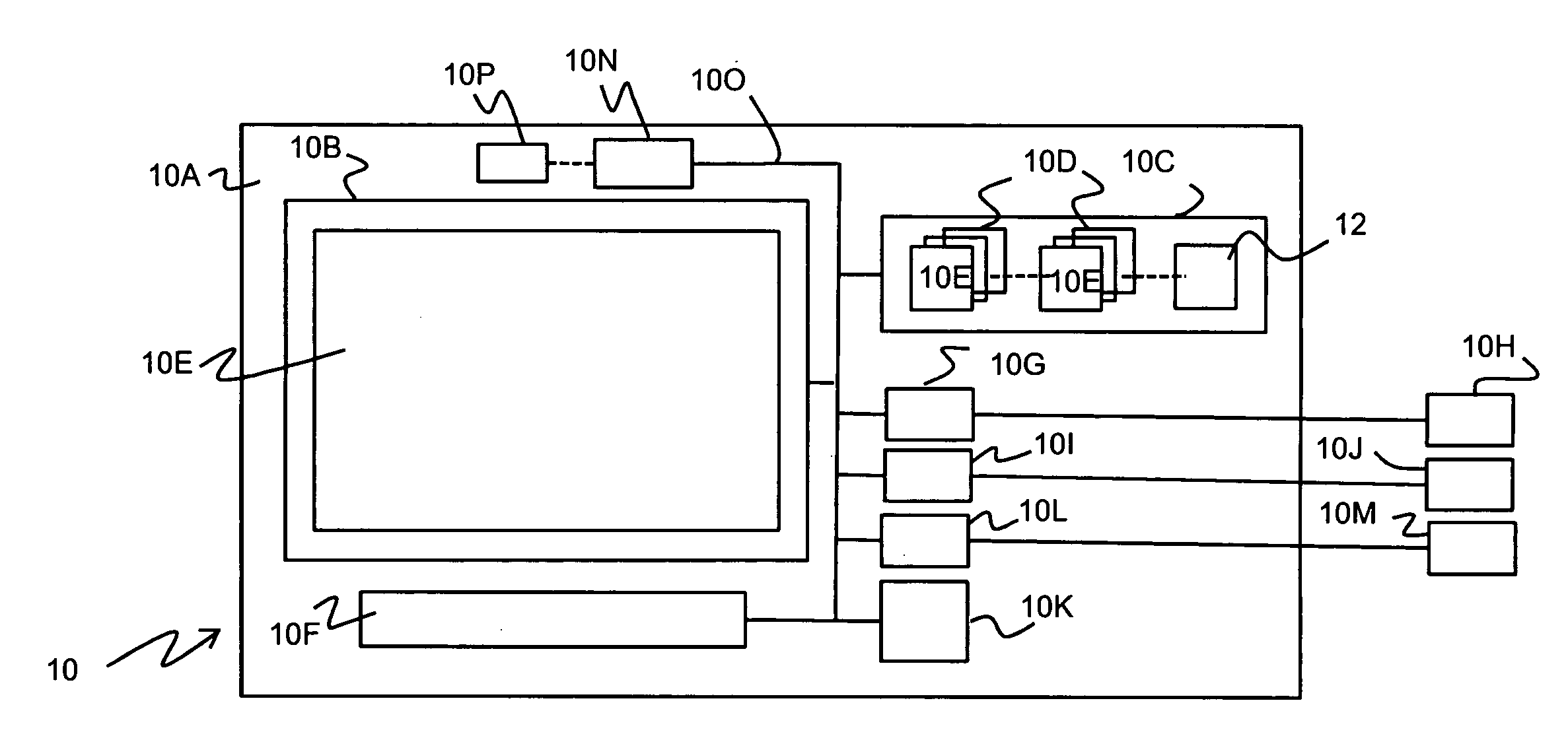

Method for tracking motion of a face

InactiveUS7127081B1Satisfies needTelevision system detailsImage analysisPattern recognitionAnimation

Owner:MOMENTUM AS +1

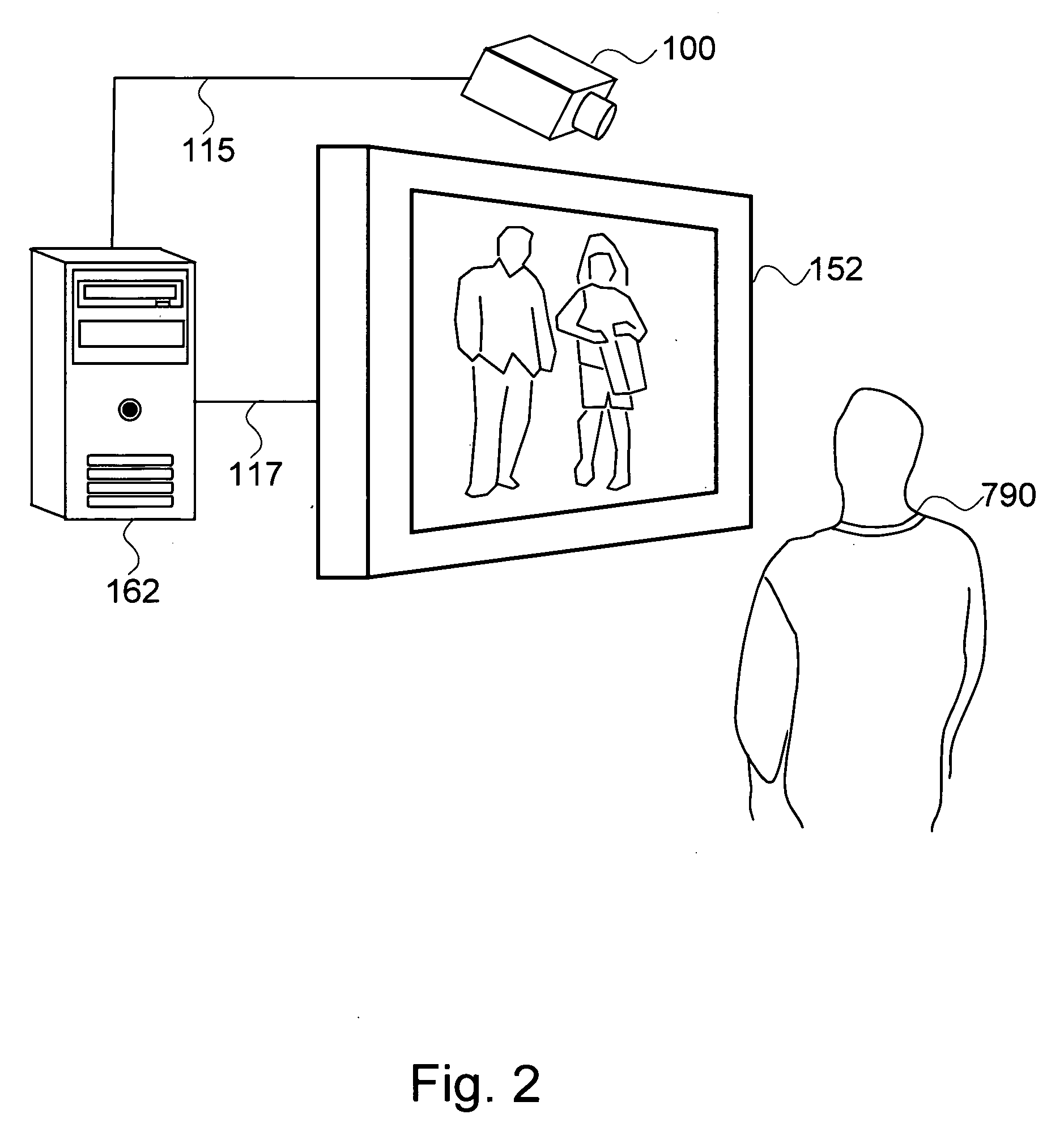

Method and apparatus for image display control according to viewer factors and responses

ActiveUS20100328492A1Television system detailsCharacter and pattern recognitionFacial expressionImage capture

A device and system and a method for selecting and displaying images in accordance with attention responses of an image viewer. An image capture device captures a viewer image of a spatial region potentially containing a viewer of the image display device and a viewer detection mechanism analyzes the viewer image and determines whether a potential viewer is observing the display screen. A gaze pattern_analysis mechanism identifies a viewer response to a currently displayed image by analysis of the viewer's emotional response as revealed by the viewer's facial expression and other sensory inputs, and the selection of an image to be displayed is then modified in accordance with the indicated viewer's attention response.

Owner:MONUMENT PEAK VENTURES LLC

Method and report assessing consumer reaction to a stimulus by matching eye position with facial coding

InactiveUS7930199B1Digital data information retrievalCharacter and pattern recognitionFacial expressionEye position

A method of reporting consumer reaction to a stimulus and resultant report generated by (i) recording facial expressions and eye positions of a human subject while exposed to a stimulus throughout a time period, (ii) coding recorded facial expressions to emotions, and (iii) reporting recorded eye positions and coded emotions, along with an identification of the stimulus.

Owner:SENSORY LOGIC

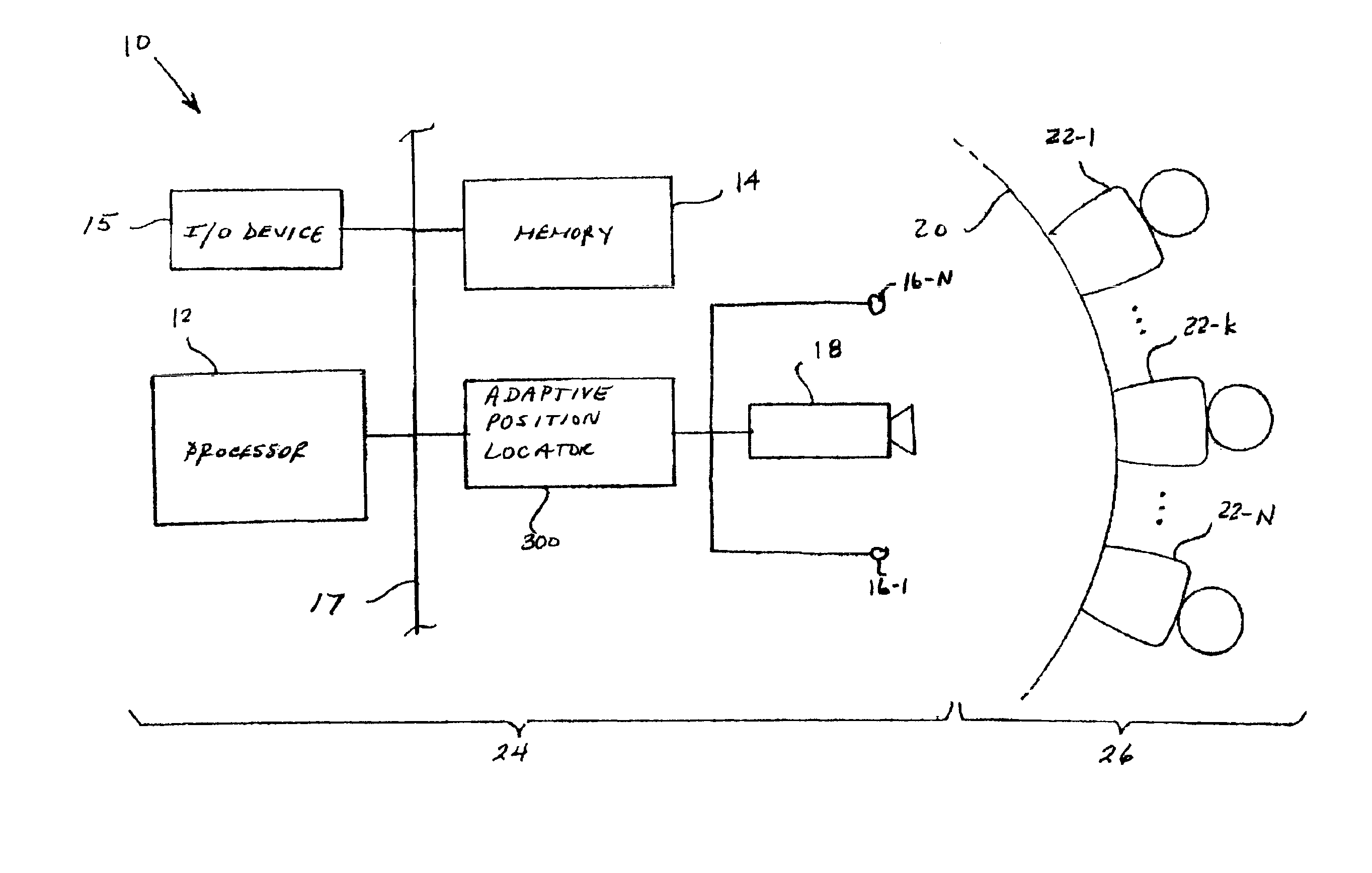

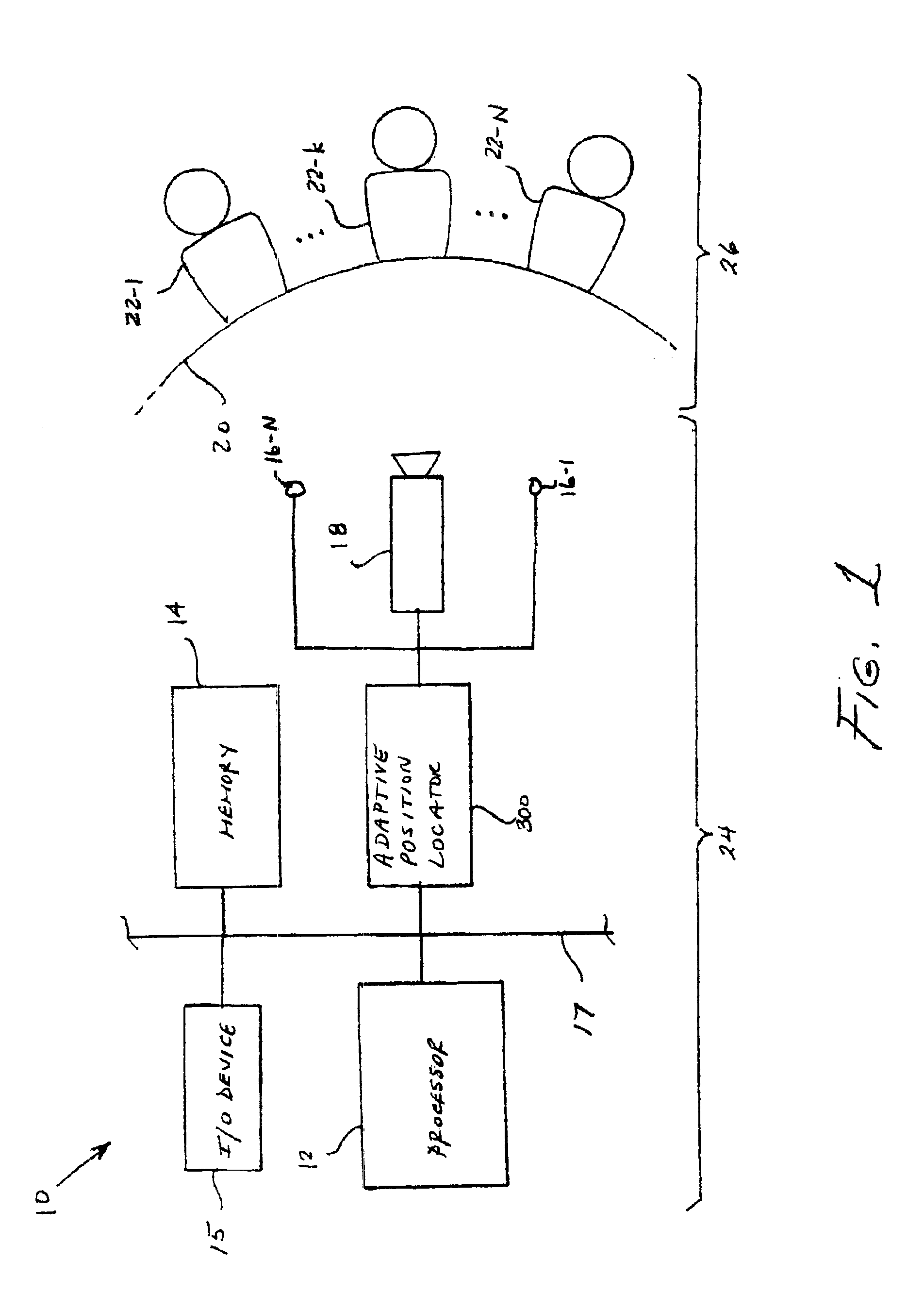

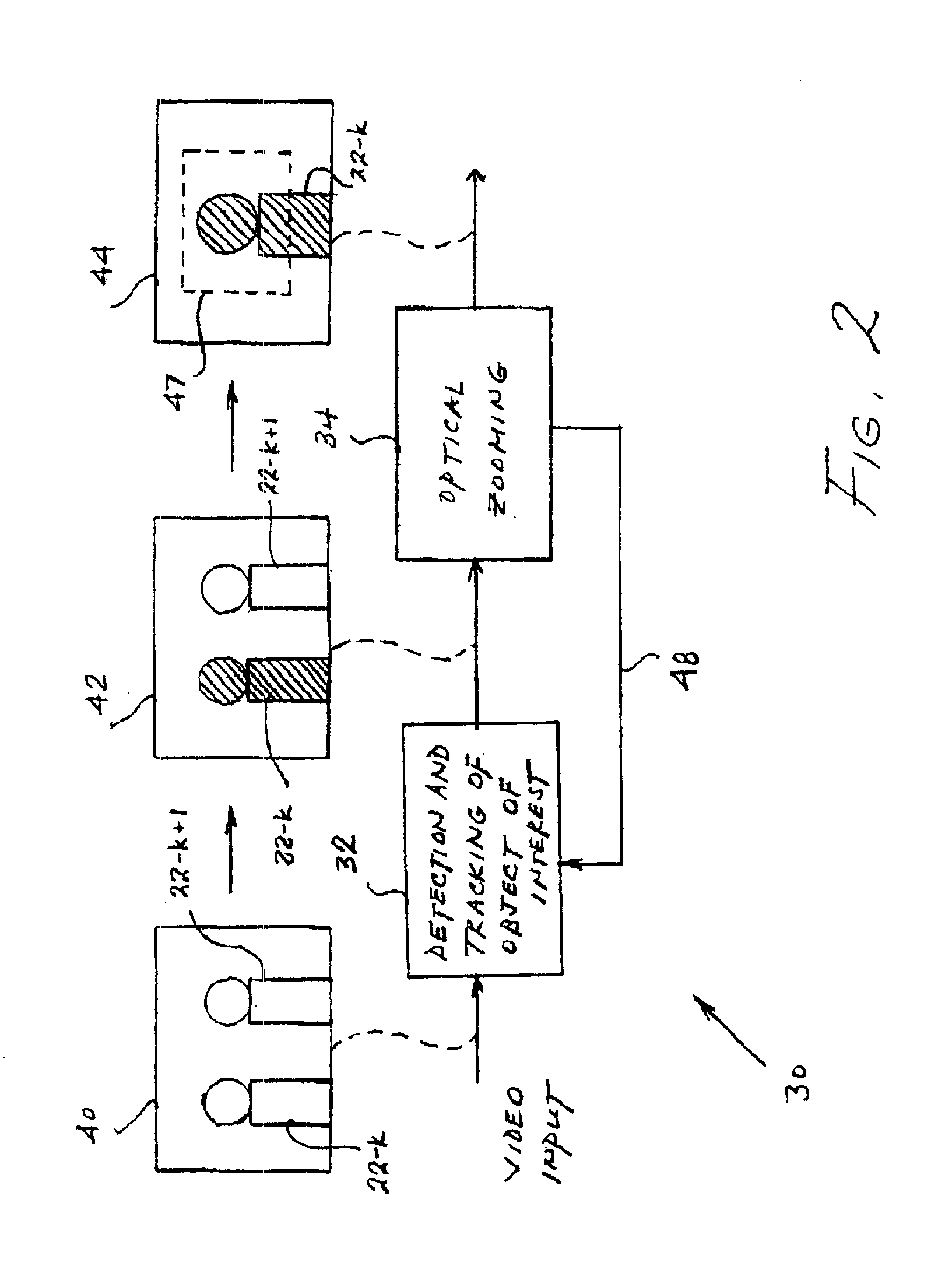

Method and apparatus for predicting events in video conferencing and other applications

InactiveUS6894714B2Television system detailsTelevision conference systemsPattern recognitionVideo processing

Methods and apparatus are disclosed for predicting events using acoustic and visual cues. The present invention processes audio and video information to identify one or more (i) acoustic cues, such as intonation patterns, pitch and loudness, (ii) visual cues, such as gaze, facial pose, body postures, hand gestures and facial expressions, or (iii) a combination of the foregoing, that are typically associated with an event, such as behavior exhibited by a video conference participant before he or she speaks. In this manner, the present invention allows the video processing system to predict events, such as the identity of the next speaker. The predictive speaker identifier operates in a learning mode to learn the characteristic profile of each participant in terms of the concept that the participant “will speak” or “will not speak” under the presence or absence of one or more predefined visual or acoustic cues. The predictive speaker identifier operates in a predictive mode to compare the learned characteristics embodied in the characteristic profile to the audio and video information and thereby predict the next speaker.

Owner:PENDRAGON WIRELESS LLC

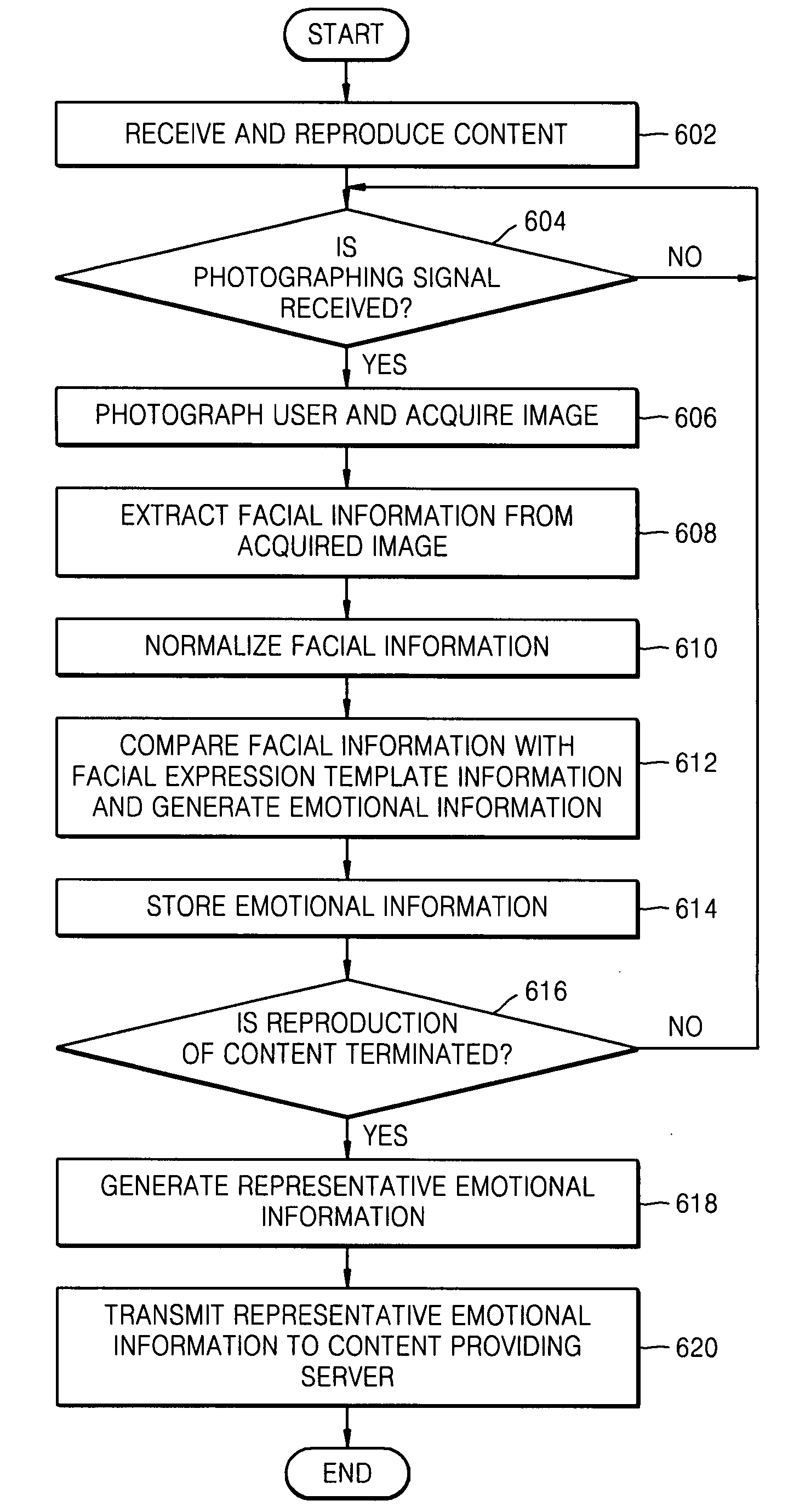

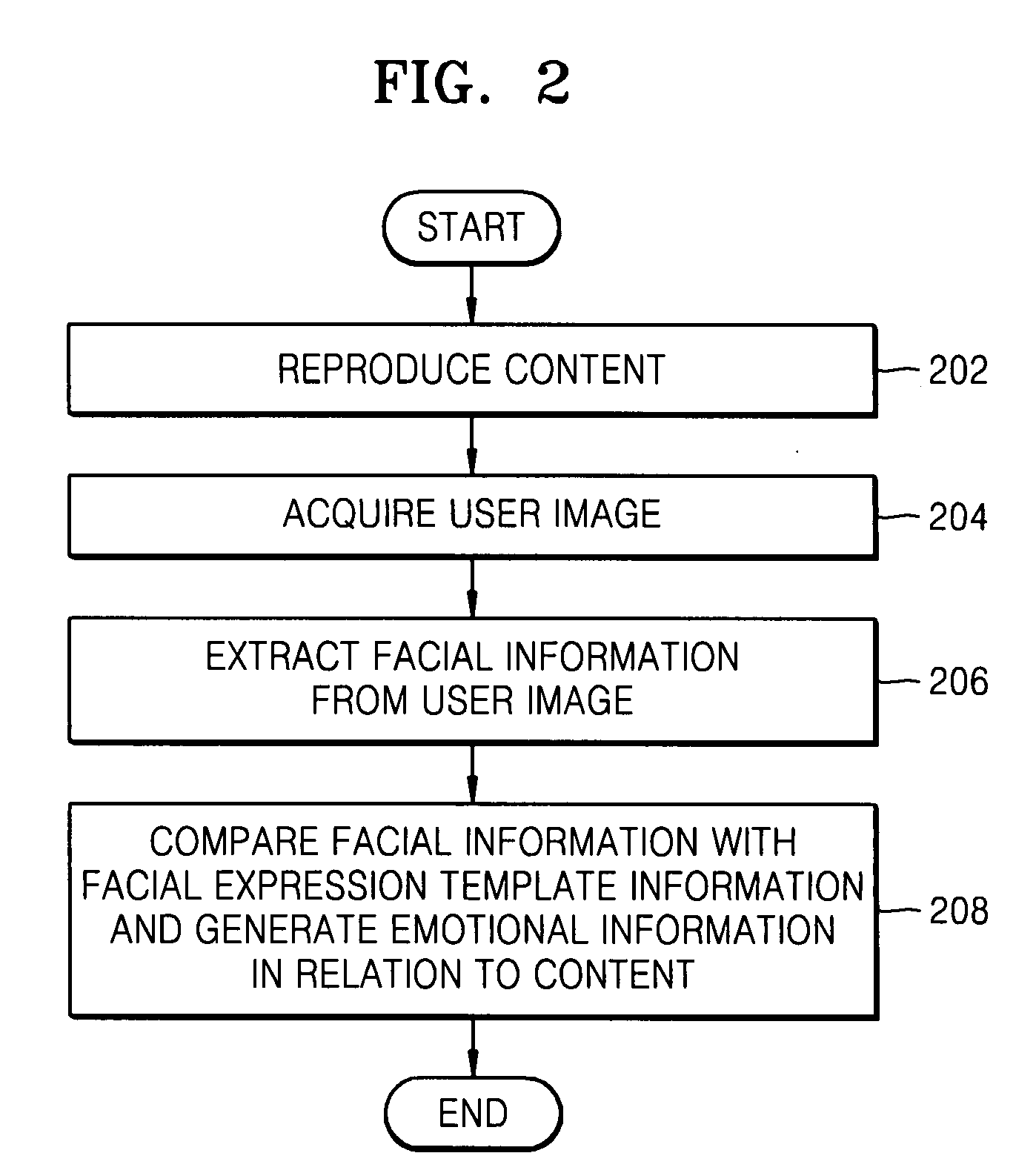

Method and apparatus for generating meta data of content

InactiveUS20080101660A1Data processing applicationsDigital data information retrievalFacial expressionClient-side

A method and apparatus are provided for generating emotional information including a user's impressions in relation to multimedia content or meta data regarding the emotional information, and a computer readable recording medium storing the method. The meta data generating method includes receiving emotional information in relation to the content from at least one client system which receives and reproduces the content; generating meta data for an emotion using the emotional information; and coupling the meta data for the emotion to the content. Accordingly, it is possible to automatically acquire emotional information by using the facial expression of a user who is appreciating multimedia content, and use the emotional information as meta data.

Owner:SAMSUNG ELECTRONICS CO LTD

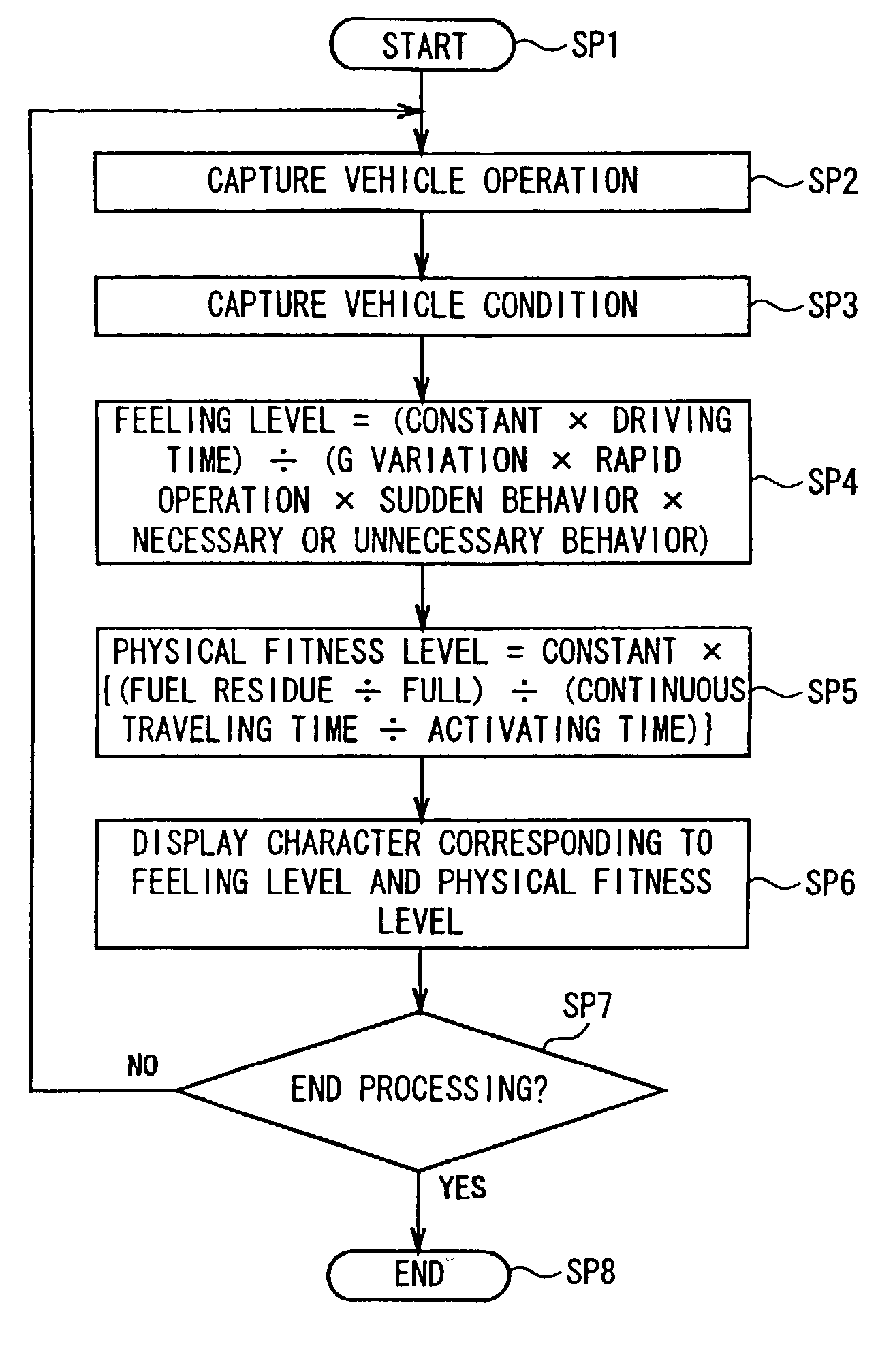

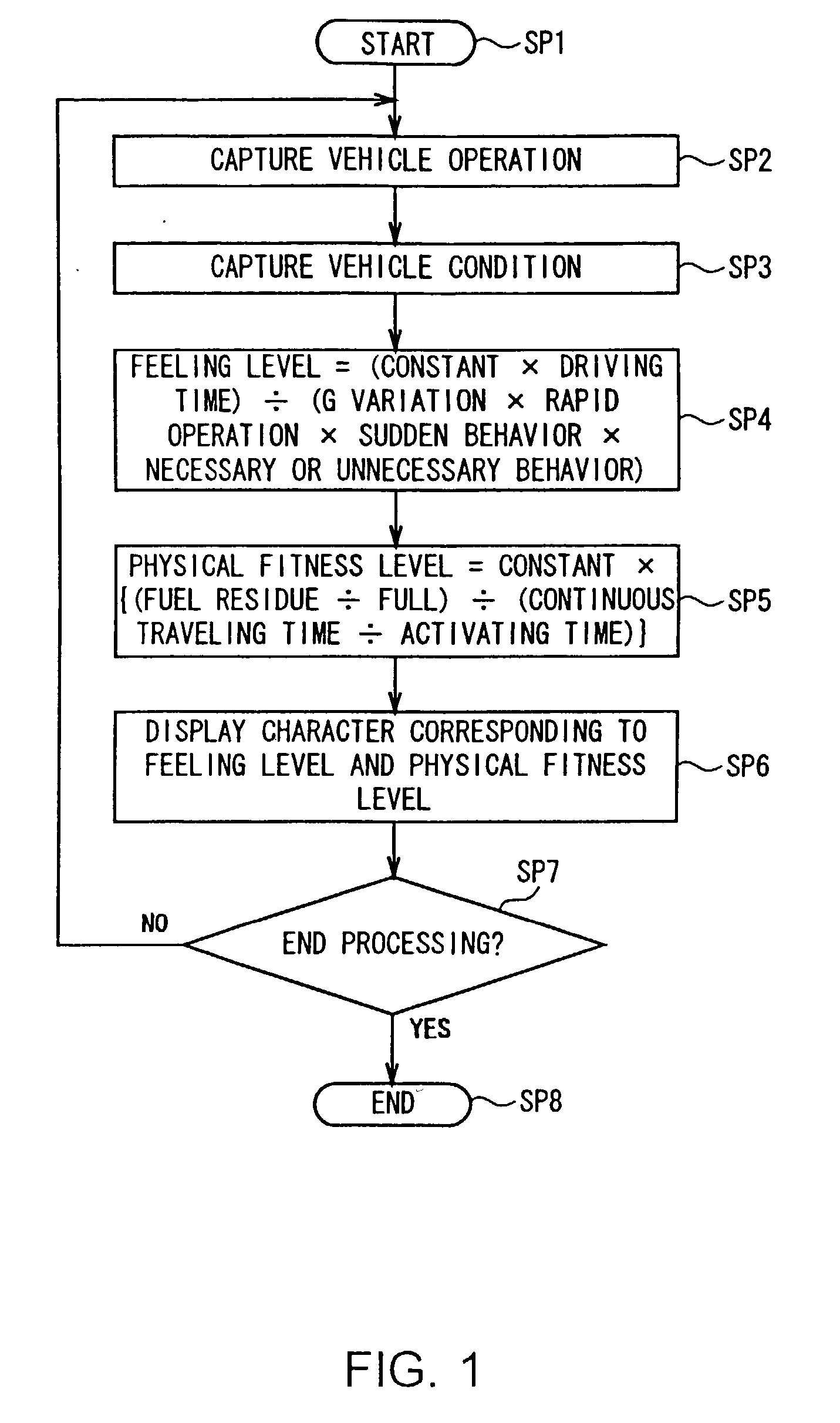

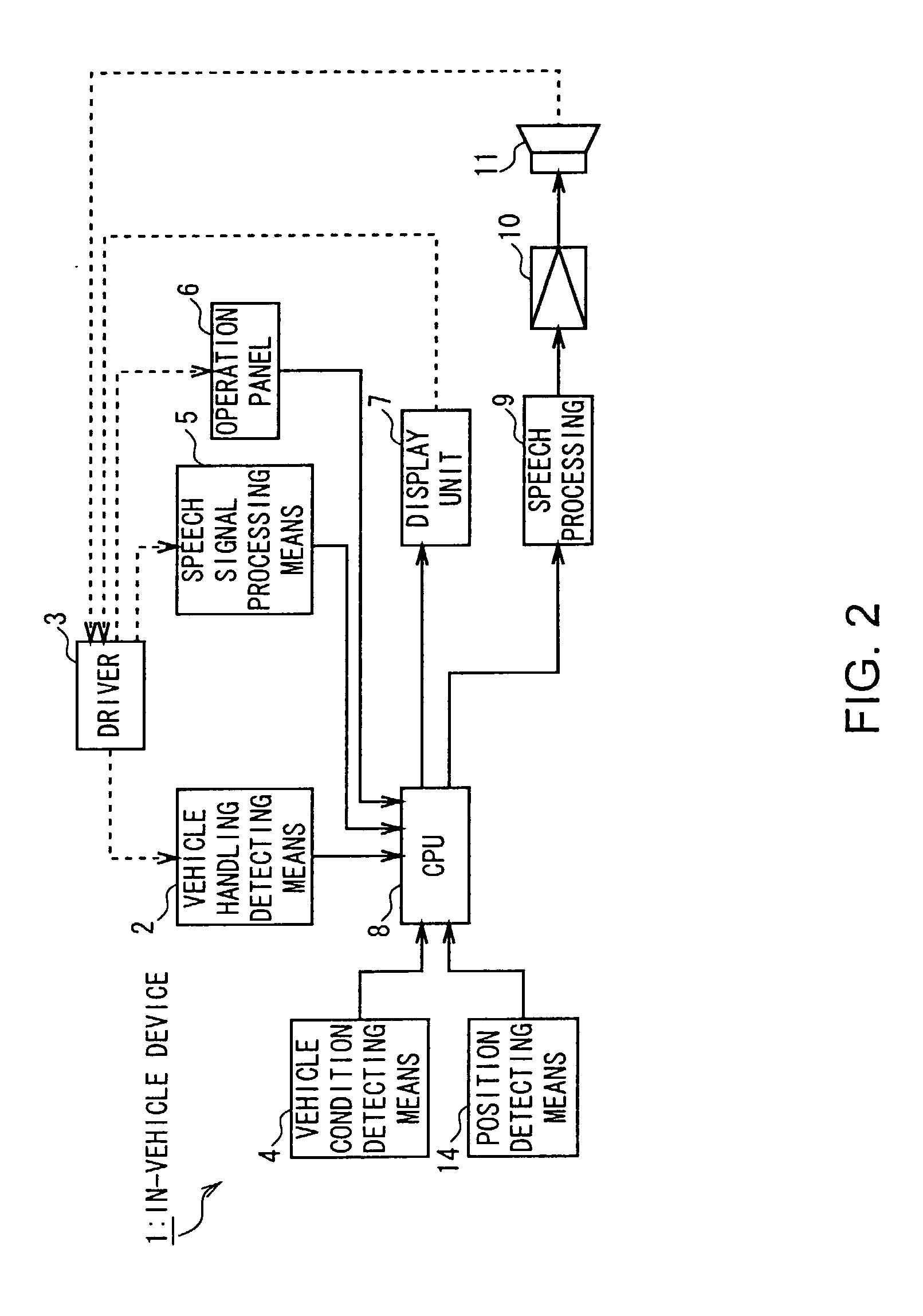

Vehicle information processing device, vehicle, and vehicle information processing method

InactiveUS20030060937A1Accurate communicationInstruments for road network navigationDigital data processing detailsInformation processingDriver/operator

The present invention relates to an in-vehicle device, a vehicle, and a vehicle information processing method, which are used, for example, for assisting in driving an automobile and can properly communicate a vehicle situation to a driver. The invention expresses user's handling of the vehicle by virtual feelings on the assumption that the vehicle has a personality, and displays the virtual feelings by facial expressions of a predetermined character.

Owner:SONY CORP +1

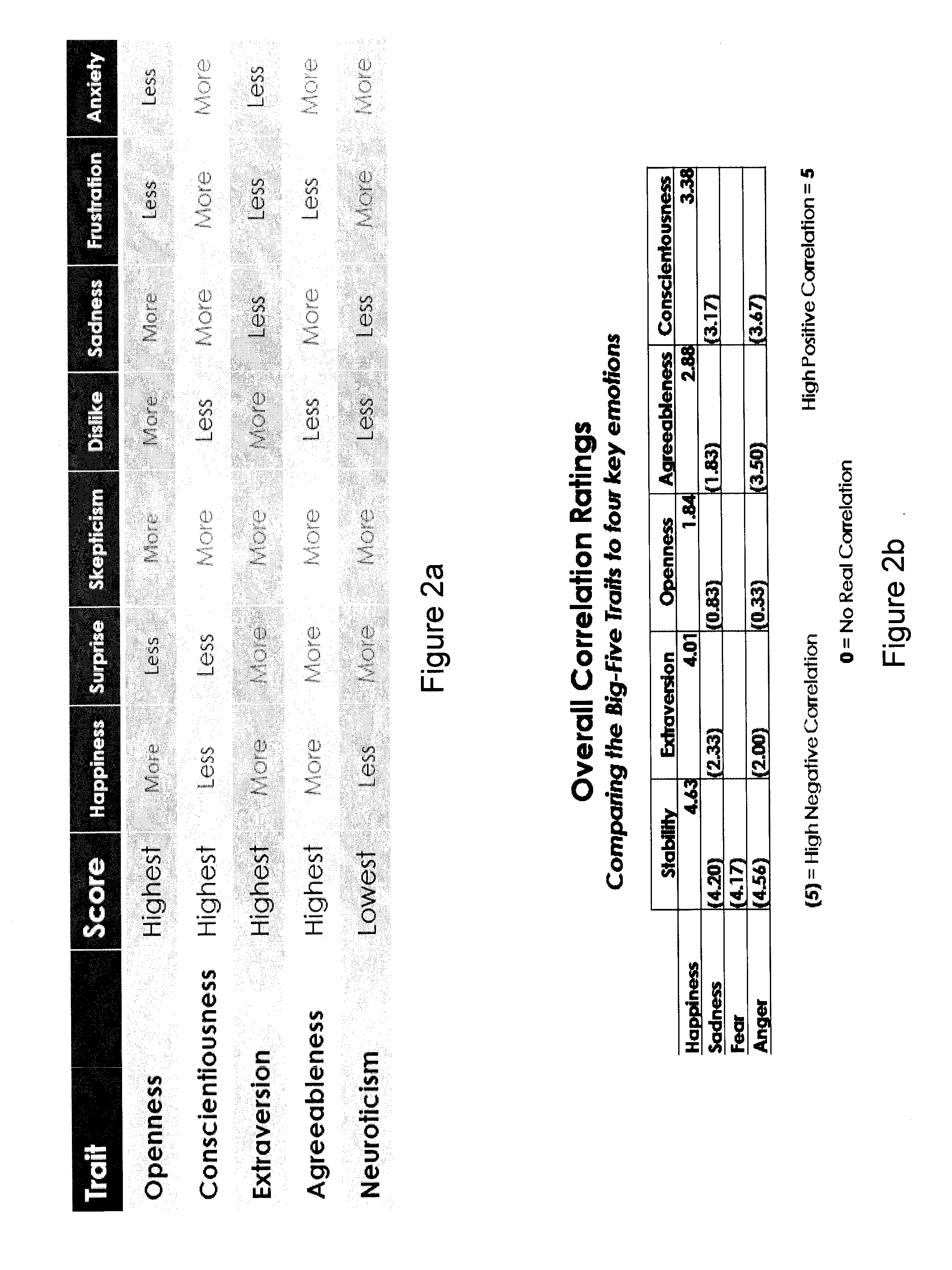

Method of assessing people's self-presentation and actions to evaluate personality type, behavioral tendencies, credibility, motivations and other insights through facial muscle activity and expressions

A method of assessing an individual through facial muscle activity and expressions includes receiving a visual recording stored on a computer-readable medium of an individual's non-verbal responses to a stimulus, the non-verbal response comprising facial expressions of the individual. The recording is accessed to automatically detect and record expressional repositioning of each of a plurality of selected facial features by conducting a computerized comparison of the facial position of each selected facial feature through sequential facial images. The contemporaneously detected and recorded expressional repositionings are automatically coded to an action unit, a combination of action units, and / or at least one emotion. The action unit, combination of action units, and / or at least one emotion are analyzed to assess one or more characteristics of the individual to develop a profile of the individual's personality in relation to the objective for which the individual is being assessed.

Owner:SENSORY LOGIC

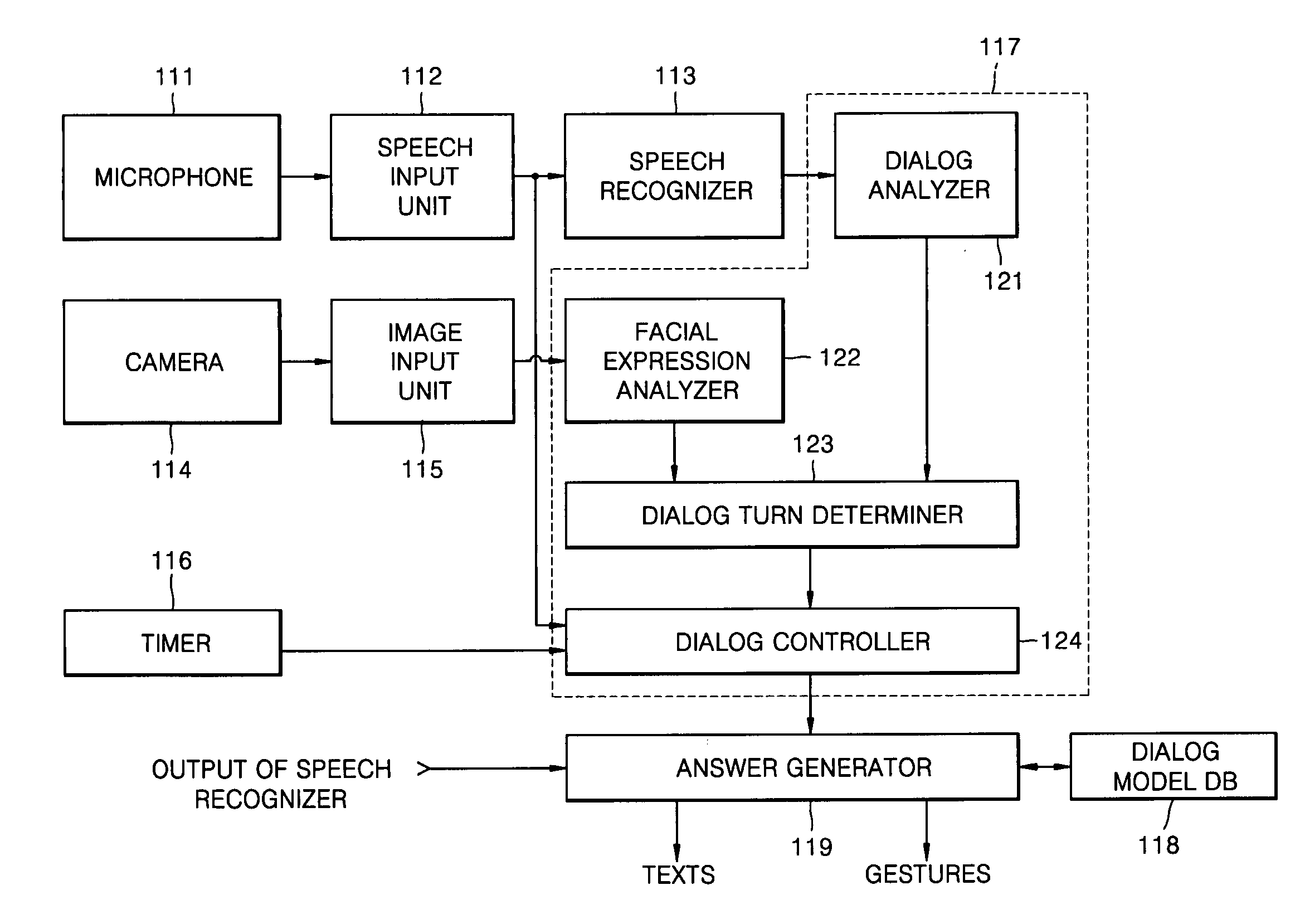

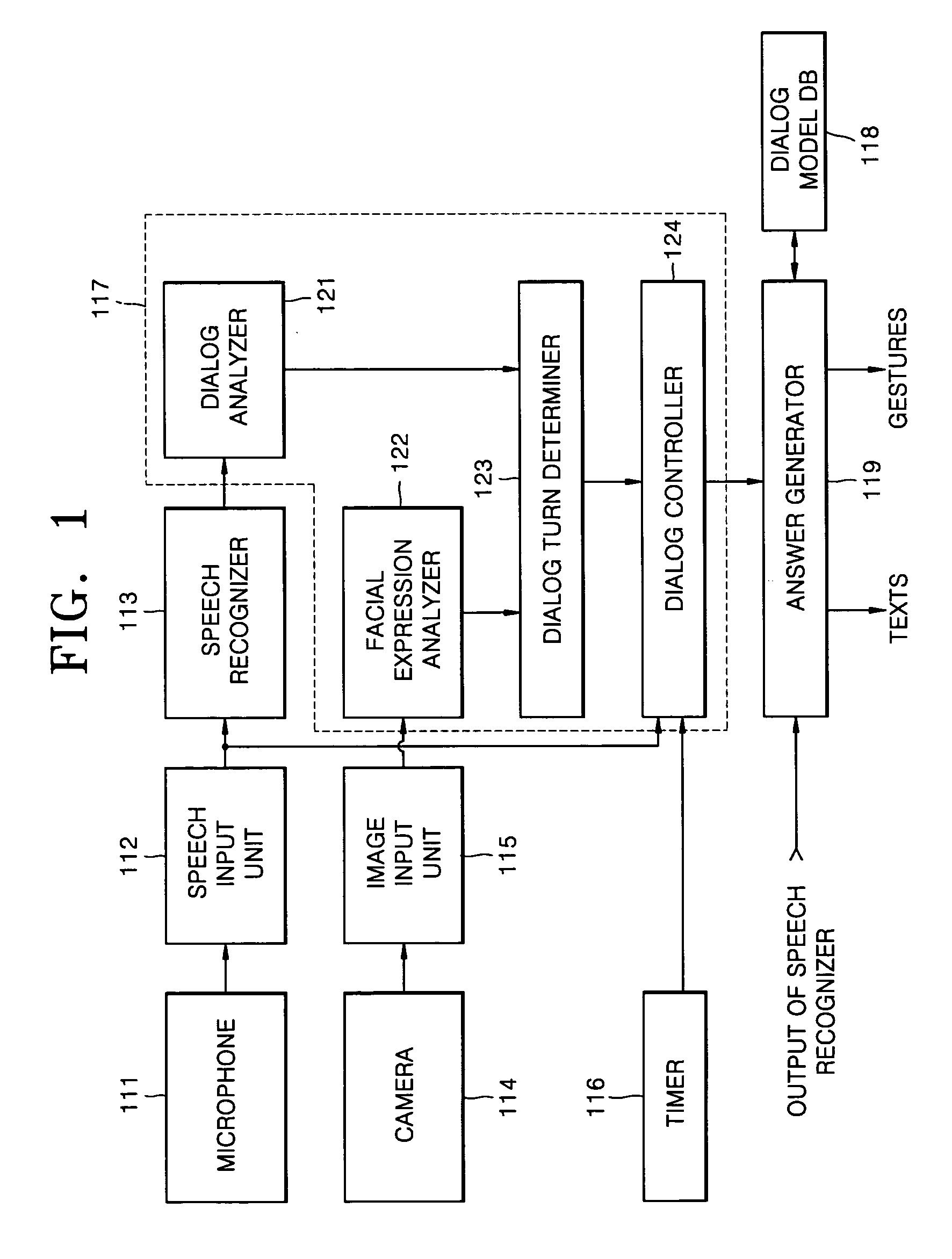

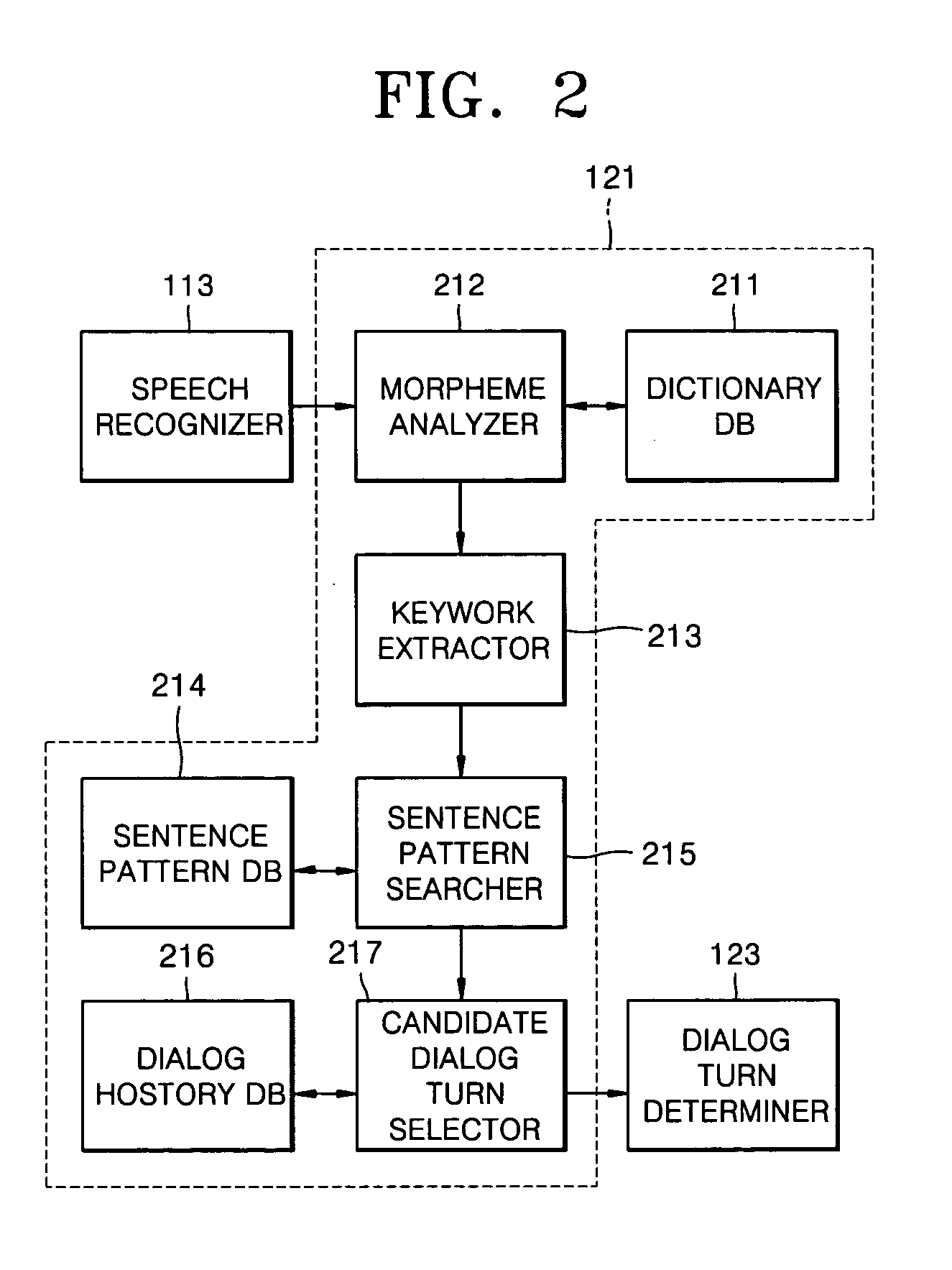

Method of and apparatus for managing dialog between user and agent

InactiveUS20040122673A1Input/output for user-computer interactionImage analysisDialog systemDelayed time

Owner:SAMSUNG ELECTRONICS CO LTD

Systems and methods for creating and distributing modifiable animated video messages

Owner:ADOBE SYST INC

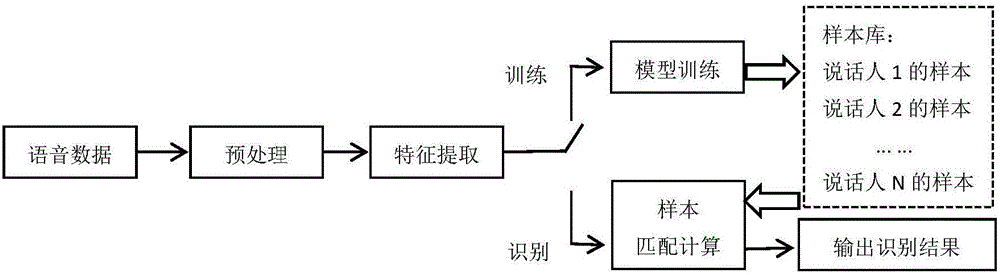

Classroom behavior monitoring system and method based on face and voice recognition

ActiveCN106851216AImprove developmentImprove learning effectSpeech analysisClosed circuit television systemsFacial expressionSpeech sound

Owner:SHANDONG NORMAL UNIV

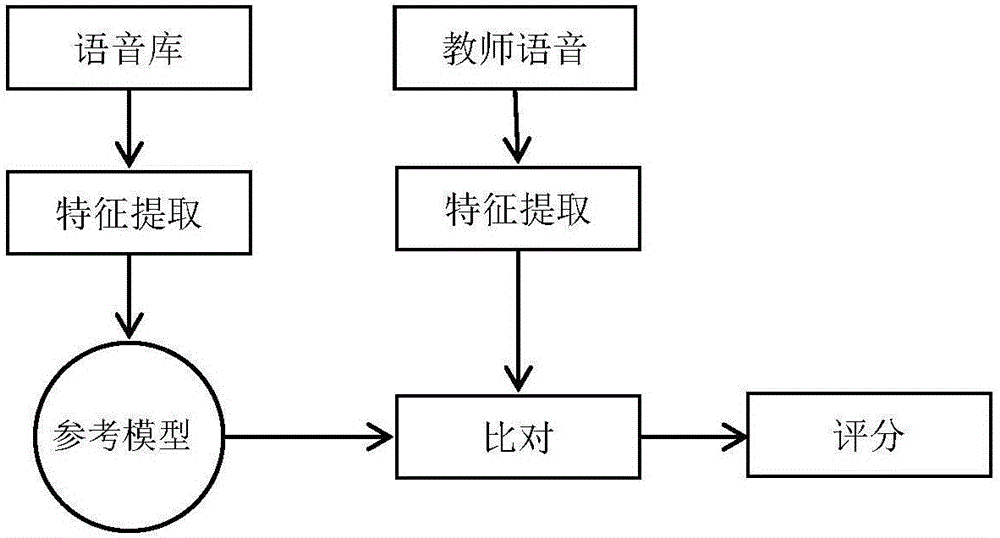

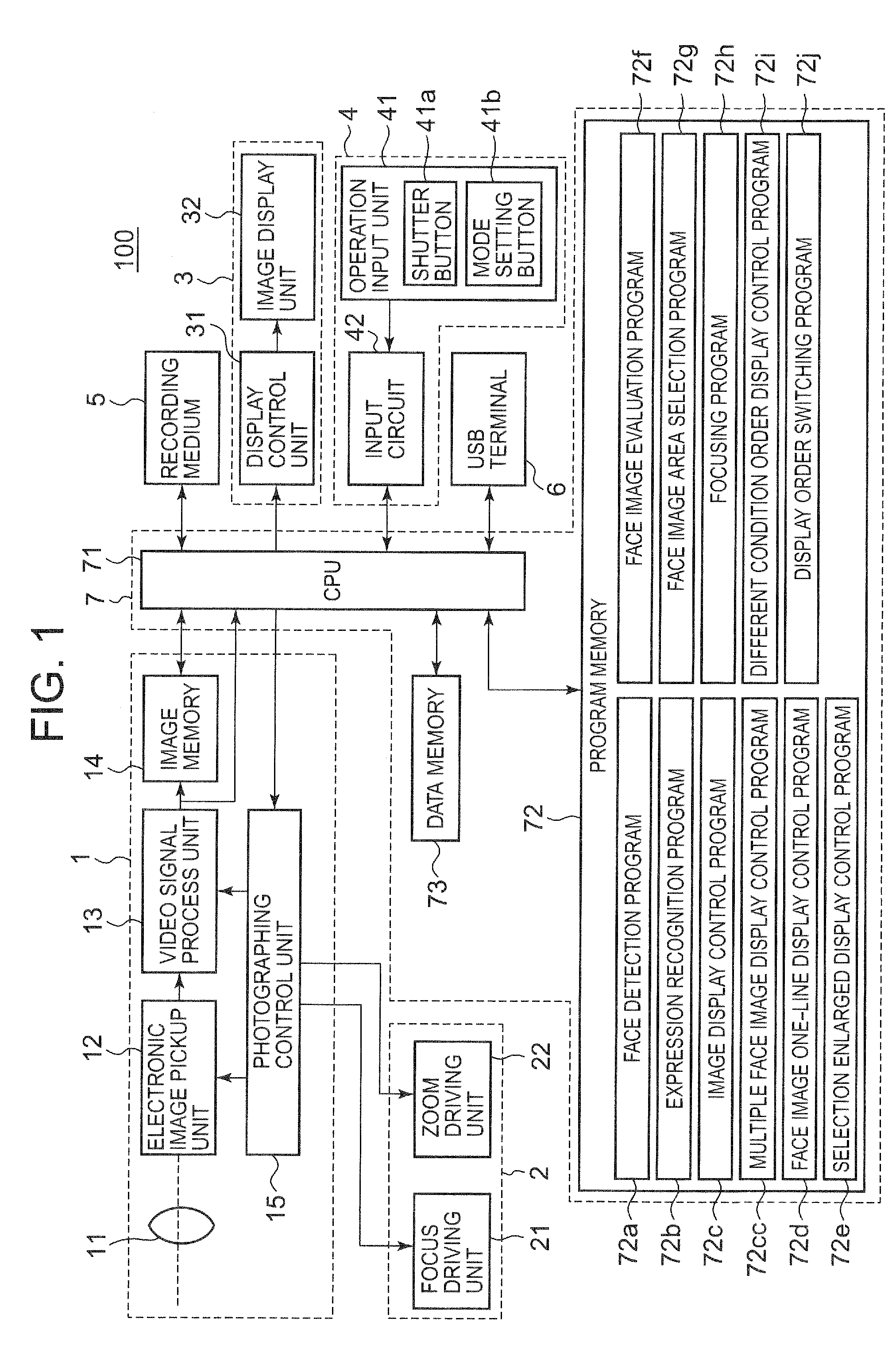

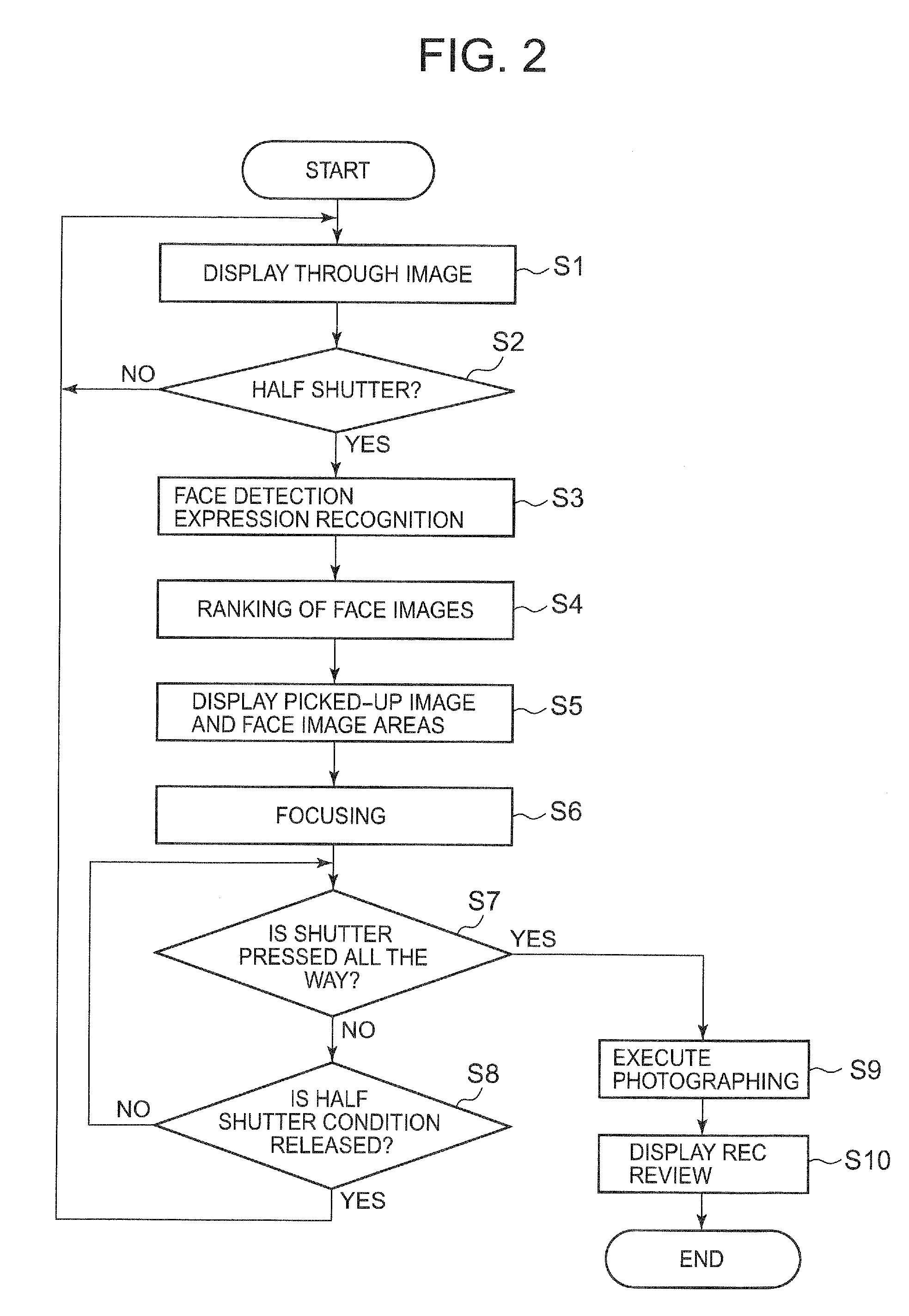

Image pickup apparatus equipped with face-recognition function

InactiveUS20080240563A1Easy to confirmTelevision system details2D-image generationRankingFacial expression

The image pickup apparatus 100 comprises the image pickup unit 1 to pick up an image of the subject which a user desires, a detecting of the face image area which includes the face of the subject person in the picked-up image based on the image information of the picked-up image, a recognizing of the expression of the face in the detected face image area, a ranking of the face image areas in the order of good smile of the recognized expressions and a displaying of the face image areas F arranged in the order of ranking and the entire picked-up image G on the same screen.

Owner:CASIO COMPUTER CO LTD

Computerized method of assessing consumer reaction to a business stimulus employing facial coding

Owner:SENSORY LOGIC

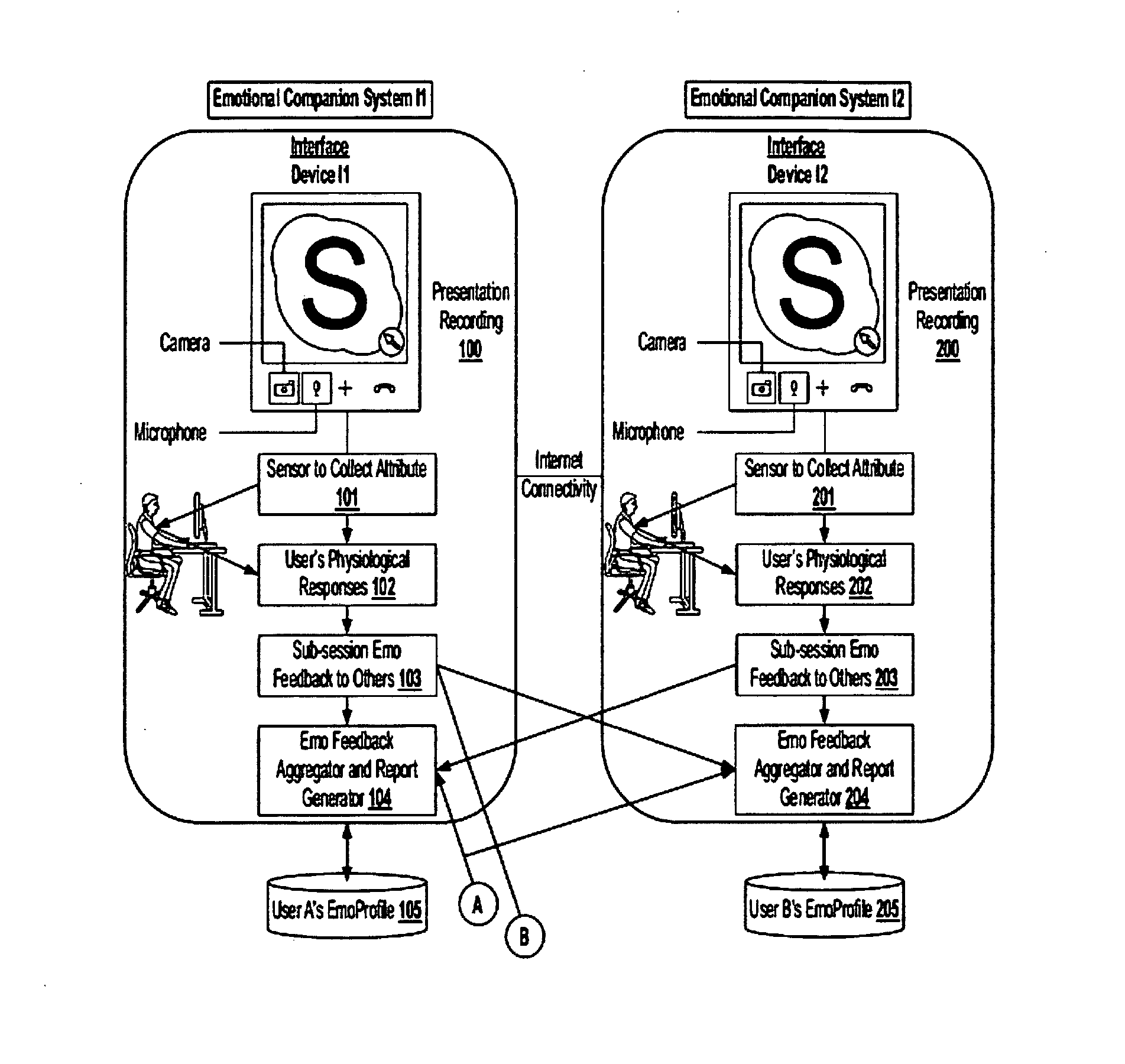

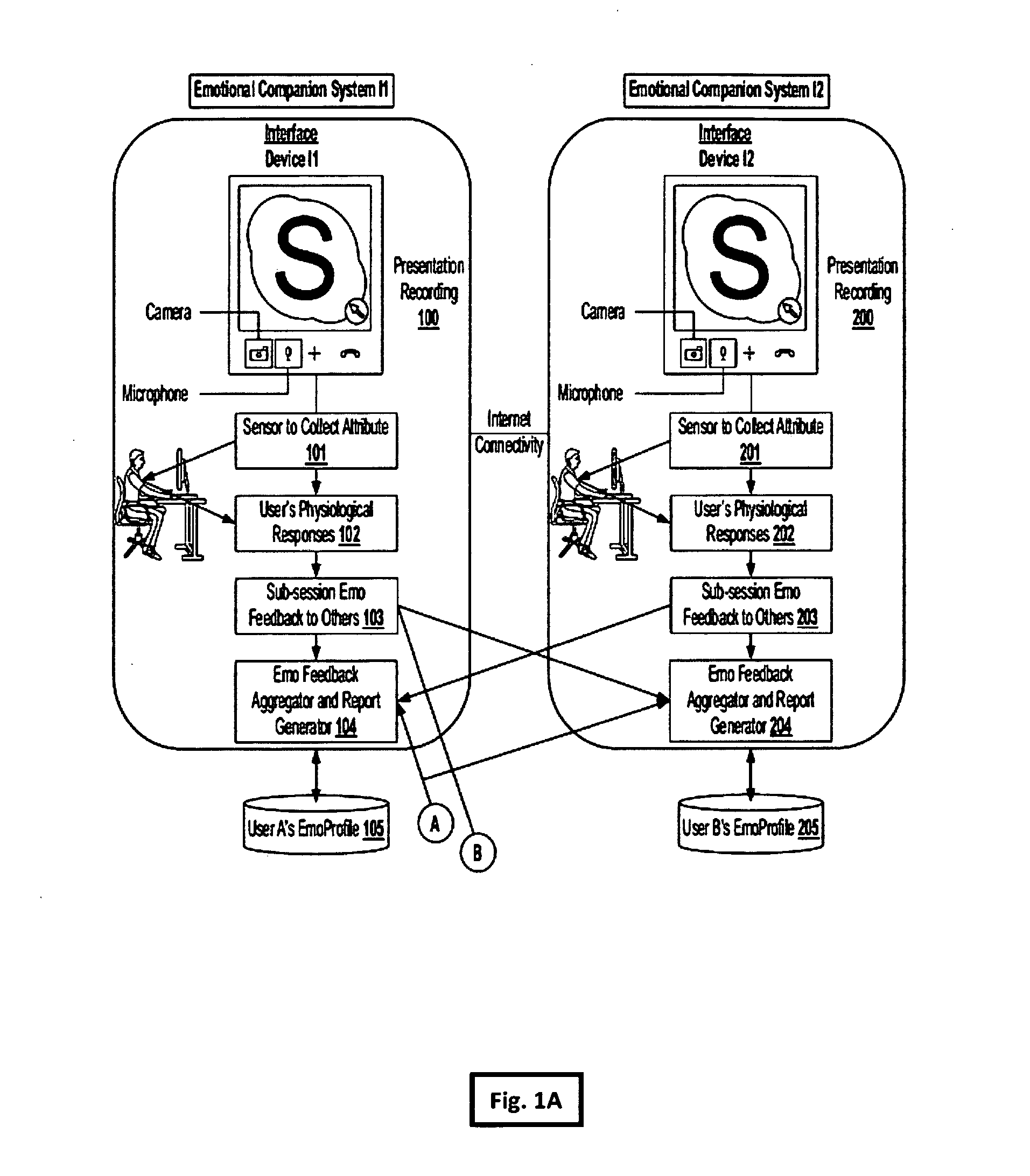

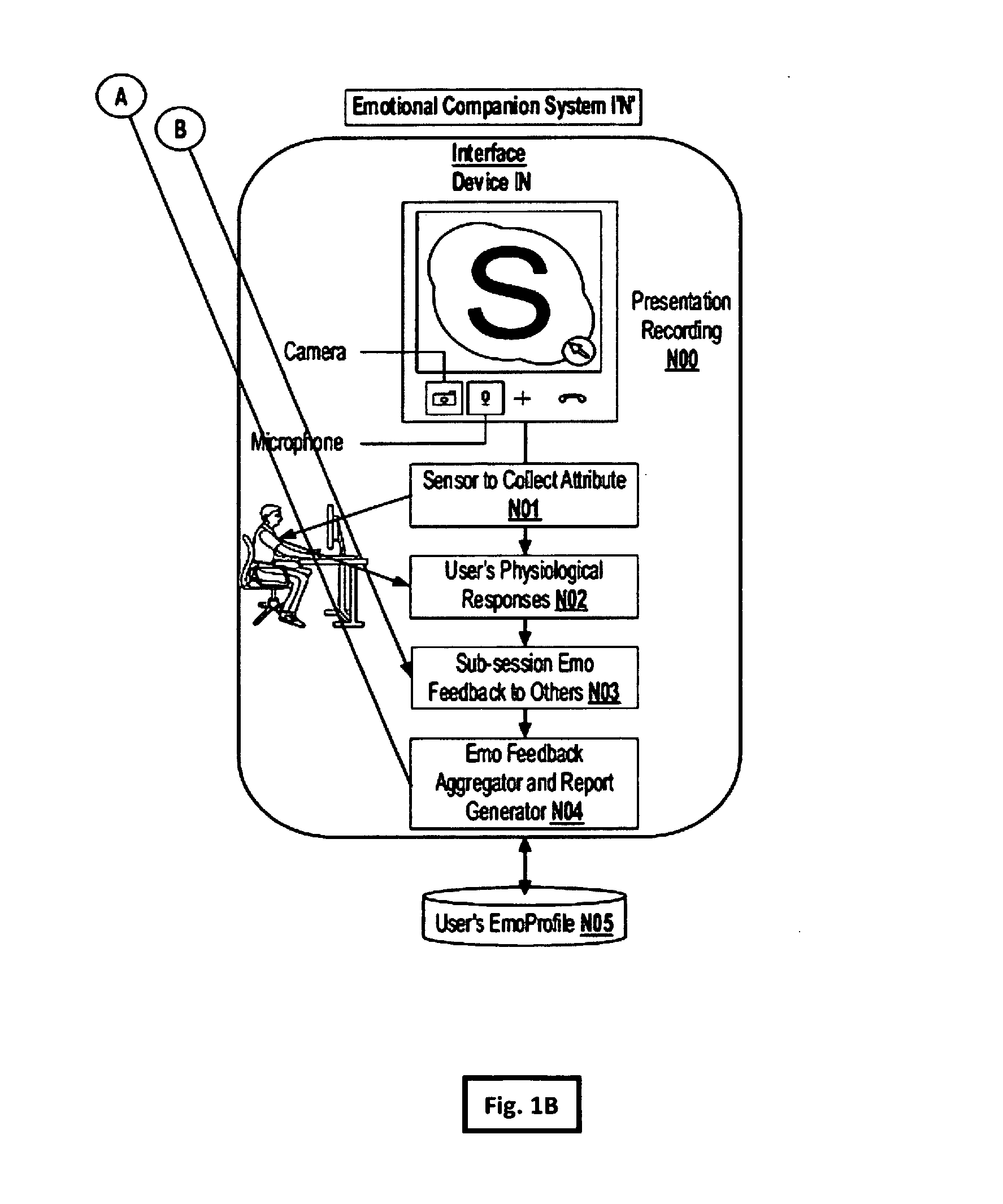

Emotion feedback based training and personalization system for aiding user performance in interactive presentations

InactiveUS20160042648A1Weakened areaImprove realismInput/output for user-computer interactionVideo gamesPersonalizationFacial expression

The present invention relates to a system and method for implementing an assistive emotional companion for a user, wherein the system is designed for capturing emotional as well as performance feedback of a participant participating in an interactive session either with a system or with a presenter participant and utilizing such feedback to adaptively customize subsequent parts of the interactive session in an iterative manner. The interactive presentation can either be a live person talking and / or presenting in person, or a streaming video in an interactive chat session, and an interactive session can be a video gaming activity, an interactive simulation, an entertainment software, an adaptive education training system, or the like. The physiological responses measured will be a combination of facial expression analysis, and voice expression analysis. Optionally, other signals such as camera based heart rate and / or touch based skin conductance may be included in certain embodiments.

Owner:KOTHURI RAVIKANTH V

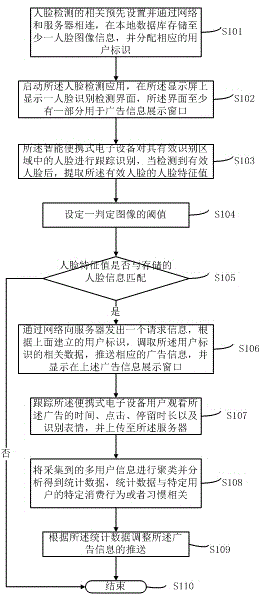

Method and system for analyzing big data of intelligent advertisements based on face identification

The invention relates to the field of intelligent advertisement putting and data analysis, in particular to a method and system for analyzing big data of intelligent advertisements based on face identification. The method comprises the following steps: correlatively presetting, storing face image information in a local database, and distributing corresponding user identifiers; starting application, and displaying a face identification detection interface of which at least one part is used for displaying advertisement information; performing tracking identification on faces, and extracting the characteristic values of the faces; setting the threshold values of images and judging whether the threshold values of the images are matched or not; calling related data of the identifiers and pushing personalized media information; recording advertisement watching time, clicking, residence time and identified facial expressions, and uploading to a server; clustering and analyzing acquired multi-user information to obtain statistic data; adjusting pushing of advertisement information according to the statistic data. According to the method and the system, an advertisement pushing window is added to existing face detection equipment, and consumption habits or behaviors of groups can be obtained according to data analysis, thereby providing guidance for commercial activities.

Owner:宋柏君

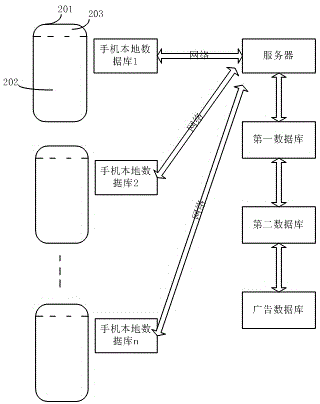

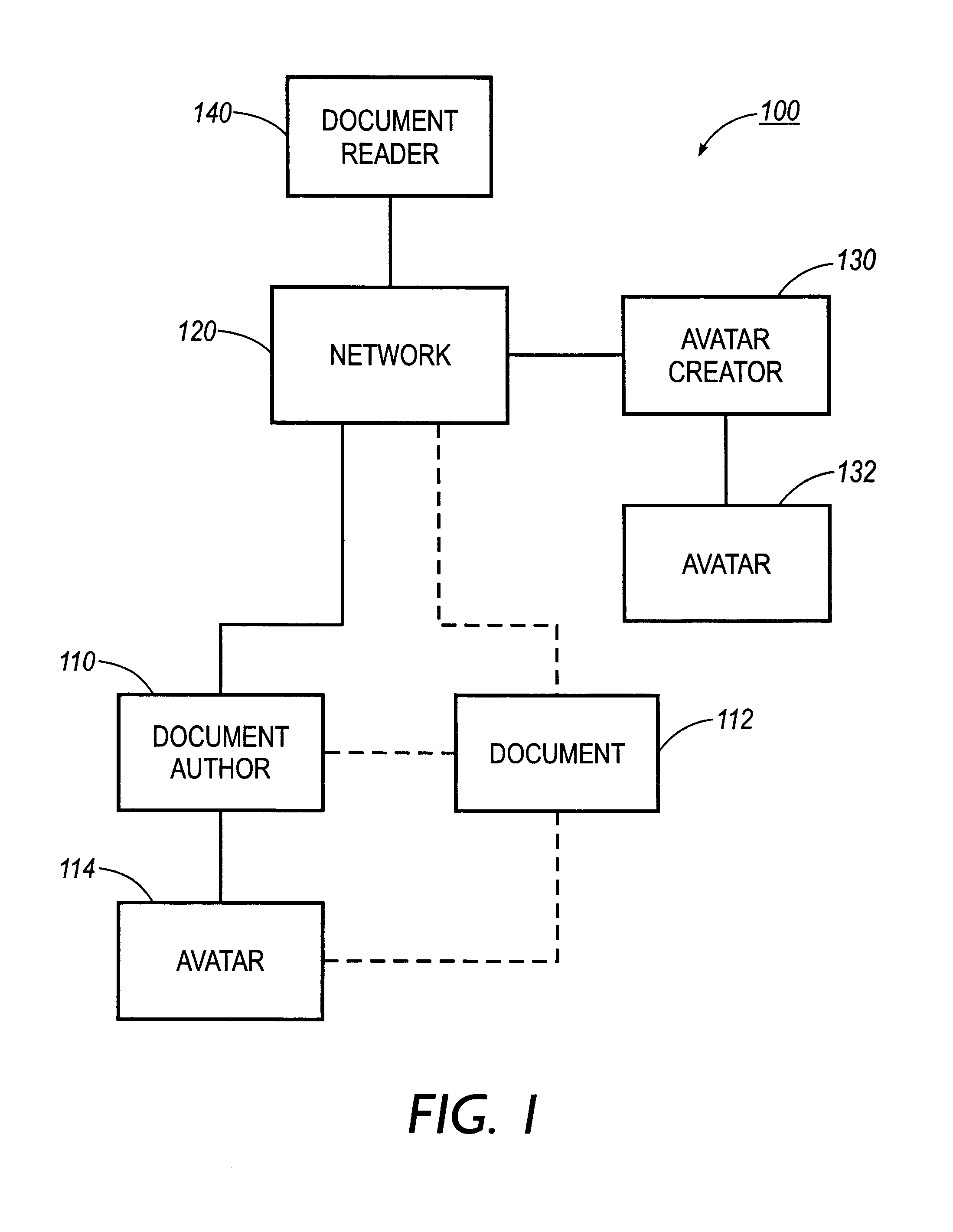

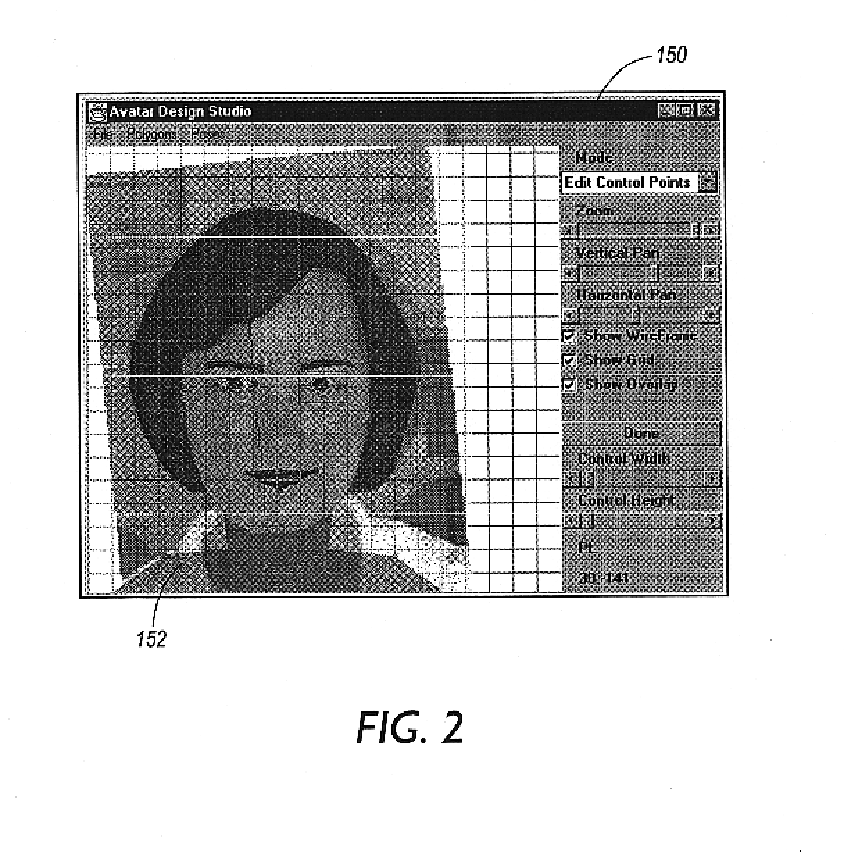

Method and apparatus for creating personal autonomous avatars

InactiveUS7006098B2Quality improvementIncrease volumeAnimationSpeech recognitionScripting languageAnimation

A method and apparatus for facilitating communication about a document between two users creates autonomous, animated computer characters, or avatars, which are then attached to the document under discussion. The avatar is created by one user, who need not be the author of the document, and is attached to the document to represent a point of view. The avatar represents the physical likeness of its creator. The avatar is animated, using an avatar scripting language, to perform specified behaviors including pointing, walking and changing facial expressions. The avatar includes audio files that are synchronized with movement of the avatar's mouth to provide an audio message.

Owner:MAJANDRO LLC

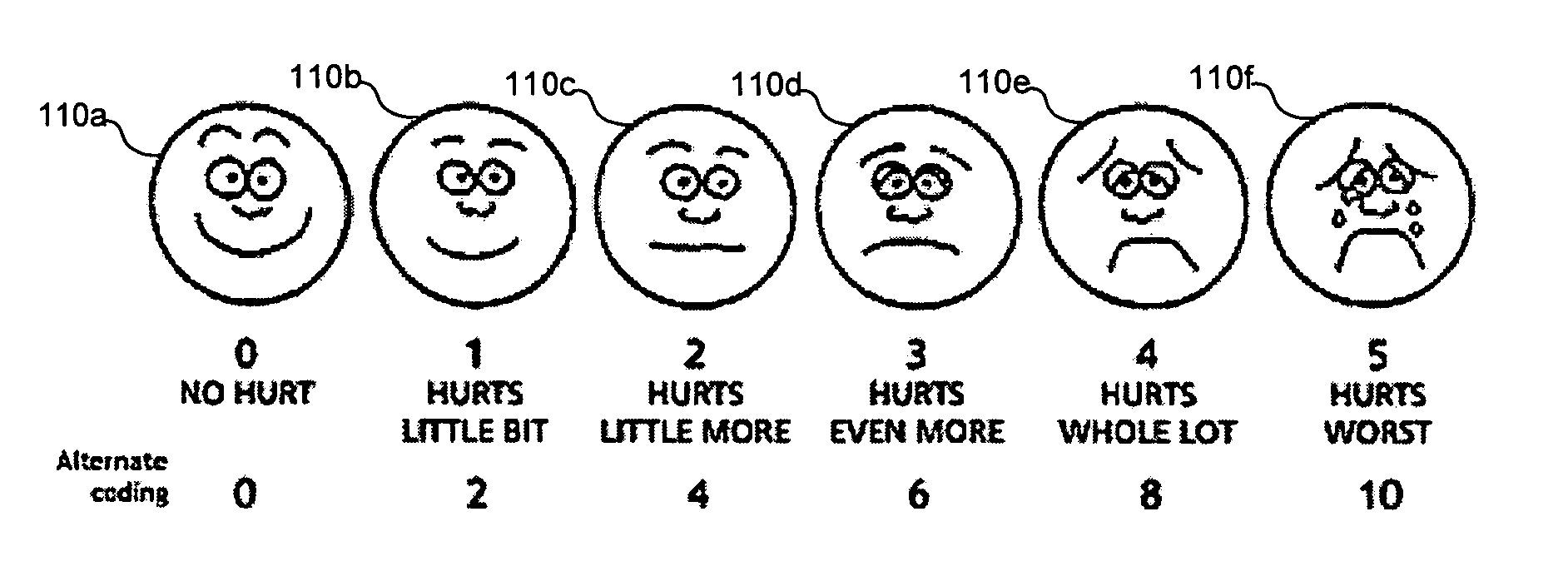

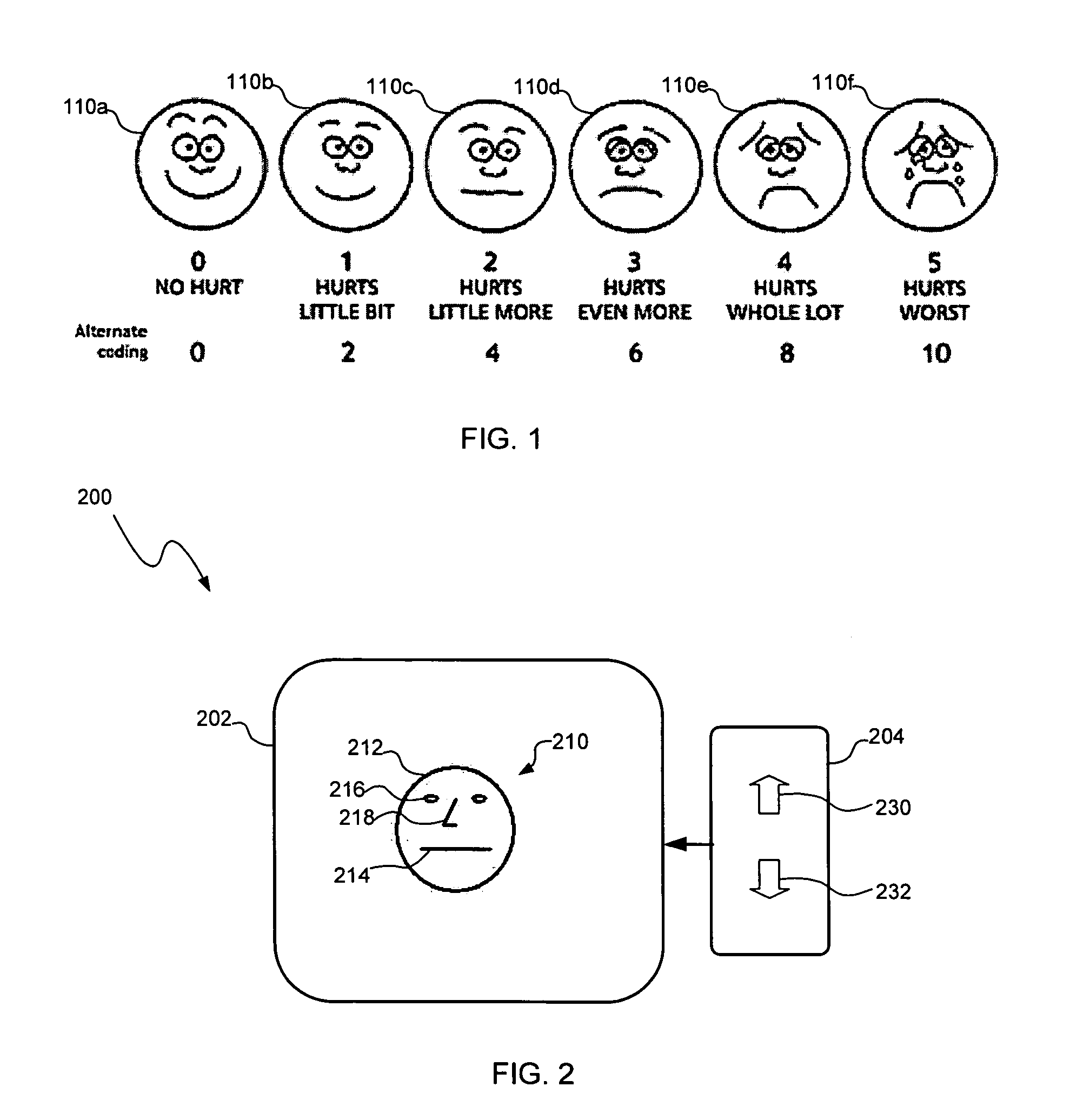

Computerized assessment system and method for assessing opinions or feelings

A computerized assessment system and method may be used to assess opinions or feelings of a subject (e.g., a child patient). The system and method may display a computer-generated face image having a variable facial expression (e.g., changing mouth and eyes) capable of changing to correspond to opinions or feelings of the subject (e.g., smiling or frowning). The system and method may receive a user input signal in accordance with the opinions or feelings of the subject and may display the changes in the variable facial expression in response to the user input signal. The system and method may also prompt the subject to express an opinion or feeling about a matter to be assessed.

Owner:PSYCHOLOGICAL APPL

Online learning system

The invention discloses an online learning system which comprises a courseware database module, a management module, and a student learning situation monitoring module. The student learning situation monitoring module comprises a face recognition module, a facial expression analysis module, a video scene analysis module, a sound recognition module, a monitoring video storage module, and a video acquisition module, and the like. The learning situation monitoring module can be applied to monitor the student learning and exam situations in real time, thereby preventing other people from taking learning and tests for someone, and improving the learning efficiency of students.

Owner:NANJING MEIXIAOJIA TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com