Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1365 results about "Gaze" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In critical theory, sociology, and psychoanalysis, the philosophic term the gaze (French le regard) describes the act of seeing and the act of being seen. The concept and the social applications of the gaze have been defined and explained by existentialist and phenomenologist philosophers; Jean-Paul Sartre, in Being and Nothingness (1943); Michel Foucault in Discipline and Punish: The Birth of the Prison (1975) developed the concept of the gaze to illustrate the dynamics of socio-political power relations and the social dynamics of society's mechanisms of discipline; and Jacques Derrida, in The Animal that Therefore I Am (More to Come) (1997) elaborated upon the inter-species relations that exist among animals and human beings, which are established by way of the gaze.

Methods and arrangements for identifying objects

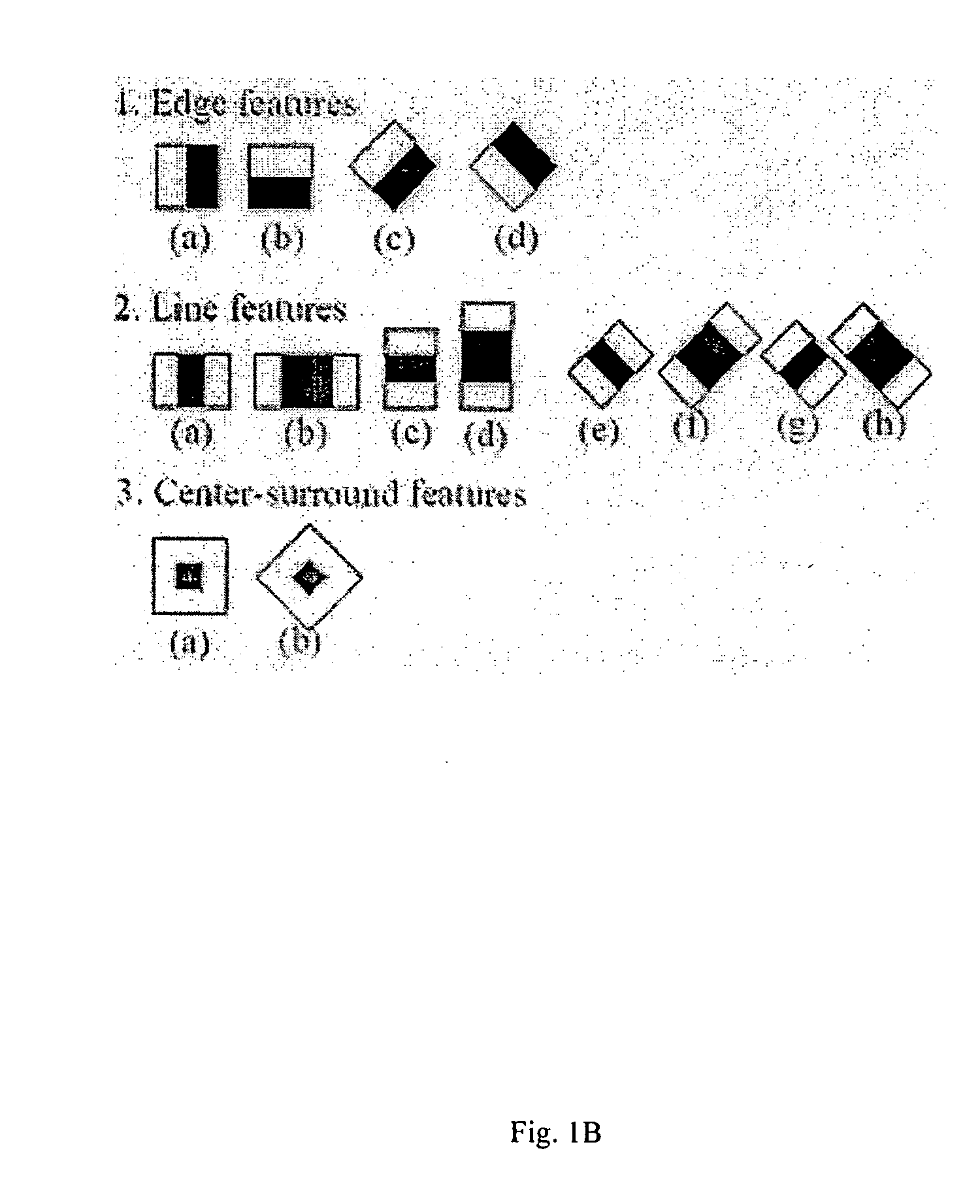

ActiveUS20130223673A1Increase check-out speedImprove accuracyStatic indicating devicesCash registersPattern recognitionGeometric primitive

In some arrangements, product packaging is digitally watermarked over most of its extent to facilitate high-throughput item identification at retail checkouts. Imagery captured by conventional or plenoptic cameras can be processed (e.g., by GPUs) to derive several different perspective-transformed views—further minimizing the need to manually reposition items for identification. Crinkles and other deformations in product packaging can be optically sensed, allowing such surfaces to be virtually flattened to aid identification. Piles of items can be 3D-modelled and virtually segmented into geometric primitives to aid identification, and to discover locations of obscured items. Other data (e.g., including data from sensors in aisles, shelves and carts, and gaze tracking for clues about visual saliency) can be used in assessing identification hypotheses about an item. A great variety of other features and arrangements are also detailed.

Owner:DIGIMARC CORP

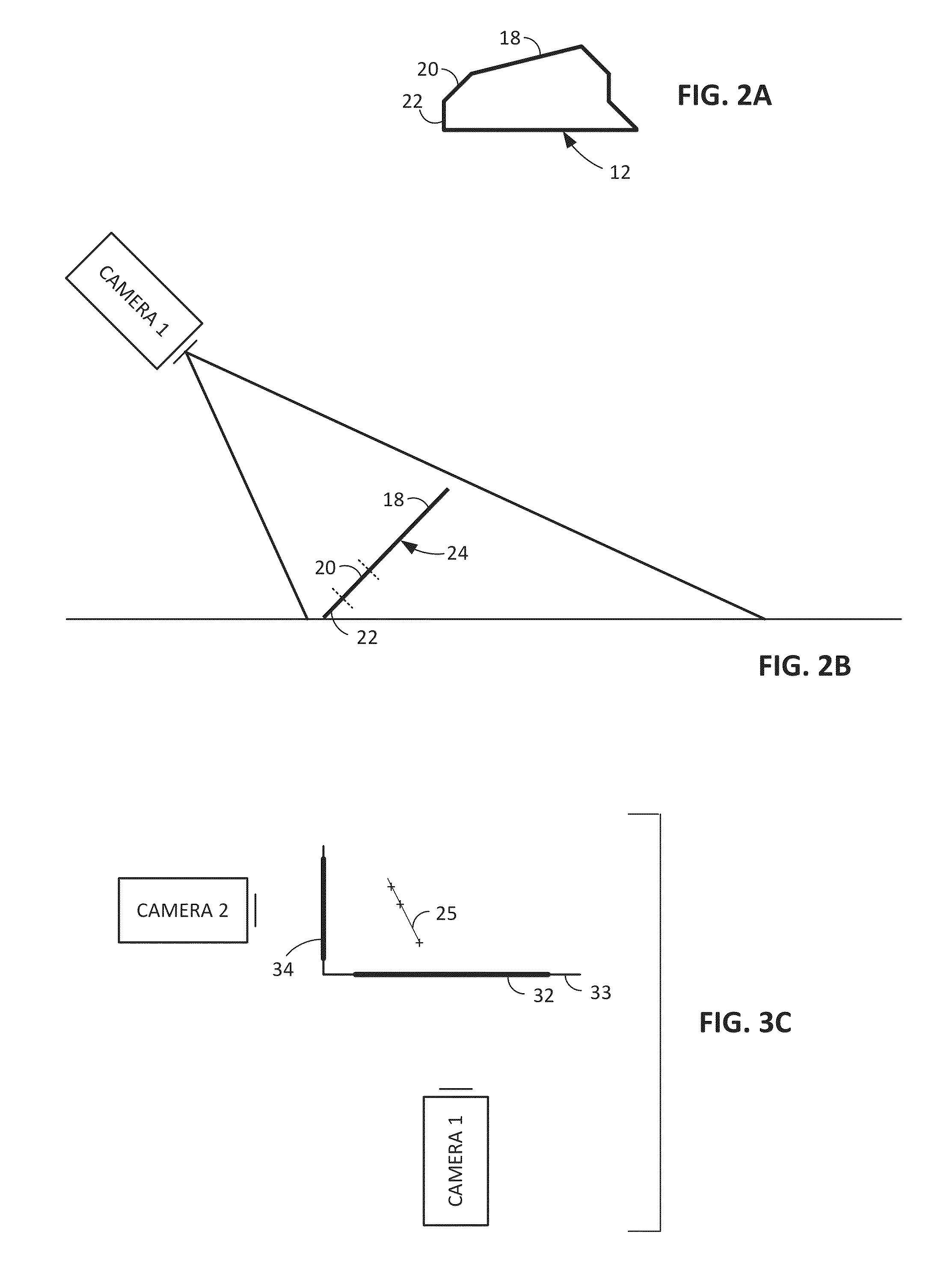

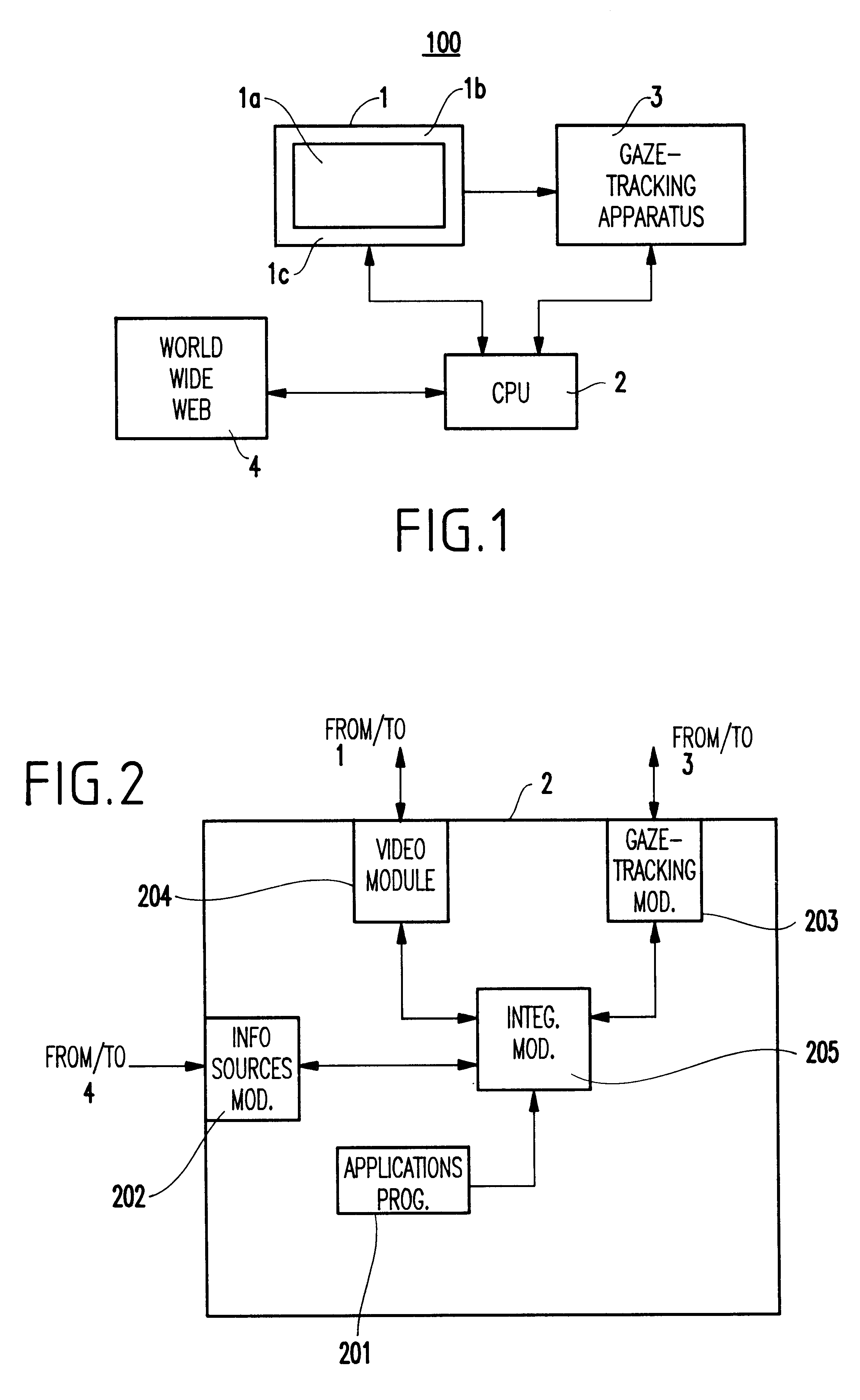

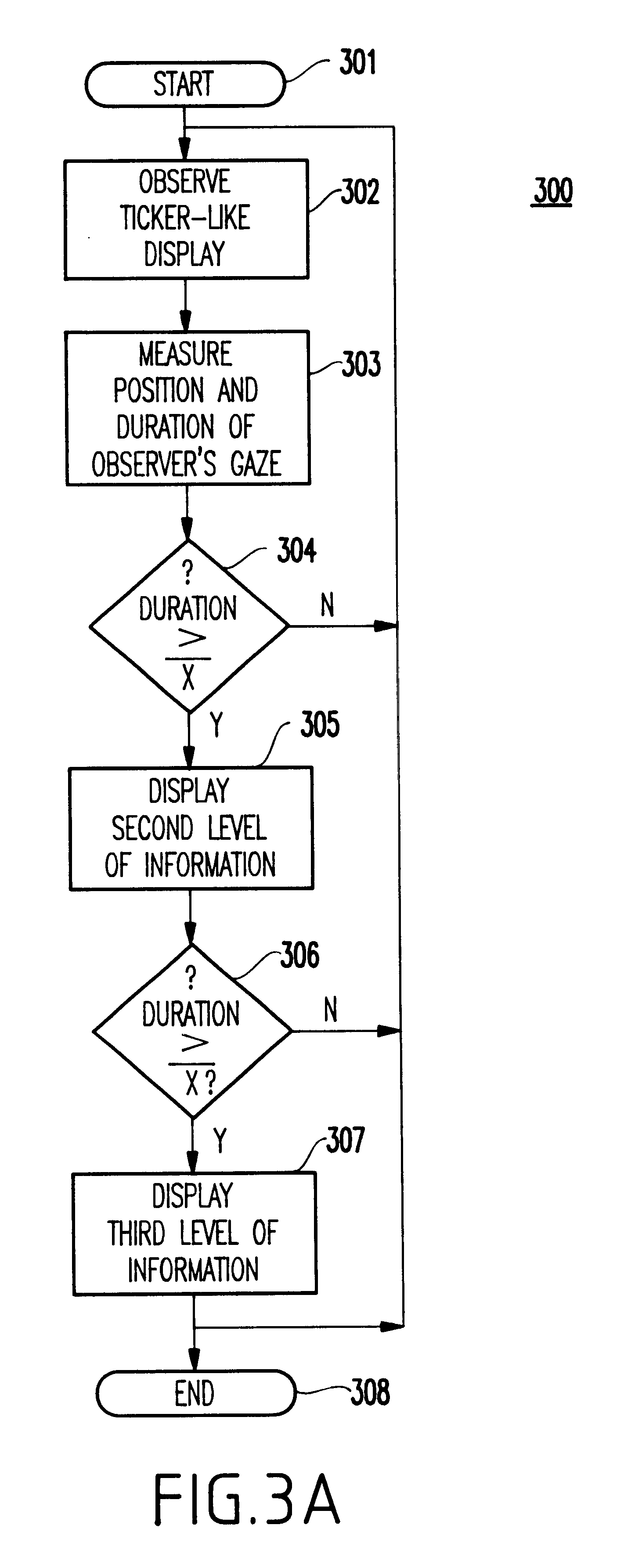

Method and system for relevance feedback through gaze tracking and ticker interfaces

InactiveUS6577329B1Input/output for user-computer interactionCathode-ray tube indicatorsDisplay deviceHuman–computer interaction

A system and method (and signal medium) for interactively displaying information, include a ticker display for displaying items having different views, a tracker for tracking a user's eye movements while observing a first view of information on the ticker display, and a mechanism, based on an output form the tracker, for determining whether a current view has relevance to the user.

Owner:TOBII TECH AB

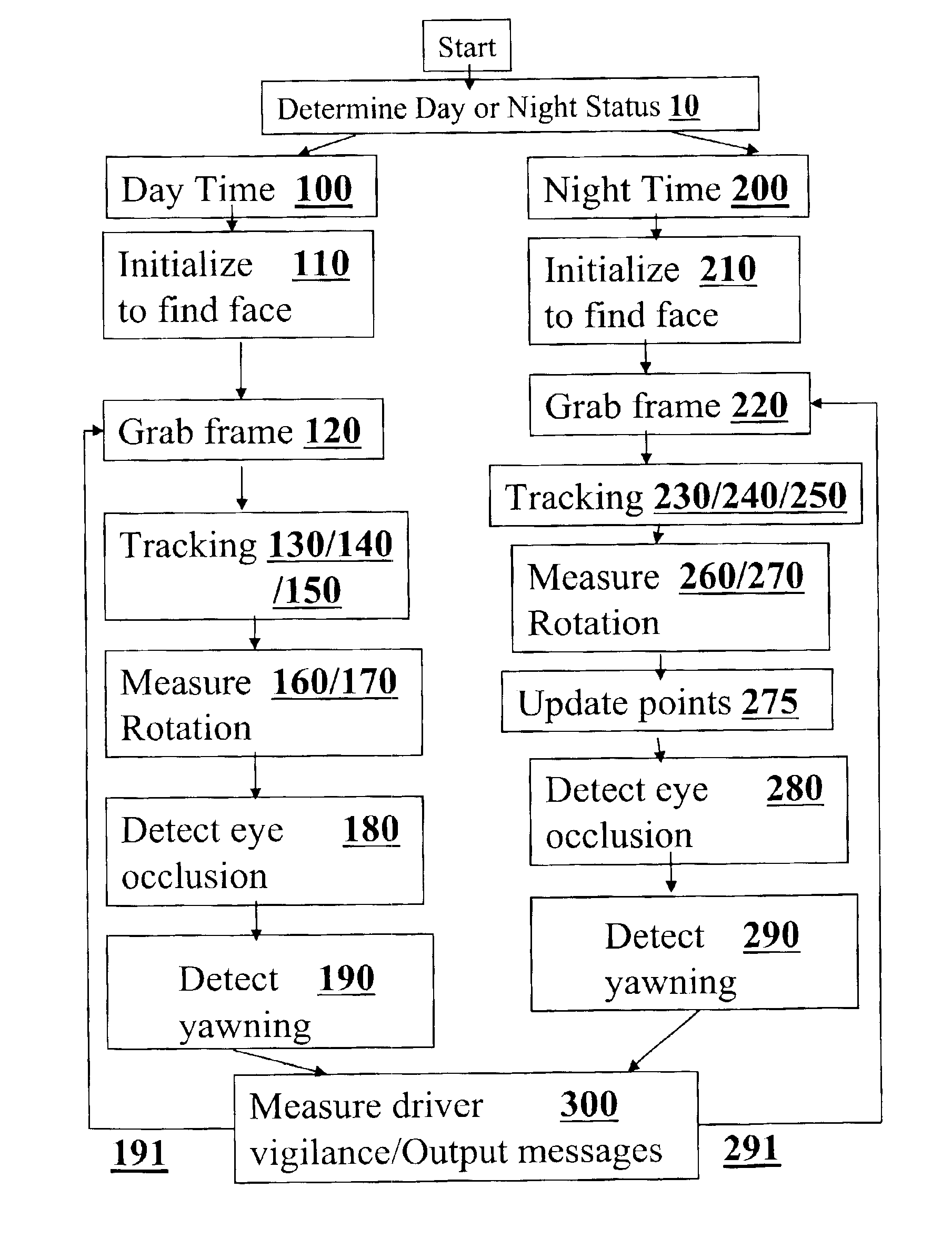

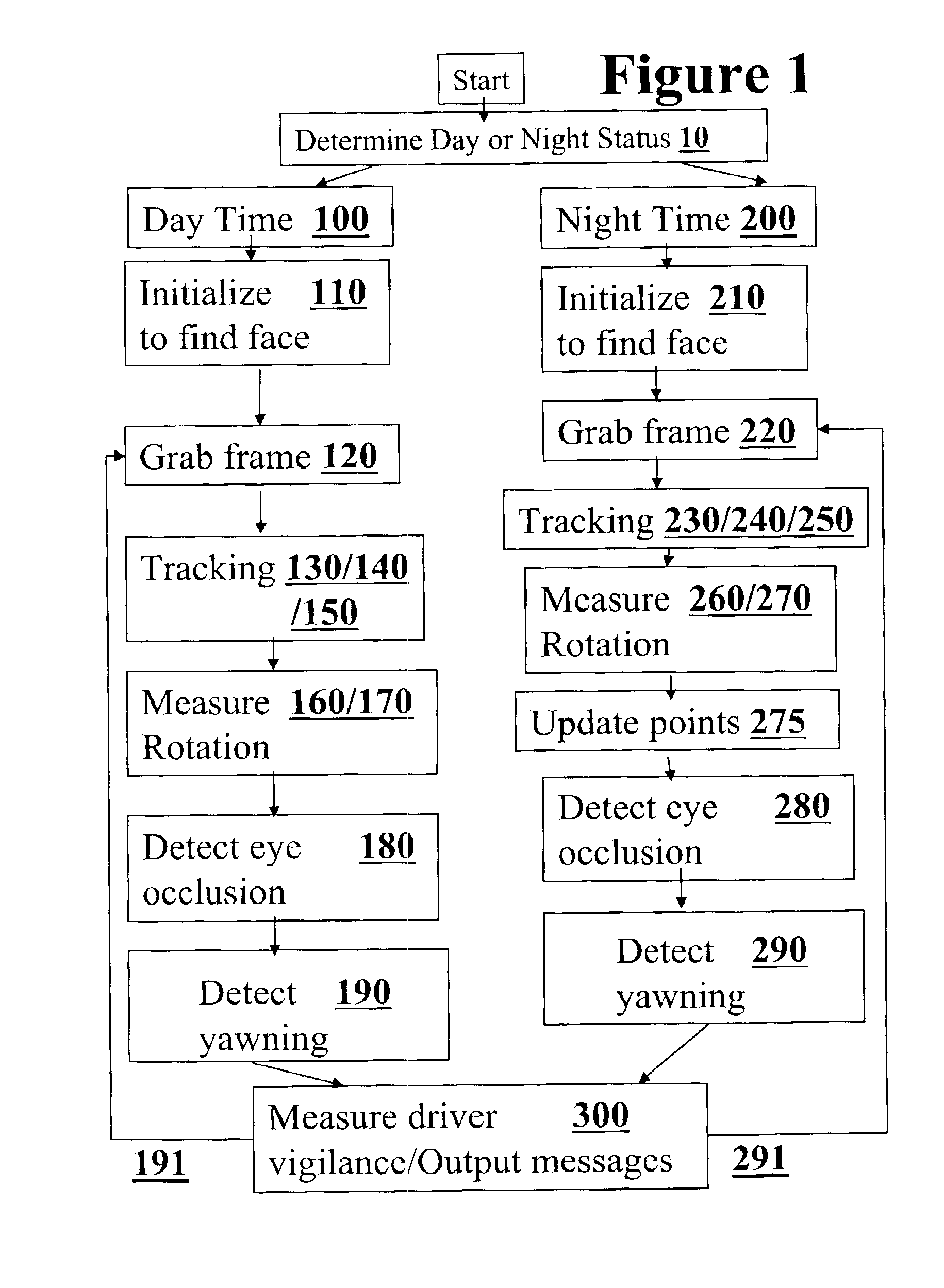

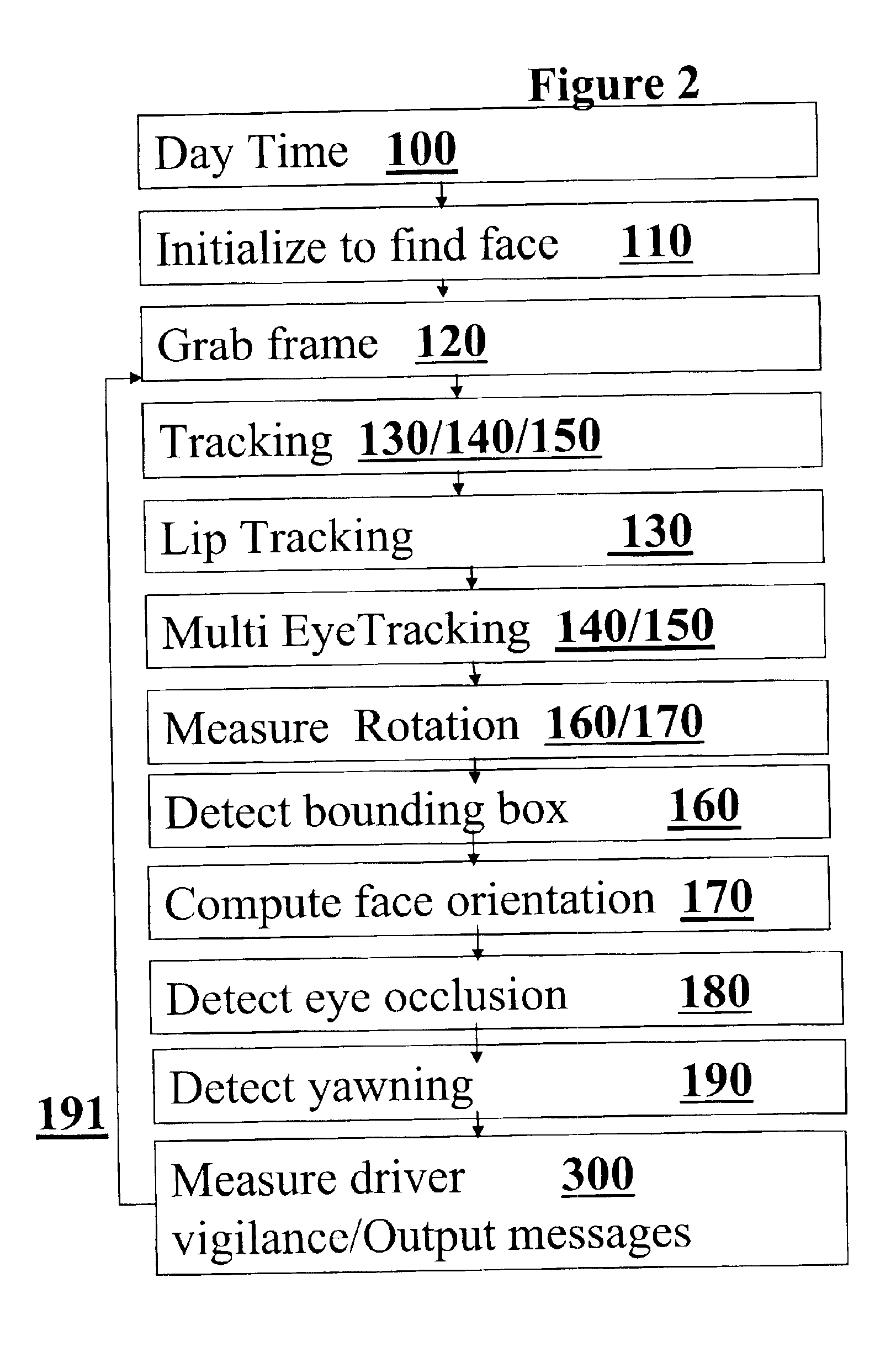

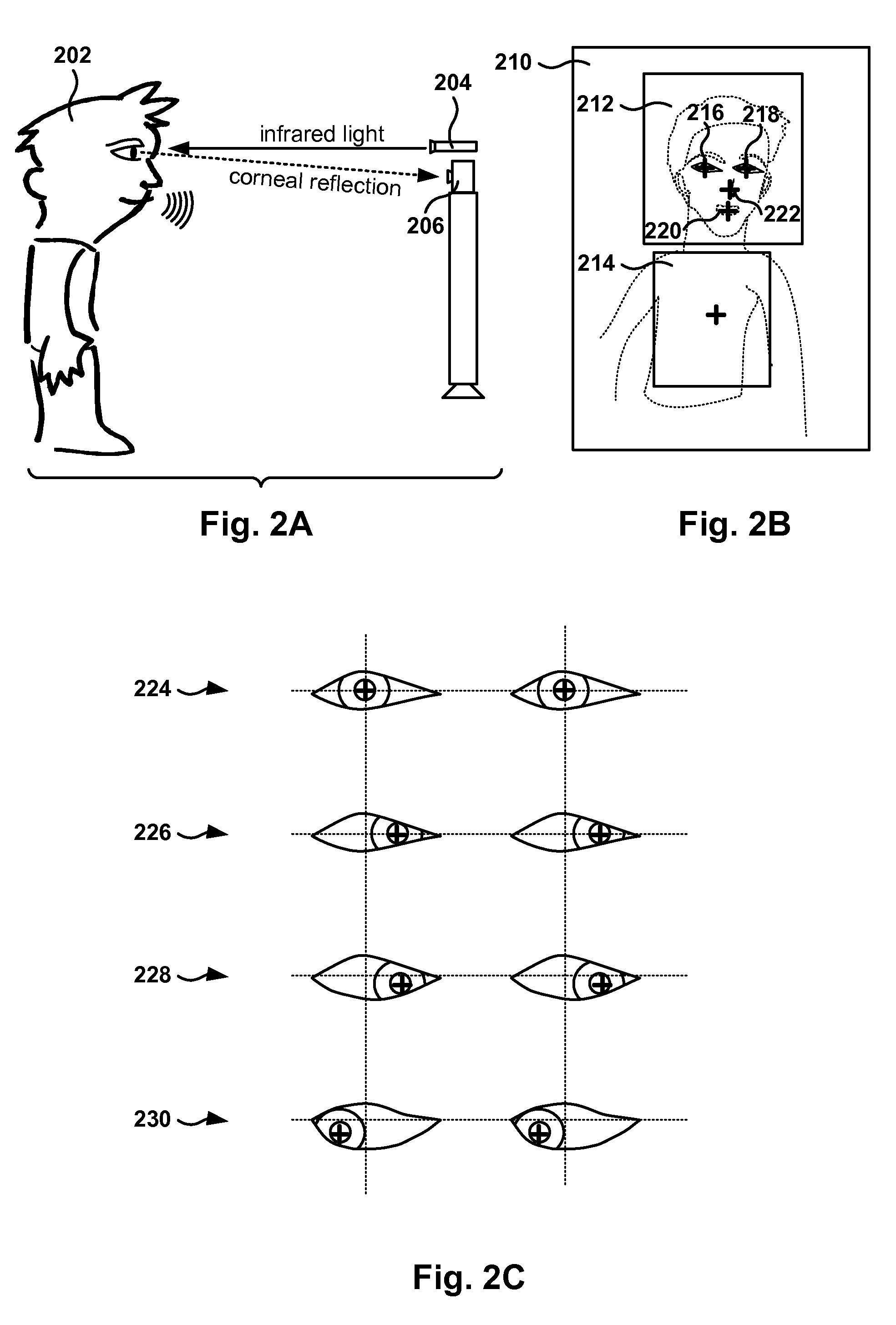

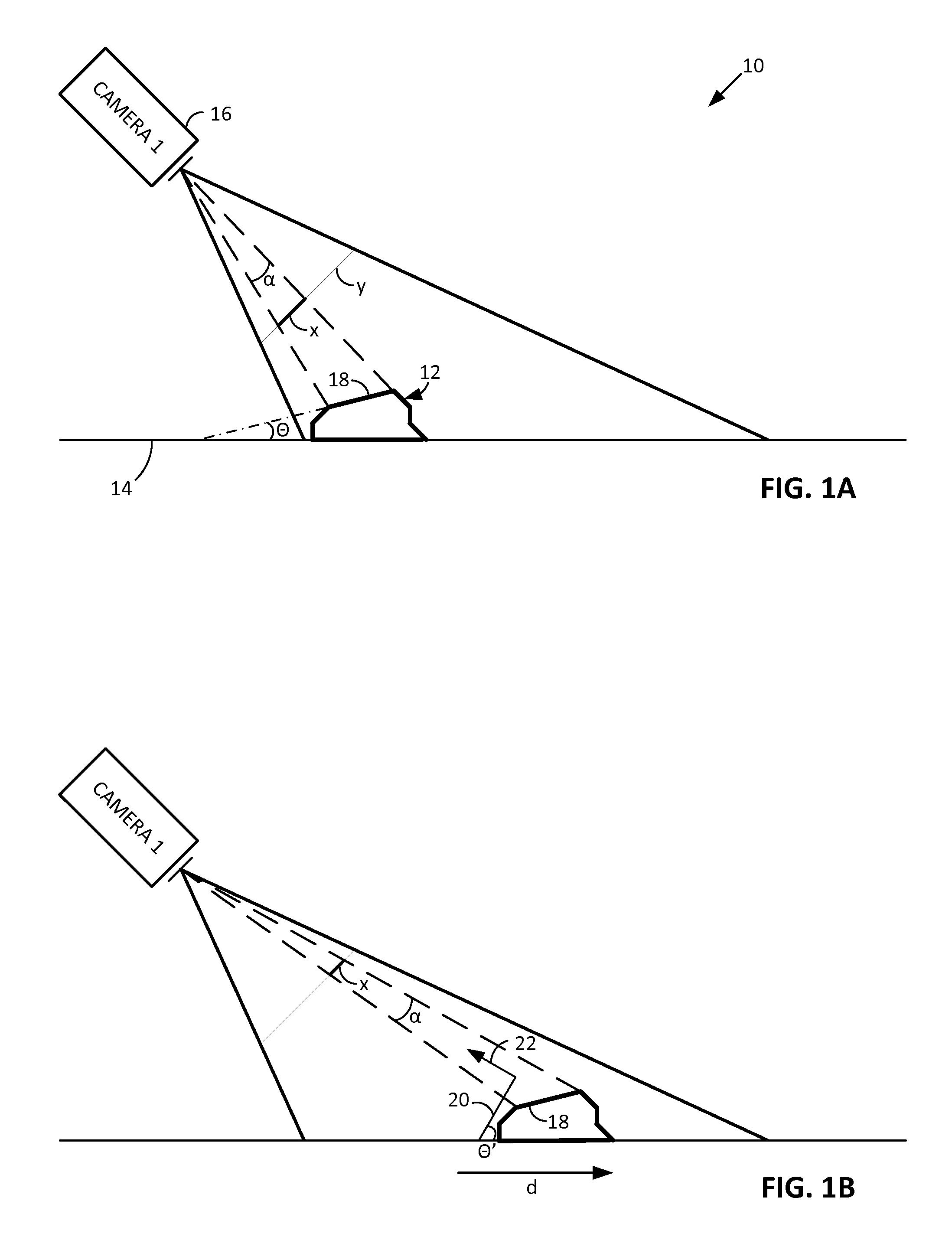

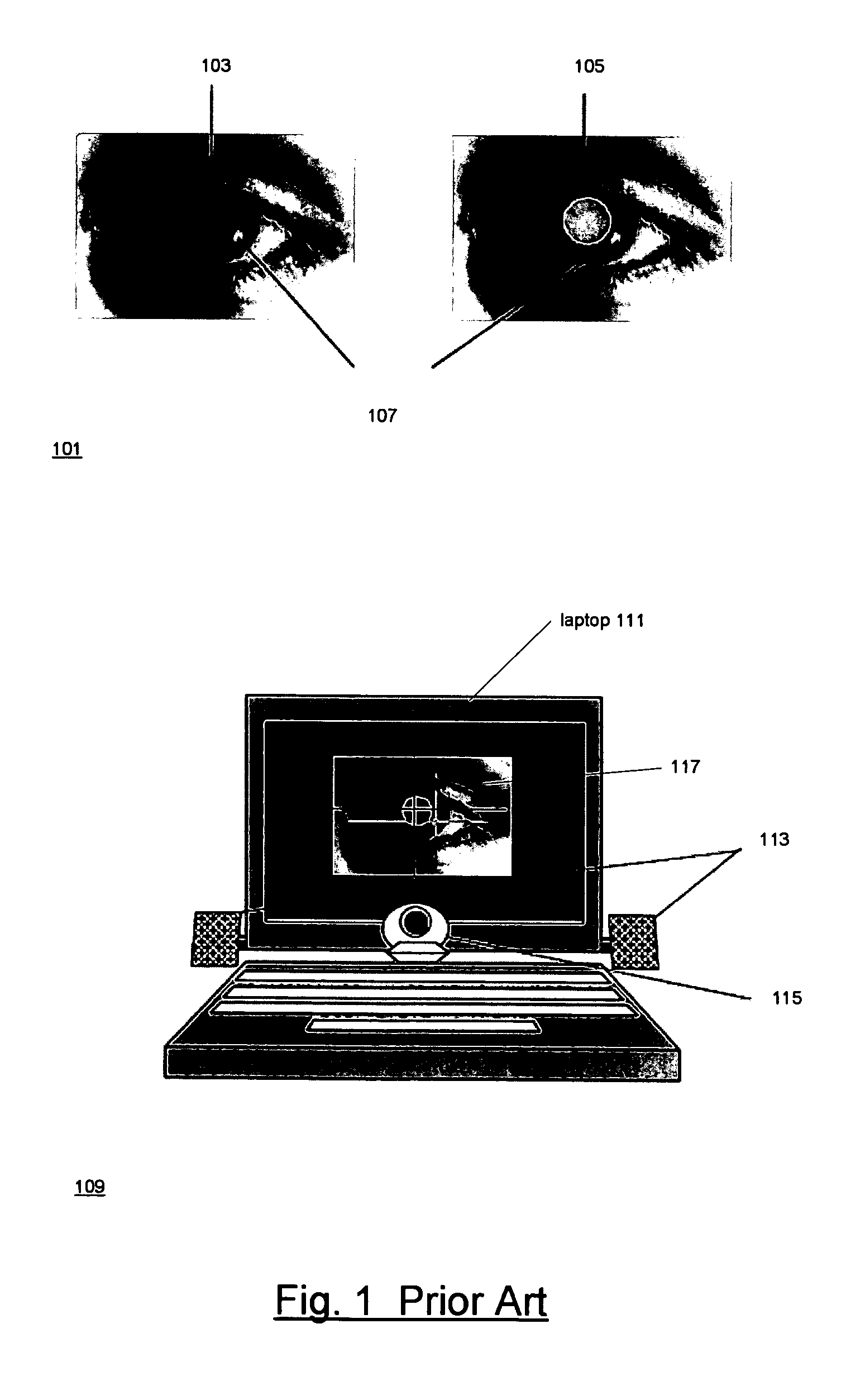

Algorithm for monitoring head/eye motion for driver alertness with one camera

InactiveUS6927694B1Easy to useApplicability to detecting driver fatigueColor television detailsClosed circuit television systemsEquipment OperatorDriver/operator

Visual methods and systems are described for detecting alertness and vigilance of persons under conditions of fatigue, lack of sleep, and exposure to mind altering substances such as alcohol and drugs. In particular, the intention can have particular applications for truck drivers, bus drivers, train operators, pilots and watercraft controllers and stationary heavy equipment operators, and students and employees during either daytime or nighttime conditions. The invention robustly tracks a person's head and facial features with a single on-board camera with a fully automatic system, that can initialize automatically, and can reinitialize when it need's to and provide outputs in realtime. The system can classify rotation in all viewing direction, detects' eye / mouth occlusion, detects' eye blinking, and recovers the 3D(three dimensional) gaze of the eyes. In addition, the system is able to track both through occlusion like eye blinking and also through occlusion like rotation. Outputs can be visual and sound alarms to the driver directly. Additional outputs can slow down the vehicle cause and / or cause the vehicle to come to a full stop. Further outputs can send data on driver, operator, student and employee vigilance to remote locales as needed for alarms and initiating other actions.

Owner:UNIV OF CENT FLORIDA RES FOUND INC +1

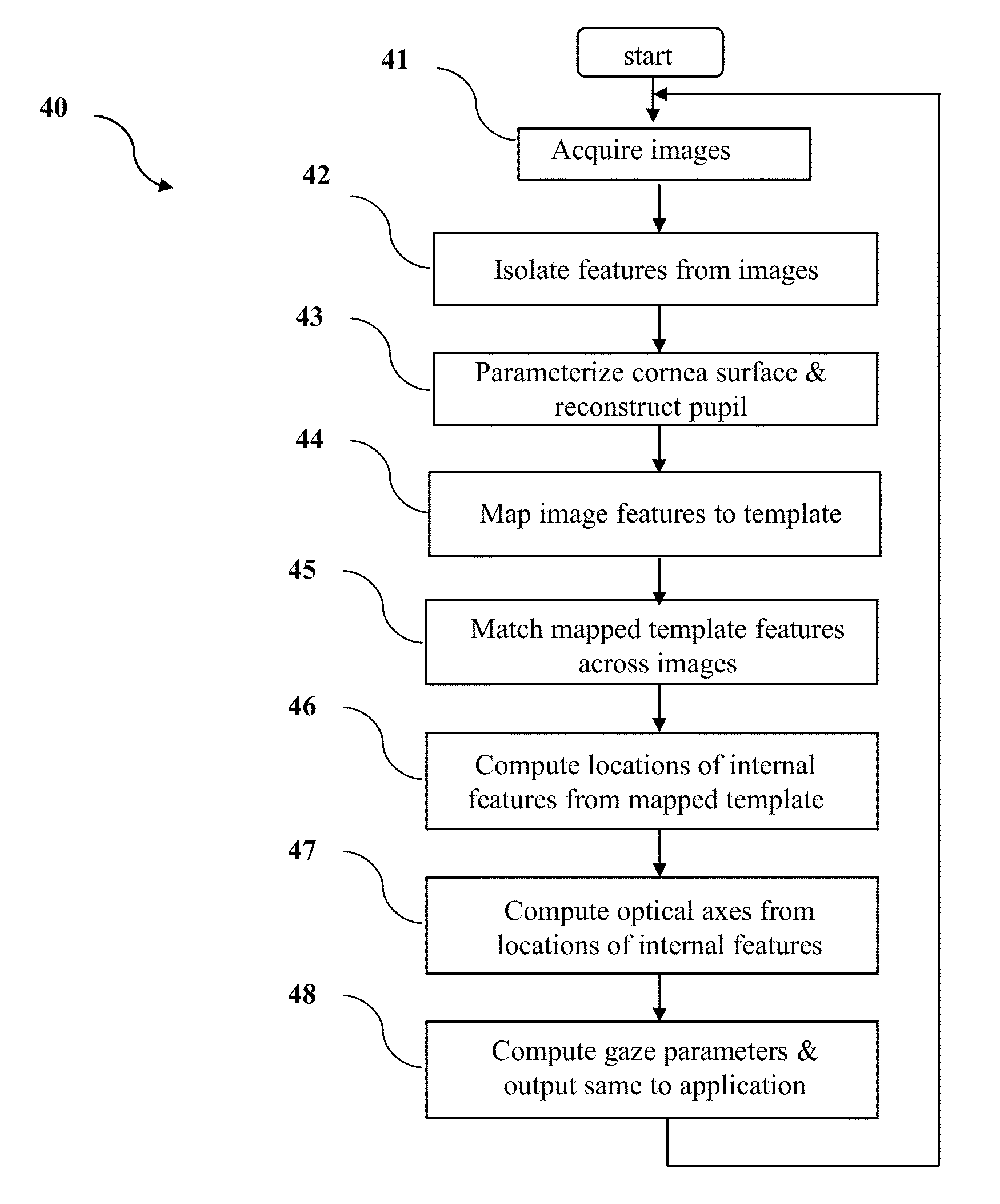

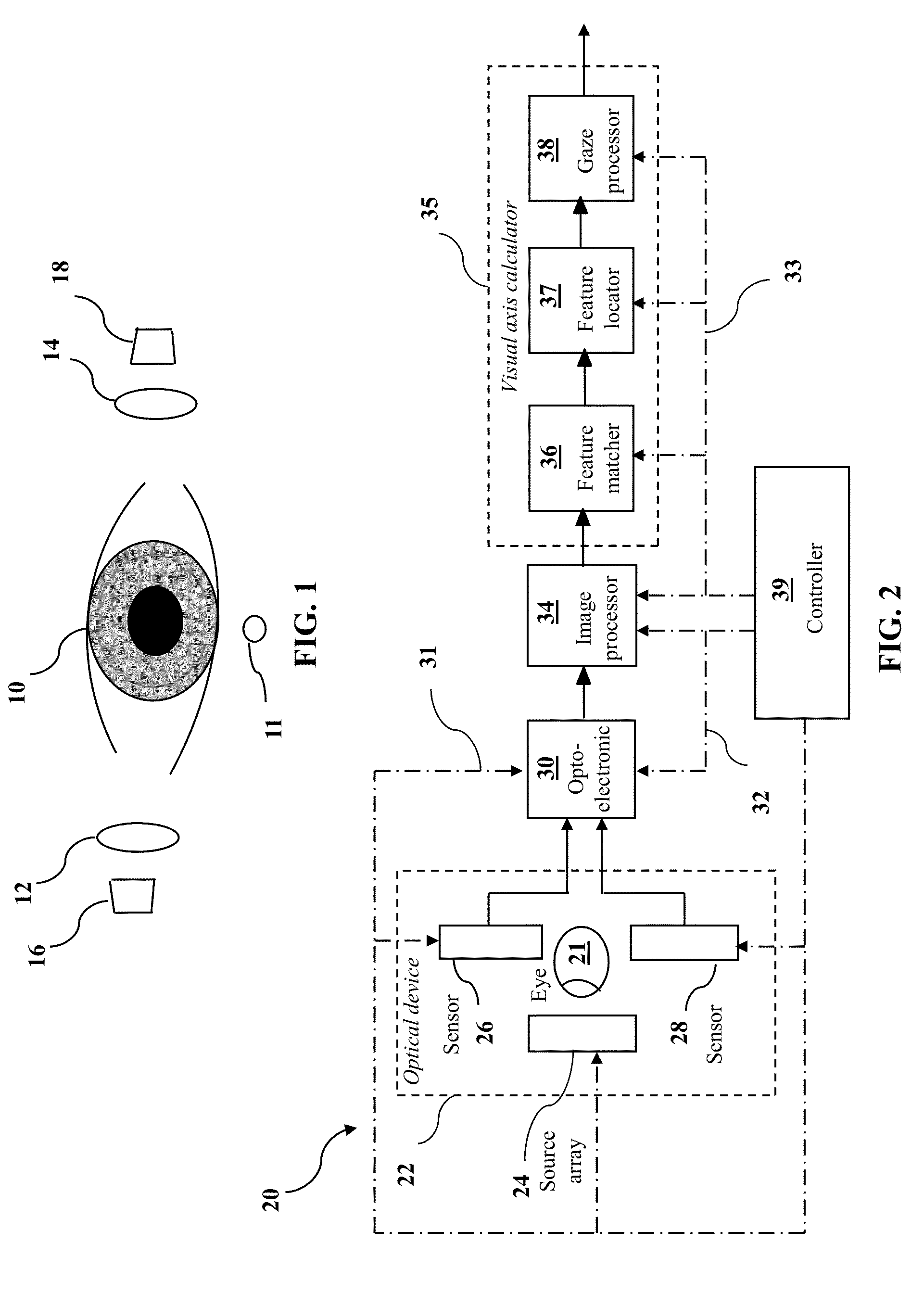

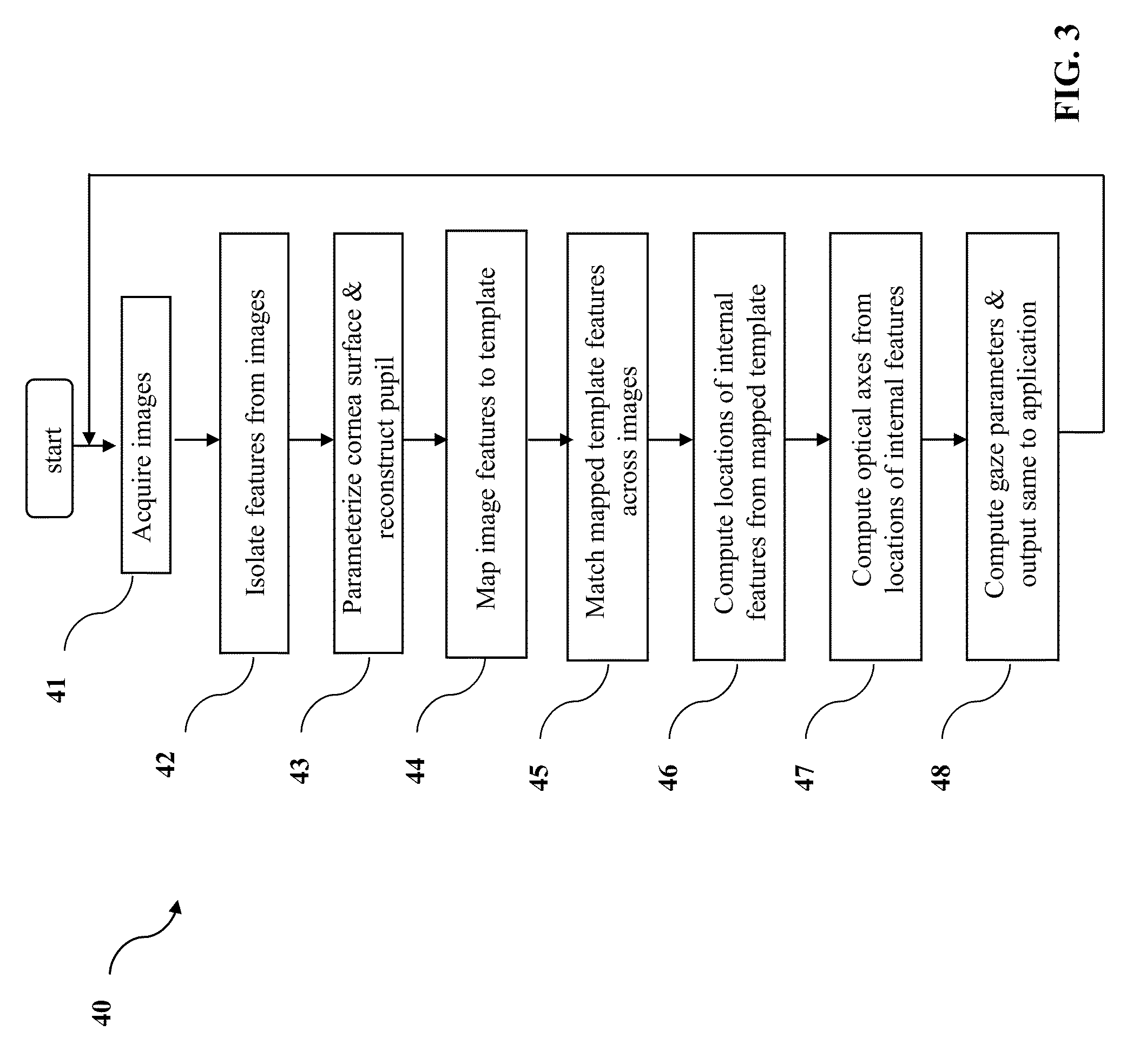

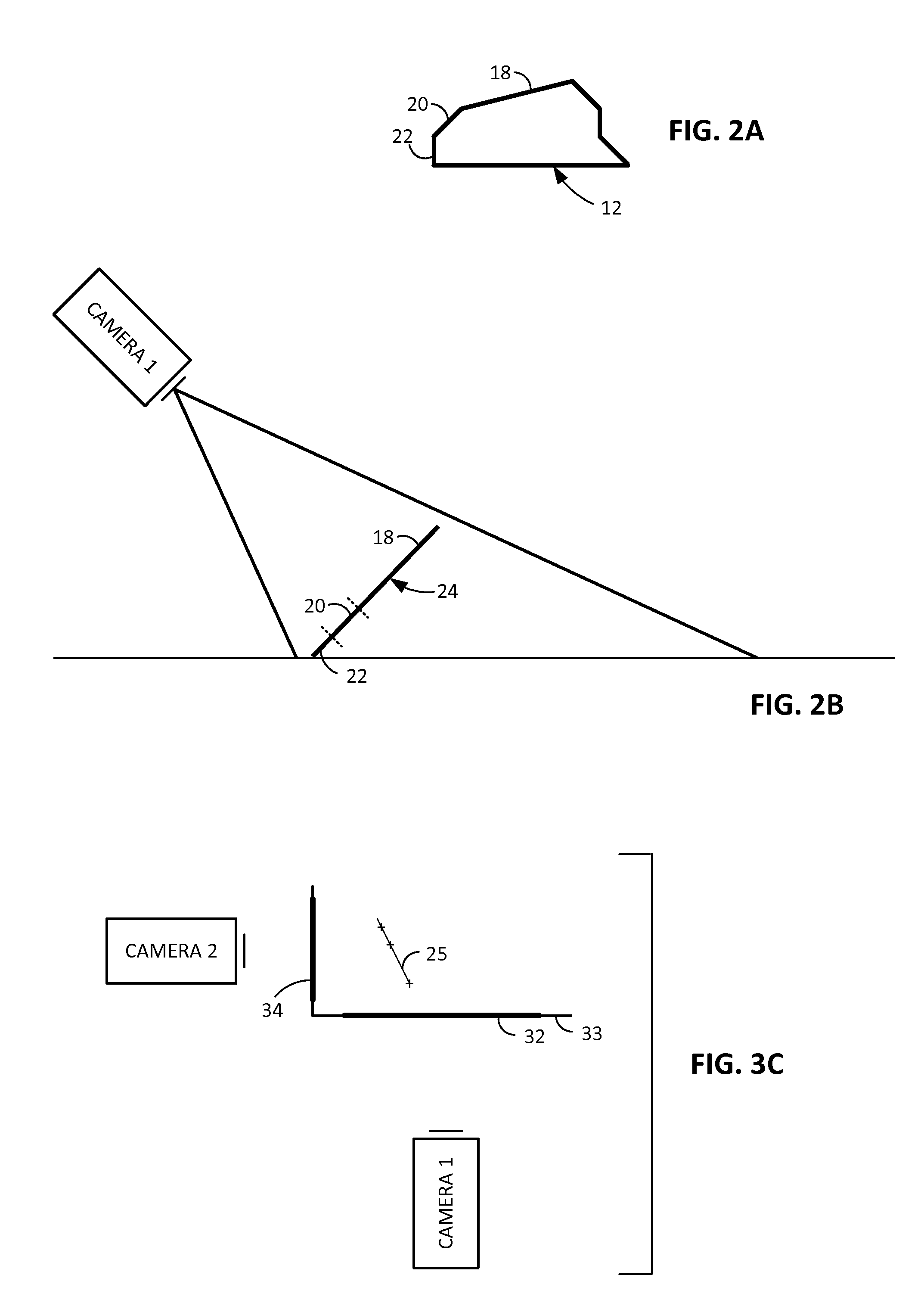

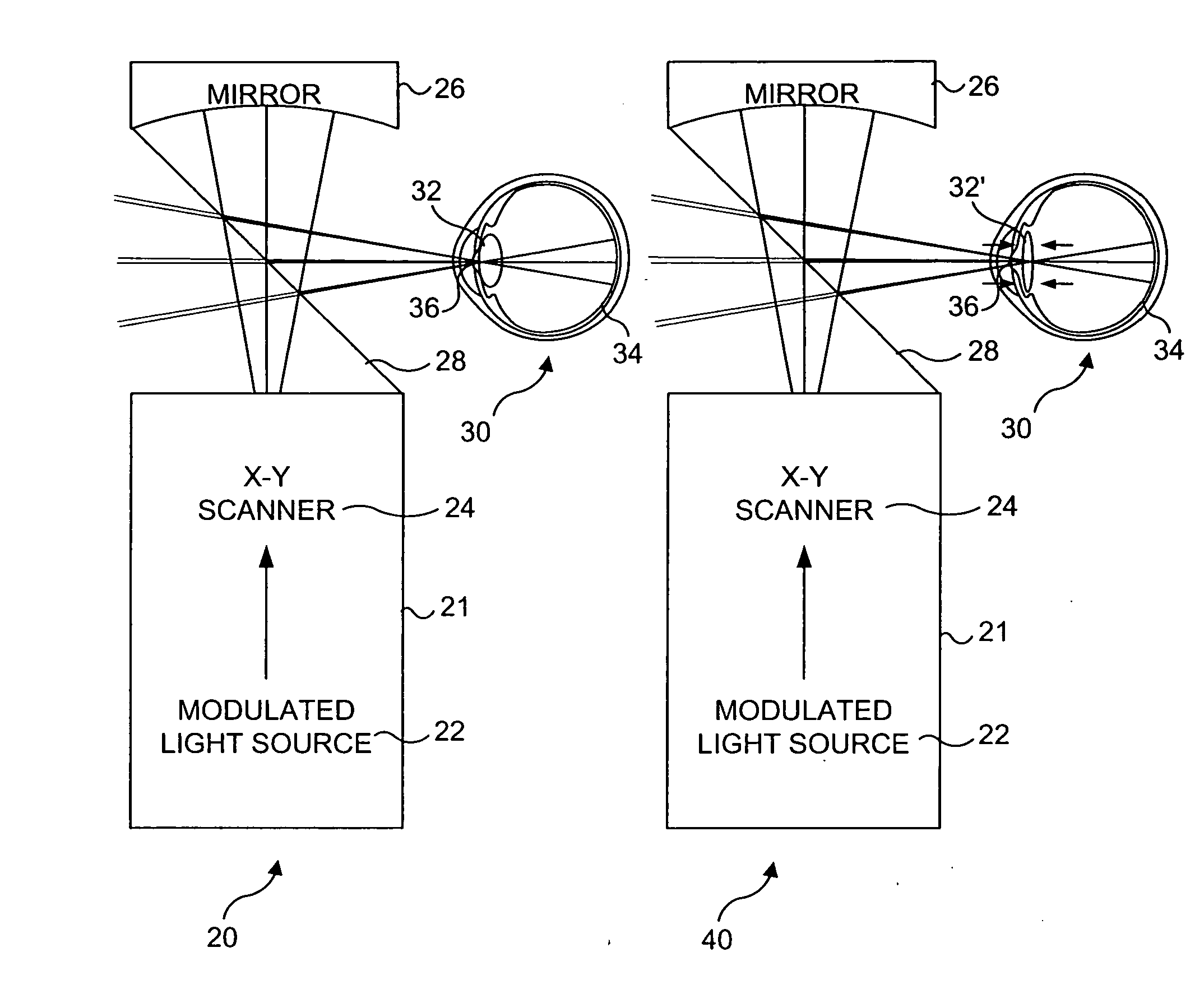

Apparatus and method for determining eye gaze from stereo-optic views

InactiveUS8824779B1Improve accuracyImprove image processing capabilitiesImage enhancementImage analysisWide fieldOptical axis

The invention, exemplified as a single lens stereo optics design with a stepped mirror system for tracking the eye, isolates landmark features in the separate images, locates the pupil in the eye, matches landmarks to a template centered on the pupil, mathematically traces refracted rays back from the matched image points through the cornea to the inner structure, and locates these structures from the intersection of the rays for the separate stereo views. Having located in this way structures of the eye in the coordinate system of the optical unit, the invention computes the optical axes and from that the line of sight and the torsion roll in vision. Along with providing a wider field of view, this invention has an additional advantage since the stereo images tend to be offset from each other and for this reason the reconstructed pupil is more accurately aligned and centered.

Owner:CORTICAL DIMENSIONS LLC

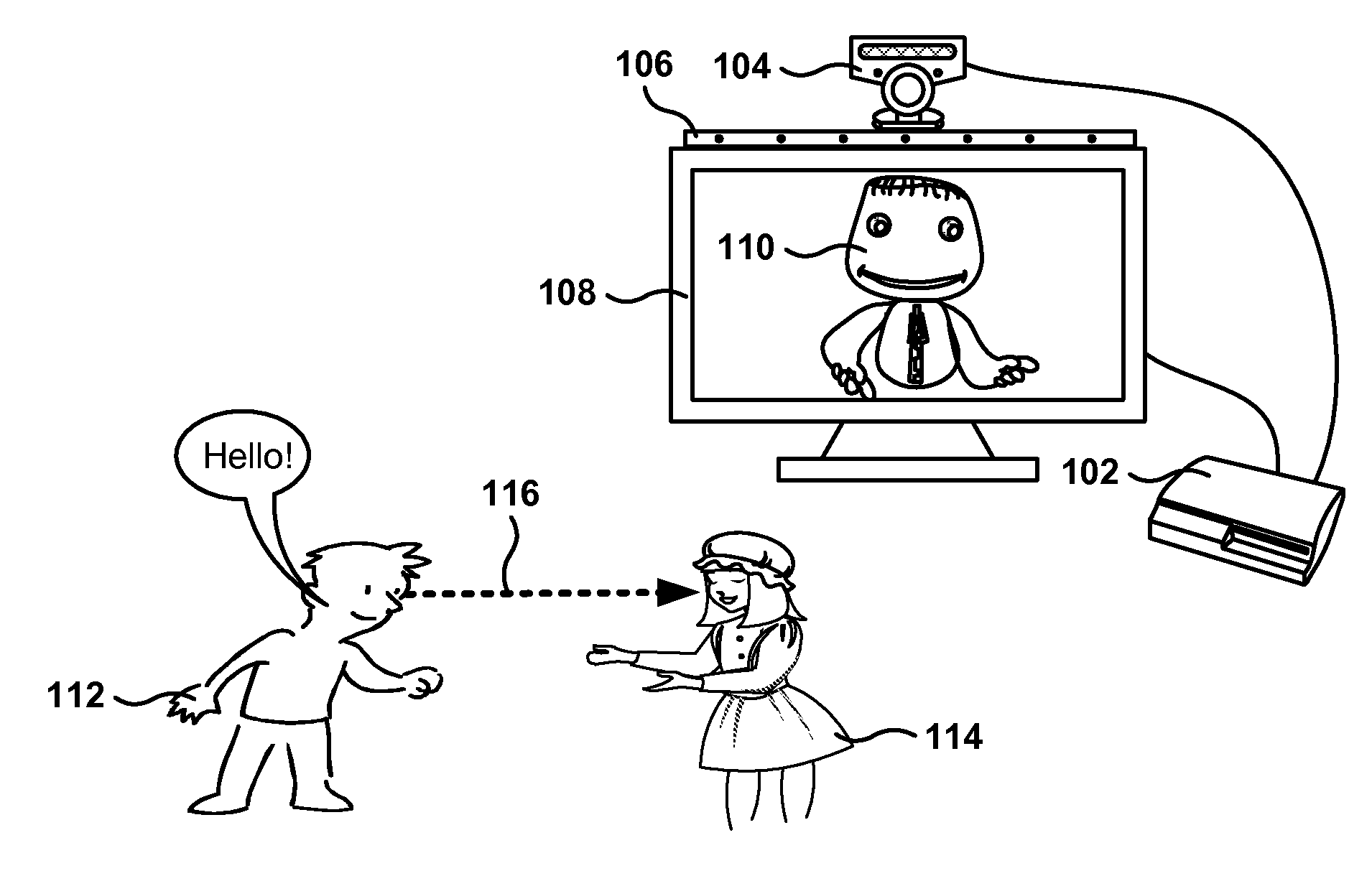

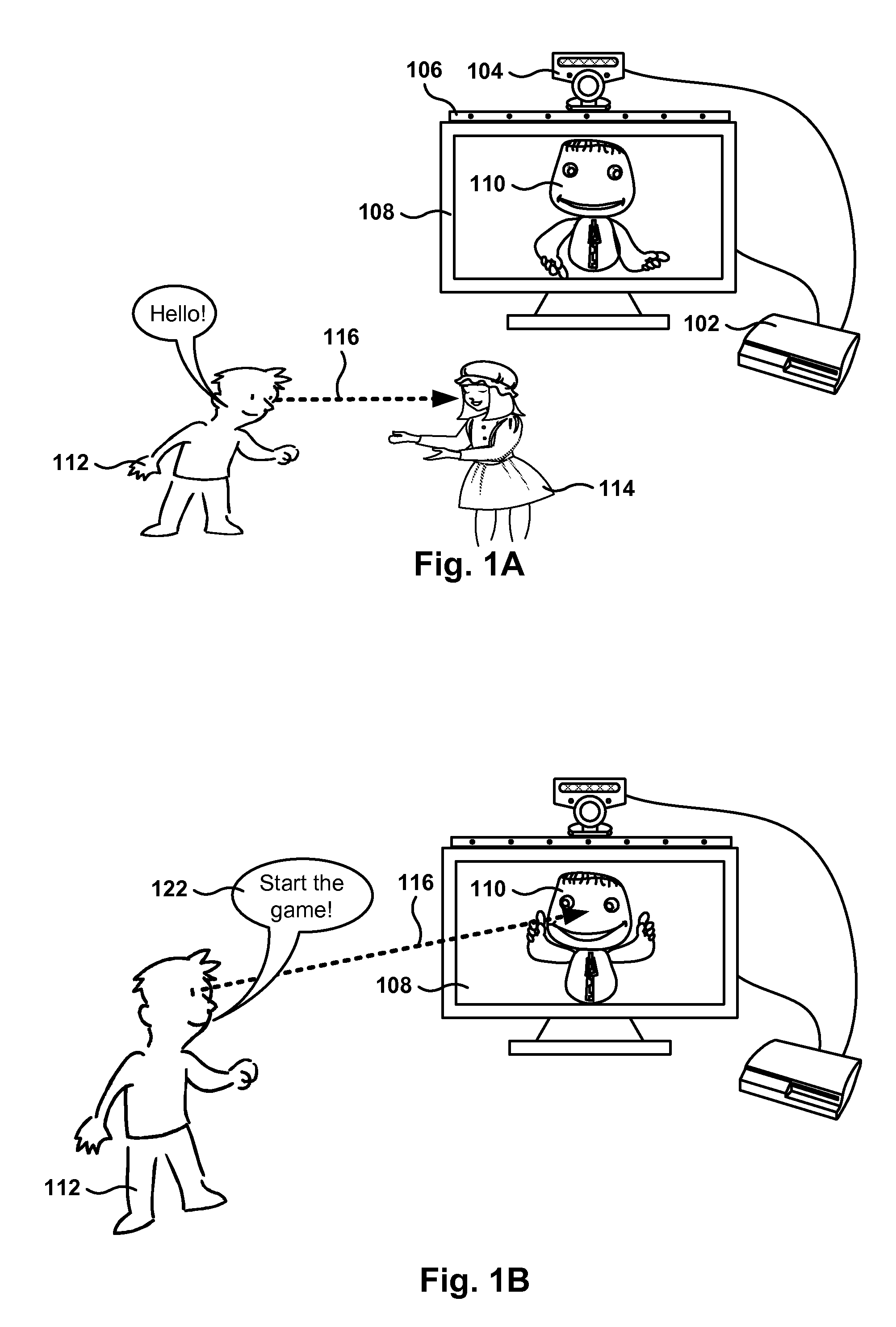

Interface with Gaze Detection and Voice Input

ActiveUS20120295708A1Dashboard fitting arrangementsInstrument arrangements/adaptationsSpeech inputHuman–computer interaction

Methods, computer programs, and systems for interfacing a user with a computer program, utilizing gaze detection and voice recognition, are provided. One method includes an operation for determining if a gaze of a user is directed towards a target associated with the computer program. The computer program is set to operate in a first state when the gaze is determined to be on the target, and set to operate in a second state when the gaze is determined to be away from the target. When operating in the first state, the computer program processes voice commands from the user, and, when operating in the second state, the computer program omits processing of voice commands.

Owner:SONY COMPUTER ENTERTAINMENT INC

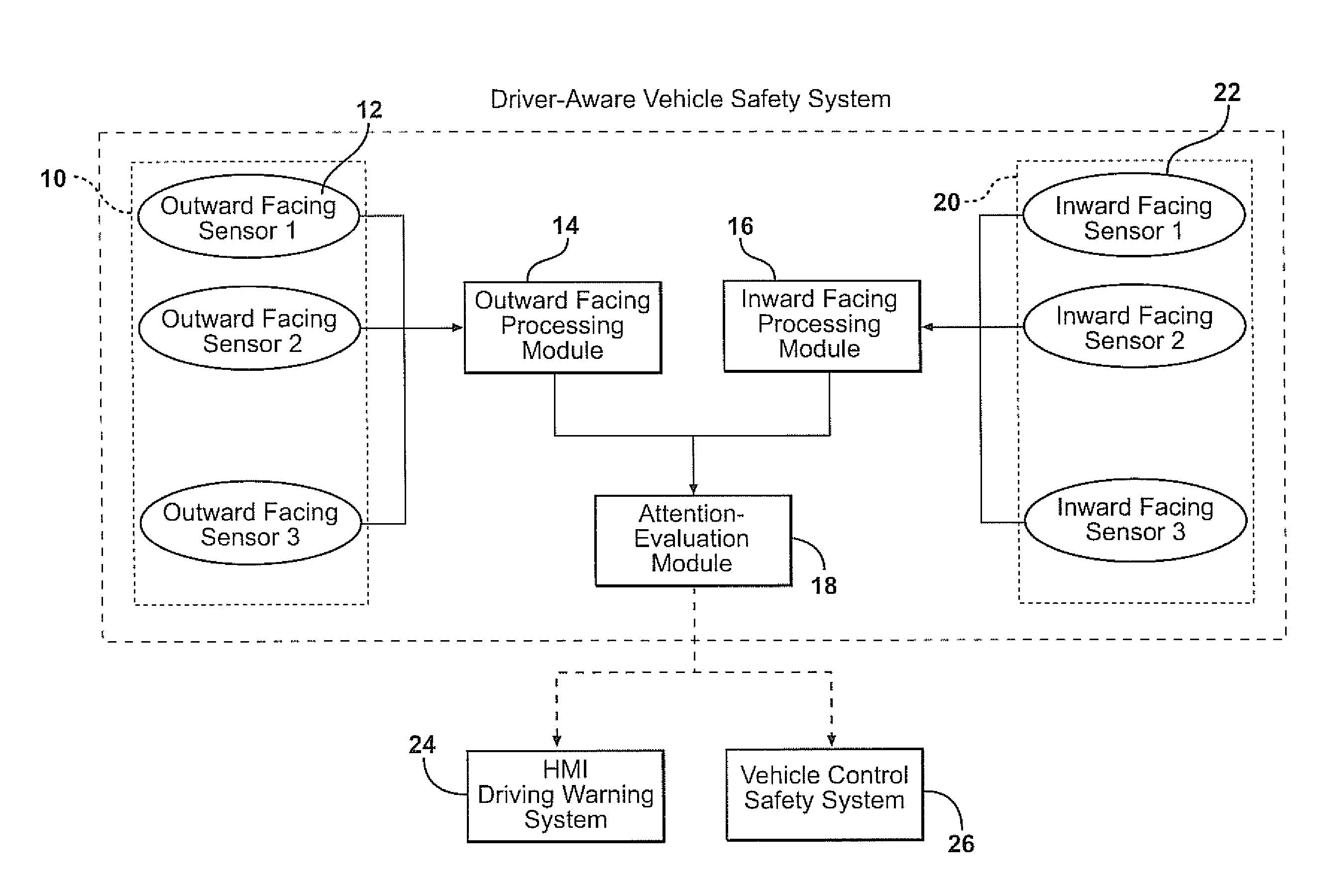

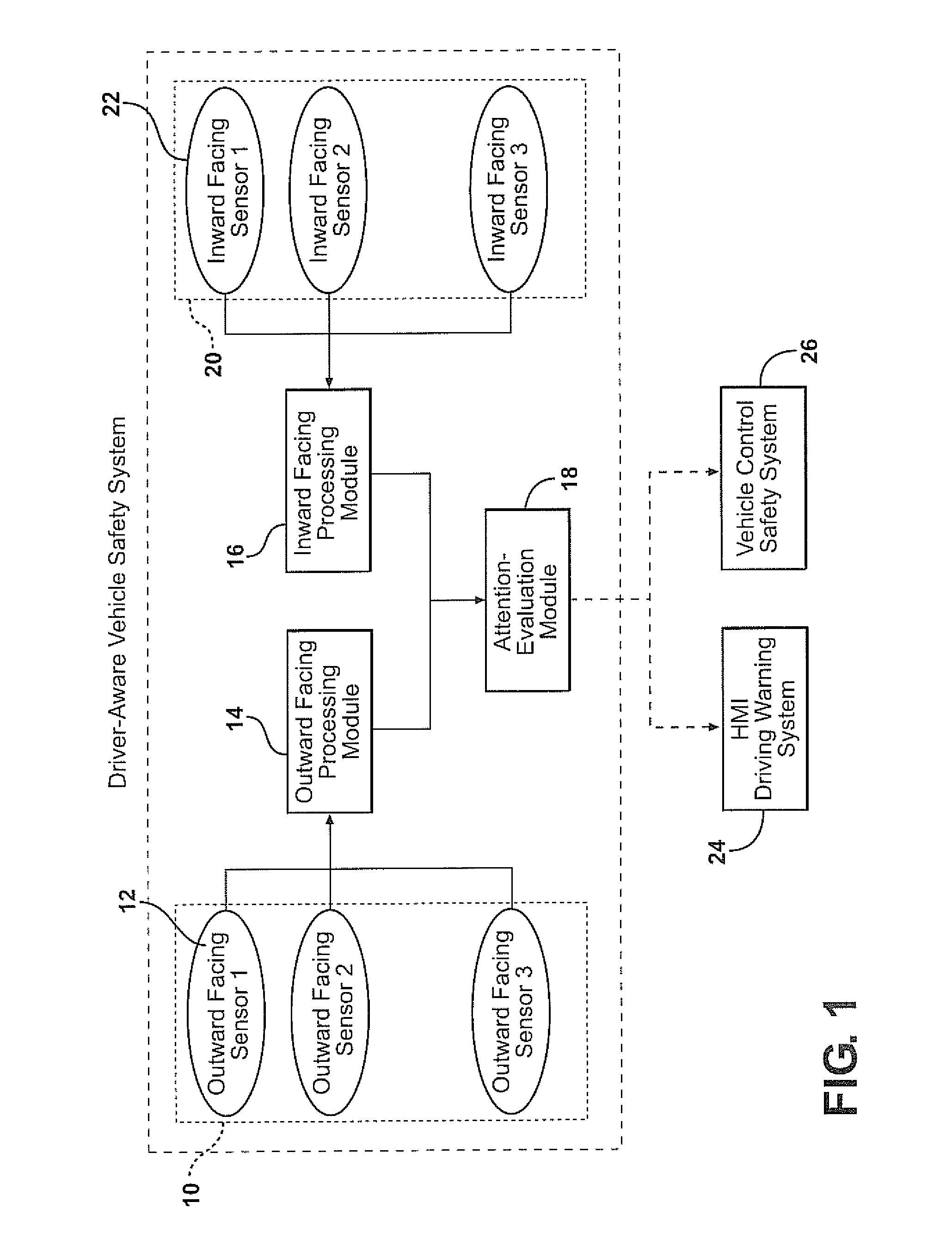

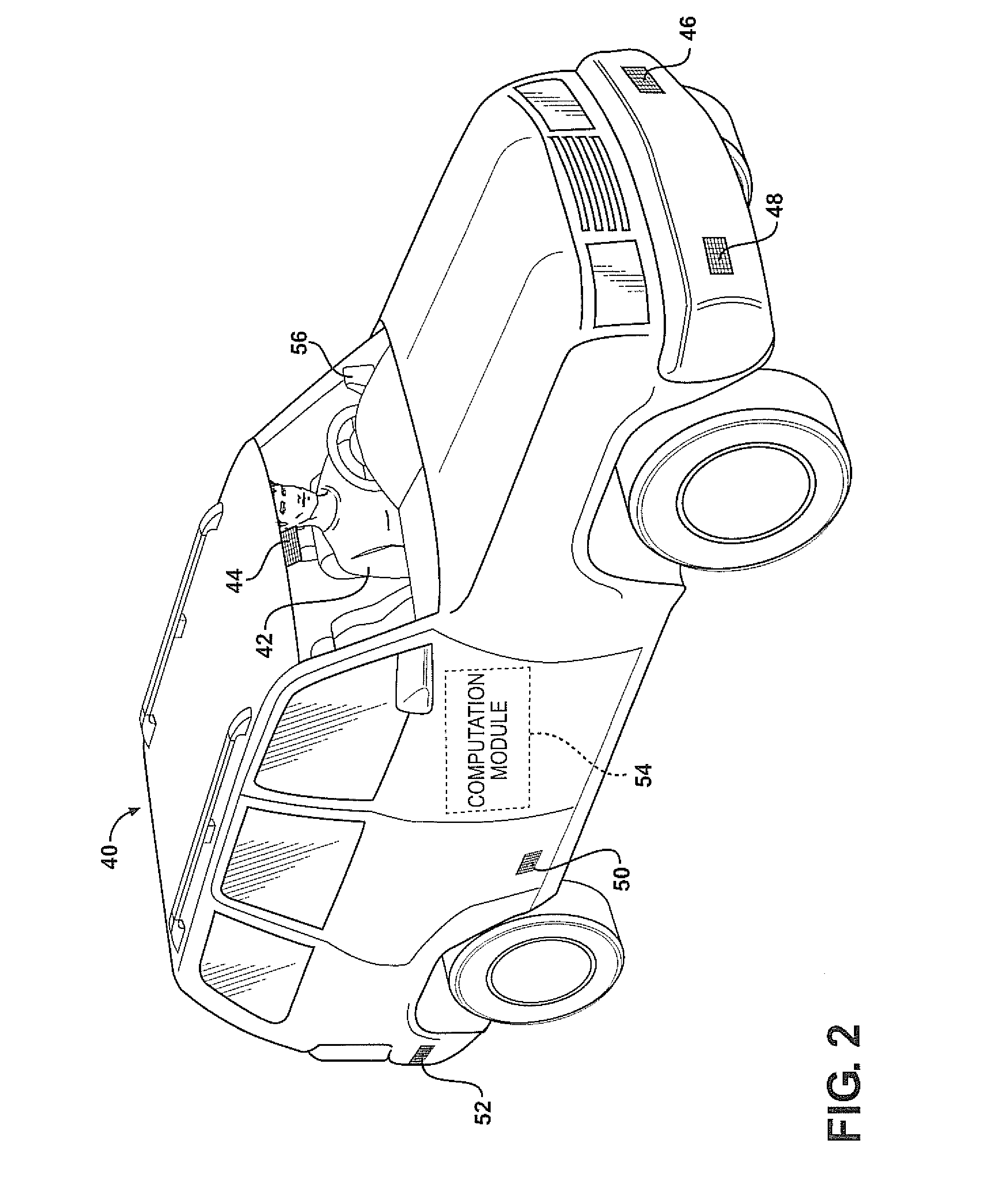

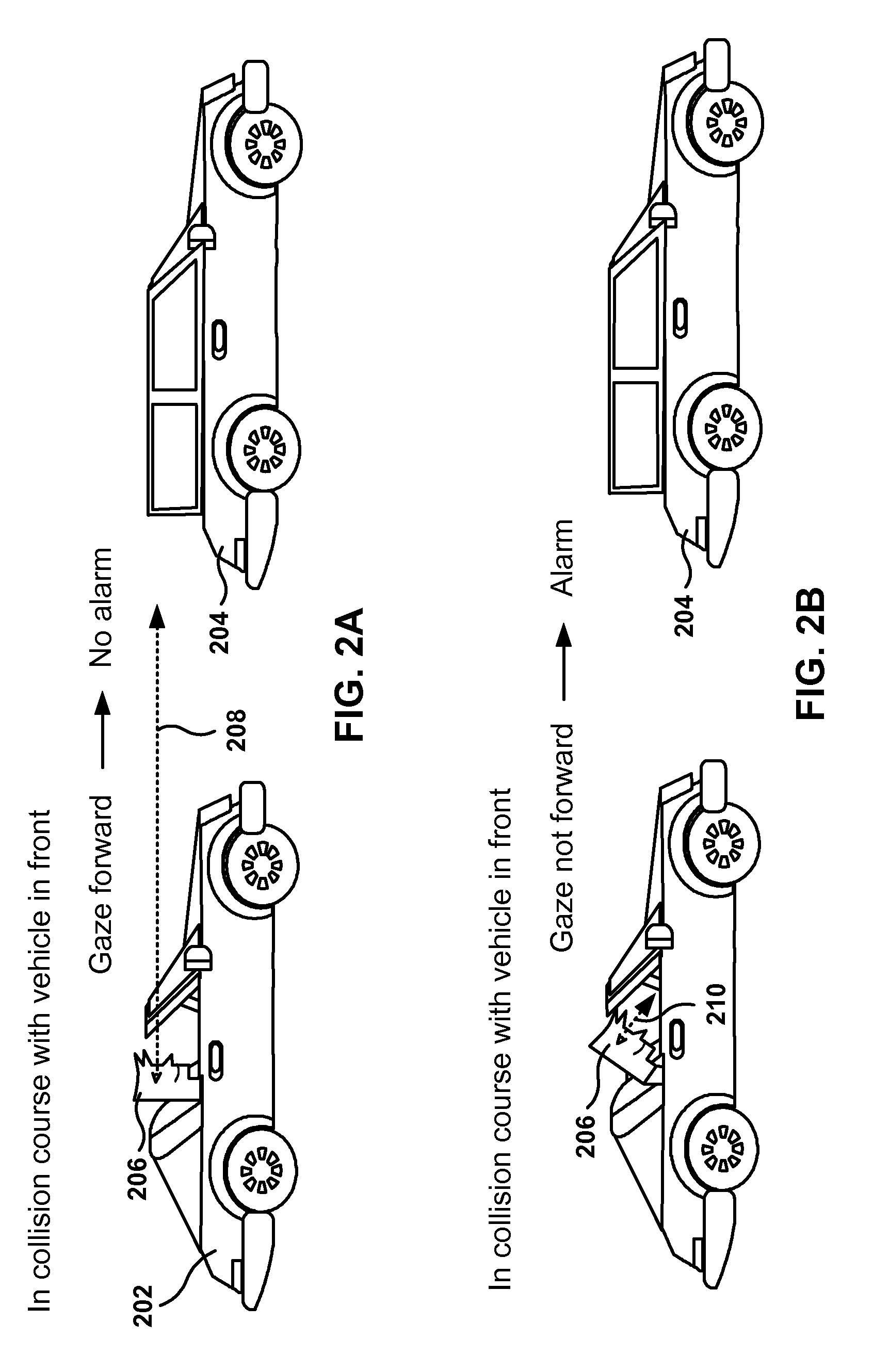

Combining driver and environment sensing for vehicular safety systems

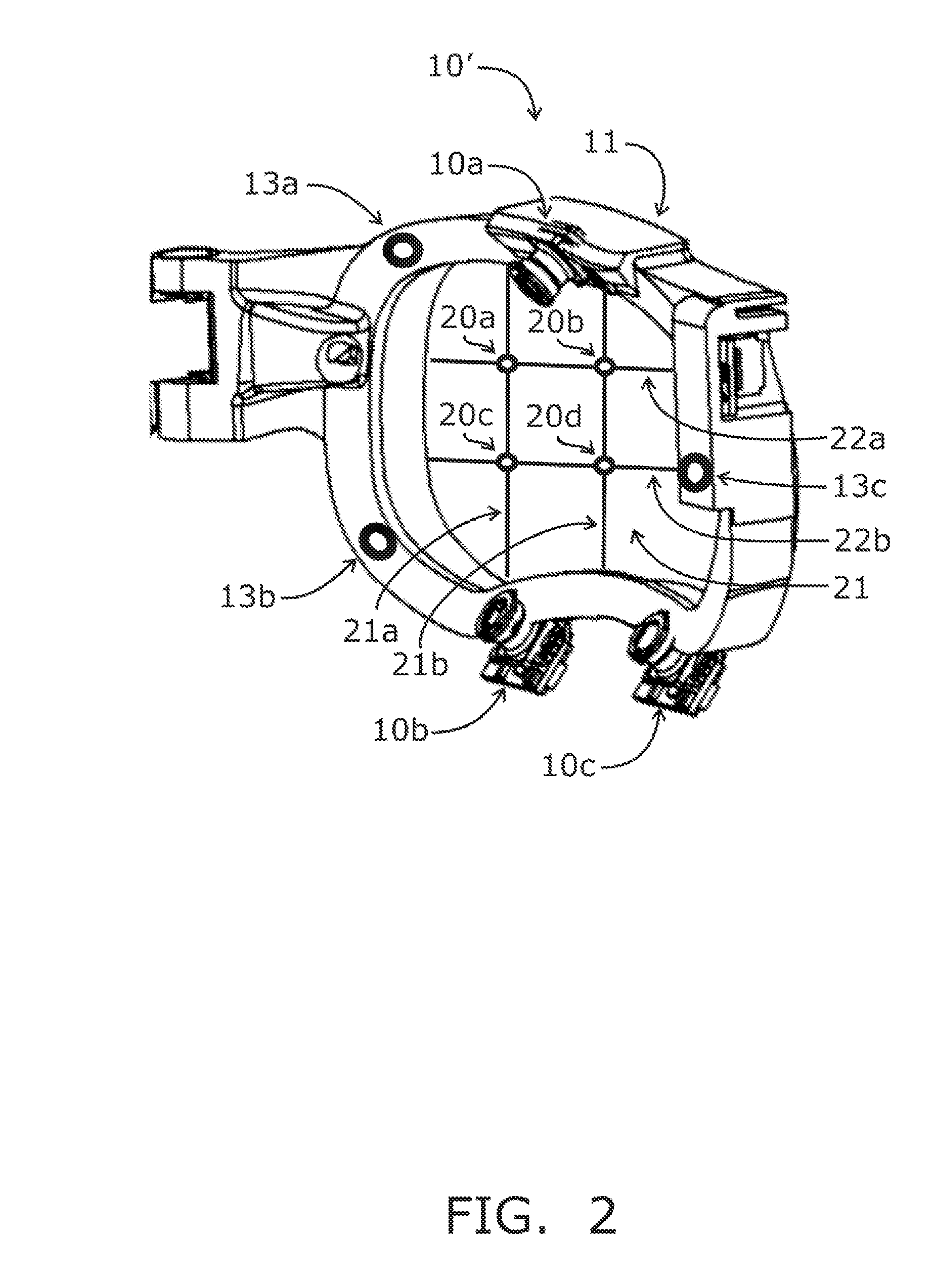

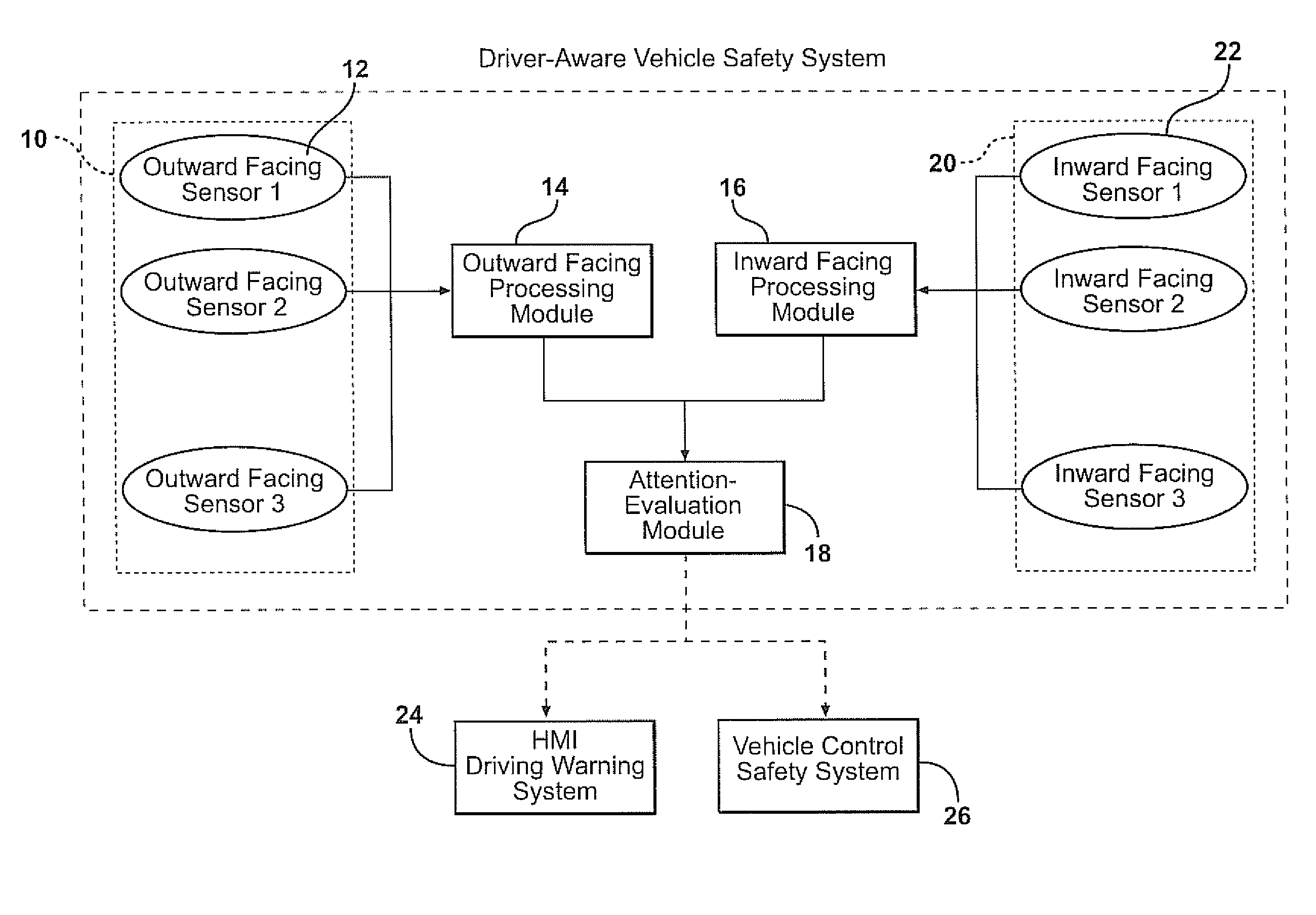

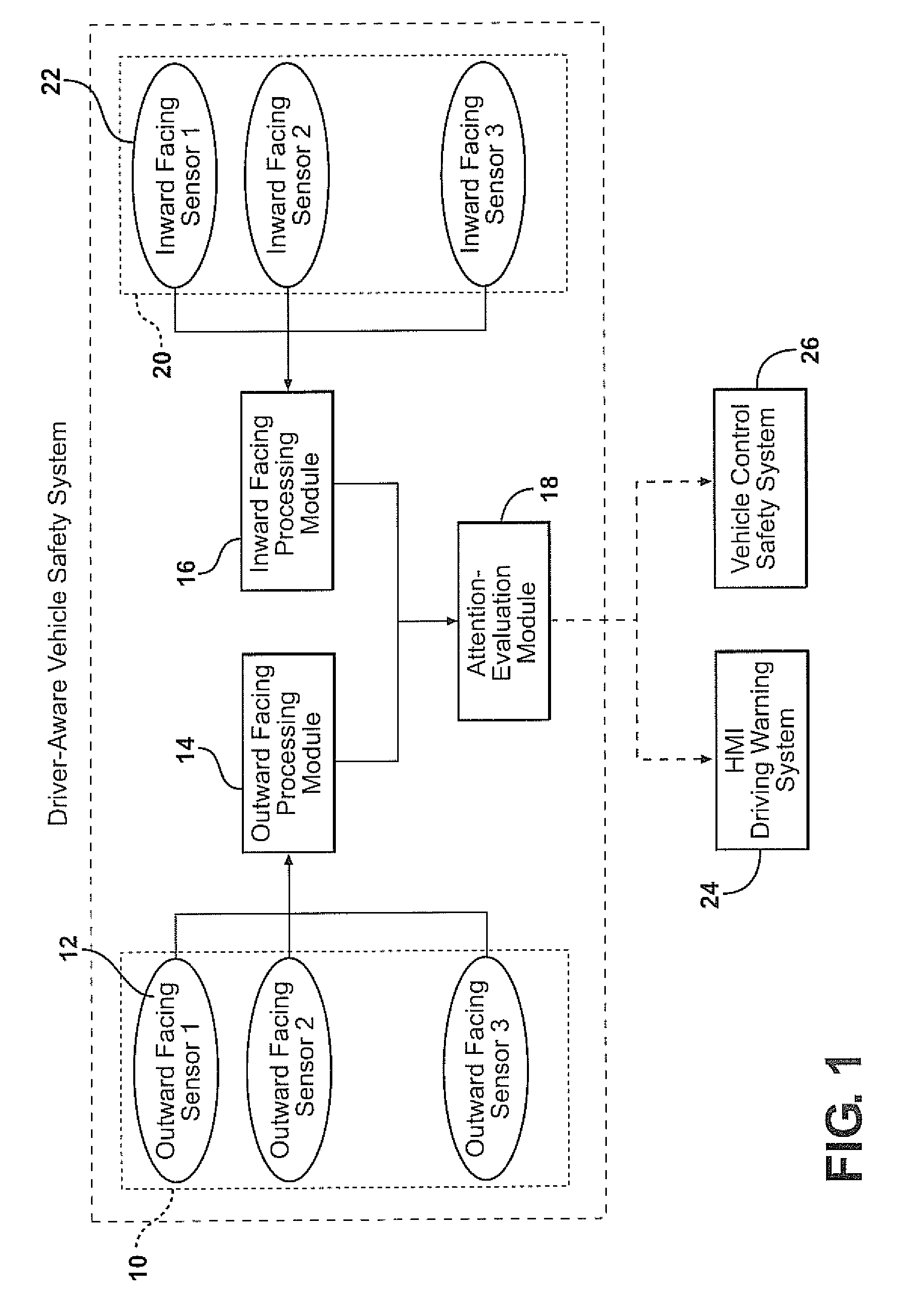

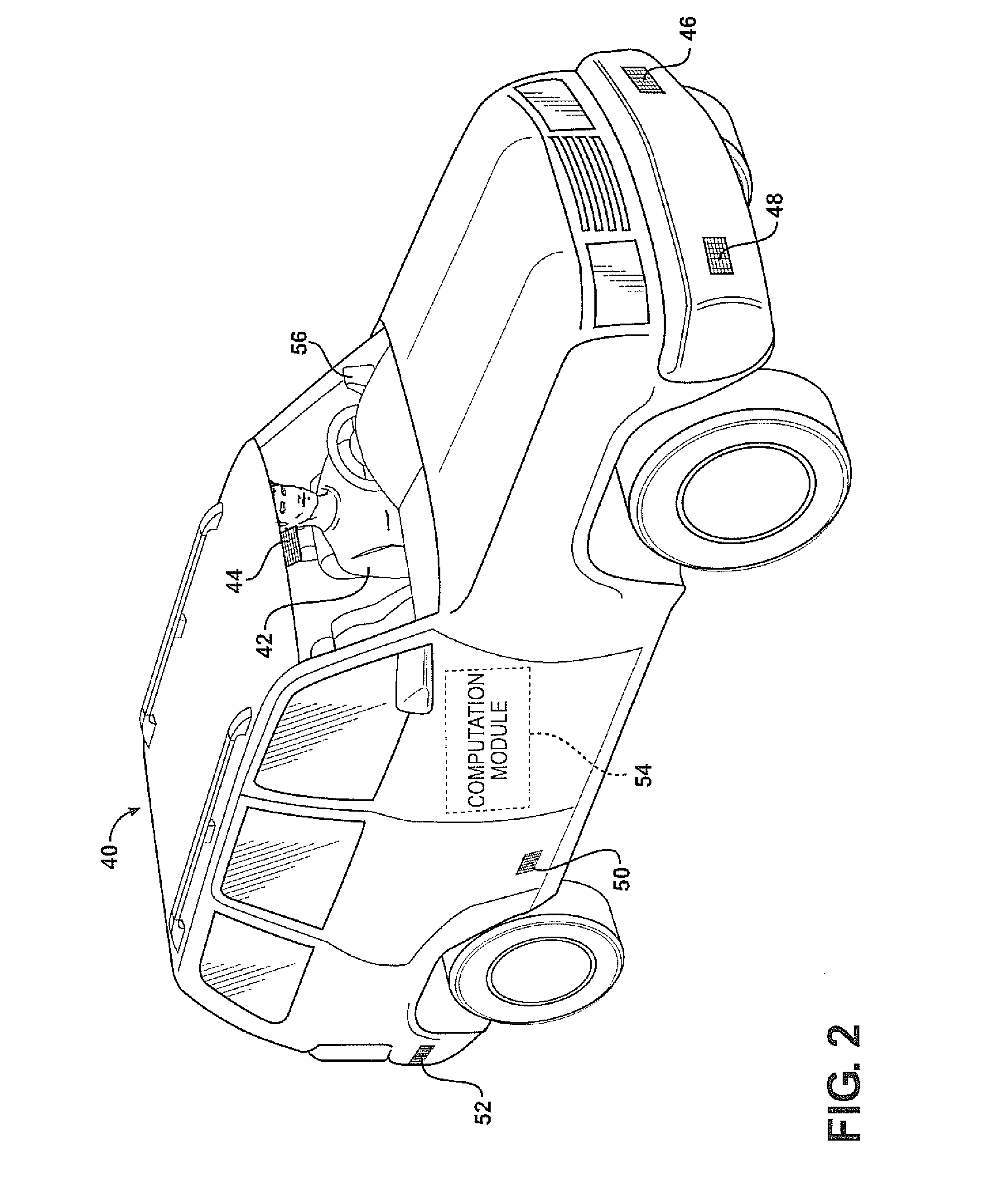

ActiveUS20110169625A1Improve securityIncrease awarenessAnti-collision systemsCharacter and pattern recognitionDriver/operatorEngineering

An apparatus for assisting safe operation of a vehicle includes an environment sensor system detecting hazards within the vehicle environment, a driver monitor providing driver awareness data (such as a gaze track), and an attention-evaluation module identifying hazards as sufficiently or insufficiently sensed by the driver by comparing the hazard data and the gaze track. An alert signal relating to the unperceived hazards can be provided.

Owner:TOYOTA MOTOR CO LTD

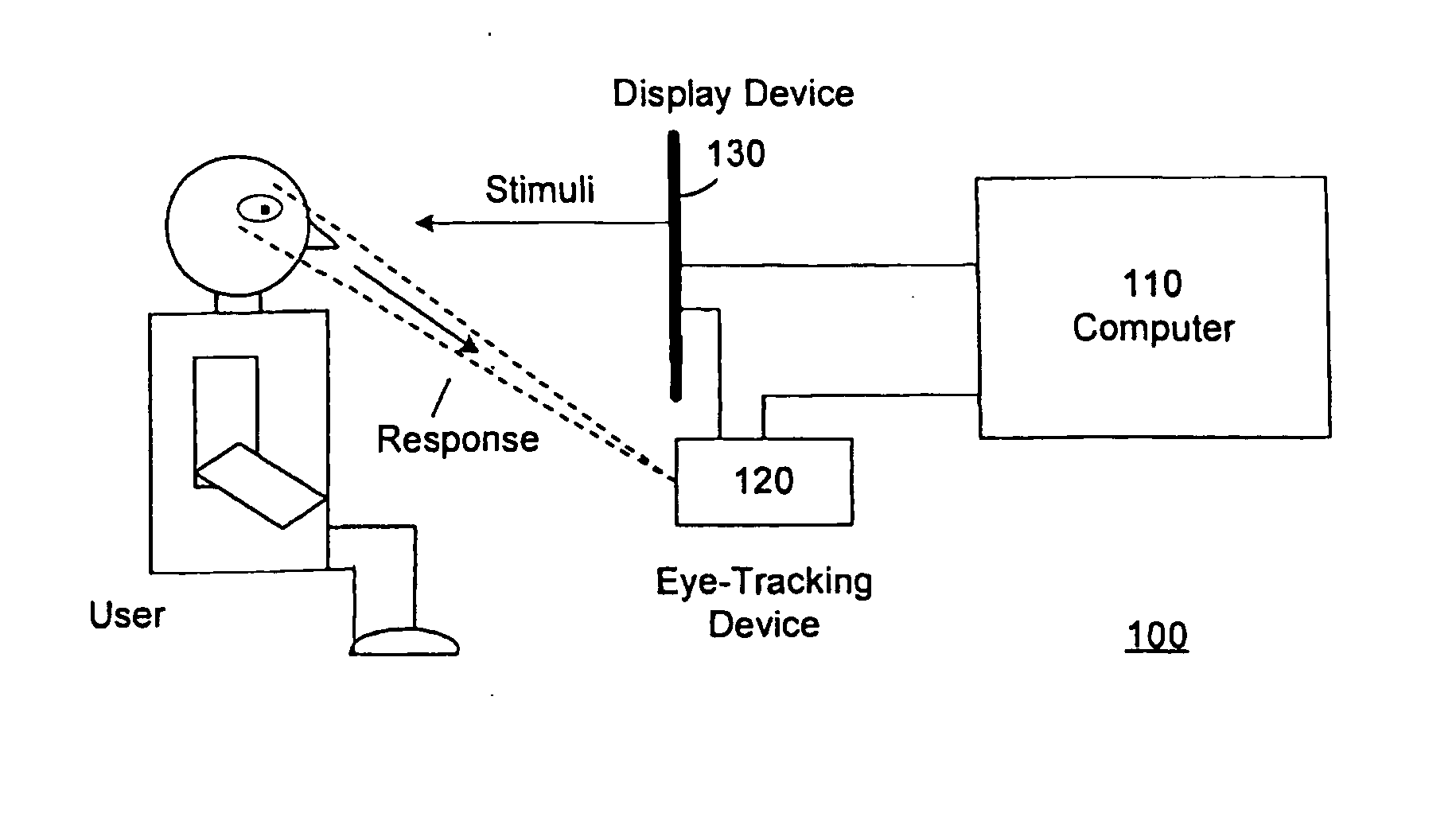

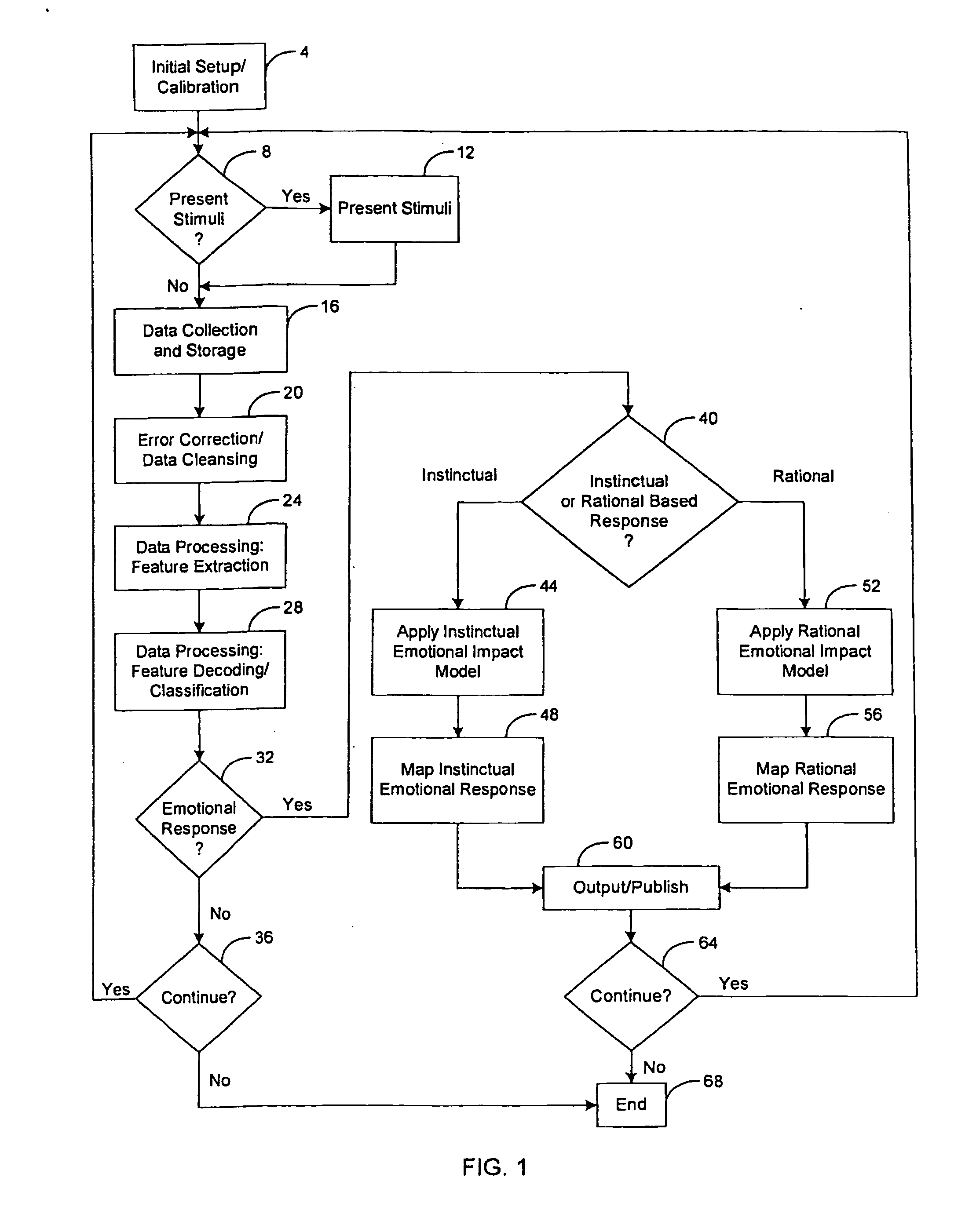

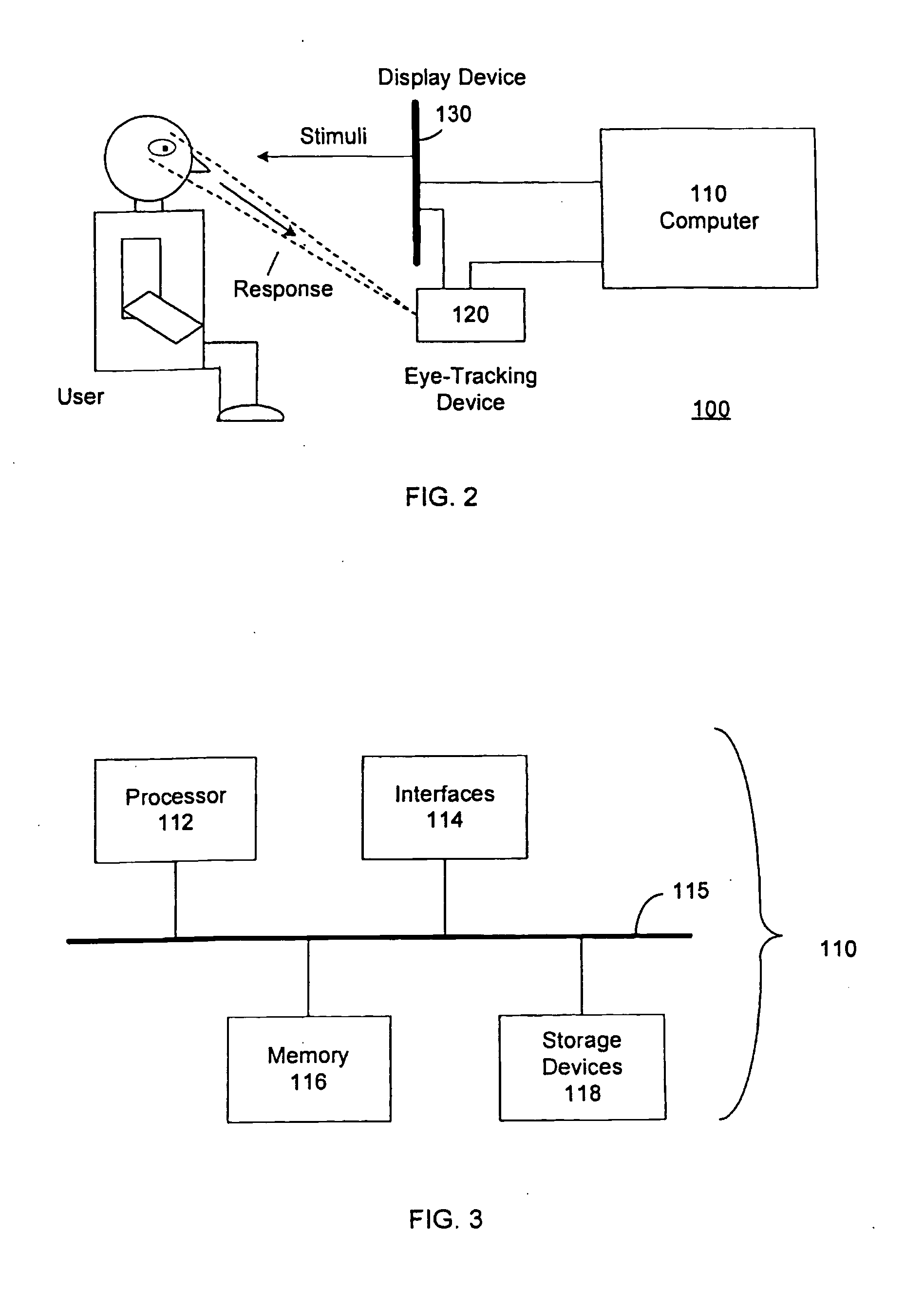

System and method for determining human emotion by analyzing eye properties

InactiveUS20070066916A1Cancel noiseEasy to explainLocal control/monitoringComputer-assisted medical data acquisitionPupilComputer science

The invention relates to a system and method for determining human emotion by analyzing a combination of eye properties of a user including, for example, pupil size, blink properties, eye position (or gaze) properties, or other properties. The system and method may be configured to measure the emotional impact of various stimuli presented to users by analyzing, among other data, the eye properties of the users while perceiving the stimuli. Measured eye properties may be used to distinguish between positive emotional responses (e.g., pleasant or “like”), neutral emotional responses, and negative emotional responses (e.g., unpleasant or “dislike”), as well as to determine the intensity of emotional responses.

Owner:IMOTIONS EMOTION TECH

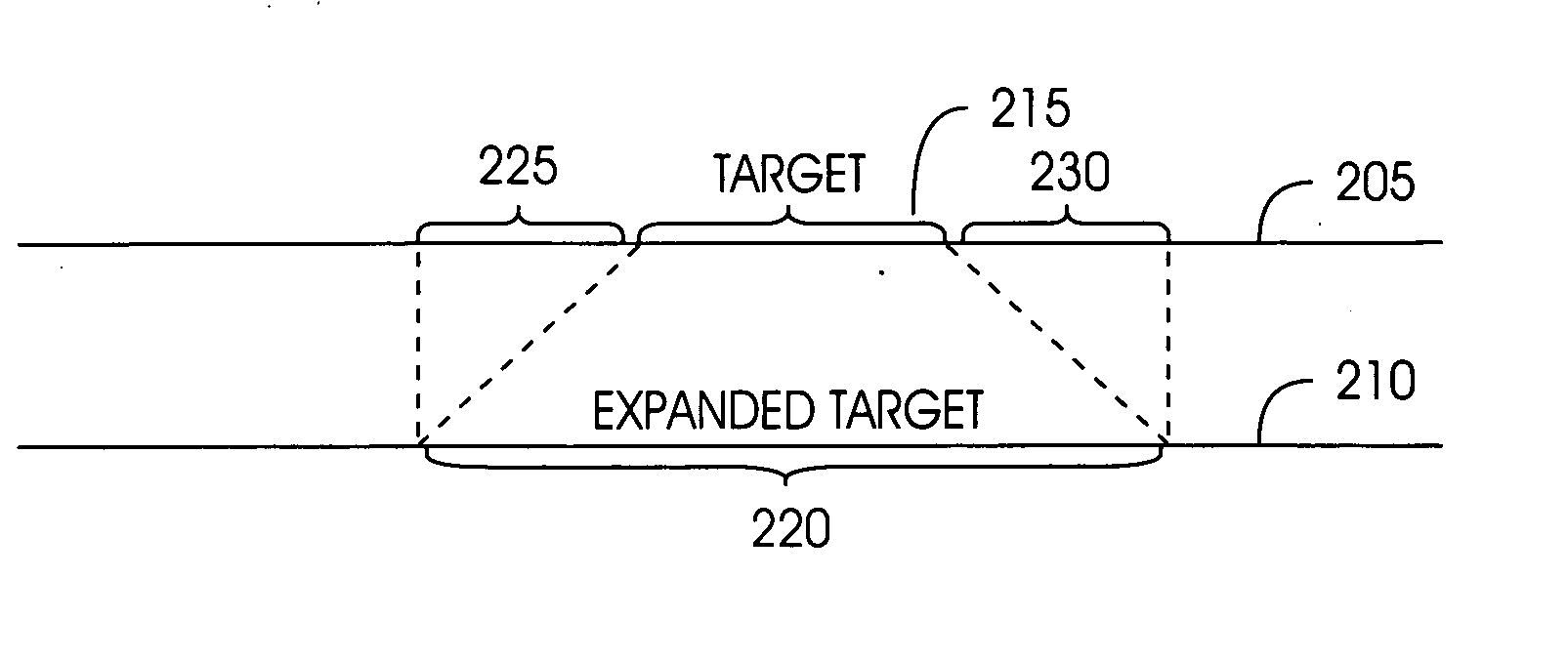

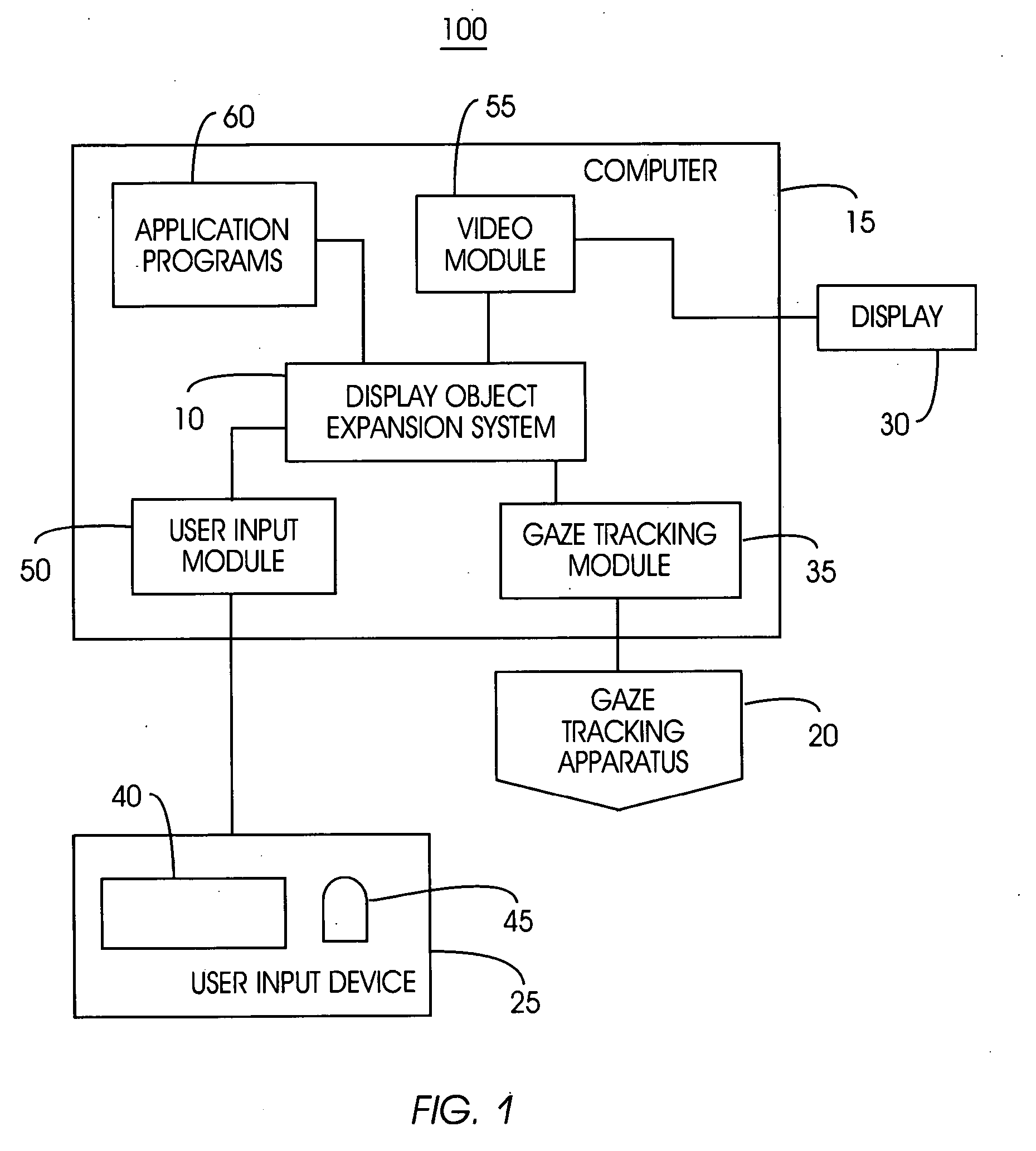

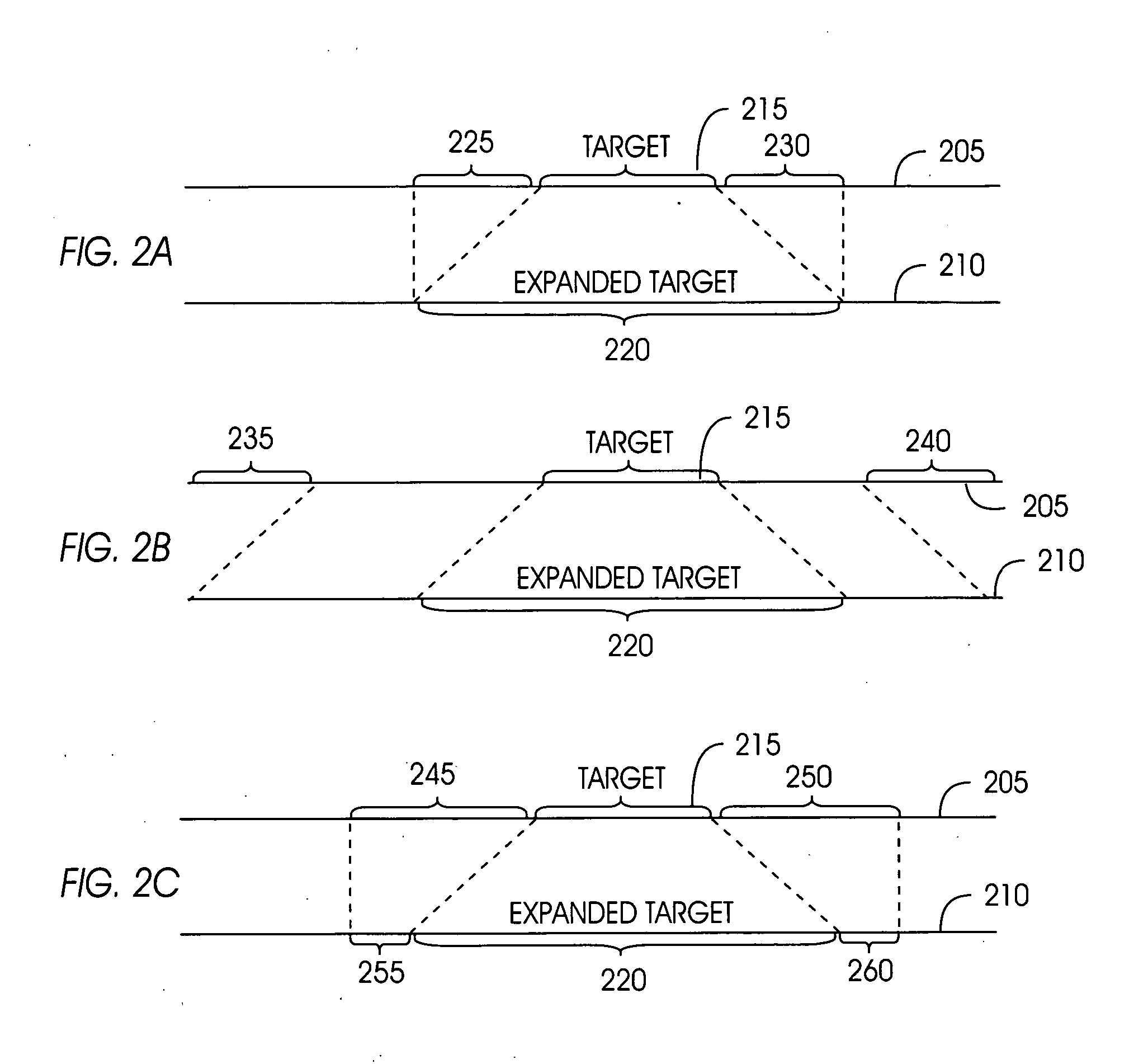

System and method for selectively expanding or contracting a portion of a display using eye-gaze tracking

InactiveUS20050047629A1Improve abilitiesMinimum of functionInput/output for user-computer interactionCharacter and pattern recognitionGraphicsVisibility

A computer-driven system amplifies a target region based on integrating eye gaze and manual operator input, thus reducing pointing time and operator fatigue. A gaze tracking apparatus monitors operator eye orientation while the operator views a video screen. Concurrently, the computer monitors an input indicator for mechanical activation or activity by the operator. According to the operator's eye orientation, the computer calculates the operator's gaze position. Also computed is a gaze area, comprising a sub-region of the video screen that includes the gaze position. The system determines a region of the screen to expand within the current gaze area when mechanical activation of the operator input device is detected. The graphical components contained are expanded, while components immediately outside of this radius may be contracted and / or translated, in order to preserve visibility of all the graphical components at all times.

Owner:IBM CORP

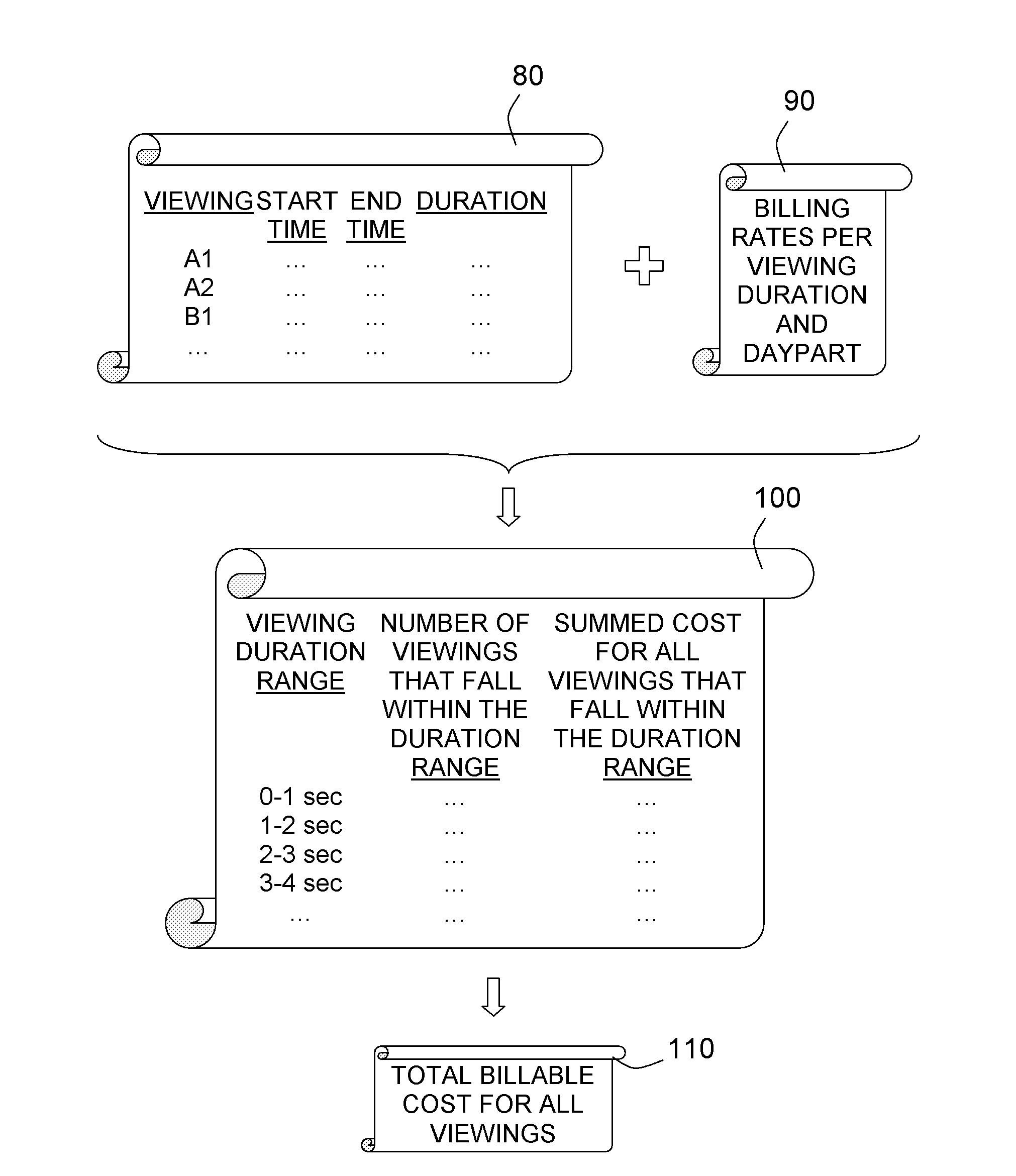

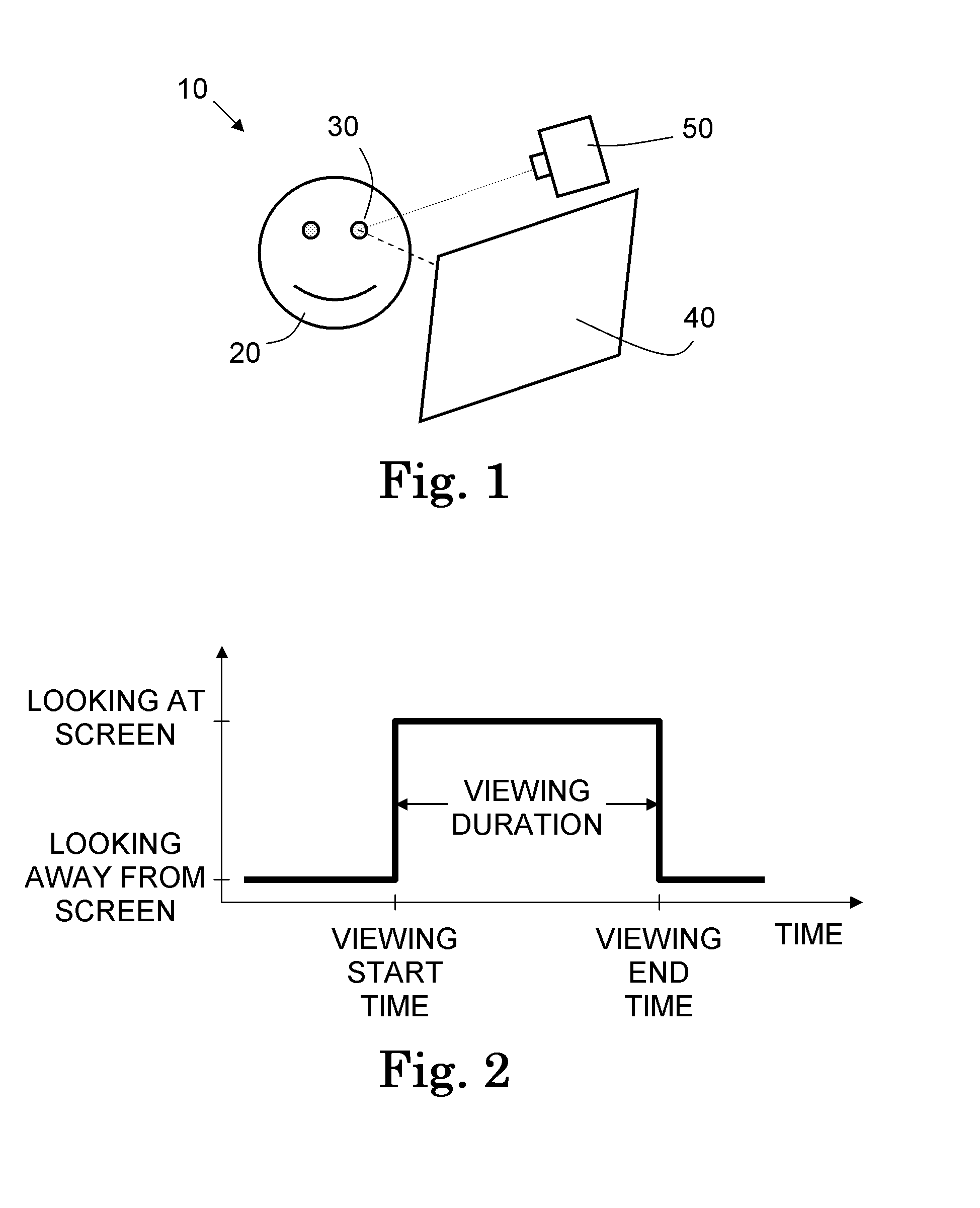

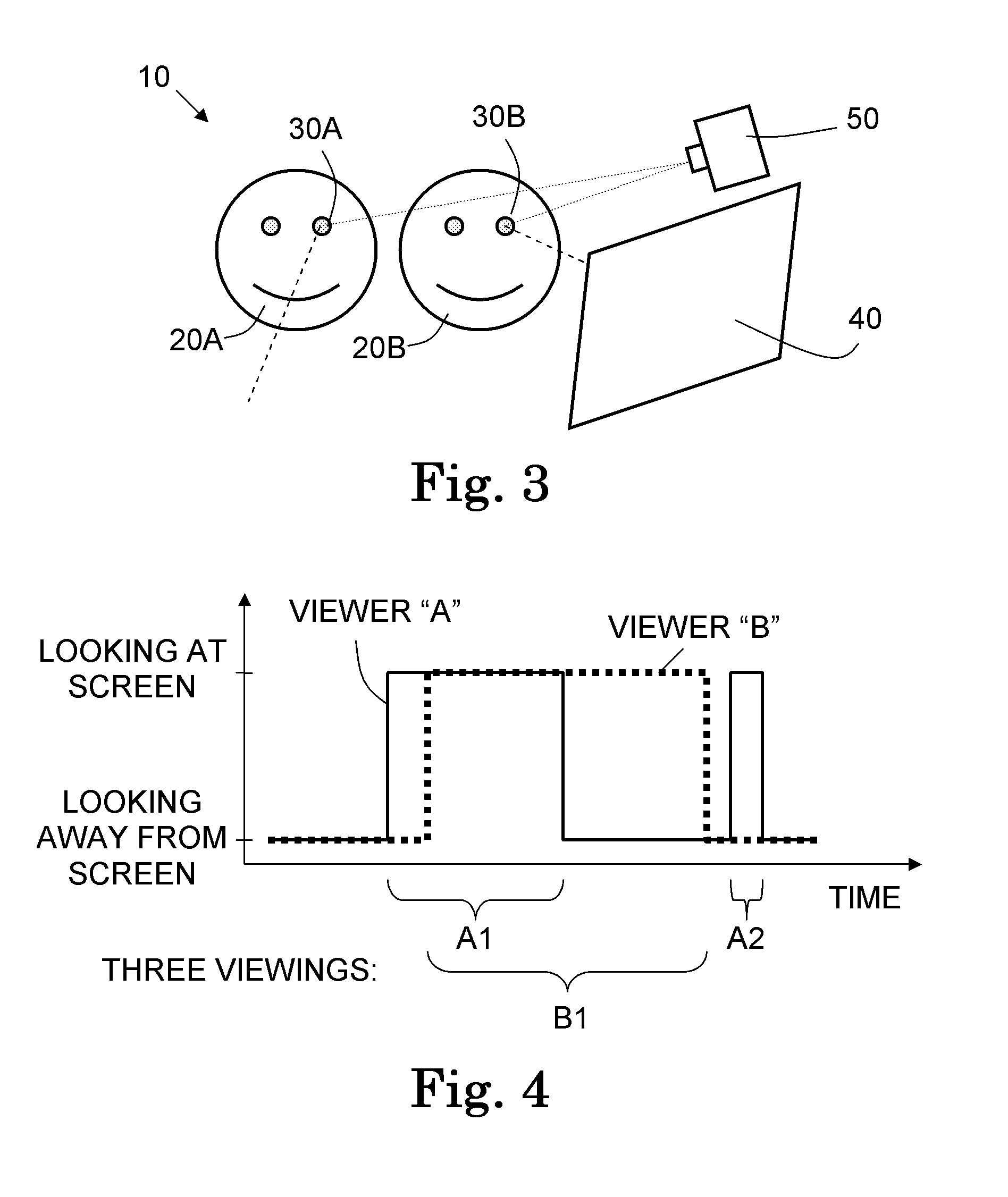

Quantitative media valuation method, system and computer program

InactiveUS20100191631A1Complete banking machinesTelevision system detailsData translationDisplay device

A method and system are disclosed for transforming accessing of a displayed content by at least one person into an overall billed value. An exemplary embodiment comprises providing a display device that displays content, tracking the respective gazes of people near and in front of the displayed content, determining if, when and how long each person views, i.e., acquires, the display, transforming the acquisition data into a billing value by, e.g., determining a billing value for each acquisition based on the numbers of acquisitions and length of each acquisition, summing the billing values for all the respective viewings, and billing the client for the summed billing values.

Owner:WEIDMANN ADRIAN

Eye tracking head mounted display

InactiveUS7542210B2Not salientImprove matching characteristicsCathode-ray tube indicatorsOptical elementsBeam splitterCentre of rotation

A head mounted display device has a mount which attaches the device to a user's head, a beam-splitter attached to the mount with movement devices, an image projector which projects images onto the beam-splitter, an eye-tracker which tracks a user's eye's gaze, and one or more processors. The device uses the eye tracker and movement devices, along with an optional head-tracker, to move the beam-splitter about the center of the eye's rotation, keeping the beam-splitter in the eye's direct line-of-sight. The user simultaneously views the image and the environment behind the image. A second beam-splitter, eye-tracker, and projector can be used on the user's other eye to create a stereoptic, virtual environment. The display can correspond to the resolving power of the human eye. The invention presets a high-resolution image wherever the user looks.

Owner:TEDDER DONALD RAY

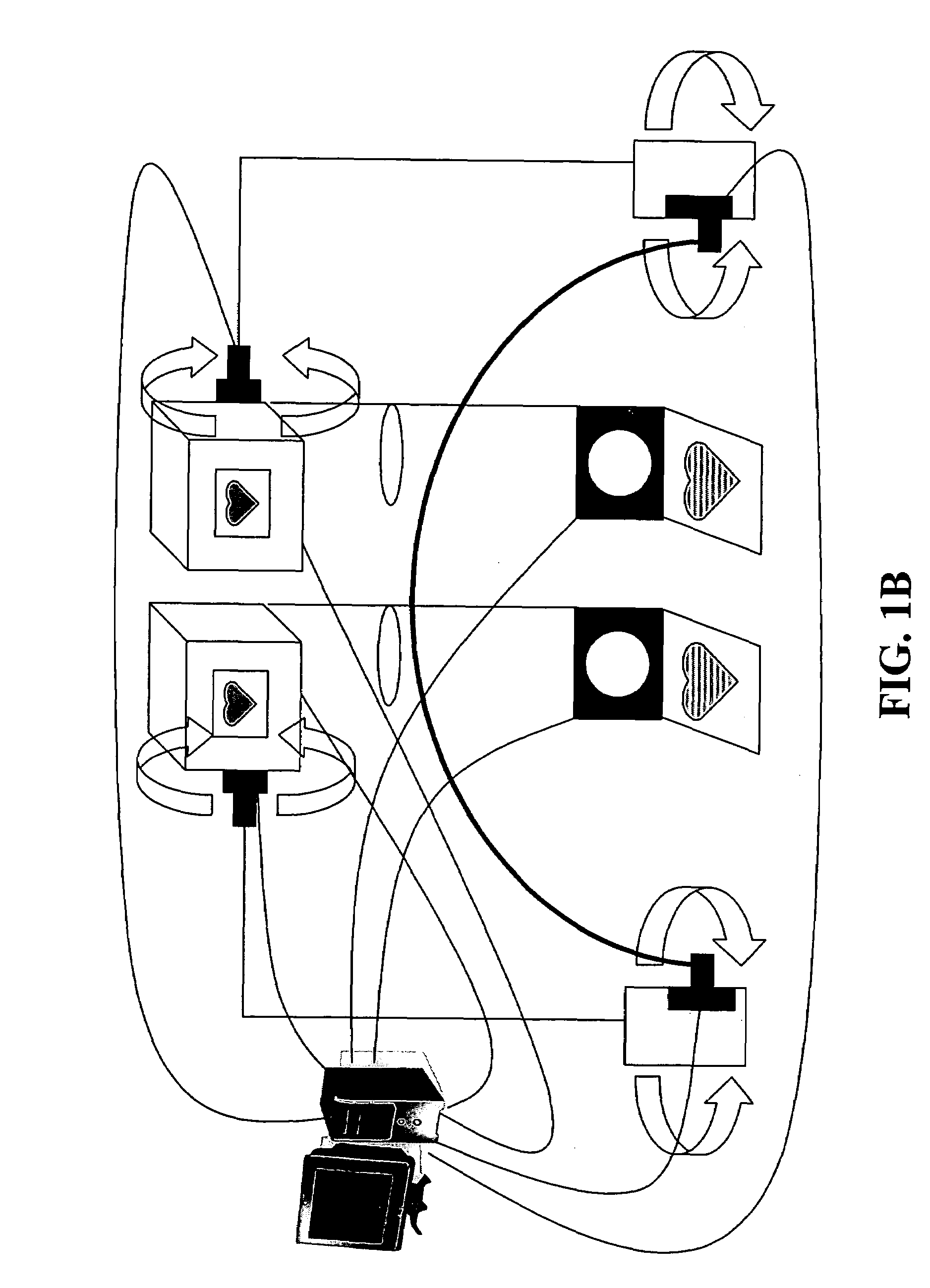

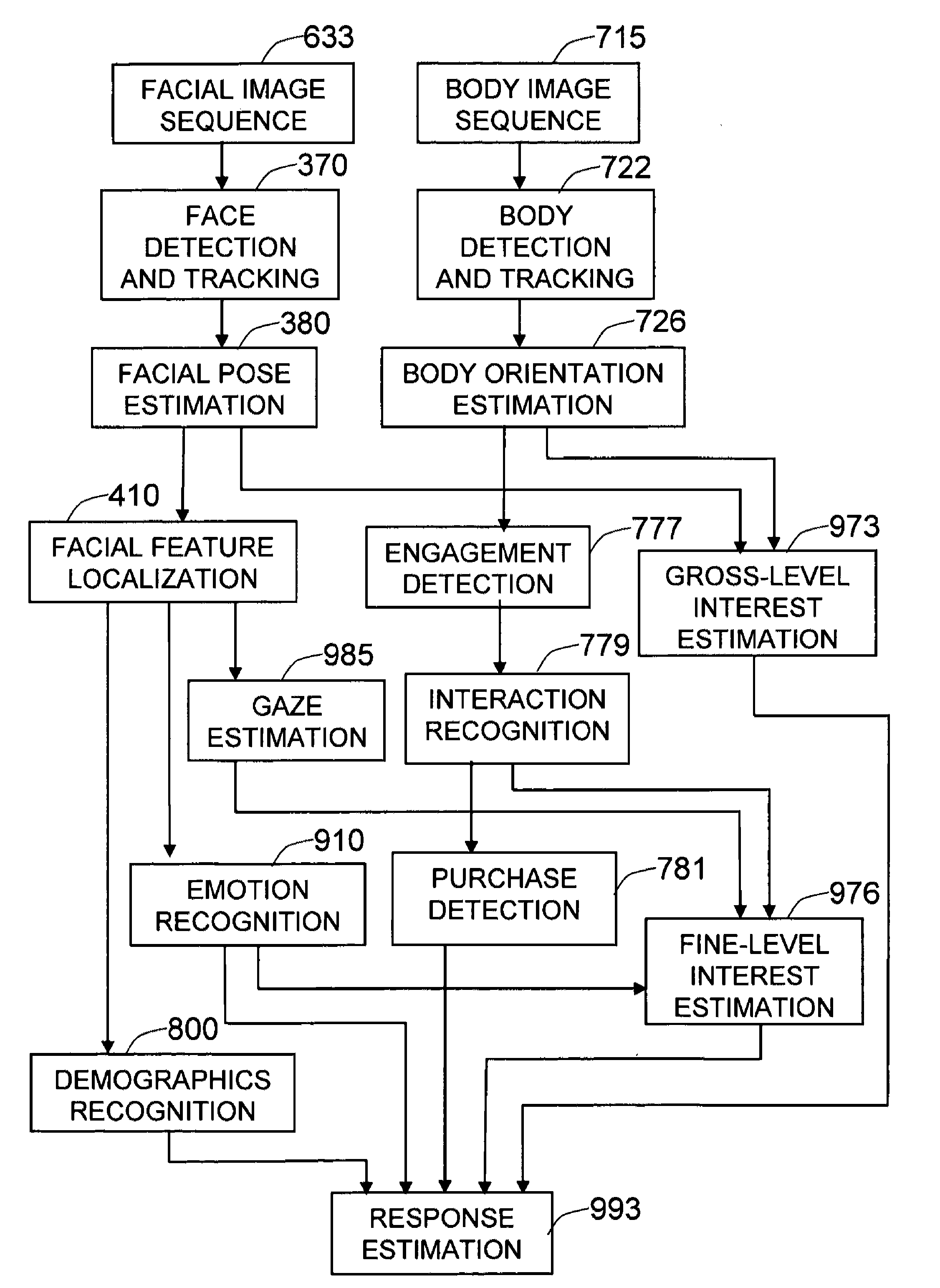

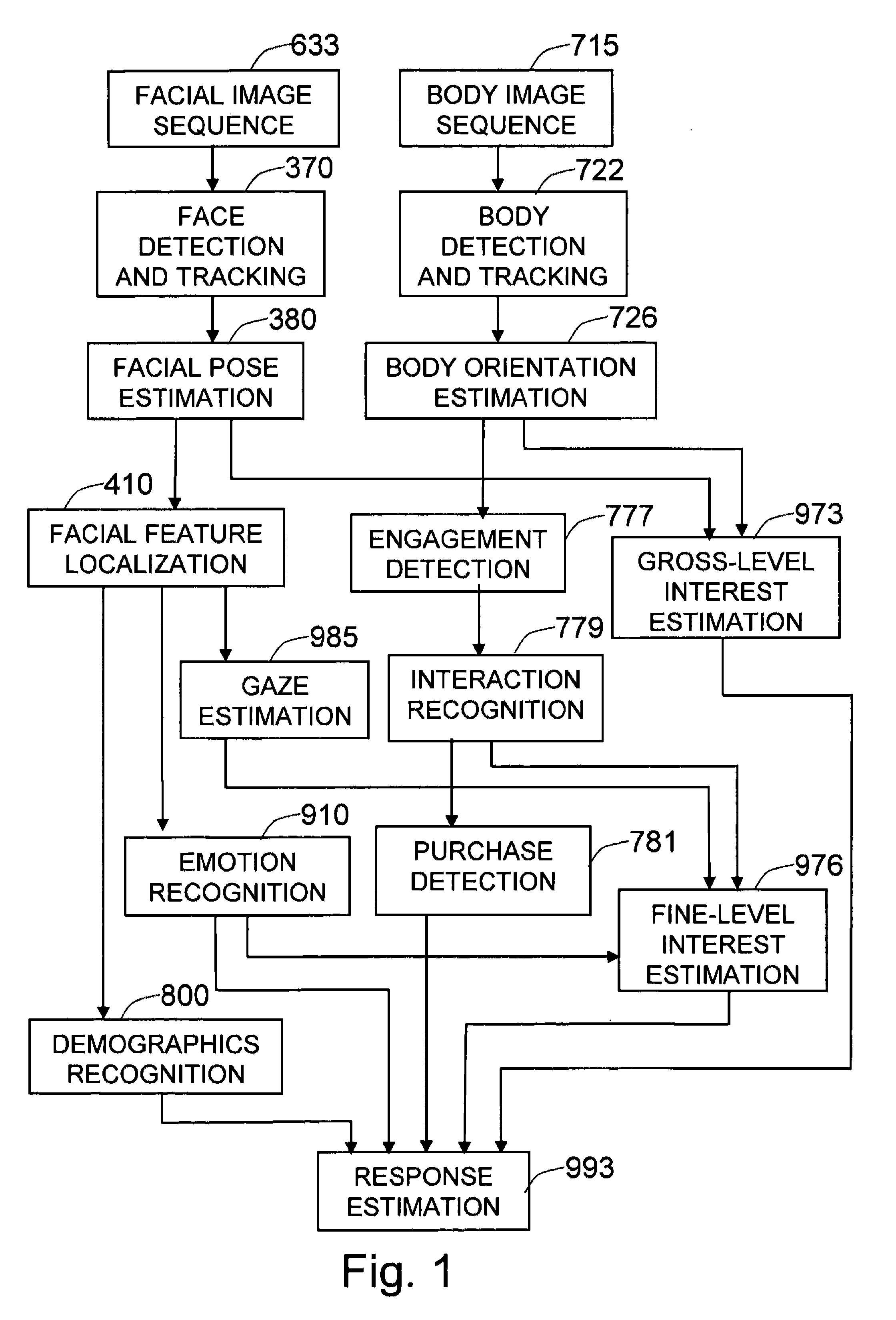

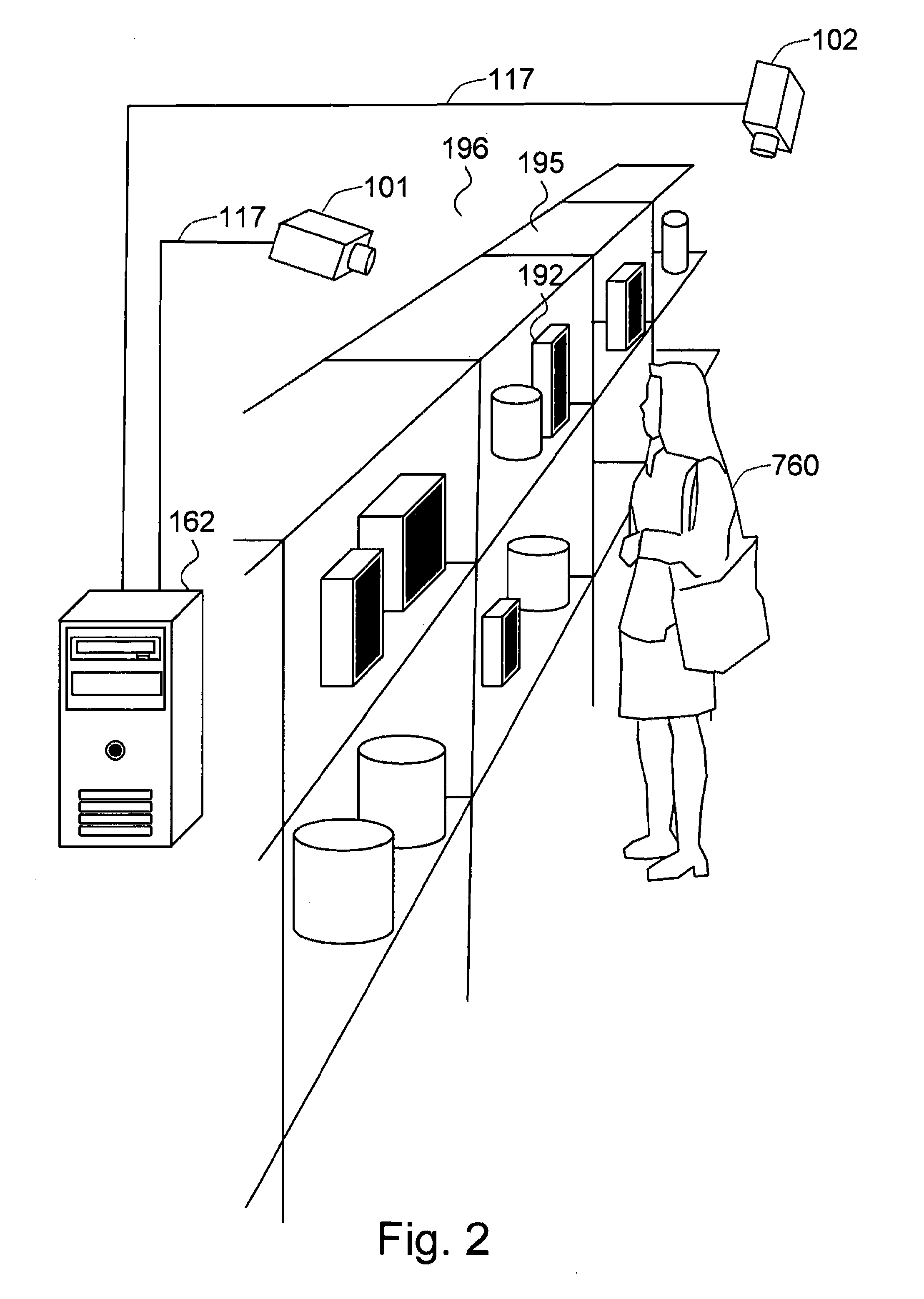

Method and system for measuring shopper response to products based on behavior and facial expression

ActiveUS8219438B1Reliable informationAccurate locationMarket predictionsAcquiring/recognising eyesPattern recognitionProduct base

The present invention is a method and system for measuring human response to retail elements, based on the shopper's facial expressions and behaviors. From a facial image sequence, the facial geometry—facial pose and facial feature positions—is estimated to facilitate the recognition of facial expressions, gaze, and demographic categories. The recognized facial expression is translated into an affective state of the shopper and the gaze is translated into the target and the level of interest of the shopper. The body image sequence is processed to identify the shopper's interaction with a given retail element—such as a product, a brand, or a category. The dynamic changes of the affective state and the interest toward the retail element measured from facial image sequence is analyzed in the context of the recognized shopper's interaction with the retail element and the demographic categories, to estimate both the shopper's changes in attitude toward the retail element and the end response—such as a purchase decision or a product rating.

Owner:PARMER GEORGE A

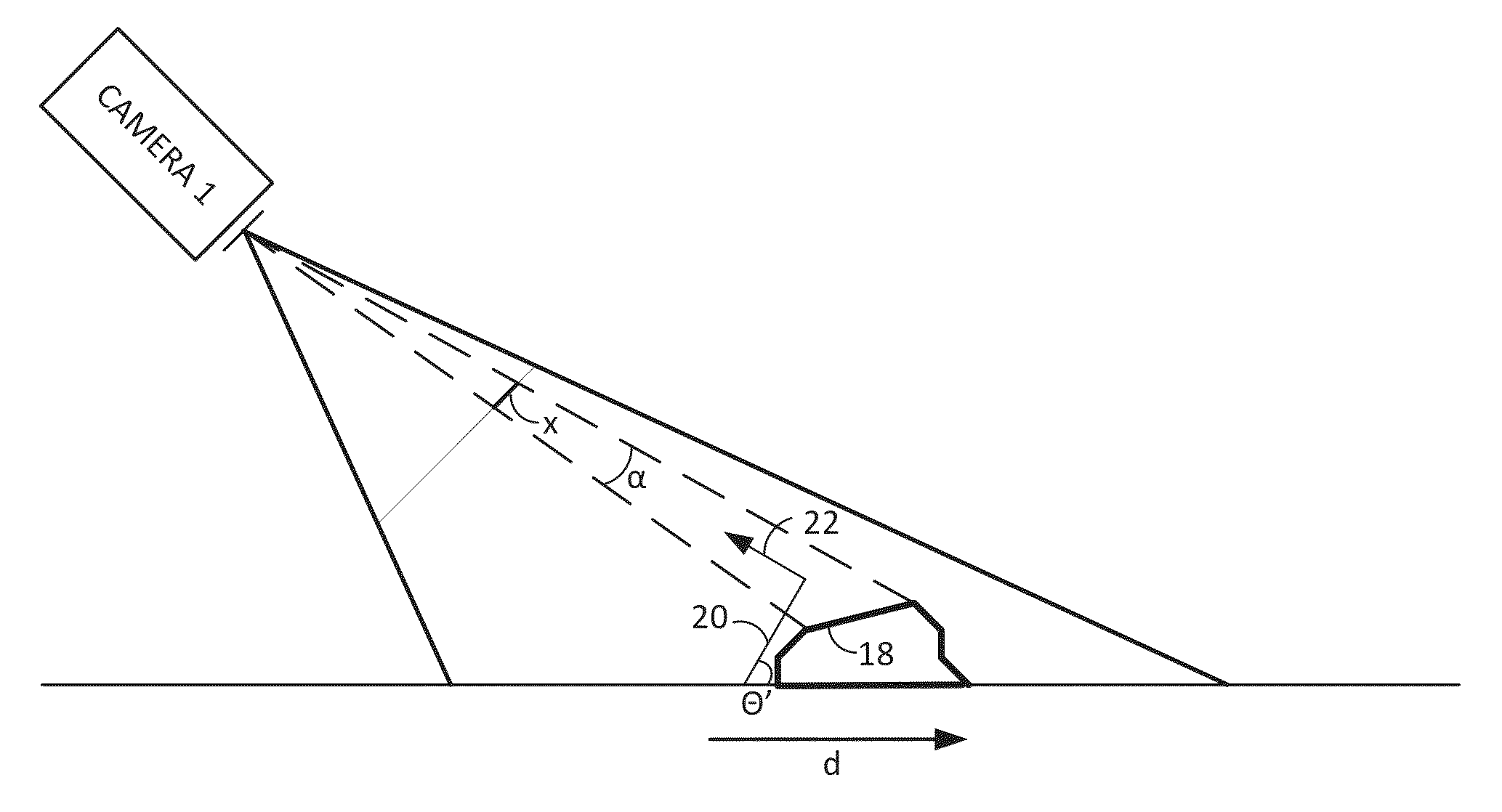

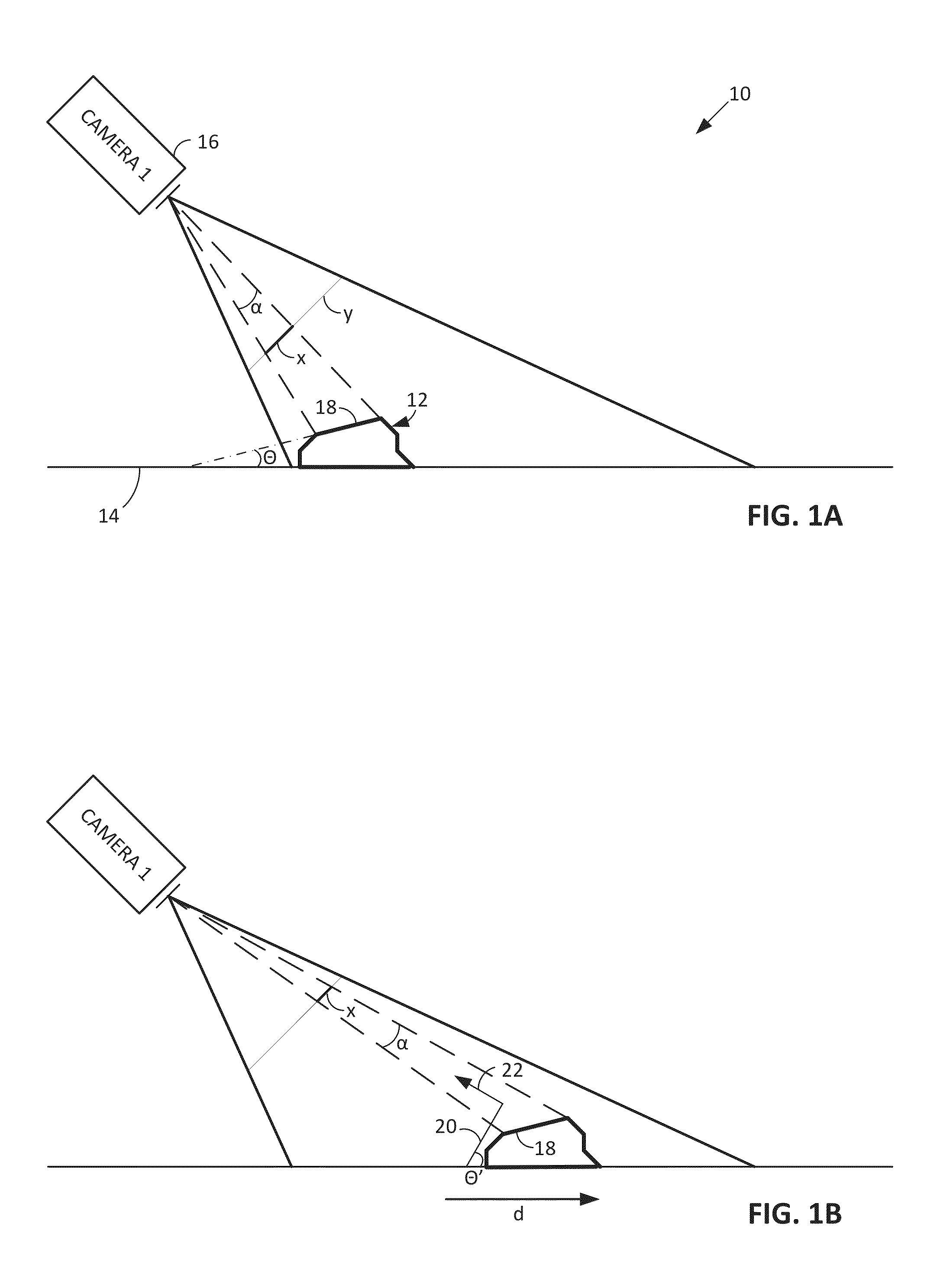

Methods and arrangements for identifying objects

ActiveUS20140052555A1Increase check-out speedImprove accuracyImage enhancementImage analysisGeometric primitiveVisual saliency

In some arrangements, product packaging is digitally watermarked over most of its extent to facilitate high-throughput item identification at retail checkouts. Imagery captured by conventional or plenoptic cameras can be processed (e.g., by GPUs) to derive several different perspective-transformed views—further minimizing the need to manually reposition items for identification. Crinkles and other deformations in product packaging can be optically sensed, allowing such surfaces to be virtually flattened to aid identification. Piles of items can be 3D-modelled and virtually segmented into geometric primitives to aid identification, and to discover locations of obscured items. Other data (e.g., including data from sensors in aisles, shelves and carts, and gaze tracking for clues about visual saliency) can be used in assessing identification hypotheses about an item. Logos may be identified and used—or ignored—in product identification. A great variety of other features and arrangements are also detailed.

Owner:DIGIMARC CORP

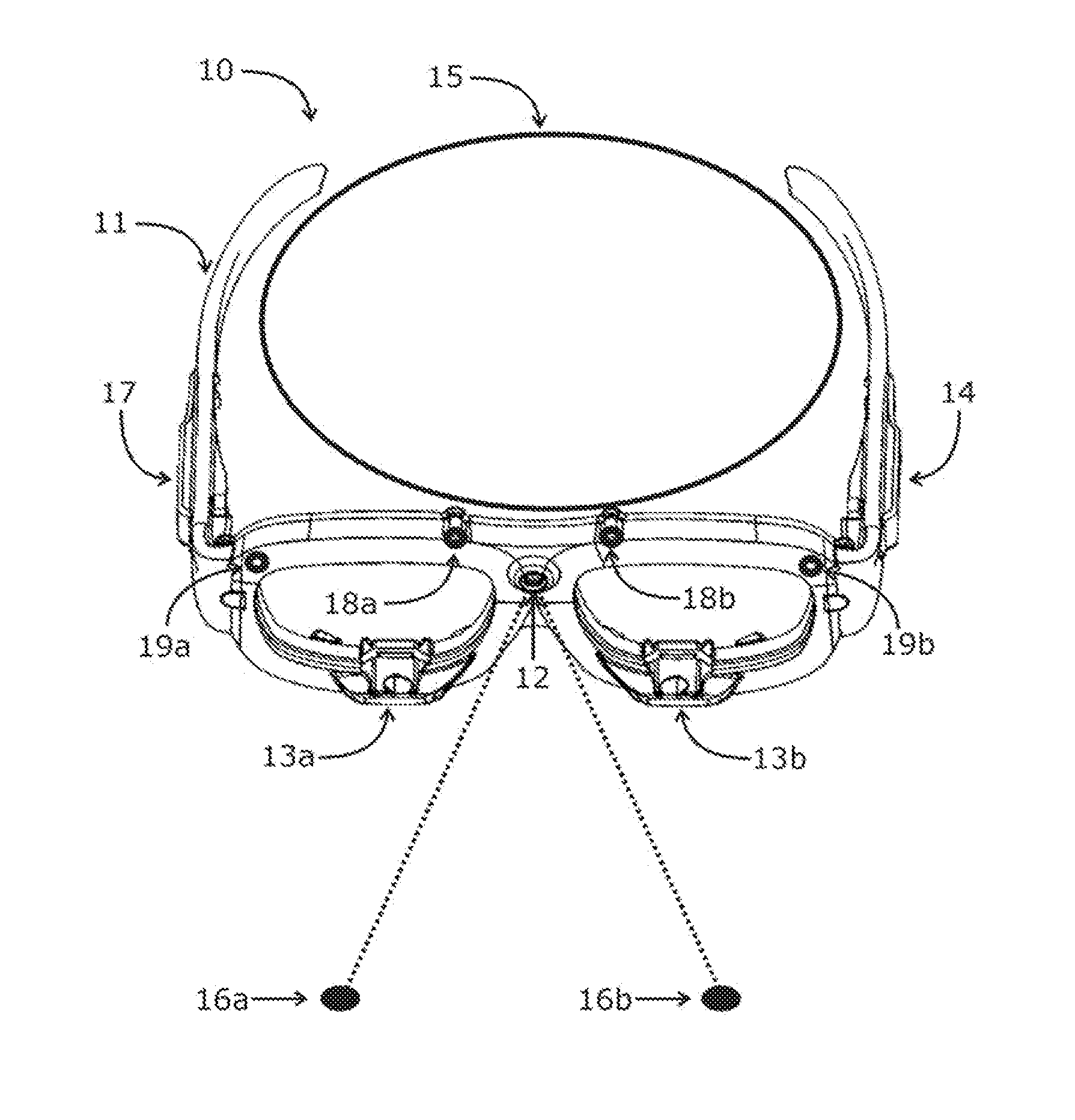

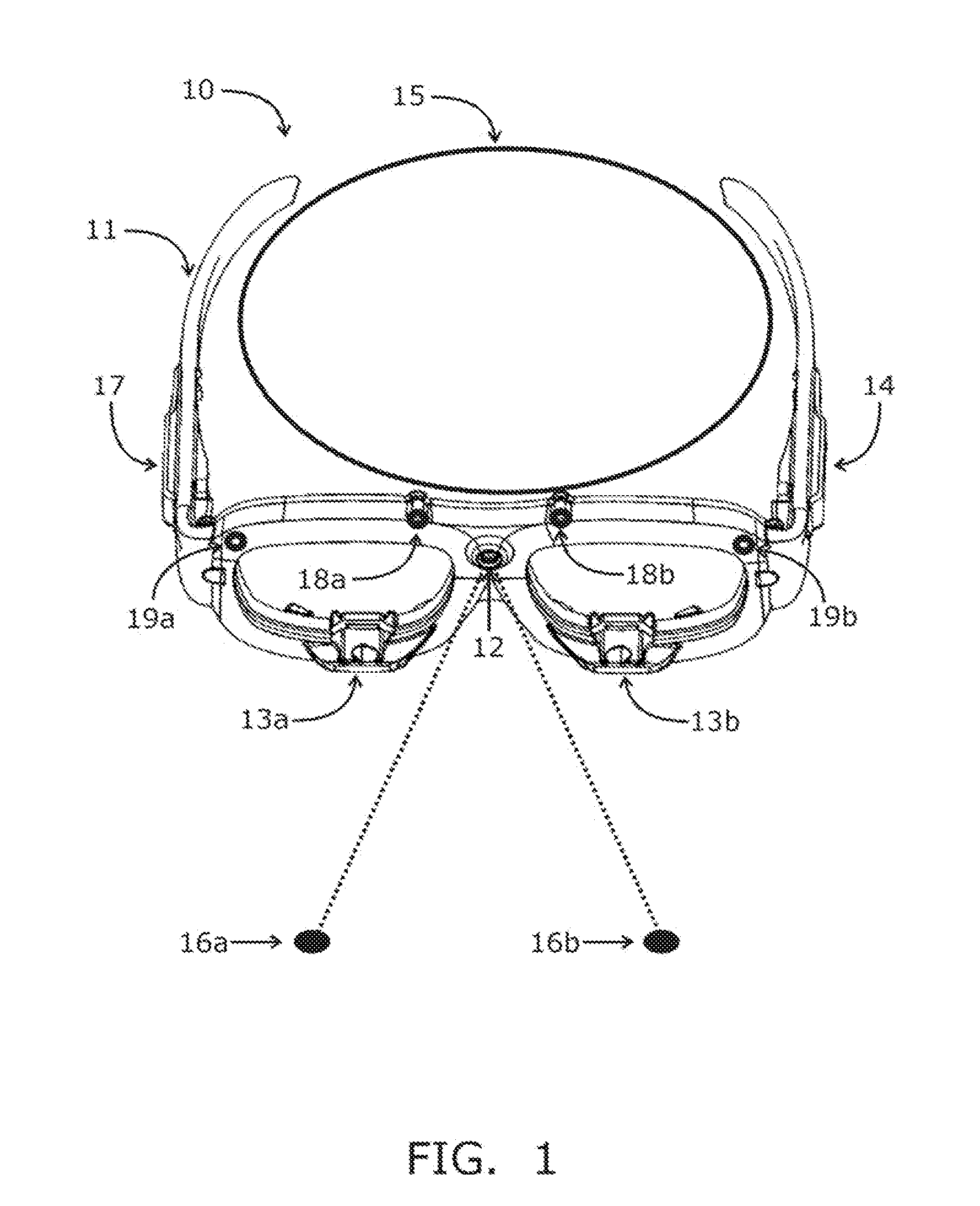

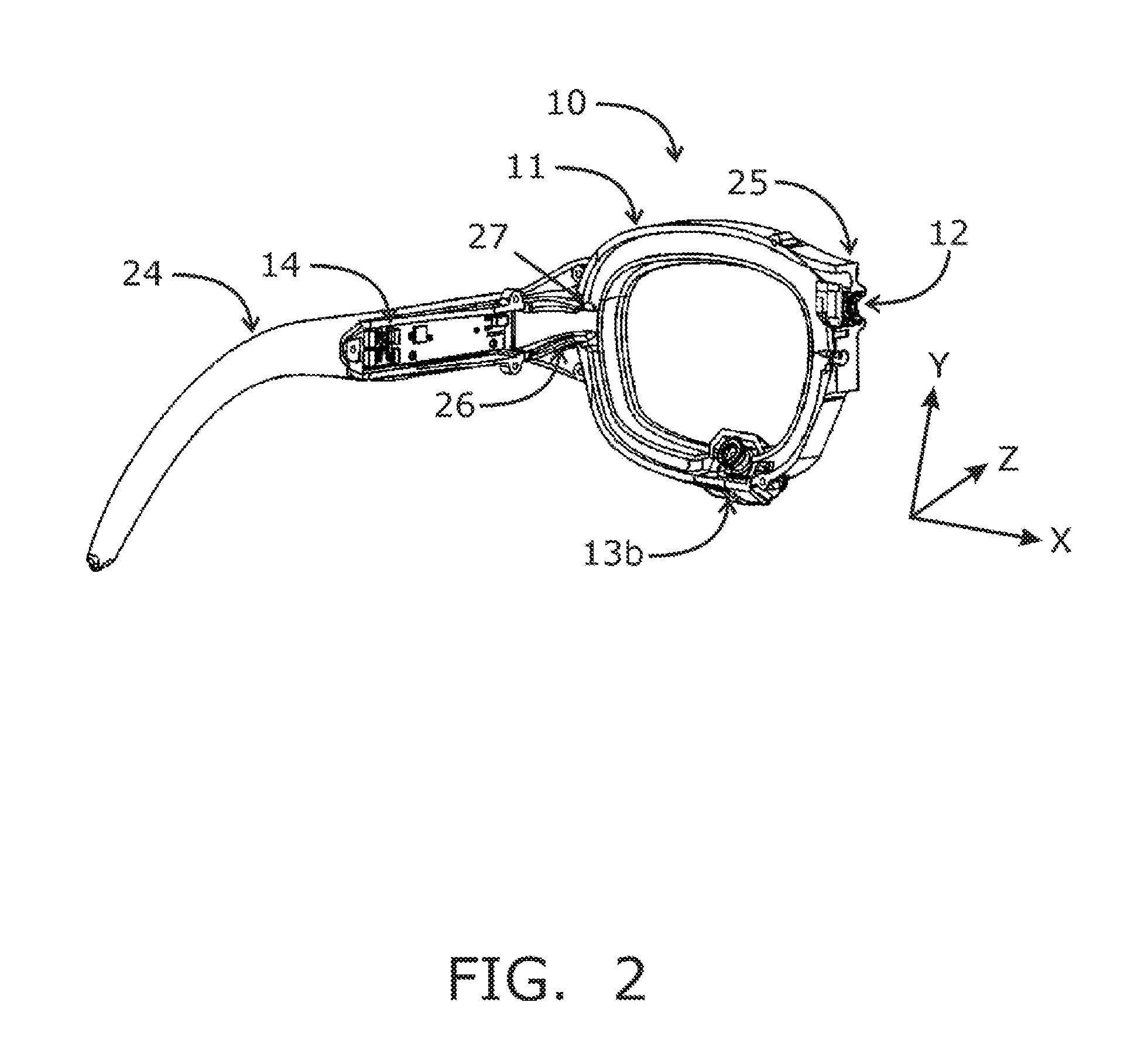

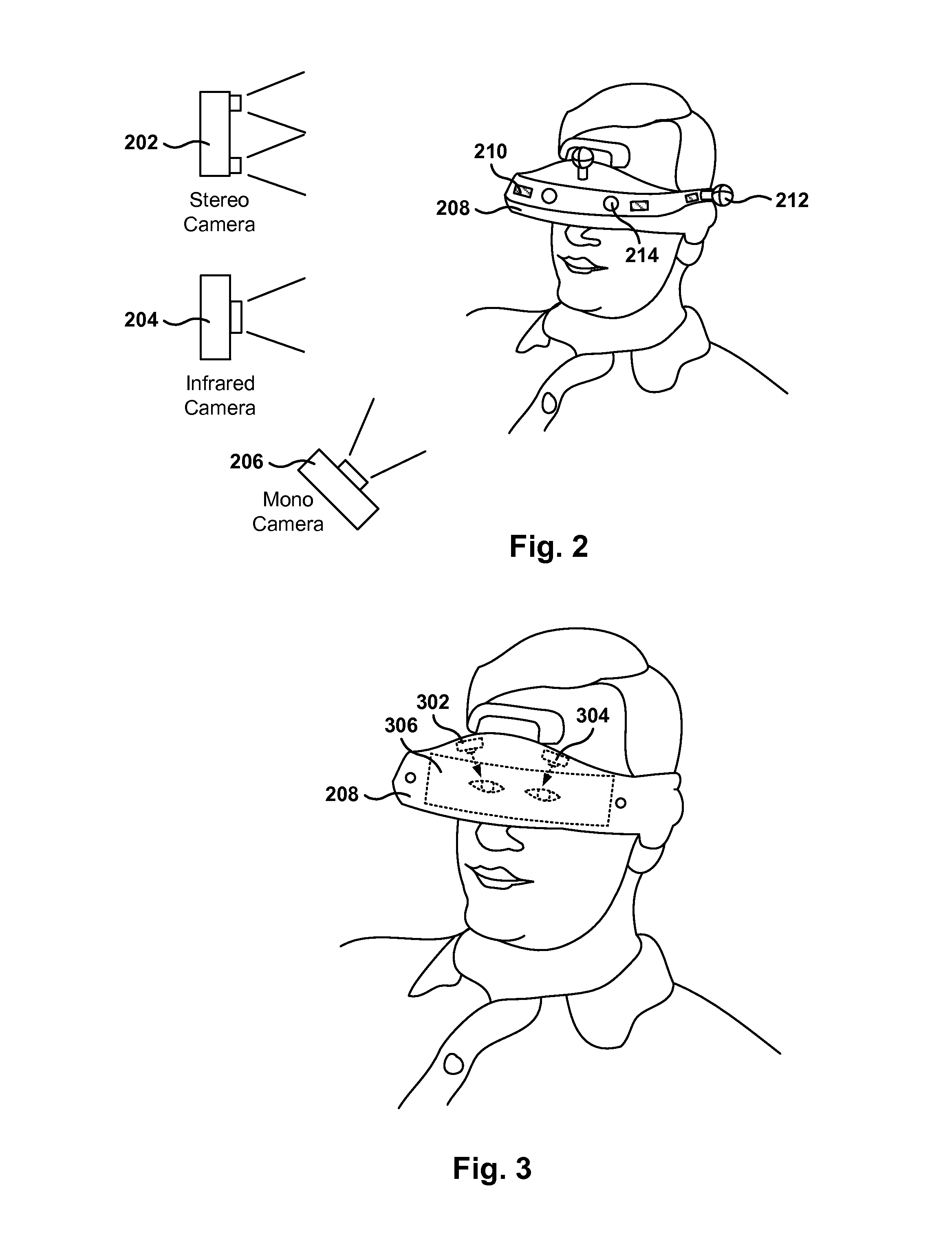

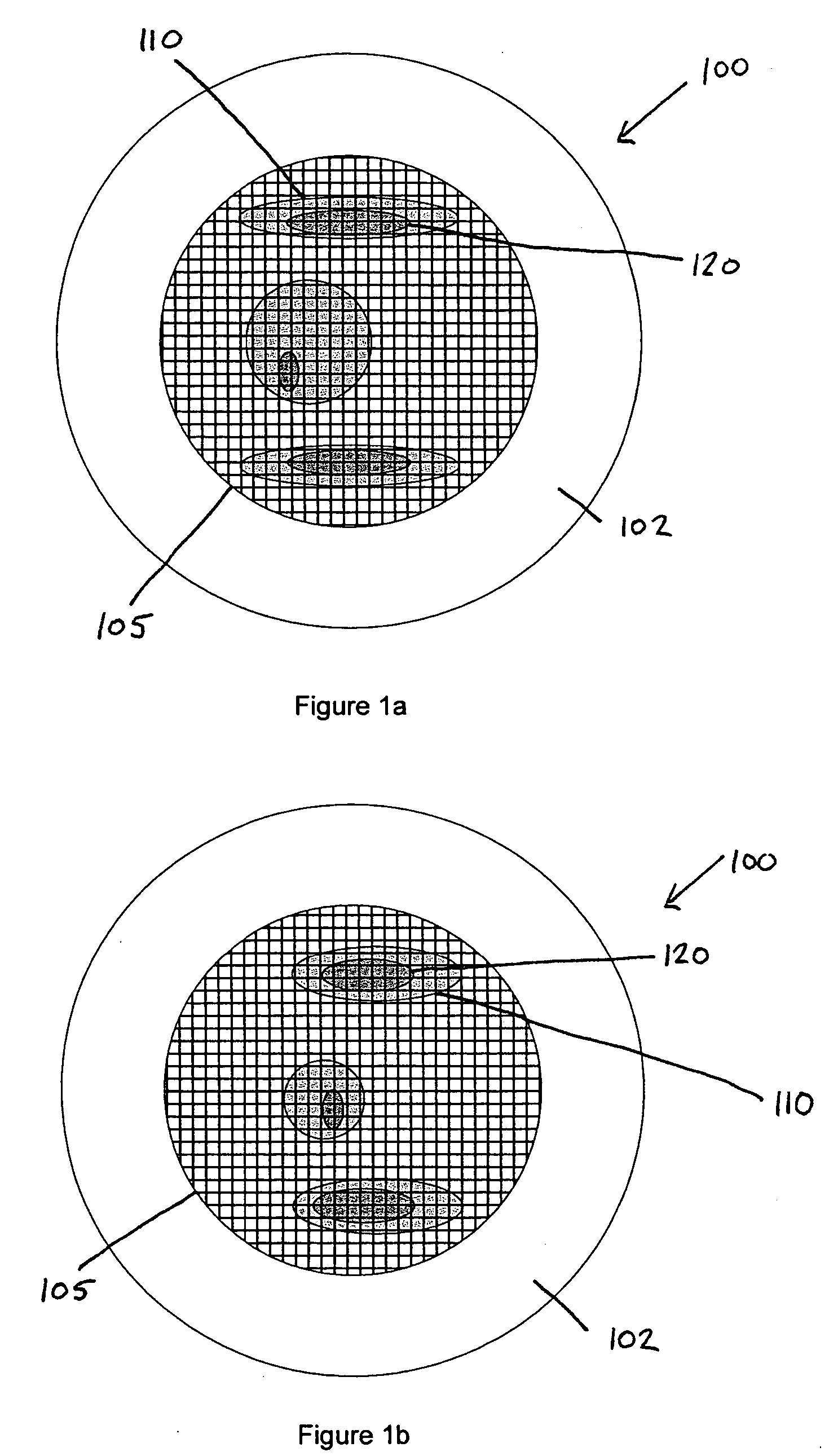

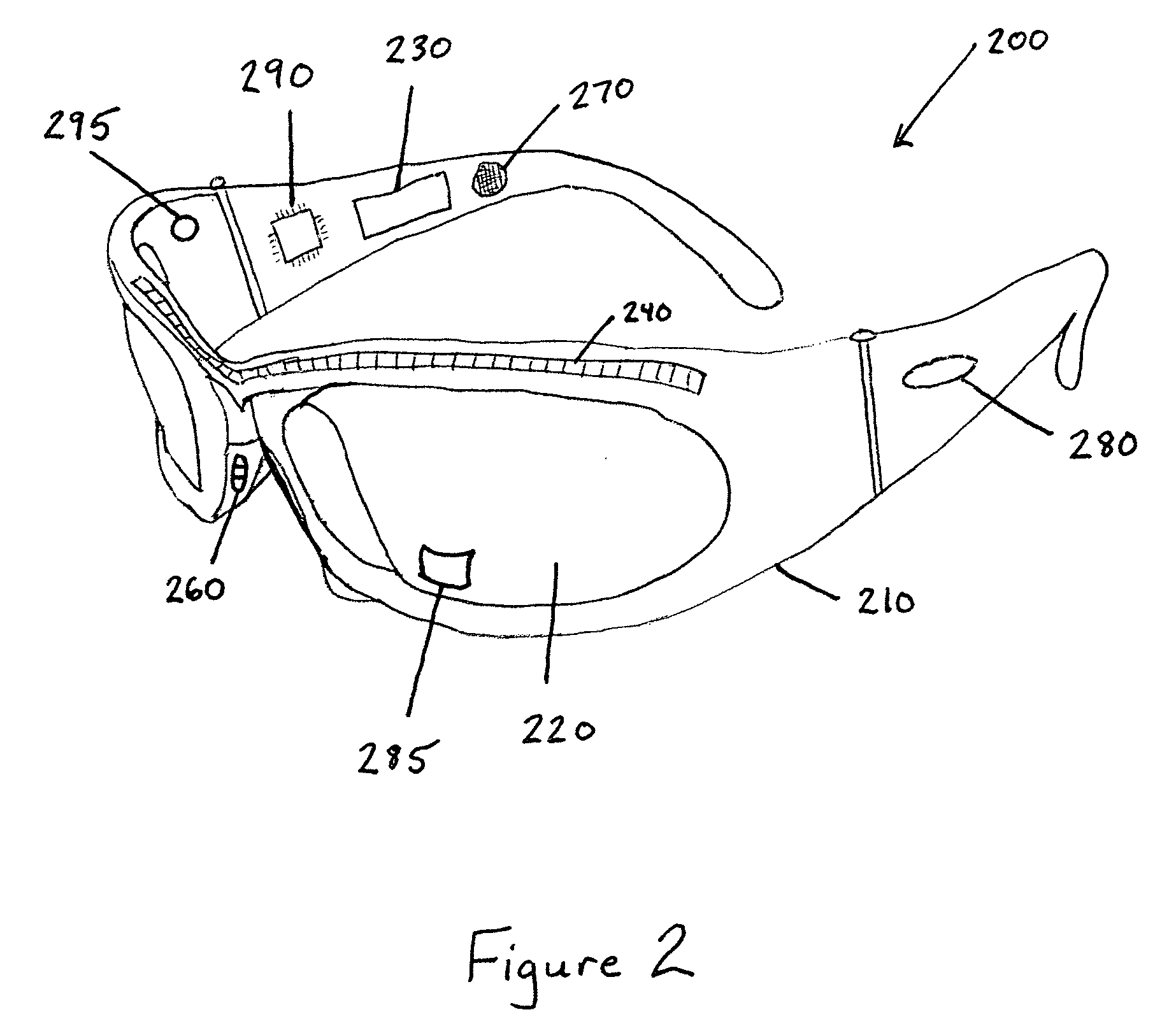

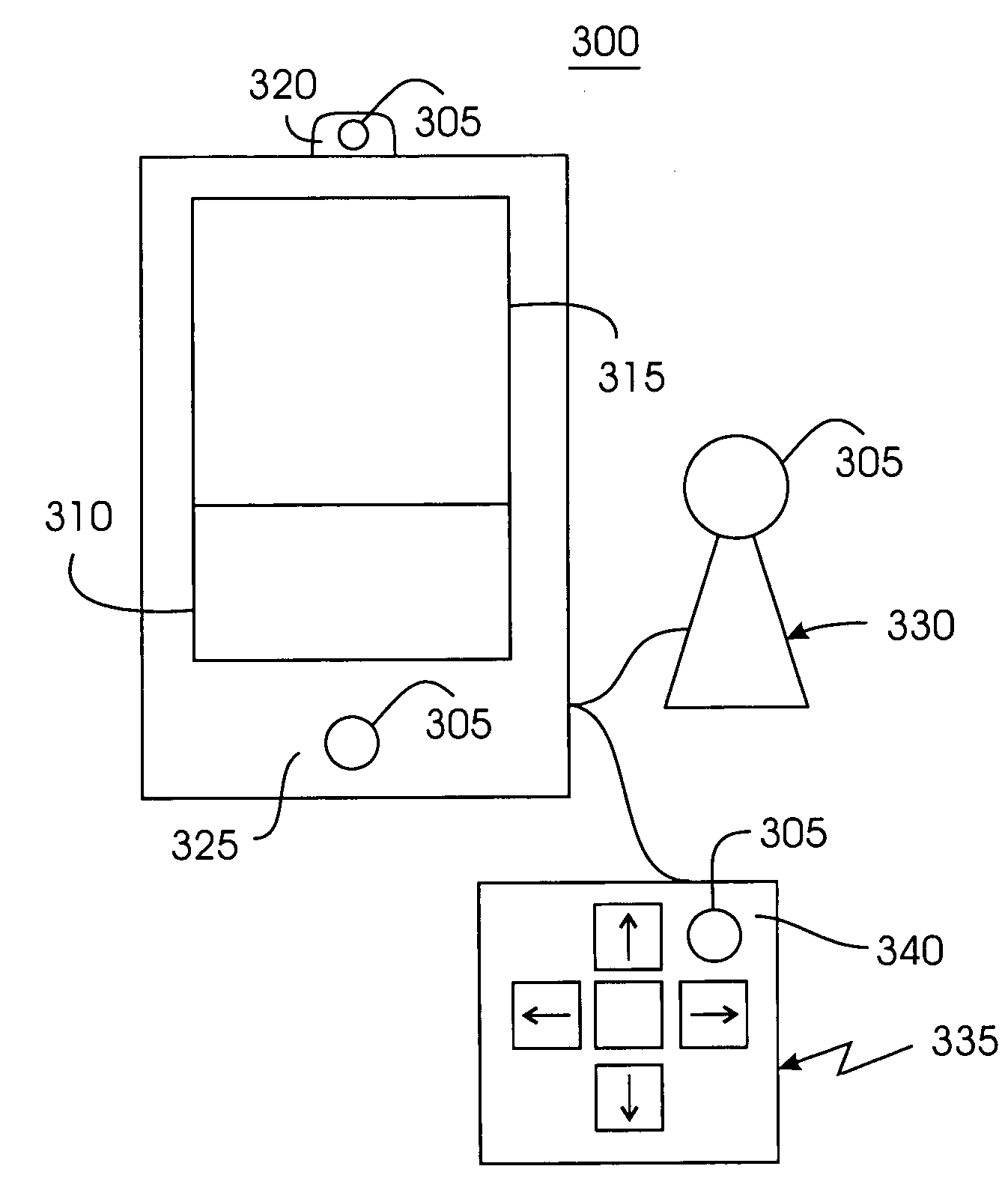

Systems and methods for identifying gaze tracking scene reference locations

A system is provided for identifying reference locations within the environment of a device wearer. The system includes a scene camera mounted on eyewear or headwear coupled to a processing unit. The system may recognize objects with known geometries that occur naturally within the wearer's environment or objects that have been intentionally placed at known locations within the wearer's environment. One or more light sources may be mounted on the headwear that illuminate reflective surfaces at selected times and wavelengths to help identify scene reference locations and glints projected from known locations onto the surface of the eye. The processing unit may control light sources to adjust illumination levels in order to help identify reference locations within the environment and corresponding glints on the surface of the eye. Objects may be identified substantially continuously within video images from scene cameras to provide a continuous data stream of reference locations.

Owner:GOOGLE LLC

Apparatus and Method for a Dynamic "Region of Interest" in a Display System

ActiveUS20110043644A1Improve usabilityLower latencyTelevision system detailsImage analysisImage resolutionNative resolution

A method and apparatus of displaying a magnified image comprising obtaining an image of a scene using a camera with greater resolution than the display, and capturing the image in the native resolution of the display by either grouping pixels together, or by capturing a smaller region of interest whose pixel resolution matches that of the display. The invention also relates to a method whereby the location of the captured region of interest may be determined by external inputs such as the location of a person's gaze in the displayed unmagnified image, or coordinates from a computer mouse. The invention further relates to a method whereby a modified image can be superimposed on an unmodified image, in order to maintain the peripheral information or context from which the modified region of interest has been captured.

Owner:ESIGHT CORP

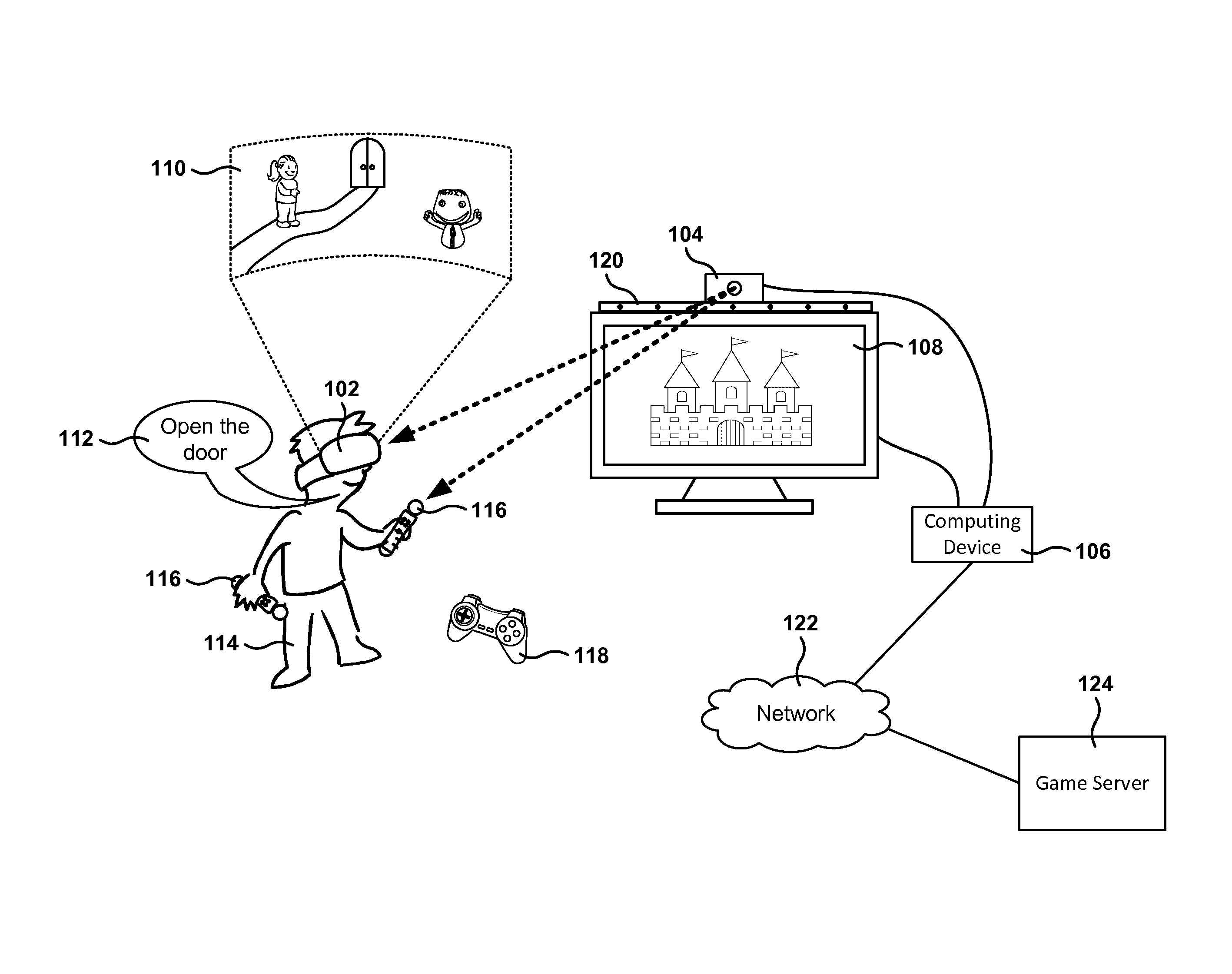

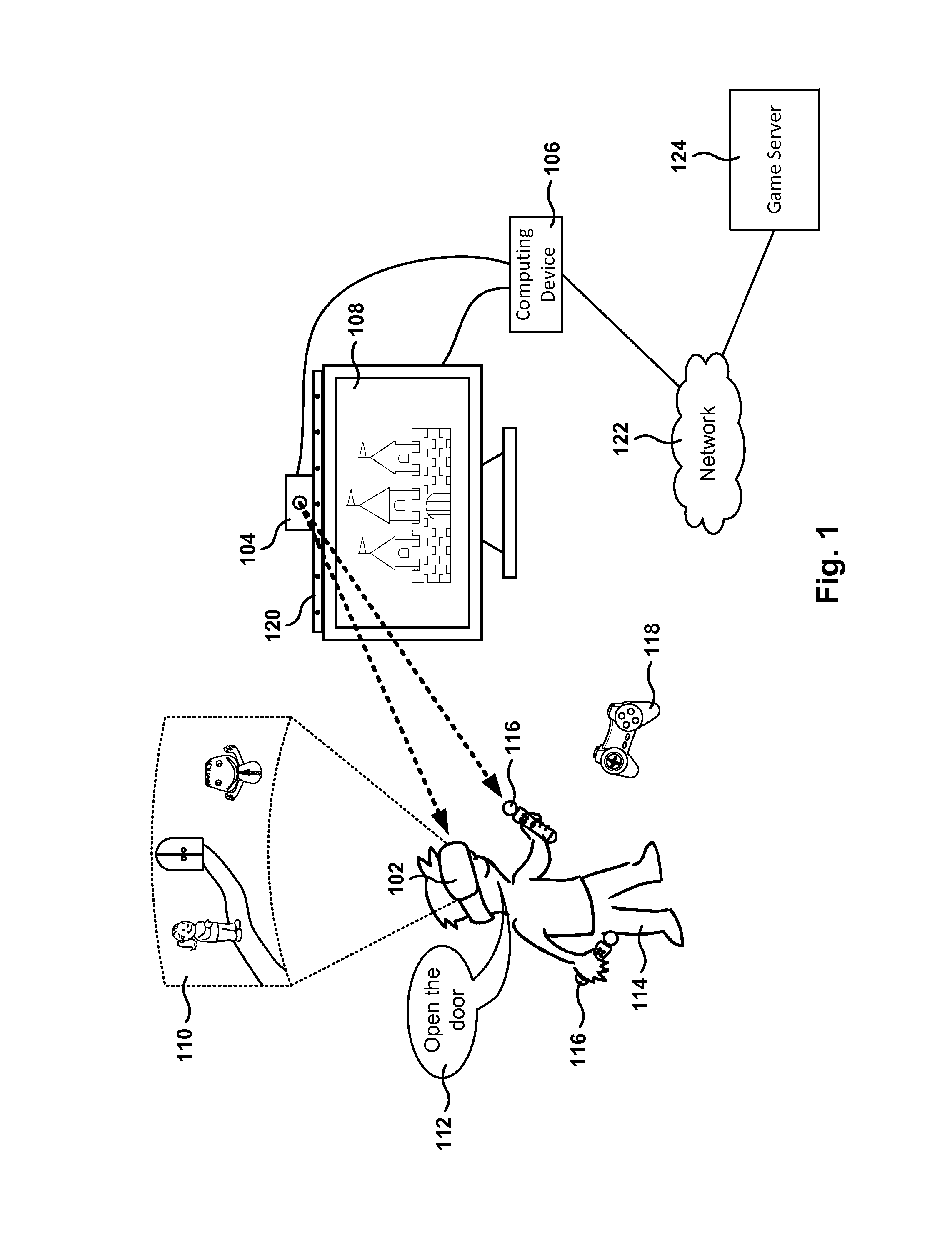

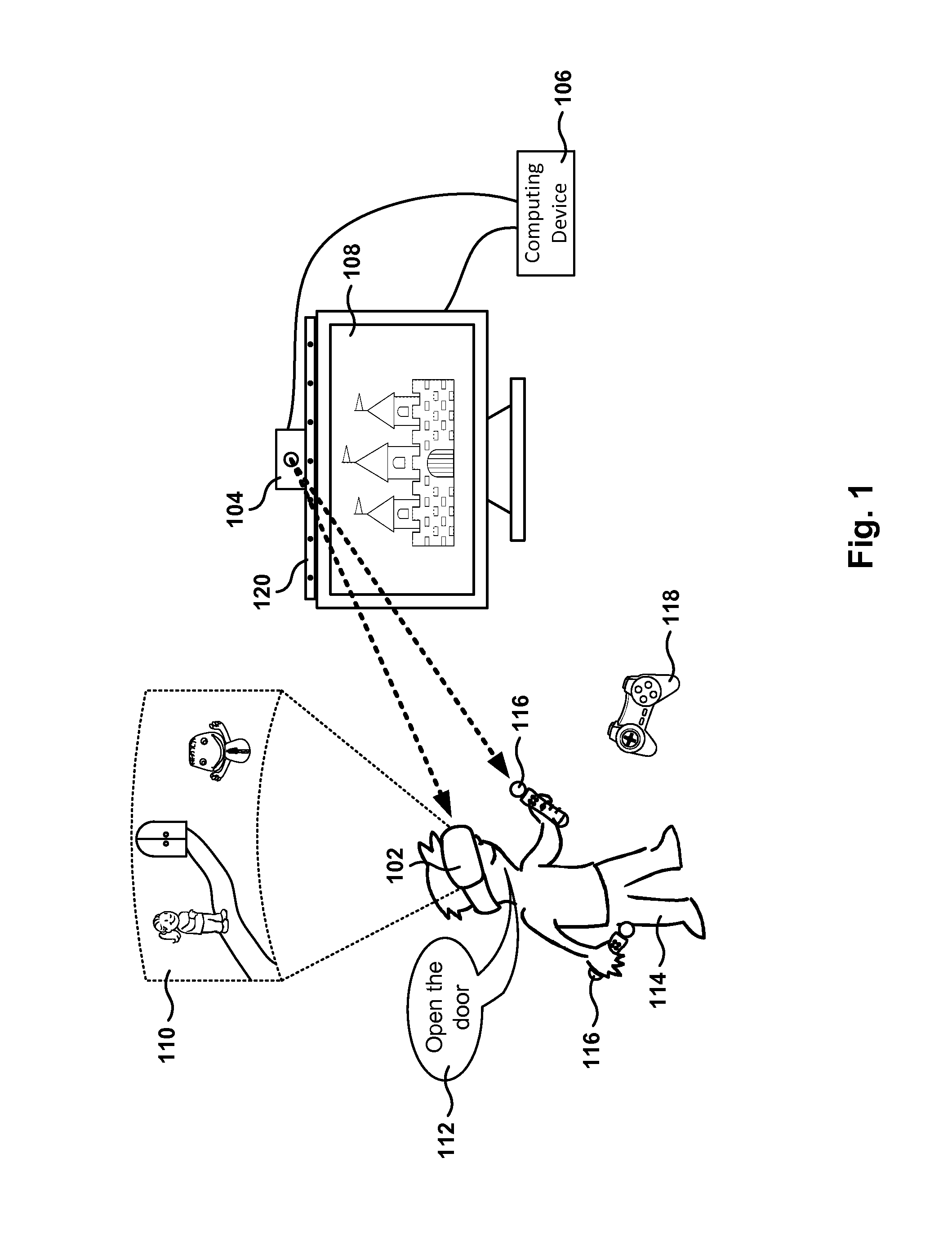

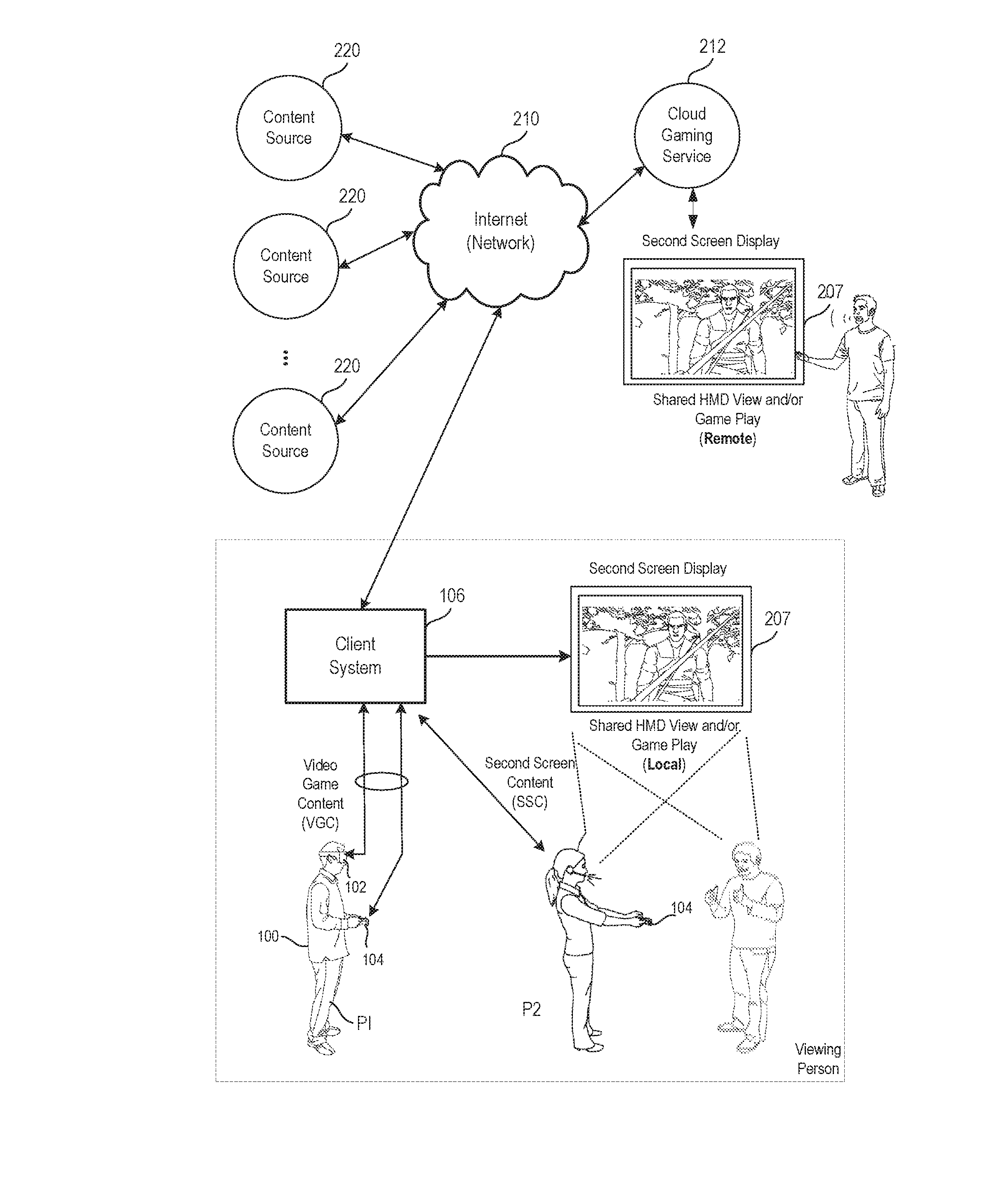

Image rendering responsive to user actions in head mounted display

ActiveUS20140361977A1Input/output for user-computer interactionCathode-ray tube indicatorsView basedDisplay device

Methods, systems, and computer programs are presented for rendering images on a head mounted display (HMD). One method includes operations for tracking, with one or more first cameras inside the HMD, the gaze of a user and for tracking motion of the HMD. The motion of the HMD is tracked by analyzing images of the HMD taken with a second camera that is not in the HMD. Further, the method includes an operation for predicting the motion of the gaze of the user based on the gaze and the motion of the HMD. Rendering policies for a plurality of regions, defined on a view rendered by the HMD, are determined based on the predicted motion of the gaze. The images are rendered on the view based on the rendering policies.

Owner:SONY COMPUTER ENTERTAINMENT INC

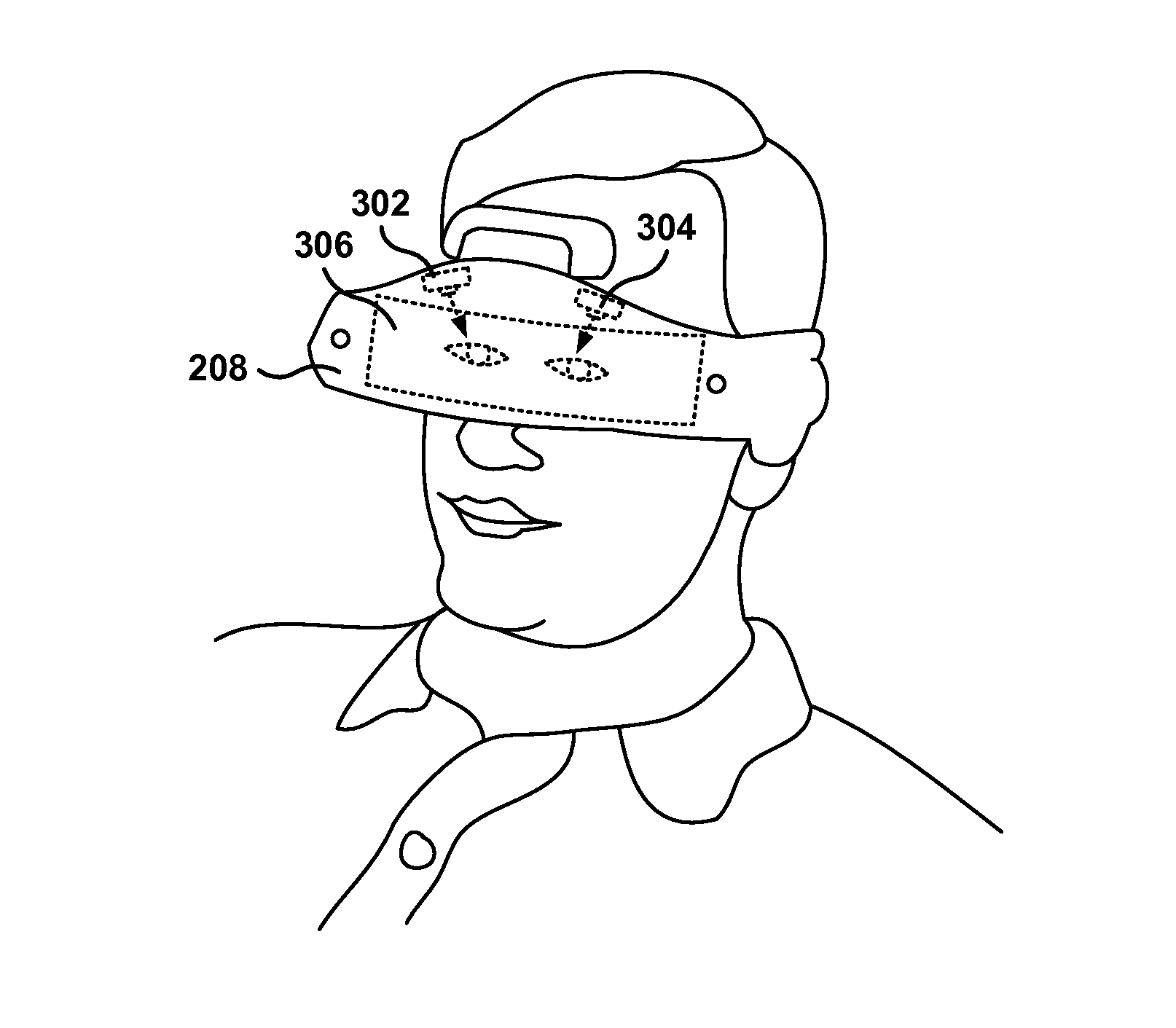

Switching mode of operation in a head mounted display

ActiveUS20140361976A1Fade outInput/output for user-computer interactionCathode-ray tube indicatorsDisplay deviceVirtual world

Methods, systems, and computer programs are presented for managing the display of images on a head mounted device (HMD). One method includes an operation for tracking the gaze of a user wearing the HMD, where the HMD is displaying a scene of a virtual world. In addition, the method includes an operation for detecting that the gaze of the user is fixed on a predetermined area for a predetermined amount of time. In response to the detecting, the method fades out a region of the display in the HMD, while maintaining the scene of the virtual world in an area of the display outside the region. Additionally, the method includes an operation for fading in a view of the real world in the region as if the HMD were transparent to the user while the user is looking through the region. The fading in of the view of the real world includes maintaining the scene of the virtual world outside the region.

Owner:SONY COMPUTER ENTERTAINMENT INC

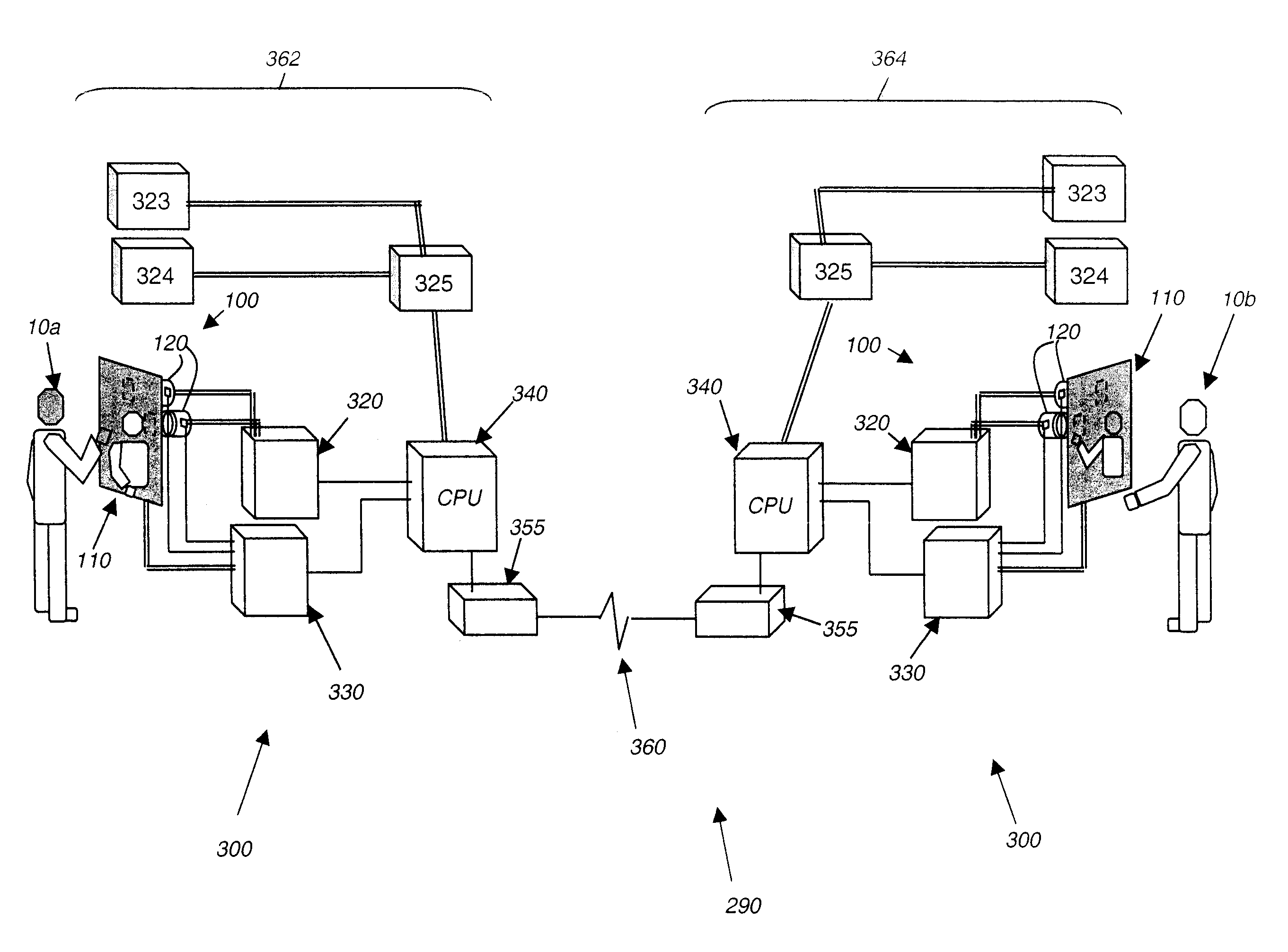

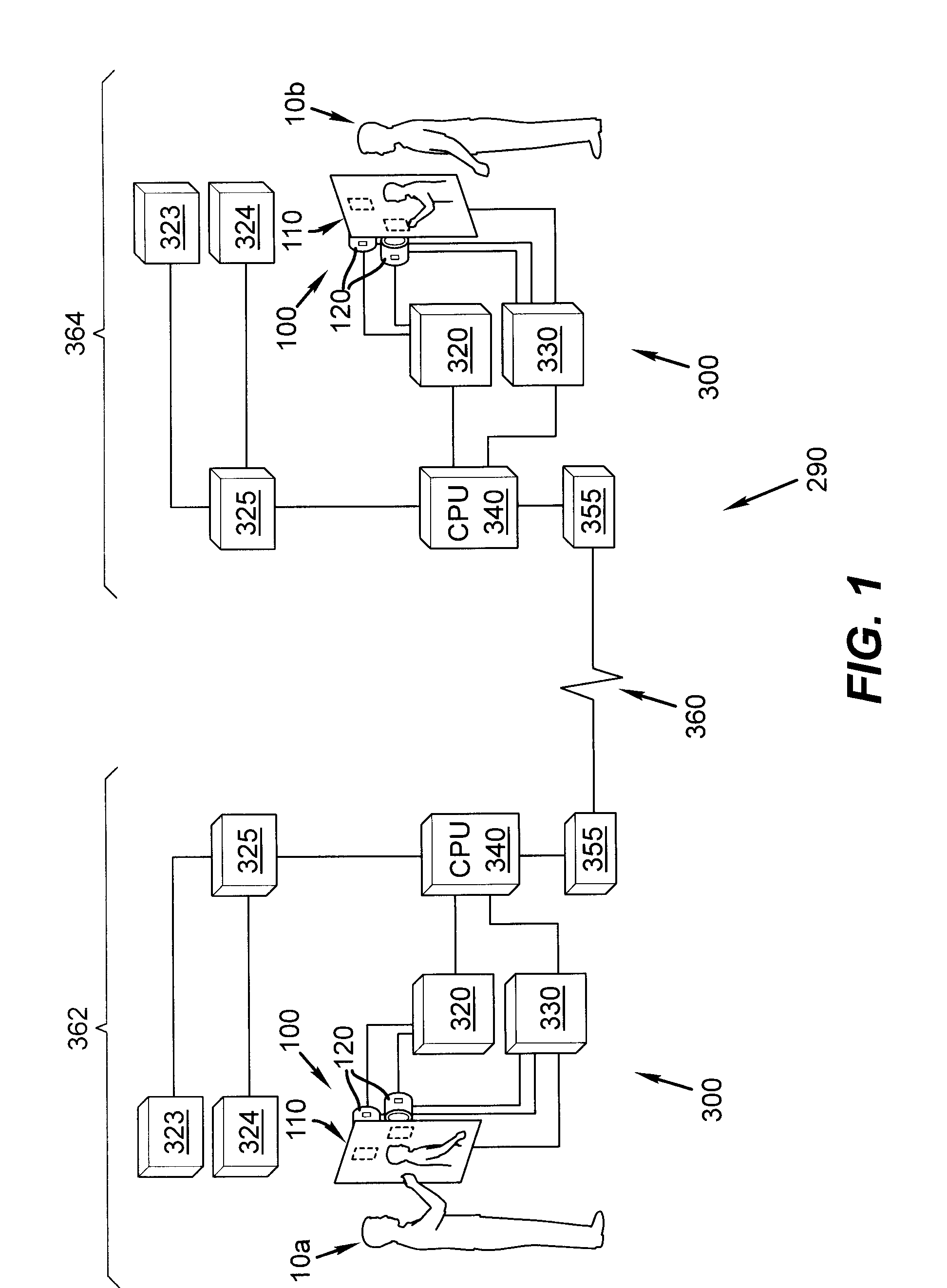

Eye gazing imaging for video communications

ActiveUS20080297589A1Television conference systemsTwo-way working systemsCommunications systemComputer graphics (images)

Video communication systems and methods for communicating between an individual in a local environment, and a remote viewer in a remote environment are provided. The system has an image display device; at least one image capture device which acquires video images for fields of view of a local environment, and any individuals therein; an audio system having an audio emission device and an audio capture device; a computer, which includes a contextual interface having a gaze adapting process, and image processor; and a communication controller which transmits and receives video images of the local environment and the remote environment, and data regarding video scene characteristics thereof across a network between the local environment and the remote environment; wherein the gaze adapting process identifies video scene characteristics of the local environment indicative of eye gaze image capture and altering the video images when the characteristics are indicative.

Owner:APPLE INC

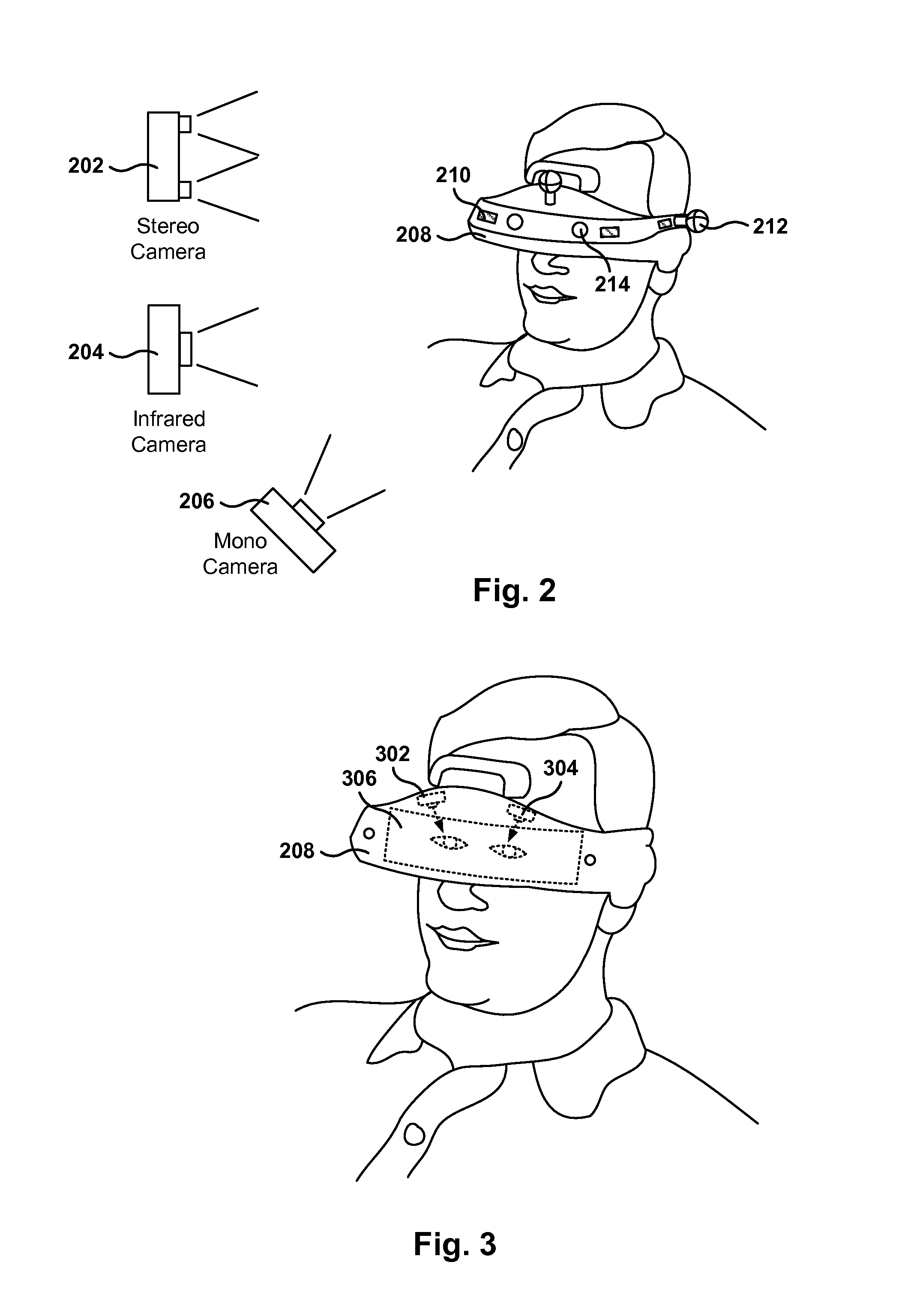

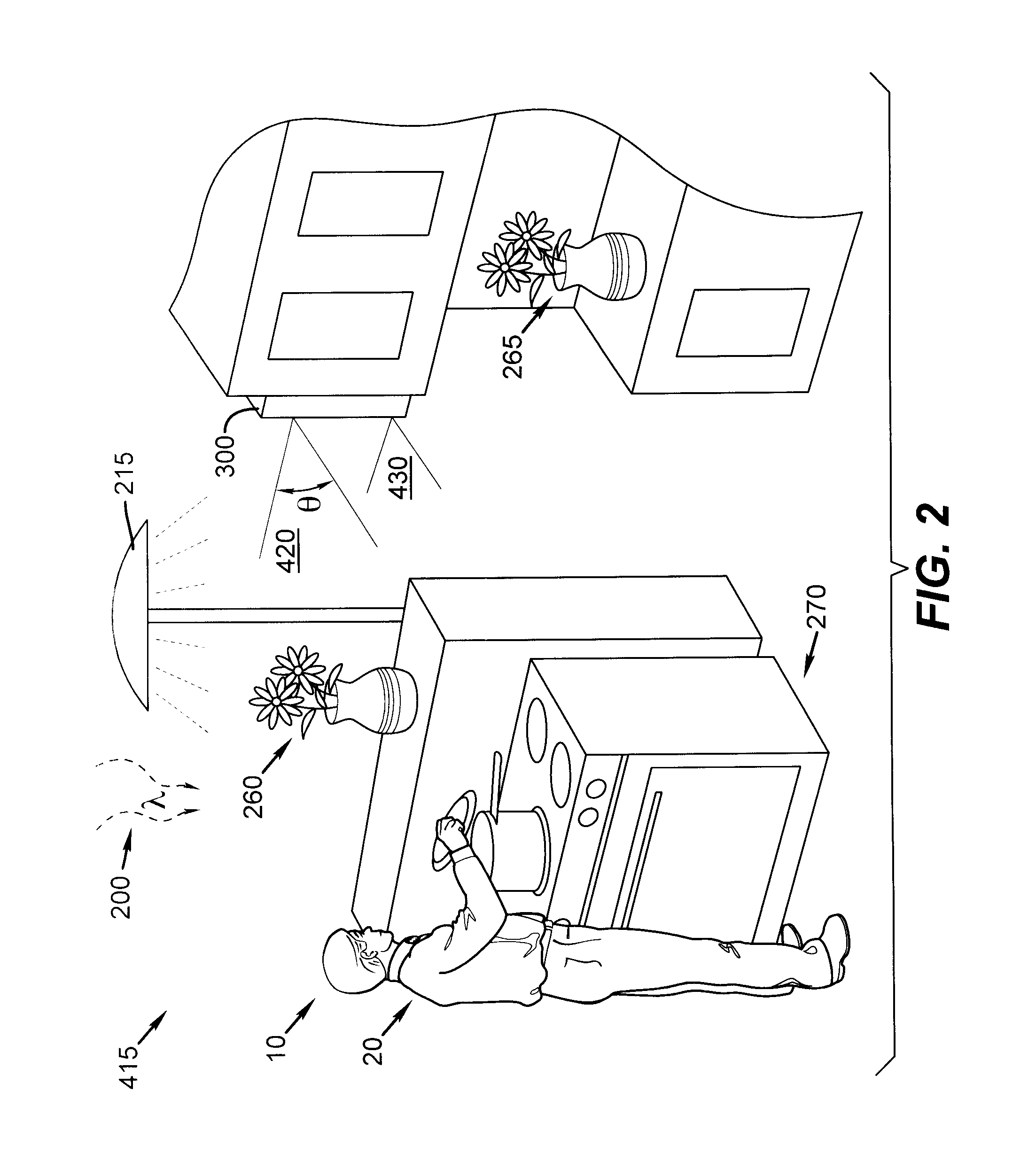

Systems and methods for high-resolution gaze tracking

ActiveUS20130114850A1Accurate trackingSure easyImage enhancementImage analysisReference vectorEyewear

A system is mounted within eyewear or headwear to unobtrusively produce and track reference locations on the surface of one or both eyes of an observer. The system utilizes multiple illumination sources and / or multiple cameras to generate and observe glints from multiple directions. The use of multiple illumination sources and cameras can compensate for the complex, three-dimensional geometry of the head and anatomical variations of the head and eye region that occurs among individuals. The system continuously tracks the initial placement and any slippage of eyewear or headwear. In addition, the use of multiple illumination sources and cameras can maintain high-precision, dynamic eye tracking as an eye moves through its full physiological range. Furthermore, illumination sources placed in the normal line-of-sight of the device wearer increase the accuracy of gaze tracking by producing reference vectors that are close to the visual axis of the device wearer.

Owner:GOOGLE LLC

Combining driver and environment sensing for vehicular safety systems

ActiveUS8384534B2Improve securityIncrease awarenessAnti-collision systemsCharacter and pattern recognitionEngineeringSafe operation

Owner:TOYOTA MOTOR CO LTD

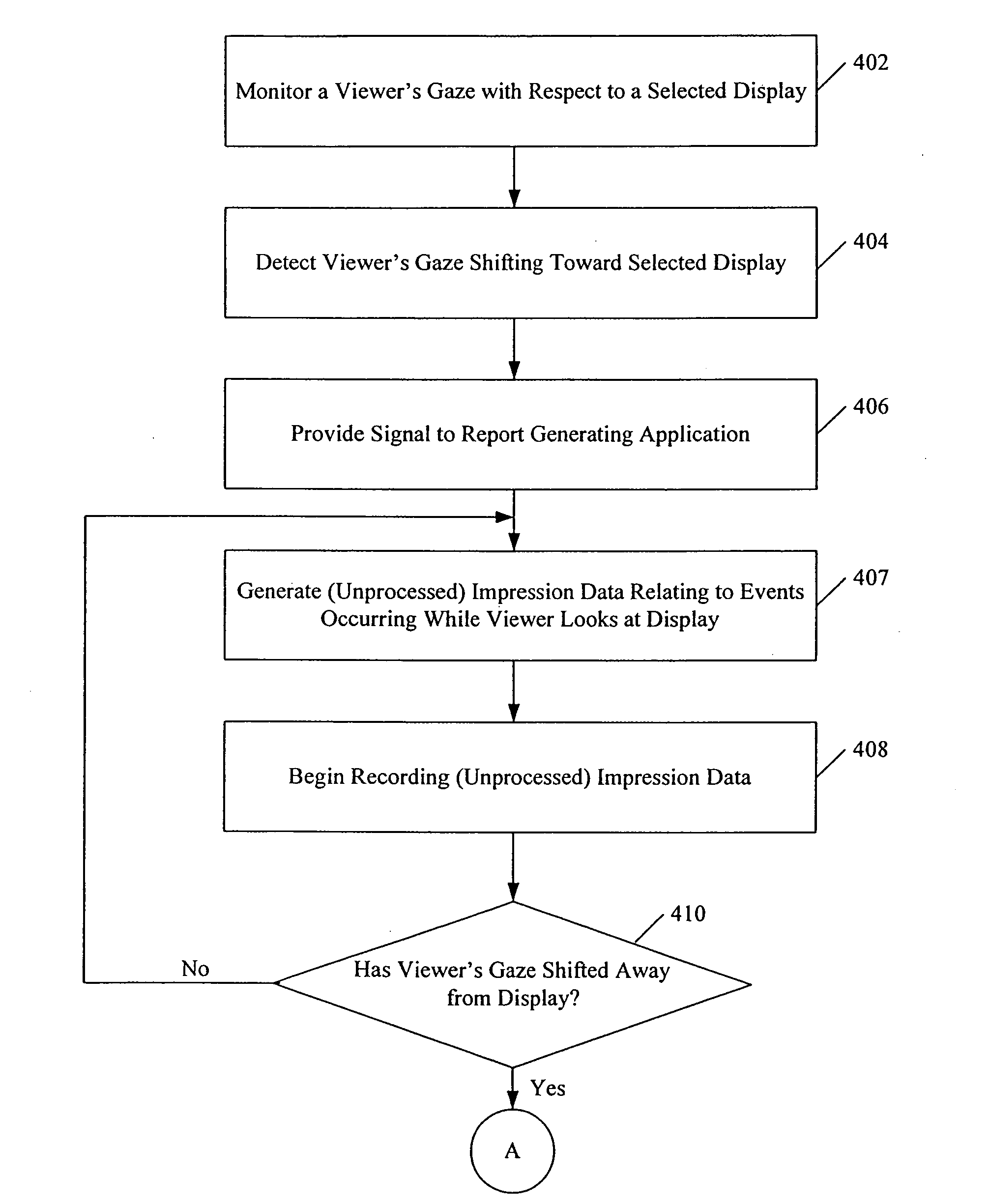

System and method for monitoring viewer attention with respect to a display and determining associated charges

InactiveUS20080147488A1Enhance advertising campaignIncrease salesComplete banking machinesAdvertisementsInvoiceDisplay device

In an example of an embodiment of the invention, data relating to at least one impression of at least one person with respect to a display is detected, and a party associated with the display is charged an amount based at least in part on the data. The at least one impression may include an action of the person with respect to the display. The action may comprise a gaze, for example, and the method may comprise detecting the gaze of the person directed toward the display. The person's gaze may be detected by a sensor, for example, which may comprise a video camera. An invoice may be generated based at least in part on the data, and sent to a selected party. The display may comprise one or more advertisements, for example. A face monitoring update method is also disclosed. Systems are also disclosed.

Owner:STUDIO IMC

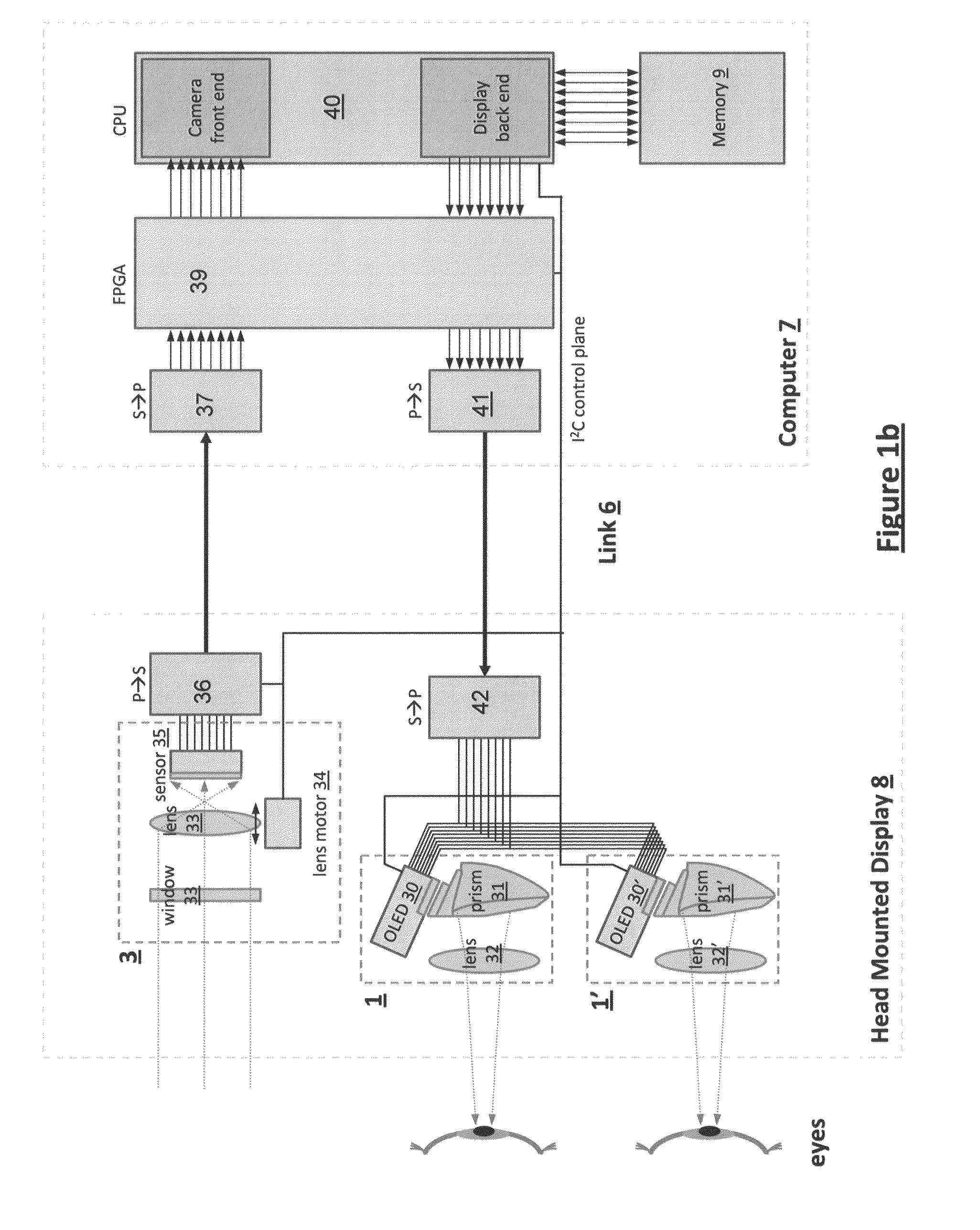

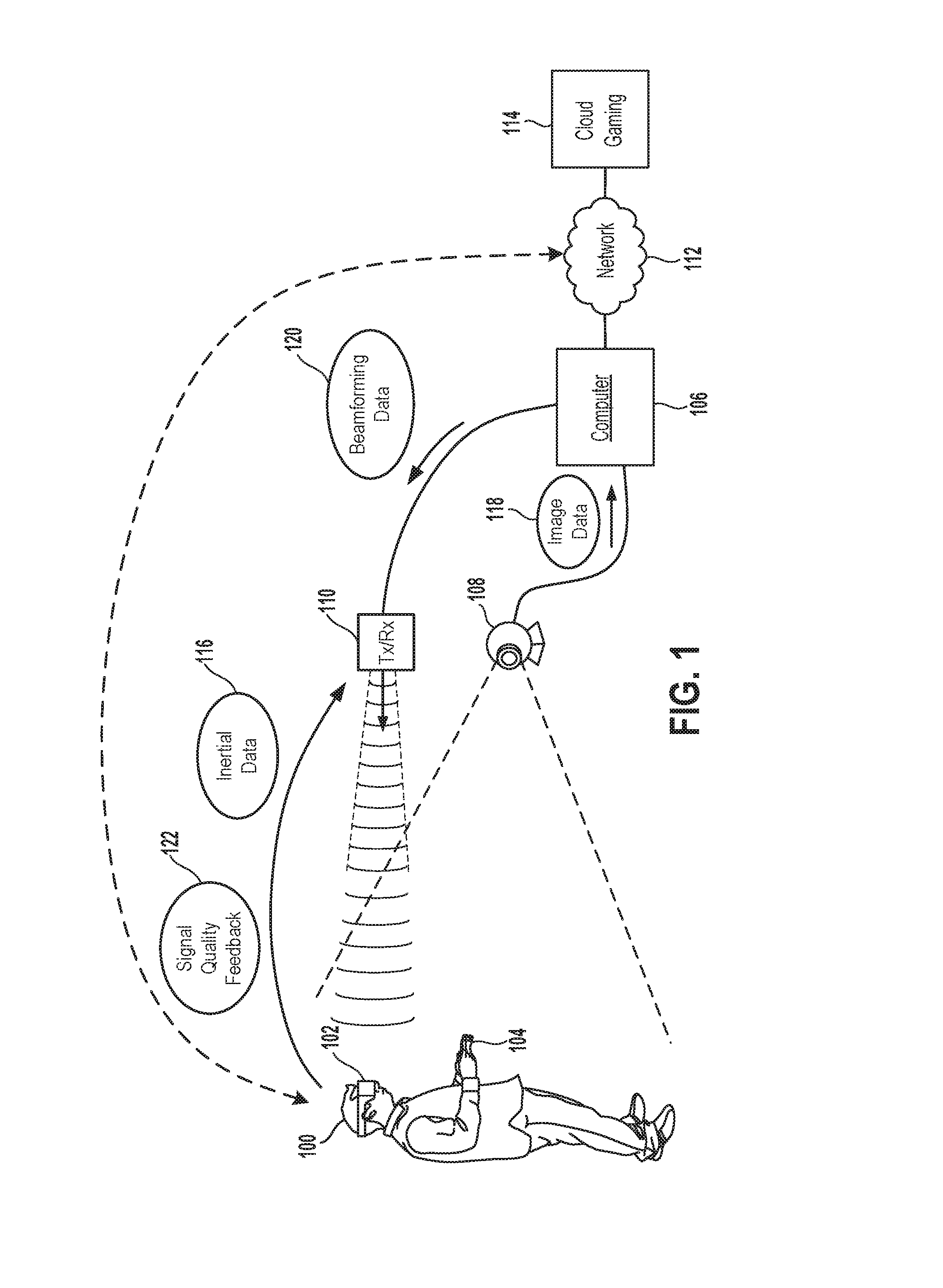

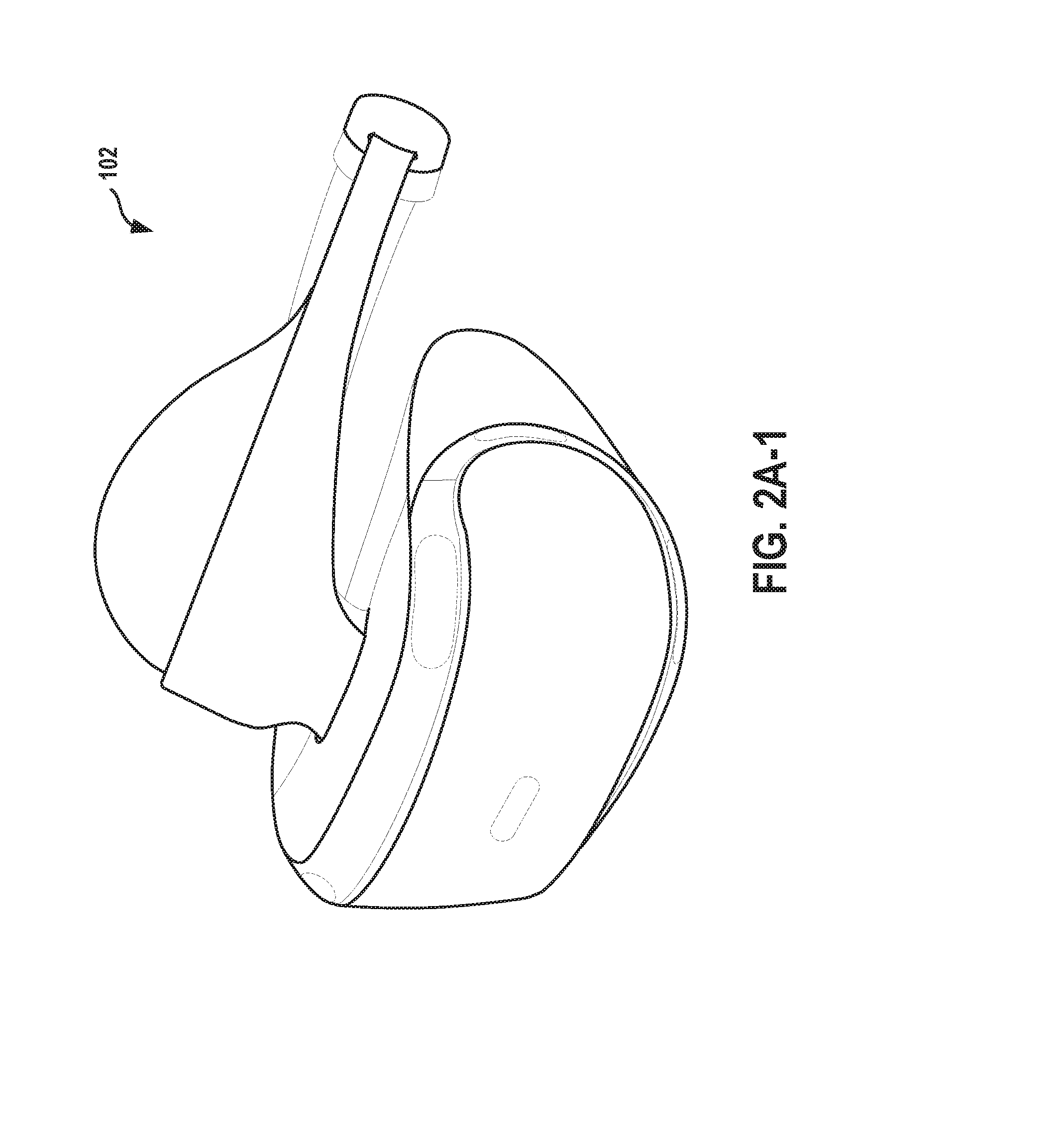

Wireless Head Mounted Display with Differential Rendering and Sound Localization

ActiveUS20170045941A1Small sizeImprove image qualityInput/output for user-computer interactionImage analysisTransceiverComputer graphics (images)

A method is provided, including the following method operations: receiving captured images of an interactive environment in which a head-mounted display (HMD) is disposed; receiving inertial data processed from at least one inertial sensor of the HMD; analyzing the captured images and the inertial data to determine a current and predicted future location of the HMD; using the predicted future location of the HMD to adjust a beamforming direction of an RF transceiver towards the predicted future location of the HMD; tracking a gaze of a user of the HMD; generating image data depicting a view of a virtual environment for the HMD, wherein regions of the view are differentially rendered; generating audio data depicting sounds from the virtual environment, the audio data being configured to enable localization of the sounds by the user; transmitting the image data and the audio data via the RF transceiver to the HMD.

Owner:SONY COMPUTER ENTERTAINMENT INC

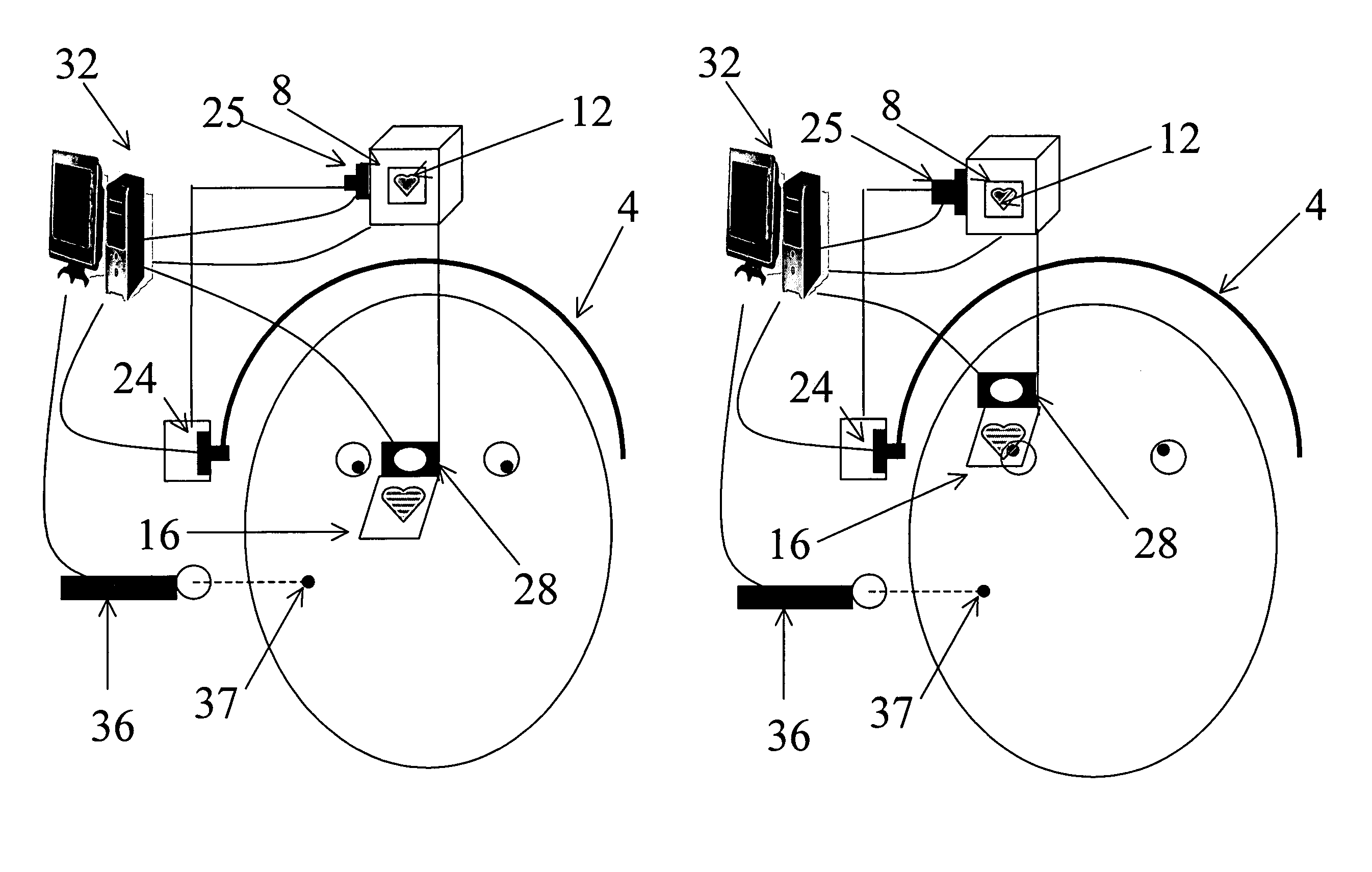

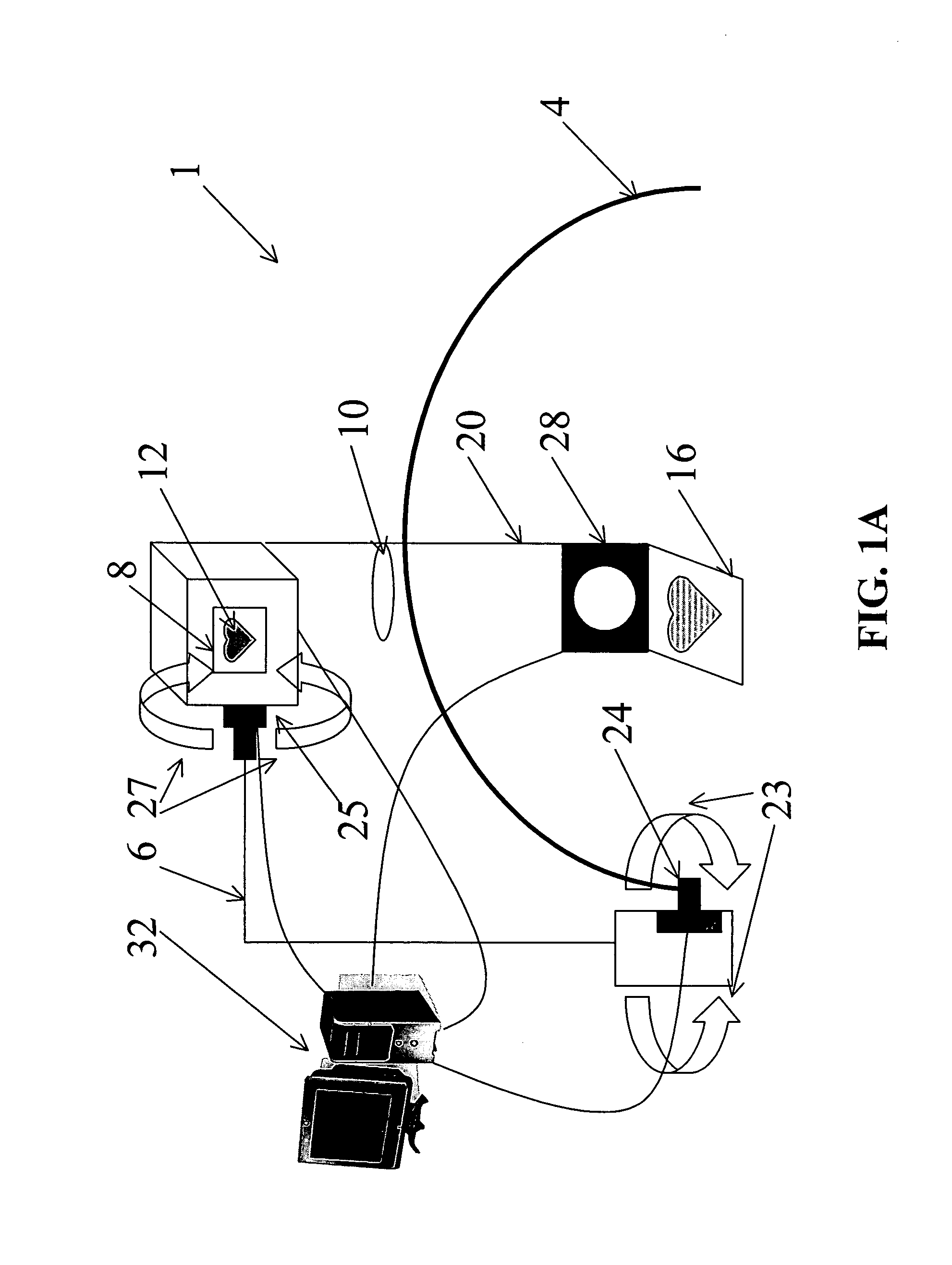

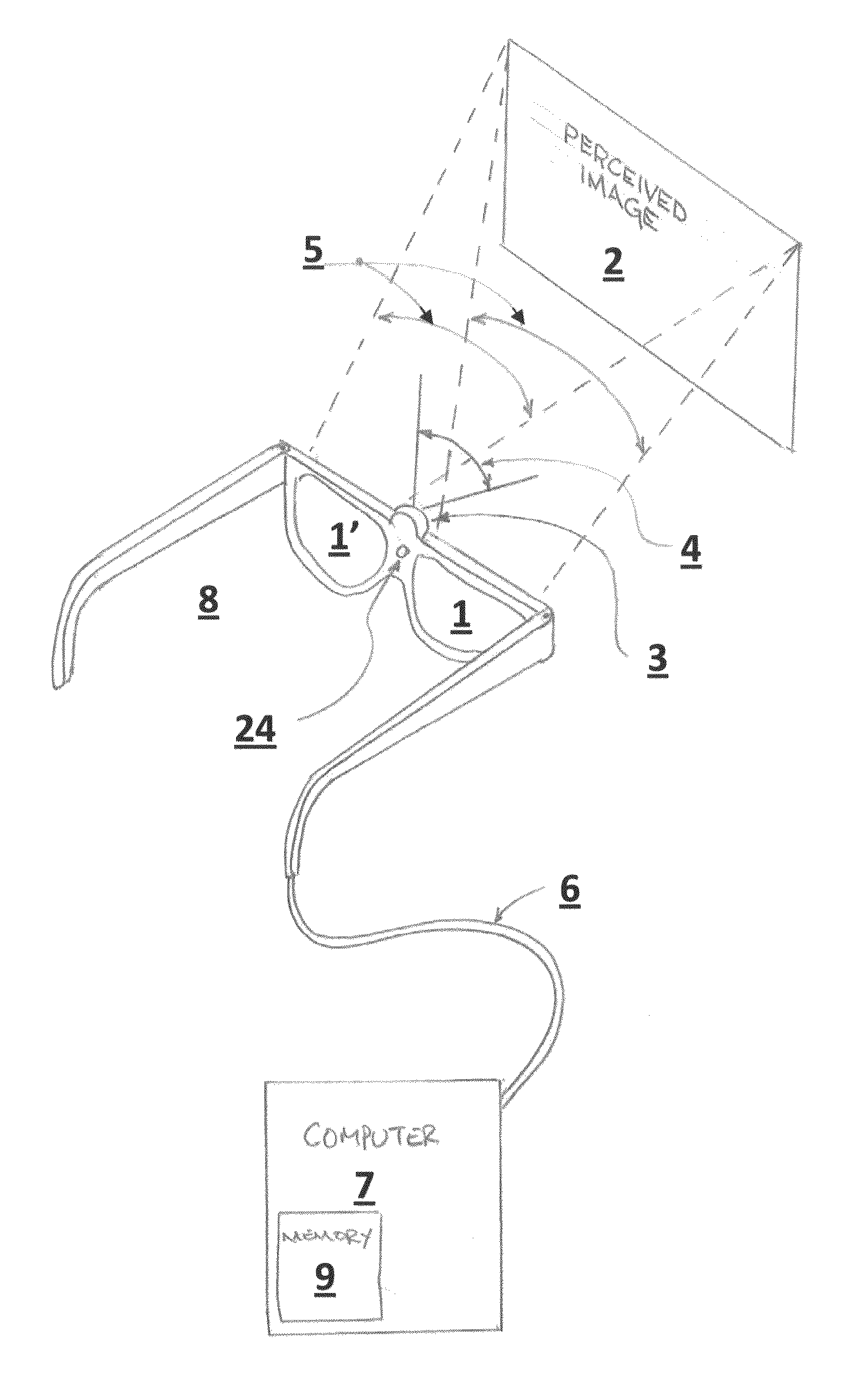

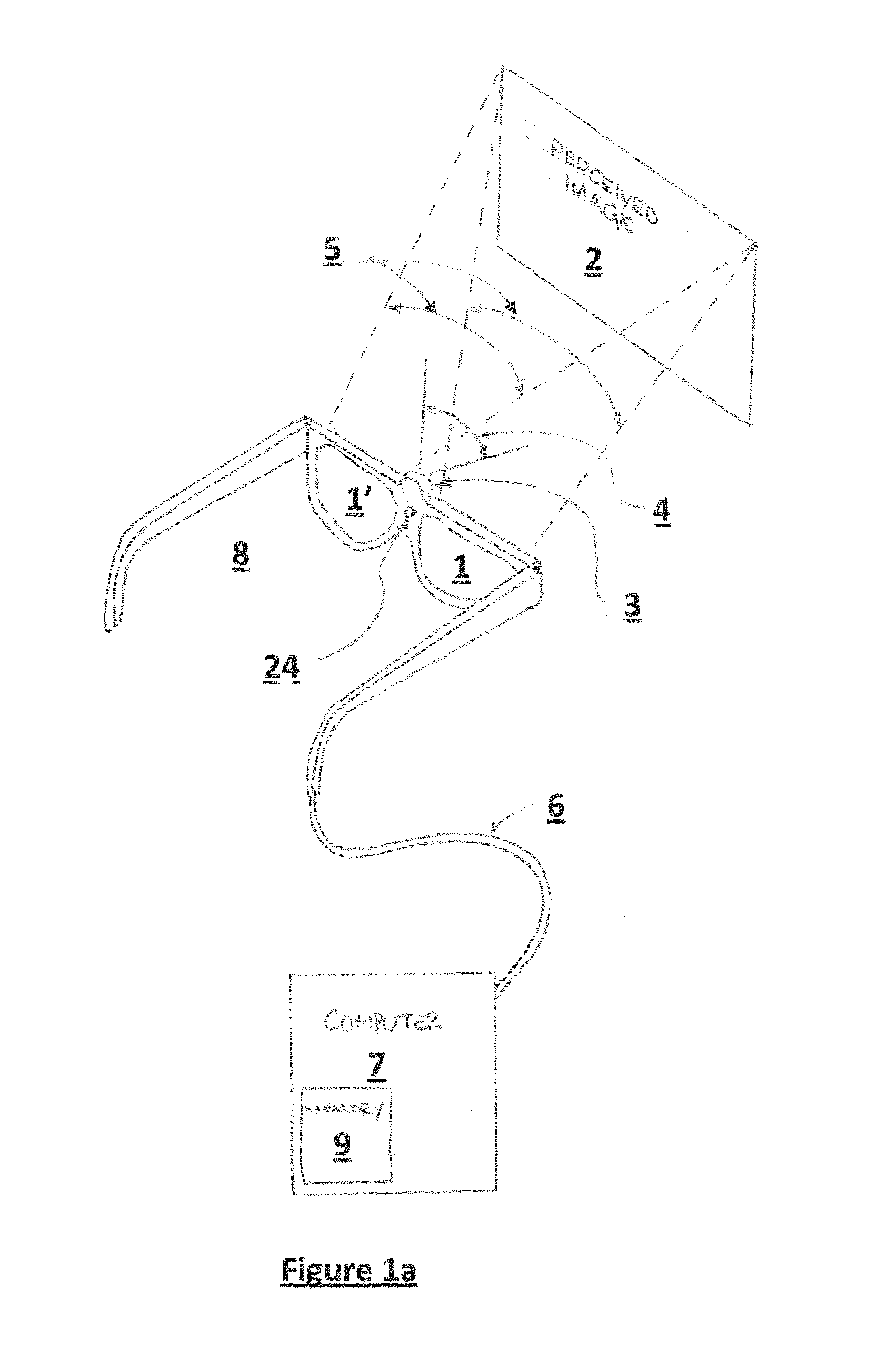

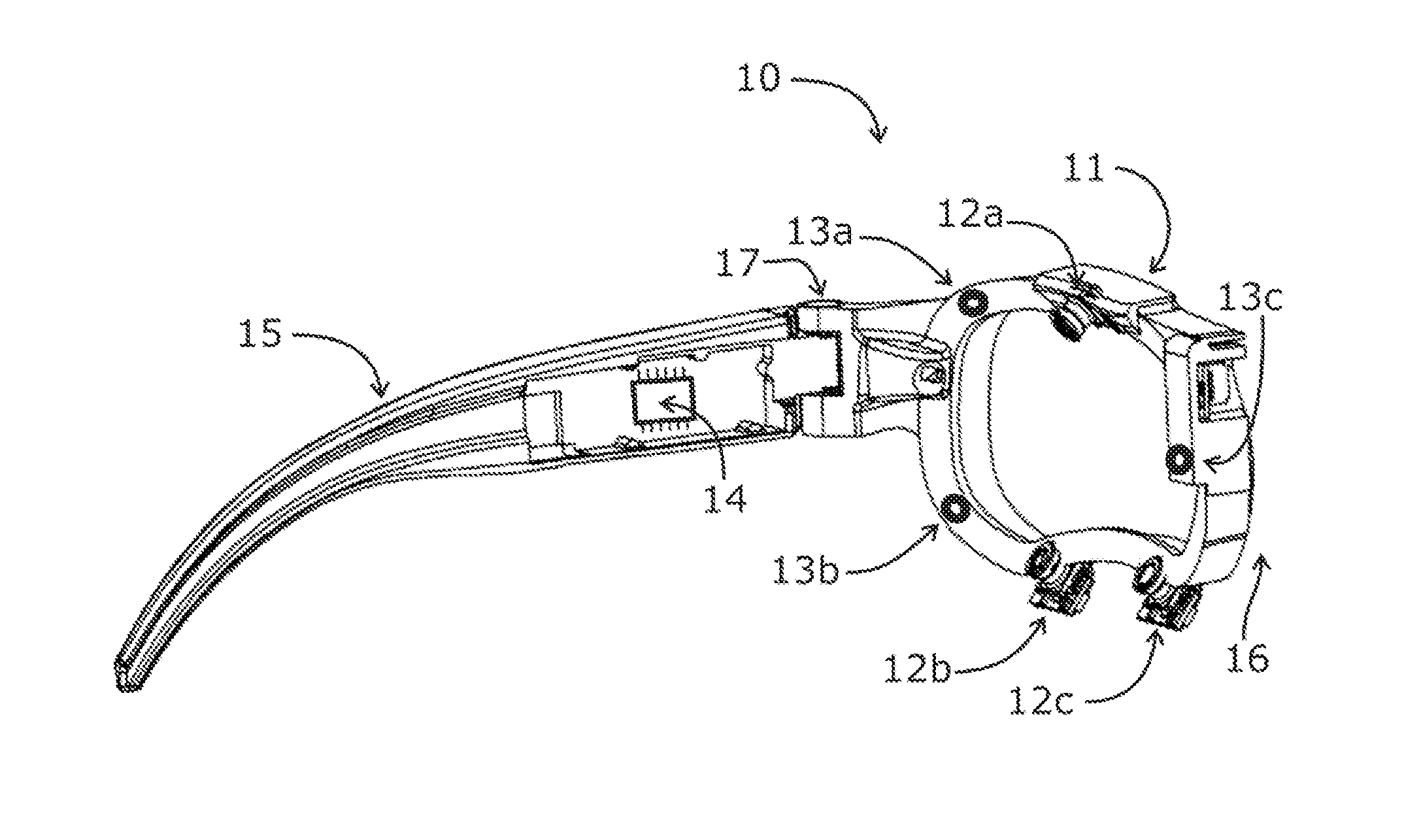

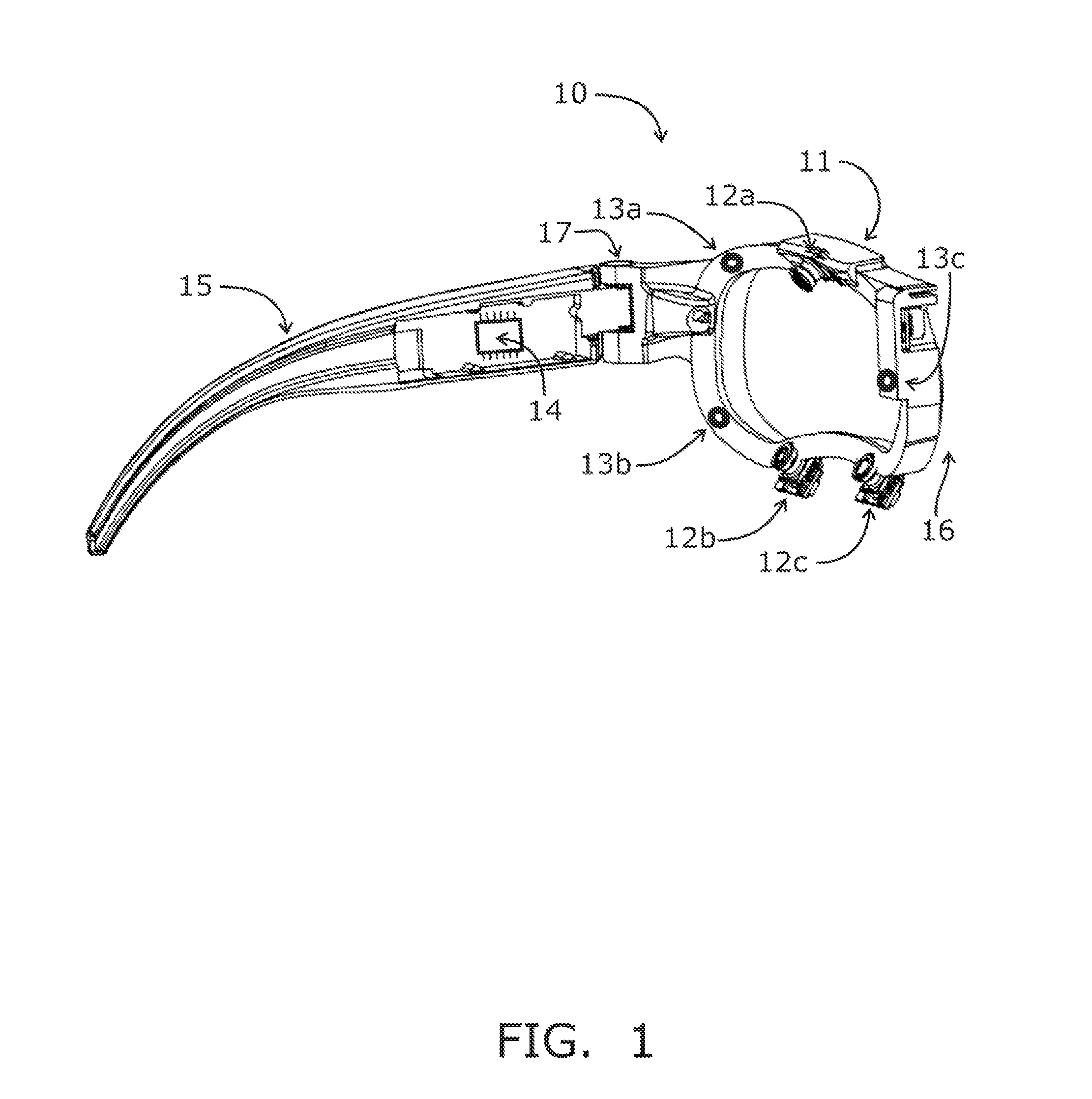

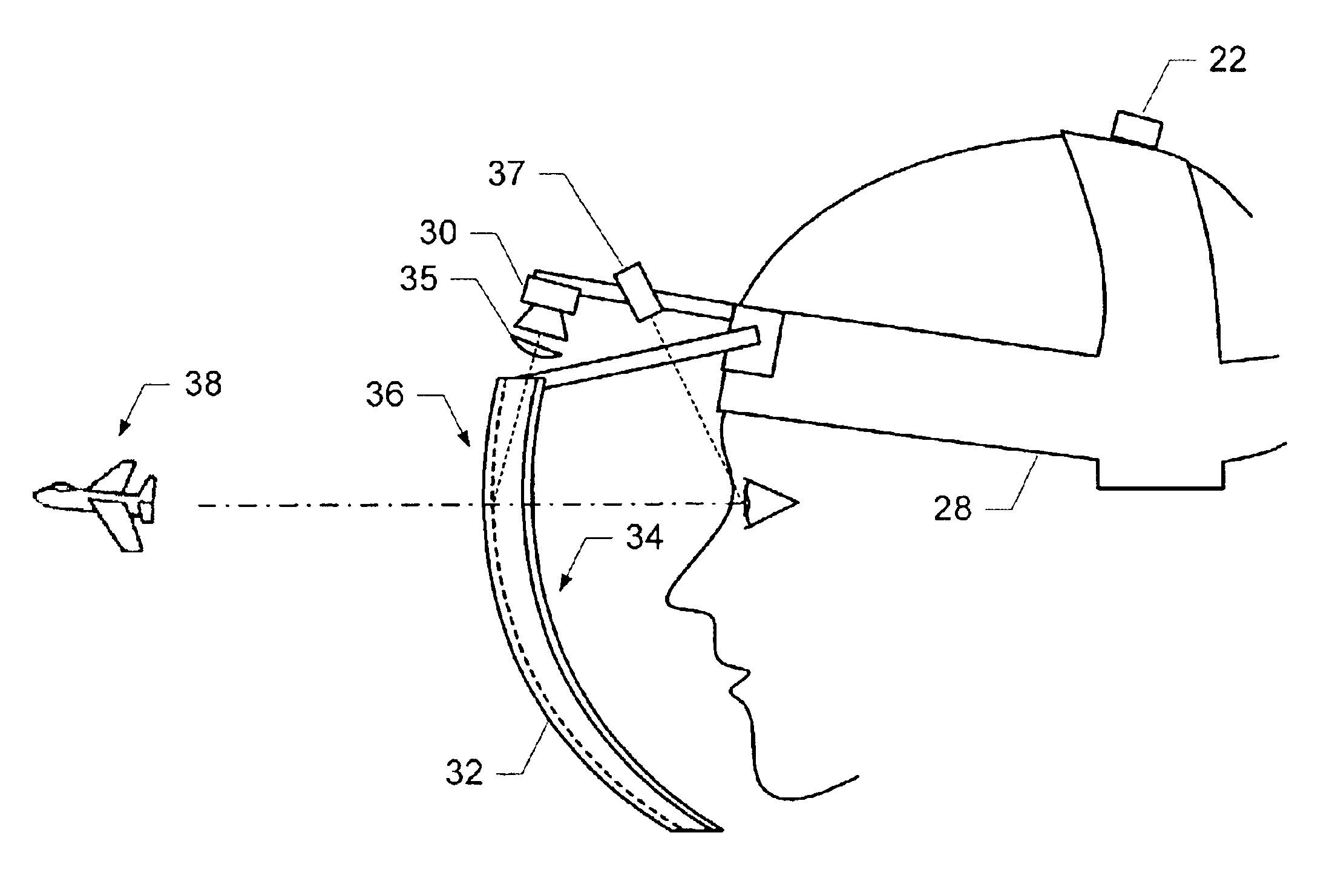

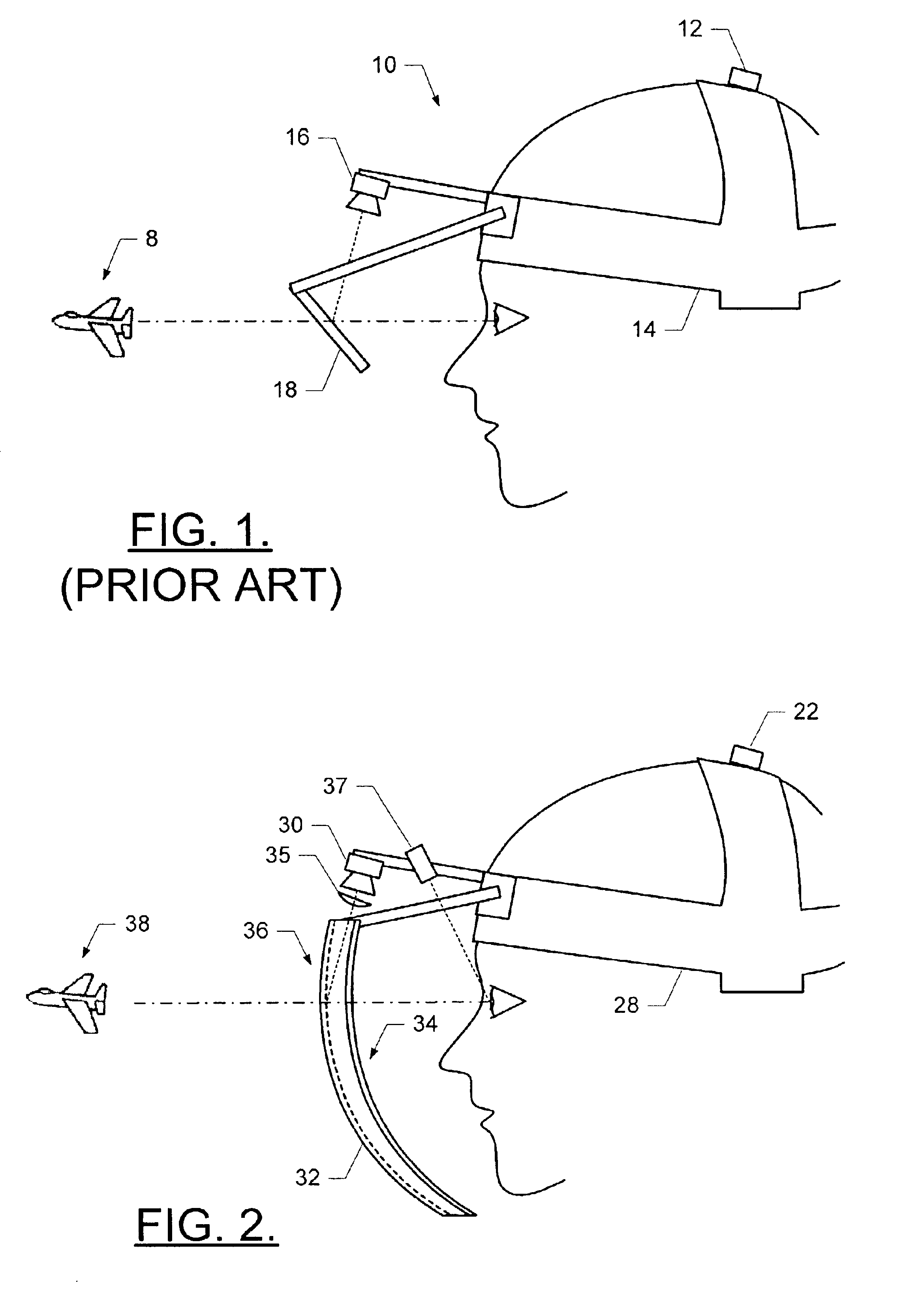

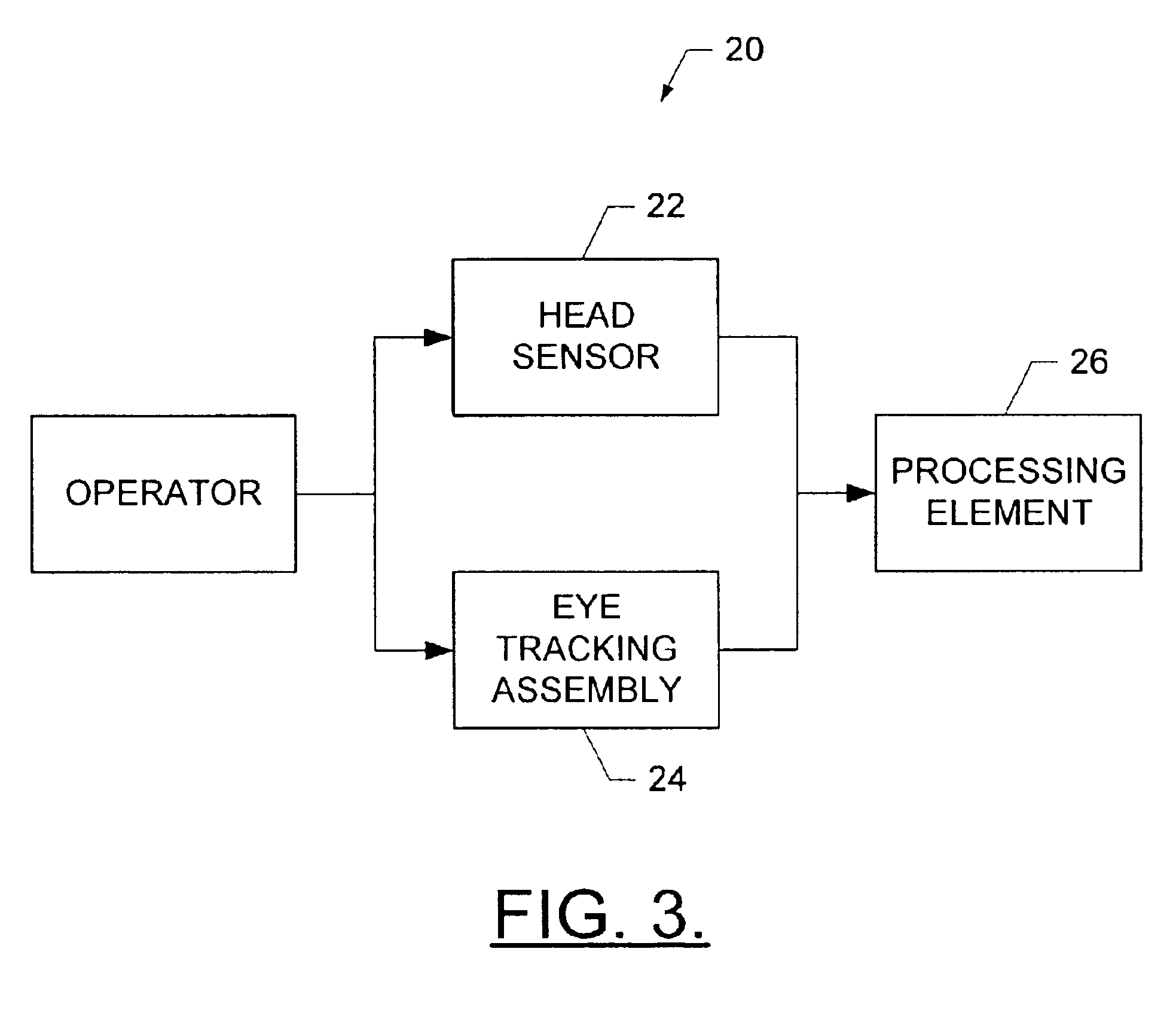

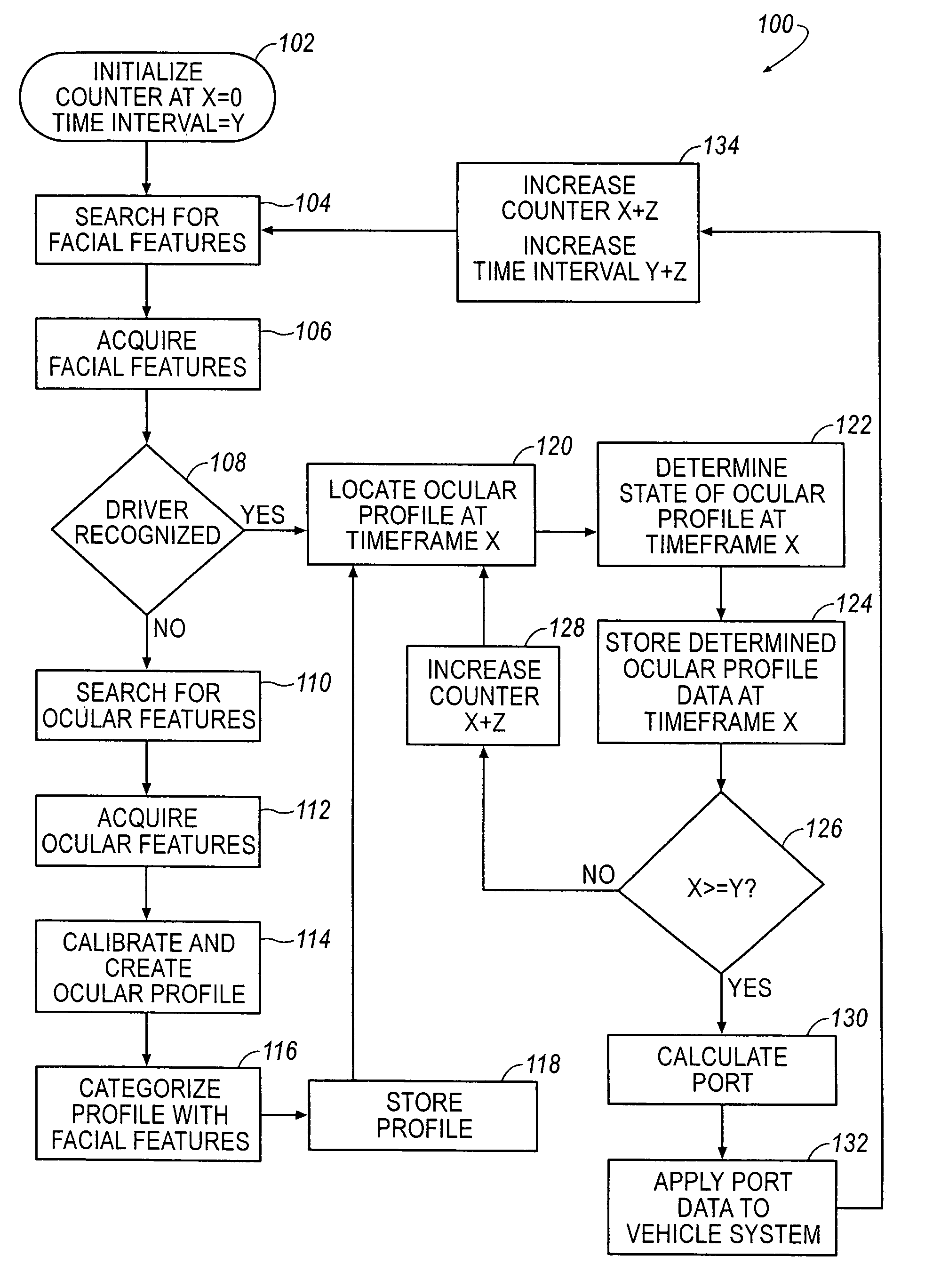

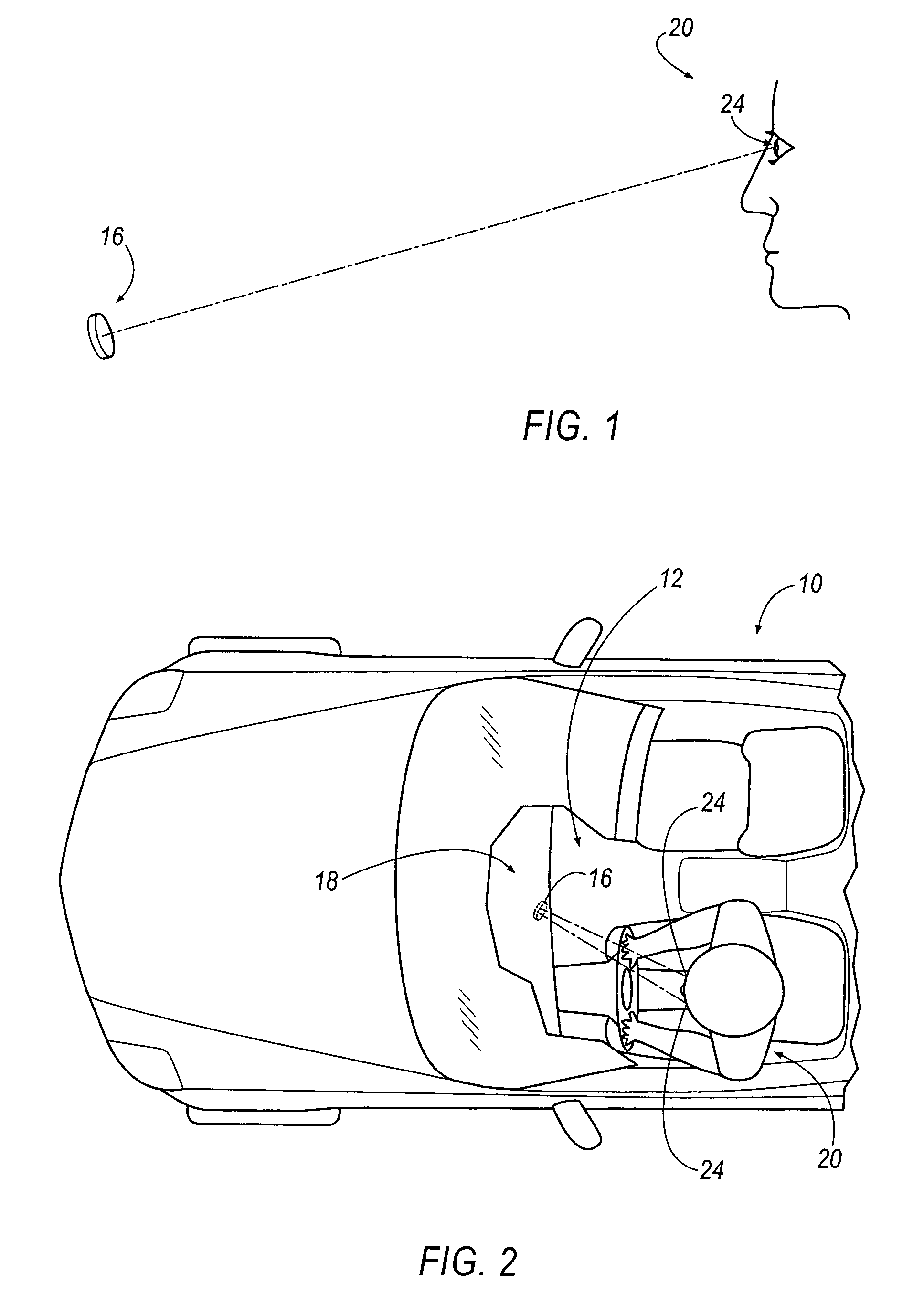

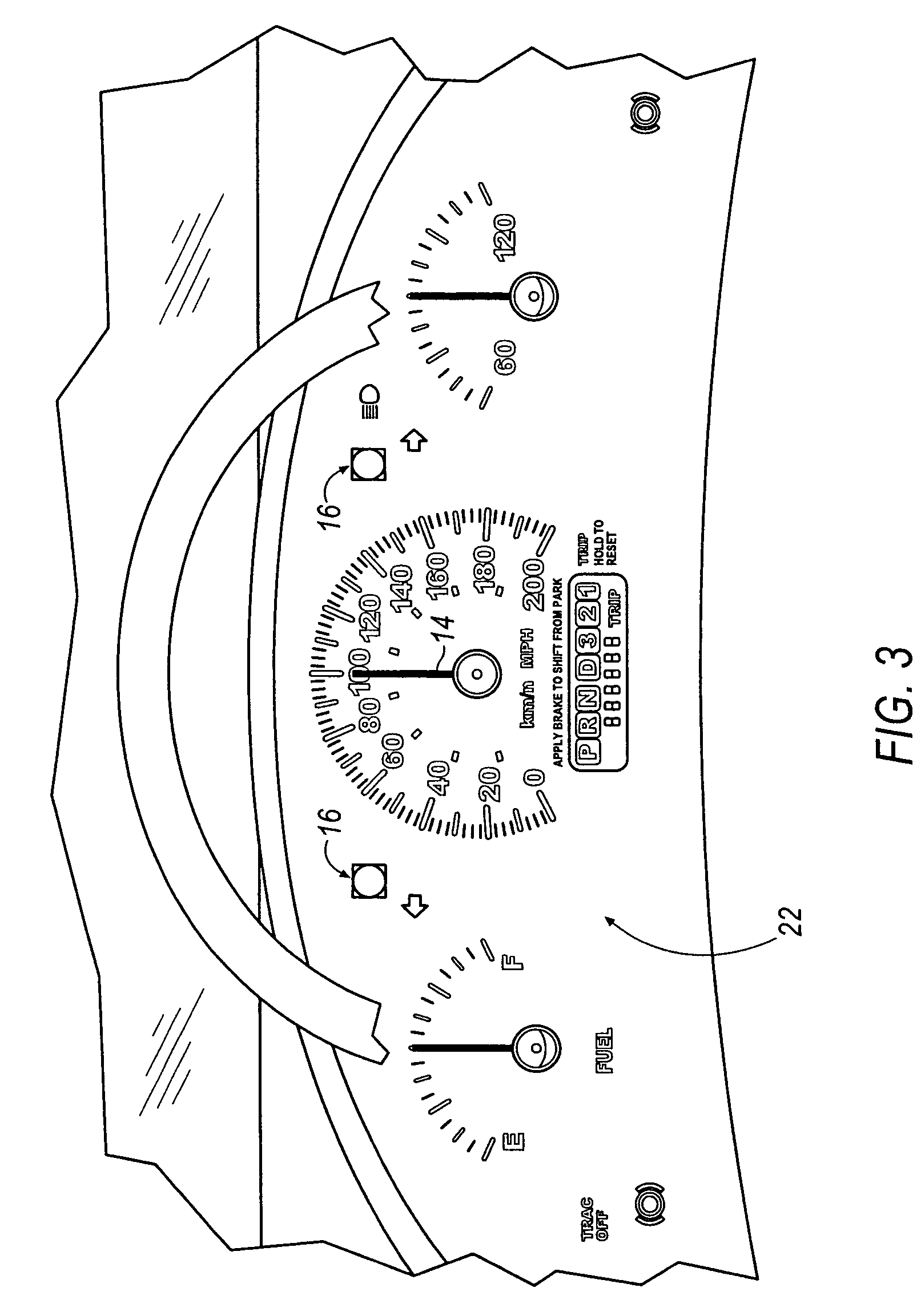

Gaze tracking system, eye-tracking assembly and an associated method of calibration

InactiveUS6943754B2Natural environmentAccurate for calibrating gaze tracking systemInput/output for user-computer interactionCosmonautic condition simulationsPosition dependentProcessing element

A system for tracking a gaze of an operator includes a head-mounted eye tracking assembly, a head-mounted head tracking assembly and a processing element. The head-mounted eye tracking assembly comprises a visor having an arcuate shape including a concave surface and an opposed convex surface. The visor is capable of being disposed such that at least a portion of the visor is located outside a field of view of the operator. The head-mounted head tracking sensor is capable of repeatedly determining a position of the head to thereby track movement of the head. In this regard, each position of the head is associated with a position of the at least one eye. Thus, the processing element can repeatedly determine the gaze of the operator, based upon each position of the head and the associated position of the eyes, thereby tracking the gaze of the operator.

Owner:THE BOEING CO

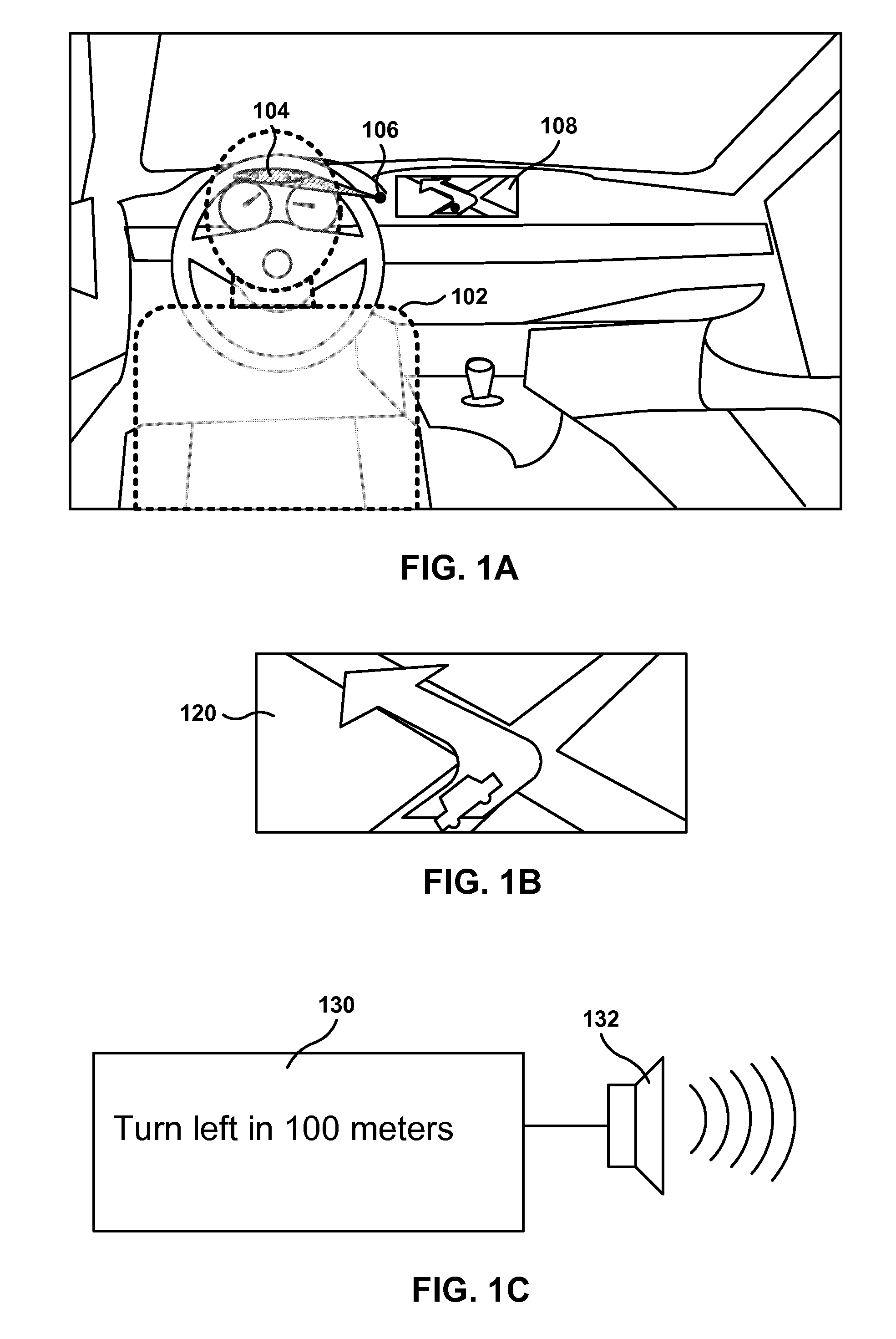

Method of mitigating driver distraction

ActiveUS7835834B2Reduce distractionsDigital data processing detailsAcquiring/recognising eyesDriver/operatorIn vehicle

A driver alert for mitigating driver distraction is issued based on a proportion of off-road gaze time and the duration of a current off-road gaze. The driver alert is ordinarily issued when the proportion of off-road gaze exceeds a threshold, but is not issued if the driver's gaze has been off-road for at least a reference time. In vehicles equipped with forward-looking object detection, the driver alert is also not issued if the closing speed of an in-path object exceeds a calibrated closing rate.

Owner:APTIV TECH LTD

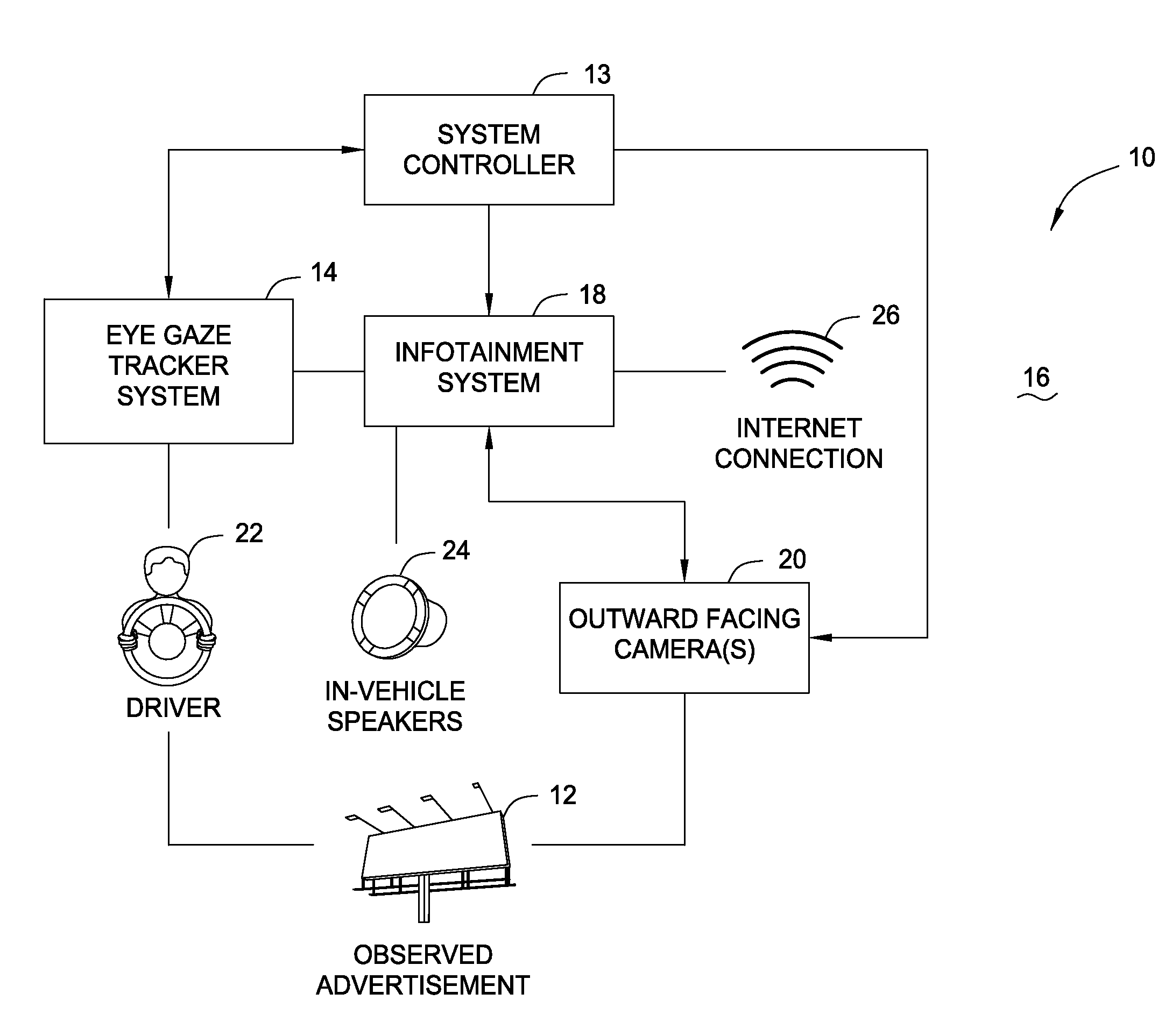

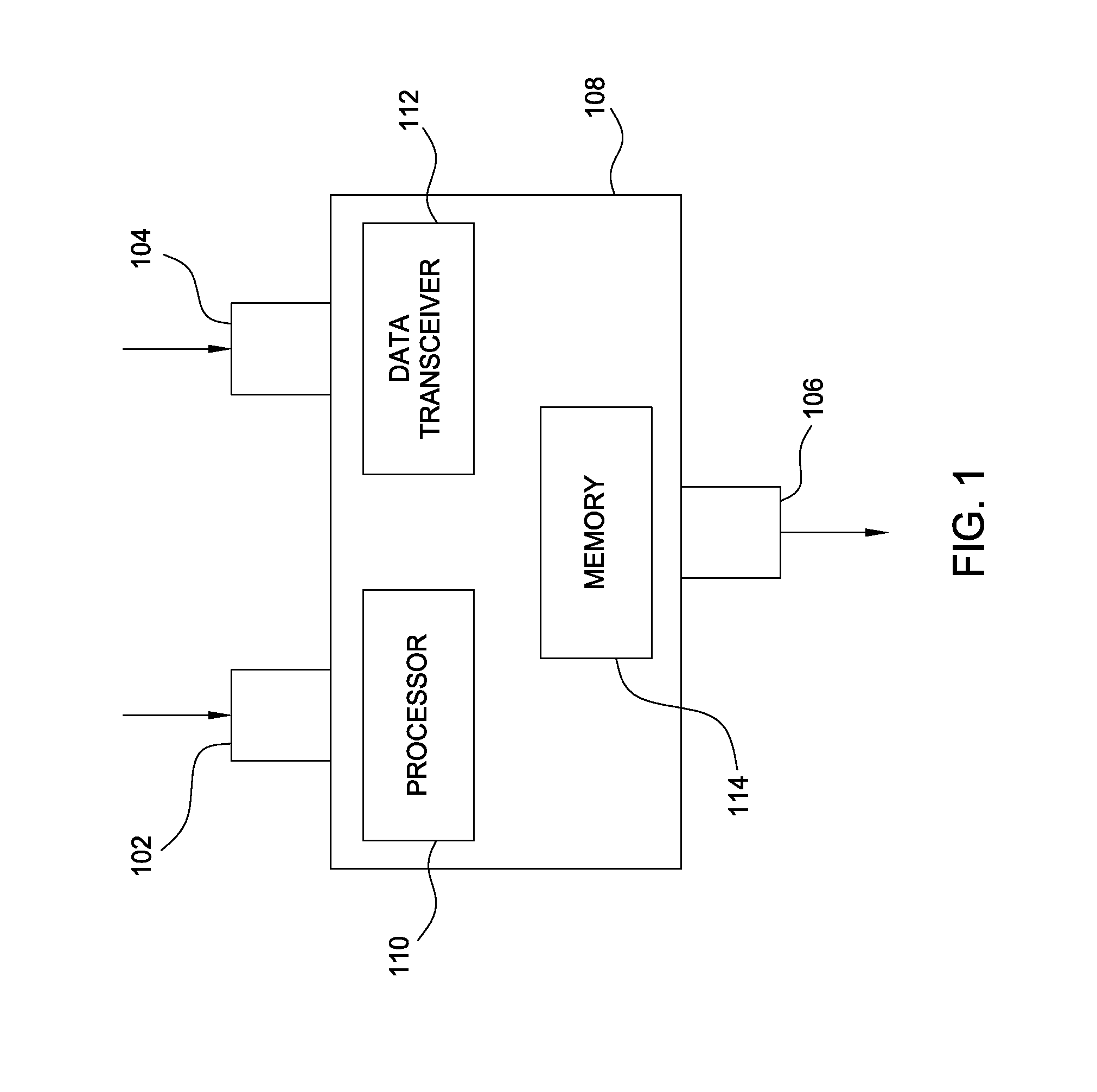

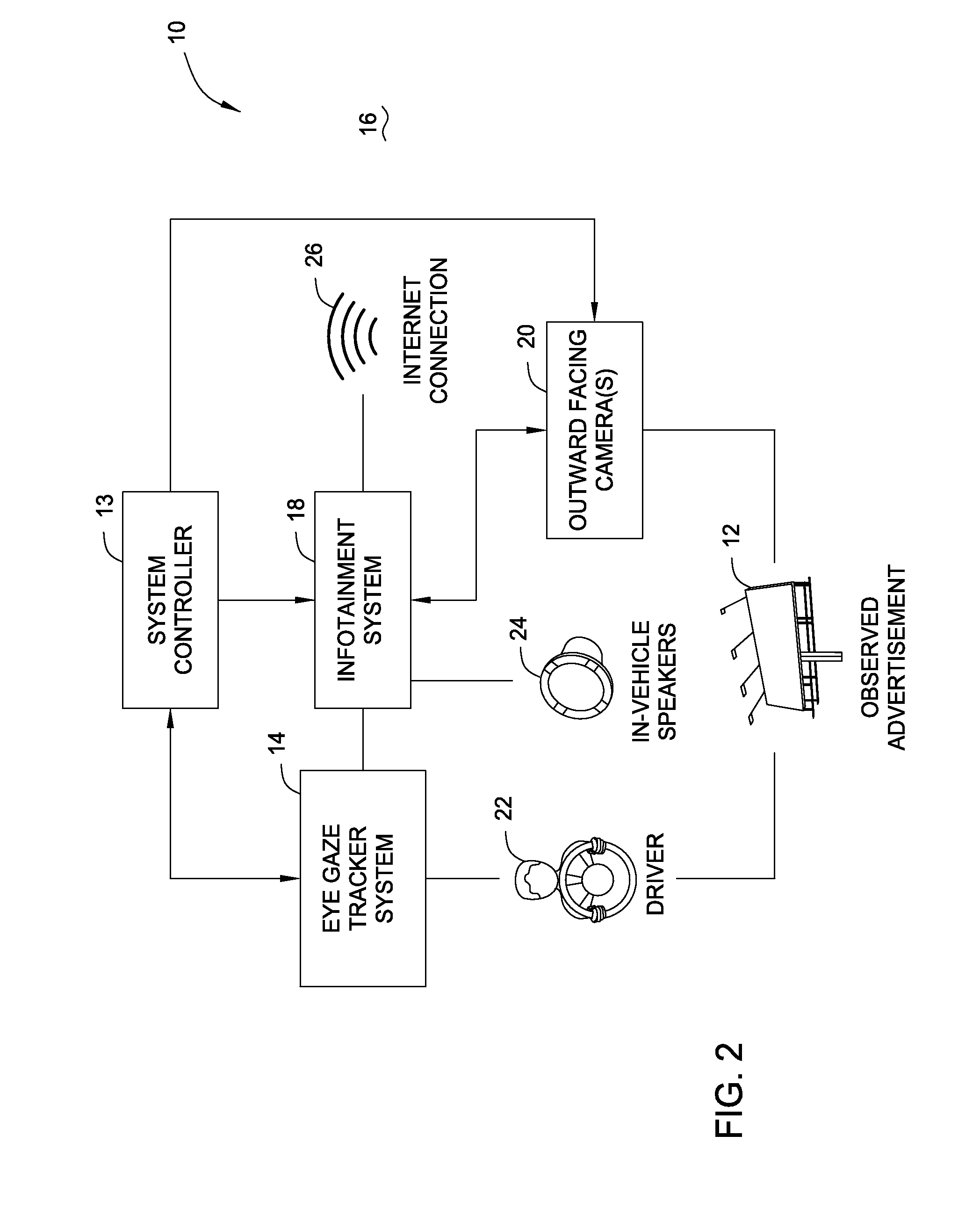

Apparatus and method for detecting a driver's interest in an advertisement by tracking driver eye gaze

InactiveUS20150006278A1Character and pattern recognitionMarketingTransducerLogic in computer science

A controller for providing advertisements to a vehicle or a wearable housing, and a computer readable medium, when executed by one or more processors, performs an operation to provide an audio advertisement to the vehicle or wearable housing. A first signal input receives a first camera signal, a second signal input receives a second camera signal, and at least one signal output transmits to at least one acoustic transducer, which provides the audio advertisement to the user. The computer logic that may be arranged within the controller determines whether the direction of the captured images of the advertisements and the direction of the user's eye gaze correspond to one another, and, if so, the computer logic outputs the audio advertisement to the audio transducer.

Owner:HARMAN INT IND INC

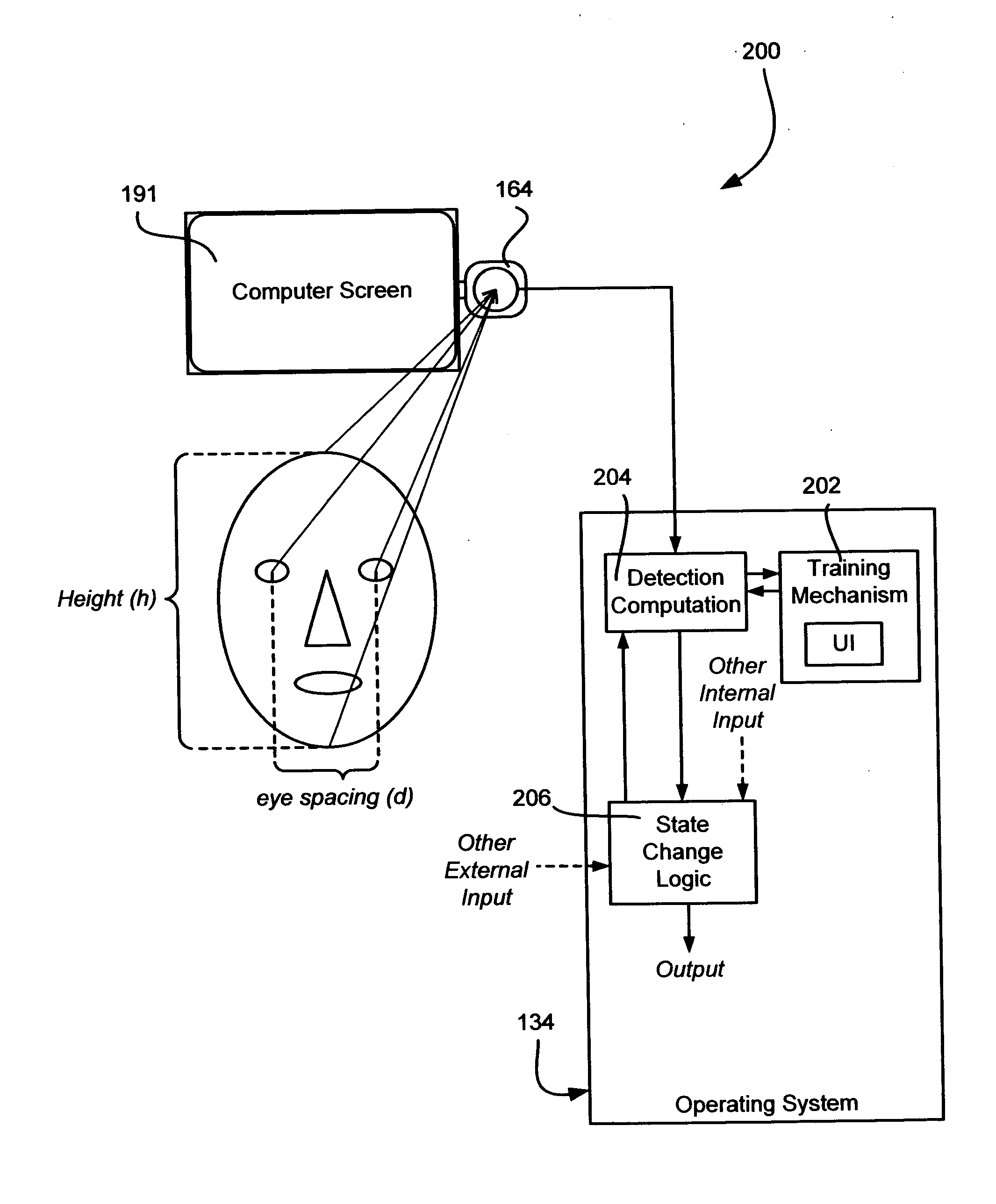

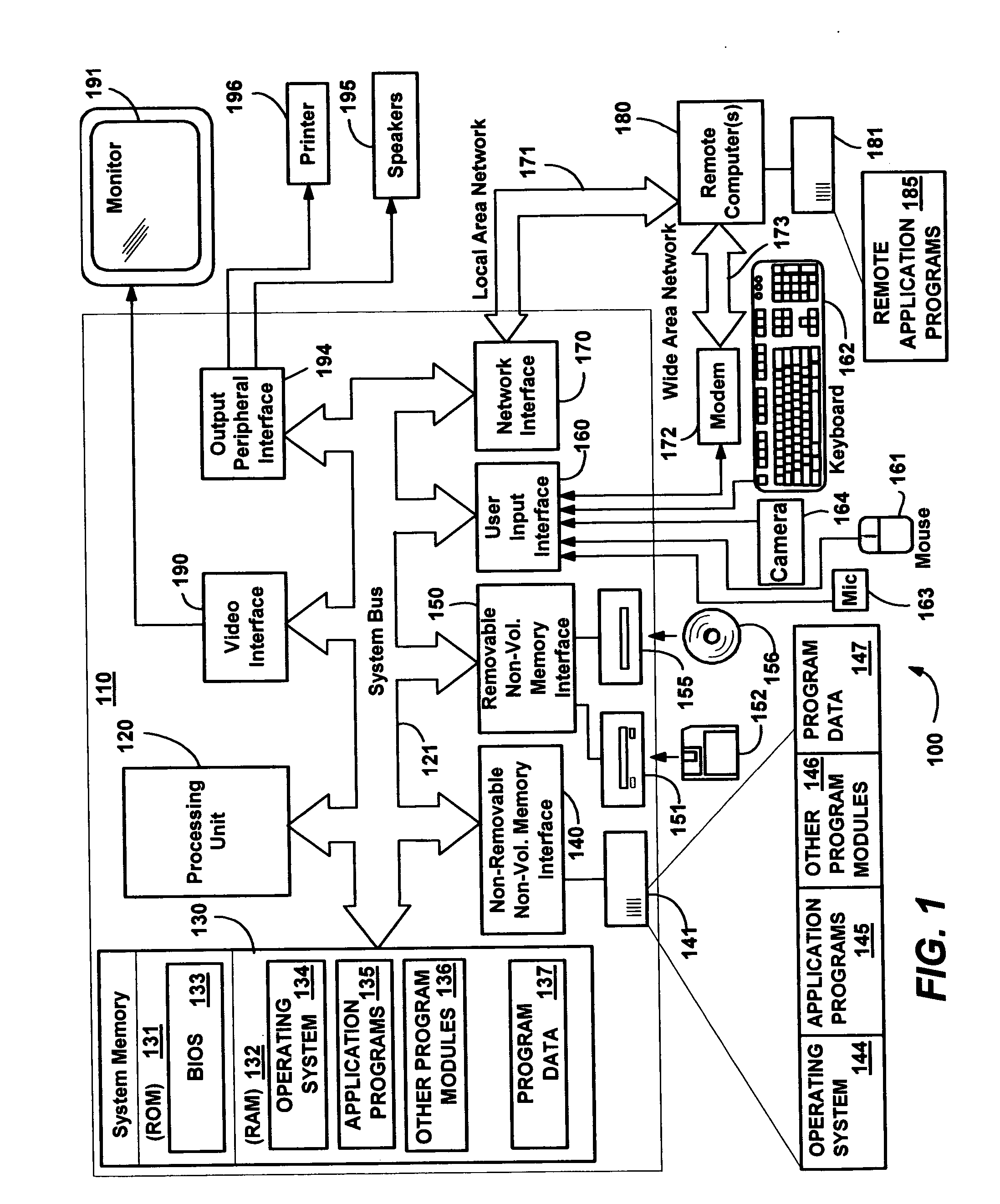

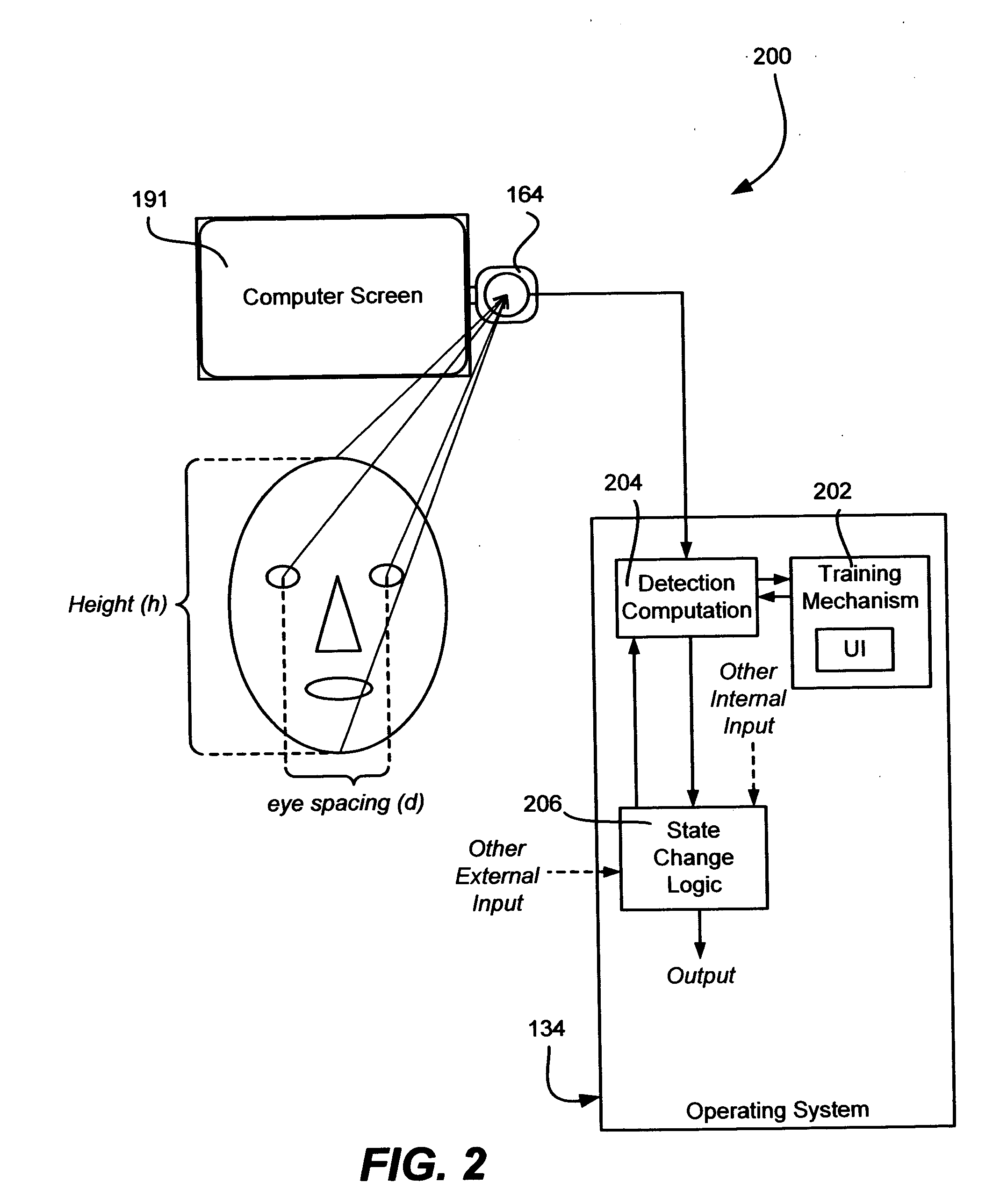

Using detected visual cues to change computer system operating states

InactiveUS20060192775A1Improve computing experienceEasy to manageEnergy efficient ICTDigital data processing detailsCommand and controlDisplay device

Described is a method and system that uses visual cues from a computer camera (e.g., webcam) based on presence detection, pose detection and / or gaze detection, to improve a user's computing experience. For example, by determining whether a user is looking at the display or not, better power management is achieved, such as by reducing power consumed by the display when the user is not looking. Voice recognition such as for command and control may be turned on and off based on where the use is looking when speaking. Visual cues may be used alone or in conjunction with other criteria, such as mouse or keyboard input, the current operating context and possibly other data, to make an operating state decision. Interaction detection is improved by determining when the user is interacting by viewing the display, even when not physically interacting via an input device.

Owner:MICROSOFT TECH LICENSING LLC

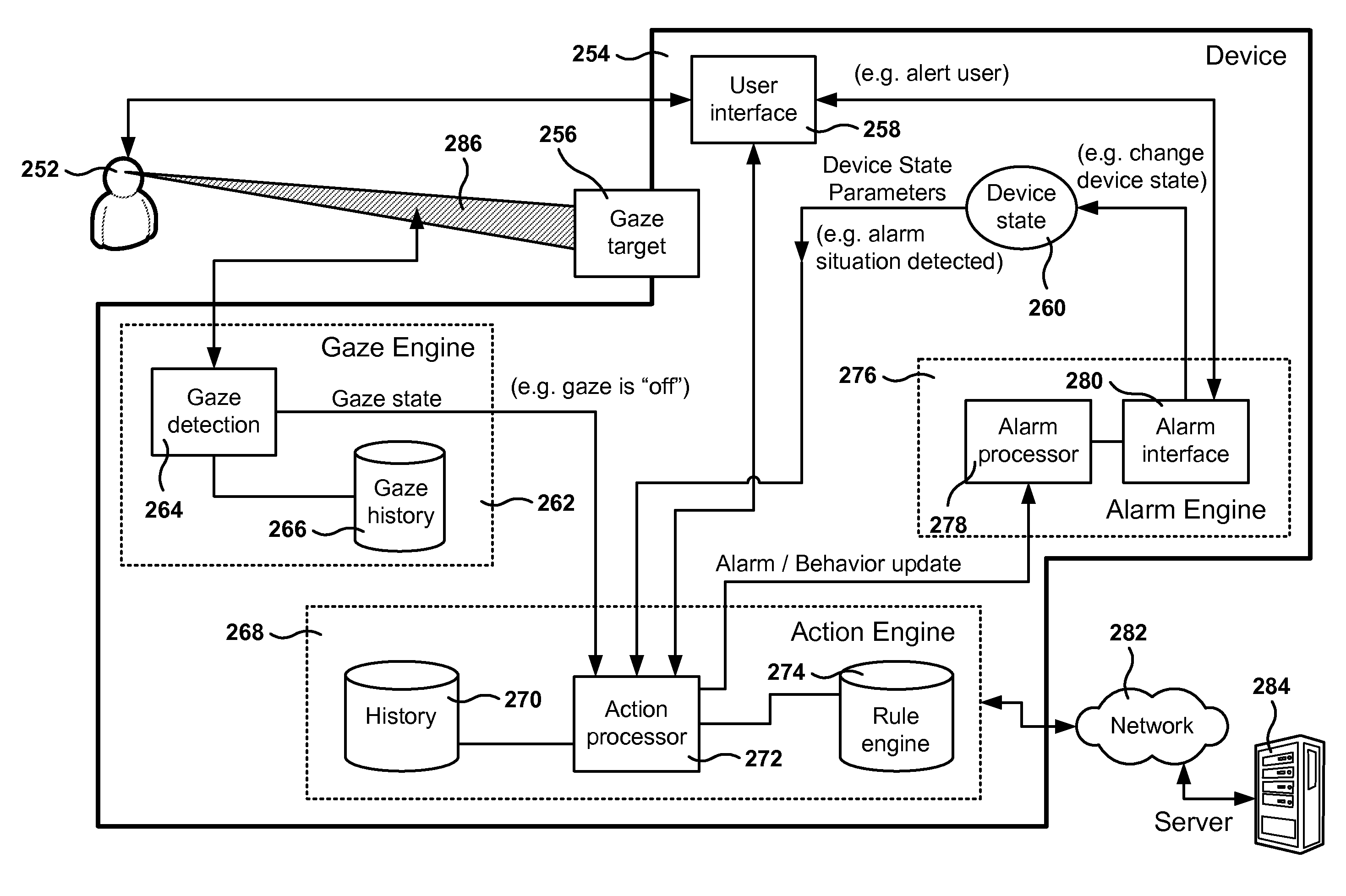

Eye Gaze to Alter Device Behavior

ActiveUS20120300061A1Energy efficient ICTTelevision system detailsOperation modeHuman–computer interaction

Methods, devices, and computer programs for controlling behavior of an electronic device are presented. A method includes an operation for operating the electronic device in a first mode of operation, and an operation for tracking the gaze of a user interfacing with the electronic device. The electronic device is maintained in a first mode of operation as long as the gaze is directed towards a predetermined target. In another operation, when the gaze is not detected to be directed towards the predetermined target, the electronic device is operated in a second mode of operation, which is different from the first mode of operation.

Owner:SONY COMPUTER ENTERTAINMENT INC

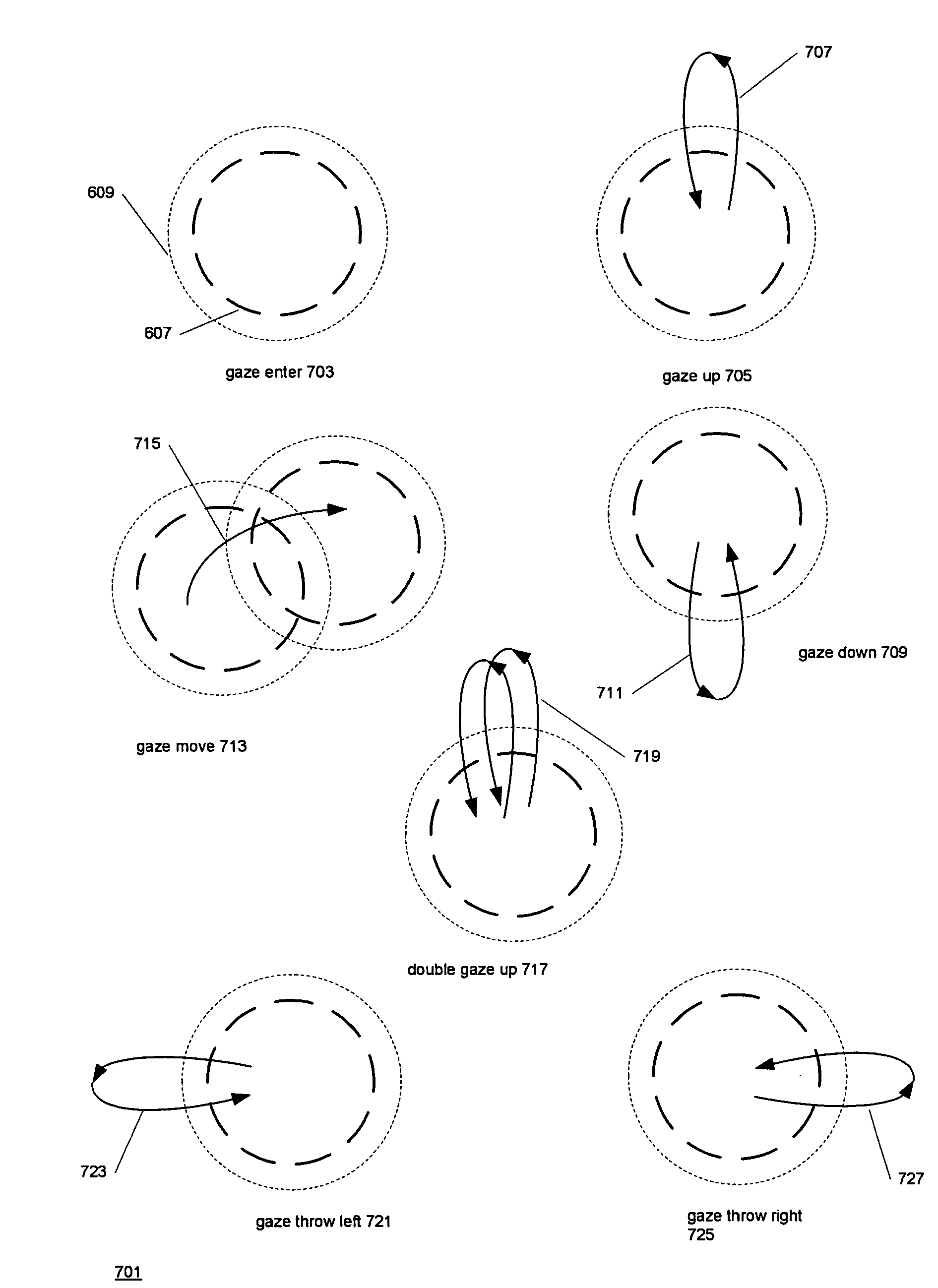

Using gaze actions to interact with a display

ActiveUS7561143B1Cathode-ray tube indicatorsInput/output processes for data processingGraphicsGraphical user interface

Techniques for using gaze actions to interact with interactive displays. A pointing device includes an eye movement tracker that tracks eye movements and an eye movement analyzer. The eye movement analyzer analyzes the eye movements for a sequence of gaze movements that indicate a gaze action which specifies an operation on the display. A gaze movement may have a location, a direction, a length, and a velocity. A processor receives an indication of the gaze action and performs the operation specified by the gaze action on the display. The interactive display may be digital or may involve real objects. Gaze actions may correspond to mouse events and may be used with standard graphical user interfaces.

Owner:SAMSUNG ELECTRONICS CO LTD

Materials and methods for simulating focal shifts in viewers using large depth of focus displays

ActiveUS20060232665A1Improve interactivityEnhances perceived realismTelevision system detailsSteroscopic systemsDisplay deviceDepth of field

A large depth of focus (DOF) display provides an image in which the apparent focus plane is adjusted to track an accommodation (focus) of a viewer's eye(s) to more effectively convey depth in the image. A device is employed to repeatedly determine accommodation as a viewer's gaze within the image changes. In response, an image that includes an apparent focus plane corresponding to the level of accommodation of the viewer is provided on the large DOF display. Objects that are not at the apparent focus plane are made to appear blurred. The images can be rendered in real-time, or can be pre-rendered and stored in an array. The dimensions of the array can each correspond to a different variable. The images can alternatively be provided by a computer controlled, adjustable focus video camera in real-time.

Owner:UNIV OF WASHINGTON

Enhanced electro-active lens system

A lens system and optical devices that provide enhanced vision correction are disclosed. The lens system includes an electro-active layer that provides correction of at least one higher order aberration. The higher order correction changes dynamically based on a user of the lens system's needs, such as a change by the user's gaze distance, pupil size, or changes in tear film following blinking, among others. Optical devices are also described that use these and other lens systems to provide correction of higher order aberrations. An optical guide is also described that guides the user's line of sight to see through a lens region having a correction for a higher order aberration.

Owner:E VISION LLC

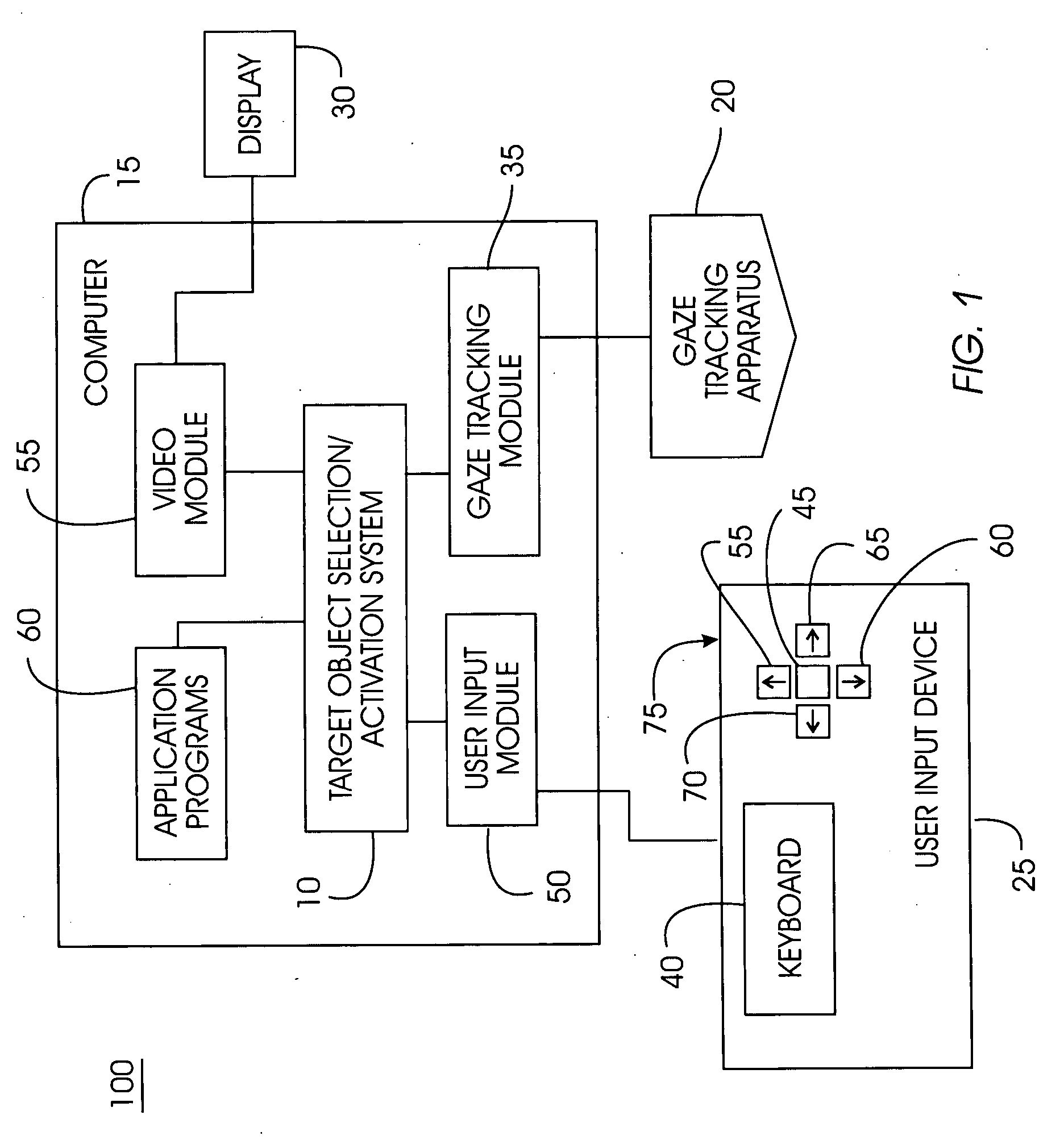

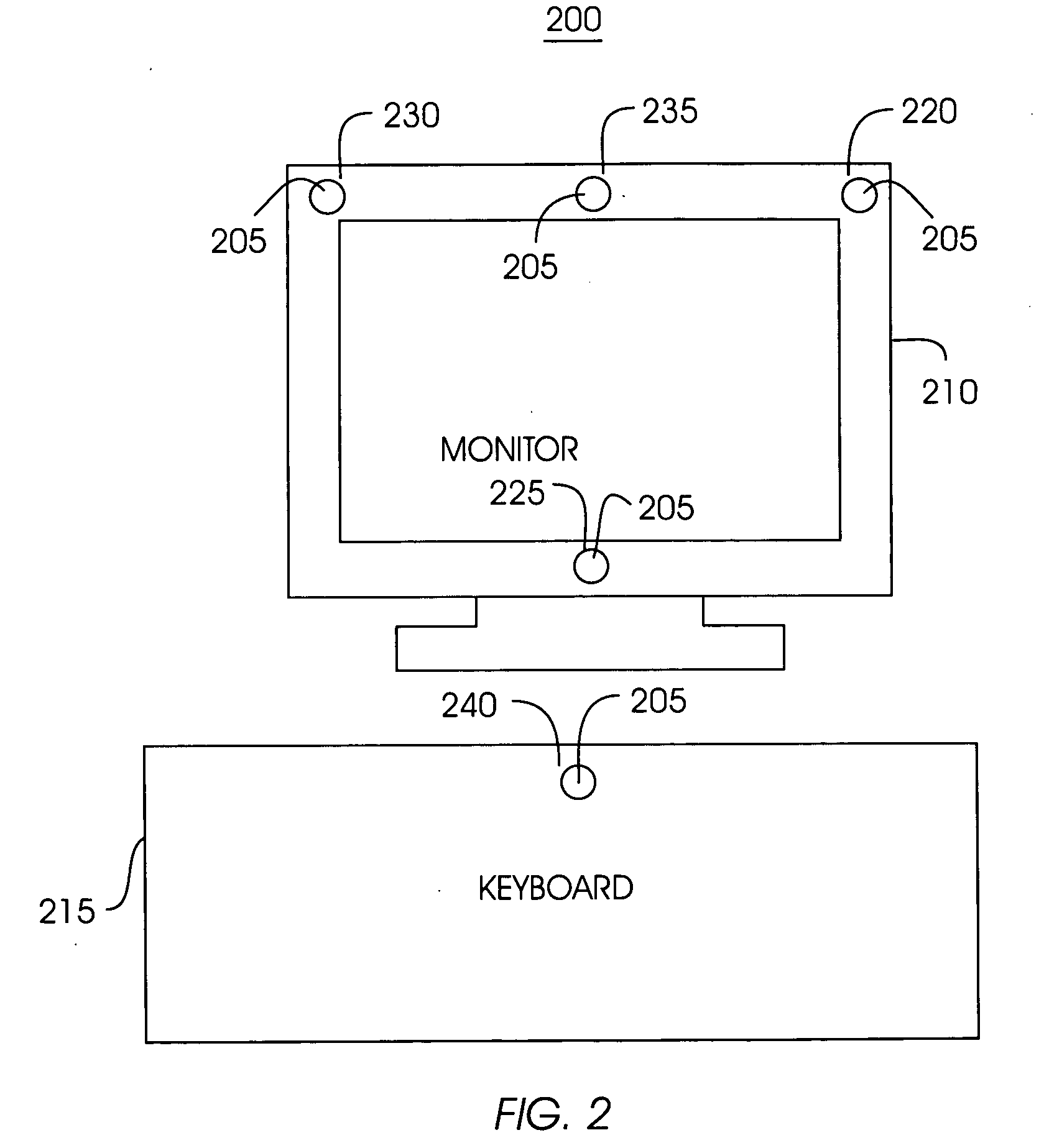

System and method for selecting and activating a target object using a combination of eye gaze and key presses

ActiveUS20050243054A1Reduce dependenceEfficient and enjoyable user interfaceInput/output for user-computer interactionCharacter and pattern recognitionGraphicsPresent method

A pointing method uses eye-gaze as an implicit control channel. A user looks in a natural fashion at a target object as a button on a graphical user interface and then presses a manual selection key. Once the selection key is pressed, the present method uses probability analysis to determine a most probable target object and to highlight it. If the highlighted object is the target object, the user can select it by pressing a key such as the selection key once again. If the highlighted object is not the target object, the user can select another target object using additional keys to navigate to the intended target object.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com