Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

437 results about "Visual saliency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Visual salience (or visual saliency) is the distinct subjective perceptual quality which makes some items in the world stand out from their neighbors and immediately grab our attention.

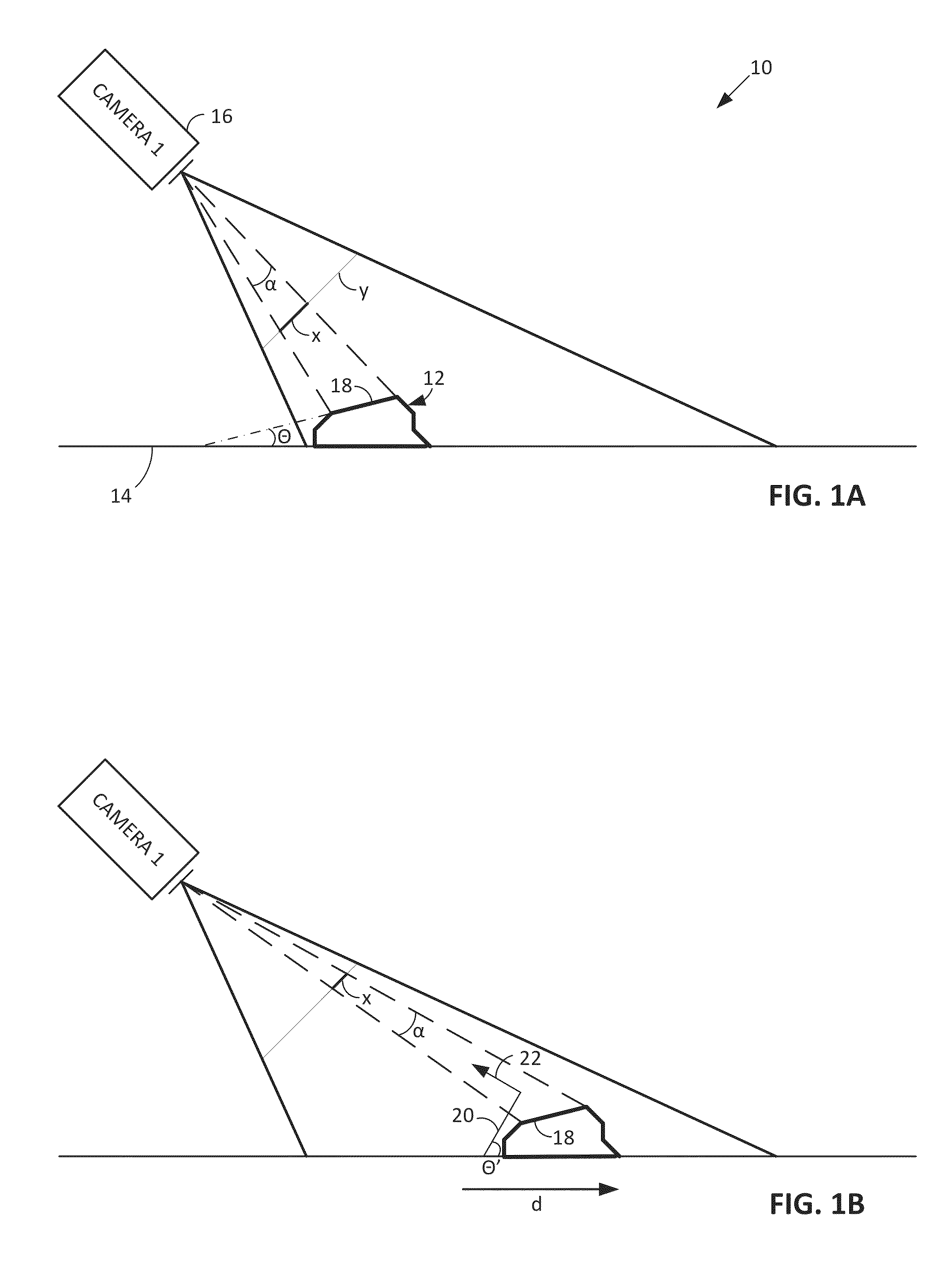

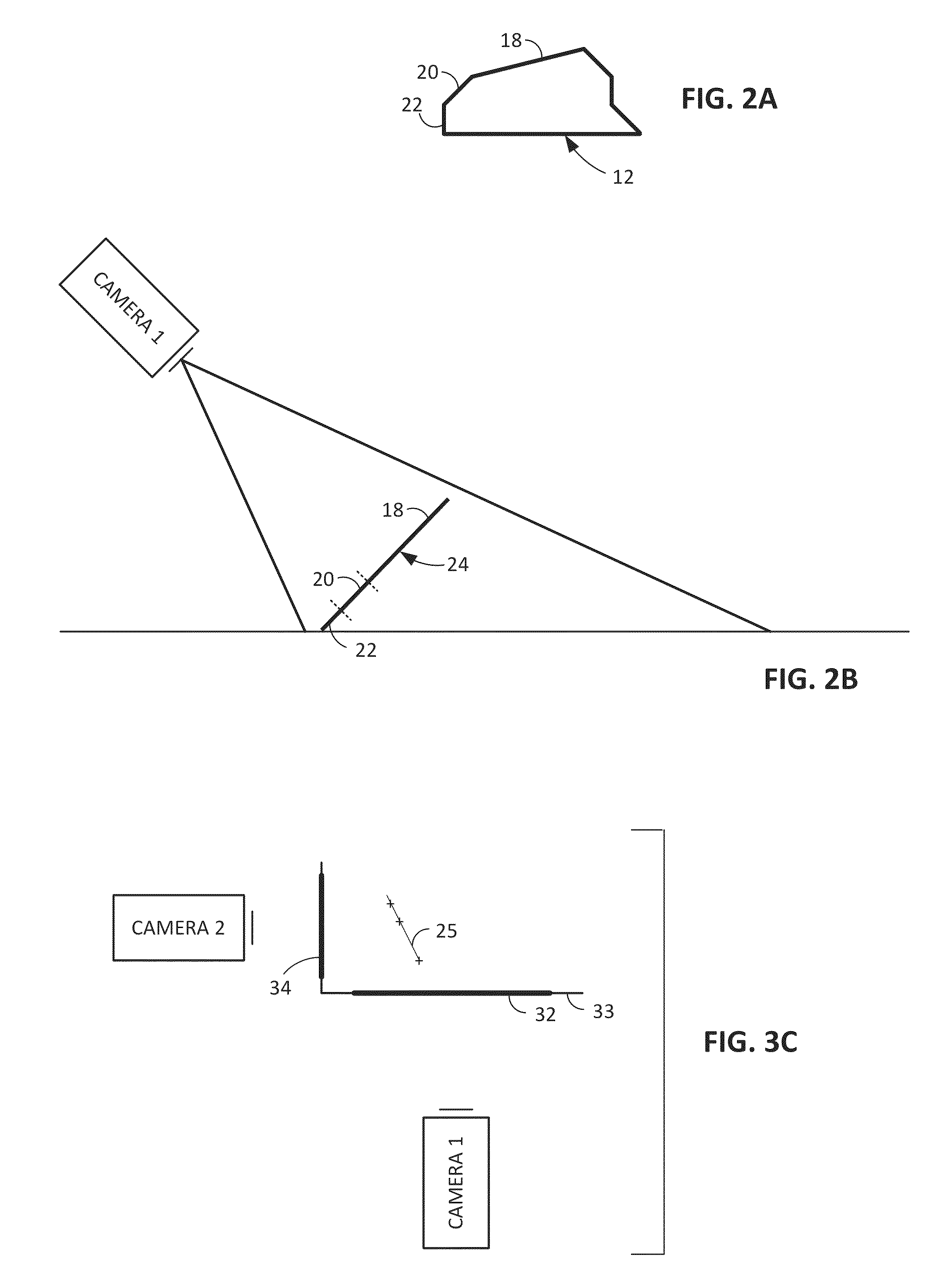

Methods and arrangements for identifying objects

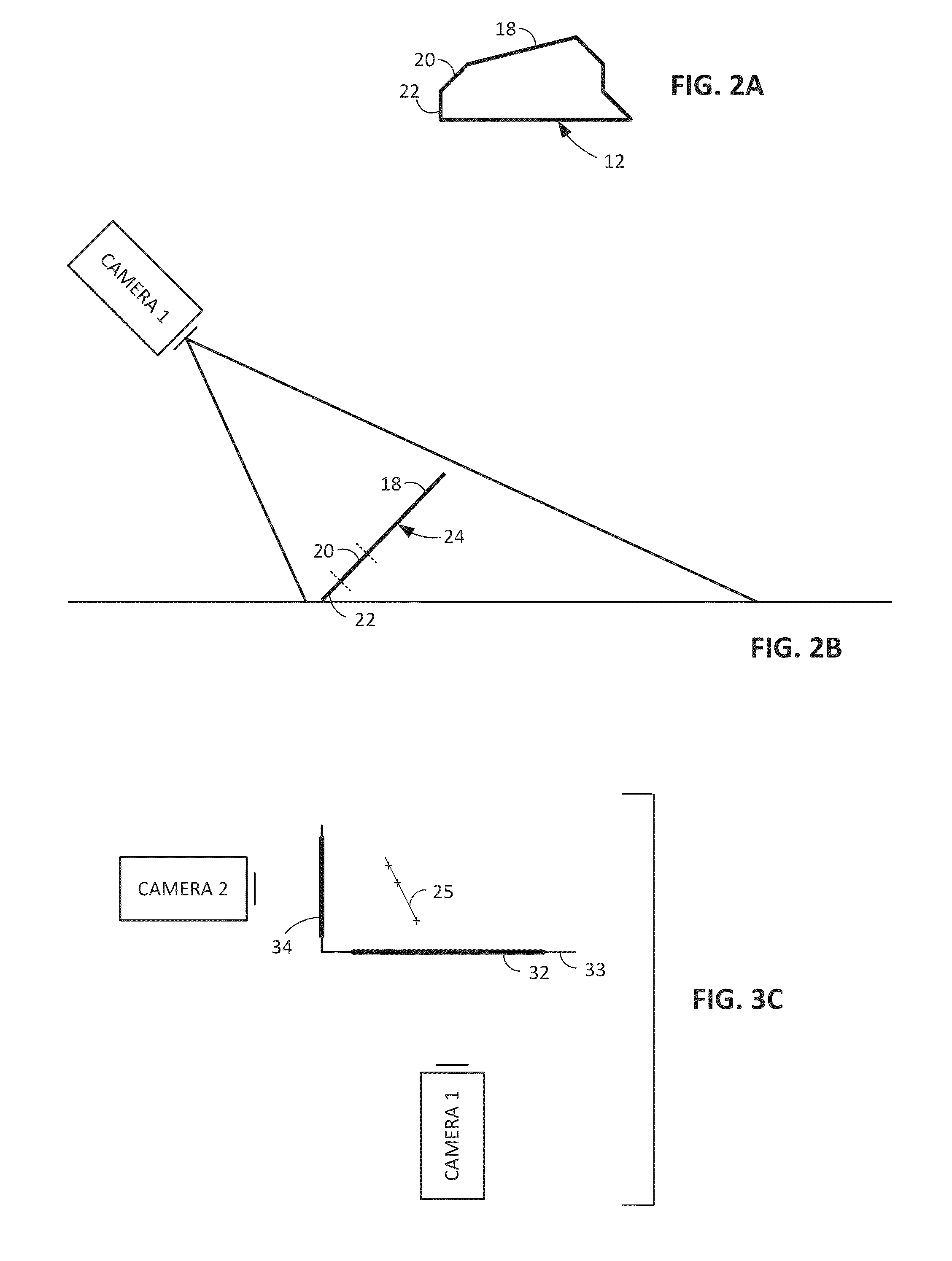

ActiveUS20130223673A1Increase check-out speedImprove accuracyStatic indicating devicesCash registersPattern recognitionGeometric primitive

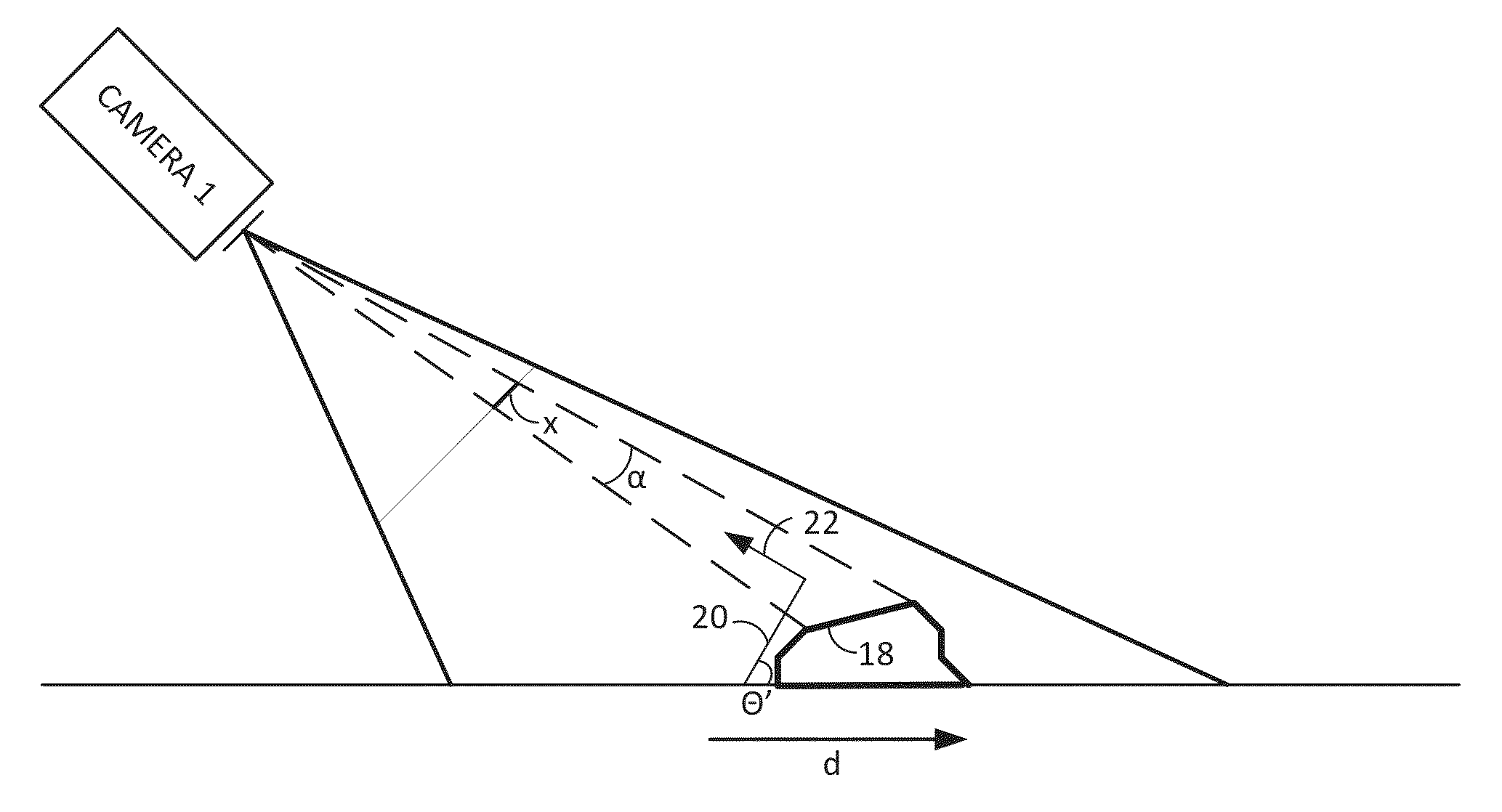

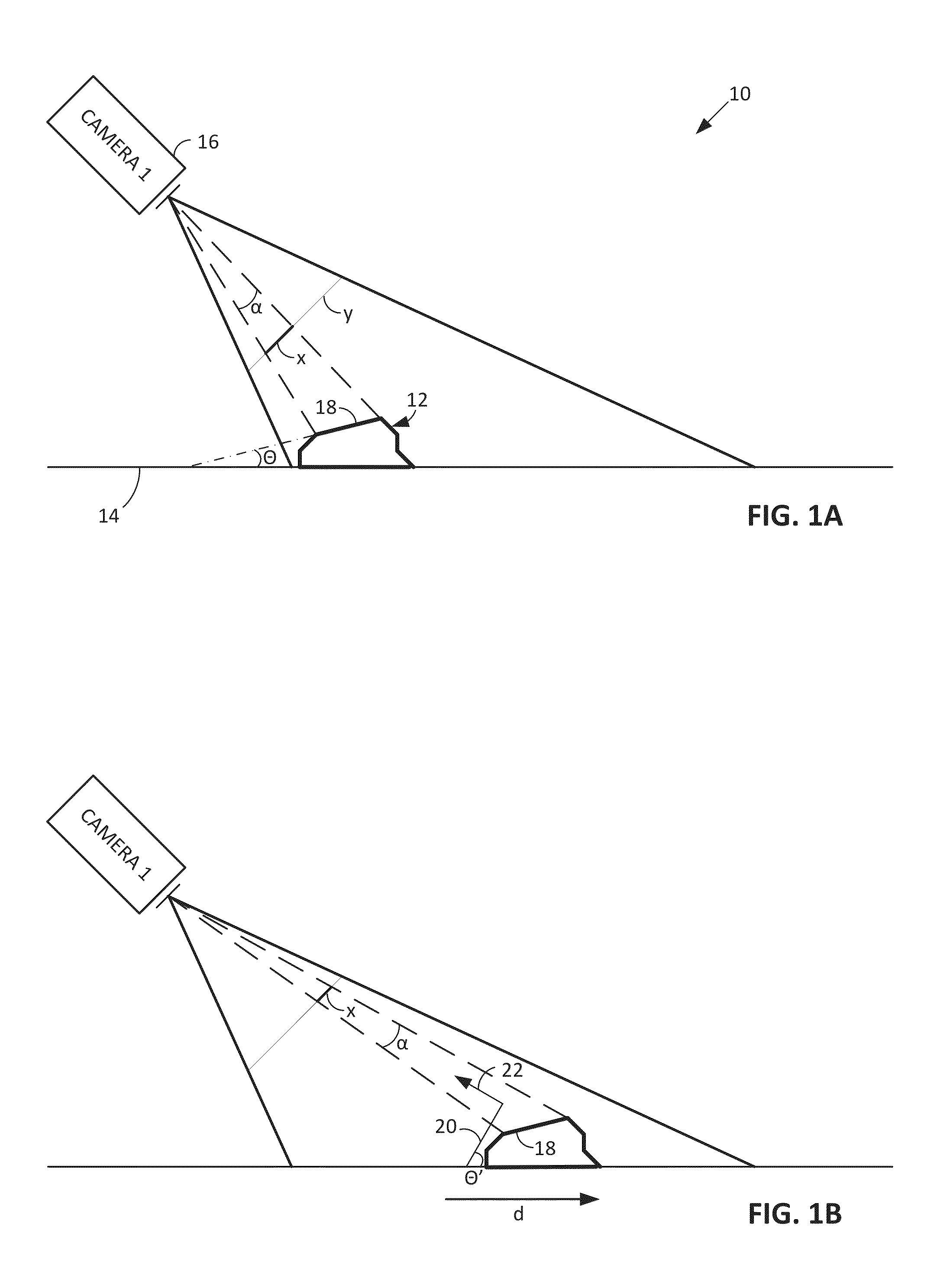

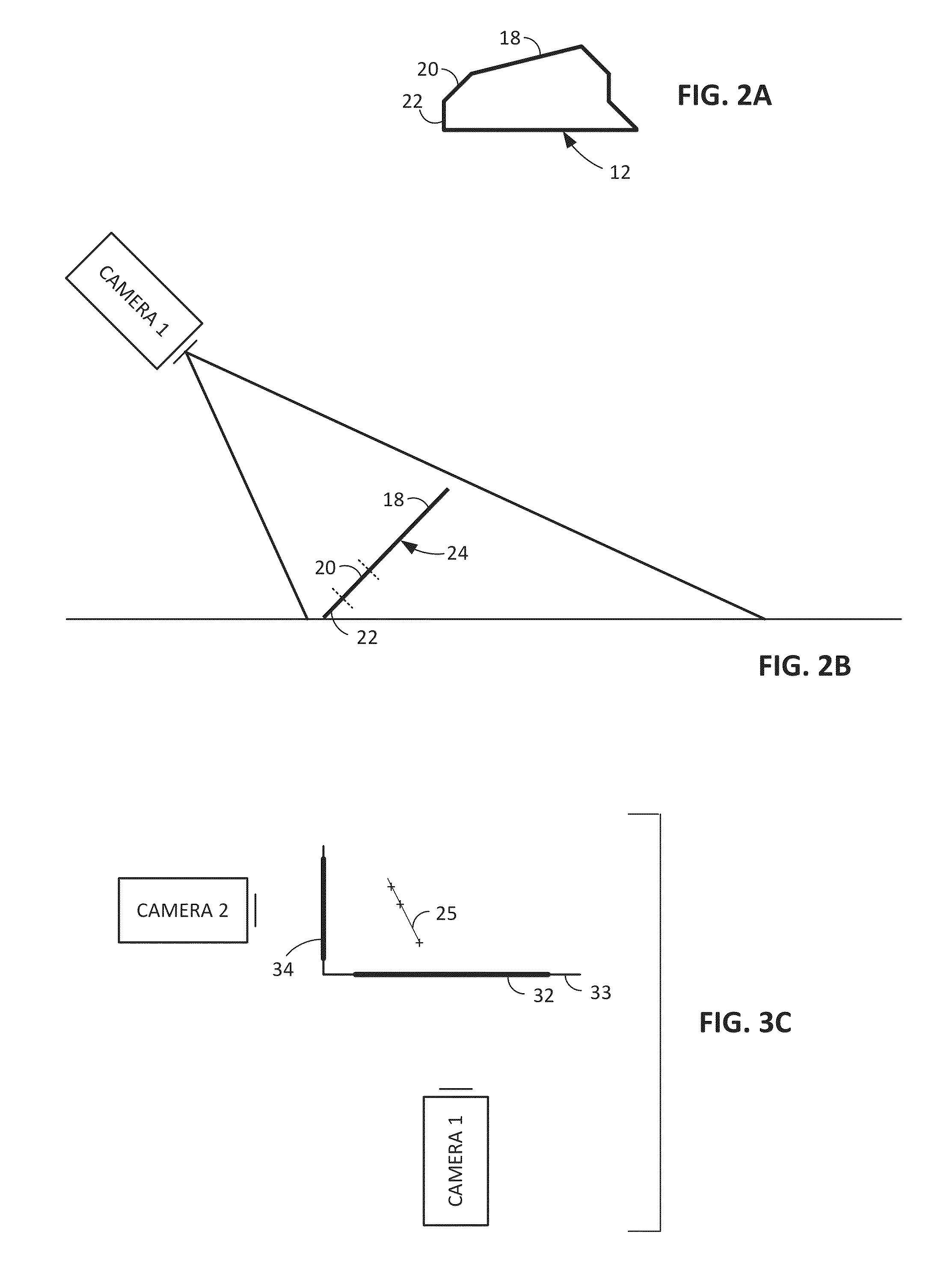

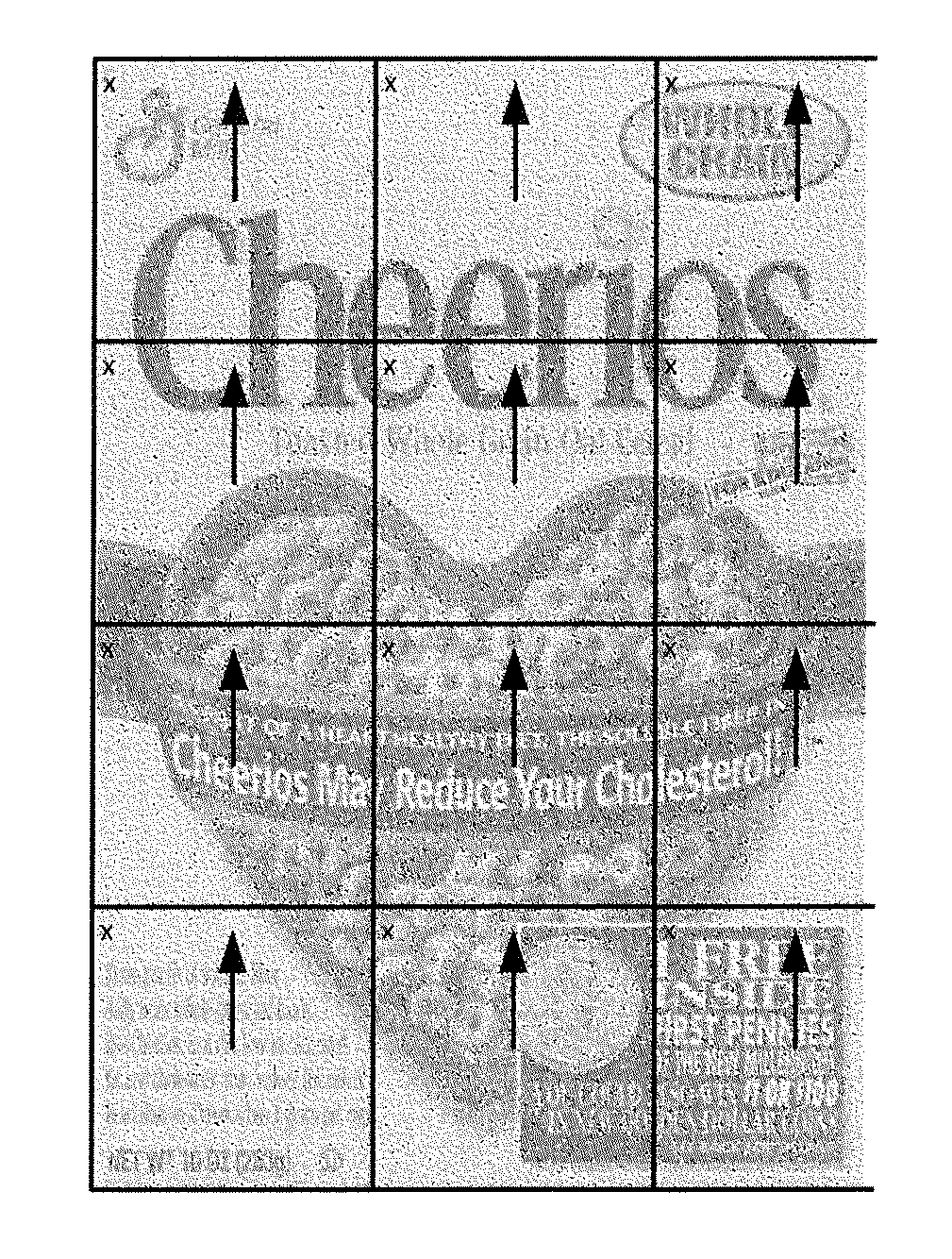

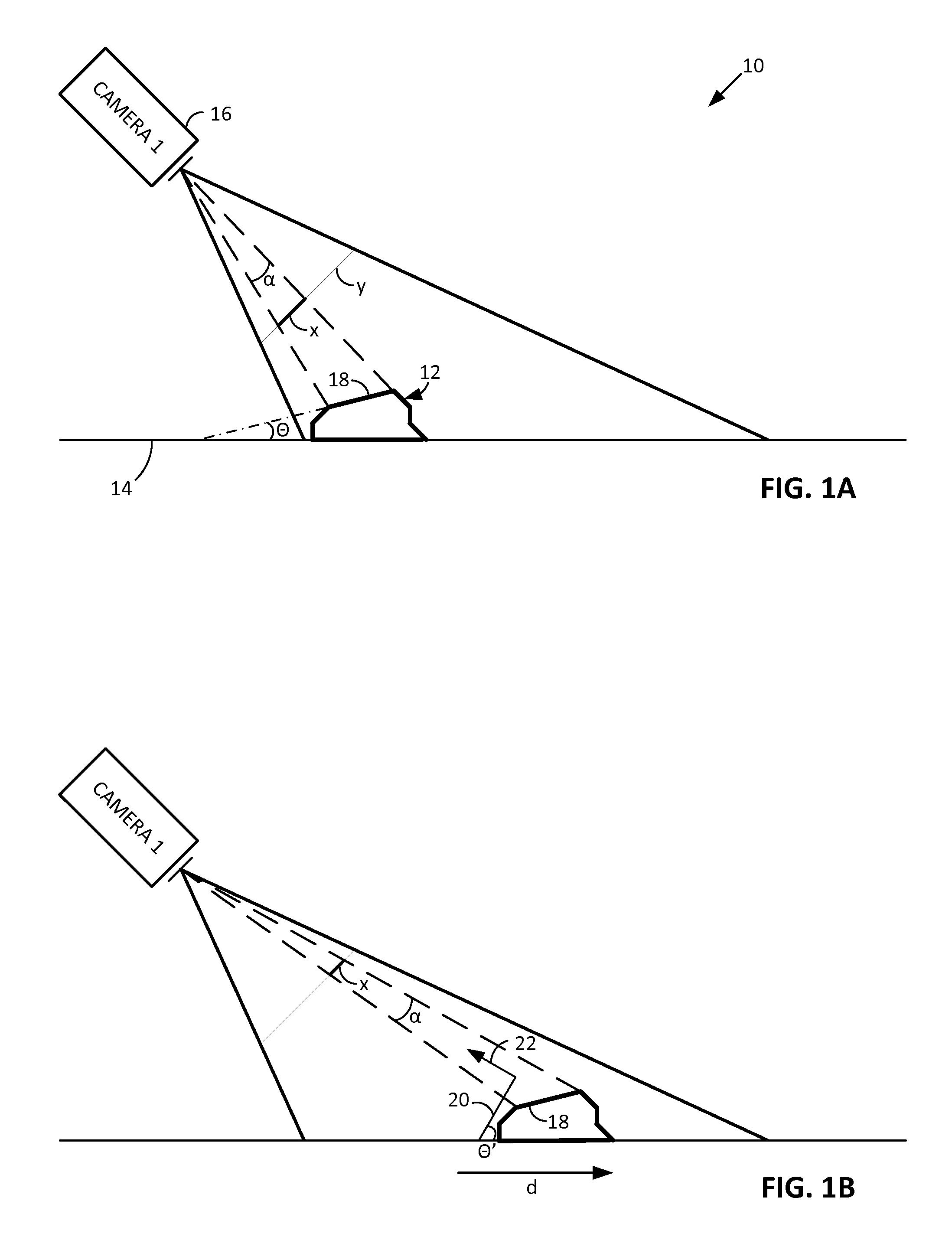

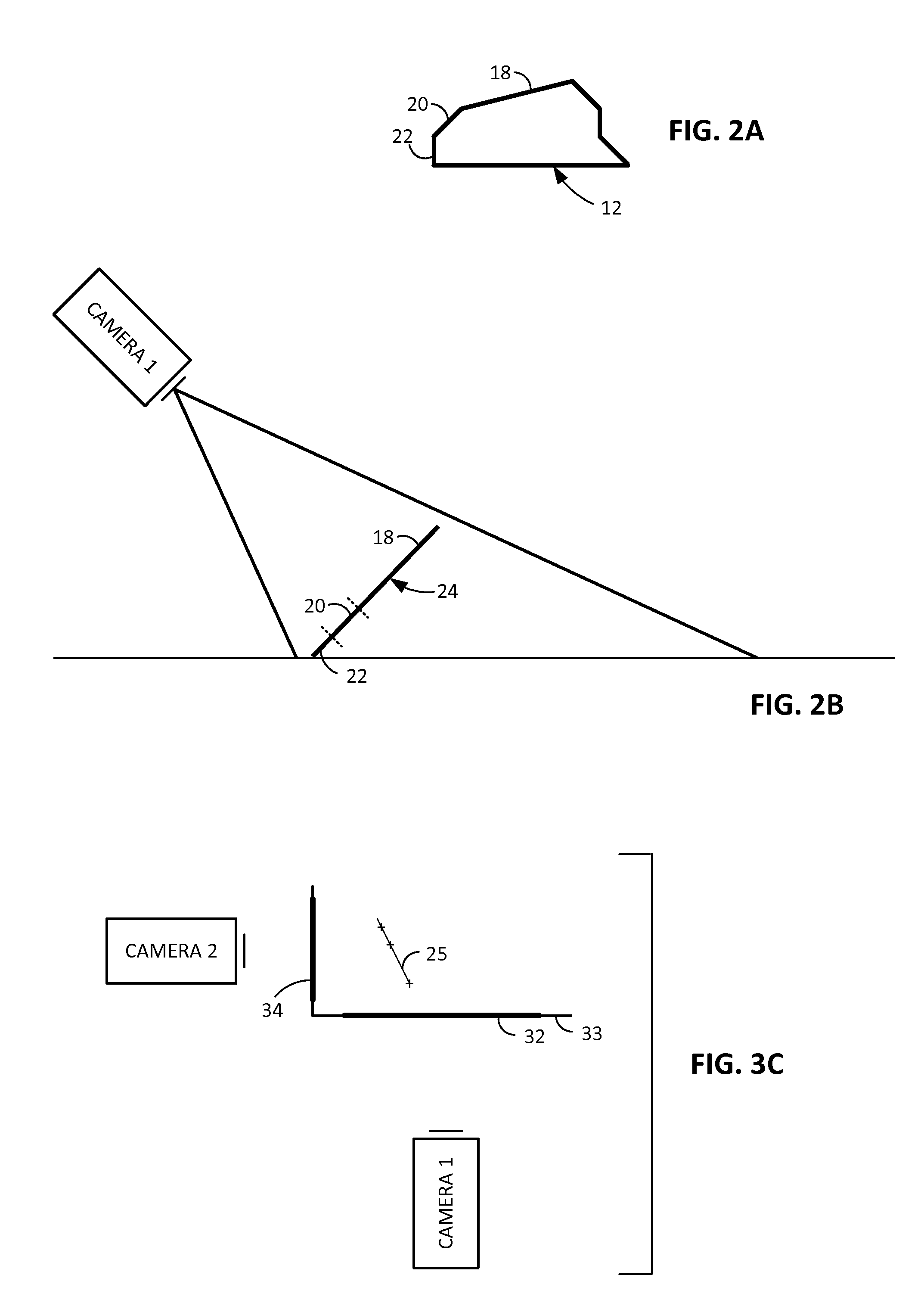

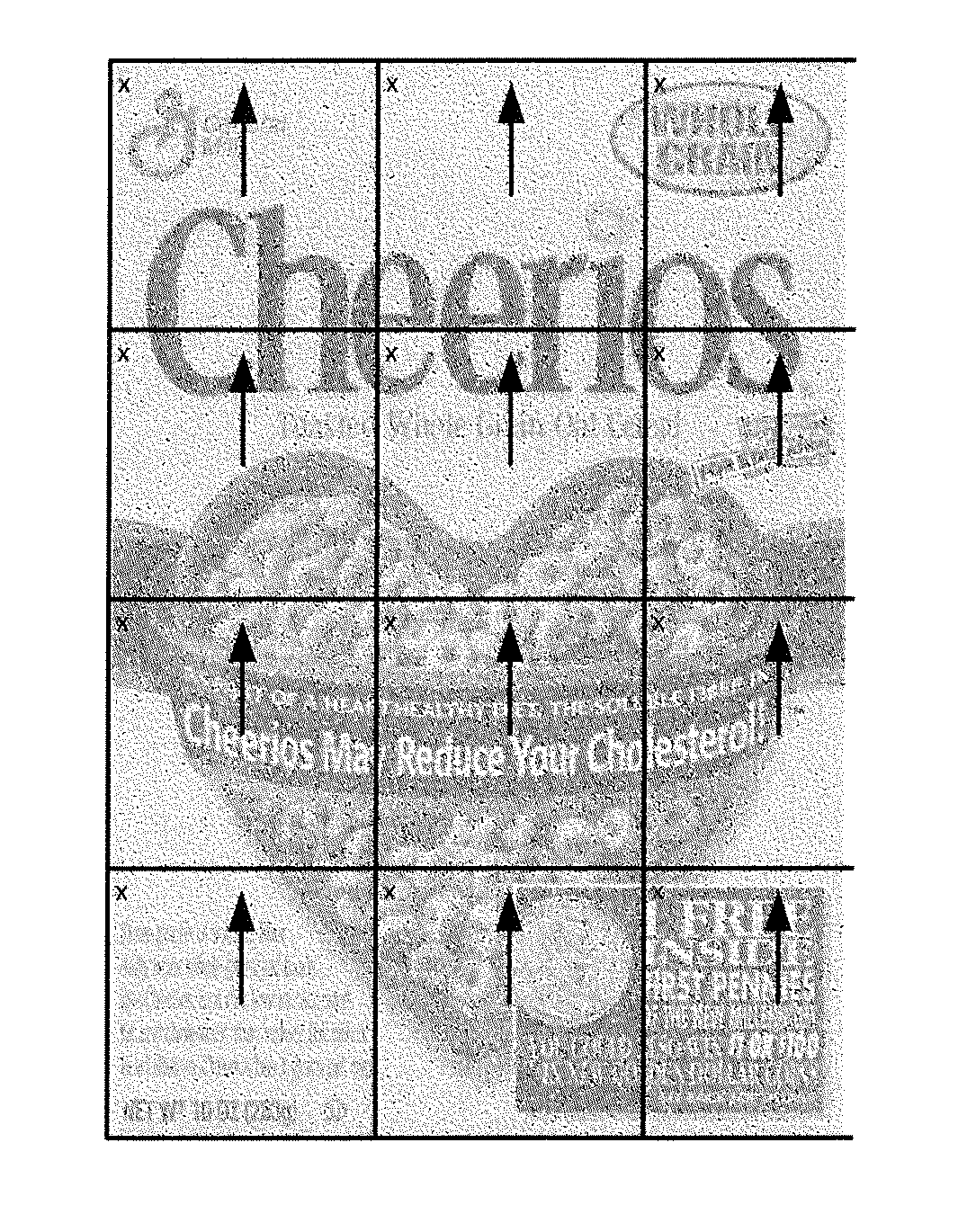

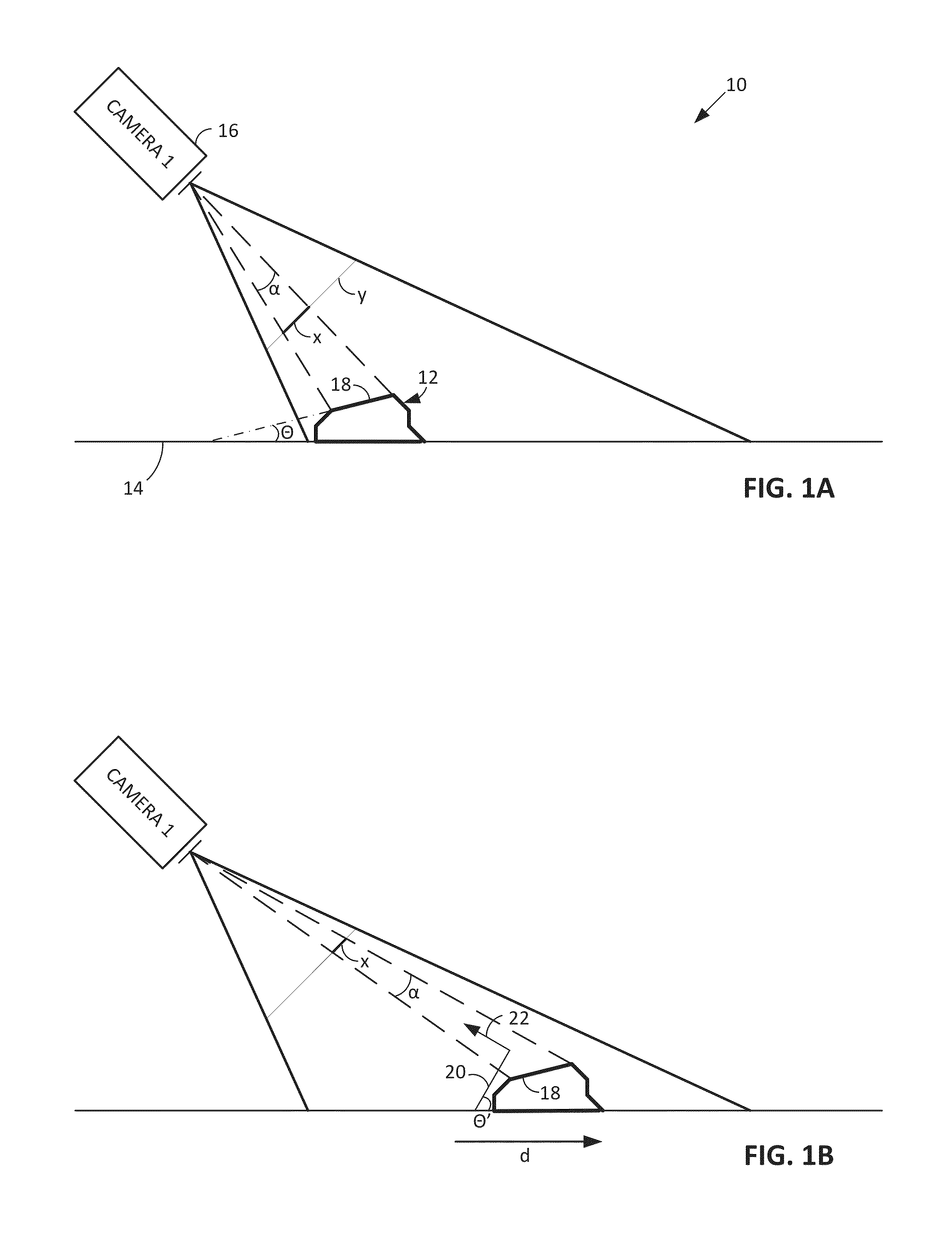

In some arrangements, product packaging is digitally watermarked over most of its extent to facilitate high-throughput item identification at retail checkouts. Imagery captured by conventional or plenoptic cameras can be processed (e.g., by GPUs) to derive several different perspective-transformed views—further minimizing the need to manually reposition items for identification. Crinkles and other deformations in product packaging can be optically sensed, allowing such surfaces to be virtually flattened to aid identification. Piles of items can be 3D-modelled and virtually segmented into geometric primitives to aid identification, and to discover locations of obscured items. Other data (e.g., including data from sensors in aisles, shelves and carts, and gaze tracking for clues about visual saliency) can be used in assessing identification hypotheses about an item. A great variety of other features and arrangements are also detailed.

Owner:DIGIMARC CORP

Methods and arrangements for identifying objects

ActiveUS20140052555A1Increase check-out speedImprove accuracyImage enhancementImage analysisGeometric primitiveVisual saliency

In some arrangements, product packaging is digitally watermarked over most of its extent to facilitate high-throughput item identification at retail checkouts. Imagery captured by conventional or plenoptic cameras can be processed (e.g., by GPUs) to derive several different perspective-transformed views—further minimizing the need to manually reposition items for identification. Crinkles and other deformations in product packaging can be optically sensed, allowing such surfaces to be virtually flattened to aid identification. Piles of items can be 3D-modelled and virtually segmented into geometric primitives to aid identification, and to discover locations of obscured items. Other data (e.g., including data from sensors in aisles, shelves and carts, and gaze tracking for clues about visual saliency) can be used in assessing identification hypotheses about an item. Logos may be identified and used—or ignored—in product identification. A great variety of other features and arrangements are also detailed.

Owner:DIGIMARC CORP

Methods and arrangements for identifying objects

ActiveUS9129277B2Improve accuracyIncrease speedImage enhancementImage analysisGeometric primitiveVisual saliency

In some arrangements, product packaging is digitally watermarked over most of its extent to facilitate high-throughput item identification at retail checkouts. Imagery captured by conventional or plenoptic cameras can be processed (e.g., by GPUs) to derive several different perspective-transformed views—further minimizing the need to manually reposition items for identification. Crinkles and other deformations in product packaging can be optically sensed, allowing such surfaces to be virtually flattened to aid identification. Piles of items can be 3D-modelled and virtually segmented into geometric primitives to aid identification, and to discover locations of obscured items. Other data (e.g., including data from sensors in aisles, shelves and carts, and gaze tracking for clues about visual saliency) can be used in assessing identification hypotheses about an item. Logos may be identified and used—or ignored—in product identification. A great variety of other features and arrangements are also detailed.

Owner:DIGIMARC CORP

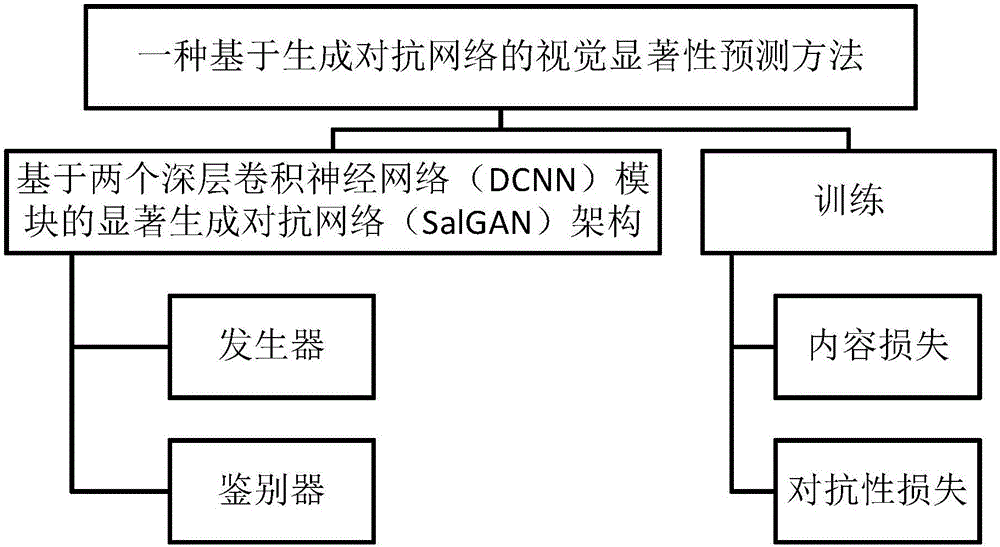

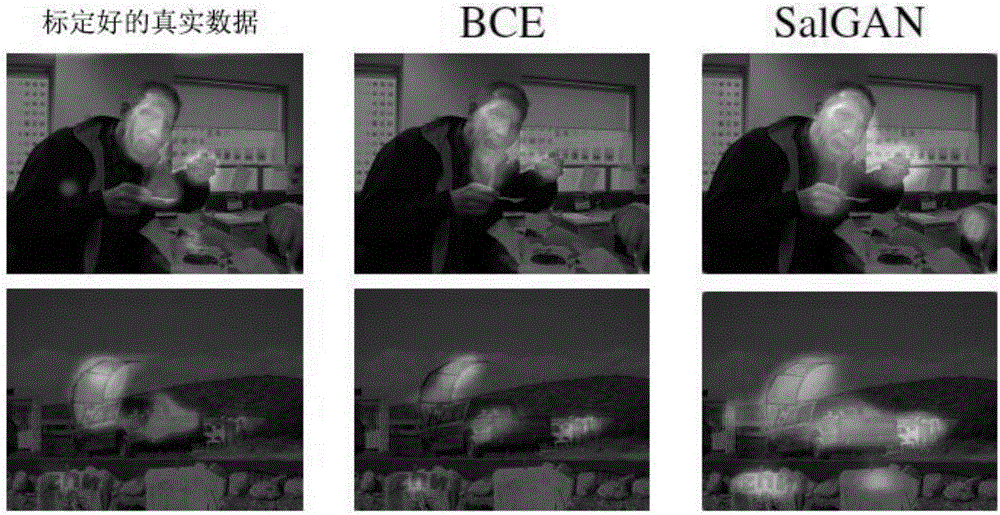

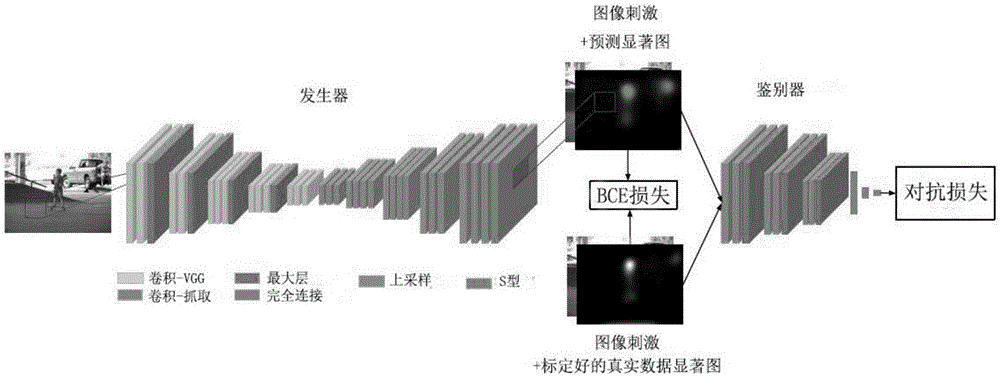

Visual significance prediction method based on generative adversarial network

InactiveCN106845471ACharacter and pattern recognitionNeural learning methodsDiscriminatorVisual saliency

The invention provides a visual significance prediction method based on a generative adversarial network. which mainly comprises the steps of constructing a saliency generative adversarial network (SalGAN) based on two deep convolutional neural network (DCNN) modules, and training. Specifically, the method comprises the steps of constructing the saliency generative adversarial network (SalGAN) based on two deep convolutional neural network (DCNN) modules, wherein the saliency generative adversarial network comprises a generator and a discriminator, and the generator and the discriminator are combined for predicating a visual saliency graph of a preset input image; and training filter weight in the SalGAN through perception loss which is caused by combining content loss and adversarial loss. A loss function in the method is combination of error from the discriminator and cross entropy relative to calibrated true data, thereby improving stability and convergence rate in adversarial training. Compared with further training of individual cross entropy, adversarial training improves performance so that higher speed and higher efficiency are realized.

Owner:SHENZHEN WEITESHI TECH

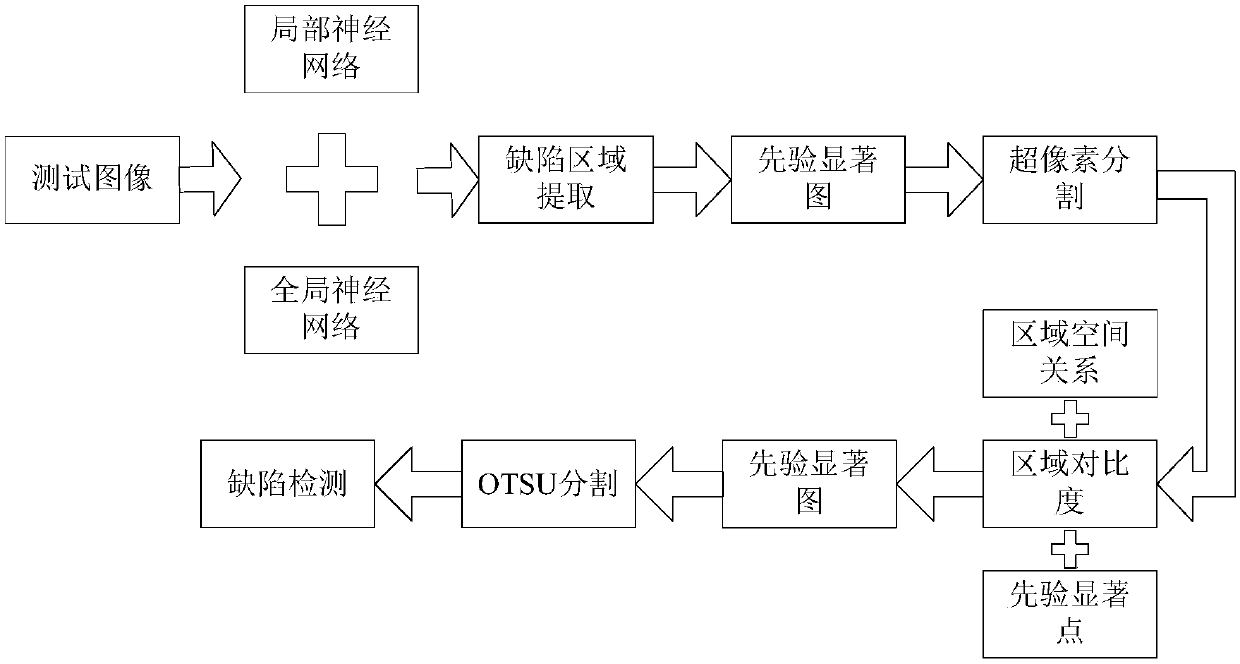

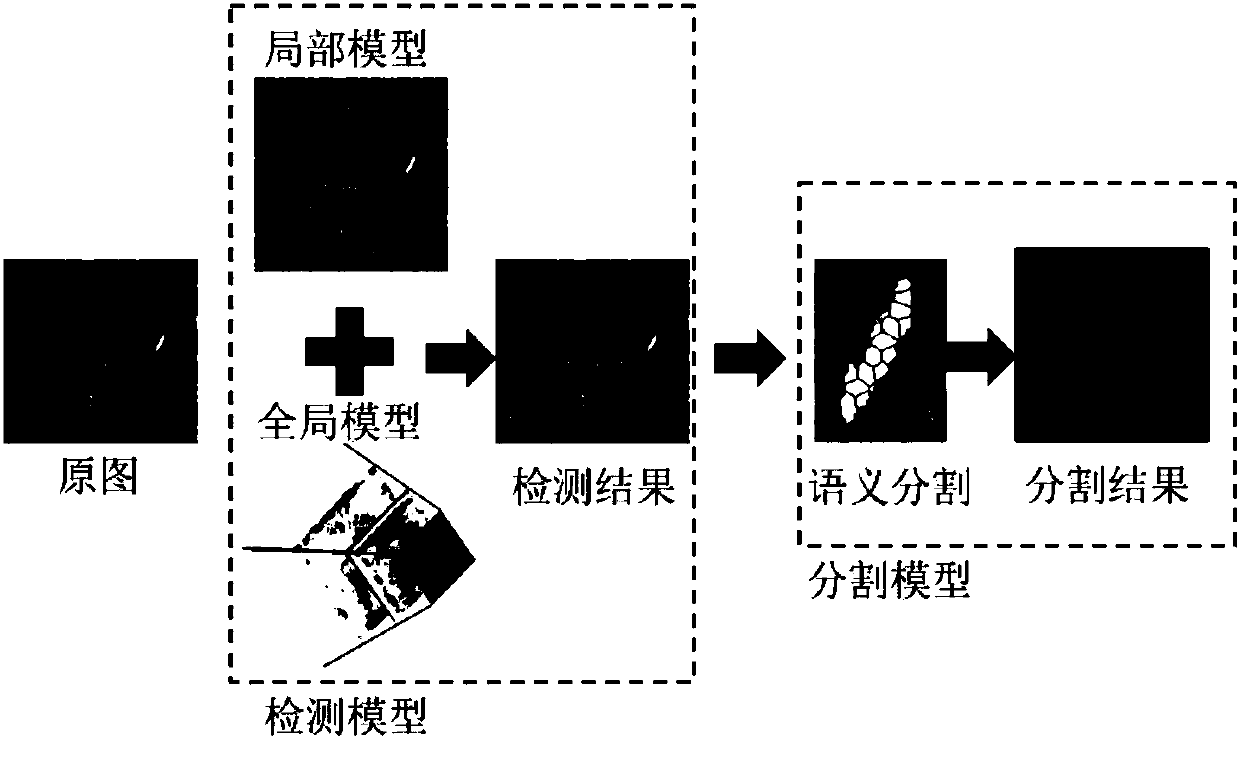

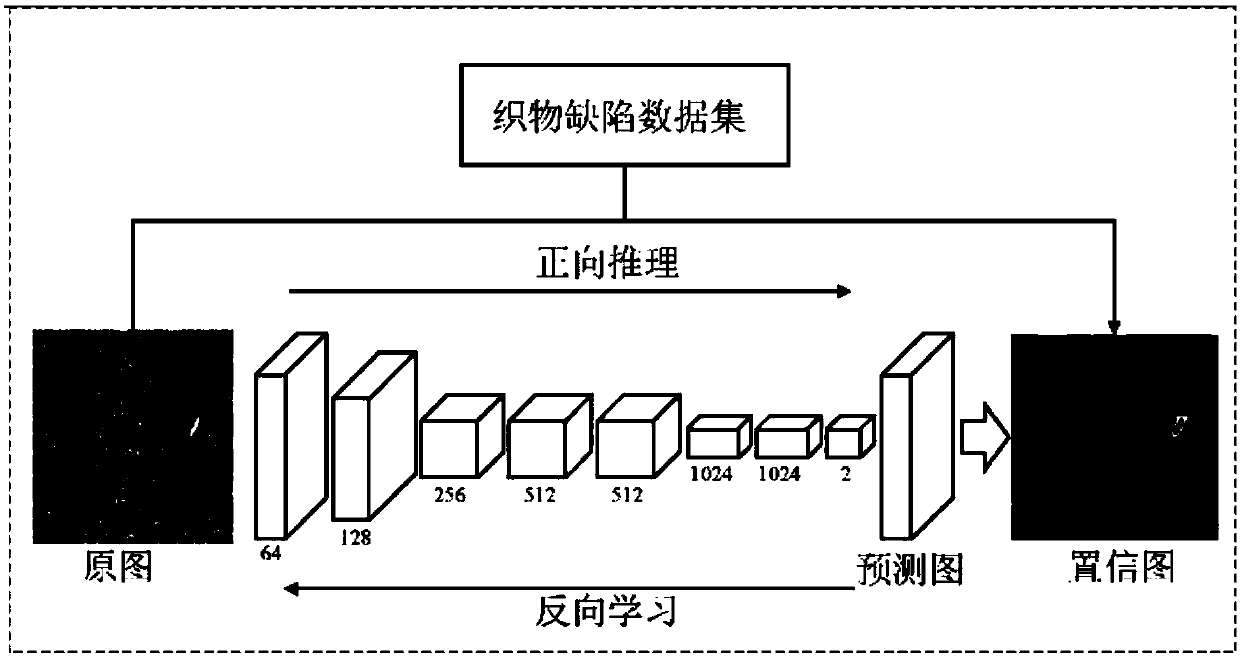

Fabric defect detection method based on deep convolution neural network and visual saliency

ActiveCN107833220AImprove robustnessStrong real-timeImage analysisNeural architecturesImaging processingVisual saliency

The invention discloses a fabric defect detection method based on a deep convolution neural network and visual saliency, which belongs to the technical field of image processing. A defect region positioning module and a defect semantic segmentation module are included. The defect region positioning module uses two deep learning models of a local convolution neural network and a global convolutionneural network for fusion, advanced features of a fabric defect are extracted automatically and act on a defect image, and precise positioning of the defect region is acquired. The defect semantic segmentation module uses the defect region positioning result, a super pixel image segmentation method based on visual saliency is combined, a defect prior foreground point is acquired, a defect target is precisely segmented, and defect detection is finally realized. The multi-deep learning-fused fabric defect positioning network and the improved fabric defect segmentation network based on the visualsaliency are used, the adaptability to the fabric image is good, the precision is high, and defects in the fabric image under a complex background and noise interference can be effectively detected.

Owner:HOHAI UNIV CHANGZHOU

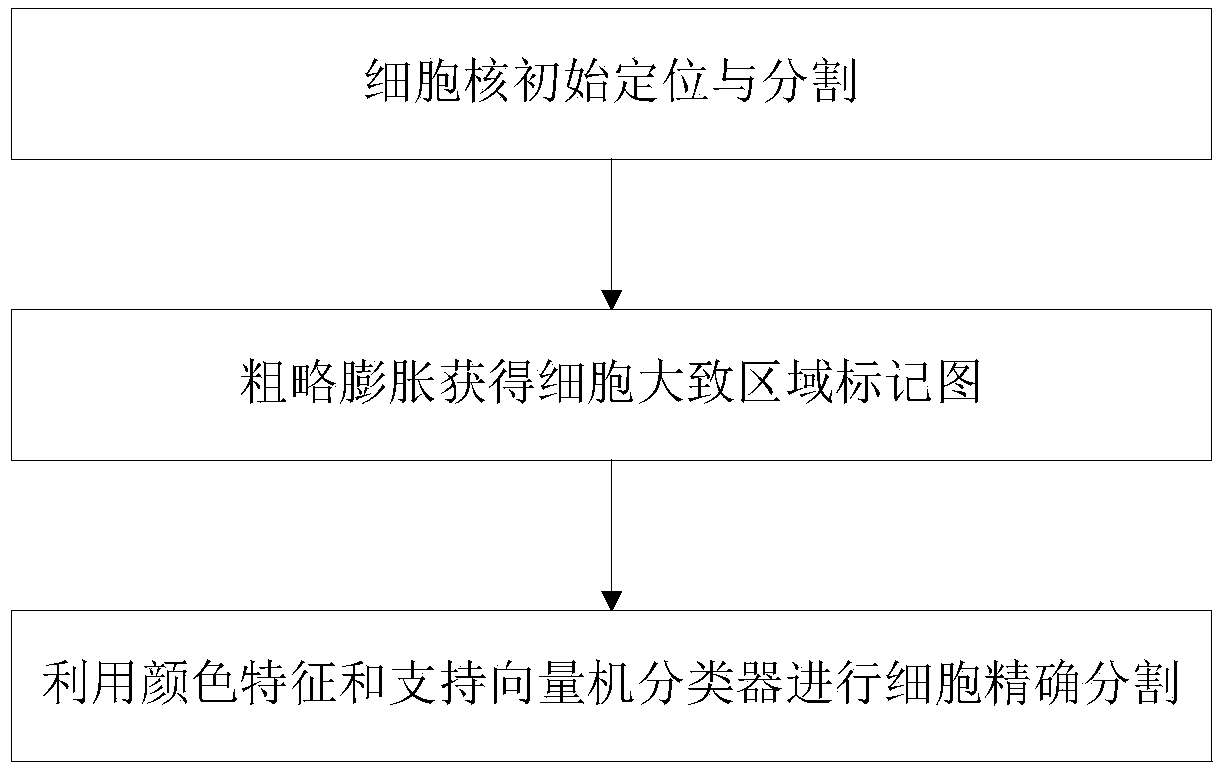

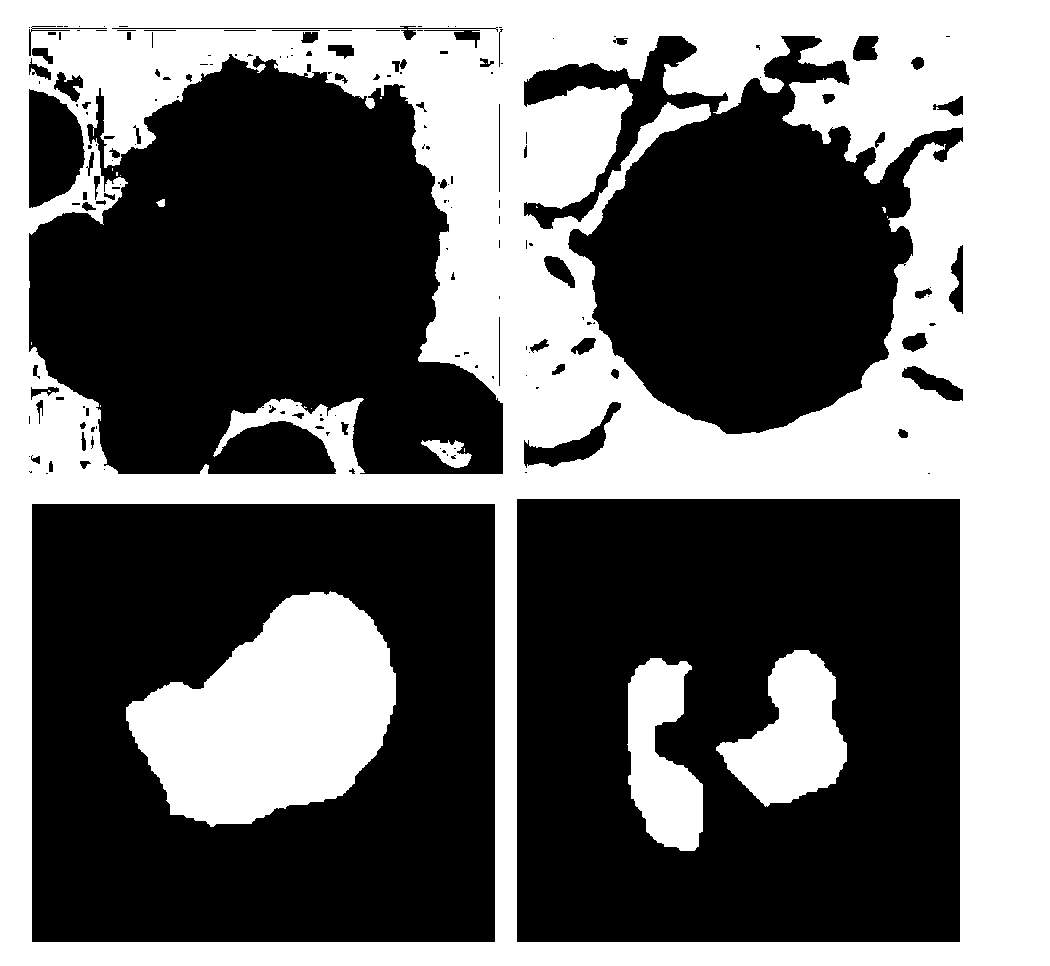

White blood cell image accurate segmentation method and system based on support vector machine

InactiveCN103473739AAccurate segmentationImprove stabilityImage enhancementImage analysisWhite blood cellVisual saliency

The invention discloses a white blood cell image accurate segmentation method and system based on a support vector machine. The method comprises performing nucleus initial positioning and segmenting, performing rough expansion so as to obtain a substantial area labeled graph of cells, and accurately segmenting the cells by using color characteristics and the classifier of the support vector machine. According to the method provided by the invention, on one hand, according to a mankind visual saliency attention mechanism, the sensitivity of human eyes to the change of image edges are simulated, and a nucleus area can be accurately and rapidly segmented by using the clustering of edge-color pairs; and on the other hand, the adopted classifier of the support vector machine has excellent stability and anti-interference performance, and at the same time the space relationship of color information and pixel points are fully utilized so that the training sample sampling mode of the classifier of the support vector machine is improved, thus the accurate segmentation of white blood cells in a cell small image can be realized.

Owner:HUAZHONG UNIV OF SCI & TECH

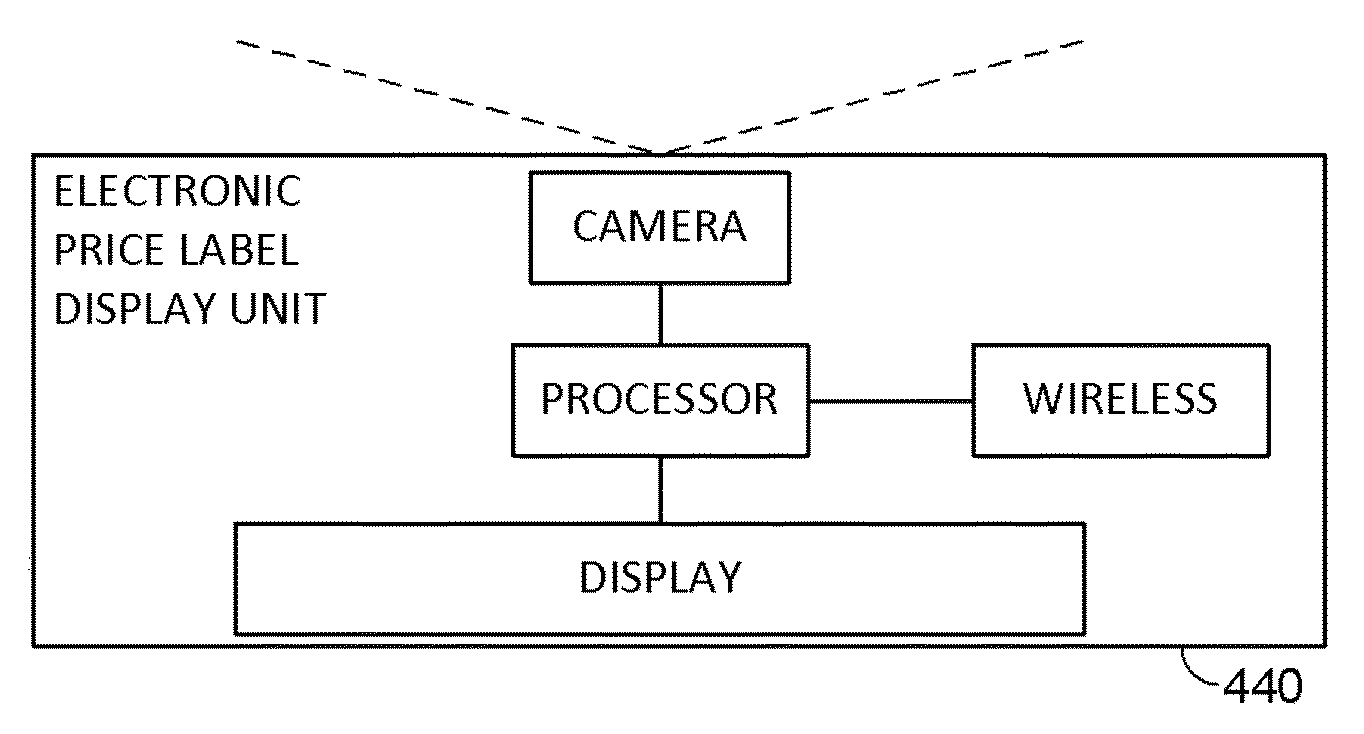

Methods and arrangements for identifying objects

ActiveUS9367770B2Improve accuracyIncrease speedStatic indicating devicesCo-operative working arrangementsPattern recognitionGeometric primitive

In some arrangements, product packaging is digitally watermarked over most of its extent to facilitate high-throughput item identification at retail checkouts. Imagery captured by conventional or plenoptic cameras can be processed (e.g., by GPUs) to derive several different perspective-transformed views—further minimizing the need to manually reposition items for identification. Crinkles and other deformations in product packaging can be optically sensed, allowing such surfaces to be virtually flattened to aid identification. Piles of items can be 3D-modelled and virtually segmented into geometric primitives to aid identification, and to discover locations of obscured items. Other data (e.g., including data from sensors in aisles, shelves and carts, and gaze tracking for clues about visual saliency) can be used in assessing identification hypotheses about an item. A great variety of other features and arrangements are also detailed.

Owner:DIGIMARC CORP

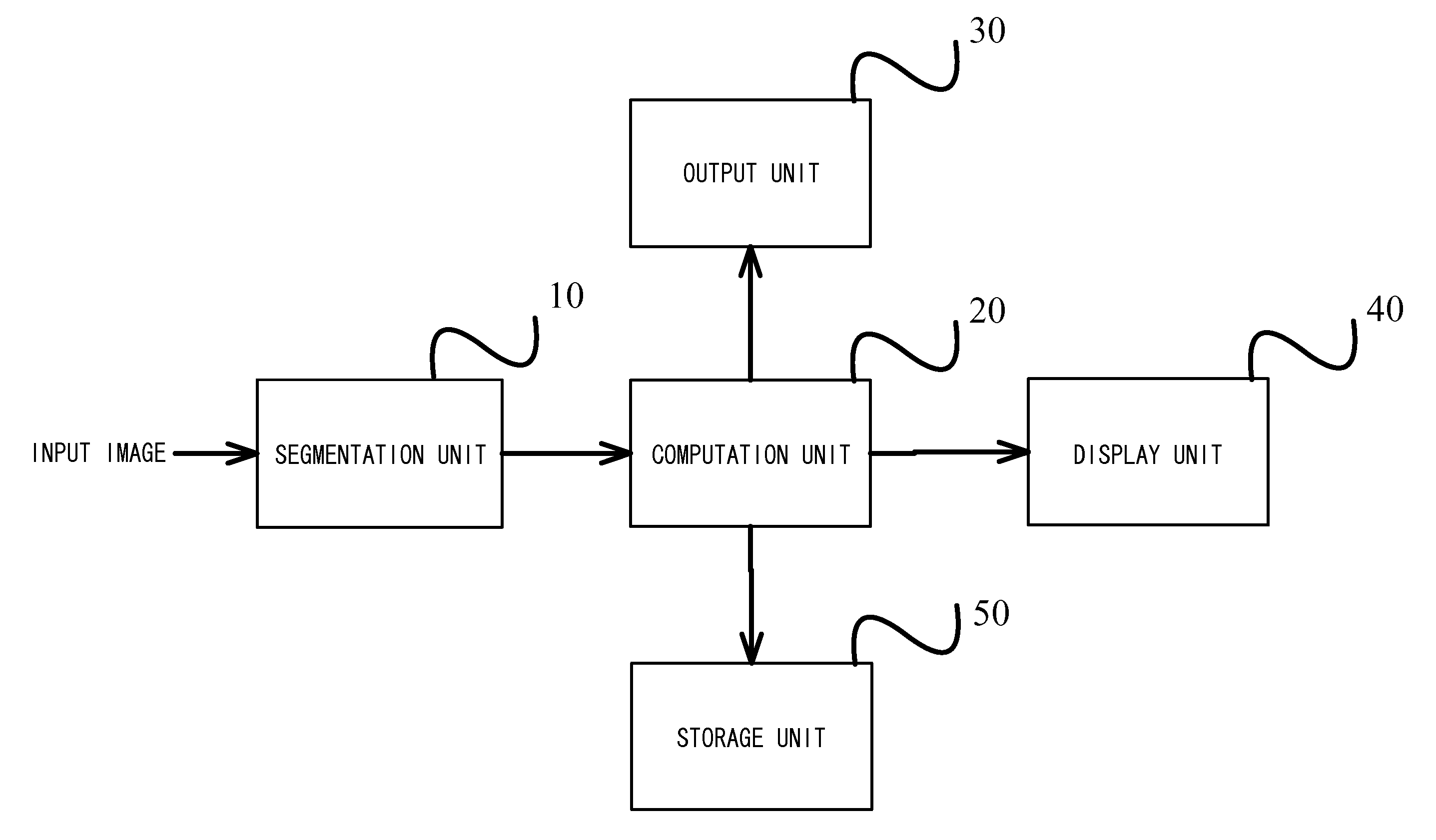

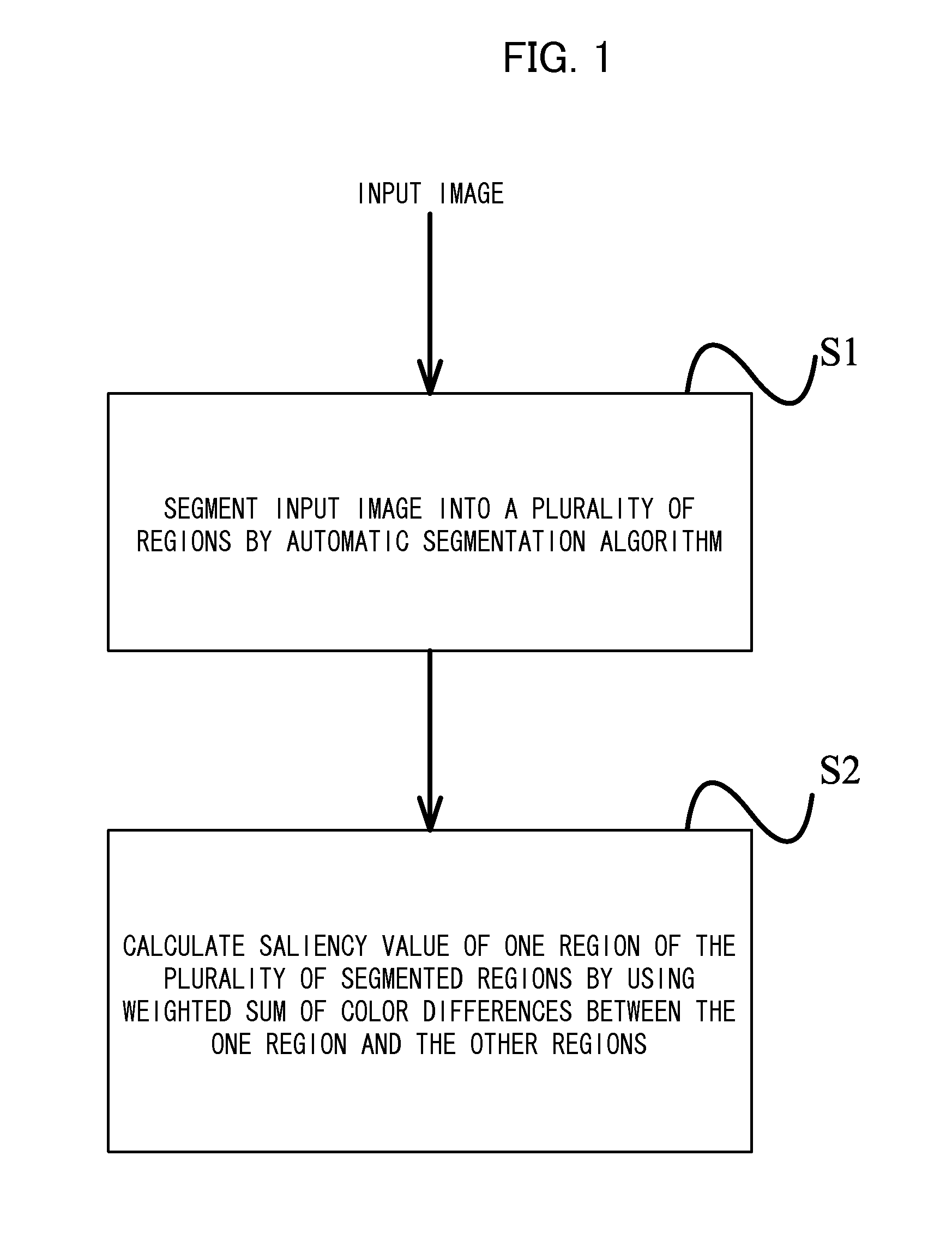

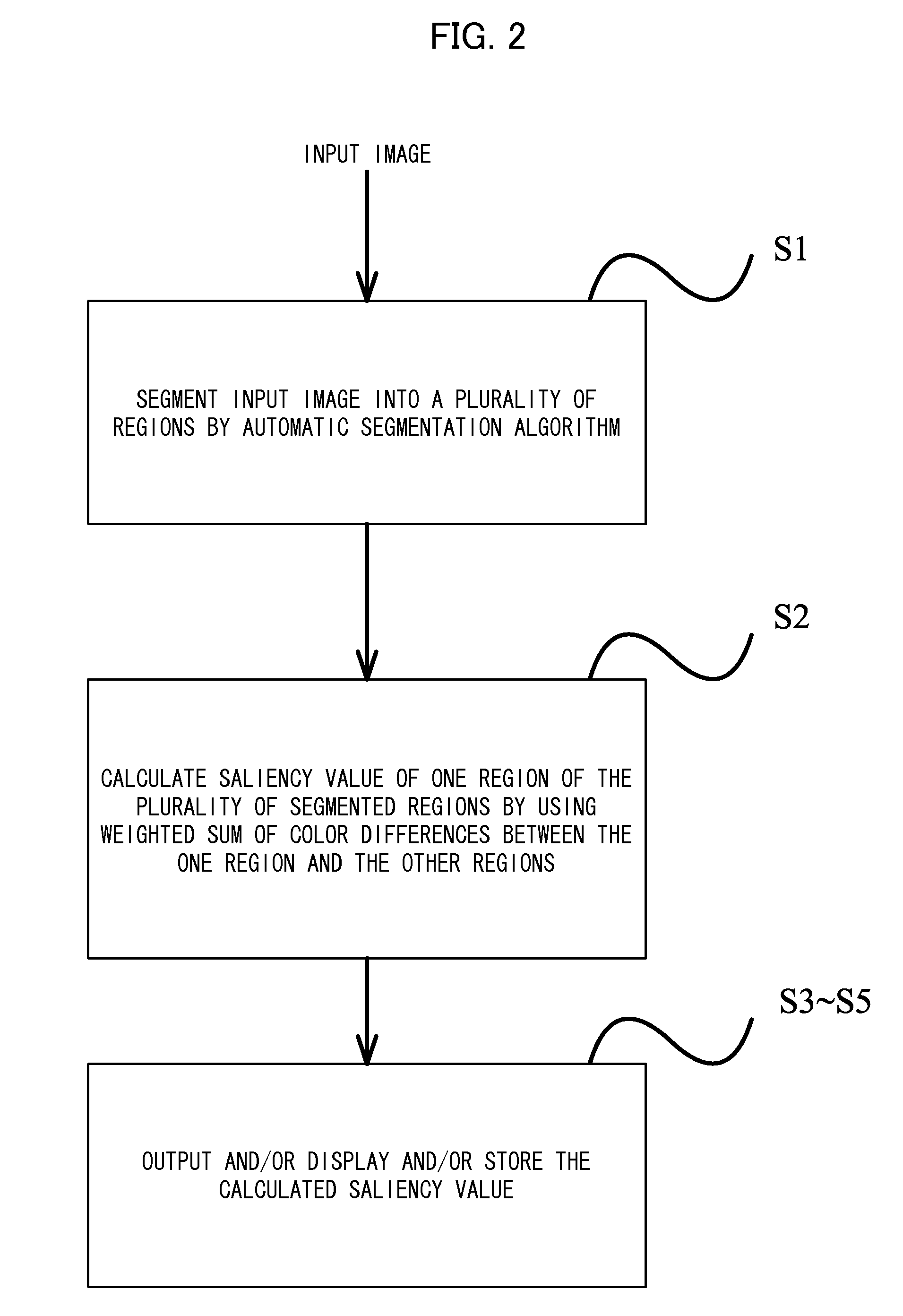

Image processing method and image processing device

ActiveUS20120288189A1Rapidly and efficiently analyzeVisual saliencyImage enhancementImage analysisAutomatic segmentationImaging processing

An image processing method includes a segmentation step that segments an input image into a plurality of regions by using an automatic segmentation algorithm, and a computation step that calculates a saliency value of one region of the plurality of segmented regions by using a weighted sum of color differences between the one region and all other regions. Accordingly, it is possible to automatically analyze visual saliency regions in an image, and a result of analysis can be used in application areas including significant object segmentation, object recognition, adaptive image compression, content-aware image resizing, and image retrieval.

Owner:ORMON CORP +1

Non-reference image quality evaluation method based on high-quality natural image statistical magnitude model

ActiveCN103996192ADetermine the qualityOvercoming the problem of weak generalization abilityImage analysisCharacter and pattern recognitionImaging qualityVisual saliency

The invention discloses a non-reference image quality evaluation method based on a high-quality natural image statistical magnitude model. The method includes the steps that firstly, parameters corresponding to a multi-element Gaussian model are learnt from first image blocks of a high-quality natural image; a test image is divided into second image blocks which are the same in size, and a multi-element Gaussian model of each second image block is extracted; the distances between the multi-element Gaussian models are determined through a bhattacharyya distance, and then the quality of distorted image quality blocks is determined; the quality of all the distorted image blocks are linearly weighted through visual saliency, finally the objective evaluation grade of the test image is acquired, the problem that an existing evaluation method is low in generalization ability can be well solved, and the requirements of actual application for the non-reference image quality evaluation method are met.

Owner:TONGJI UNIV

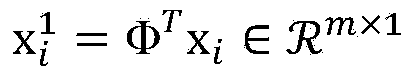

HEVC (High Efficiency Video Coding) code rate control method based on region-of-interest

ActiveCN105049850AImprove codingImprove subjective qualityDigital video signal modificationTime domainPattern recognition

The invention provides an HEVC (High Efficiency Video Coding) code rate control method based on region-of-interest. The HEVC code rate control method based on region-of-interest comprises the steps: generating a spatial domain significance graph of the current frame according to a GBVS (Graph Based Visual Saliency) model; generating a time domain significance graph of the current frame through motion vector information; integrating the time domain significance graph and the spatial domain significance graph by using the consistency normalization method to obtain the eventual significance graph; performing regional division for the current frame image by using the significance graph so as to divide the region into a region-of-interest and a non-region-of-interest; performing bit distribution for the region-of-interest and the non-region-of-interest respectively; performing bit distribution for each LCU in the current frame according to the significance; calculating lambda and a QP (Quantization Parameter) value according to the distributed code rate, and clipping and correcting the lambda and the QP value; and performing coding by using the eventually obtained lambda and the QP value. The HEVC code rate control method based on region-of-interest can improve the subjective quality for video coding and accurately control the output bit at the same time.

Owner:SHANGHAI UNIV

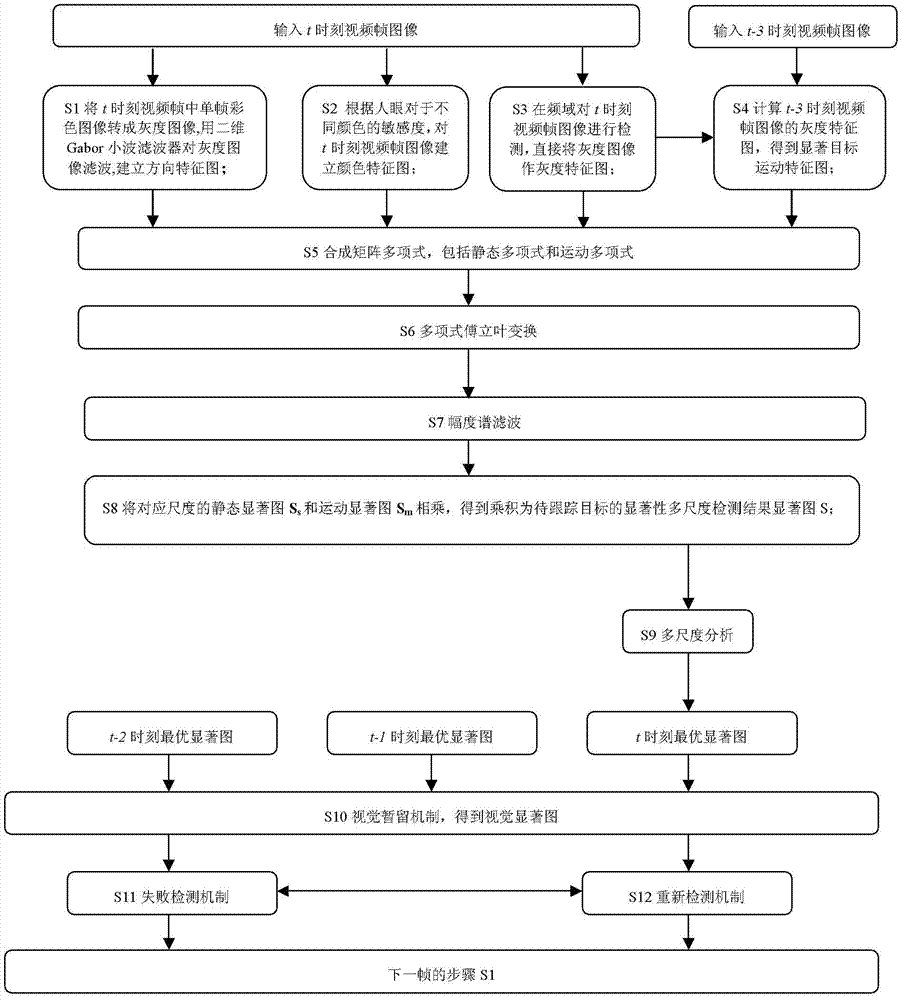

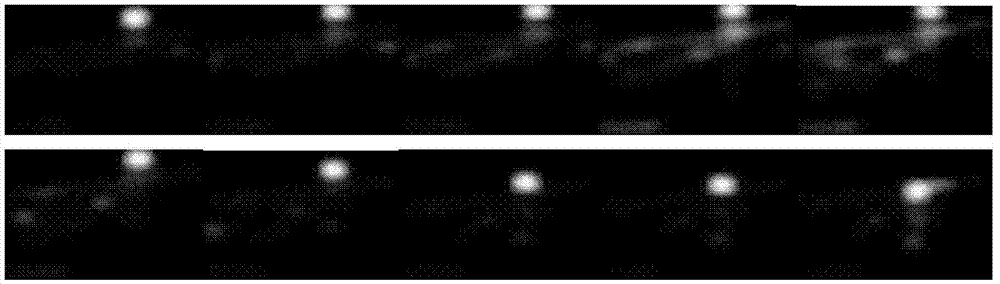

Target tracking method based on frequency domain saliency

InactiveCN103400129AImprove tracking accuracyTracking effect is stableCharacter and pattern recognitionTime domainVisual field loss

The invention relates to a target tracking method based on frequency domain saliency, which comprises the steps of S1-S4, establishing direction feature maps, color feature maps, gray feature maps and motion feature maps; S5-S6, establishing static and moving polynomials and performing Fourier transform to the static and moving polynomials; S7, performing Gaussian low-pass filtration and inverse Fourier transform to magnitude spectra to obtain static saliency maps and moving saliency maps; S8, multiplying the moving saliency maps by the static saliency maps with the corresponding scales to obtain saliency multi-scale detection result saliency map; S9, calculating the one-dimensional entropy function of the histogram of the saliency map and extracting a time domain saliency map corresponding to a minimum information entropy as an optimal saliency map at the moment t; S10, using products of average weight of t-1 and t-2 frame saliency maps and the optimal saliency map at the moment t as visual saliency maps; S11, calculating difference of central positions of the visual saliency maps of adjacent frames, judging whether the tracking is failed or not and recording a failure saliency map; and S12, comparing the visual saliency map of the current frame with the failure saliency map and judging whether a target returns back to a visual field or not.

Owner:INST OF OPTICS & ELECTRONICS - CHINESE ACAD OF SCI

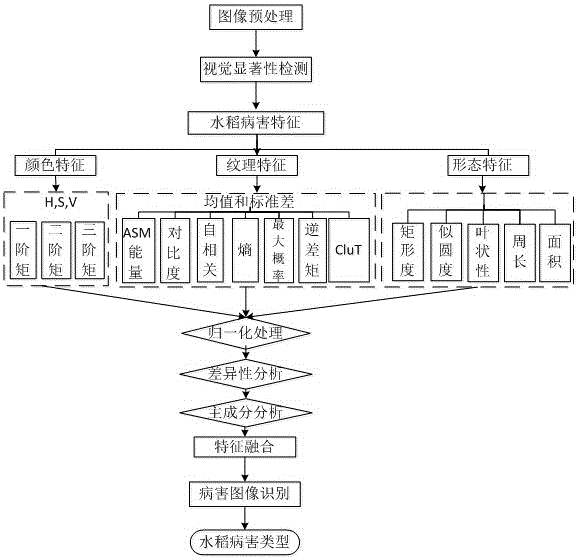

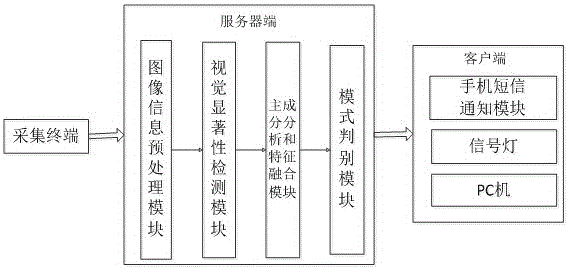

Rice disease recognition method based on principal component analysis and neural network and rice disease recognition system thereof

ActiveCN105938564AEfficient detectionBiological neural network modelsCharacter and pattern recognitionDiseasePrincipal component analysis

The invention relates to a rice disease recognition method based on principal component analysis and a neural network. The method comprises the steps that rice disease image data are acquired and image preprocessing is performed; visual saliency detection is performed, and rice disease images of ideal disease spot outlines are searched from salient map sequences; features are extracted from the rice disease images from the aspects of color, shape and texture, and difference analysis and principal component analysis are performed so that different feature combinations are found; and construction of a machine learning model is performed on different feature combinations and a prediction result is fed back to a client side. The invention also discloses a rice disease recognition system based on principal component analysis and the neural network. Image information is acquired and the images are transmitted to a server side through the network. Preprocessing and disease spot detection are performed on the acquired tissue culturing images through the server side, and management personnel are prompted through a mobile phone short message and a signal lamp and a PC side according to the detection result.

Owner:WUXI CAS INTELLIGENT AGRI DEV

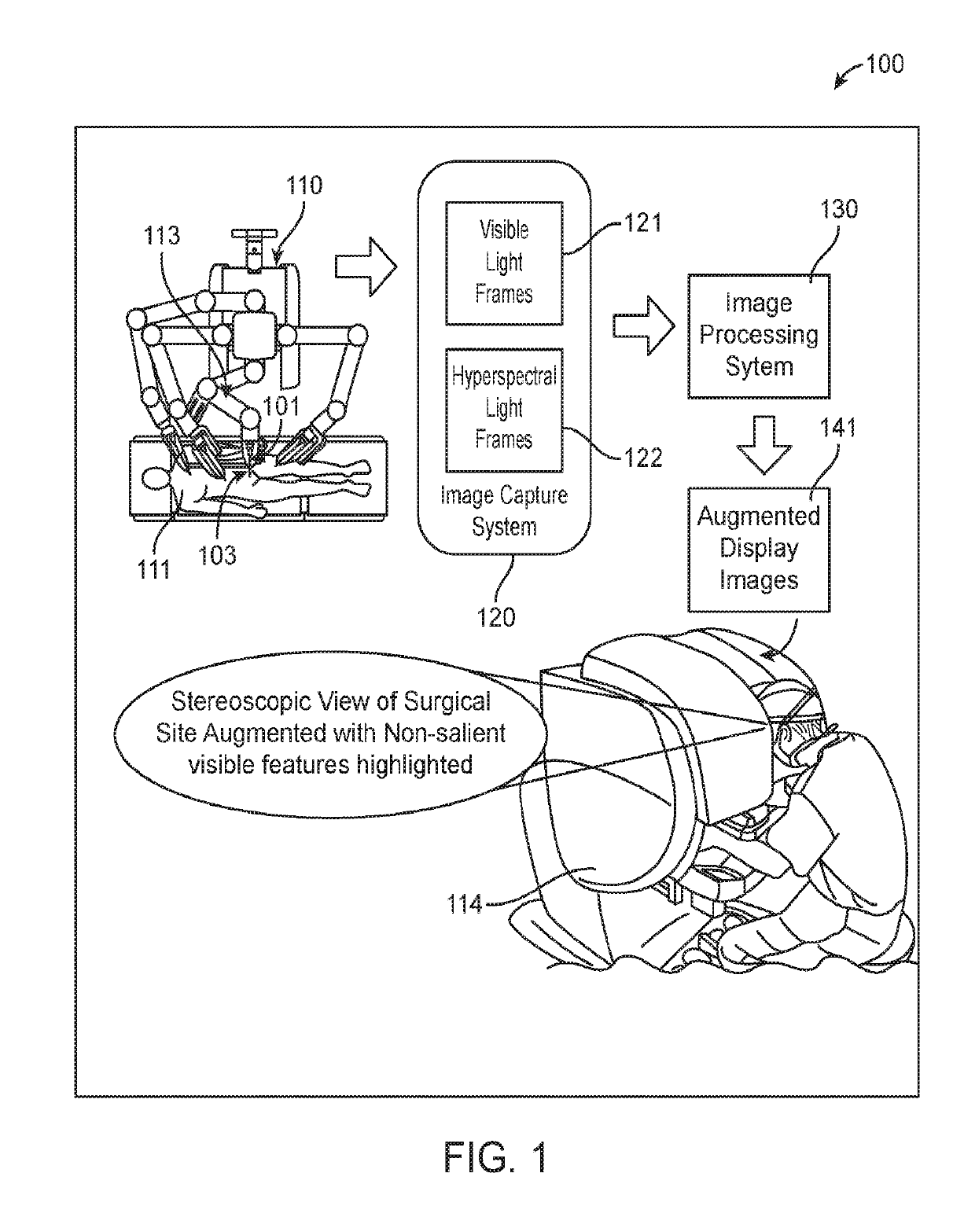

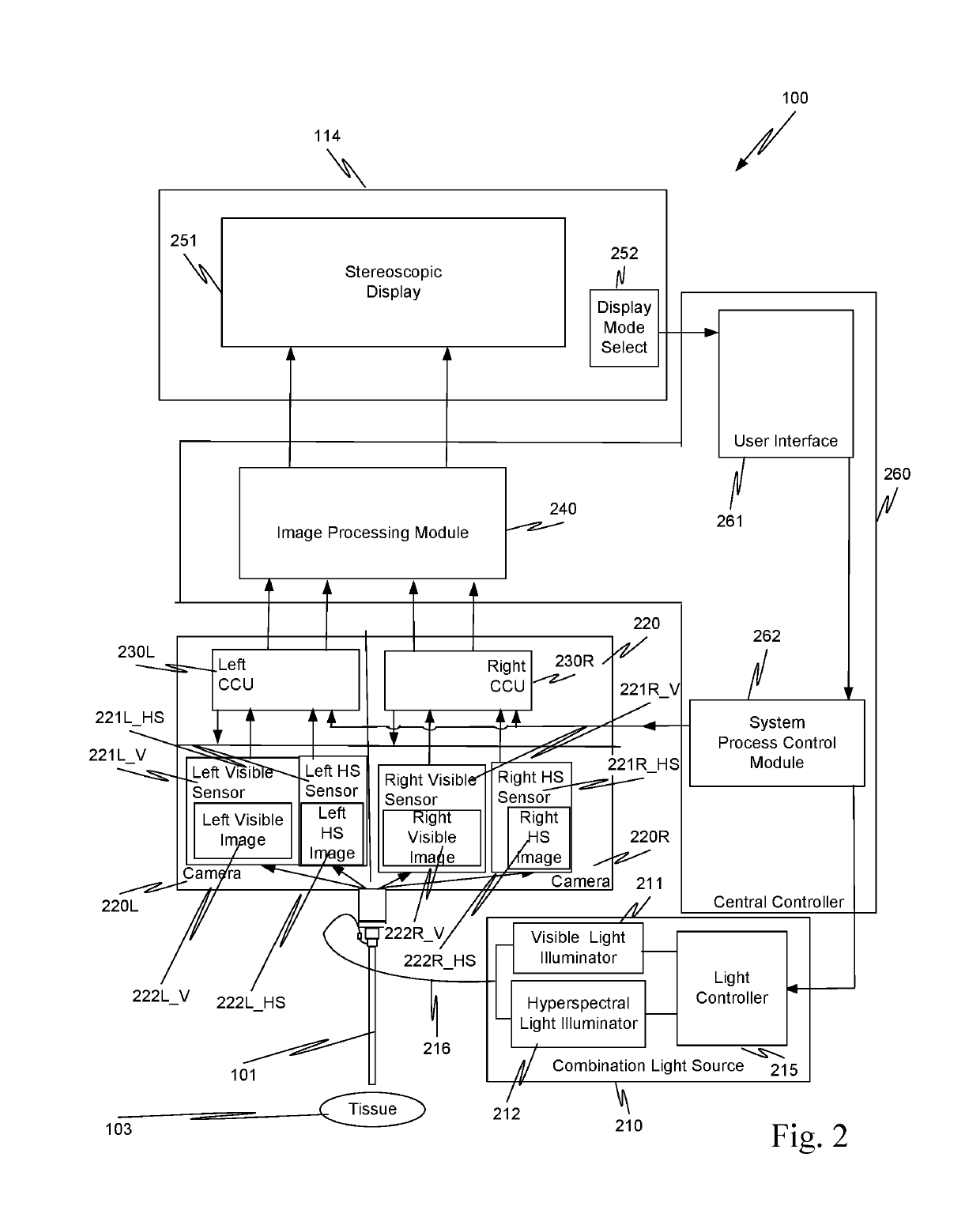

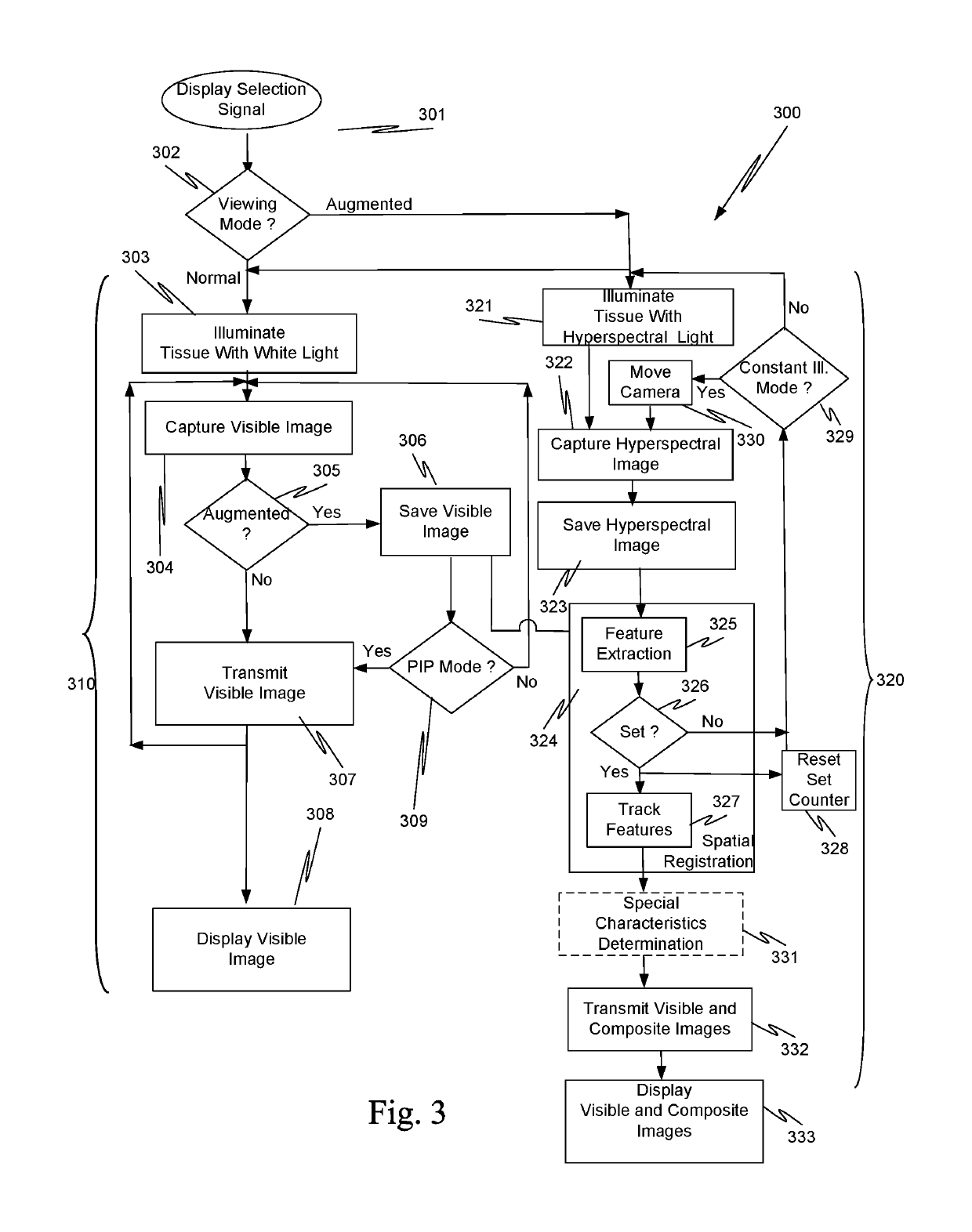

Simultaneous white light and hyperspectral light imaging systems

PendingUS20190200848A1Increase awarenessSurgical navigation systemsDiagnostics using spectroscopyDiagnostic Radiology ModalityVisual perception

A computer-assisted surgical system simultaneously provides visible light and alternate modality images that identify tissue or increase the visual salience of features of clinical interest that a surgeon normally uses when performing a surgical intervention using the computer-assisted surgical system. Hyperspectral light from tissue of interest is used to safely and efficiently image that tissue even though the tissue may be obscured in the normal visible image of the surgical field. The combination of visible and hyperspectral images are analyzed to provide details and information concerning the tissue or other bodily function that were not previously available.

Owner:INTUITIVE SURGICAL OPERATIONS INC

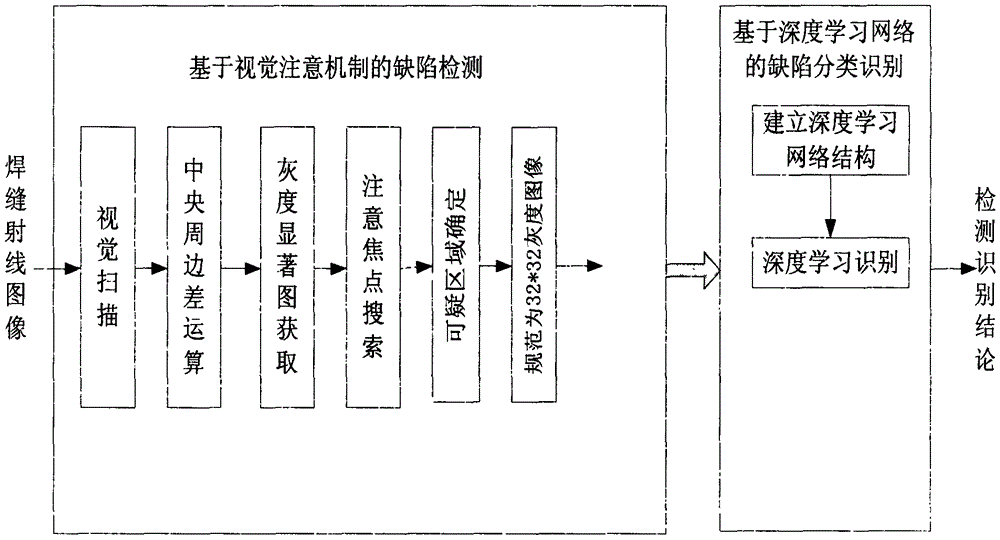

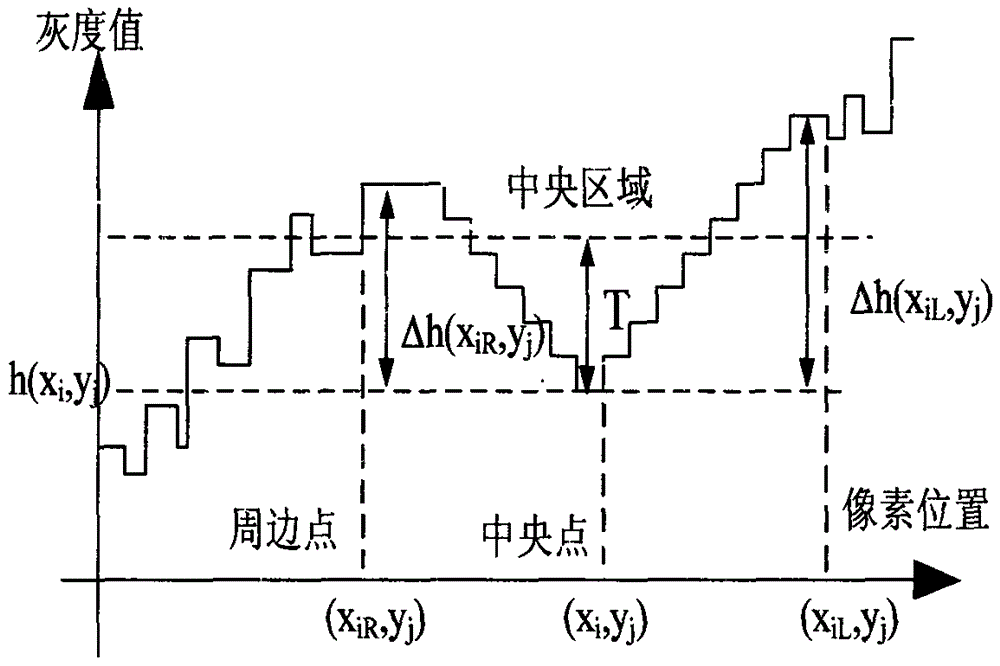

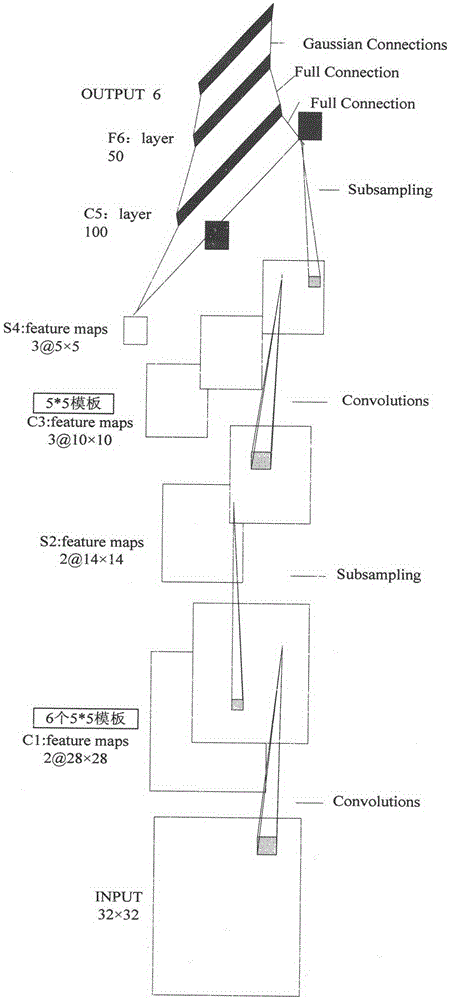

Method and device for detecting and identifying X-ray image defects of welding seam

InactiveCN104977313AReduce processingReduce distractionsNeural learning methodsElectric digital data processingPattern recognitionSaliency map

The invention provides a method and a device for detecting and identifying X-ray image defects of a welding seam. The method comprises the following steps: scanning with vision, performing central-peripheral difference operation, obtaining a grey saliency map, searching focus of attention, and determining a dubious area; and enabling a pixel grey-scale signal in the dubious area to directly pass through a trained layered network depth model by adopting deep learning, and directly identifying by obtaining substantive characteristics in the dubious defection area. According to the invention, image objects are selected and identified in series from strong to weak visual saliency; the efficiency and the accuracy for analyzing and identifying images are increased; and the method is strong in adaptation and good in universality.

Owner:四川省特种设备检验研究院 +1

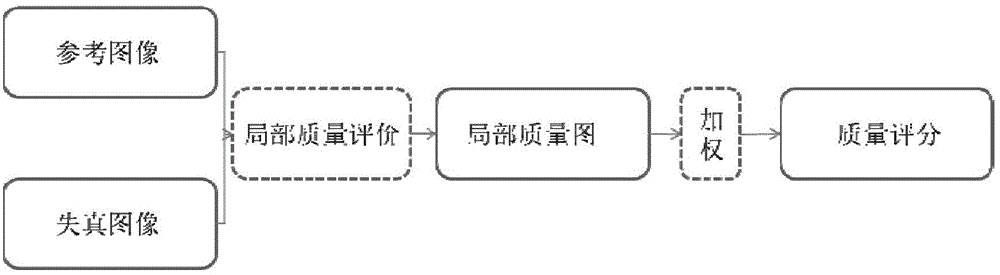

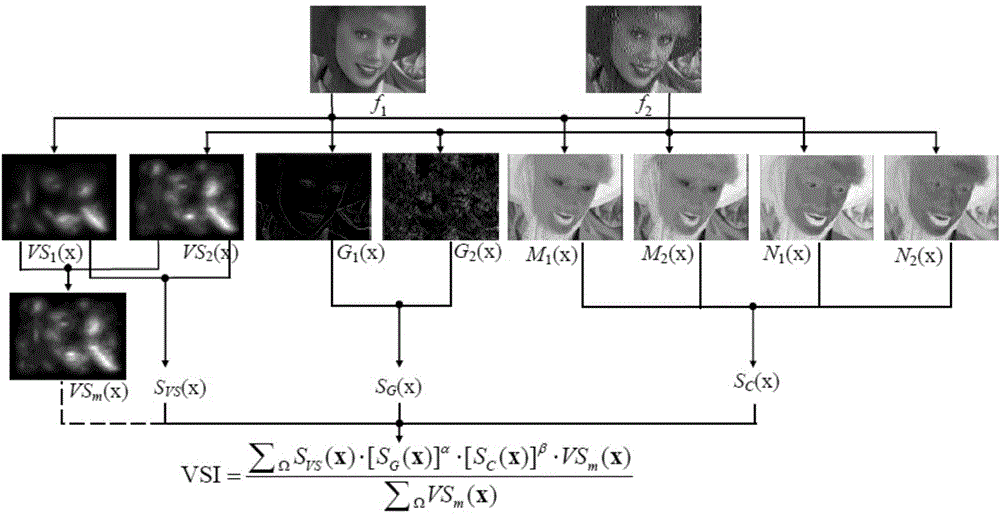

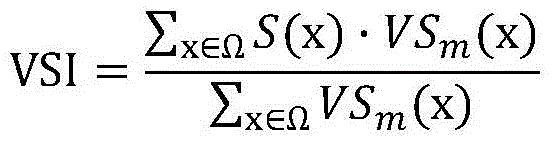

Full-reference color image quality evaluation method based on visual saliency

The invention discloses a full-reference color image quality evaluation method based on visual saliency. The method comprises the steps of (1) determining visual saliency maps VS1(x) and VS2(x), gradient maps G1(x) and G2(x), yellow and blue contrast chromaticity components M1(x) and M2(x), and red and green contrast chromaticity components N1(x) and N2(x) of a reference image f(1) and a distorted image f(2) respectively; (2) determining a local quality map S(x) according to the VS1(x), VS2(x), G1(x), G2(x), M1(x), M2(x), N1(x) and N2(x); (3) obtaining the final quality VXI of f(2) with the larger one of VS1(x) and VS2(x) as the weight function. During local quality evaluation, the color image chromaticity components are introduced by means of the relationship between visual saliency and image quality. During distorted image quality score determination, visual saliency serves as the weight function, the objectively evaluated quality of the distorted image is obtained, and the accuracy of the full-reference image quality evaluation method is improved.

Owner:TONGJI UNIV

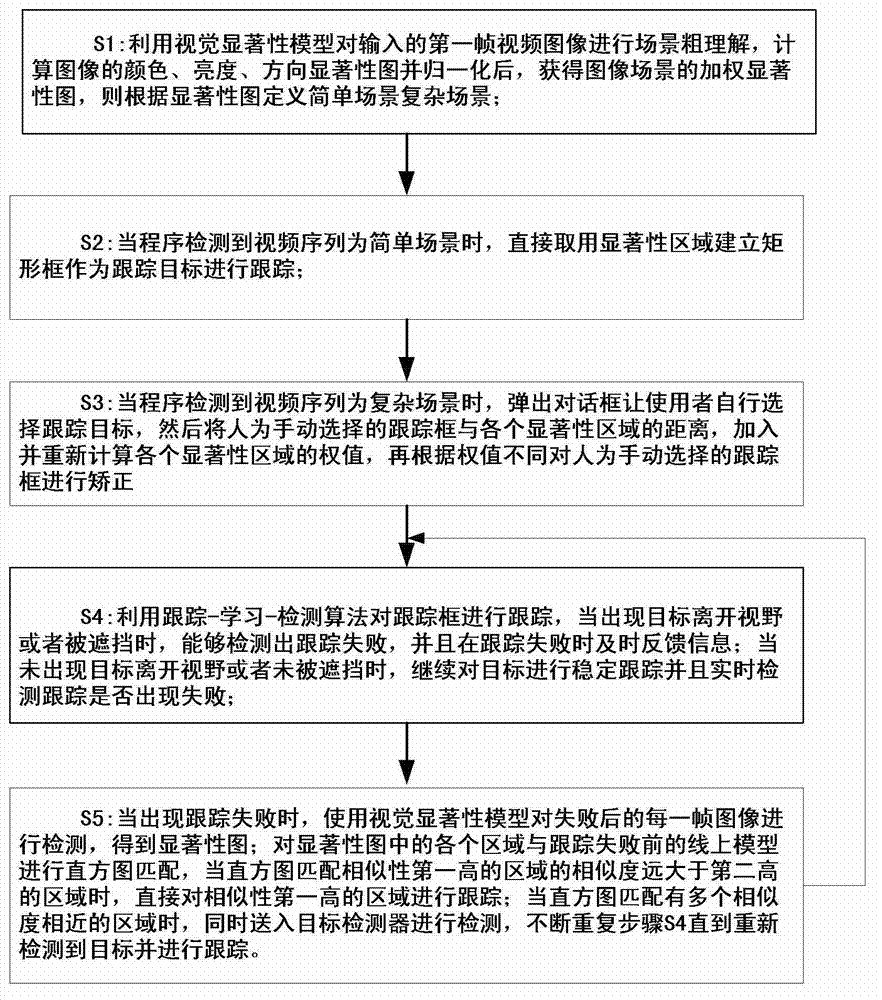

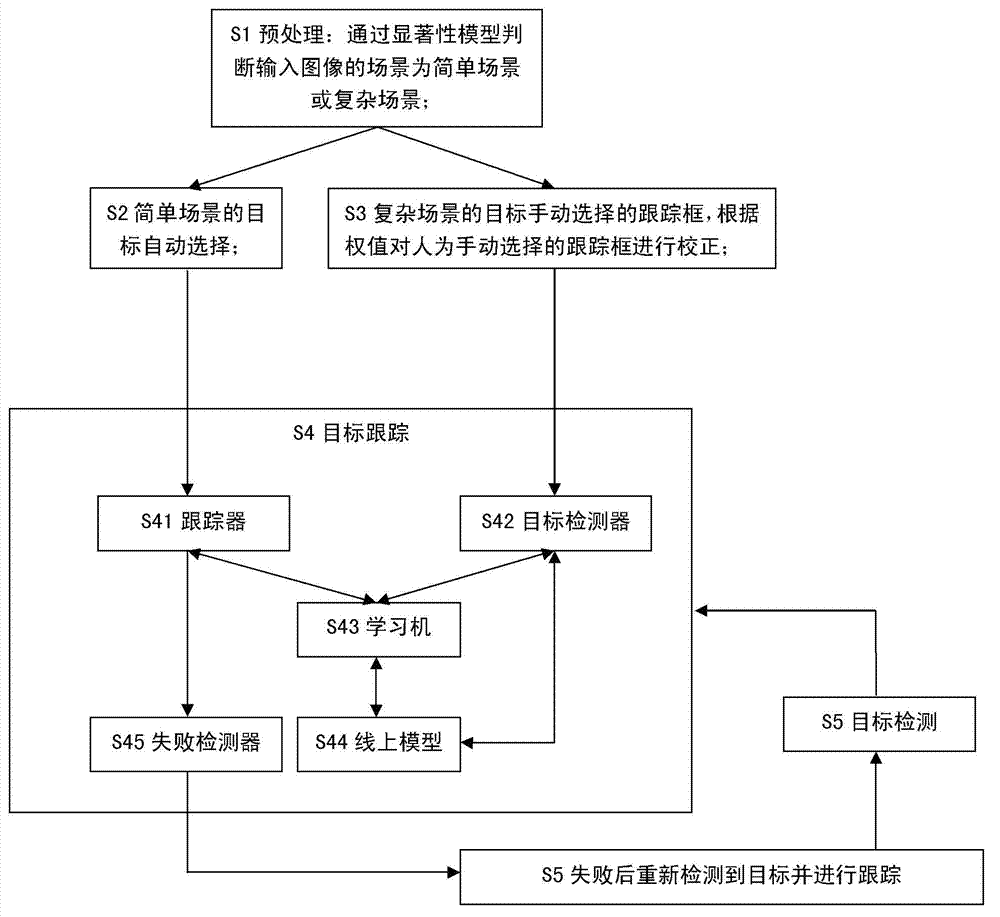

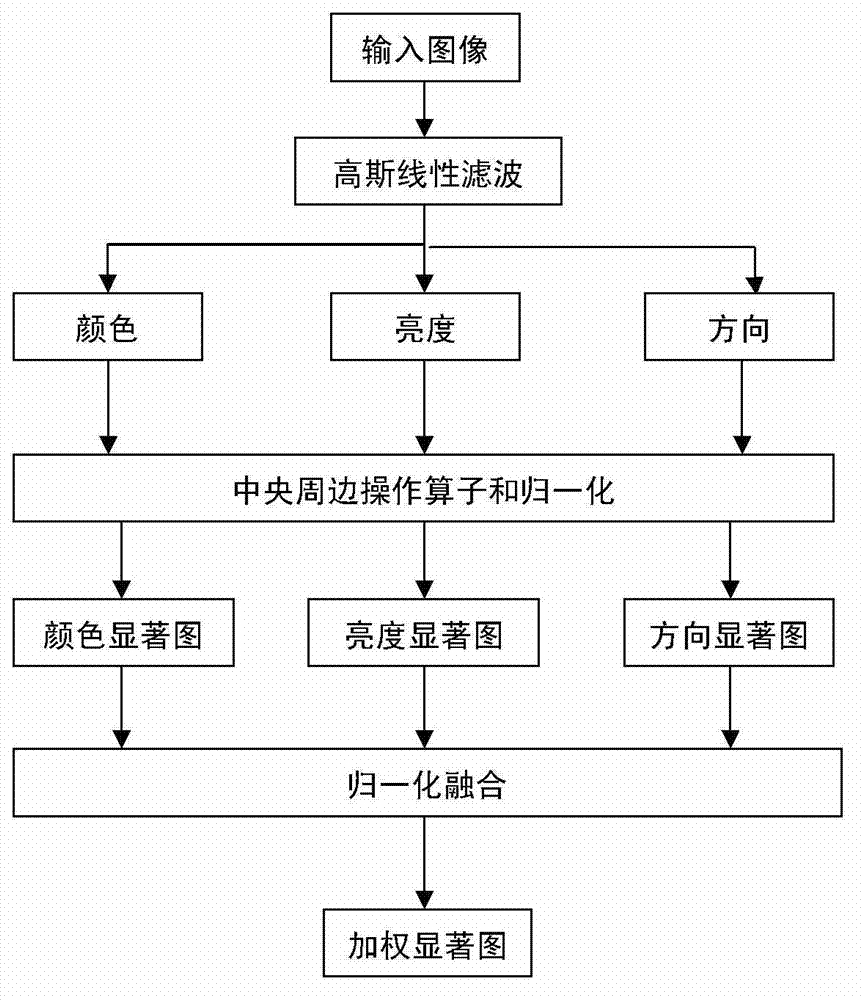

Visual saliency model based automatic detecting and tracking method

The invention discloses a visual saliency model based automatic detecting and tracking method. The visual saliency model based automatic detecting and tracking method comprises the steps of: calculating a color, brightness and direction saliency graph of an input video image by using a visual saliency model, and defining a simple scene and a complex scene based on the weighted saliency graph; establishing a rectangular frame to serve as a tracking target to be tracked by using a saliency region when the simple scene is detected; correcting a manually selected tracking frame based on different weights when the complex scene is detected; tracking the tracking frame by utilizing a tracking studying and detecting algorithm, and detecting that the tracking is failed; detecting the image of each frame after the failure by using the visual saliency model, performing histogram matching on each region in the saliency graph and the online model before the tracking failure, and tracking a region with the highest similarity; and sending multiple regions with similar similarity into a target detector at the same time for detection, repeating tracking detection for the image target of the next frame, using a histogram comparison step until a target is detected again and tracking.

Owner:INST OF OPTICS & ELECTRONICS - CHINESE ACAD OF SCI

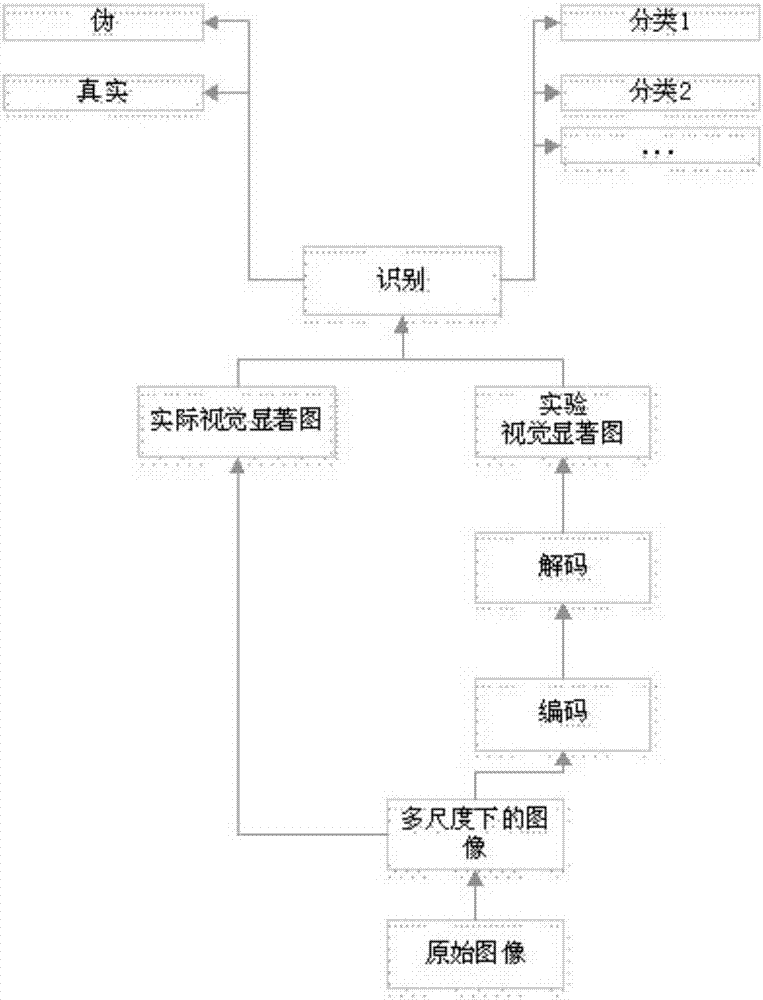

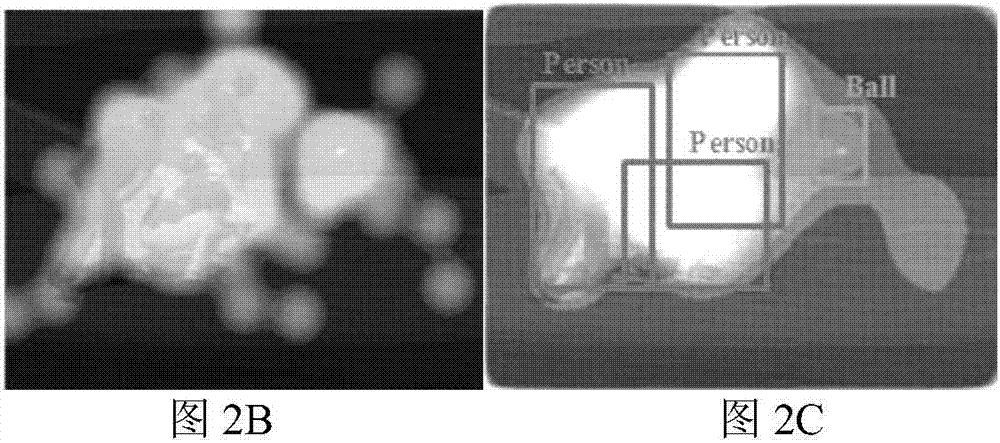

Visual saliency detection method combined with image classification

ActiveCN107346436ARobustEfficient analysisImage enhancementImage analysisPattern recognitionSaliency map

The invention provides a visual saliency detection method combined with image classification. The method comprises the steps of utilizing a visual saliency detecting model which comprises an image coding network, an image decoding network and an image identification model, using a multidirectional image as an input of the image coding network, and extracting an image characteristic on the condition of multiple resolution as a coding characteristic vector F; fixing a weight except for the last two layers in the image coding network, and training network parameters for obtaining a visual saliency picture of an original image; using the F as the input of the image decoding network, and performing normalization processing on the saliency picture which corresponds with the original image; for the input F of the image decoding network, finally obtaining a generated visual saliency picture through an upsampling layer and a nonlinear sigmoid layer; by means of the image identification network, using the visual saliency picture of the original image and the generated visual saliency picture as the input, performing characteristic extraction by means of a convolutional layer with a small convolution kernel and performing pooling processing, and finally outputting probability distribution of the generated picture and probability distribution of classification labels by means of three total connecting layers. The method provided by the invention realizes quick and effective image analysis and determining and furthermore realizes good effects such as saving manpower and physical resource costs and remarkably improving accuracy in practices such as image marking, supervising and behavior predicating.

Owner:以萨技术股份有限公司

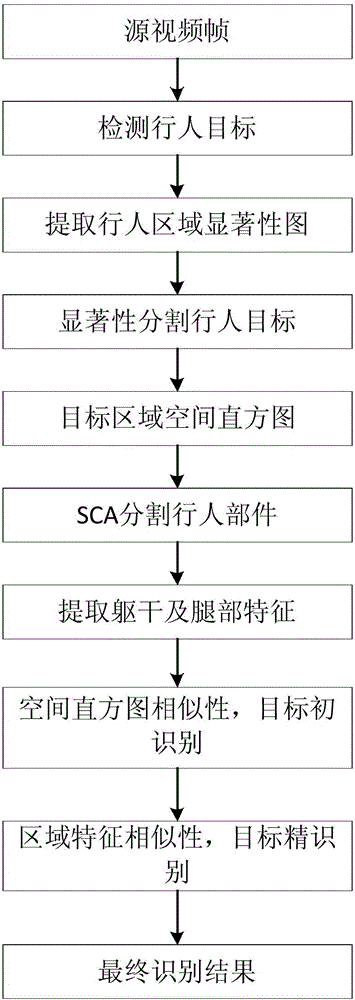

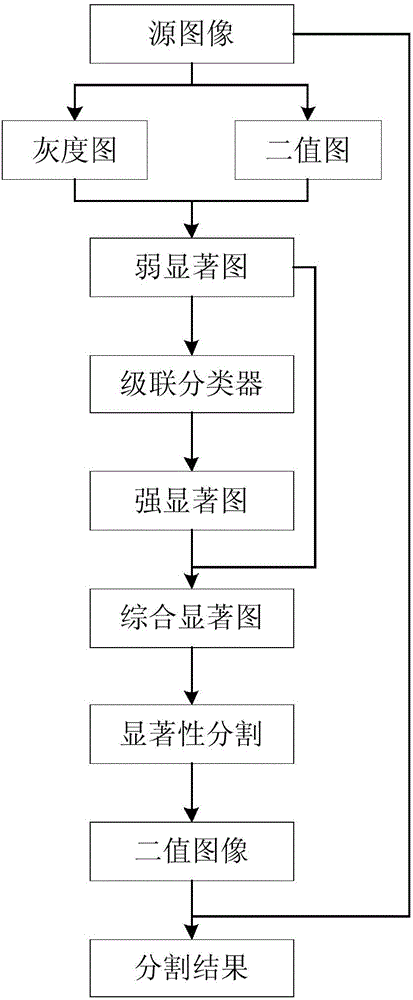

Visual saliency and multiple characteristics-based pedestrian re-recognition method

ActiveCN105023008AImprove accuracyFully describeCharacter and pattern recognitionHuman bodyMulti camera

The invention discloses a visual saliency and multiple characteristics-based pedestrian re-recognition method. The method comprises: quickly detecting a pedestrian target in a video; partitioning a pedestrian region by virtue of a saliency detection algorithm and extracting a spatial histogram of a target region; partitioning a human body into three parts: a head, a trunk and legs by virtue of a SCA method and extracting color, position, shape and texture characteristics of the trunk and the legs; calculating the similarity of the spatial histogram by adopting an improved JSD similarity measurement criterion for primary recognition of the target; and calculating the similarity of a regional characteristic set by virtue of a Gaussian function to obtain a final recognition result. The method provided by the invention can be used for realizing long-term tracking and monitoring of special pedestrians in a multi-camera network in different background environments and under different camera settings. The method is used for realizing intelligentized processing of monitoring vidoes and immediately responding to unusual conditions in the videos, and moreover, a lot of manpower and material resources can be saved.

Owner:江苏睿世力科技有限公司

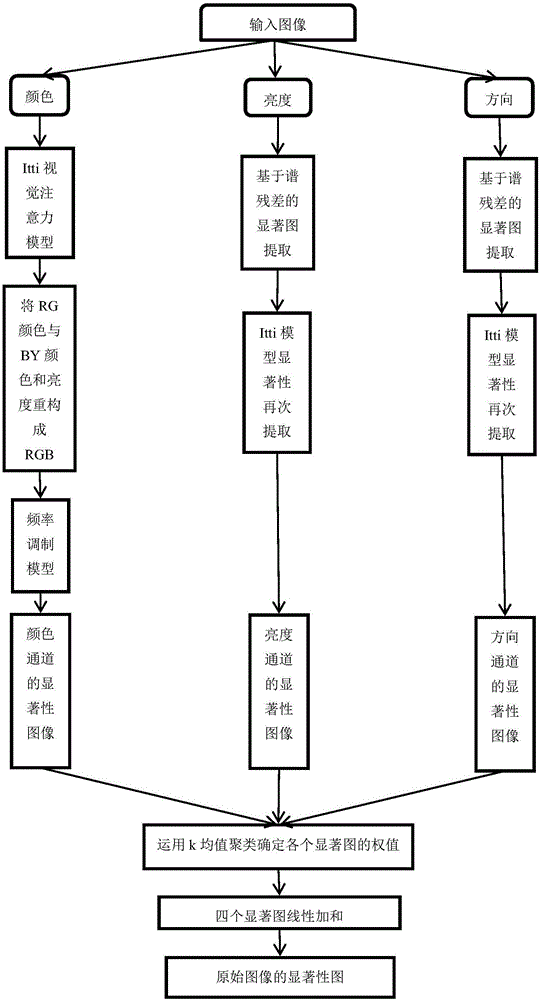

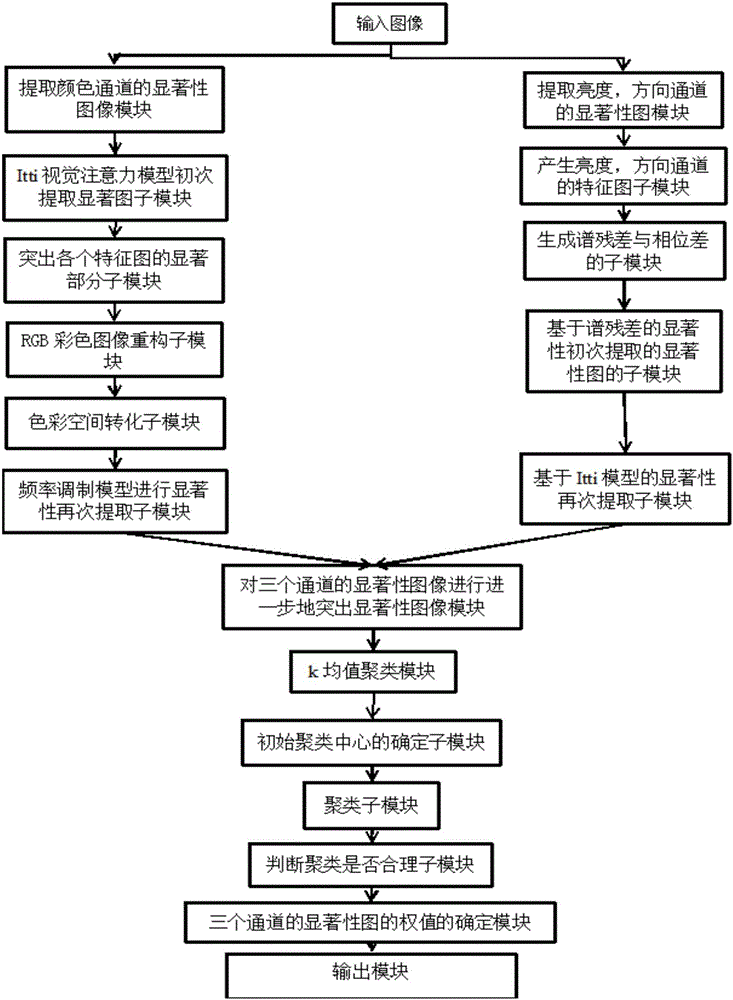

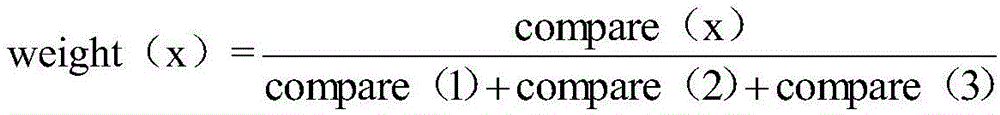

Visual saliency object detection method

ActiveCN105825238APrecise positioningImprove accuracyImage enhancementImage analysisSaliency mapVisual saliency

The present invention discloses a visual saliency object detection method. The method comprises the steps: (1) inputting an image to be detected, extracting saliency maps of the improved color, the brightness and the direction on the basis of an Itti visual attention model through adoption of spectral residual and a frequency modulation model; (2) obtaining the comparison difference of a saliency area and a non-saliency area in each channel saliency image through k-means clustering, and obtaining the optimal weight of each channel saliency image; and (3) weighting of the saliency image of each channel, and obtaining the saliency image of an original image, wherein the saliency area of the saliency image is an object area. The visual saliency object detection method is able to improve the deficiency of the visual attention model, effectively highlight the saliency area and inhibit the non-saliency area so as to simulate the location of human vision attention for a natural scene target.

Owner:JIANGSU UNIV

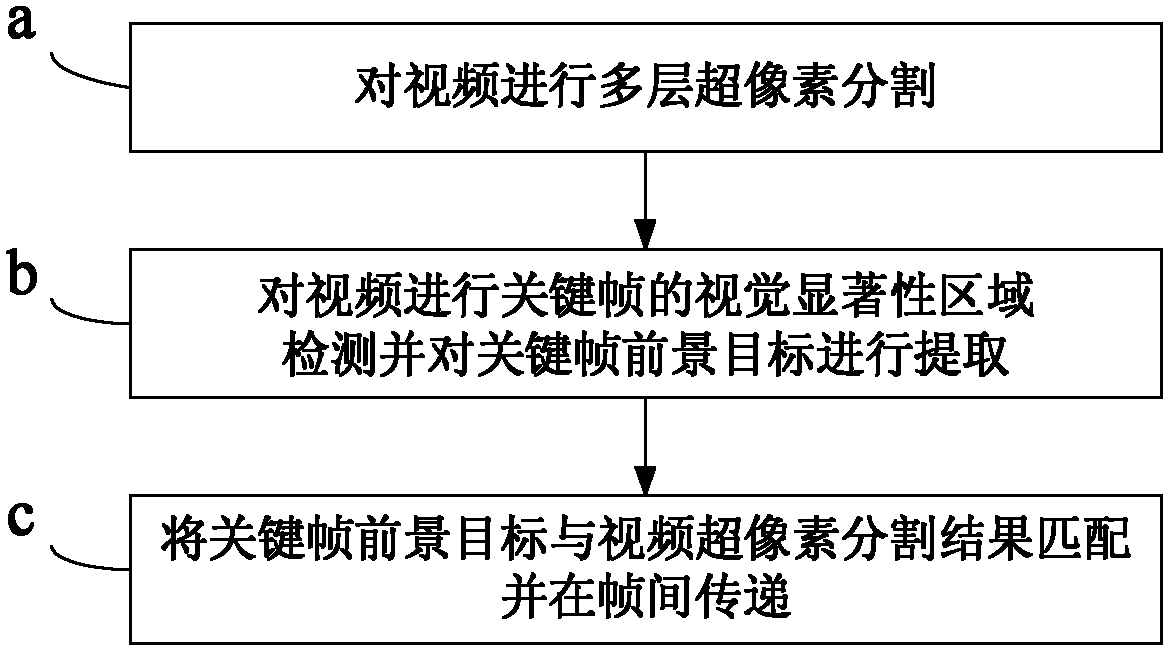

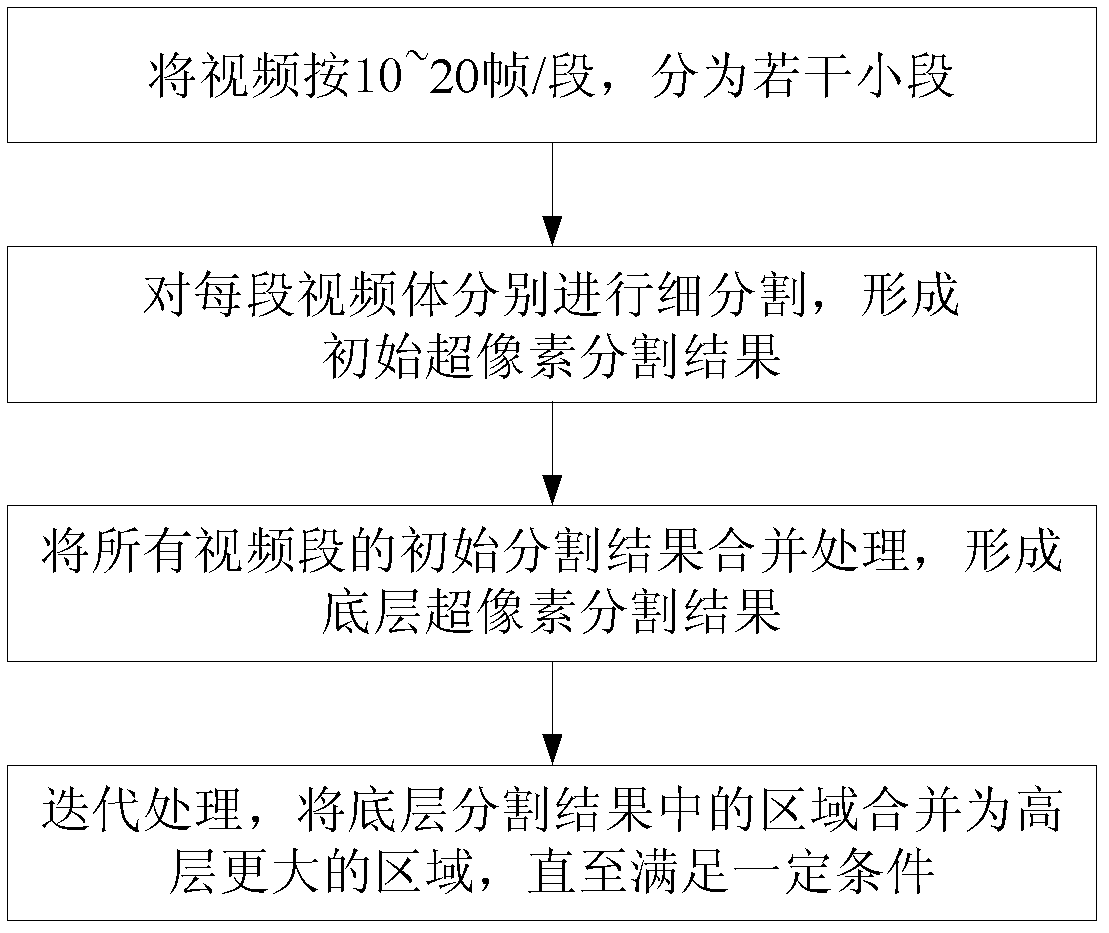

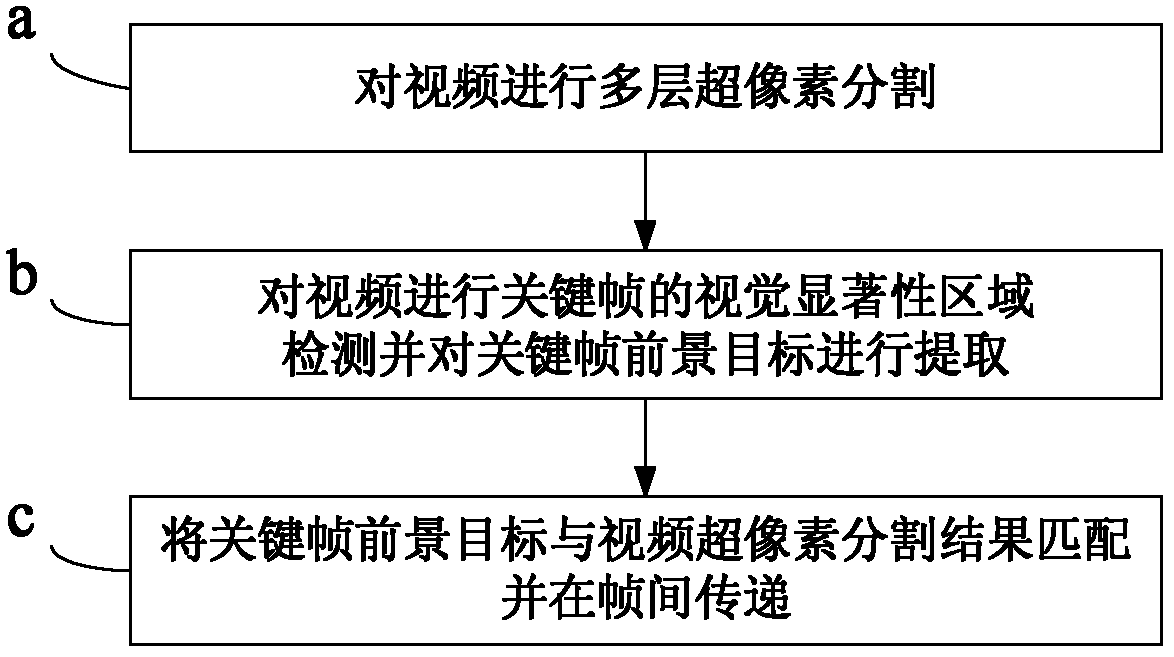

Video foreground object extracting method based on visual saliency and superpixel division

ActiveCN102637253AImprove efficiencyThe result is accurateCharacter and pattern recognitionPattern recognitionVisual saliency

The invention discloses a video foreground object extracting method based on visual saliency and superpixel division. The video foreground object extracting method includes steps: a, dividing multiple layers of superpixels of video: dividing the superpixels of the video used as a three-dimensional video body, and grouping elements of the video body into body areas; b, detecting visual saliency areas of key frames of the video and extracting foreground objects of the key frames: analyzing the visual saliency areas in images of the key frames of the video by a visual saliency detecting method, then using the visual saliency areas as initial values and obtaining the foreground objects of the key frames by an image foreground extracting method; and c, matching the foreground objects of the key frames with a dividing result of the superpixels of the video and transmitting foreground object extracting results of the key frames among the frames: diffusing areas, covered by the foreground objects of the key frames, of the video body, and further continuously transmitting the foreground object extracting results among the frames. The video foreground object extracting method is high in efficiency, accurate in result and little in manual intervention and is robust.

Owner:TSINGHUA UNIV +1

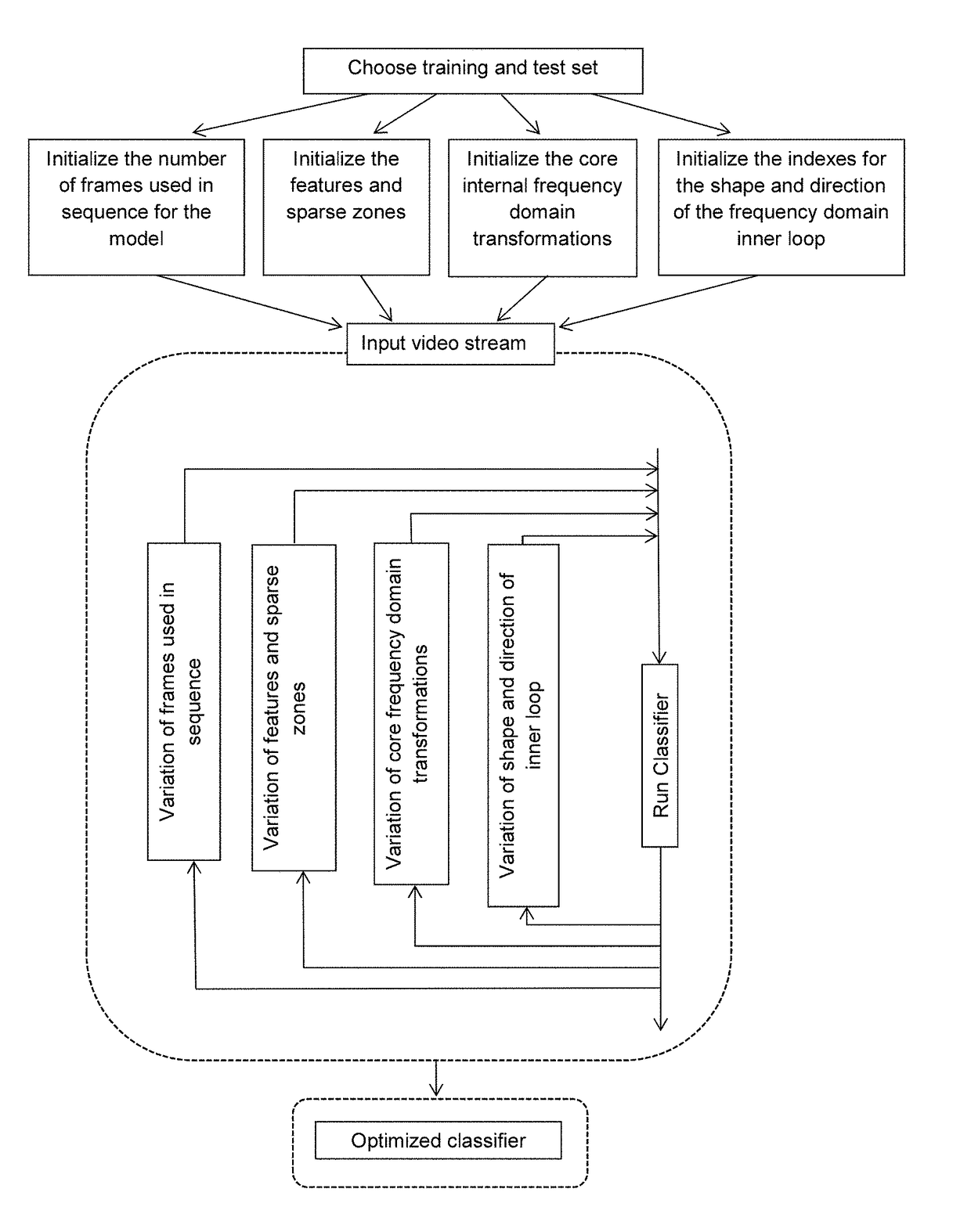

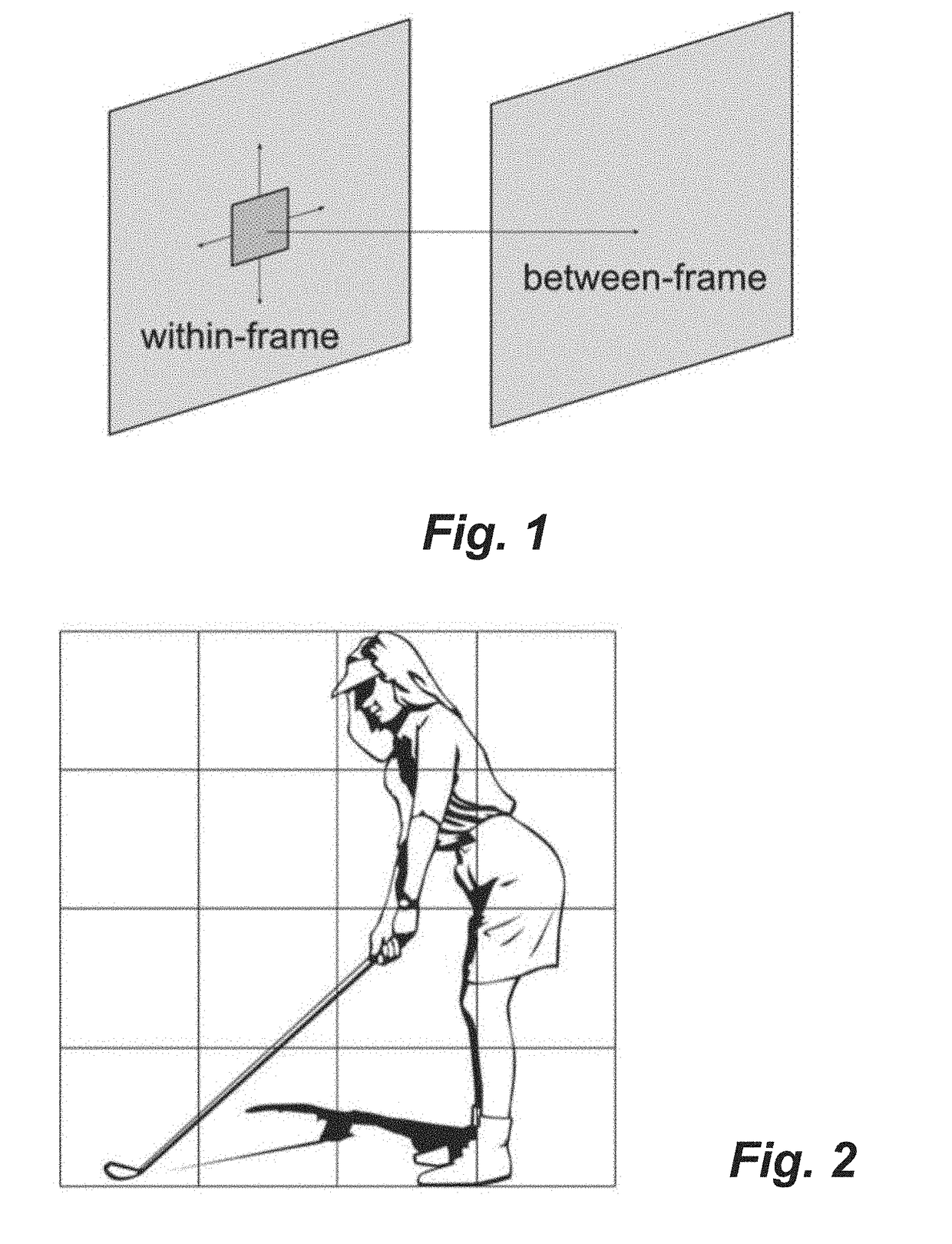

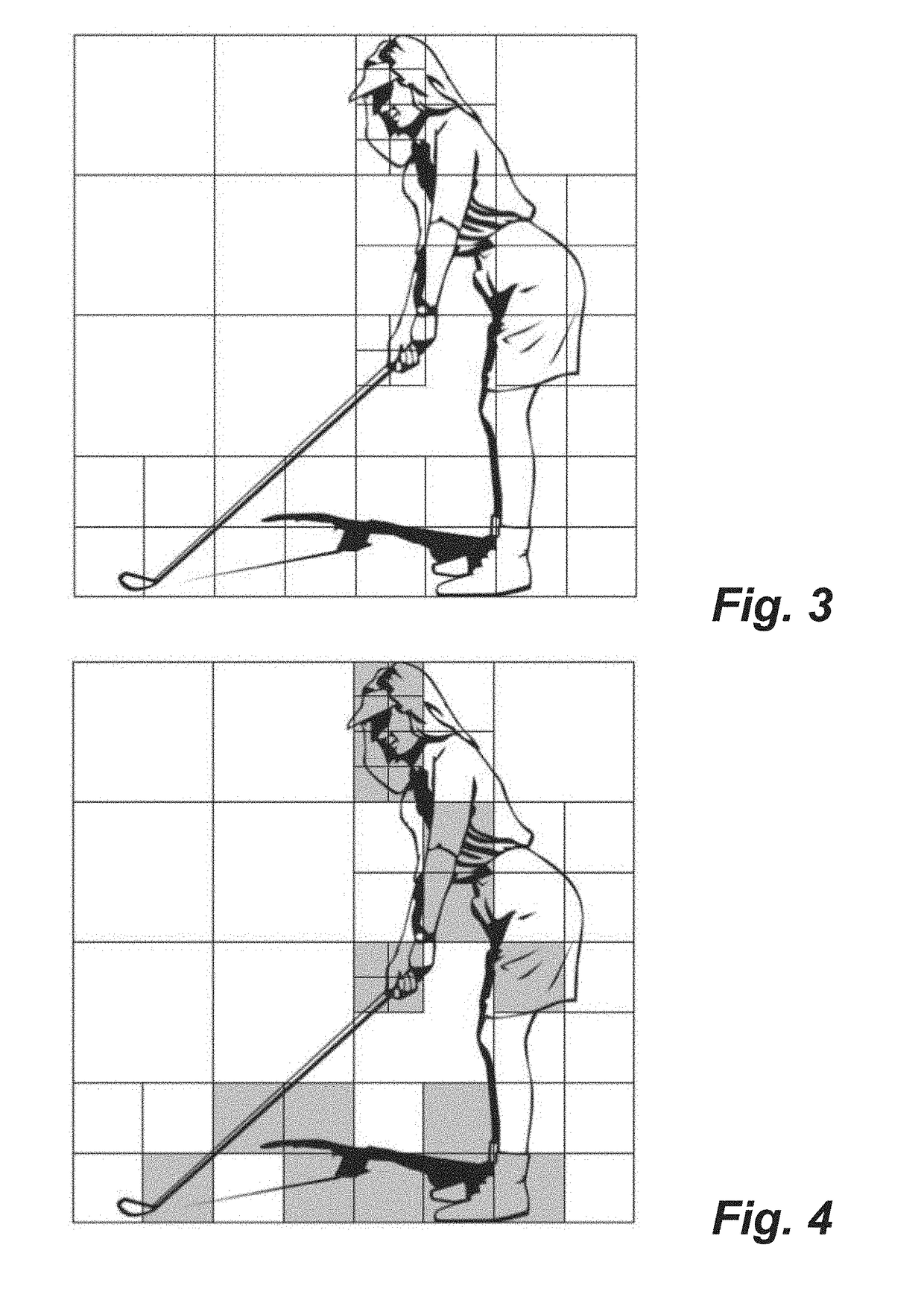

Method for image processing and video compression

A method for video compression through image processing and object detection, to be carried out by an electronic processing unit, based either on images or on a digital video stream of images, the images being defined by a single frame or by sequences of frames of said video stream, with the aim of enhancing and then isolating the frequency domain signals representing a content to be identified, and decreasing or ignoring the frequency domain noise with respect to the content within the images or the video stream, comprises the steps of: obtaining a digital image or a sequence of digital images from either a corresponding single frame or a corresponding sequence of frames of said video stream, all the digital images being defined in a spatial domain; selecting one or more pairs of sparse zones, each covering at least a portion of said single frame or at least two frames of said sequence of frames, each pair of sparse zones generating a selected feature, each zone being defined by two sequences of spatial data; transforming the selected features into frequency domain data by combining, for each zone, said two sequences of spatial data through a 2D variation of an L-transformation, varying the transfer function, shape and direction of the frequency domain data for each zone, thus generating a normalized complex vector for each of said selected features; combining all said normalized complex vectors to define a model of the content to be identified; and inputting that model from said selected features in a classifier, therefore obtaining the data for object detection or visual saliency to use for video compression.

Owner:INTEL CORP

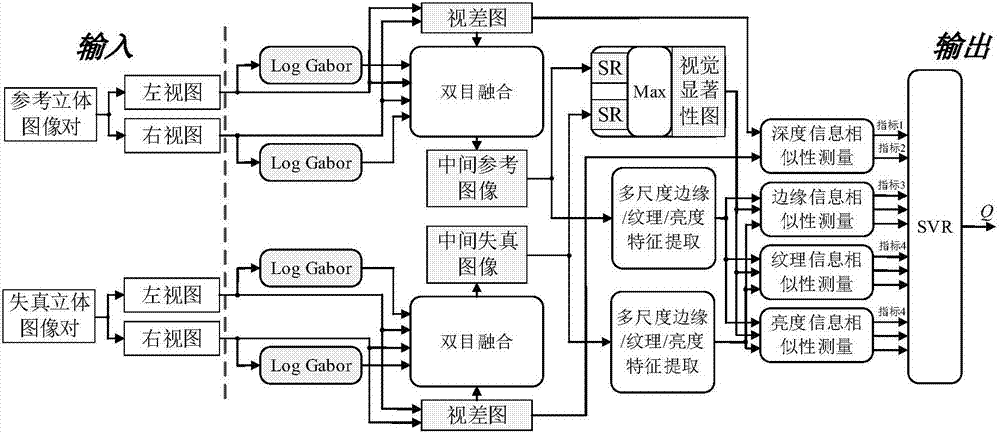

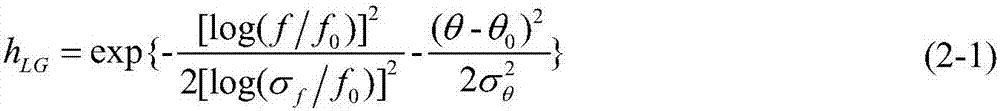

All-reference three-dimensional image quality objective evaluation method based on visual salient feature extraction

ActiveCN107578404AAchieve objective evaluationImprove consistencyImage enhancementImage analysisObjective qualityImaging quality

The invention discloses an all-reference three-dimensional image quality objective evaluation method based on visual salient feature extraction. According to the method, a left view and a right view of a three-dimensional image pair are processed to obtain a corresponding disparity map; image fusion is performed on the left view and the right view of the three-dimensional image pair to obtain an intermediate reference image and an intermediate distortion image; a spectral residual visual saliency model is utilized to obtain a reference saliency map and a distortion saliency map, and a visual saliency map is obtained through integration; visual information features are extracted from the intermediate reference image and the intermediate distortion image, and depth information features are extracted from the disparity map of the three-dimensional image pair; similarity measurement is performed to obtain measurement indexes of all the visual information features of vision saliency enhancement; and support vector machine training prediction is performed, an objective quality score is obtained, mapping of three-dimensional image quality is realized, and measurement and evaluation of three-dimensional image quality are completed. Through the method, image quality objective evaluation and subjective evaluation have good consistency, and the performance is superior to that of existingthree-dimensional image quality evaluation methods.

Owner:ZHEJIANG UNIV

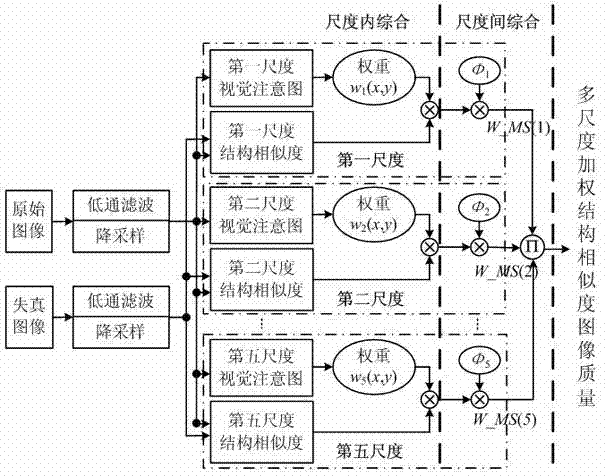

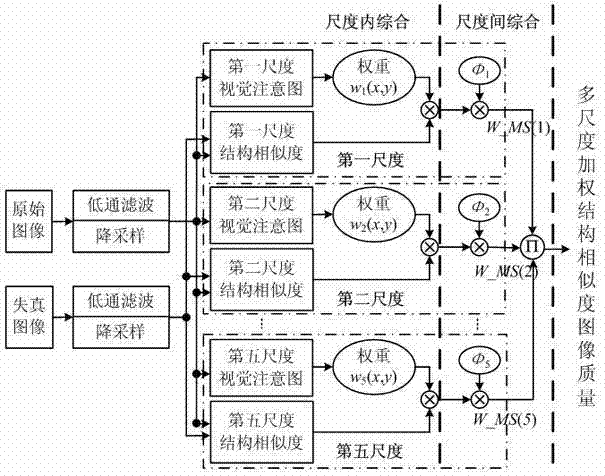

Image quality evaluating method based on multi-scale structure similarity weighted aggregate

InactiveCN102421007AImprove the problem of low prediction accuracyImprove performanceTelevision systemsPattern recognitionImaging quality

The invention discloses an image quality evaluating method based on multi-scale structure similarity weighted aggregate. The traditional method based on structure similarity has defects in many aspects, in the method disclosed by the invention, the visual attention characteristics and multilayer visual characteristics of a human visual system are fully considered to realize the weighted aggregatefor the structure similarity in intra-scale and inter-scale manners, and the objective evaluation to a full reference image is carried out. The image quality evaluating method mainly comprises the steps of: in the scales, generating a weight coefficient of a corresponding image block based on visual saliency, and performing weighted aggregate on the structural similarity in the intra-scale manner; and among the scales, performing the weighted aggregate on the structure similarity in the inter-scale manner by using the weighted coefficient obtained through training or from experience.

Owner:ZHEJIANG UNIV

Fabric defect detection method based on local statistical characteristics and overall significance analysis

InactiveCN103729842AEffective positioningAdaptableImage enhancementImage analysisSaliency mapFeature extraction

The invention discloses a fabric defect detection method based on local statistical characteristics and overall significance analysis. The fabric defect detection method includes local texture and gray statistical characteristic extraction, visual saliency map generation and visual saliency map segmentation. Firstly, an image is subjected to blocking, and local texture and gray statistical characteristics of image blocks are extracted; then, K other image blocks are randomly selected as for each current image block, the contrast ratio between statistical characteristics of the current image block and statistical characteristics of other image blocks is calculated, and visual saliency maps are generated based on overall significance analysis; finally, the saliency maps are segmented according to the optimal threshold iteration segmentation algorithm to acquire the fabric defect detection result. By means of the method, fabric texture statistical characteristics and gray statistical characteristics are comprehensively taken into consideration, and high detection precision is achieved; training samples are not needed, and the self-adaptability is strong; the calculation speed is high and on-line detection is facilitated.

Owner:ZHONGYUAN ENGINEERING COLLEGE

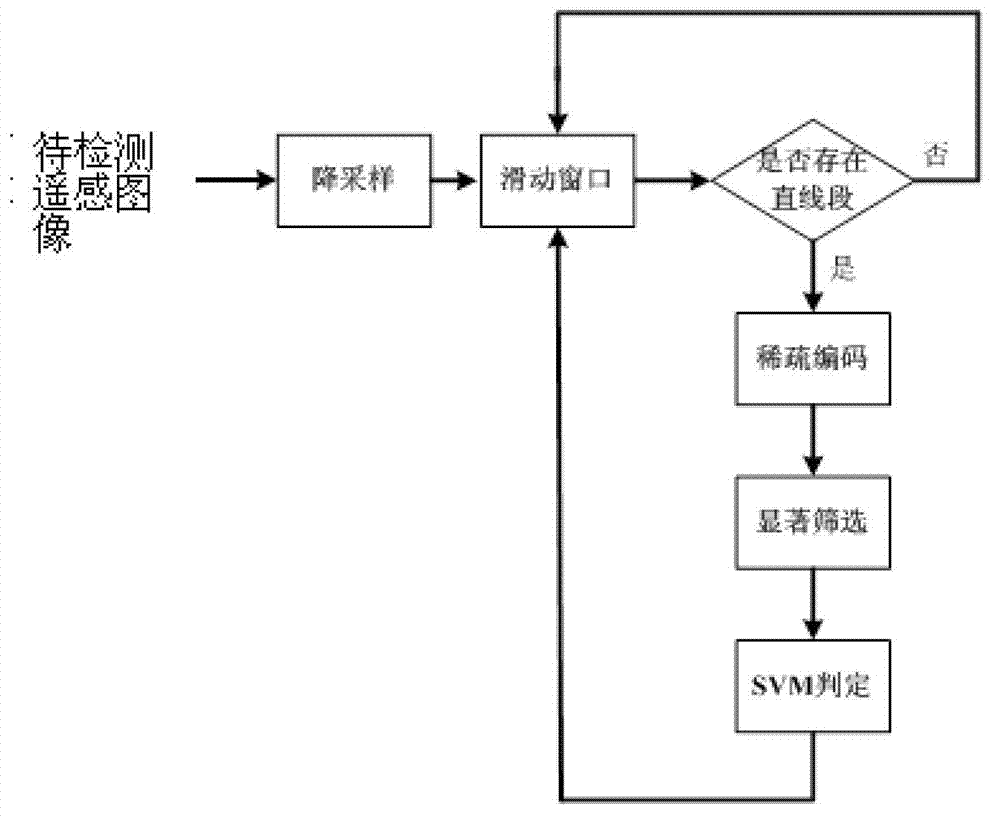

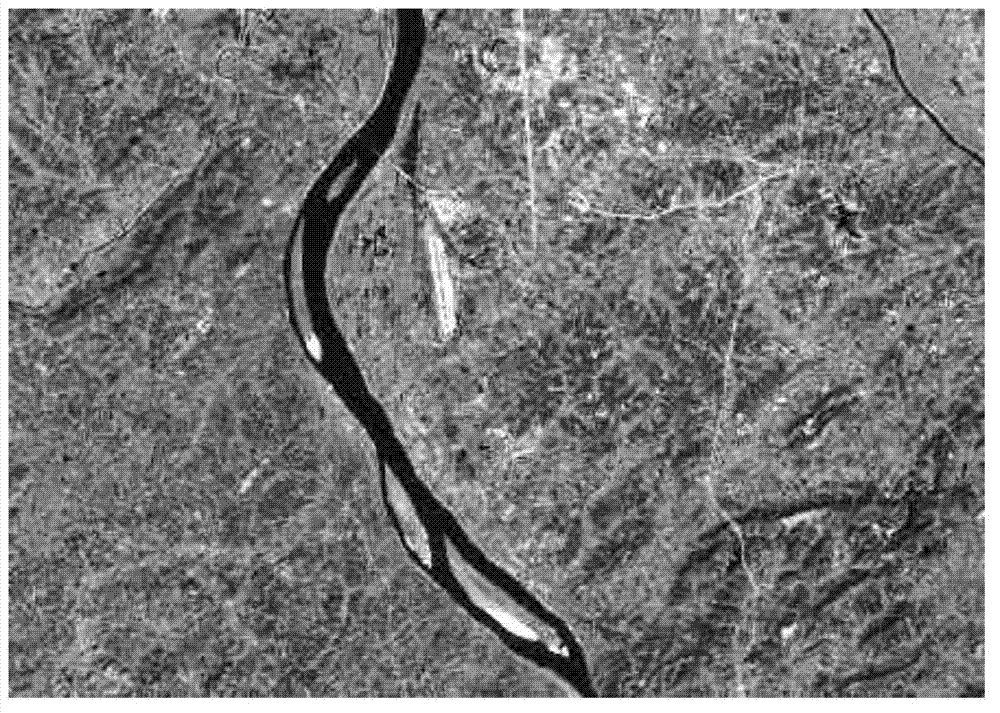

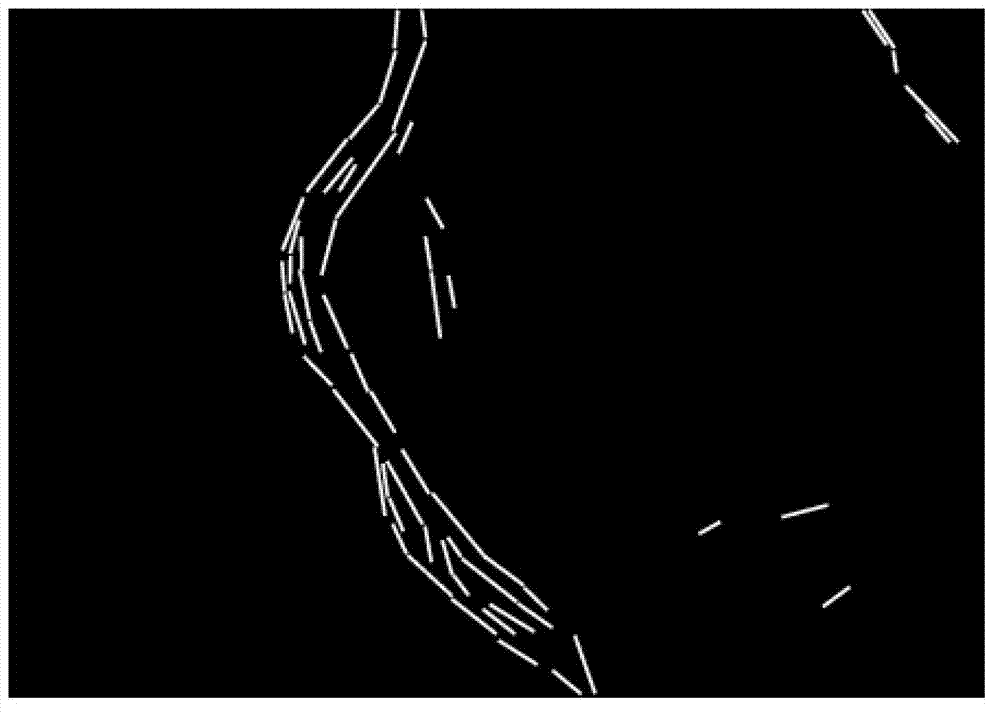

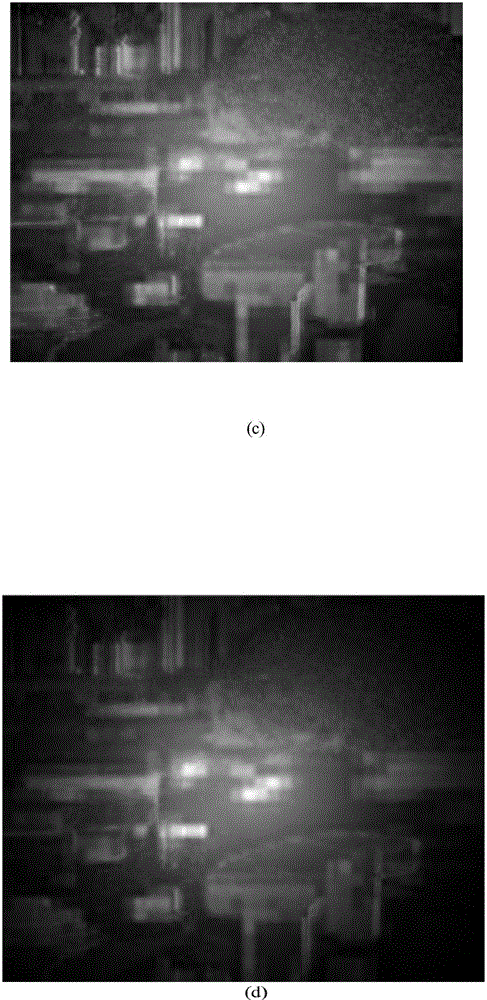

Sparse coding and visual saliency-based method for detecting airport through infrared remote sensing image

ActiveCN102831402AAdd screening strategyEfficient captureCharacter and pattern recognitionSupport vector machineLeast significant difference

The invention relates to a sparse coding and visual saliency-based method for detecting an airport through an infrared remote sensing image. The method comprises the following steps of: firstly, down-sampling an original remote sensing image, linearly detecting the down-sampled remote sensing image via using an LSD (Least Significant Difference) algorithm, calculating the saliency of the image via using an FT algorithm; then detecting the airport by utilizing a sliding window target detector, judging whether a linear section exists in the sliding window, if not, sliding the window continuously, if so, carrying out the sparse coding on the window by utilizing a dictionary constructed by an airport target image of the remote sensing image, and screening sparse codes in a way of combining the sparse codes with salient values of the window, so as to obtain sparse expression characteristics of the window; finally, discriminating the sparse code characteristics of the sliding window via an SVM (Support Vector Machine) binary classifier, judging whether the airport exists in the window, and realizing the detection of an airport target ultimately. Compared with other invented technologies, the method has the advantages of high airport detection accuracy and low false alarm rate.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Three-dimensional image quality objective evaluation method based on three-dimensional visual saliency

InactiveCN104994375AAvoid equally weighted flawsHigh simulationTelevision systemsSteroscopic systemsAbsolute differenceVisual saliency

The invention discloses a three-dimensional image quality objective evaluation method based on three-dimensional visual saliency. The three-dimensional image quality objective evaluation method comprises the following steps: optimizing a three-dimensional visual saliency map by simulating central offset and central concave characteristic, and extracting an optimized three-dimensional visual saliency map; acquiring a three-dimensional image comprehensive quality map according to a distorted three-dimensional image quality map and a quality map of an absolute difference map; and performing weighted summation on the three-dimensional image comprehensive quality map through the three-dimensional visual saliency map to obtain a distorted three-dimensional image quality objective evaluation value. The overall performance of the method on the aspect of three-dimensional image quality objective evaluation is superior to an algorithm in the prior art; practical feasibility is realized; a high three-dimensional image quality objective evaluation index is obtained; and a plurality of demands in practical application are met.

Owner:TIANJIN UNIV

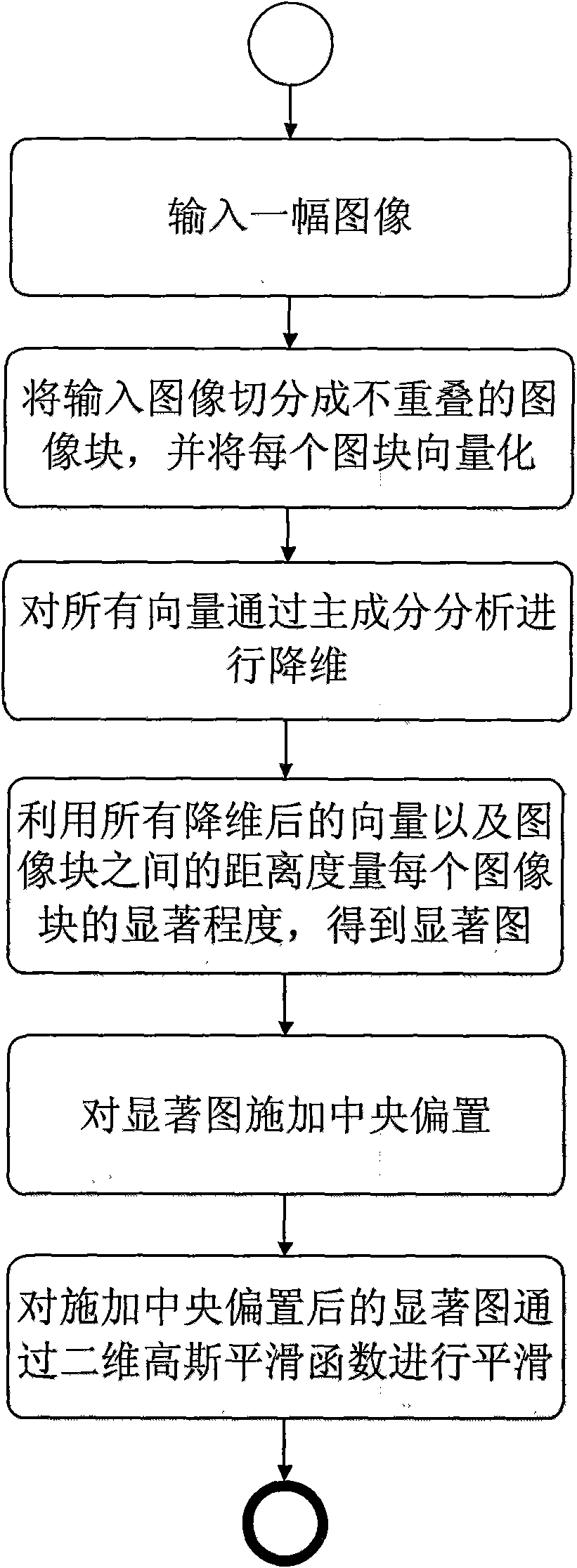

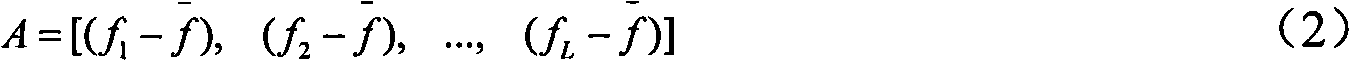

Method for detecting degree of visual saliency of image in different regions

ActiveCN101984464ASteps to Avoid Feature SelectionImprove execution efficiencyImage enhancementImage analysisSaliency mapVisual saliency

The invention discloses a method for detecting the degree of visual saliency of an image in different regions, which comprises the following steps: segmenting the input image into non-overlapping image blocks, and carrying out vectorization on each image block; carrying out dimensionality reduction on all vectors obtained in the step 1 through the PCA principle component analytical method for reducing noise and redundant information in the image; calculating the non-similarity degree between each image block and all the other image blocks by utilizing the vectors after the dimensionality reduction, calculating the degree of visual saliency of each image block by further combining with the distance between the image blocks and obtaining a saliency map; imposing central bias on the saliency map, and obtaining the saliency map after imposing the central bias; and smoothing the saliency map after imposing the central bias through a two-dimensional Gaussian smoothing operator, and obtaining a final result image which reflects the degree of saliency of the image in all the regions. Compared with the prior art, the method does not need to extract visual features, such as color, orientation, texture and the like and can avoid the step of selecting the features. The method has the advantages of simpleness and high efficiency.

Owner:BEIJING UNIV OF TECH

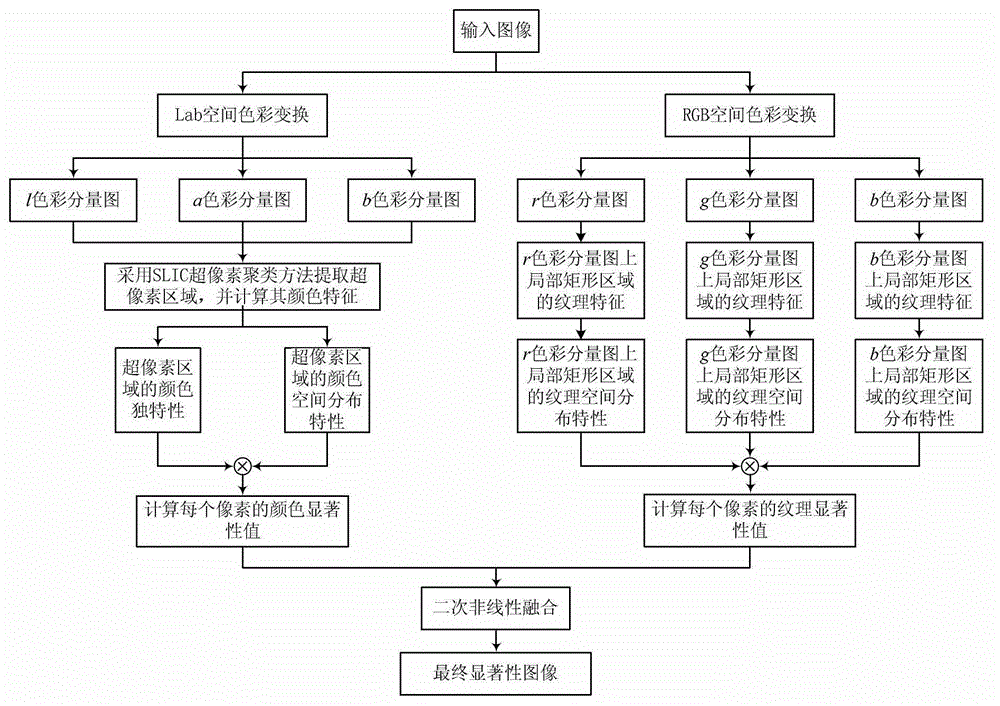

Visual saliency detection method with fusion of region color and HoG (histogram of oriented gradient) features

InactiveCN102867313AImprove the ability to distinguishImage analysisVisual saliencyComponent diagram

The invention relates to a visual saliency detection method with fusion of region color and HoG (histogram of oriented gradient) features. At present, the existing method is generally based on a pure calculation model of the region color feature and is insensitive to salient difference of texture. The method disclosed by the invention comprises the following steps of: firstly calculating a color saliency value of each pixel by analyzing color contrast and distribution feature of a superpixel region on a CIELAB (CIE 1976 L*, a*, b*) space color component diagram of an original image; then extracting an HoG-based local rectangular region texture feature on an RGB (red, green and blue) space color component diagram of the original image, and calculating a texture saliency value of each pixel by analyzing texture contrast and distribution feature of a local rectangular region; and finally fusing the color saliency value and the texture saliency value of each pixel into a final saliency value of the pixel by adopting a secondary non-linear fusion method. According to the method disclosed by the invention, a full-resolution saliency image which is in line with sense of sight of human eyes can be obtained, and the distinguishing capability against a saliency object is further stronger.

Owner:海宁鼎丞智能设备有限公司

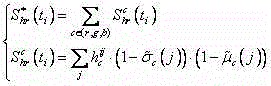

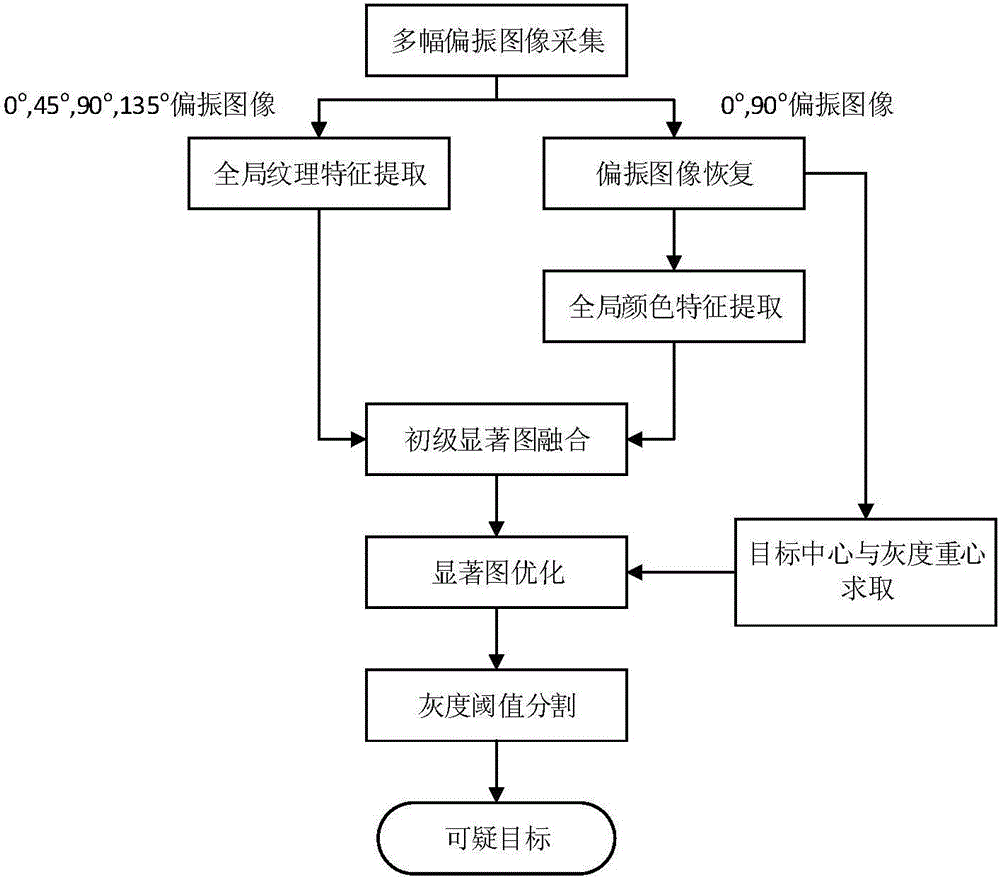

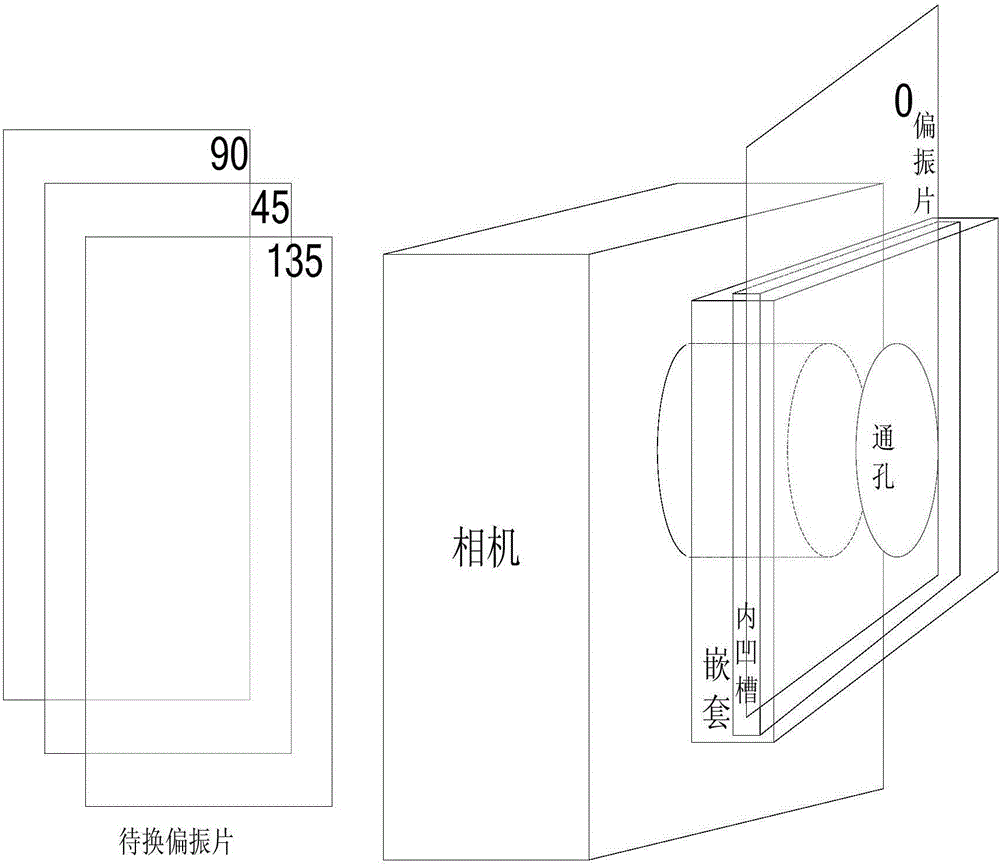

Salient visual method based on polarization imaging and applicable to underwater target detection

ActiveCN106407927AImprove visibilityTarget radiation weakenedCharacter and pattern recognitionGravity centerVisual perception

The invention discloses a salient visual method based on polarization imaging and applicable to underwater target detection, comprising the following steps: (A) acquiring auto-registration polarization images of the same underwater position at multiple angles; (B) performing underwater image restoration based on polarization information; (C) extracting global texture features; (D) extracting color features based on global contrast; (E) fusing visual saliency features; (F) performing saliency map optimization and target extraction based on the target center and the gray gravity center; and (G) performing threshold segmentation on a final saliency map to realize underwater target detection. Salience optimization is realized by use of the target center probability, image gray gravity center and space smoothness. The background is further restrained, and the foreground is highlighted. High detection rate and high identification rate are achieved for target detection in a complicated water environment, and the real-time requirement is satisfied. The method has a good application prospect.

Owner:HOHAI UNIV CHANGZHOU

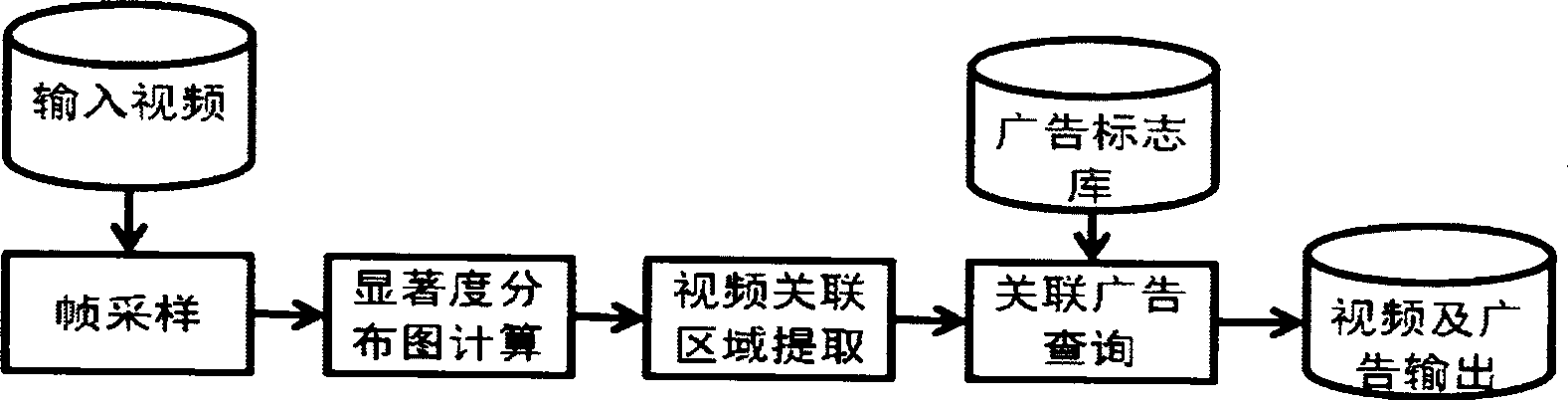

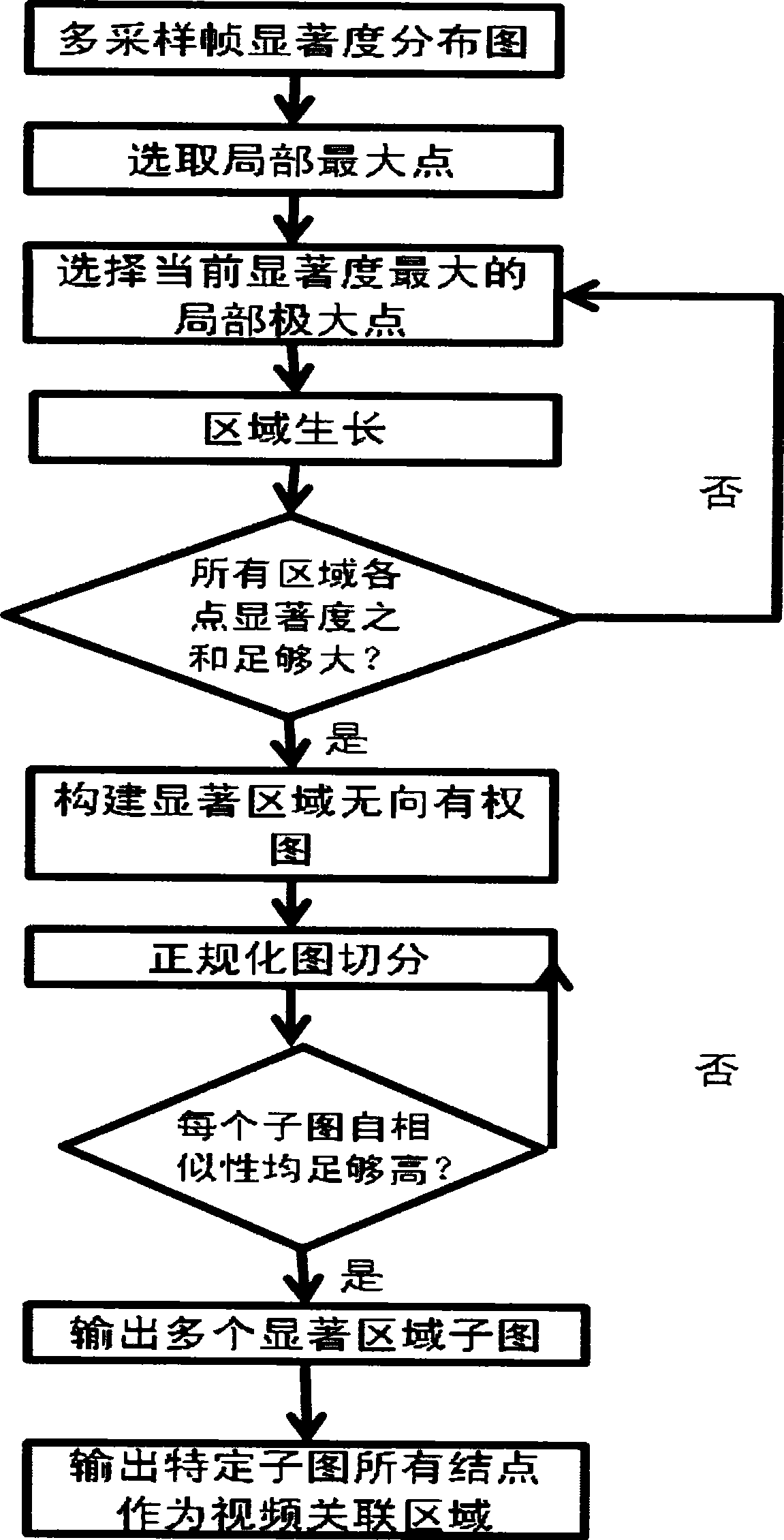

Video advertisement correlation method and system based on visual saliency

InactiveCN101489139AEasy to understandEasy to explorePulse modulation television signal transmissionAdvertisingPattern recognitionRelevant information

The invention relates to an image and a video processing method, especially to a video advertisement correlation method based on a visual saliency. The invention can automatically select a most attracted salient region in a video by calculating a saliency distribution graph of a sampling frame. Based on the extracted salient region, the method automatically obtains advertising signs associated with the salient region in an advertisement base through a plurality of retrieval methods. Finally the method synchronously plays the video clip and queried advertisement information to a user. The invention can associates relative advertisement information for the salient region concerned with the user, further satisfies the demands of deep understand and further exploration to the region concerned with the user.

Owner:PEKING UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com