Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1224 results about "Saliency map" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer vision, a saliency map is an image that shows each pixel's unique quality. The goal of a saliency map is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. For example, if a pixel has a high grey level or other unique color quality in a color image, that pixel's quality will show in the saliency map and in an obvious way. Saliency is a kind of image segmentation.

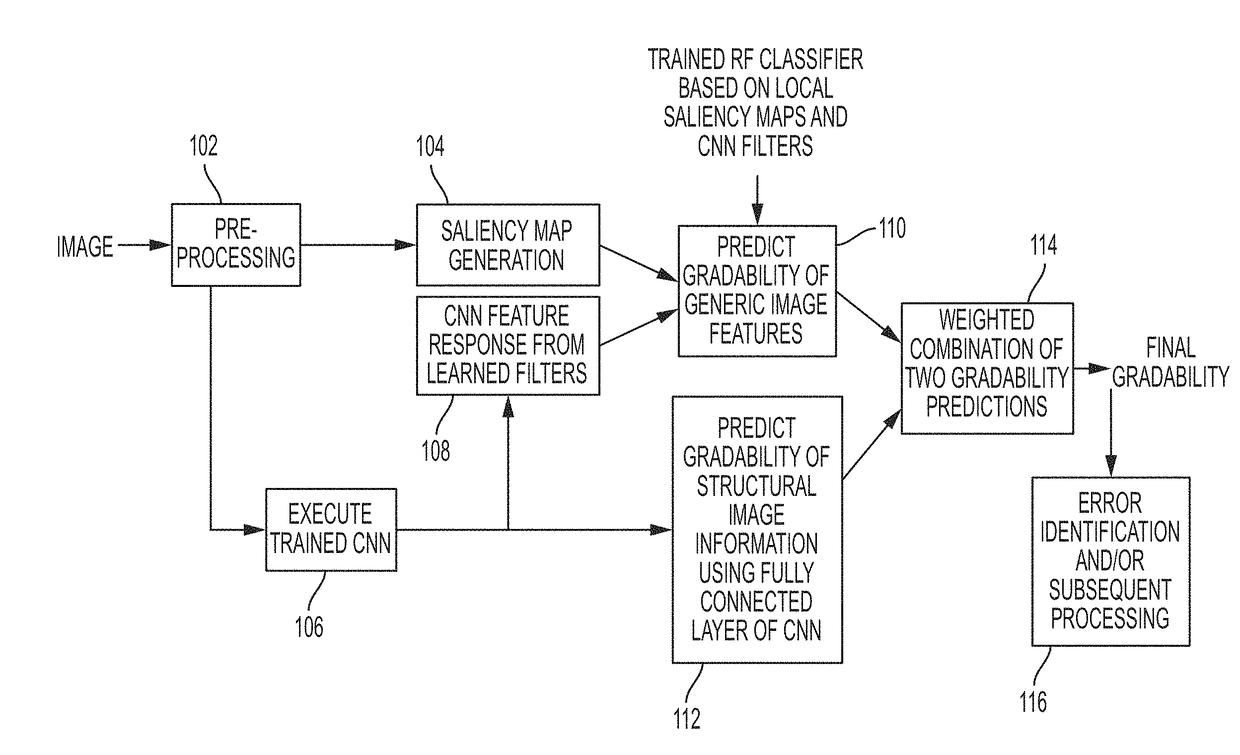

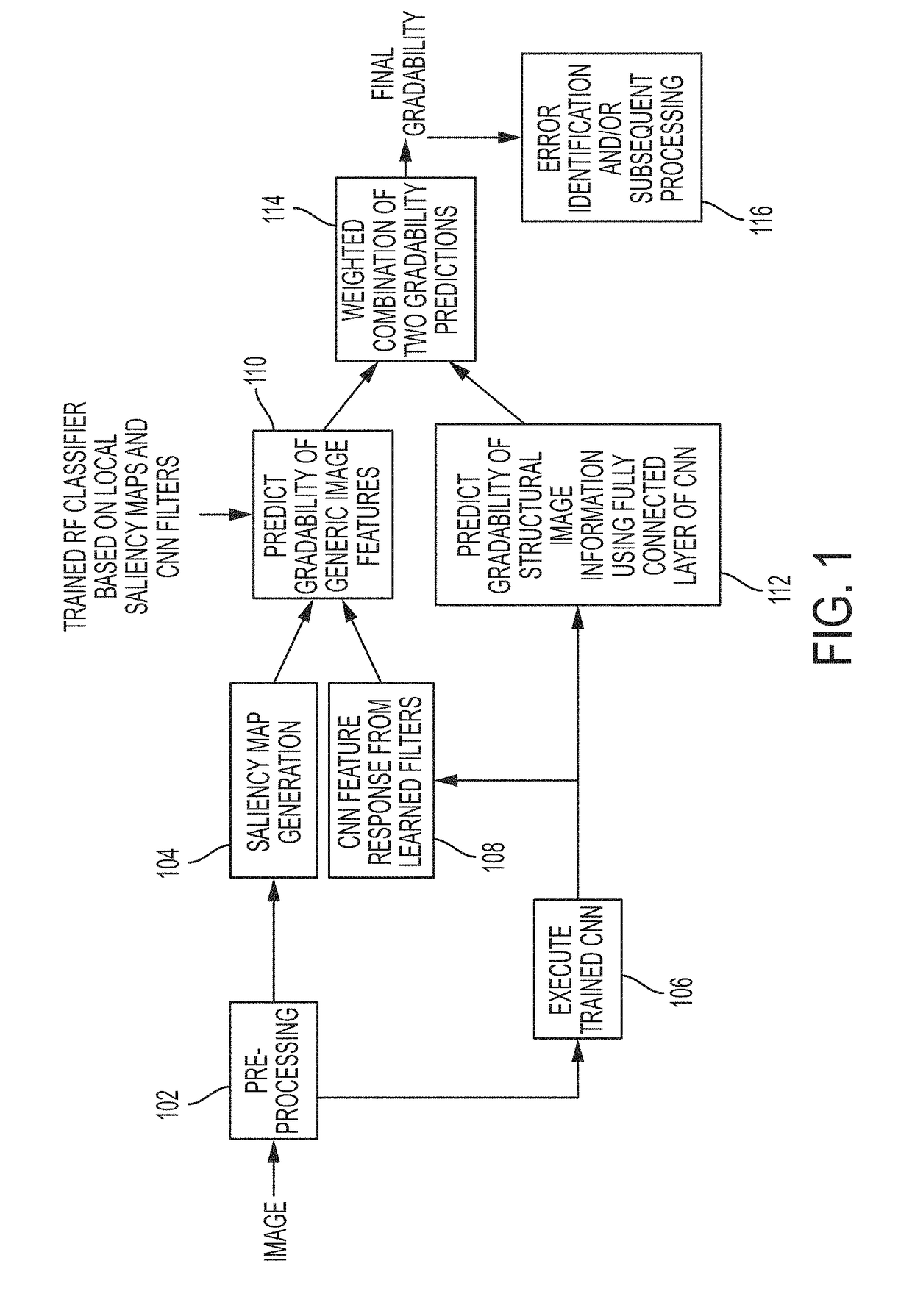

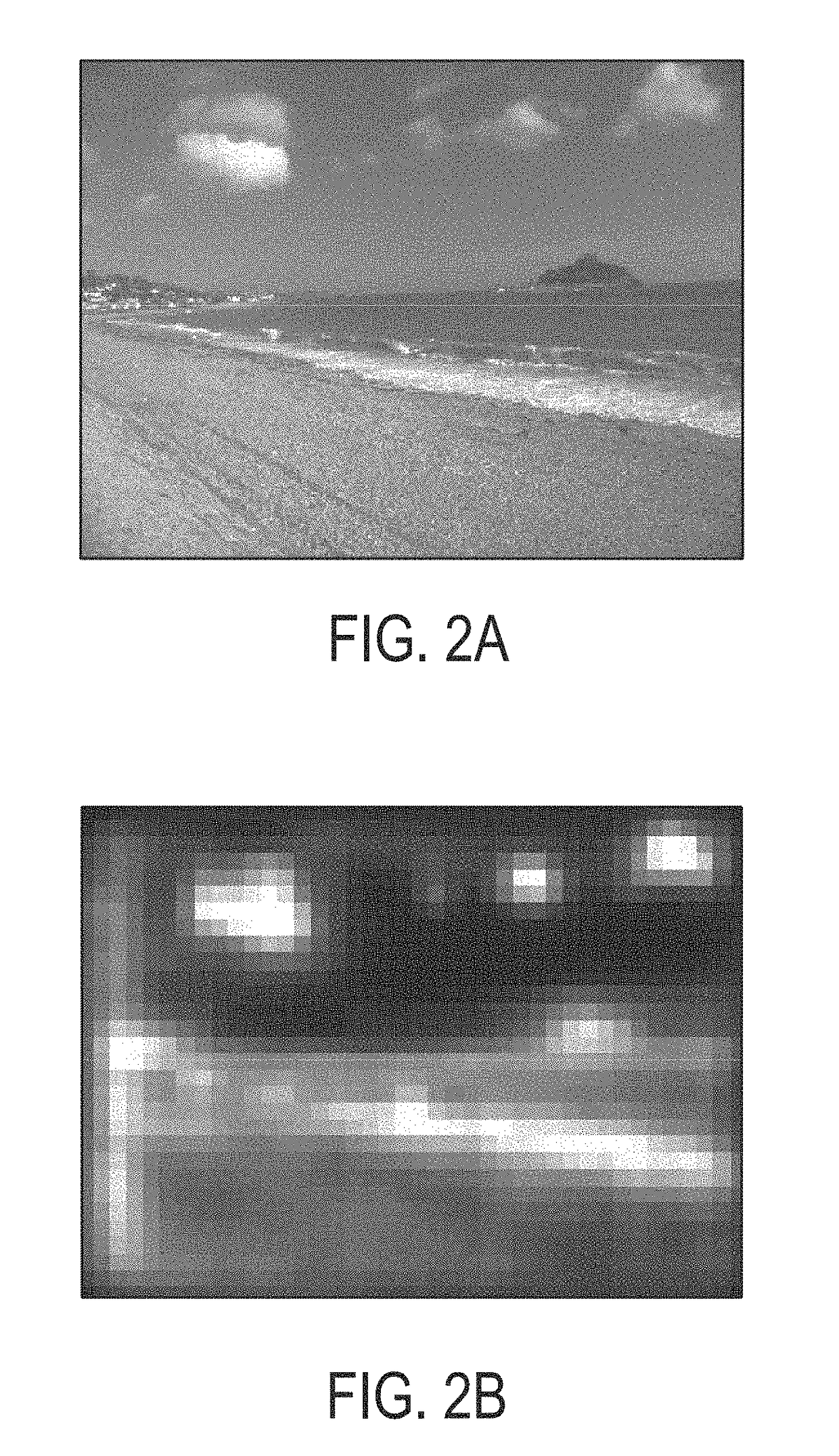

Retinal image quality assessment, error identification and automatic quality correction

Automatically determining image quality of a machine generated image may generate a local saliency map of the image to obtain a set of unsupervised features. The image is run through a trained convolutional neural network (CNN) to extract a set of supervised features from a fully connected layer of the CNN, the image convolved with a set of learned kernels from the CNN to obtain a complementary set of supervised features. The set of unsupervised features and the complementary set of supervised features are combined, and a first decision on gradability of the image is predicted. A second decision on gradability of the image is predicted based on the set of supervised features. Whether the image is gradable is determined based on a weighted combination of the first decision and the second decision.

Owner:IBM CORP

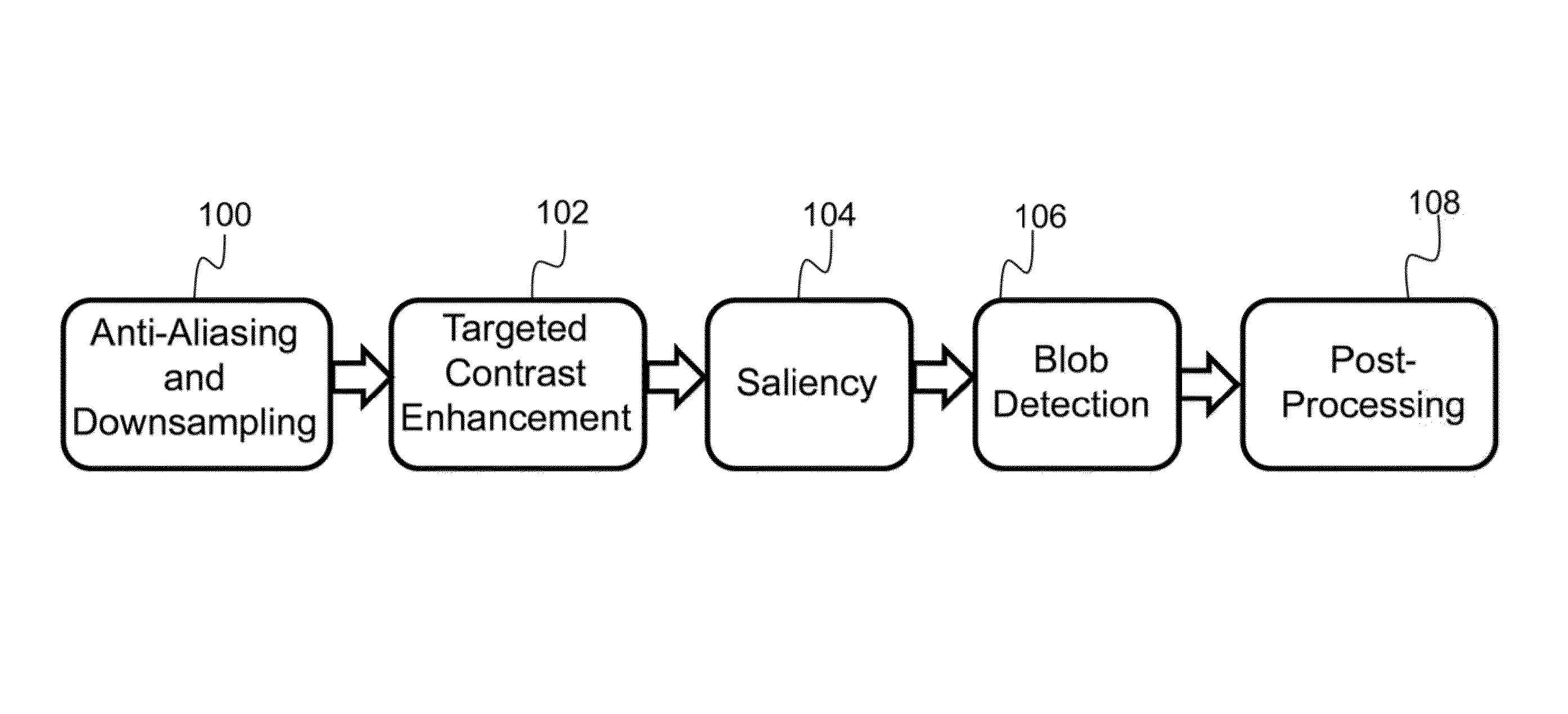

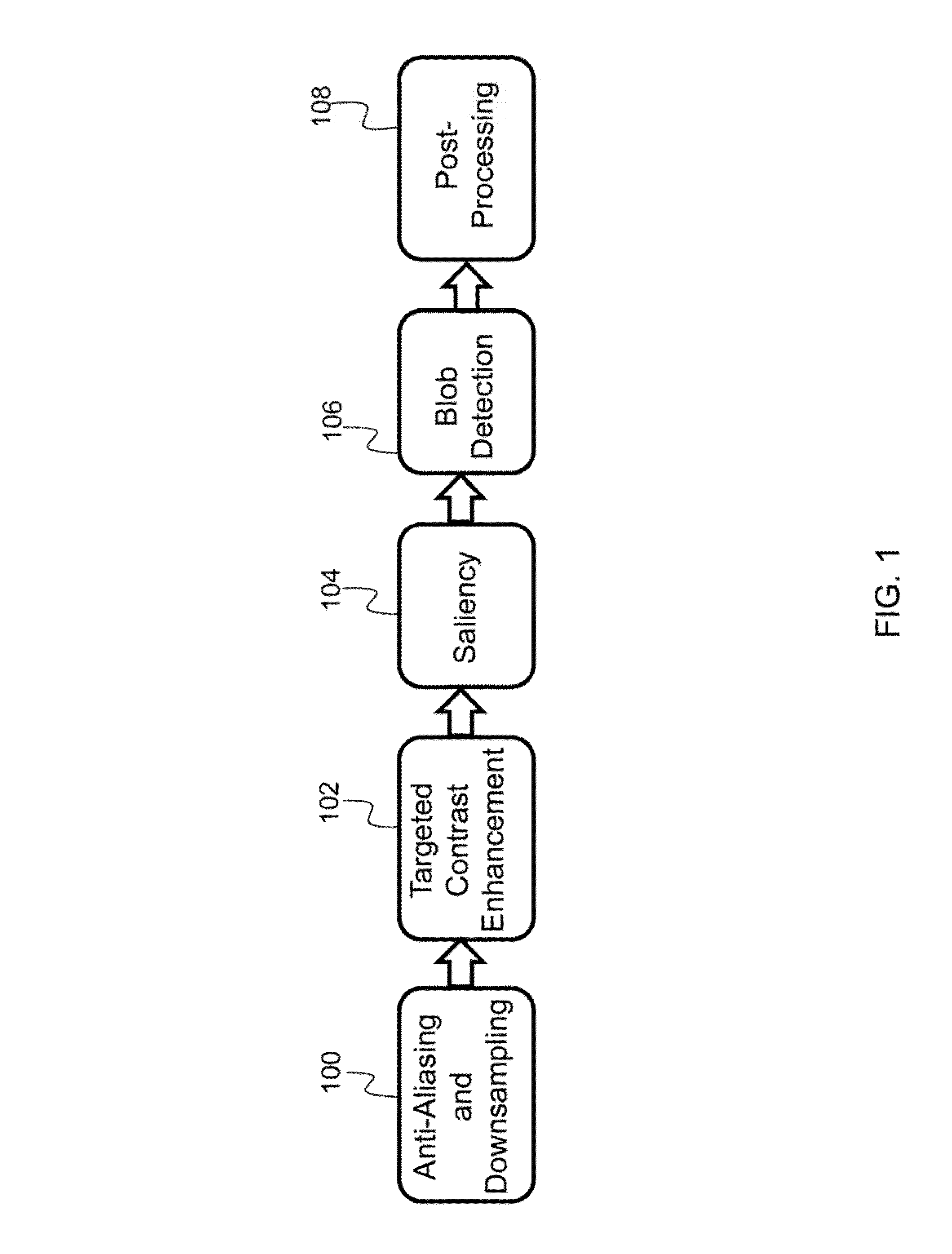

Robust static and moving object detection system via attentional mechanisms

Described, is a system for object detection via multi-scale attentional mechanisms. The system receives a multi-band image as input. Anti-aliasing and downsampling processes are performed to reduce the size of the multi-band image. Targeted contrast enhancement is performed on the multi-band image to enhance a target color of interest. A response map for each target color of interest is generated, and each response map is independently processed to generate a saliency map. The saliency map is converted into a set of detections representing potential objects of interest, wherein each detection is associated with parameters, such as position parameters, size parameters, an orientation parameter, and a score parameter. A post-processing step is applied to filter out false alarm detections in the set of detections, resulting in a final set of detections. Finally, the final set of detections and their associated parameters representing objects of interest is output.

Owner:HRL LAB

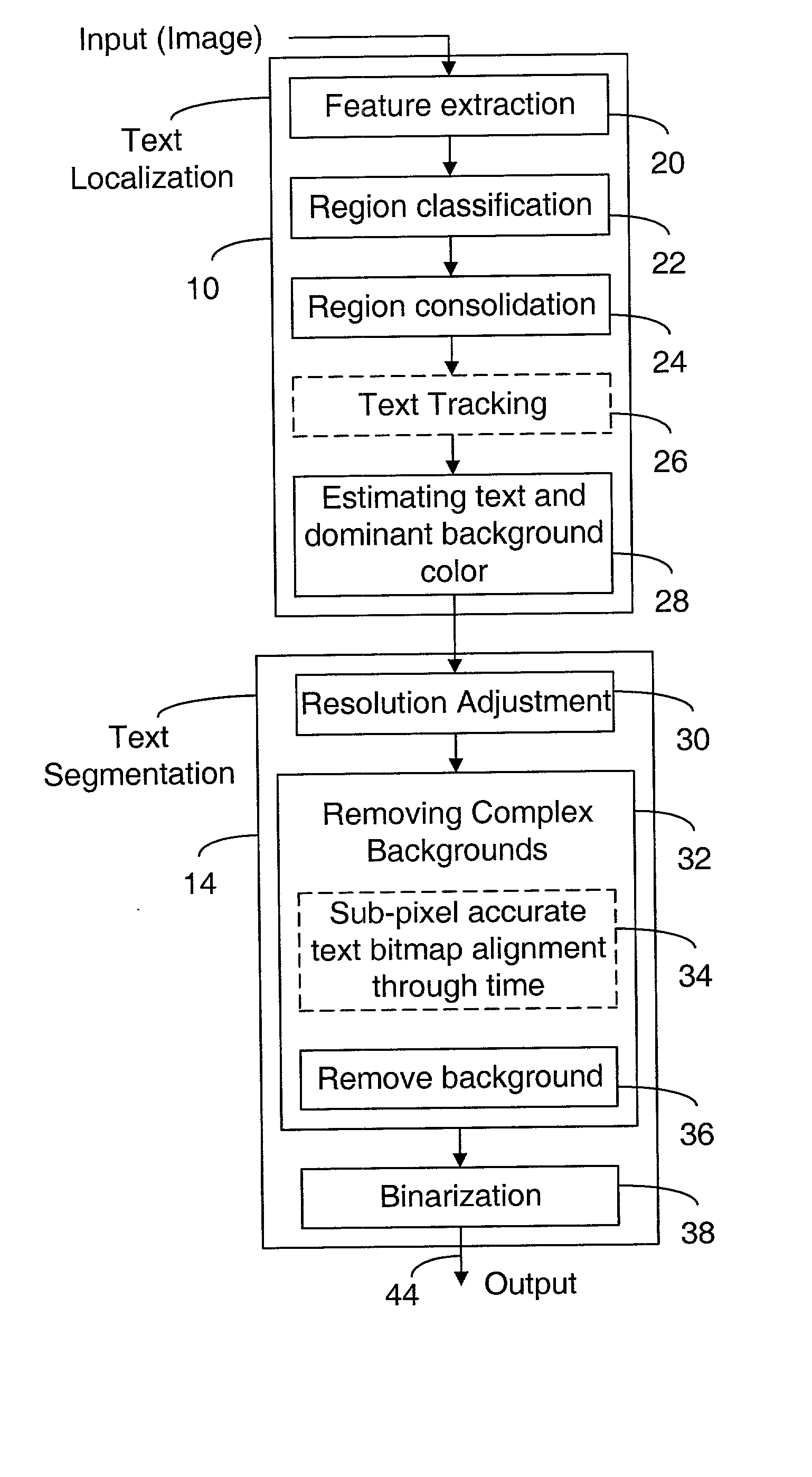

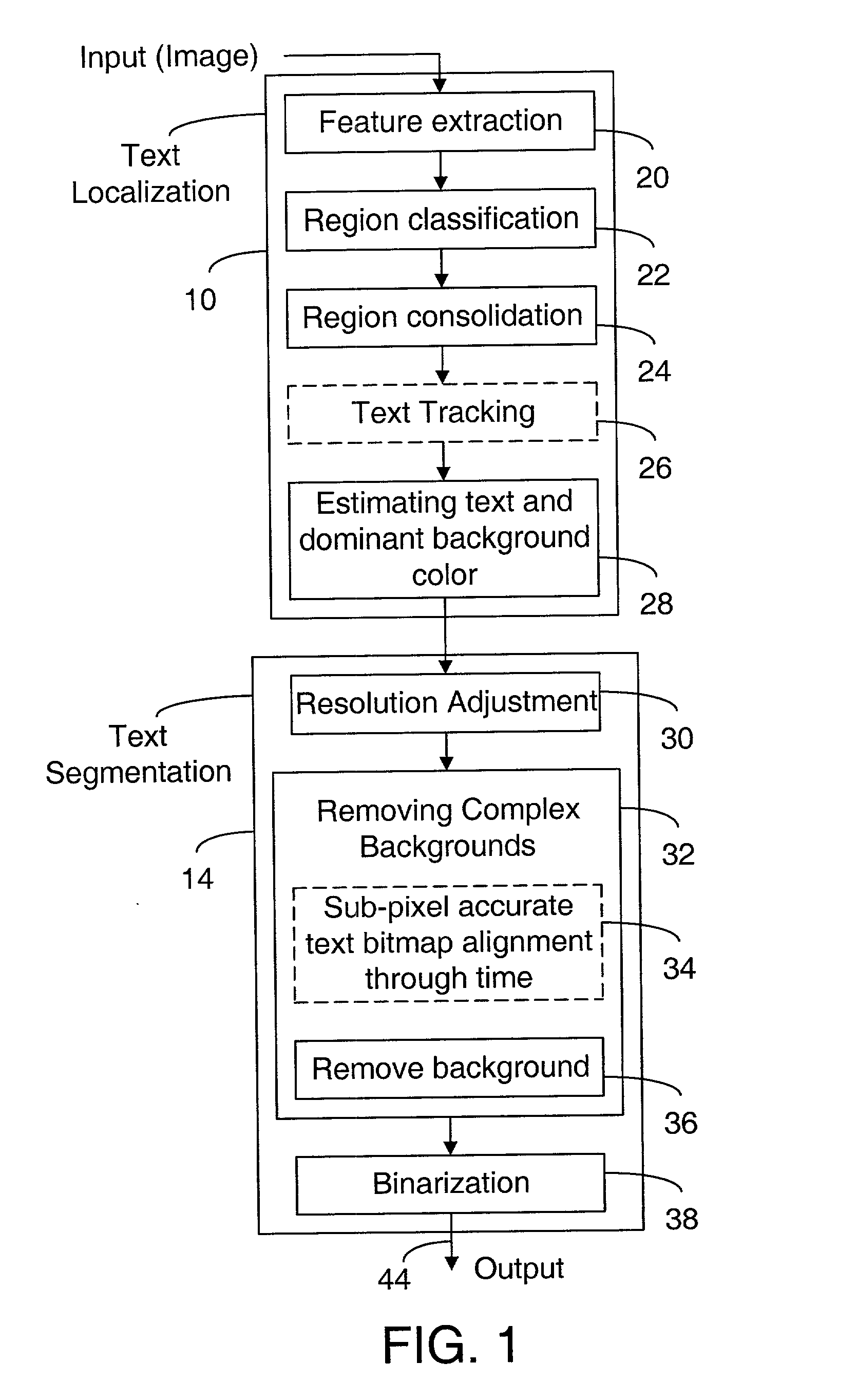

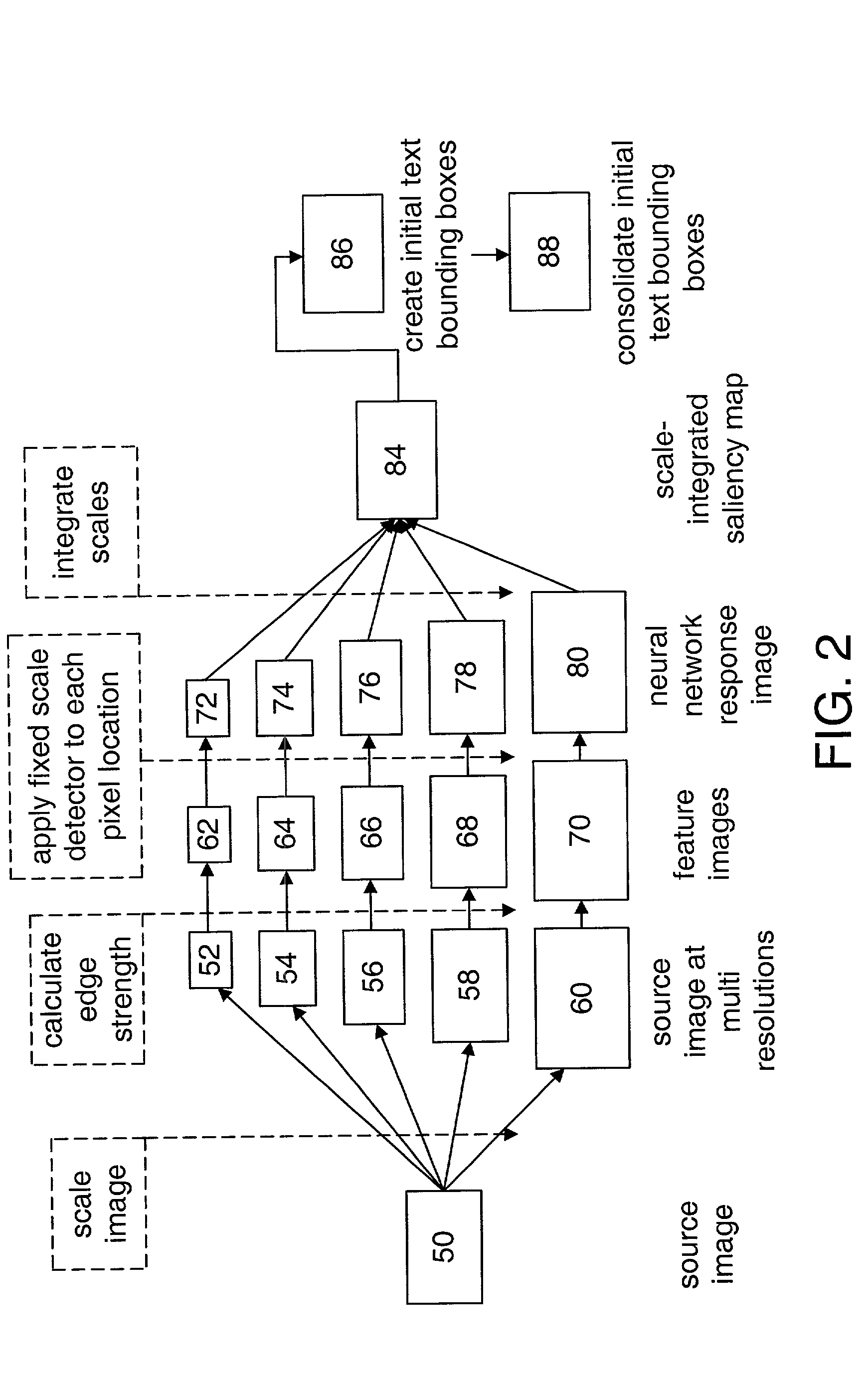

Generalized text localization in images

In some embodiments, the invention includes a method for locating text in digital images. The method includes scaling a digital image into images of multiple resolutions and classifying whether pixels in the multiple resolutions are part of a text region. The method also includes integrating scales to create a scale integration saliency map and using the saliency map to create initial text bounding boxes through expanding the boxes from rectangles of pixels including at least one pixel to include groups of at least one pixel adjacent to the rectangles, wherein the groups have a particular relationship to a first threshold. The initial text bounding boxes are consolidated. In other embodiments, a method includes classifying whether pixels are part of a text region, creating initial text bounding boxes, and consolidating the initial text bounding boxes, wherein the consolidating includes creating horizontal projection profiles having adaptive thresholds and vertical projection profiles having adaptive thresholds.

Owner:INTEL CORP

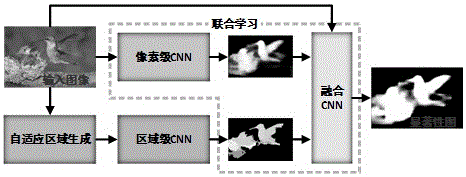

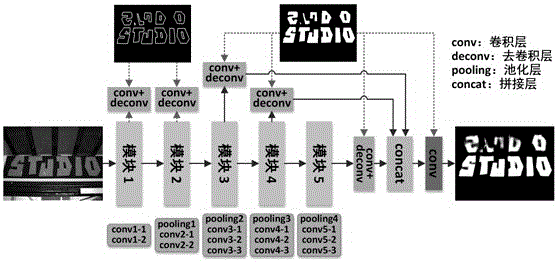

Region-level and pixel-level fusion saliency detection method based on convolutional neural networks (CNN)

ActiveCN106157319AGood saliency detection performanceGuaranteed edgeImage enhancementImage analysisSaliency mapResearch Object

The invention discloses a region-level and pixel-level fusion saliency detection method based on convolutional neural networks (CNN). The research object of the method is a static image of which the content can be arbitrary, and the research goal of the method is to find a target striking the eyes of a person from images and assign different saliency values to the target. An adaptive region generation technology is mainly proposed, and two CNN structures are designed and used for pixel-level saliency prediction and saliency fusion respectively. The two CNN models are used for training a network model with the image as input and the real result of the image as a supervisory signal and finally outputting a saliency map of the same size as the input image. By means of the method, region-level saliency estimation and pixel-level saliency prediction can be effectively carried out to obtain two saliency maps, and finally the two saliency maps and the original image are fused through the CNNs for saliency fusion to obtain the final saliency map.

Owner:HARBIN INST OF TECH

Method of identifying motion sickness

InactiveUS20120134543A1Precise processEasy to optimizeCharacter and pattern recognitionSteroscopic systemsHead movementsMotion sickness

A method to determine the propensity of an image-sequence to induce motion sickness in a viewer includes using a processor to analyze the image-sequence information to extract salient static and dynamic visual features in the image sequence information, evaluating distribution of the salient static and dynamic features in the saliency map to estimate a probability of the image sequence causing the viewer to make eye and head movements and, using the estimated probability to determine the propensity that the image-sequence would induce motion sickness in a user as a consequence of the eye and head movements.

Owner:EASTMAN KODAK CO

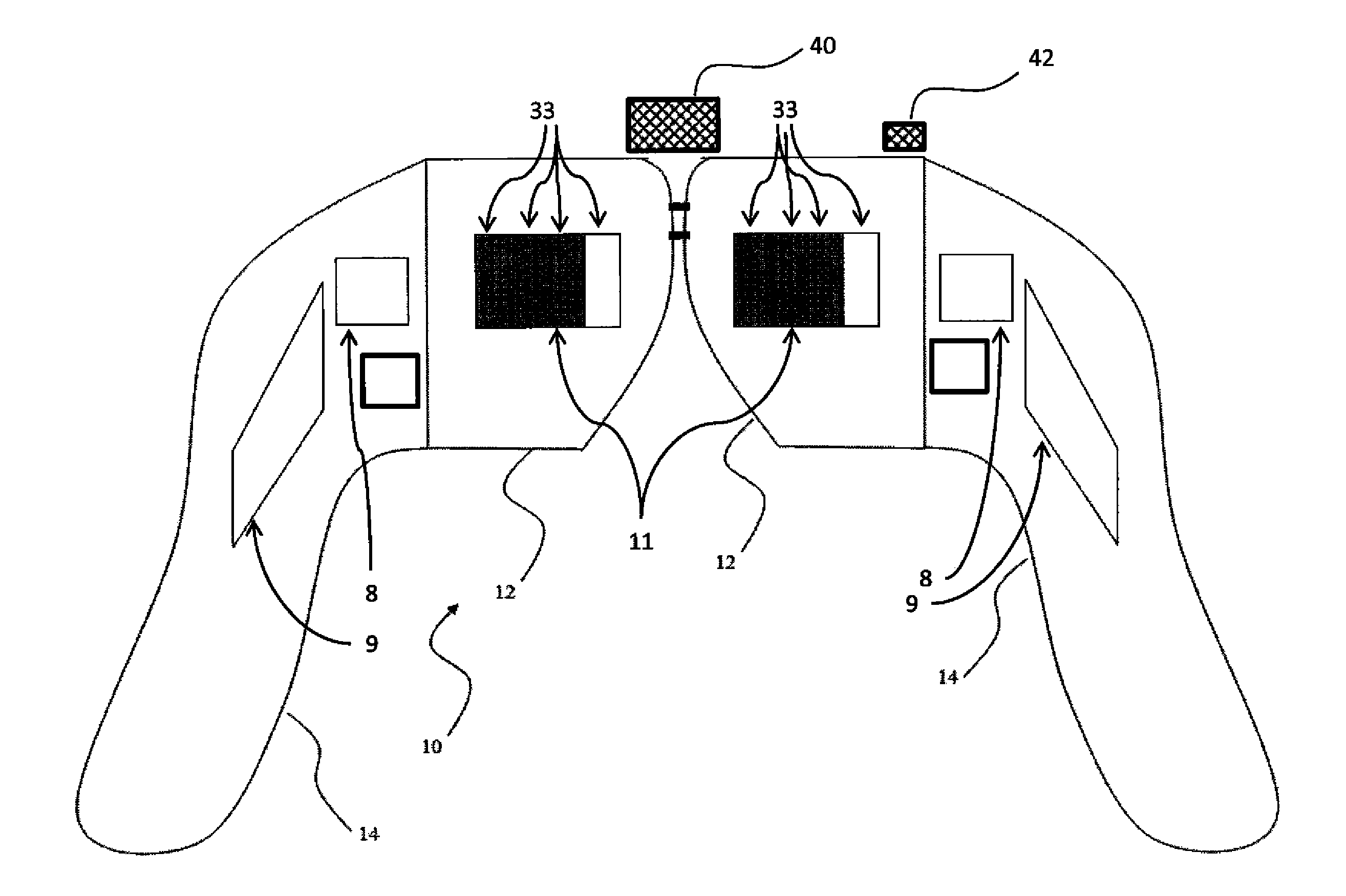

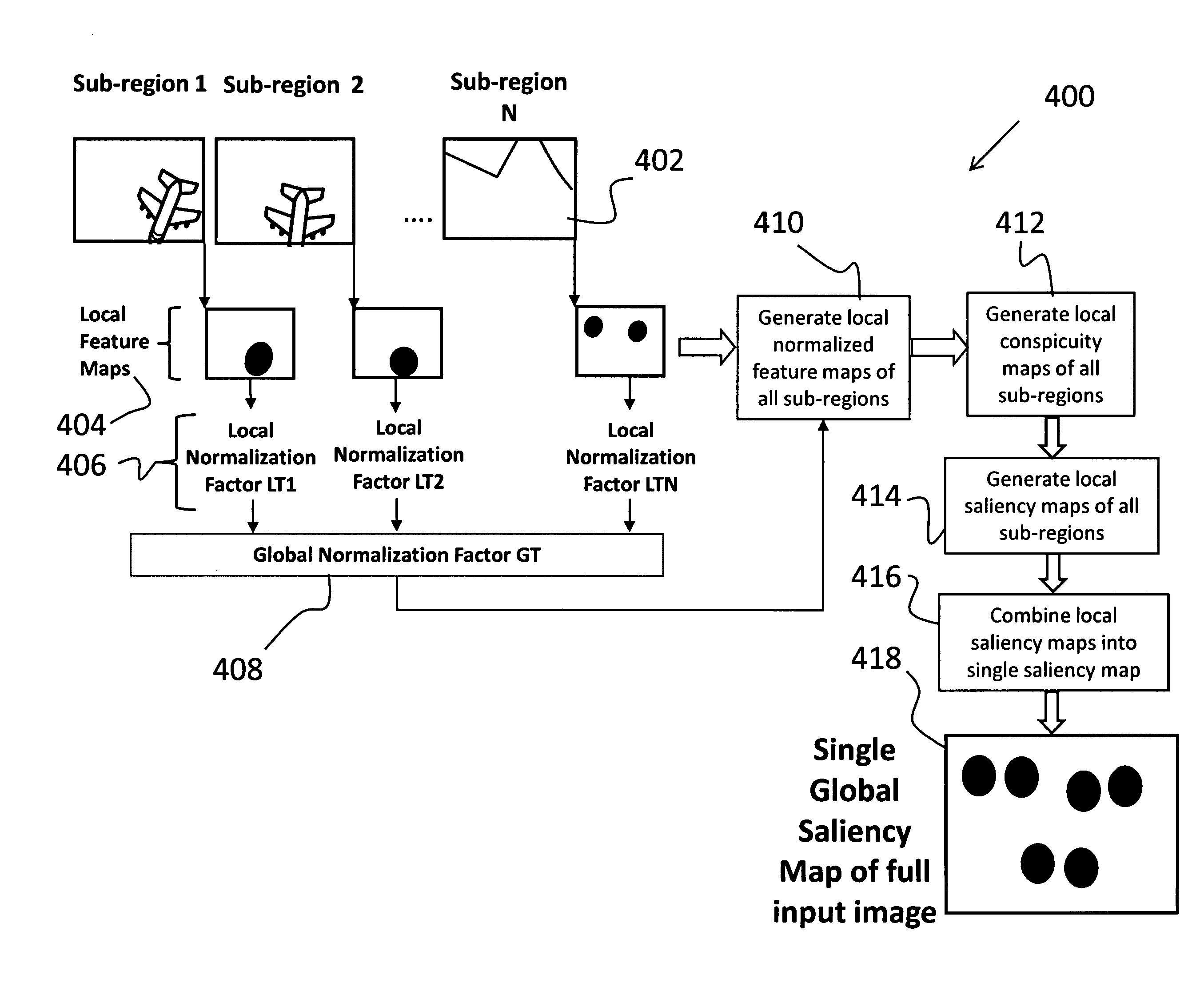

Visual attention system for salient regions in imagery

Described is a system for finding salient regions in imagery. The system improves upon the prior art by receiving an input image of a scene and dividing the image into a plurality of image sub-regions. Each sub-region is assigned a coordinate position within the image such that the sub-regions collectively form the input image. A plurality of local saliency maps are generated, where each local saliency map is based on a corresponding sub-region and a coordinate position representative of the corresponding sub-region. Finally, the plurality of local saliency maps is combined according to their coordinate positions to generate a single global saliency map of the input image of the scene.

Owner:HRL LAB

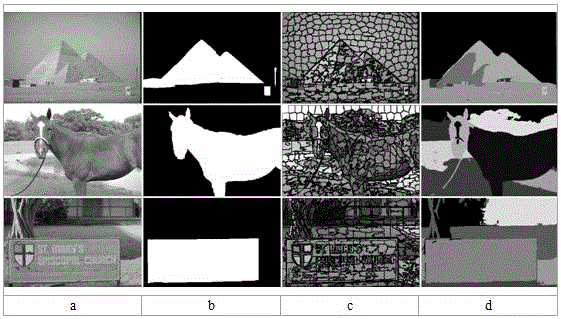

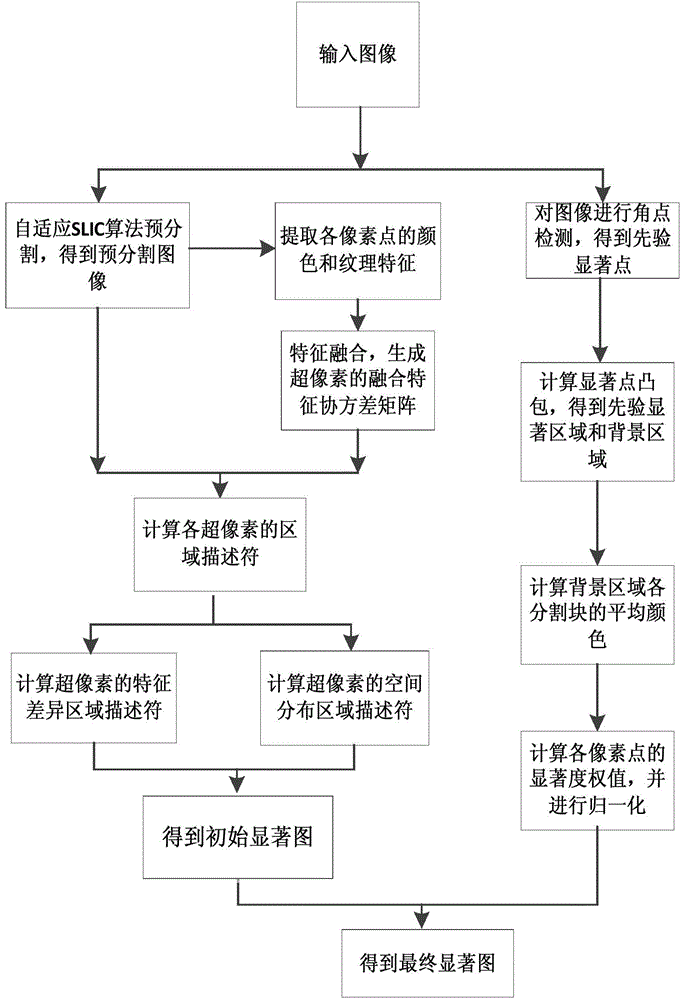

Image saliency detection method based on region description and priori knowledge

InactiveCN104103082APreserve edge detailSignificance detection is goodImage analysisPattern recognitionSaliency map

The invention discloses an image saliency detection method based on region description and priori knowledge. The method comprises the following steps that: (1) an image to be detected is subjected to pre-segmentation, superpixels are generated, and a pre-segmentation image is obtained; (2) a fusion feature covariance matrix of the superpixels is generated; (3) feature different region descriptors and space distribution region descriptors of each superpixel are calculated; (4) the initial saliency value of each pixel point of the image to be detected is calculated; (5) a priori saliency region and a background region of the image are obtained; (6) the saliency weight of each pixel point of the image to be detected is calculated; and (7) the final saliency value of each pixel point is calculated. The image saliency detection method has the advantages that the saliency region can be uniformly highlighted in an obtained final saliency map; the background noise interference is inhibited; a good saliency detection effect can be achieved in ordinary images, and the processing on the saliency detection of complicated images can also be realized; and the processing on subsequent image key region extraction and the like can also be favorably carried out.

Owner:SOUTH CHINA UNIV OF TECH

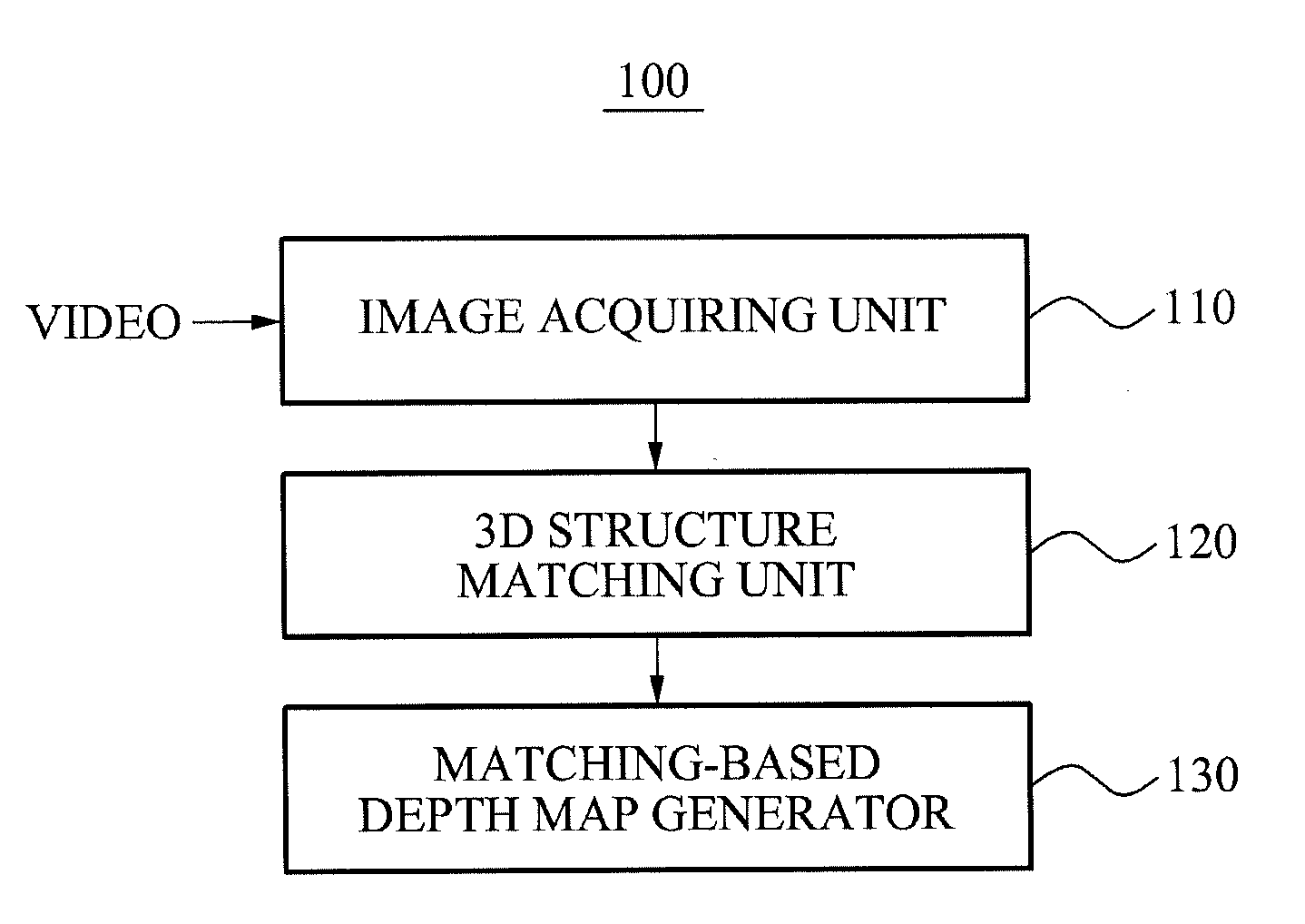

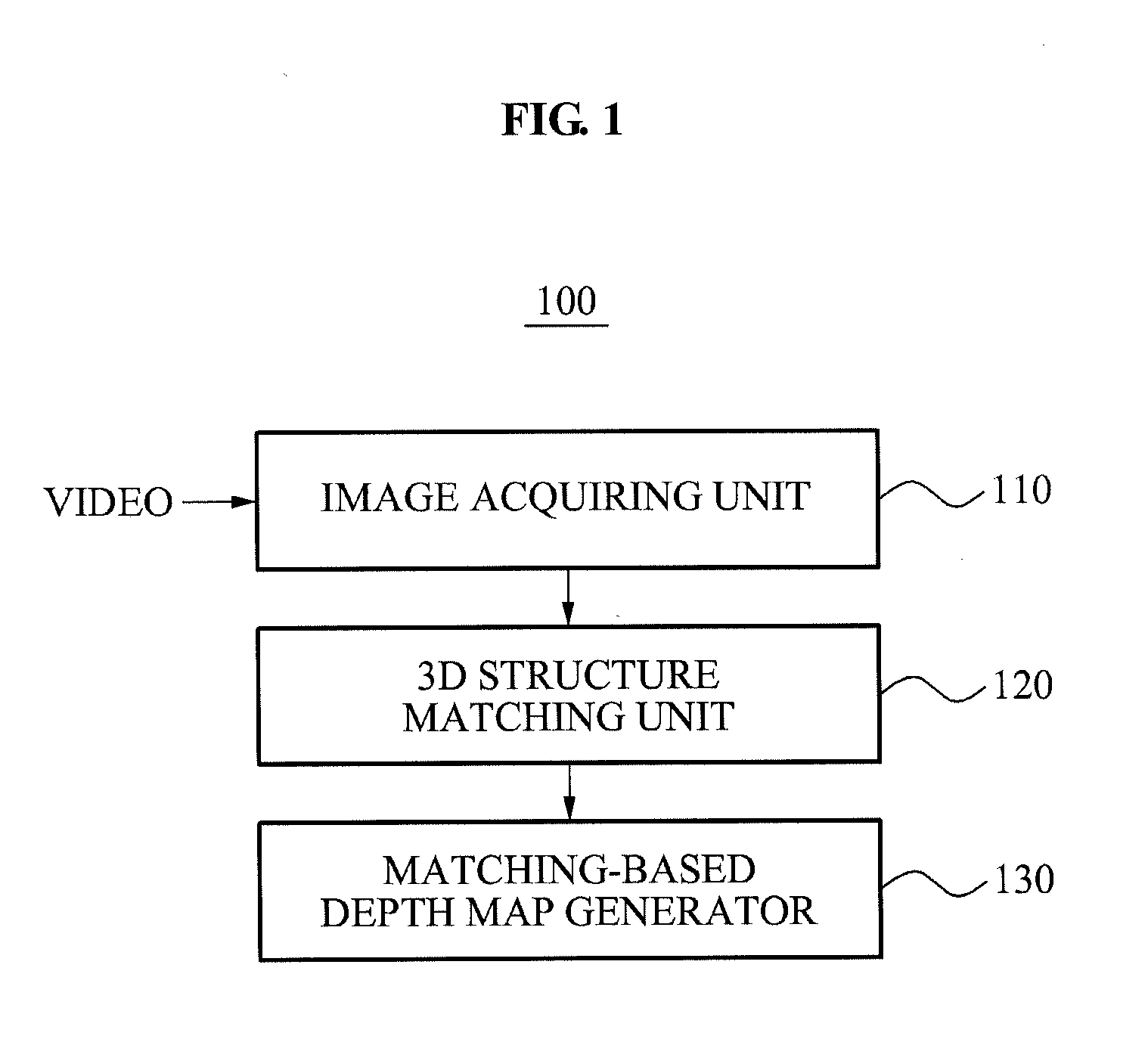

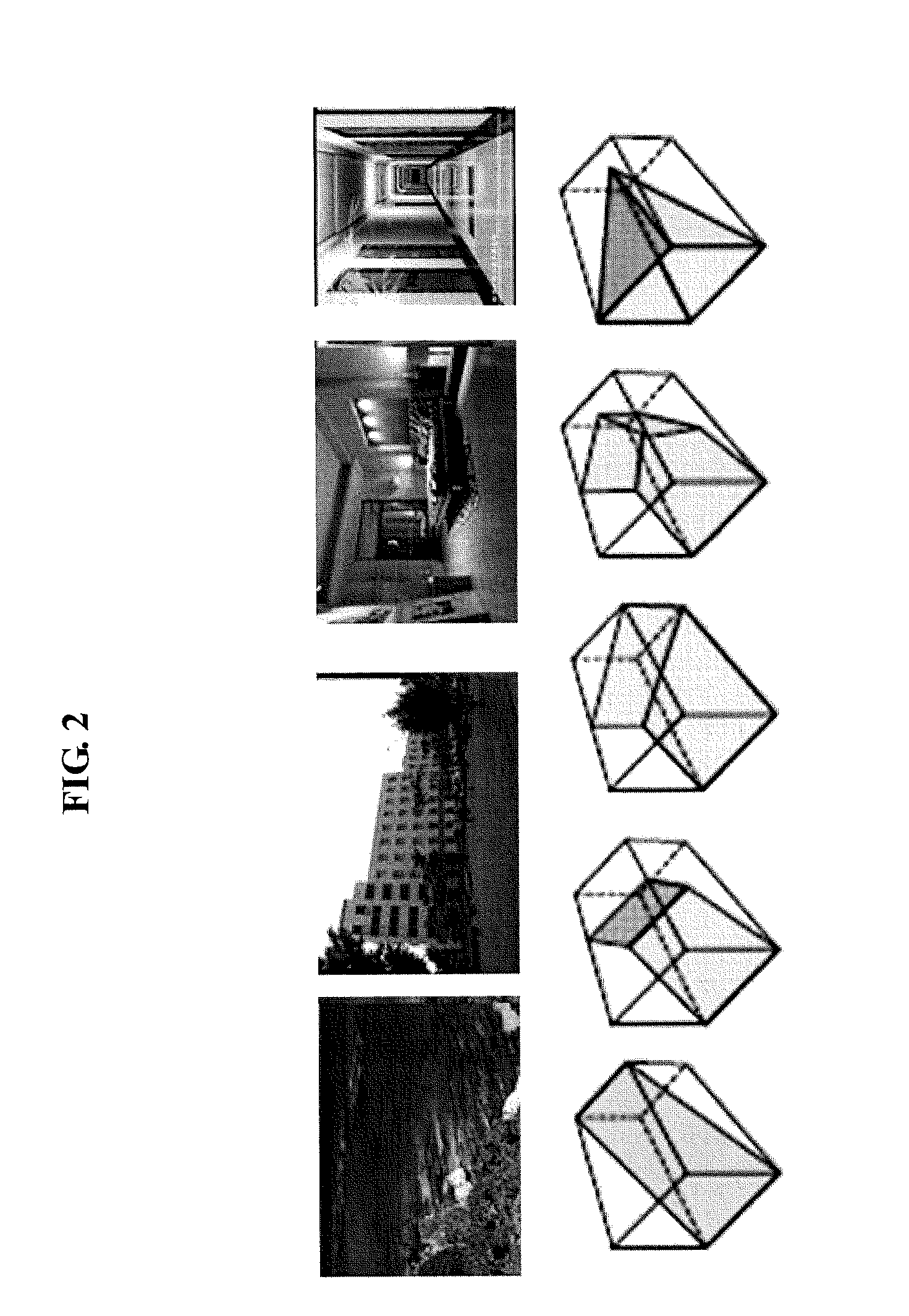

Apparatus, method and computer-readable medium generating depth map

Disclosed are an apparatus, a method and a computer-readable medium automatically generating a depth map corresponding to each two-dimensional (2D) image in a video. The apparatus includes an image acquiring unit to acquire a plurality of 2D images that are temporally consecutive in an input video, a saliency map generator to generate at least one saliency map corresponding to a current 2D image among the plurality of 2D images based on a Human Visual Perception (HVP) model, a saliency-based depth map generator, a three-dimensional (3D) structure matching unit to calculate matching scores between the current 2D image and a plurality of 3D typical structures that are stored in advance, and to determine a 3D typical structure having a highest matching score among the plurality of 3D typical structures to be a 3D structure of the current 2D image, a matching-based depth map generator; a combined depth map generator to combine the saliency-based depth map and the matching-based depth map and to generate a combined depth map, and a spatial and temporal smoothing unit to spatially and temporally smooth the combined depth map.

Owner:SAMSUNG ELECTRONICS CO LTD

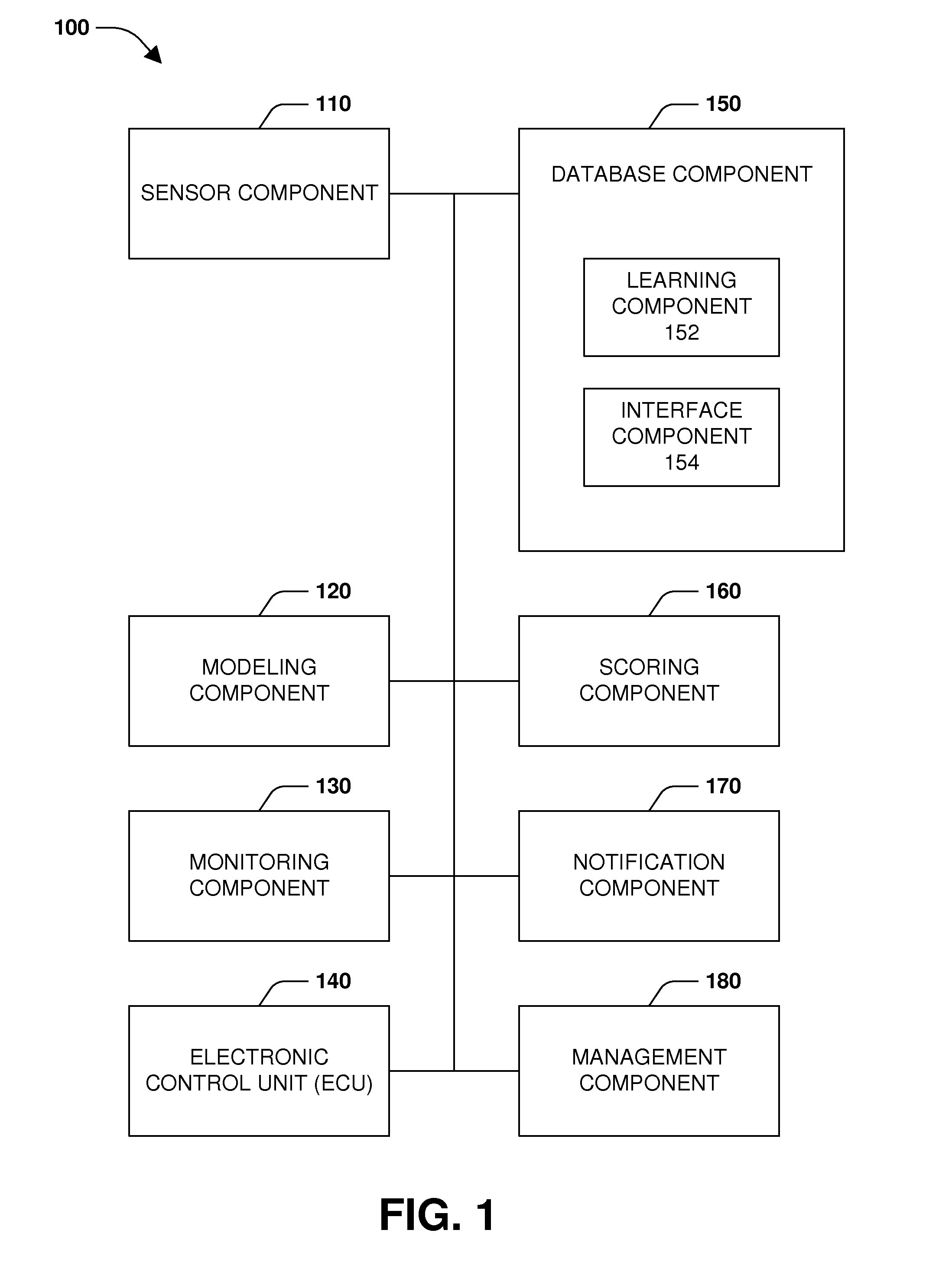

Saliency based awareness modeling

ActiveUS20160117947A1Reduce the amount requiredEffective attentionCosmonautic condition simulationsSimulatorsPattern recognitionDriver/operator

In one or more embodiments, driver awareness may be calculated, inferred, or estimated utilizing a saliency model, a predictive model, or an operating environment model. An awareness model including one or more awareness scores for one or more objects may be constructed based on the saliency model or one or more saliency parameters associated therewith. A variety of sensors or components may detect one or more object attributes, saliency, operator attributes, operator behavior, operator responses, etc. and construct one or more models accordingly. Examples of object attributes associated with saliency or saliency parameters may include visual characteristics, visual stimuli, optical flow, velocity, movement, color, color differences, contrast, contrast differences, color saturation, brightness, edge strength, luminance, a quick transient (e.g., a flashing light, an abrupt onset of a change in intensity, brightness, etc.).

Owner:HONDA MOTOR CO LTD

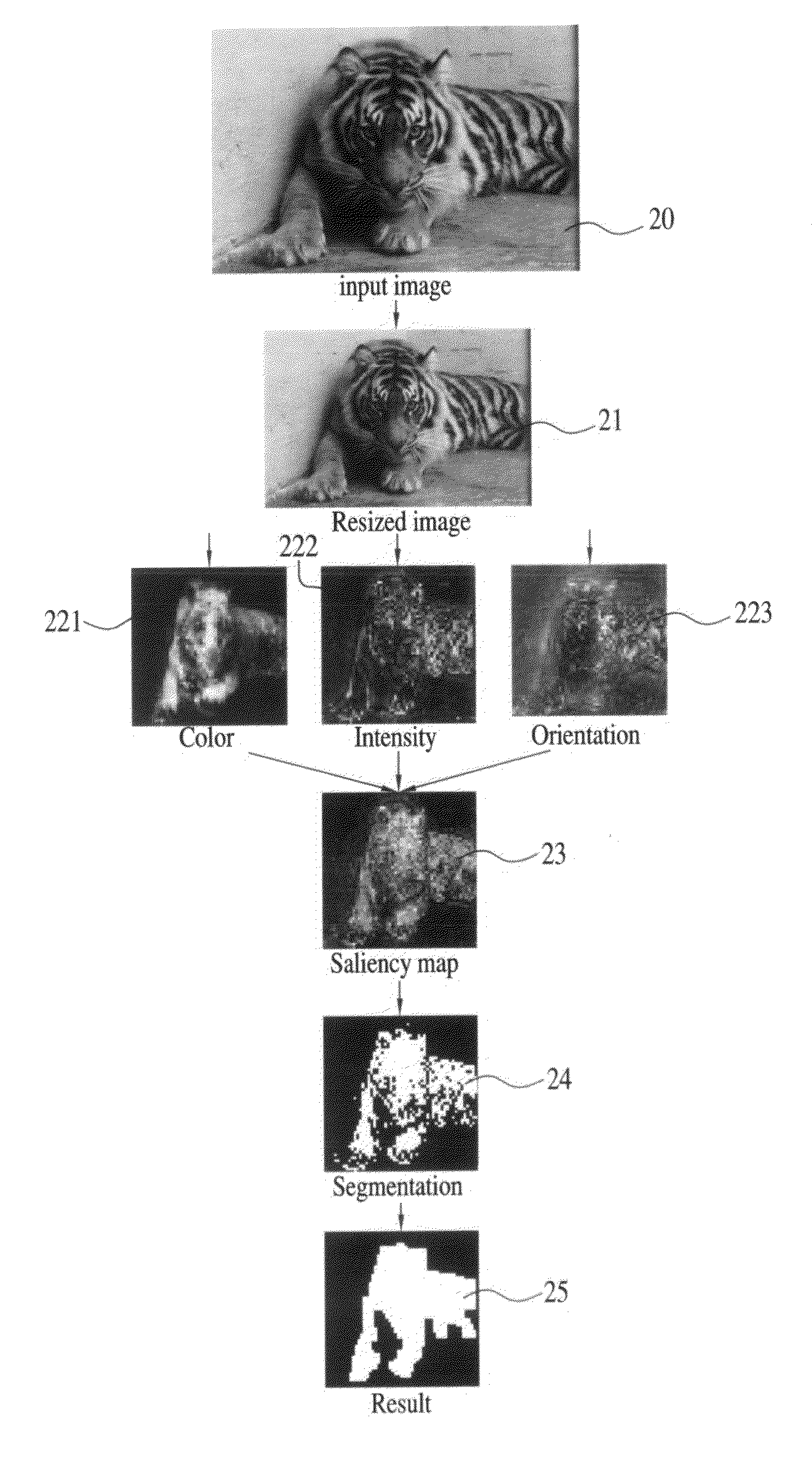

Method of automatically detecting and tracking successive frames in a region of interesting by an electronic imaging device

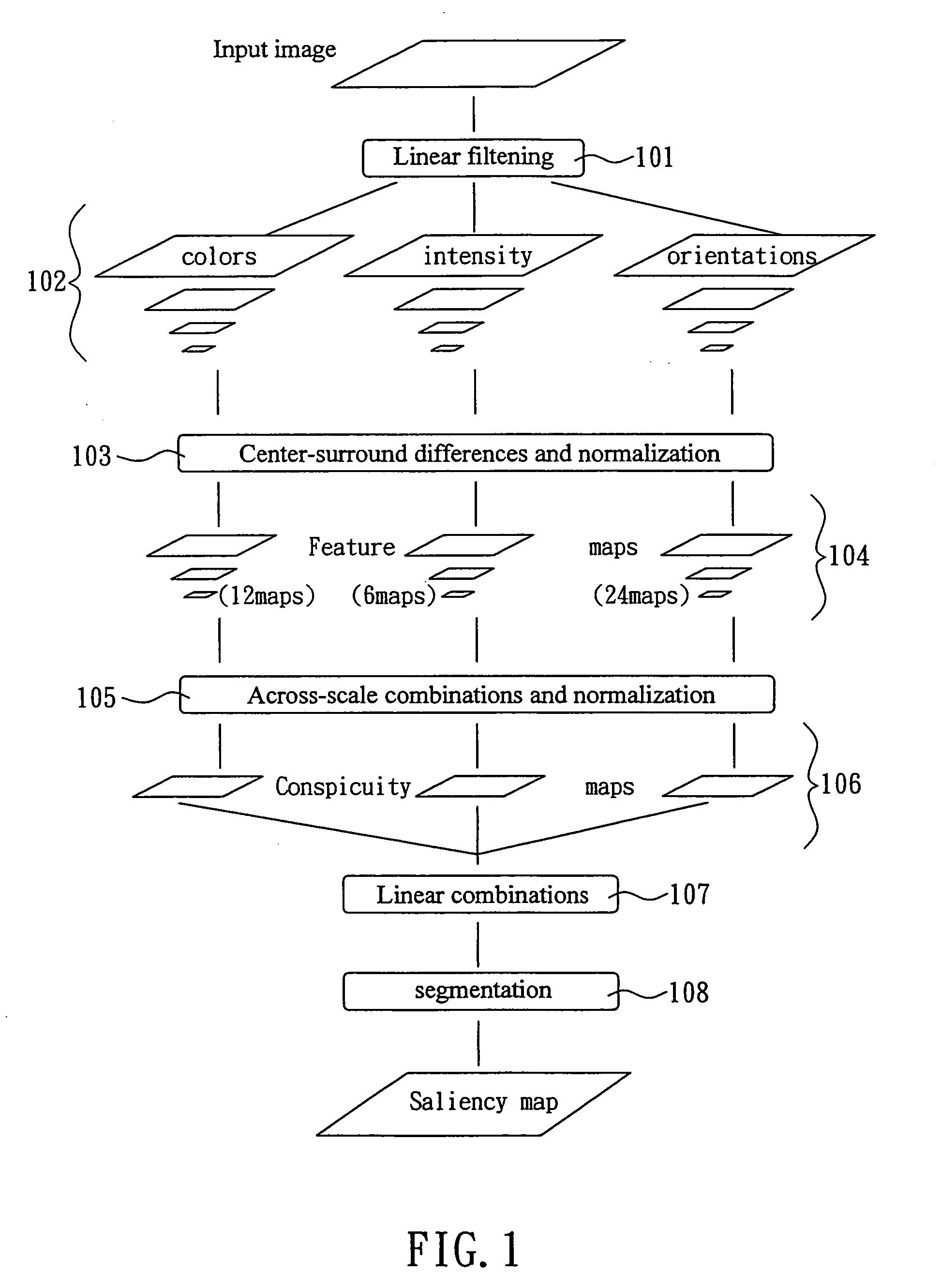

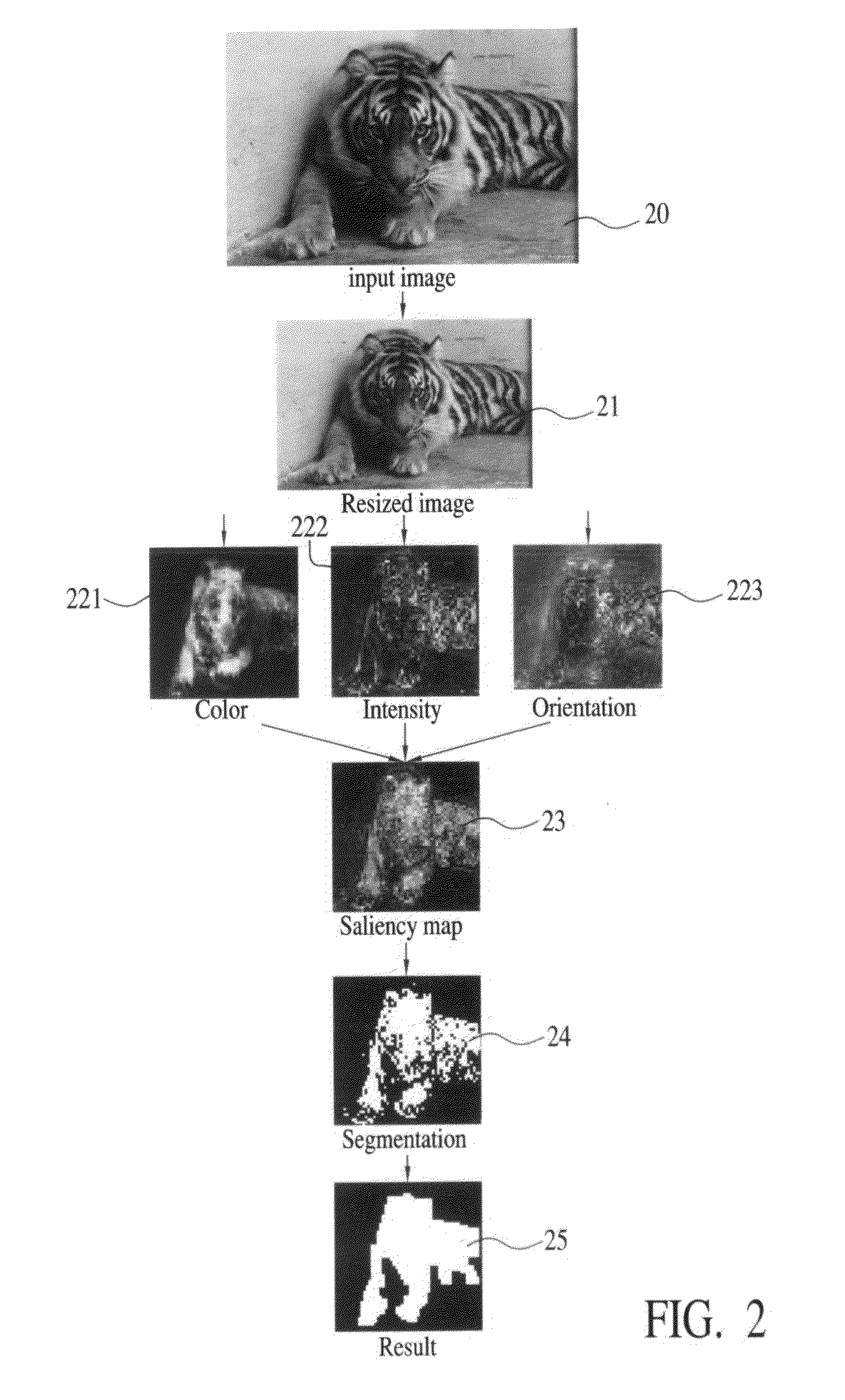

ActiveUS20090304231A1Improvement of capability of identifyingImprove computing speedCharacter and pattern recognitionSaliency mapPattern perception

A method of automatically detecting and tracking successive frames in a region of interesting by an electronic imaging device includes: decomposing a frame into intensity, color and direction features according to human perceptions; filtering an input image by a Gaussian pyramid to obtain levels of pyramid representations by down sampling; calculating the features of pyramid representations; using a linear center-surround operator similar to a biological perception to expedite the calculation of a mean value of the peripheral region; using the difference of each feature between a small central region and the peripheral region as a measured value; overlaying the pyramid feature maps to obtain a conspicuity map and unify the conspicuity maps of the three features; obtaining a saliency map of the frames by linear combination; and using the saliency map for a segmentation to mark an interesting region of a frame in the large region of the conspicuity maps.

Owner:ARCSOFT

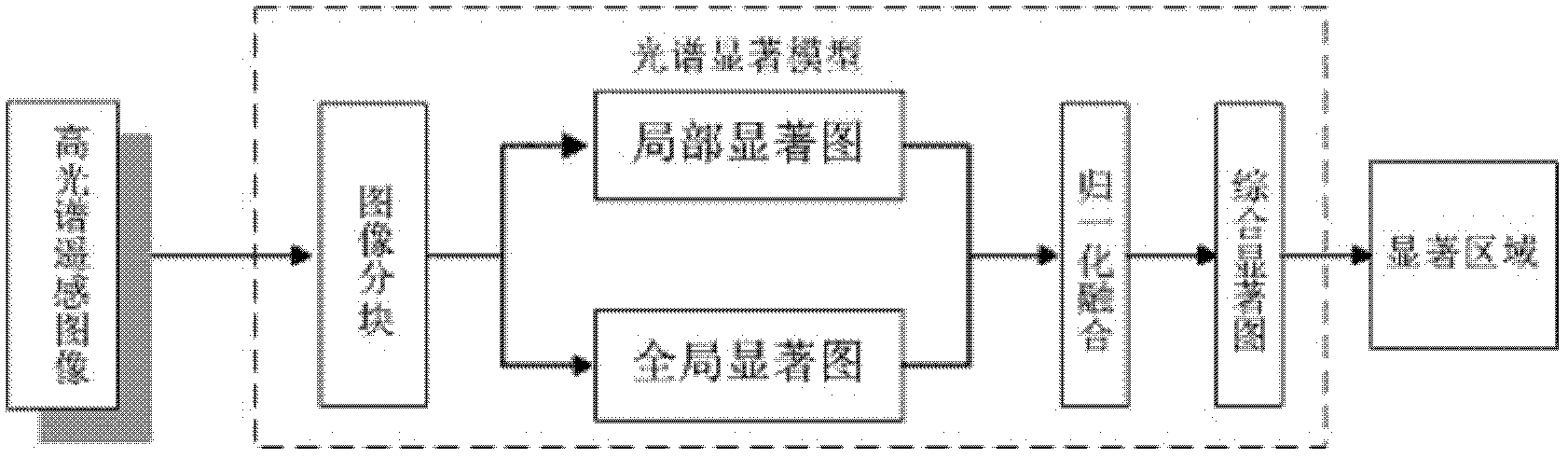

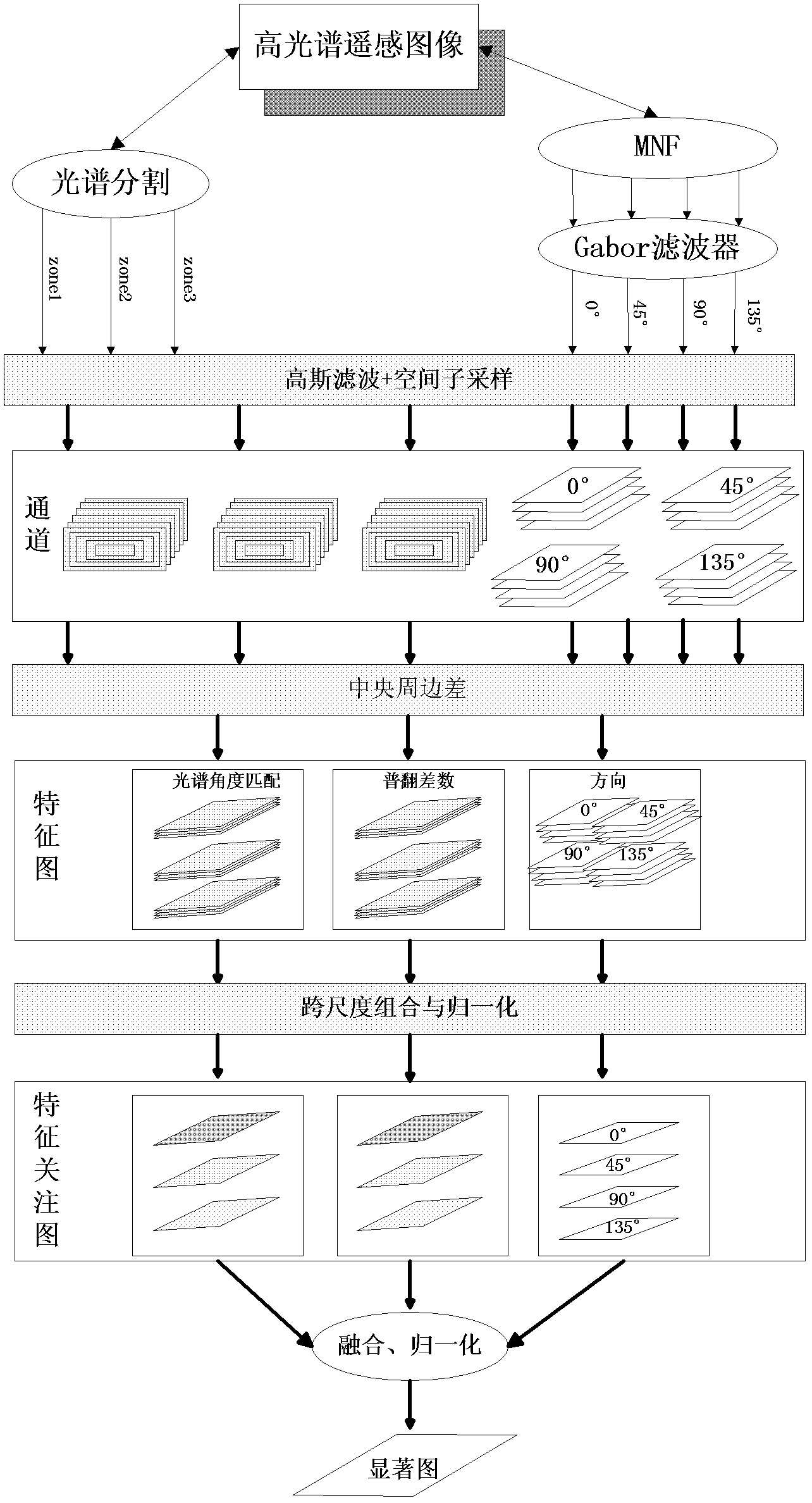

Hyperspectral remote sensing image small target detection method based on spectrum saliency

ActiveCN103729848AImprove accuracyAchieving small target detectionImage enhancementImage analysisSaliency mapImaging processing

The invention discloses a hyperspectral remote sensing image small target detection method based on spectrum saliency and belongs to the field of hyperspectral remote sensing images. When the method is used for target detection, local saliency is calculated with an improved Itti model by means of spectrum information and spatial information extracted from a hyperspectral image, and a local saliency map is constructed; then global saliency is calculated with an improved evolutionary programming method, and a global saliency map is constructed; finally, the local saliency map and the global saliency map are combined in a normalized mode to obtain an overall vision saliency map which is taken as the final target detection result. According to the method, a saliency model suitable for the hyperspectral image is established according to the spectrum saliency, image interested target detection is achieved based on comprehensive analysis of the spectral signature and spatial signature of the hyperspectral image, main contents of the image are highlighted, and image processing and analyzing complexity is reduced.

Owner:BEIJING UNIV OF TECH

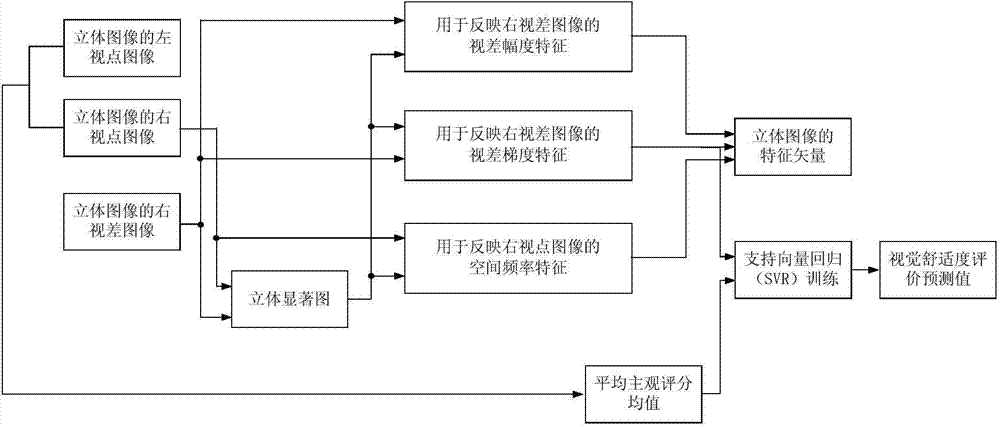

Method for evaluating visual comfort degree of three-dimensional image

ActiveCN103581661AImprove relevanceImprove stabilityTelevision systemsSteroscopic systemsParallaxViewpoints

The invention discloses a method for evaluating the visual comfort degree of a three-dimensional image. The method comprises the steps that first a three-dimensional saliency map of a right viewpoint image is obtained by extracting a saliency map of the right viewpoint image and a deep saliency map of a right parallax image, then parallax amplitude, parallax gradient and spatial frequency characteristics are extracted according to three-dimensional saliency map weighing, a characteristic vector of the three-dimensional image is obtained, characteristic vectors of all the three-dimensional images in a three-dimensional image set are trained by utilizing support vector regression, finally each three-dimensional image in the three-dimensional image set is tested by utilizing a support vector regression training model obtained through training, and an objective visual comfort degree evaluation predicted value is obtained. The method has the advantages that characteristic vector information of the three-dimensional images has strong stability and can well reflect the variation situation of the visual comfort degree of the three-dimensional images, so that correlation of an objective evaluation result and subjective perception is effectively improved.

Owner:贤珹(上海)信息科技有限公司

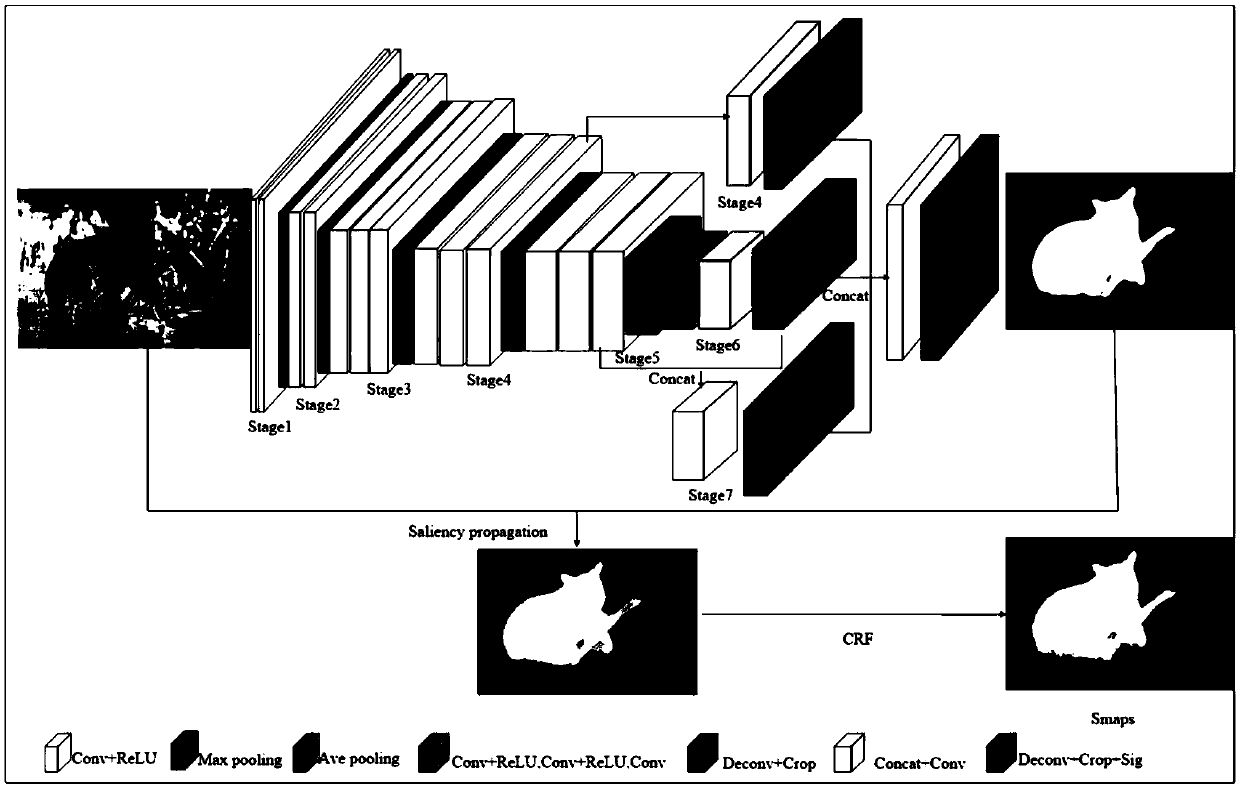

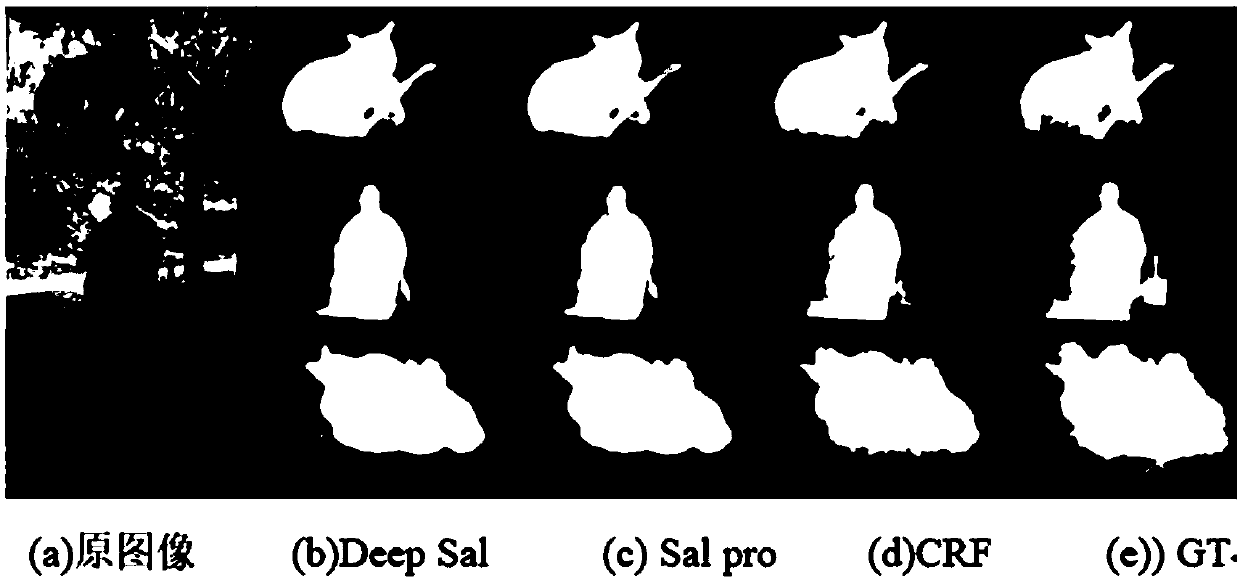

Image salient target detection method combined with deep learning

InactiveCN108898145AImprove robustnessEasy to integrateCharacter and pattern recognitionConditional random fieldSaliency map

The invention provides an image salient target detection method combined with deep learning. The method is based on an improved RFCN deep convolution neural network of cross-level feature fusion, anda network model comprises two parts of basic feature extraction and cross-level feature fusion. The method comprises: firstly, using an improved deep convolution network model to extract features of an input image, and using a cross-level fusion framework for feature fusion, to generate a high-level semantic feature preliminary saliency map; then, fusing the preliminary saliency map with image bottom-layer features to perform saliency propagation and obtain structure information; finally, using a conditional random field (CRF) to optimize a saliency propagation result to obtain a final saliency map. In a PR curve graph obtain by the method, F value and MAE effect are better than those obtained by other nine algorithms. The method can improve integrity of salient target detection, and has characteristics of less background noise and high algorithm robustness.

Owner:SOUTHWEST JIAOTONG UNIV

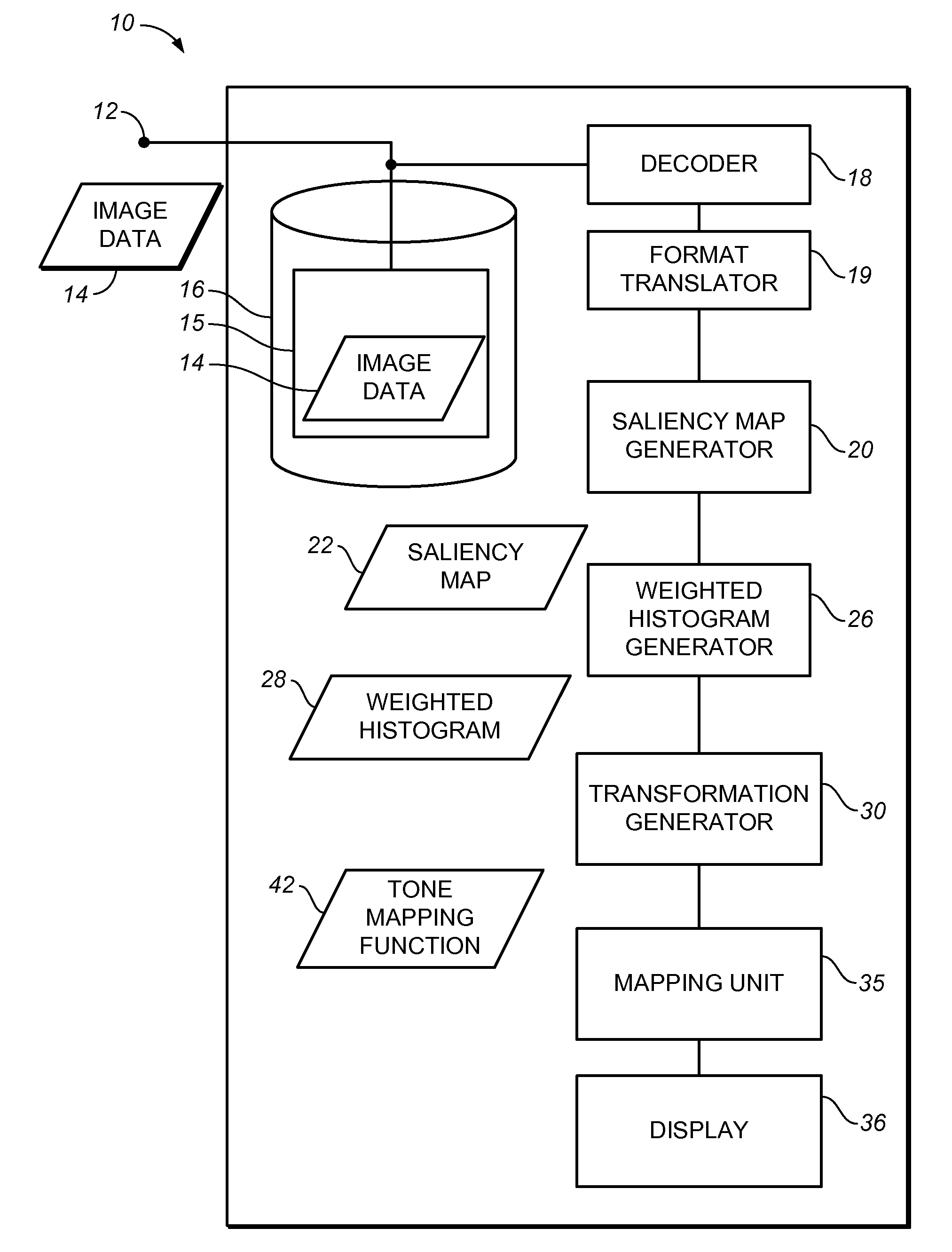

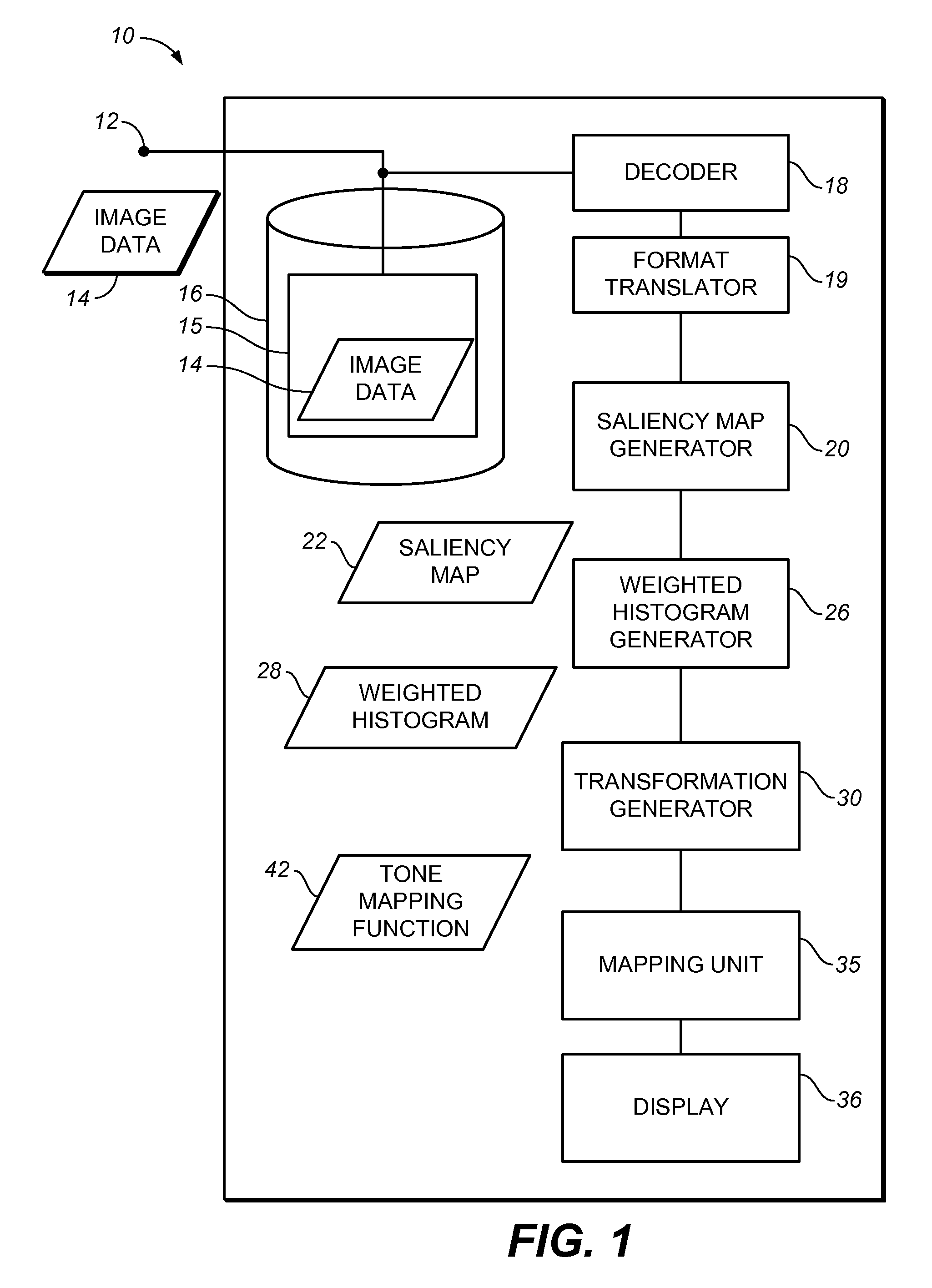

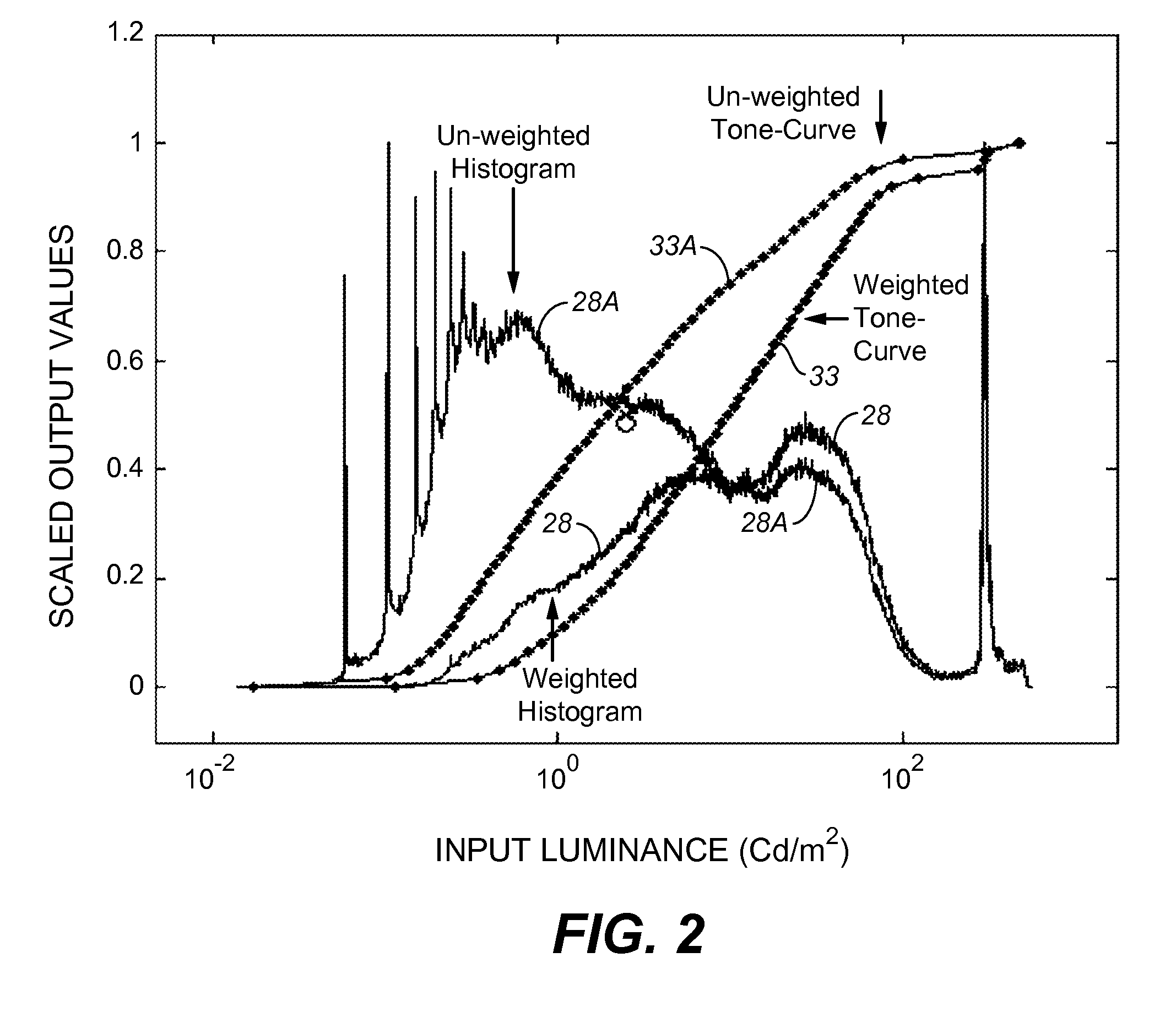

Local Definition of Global Image Transformations

A global image adjustment, such as dynamic range adjustment is established based on image characteristics. The image adjustment is based more heavily on pixel values in image areas identified as being important by one or more saliency mapping algorithms. In one application to dynamic range adjustment, a saliency map is applied to create a weighed histogram and a transformation is determined from the weighted histogram. Artifacts typical of local adjustment schemes may be avoided.

Owner:DOLBY LAB LICENSING CORP

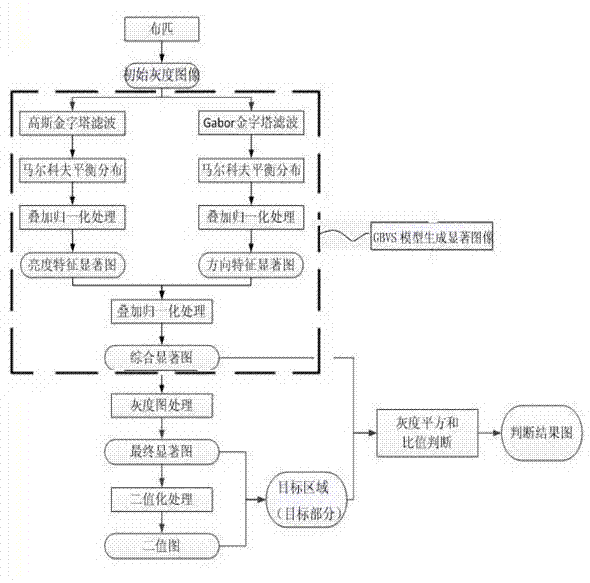

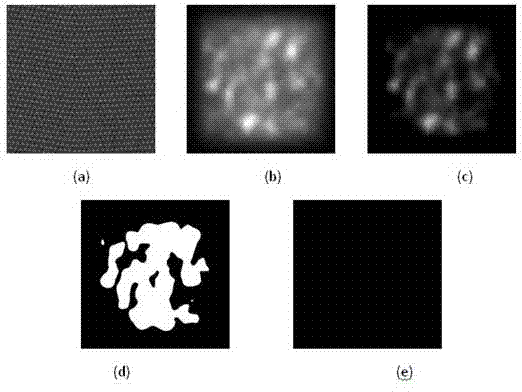

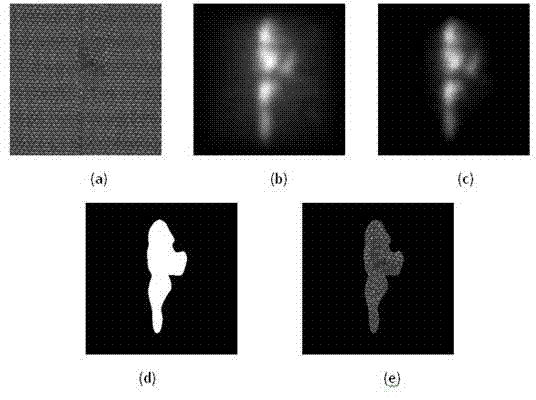

Vision conspicuousness-based cloth flaw detection method

The invention discloses a vision conspicuousness-based cloth flaw detection method which comprises the following steps: (1) collecting an image; (2) processing brightness features; (3) processing direction features; (4) performing multichannel superposition normalizing processing; (5) processing a grey-scale map; (6) performing binaryzation processing; (7) judging a flaw area. Compared with the traditional cloth flaw detection method, the vision conspicuousness-based cloth flaw detection method has the advantages that the operation complexity is reduced, the recognition rate is increased, accurate positioning can be realized, false detection easily caused under the condition that a gray value of a conspicuousness image of a perfection image of detected cloth is higher than a gray value of a perfection part in a flaw image is avoided, the interference of a background during detection is effectively reduced, and the condition that a target area obtained by performing adaptive threshold segmentation on an image of perfect cloth is mistakenly judged to be the flaw area is reduced.

Owner:SUZHOU UNIV

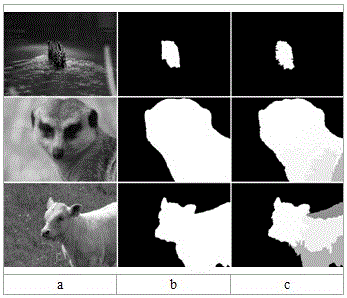

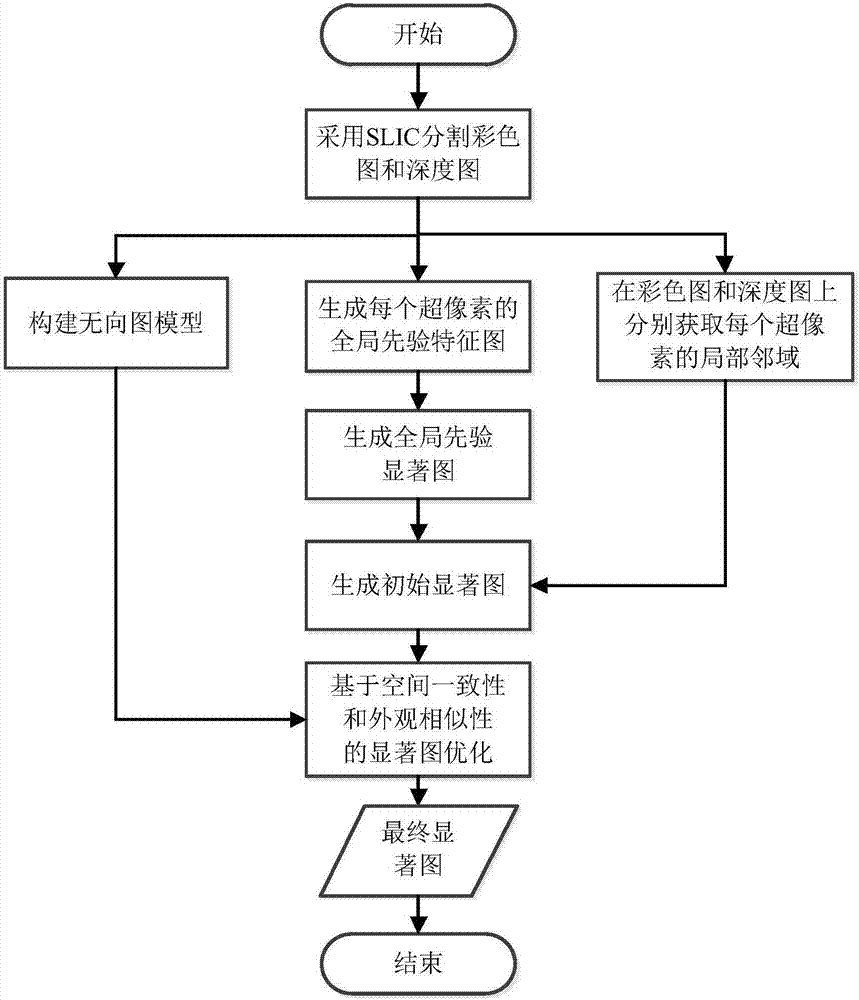

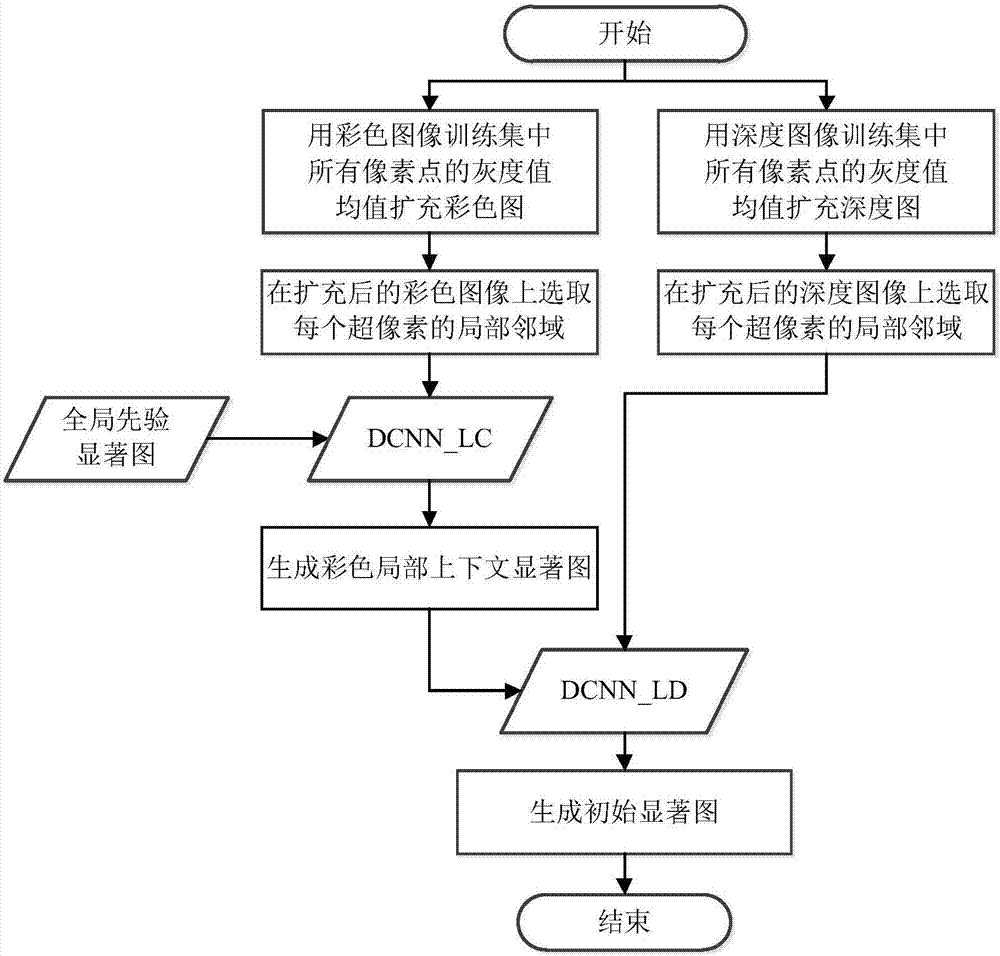

Deep learning saliency detection method based on global a priori and local context

ActiveCN107274419ASolve the problem of false detectionImprove robustnessImage enhancementImage analysisColor imageSaliency map

The invention discloses a deep learning saliency detection method based on the global a priori and local context. The method includes the steps of firstly, performing superpixel segmentation for a color image and a depth image, obtaining a global a priori feature map of each superpixel based on middle-level features such as compactness, uniqueness and background of each superpixel, and further obtaining a global a priori saliency map through a deep learning model; then, combining the global a priori saliency map and the local context information in the color image and the depth image, and obtaining an initial saliency map through the deep learning model; and finally, optimizing the initial saliency map based on spatial consistency and appearance similarity to obtain a final saliency map. The method of the invention can be used for solving the problem that a traditional saliency detection method cannot effectively detect a salient object in a complex background image and also for solving the problem that a conventional saliency detection method based on deep learning leads to false detection due to the presence of noise in the extracted high-level features.

Owner:BEIJING UNIV OF TECH

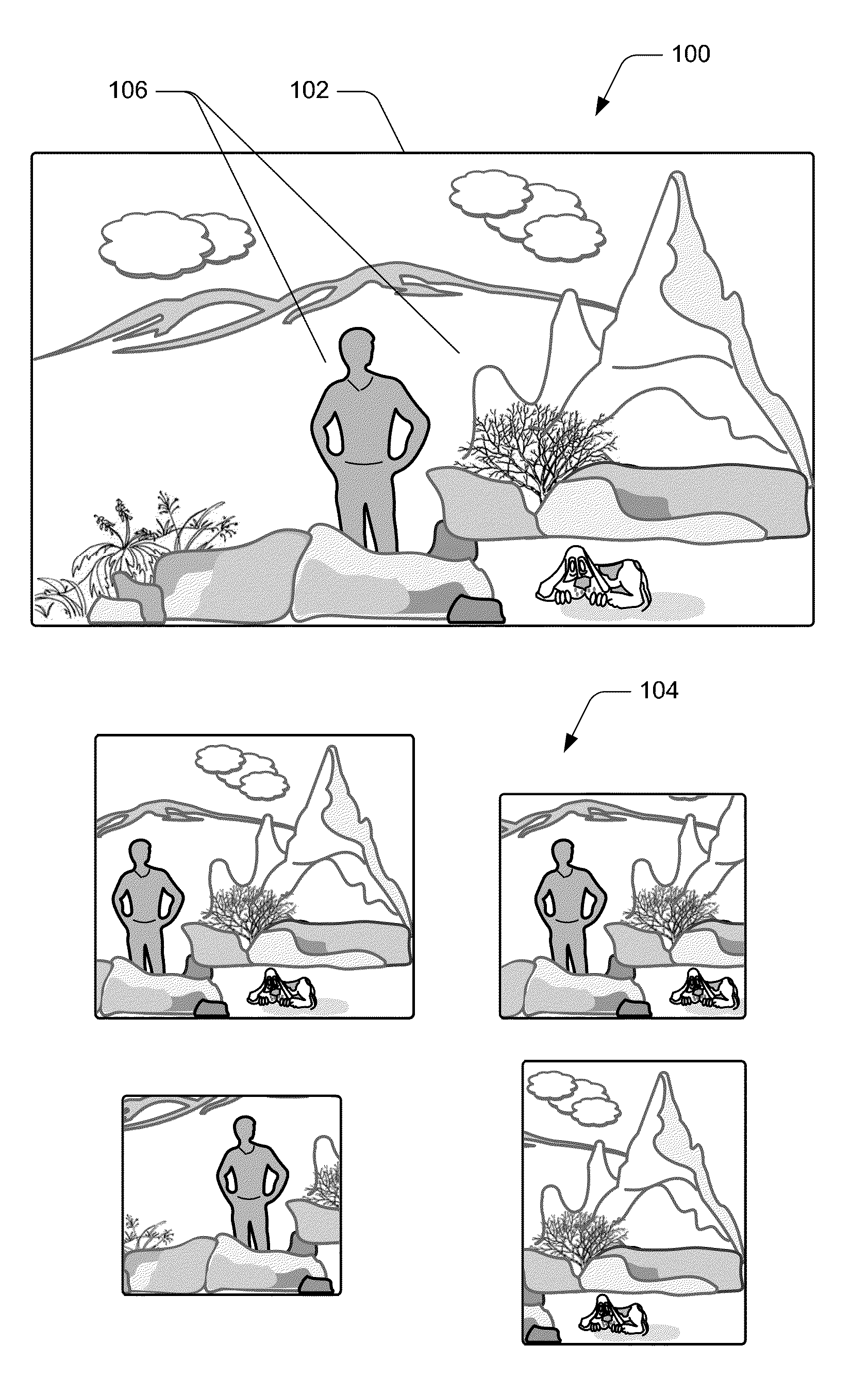

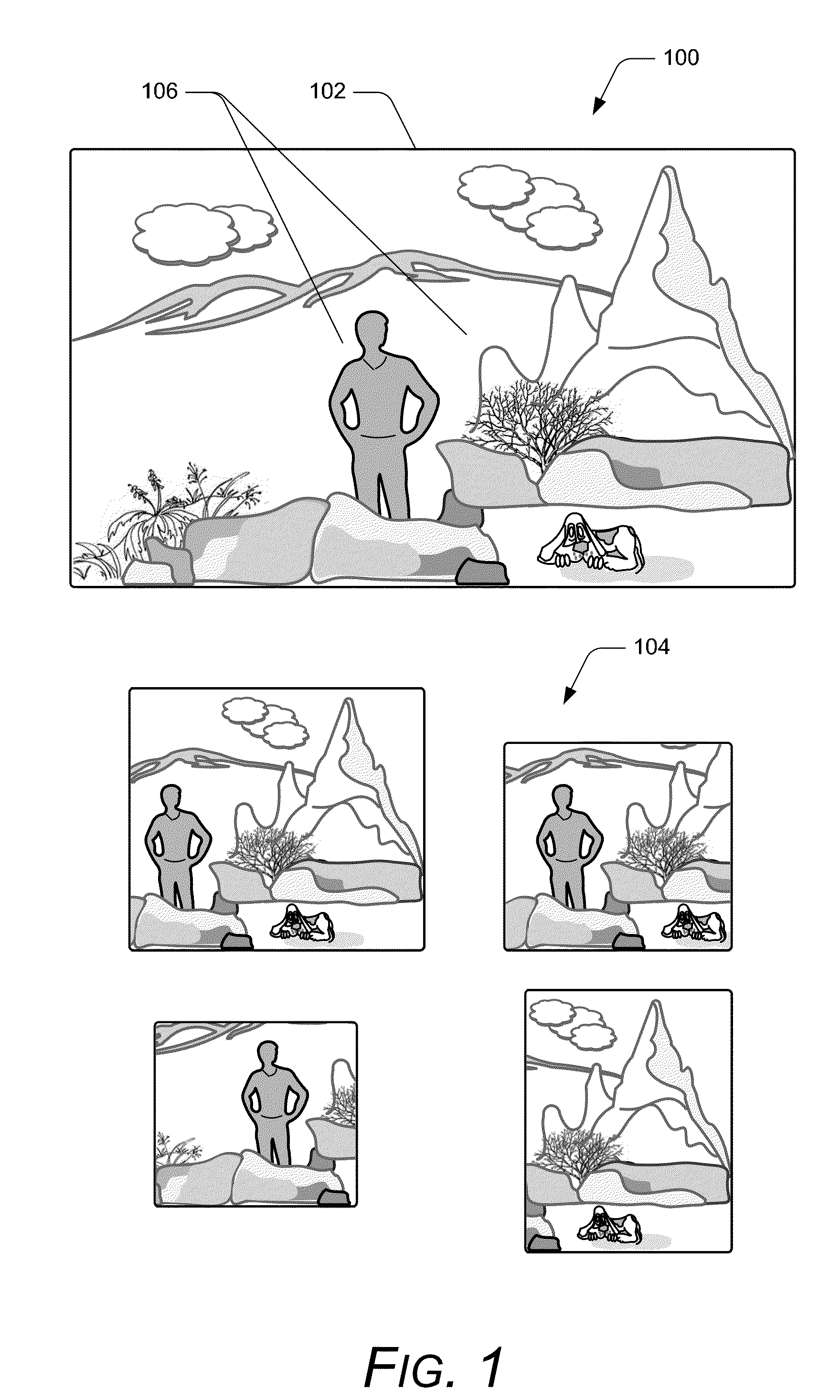

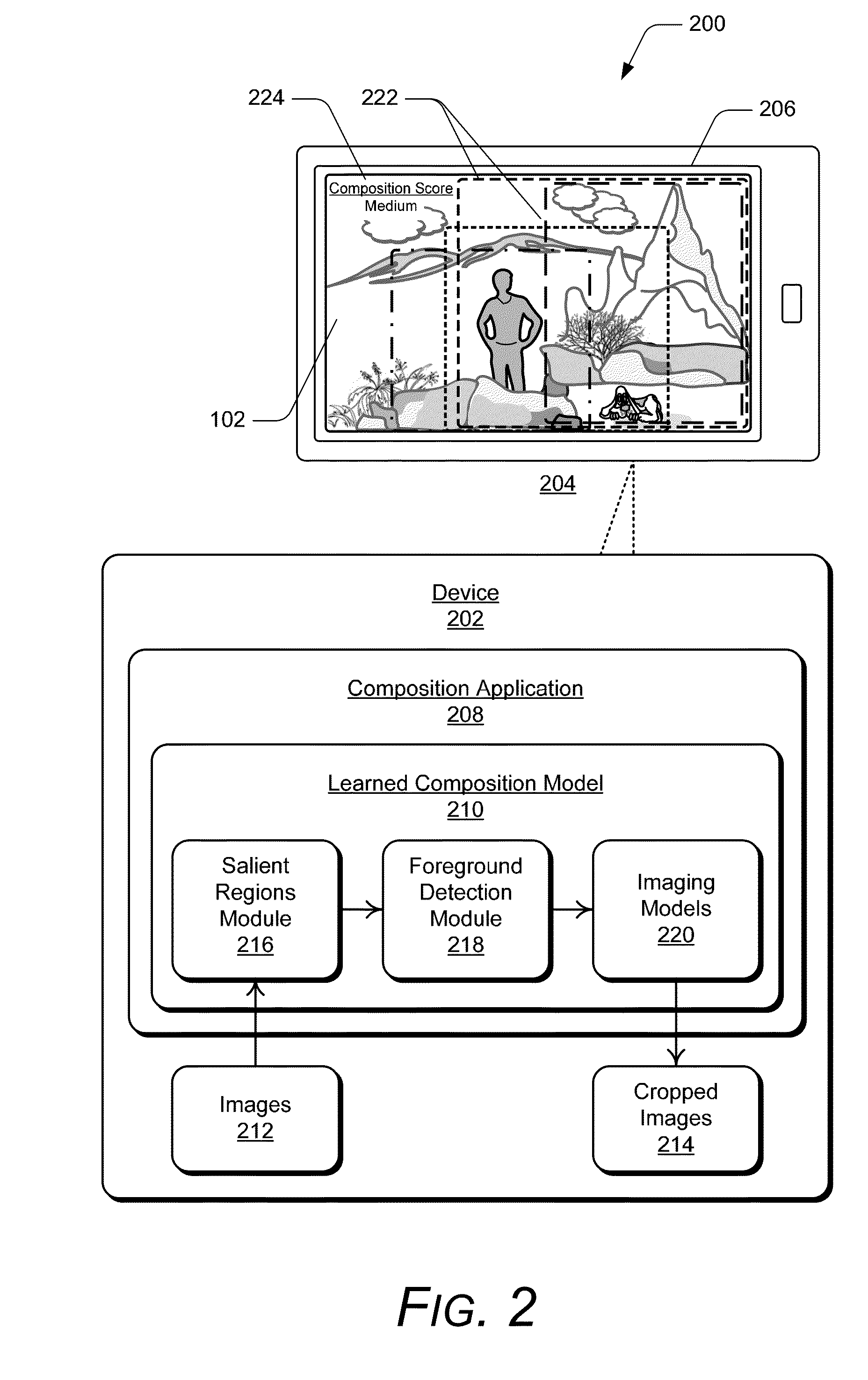

Iterative saliency map estimation

In techniques for iterative saliency map estimation, a salient regions module applies a saliency estimation technique to compute a saliency map of an image that includes image regions. A salient image region of the image is determined from the saliency map, and an image region that corresponds to the salient image region is removed from the image. The salient regions module then iteratively determines subsequent salient image regions of the image utilizing the saliency estimation technique to recompute the saliency map of the image with the image region removed, and removes the image regions that correspond to the subsequent salient image regions from the image. The salient image regions of the image are iteratively determined until no salient image regions are detected in the image, and a salient features map is generated that includes each of the salient image regions determined iteratively and combined to generate the final saliency map.

Owner:ADOBE INC

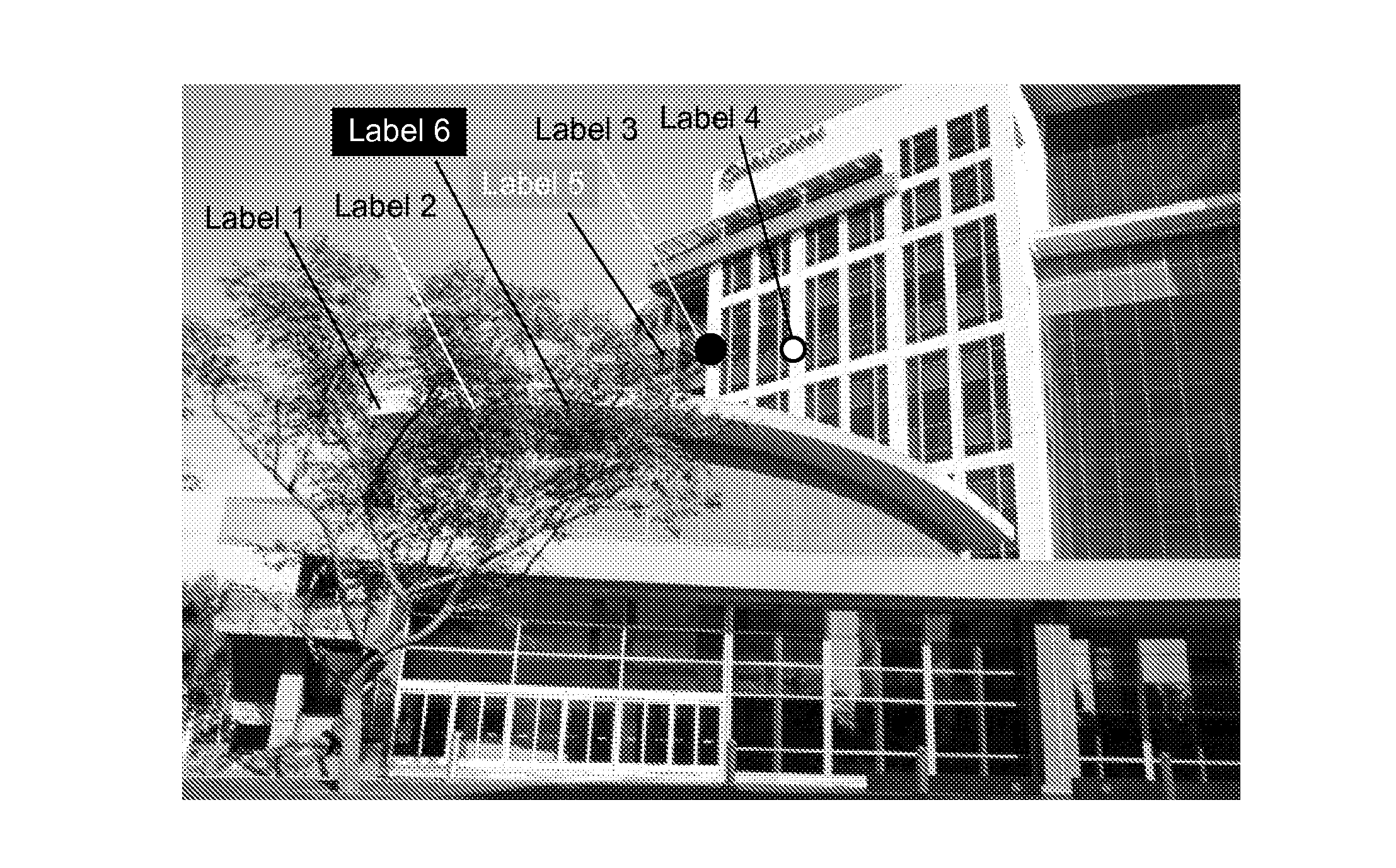

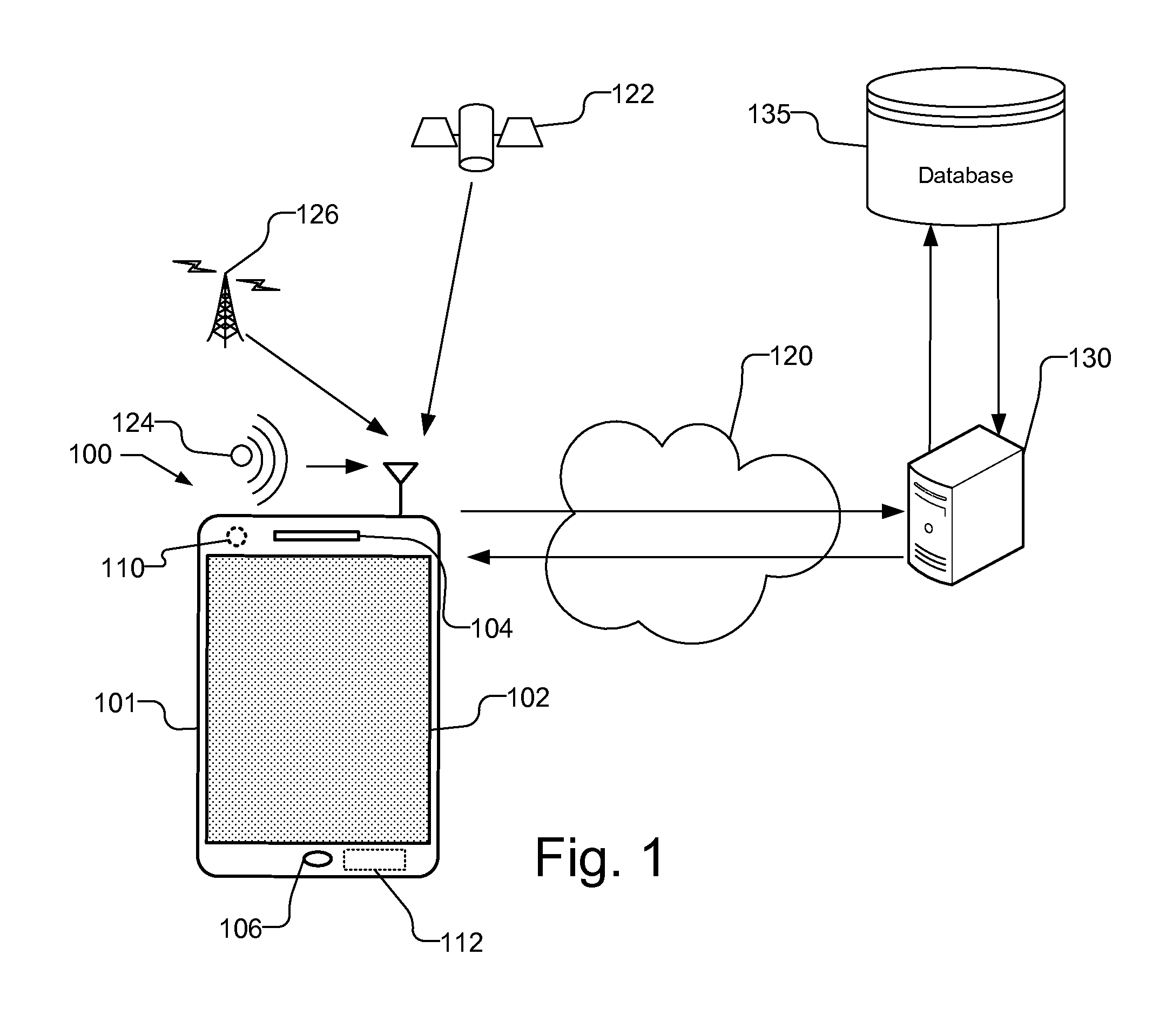

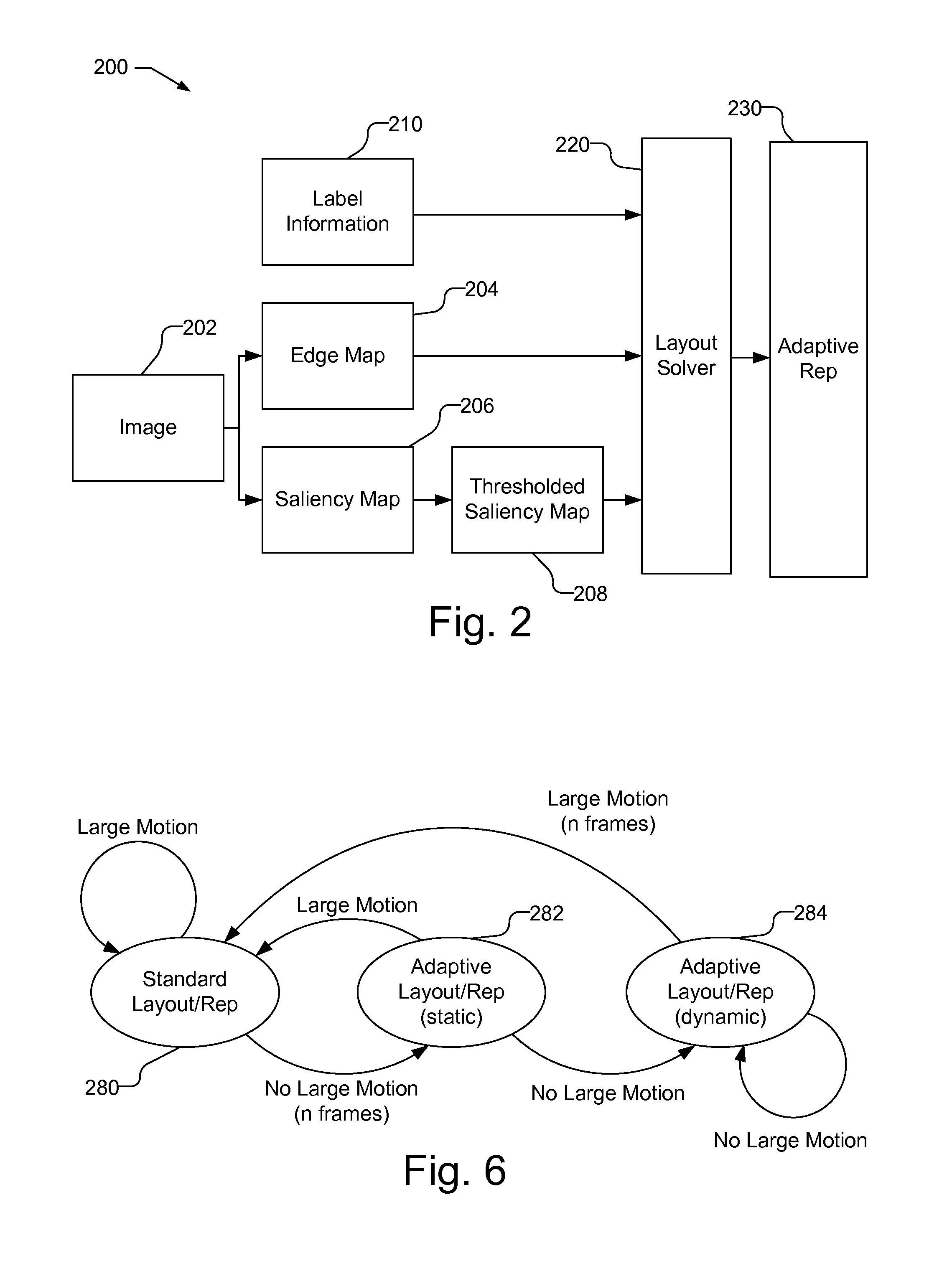

Image-driven view management for annotations

ActiveUS20130314441A1Image analysisCathode-ray tube indicatorsSaliency mapComputer graphics (images)

A mobile device uses an image-driven view management approach for annotating images in real-time. An image-based layout process used by the mobile device computes a saliency map and generates an edge map from a frame of a video stream. The saliency map may be further processed by applying thresholds to reduce the number of saliency levels. The saliency map and edge map are used together to determine a layout position of labels to be rendered over the video stream. The labels are displayed in the layout position until a change of orientation of the camera that exceeds a threshold is detected. Additionally, the representation of the label may be adjusted, e.g., based on a plurality of pixels bounded by an area that is coincident with a layout position for a label in the video frame.

Owner:QUALCOMM INC

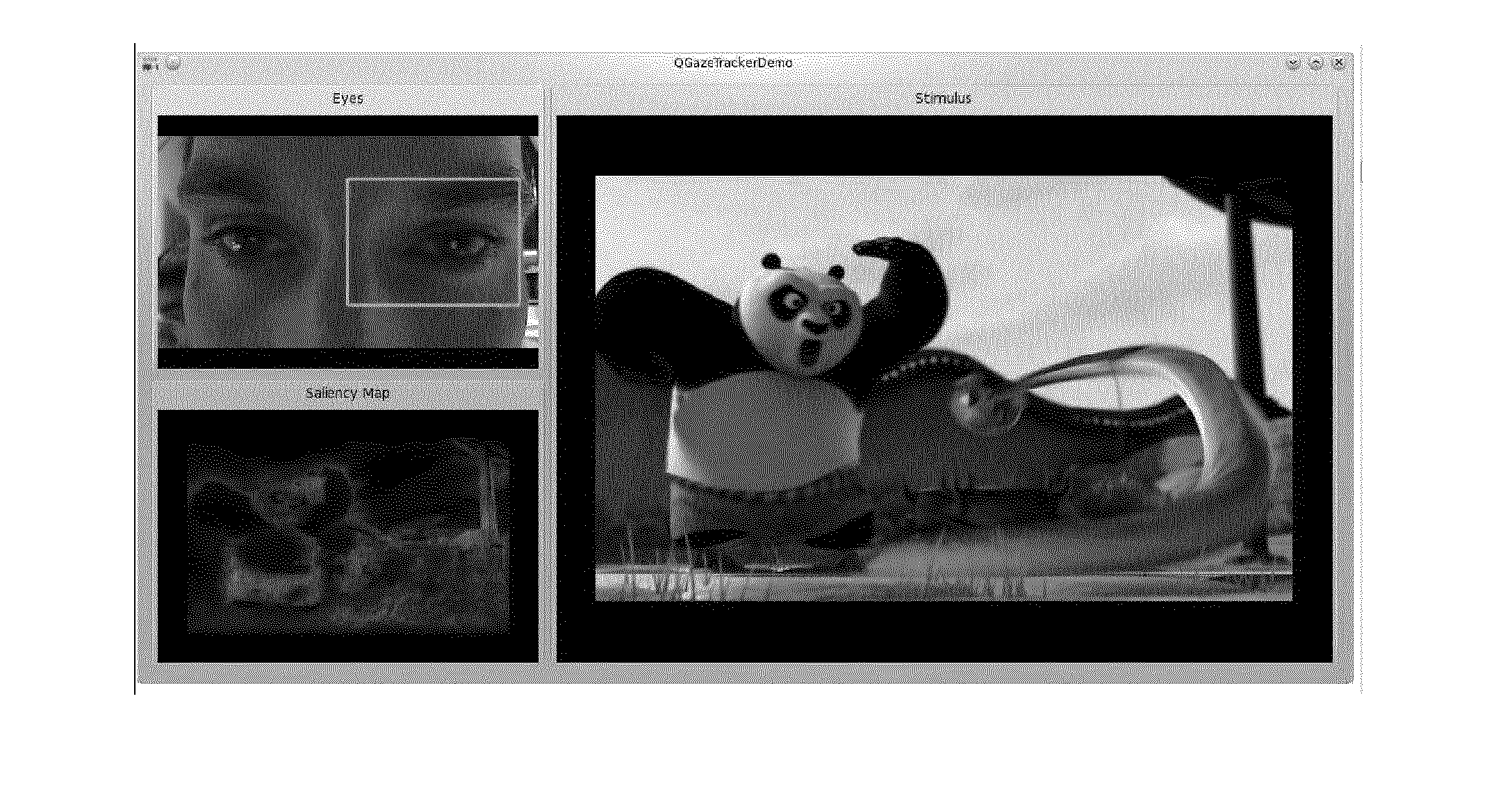

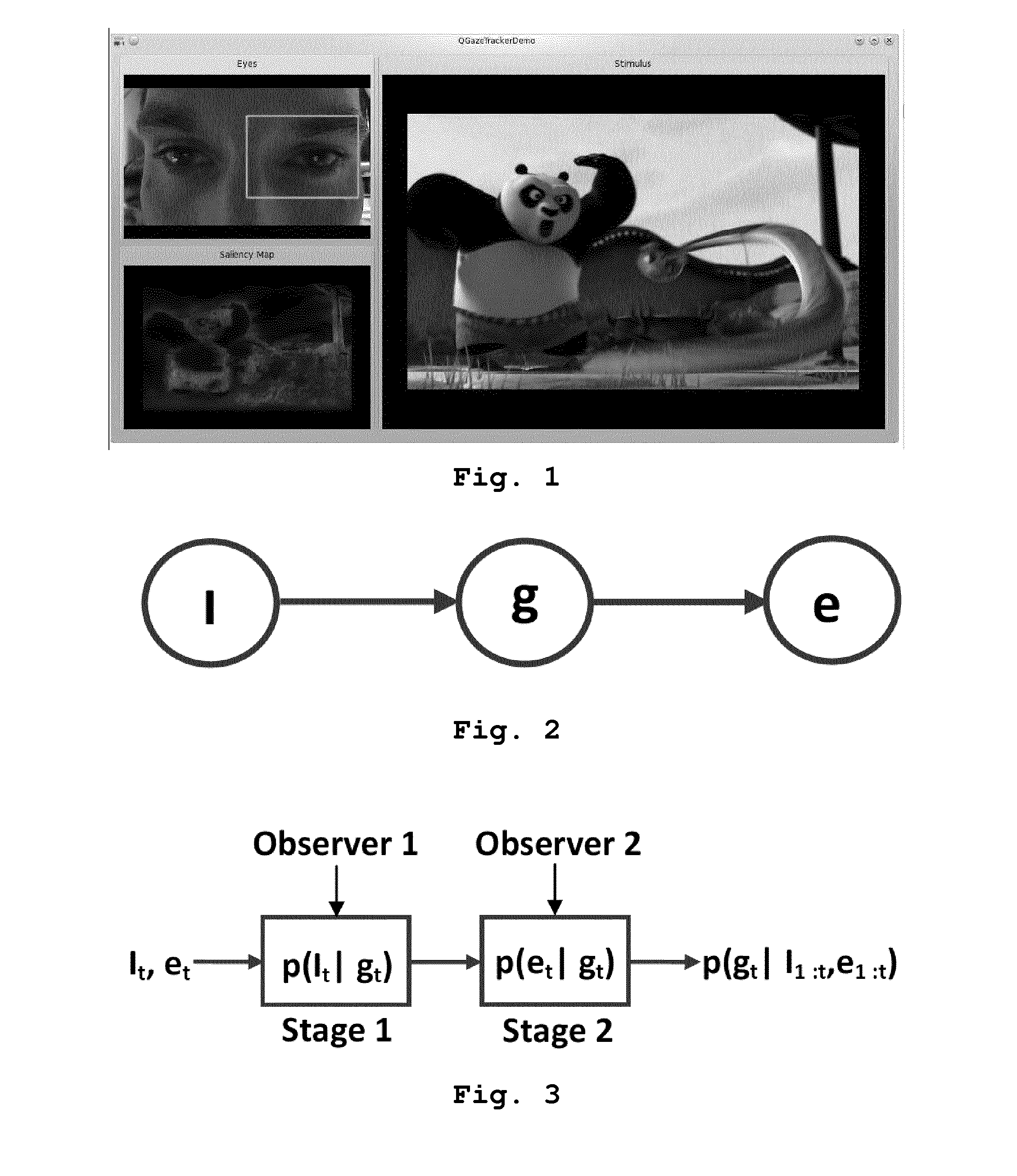

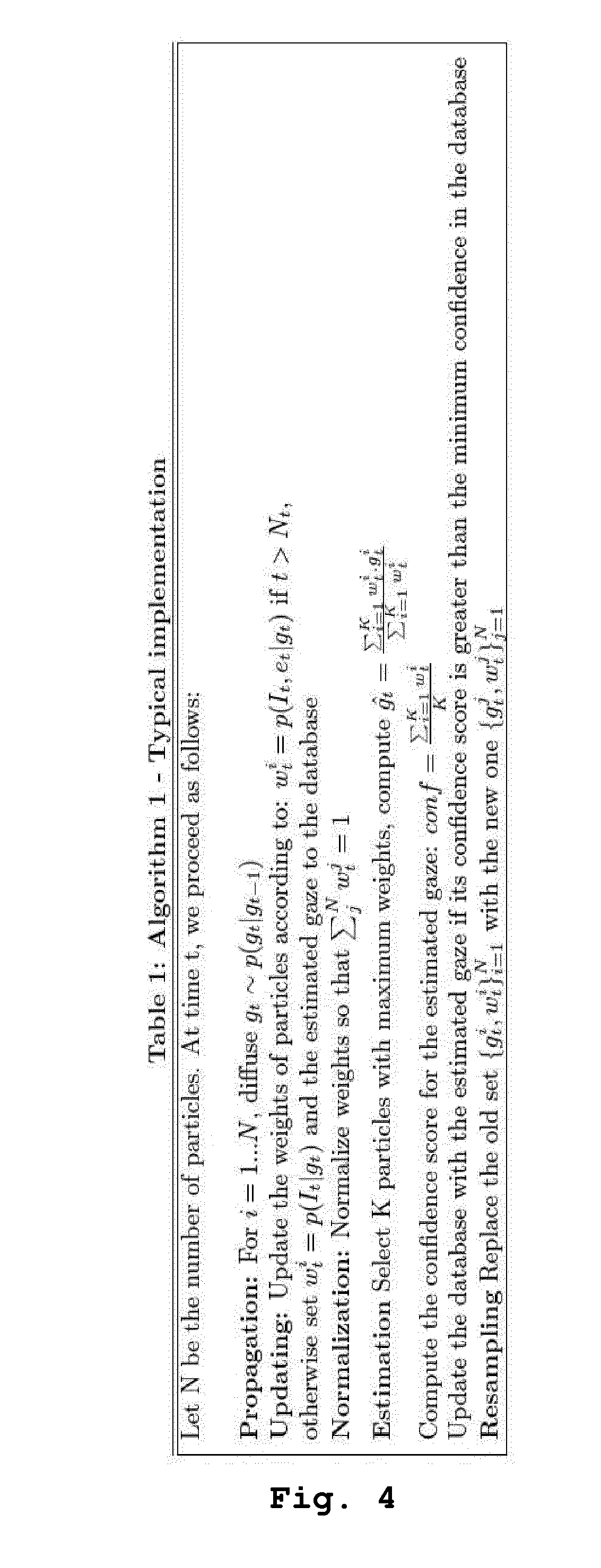

Method for calibration free gaze tracking using low cost camera

InactiveUS20150092983A1Maintain accuracyComputational cost is addressedImage enhancementImage analysisSaliency mapVideo image

A method and device for eye gaze estimation with regard to a sequence of images. The method comprises receiving a sequence of first video images and a corresponding sequence of first eye images of a user watching at the first video images; determining first saliency maps associated with at least a part of the first video images; estimating associated first gaze points from the first saliency maps associated with the video images associated with the first eye images; storing of pairs of first eye images / first gaze points in a database; for a new eye image, called second eye image, estimating an associated second gaze point from the estimated first gaze points and from a second saliency map associated with a second video image associated with the second eye image; storing the second eye image and its associated second gaze point in the database.

Owner:MAGNOLIA LICENSING LLC

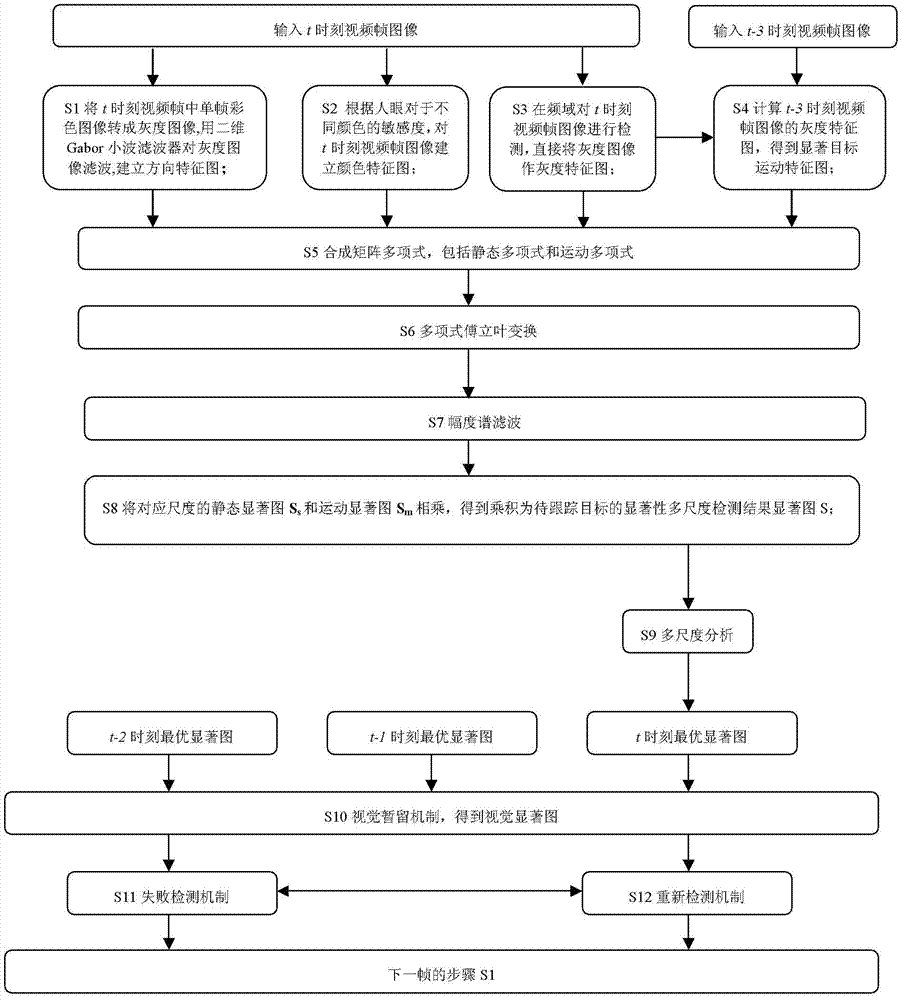

Target tracking method based on frequency domain saliency

InactiveCN103400129AImprove tracking accuracyTracking effect is stableCharacter and pattern recognitionTime domainVisual field loss

The invention relates to a target tracking method based on frequency domain saliency, which comprises the steps of S1-S4, establishing direction feature maps, color feature maps, gray feature maps and motion feature maps; S5-S6, establishing static and moving polynomials and performing Fourier transform to the static and moving polynomials; S7, performing Gaussian low-pass filtration and inverse Fourier transform to magnitude spectra to obtain static saliency maps and moving saliency maps; S8, multiplying the moving saliency maps by the static saliency maps with the corresponding scales to obtain saliency multi-scale detection result saliency map; S9, calculating the one-dimensional entropy function of the histogram of the saliency map and extracting a time domain saliency map corresponding to a minimum information entropy as an optimal saliency map at the moment t; S10, using products of average weight of t-1 and t-2 frame saliency maps and the optimal saliency map at the moment t as visual saliency maps; S11, calculating difference of central positions of the visual saliency maps of adjacent frames, judging whether the tracking is failed or not and recording a failure saliency map; and S12, comparing the visual saliency map of the current frame with the failure saliency map and judging whether a target returns back to a visual field or not.

Owner:INST OF OPTICS & ELECTRONICS - CHINESE ACAD OF SCI

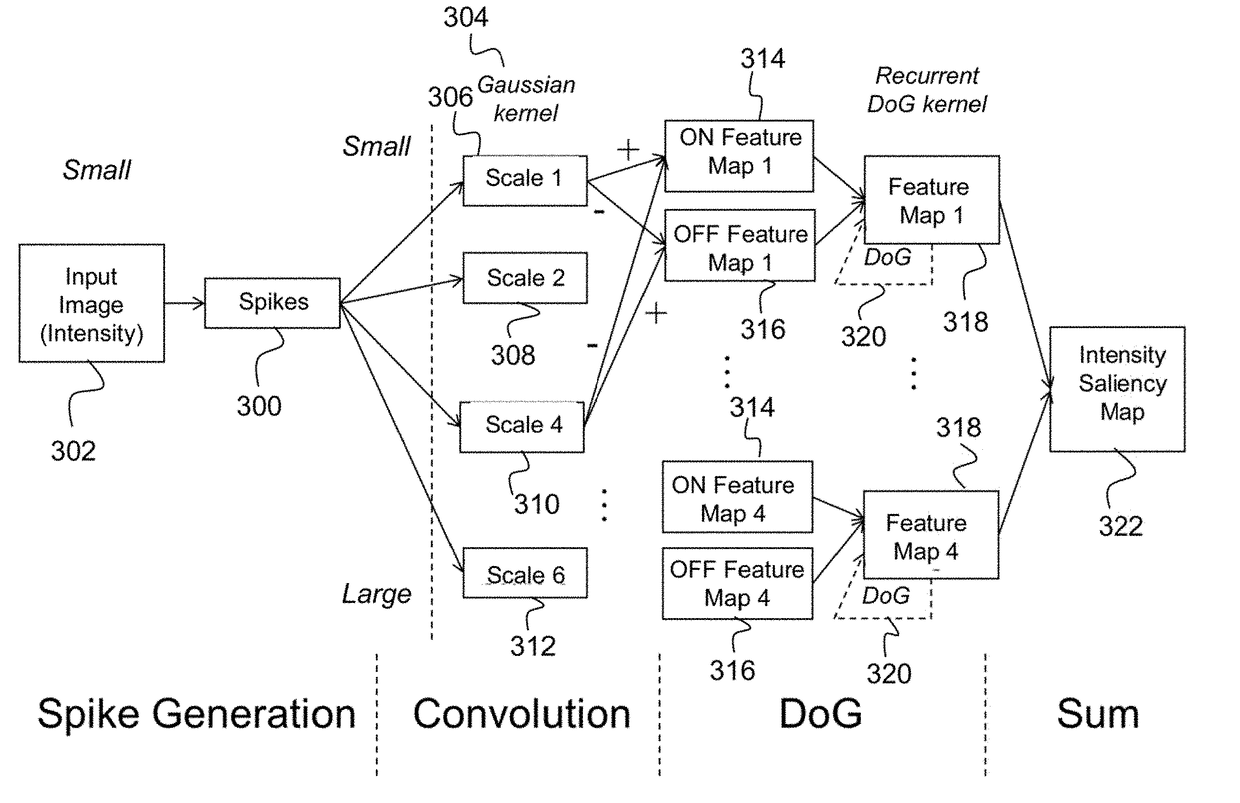

Method for object detection in digital image and video using spiking neural networks

ActiveUS20170300788A1Increasing spike activityReducing spike activityCharacter and pattern recognitionNeural architecturesSaliency mapSpiking neural network

Described is a system for object detection in images or videos using spiking neural networks. An intensity saliency map is generated from an intensity of an input image having color components using a spiking neural network. Additionally, a color saliency map is generated from a plurality of colors in the input image using a spiking neural network. An object detection model is generated by combining the intensity saliency map and multiple color saliency maps. The object detection model is used to detect multiple objects of interest in the input image.

Owner:HRL LAB

Magnetic tile surface defect detection method based on improved machine vision attention mechanism

ActiveCN106093066AImplement automatic detectionReduce computationImage enhancementImage analysisMachine visionSaliency map

The invention discloses a magnetic tile surface defect detection method based on an improved machine vision attention mechanism. The magnetic tile surface defect detection method comprises the following steps: I, inputting a magnetic tile image, and enhancing the overall gray contrast ratio of the image by using a method of combination of morphological top cap and bottom cap conversion; II, uniformly dividing the obtained image into a*b image blocks, and distinguishing defect image blocks and non-defect image blocks according to gray characteristic quantities of the divided image blocks; III, calculating the conspicuousness of an obtained image block by using an improved Itti vision attention mechanism model, and selecting a primary characteristic so as to form a comprehensive saliency map; and IV, thresholding the comprehensive saliency map by using an Ostu threshold method, and extracting a defect area. By virtue of morphological processing, image blocking and vision attention mechanism ideas, problems that the brightness is not uniform, the magnetic tile defect area is relatively small, a magnetic tile has texture interference and the like can be effectively overcome, various magnetic tile defects can be rapidly and effectively extracted, and thus the magnetic tile surface defect detection method is very good in adaptability.

Owner:ANHUI UNIVERSITY OF TECHNOLOGY

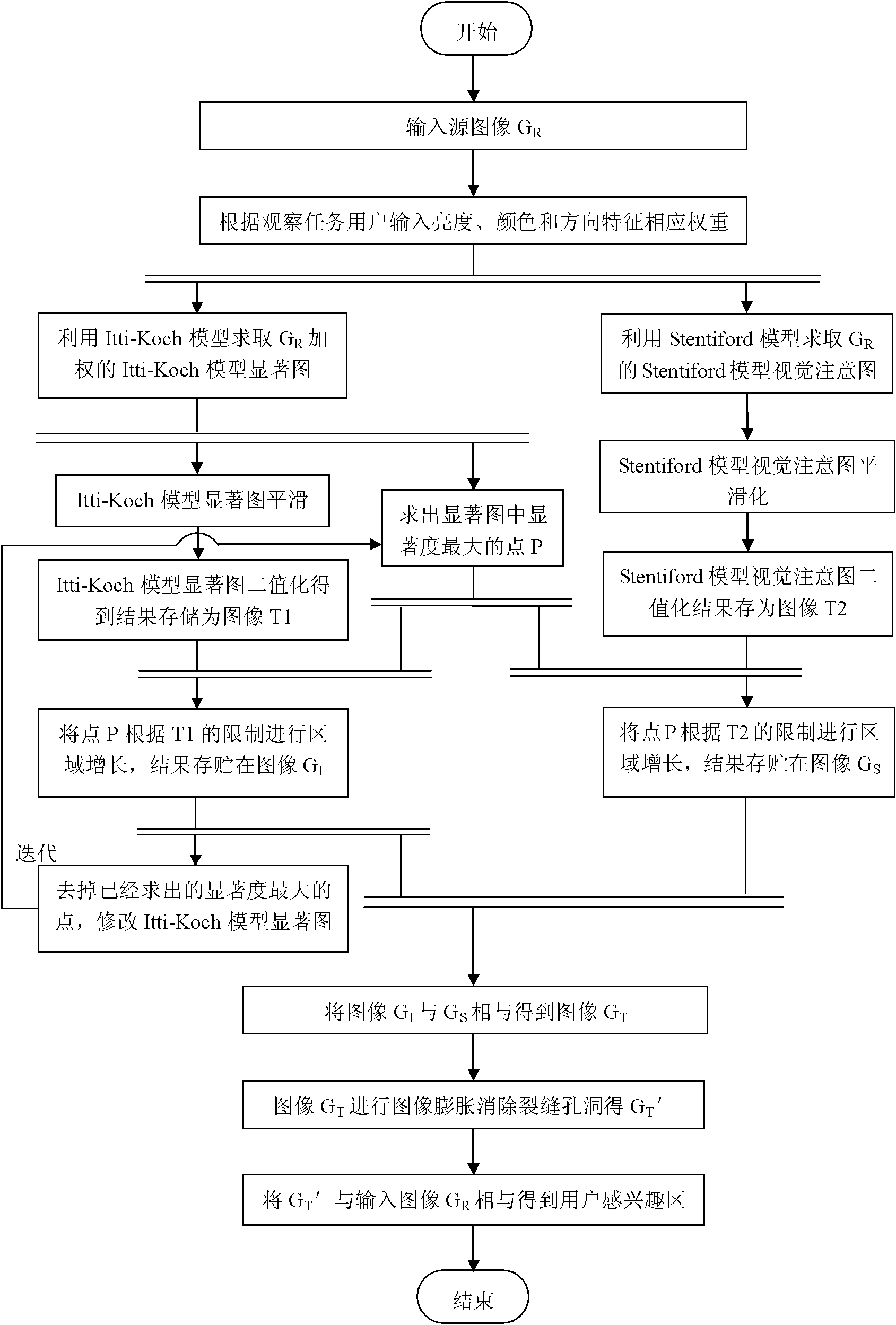

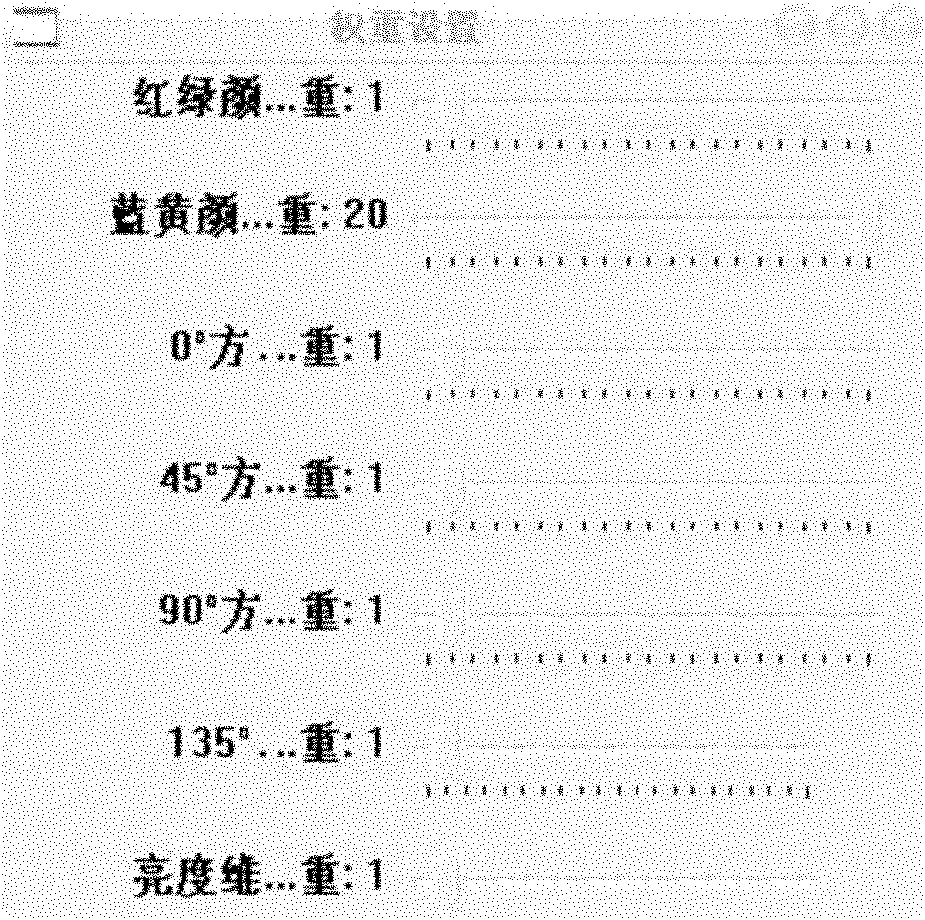

Method for extracting image region of interest by combining bottom-up and top-down ways

InactiveCN102063623AQuick extractionIncrease brightnessImage analysisCharacter and pattern recognitionPattern recognitionSaliency map

The invention provides a method for extracting an image region of interest by combining bottom-up and top-down ways. In the method, an interactive way is adopted, a user converts top-down information of observation tasks carried by the user into different weight values which are used for inputting the image bottom-layer features, an Itti-Koch model and a Stentiford model are combined, and the advantages of the two models are absorbed, thus the method for extracting region of interest combined with a user task and image visual stimulation is realized. Inquiry intention of the user and understanding on the image are converted into weights of a saliency map, namely the bottom-layer features are added corresponding weights to influence the solution of the saliency map, thus a gap between the inquiry intention of the user and the bottom-layer features of an image is reduced and the extracted region of interest is more consistent with the requirement of the user. The processing results of multiple images show that better result can be obtained by adopting the method to extract the region of interest for the user.

Owner:CENT SOUTH UNIV

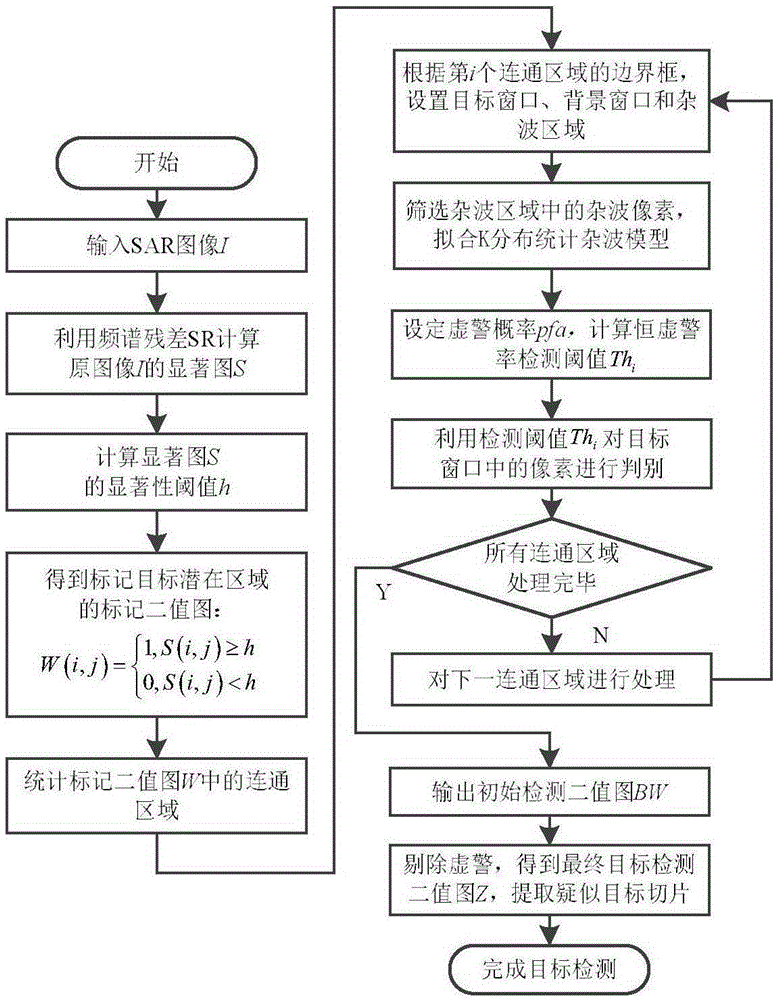

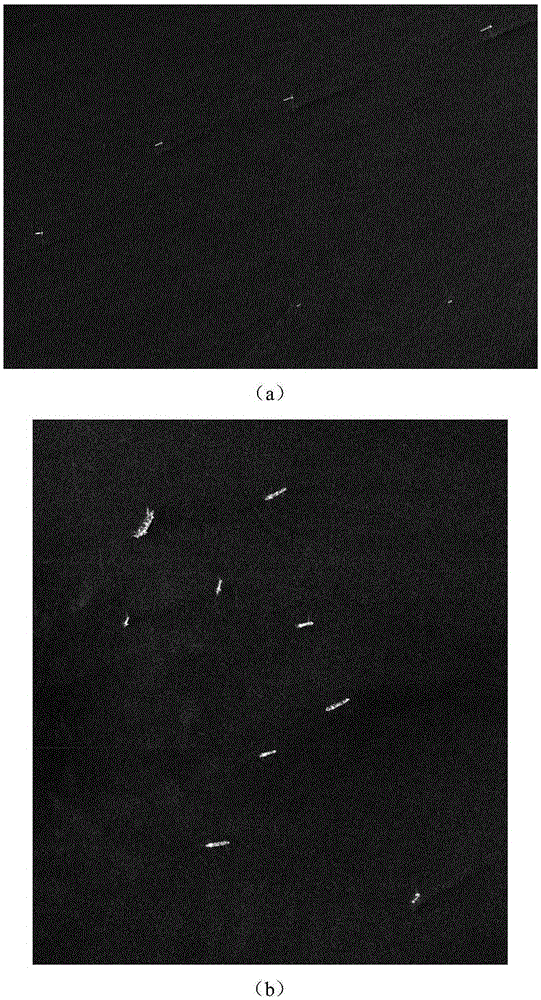

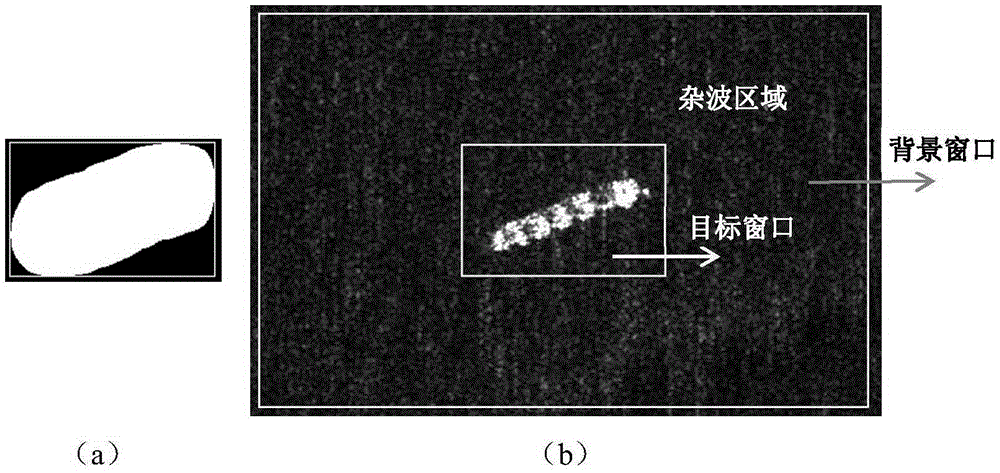

SAR (Synthetic Aperture Radar) image target detection method based on visual attention model and constant false alarm rate

ActiveCN105354541AAccurate acquisitionReduce false alarmsCharacter and pattern recognitionPattern recognitionFrequency spectrum

The present invention discloses an SAR (Synthetic Aperture Radar) image target detection method based on a visual attention model and a constant false alarm rate, which mainly solves the problems of a low detection speed and a high clutter false alarm rate in the existing SAR image marine ship target detection technology. The implementation steps of the method are as follows: extracting a saliency map corresponding to an SAR image according to Fourier spectrum residual error information; calculating a saliency threshold, so as to select a potential target area on the saliency map; detecting the potential target area by adopting an adaptive sliding window constant false alarm rate method, and obtaining an initial detection result; and obtaining a final detection result after removing a false alarm from the initial detection result, and extracting a suspected ship target slice, so as to complete a target detection process. The SAR image target detection method based on the visual attention model and the constant false alarm rate provided by the present invention has the advantages of a high calculation speed, a high target detection rate and a low false alarm rate, and meanwhile the method has the advantages of simpleness and easy implementation and can be used for marine ship target detection.

Owner:XIDIAN UNIV

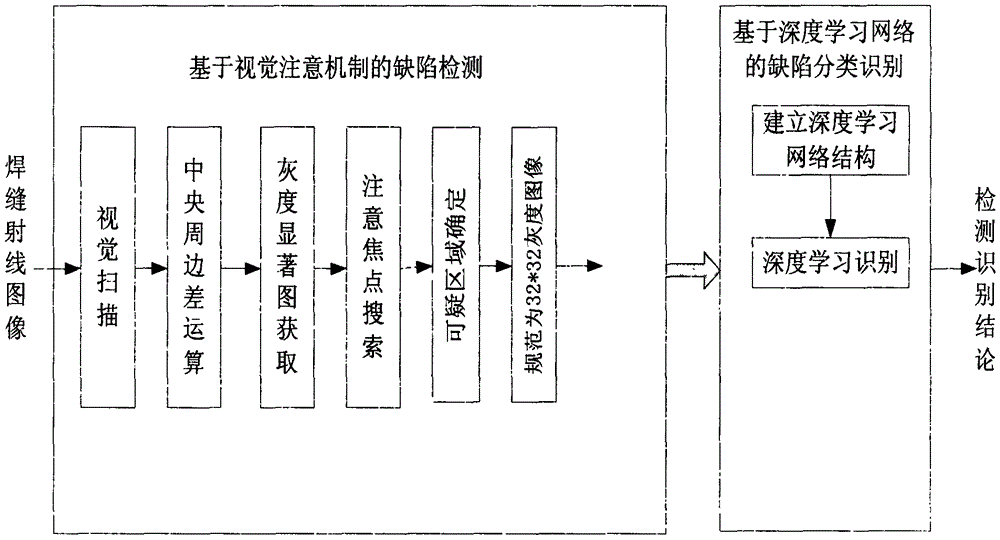

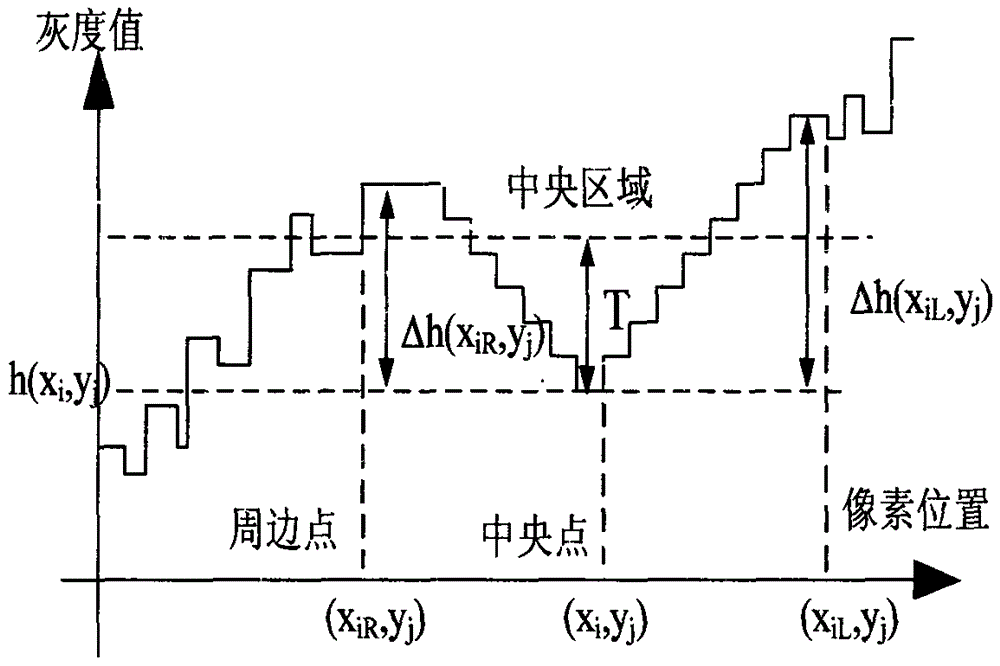

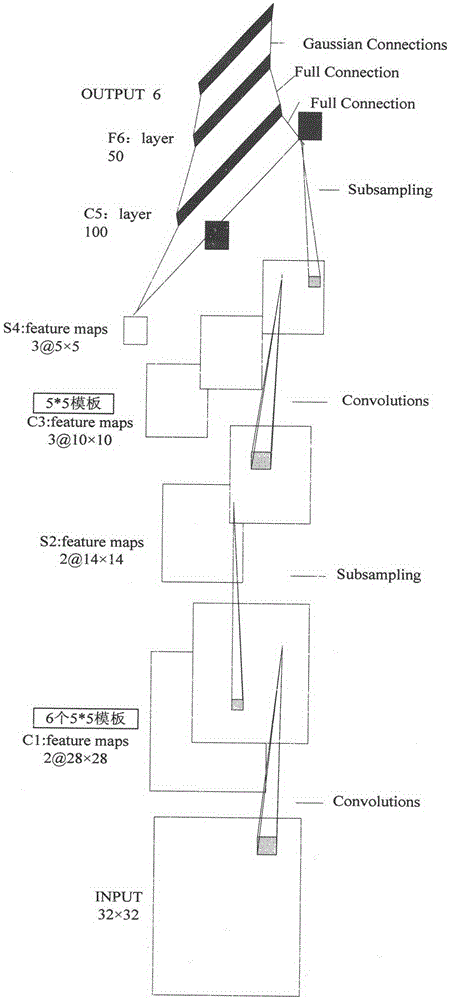

Method and device for detecting and identifying X-ray image defects of welding seam

InactiveCN104977313AReduce processingReduce distractionsNeural learning methodsElectric digital data processingPattern recognitionSaliency map

The invention provides a method and a device for detecting and identifying X-ray image defects of a welding seam. The method comprises the following steps: scanning with vision, performing central-peripheral difference operation, obtaining a grey saliency map, searching focus of attention, and determining a dubious area; and enabling a pixel grey-scale signal in the dubious area to directly pass through a trained layered network depth model by adopting deep learning, and directly identifying by obtaining substantive characteristics in the dubious defection area. According to the invention, image objects are selected and identified in series from strong to weak visual saliency; the efficiency and the accuracy for analyzing and identifying images are increased; and the method is strong in adaptation and good in universality.

Owner:四川省特种设备检验研究院 +1

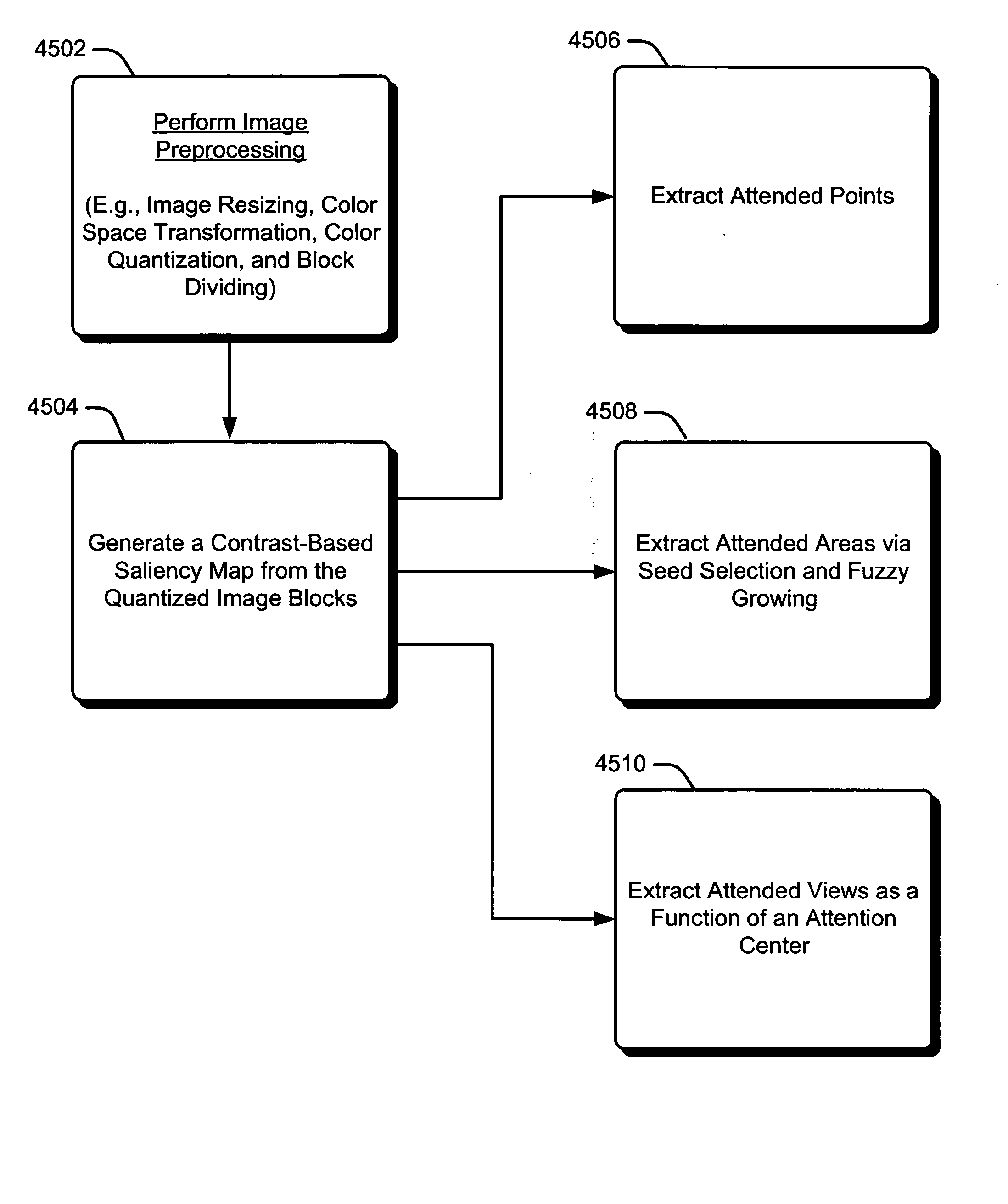

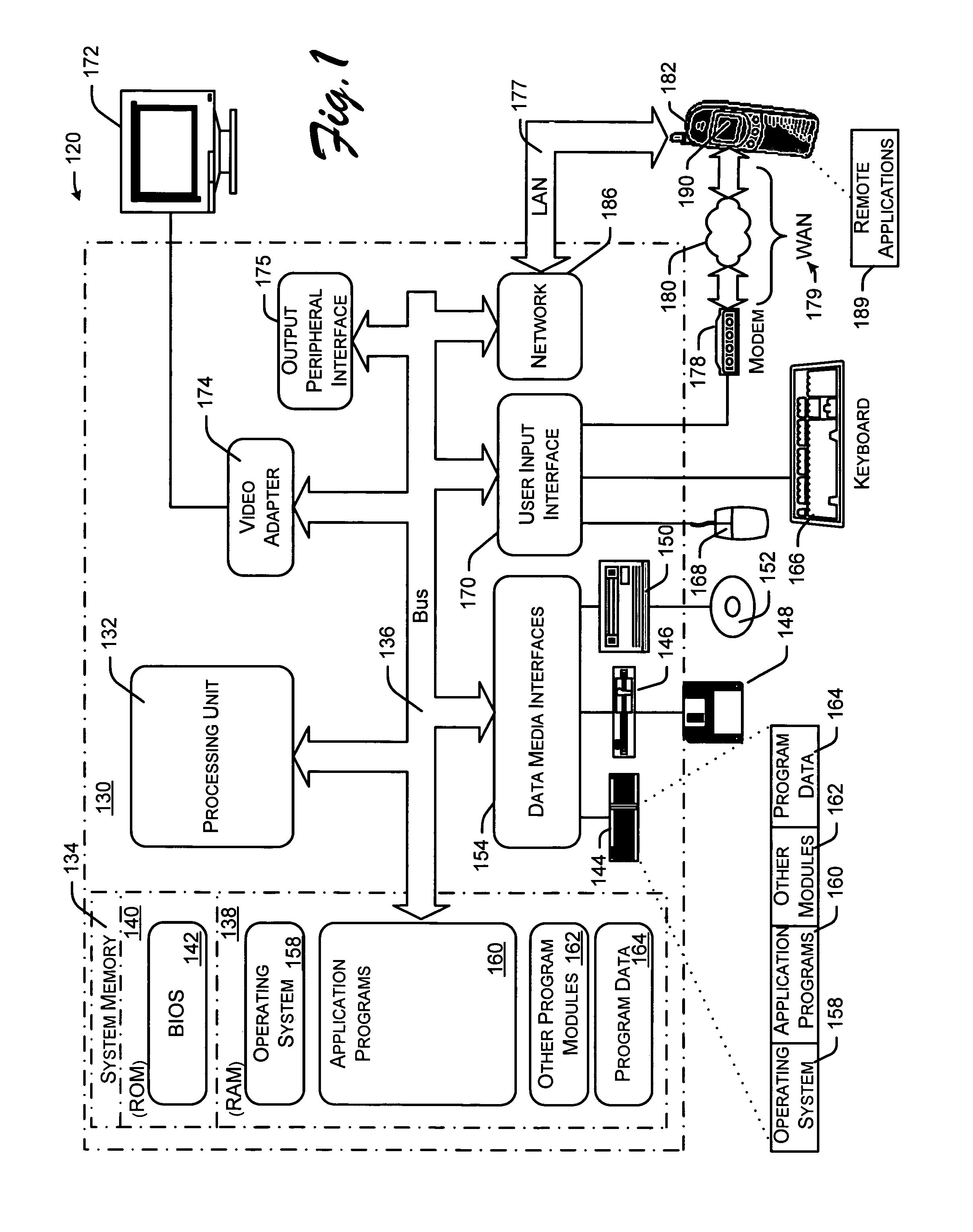

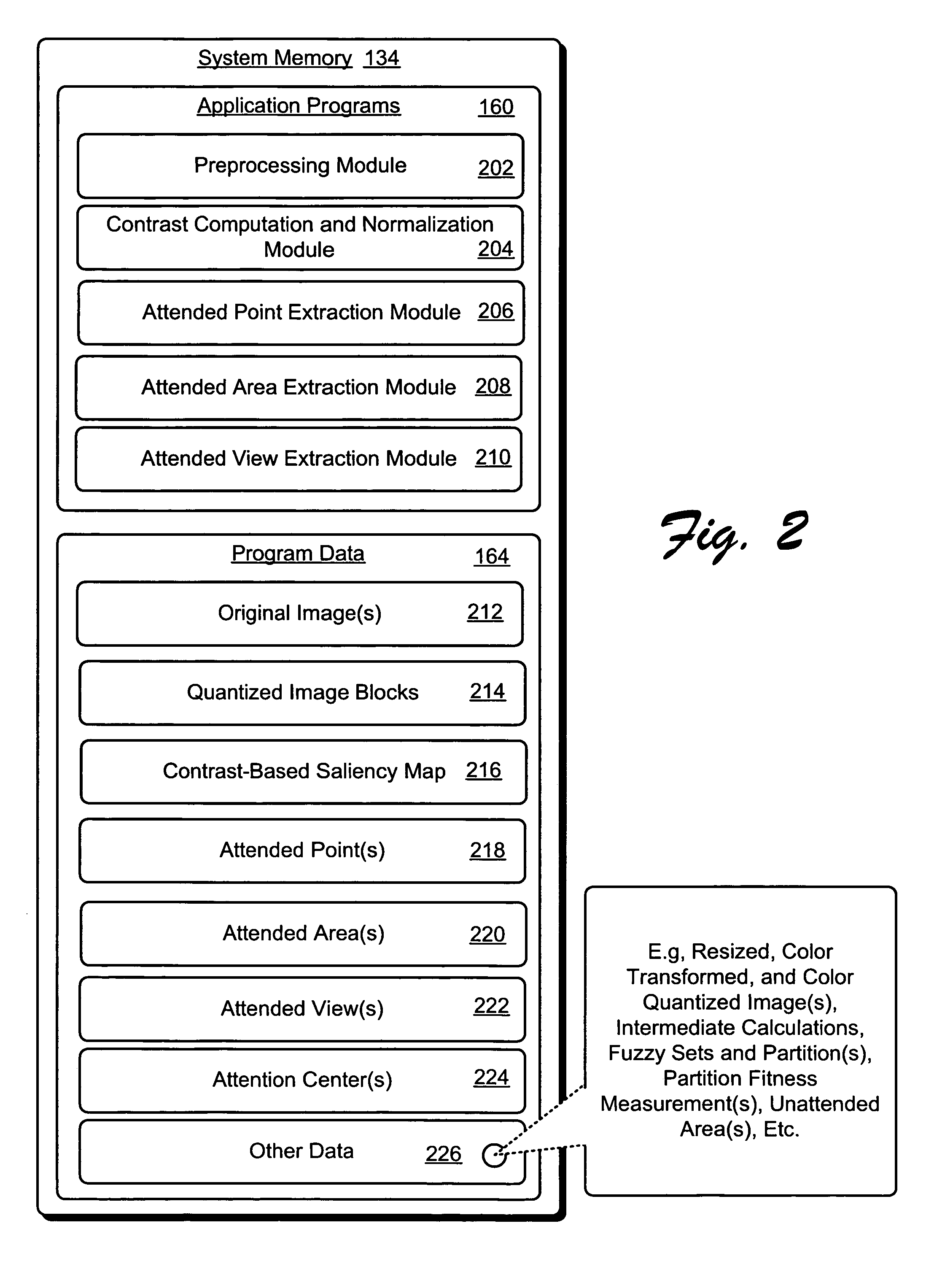

Contrast-based image attention analysis framework

Systems and methods for image attention analysis are described. In one aspect, image attention is modeled by preprocessing an image to generate a quantized set of image blocks. A contrast-based saliency map for modeling one-to-three levels of image attention is then generated from the quantized image blocks.

Owner:MICROSOFT TECH LICENSING LLC

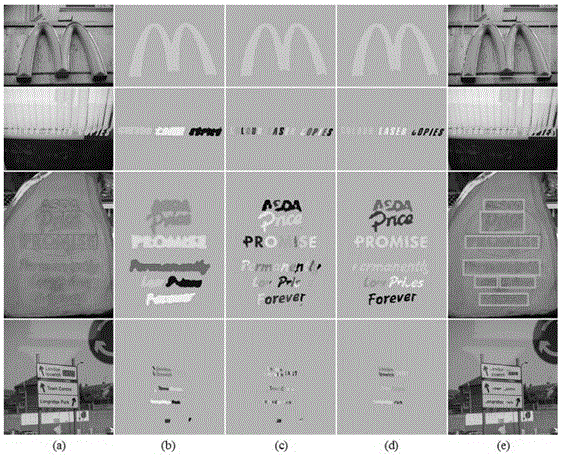

Text saliency-based scene text detection method

ActiveCN106778757AImprove robustnessImprove the ability to distinguishCharacter and pattern recognitionNeural learning methodsSaliency mapText detection

The invention discloses a text saliency-based scene text detection method. The method comprises the following steps of initial text saliency detection, text saliency detailing and text saliency region classification. In the initial text saliency detection stage, a CNN model used for text saliency detection is designed, and the model can automatically learn features capable of representing intrinsic attributes of a text from an image and obtain a saliency map with consciousness for the text. In the text saliency detailing stage, a text saliency detailing CNN model is designed and used for performing further text saliency detection on a rough text saliency region. In the text saliency region classification stage, a text saliency region classification CNN model is used for filtering a non-text region and obtaining a final text detection result. By introducing saliency detection in a scene text detection process, a text region in a scene can be effectively detected, so that the performance of the scene text detection method is improved.

Owner:HARBIN INST OF TECH

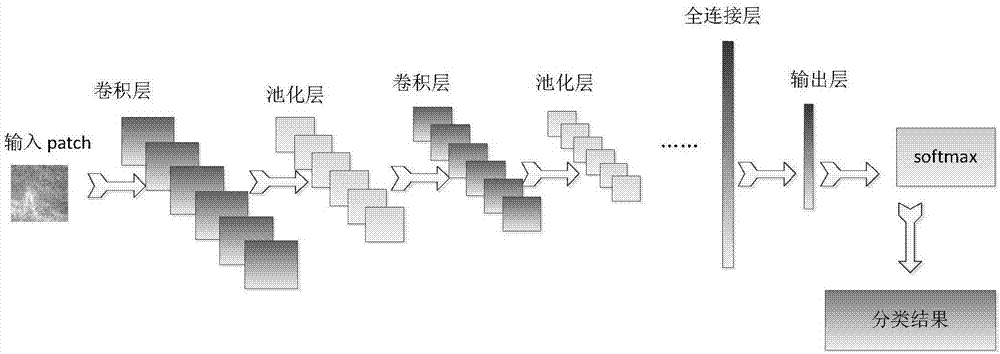

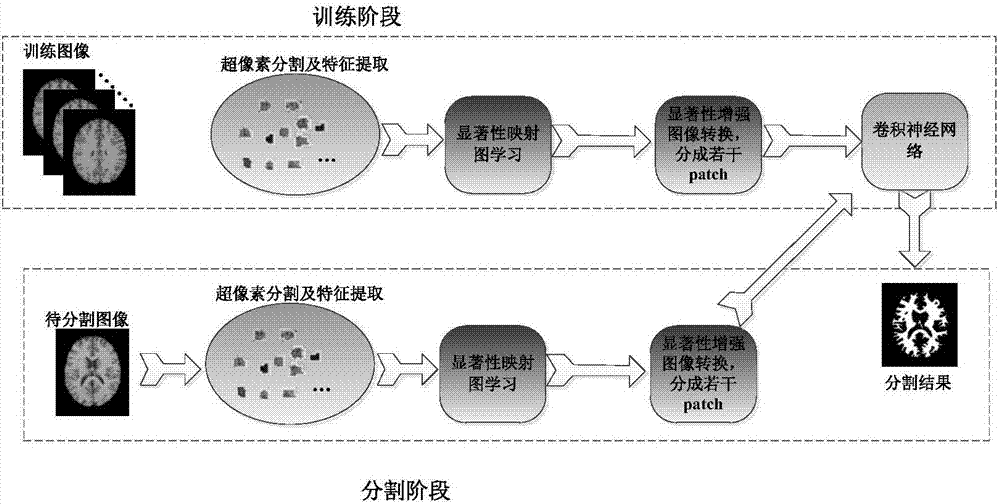

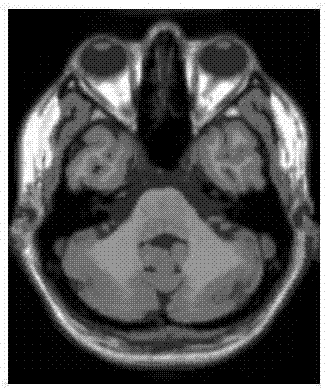

Brain image segmentation method and system based on saliency learning convolution nerve network

ActiveCN107506761AImprove classification performanceGood segmentation resultImage enhancementImage analysisNerve networkSaliency map

The invention discloses a brain image segmentation method and system based on a saliency learning convolution nerve network; the brain image segmentation method comprises the following steps: firstly proposing a saliency learning method to obtain a MR image saliency map; carrying out saliency enhanced transformation according to the saliency map, thus obtaining a saliency enhanced image; splitting the saliency enhanced image into a plurality of image blocks, and training a convolution nerve network so as to serve as the final segmentation model. The saliency learning model can form the saliency map, and said class information is obtained according to a target space position, and has no relation with image gray scale information; the saliency information can obviously enhance the target saliency, thus improving the target class and background class discrimination, and providing certain robustness for gray scale inhomogeneity. The convolution nerve network trained by the saliency enhanced images can be employed to learn the saliency enhanced image discrimination information, thus more effectively solving the gray scale inhomogeneity problems in the brain MR image.

Owner:SHANDONG UNIV

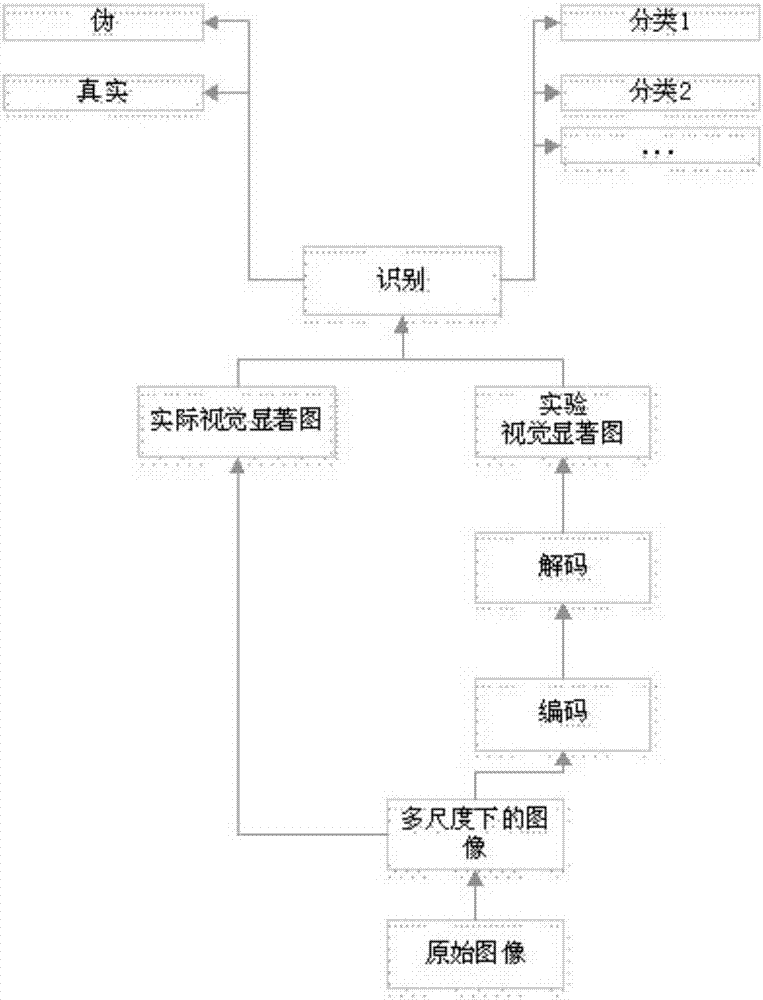

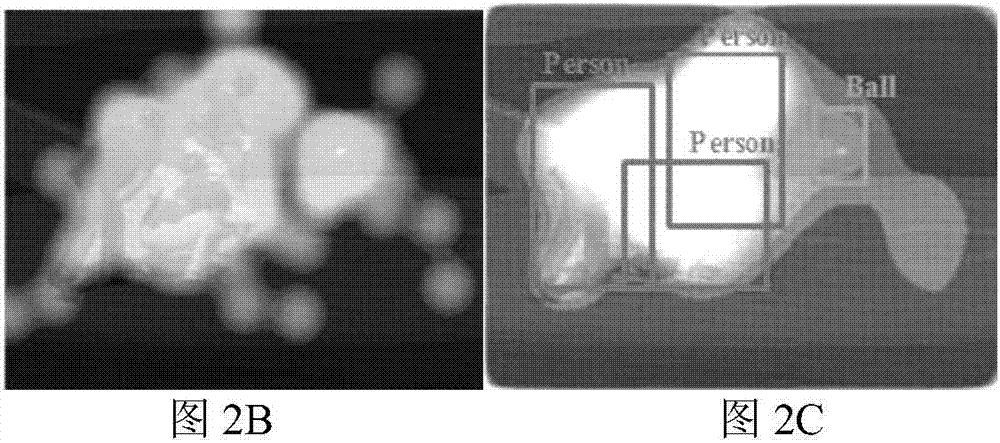

Visual saliency detection method combined with image classification

ActiveCN107346436ARobustEfficient analysisImage enhancementImage analysisPattern recognitionSaliency map

The invention provides a visual saliency detection method combined with image classification. The method comprises the steps of utilizing a visual saliency detecting model which comprises an image coding network, an image decoding network and an image identification model, using a multidirectional image as an input of the image coding network, and extracting an image characteristic on the condition of multiple resolution as a coding characteristic vector F; fixing a weight except for the last two layers in the image coding network, and training network parameters for obtaining a visual saliency picture of an original image; using the F as the input of the image decoding network, and performing normalization processing on the saliency picture which corresponds with the original image; for the input F of the image decoding network, finally obtaining a generated visual saliency picture through an upsampling layer and a nonlinear sigmoid layer; by means of the image identification network, using the visual saliency picture of the original image and the generated visual saliency picture as the input, performing characteristic extraction by means of a convolutional layer with a small convolution kernel and performing pooling processing, and finally outputting probability distribution of the generated picture and probability distribution of classification labels by means of three total connecting layers. The method provided by the invention realizes quick and effective image analysis and determining and furthermore realizes good effects such as saving manpower and physical resource costs and remarkably improving accuracy in practices such as image marking, supervising and behavior predicating.

Owner:以萨技术股份有限公司

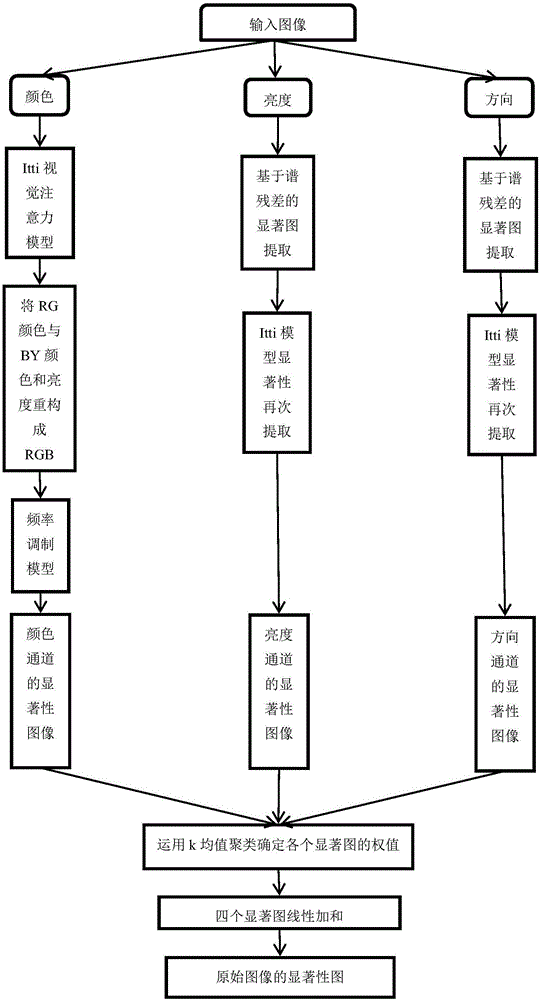

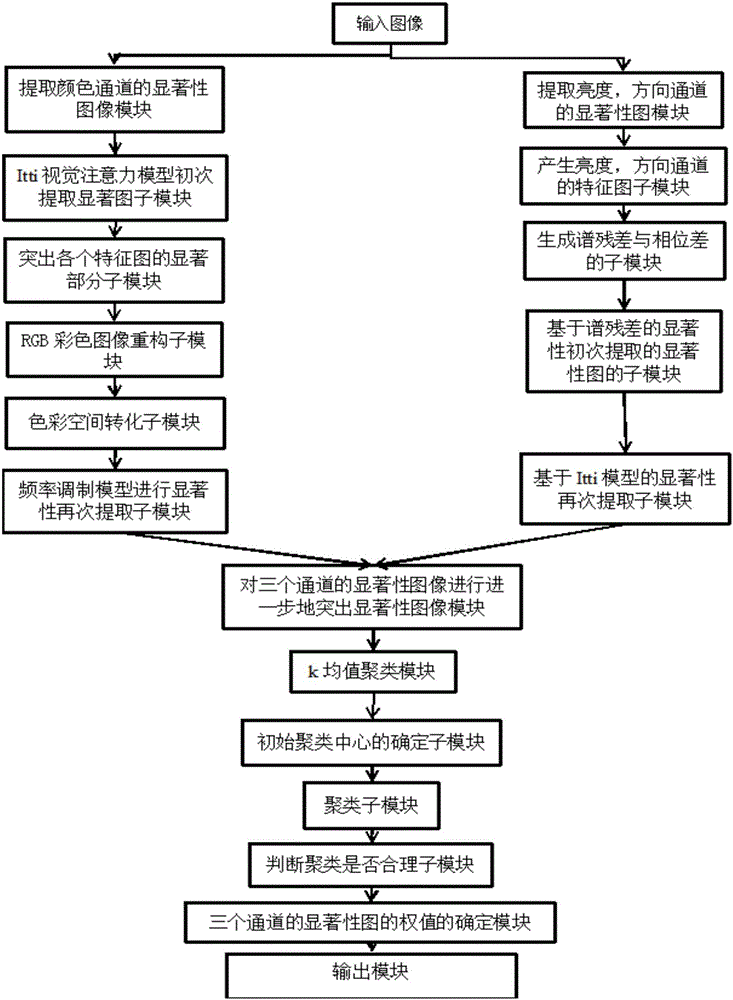

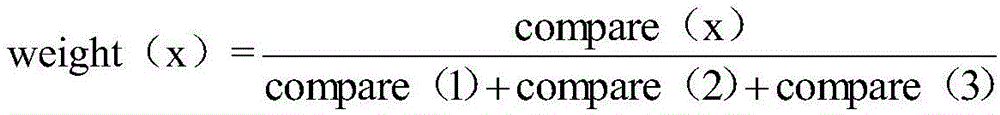

Visual saliency object detection method

ActiveCN105825238APrecise positioningImprove accuracyImage enhancementImage analysisSaliency mapVisual saliency

The present invention discloses a visual saliency object detection method. The method comprises the steps: (1) inputting an image to be detected, extracting saliency maps of the improved color, the brightness and the direction on the basis of an Itti visual attention model through adoption of spectral residual and a frequency modulation model; (2) obtaining the comparison difference of a saliency area and a non-saliency area in each channel saliency image through k-means clustering, and obtaining the optimal weight of each channel saliency image; and (3) weighting of the saliency image of each channel, and obtaining the saliency image of an original image, wherein the saliency area of the saliency image is an object area. The visual saliency object detection method is able to improve the deficiency of the visual attention model, effectively highlight the saliency area and inhibit the non-saliency area so as to simulate the location of human vision attention for a natural scene target.

Owner:JIANGSU UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com