Deep learning saliency detection method based on global a priori and local context

A technology of deep learning and detection methods, applied in the fields of image processing and computer vision, can solve the problems of misdetection of high-level features, failure to effectively detect prominent objects in complex background images, etc., achieve good robustness, reduce learning ambiguity, and detect The results are accurate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

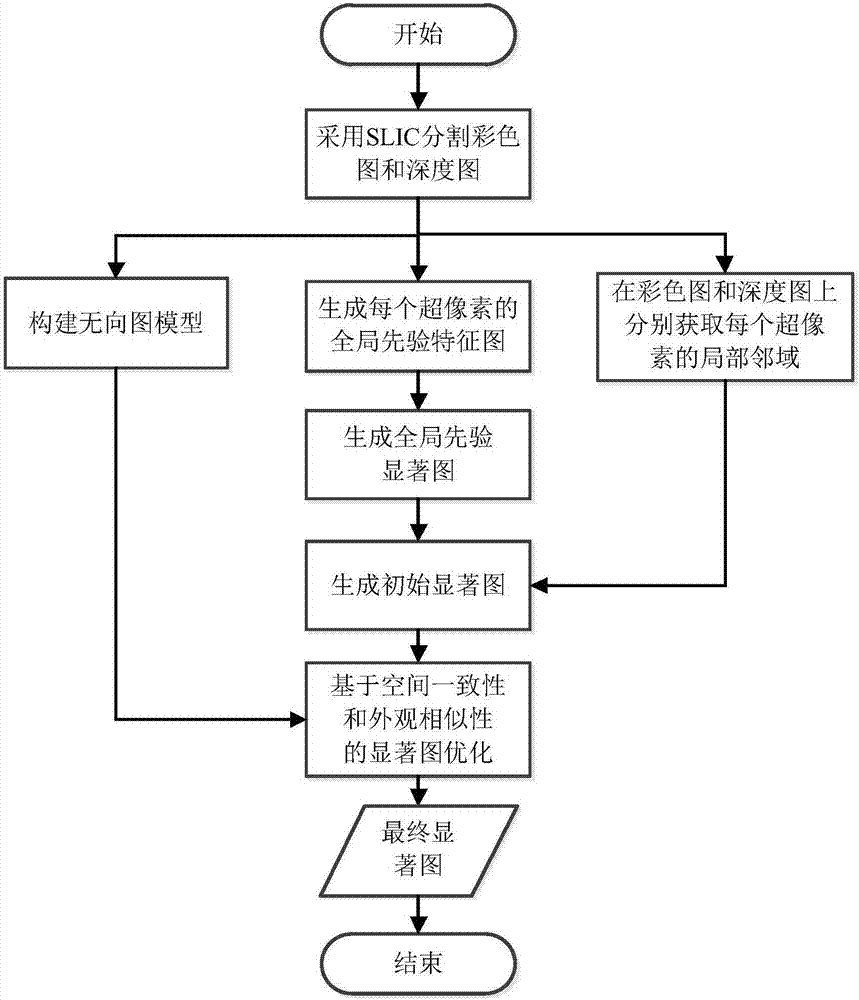

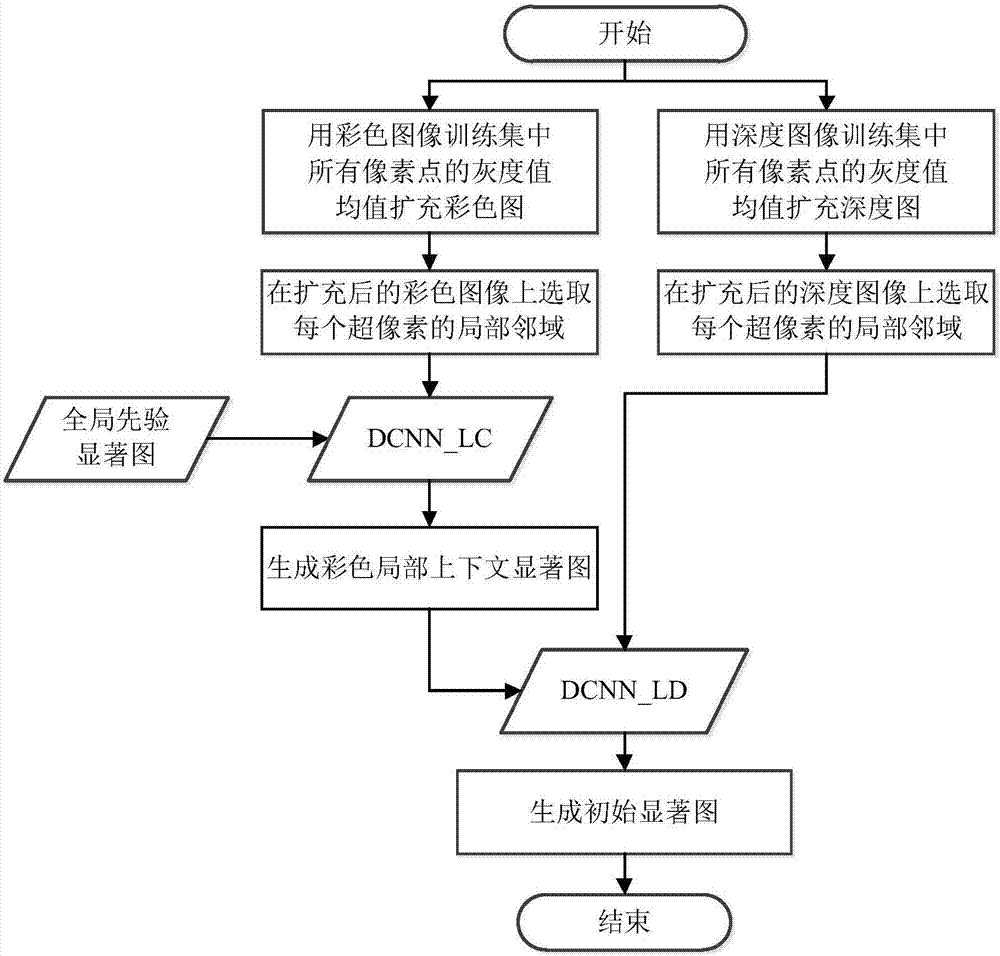

[0033] The invention provides a deep learning saliency detection method based on global prior and local context. The method first performs superpixel segmentation on color images and depth images, and based on middle-level features such as compactness, uniqueness and background of superpixels , through the global prior deep learning model, the global prior saliency map is calculated; combined with the global prior saliency map and the local context information in the color image and the depth image, the initial saliency map is obtained through the deep learning model; finally, according to the spatial consistency The initial saliency map is optimized based on the similarity of sex and appearance, and the final saliency map is obtained. The invention is suitable for image salience detection, has good robustness, and the detection result is accurate.

[0034] Such as figure 1 Shown, the present invention comprises the following steps:

[0035] 1) Use the SLIC superpixel segmen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com