Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1484 results about "Interactive displays" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

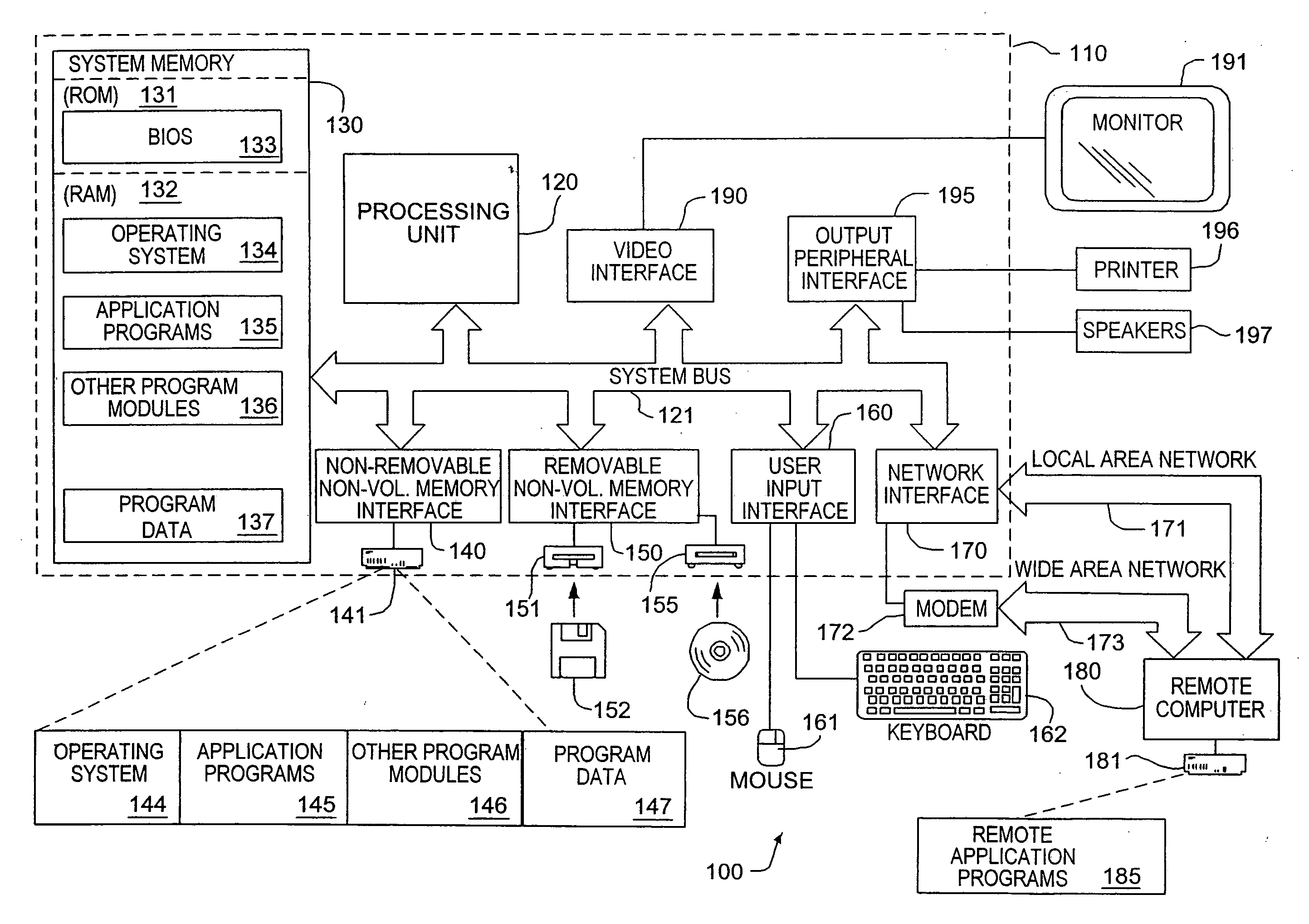

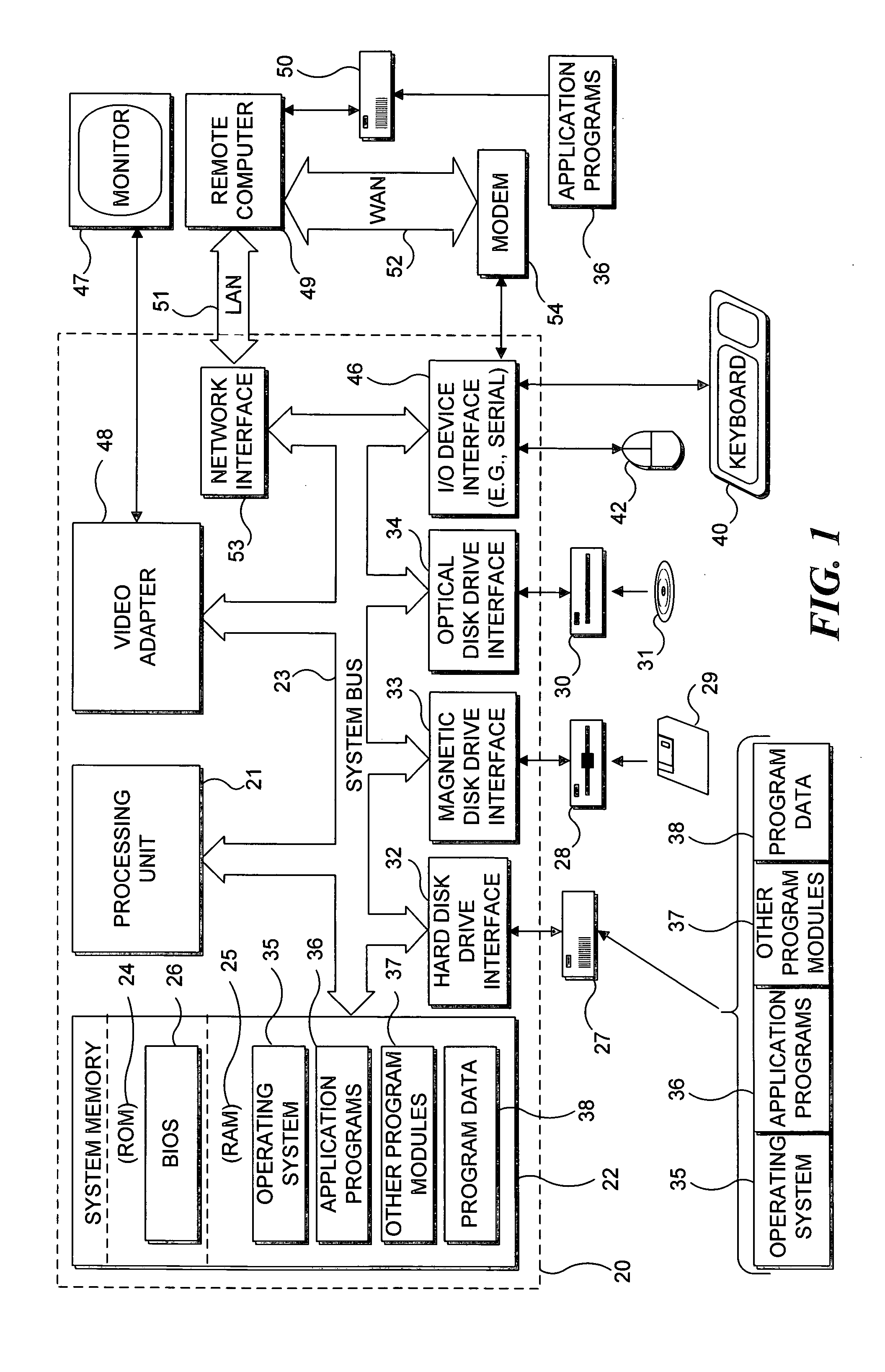

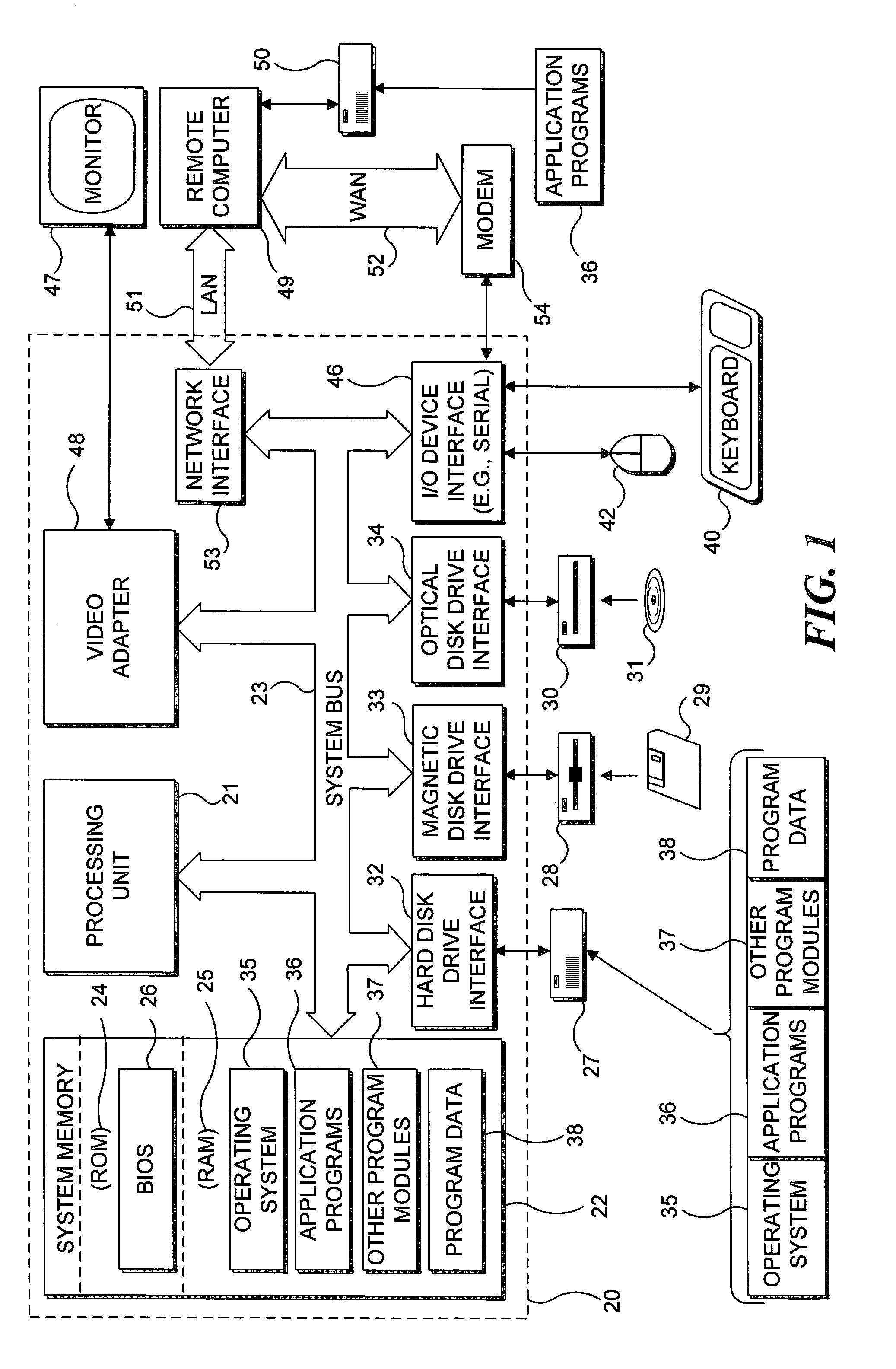

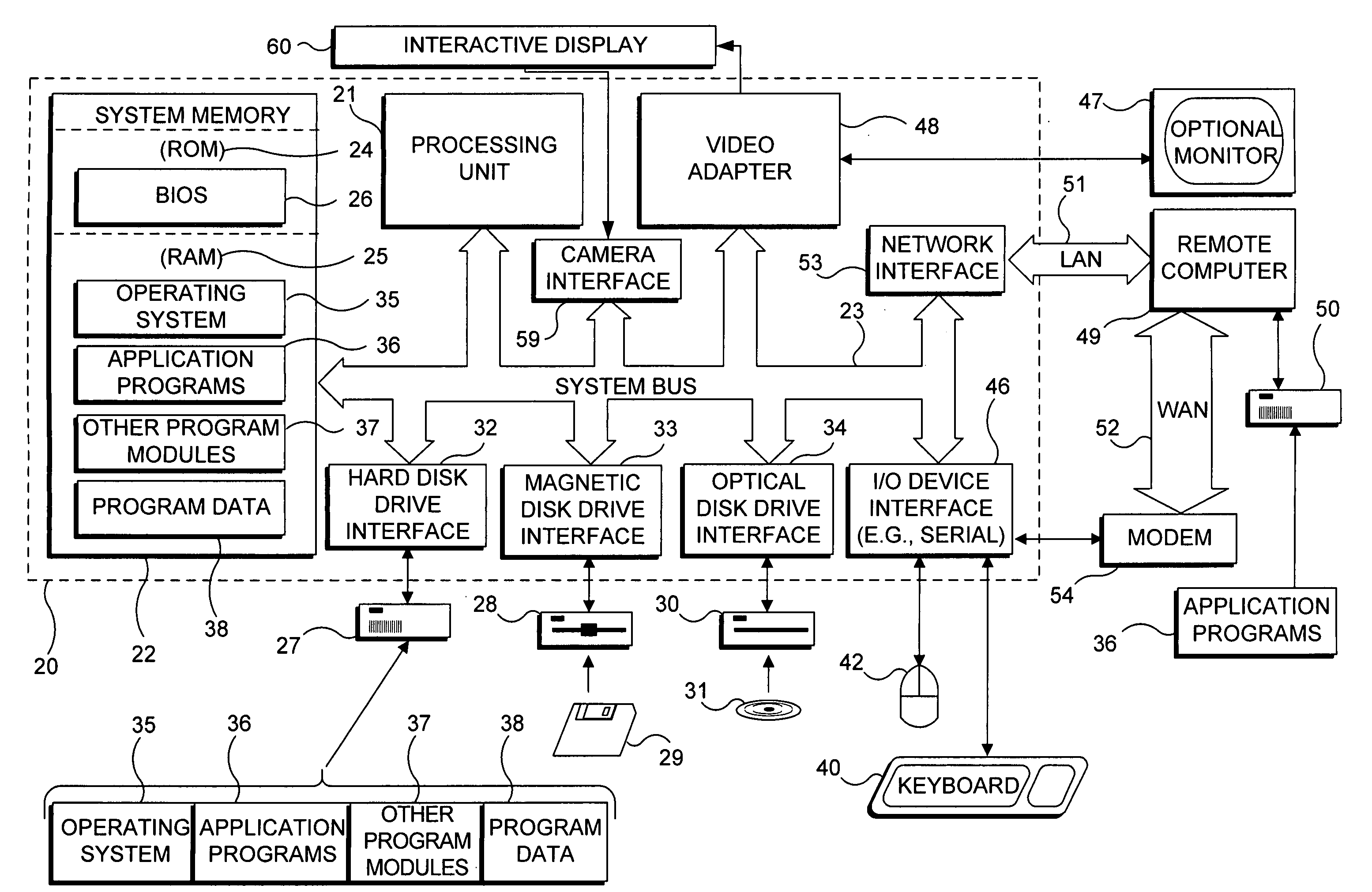

Recognizing gestures and using gestures for interacting with software applications

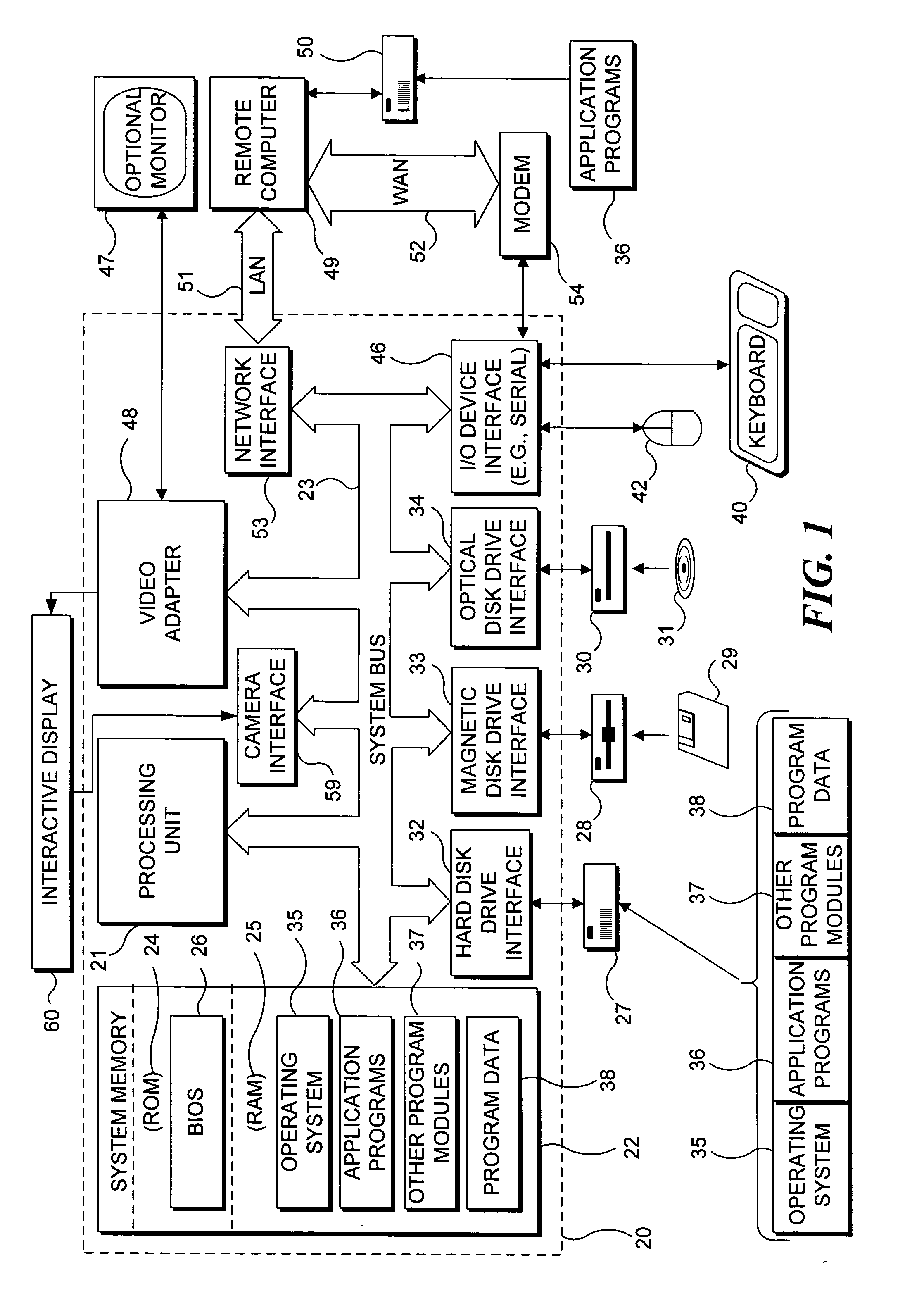

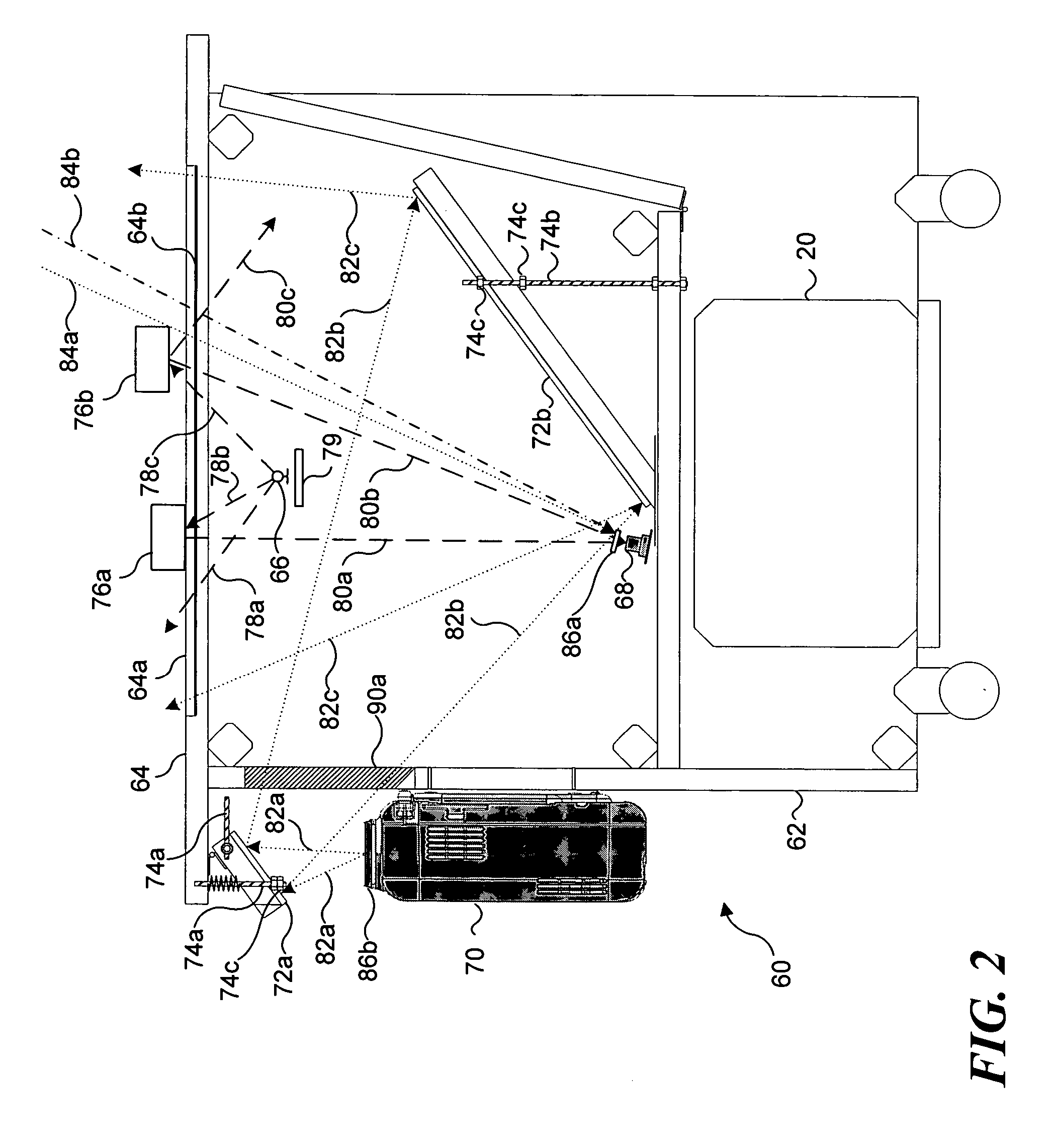

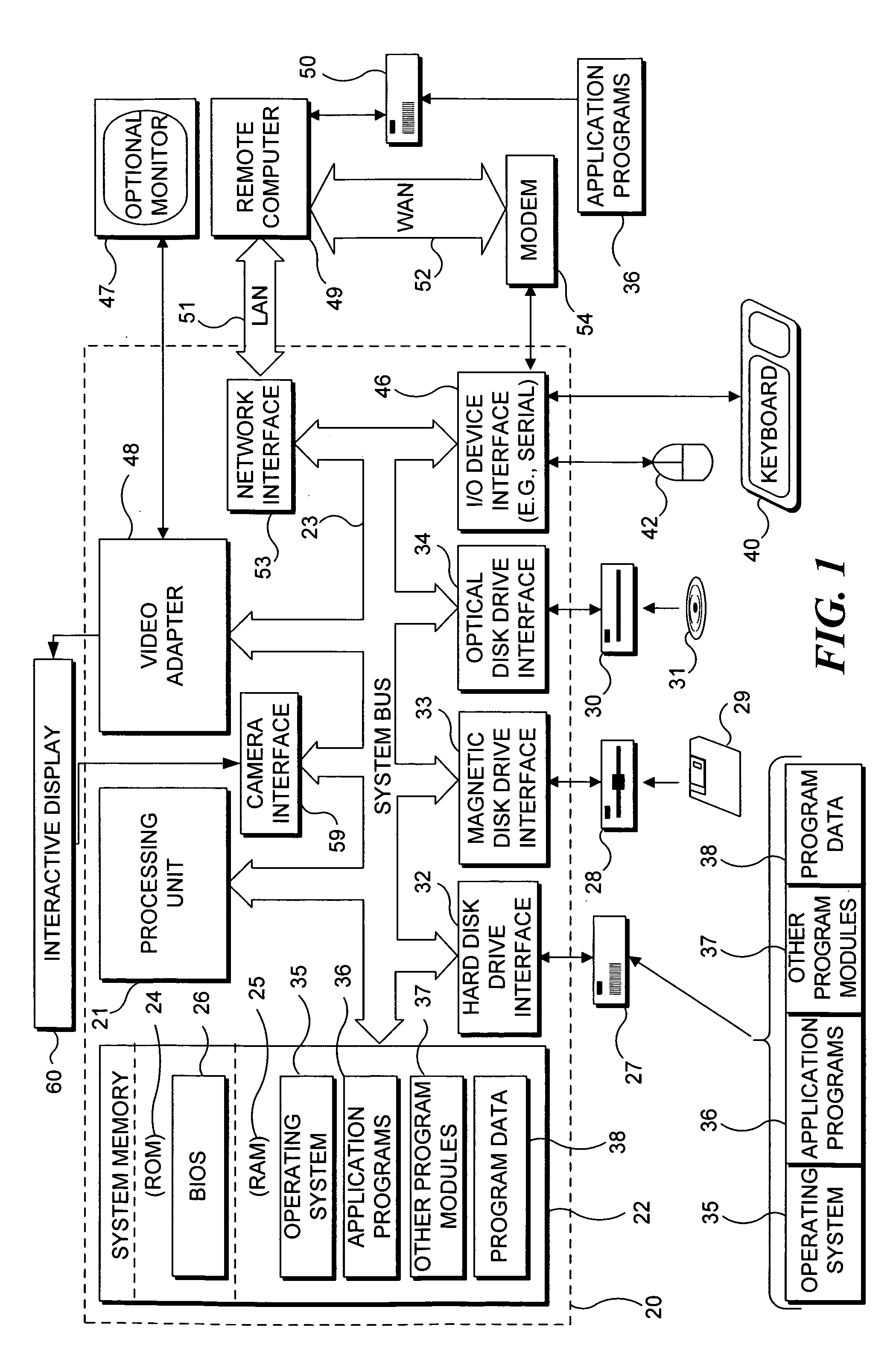

InactiveUS20060010400A1Character and pattern recognitionColor television detailsInteractive displaysHuman–computer interaction

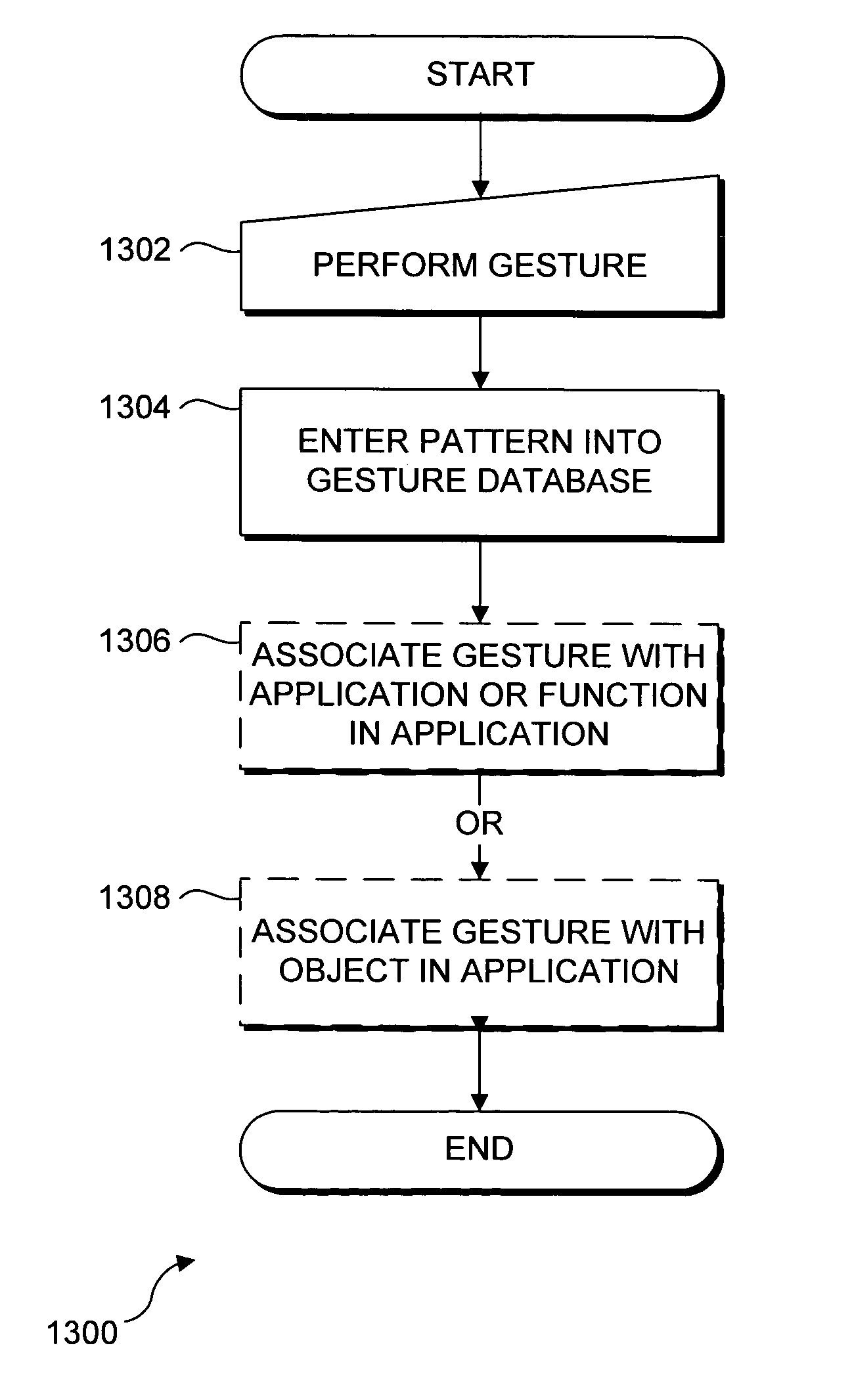

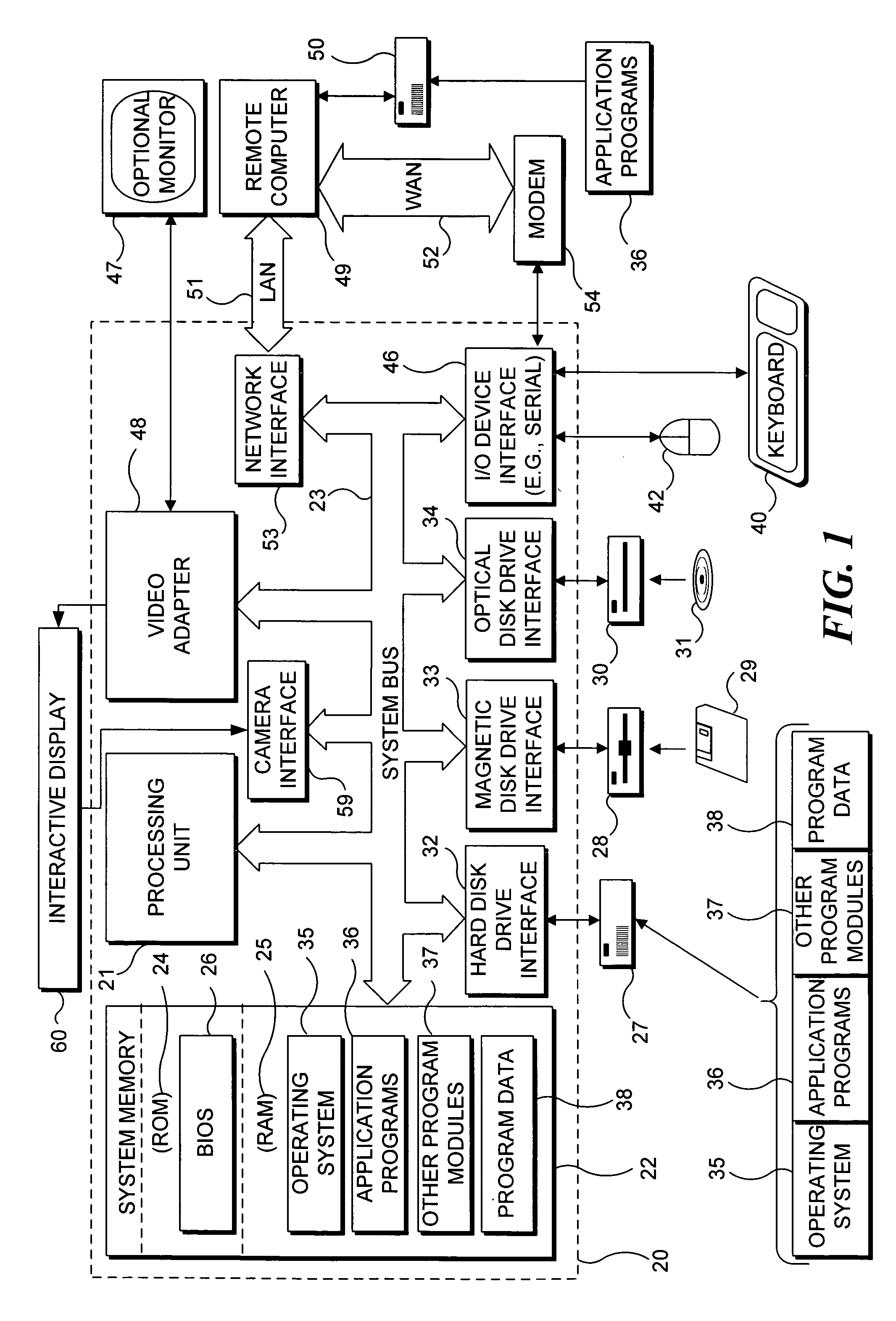

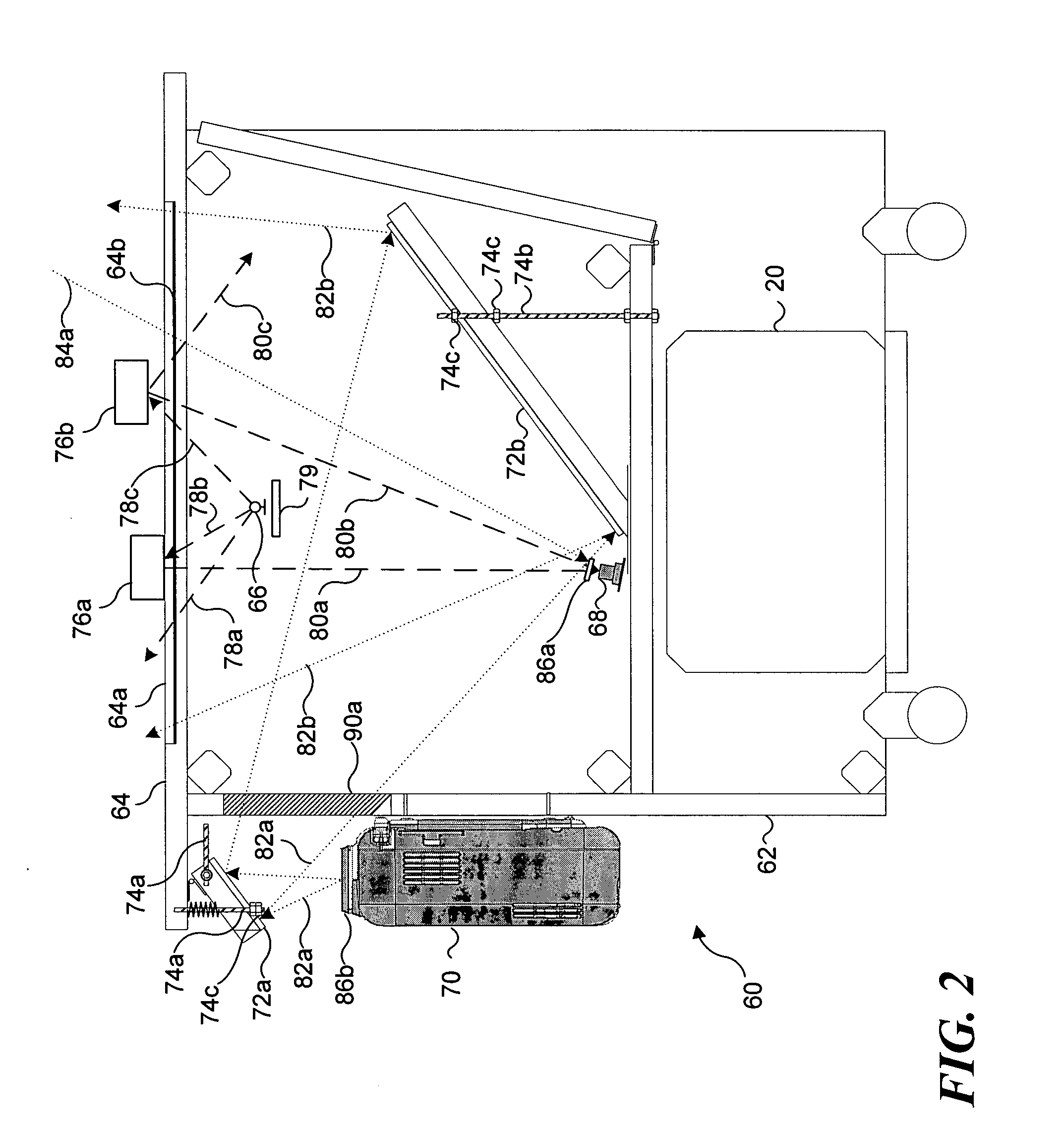

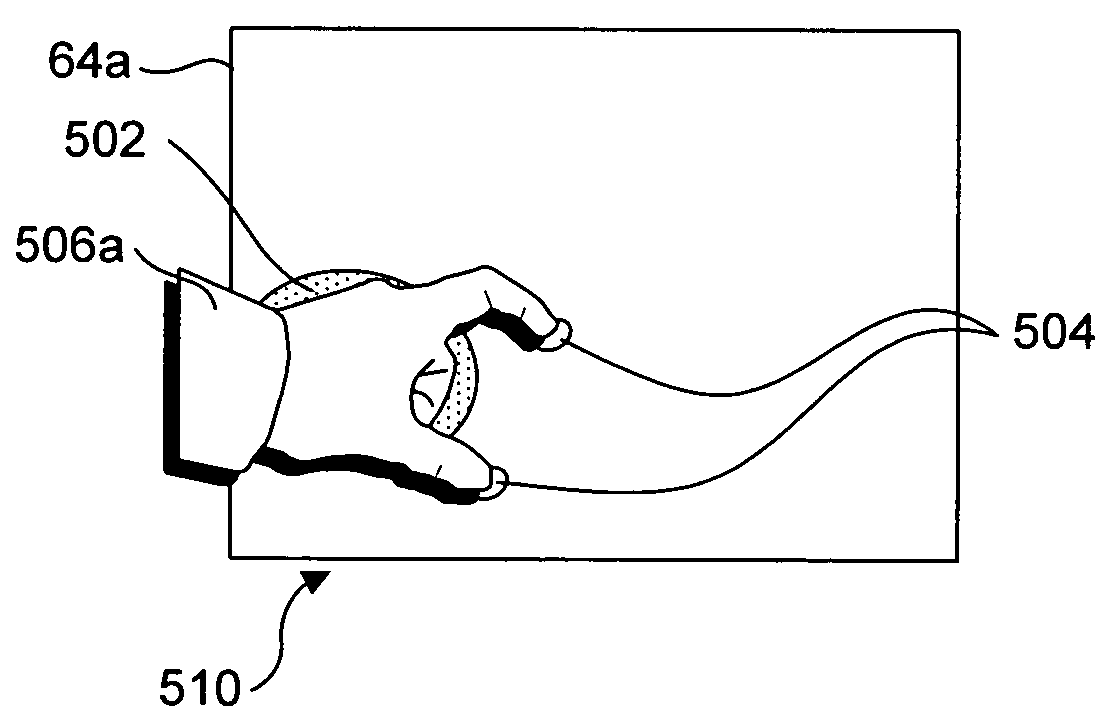

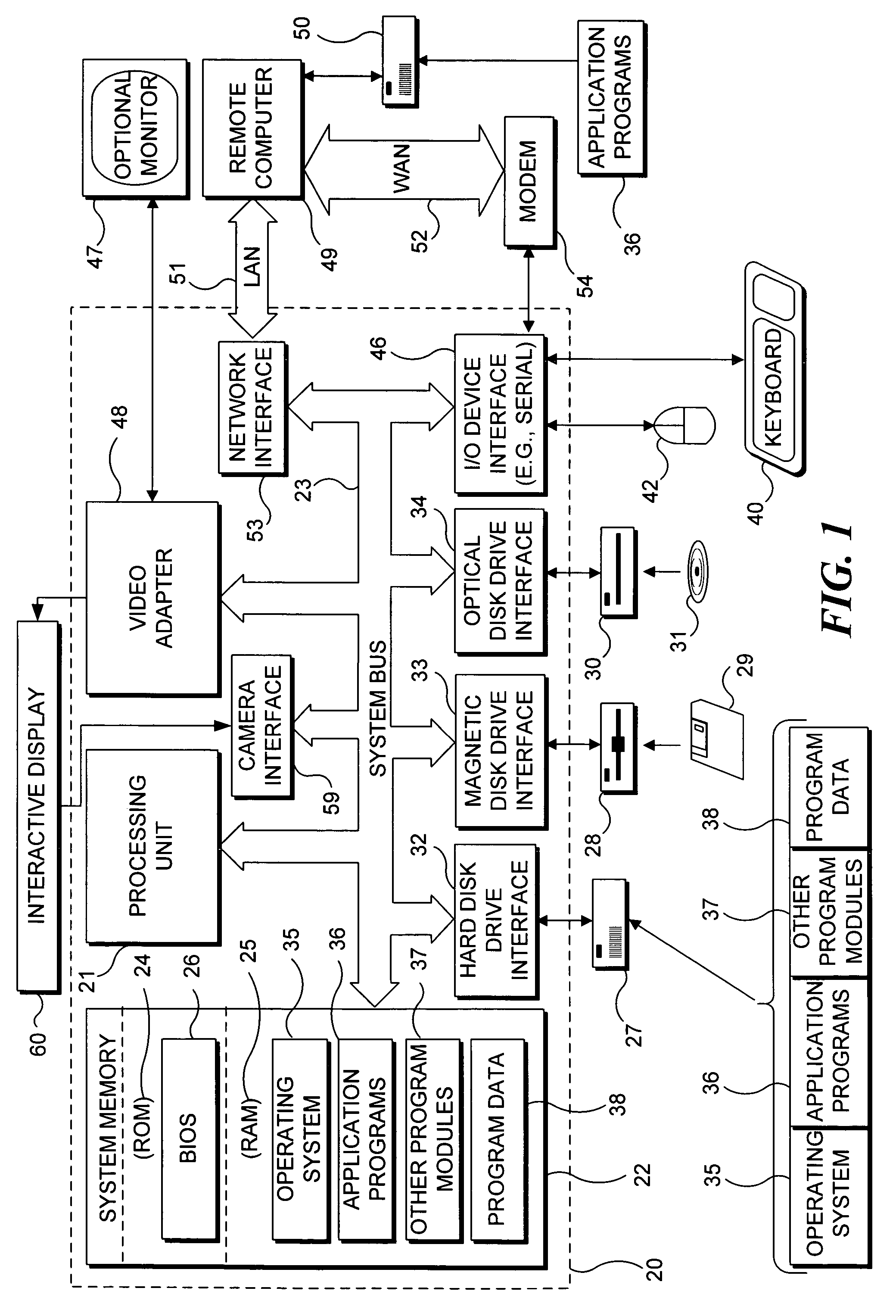

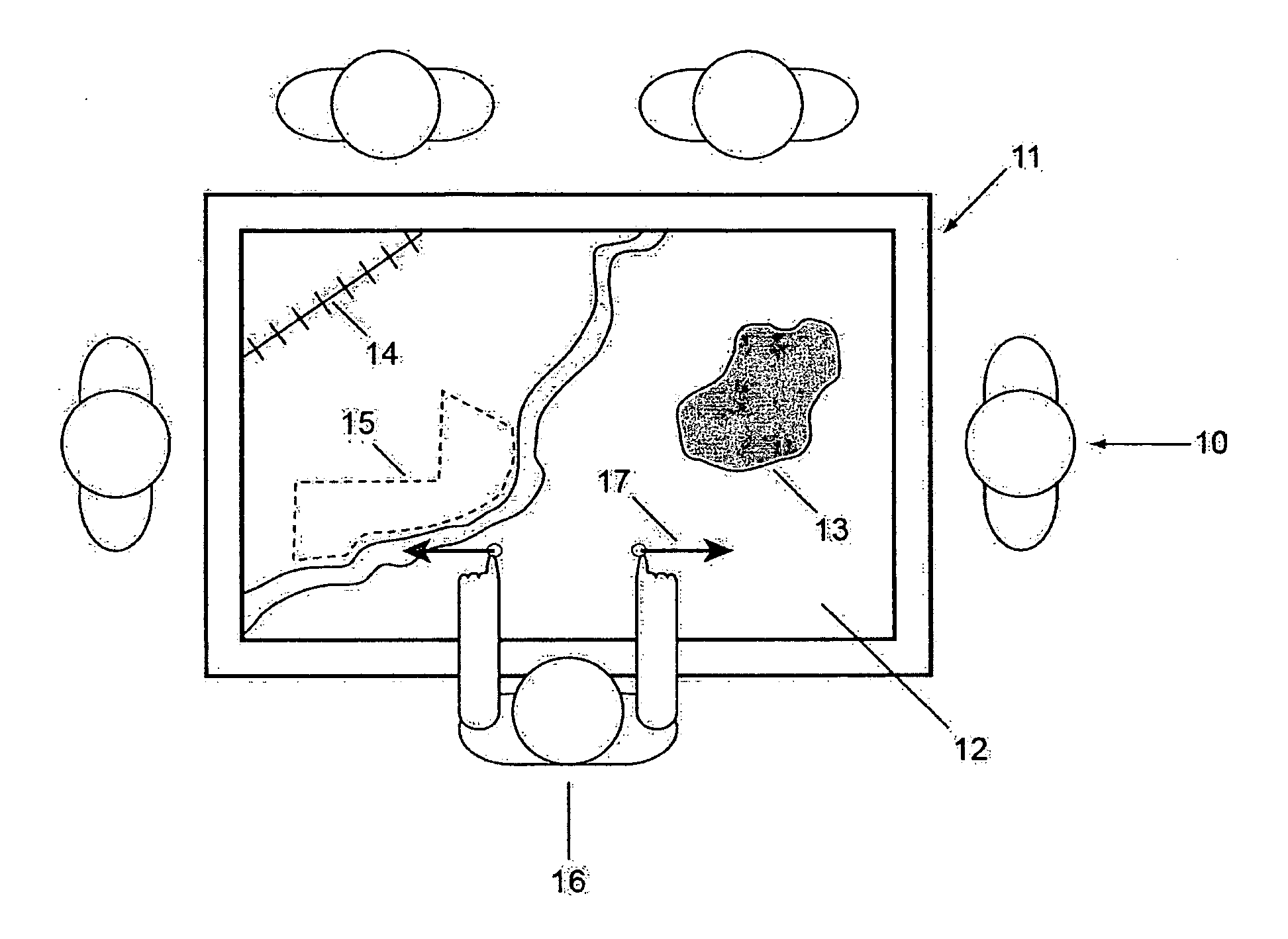

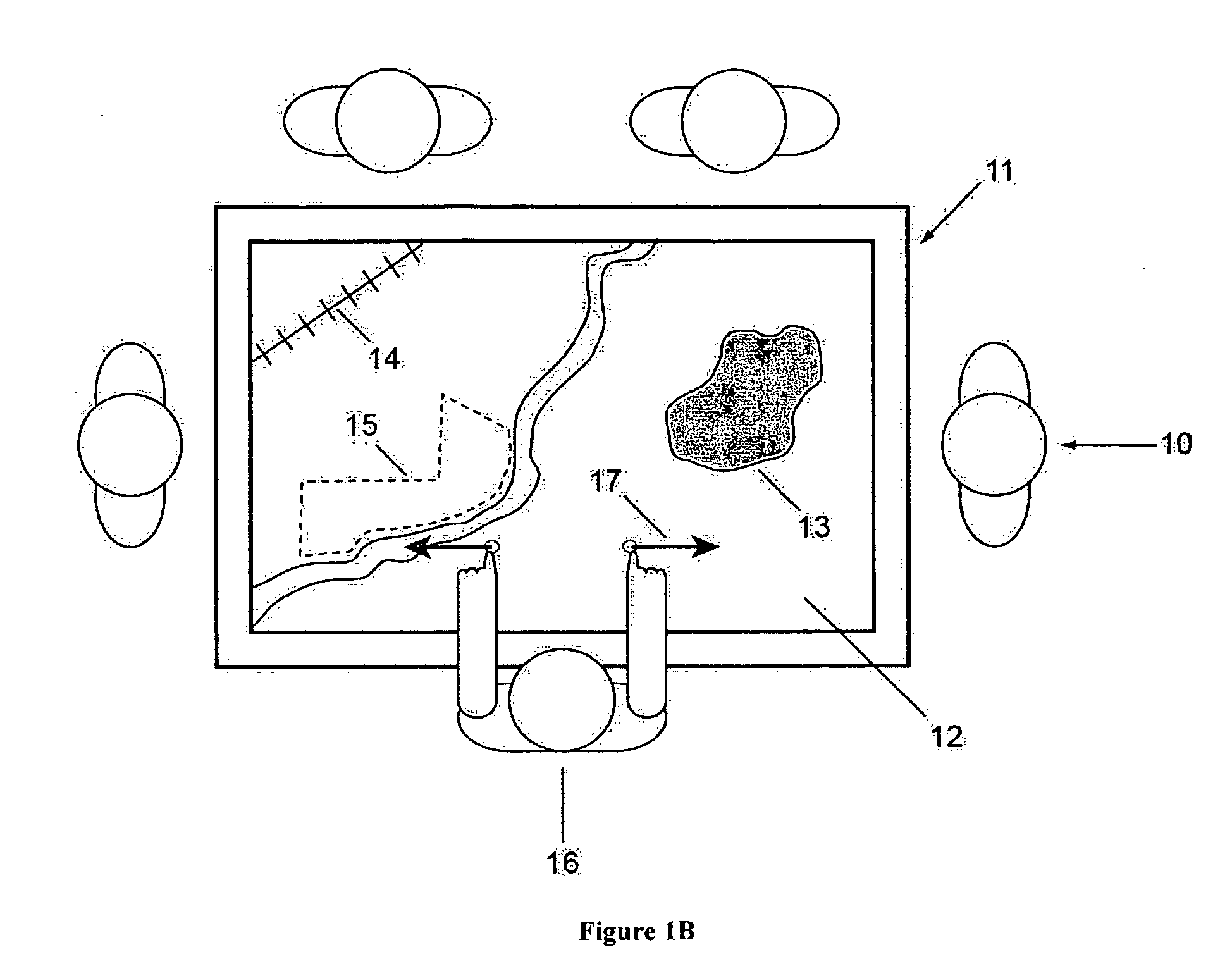

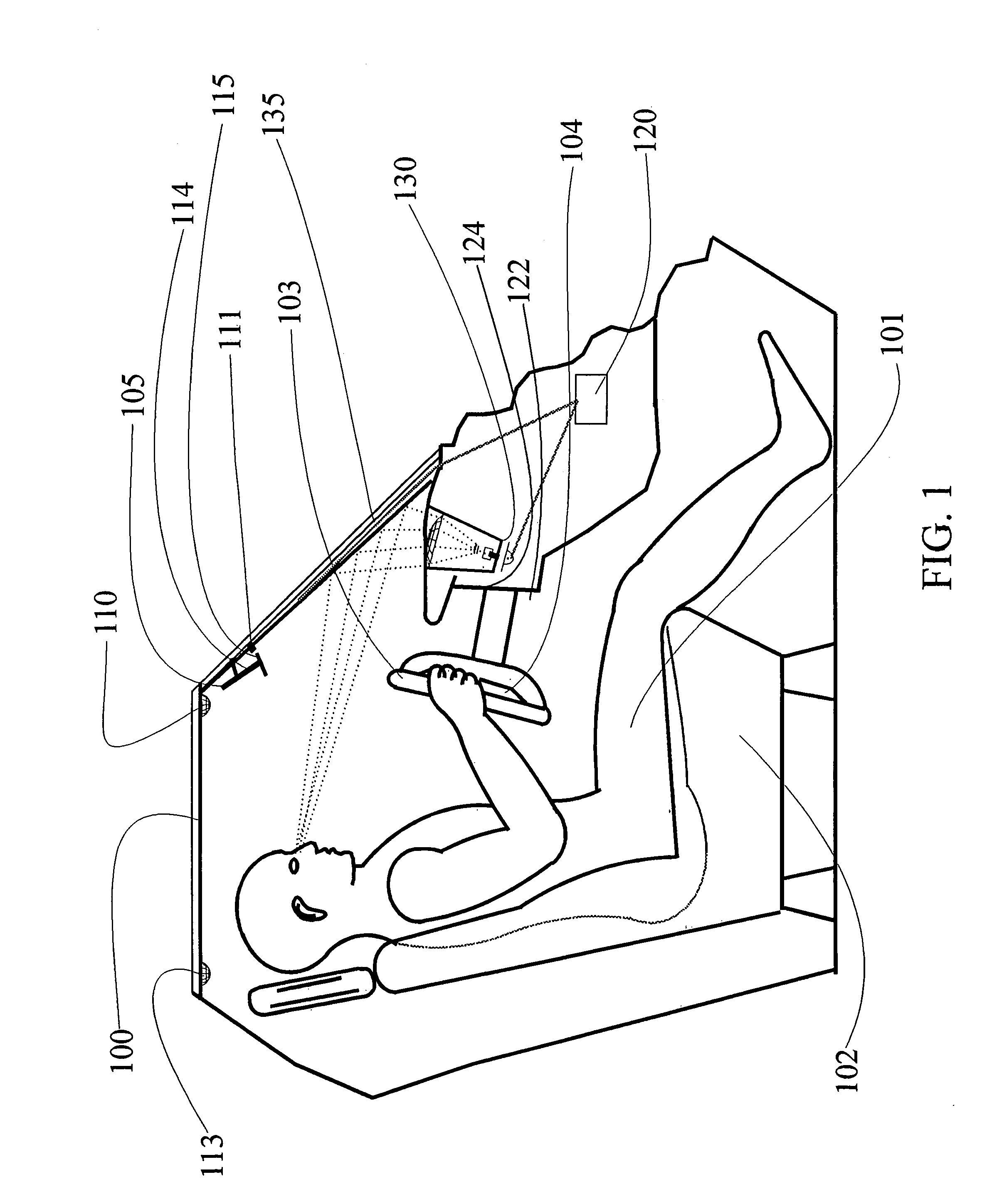

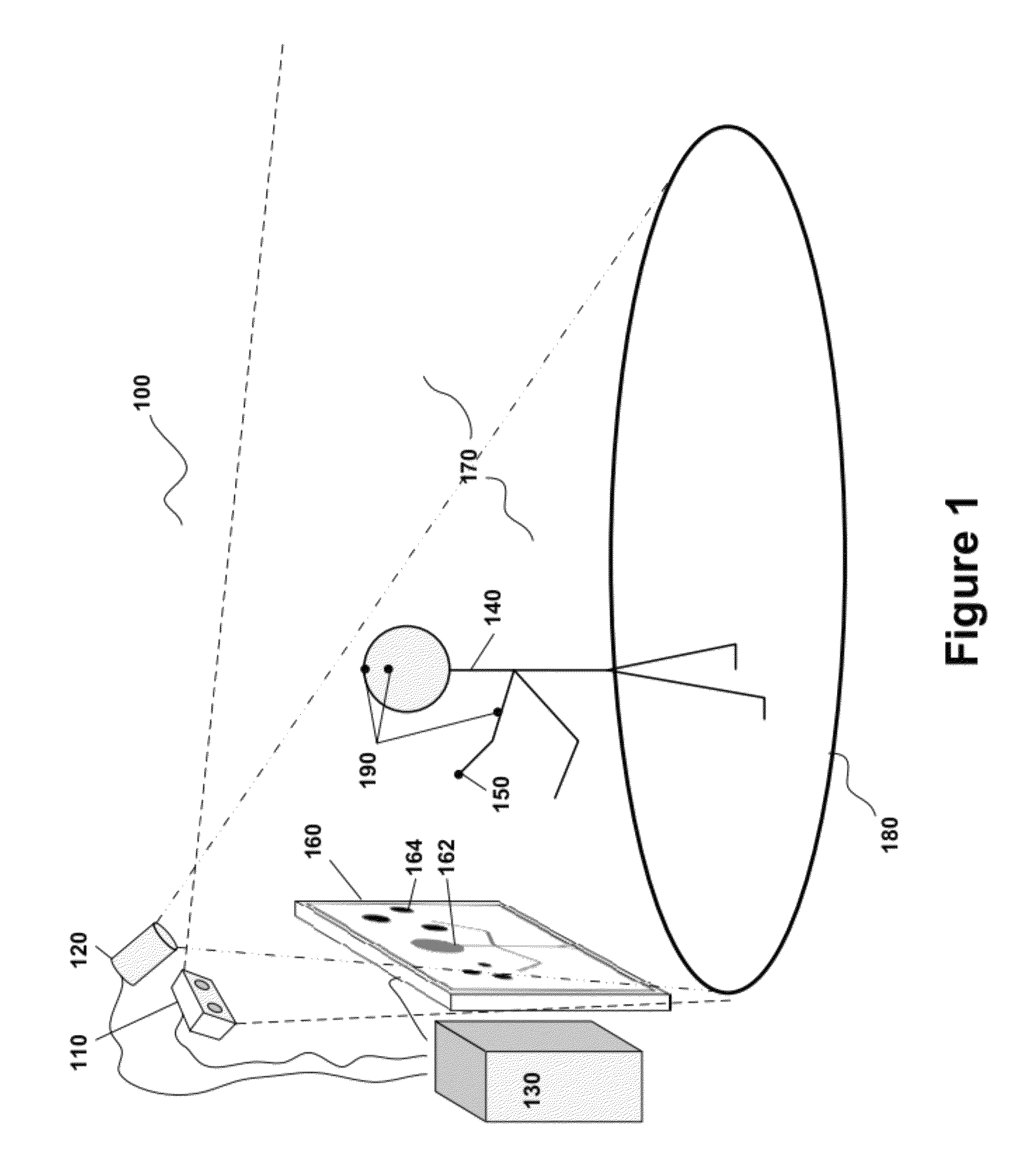

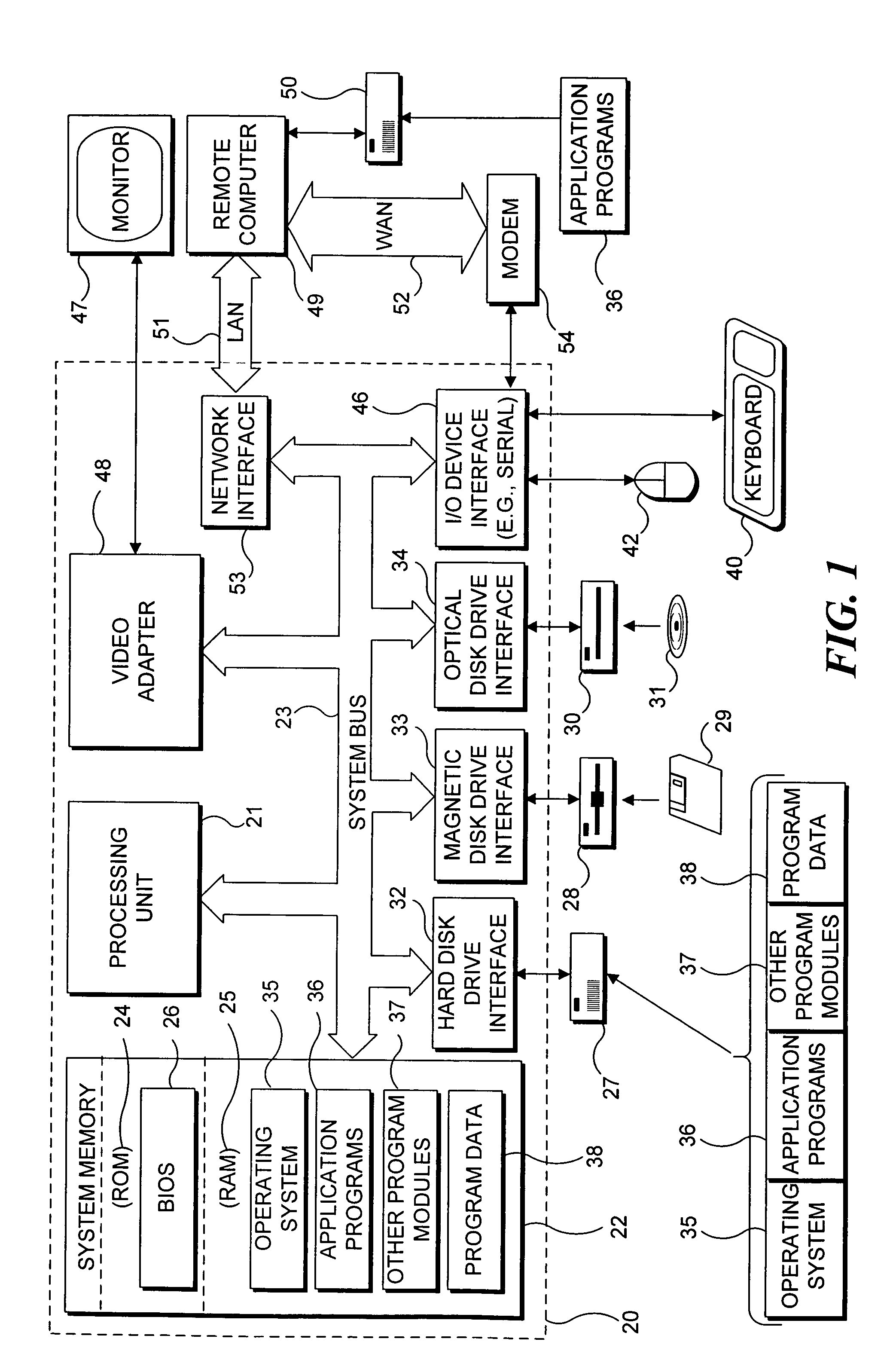

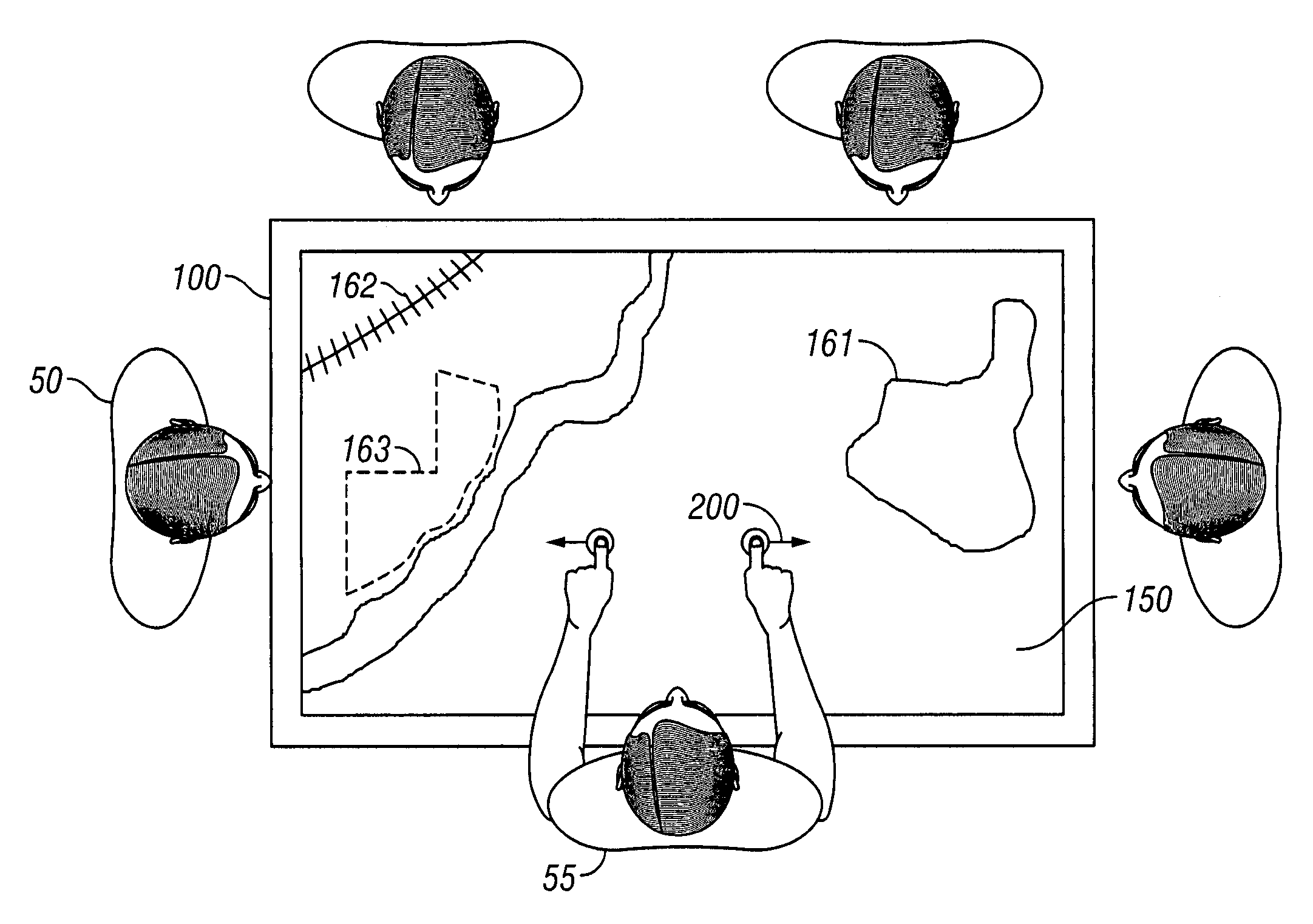

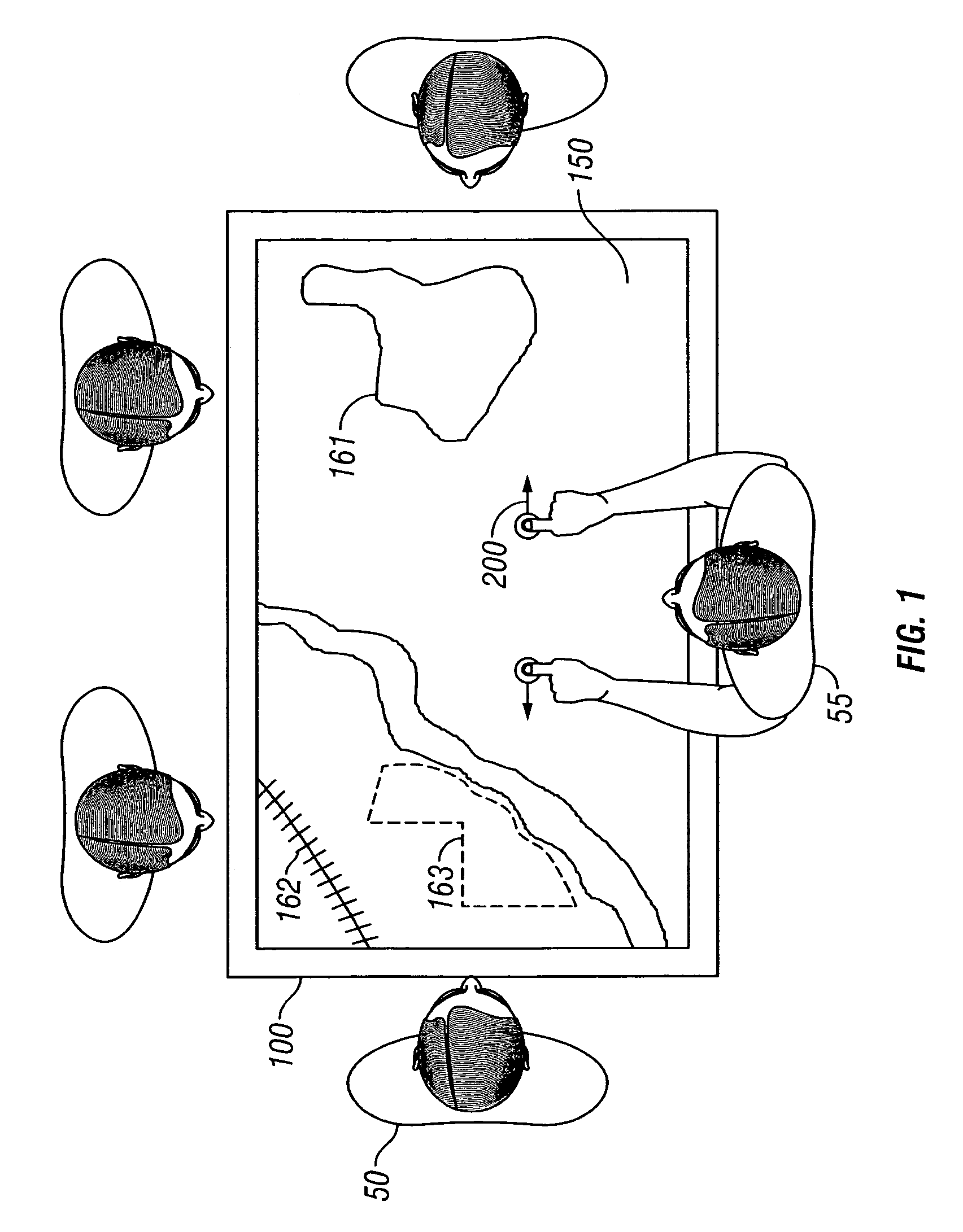

An interactive display table has a display surface for displaying images and upon or adjacent to which various objects, including a user's hand(s) and finger(s) can be detected. A video camera within the interactive display table responds to infrared (IR) light reflected from the objects to detect any connected components. Connected component correspond to portions of the object(s) that are either in contact, or proximate the display surface. Using these connected components, the interactive display table senses and infers natural hand or finger positions, or movement of an object, to detect gestures. Specific gestures are used to execute applications, carryout functions in an application, create a virtual object, or do other interactions, each of which is associated with a different gesture. A gesture can be a static pose, or a more complex configuration, and / or movement made with one or both hands or other objects.

Owner:MICROSOFT TECH LICENSING LLC

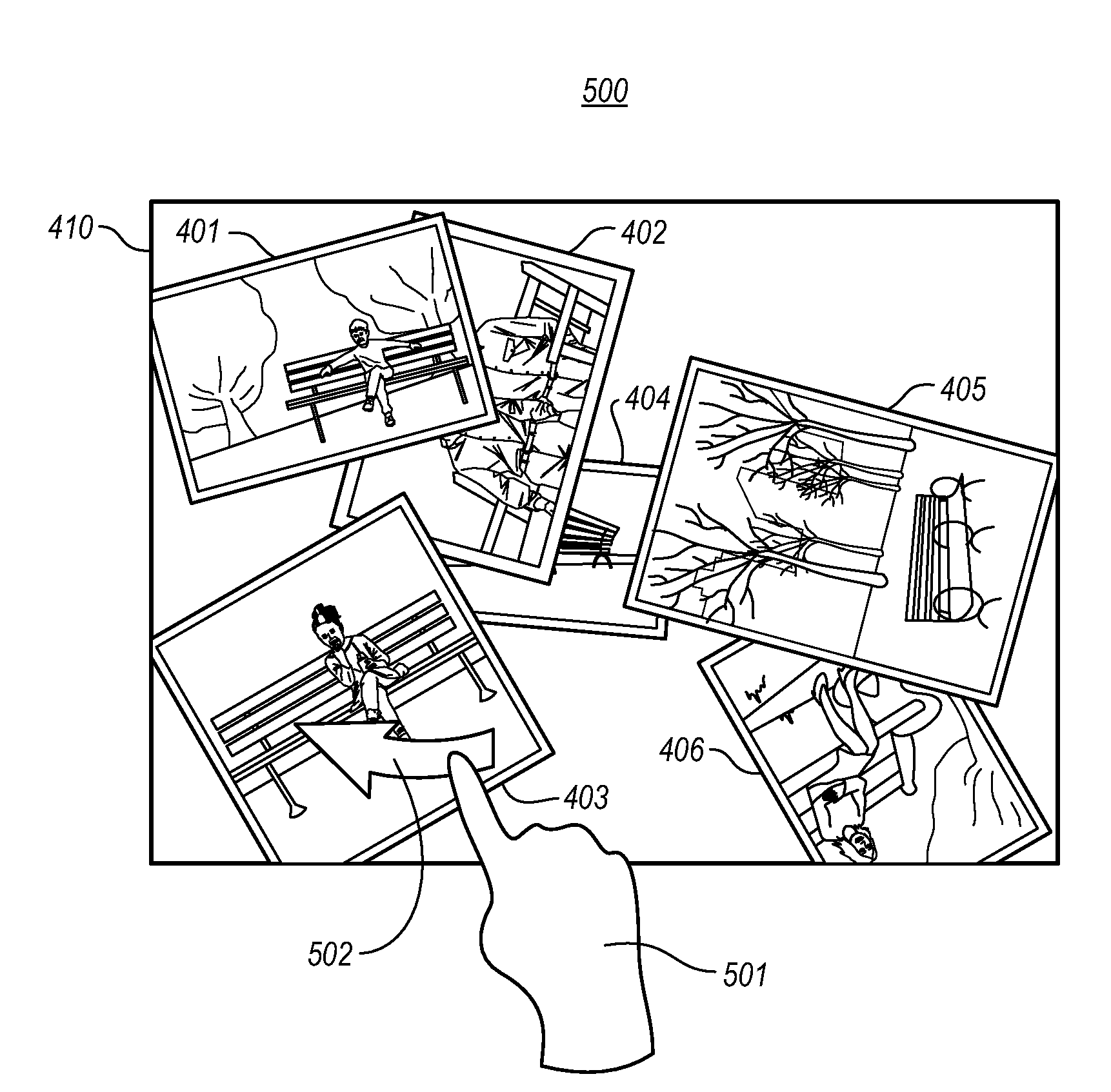

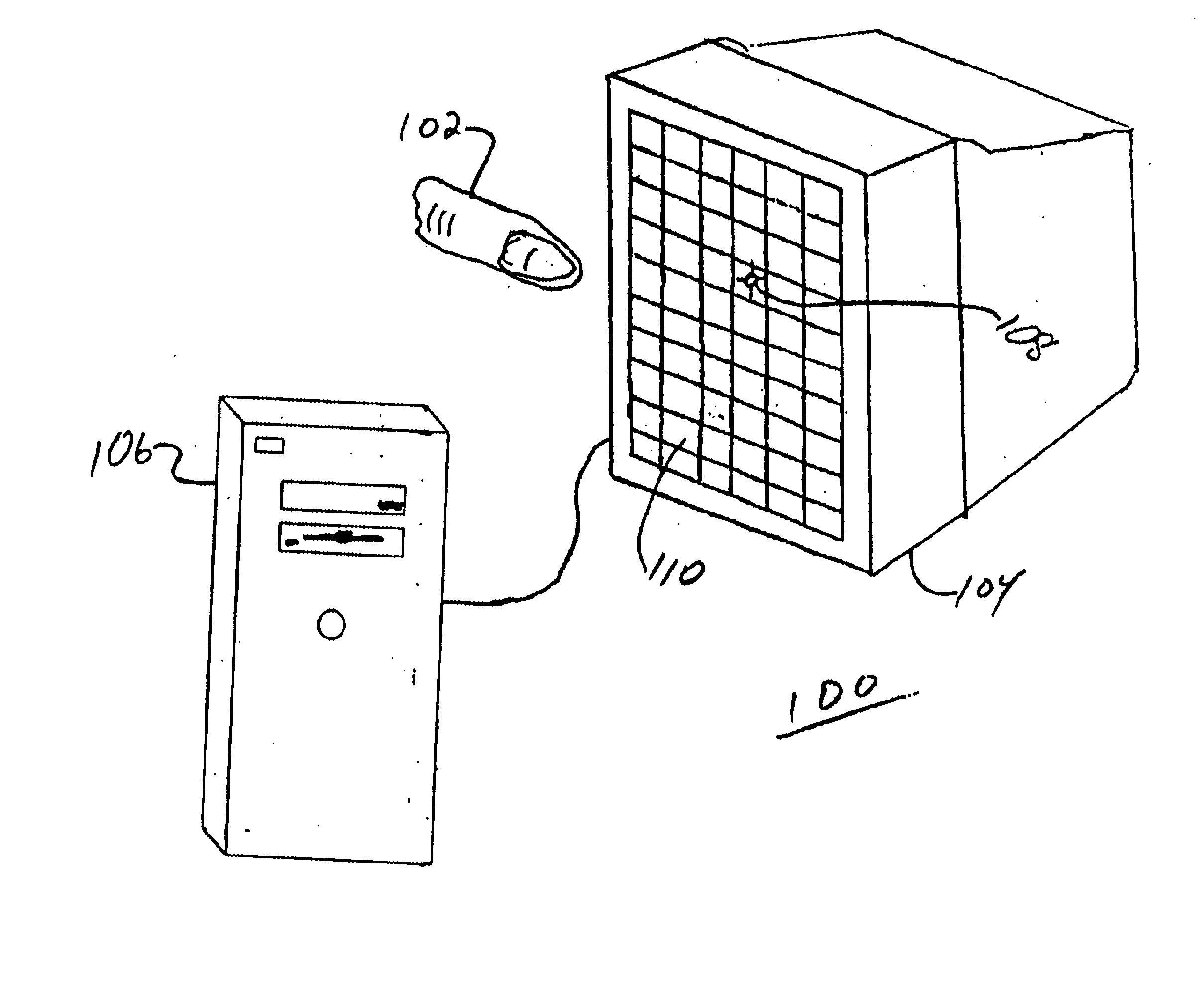

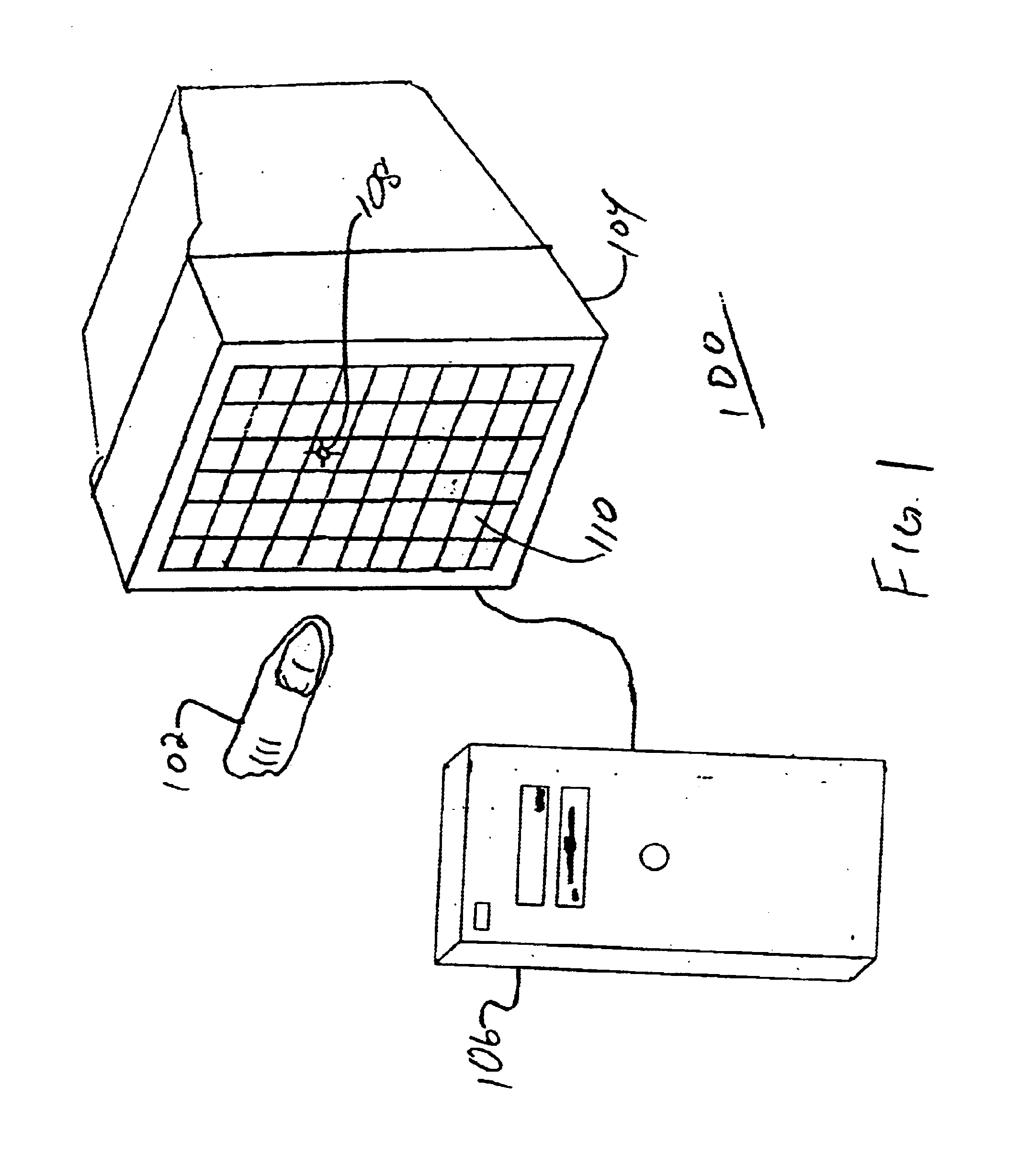

Using physical objects to adjust attributes of an interactive display application

InactiveUS20060001650A1Cathode-ray tube indicatorsInput/output processes for data processingInteractive displaysHuman–computer interaction

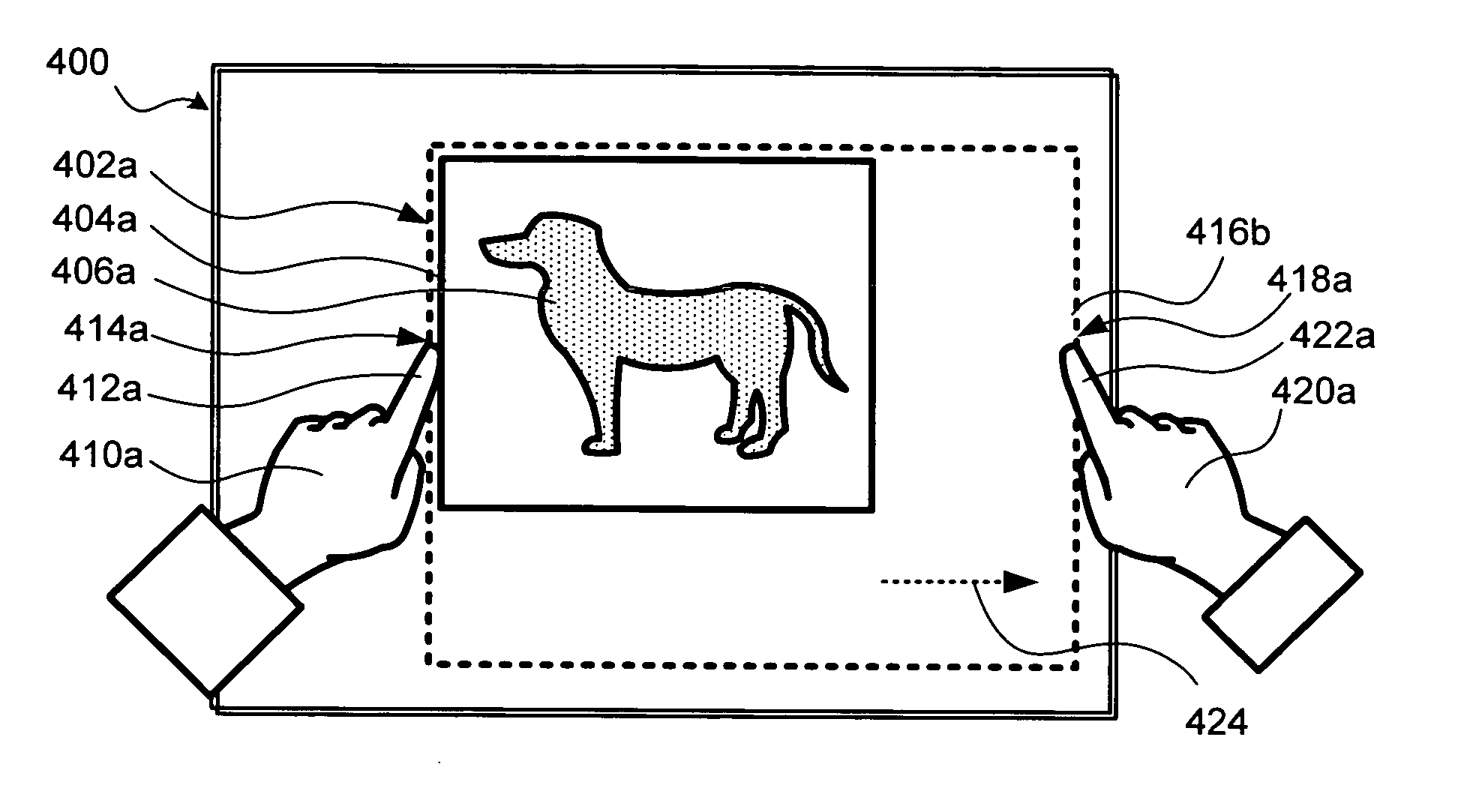

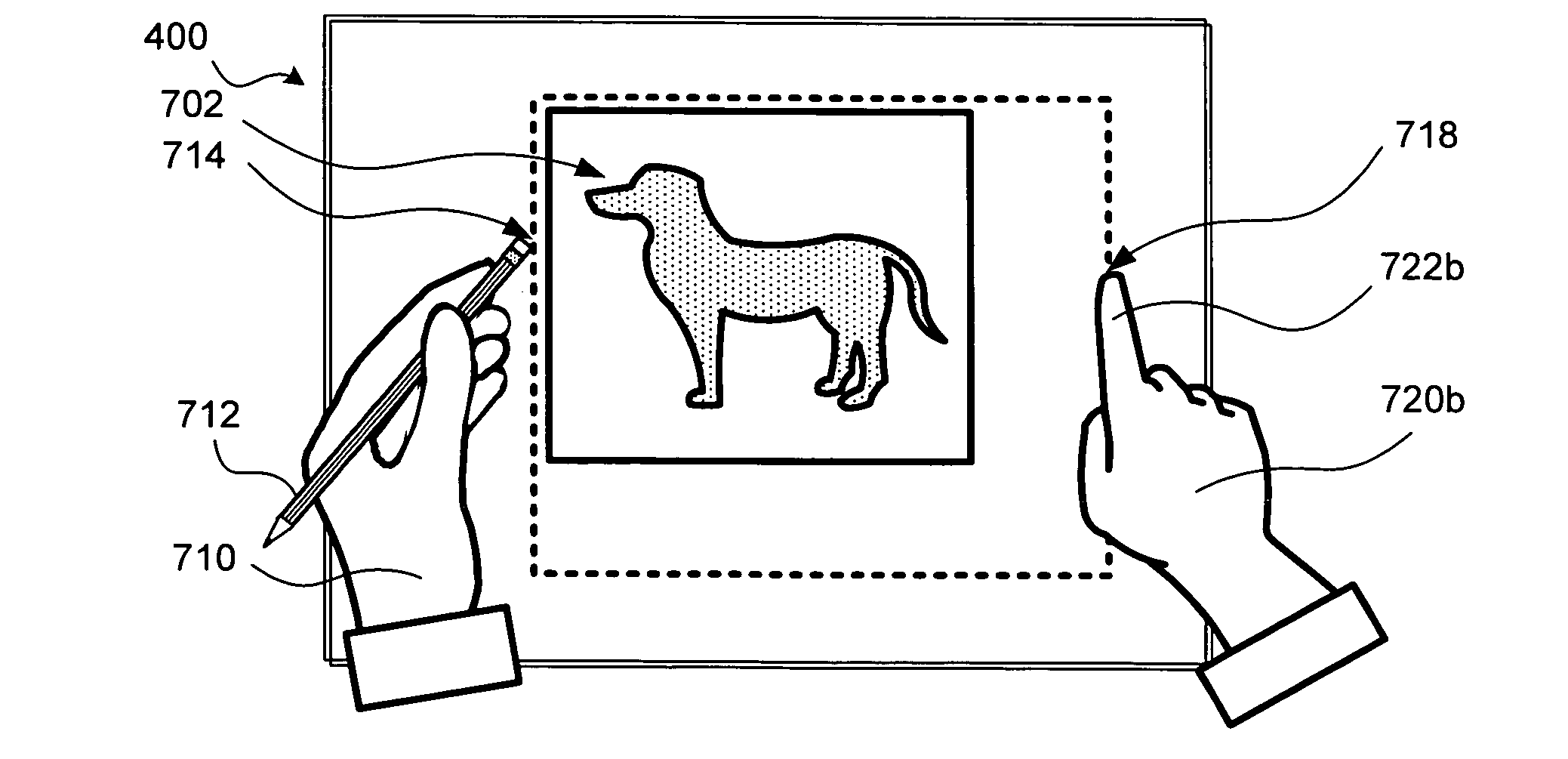

Input is provided to an application using a plurality of physical objects disposed adjacent to an interactive display surface. A primary location is determined where a primary physical object, e.g., a finger or thumb of the user, is positioned adjacent to the interactive display surface. An additional location is determined where an additional physical object is positioned adjacent to the interactive display surface. The attribute might be a size of an image or selected portion of the image that will be retained after cropping. A change in position of at least one of the objects is detected, and the attribute is adjusted based on the change in position of one or both objects. A range of selectable options of the application can also be display by touching the interactive display surface with one's fingers or other objects, and one of the options can be selected with another object.

Owner:MICROSOFT TECH LICENSING LLC

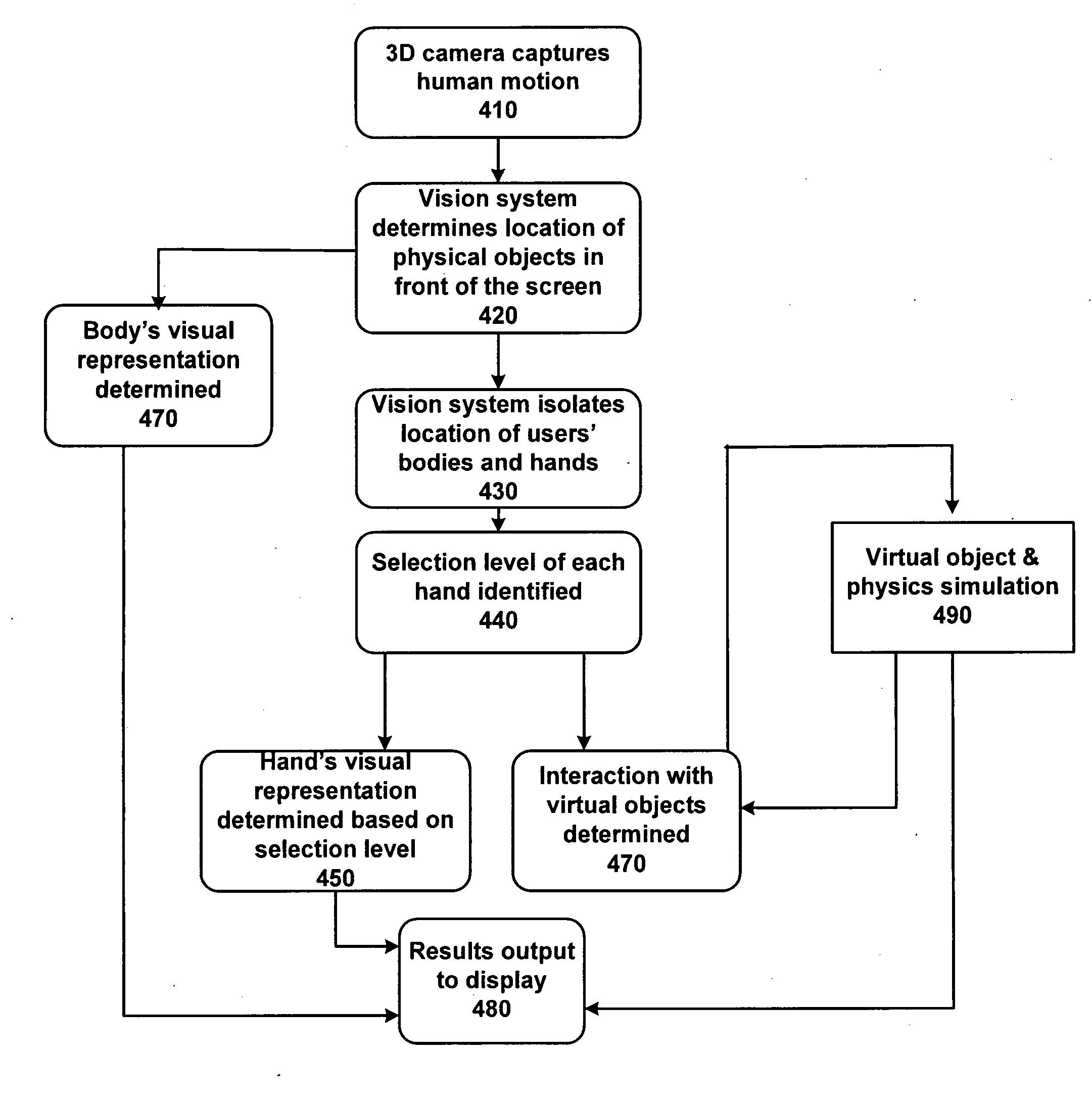

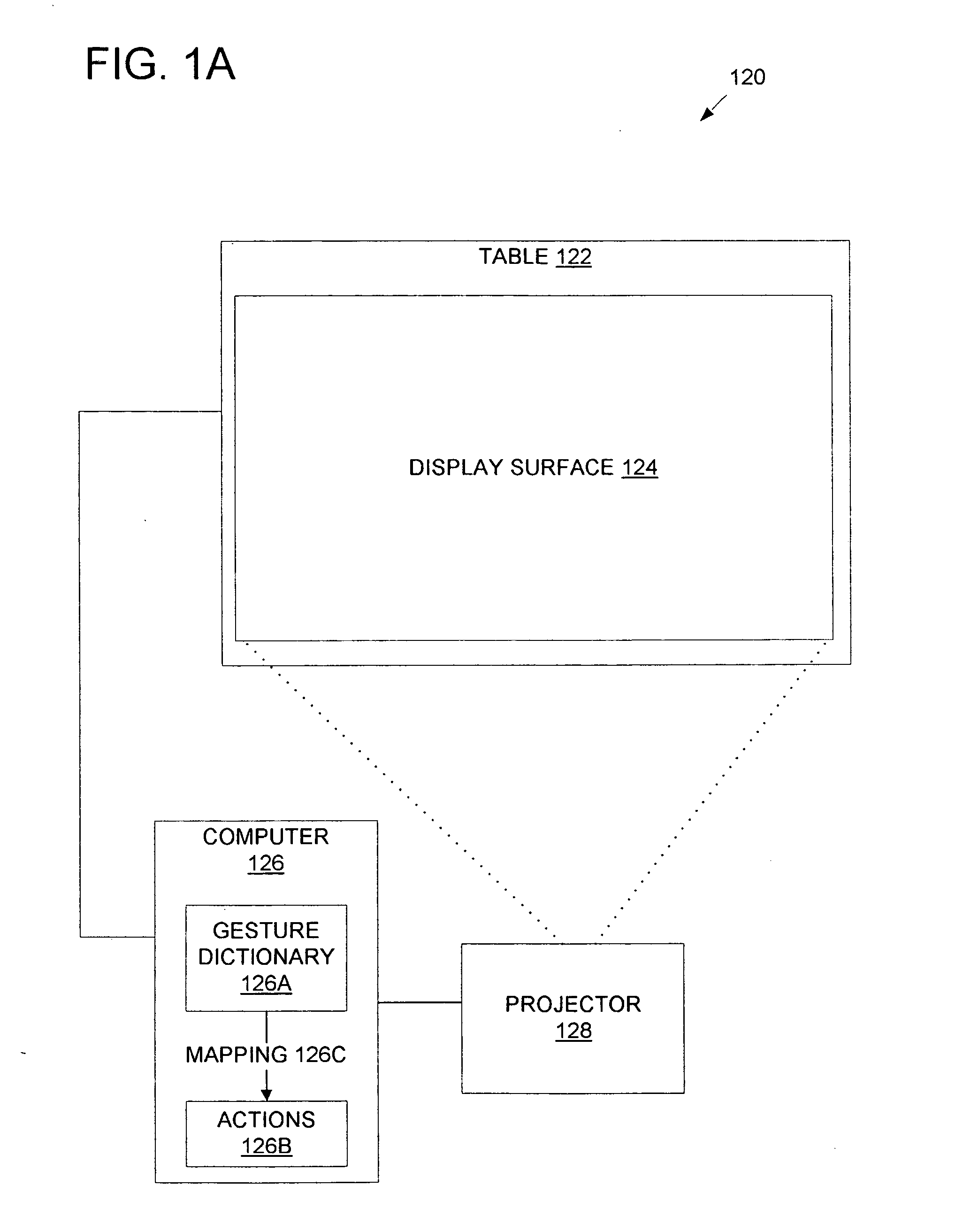

Processing of Gesture-Based User Interactions

ActiveUS20090077504A1Character and pattern recognitionUsing optical meansInteraction systemsDisplay device

Systems and methods for processing gesture-based user interactions with an interactive display are provided.

Owner:META PLATFORMS INC

Recognizing gestures and using gestures for interacting with software applications

InactiveUS7519223B2Character and pattern recognitionColor television detailsDisplay deviceApplication software

An interactive display table has a display surface for displaying images and upon or adjacent to which various objects, including a user's hand(s) and finger(s) can be detected. A video camera within the interactive display table responds to infrared (IR) light reflected from the objects to detect any connected components. Connected component correspond to portions of the object(s) that are either in contact, or proximate the display surface. Using these connected components, the interactive display table senses and infers natural hand or finger positions, or movement of an object, to detect gestures. Specific gestures are used to execute applications, carryout functions in an application, create a virtual object, or do other interactions, each of which is associated with a different gesture. A gesture can be a static pose, or a more complex configuration, and / or movement made with one or both hands or other objects.

Owner:MICROSOFT TECH LICENSING LLC

Method and apparatus continuing action of user gestures performed upon a touch sensitive interactive display in simulation of inertia

A method and apparatus for operating a multi-user interactive display system including a display having a touch-sensitive display surface. A position is detected for each contact site at which the display surface experiences external physical contact. Each contact site's position history is using to compute velocity data for the respective contact site. At least one of the following is using to identify occurrence of one or more user gestures from a predetermined set of user gestures: the position history, the velocity data. Each user gesture corresponds to at least one predetermined action for updating imagery presented by the display as a whole. Action is commenced corresponding to the identified gesture. Responsive to a user gesture terminating with a nonzero velocity across the display surface, action is corresponding to the gesture is continued so as to simulate inertia imparted by said gesture.

Owner:QUALCOMM INC

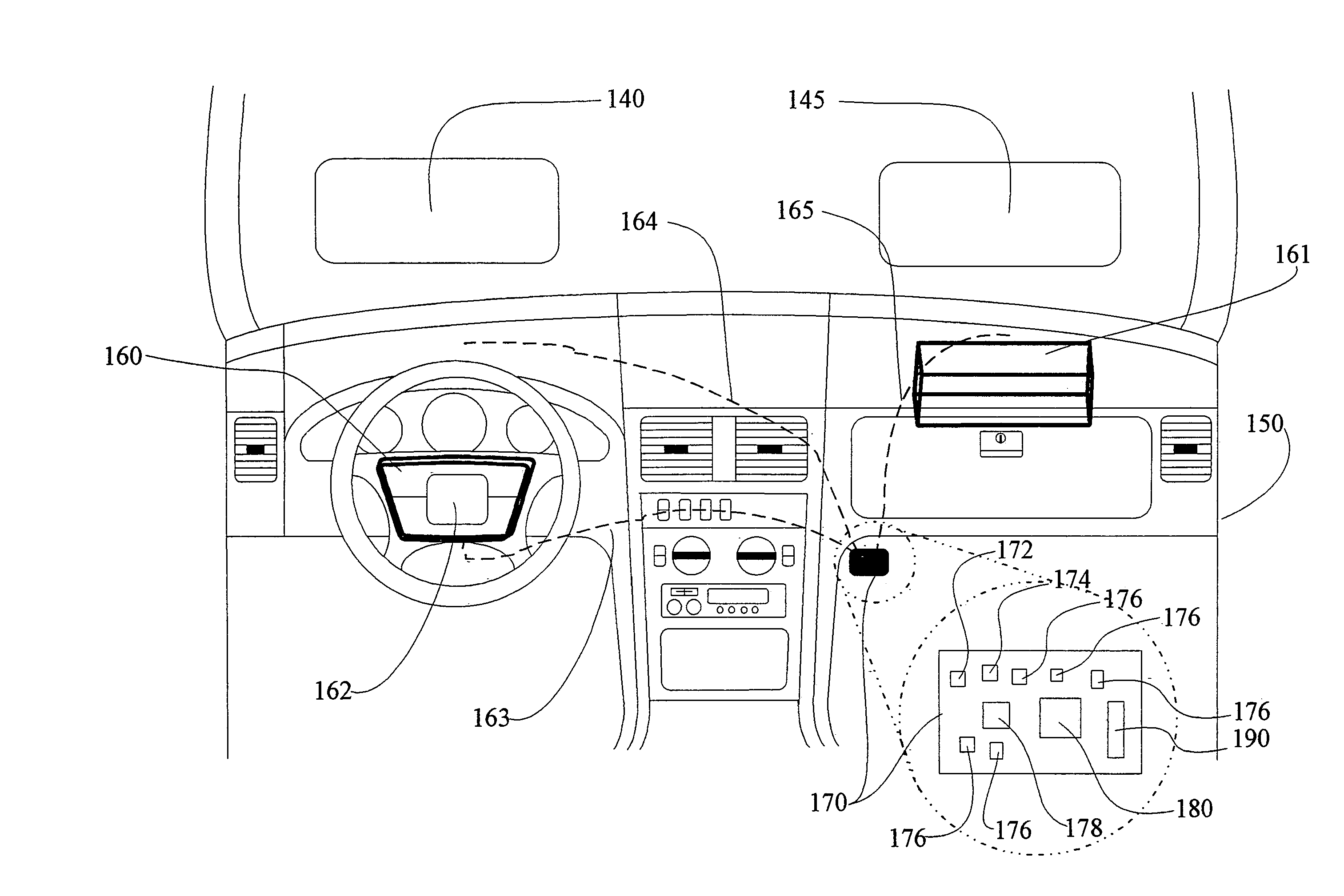

Interactive vehicle display system

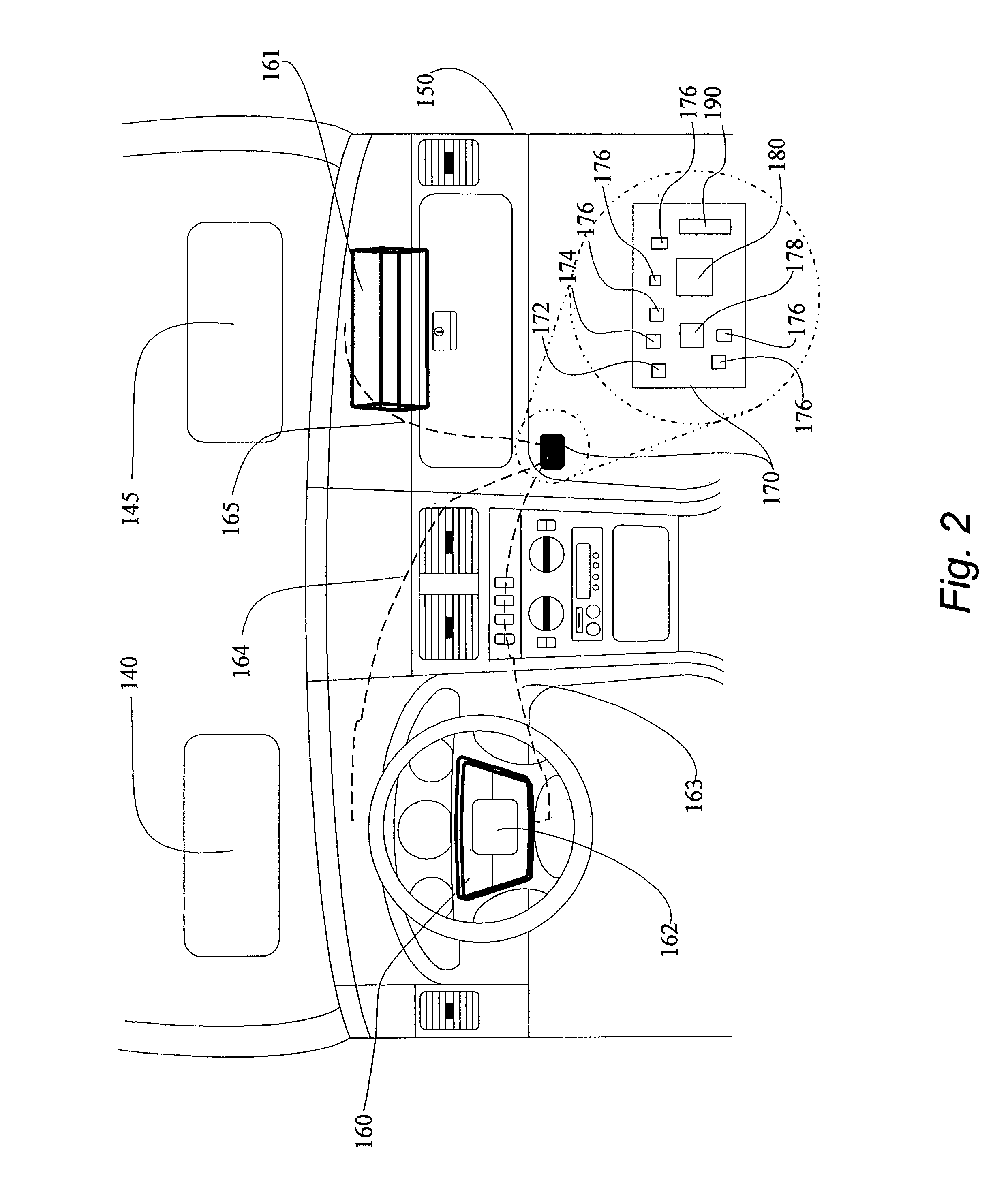

InactiveUS7126583B1Improve viewing effectEasy to viewDashboard fitting arrangementsInstrument arrangements/adaptationsGraphicsHead-up display

An interactive display system for a vehicle including a heads up display system for projecting text and / or graphics into a field of view of a forward-facing occupant of the vehicle and an occupant-controllable device enabling the occupant to interact with the heads up display system to change the text and / or graphics projected by the heads up display system or direct another vehicular system to perform an operation. The device may be a touch pad arranged on a steering wheel of the vehicle (possibly over a cover of an airbag module in the steering wheel) or at another location accessible to the occupant of the vehicle. A processor and associated electrical architecture are provided for correlating a location on the touch pad which has been touched by the occupant to the projected text and / or graphics. The device may also be a microphone.

Owner:AMERICAN VEHICULAR SCI

Using physical objects to adjust attributes of an interactive display application

InactiveUS7743348B2Input/output for user-computer interactionGraph readingInteractive displaysHuman–computer interaction

Input is provided to an application using a plurality of physical objects disposed adjacent to an interactive display surface. A primary location is determined where a primary physical object, e.g., a finger or thumb of the user, is positioned adjacent to the interactive display surface. An additional location is determined where an additional physical object is positioned adjacent to the interactive display surface. The attribute might be a size of an image or selected portion of the image that will be retained after cropping. A change in position of at least one of the objects is detected, and the attribute is adjusted based on the change in position of one or both objects. A range of selectable options of the application can also be display by touching the interactive display surface with one's fingers or other objects, and one of the options can be selected with another object.

Owner:MICROSOFT TECH LICENSING LLC

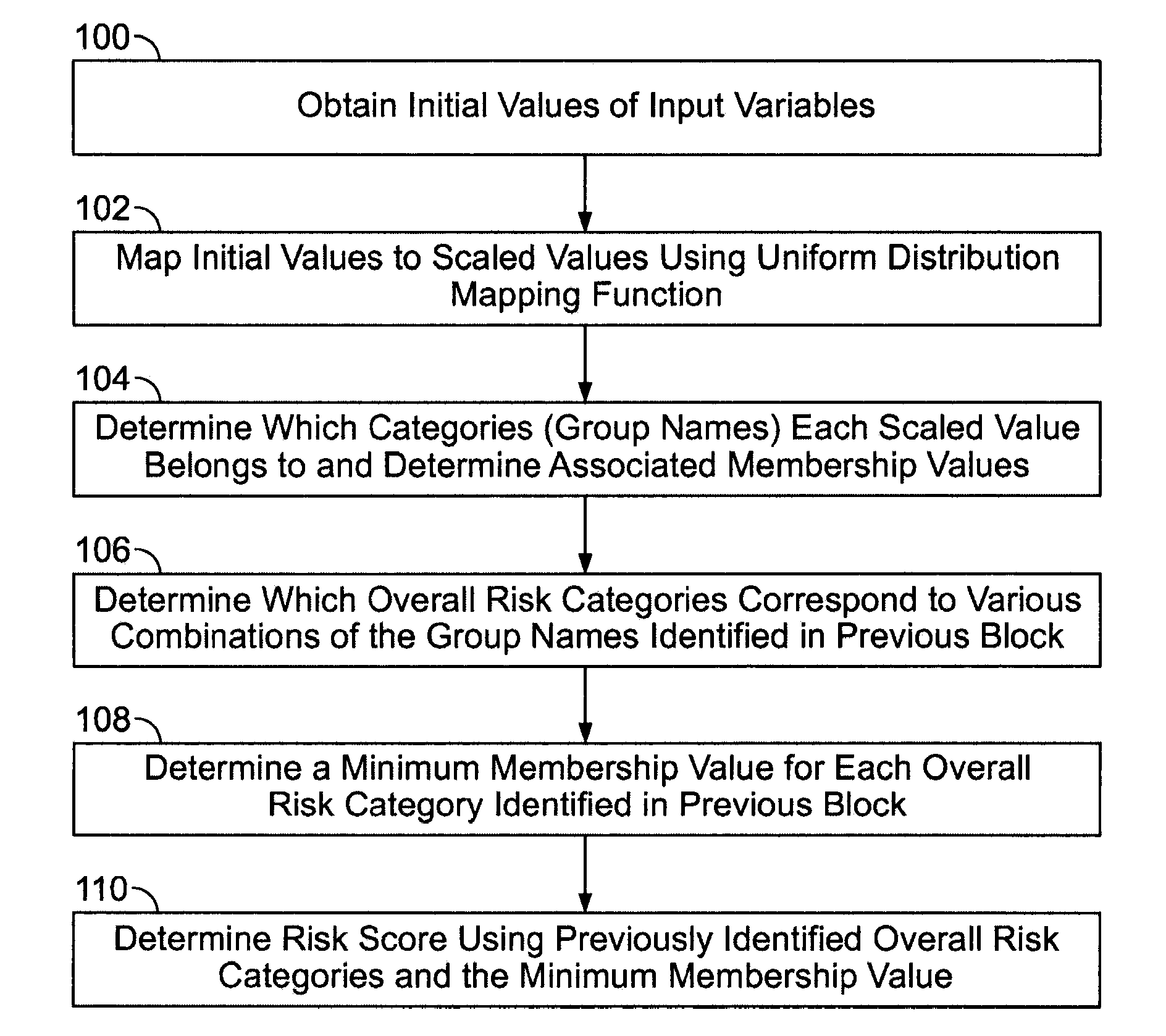

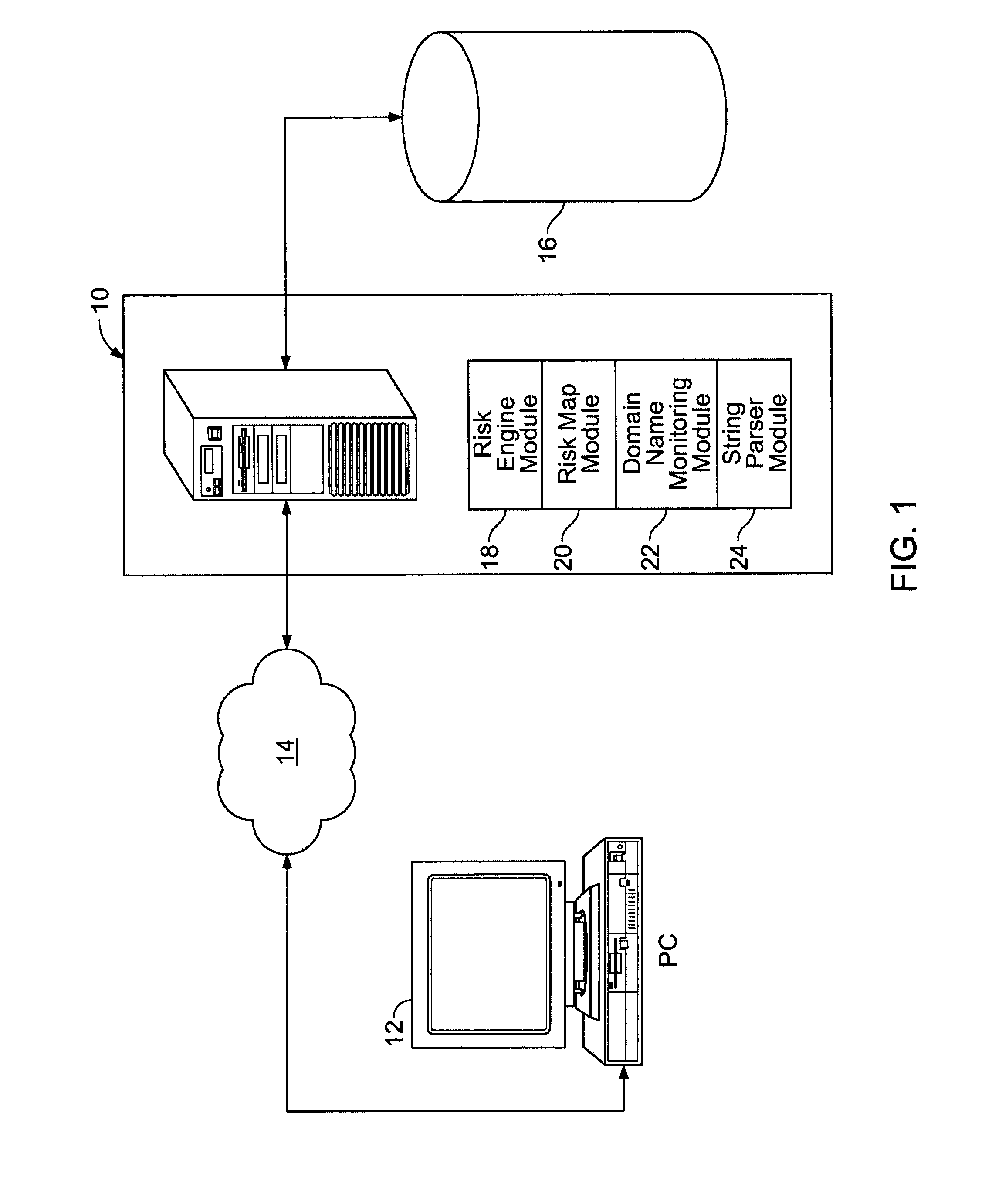

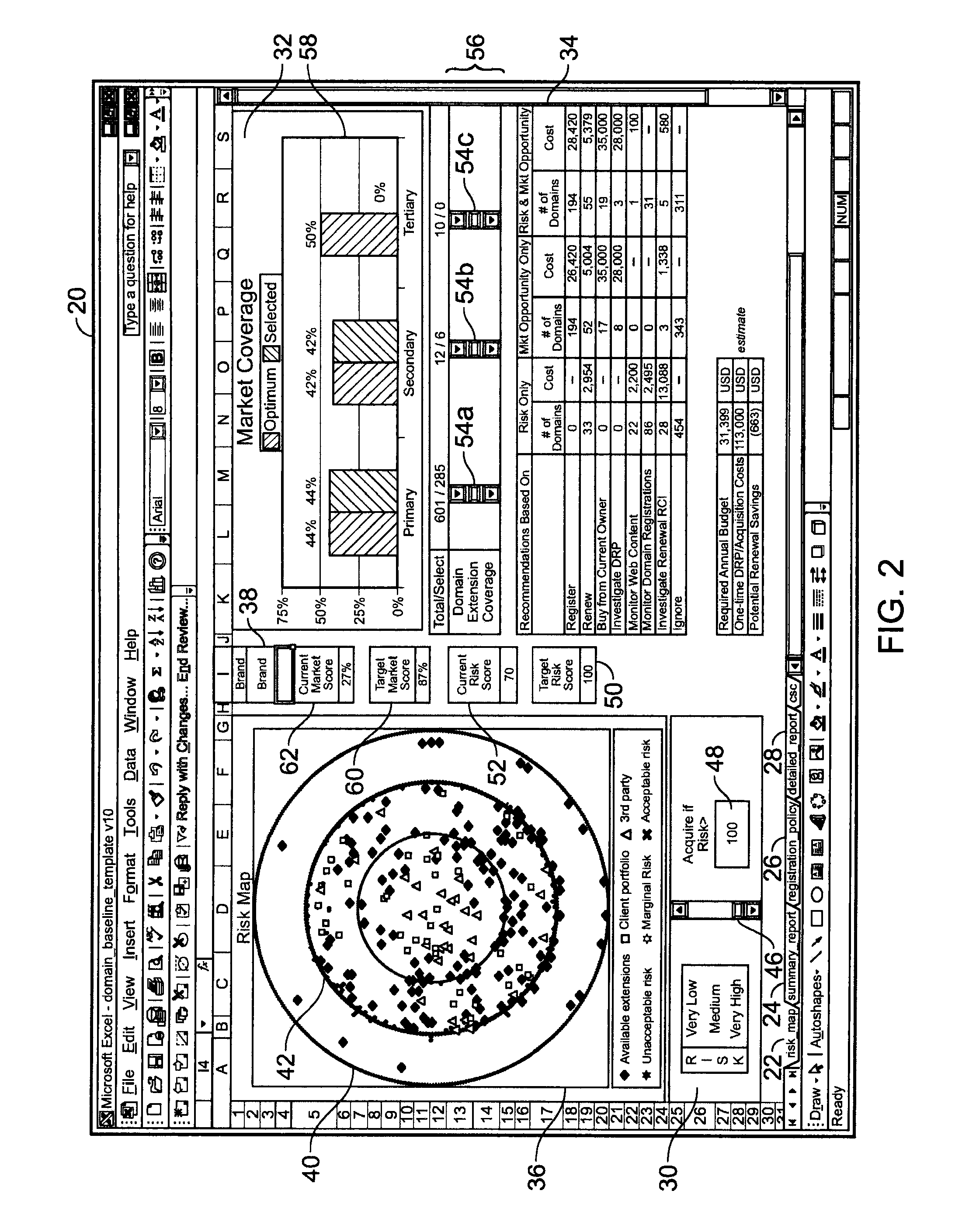

Assessment of Risk to Domain Names, Brand Names and the Like

InactiveUS20080270203A1Eliminate unacceptable riskMitigate marginal riskFinanceForecastingThird partyDomain name

Assessment of risk to a specified name that represents at least one of a brand name, a domain name, a trademark, a service mark or a business entity name, includes acquiring data relating to the specified name, and quantifying risks to the specified name in the event a third-party has obtained, or were to obtain, a registration to the same name or a variant of the name. Risk scores are associated with the potential and actual registrations. An interactive display showing the risk scores is provided. Monitoring domain names and parsing domain names also are disclosed.

Owner:CORPORATION SERVICE COMPANY

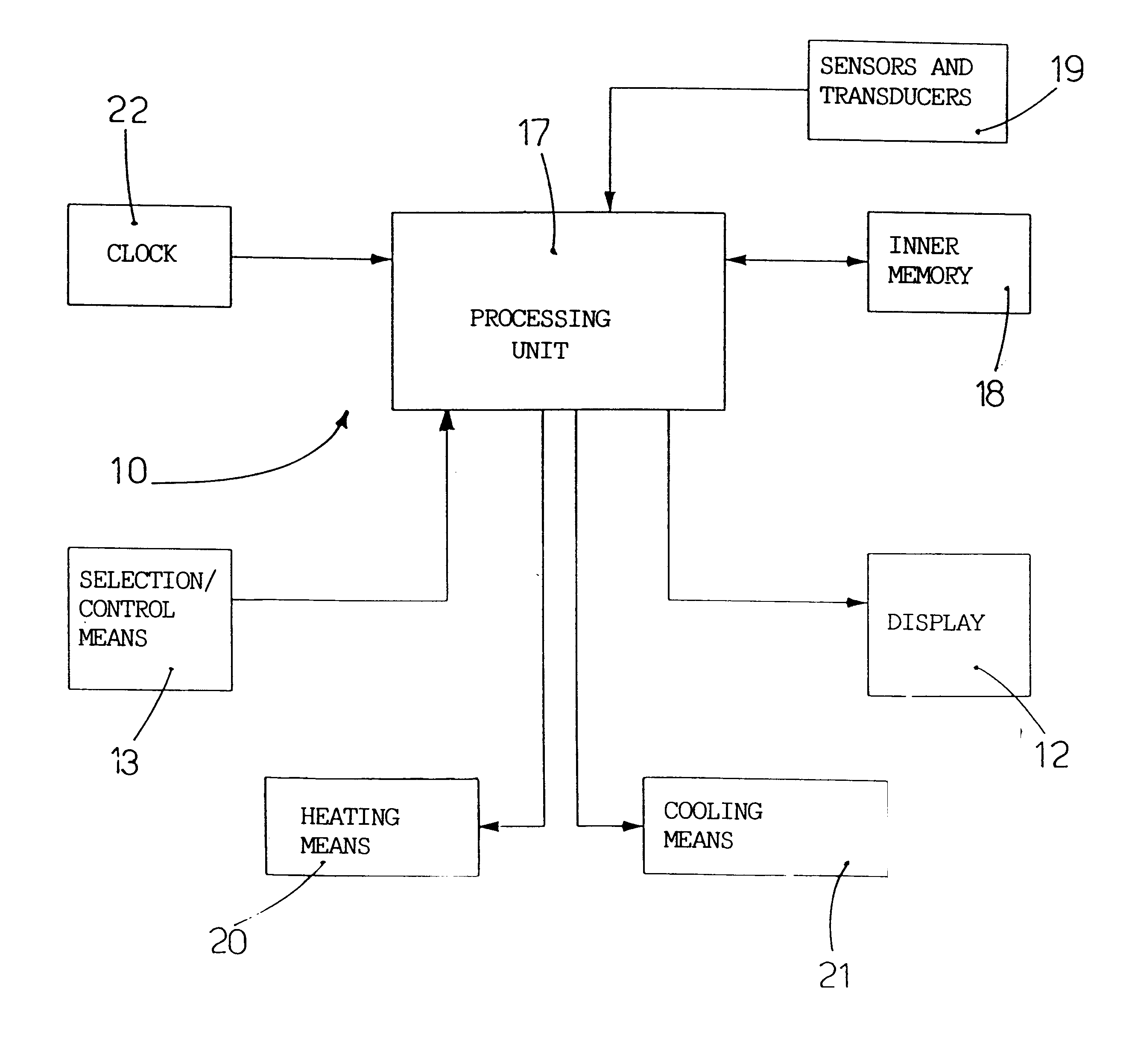

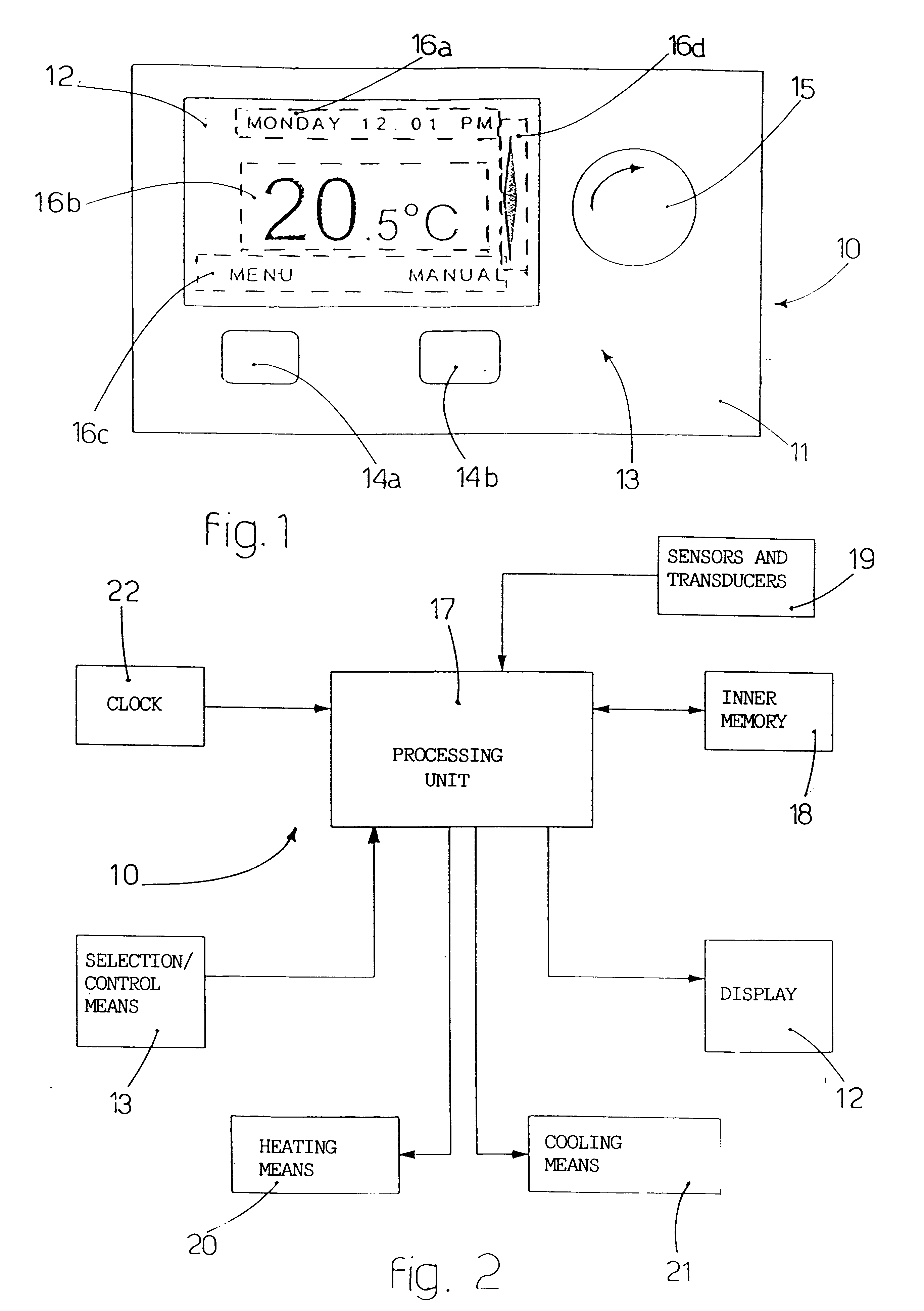

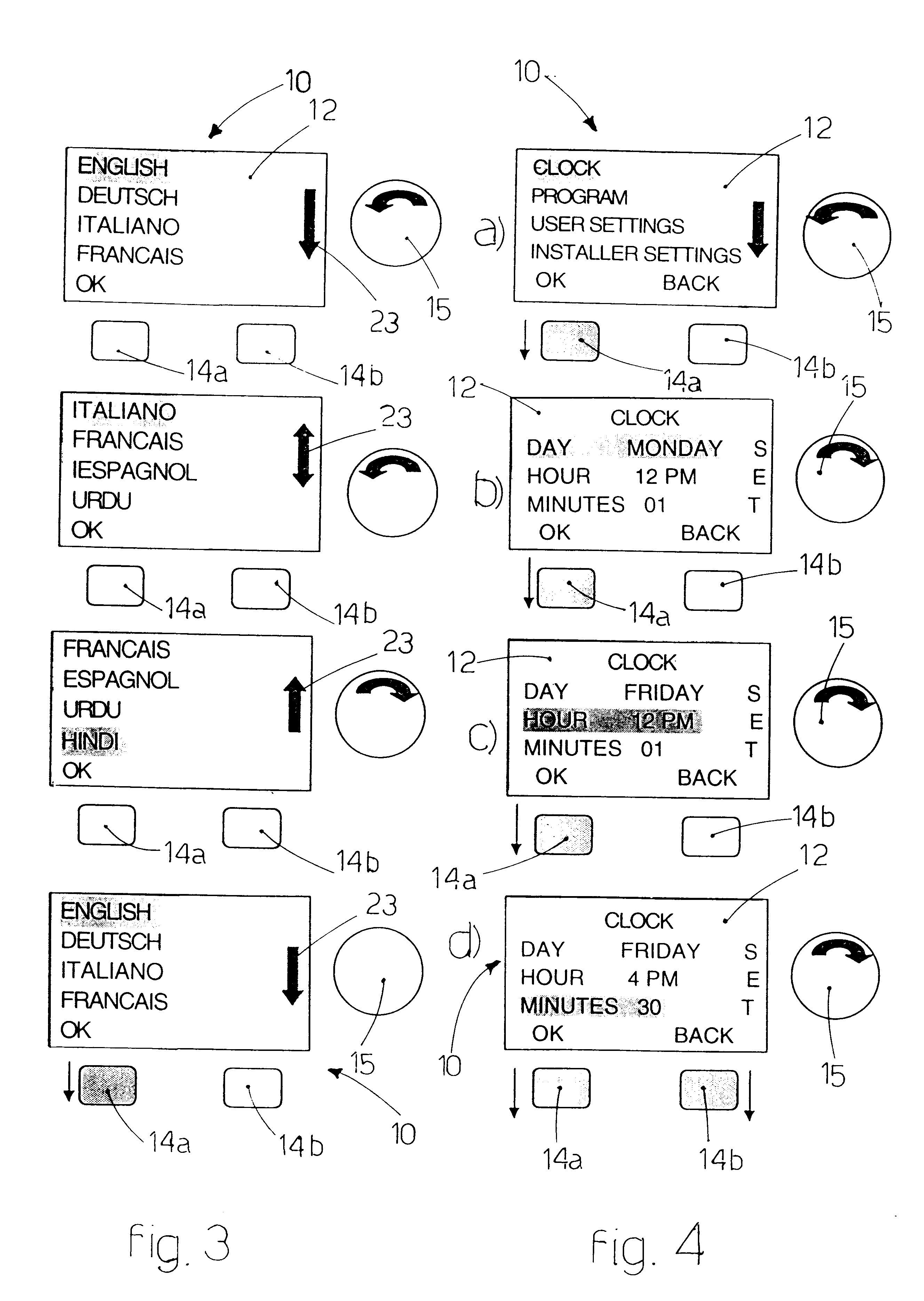

Electronic device for regulating and controlling ambient temperatures, and relative setting method

InactiveUS6502758B2Easy to useQuick understandingDomestic cooling apparatusTemperature control using electric meansDot matrixDisplay device

Electronic device operable by an user for regulating and controlling ambient parameters, such as ambient temperature, comprising a processing unit, a display device for displaying selectable control functions and setting parameters related to a set of desired ambient conditions and a selection / control unit for selecting by the user at least one control function or setting parameter from among a set of displayed control functions or setting parameters. The device comprises an interactive display of a dot matrix type able to display to a user variable indications comprising at least the specific control function associated with each of said selection / control unit according to the specific selection made by the user.

Owner:INVENSYS CONTROLS ITAL

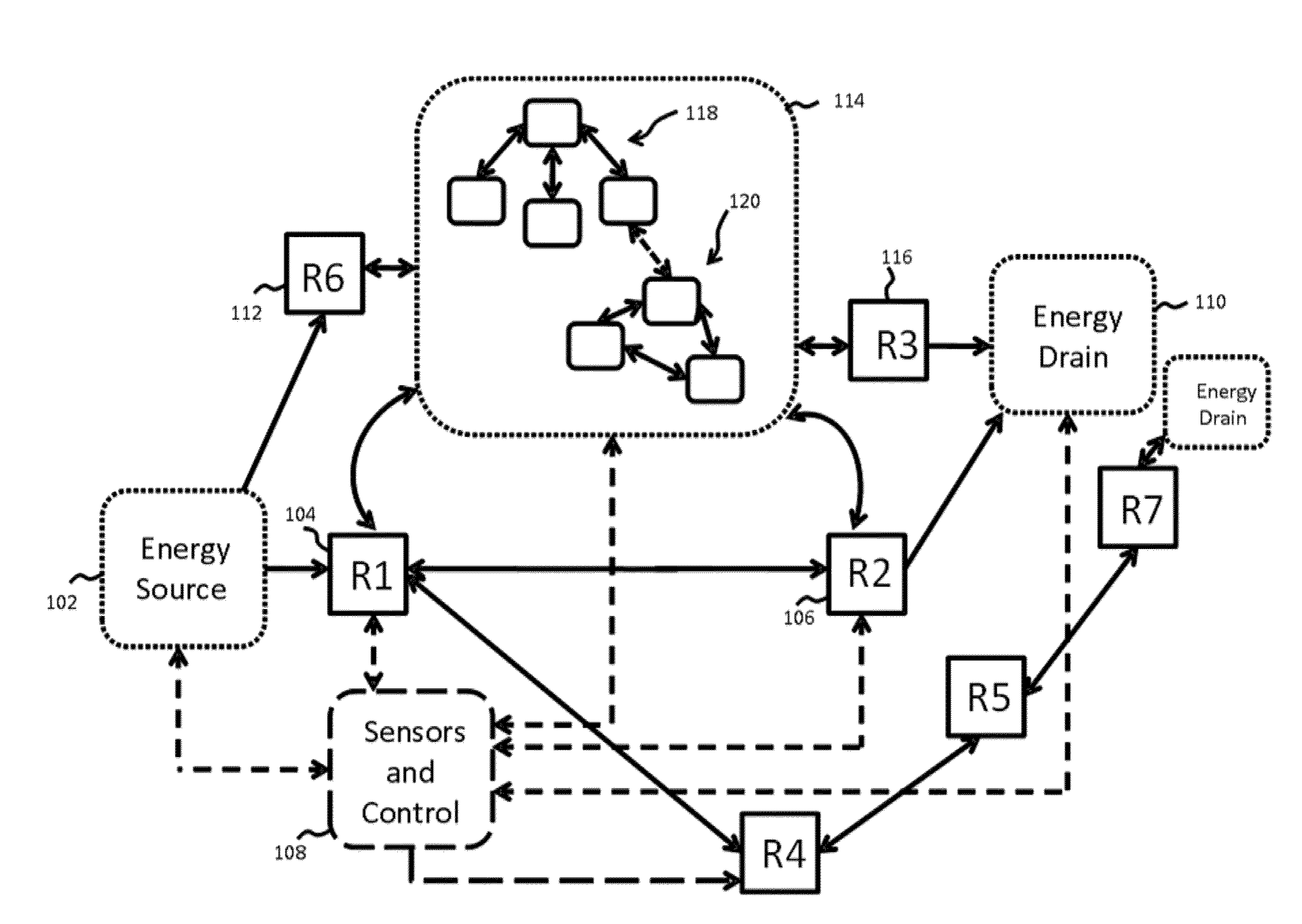

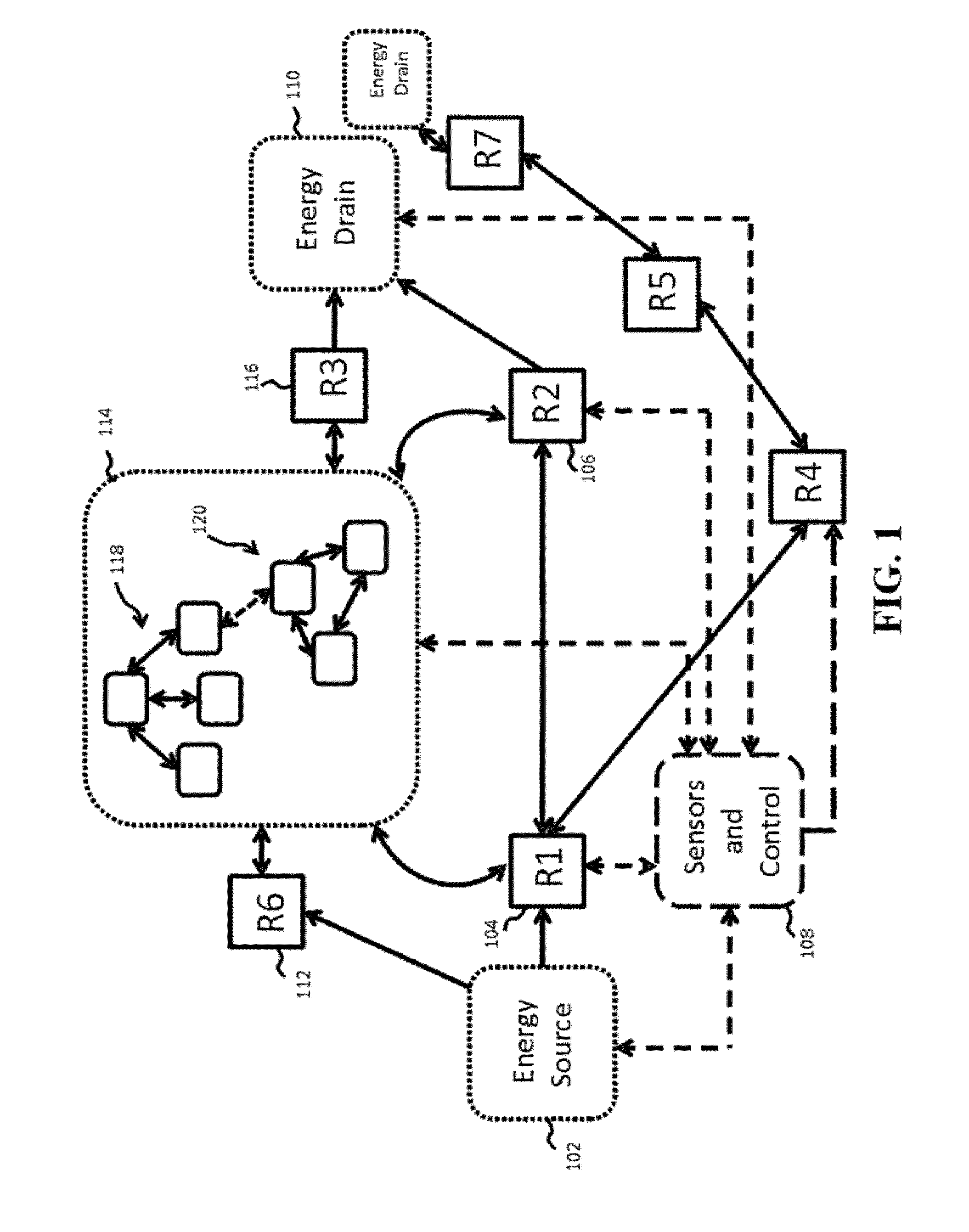

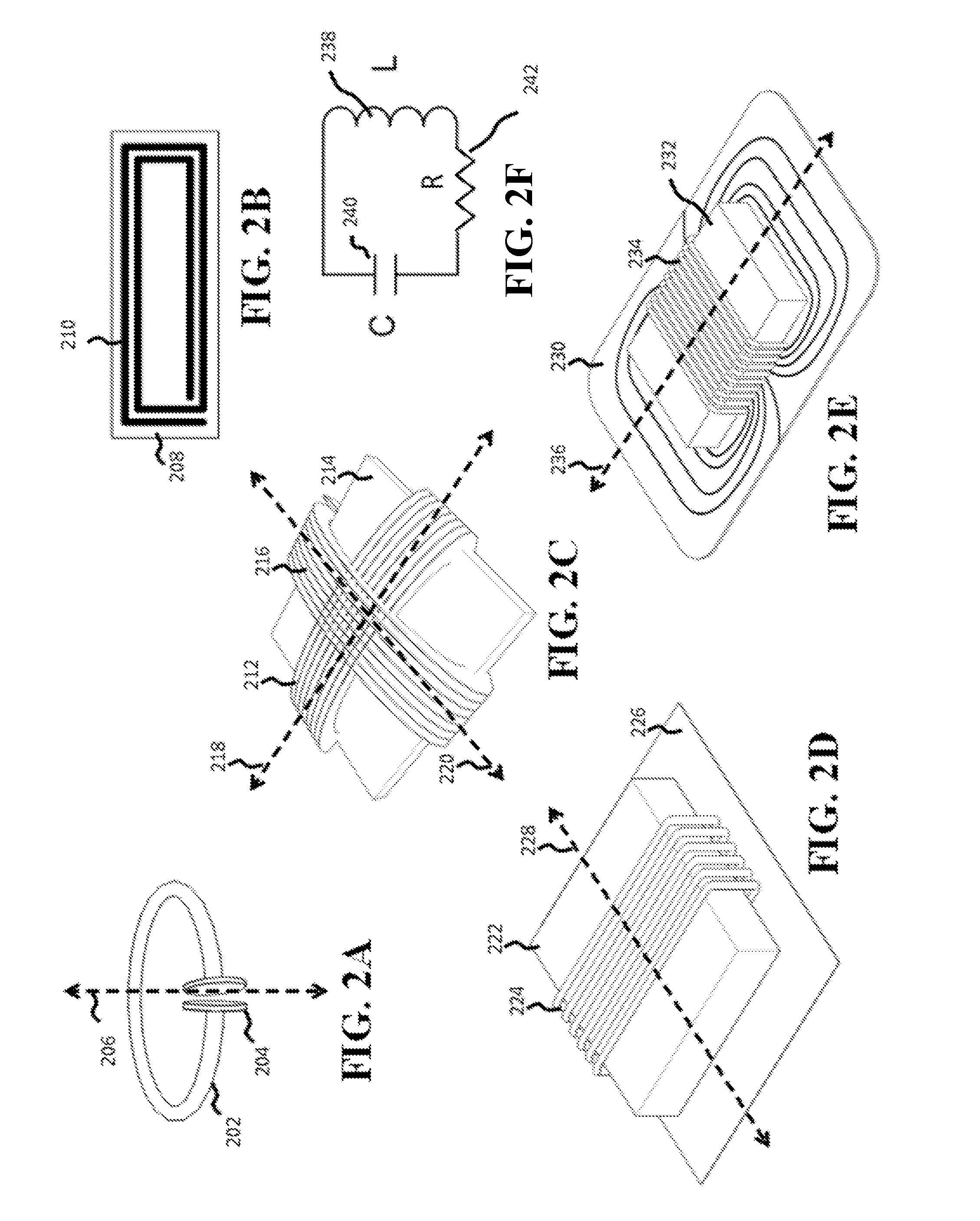

Wireless energy transfer

InactiveUS20140327320A1Increase heating capacityIncrease temperatureNear-field transmissionBatteries circuit arrangementsThermal energyEnergy transfer

A wireless energy transfer system includes wirelessly powered footwear. Device resonators in footwear may capture energy from source resonators. Captured energy may be used to generate thermal energy in the footwear. Wireless energy may be generated by wireless warming installations. Installations may be located in public locations and may activate when a user is near the installation. In some cases, the warming installations may include interactive displays and may require user input to activate energy transfer.

Owner:WITRICITY CORP

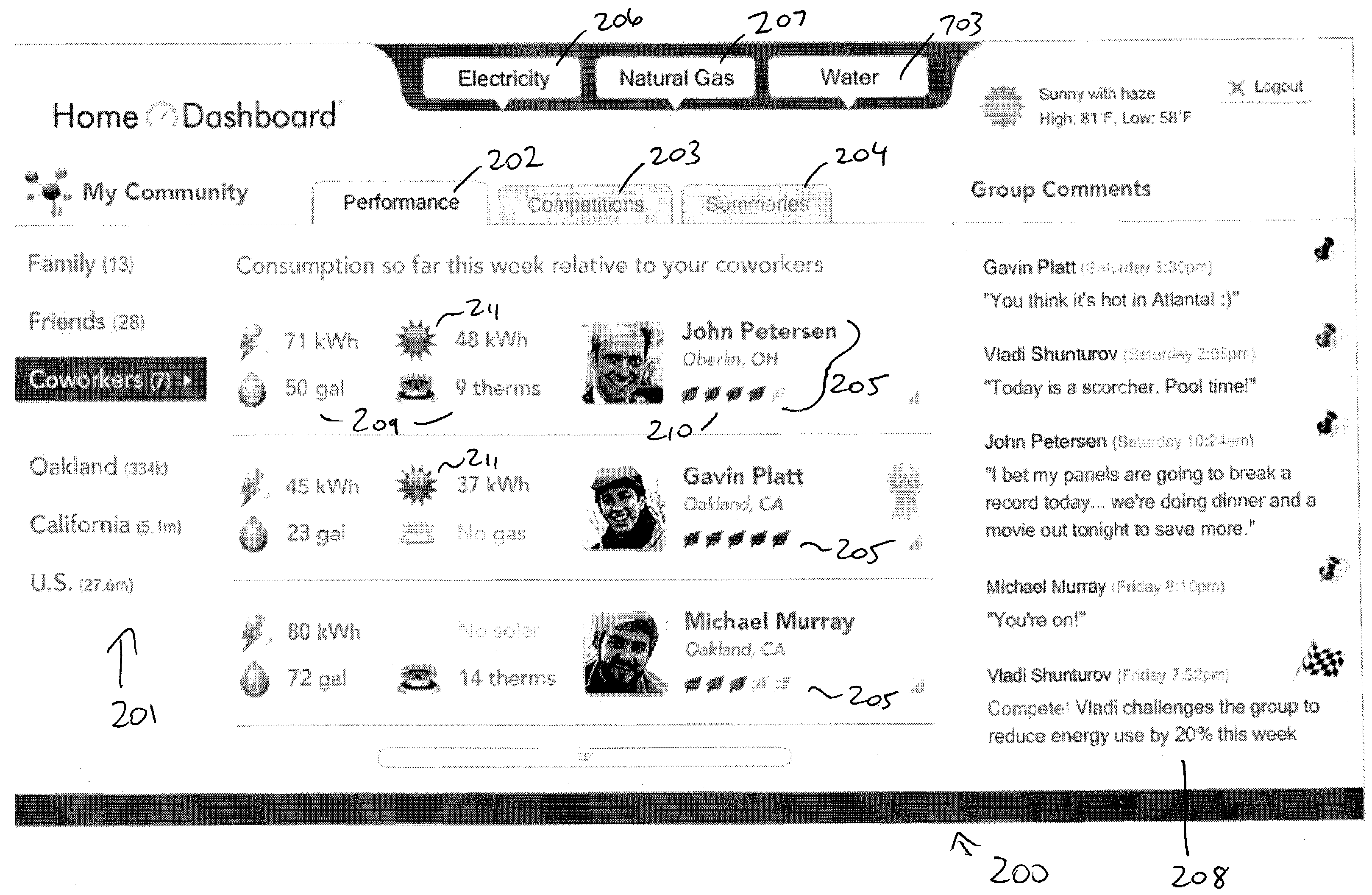

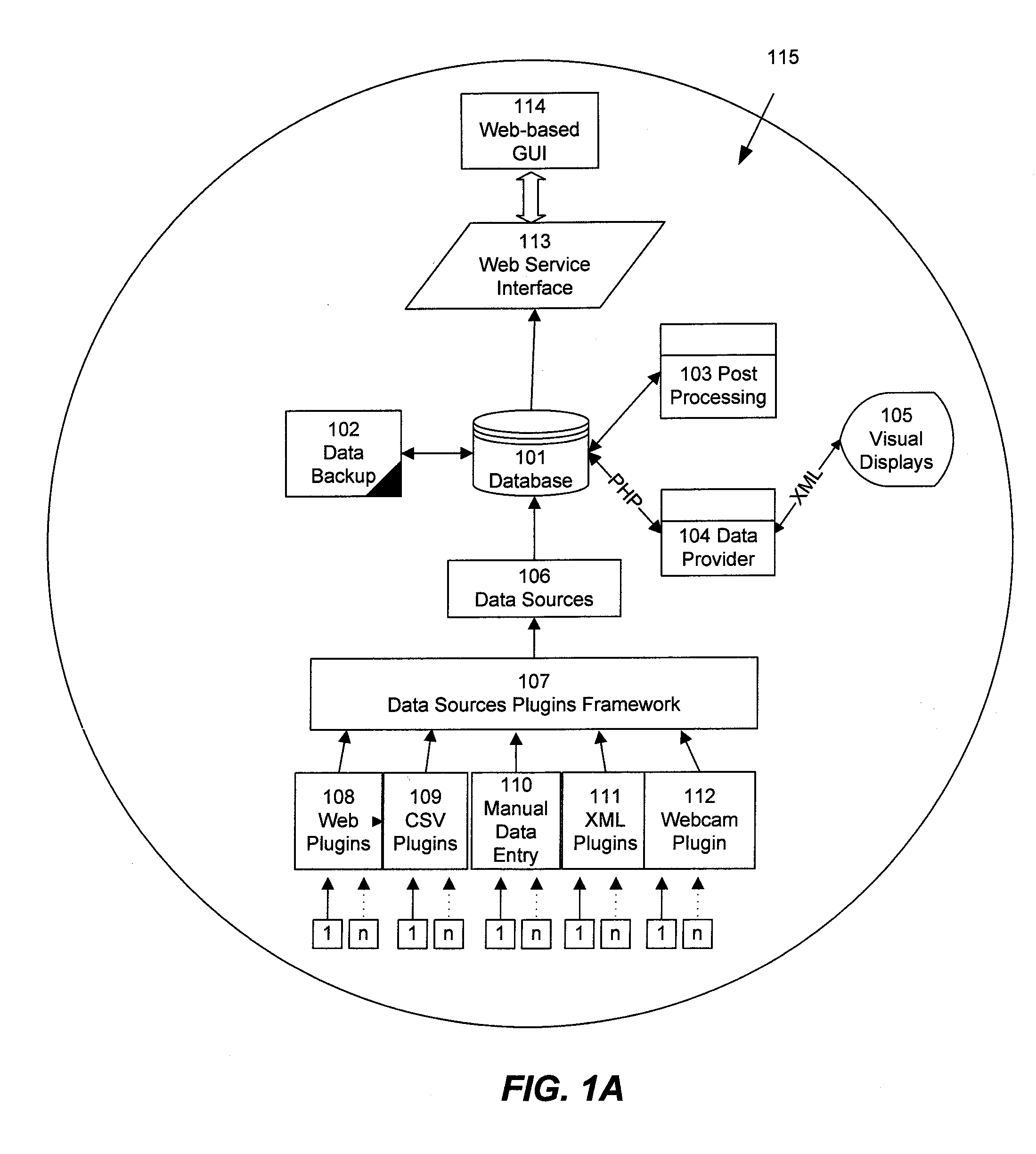

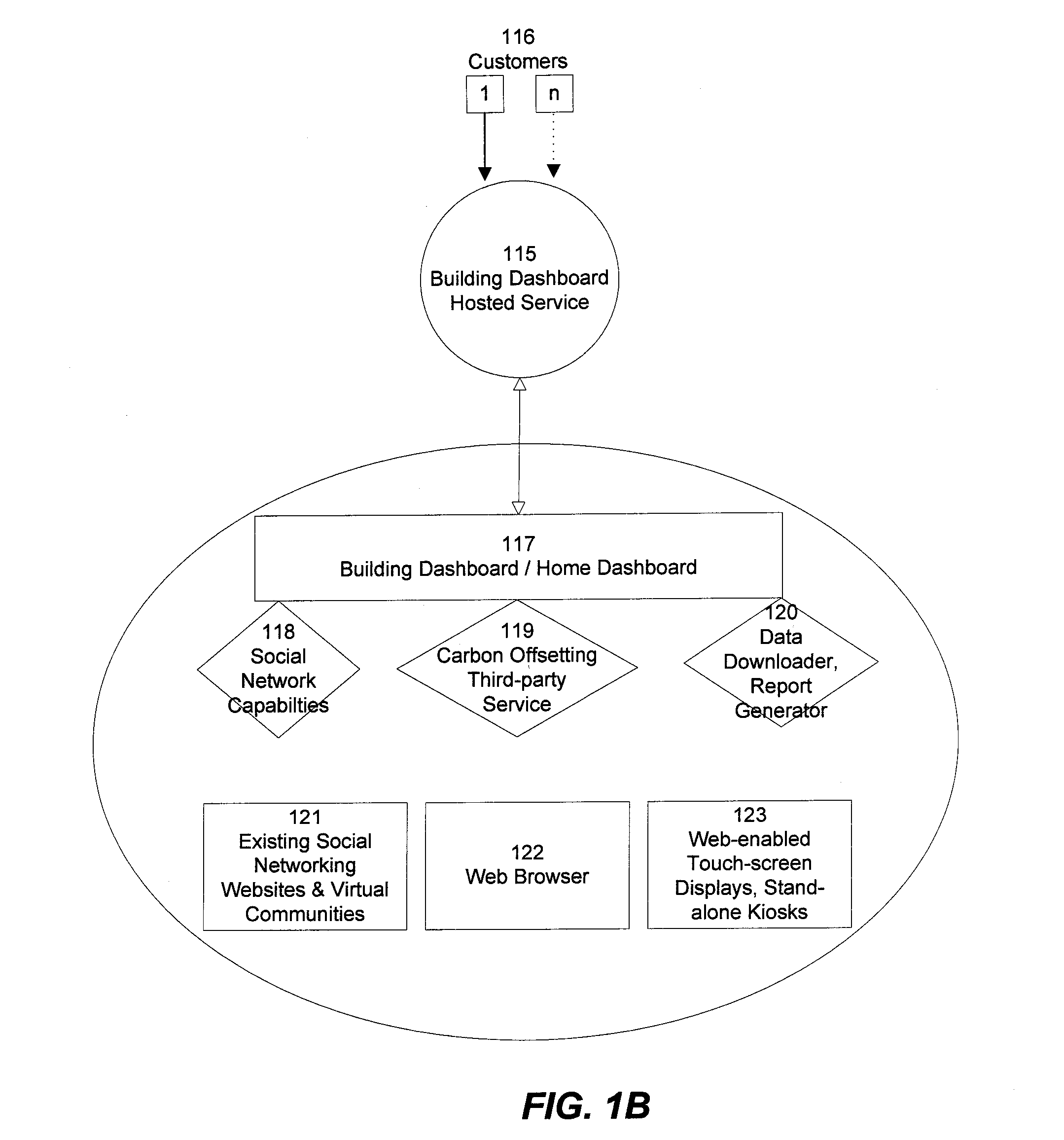

Collecting, sharing, comparing, and displaying resource usage data

ActiveUS20080306985A1Easy to compareThe effect is highlightedOffice automationCommerceGraphicsInteractive displays

Resource usage data is automatically collected for an individual, house-hold, family, organization, or other entity. The collected data is transmitted to a central repository, where it is stored and compared with real-time and / or historical usage data by that same entity and / or with data from other sources. Graphical, interactive displays and reports of resource usage data are then made available. These displays can include comparisons with data representing any or all of community averages, specific entities, historical use, representative similarly-situated entities, and the like. Resource usage data can be made available within a social networking context, published, and / or selectively shared with other entities.

Owner:ABL IP HLDG

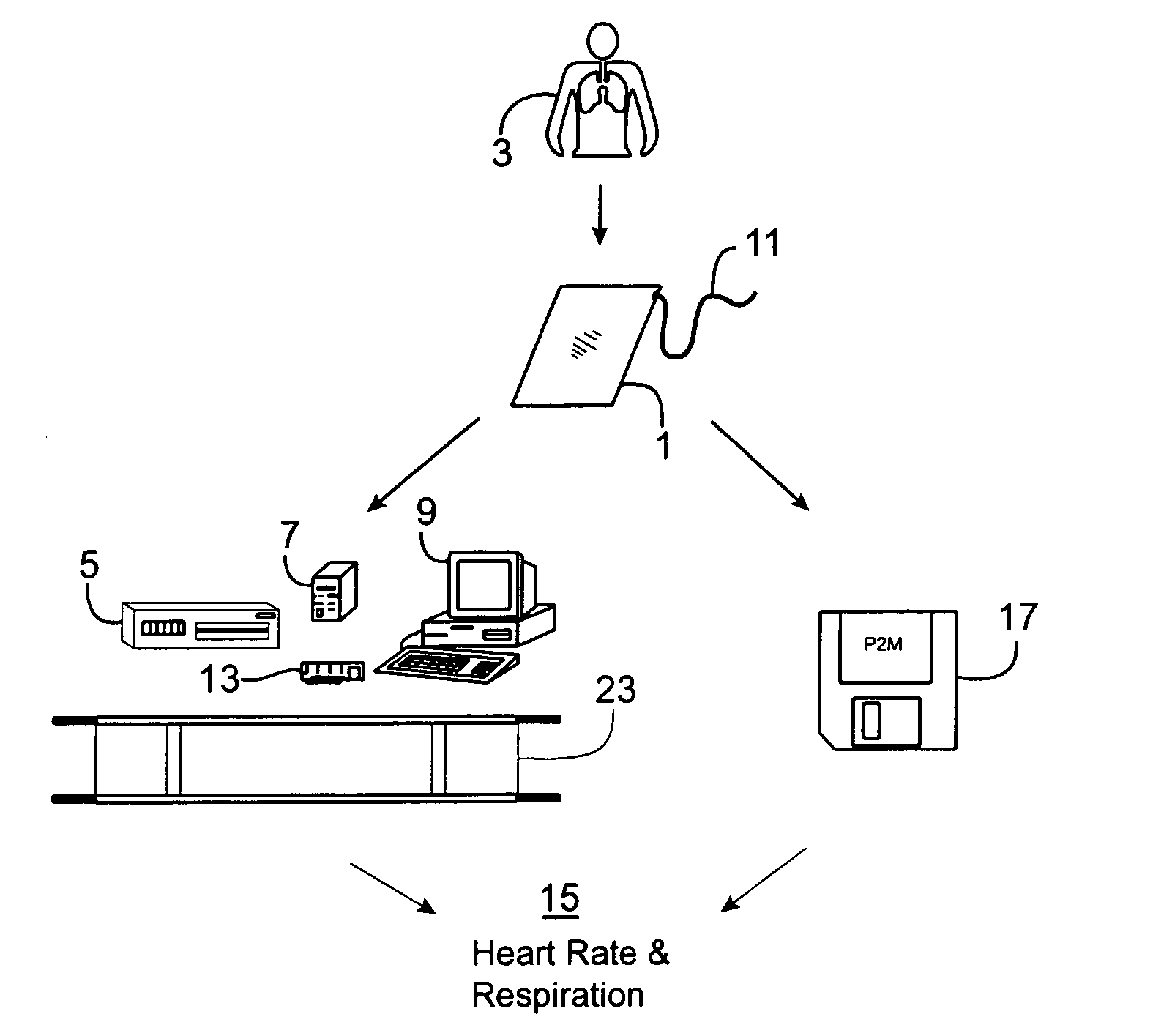

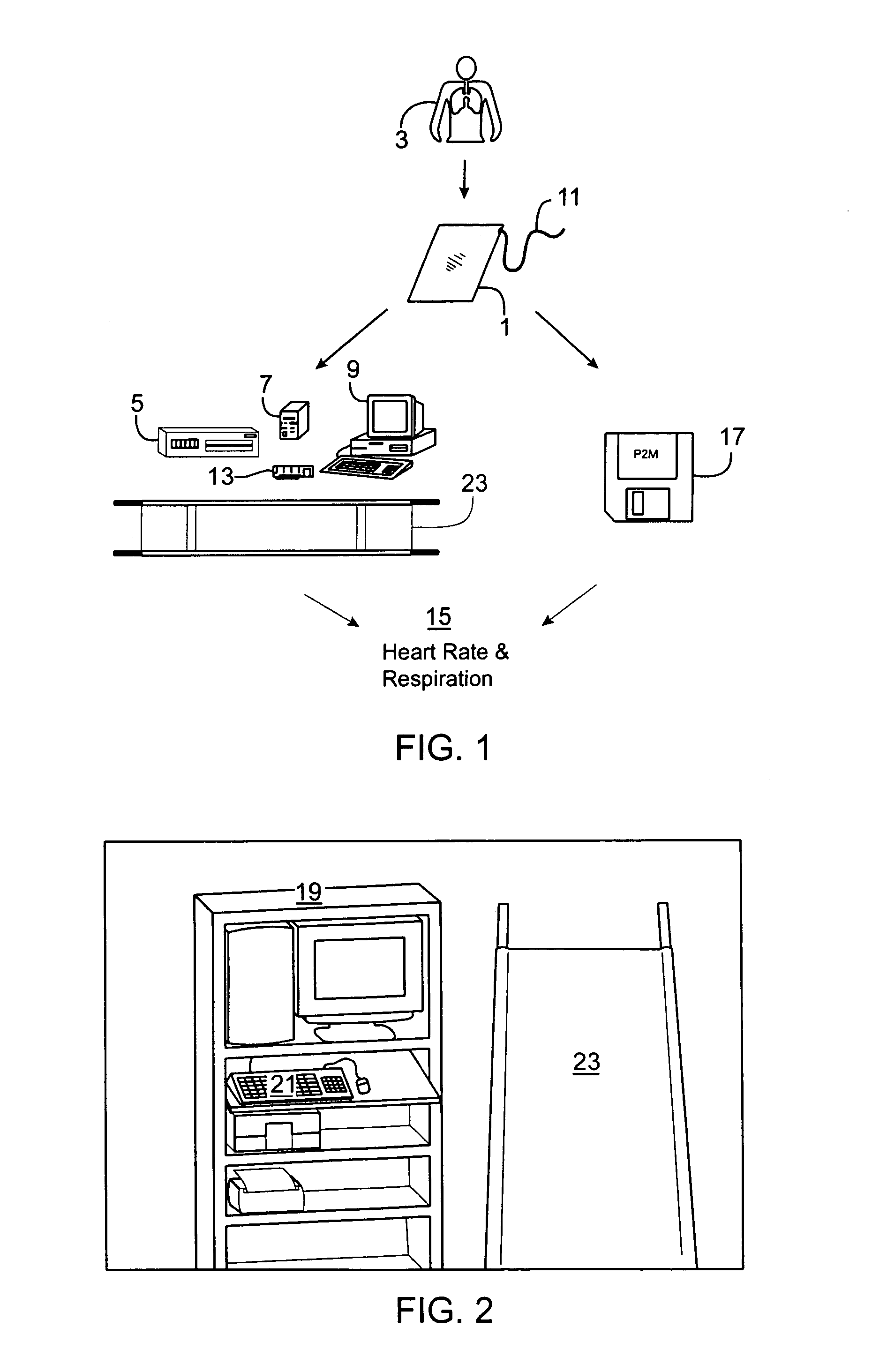

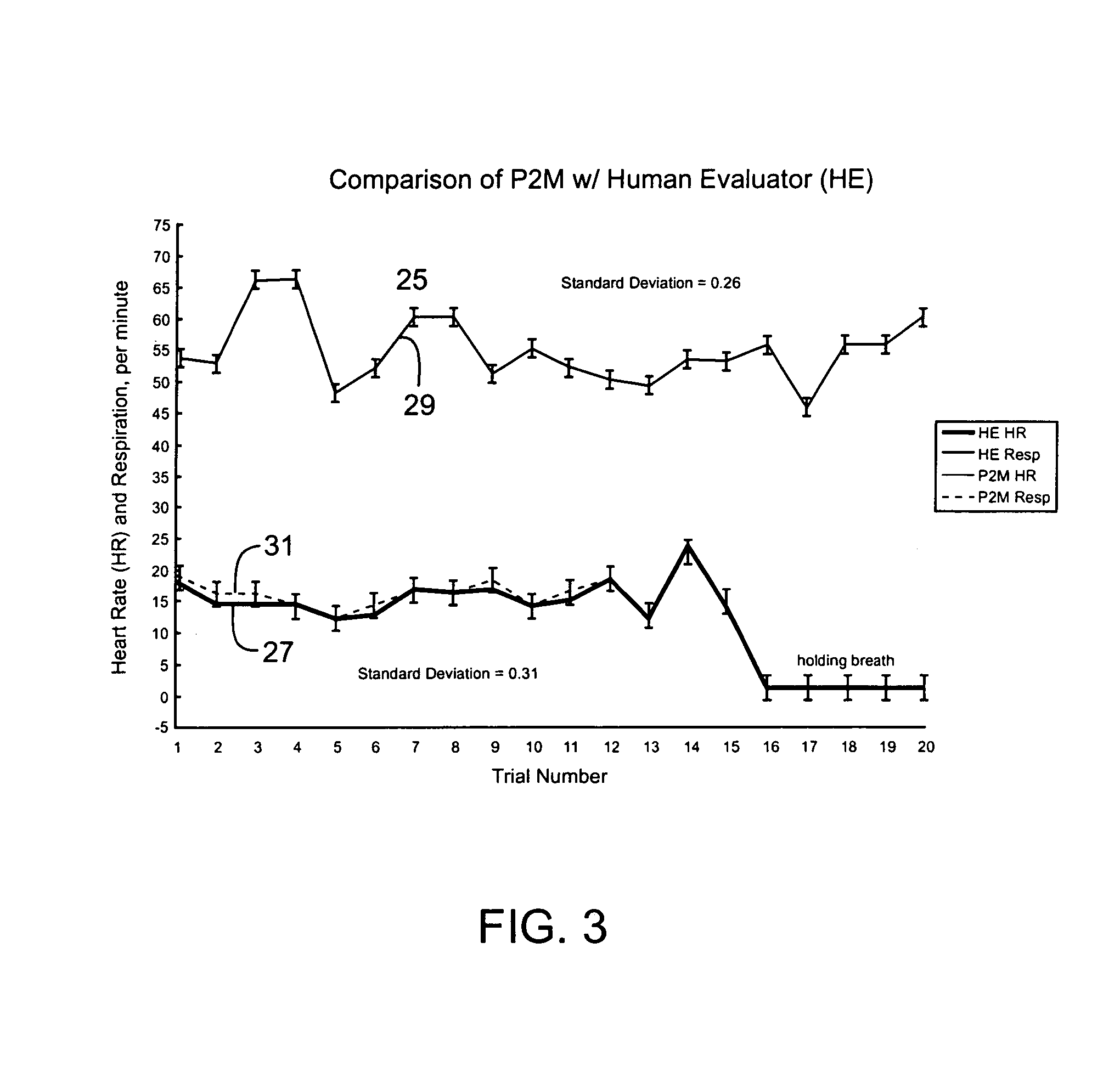

Passive physiological monitoring (P2M) system

InactiveUS6984207B1Easy to deployEvaluation of blood vesselsCatheterInternal bleedingBand-pass filter

Passive Physiological monitoring apparatus and method have a sensor for sensing physiological phenomenon. A converter converts sensed data into electrical signals and a computer receives and computes the signals, and outputs computed data for real-time interactive display. The sensor is a piezoelectric film of polyvinylidene fluoride. A band-pass filter filters out noise and isolates the signals to reflect data from the body. A pre-amplifier amplifies signals. Signals detected include mechanical, thermal and acoustic signatures reflecting cardiac output, cardiac function, internal bleeding, respiratory, pulse, apnea, and temperature. A pad may incorporate the PVDF film and may be fluid-filled. The film converts mechanical energy into analog voltage signals. Analog signals are fed through the band-pass filter and the amplifier. A converter converts the analog signals to digital signals. A Fourier transform routine is used to transform into the frequency domain. A microcomputer is used for recording, analyzing and displaying data for on-line assessment and for providing realtime response. A radio-frequency filter may be connected to a cable and the film for transferring signals from the film through the cable. The sensor may be an array provided in a MEDEVAC litter or other device for measuring acoustic and hydraulic signals from the body of a patient for field monitoring, hospital monitoring, transport monitoring, home, remote monitoring.

Owner:HOANA MEDICAL

Gesture-based user interactions with status indicators for acceptable inputs in volumetric zones

Owner:META PLATFORMS INC

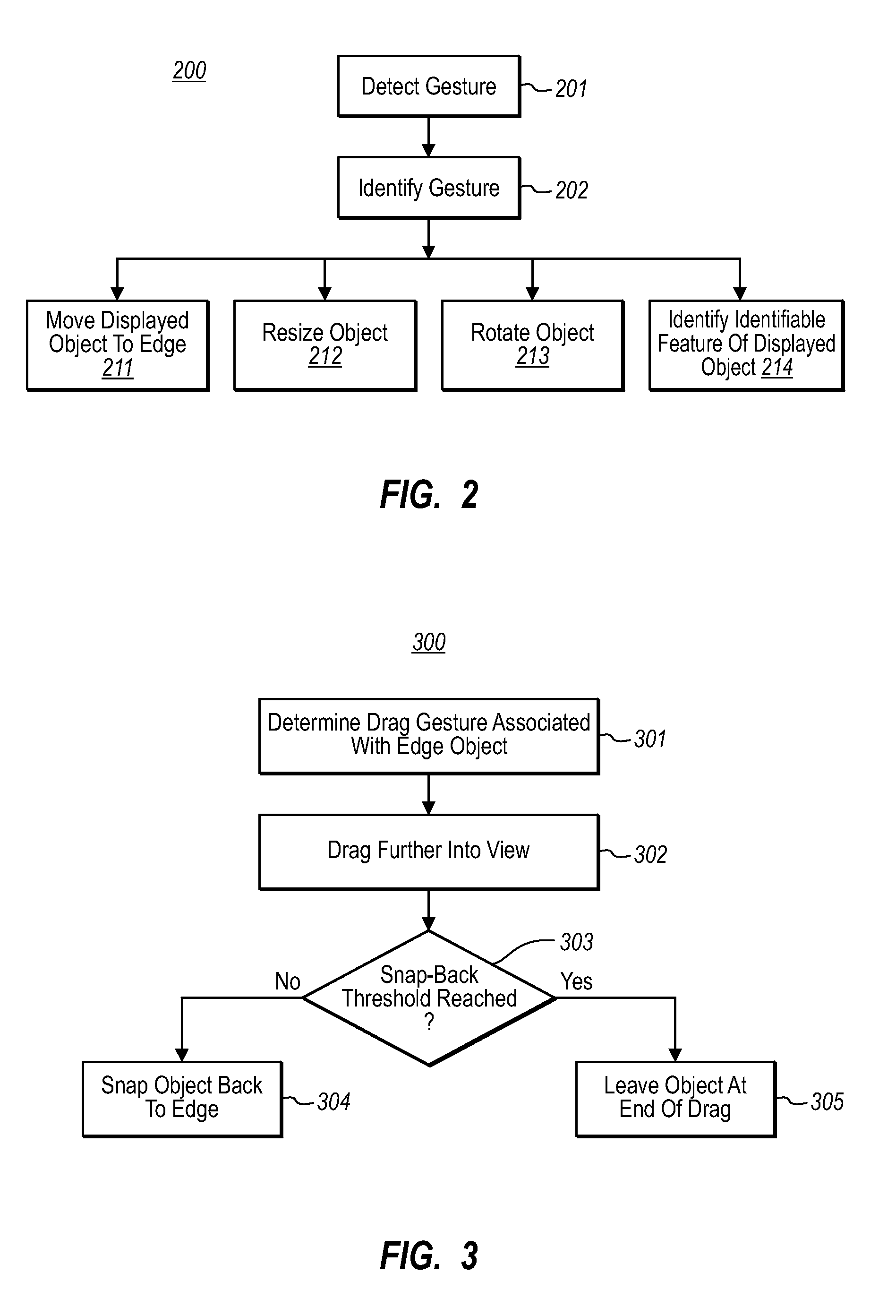

Gestured movement of object to display edge

InactiveUS20080282202A1Character and pattern recognitionCathode-ray tube indicatorsDisplay deviceInteractive displays

Owner:MICROSOFT TECH LICENSING LLC

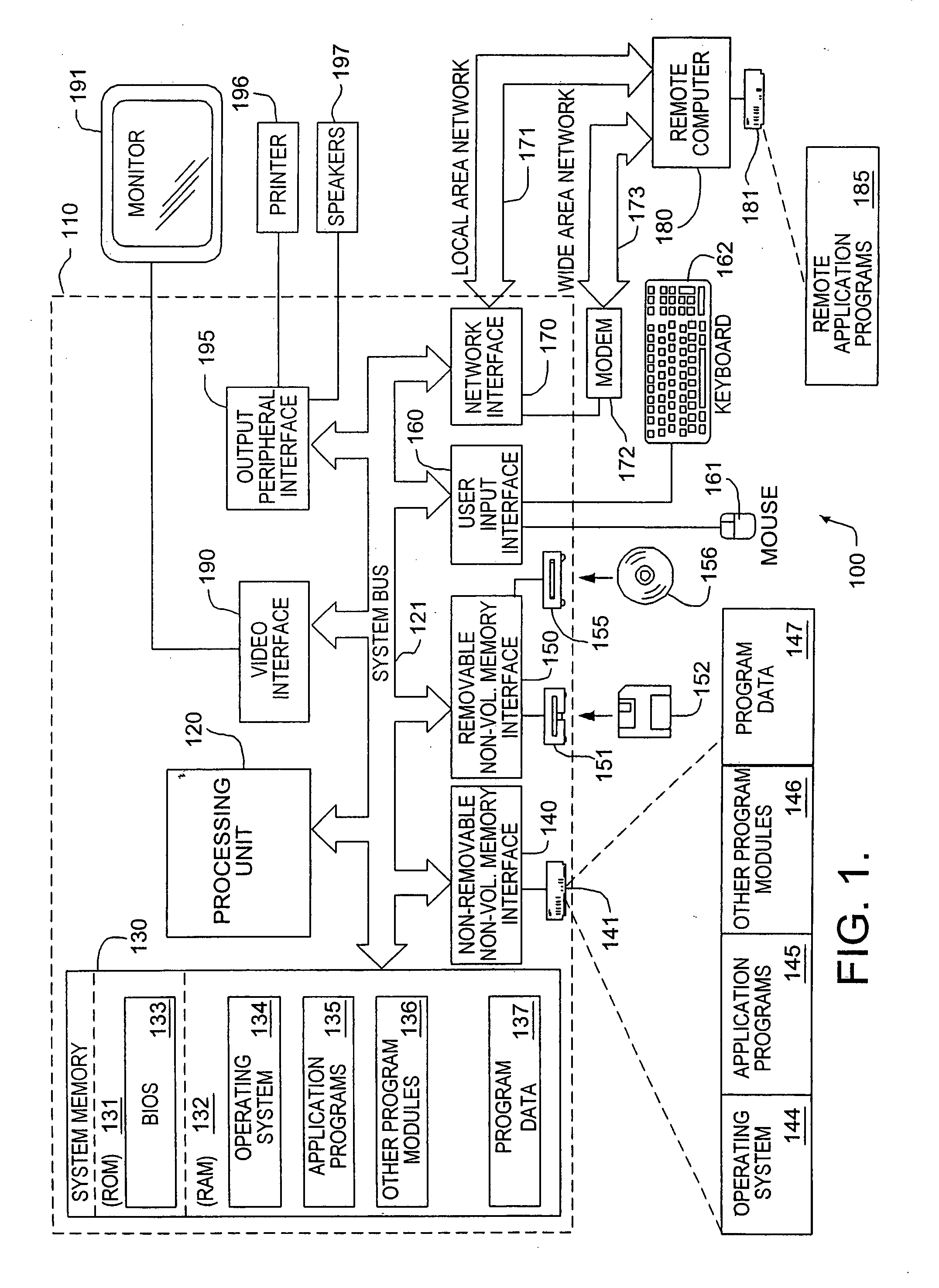

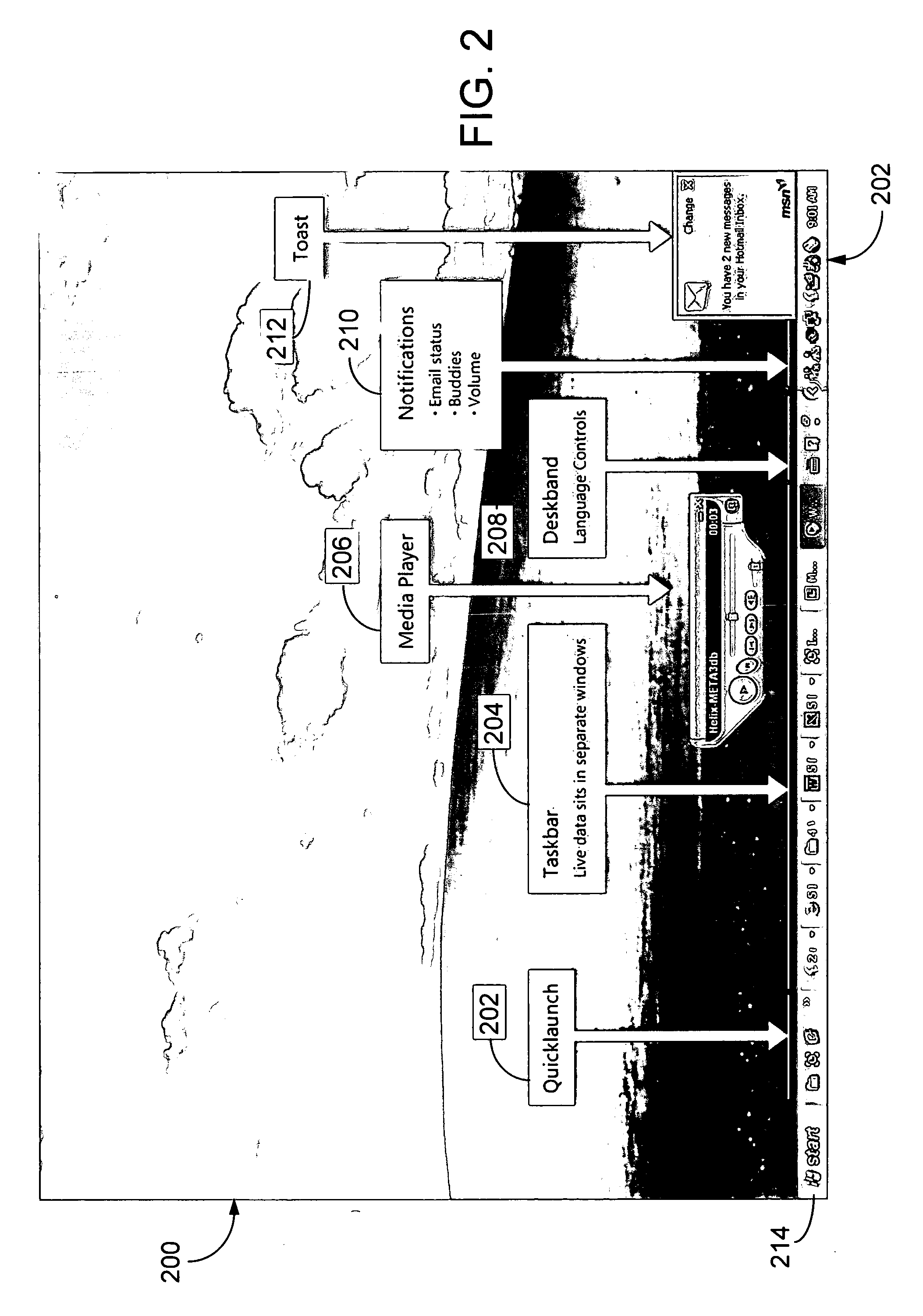

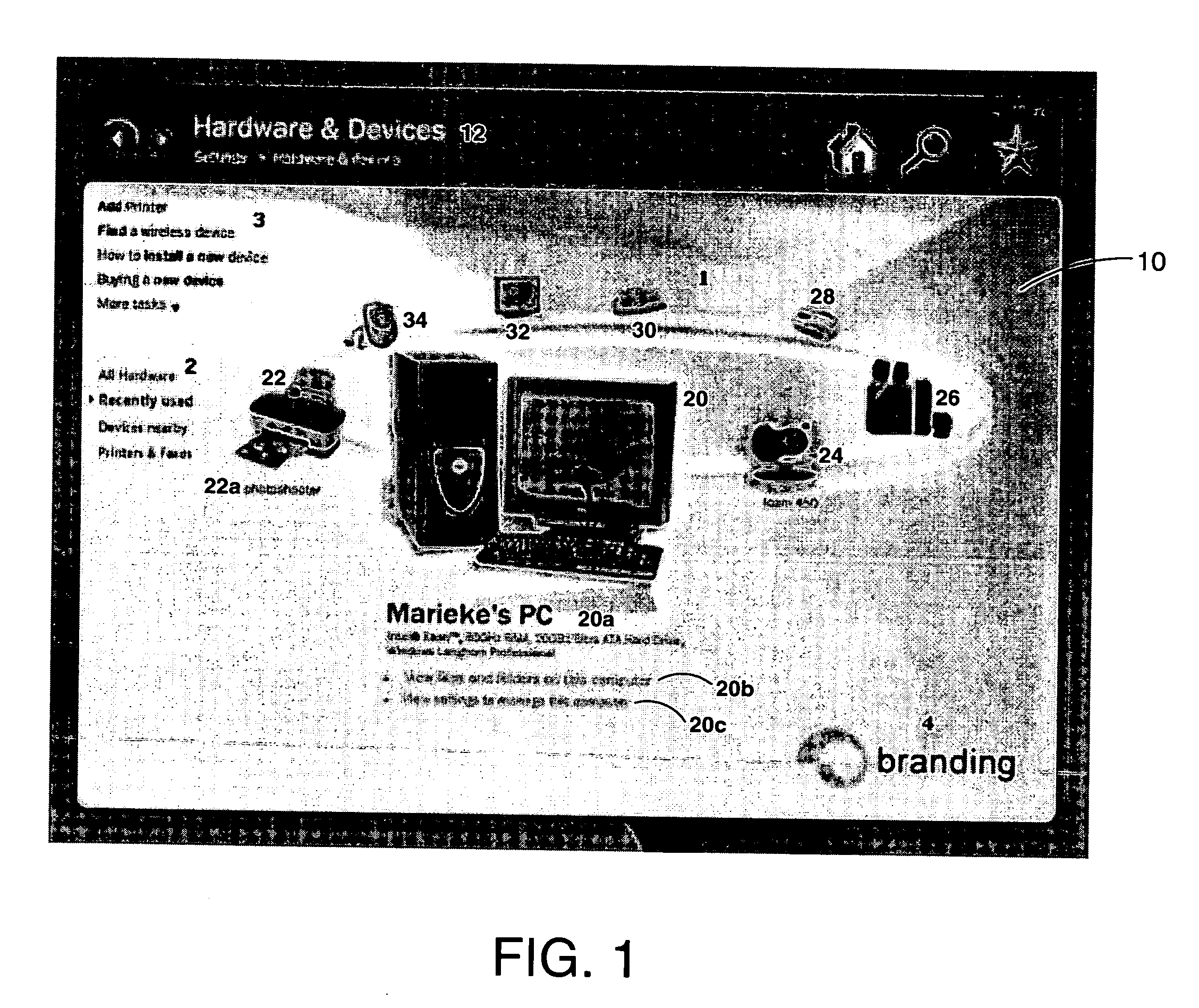

System and method for providing dynamic user information in an interactive display

InactiveUS20080120569A1Quick and convenient and consistentCathode-ray tube indicatorsInput/output processes for data processingDisplay deviceInteractive displays

The present invention is directed to a method and system for use in a computing environment to present and provide access to information that a user cares about. A scheme is provided for presenting frequently used controls and information in tiles within a sidebar. Tiles are hosted individually or in groups, within a sidebar, for interaction by a user. Tiles can be added or removed from the sidebar automatically or by user request. The present invention is further directed to a method for providing a scaleable and useable preview of tiles within a sidebar. Further still, the present invention is directed to maintaining an overflow area of icons for tiles that would not fit within the sidebar. The sidebar has content that dynamically adjusts in response to the addition, expansion, squishing or removal of tiles. Even Further, a user can customize the sidebar of the present invention.

Owner:MICROSOFT TECH LICENSING LLC

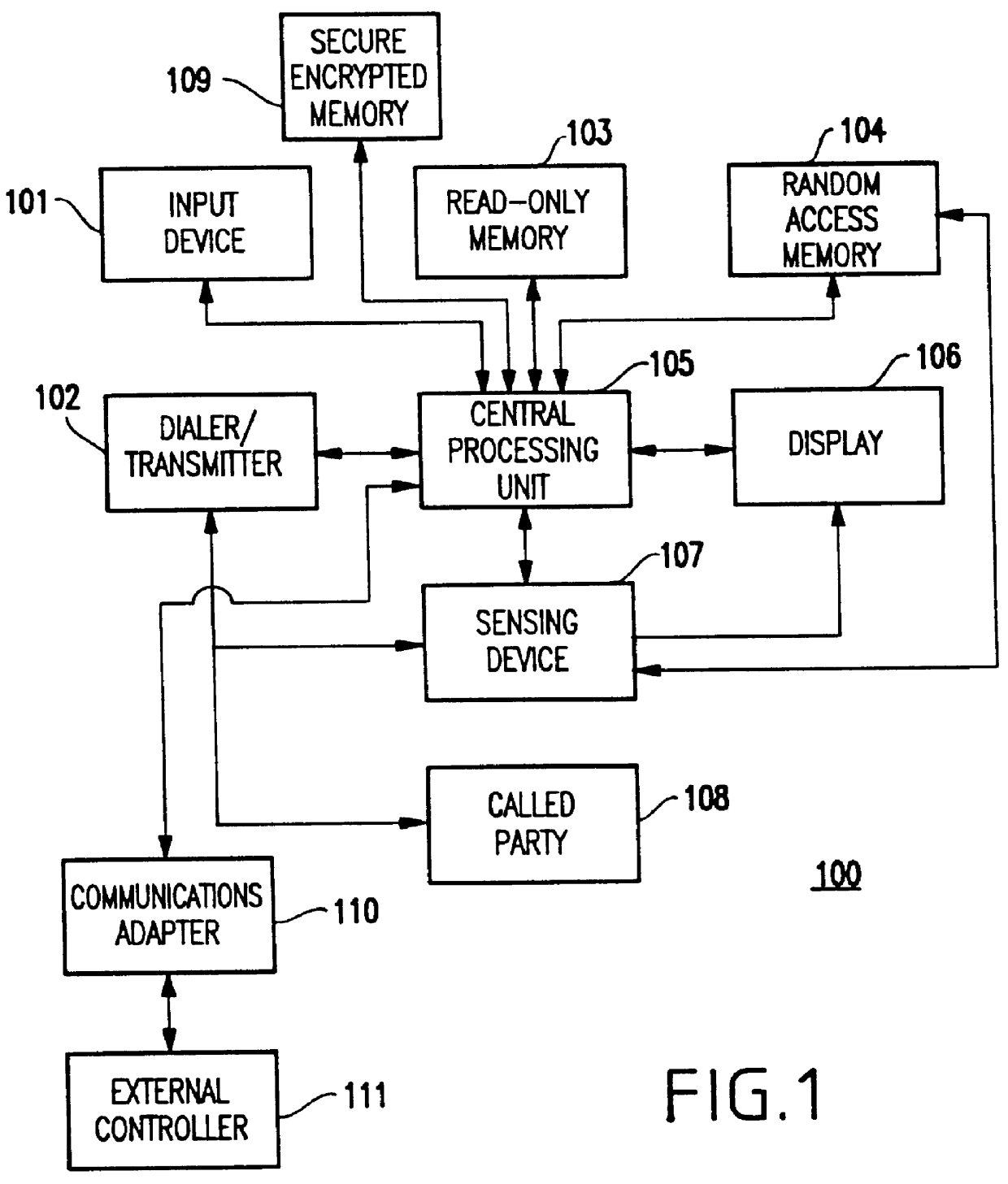

Graphical voice response system and method therefor

InactiveUS6104790AShorten the timeTime spentInput/output for user-computer interactionSubscriber signalling identity devicesGraphicsDialer

A method and system for communicating between a calling party and a called party, includes a dialer for dialing a communication device of a called party, a sensor for sensing whether a voice menu file is associated with the communication device of the called party, and an interactive display for displaying the voice menu file when the sensor senses the voice menu file.

Owner:IBM CORP

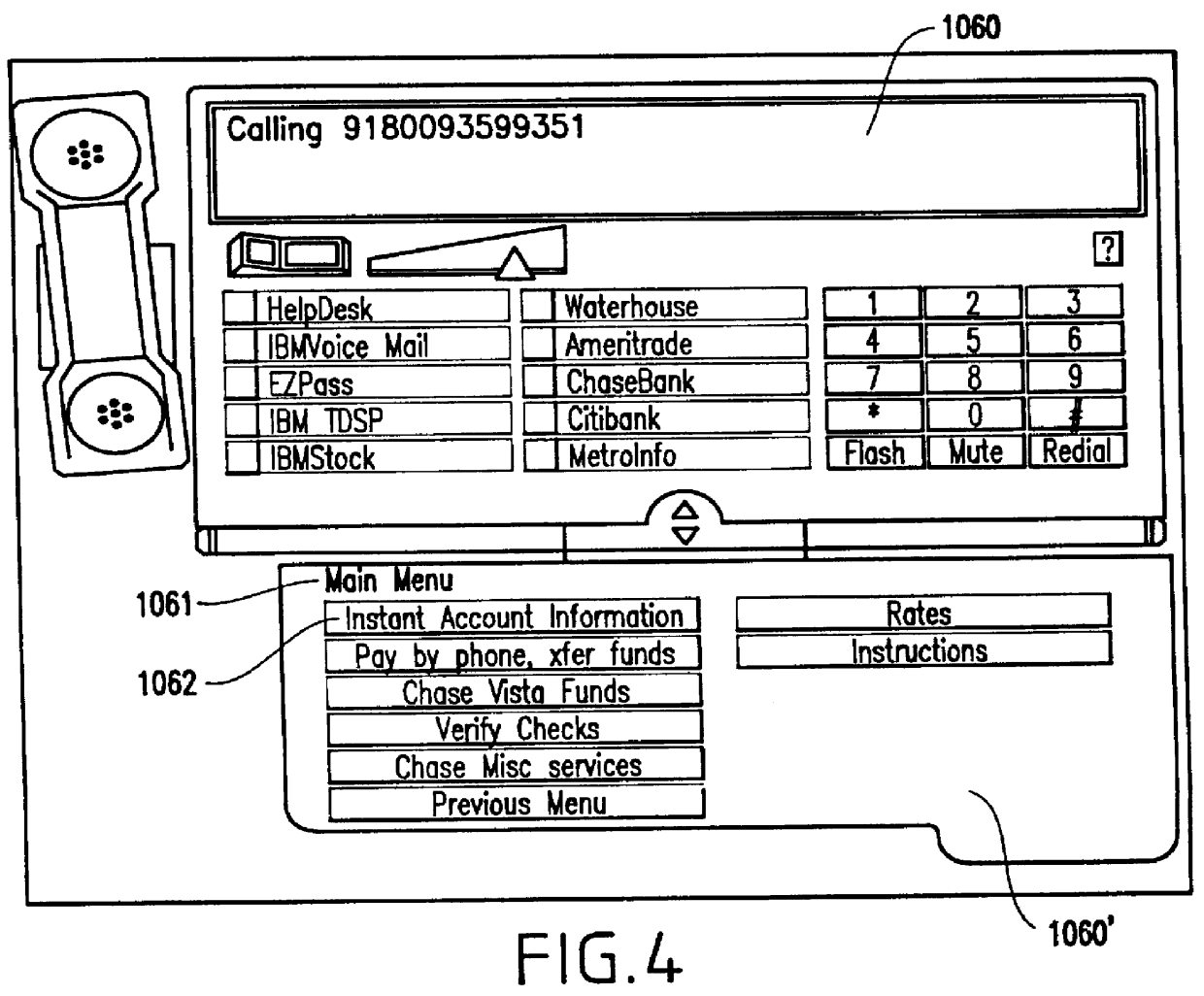

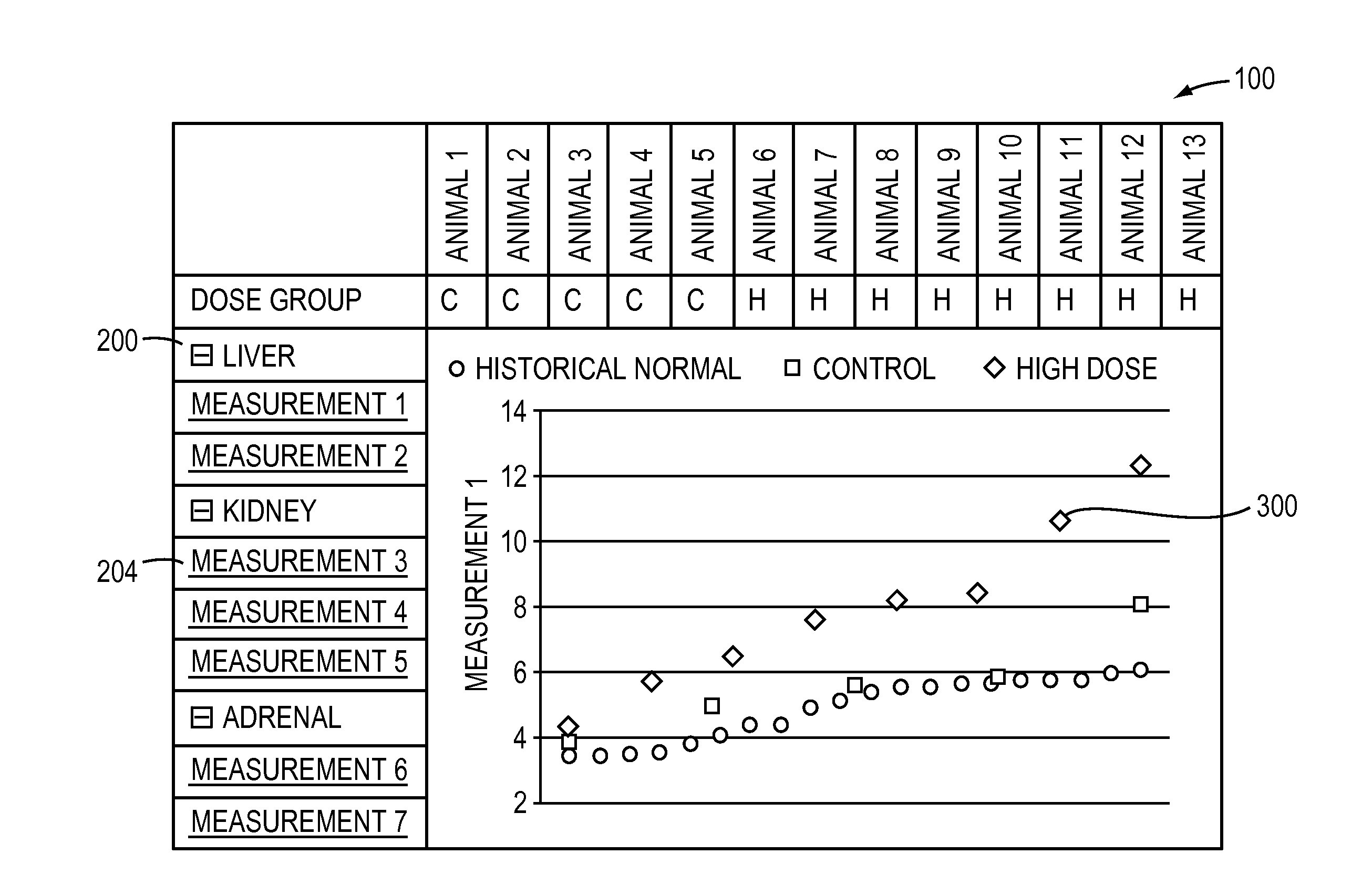

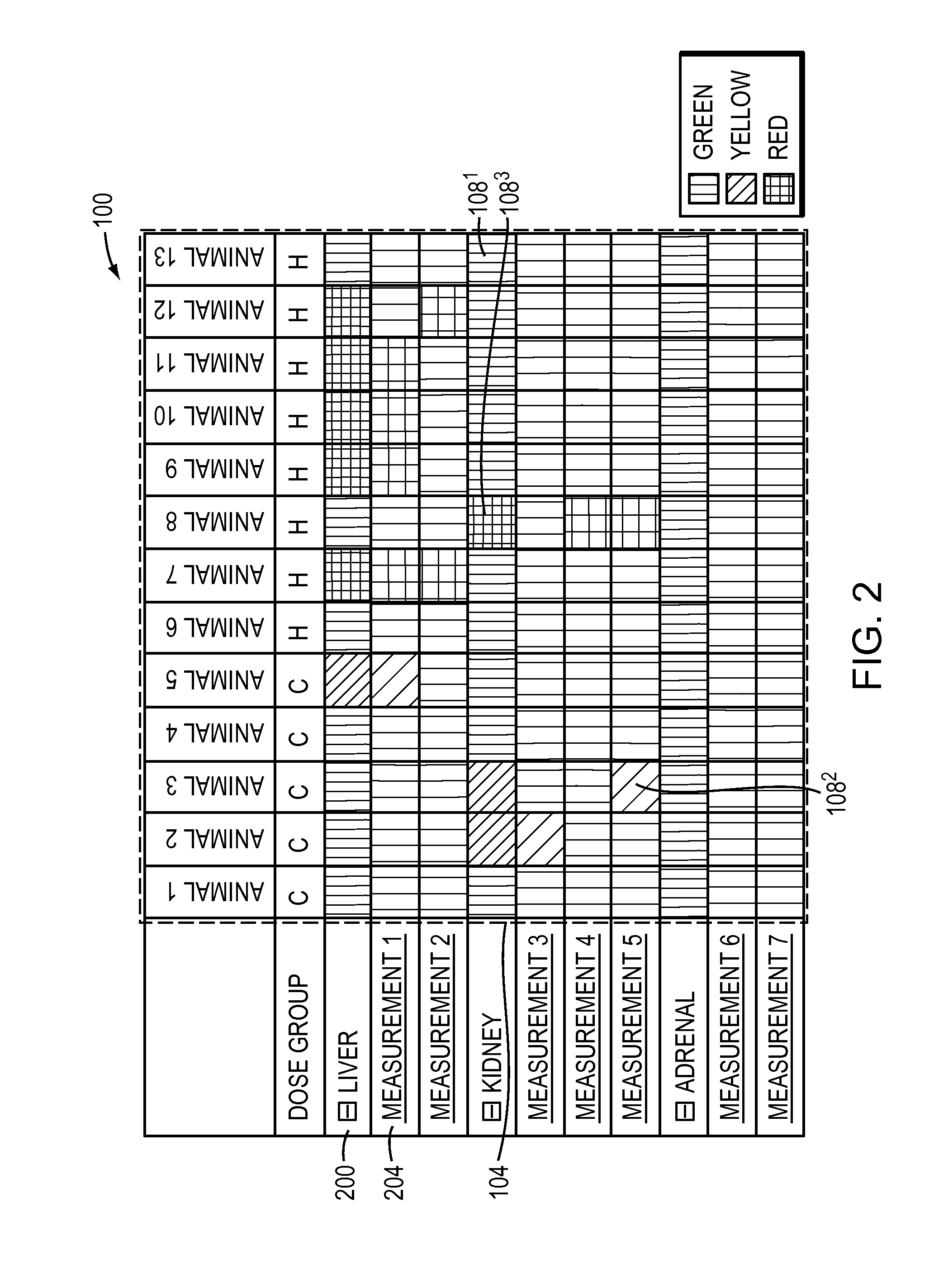

Methods and Apparatus for Interactive Display of Images and Measurements

InactiveUS20120019559A1Medical data miningDrawing from basic elementsInteractive displaysHuman–computer interaction

Owner:CHARLES RIVER LAB INC

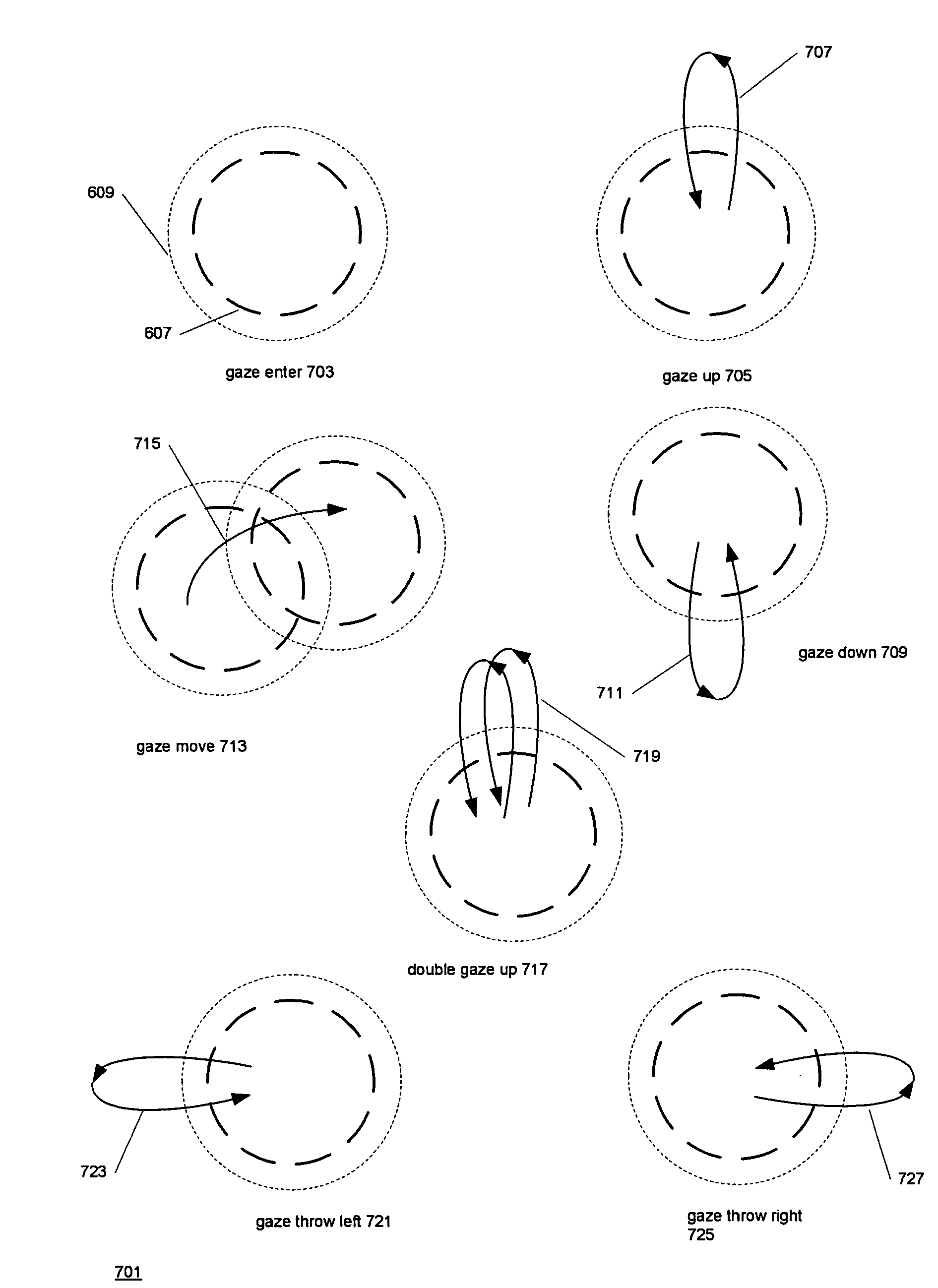

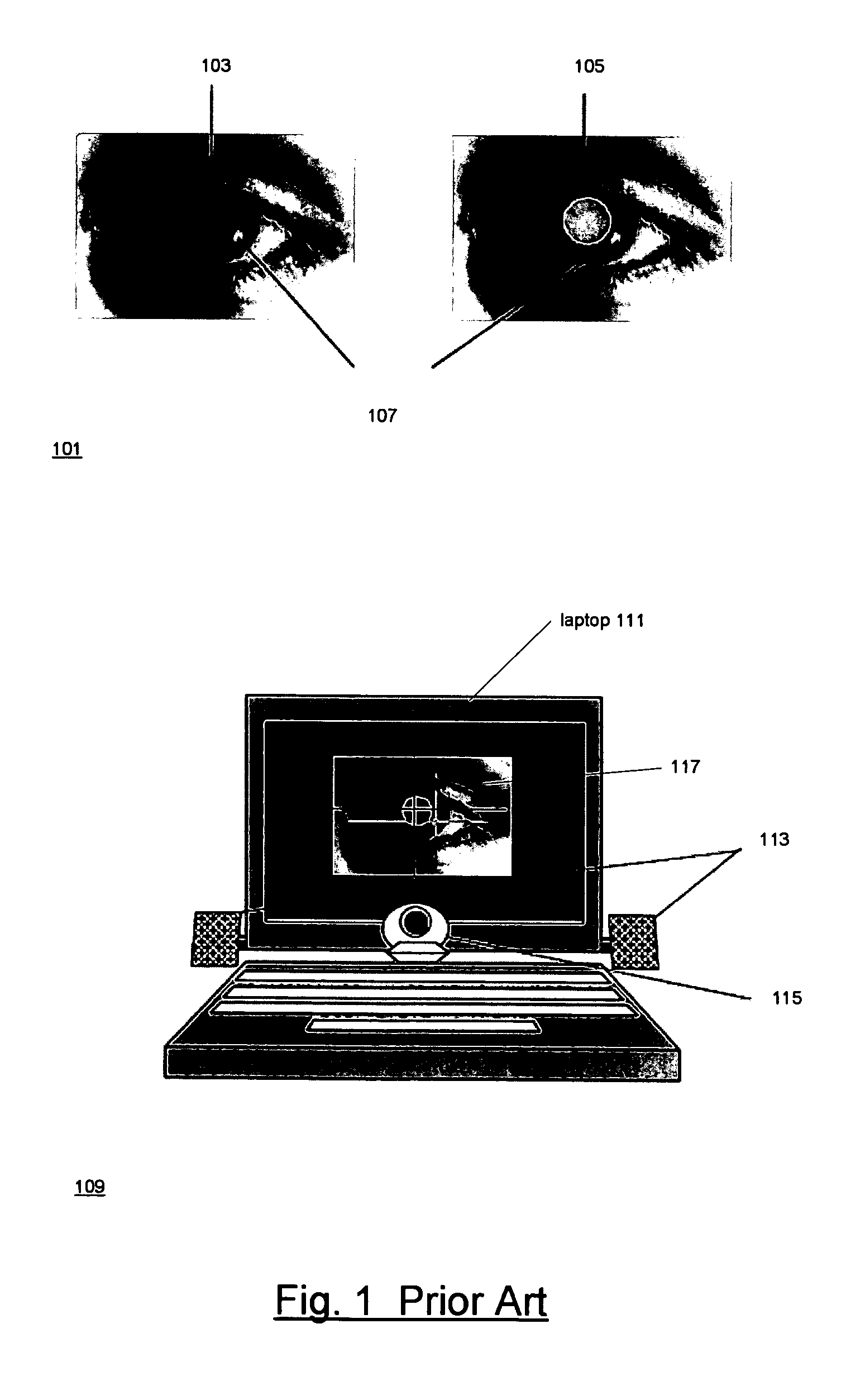

Using gaze actions to interact with a display

ActiveUS7561143B1Cathode-ray tube indicatorsInput/output processes for data processingGraphicsGraphical user interface

Techniques for using gaze actions to interact with interactive displays. A pointing device includes an eye movement tracker that tracks eye movements and an eye movement analyzer. The eye movement analyzer analyzes the eye movements for a sequence of gaze movements that indicate a gaze action which specifies an operation on the display. A gaze movement may have a location, a direction, a length, and a velocity. A processor receives an indication of the gaze action and performs the operation specified by the gaze action on the display. The interactive display may be digital or may involve real objects. Gaze actions may correspond to mouse events and may be used with standard graphical user interfaces.

Owner:SAMSUNG ELECTRONICS CO LTD

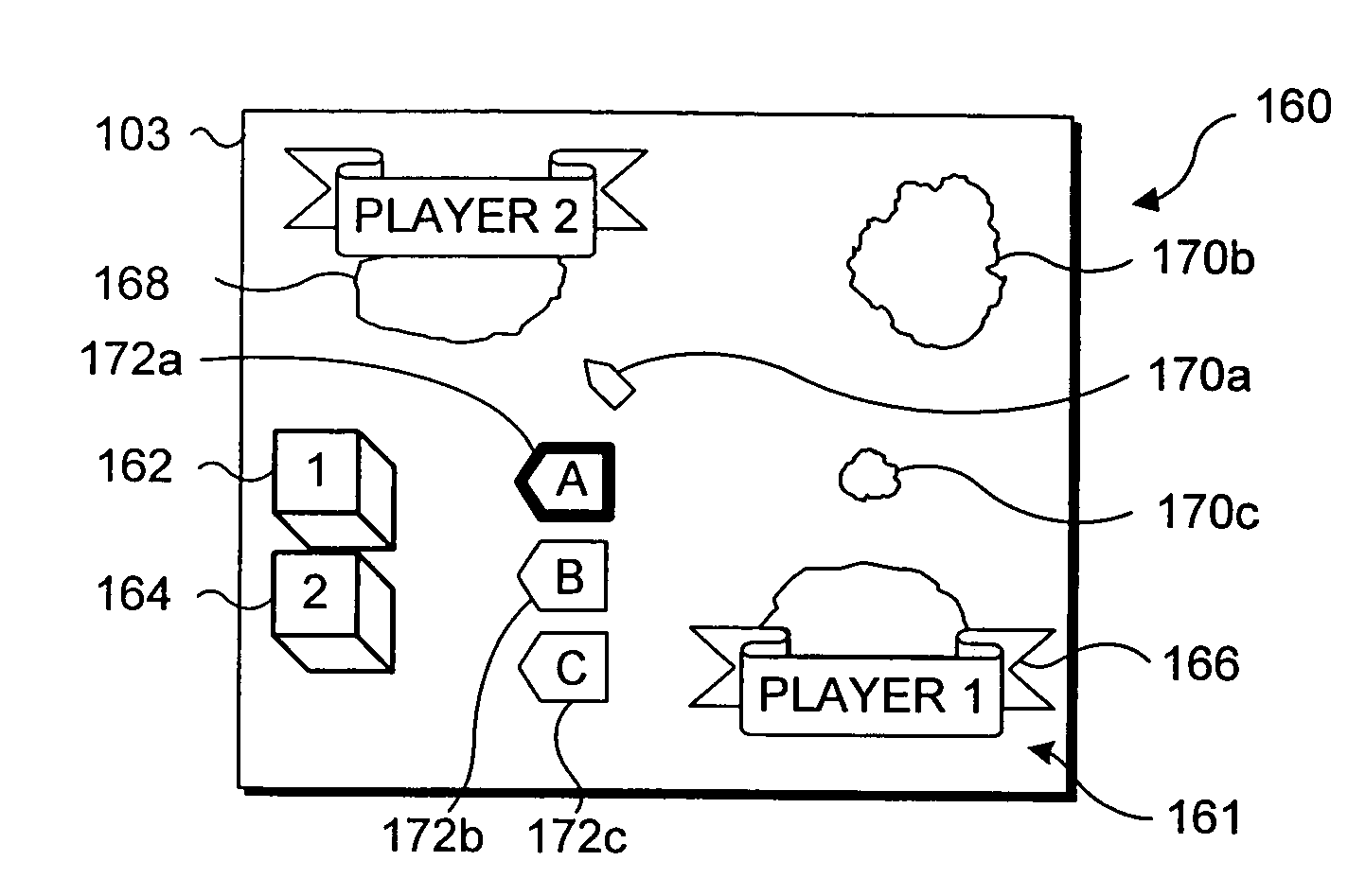

Associating application states with a physical object

InactiveUS7397464B1Simple methodCathode-ray tube indicatorsInput/output processes for data processingApplication softwareInteractive displays

An application state of a computer program is stored and associated with a physical object and can be subsequently retrieved when the physical object is detected adjacent to an interactive display surface. An identifying characteristic presented by the physical object, such as a reflective pattern applied to the object, is detected when the physical object is positioned on the interactive display surface. The user or the system can initiate a save of the application state. For example, the state of an electronic game using the interactive display surface can be saved. Attributes representative of the state are stored and associated with the identifying characteristic of the physical object. When the physical object is again placed on the interactive display surface, the physical object is detected based on its identifying characteristic, and the attributes representative of the state can be selectively retrieved and used to recreate the state of the application.

Owner:MICROSOFT TECH LICENSING LLC

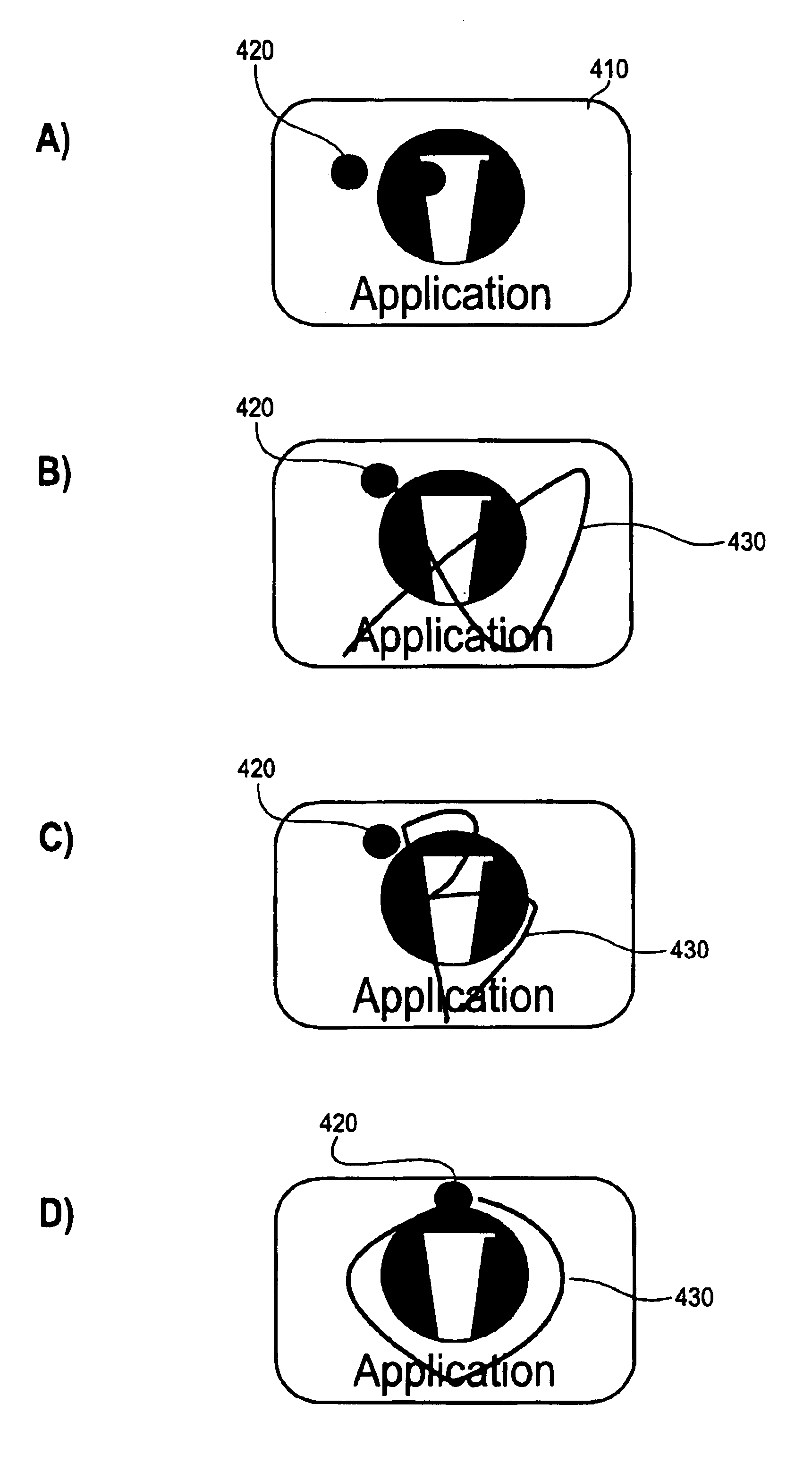

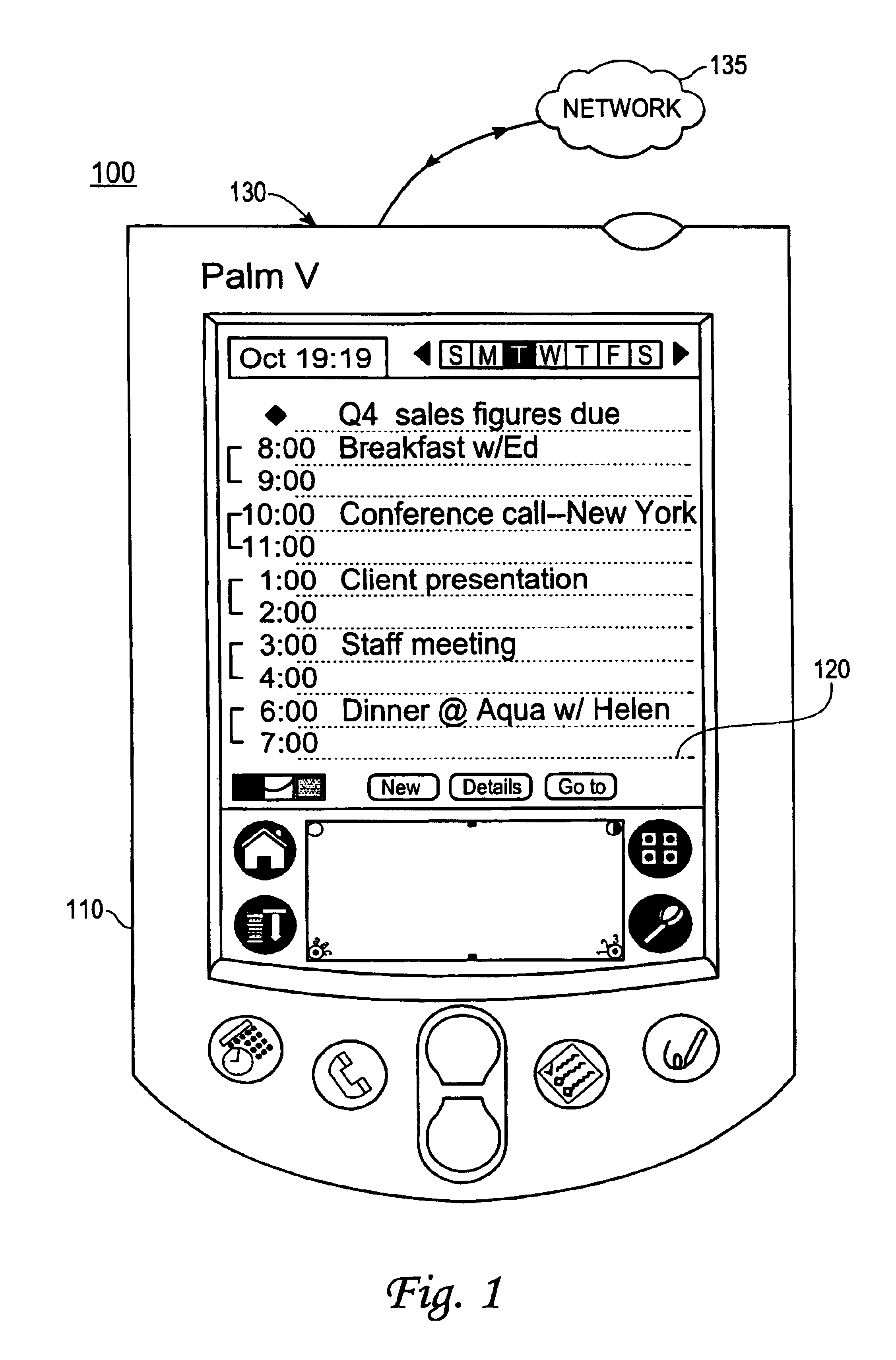

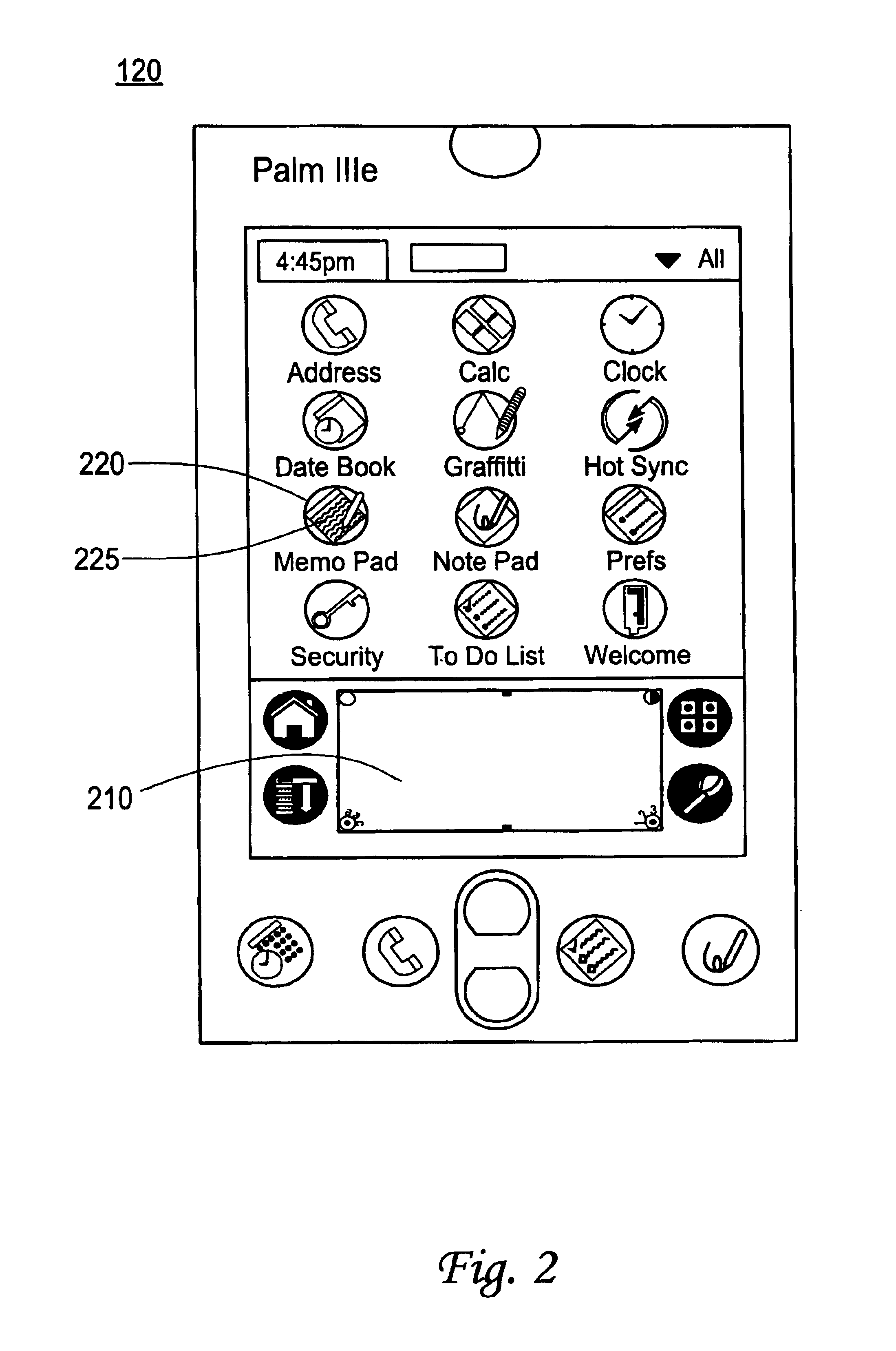

Method for controlling a handheld computer by entering commands onto a displayed feature of the handheld computer

InactiveUS6956562B1Cathode-ray tube indicatorsDigital output to display deviceGraphicsOperational system

A method for software control using a user-interactive display screen feature is disclosed that reduces stylus or other manipulations necessary to invoke software functionality from the display screen. According to the method, a graphical feature having a surface area is displayed on a touch-sensitive screen. The touch-sensitive screen is coupled to at least one processor and the graphical feature is generated by an operating system and uniquely associated with a particular software program by the operating system. To control software executing on the processor, a user-supplied writing on the surface area is received and the software is controlled responsive to the writing. In alternate embodiments, the method further controls data stored in a memory device responsive to the writing or further controls transmission of data from a radiation emitter, which may be coupled to voice and data networks.

Owner:ACCESS

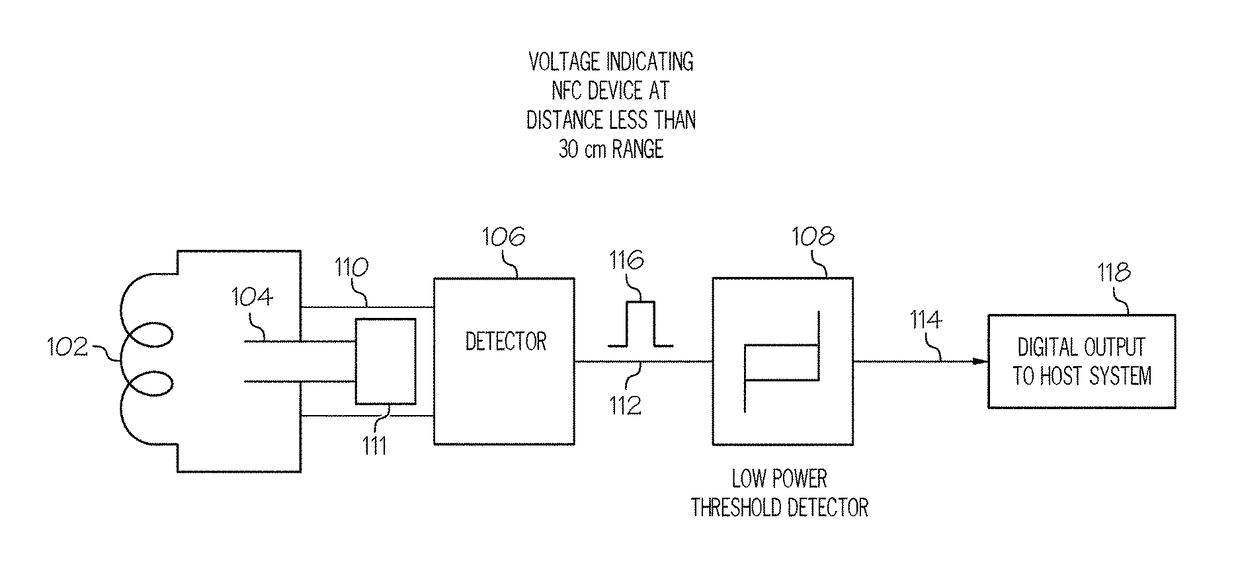

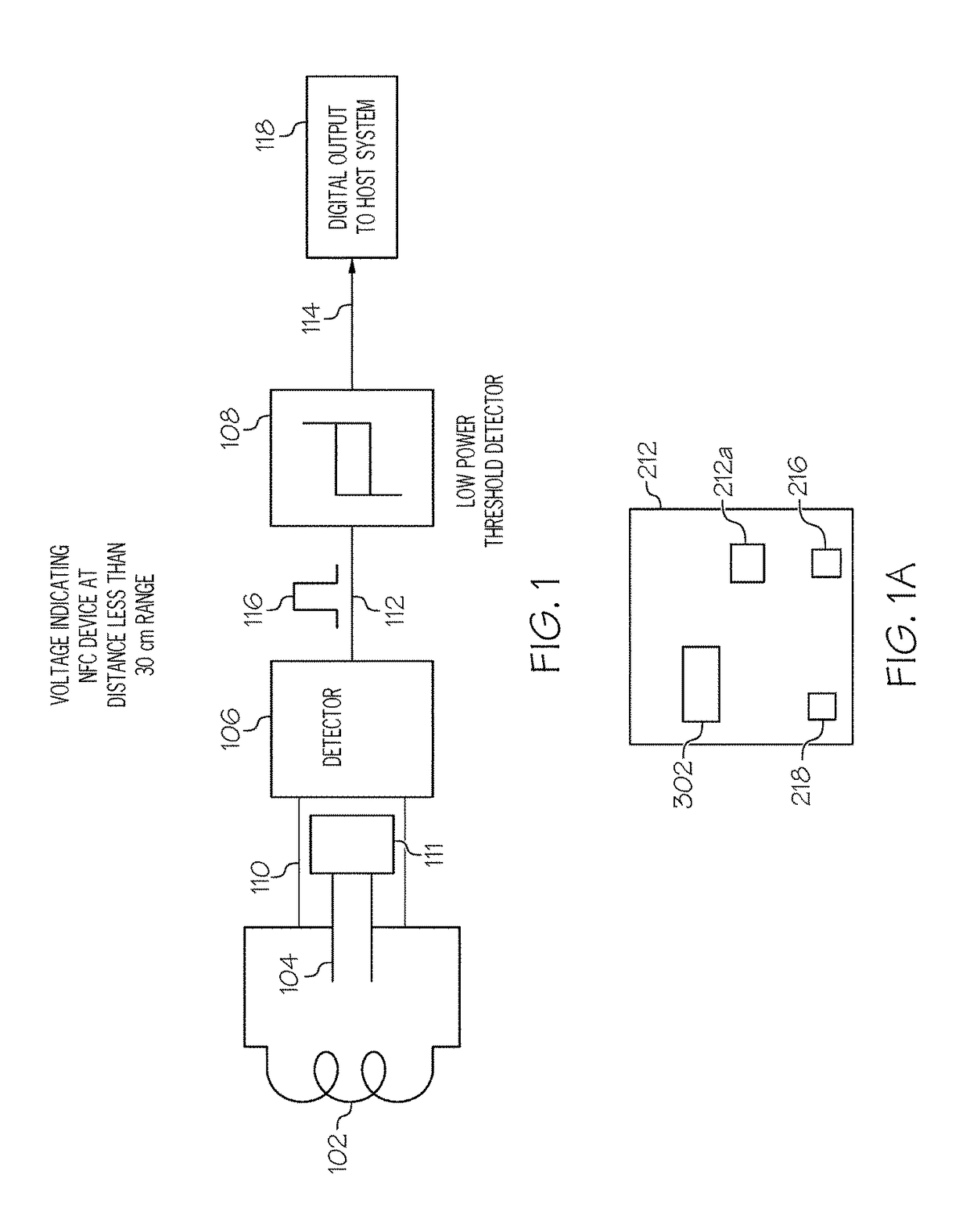

NFC tags with proximity detection

Owner:AVERY DENNISON CORP

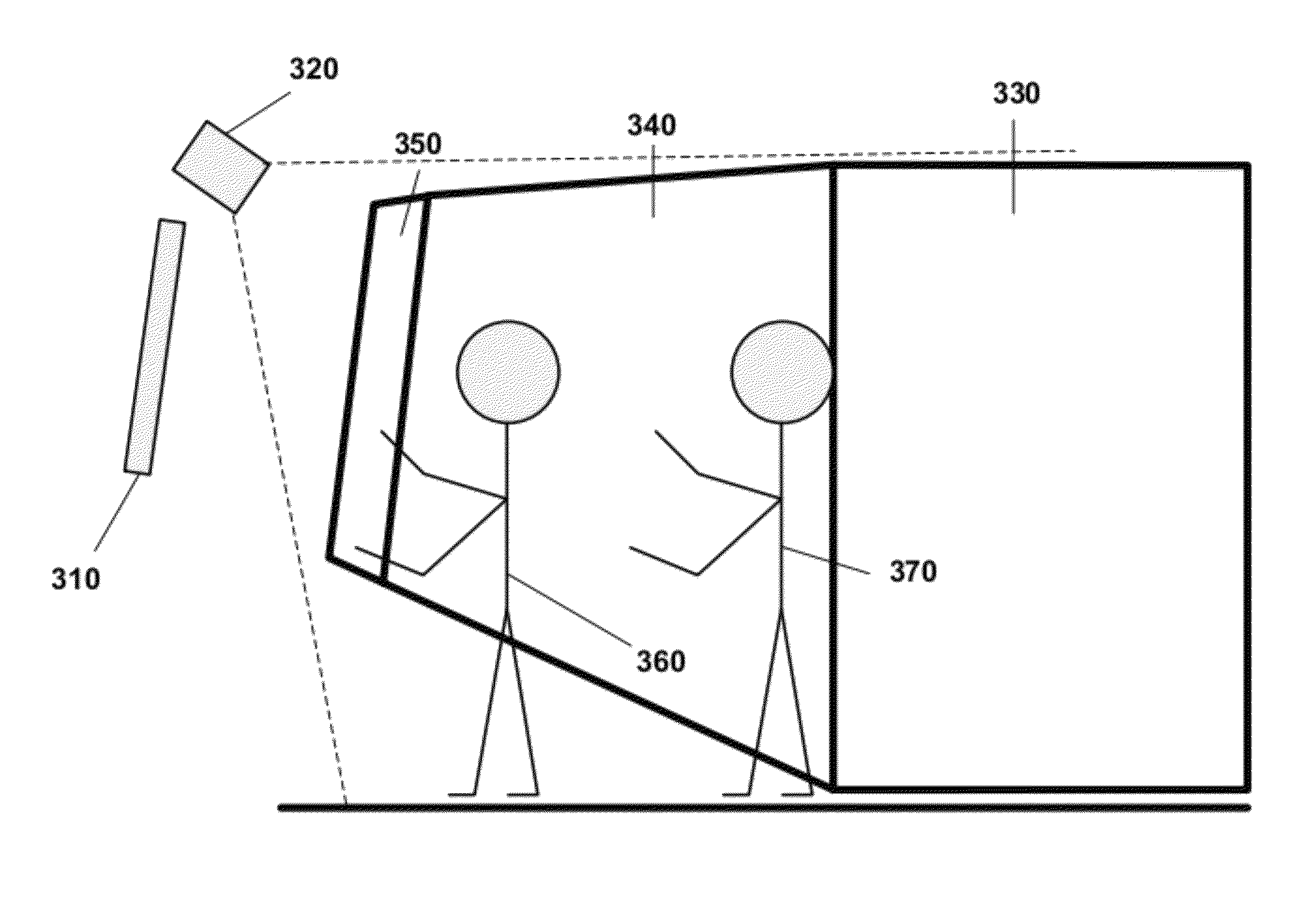

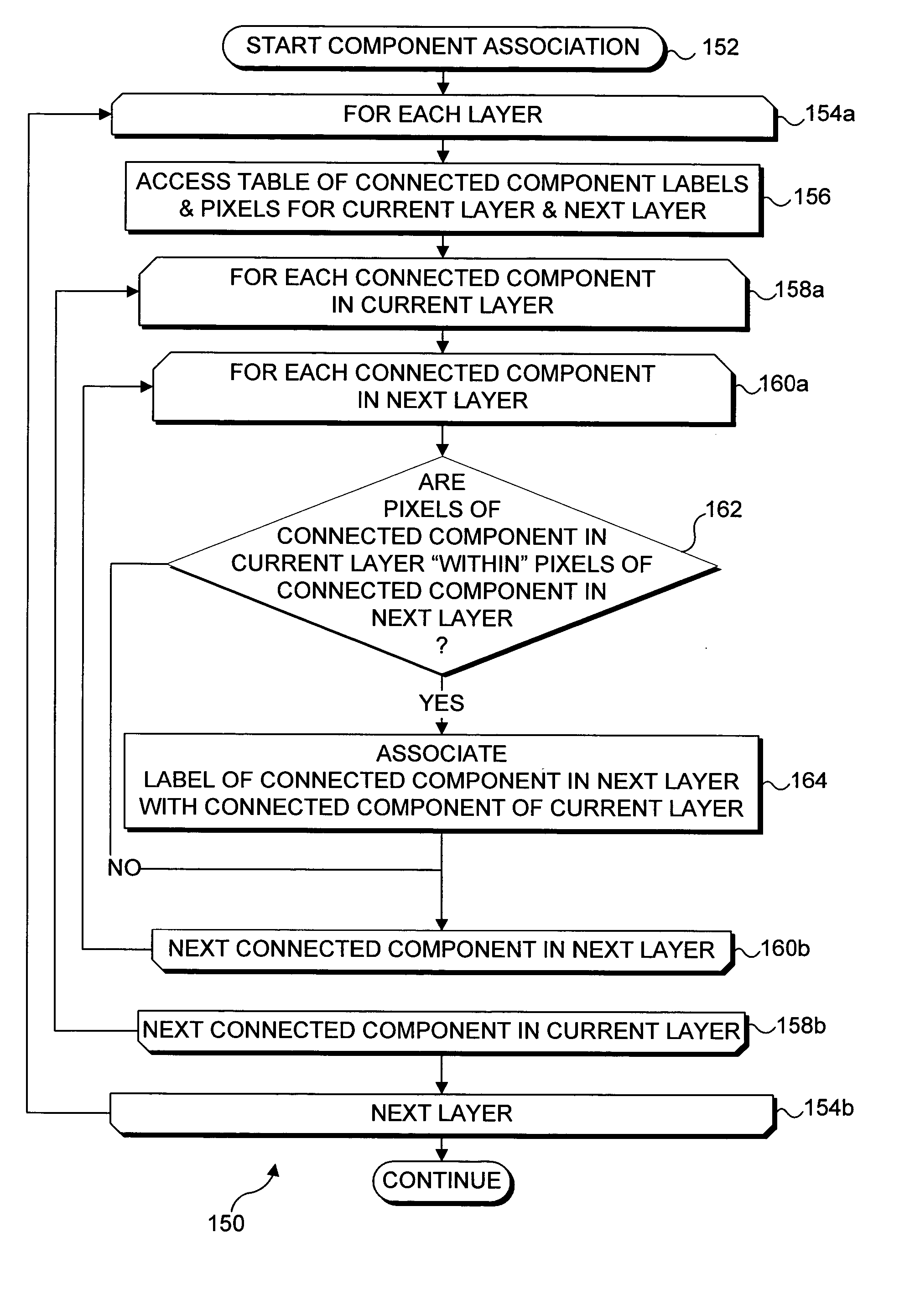

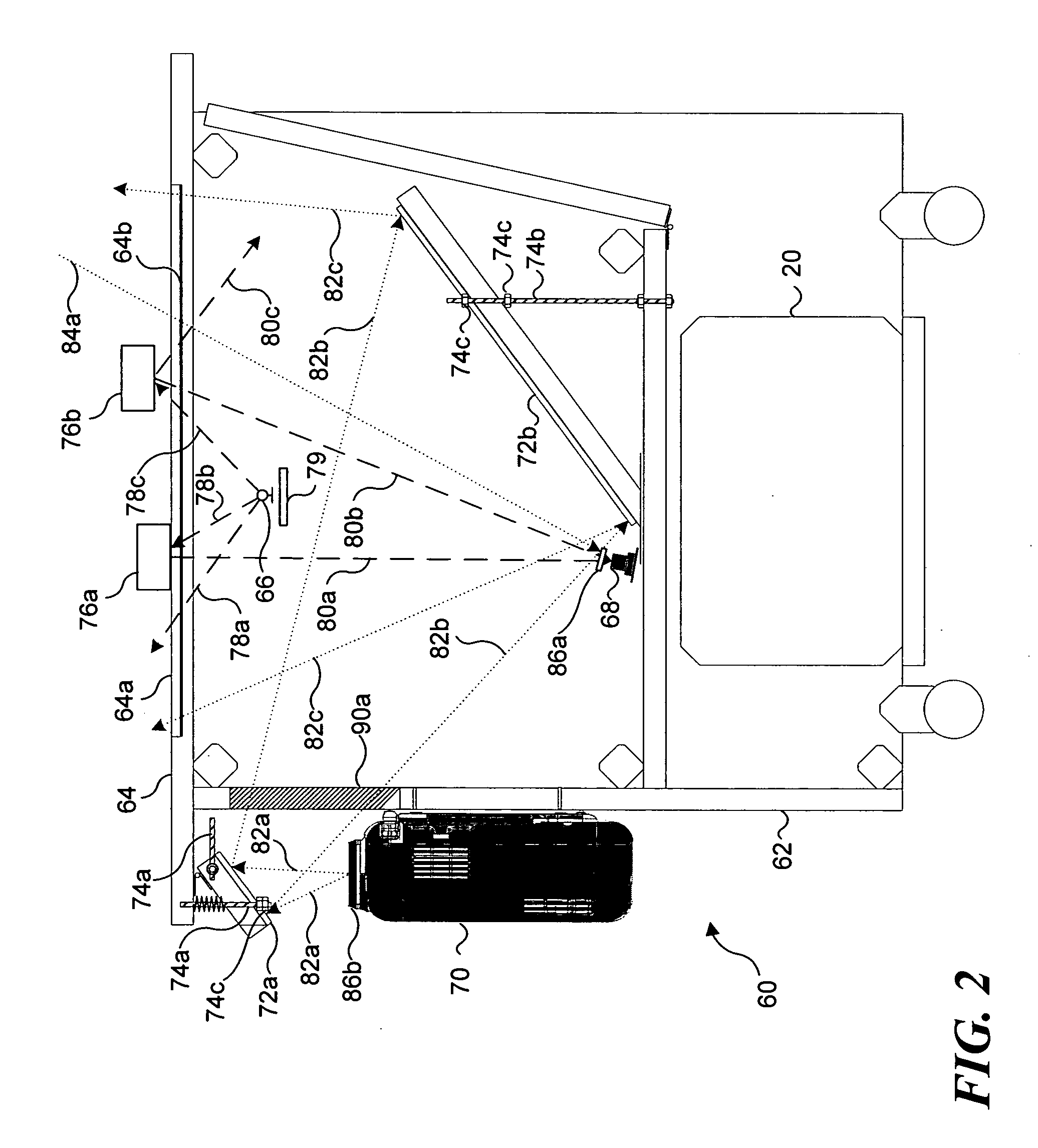

Determining connectedness and offset of 3D objects relative to an interactive surface

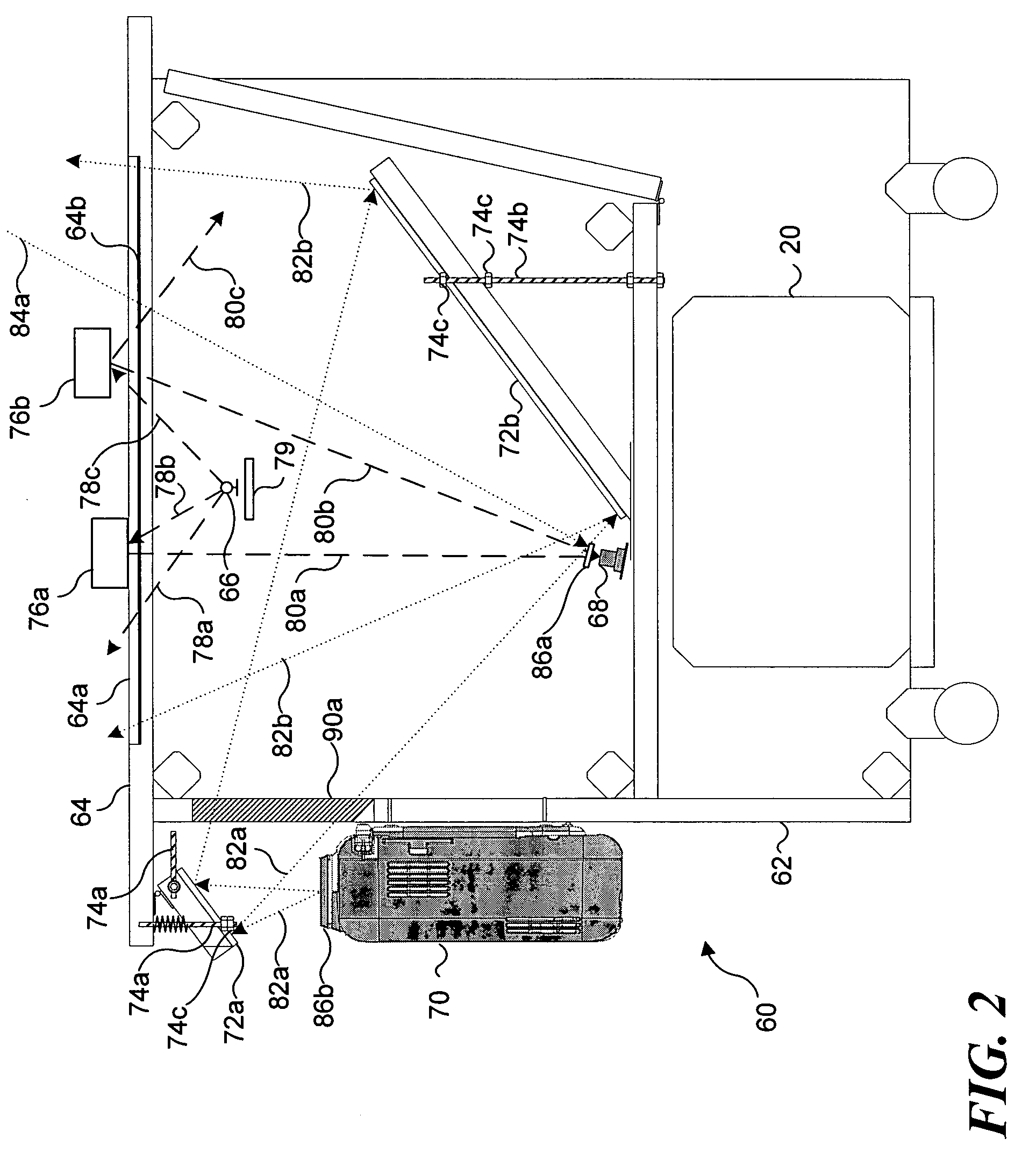

InactiveUS20050226505A1Lower latencyCharacter and pattern recognitionInput/output processes for data processingInteractive displaysVideo camera

A position of a three-dimensional (3D) object relative to a display surface of an interactive display system is detected based upon the intensity of infrared (IR) light reflected from the object and received by an IR video camera disposed under the display surface. As the object approaches the display surface, a “hover” connected component is defined by pixels in the image produced by the IR video camera that have an intensity greater than a predefined hover threshold and are immediately adjacent to another pixel also having an intensity greater than the hover threshold. When the object contacts the display surface, a “touch” connected component is defined by pixels in the image having an intensity greater than a touch threshold, which is greater than the hover threshold. Connected components determined for an object at different heights above the surface are associated with a common label if their bounding areas overlap.

Owner:MICROSOFT TECH LICENSING LLC

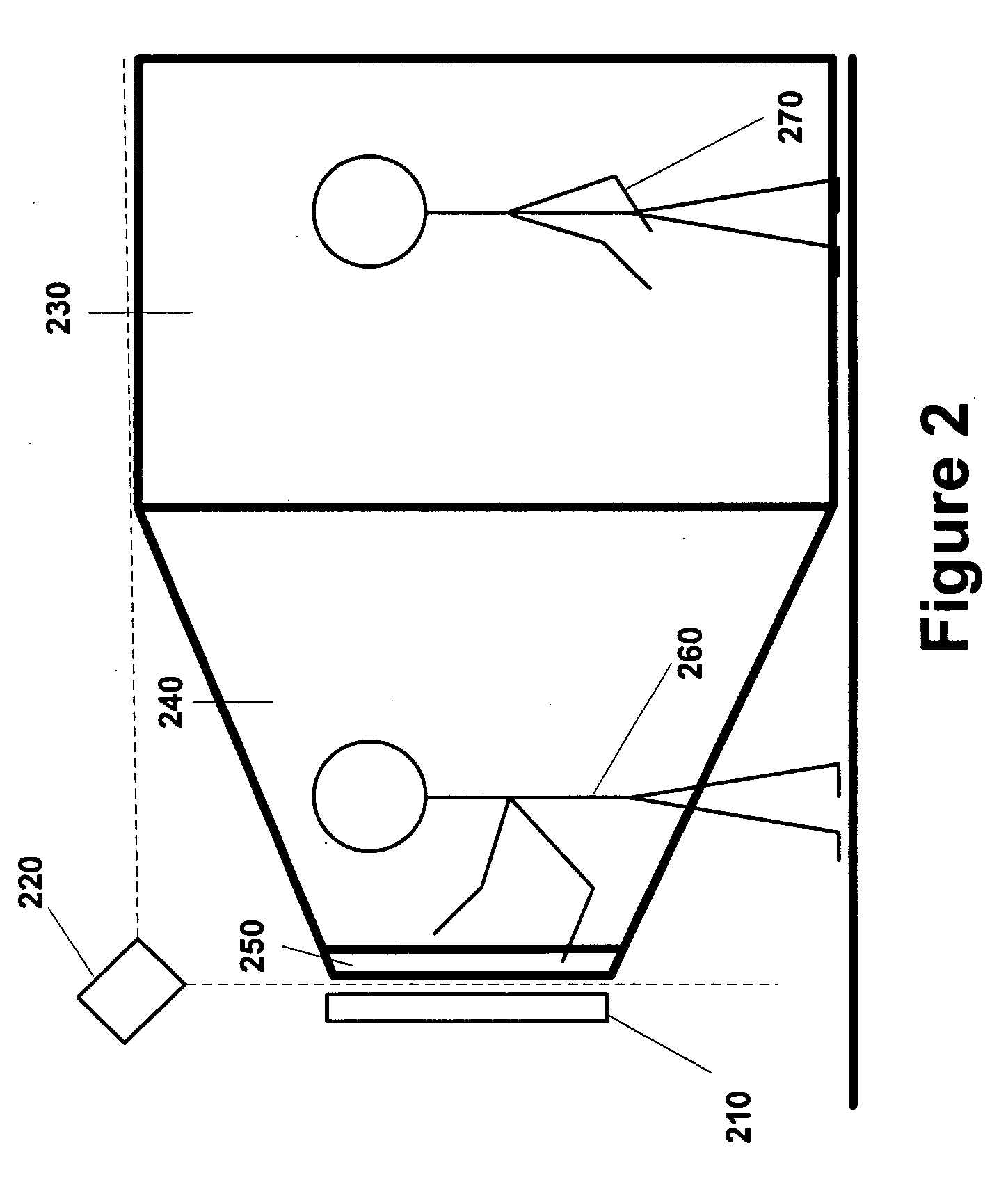

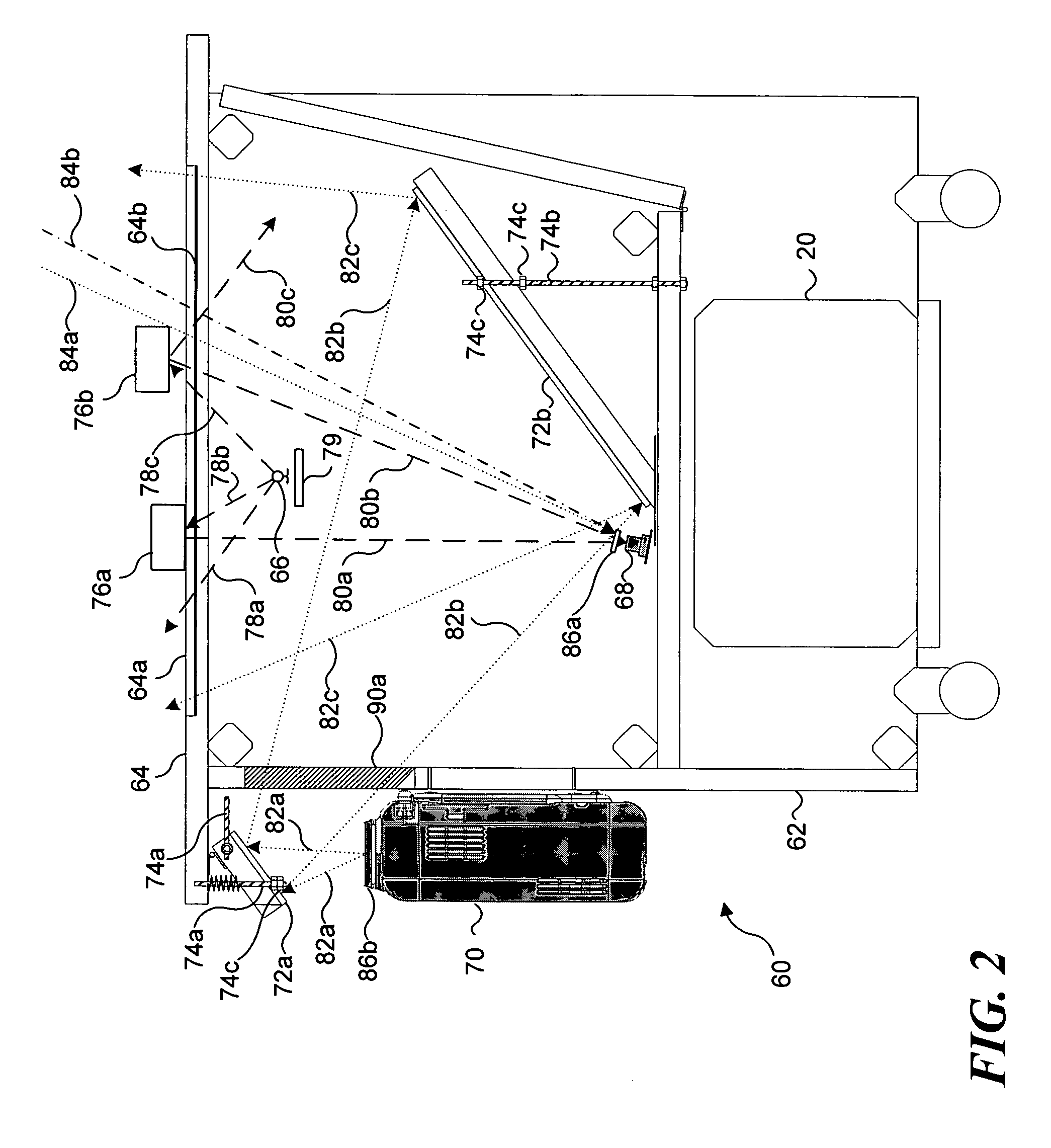

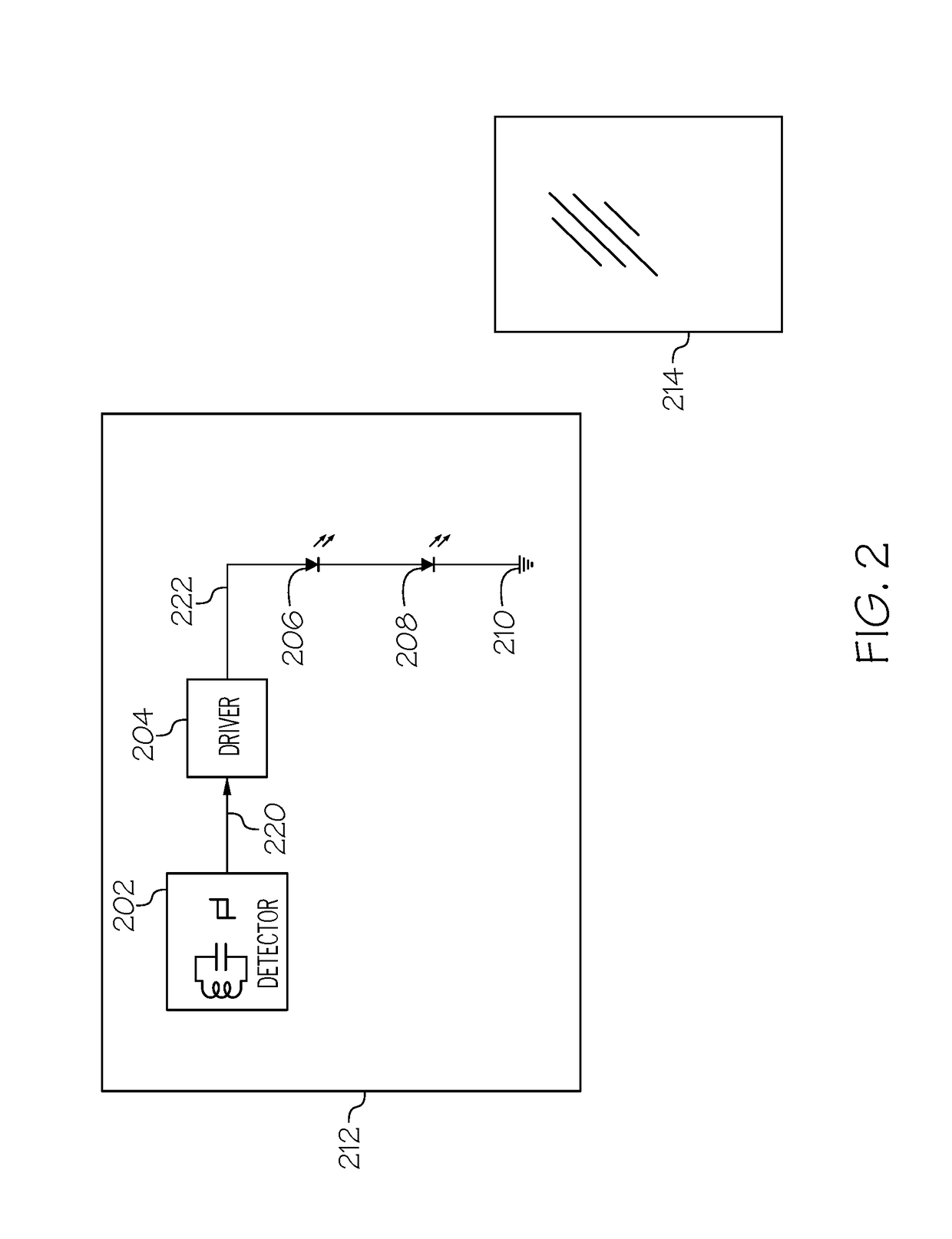

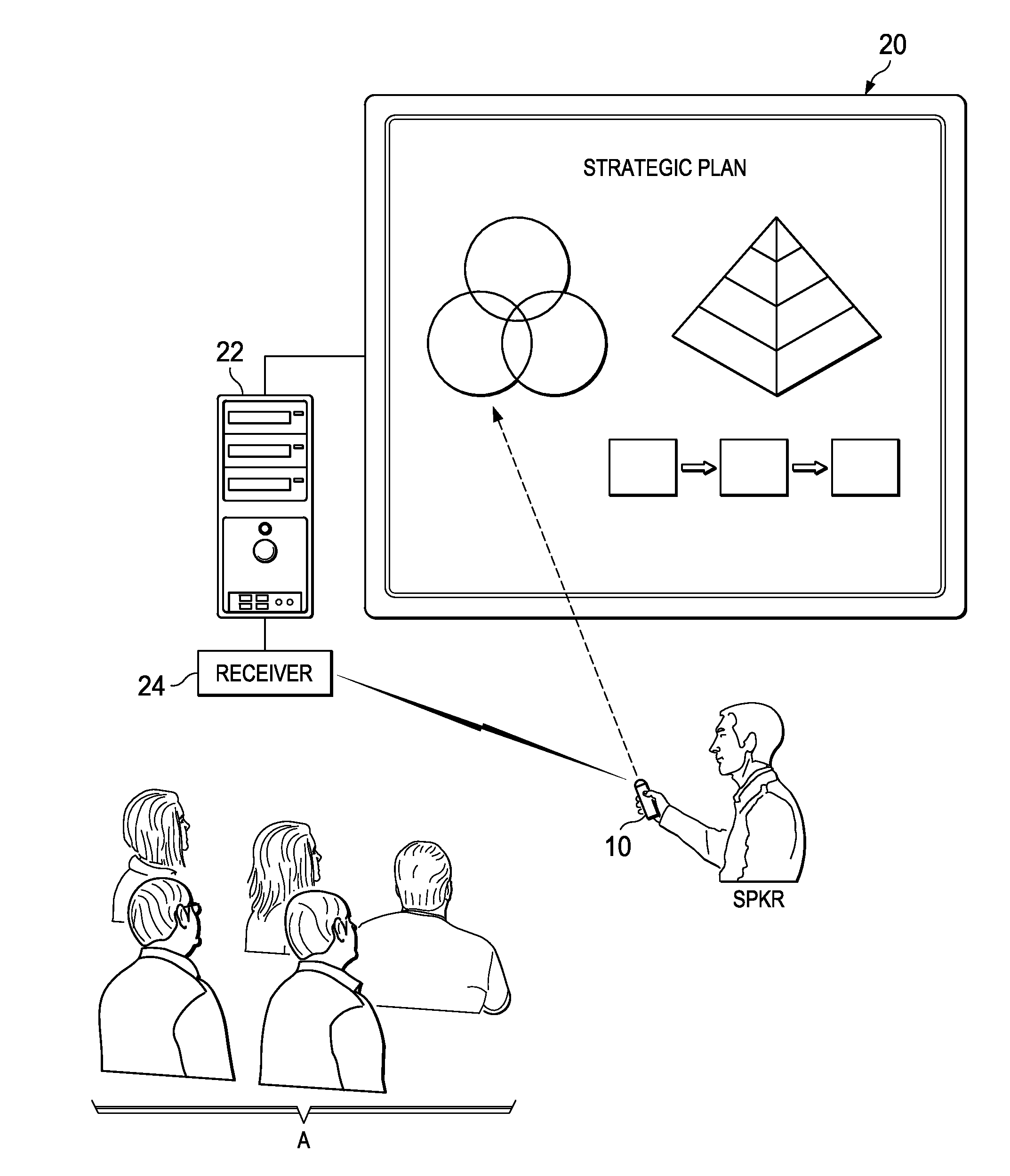

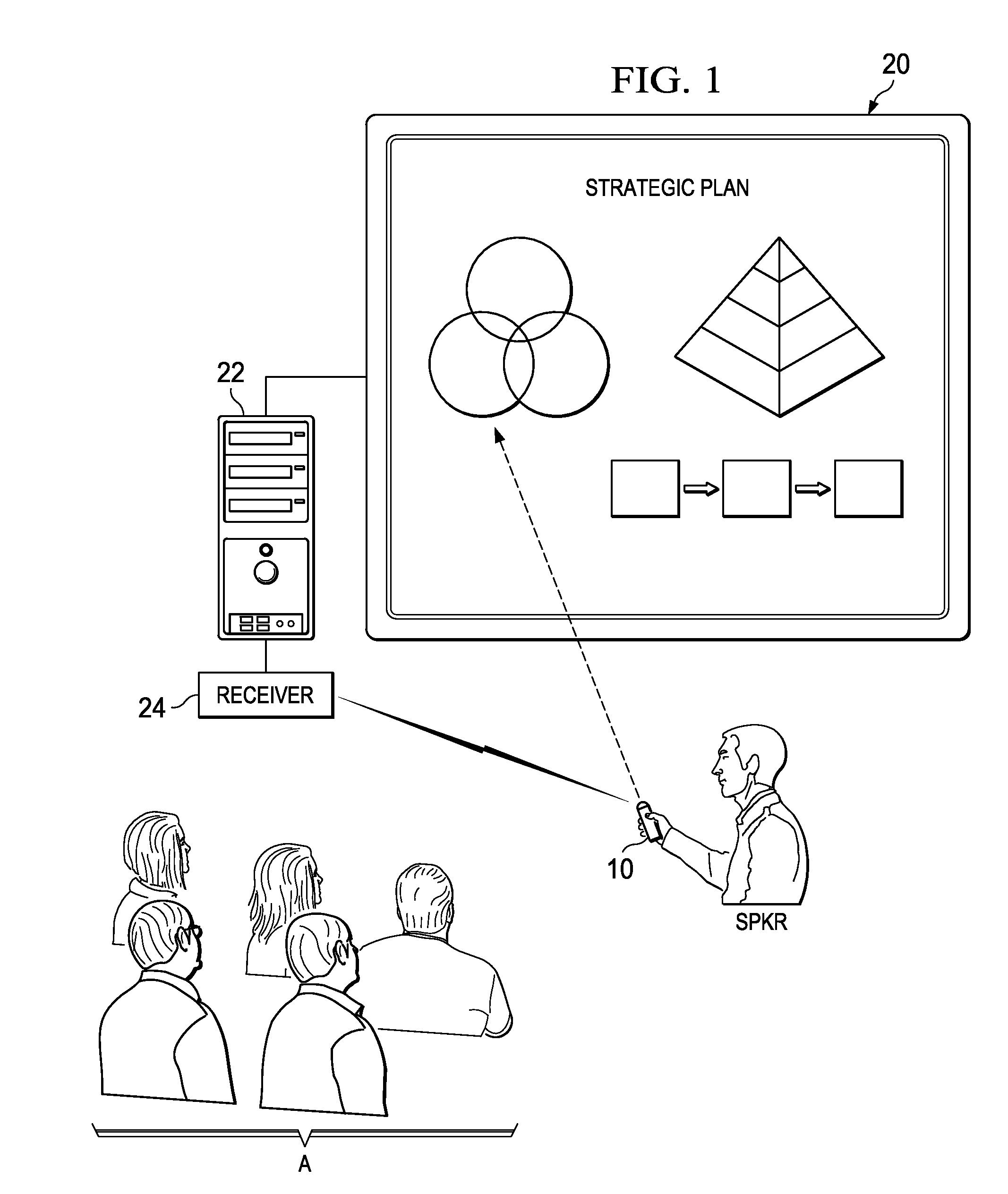

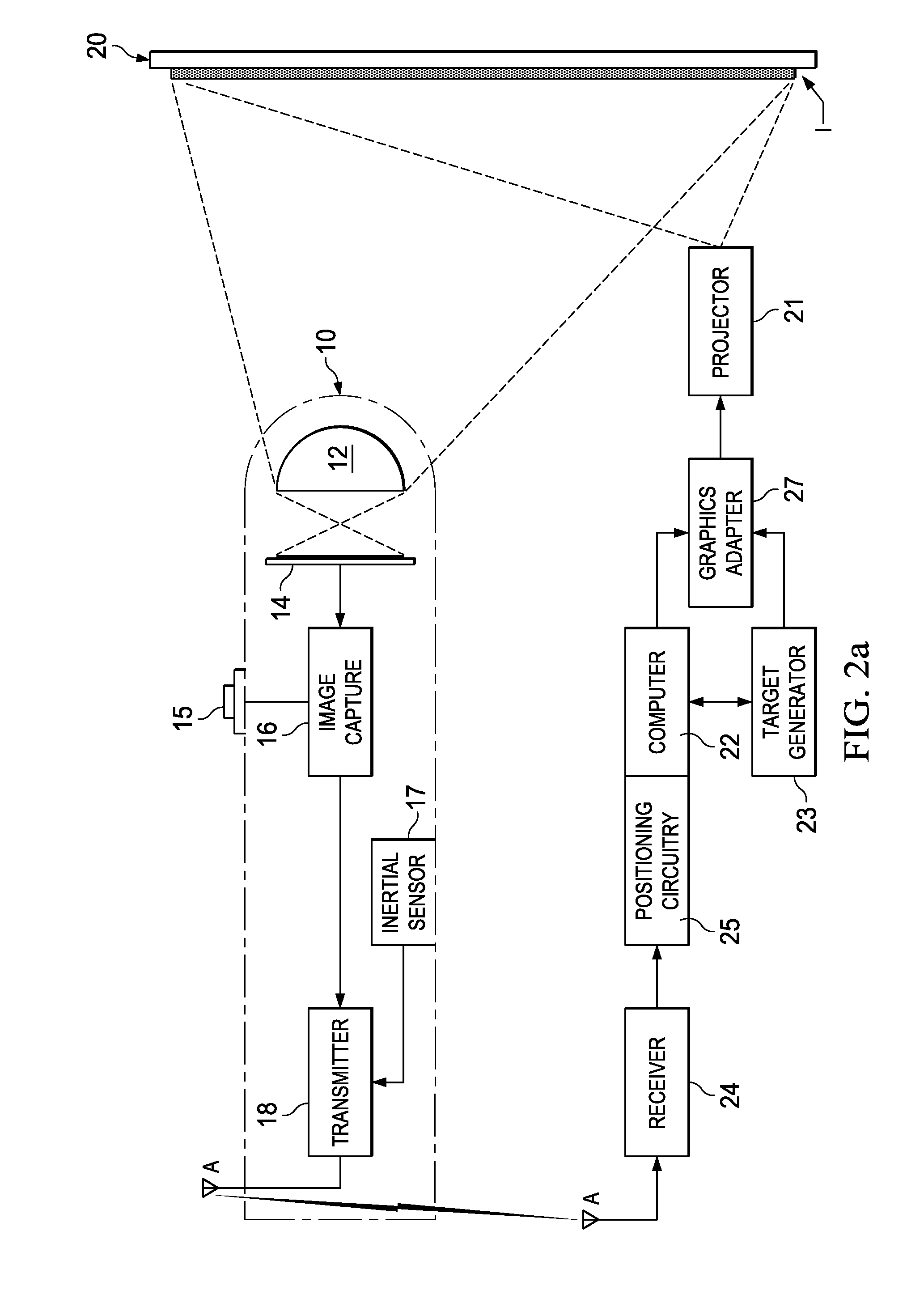

Interactive Display System

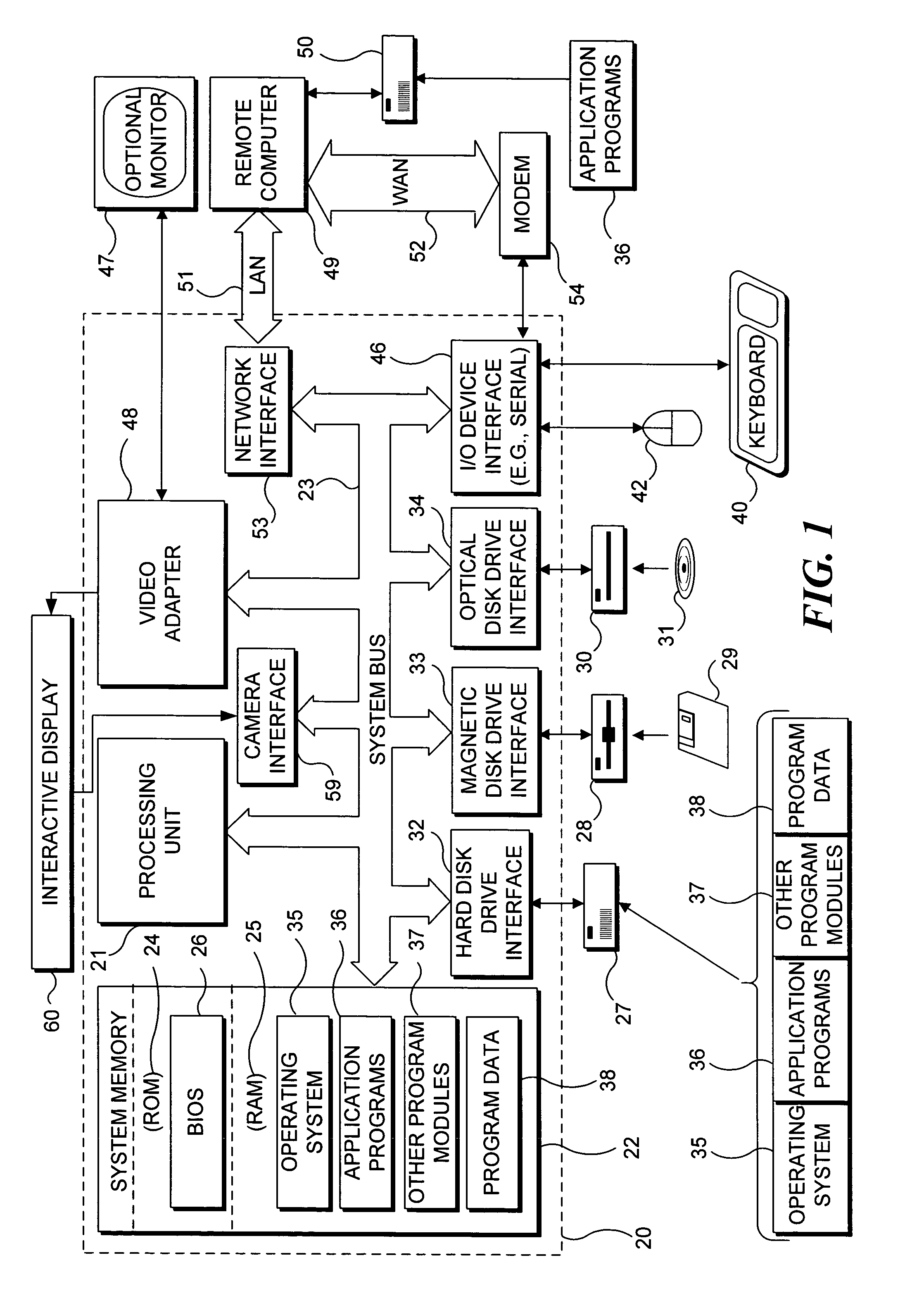

InactiveUS20110227827A1Reduce human perceptibilityPositioning can be rapidly and efficientlyInput/output for user-computer interactionCathode-ray tube indicatorsDisplay deviceConsecutive frame

An interactive display system including a wireless pointing device including a camera or other video capture system. The pointing device captures images displayed by the computer, including one or more human-imperceptible positioning targets. The positioning targets are presented as patterned modulation of the intensity (e.g., variation in pixel intensity) in a display frame of the visual payload, followed by the opposite modulation in a successive frame. At least two captured image frames are subtracted from one another to recover the positioning target in the captured visual data and to remove the displayed image payload. The location, size, and orientation of the recovered positioning target identify the aiming point of the remote pointing device relative to the display. Another embodiment uses temporal sequencing of positioning targets (either human-perceptible or human-imperceptible) to position the pointing device.

Owner:INTERPHASE CORP

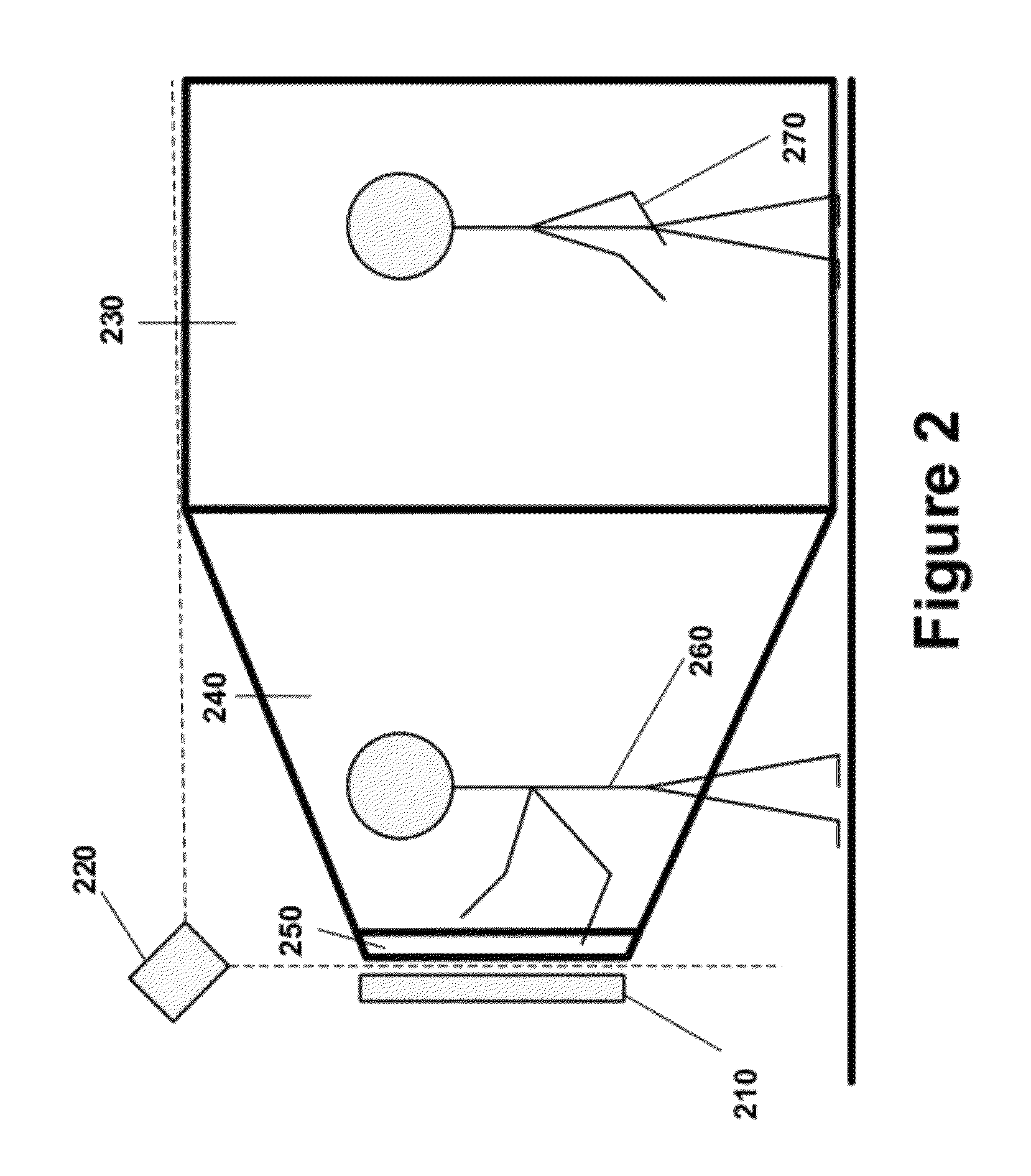

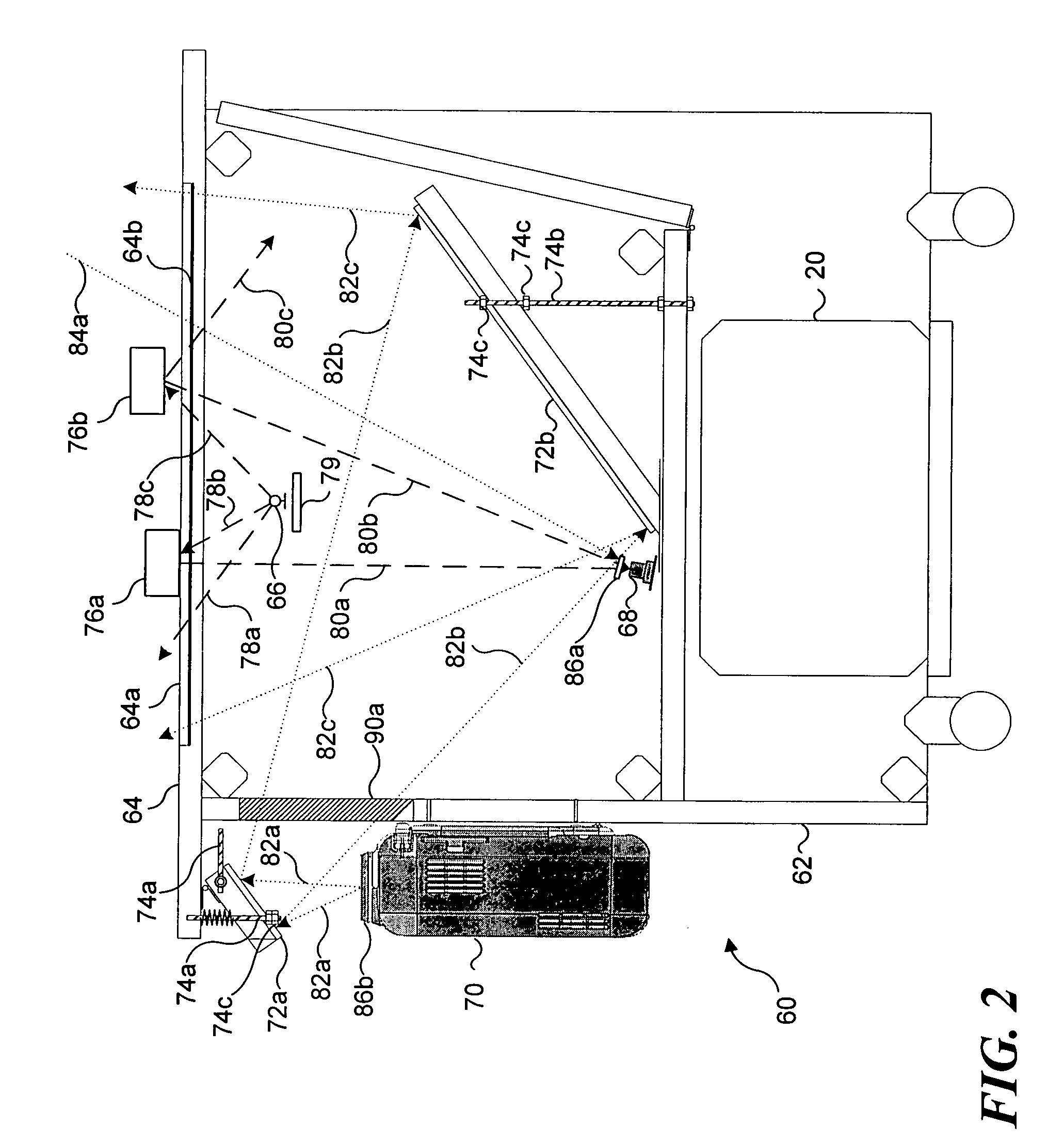

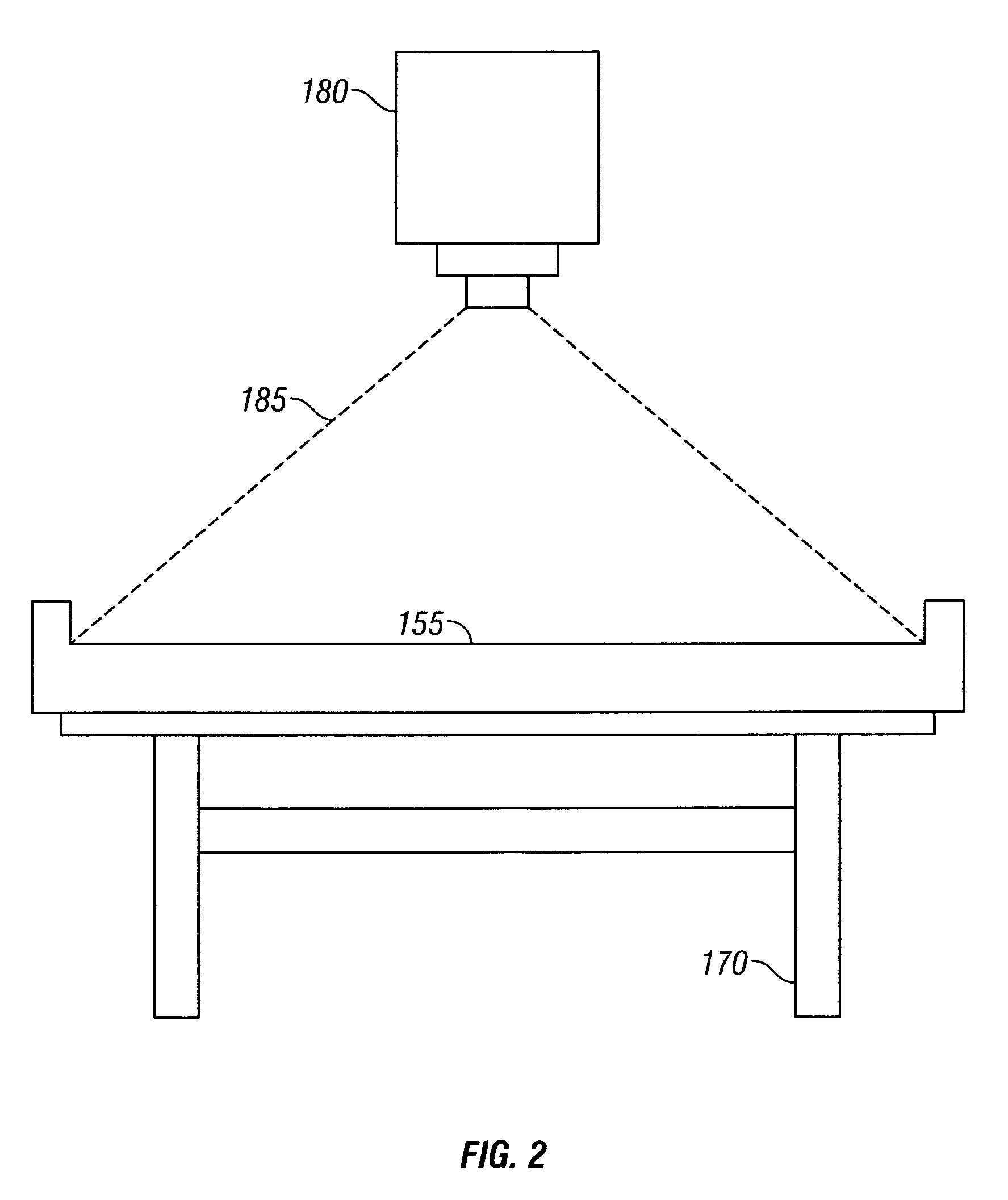

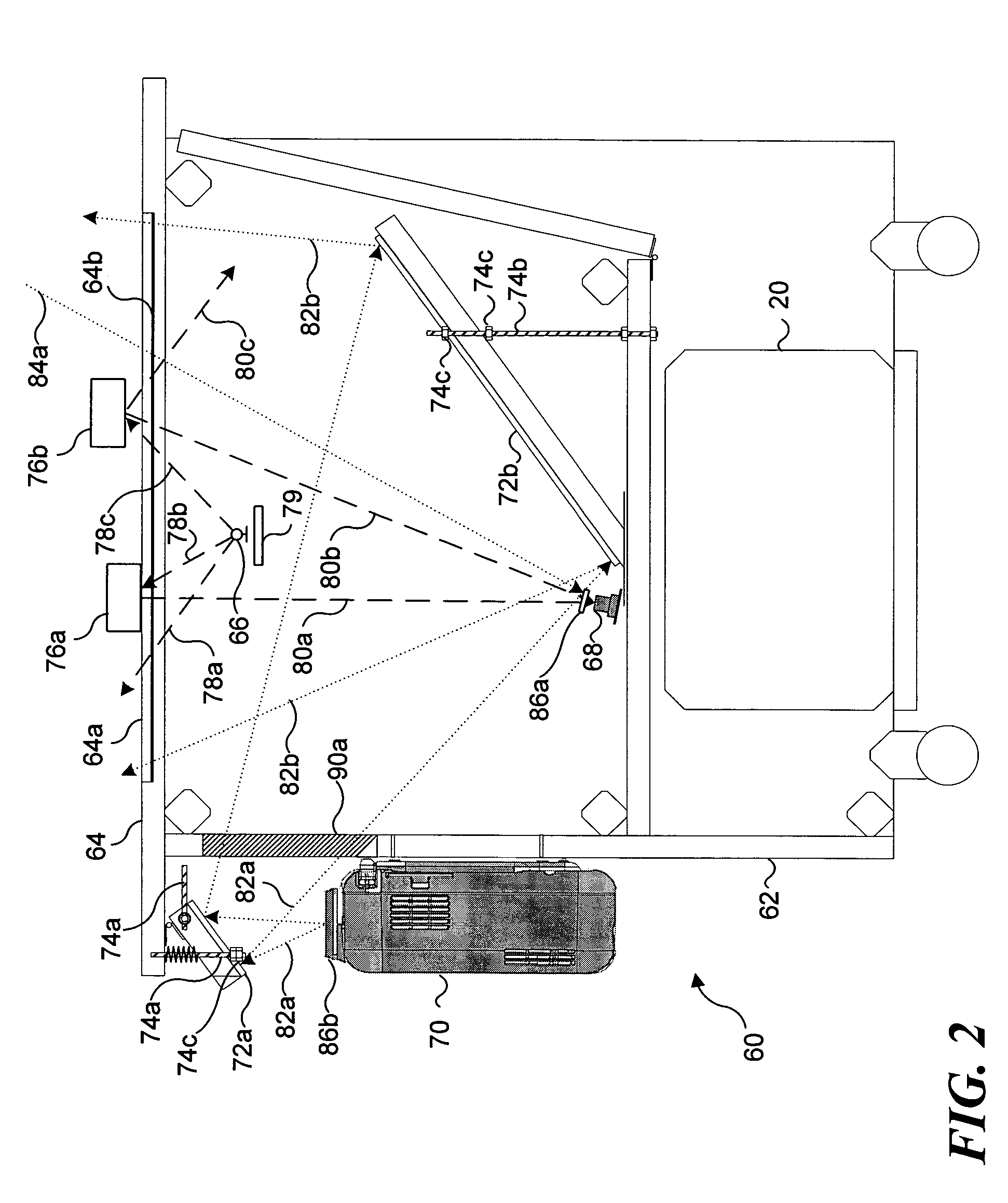

Touch detecting interactive display

The invention provides an interactive display that is controlled by user gestures identified on a touch detecting display surface. In the preferred embodiment of the invention, imagery is projected onto a horizontal projection surface from a projector located above the projection surface. The locations where a user contacts the projection surface are detected using a set of infrared emitters and receivers arrayed around the perimeter of the projection surface. For each contact location, a computer software application stores a history of contact position information and, from the position history, determines a velocity for each contact location. Based upon the position history and the velocity information, gestures are identified. The identified gestures are associated with display commands that are executed to update the displayed imagery accordingly. Thus, the invention enables users to control the display through direct physical interaction with the imagery.

Owner:QUALCOMM INC

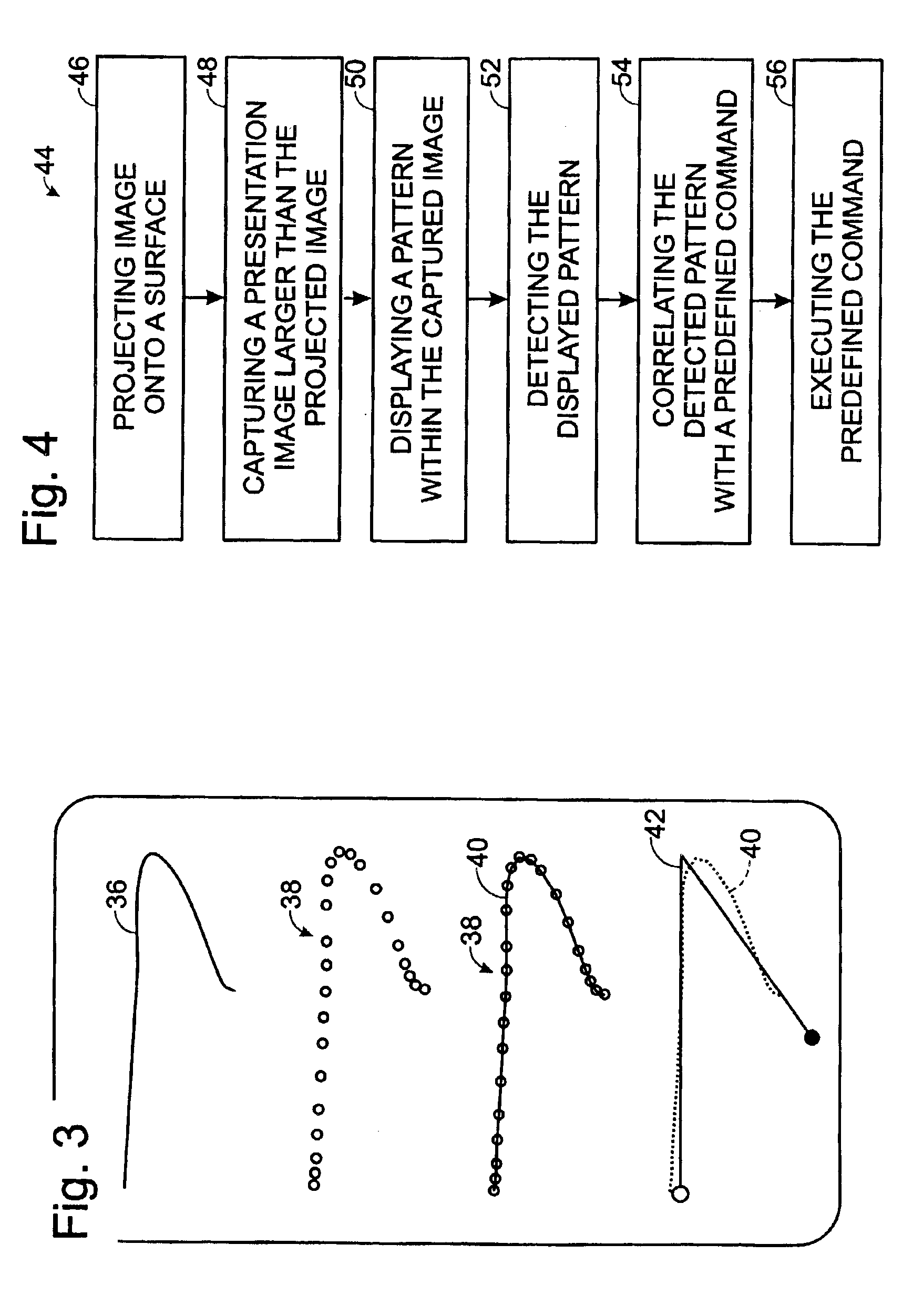

Interactive display device

InactiveUS6840627B2Input/output for user-computer interactionTelevision system detailsDisplay deviceInteractive displays

A display device including a light engine configured to project an image onto a display surface, an image sensor configured to capture a presentation image larger than the projected image, and a processor, coupled to the image sensor, that is configured to modify the projected image based on the captured image.

Owner:HEWLETT PACKARD DEV CO LP

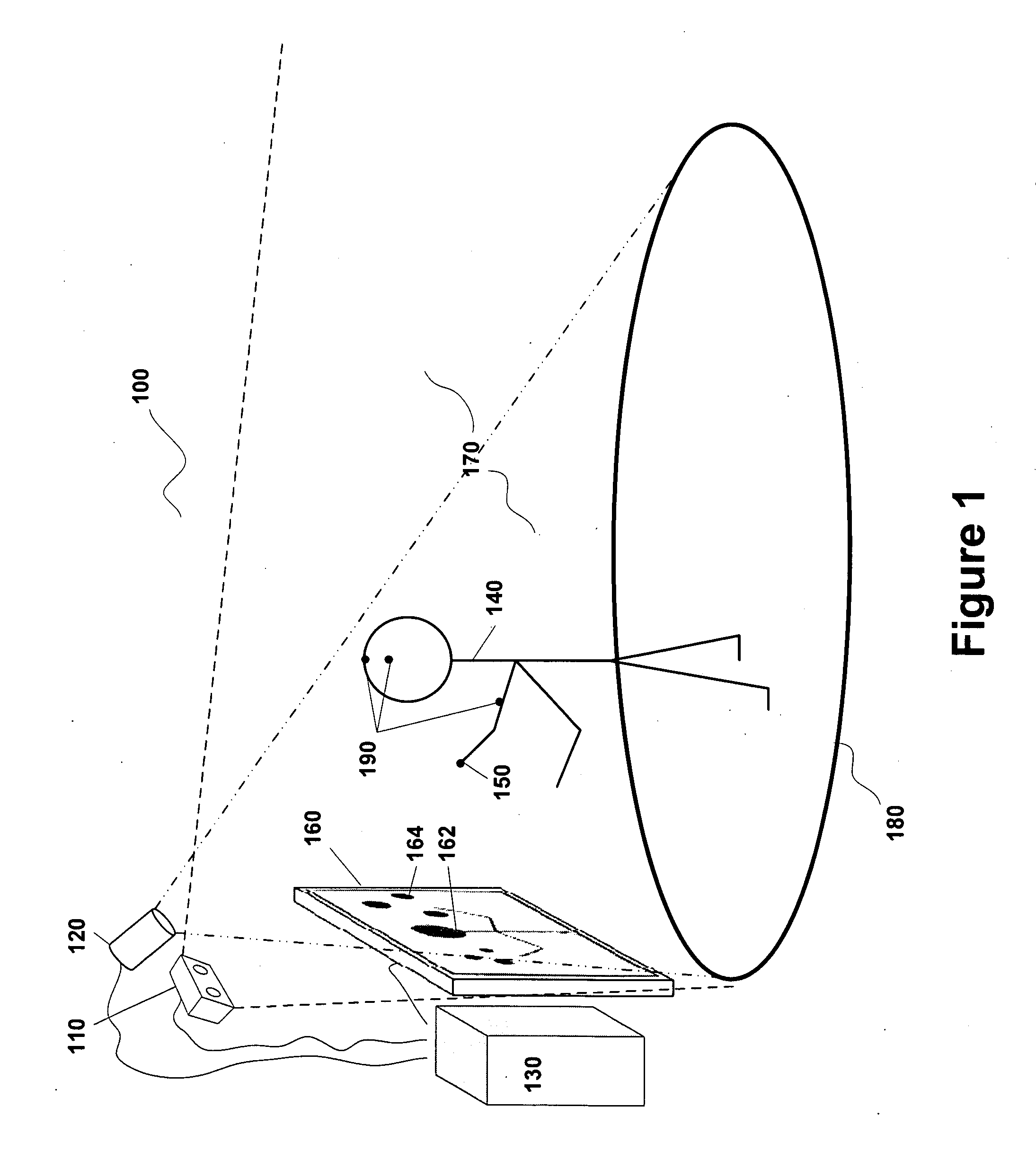

System and method for providing an interactive display

The present invention is directed to a method and system for organizing and displaying items for a user interface. The method includes providing a plurality of three-dimensional items, each three-dimensional item representing user information, and arranging the three-dimensional items around a perimeter, wherein the perimeter forms a portion of a closed area. The closed area may be an ellipse, circle, or other geometric shape. The three-dimensional items include at least one item in a focus position. Typically the three-dimensional items will also include at least one item in a peripheral position and may also include background items. The items are capable of rotating around the perimeter. Additionally, the method and system may scale the items in a manner appropriate to a position along the perimeter. The method and system additionally provide for rotation of the items around the perimeter upon receiving a user request.

Owner:MICROSOFT TECH LICENSING LLC

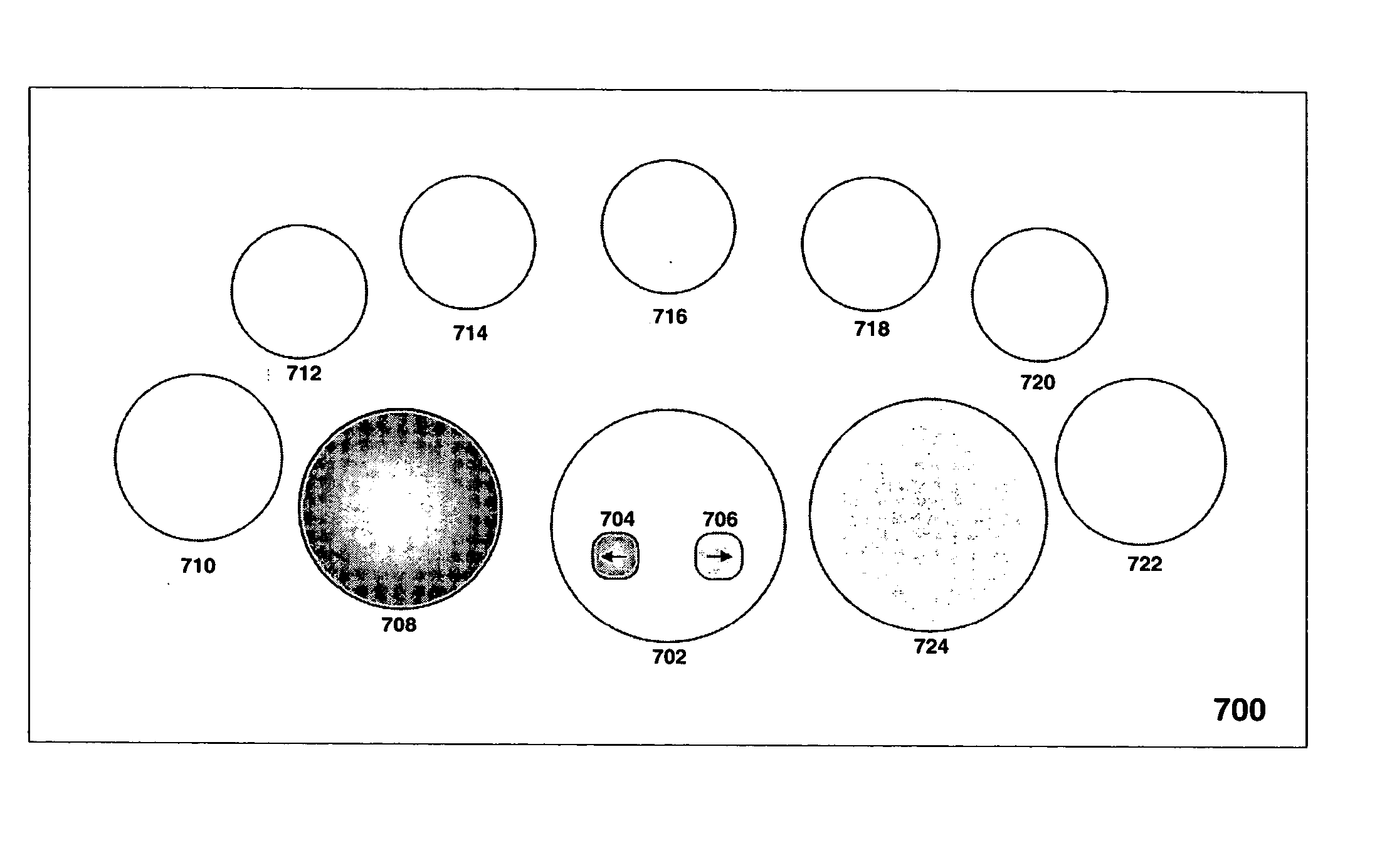

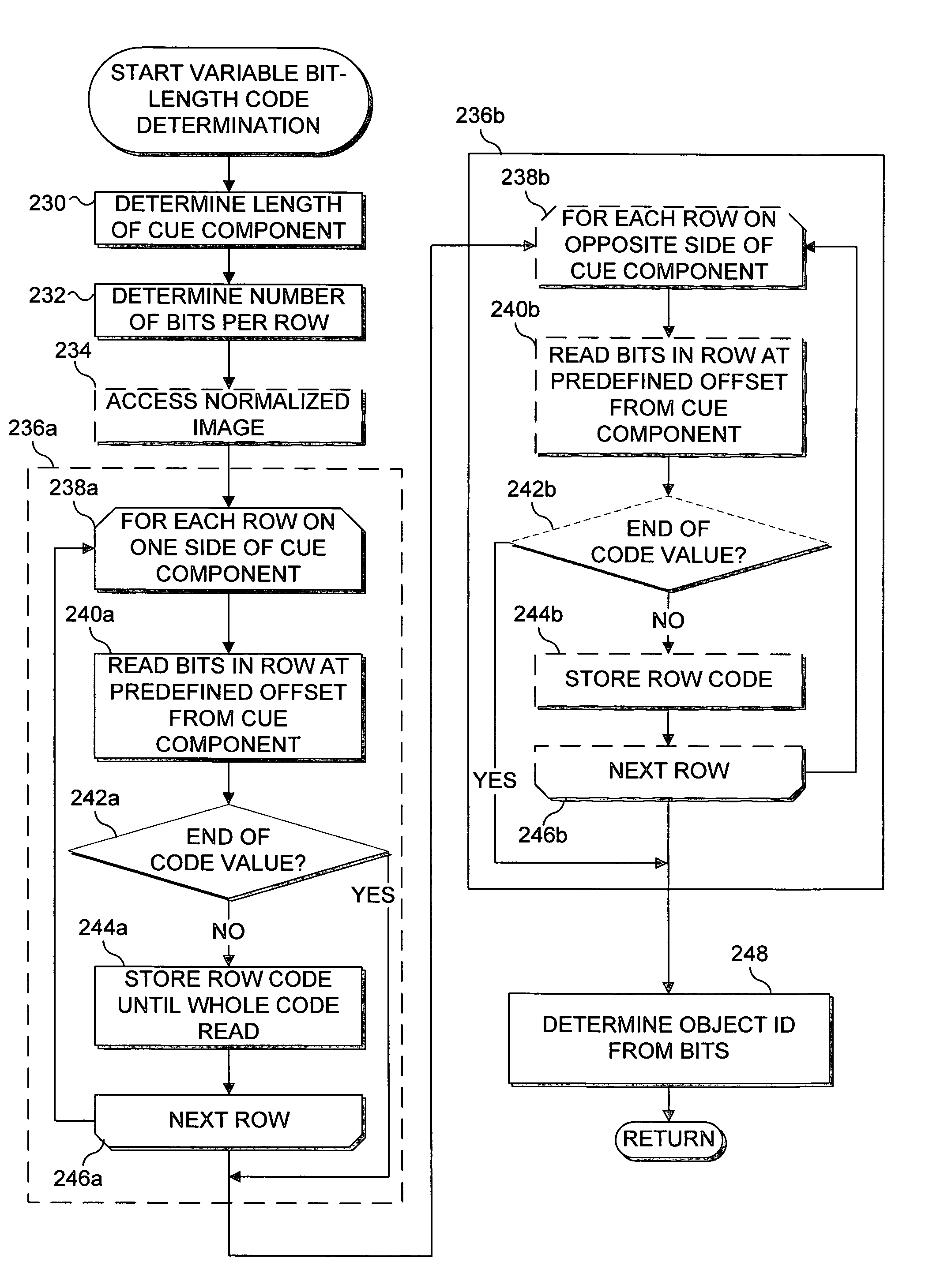

Identification of object on interactive display surface by identifying coded pattern

InactiveUS7204428B2Noise minimizationCharacter and pattern recognitionRecord carriers used with machinesDisplay deviceInteractive displays

A coded pattern applied to an object is identified when the object is placed on a display surface of an interactive display. The coded pattern is detected in an image of the display surface produced in response to reflected infrared (IR) light received from the coded pattern by an IR video camera disposed on an opposite side of the display surface from the object. The coded pattern can be either a circular, linear, matrix, variable bit length matrix, multi-level matrix, black / white (binary), or gray scale pattern. The coded pattern serves as an identifier of the object and includes a cue component and a code portion disposed in a predefined location relative to the cue component. A border region encompasses the cue component and the code portion and masks undesired noise that might interfere with decoding the code portion.

Owner:MICROSOFT TECH LICENSING LLC

Using clear-coded, see-through objects to manipulate virtual objects

ActiveUS20060092170A1Improve usabilityCathode-ray tube indicatorsVideo gamesComputer visionInteractive displays

An object placed on an interactive display surface is detected and its position and orientation are determined in response to IR light that is reflected from an encoded marking on the object. Upon detecting the object on an interactive display surface, a software program produces a virtual entity or image visible through the object to perform a predefined function. For example, the object may appear to magnify text visible through the object, or to translate a word or phrase from one language to another, so that the translated word or phrase is visible through the object. When the object is moved, the virtual entity or image that is visible through the object may move with it, or can control the function being performed. A plurality of such objects can each display a portion of an image, and when correctly positioned, together will display the entire image, like a jigsaw puzzle.

Owner:ZHIGU HLDG

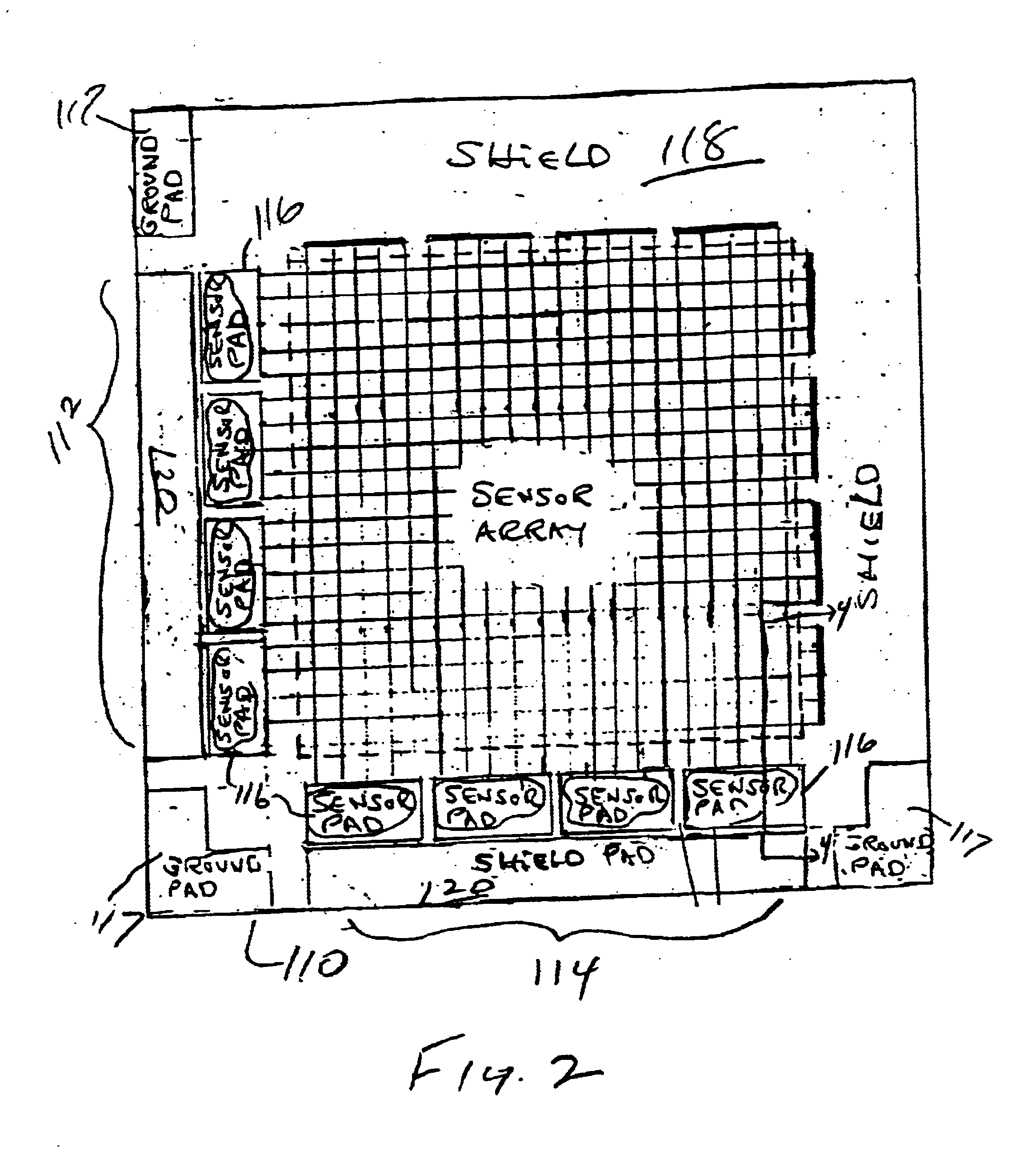

Three dimensional interactive display

InactiveUS6847354B2Improve manual input to displayed response coordinationIncrease inputInput/output for user-computer interactionCathode-ray tube indicatorsAudio power amplifierDisplay device

A three-dimensional (3-D) interactive display and method of forming the same, includes a transparent capaciflector (TC) camera formed on a transparent shield layer on the screen surface. A first dielectric layer is formed on the shield layer. A first wire layer is formed on the first dielectric layer. A second dielectric layer is formed on the first wire layer. A second wire layer is formed on the second dielectric layer. Wires on the first wire layer and second wire layer are grouped into groups of parallel wires with a turnaround at one end of each group and a sensor pad at the opposite end. An operational amplifier is connected to each of the sensor pads and the shield pad biases the pads and receives a signal from connected sensor pads in response to intrusion of a probe. The signal is proportional to probe location with respect to the monitor screen.

Owner:NASA

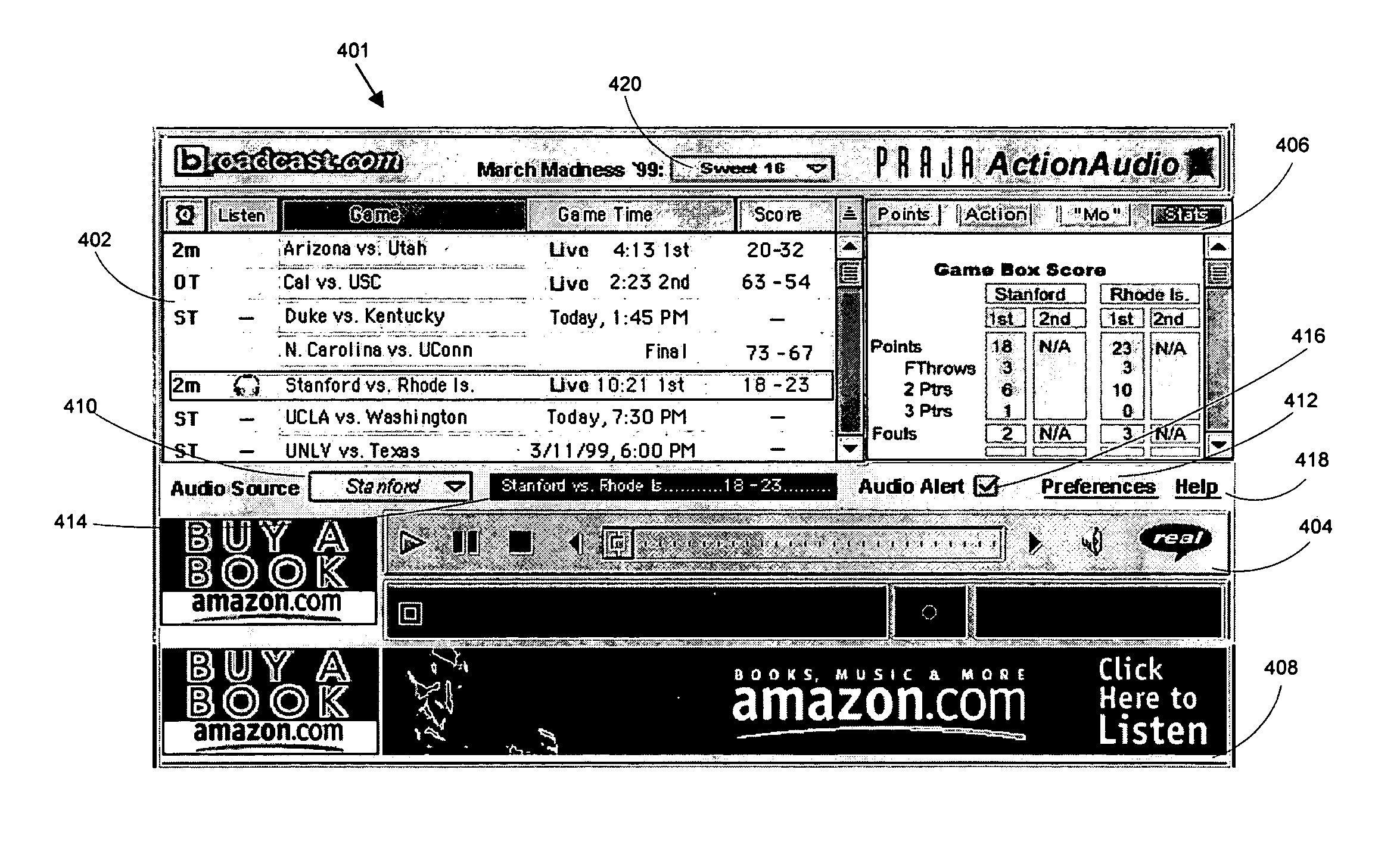

Intelligent console for content-based interactivity

The intelligent console method and apparatus of the present invention includes a powerful, intuitive, yet highly flexible means for accessing a multi-media system having multiple multi-media data types. The present intelligent console provides an interactive display of linked multi-media events based on a user's personal taste. The intelligent console includes a graph / data display that can provide several graphical representations of the events that satisfy user queries. The user can access an event simply by selecting the time of interest on the timeline of the graph / data display. Because the system links together all of the multi-media data types associated with a selected event, the intelligent console synchronizes and displays the multiple media data when a user selects the event. Complex queries can be made using the present intelligent console. The user is alerted to the events satisfying the complex queries and if the user chooses, the corresponding and associated multi-media data is displayed.

Owner:CLOUD SOFTWARE GRP INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com