Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

3317 results about "Interaction systems" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The Interaction System is an interface between the player and their environment within a video game. In the Splinter Cell series, the Interaction System is used as an interface for the player to connect with their environment, cooperate with a person and do something not specifically defined to a basic control action.

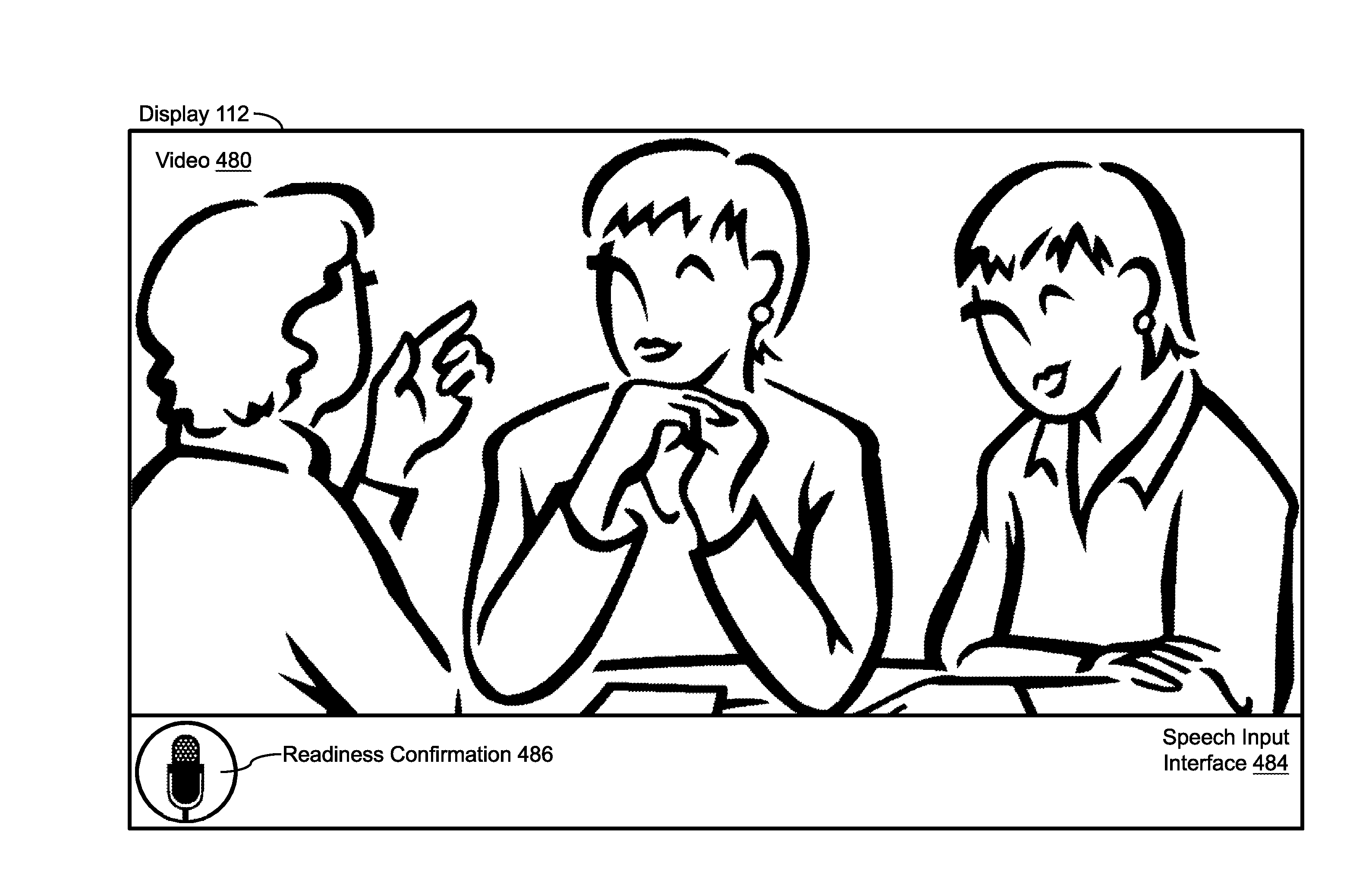

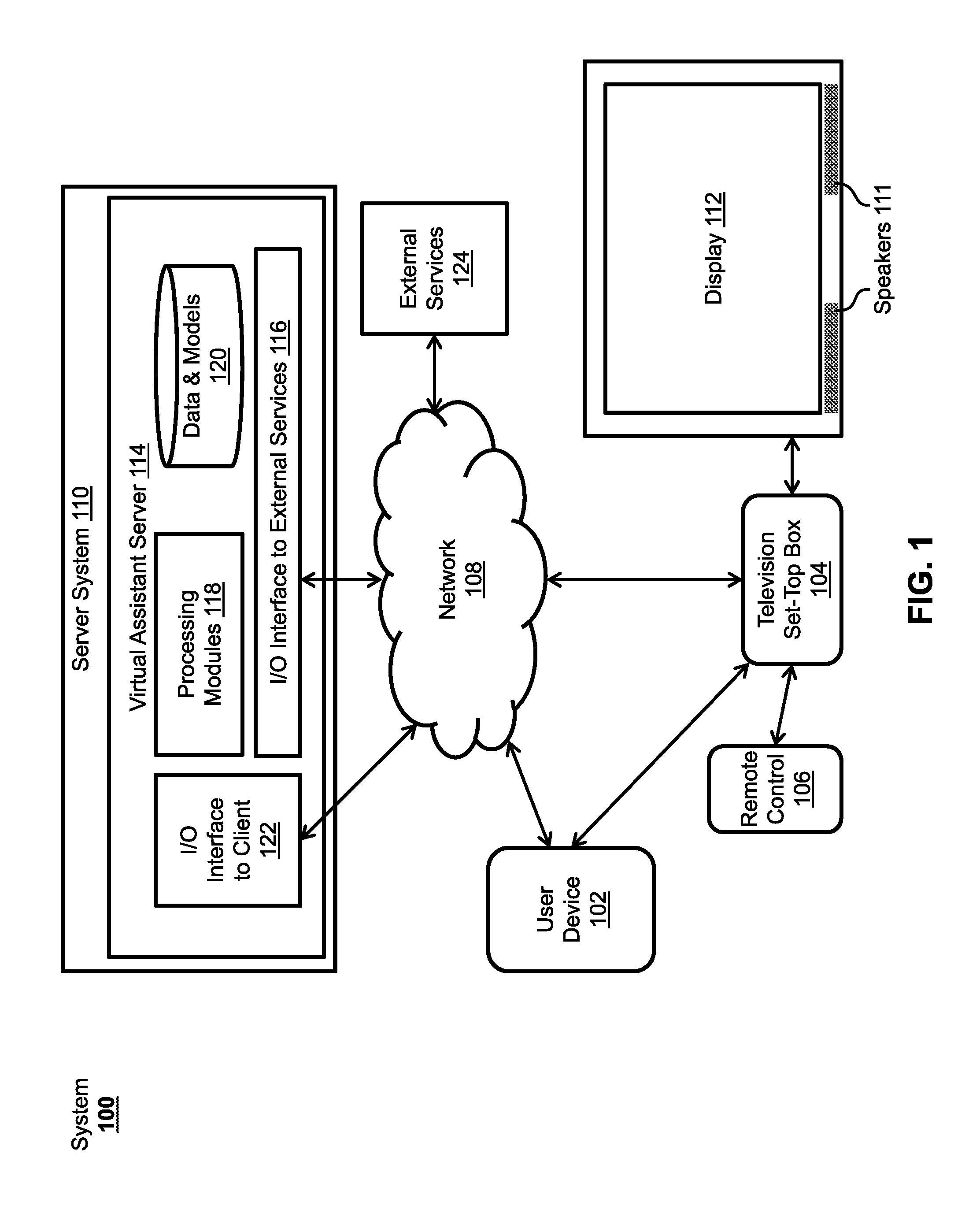

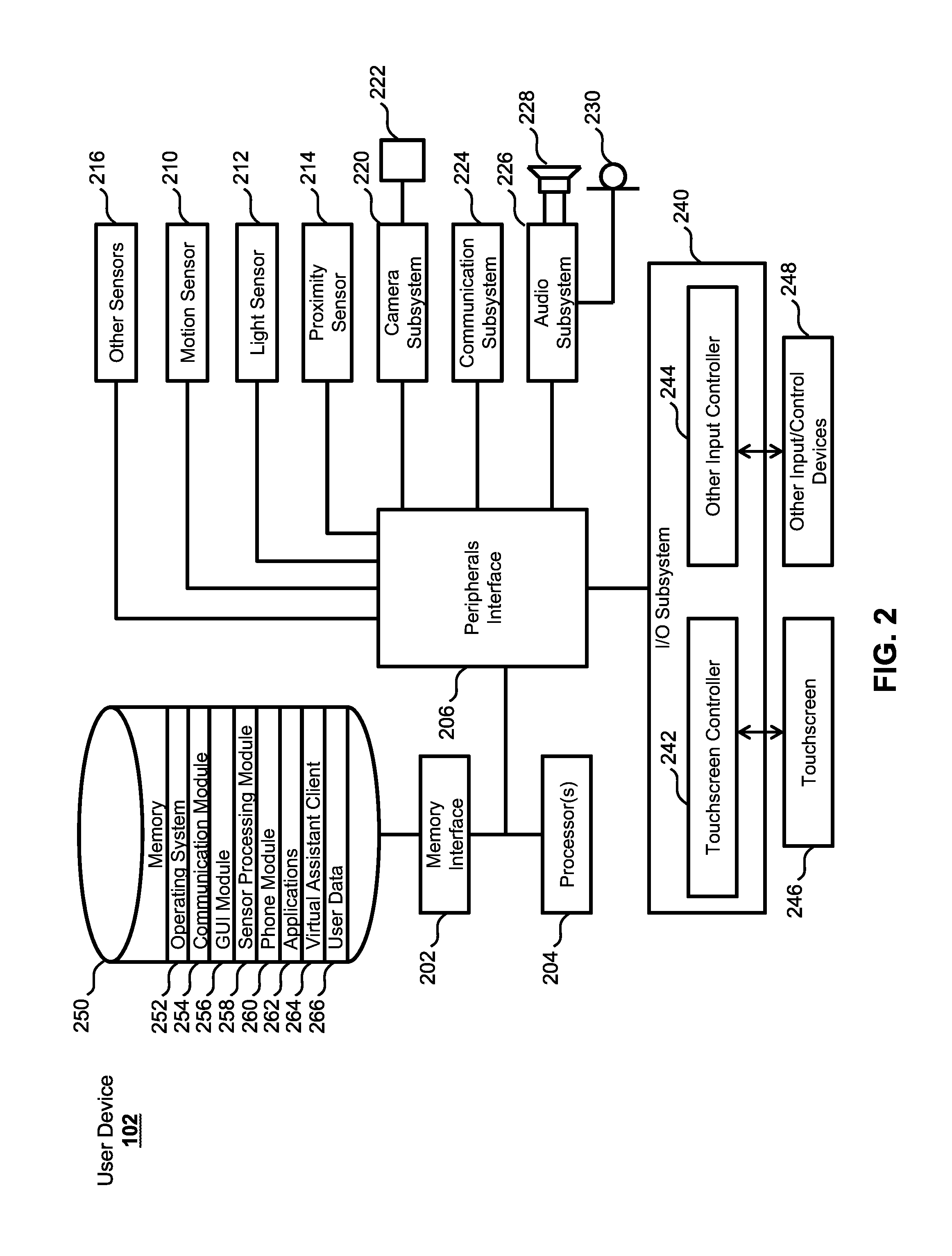

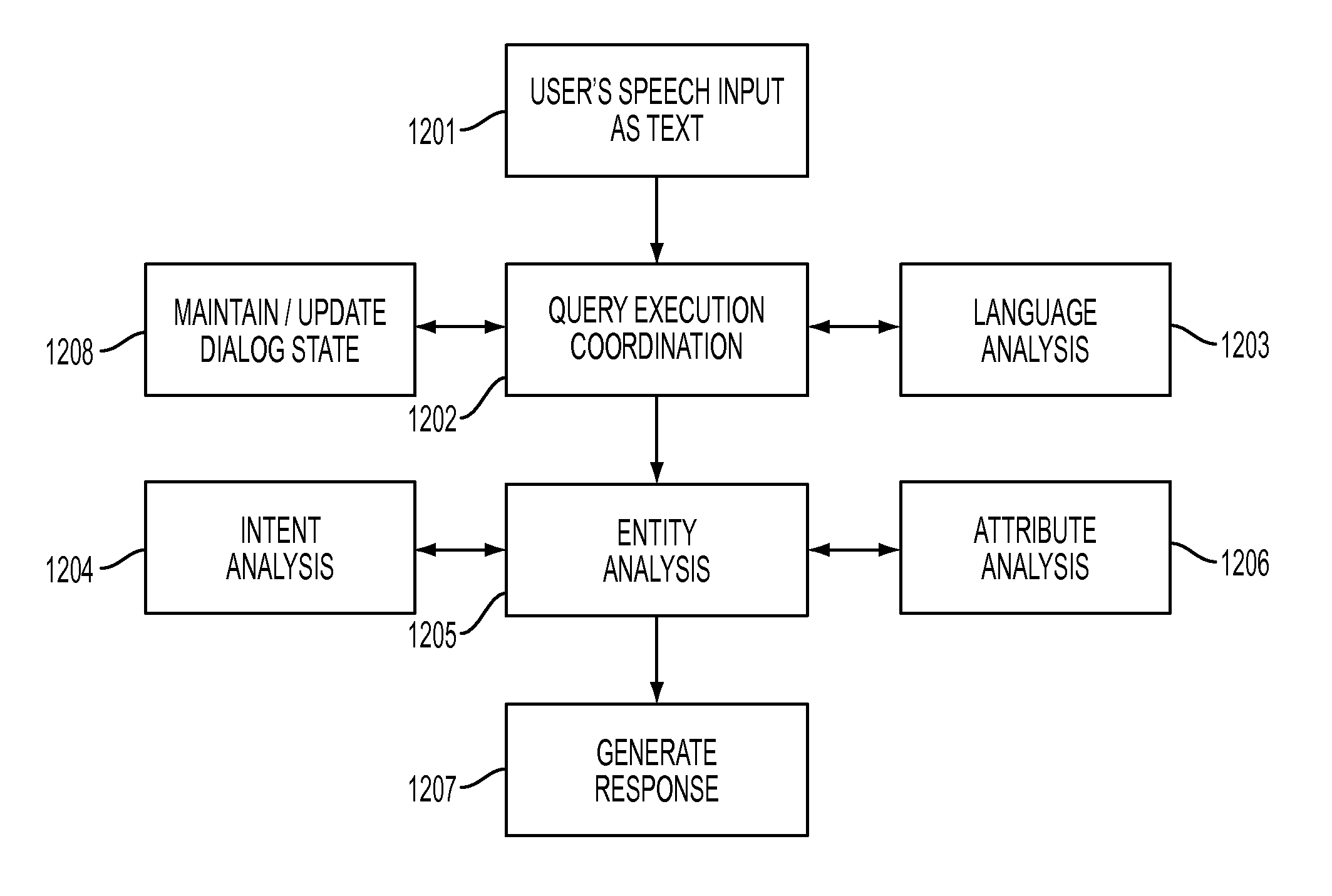

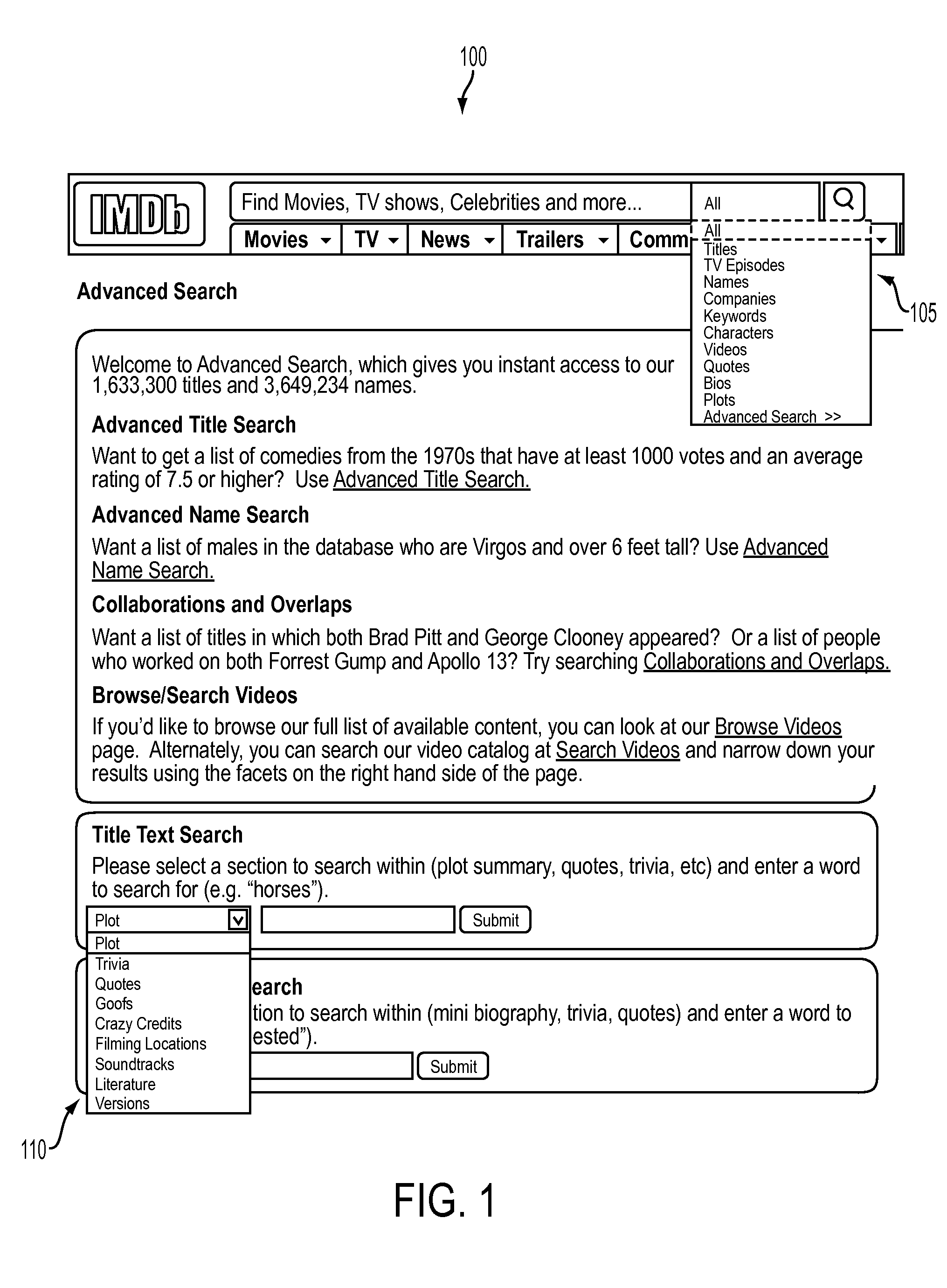

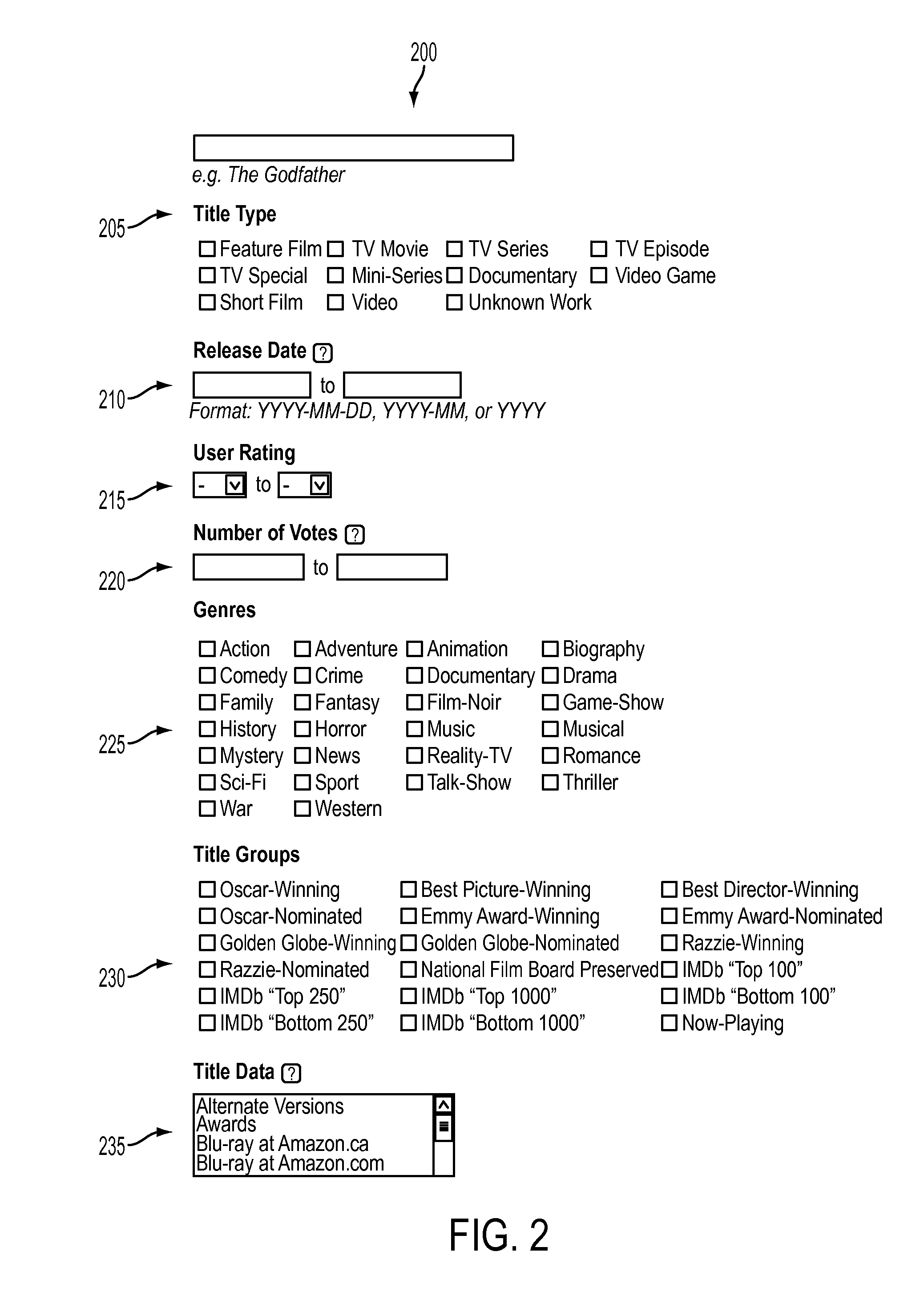

Intelligent automated assistant for TV user interactions

Systems and processes are disclosed for controlling television user interactions using a virtual assistant. A virtual assistant can interact with a television set-top box to control content shown on a television. Speech input for the virtual assistant can be received from a device with a microphone. User intent can be determined from the speech input, and the virtual assistant can execute tasks according to the user's intent, including causing playback of media on the television. Virtual assistant interactions can be shown on the television in interfaces that expand or contract to occupy a minimal amount of space while conveying desired information. Multiple devices associated with multiple displays can be used to determine user intent from speech input as well as to convey information to users. In some examples, virtual assistant query suggestions can be provided to the user based on media content shown on a display.

Owner:APPLE INC

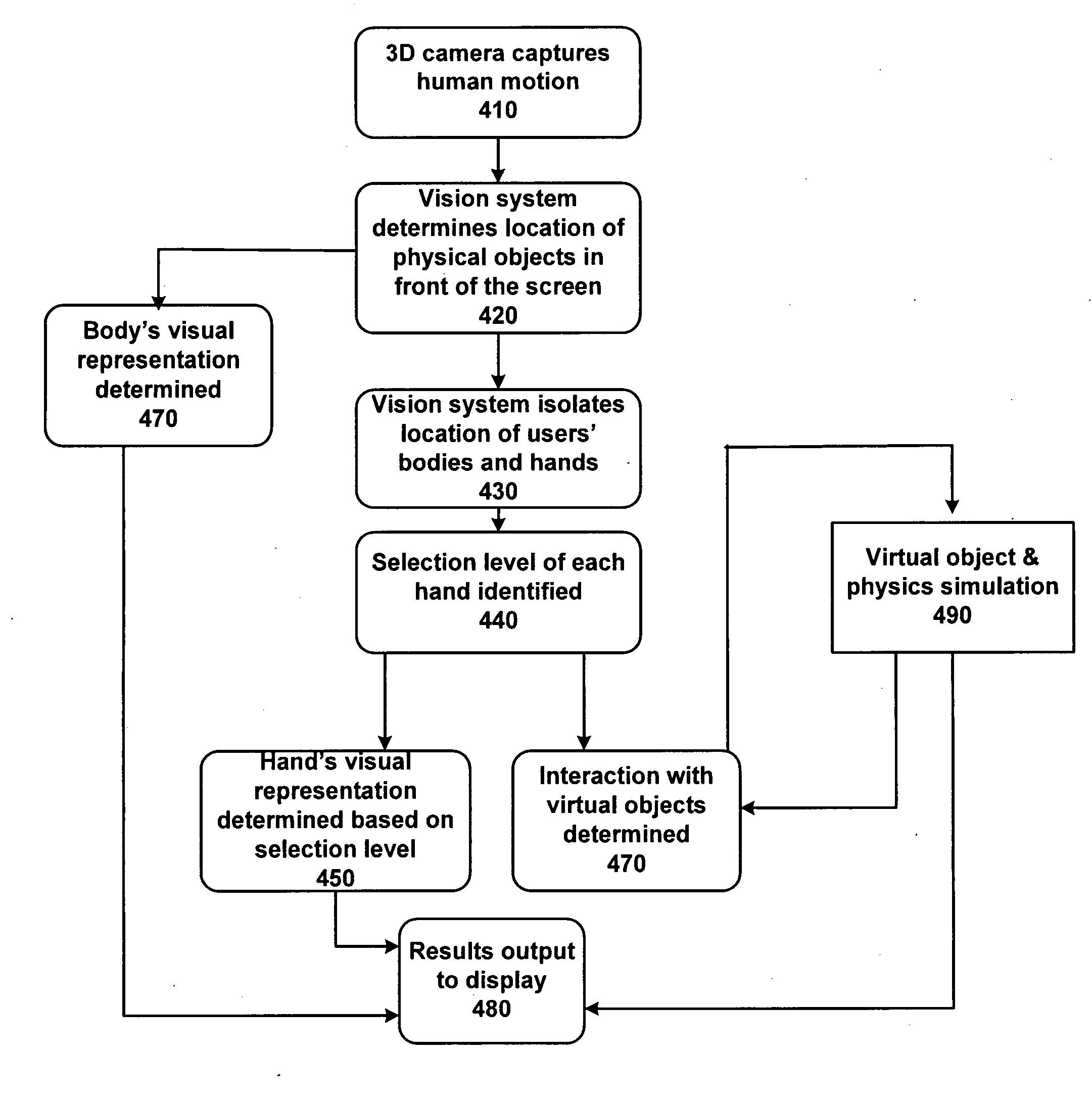

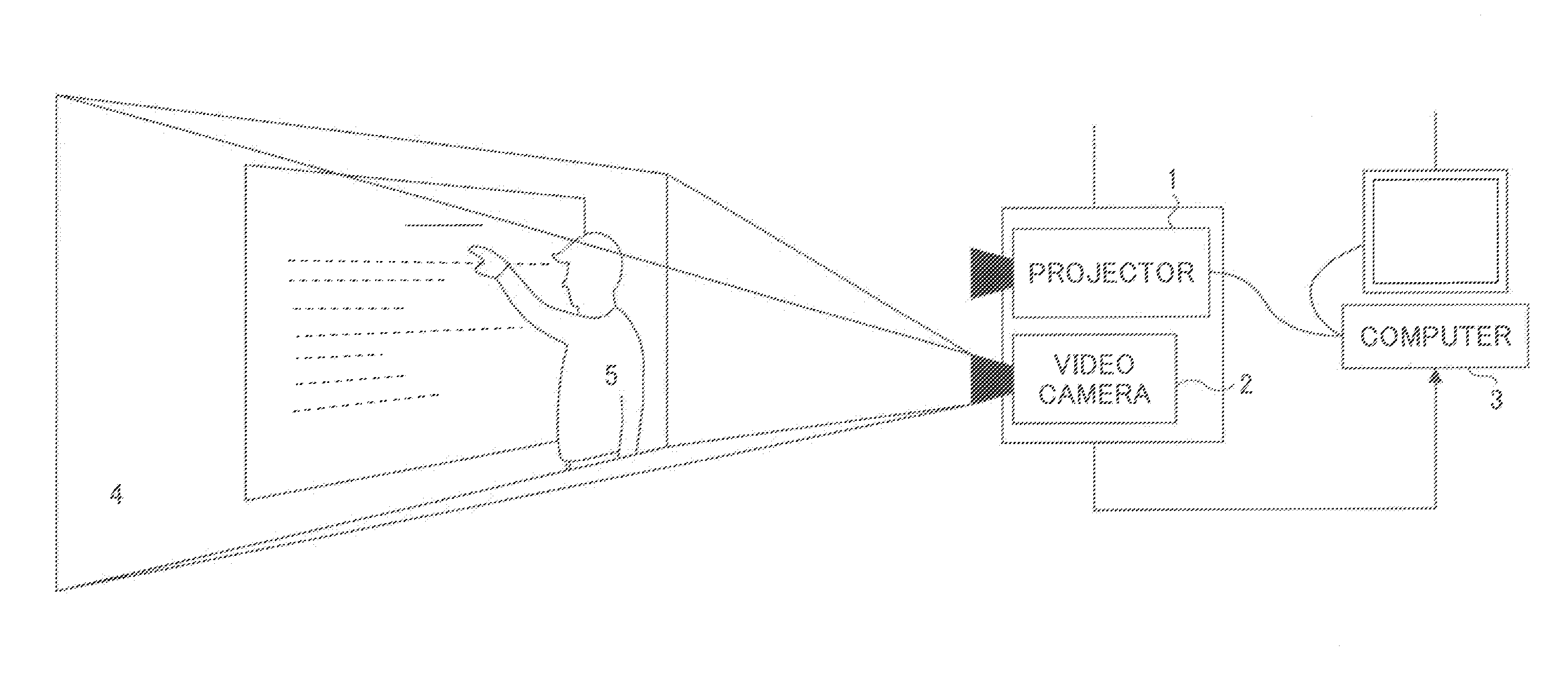

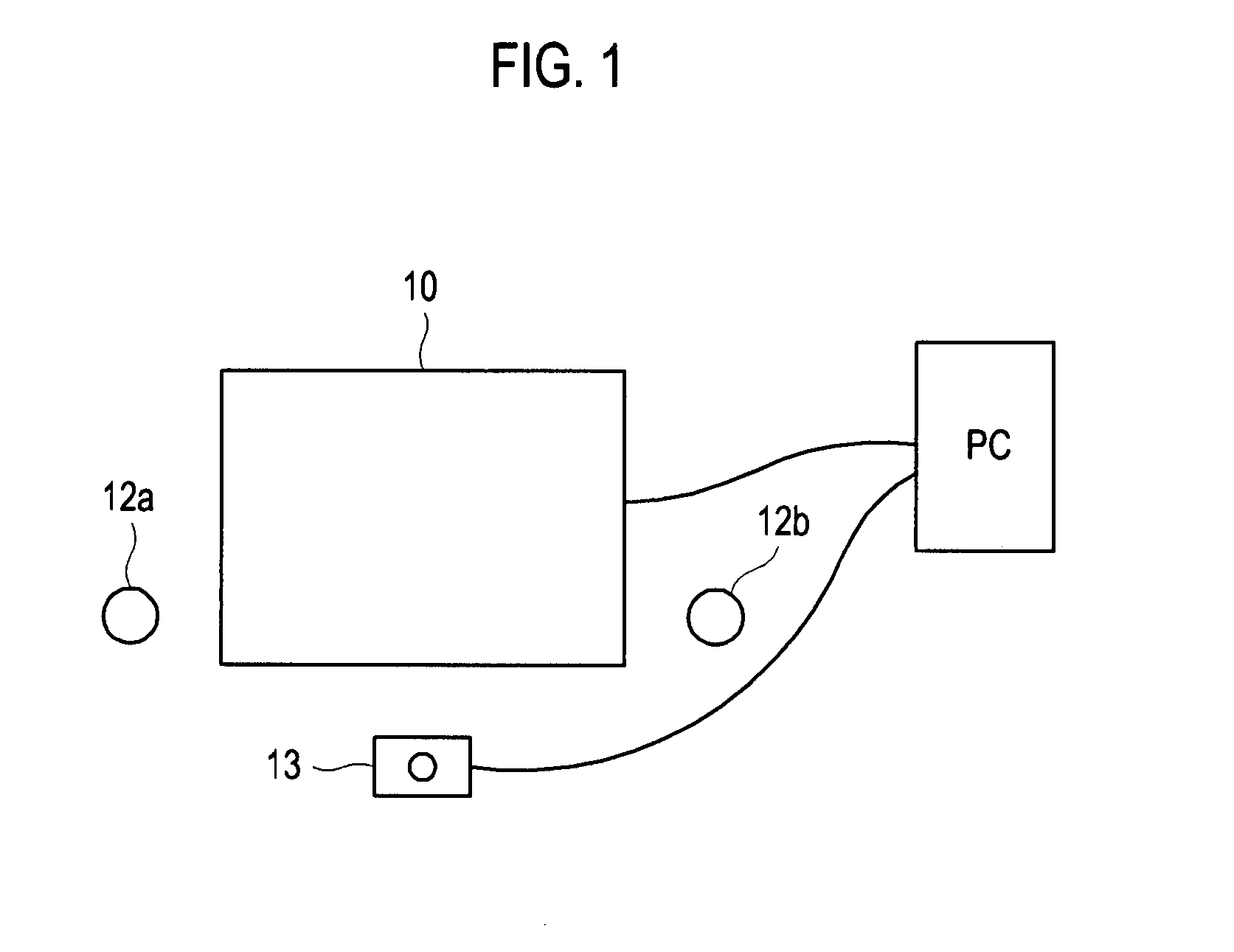

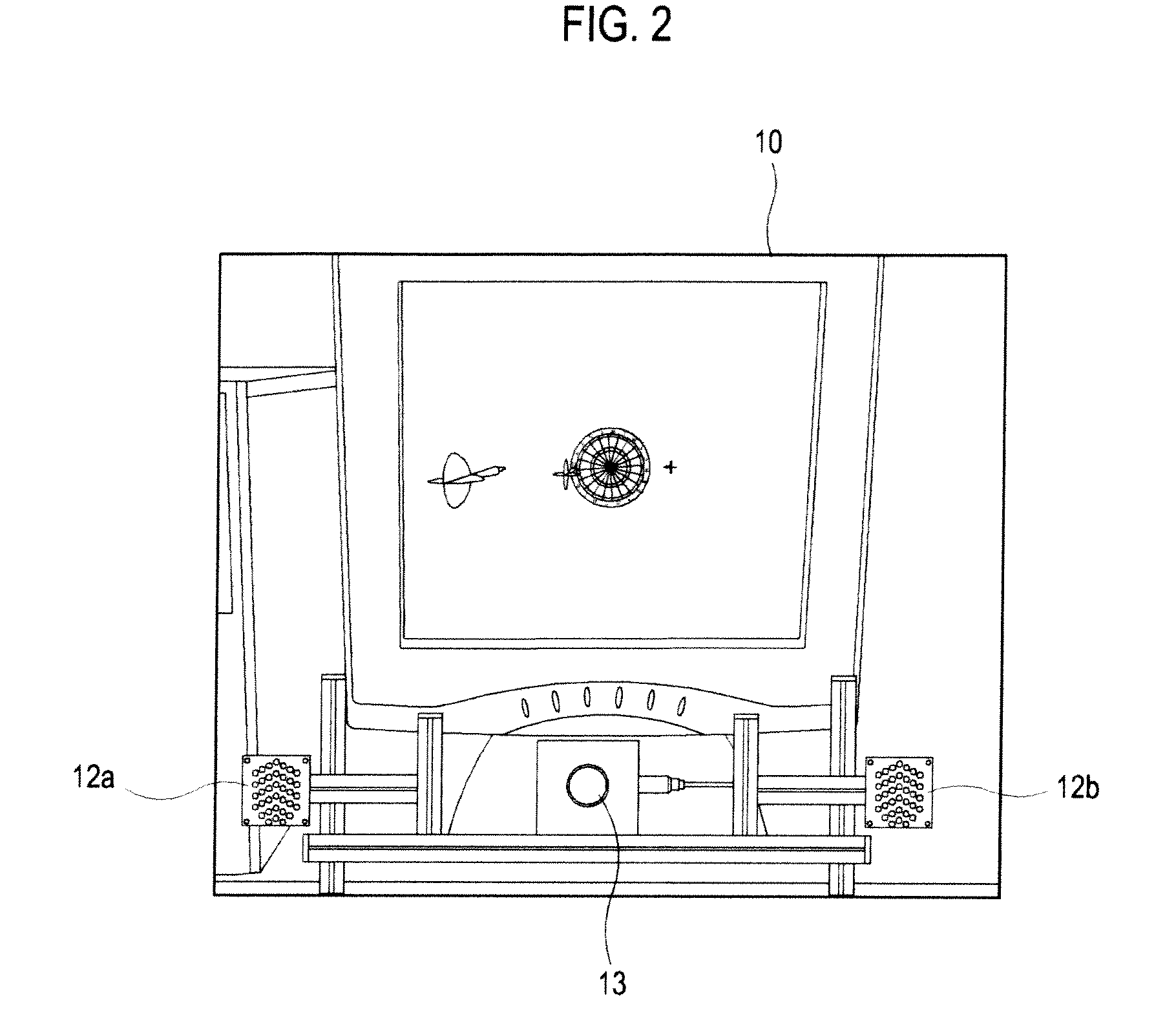

Processing of Gesture-Based User Interactions

ActiveUS20090077504A1Character and pattern recognitionUsing optical meansInteraction systemsDisplay device

Systems and methods for processing gesture-based user interactions with an interactive display are provided.

Owner:META PLATFORMS INC

Multi-participant, mixed-initiative voice interaction system

ActiveUS20090248420A1Devices with voice recognitionCalling susbscriber number recording/indicationInteraction systemsHuman–computer interaction

A voice interaction system includes one or more independent, concurrent state charts, which are used to model the behavior of each of a plurality of participants. The model simplifies the notation and provide a clear description of the interactions between multiple participants. These state charts capture the flow of voice prompts, the impact of externally initiated events and voice commands, and capture the progress of audio through each prompt. This system enables a method to prioritize conflicting and concurrent events leveraging historical patterns and the progress of in-progress prompts.

Owner:VALUE8 CO LTD

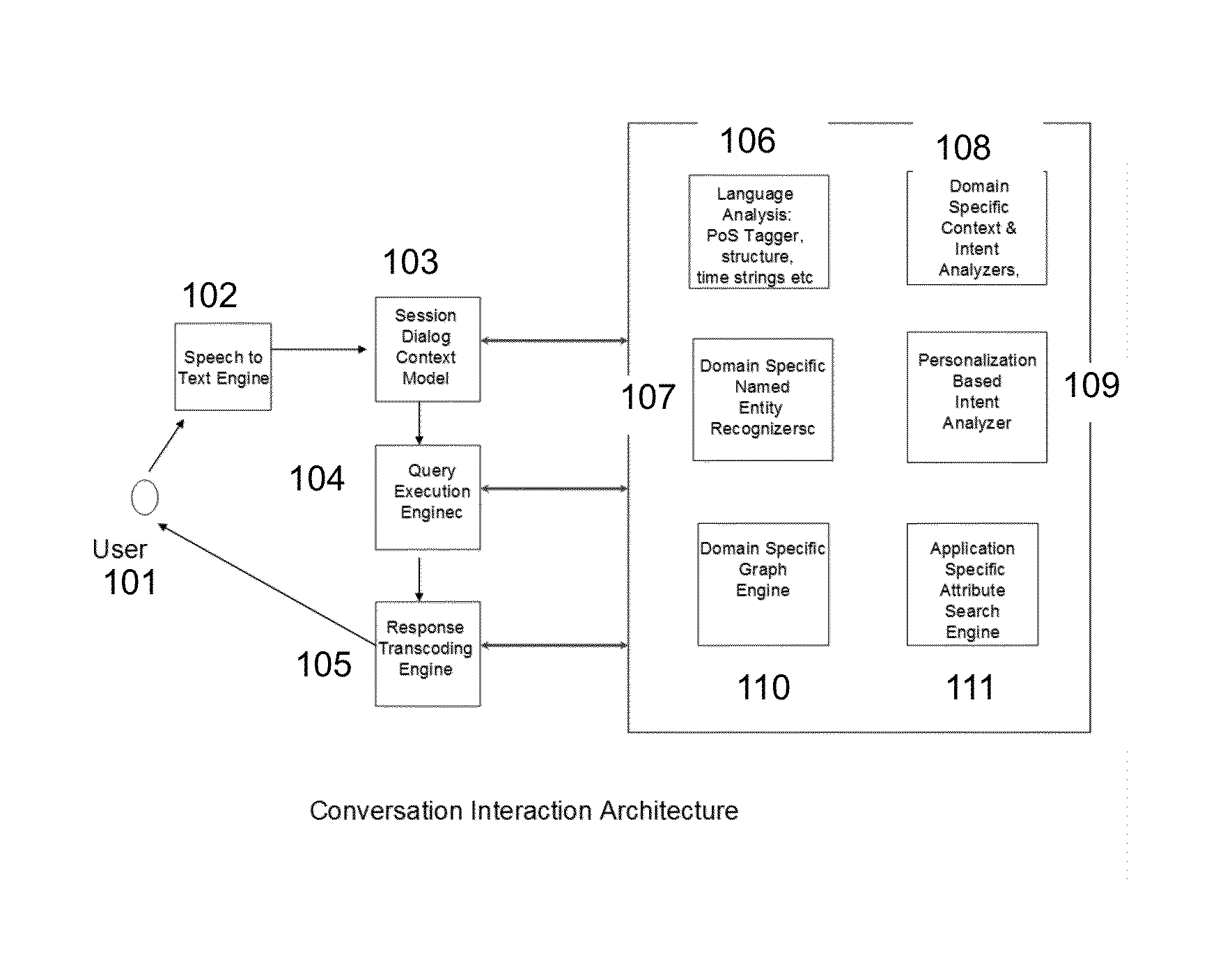

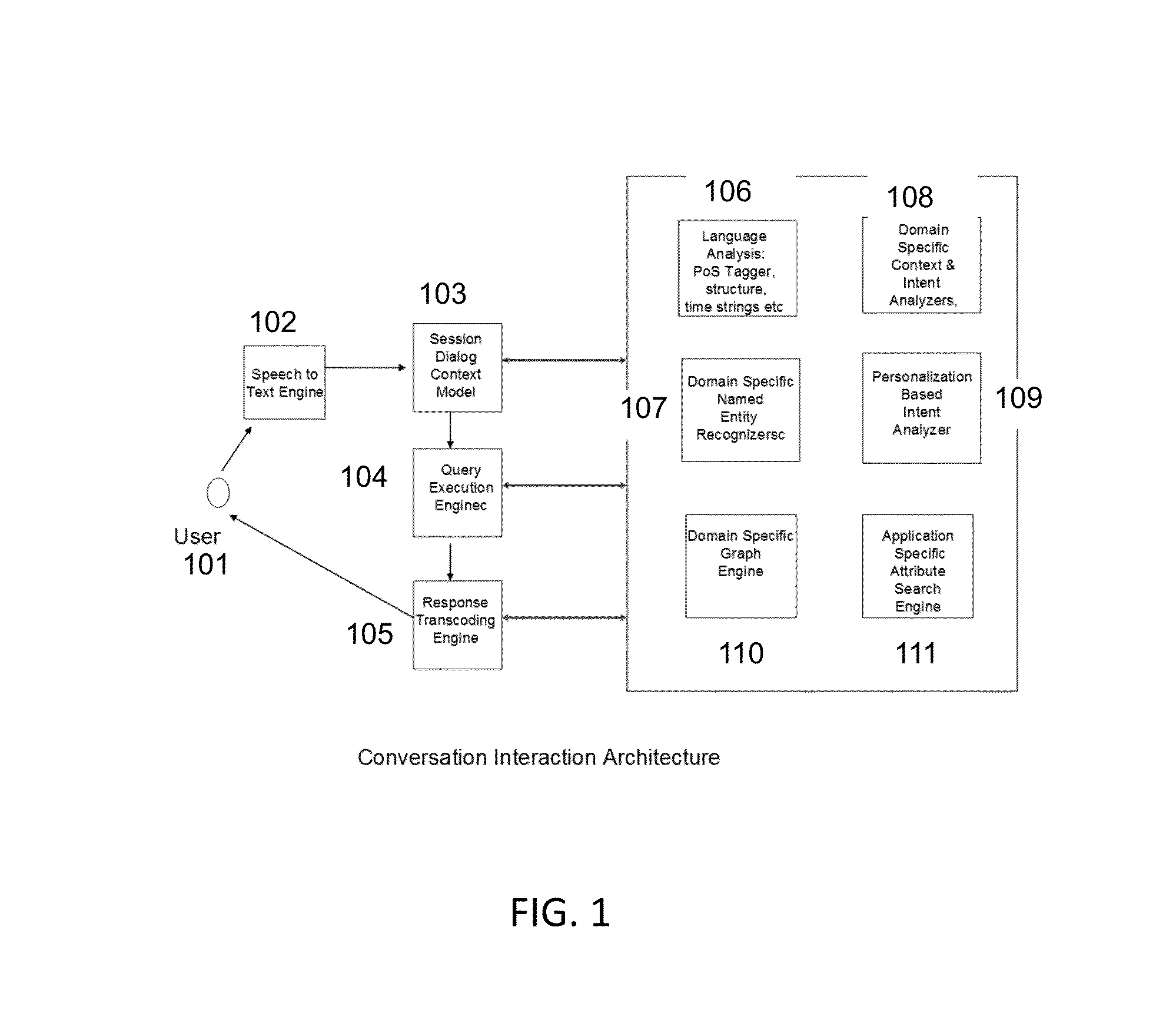

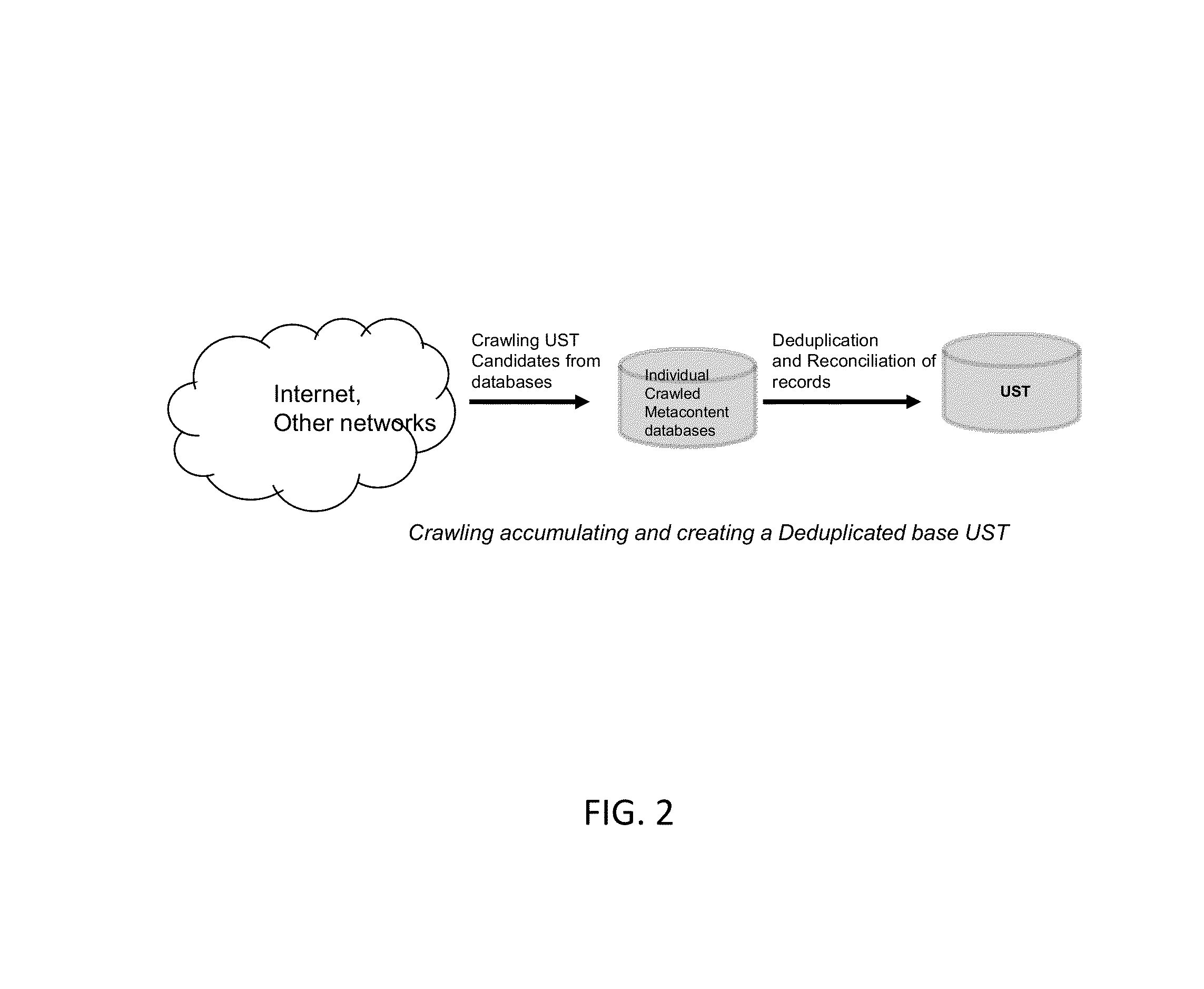

Disambiguating user intent in conversational interaction system for large corpus information retrieval

A method of disambiguating user intent in conversational interactions for information retrieval is disclosed. The method includes providing access to a set of content items with metadata describing the content items and providing access to structural knowledge showing semantic relationships and links among the content items. The method further includes providing a user preference signature, receiving a first input from the user that is intended by the user to identify at least one desired content item, and determining an ambiguity index of the first input. If the ambiguity index is high, the method determines a query input based on the first input and at least one of the structural knowledge, the user preference signature, a location of the user, and the time of the first input and selects a content item based on comparing the query input and the metadata associated with the content item.

Owner:VEVEO INC

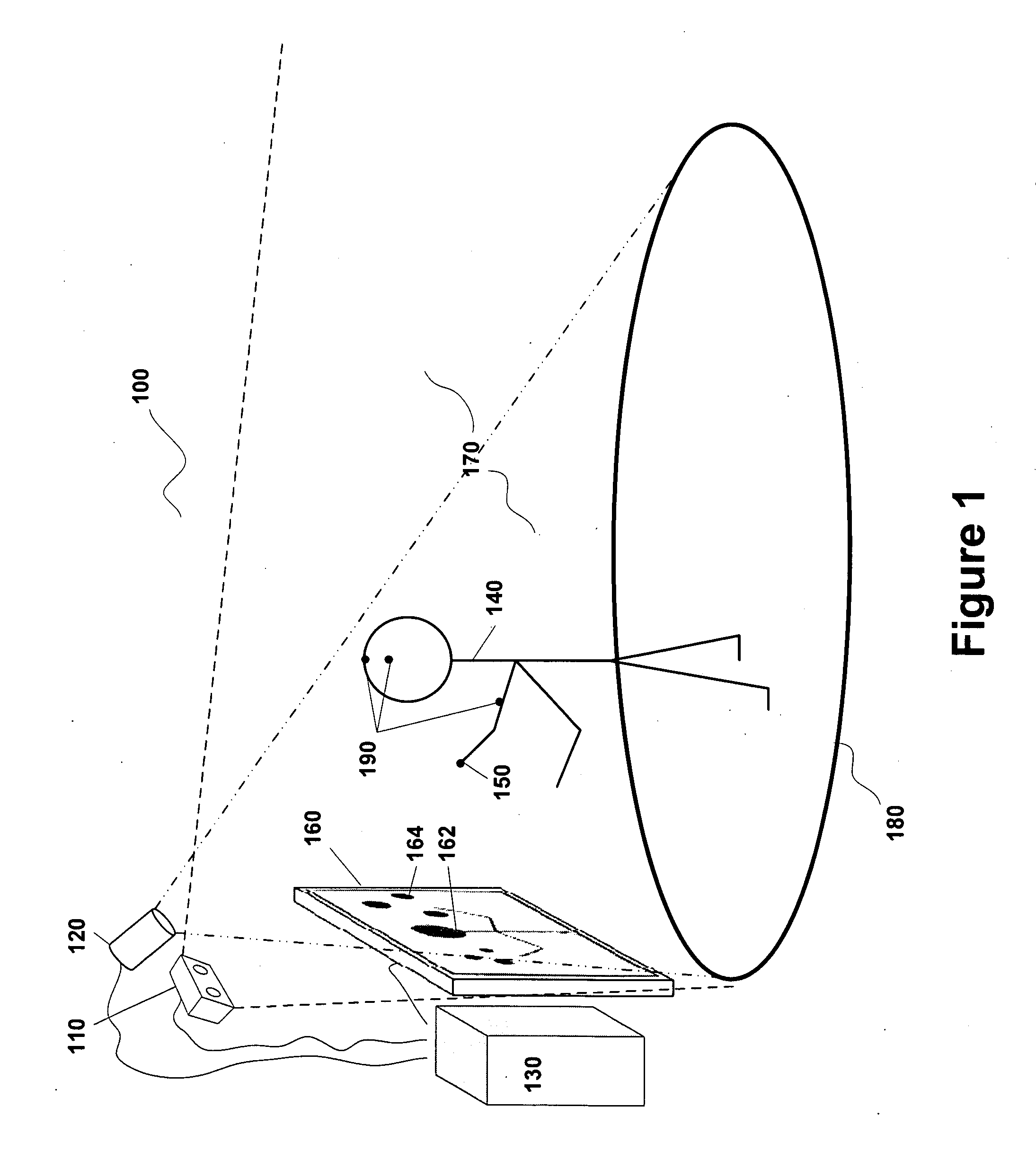

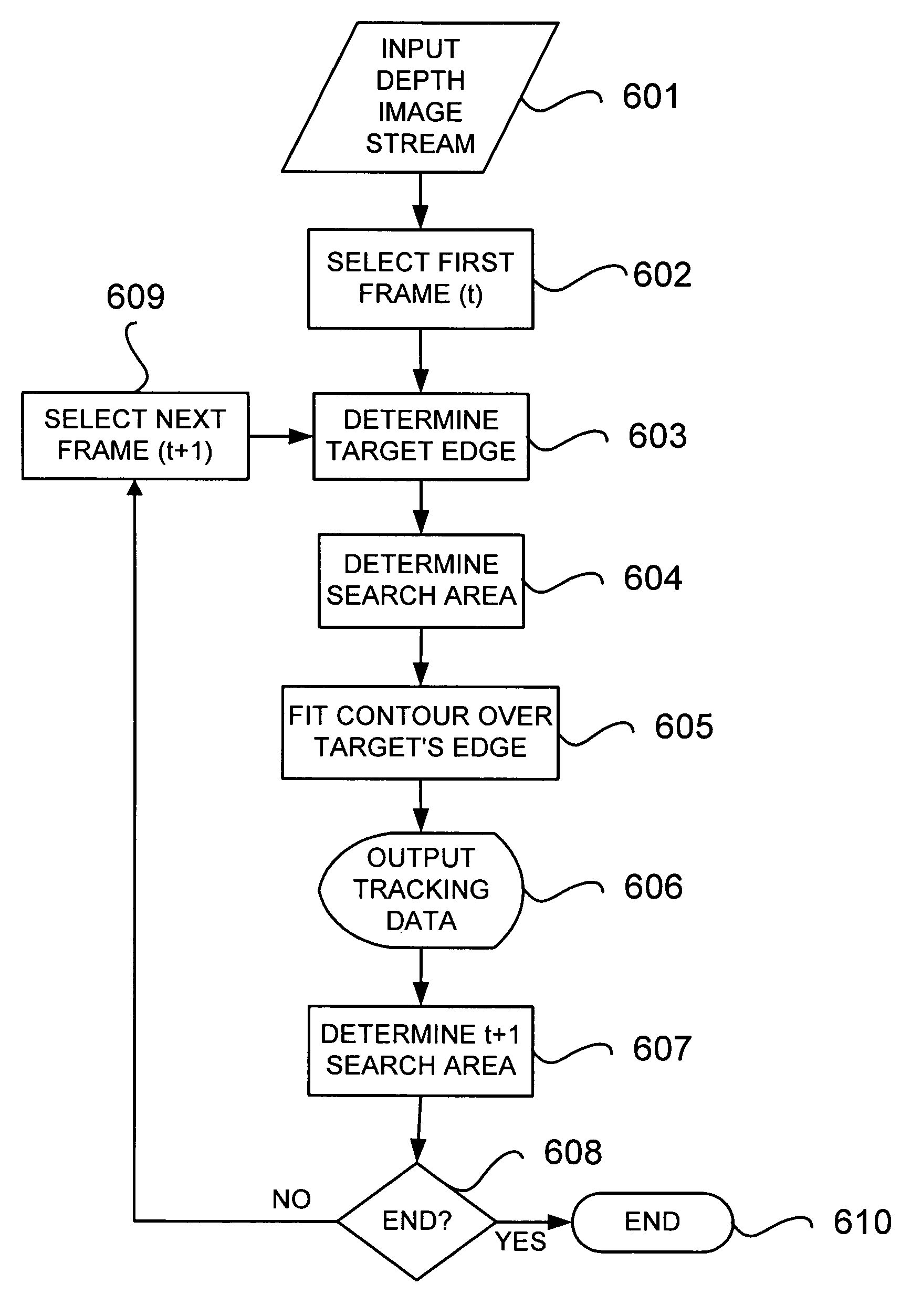

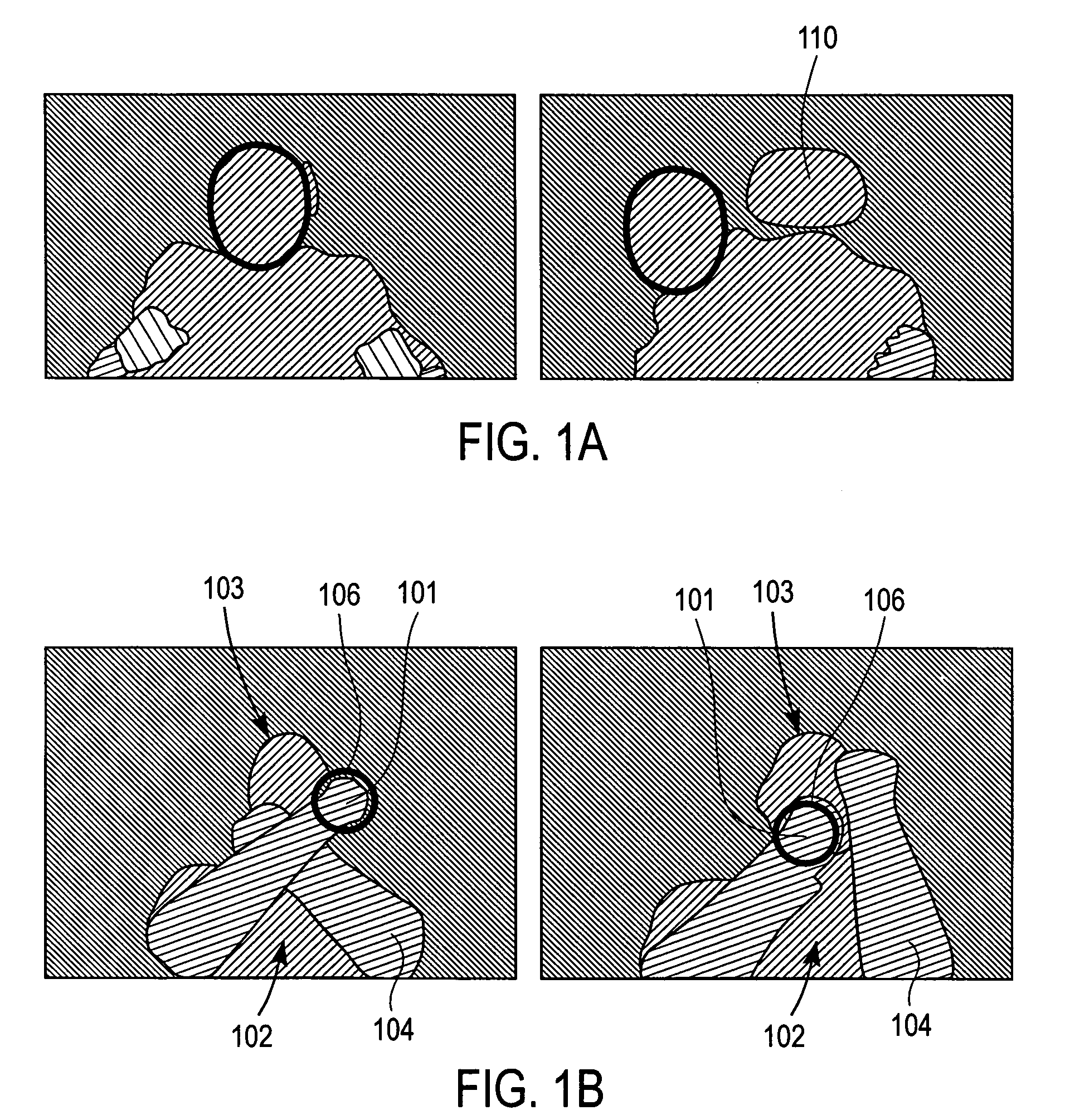

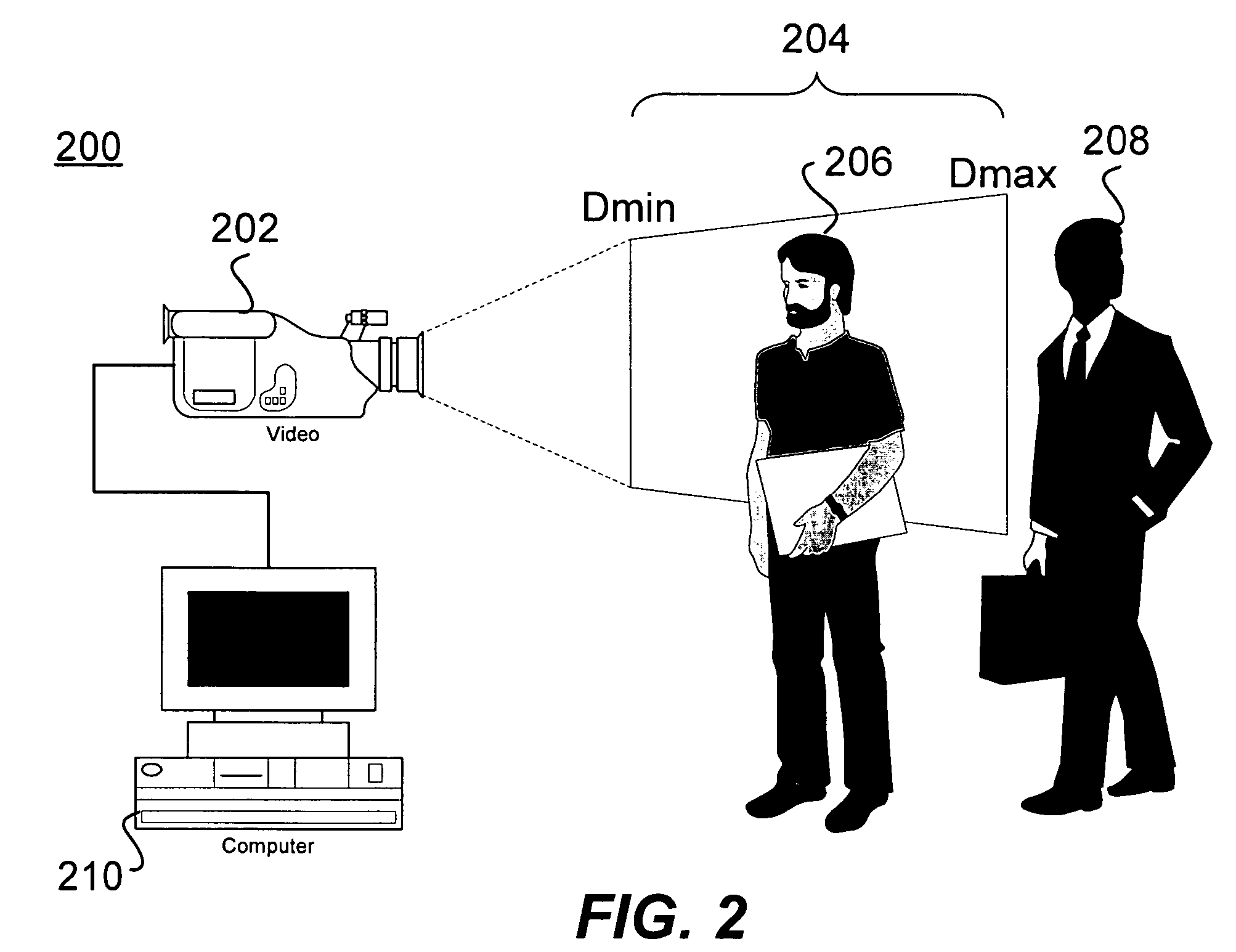

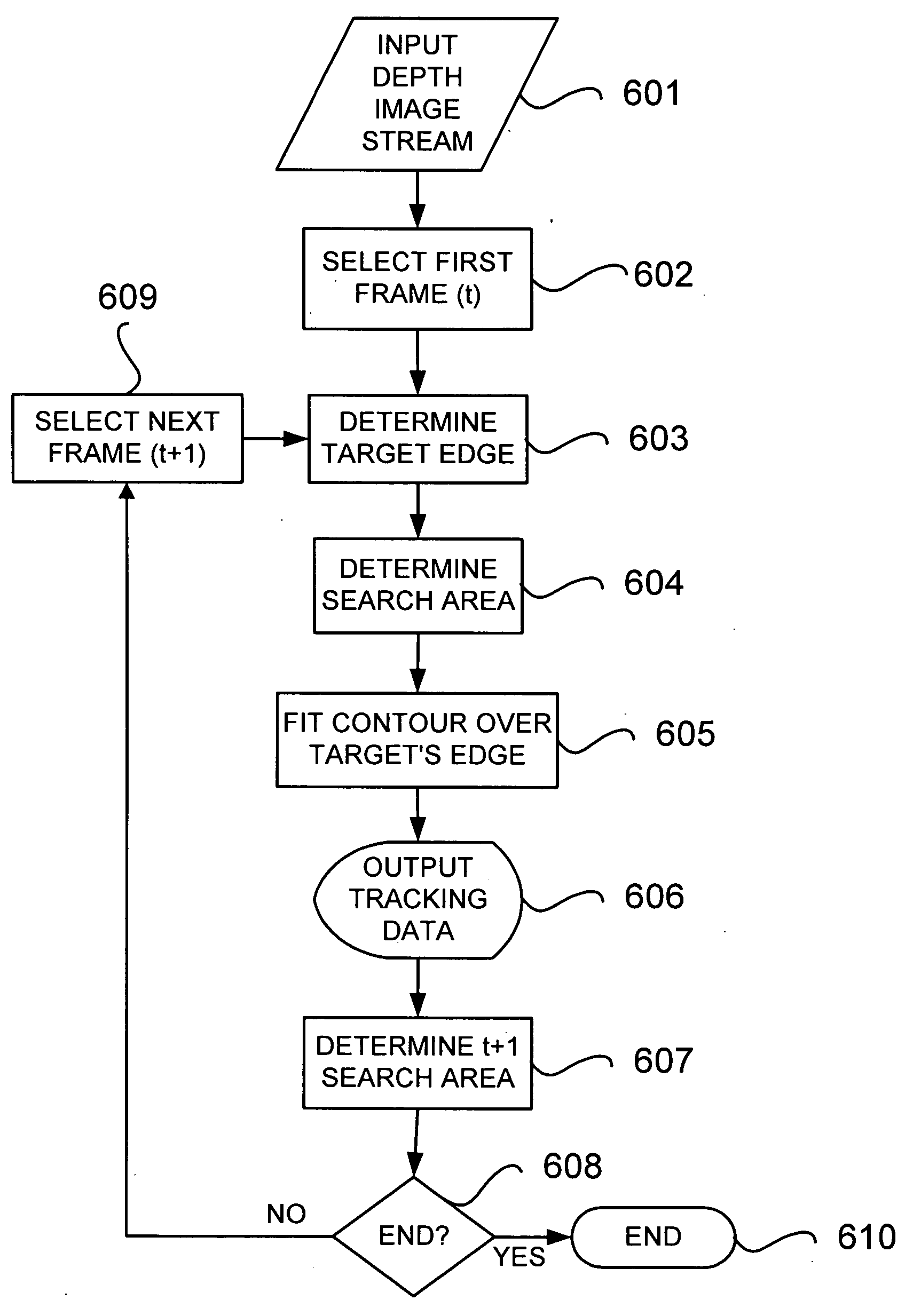

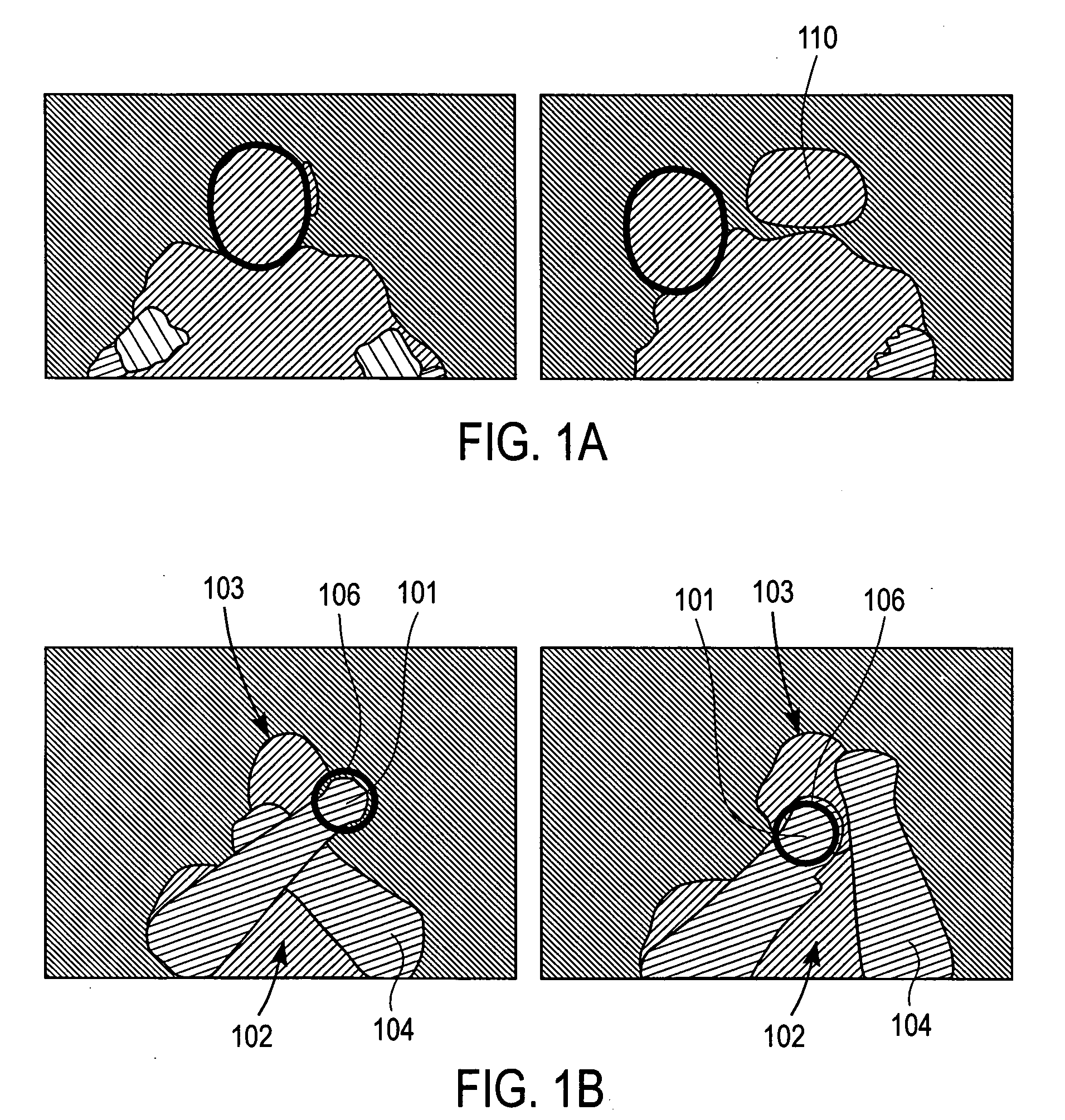

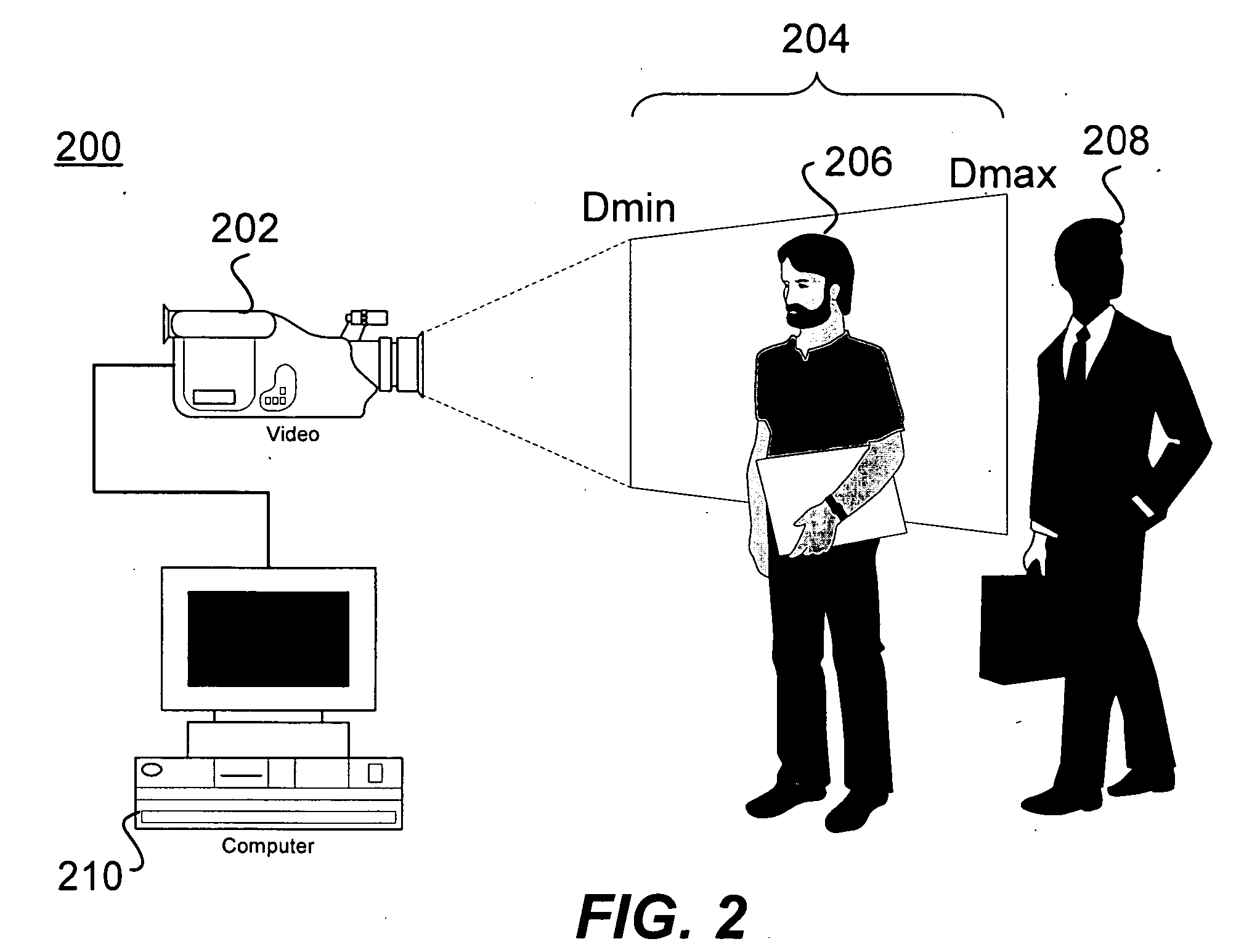

Visual tracking using depth data

Real-time visual tracking using depth sensing camera technology, results in illumination-invariant tracking performance. Depth sensing (time-of-flight) cameras provide real-time depth and color images of the same scene. Depth windows regulate the tracked area by controlling shutter speed. A potential field is derived from the depth image data to provide edge information of the tracked target. A mathematically representable contour can model the tracked target. Based on the depth data, determining a best fit between the contour and the edge of the tracked target provides position information for tracking. Applications using depth sensor based visual tracking include head tracking, hand tracking, body-pose estimation, robotic command determination, and other human-computer interaction systems.

Owner:HONDA MOTOR CO LTD

Visual tracking using depth data

Real-time visual tracking using depth sensing camera technology, results in illumination-invariant tracking performance. Depth sensing (time-of-flight) cameras provide real-time depth and color images of the same scene. Depth windows regulate the tracked area by controlling shutter speed. A potential field is derived from the depth image data to provide edge information of the tracked target. A mathematically representable contour can model the tracked target. Based on the depth data, determining a best fit between the contour and the edge of the tracked target provides position information for tracking. Applications using depth sensor based visual tracking include head tracking, hand tracking, body-pose estimation, robotic command determination, and other human-computer interaction systems.

Owner:HONDA MOTOR CO LTD

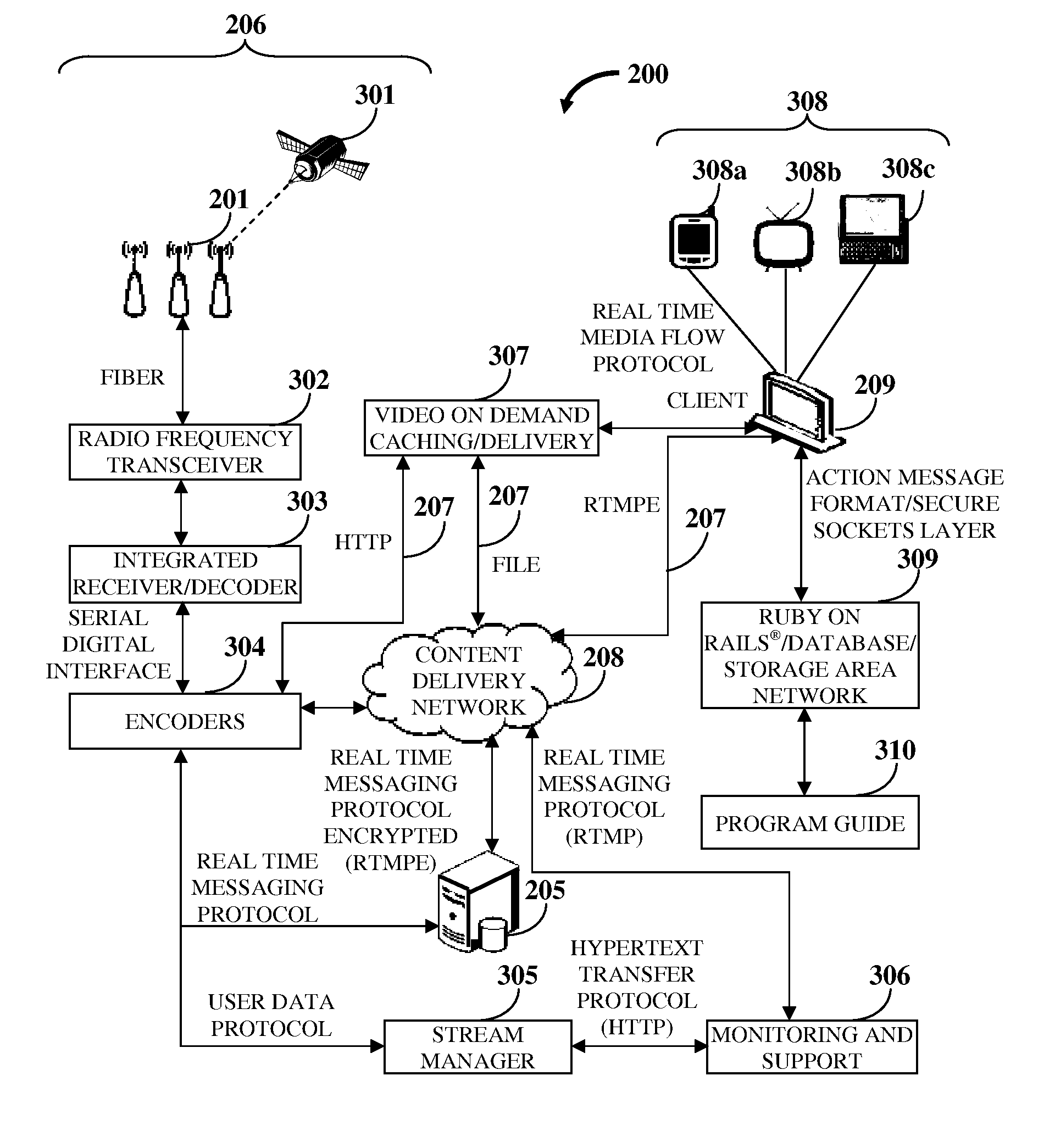

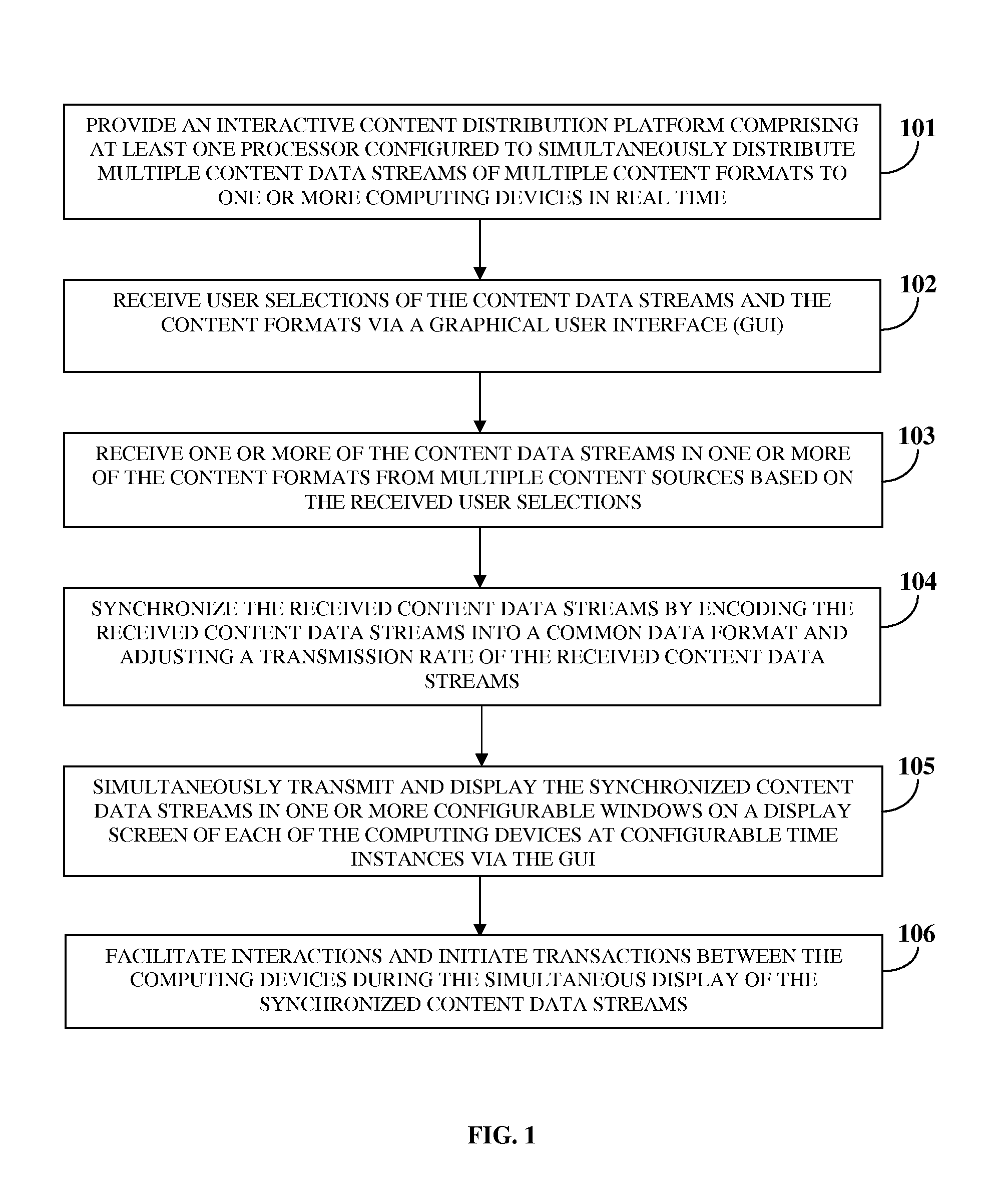

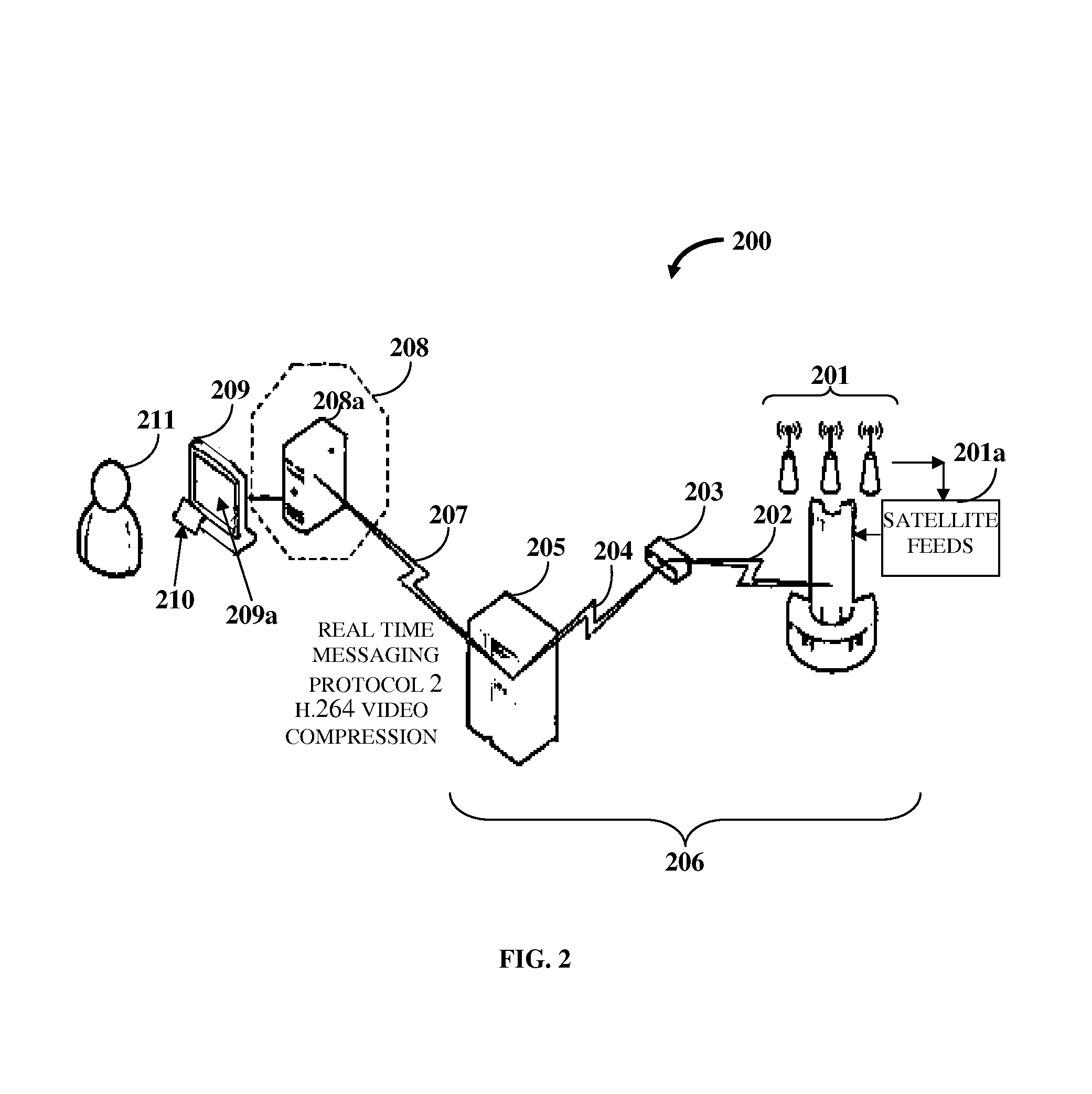

Simultaneous Content Data Streaming And Interaction System

InactiveUS20140195675A1Enhanced interactionSimple waySynchronisation arrangementBroadcast transmission systemsInteraction systemsContent distribution

A computer implemented method and system simultaneously distributes content data streams (CDSs) of multiple content formats, for example, live cable television content, gaming content, social media content, user generated content, etc., to one or more computing devices. An interactive content distribution platform (ICDP) receives user selections of the CDSs and the content formats via a graphical user interface (GUI) and receives one or more CDSs in one or more content formats from multiple content sources based on the user selections. The ICDP synchronizes the CDSs by encoding the CDSs into a common data format and adjusting a transmission rate of the CDSs. The ICDP simultaneously transmits and displays the synchronized CDSs in one or more configurable windows on a display screen of each computing device at configurable time instances via the GUI. The ICDP facilitates interactions and initiates transactions between computing devices during the simultaneous display of the synchronized CDSs.

Owner:GIGA ENTERTAINMENT MEDIA

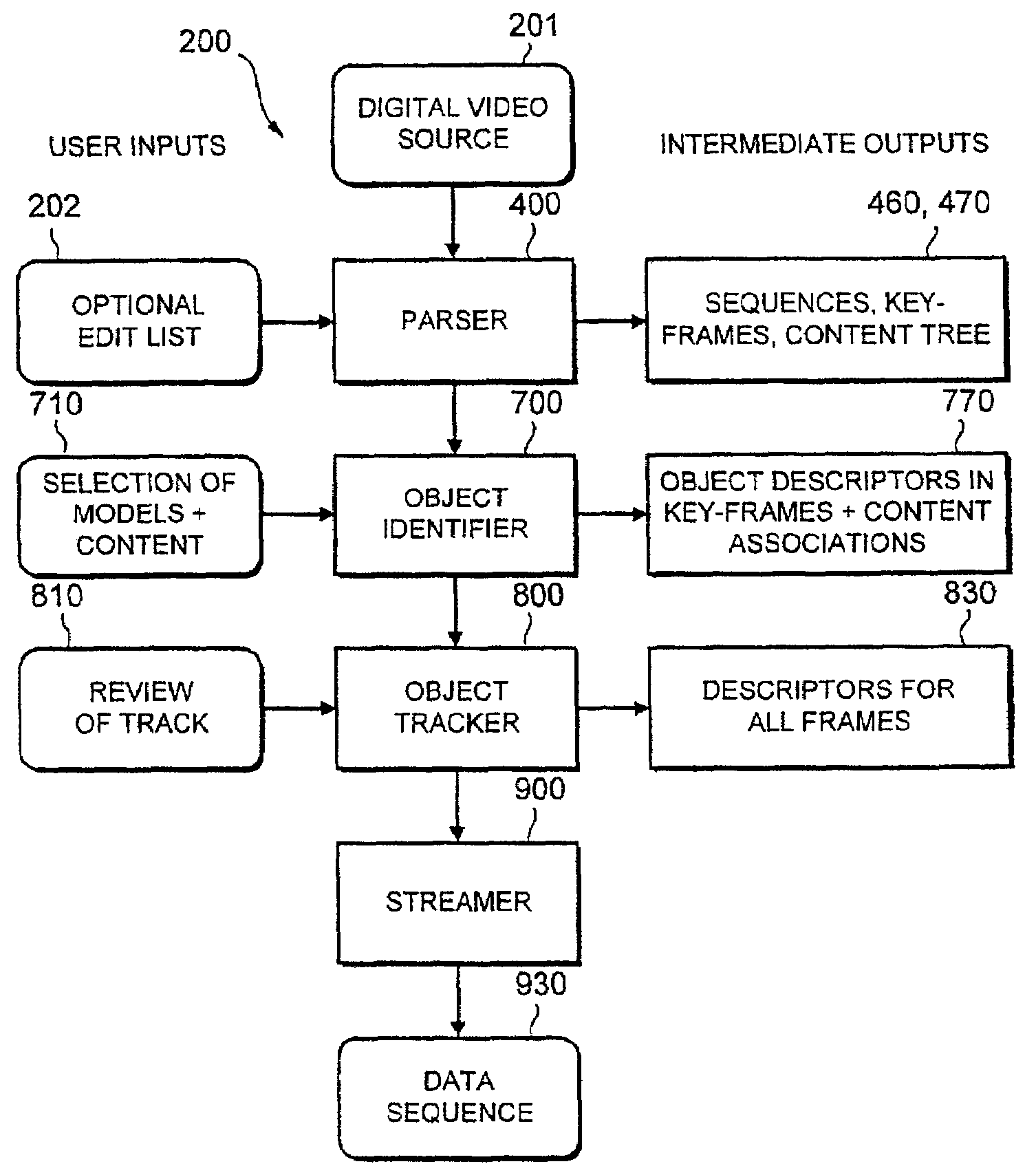

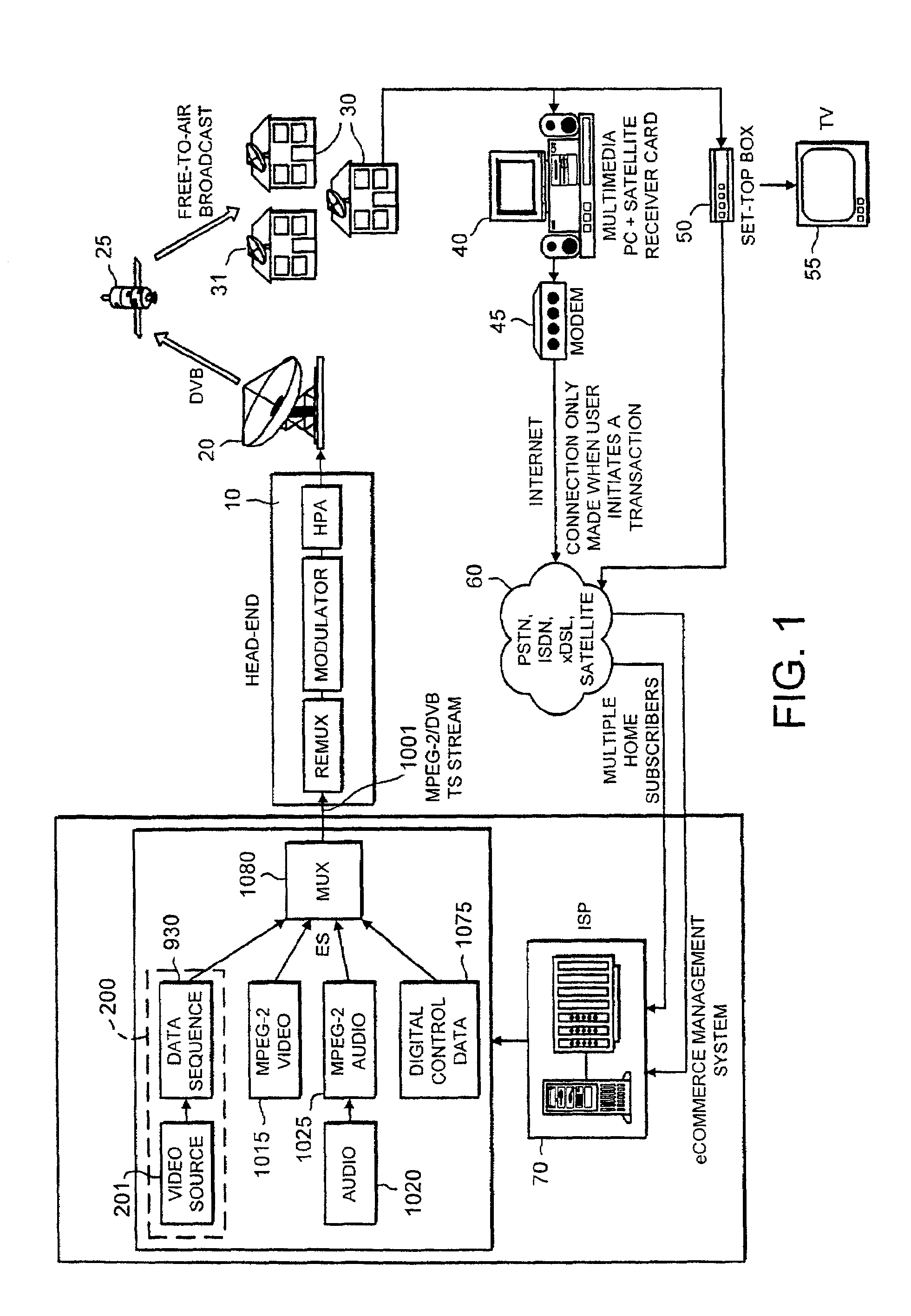

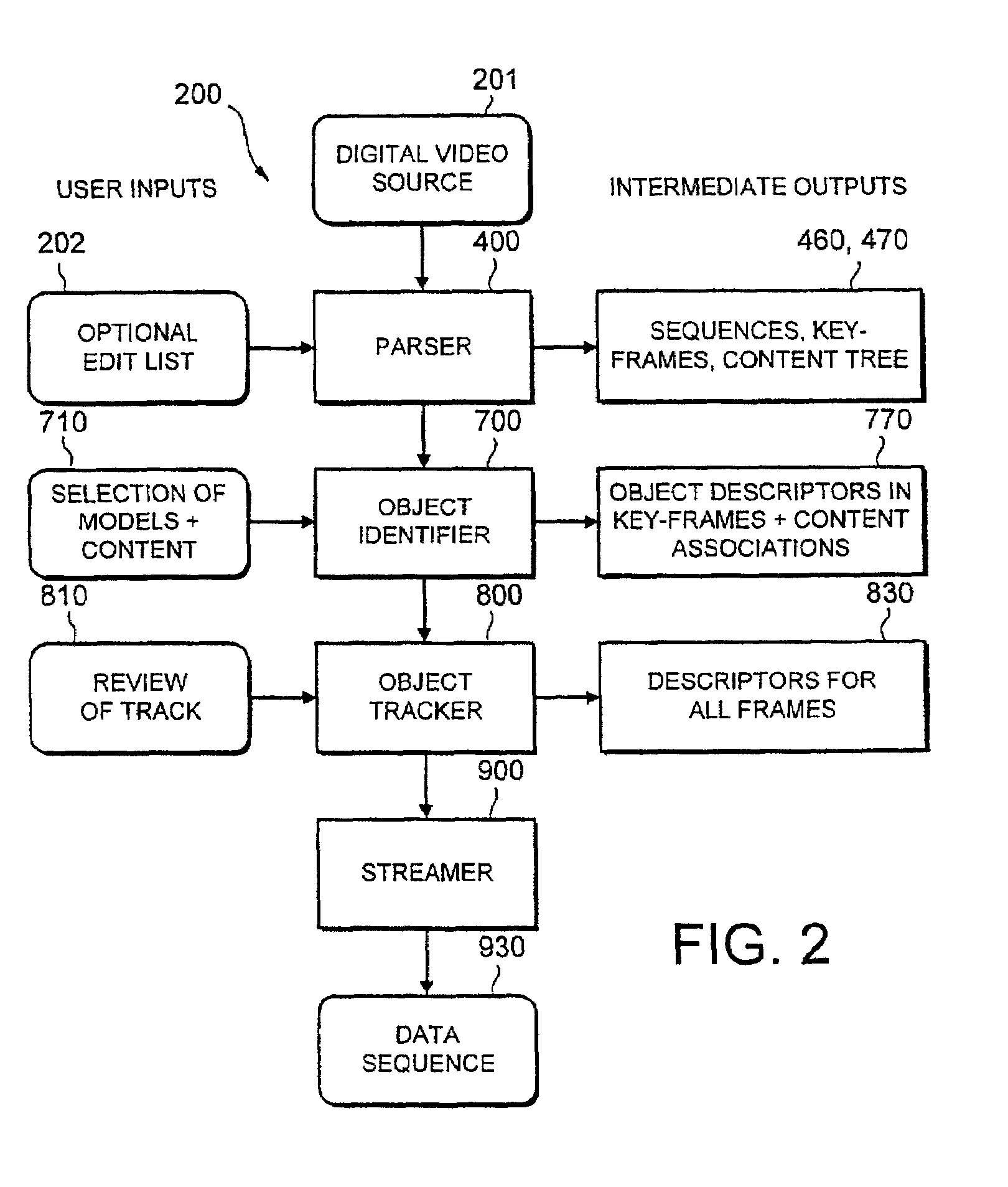

Interactive system

InactiveUS7158676B1Save on advertisingMaintaining and increasing advertising revenueTelevision system detailsRecording carrier detailsInteraction systemsInteractive content

An interactive system provides a video program signal and generates interactive content data to be associated with at least one object within a frame of the video program. The interactive content data is embedded with the object and the object is tracked through a sequence of frames and the interactive content data is embedded into each one of the frames. The program frames with the embedded data are multiplexed with video and audio signals and may be broadcast. A receiver identifies an object of interest and the embedded data associated with the object is retrieved. The embedded data may be used for e-commerce.

Owner:EMUSE CORP

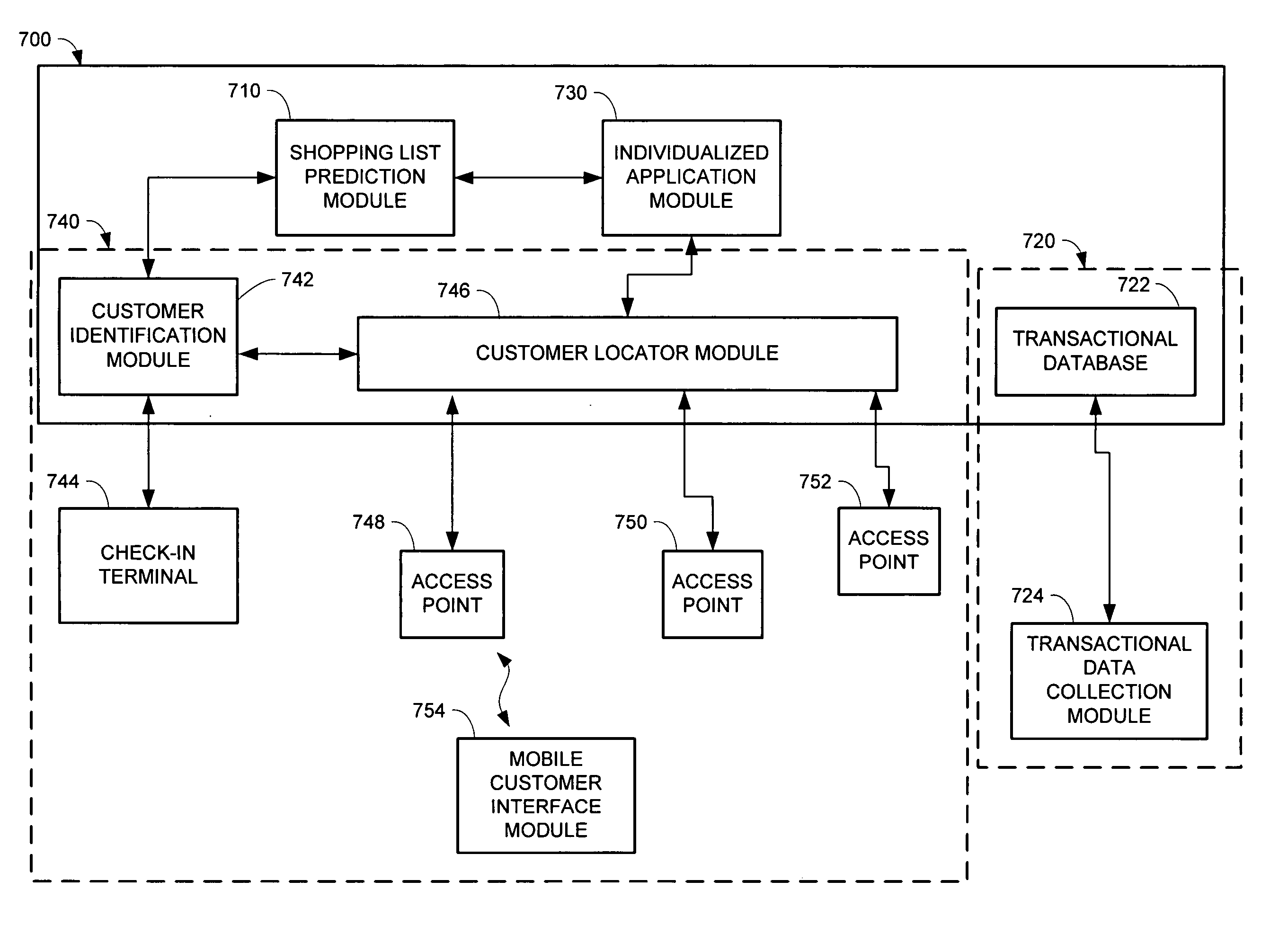

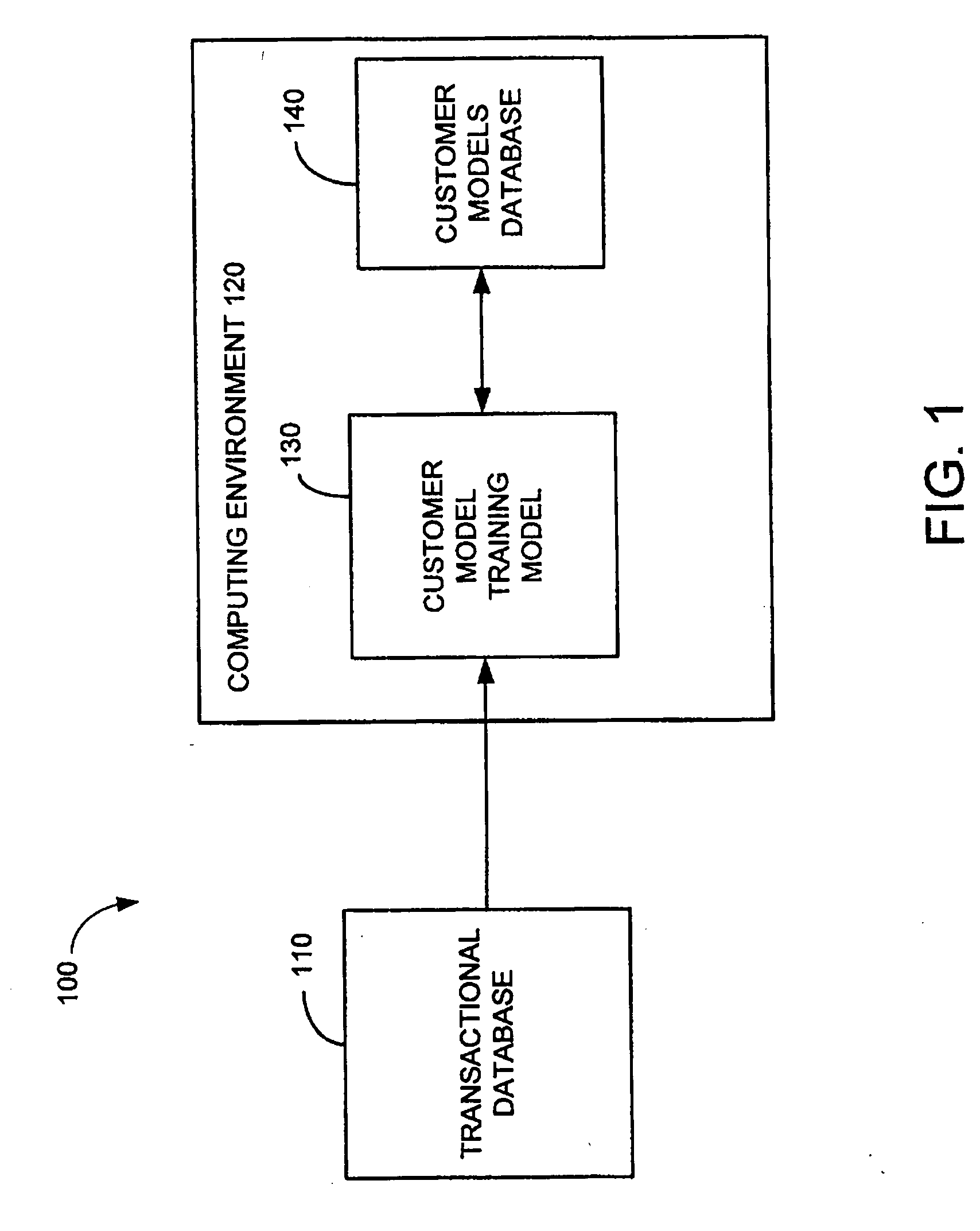

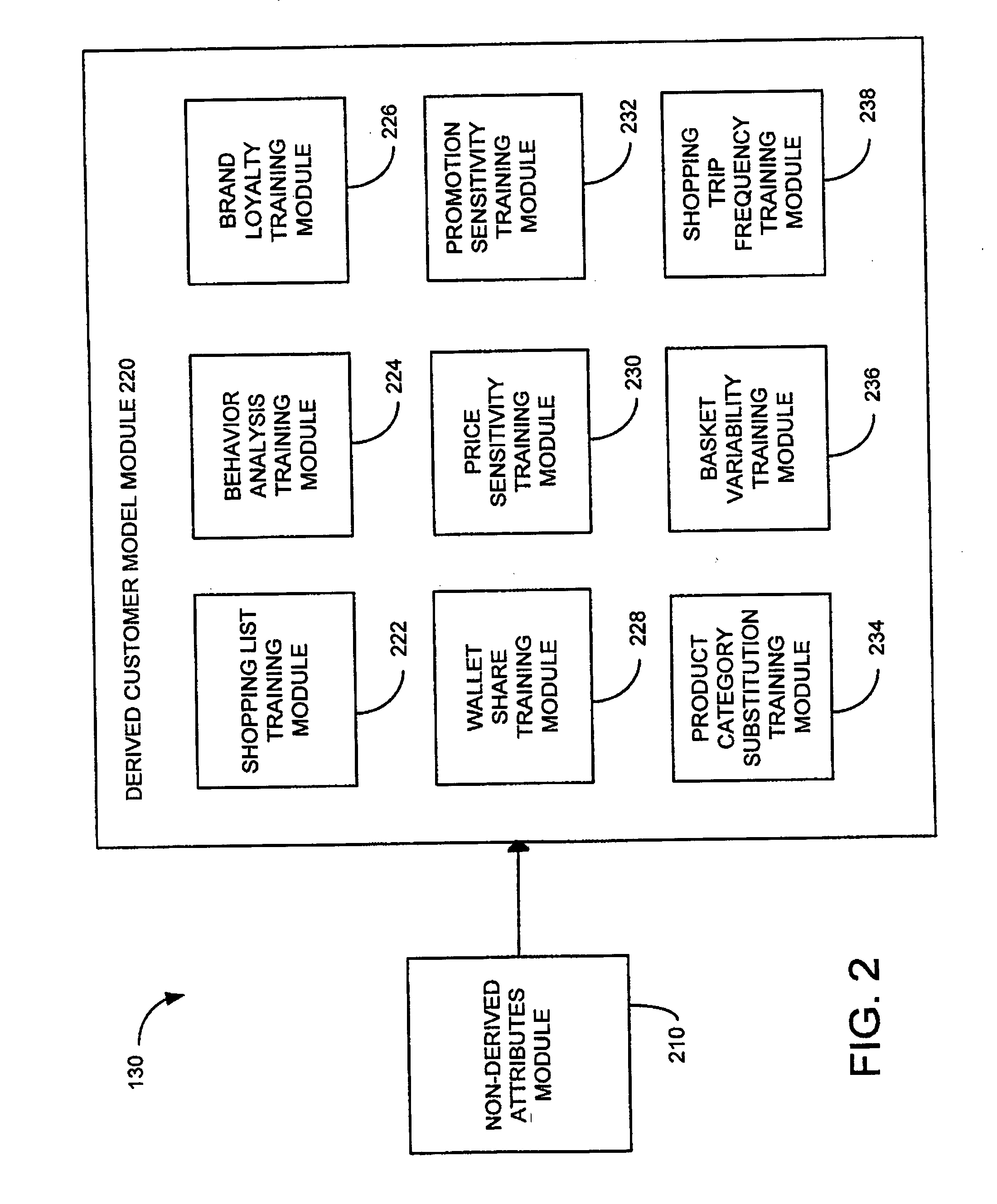

System for individualized customer interaction

ActiveUS20050189415A1Satisfies needDiscounts/incentivesAdvertisementsPersonalizationInteraction systems

A method and system for using individualized customer models when operating a retail establishment is provided. The individualized customer models may be generated using statistical analysis of transaction data for the customer, thereby generating sub-models and attributes tailored to customer. The individualized customer models may be used in any aspect of a retail establishment's operations, ranging from supply chain management issues, inventory control, promotion planning (such as selecting parameters for a promotion or simulating results of a promotion), to customer interaction (such as providing a shopping list or providing individualized promotions).

Owner:ACCENTURE GLOBAL SERVICES LTD

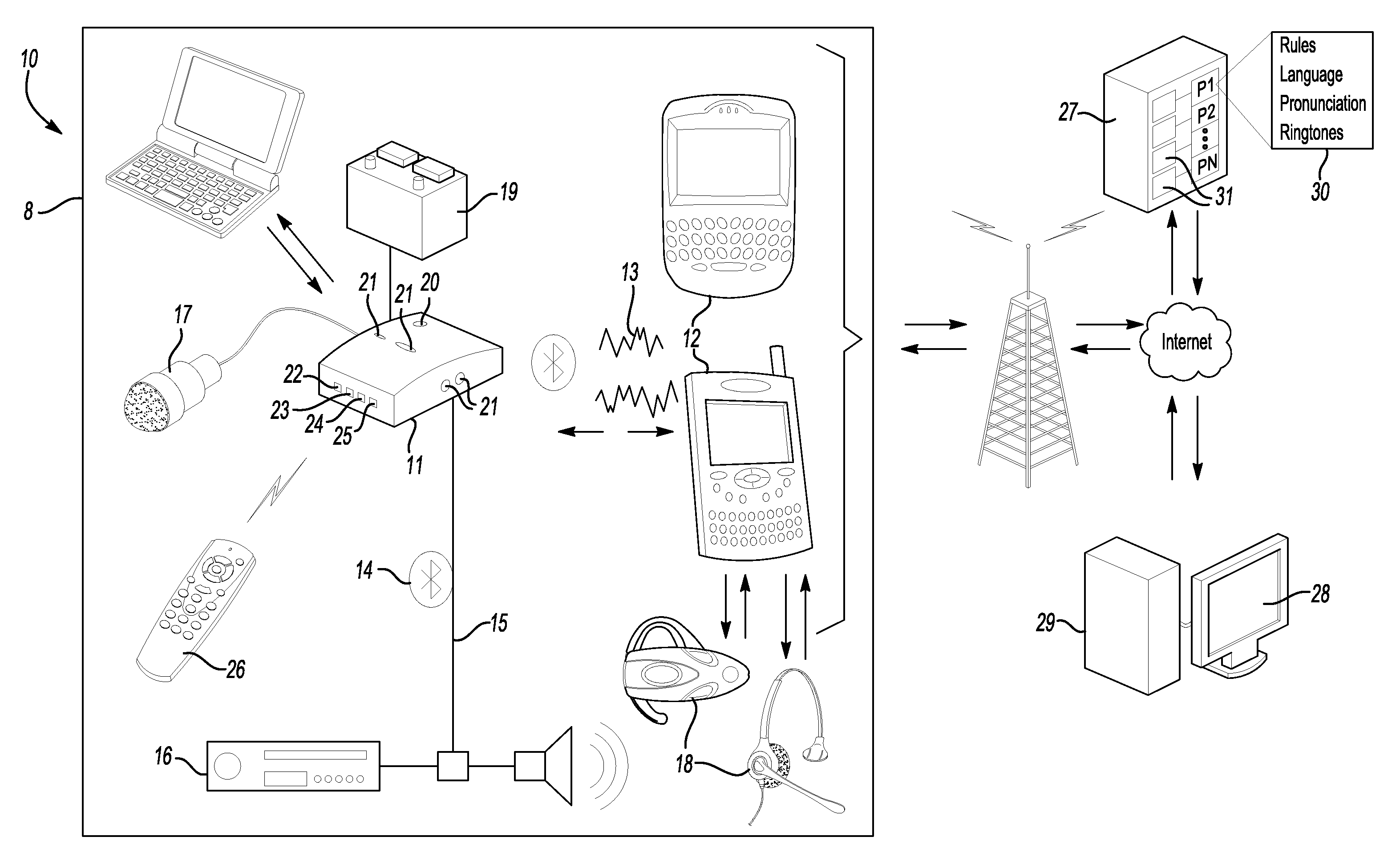

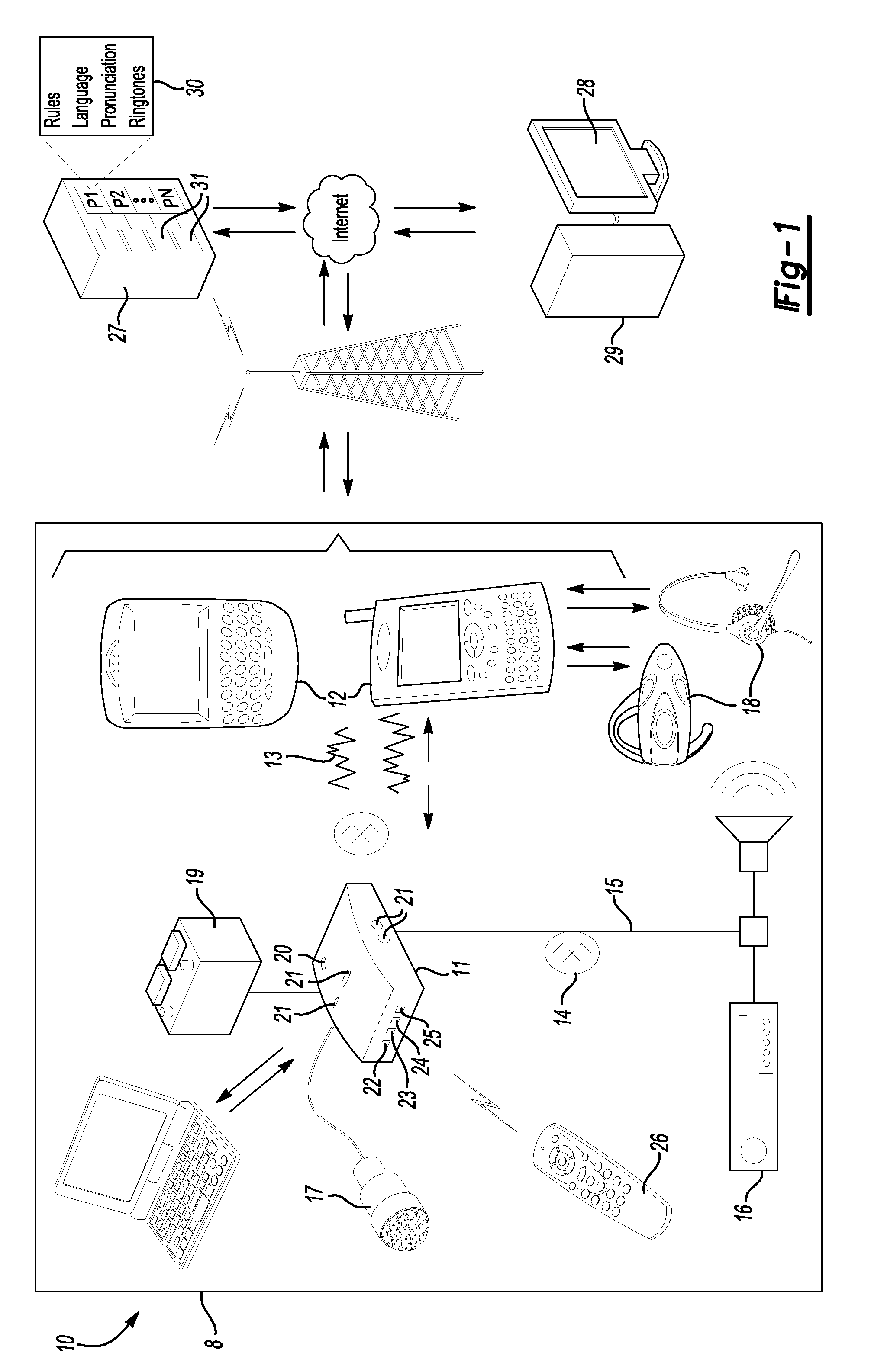

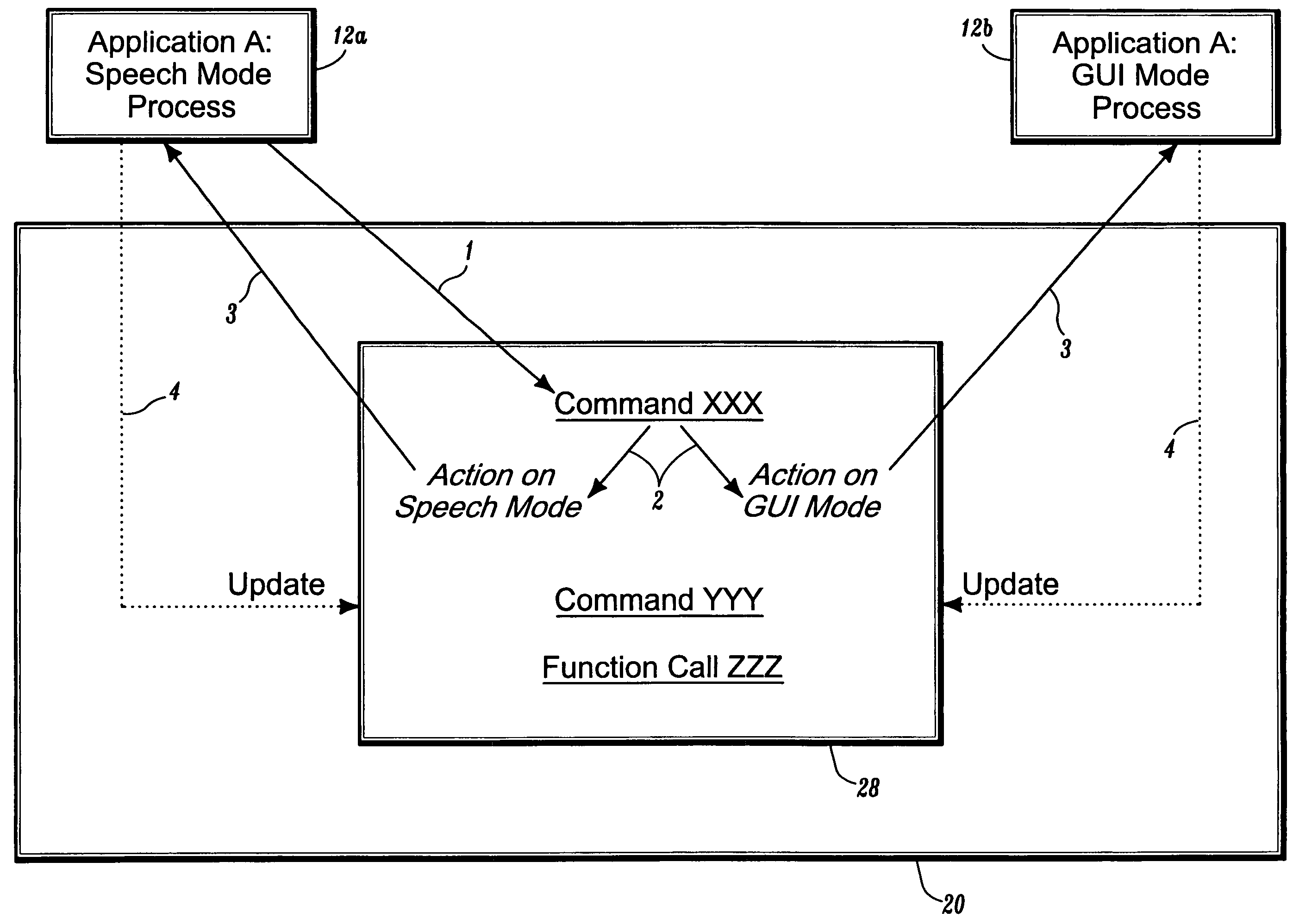

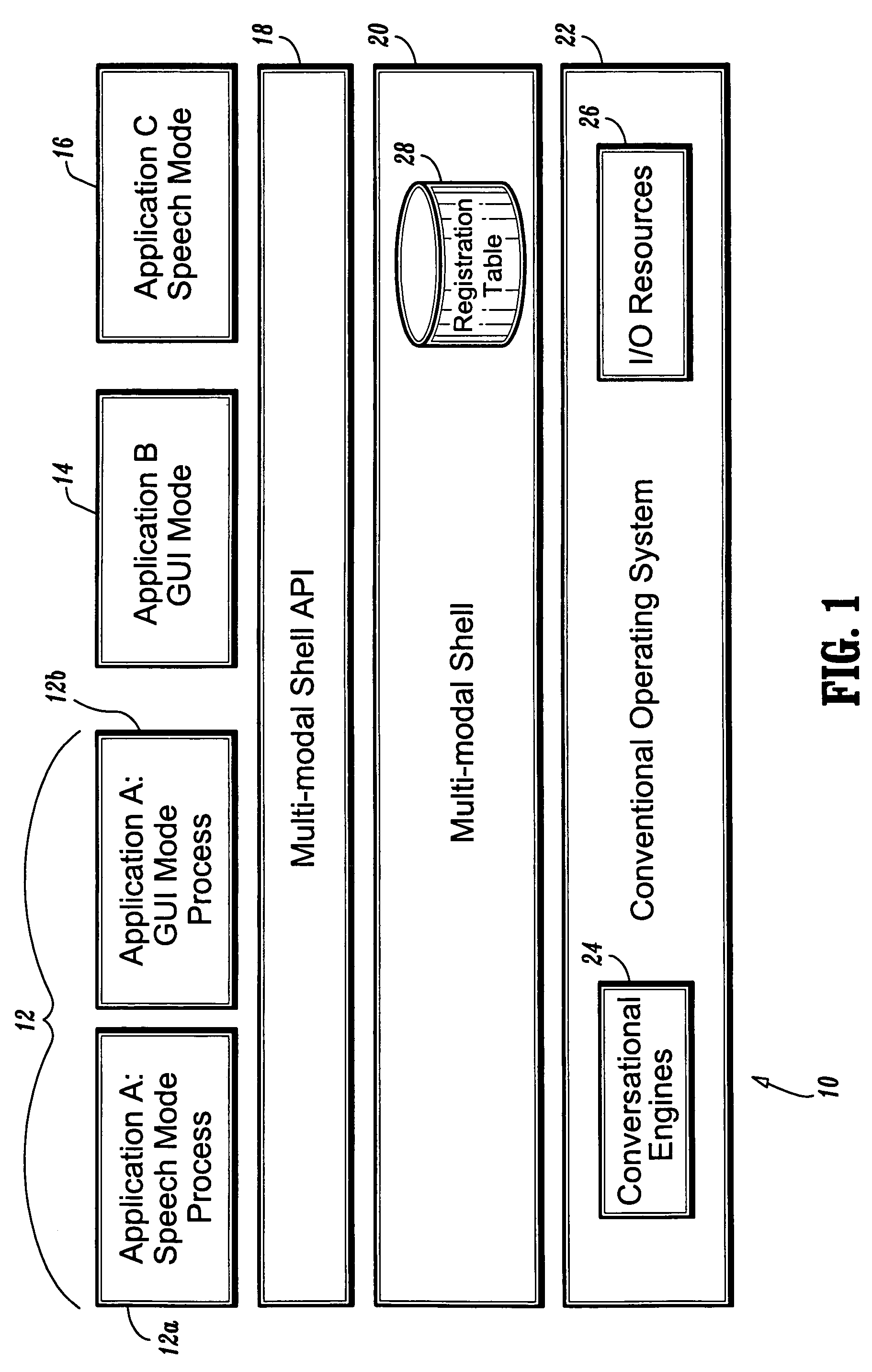

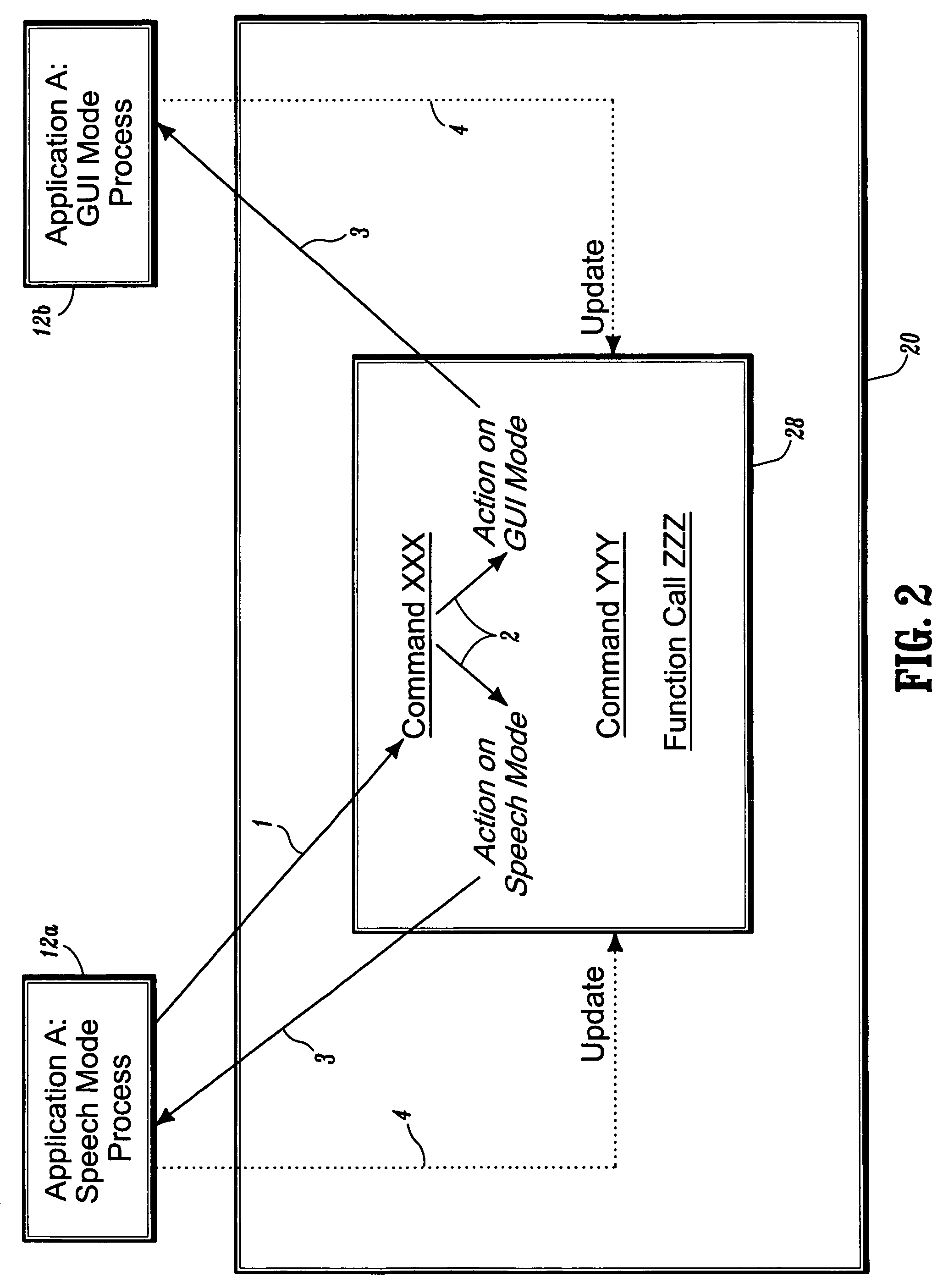

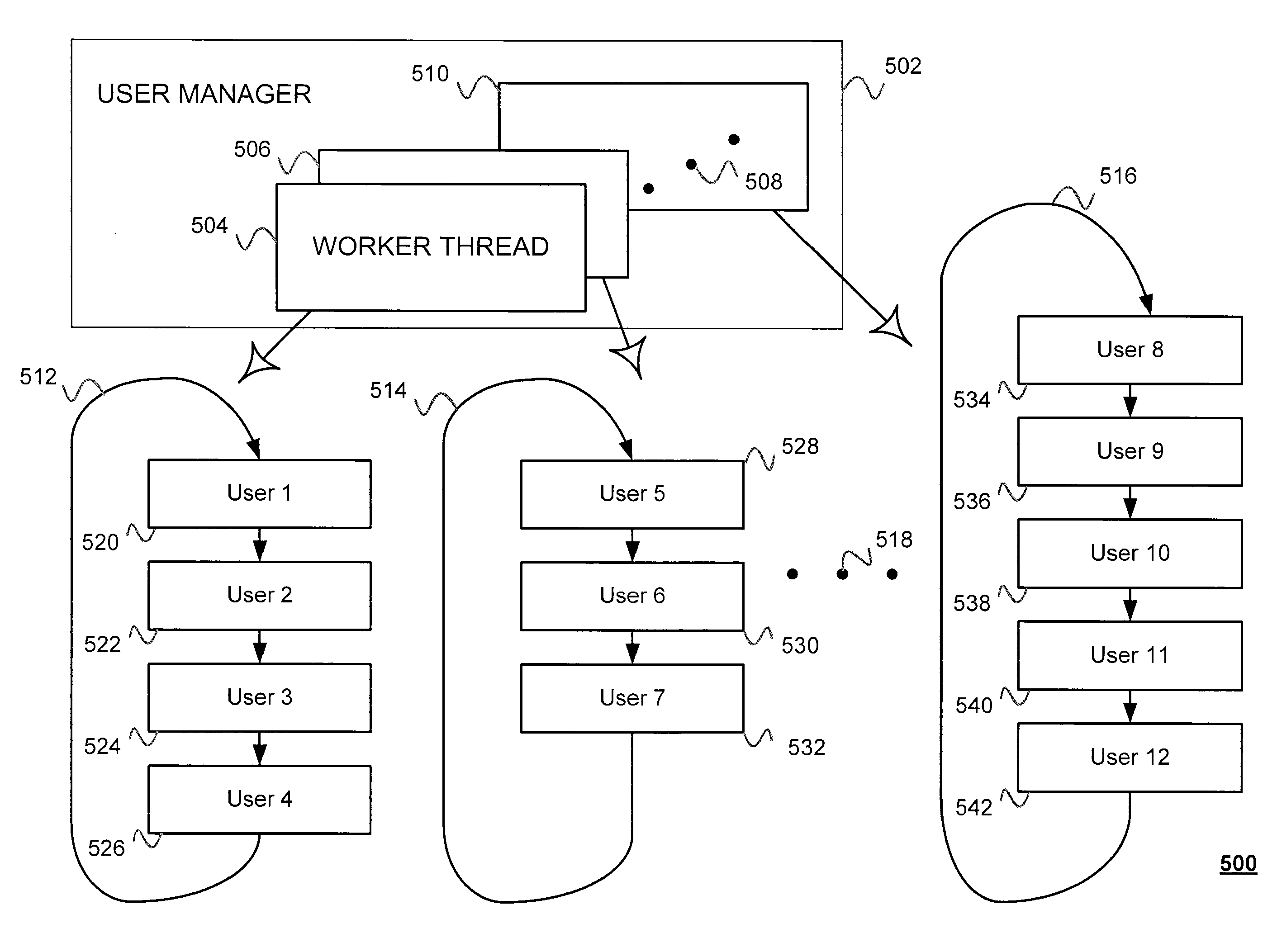

Systems and methods for synchronizing multi-modal interactions

System and methods for synchronizing interactions between mono-mode applications, different modes of a multi-modal application, and devices having different UI modalities. In one aspect, a multi-modal shell coordinates multiple mode processes (i.e. modalities) of the same application or multiple applications through API calls, whereby each mode process registers its active commands and the corresponding actions in each of the registered modalities. The multi-modal shell comprises a registry that is implemented with a command-to-action table. With the execution of a registered command, each of the corresponding actions are triggered to update each mode process accordingly, and possible update the registry to support new commands based on the change in state of the dialog or application. In another aspect, separate applications (with UI of different modalities) are coordinated via threads (e.g., applets) connected by socket connections (or virtual socket connections implemented differently). Any command in one mode triggers the corresponding thread to communicate the action to the thread of the other application. This second thread modifies accordingly the state of the second process mode. The threads are updated or replaced by new threads.

Owner:UNILOC 2017 LLC

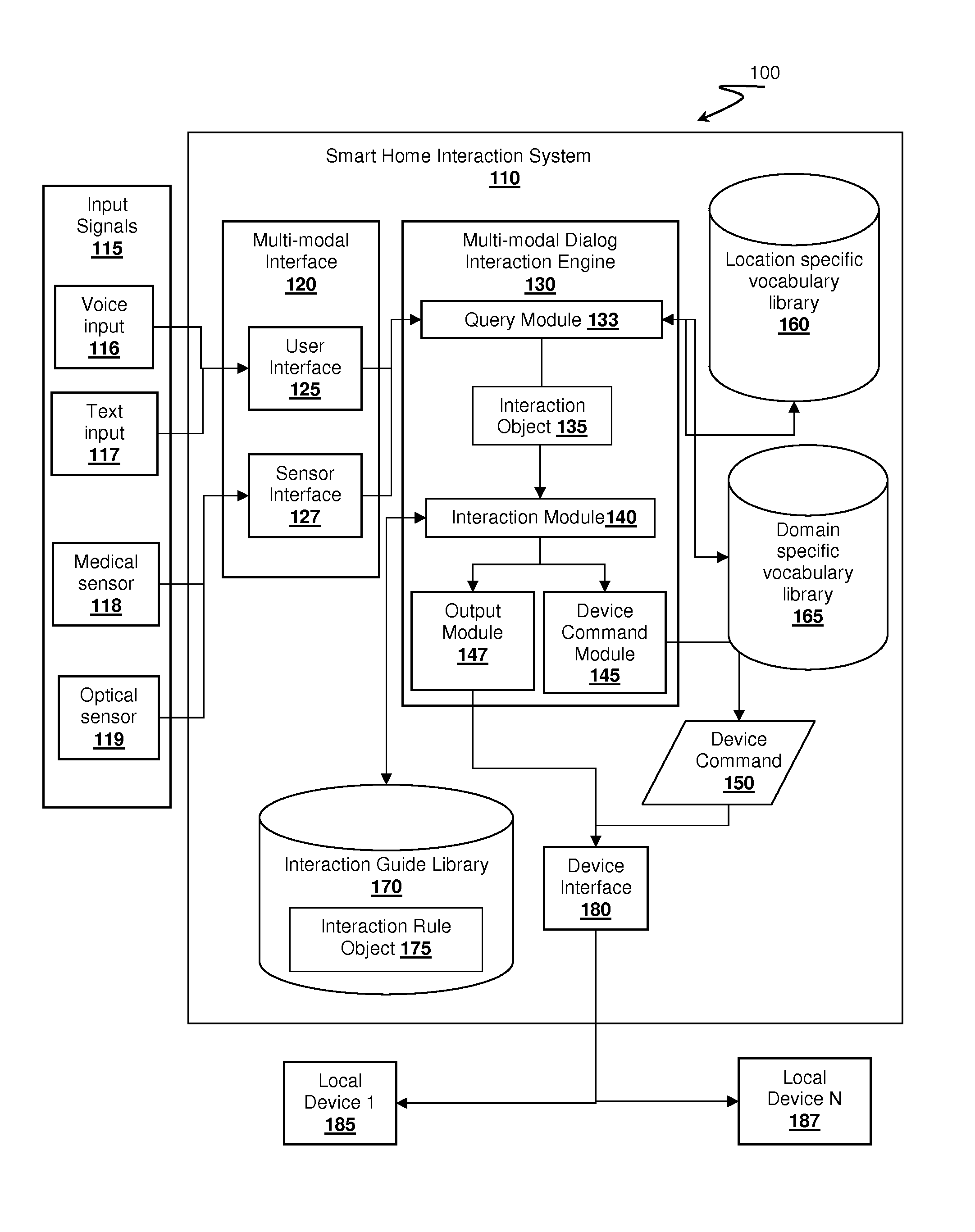

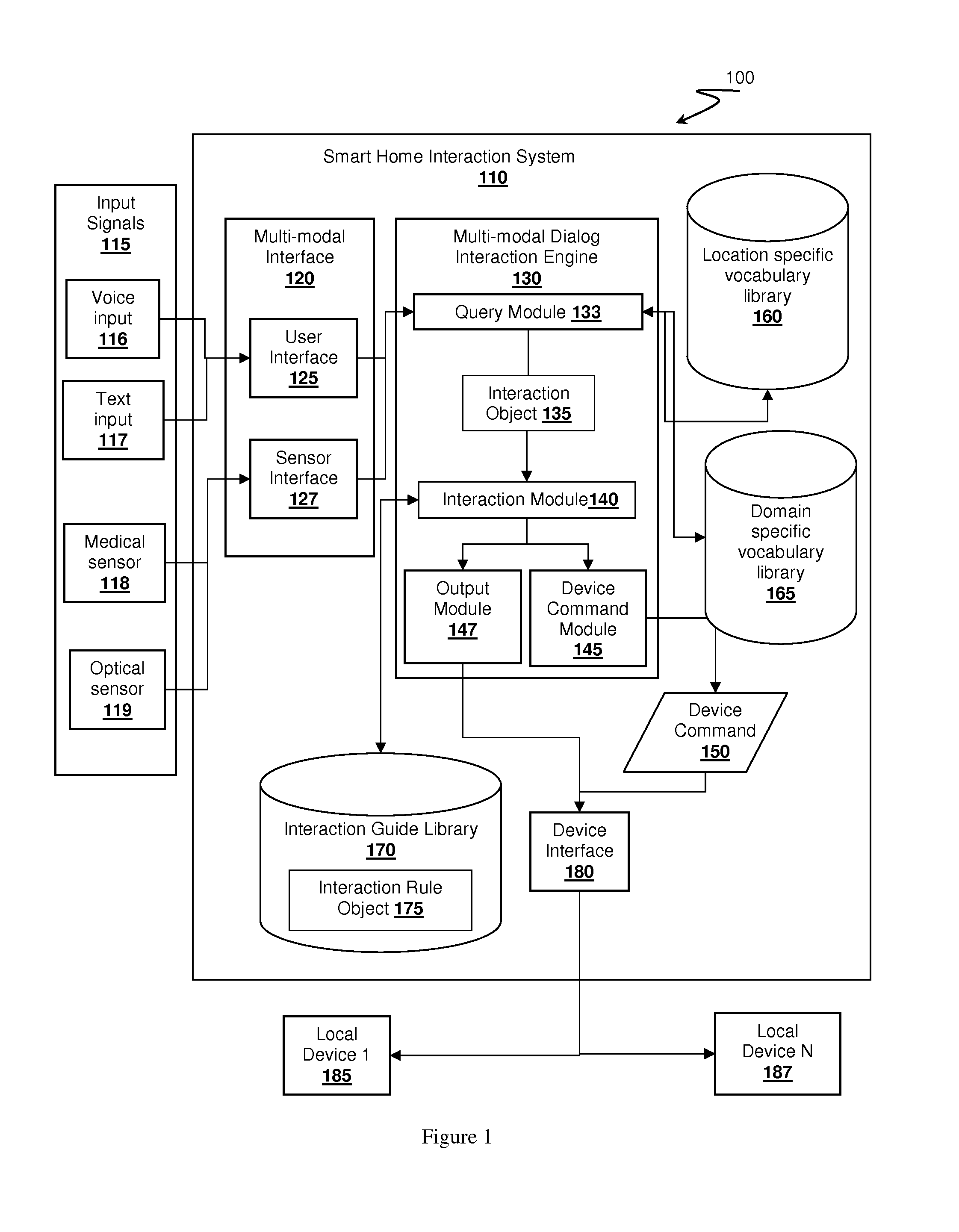

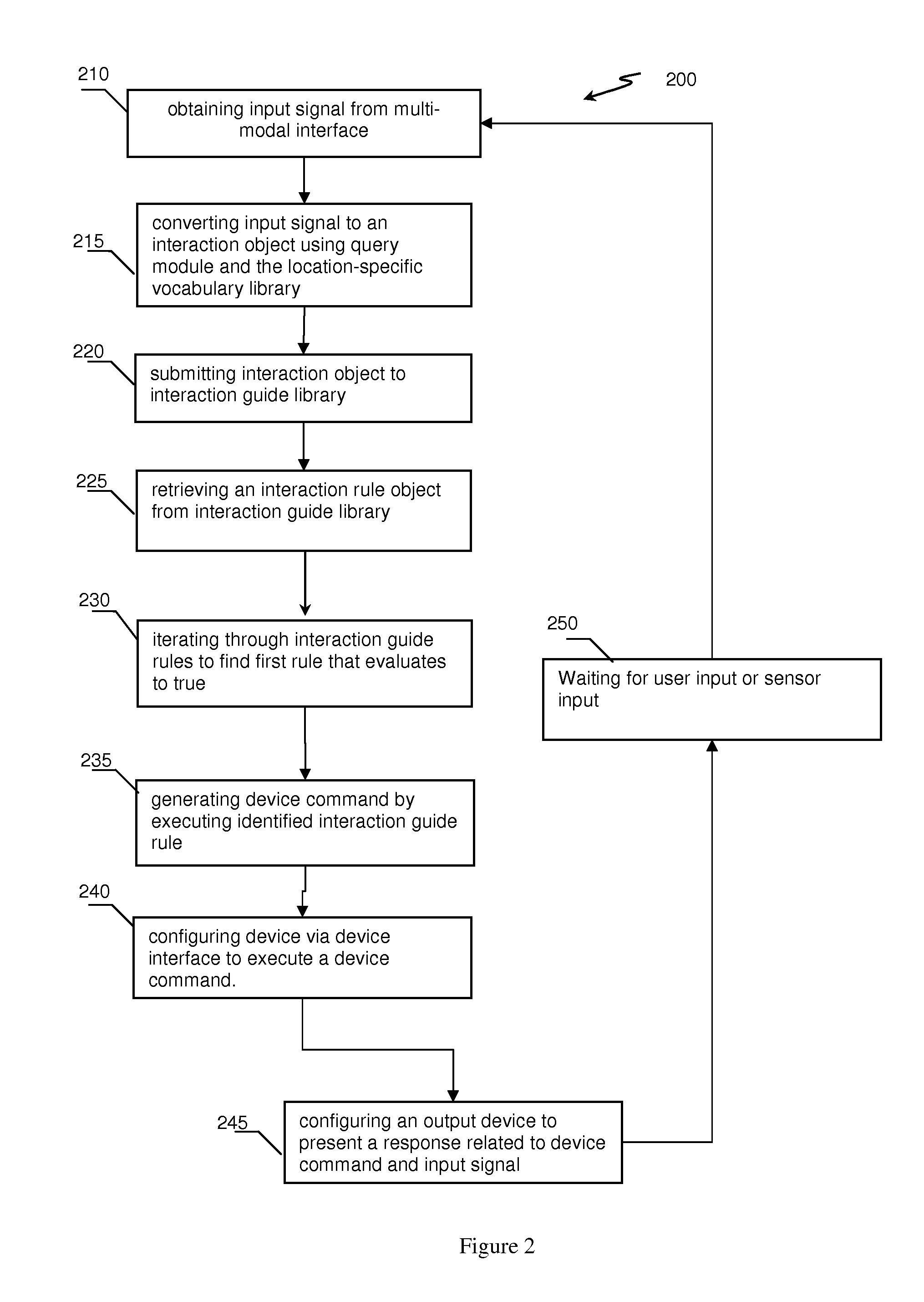

Smart Home Automation Systems and Methods

ActiveUS20140108019A1Accurate and more fluid interactionImprove human-computer interactionProgramme controlComputer controlTablet computerLearning based

A smart home interaction system is presented. It is built on a multi-modal, multithreaded conversational dialog engine. The system provides a natural language user interface for the control of household devices, appliances or household functionality. The smart home automation agent can receive input from users through sensing devices such as a smart phone, a tablet computer or a laptop computer. Users interact with the system from within the household or from remote locations. The smart home system can receive input from sensors or any other machines with which it is interfaced. The system employs interaction guide rules for processing reaction to both user and sensor input and driving the conversational interactions that result from such input. The system adaptively learns based on both user and sensor input and can learn the preferences and practices of its users.

Owner:NANT HLDG IP LLC

Method of and system for using conversation state information in a conversational interaction system

ActiveUS8577671B1Natural language translationSemantic analysisInteraction systemsInteractive content

A method of using conversation state information in a conversational interaction system is disclosed. A method of inferring a change of a conversation session during continuous user interaction with an interactive content providing system includes receiving input from the user including linguistic elements intended by the user to identify an item, associating a linguistic element of the input with a first conversation session, and providing a response based on the input. The method also includes receiving additional input from the user and inferring whether or not the additional input from the user is related to the linguistic element associated with the conversation session. If related, the method provides a response based on the additional input and the linguistic element associated with the first conversation session. Otherwise, the method provides a response based on the second input without regard for the linguistic element associated with the first conversation session.

Owner:VEVEO INC

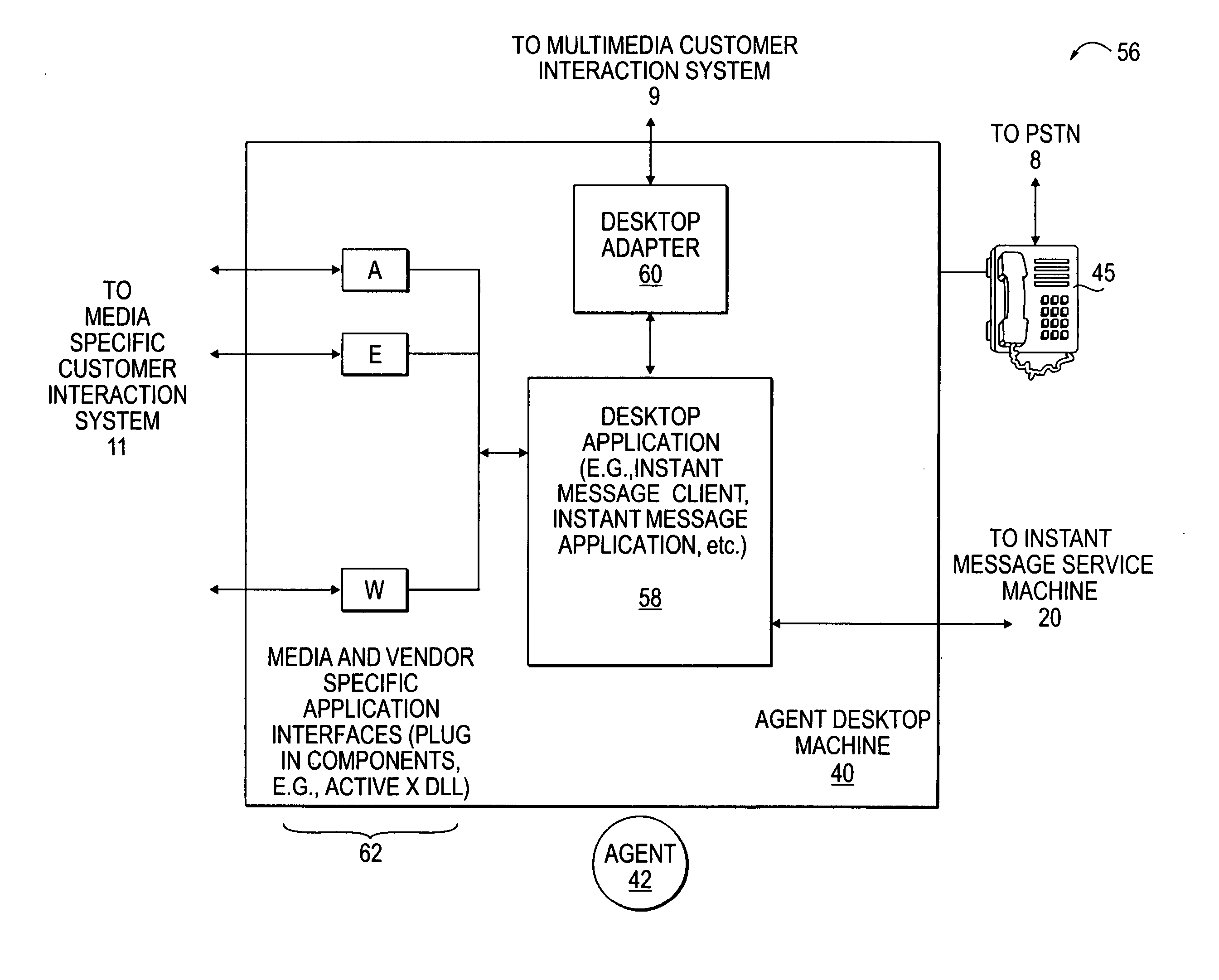

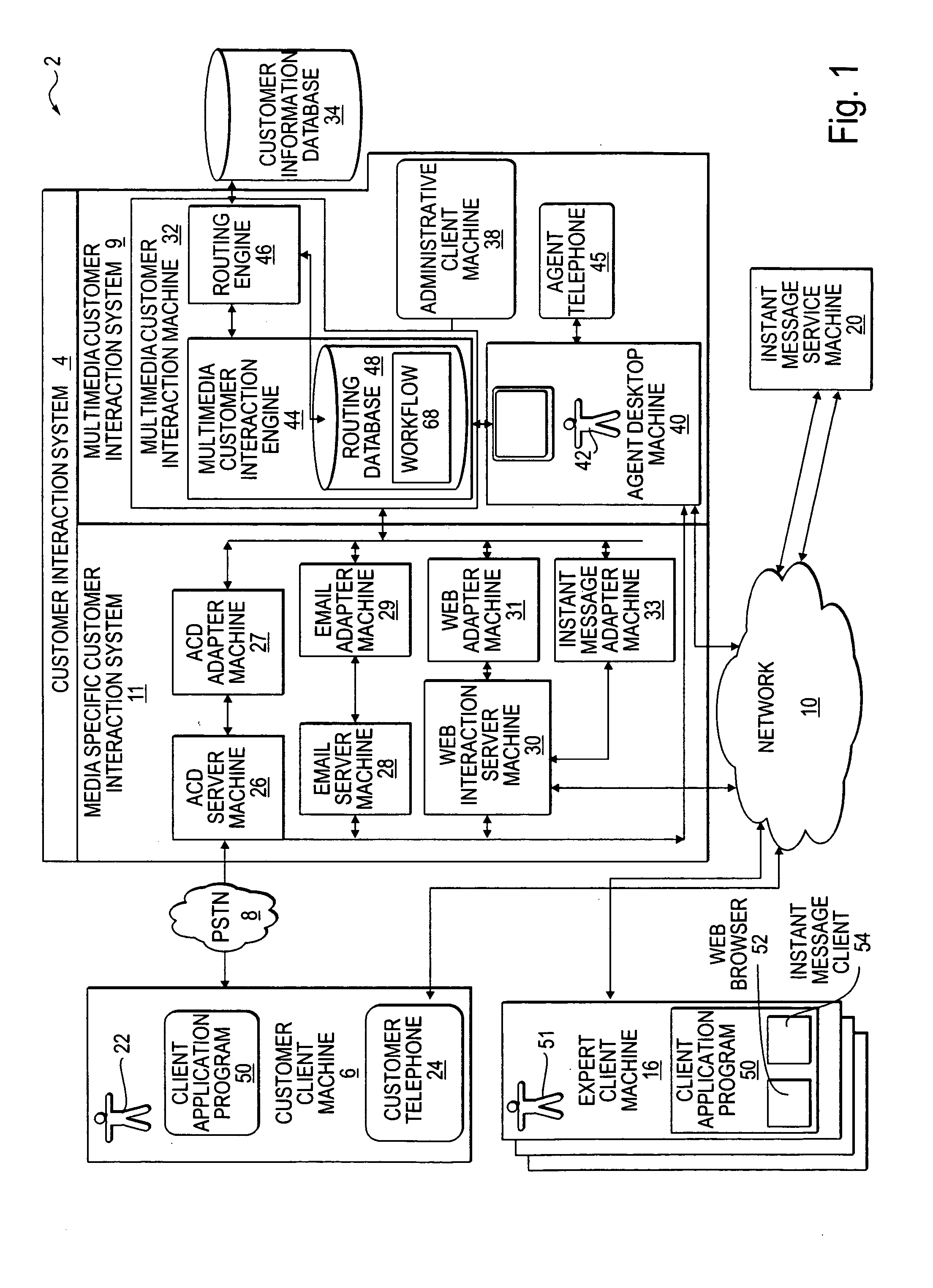

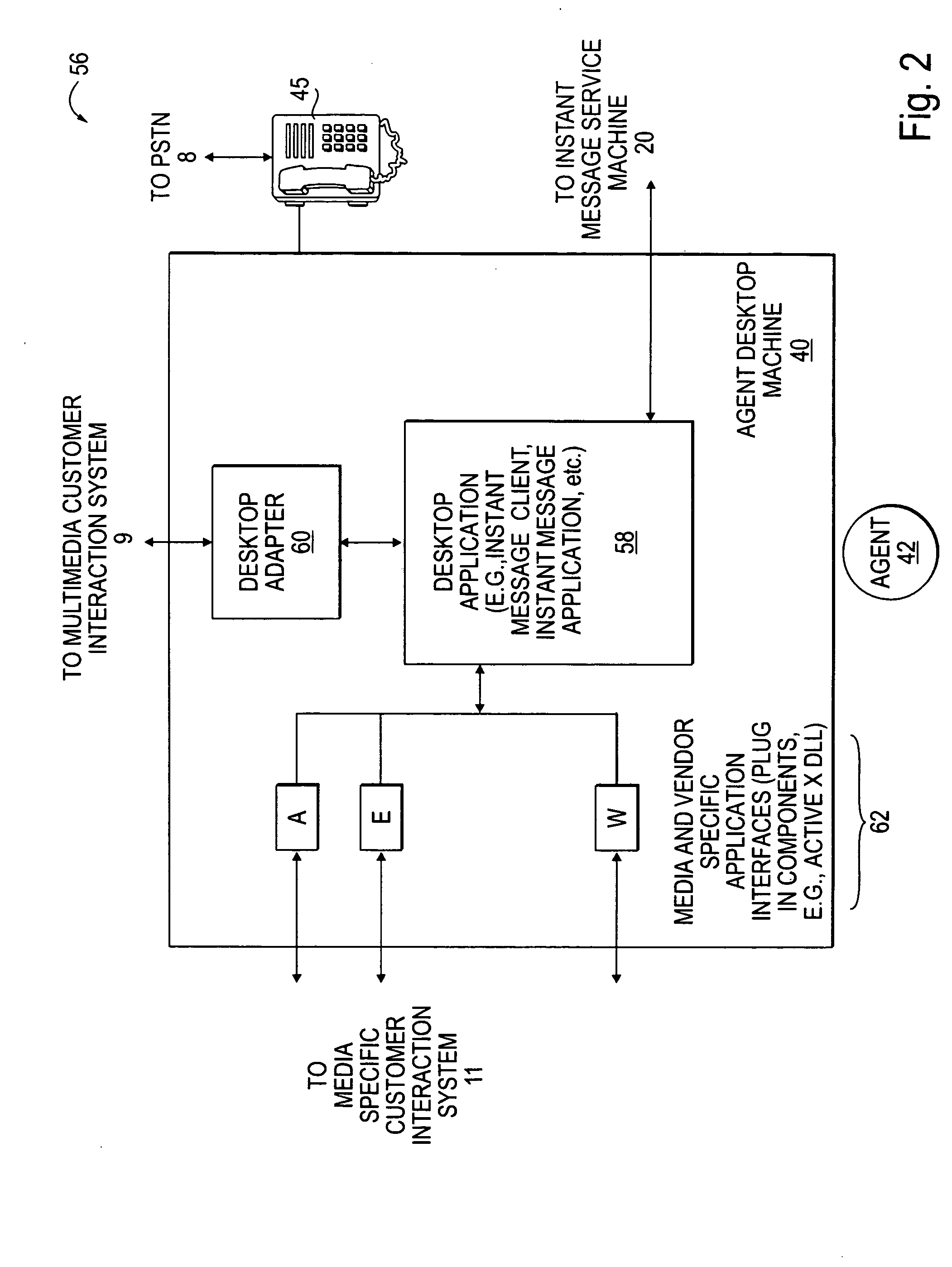

Method and system to provide expert support with a customer interaction system

InactiveUS20050086290A1Interconnection arrangementsMultiple digital computer combinationsInteraction systemsHuman–computer interaction

A method and apparatus to is provided to respond to a customer query received at a customer interaction system. The method includes communicating at least one expert group to an agent; receiving a selection from the agent that identifies an expert group from the at least one expert group, the selection triggering a first immediate message that includes a request for assistance from the expert group; identifying at least one expert that is associated with the expert group; and establishing an immediate message connection between the at least one expert and the agent, wherein the immediate message connection enables the exchange of immediate messages between the at least one expert and the agent so the agent may respond to the customer query.

Owner:ASPECT COMM

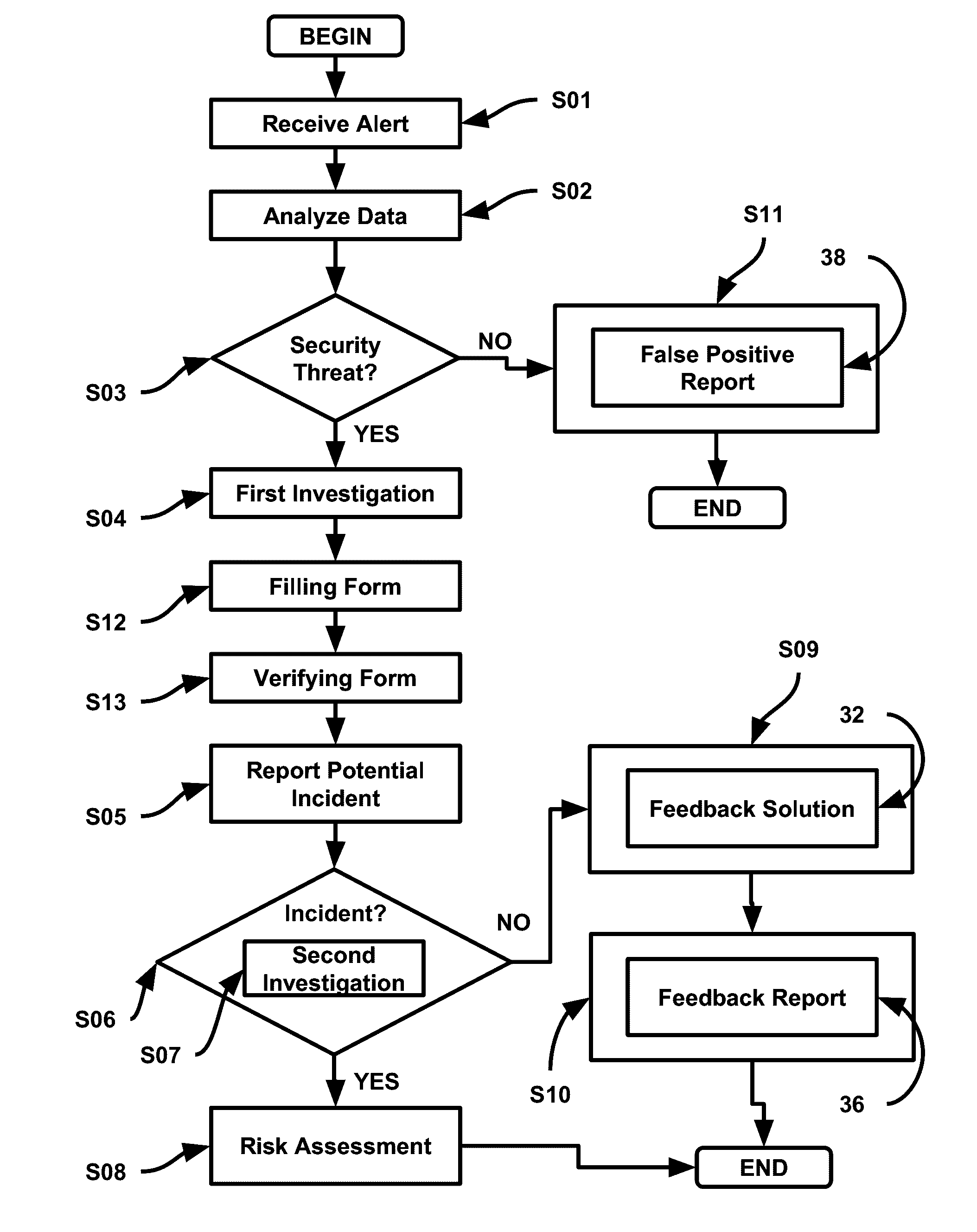

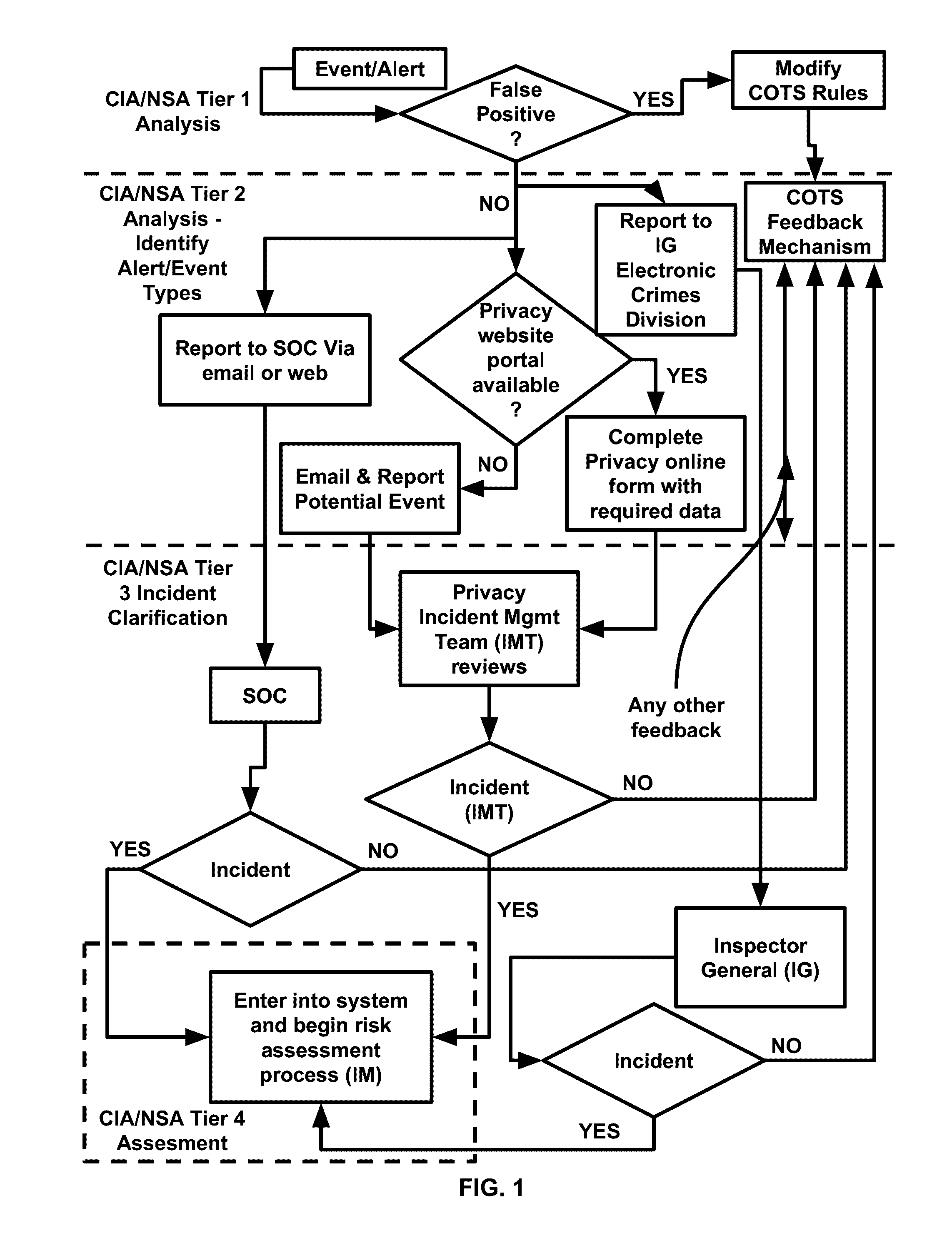

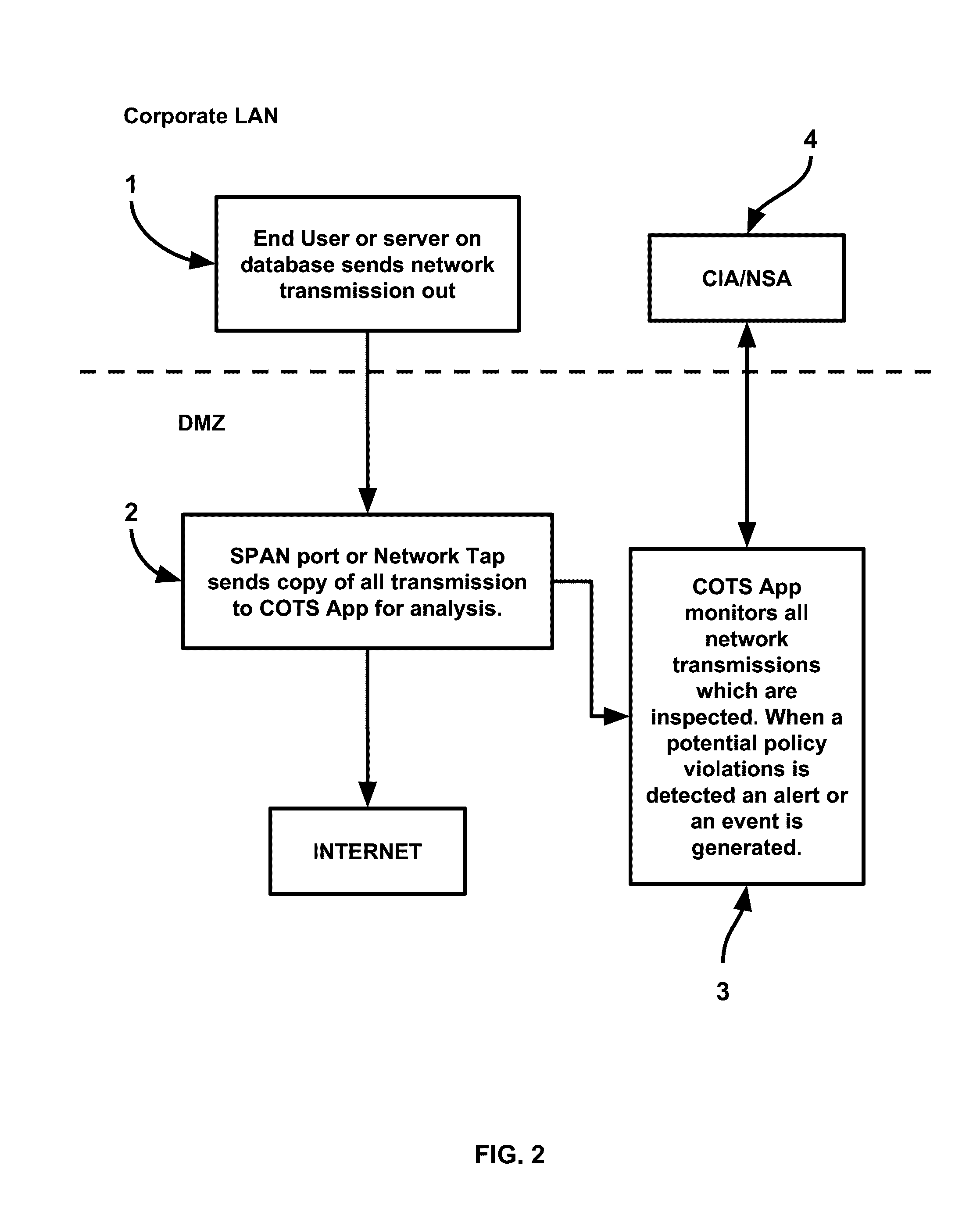

Method and device for managing security in a computer network

InactiveUS20160330219A1Speed up iterationShorten the timeNavigation instrumentsKnowledge representationInteraction systemsInformation type

Method and device for managing security in a computer network include algorithms of iterative intelligence growth, iterative evolution, and evolution pathways; sub-algorithms of information type identifier, conspiracy detection, media scanner, privilege isolation analysis, user risk management and foreign entities management; and modules of security behavior, creativity, artificial threat, automated growth guidance, response / generic parser, security review module and monitoring interaction system. Applications include malware predictive tracking, clandestine machine intelligence retribution through covert operations in cyberspace, logically inferred zero-database a-priori realtime defense, critical infrastructure protection & retribution through cloud & tiered information security, and critical thinking memory & perception.

Owner:HASAN SYED KAMRAN

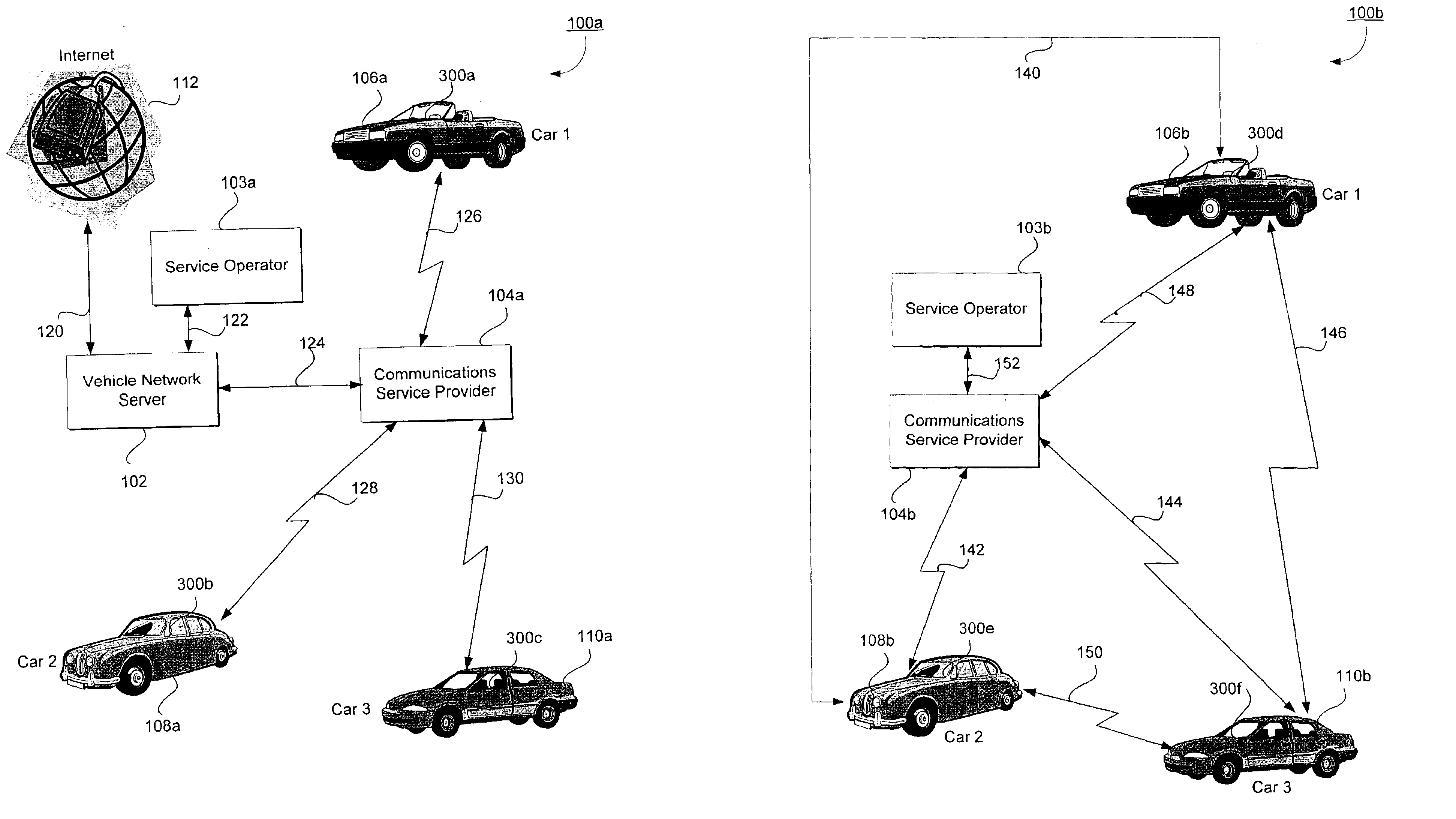

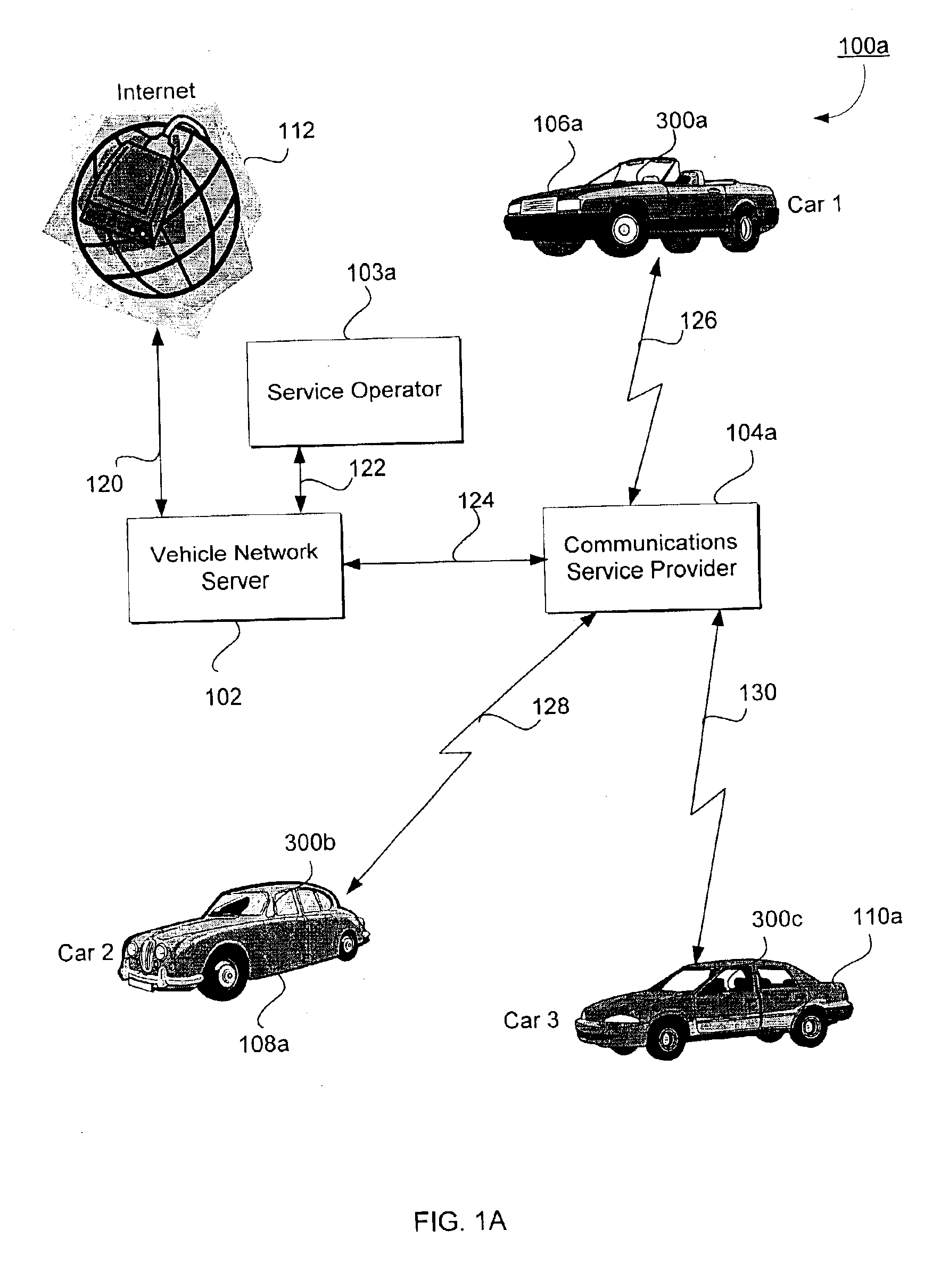

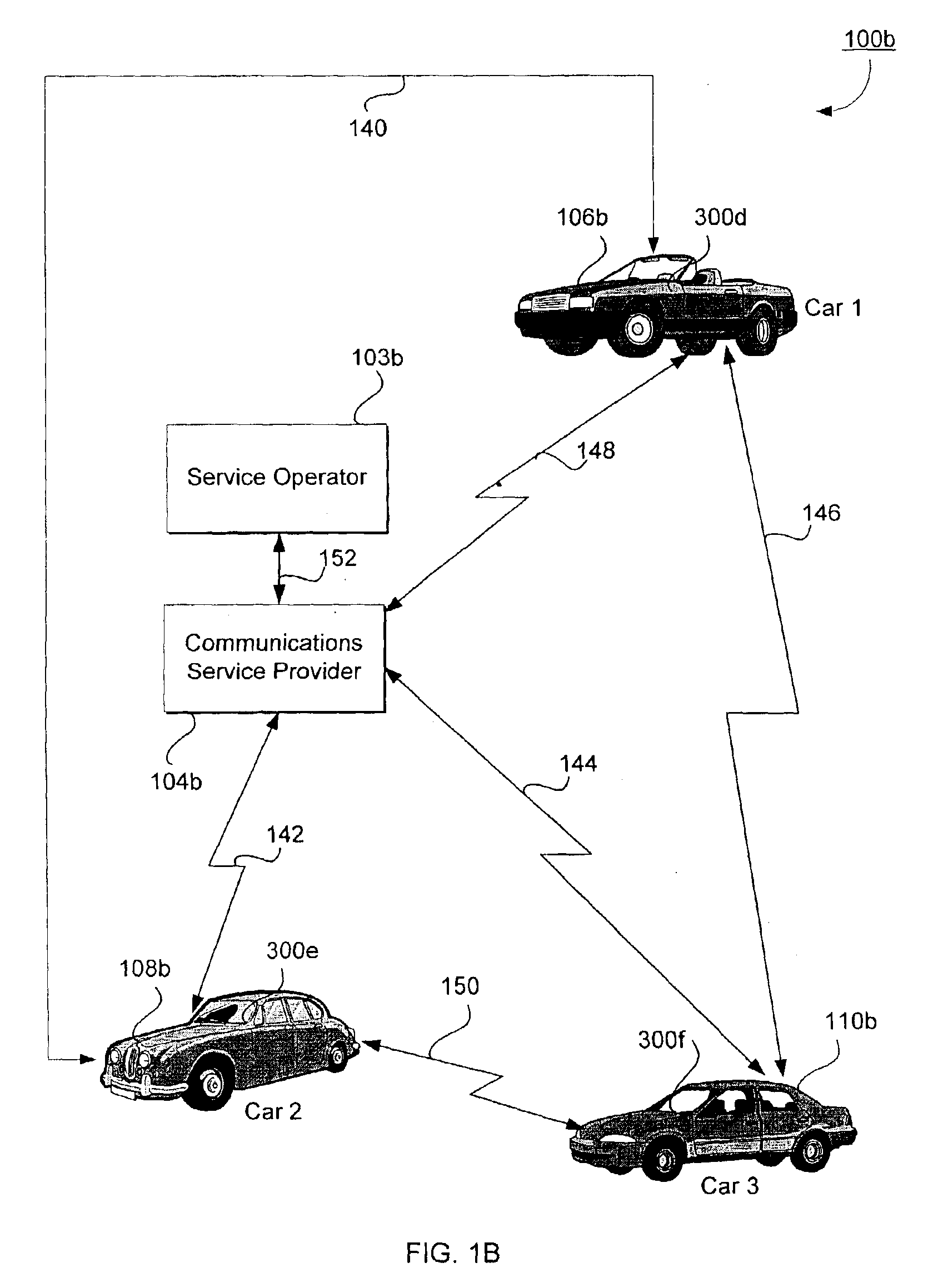

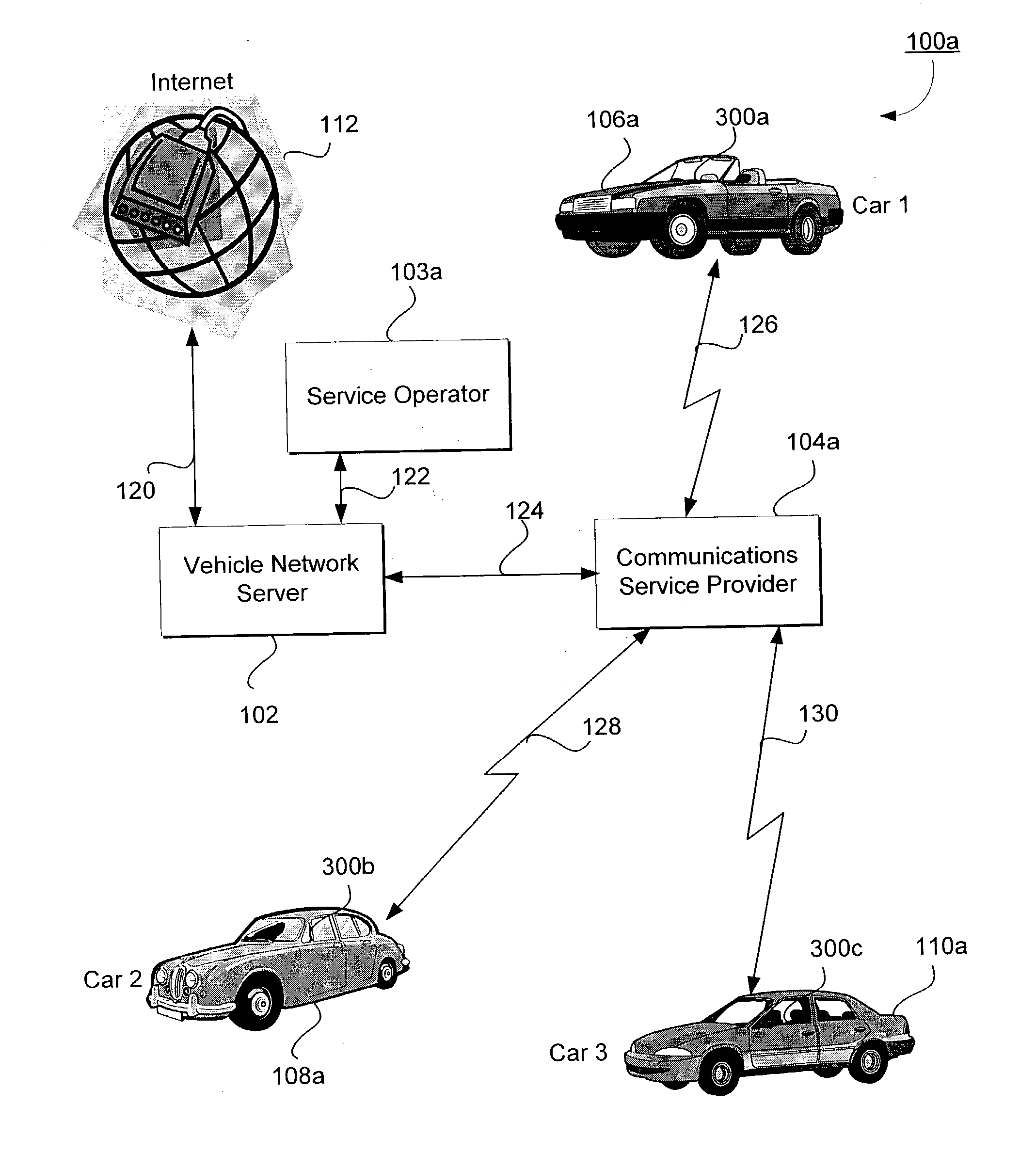

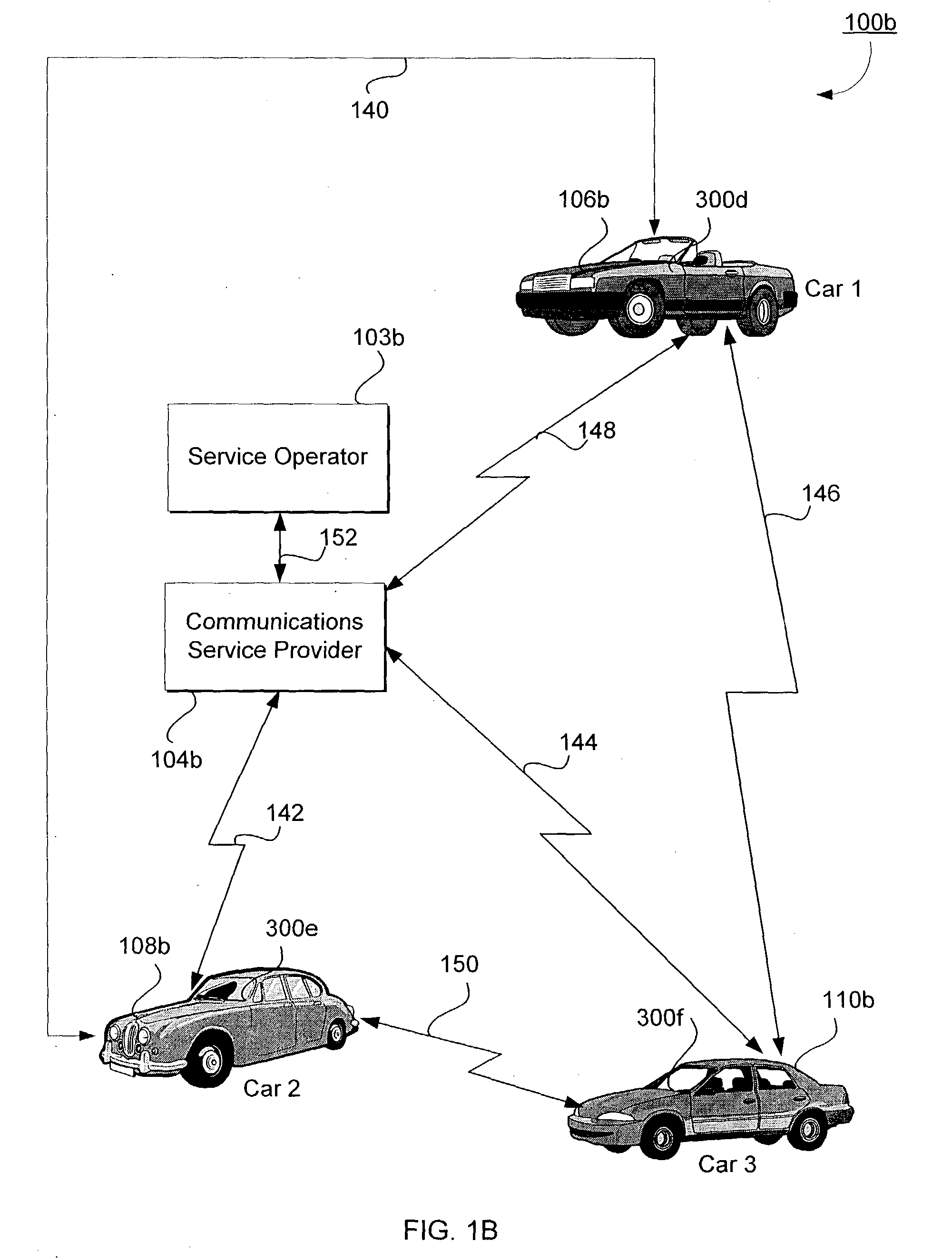

Group interaction system for interaction with other vehicles of a group

InactiveUS6868333B2Easy to optimizeGood choiceInstruments for road network navigationArrangements for variable traffic instructionsInteraction systemsEngineering

The group interaction system comprises a plurality of vehicle navigation systems that are capable of communicating with one another, displaying the location of other vehicle navigation systems in a group, and receiving selection of certain vehicle navigation systems in the group and selection of an application for interaction among the selected vehicle navigation systems. A group of vehicle navigation systems to interact with is established. One or more of other vehicle navigation systems to interact with the vehicle navigation system. An application is also selected on the vehicle navigation system for interaction with the selected other vehicle navigation systems. In response, the vehicle navigation system runs the selected application with respect to the selected other navigation systems.

Owner:TOYOTA INFOTECHNOLOGY CENT CO LTD

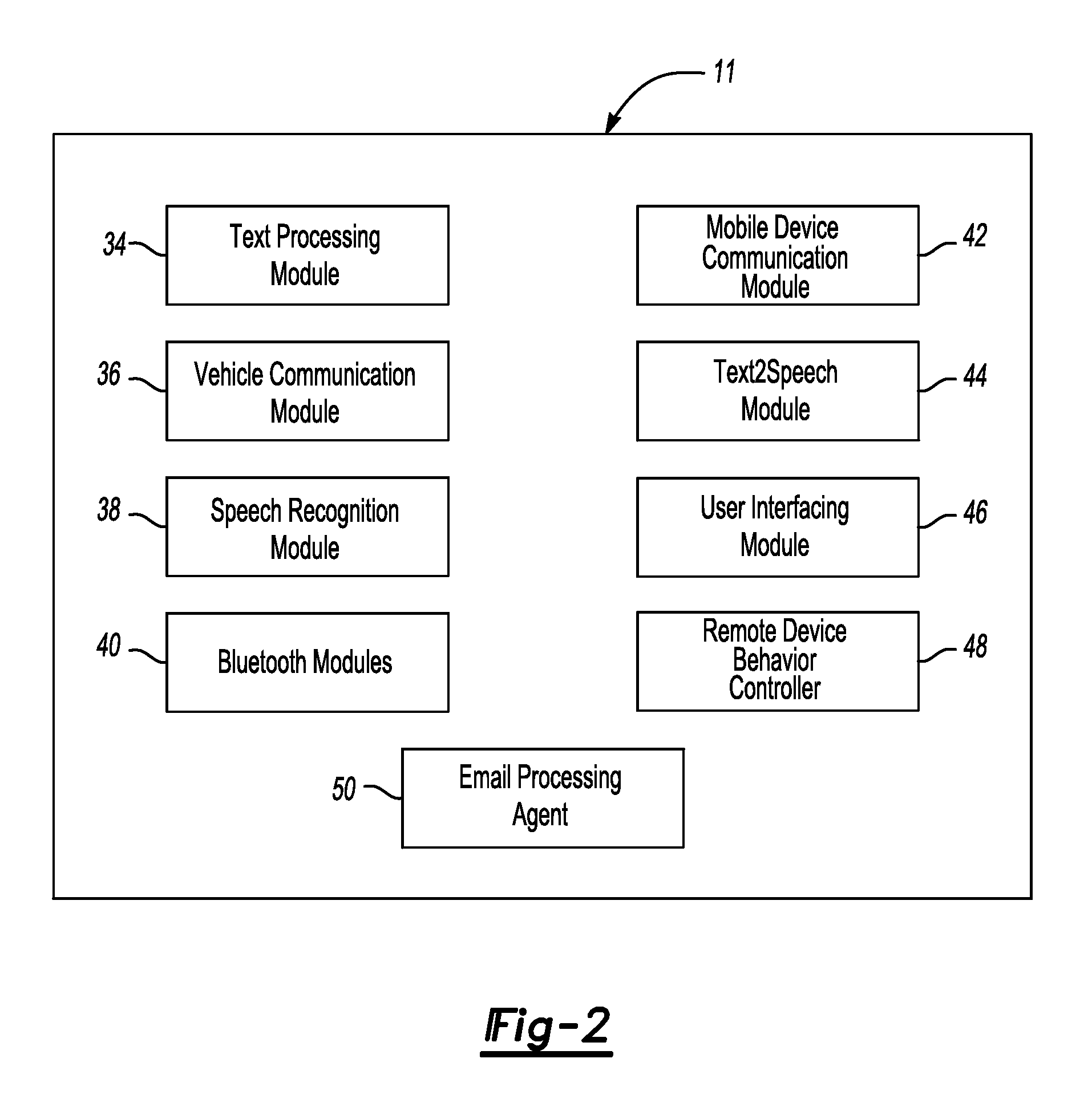

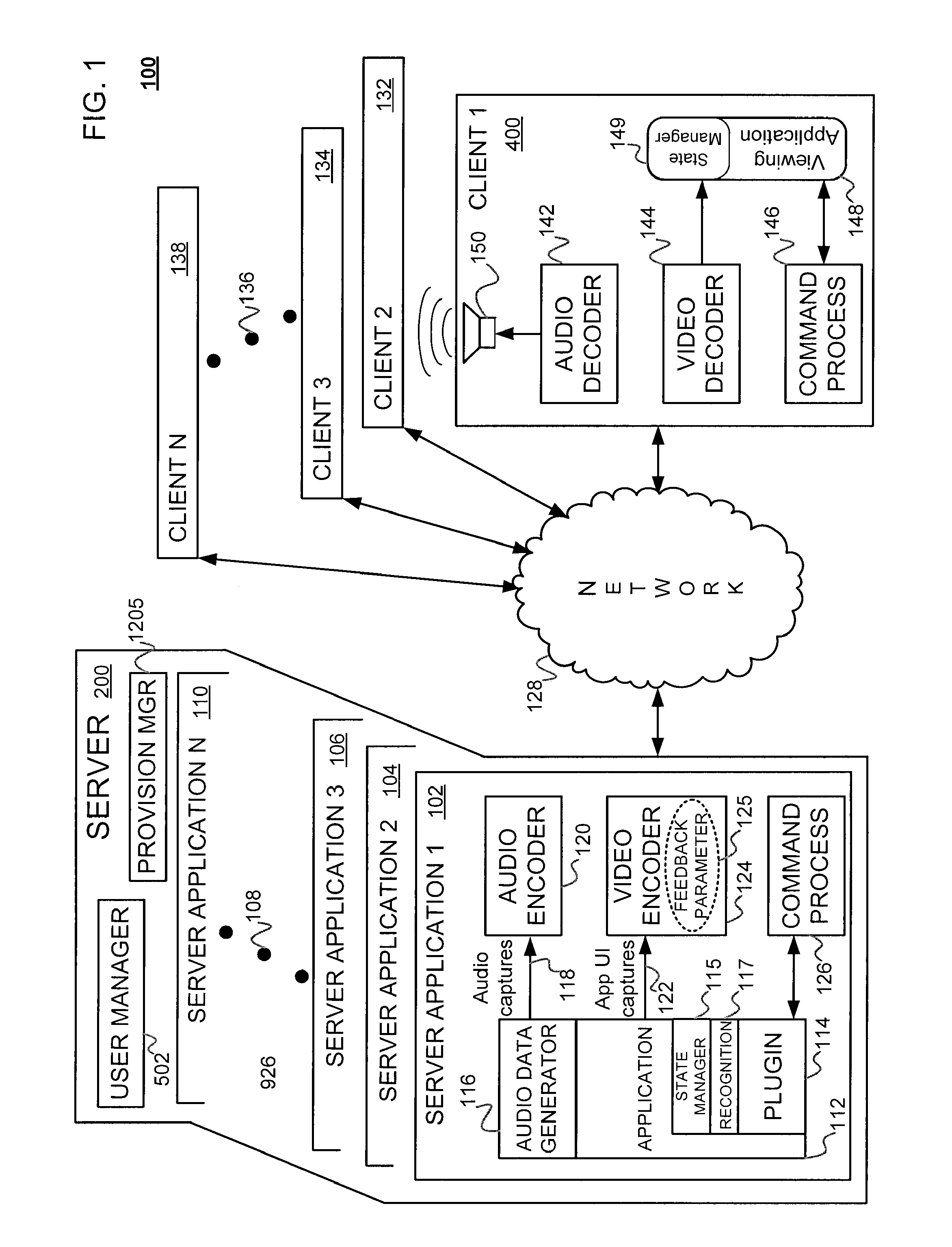

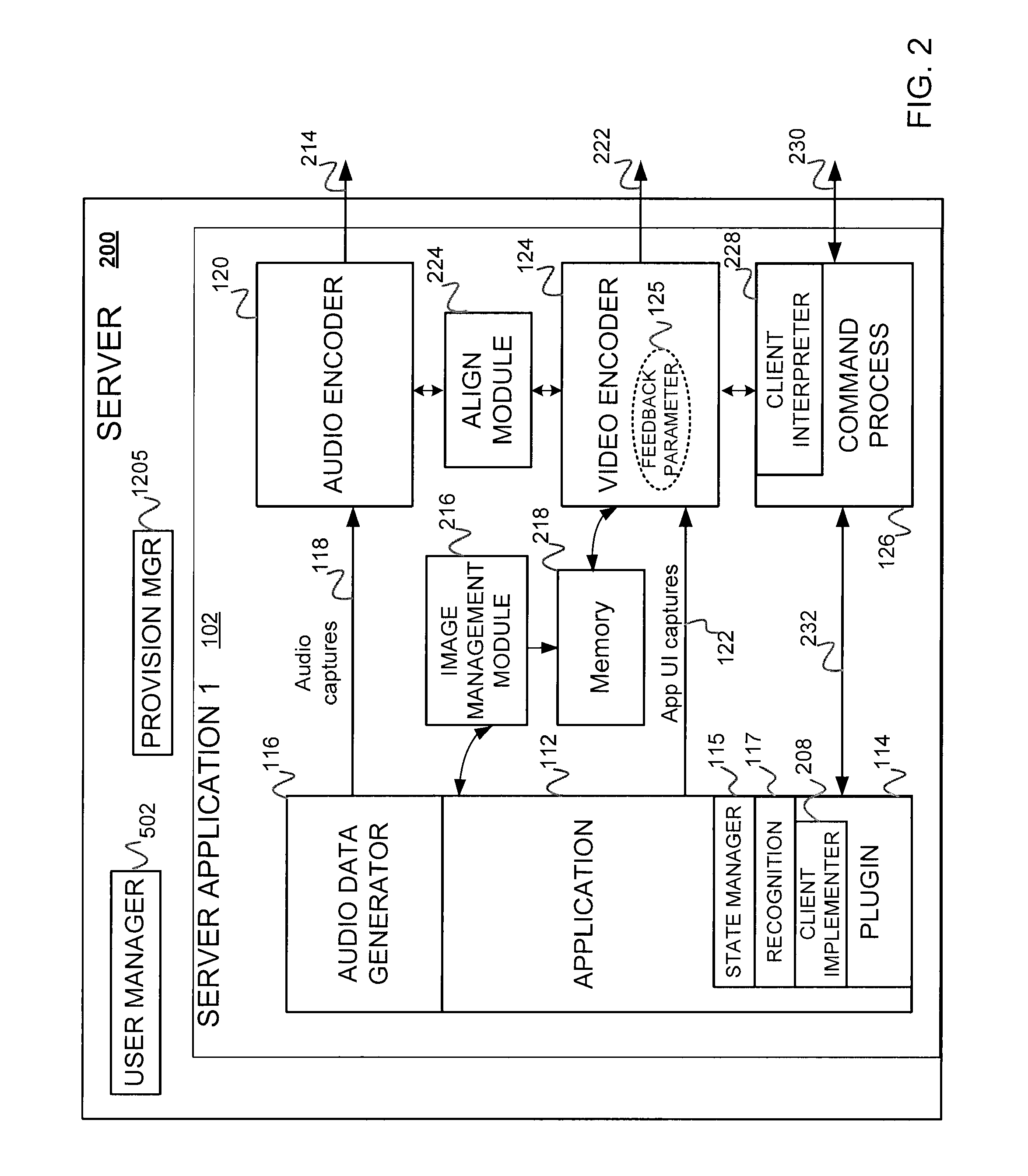

Mobile device user interface for remote interaction

InactiveUS20080184128A1Cathode-ray tube indicatorsDigital video signal modificationInteraction systemsGraphics

Systems and methods pertaining to displaying a web browsing session are disclosed. In one embodiment, a system includes a web browsing engine residing on a first device, with a viewing application residing on a second device and operatively coupled to the web browsing engine, where the viewing application is adapted to display a portion of a webpage rendered by the web browsing engine and an overlay graphical component. In the same embodiment, the system also includes a recognition engine adapted to identify an element on the webpage and communicate information regarding the element to the viewing application.

Owner:OTELLO CORP ASA

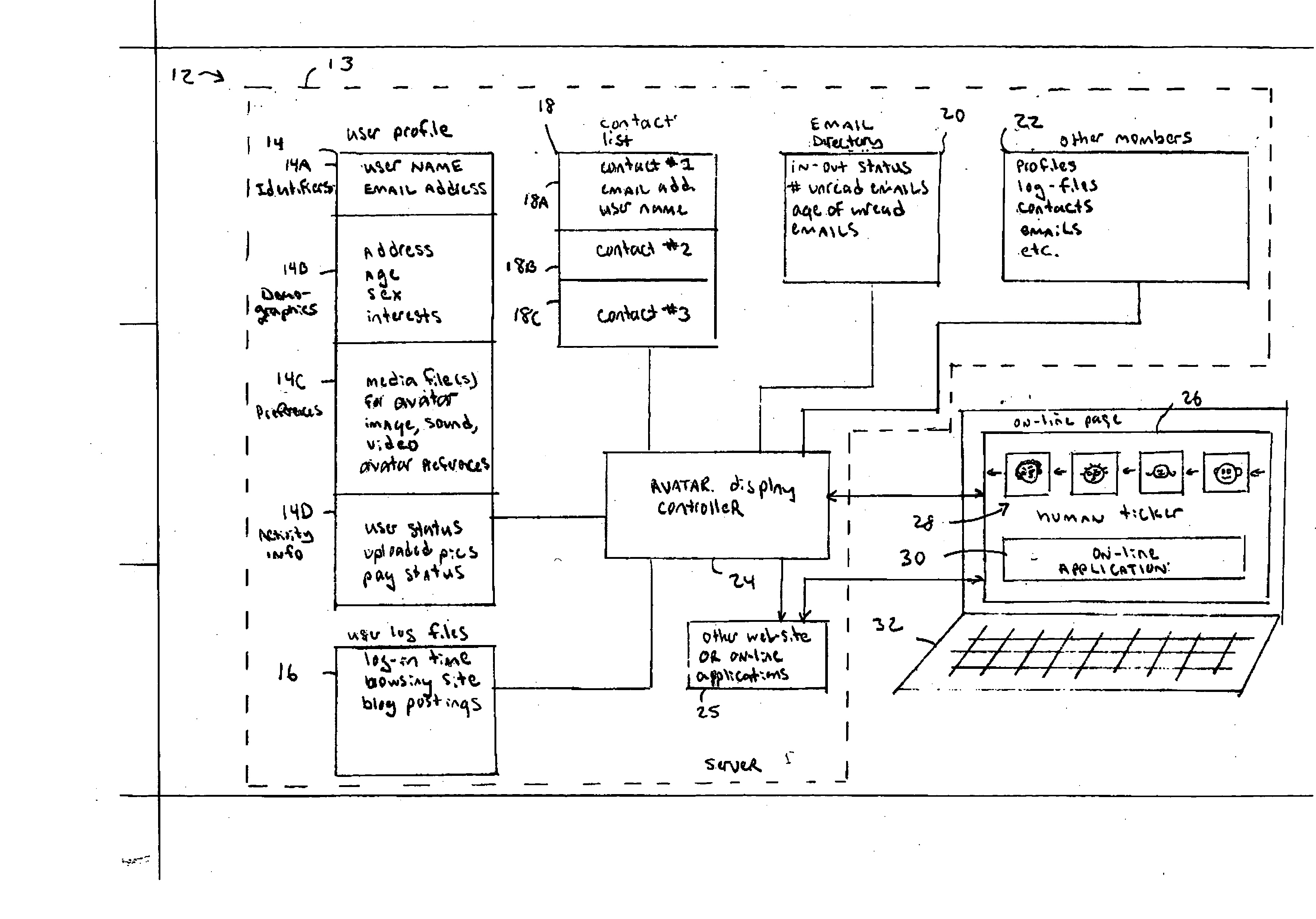

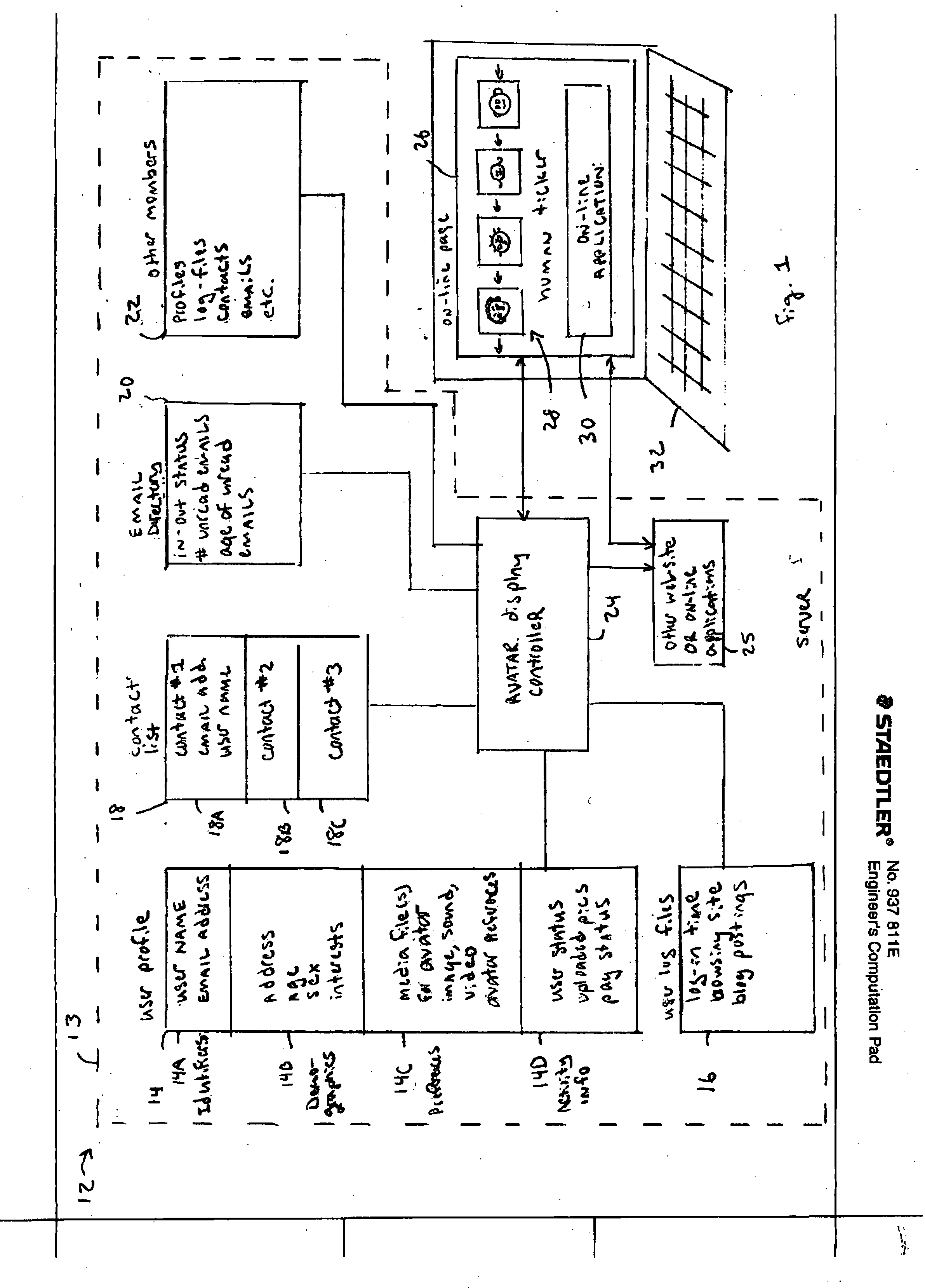

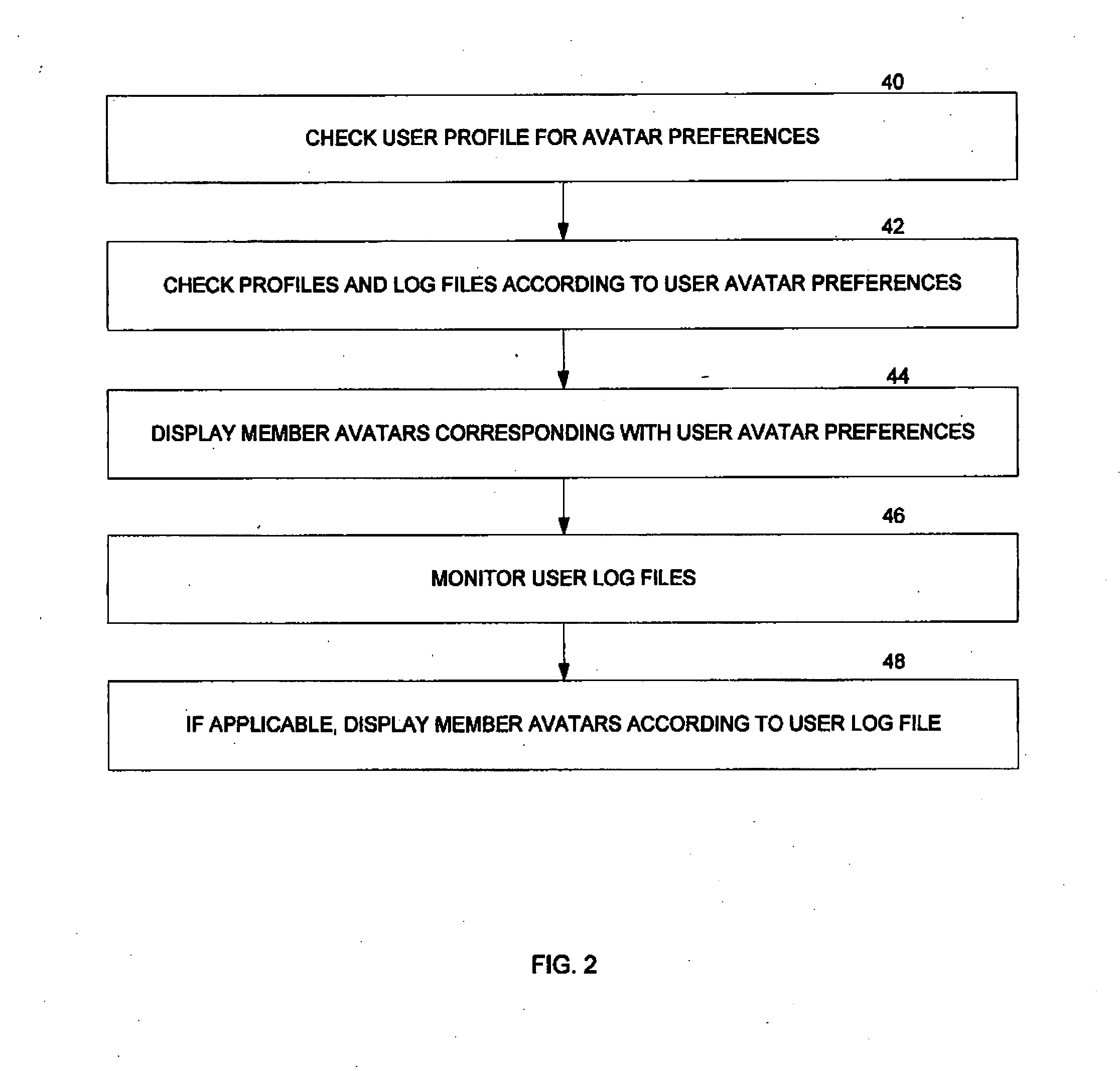

On-line interaction system

An avatar display system monitors the activities or status of different members on a network site. Avatars representing the different members are displayed in conjunction with an on-line application according to the different identified member activities or status. Different avatar display techniques and filtering schemes are used to both promote and improve interactions between different members of the on-line application or website.

Owner:SOCIAL CONCEPTS

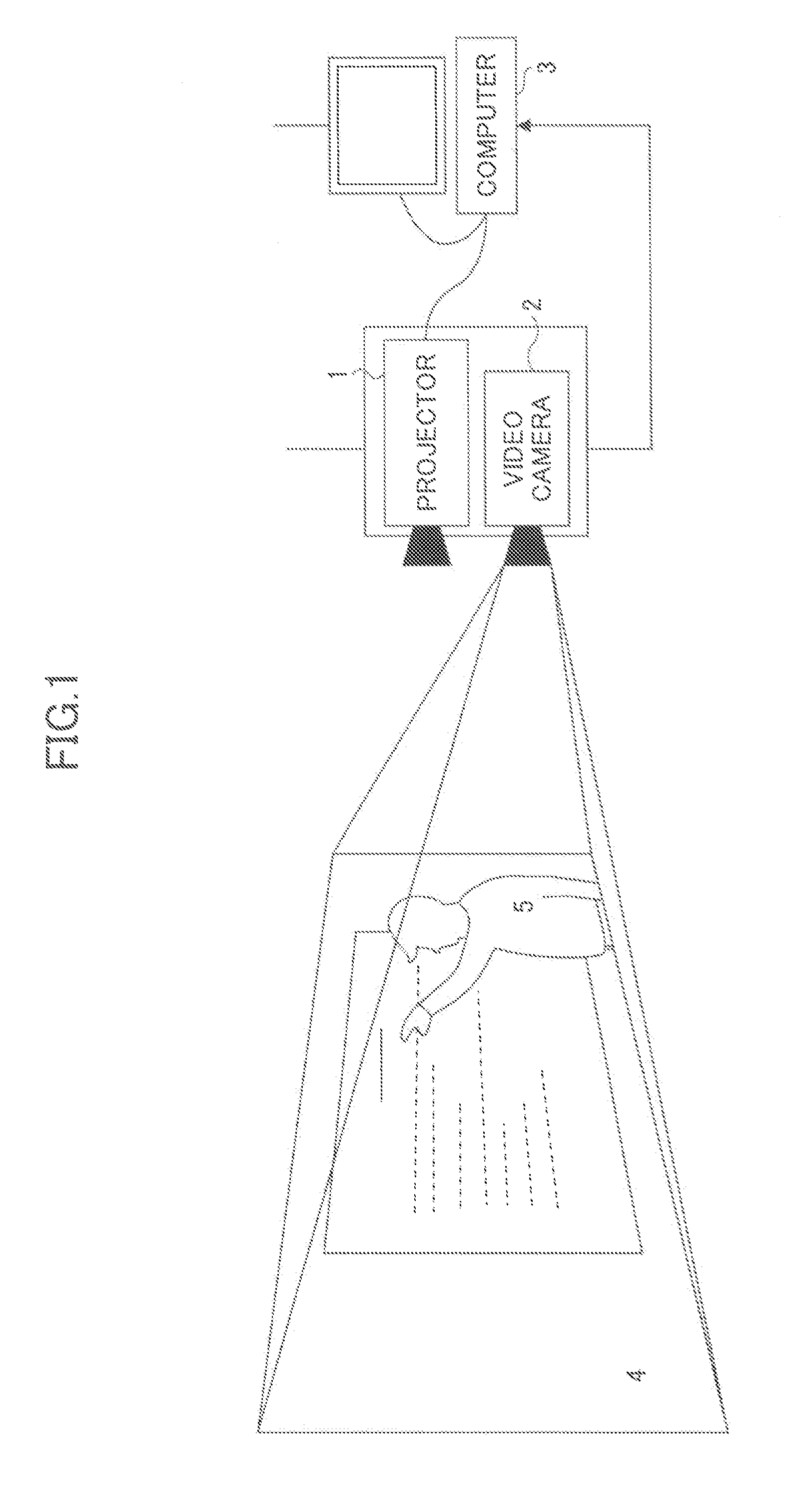

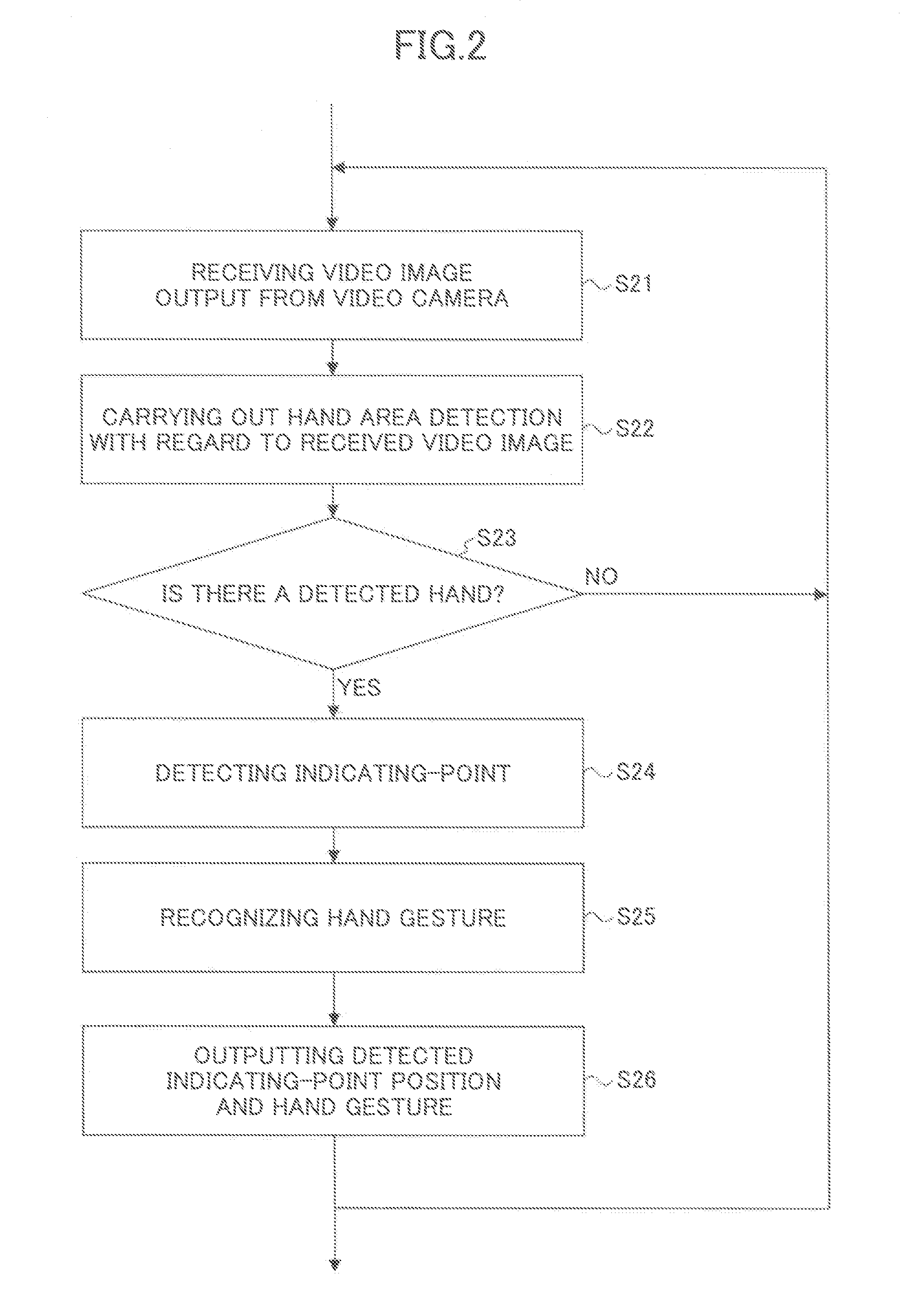

Hand and indicating-point positioning method and hand gesture determining method used in human-computer interaction system

InactiveUS20120062736A1Rapid positioningCharacter and pattern recognitionColor television detailsInteraction systemsPattern recognition

Disclosed are a hand positioning method and a human-computer interaction system. The method comprises a step of continuously capturing a current image so as to obtain a sequence of video images; a step of extracting a foreground image from each of the captured video images, and then carrying out binary processing so as to obtain a binary foreground image; a step of obtaining a vertex set of a minimum convex hull of the binary foreground image, and then creating areas of concern serving as candidate hand areas; and a step of extracting hand imaging features from the respective created areas of concern, and then determining a hand area from the candidate hand areas by carrying out pattern recognition based on the extracted hand imaging features.

Owner:RICOH KK

Group interaction system for interaction with other vehicles of a group

InactiveUS20040148090A1Easy to optimizeGood choiceInstruments for road network navigationArrangements for variable traffic instructionsInteraction systemsEngineering

The group interaction system comprises a plurality of vehicle navigation systems that are capable of communicating with one another, displaying the location of other vehicle navigation systems in a group, and receiving selection of certain vehicle navigation systems in the group and selection of an application for interaction among the selected vehicle navigation systems. A group of vehicle navigation systems to interact with is established. One or more of other vehicle navigation systems to interact with the vehicle navigation system. An application is also selected on the vehicle navigation system for interaction with the selected other vehicle navigation systems. In response, the vehicle navigation system runs the selected application with respect to the selected other navigation systems.

Owner:TOYOTA INFOTECHNOLOGY CENT CO LTD

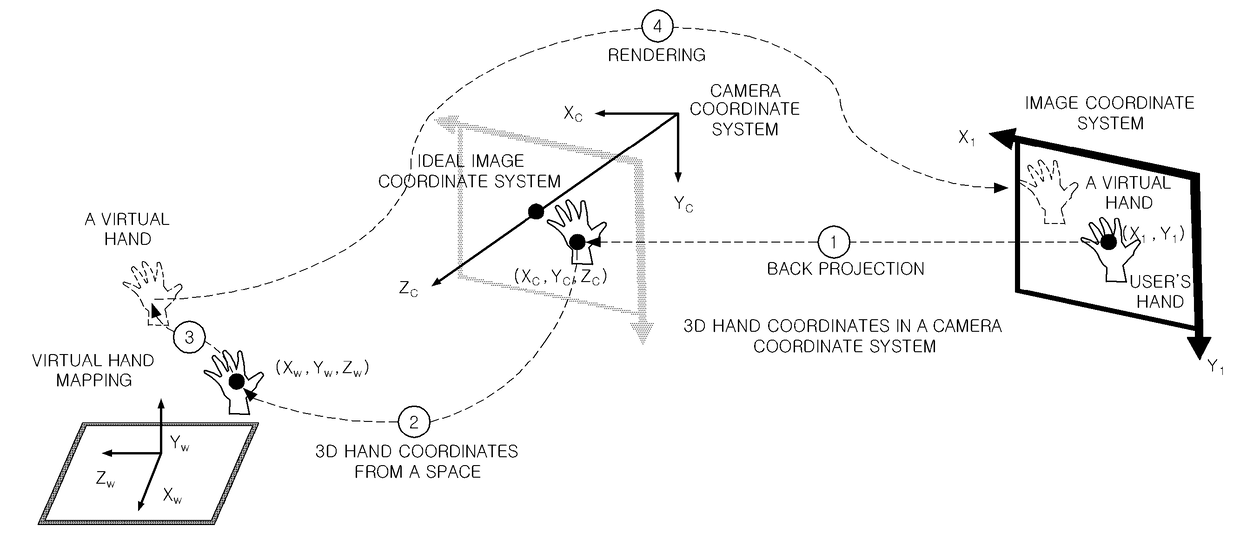

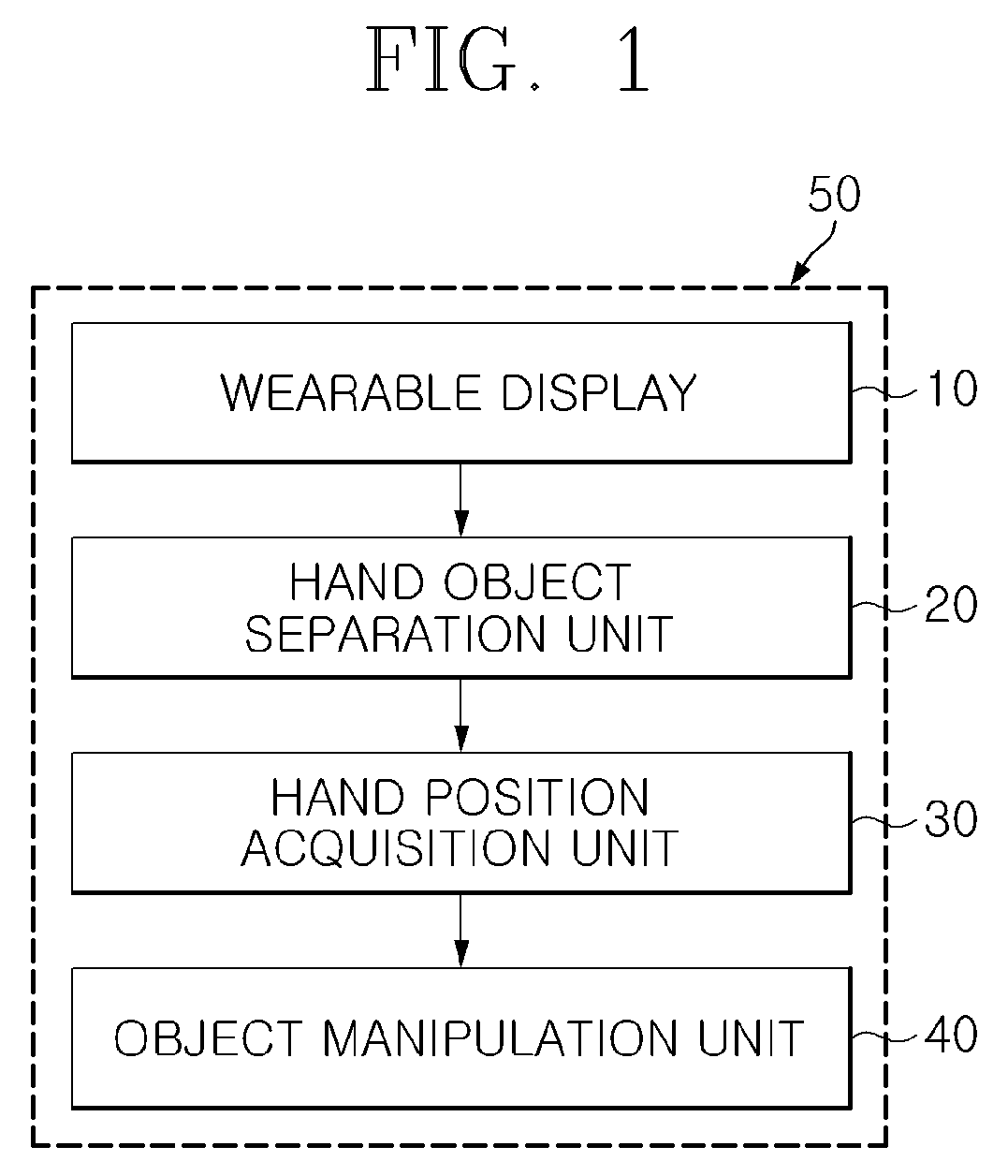

Apparatus and method for estimating hand position utilizing head mounted color depth camera, and bare hand interaction system using same

InactiveUS20170140552A1Increase distanceRecognition distanceInput/output for user-computer interactionImage enhancementInteraction systemsColor depth

The present invention relates to a technology that allows a user to manipulate a virtual three-dimensional (3D) object with his or her bare hand in a wearable augmented reality (AR) environment, and more particularly, to a technology that is capable of detecting 3D positions of a pair of cameras mounted on a wearable display and a 3D position of a user's hand in a space by using distance input data of an RGB-Depth (RGB-D) camera, without separate hand and camera tracking devices installed in the space (environment) and enabling a user's bare hand interaction based on the detected 3D positions.

Owner:KOREA ADVANCED INST OF SCI & TECH

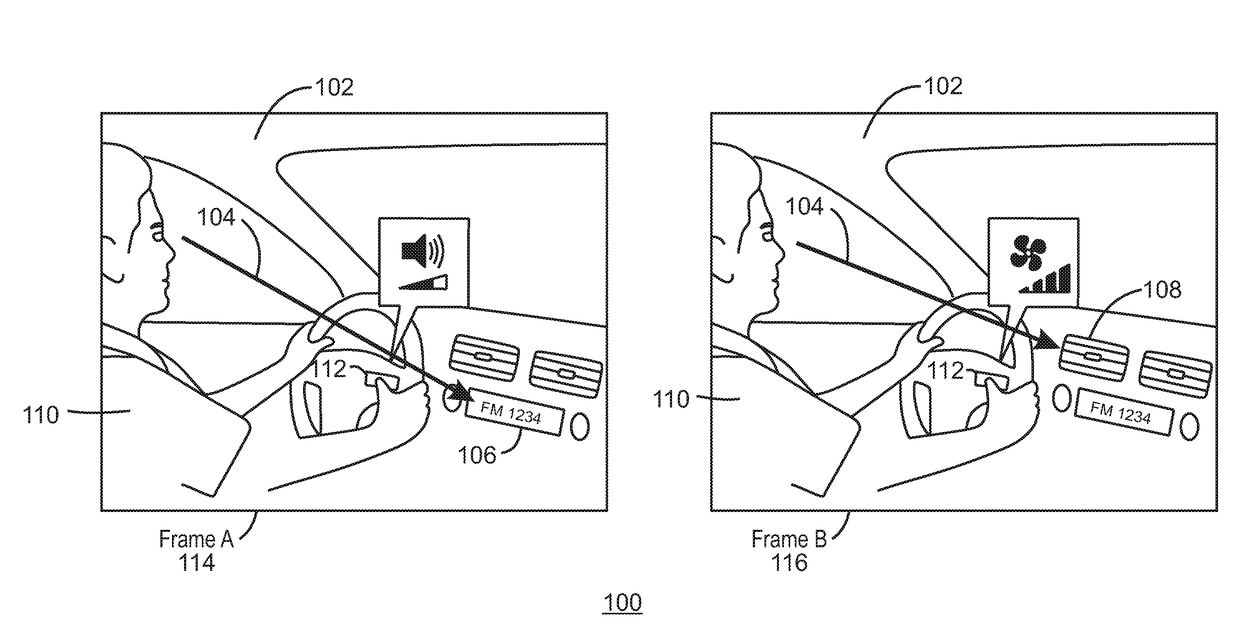

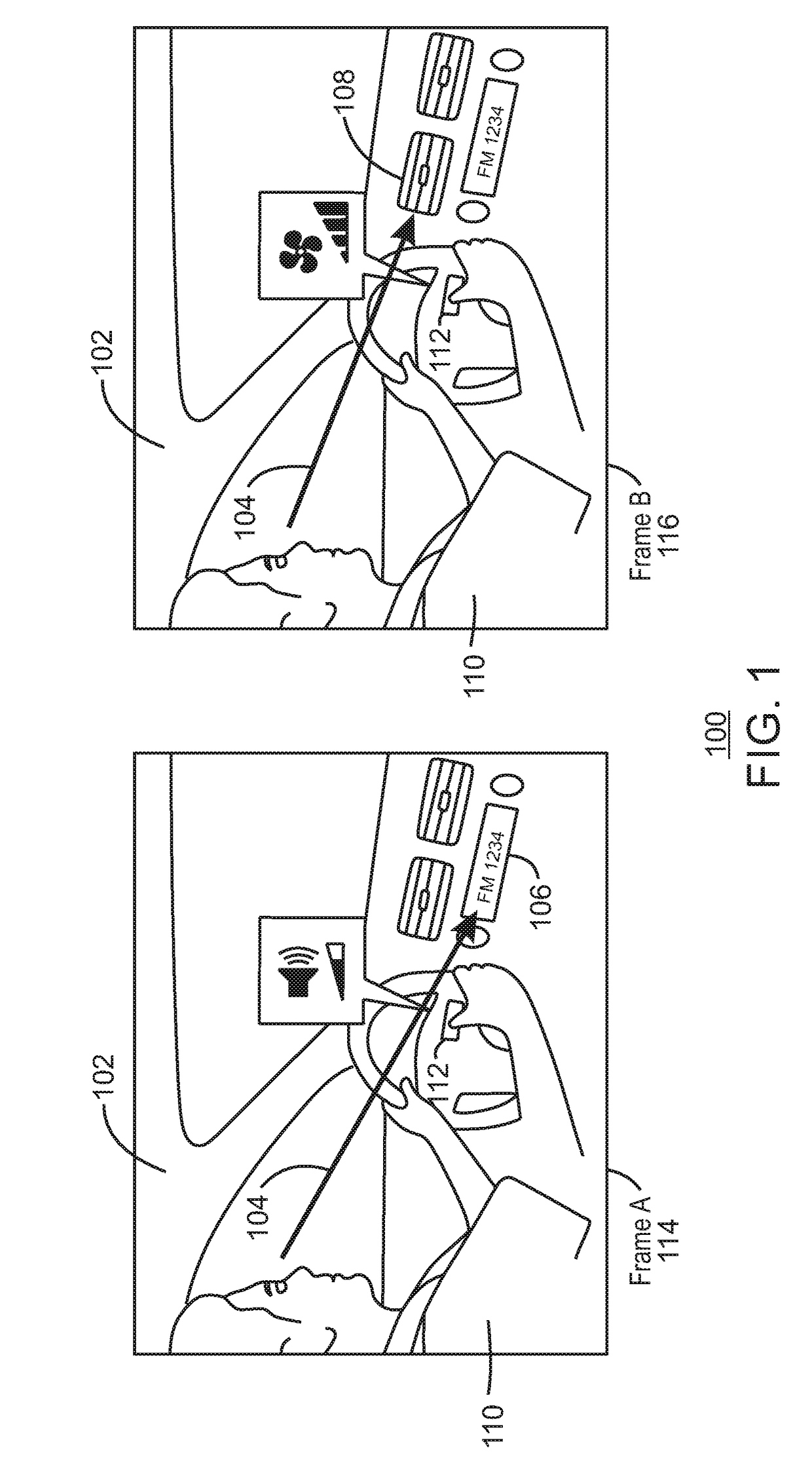

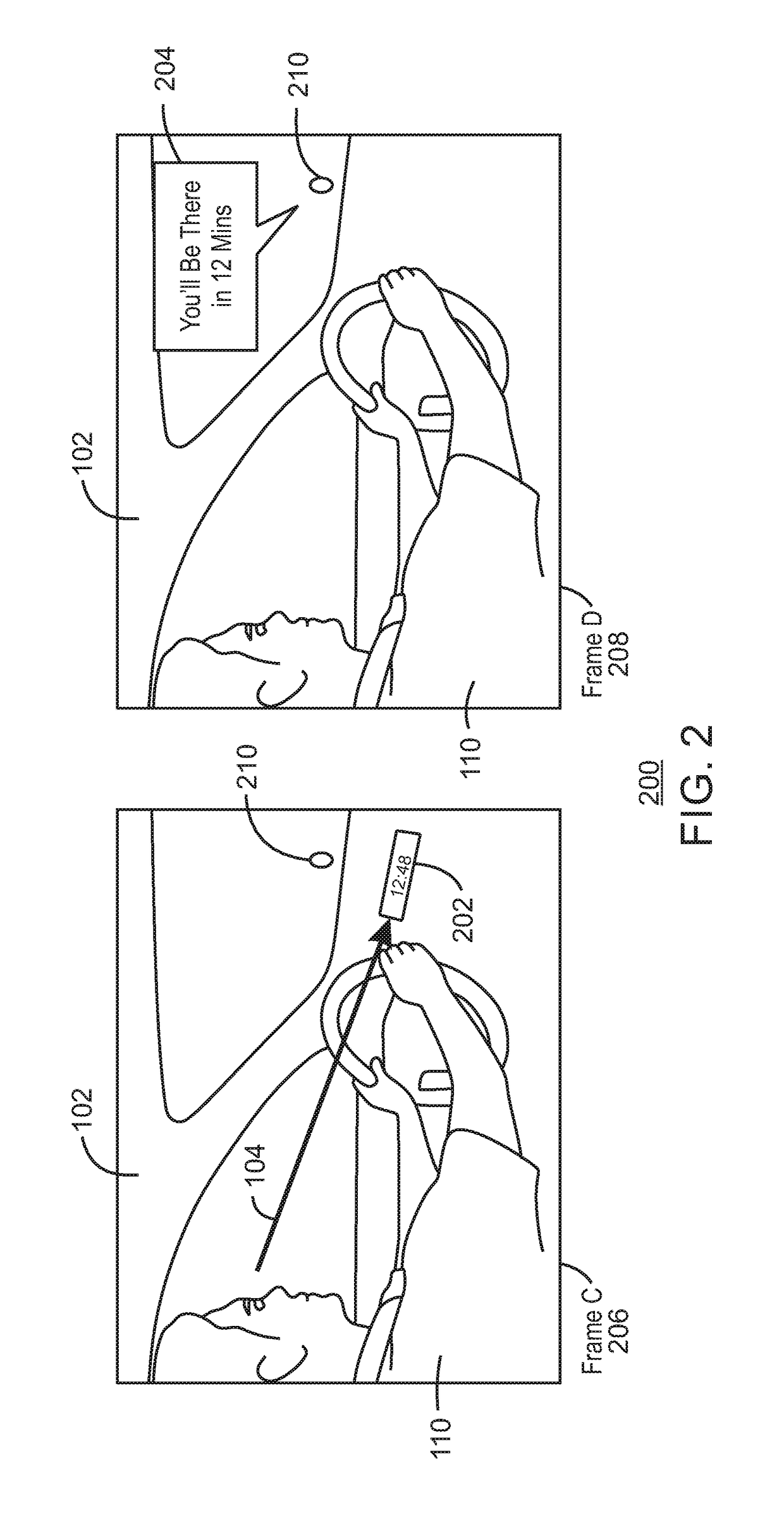

Interaction based on capturing user intent via eye gaze

InactiveUS20170235361A1Input/output for user-computer interactionSound input/outputInteraction systemsComputer science

Exemplary embodiments of the present invention relate to an interaction system for a vehicle that can be configured by a user. For example, an interaction system for a vehicle can include an image capture resource to receive eye image data of a user. The interaction system for a vehicle can also include a processor to identify a direction of an eye gaze of the user based on the eye image data. The processor can correlate the eye gaze to a driving experience function (DEF), and the processor can transmit a DEF communication.

Owner:PANASONIC AUTOMOTIVE SYST OF AMERICA

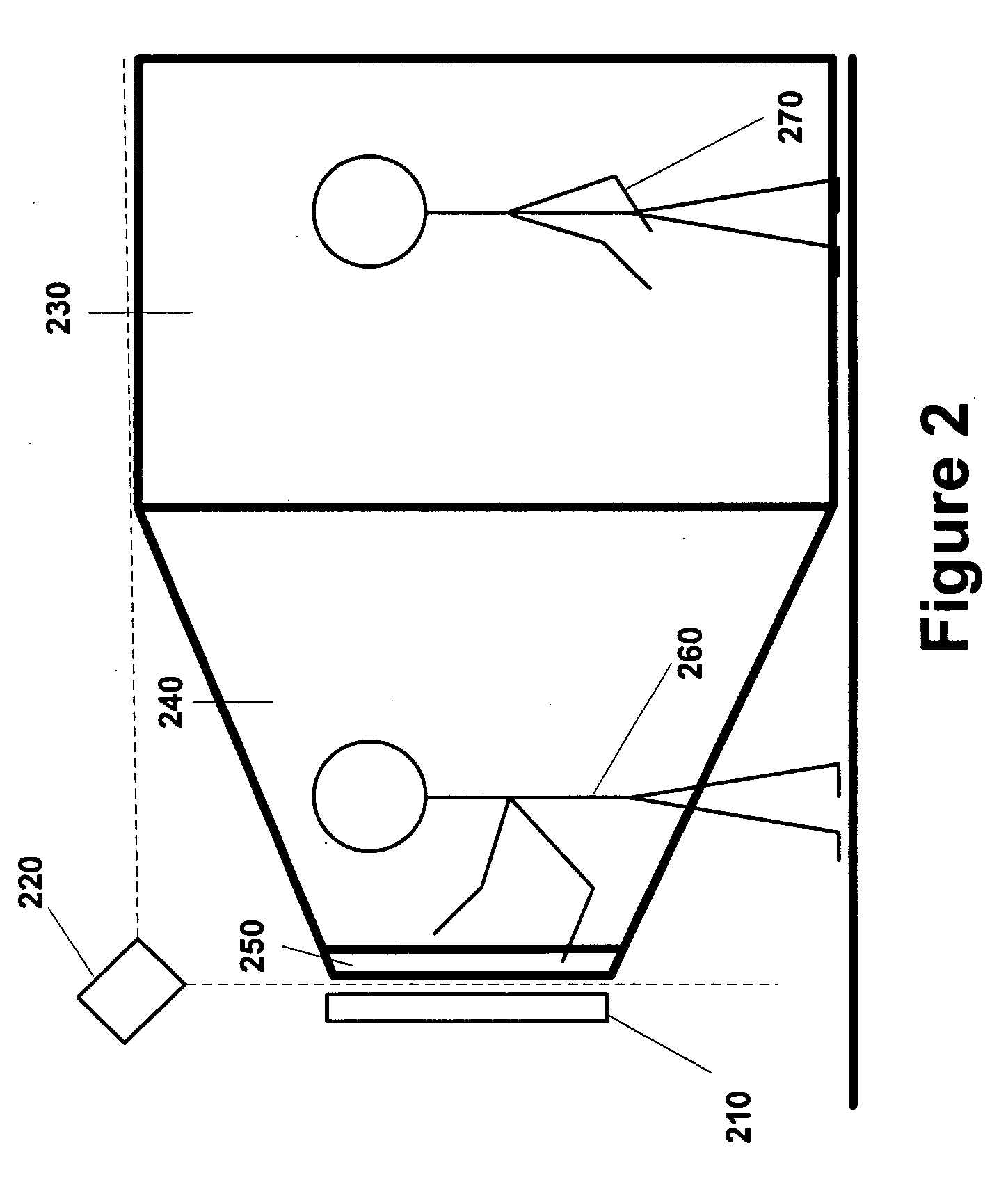

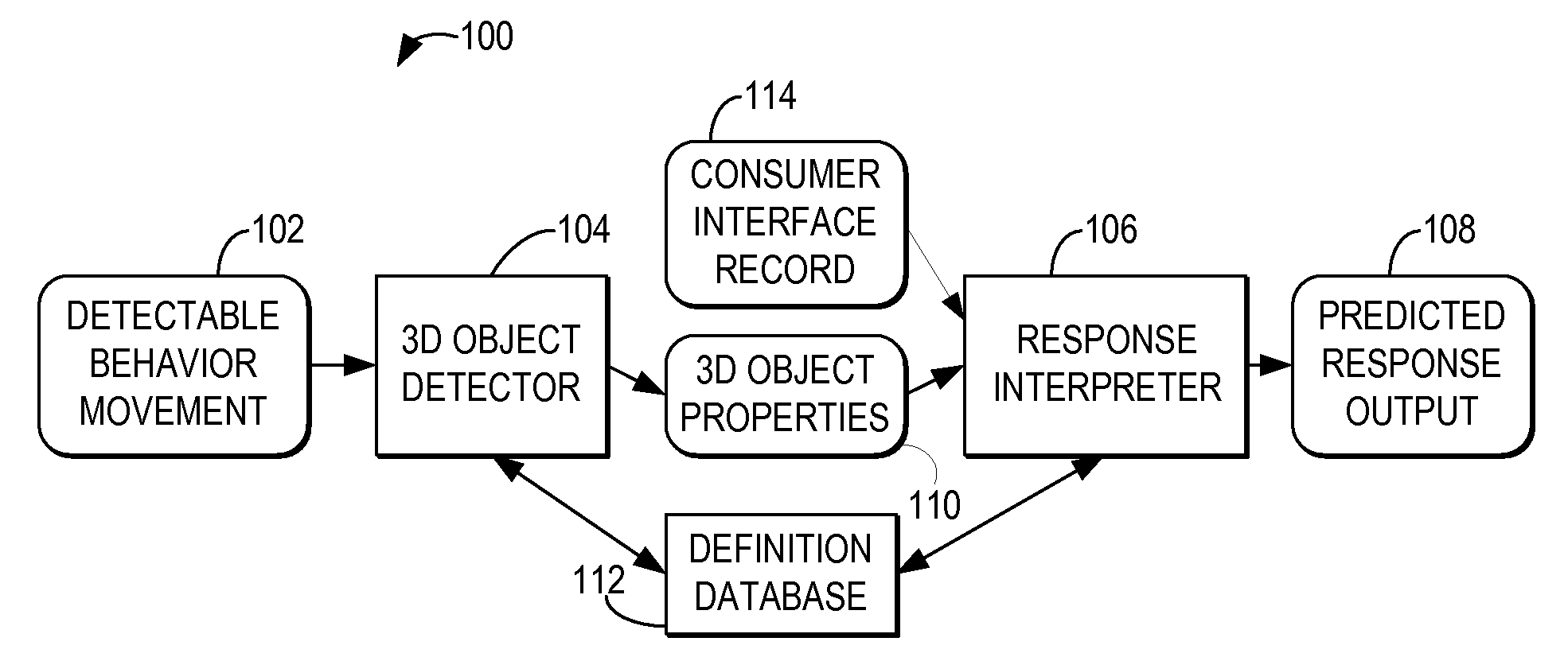

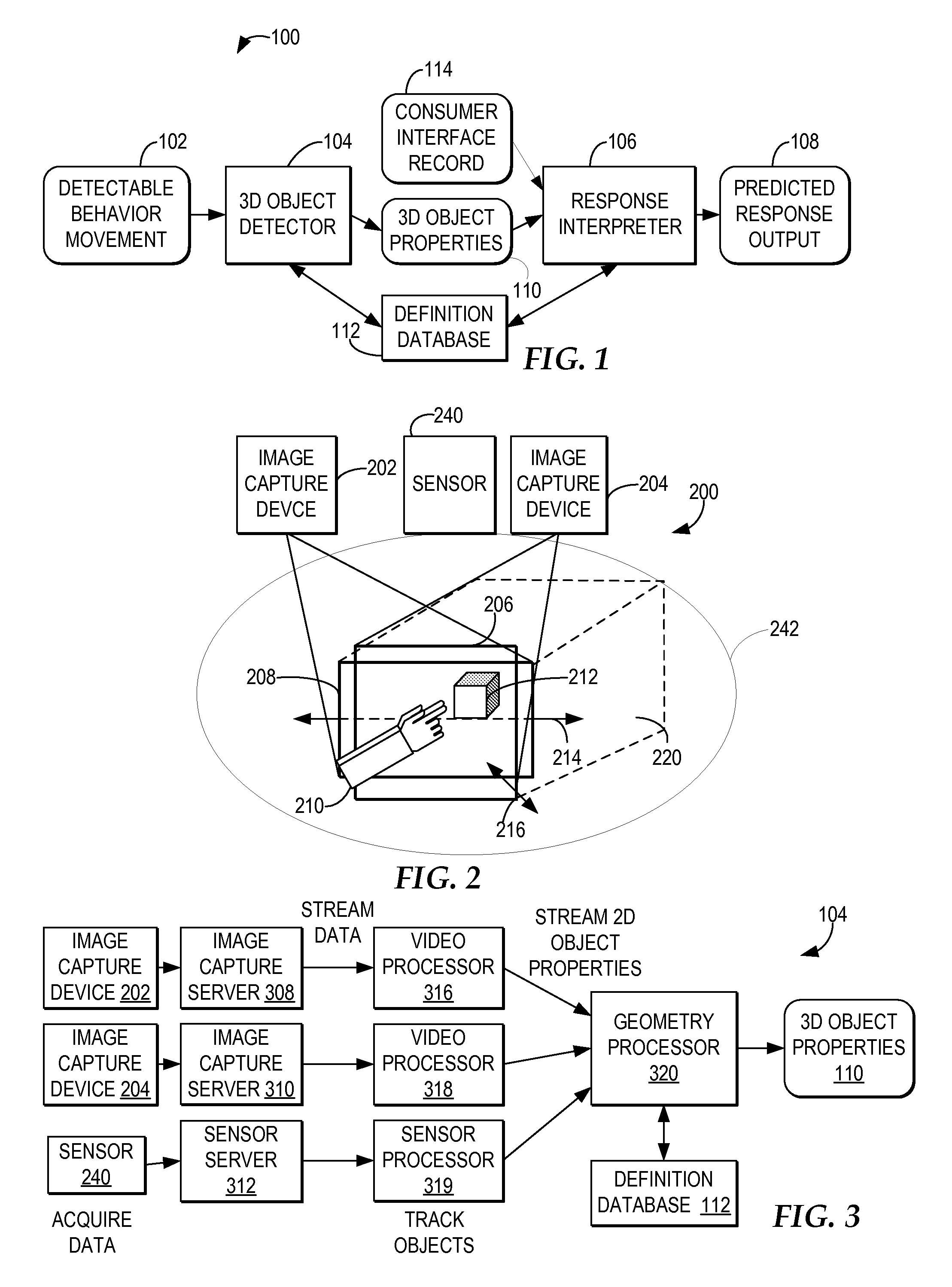

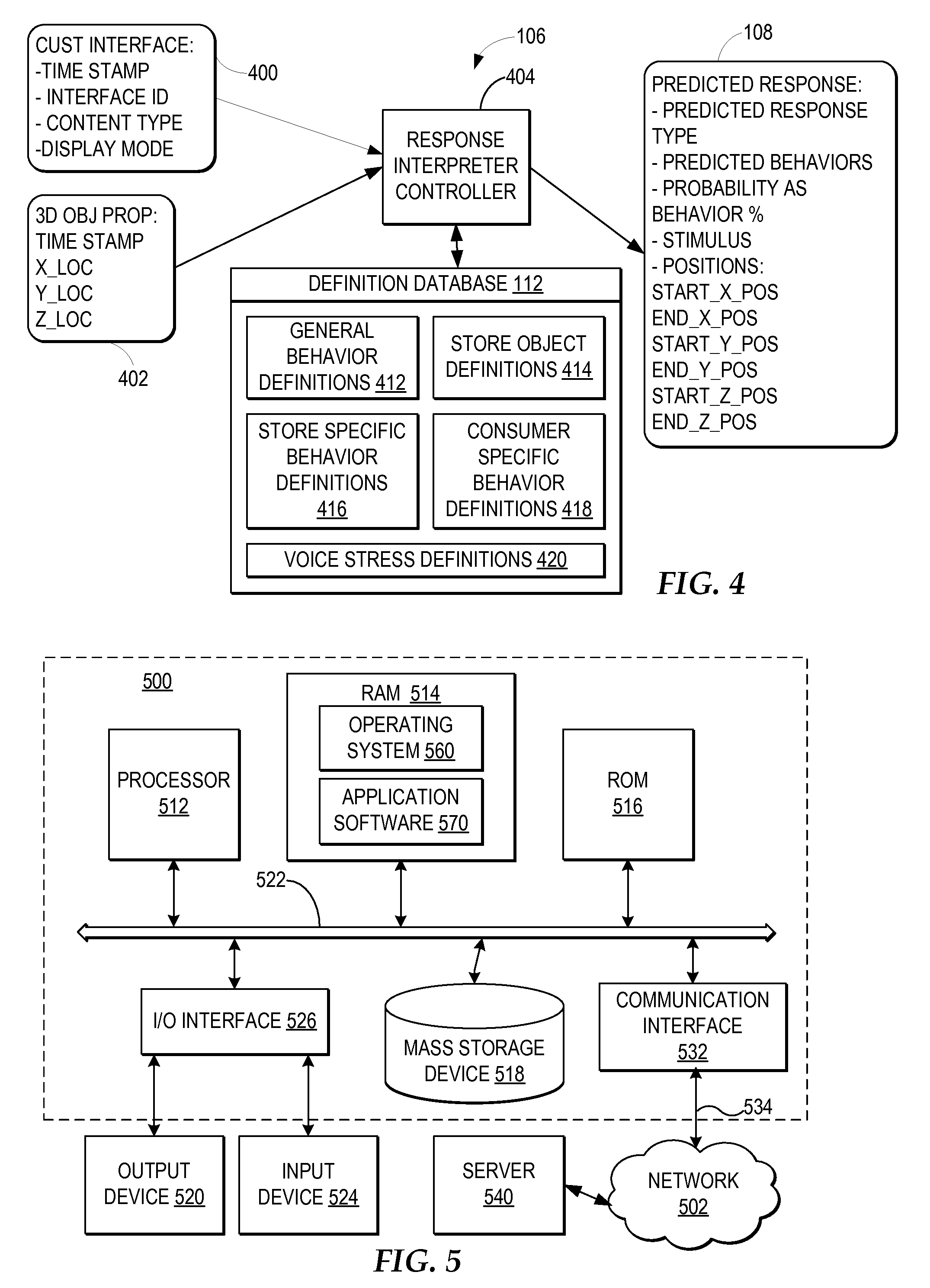

Adjusting a consumer experience based on a 3D captured image stream of a consumer response

InactiveUS20080172261A1Improved behavior identificationEasy to identifyCharacter and pattern recognitionInteraction systemsControl signal

A response processing system captures a three-dimensional movement of the consumer within a consumer environment, wherein the three-dimensional movement is determined using at least one image capture device aimed at the consumer. The response processing system identifies at least one behavior of the consumer in response to at least one stimulus within the consumer environment from a three-dimensional object properties stream of the captured movement. A consumer interaction system detects whether the at least one behavior of the consumer indicates a type of response to the at least one stimulus requiring adjustment of the consumer environment. Responsive to detecting that the behavior of the consumer indicates a type of response to the at least one stimulus requiring adjustment of the consumer environment, the consumer interaction system generates a control signal to trigger at least one change of the at least one stimulus within the consumer environment.

Owner:IBM CORP

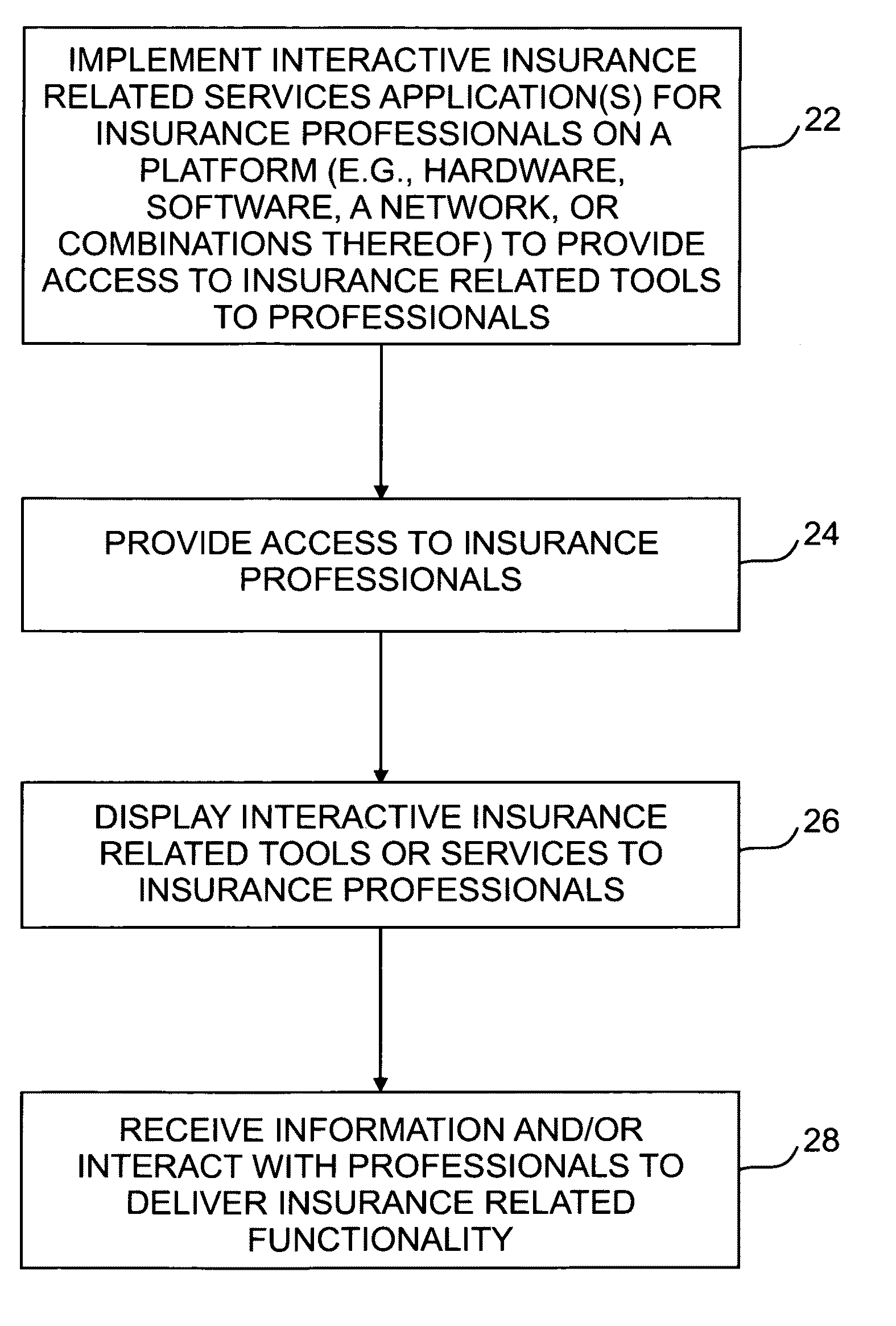

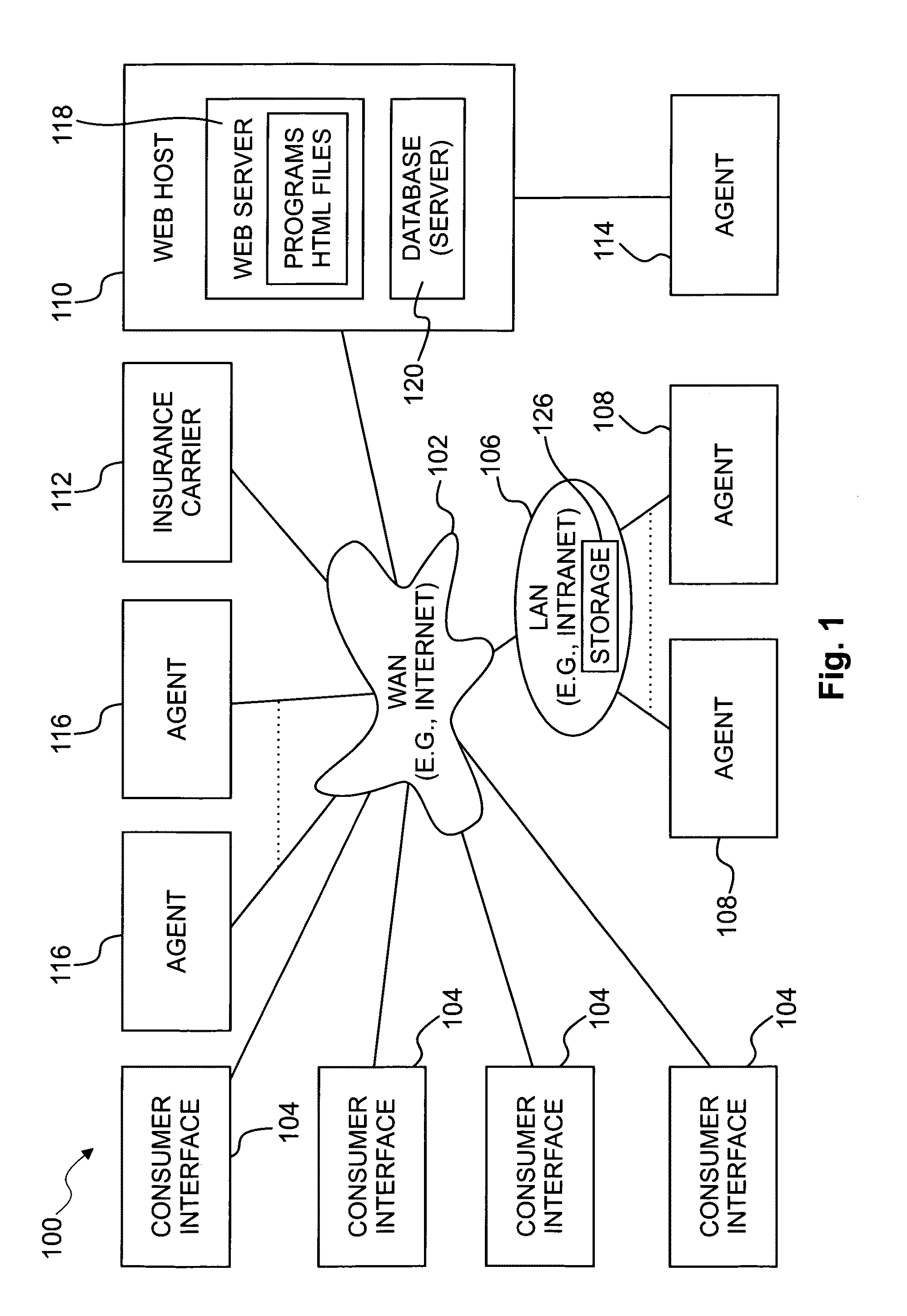

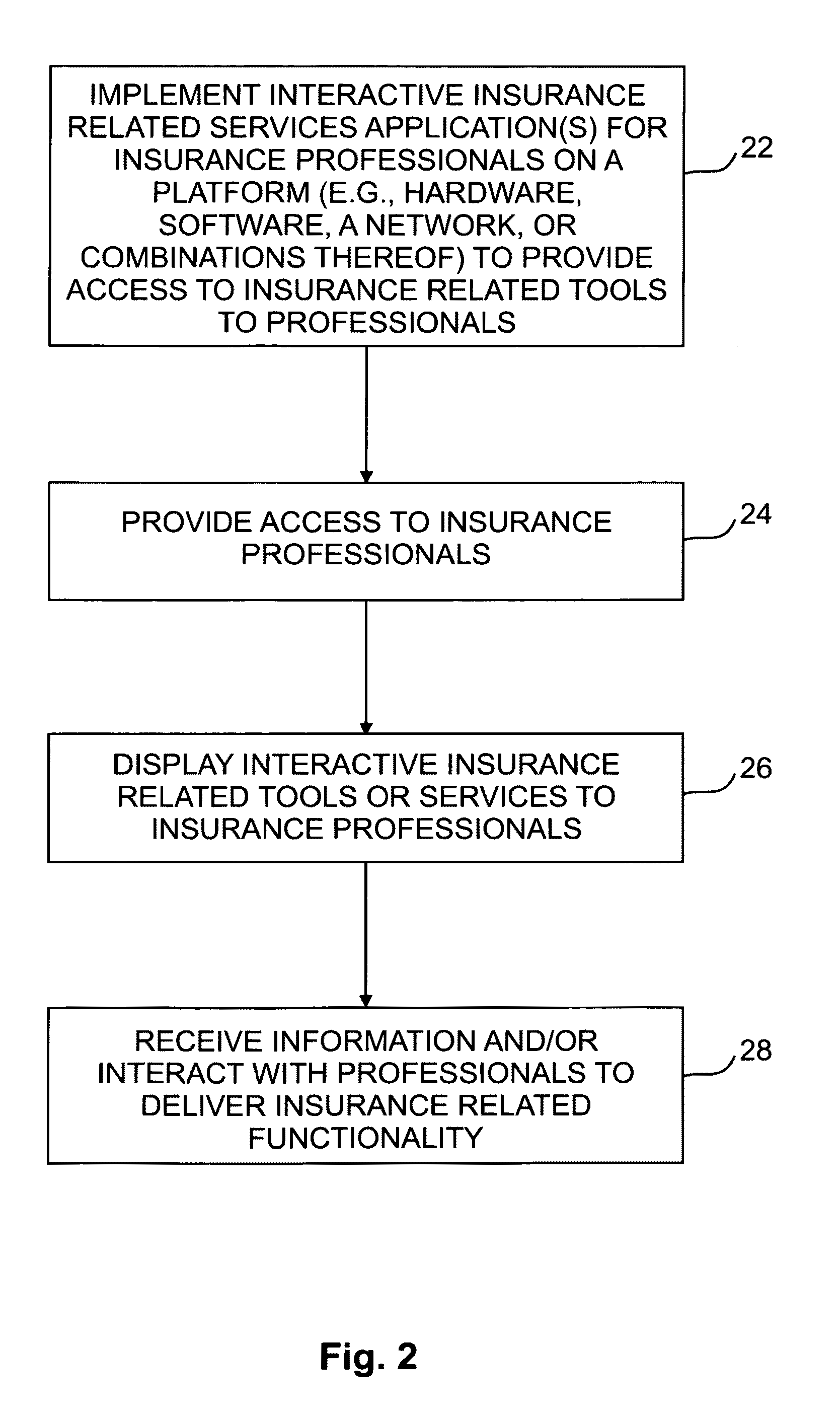

Interactive systems and methods for insurance-related activities

ActiveUS8185463B1Increase reflectionEasy to analyzeFinanceOffice automationInteraction systemsWeb browser

Systems and methods for performing insurance related activities are provided. Software can be implemented to provide an application that includes an interactive interface for use by insurance professionals in managing clients, marketing insurance, and storing information. For example, a network application can be implemented that a user can access via a web browser and which is intuitive for quick comprehension and interaction by users. The application can include multiple layers directed to particular stages of the insurance-client relationship. The application can include a Workflow Wizard® to aid the user in managing and maintaining client information and tracking progress. Aggregation services can also be incorporated into the application. Interactive insurance-and-client specific display pages can be incorporated to aid in understanding a client's current insurance information and to generate presentations. “Value” calculators may be implemented to illustrate a comparison of a client's current level of protection to a client's current financial state. Interactive tools for evaluating a customer's financial condition during retirement and how life insurance affects a customer's financial condition are also provided.

Owner:GUARDIAN LIFE INSURANCE COMPANY OF AMERICA

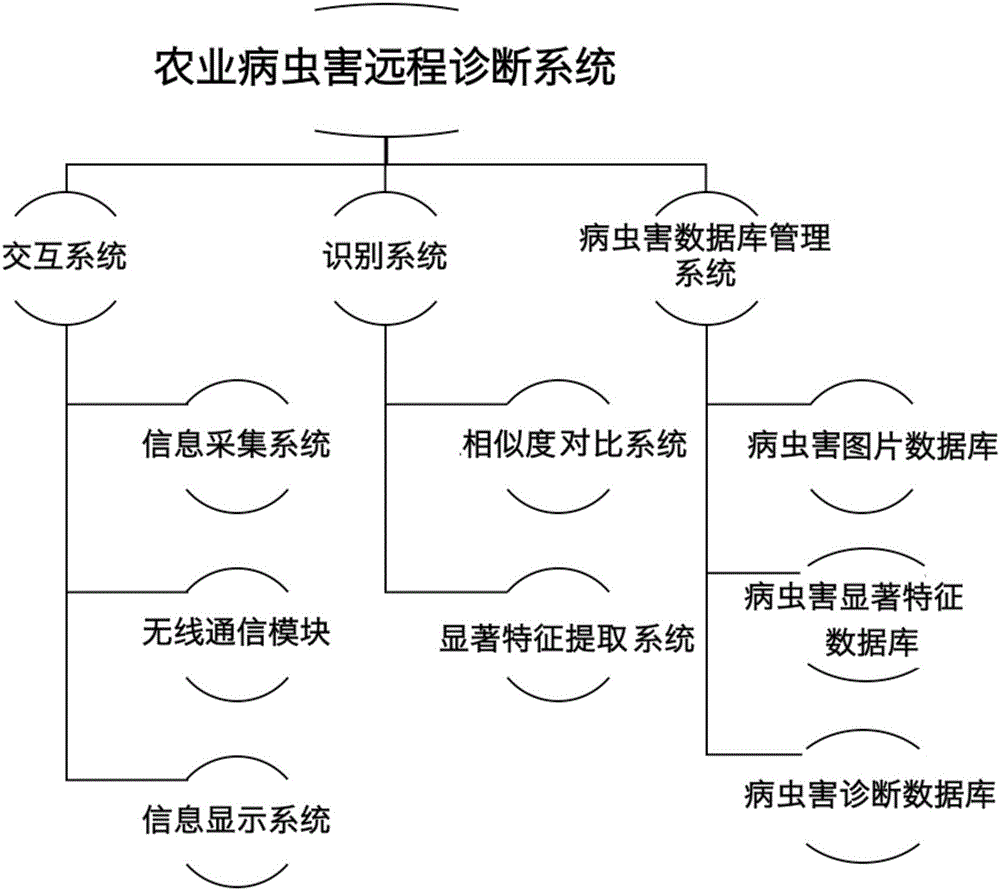

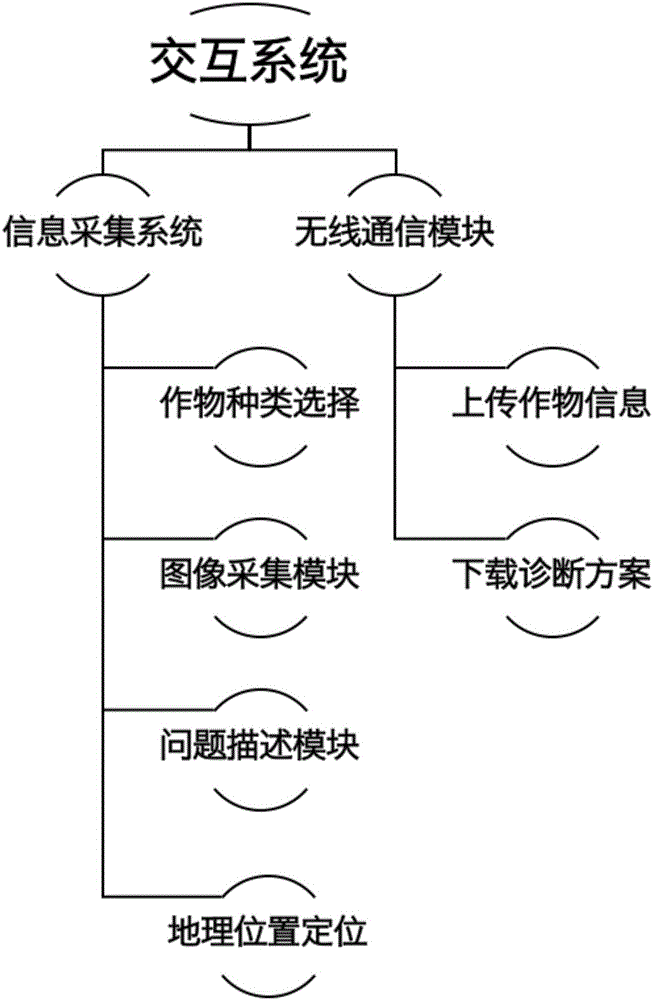

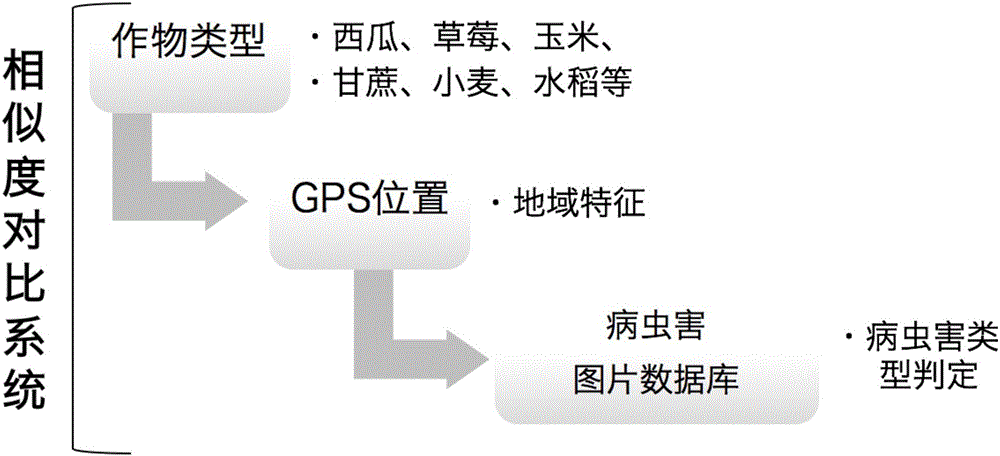

Smart agricultural insect disease remote automatic diagnosis system

InactiveCN105787446AImprove accuracyComprehensive responseData processing applicationsCharacter and pattern recognitionInteraction systemsBiotechnology

The invention relates to a smart agricultural insect disease remote automatic diagnosis system. The system comprises an interaction system, an identification system and an insect disease database management system. The interaction system comprises an information acquisition system, a wireless communication system and an information display system; the identification system comprises a similarity comparison system and a remarkable feature extraction system; and the inset disease database management system comprises an insect disease picture database, an insect disease remarkable feature database and an insect disease diagnosis database. The smart agricultural insect disease remote automatic diagnosis system, based on images of crop growth conditions, through intersection operation of the image similarity and remarkable feature extraction comparison system and a machine learning algorithm of the identification system, can effectively improve the identification accuracy of an insect disease type. According to the insect disease type, the system provides an omni-directional diagnosis scheme so as to improve the survival rate and the quality of crops.

Owner:上海劲牛信息技术有限公司

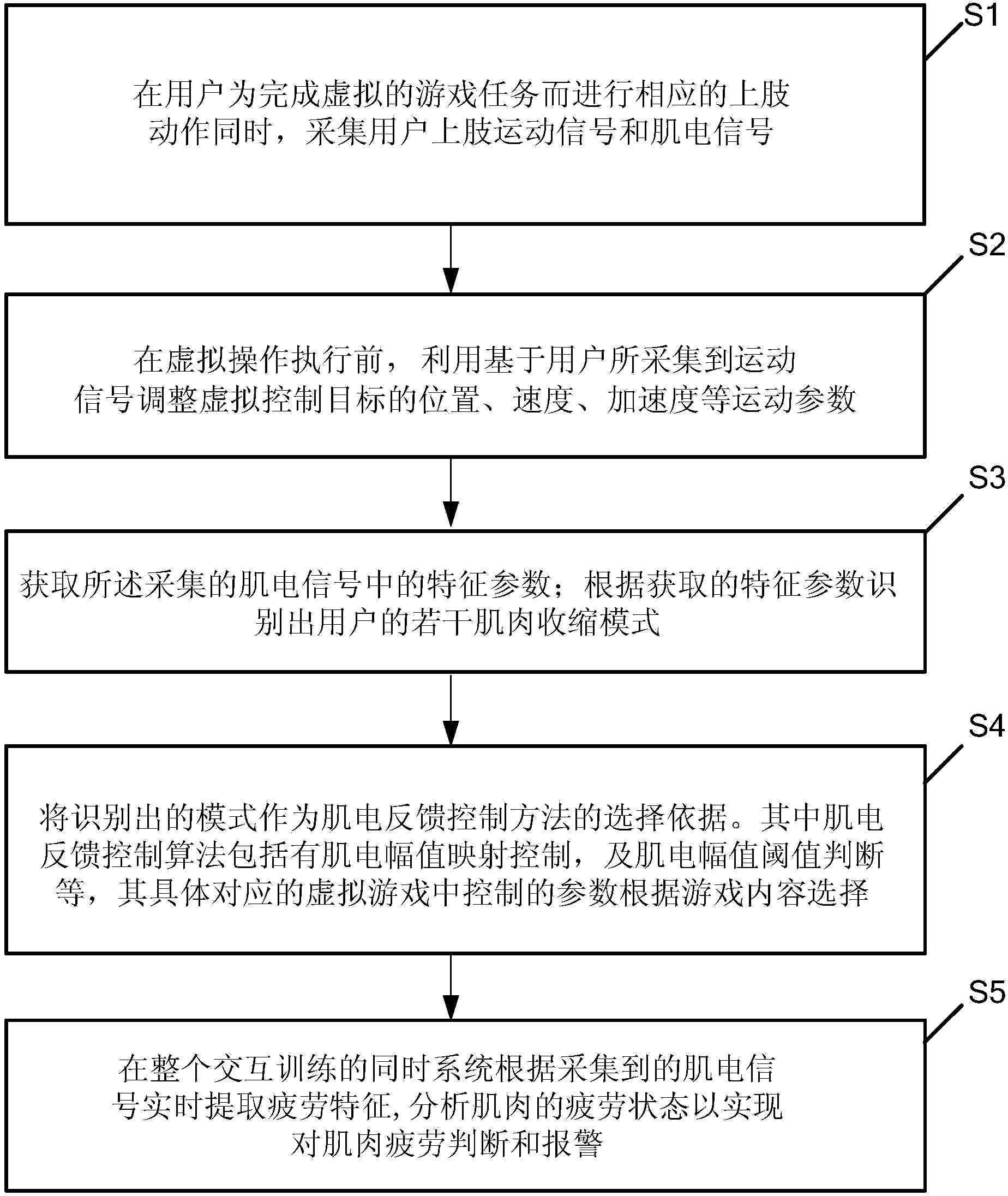

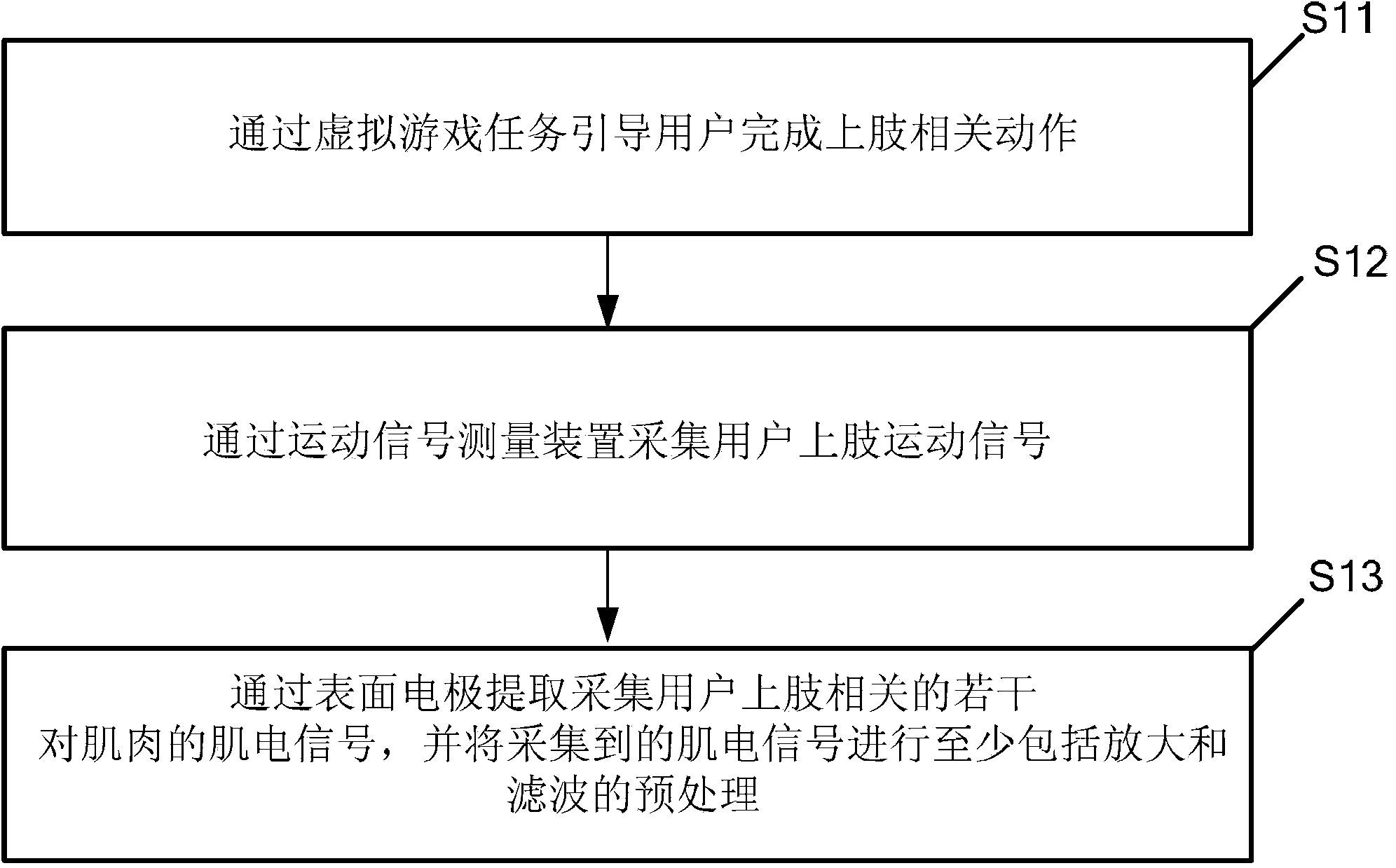

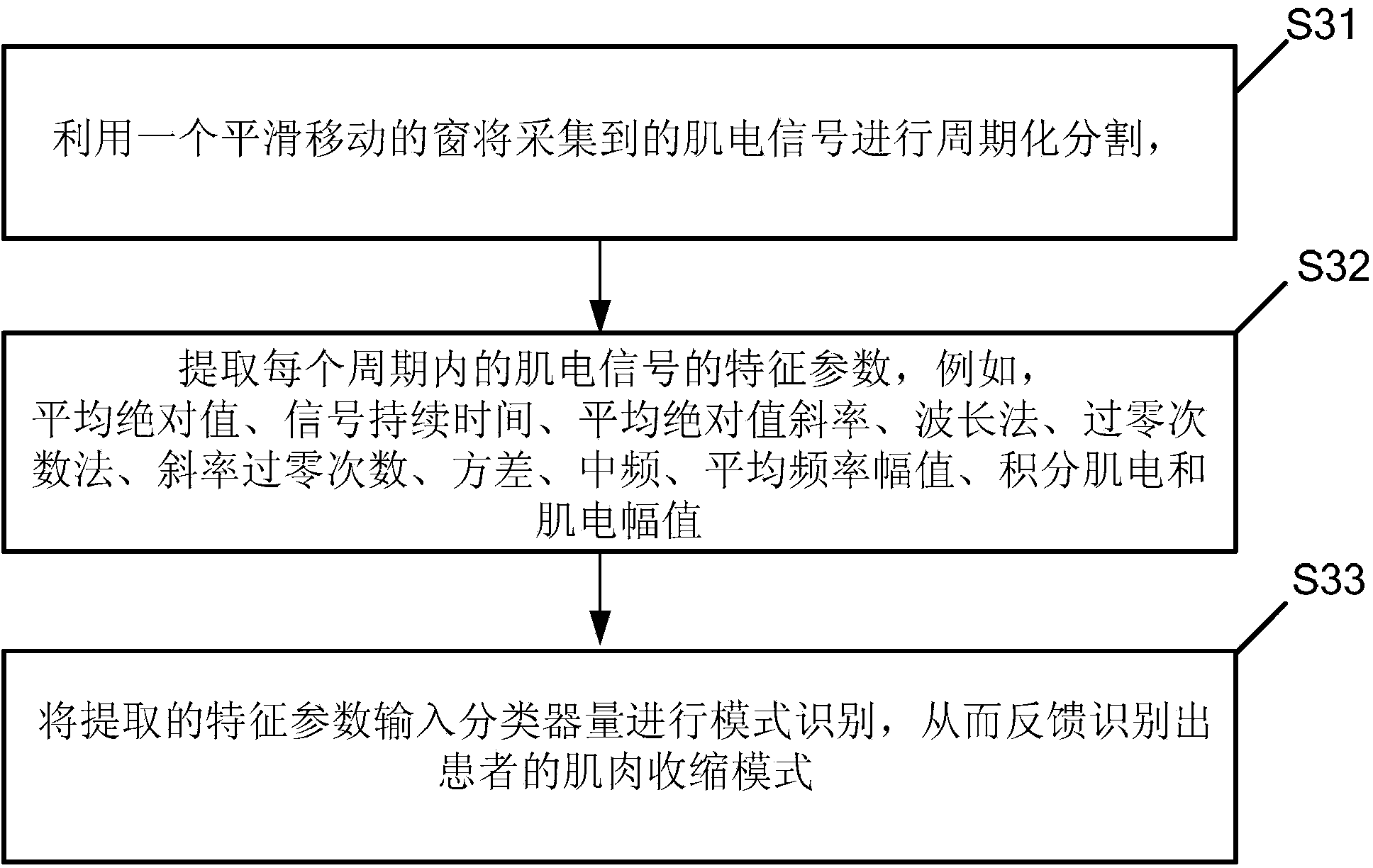

Myoelectricity feedback based upper limb training method and system

ActiveCN104107134AControl reachedReasonable adjustmentGymnastic exercisingChiropractic devicesInteraction systemsMuscle contraction

The invention discloses a myoelectricity feedback based upper limb training method and system. The myoelectricity feedback based upper limb training system orderly combines signal acquisition, mode identification, biological feedback and real-time fatigue evaluation, thereby being capable of helping a user train the movement function of the upper limb. The upper limb training method includes steps of acquiring movement signals and myoelectric signals of the upper limb joint of the user when the user acts correspondingly such as forearm rotation and wrist bending / stretching as completing virtual game tasks, adjusting movement parameters of virtual control targets on the basis of the movement signals acquired by the users, identifying several muscle contraction modes of the user according to the characteristic parameters of the myoelectric signals, and utilizing the identified modes as selection basis for a myoelectric feedback control method. In addition, the upper limb training system is capable of extracting fatigue characteristics in real time according to the acquired myoelectric signals, analyzing fatigue states of muscles so as to judge muscle fatigue and send an alarm during the whole interaction system.

Owner:SUN YAT SEN UNIV

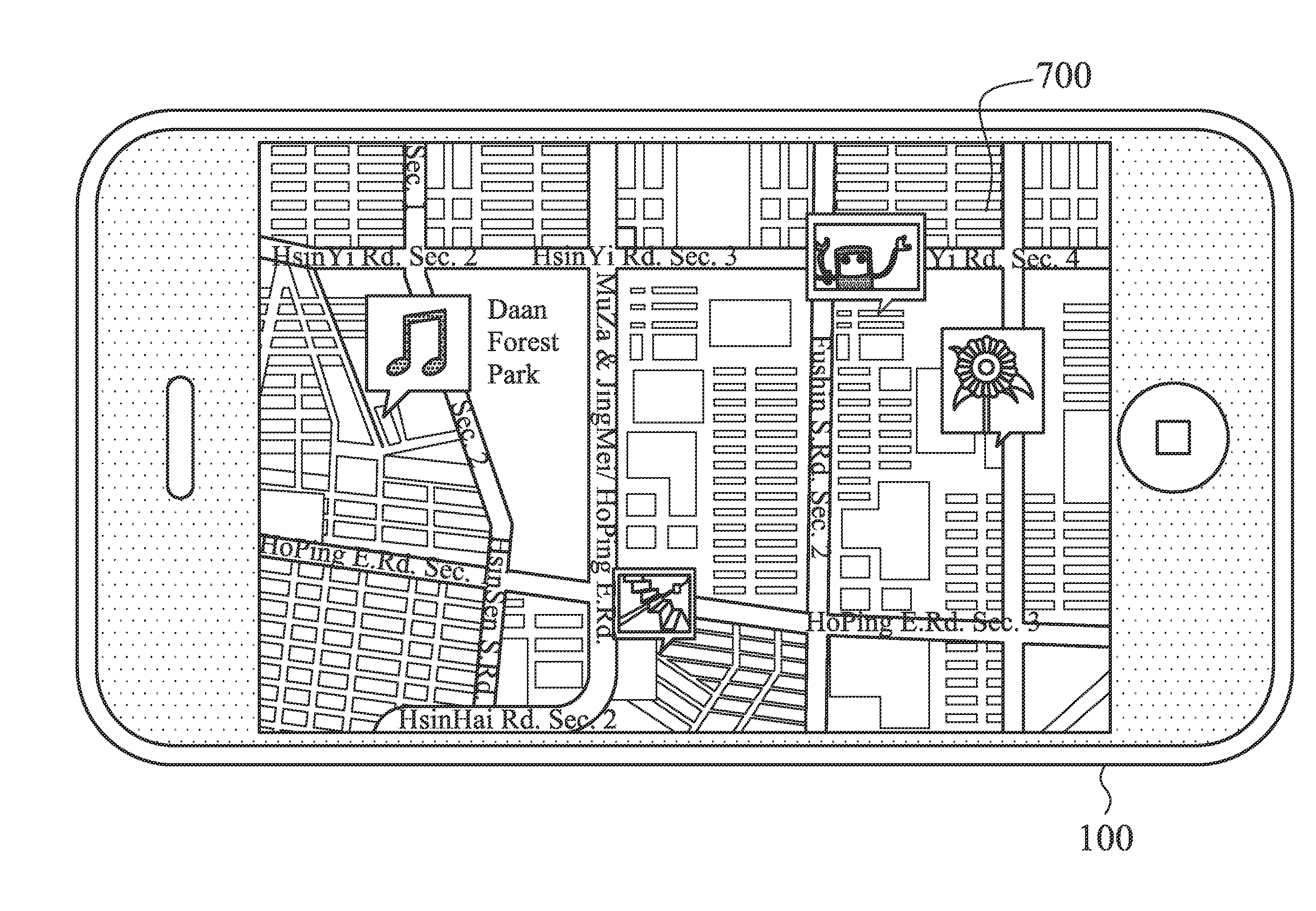

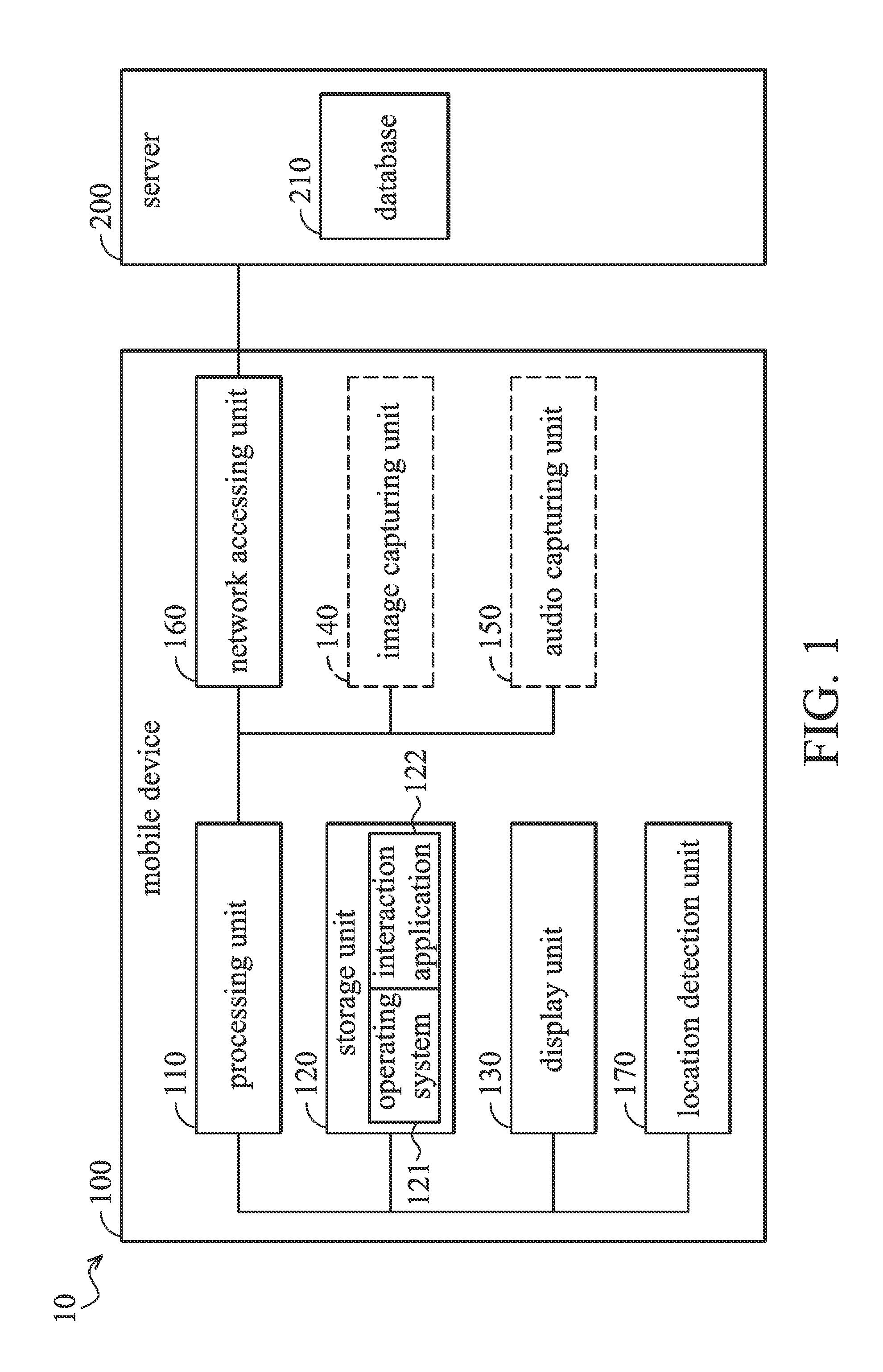

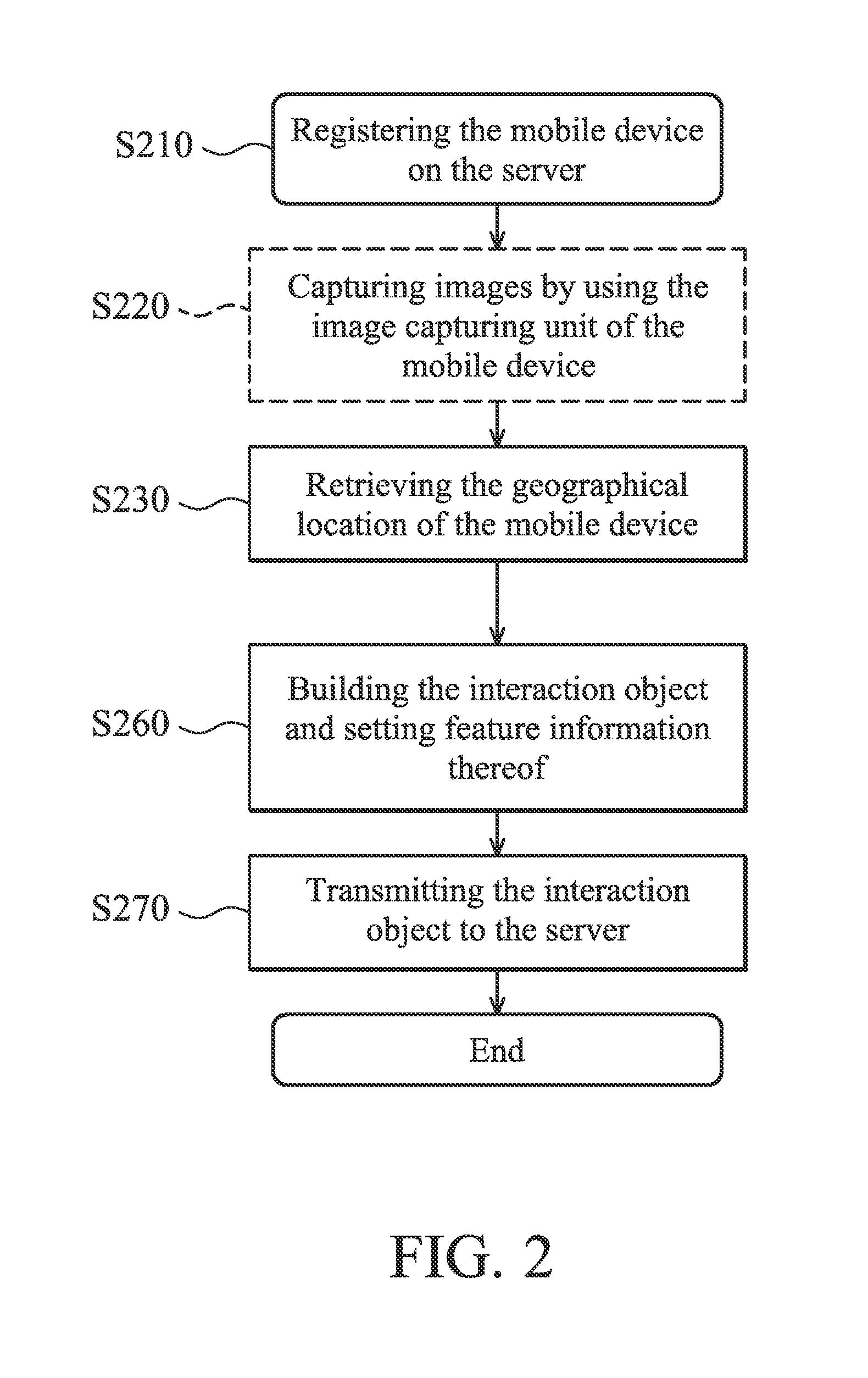

Interaction system

InactiveUS20140004884A1Improve interactive experienceLocation information based serviceInteraction systemsLocation detection

An interaction system is provided. The interaction system has: a mobile device having: a location detection unit configured to retrieve a geographical location of the mobile device; and a server configured to retrieve the geographical location of the mobile device, wherein the server has a database configured to store at least one interaction object and location information associated with the interaction object, and the server further determines whether the location information of the interaction object corresponds to the geographical location of the mobile device, wherein when the location information of the interaction object corresponds to the geographical location of the mobile device, the server further transmits the interaction object to the mobile device, so that the mobile device executes the at least one interaction object.

Owner:QUANTA COMPUTER INC

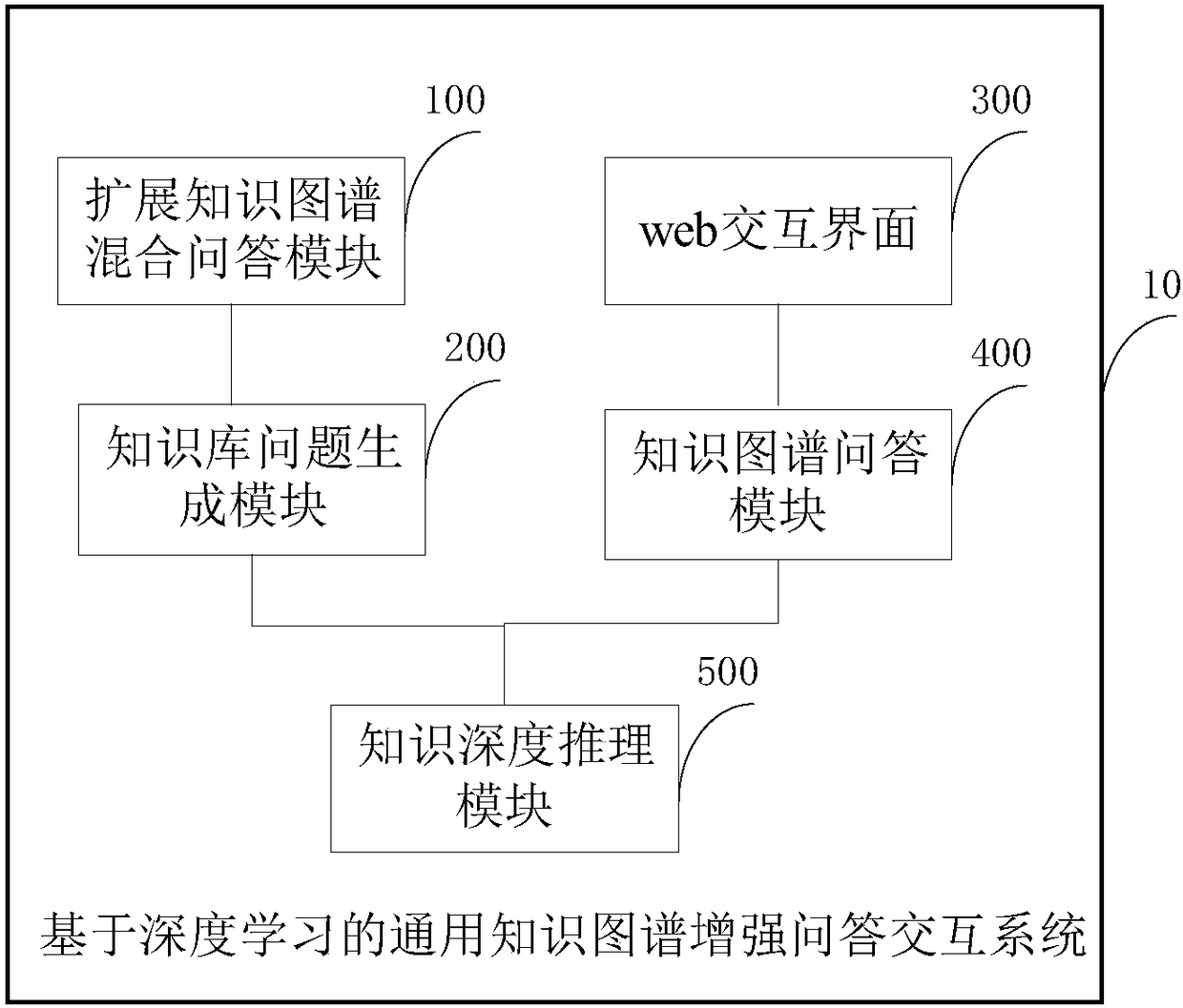

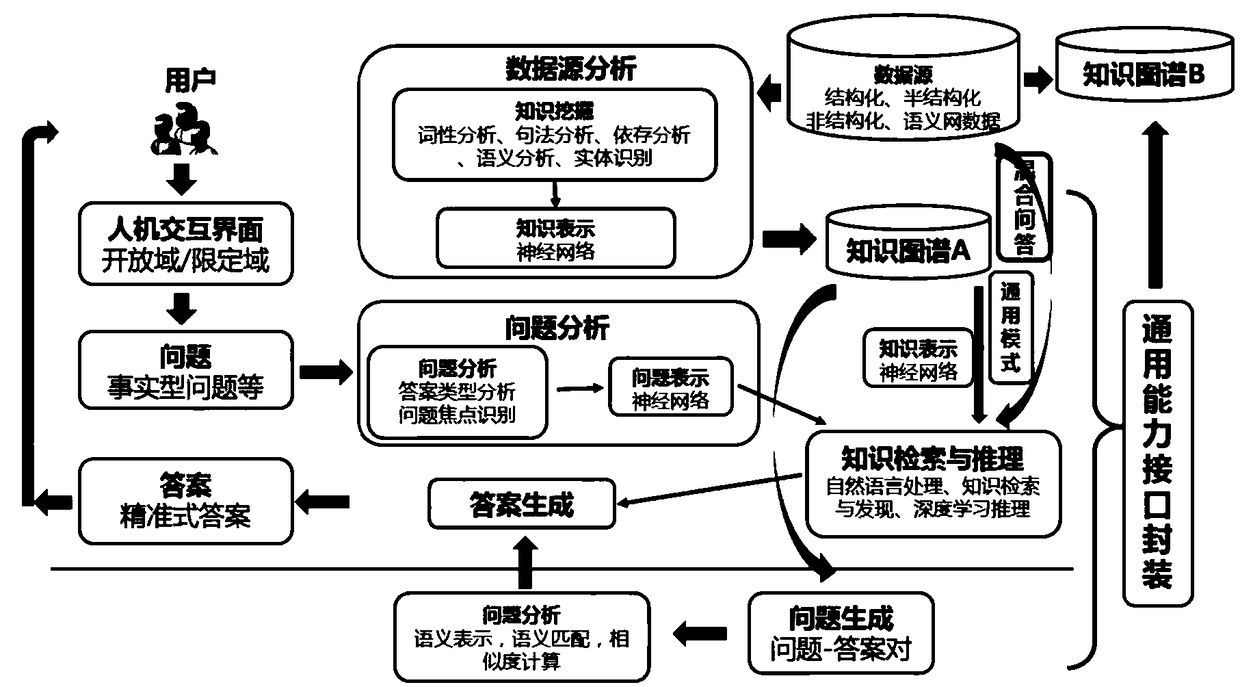

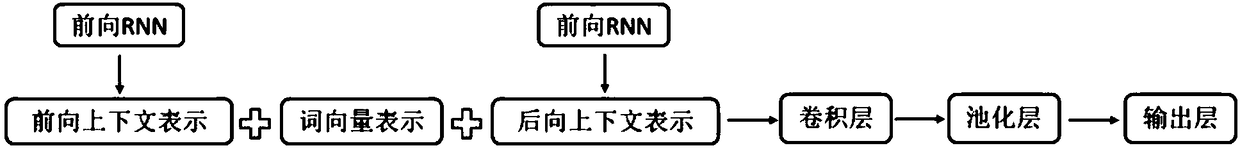

Enhanced question answering interaction system and method for universal mapping knowledge domain on the basis of deep learning

ActiveCN108509519AImprove performanceEasy to operateSemantic analysisKnowledge representationInteraction systemsQuestion generation

The invention discloses an enhanced question answering interaction system and method for a universal mapping knowledge domain on the basis of deep learning. The system comprises an expanded mapping knowledge domain hybrid question answering module, a knowledge base question generation module, a web interaction module, a mapping knowledge domain question answering module and a knowledge depth reasoning module, wherein the expanded mapping knowledge domain hybrid question answering module is used for obtaining an expanded mapping knowledge domain; the knowledge base question generation module independently generates answers corresponding to different questions to enable generate a plurality of question-answer pairs; the web interaction interface is used for obtaining a user question; the mapping knowledge domain question answering module obtains the type of an answer corresponding to the user question, and obtains a numerical value vector corresponding to the user question; and the knowledge depth reasoning module is used for carrying out knowledge retrieval and reasoning on the type of the answer corresponding to the user question and the numerical value vector corresponding to theuser question, and obtaining the target answer of the user question according to retrieval and reasoning results and a plurality of question-answer pairs. By use of the system, the performance, the operability, the semantic comprehension analysis ability, the comprehensive question answering expansion ability and the universal technology sharing ability of the question answering system can be effectively improved, and answer generation accuracy is improved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

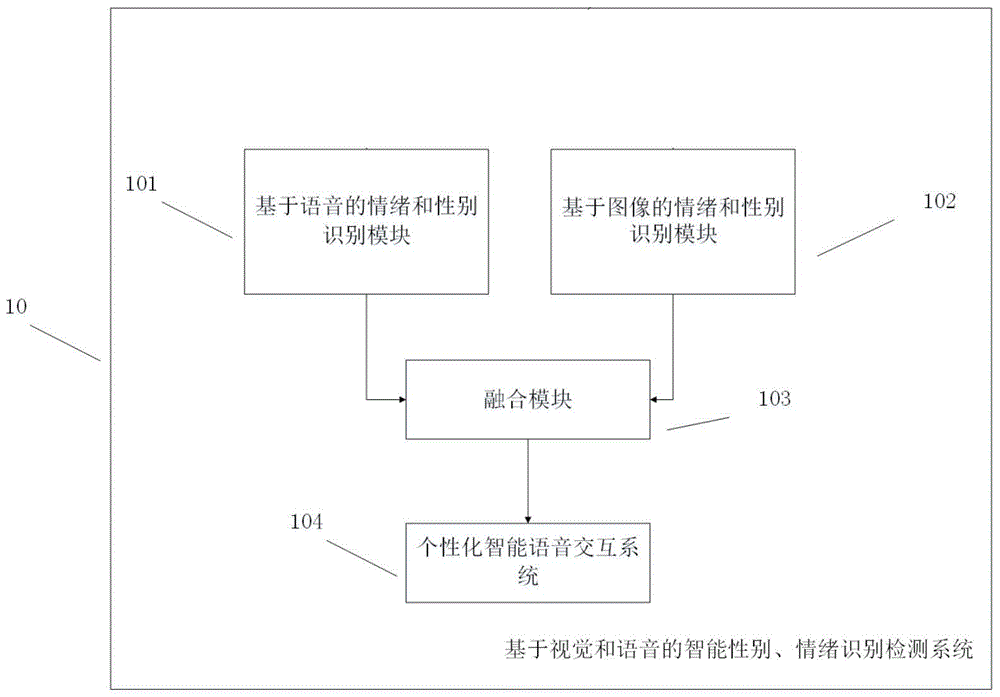

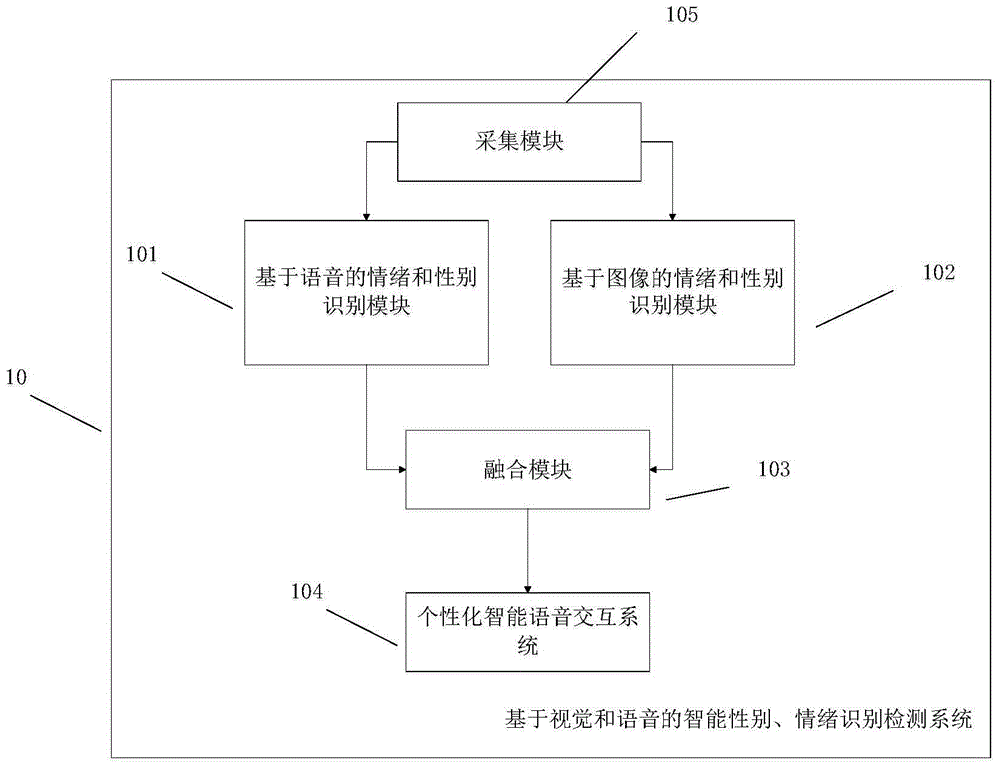

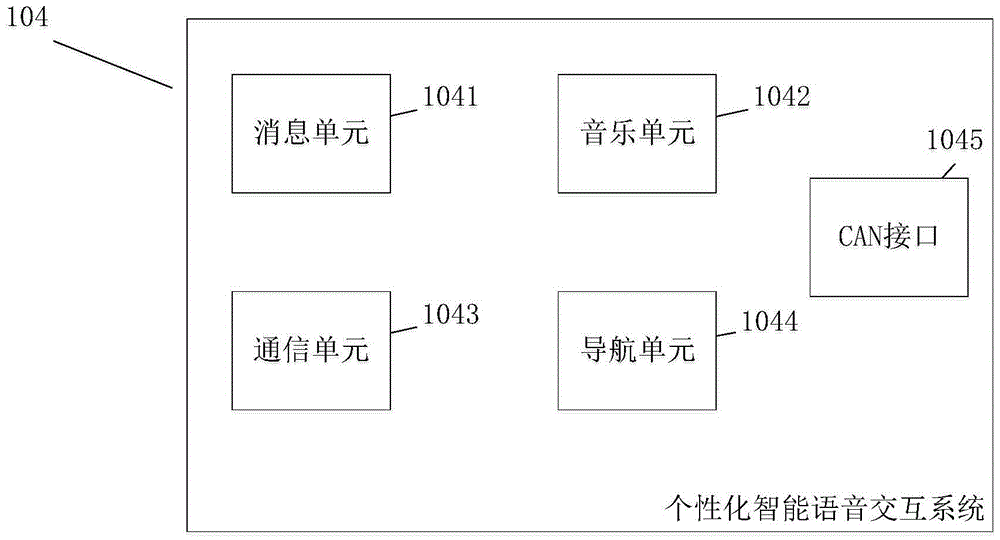

Intelligent gender and emotion recognition detection system and method based on vision and voice

InactiveCN105700682AAutomatic gender/mood detectionHigh precisionInput/output for user-computer interactionCharacter and pattern recognitionPattern recognitionInteraction systems

The invention discloses an intelligent gender and emotion recognition detection system and method based on vision and voice. The system comprises an image-based emotion and gender recognition module, a voice-based emotion and gender recognition module, a fusion module and a personalized intelligent voice interaction system, wherein the image-based emotion and gender recognition module is used for recognizing the emotion of a person in a vehicle according to a face image and recognizing the gender of the person in the vehicle according to the face; the voice-based emotion and gender recognition module is used for recognizing the emotion and the gender of the person in the vehicle according to voice; the fusion module is used for matching the gender recognition results, fusing the emotion recognition result, and sending the results to the personalized intelligent voice interaction system; and the personalized intelligent voice interaction system is used for voice interaction. By adopting the system and the method, the gender / emotion recognition accuracy is improved via fused images and voice recognition results; the driving experience and the driving safety are improved via the personalized intelligent voice interaction system; and the using fun of vehicle-mounted equipment and the accuracy of information service are improved by voice interaction.

Owner:BEIJING ILEJA TECH CO LTD

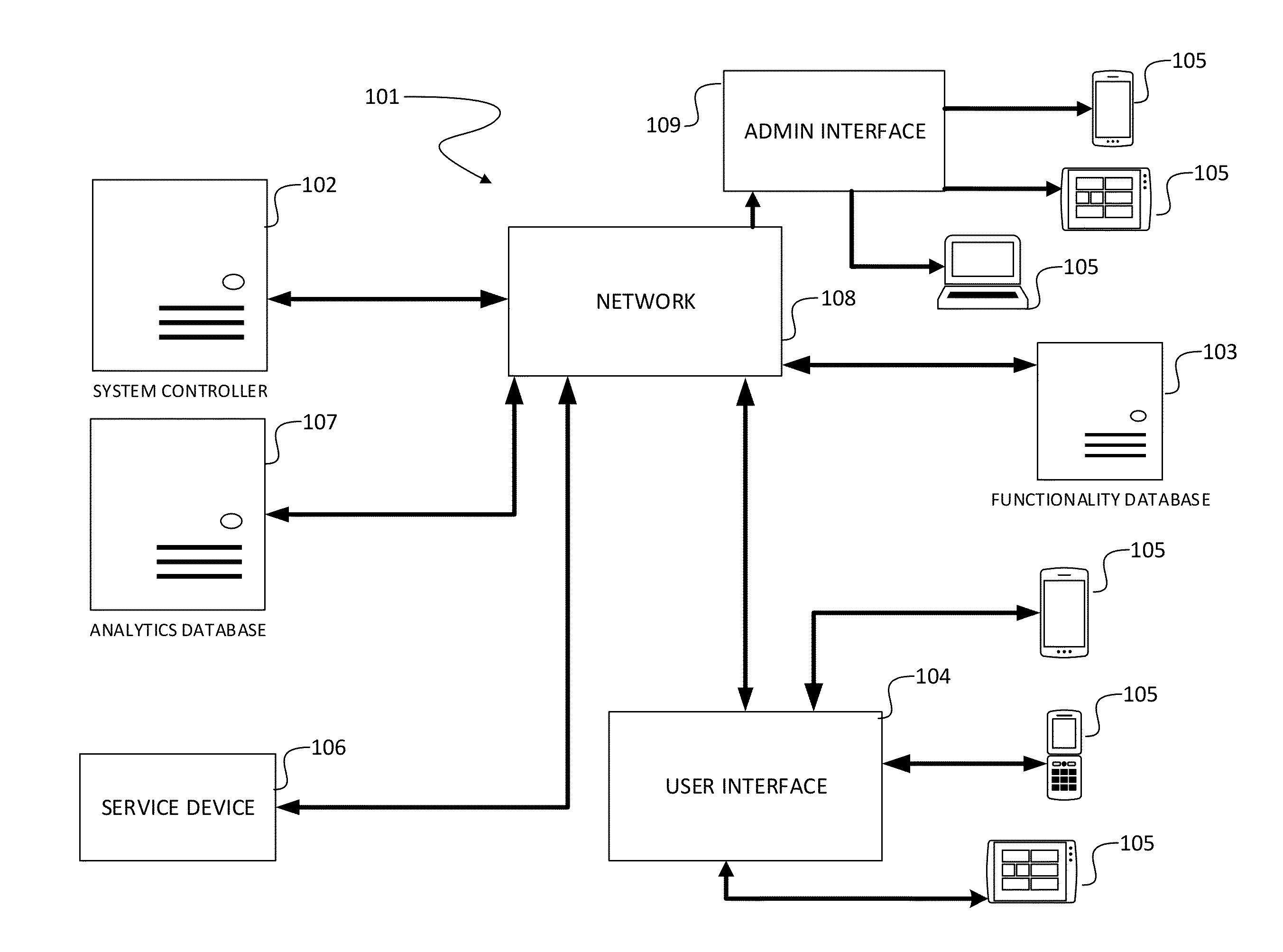

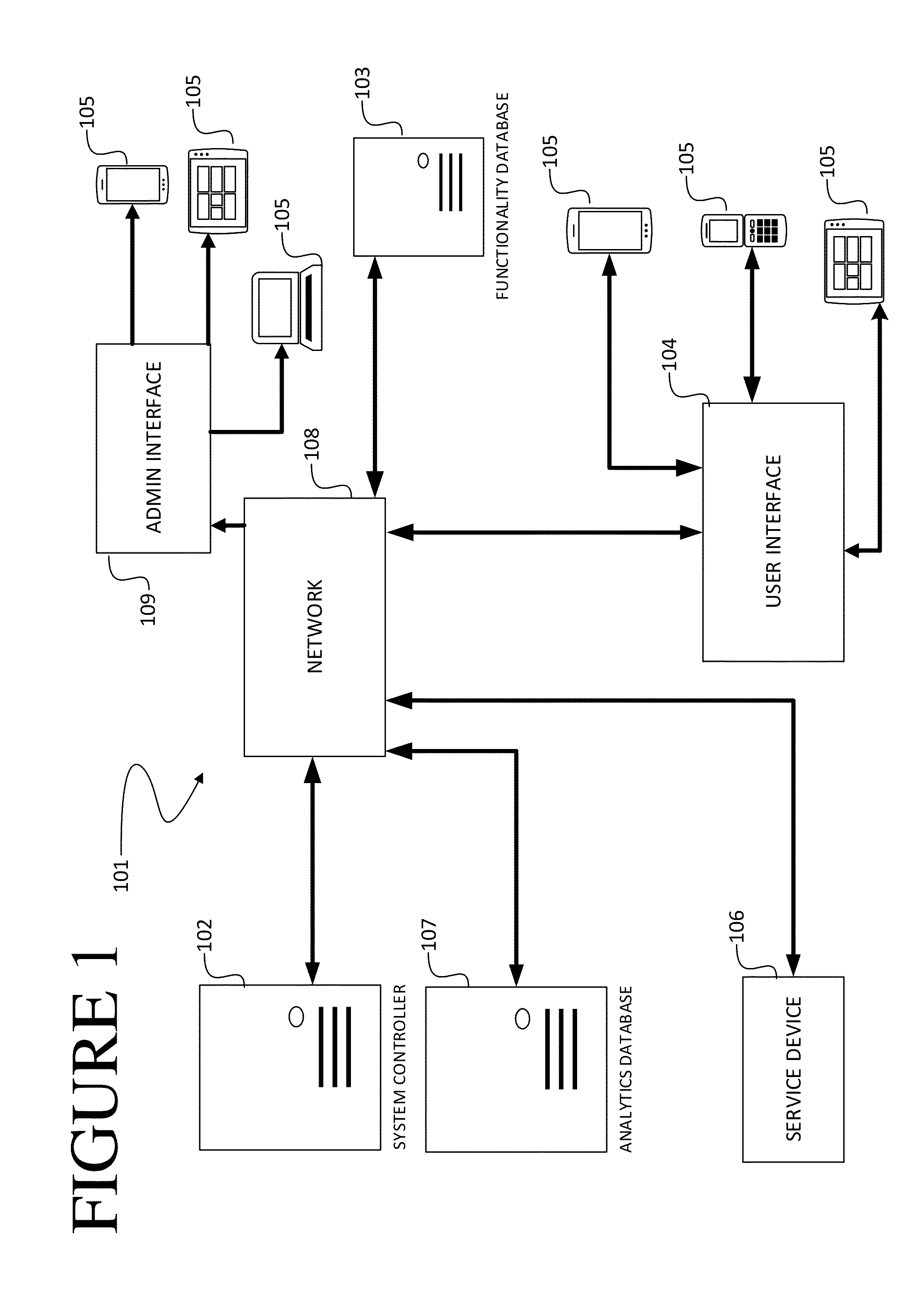

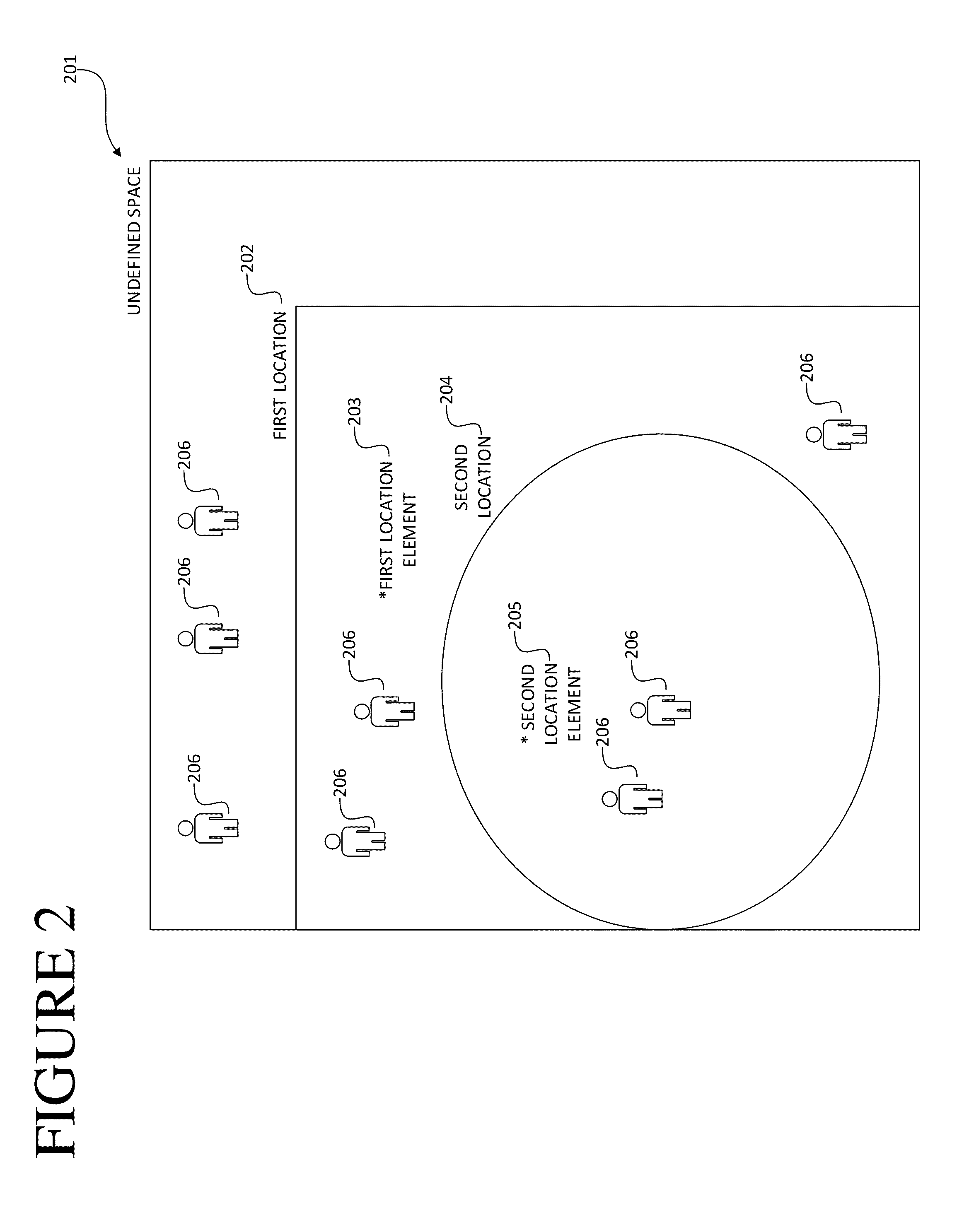

Location-based communication and interaction system

InactiveUS20140201367A1Digital computer detailsLocation information based serviceInteraction systemsFeature set

A system comprising a database containing first and second location elements, a feature set, and a first unique identifier associated with the second location element; a system controller coupled to the database and configured to establish the first location element with a first physical location, establish the second location element with a second physical location and associate the first unique identifier with the second location element; and a user interface coupling a first user device with the system controller, the first user device associated with the first location element; and the system controller being further configured to transmit an initiated action between the first and second user, to transmit the unique identifier to the first user in relation to the action from the second user, and to associate the first user with the second location element based on the possession of the unique identifier by the first user after transmission.

Owner:SOCIAL ORDER

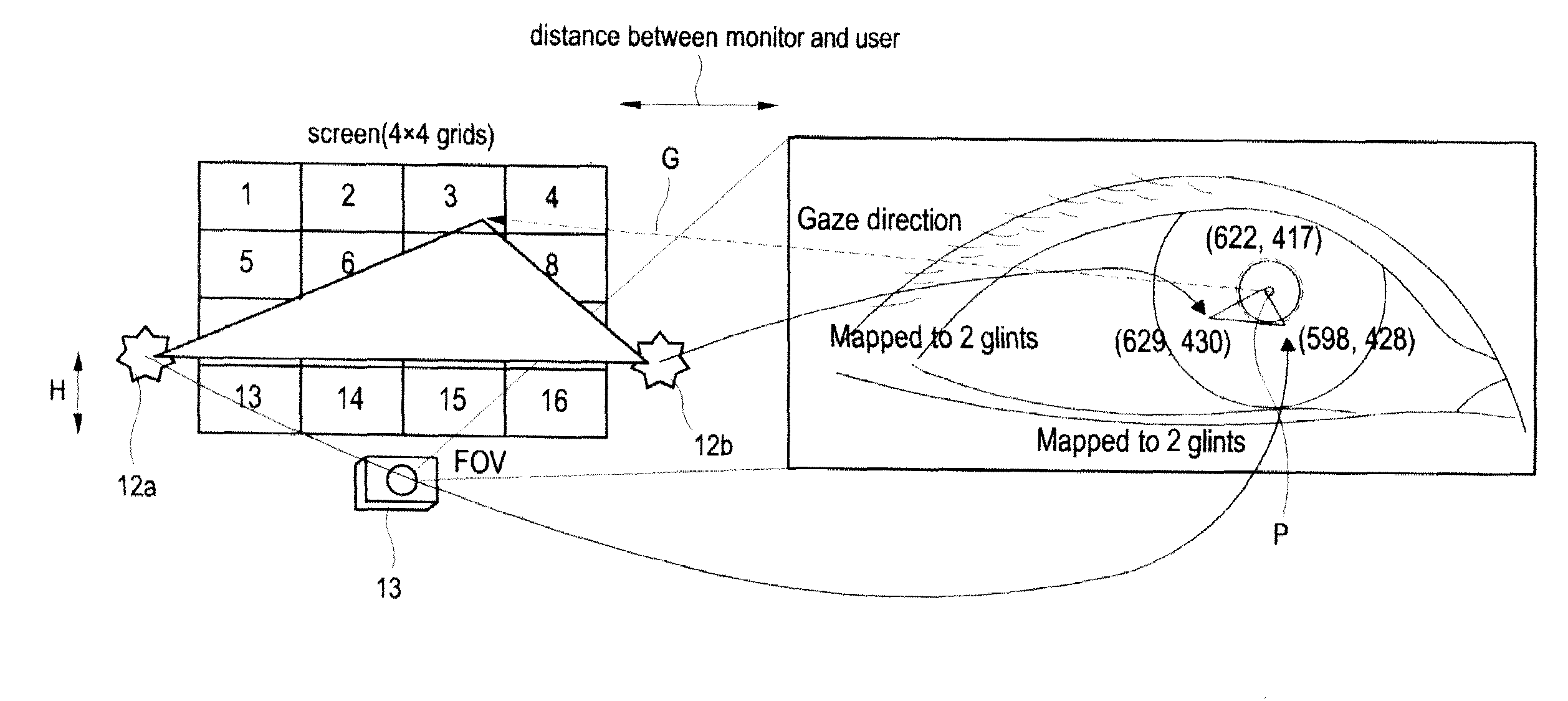

System and method for Three-dimensional interaction based on gaze and system and method for tracking Three-dimensional gaze

A gaze-based three-dimensional (3D) interaction system and method, as well as a 3D gaze tracking system and method, are disclosed. The gaze direction is determined by using the image of one eye of the operator who gazes at a 3D image, while the gaze depth is determined by the distance between pupil centers from both eyes of the operator shown in an image of both eyes of the operator.

Owner:KOREA INST OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com